Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

73 results about "Posted write" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A posted write is a computer bus write transaction that does not wait for a write completion response to indicate success or failure of the write transaction. For a posted write, the CPU assumes that the write cycle will complete with zero wait states, and so doesn't wait for the done. This speeds up writes considerably. For starters, it doesn't have to wait for the done response, but it also allows for better pipelining of the datapath without much performance penalty.

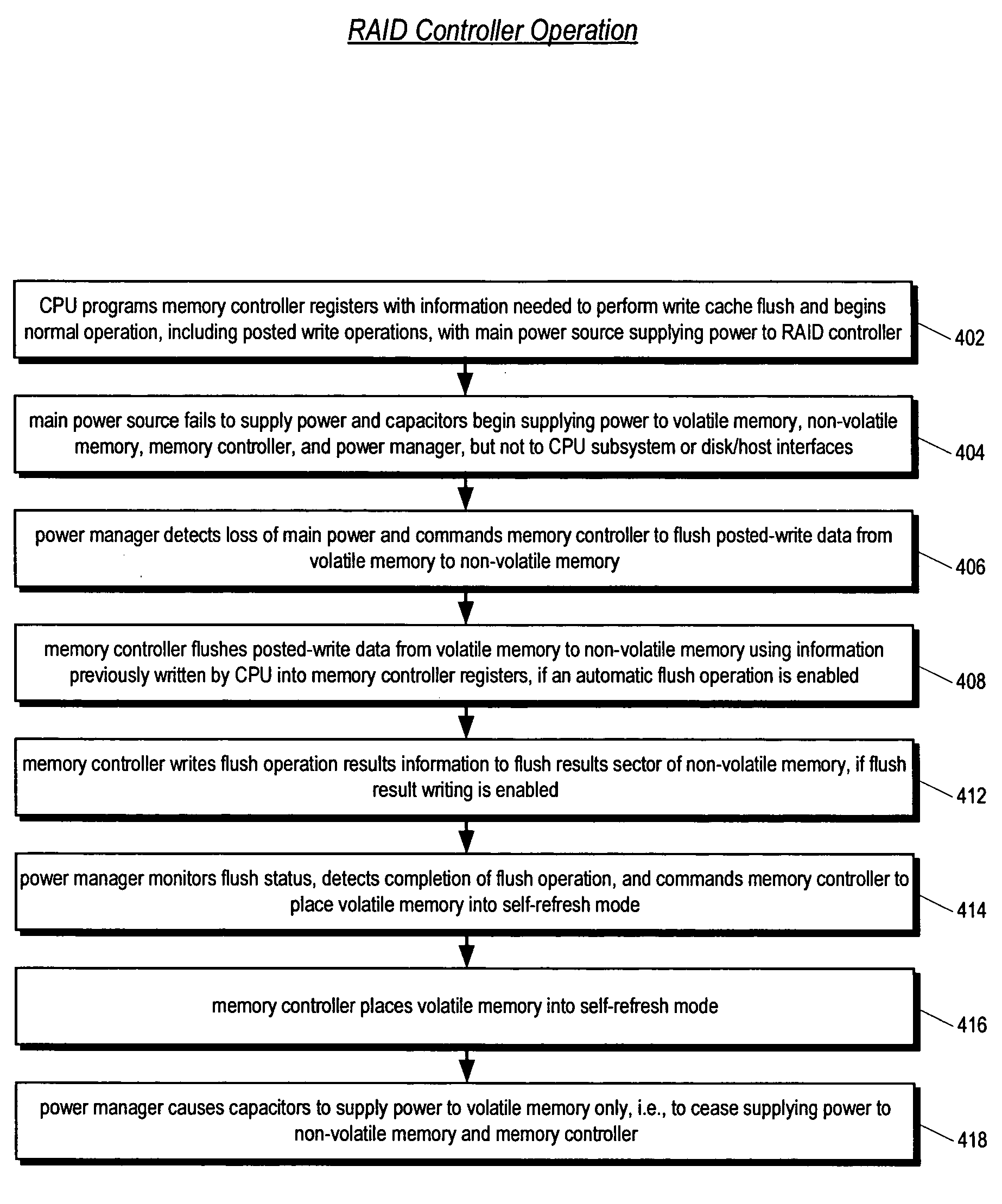

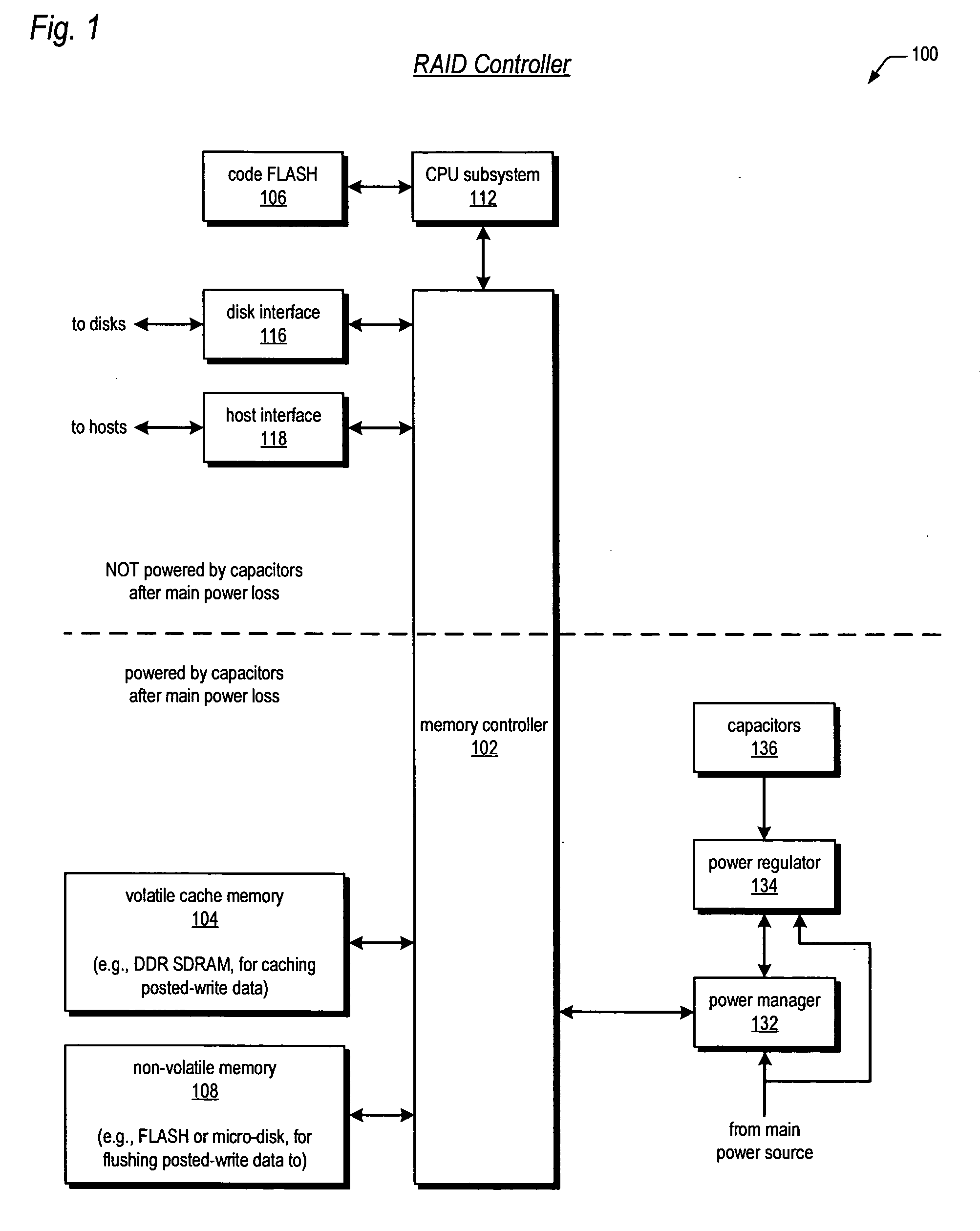

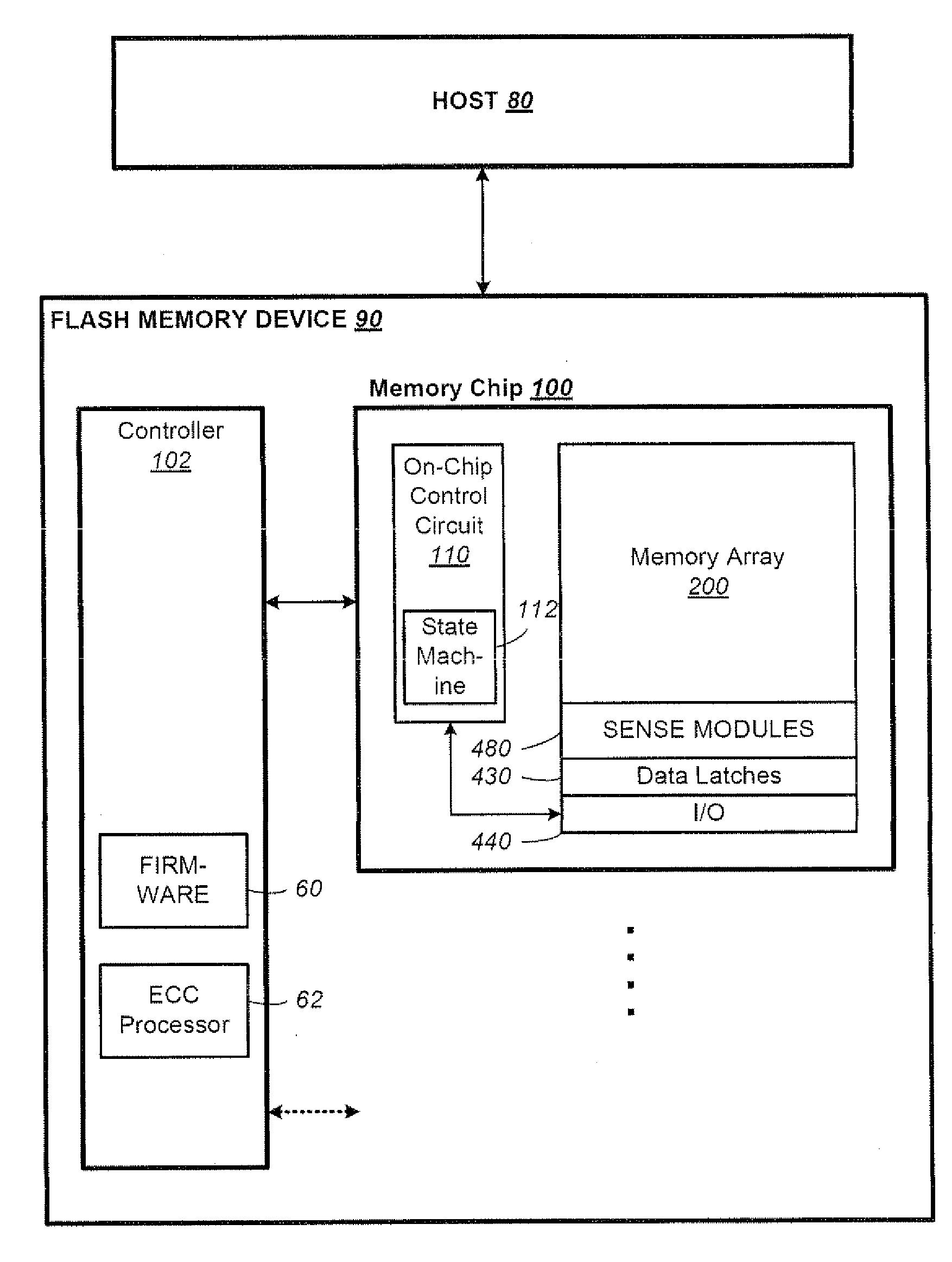

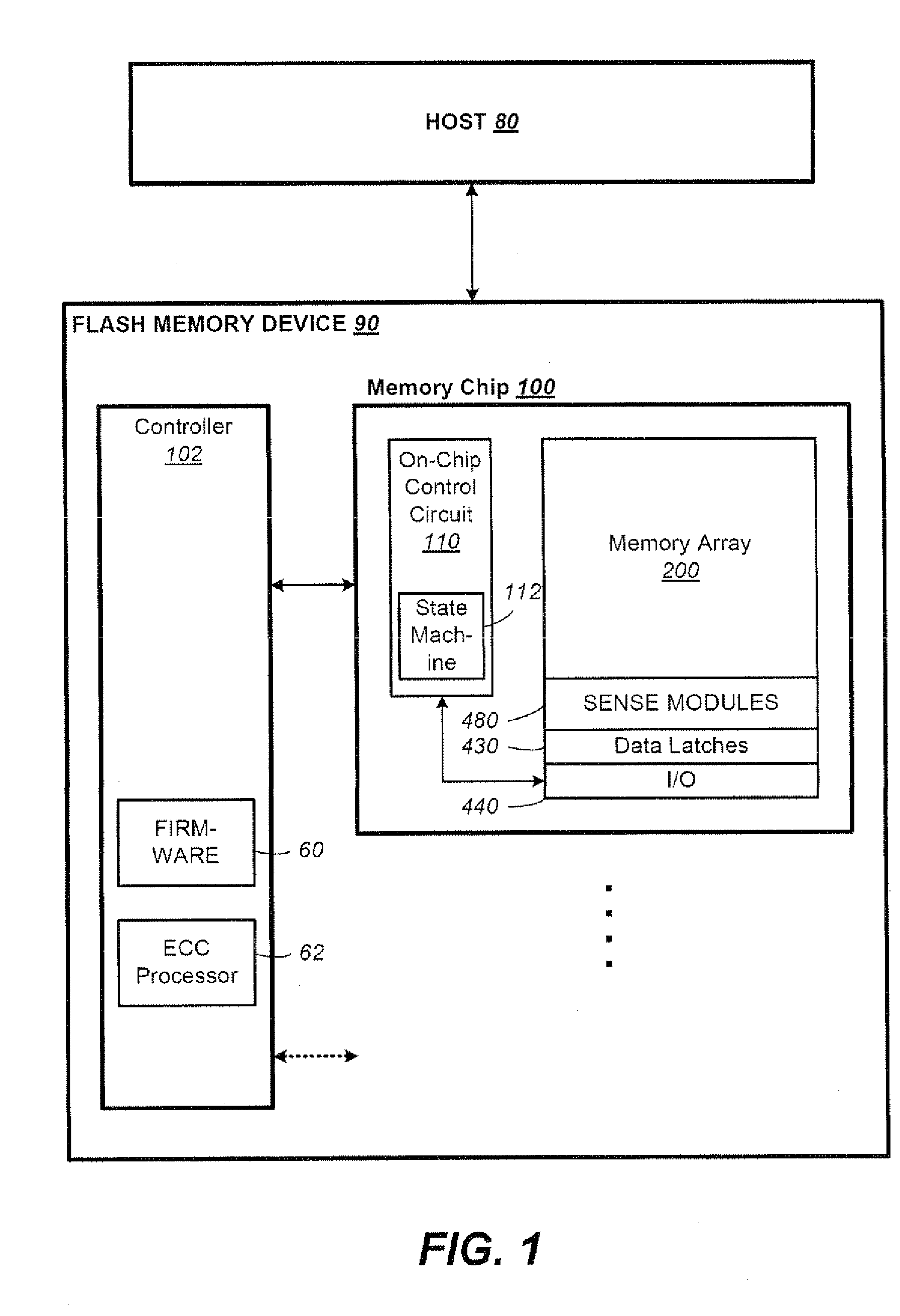

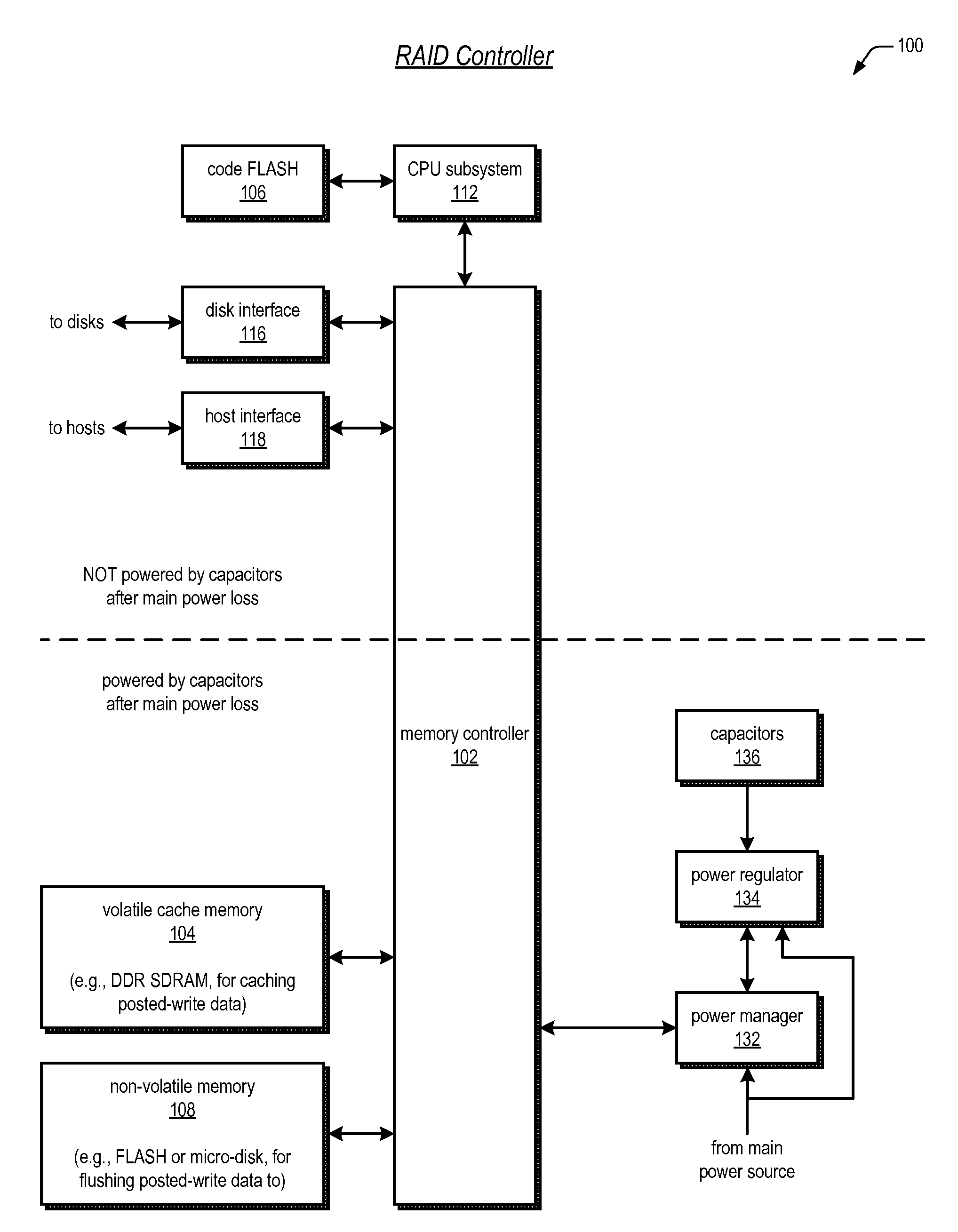

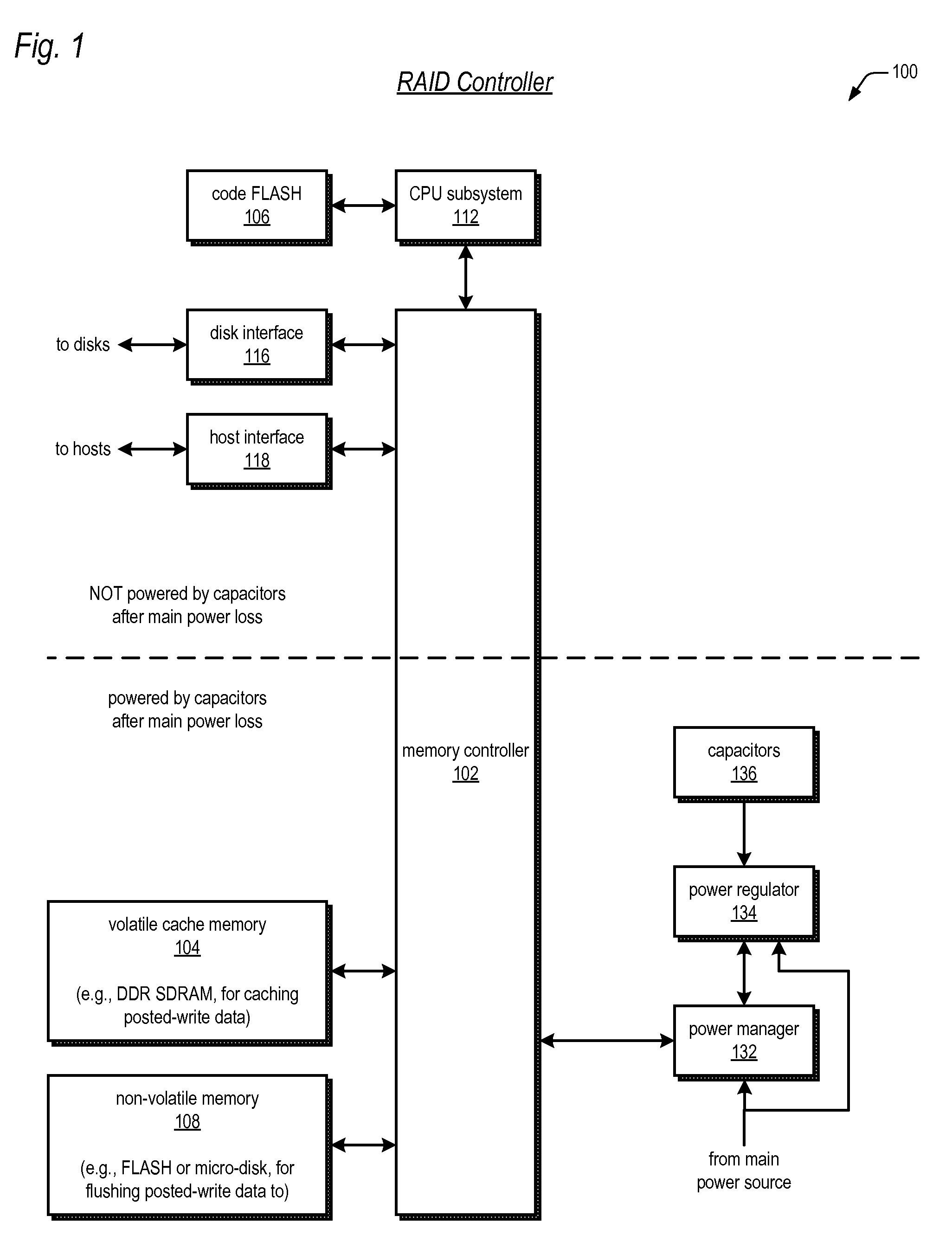

Raid controller using capacitor energy source to flush volatile cache data to non-volatile memory during main power outage

InactiveUS20060015683A1Reduces amount of energy storage capacity requirementLess expensiveEnergy efficient ICTMemory loss protectionRAIDStored energy

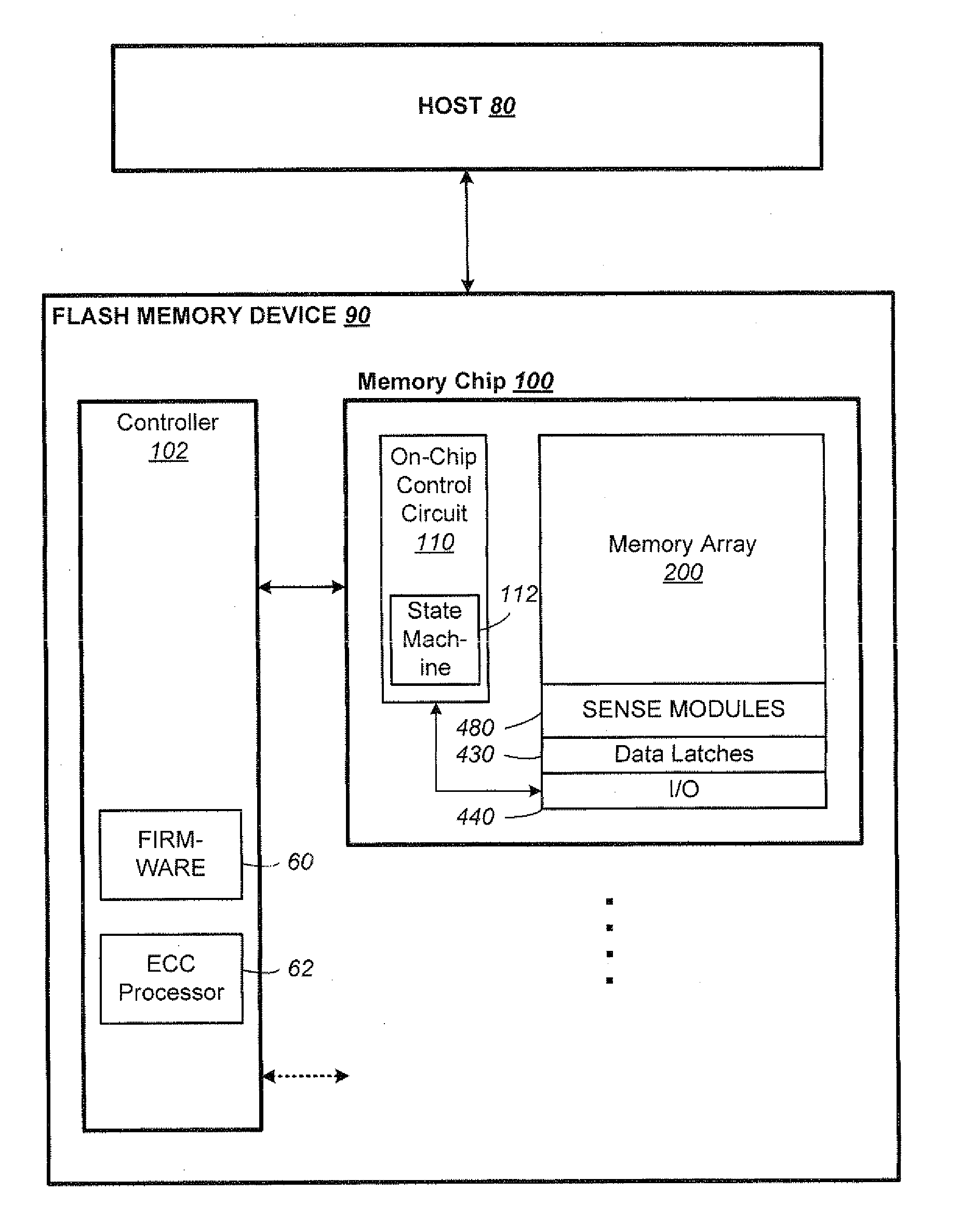

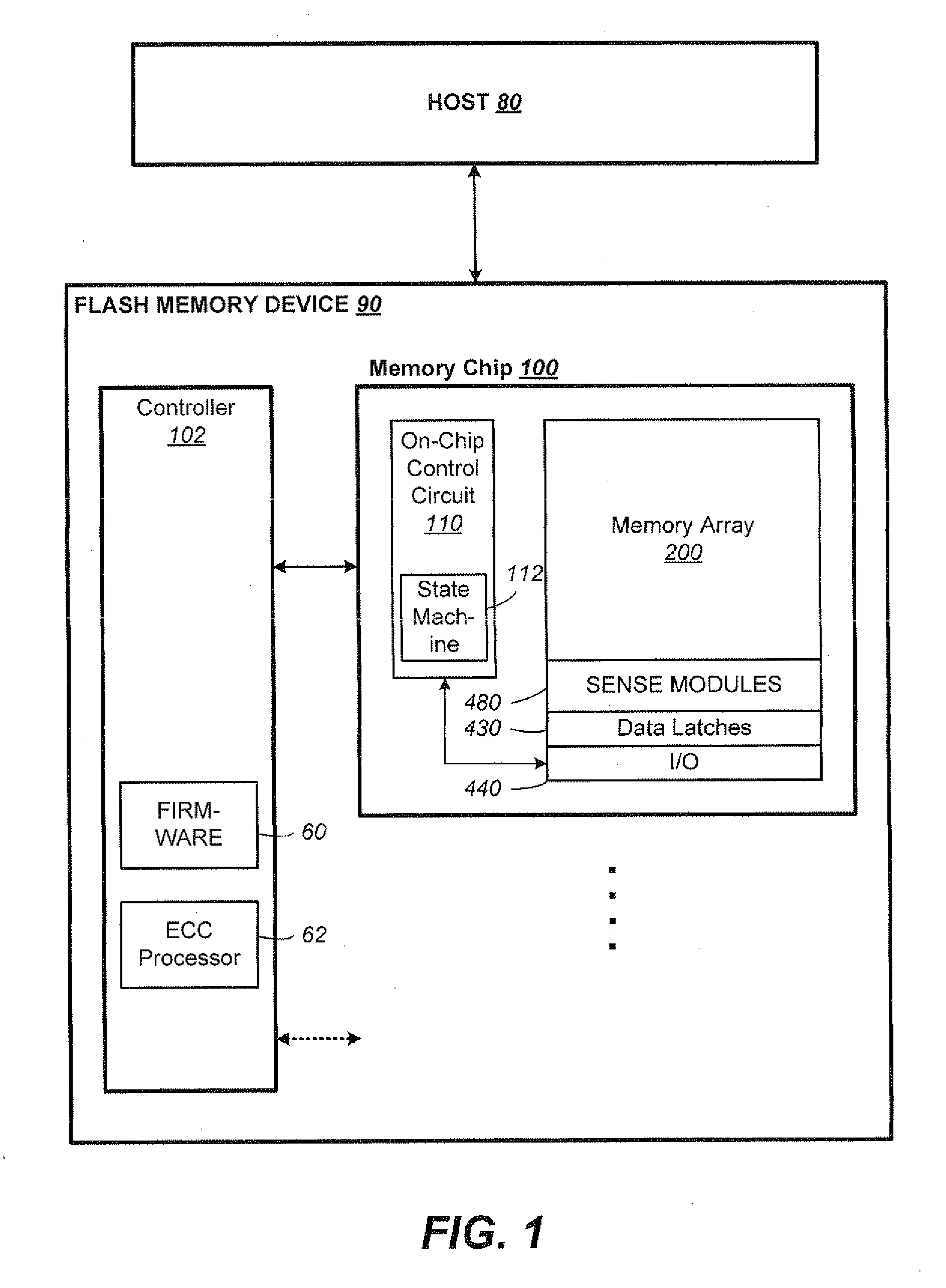

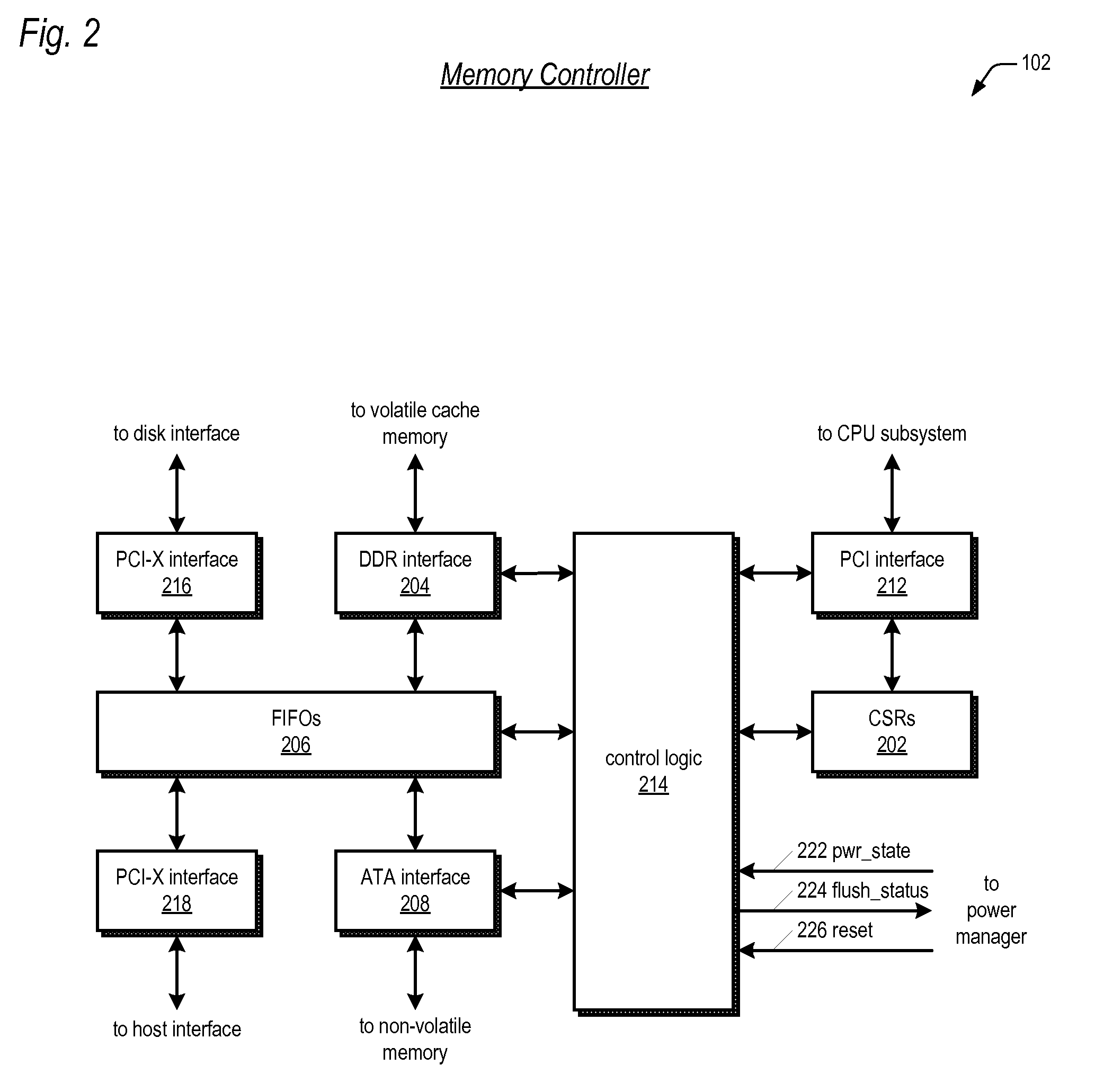

A write-caching RAID controller is disclosed. The controller includes a CPU that manages transfers of posted-write data from host computers to a volatile memory and transfers of the posted-write data from the volatile memory to storage devices when a main power source is supplying power to the RAID controller. A memory controller flushes the posted-write data from the volatile memory to the non-volatile memory when main power fails, during which time capacitors provide power to the memory controller, volatile memory, and non-volatile memory, but not to the CPU, in order to reduce the energy storage requirements of the capacitors. During main power provision, the CPU programs the memory controller with information needed to perform the flush operation, such as the location and size of the posted-write data in the volatile memory and various flush operation characteristics.

Owner:DOT HILL SYST

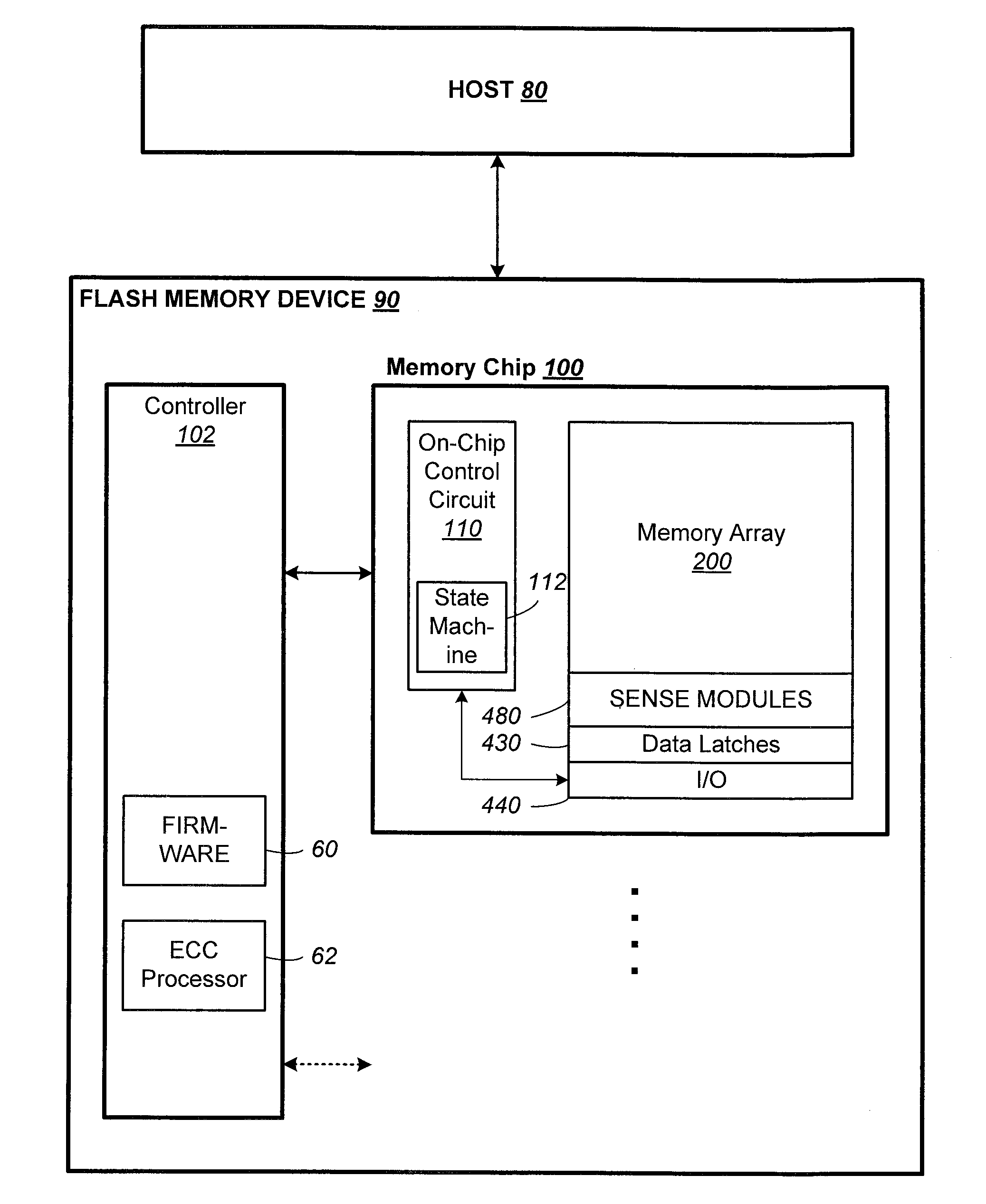

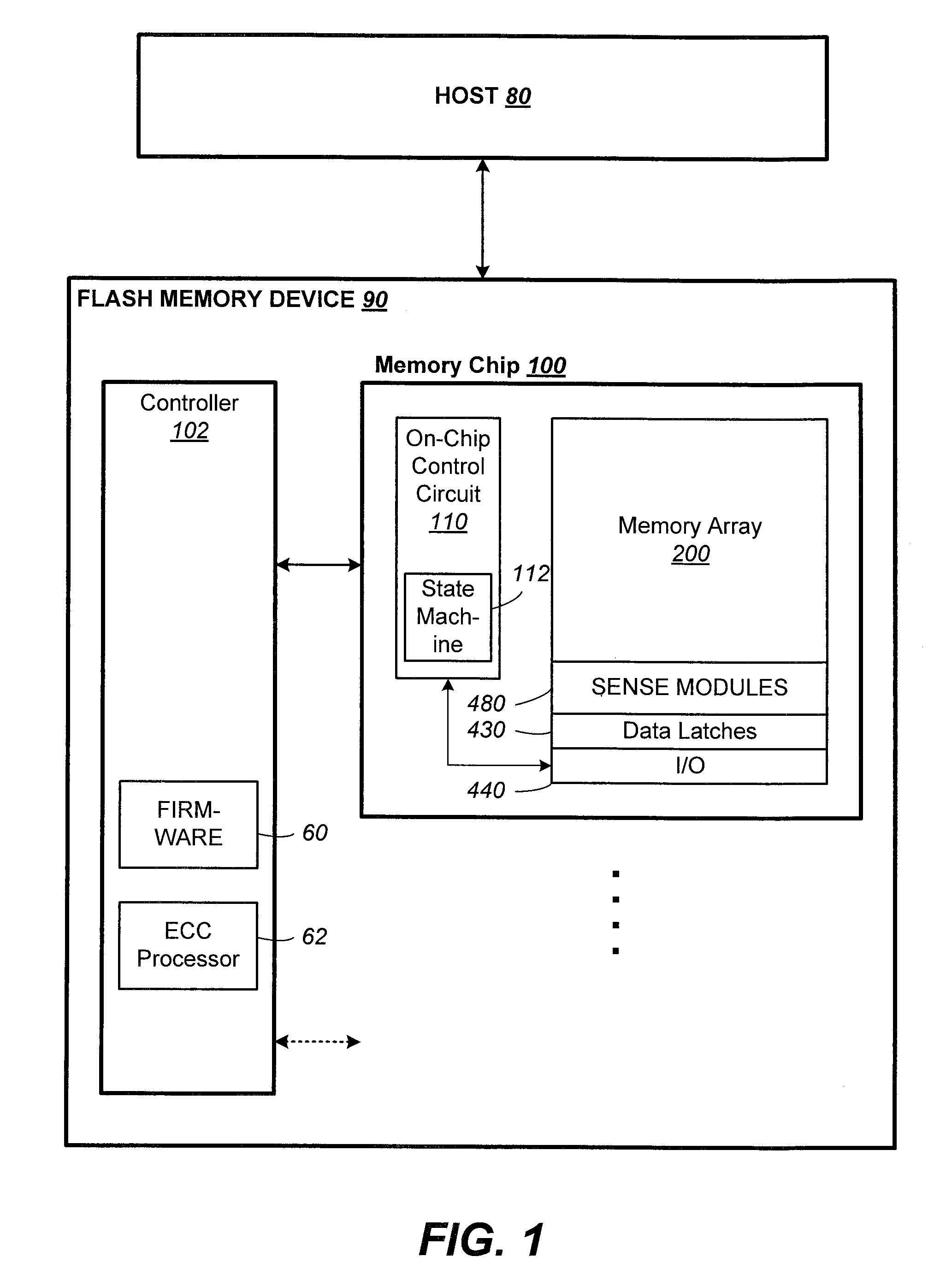

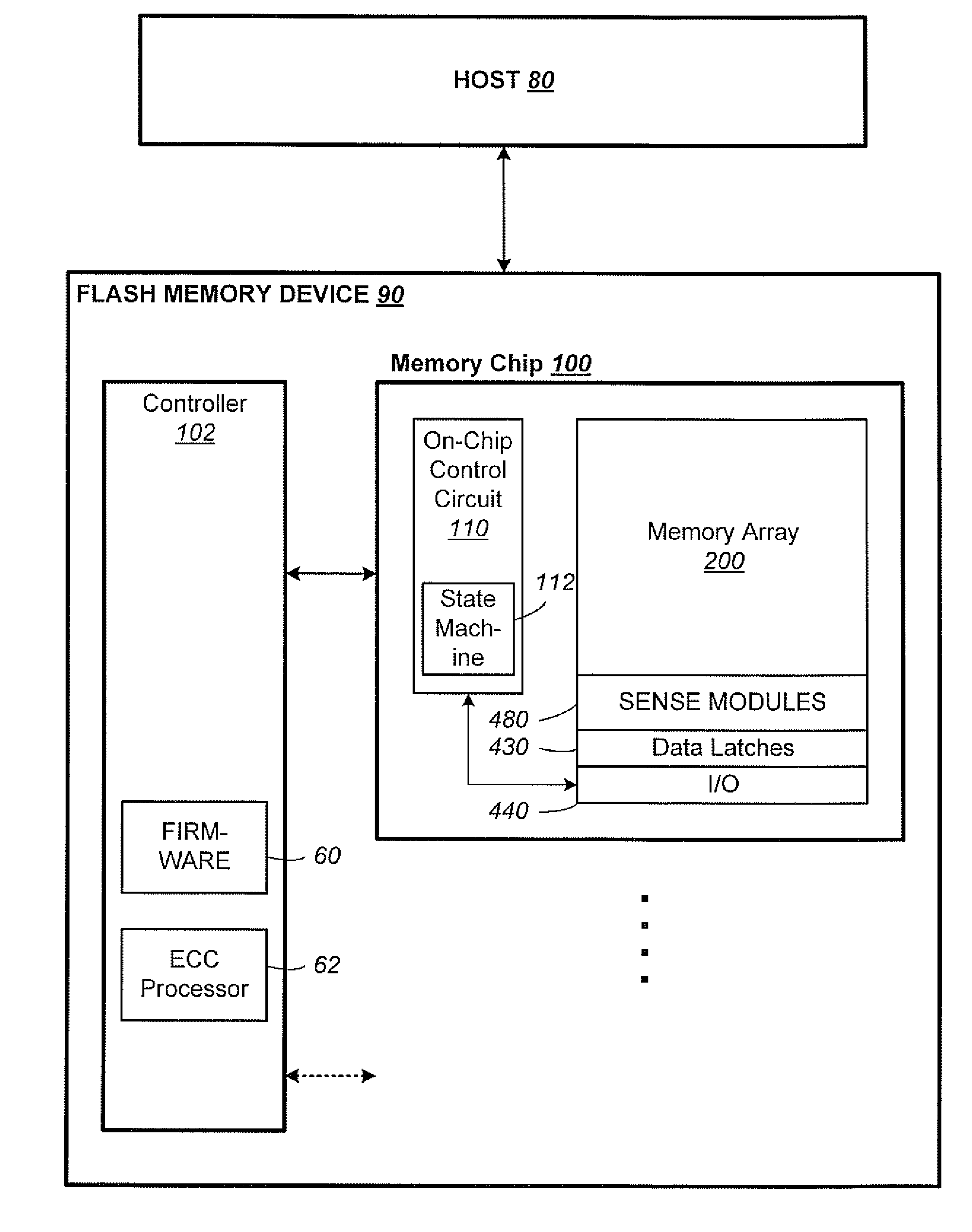

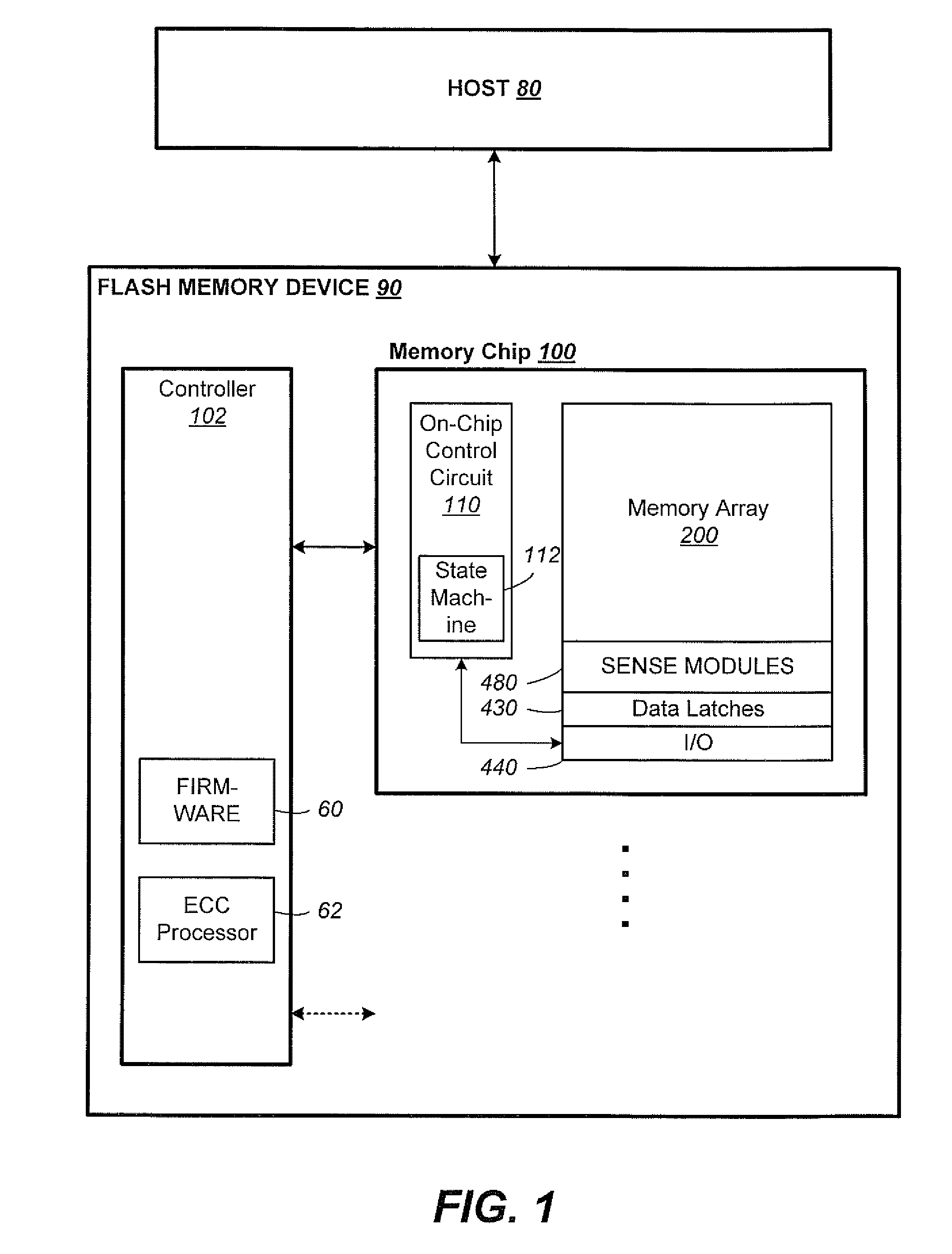

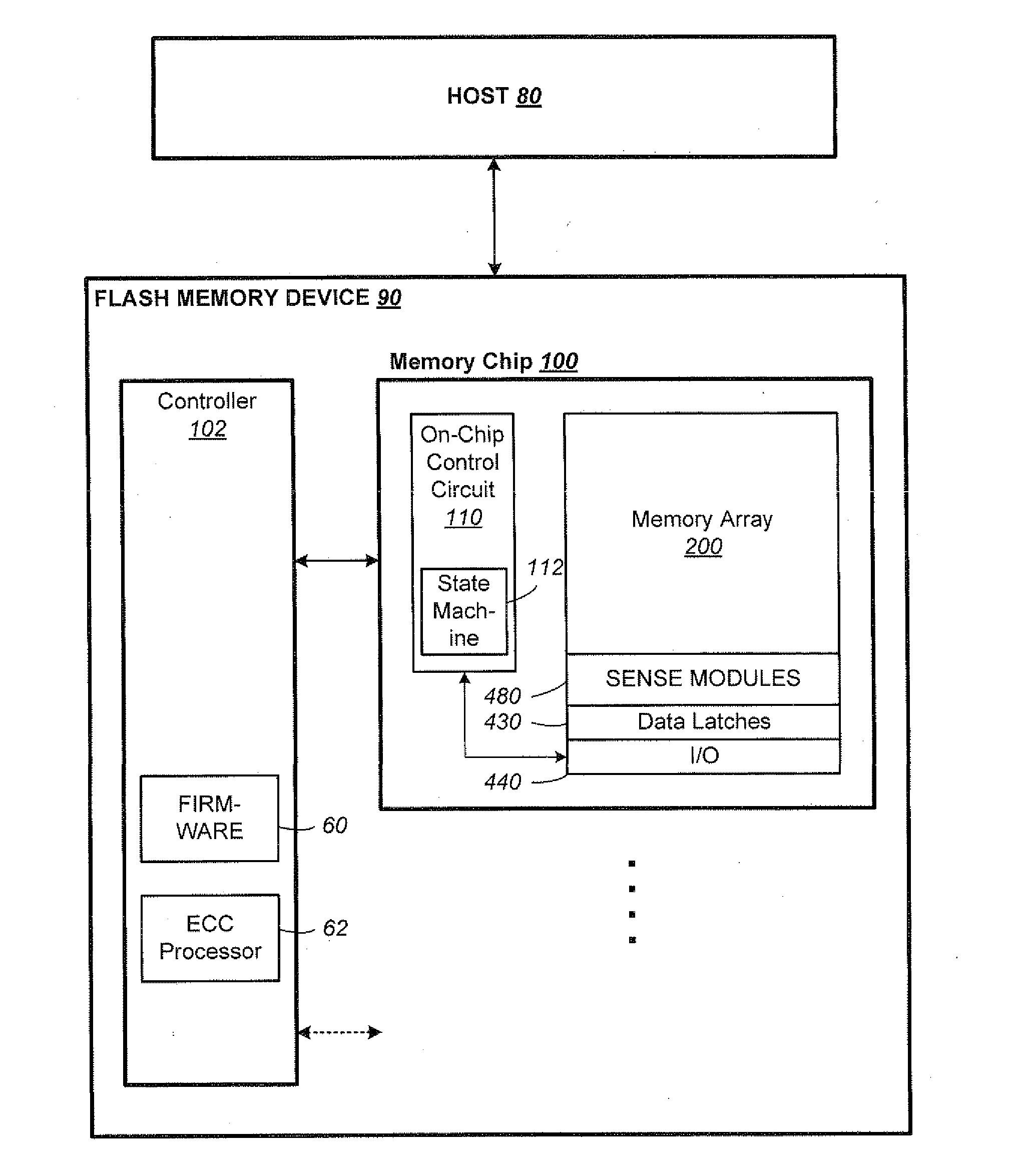

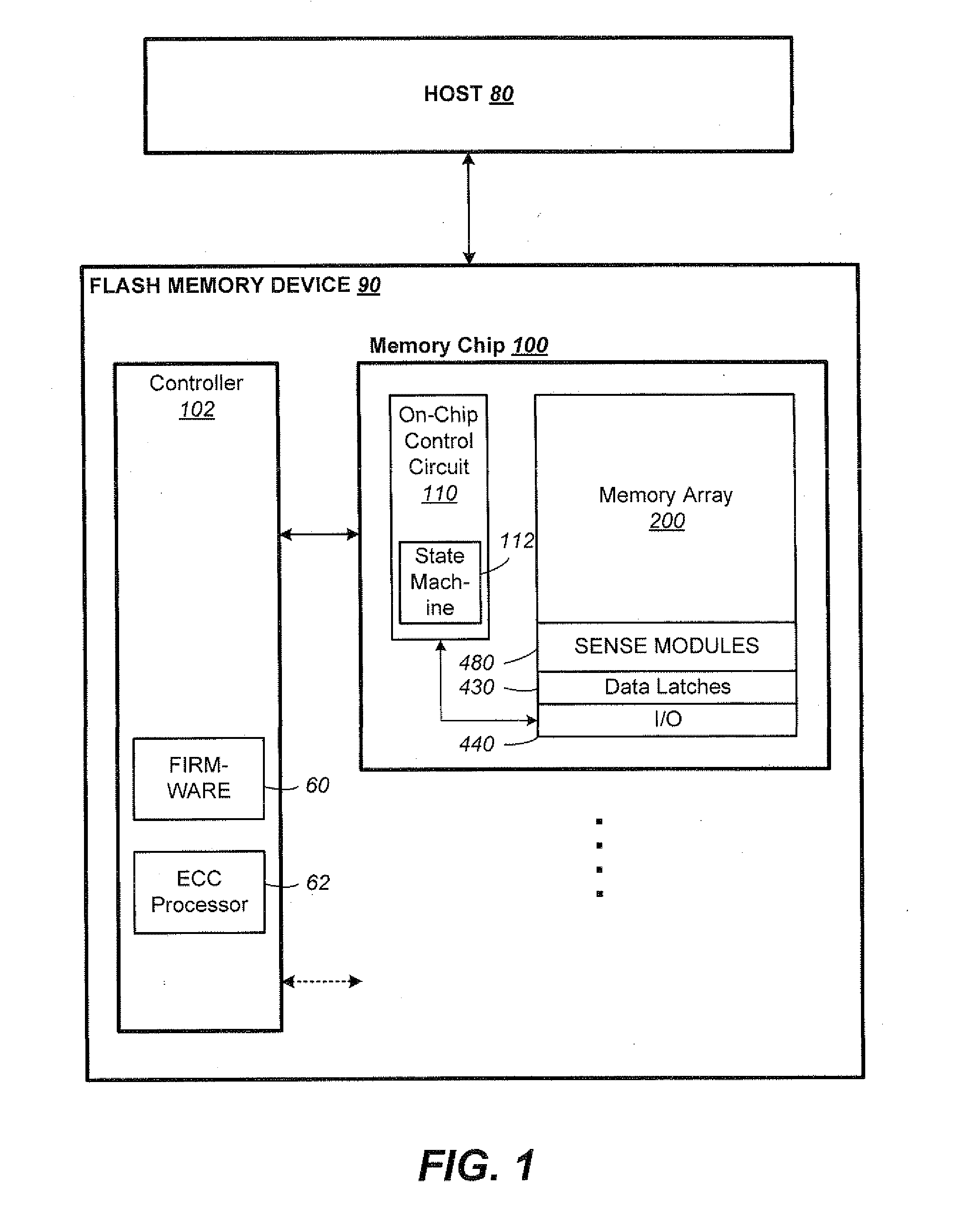

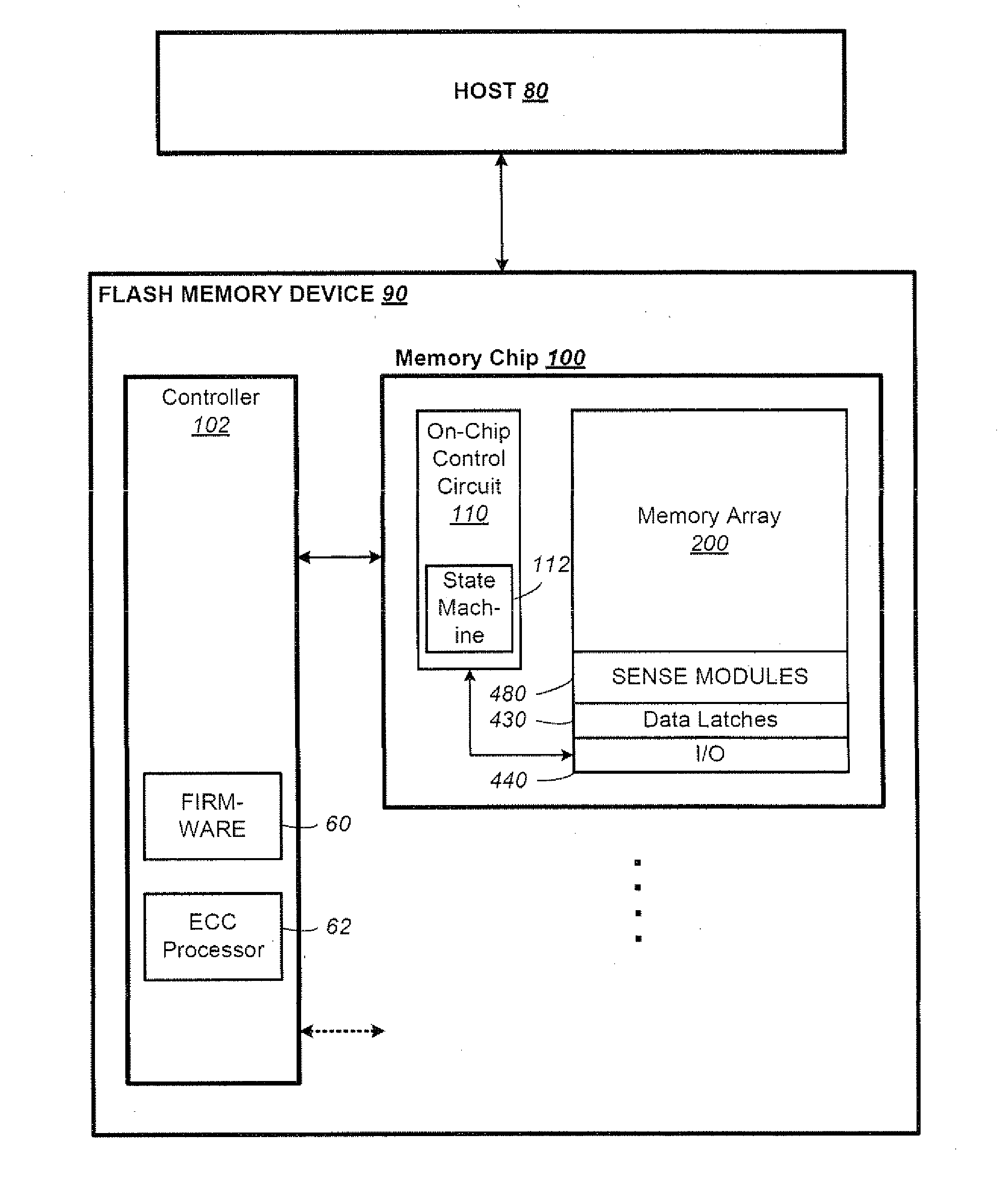

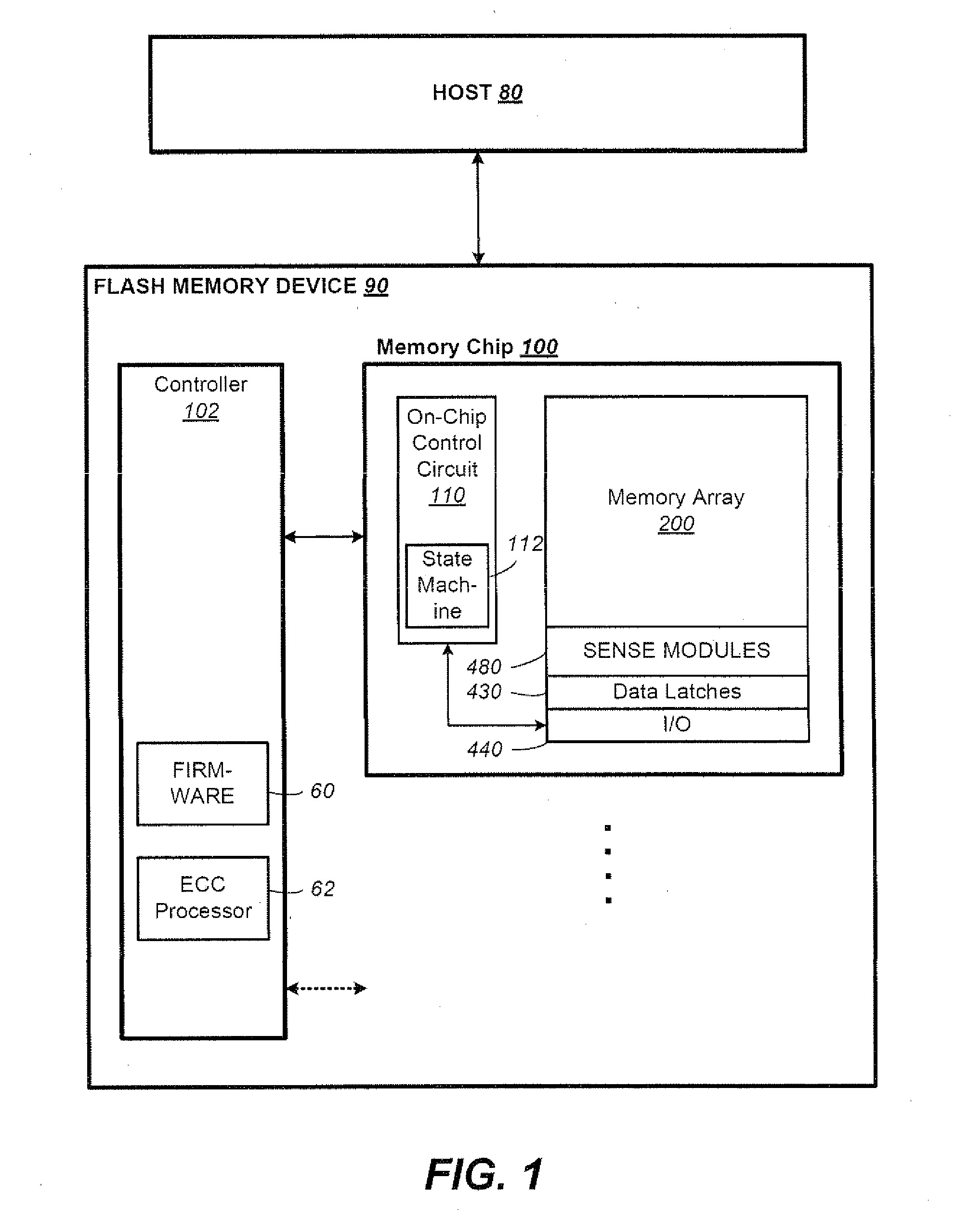

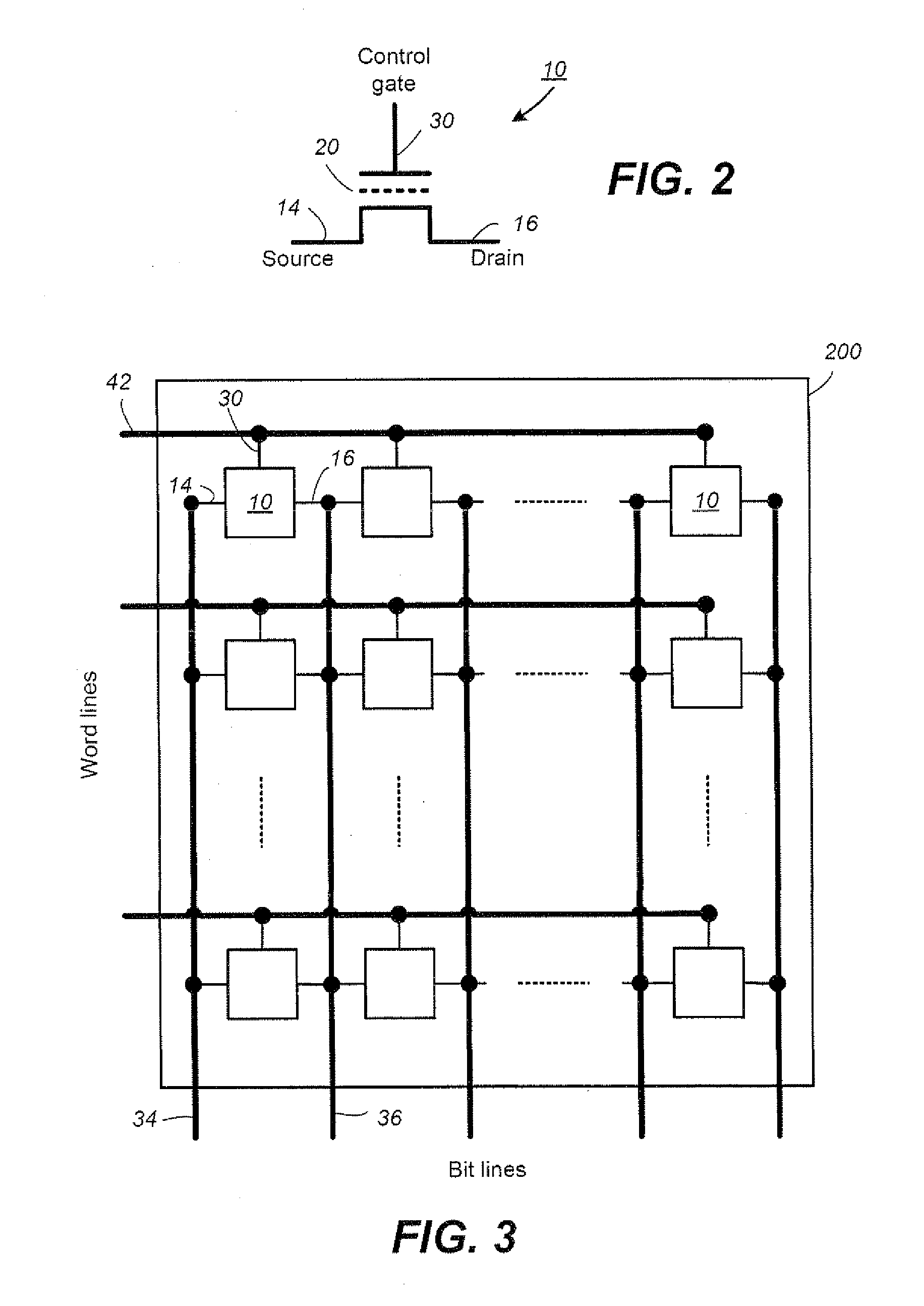

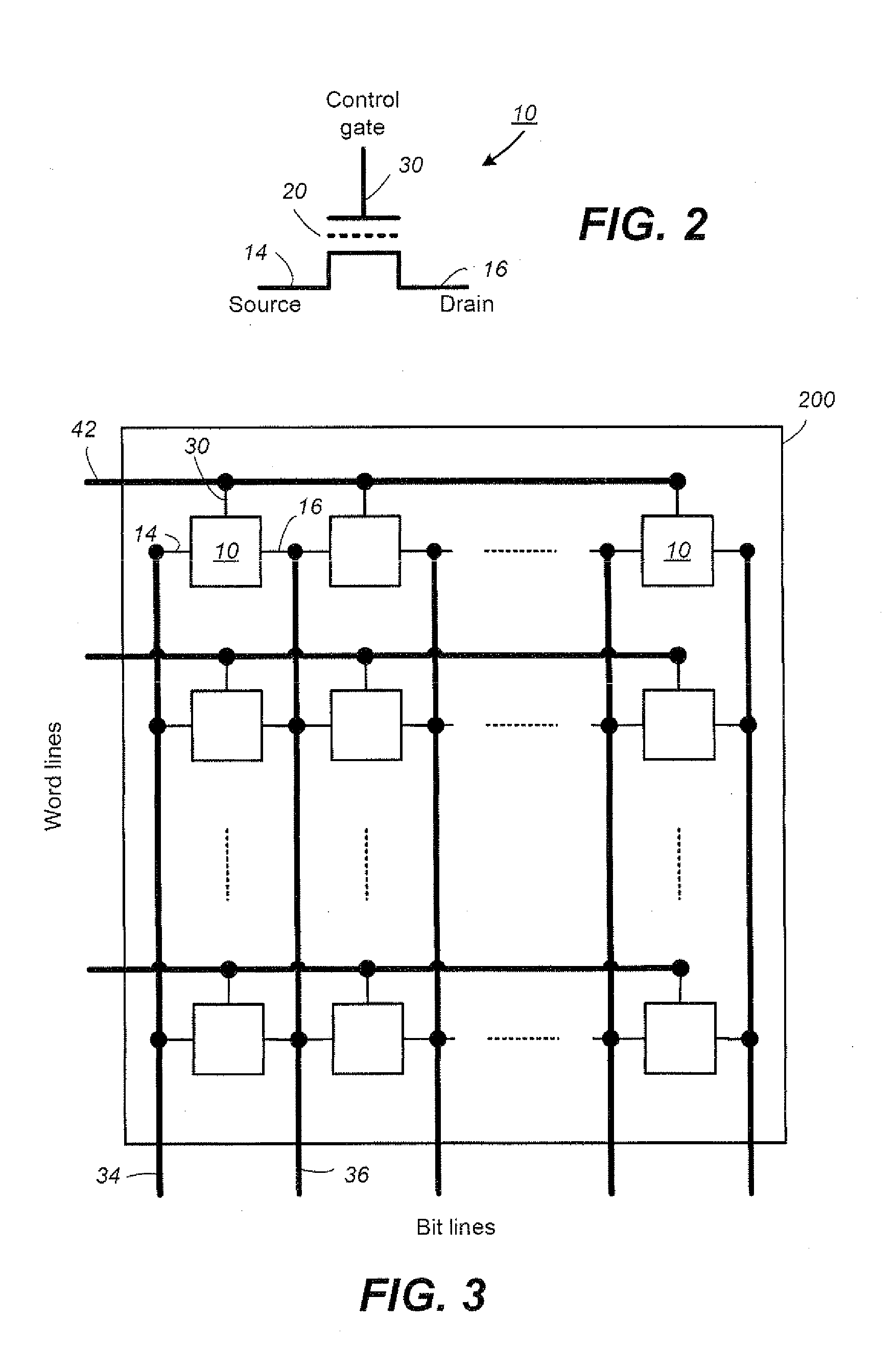

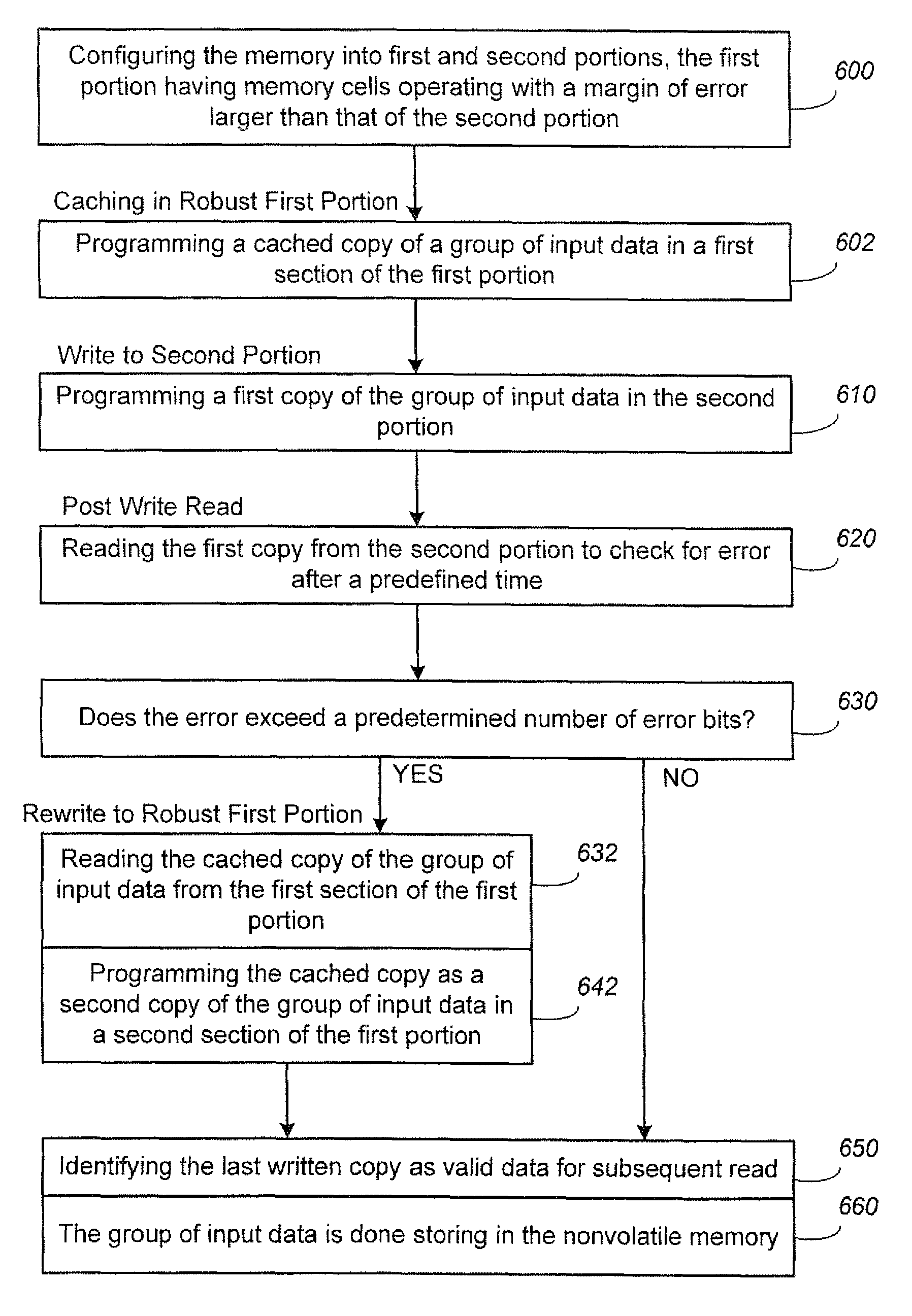

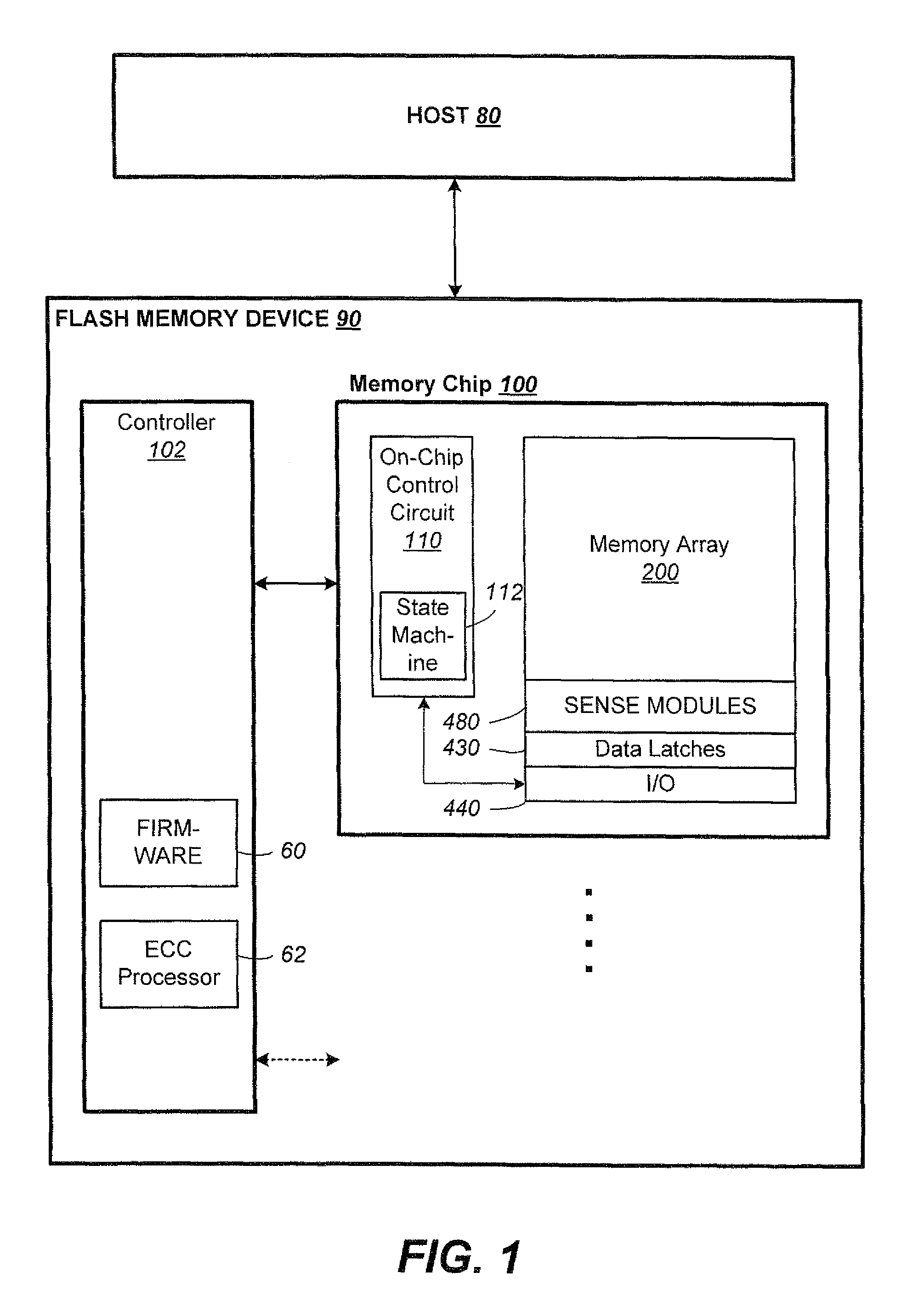

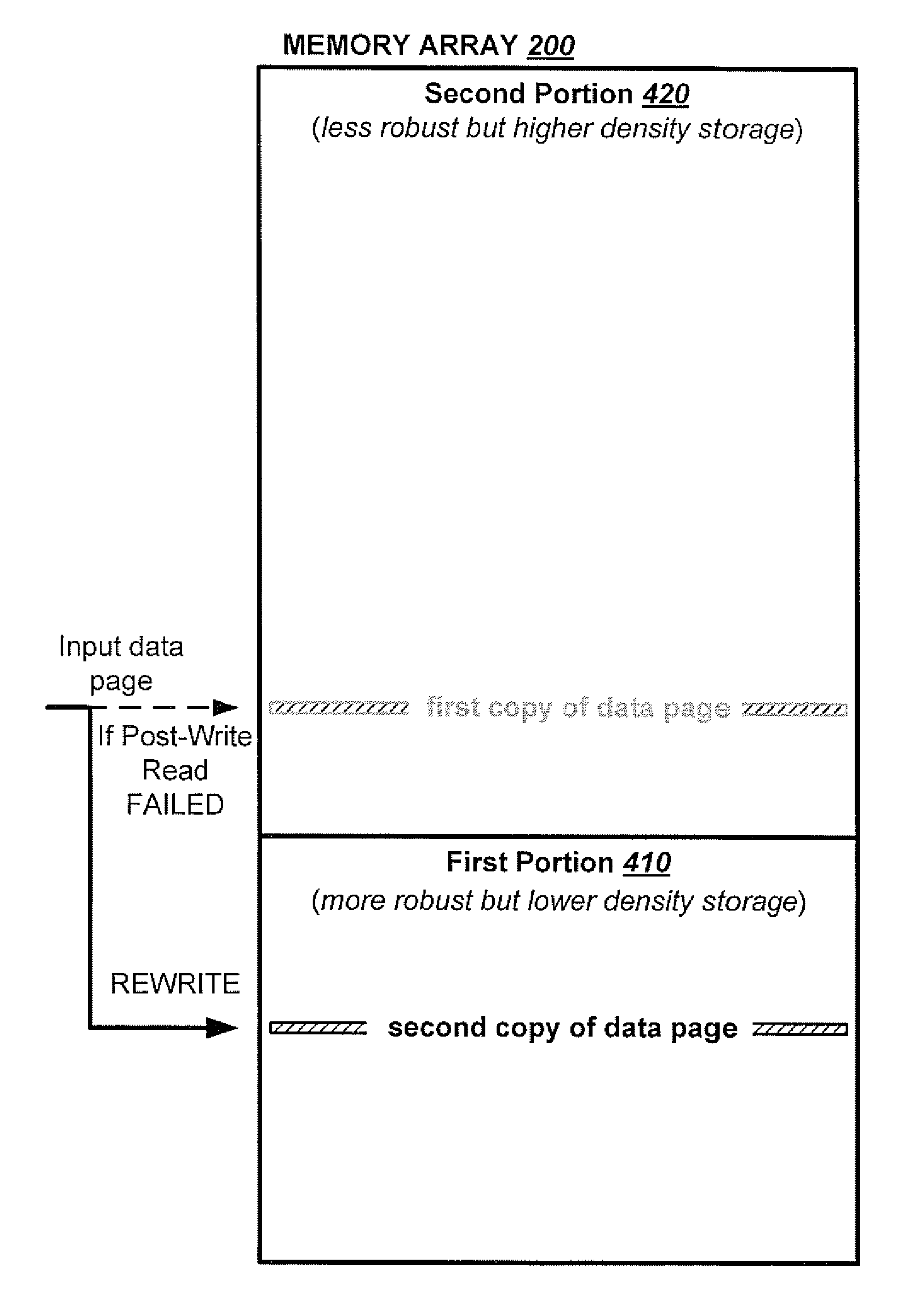

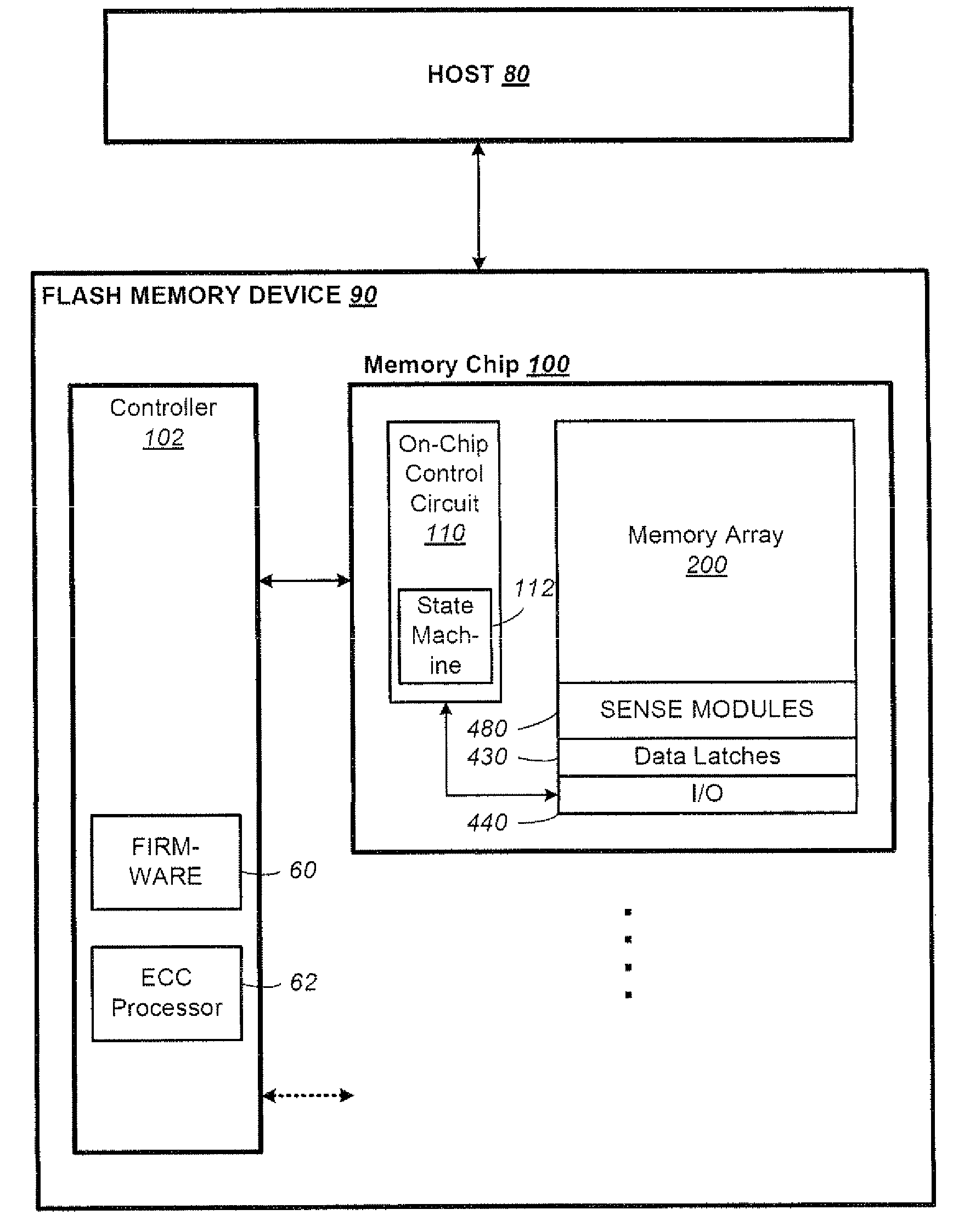

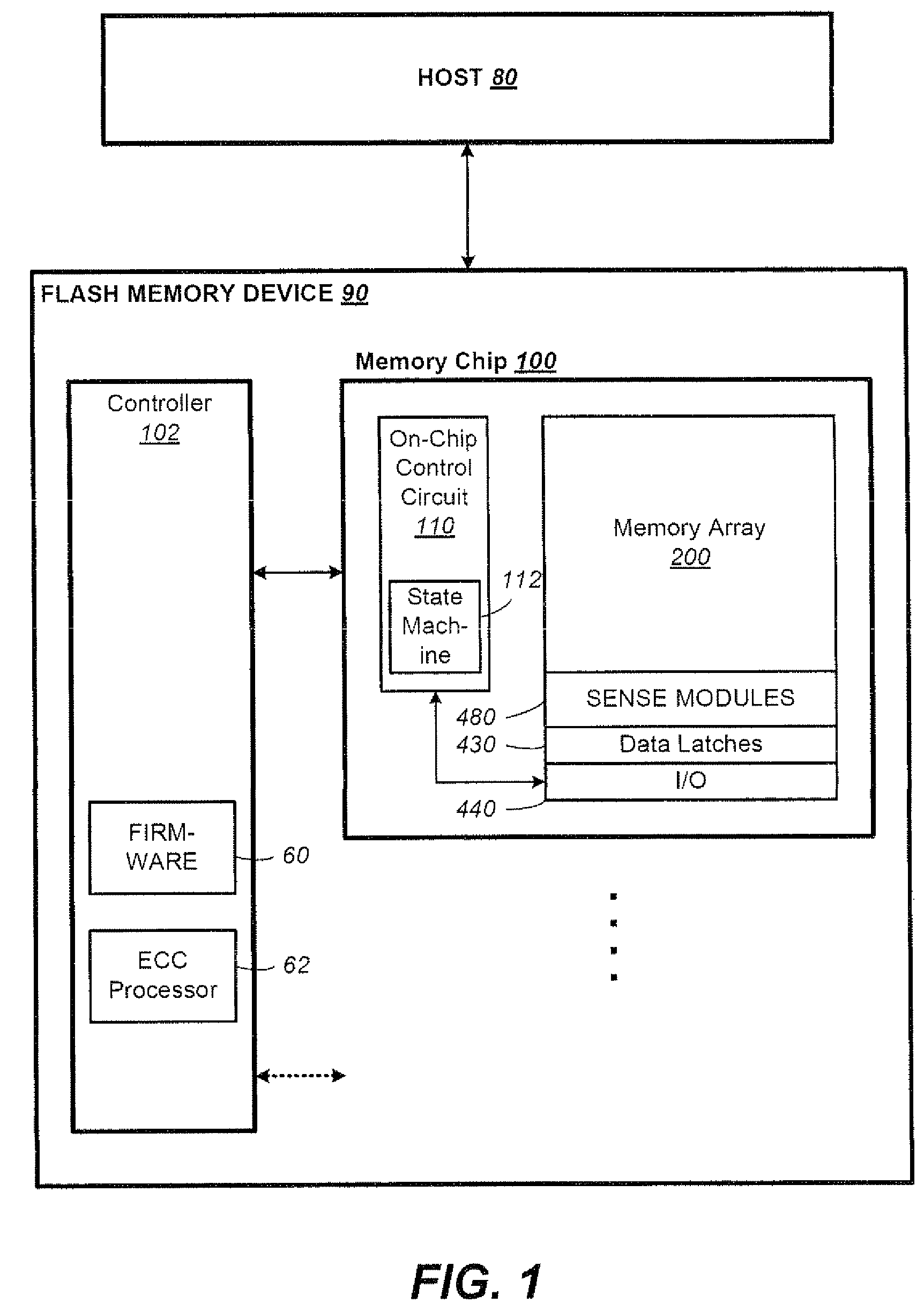

Non-Volatile Memory And Method With Post-Write Read And Adaptive Re-Write To Manage Errors

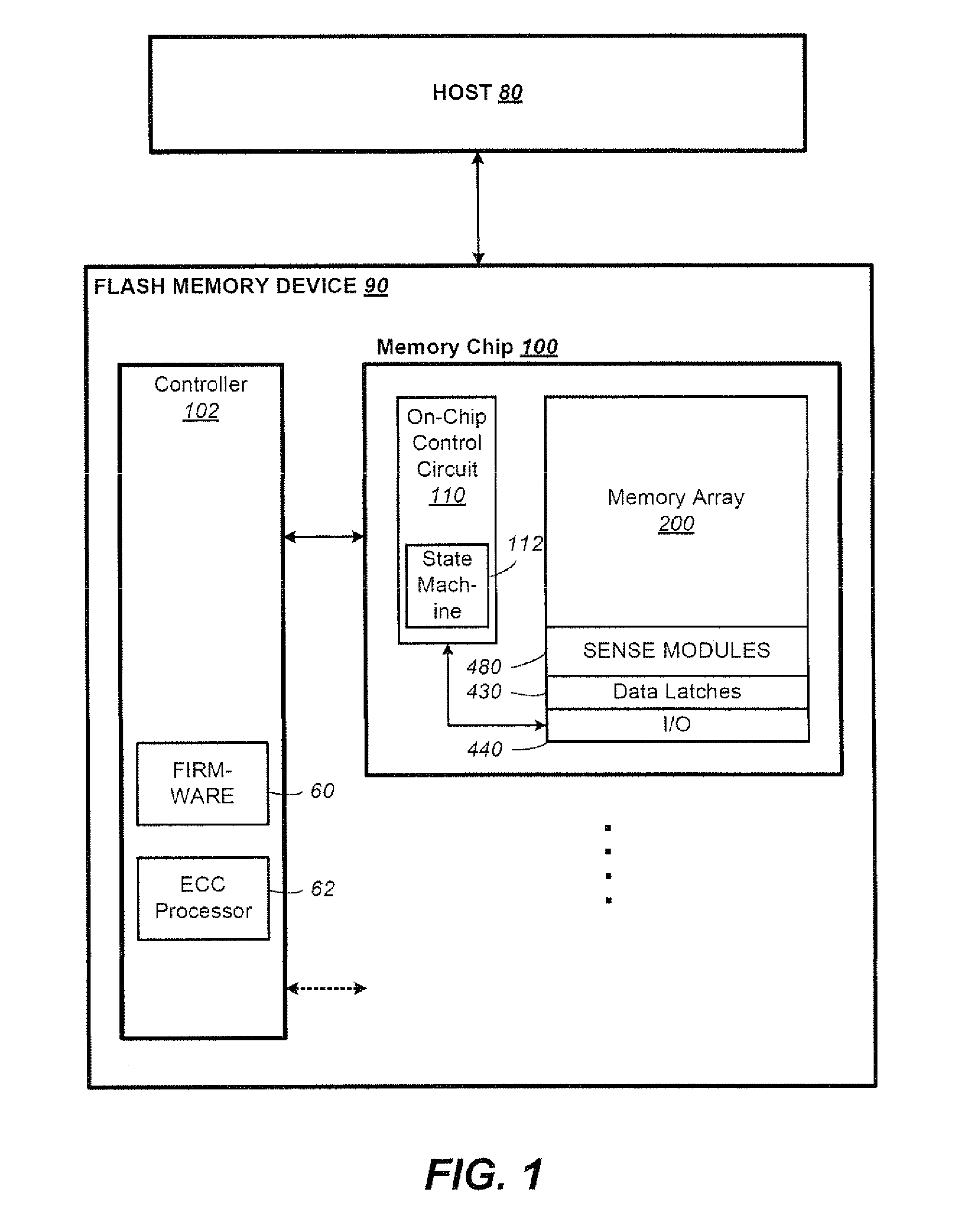

ActiveUS20110099460A1Small marginImprove performanceMemory loss protectionRead-only memoriesHigh densityLow density

Data errors in non-volatile memory inevitably increase with usage and with higher density of bits stored per cell. The memory is configured to have a first portion operating with less error but of lower density storage, and a second portion operating with a higher density but less robust storage. Input data is written and staged in the first portion before being copied to the second portion. An error management provides checking the quality of the copied data for excessive error bits. The copying and checking are repeated on a different location in the second portion until either a predetermined quality is satisfied or the number or repeats exceeds a predetermined limit. The error management is not started when a memory is new with little or no errors, but started after the memory has aged to a predetermined amount as determined by the number of erase / program cycling its has experienced.

Owner:SANDISK TECH LLC

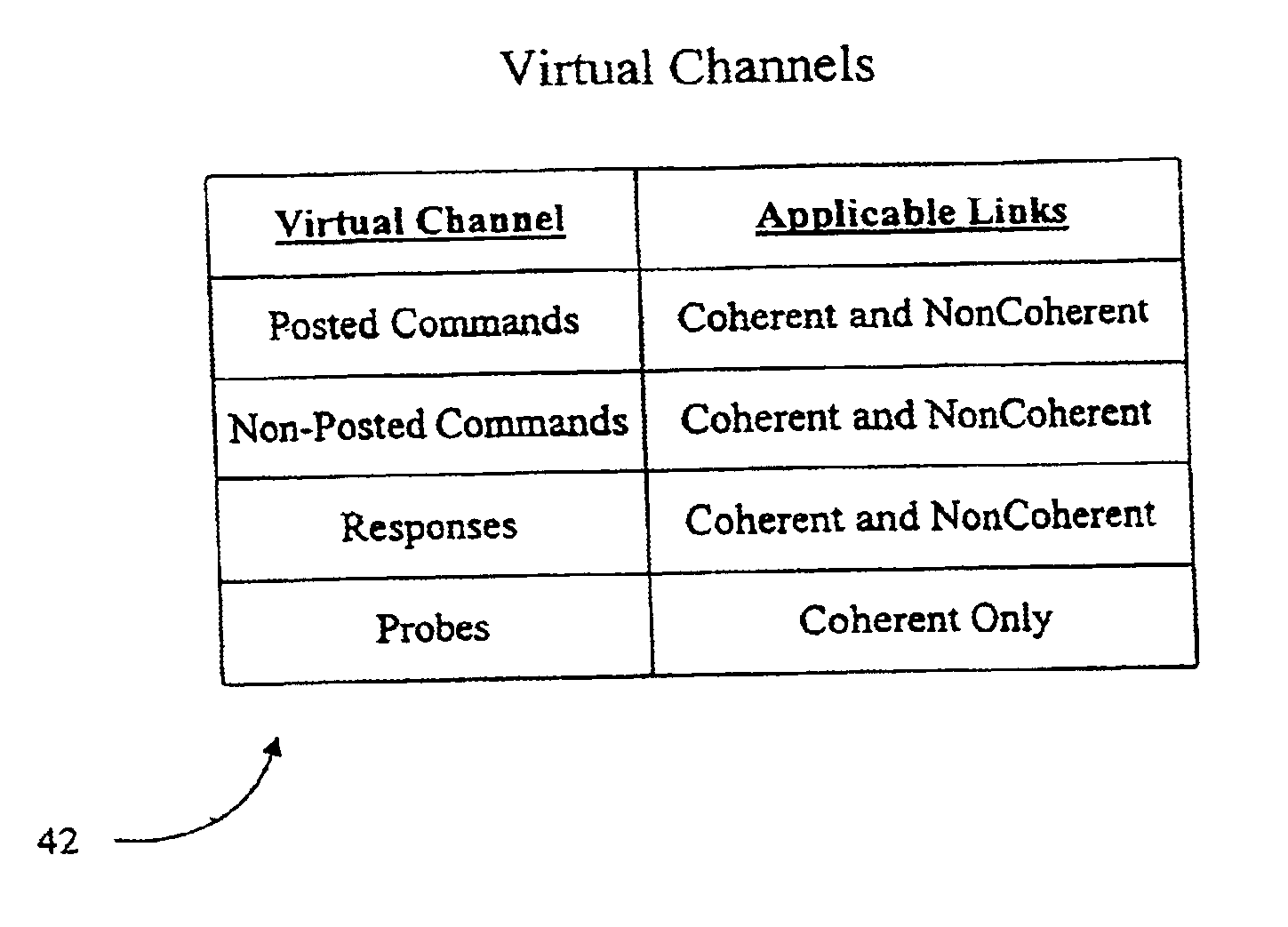

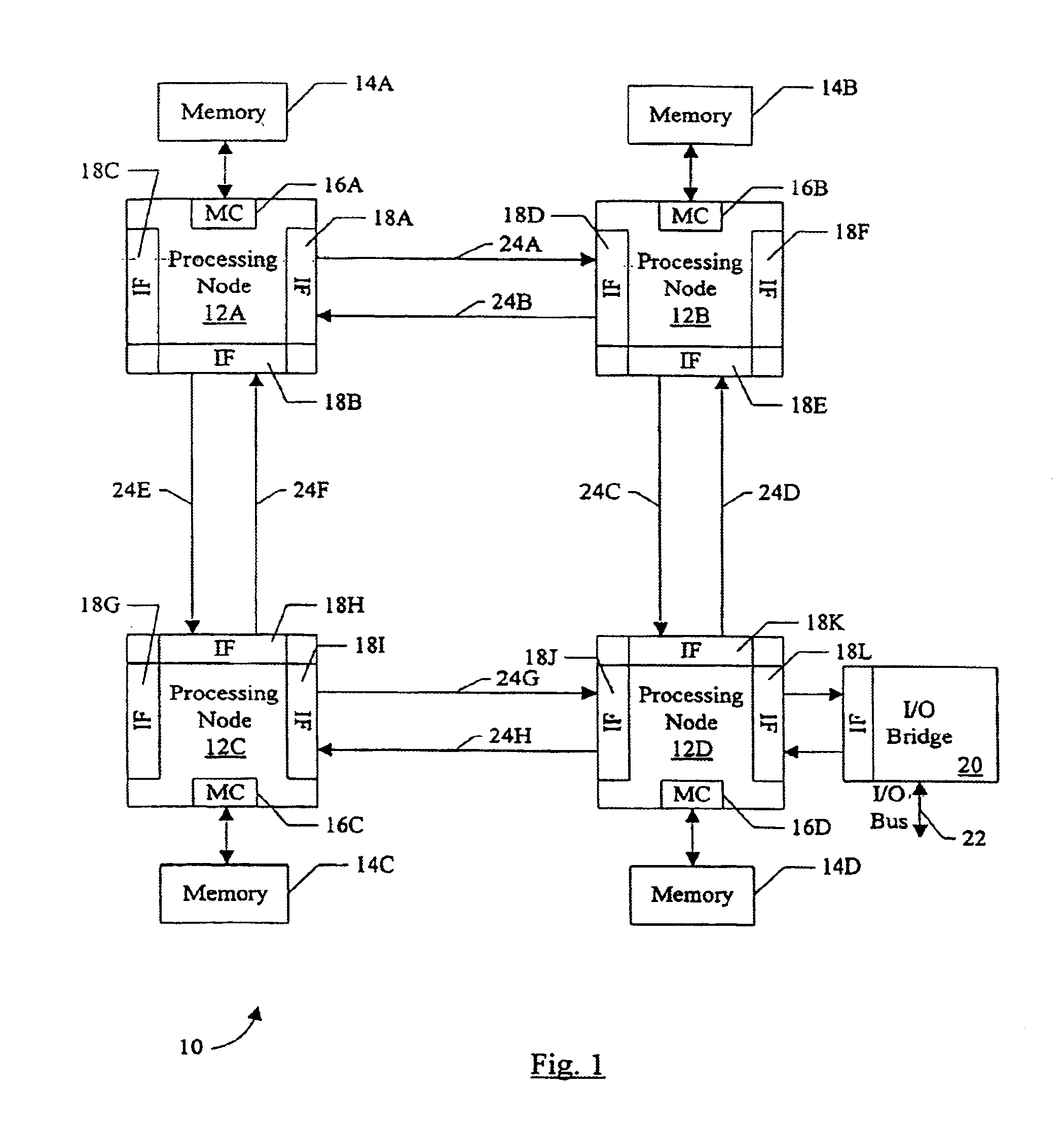

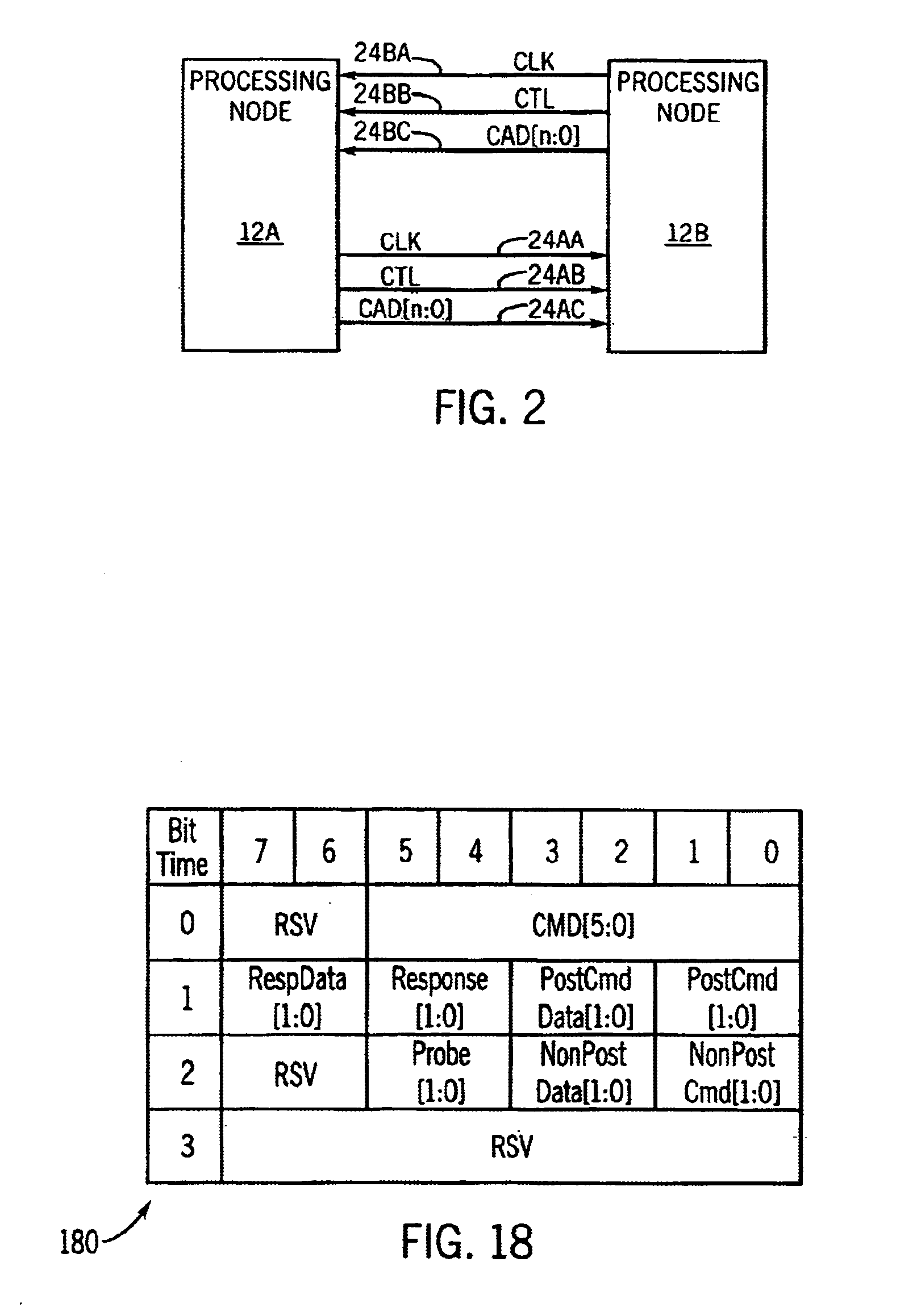

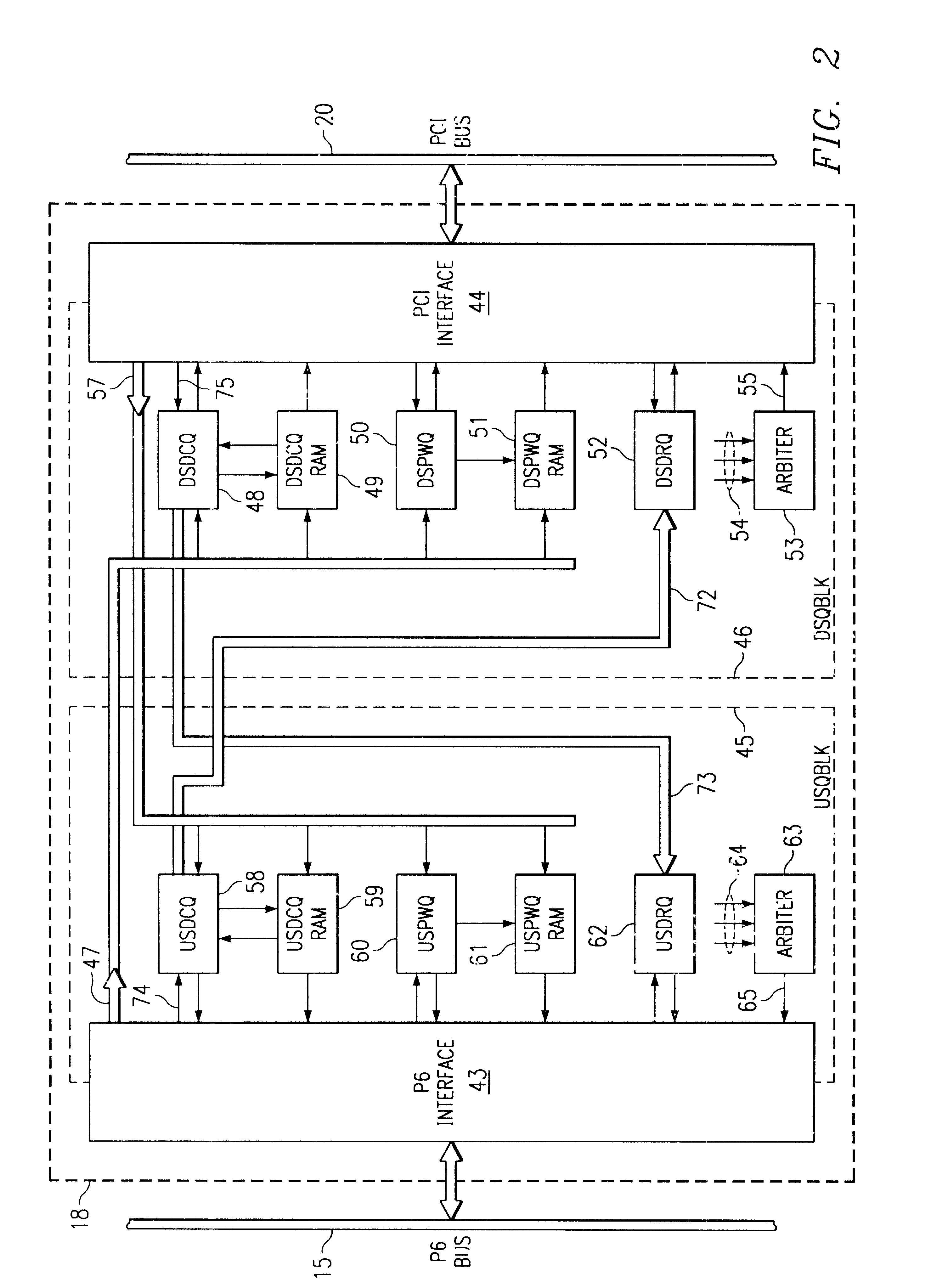

System and method for implementing a separate virtual channel for posted requests in a multiprocessor computer system

InactiveUS6950438B1Avoiding certain deadlockDeadlock-free operationTime-division multiplexData switching by path configurationMulti processorComputer compatibility

A computer system employs virtual channels and allocates different resources to the virtual channels. More particularly, the computer system provides a posted commands virtual channel separate from the non-posted commands virtual channel for routing posted and non-posted commands or requests through coherent and noncoherent fabrics within the computer system. Because separate resources are allocated to the virtual channels in the computer system, posted requests may be allowed to become unordered with other requests from the same source. Implementation of a separate posted commands virtual channel may allow the computer system to maintain compatibility with I / O systems in which posted write requests may become unordered with previous posted requests (e.g., the Peripheral Component Interconnect Bus, or PCI). Implementation of the separate posted commands virtual channel thus may assist in providing deadlock-free operation.

Owner:ALPHA PROCESSOR +2

Non-Volatile Memory And Method With Post-Write Read And Adaptive Re-Write To Manage Errors

ActiveUS20110099418A1Small marginReduce memory costMemory adressing/allocation/relocationRead-only memoriesHigh densityQuality assurance

Data errors in non-volatile memory inevitably increase with usage and with higher density of bits stored per cell. For acceptable quality assurance, conventional error correction codes (“ECC”) have to correct a maximum number of error bits up to the far tail end of a statistical population. The present memory is configured to have a first portion operating with less error but of lower density storage, and a second portion operating with a higher density but less robust storage. If excessive error bits (at the far tail-end) occur after writing a group of data to the second portion, the data is adaptively rewritten to the first portion which will produce less error bits. Preferably, the data is initially written to a cache also in the first portion to provide source data for any rewrites. Thus, a more efficient ECC not requiring to correcting for the far tail end can be used.

Owner:SANDISK TECH LLC

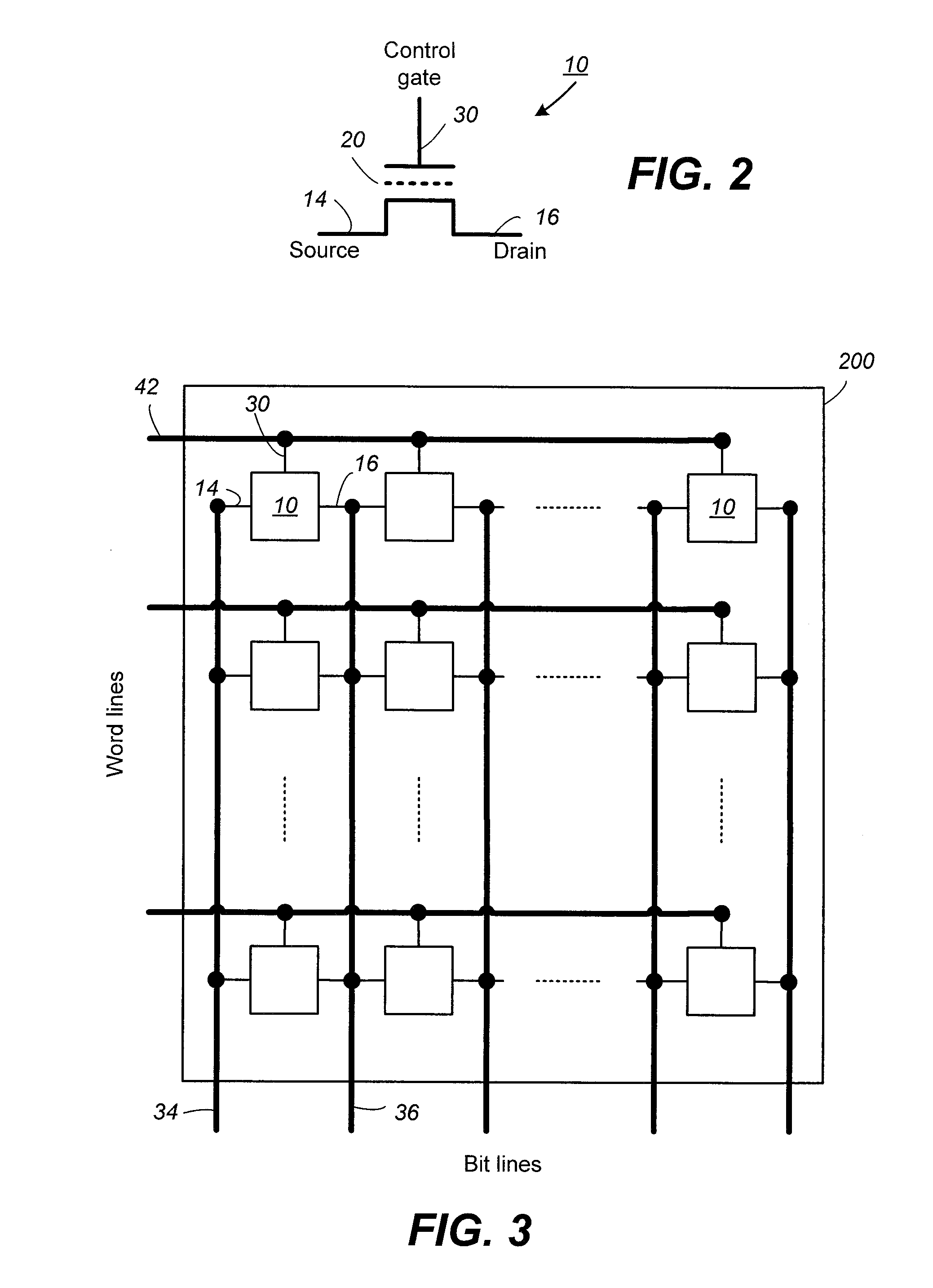

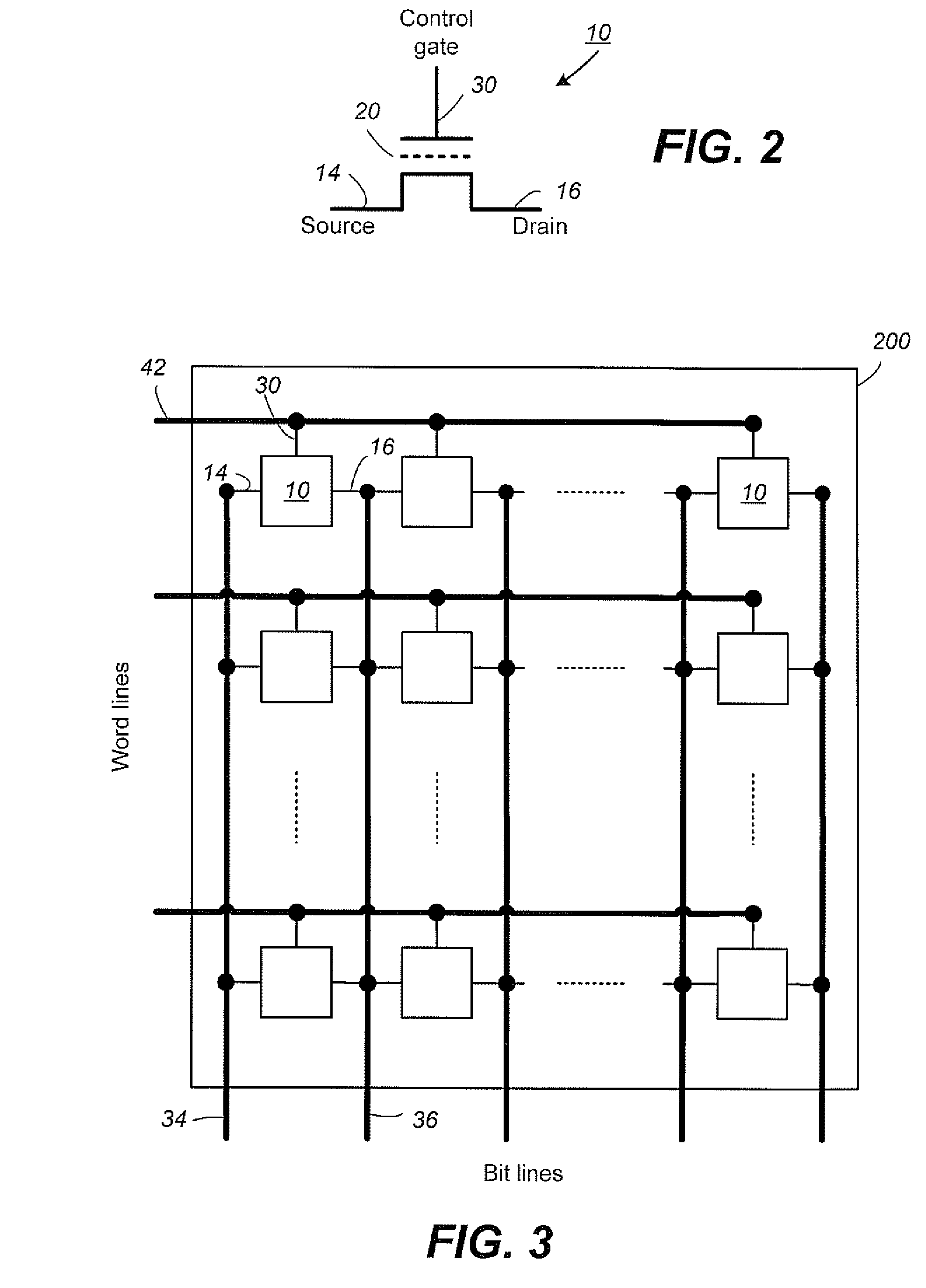

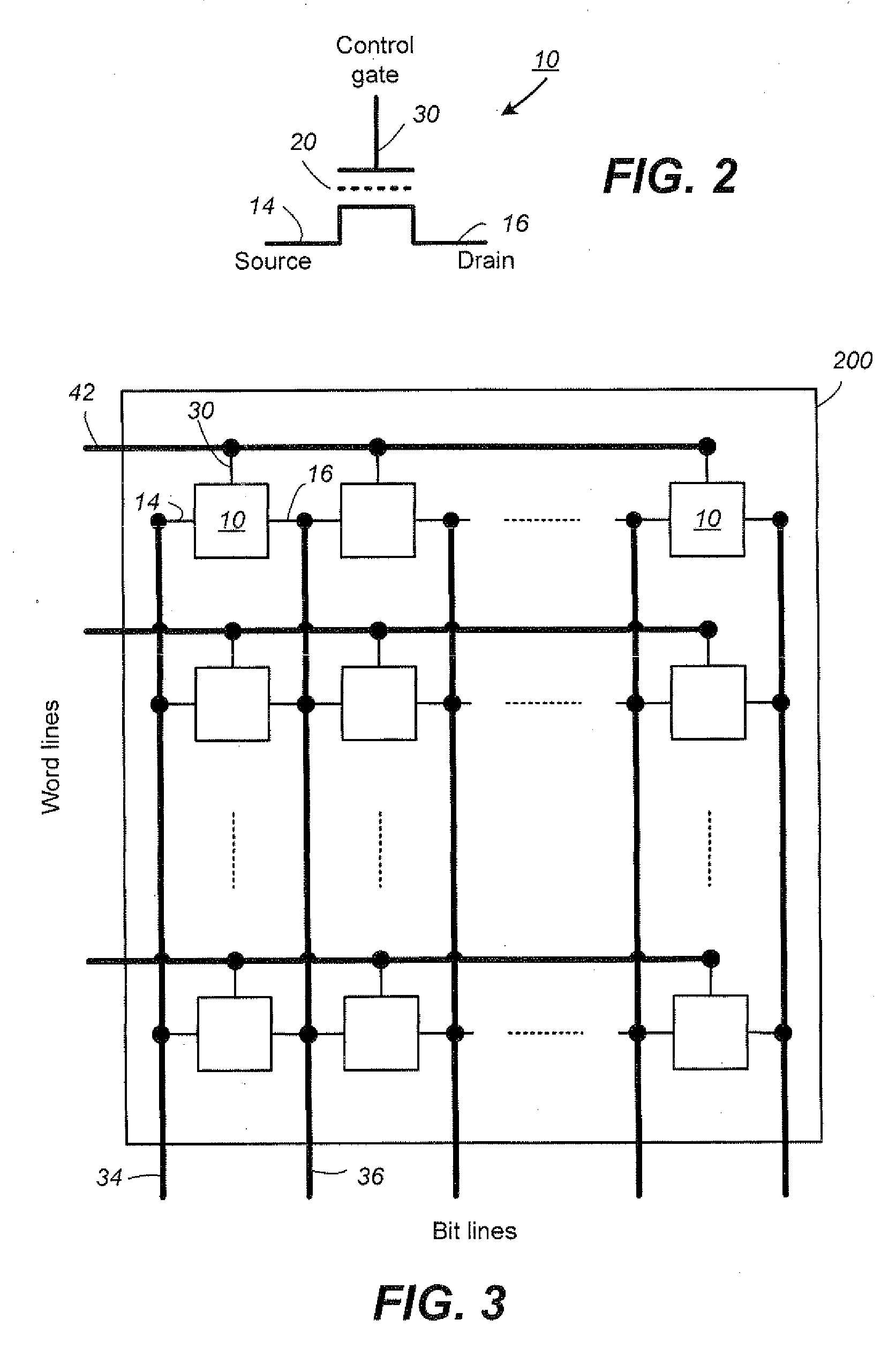

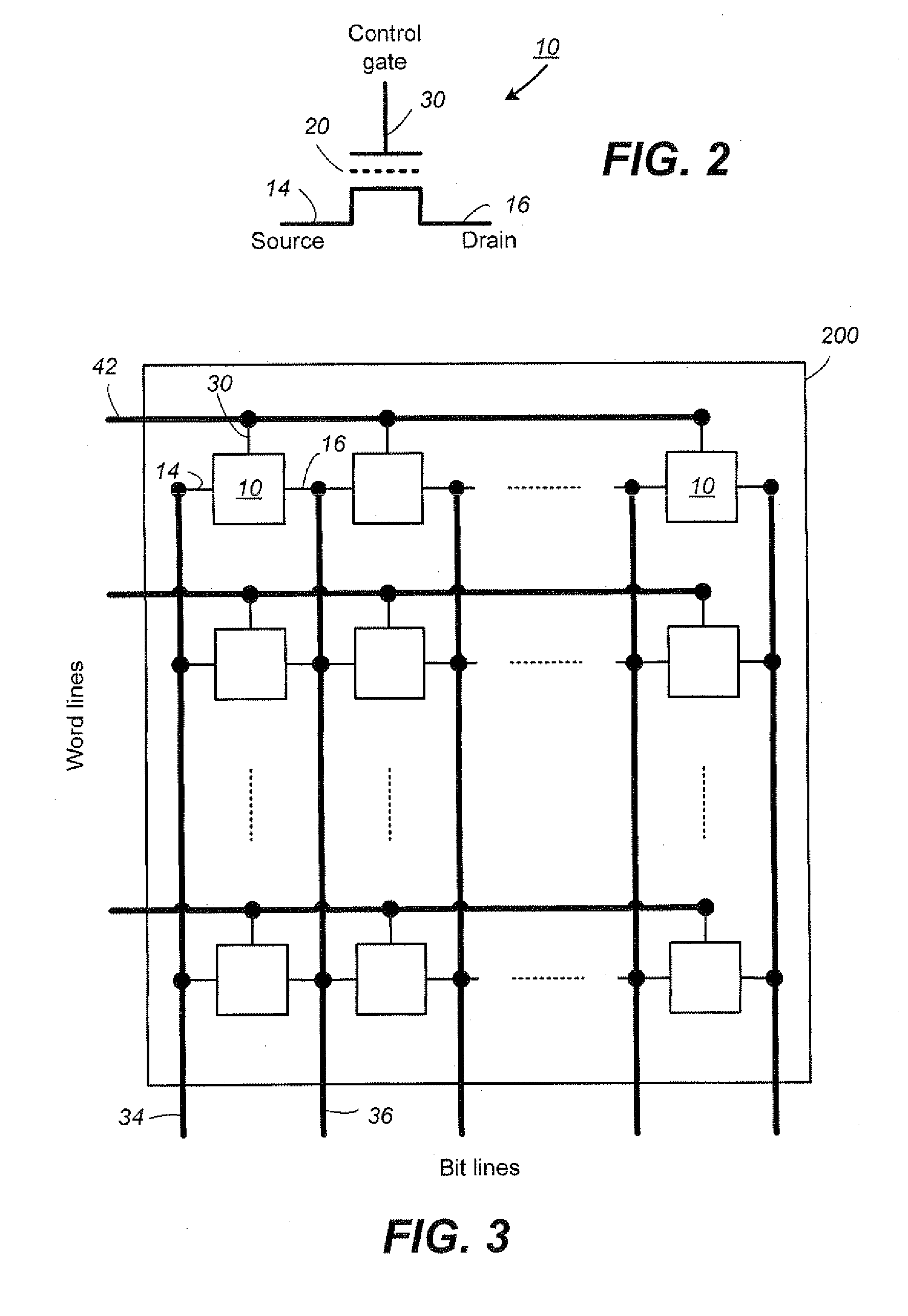

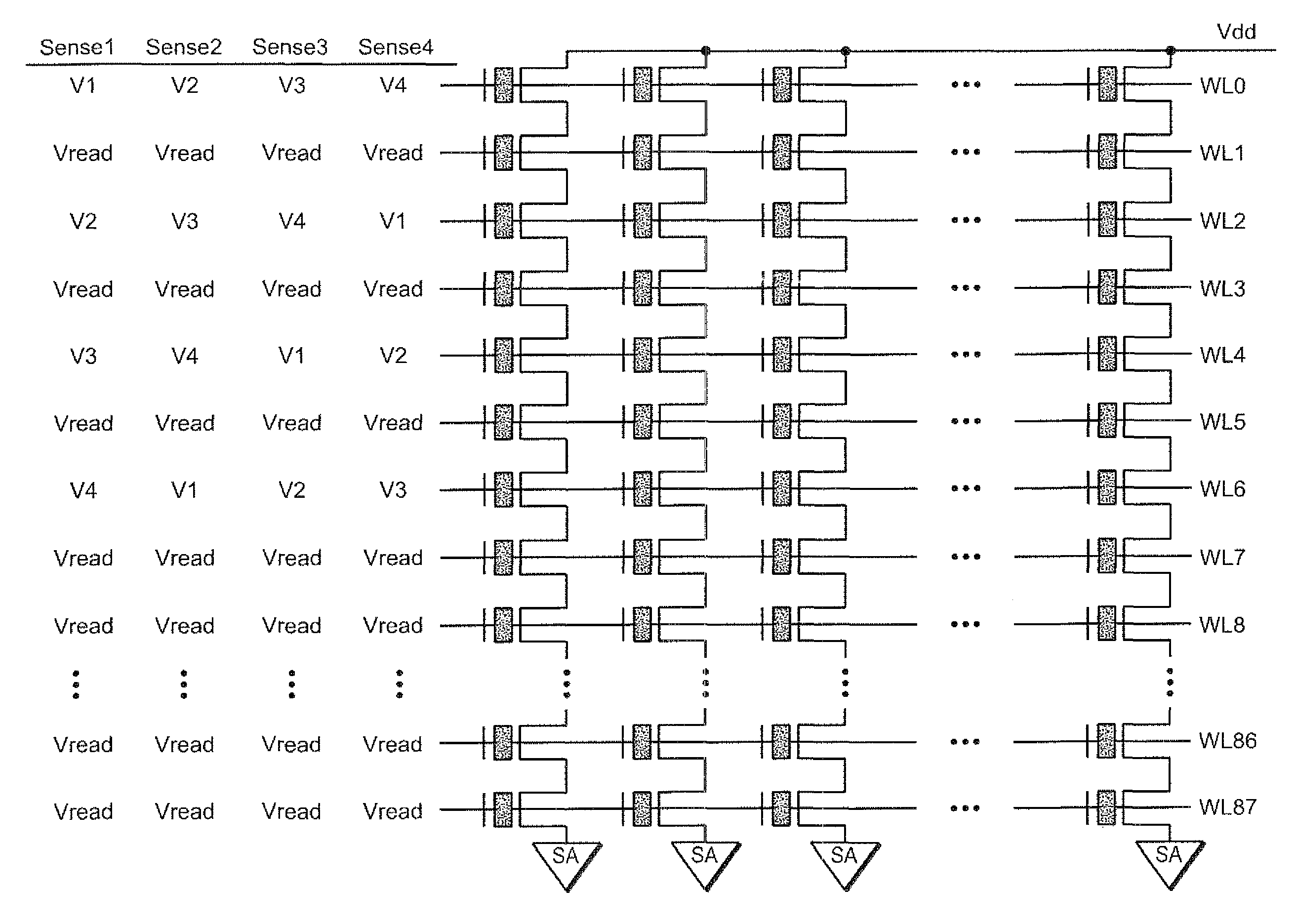

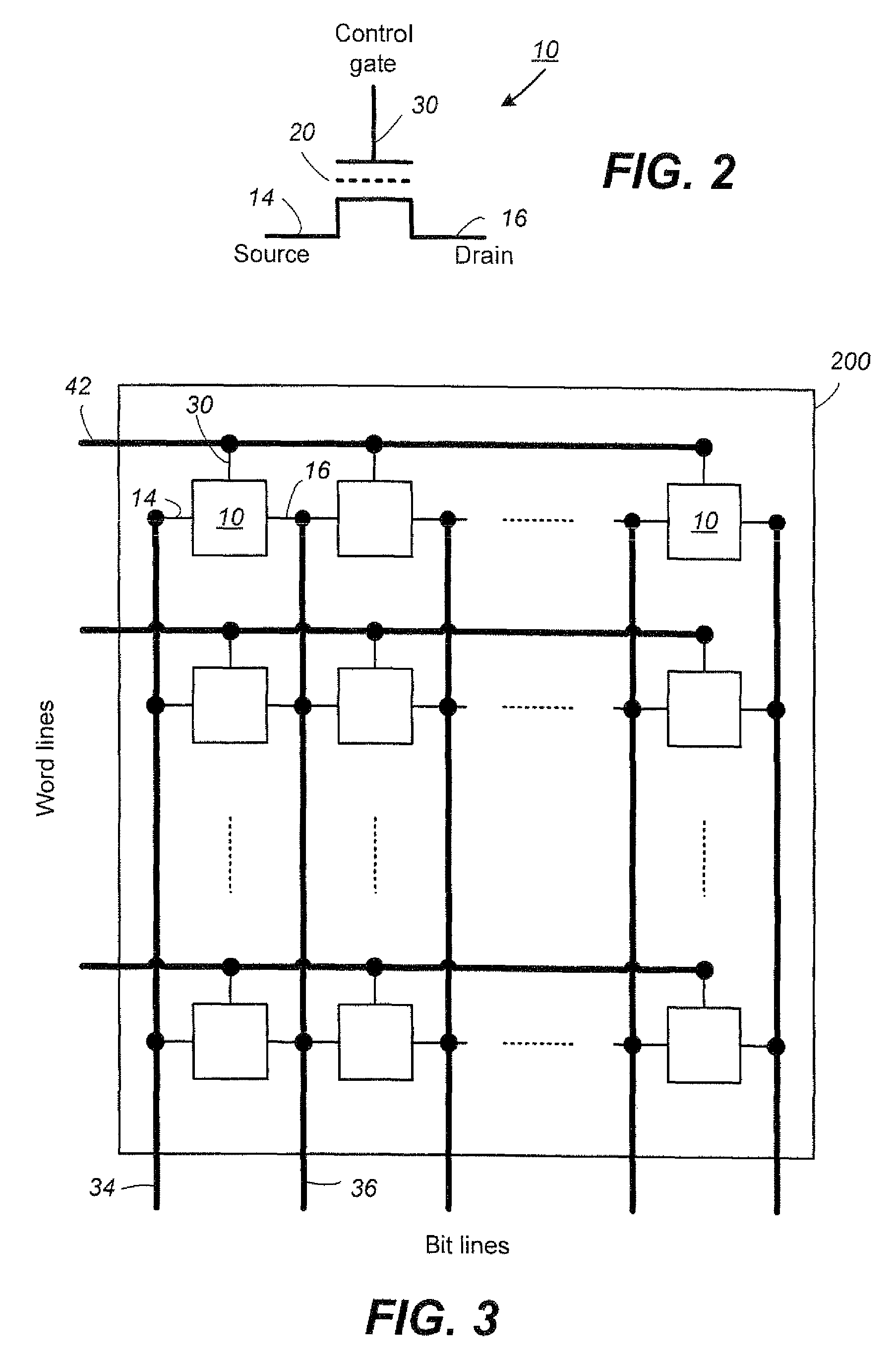

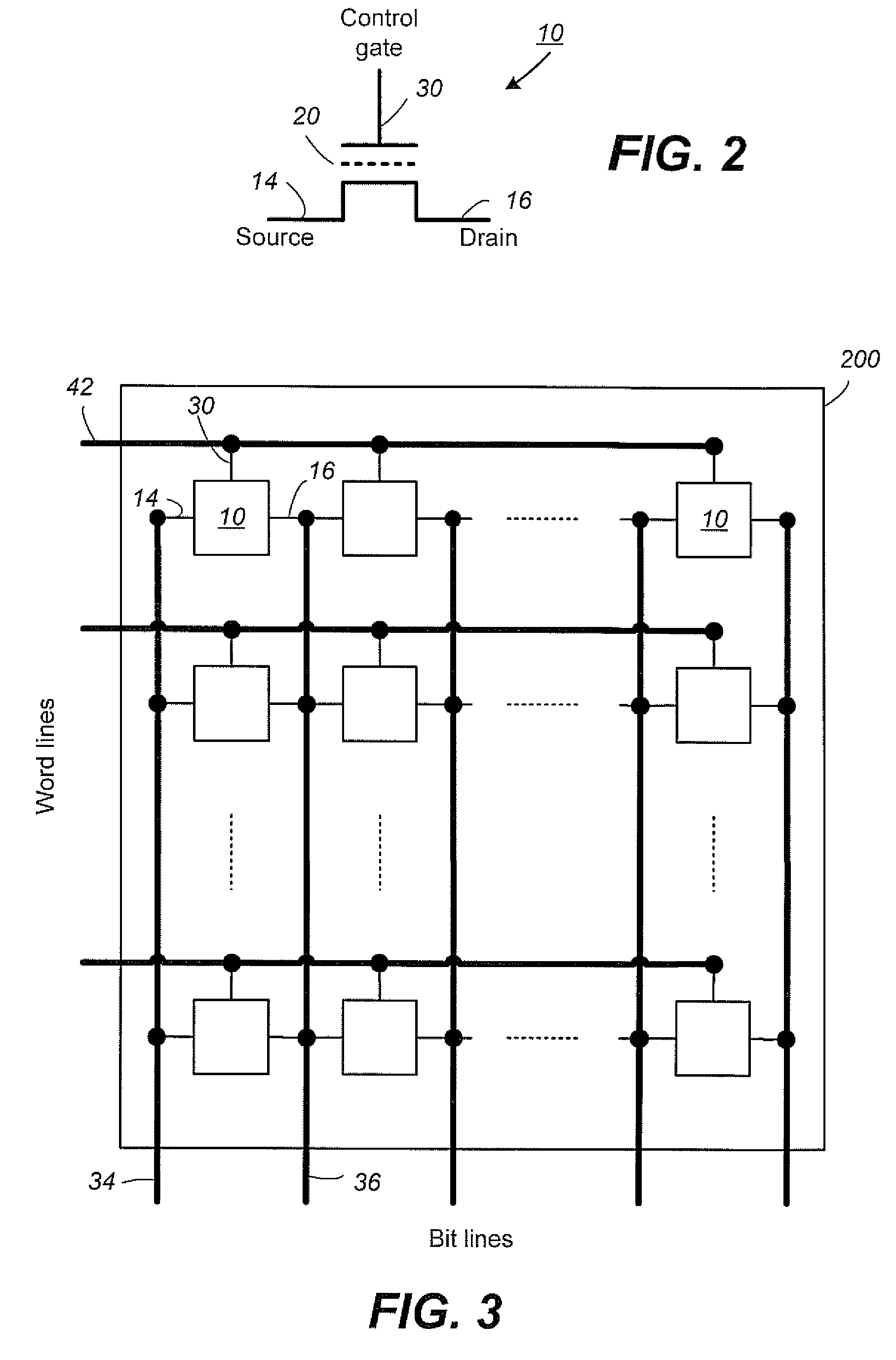

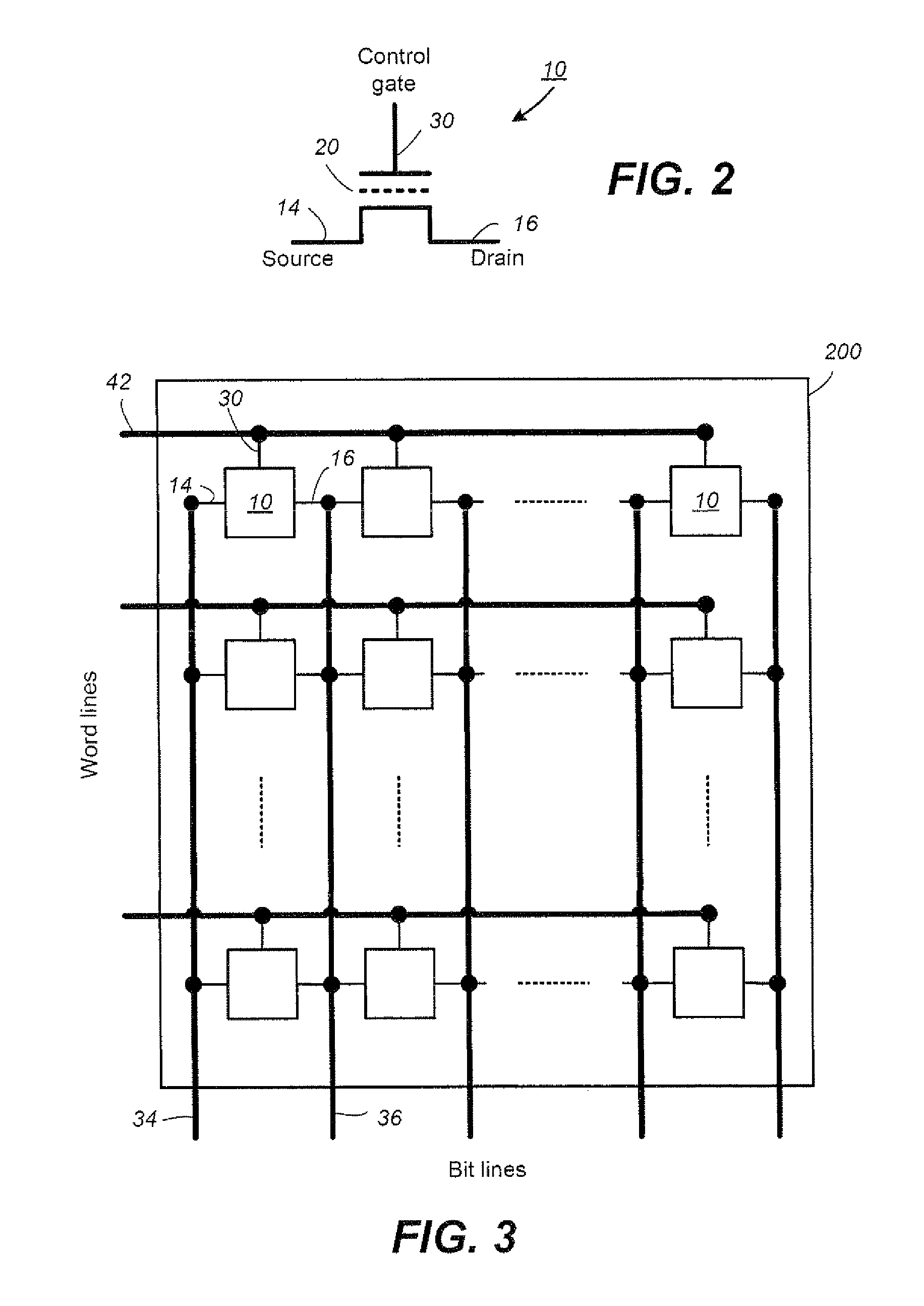

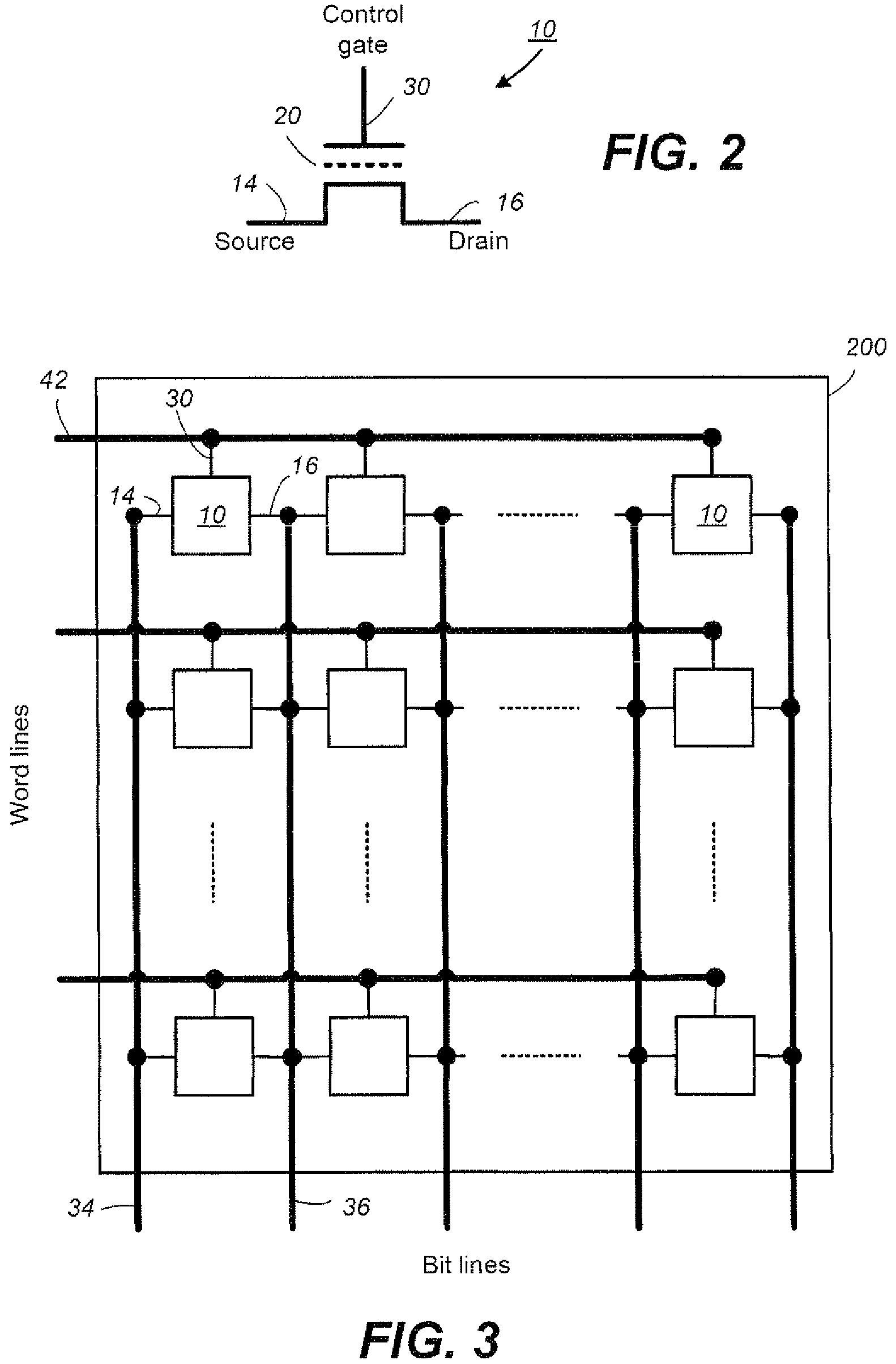

Simultaneous Sensing of Multiple Wordlines and Detection of NAND Failures

Techniques for a post-write read are presented. In an exemplary embodiment, a combined simultaneous sensing of multiple word lines is used in order to identify a problem in one or more of these word lines. That is, sensing voltages are concurrently applied to the control gates of more than one memory cell whose resultant conductance is measured on the same bit line. The combined sensing result is use for measuring certain statistics of the cell voltage distribution (CVD) of multiple word lines and comparing it to the expected value. In case the measured statistics are different than expected, this may indicate that one or more of the sensed word lines may exhibit a failure and more thorough examination of the group of word lines can be performed.

Owner:SANDISK TECH LLC

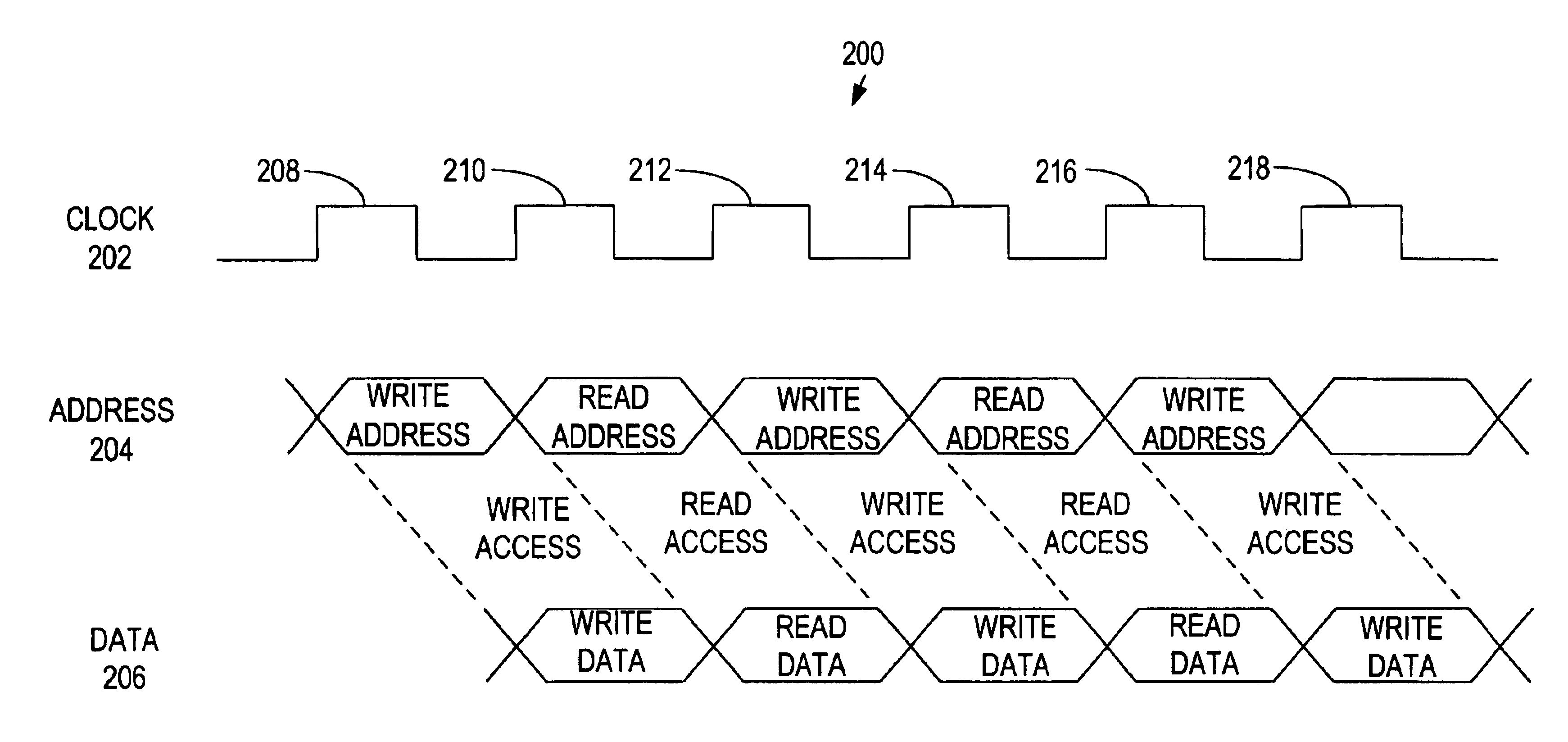

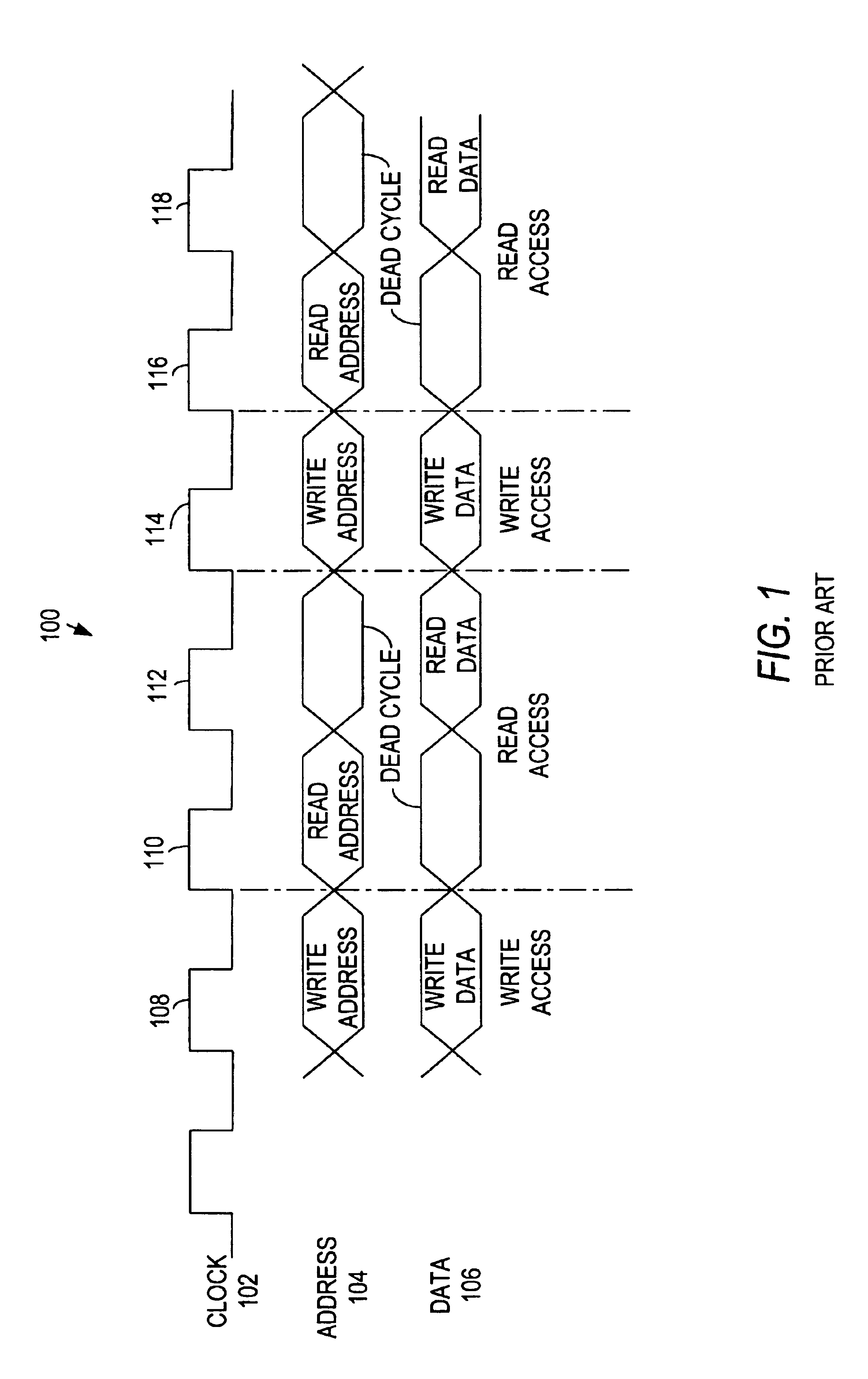

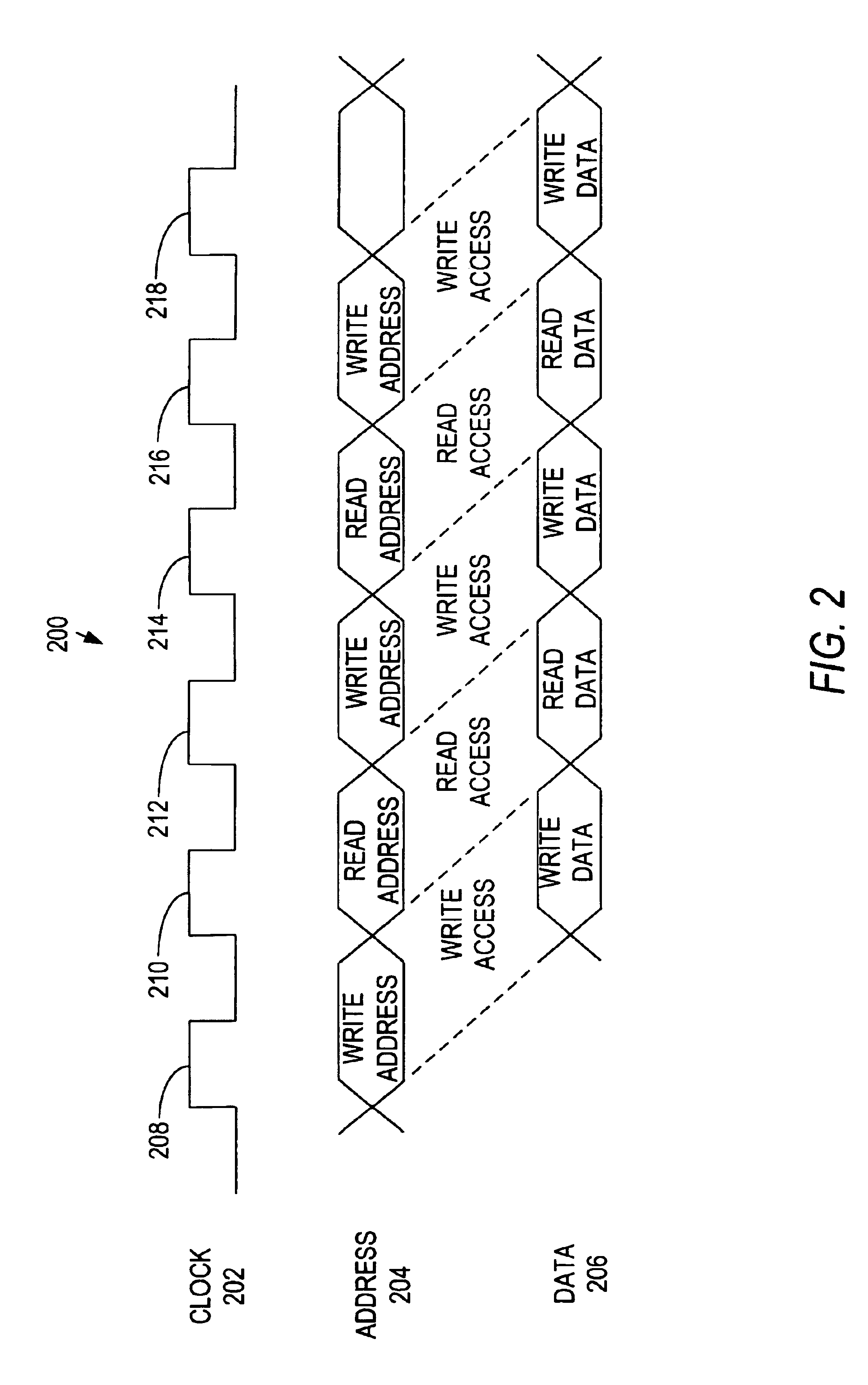

Multi-bank memory accesses using posted writes

InactiveUS6938142B2Reduce read-write access delayReduce read-write access delay and write-read access delayMemory adressing/allocation/relocationDigital storageOperating systemPosted write

Systems and methods for reducing delays between successive write and read accesses in multi-bank memory devices are provided. Computer circuits modify the relative timing between addresses and data of write accesses, reducing delays between successive write and read accesses. Memory devices that interface with these computer circuits use posted write accesses to effectively return the modified relative timing to its original timing before processing the write access.

Owner:ROUND ROCK RES LLC

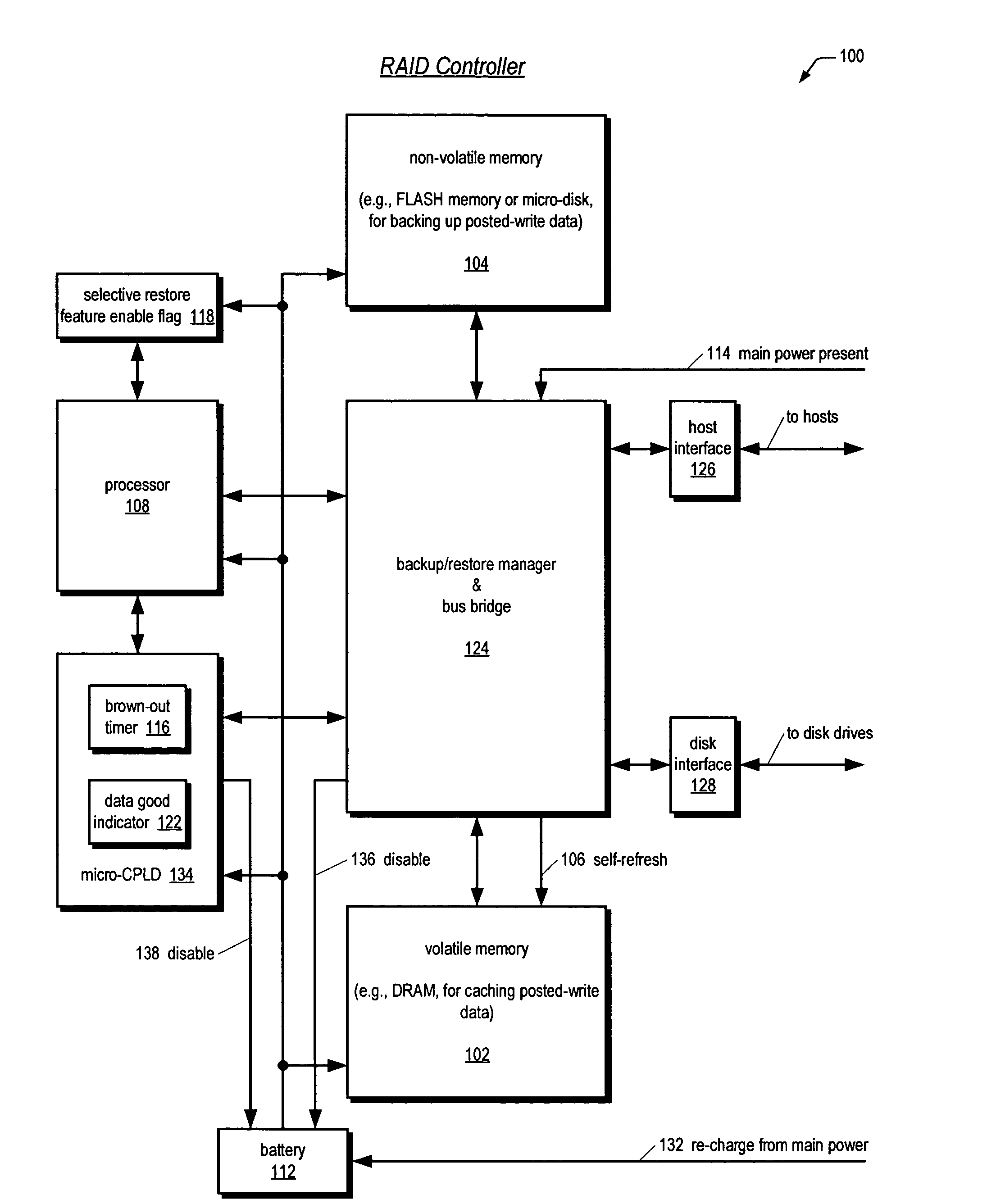

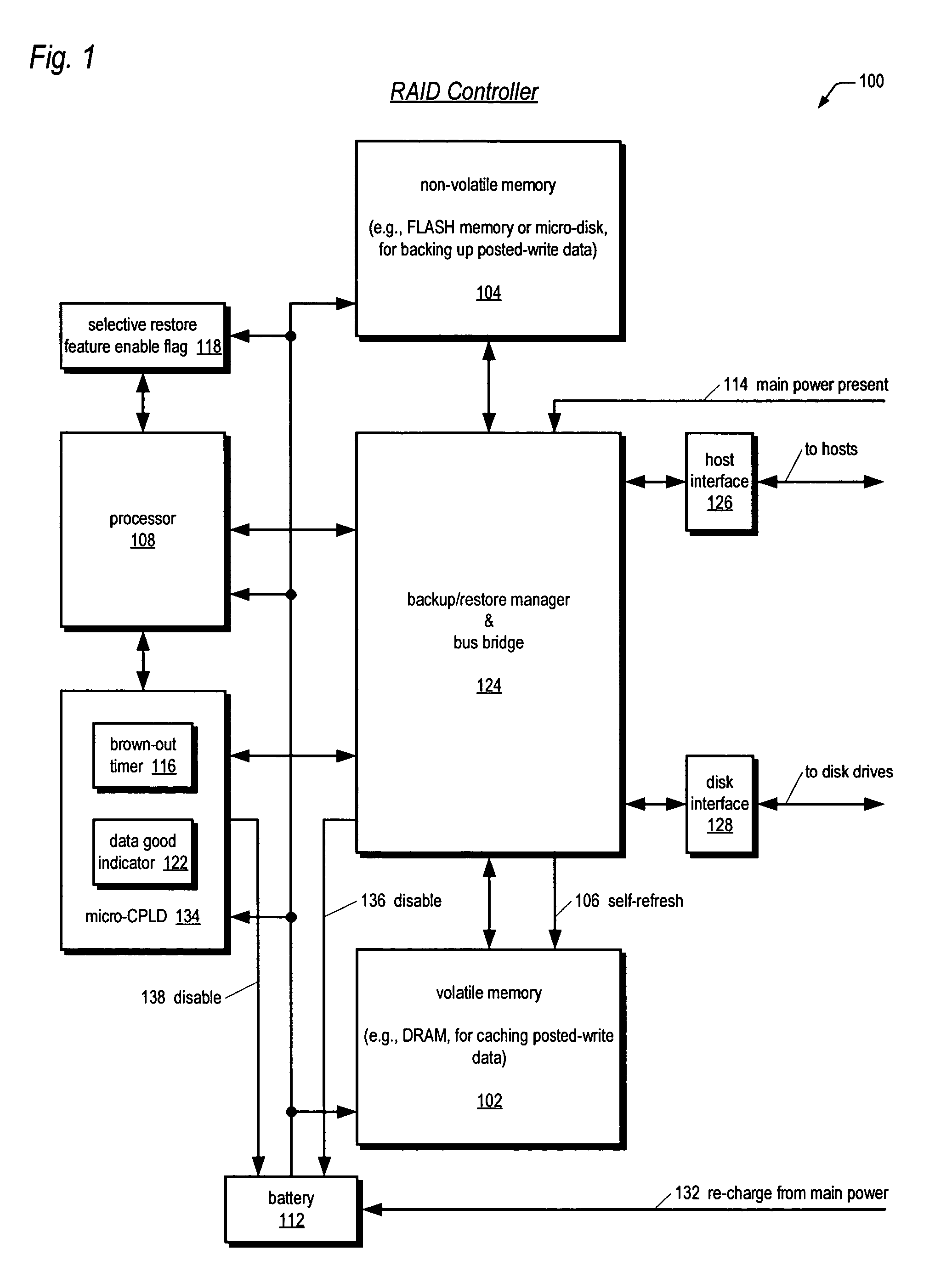

Apparatus and method in a cached raid controller utilizing a solid state backup device for improving data availability time

ActiveUS20050283648A1Improve data availabilityShorten the timeEnergy efficient ICTDigital data processing detailsRAIDControl store

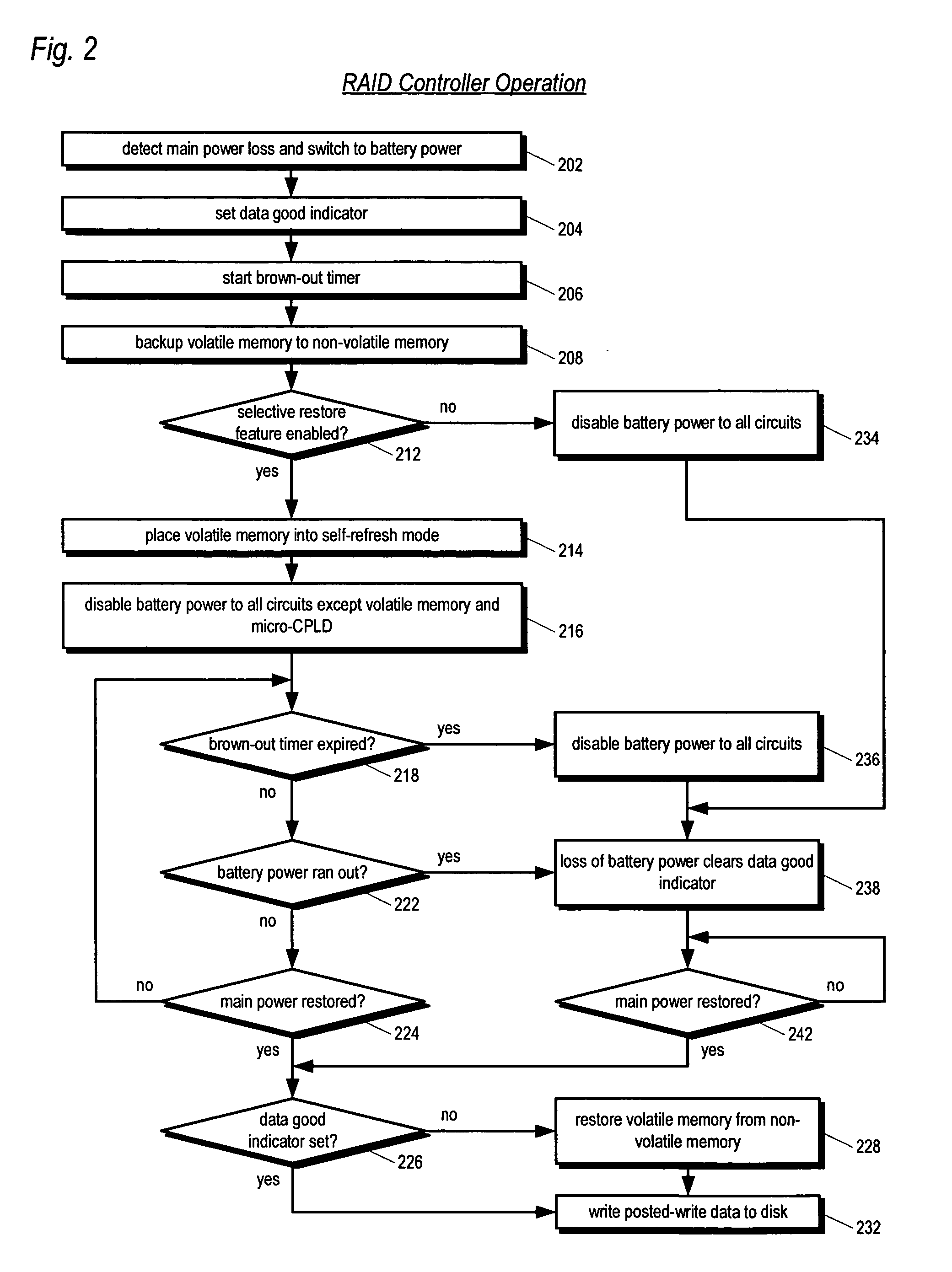

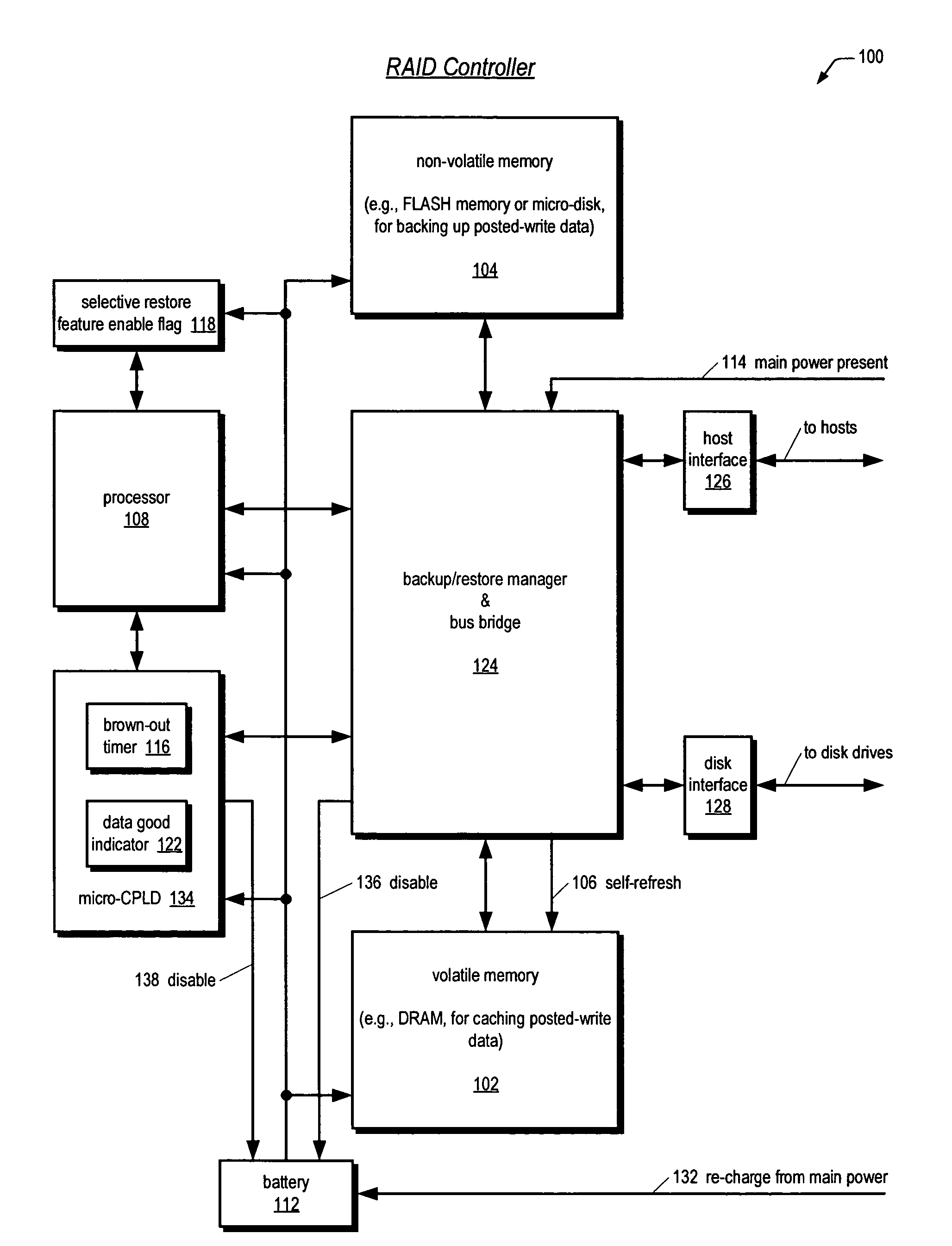

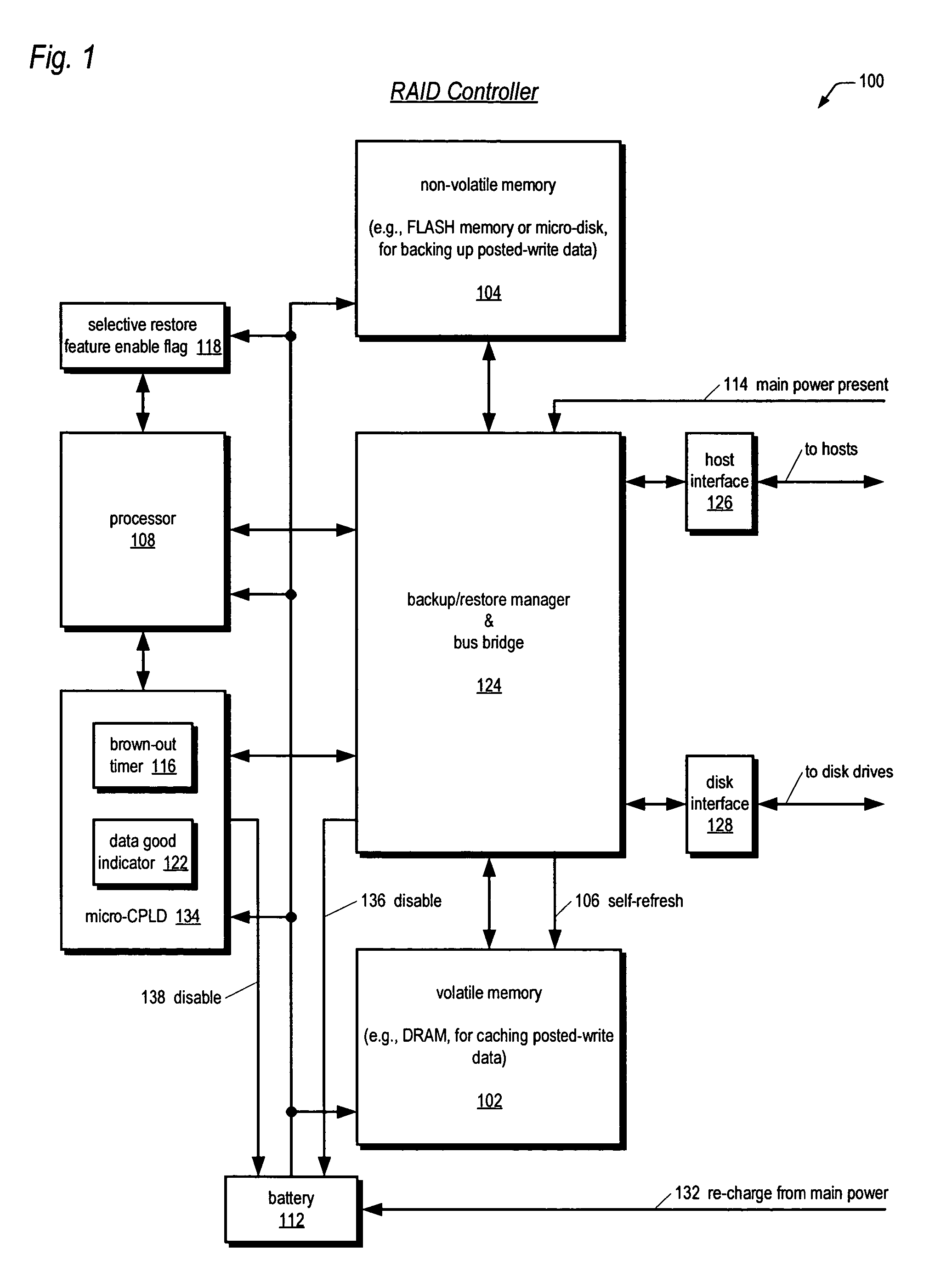

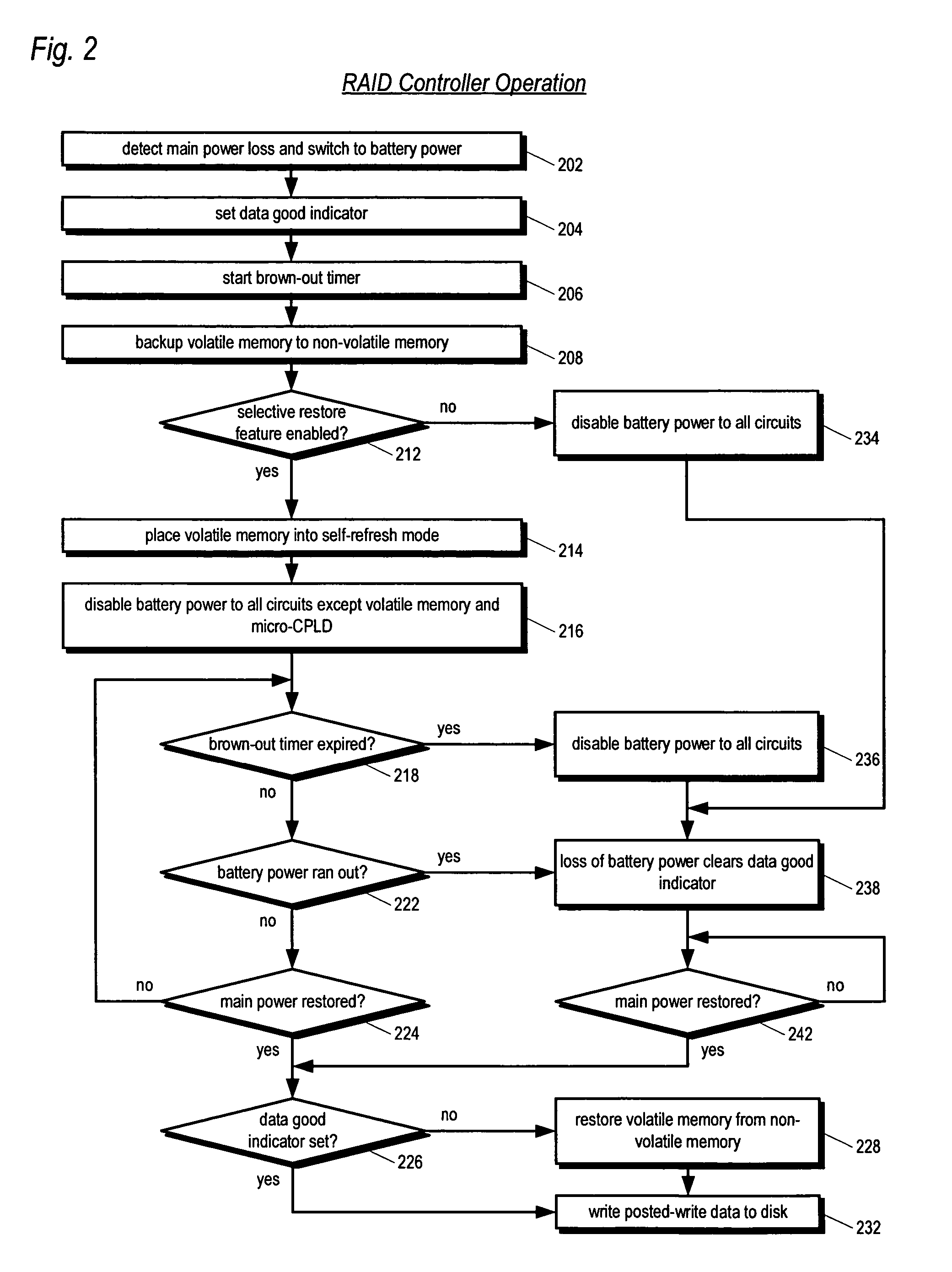

An apparatus for reducing data unavailability time after a loss of main power in a storage controller is described. The controller backs up its volatile memory containing posted-write data to a non-volatile memory upon detecting a loss of main power. The controller continues to provide battery power to the volatile memory to sustain the posted-write data. If the battery is able to supply power to the volatile memory until main power is restored, the controller foregoes restoring the posted-write data to the volatile memory from the non-volatile memory. By not incurring the restore time, which may be substantial if the volatile memory is large since read rates from volatile memories are typically slow, the data unavailability time is reduced. The selective restore feature is user-disableable and also includes a brown-out timer for allowing a user to specify how long to battery-power the volatile memory if the feature is enabled.

Owner:DOT HILL SYST

Sending packets using optimized pio write sequences without sfences

ActiveUS20150378737A1Memory architecture accessing/allocationMemory adressing/allocation/relocationNetwork packetPosted write

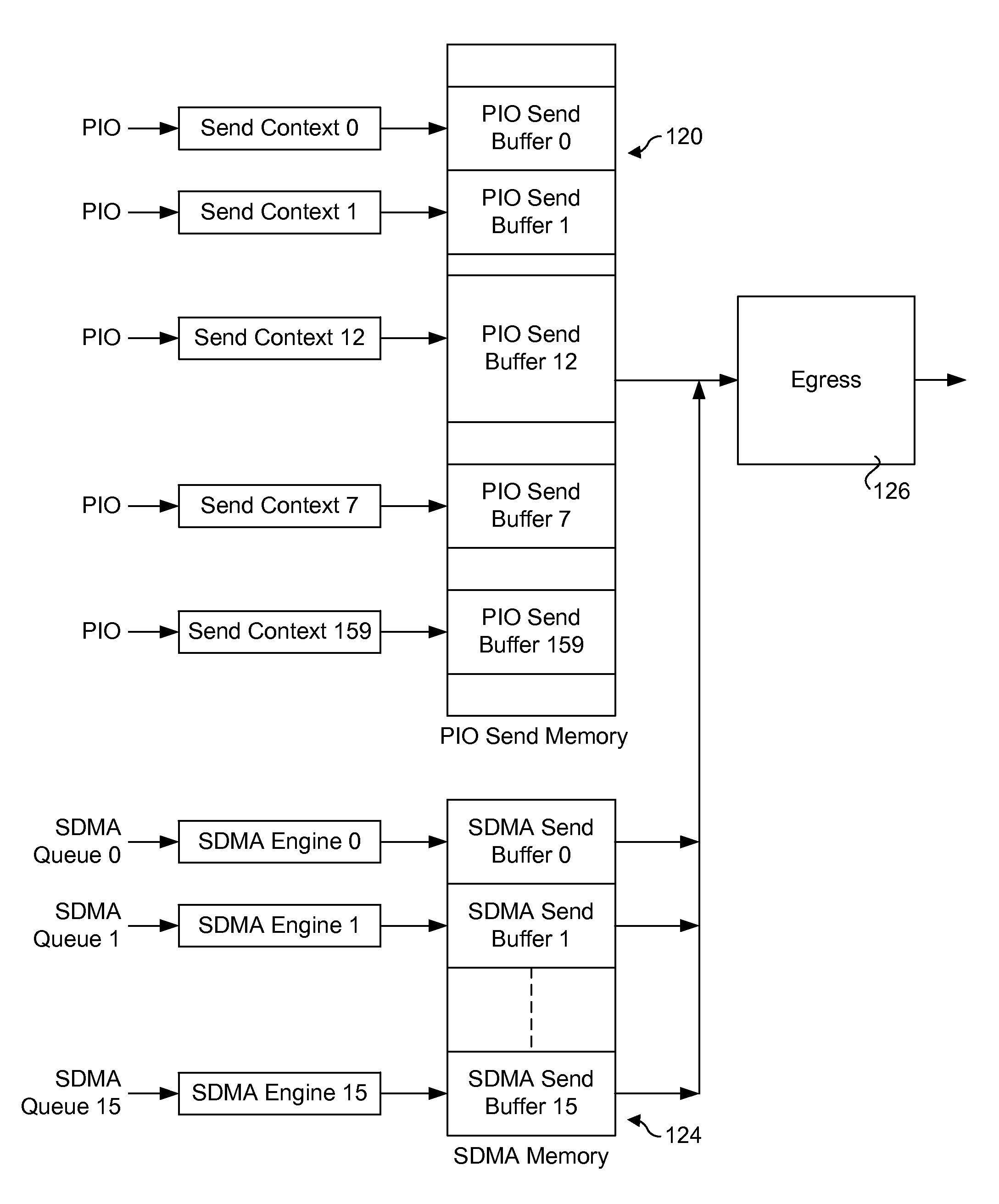

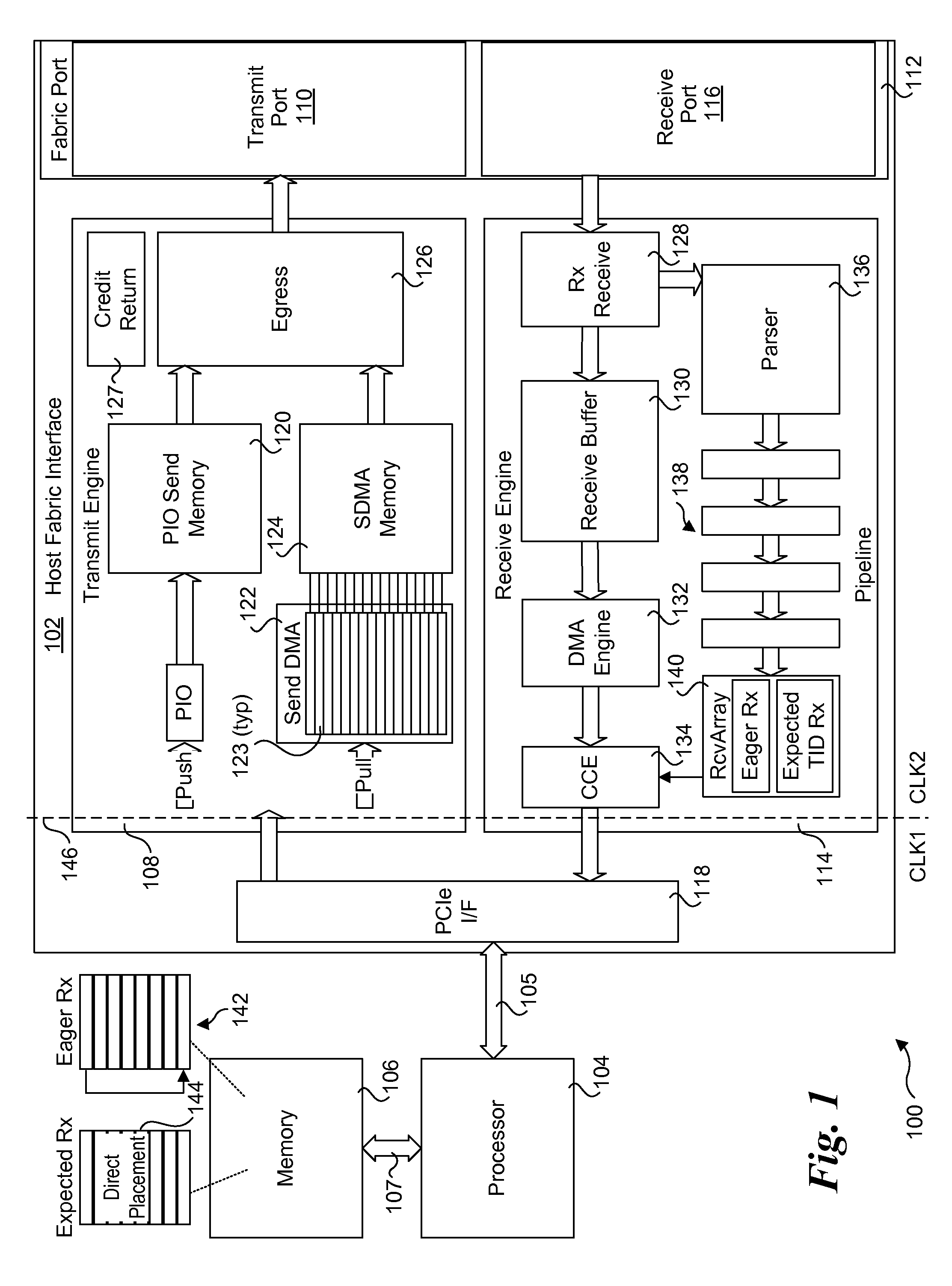

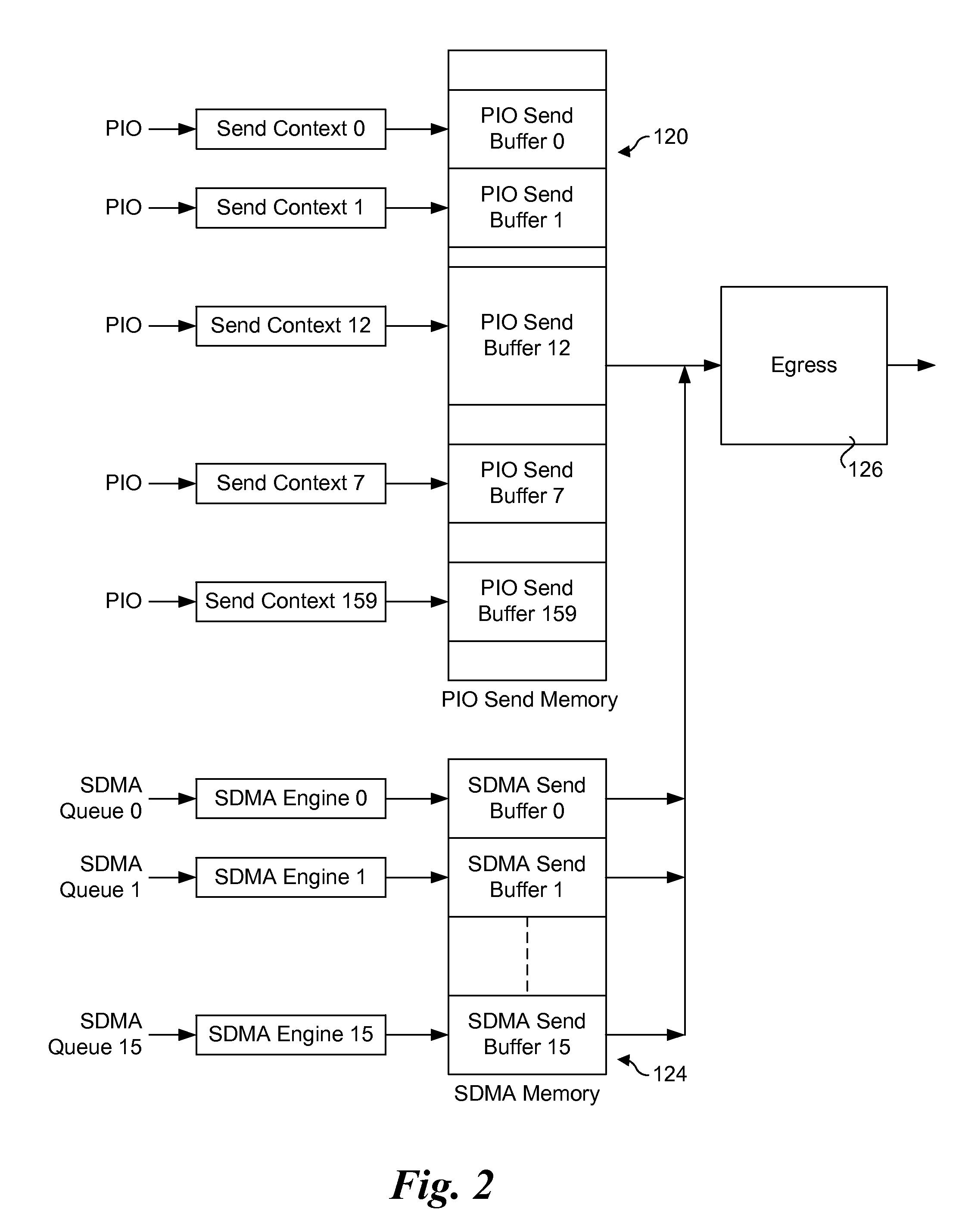

Method and apparatus for sending packets using optimized PIO write sequences without sfences. Sequences of Programmed Input / Output (PIO) write instructions to write packet data to a PIO send memory are received at a processor supporting out of order execution. The PIO write instructions are received in an original order and executed out of order, with each PIO write instruction writing a store unit of data to a store buffer or a store block of data to the store buffer. Logic is provided for the store buffer to detect when store blocks are filled, resulting in the data in those store blocks being drained via PCIe posted writes that are written to send blocks in the PIO send memory at addresses defined by the PIO write instructions. Logic is employed for detecting the fill size of packets and when a packet's send blocks have been filled, enabling the packet data to be eligible for egress.

Owner:INTEL CORP

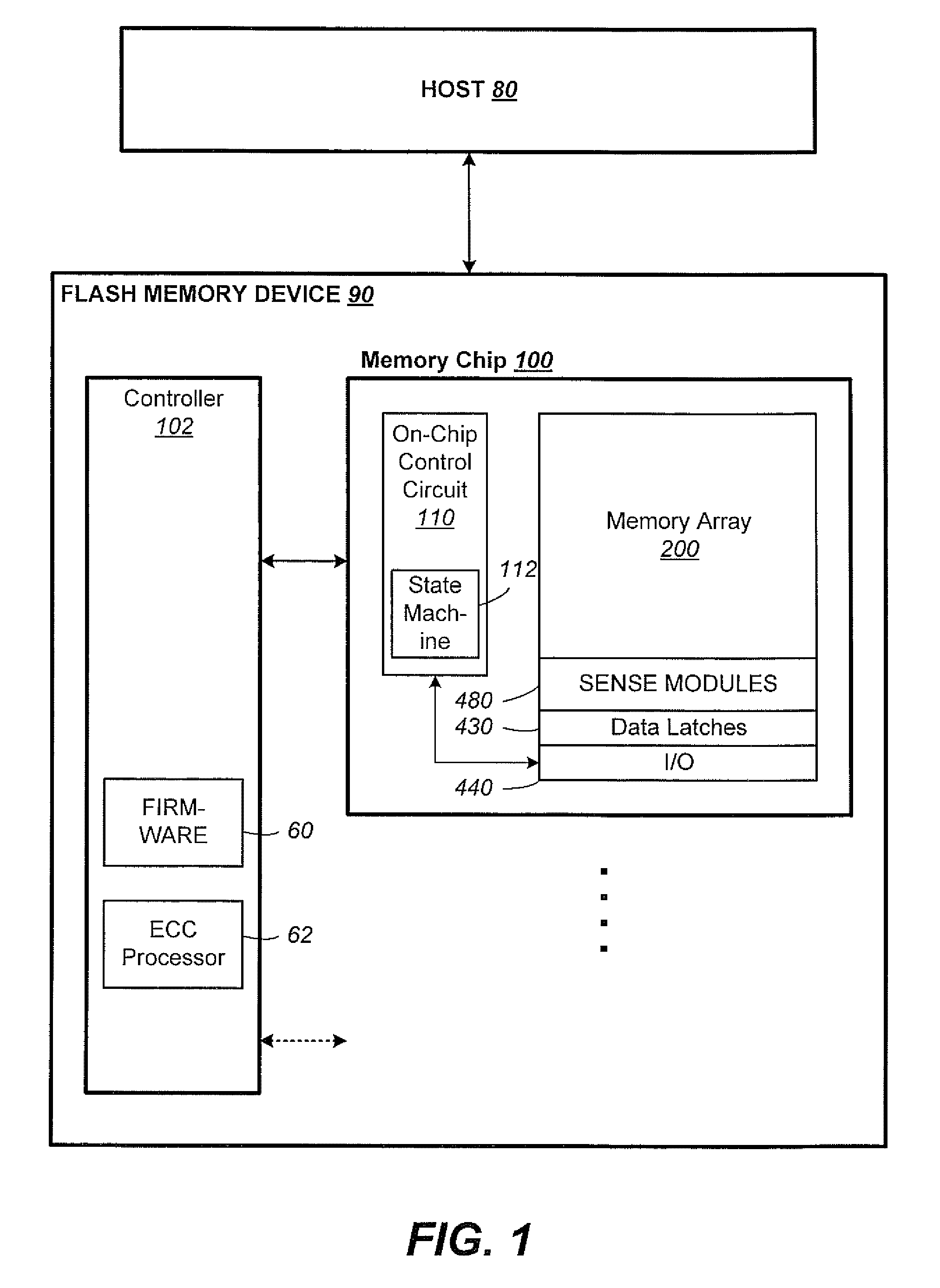

Post-Write Read in Non-Volatile Memories Using Comparison of Data as Written in Binary and Multi-State Formats

InactiveUS20130031431A1Error detection/correctionRead-only memoriesFile comparisonNon-volatile memory

Techniques for a post-write read are presented. In an exemplary embodiment, host data is initially written into the non-volatile memory in binary form, such as a non-volatile binary cache. It is then subsequently written from the binary section into a multi-state non-volatile section of the memory. After being written in multi-state format, pages of data from a multi-state block can then be checked against there source pages in the binary section to verify the quality of the multi-state write. This process can be performed on the memory device itself, without transferring the pages out to the controller.

Owner:SANDISK TECH LLC

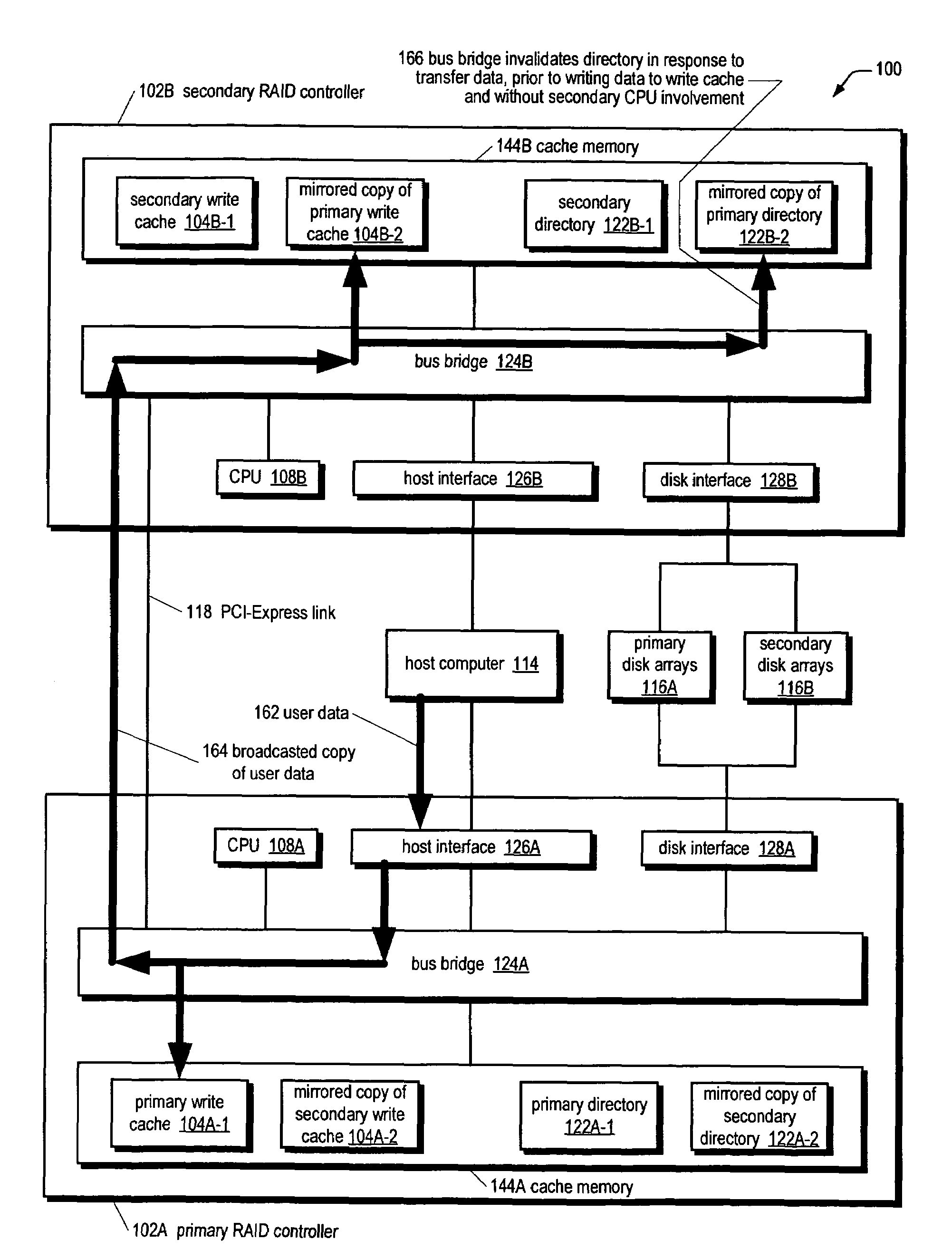

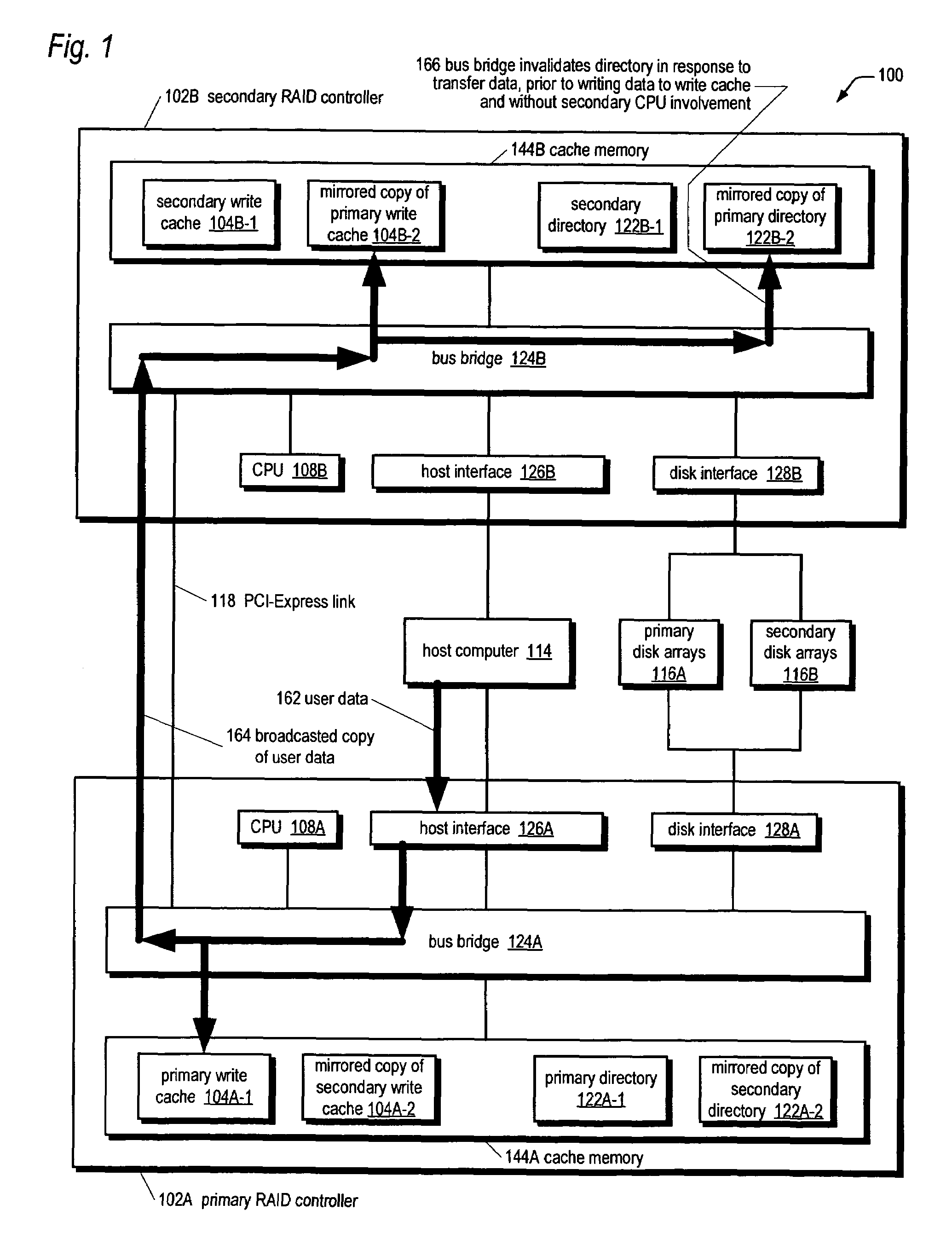

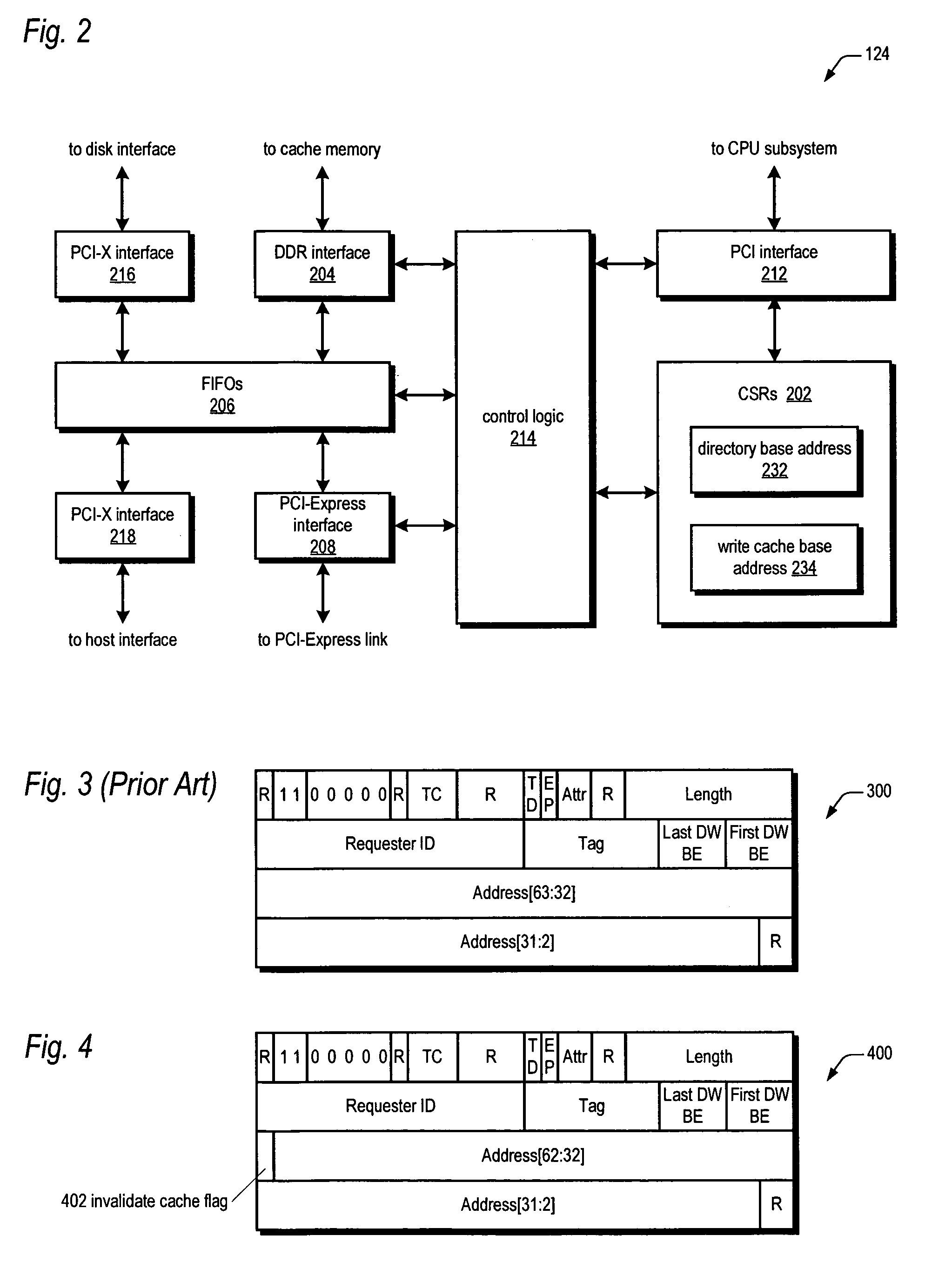

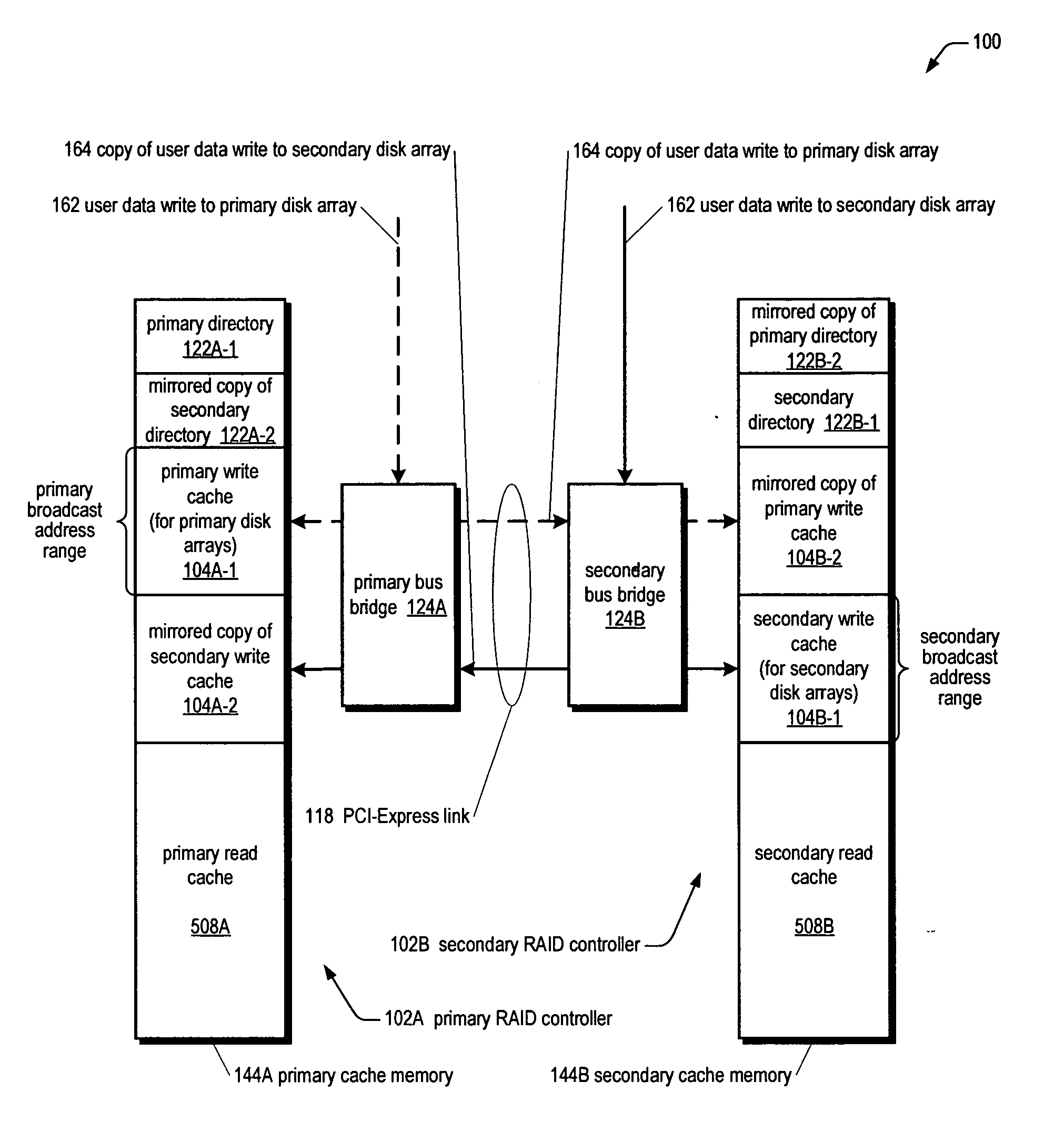

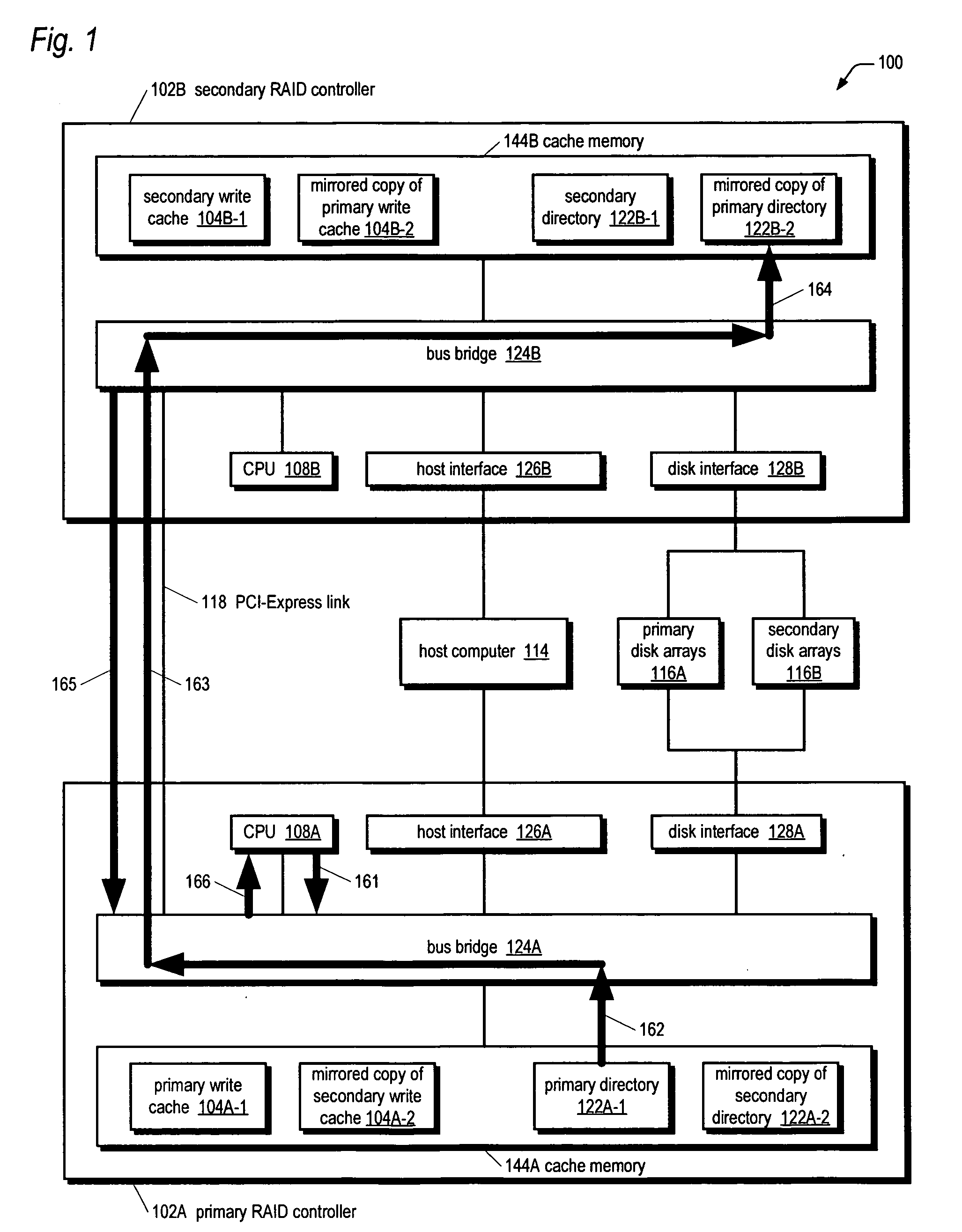

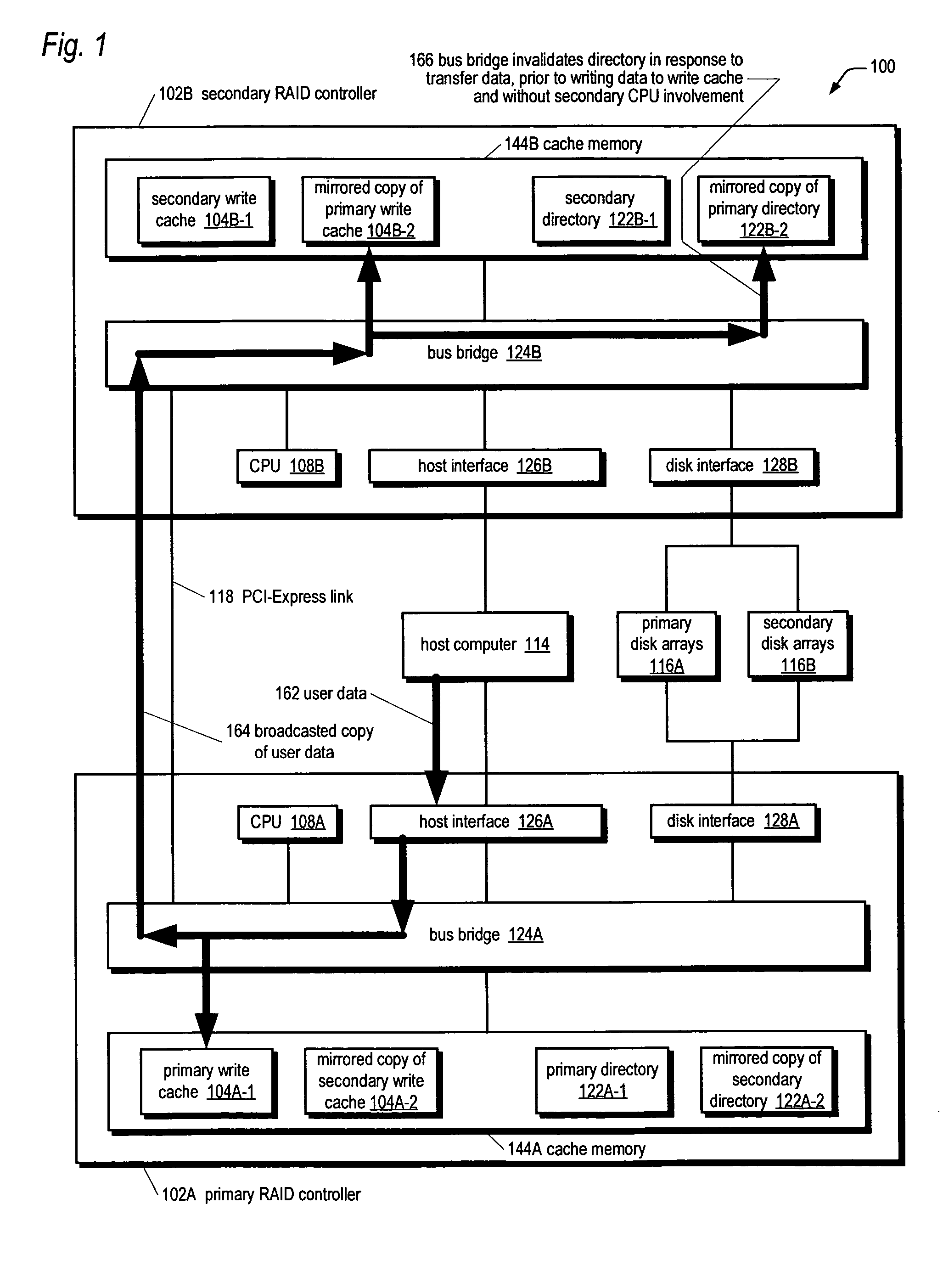

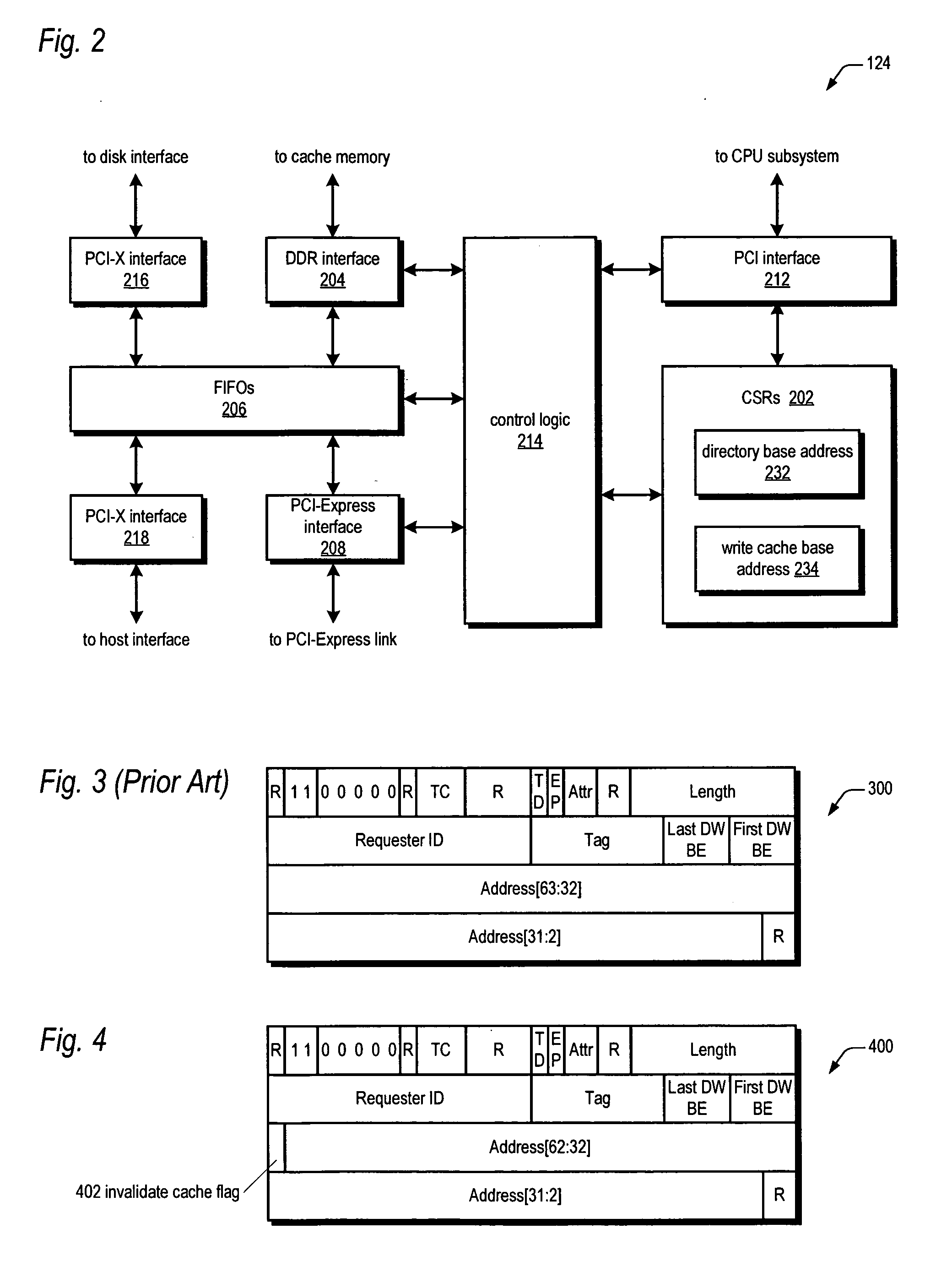

RAID system for performing efficient mirrored posted-write operations

InactiveUS7340555B2Reduce needMemory architecture accessing/allocationError detection/correctionRAIDPCI Express

A bus bridge on a primary RAID controller receives user write data from a host and writes the data to its write cache and also broadcasts the data over a high speed link (e.g., PCI-Express) to a secondary RAID controller's bus bridge, which writes the data to its mirroring write cache. However, before writing the data, the second bus bridge automatically invalidates the cache buffers to which the data is to be written, which alleviates the primary controller's CPU from sending a message to the secondary controller's CPU to instruct it to invalidate the cache buffers. The secondary controller CPU programs its bus bridge at boot time with the base address of its mirrored write cache to enable it to detect that the cache buffer needs invalidating in response to the broadcast write, and with the base address of its directory that includes the cache buffer valid bits.

Owner:DOT HILL SYST

Data Recovery for Defective Word Lines During Programming of Non-Volatile Memory Arrays

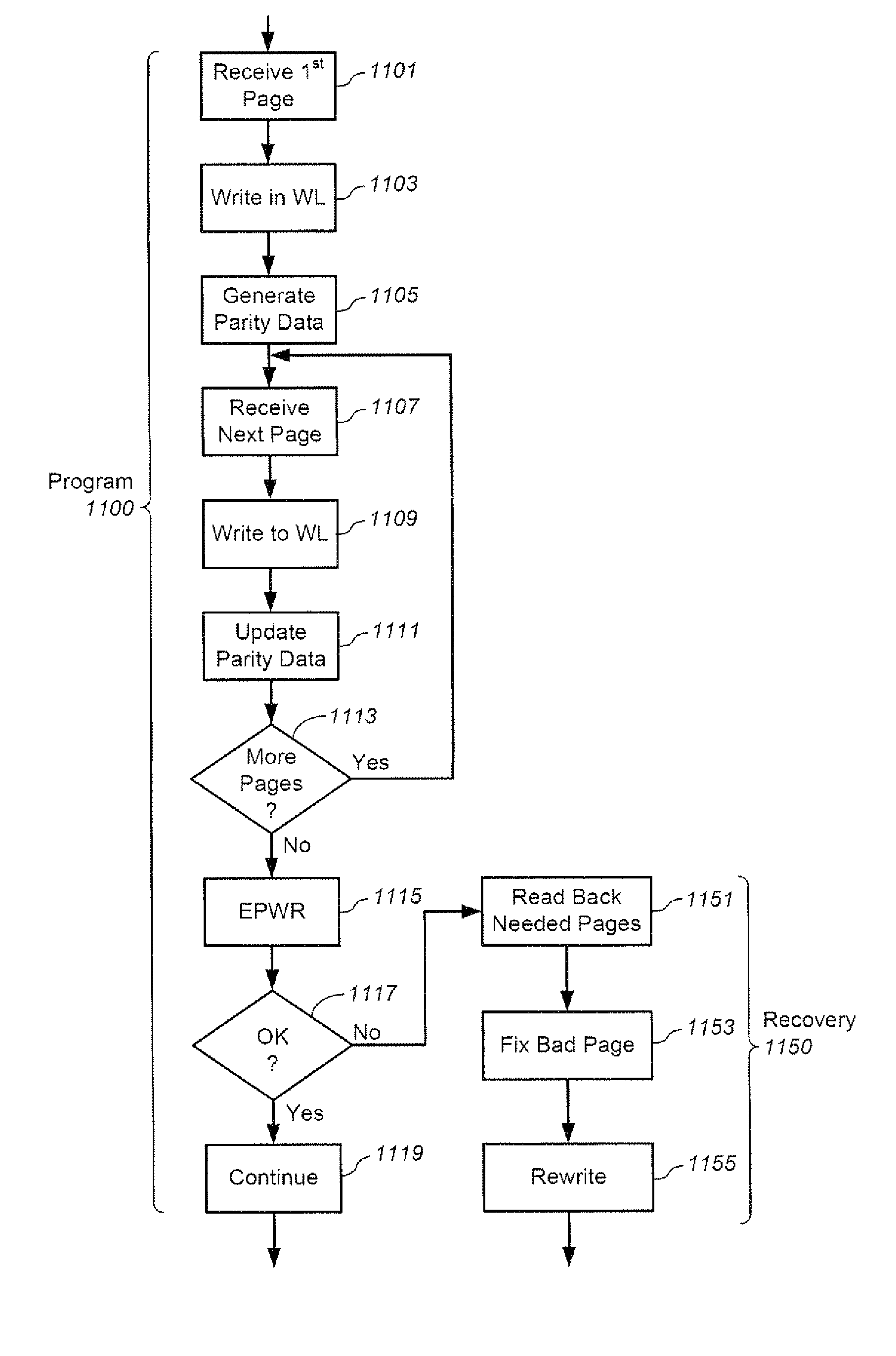

The recovery of data during programming, such as in the case of a broken word-line, is considered. The arrangement described assumes that k pages may be corrupted when the system finishes programming a block. Then these corrupted pages can be recovered using an erasure code. In order to recover any k pages, the system will compute and temporarily store k parity pages in the controller. These k parity pages may be computed on-the-fly as the data pages are received from the host. Once programming of the block is finished, a post-write read may be done in order to validate that the data is stored reliably. If no problem is detected during EPWR, then the parity pages in the controller may be discarded. In case a problem is detected, and data in up to k pages is corrupt on some bad word-lines, then the missing data is recovered using the k parity pages that are stored in the controller and using the other non-corrupted pages that are read from the block of the memory array and decoded. Once the recovery is complete the block can be reprogrammed and the temporary parity pages in the controller may be discarded upon successfully reprogramming.

Owner:SANDISK TECH LLC

Non-Volatile Memory and Method with Accelerated Post-Write Read Using Combined Verification of Multiple Pages

ActiveUS20130031430A1High error rateDigital storageRedundant hardware error correctionCombinational verificationOperating system

A post-write read operation, using a combined verification of multiple pages of data, is presented. In a simultaneous verification of multiple pages in a block, the controller evaluates a combined function of the multiple pages, instead of evaluating each page separately. In one exemplary embodiment, the combined function is formed by XORing the pages together. Such a combined verification of multiple pages based on the read data can significantly reduce the controller involvement, lowering the required bus and ECC bandwidth for a post-write read and hence allow efficient post-write reads when the number of dies is large.

Owner:SANDISK TECH LLC

Combined simultaneous sensing of multiple wordlines in a post-write read (PWR) and detection of NAND failures

Techniques for a post-write read are presented. In an exemplary embodiment, a combined simultaneous sensing of multiple word lines is used in order to identify a problem in one or more of these word lines. That is, sensing voltages are concurrently applied to the control gates of more than one memory cell whose resultant conductance is measured on the same bit line. The combined sensing result is use for measuring certain statistics of the cell voltage distribution (CVD) of multiple word lines and comparing it to the expected value. In case the measured statistics are different than expected, this may indicate that one or more of the sensed word lines may exhibit a failure and more thorough examination of the group of word lines can be performed.

Owner:SANDISK TECH LLC

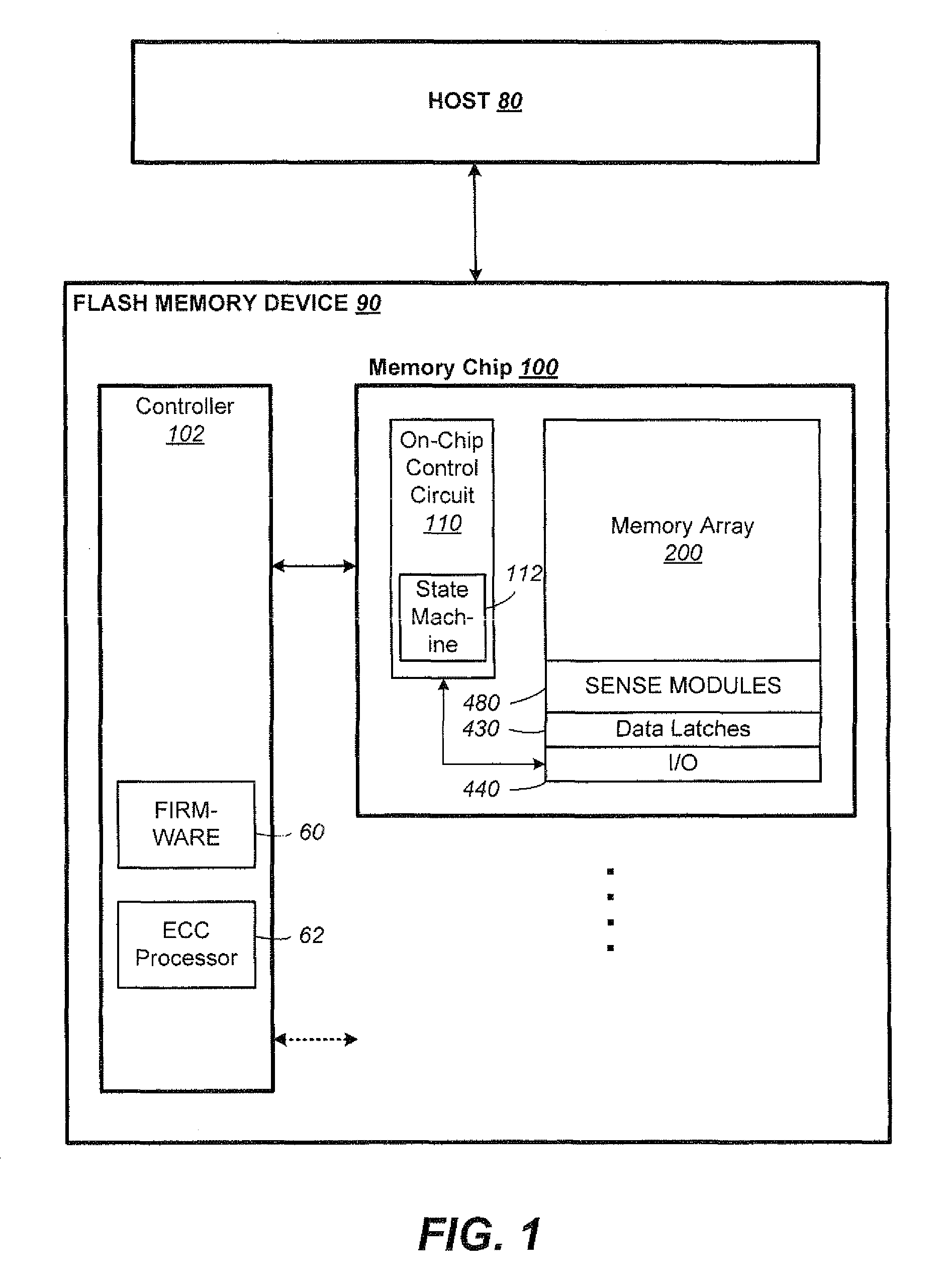

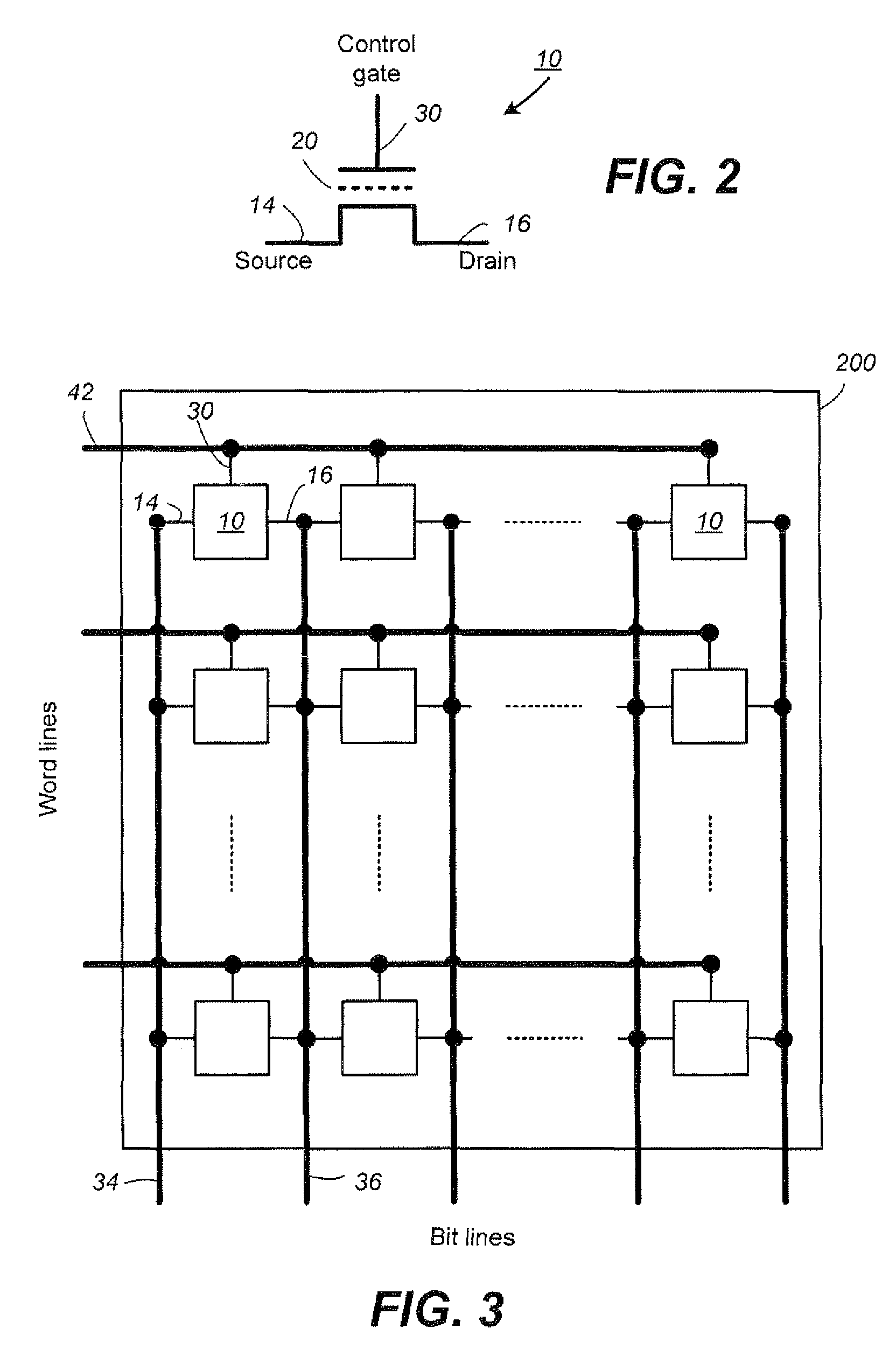

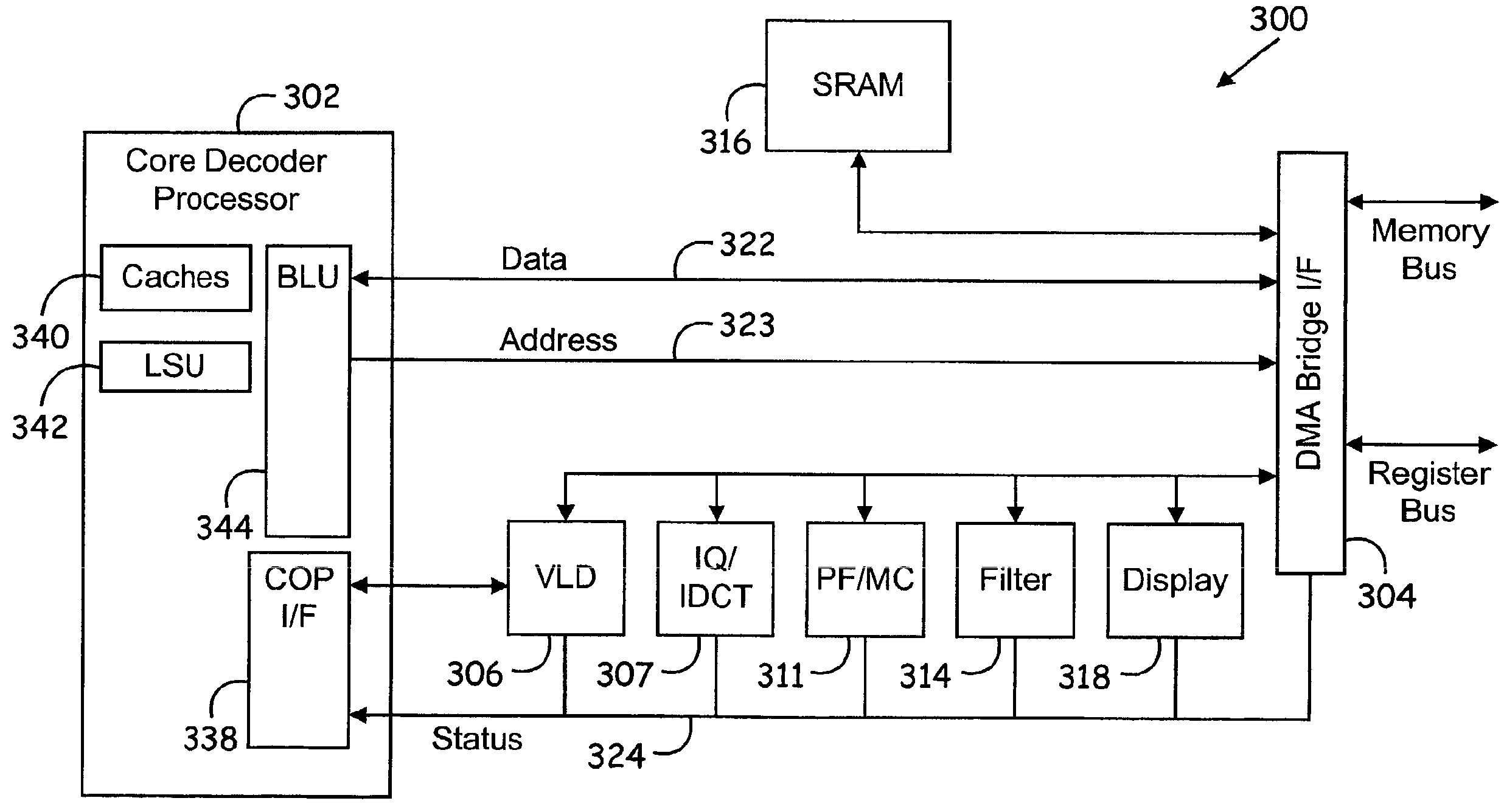

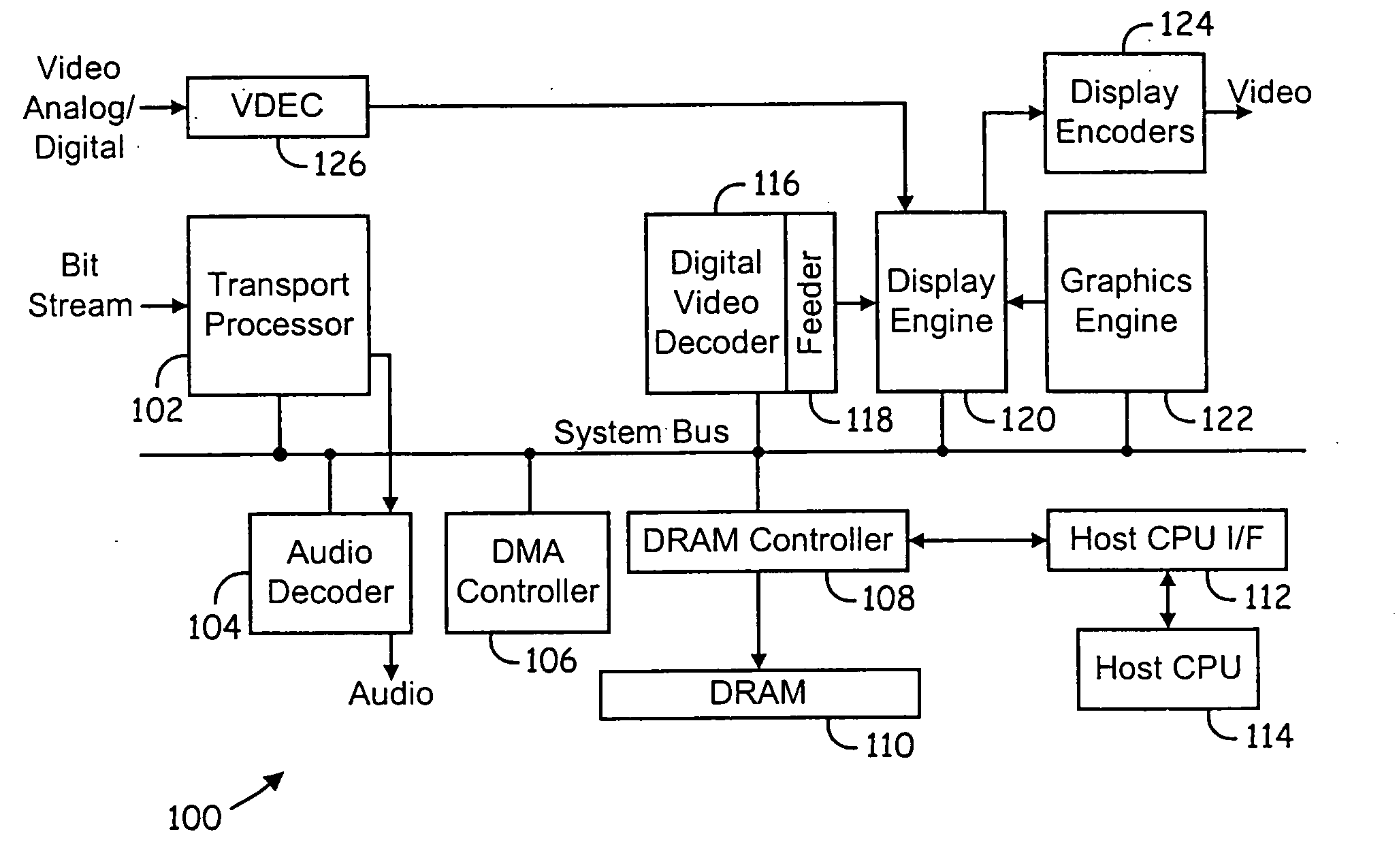

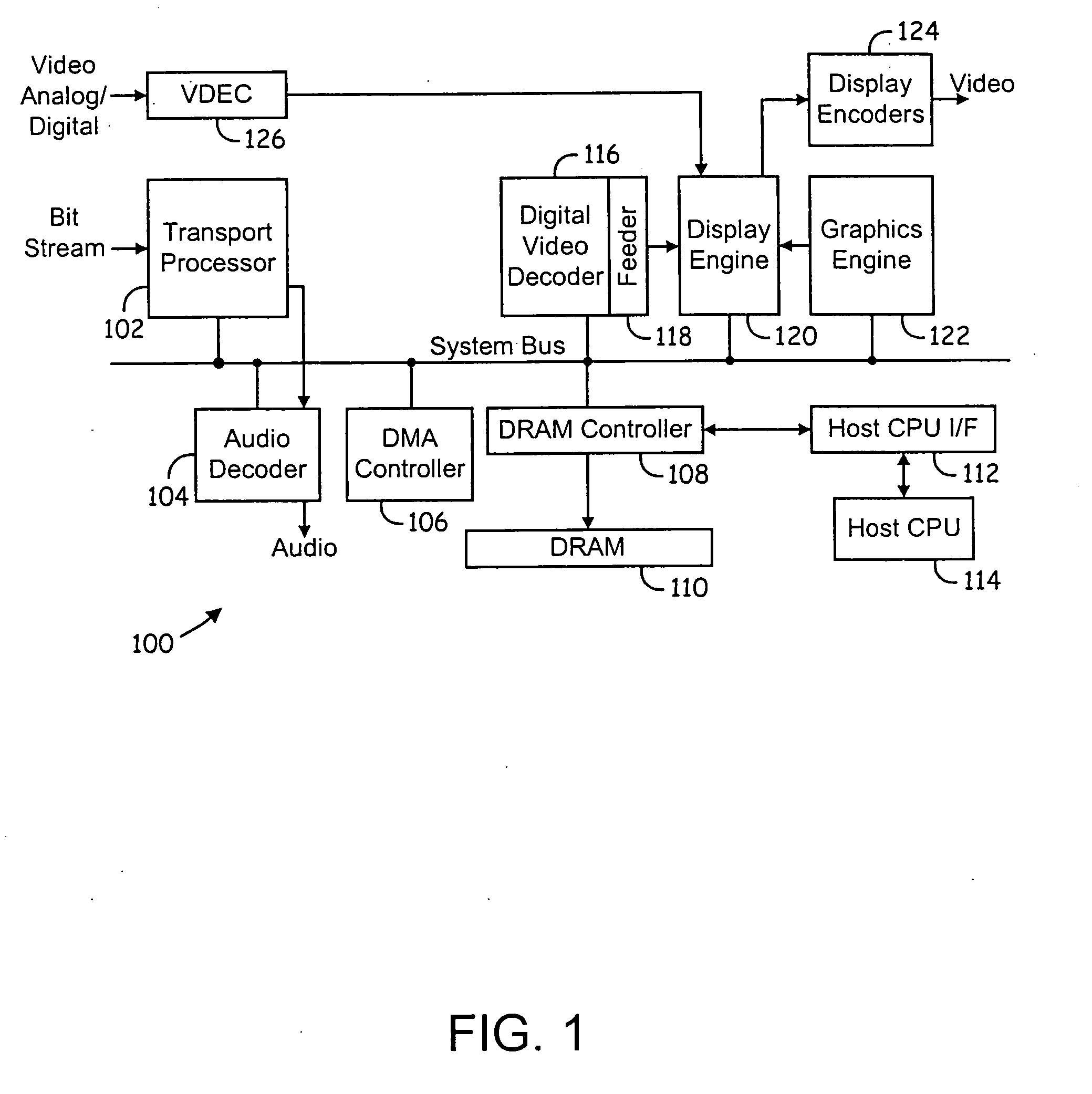

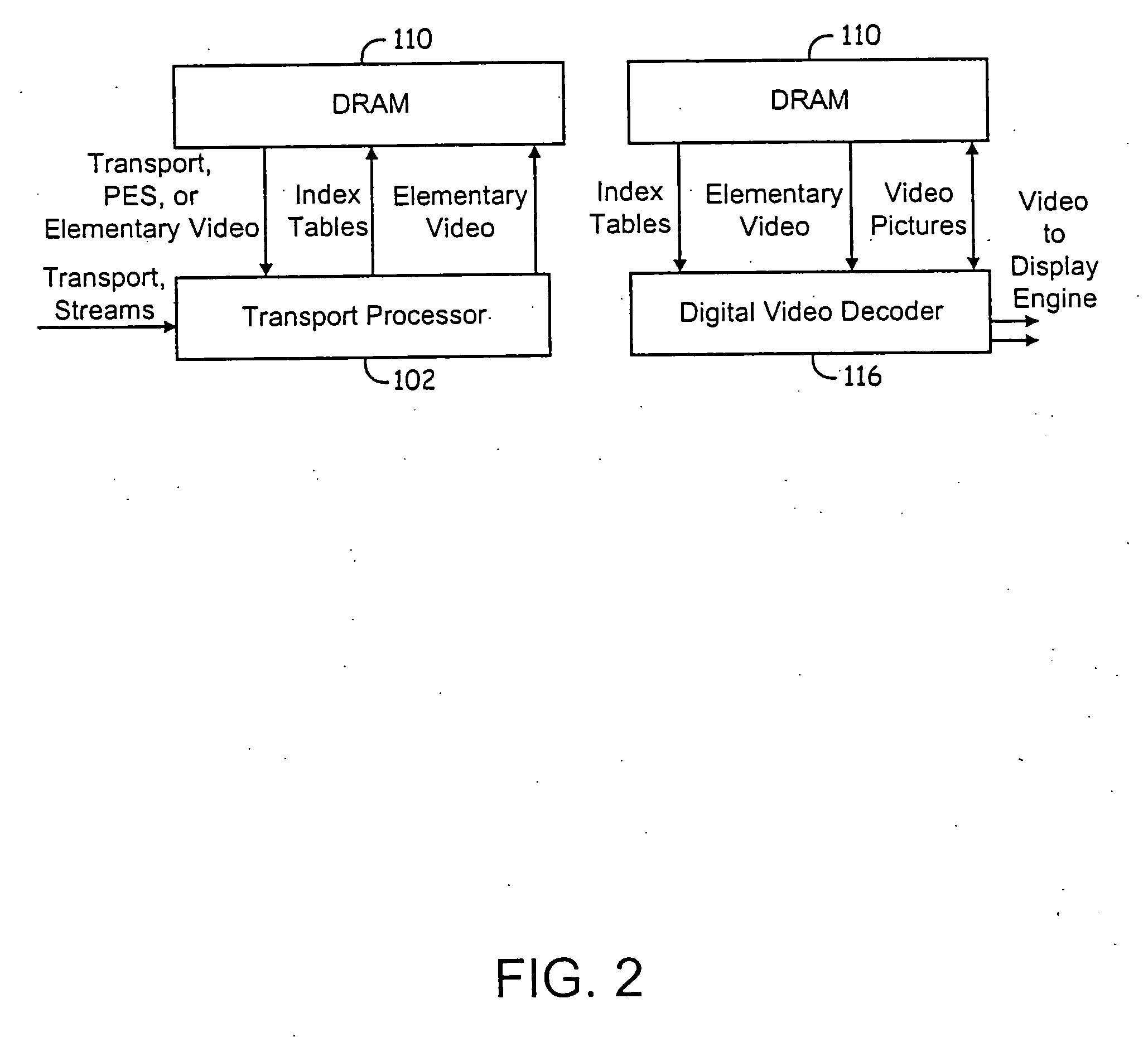

Method of communicating between modules in a decoding system

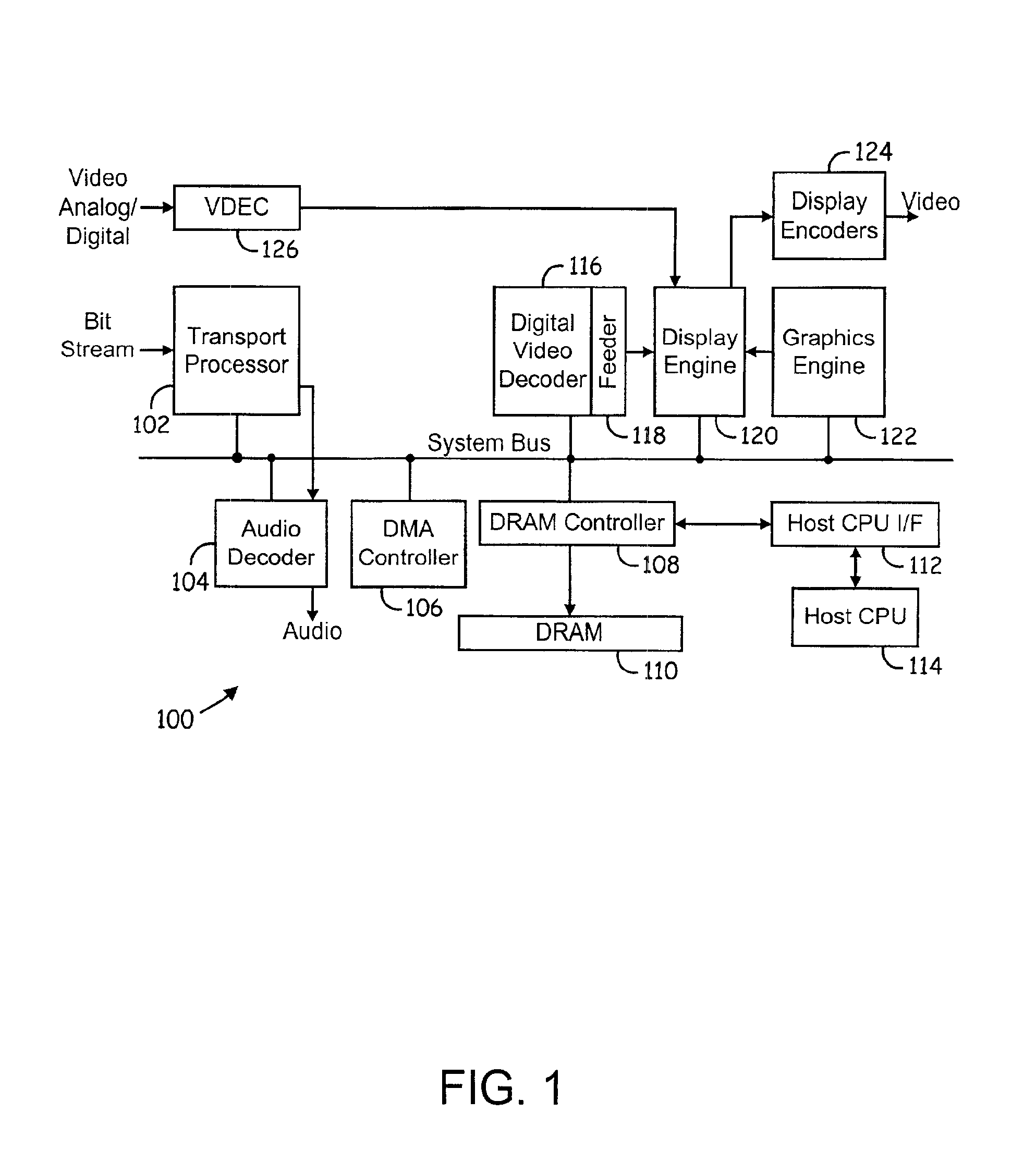

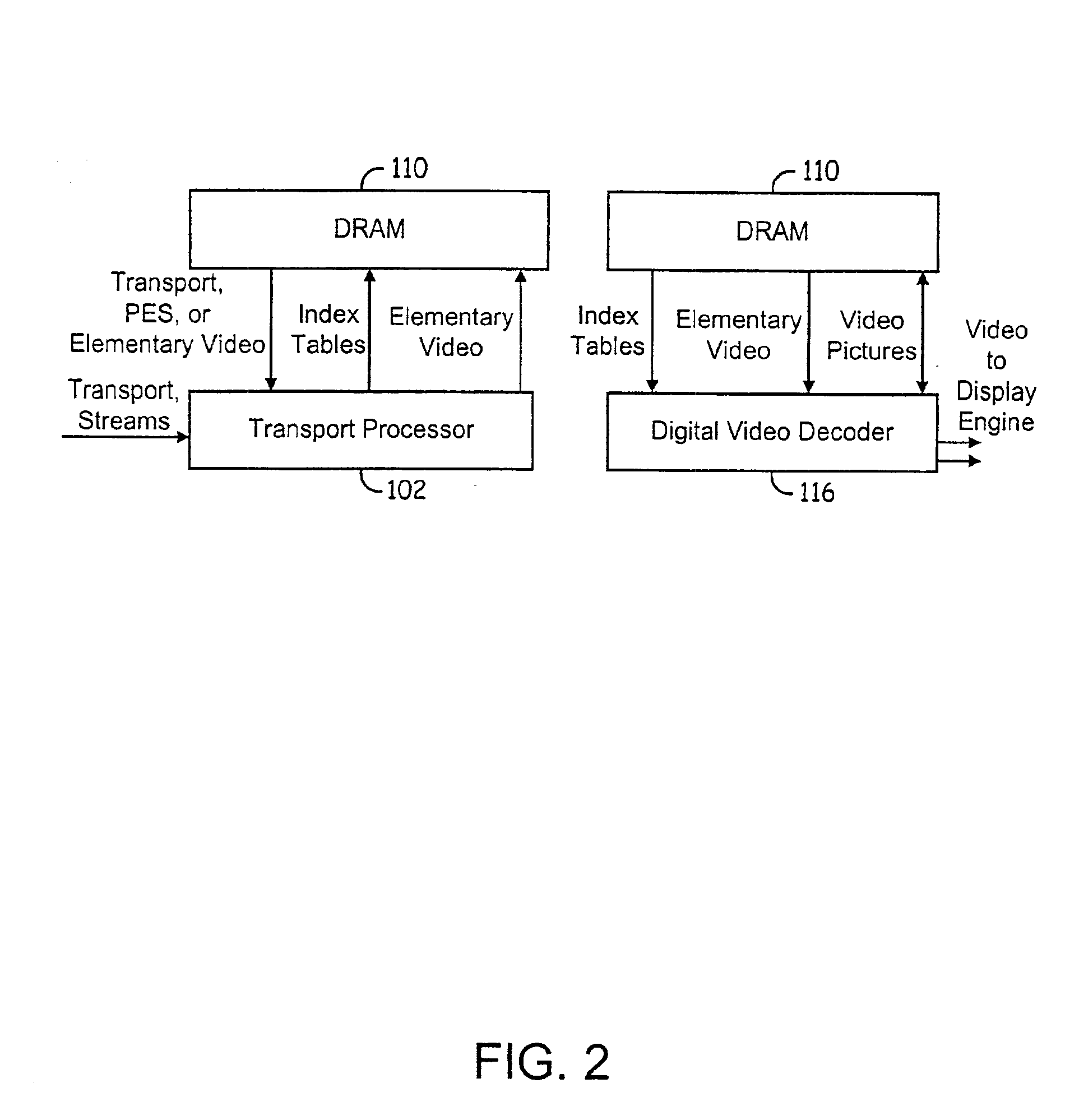

ActiveUS6963613B2Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningComputer moduleVariable length

Means of communicating between modules in a decoding system. A variable-length decoding accelerator communicates with a core decoder processor via a co-processor interface. In one embodiment, other decoding accelerators, in addition to the variable-length decoder, are adapted to provide status data indicative of their status to a co-processor status register. In another embodiment, a decoding accelerator is controlled by providing commands to the accelerator via posted write operations and polling the accelerator to determine whether the command has been performed. In still another embodiment, a first hardware accelerator communicates with a core decoder processor via a co-processor interface and other decoding accelerators, in addition to the first hardware accelerator, are adapted to provide status data indicative of their status to a co-processor status register.

Owner:AVAGO TECH INT SALES PTE LTD

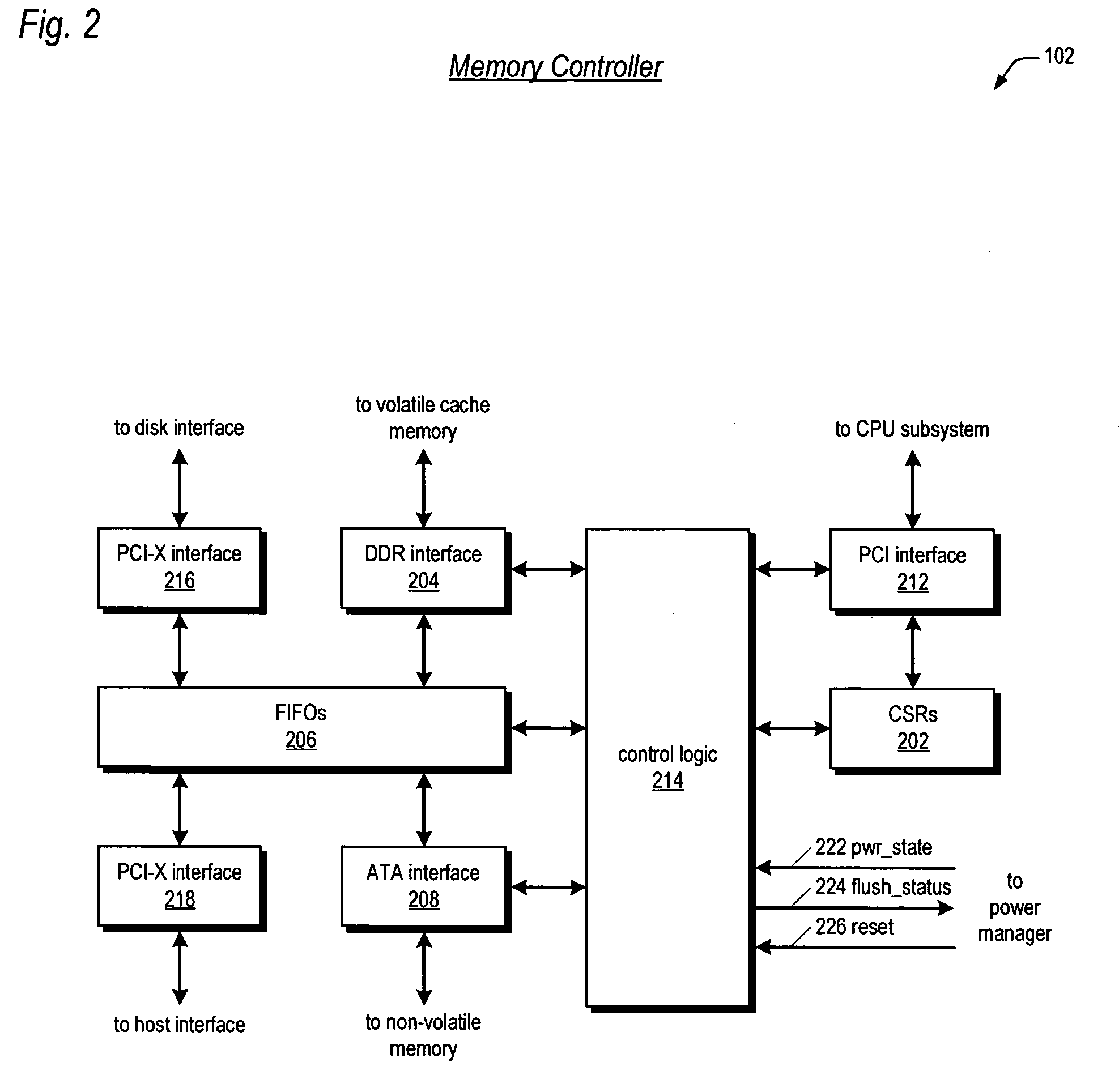

Raid controller using capacitor energy source to flush volatile cache data to non-volatile memory during main power outage

ActiveUS20080215808A1Reduces amount of energy storage capacity requirementLess expensiveEnergy efficient ICTError detection/correctionRAIDMemory controller

A write-caching RAID controller includes a CPU that manages transfers of posted-write data from host computers to a volatile memory and transfers of the posted-write data from the volatile memory to a redundant array of storage devices when a main power source is supplying power to the RAID controller. A memory controller transfers the posted-write data received from the host computers to the volatile memory and transfers the posted-write data from the volatile memory for transfer to the redundant array of storage devices as managed by the CPU. The memory controller flushes the posted-write data from the volatile memory to the non-volatile memory when main power fails, during which time capacitors provide power to the memory controller, volatile memory, and non-volatile memory, but not to the CPU, in order to reduce the energy storage requirements of the capacitors. During main power provision, the CPU programs the memory controller with information needed to perform the flush operation, such as the location and size of the posted-write data in the volatile memory and various flush operation characteristics.

Owner:DOT HILL SYST

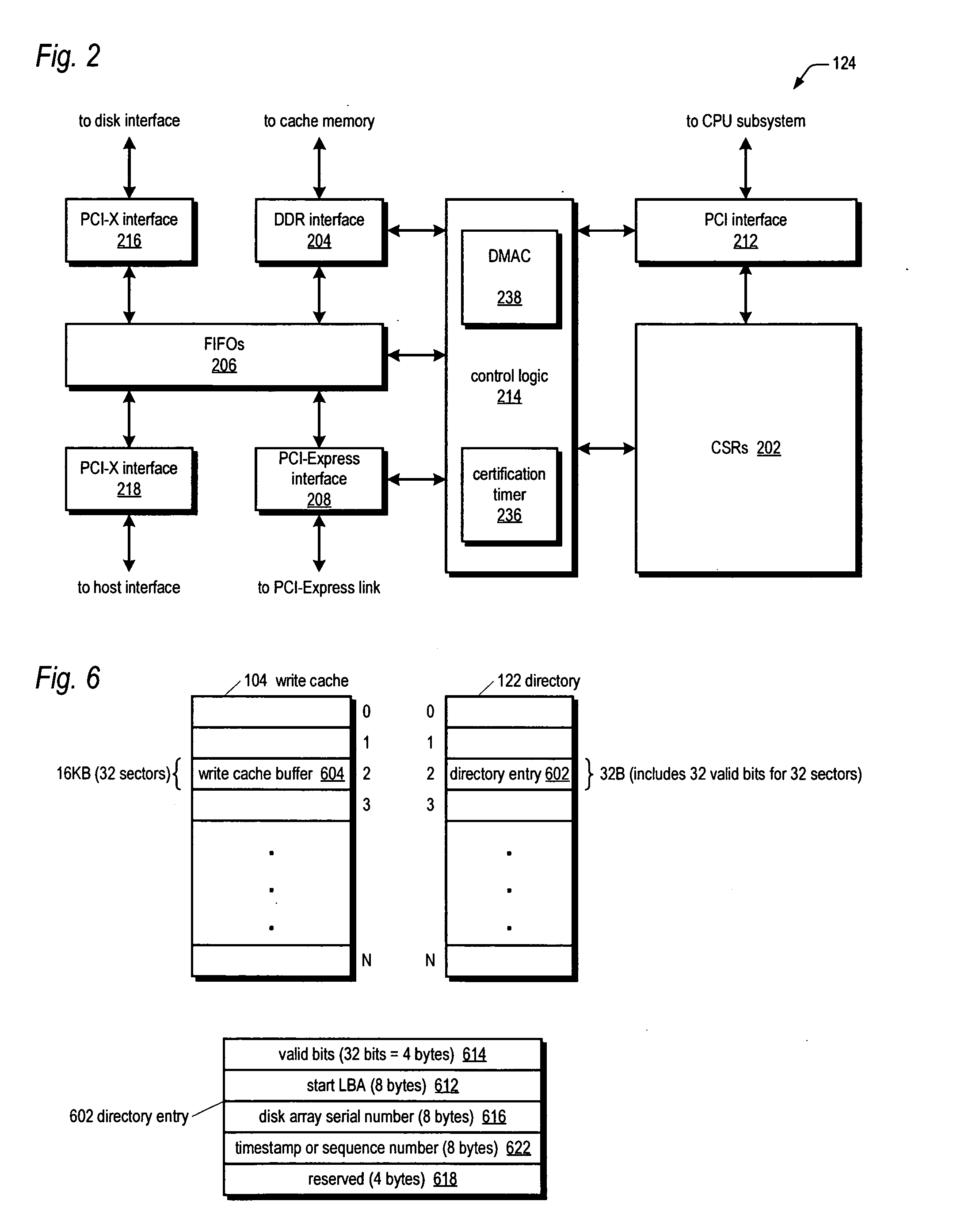

Certified memory-to-memory data transfer between active-active raid controllers

InactiveUS20060106982A1Easy to operateMemory loss protectionError detection/correctionRAIDPCI Express

A system for performing an efficient mirrored posted-write operation having first and second RAID controllers in communication via a PCI-Express link is disclosed. The first bus bridge transmits a PCI-Express memory write request TLP to the second bus bridge. The TLP header includes an indication of whether the first CPU requests a certification that certifies the payload data has been written to the second write cache memory. If the indication requests the certification, the second bus bridge automatically transmits the certification to the first bus bridge independent of the second CPU, after writing the payload data to the second write cache memory. The first bus bridge generates an interrupt to the first CPU in response to receiving the certification. The certified transfer may be used to validate and / or invalidate mirrored copies of a write cache directory on the RAID controllers, among other uses.

Owner:DOT HILL SYST

RAID system for performing efficient mirrored posted-write operations

InactiveUS20060277347A1Reduce needMemory architecture accessing/allocationError detection/correctionRAIDPCI Express

A bus bridge on a primary RAID controller receives user write data from a host and writes the data to its write cache and also broadcasts the data over a high speed link (e.g., PCI-Express) to a secondary RAID controller's bus bridge, which writes the data to its mirroring write cache. However, before writing the data, the second bus bridge automatically invalidates the cache buffers to which the data is to be written, which alleviates the primary controller's CPU from sending a message to the secondary controller's CPU to instruct it to invalidate the cache buffers. The secondary controller CPU programs its bus bridge at boot time with the base address of its mirrored write cache to enable it to detect that the cache buffer needs invalidating in response to the broadcast write, and with the base address of its directory that includes the cache buffer valid bits.

Owner:DOT HILL SYST

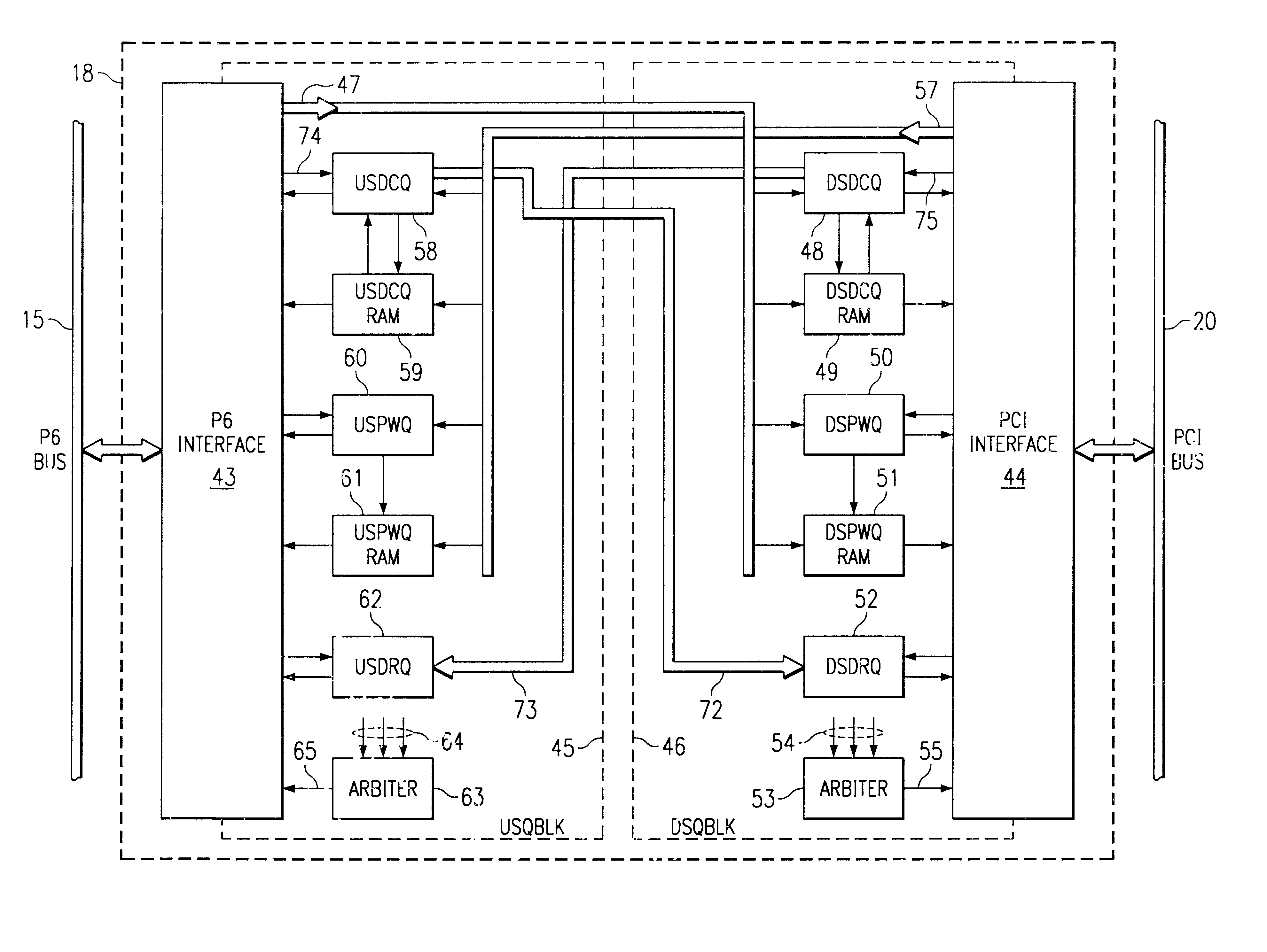

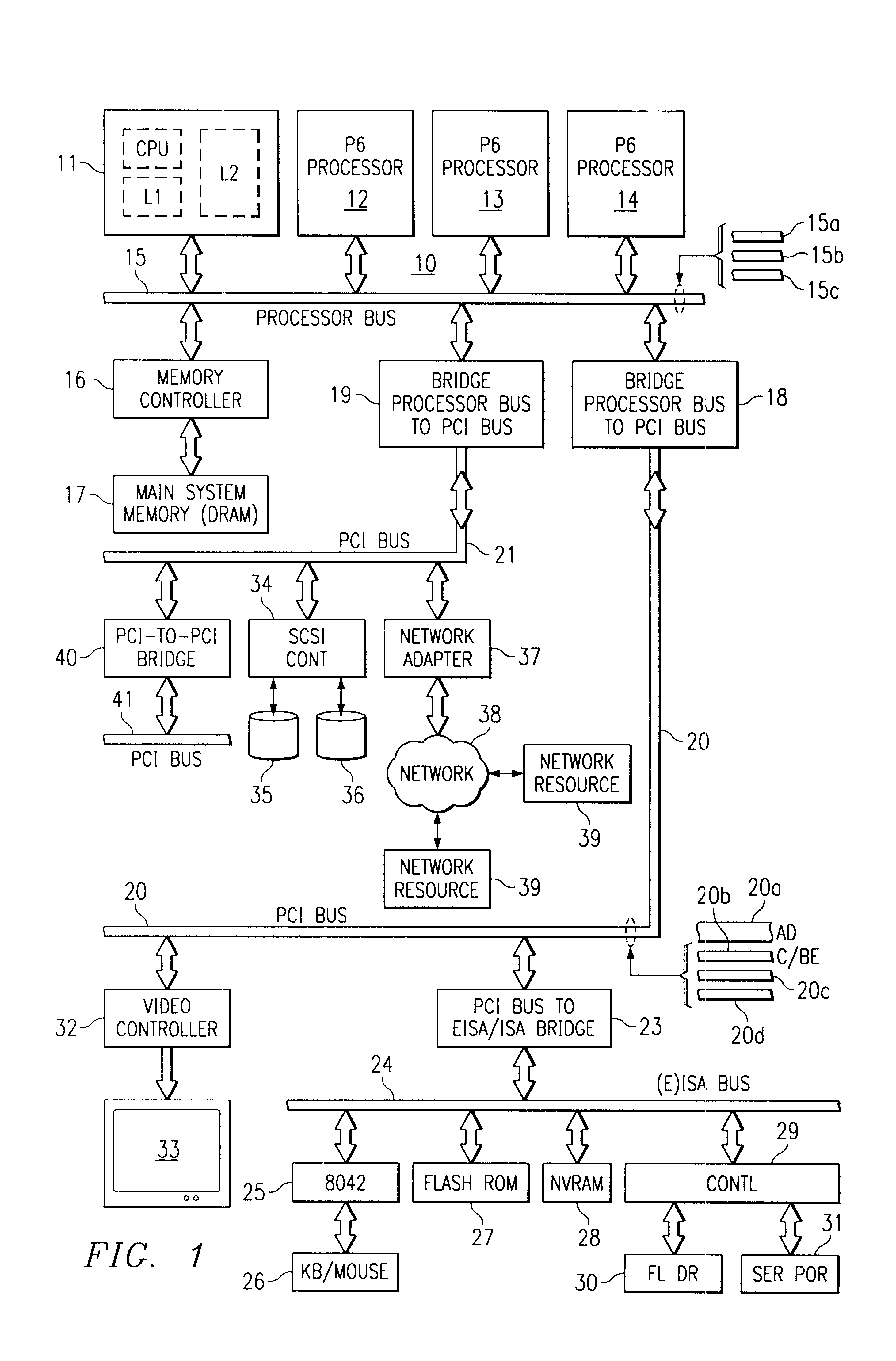

Bus-to-bus bridge in computer system, with fast burst memory range

InactiveUSRE37980E1Way of increaseEasy to handleElectric digital data processingMemory addressComputerized system

A computer system has a processor bus under control of the microprocessor itself, and this bus communicates with main memory, providing high-performance access for most cache fill operations. In addition, the system includes one or more expansion buses, preferably of the PCI type in the example embodiment. A host-to-PCI bridge is used for coupling the processor bus to the expansion bus. Other buses may be coupled to the PCI bus via PCI-to-(E) ISA bridges, for example. The host-to-PCI bridge contains queues for posted writes and delayed read requests. All transactions are queued going through the bridge, upstream or downstream. The system bus is superpipelined, in that transactions overlap. A fast burst transactions are allowed between the bridge and main memory, i.e., requests which can be satisfied without deferring or retrying are applied to the system bus without waiting to get a response from the target. A range of addresses (e.g., system memory addresses) is defined to be a fast burst range, and any address in this range is treated differently compared to addresses outside the range. The bridge is programmed, by configuration cycles, to establish this fast burst range, within which it is known that an out-of-order response will not be received. When a transaction reaches a bridge interface from the PCI bus, and it is recognized that the address is within the fast burst range, then the fast burst mode is allowed, and write or read requests can be issued without waiting for the snoop phase, since there is no possibility of defer or retry.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Configurable accelerated post-write read to manage errors

Data errors in non-volatile memory inevitably increase with usage and with higher density of bits stored per cell. The memory is configured to have a first portion operating with less error but of lower density storage, and a second portion operating with a higher density but less robust storage. An error management provides reading and checking the copy after copying to the second portion. If the copy has excessive error bits, it is repeated in a different location either in the second or first portion. The reading and checking of the copy is accelerated by reading only a sample of it. The sample is selected from a subset of the copy having its own ECC, where the sample selected depends on the count of erase-program cycles that a block has experienced, where different count ranges can use different samples.

Owner:SANDISK TECH LLC

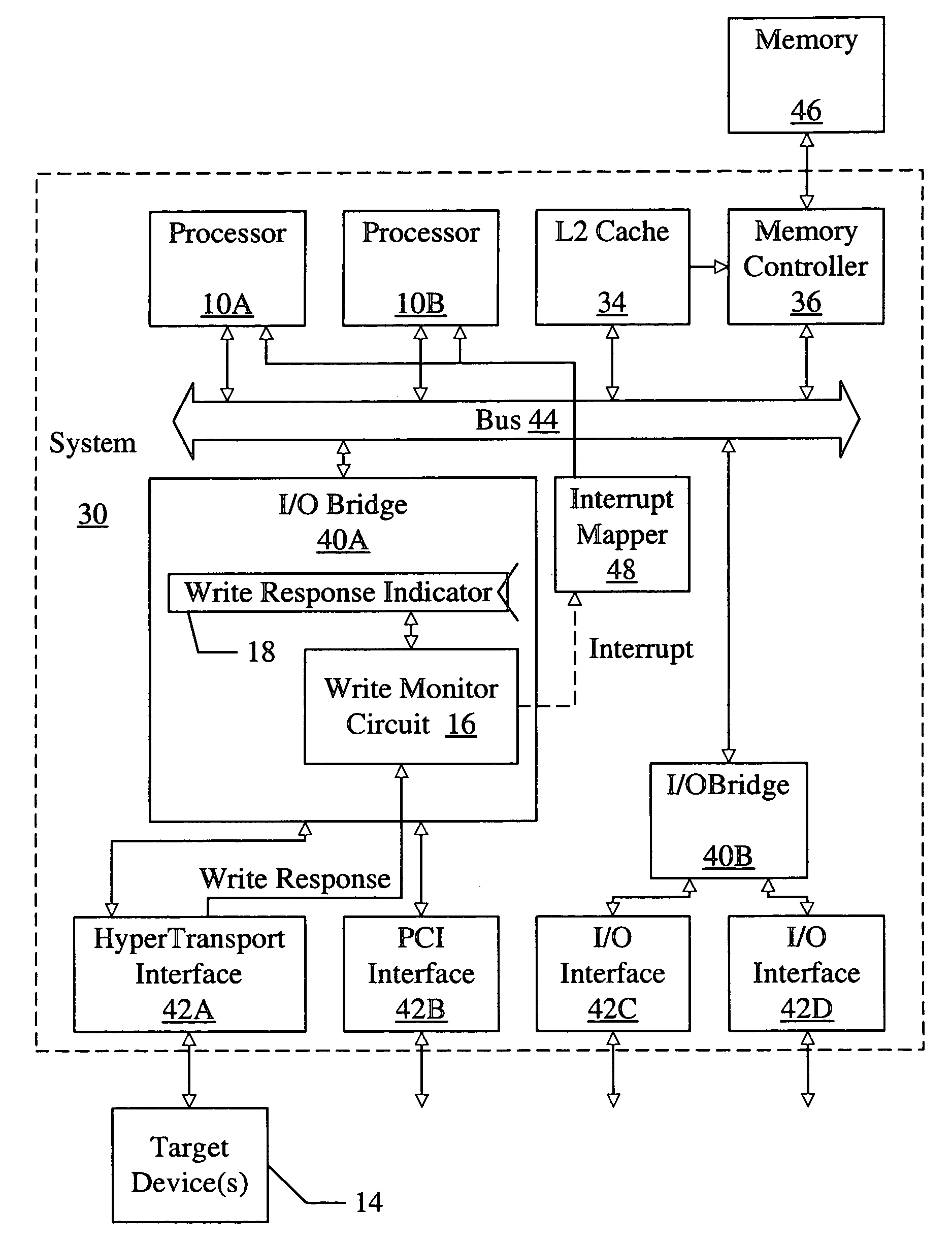

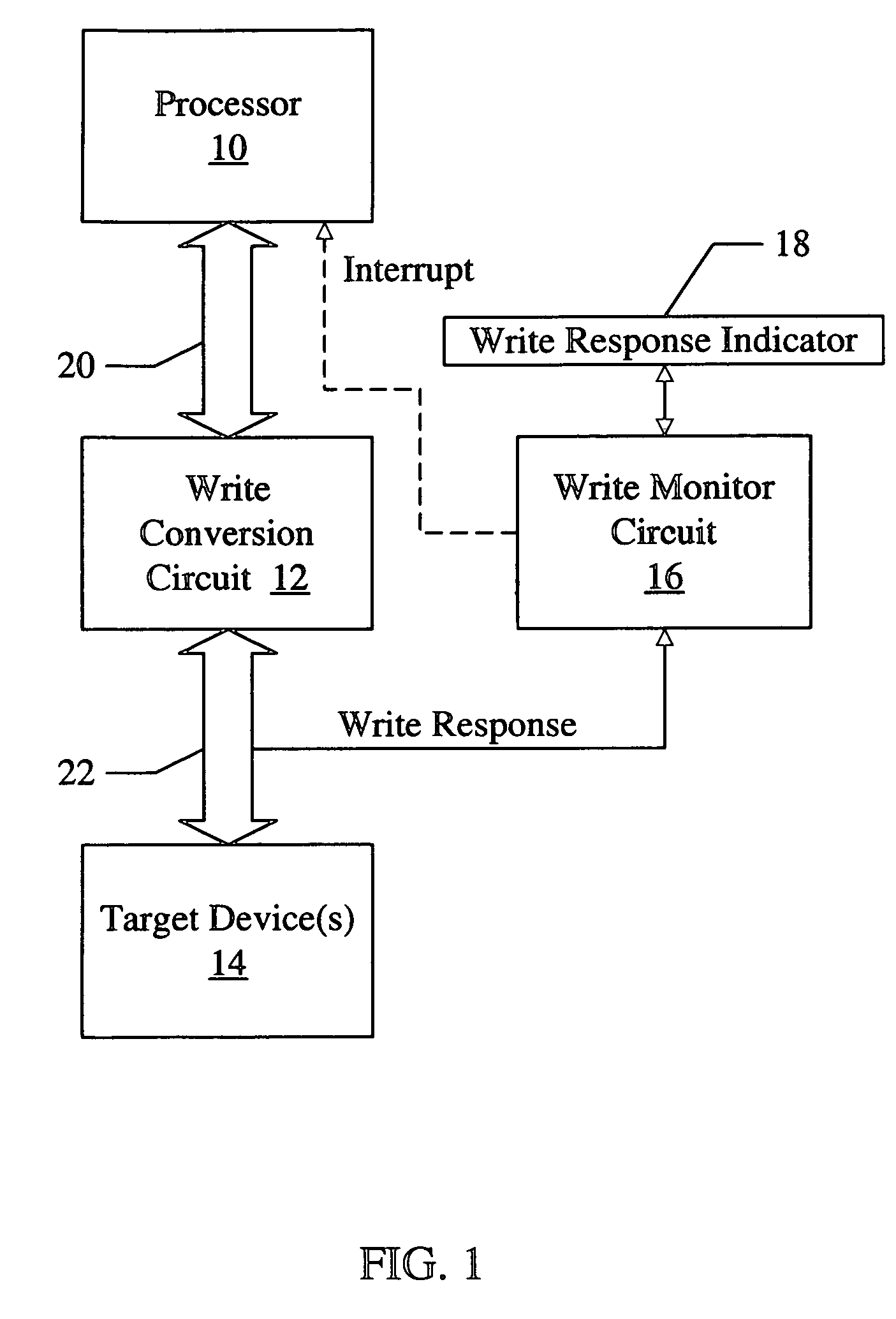

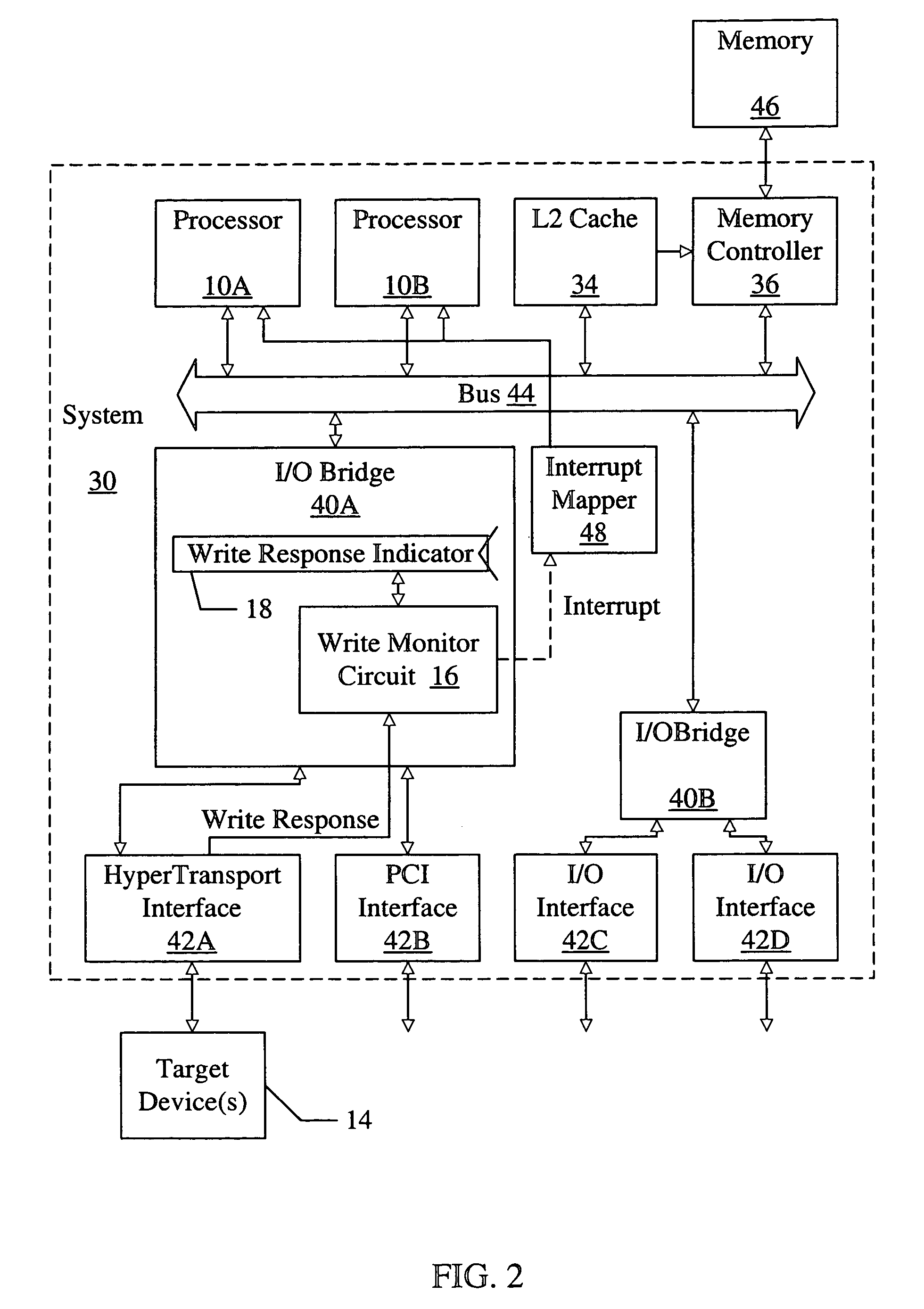

Tracking a non-posted writes in a system using a storage location to store a write response indicator when the non-posted write has reached a target device

ActiveUS7003615B2Memory systemsInput/output processes for data processingLocation trackingOperating system

Owner:AVAGO TECH INT SALES PTE LTD

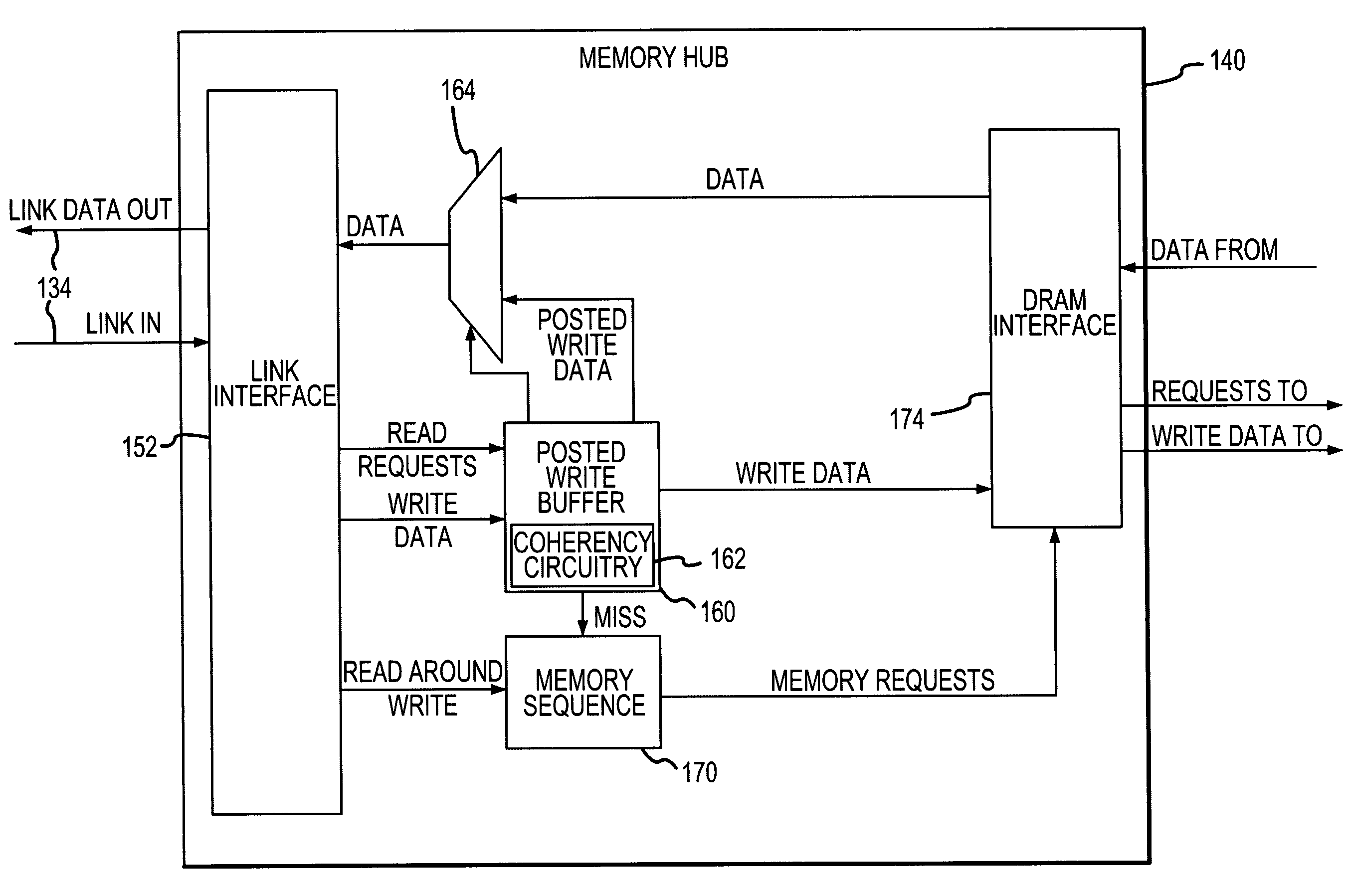

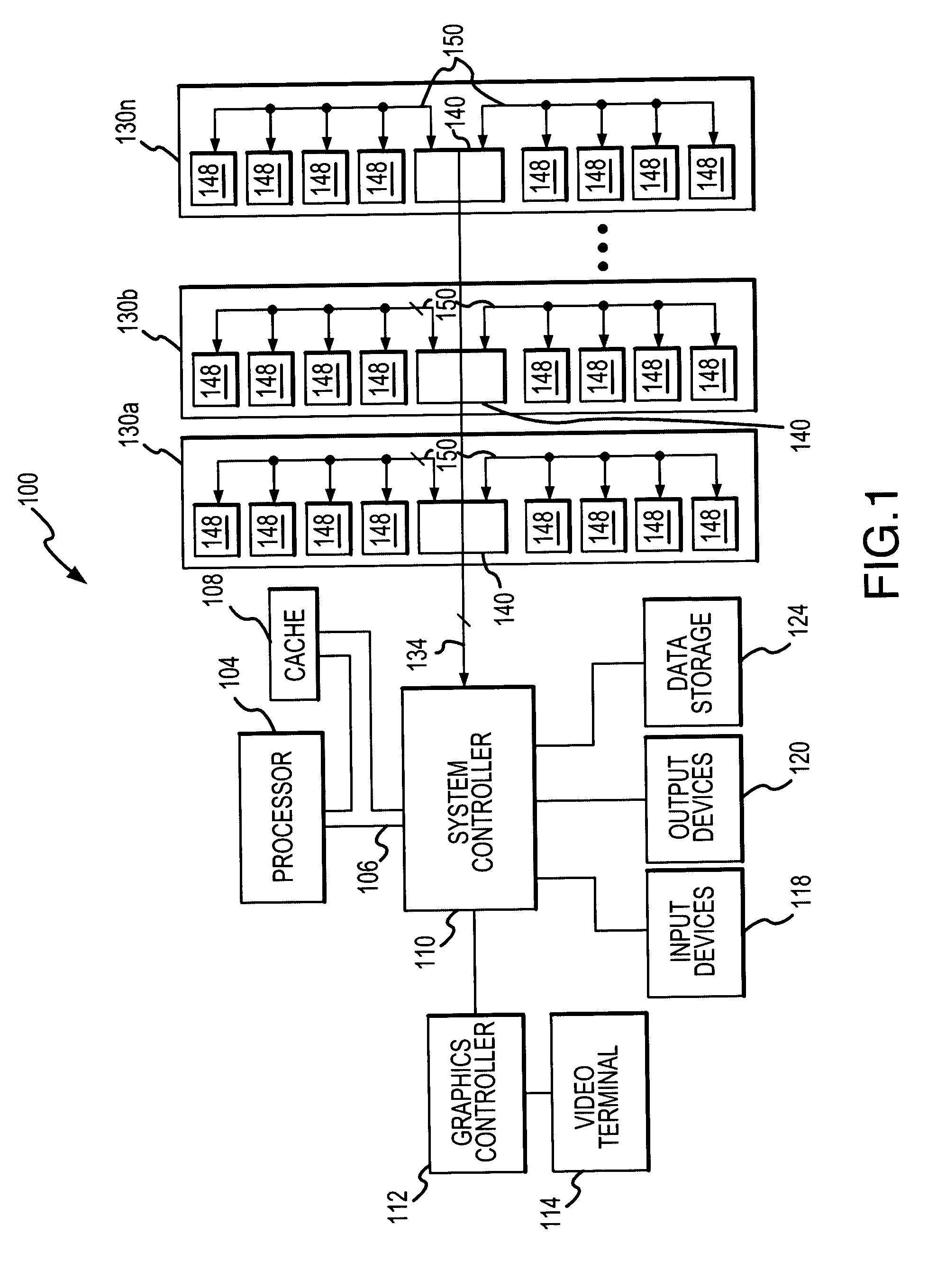

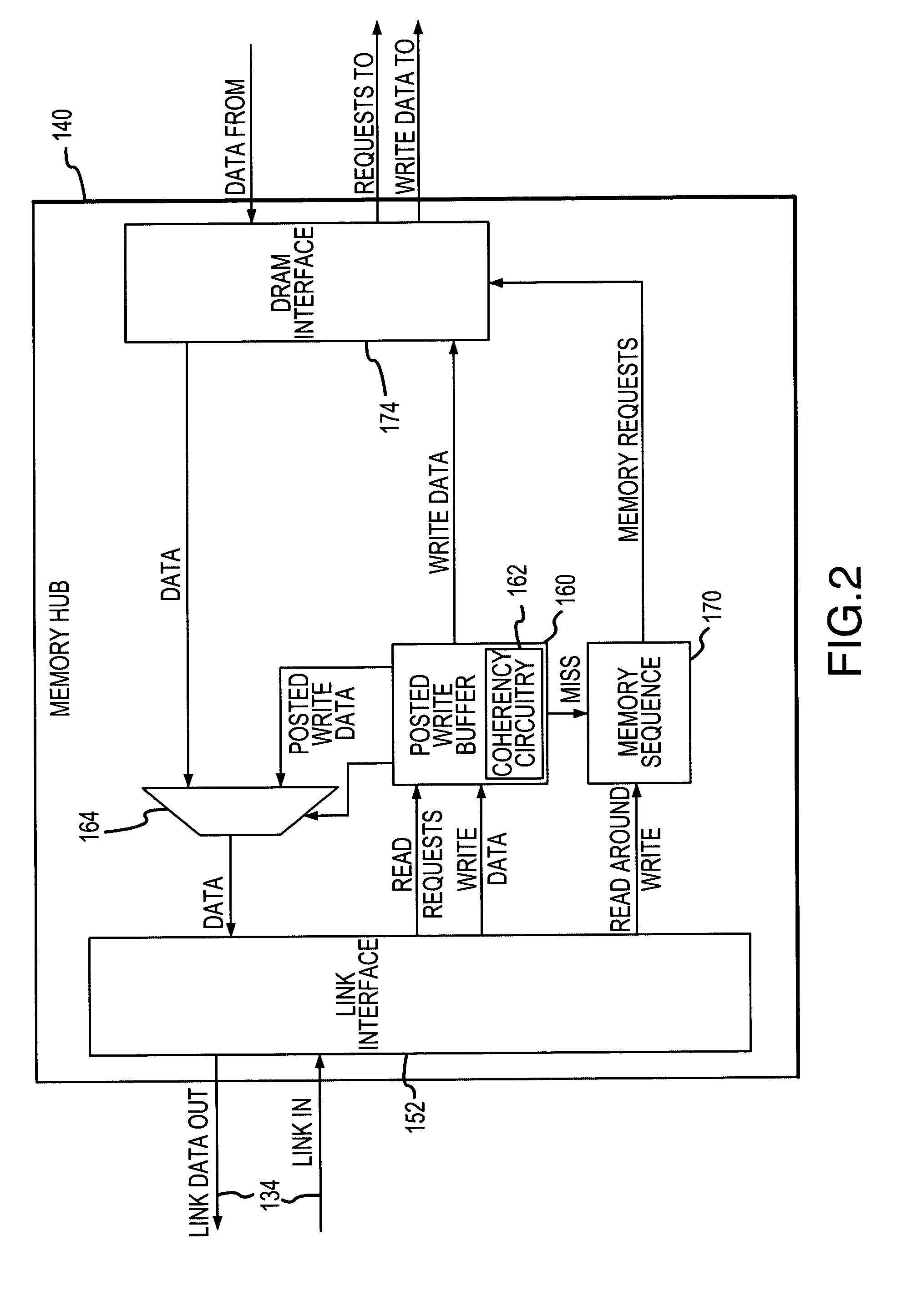

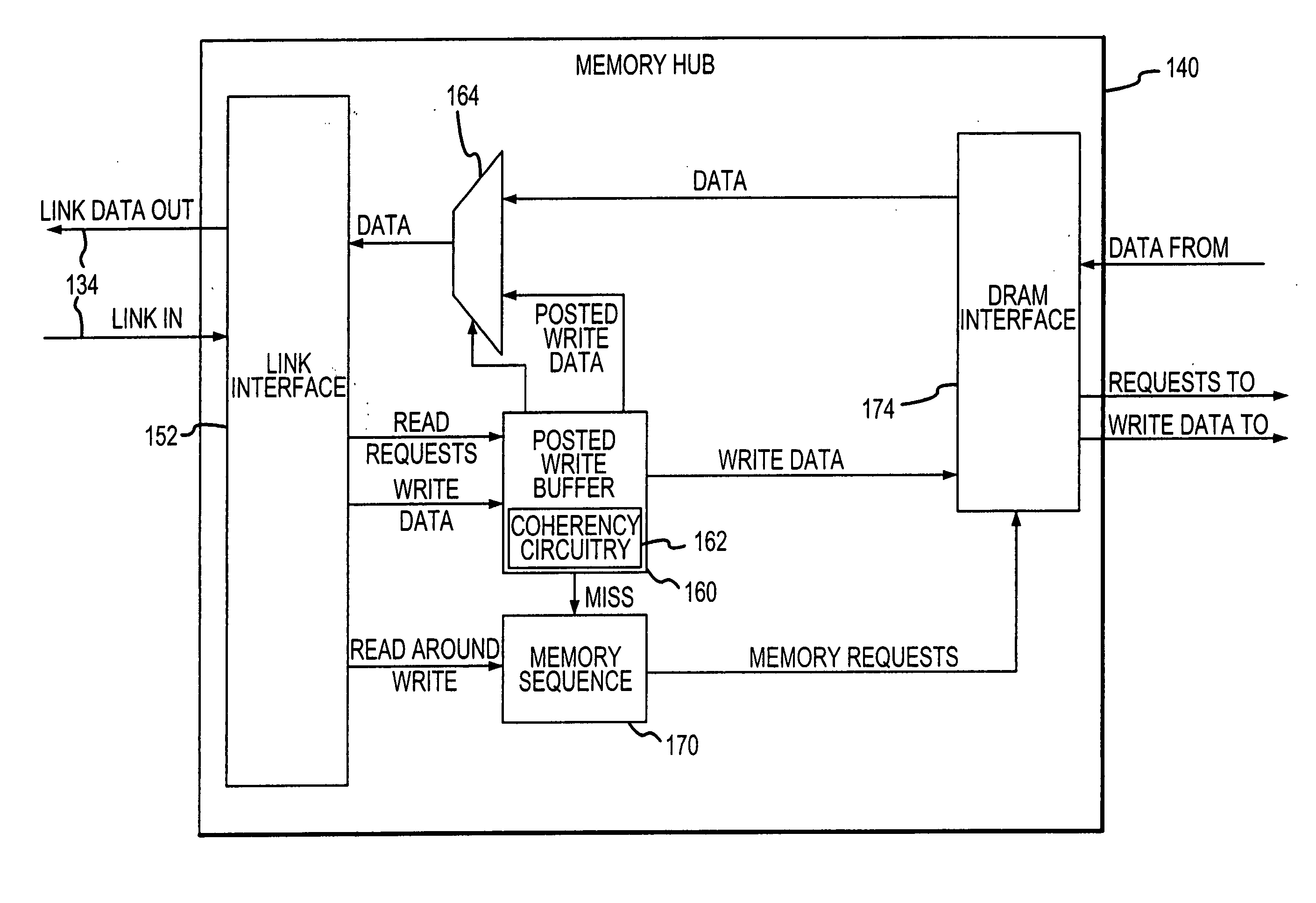

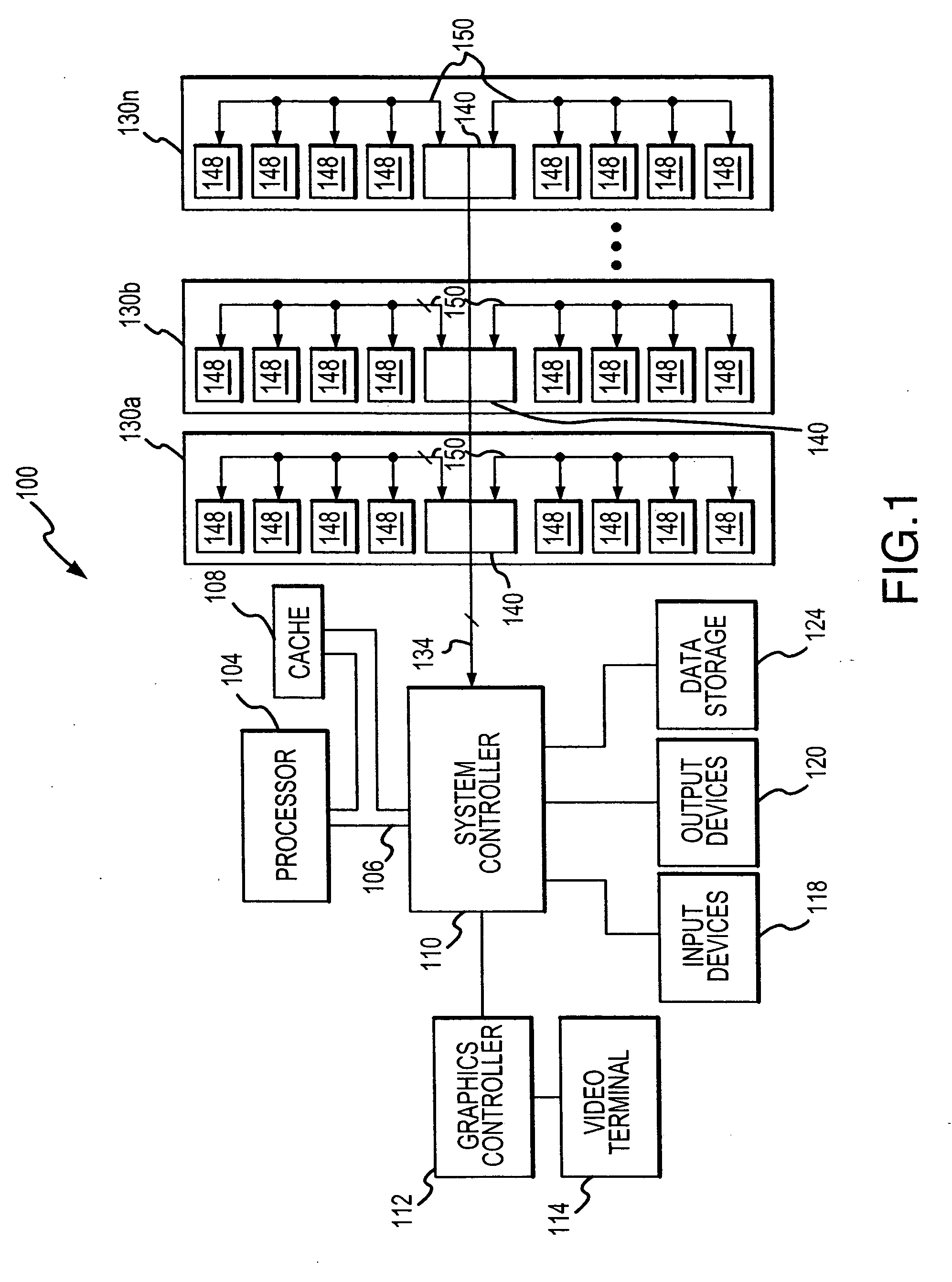

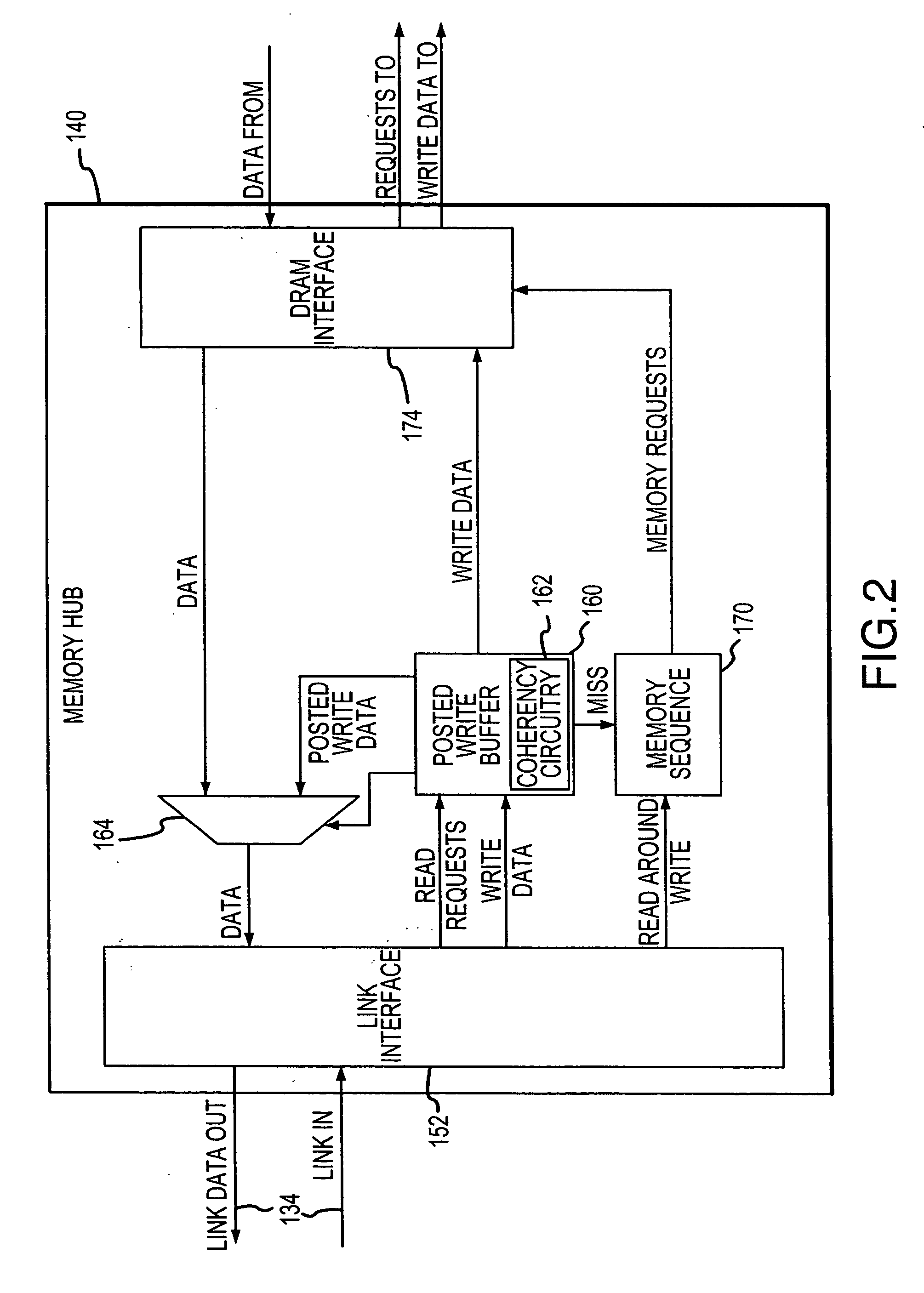

Posted write buffers and methods of posting write requests in memory modules

A memory module includes a memory hub coupled to several memory devices. The memory hub includes a posted write buffer that stores write requests so that subsequently issued read requests can first be coupled to the memory devices. The write request addresses are also posted in the buffer and compared to subsequent read request addresses. In the event of a positive comparison indicating that a read request is directed to an address to which an earlier write request was directed, the read data are provided from the buffer. When the memory devices are not busy servicing read request, the write requests can be transferred from the posted write buffer to the memory devices. The write requests may also be accumulated in the posted write buffer until either a predetermined number of write requests have been accumulated or the write requests have been posted for a predetermined duration.

Owner:ROUND ROCK RES LLC

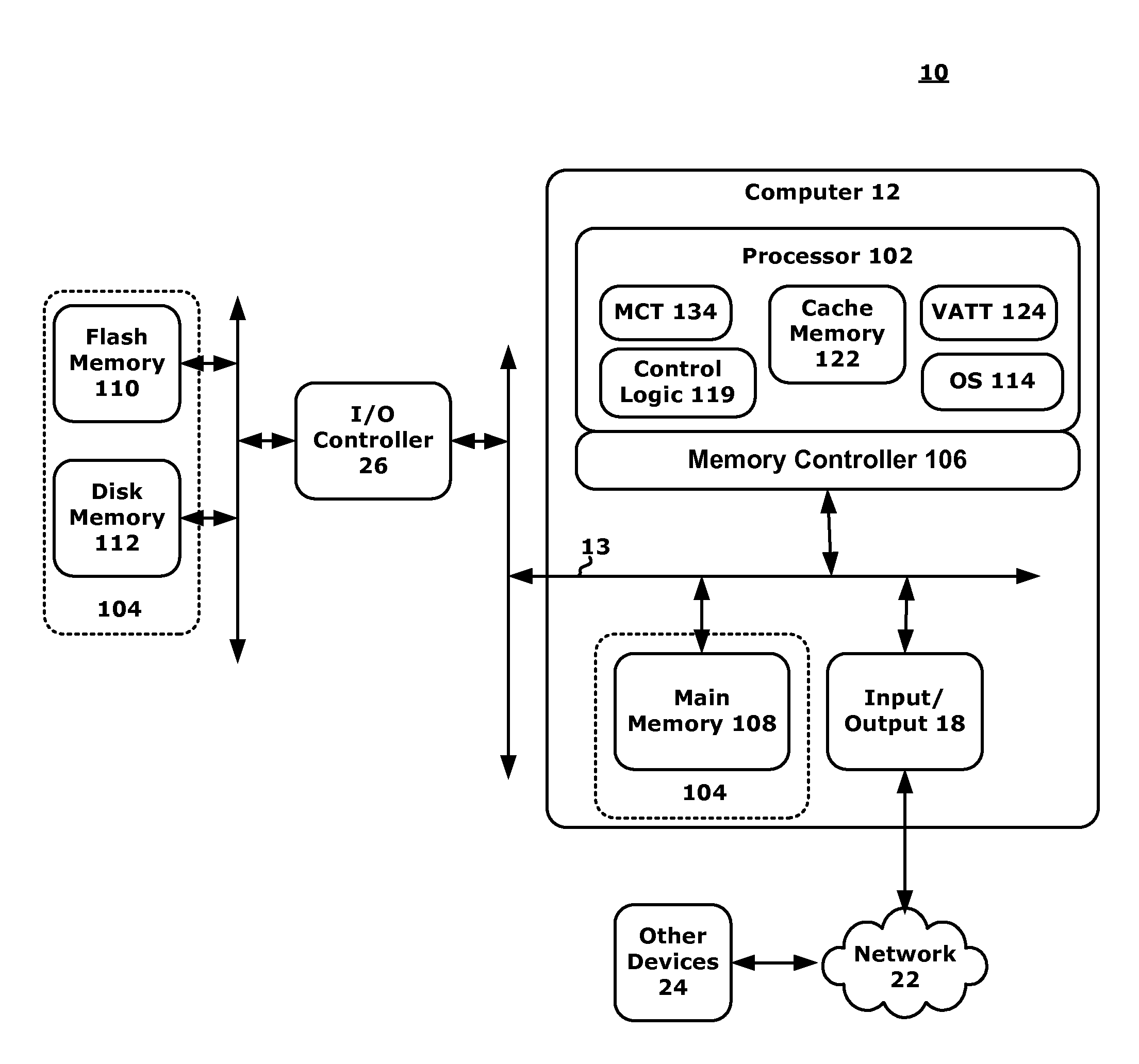

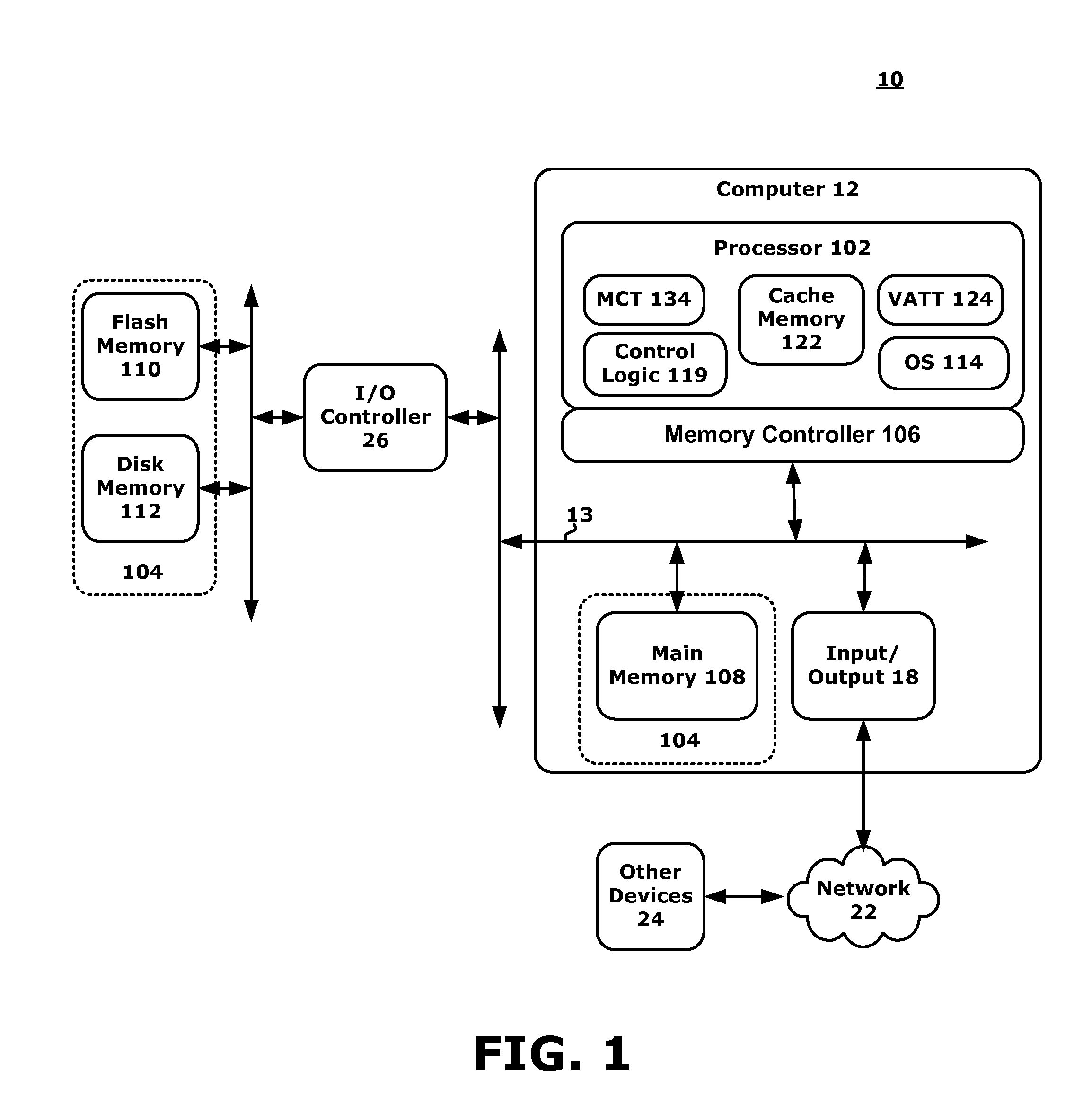

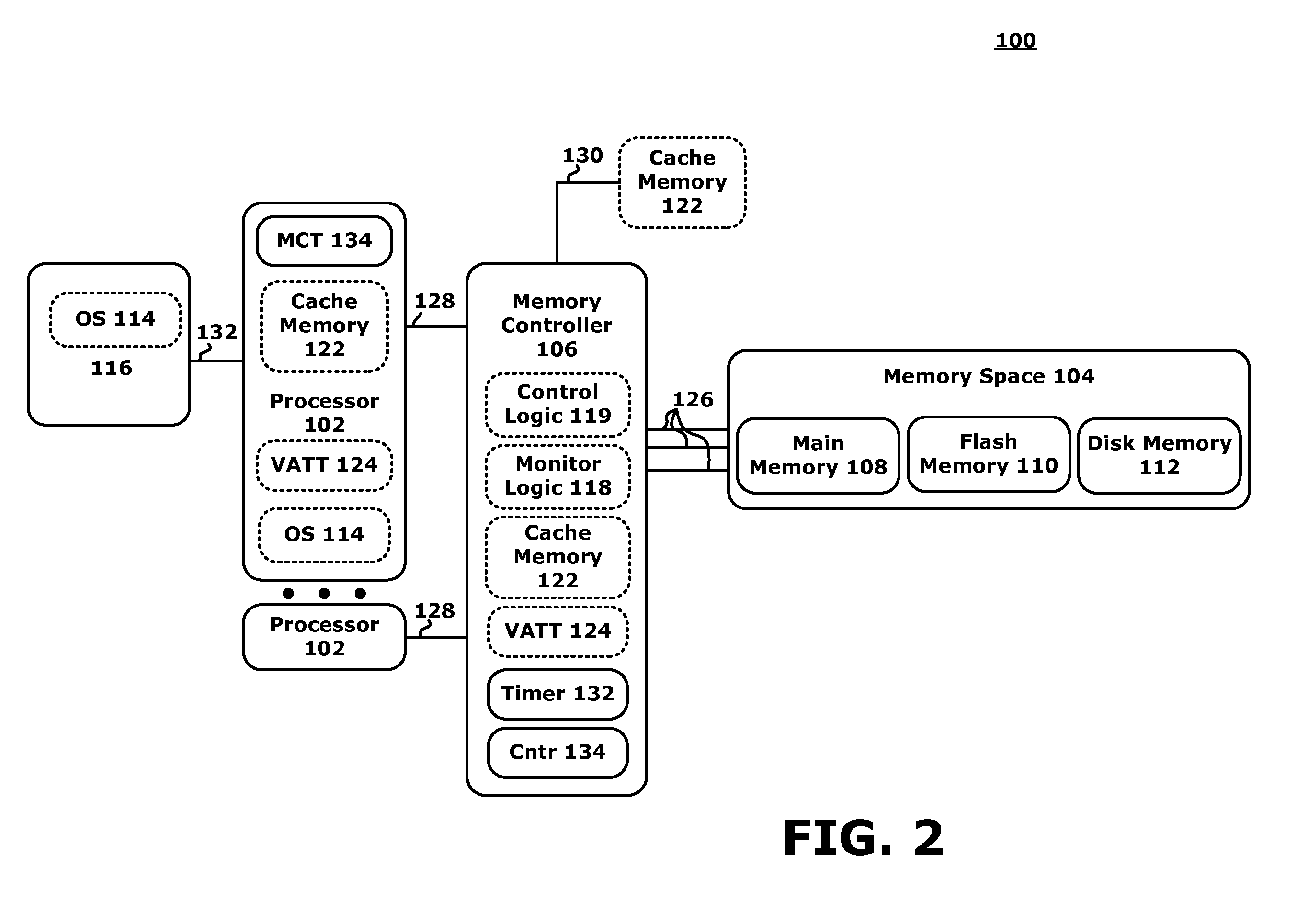

Memory Architecture with Policy Based Data Storage

InactiveUS20120066473A1Efficient storageEnergy efficient ICTMemory adressing/allocation/relocationMemory typeMemory controller

A computing system and methods for memory management are presented. A memory or an I / O controller receives a write request where the data two be written is associated with an address. Hint information may be associated with the address and may relate to memory characteristics such as an historical, O / S direction, data priority, job priority, job importance, job category, memory type, I / O sender ID, latency, power, write cost, or read cost components. The memory controller may interrogate the hint information to determine where (e.g., what memory type or class) to store the associated data. Data is therefore efficiently stored within the system. The hint information may also be used to track post-write information and may be interrogated to determine if a data migration should occur and to which new memory type or class the data should be moved.

Owner:IBM CORP

Non-volatile memory and method with post-write read and adaptive re-write to manage errors

ActiveUS8214700B2Small marginReduce memory costRead-only memoriesDigital storageHigh densityQuality assurance

Data errors in non-volatile memory inevitably increase with usage and with higher density of bits stored per cell. For acceptable quality assurance, conventional error correction codes (“ECC”) have to correct a maximum number of error bits up to the far tail end of a statistical population. The present memory is configured to have a first portion operating with less error but of lower density storage, and a second portion operating with a higher density but less robust storage. If excessive error bits (at the far tail-end) occur after writing a group of data to the second portion, the data is adaptively rewritten to the first portion which will produce less error bits. Preferably, the data is initially written to a cache also in the first portion to provide source data for any rewrites. Thus, a more efficient ECC not requiring to correcting for the far tail end can be used.

Owner:SANDISK TECH LLC

Data recovery for defective word lines during programming of non-volatile memory arrays

The recovery of data during programming, such as in the case of a broken word-line, is considered. The arrangement described assumes that k pages may be corrupted when the system finishes programming a block. Then these corrupted pages can be recovered using an erasure code. In order to recover any k pages, the system will compute and temporarily store k parity pages in the controller. These k parity pages may be computed on-the-fly as the data pages are received from the host. After programming the block if a problem is detected in a post-write read, and data in up to k pages is corrupt on some bad word-lines, then the missing data is recovered using the k parity pages that are stored in the controller and using the other non-corrupted pages that are read from the block of the memory array and decoded.

Owner:SANDISK TECH LLC

Method of communicating between modules in a decoding system

InactiveUS20060056517A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningCoprocessorVariable length

Means of communicating between modules in a decoding system. A variable-length decoding accelerator communicates with a core decoder processor via a co-processor interface. In one embodiment, other decoding accelerators, in addition to the variable-length decoder, are adapted to provide status data indicative of their status to a co-processor status register. In another embodiment, a decoding accelerator is controlled by providing commands to the accelerator via posted write operations and polling the accelerator to determine whether the command has been performed. In still another embodiment, a first hardware accelerator communicates with a core decoder processor via a co-processor interface and other decoding accelerators, in addition to the first hardware accelerator, are adapted to provide status data indicative of their status to a co-processor status register.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Posted write buffers and method of posting write requests in memory modules

A memory module includes a memory hub coupled to several memory devices. The memory hub includes a posted write buffer that stores write requests so that subsequently issued read requests can first be coupled to the memory devices. The write request addresses are also posted in the buffer and compared to subsequent read request addresses. In the event of a positive comparison indicating that a read request is directed to an address to which an earlier write request was directed, the read data are provided from the buffer. When the memory devices are not busy servicing read request, the write requests can be transferred from the posted write buffer to the memory devices. The write requests may also be accumulated in the posted write buffer until either a predetermined number of write requests have been accumulated or the write requests have been posted for a predetermined duration.

Owner:ROUND ROCK RES LLC

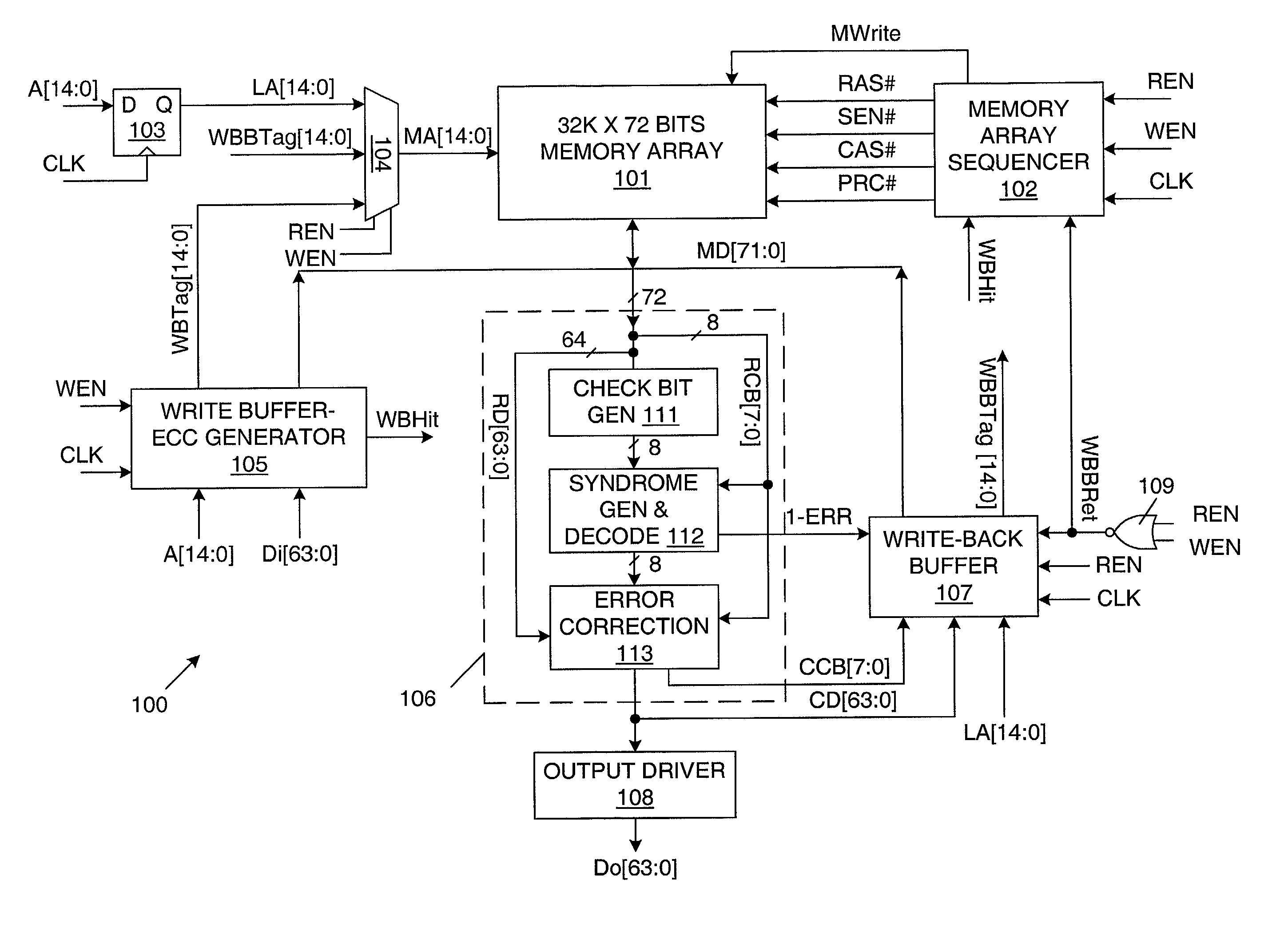

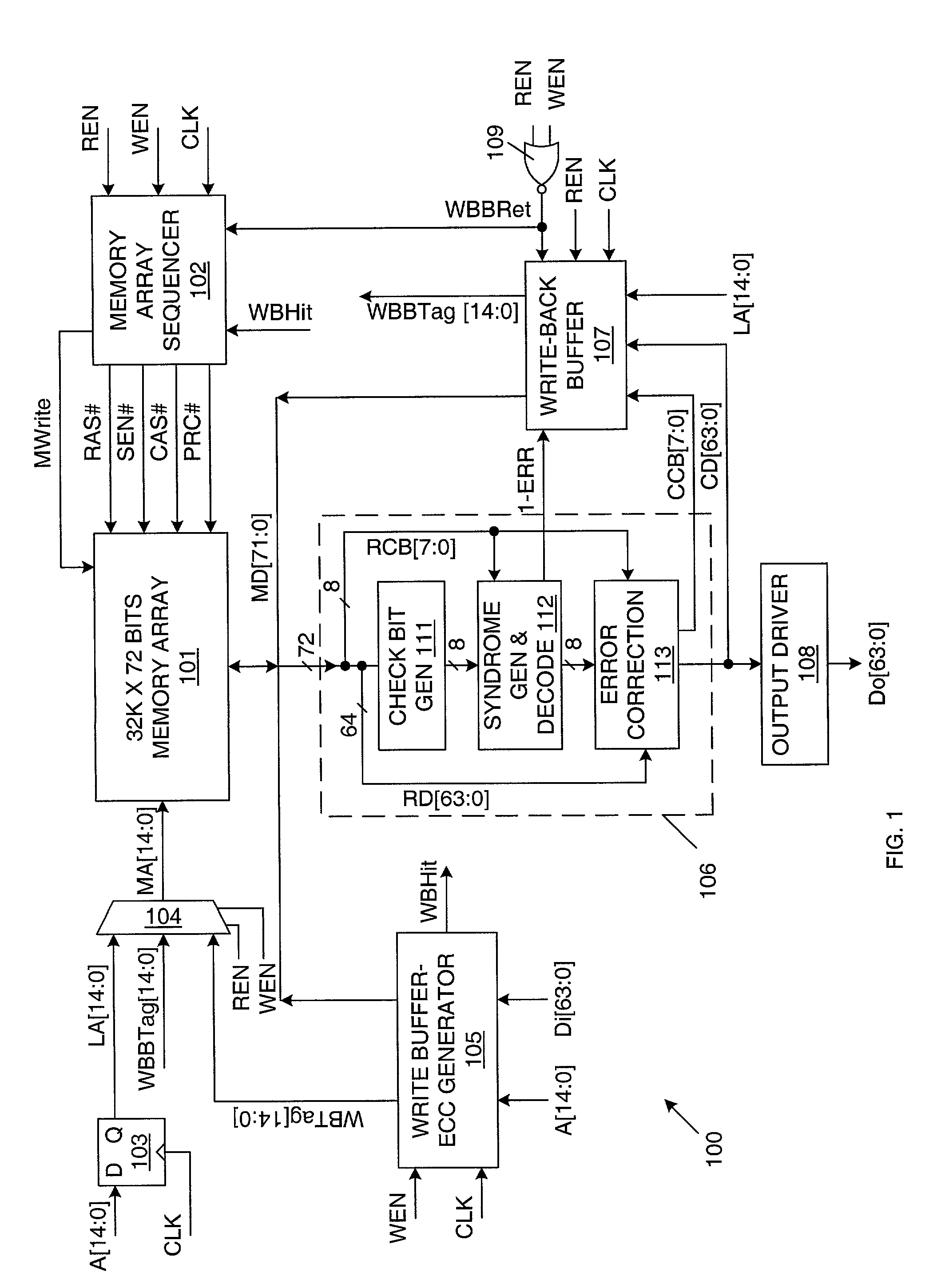

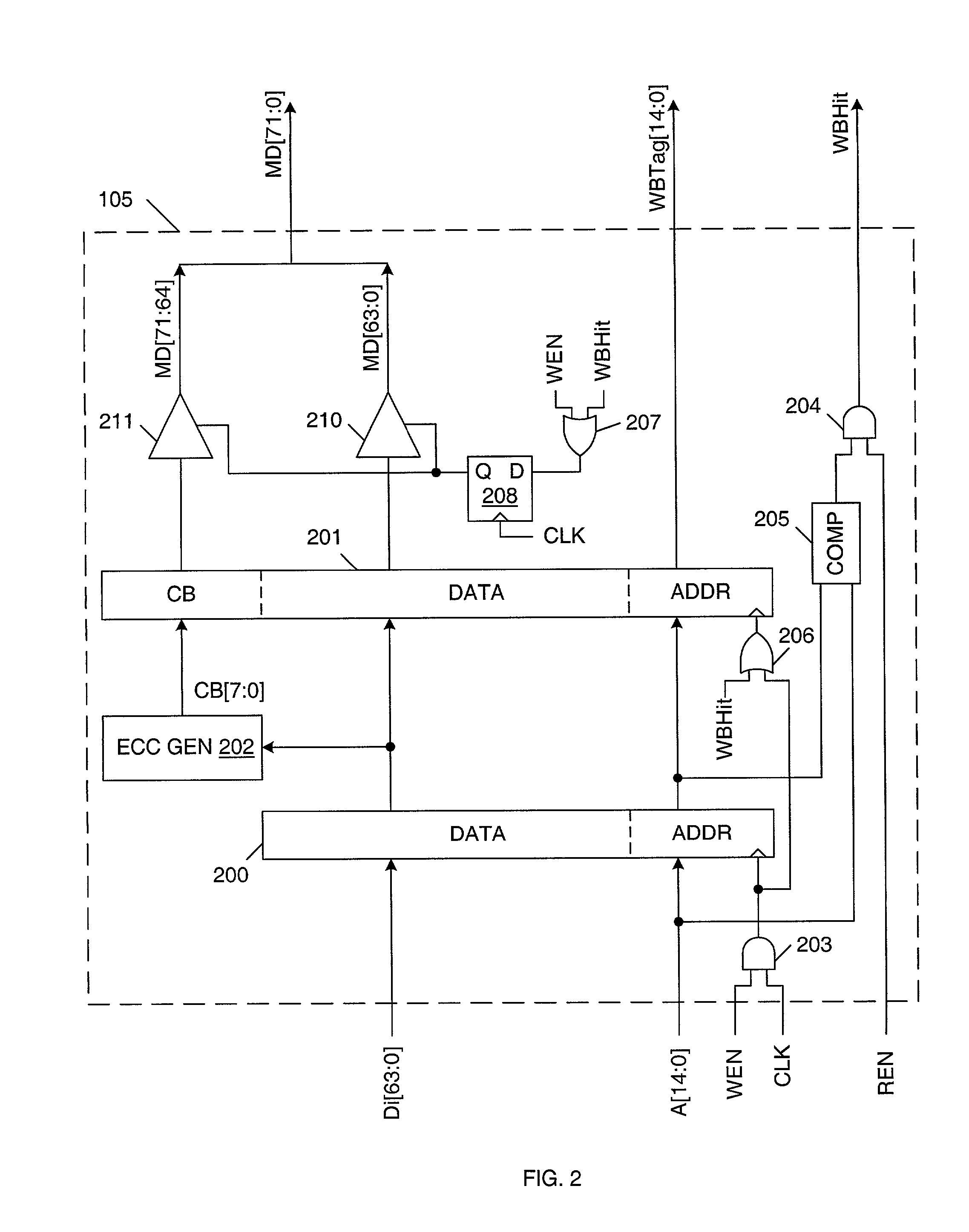

Error correcting memory and method of operating same

InactiveUS7051264B2Shorten the timeEliminate the effects ofCode conversionCoding detailsWrite bufferExternal storage

A memory device that uses error correction code (ECC) circuitry to improve the reliability of the memory device in view of single-bit errors caused by hard failure or soft error. A write buffer is used to post write data, so that ECC generation and memory write array operation can be carried out in parallel. As a result there is no penalty in write latency or memory cycle time due to ECC generation. A write-back buffer is used to post corrected ECC words during read operations, so that write-back of corrected ECC words does not need to take place during the same cycle that data is read. Instead, write-back operations are performed during idle cycles when no external memory access is requested, such that the write back operation does not impose a penalty on memory cycle time or affect memory access latency.

Owner:MOSYS INC

Apparatus and method in a cached raid controller utilizing a solid state backup device for improving data availability time

ActiveUS7380055B2Shorten the timeImprove data availabilityEnergy efficient ICTDigital data processing detailsRAIDControl store

An apparatus for reducing data unavailability time after a loss of main power in a storage controller is described. The controller backs up its volatile memory containing posted-write data to a non-volatile memory upon detecting a loss of main power. The controller continues to provide battery power to the volatile memory to sustain the posted-write data. If the battery is able to supply power to the volatile memory until main power is restored, the controller foregoes restoring the posted-write data to the volatile memory from the non-volatile memory. By not incurring the restore time, which may be substantial if the volatile memory is large since read rates from volatile memories are typically slow, the data unavailability time is reduced. The selective restore feature is user-disableable and also includes a brown-out timer for allowing a user to specify how long to battery-power the volatile memory if the feature is enabled.

Owner:DOT HILL SYST

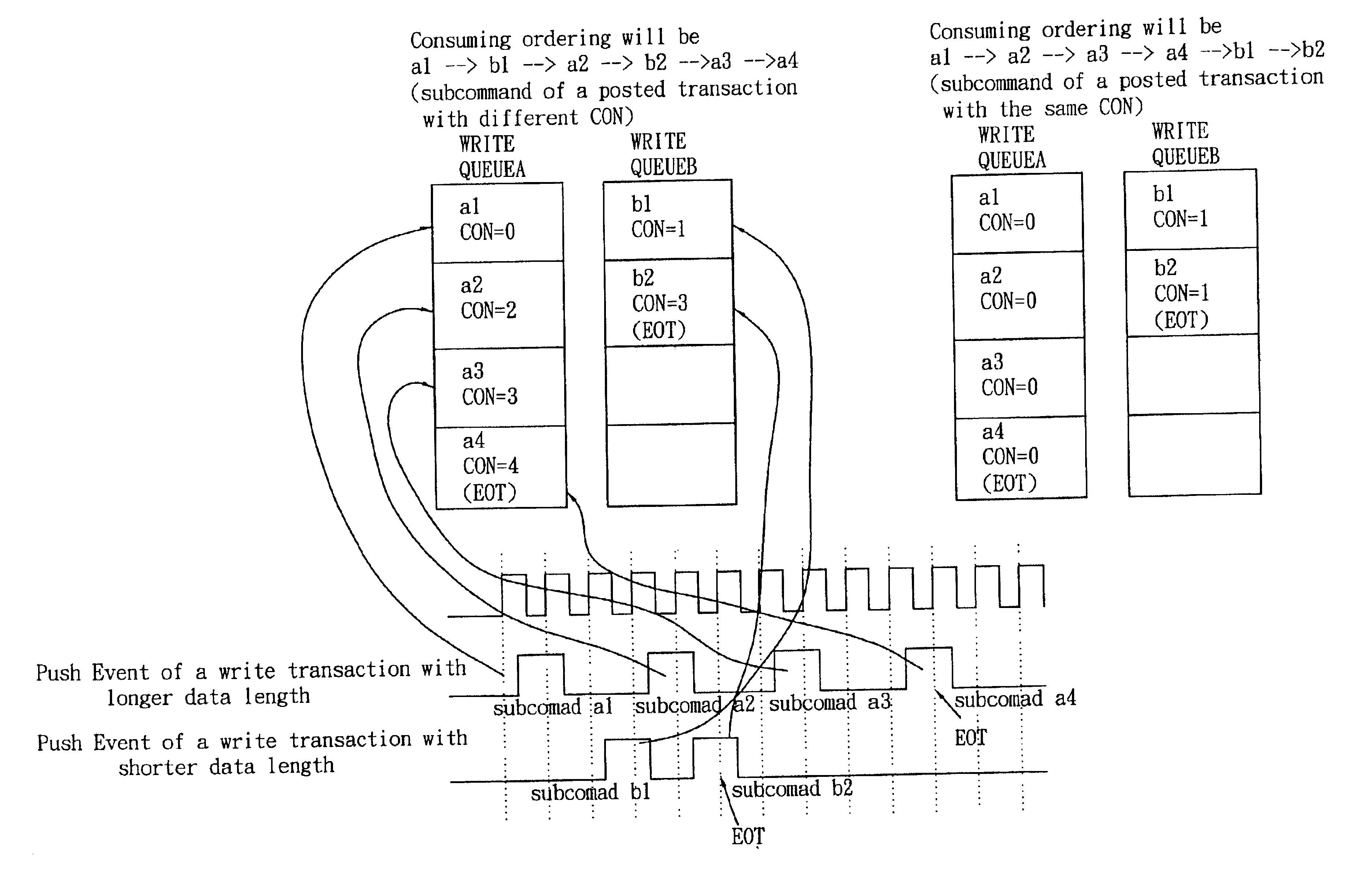

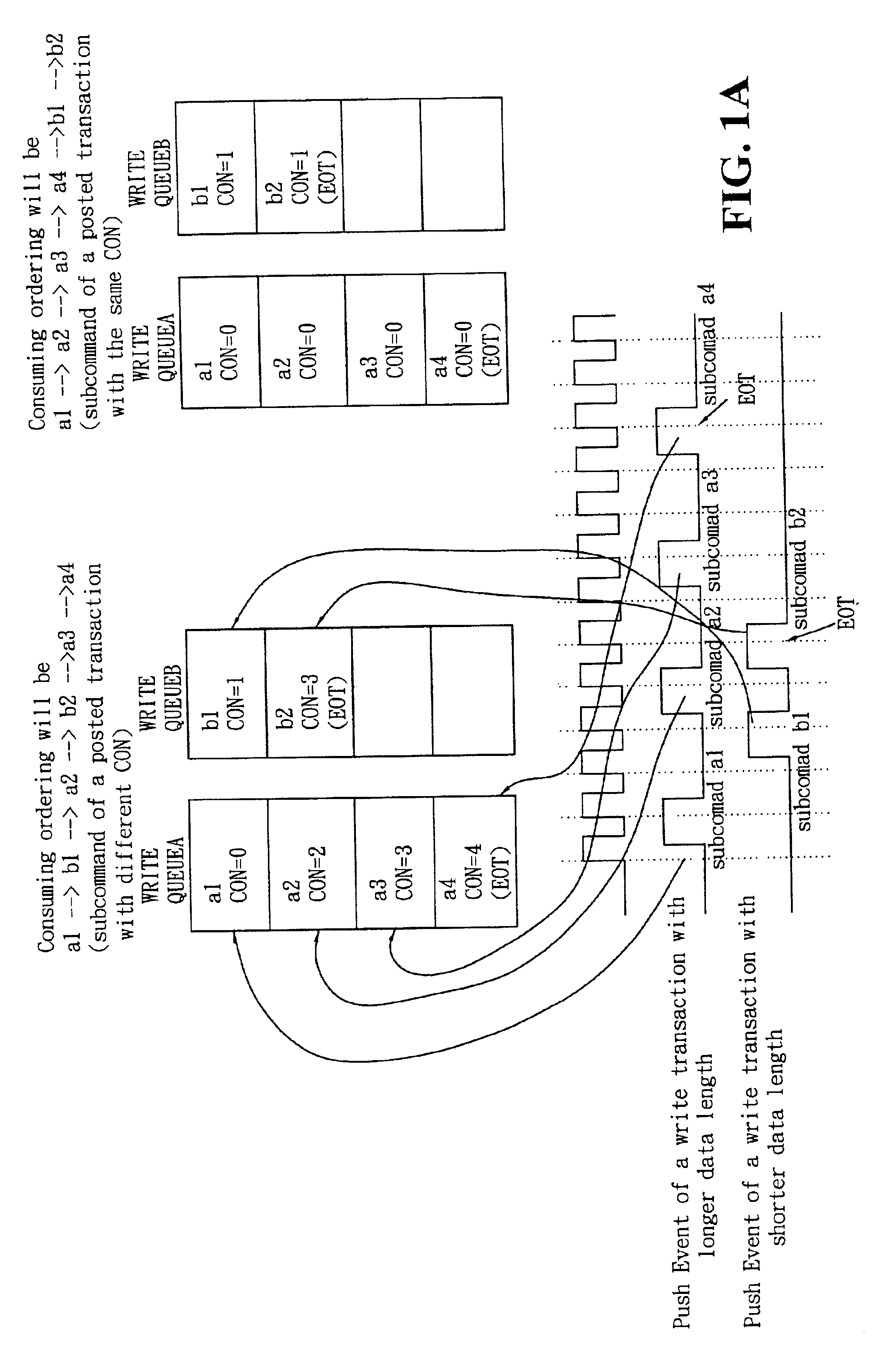

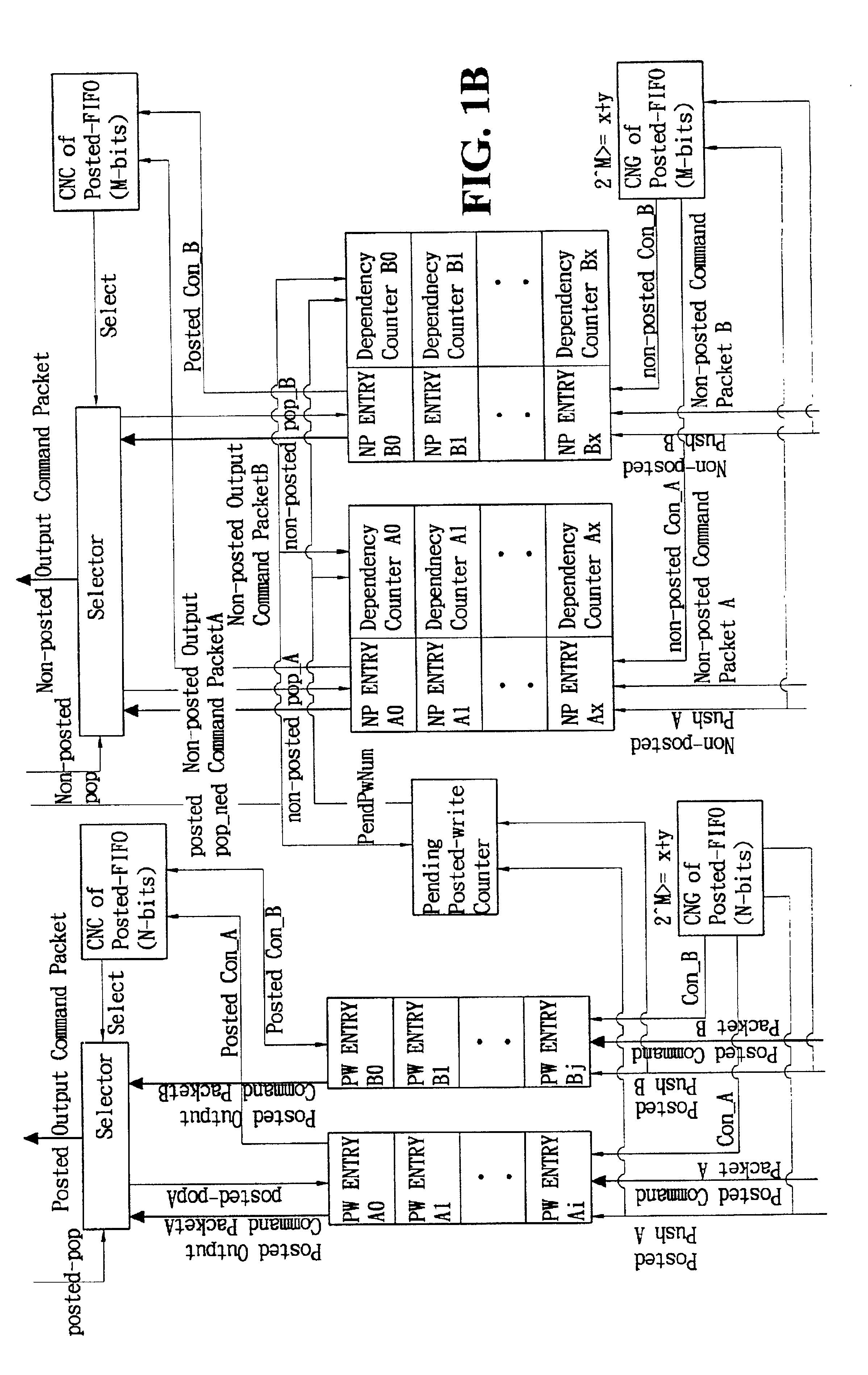

Command order maintenance scheme for multi-in/multi-out FIFO in multi-threaded I/O links

InactiveUS6862673B2Avoid lossOvercomes drawbackMemory systemsInput/output processes for data processingNumber generatorMultiple input

A mechanism for maintaining the first-in first-out order of commands in a multiple-input and multiple-output buffer structure includes a command number generator for generating and assigning a command number to each command entering the buffer structure, and a command number comparator for comparing the command number of the outgoing command at each buffer in the buffer structure to determine which command should exit. Both command number generator and command comparator have a cyclic counter that has a period greater than or equal to the total number of allowable buffer entries in the buffer structure. For maintaining order of posted and non-posted command queues, a pending posted write counter is used in the posted command queue to record the number of pending posted write command and each entry in the non-posted command queue is associated with a dependency counter.

Owner:SILICON INTEGRATED SYSTEMS

Non-volatile memory and method with accelerated post-write read using combined verification of multiple pages

A post-write read operation, using a combined verification of multiple pages of data, is presented. In a simultaneous verification of multiple pages in a block, the controller evaluates a combined function of the multiple pages, instead of evaluating each page separately. In one exemplary embodiment, the combined function is formed by XORing the pages together. Such a combined verification of multiple pages based on the read data can significantly reduce the controller involvement, lowering the required bus and ECC bandwidth for a post-write read and hence allow efficient post-write reads when the number of dies is large.

Owner:SANDISK TECH LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com