Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

136 results about "Multimodal data" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A multimodel database is a data processing platform that supports multiple data models, which define the parameters for how the information in a database is organized and arranged. Being able to incorporate multiple models into a single database lets information technology (IT) teams and other users meet various application requirements without...

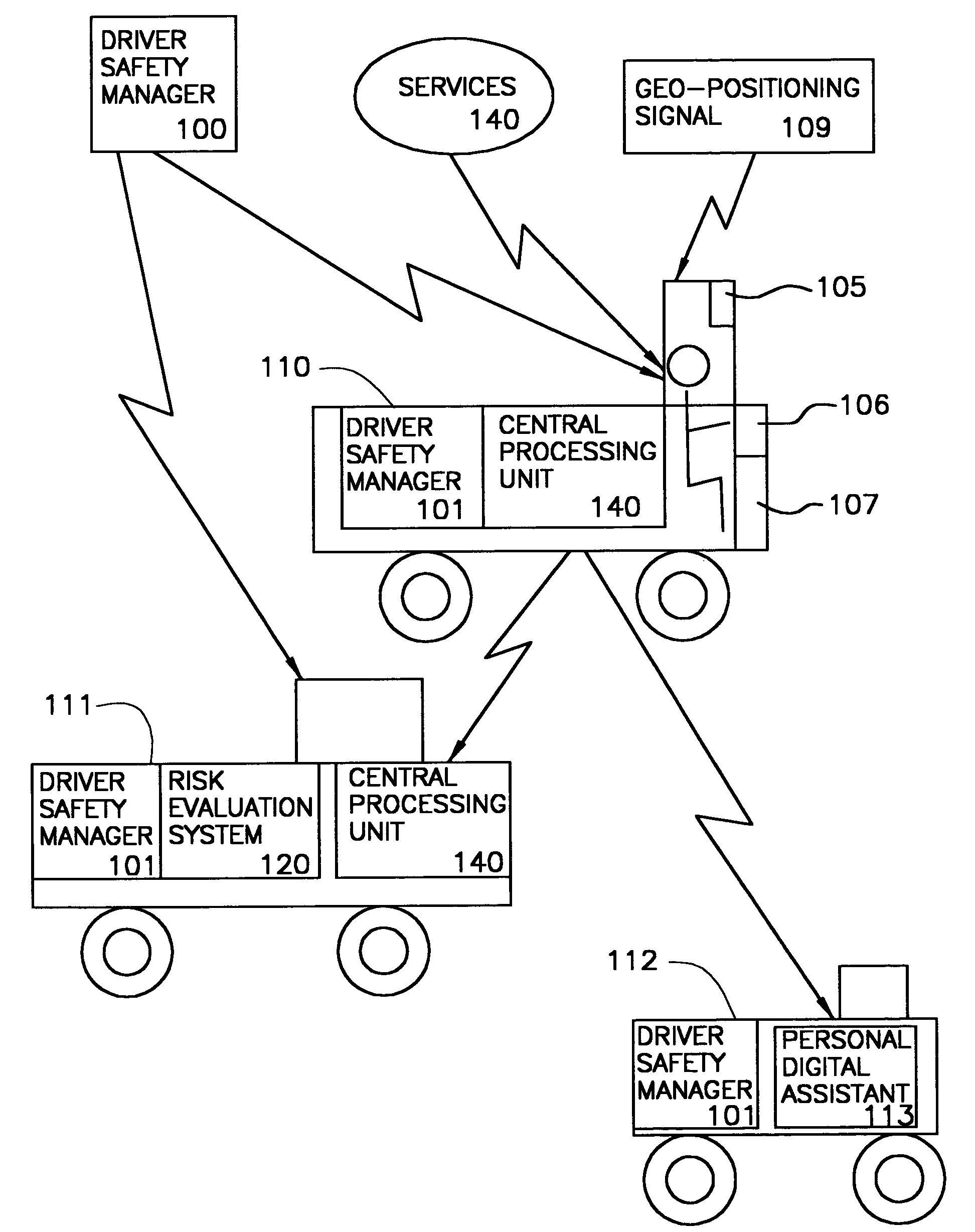

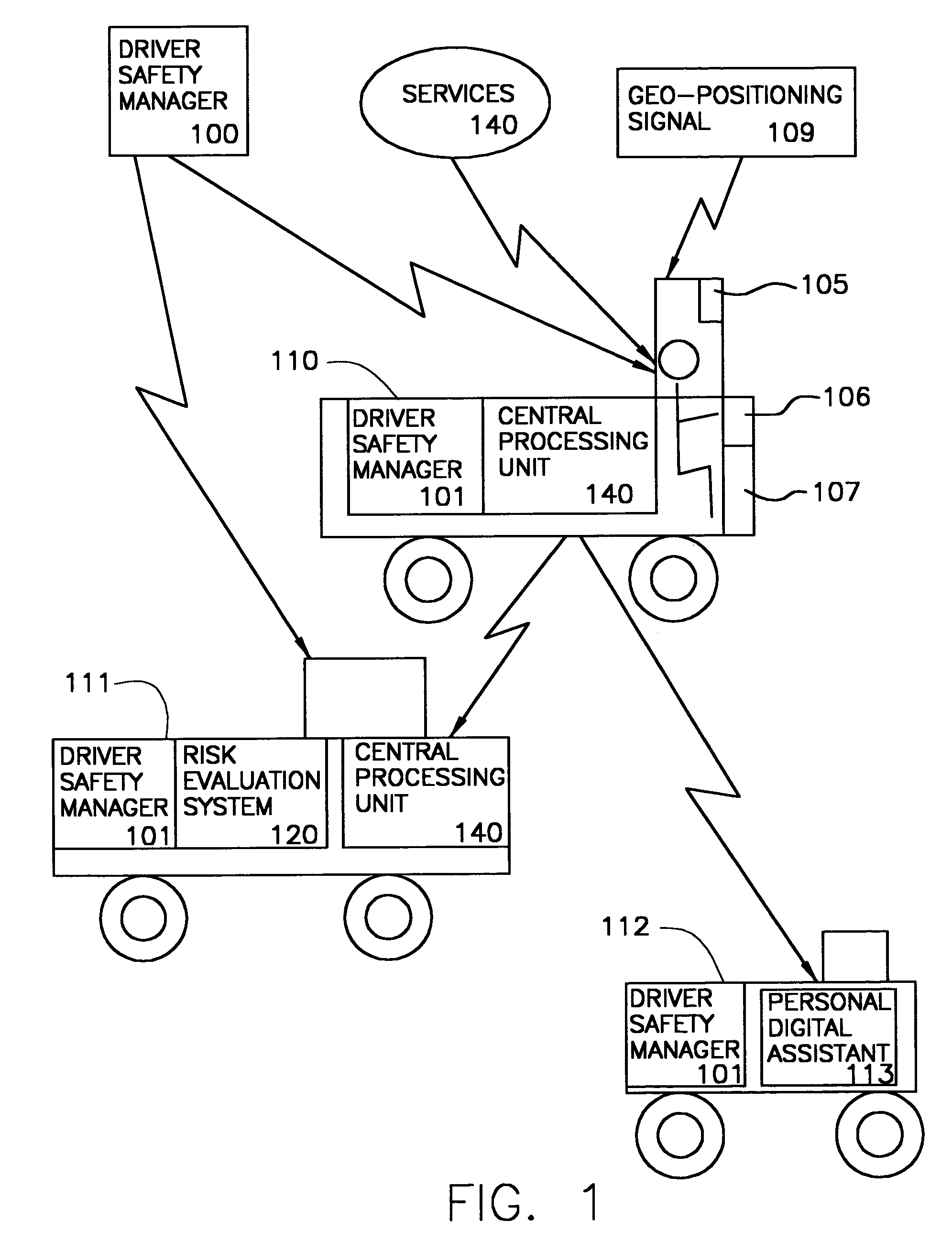

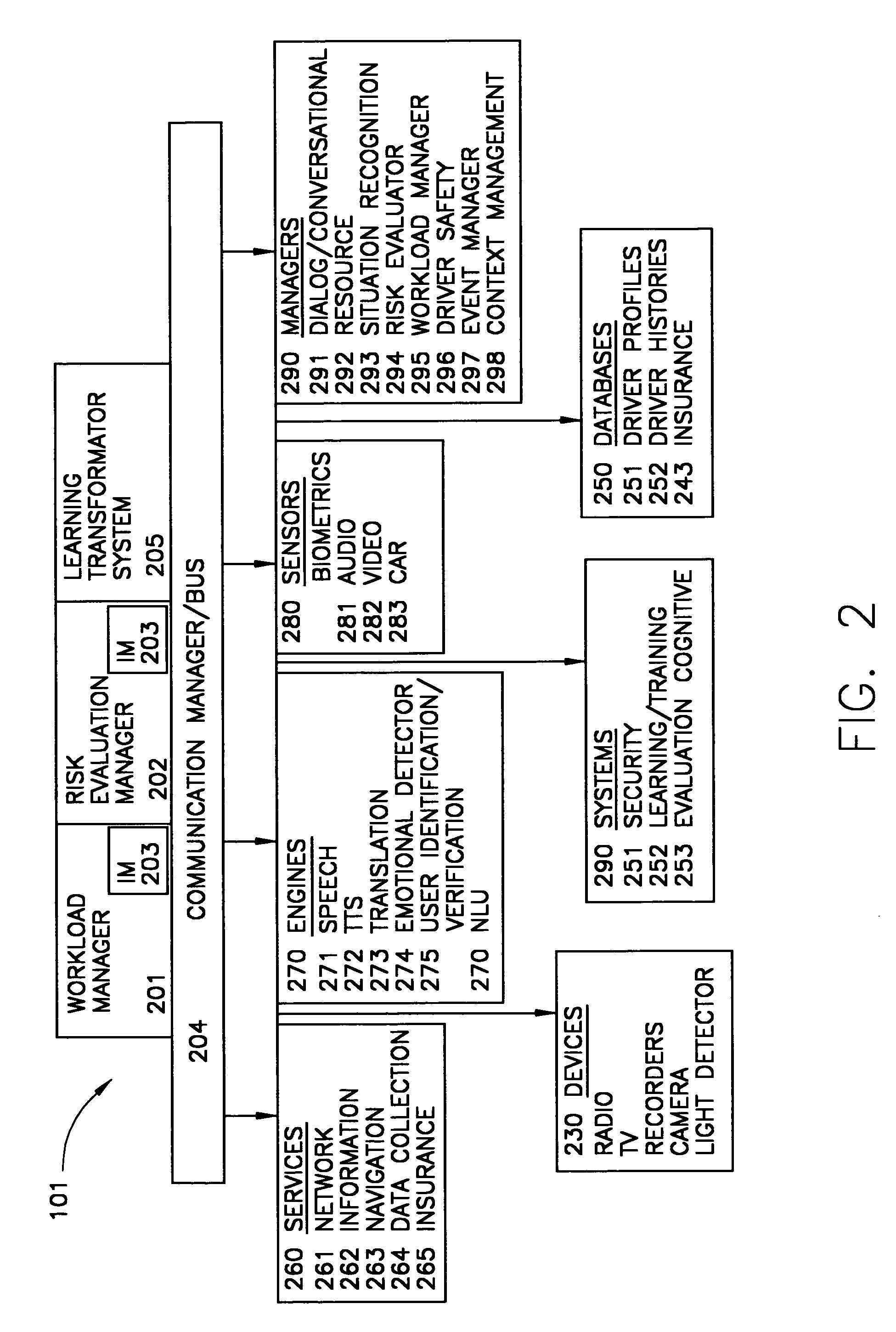

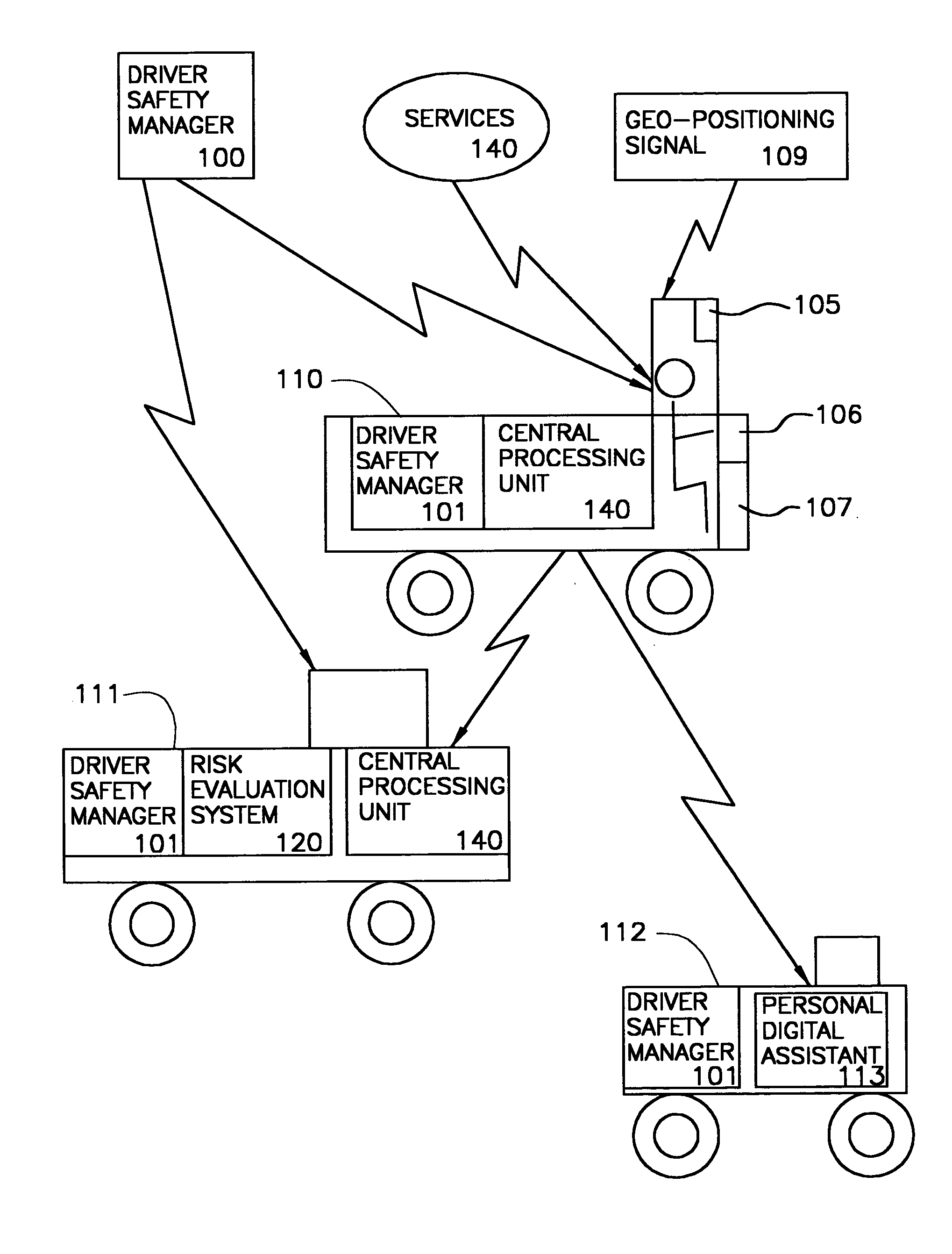

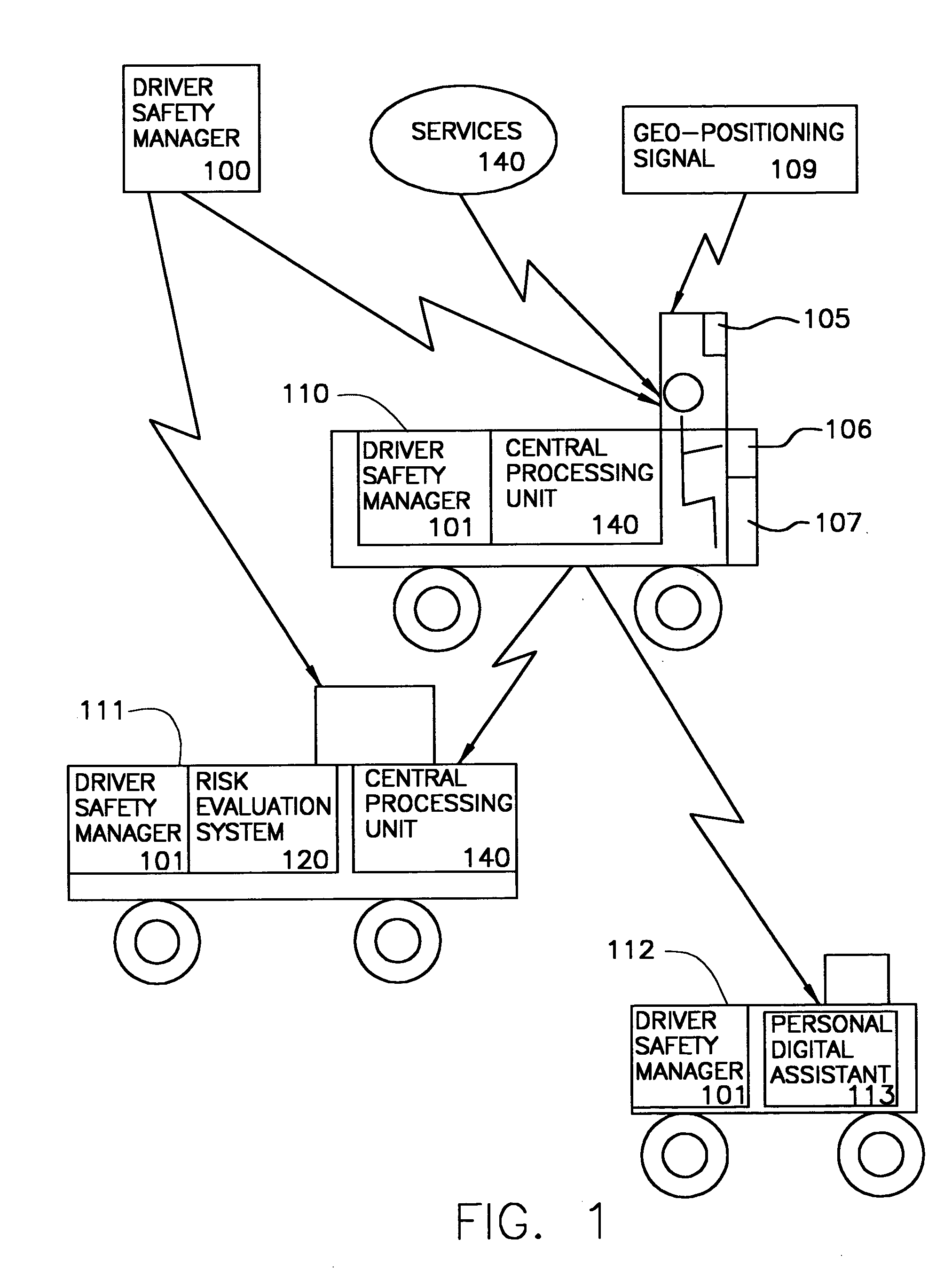

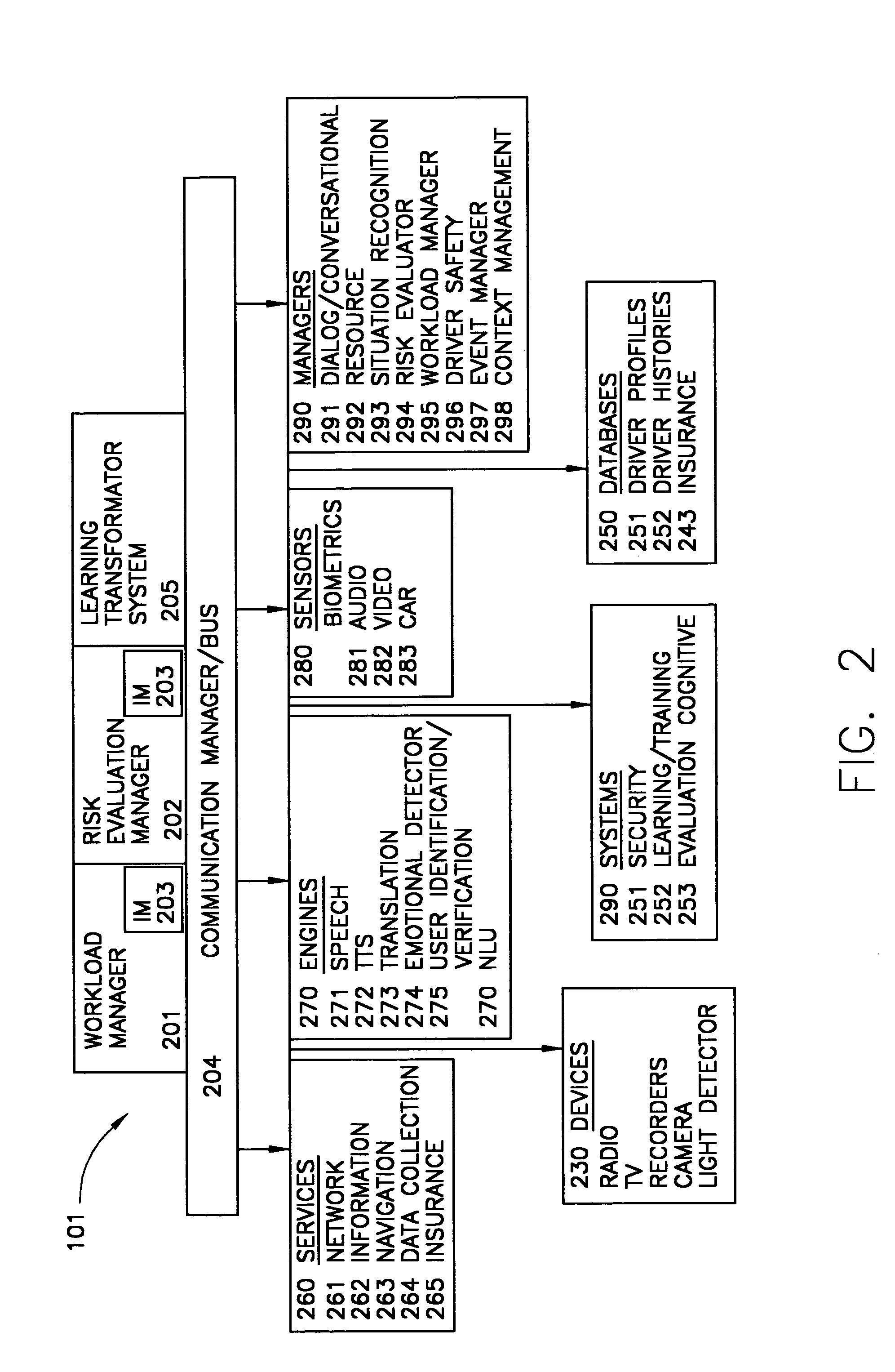

Driver safety manager

ActiveUS7349782B2Vehicle testingRegistering/indicating working of vehiclesDriver/operatorMultimodal data

A unified approach that permits the consideration of different issues and problems that affect driving safety. Particularly, there is proposed herein the creation of a driver safety manager (DSM). The driver safety manager embraces numerous different factors, multimodal data, processes, internal and external systems and the like associated with driving.

Owner:WAYMO LLC

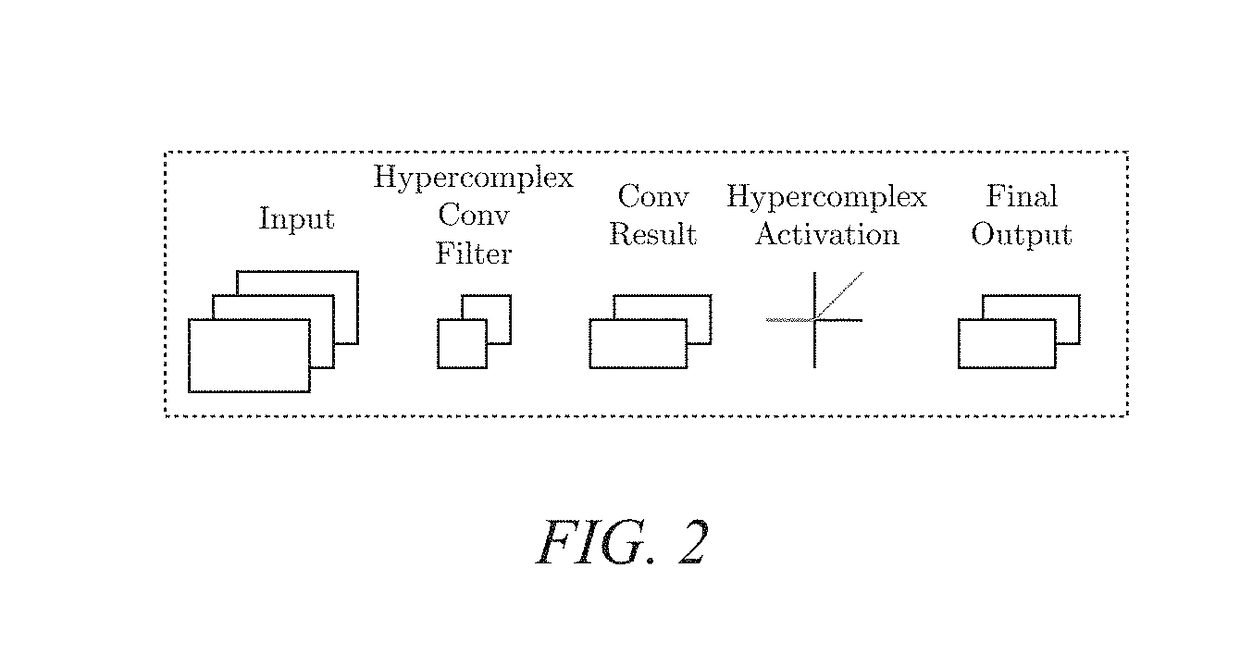

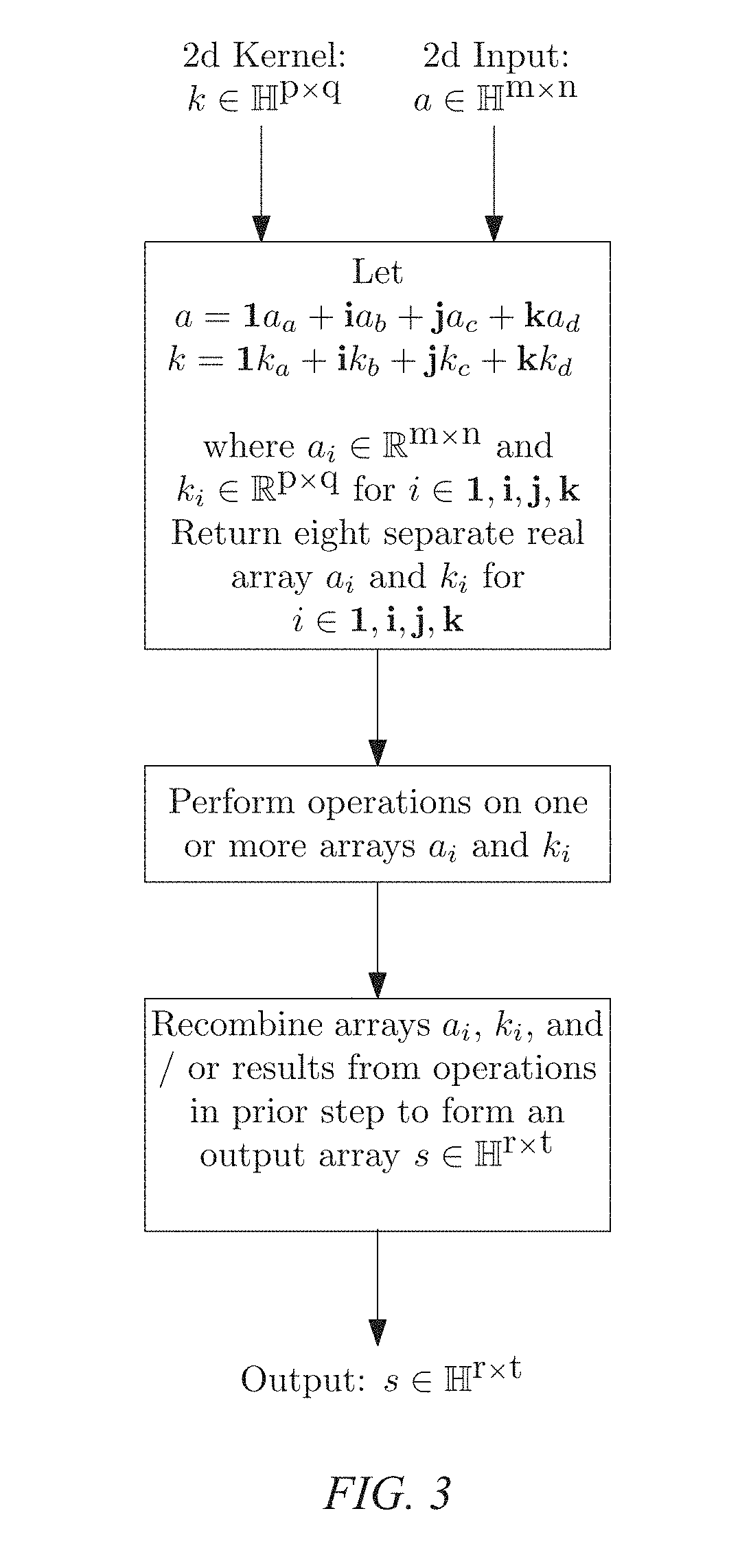

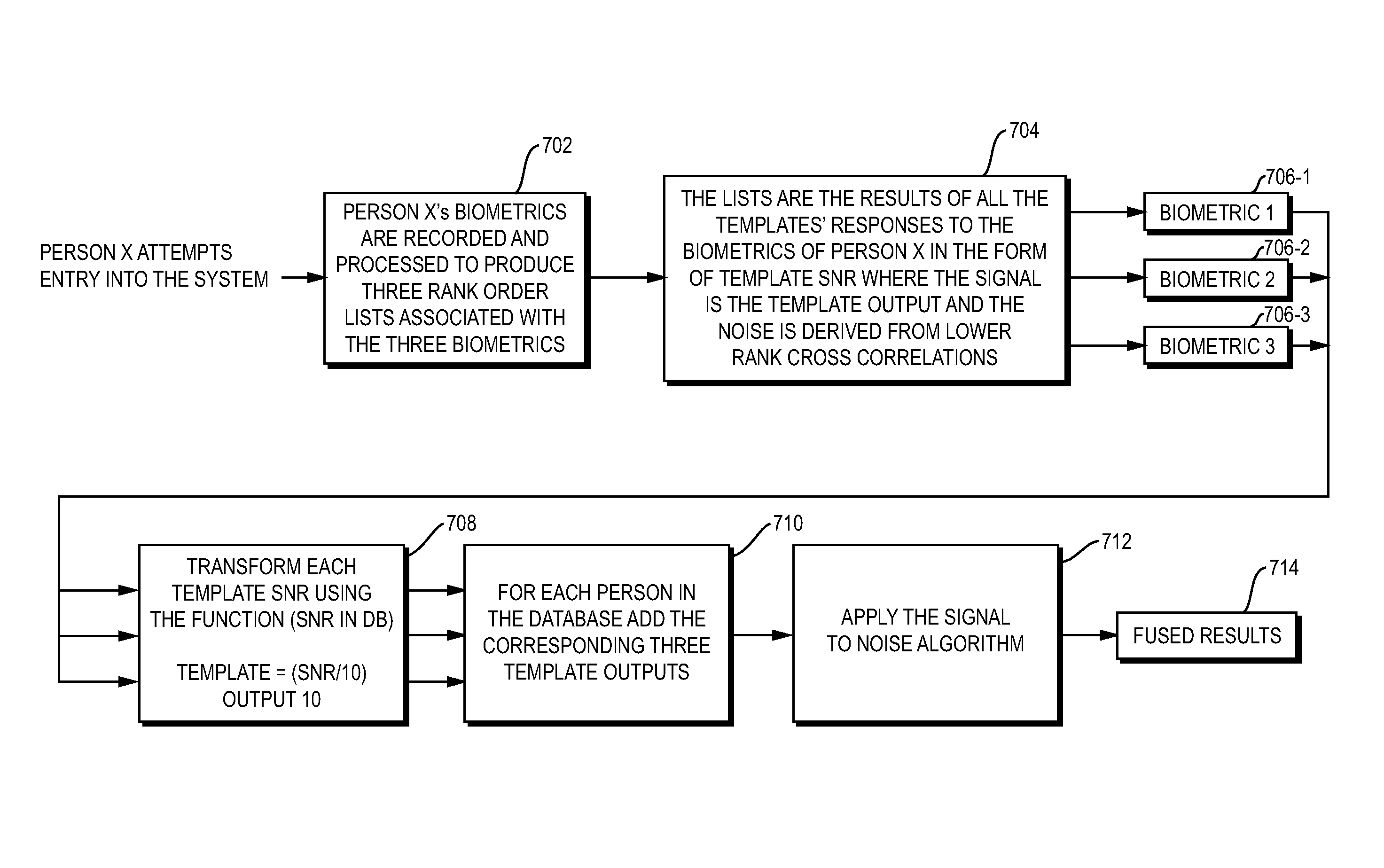

Hypercomplex deep learning methods, architectures, and apparatus for multimodal small, medium, and large-scale data representation, analysis, and applications

A method and system for creating hypercomplex representations of data includes, in one exemplary embodiment, at least one set of training data with associated labels or desired response values, transforming the data and labels into hypercomplex values, methods for defining hypercomplex graphs of functions, training algorithms to minimize the cost of an error function over the parameters in the graph, and methods for reading hierarchical data representations from the resulting graph. Another exemplary embodiment learns hierarchical representations from unlabeled data. The method and system, in another exemplary embodiment, may be employed for biometric identity verification by combining multimodal data collected using many sensors, including, data, for example, such as anatomical characteristics, behavioral characteristics, demographic indicators, artificial characteristics. In other exemplary embodiments, the system and method may learn hypercomplex function approximations in one environment and transfer the learning to other target environments. Other exemplary applications of the hypercomplex deep learning framework include: image segmentation; image quality evaluation; image steganalysis; face recognition; event embedding in natural language processing; machine translation between languages; object recognition; medical applications such as breast cancer mass classification; multispectral imaging; audio processing; color image filtering; and clothing identification.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

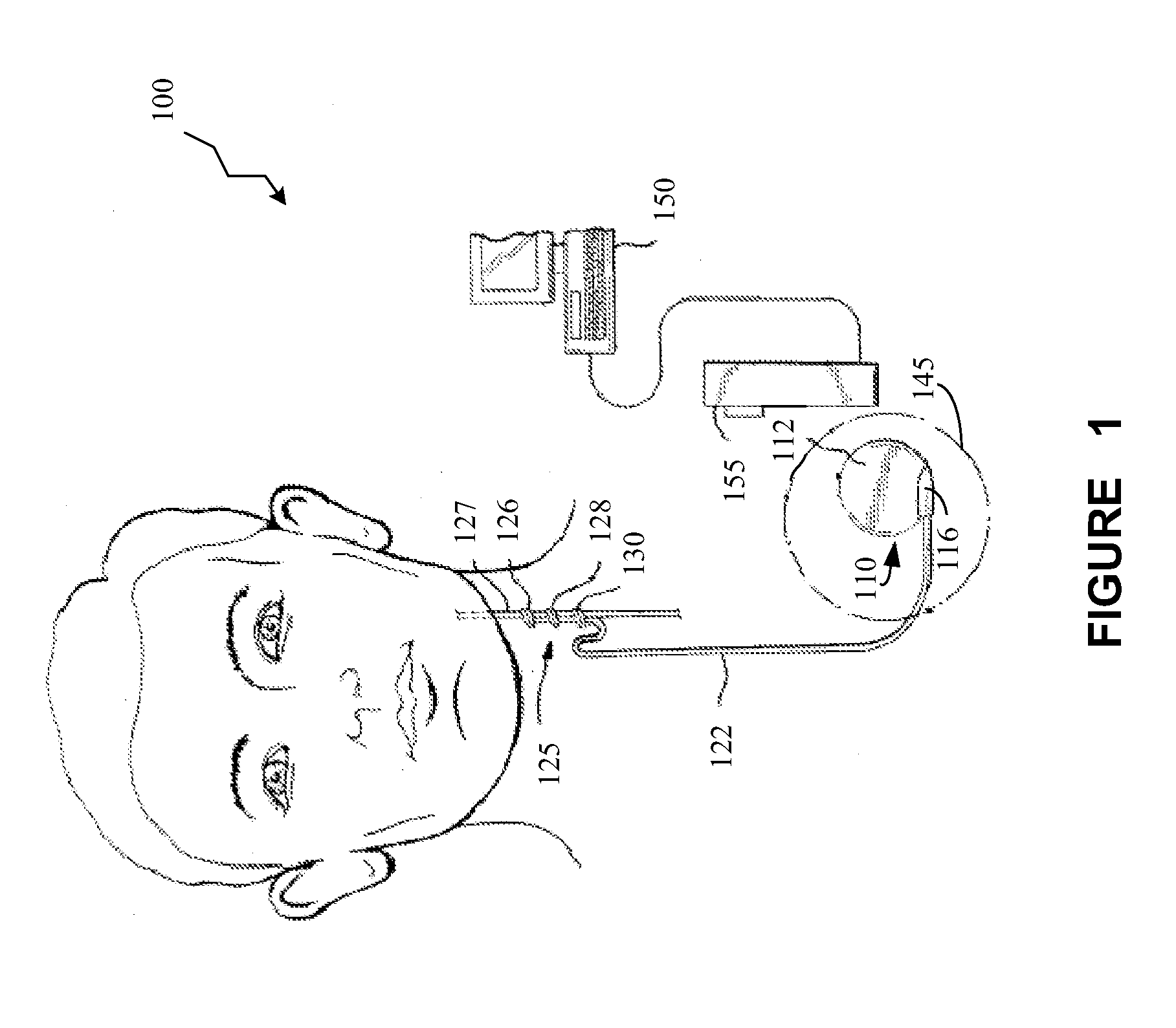

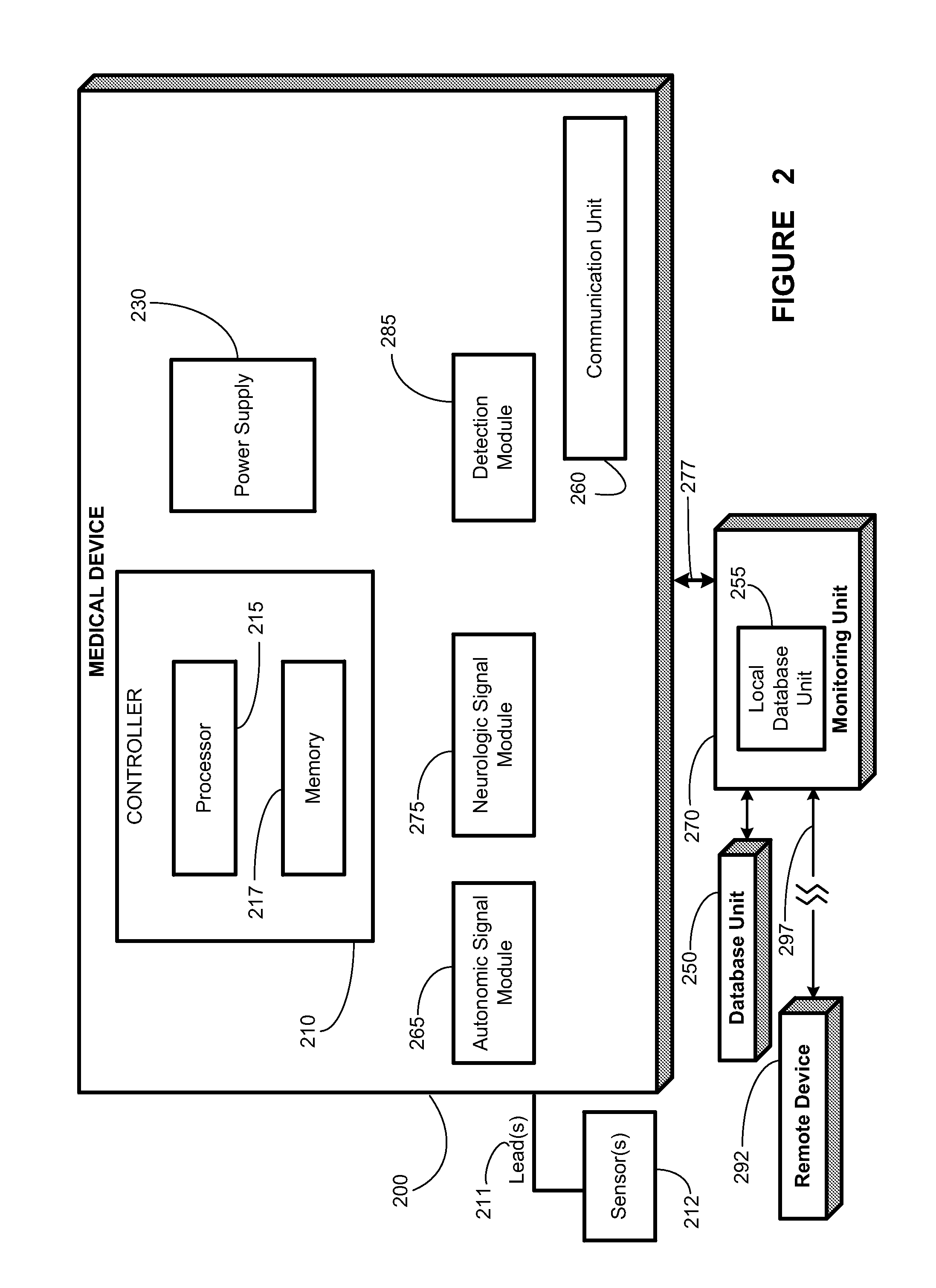

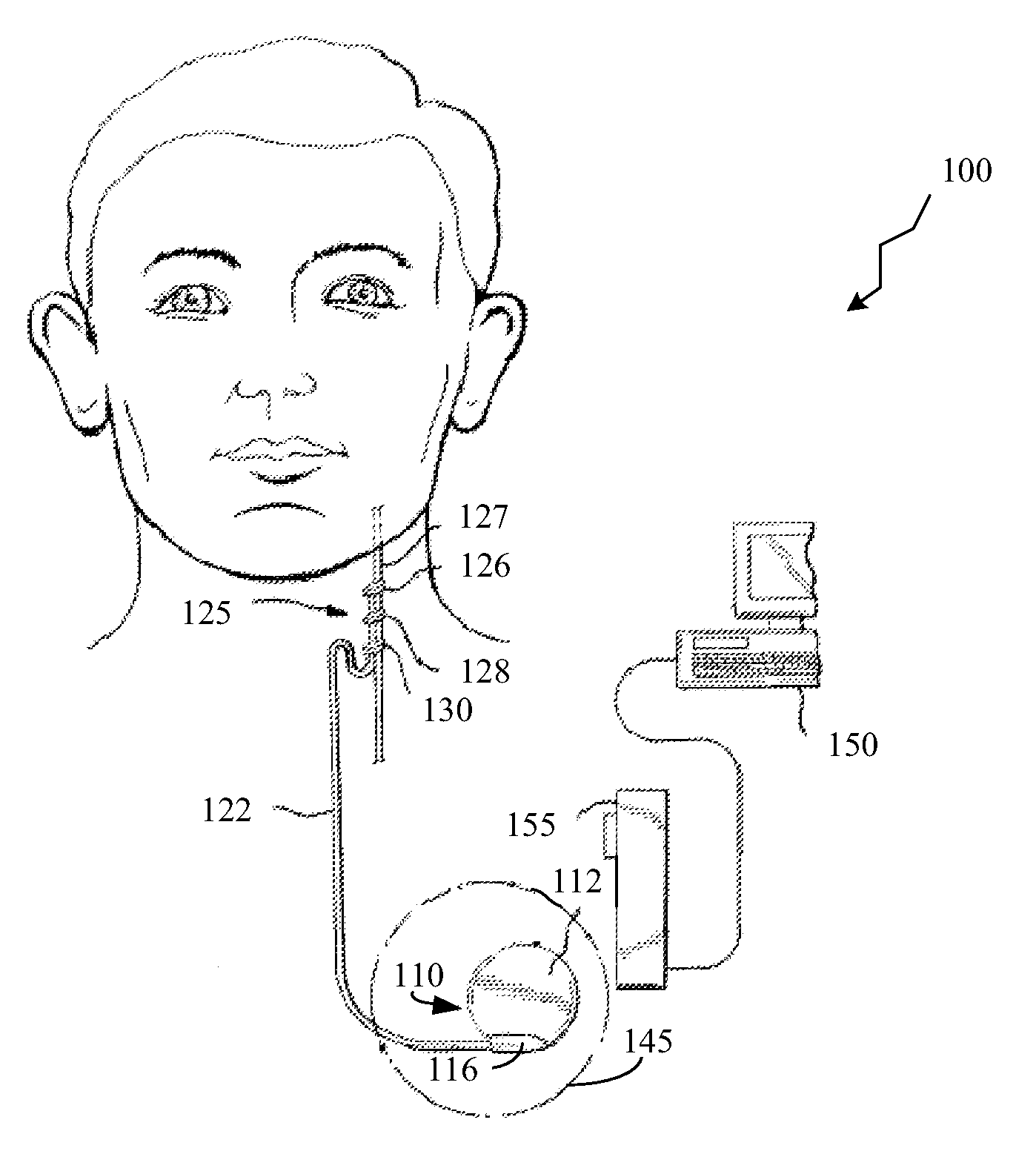

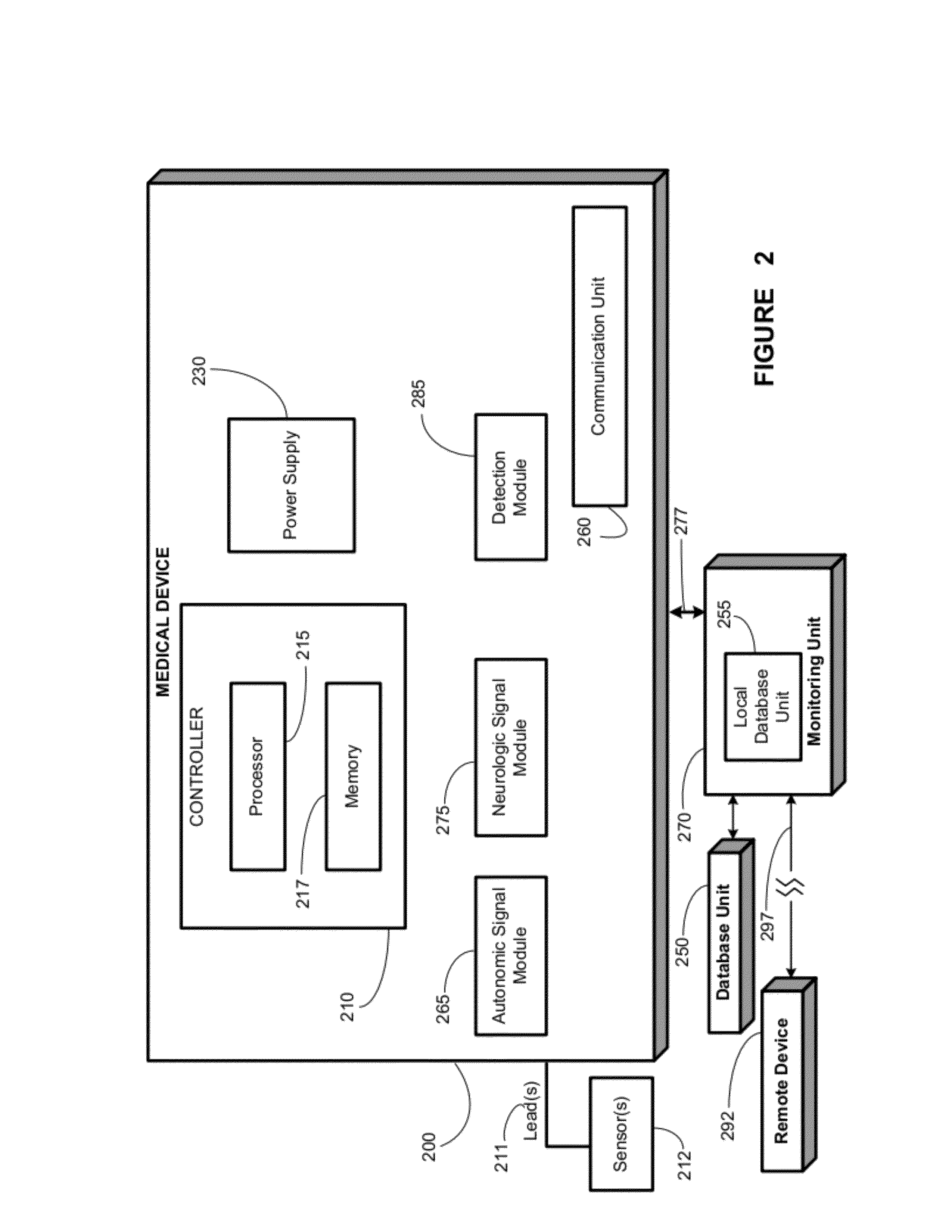

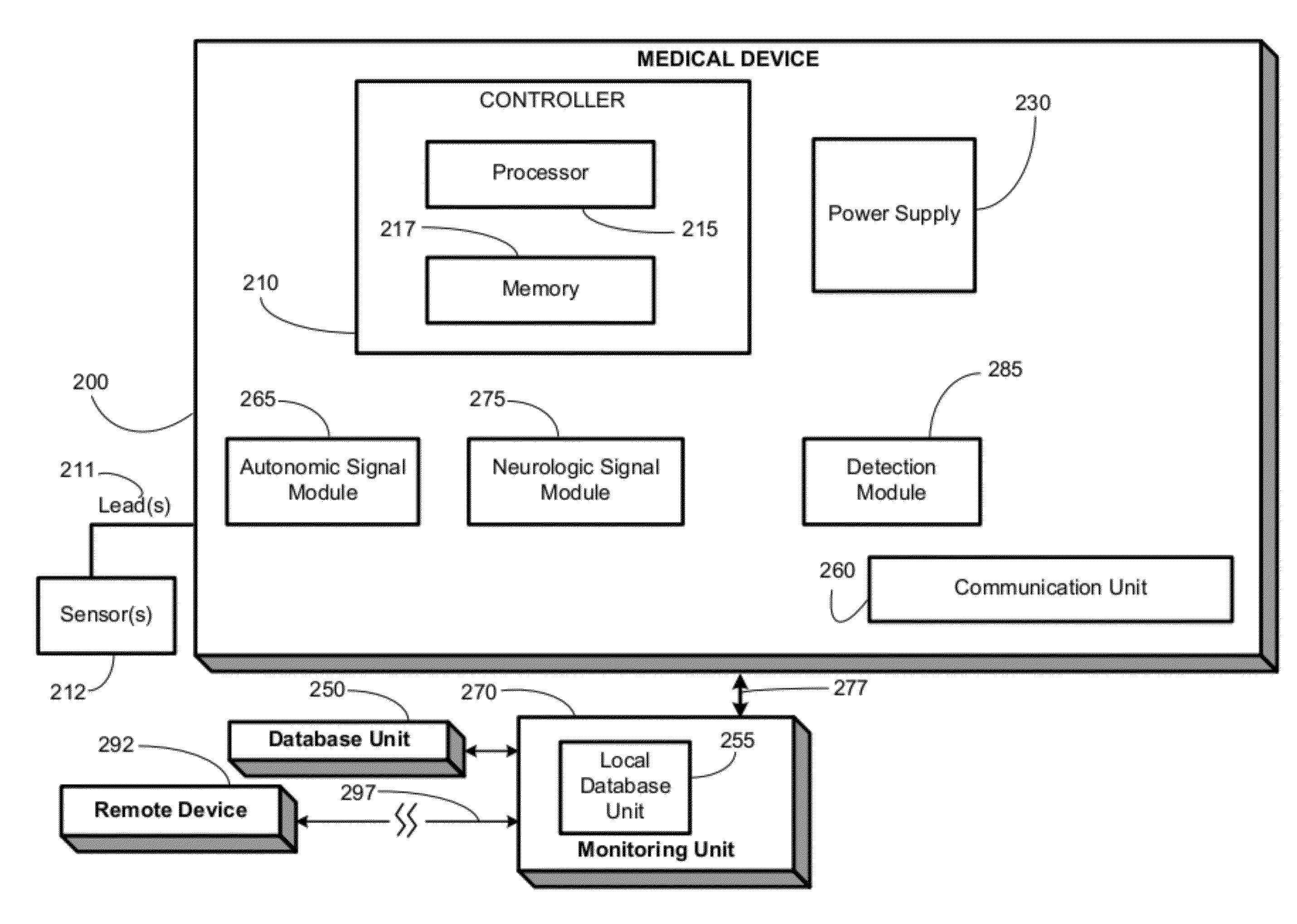

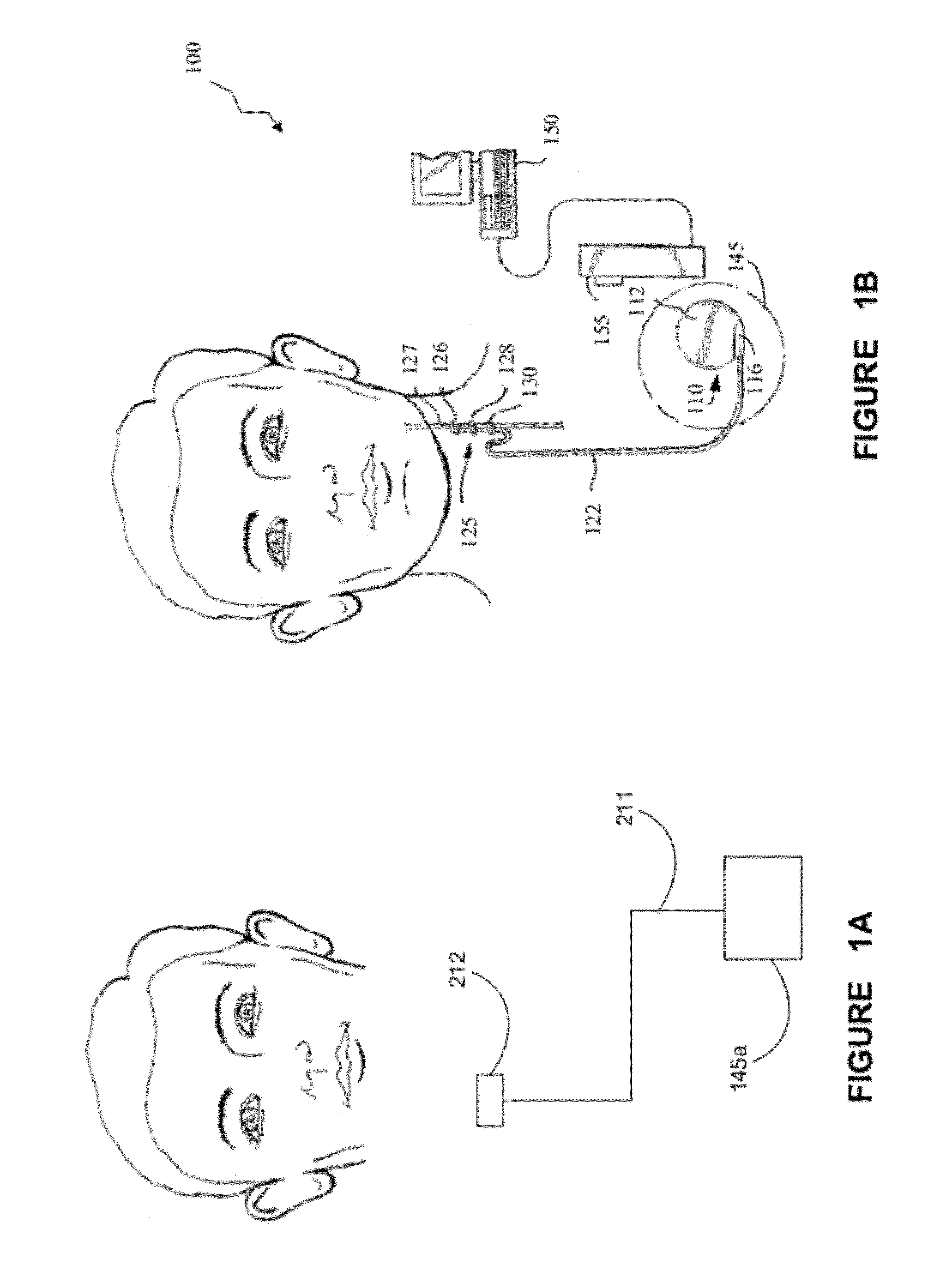

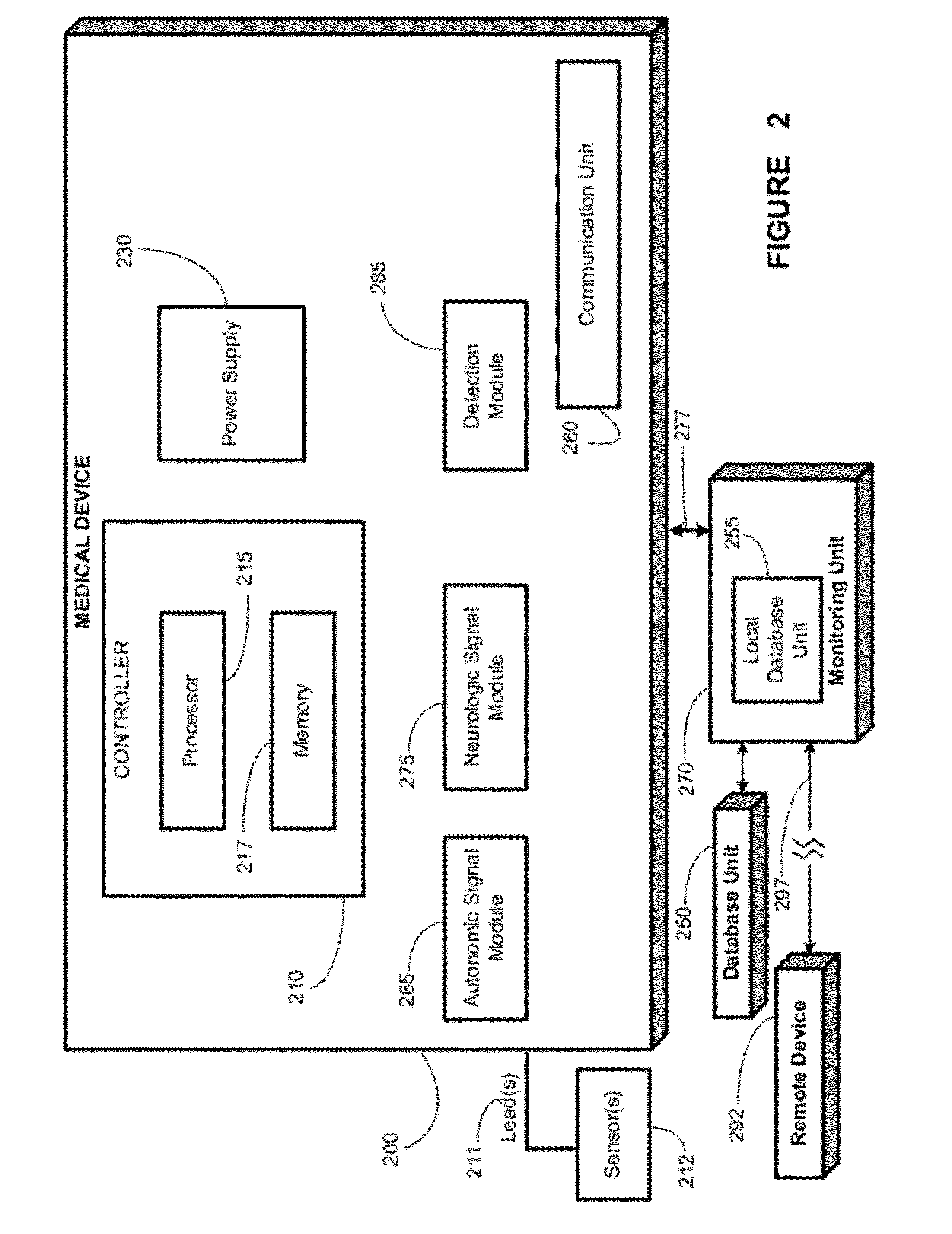

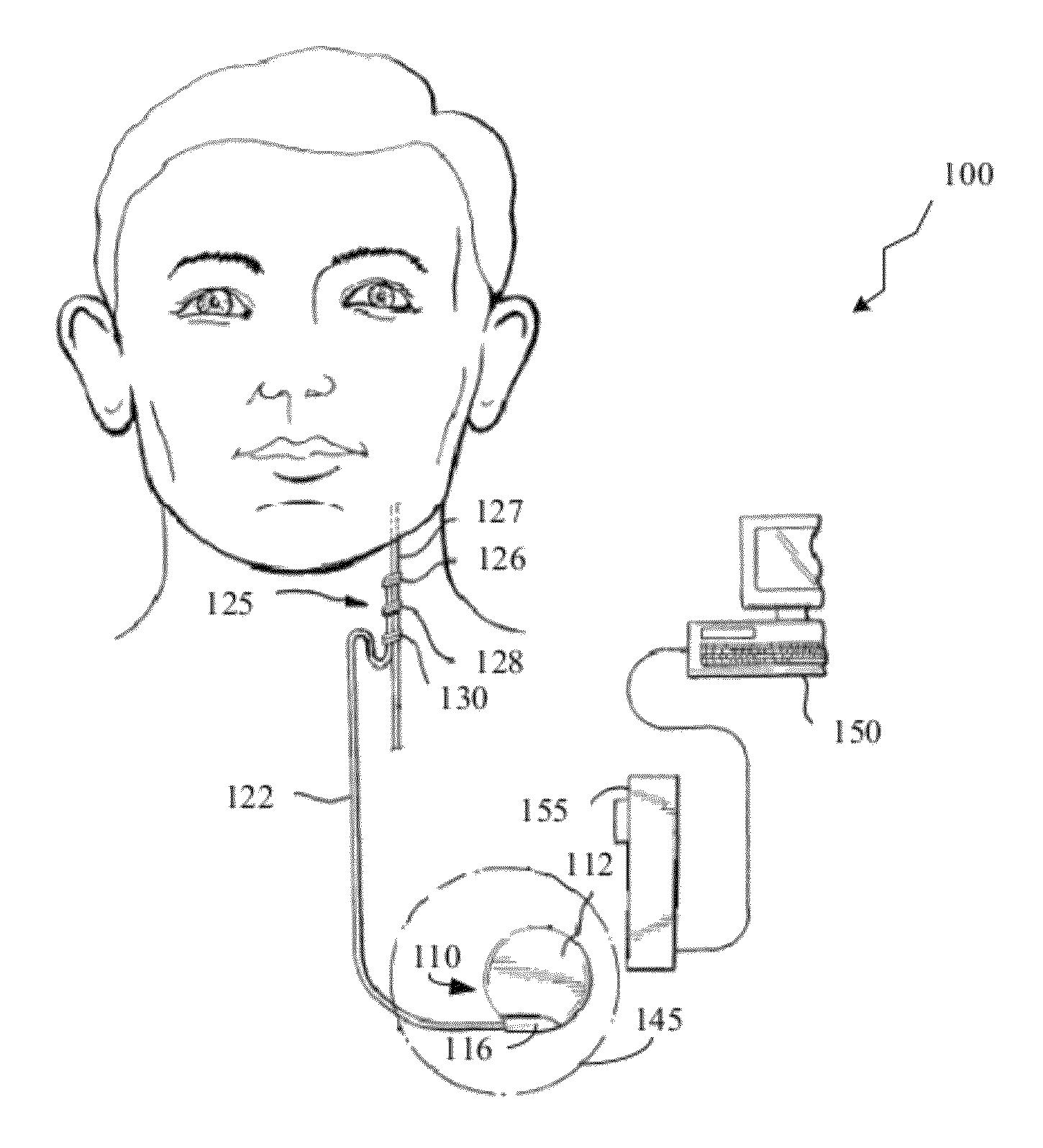

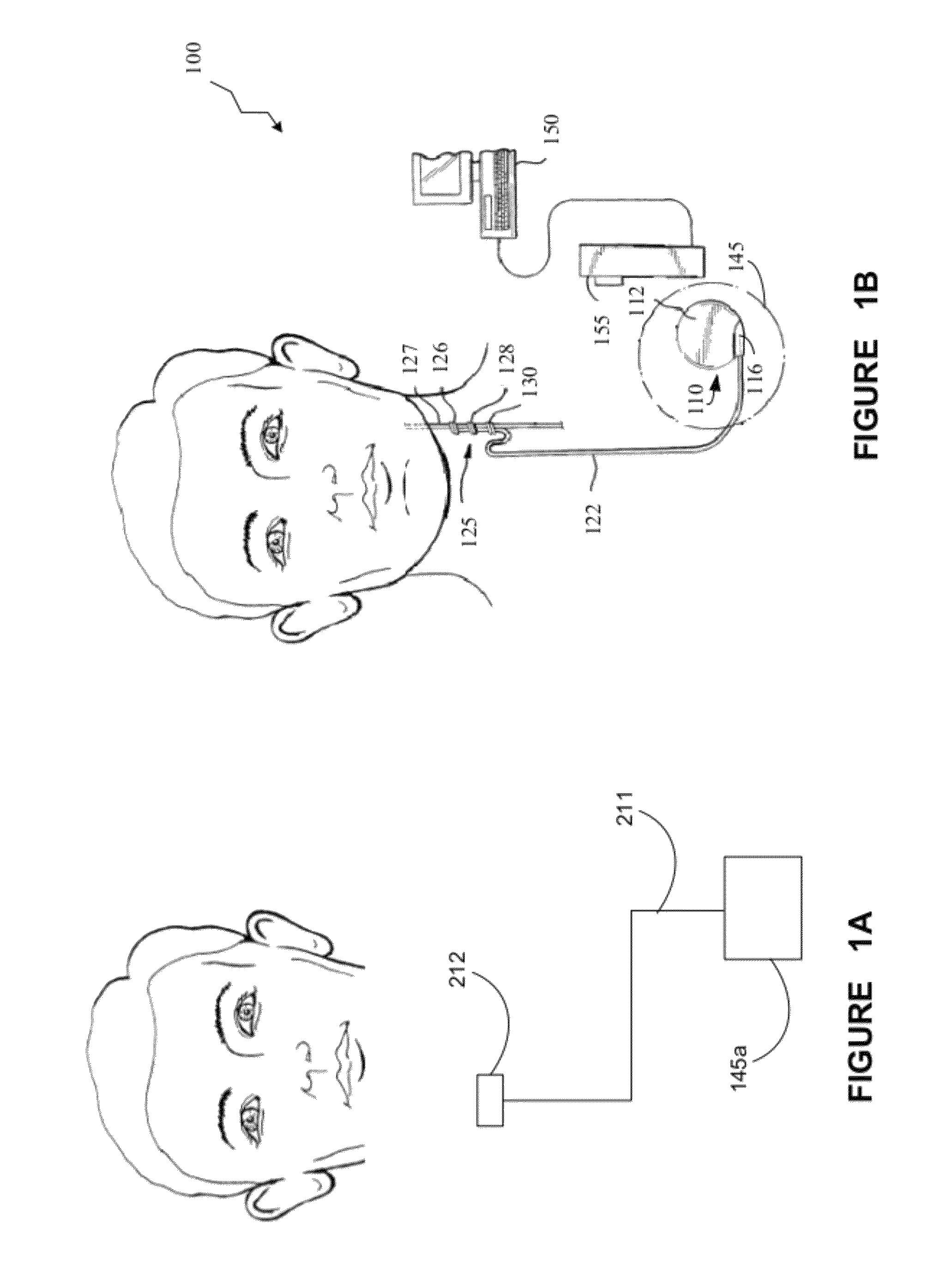

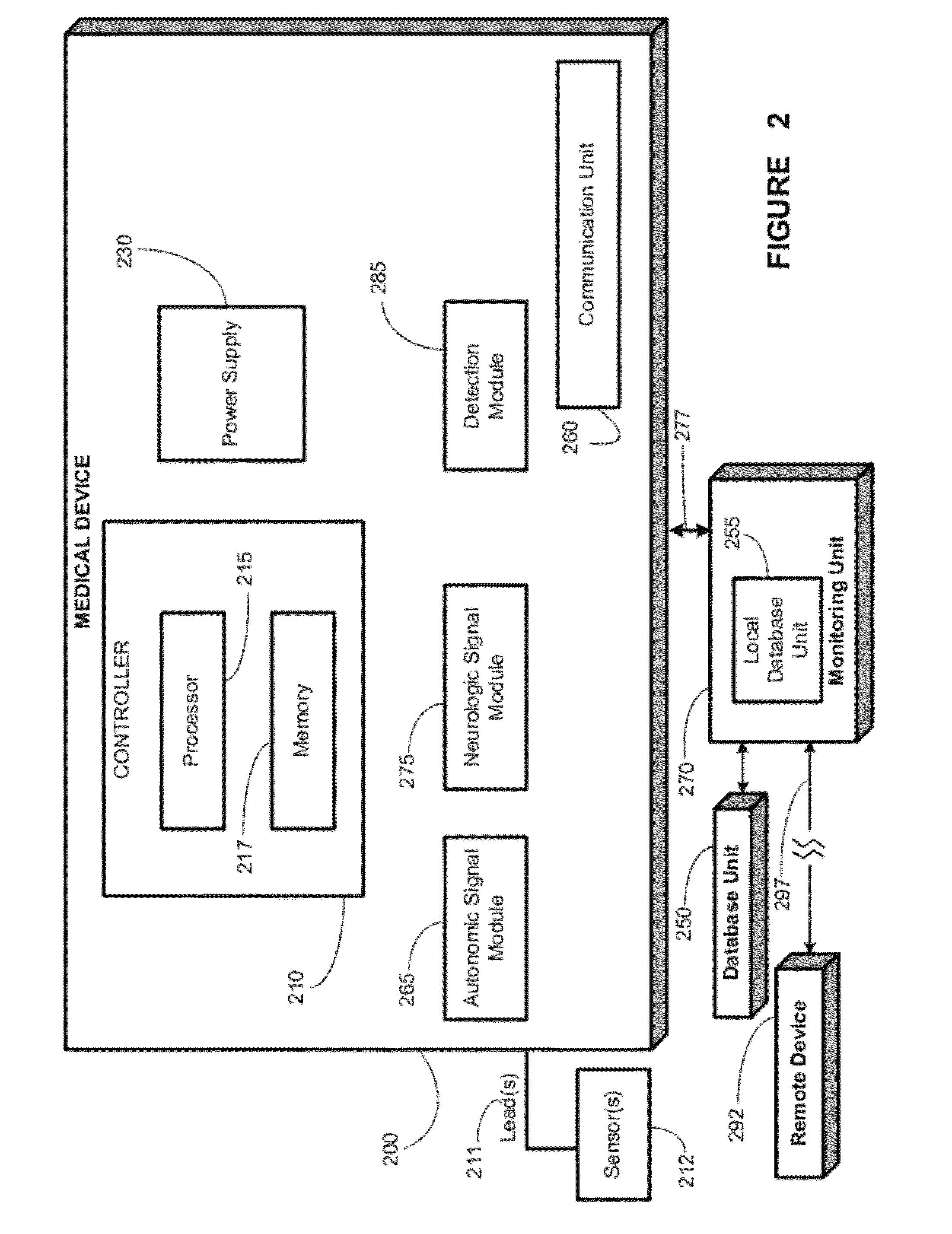

Detecting, quantifying, and/or classifying seizures using multimodal data

ActiveUS20120083700A1Decrease in carbon dioxide contentElectroencephalographyElectrotherapyMedical equipmentMedicine

Methods, systems, and apparatus for detecting an epileptic event, for example, a seizure in a patient using a medical device. The determination is performed by providing an autonomic signal indicative of the patient's autonomic activity; providing a neurologic signal indicative of the patient's neurological activity; and detecting an epileptic event based upon the autonomic signal and the neurologic signal.

Owner:FLINT HILLS SCI L L C

Driver safety manager

ActiveUS20050192730A1Vehicle testingRegistering/indicating working of vehiclesDriver/operatorMultimodal data

A unified approach that permits the consideration of different issues and problems that affect driving safety. Particularly, there is proposed herein the creation of a driver safety manager (DSM). The driver safety manager embraces numerous different factors, multimodal data, processes, internal and external systems and the like associated with driving.

Owner:WAYMO LLC

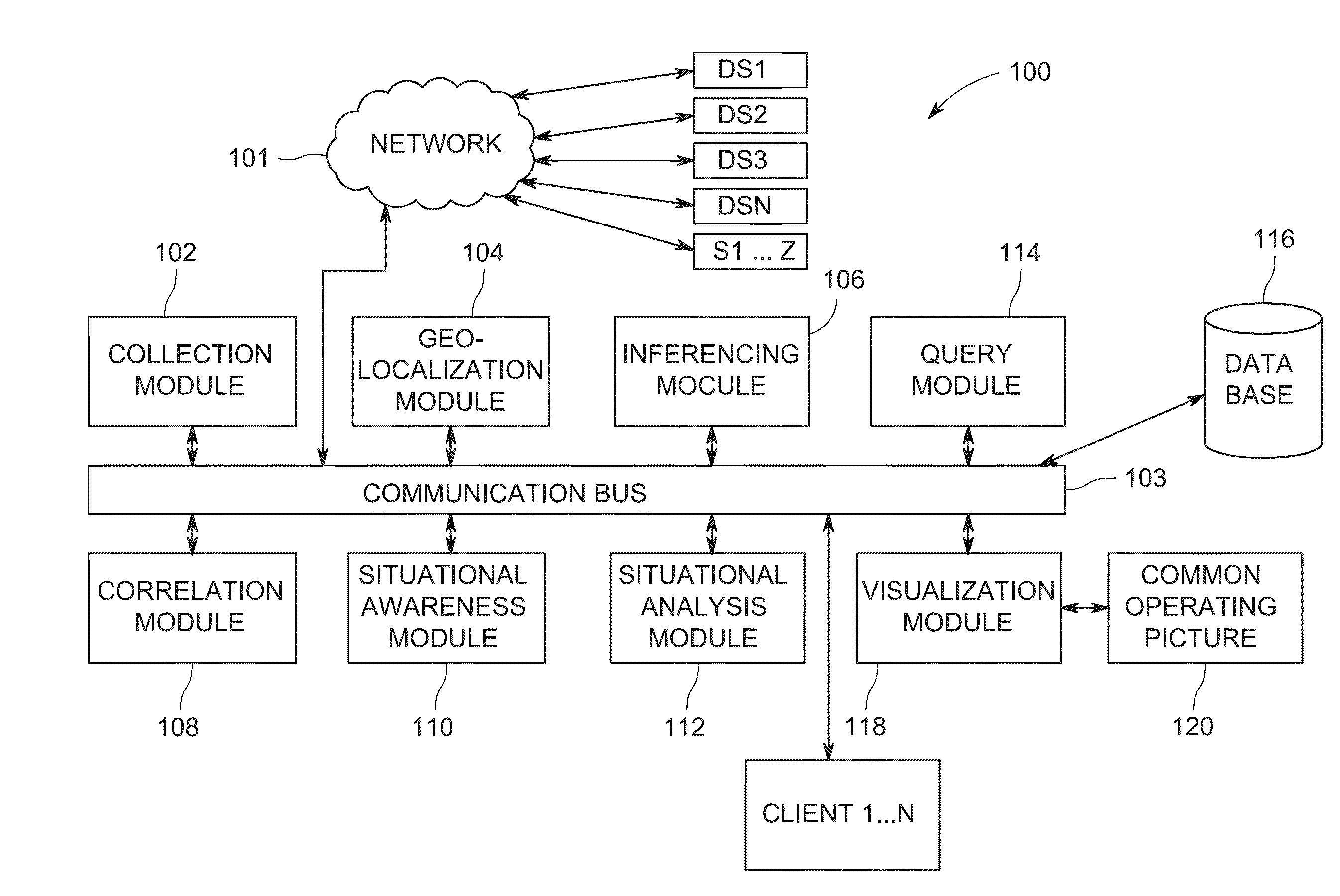

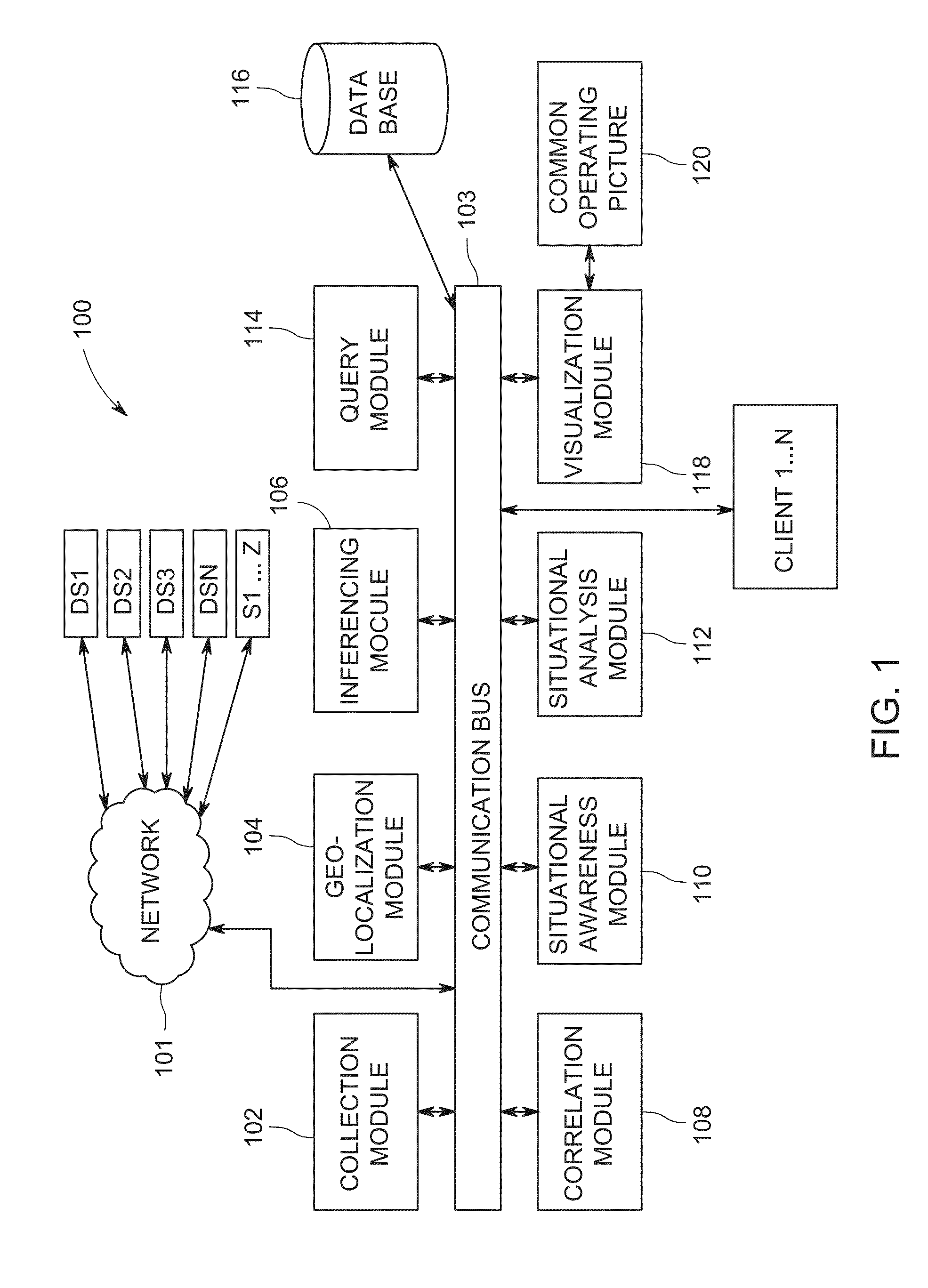

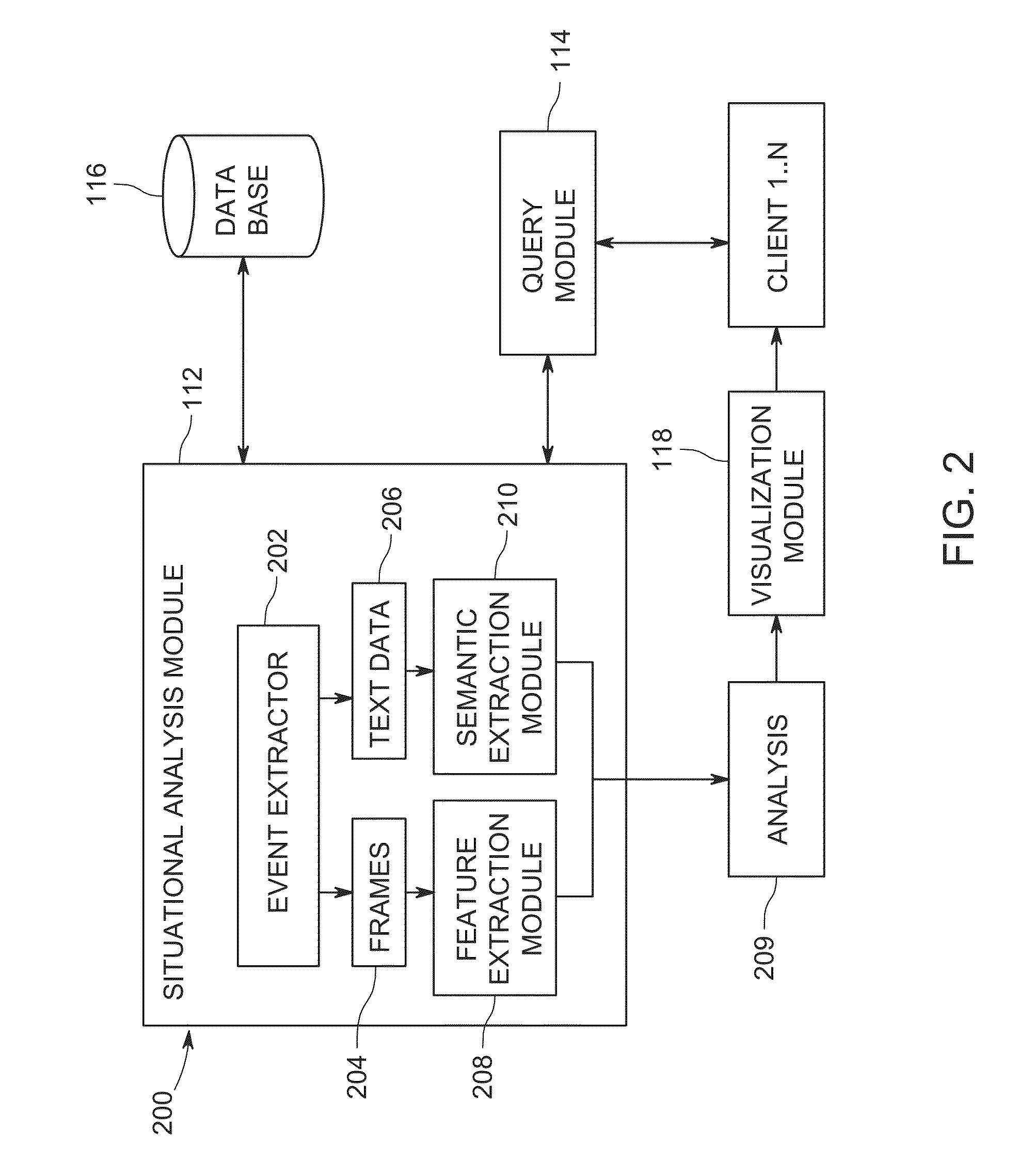

Method and apparatus for correlating and viewing disparate data

ActiveUS20160110433A1Natural language translationDigital data information retrievalMultimodal dataMulti modal data

Methods and apparatuses of the present invention generally relate to generating actionable data based on multimodal data from unsynchronized data sources. In an exemplary embodiment, the method comprises receiving multimodal data from one or more unsynchronized data sources, extracting concepts from the multimodal data, the concepts comprising at least one of objects, actions, scenes and emotions, indexing the concepts for searchability; and generating actionable data based on the concepts.

Owner:SRI INTERNATIONAL

Incident prediction and response using deep learning techniques and multimodal data

ActiveUS20170091617A1Efficient methodNeural learning methodsRestrict boltzmann machineMultimodal data

Owner:IBM CORP

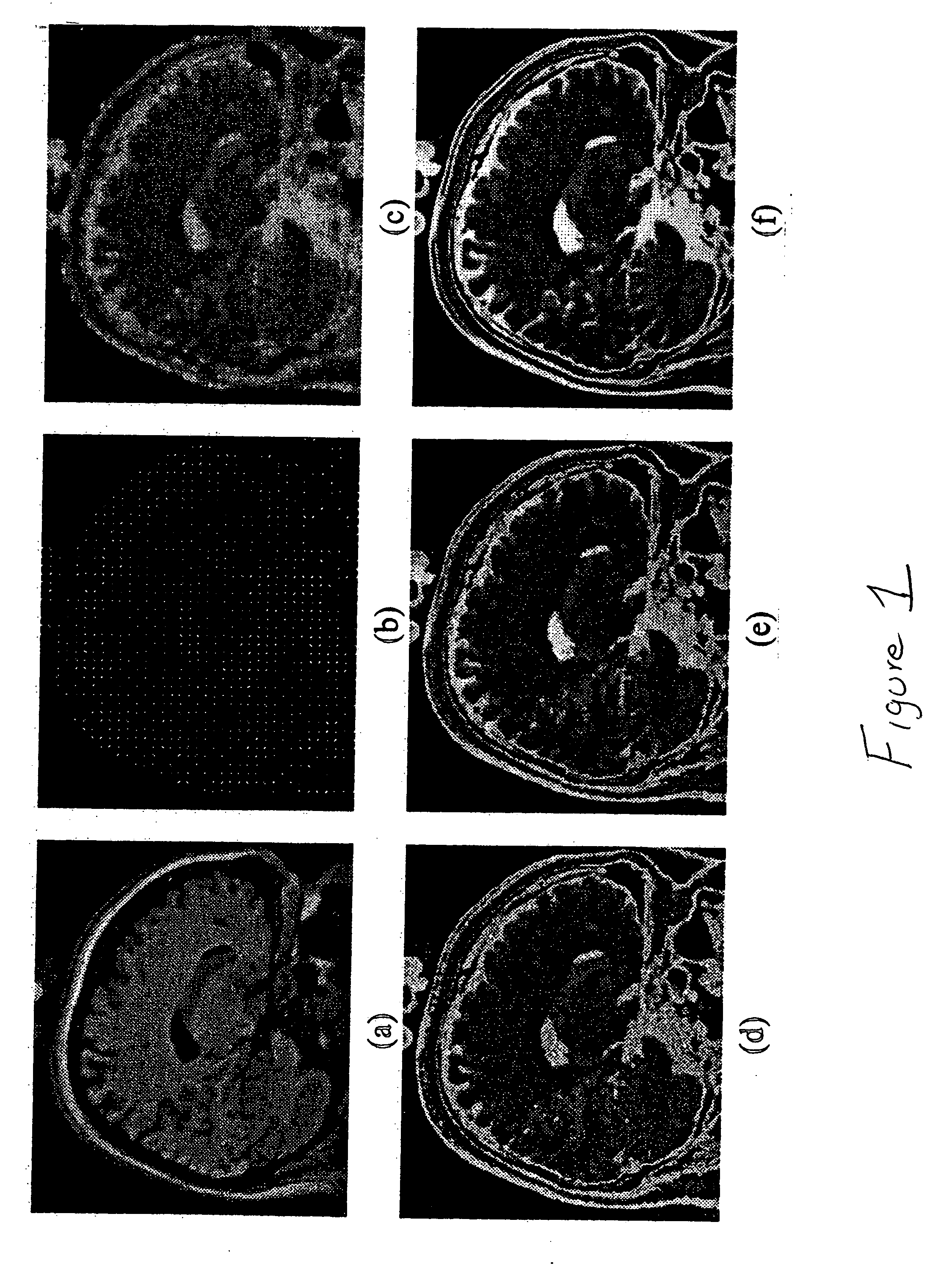

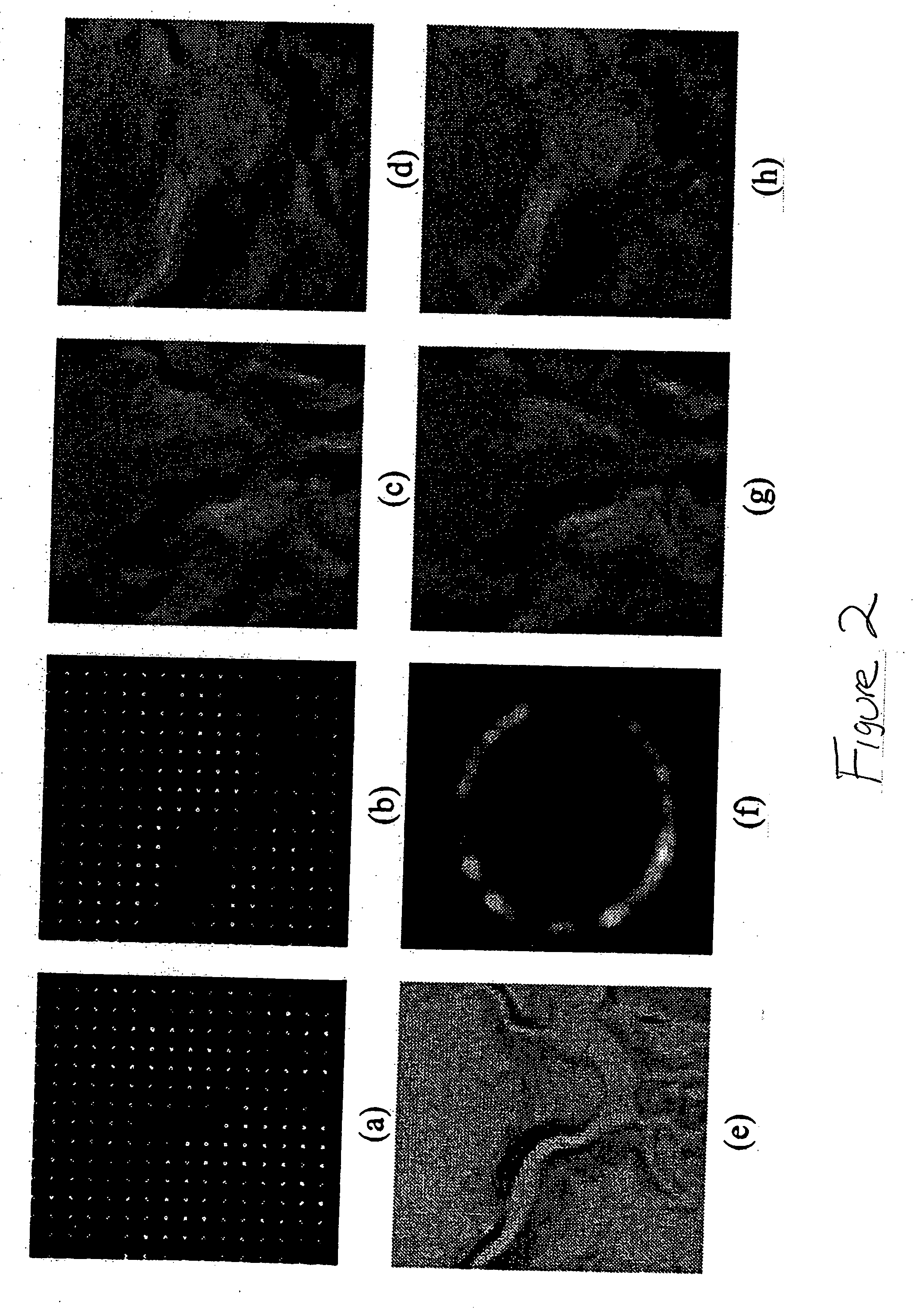

Enhanced multi-protocol analysis via intelligent supervised embedding (empravise) for multimodal data fusion

ActiveUS20140037172A1Image analysisCharacter and pattern recognitionAlgorithmDimensionality reduction

The present invention provides a system and method for analysis of multimodal imaging and non-imaging biomedical data, using a multi-parametric data representation and integration framework. The present invention makes use of (1) dimensionality reduction to account for differing dimensionalities and scale in multimodal biomedical data, and (2) a supervised ensemble of embeddings to accurately capture maximum available class information from the data.

Owner:RUTGERS THE STATE UNIV

Detecting, quantifying, and/or classifying seizures using multimodal data

Methods, systems, and apparatus for detecting an epileptic event, for example, a seizure in a patient using a medical device. The determination is performed by providing an autonomic signal indicative of the patient's autonomic activity; providing a neurologic signal indicative of the patient's neurological activity; and detecting an epileptic event based upon the autonomic signal and the neurologic signal.

Owner:FLINT HILLS SCI L L C

Detecting, quantifying, and/or classifying seizures using multimodal data

ActiveUS20120083701A1Ultrasonic/sonic/infrasonic diagnosticsElectrotherapyCardiac activityMultimodal data

A method, comprising receiving at least one of a signal relating to a first cardiac activity and a signal relating to a first body movement from a patient; triggering at least one of a test of the patient's responsiveness, awareness, a second cardiac activity, a second body movement, a spectral analysis test of the second cardiac activity, and a spectral analysis test of the second body movement, based on at least one of the signal relating to the first cardiac activity and the signal relating to the first body movement; determining an occurrence of an epileptic event based at least in part on said one or more triggered tests; and performing a further action in response to said determination of said occurrence of said epileptic event. Further methods allow classification of epileptic events. Apparatus and systems capable of implementing the method.

Owner:FLINT HILLS SCI L L C

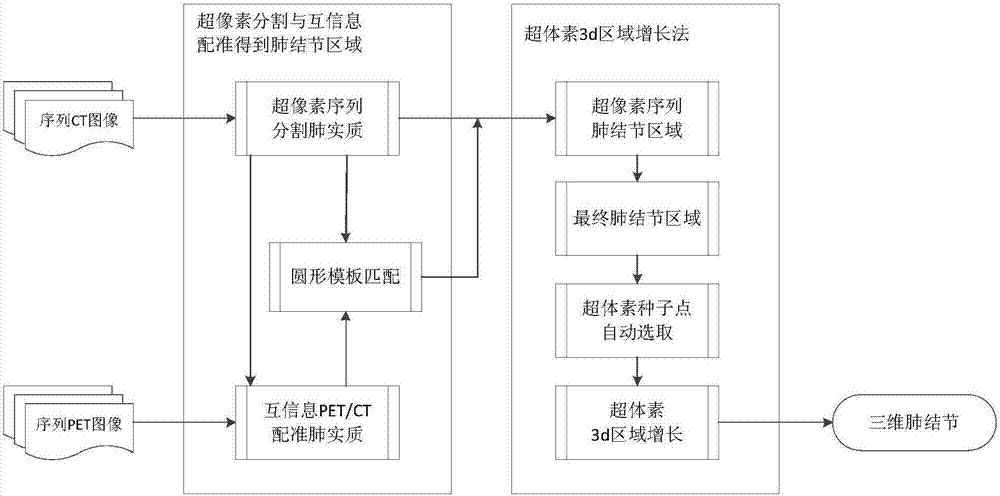

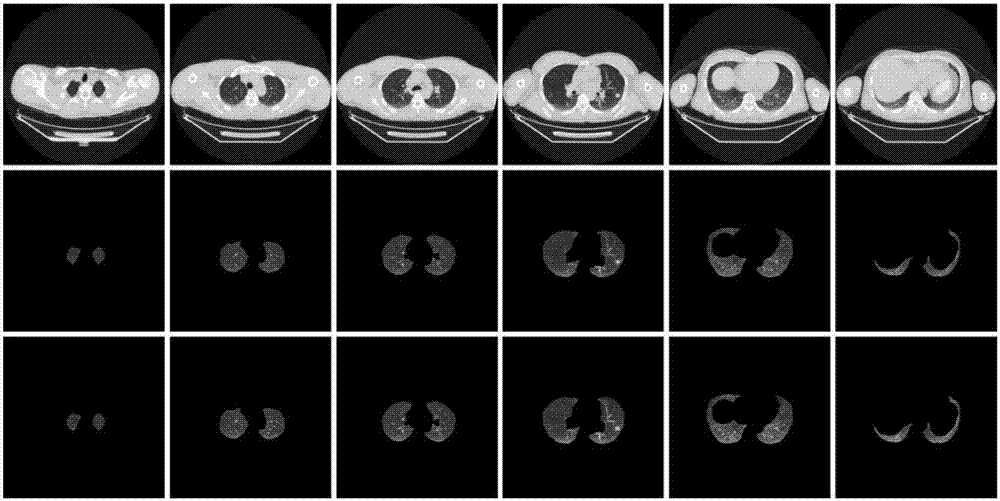

Supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data

ActiveCN107230206AUnderstand intuitiveImprove the quality of surgeryImage enhancementImage analysisPulmonary noduleDiagnostic Radiology Modality

The invention discloses a supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data. The method comprises the following steps: A) extracting sequence lung parenchyma images through superpixel segmentation and self-generating neuronal forest clustering; B) registering the sequence lung parenchyma images through PET / CT multimodal data based on mutual information; C) marking and extracting an accurate sequence pulmonary nodule region through a multi-scale variable circular template matching algorithm; and D) carrying out three-dimensional reconstruction on the sequence pulmonary nodule images through a supervoxel 3D region growth algorithm to obtain a final three-dimensional shape of pulmonary nodules. The method forms a 3D reconstruction area of the pulmonary nodules through the supervoxel 3D region growth algorithm, and can reflect dynamic relation between pulmonary lesions and surrounding tissues, so that features of shape, size and appearance of the pulmonary nodules as well as adhesion conditions of the pulmonary nodules with surrounding pleura or blood vessels can be known visually.

Owner:TAIYUAN UNIV OF TECH

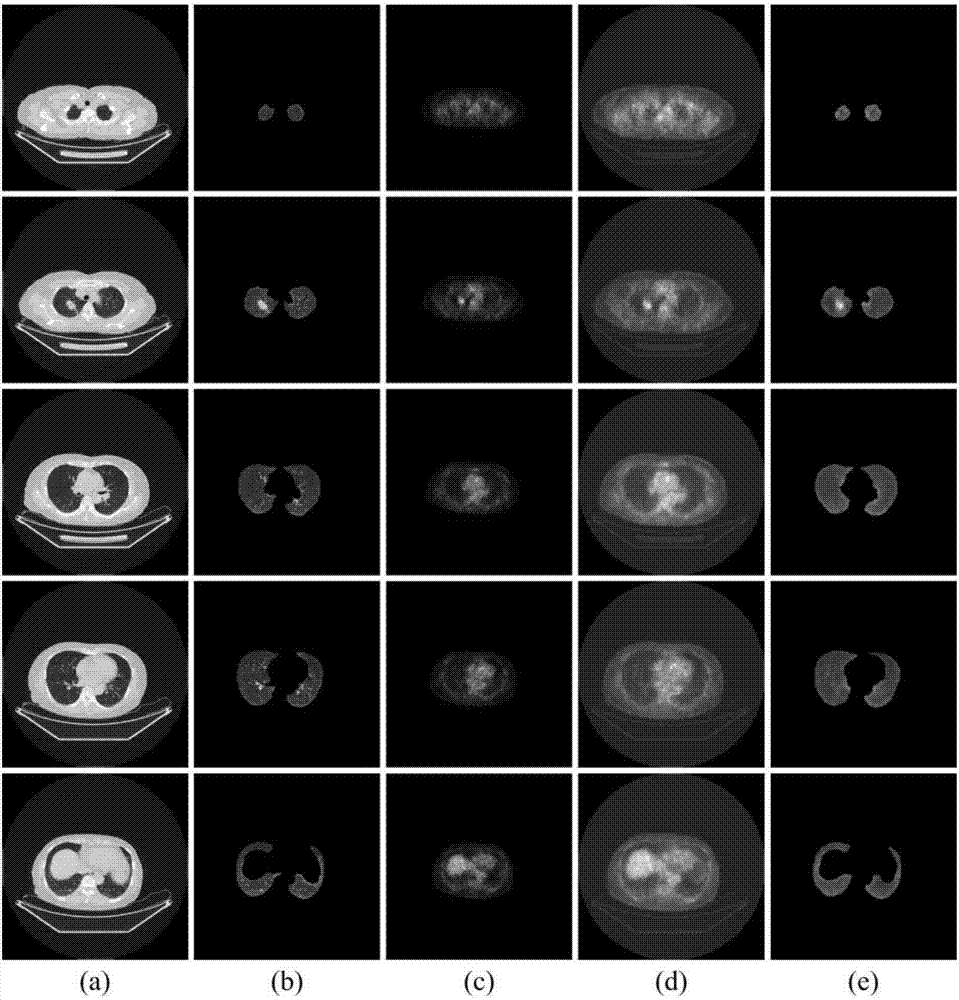

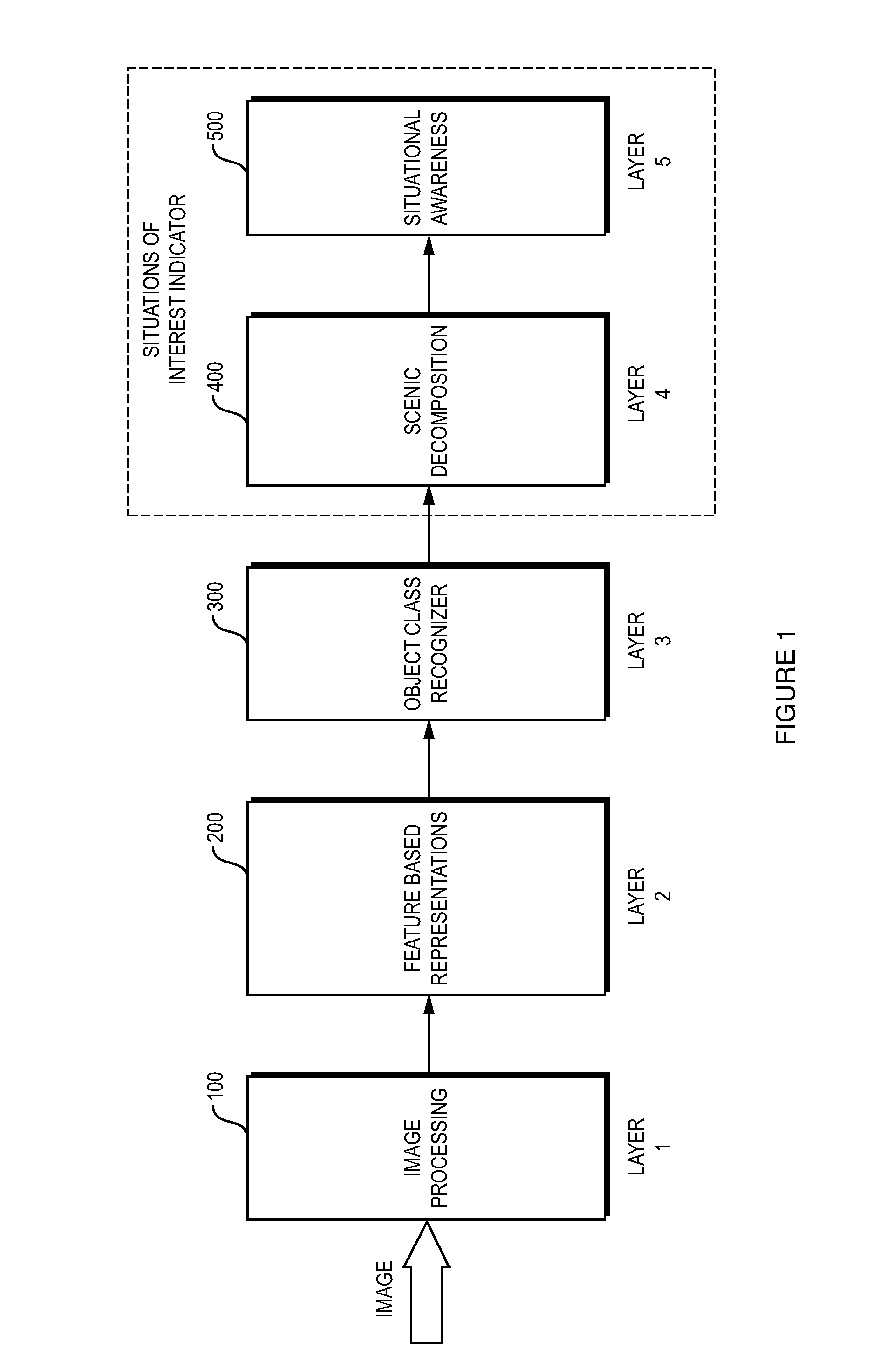

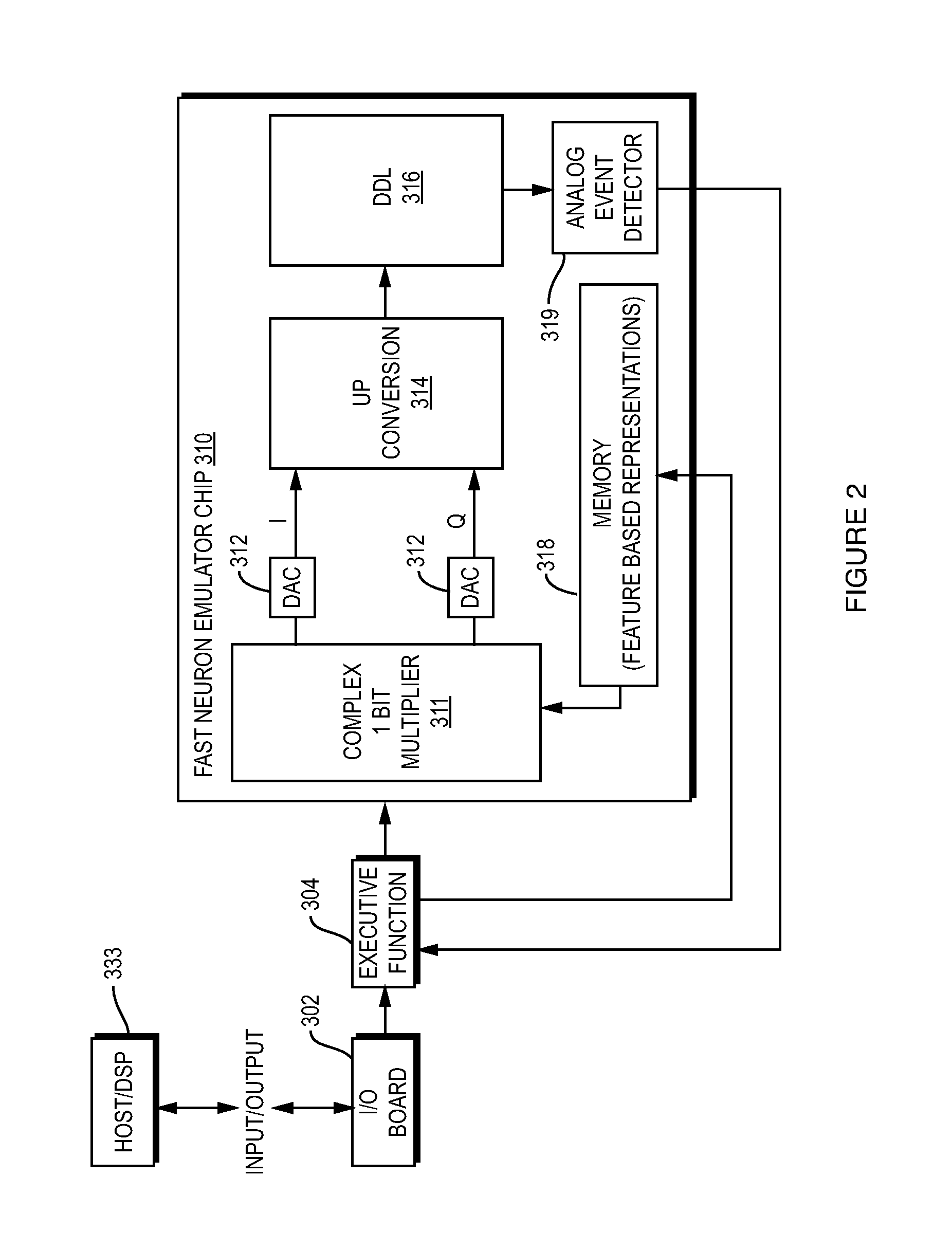

Neuromorphic parallel processor

ActiveUS8401297B1Improve performanceHigh biometric recognitionCharacter and pattern recognitionNeural architecturesObject ClassMultimodal data

A neuromorphic parallel image processing approach that has five (5) functional layers. The first performs a frequency domain transform on the image data generating multiple scales and feature based representations which are independent of orientation. The second layer is populated with feature based representations. The third layer, an object class recognizer layer, are fused using a neuromorphic parallel processor. Fusion of multimodal data can achieve high confidence, biometric recognition.

Owner:AMI RES & DEV

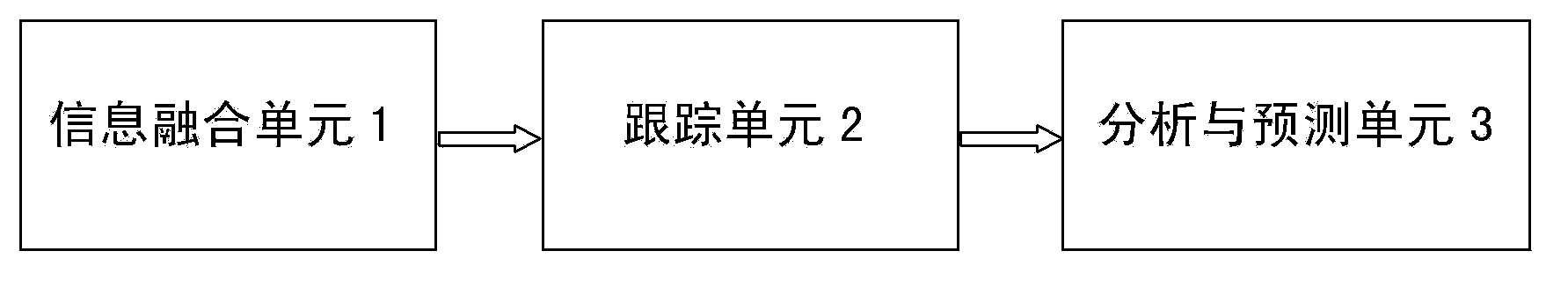

Analysis and prediction system for cooperative correlative tracking and global situation of network social events

InactiveCN103455705AImprove tracking accuracyEasy to understandSpecial data processing applicationsSpace - propertyInformation integration

The invention relates to an analysis and prediction system for cooperative correlative tracking and global situation of network social events. The system comprises an information fusion unit, a tracking unit, and an analysis and prediction unit. The information fusion unit is used for fusing multimodal features of network social event data to obtain fused information of the multimodal data of social events, thus establishing a semantic description model for the multimodal data of cross-social events. The tracking unit is connected with the information fusion unit and is used for acquiring sematic correlative tracking data of the social events on each aspect according to cross-modal property, cross-platform property and trans-time-and-space property of the network social events in face of network contents including rich multimedia information on the basis of the semantic description model for the multimodal data of the cross-social events. The analysis and prediction unit connected with the tracking unit is used for acquiring social-event-based global situation analysis and prediction data on the basis of the semantic correlative tracking data.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

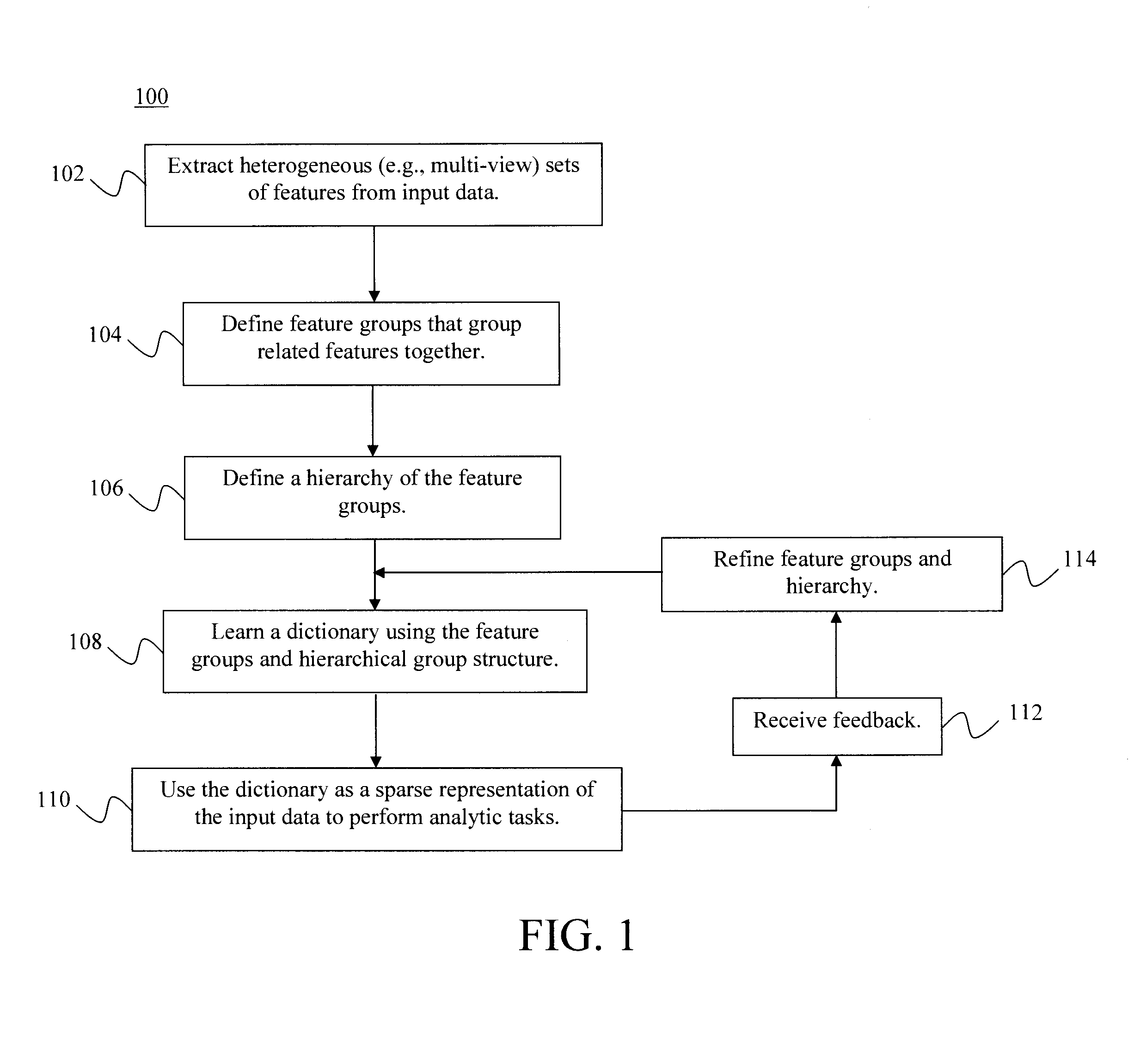

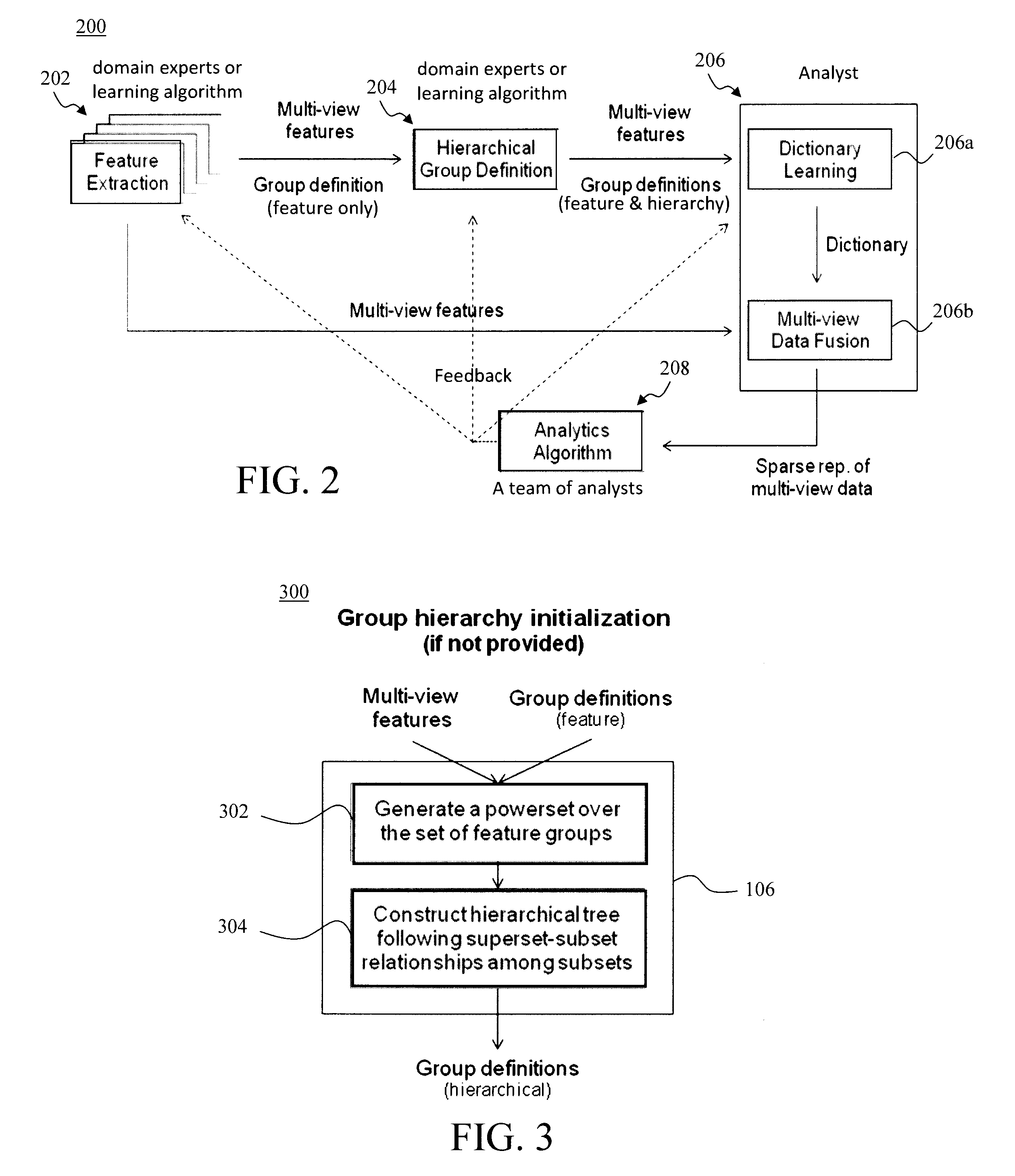

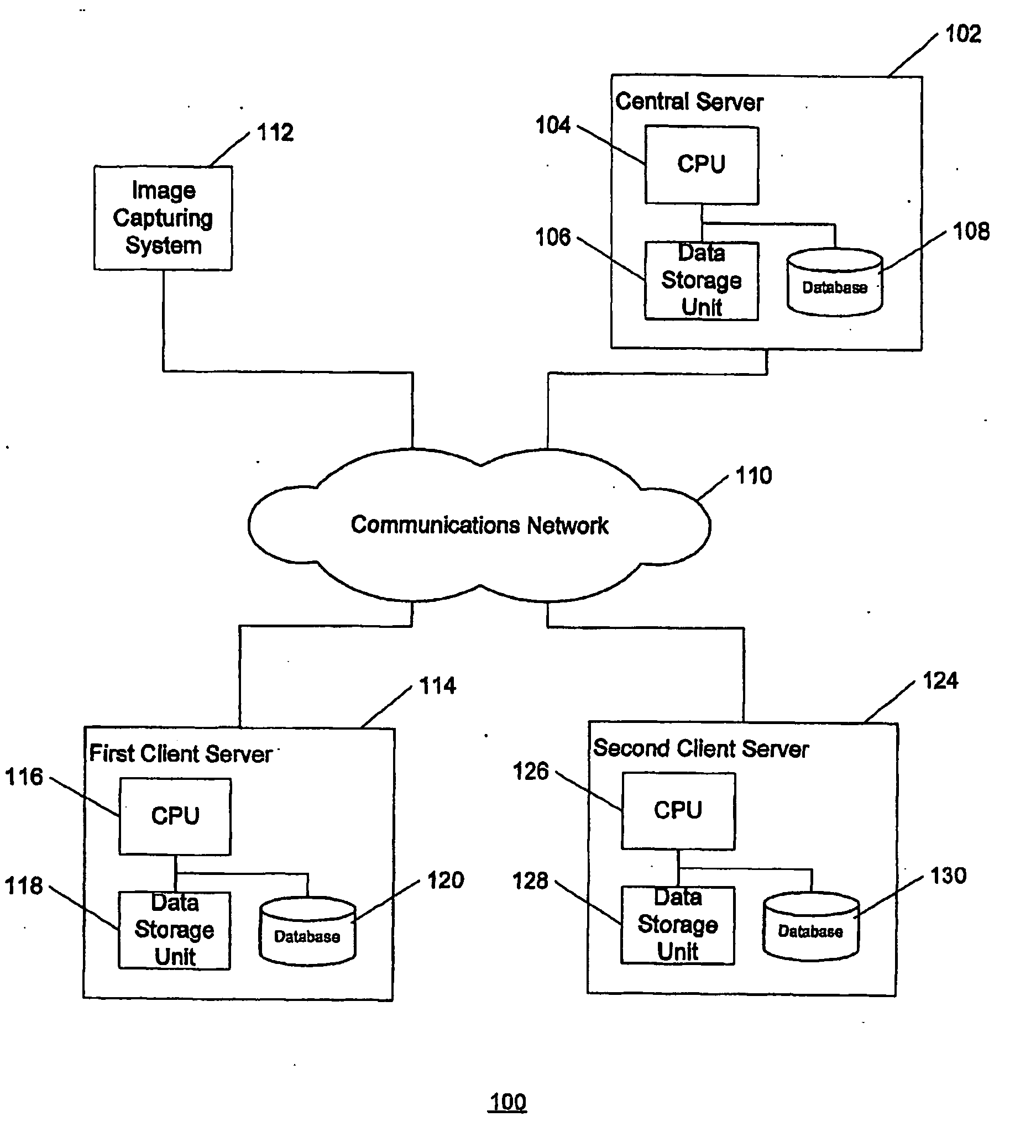

Multimodal Data Fusion by Hierarchical Multi-View Dictionary Learning

ActiveUS20160283858A1Digital data information retrievalCharacter and pattern recognitionDictionary learningMultimodal data

Techniques for multimodal data fusion having a multimodal hierarchical dictionary learning framework that learns latent subspaces with hierarchical overlaps are provided. In one aspect, a method for multi-view data fusion with hierarchical multi-view dictionary learning is provided which includes the steps of: extracting multi-view features from input data; defining feature groups that group together the multi-view features that are related; defining a hierarchical structure of the feature groups; and learning a dictionary using the feature groups and the hierarchy of the feature groups. A system for multi-view data fusion with hierarchical multi-view dictionary learning is also provided.

Owner:IBM CORP

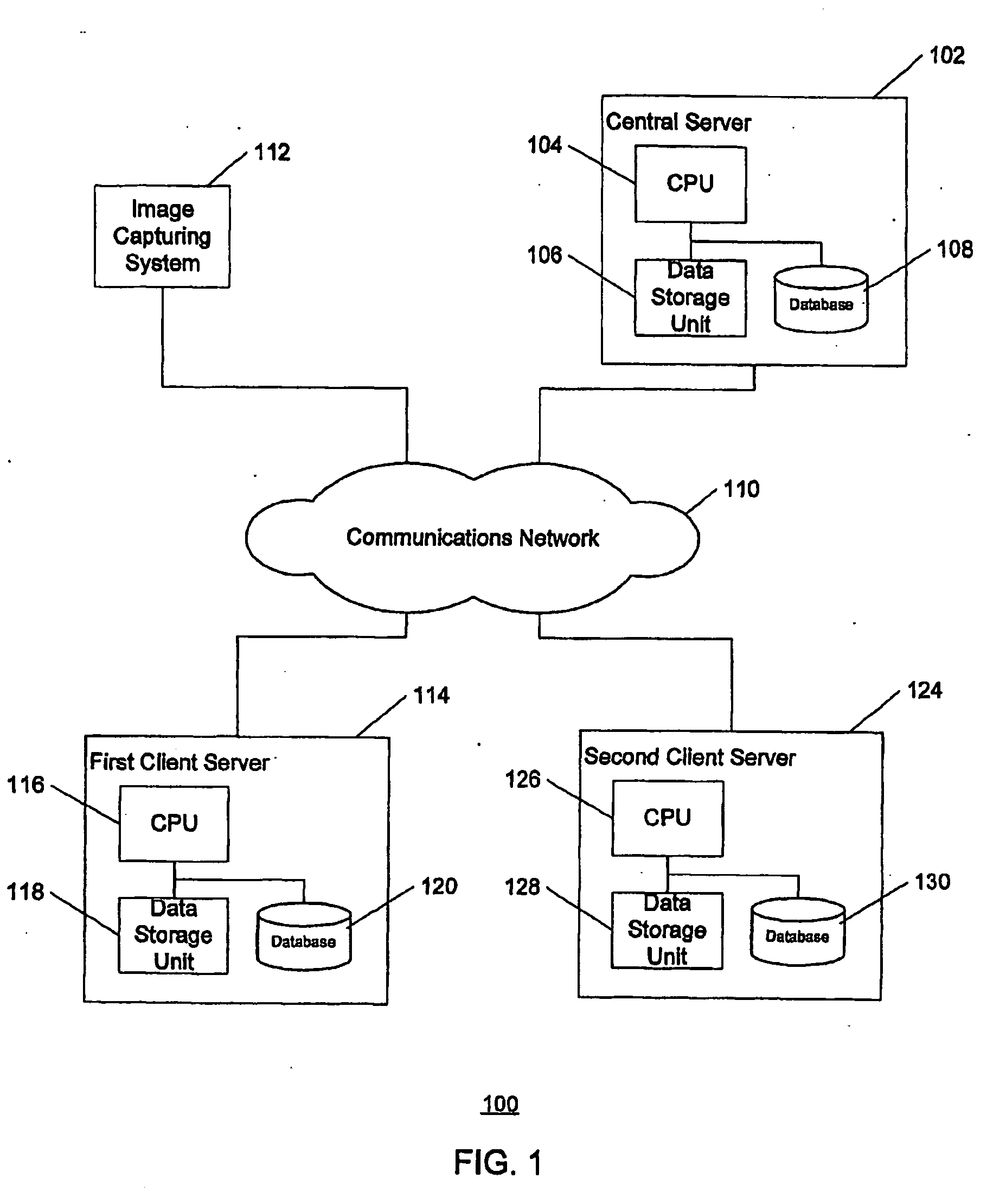

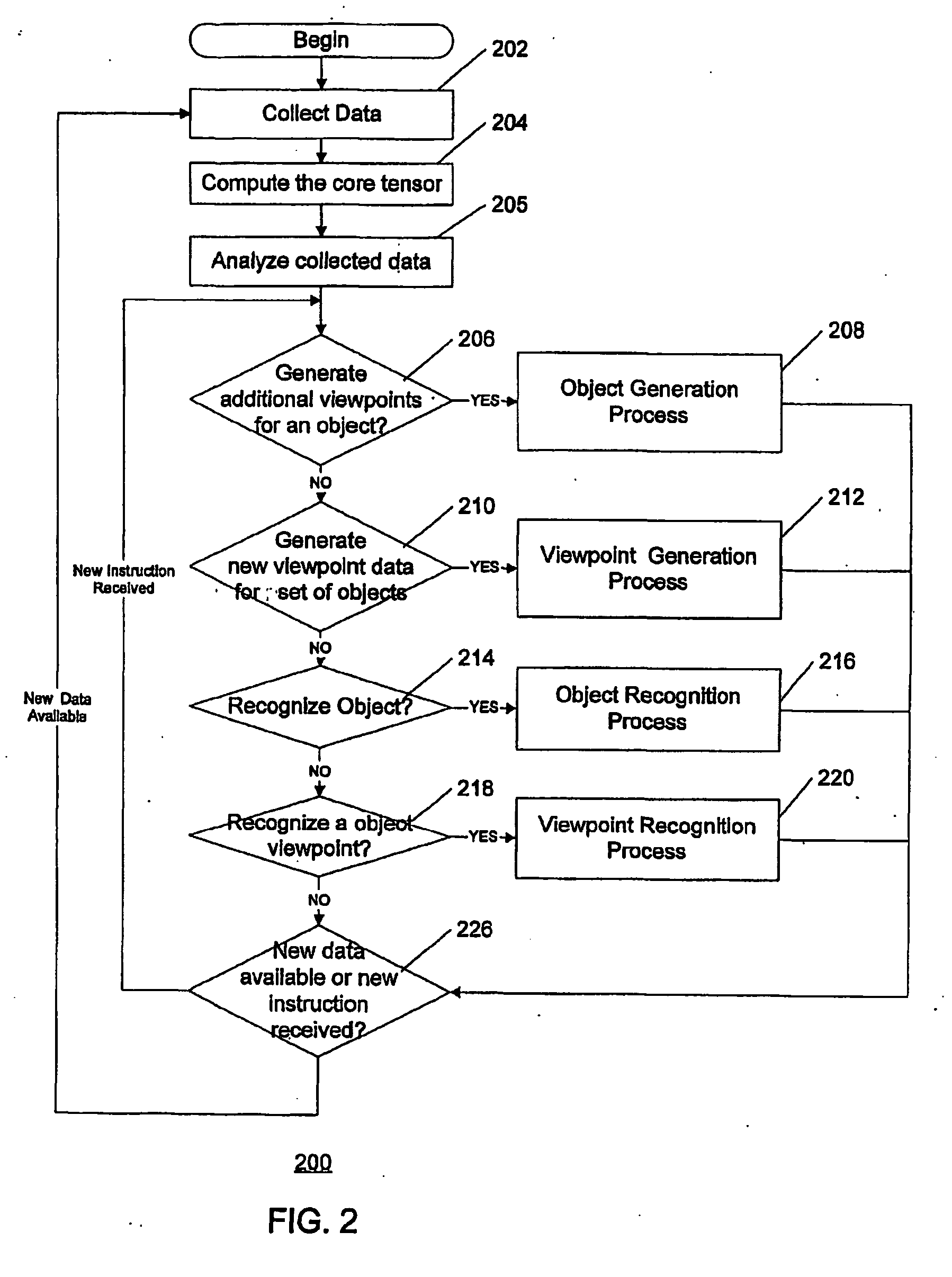

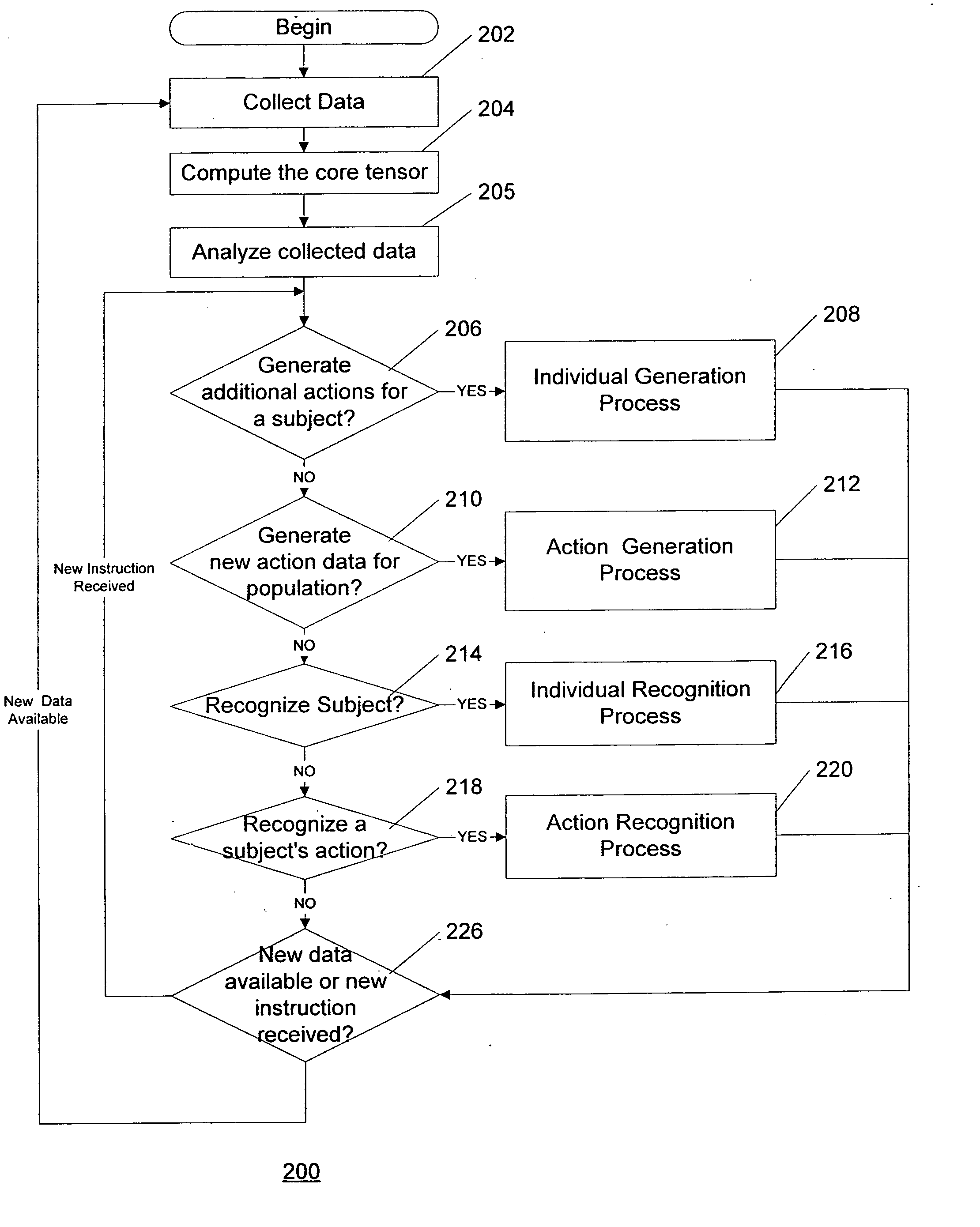

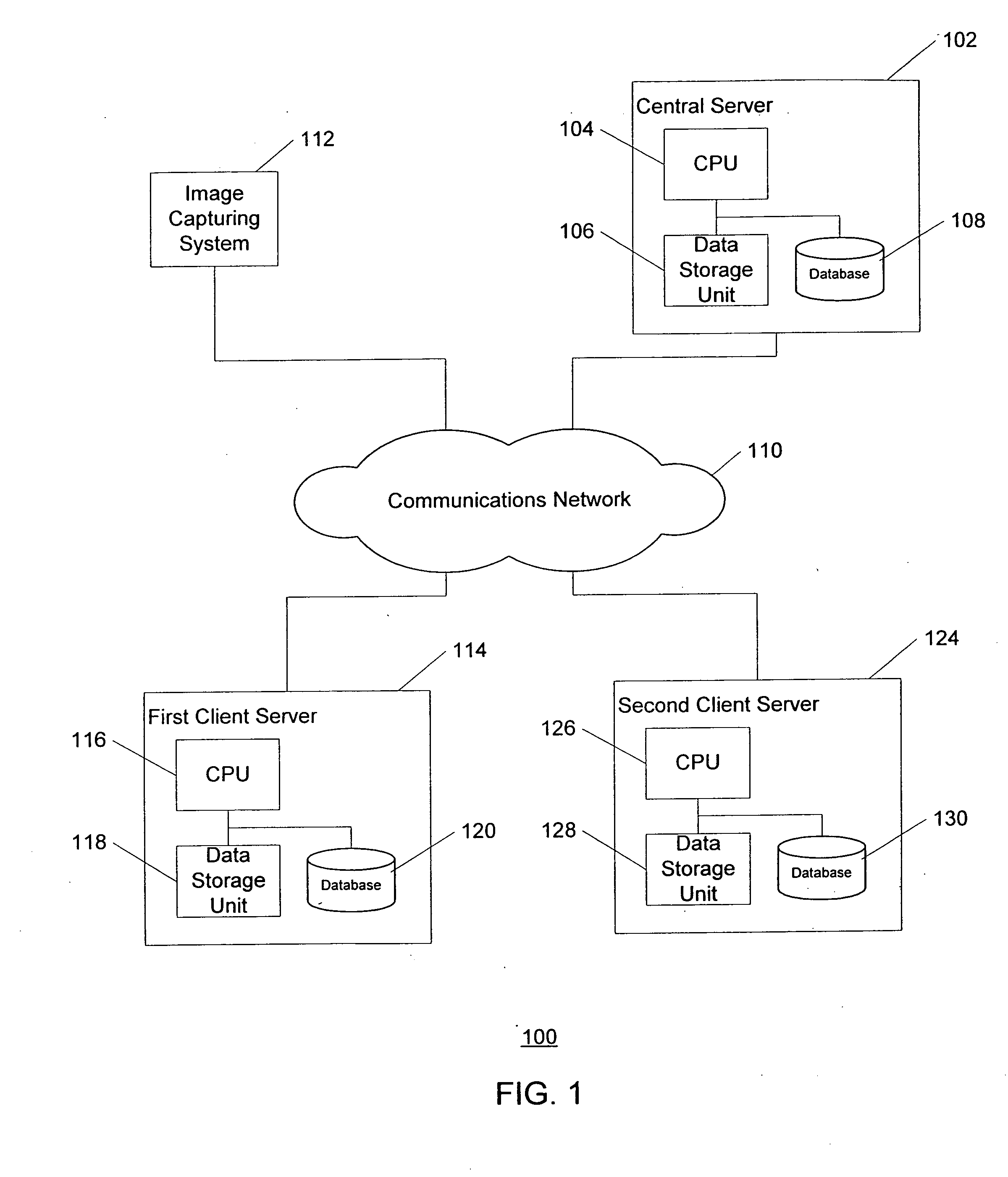

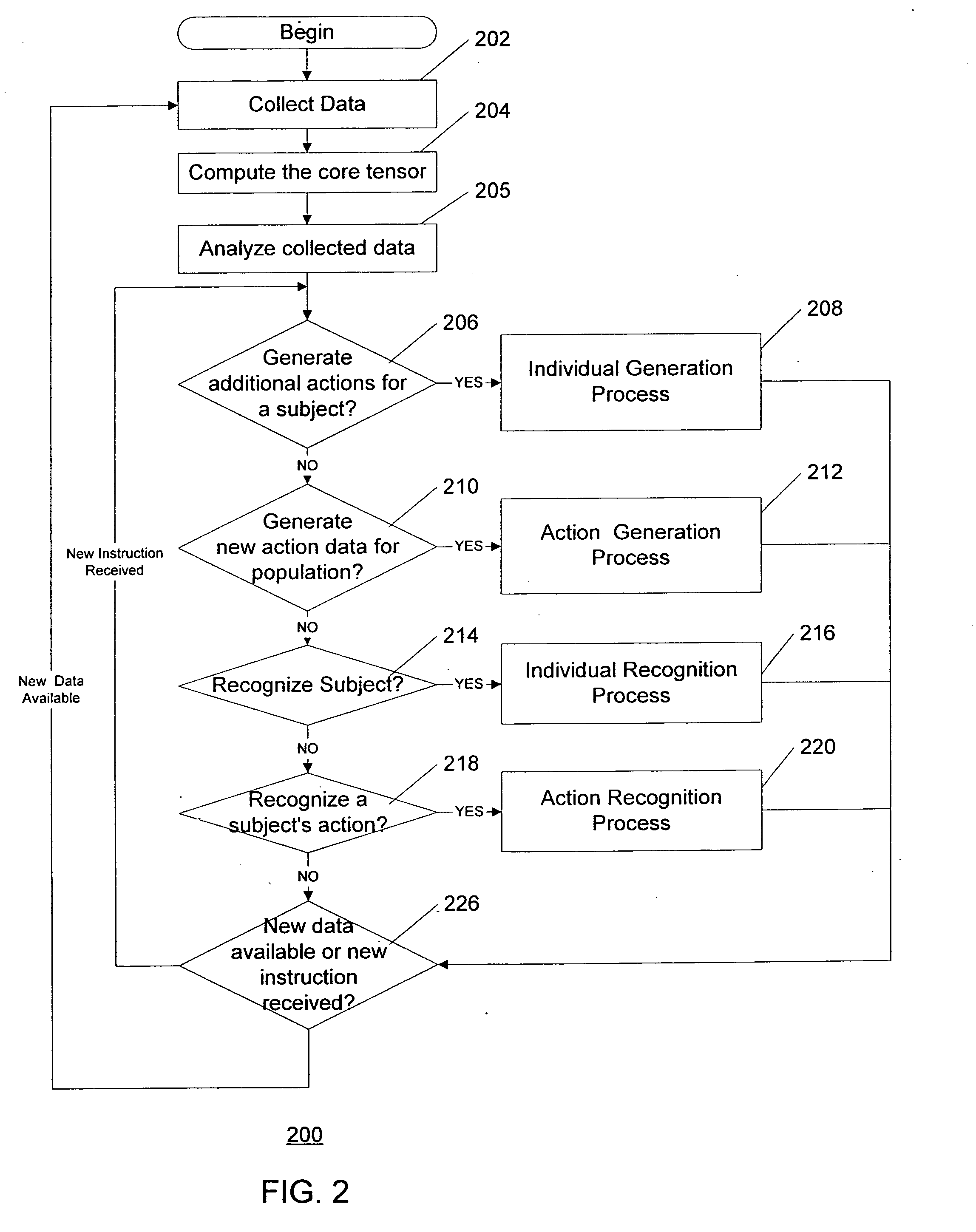

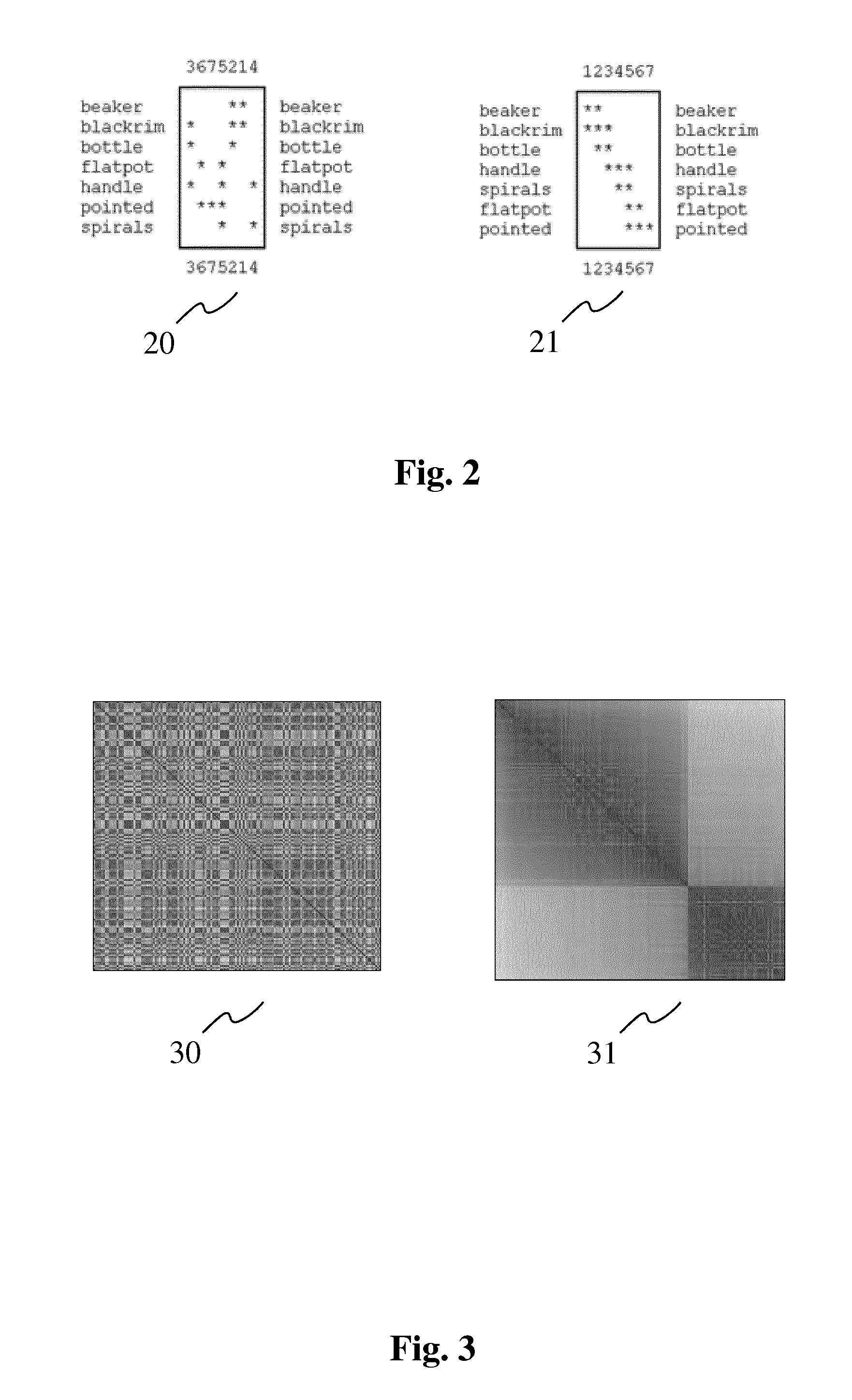

Logic arrangement, data structure, system and method for multilinear representation of multimodal data ensembles for synthesis, rotation and compression

InactiveUS20060143142A1Reduce dimensionalityFull dimensionalityDigital computer detailsCharacter and pattern recognitionViewpointsDimensionality reduction

A data structure, method, storage medium and logic arrangement are provided for use in collecting and analyzing multilinear data describing various characteristics of different objects. In particular it is possible to recognize an unknown object or an unknown viewpoint of an object, as well as synthesize a known viewpoint never before recorded of an object, and reduce the amount of stored data describing an object or viewpoint by using dimensionality reduction techniques, and the like.

Owner:NEW YORK UNIV

Detecting, quantifying, and/or classifying seizures using multimodal data

A method, comprising receiving at least one of a signal relating to a first cardiac activity and a signal relating to a first body movement from a patient; triggering at least one of a test of the patient's responsiveness, awareness, a second cardiac activity, a second body movement, a spectral analysis test of the second cardiac activity, and a spectral analysis test of the second body movement, based on at least one of the signal relating to the first cardiac activity and the signal relating to the first body movement; determining an occurrence of an epileptic event based at least in part on said one or more triggered tests; and performing a further action in response to said determination of said occurrence of said epileptic event. Further methods allow classification of epileptic events. Apparatus and systems capable of implementing the method.

Owner:FLINT HILLS SCI L L C

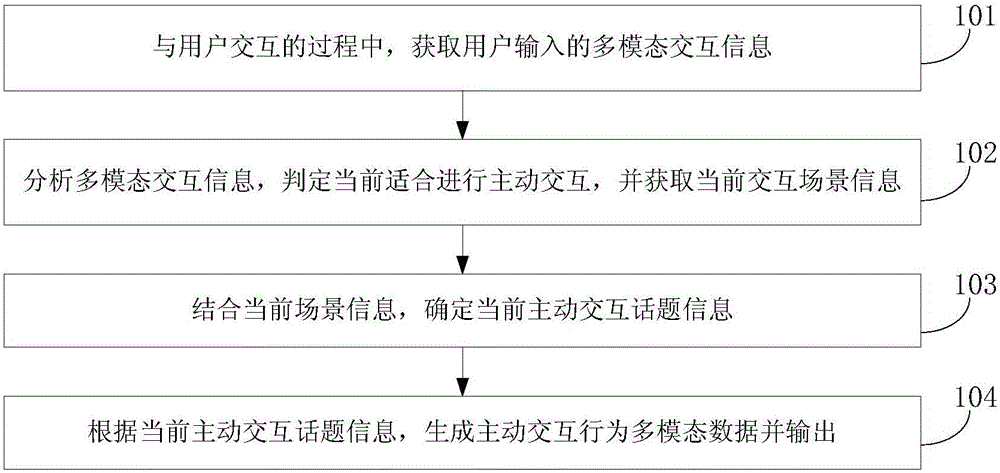

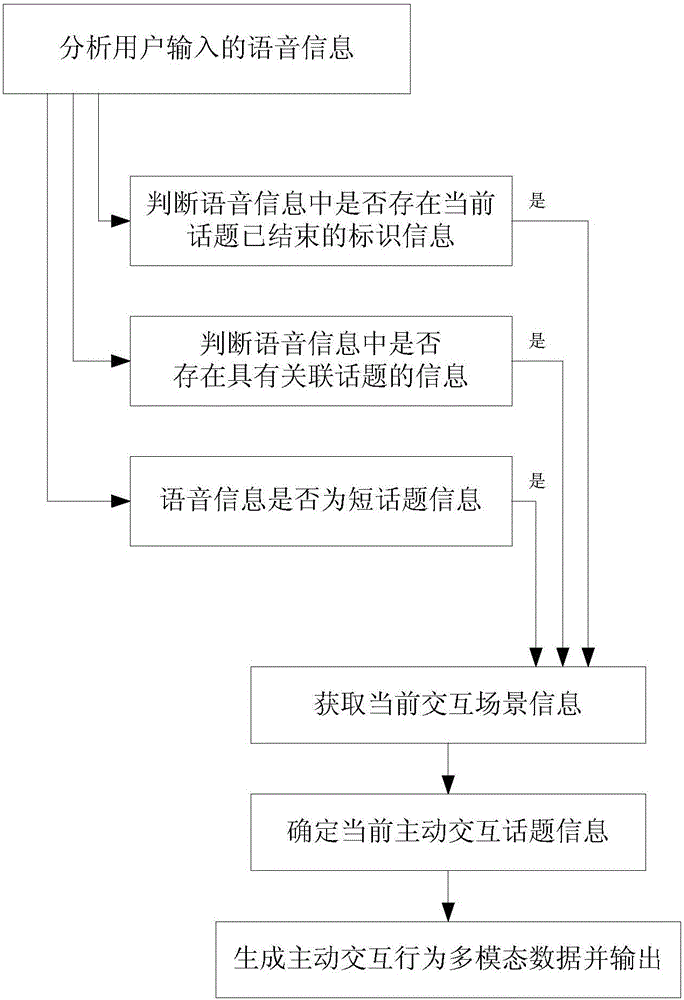

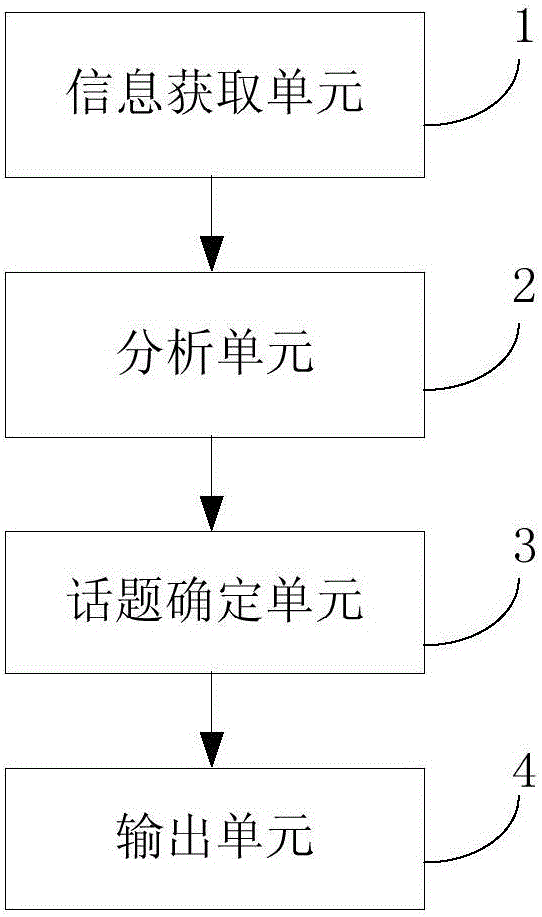

Interactive method and device for intelligent robot

The invention discloses an interactive method and device for an intelligent robot, belongs to the technical field of robots, and solves the technical problem that a conventional interactive method for the intelligent robot cannot realize active interaction. The method comprises the following steps: in a process of interaction with a user, acquiring multimodal interactive information input by the user; analyzing the multimodal interactive information, judging that active interaction is suitably performed currently, and acquiring current interactive scene information;, determining current active interactive topic information by combining the current interactive scene information; and generating active interactive behavior multimodal data according to the current active interactive topic information and outputting the active interactive behavior multimodal data.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

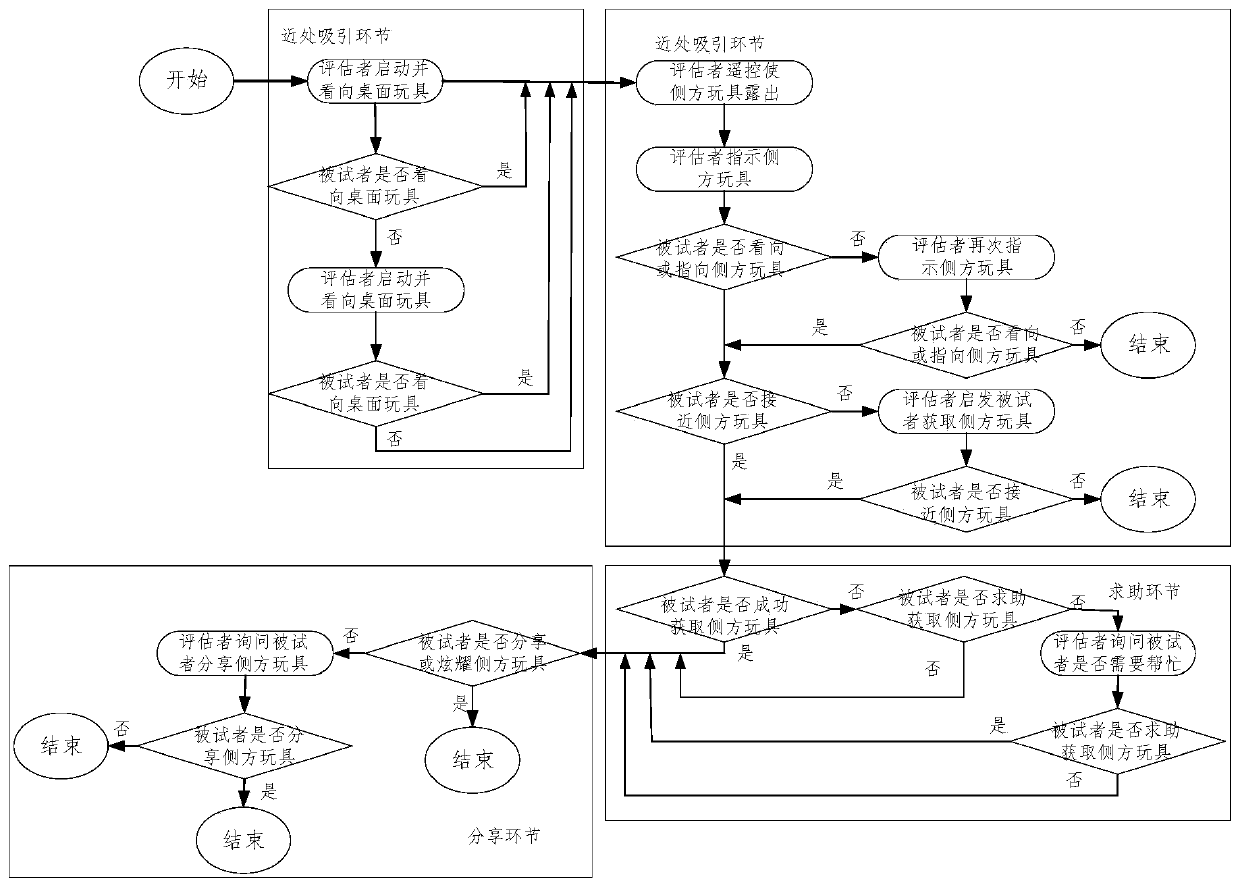

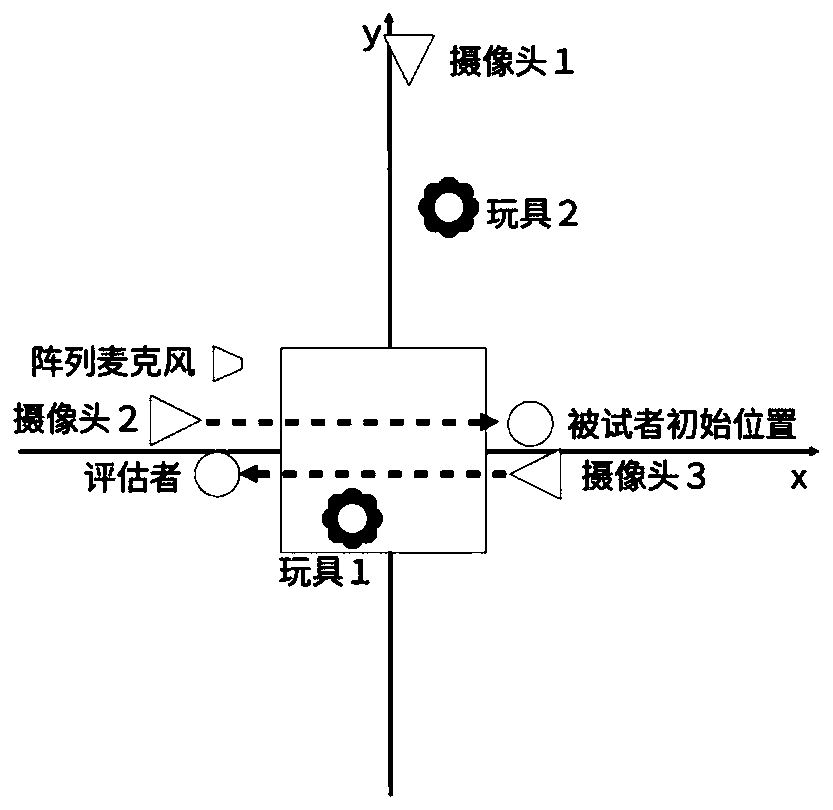

Early-stage autism screening system based on joint attention ability test and audio-video behavior analysis

ActiveCN110313923ARestore natural performanceMore room for self-expressionHealth-index calculationSensorsData acquisitionData acquisition module

The invention discloses an early-stage autism screening system based on a joint attention ability test and an audio-video behavior analysis. Audio-video multimodal data of an evaluator and a testee iscollected and analyzed so as to evaluate and predict autistic spectrum disorders. The system includes a data acquisition module, a preprocessing module, a feature extraction module, a training classification module and a prediction module. The data acquisition module is used for multi-view and multi-channel collection of the audio-video multimodal data of the evaluator and the testee in a test; the preprocessing module synchronously collects the audio-video data, detects and marks the time when the evaluator issues a command through voice recognition, and extracts and analyzes an audio videoafter the point in time; the feature extraction module is used for feature extraction of the pre-processed audio-video data, and speech content, facial emotions and other features are obtained; the training classification module takes the extracted combination features as an input of a machine learning classifier for training, and a classifier model for predicting the autism is obtained; by usingthe classifier model obtained through training, the prediction module performs autism classification and prediction on the testee whose data is collected.

Owner:DUKE KUNSHAN UNIVERSITY +1

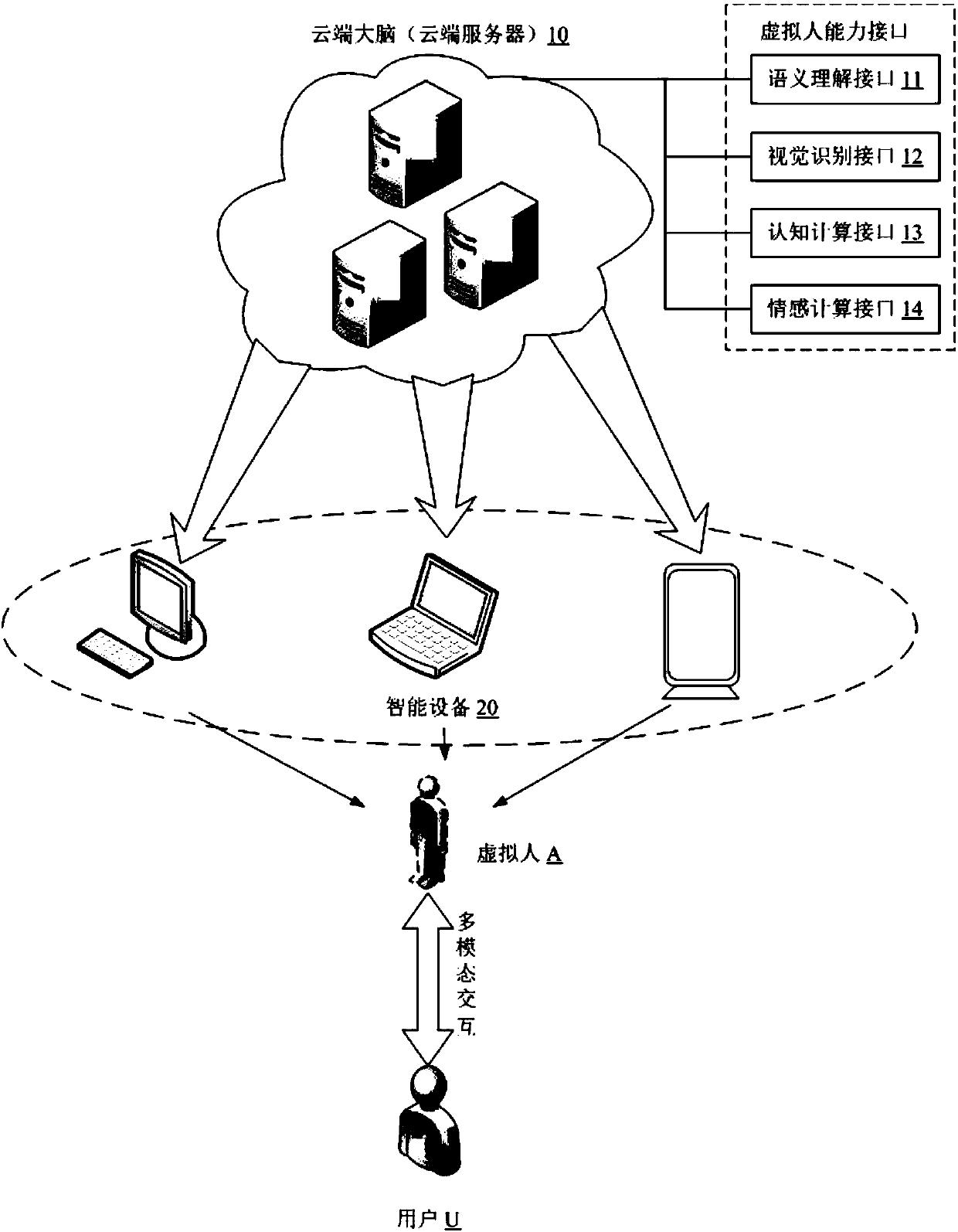

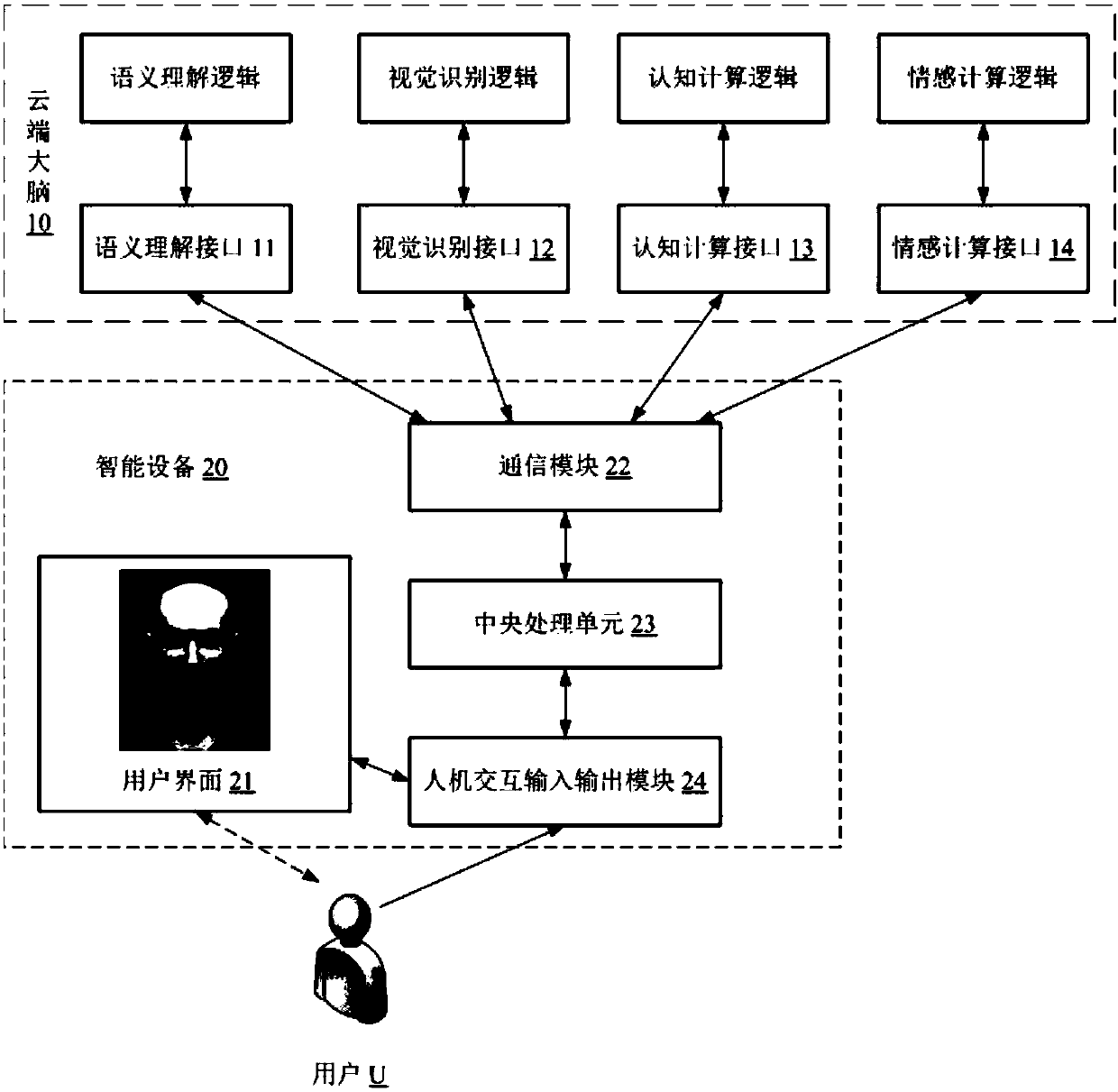

Virtual human visual processing method and system based on multimodal interaction

InactiveCN107765856AMeet needsImprove experienceInput/output for user-computer interactionSound input/outputMultimodal dataUser needs

The invention discloses a virtual human visual processing method and system based on multimodal interaction. A virtual human runs in intelligent equipment. The method comprises the steps that when thevirtual human is in an awakened state, the virtual human is displayed in a preset display region, and the virtual human has specific personalities and properties; multimodal data is acquired, whereinthe multimodal data comprises data from surroundings and multimodal input data performing interaction with a user; a virtual human ability interface is called to analyze the multimodal input data, and multimodal output data is decided; relative position information is utilized to calculate execution parameters for turning of the head of the virtual human towards a target face; and the execution parameters are displayed in the preset display region. Through the embodiment, when the virtual human performs interaction with the user, it is guaranteed that the virtual human tracks the face of theuser all the time to perform face-to-face multimodal interaction with the user, the user demand is met, and user experience is improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

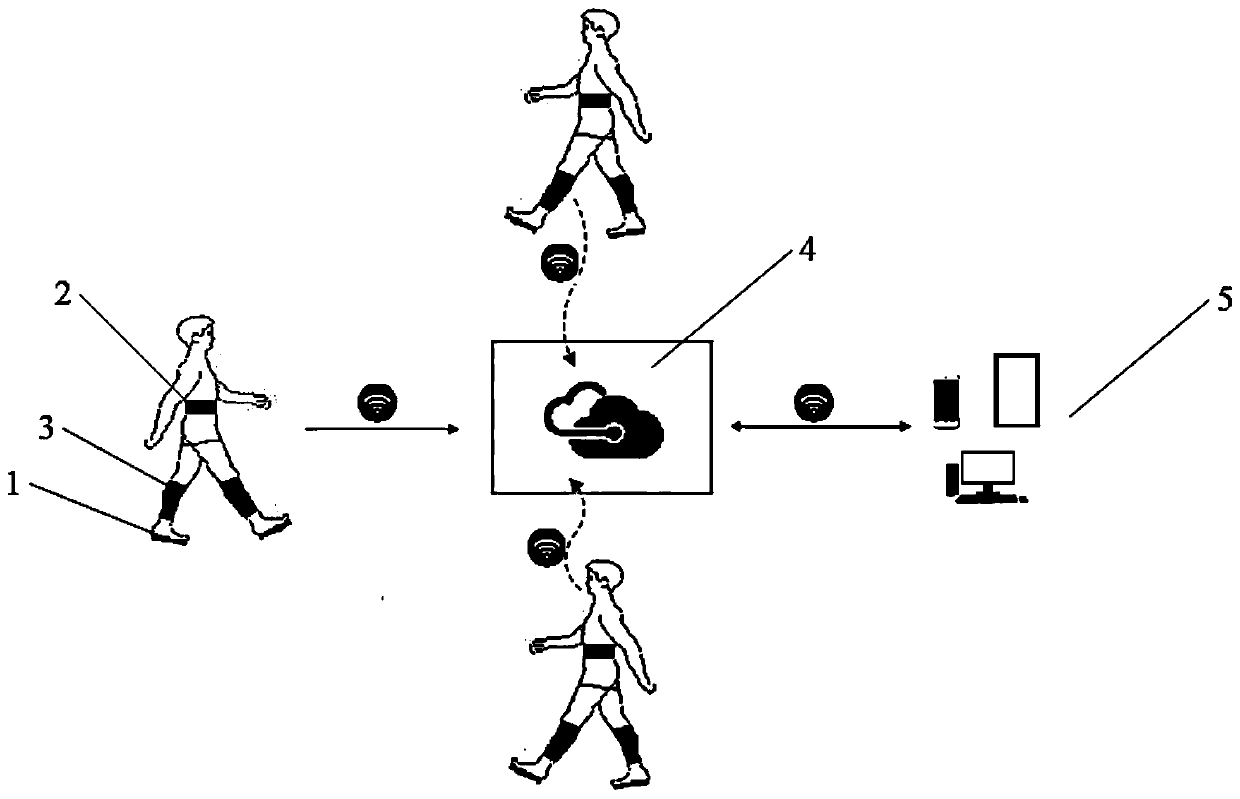

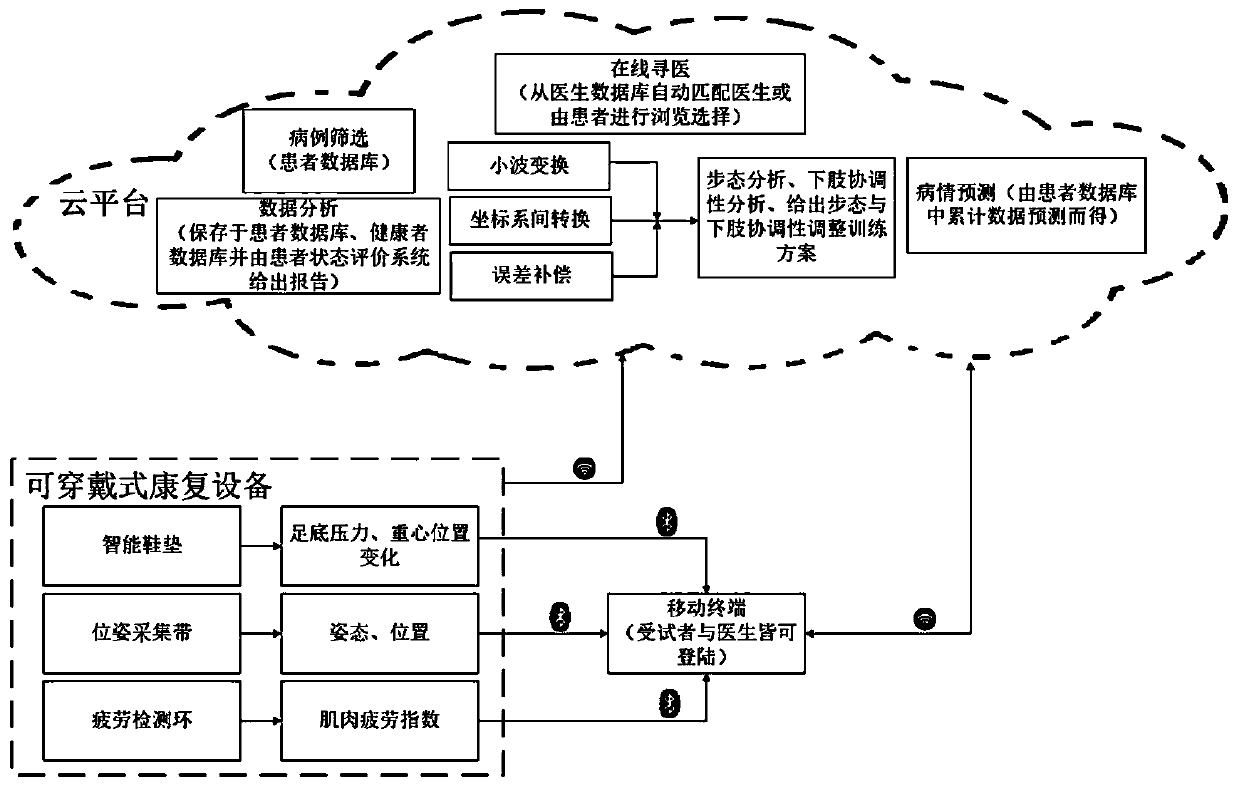

Coordinated rehabilitation training platform for human gait and lower limbs based on cloud platform

The invention discloses a coordinated rehabilitation training platform for human gait and lower limbs based on a cloud platform. The platform comprises wearable rehabilitation equipment, a cloud platform and a mobile terminal. Through a wearable intelligent insole, a posture detection belt and a fatigue detection device, plantar pressure, left and right foot barycenter data, position and posture data and muscle fatigue data of a patient in the rehabilitation training process are collected in real time and transmitted to the cloud platform synchronously; by storing and cloud computing the collected multimodal data through the cloud platform, an evaluation report of gait rehabilitation training and lower limb coordination rehabilitation training can be given in time, and a corresponding rehabilitation scheme can be revised; a user selects the rehabilitation training scheme and manages the rehabilitation training process through the mobile terminal; and the platform further provides a plurality of rehabilitation training modes, and the functions of remote doctors' video supervision, after-the-fact guidance and online medical search, and thus the rehabilitation training is more efficient, scientific and convenient.

Owner:ZHENGZHOU UNIV

Logic arrangement, data structure, system and method for multilinear representation of multimodal data ensembles for synthesis, recognition and compression

InactiveUS20050210036A1Reduce dimensionalityImage analysisDigital data processing detailsDimensionality reductionMultimodal data

A data structure, method, storage medium and logic arrangement are provided for use in collecting and analyzing multilinear data describing various characteristics of different objects. In particular it is possible to recognize an unknown individual, an unknown object, an unknown action being performed by an individual, an unknown expression being formed by an individual, as well as synthesize a known action never before recorded as being performed by an individual, synthesize an expression never before recorded as being formed by an individual, an reduce the amount of stored data describing an object or action by using dimensionality reduction techniques, and the like.

Owner:NEW YORK UNIV

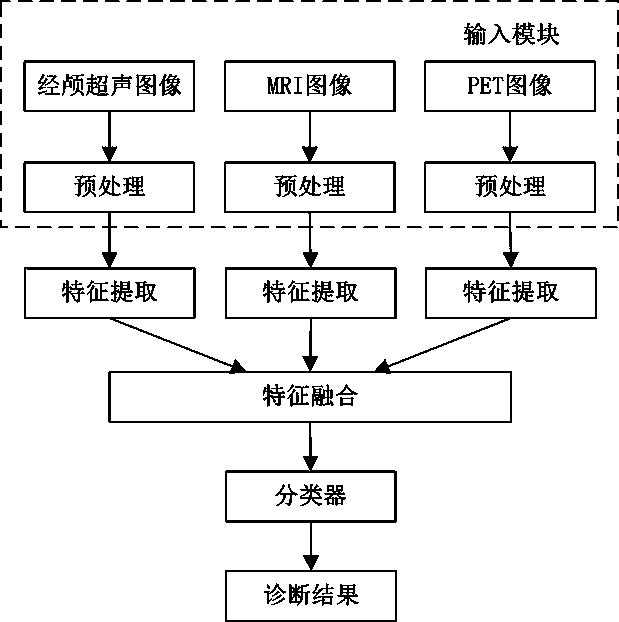

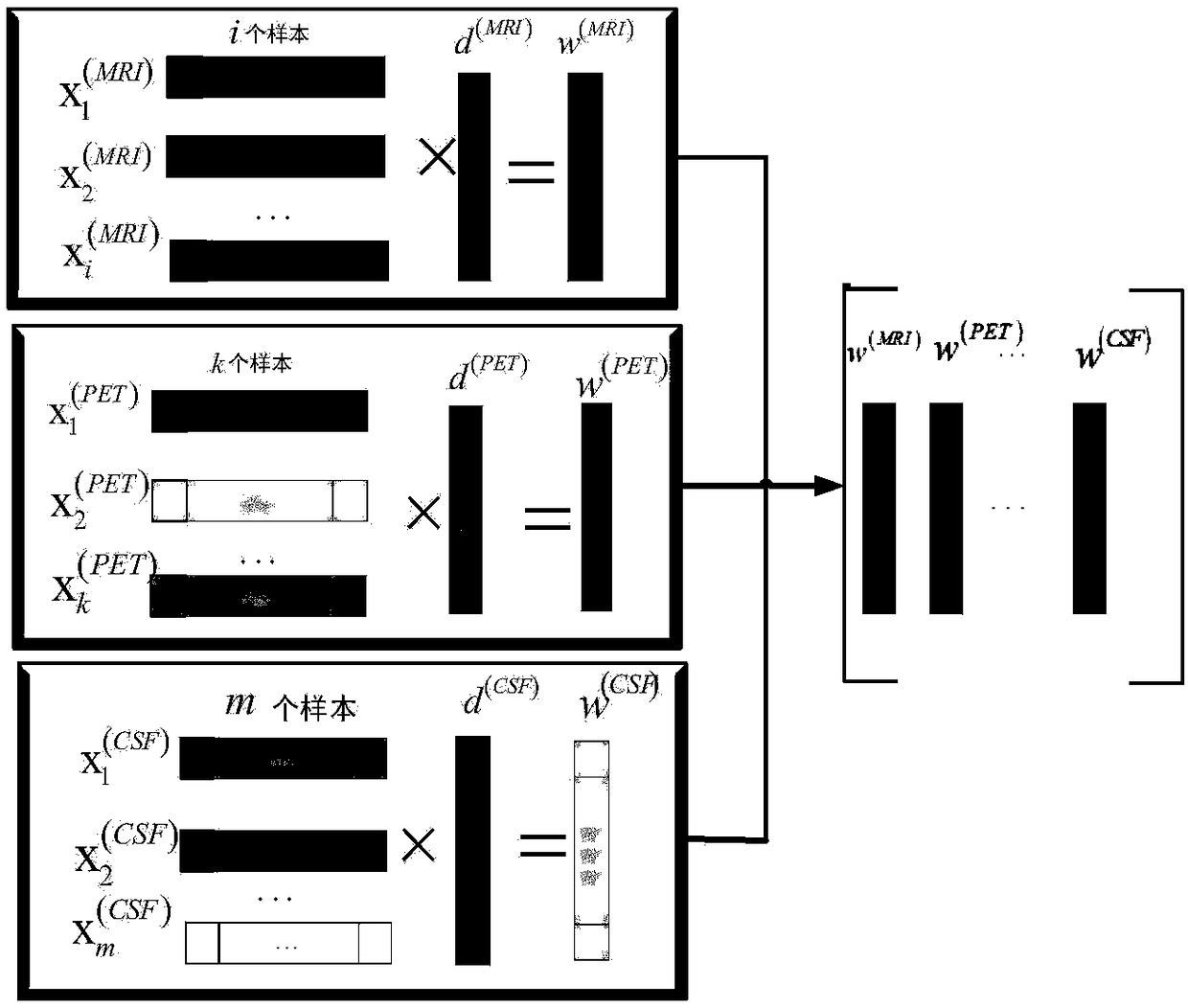

Multimodal-medical-image-based auxiliary diagnosis system and method for Parkinson's disease

InactiveCN108961215AStable supportImage enhancementImage analysisDiagnostic Radiology ModalityMultimodal data

The invention discloses a multimodal-medical-image-based auxiliary diagnosis system and method for the Parkinson's disease. The system is composed of an input module, a feature extraction module, a feature fusion module, and a diagnosis module. In addition, the method includes: after an ultrasonic image, an MRI image and a PET image are obtained, feature extraction is carried out on the data of three channels by using different methods, and feature fusion is carried out on the extracted features by using different fusion methods; the diagnostic module processes the features of three modes by means of integrated learning to obtain a diagnosis result, so that an auxiliary reference is provided for the diagnosis by the doctor. For the exiting early-stage diagnosis of the Parkinson's disease,the multimodal data are analyzed quantitatively, so that the system and method have the important significance in the early diagnosis of the Parkinson's disease; and the accuracy of diagnosis is improved and the subjective determination error of the operator is reduced. Moreover, the early clinical intervention is realized and thus the post-stage disability is reduced, so that a certain auxiliaryguidance effect is realized.

Owner:SHANGHAI UNIV

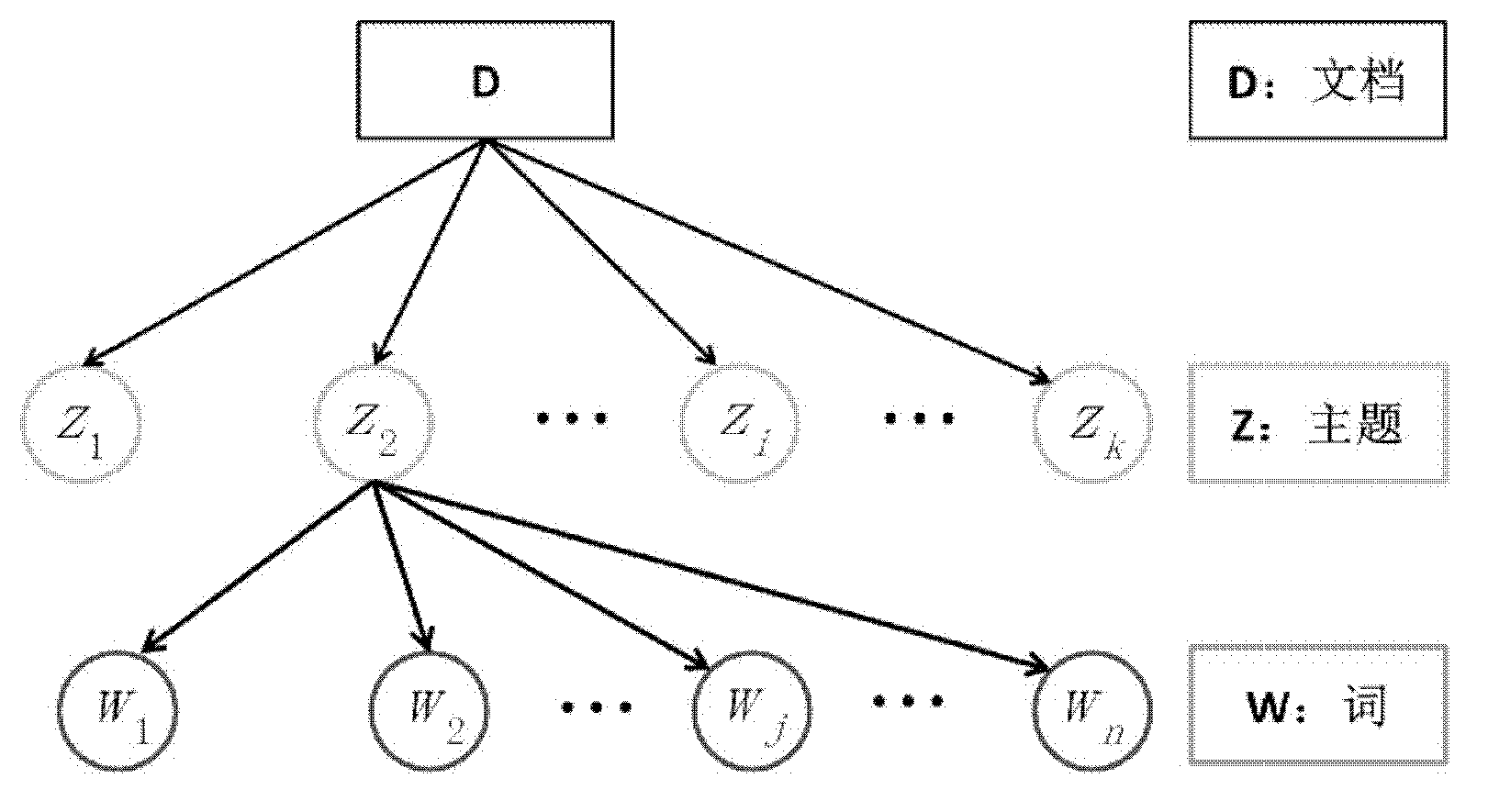

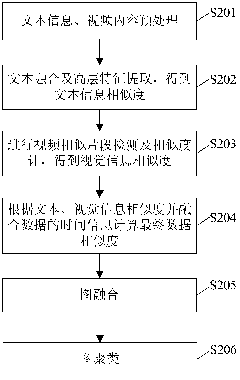

Cross-media topic detection method and device based on multimodal information fusion and graph clustering

ActiveCN103995804ACharacter and pattern recognitionSpecial data processing applicationsTemporal informationFeature extraction

The invention discloses a cross-media topic detection method and device based on multimodal information fusion and graph clustering. The method includes the steps that first, text information and video content are preprocessed; second, text fusion and high-layer characteristic extraction are performed to obtain text information similarity; third, similar clips of video are detected, and vision information similarity is obtained; fourth, according to the text information similarity, the vision information similarity and time information of fused data, the final data similarity is calculated; fifth, according to the final data similarity, graph fusion and graph clustering are performed, and topic detection is completed. The method effectively avoids the over-segmentation and the over-generation problem caused by hard quantification on a time axis and the problem that an existing topic detection method can not be transplanted to topic detection on multimodal data from different multimedia sources.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

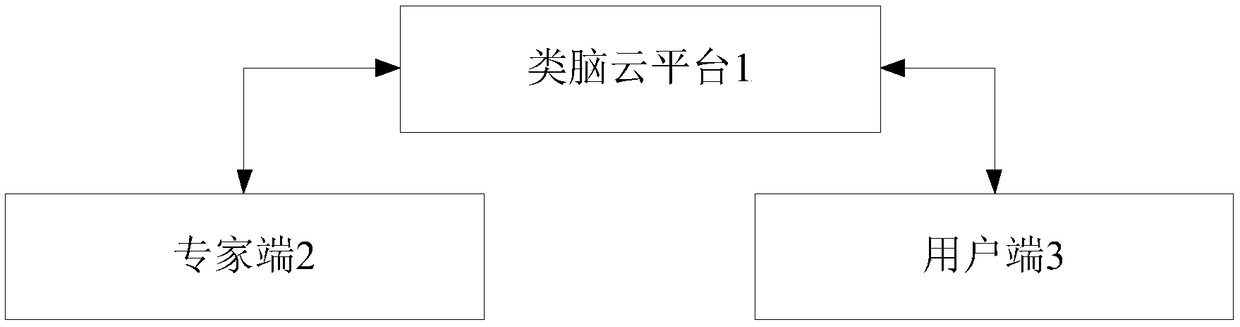

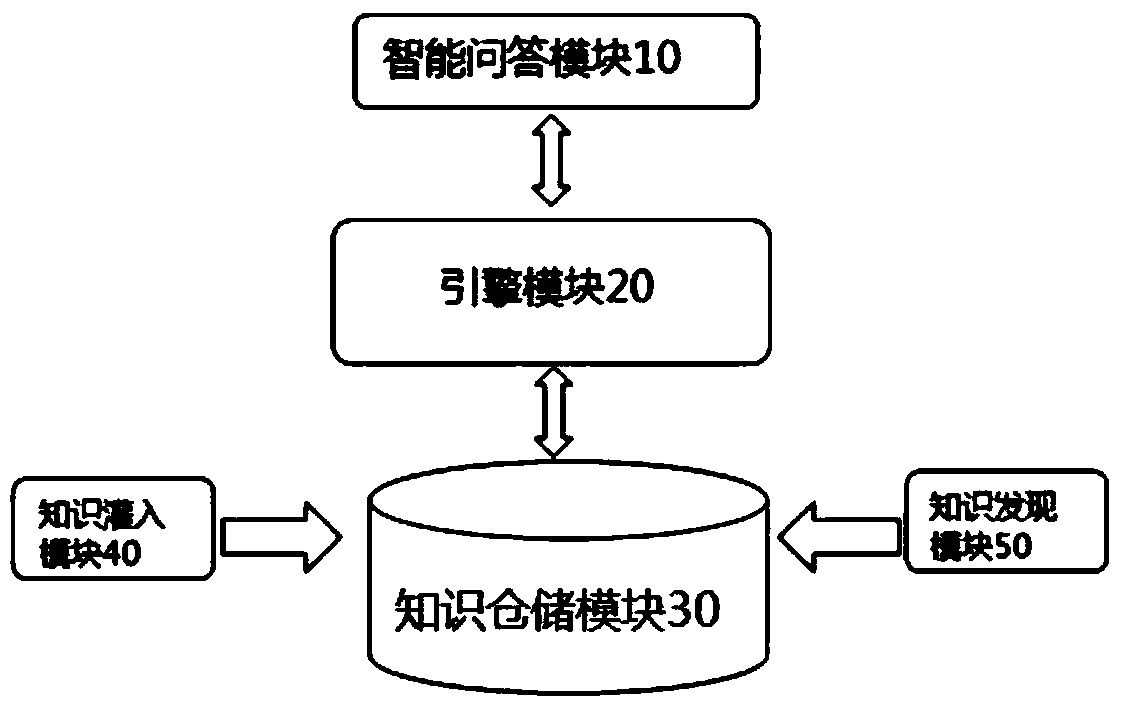

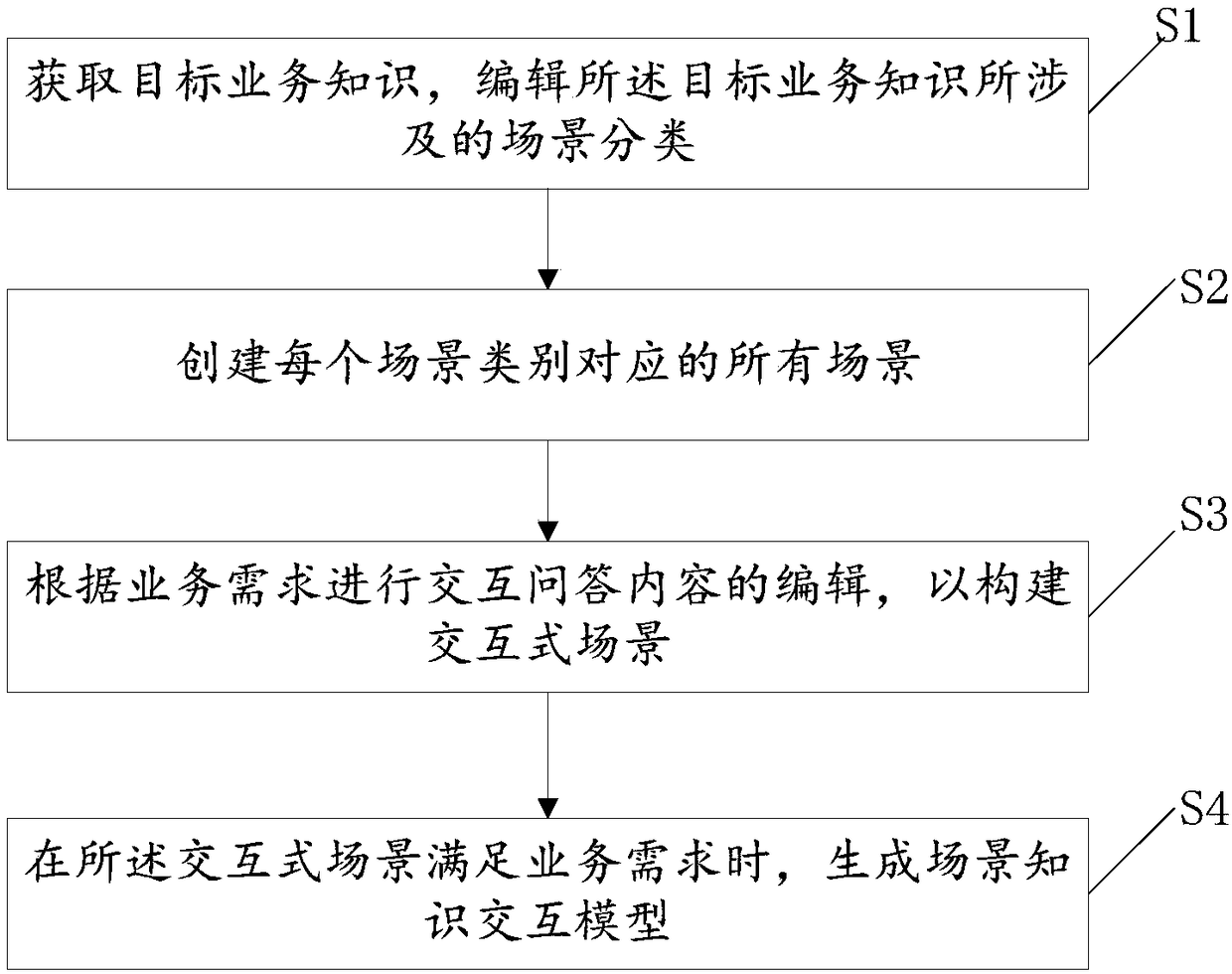

An expert service robot cloud platform

InactiveCN109145168AImprove experienceOrganized memoryOther databases queryingMachine learningService experienceInteraction interface

The invention discloses an expert service robot cloud platform, a brain-like cloud platform, an expert terminal and a user terminal. The brain cloud-like platform is connected with the user terminal through the human-computer interaction interface, and is used for receiving the multimodal data sent by the user terminal, and completing the session with the user according to various preset knowledge; conversations include: greetings, a question-and-answer session or multiple rounds of conversation; the user terminal is used for sending multimodal data to the brain cloud-like platform through thehuman-computer interaction interface, and for receiving the data returned by the brain cloud-like platform through the human-computer interaction interface; the expert terminal is used for experts toinject relevant expert knowledge data into the brain-like cloud platform. The technical proposal of the invention can provide efficient and accurate intelligent session for users and improve user experience.

Owner:广州极天信息技术股份有限公司

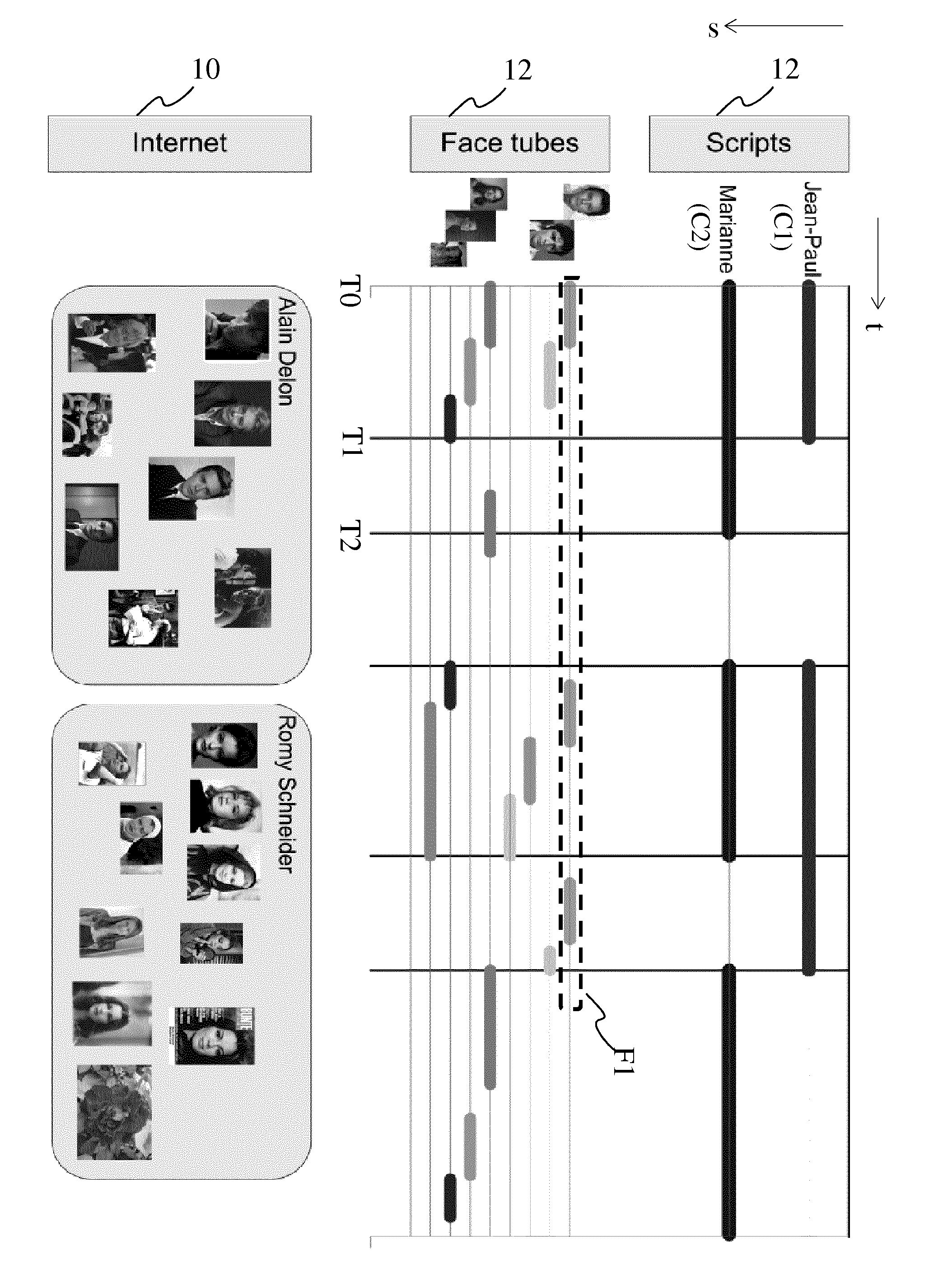

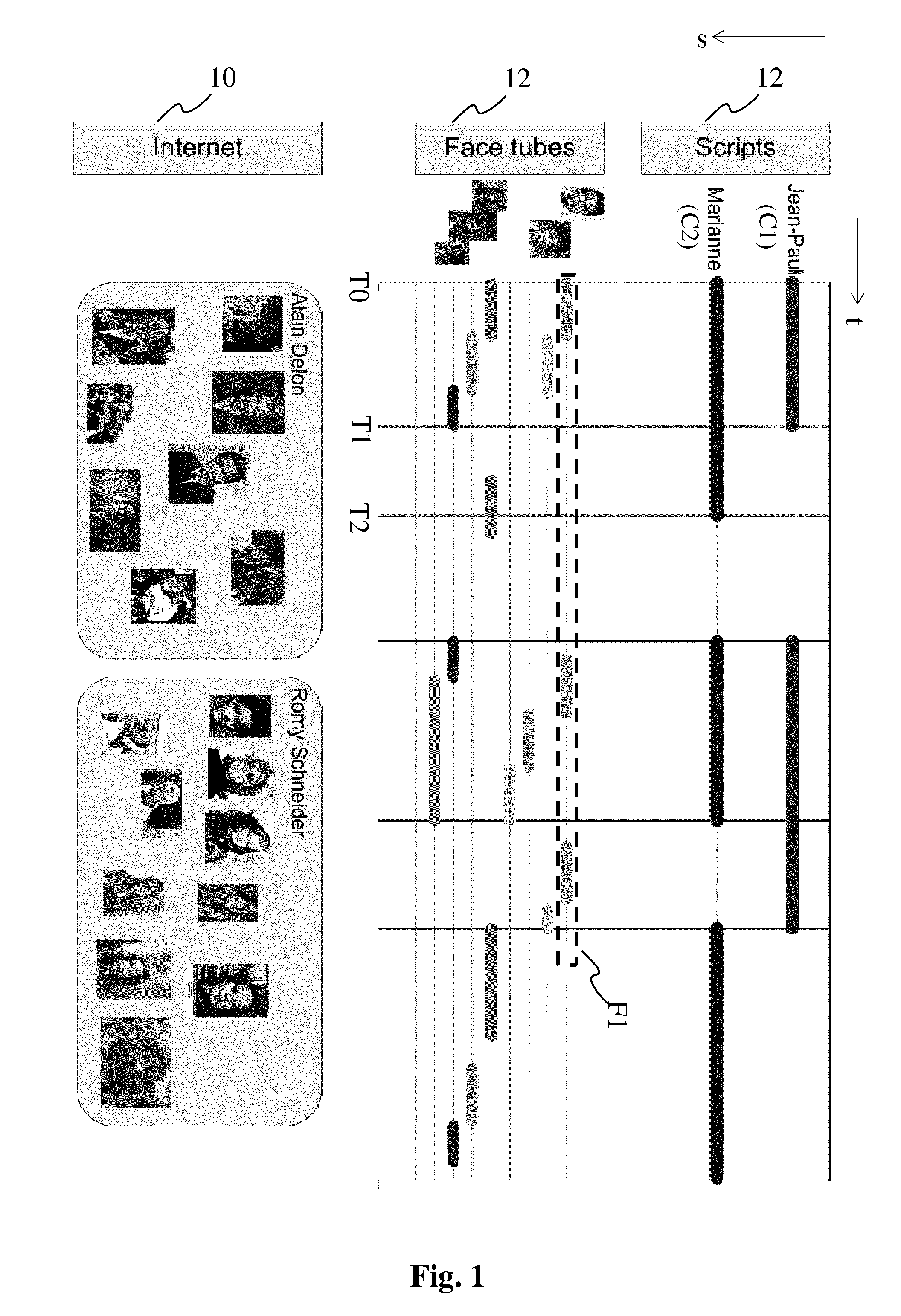

Method for identifying objects in an audiovisual document and corresponding device

InactiveUS20150356353A1Character and pattern recognitionSpeech recognitionAudiovisual documentCluster algorithm

The invention relates to the technical field of recognition of objects in audiovisual documents. The method uses multimodal data that is collected and stored in a similarity matrix. A level of similarity is determined for each matrix cell. Then a clustering algorithm is applied to cluster the information comprised in the similarity matrix. Clusters are identified, each identified cell cluster identifying an object in the audiovisual document.

Owner:THOMSON LICENSING SA

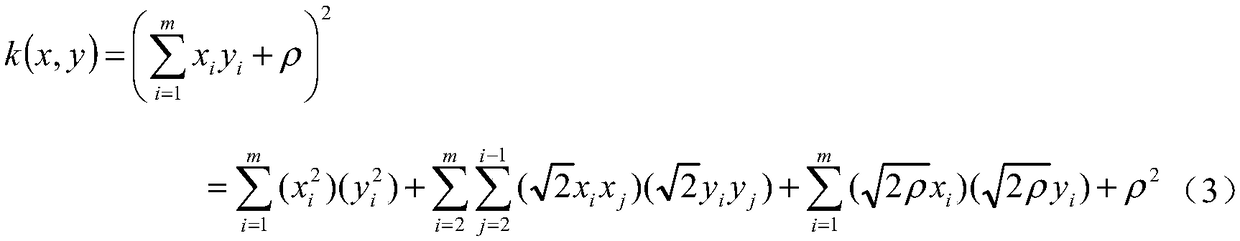

Multi-modal data analysis method and system based on high Laplacian regularization and low-rank representation

ActiveCN109215780AAvoid localityEffective diagnosisCharacter and pattern recognitionMedical automated diagnosisSupport vector machineMultimodal data

The invention discloses a multi-modal data analysis method and system based on high Laplacian regularization and low-rank representation and belongs to the field of multimodal data analysis. The invention aims to capture the global linear structure and nonlinear geometric structure of multimodal data. The method includes the following steps that: multi-modal data are processed, so that a pluralityof data matrices are obtained; low-rank representation and Laplacian regularization term are combined so as to construct a non-negative sparse hyper-laplacian regularization and low-rank representation model, the non-negative sparse hyper-laplacian regularization and low-rank representation model is made to learn each data matrix, so that a high Laplacian regularization and low-rank subspace is obtained; learning is performed on the basis of the high Laplacian regularization and low-rank subspace and a support vector machine, so that a plurality of classifiers can be obtained; and voting is performed for the classifiers, so that a final classifier is obtained. The structure of the system includes a data processing module, a data analysis module, a classification module, and a voting module. With the method adopted, the global linear structure and nonlinear geometry of the multimodal data can be captured.

Owner:QILU UNIV OF TECH

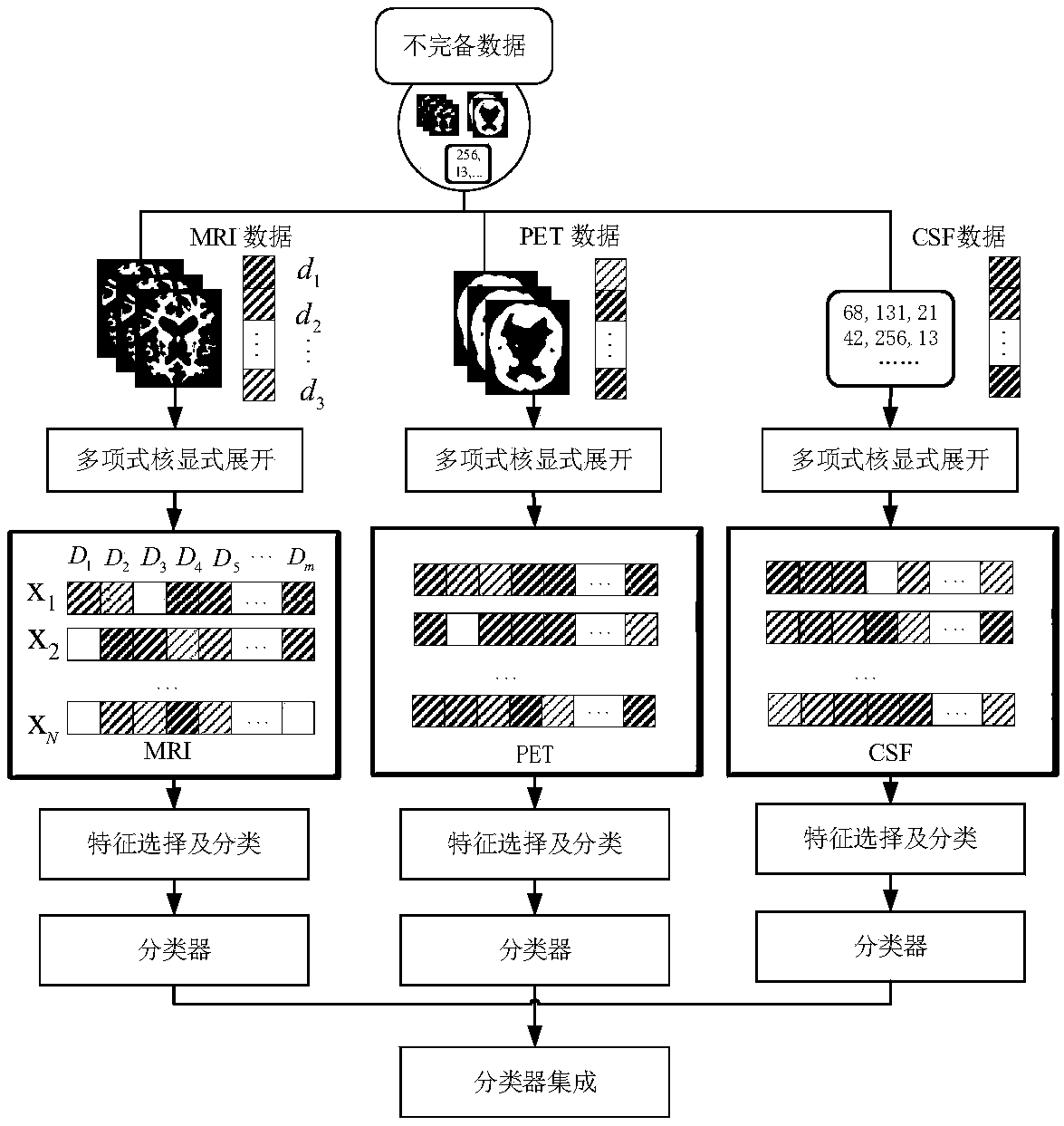

Multi-modal data classification method based on feature selection

InactiveCN109359685AAccurate classificationFacilitate early diagnosis and treatmentCharacter and pattern recognitionMultimodal dataData information

The invention provides a multimodal data classification method based on feature selection, The method comprises the following steps of: collecting and processing multimodal data, expanding and representing the data by nonlinear kernel explicit expansion, obtaining combined high-order disease features, quickly identifying key features in high-dimensional feature space by feature selection method, constructing ensemble learning model, and performing image classification. The method provided by the invention can fully utilize the data information in each mode, and improves the classification accuracy.

Owner:XIAN UNIV OF POSTS & TELECOMM

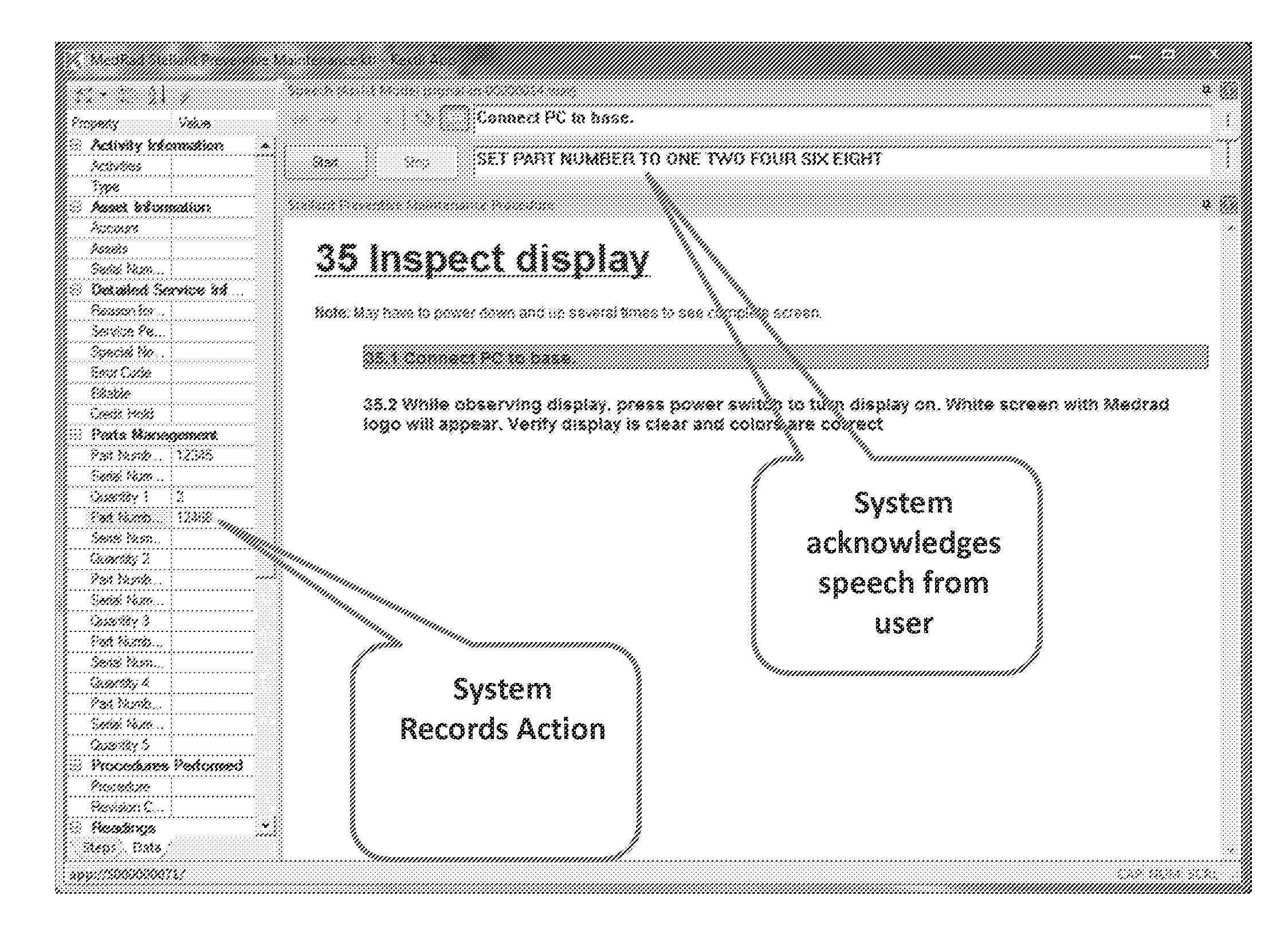

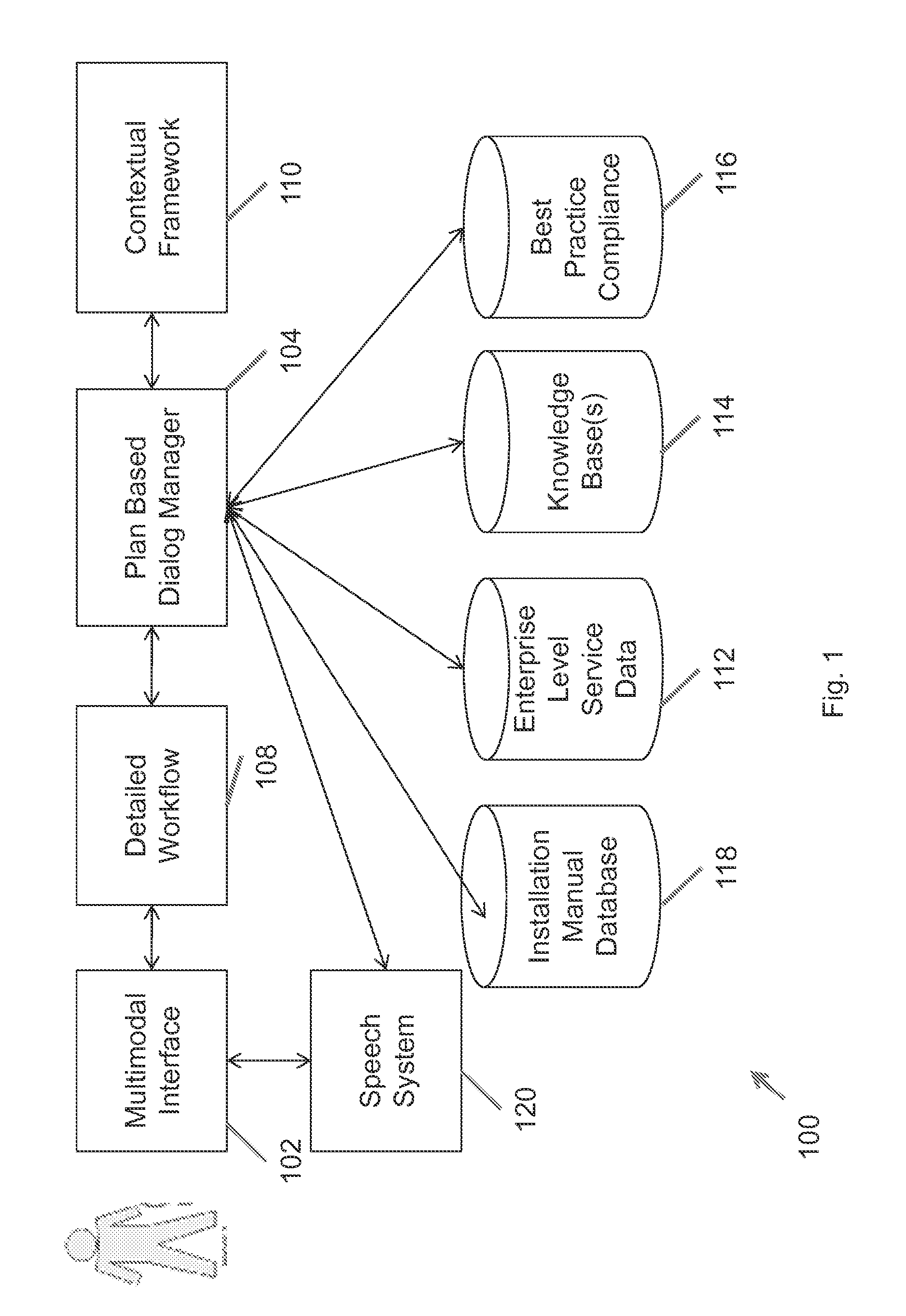

Systems and methods for voice-guided operations

InactiveUS20130204619A1Easy to useNatural language data processingSpeech recognitionMultimodal dataSpeech sound

A method includes transforming textual material data into a multimodal data structure including a plurality of classes selected from the group consisting of output, procedural information, and contextual information to produce transformed textual data, storing the transformed textual data on a memory device, retrieving, in response to a user request via a multimodal interface, requested transformed textual data and presenting the retrieved transformed textual data to the user via the multimodal interface.

Owner:KEXTIL

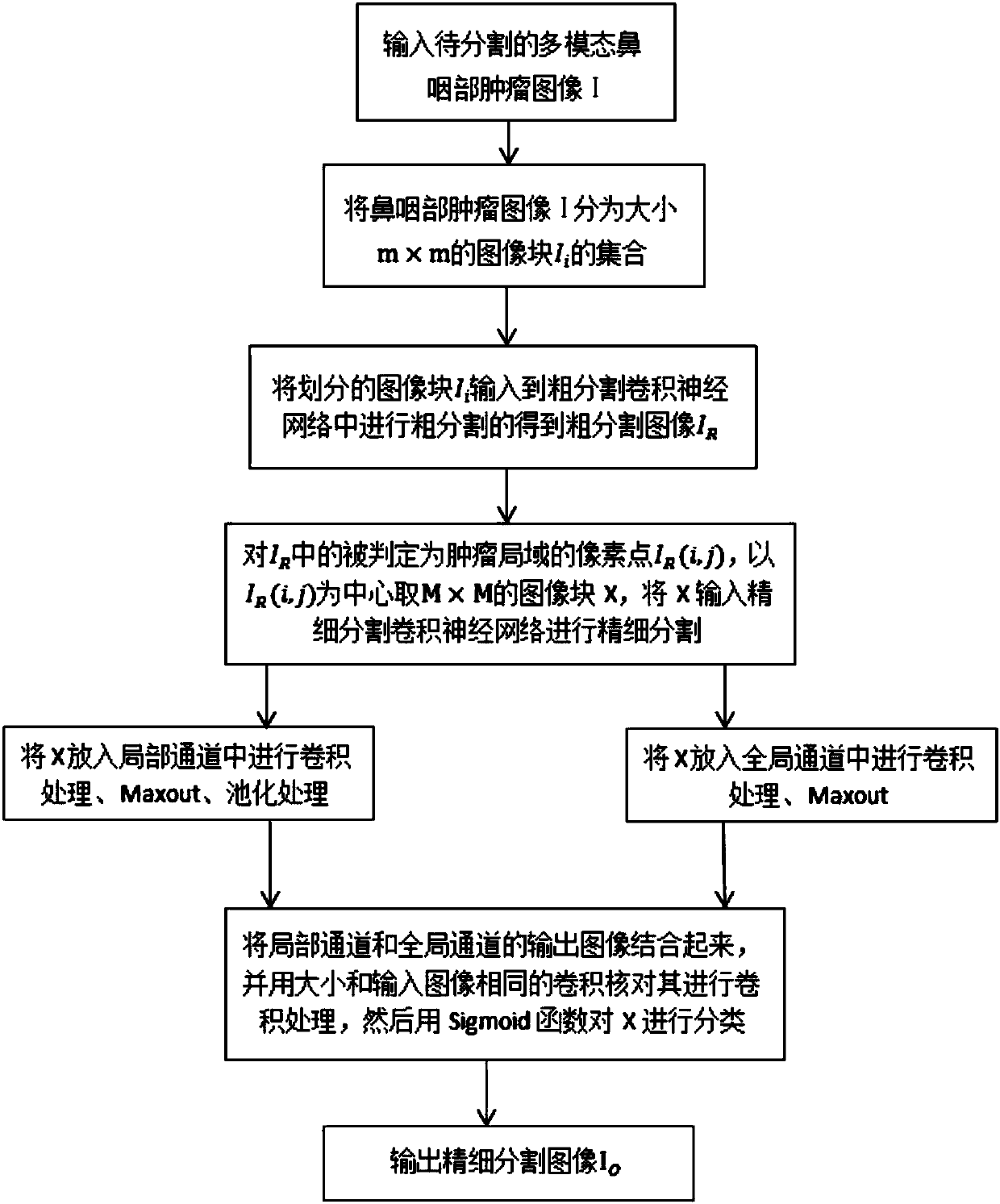

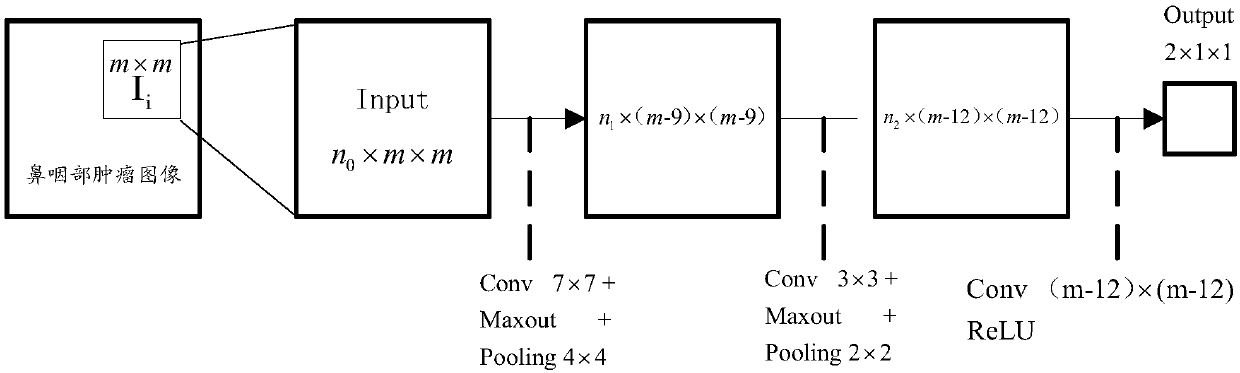

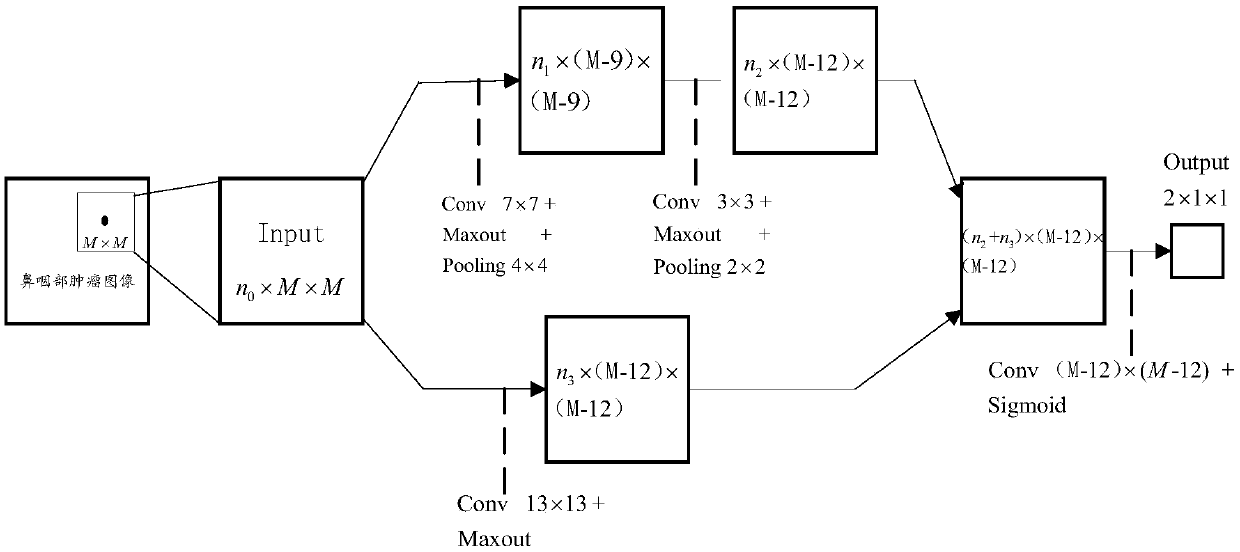

CNN-based multimodal nasopharyngeal tumor joint segmentation method

ActiveCN107610129ASolve problems that are too complex to be applied to clinical treatmentSimple structureImage analysisNeural architecturesNasopharyngeal tumorsMultimodal data

The present invention relates to a CNN-based multimodal nasopharyngeal tumor joint segmentation method. The method comprises: segmenting a multimodal nasopharyngeal tumor image into image blocks of the same size; inputting the segmented image blocks into a rough segmentation CNN for rough segmentation to obtain rough segmented images, and obtaining an image block determined as a tumor area by rough segmentation processing; inputting the rough segmented images into the fine CNN for fine segmentation; and carrying out fine segmentation on each pixel in the image blocks determined as a tumor area, determining the category of the pixel, and obtaining pixel-level finely segmented images finally. The segmentation method of the present invention does not need to determine the category of all thepixels in the nasopharyngeal tumor image, and only needs to determine a few lesion areas, thereby greatly improving the efficiency of the segmentation algorithm and shortening the segmentation time;and in addition, both multimodal data and CNN are used for image segmentation, and richer information of the to-be-segmented tumor image is preserved, so that the segmentation accuracy of the algorithm is improved.

Owner:SICHUAN UNIV

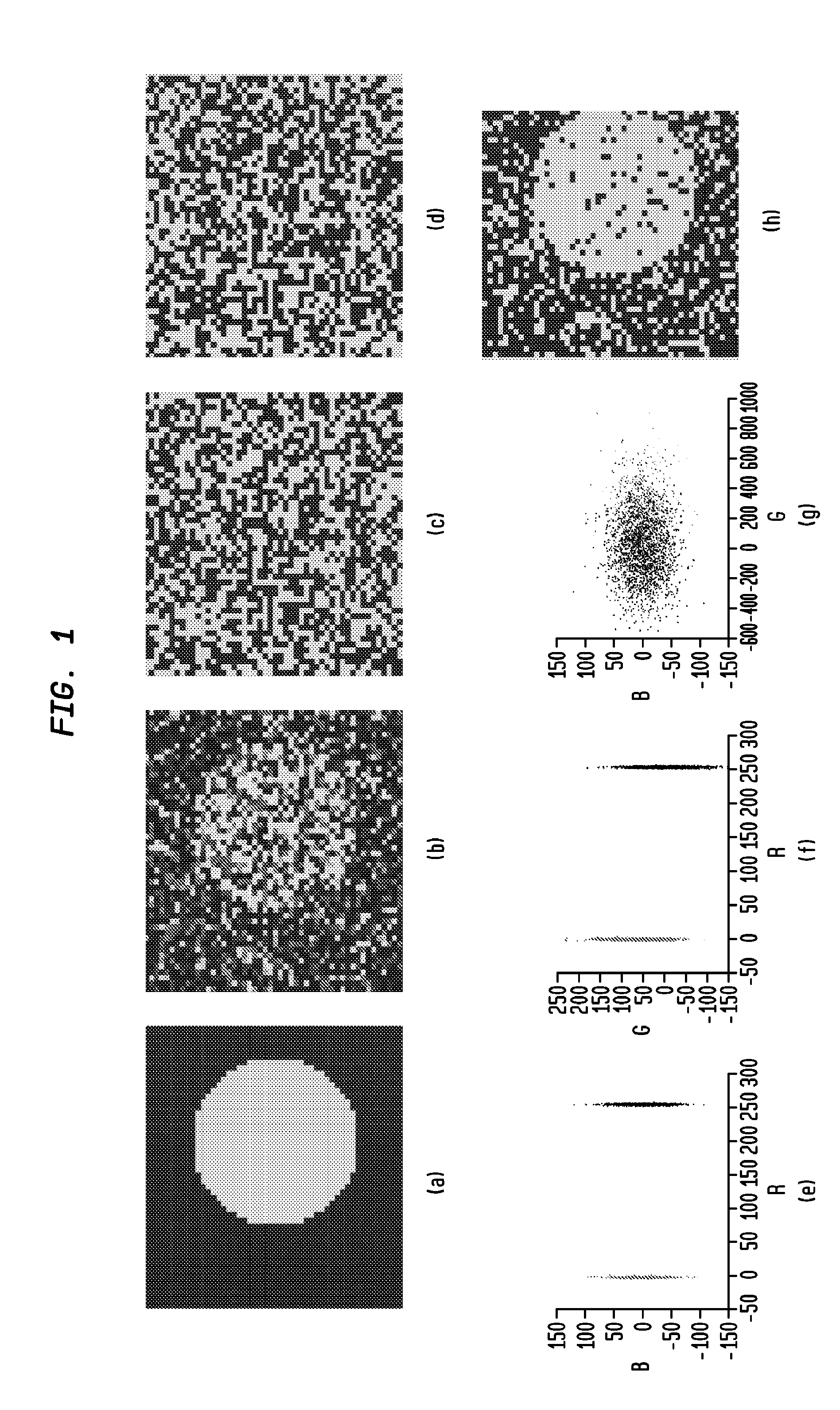

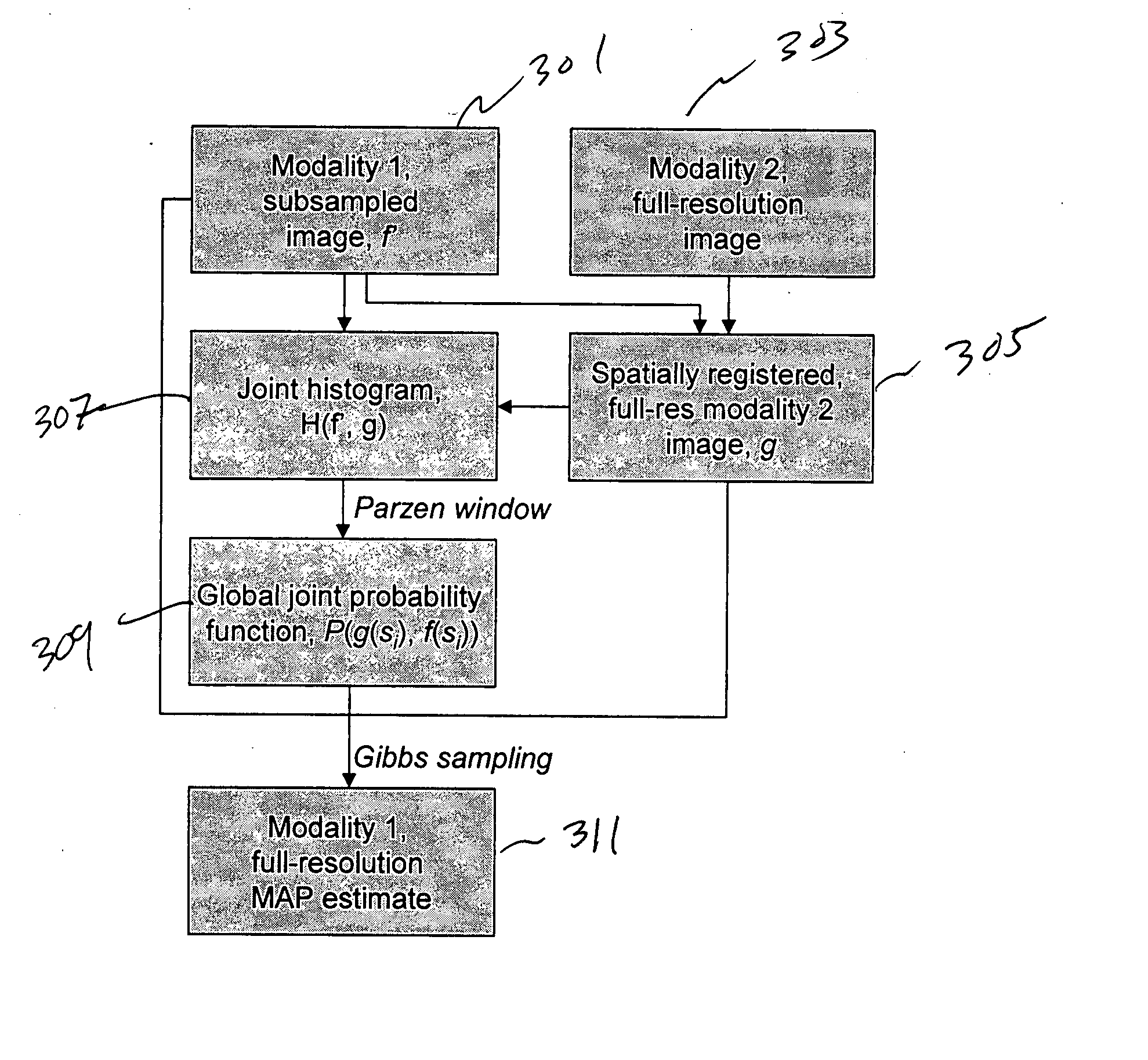

Method and apparatus for propagating high resolution detail between multimodal data sets

ActiveUS20070104393A1High resolutionReduce resolutionGeometric image transformationCharacter and pattern recognitionData setImage resolution

The dataset describing an entity in a first modality and of a first, high resolution is used to enhance the resolution of a dataset describing the same entity in a second modality of a lower resolution. The two data sets of different modalities are spatially registered to each other. From this information, a joint histogram of the values in the two datasets is computed to provide a raw analysis of how the intensities in the first dataset correspond to intensities in the second dataset. This is converted into a joint probability of possible intensities for the missing pixels in the low resolution dataset as a function of the intensities of the corresponding pixels in the high-resolution dataset to provide a very rough estimate of the intensities of the missing pixels in the low resolution dataset. Then, an objective function is defined over the set of possible new values that gives preference to datasets consistent with (1) the joint probability distributions, (2) the existing values in the low resolution dataset, and (3) smoothness throughout the data set. Finally, an annealing or similar iterative method is used to minimize the objective function and find an optimal solution over the entire dataset.

Owner:HONEYWELL INT INC

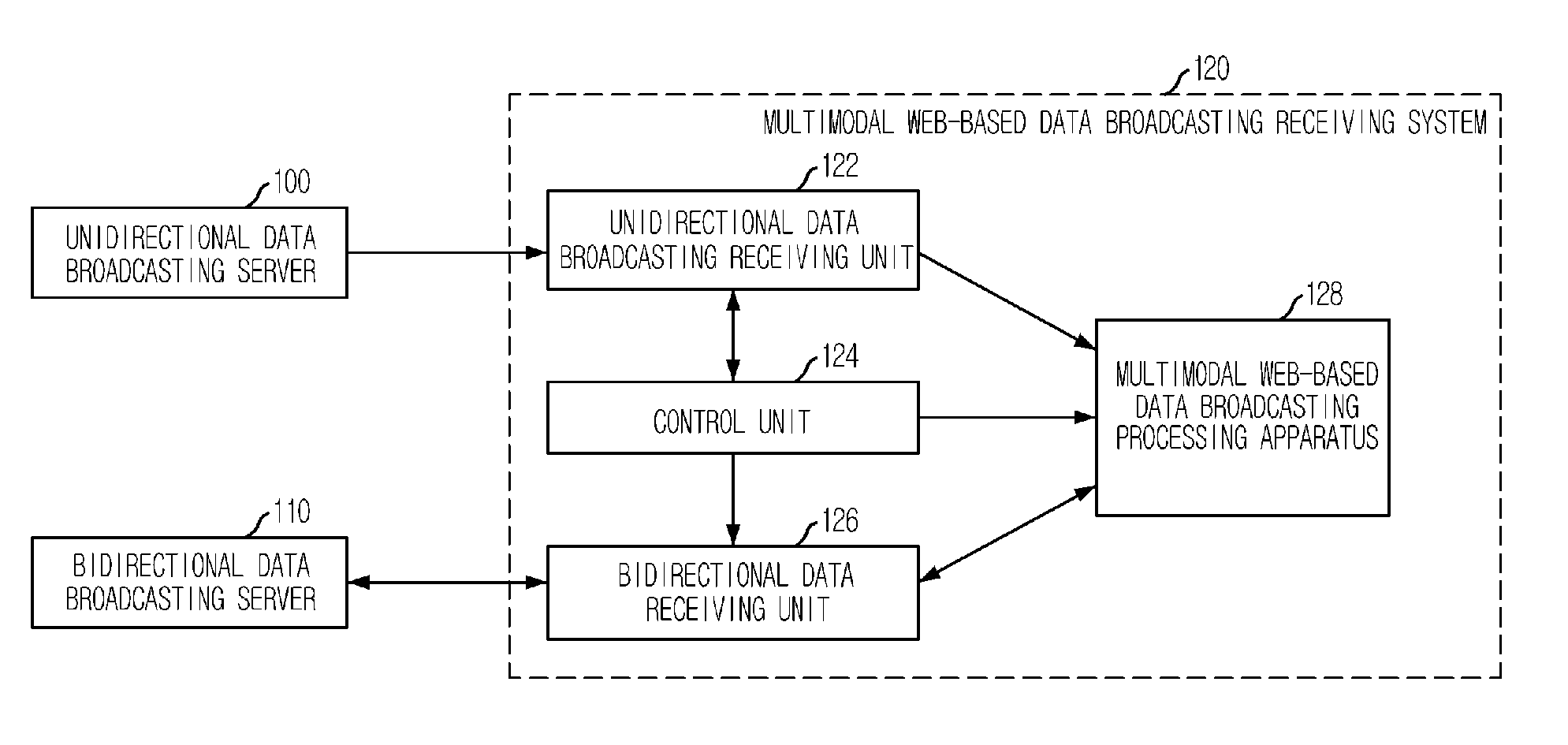

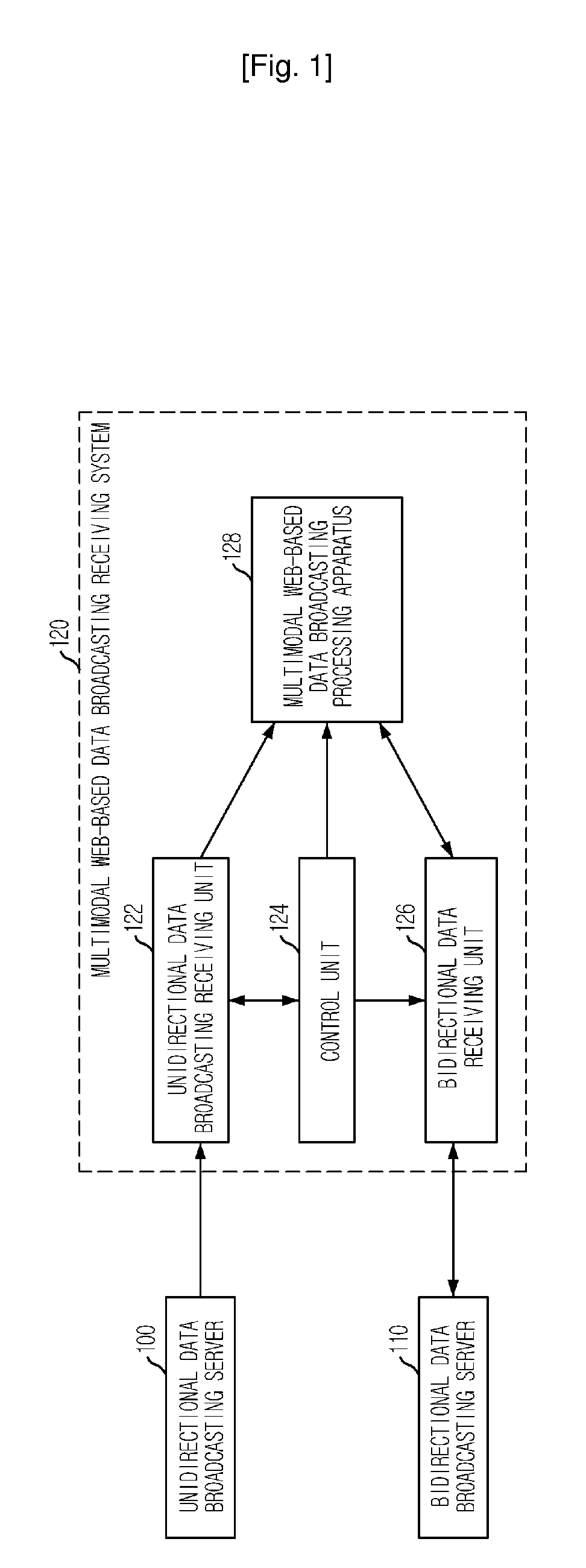

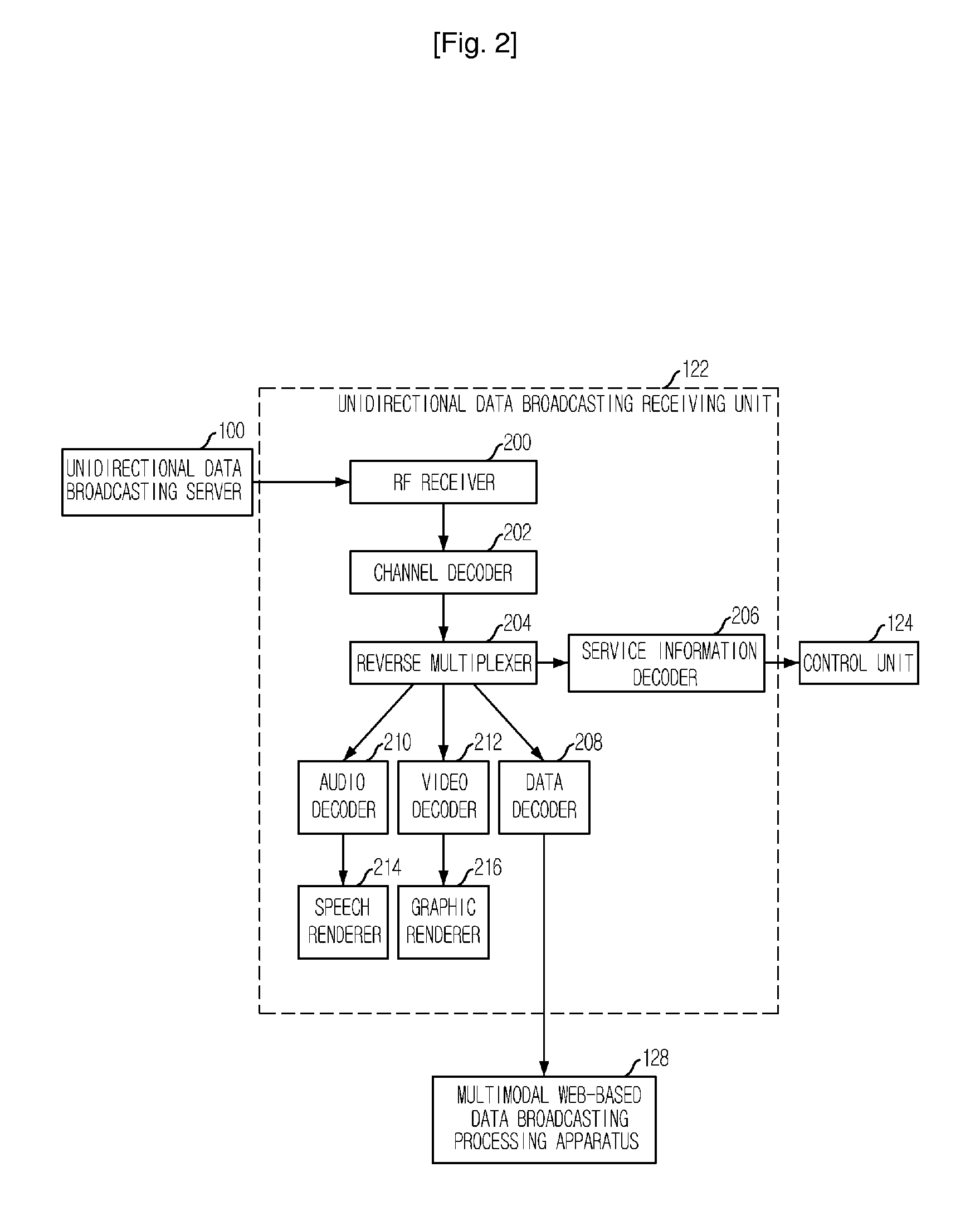

Apparatus and Method for Processing Multimodal Data Broadcasting and System and Method for Receiving Multimodal Data Broadcasting

InactiveUS20070258701A1Reduce chanceTelevision system detailsPulse modulation television signal transmissionGraphicsMultimodal data

Disclosed are an apparatus and a method for processing multimodal data broadcasting and a system and a method for receiving multimodal data broadcasting. The multimodal data broadcasting processing apparatus includes: at least one interfacing unit for receiving contents transferred from a data broadcasting server and storing the contents; a multimodal browsing unit for parsing the stored contents, classifying the contents and interpreting the classified contents to generate instructions and data necessary for running a corresponding browsing unit; a graphic browsing unit for generating a graphic output signal according to the instructions and data necessary for running the graphic browsing unit; a voice browsing unit for generating a speech output signal according to the instructions and data necessary for running the voice browsing unit; a graphic output unit for outputting graphics according to the graphic output signal; and a speech output unit for outputting speech according to the speech output signal.

Owner:ELECTRONICS & TELECOMM RES INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com