Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

658 results about "Multi modal data" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multi-modal data collection simply describes using more than one data-collection technology to accomplish a task. It can easily be argued that we have been doing multi-modal data collection for decades. Technically speaking, entering information on a keypad is a form of data collection,...

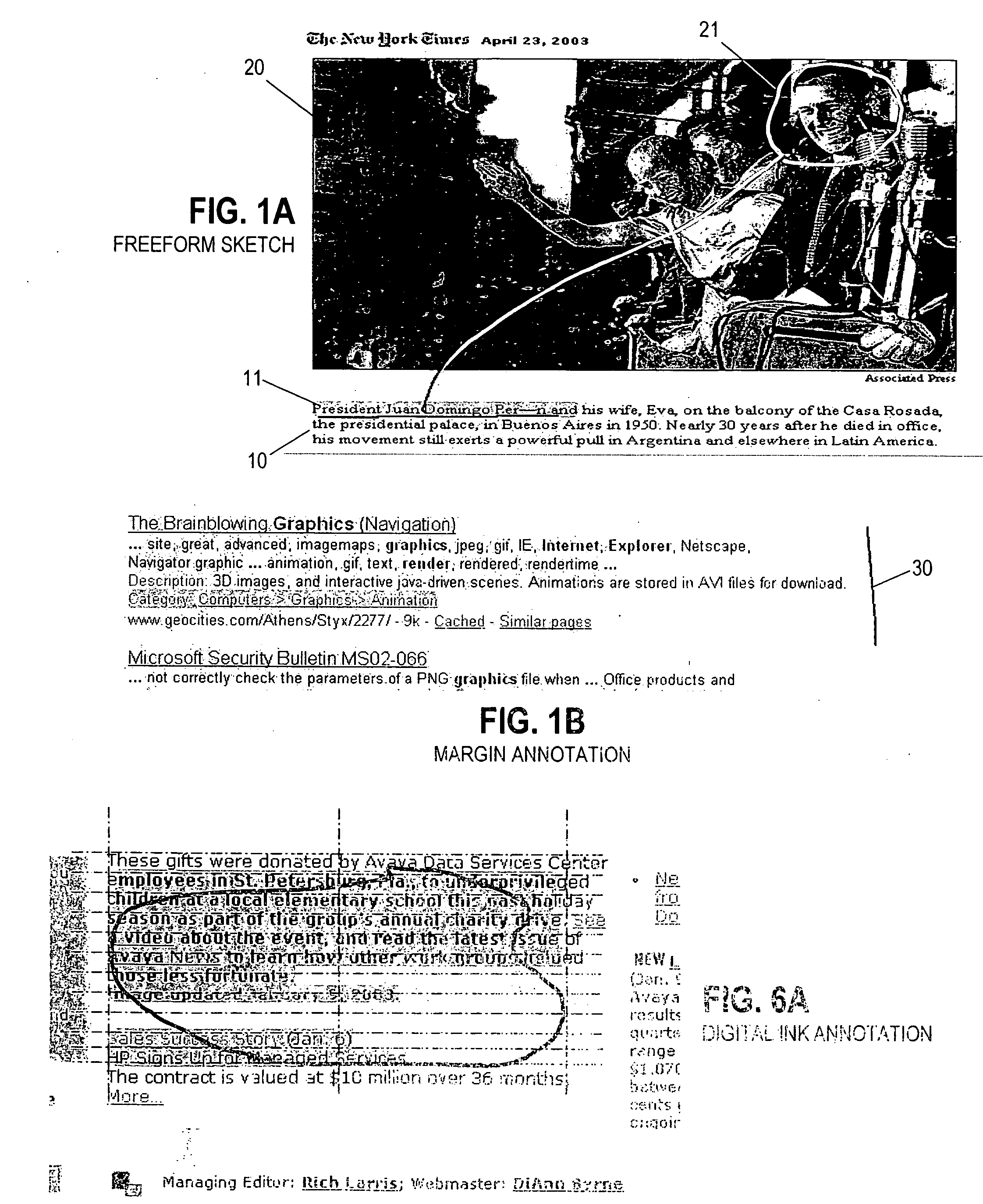

Sharing inking during multi-modal communication

Voice input is captured by a microphone that is connected to a standard sound card. Ink is also captured using an input device, such as a mouse, a tablet pc or the pen / stylus of a personal digital assistant (PDA). The captured voice input is converted in the soundcard to speech data and forwarded to an indexer module, where it is temporally indexed to ink obtained from an ink capture module via an input device. The indexed ink / speech data is then stored in a memory module for subsequent user access. When the ink is selected by a user, such as via a pen / stylus of the PDA, the speech data that is indexed to the ink is played, i.e., the multi-modal data is retrieved. The listener is able to enter ink on a document based on the content of the voice input.

Owner:AVAYA INC

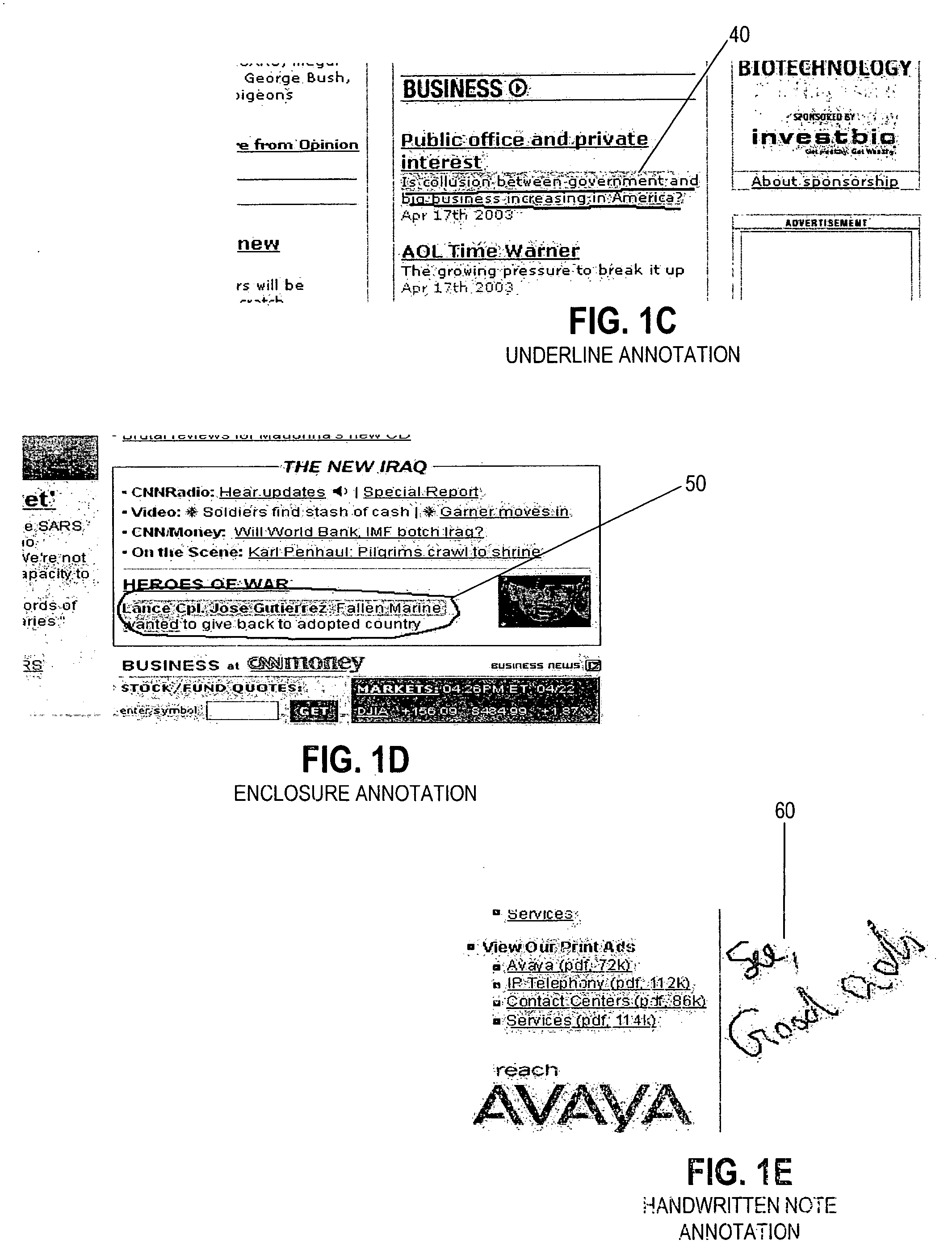

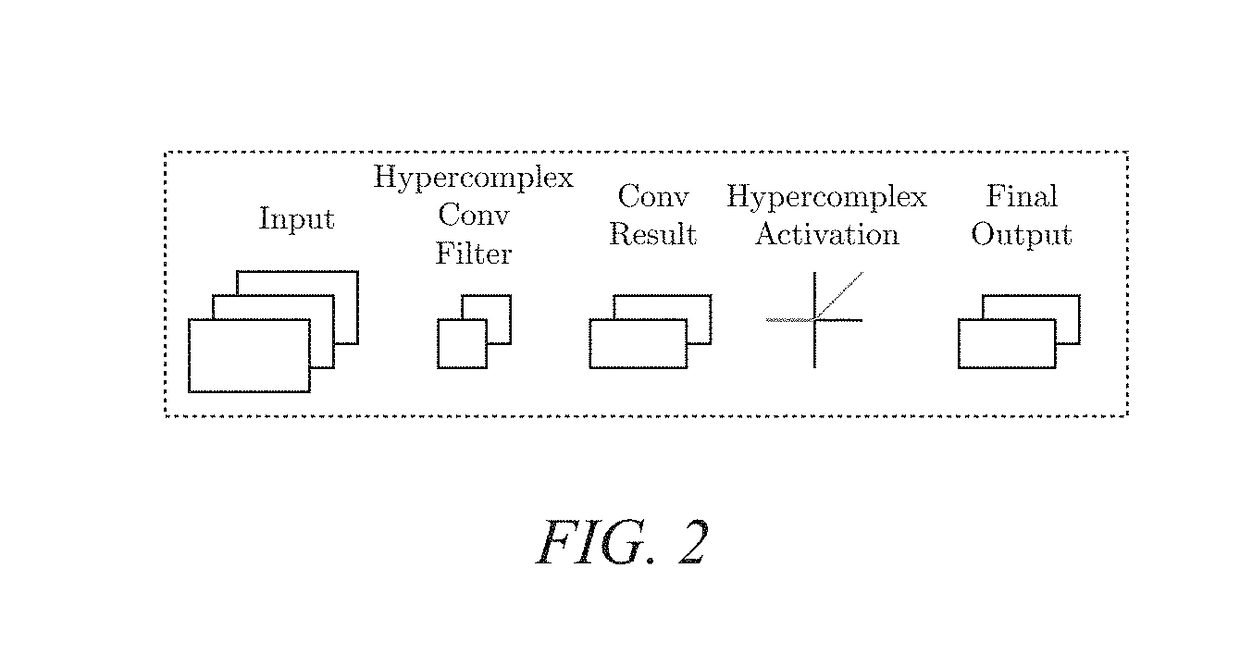

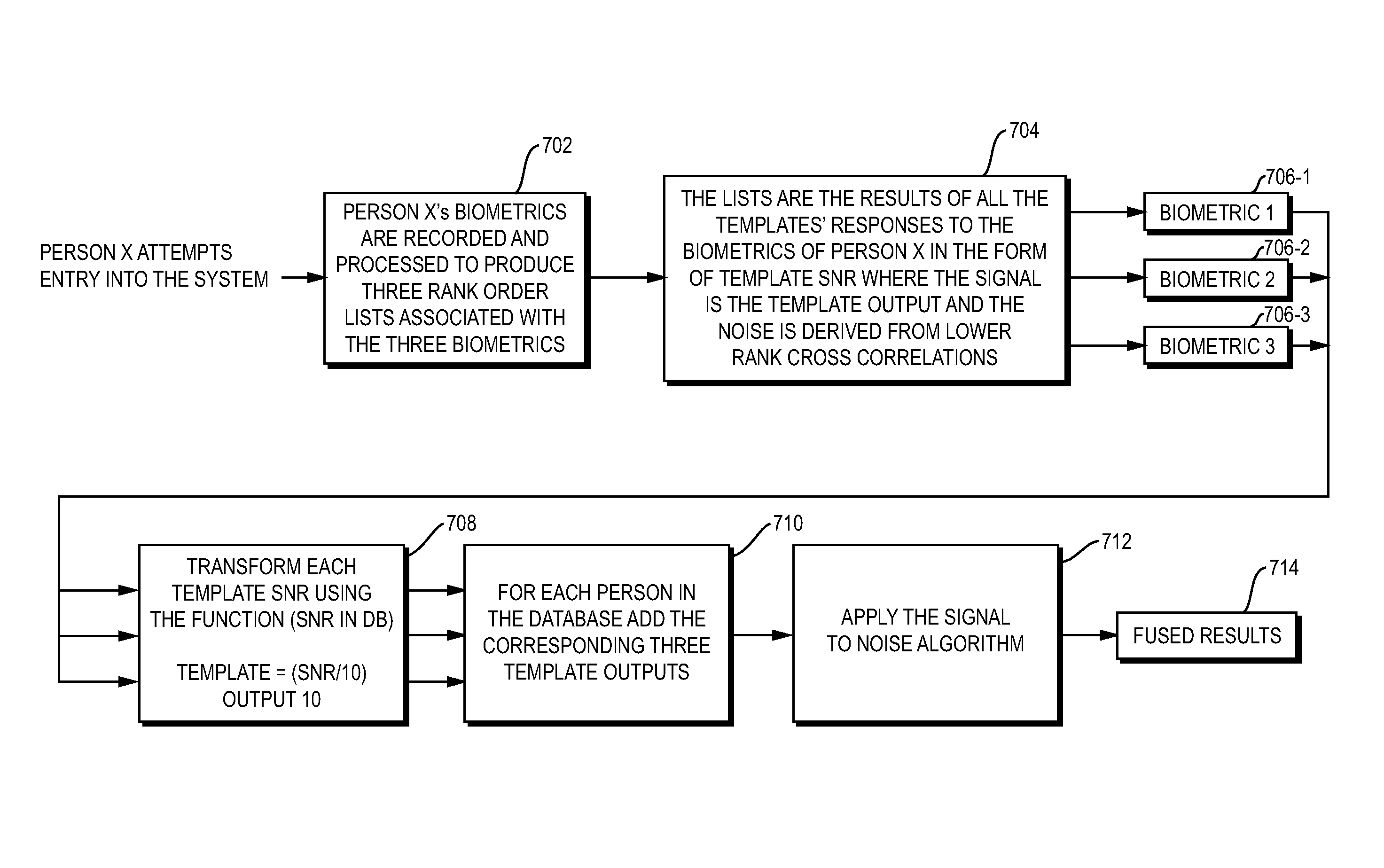

Hypercomplex deep learning methods, architectures, and apparatus for multimodal small, medium, and large-scale data representation, analysis, and applications

A method and system for creating hypercomplex representations of data includes, in one exemplary embodiment, at least one set of training data with associated labels or desired response values, transforming the data and labels into hypercomplex values, methods for defining hypercomplex graphs of functions, training algorithms to minimize the cost of an error function over the parameters in the graph, and methods for reading hierarchical data representations from the resulting graph. Another exemplary embodiment learns hierarchical representations from unlabeled data. The method and system, in another exemplary embodiment, may be employed for biometric identity verification by combining multimodal data collected using many sensors, including, data, for example, such as anatomical characteristics, behavioral characteristics, demographic indicators, artificial characteristics. In other exemplary embodiments, the system and method may learn hypercomplex function approximations in one environment and transfer the learning to other target environments. Other exemplary applications of the hypercomplex deep learning framework include: image segmentation; image quality evaluation; image steganalysis; face recognition; event embedding in natural language processing; machine translation between languages; object recognition; medical applications such as breast cancer mass classification; multispectral imaging; audio processing; color image filtering; and clothing identification.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

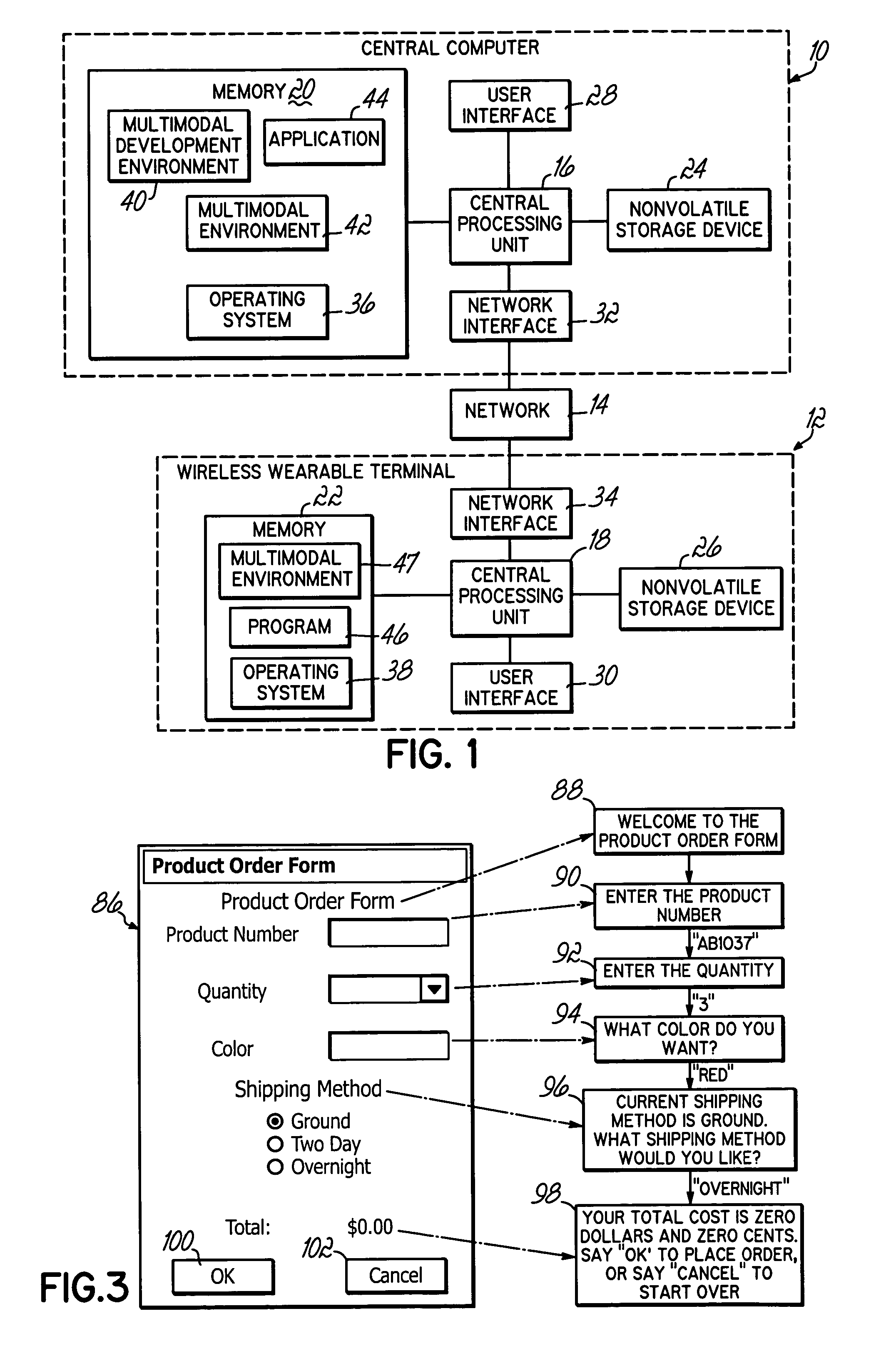

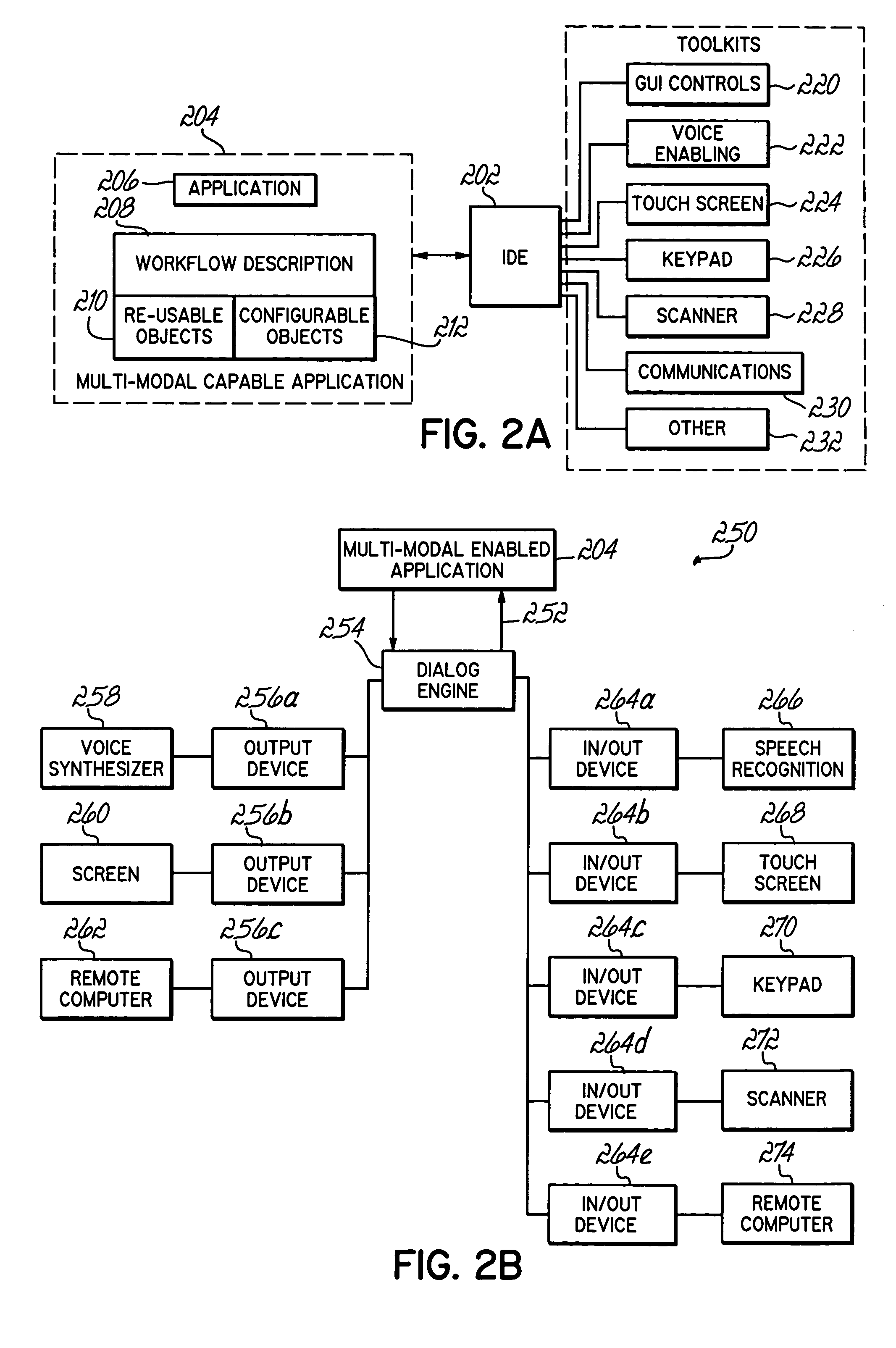

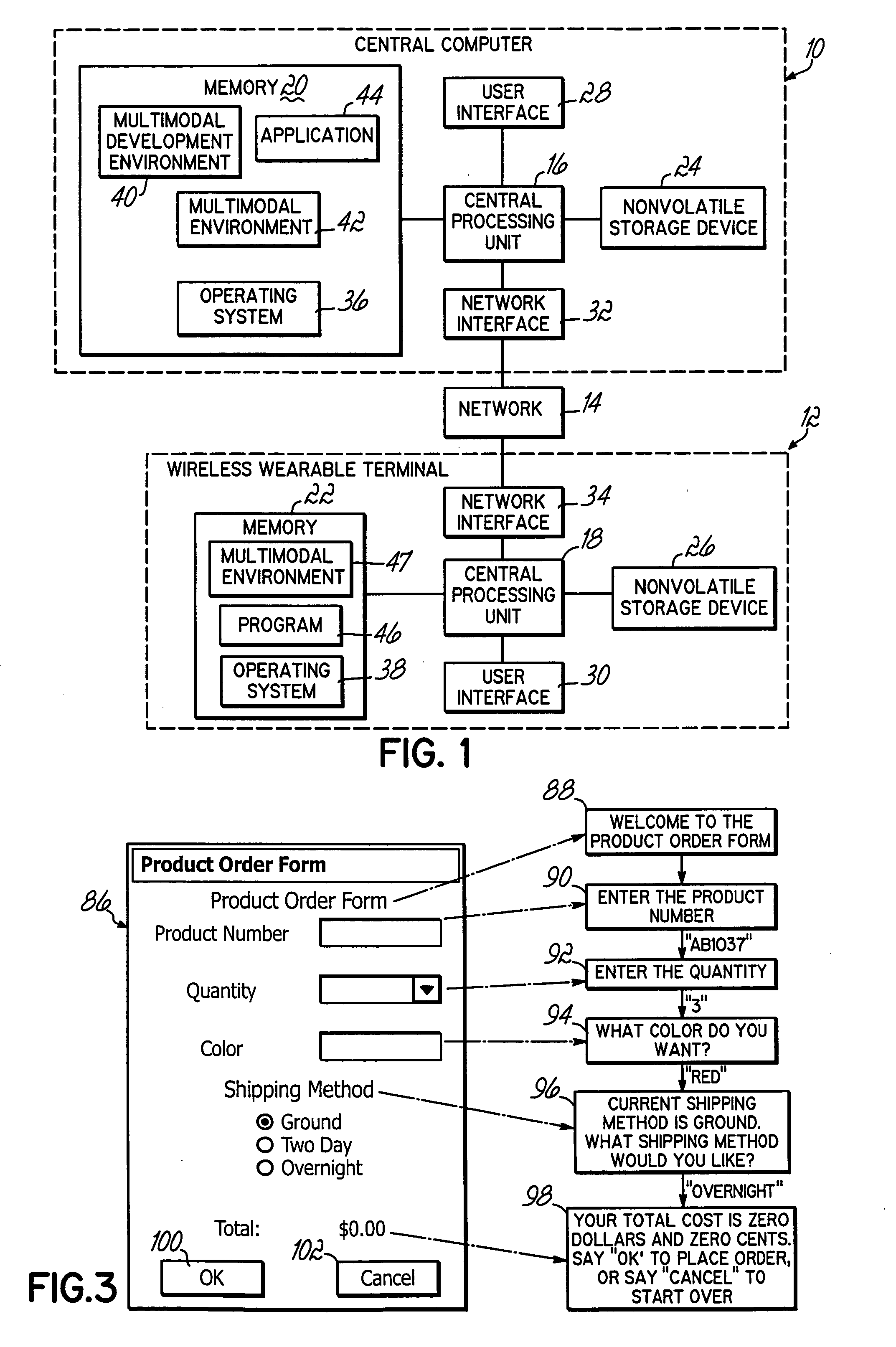

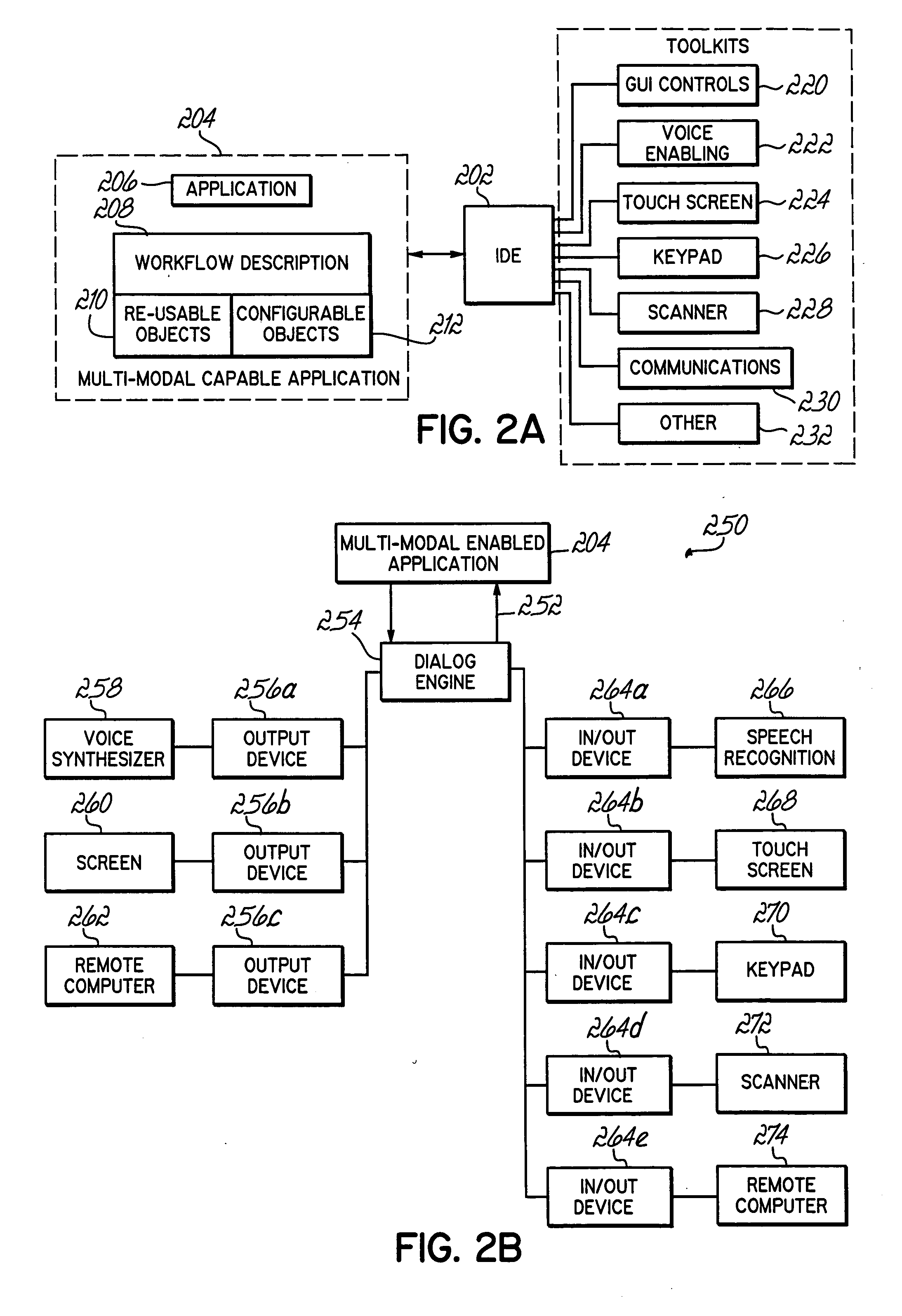

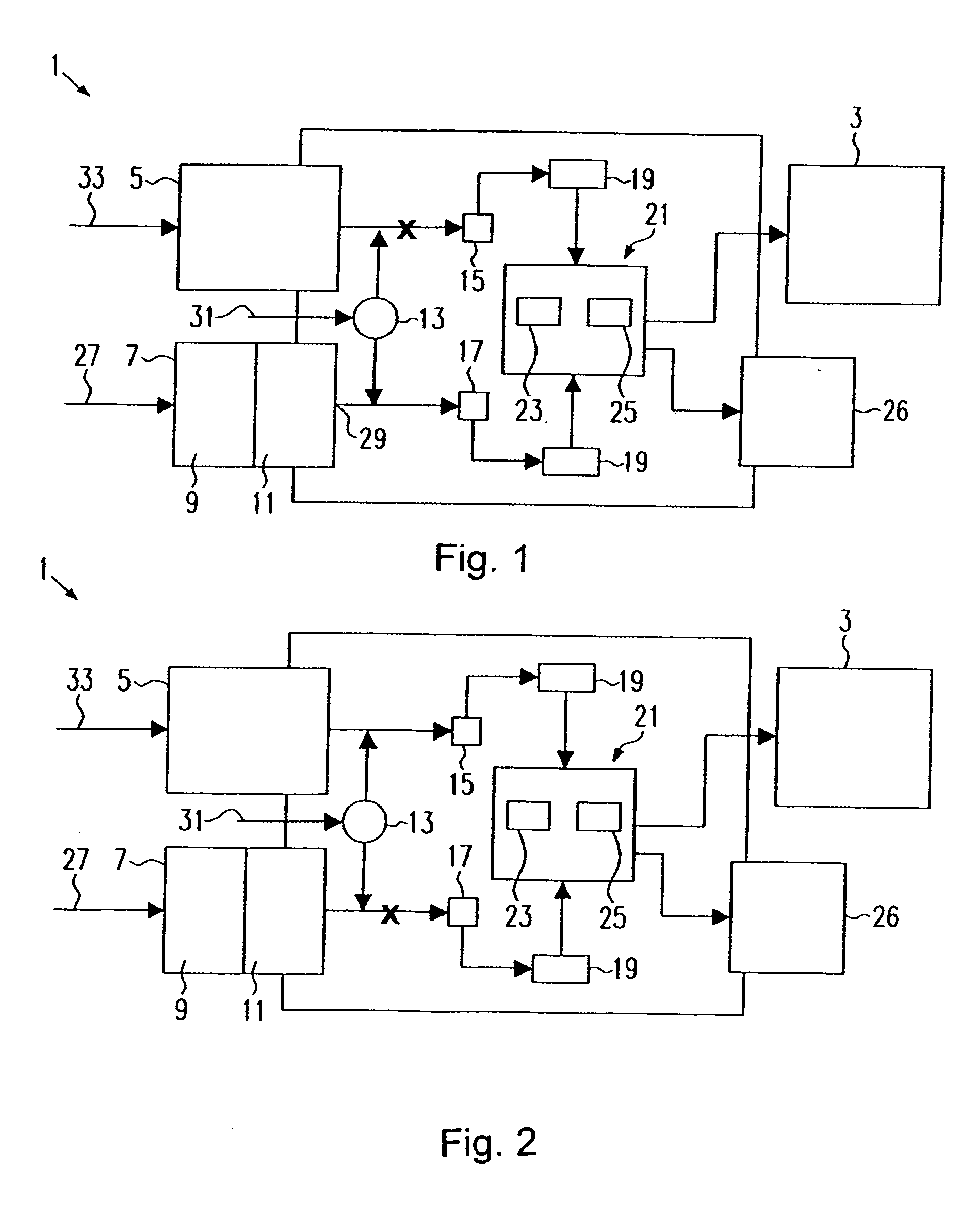

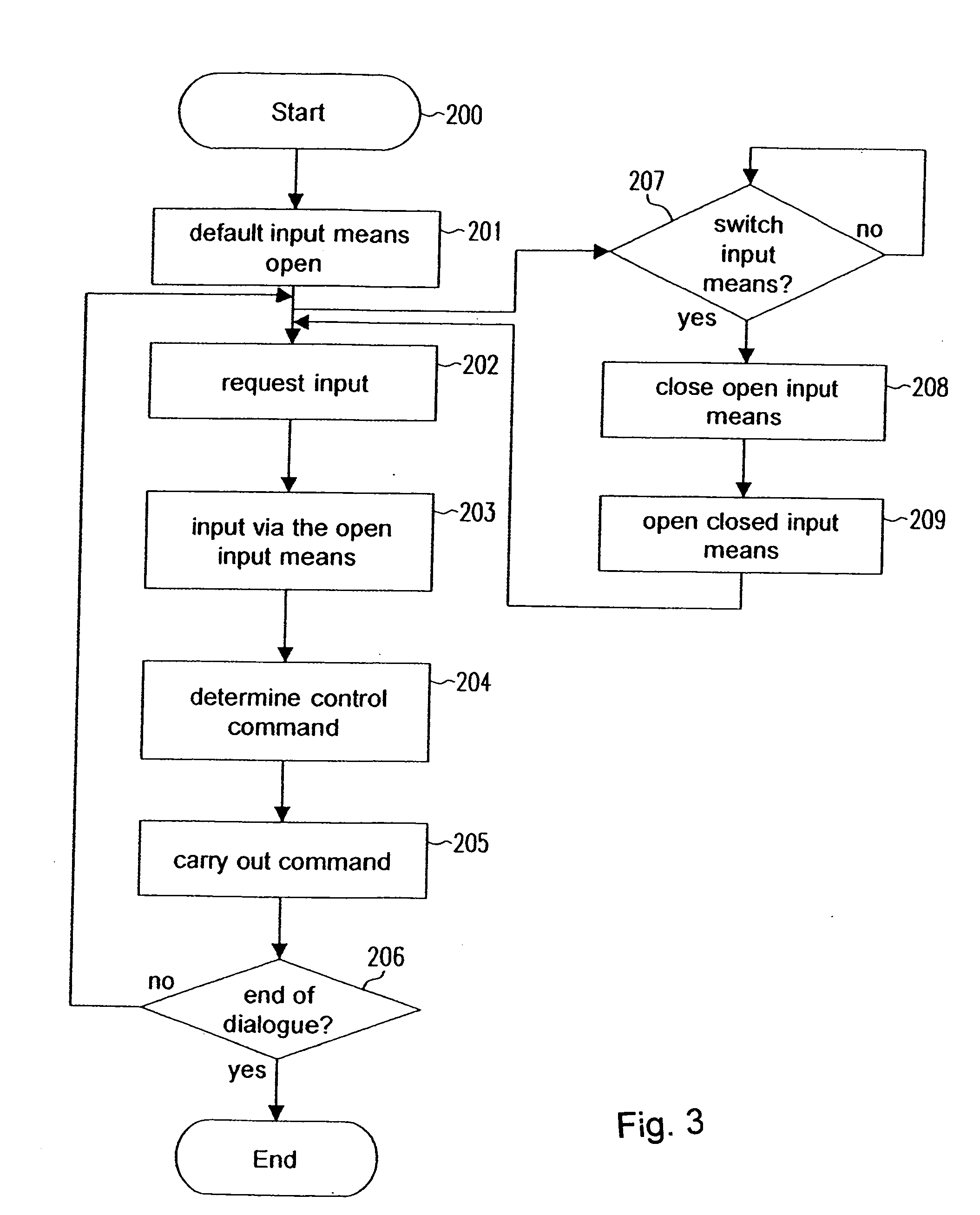

Method and system for integrating multi-modal data capture device inputs with multi-modal output capabilities

InactiveUS20050010892A1Software engineeringSpecific program execution arrangementsGraphicsGraphical user interface

A dialog engine includes methods for integrating multi-modal data capture device inputs with multimodal output capabilities in which a work flow description is extracted from objects in a graphical user interface and a multi-modal user interface is defined. The dialog engine synchronizes the flow of information, in accordance with the work flow description, between input / output devices and an application.

Owner:VOCOLLECT

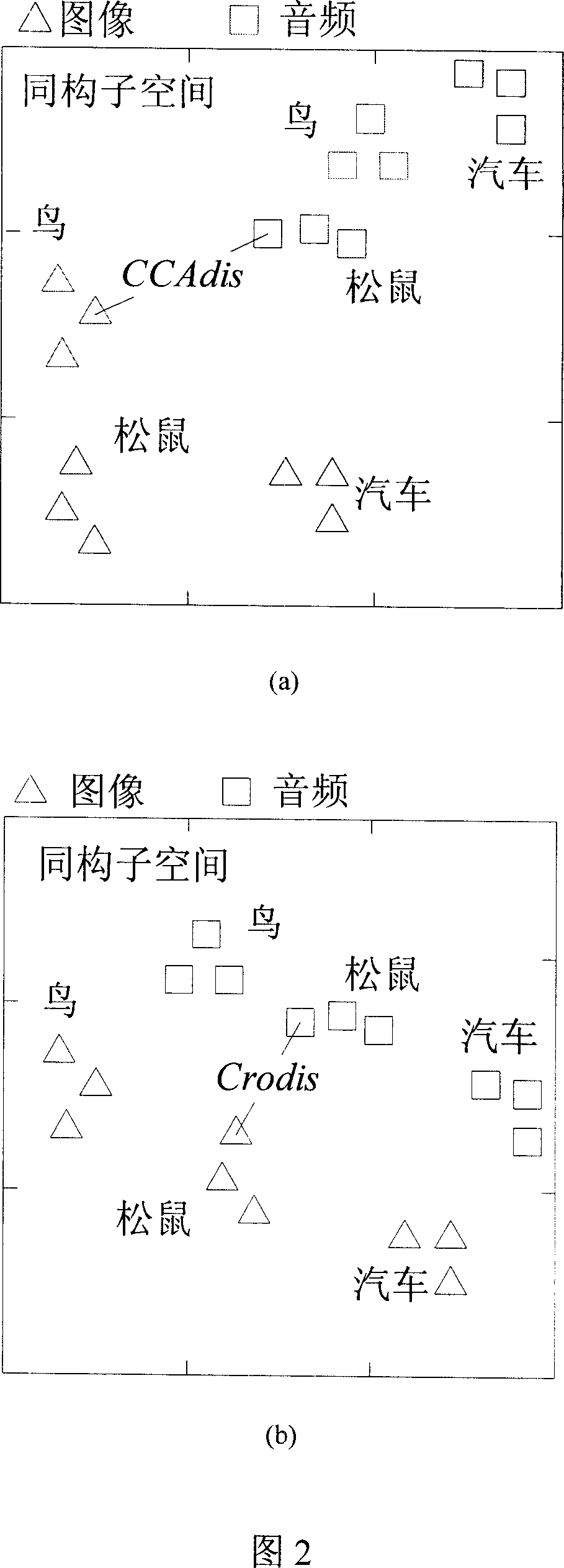

Transmedia searching method based on content correlation

InactiveCN101021849AResolving heterogeneitySpecial data processing applicationsData setSpace mapping

This invention discloses a method for media-crossing searches based on content relativity, which applies the typical relativity analysis to analyze the content characters of different mode media data, maps a visual sense character vector of image data and an auditory character vector of audio data in a low dimension isomorphic sub-space simultaneously by a sub-space mapping algorithm, measures the relativities among different mode data based on a general distance function and modifies the topological structure of a multi-mode data set in the sub-space to increase the cross media search efficiency effectively.

Owner:ZHEJIANG UNIV

3D convolutional neural network sign language identification method integrated with multi-modal data

ActiveCN107679491AOvercoming featureOvercome precisionCharacter and pattern recognitionNerve networkComputation complexity

The invention discloses a 3D convolutional neural network sign language identification method integrated with multi-modal data. The 3D convolutional neural network sign language identification methodintegrated with multi-modal data includes the steps: constructing a deep neural network, respectively performing characteristic extraction on an infrared image and a contour image of a gesture from the spatial dimension and the time dimension of a video, and integrating two network output based on different data formats to perform final classification of sign language. The 3D convolutional neuralnetwork sign language identification method integrated with multi-modal data can accurately extract the limb movement track information in two different data formats, can effectively reduce the computing complexity of a model, uses a deep learning strategy to integrate the classification results of two networks, and effectively solves the problem that single classifier encounters error classification because of data loss, so as to enable the model to have relatively higher robustness for illumination and background noise of different scenes.

Owner:HUAZHONG NORMAL UNIV

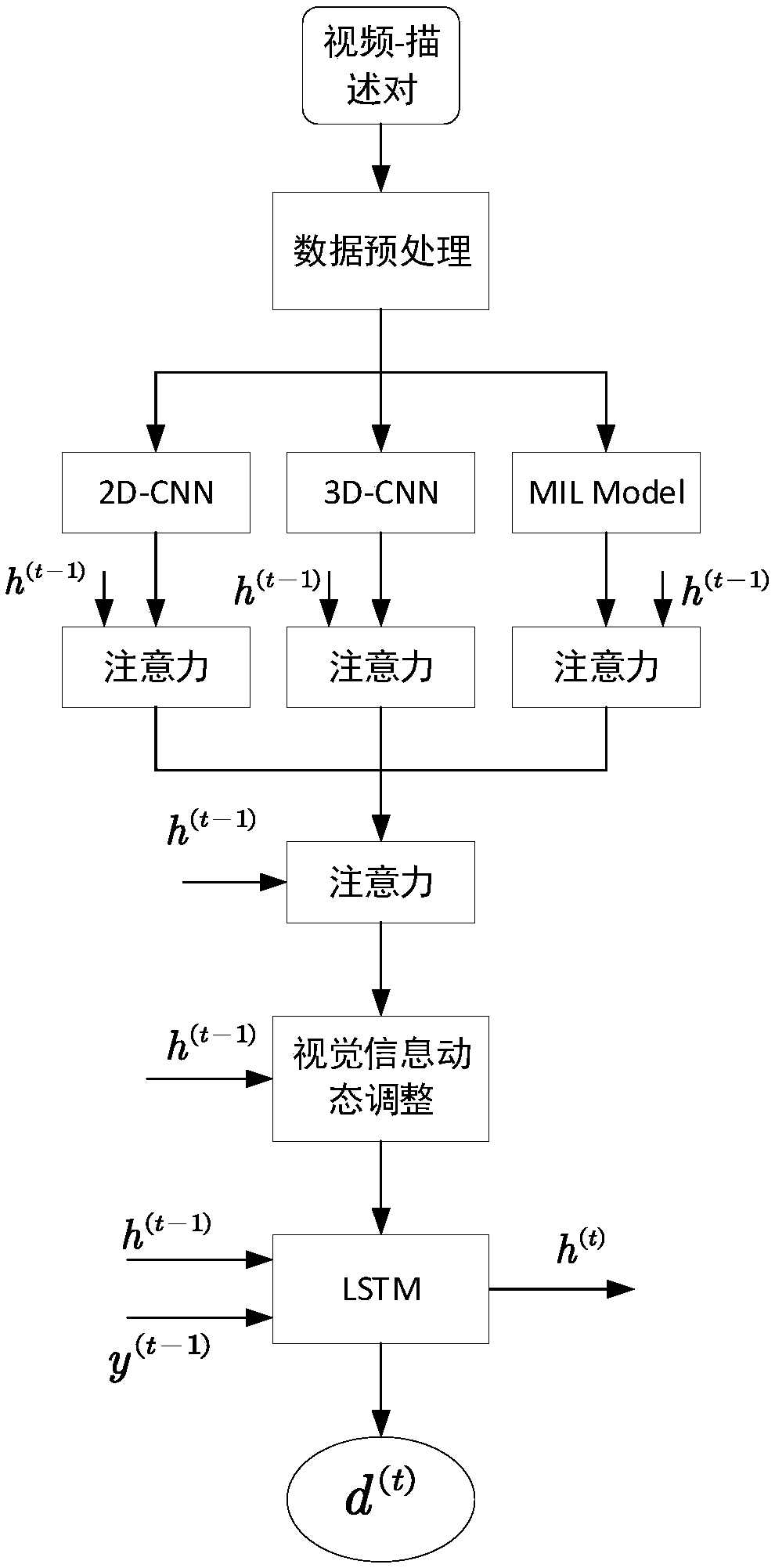

A combined video description method based on multi-modal features and multi-layer attention mechanism

ActiveCN109344288AImprove accuracyVideo data queryingNeural architecturesFeature dataVisual perception

The invention discloses a combined video description method based on multi-modal features and multi-layer attention mechanism. Firstly, the invention counts the words appearing in the description sentence to form a vocabulary, and numbers each word to facilitate vector representation. Then three kinds of feature data are extracted, including semantic attribute feature, Image information features extracted by 2D-CNN and video motion information features extracted by 3D-CNN, and then multi-modal data dynamic fusion through the multi-layer attention mechanism to obtain visual information, and then according to the current context, adjust the use of visual information; Finally, according to the current context and visual information, the words described in the video are generated. After the multi-modal features of the video are fused through the multi-layer attention mechanism, the invention generates the semantic description of the video based on the multi-modal features of the video, thereby effectively improving the accuracy of the video description.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

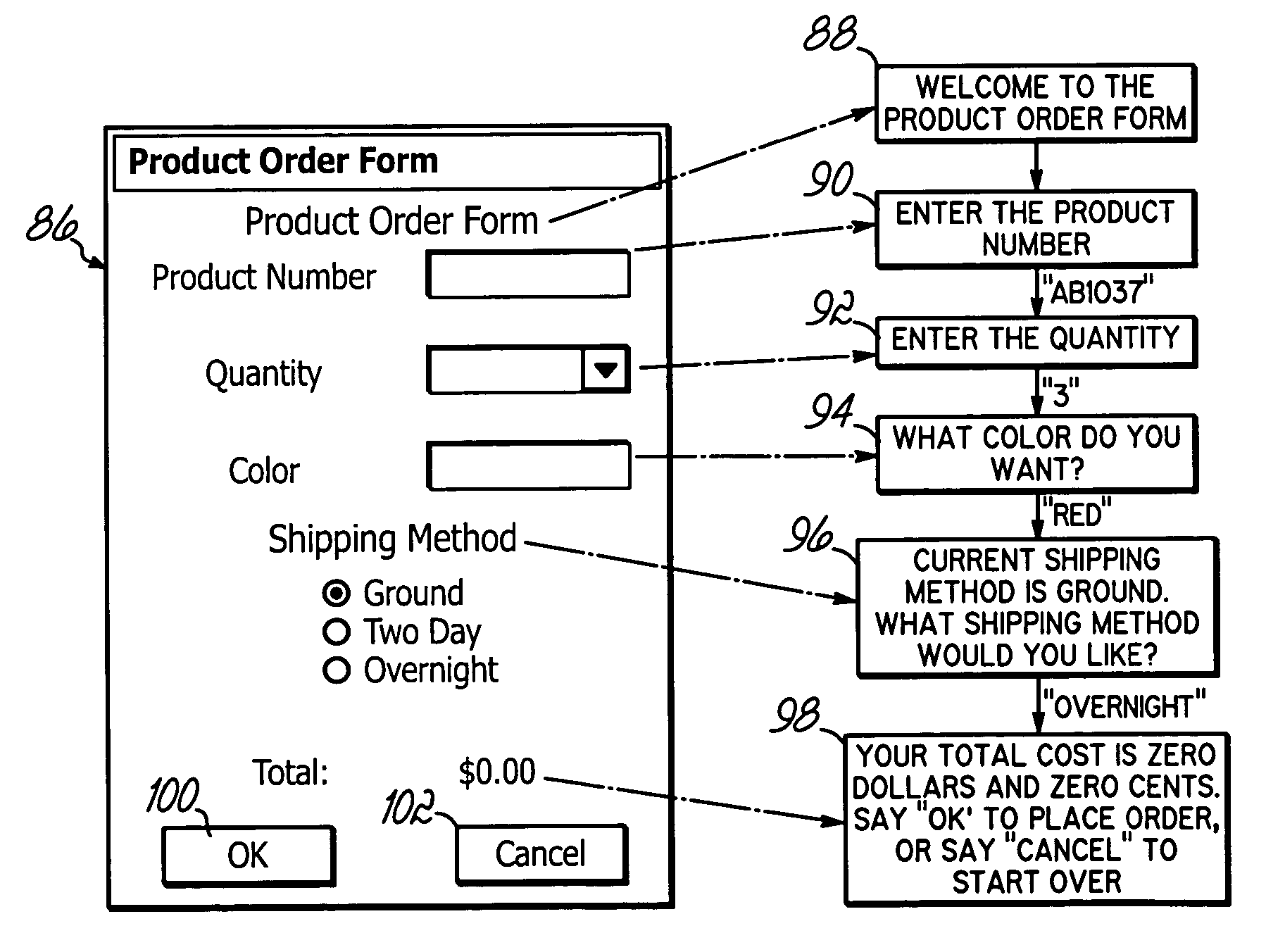

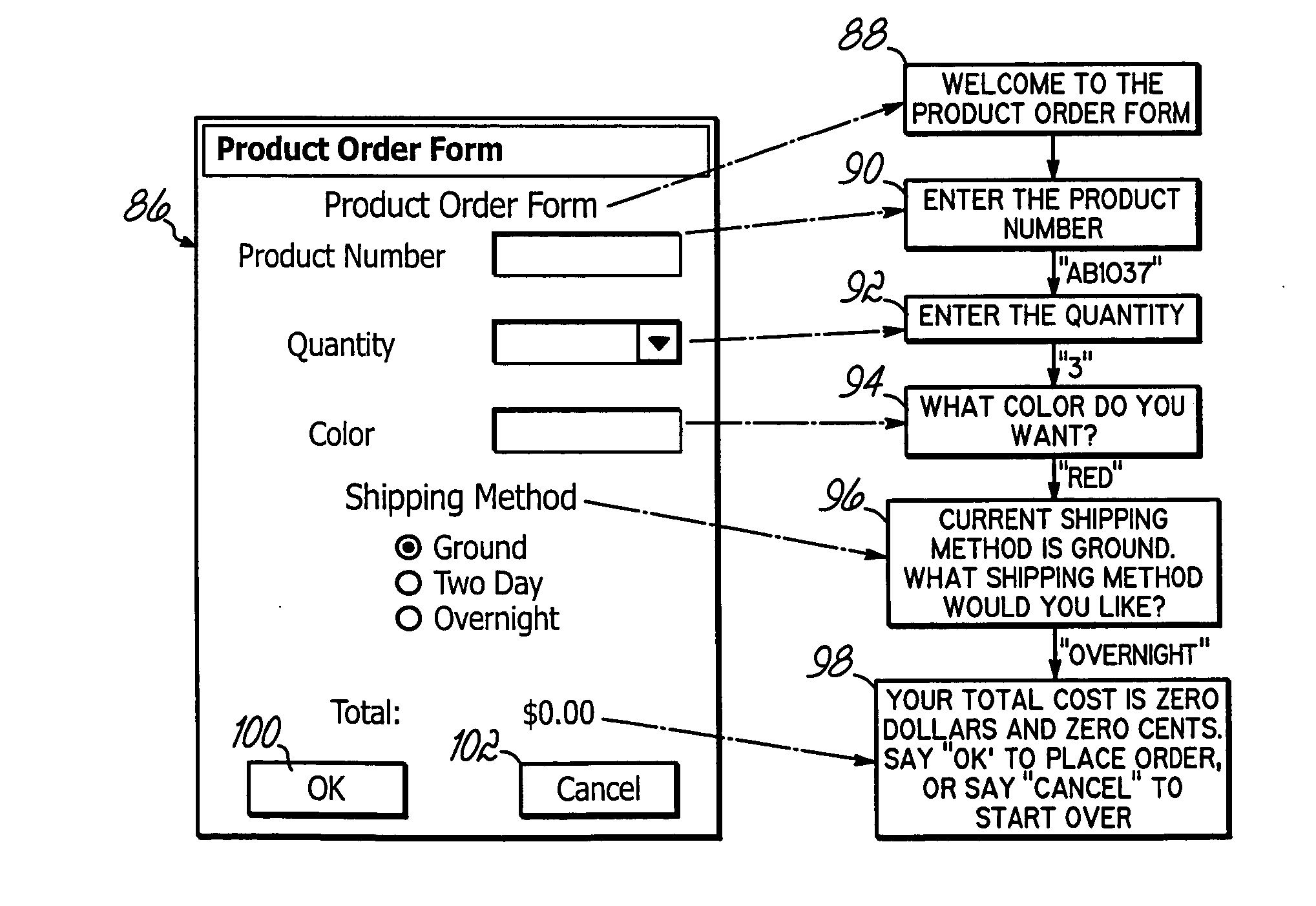

Method and system for intelligent prompt control in a multimodal software application

InactiveUS20050010418A1Speech recognitionInput/output processes for data processingGraphicsGraphical user interface

Dialog manager and methods for integrating multi-modal data capture device inputs or speech recognition inputs with speech output capabilities. A work flow description is extracted from objects in a graphical user interface and a multi-modal user interface is defined. A dialog engine synchronizes the flow of information, in accordance with the work flow description, between input / output devices and an application. The prompts for inputting data, which are output via a plurality of peripheral devices, are controlled in an intelligent manner by the dialog engine based on the input state of the peripheral devices. Functionality such as barge-in, prompt-holdoff, priority prompts, and talk-ahead is provided.

Owner:VOCOLLECT

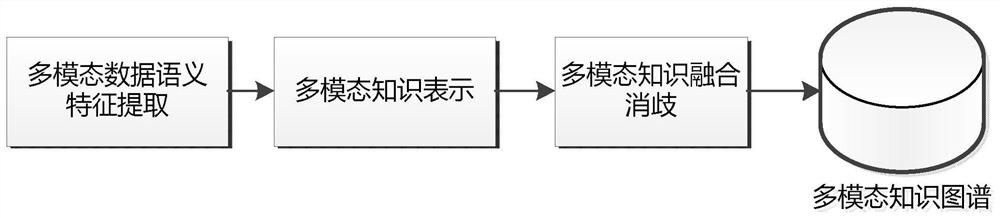

Multi-modal knowledge graph construction method

PendingCN112200317ARich knowledge typeThree-dimensional knowledge typeKnowledge representationSpecial data processing applicationsFeature extractionEngineering

The invention discloses a multi-modal knowledge graph construction method, and relates to the knowledge engineering technology in the field of big data. The method is realized through the following technical scheme: firstly, extracting multi-modal data semantic features based on a multi-modal data feature representation model, constructing a pre-training model-based data feature extraction model for texts, images, audios, videos and the like, and respectively finishing single-modal data semantic feature extraction; secondly, projecting different types of data into the same vector space for representation on the basis of unsupervised graph, attribute graph, heterogeneous graph embedding and other modes, so as to realize cross-modal multi-modal knowledge representation; on the basis of the above work, two maps needing to be fused and aligned are converted into vector representation forms respectively, then based on the obtained multi-modal knowledge representation, the mapping relation of entity pairs between knowledge maps is learned according to priori alignment data, multi-modal knowledge fusion disambiguation is completed, decoding and mapping to corresponding nodes in the knowledge maps are completed, and a fused new atlas, entities and attributes thereof are generated.

Owner:10TH RES INST OF CETC

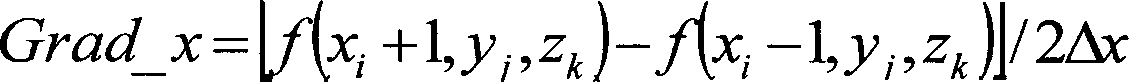

Multi-modality medical data three-dimensional visual method

InactiveCN1818974ANarrow searchHigh speedImage analysis2D-image generationDiagnostic Radiology ModalityNormalized mutual information

The invention involves a multi-mode medical body data three-dimensional video-method. Most the existing technologies are based on two-two-dimensional lay or two-three dimensional lay, which do not realize the integrated display course of medical body data aligning and amalgamation. The said method includes standardization mutual information-way aligning based on multi resolution ratio and single drop multi-mode straight body protracting-way amalgamation display. The previous one includes three approaches, such as coordinate transform, orientation criterion and multi resolution ratio optimize; the latter includes five approaches, such as impress function definition, the calculation of illumination model, image composition, single drop multi-mode show of the multi-mode data and amalgamation display. The invention can realize the integrated display course of medical body data aligning and amalgamation, and is a real two-three dimensional multi-mode video-method.

Owner:HANGZHOU DIANZI UNIV

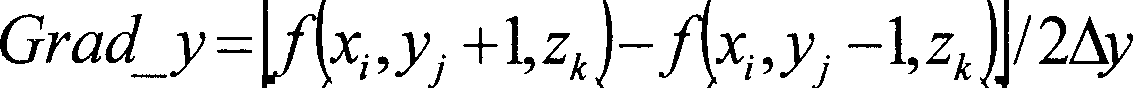

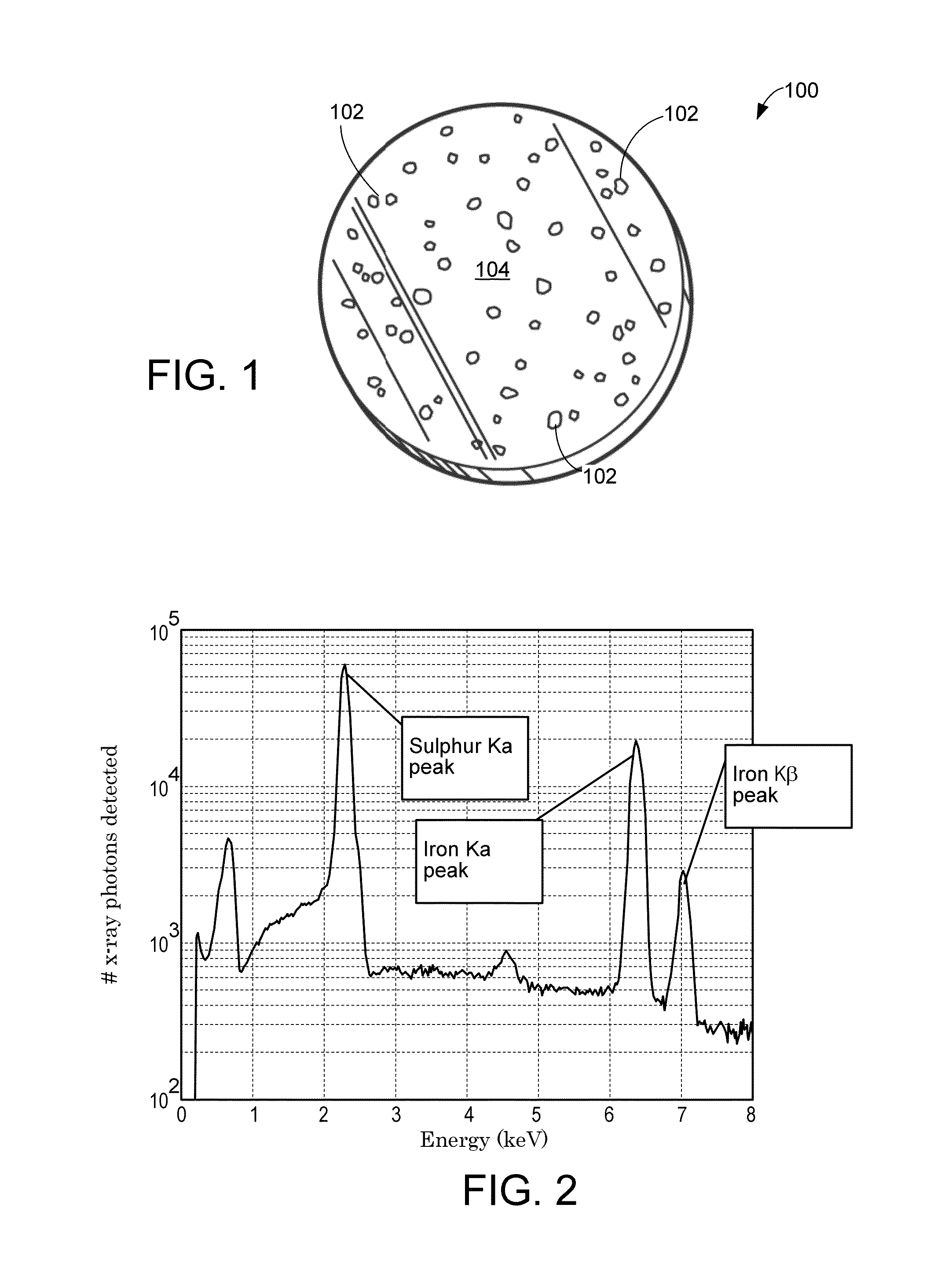

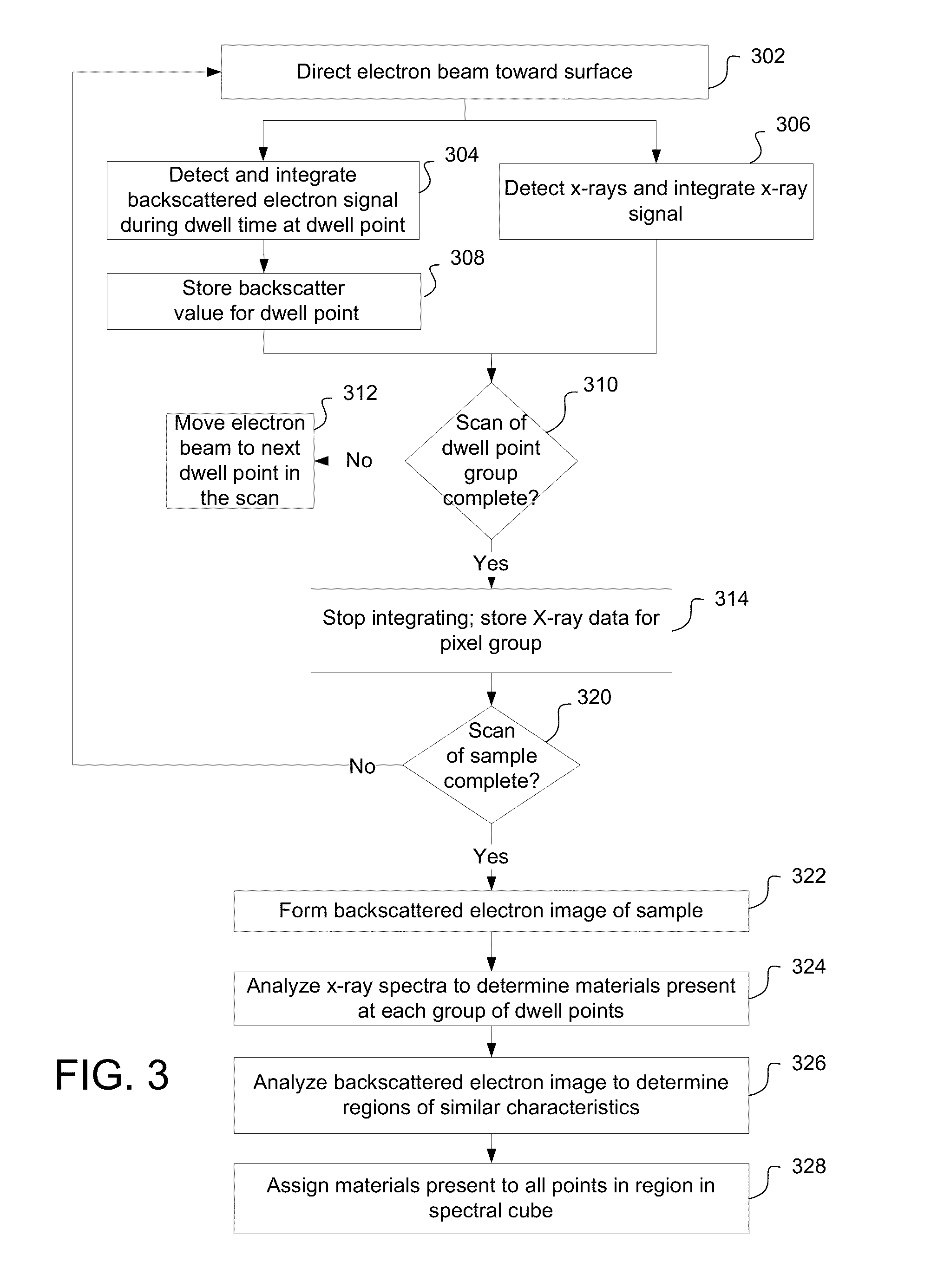

Clustering of multi-modal data

ActiveUS20130015351A1Easy accessHigh resolutionMaterial analysis using wave/particle radiationElectric discharge tubesAnalysis dataAcquisition time

Information from multiple detectors acquiring different types of information is combined to determine one or more properties of a sample more efficiently than the properties could be determined using a single type of information from a single type of detector. In some embodiments, information is collected simultaneously from the different detectors which can greatly reduce data acquisition time. In some embodiments, information from different points on the sample are grouped based on information from one type of detector and information from the second type of detector related to these points is combined, for example, to create a single spectrum from a second detector of a region of common composition as determined by the first detector. In some embodiments, the data collection is adaptive, that is, the data is analyzed during collection to determine whether sufficient data has been collected to determine a desired property with the desired confidence.

Owner:FEI CO

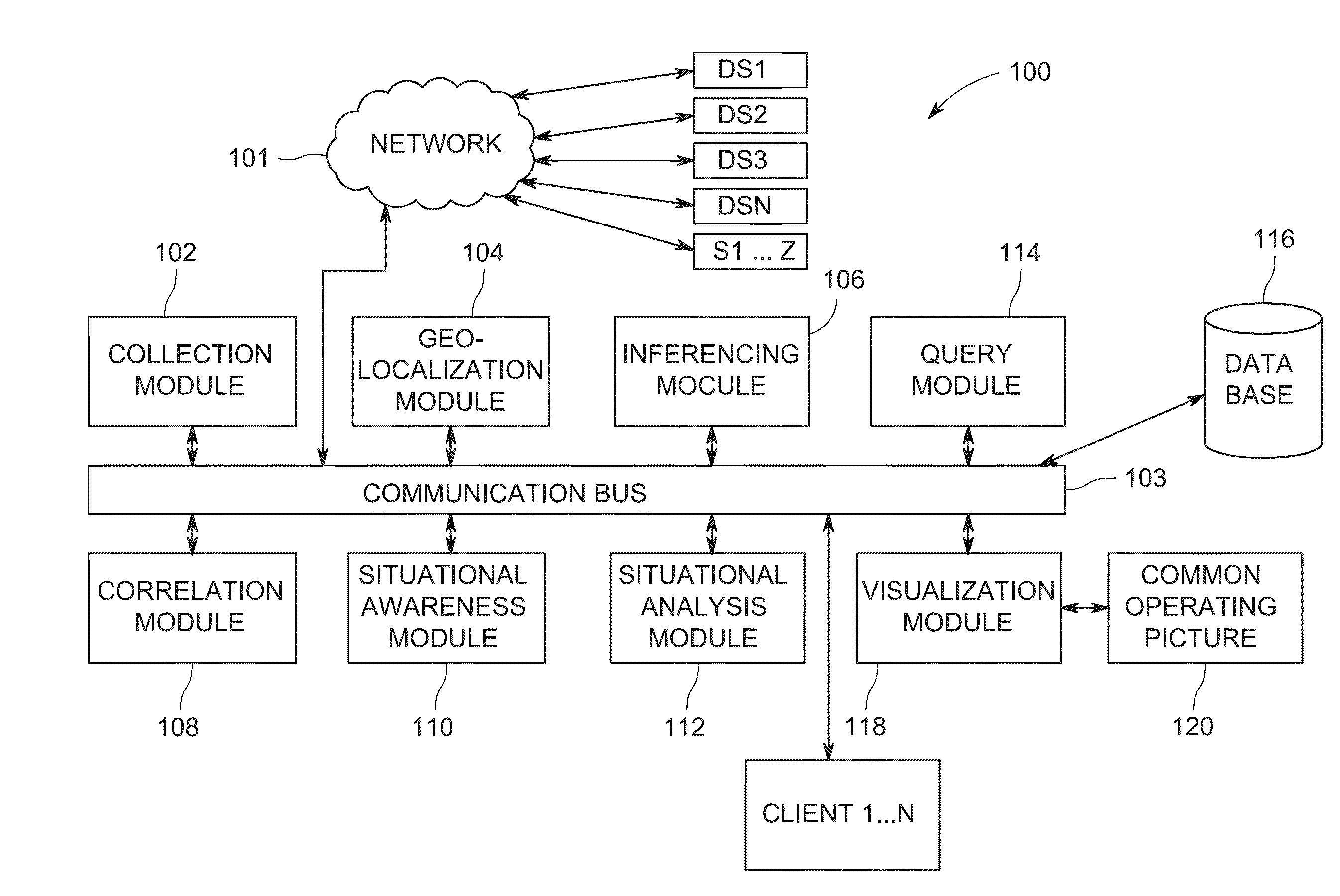

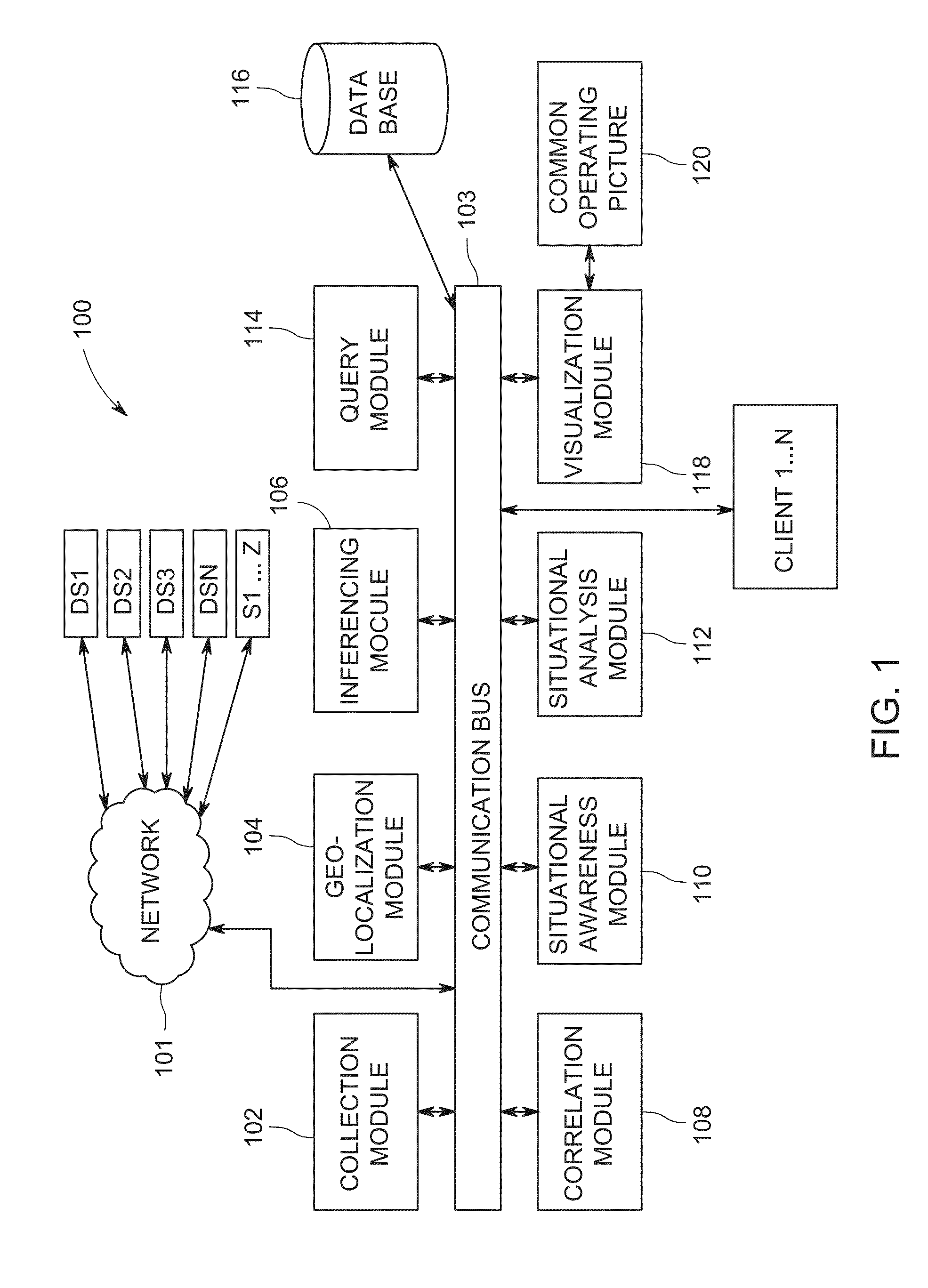

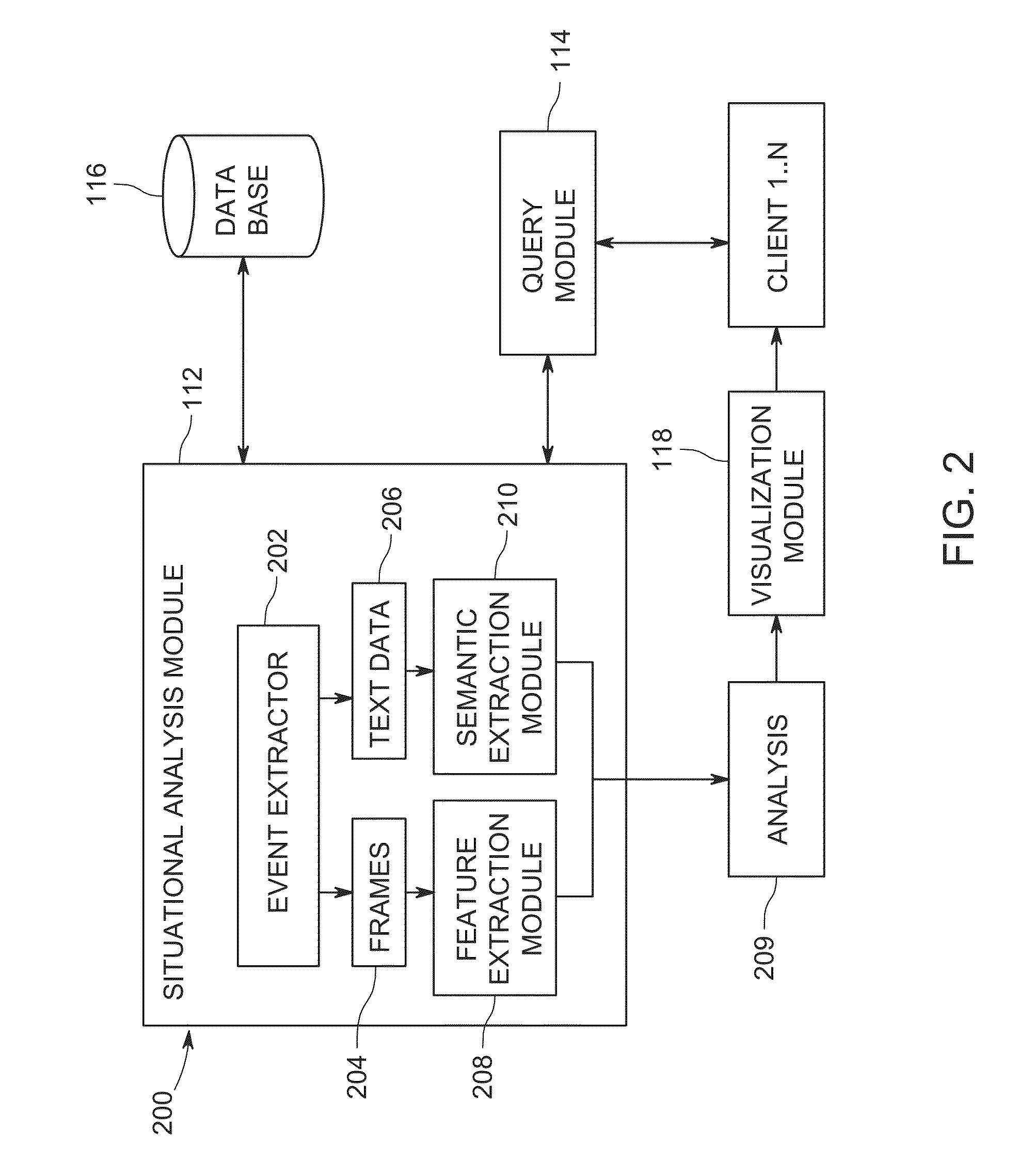

Method and apparatus for correlating and viewing disparate data

ActiveUS20160110433A1Natural language translationDigital data information retrievalMultimodal dataMulti modal data

Methods and apparatuses of the present invention generally relate to generating actionable data based on multimodal data from unsynchronized data sources. In an exemplary embodiment, the method comprises receiving multimodal data from one or more unsynchronized data sources, extracting concepts from the multimodal data, the concepts comprising at least one of objects, actions, scenes and emotions, indexing the concepts for searchability; and generating actionable data based on the concepts.

Owner:SRI INTERNATIONAL

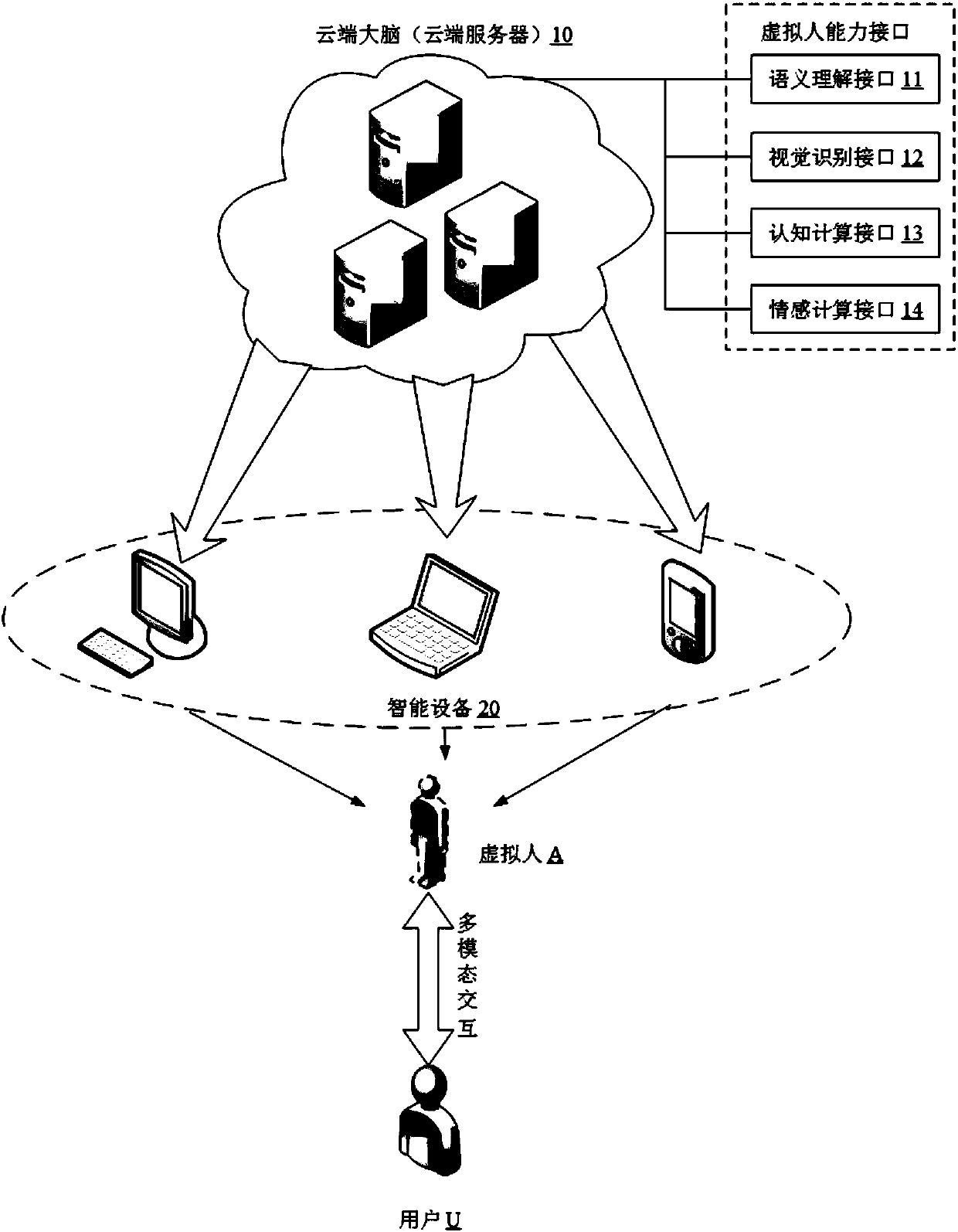

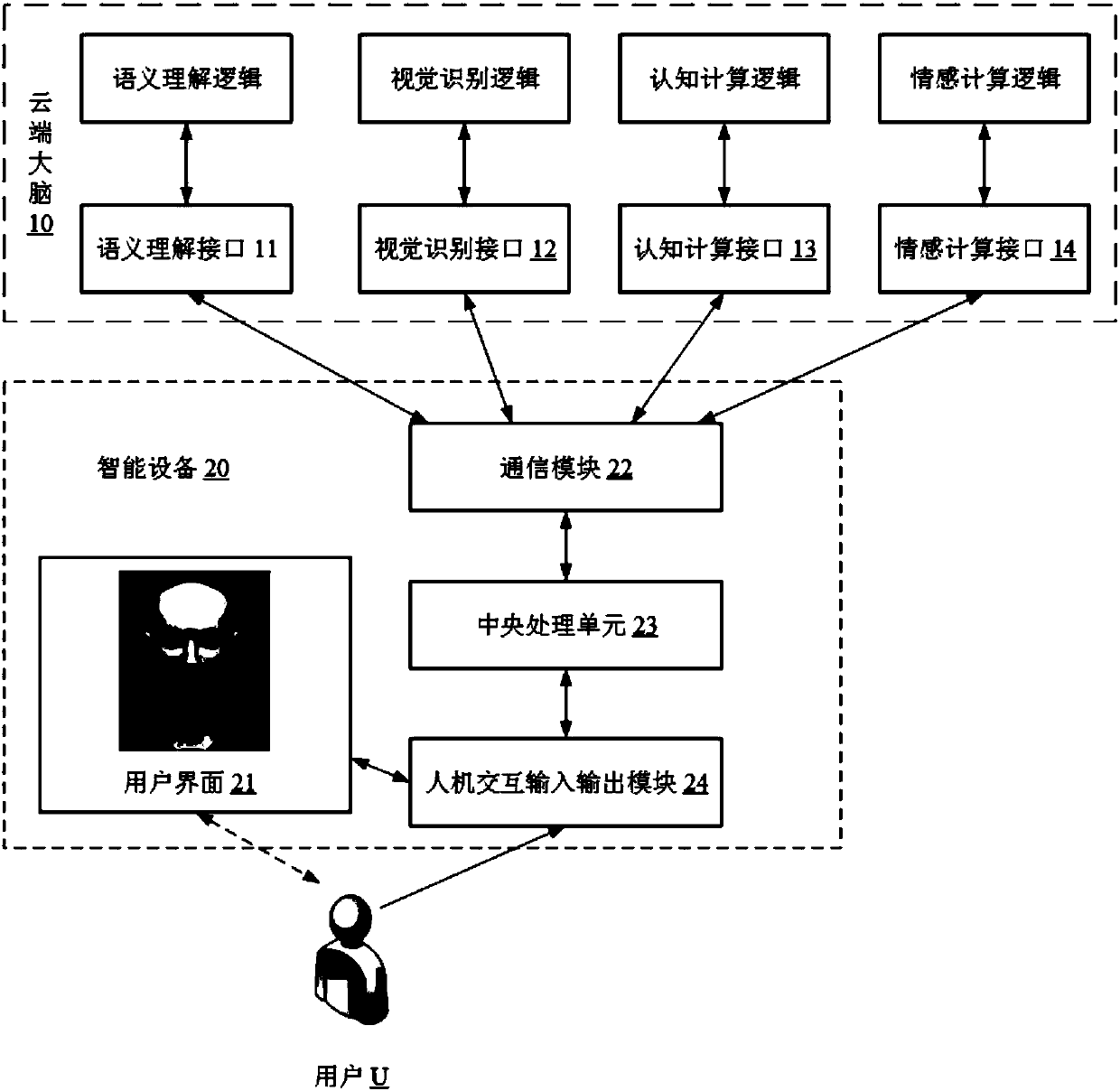

Virtual person-based multi-mode interactive processing method and system

PendingCN107765852AMeet needsImprove experienceInput/output for user-computer interactionGraph readingData matchingUser needs

The invention discloses a virtual person-based multi-mode interactive processing method and system. A virtual person runs in an intelligent device. The method comprises the following steps of awakening the virtual person to enable the virtual person to be displayed in a preset display region, wherein the virtual person has specific characters and attributes; obtaining multi-mode data, wherein themulti-mode data includes data from a surrounding environment and multi-mode input data interacting with a user; calling a virtual person ability interface to analyze the multi-mode data, and decidingmulti-mode output data; matching the multi-mode output data with executive parameters of a mouth shape, a facial expression, a head action and a limb body action of the virtual person; and presentingthe executive parameters in the preset display region. When the virtual person interacts with the user, voice, facial expression, emotion, head and limb body fusion can be realized to present a vividand fluent character interaction effect, thereby meeting user demands and improving user experience.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

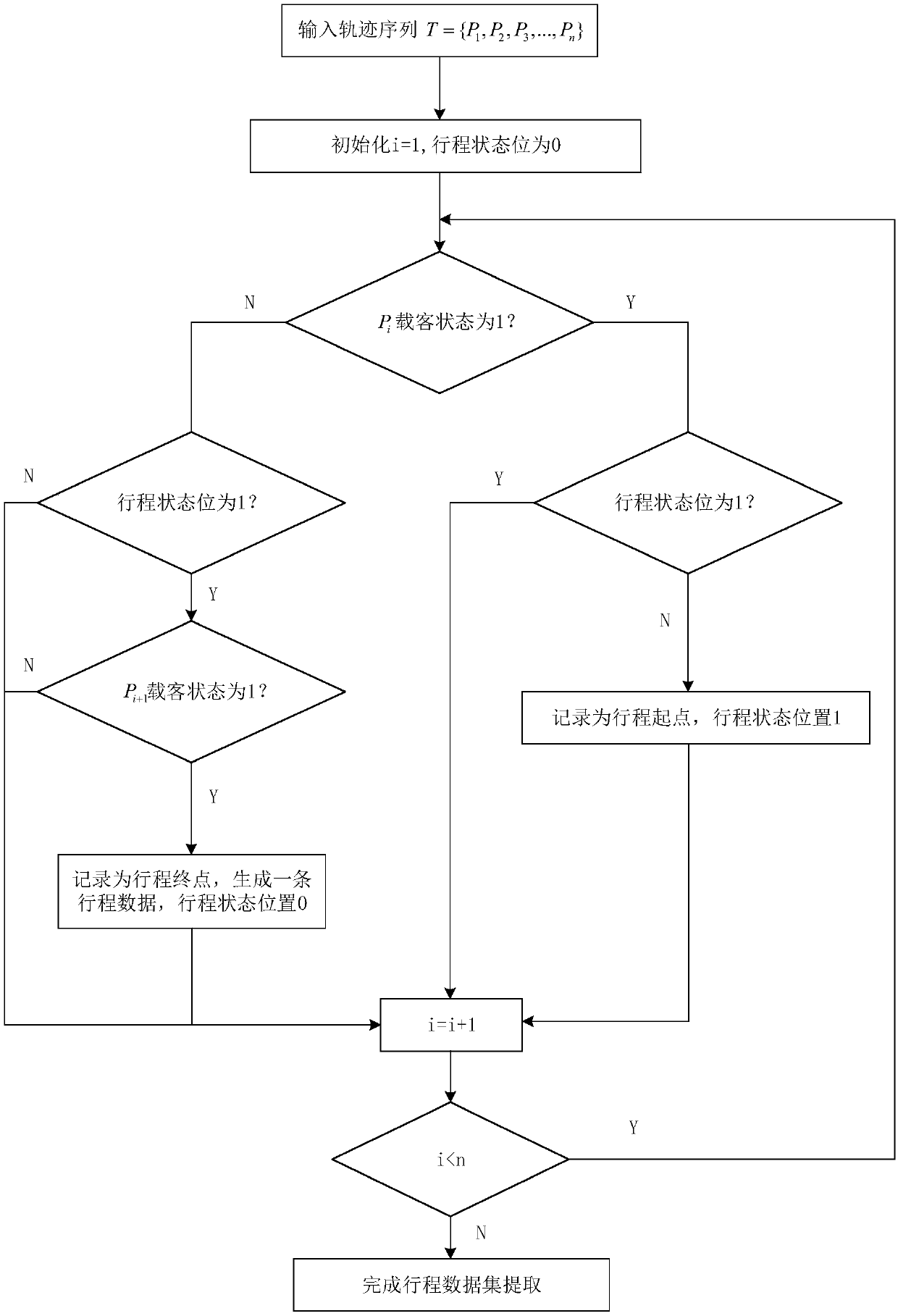

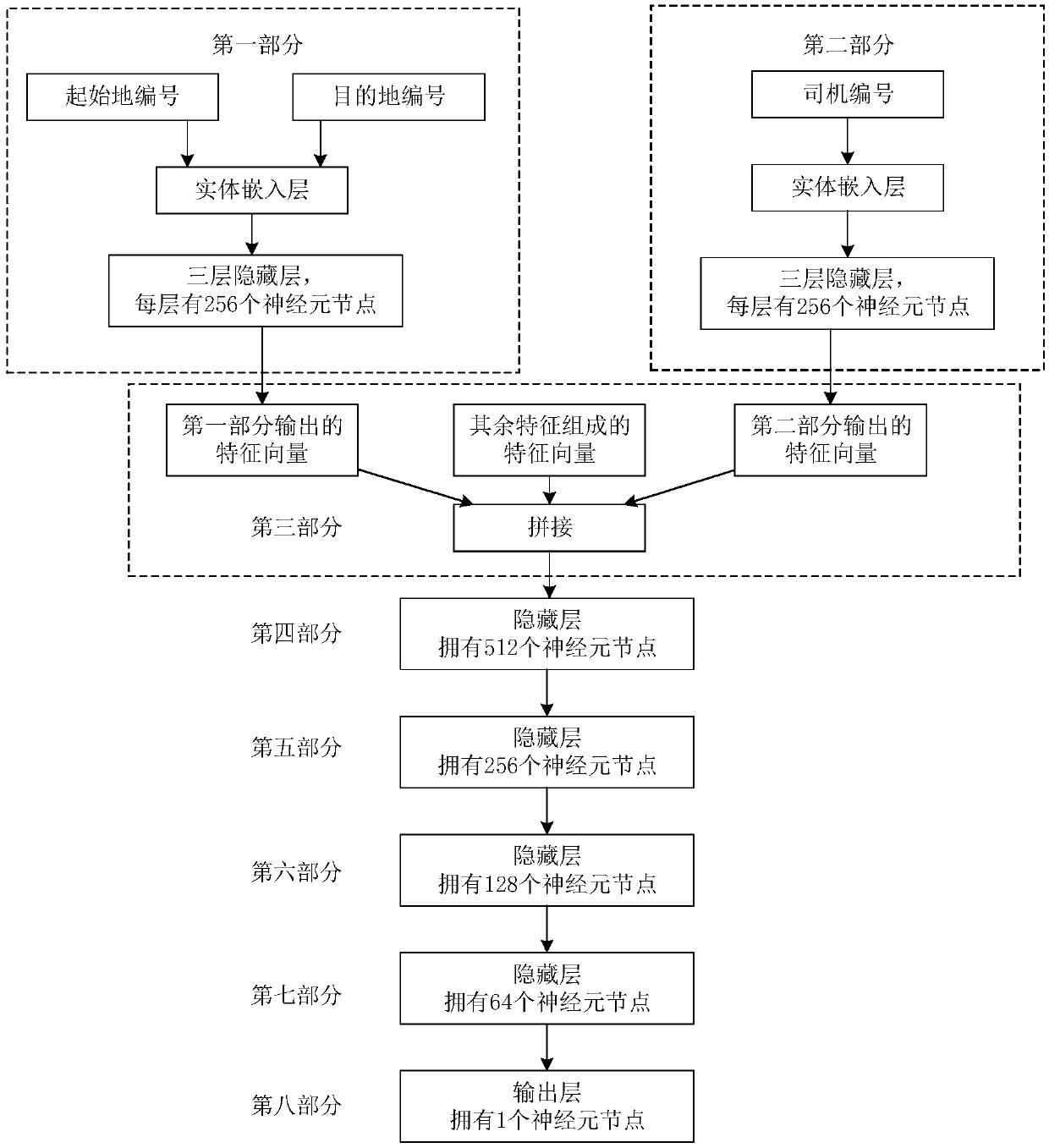

A travel time prediction method based on multi-modal data fusion and multi-model integration

ActiveCN109670277AReduce computational costFast operationForecastingDesign optimisation/simulationNetwork modelGps trajectory

The invention discloses a travel time prediction method based on multi-modal data fusion and multi-model integration. The travel time prediction method comprises a multi-modal data preprocessing module which extracts taxi travel data from taxi GPS track data according to the passenger carrying state; a multi-modal data analysis, feature extraction and feature fusion module which is used for extracting corresponding feature sub-vectors from the fields of taxi track data, weather data, driver portrait data and the like and completing feature splicing; and a multi-model integration module which is used for respectively establishing a gradient improvement decision tree model and a deep neural network model, and integrating prediction results of the models by using the decision tree model. According to the travel time prediction method, by fusing the multi-modal data such as taxi track data, weather data and driver portrait data, the factors influencing travel time are fully extracted and mined, and an integrated model based on a decision tree is established, so that higher travel time prediction accuracy is obtained at lower calculation cost.

Owner:NANJING UNIV OF POSTS & TELECOMM

Incident prediction and response using deep learning techniques and multimodal data

ActiveUS20170091617A1Efficient methodNeural learning methodsRestrict boltzmann machineMultimodal data

Owner:IBM CORP

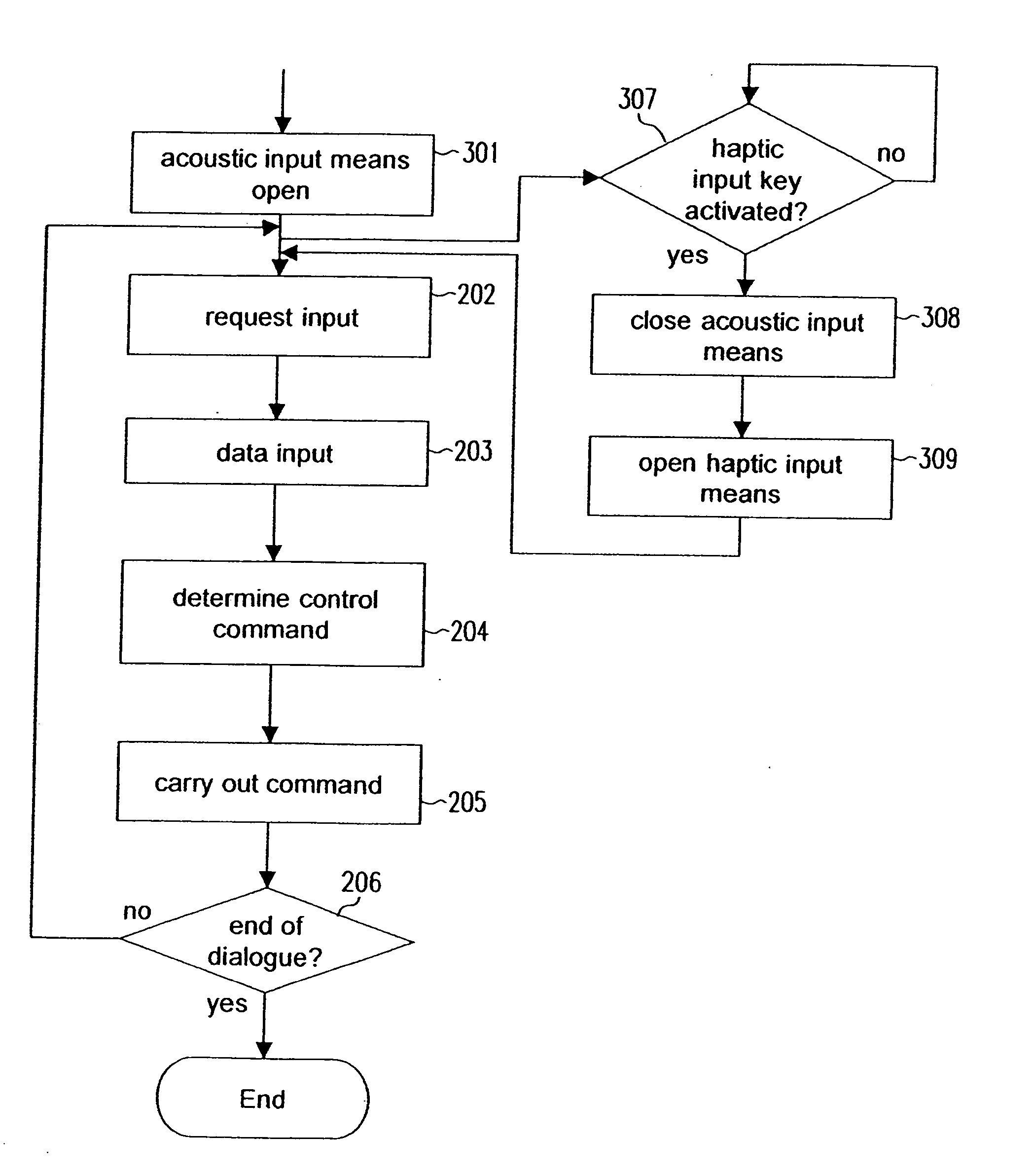

Multi-modal data input

ActiveUS20050171664A1Controlling traffic signalsElectric signal transmission systemsControl systemData loss

A control system processes commands received from a user. The control system may control one or more devices within a vehicle. A switch allows the control system to choose one of multiple input channels. The method that receives the data selects a channel through a process that minimizes data losses. The switching occurs upon a user request, when the control system does not recognize an input, or when an interference masks a user request.

Owner:HARMAN BECKER AUTOMOTIVE SYST

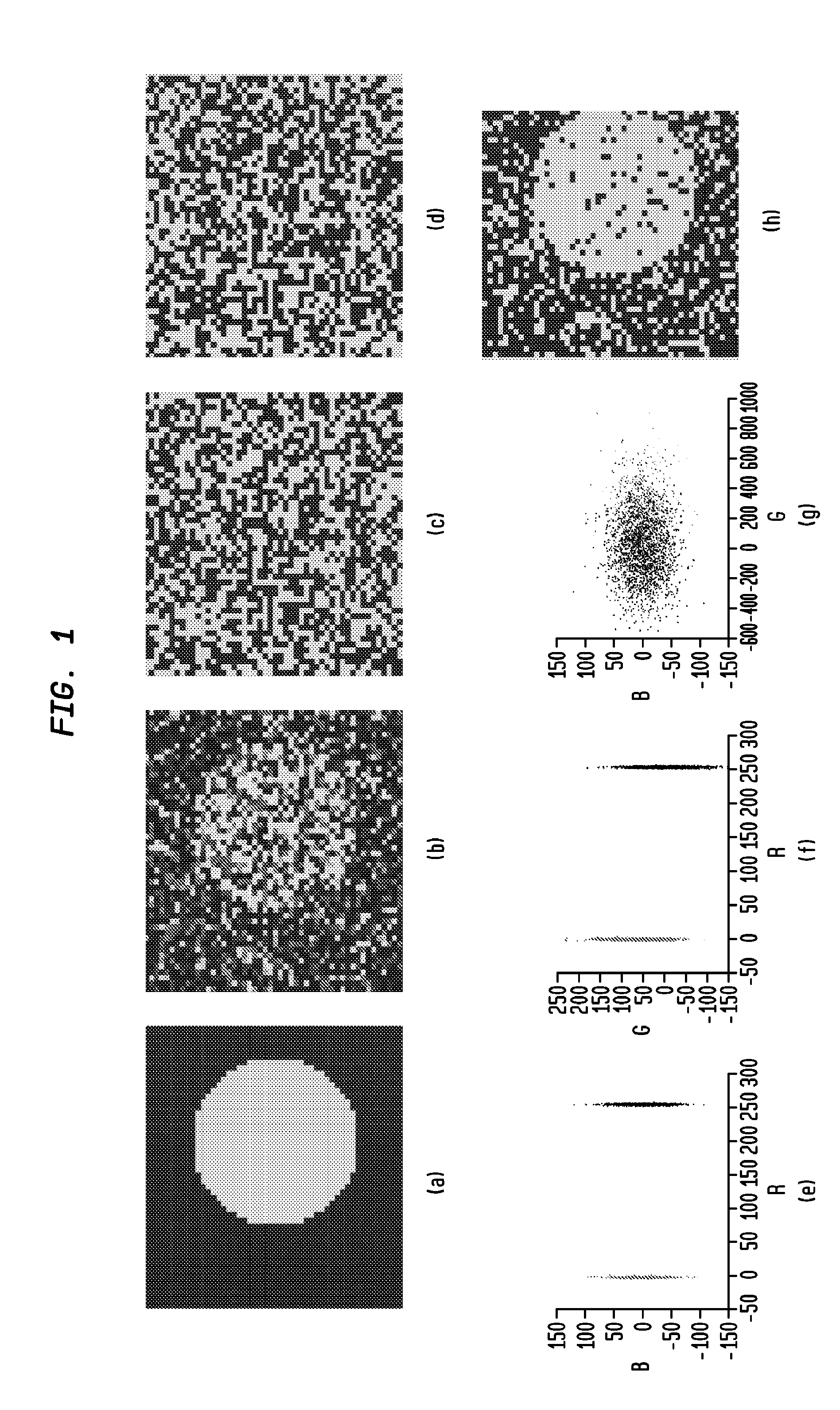

Enhanced multi-protocol analysis via intelligent supervised embedding (empravise) for multimodal data fusion

ActiveUS20140037172A1Image analysisCharacter and pattern recognitionAlgorithmDimensionality reduction

The present invention provides a system and method for analysis of multimodal imaging and non-imaging biomedical data, using a multi-parametric data representation and integration framework. The present invention makes use of (1) dimensionality reduction to account for differing dimensionalities and scale in multimodal biomedical data, and (2) a supervised ensemble of embeddings to accurately capture maximum available class information from the data.

Owner:RUTGERS THE STATE UNIV

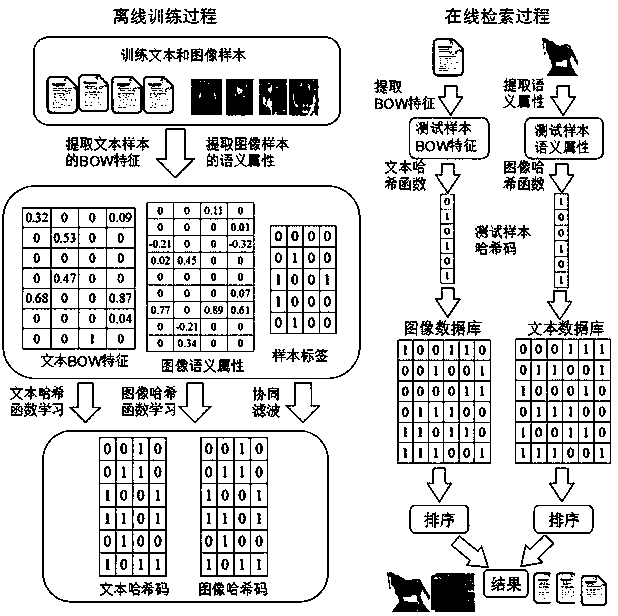

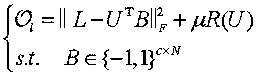

Discrete supervision cross-modal hashing retrieval method based on semantic alignment

ActiveCN107729513AImprove performanceBridging the Heterogeneous GapSpecial data processing applicationsHash functionSemantic alignment

The invention discloses a DSAH (discrete semantic alignment hashing) method based on semantic alignment for cross-modal retrieval. In the training process, a heterogeneous gap is reduced by the aid ofimage attributes and modal alignment semantic information. In order to reduce memory overhead and training time, a latent semantic space is learned by synergistic filtering, and the internal relationbetween a hash code and a label is directly built. Finally, in order to decrease quantization errors, a discrete optimization method is proposed to obtain a hash function with better performances. Inthe retrieval process, samples in a testing set are mapped to a binary space by the hash function, the Hamming distance between a binary code of a query sample and a heterogeneous sample to be retrieved is calculated, and front ranked samples are returned according to the sequence from small to large. Experimental results of two representative multi-modal data sets prove superior performances ofDSAH.

Owner:LUDONG UNIVERSITY

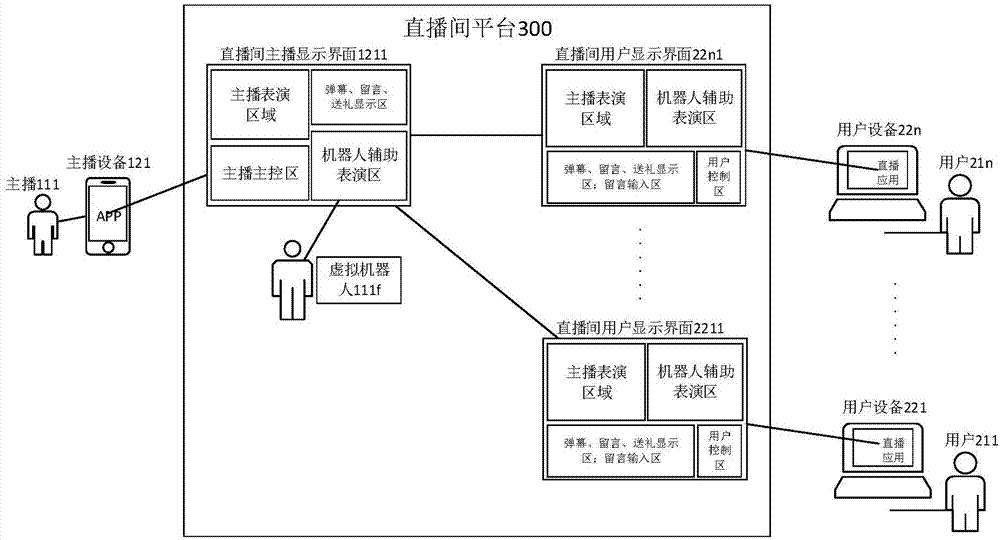

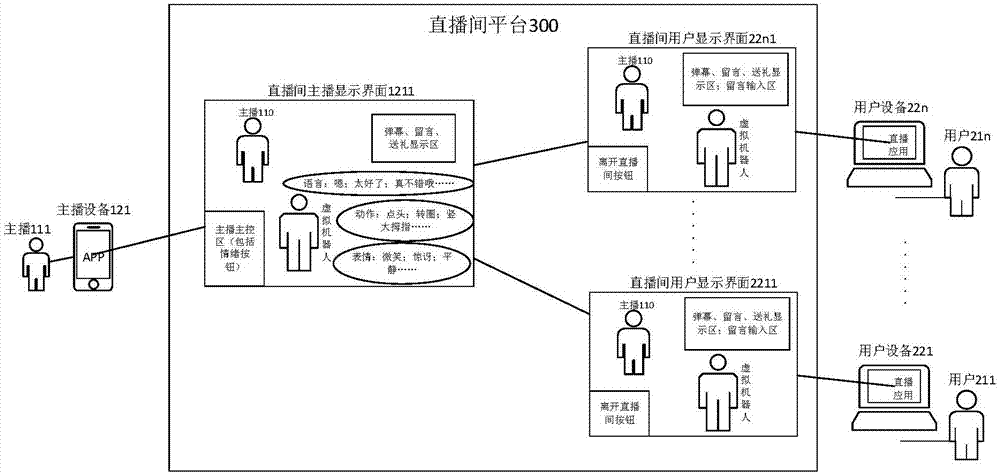

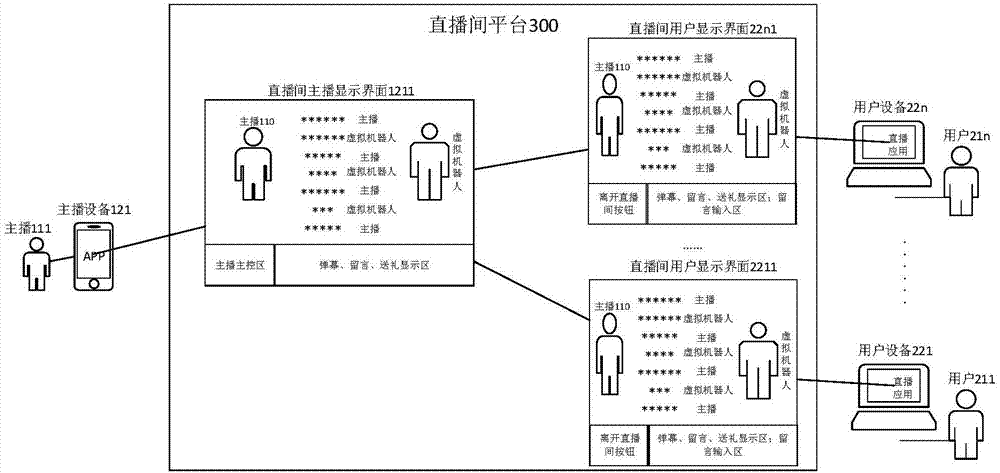

Virtual robot multi-mode interaction method and system applied to video live-broadcasting platform

ActiveCN107423809AEnhance interestGuaranteed stickinessInput/output for user-computer interactionArtificial lifeVirtual robotMultiple modes

The invention discloses a virtual robot multi-mode interaction method and system applied to a video live-broadcasting platform. A video live-broadcasting platform application gets access to a virtual robot with multi-mode interaction ability. The multi-mode interaction method comprises a step of displaying a virtual robot with a specific image in a preset display area, entering into a default live-broadcasting auxiliary mode, and receiving multi-mode data and a multi-mode instruction inputted in a live-broadcasting room in real time, a step of analyzing the multi-mode data and the multi-mode instruction, and judging and determining a target live-broadcasting auxiliary mode by using the multi-mode interaction ability of the virtual robot, and a step of starting the target live-broadcasting auxiliary mode and allowing the virtual robot to carry out multi-mode interaction and display according to the target live-broadcasting auxiliary mode. The multi-mode interaction of multiple modes is displayed by using live-broadcasting mode conversion, the user interest is improved, the user stickiness is maintained, and the user experience is improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

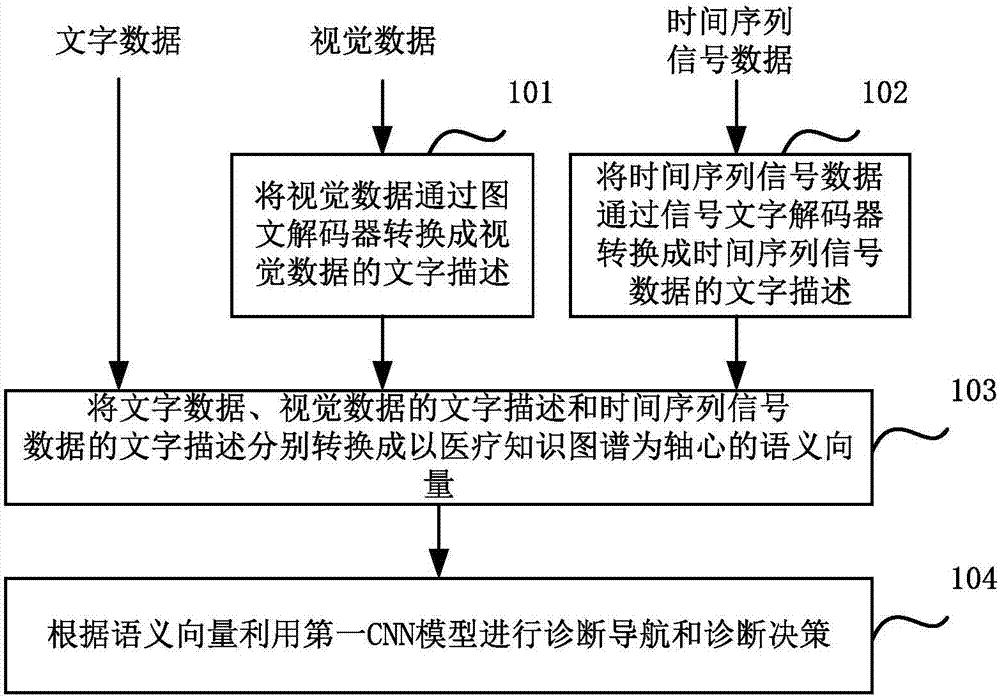

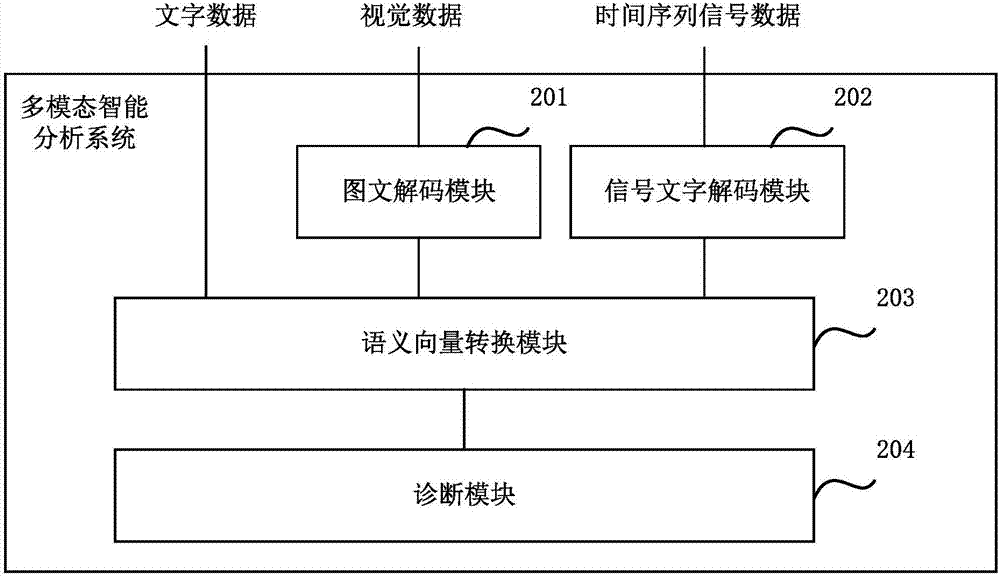

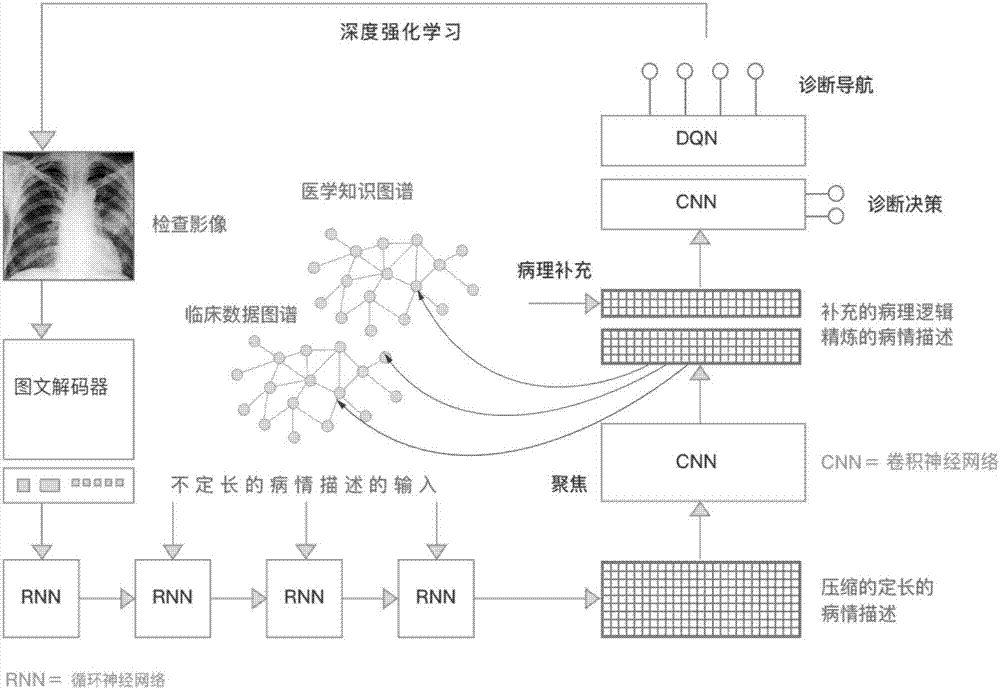

Multimode intelligent analysis method and system

ActiveCN107247881ABest diagnostic pathImprove accuracySpecial data processing applicationsSemantic vectorDisease description

The invention relates to a multimode intelligent analysis method and a system. The disease description is multimode data, and the multimode data comprises character data, time sequence signal data and visual data; the method can be applied to the diagnosis navigation and diagnosis decision according to the disease situation; the method includes steps of converting visual data to character description through an image-text decoder; converting a time sequence signal to the text description through a signal character decoder; respectively converting the character data, the character description of the visual data and the time sequence signal data to a semantic vector taking a medical treatment mapping knowledge domain as an axis, wherein the medical treatment mapping knowledge domain describes the topological relation among the symptom, laboratorial index, disease, drug and operation; according to the semantic vector, a first convolution neutral network CNN model is applied to perform diagnosis navigation and diagnosis decision. According to the embodiment, the best diagnosis path and the diagnosis result with high accuracy can be acquired.

Owner:北京大数医达科技有限公司

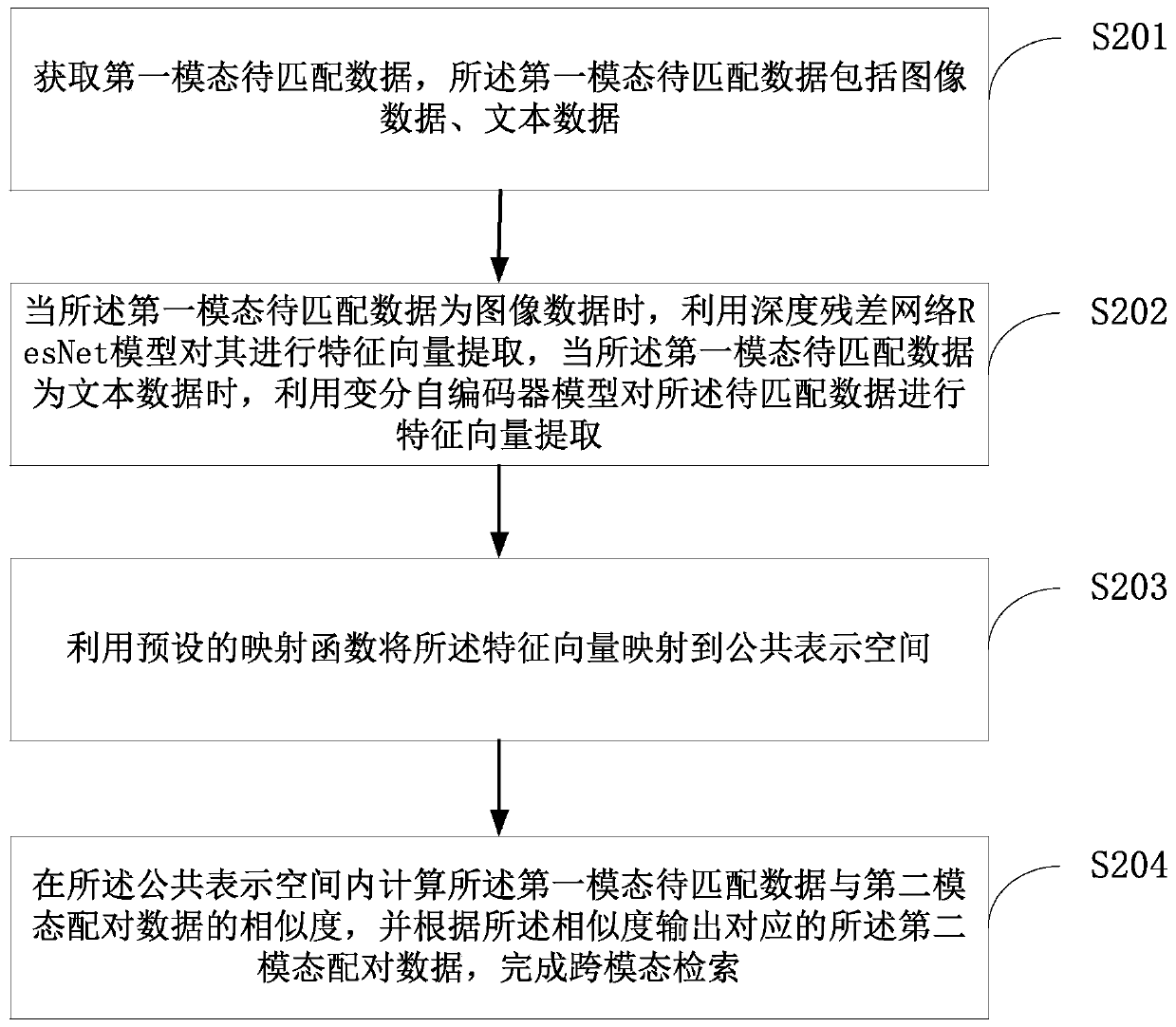

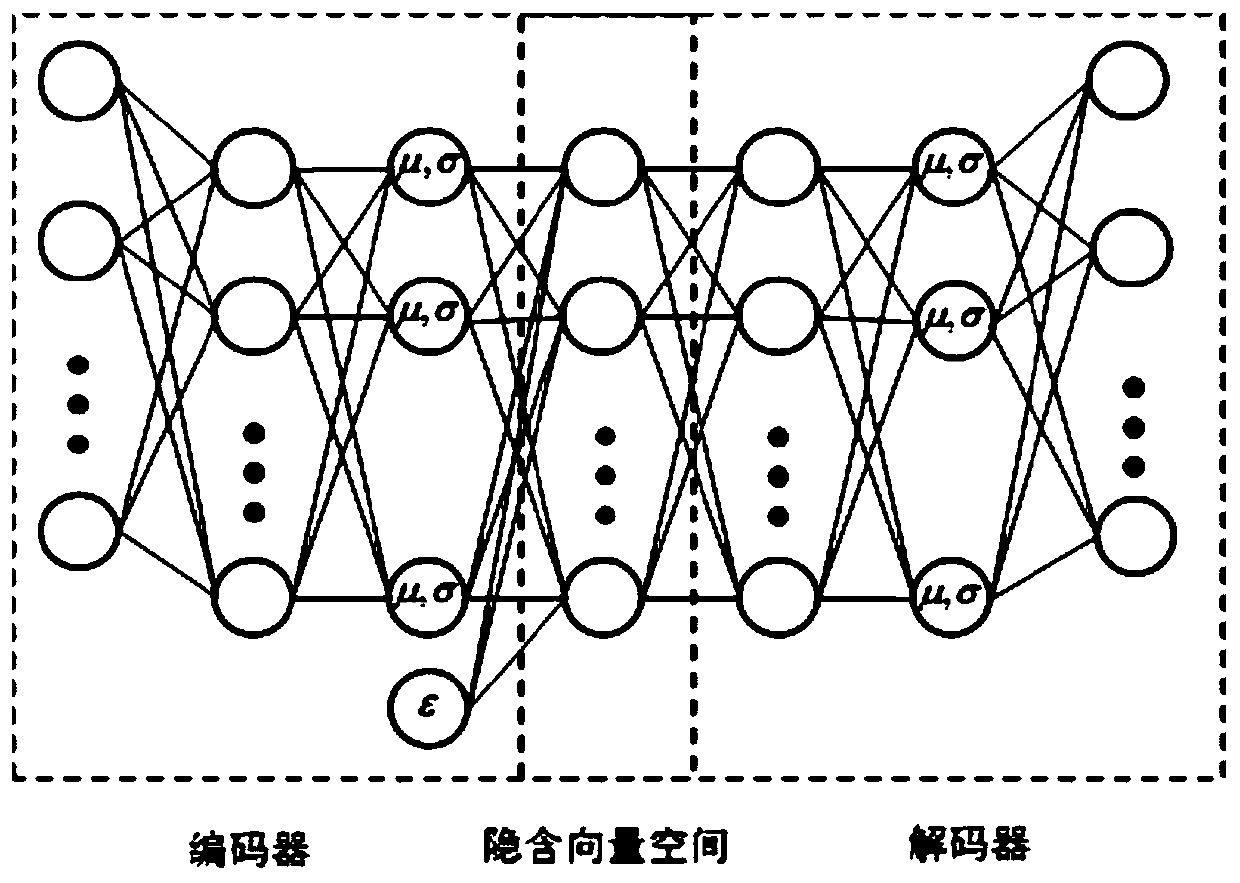

Cross-modal retrieval method and device, computer equipment and storage medium

ActiveCN109783655ASimple structureEasy to trainMultimedia data queryingNeural architecturesFeature vectorFeature extraction

The invention relates to the technical field of multi-modal data retrieval, in particular to a cross-modal retrieval method and device, computer equipment and a storage medium. The method comprises the steps of obtaining first modal to-be-matched data, wherein the first modal to-be-matched data comprises image data and text data; When the first modal to-be-matched data is image data, carrying outfeature vector extraction by using a deep residual network ResNet model, and when the first modal to-be-matched data is text data, carrying out feature vector extraction by using a variational auto-encoder model; Mapping the feature vector to a common representation space by using a preset mapping function; And calculating the similarity between the first modal to-be-matched data and the second modal matching data in the common representation space, and outputting the corresponding second modal matching data according to the similarity to complete cross-modal retrieval. Characteristics of dataare extracted more fully, and retrieval accuracy is improved.

Owner:XIDIAN UNIV

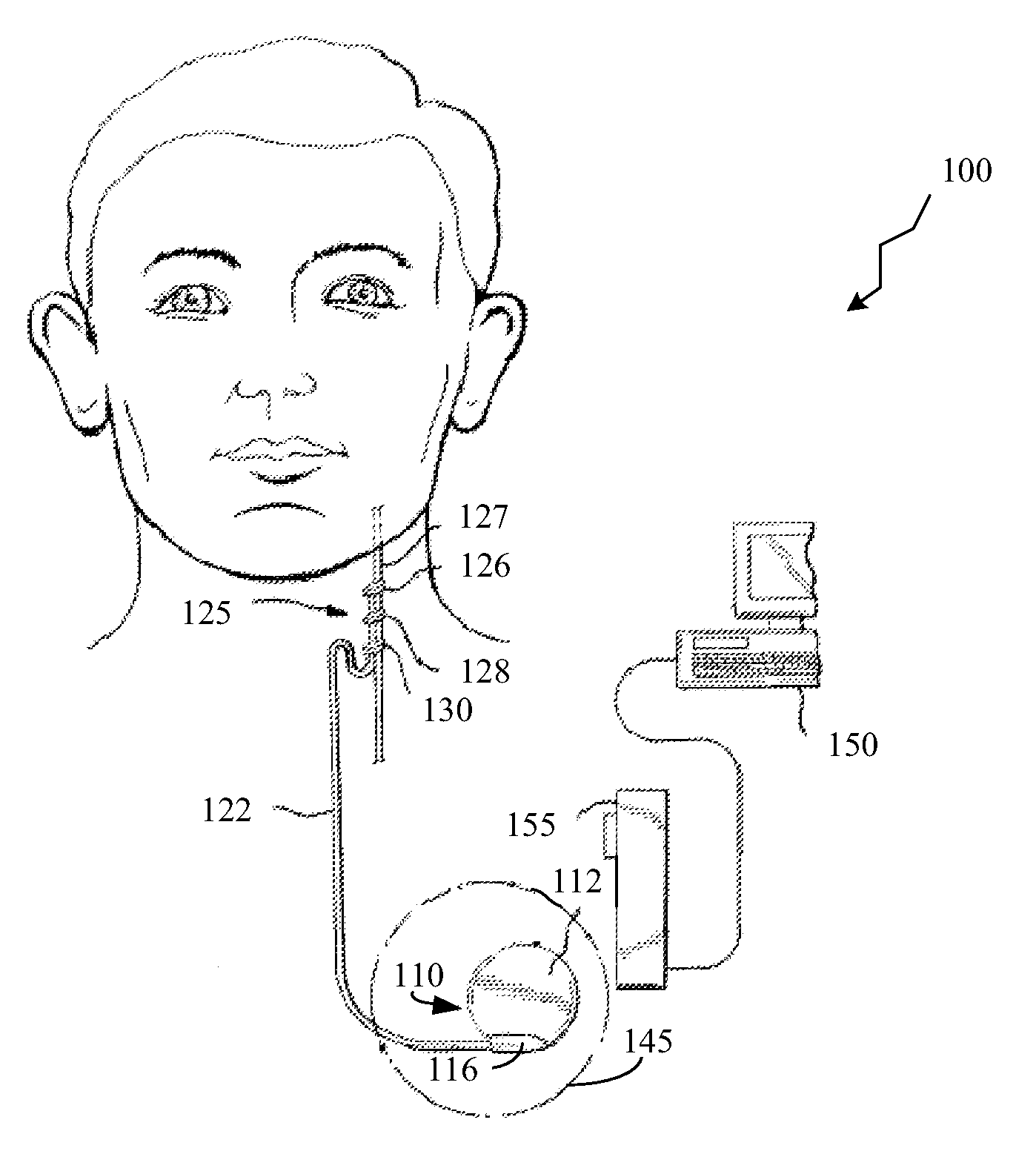

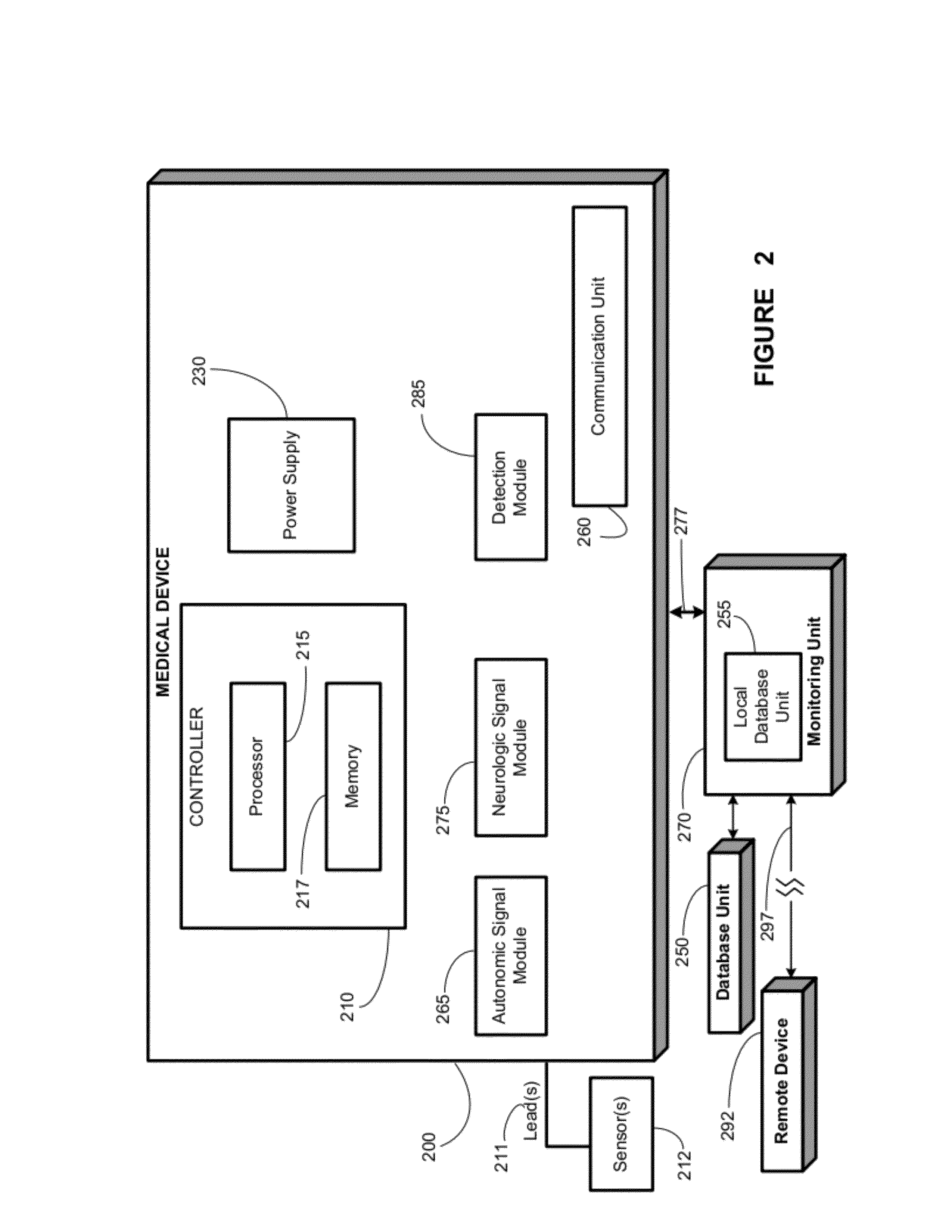

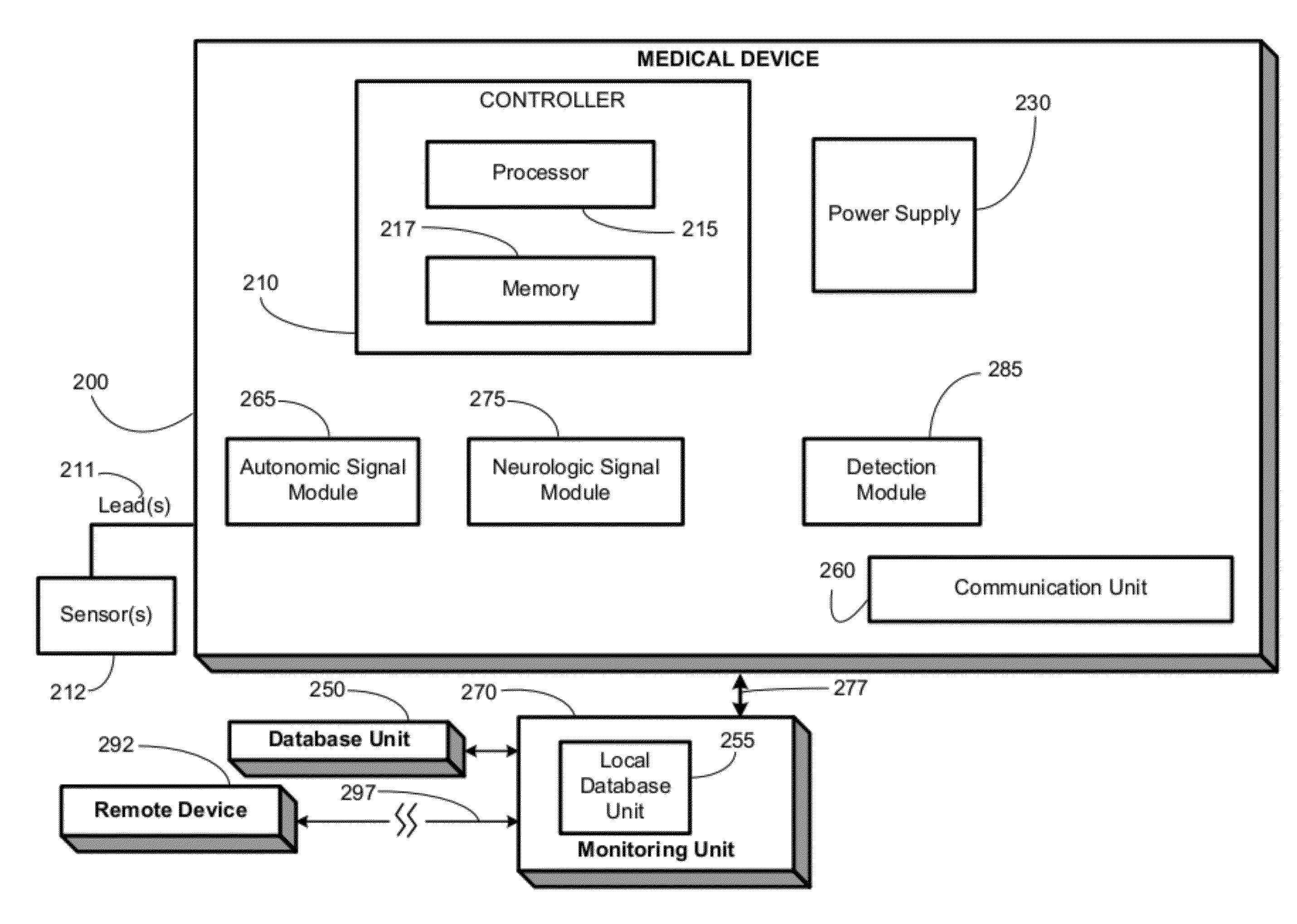

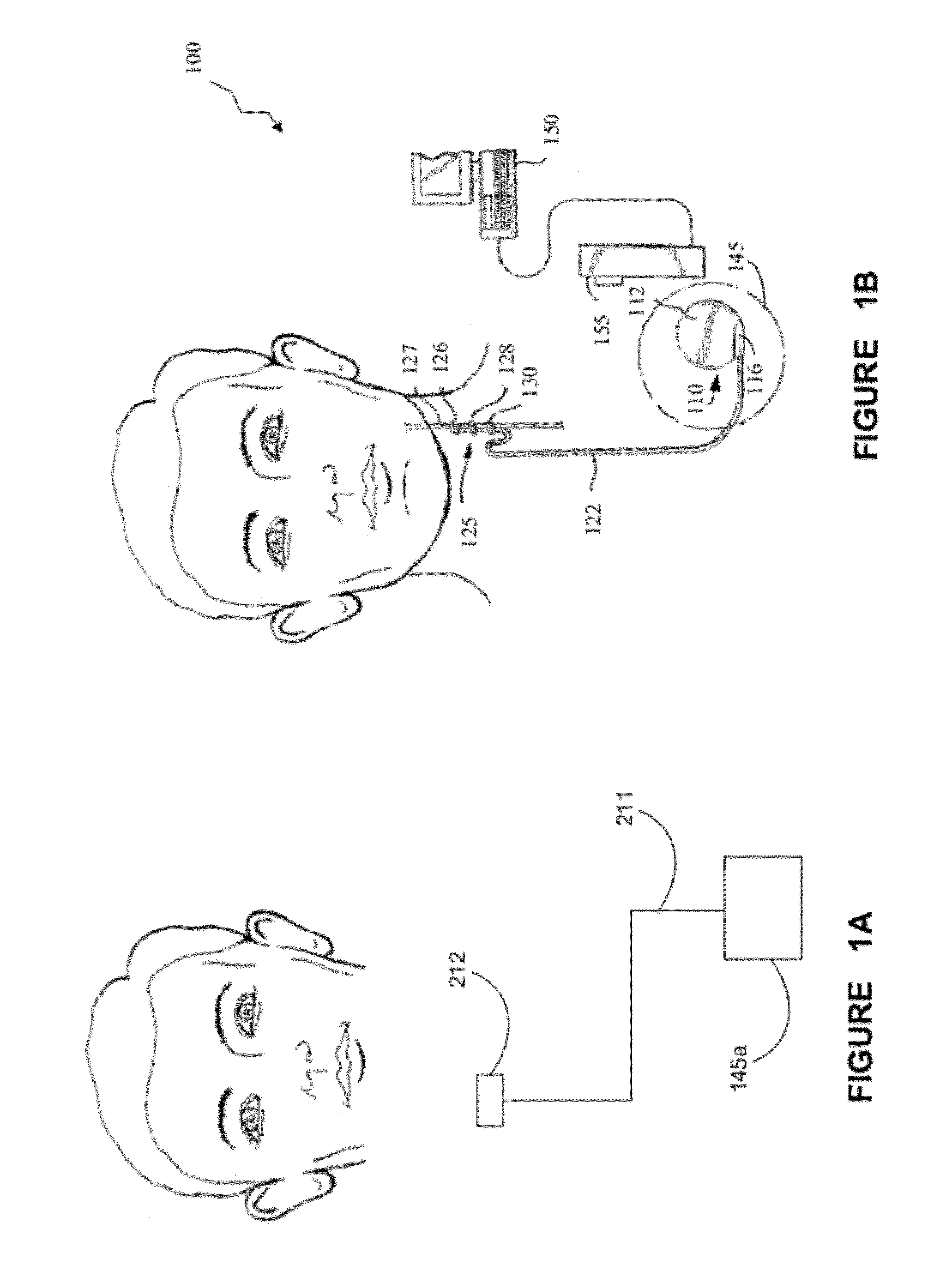

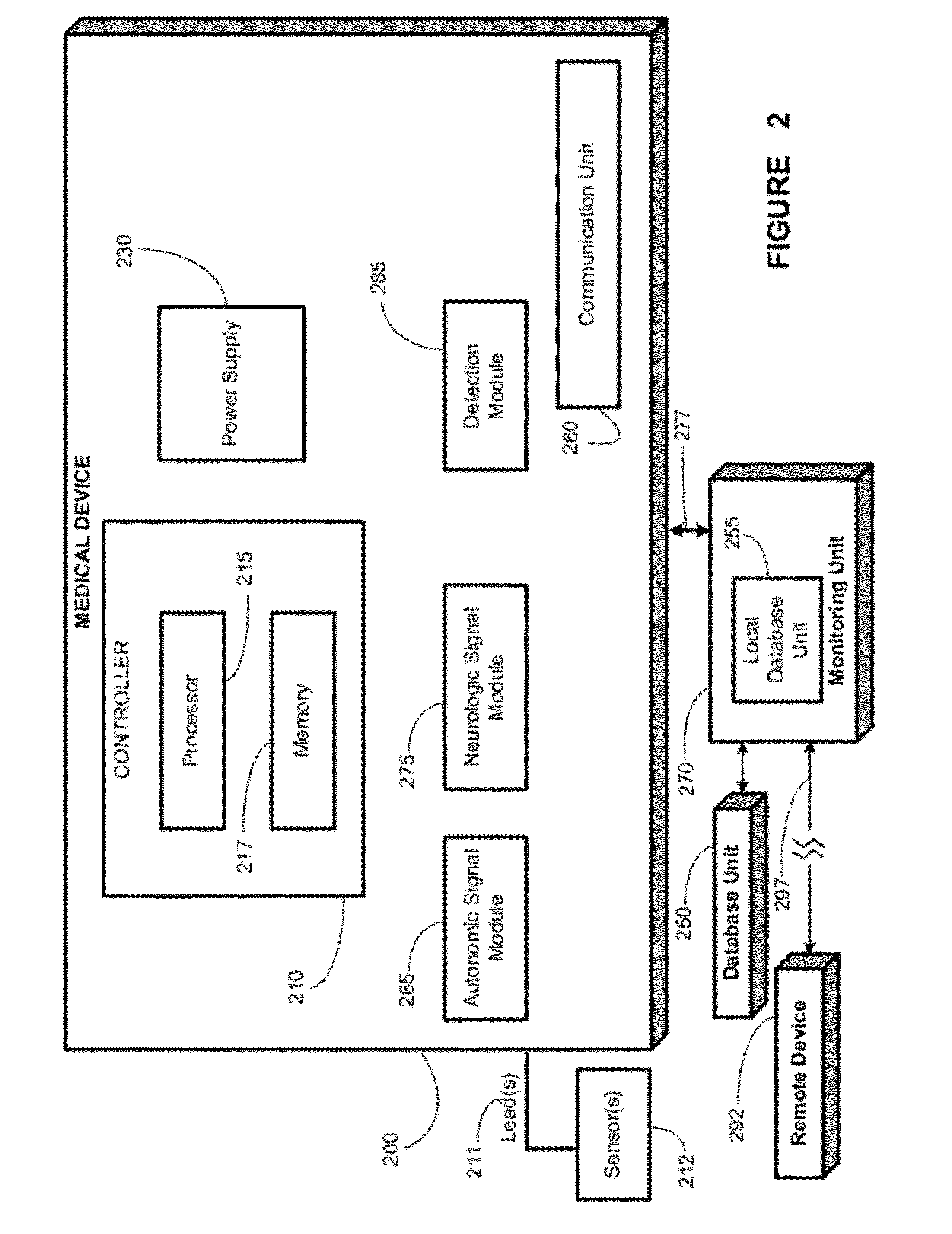

Detecting, quantifying, and/or classifying seizures using multimodal data

Methods, systems, and apparatus for detecting an epileptic event, for example, a seizure in a patient using a medical device. The determination is performed by providing an autonomic signal indicative of the patient's autonomic activity; providing a neurologic signal indicative of the patient's neurological activity; and detecting an epileptic event based upon the autonomic signal and the neurologic signal.

Owner:FLINT HILLS SCI L L C

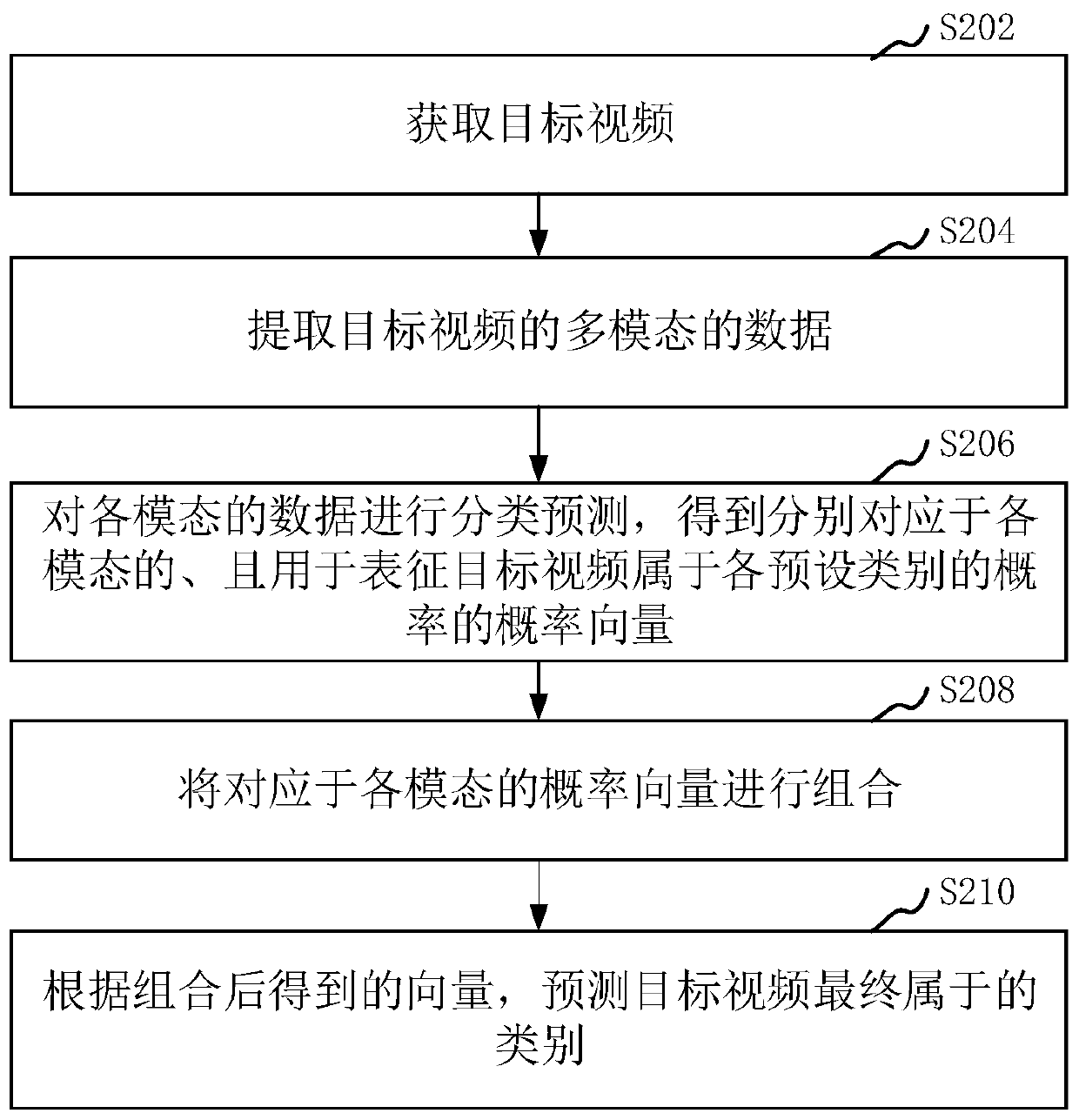

Video classification processing method and device, computer equipment and storage medium

ActiveCN110162669AImprove accuracyIncrease the amount of informationVideo data clustering/classificationCharacter and pattern recognitionComputer graphics (images)Computer vision

The invention relates to a video classification processing method and device, computer equipment and a storage medium. The method comprises the steps of obtaining a target video; extracting multi-modal data of the target video; performing classification prediction on the data of each mode to obtain probability vectors which respectively correspond to each mode and are used for representing the probability that the target video belongs to each preset category; combining the probability vectors corresponding to the modalities; and predicting the final category of the target video according to the combined vectors. According to the scheme, the accuracy of video classification can be improved.

Owner:深圳市雅阅科技有限公司

Detecting, quantifying, and/or classifying seizures using multimodal data

ActiveUS20120083701A1Ultrasonic/sonic/infrasonic diagnosticsElectrotherapyCardiac activityMultimodal data

A method, comprising receiving at least one of a signal relating to a first cardiac activity and a signal relating to a first body movement from a patient; triggering at least one of a test of the patient's responsiveness, awareness, a second cardiac activity, a second body movement, a spectral analysis test of the second cardiac activity, and a spectral analysis test of the second body movement, based on at least one of the signal relating to the first cardiac activity and the signal relating to the first body movement; determining an occurrence of an epileptic event based at least in part on said one or more triggered tests; and performing a further action in response to said determination of said occurrence of said epileptic event. Further methods allow classification of epileptic events. Apparatus and systems capable of implementing the method.

Owner:FLINT HILLS SCI L L C

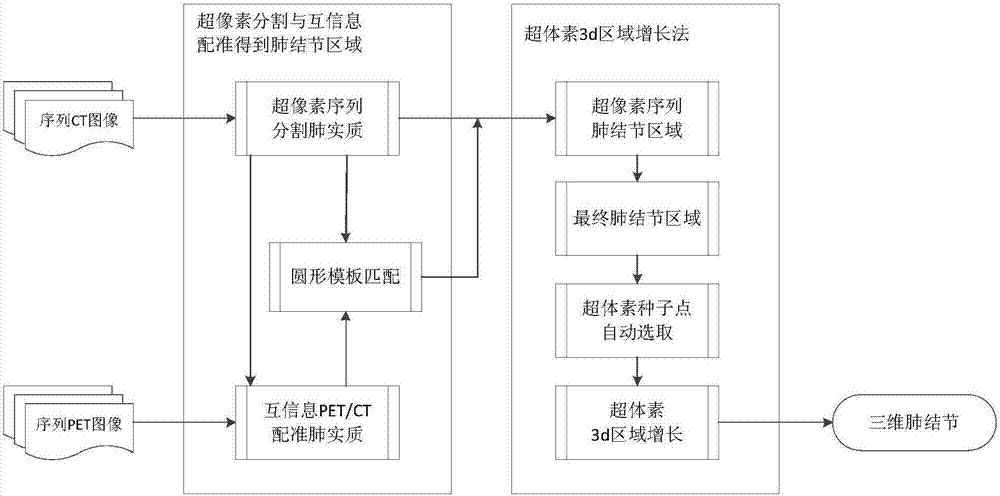

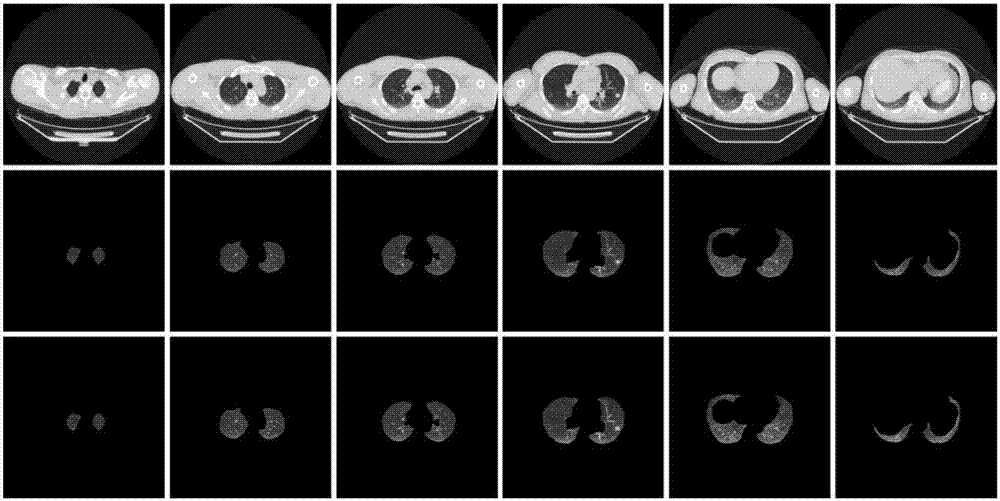

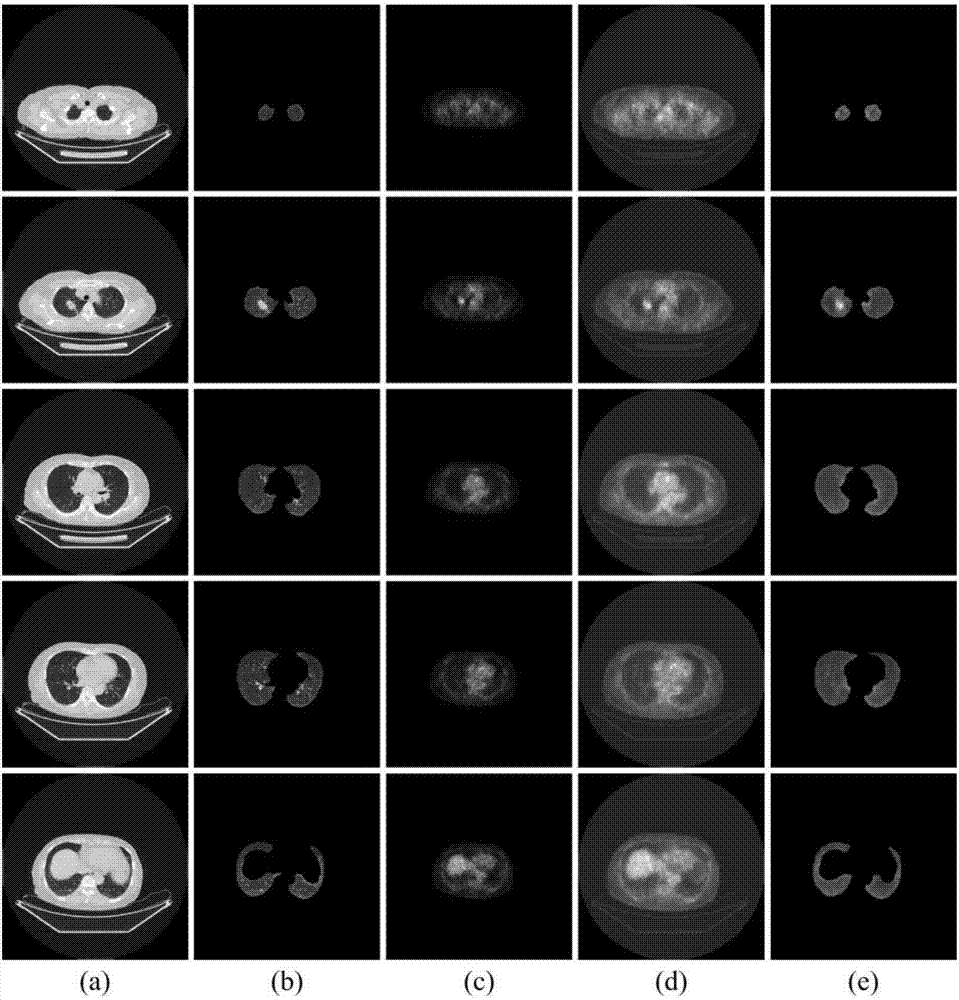

Supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data

ActiveCN107230206AUnderstand intuitiveImprove the quality of surgeryImage enhancementImage analysisPulmonary noduleDiagnostic Radiology Modality

The invention discloses a supervoxel sequence lung image 3D pulmonary nodule segmentation method based on multimodal data. The method comprises the following steps: A) extracting sequence lung parenchyma images through superpixel segmentation and self-generating neuronal forest clustering; B) registering the sequence lung parenchyma images through PET / CT multimodal data based on mutual information; C) marking and extracting an accurate sequence pulmonary nodule region through a multi-scale variable circular template matching algorithm; and D) carrying out three-dimensional reconstruction on the sequence pulmonary nodule images through a supervoxel 3D region growth algorithm to obtain a final three-dimensional shape of pulmonary nodules. The method forms a 3D reconstruction area of the pulmonary nodules through the supervoxel 3D region growth algorithm, and can reflect dynamic relation between pulmonary lesions and surrounding tissues, so that features of shape, size and appearance of the pulmonary nodules as well as adhesion conditions of the pulmonary nodules with surrounding pleura or blood vessels can be known visually.

Owner:TAIYUAN UNIV OF TECH

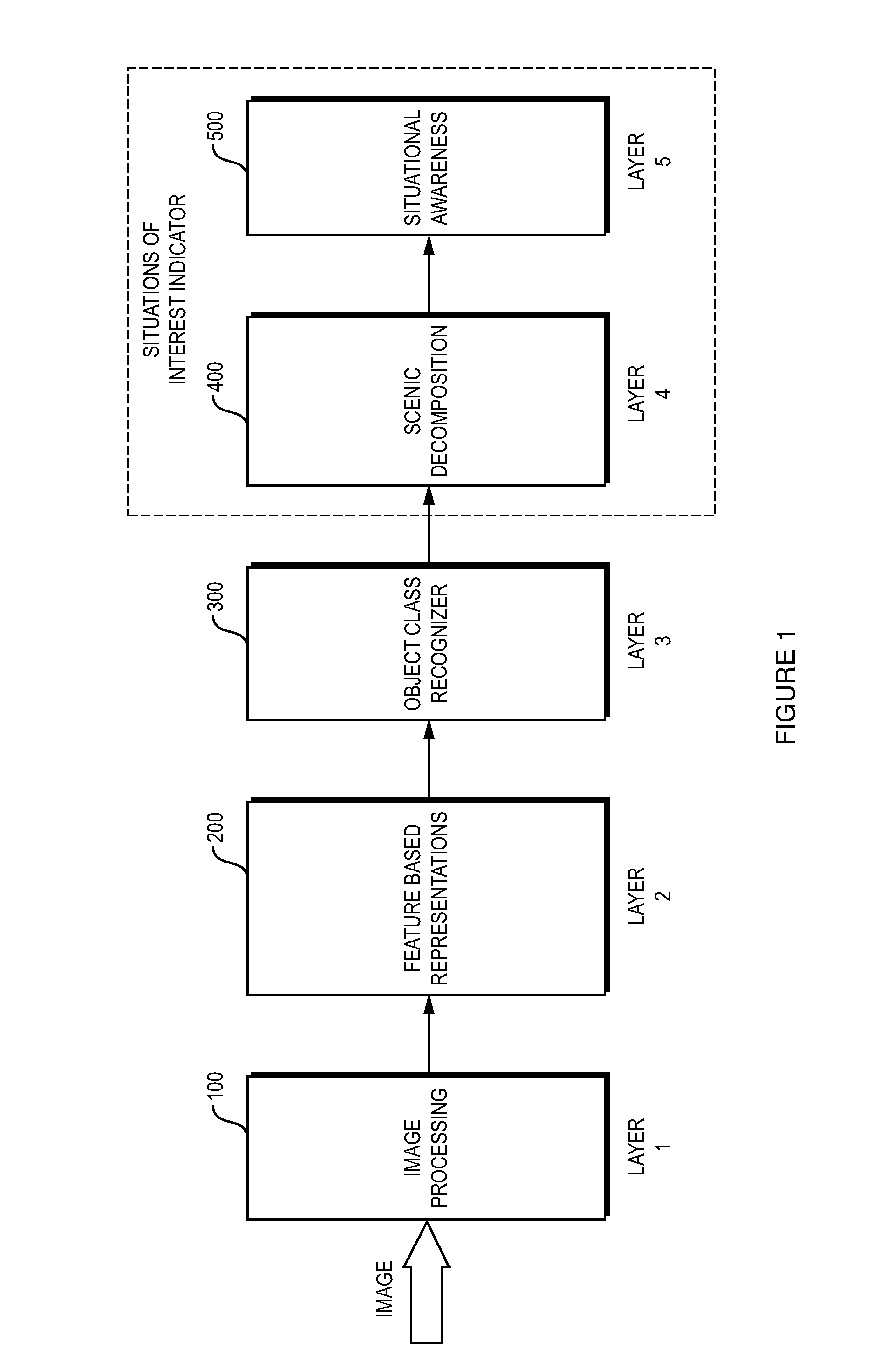

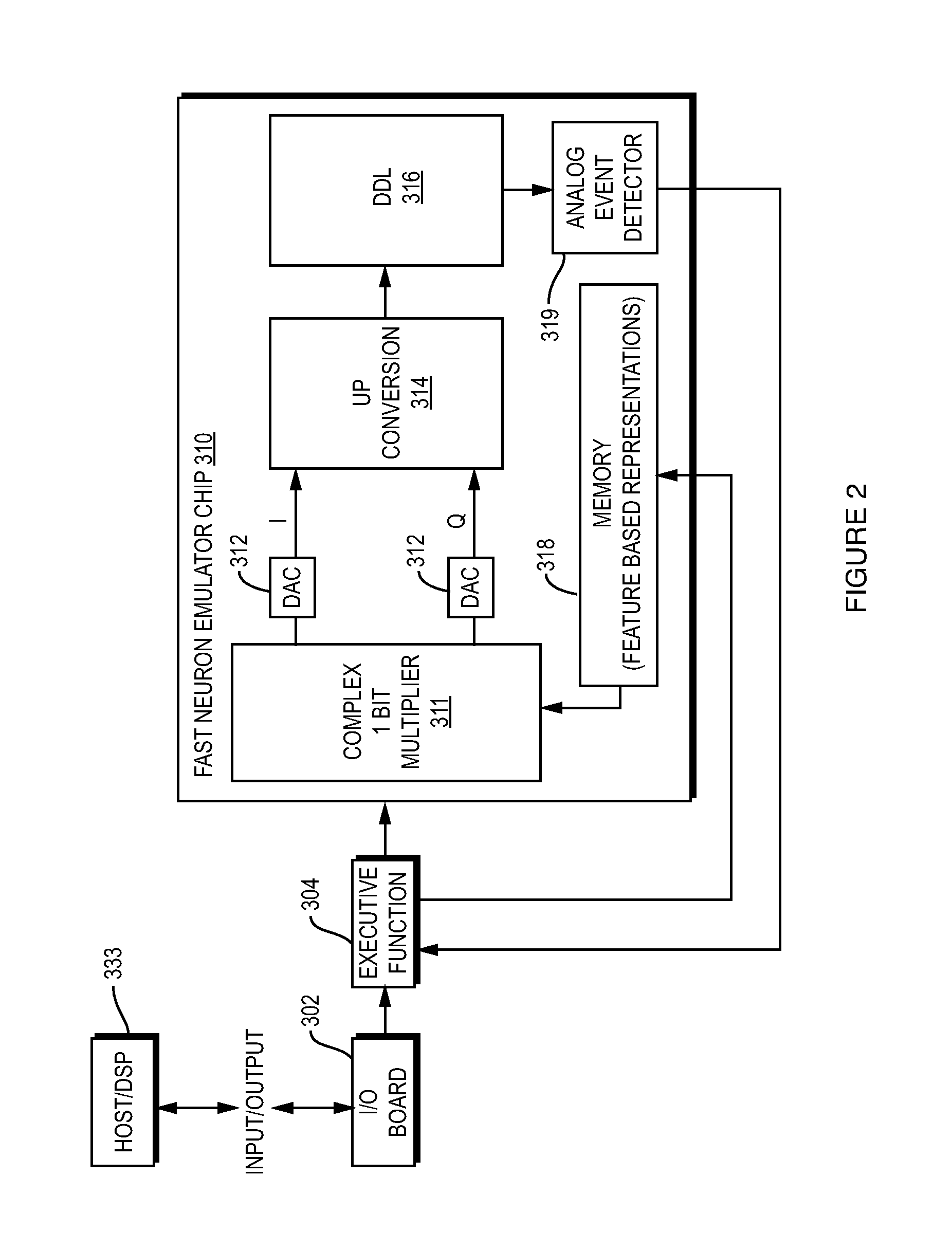

Neuromorphic parallel processor

ActiveUS8401297B1Improve performanceHigh biometric recognitionCharacter and pattern recognitionNeural architecturesObject ClassMultimodal data

A neuromorphic parallel image processing approach that has five (5) functional layers. The first performs a frequency domain transform on the image data generating multiple scales and feature based representations which are independent of orientation. The second layer is populated with feature based representations. The third layer, an object class recognizer layer, are fused using a neuromorphic parallel processor. Fusion of multimodal data can achieve high confidence, biometric recognition.

Owner:AMI RES & DEV

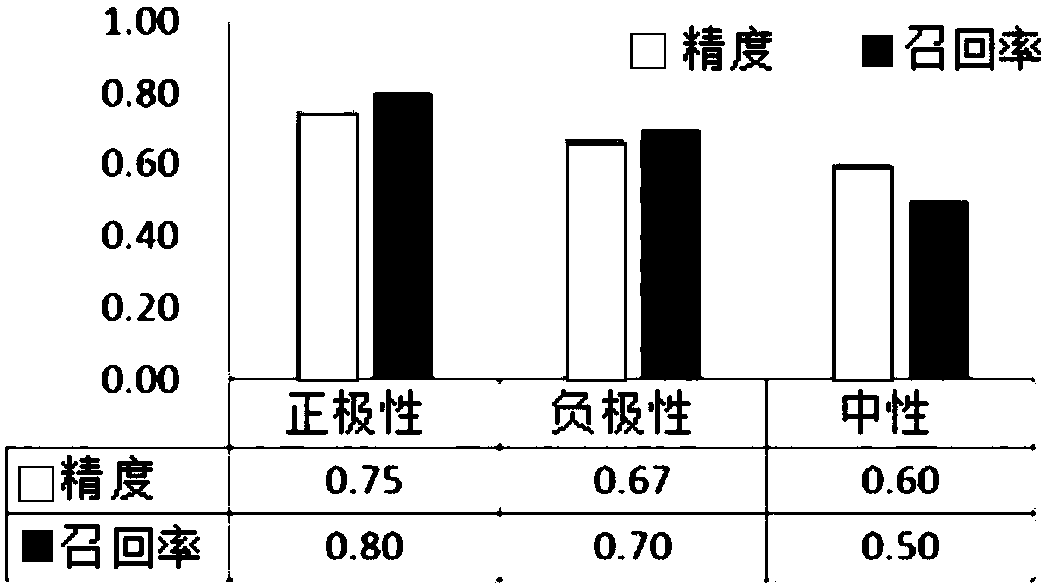

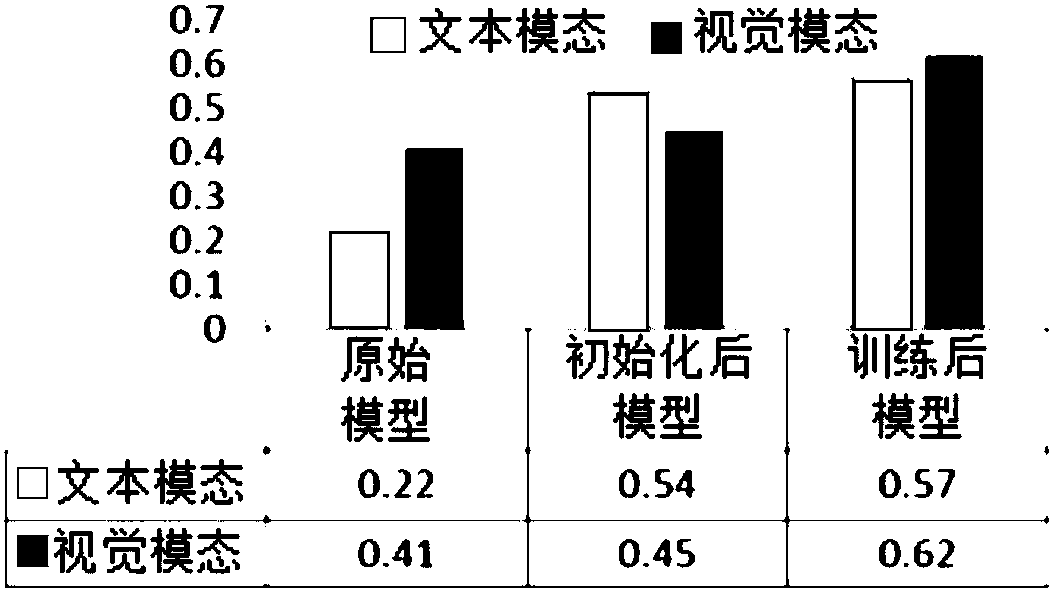

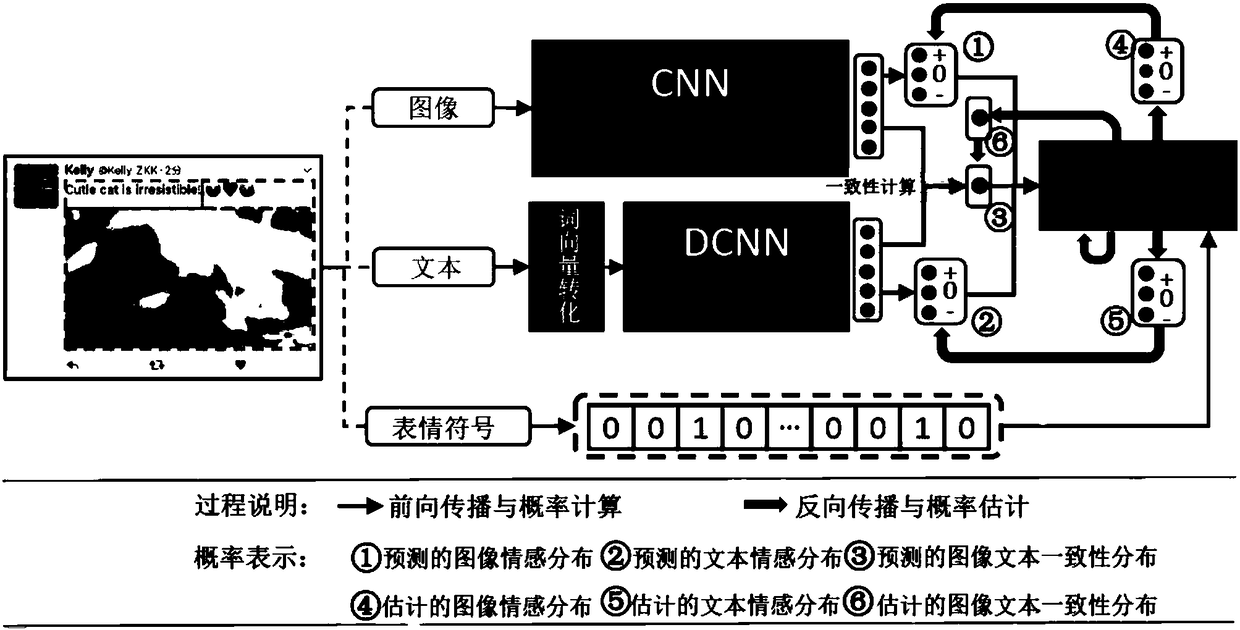

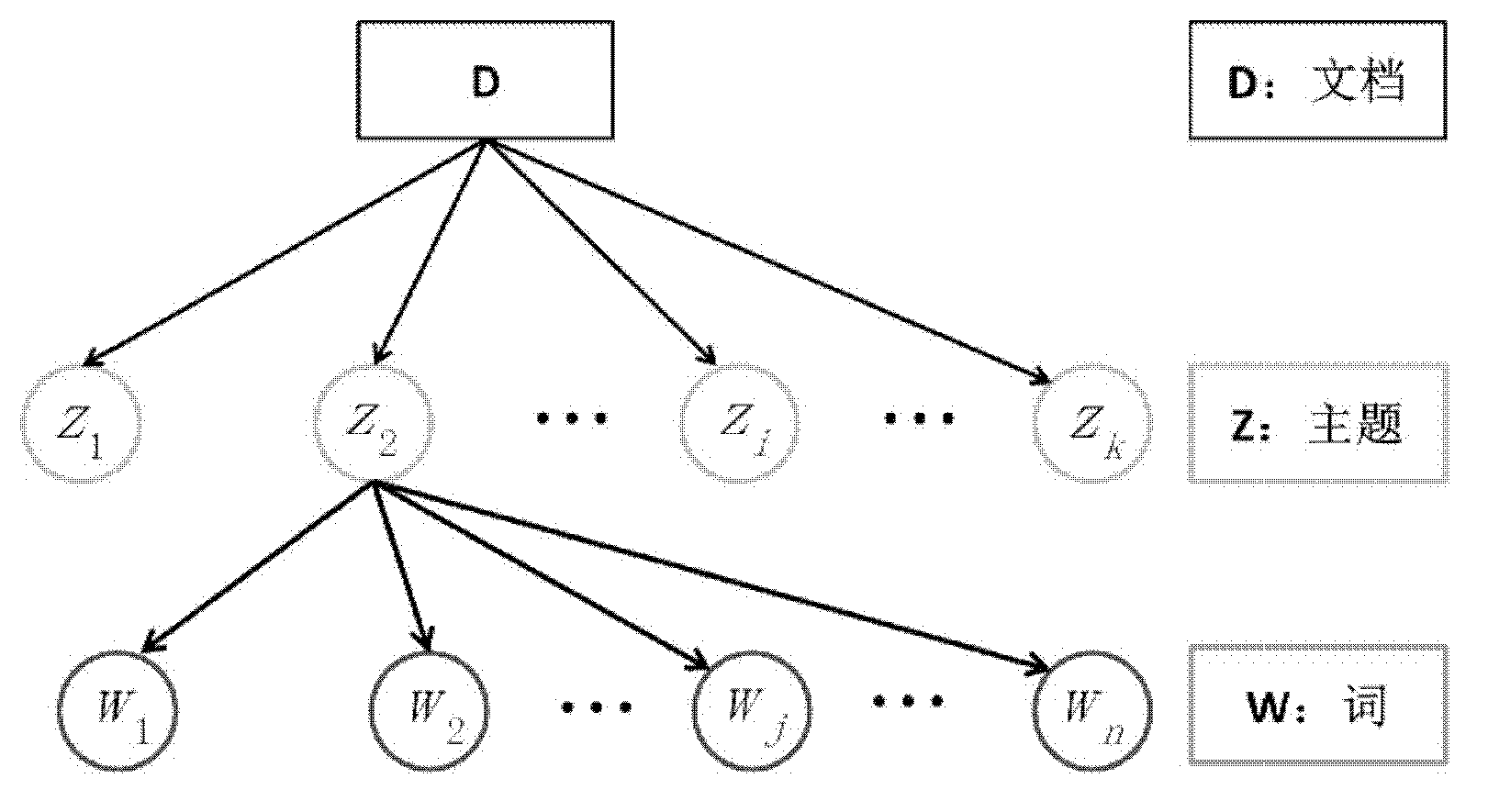

Micro-blog emotion prediction method based on weak supervised type multi-modal deep learning

InactiveCN108108849AImprove the effect of sentiment classificationSolving Multimodal Discriminative RepresentationsWeb data indexingForecastingMicrobloggingPredictive methods

The invention discloses a micro-blog emotion prediction method based on weak supervised type multi-modal deep learning and relates to the field of multi-modal emotion analysis. The method comprises the following steps of preprocessing micro-blog multi-modal data; carrying out the weak supervised training of a multi-modal deep learning model; and carrying out the micro-blog emotion prediction of the multi-modal deep learning model. The method solves the problems of the multi-modal discriminant expression and the data label limitation existing in the emotion prediction of the micro-blog multi-channel content in the prior art, and realizes the final multi-modal emotion class prediction, wherein the accuracy is adopted as the experiment evaluation standard. The consistency degree between the predicted micro-blog emotion polarity category and the pre-marked emotion category is reflected. The performance of the method is greatly improved and the correlation among multiple modals is considered. As a result, an optimal effect is achieved in the aspect of the overall multi-modal performance. An ideal classification effect is achieved for different emotion categories. Through the weak supervised training, an initial model for text and image modals is obviously improved in the aspect of emotion classification effect.

Owner:XIAMEN UNIV

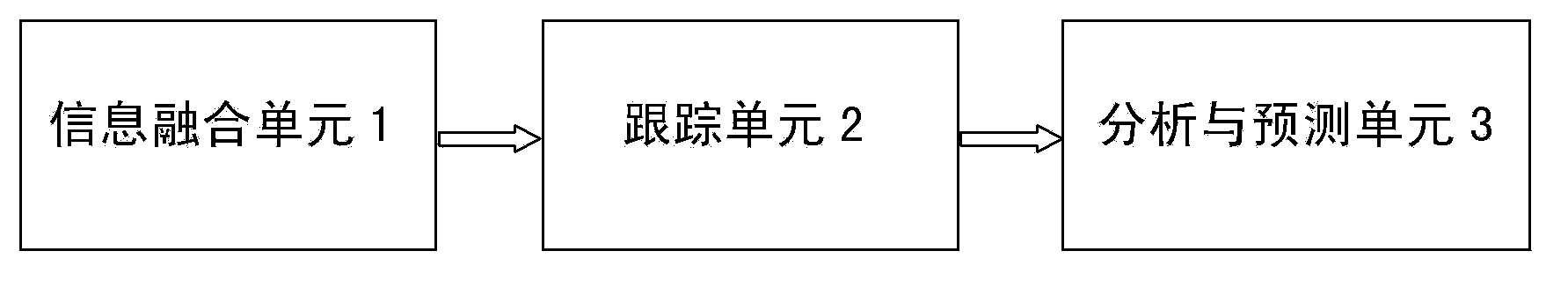

Analysis and prediction system for cooperative correlative tracking and global situation of network social events

InactiveCN103455705AImprove tracking accuracyEasy to understandSpecial data processing applicationsSpace - propertyInformation integration

The invention relates to an analysis and prediction system for cooperative correlative tracking and global situation of network social events. The system comprises an information fusion unit, a tracking unit, and an analysis and prediction unit. The information fusion unit is used for fusing multimodal features of network social event data to obtain fused information of the multimodal data of social events, thus establishing a semantic description model for the multimodal data of cross-social events. The tracking unit is connected with the information fusion unit and is used for acquiring sematic correlative tracking data of the social events on each aspect according to cross-modal property, cross-platform property and trans-time-and-space property of the network social events in face of network contents including rich multimedia information on the basis of the semantic description model for the multimodal data of the cross-social events. The analysis and prediction unit connected with the tracking unit is used for acquiring social-event-based global situation analysis and prediction data on the basis of the semantic correlative tracking data.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

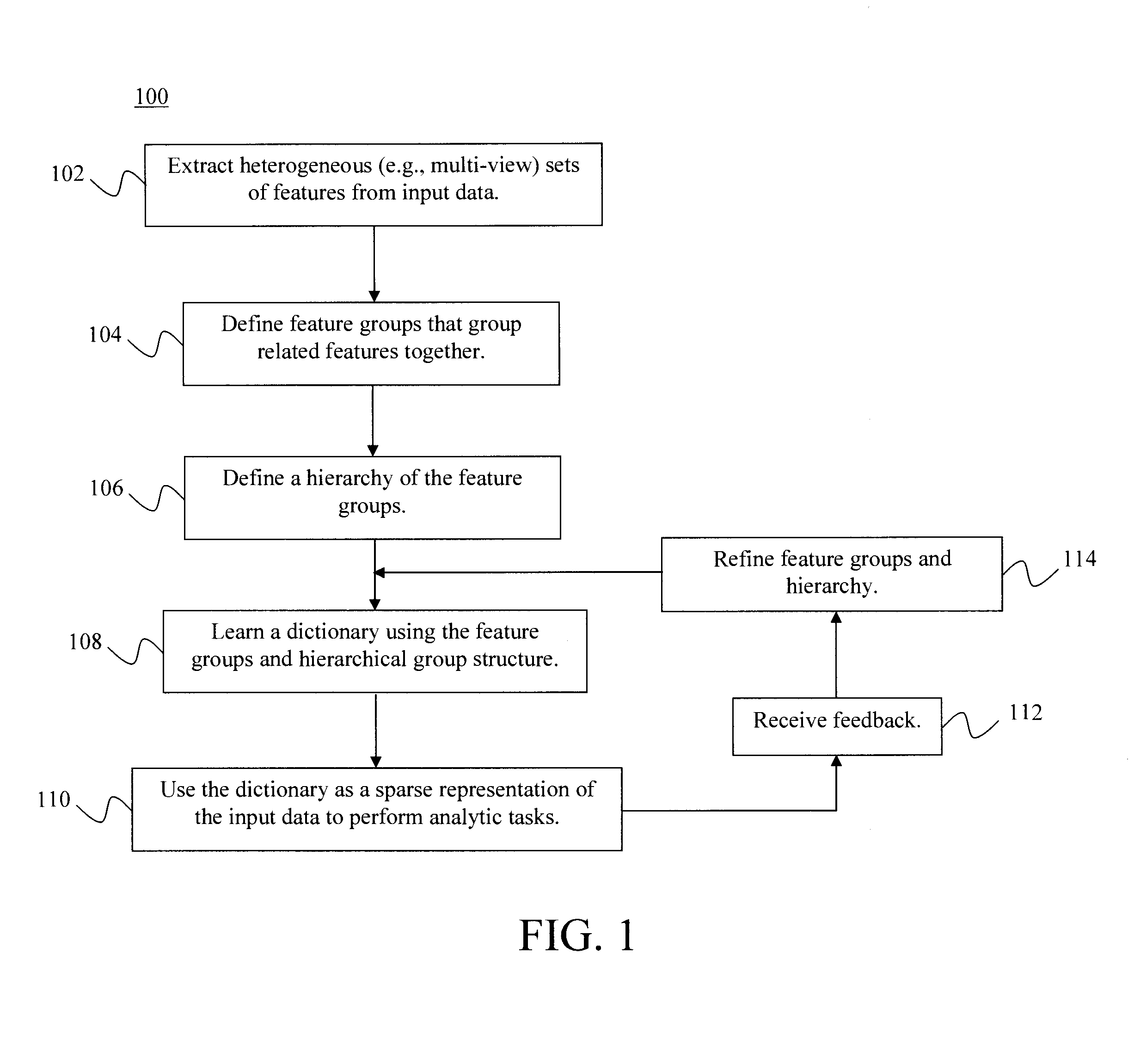

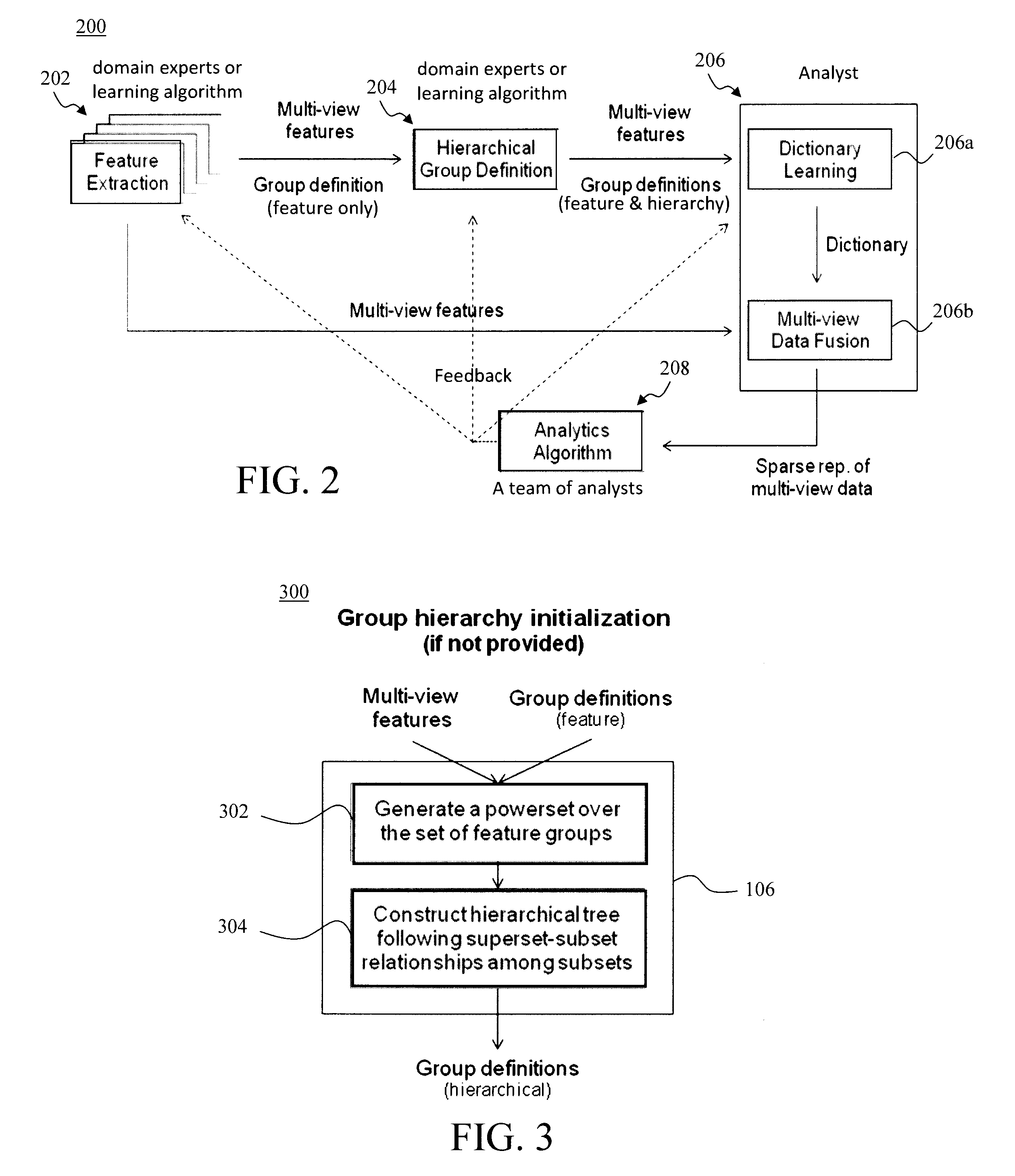

Multimodal Data Fusion by Hierarchical Multi-View Dictionary Learning

ActiveUS20160283858A1Digital data information retrievalCharacter and pattern recognitionDictionary learningMultimodal data

Techniques for multimodal data fusion having a multimodal hierarchical dictionary learning framework that learns latent subspaces with hierarchical overlaps are provided. In one aspect, a method for multi-view data fusion with hierarchical multi-view dictionary learning is provided which includes the steps of: extracting multi-view features from input data; defining feature groups that group together the multi-view features that are related; defining a hierarchical structure of the feature groups; and learning a dictionary using the feature groups and the hierarchy of the feature groups. A system for multi-view data fusion with hierarchical multi-view dictionary learning is also provided.

Owner:IBM CORP

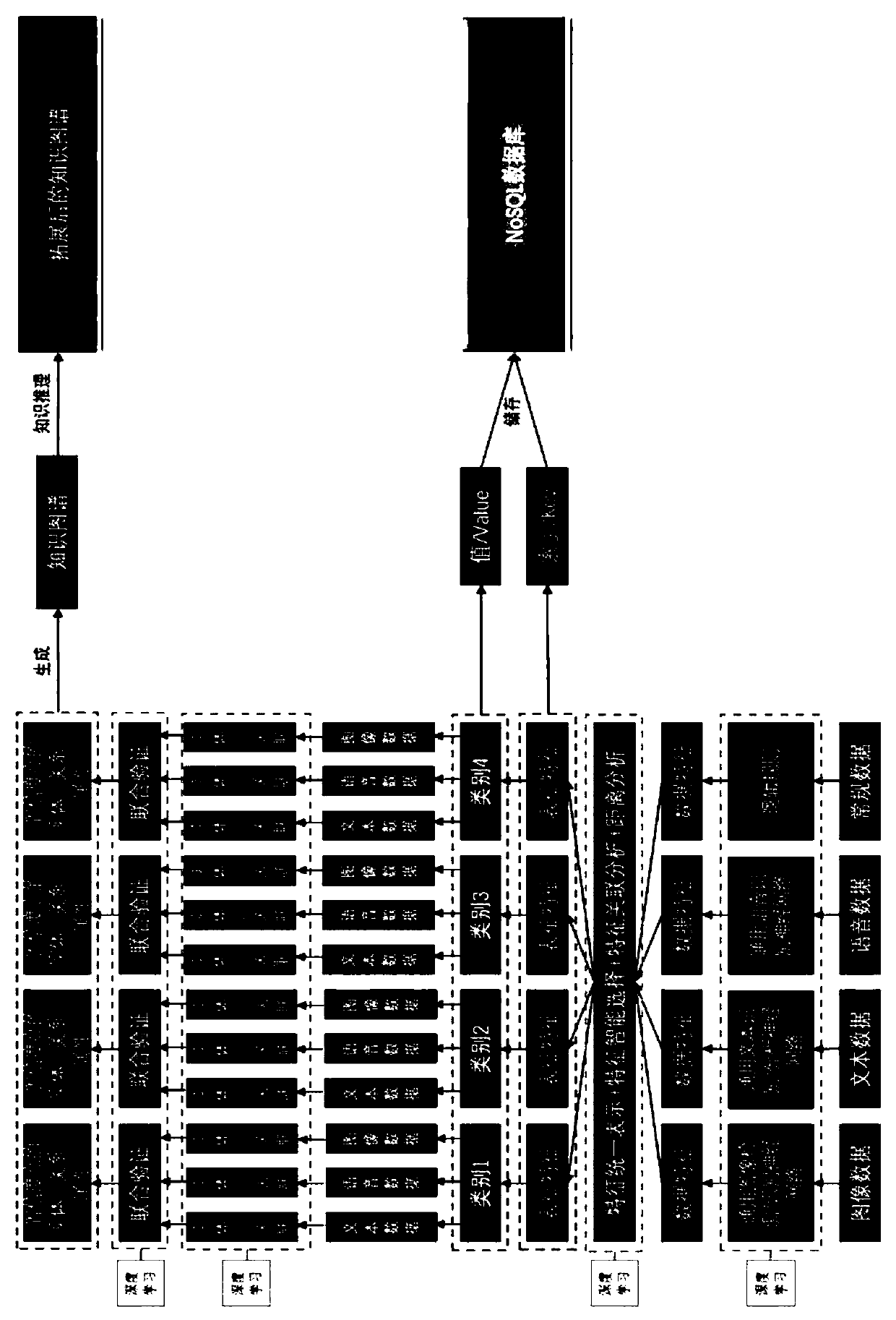

A knowledge graph system construction method

ActiveCN109697233AImprove build efficiencySolve problemsEnergy efficient computingSemantic tool creationClosed loopData acquisition

The invention provides a knowledge graph system establishment method. The method comprises the following steps: data acquisition; extraction of features-characterization features-feature preservation-knowledge graph foundation-construction of knowledge map. According to the invention, the multimedia data can be associated to construct the knowledge graph; unified management and use of multimedia data are carried out; the problem of insufficient training data is solved; the key information extraction accuracy of the knowledge graph is improved; knowledge graph construction efficiency is improved, the knowledge graph and the bottom multi-mode data are associated through entity attribute feature association and key value pair storage, rapid retrieval is carried out through a data disassembling and positioning algorithm, rapid iterative evolution and perfection of the whole system are achieved through a closed-loop system, and therefore powerful support is provided for various later applications.

Owner:CETC BIGDATA RES INST CO LTD

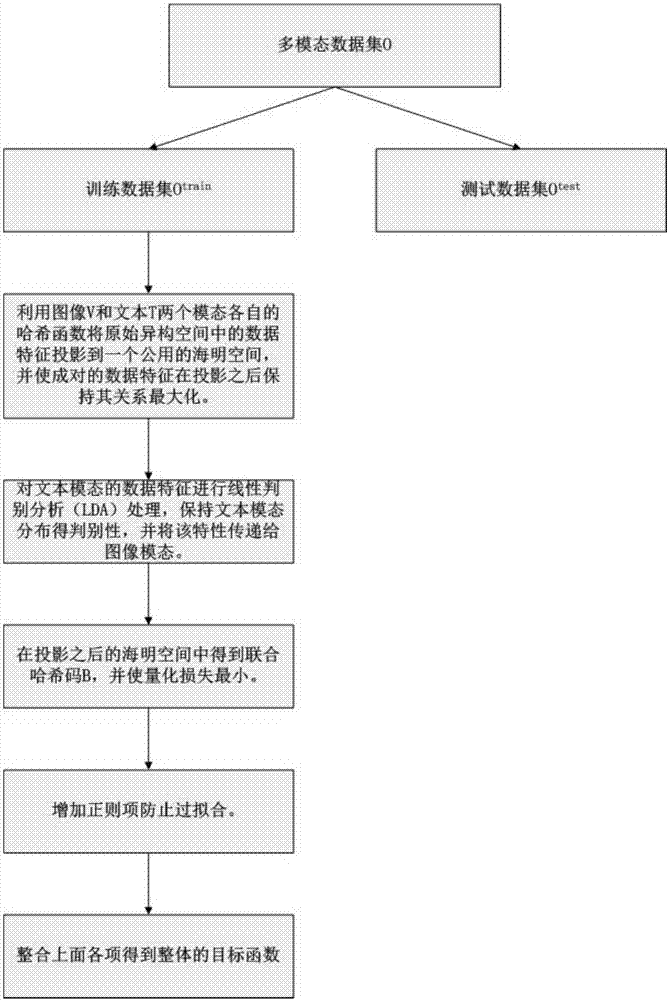

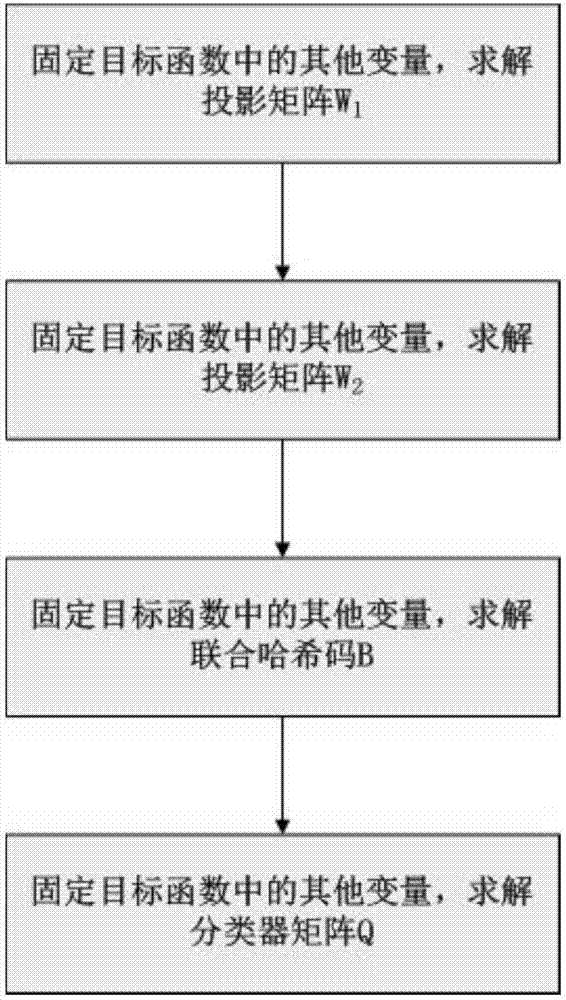

Discriminative association maximization hash-based cross-mode retrieval method

ActiveCN107402993AImprove performanceReduce consumptionCharacter and pattern recognitionSpecial data processing applicationsHat matrixData set

The invention provides a discriminative association maximization hash-based cross-mode retrieval method. The method comprises the steps of performing multi-mode extraction on a training data set to obtain a training multi-mode data set; for the training multi-mode data set, building a discriminative association maximization hash-based target function on the data set; solving the target function to obtain a projection matrix, projected to a common Hamming space, of images and texts, and combined hash codes of image and text pairs; for a test data set, projecting the test data set to the common Hamming space, and performing quantization through a hash function to obtain hash codes of samples of the training set; and performing cross-mode retrieval based on the hash codes. According to the method, the cross-media retrieval efficiency and accuracy are improved.

Owner:SHANDONG NORMAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com