Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

76 results about "Lip feature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Ophthalmic structure

A ring for dilating a pupil during an ophthalmic procedure includes a series of spaced supports for engaging an iris perimeter. The supports are plate elements which form an open pocket directed outwardly for engaging the iris. The sides of the ring form a primary plane, and the plates are located in respective planes above and below the primary plane. The outer periphery of the top and bottom plates forms a lip feature which is the opening to the pocket which retains the iris.

Owner:OASIS MEDICAL

Adaptive lip language interaction method and interaction apparatus

InactiveCN106504751AImprove hit rateNot easily affected by strengthCharacter and pattern recognitionSpeech recognitionColor imageHuman body

The invention discloses an adaptive lip language interaction method and interaction apparatus. The adaptive lip language interaction method comprises the following steps: obtaining a depth image of a target human body object and an infrared image or a color image of the target human body object; obtaining lip area images of the target human body object respectively from the depth image and the infrared image or the color image; extracting lip features from the lip area images, and performing lip language identification after fusion processing is performed on the lip features extracted from the depth image and the infrared image or from the depth image and the color image; and converting a lip language identification result into a corresponding operation instruction, and according to the operation instruction, performing interaction. Such a mode is not easily affected by environment such as light intensity, the hit rate of image identification can be effectively improved, the hit rate of lip language identification is further improved, and the execution efficiency and the operation accuracy of the interaction can be finally effectively improved.

Owner:SHENZHEN ORBBEC CO LTD

Snap-in slot mount RFI/EMI clips

ActiveUS6946598B1Reduce the amount requiredFacilitate EMI gasket installation processScreening gaskets/sealsRack/frame constructionHealth riskLip feature

An EMI shielding gasket for reducing the amount of force required during the mounting process. The present invention provides an extended lip feature on the gasket to facilitate the installation process and to function as a lead-in, such that less force is required by the assembler when mounting the EMI gasket. As less manipulation may be required to install the gaskets, gaskets may be mounted correctly more often, thereby preventing the occurrence of EMI leakage. The present invention also reduces the possibility that the EMI gaskets may become damaged due to excess manipulation, and assemblers may experience fewer physical problems related to manipulating the gaskets. By reducing the amount of force required to mount the EMI gaskets, the present invention may increase productivity by decreasing assembly time, decrease rework of improperly assembled or damaged gaskets, and reduce health risks and subsequent insurance claims of assemblers during manufacturing.

Owner:ORACLE INT CORP

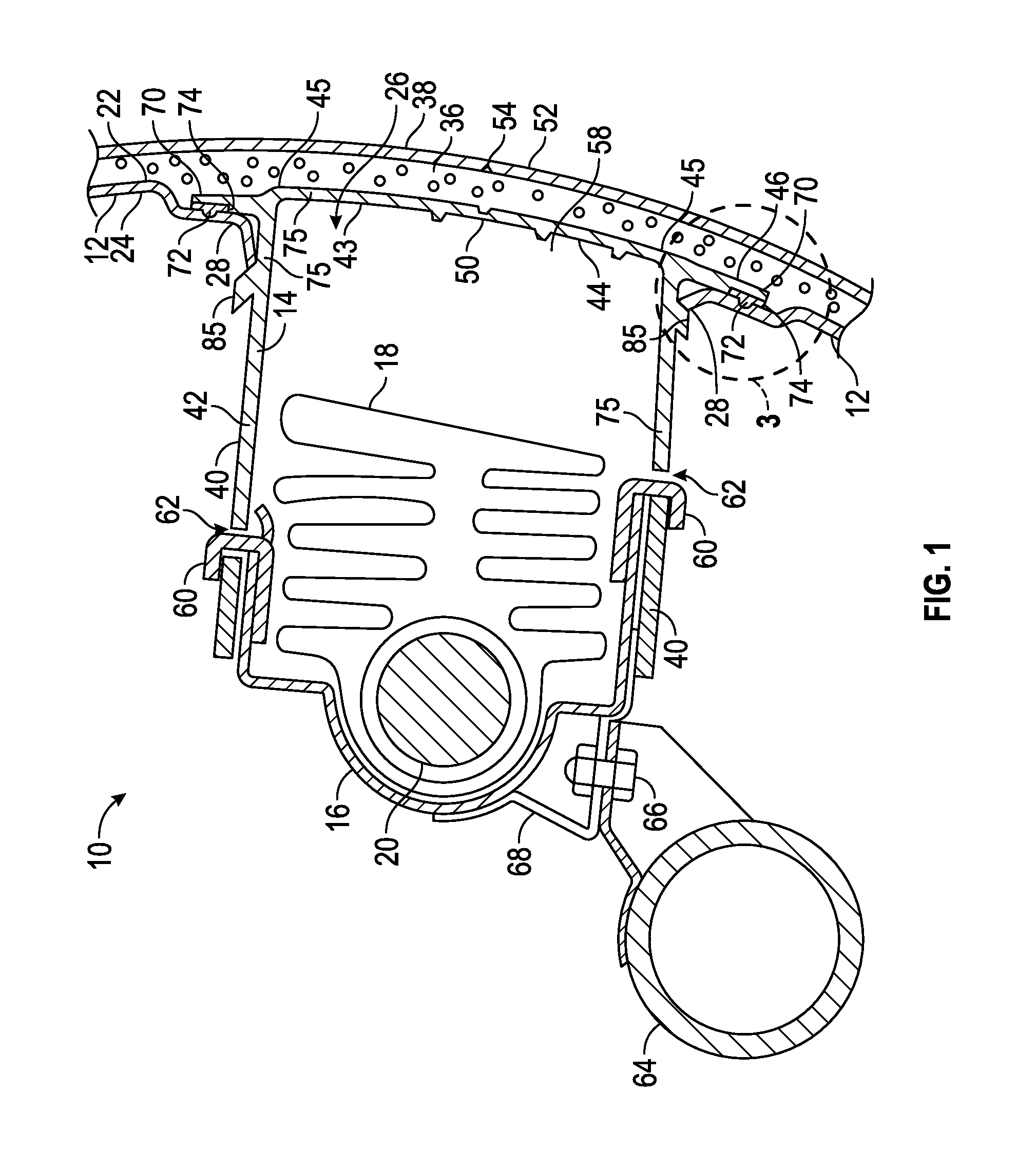

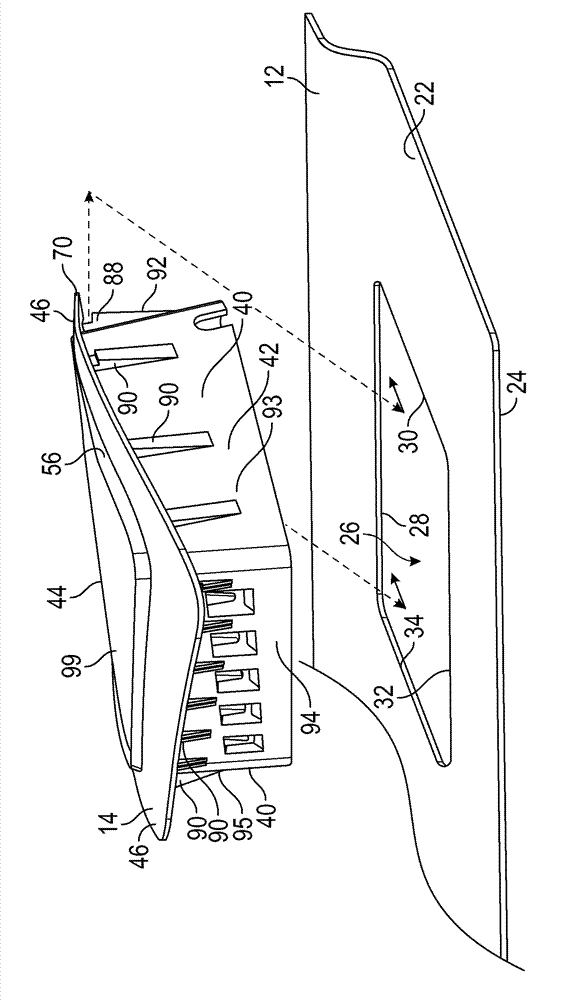

Foam-in-place interior panels having integrated airbag door for motor vehicles and methods for making the same

Interior panels having integrated airbag doors for motor vehicles and methods for making such interior panels are provided herein. In one example, an interior panel comprises a substrate having outer and inner surfaces and an opening extending therethrough. An airbag chute-door assembly is mounted to the substrate and comprises a chute wall that at least partially surrounds an interior space. A door flap portion is pivotally connected to the chute wall and at least partially covers the opening. A perimeter flange extends from the chute wall and has a flange section that overlies the outer surface of the substrate. A molded-in lip feature extends from the flange section and contacts the outer surface to form a seal between the flange section and the substrate. A skin covering extends over the substrate and a foam is disposed between the skin covering and the substrate.

Owner:FAURECIA INTERIOR SYST

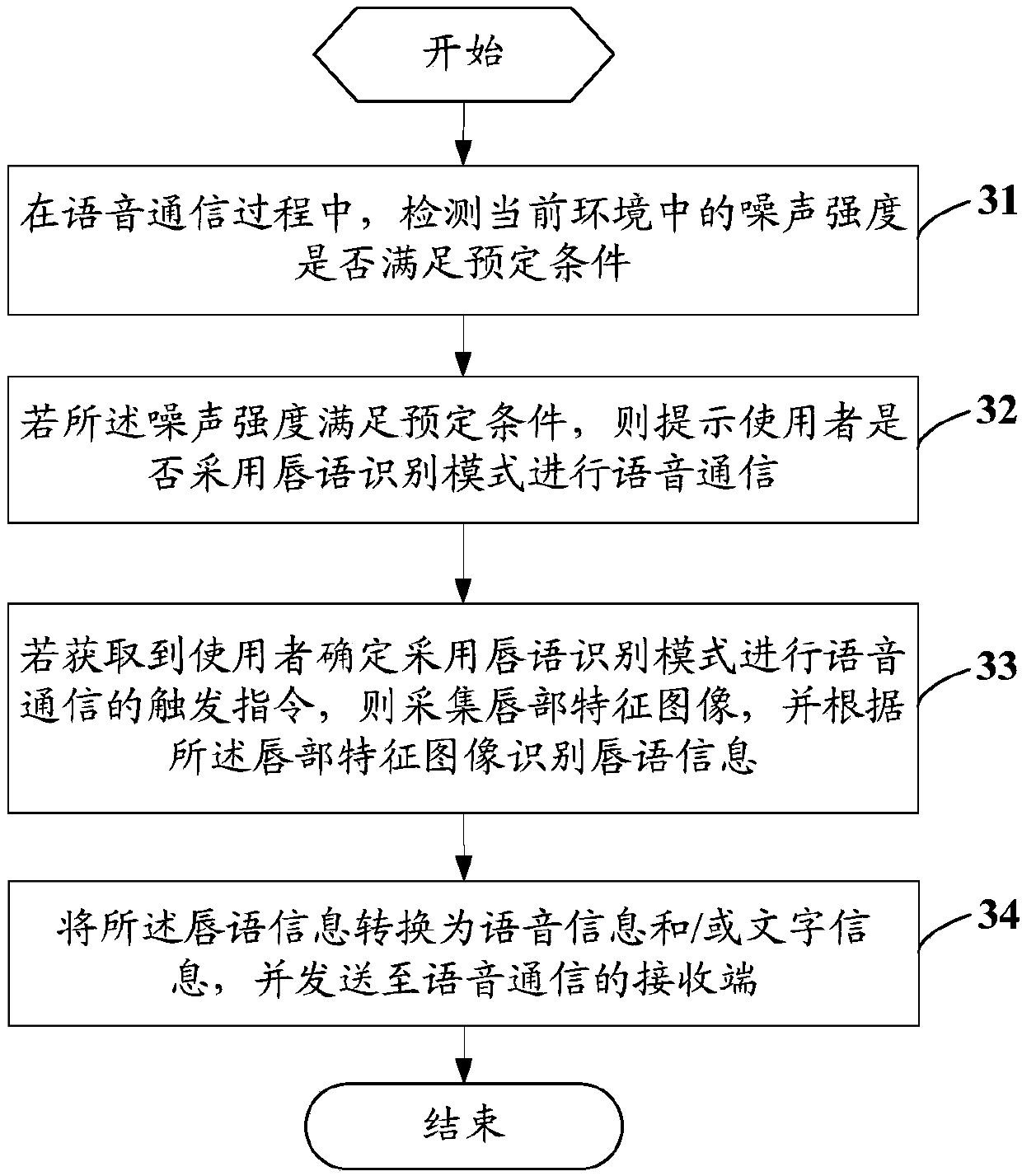

Voice recognition method, mobile terminal and computer readable storage medium

InactiveCN107799125AImprove communication qualityCharacter and pattern recognitionSpeech recognitionCommunication qualityVoice communication

The invention discloses a voice recognition method, a mobile terminal and a computer readable storage medium. The method comprises the steps of detecting whether the noise intensity in the current environment meets a preset condition in a voice communication process, acquiring a lip feature image if the noise intensity meets a preset condition, recognizing lip language information according to thelip feature image, converting the lip language information into voice information and / or text information and sending the voice information and / or text information to a voice communication receivingend. Accurate voice information and / or text information is received by the voice communication receiving end in a noisy environment, so that the communication quality is improved.

Owner:VIVO MOBILE COMM CO LTD

Lip language recognition method and device based on deep learning

PendingCN109637521AImprove recognition accuracyImprove speech recognition accuracyCharacter and pattern recognitionSpeech recognitionFeature vectorLip feature

The invention relates to a lip language recognition method and device based on deep learning. The method includes the following steps that a voice signal and a video of a user are obtained, and the video is obtained by shooting the face of the user when the user sends the voice signal; the voice signal is identified by the voice recognition technology, and a first text is obtained; a to-be-recognized lip image sequence is obtained from the video; a lip feature vector is extracted from the to-be-recognized lip image sequence, and a second text is obtained according to the lip feature vector; the first text is corrected according to the second text, and a text corresponding to the voice signal of the user is obtained. According to the technical scheme, the problem of low accuracy of voice recognition in a noisy environment of the prior art can be solved.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

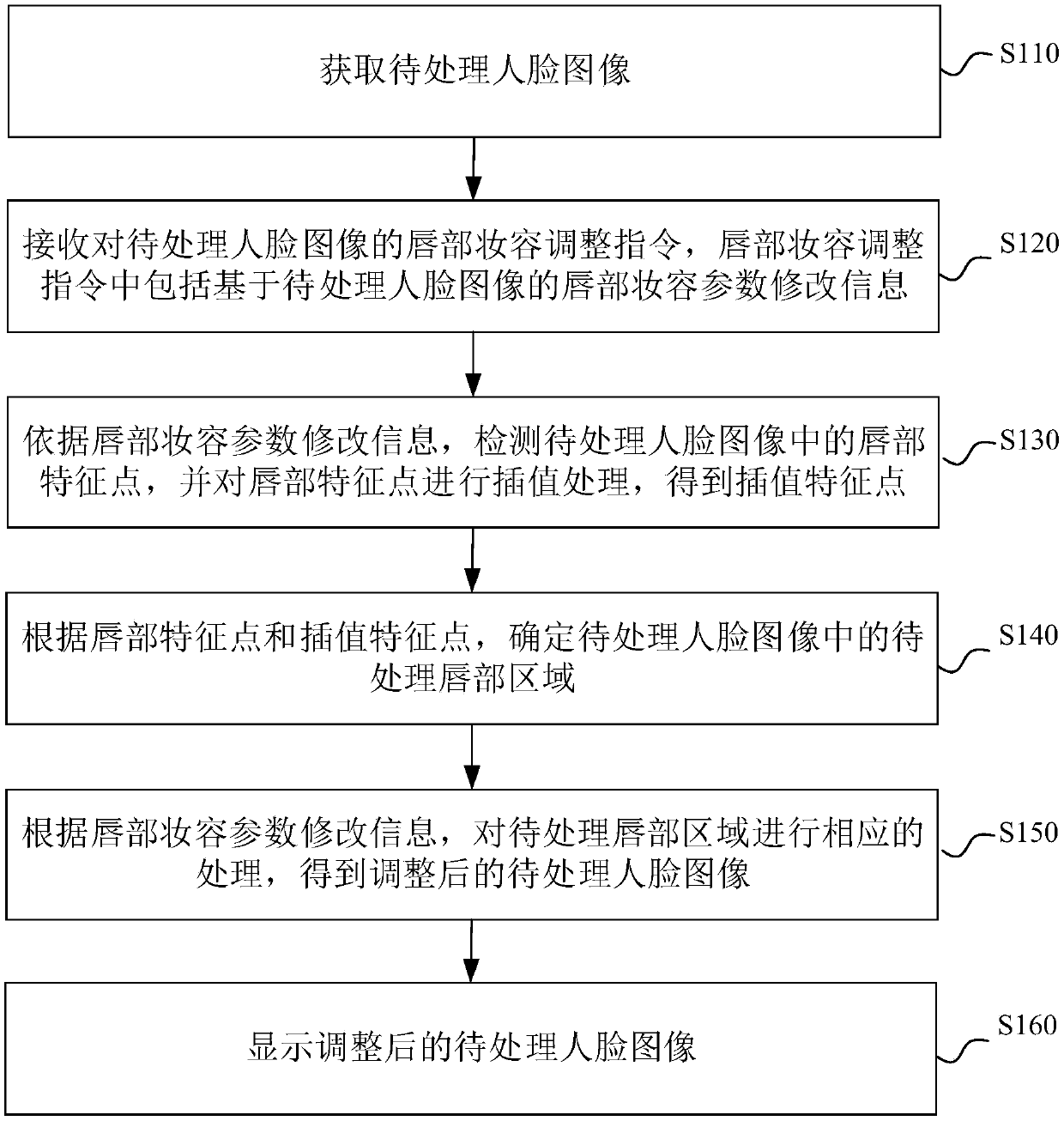

Face image processing method and device, electronic equipment and computer storage medium

PendingCN109584180AReduce processing timeImprove user experienceImage enhancementImage analysisImaging processingLip feature

The embodiment of the invention provides a face image processing method. The invention discloses a device, electronic equipment and a computer storage medium. The method comprises the following stepsof: obtaining a sample; lip makeup parameter modification information based on the to-be-processed face image is included in the lip makeup adjustment instruction; detecting lip feature points in theto-be-processed face image, performing interpolation processing on the lip feature points to obtain interpolation feature points, determining a lip region based on the lip feature points and the interpolation feature points, and performing corresponding processing on the to-be-processed lip region according to the lip makeup parameter modification information to obtain an adjusted to-be-processedface image. According to the scheme of the embodiment, When the lip makeup adjusting instruction is received, the to-be-processed face image can be processed according to the lip makeup adjusting instruction, that is, the function of adjusting the lip makeup of the user in the image through one key is achieved, the user does not need to edit the lip makeup of the face image manually, the processing time is shortened, and the use experience of the user is improved.

Owner:SHENZHEN LIANMENG TECH CO LTD

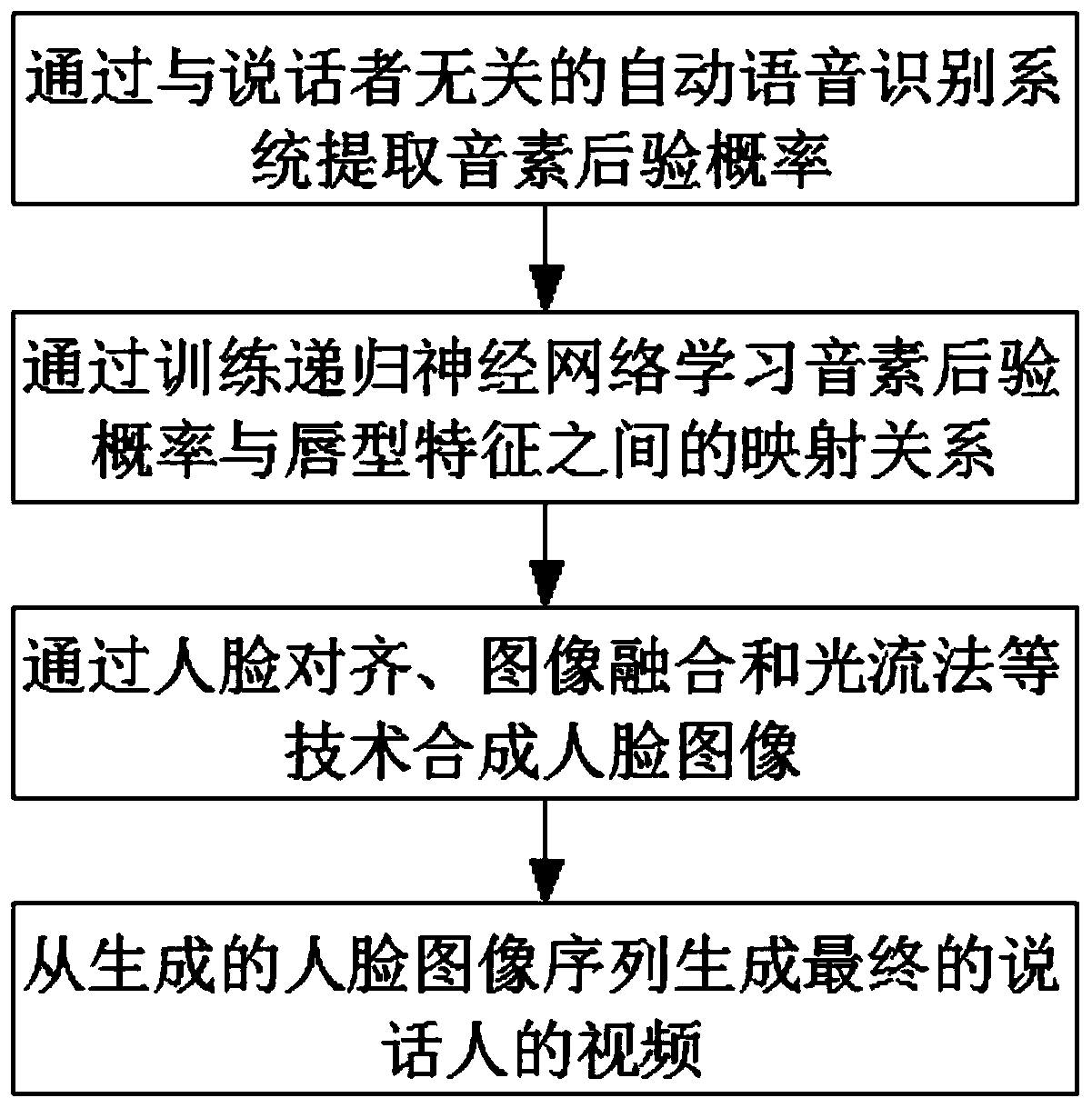

Personalized voice and video generation system based on phoneme posterior probability

PendingCN110880315AReduce data volume requirementsSynchronize Lip ShapesSpeech recognitionSpeech synthesisPersonalizationLip feature

The invention discloses a personalized voice and video generation system based on phoneme posterior probability. The personalized voice and video generation system mainly comprises the following steps: S1, extracting phoneme posterior probability through an automatic voice recognition system; s2, training a recurrent neural network to learn a mapping relationship between phoneme posterior probability and lip features, and through the network, inputting an audio of any target speaker to output the corresponding lip feature; s3, synthesizing the lip-shaped features into a corresponding face image through face alignment, image fusion, an optical flow method and other technologies; and S4, generating a final speaker speech video from the generated face sequence through dynamic planning and other technologies. The invention relates to the technical field of speech synthesis and speech conversion. According to the method, the lip shape is generated based on the phoneme posteriori probability, the requirement for the video data volume of the target speaker is greatly reduced, meanwhile, the video of the target speaker can be directly generated from the text content, and the audio of the speaker does not need to be additionally recorded.

Owner:深圳市声希科技有限公司

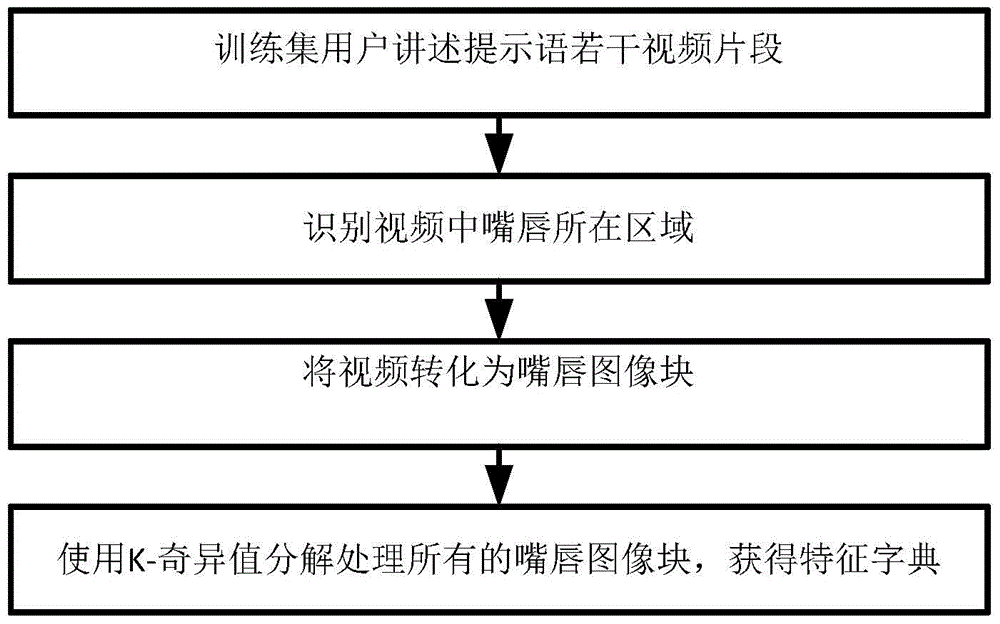

Method for lip feature-based identity authentication based on sparse coding

InactiveCN105787428AImprove execution efficiencyImprove robustnessCharacter and pattern recognitionDigital data authenticationIdentity recognitionLip feature

The invention provides a method for lip feature identity authentication based on sparse coding. The method includes the following steps: a first step of in accordance with the position of the lip, extracting a lip image block from an input video; a second step of establishing a sparse coding featuring dictionary group, reconstructing the lip image block, calculating reconstructing errors; a third step of in accordance with the reconstructing errors, identifying and authenticating the identity of a speaking user. According to the invention, the method takes physiological structure of the lip and behavior habit double features into consideration simultaneously, gets more information from lip features, and increases accuracy of identification. The method adopts the sparse coding algorithm to better confront all kinds of noise caused by environment and personal factors of the speaker, and has a strong robustness. The method uses reconstructing of the sparse coding and the reconstructing errors as determining basis, and a computer has a high execution efficiency and rapid speed.

Owner:SHANGHAI JIAO TONG UNIV +1

Foam-in-place interior panels having integrated airbag doors including multi-shot injection molded airbag chute-door assemblies for motor vehicles and methods for making the same

ActiveUS9039036B1Pedestrian/occupant safety arrangementDomestic articlesMobile vehicleInterior space

Interior panels having integrated airbag doors for motor vehicles and methods for making such interior panels are provided herein. In one example, an interior panel comprises a substrate having outer and inner surfaces and an opening extending therethrough. A multi-shot injection molded airbag chute-door assembly is mounted to the substrate and comprises a chute wall that at least partially surrounds an interior space. A door flap portion is pivotally connected to the chute wall and at least partially covers the opening. A perimeter flange extends from the chute wall and has a flange section that overlies the outer surface of the substrate. A molded-in lip feature extends from the flange section and contacts the outer surface to form a seal between the flange section and the substrate. A skin covering extends over the substrate and a foam is disposed between the skin covering and the substrate.

Owner:FAURECIA INTERIOR SYST

Video synthesis method and device, equipment and storage medium

ActiveCN112866586AImprove viewing experienceTelevision system detailsColor television detailsComputer graphics (images)Synthesis methods

The embodiment of the invention discloses a video synthesis method and device, equipment and a storage medium. The obtained to-be-synthesized text can be a text in any language, the to-be-synthesized video can be a video clip selected by a user and including any anchor image, an audio stream is automatically generated according to the obtained to-be-synthesized text, and video features and lip features are generated based on an audio-free video stream in the to-be-synthesized video, audio features and mouth shape features are generated according to the audio stream, a mouth and lip mapping relation is determined based on the mouth shape features and lip features, a video sequence with consistent mouth and lip is determined according to the mouth and lip mapping relation, and further, a target synthetic video is generated according to the fused video sequence, so that human face and lip actions in the whole target synthetic video are kept consistent. The anchor lip movement in the target synthetic video is kept natural and consistent, and the target synthetic video conforming to the willingness of the user is generated, so that the watching experience of the user is improved.

Owner:北京中科闻歌科技股份有限公司

Collapsible music stand extension device

A collapsible device that can be quickly and easily mounted to certain types of music stands to allow viewing of up to six sheets of paper side by side. The device consists principally of four panel sections, two of which, in the expanded position, hang from the top horizontal edge of the music stand via lips provided for this purpose. Two lower panels, each attached via hinge to the upper panel directly above it, also include lip features at the bottom of the device for holding the sheets of paper. In the expanded position, the four panels cover and extend the viewable plane of the stand. The device can be folded on two perpendicular axes for compact storage and transport. Usable width combined with ease of transport and deployment differentiate the device from prior art, allowing the device to be treated as part of a musician's personal gear.

Owner:MARCHIONE THOMAS RICHARD

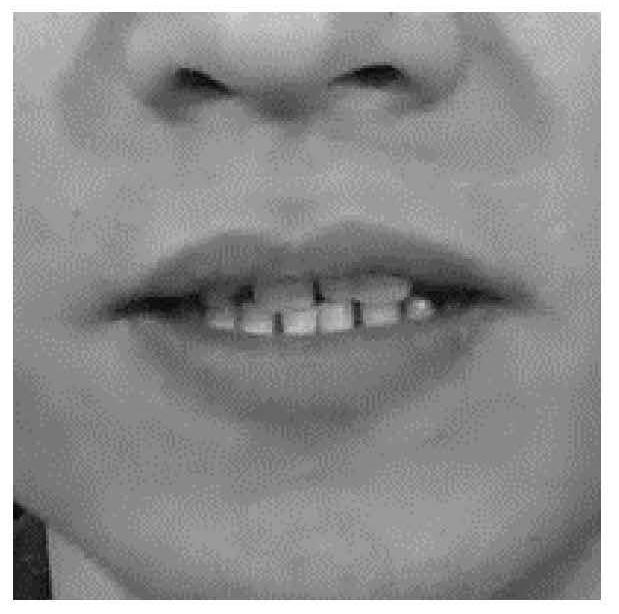

Tooth identification method, device and system

ActiveCN106446800AQuick and accurate identificationCharacter and pattern recognitionLip featureLightness

The embodiment of the invention provides a tooth identification method, device and system. The method comprises the following steps of: according to the obtained coordinates of lip feature points, determining a target image which comprises teeth to obtain the approximate area of the teeth; combining with a characteristic that the teeth are bright on a luminance channel, converting the target image into a preset color space of which the luminance detection is sensitive; on the basis of a luminance grayscale image and a first masking grayscale image, obtaining a second masking grayscale image; on the basis of a chromaticity image and a saturability image, obtaining a lip probability image; and finally, on the basis of the second masking grayscale image and the lip probability image, obtaining a third masking grayscale image, wherein the pixel value of a tooth part in the third masking grayscale image has an obvious difference with pixel values on other positions, and an area which is formed by the pixels of which the pixel values are greater than or equal to a third preset value in the third masking grayscale image as a tooth area. Therefore, a purpose that the teeth can be quickly and accurately identified is realized.

Owner:北京贝塔科技有限公司

Infant emotion acquisition method and system

InactiveCN107133593AEasy to manageAcquiring/recognising facial featuresPattern recognitionCrucial point

The invention discloses an infant emotion acquisition method and system. The method includes the steps of acquiring a face image of an infant; detecting a face position in the face image, and positioning eyebrow key points, eye key points and lip key points; obtaining eyebrow features according to the eyebrow key points, obtaining eye features according to the eye key points and obtaining lip features according to the lip key points; and determining the emotion of the infant through the eyebrow features, eye features and lip features. The method can realize the acquisition of the emotion of the infant and facilitates the management of emotions of the infant.

Owner:湖南科乐坊教育科技股份有限公司

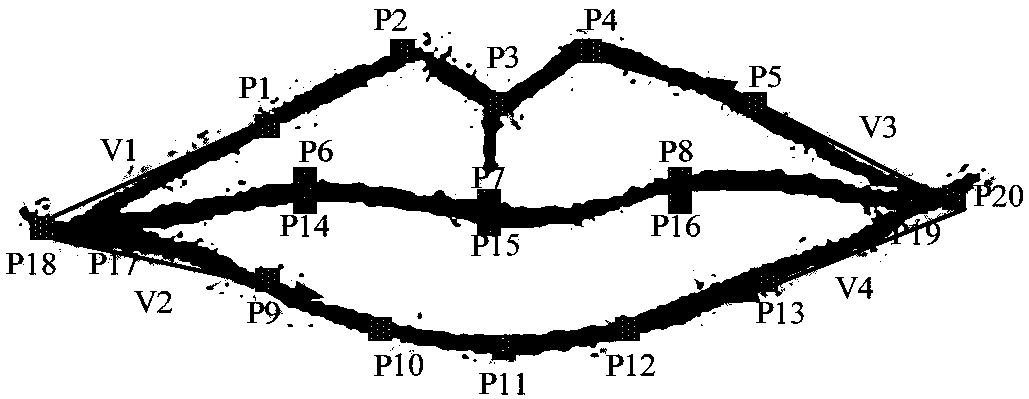

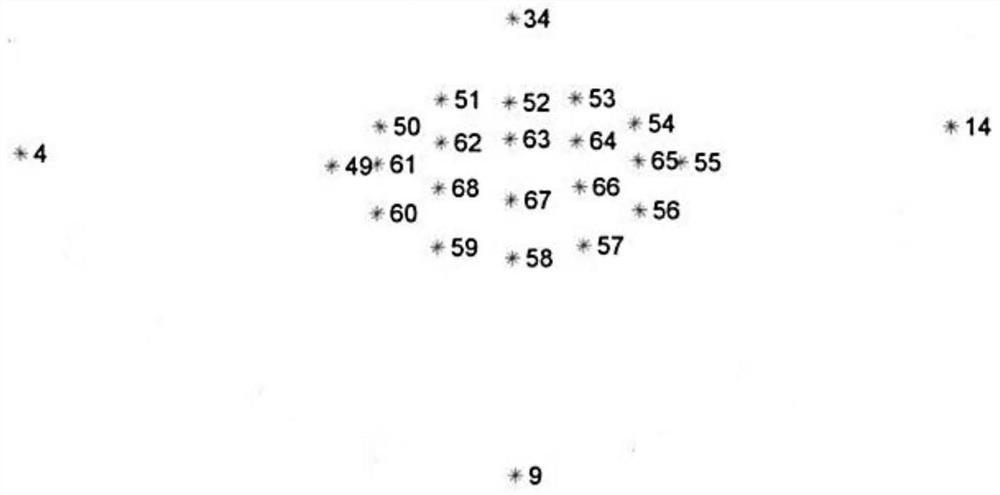

Method, apparatus for analyzing lip motion and storage medium

ActiveCN107633205AReal-time captureRealize analysisCharacter and pattern recognitionLip featureComputer vision

The invention discloses a method, apparatus for analyzing lip motion and a storage medium. The method includes the following steps: acquiring a real-time image that is photographed by a camera apparatus, extracting one real-time face image from the real-time image; inputting the real-time face image to a lip average model that is well-trained in advance, identifying t lip feature points in the real-time face image that indicate the position of the lips; based on the t lip feature points, determining a lip region, inputting the lip region t a lip classification model that is well-trained in advance, determining whether the lip region is a man's lip region; and if the lip region is a man's lip region, based on the x and y coordinates of the t lip feature points in the real-time face image, calculating to obtain the moving direction and moving distance of the lips in the real-time face image. According to the invention, based on the coordinates of the lip feature points, the method hereincan calculate the moving information of the lips in the real-time face image, can analyze the lip region and performs real-time capturing of the lip motions.

Owner:PING AN TECH (SHENZHEN) CO LTD

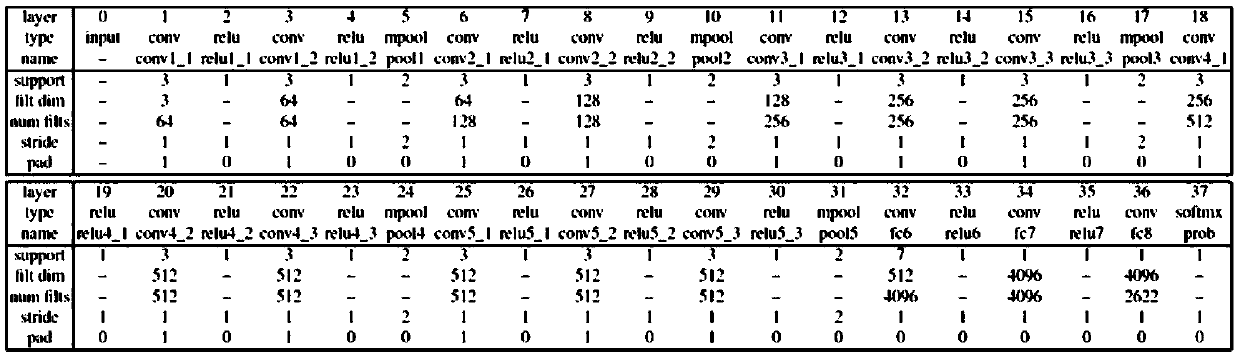

Chinese lip language recognition method and device based on hybrid convolutional neural network

PendingCN111401250AQuick identificationHigh speedCharacter and pattern recognitionNeural architecturesFace detectionLip feature

The invention discloses a Chinese lip language recognition method and device based on a hybrid convolutional neural network, and belongs to the field of machine vision and deep learning. The method comprises the following steps: acquiring the facial image information of a speaker through a camera; detecting and cutting a lip image sequence from the face image information by utilizing a face detector; carrying out the lip feature extraction on the lip image sequence by using a hybrid convolutional neural network; inputting the lip features into a Bi-GRU model, obtaining an identification probability result of the phoneme unit; inputting an identification probability result of the phoneme unit into a connection time sequence classifier CTC; obtaining a phoneme unit classification result; processing the classification result of the phoneme units by adopting a decoding method of introducing an attention mechanism. According to the method, the problem that an existing network framework cannot recognize graphic language characters such as Chinese is solved, possibility is provided for application of a lip language recognition technology in an actual scene, and the method can be widely popularized in the field of computer vision.

Owner:NORTHEASTERN UNIV

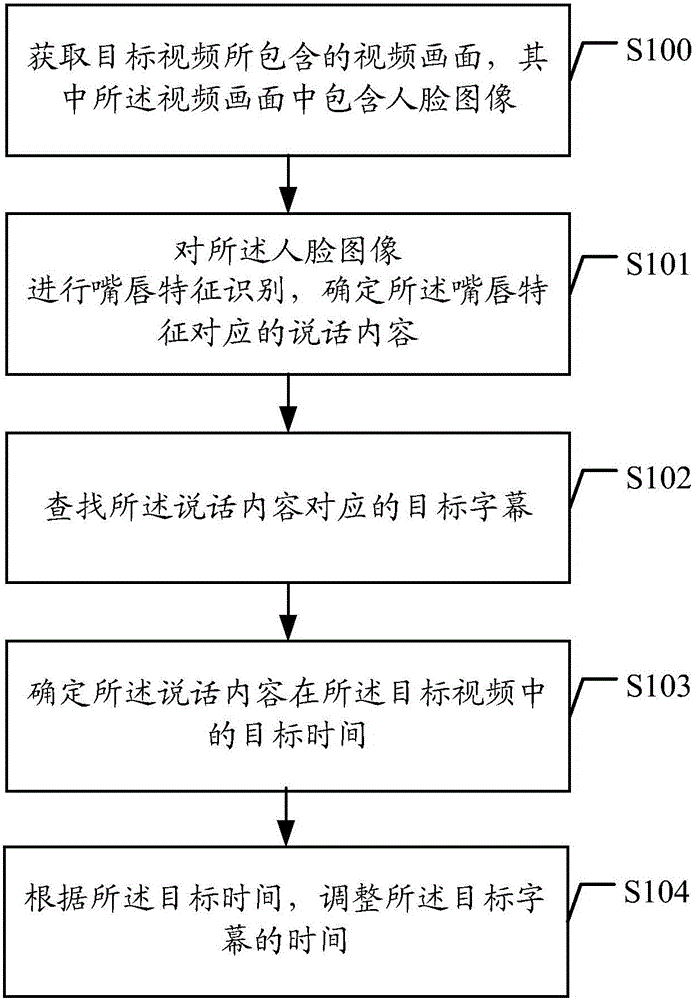

Subtitle correction method and terminal

InactiveCN105100647AAutomatic time adjustmentImprove consistencyTelevision system detailsColor television detailsComputer terminalLip feature

The invention discloses a subtitle correction method and a terminal. The method comprises that a video frame, which is contained by a target video and comprises a face image, is obtained; the lip feature of the face image is identified, and speaking content corresponding to the lip feature is determined; target subtitles corresponding to the speaking content are searched; target time of the speaking content in the target video is determined; and time of the target subtitles is adjusted according to the target time. According to the invention, time of the target subtitles can be adjusted automatically, and the subtitles are more consistent with the video time.

Owner:SHENZHEN GIONEE COMM EQUIP

Voice lip fitting method and system and storage medium

The invention relates to a voice lip shape fitting method. The method comprises the following steps: collecting image data and voice data of a target person video data set; extracting a lip feature vector of a target person in the image data; extracting a voice feature vector of a target person in the voice data; training a multi-scale fusion convolutional neural network by taking the voice feature vector as an input and the lip feature vector as an output; and inputting a to-be-fitted voice feature vector of the target person into the multi-scale fusion convolutional neural network, generating a fitted lip feature vector by the multi-scale fusion convolutional neural network, outputting the fitted lip feature vector, and fitting the lip based on the lip feature vector.

Owner:SUN YAT SEN UNIV

Lip characteristic and deep learning based smiling face recognition method

InactiveCN105956570AImprove recognition accuracySuppresses the effects of non-Gaussian noiseAcquiring/recognising facial featuresFeature vectorPositive sample

The invention discloses a lip feature and deep learning based smiling face recognition method, which comprises the steps of firstly tailoring on a positive sample image containing a smiling face and a negative sample image without a smiling face so as to acquire lip image training samples, carrying out feature extraction on all lip image training samples respectively so as to acquire feature vectors corresponding to each training sample, and training a deep neural network by adopting the feature vectors of the training samples; as for an image to be recognized, acquiring a lip feature vector of a human face in the image to be recognized by adopting the same method, inputting the lip feature vector into the well trained deep neural network so as to carry out recognition, and acquiring a recognition result on whether the human face is a smiling face or not. The smiling face recognition method disclosed by the invention improves the smiling face recognition accuracy under complicated conditions by combining lip features and feature learning capacity of the deep neural network; and influences of non-Gaussian noises are suppressed through improving an overall cost function in training of the deep neural network, and the recognition accuracy is improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Lip motion capturing method and device and storage medium

The invention discloses a lip motion capturing method and device and a storage medium. The lip motion capturing method comprises the steps of acquiring a real-time image taken by a camera shooting device, and extracting a real-time face image from the real-time image; inputting the real-time face image into a pre-trained lip average model, and recognizing t lip feature points representing a lip position in the real-time face image; and calculating the motion direction and the motion distance of a lip in the real-time face image according to x, y coordinates of the t lip feature points in the real-time face image. The motion information of the lip in the real-time face image is calculated according to the coordinates of the lip feature points, and real-time capturing for lip motions is achieved.

Owner:PING AN TECH (SHENZHEN) CO LTD

Mouth-movement-identification-based video marshalling method

InactiveCN104298961AHigh sensitivityImprove viewabilityCharacter and pattern recognitionColor imageFeature vector

Disclosed in the invention is a mouth-movement-identification-based video marshalling method. According to the invention, on the basis of distribution differences of a tone (H) component, a saturation (S) component, and a brightness (V) component at lip color and skin color areas in a color image, three color feature vectors are selected; filtering and area connection processing is carried out on a binary image that has been processed by classification and threshold segmentation by a fisher classifier; a lip feature is matched with an animation picture lip feature in a material library; and a transition image between two frames is obtained by image interpolation synthesis, thereby realizing automatic video marshalling. The fisher classifier is constructed by selecting color information in the HSV color space reasonably, thereby obtaining more information contents for lip color and skin color area segmentation and enhancing reliability and adaptivity of mouth matching feature extraction in a complex environment. Moreover, with the image interpolation technology, the transition image between the two matched video frame pictures is generated, thereby improving the sensitivity and ornamental value of the video marshalling and realizing a smooth and complete video content.

Owner:COMMUNICATION UNIVERSITY OF CHINA

Foam-in-place interior panels having integrated airbag door for motor vehicles and methods for making the same

Interior panels having integrated airbag doors for motor vehicles and methods for making such interior panels are provided herein. In one example, an interior panel comprises a substrate having outer and inner surfaces and an opening extending therethrough. An airbag chute-door assembly is mounted to the substrate and comprises a chute wall that at least partially surrounds an interior space. A door flap portion is pivotally connected to the chute wall and at least partially covers the opening. A perimeter flange extends from the chute wall and has a flange section that overlies the outer surface of the substrate. A molded-in lip feature extends from the flange section and contacts the outer surface to form a seal between the flange section and the substrate. A skin covering extends over the substrate and a foam is disposed between the skin covering and the substrate

Owner:FAURECIA INTERIOR SYST

Methods and Devices for Implementing an Improved Rongeur

A rongeur which functions to act on (or bite) bone while approaching the bone surface from an axial orientation is provided. In one embodiment, a rongeur is provided with a rotatably / pivotally coupled cutting jaw component to cause the cutting jaw to exert force on the bone. The rongeur may include an axially sliding component which provides axial force and actuates a cutting jaw such that it pivots toward a bone surface. Additionally, a lip feature which creates an opposing surface wherein the cutting jaw pivots toward that surface in order to bite the bone may also be provided. The lip feature may act as a shim between dura mater tissue and the inferior surface of a bone. An axial rongeur may be configured to bite and remove bone when placed while under the surface of a patient's skin (e.g. after axially traversing a distance under the skin).

Owner:OSTEOMED

Lip language recognition method combining graph neural network and multi-feature fusion

ActiveCN112861791AAccurate identificationAccurate extractionCharacter and pattern recognitionNeural architecturesData setNetwork generation

The invention discloses a lip language recognition method combining a graph neural network and multi-feature fusion. The method comprises the following steps: firstly, extracting and constructing a face change sequence, marking face feature points, correcting a lip deflection angle, performing pre-processing through a trained lip semantic segmentation network, training a lip language recognition network through a graph structure of a single-frame feature point relationship and a graph structure of an adjacent-frame feature point relationship, and finally, generating a lip language recognition result through the trained lip language recognition network. CNN lip features and lip region feature points obtained after CNN extraction and feature fusion are performed on an identification network data set and a lip semantic segmentation network data set are subjected to the extraction and fusion by the GNN lip features obtained after GNN extraction and fusion and then input into BiGRU for identification, and the problems that time sequence feature extraction is difficult and lip feature extraction is affected by external factors are solved; the method effectively extracts the static features of the lip and the dynamic features of the lip change, and has the characteristics of high lip change feature extraction capability, high recognition result accuracy and the like.

Owner:HEBEI UNIV OF TECH

Intelligent wheelchair man-machine interaction method based on double-mixture lip feature extraction

ActiveCN103425987AOvercome the effects of recognition errorsImprove recognition rateCharacter and pattern recognitionFeature vectorWheelchair

The invention discloses an intelligent wheelchair man-machine interaction method based on double-mixture lip feature extraction, and relates to the field of feature extraction and recognition control of a lip recognition technology. The method includes the steps of firstly, conducting DT_CWT filtering on a lip, then, conducting DCT conversion on a lip feather vector extracted through the DT_CWT so that the lip features extracted after conversion is conducted through the DT_CWT can be concentrated in a large coefficient obtained after the DCT conversion, enabling the feature vector to contain the largest amount of lip information, and enabling the effect of dimensionality reduction to be achieved at the same time, wherein due to the fact that the DT_CWT has approximate translation invariance, the difference between feature values of the same lip in different positions in an ROI is small after the DT_CWT filtering is conducted, and the influence produced when the lip recognition is wrong due to position offset of the lip in the ROI is eliminated. According to the intelligent wheelchair man-machine interaction method, the lip recognition rate is greatly improved, and the robustness of a lip recognition system is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Lip-based person recognition method, device, program, medium, and electronic apparatus

The embodiment of the invention provides a lip-based person recognition method and device, a computer program product, a computer readable storage medium and electronic equipment. The person recognition method comprises the following steps: performing face detection on a to-be-recognized image, and extracting a lip region image from the to-be-recognized image according to a face detection result;performing feature extraction on the lip region image to obtain lip features; and performing character recognition on the to-be-recognized image according to the lip features. By adopting the technical scheme of the embodiment of the invention, the lip area of the person can be accurately identified by utilizing the better identification capability of the lip characteristics on the mouth of the person, and the accuracy of person identification is further improved.

Owner:BEIJING D EAR TECH

Method for converting lip image feature into speech coding parameter

ActiveCN108538283AEasy to construct trainingCharacter and pattern recognitionNeural architecturesFeature vectorTransformation of text

The invention relates to a method for converting a lip image feature into a speech coding parameter, comprising the following steps: 1) constructing a speech coding parameter converter including an input cache and a trained predictor, successively receiving lip feature vectors in chronological order, and storing the lip feature vectors in the input cache of the converter; 2) inputting k latest lipfeature vectors cached at the current time into the predictor as a short-time vector sequence at regular intervals,, and obtaining a predicted result which is a coding parameter vector of one speechframe; and 3) enabling the speech coding parameter converter to output the predicted result. Compared with the prior art, the method requires no intermediate characters, has high conversion efficiency, and facilitates training.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

Far field voice noise reduction method, system and terminal and computer readable storage medium

ActiveCN108615534AImprove noise reductionEasy to understand implementationSpeech recognitionLip featureNoise reduction

The invention provides a far field voice noise reduction method, system and terminal and a computer readable storage medium. The far field voice noise reduction method comprises the steps that the lipfeatures of the user are acquired and the feature position value of the lip features is identified; the feature position value and the standard position value are compared, and whether the lip is inthe moving state is judged; the space position value in space of the lip in the moving state is determined; an audio signal is received towards the direction of the lip in the moving state according to the space position value; and audio processing is performed on the audio signal, wherein the standard position value is obtained through statistics, and the standard position value is the position value of the lip features when the lip is in the static state. With application of the far field voice noise reduction method, the actual speaker can be identified and voice receiving towards the direction of the actual speaker is performed and then the audio processing operation is performed so that the far field voice noise reduction performance under the noisy environment can be enhanced.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Lip language recognition method and system based on cross-modal attention enhancement

The invention discloses a lip language recognition method and system based on cross-modal attention enhancement, and the method comprises the steps of extracting a lip image sequence and the lip motion information, obtaining a corresponding lip feature sequence and a lip motion sequence through a pre-training feature extractor, inputting the obtained feature sequences into a cross-modal attention network, obtaining a lip enhancement feature sequence; through a multi-branch attention mechanism, establishing the time sequence relevance of an intra-modal feature sequence, and specifically selecting the related information in input at an output end. According to the method, the relevance between the time sequence information is considered, optical flow calculation is carried out on the adjacent frames to obtain the motion information between the visual features, the lip visual features are represented and fused and enhanced by using the motion information, the context information in the mode is fully utilized, and finally, the correlation representation and selection of the intra-modal features are carried out through the multi-branch attention mechanism, so that the lip reading recognition accuracy is improved.

Owner:HUNAN UNIV

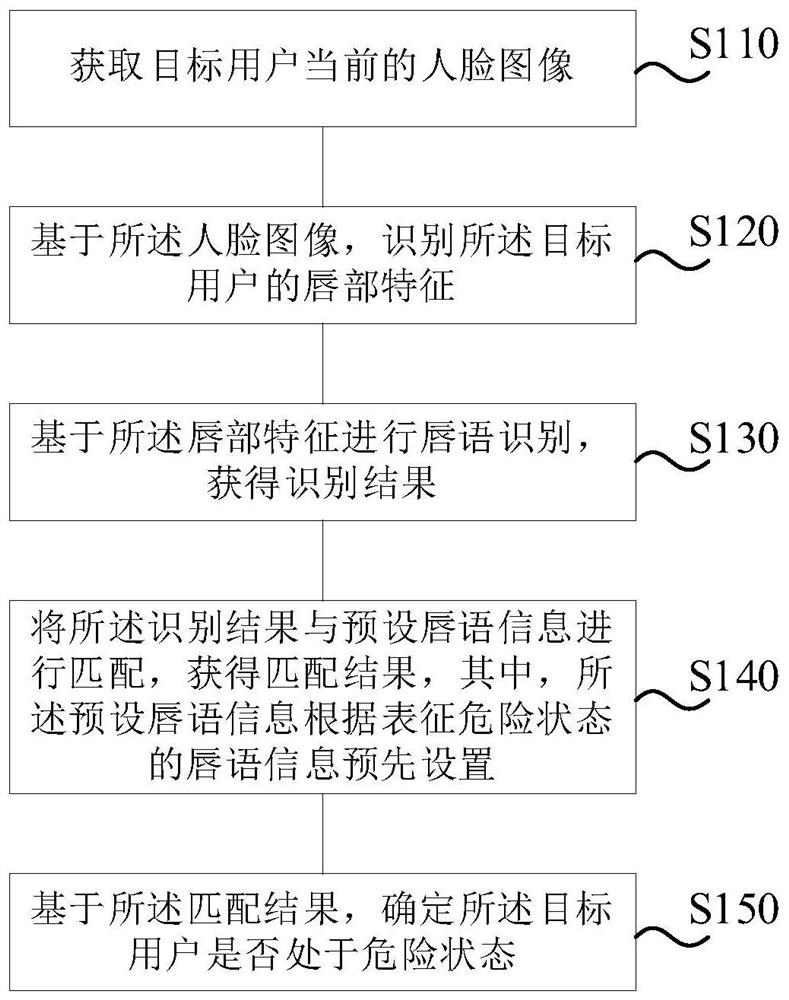

Dangerous state recognition method and device, electronic equipment and storage medium

PendingCN112132095AEasy to reportImprove securityData processing applicationsSemantic analysisEngineeringLip feature

The invention discloses a dangerous state recognition method and device, electronic equipment and a storage medium, and relates to the technical field of safety. The method comprises the steps of obtaining a current face image of a target user, identifying lip features of the target user based on the face image, performing lip language identification based on the lip features to obtain an identification result, and matching the identification result with preset lip language information to obtain a matching result, wherein the preset lip language information is preset according to lip languageinformation representing a dangerous state, and determining whether the target user is in the dangerous state based on the matching result. Therefore, whether the user is in a dangerous state or not can be determined based on the lip action of the user.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com