Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

701 results about "Eyebrow" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The eyebrow is an area of thick, delicate hair above the eye that follows the shape of the lower margin of the brow ridges of some mammals. The most recent research suggests that in humans its primary function is to allow for a wider range of ‘non-verbal’ communication. It is common for people to modify their eyebrows by means of hair removal and makeup.

Near-infrared method and system for use in face detection

ActiveUS7027619B2Improve accuracyReliable face detectionImage analysisPerson identificationPattern recognitionFace detection

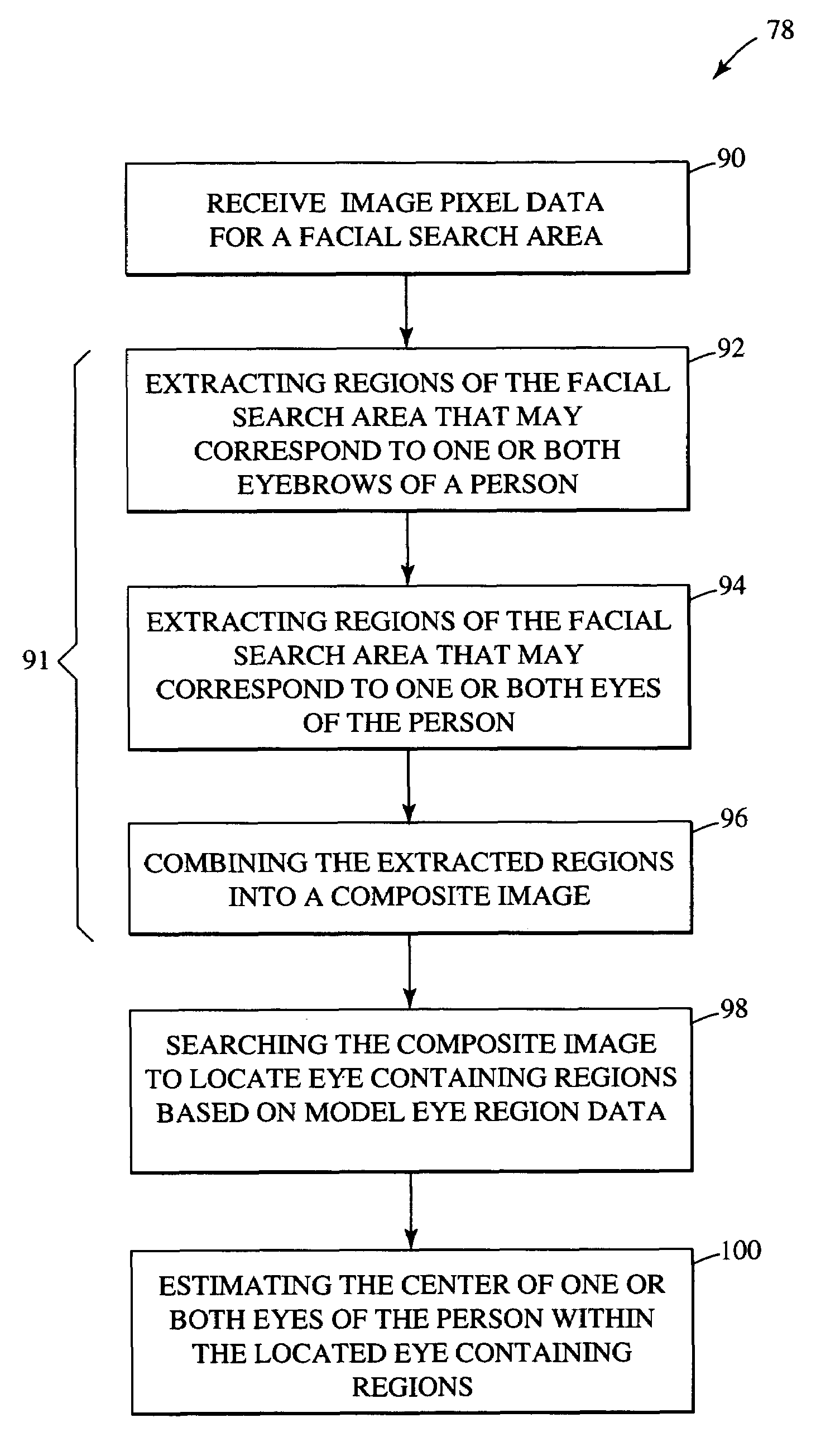

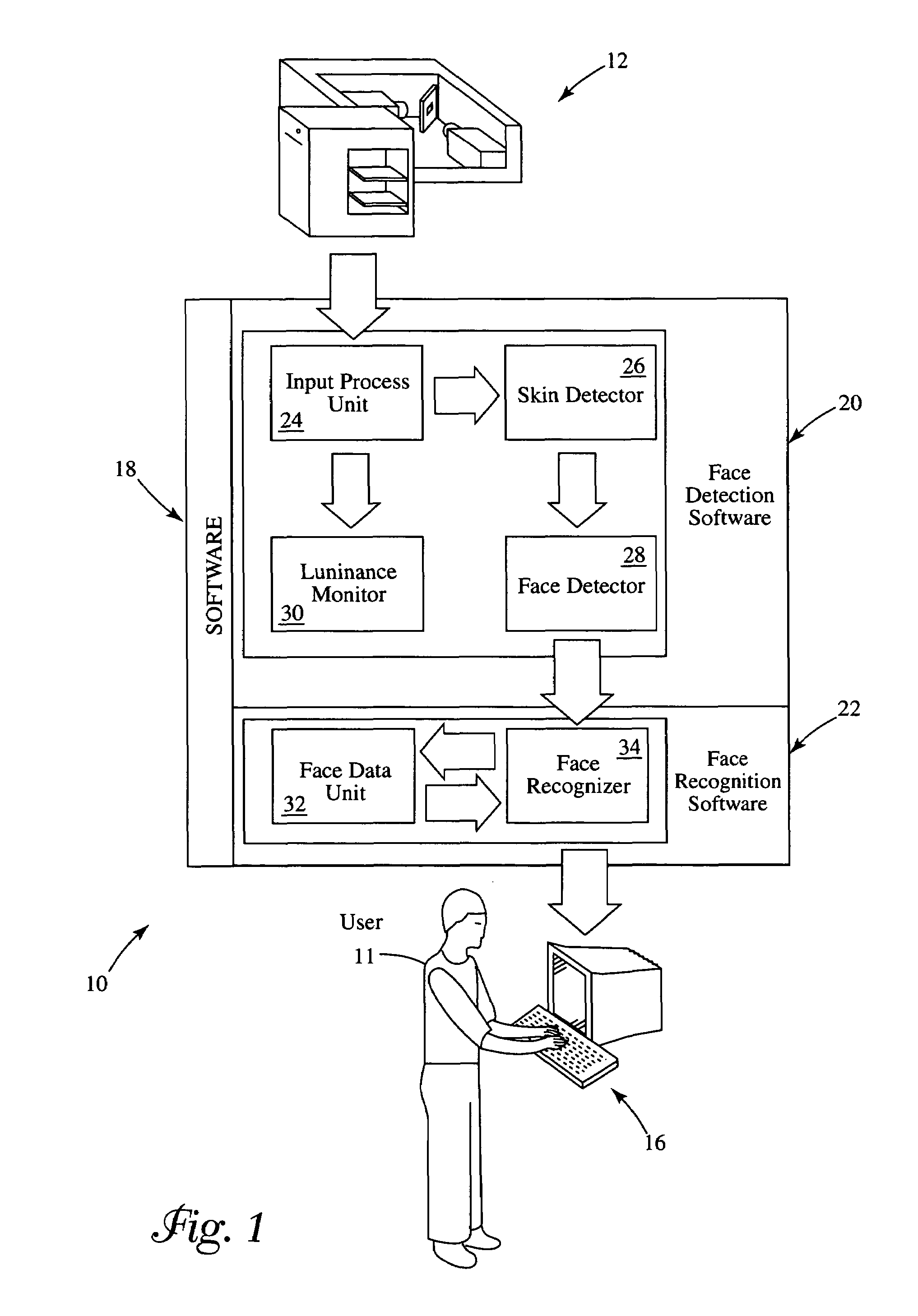

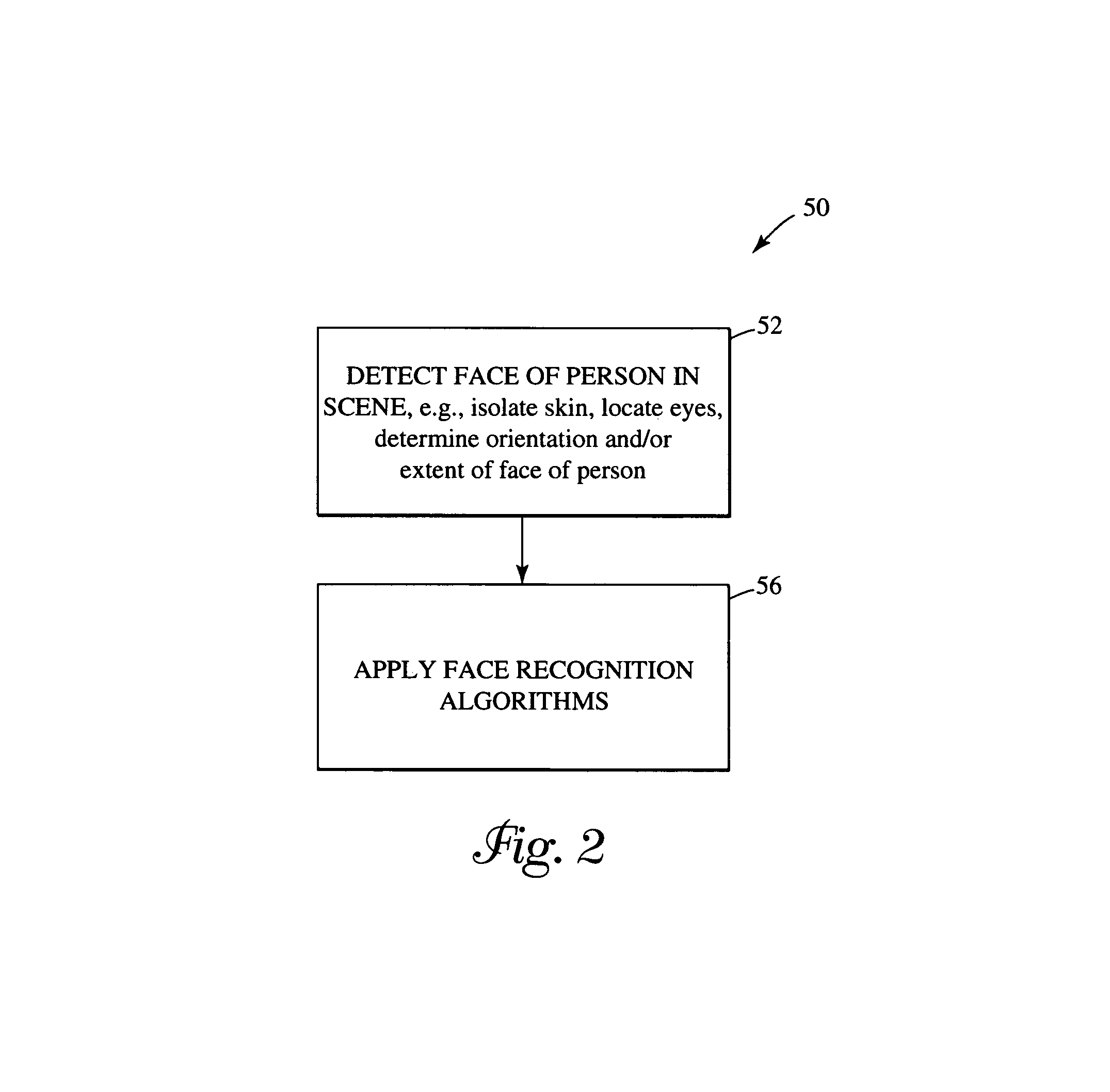

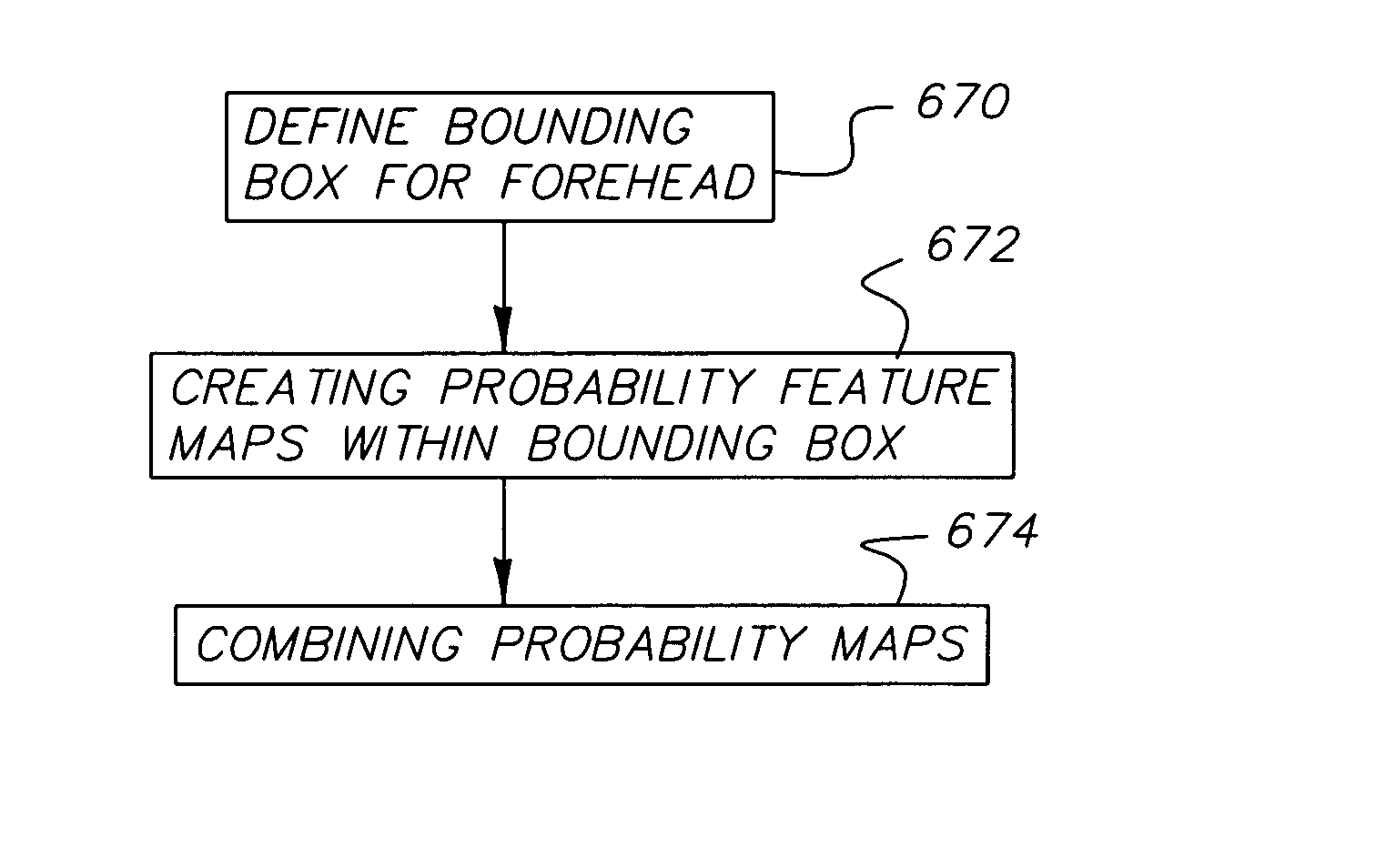

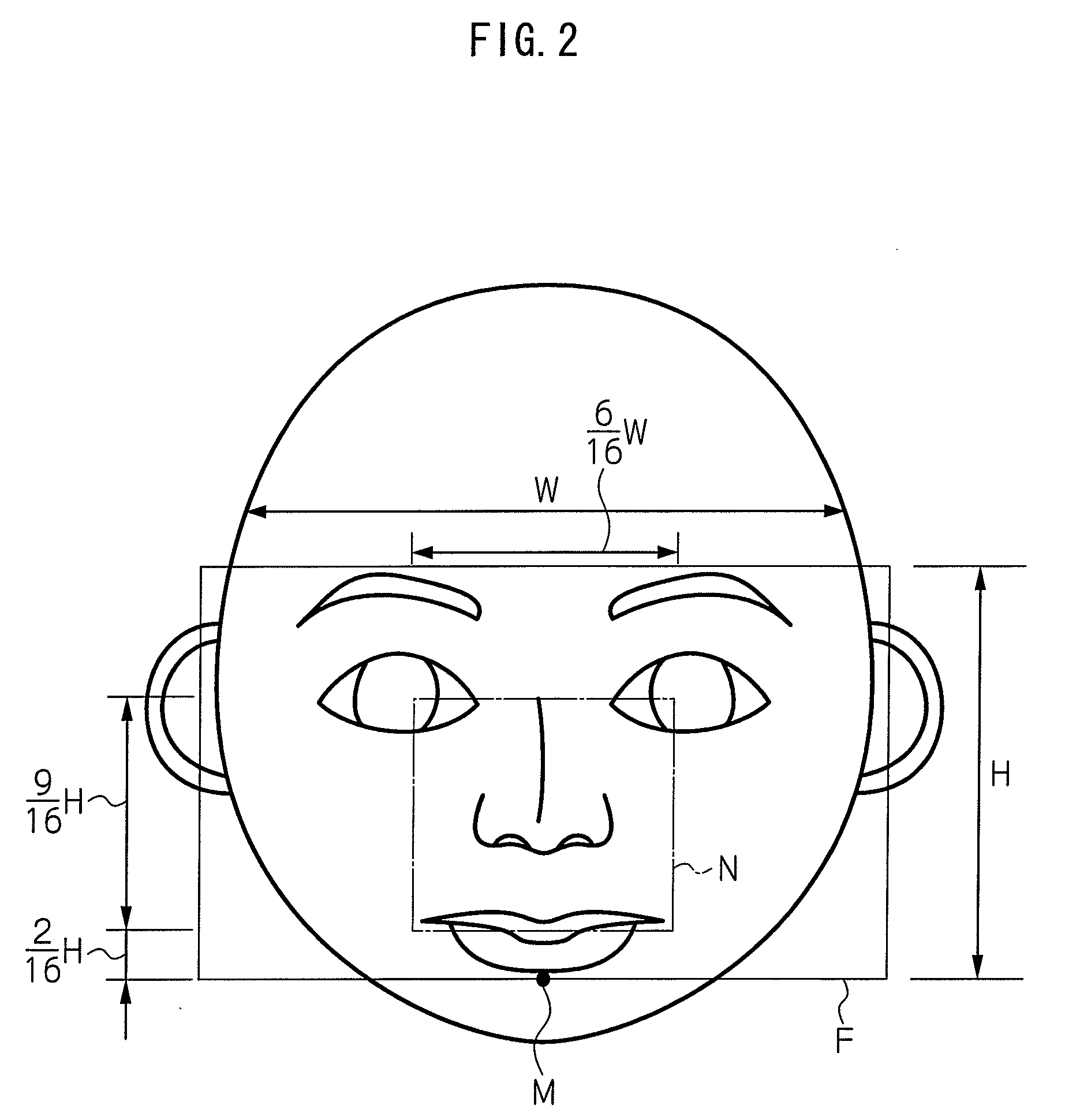

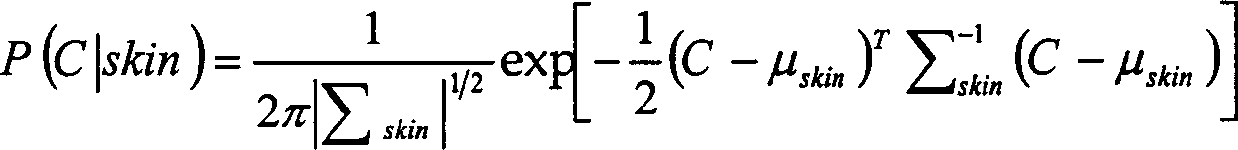

A method and system for use in detecting a face of a person includes providing image data representative of reflected light of a scene in at least one bandwidth within a reflected infrared radiation range. Regions of the scene in the image data which may correspond to one or both eyebrows of a person and which may correspond to one or both eyes of the person are extracted from the data. The extracted regions which may correspond to one or both eyebrows of the person are combined with the extracted regions that may correspond to one or both eyes of the person resulting in a composite feature image. The composite feature image is searched based on model data representative of an eye region to detect one or both eyes of the person.

Owner:HONEYWELL INT INC

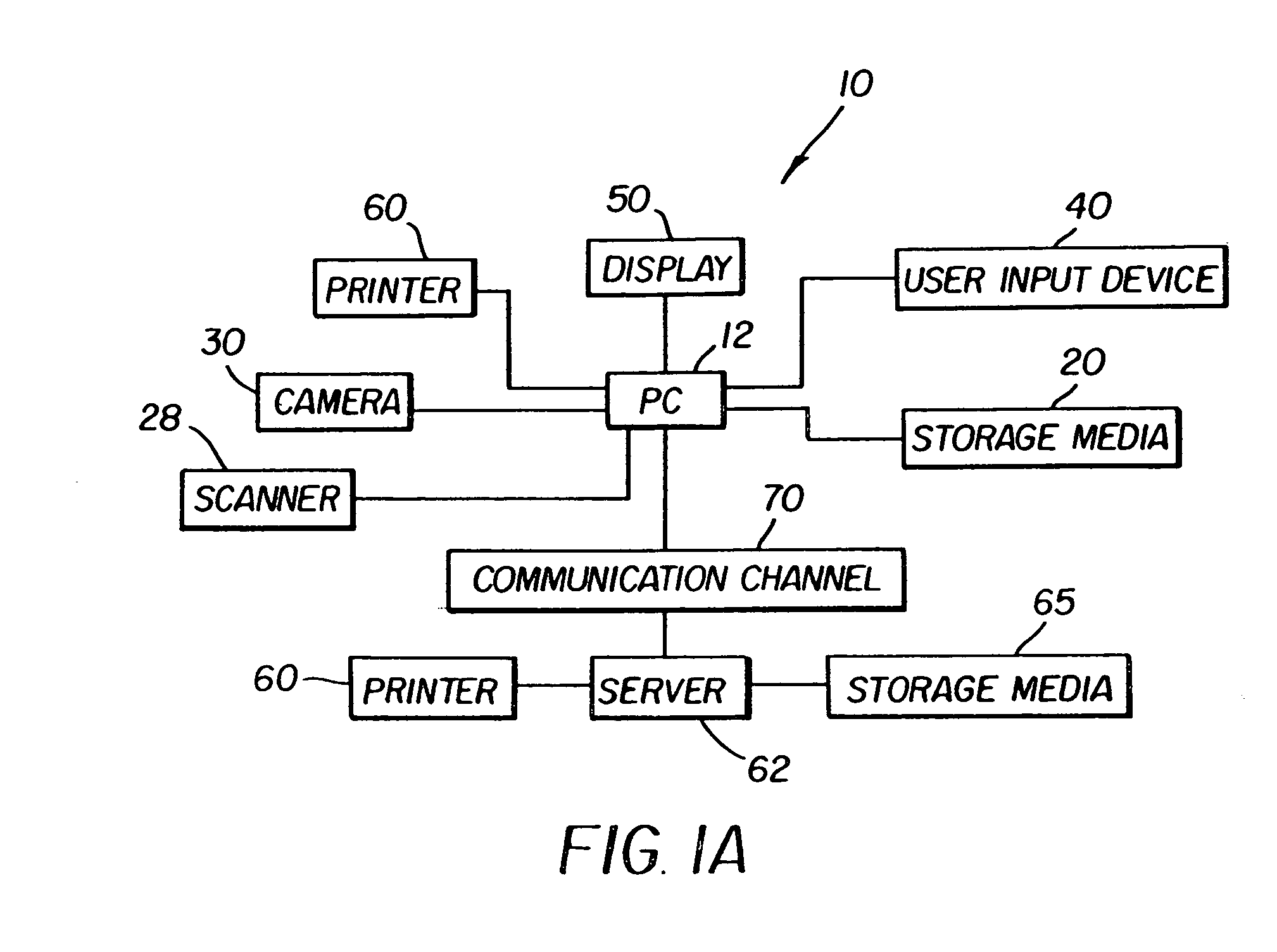

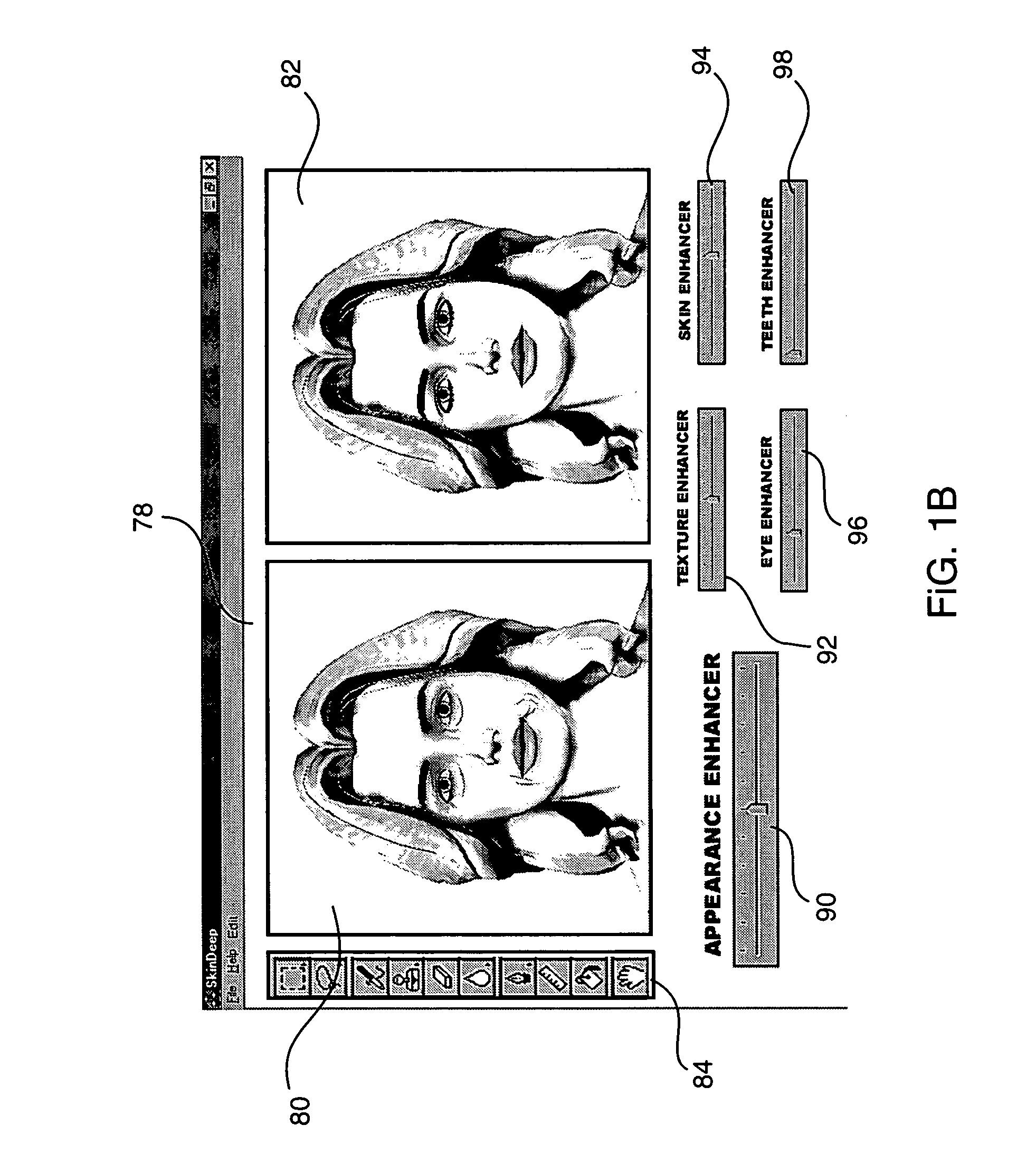

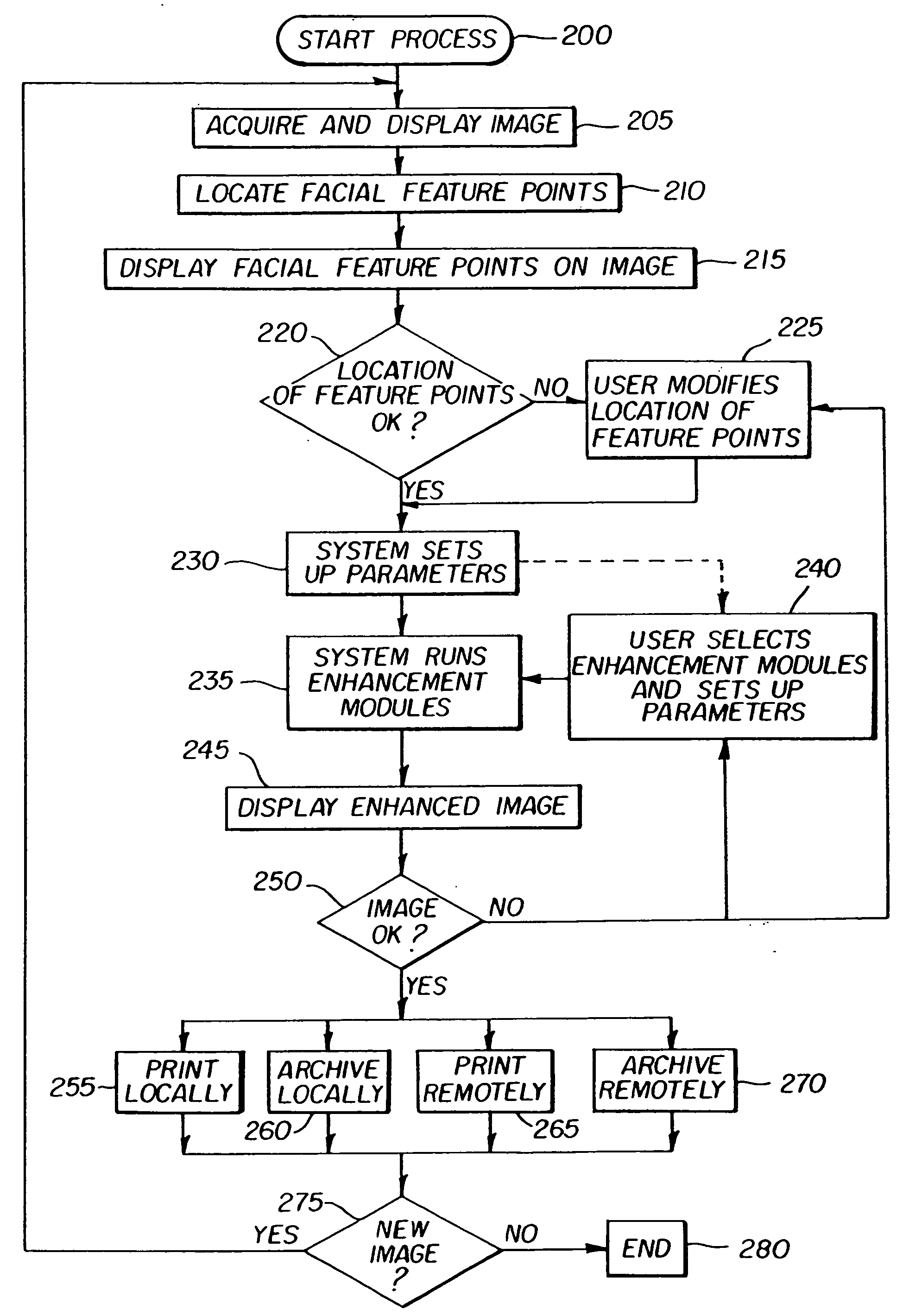

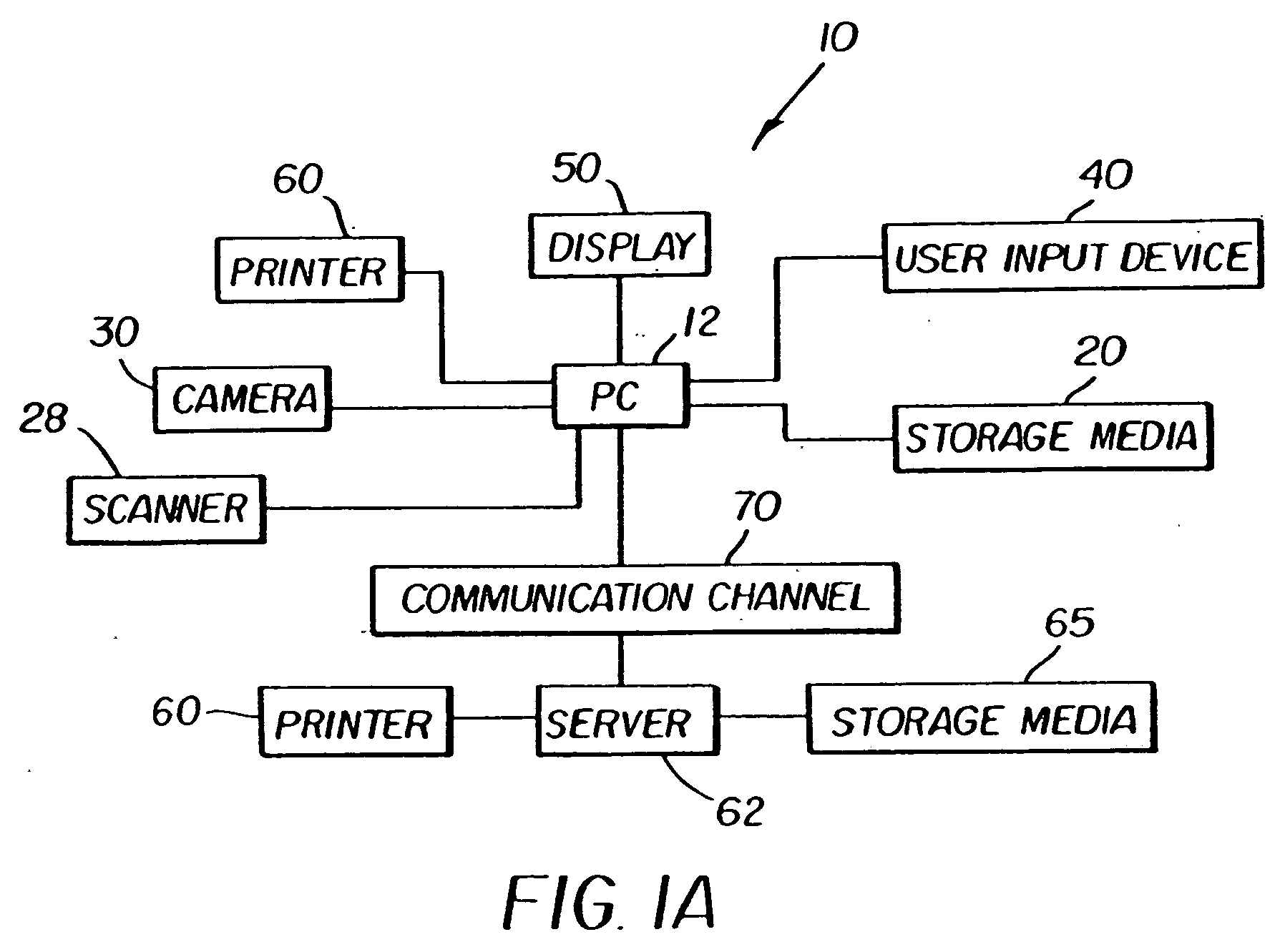

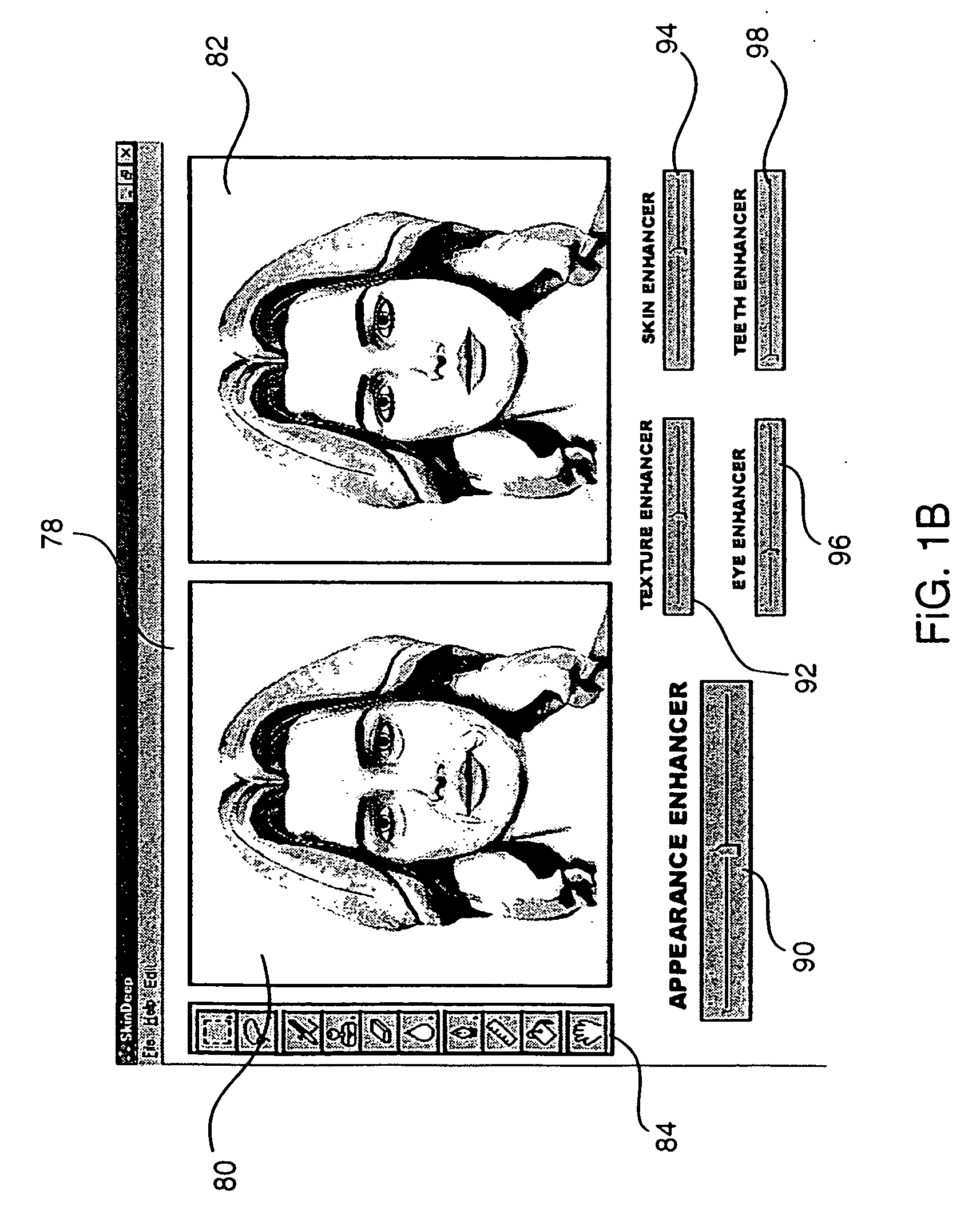

Method and system for enhancing portrait images that are processed in a batch mode

InactiveUS7039222B2Efficiently usImage enhancementTelevision system detailsBatch processingDigital image

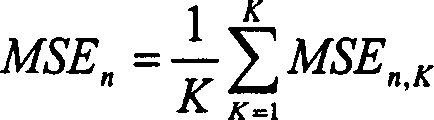

A batch processing method for enhancing an appearance of a face located in a digital image, where the image is one of a large number of images that are being processed through a batch process, comprises the steps of: (a) providing a script file that identifies one or more original digital images that have been selected for enhancement, wherein the script file includes an instruction for the location of each original digital image; (b) using the instructions in the script file, acquiring an original digital image containing one or more faces; (c) detecting a location of facial feature points in the one or more faces, said facial feature points including points identifying salient features including one or more of skin, eyes, eyebrows, nose, mouth, and hair; (d) using the location of the facial feature points to segment the face into different regions, said different regions including one or more of skin, eyes, eyebrows, nose, mouth, neck and hair regions; (e) determining one or more facially relevant characteristics of the different regions; (f) based on the facially relevant characteristics of the different regions, selecting one or more enhancement filters each customized especially for a particular region and selecting the default parameters for the enhancement filters; (g) executing the enhancement filters on the particular regions, thereby producing an enhanced digital image from the original digital image; (h) storing the enhanced digital image; and (i) generating an output script file having instructions that indicate one or more operations in one or more of the steps (c)–(f) that have been performed on the enhanced digital image.

Owner:MONUMENT PEAK VENTURES LLC

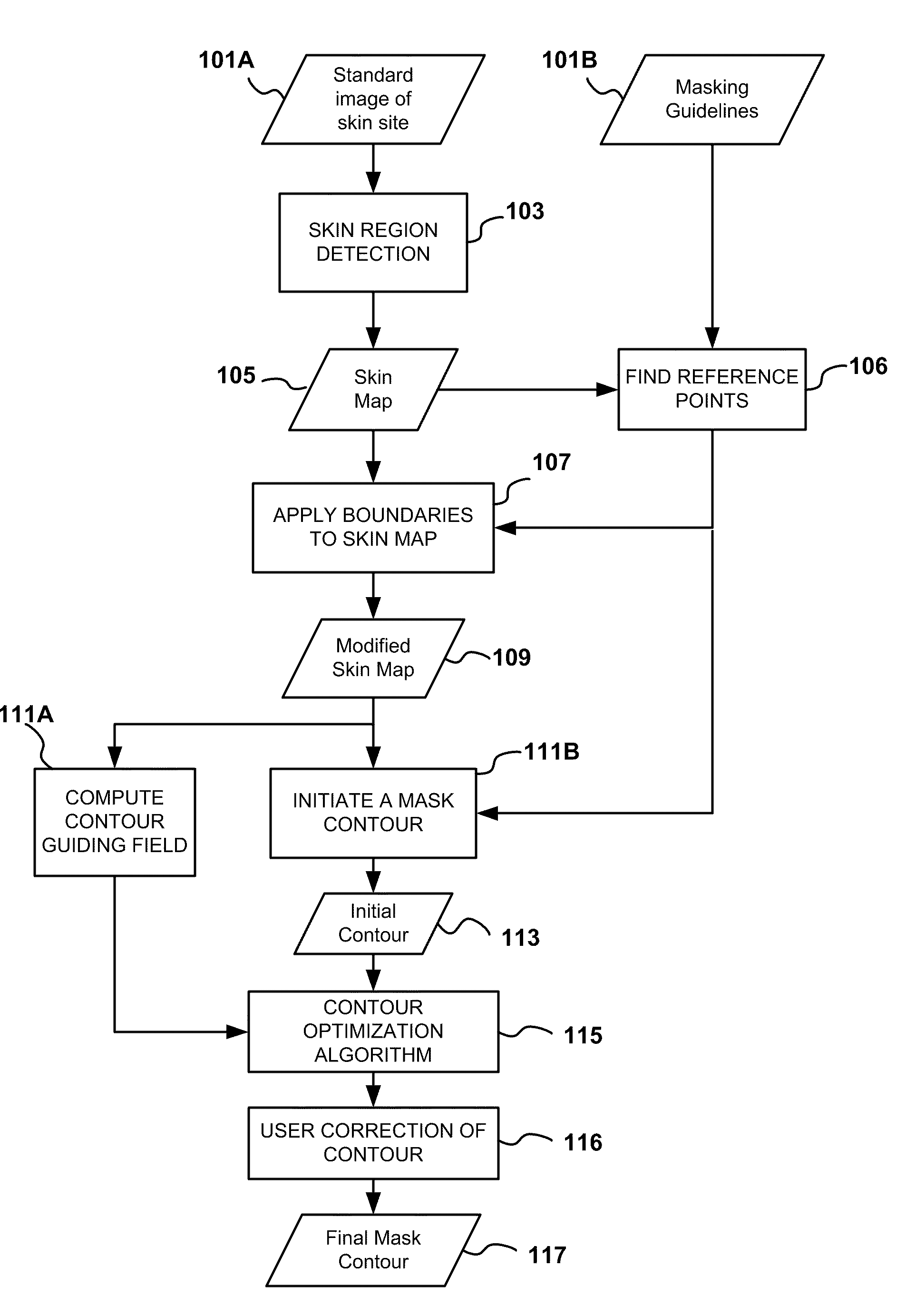

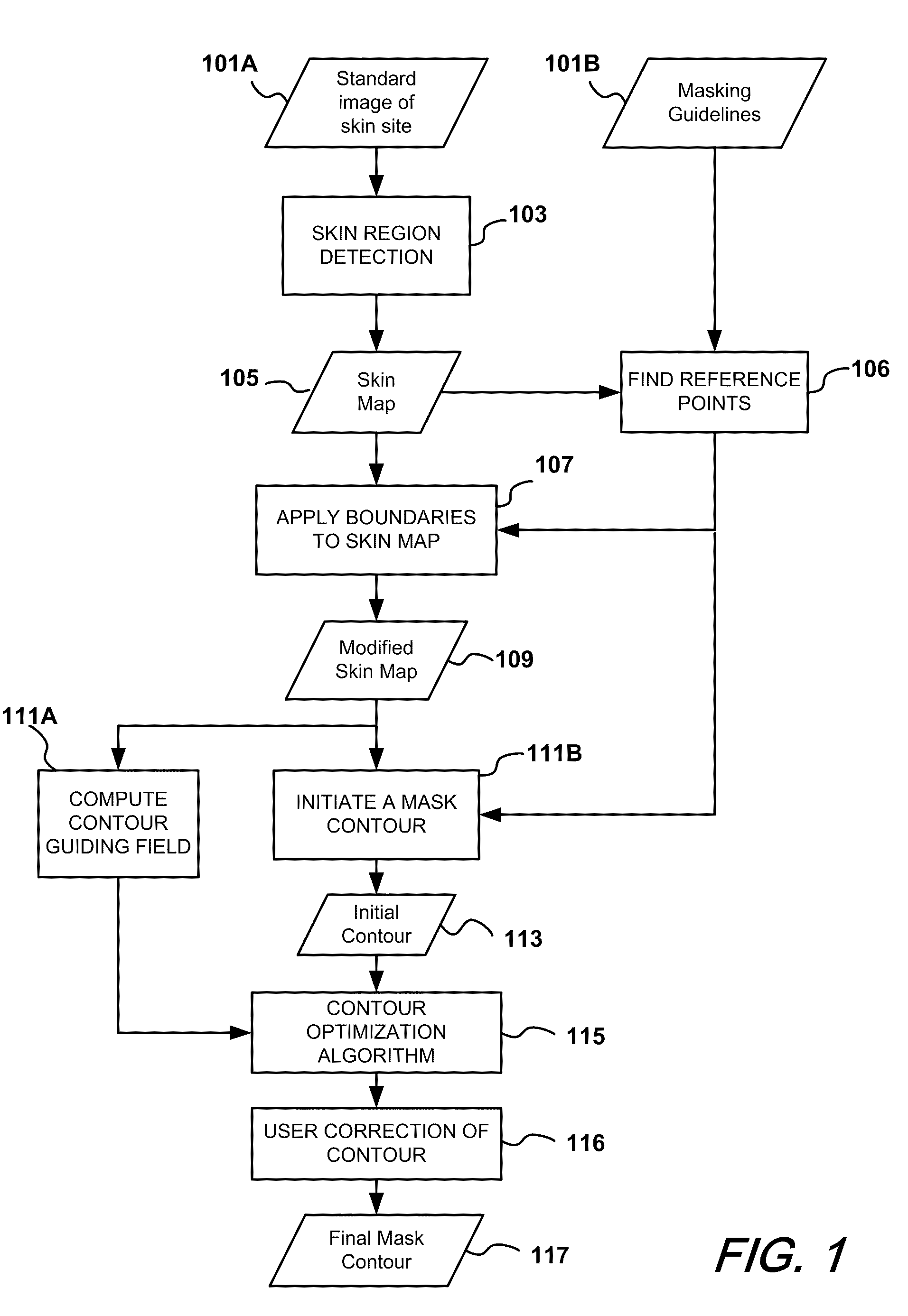

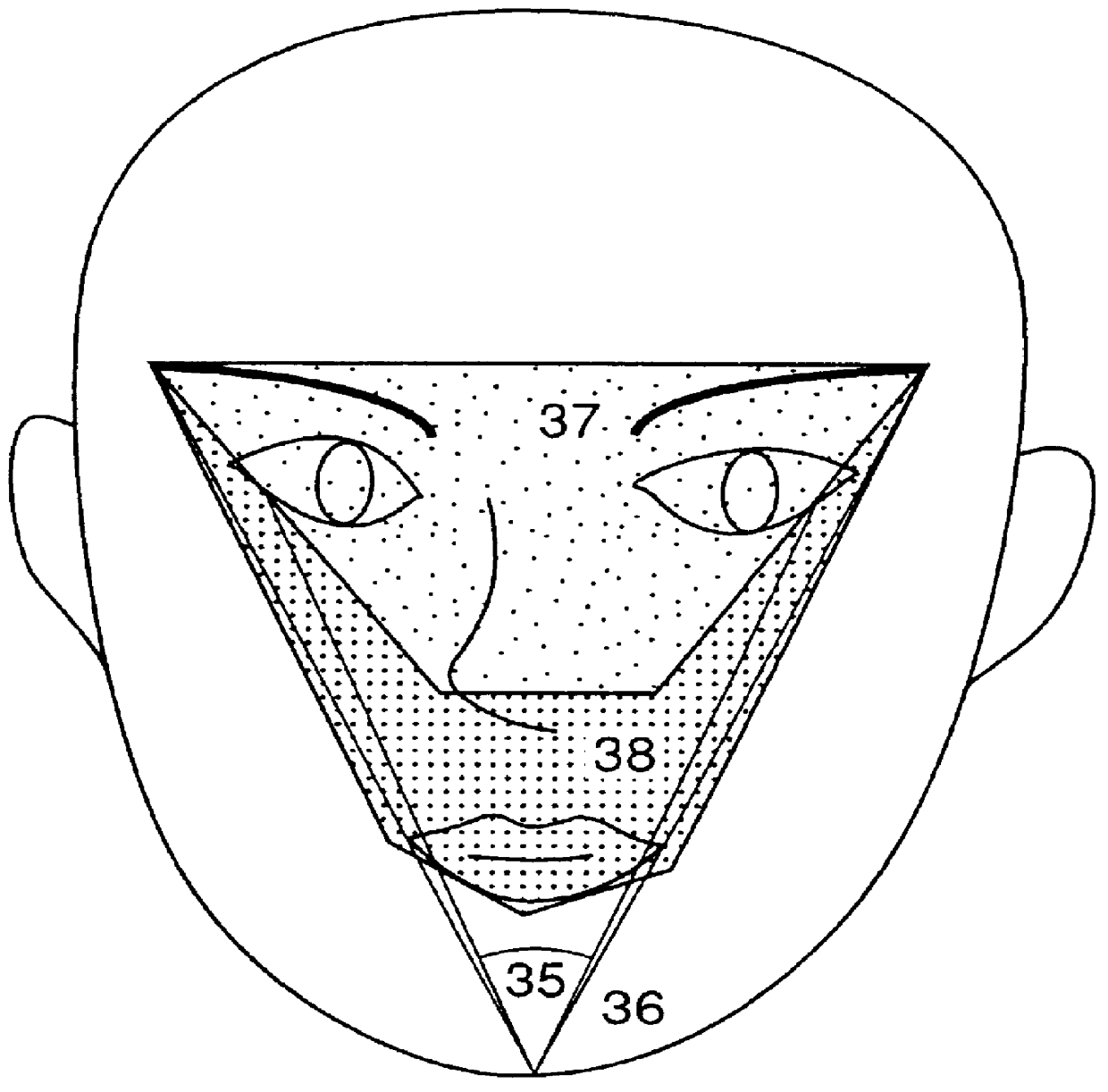

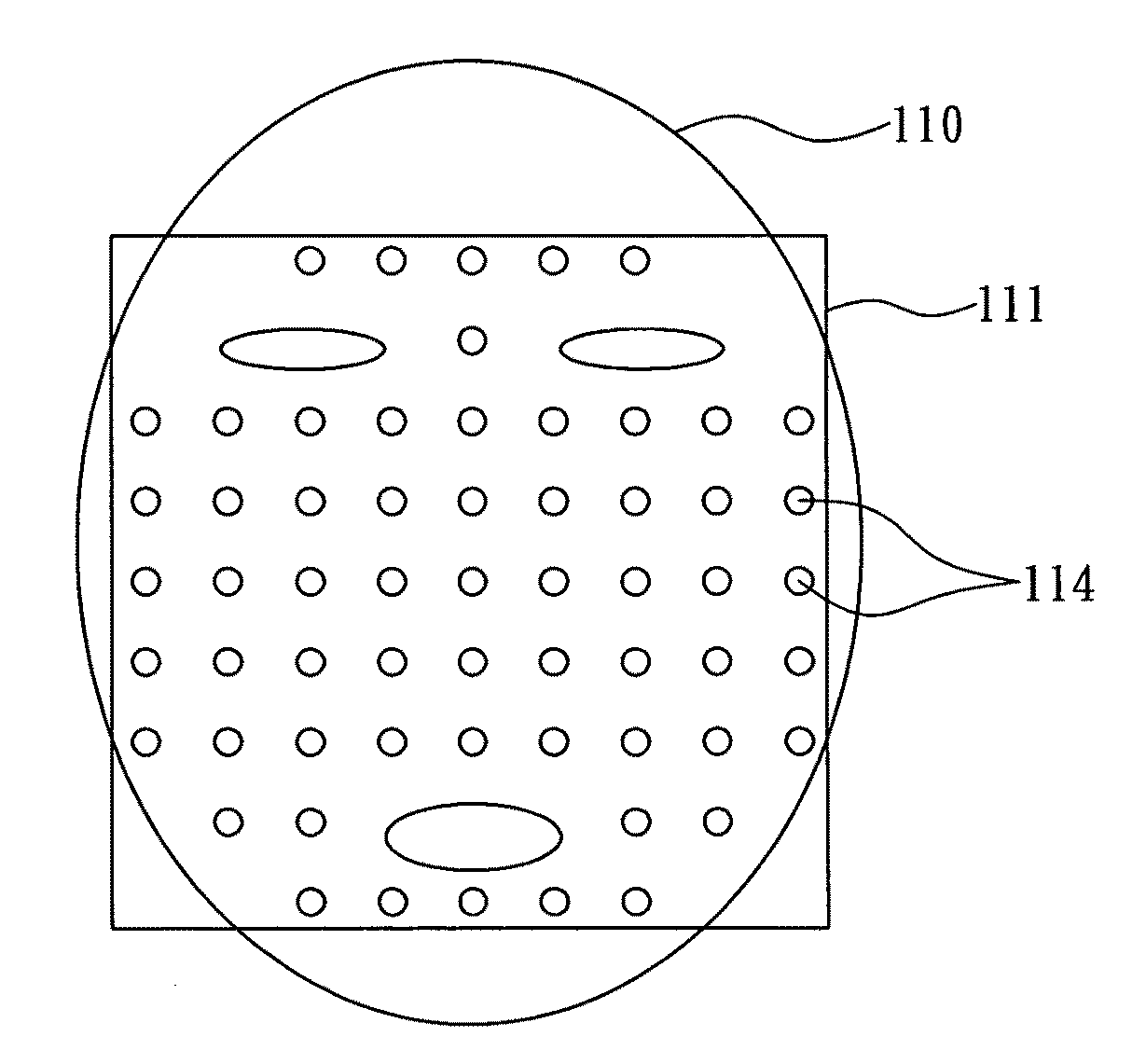

Automatic mask design and registration and feature detection for computer-aided skin analysis

ActiveUS20090196475A1Avoiding skin regions not useful or amenableCharacter and pattern recognitionDiagnostic recording/measuringDiagnostic Radiology ModalityNose

Methods and systems for automatically generating a mask delineating a region of interest (ROI) within an image containing skin are disclosed. The image may be of an anatomical area containing skin, such as the face, neck, chest, shoulders, arms or hands, among others, or may be of portions of such areas, such as the cheek, forehead, or nose, among others. The mask that is generated is based on the locations of anatomical features or landmarks in the image, such as the eyes, nose, eyebrows and lips, which can vary from subject to subject and image to image. As such, masks can be adapted to individual subjects and to different images of the same subjects, while delineating anatomically standardized ROIs, thereby facilitating standardized, reproducible skin analysis over multiple subjects and / or over multiple images of each subject. Moreover, the masks can be limited to skin regions that include uniformly illuminated portions of skin while excluding skin regions in shadow or hot-spot areas that would otherwise provide erroneous feature analysis results. Methods and systems are also disclosed for automatically registering a skin mask delineating a skin ROI in a first image captured in one imaging modality (e.g., standard white light, UV light, polarized light, multi-spectral absorption or fluorescence imaging, etc.) onto a second image of the ROI captured in the same or another imaging modality. Such registration can be done using linear as well as non-linear spatial transformation techniques.

Owner:CANFIELD SCI

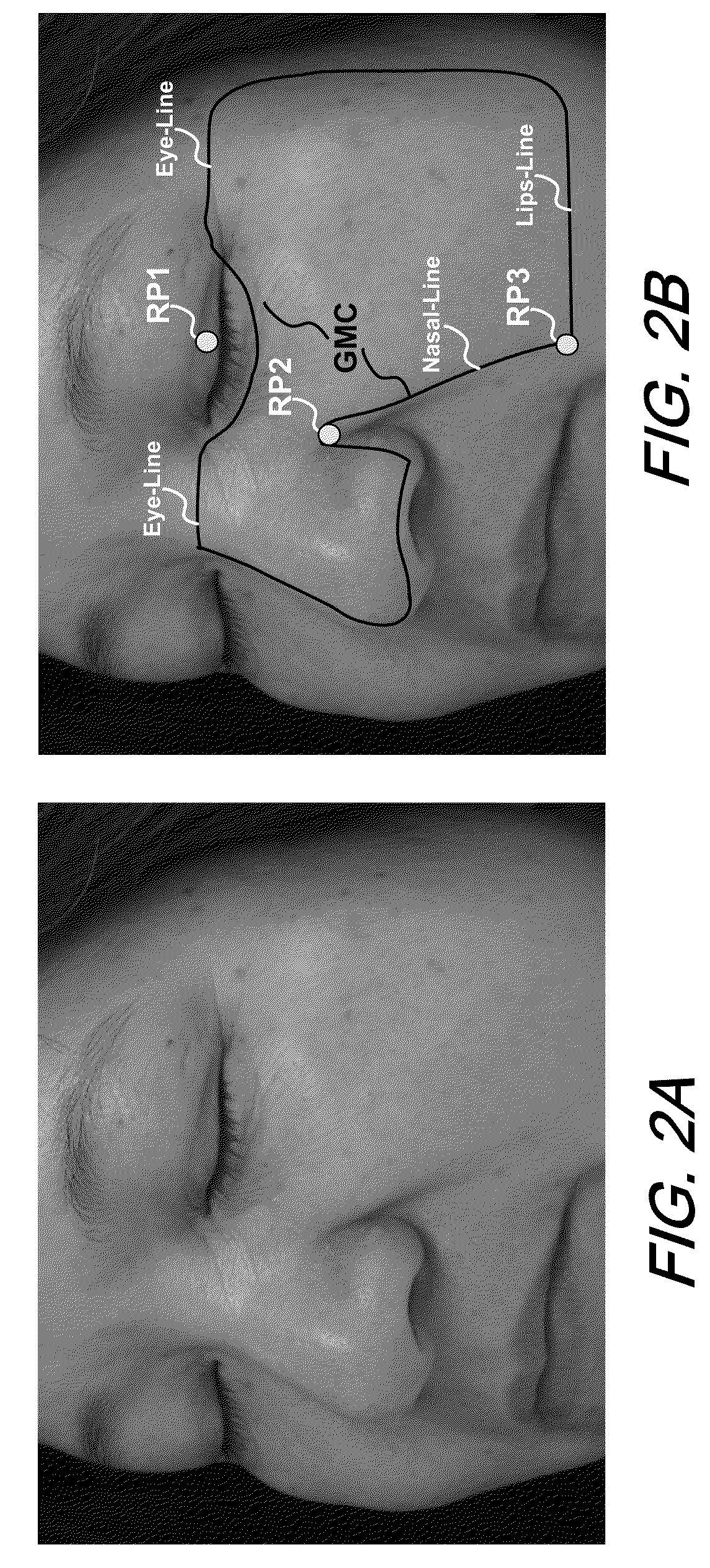

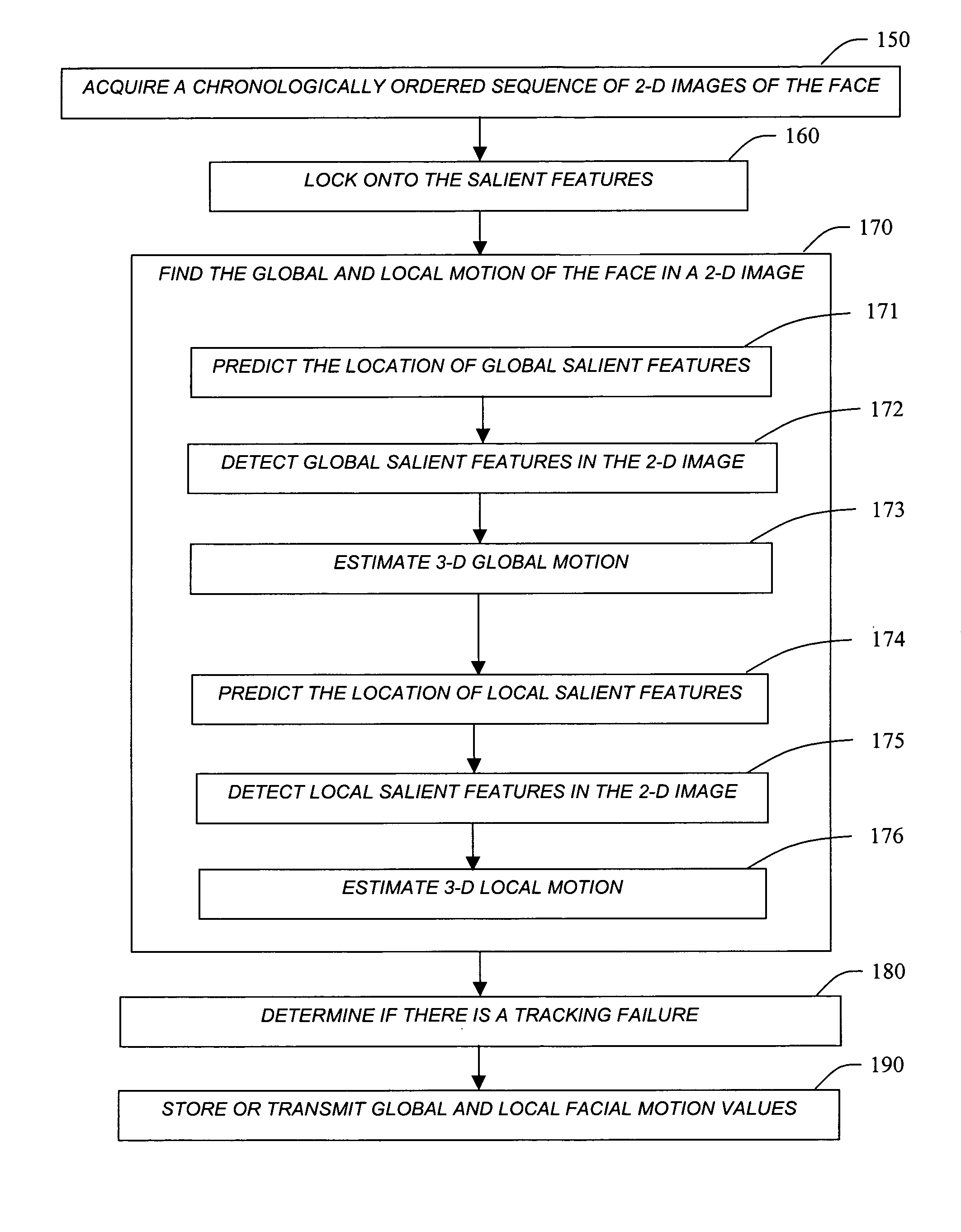

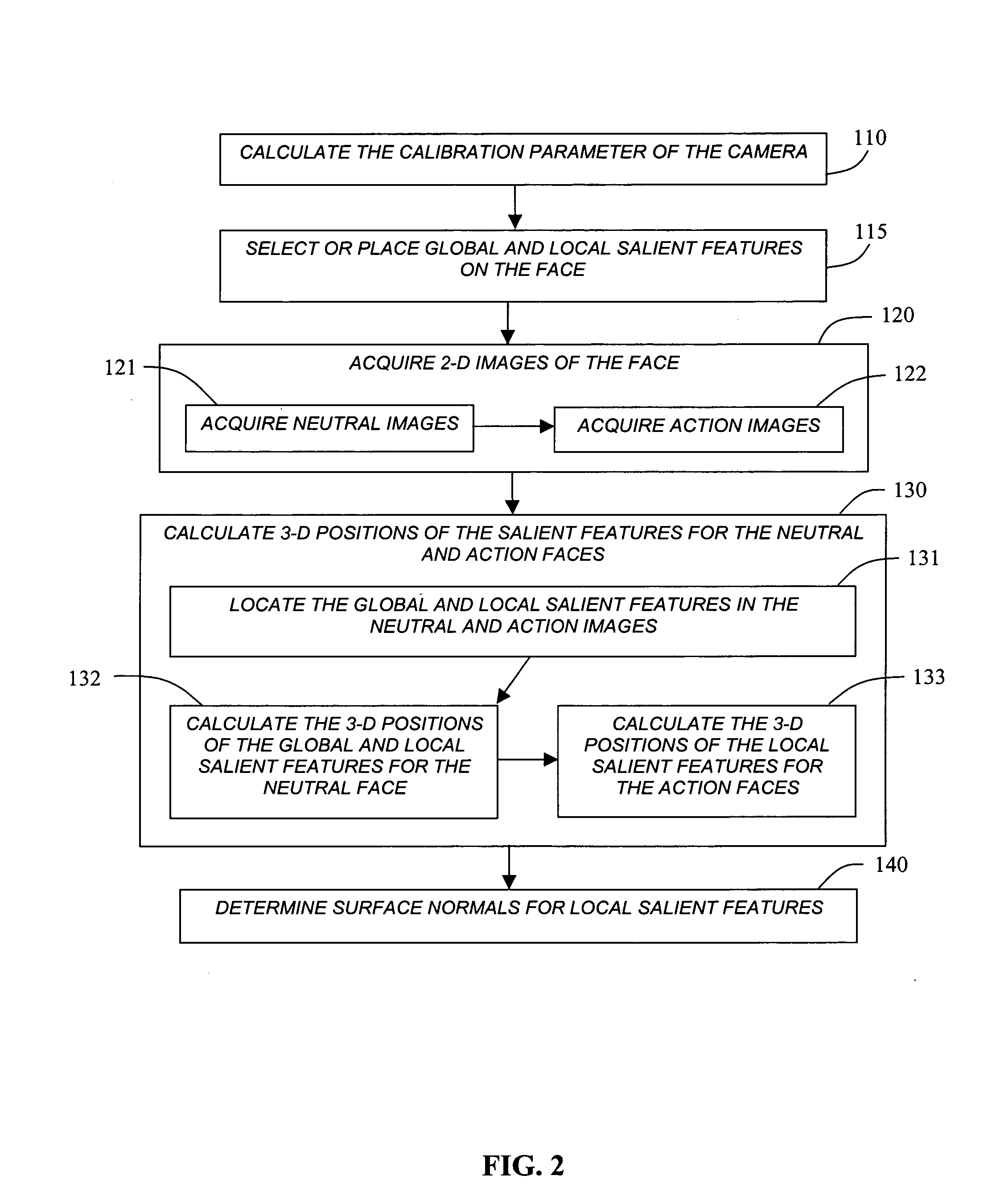

Method for tracking motion of a face

InactiveUS7127081B1Satisfies needTelevision system detailsImage analysisPattern recognitionAnimation

Owner:MOMENTUM AS +1

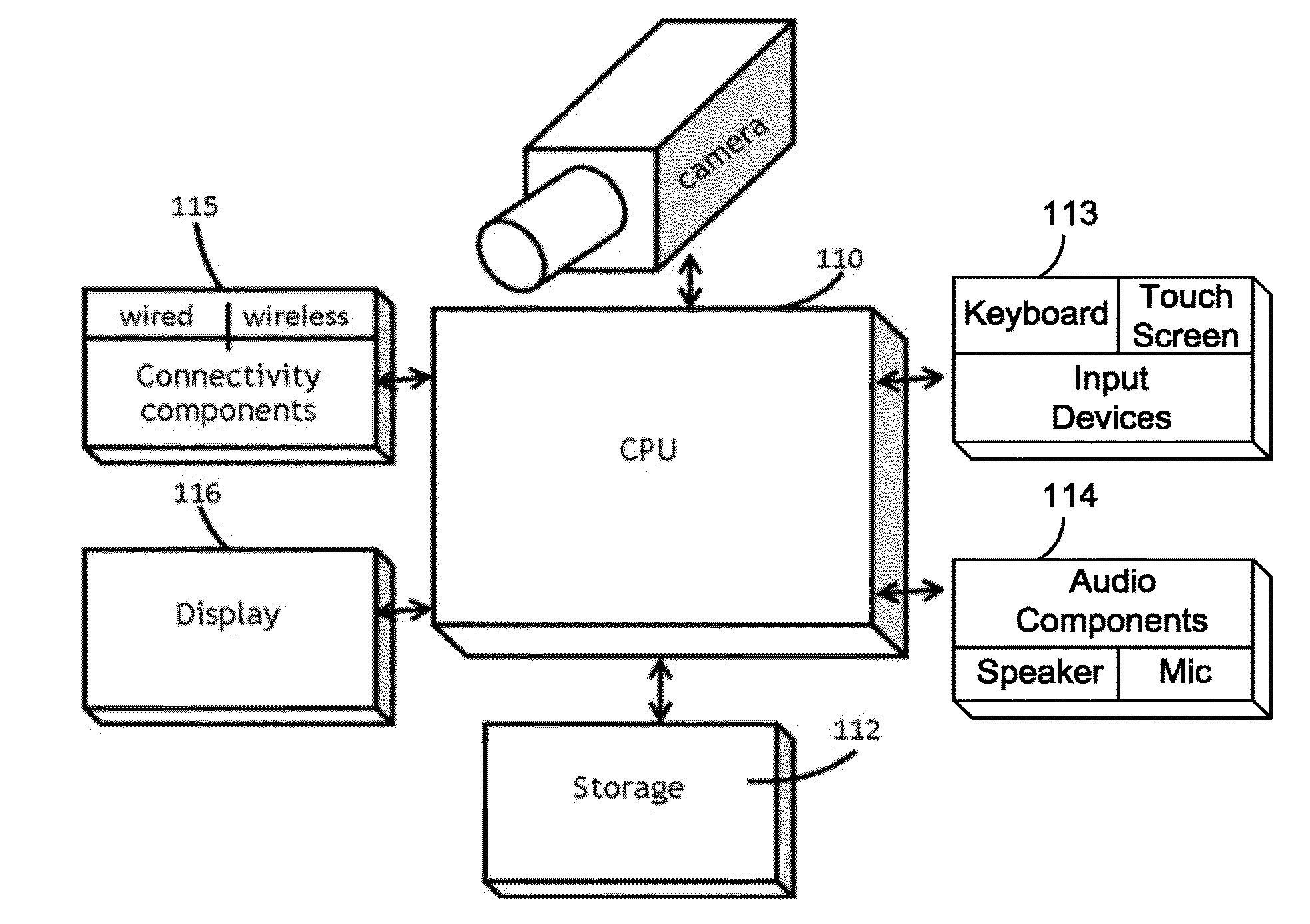

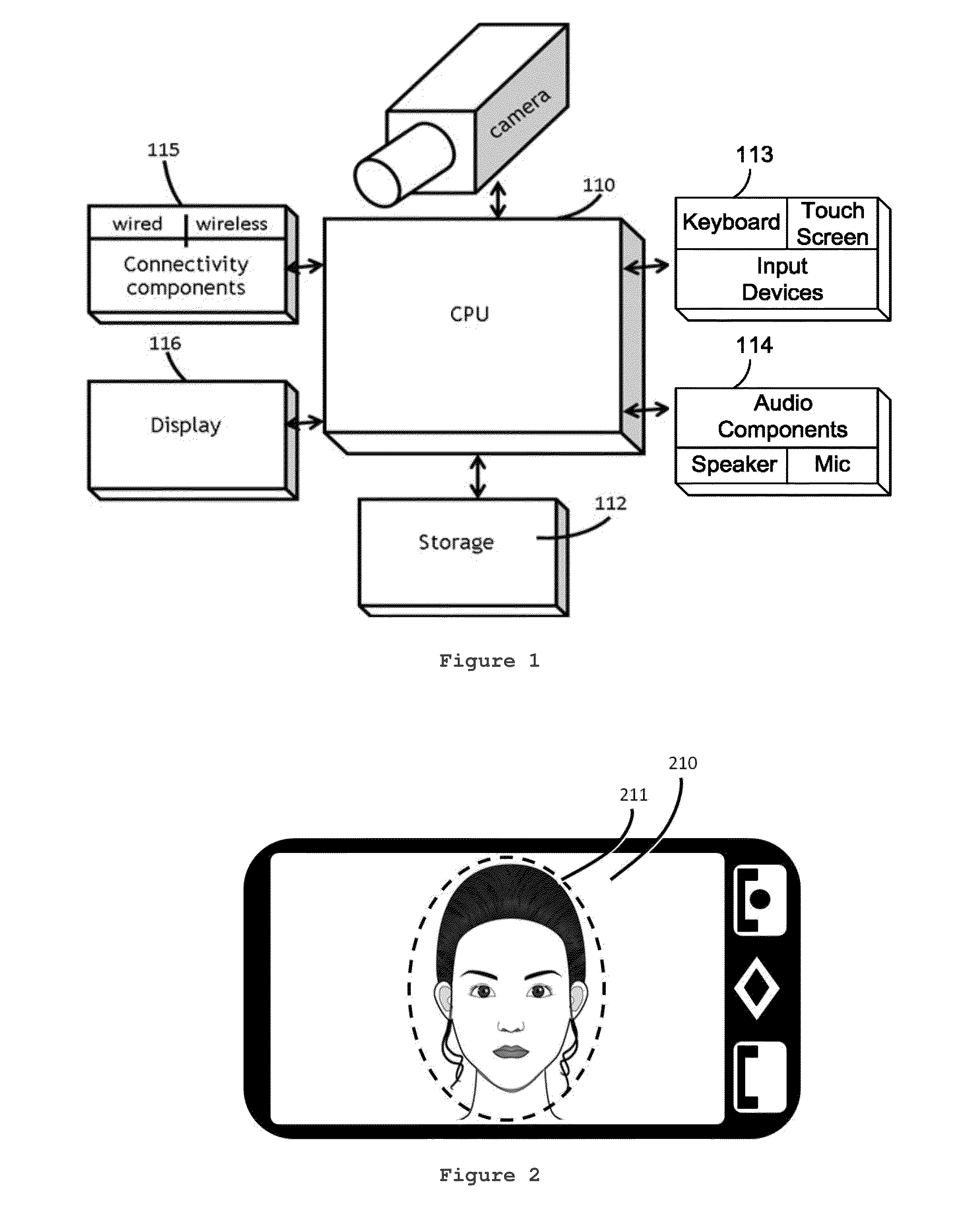

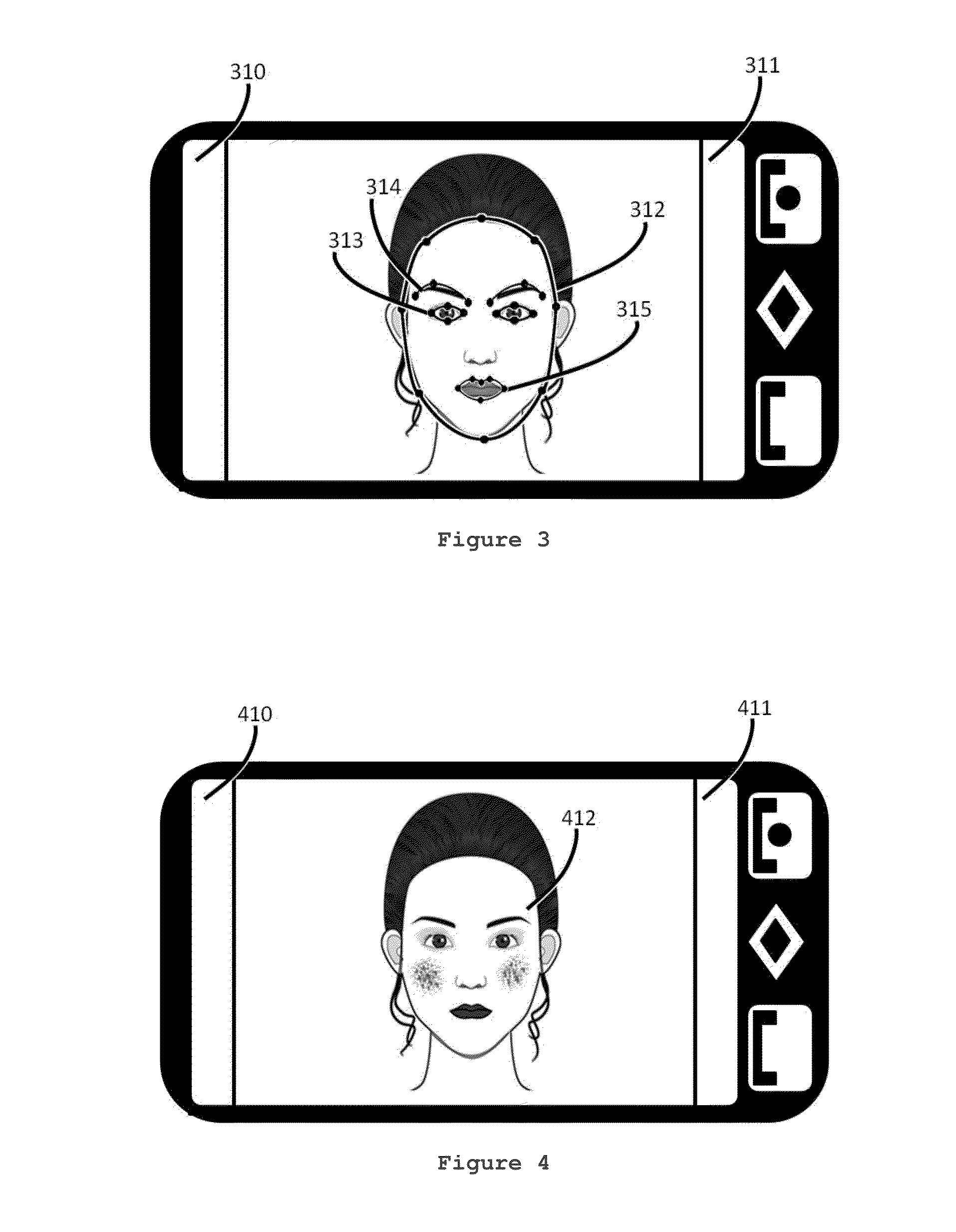

Method and system for make-up simulation on portable devices having digital cameras

InactiveUS20130169827A1Low costMinimizing effortTelevision system details2D-image generationTouchscreenApplication areas

A system and method are capable of performing make-up virtual images obtained with portable devices with a digital camera. The method automatically detects points of interest (eyes, mouth, eyebrow, face contour) of the user's face image allowing the user to virtually apply make-up using fingers on a touch screen. Another algorithm was created to avoid “blurring” of make-up in the application from the user's fingers. The system allows the creation of virtual make-up faces with high accuracy. To carry out the virtual make-up, methods were created for automatically detecting points of interest in the facial image and enforcing transparency in the image to simulate the make-up and restrict the make-up application area within the region where the points of interest are found. The system allows testing cosmetics application in facial images to aid choosing colors of cosmetics before actually applying them.

Owner:SAMSUNG ELECTRONICSA AMAZONIA

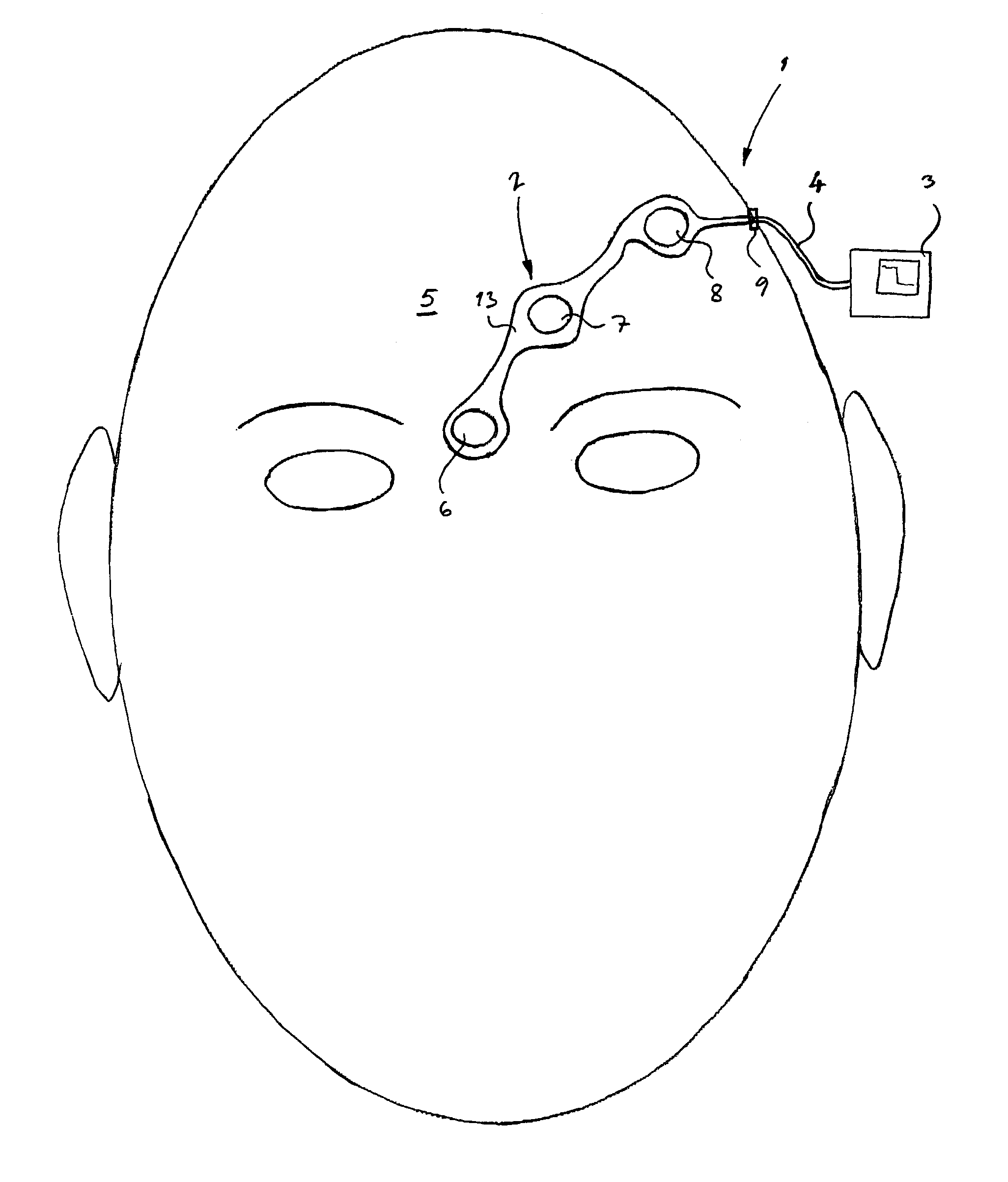

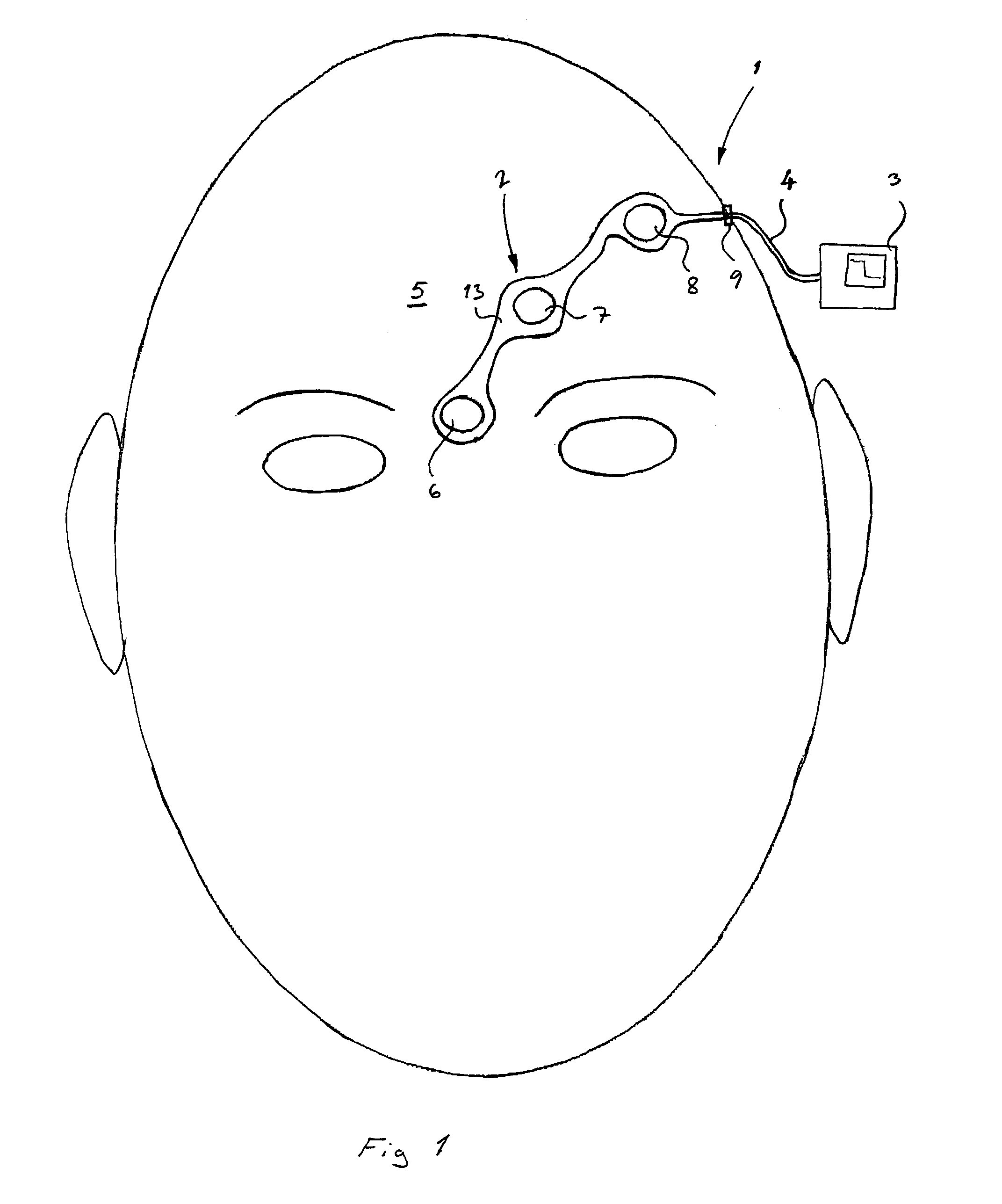

Method of positioning electrodes for central nervous system monitoring and sensing pain reactions of a patient

ActiveUS7130673B2Better measurement resultsPractical and convenientElectroencephalographyElectromyographyMedicineForehead

A method concerning measurements by using an electrode array comprising three electrodes for central nervous system (CNS) monitoring from the forehead of a patient's head. A first electrode of said three electrodes is positioned between the eyebrows or immediately above the eyebrows of the patient. A third electrode of said three electrodes is positioned apart from the first electrode on the hairless fronto-lateral area of the forehead the patient. A second electrode is positioned between the first and the third electrodes on the forehead of the patient.

Owner:GE HEALTHCARE FINLAND

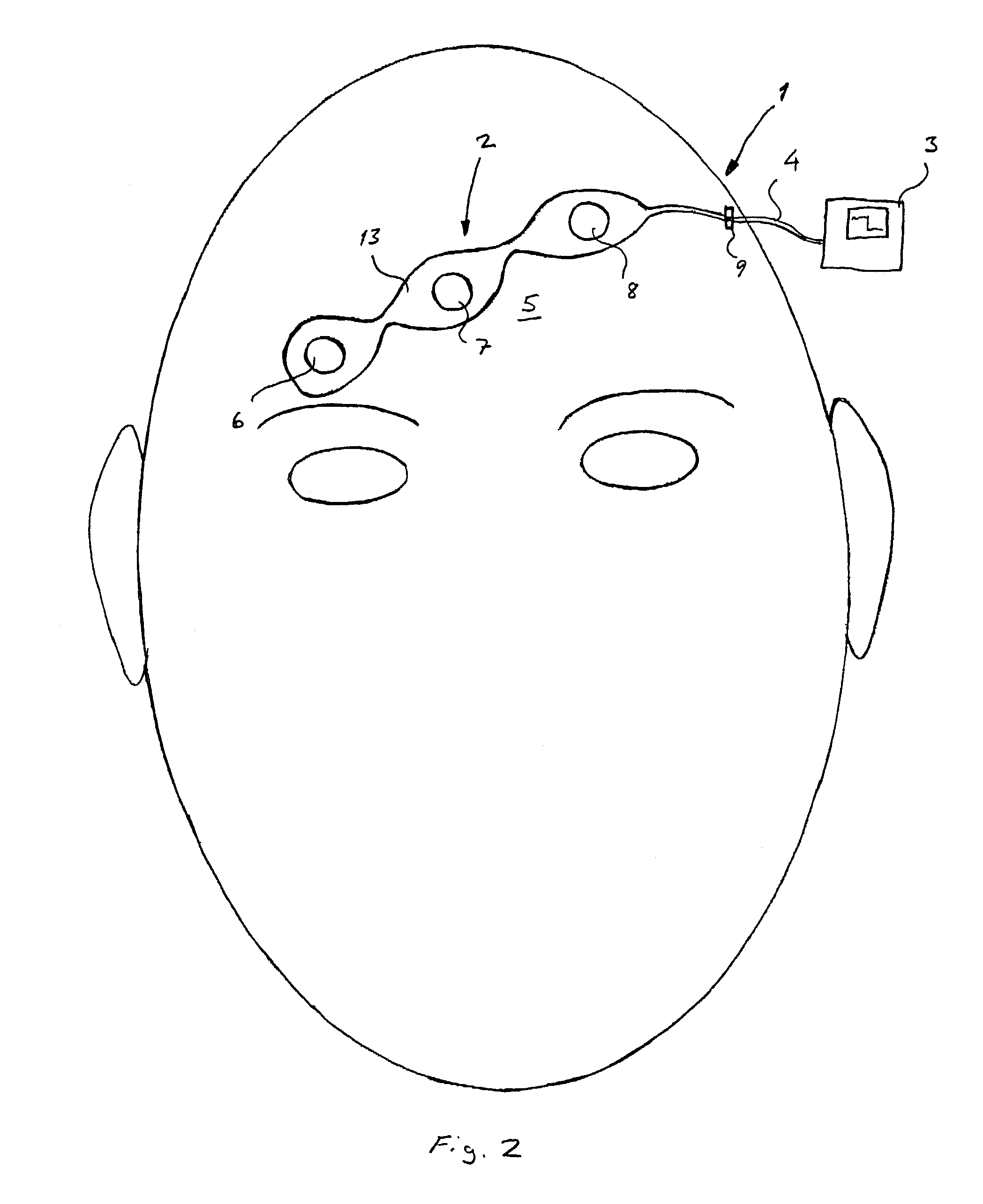

Method for classifying features and a map representing the features

InactiveUS6091836AExact and easy creationEasy to determineCharacter and pattern recognitionPackaging toiletriesPattern recognitionNose

A method for classifying a face in which facial features are analyzed so that the face is appropriately classified or recognized in order to facilitate an exact and easy creation of an image produced by applying makeup. A first index represents one of a length of the face and a configuration of formational elements of the face, the formational elements including an eye, an eyebrow, a mouth and a nose. A second index represents one of a contour of the face and a contour of each of the formational elements of the face. A face is classified into one of groups of features each of which provides similar impressions by using the first index and the second index.

Owner:SHISEIDO CO LTD

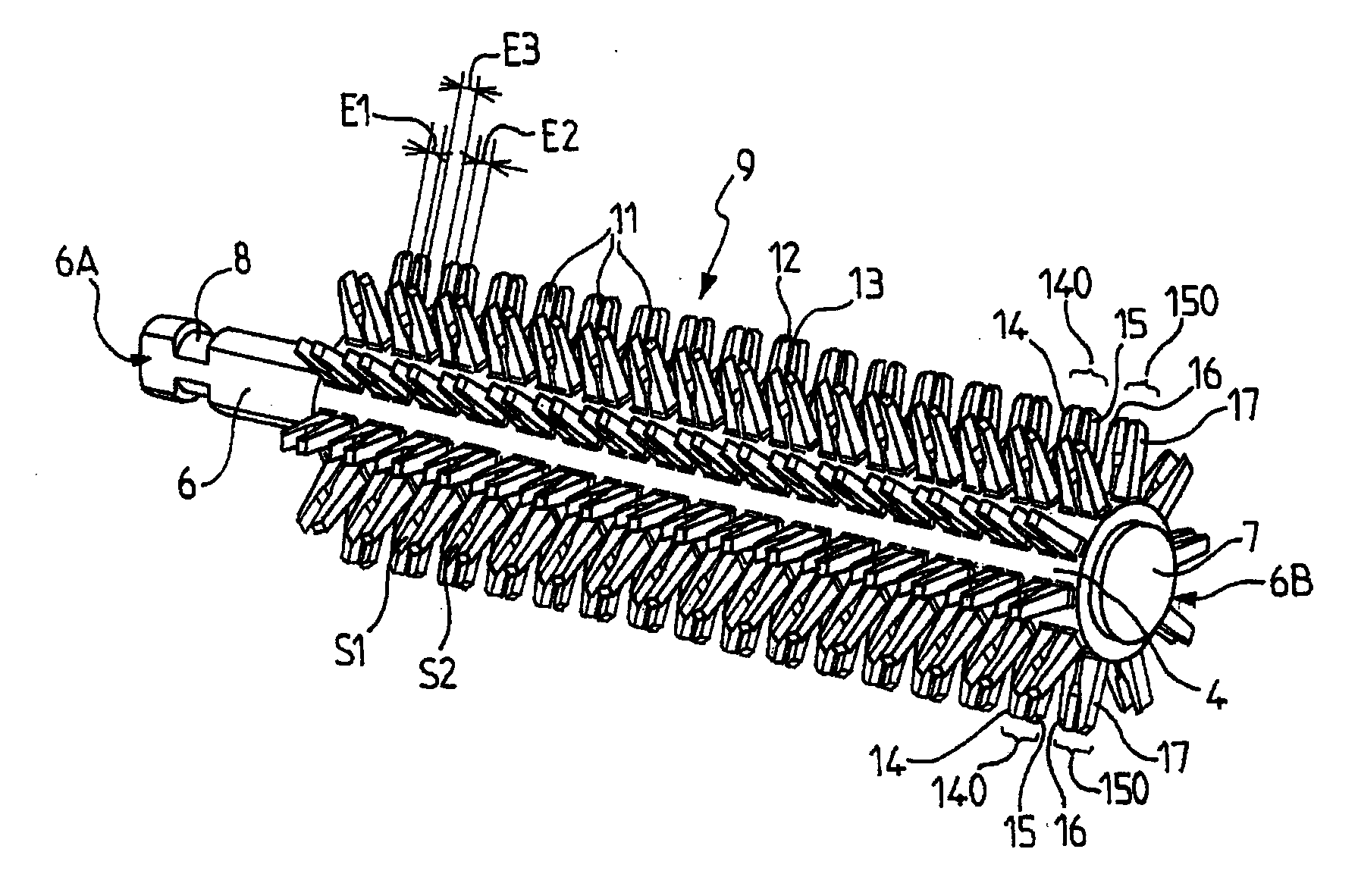

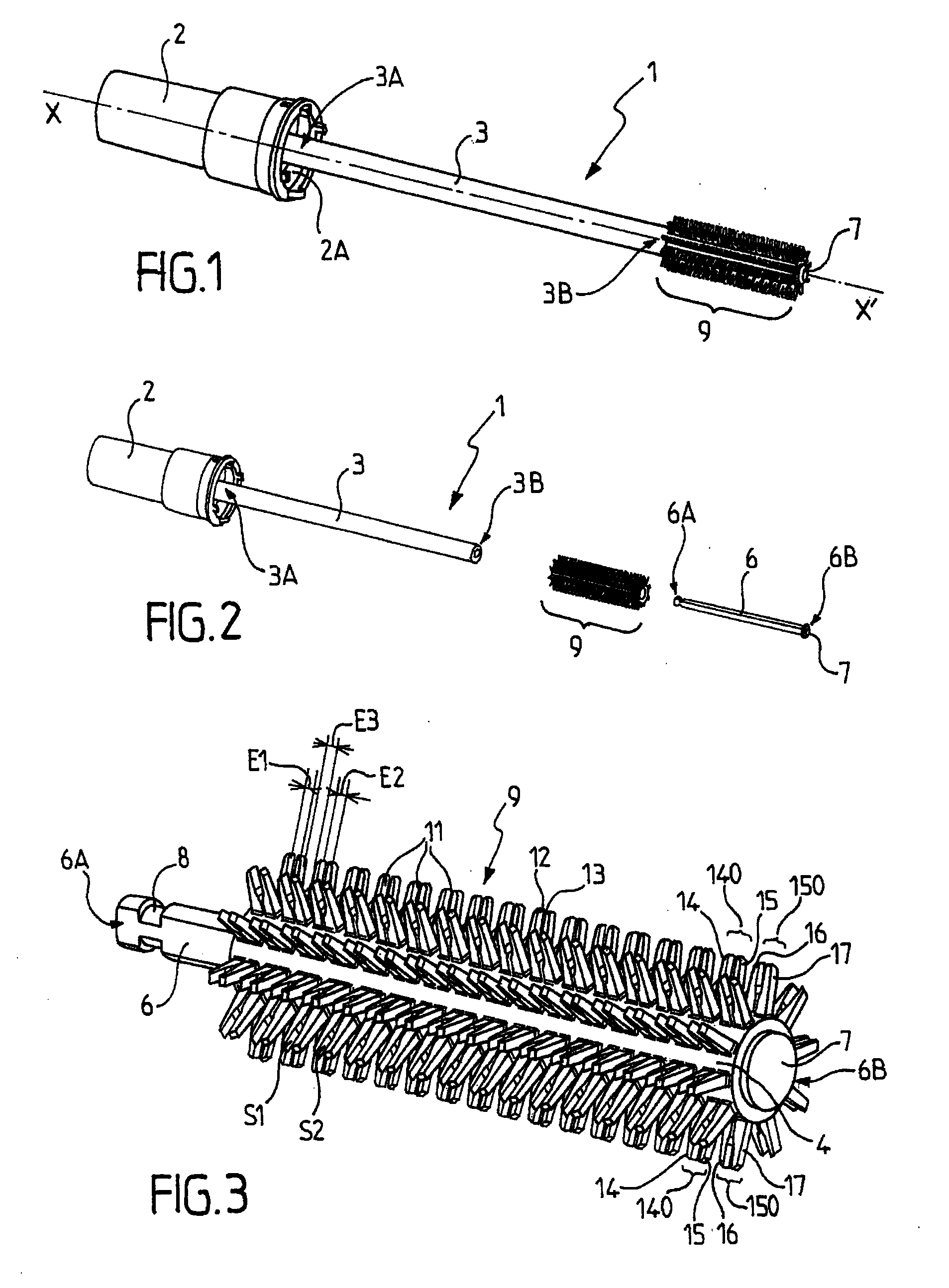

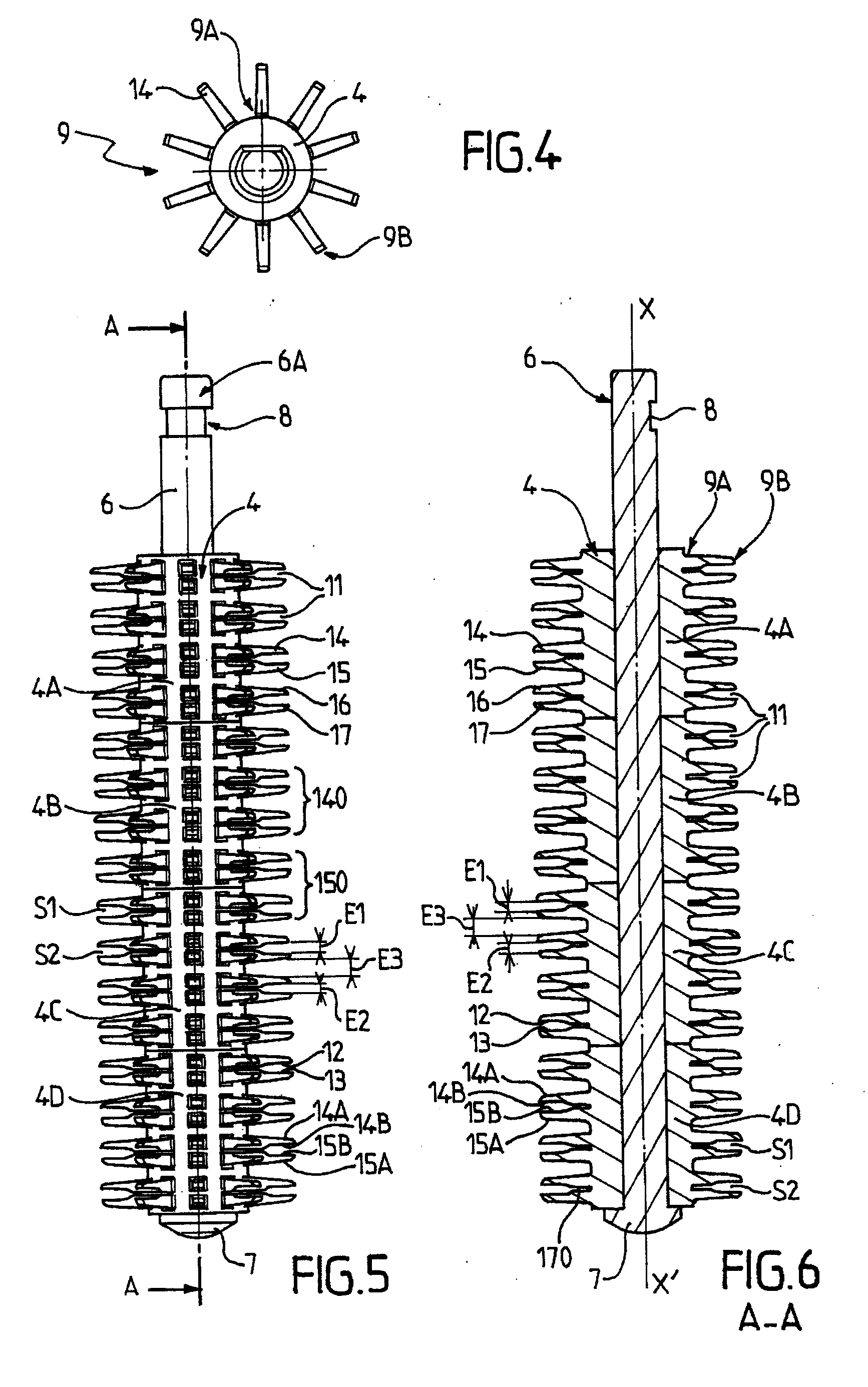

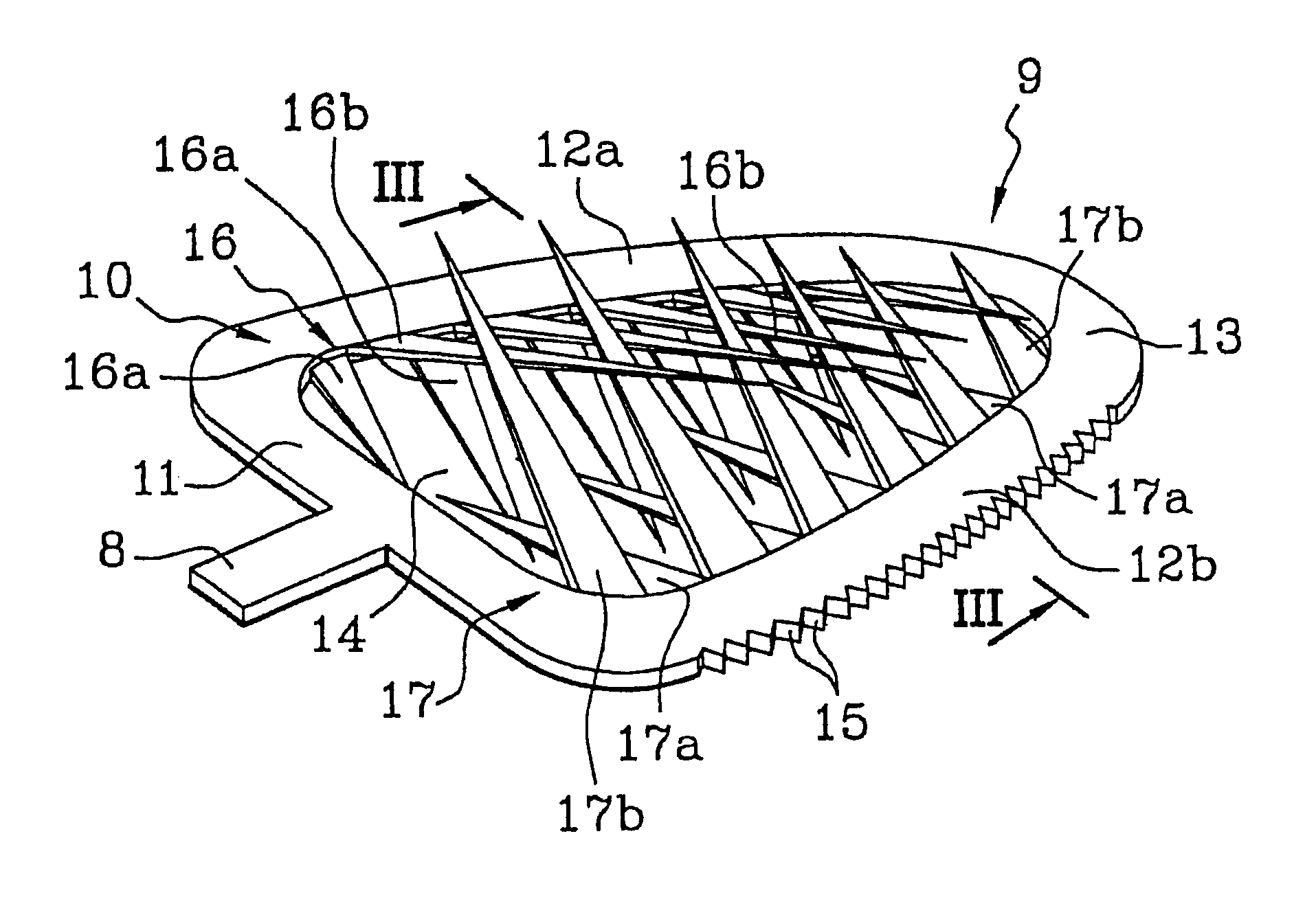

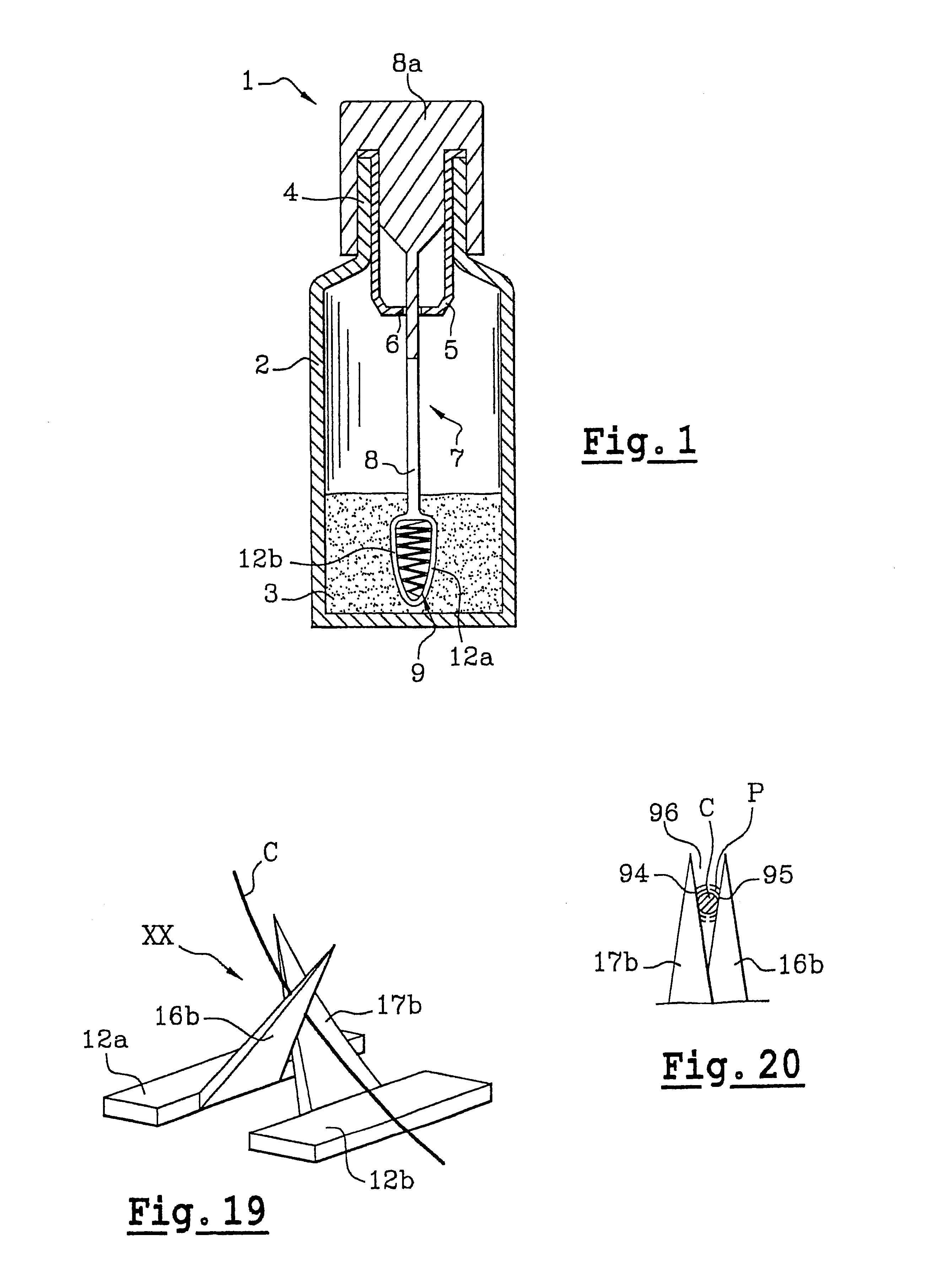

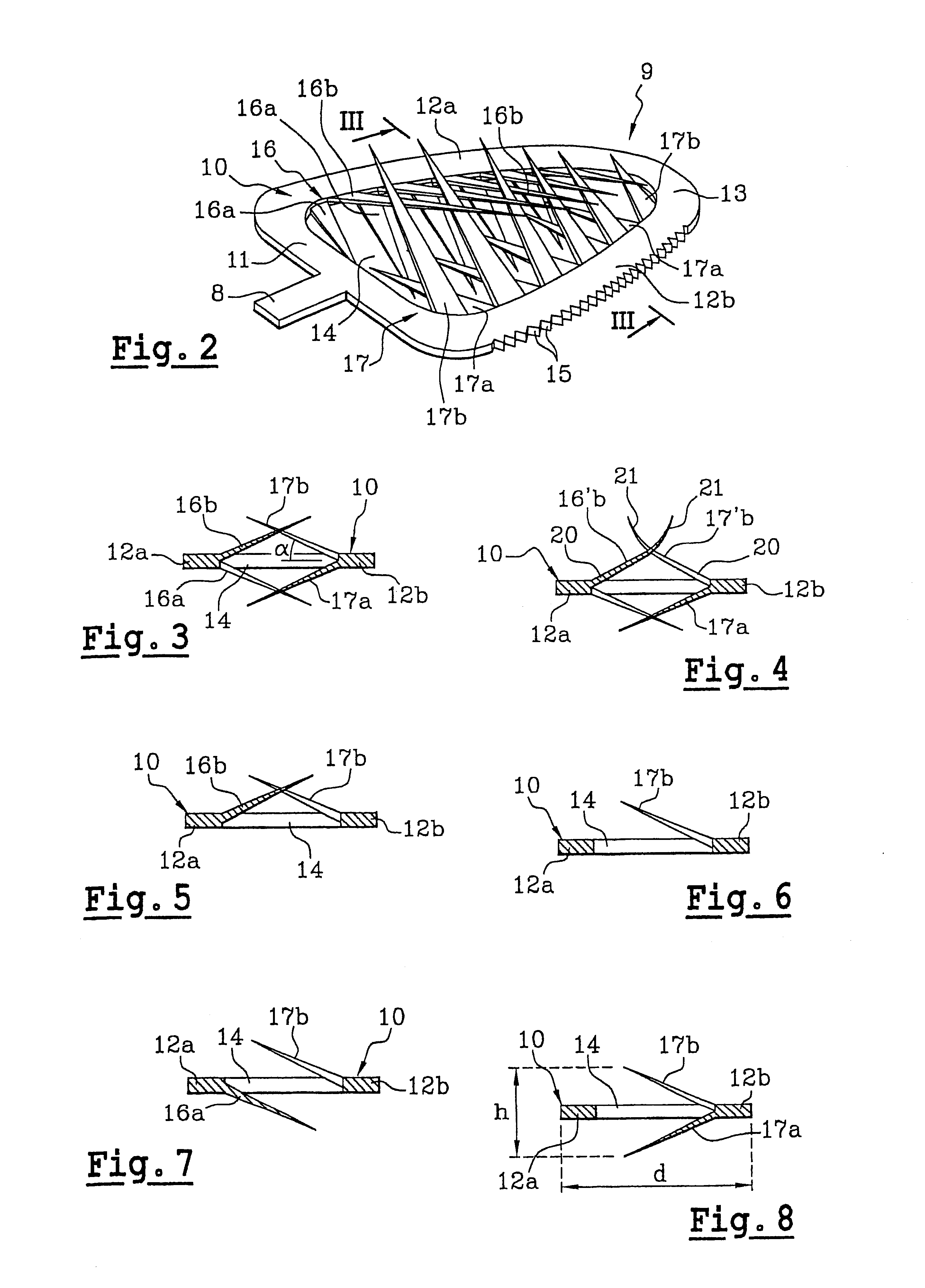

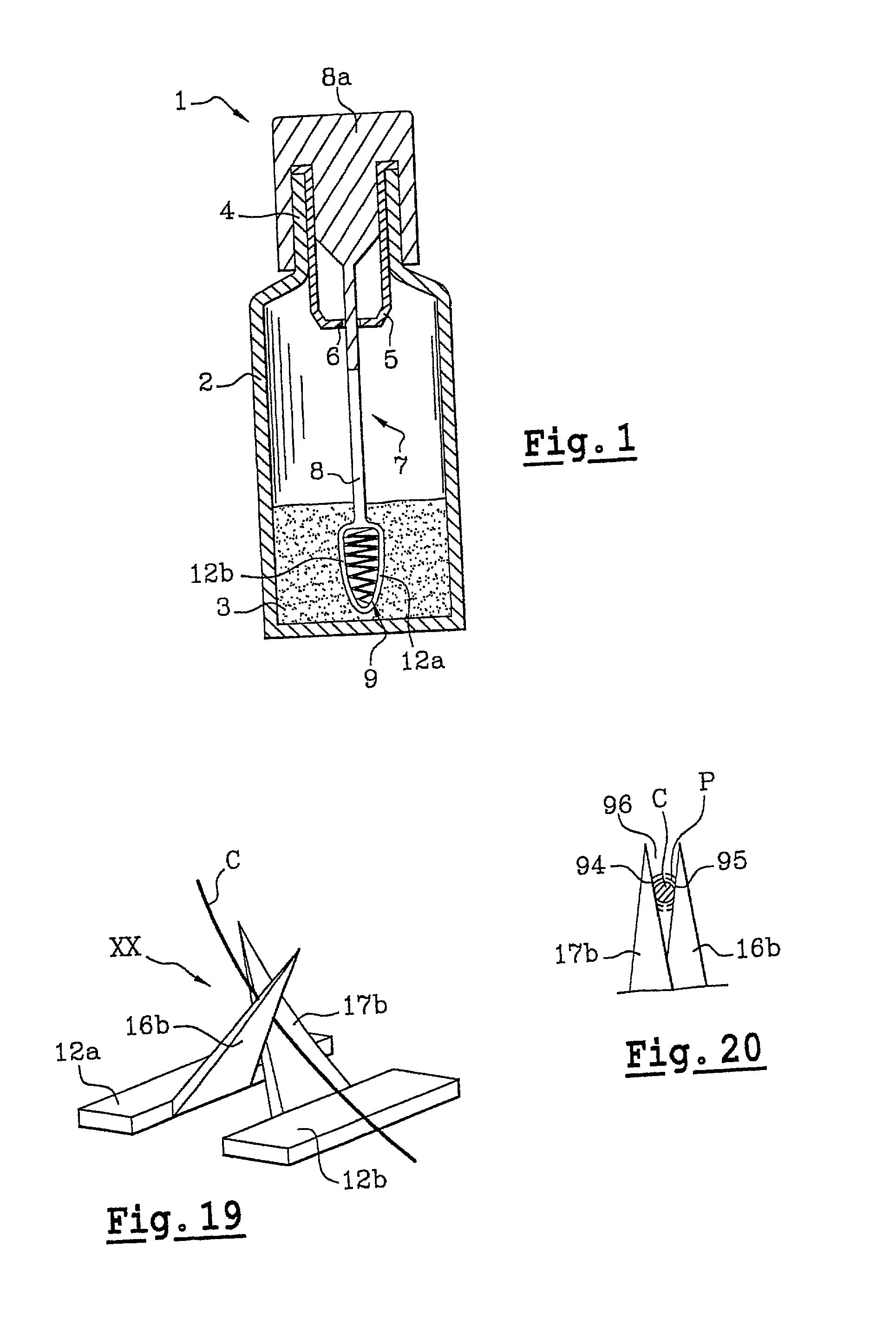

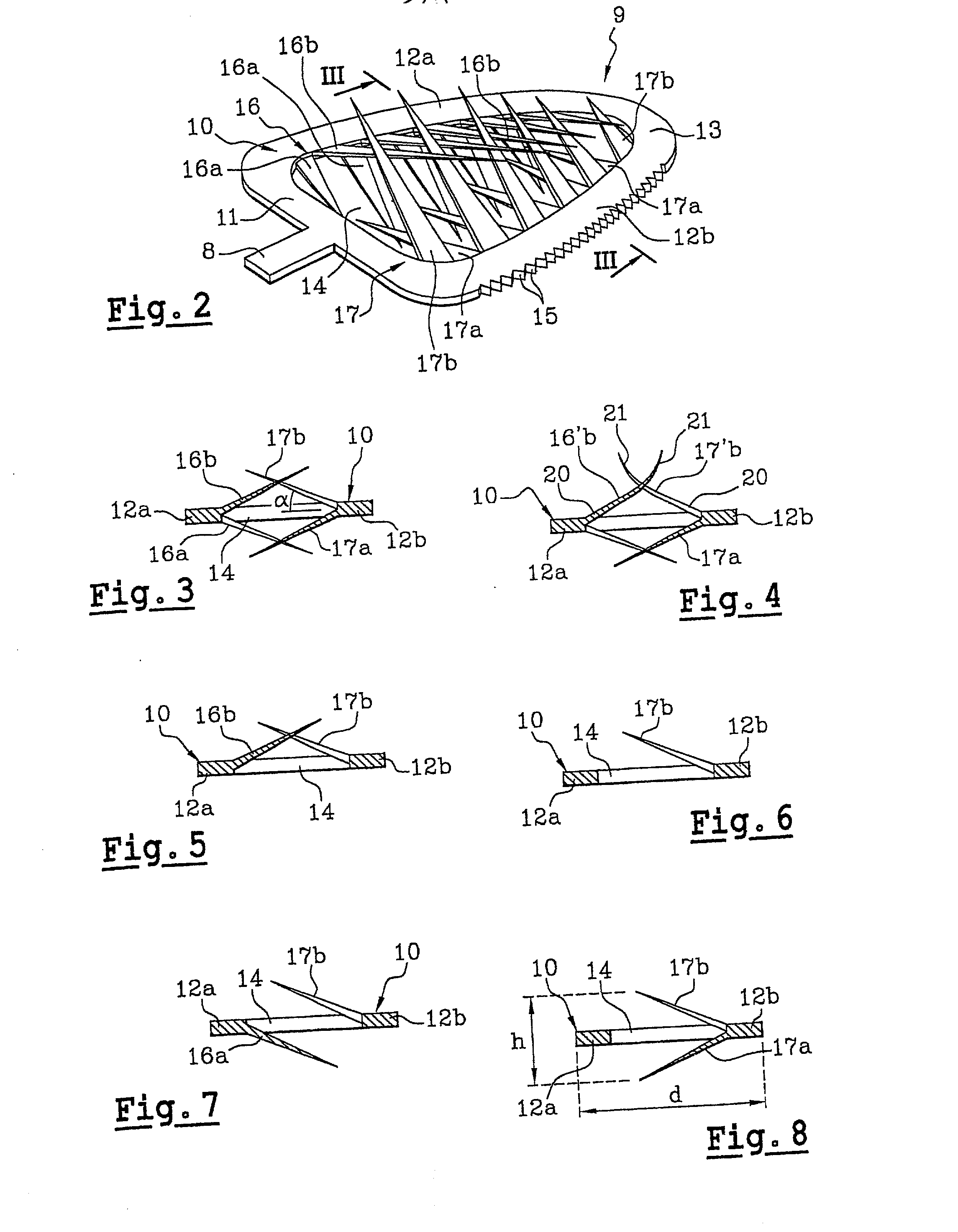

Instrument for applying a composition on the eyelashes or eyebrows

InactiveUS20070062552A1Accurate collectionAvoid OverloadingBrushesPackaging toiletriesEyelashBiomedical engineering

An instrument for applying a liquid or semi-liquid composition on the eyelashes or the eyebrows, comprising a core extending in an axial direction, and at least first, second, third, and fourth projections projecting from the core, wherein the first and second projections that form a first group of projections are mutually spaced apart by a first spacing to define a first interstitial gap in the form of a sheet extending at least locally in a plane that is substantially perpendicular to the axial direction, the first interstitial gap being shaped and dimensioned to retain the composition therein for the purpose of being applied to the eyelashes or the eyebrows, the third and fourth projections that form a second group of projections, being mutually spaced apart by a second spacing to define a second interstitial gap in the form of a sheet that extends at least locally in a plane that is substantially perpendicular to the axial direction, the interstitial gap being shaped and dimensioned to retain the composition therein for application on the eyelashes or eyebrows, the first and second groups being mutually spaced apart by a third spacing substantially greater than both the first and the second spacings.

Owner:YVES SAINT LAURENT PARFUMS SOCIETE ANONYME

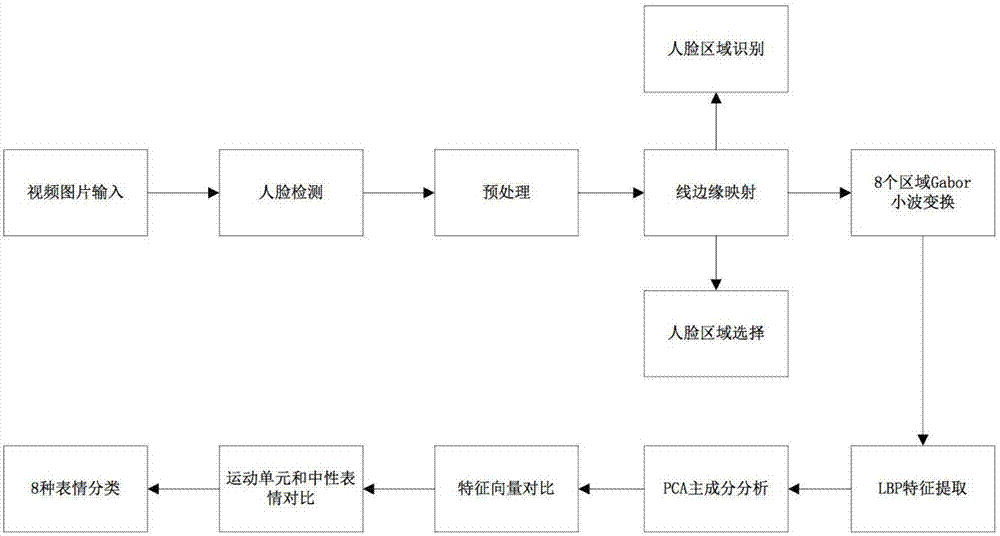

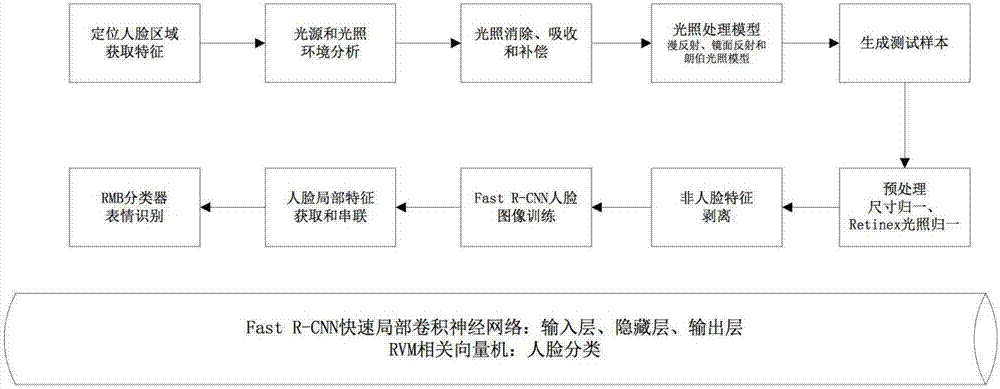

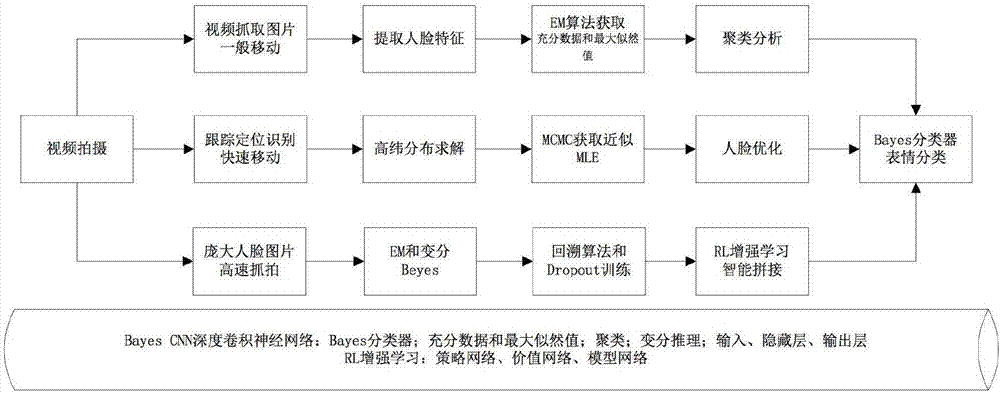

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

Device for applying a substance to the eyelashes or the eyebrows

InactiveUS6655390B2Avoid insufficient lengthComfortable to useBristleHair combsEyelashBiomedical engineering

Owner:LOREAL SA

Health and cosmetic composition and regime for stimulating hair growth and thickening on the head, including the scalp, eyelashes, and eyebrows, and which discourages hair loss

InactiveUS20080275118A1Preventing hair lossPromotes hair growthCosmetic preparationsBiocideEyelashHair growth

This invention relates to compositions and processes for stimulating the growth of mammalian hair comprising the topical application of compositions comprising a hair growth stimulating and / or hair loss prevention agent, and a hair and / or skin lightening and / or neutralization agent, in association with a topical pharmaceutical carrier. Unlike other products, the composition of the invention can be used with dyed / treated hair so as to not affect the color of the hair.

Owner:SHAW MARI M

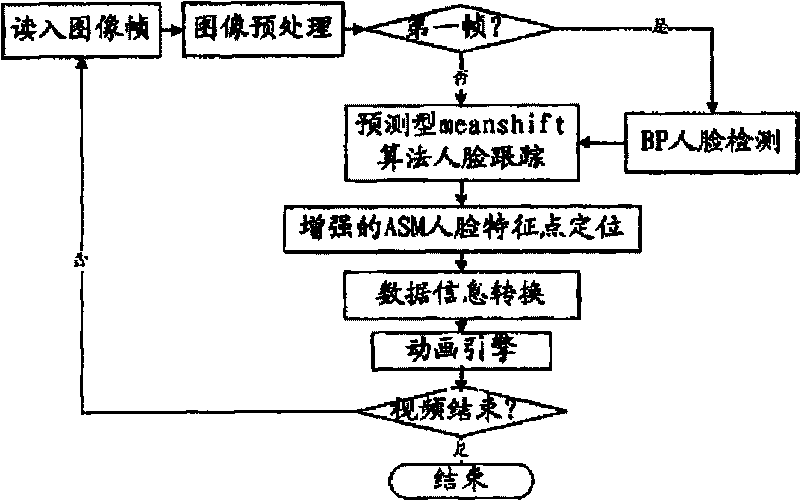

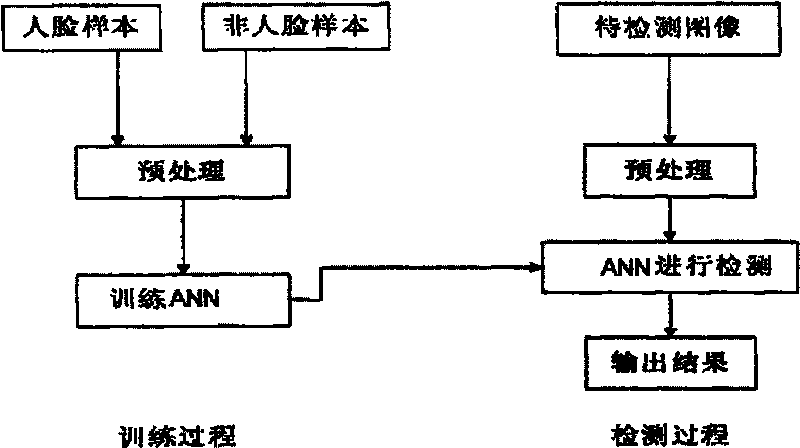

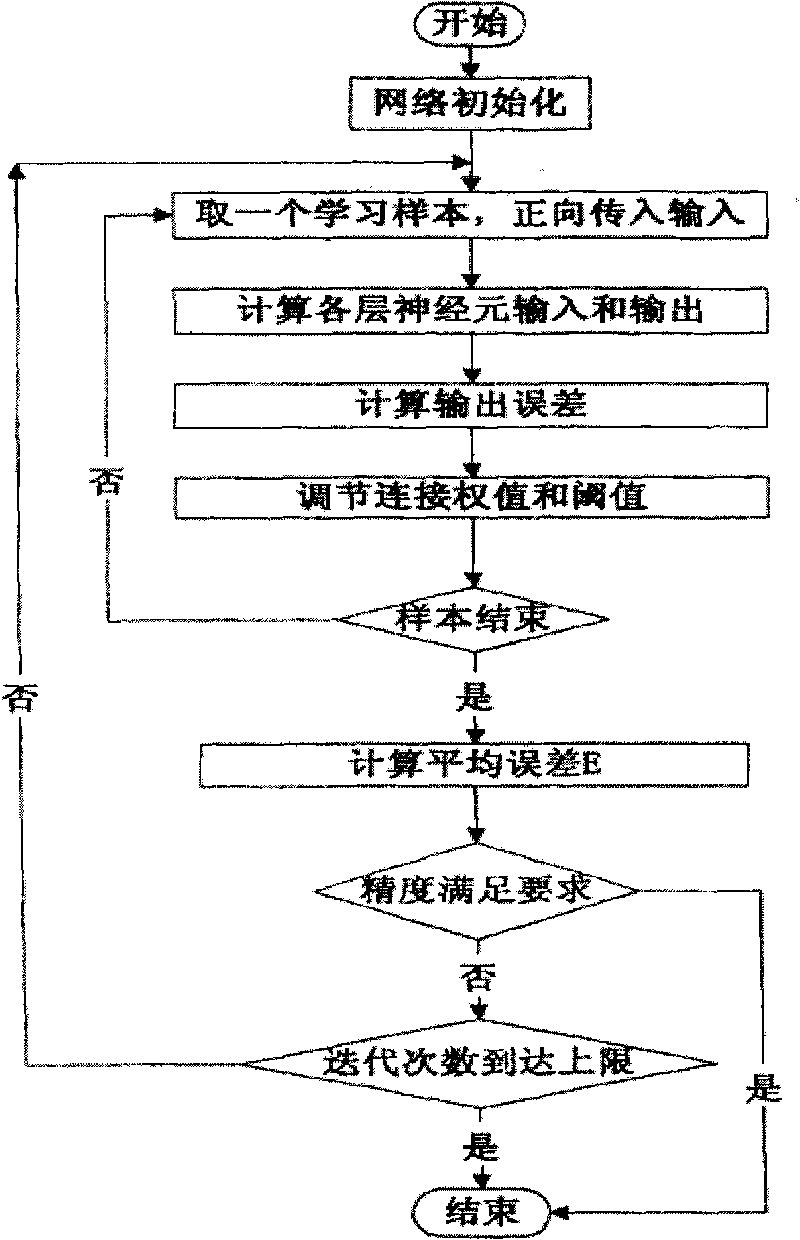

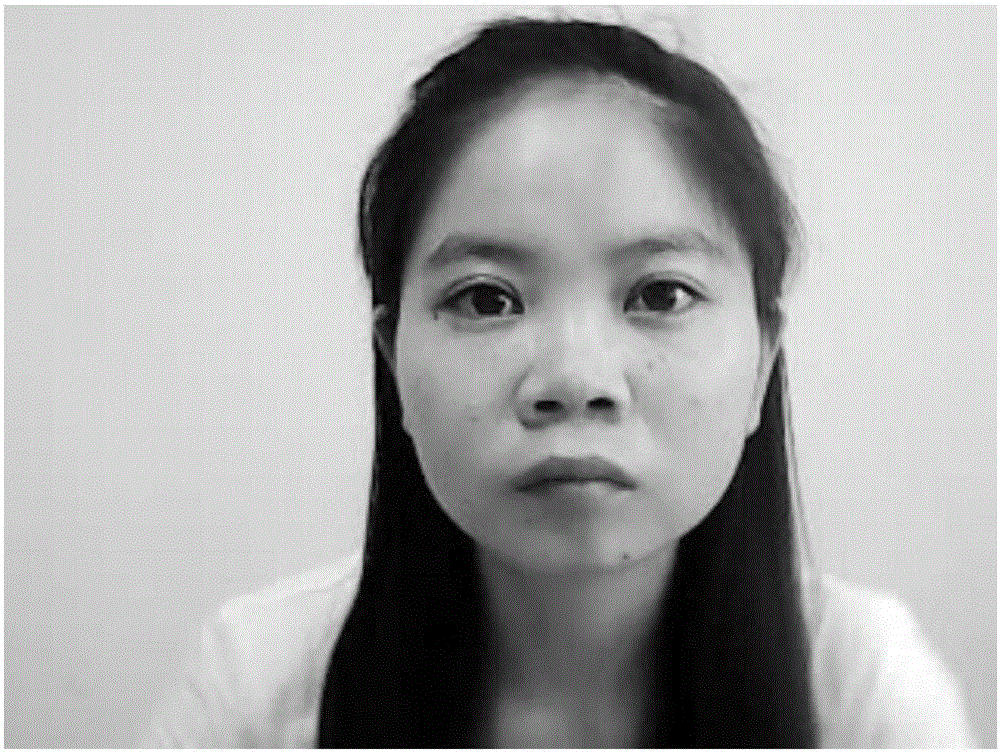

Video-based 3D human face expression cartoon driving method

InactiveCN101739712ASufficient prior knowledgeGood tracking resultCharacter and pattern recognition3D-image renderingFace detectionPattern recognition

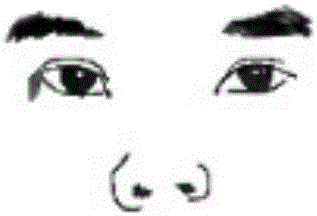

The invention discloses a video-based 3D human face expression cartoon driving method, which comprises the following steps: (1) image preprocessing, namely improving the image quality through light compensation, Gaussian smoothing and morphology operation of a gray level image; (2) BP human face detection, namely detecting a human face in a video through a BP neural network algorithm, and returning the size of the position of the human face for providing a smaller search range for human face characteristic point positioning of the next step to ensure instantaneity; (3) ASM human face characteristic point positioning and tracking, namely precisely extracting characteristic point information of human face shape, eyes, eyebrows, mouth and nose through an enhanced active shape model algorithm and a predicted meanshift algorithm, and returning the definite position; and (4) data information conversion, namely converting the data information acquired in the human face characteristic point positioning and tracking step to acquire the motion information of the human face. The method can overcome the defects in the prior art, and can achieve live human face cartoon driving effect.

Owner:SICHUAN UNIV

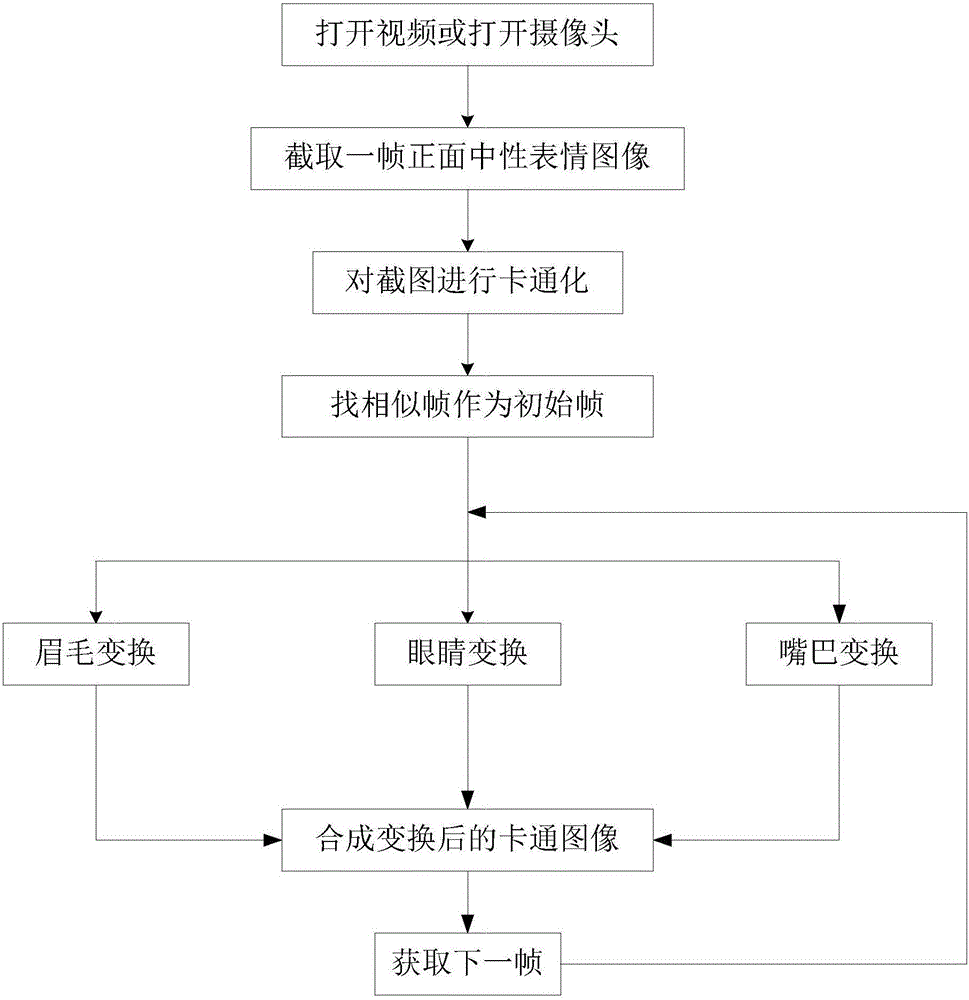

Video face cartoon animation generation method

The present invention discloses a video face cartoon animation generation method belonging to the image processing technology field. The video face cartoon animation generation method of the present invention comprises the following steps of firstly intercepting a frame of positive and neutral expression image from an input video, carrying out the cartoonlization processing on the neutral expression image, recording the eyebrow contour points, the eye contour points, and the maximum height difference h of the upper and lower eyelids when the eyes open; searching a frame similar with the neutral expression image as an initial conversion frame, based on the characteristic points of the last frame, determining the characteristic point processing of the next frame containing the corresponding conversion processing of the eyebrows, the eyes and the mouth, and synthesizing the converted cartoon images, obtaining the next frame image of the image frame corresponding to the synthesized cartoon images, repeating the above steps, and outputting the multiple frames of continuous cartoon images to generate the cartoon animation. The video face cartoon animation generation method of the present invention is used for the generation of the cartoon animation, is faster in generation speed and better in generation effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

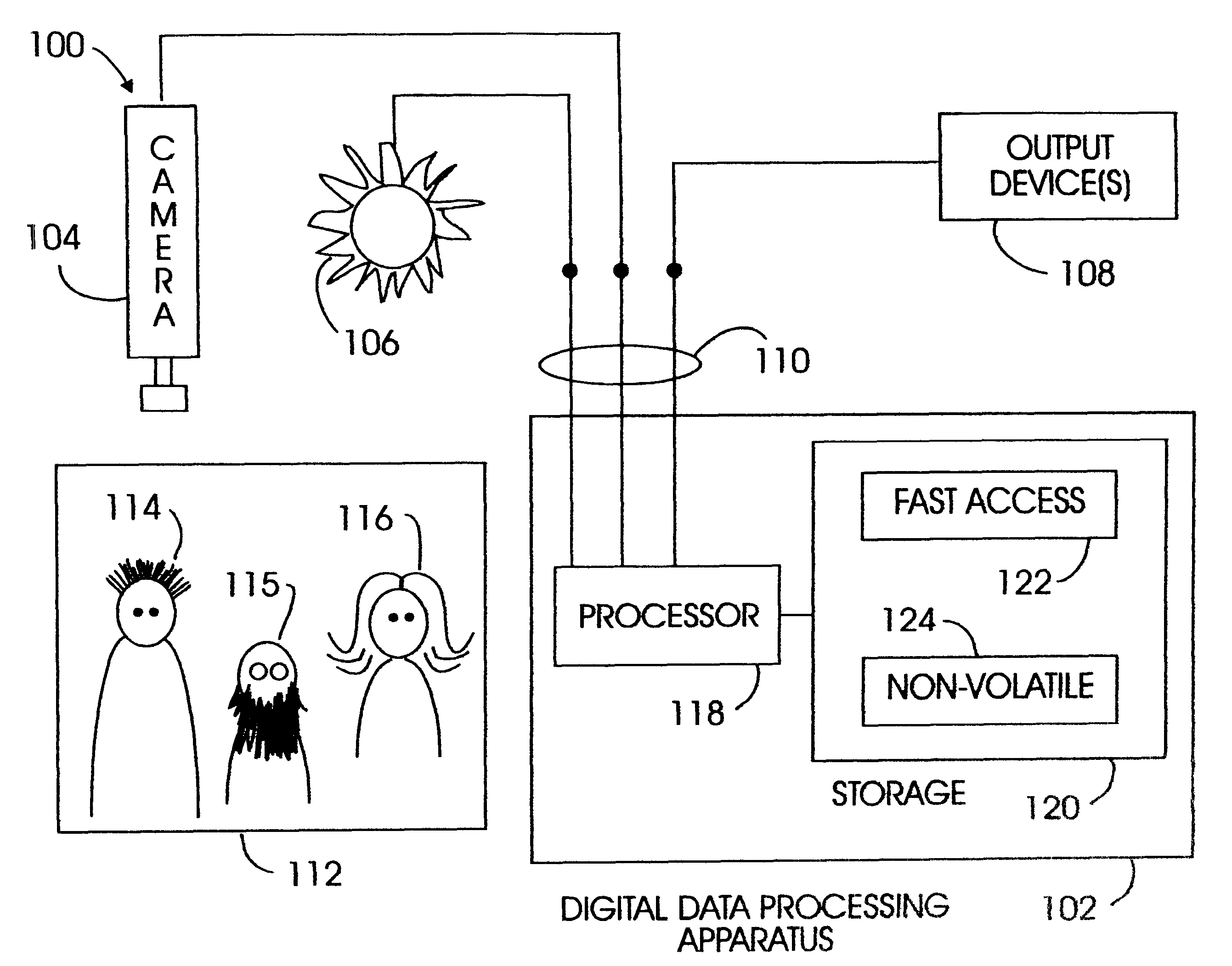

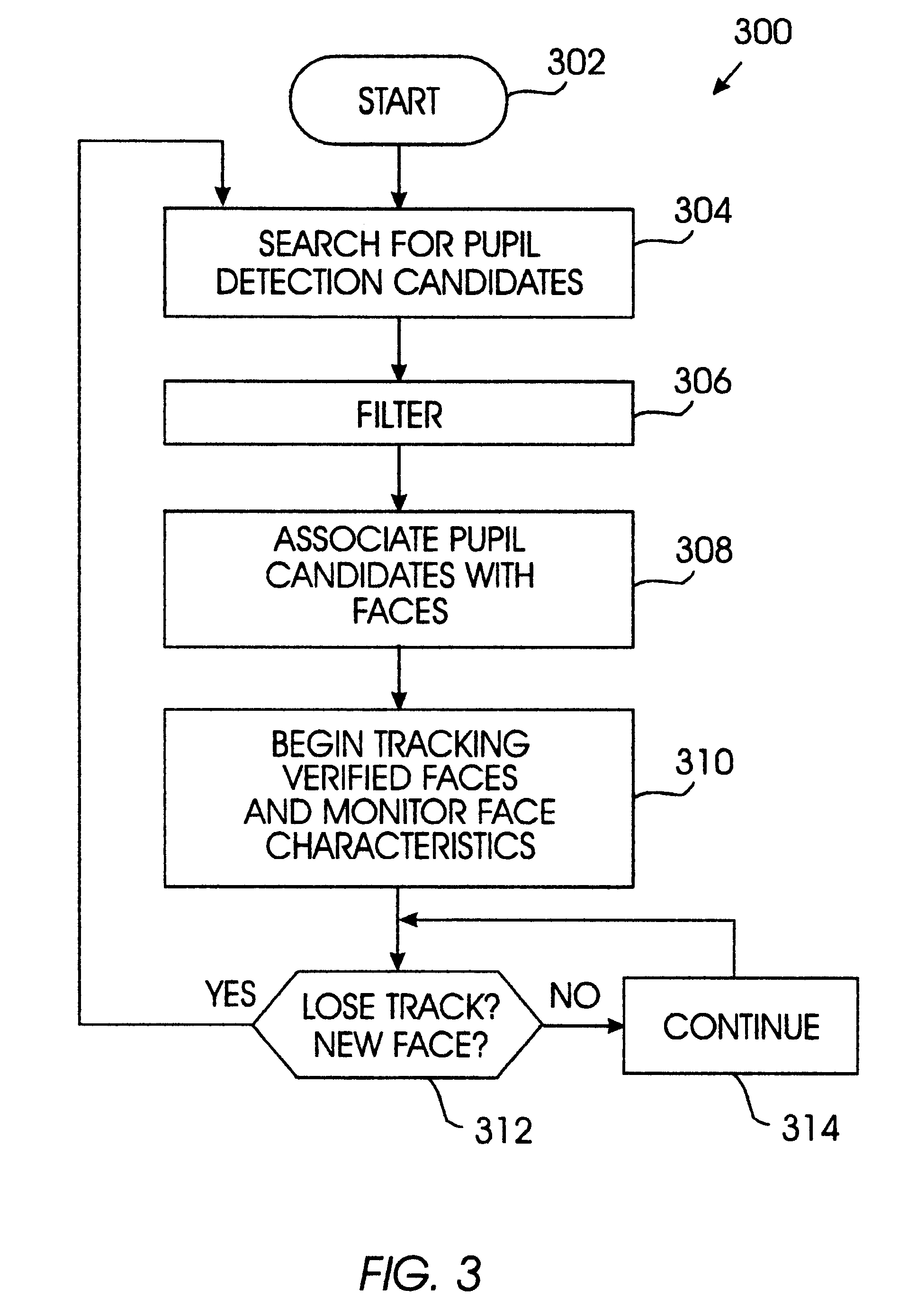

Method and apparatus for associating pupils with subjects

InactiveUS6539100B1Input/output for user-computer interactionImage enhancementPattern recognitionCamera image

A method and apparatus analyzes a scene to determine which pupils correspond to which subjects. First, a machine-readable representation of the scene, such as a camera image, is generated. Although more detail may be provided, this representation minimally depicts certain visually perceivable characteristics of multiple pupil candidates corresponding to multiple subjects in the scene. A machine such as a computer then examines various features of the pupil candidates. The features under analysis include (1) visually perceivable characteristics of the pupil candidates at one given time ("spatial cues"), and (2) changes in visually perceivable characteristics of the pupil candidates over a sampling period ("temporal cues"). The spatial and temporal cues may be used to identify associated pupil pairs. Some exemplary spatial cues include interocular distance, shape, height, and color of potentially paired pupils. In addition to features of the pupils themselves, spatial cues may also include nearby facial features such as presence of a nose / mouth / eyebrows in predetermined relationship to potentially paired pupils, a similarly colored iris surrounding each of two pupils, skin of similar color nearby, etc. Some exemplary temporal cues include motion or blinking of paired pupils together, etc. With the foregoing examination, each pupil candidate can be associated with a subject in the scene.

Owner:IPG HEALTHCARE 501 LTD

Device for applying a substance to the eyelashes or the eyebrows

InactiveUS20020005209A1Sufficient length of timeComfortable to useBristleHair combsEyelashBiomedical engineering

Owner:LOREAL SA

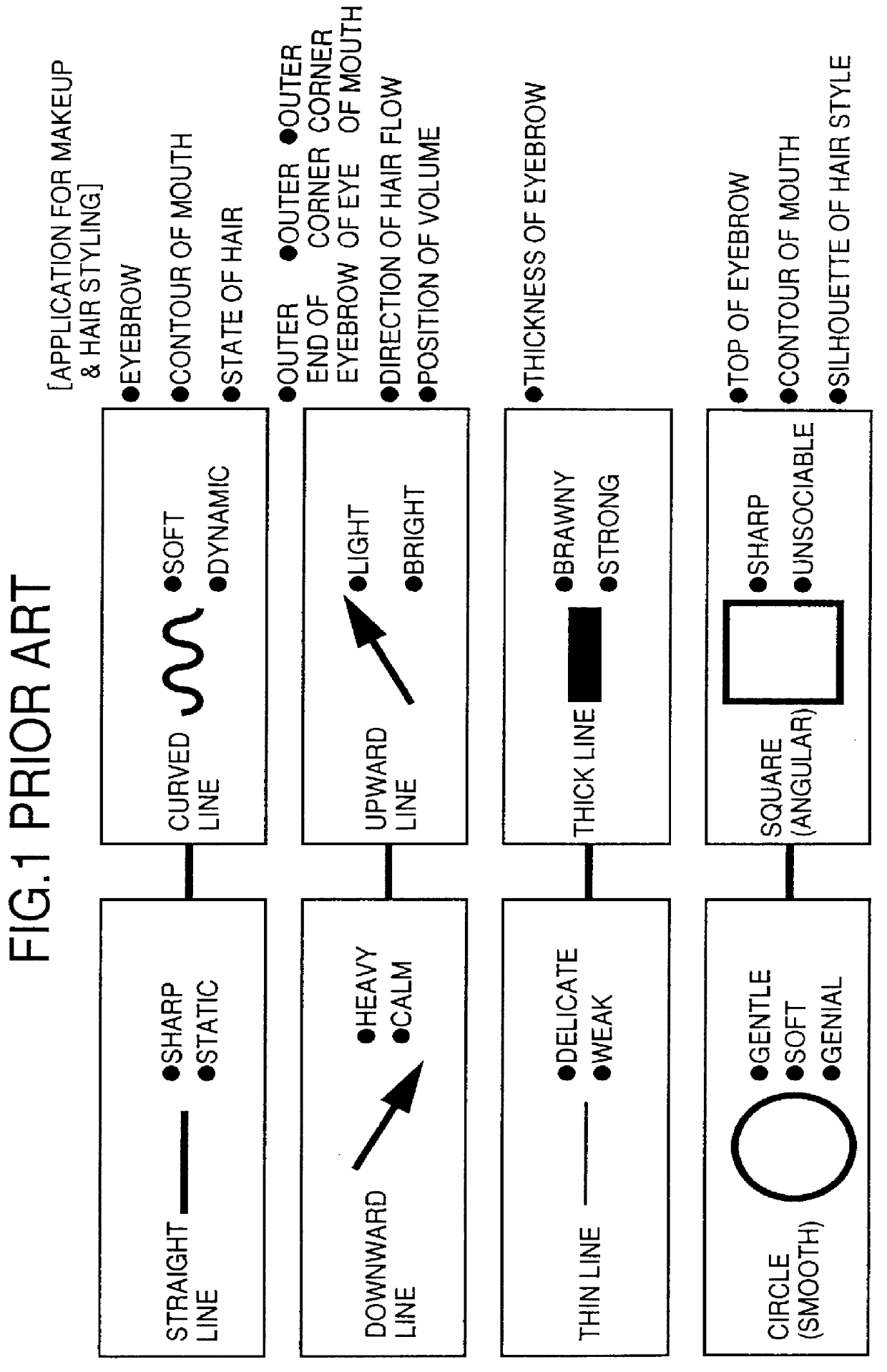

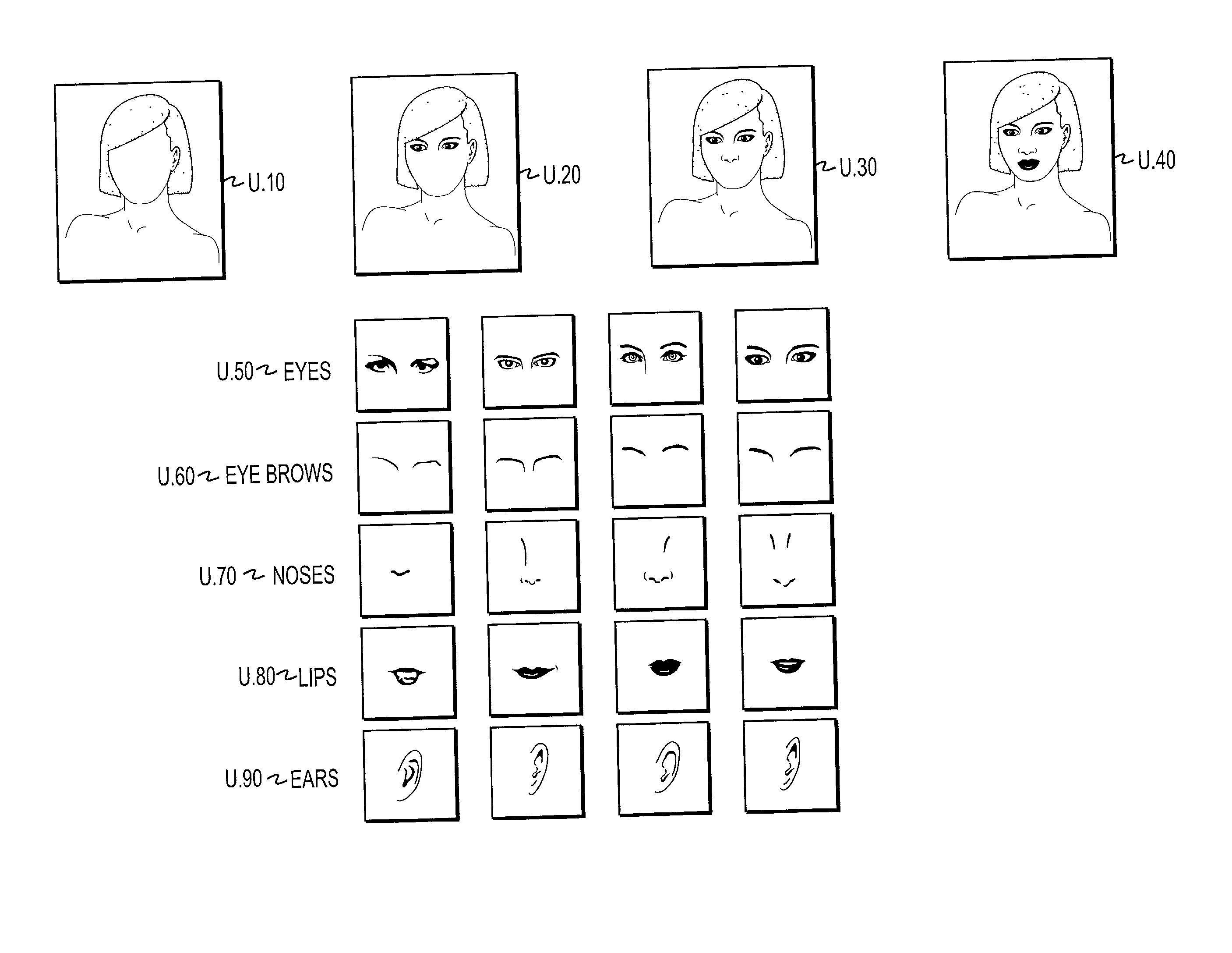

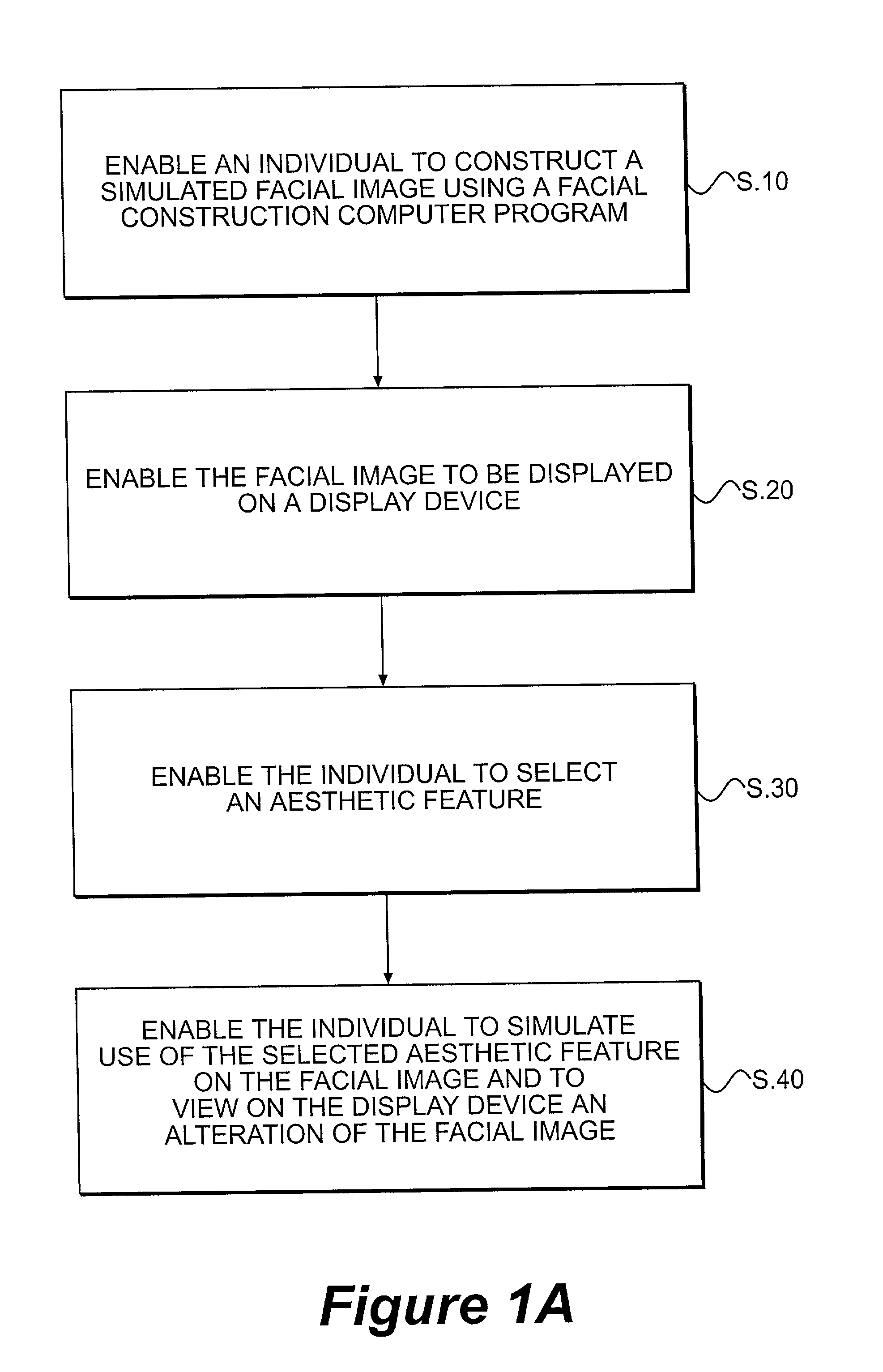

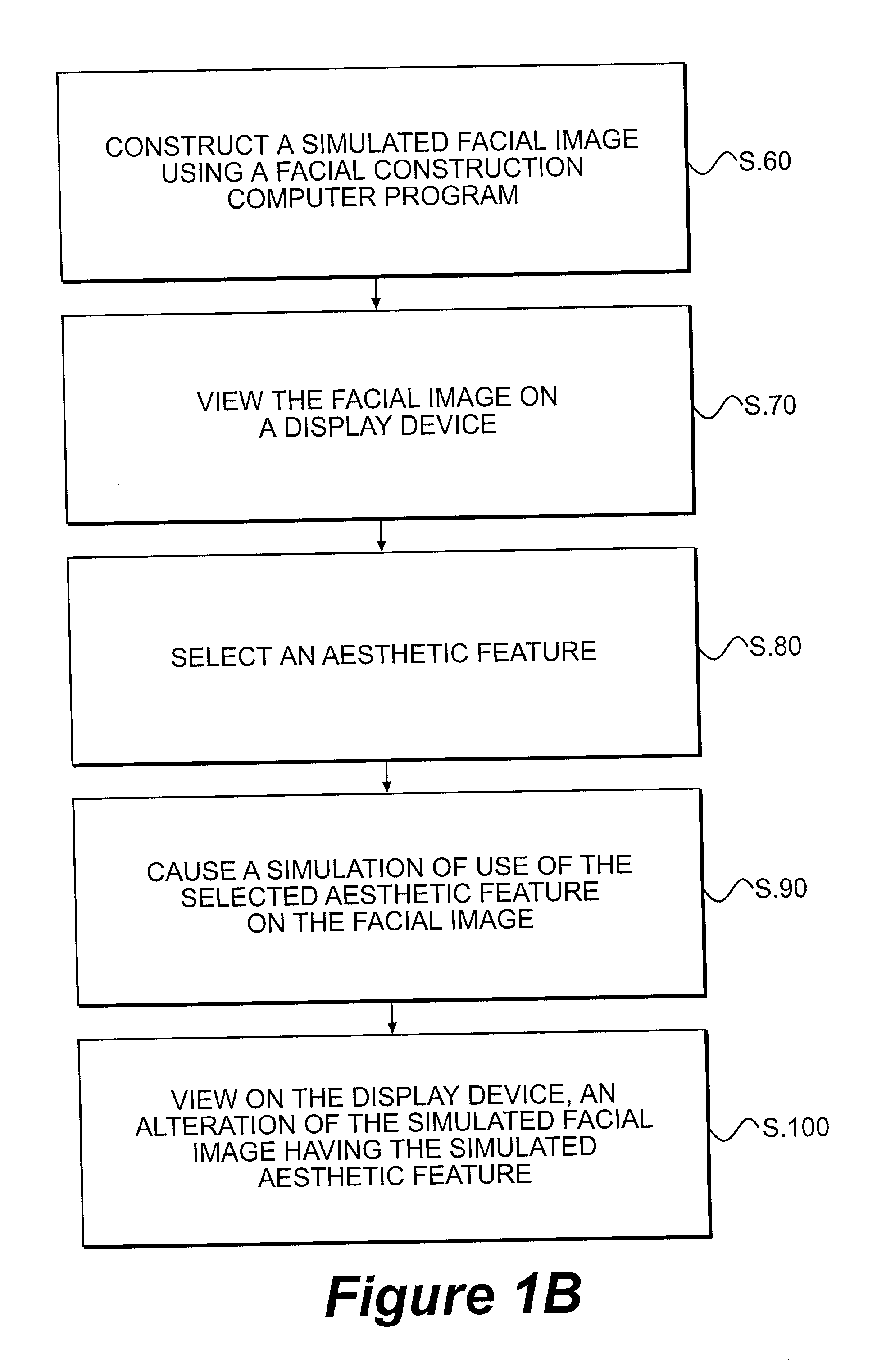

Simulation of an aesthetic feature on a facial image

InactiveUS20030065255A1Enabling useEasy constructionMedical simulationTelevision system detailsComputer graphics (images)Nose

Disclosed methods and systems enable simulated use of an aesthetic feature on a simulated facial image. One of the methods involves enabling an individual to construct a simulated facial image using a facial construction computer program, wherein the facial construction computer program permits the individual to select at least one of a head, eyes, nose, lips, ears, and eye brows. The method also involves enabling the simulated facial image to be displayed on a display device and enabling the individual to select an aesthetic feature. The method further involves enabling the individual to simulate use of the selected aesthetic feature on the simulated facial image and to view on the display device an alteration of the simulated facial image having the simulated aesthetic feature.

Owner:LOREAL SA +1

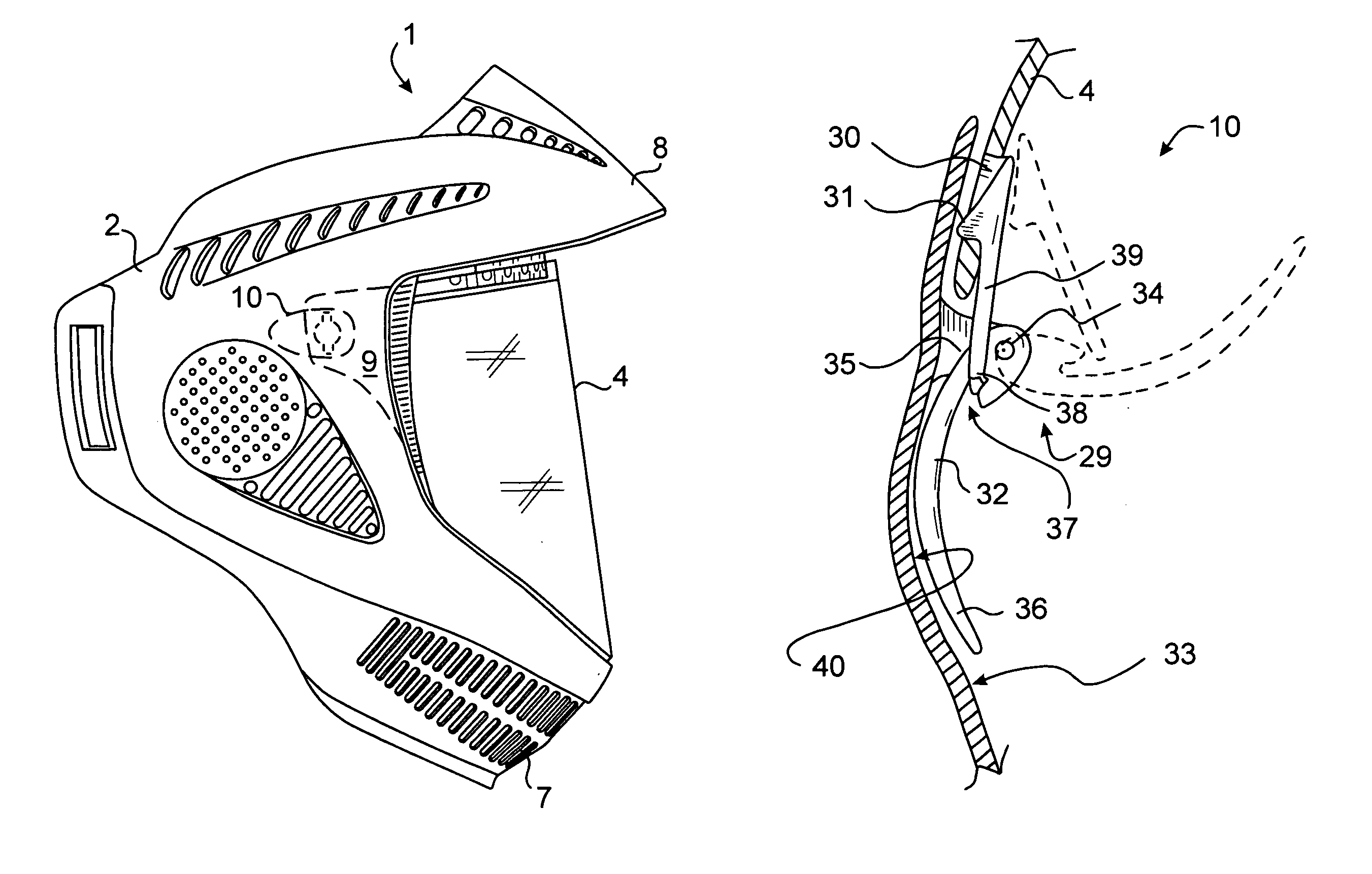

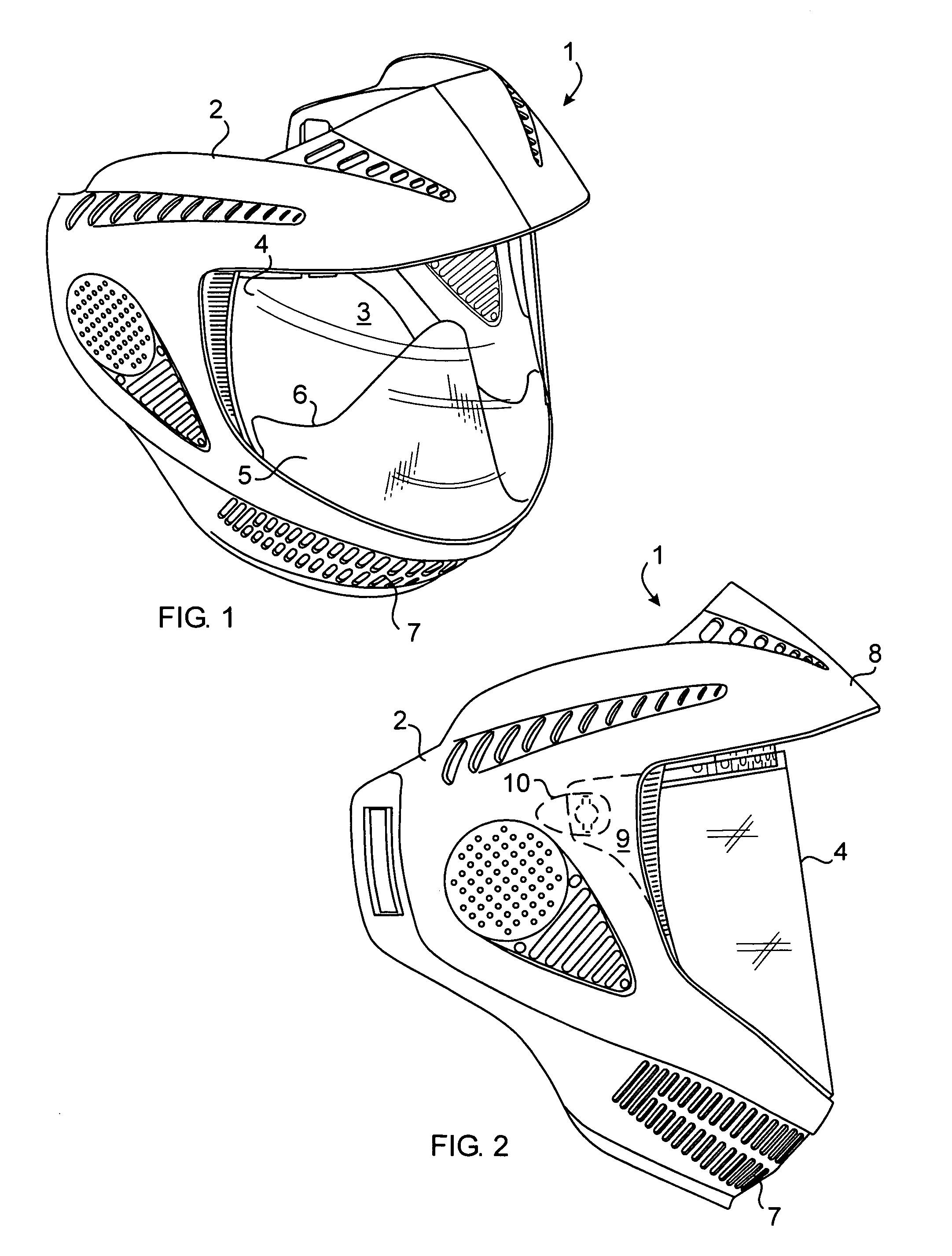

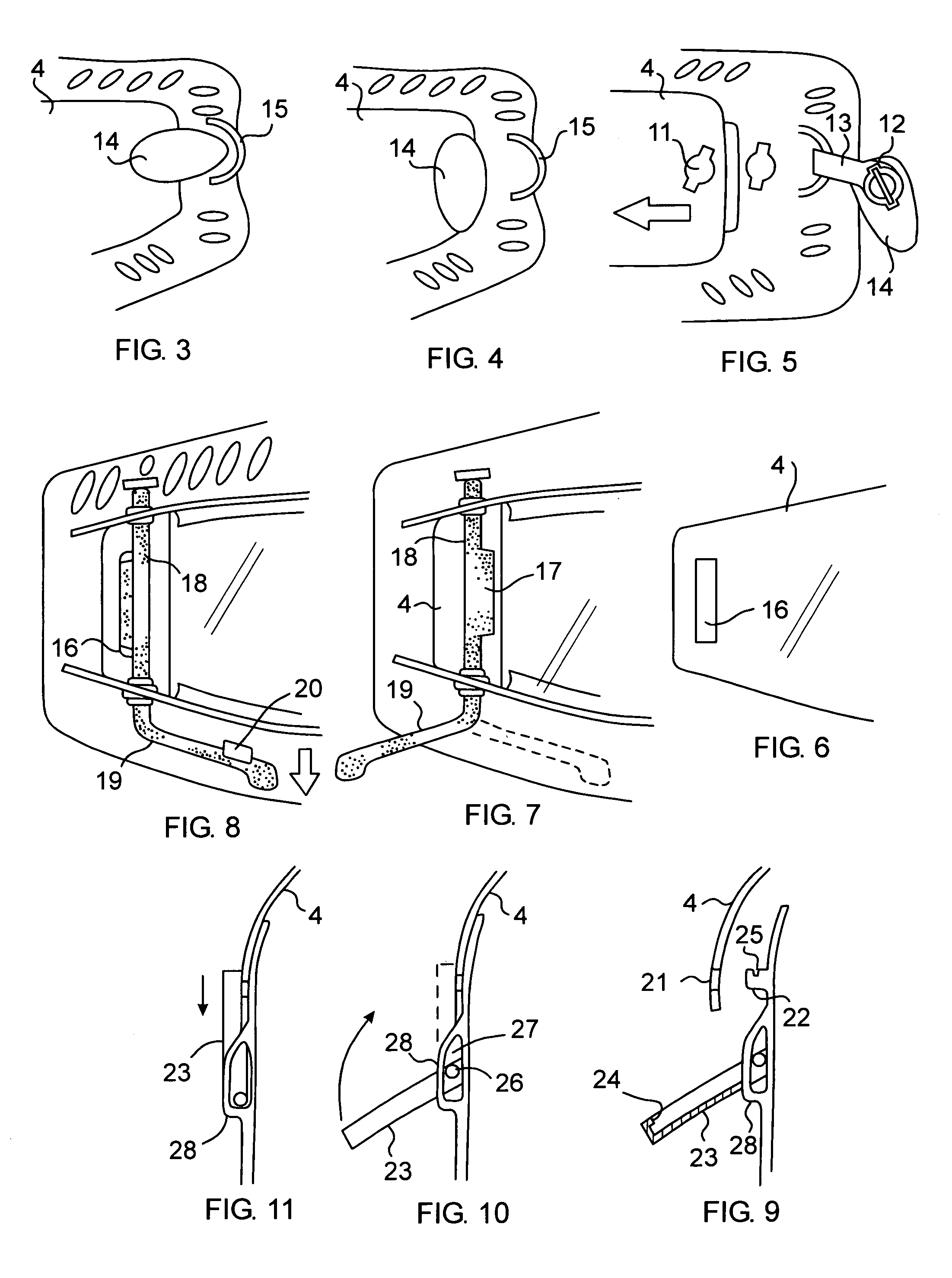

Face mask with detachable eye shield

In a face mask for use in the practice of paint-ball games or other such activities requiring effective shielding of the face, a large unitary lens is applied to an aperture extending from the brow to the chin and from one temple to the other. The lens is secured by a pair of releasable clips that can be quickly manipulated for cleaning or replacement of the lens in a matter of seconds. A semi-conical breath deflector extends behind the lens from the nose bridge to the base of the lens channeling the breath away from the lens and towards ventilating slits in the base of the mask. The slant of the deflector avoids any obstruction of the field of vision such as created by the lens frame of conventional goggles and face masks.

Owner:JT SPORTS

Method and system for enhancing portrait images that are processed in a batch mode

InactiveUS20060153470A1Efficiently usImage enhancementTelevision system detailsBatch processingDigital image

Owner:MONUMENT PEAK VENTURES LLC

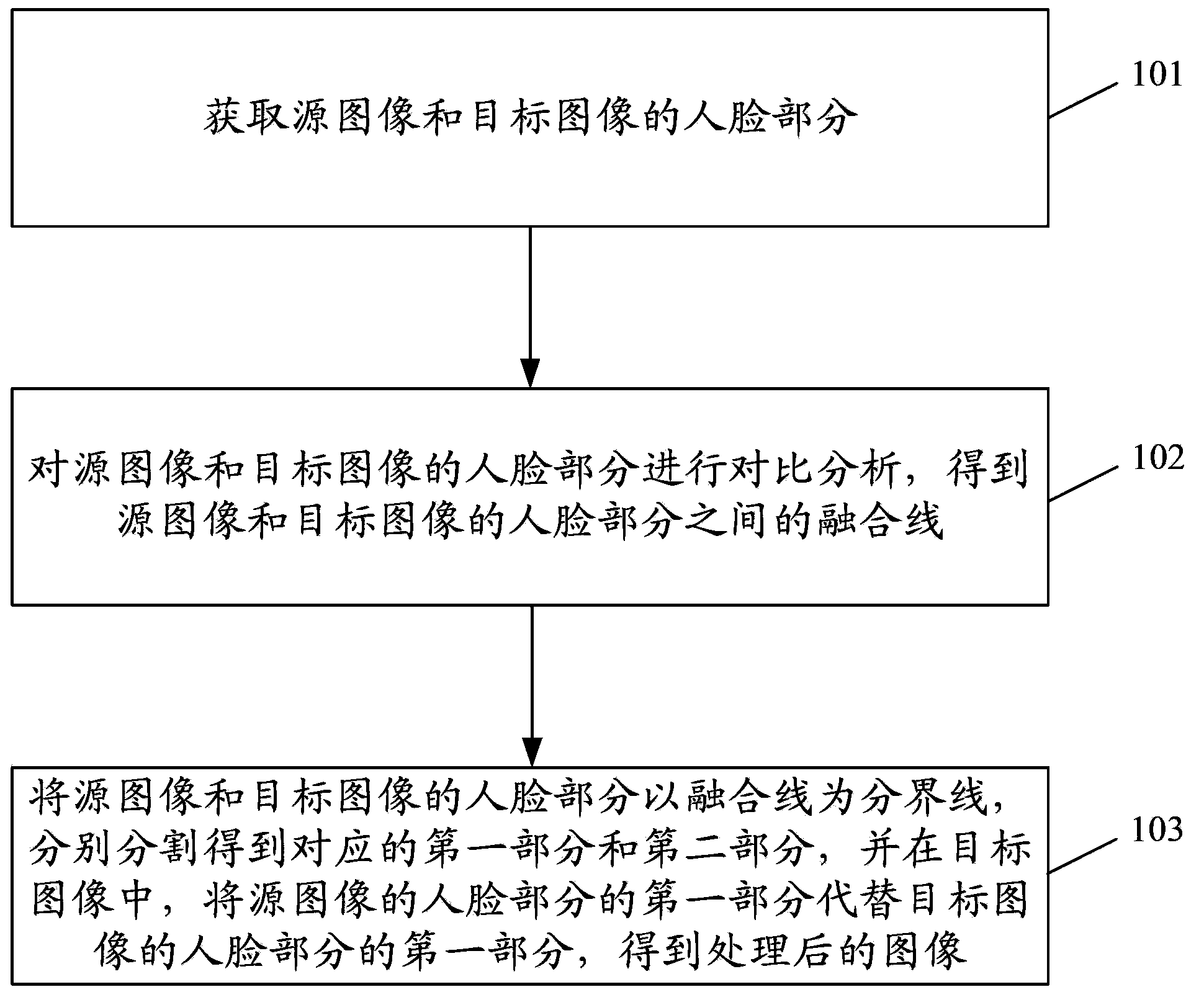

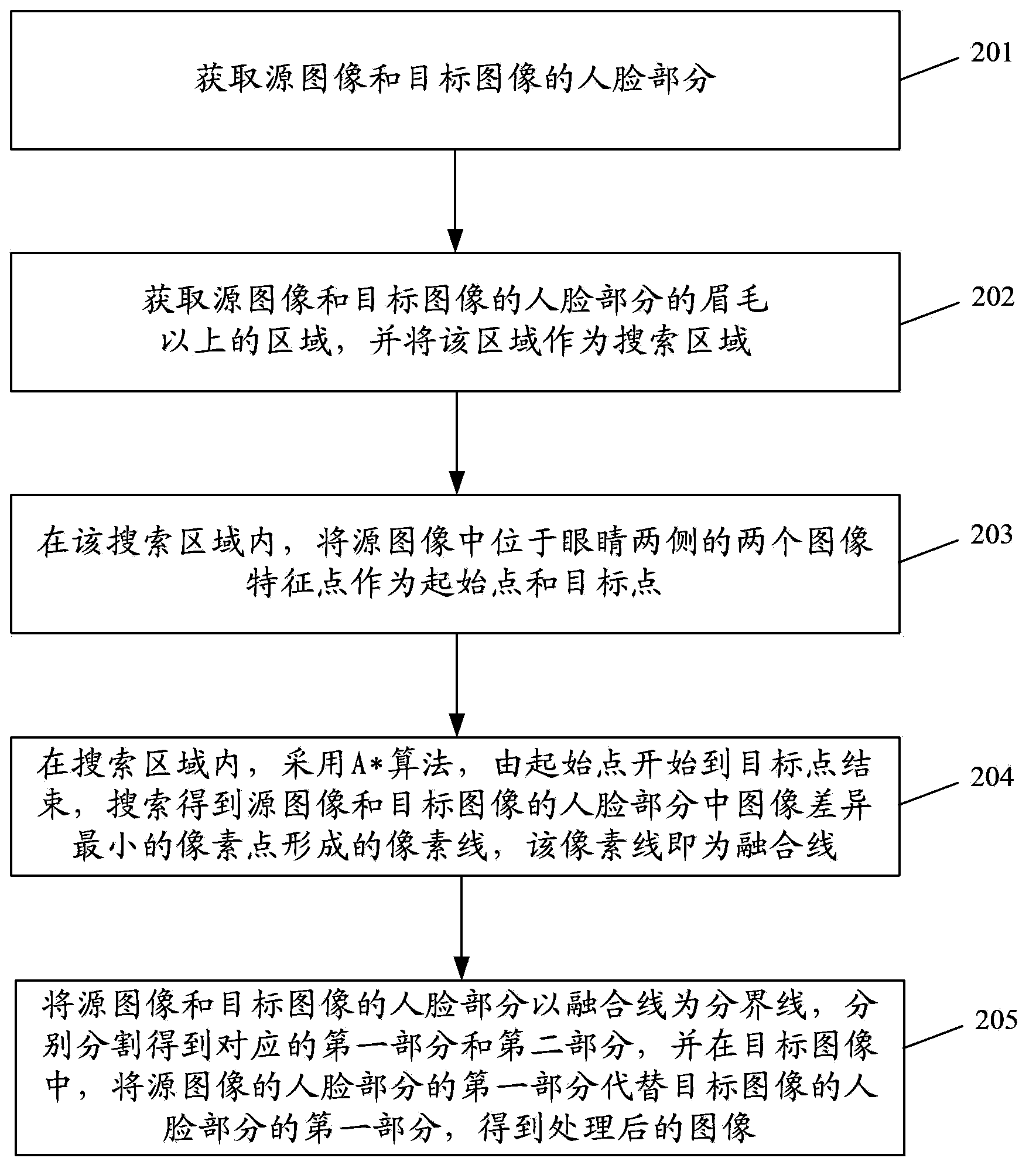

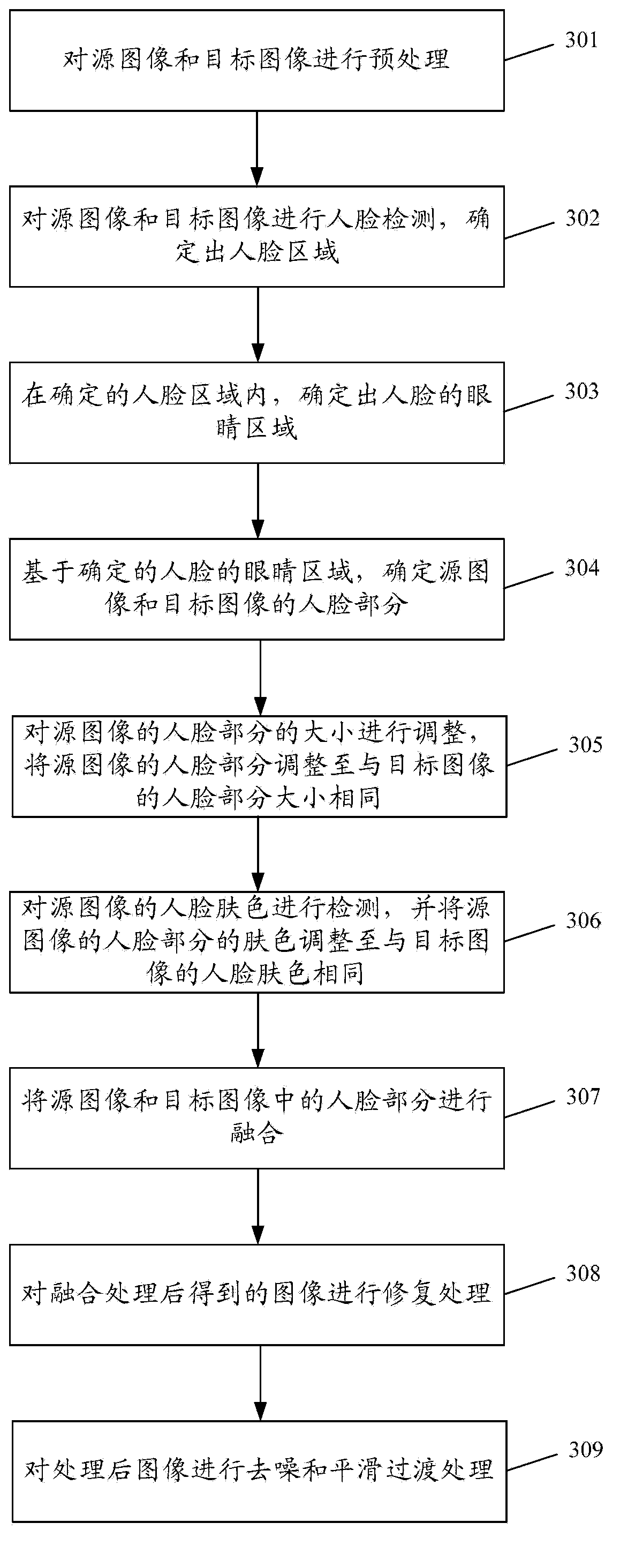

Face image processing method

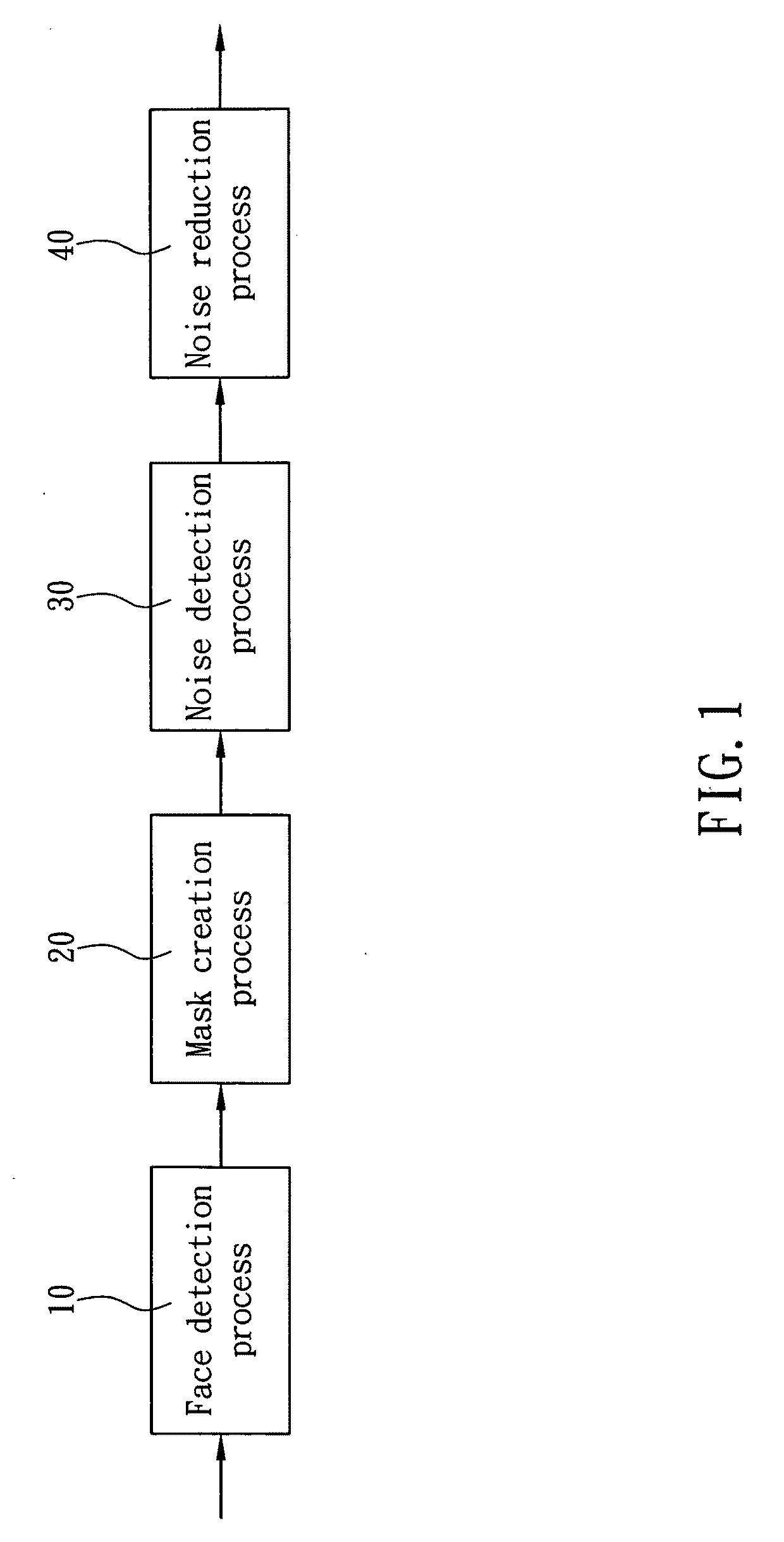

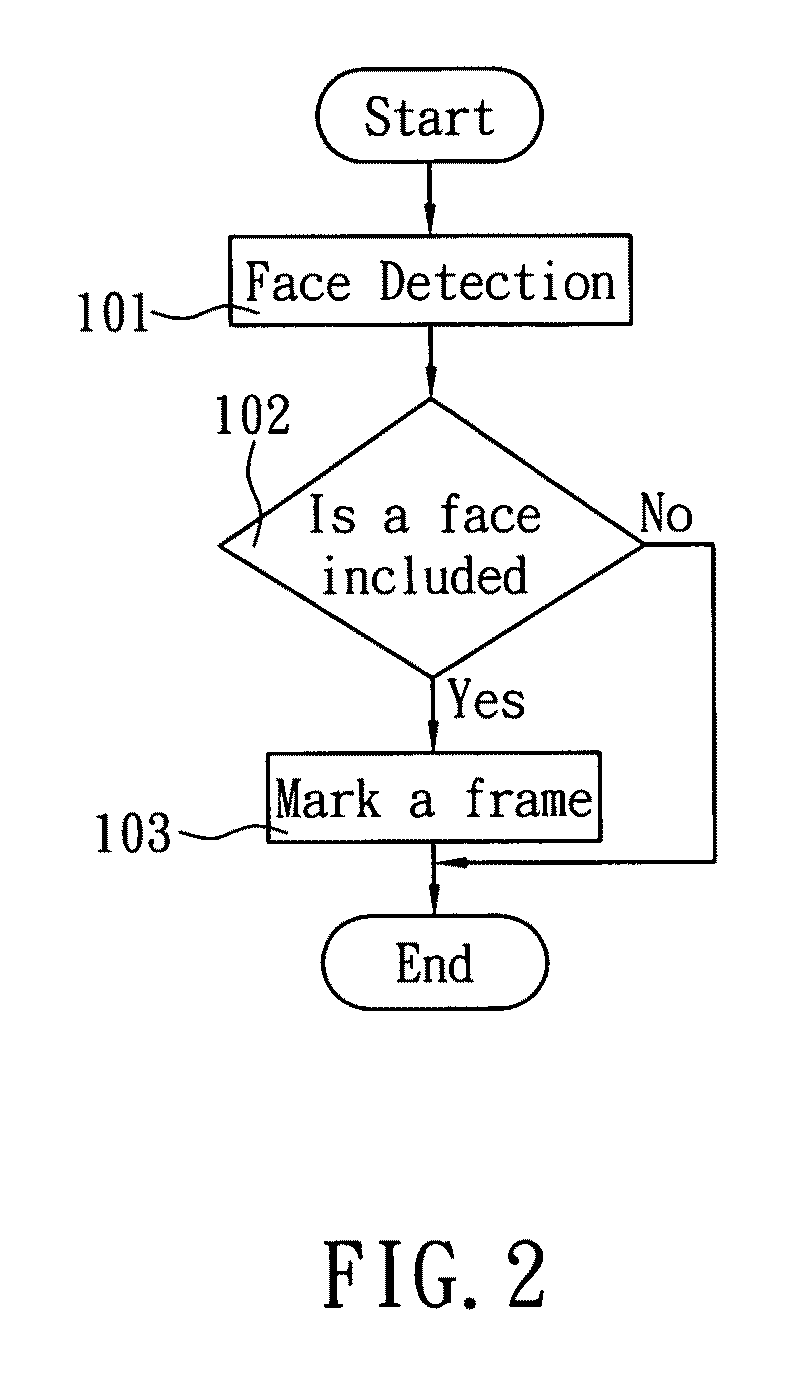

ActiveUS20100177981A1Easy to removeSimple process and operationImage enhancementImage analysisFace detectionImaging processing

A face image processing method is applied to an electronic device, such that the electronic device can perform a face detection to a digital image to obtain a face image in the digital image automatically, and perform a skin color detection to the face image to exclude non-skin features such as eyes, eyeglasses, eyebrows, a moustache, a mouth and nostrils on the face image, and form a skin mask in an area range of the face image belonging to skin color, and finally perform a filtering process to the area range of the face image corresponding to the skin mask to filter high-frequency, mid-frequency and low-frequency noises of an abnormal skin color in the area range of the face image, so as to quickly remove blemishes and dark spots existed in the area range of the face image.

Owner:ARCSOFT CORP LTD

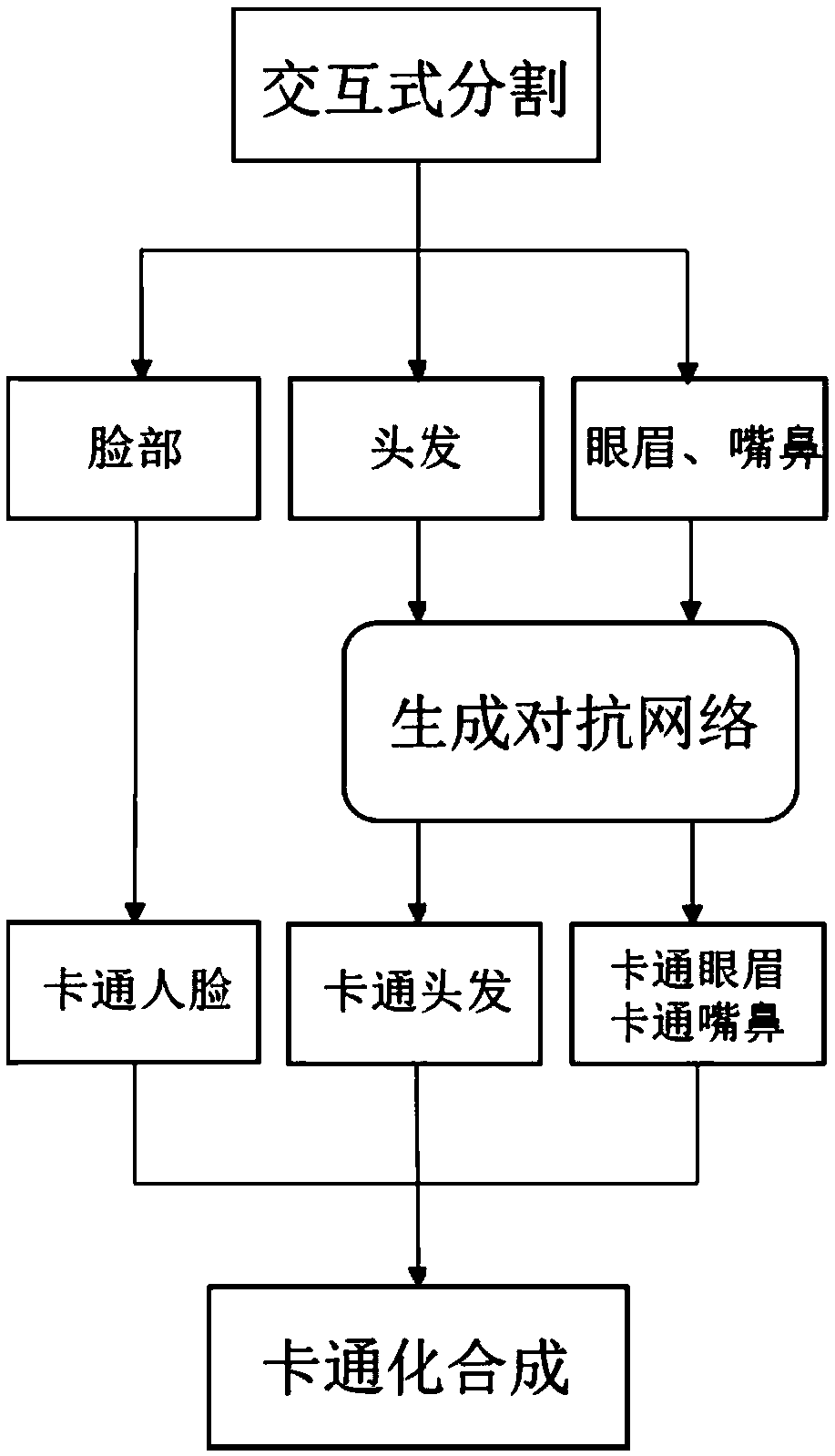

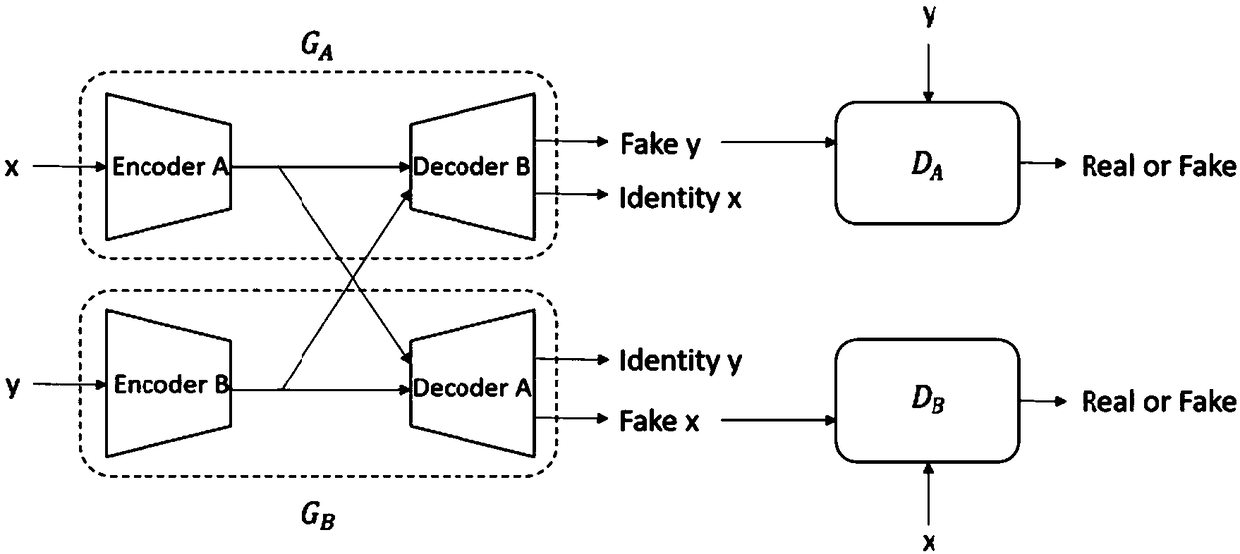

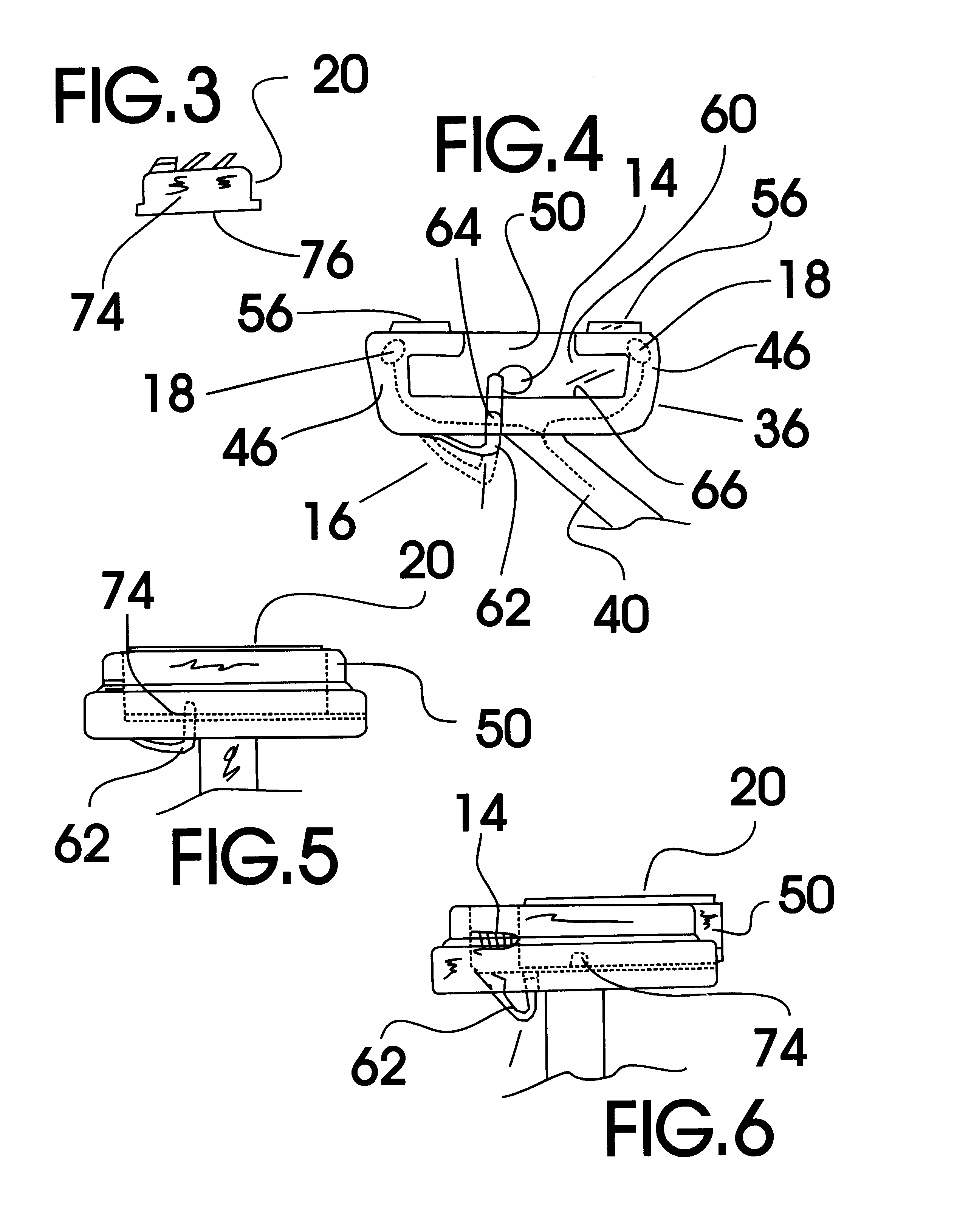

An interactive face cartoon method based on generative adversarial networks

ActiveCN109376582AEliminate differencesCharacter and pattern recognitionNeural architecturesPattern recognitionNose

The invention discloses an interactive face cartoon method based on generative adversarial networks. The image to be processed is firstly subjected to interactive segmentation processing to obtain eyebrow-eye, mouth-nose, hair and face images, and then eyebrow-eye, mouth-nose and hair images are respectively input into three trained eye, mouth-nose and hair generation models to output corresponding cartoon five-feature images. Based on the cartoon processing of face image, the cartoon face can be obtained directly. Then the facial features are synthesized on the cartoon face and superimposed on the hair effect to get the final cartoon image. The invention utilizes the advantages of interactive and generating antagonistic network, obtains the five features of human hair, face shape and facethrough interactive segmentation, eliminates the difference between training samples due to different backgrounds, converts the style of each part through generating antagonistic network, and retainsas much information of eye corner, mouth corner and other detail parts as possible.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

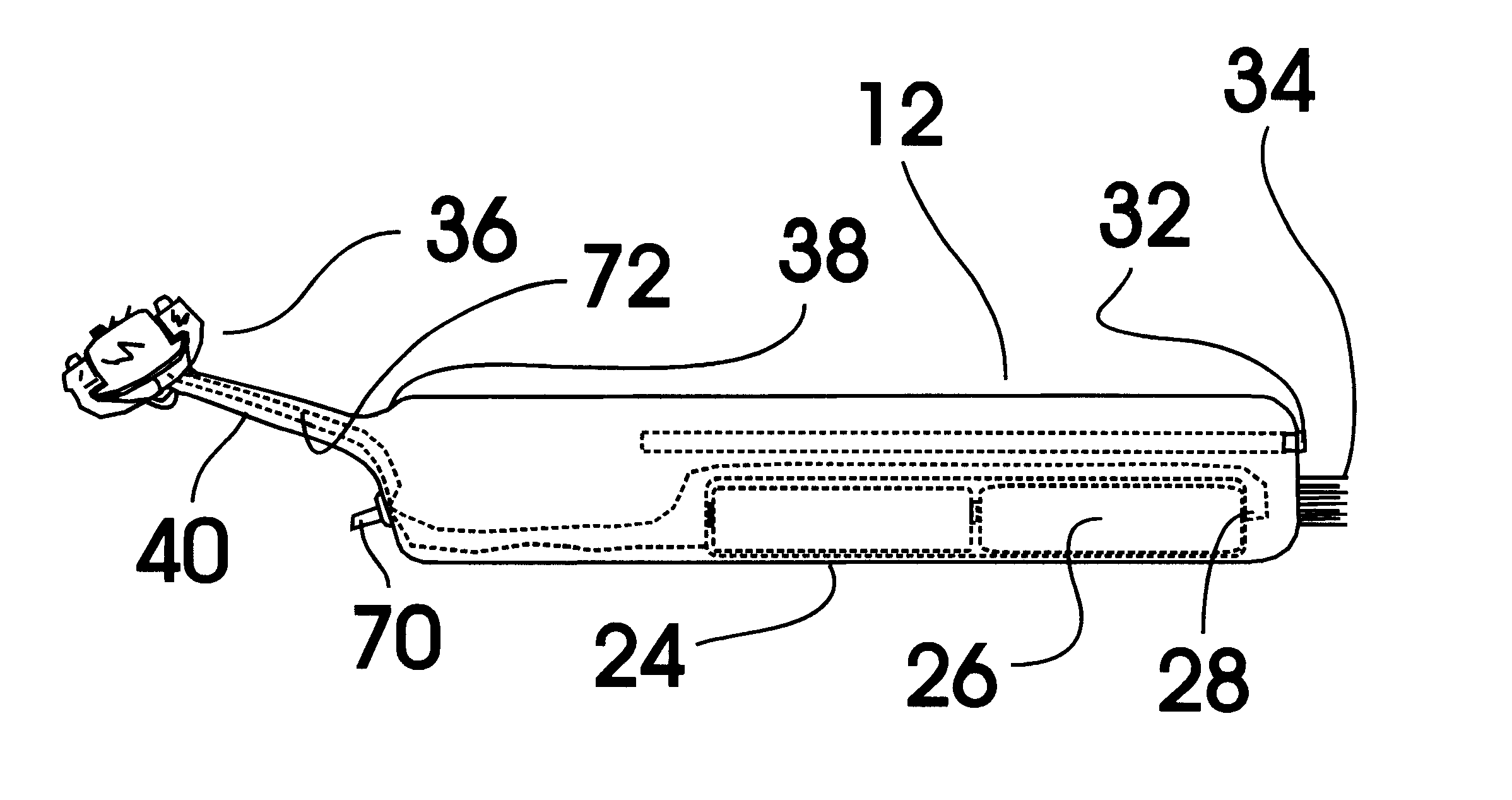

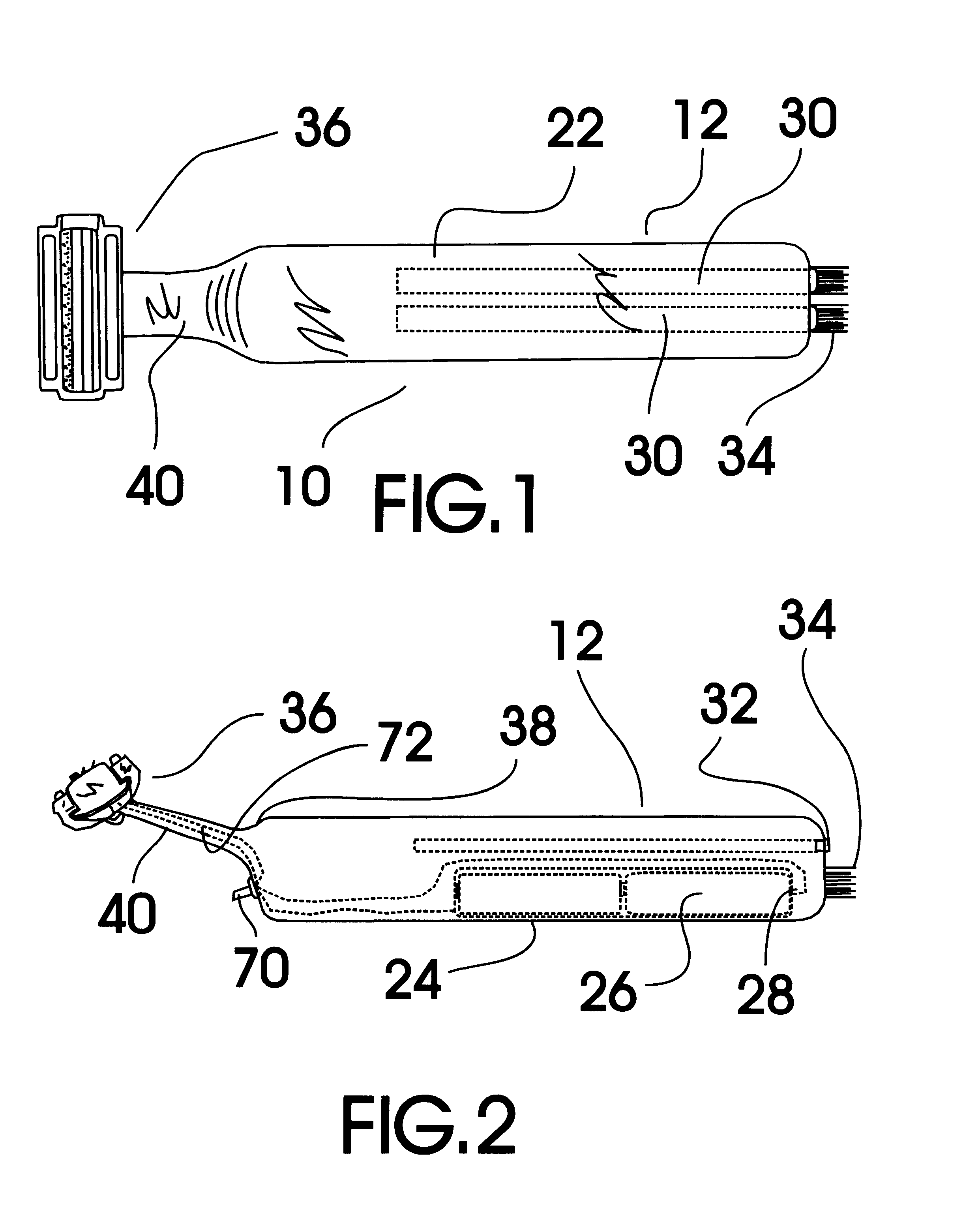

Eyebrow grooming tool

An eyebrow grooming tool that includes a molded plastic handle and a razor blade cartridge holding assembly in connection with the plastic handle. The razor blade cartridge holding assembly is provided with a light source shining through two elongated transparent windows positioned on either side of the razor blade cartridge for illuminating the eyebrow to be trimmed. A brush for grooming the eyebrow before and after trimming by the razor assembly is provided at the back end of the handle.

Owner:AUDET DIANE L

Image processing method and image processing device

ActiveCN103839223AImprove deformation processing effectGood splicing effectImage enhancementImage analysisImaging processingEyebrow

The embodiment of the invention provides an image processing method and an image processing device. The method comprises the following steps: face parts of a source image and a target image are acquired; a comparative analysis is made on the face parts of the source image and the target image to obtain a fusion line between the face parts of the source image and the target image, wherein the fusion line is a pixel line with the minimum image difference in areas above the eyebrows of the face parts of the source image and the target image and is located in the areas above the eyebrows of the face parts; and the face parts of the source image and the target image are respectively segmented by taking the fusion line as a dividing line to correspondingly obtain a first part and a second part, and in the target image, the first part of the face part of the target image is replaced with the first part of the face part of the source image to obtain a processed image, wherein the first parts are parts including the noses in the face parts. The image processing method and the image processing device provided by the embodiment of the invention can be applied to image processing in which the face of a target image is replaced with the face of a source image, and the image processing effect after replacement can be improved.

Owner:HUAWEI TECH CO LTD +1

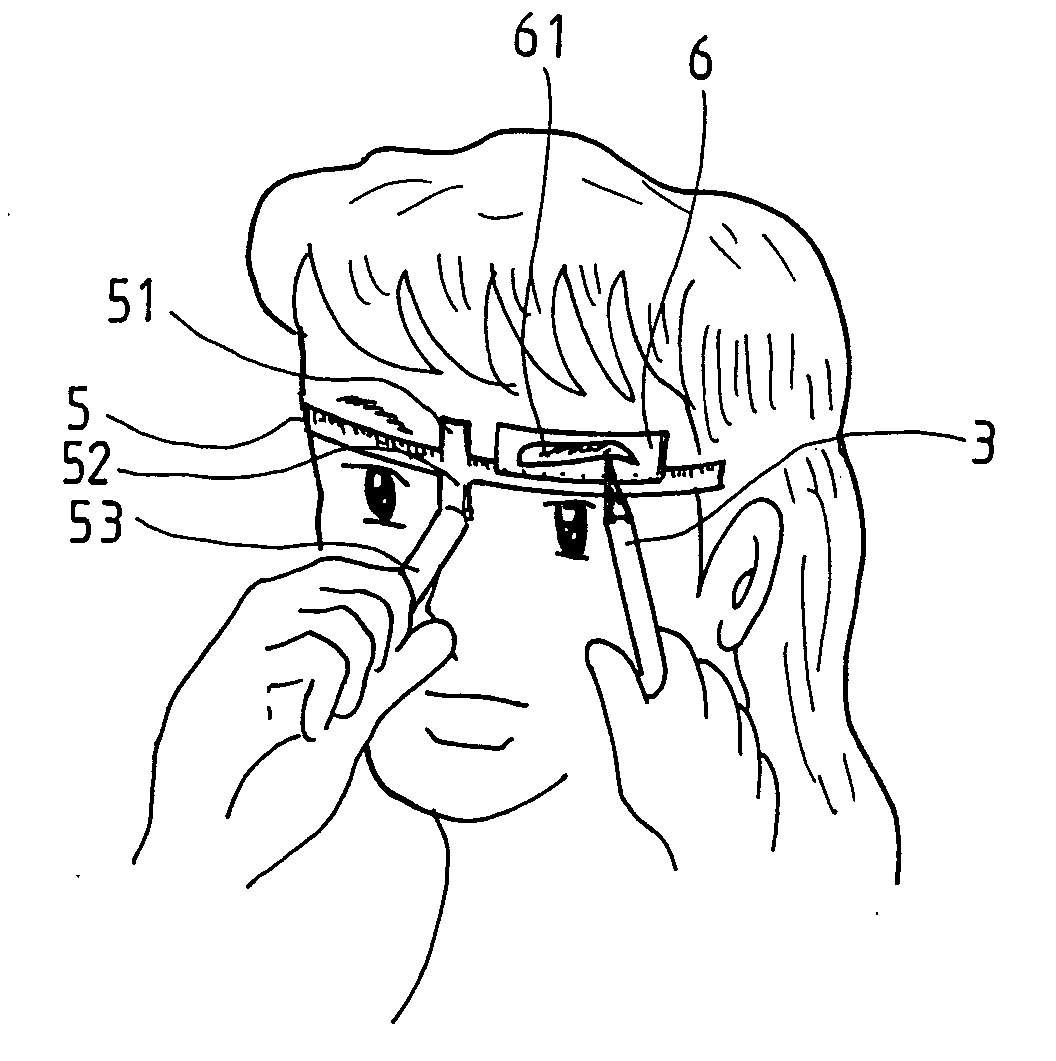

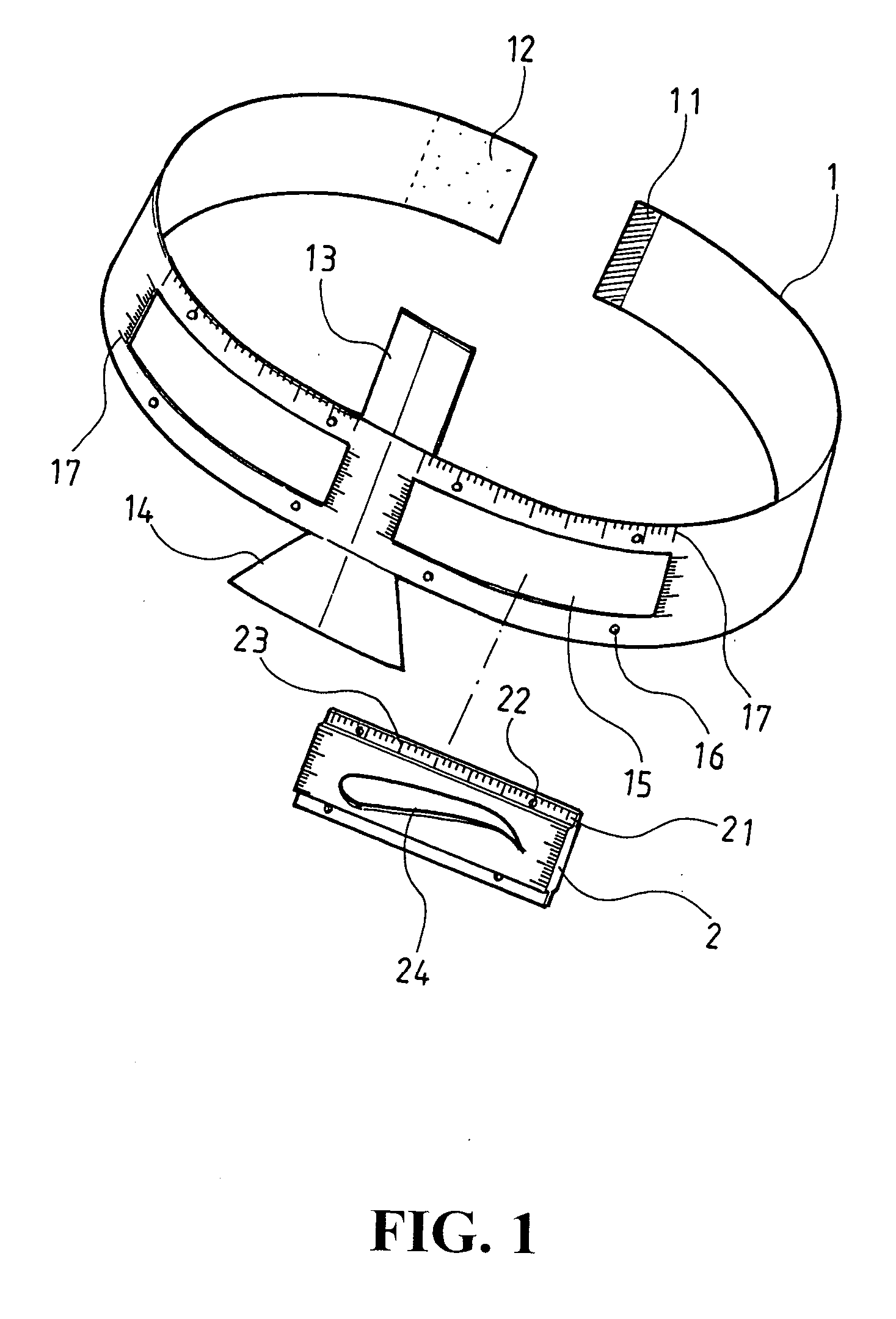

Stencil strap for eyebrow stenciling

InactiveUS20070006748A1Liquid surface applicatorsStencilling apparatusEngineeringMechanical engineering

A stencil strap for eyebrow-stenciling is disclosed. The strap comprises a strap body having two ends mounted with a male and female adhesive plate, and a central upper and lower edge provided with an eyebrow center and nose positioning plate, wherein the two lateral sides of the positioning plate are respectively provided with elongated opening and the upper and lower edge of the opening are provided with a plurality of engaging hooks; and a plurality of stencils having a circumferential edge provided with a stepped section, the stepped section being mounted with fastening hole corresponding to the engaging hooks of the strap body, wherein the surface of the stencil is stenciling opening of various shapes.

Owner:LIU CHEN HAI

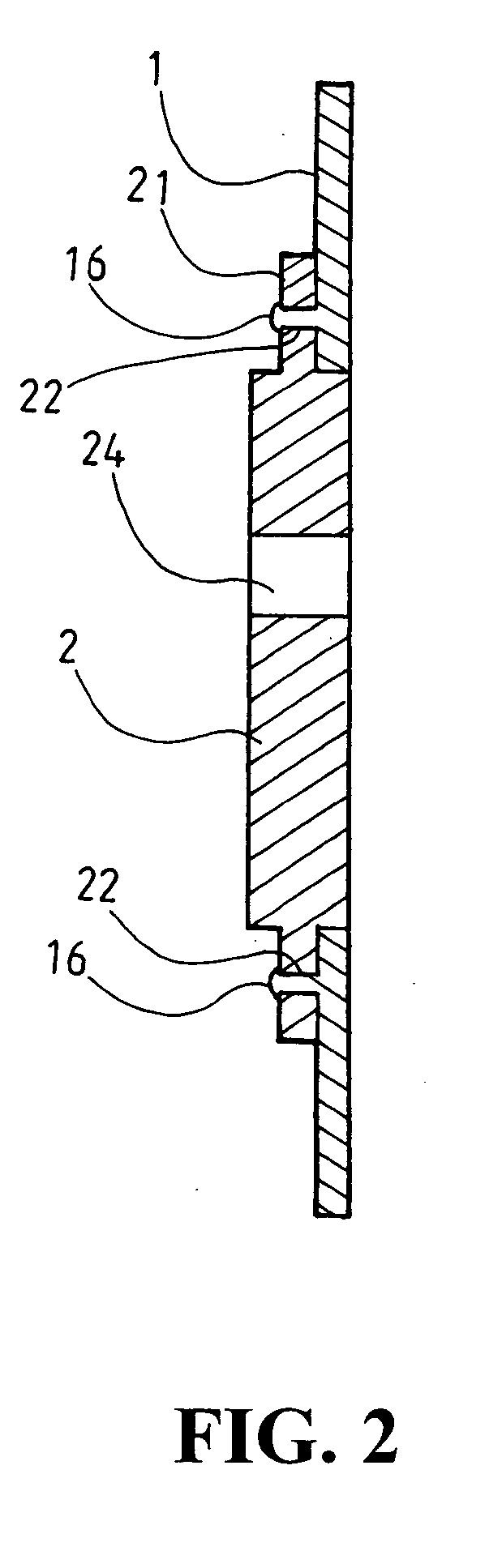

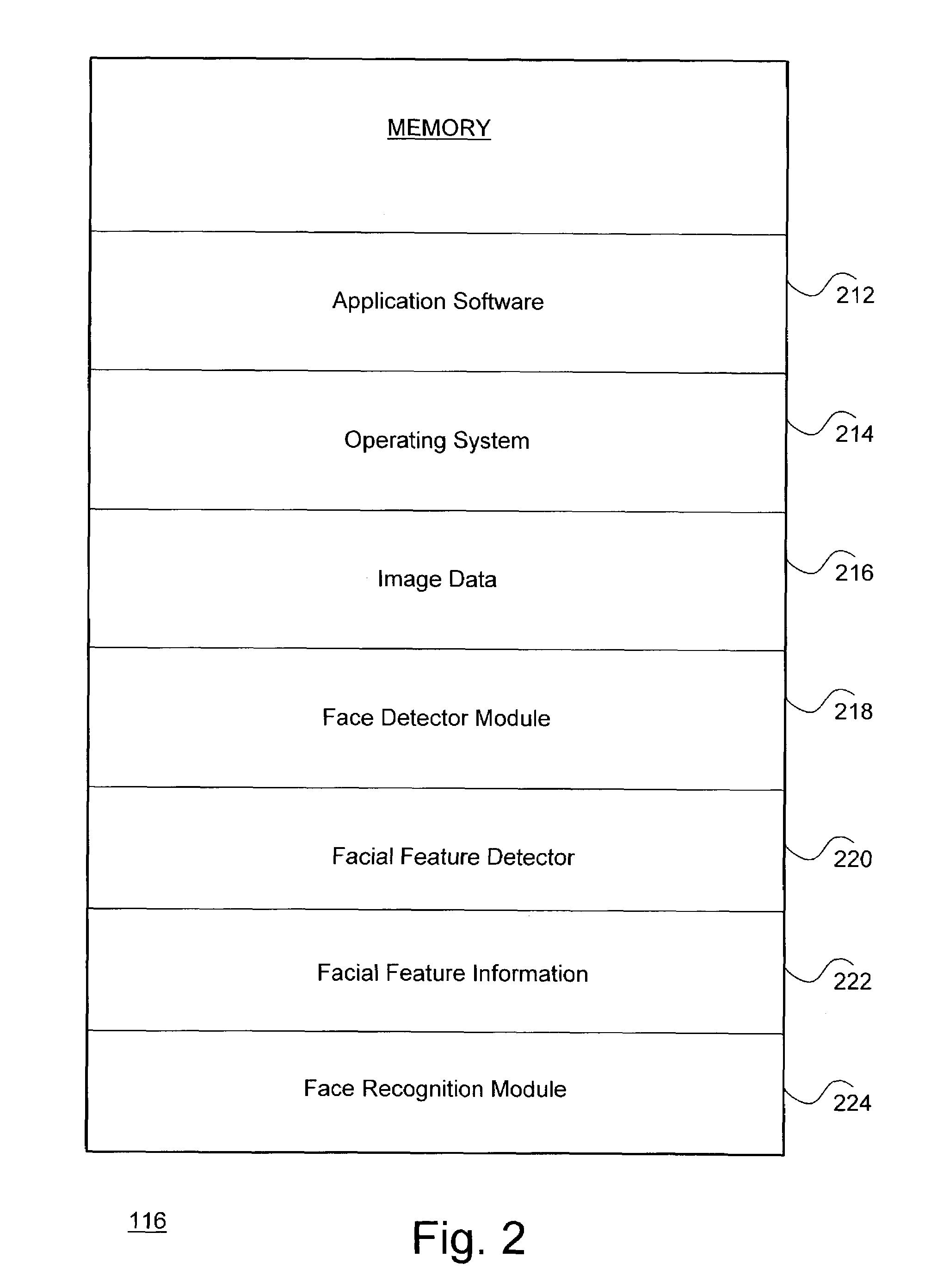

System and method for effectively extracting facial feature information

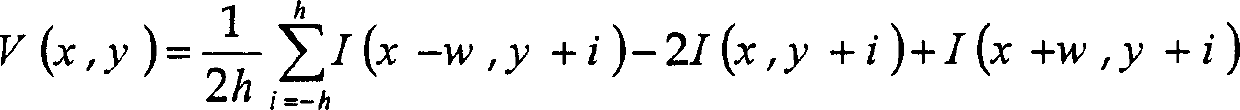

ActiveUS7239726B2Efficient extractionAccurately determinedCharacter and pattern recognitionVertical projectionNostril

A system and method for effectively extracting facial feature information from image data may include a facial feature detector configured to generate facial feature information. The facial feature detector may perform an eye-area vertical projection procedure for generating eye information and eyebrow information. The facial feature detector may utilize an eyebrow filter to detect eyebrow location coordinates and eyebrow slope characteristics. Similarly, the facial feature detector may utilize an iris filter to detect iris location coordinates. The facial feature detector may also perform a mouth-area vertical projection procedure for generating corresponding nose / nostril location information and mouth / lip location information.

Owner:SONY CORP +1

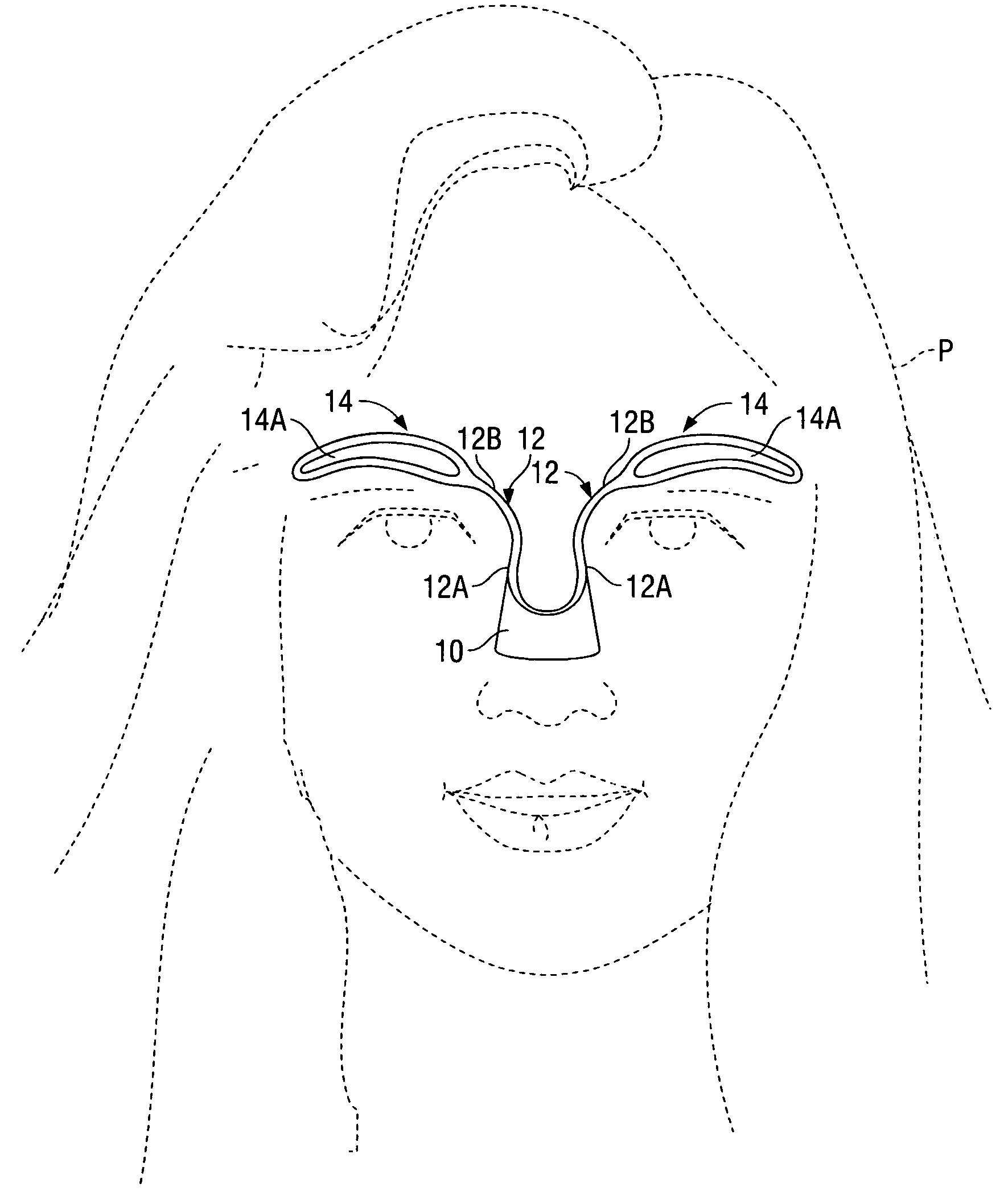

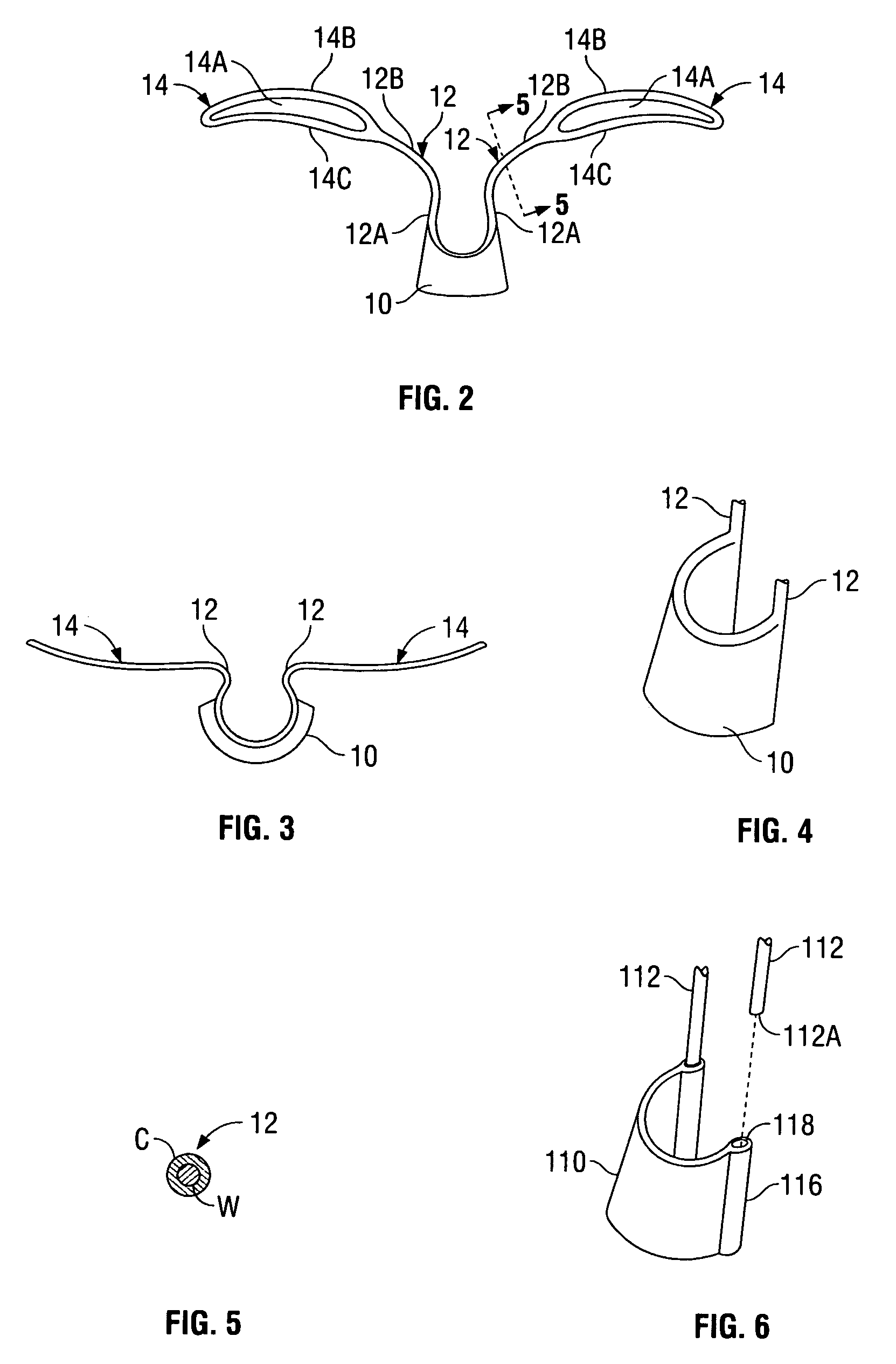

Template and method for applying makeup to eyebrows

ActiveUS7219674B1Conveniently and effectively apply makeupAlteration can be preventedPackaging toiletriesPackaging cosmeticsNoseBiomedical engineering

An eyebrow template can be used to apply makeup to a person's eyebrows. The template has a saddle that is shaped to be placed against the bridge of the person's nose. The template also has a pair of guides each attached to the saddle and each having a guide opening for guiding the application of makeup to the person's eyebrows. After placing the saddle against the bridge of the person's nose the templates are adjusted to outline the person's eyebrows. Then makeup is applied to the person's eyebrows using the templates to guide the makeup application.

Owner:SHELLEY DEBORAH

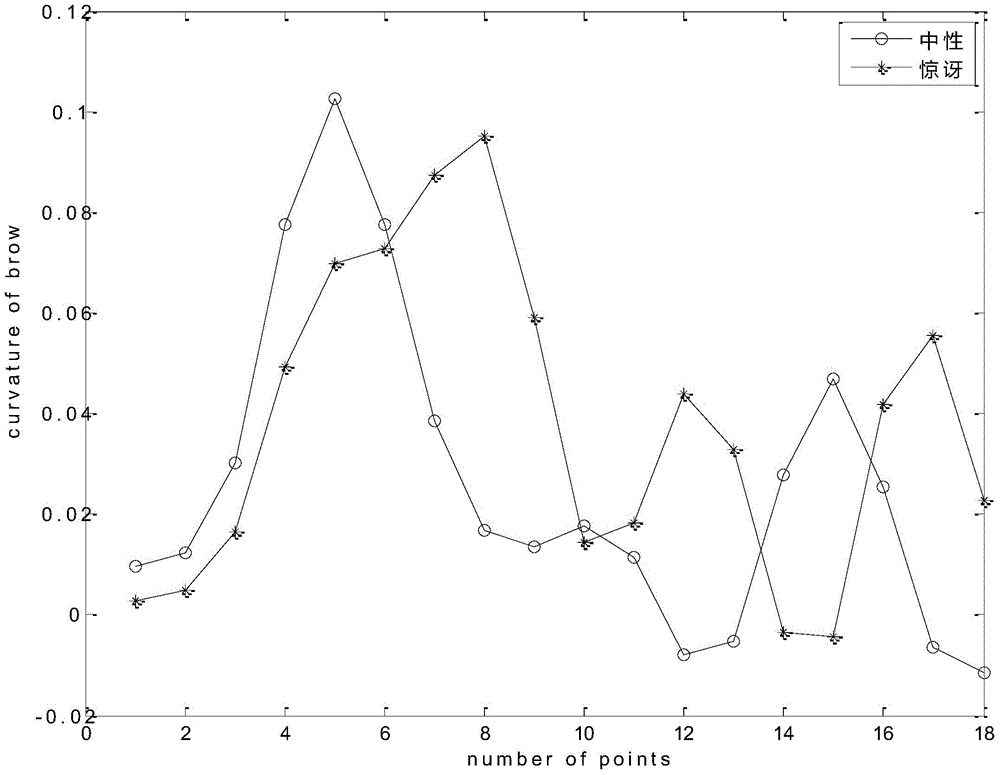

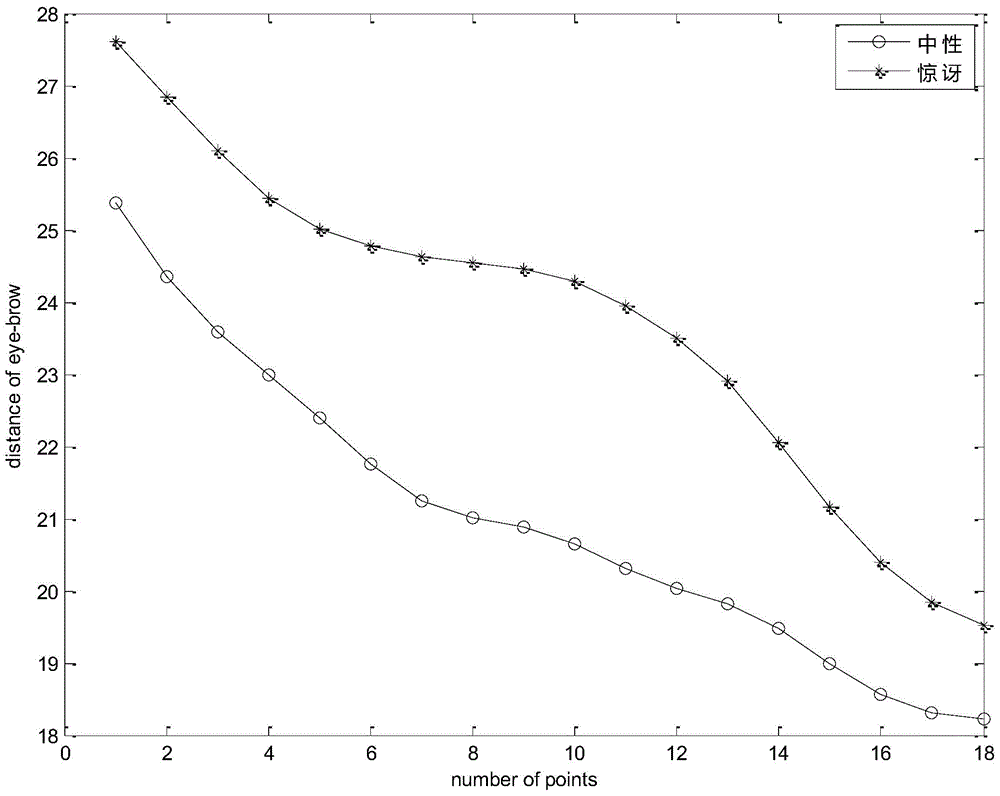

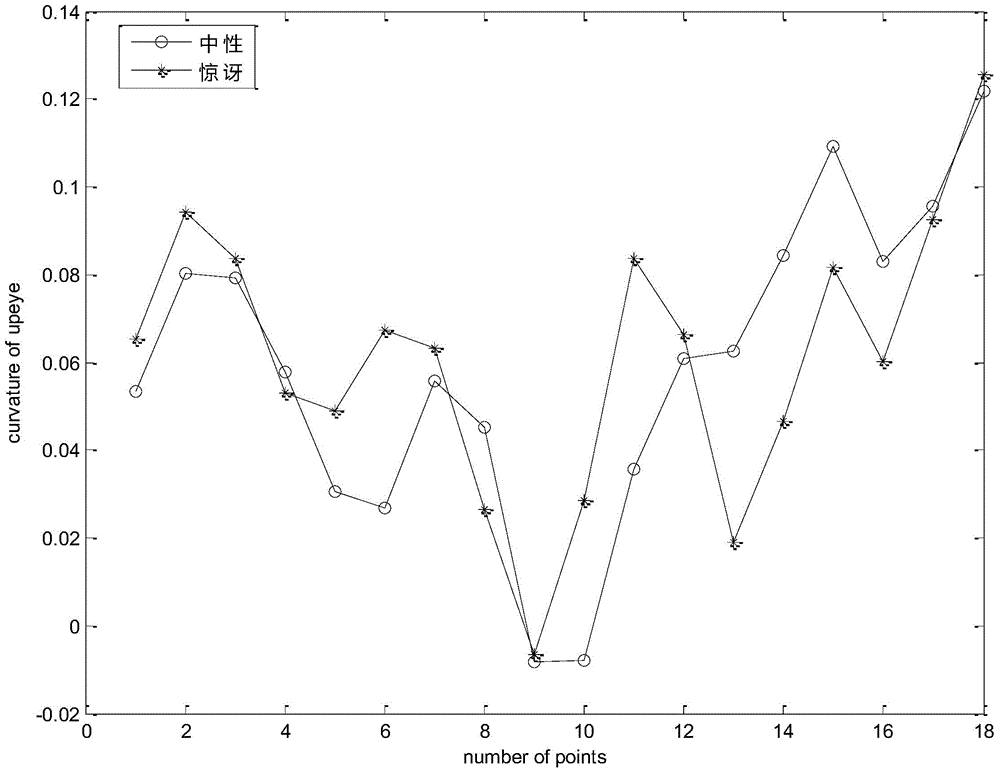

Active-shape-model-algorithm-based method for analyzing face expression

InactiveCN104951743ARealize expression recognitionVerify validityCharacter and pattern recognitionPattern recognitionNose

The invention relates to an active-shape-model-algorithm-based method for analyzing a face expression. The method comprises: a face expression database is stored or selected and parts of or all face expressions in the face expression database are selected as training images; on the basis of the active shape model algorithm, feature point localization is carried out on the training images, wherein the feature points are ones localized based on the eyebrows, eyes, noses, and mouths of the training images and form contour data of the eyebrows, eyes, noses, and mouths; data training is carried out to obtain numerical constraint conditions of all expressions; and according to the numerical constraint conditions of all expressions, a mathematical model of the face expressions is established, and then face identification is carried out based on the mathematical model.

Owner:SUZHOU UNIV

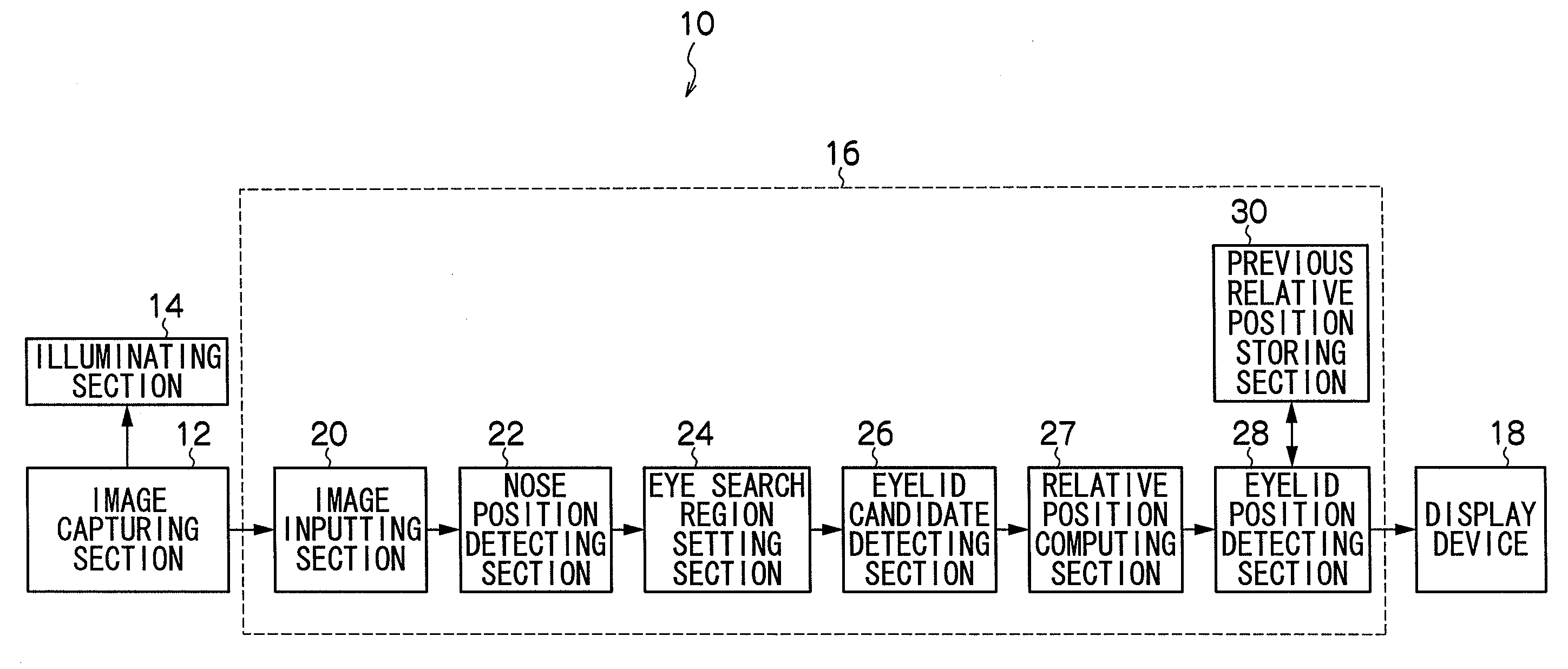

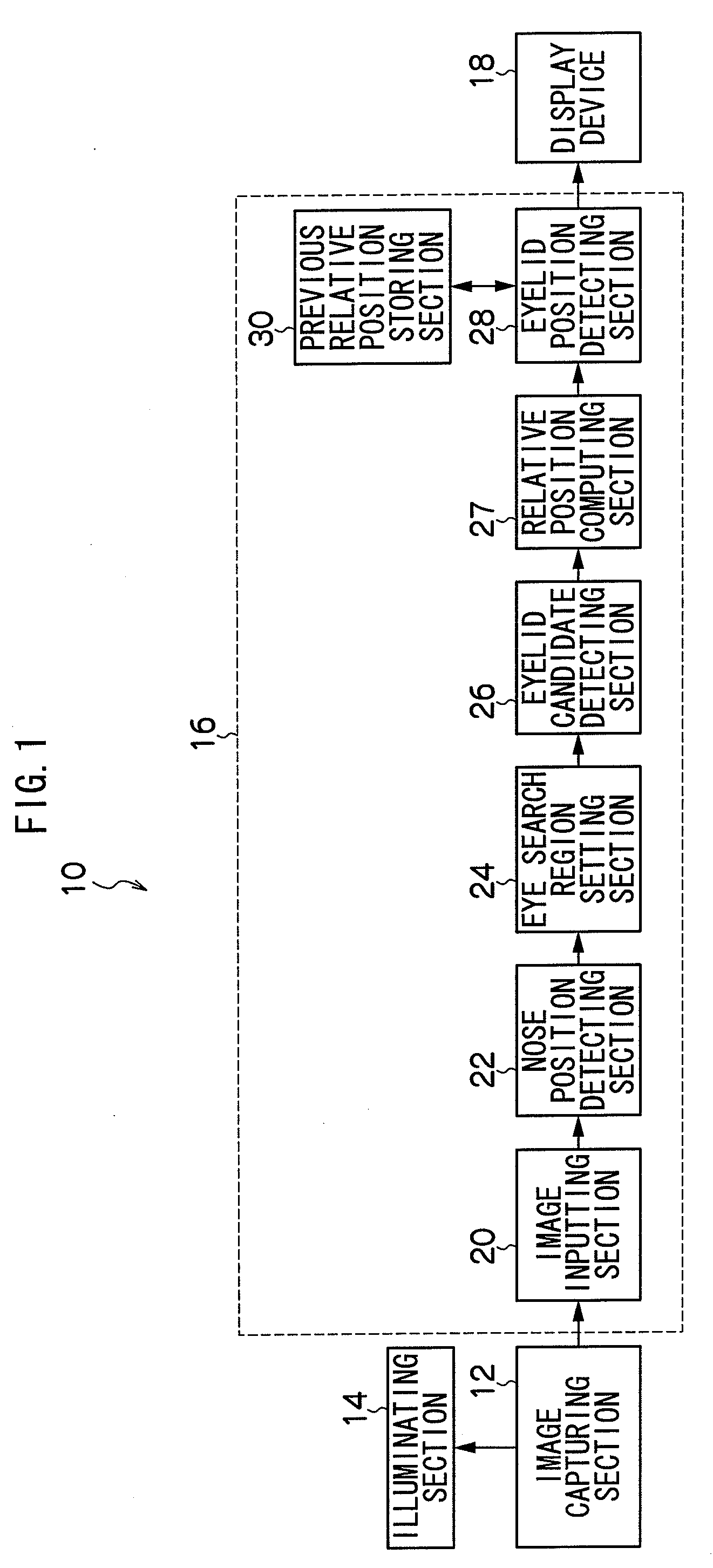

Face region detecting device, method, and computer readable recording medium

ActiveUS20080232650A1Character and pattern recognitionEye diagnosticsPattern recognitionLocation detection

An image capturing section captures a face of an observed person. A position detecting section detects, from a face image expressing the face captured by the image capturing section, one of a position expressing a characteristic of a nose and a position expressing a characteristic of a region between eyebrows. A computing section computes a past relative position of a predetermined region of the face that is based on the position detected in the past by the position detecting section. A region position detecting section detects a position of the predetermined region on the basis of the past relative position computed by the computing section and the current position detected by the position detecting section.

Owner:AISIN SEIKI KK +1

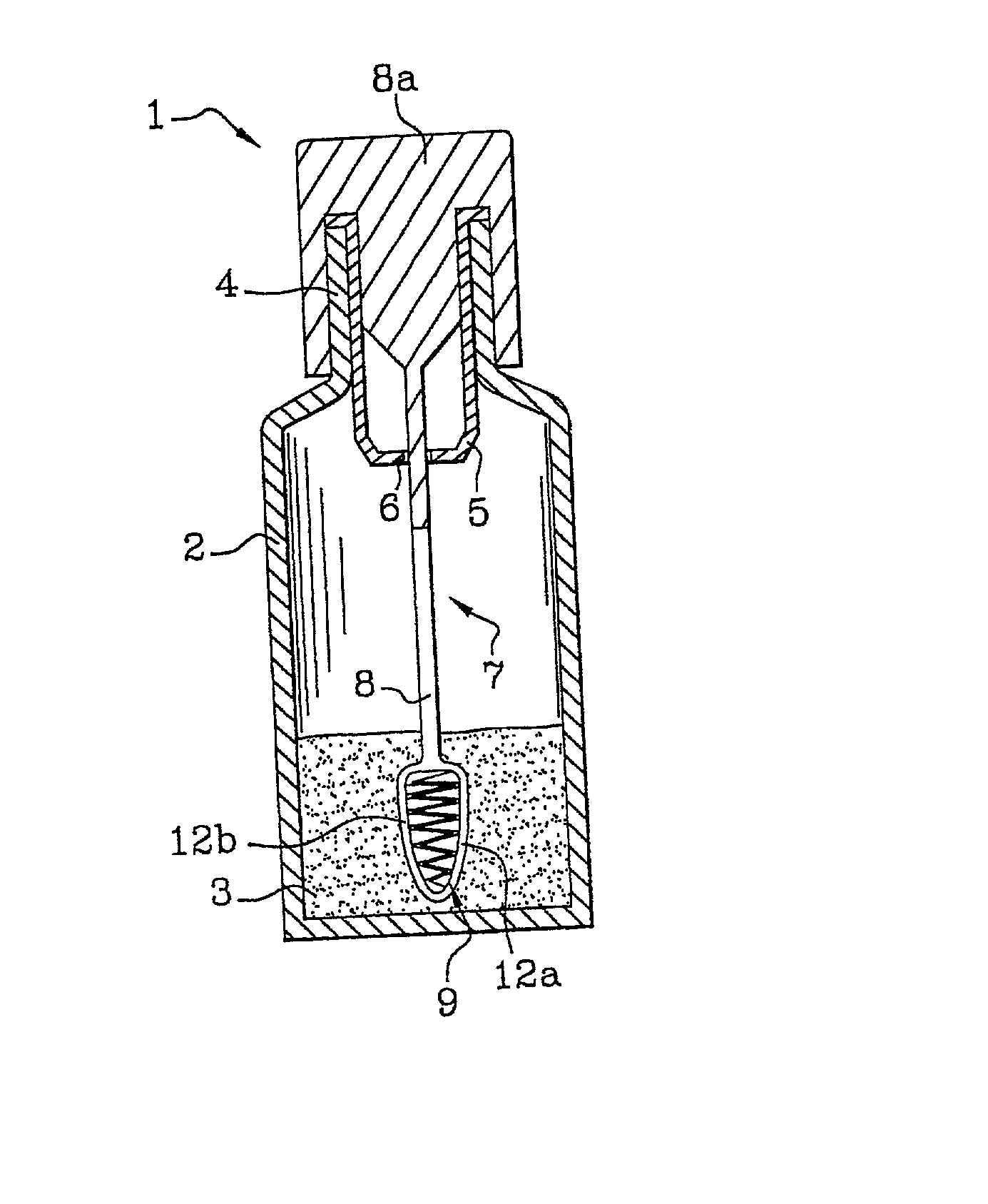

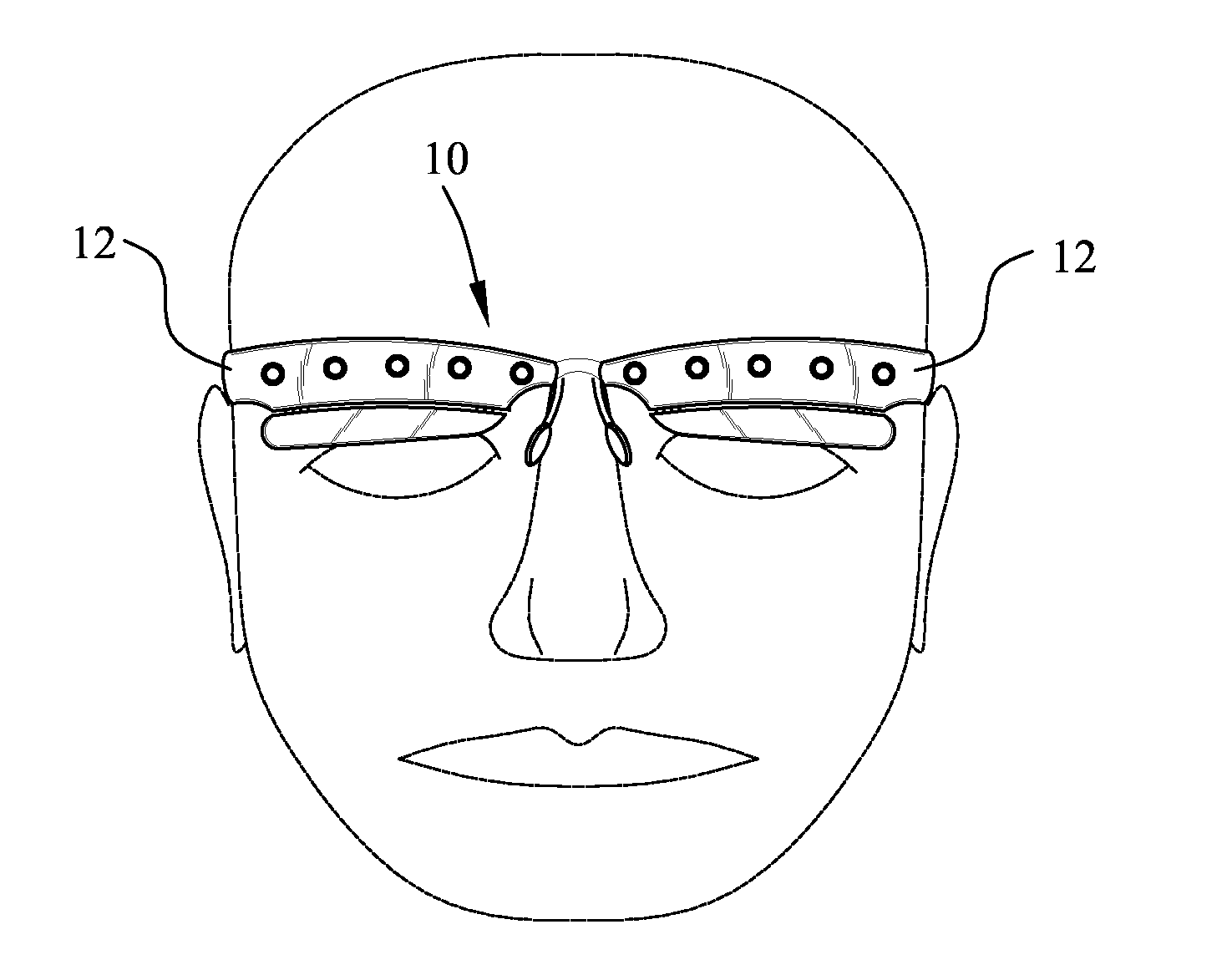

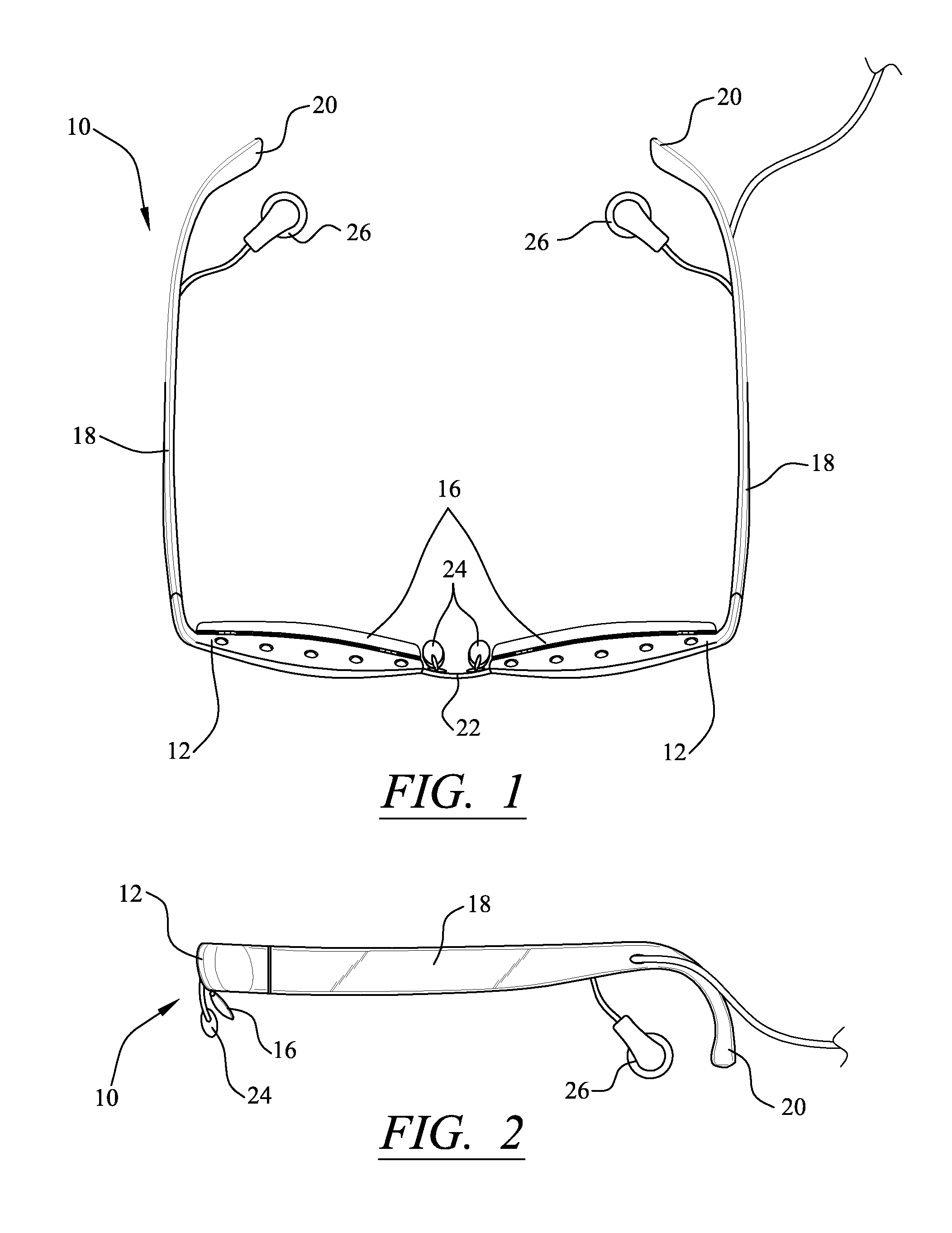

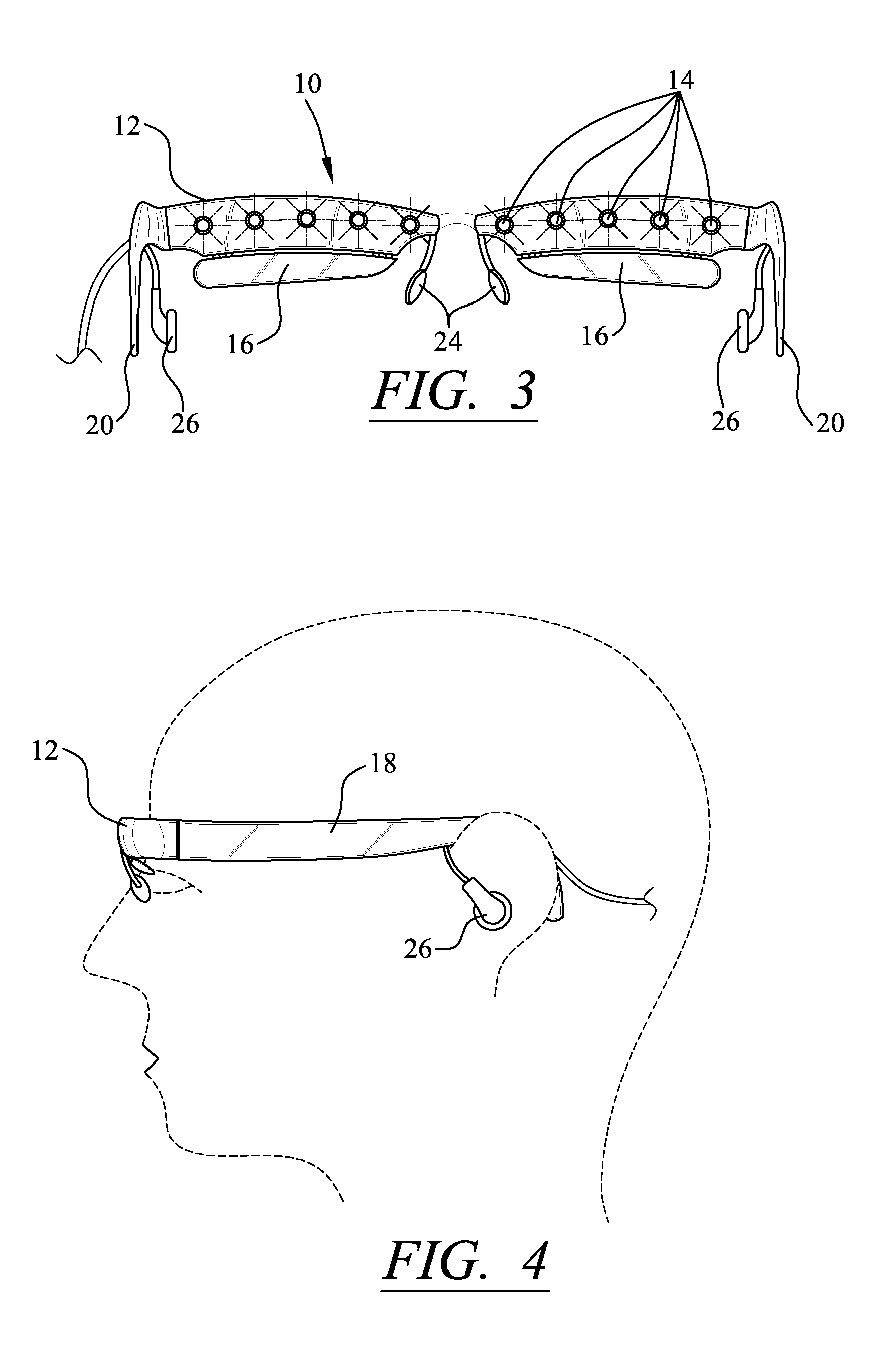

Device for promoting growth of eyebrow hair

InactiveUS20130041432A1Promote growthEqually distributedDiagnosticsSurgeryBiostimulationPrimary battery

A device for promoting eyebrow hair growth includes an array of light generating sources, such as LEDs, laser diodes and IPLs, which are housed within a brow plate, for providing evenly distributed light to a user's eyebrows at a low level output wavelength suitable for stimulating hair growth. This photo-biostimulation process promotes hair growth in the directed region by producing an increase in ATP and keratin production, enhancement in blood flow and circulation, as well as an increase in collagen production. Temple arm members with corresponding ear pieces or a headband are used to support the device on a user's head. The light generating sources may be powered by an internal power source, such as a rechargeable battery or disposable batteries, located within the headband or temple arm members, or by an external power source, such as a plug used in connection with an AC outlet.

Owner:APIRA SCI

Method and system for intensify human image pattern

A retouching method for enhancing an appearance of a face located in a digital image involves acquiring a digital image containing one or more faces and detecting a location of facial feature points in the faces, where the facial feature points include points identifying salient features such as skin, eyes, eyebrows, nose, mouth, and hair. The location of the facial feature points are used to segment the face into different regions such as skin, eyes, eyebrows, nose, mouth, neck and hair regions. Facially relevant characteristics of the different regions are determined and, based on these facially relevant characteristics, an ensemble of enhancement filters are selected, each customized especially for a particular region, and the default parameters for the enhancement filters are selected. The enhancement filters are then executed on the particular regions, thereby producing an enhanced digital image from the digital image.

Owner:MONUMENT PEAK VENTURES LLC

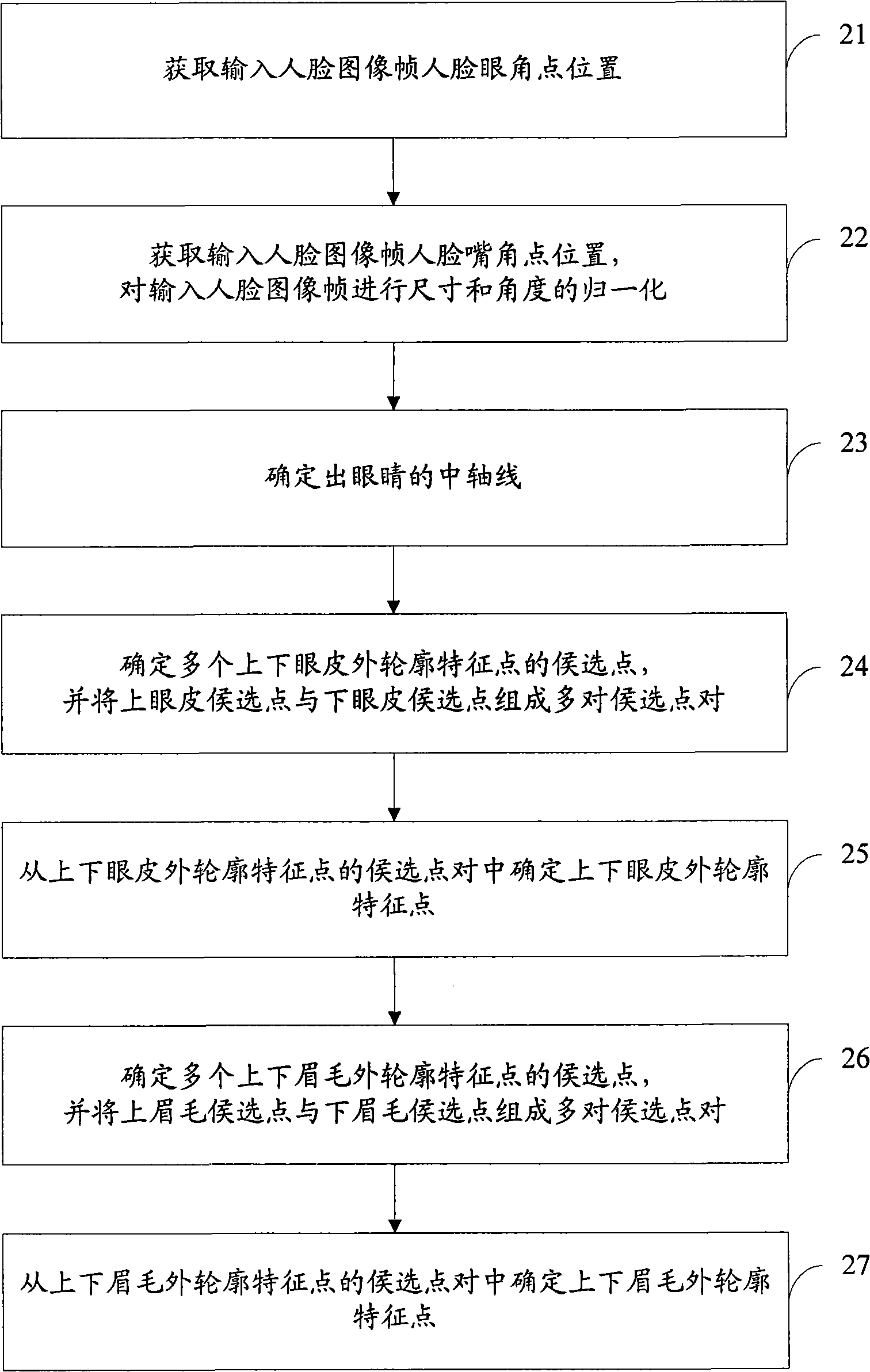

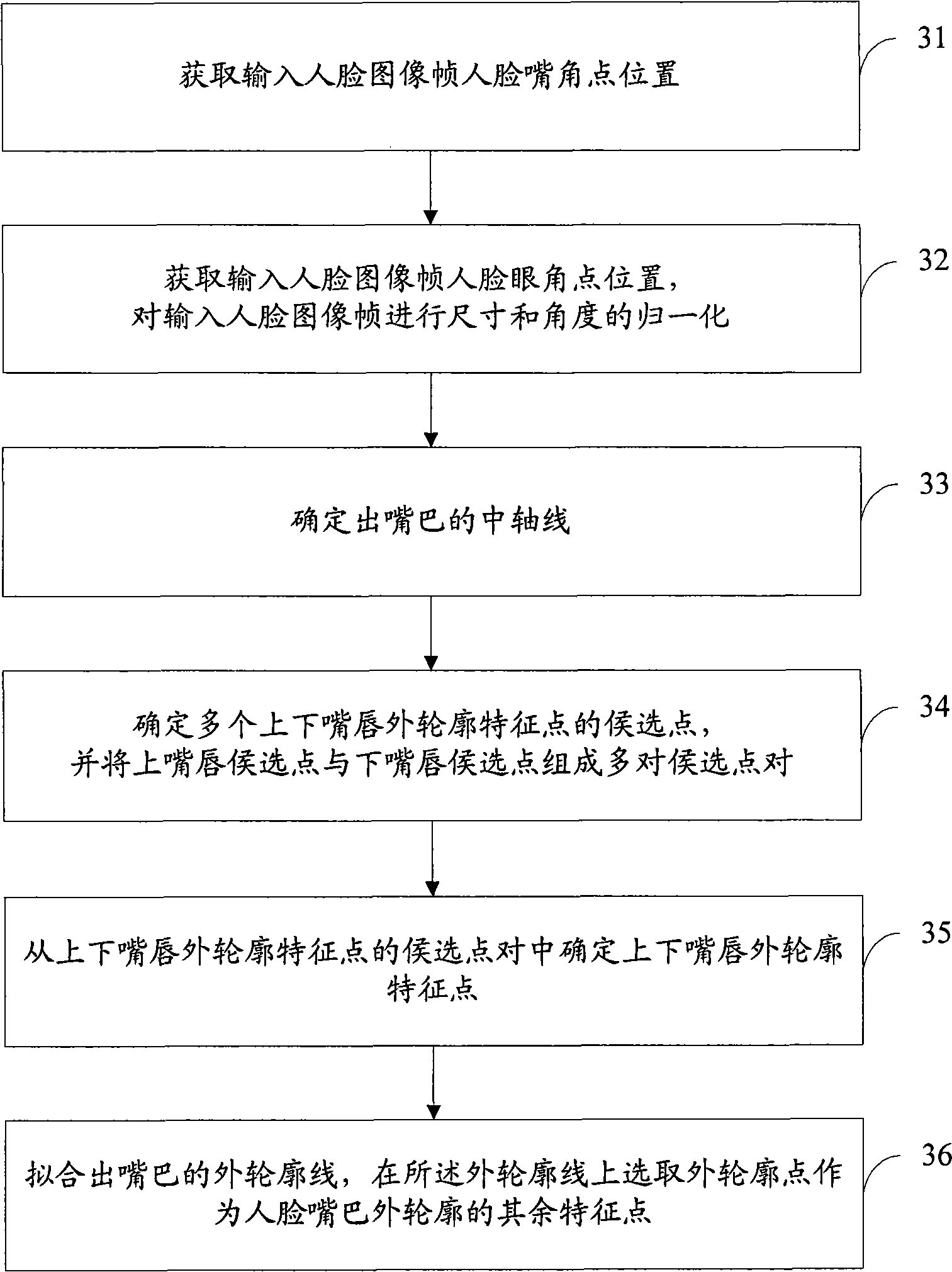

Human face critical organ contour characteristic points positioning and tracking method and device

The invention discloses a method for positioning and tracking outer contour feature points of key face organs and a device thereof, comprising the following steps: first, the positions of canthi points and angulus oris points on the face are obtained; the medial axis of the eyes and the mouth is identified based on the positions; the outer contour feature points of the eyes and eyebrows are identified on the medial axis of the eyes, and the outer contour feature points of the mouth are identified on the medial axis of the mouth. Consistent positioning and tracking of outer contour feature points of key face organs are conducted on such a basis. The technical proposal provided by the embodiment of the invention solves the problem of inaccurate positioning of outer contour feature points of eyes, mouth and eyebrows when people makes various faces; based on the positioning of the feature points, the existing two-dimensional and three-dimensional face models can be real-time driven, and face expressions and motions such as frowning, blinking and mouth opening of people before a camera can be real-time simulated, thus creating various real and vivid face animations.

Owner:BEIJING VIMICRO ARTIFICIAL INTELLIGENCE CHIP TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com