Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

422 results about "Subtitle" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Subtitles are text derived from either a transcript or screenplay of the dialog or commentary in films, television programs, video games, and the like, usually displayed at the bottom of the screen, but can also be at the top of the screen if there is already text at the bottom of the screen. They can either be a form of written translation of a dialog in a foreign language, or a written rendering of the dialog in the same language, with or without added information to help viewers who are deaf or hard of hearing to follow the dialog, or people who cannot understand the spoken dialogue or who have accent recognition problems.

Playback apparatus, program, playback method

InactiveUS20060078301A1Diminished attractivenessMaintain the level of quality assuranceTelevision system detailsColor television signals processingSubtitleEngineering

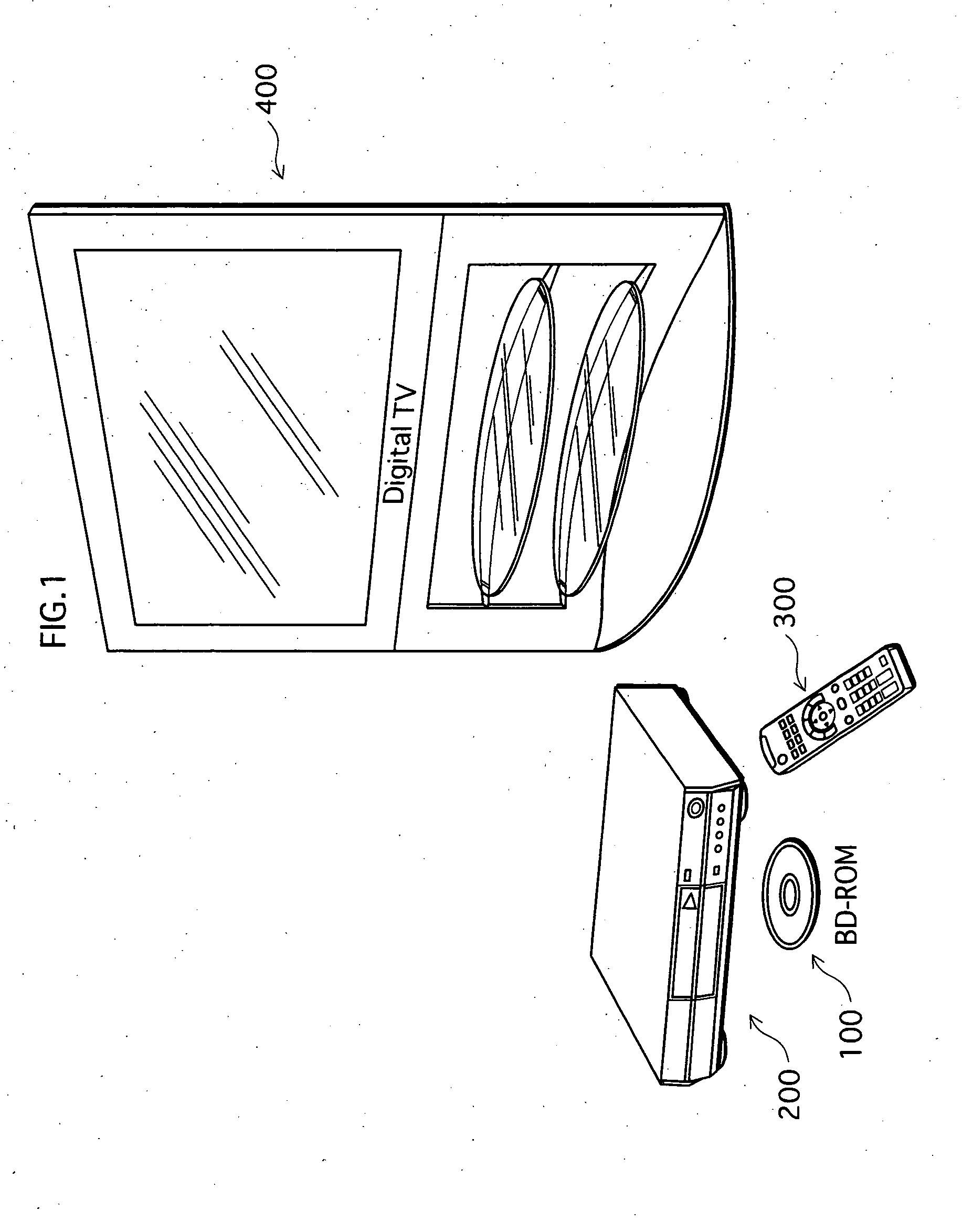

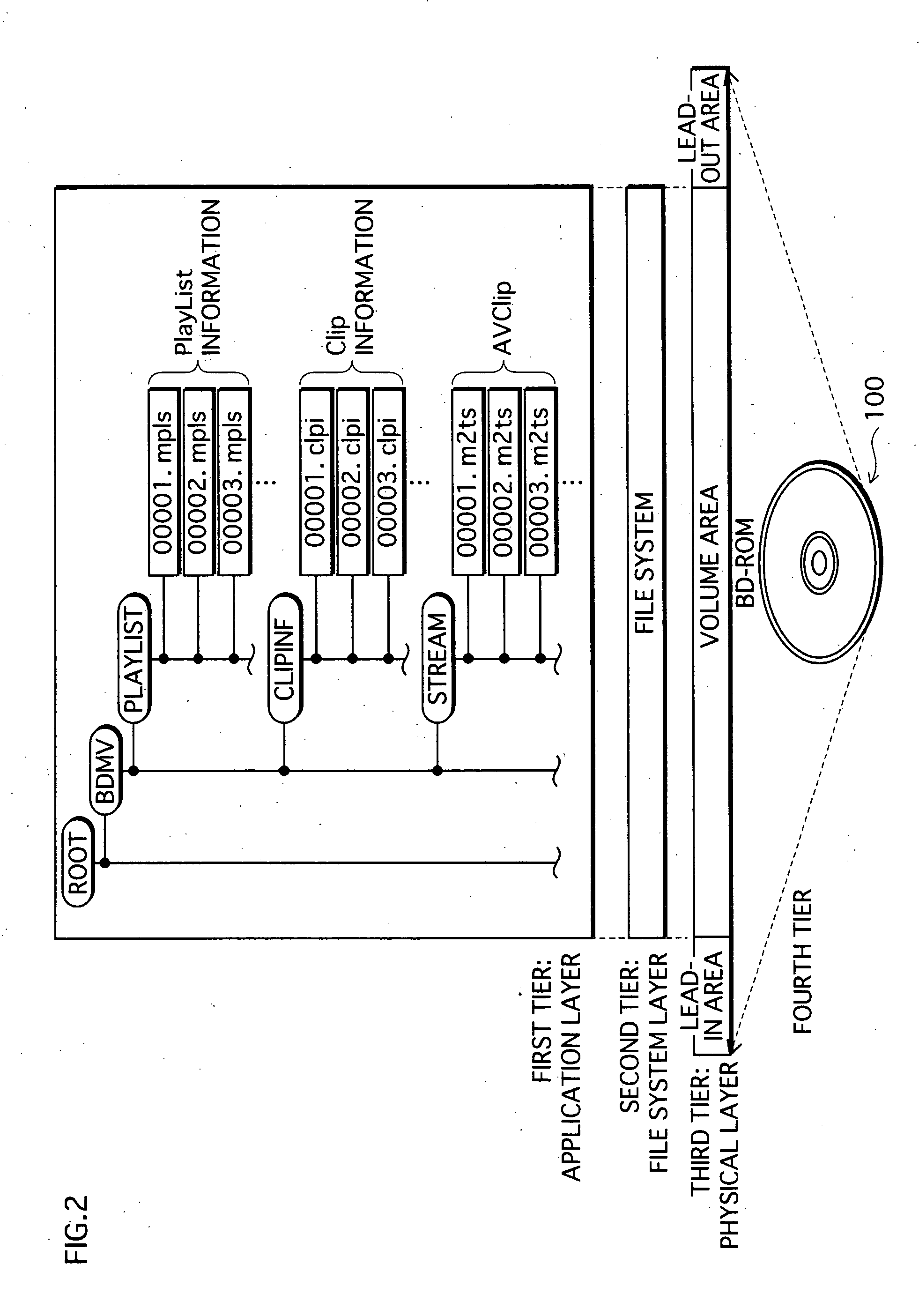

A BD-ROM playback apparatus plays back a text subtitle stream during a playback of moving pictures. A reserved area of a PSR stores therein, as a supplementary language, an identifier of a language in which the playback apparatus is not capable of decoding a text subtitle stream, due to inconvenience caused during display of the text subtitle. The set-up unit 32 inquires of a user whether the user wishes to increase the number of languages in which subtitles are displayable even if inconvenience may be caused during the display. In the case where the user performs an affirmative operation in response to the inquiry, the stream selection unit 17 selects a text subtitle stream corresponding to the supplementary language from the plurality of text subtitle streams.

Owner:PANASONIC CORP

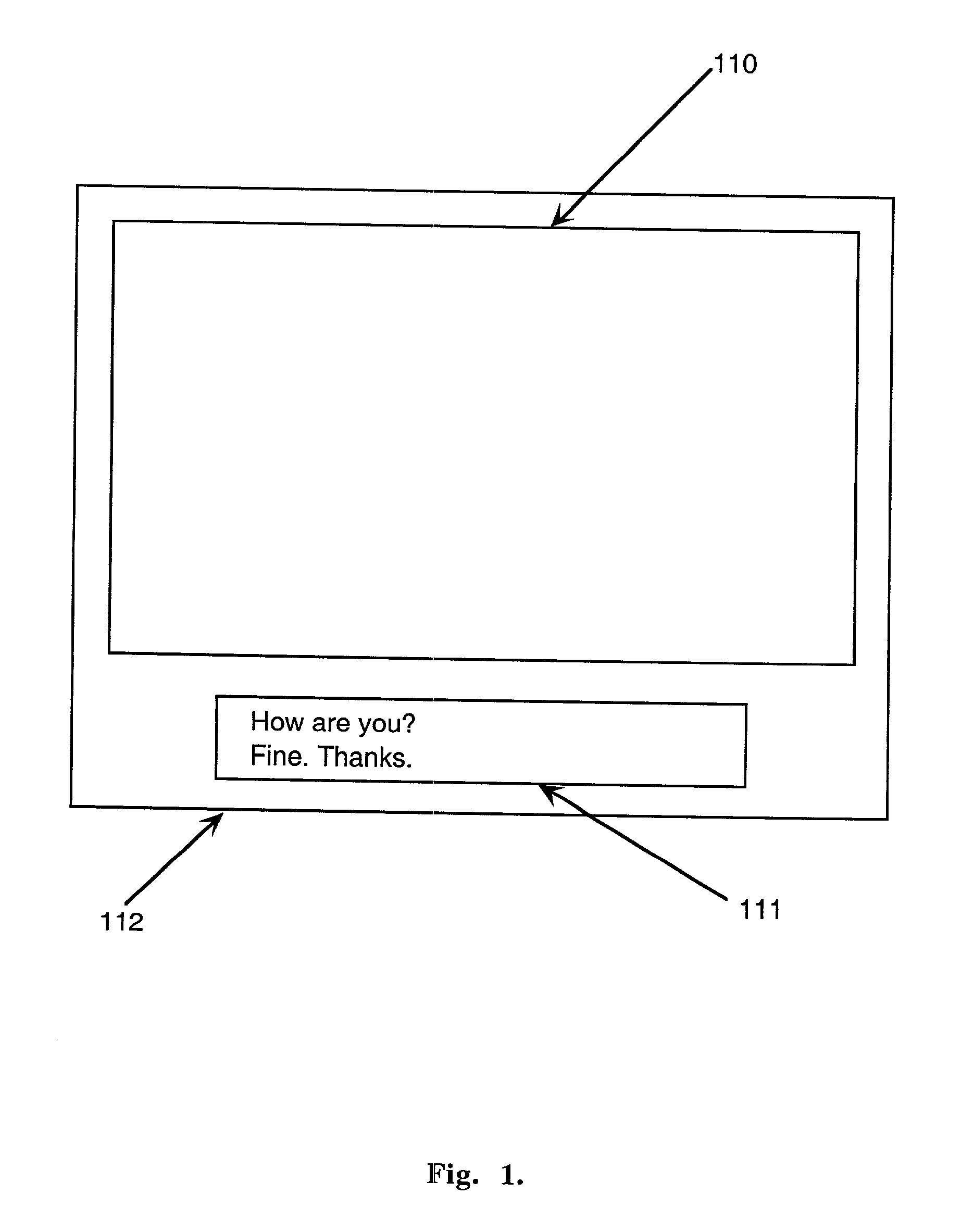

Video display apparatus with separate display means for textual information

InactiveUS20030128296A1Effective displayTelevision system detailsColor signal processing circuitsClosed captioningTelevision receivers

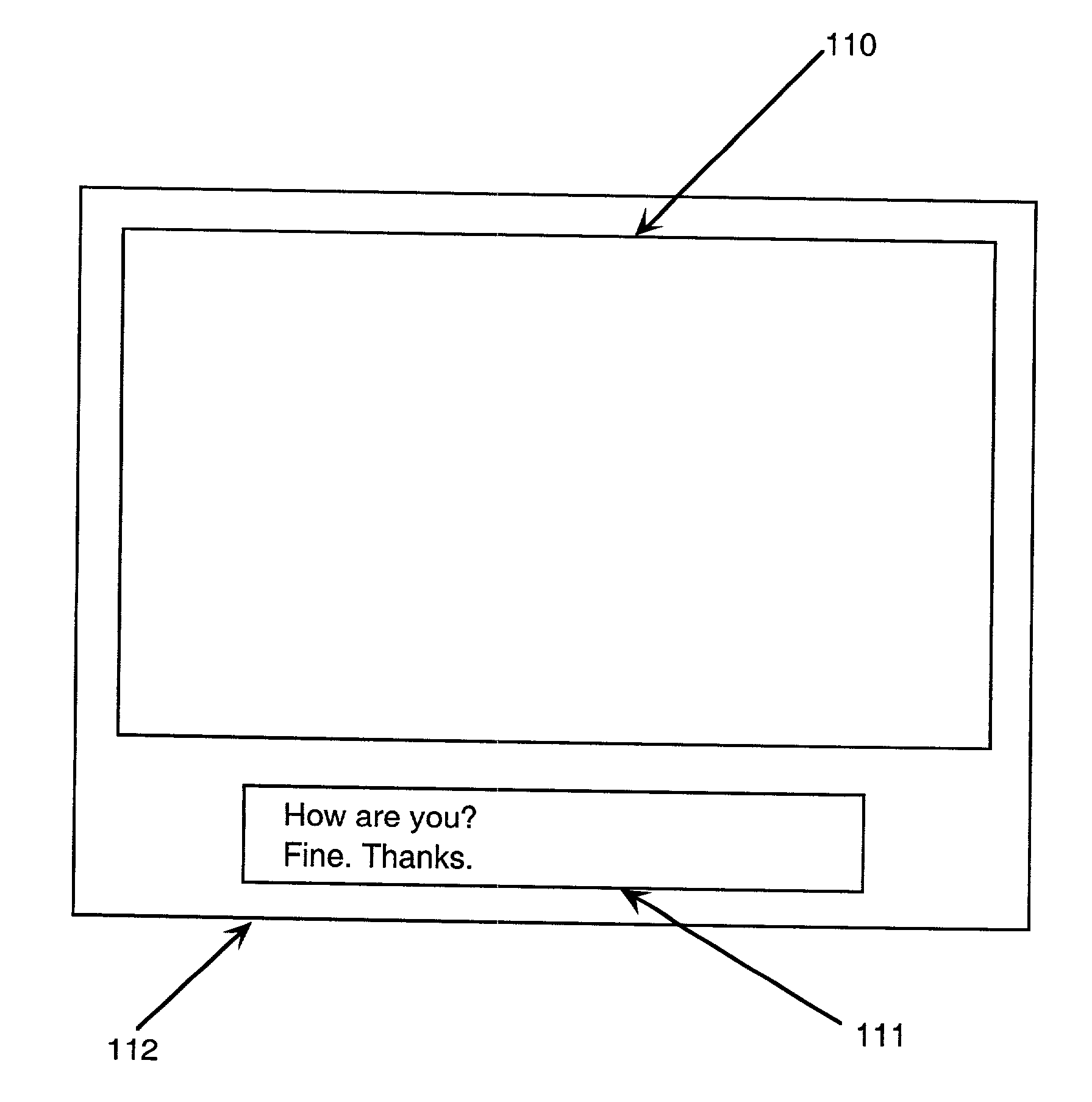

A video display apparatus with separate display means is provided so that the textual information can be displayed without preventing viewers from watching the full picture. The separate display means can be provided for a television receiver so that textual information, which includes closed caption text and subtitles, can be displayed on the separate display means without occupying the picture area. The separate display means can be provided for a screen in a movie theater so that subtitles can be displayed on the separate display means when a foreign movie is played. The separate display unit can be used to display other textual information, including a channel number, a name of the broadcasting station, the title of the current program and the remaining time of the current program. Furthermore, the separate display unit can display information on a local weather and a local time.

Owner:IND ACADEMIC CORP FOUND YONSEI UNIV

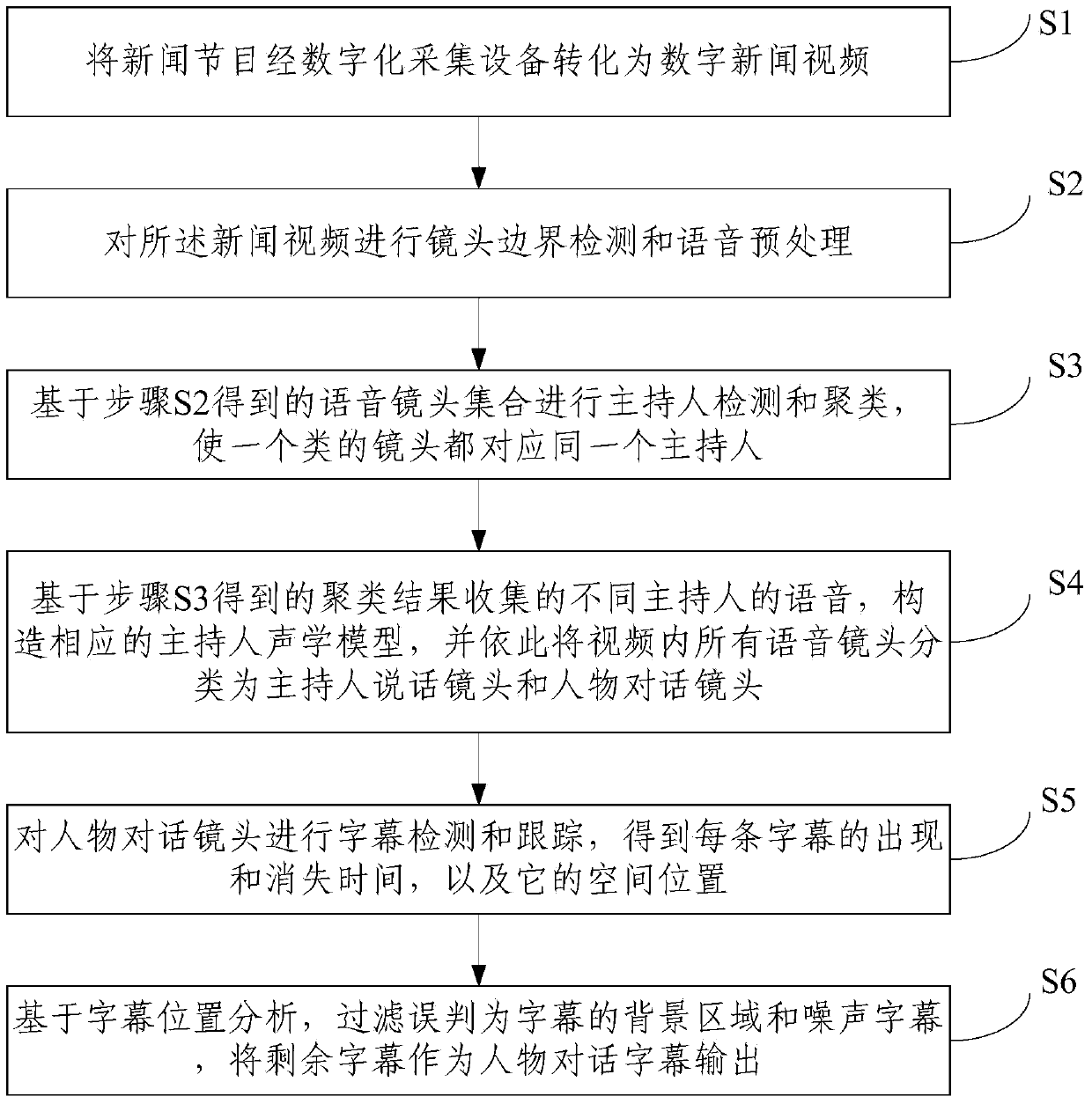

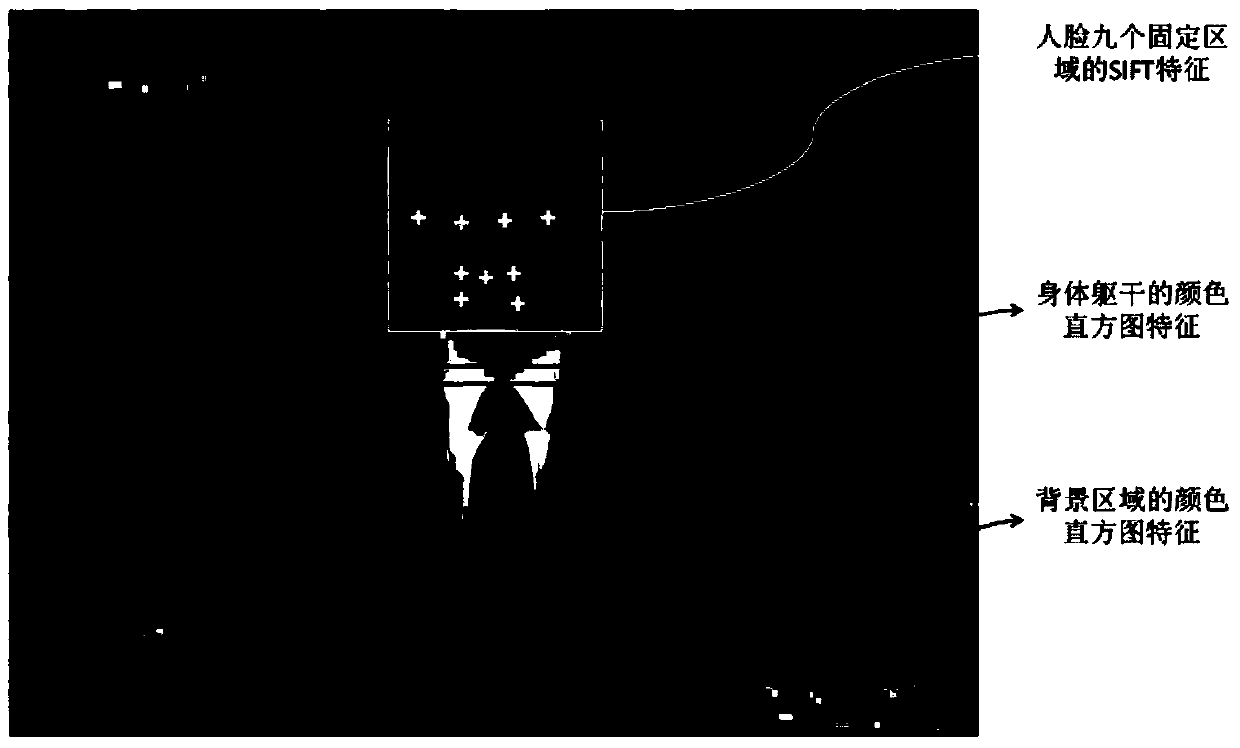

Character dialogue subtitle extraction method oriented to news video

ActiveCN103856689ASolve extraction difficultiesTelevision system detailsCharacter and pattern recognitionSubtitleAcoustic model

The invention discloses a character dialogue subtitle extraction method oriented to a news video. The method includes the steps that firstly, a news program is converted into a digital news video through a digital acquisition device; secondly, shot boundary detection and voice preprocessing are conducted on the news video; thirdly, host detection and clustering are conducted based on voice shot sets acquired in the second step, and then shots in the same class are made to all correspond to a same host; fourthly, based on voice of different hosts acquired through a clustering result acquired in the third step, corresponding host acoustic models are built, and all voice shots in the video are divided into the host speaking shots and the character dialogue shots; fifthly, subtitle detection and tracking are conducted on the character dialogue shots, and the appearance time, disappearance time and the space position of each subtitle are acquired; sixthly, based on subtitle position analysis, background regions and noise subtitles misjudged as subtitles are filtered out, and the remaining subtitles are used as character dialogue subtitles for output.

Owner:北京中科模识科技有限公司

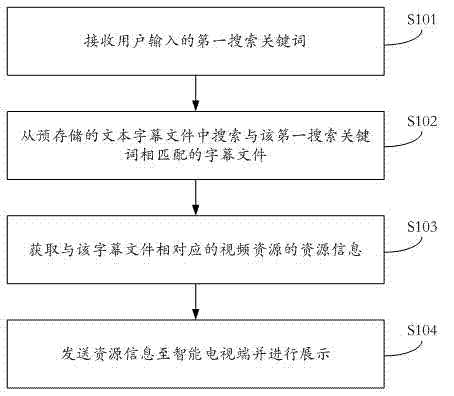

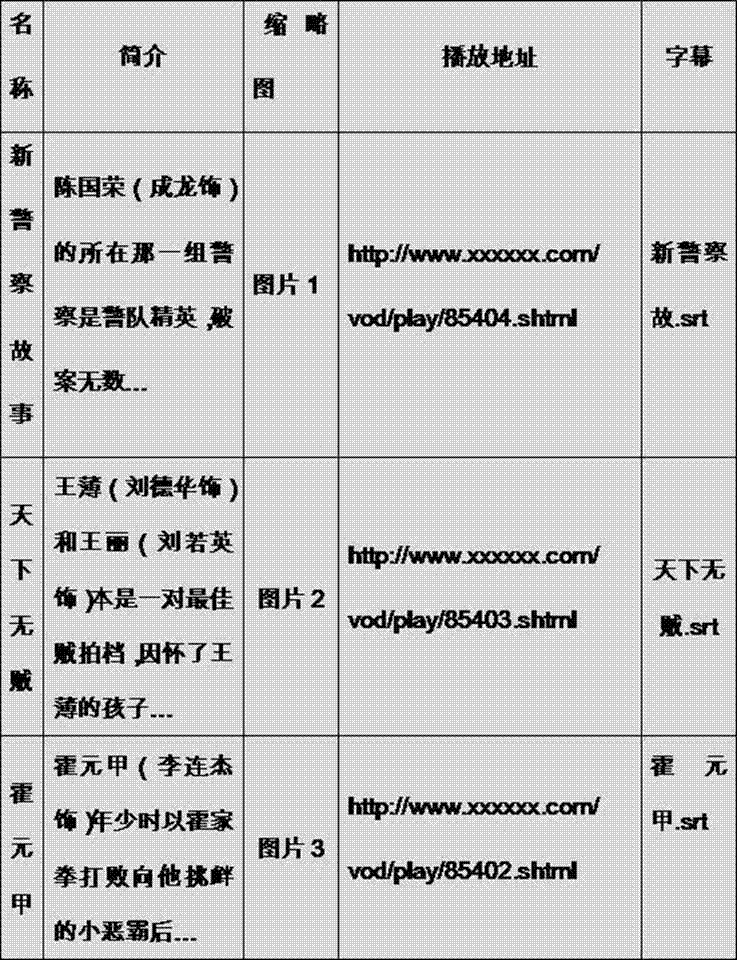

Intelligent television video resource searching method and system

InactiveCN103686200AImplement voice searchEasy to searchSelective content distributionSpecial data processing applicationsSubtitleResource information

The invention discloses an intelligent television video resource searching method and system. The intelligent television video resource searching method comprises receiving a first searching key word input through a user; searching a subtitle file which is matched with the first searching key word in a prestored text subtitle file; obtaining video resource information which is corresponding to the subtitle file; sending the resource information to an intelligent television terminal and displaying. The intelligent television video resource searching method is convenient and flexible due to the fact that the user can search videos according to words in the videos.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

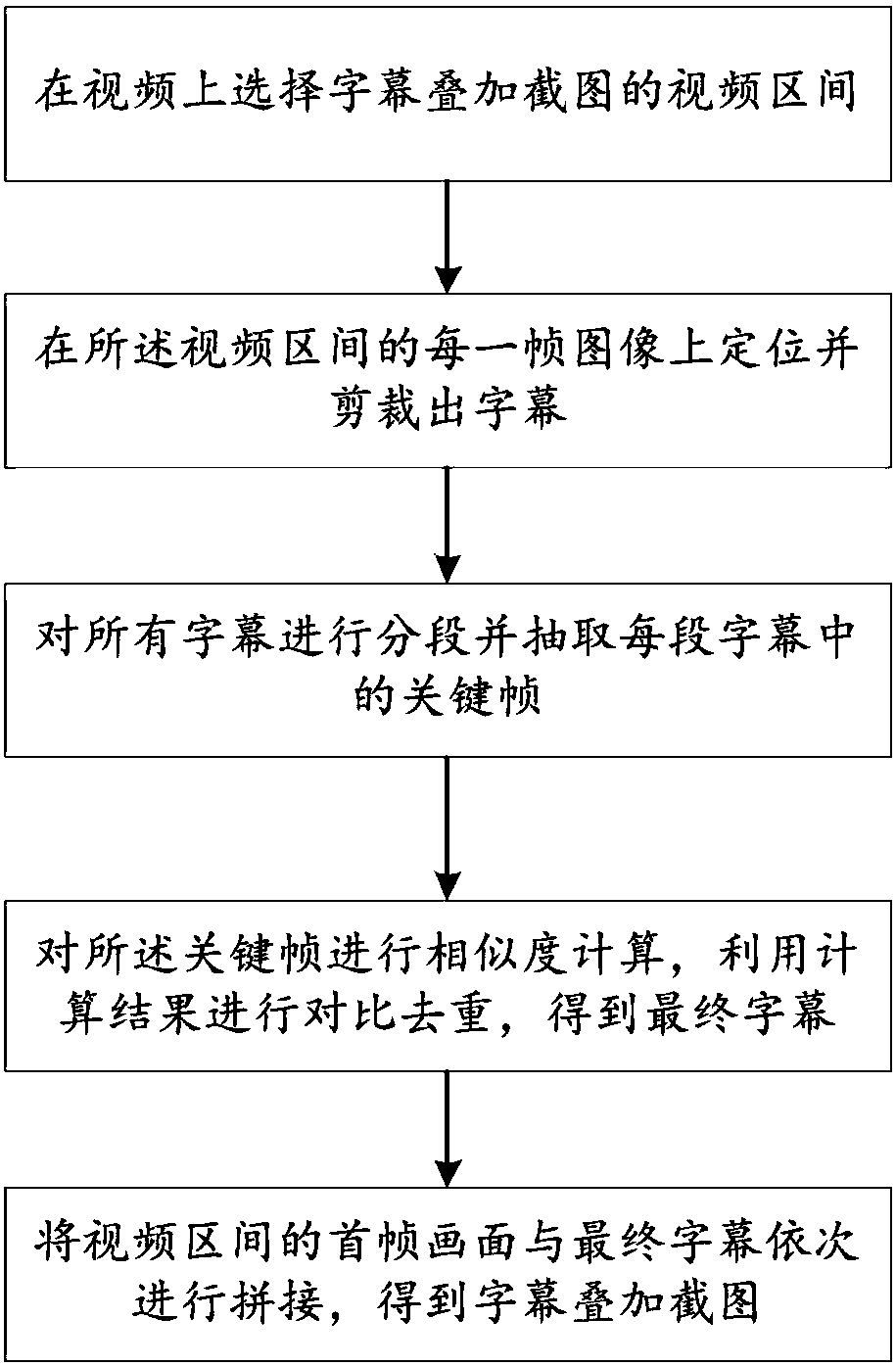

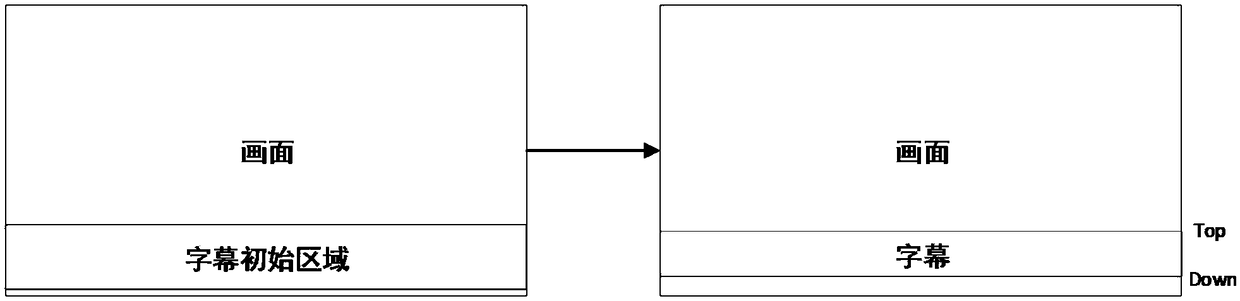

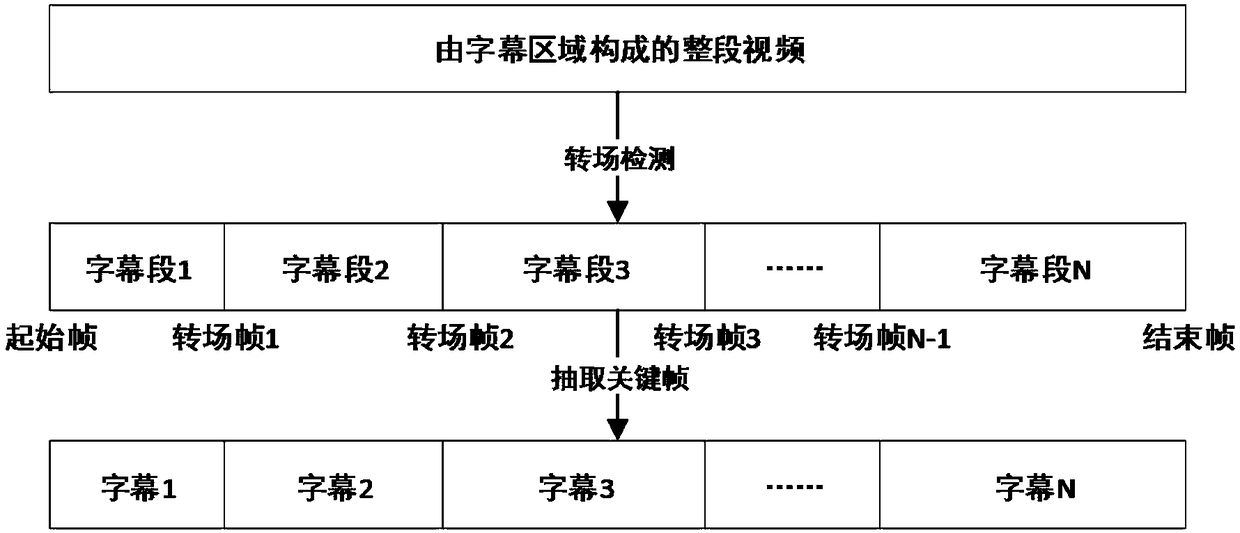

Method for realizing subtitle overlay screenshot based on deep learning

ActiveCN108347643AImprove accuracyImprove image stitching effectSelective content distributionDeep learningKey frame

The invention discloses a method for realizing subtitle overlay screenshot based on deep learning, and belongs to the field of media technology. The method comprises the following steps: selecting a video interval for subtitle overlay screenshot from a video; locating and cutting out subtitles on each frame of image in the video interval; segmenting all subtitles and extracting key frames in eachsubtitle; performing similarity calculation on the key frames, and performing comparative duplication elimination according to a calculation result to obtain final subtitles; and stitching a first frame of picture in the video interval with the final subtitles in sequence to obtain a subtitle overlay screenshot. The method is low in error rate, high in processing efficiency and high in automationdegree.

Owner:CHENGDU SOBEY DIGITAL TECH CO LTD

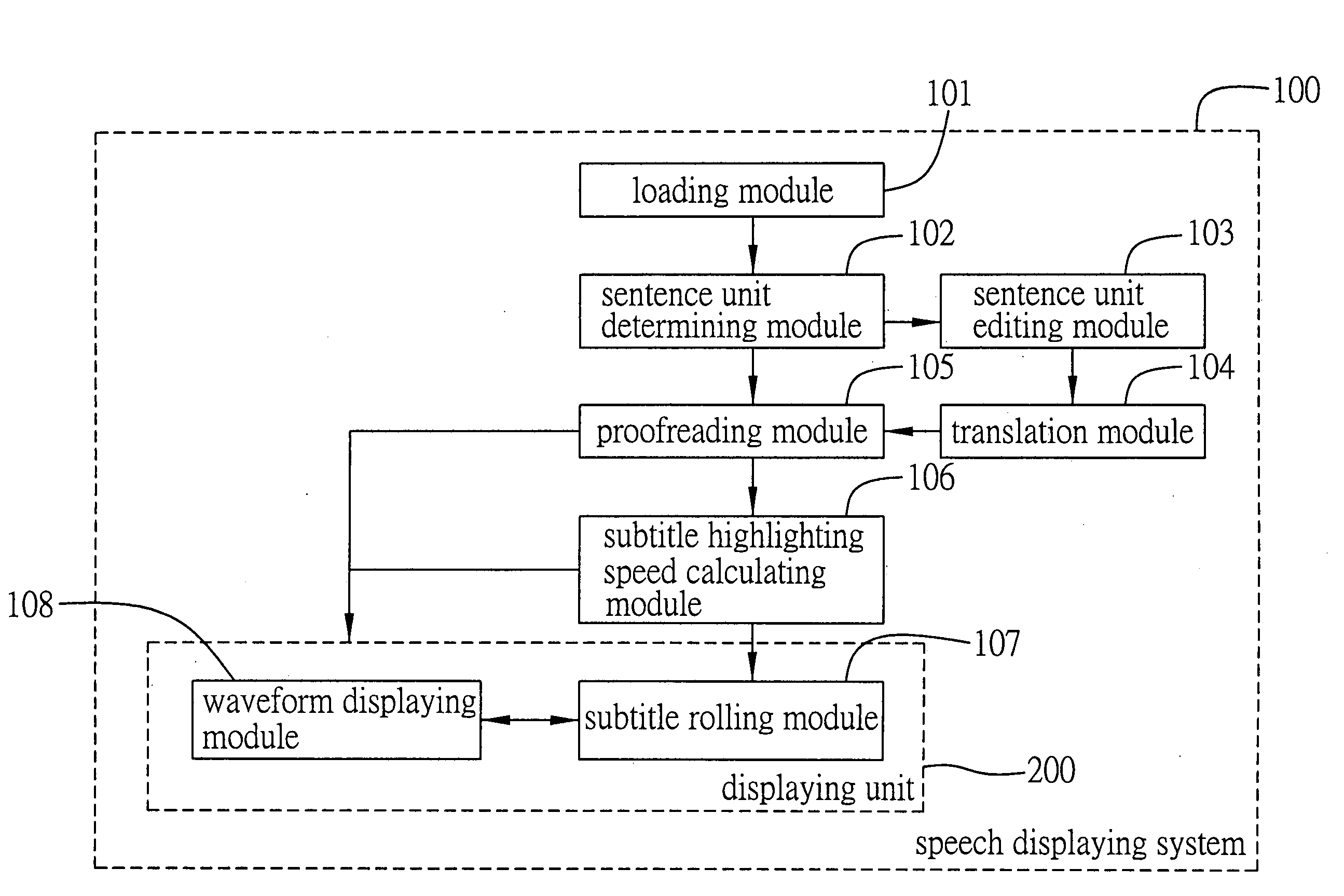

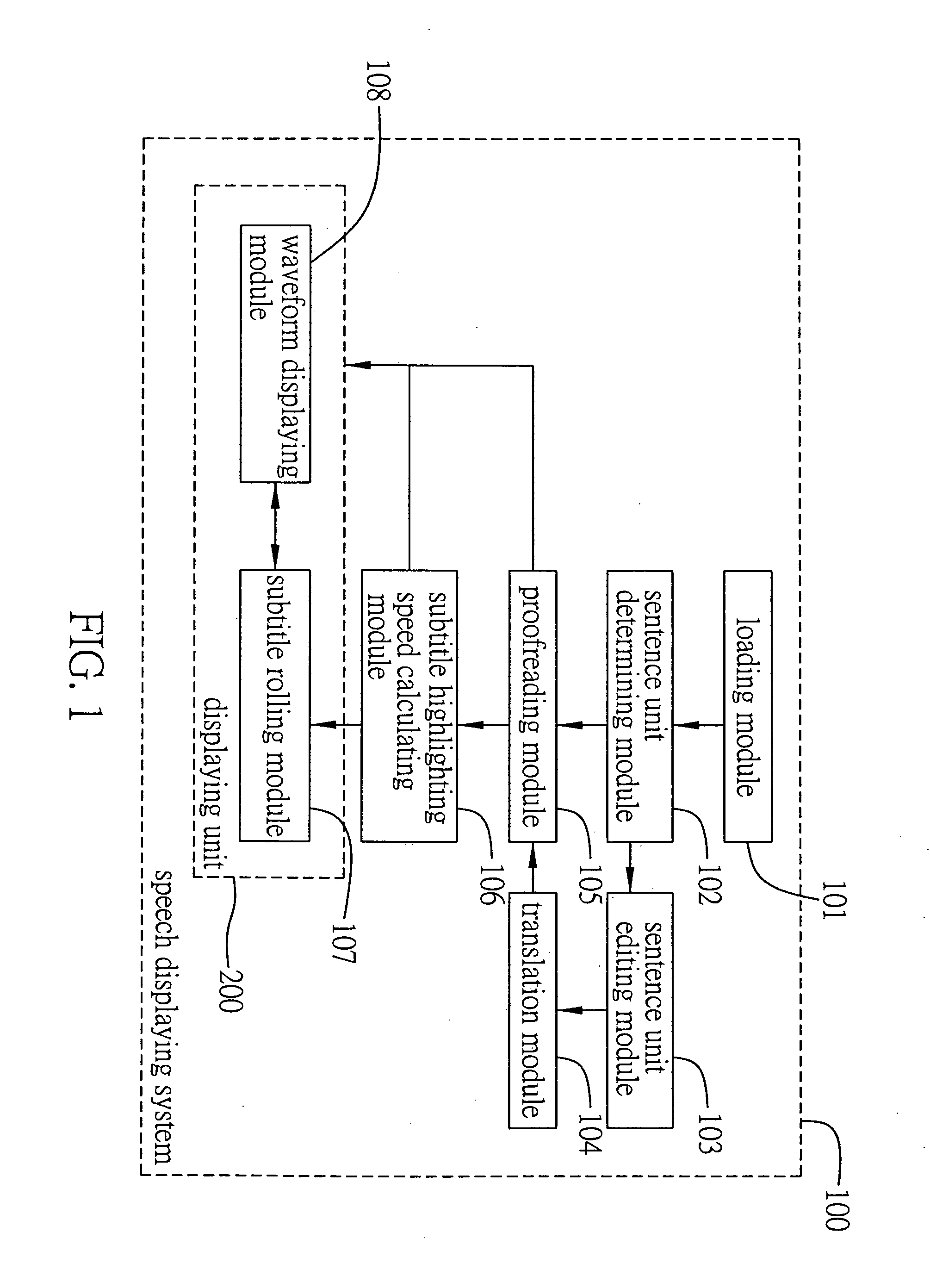

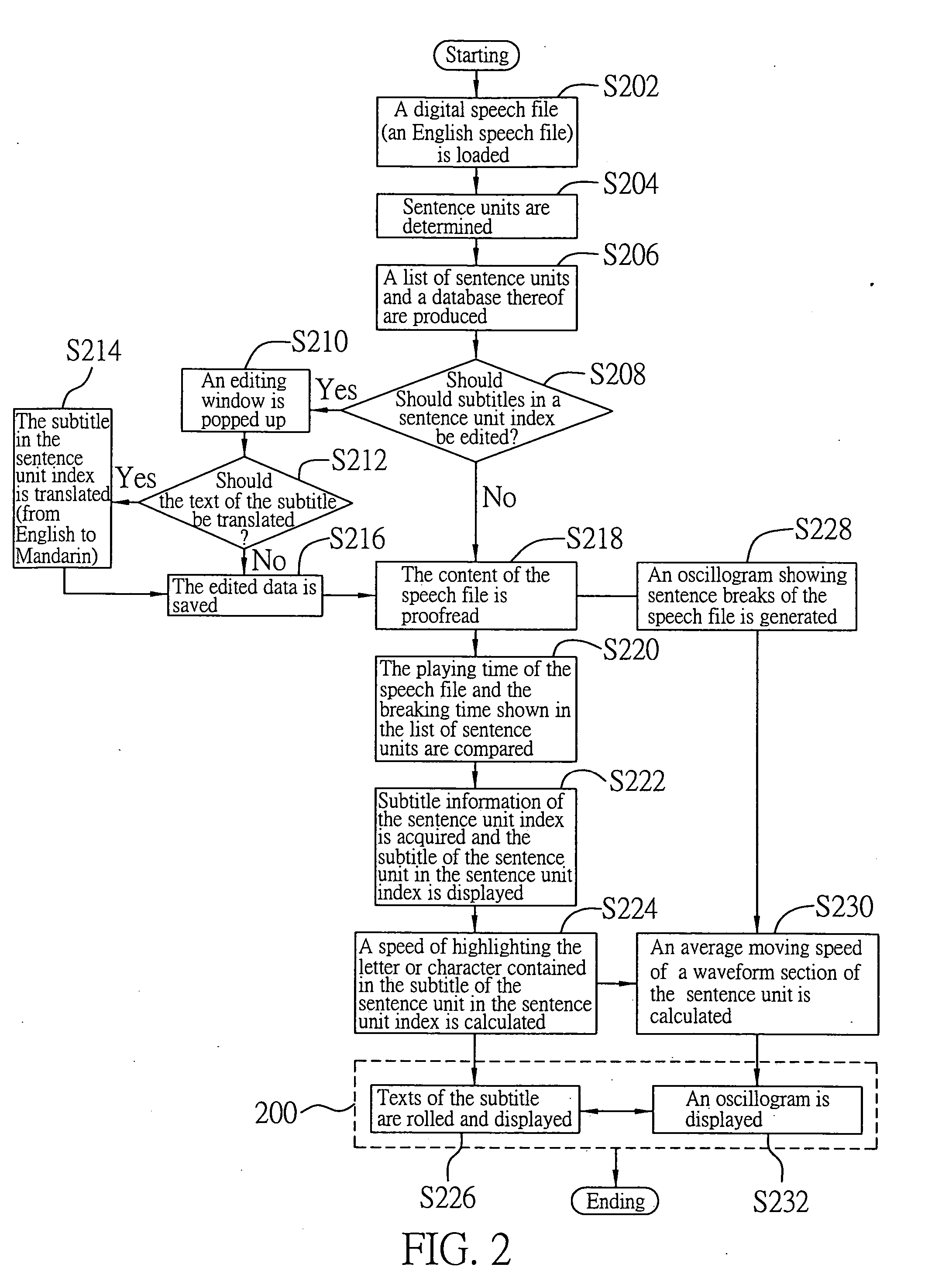

Speech displaying system and method

ActiveUS20060074690A1Easy to practiceNatural language translationAnalogue secracy/subscription systemsSpeech soundLettering

A speech displaying system and method can display playing progress by waveform and synchronously display text of a speech file using rolling subtitles when playing the speech file. After the speech file is loaded via a loading module, a sentence unit determining module partitions content of the speech file into a plurality of sentence units to produce a list of sentence units. A subtitle highlighting speed calculating module calculates a speed of highlighting every single letter or character contained in the subtitles in the sentence unit index for a sentence unit. A subtitle rolling module displays content of the list of sentence units. When the speech file is played, the subtitles in the sentence unit index are clearly marked, and every letter or character of the subtitles is highlighted. A waveform displaying module marks positions of sentence pauses and playing progress on an oscillogram for the speech file by lines.

Owner:INVENTEC CORP

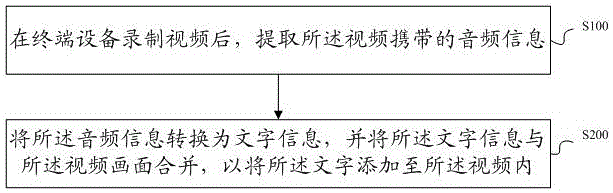

Method and system for automatically adding subtitles

InactiveCN106851401AEasy to useEasy to shareSelective content distributionSubtitleTerminal equipment

The invention discloses a method and system for automatically adding subtitles. The method comprises the steps of after a terminal device records a video, extracting audio information carried in the video; and converting the audio information into text information and combining the text information and the video images, thereby adding texts to the video. According to the method and the system, after the terminal device records the video, audios are automatically extracted from the video, the audios are converted into the texts, and text subtitles and the original video are combined to form a new video containing the subtitles, so the video carrying the subtitles is obtained, a user can share the video conveniently, and the usage of the user is facilitated.

Owner:HUIZHOU TCL MOBILE COMM CO LTD

Video caption recognition method and device, equipment and storage medium

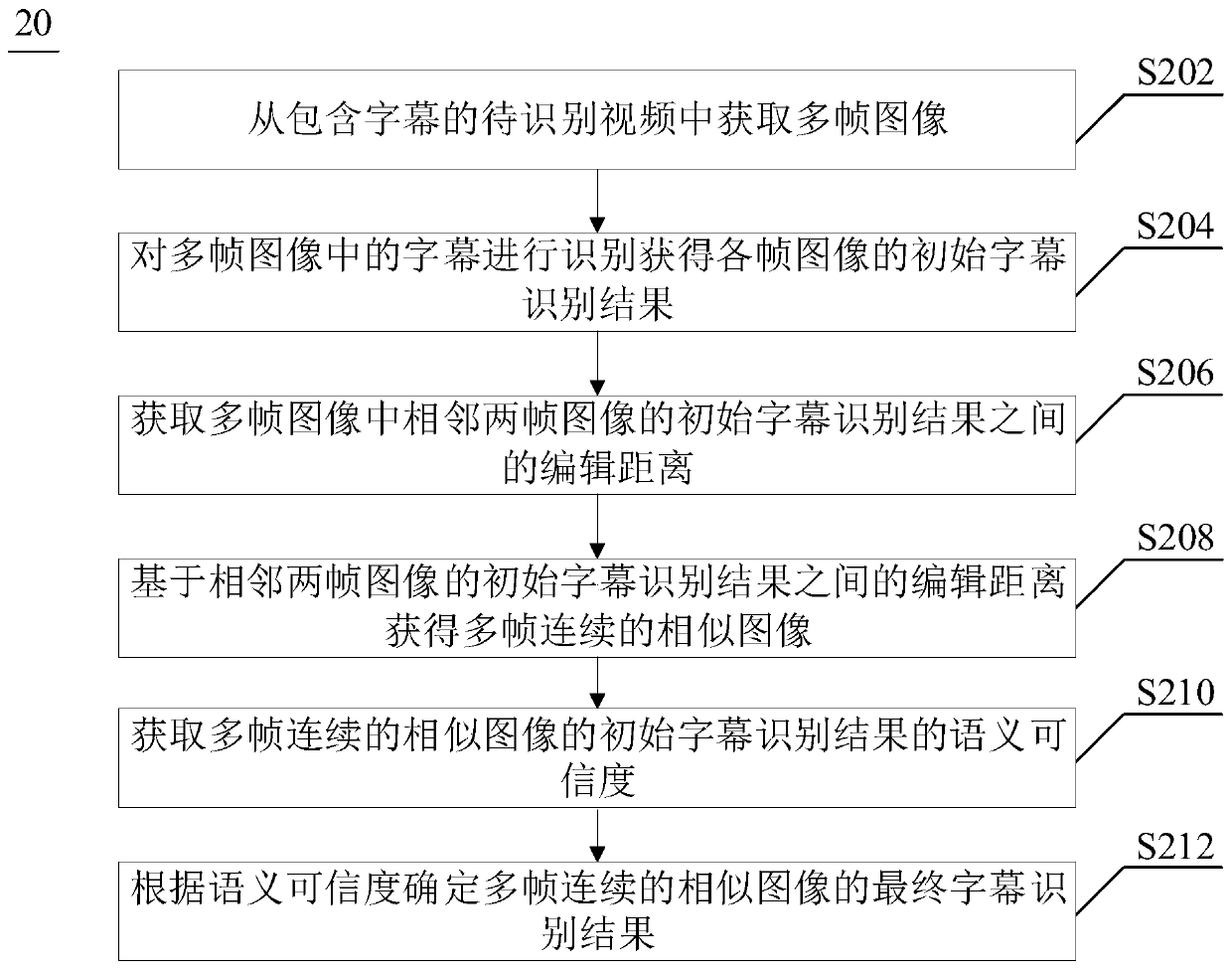

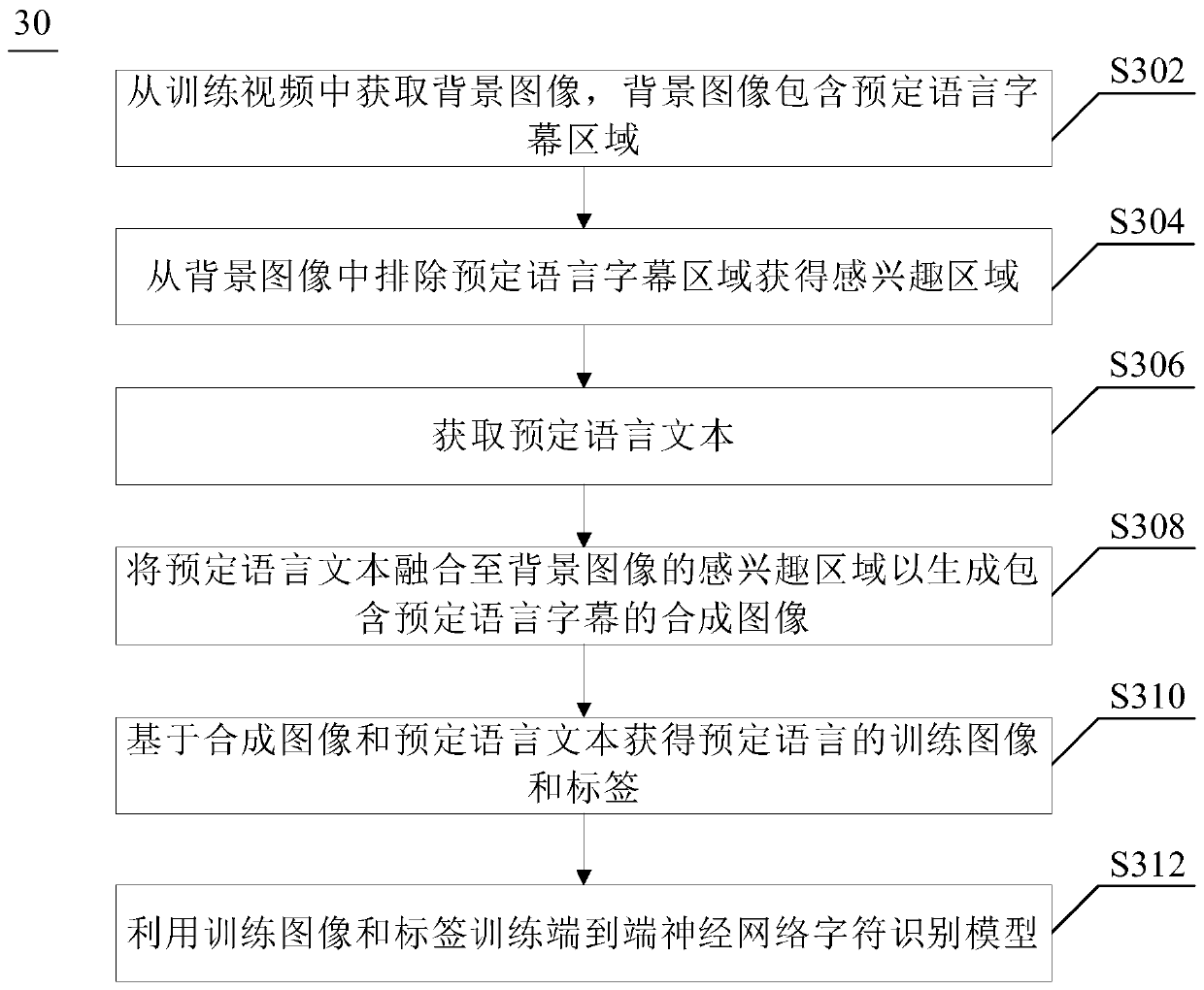

ActiveCN111582241AImprove accuracyNeural architecturesCharacter recognitionVisual technologyComputer graphics (images)

The invention provides a video caption recognition method and device, equipment and a storage medium, and relates to the technical field of computer vision. The method comprises the following steps: acquiring multiple frames of images from a to-be-identified video containing subtitles; identifying subtitles in the multiple frames of images to obtain an initial subtitle identification result of each frame of image; obtaining an editing distance between initial subtitle identification results of two adjacent frames of images in the multiple frames of images; obtaining a plurality of frames of continuous similar images based on the editing distance between the initial subtitle recognition results of the two adjacent frames of images; obtaining semantic credibility of an initial subtitle recognition result of the multi-frame continuous similar images; and determining a final caption identification result of the multi-frame continuous similar images according to the semantic credibility. The invention improves the accuracy of the identification result of the video subtitles to a certain extent.

Owner:TENCENT TECH (SHENZHEN) CO LTD

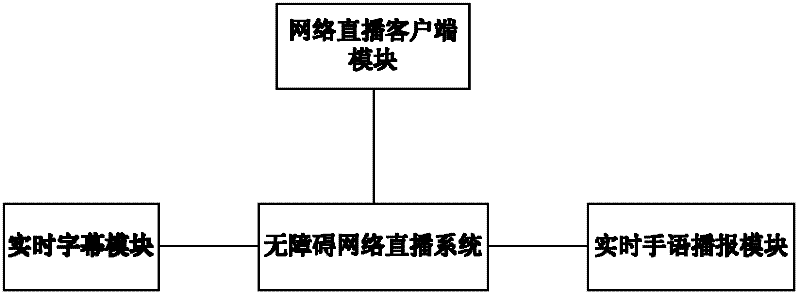

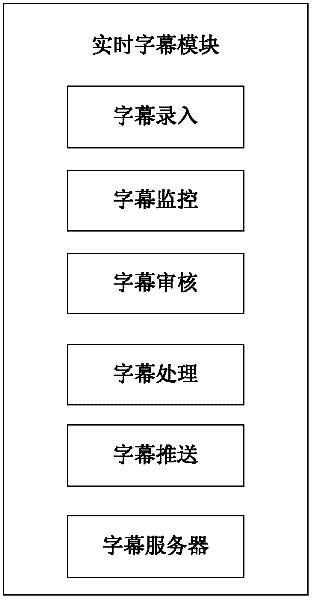

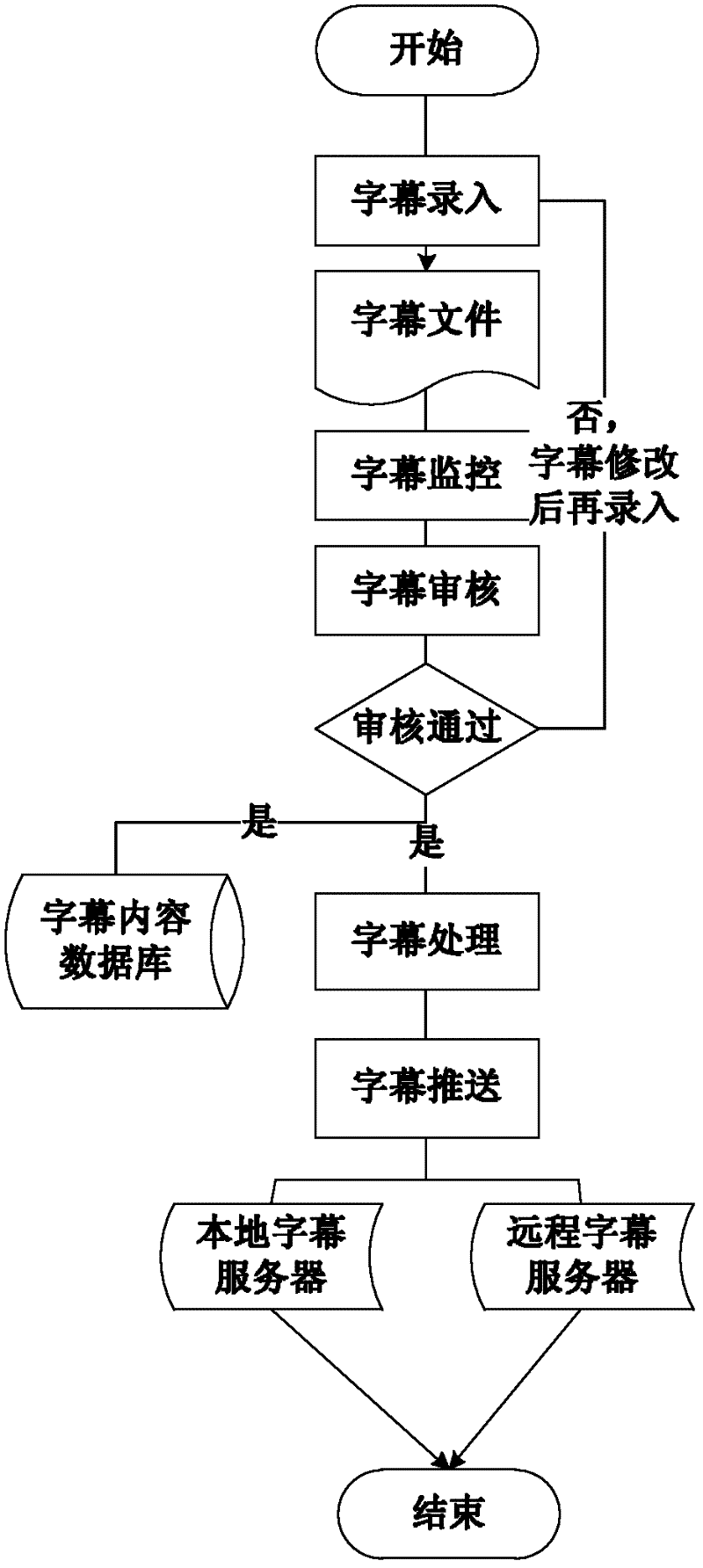

Method and system for adding real-time subtitle and sign language services to live program based on P2P (Peer-to-Peer) network

InactiveCN102655606AReal-timeAchieve accuracySelective content distributionTelecommunicationsSynchronization networks

The invention provides a method and system for adding real-time subtitle and sign language services to a live program based on a P2P (Peer-to-Peer) network. The method is characterized by comprising the following steps of: 1) making corresponding real-time subtitles according to a television direct transmission or field of the live program; 2) making the corresponding real-time sign language according to the television direct transmission or field of the live program; and 3) respectively synchronizing the subtitle and the sign language with the network live program, and playing. The system is used for realizing the method to the network live programs, and comprises a real-time subtitle module for generating the real-time subtitle for a live video program, a real-time sign language module for generating a real-time sign language for the live video program, and a network live client module for directly broadcasting a network program, synchronizing a network live program with the real-time subtitle, and synchronizing the network live program and a real-time sign language video.

Owner:ZHEJIANG UNIV

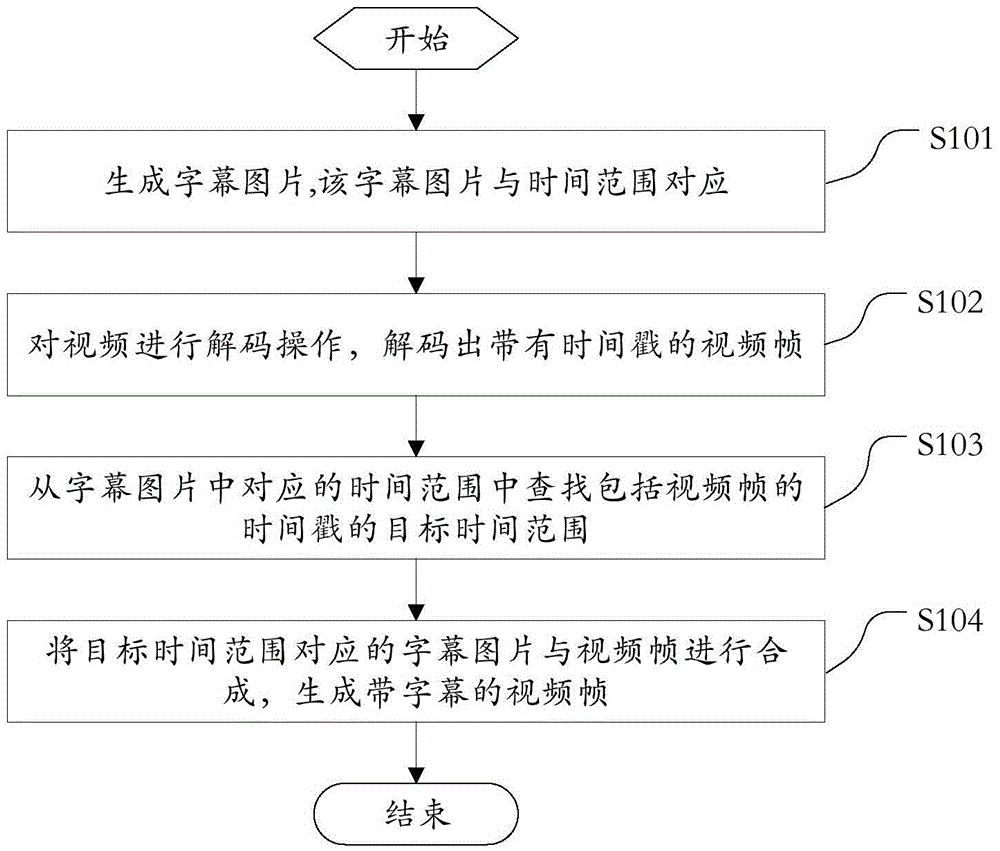

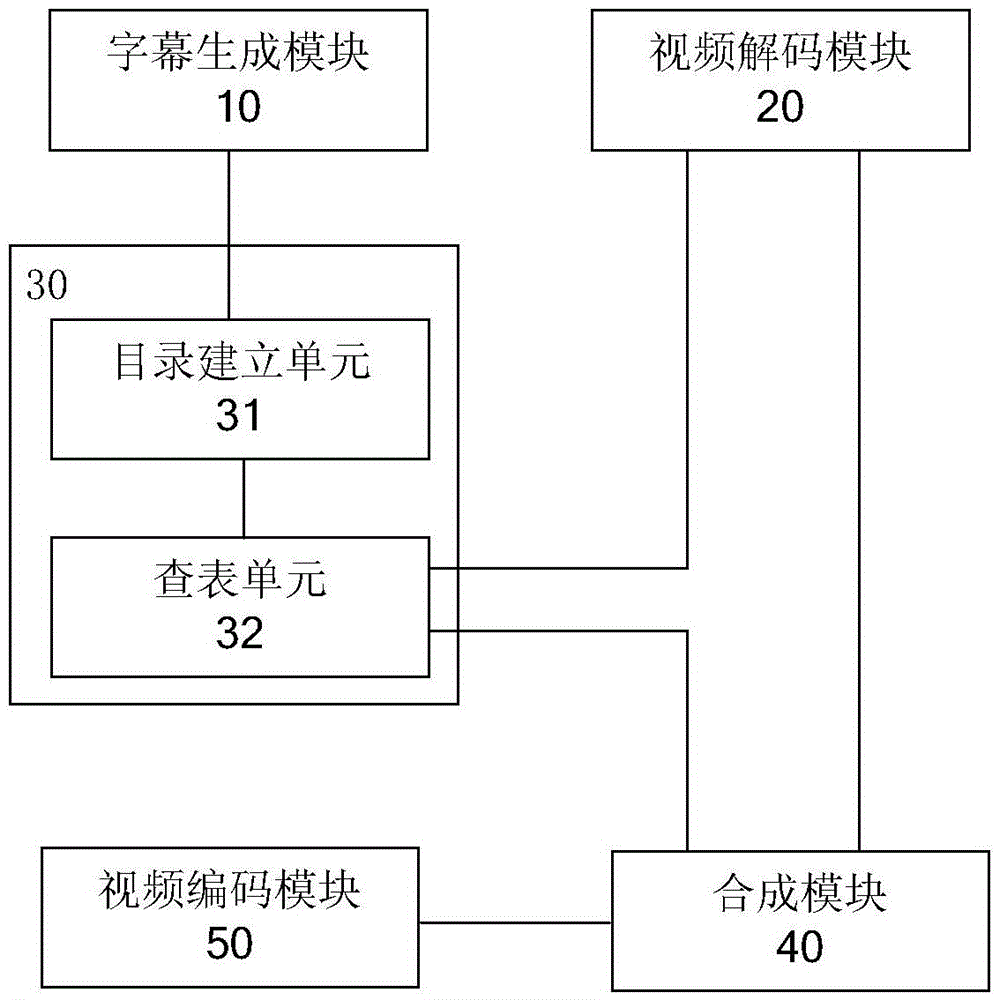

Video subtitle adding method, device and terminal

InactiveCN105979169AMeet the needs of quickly adding subtitlesSimple technical solutionTelevision system detailsColor television detailsTime rangeTimestamp

The present invention provides a method, device and terminal for adding subtitles to video. Specifically, first generate a number of transparent subtitle pictures named after the time range. For example, T1 and T2 represent two time points, and T1<=T2. The generated picture T1-T2.png indicates that in the time range from T1 to T2, the video The subtitles in the picture T1-T2.png will be displayed; then the video to which the subtitles will be added is decoded; the time stamp of the decoded video frame is compared with the time range of the subtitle picture; then the time range includes the time stamp of the video frame The subtitle picture is synthesized with the video frame to obtain the video frame with subtitles; the video with subtitles can be obtained by encoding the video frame with subtitles. Because the technical solution proposed by the present invention is concise, fast, and consumes less resources, it can meet the requirement of quickly adding subtitles to videos on a mobile terminal.

Owner:LETV INFORMATION TECH BEIJING

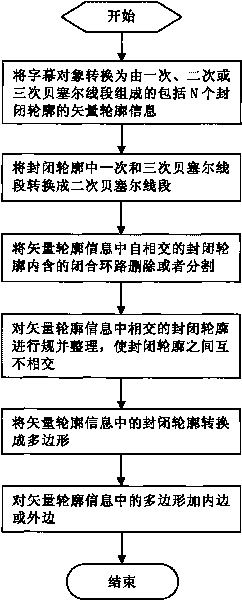

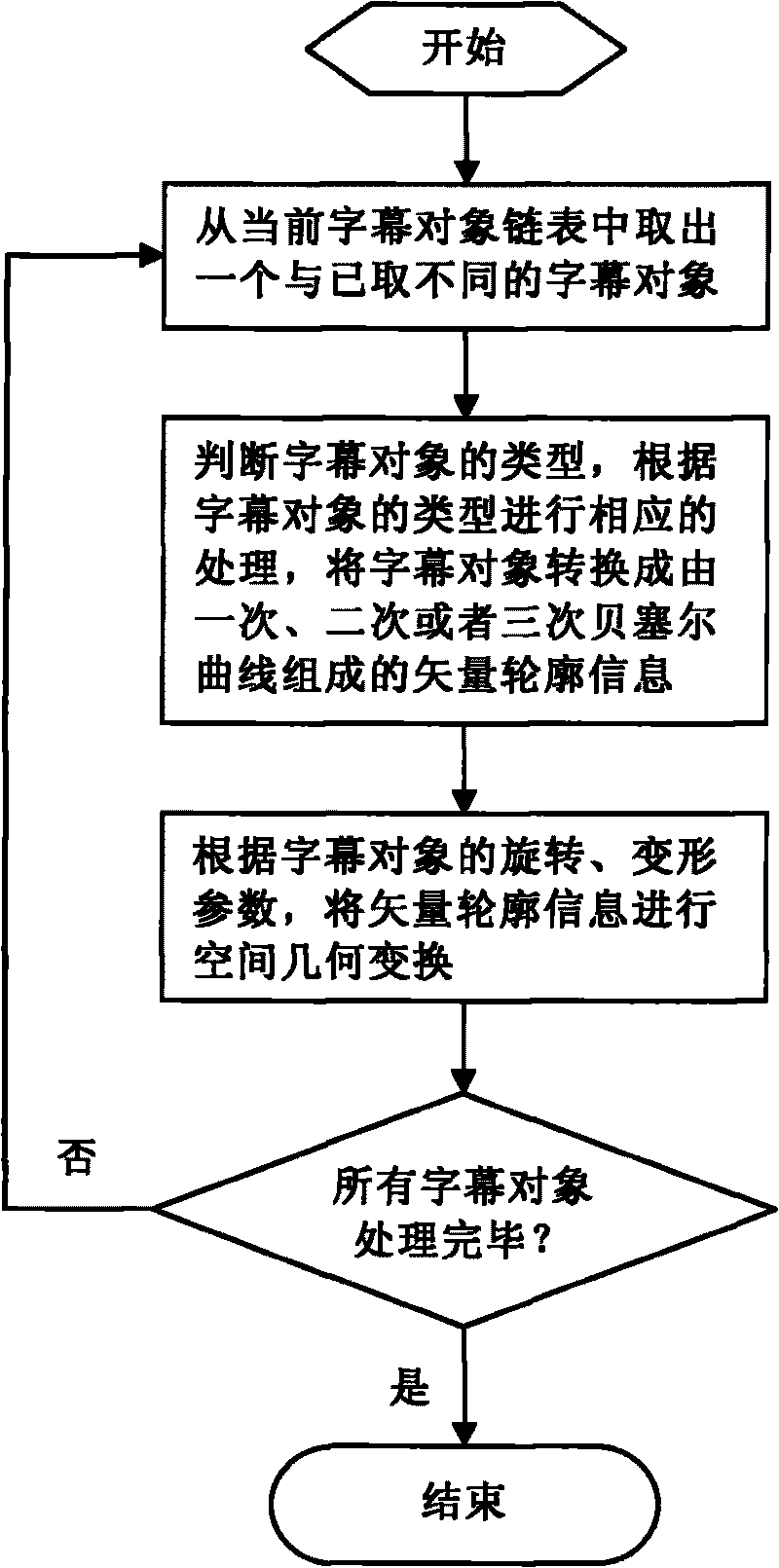

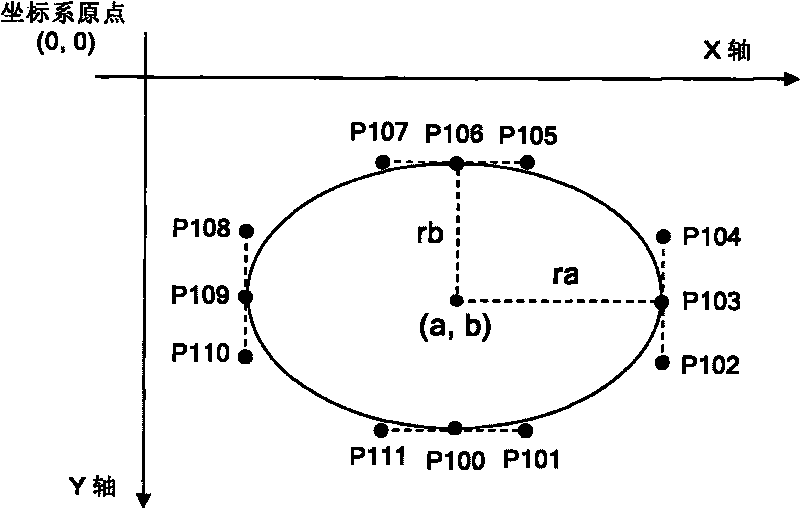

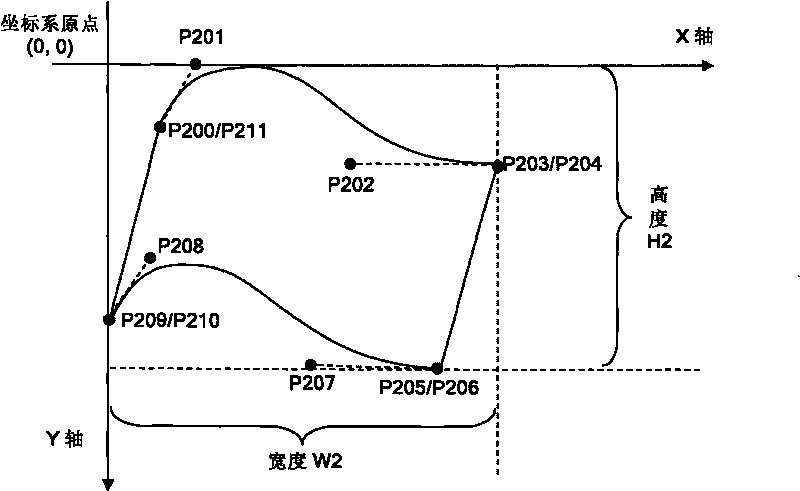

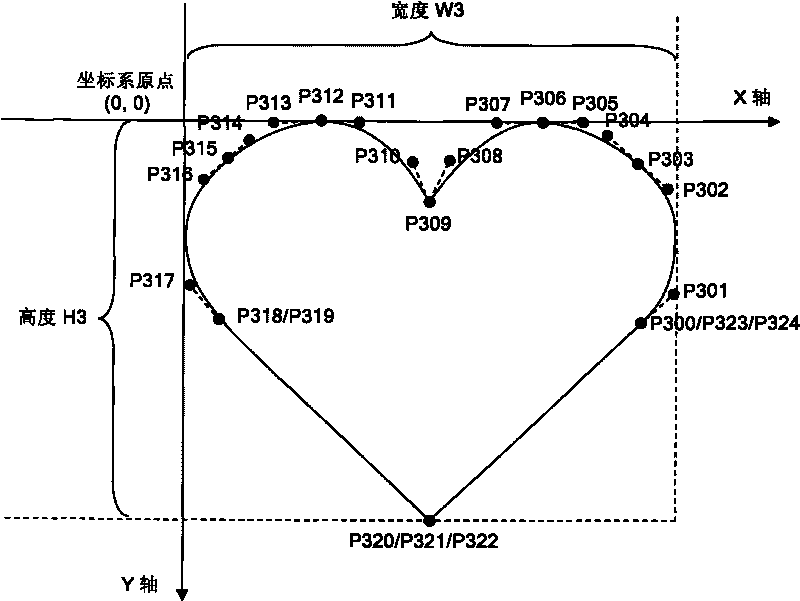

Subtitle dramatizing method based on closed outline of Bezier

InactiveCN101764945AImprove efficiencyImprove performanceImage enhancementTelevision system detailsBroadcastingComputer science

The invention discloses a subtitle dramatizing method based on the closed outline of Bezier, which belongs to the technical field of subtitle editing and broadcasting of a television program manufacture and broadcasting agent in television and film industries. The method of the invention comprises the following steps: firstly, converting subtitle objects into vector outline information which consists of first power, second power or third power Bezier line sections and comprises N closed vector outlines; then, converting the first power and the third power Bezier line sections into second power Bezier line sections; next, deleting or cutting the closed annular paths contained in the self intersected closed outlines in the vector outline information; regulating and sorting the intersected closed outlines in the vector outline information so that the closed outlines are not intersected mutually; and finally, converting the intersected closed outlines in the vector outline information into polygons, and dramatizing the subtitles after adding the inner edges or the outer edges on the polygons. When being adopted, the method of the invention can improve the subtitle dramatizing efficiency, can enhance the subtitle dramatizing effect, and can meet the high-grade application requirement of the subtitle.

Owner:北京市文化科技融资租赁股份有限公司

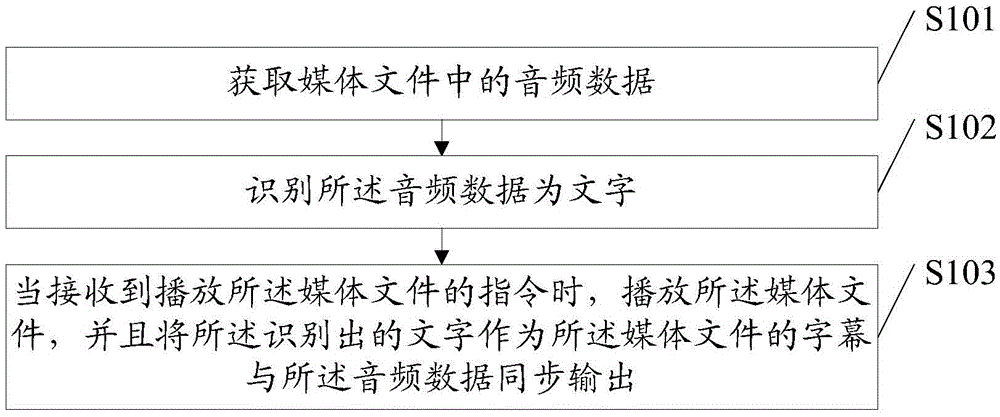

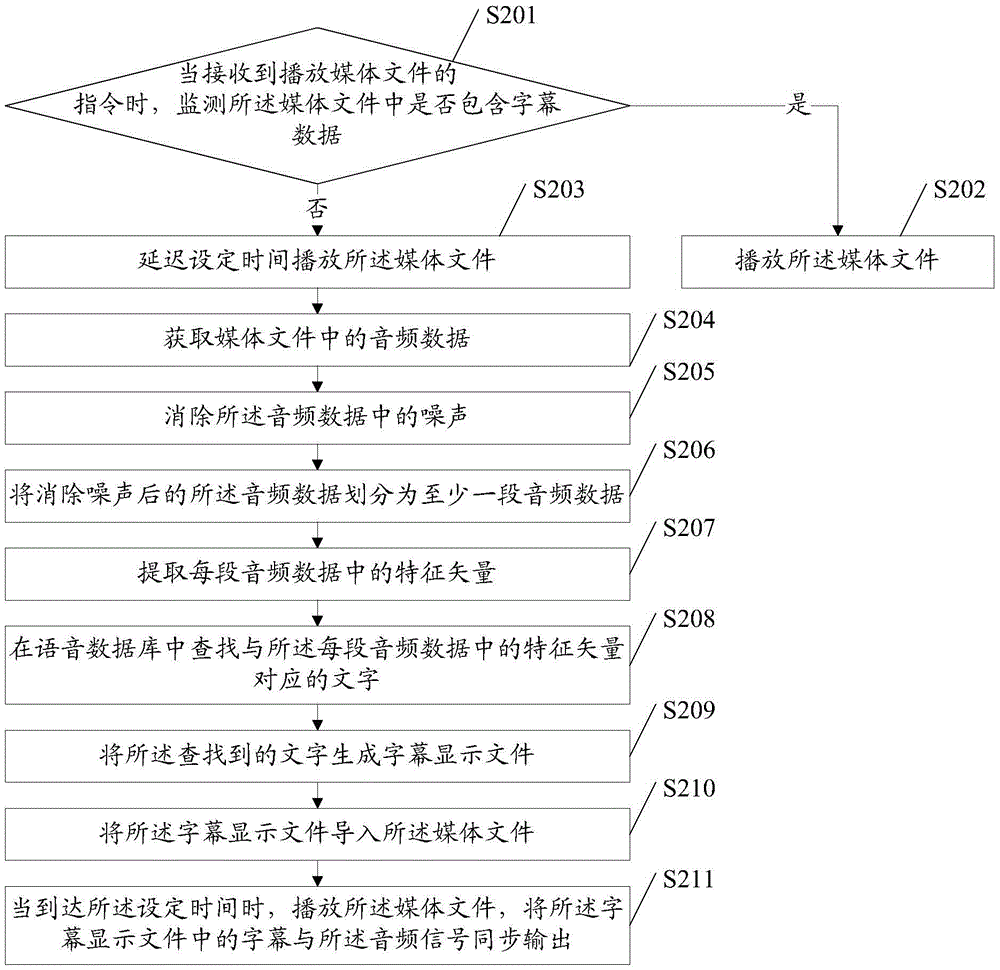

Subtitle output method and device

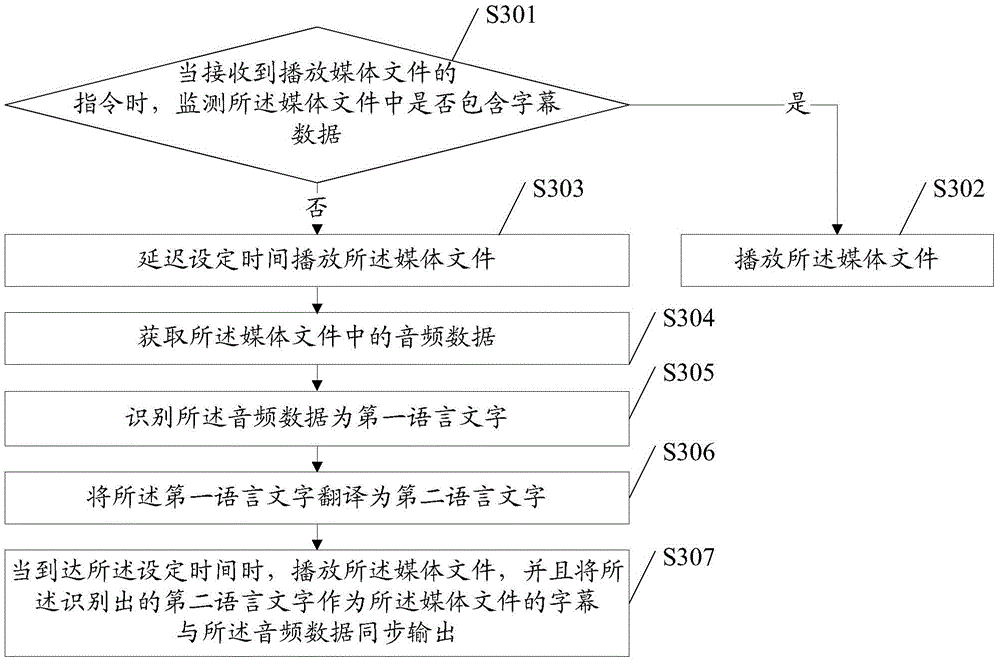

The embodiment of the invention discloses a subtitle output method and device. Audio data in a media file are acquired and the audio data are identified as characters. When an instruction of playing the media file is received, the media file is played, and the identified characters act as subtitles of the media file to be synchronously outputted with the audio data so as to provide the subtitles to the media file without subtitles and provide more information to users.

Owner:MEIZU TECH CO LTD

Method and device for displaying live broadcast subtitles, server and medium

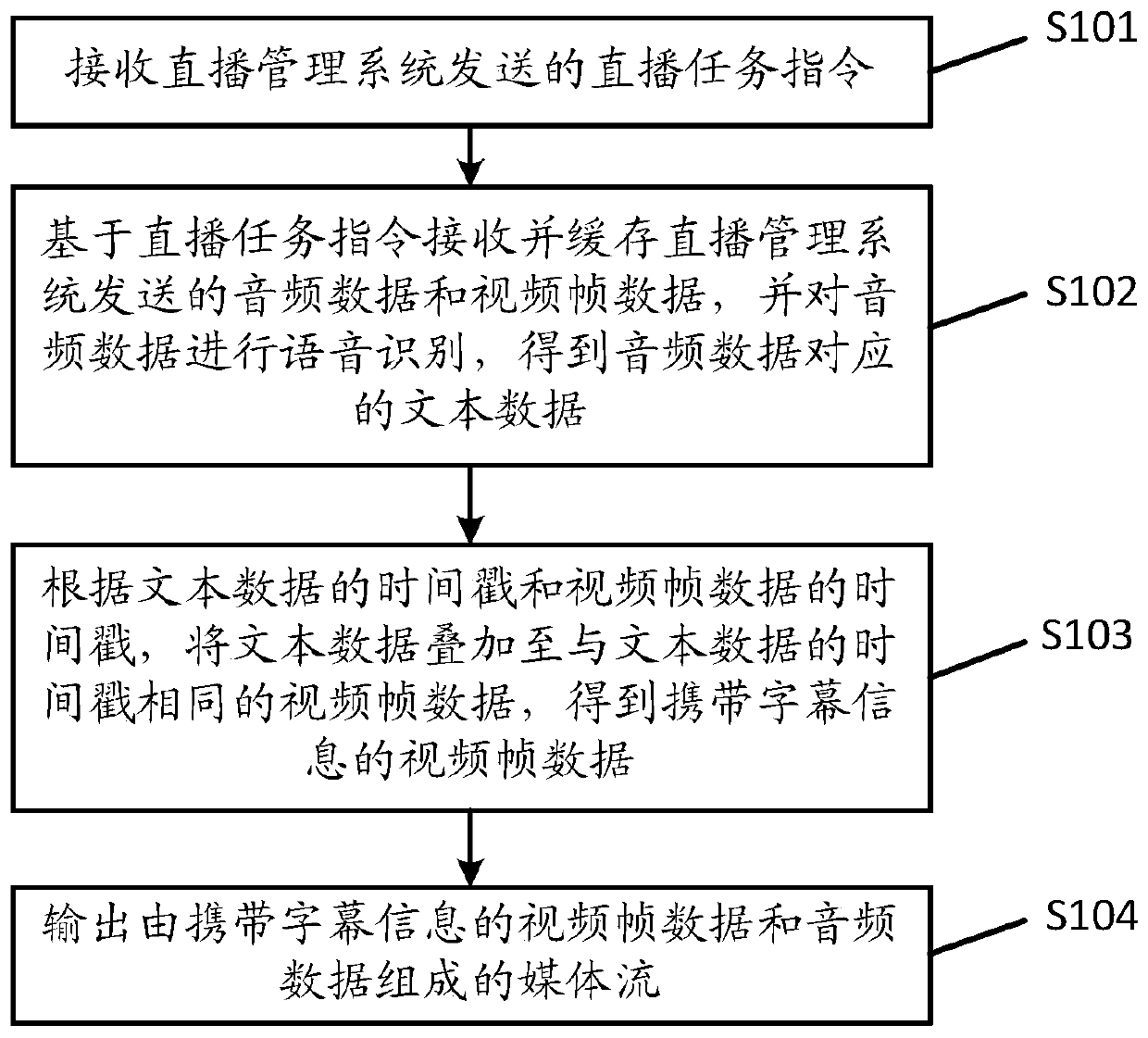

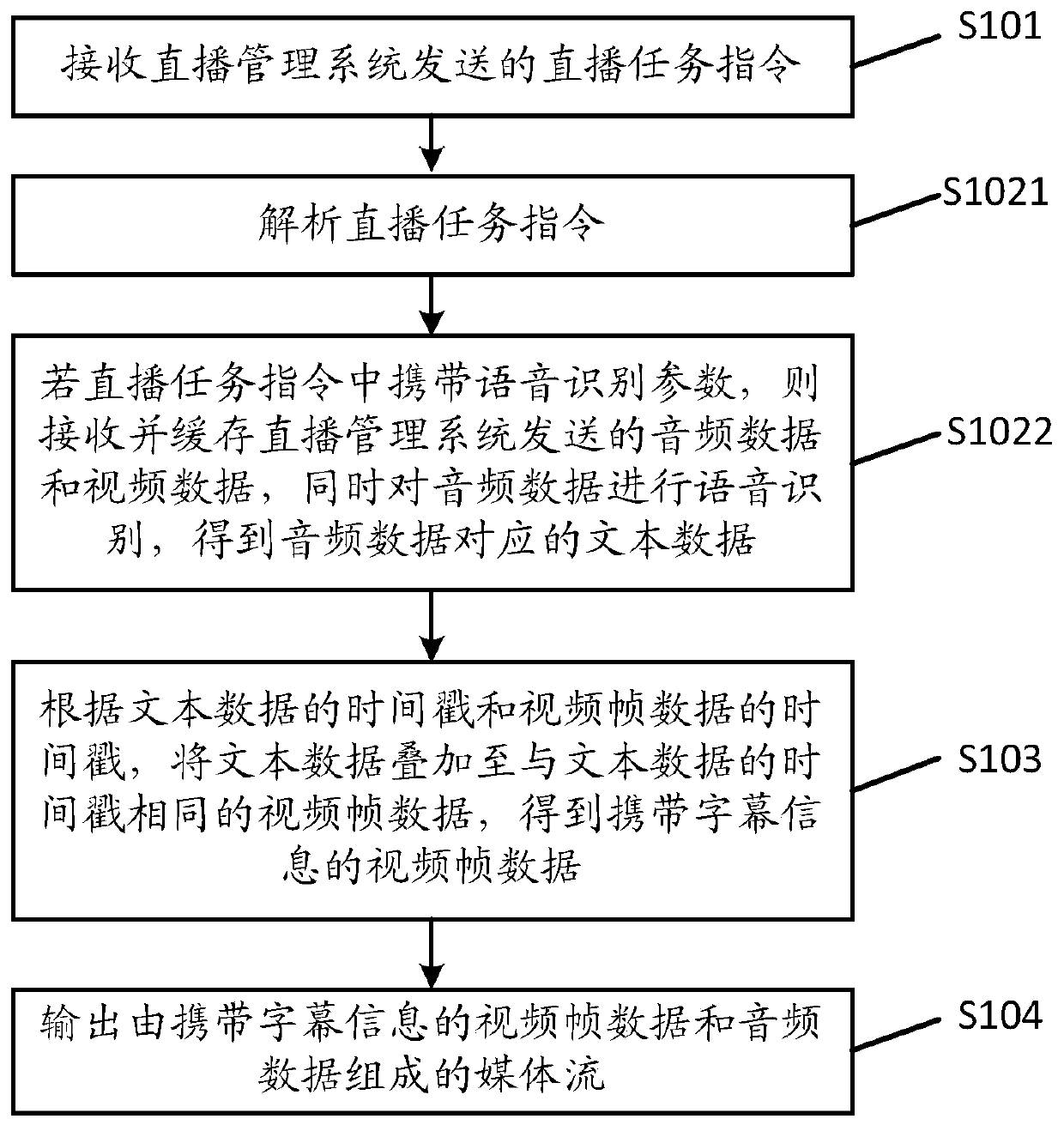

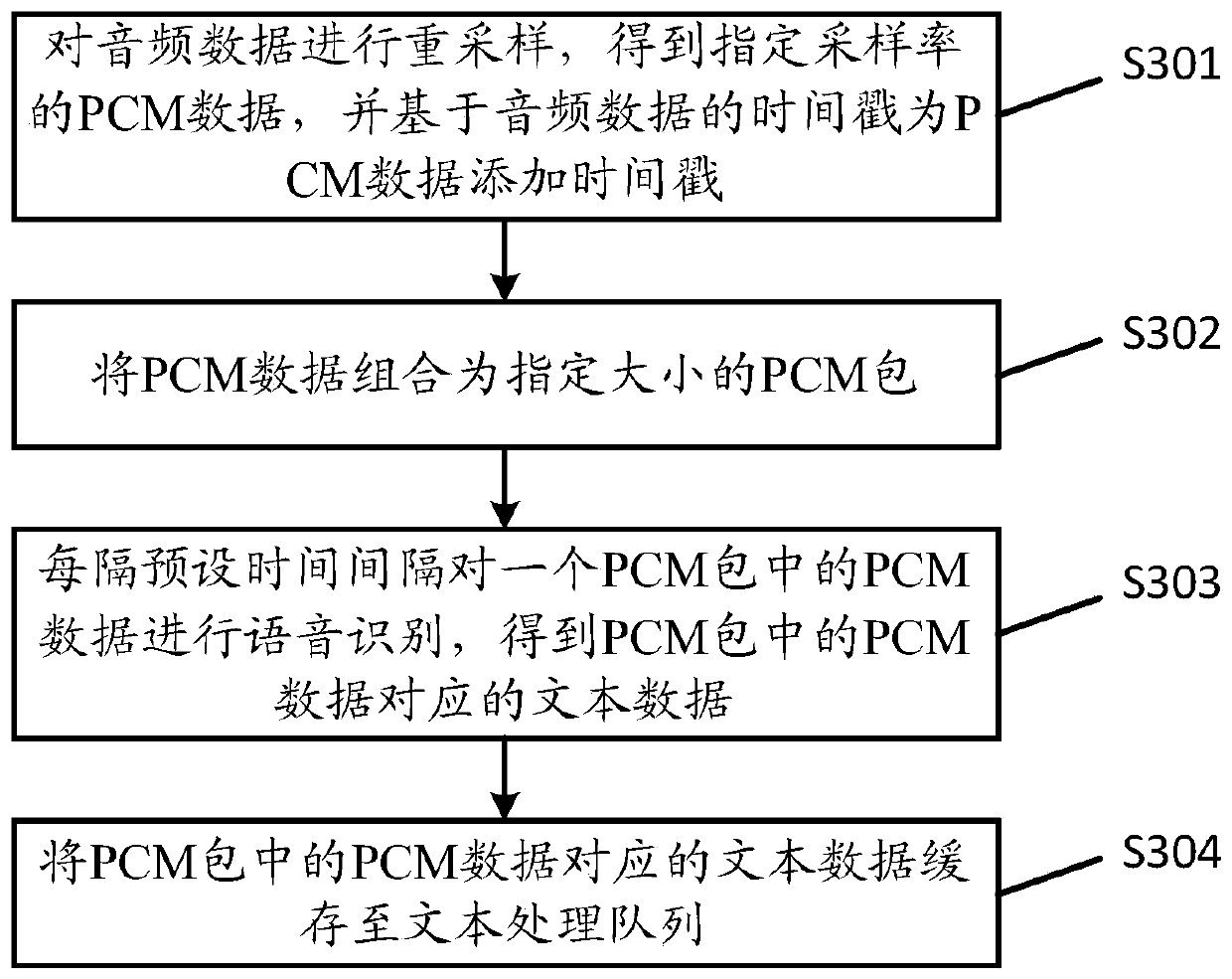

The embodiment of the invention provides a method and a device for displaying live broadcast subtitles, a server and a medium, and relates to the technical field of information processing. The methodaccording to the embodiment comprises the steps of receiving a live broadcast task instruction sent by a live broadcast management system, receiving and caching audio data and video frame data sent bythe live broadcast management system based on the live broadcast task instruction, performing voice recognition on the audio data; text data corresponding to the audio data is obtained; and accordingto the timestamp of the text data and the timestamp of the video frame data, superposing the text data to the video frame data with the same timestamp as the text data to obtain the video frame datacarrying the subtitle information, and outputting a media stream composed of the video frame data carrying the subtitle information and the audio data. By adopting the method, subtitles can be displayed for the live video.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

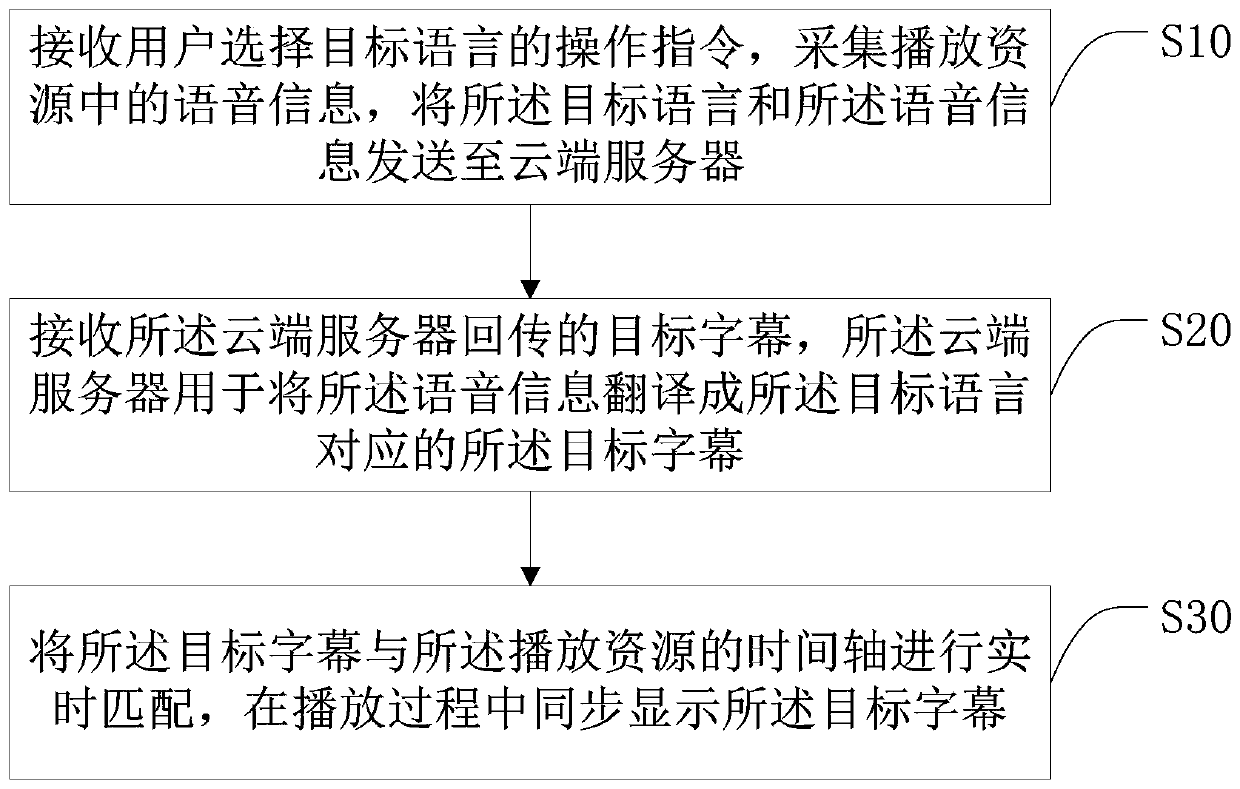

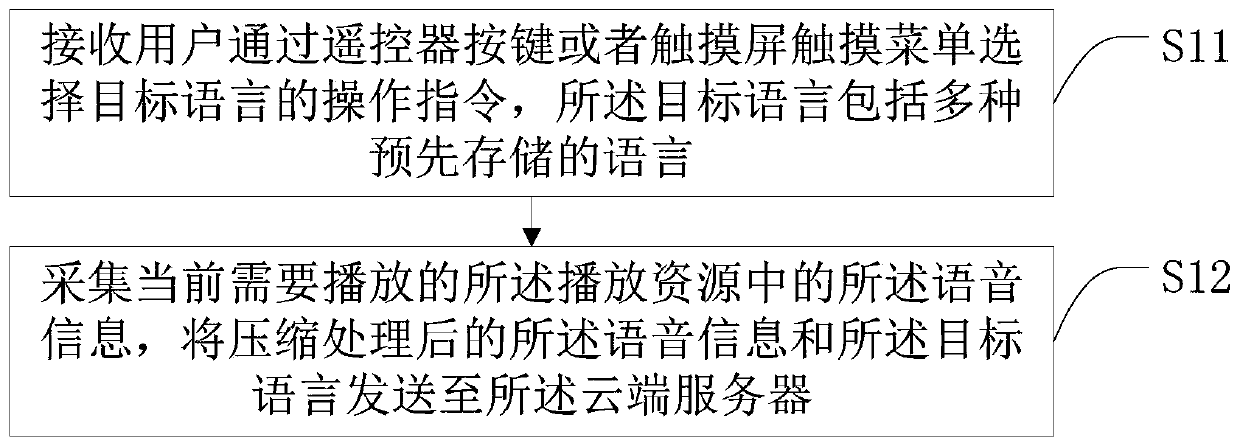

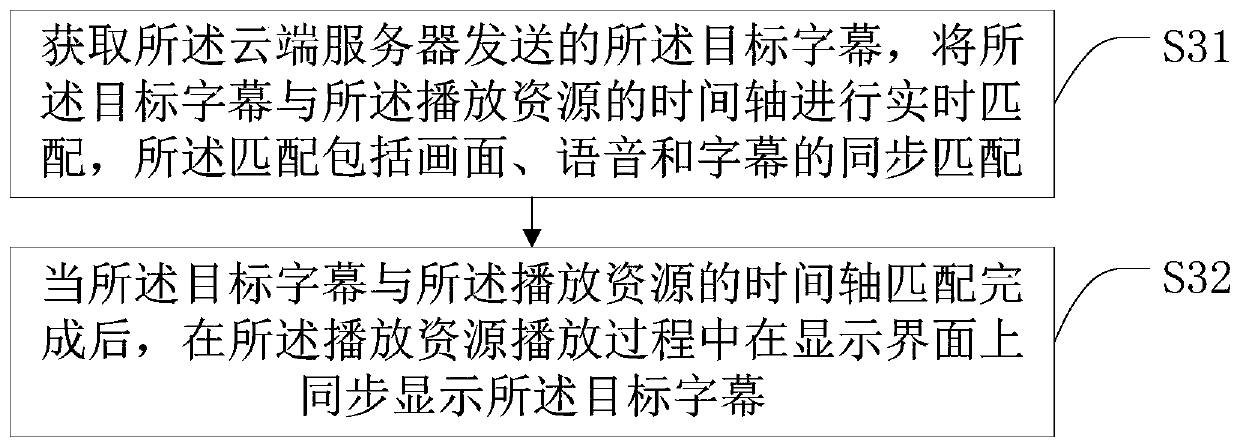

Simultaneous subtitle translation method, smart television and storage medium

InactiveCN110769265AEasy to understandEasy to watchSelective content distributionSubtitleEngineering

The invention discloses a simultaneous subtitle translation method, a smart television and a storage medium. The method comprises the steps: receiving an operation instruction of a user for selectinga target language, collecting voice information in a playing resource, and transmitting the target language and the voice information to a cloud server; receiving target subtitles returned by the cloud server, wherein the cloud server is used for translating the voice information into the target subtitles corresponding to the target language; and matching the target caption with the time axis of the playing resource in real time, and synchronously displaying the target caption in the playing process. According to the invention, the voice information in the playing resources is collected; according to language subtitles required by a user, the voice information is sent to a cloud server to be synchronously translated into target subtitles corresponding to a target language selected by the user, and the target subtitles are synchronously displayed during playing, so that the user can easily understand content information expressed by audios and videos of different languages, and convenient watching or learning is realized.

Owner:SHENZHEN SKYWORTH RGB ELECTRONICS CO LTD

Event-driven streaming media interactivity

Aspects described herein may provide systems, methods, and device for facilitating language learning using videos. Subtitles may be displayed in a first, target language or a second, native language during display of the video. On a pause event, both the target language subtitle and the native language subtitle may be displayed simultaneously to facilitate understanding. While paused, a user may select an option to be provided with additional contextual information indicating usage and context associated with one or more words of the target language subtitle. The user may navigate through previous and next subtitles with additional contextual information while the video is paused. Other aspects may allow users to create auto-continuous video loops of definable duration, and may allow users to generate video segments by searching an entire database of subtitle text, and may allow users create, save, share, and search video loops.

Owner:FLICKRAY INC

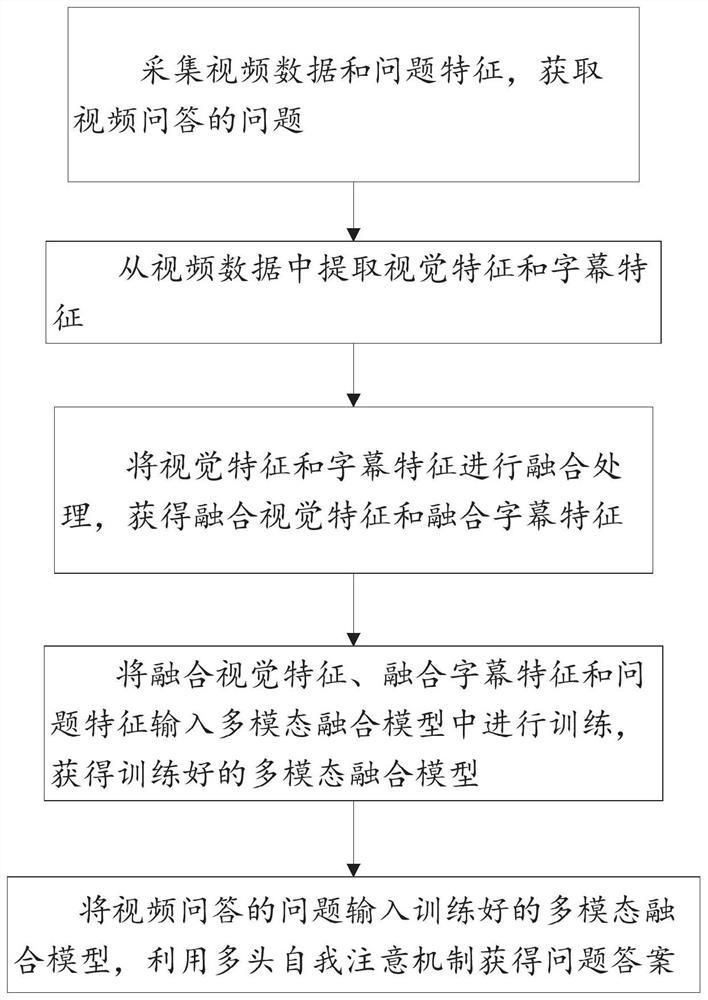

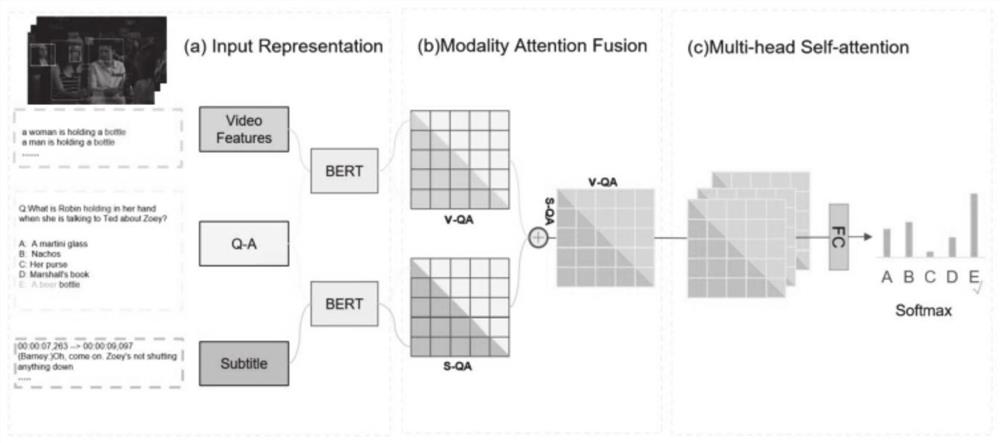

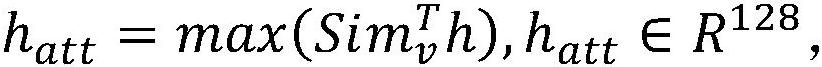

Method and system for improving video question-answering precision based on multi-modal fusion model

ActiveCN112559698AImprove accuracyAccurate captureDigital data information retrievalNatural language data processingPattern recognitionSubtitle

The invention provides a method and system for improving video question-answering precision based on a multi-modal fusion model, and the method comprises the steps: collecting video data and questionfeatures, and obtaining a video question-answering question; extracting visual features and subtitle features from the video data; performing fusion processing on the visual features and the subtitlefeatures to obtain fused visual features and fused subtitle features; inputting the fused visual features, the fused caption features and the problem features into a multi-modal fusion model for training to obtain a trained multi-modal fusion model; inputting the questions of the video questions and answers into the trained multi-modal fusion model to obtain answers to the questions; different target entity instances are focused for different questions according to the characteristics of the questions, so that the accuracy of selecting answers by the model is improved.

Owner:SHANDONG NORMAL UNIV

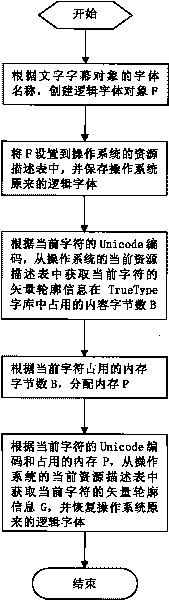

Method for dividing quadratic Bezier curve in subtitle object vector outline by intersection points

InactiveCN101764943AImprove subsequent rendering efficiencyEnhance the final renderingTelevision system detailsColor television detailsComputer graphics (images)Algorithm

The invention discloses a method for dividing quadratic Bezier curve in subtitle object vector outline by intersection points, belonging to the subtitle editing-broadcasting technical field of a TV program making and broadcasting mechanism in radio industry; in the method, intersection points from S(0)to S(n-1)of all quadratic Bezier line segments and other quadratic Bezier line segments in the subtitle object vector outline are calculated; the line segment B at the S(0) point is divided into line segment B1 and line segment B1'; the ling segment B1'is divided into line segment B2 and line segment B2'at the S(1) point; by parity of reasoning, the line segment Bn-1 is divided into line segment Bn and line segment Bn'at the S(n-1) point, and finally, N+1 divided ling segments are obtained; by adopting the method in the invention, the method is beneficial to follow-up rendering of the subtitle object and the final rendering effect of the subtitle object is enhanced.

Owner:北京市文化科技融资租赁股份有限公司

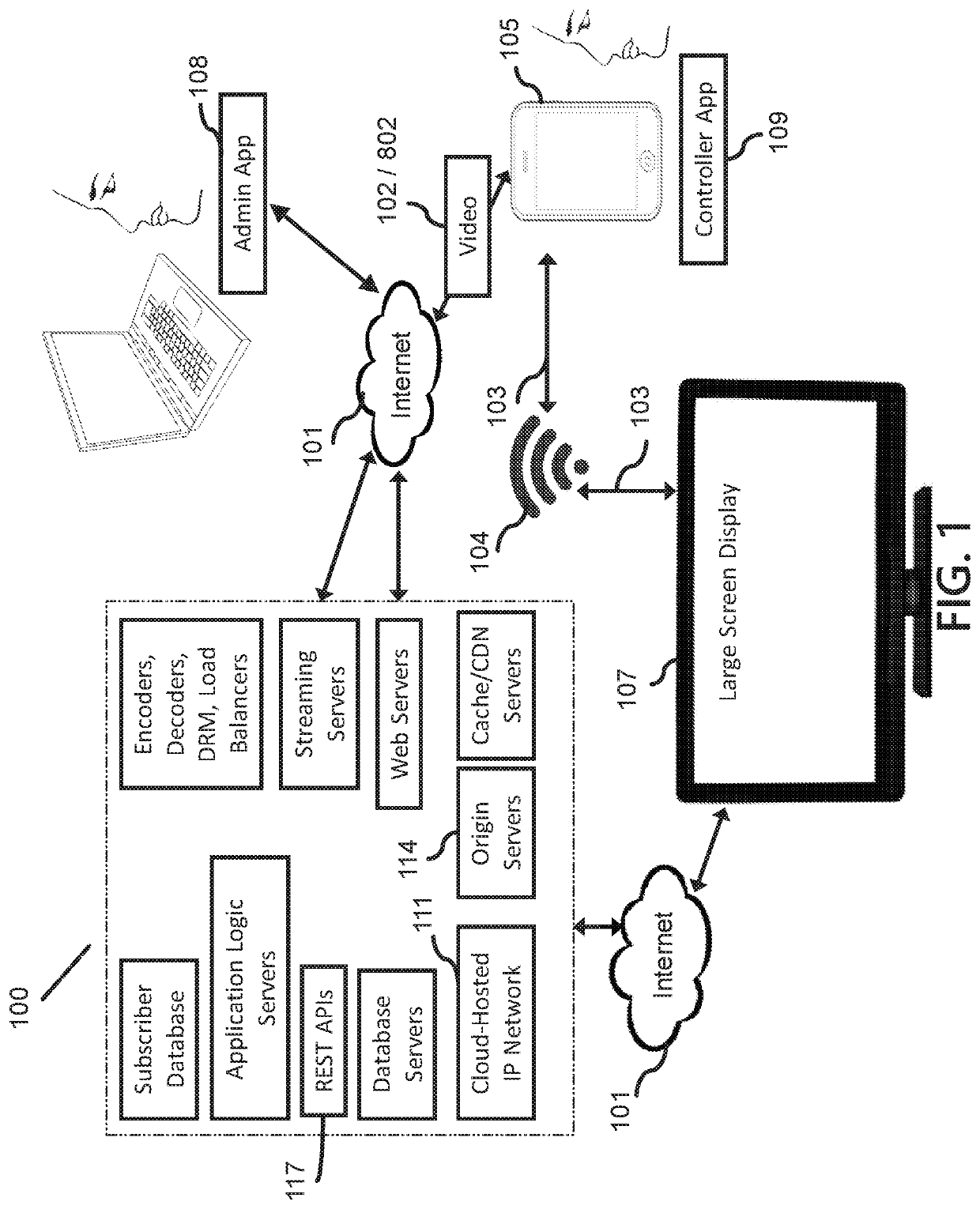

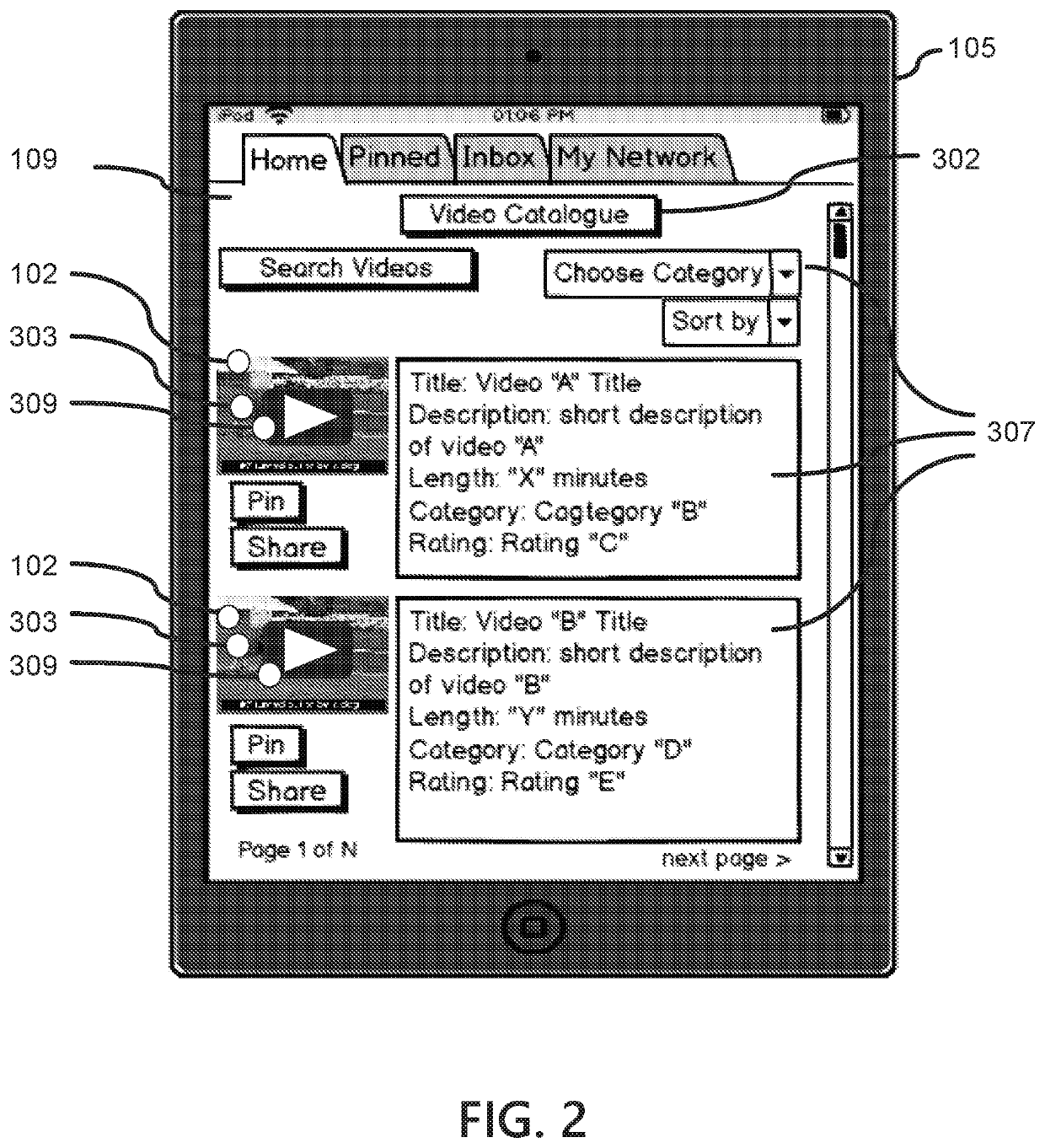

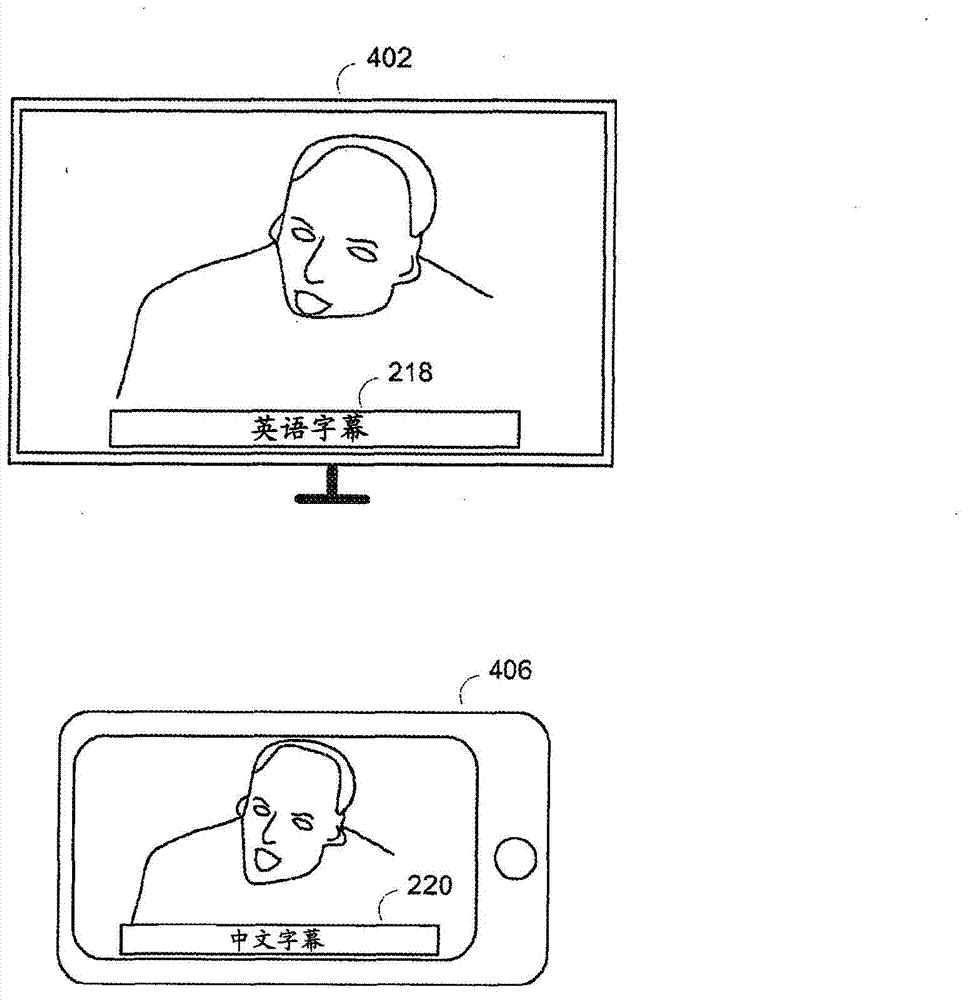

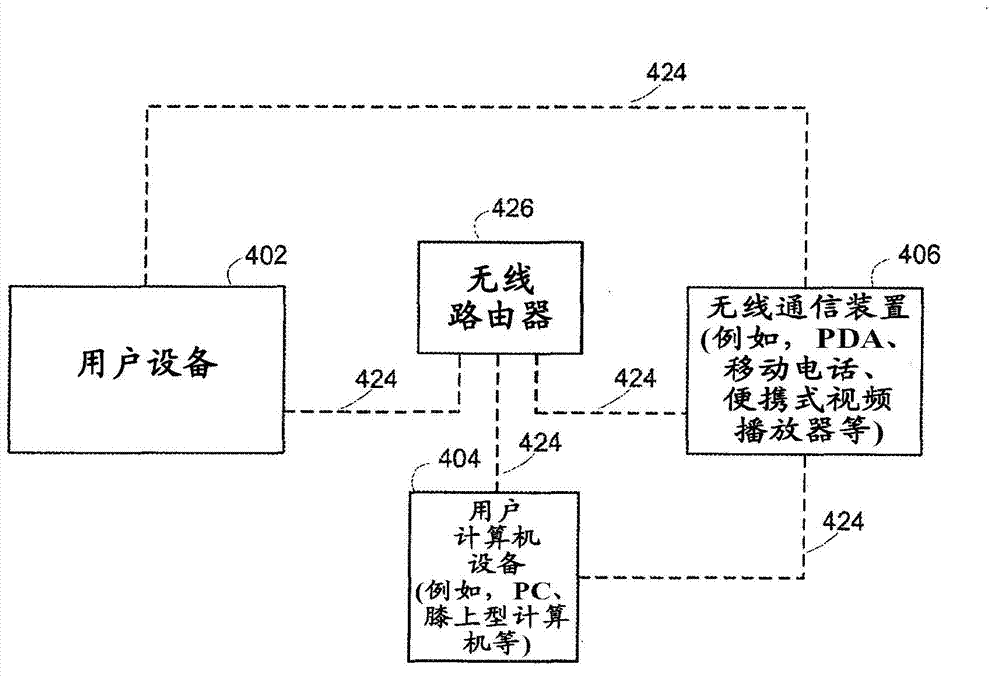

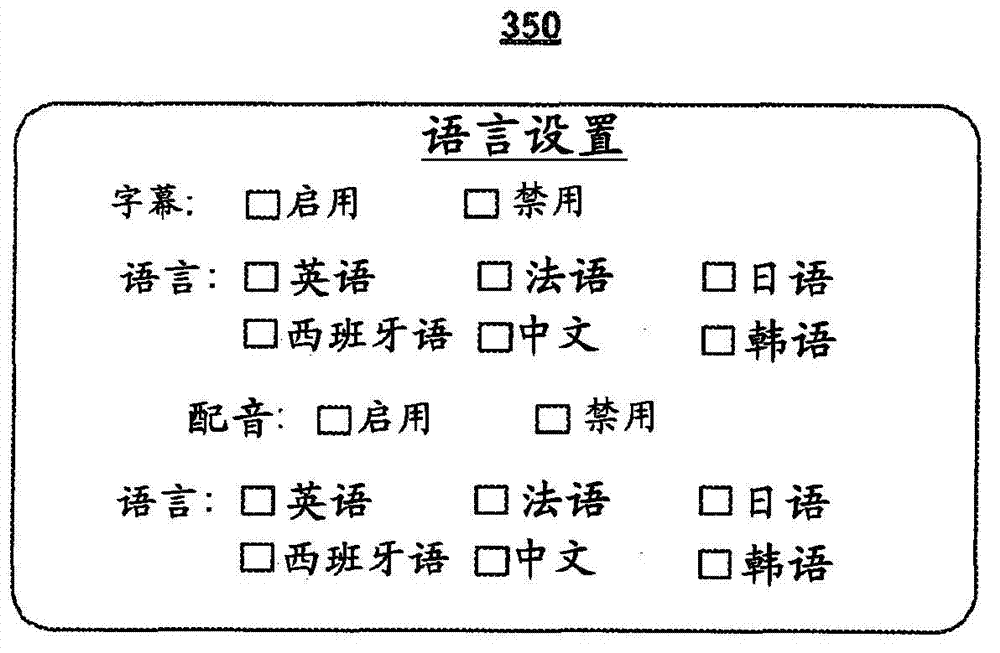

Systems and methods for providing media guidance application functionality using a wireless communications device

A wireless communications device provides users with opportunities to access interactive media guidance or other applications and to control user equipment and interactive media guidance applications. In an exemplary embodiment, if the wireless communications device goes outside of a predetermined range from the user equipment, content that was playing on user equipment may automatically be streamed to the wireless communications device. In another exemplary embodiment, users can play a program with subtitles in one language on user equipment while simultaneously playing the same program with subtitles in another language on the wireless communications device. In yet another exemplary embodiment, users can access a surfing guide application which allows browsing of screenshots of programs playing on broadcast channels on the wireless communications device.

Owner:ROVI GUIDES INC

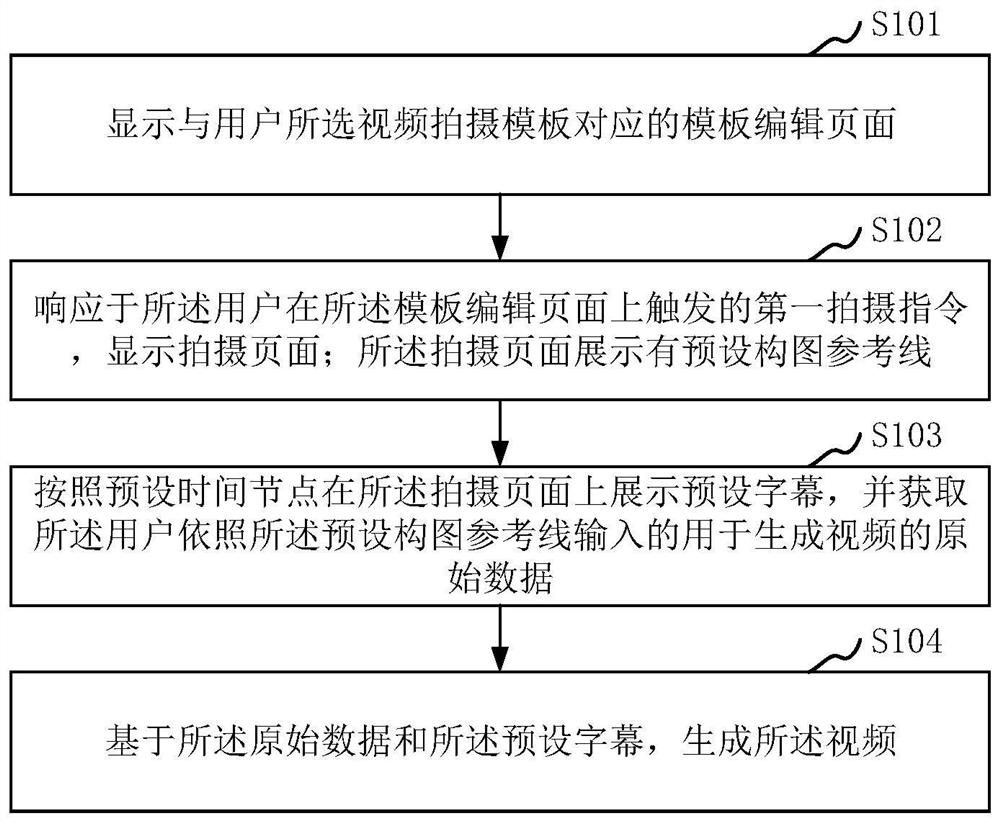

Video generation method and device, computer equipment and storage medium

InactiveCN112422831ASave time editing clipsImprove production efficiencyTelevision system detailsColor television detailsComputer hardwareOriginal data

The invention relates to a video generation method and device, computer equipment and a storage medium. By adopting the method and the device, a user does not need to spend a long time for editing andediting, and does not need to make subtitles synchronized with a time axis, so that a large amount of video editing and editing time is saved for the user, and the video making efficiency is improved. The method comprises the steps of displaying a template editing page corresponding to a video shooting template selected by a user, and displaying a shooting page in response to a first shooting instruction triggered by the user on the template editing page, wherein a preset composition reference line is displayed on the shooting page; displaying preset subtitles on the shooting page according to a preset time node, and obtaining original data which is input by the user according to the preset composition reference line and is used for generating a video; and generating a video based on theoriginal data and the preset subtitles.

Owner:GUANGZHOU PACIFIC COMP INFORMATION CONSULTINGCO LTD

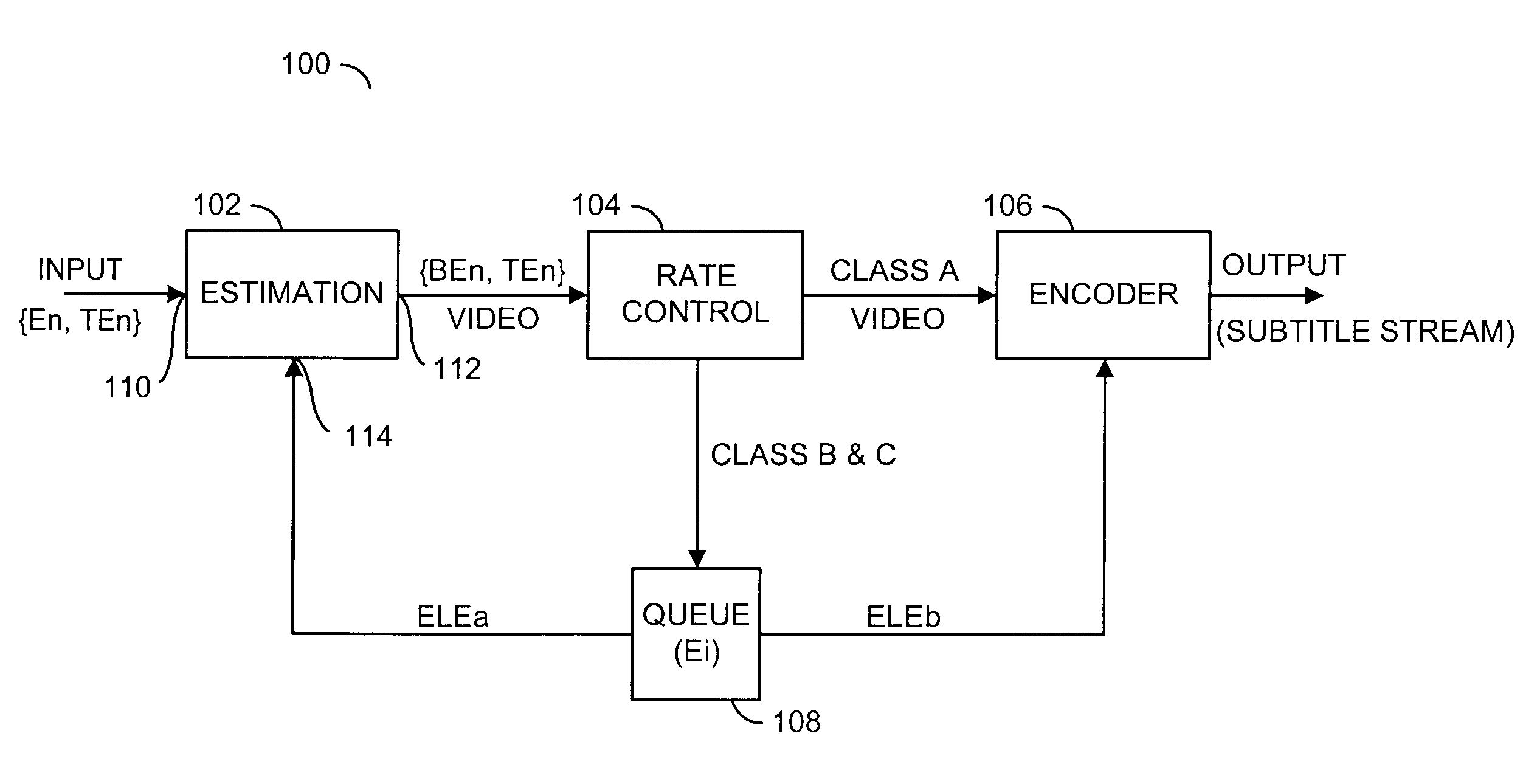

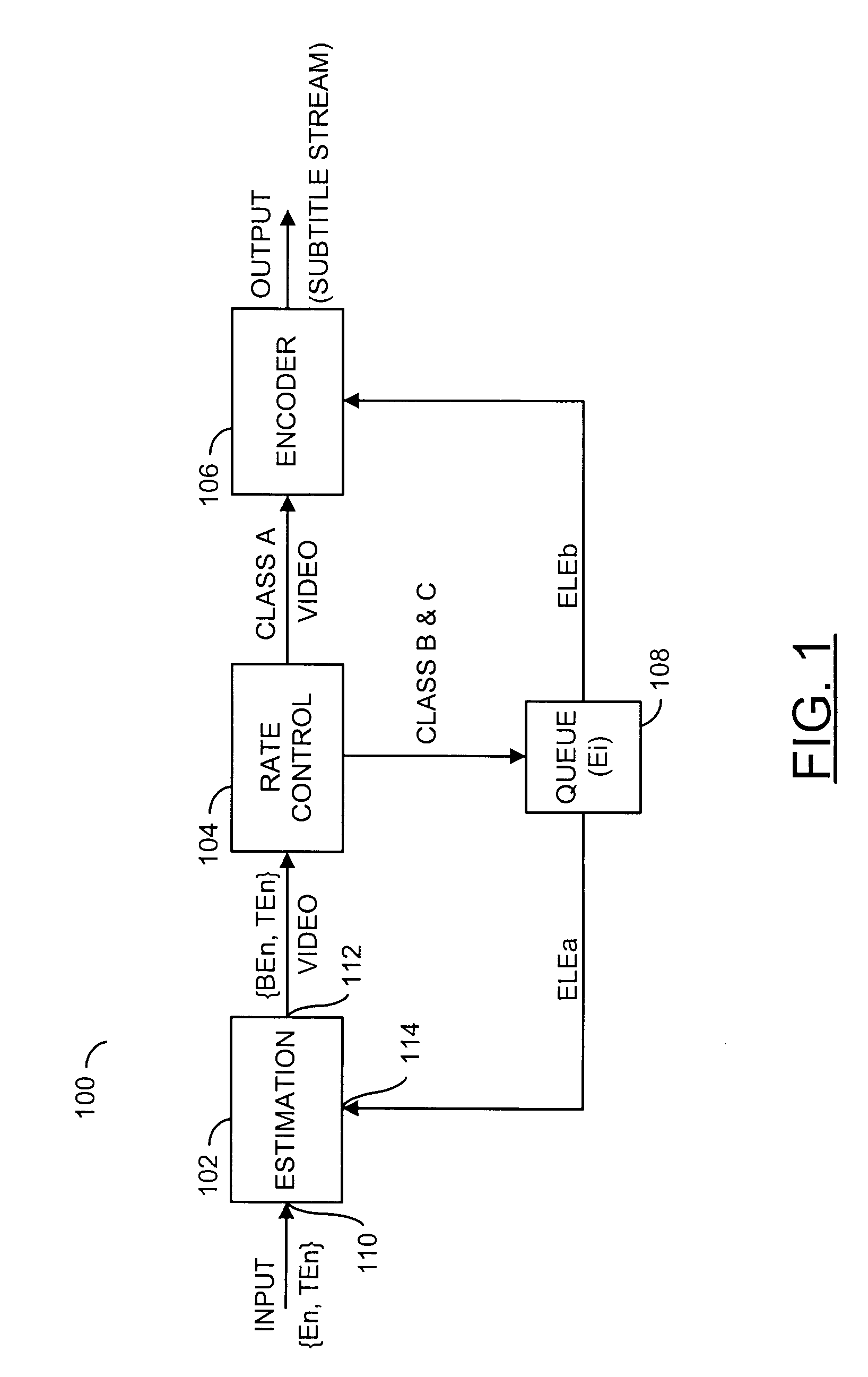

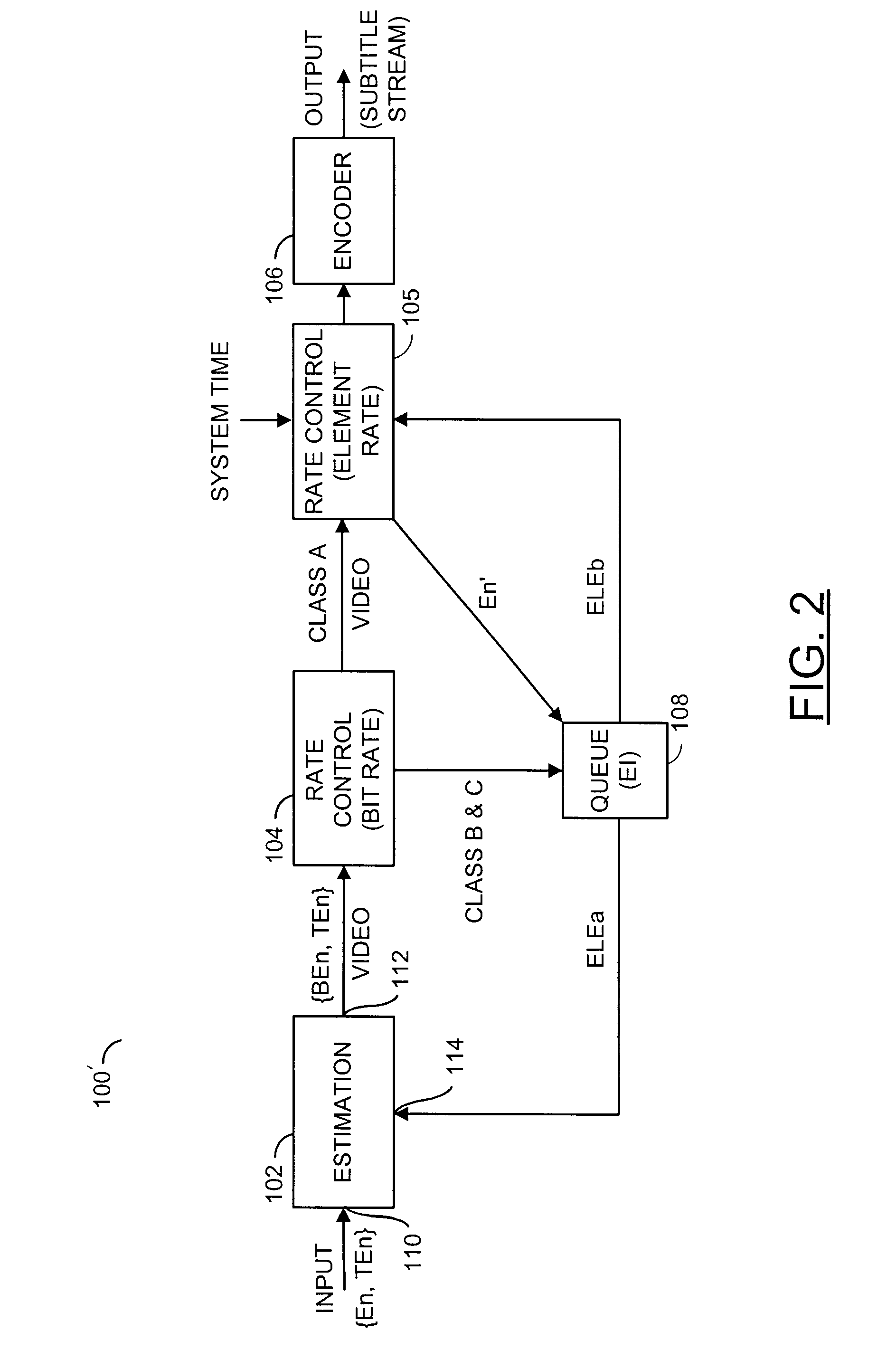

Rate control for real time transcoding of subtitles for application with limited memory

ActiveUS20090219440A1Minimal amount of circuitryTelevision system detailsElectronic editing digitised analogue information signalsComputer hardwareComputer architecture

An apparatus comprising an estimation circuit, a rate control circuit, a queue circuit, and an encoder circuit. The estimation circuit may be configured to generate a size value in response to an input signal comprising (i) a plurality of frames and (ii) a plurality of embedded subtitle elements. The rate control circuit may be configured to (i) generate a control signal, (ii) pass through the plurality of frames, (iii) present a first one or more of subtitle elements for current processing in response to the size value, and (iv) present a second one or more of subtitle elements for subsequent processing. The queue circuit may be configured to (i) receive the second one or more subtitle elements, (ii) present the second one or more of subtitle elements for current processing when the control signal is in a first state and (iii) hold a second one or more subtitle elements for subsequent processing when the control signal is in a second state. The encoder circuit may be configured to generate an output signal in response to (i) the plurality of frames, (ii) the first one or more subtitle elements, and (iii) the second one or more subtitle elements.

Owner:AVAGO TECH INT SALES PTE LTD

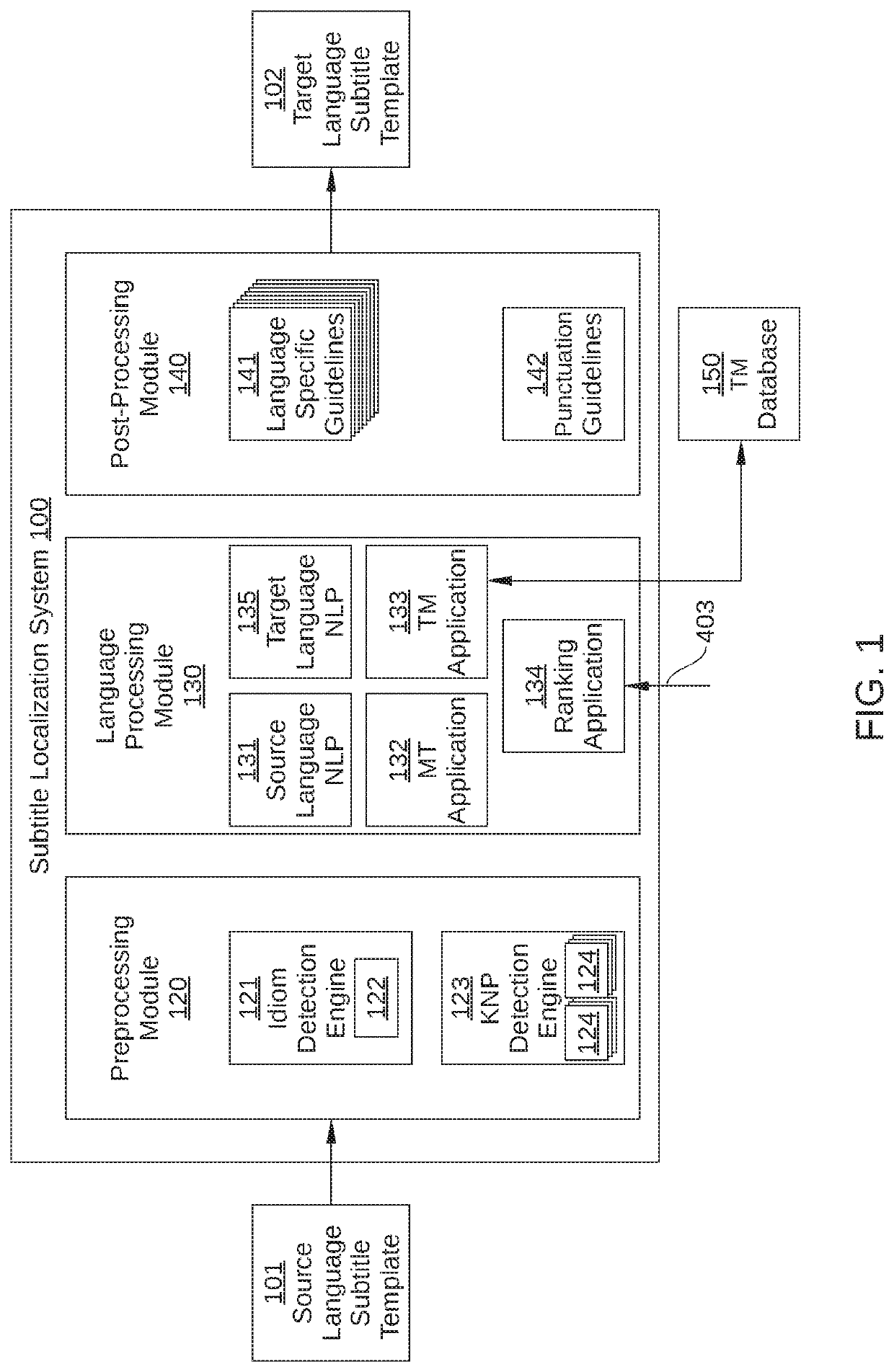

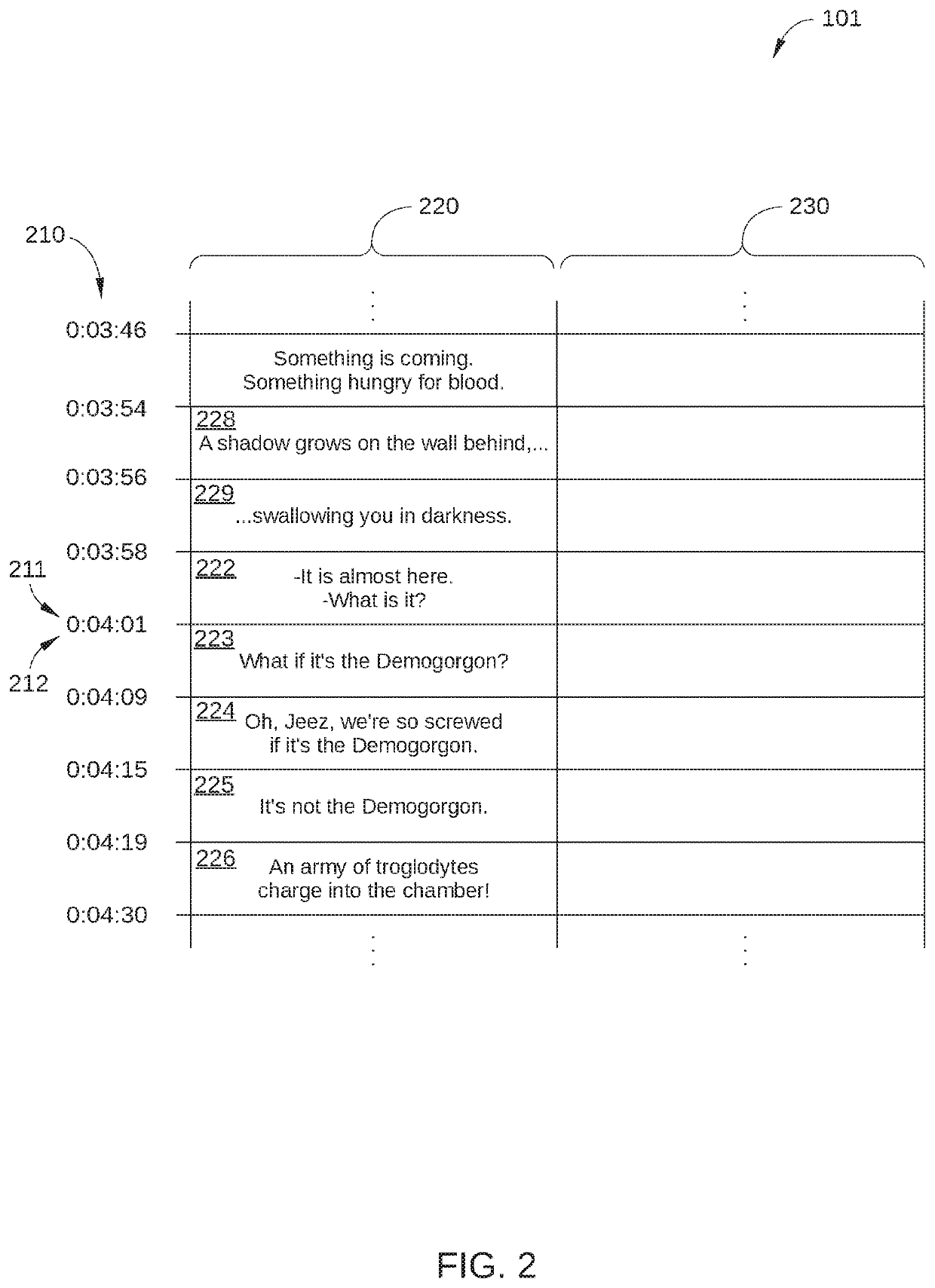

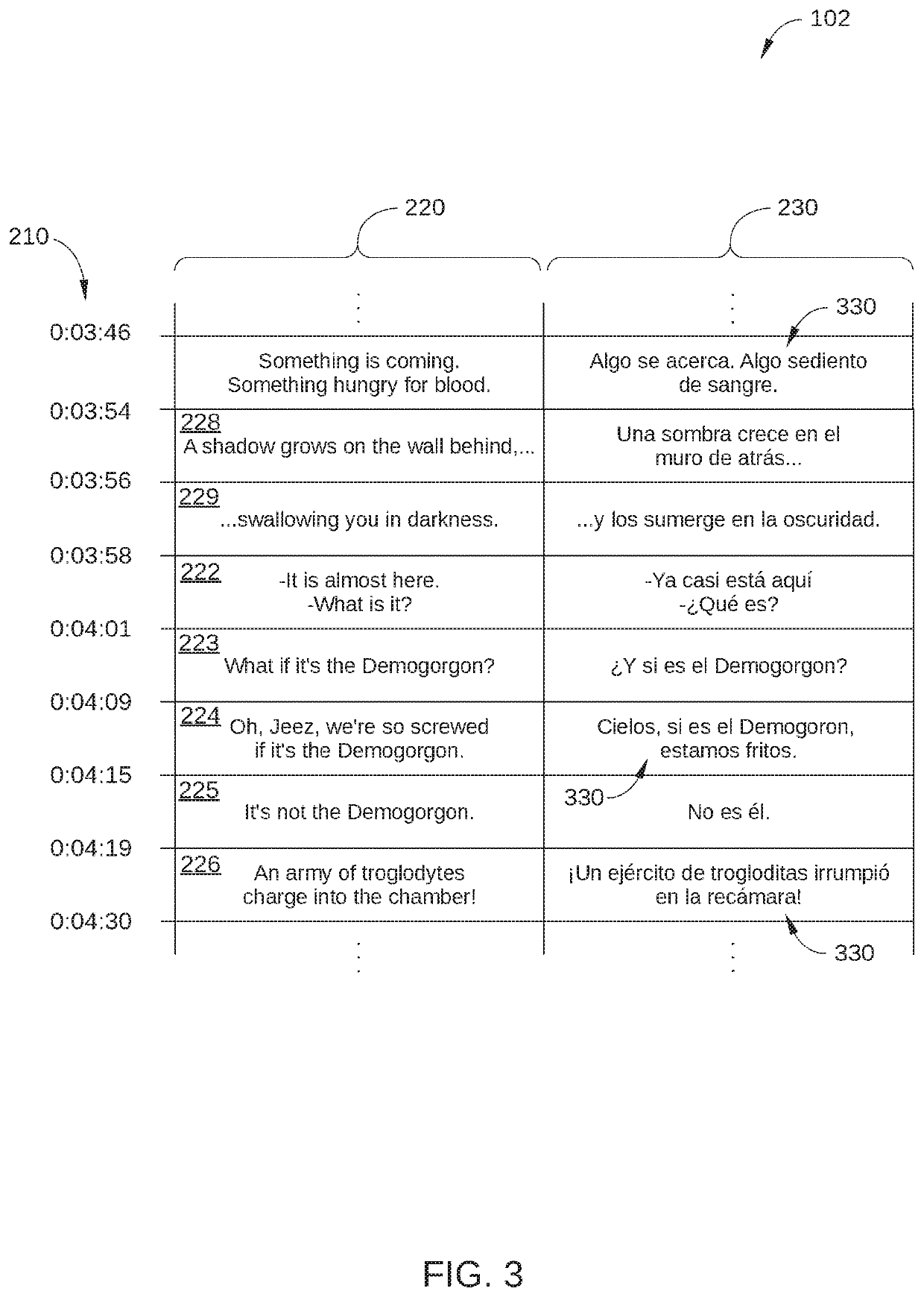

Machine-assisted translation for subtitle localization

ActiveUS20200394271A1High-quality subtitleNatural language translationSpeech recognitionNatural language processingSubtitle

One embodiment of the present disclosure sets forth a technique for generating translation suggestions. The technique includes receiving a sequence of source-language subtitle events associated with a content item, where each source-language subtitle event includes a different textual string representing a corresponding portion of the content item, generating a unit of translatable text based on a textual string included in at least one source-language subtitle event from the sequence, translating, via software executing on a machine, the unit of translatable text into target-language text, generating, based on the target-language text, at least one target-language subtitle event associated with a portion of the content item corresponding to the at least one source-language subtitle event, and generating, for display, a subtitle presentation template that includes the at least one target-language subtitle event.

Owner:NETFLIX

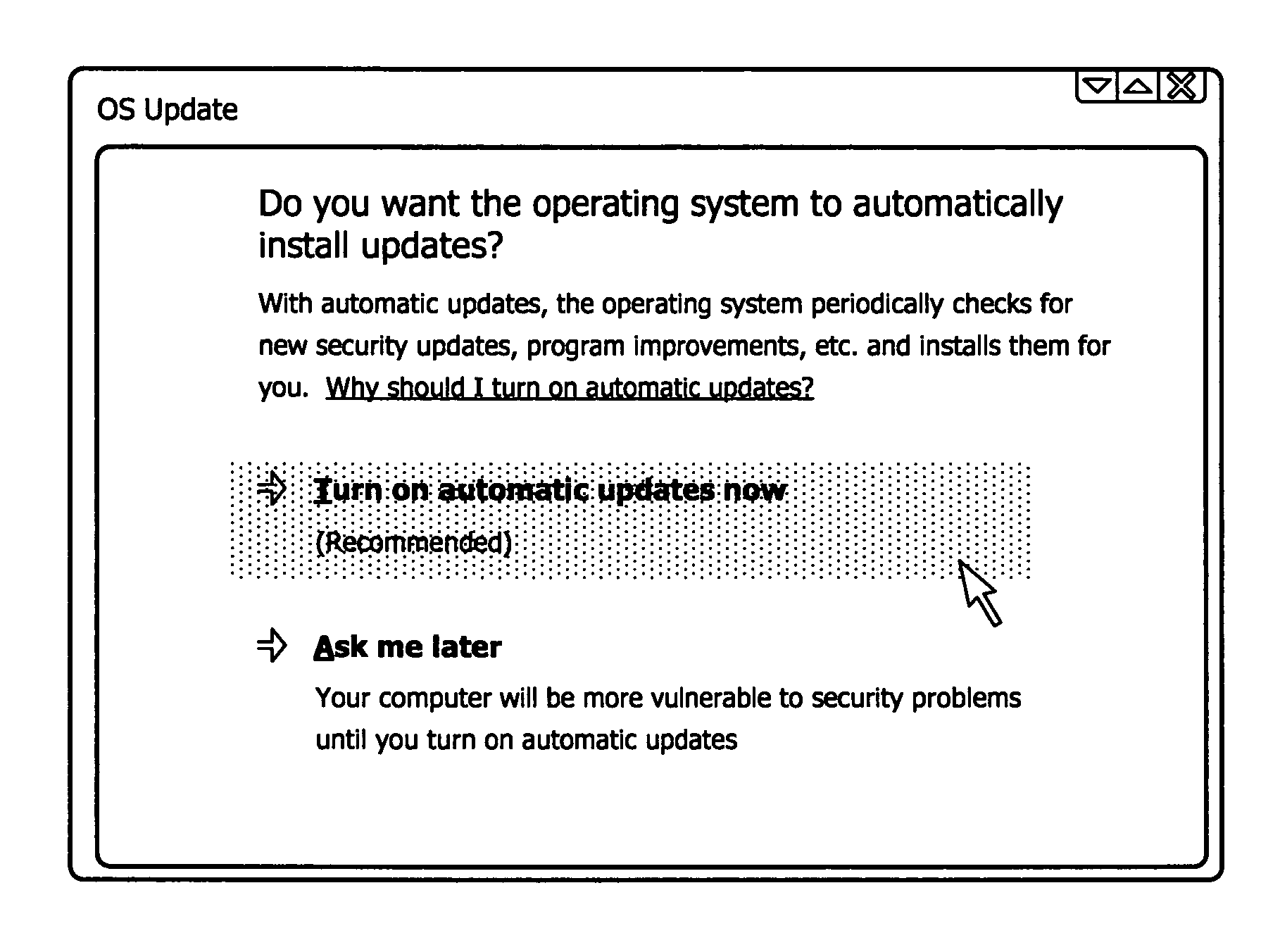

Command links

InactiveUS20060236244A1Sure easySpecial data processing applicationsInput/output processes for data processingInput controlGlyph

A command link input control has a main title portion describing a user input option corresponding to selection of that command link. Upon selection of the command link, a dialog containing that command link is completed without requiring a user to select additional input controls. The command link may optionally contain a subtitle portion for providing supplementary text further explaining or otherwise elaborating upon the option corresponding to the command link. The command link may also contain a glyph. Upon hovering a cursor over a command link or otherwise indicating potential selectability of the command link, the entire link is highlighted by, e.g., altering the background color of the display region containing the main title, subtitle and / or glyph.

Owner:MICROSOFT TECH LICENSING LLC

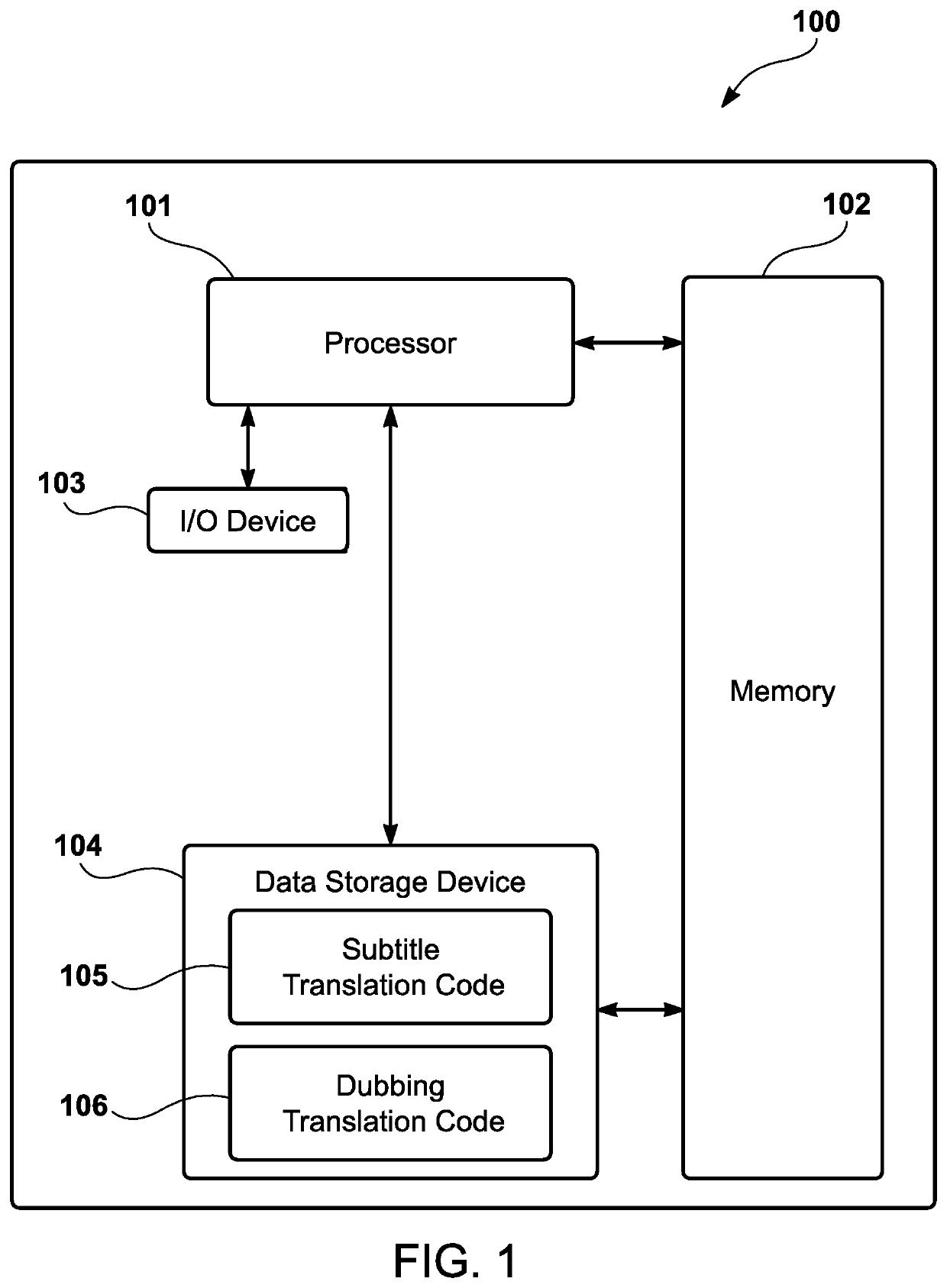

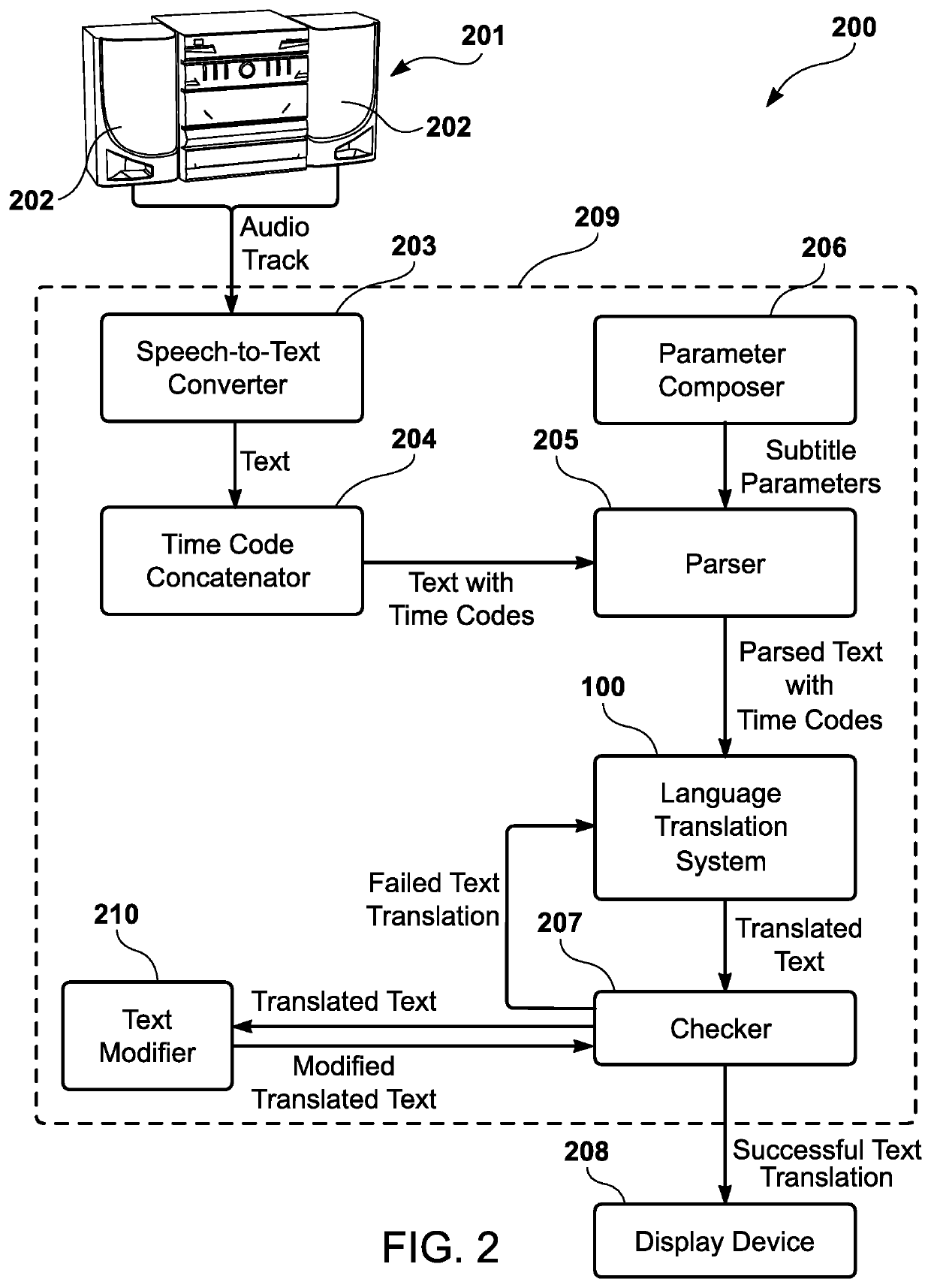

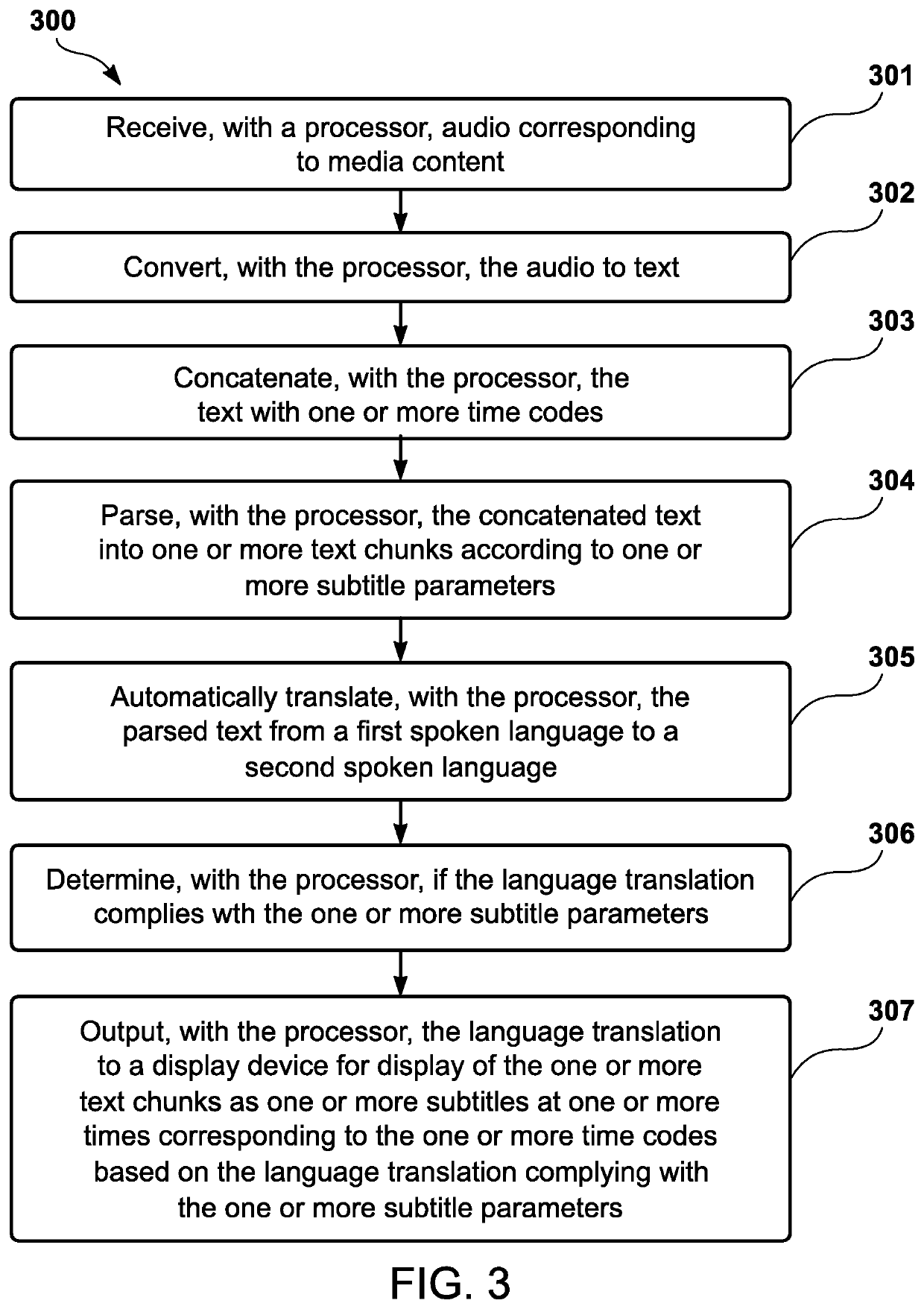

Machine translation system for entertainment and media

A process receives, with a processor, audio corresponding to media content. Further, the process converts, with the processor, the audio to text. In addition, the process concatenates, with the processor, the text with one or more time codes. The process also parses, with the processor, the concatenated text into one or more text chunks according to one or more subtitle parameters. Further, the process automatically translates, with the processor, the parsed text from a first spoken language to a second spoken language. Moreover, the process determines, with the processor, if the language translation complies with the one or more subtitle parameters. Additionally, the process outputs, with the processor, the language translation to a display device for display of the one or more text chunks as one or more subtitles at one or more times corresponding to the one or more time codes.

Owner:DISNEY ENTERPRISES INC

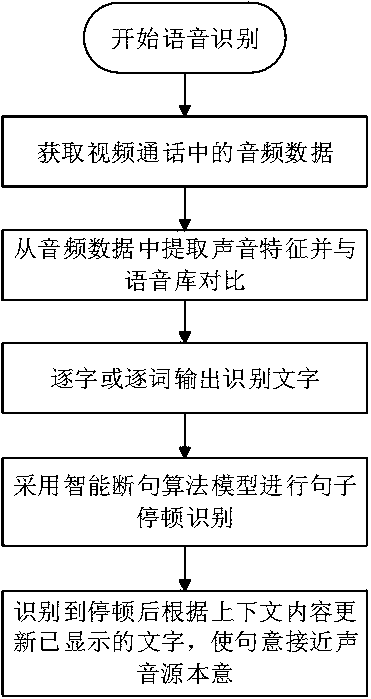

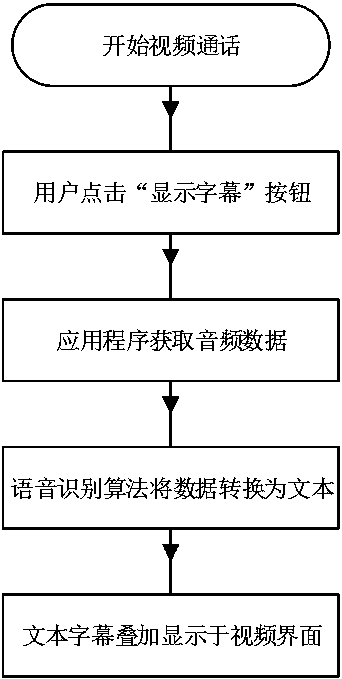

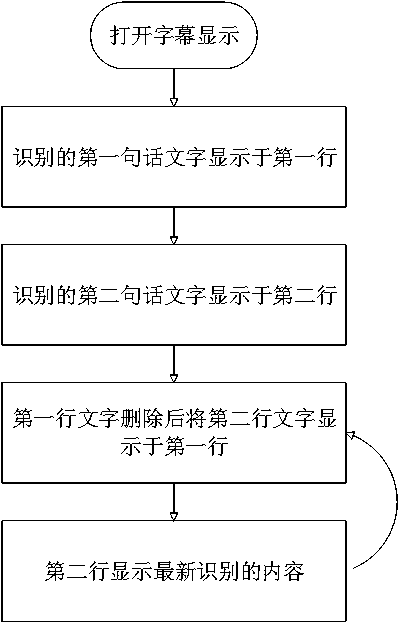

Technology for realizing real-time subtitle overlay during video call and applications of technology

InactiveCN110415706AFlexible displaySolve distressTelevision system detailsColor television detailsComputer graphics (images)Recognition algorithm

The invention discloses a technology for realizing real-time subtitle overlay during a video call and applications of the technology. The technology comprises subtitle software, and comprises the following steps: S1, a voice recognition algorithm, namely, through a machine learning algorithm, capturing audio data in a video in real time, and converting the audio data into language data with practical meaning; S2, a character converting algorithm, namely, carrying out algorithm processing on the acquired voice data so that character information is obtained through converting in real time; S3, asubtitle display algorithm, namely, carrying out real-time character-by-character or word-by-word display on the character information; S4, an automatic sentence punctuating algorithm, namely, analyzing an audio file, so that the starting and pausing points of one sentence are acquired; and S5, a character and audio / video overlay method, namely, directly displaying characters to a video interfacein an overlay manner, so that video captions are formed, wherein the video interface does not assign the display positions of the character subtitles. Through the technology for realizing real-time subtitle overlay during a video call and the applications of the technology, after the complete sentence is acquired, all the displayed characters can be updated according to the meaning of the complete sentence.

Owner:BEE SMART INFORMATION TECH CO LTD

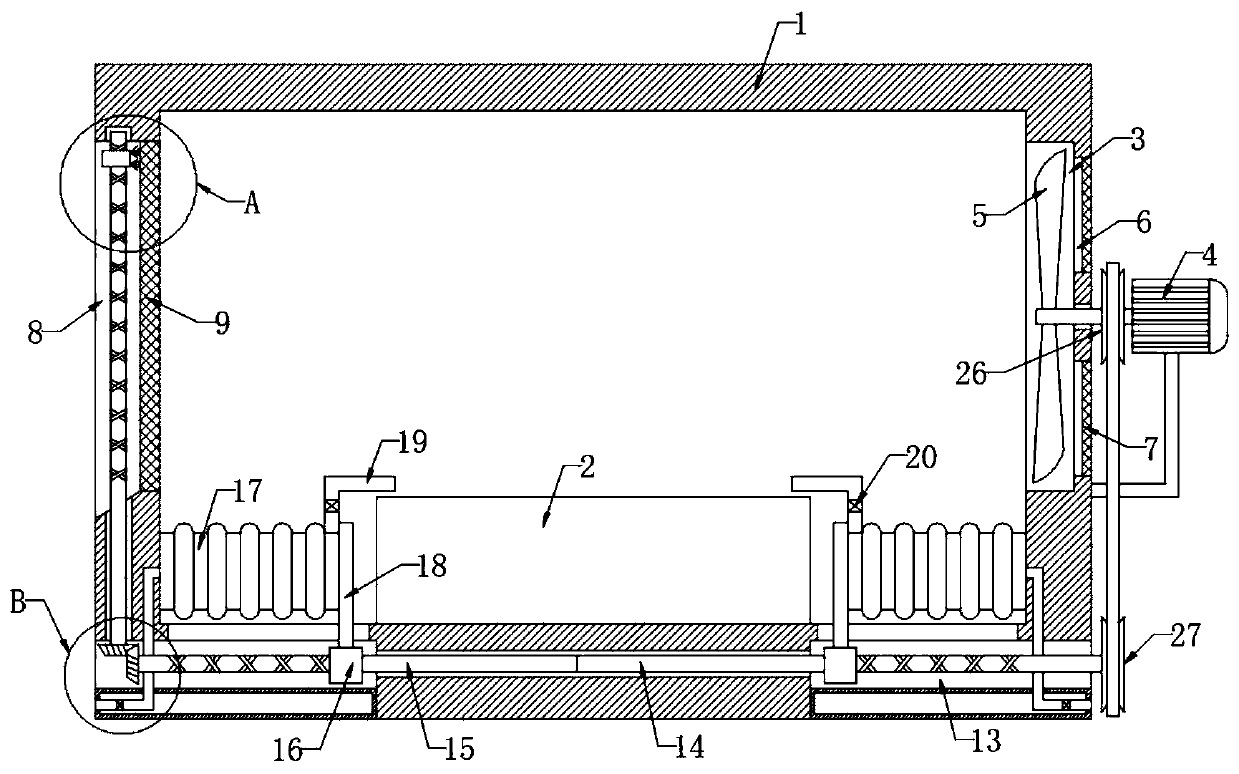

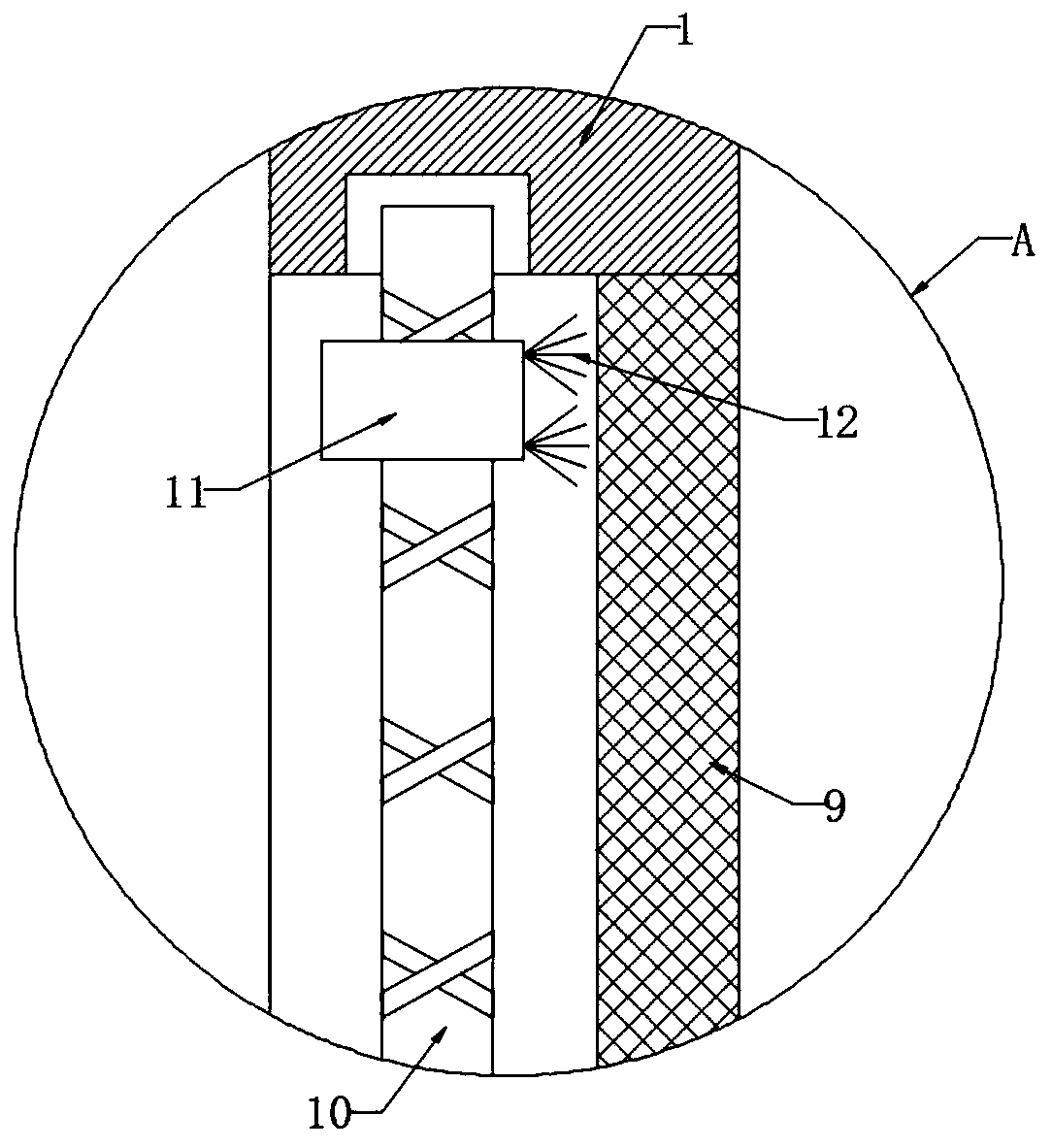

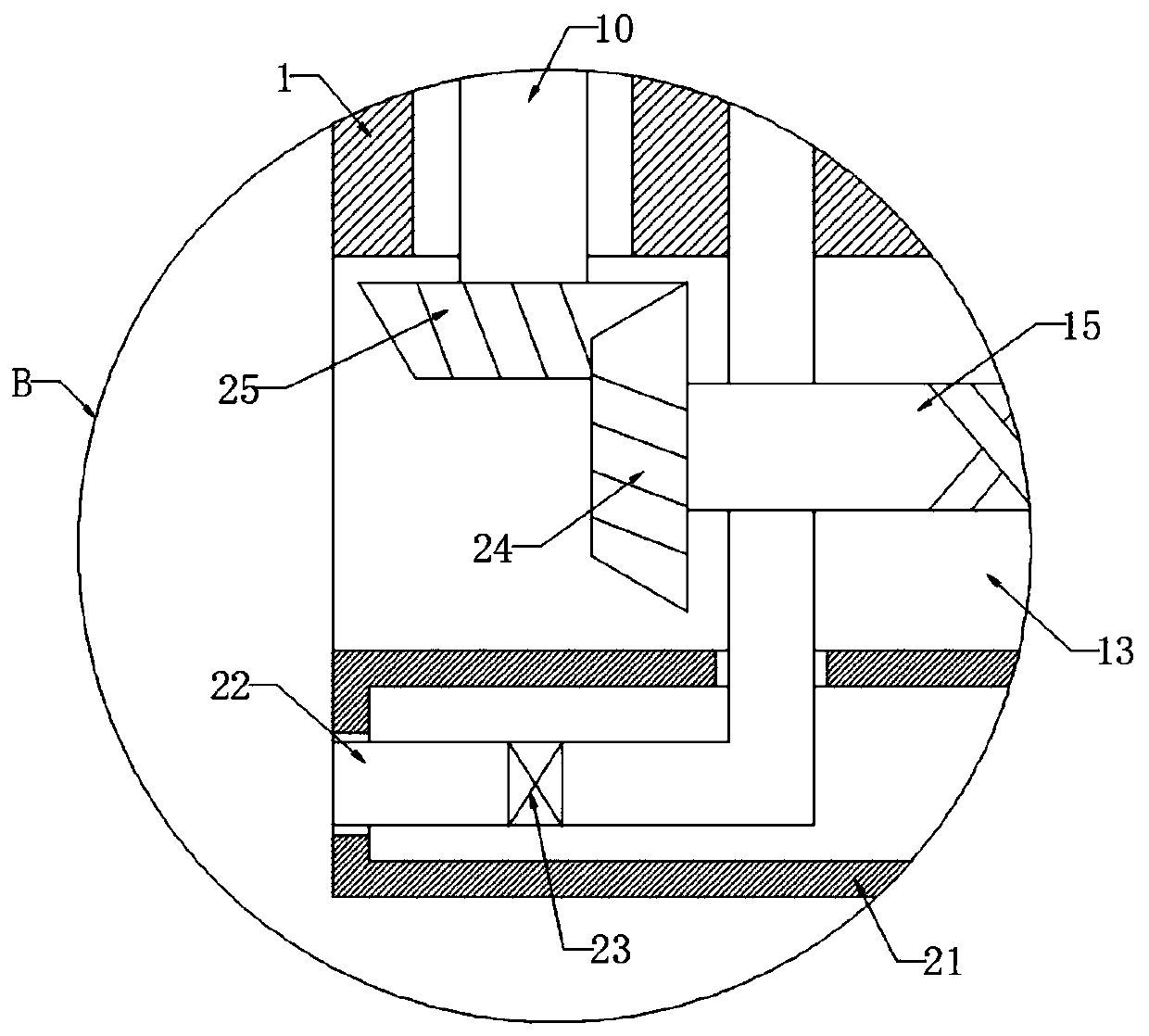

Calculating meter system for adding subtitles to foreign language audio image data in real time

InactiveCN111601479AAffects effective heat dissipationAvoid affecting air convectionCasings/cabinets/drawers detailsCooling/ventilation/heating modificationsCold airSubtitle

The invention discloses a calculating meter system for adding subtitles to foreign language audio image data in real time. The calculating meter system comprises a machine body, wherein a platform used for installing computer hardware is fixedly connected to the bottom in the machine body, a circular groove is formed in the inner wall of the machine body, a motor is fixedly connected to the side wall of the machine body through a bracket, and the movable shaft of the motor penetrates through the side wall of the machine body and extends into the circular groove. According to the invention, through rotation of a first reciprocating lead screw, a vertical plate drives bristles to slide up and down on the side wall of a second filter screen, and dust on the side wall of the second filter screen is cleaned in real time, so that the condition that a large amount of dust is accumulated after the second filter screen is used for a long time to affect the effective heat dissipation in the machine body is avoid; and a second reciprocating lead screw and a third reciprocating lead screw rotate to drive a sliding block to move back and forth, so that a fixing plate drives a telescopic air bagto continuously stretch out and draw back, and the cold air in the telescopic air bag is continuously exhausted to a platform through an air outlet pipe so as to accelerate the heat dissipation of electronic elements on the platform.

Owner:HEILONGJIANG UNIV OF TECH +3

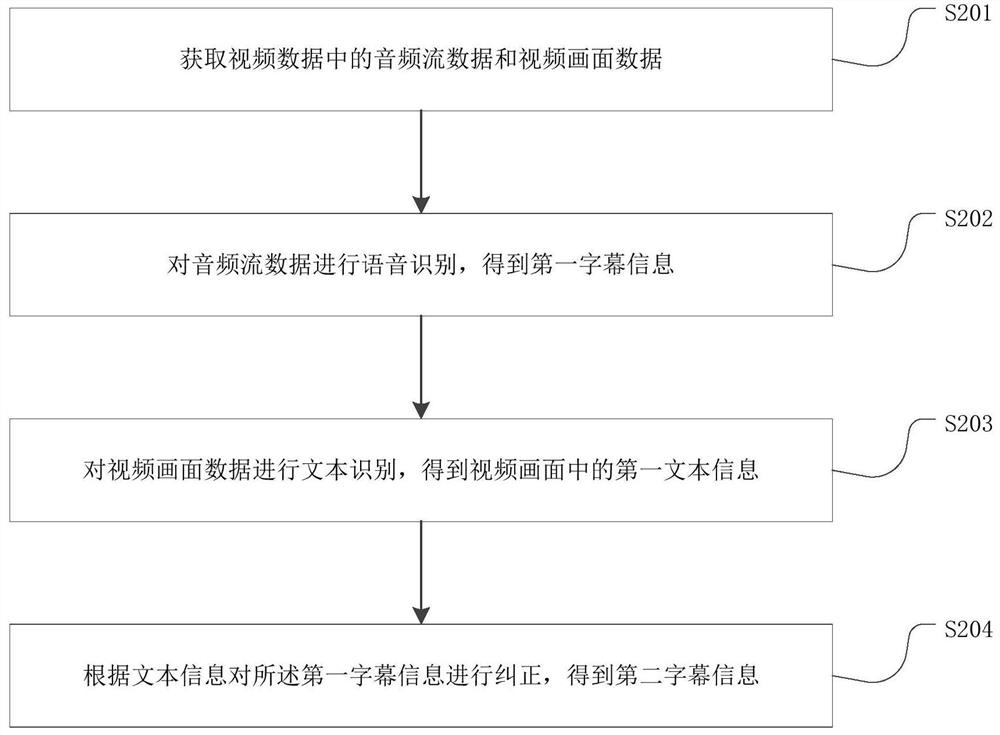

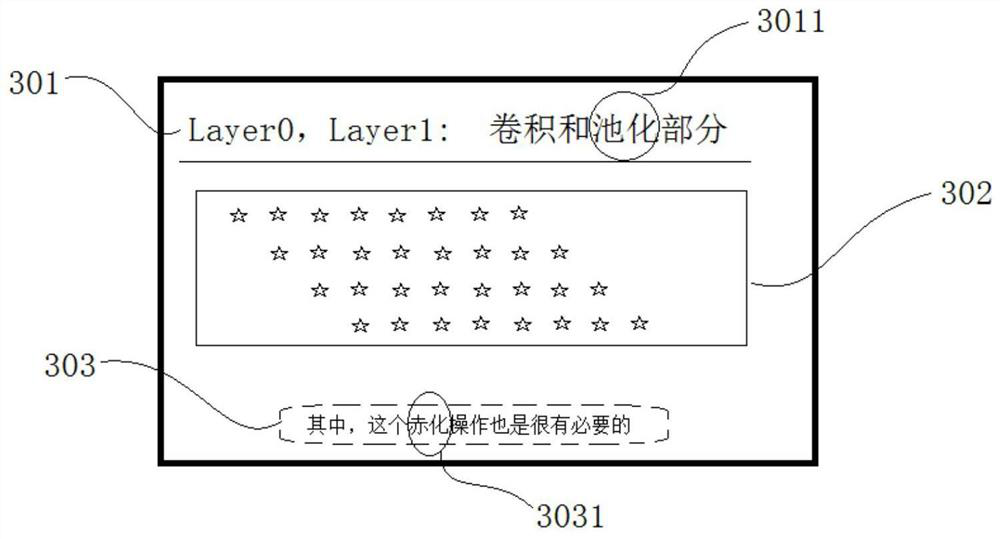

Subtitle correction method, subtitle display method, subtitle correction device, subtitle display device, equipment and medium

PendingCN111968649AImprove consistencyImprove viewing experienceSpeech recognitionSelective content distributionText recognitionComputer graphics (images)

The invention discloses a subtitle correction method, a subtitle display method, a subtitle correction device, a subtitle display device, equipment and medium. The subtitle correction method comprisesthe following steps: acquiring audio stream data and video picture data in video data; performing voice recognition on the audio stream data to obtain first subtitle information; performing text recognition on the video picture data; and correcting the first subtitle information according to the text recognition result to obtain second subtitle information. The subtitle display method comprises the following steps: acquiring video data and second subtitle information; and when the video data is played, displaying the second subtitle information. According to the invention, the subtitle information recognized by voice is corrected based on text recognition of the video picture content, the subtitle information related to the video picture content can be corrected, the consistency between the subtitles recognized by voice and the video content is improved, the accuracy of the subtitle content is improved, the watching experience of a user is improved, and the subtitle correction method,the subtitle display method, the subtitle correction device, the subtitle display device, the equipment and the medium can be widely applied to the technical field of the Internet.

Owner:TENCENT TECH (SHENZHEN) CO LTD

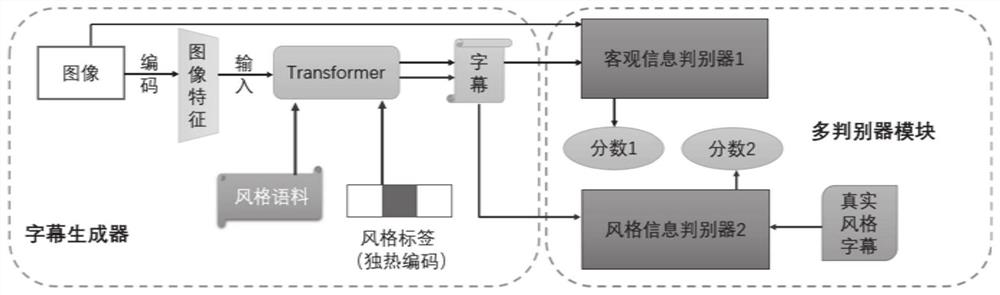

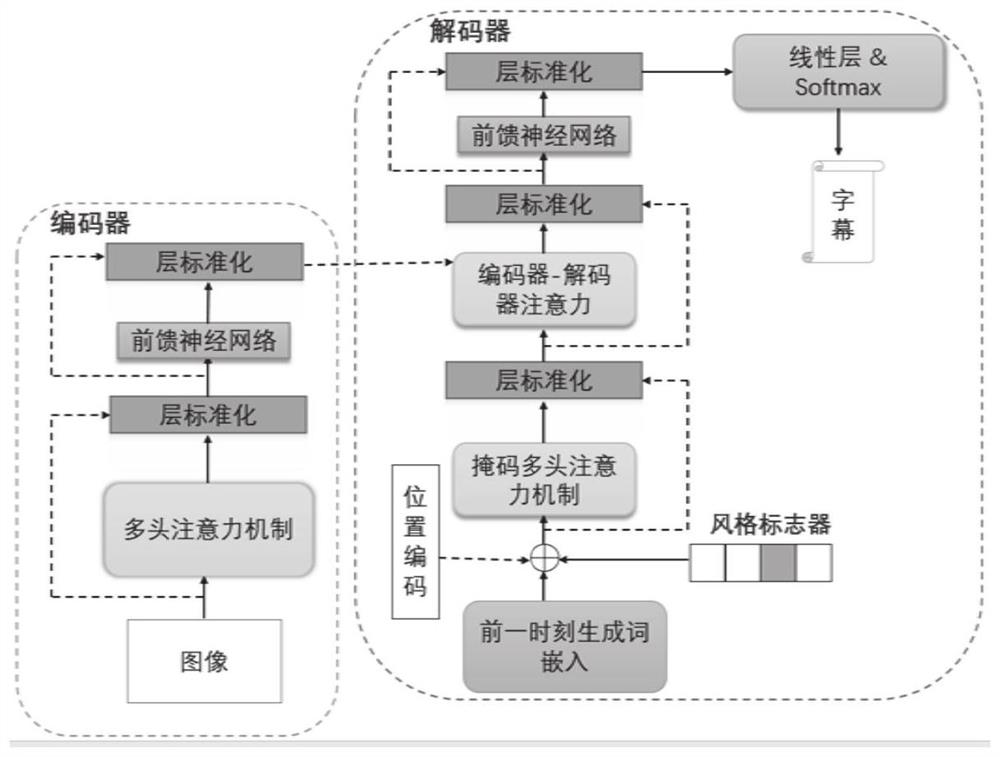

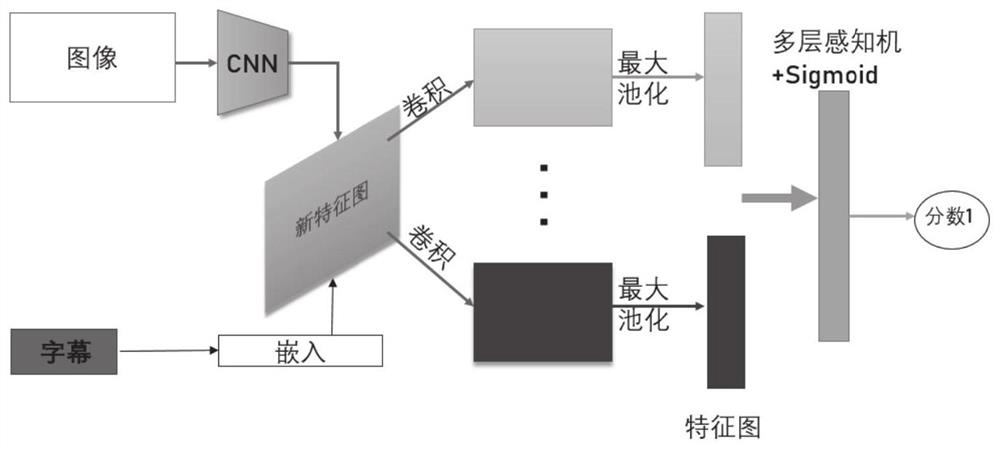

Cross-modal image multi-style subtitle generation method and system

ActiveCN112084841ACharacter and pattern recognitionNeural architecturesComputer graphics (images)Subtitle

The invention discloses a cross-modal image multi-style subtitle generation method and system. The method comprises the steps that an image of subtitles to be generated is acquired; the image of subtitles to be generated is input into a pre-trained multi-style subtitle generation model, and multi-style subtitles of the image are output; wherein the pre-trained multi-style caption generation modelis obtained after training based on an adversarial generative network; the training step comprises the steps of firstly training the image objective information expression capability of the multi-style subtitle generation model, and then training the stylized subtitle generation capability of the multi-style subtitle generation model.

Owner:QILU UNIV OF TECH

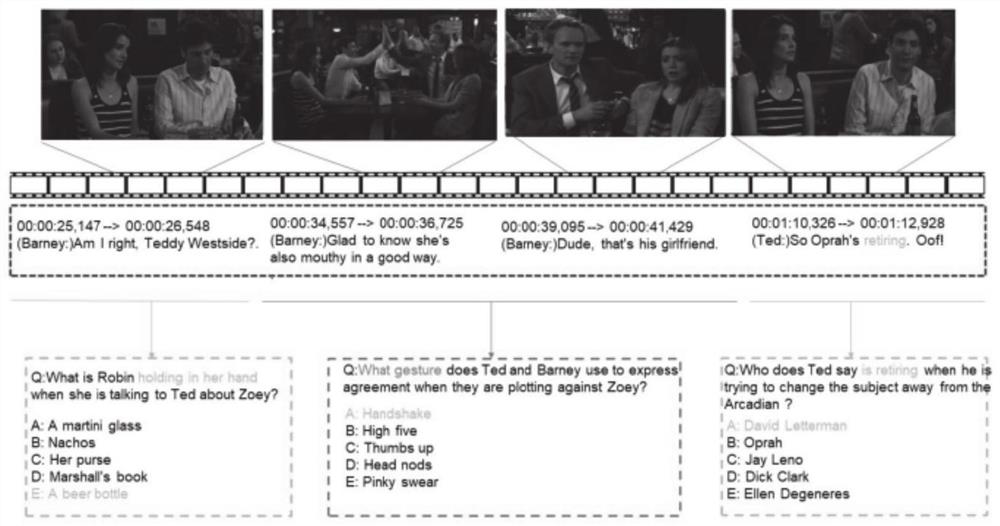

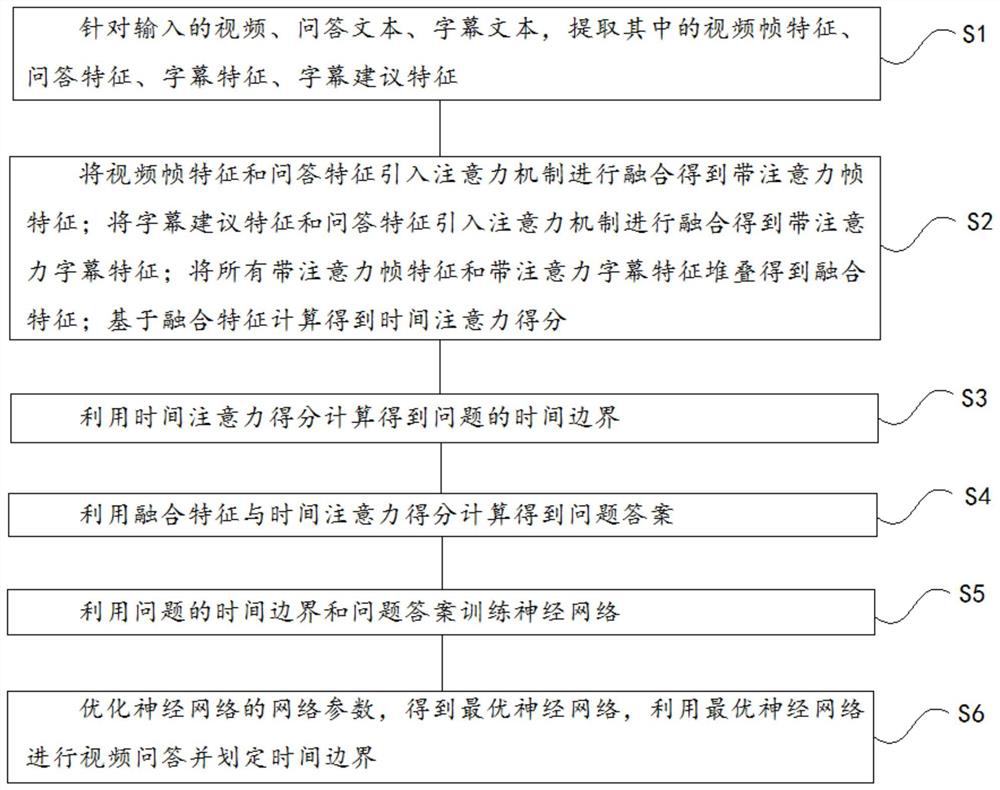

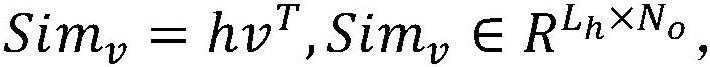

Method for performing multi-mode video question answering by using frame-subtitle self-supervision

ActiveCN112860945ACorrect answerDigital data information retrievalCharacter and pattern recognitionSubtitleQuestions and answers

The invention belongs to the field of video questions and answers, and particularly relates to a method for performing multi-mode video question answering by using frame-subtitle self-supervision. The method includes the following steps: extracting video frame features, question and answer features, subtitle features and subtitle suggestion features; obtaining frame features with attention and caption features with attention, and obtaining fusion features; calculating and obtaining a time attention score based on the fusion feature; calculating and obtaining the time boundary of the question by using the time attention score; calculating and obtaining answers to the questions by adopting the fusion features and the time attention scores; training a neural network by using the time boundary of the question and the answer of the question; and optimizing network parameters of the neural network, performing video question answering by using the optimal neural network, and delimiting a time boundary. The time boundary related to the problem is generated according to the self-designed time attention score instead of using time annotation with high annotation cost. In addition, more accurate answers are obtained by mining the relation between the subtitles and the corresponding video content.

Owner:STATE GRID ZHEJIANG ELECTRIC POWER +3

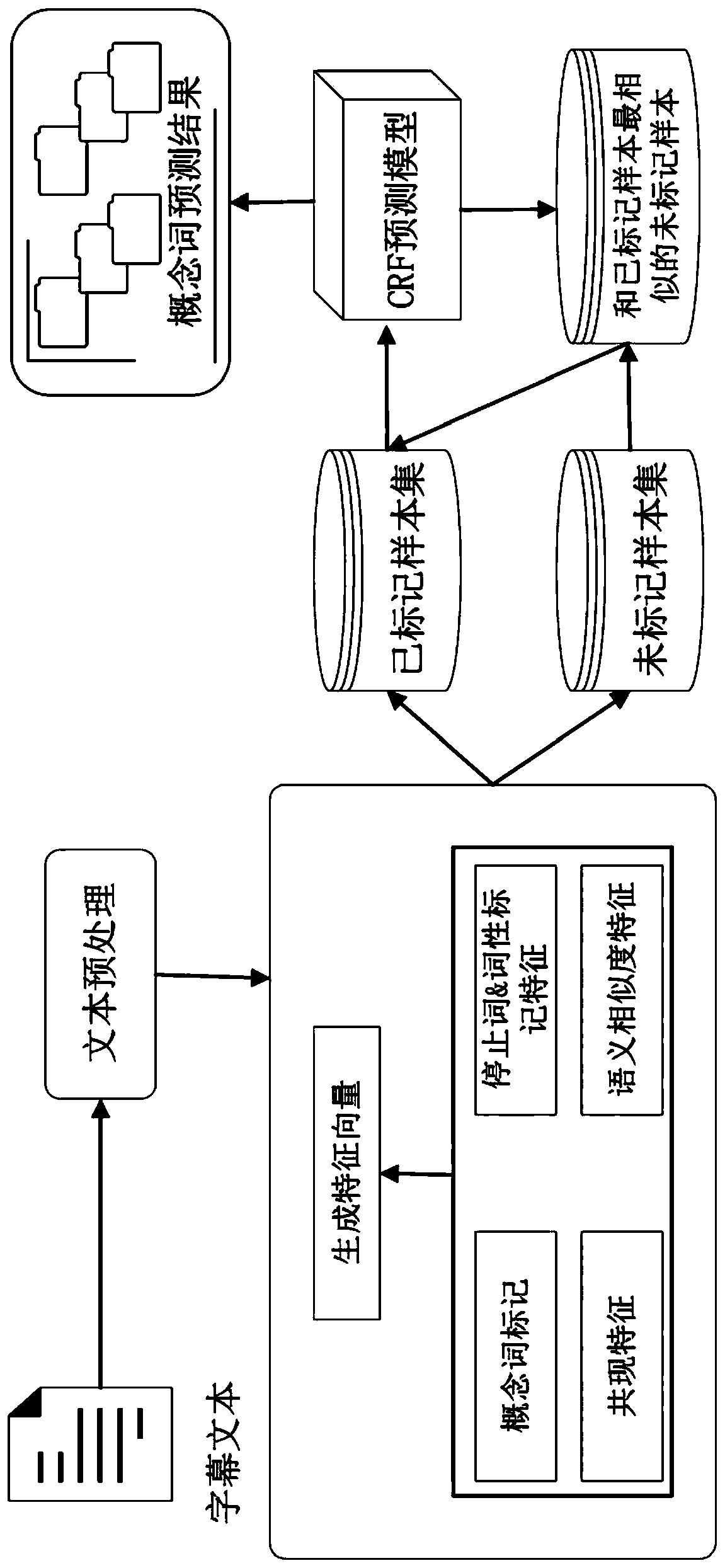

Method for extracting conceptual words from video subtitles

ActiveCN110175246AImprove forecast accuracyGood performanceDigital data information retrievalSemantic analysisPunctuationCo-occurrence

The invention discloses a method for extracting conceptual words from video subtitles, which comprises the following steps of: carrying out word segmentation processing on a subtitle text, and deleting punctuation marks; stop words and part-of-speech tagging are carried out on the caption text after word segmentation; calculating co-occurrence characteristics of the target word and the adjacent word; calculating the semantic similarity between the target word and the adjacent word; performing concept word marking on a small number of subtitle texts subjected to word segmentation to serve as atraining set; and training a pre-established semi-supervised learning framework based on a conditional random field according to the training set to obtain a conceptual word prediction model, and obtaining a conceptual word prediction result corresponding to the subtitle text output by the conceptual word prediction model. Based on the method for extracting the conceptual words provided by the invention, the workload of manually labeling corpora is reduced, the accuracy of extracting the conceptual words in the MOOC video subtitle scene is improved, and the actual requirements are met.

Owner:SHANDONG UNIV OF SCI & TECH

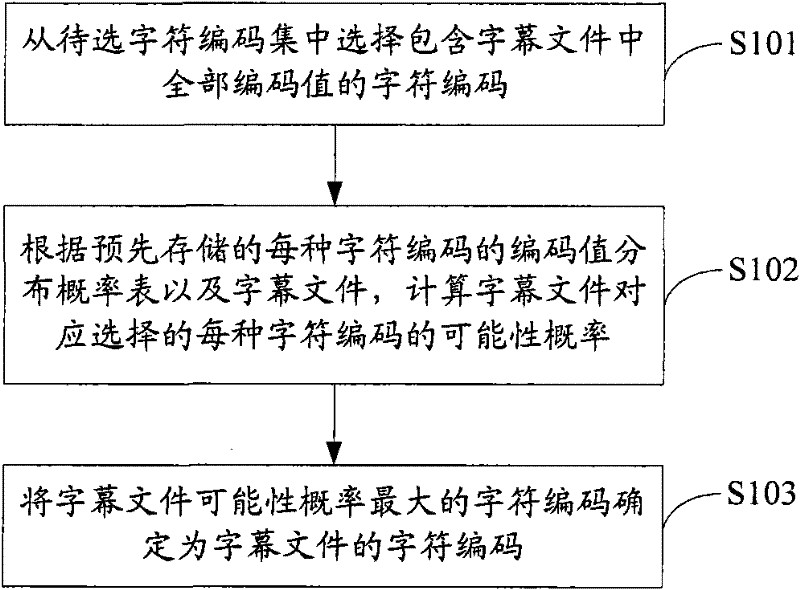

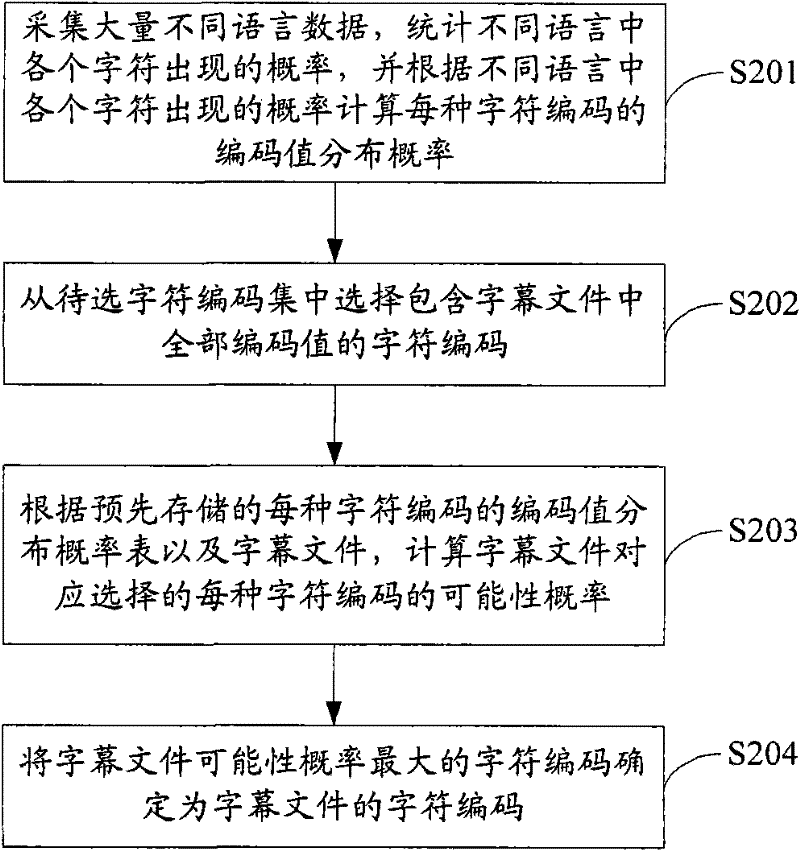

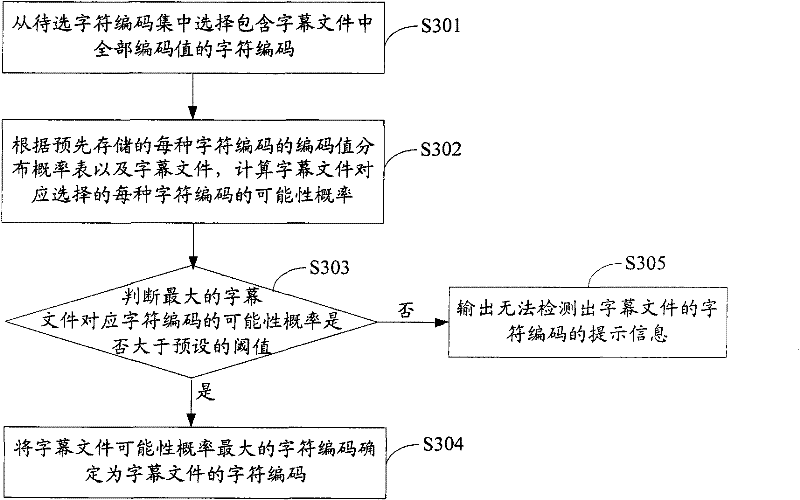

Player and character code detection method and device for subtitle file

ActiveCN102194503AAutomatic detectionQuick checkCarrier indexing/addressing/timing/synchronisingComputer hardwareComputer science

The invention is applicable to the field of multimedia processing, providing a player and a character code detection method and device for a subtitle file. The method comprises the following steps of: selecting character codes comprising all code values in the subtitle file from a character code set to be selected; calculating probability of correspondingly selecting each type of character code by the subtitle file according to a pre-stored code value distribution probability table of each kind of character code and the subtitle file; and determining the character code with the maximum probability of the subtitle file as the character code of the subtitle file. According to the embodiment provided by the invention, the character code of the subtitle file can be automatically, quickly and accurately detected. When a video file is played, since the character code of the subtitle file corresponding to the video file can be automatically, quickly and accurately loaded and detected, the player can analyze the subtitle file by using the character code of the subtitle file; therefore, subtitle content can be accurately displayed.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com