Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

35 results about "Cache compression" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache compression enables controlling and optimizing memory usage. The objects in cache are not compressed when the parameter is set to false. The objects in cache are compressed when the parameter is set to true.

Memory control

ActiveUS20110093654A1Improve scalabilityImprove caching capacityMemory architecture accessing/allocationEnergy efficient ICTParallel computingPower control

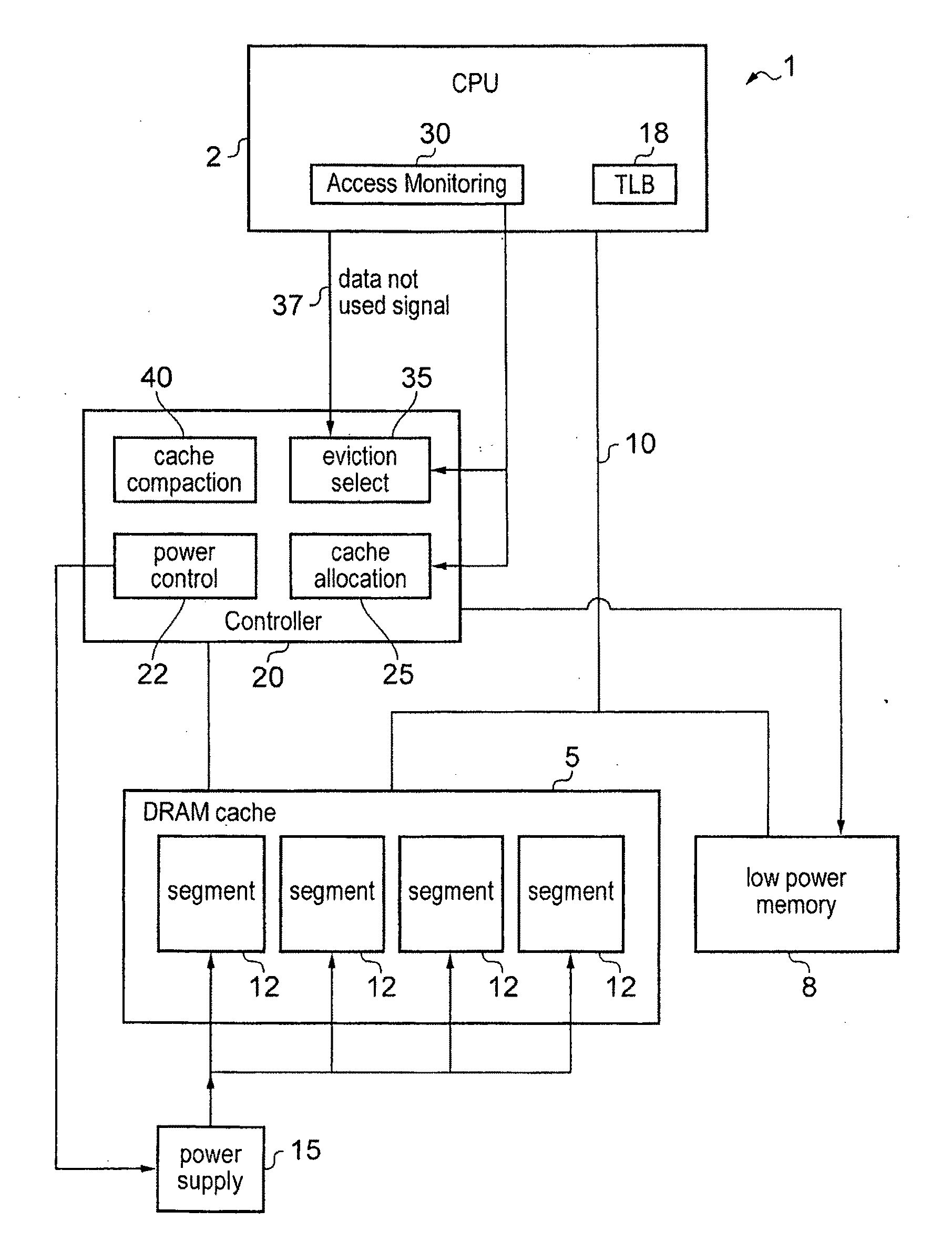

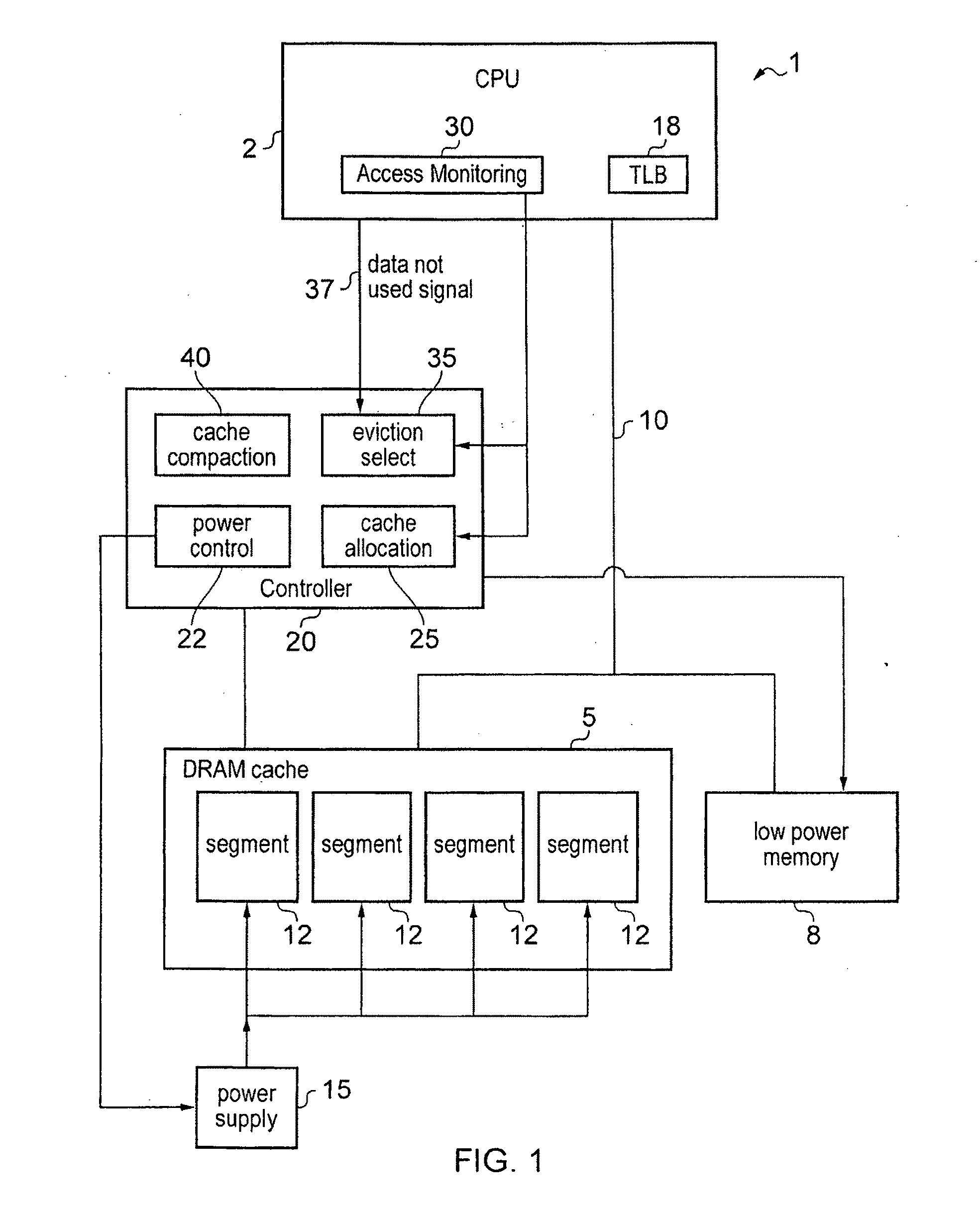

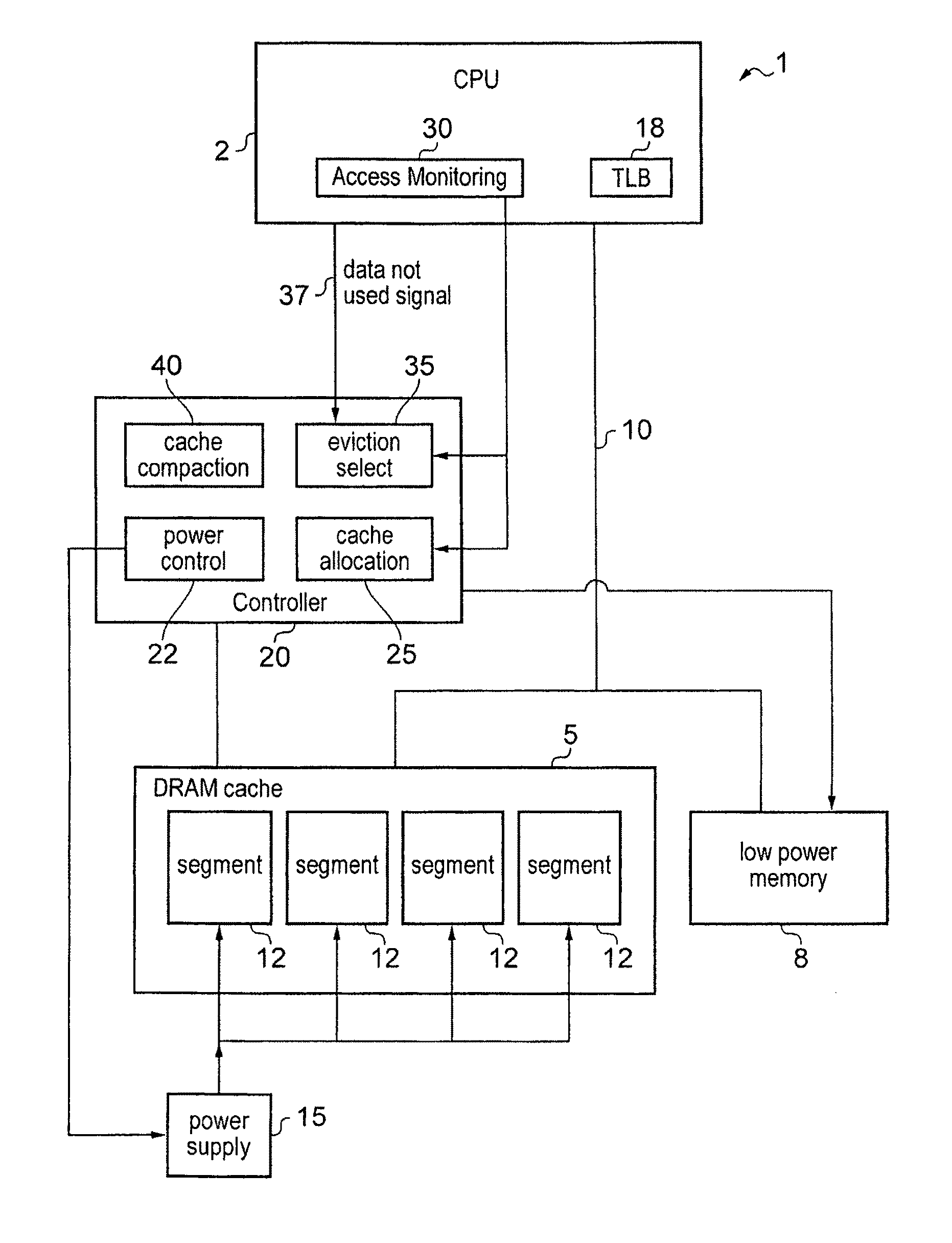

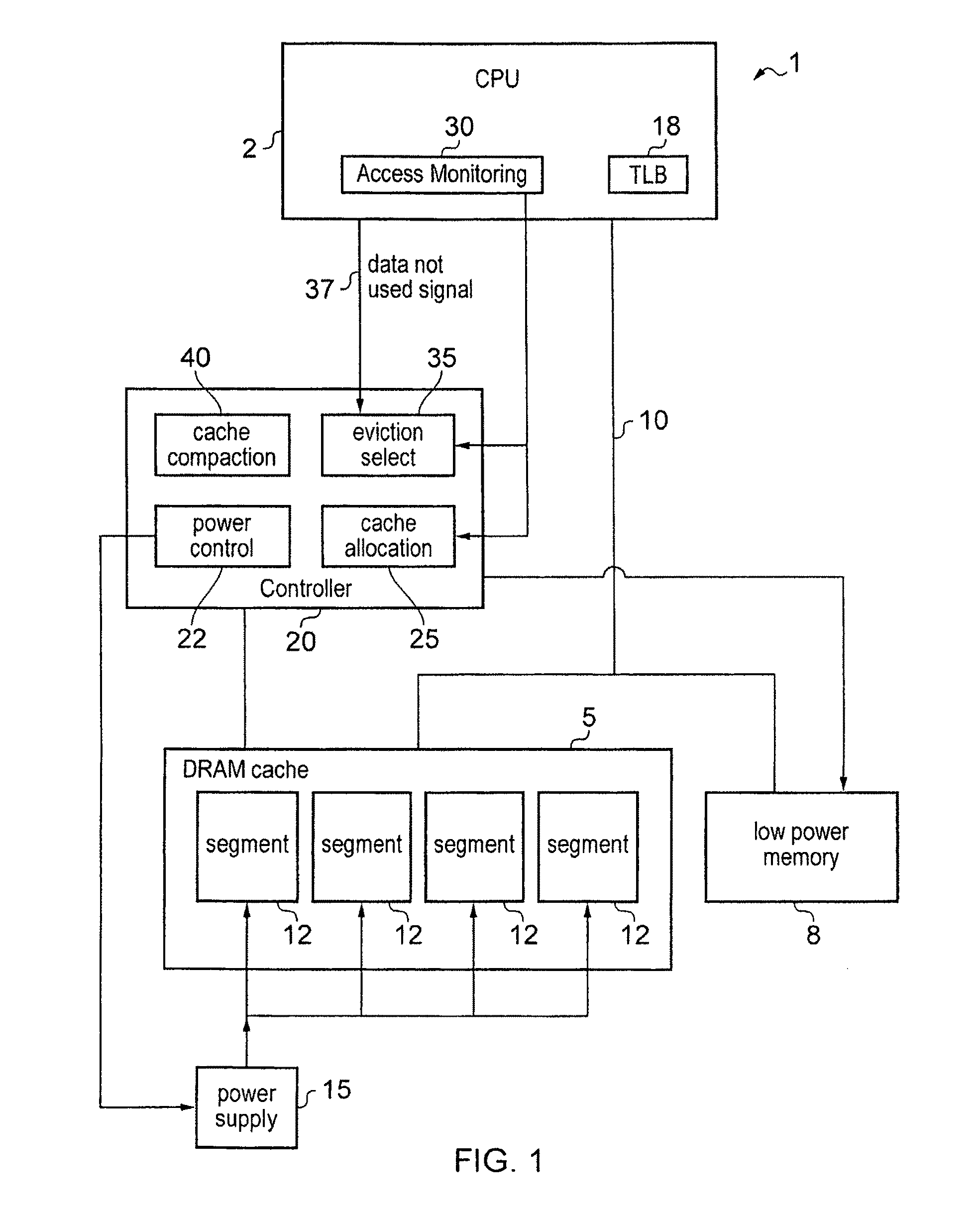

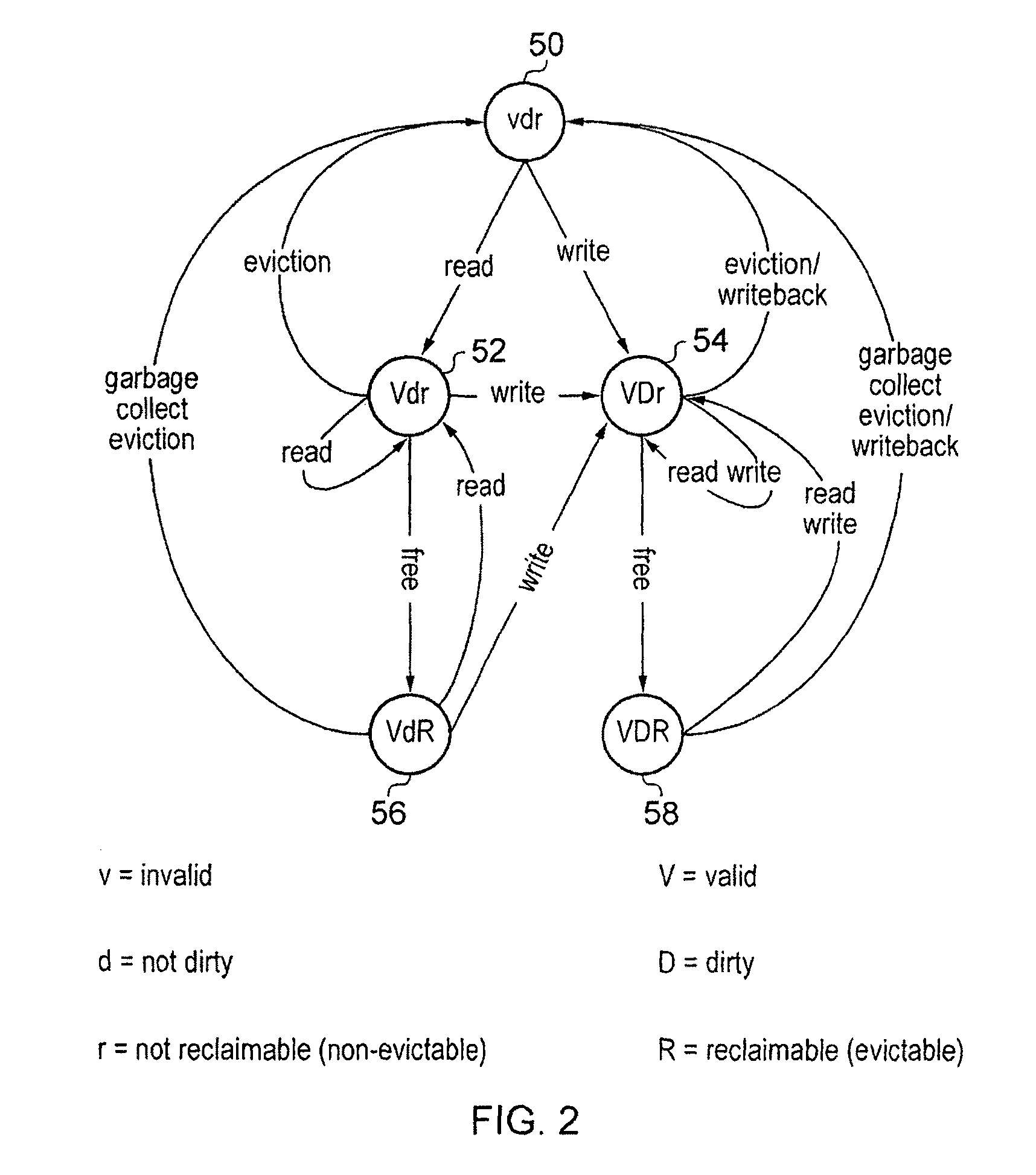

A data processing apparatus 1 comprises data processing circuitry 2, a memory8 for storing data and a cache memory 5 for storing cached data from the memory 8. The cache memory 5 is partitioned into cache segments 12 which may be individually placed in a power saving state by power supply circuitry 15 under control of power control circuitry 22. The number of segments which are active at any time may be dynamically adjusted in dependence upon operating requirements of the processor 2. An eviction selection mechanism 35 is provided to select evictable cached data for eviction from the cache. A cache compacting mechanism 40 is provided to evict evictable cached data from the cache and to store non-evictable cached data in fewer cache segments than were used to store the cached data prior to eviction of the evictable cached data. Compacting the cache enables at least one cache segment that, following eviction of the evictable cached data, is no longer required to store cached data to be placed in the power saving state by the power supply circuitry.

Owner:RGT UNIV OF MICHIGAN

Adaptive cache compression system

ActiveUS20060101206A1Simple methodStable controlMemory architecture accessing/allocationMemory systemsParallel computingInstruction cycle

Owner:WISCONSIN ALUMNI RES FOUND

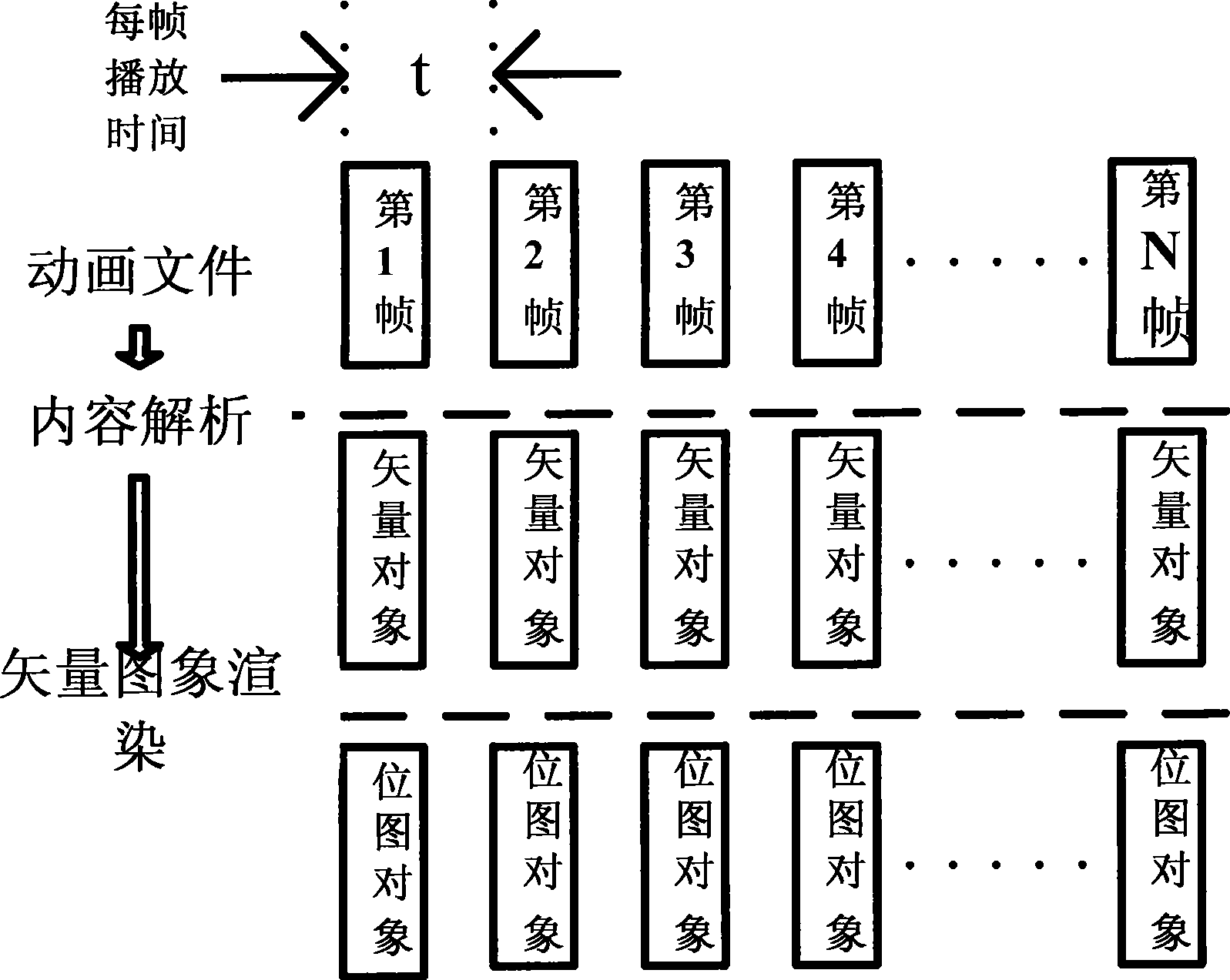

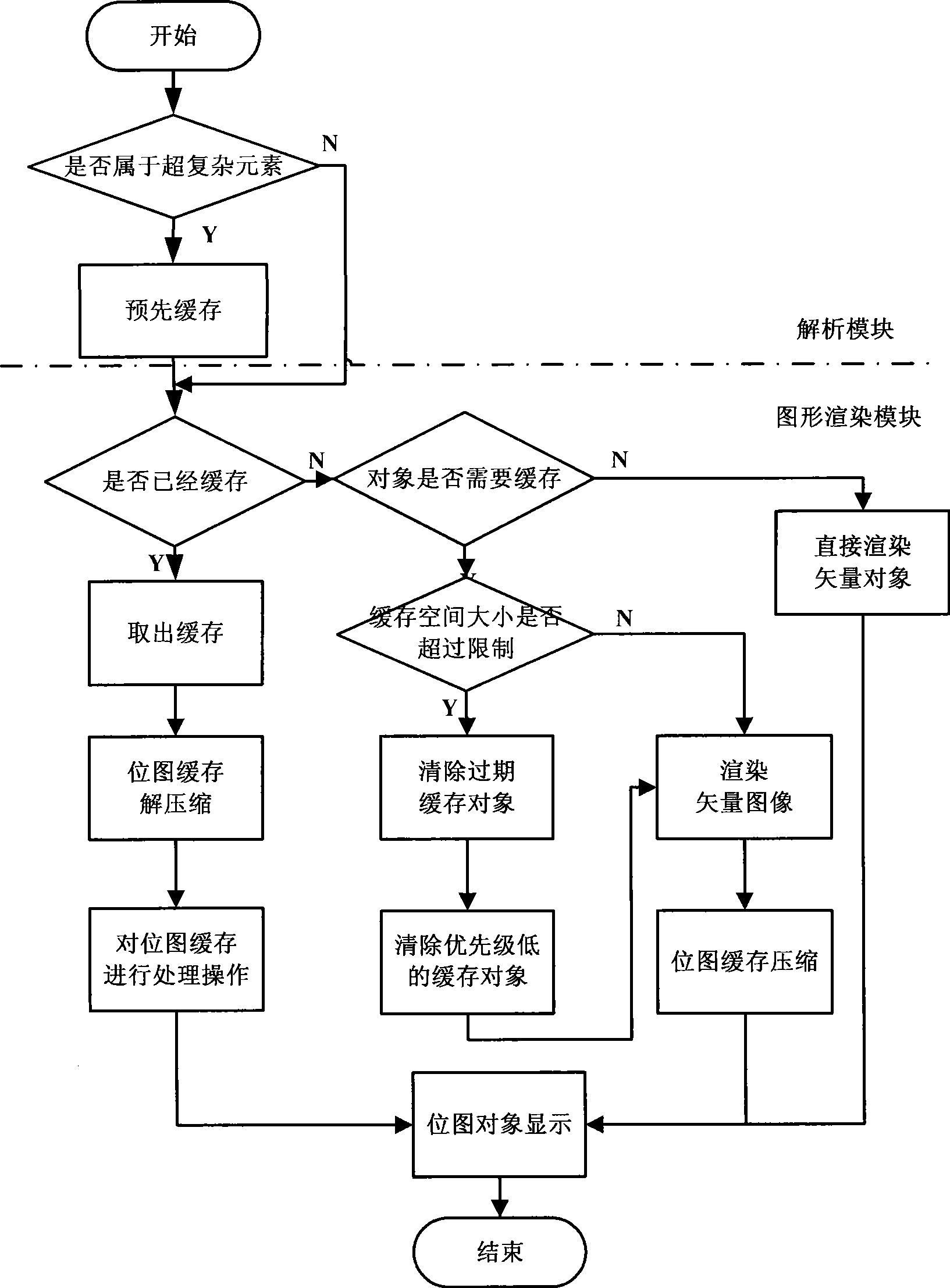

Vector graphic display acceleration method based on bitmap caching

InactiveCN101470893AImprove display speedReduce calculationMemory adressing/allocation/relocationImage memory managementAnimationLinked list

The invention relates to a method for accelerating vector graph display on the basis of bitmap buffering, which comprises: buffering a rendering result of a complicated vector object which is continuously displayed in the process of playing vector animation, taking out a corresponding bitmap buffering object when the displayed object appears for the second time, and directly displaying the object on an appointed position through transformation operations. The method for accelerating comprises a pre-buffering strategy, a buffering choosing strategy, a buffer using strategy, a buffer compressing and preserving strategy and a buffer replacing strategy. The method has the advantages that a large amount of calculation is saved, the time for rendering the vector graphs is saved, the display speed is accelerated, the buffer capacity size and the edge curve threshold of a complicated graph are adjusted to achieve the best effect according to the difference of the memory size or the processing speed of an embedded real type device, the operation is simple, only an ID number, the length, the width, the bitmap data, the priority and a frame number which is used recently of the vector object are stored, which can be realized through one simple chained list, a step of rendering and the playing flow are transparent, and the playing flow of the vector animation is not affected.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

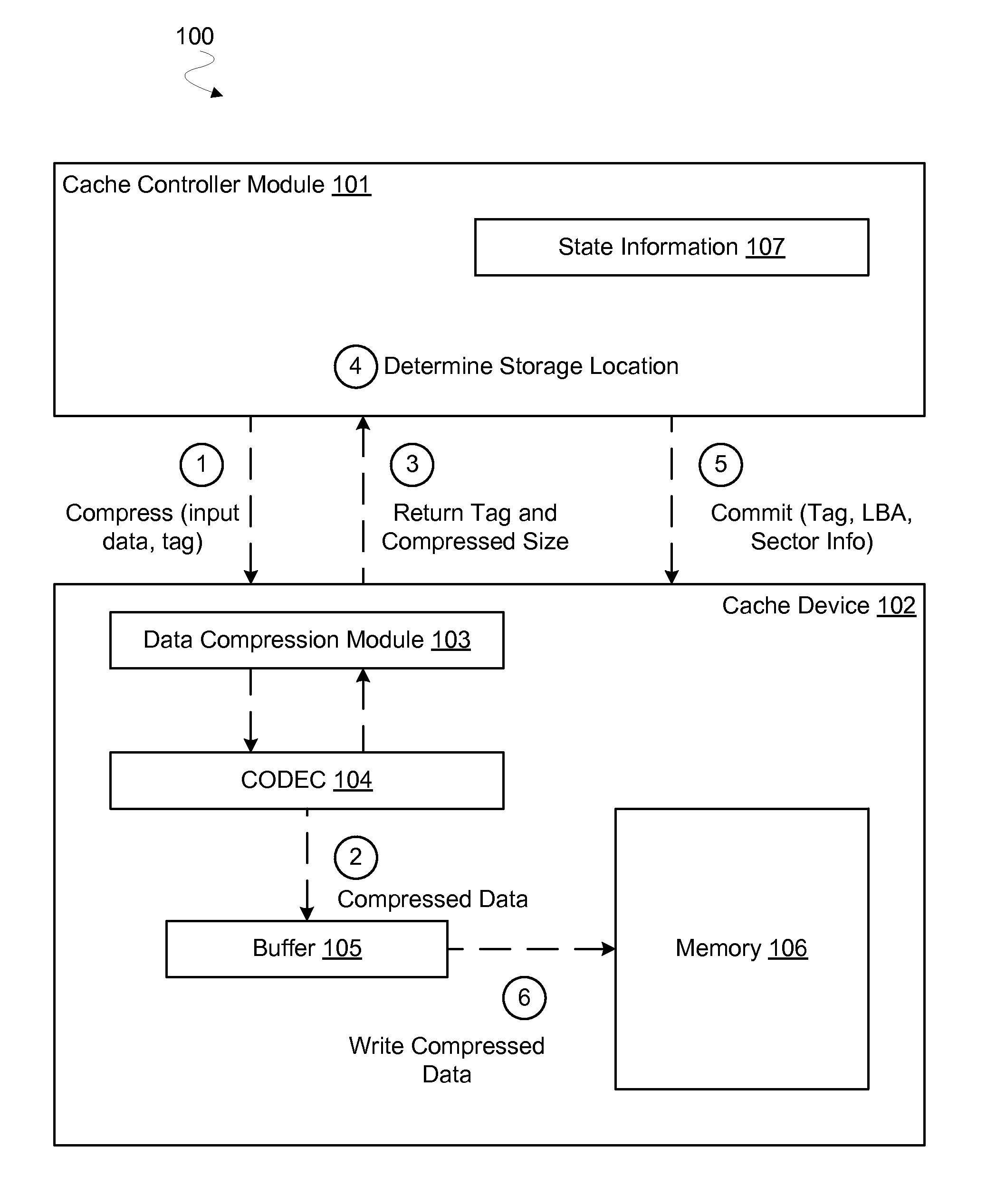

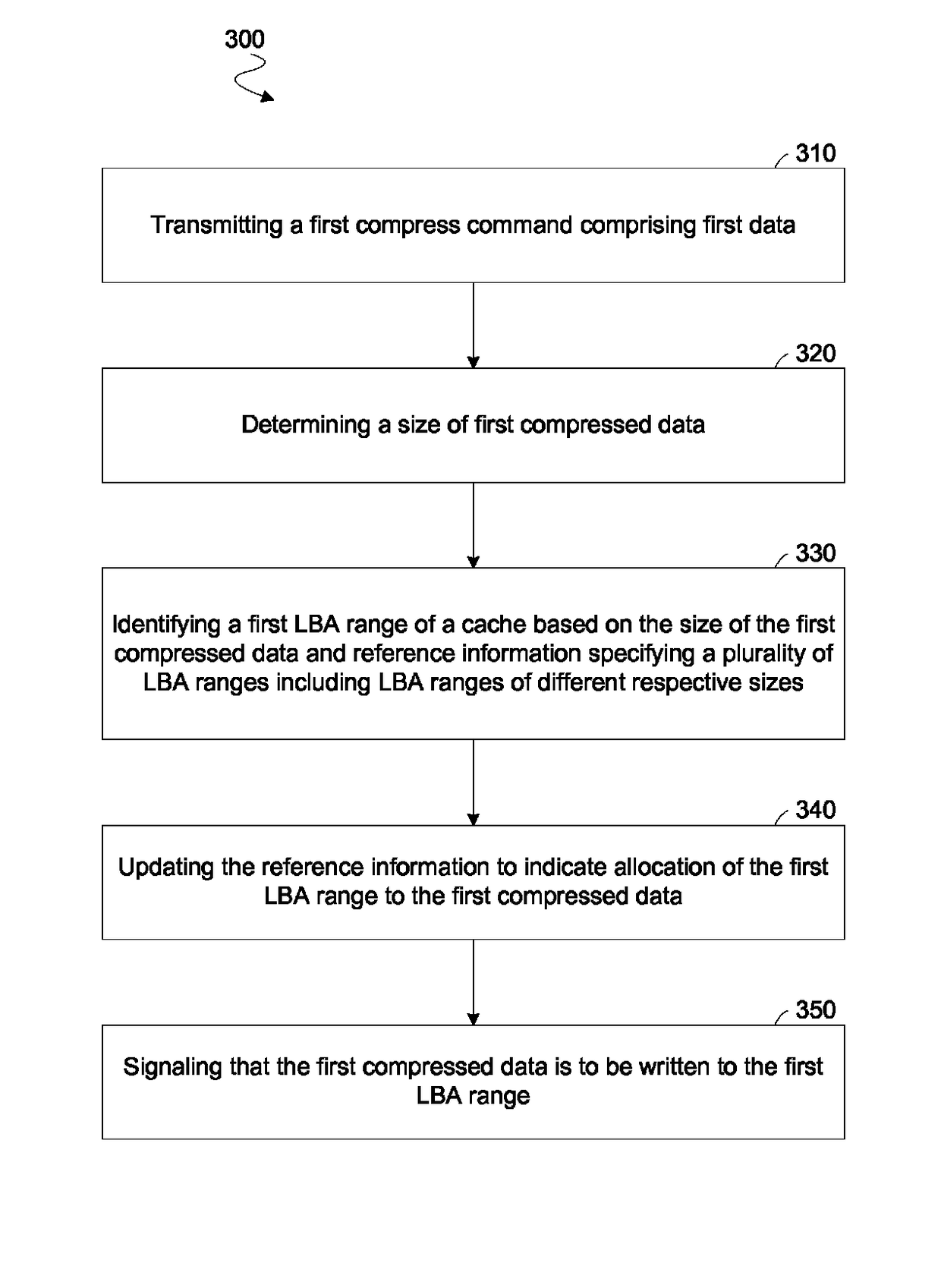

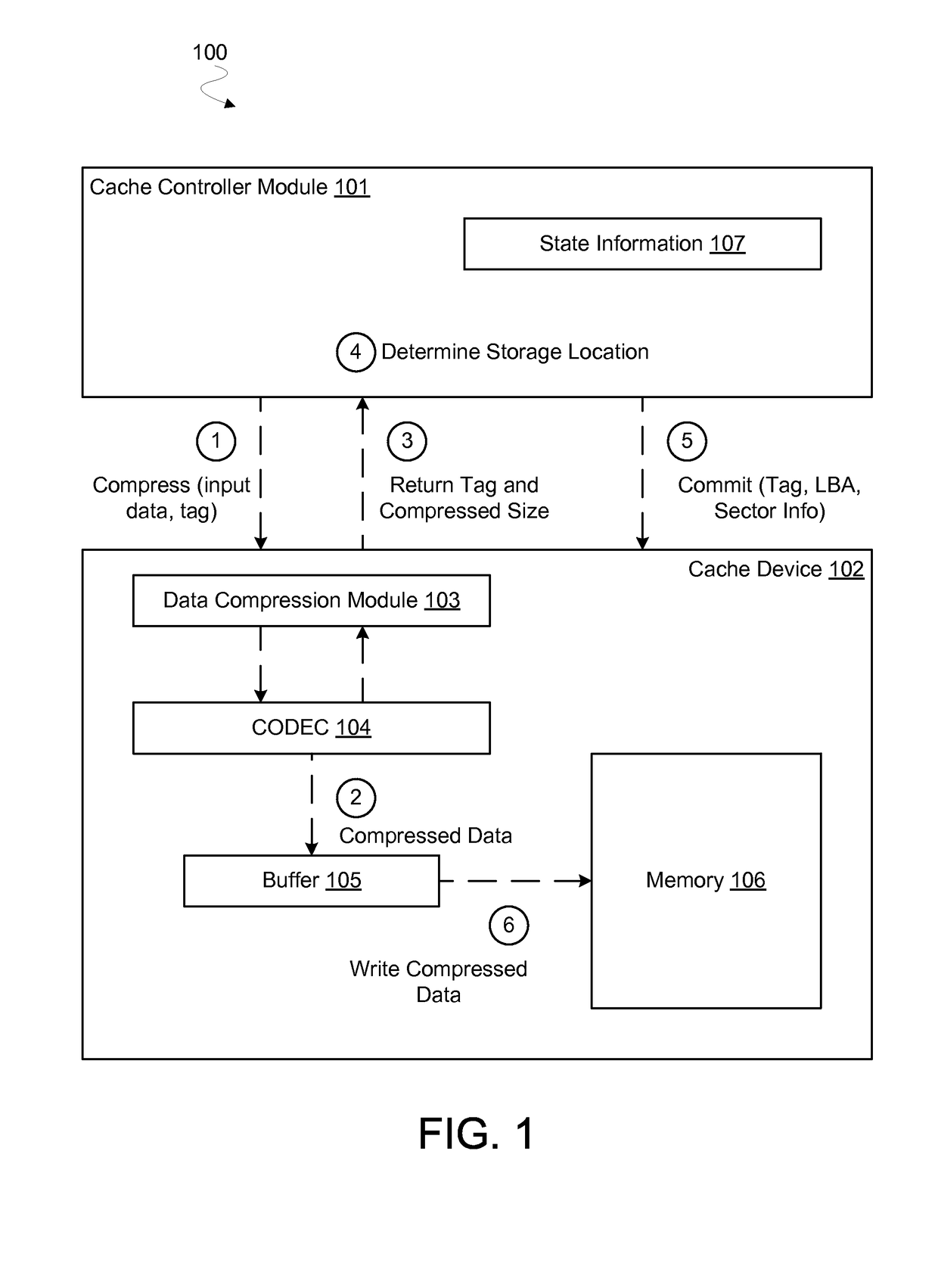

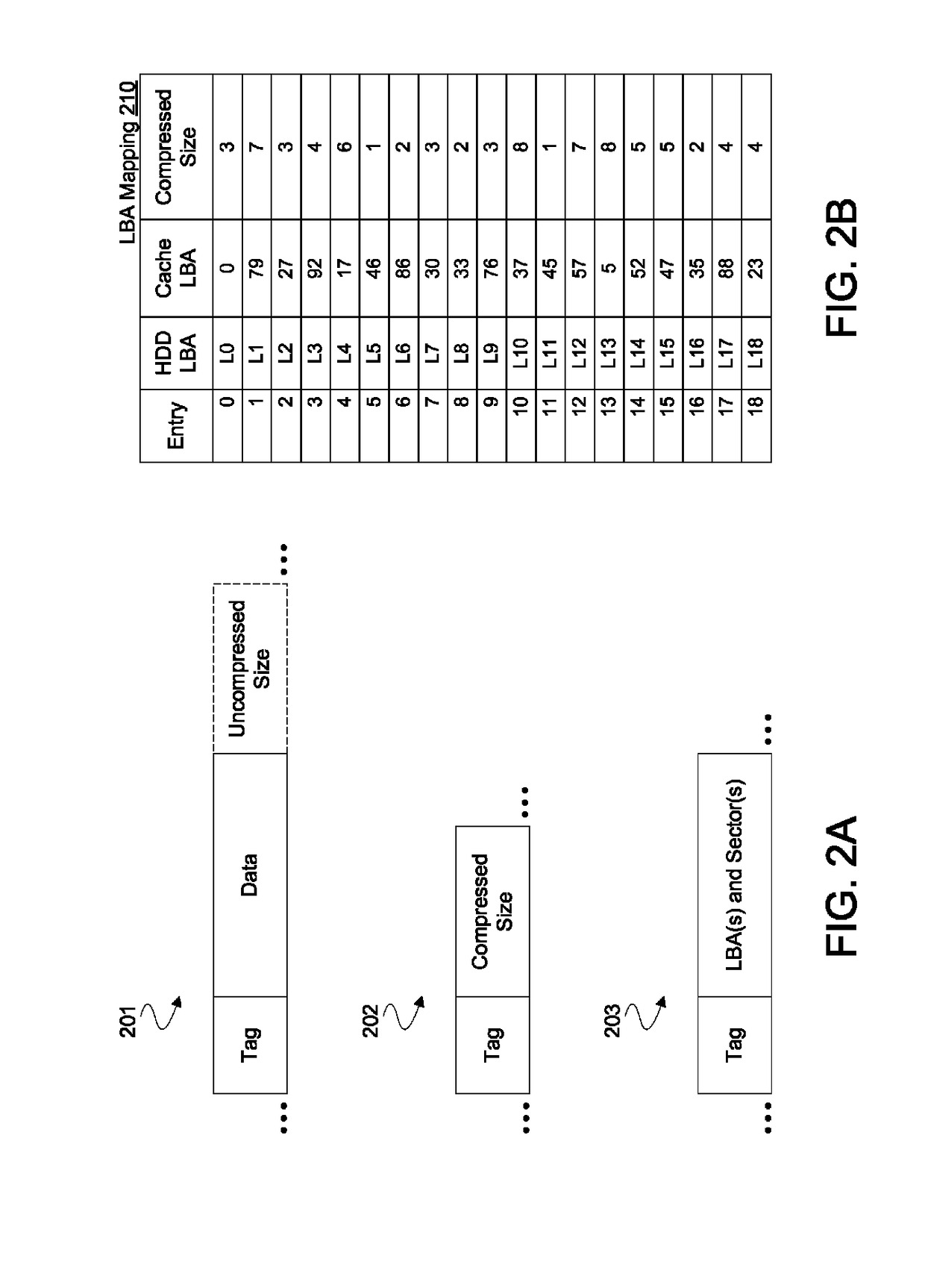

Apparatus, system and method for caching compressed data

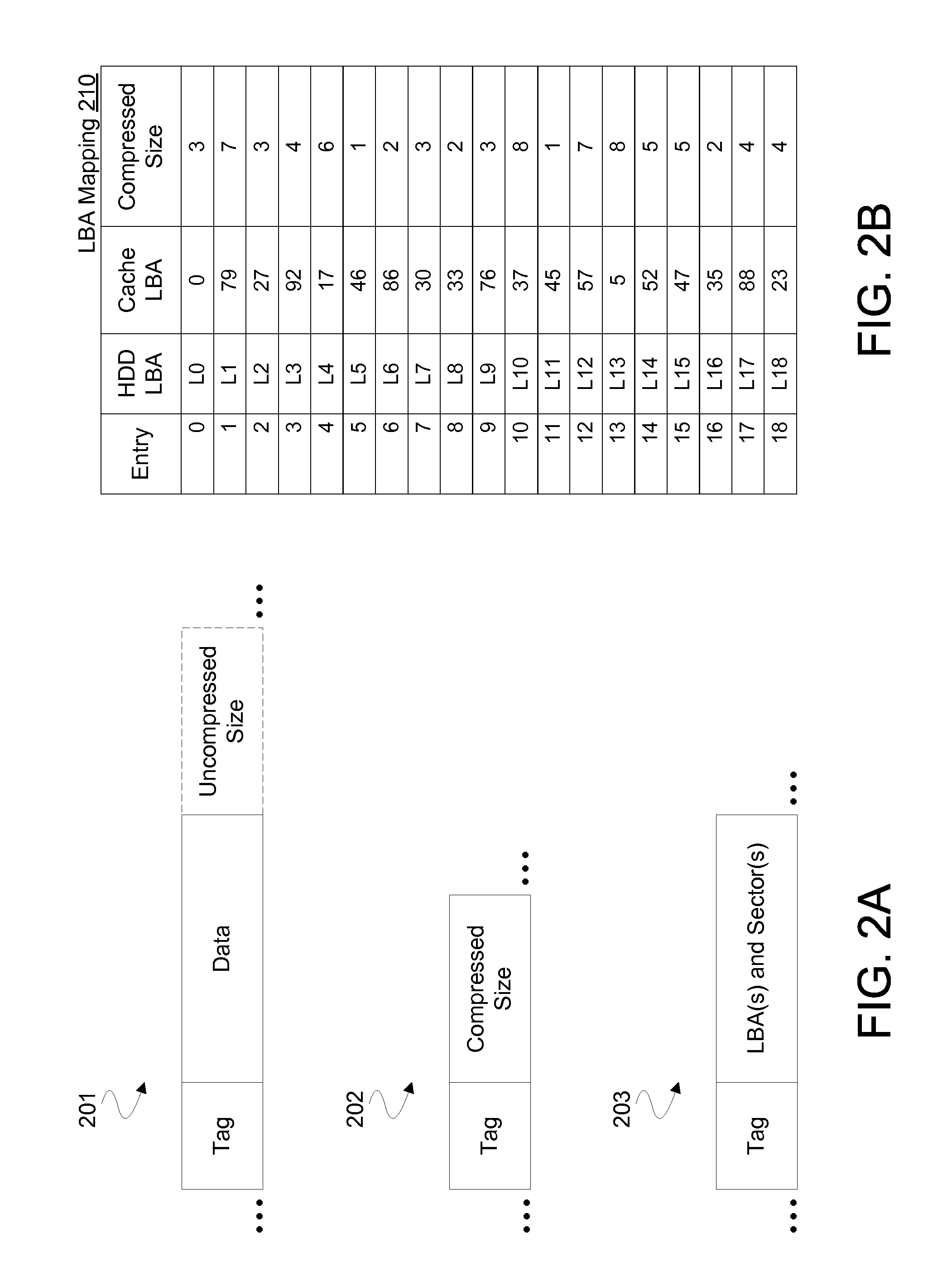

ActiveUS20160170878A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingParallel computing

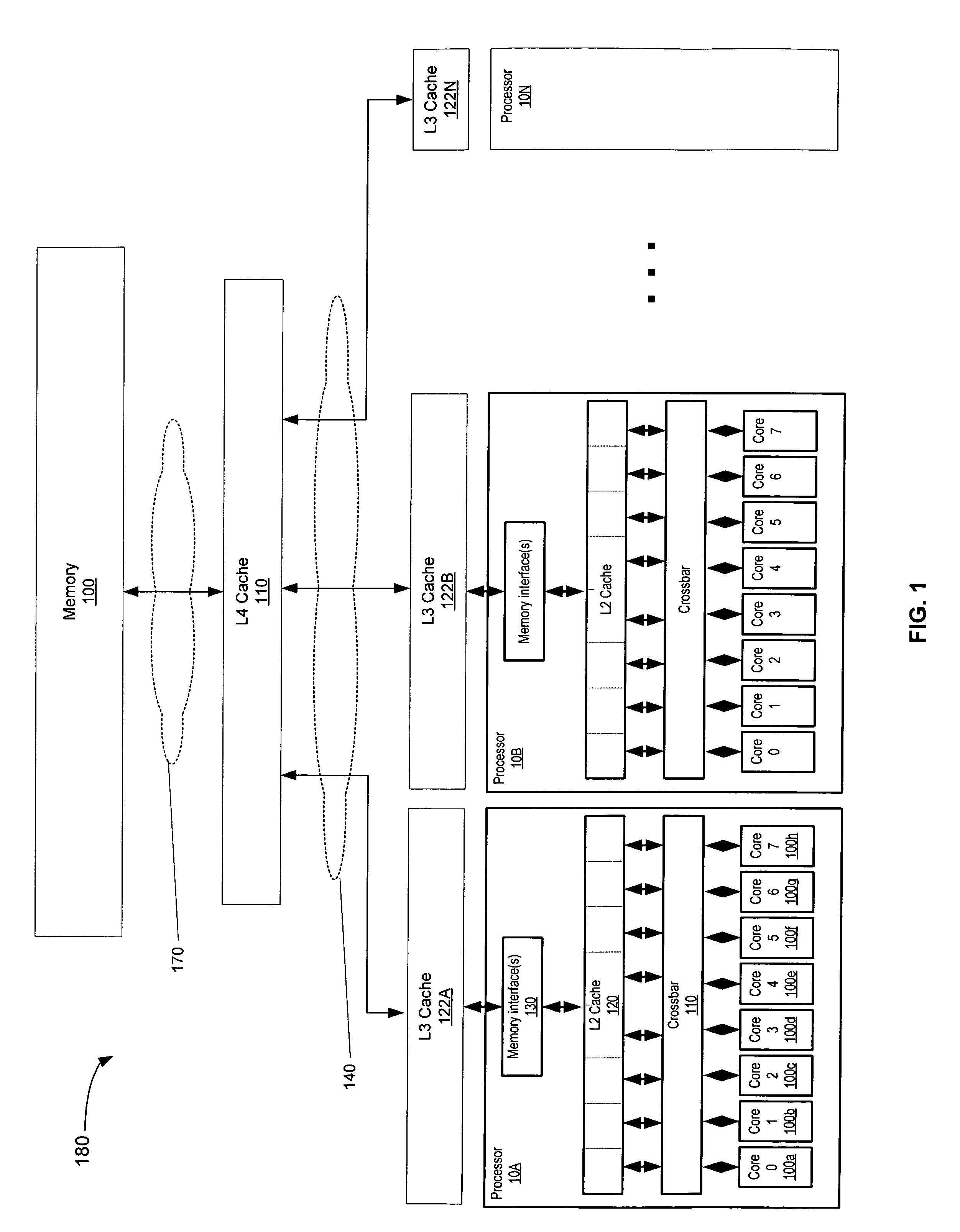

Techniques and mechanisms to efficiently cache data based on compression of such data. The technologies of the present disclosure include cache systems, methods, and computer readable media to support operations performed with data that is compressed prior to being written as a cache line in a cache memory. In some embodiments, a cache controller determines the size of compressed data to be stored as a cache line. The cache controller identifies a logical block address (LBA) range to cache the compressed data, where such identifying is based on the size of the compressed data and on reference information describing multiple LBA ranges of the cache memory. One or more such LBA ranges are of different respective sizes. In other embodiments, LBA ranges of the cache memory concurrently store respective compressed cache lines, wherein the LBA ranges and are of different respective sizes.

Owner:TAHOE RES LTD

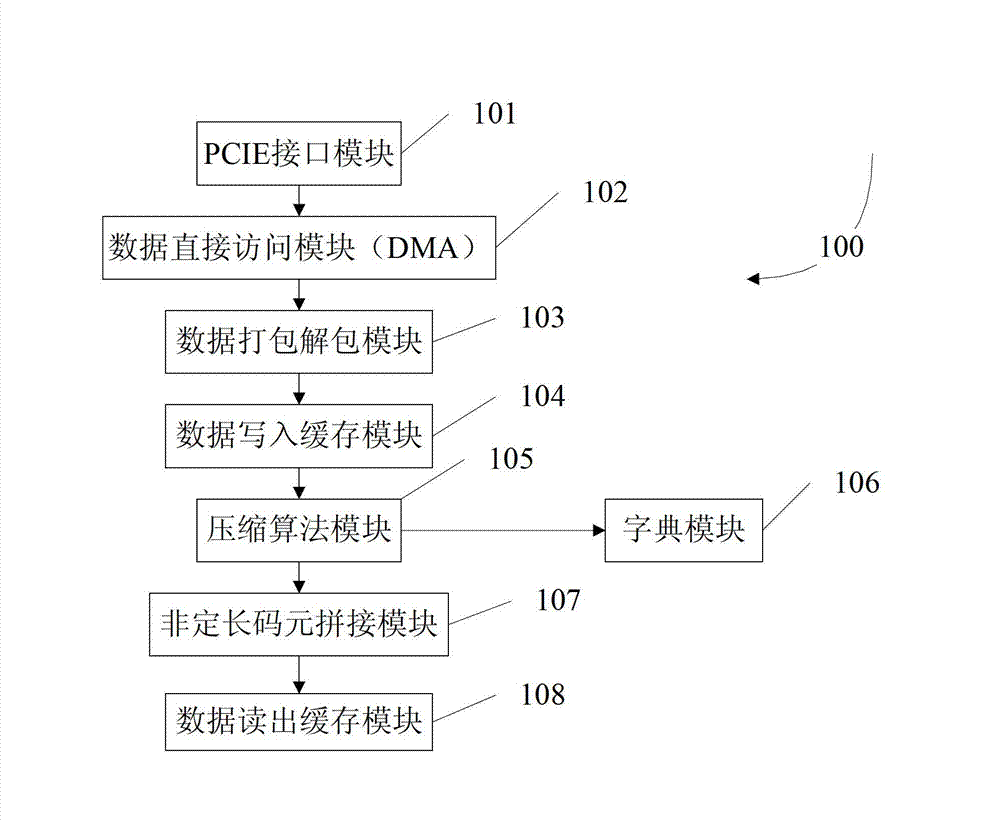

Hardware LZ77 compression implementation system and implementation method thereof

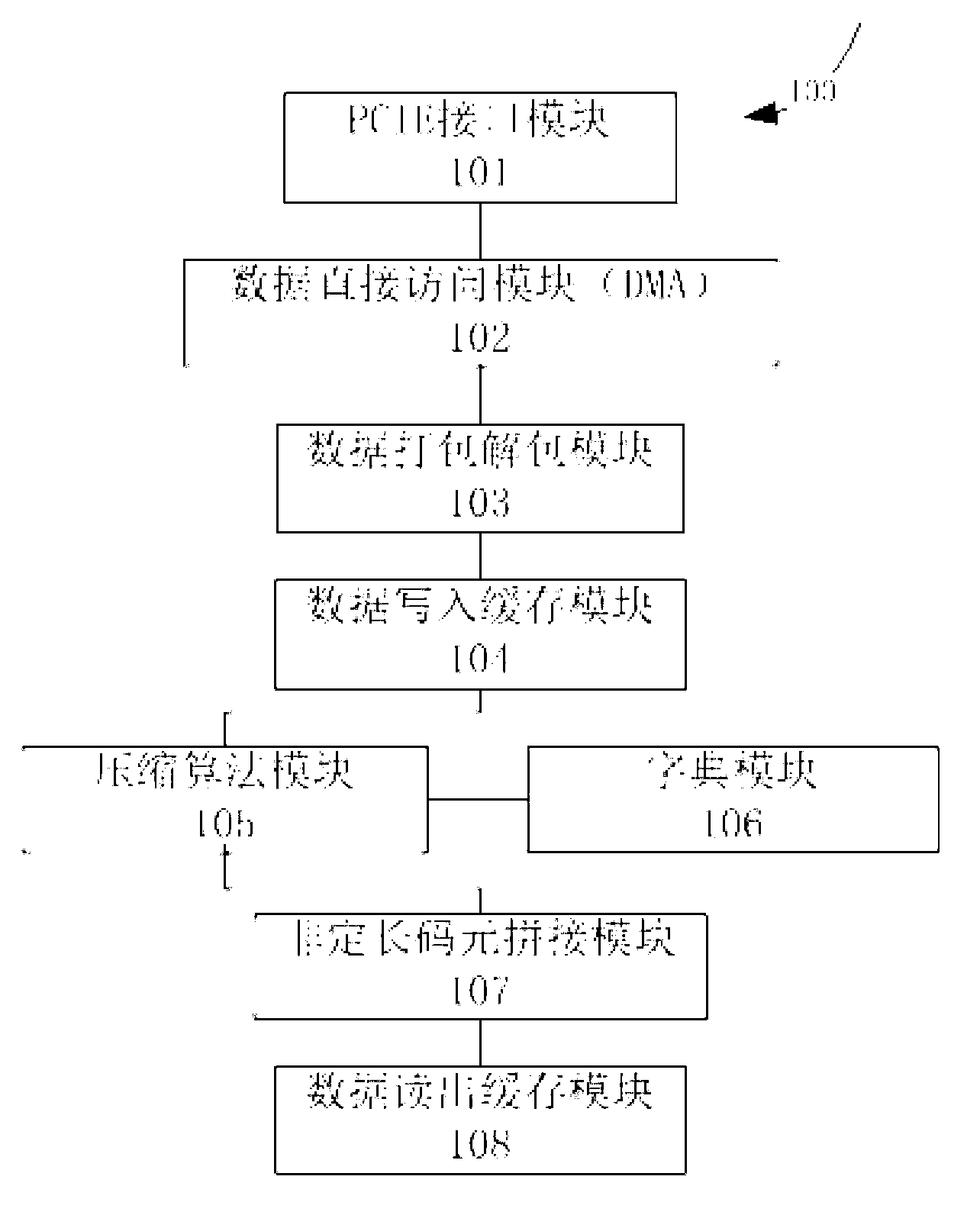

InactiveCN103023509AImprove compression efficiencyImprove efficiencyCode conversionCoded elementCache compression

The invention discloses a hardware LZ77 compression implementation system and a hardware LZ77 compression implementation method. The hardware LZ77 compression implementation system comprises a PCIE (Peripheral Component Interconnect Express) interface module, a data direct access module, a data packing / unpacking module, a data write-in buffer module, a compression algorithm module, a dictionary module, an unfixed length code element joining module and a data read-out buffer module. The hardware LZ77 compression implementation method comprises the following steps of: 1. caching data to be compressed; 2. performing compressed encoding on character string data; 3. splicing unfixed length data; and 4. caching the compressed data. According to the system and the method provided by the invention, LZ77 compression is realized through hardware; and therefore, the efficiency of the LZ77 compression algorithm can be effectively improved and CPU (Central Processing Unit) is released from mass data compression.

Owner:WUXI XINXIANG ELECTRONICS TECH

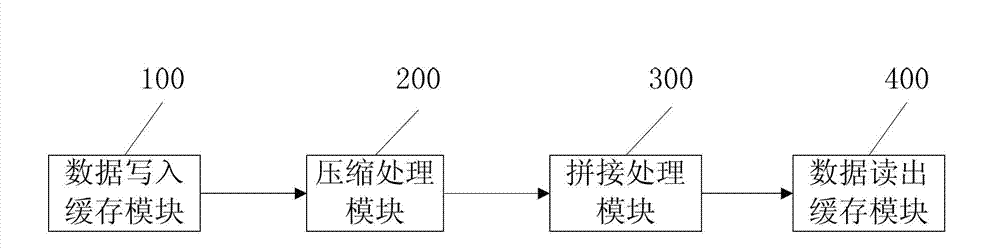

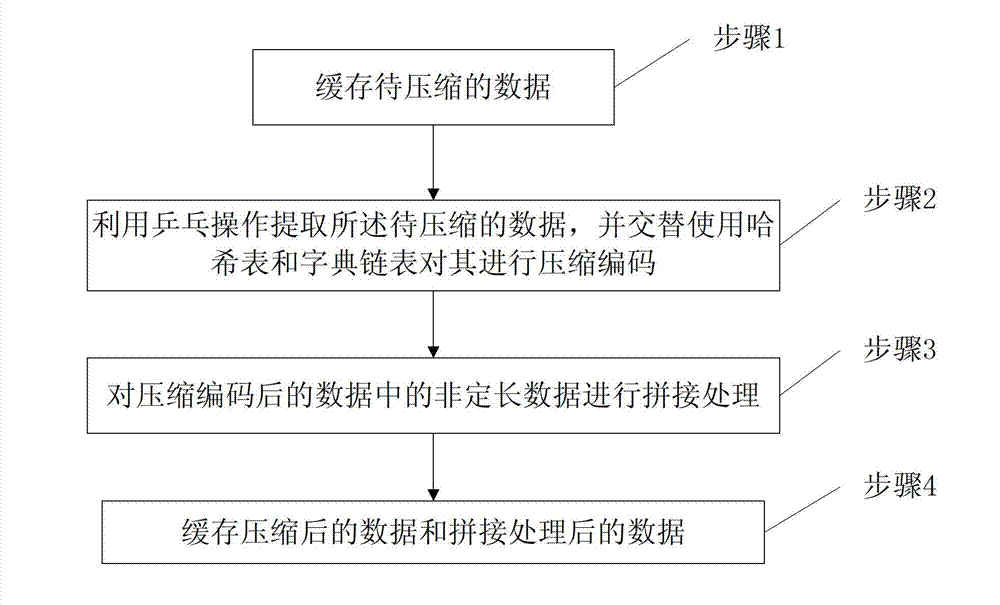

System and method for hardware LZ77 compression implementation

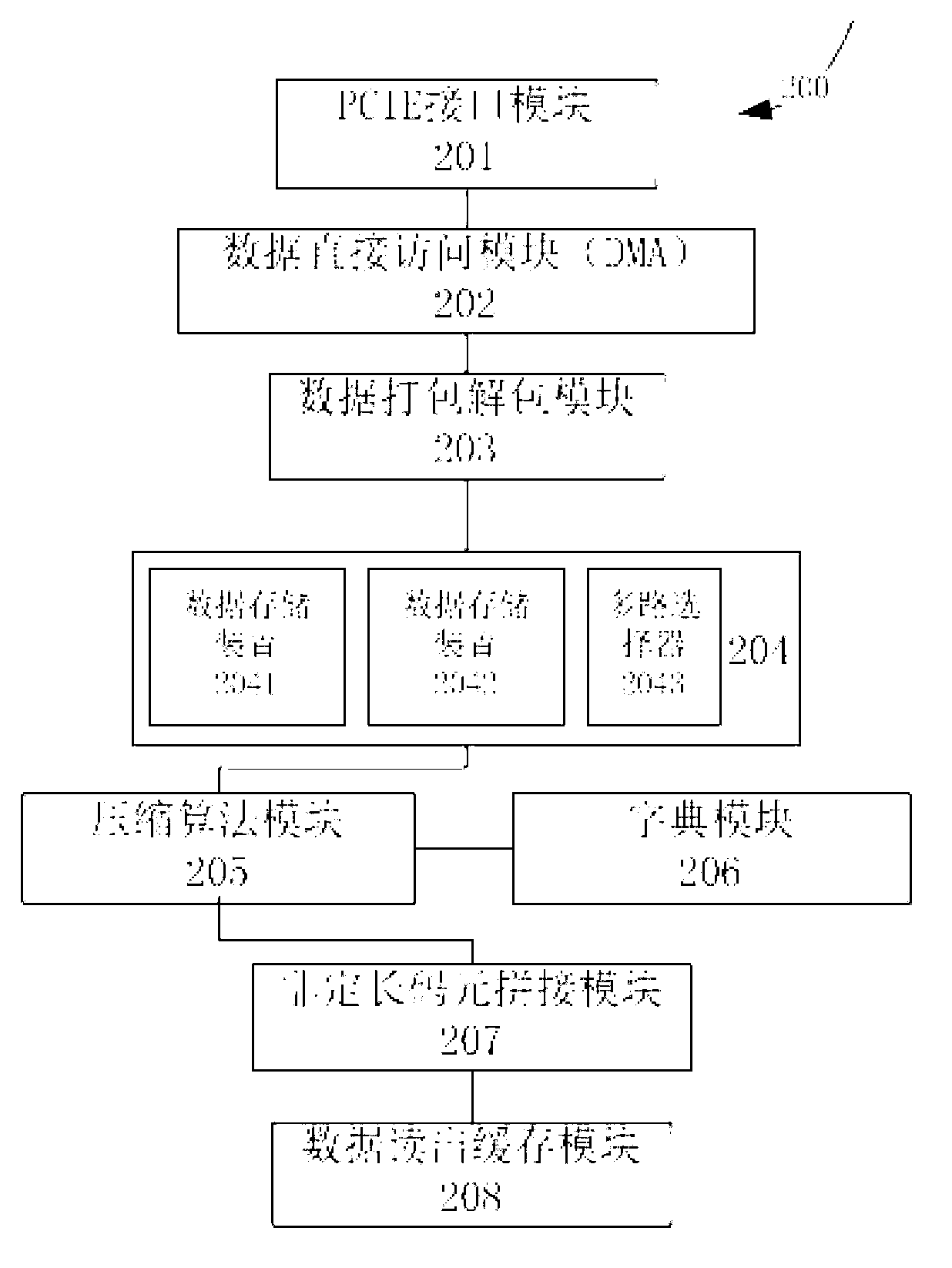

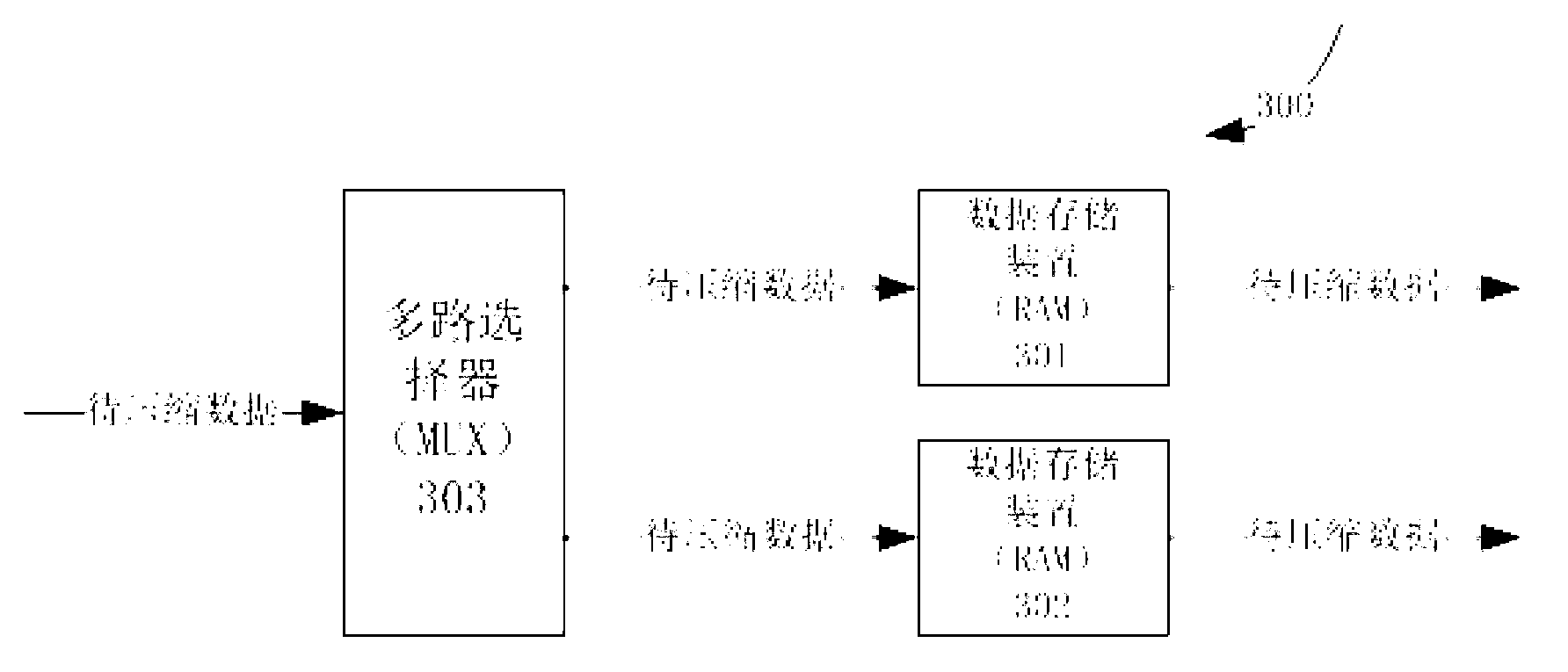

InactiveCN103095305AImprove compression efficiencyGuaranteed compression ratioCode conversionProcess efficiencyHash table

The invention provides a system and a method for hardware LZ77 compression implementation. The method comprises the following steps: step 1 caching data to be compressed, step 2 extracting the data to be compressed by means of ping pong operation, and alternately using a Hash table and a dictionary chain table for compressed encoding, step 3 splicing-processing non-fixed-length data after compressed encoding, and step 4 caching the compressed data and the spliced-processed data. According to the system and the method, Field Programmable Gata Array (FPGA) is adopted to realize the LZ77 compression function. By means of a data writing caching module and a data written-out caching module, the ping pong writing and ping pong reading function of data is realized. By means of a dictionary module, alternate update and use of the dictionary are realized so that an LZ77 compression algorithm module reaches the highest efficiency. Besides, by means of the parallel operation of the compression algorithm module and a non-fixed-length splicing-processing module, processing efficiency of the existing LZ77 compression algorithm is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Cache memory with power saving state

ActiveUS8285936B2Efficient powerEasy accessMemory architecture accessing/allocationEnergy efficient ICTParallel computingPower control

A data processing apparatus 1 comprises data processing circuitry 2, a memory 8 for storing data and a cache memory 5 for storing cached data from the memory 8. The cache memory 5 is partitioned into cache segments 12 which may be individually placed in a power saving state by power supply circuitry 15 under control of power control circuitry 22. The number of segments which are active at any time may be dynamically adjusted in dependence upon operating requirements of the processor 2. An eviction selection mechanism 35 is provided to select evictable cached data for eviction from the cache. A cache compacting mechanism 40 is provided to evict evictable cached data from the cache and to store non-evictable cached data in fewer cache segments than were used to store the cached data prior to eviction of the evictable cached data. Compacting the cache enables at least one cache segment that, following eviction of the evictable cached data, is no longer required to store cached data to be placed in the power saving state by the power supply circuitry.

Owner:RGT UNIV OF MICHIGAN

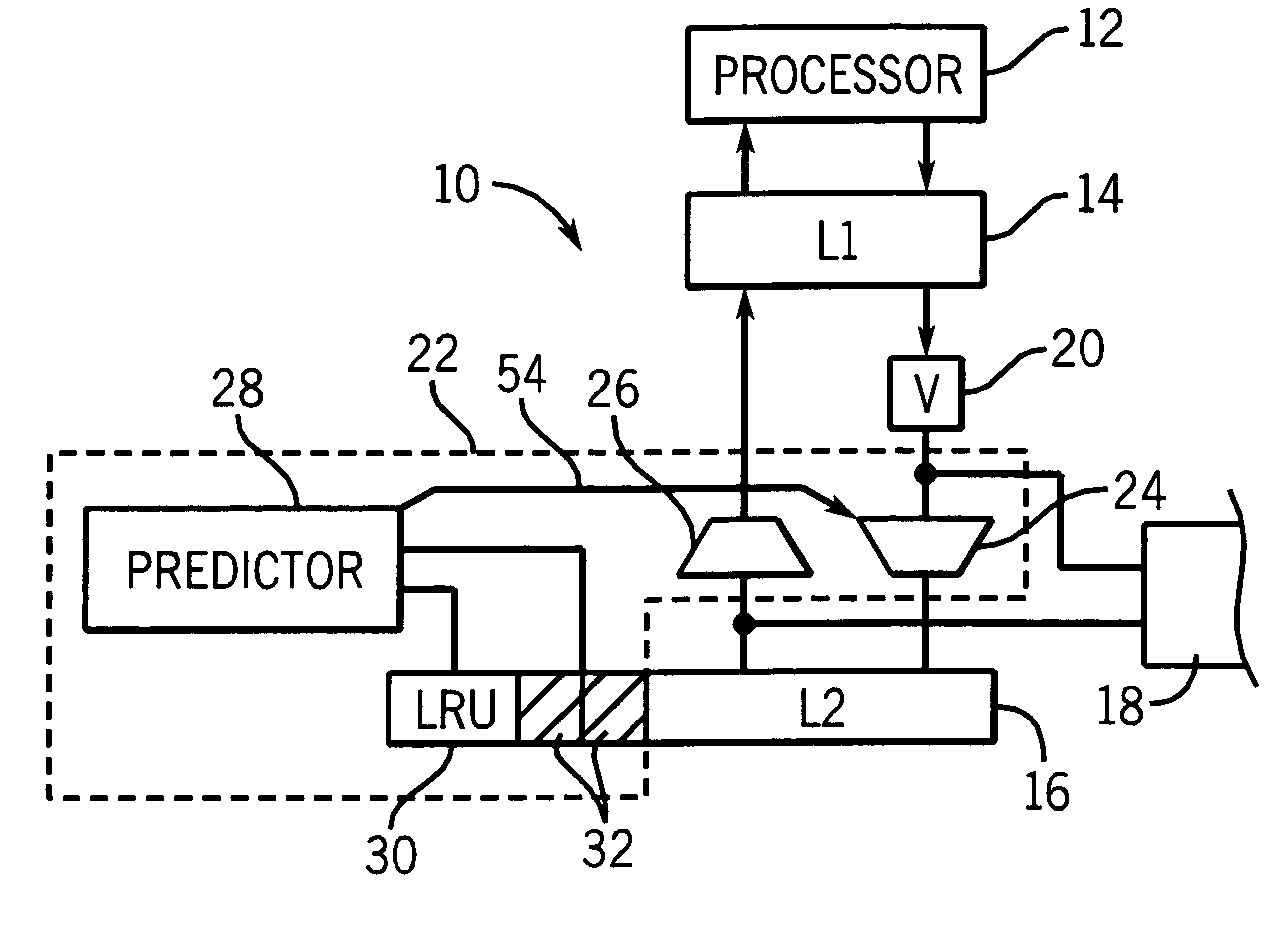

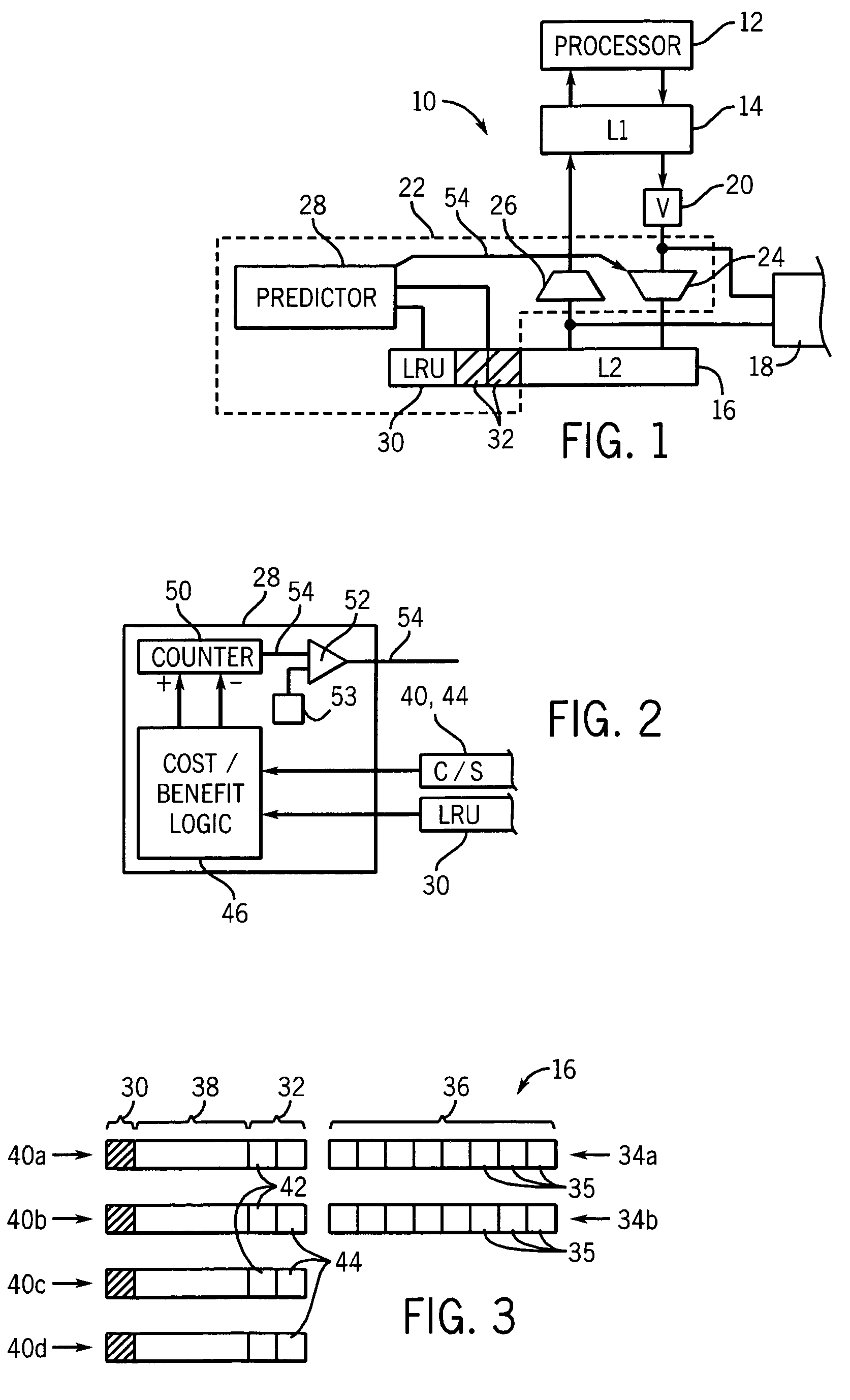

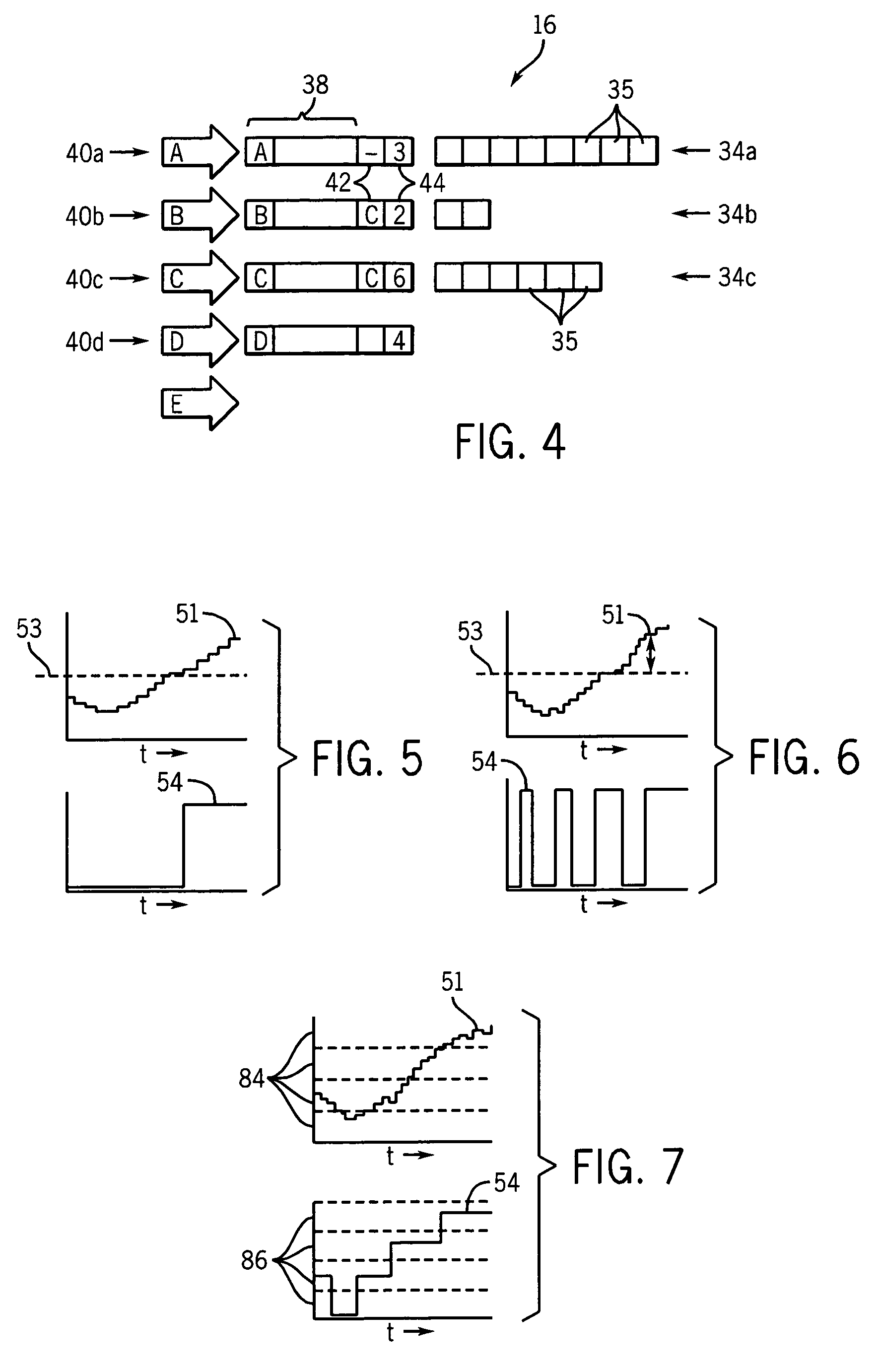

Method and mechanism for cache compaction and bandwidth reduction

ActiveUS8046538B1Memory architecture accessing/allocationMemory adressing/allocation/relocationOperating systemCache compression

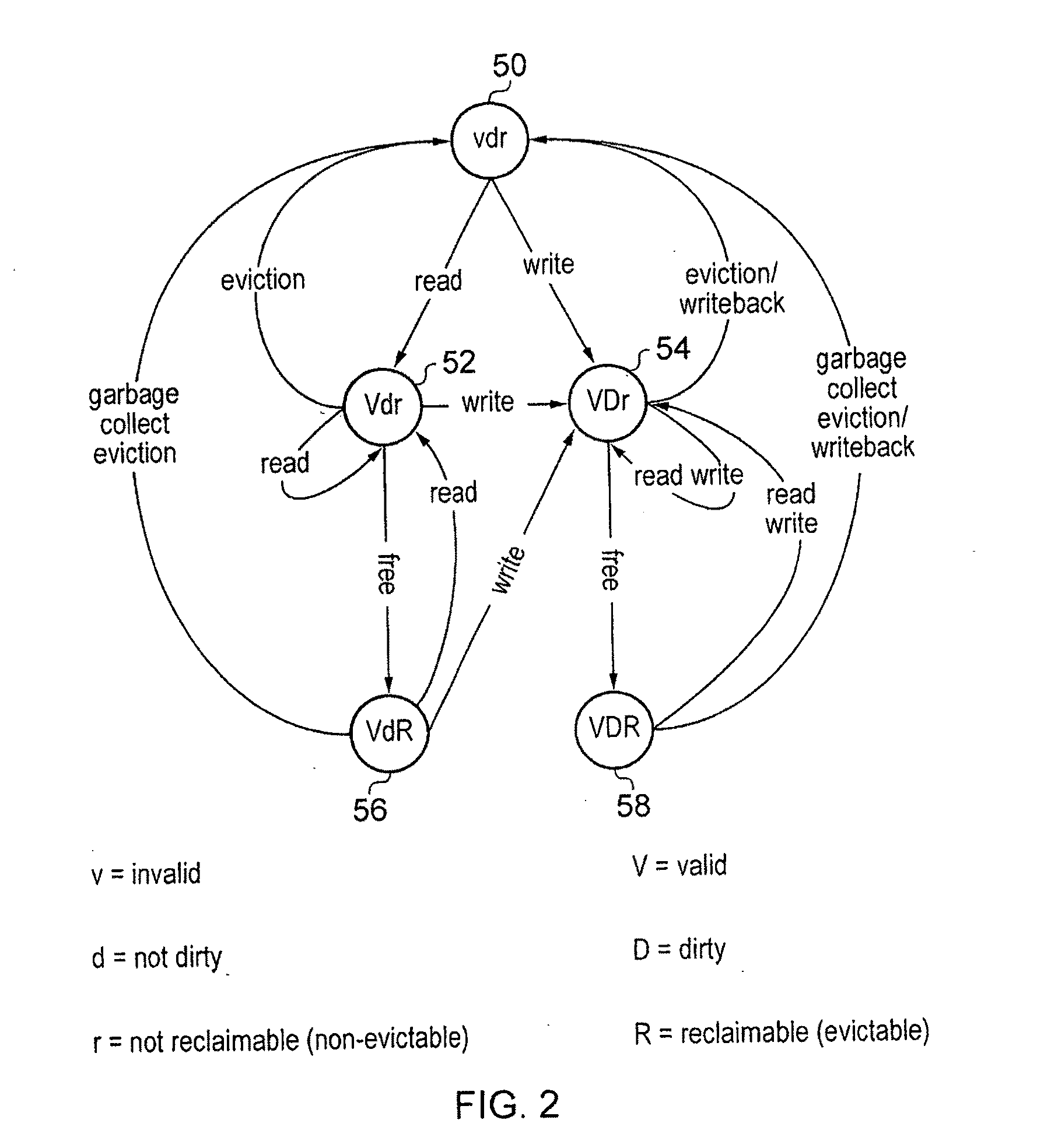

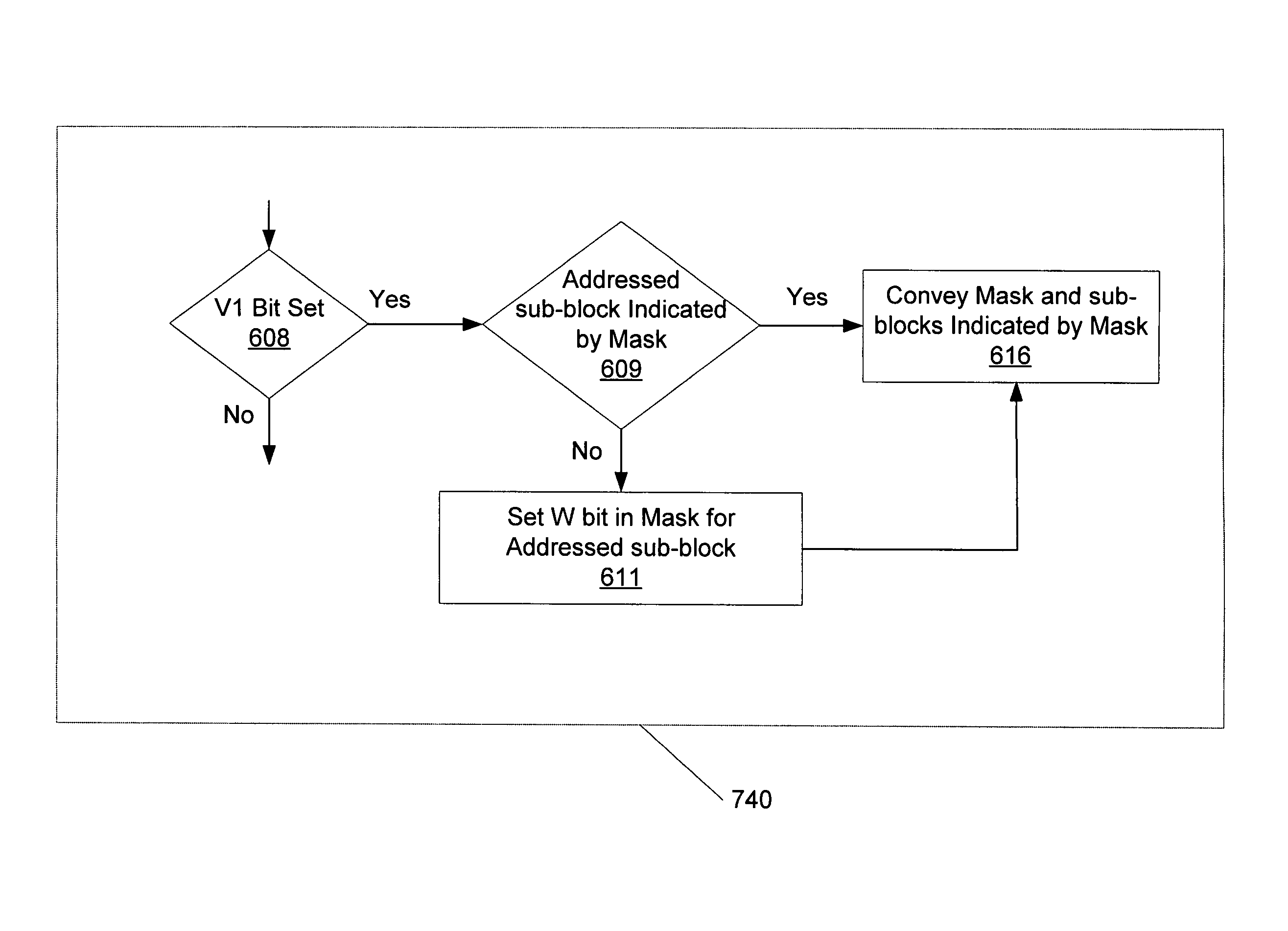

A method and mechanism are managing caches. A cache is configured to store blocks of data based upon predictions of future accesses. Each block is partitioned into sub-blocks, and if it is predicted a given sub-block is unlikely to be accessed, the sub-block may not be stored in the cache. Associated with each block is a mask which indicates whether sub-blocks of the block are likely to be accessed. When a block is first loaded into the cache, the corresponding mask is cleared and an indication is set for the block to indicate a training mode for the block. Access patterns of the block are then monitored and stored in the mask. If a given sub-block is accessed a predetermined number of times, a bit in the mask is set to indicate that the sub-block is likely to be accessed. When a block is evicted from the cache, the mask is also transferred for storage and only the sub-blocks identified by the mask as being likely to be accessed may be transferred for storage. If previously evicted data is restored to the cache, a previously stored mask is accessed to determine which of the sub-blocks are predicted likely to be accessed. The lower level storage may then transfer only those sub-blocks predicted likely to be accessed to the cache.

Owner:ORACLE INT CORP

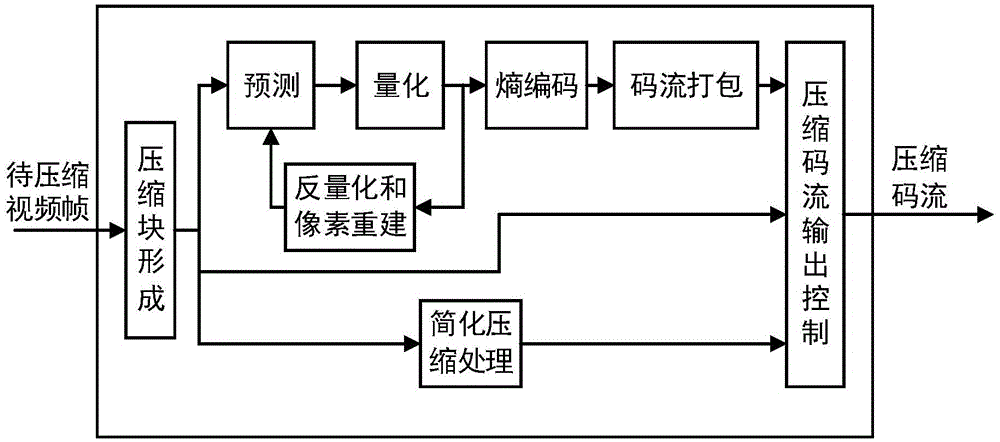

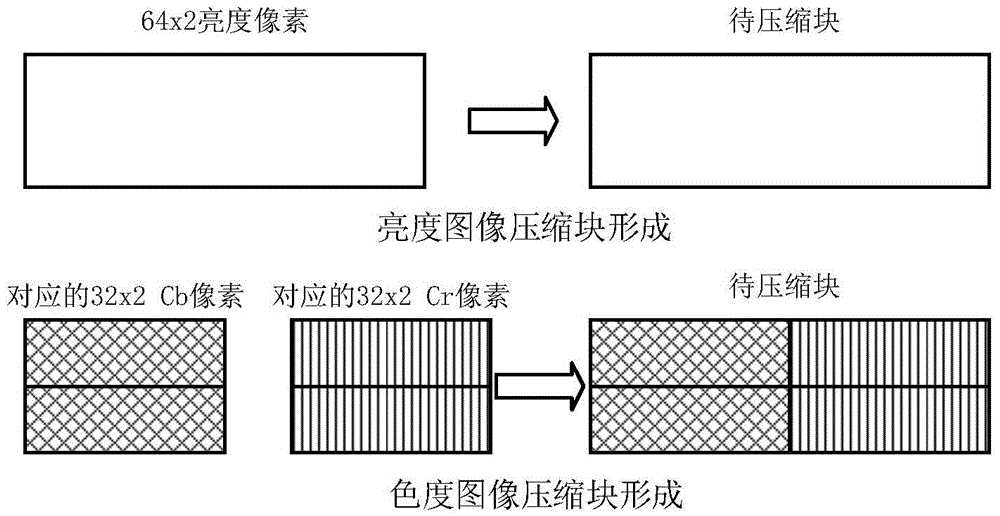

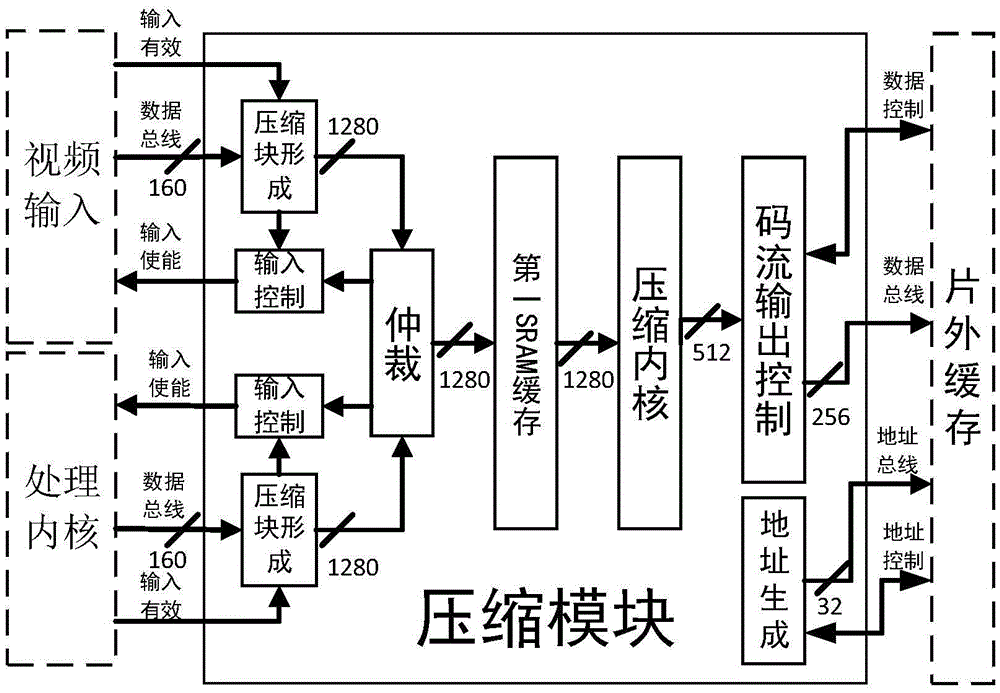

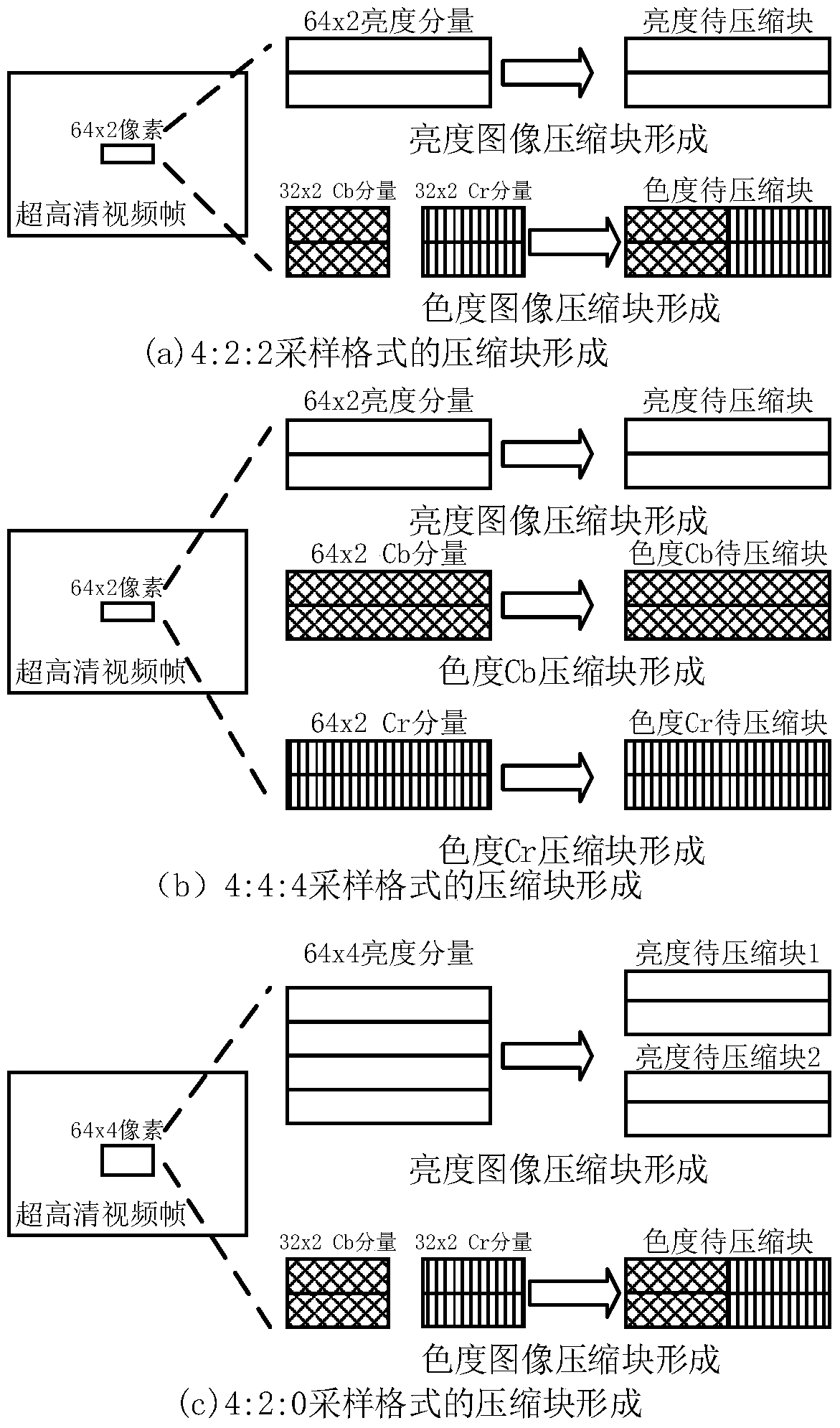

Out-chip buffer compression method for superhigh-definition processing system

ActiveCN105472389AReduce write bandwidthReduce read bandwidthDigital video signal modificationSelective content distributionProcessing coreParallel computing

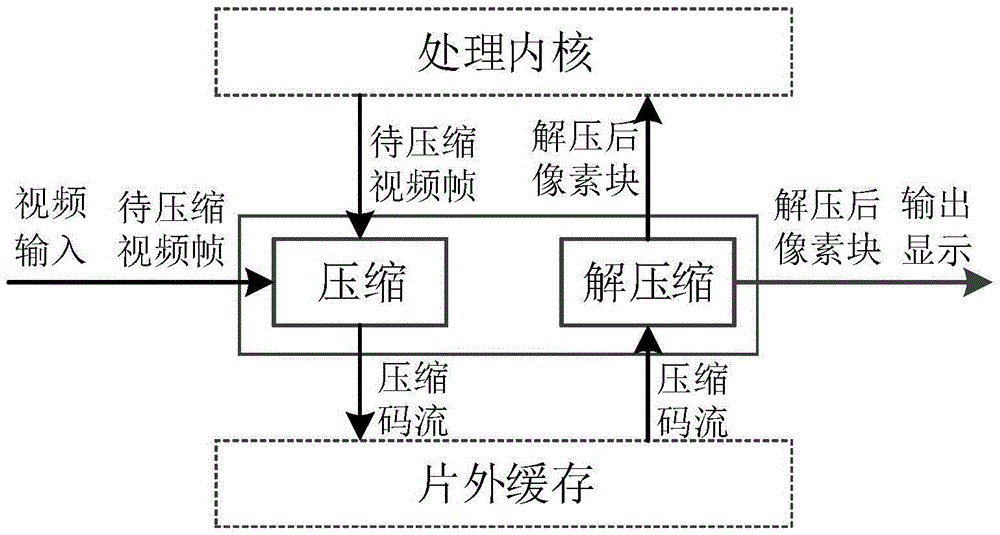

The invention provides an out-chip buffer compression method for a superhigh-definition processing system, and the method comprises the steps: compression: grouping video frame data from an original video input end and video frame data from a processing core during the completing of a video processing function so as to form a to-be-compressed block, carrying out the in-block pixel grouping, prediction, quantification, reverse quantification, pixel reconstruction, entropy coding, code stream packaging, compression simplifying and compressed code stream output control operation of each to-be-compressed block, obtaining a compressed code stream, and enabling the compressed code stream to be written into an out-chip buffer; decompression: reading a compressed code stream from the out-chip buffer, carrying out real-time decoding after code stream analysis, entropy decoding, reverse quantification, pixel forming, simplified compression decoding and pixel block restoration, forming a decompressed pixel block, and enables the decompressed pixel block to be outputted to the processing core and an output display module. The method can greatly reduce the bandwidth of the out-chip buffer, and improves the data throughput rate of the system.

Owner:SHANGHAI JIAO TONG UNIV

Off-chip cache compression method for ultra-high-definition video processing system

InactiveCN107026999AReduce write bandwidthReduce capacityDigital video signal modificationHigh-definition television systemsParallel computingVideo processing

The invention discloses an off-chip cache compression method for an ultra-high-definition video processing system. The off-chip cache compression method comprises the steps of: S1, grouping: grouping video frame data from an original video input end and video frame data completing a video processing function, so that blocks to be compressed are formed, and then, for each block to be compressed, performing pixel grouping in block; S2, reconstructing: arranging pixel groups in the blocks to be compressed according to pixel height, and then, performing pixel reconstruction through obtaining of an absolute value of the residual error, rate magnification, cut reduction, error compensation and calculation of a pixel reconstruction value sequentially; and S3, compressing: performing coding, code stream packaging, compression processing and compression code stream output control operation of the blocks to be compressed after the pixels are reconstructed, so that a compressed code stream is obtained, and then, writing the compressed code stream in an off-chip cache. By means of the off-chip cache compression method for the ultra-high-definition video processing system disclosed by the invention, the writing bandwidth of an off-chip storage can be effectively reduced; furthermore, the pixels can be improved; the capacity of the off-chip cache can be greatly reduced; and the method is simple, convenient to use and low in cost.

Owner:成都小娱网络科技有限公司

Adaptive cache compression system

ActiveUS7412564B2Simple methodStable controlMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingInstruction cycle

Owner:WISCONSIN ALUMNI RES FOUND

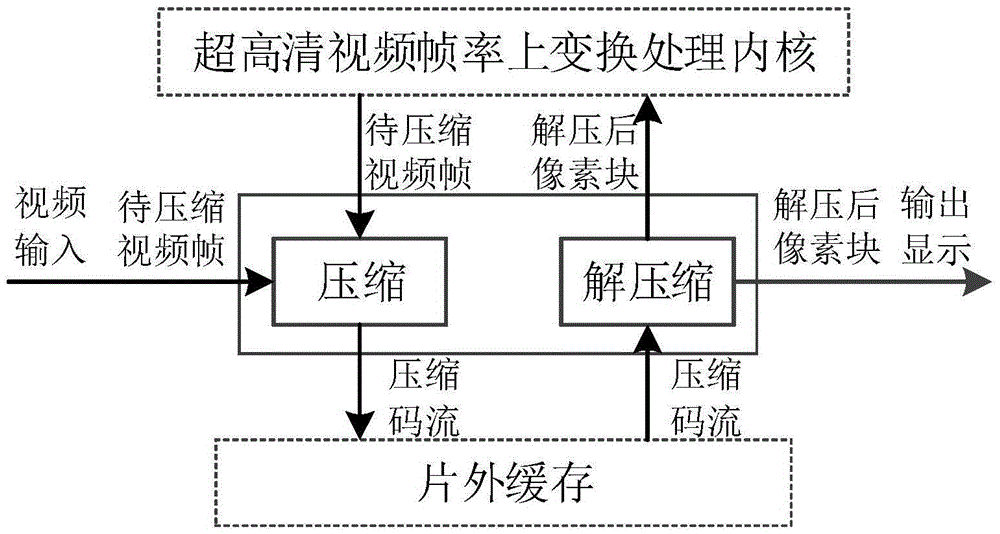

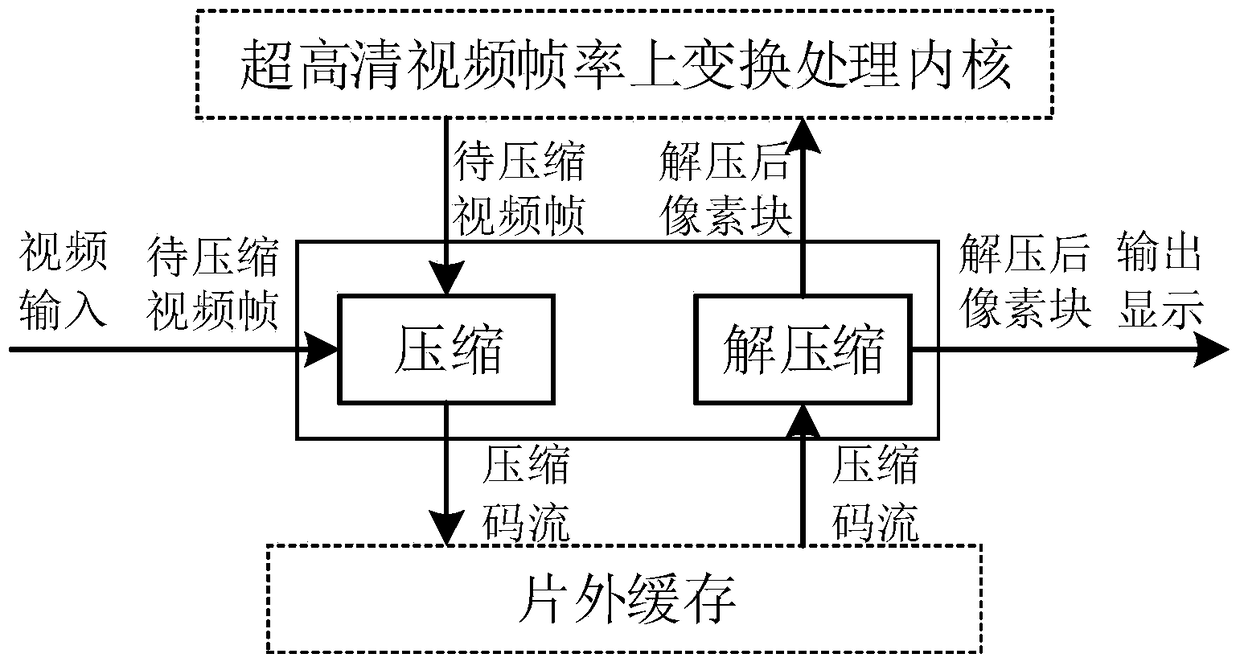

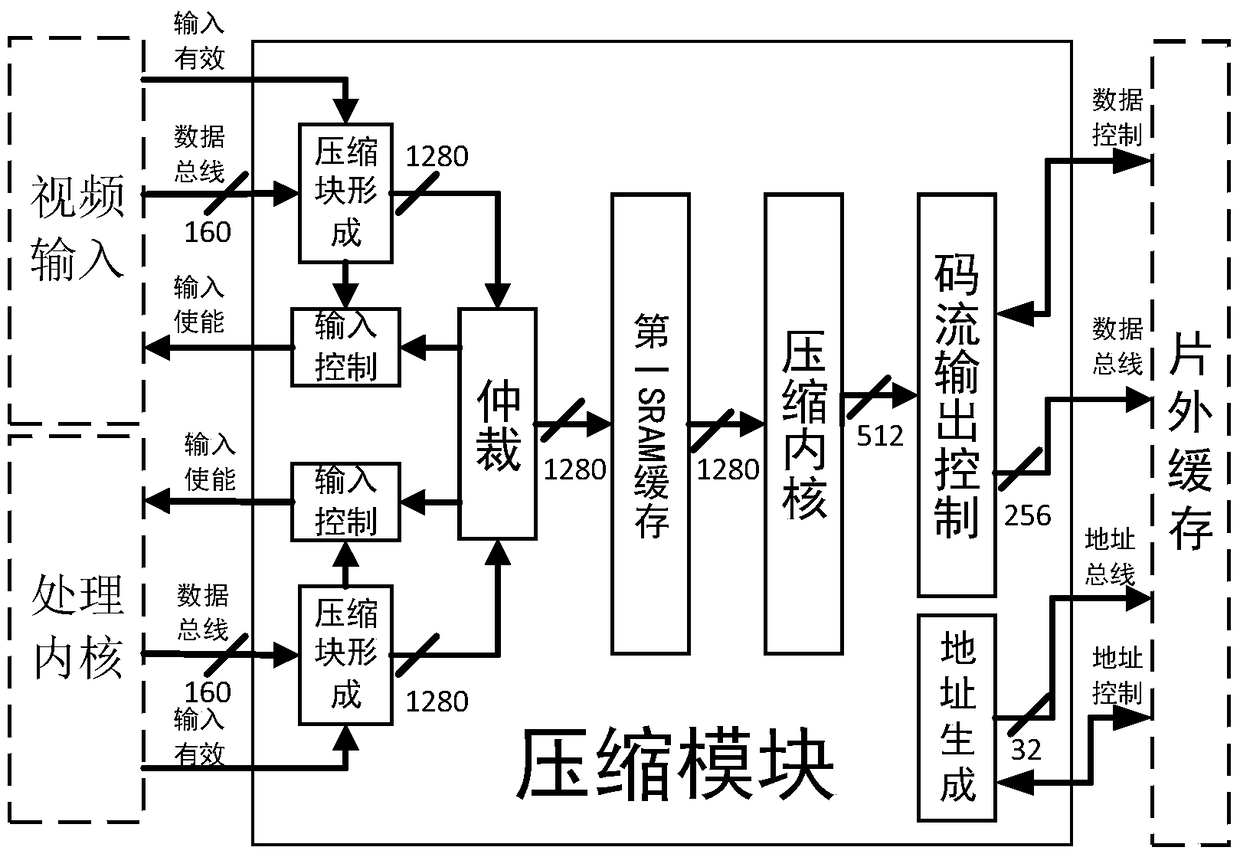

Out-chip buffer compression system for superhigh-definition frame rate up-conversion

ActiveCN105472442AReduce power consumptionReduce capacityDigital video signal modificationSelective content distributionProcessing coreParallel computing

The invention provides an out-chip buffer compression system for superhigh-definition frame rate up-conversion, and the system comprises a compression module and a decompression module. The compression module compresses to-be-compressed video frame data from video input and a superhigh-definition frame rate up-conversion processing core, forming a compressed code stream, and enables the compressed code stream to be written into an out-chip buffer; a decompression module which requests the compressed code stream for the out-chip buffer, and receives the compressed code stream from the out-chip buffer. The decompression module carries out the real-time decoding of the compressed code stream, forms a decompressed pixel block, and enables the decompressed pixel block to be outputted to the superhigh-definition frame rate up-conversion processing core and an output display module. The method can greatly reduce the bandwidth of the out-chip buffer, improves the data throughput rate, and reduces the power consumption of an uperhigh-definition frame rate up-conversion system.

Owner:SHANGHAI JIAO TONG UNIV

Apparatus, system and method for caching compressed data

ActiveUS9652384B2Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingParallel computing

Techniques and mechanisms to efficiently cache data based on compression of such data. The technologies of the present disclosure include cache systems, methods, and computer readable media to support operations performed with data that is compressed prior to being written as a cache line in a cache memory. In some embodiments, a cache controller determines the size of compressed data to be stored as a cache line. The cache controller identifies a logical block address (LBA) range to cache the compressed data, where such identifying is based on the size of the compressed data and on reference information describing multiple LBA ranges of the cache memory. One or more such LBA ranges are of different respective sizes. In other embodiments, LBA ranges of the cache memory concurrently store respective compressed cache lines, wherein the LBA ranges and are of different respective sizes.

Owner:TAHOE RES LTD

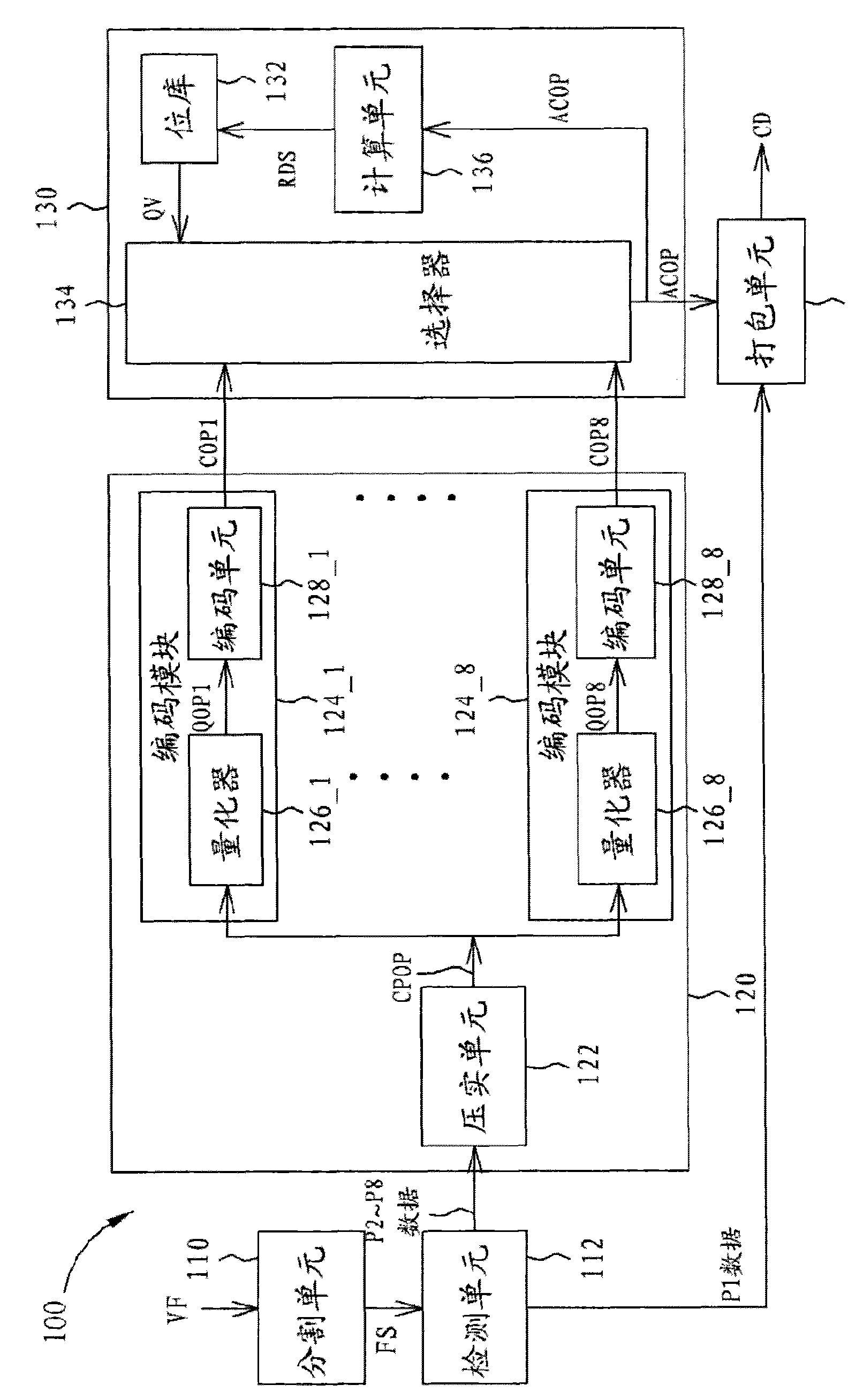

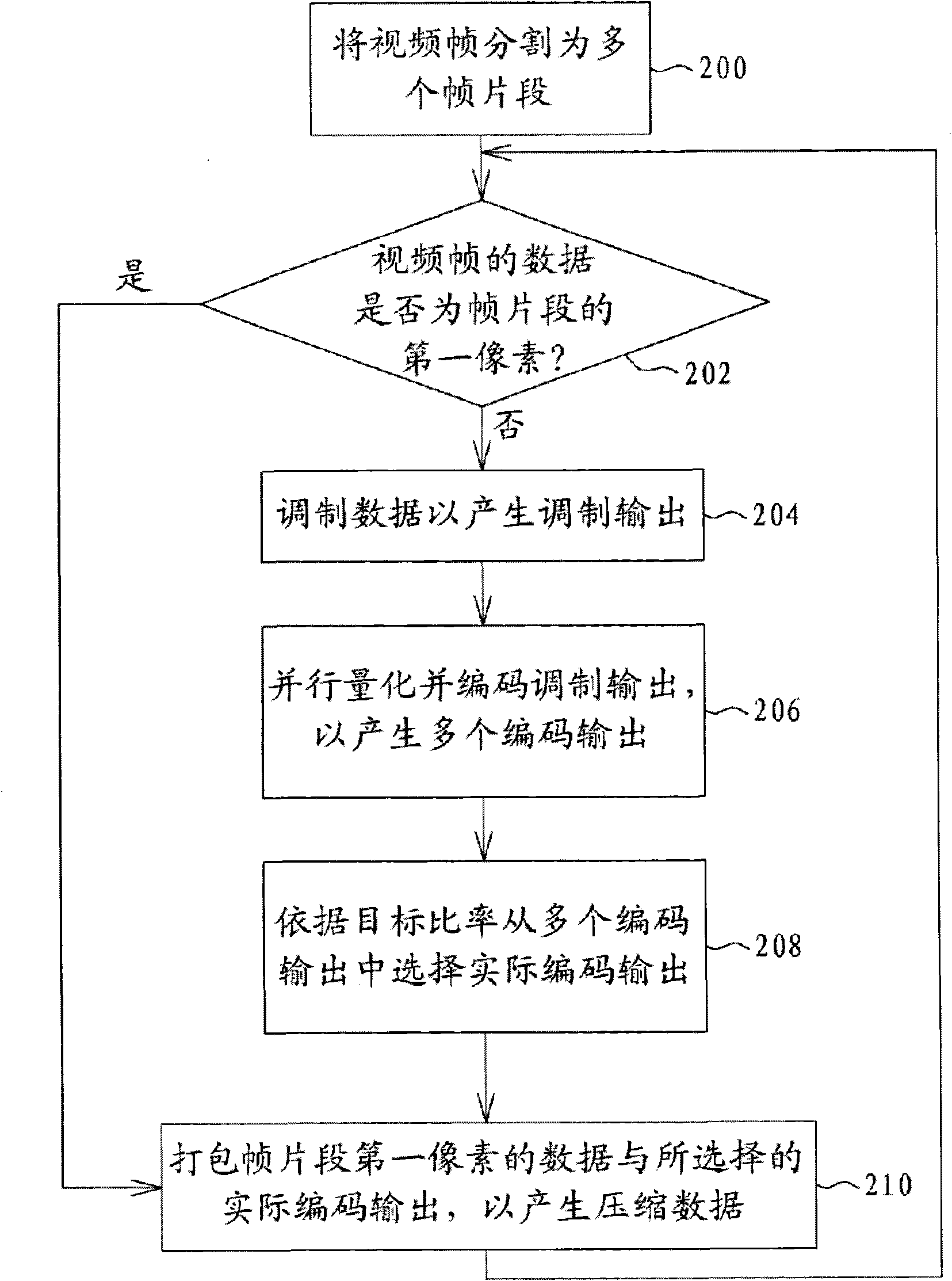

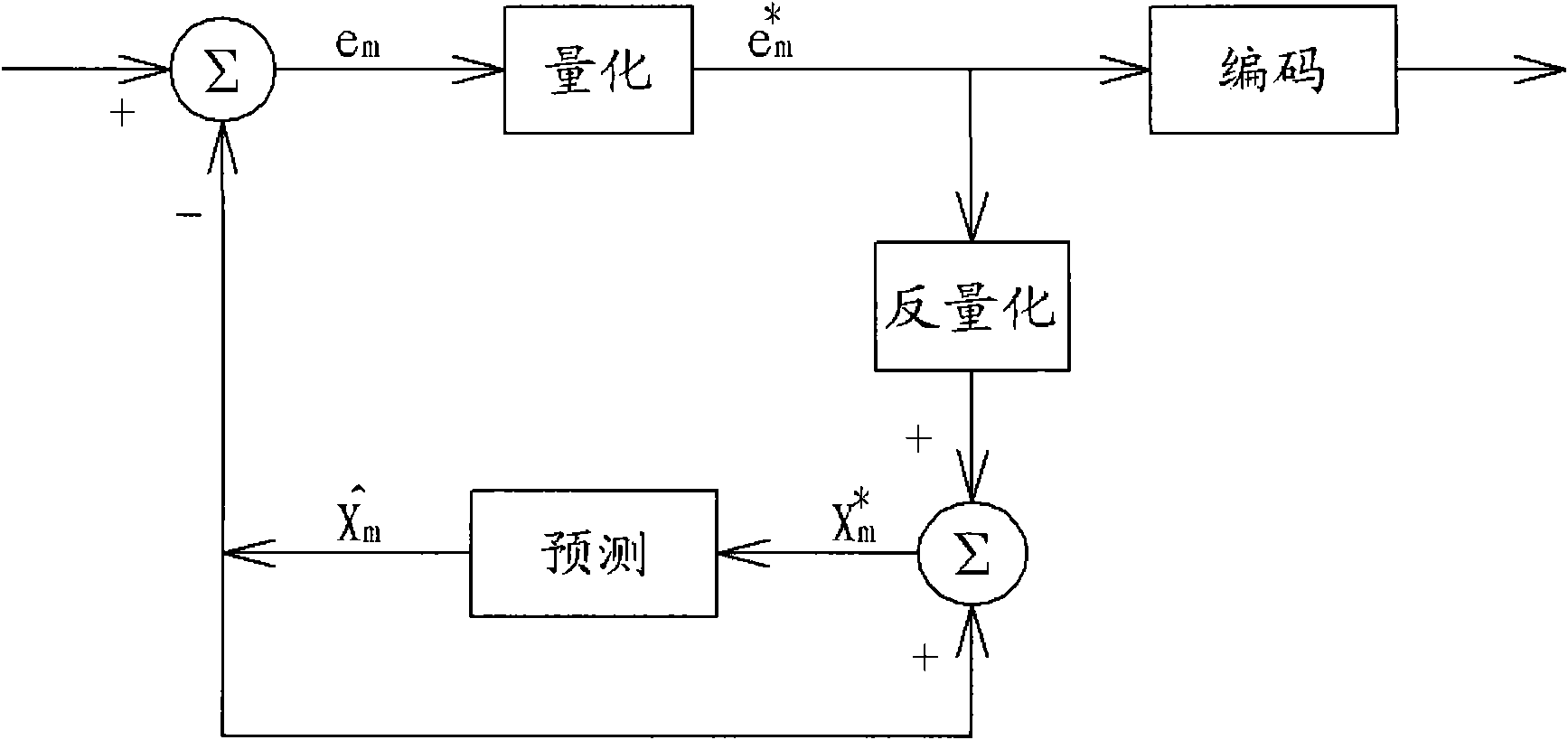

Method of rate control, video frame encoder and frame compression system thereof

InactiveCN101540903AEfficient use ofPulse modulation television signal transmissionDigital video signal modificationData compressionCache compression

This invention provides a video frame encoder, comprising: a segment unit, segmenting a video frame into a plurality of frame segments; a data compressing module, coupled to the segment unit, compressing a frame segment according to a plurality of compression rates to generate a plurality of coded outputs respectively corresponding to the plurality of compression rates; a selecting module, coupled to the data compressing module, selecting an actual coded output from the plurality of coded outputs based on a target rate; and a packing unit, coupled to the selecting module, packing the actual coded output to generate compressed data. The video frame encoder provided by this invention controls the compression ratio to realize the random access, thereby allowing the part update of the video frame and realizing the effective utilization of the memory.

Owner:MEDIATEK INC

Technique for Passive Cache Compaction Using A Least Recently Used Cache Algorithm

ActiveUS20150169470A1Memory architecture accessing/allocationMemory adressing/allocation/relocationUnique identifierLeast recently frequently used

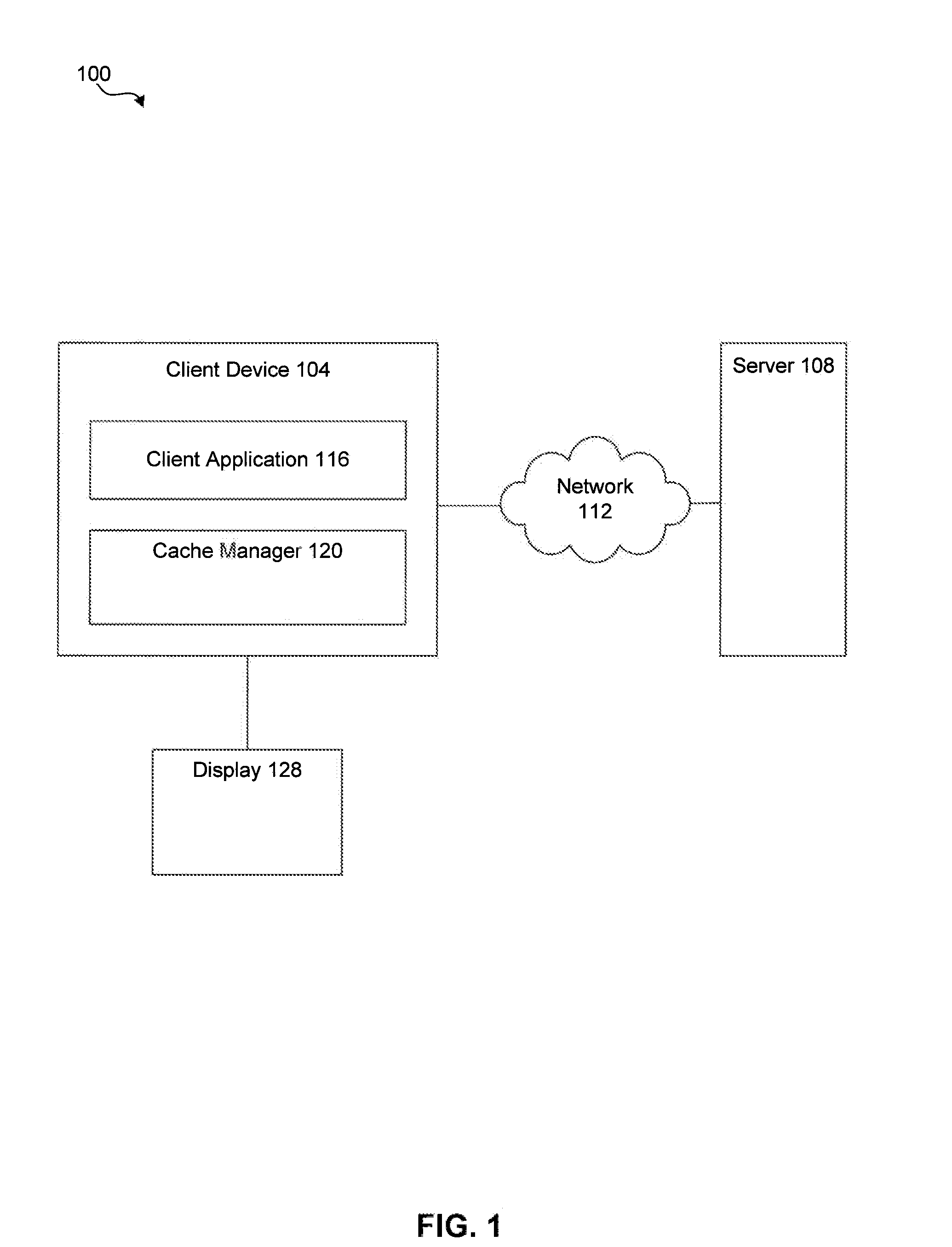

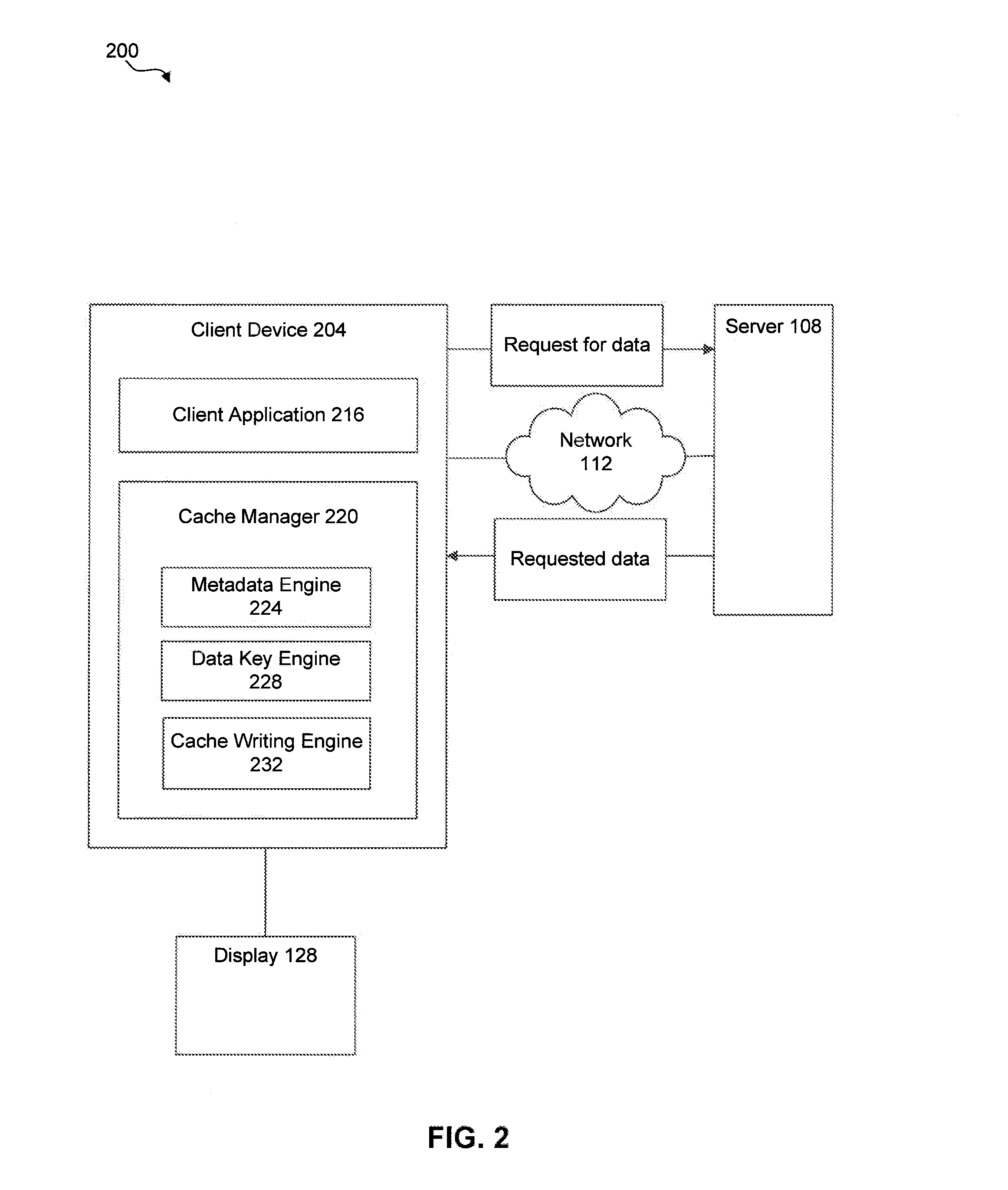

An example method for passive compaction of a cache includes determining first metadata associated with first data and second metadata associated with second data. The first metadata includes a first retrieval time, and the second metadata includes a second retrieval time. The example method further includes obtaining a first metadata key including a first unique identifier and obtaining a second metadata key including a second unique identifier. The example method also includes generating a first data key and generating a second data key. The example method further includes writing, at a client device, the first and second data to the cache. Each of the first and second data occupy one or more contiguous blocks of physical memory in the cache, and the first and second data are stored in the cache in an order based on the relative values of the first and second retrieval times.

Owner:GOOGLE LLC

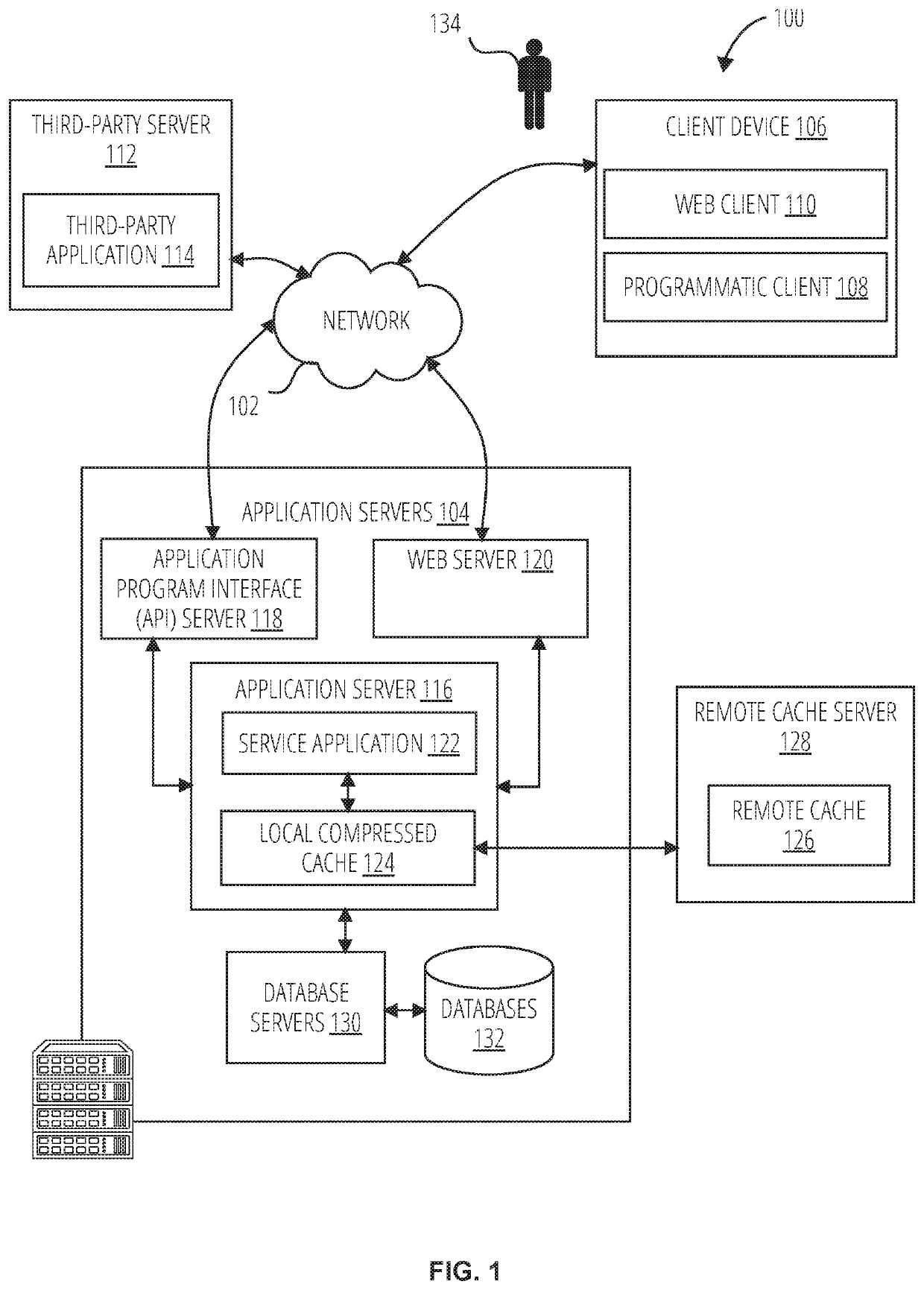

Compressed cache using dynamically stacked roaring bitmaps

ActiveUS20200201760A1Memory architecture accessing/allocationCode conversionComputational scienceParallel computing

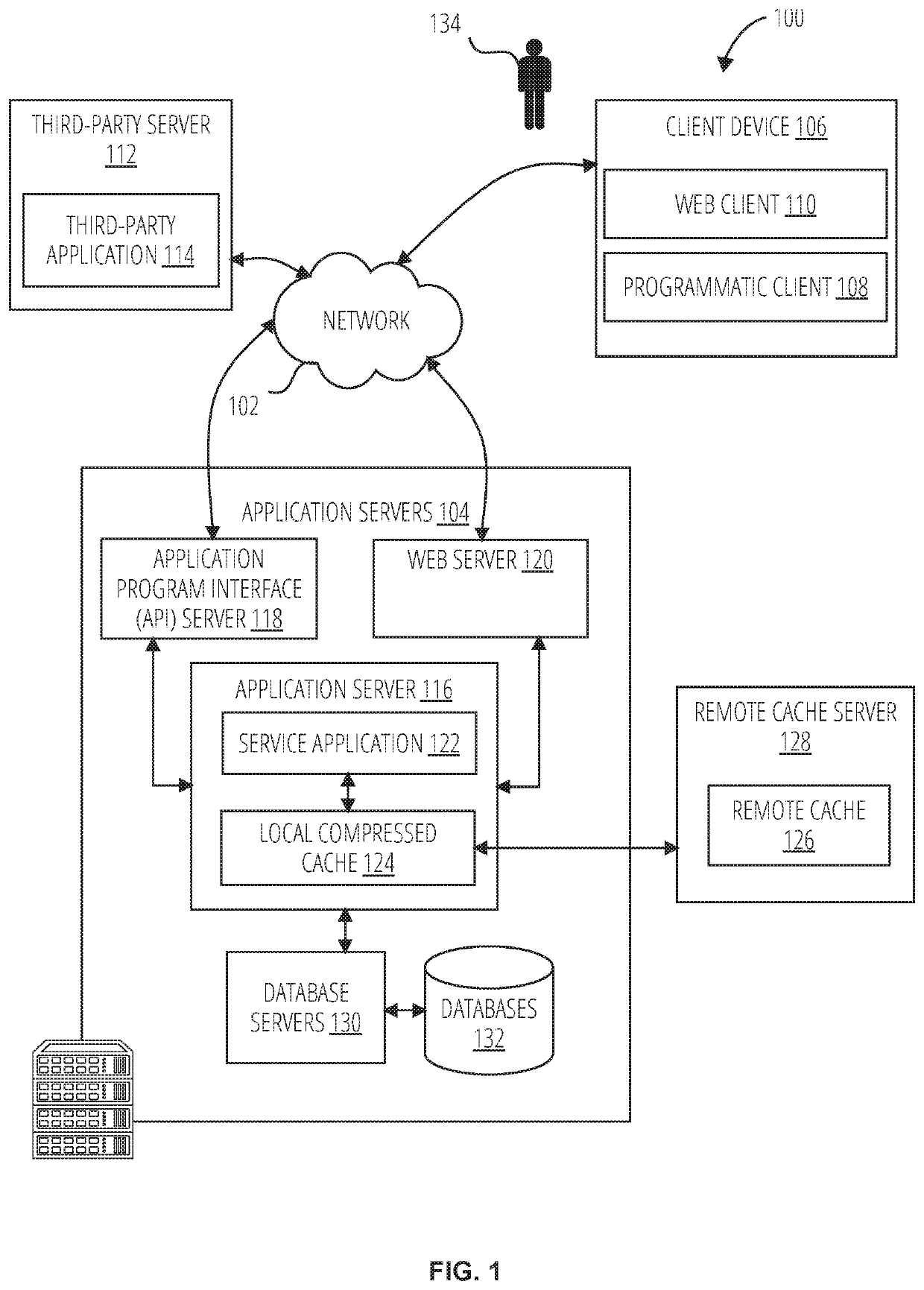

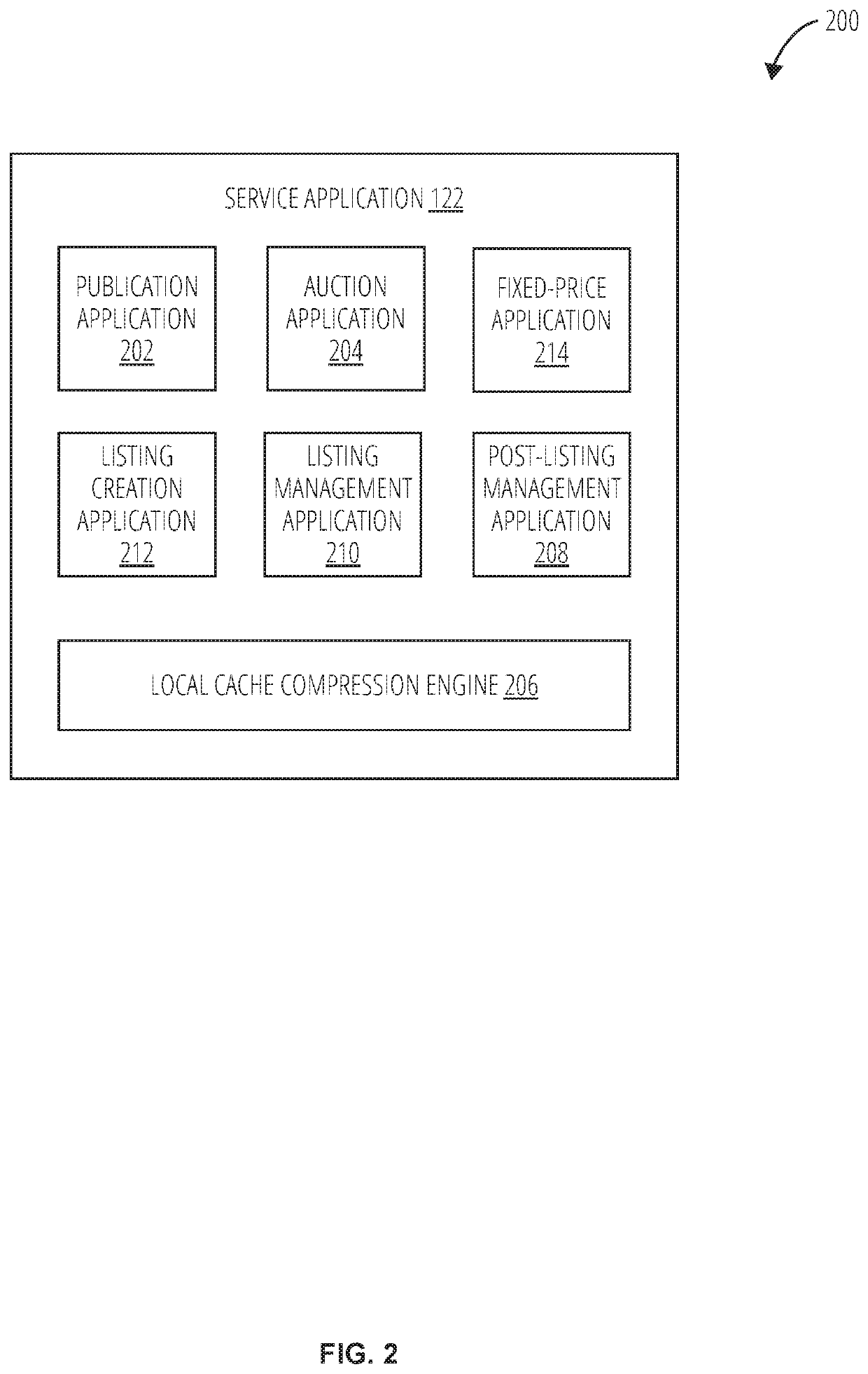

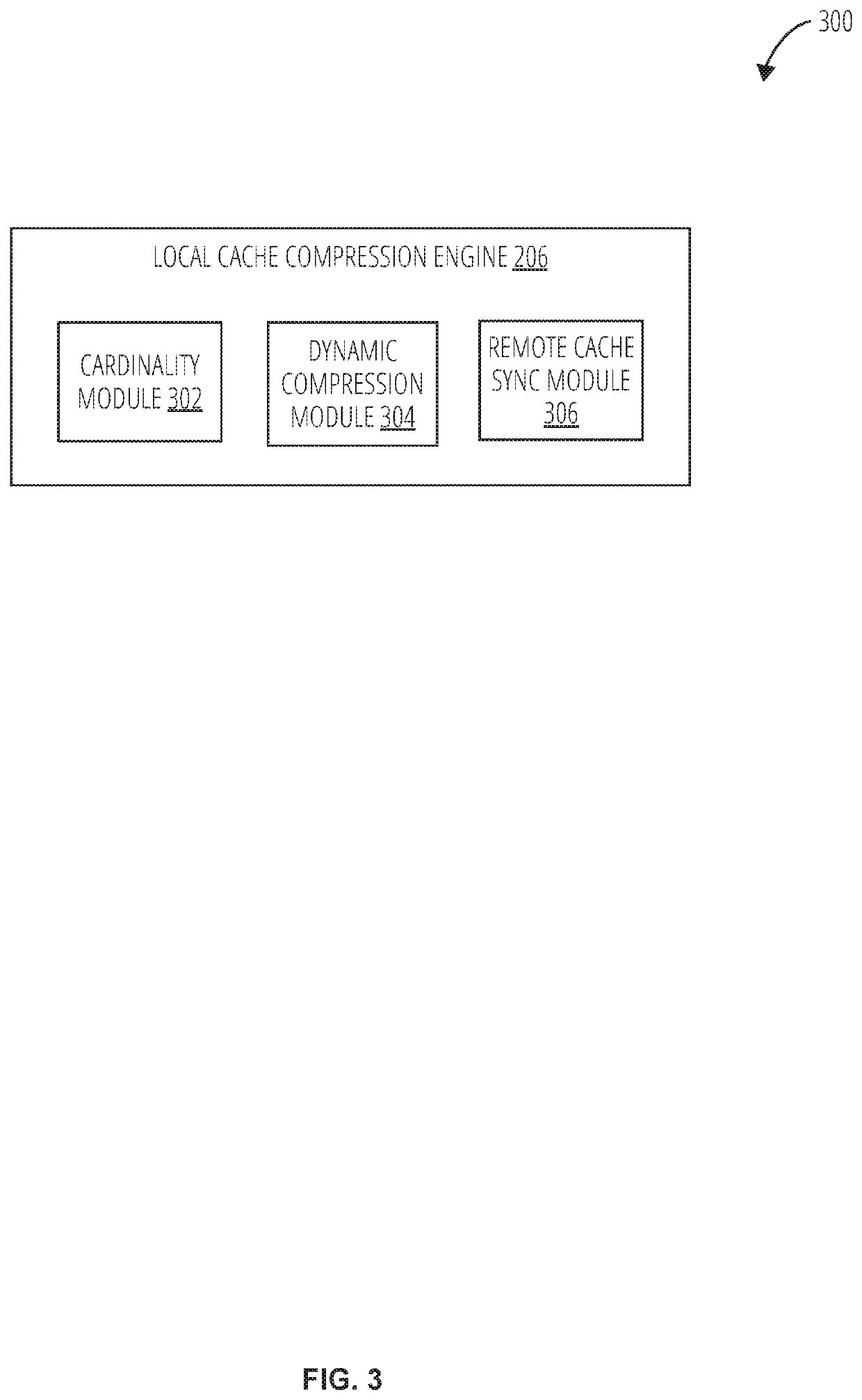

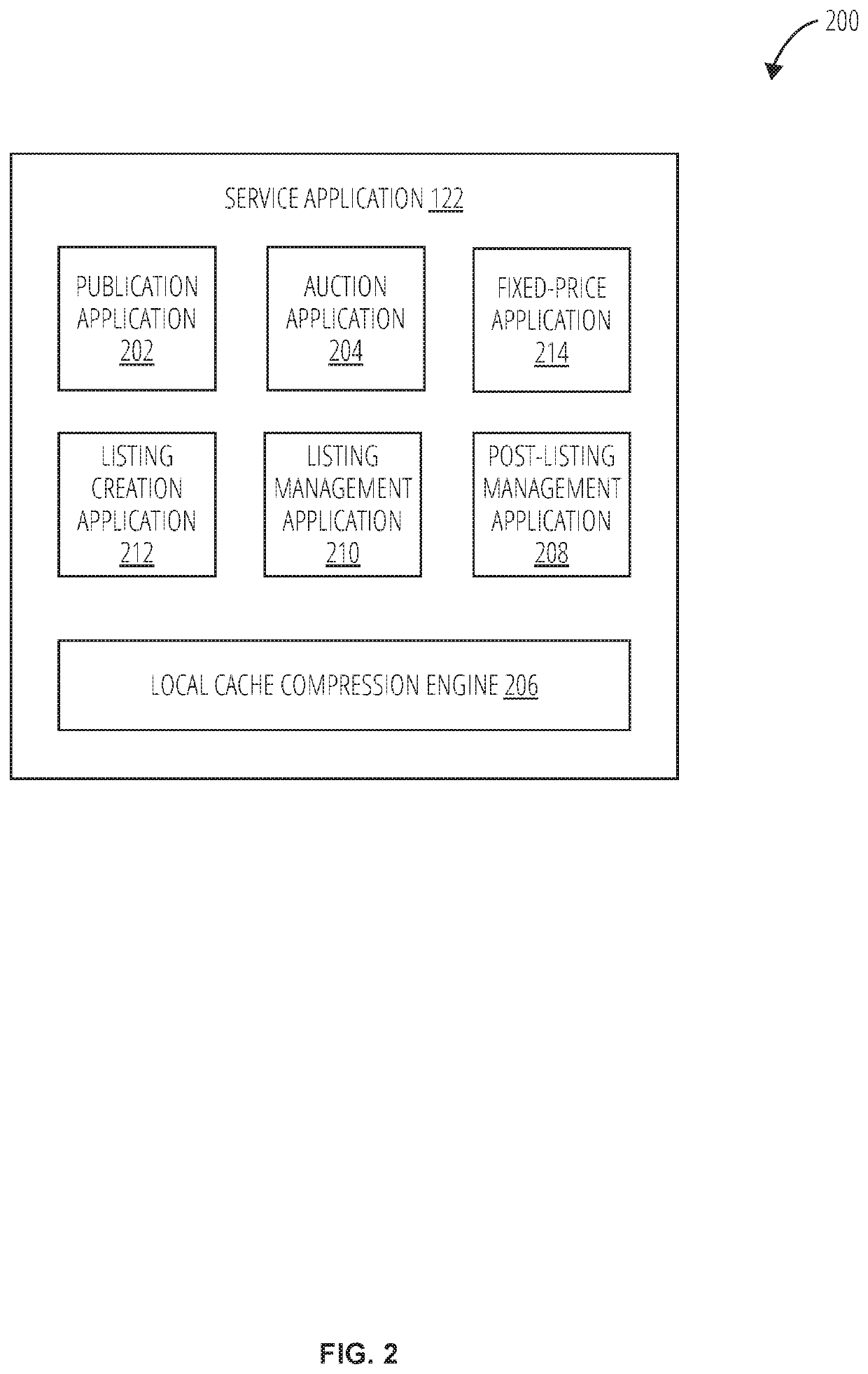

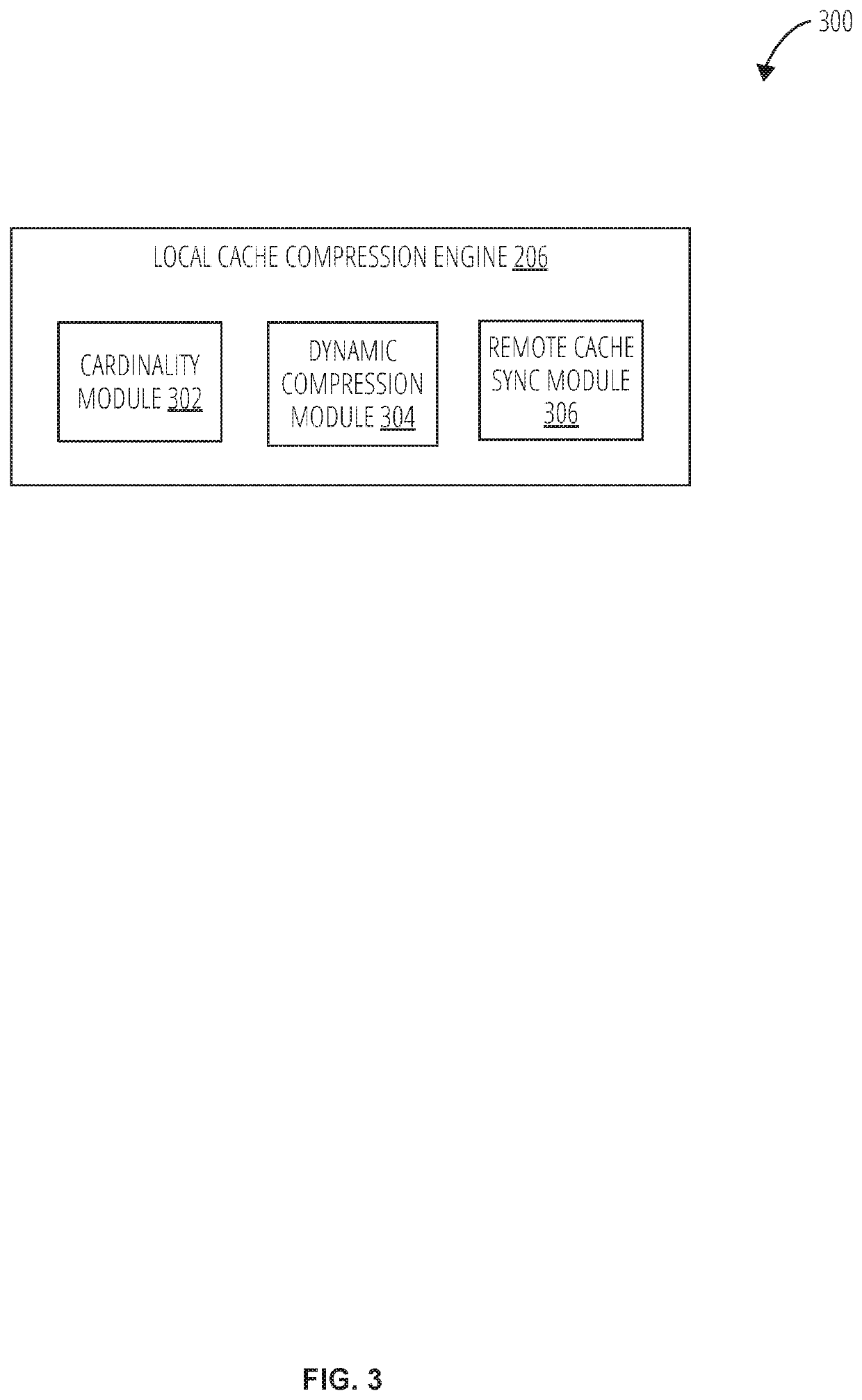

A method for compressing data in a local cache of a web server is described. A local cache compression engine accesses values in the local cache and determines a cardinality of the values of the local cache. The local cache compression engine determines a compression rate of a compression algorithm based on the cardinality of the values of the local cache. The compression algorithm is applied to the cache based on the compression rate to generate a compressed local cache.

Owner:EBAY INC

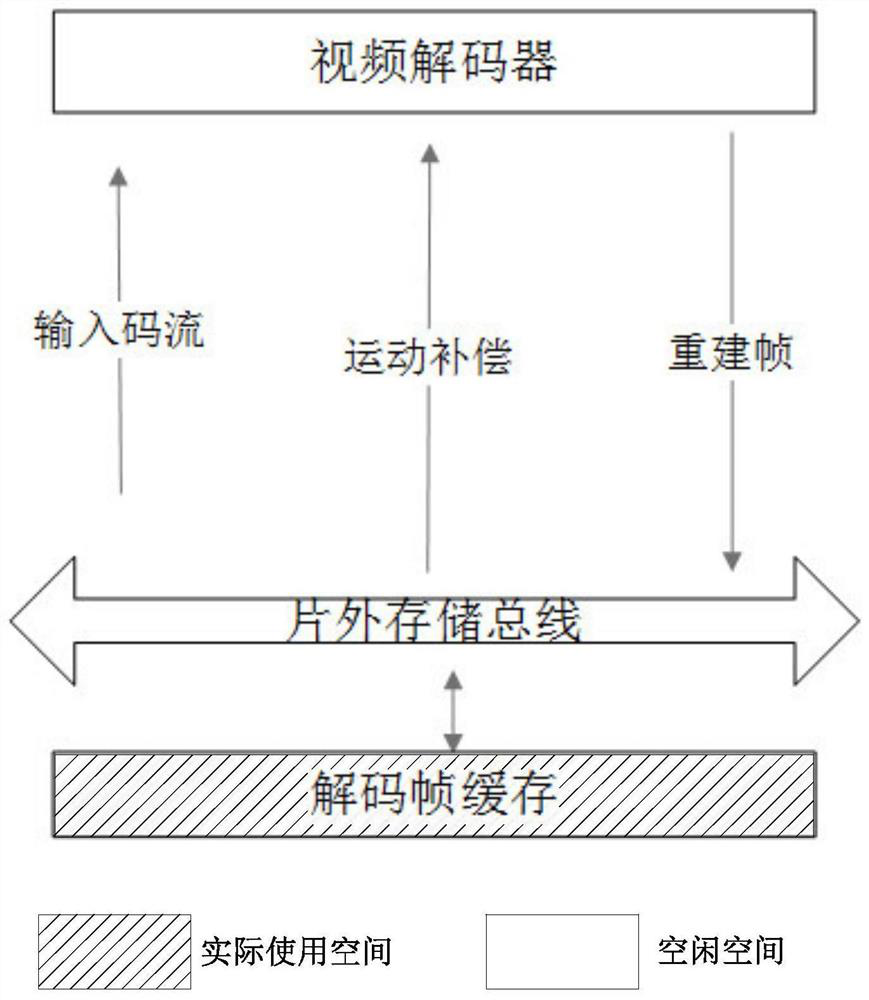

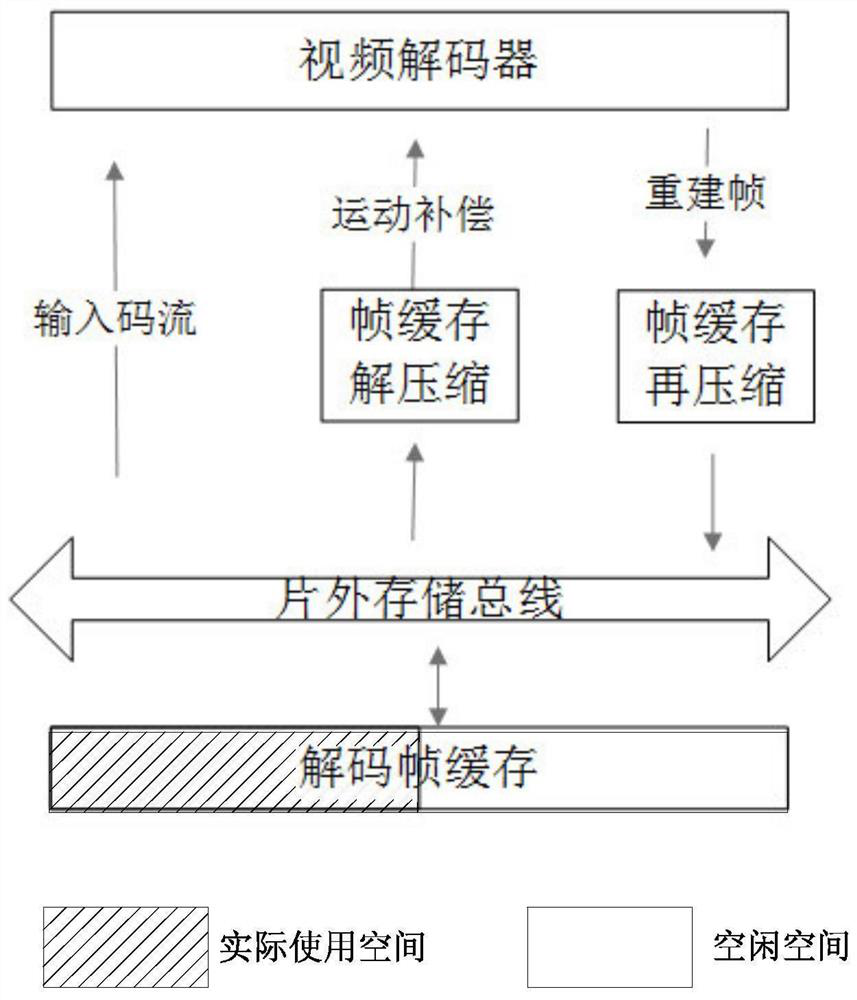

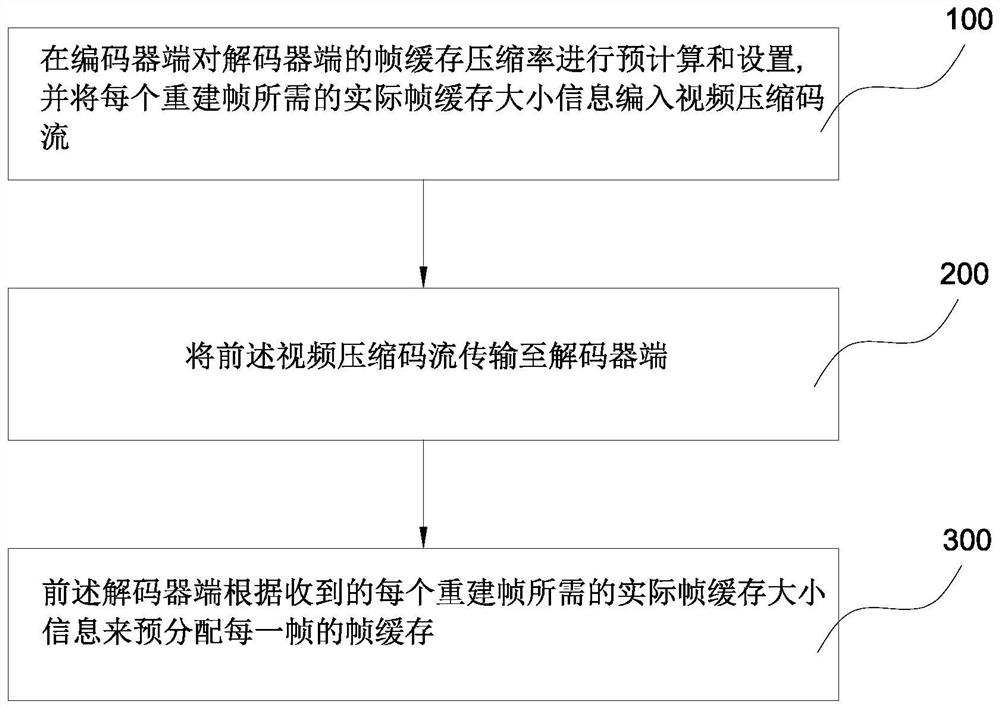

Encoding method supporting decoding compressed frame buffer self-adaptive allocation and application

ActiveCN111787330ASave storage spaceDecoding delayClosed circuit television systemsDigital video signal modificationComputer architectureParallel computing

Owner:MOLCHIP TECH (SHANGHAI) CO LTD

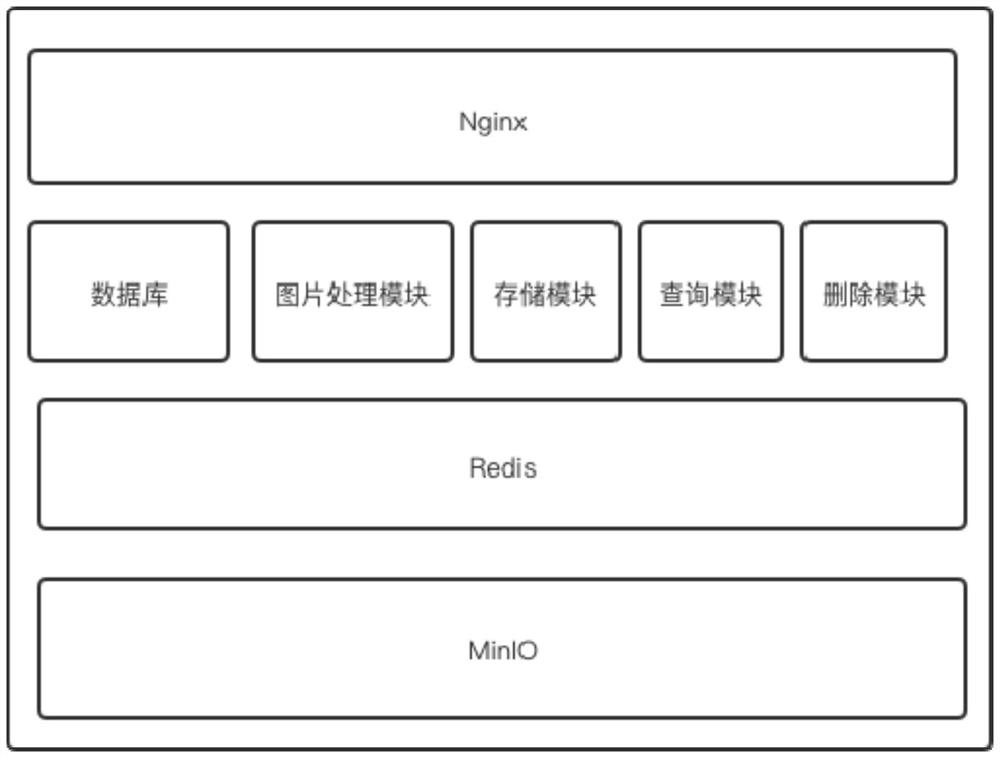

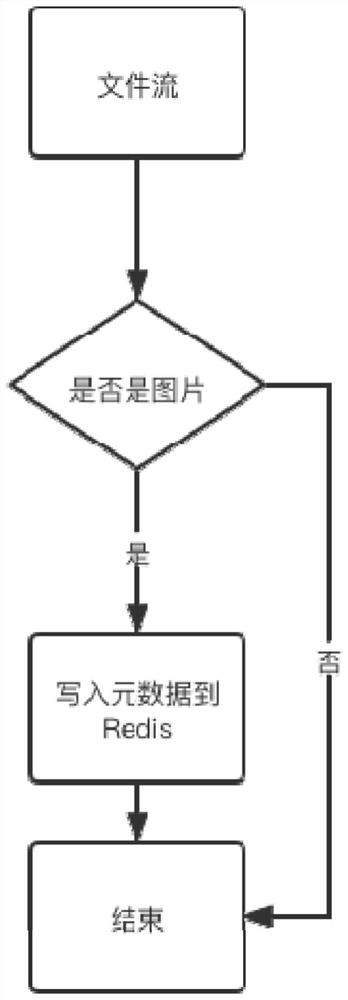

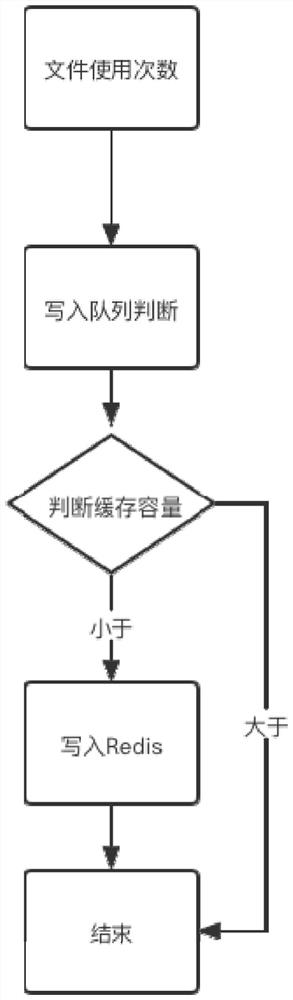

Distributed file storage system and method based on Nginx + MinIO + Redis

PendingCN114817176ASolve lengthSolve space problemsDigital data information retrievalSpecial data processing applicationsParallel computingDistributed cache

The invention relates to the technical field of data storage, and particularly discloses a distributed file storage system and method based on Nginx + MinIO + Redis, and the system comprises a database and middleware; the middleware comprises a picture processing module and a picture processing module, wherein the picture processing module is used for carrying out thumbnail operation on a picture on the basis of an http - image - filter - module in Nginx; the abbreviation operation comprises zooming the picture according to the specified width and height; the storage module is used for carrying out distributed storage on the file data based on the MinIO cluster; the storage module comprises a file uploading unit, a cache compression unit and a cache replacement unit; the query module is used for executing file query operation on the distributed cached files based on the Redis cluster; and the deletion module is used for executing deletion operation on the distributed file. The invention provides a file compression caching strategy based on Thumbnalator, Base64 and Gzip compression algorithms, the problems that the length of a character string is too long and the occupied memory space is large when the Redis caches the file are solved, and the utilization rate of the memory space is improved.

Owner:福建财通信息科技有限公司

Compressed cache using dynamically stacked roaring bitmaps

ActiveUS11016888B2Memory architecture accessing/allocationCode conversionComputational scienceParallel computing

A method for compressing data in a local cache of a web server is described. A local cache compression engine accesses values in the local cache and determines a cardinality of the values of the local cache. The local cache compression engine determines a compression rate of a compression algorithm based on the cardinality of the values of the local cache. The compression algorithm is applied to the cache based on the compression rate to generate a compressed local cache.

Owner:EBAY INC

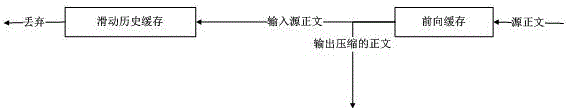

Physical information system data compression transmission method using sliding window cache

InactiveCN106850785AImprove compression transfer speedShorten the time to match compressionTransmissionComputer hardwareData compression

The invention relates to a data transmission method of a physical information system, and in particular to a physical information system data compression transmission method using a sliding window cache. By aiming at a problem that the physical information system has a large amount of data to be transmitted, a data compression transmission method based on sliding window cache compression is provided, and when data is transmitted in the physical information system, a data flow has a large possibility of occurring repetitions. When one repetition occurs, a short code can take place of a repeated sequence. Such repetition is scanned by a compression program, and codes are generated to take place of repeated sequences at the same time. With the passage of time, the codes can be reused to capture a new sequence. A decompression program can deduce a current mapping in the codes and an original data sequence. The physical information system data compression transmission method using the sliding window cache, which is provided by the invention, can largely shorten data matching compression time so as to improve data compression transmission speed.

Owner:JINAN INSPUR HIGH TECH TECH DEV CO LTD

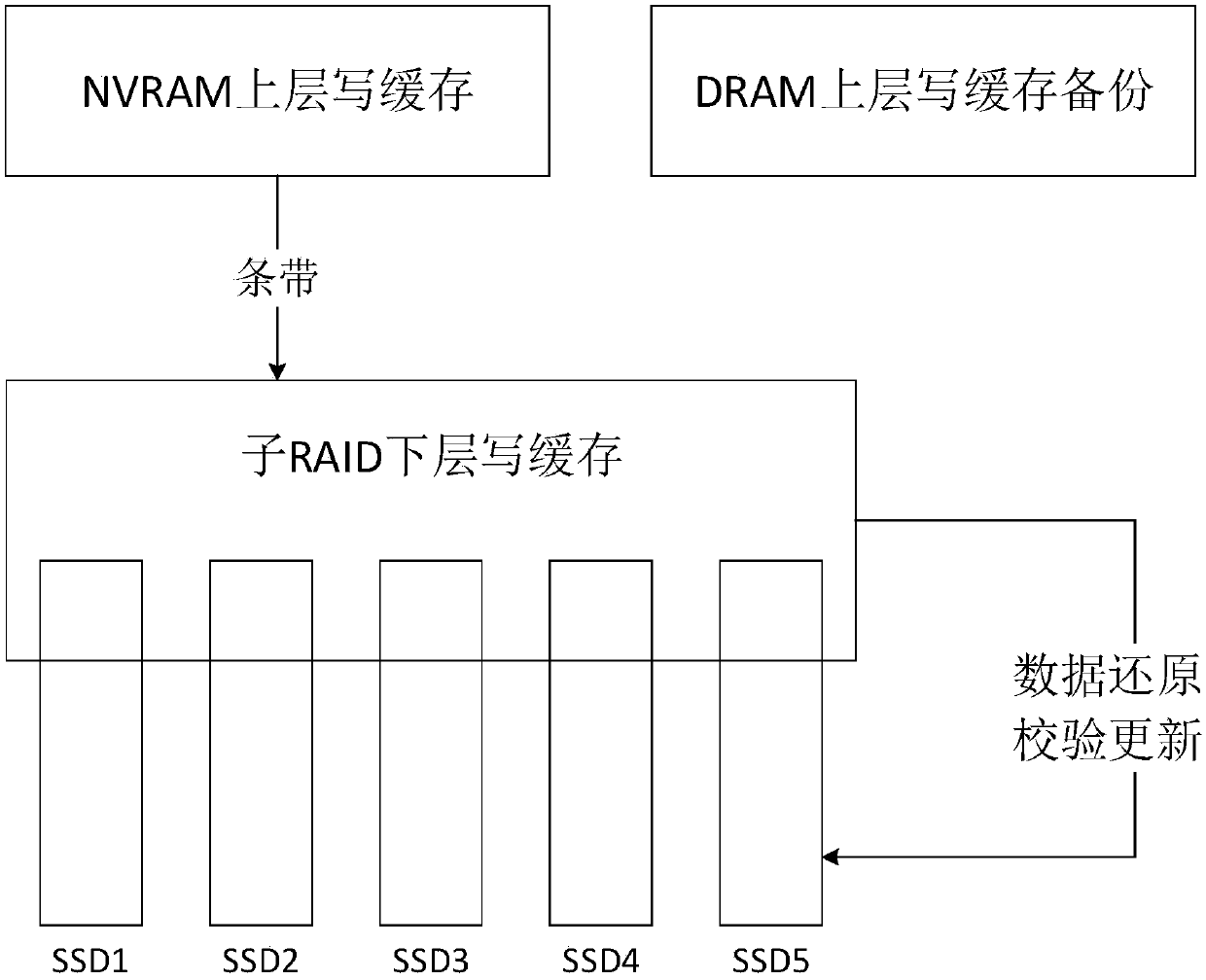

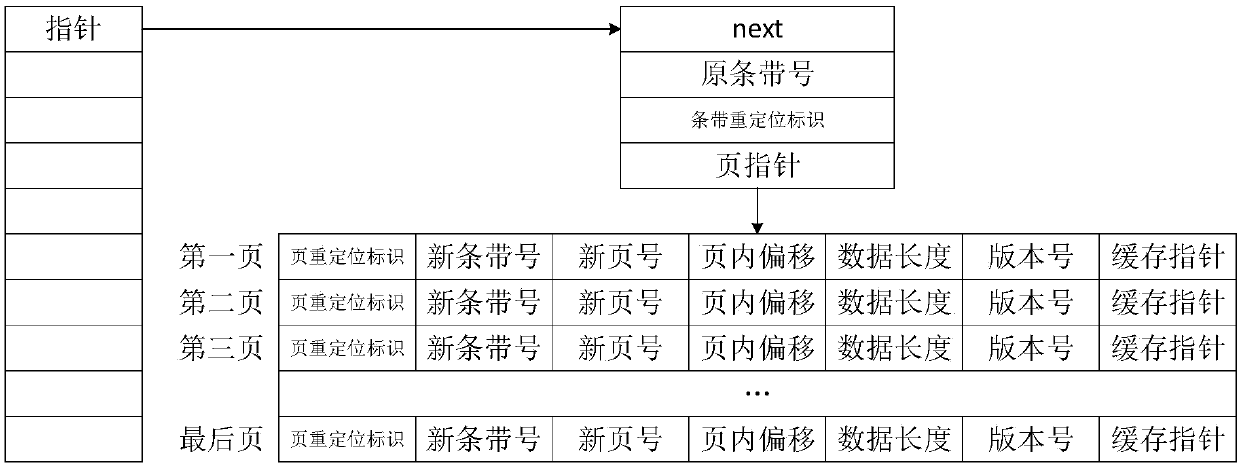

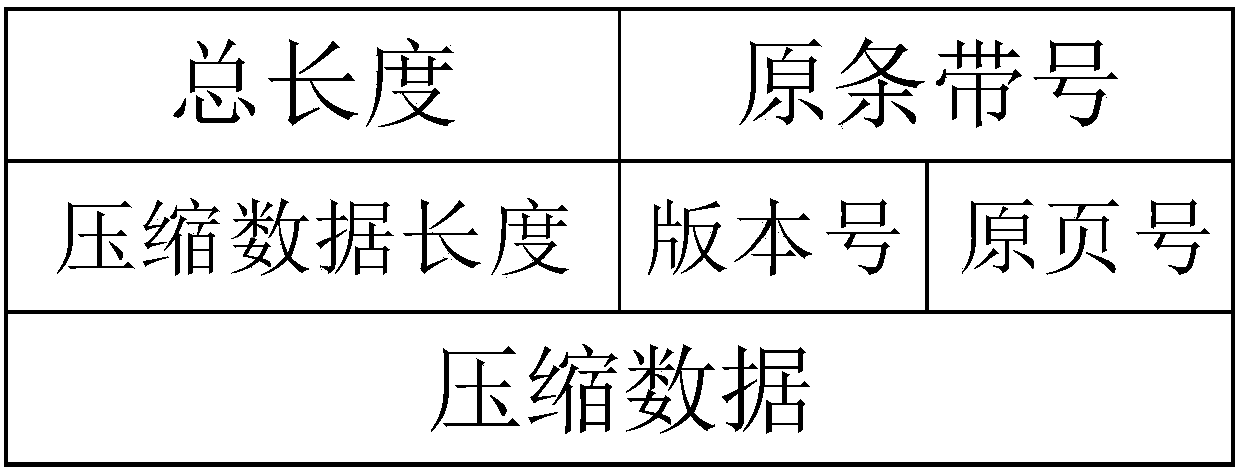

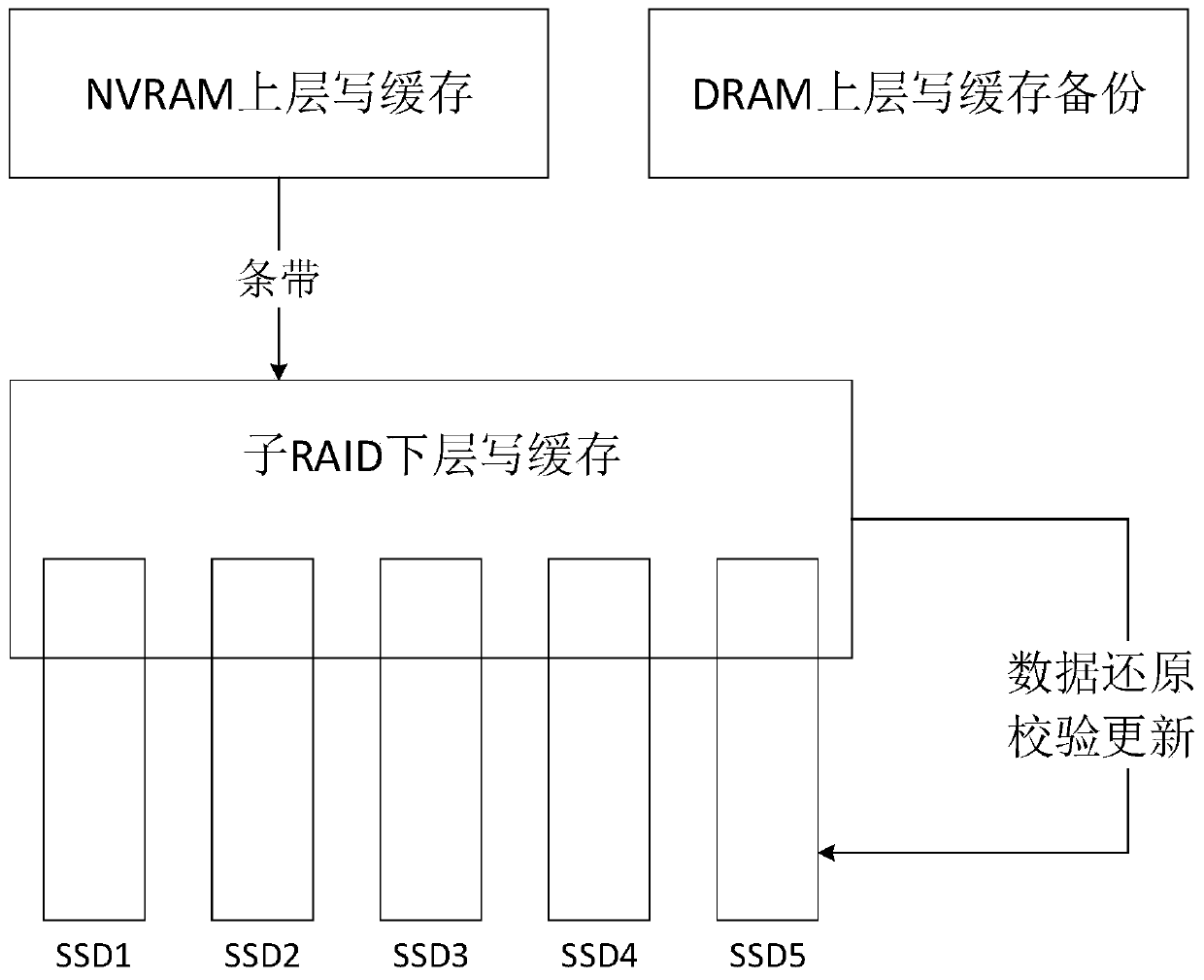

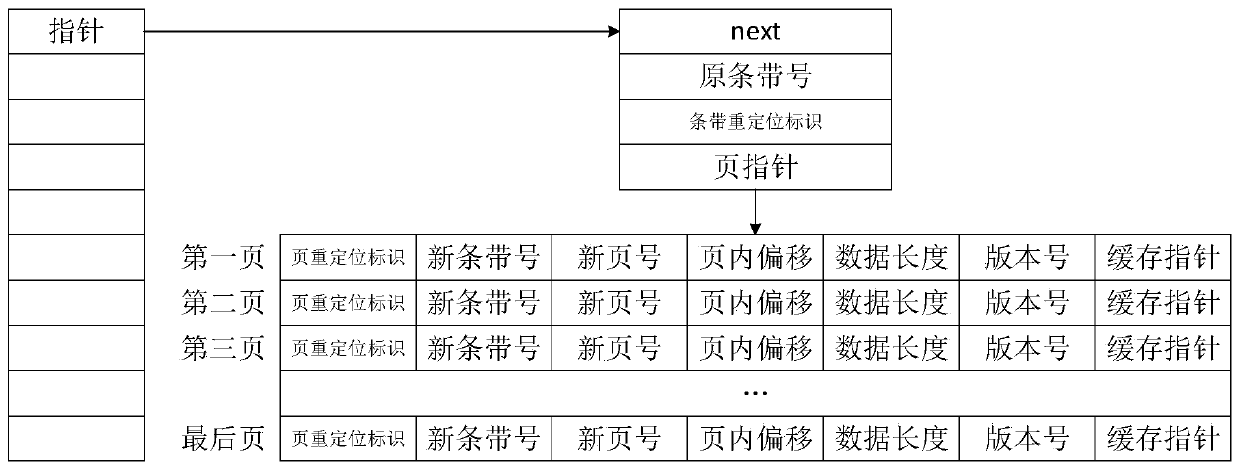

Multilevel cache based on SSD RAID array and caching method

ActiveCN107608626AExtend your lifeReduced cache capacity requirementsInput/output to record carriersMemory systemsRAIDElectricity

The invention discloses a multilevel cache based on an SSD RAID array and a caching method and belongs to the field of solid state disk storage technology. The multilevel cache is designed, wherein anupper-layer cache is realized through a small-capacity NVRAM and is responsible for compressing data, backing up the data to a DRAM, integrating the compressed data and metadata of the compressed data into a page for storage and scheduling the data to a lower-layer cache with stripes being units; and the lower-layer cache is realized by separating a part of space from an RAID and is responsible for caching the compressed data, maintaining a mapping table of the original data and the compressed data, restoring the compressed data to the RAID and performing power-fail recovery on the mapping table. The multilevel caching method based on the SSD RAID array is also implemented. Through the technical scheme, according to the characteristic that redundancy between new data and old data is large, the compressed data is managed and stored in the multilevel cache, the demand for cache capacity is reduced, and the cost and the error rate are lowered; and multilevel cache design ensures system reliability, and regardless of the DRAM, the NVRAM or the RAID, timely recovery can be realized in case of data loss or errors.

Owner:HUAZHONG UNIV OF SCI & TECH

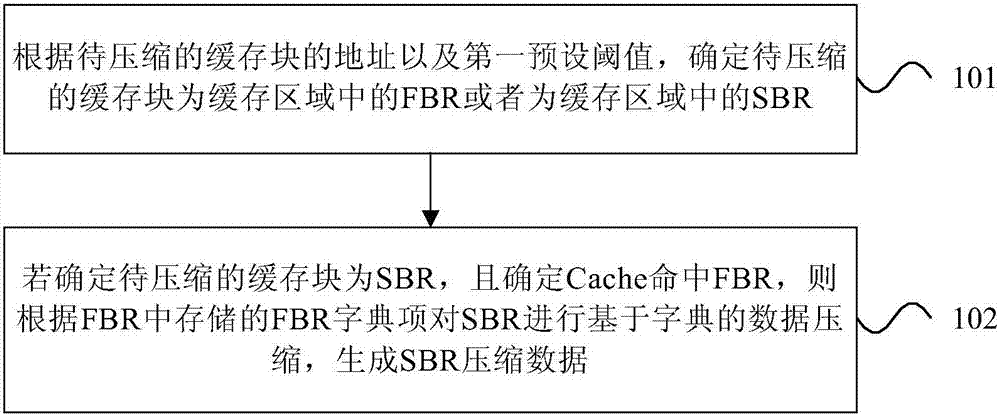

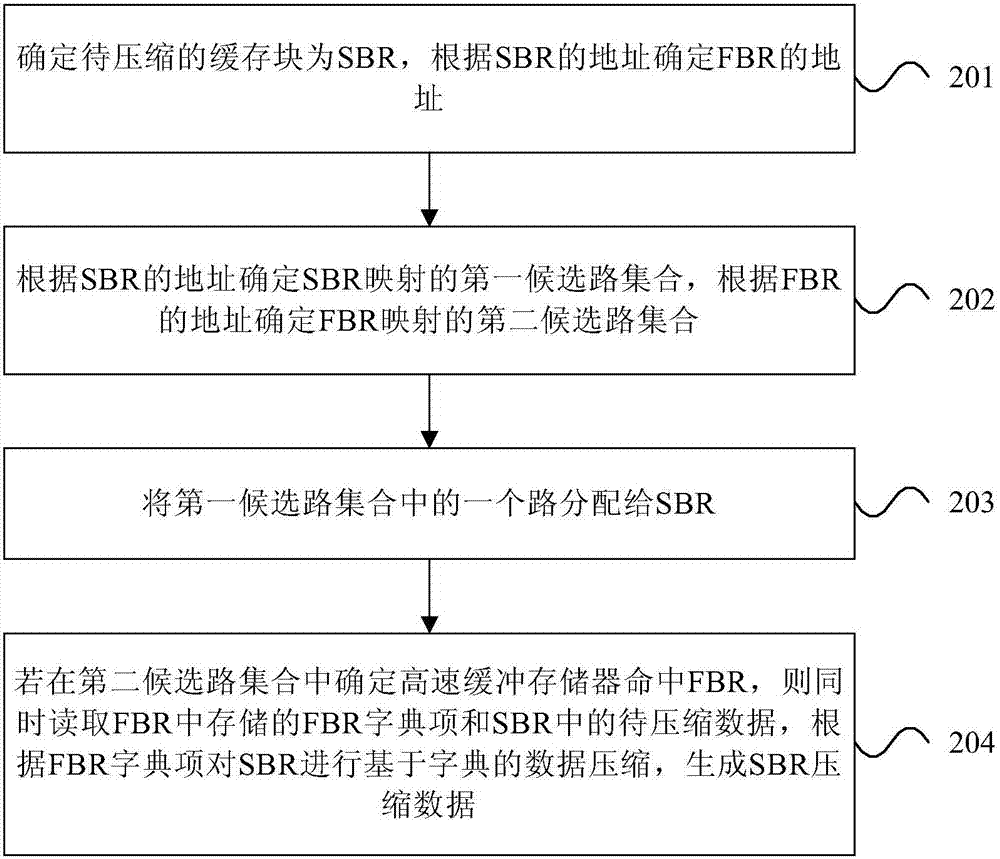

Cache memory access method and apparatus

InactiveCN107515829AIncrease the compression ratioImplement data compressionMemory systemsData compressionAccess method

The invention provides a cache memory access method and apparatus. The cache memory access method comprises the steps of determining a to-be-compressed cache block as a first block in region (FBR) in a cache region or a successive block in region (SBR) in the cache region according to an address of the to-be-compressed cache block and a first preset threshold, wherein the cache region is located in a cache memory and comprises a quantity of cache blocks with continuous addresses, and the quantity is equal to the first preset threshold; and if the to-be-compressed cache block is determined as the SBR and the cache memory is determined to hit the FBR, performing dictionary-based data compression on the SBR according to FBR dictionary items stored in the FBR to generate compressed SBR data. According to the cache memory access method provided by the invention, the whole cache region is subjected to the data compression by a compression delay of the single cache block, so that the Cache compression rate is increased.

Owner:LOONGSON TECH CORP

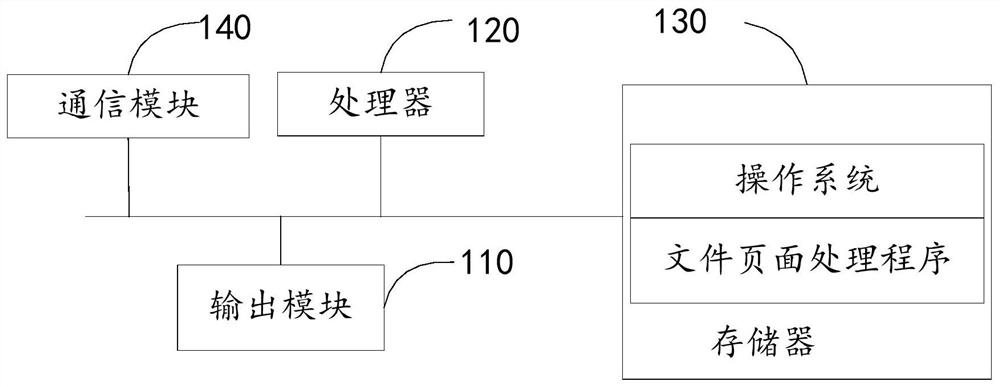

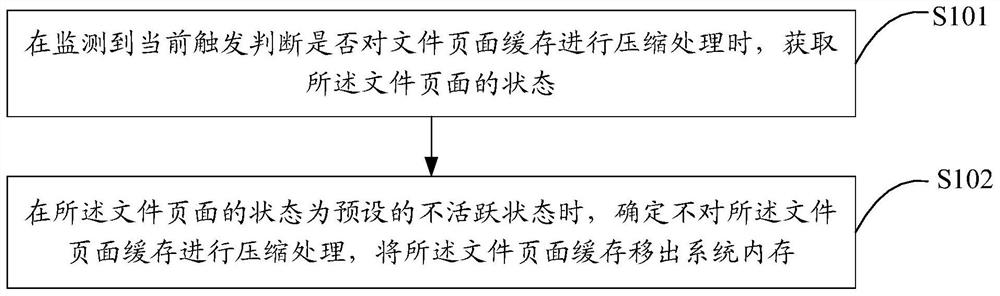

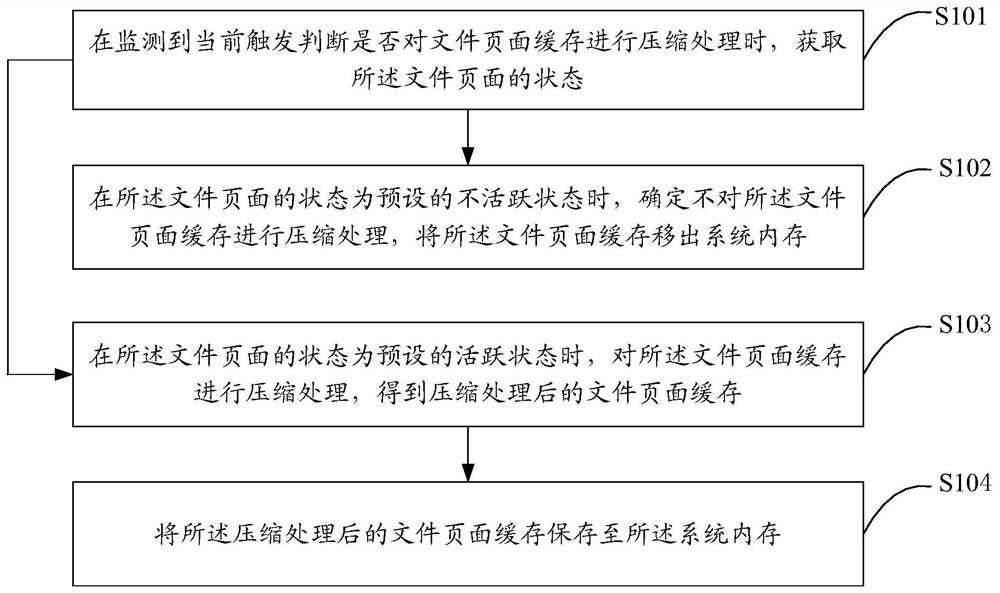

File page processing method and device, terminal equipment and storage medium

PendingCN112069433AAvoid affecting performanceWebsite content managementSpecial data processing applicationsTerminal equipmentTerm memory

The invention discloses a file page processing method and device, terminal equipment and a storage medium. The method comprises the steps of obtaining the state of a file page when a current trigger is monitored to judge whether to carry out the compression processing of a file page cache or not; and when the state of the file page is a preset inactive state, determining that the file page cache is not compressed, and moving the file page cache out of a system memory. Therefore, through the scheme, when the system memory is in shortage and the file pages are compressed through the compressionmechanism, the file pages with large life cycle and low probability are excluded, and the file pages are directly eliminated from the memory without cache compression. Therefore, the file page cache with low use probability is prevented from being compressed and staying in the memory, memory waste is avoided, and system performance is prevented from being affected.

Owner:OPPO CHONGQING INTELLIGENT TECH CO LTD

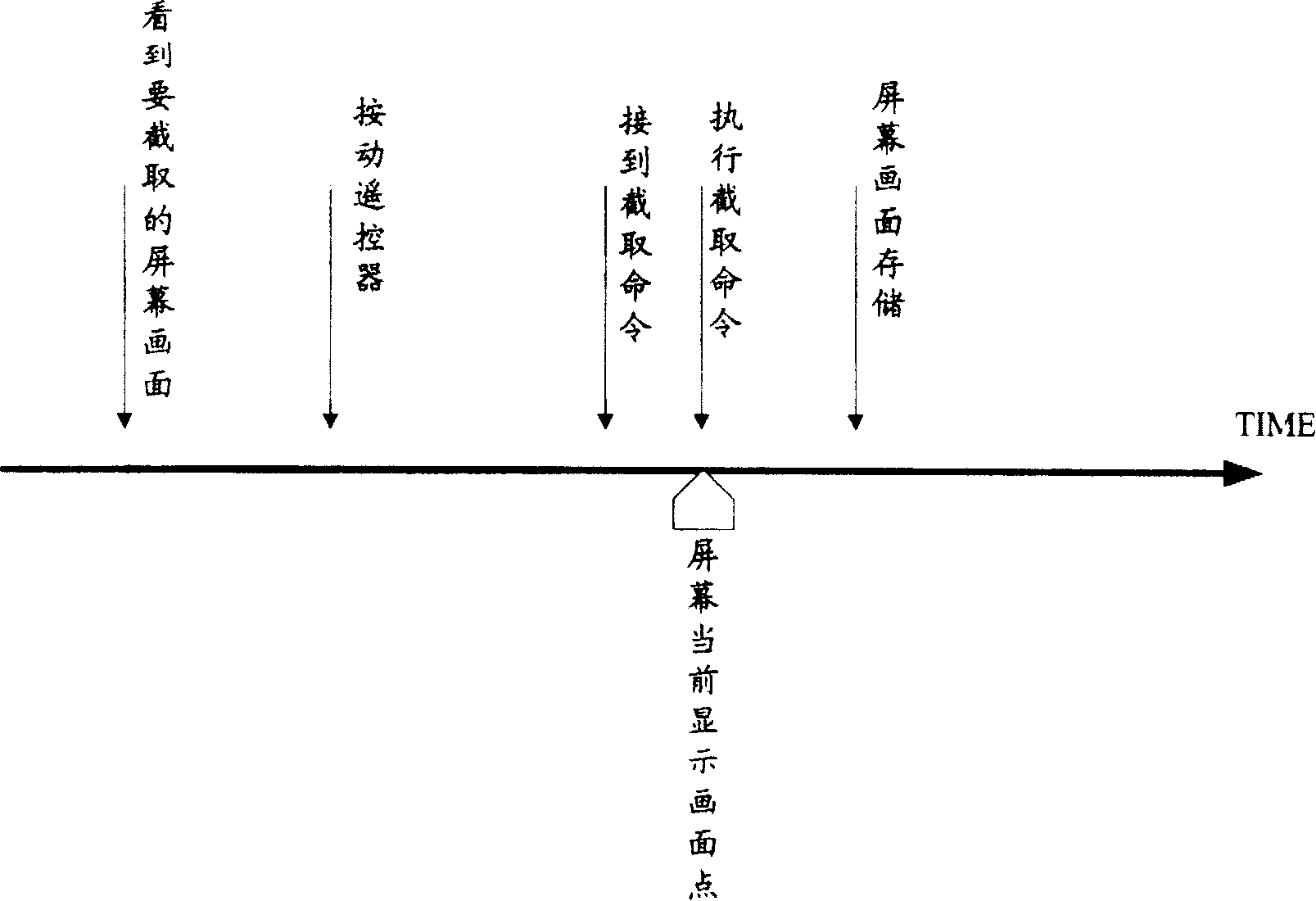

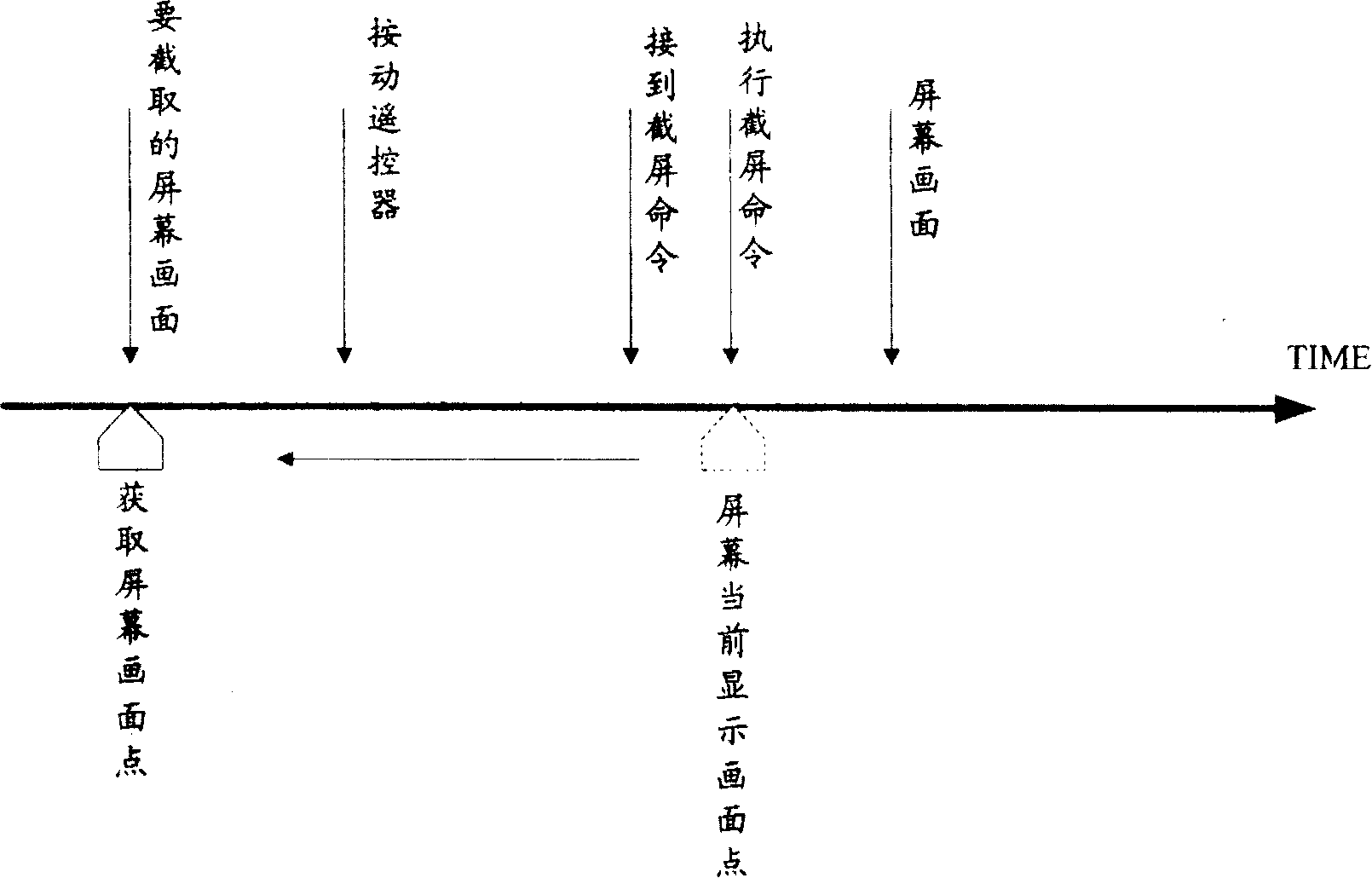

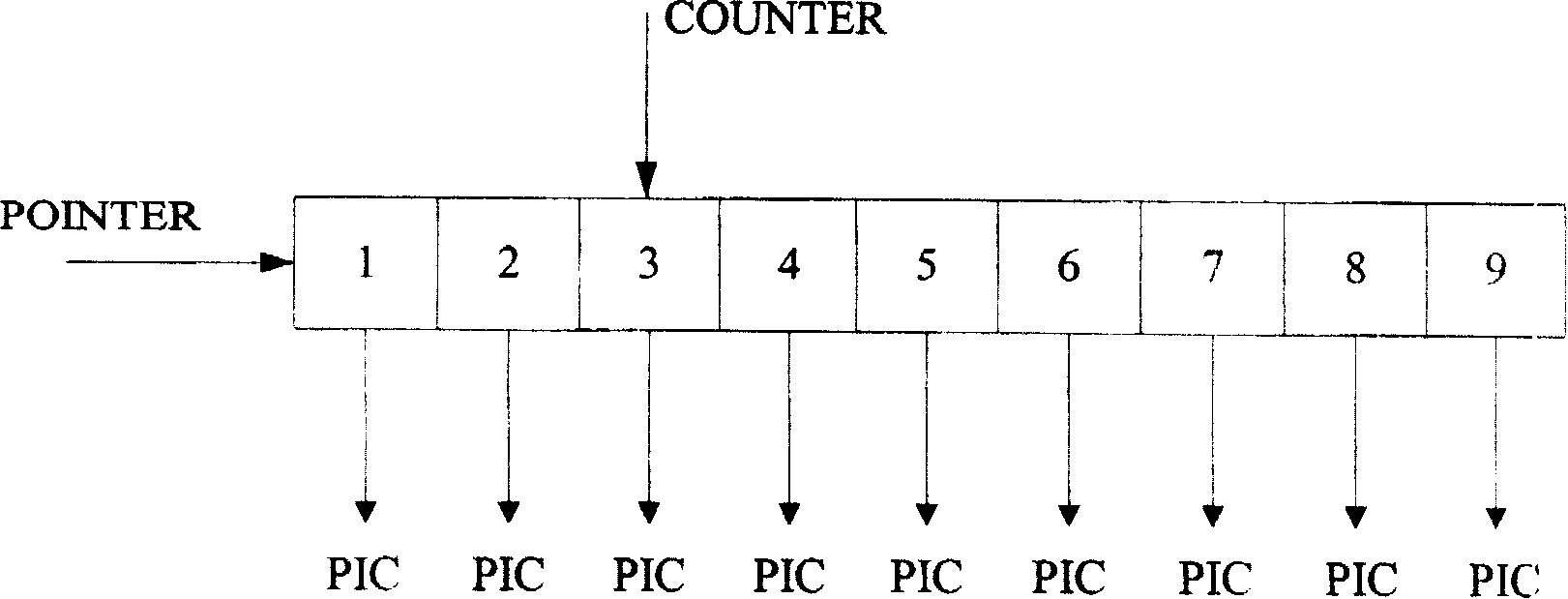

Screen printing method

InactiveCN100384240CTelevision system detailsColor television detailsScreen printingComputer graphics (images)

This invention discloses a screen catching method, which first sets a buffer area for screen in the catching system with its frame number determined by the screen images number played per second. The method also comprises the following steps: a, the system orderly playing the screen images and the system buffer area adopting new means to orderly memory the compressed screen images; b, the system requiring the prior buffered images form the area when executing the catching orders and using the image as the image to be caught for buffer.

Owner:LENOVO (BEIJING) CO LTD

A multi-level cache and cache method based on ssd RAID array

ActiveCN107608626BExtend your lifeReduced cache capacity requirementsInput/output to record carriersMemory systemsComputer architectureOccurrence data

Owner:HUAZHONG UNIV OF SCI & TECH

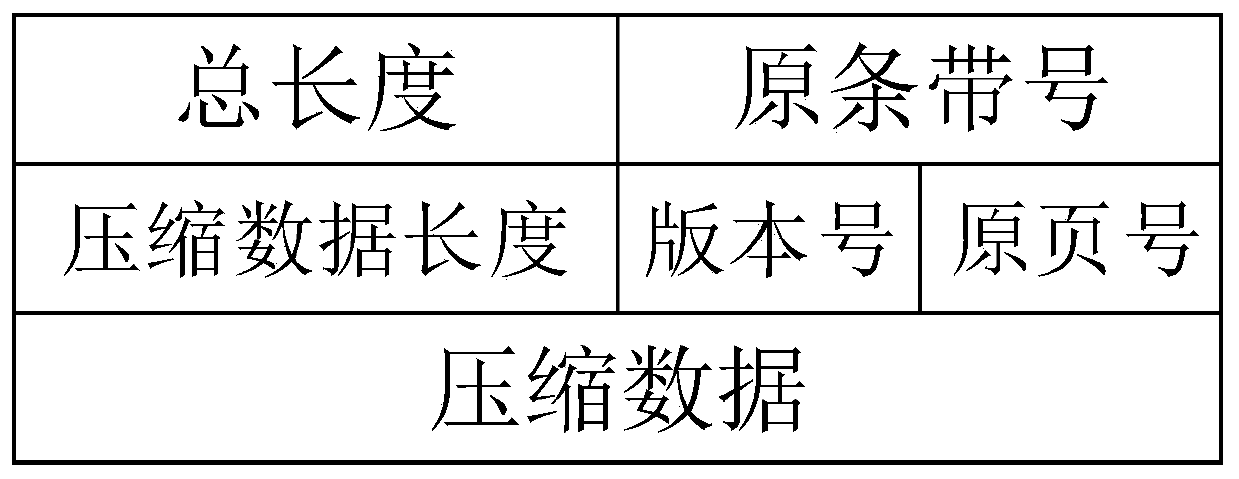

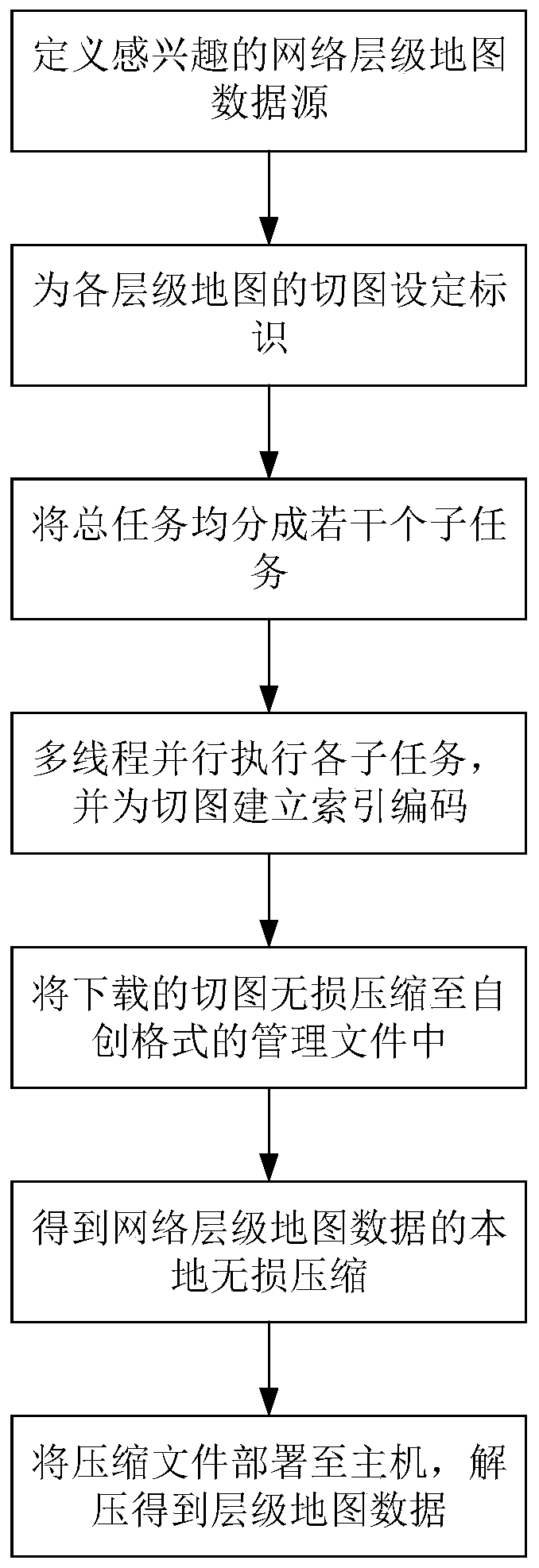

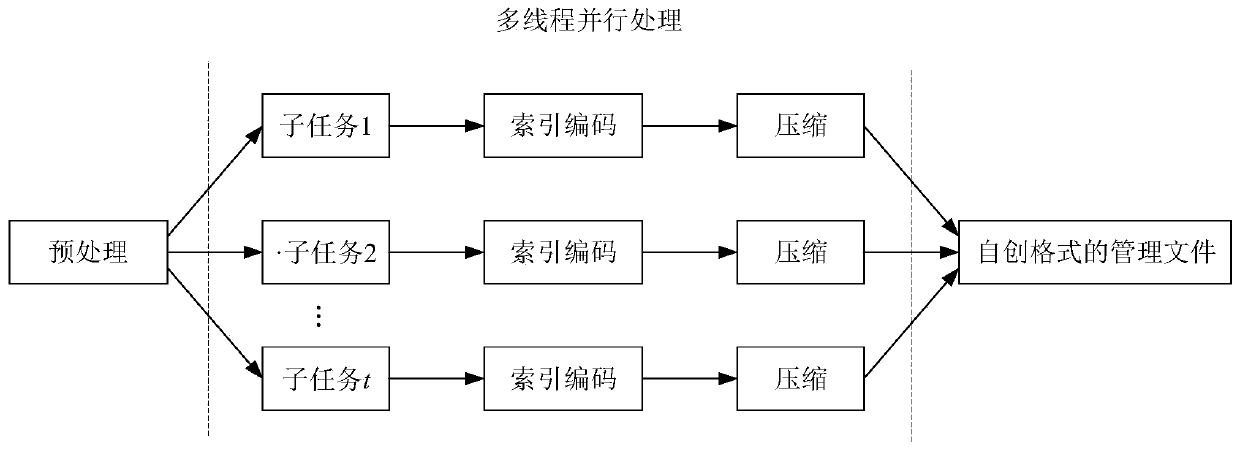

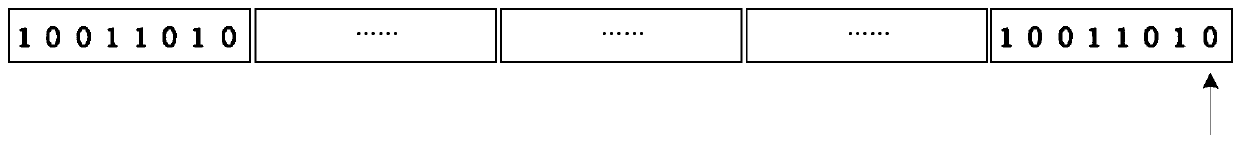

Rapid caching method for network level map data

ActiveCN110427345AImprove efficiencyGeographical information databasesSpecial data processing applicationsSlide windowByte

The invention discloses a rapid caching method for network level map data. The rapid caching method comprises the following steps: calculating the total number of cut images of map data of all layers,dividing the total number of cut images into a plurality of subtasks according to the number of CPU cores of a computer, and enabling each subtask to be in one-to-one correspondence with the CPU coreof each computer; and establishing an index code for each cut graph; setting the number of repeated bytes and the size of a sliding window, enabling a CPU core of a computer to read a corresponding cut image, traversing all rows of data of the cut image through the sliding window, and compressing repeated byte segments. According to the rapid caching method, the efficiency of generating and reading the hierarchical map data is improved by adopting a multi-thread cache compression method, a self-created cut map compression method and a decompression method.

Owner:武汉星源云意科技有限公司

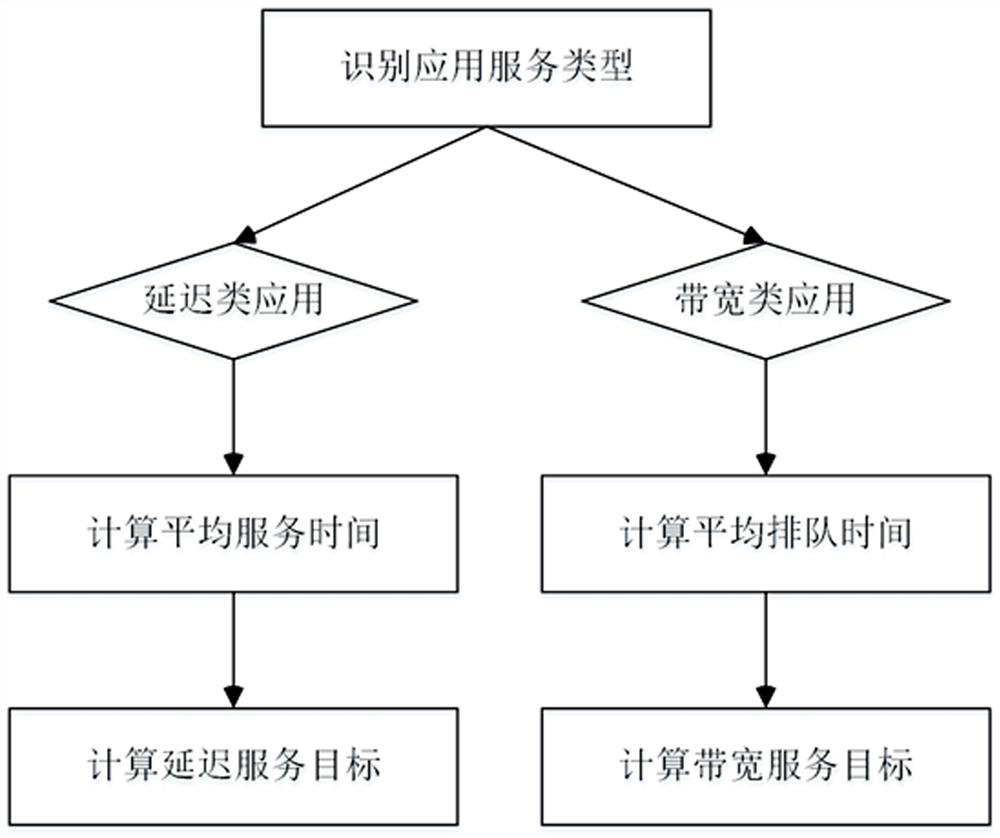

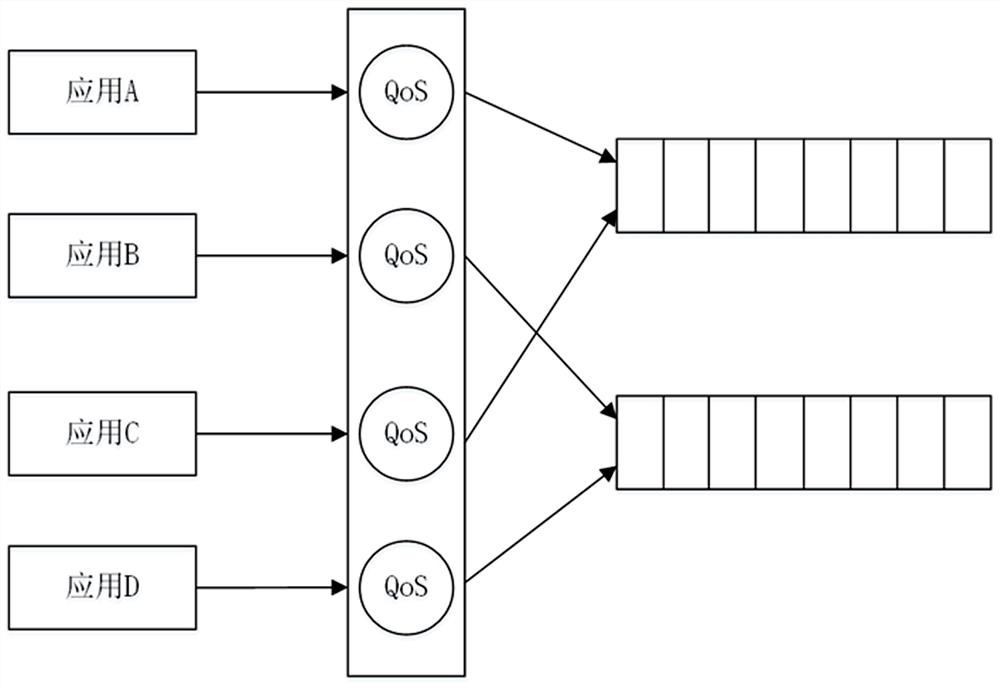

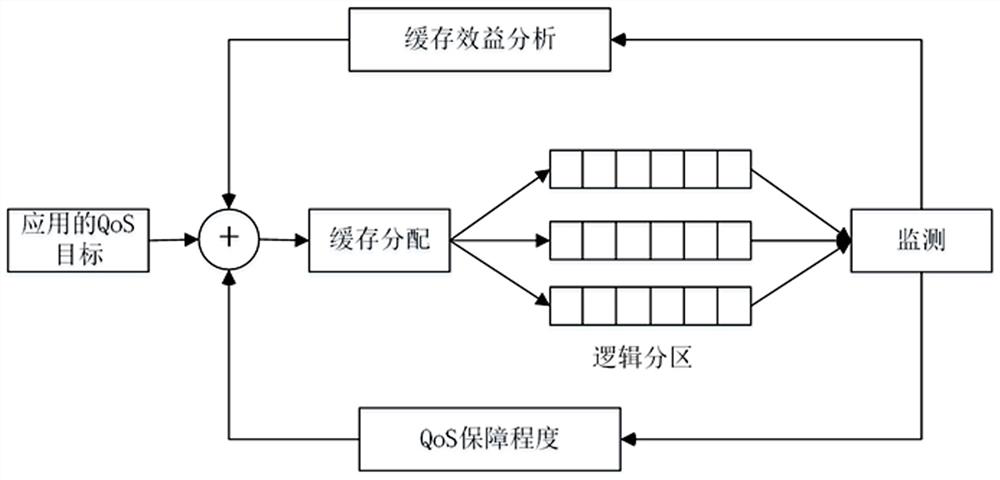

A quality-of-service-aware cache scheduling method based on feedback and fair queues

The present invention provides a quality-of-service-aware cache scheduling method based on feedback and fair queues, using a quality-of-service measurement strategy to index the quality of service of different similar applications, and using start-time fair queues to set different start service times to control different applications Request service sequence, use the feedback-based cache partition management module to divide all logical partitions into two types: providing partitions and receiving partitions, and adjust the cache allocation between the two types of logical partitions, and balance the whole through the cache block allocation management module The performance and guaranteed service quality, and the cache elimination strategy monitoring module monitor the current cache elimination strategy efficiency of each logical partition, and make dynamic adjustments according to the load characteristics of the application, and use the cache compression monitoring module to capture applications with poor locality, that is, existing The application of the cache hit rate long tail phenomenon. The invention can take into account the overall cache efficiency and the service quality guarantee between applications.

Owner:ZHEJIANG LAB

An Off-Chip Buffer Compression System for UHD Frame Rate Up-conversion

ActiveCN105472442BReduce power consumptionReduce capacityDigital video signal modificationSelective content distributionProcessing coreParallel computing

The invention provides an out-chip buffer compression system for superhigh-definition frame rate up-conversion, and the system comprises a compression module and a decompression module. The compression module compresses to-be-compressed video frame data from video input and a superhigh-definition frame rate up-conversion processing core, forming a compressed code stream, and enables the compressed code stream to be written into an out-chip buffer; a decompression module which requests the compressed code stream for the out-chip buffer, and receives the compressed code stream from the out-chip buffer. The decompression module carries out the real-time decoding of the compressed code stream, forms a decompressed pixel block, and enables the decompressed pixel block to be outputted to the superhigh-definition frame rate up-conversion processing core and an output display module. The method can greatly reduce the bandwidth of the out-chip buffer, improves the data throughput rate, and reduces the power consumption of an uperhigh-definition frame rate up-conversion system.

Owner:SHANGHAI JIAO TONG UNIV

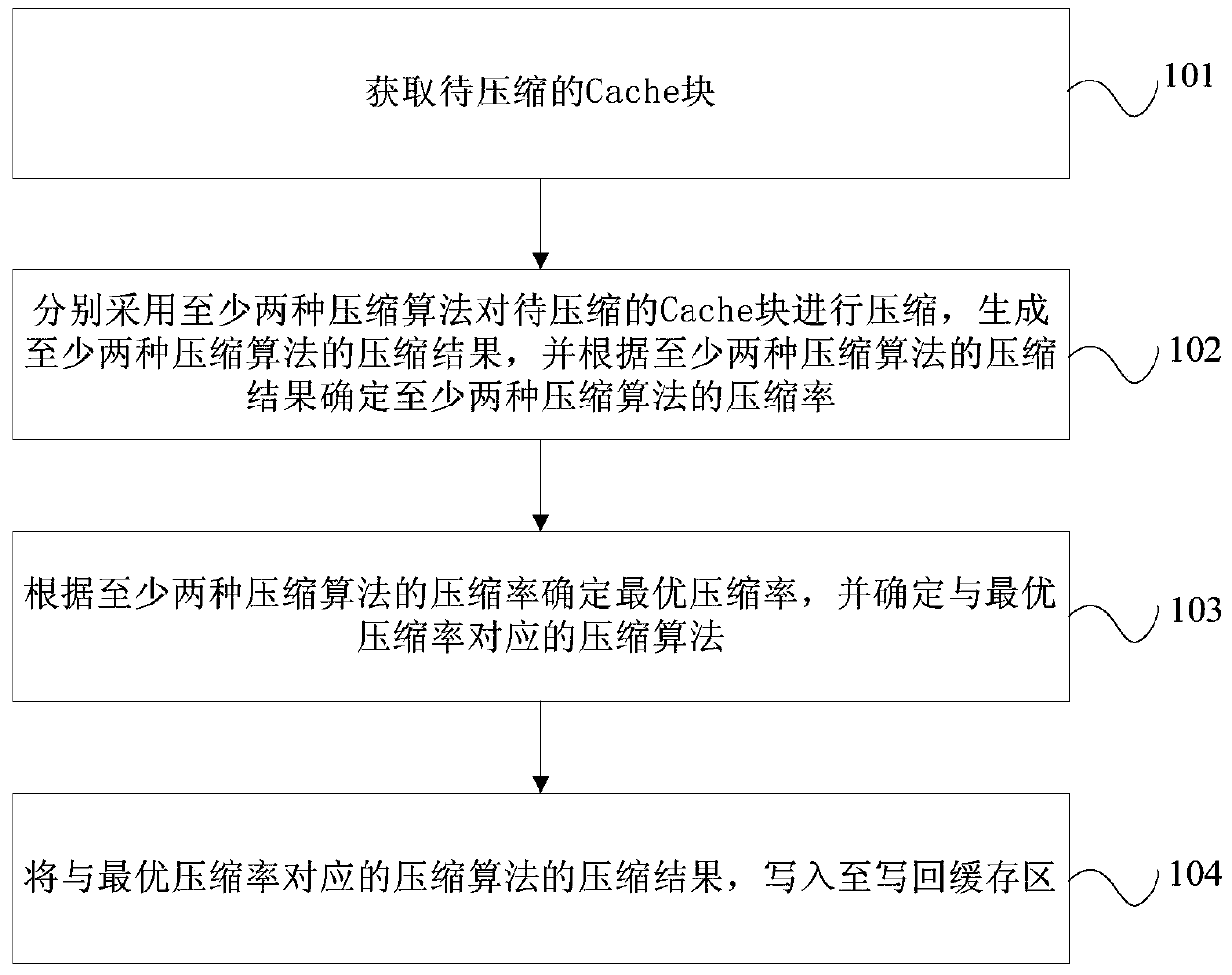

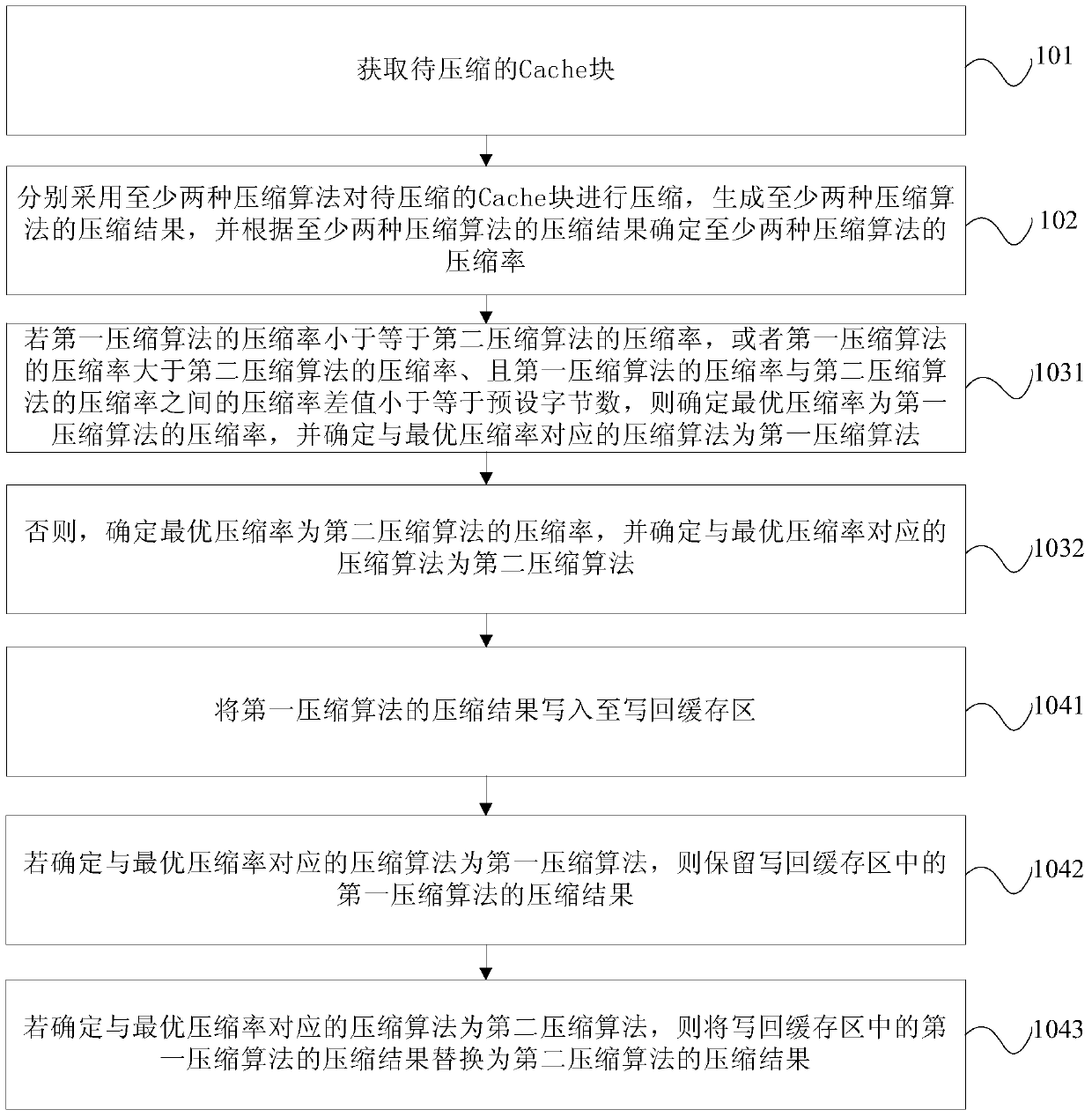

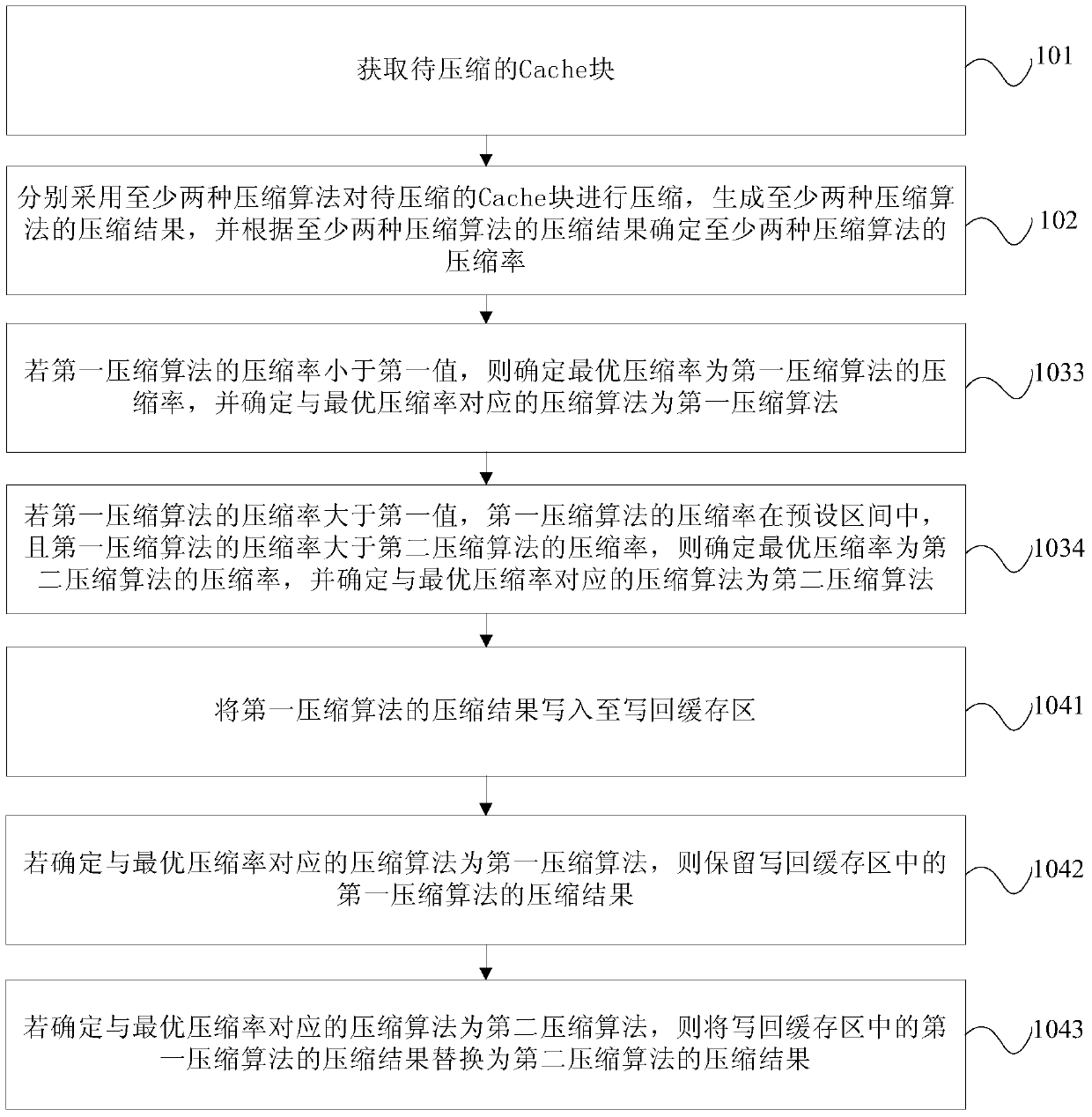

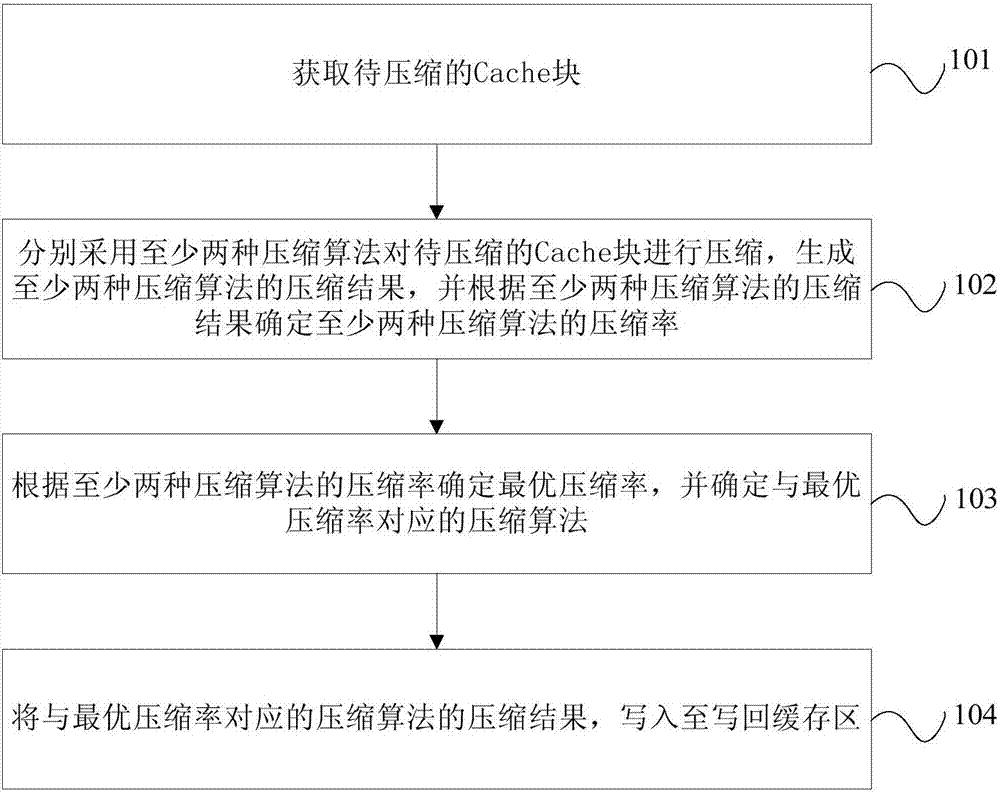

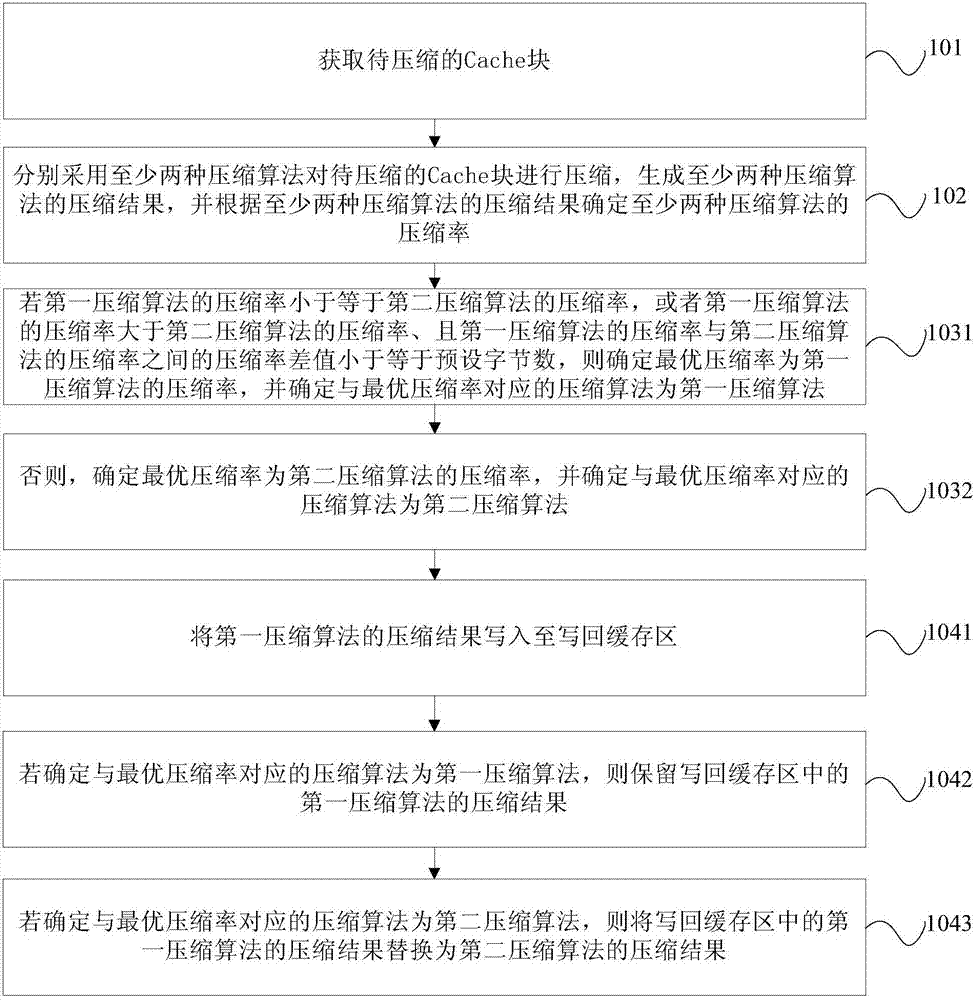

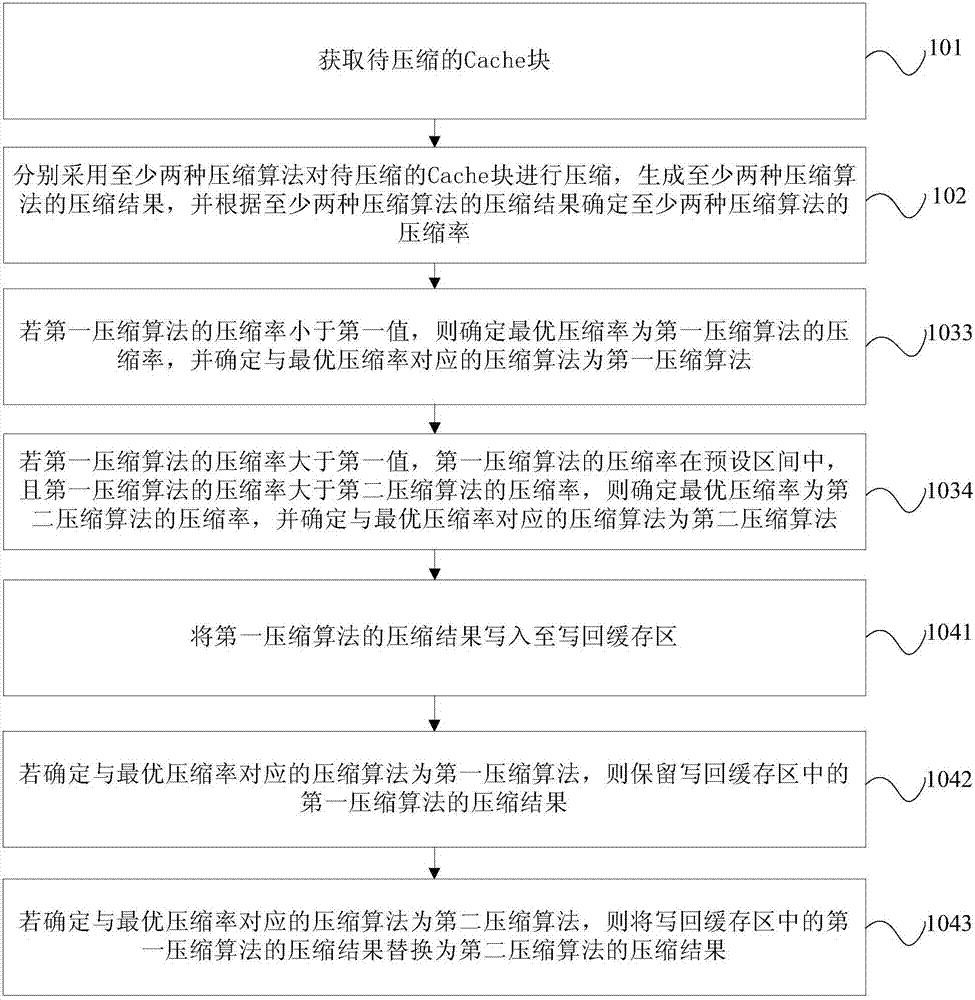

Cache compression method and device

The invention provides a Cache compression method and device. The method comprises the steps that a to-be-compressed Cache block is acquired; at least two compression algorithms are adopted to compress the to-be-compressed Cache block, compression results of all the compression algorithms are generated, and compression rates of all the compression algorithms are determined according to the compression results of all the compression algorithms; the optimal compression rate is determined according to the compression rates of all the compression algorithms, and the compression algorithm corresponding to the optimal compression rate is determined; and the compression result of the compression algorithm corresponding to the optimal compression rate is written into a write-back cache region. The compression algorithms are combined, the compression rates and the compression results of the compression algorithms are arbitrated, the compression rates and a decompression delay are balanced, and the compression method with a high compression rate and a short decompression delay is provided.

Owner:LOONGSON TECH CORP

Cache compression method and device

The invention provides a Cache compression method and device. The method comprises the steps that a to-be-compressed Cache block is acquired; at least two compression algorithms are adopted to compress the to-be-compressed Cache block, compression results of all the compression algorithms are generated, and compression rates of all the compression algorithms are determined according to the compression results of all the compression algorithms; the optimal compression rate is determined according to the compression rates of all the compression algorithms, and the compression algorithm corresponding to the optimal compression rate is determined; and the compression result of the compression algorithm corresponding to the optimal compression rate is written into a write-back cache region. The compression algorithms are combined, the compression rates and the compression results of the compression algorithms are arbitrated, the compression rates and a decompression delay are balanced, and the compression method with a high compression rate and a short decompression delay is provided.

Owner:LOONGSON TECH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com