Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

54results about How to "Reduce the intra-class distance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

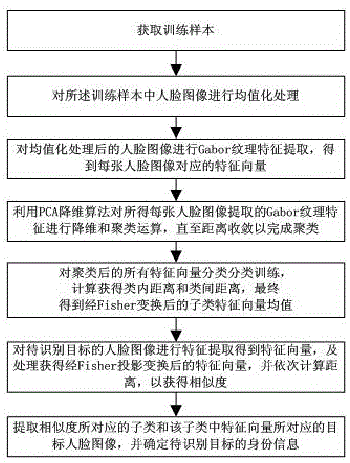

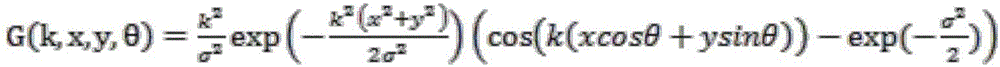

Clustering and reclassifying face recognition method

InactiveCN106250821AReduce the intra-class distanceReduce information lossCharacter and pattern recognitionDimensionality reductionFeature extraction

The invention discloses a clustering and reclassifying face recognition method, which comprises the steps of acquiring a training sample; carrying out equalization processing on the training sample; carrying out Gabor texture feature extraction on face images, and acquiring a feature vector corresponding to each face image after feature extraction; carrying out dimension reduction on acquired Gabor texture features of each face image to acquire feature vectors after dimension reduction; carrying out a clustering operation until distance convergence so as to complete clustering; classifying all of the clustered feature vectors to acquire a plurality of subclasses, calculating to determine each vector mean value, and calculating to acquire a within-class distance and an among-class distance; carrying out feature extraction and preprocessing on face images of a target to be recognized, acquiring a feature vector after projection transformation, and calculating the distance between the acquired feature vector and the feature vectors in each subclass sequentially so as to acquire the similarity; and determining identity information of the target to be recognized. The method disclosed by the invention can shorten the among-class distance so as to reduce an error in the acquisition process, and the accuracy of face recognition is improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

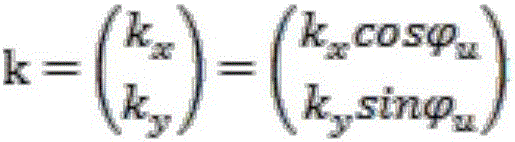

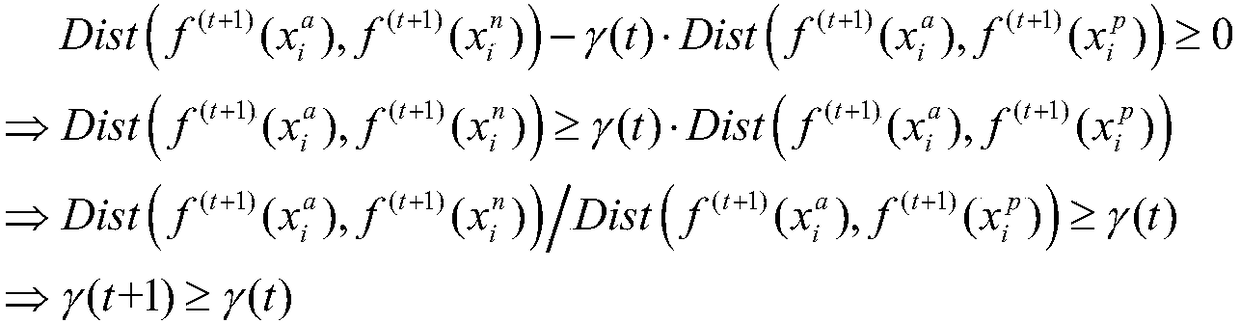

Trace ratio criterion-based triple loss function design method

ActiveCN108399428ATo achieve the purpose of learningIncrease the distance between classesCharacter and pattern recognitionNeural architecturesData setSelection criterion

The invention discloses a trace ratio criterion-based triple loss function design method. Through investigation and survey of image feature extraction, a triple loss function and a trace ratio criterion, the trace ratio criterion is used as a triple selection criterion and a loss calculation method. The method mainly comprises the steps of A, performing triple sample establishment: establishing triple samples by samples in a data set; B, performing triple sample selection: performing screening in the established triple samples, setting an effective selection mechanism, and while the precisionloss is avoided, increasing the training speed; C, performing loss function design: according to the triple samples obtained in the step B, calculating distances between current samples in triples andpositive and negative samples, and designing the loss function for calculating an error between a model prediction result and a real result; and D, performing deep network training: transmitting themodel error to a deep convolutional neural network, performing update adjustment on network parameters, and iteratively training a model until convergence.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

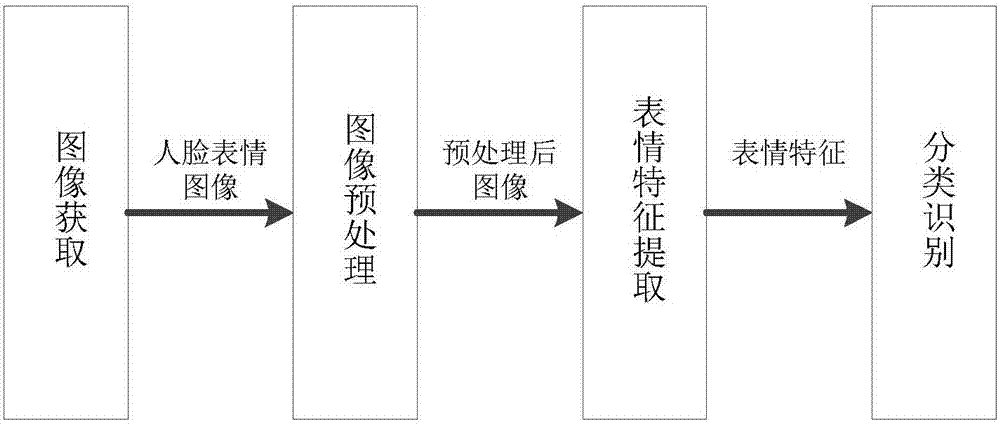

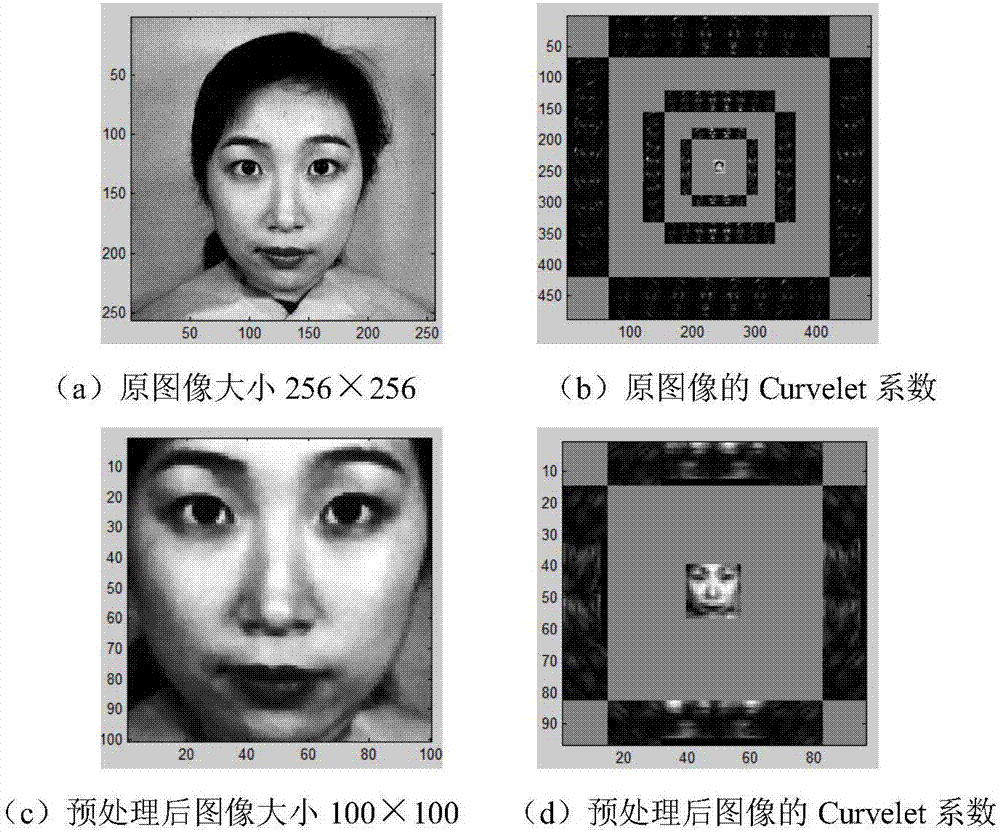

Human face expression recognition method based on Curvelet transform and sparse learning

InactiveCN106980848AImprove discrimination abilityGood refactoring abilityAcquiring/recognising facial featuresMultiscale geometric analysisSparse learning

The invention discloses a human face expression recognition method based on Curvelet transform and sparse learning. The method comprises the following steps: 1, inputting a human face expression image, carrying out the preprocessing of the human face expression image, and cutting and obtaining an eye region and a mouth region from the human face expression image after processing; 2, extracting the human face expression features through Curvelet transform, carrying out the Curvelet transform and feature extraction of the human face expression image after preprocessing, the eye region and the mouth region, carrying out the serial fusion of the three features, and obtaining fusion features; 3, carrying out the classification recognition based on the sparse learning, and respectively employing SRC for classification and recognition of the human face Curvelet features and fusion features; or respectively employing FDDL for classification and recognition of the human face Curvelet features and fusion features. The Curvelet transform employed in the method is a multi-scale geometric analysis tool, and can extract the multi-scale and multi-direction features. Meanwhile, the method employs a local region fusion method, and enables the fusion features to be better in imaging representing capability and feature discrimination capability.

Owner:HANGZHOU DIANZI UNIV

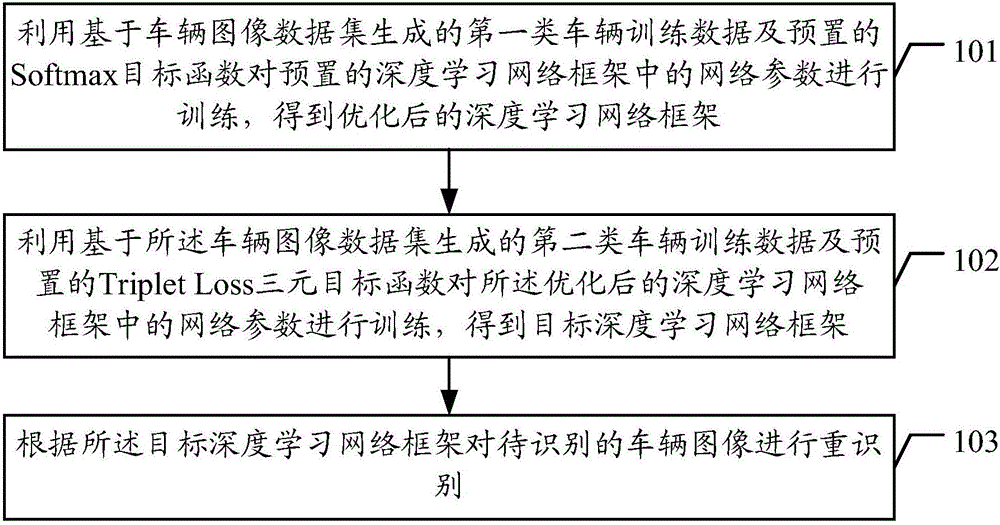

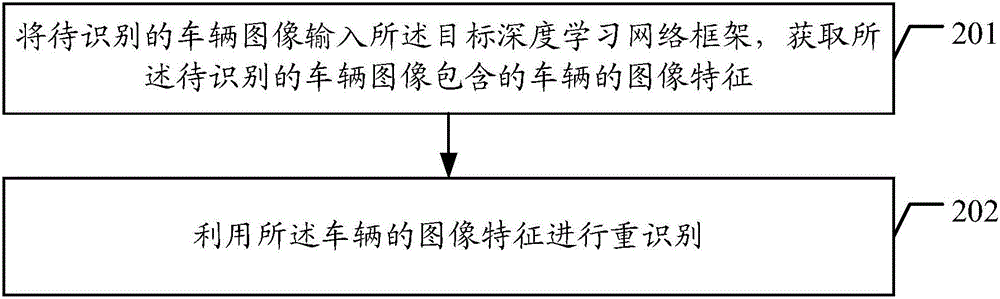

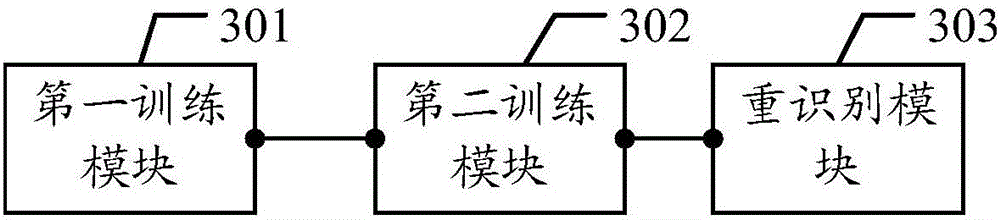

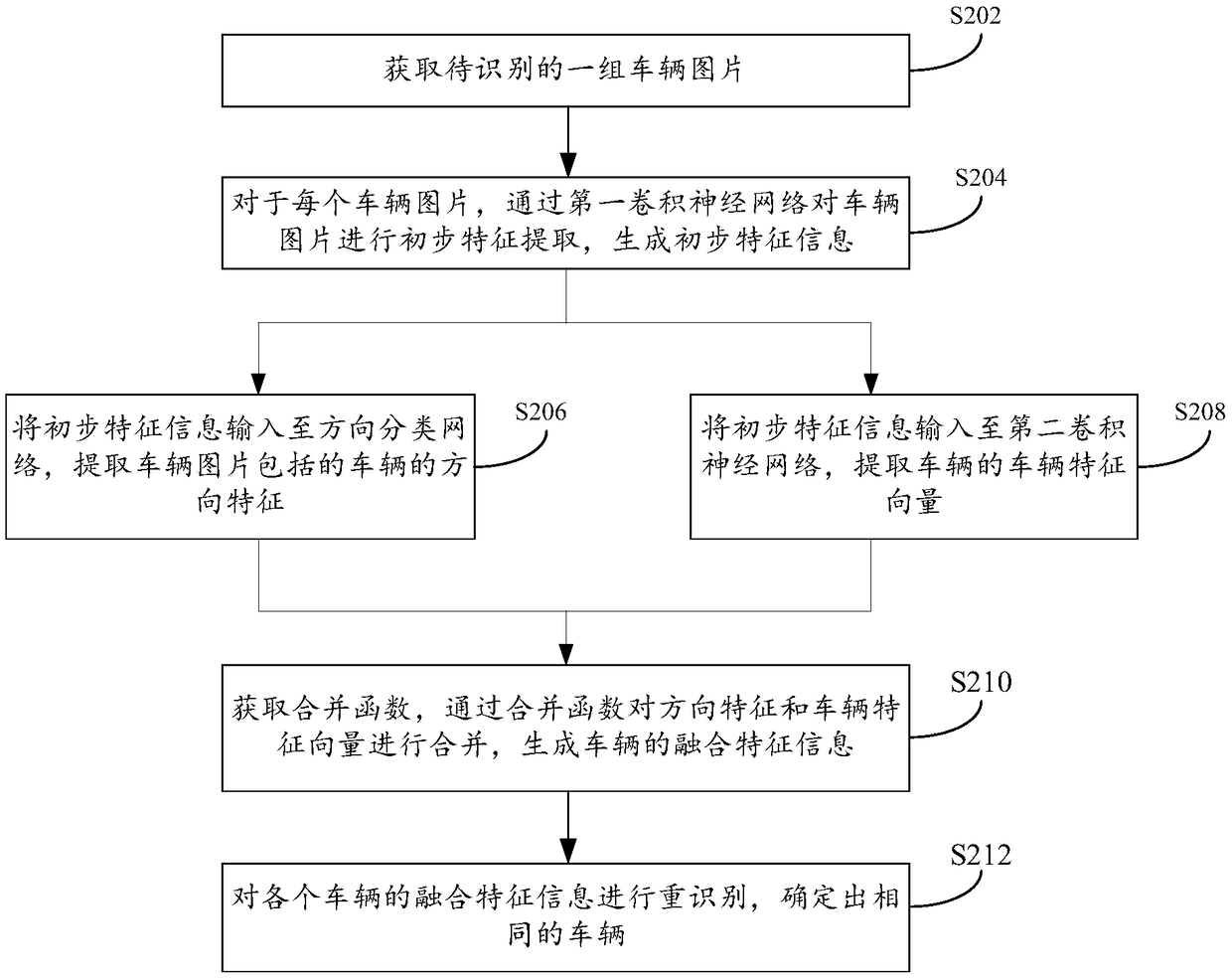

Method and device of vehicle reidentification based on multiple objective function deep learning

InactiveCN106709528AEasy to re-identifyMeet the needs of re-identificationCharacter and pattern recognitionData setSimulation

The present invention discloses a method and device of vehicle reidentification based on multiple objective function deep learning. The method comprises: employing first-class vehicle training data generated based on a vehicle image data set and a preset Softmax objective function to train the preset network parameters in a deep learning network frame, obtaining an optimal depth learning network frame, employing second-class vehicle training data generated based on the vehicle image data and a preset Triplet Loss ternary objective function to train the optimal network parameters in the depth learning network frame, obtaining a target depth learning network frame, and performing reidentification of the vehicle image to be identified according to the target depth learning network frame. Through adoption of the target depth learning network frame, the image features with high robustness and high reliability can be obtained to facilitate vehicle reidentification and effectively satisfy the requirement of the vehicle reidentification in the monitoring video.

Owner:SHENZHEN UNIV

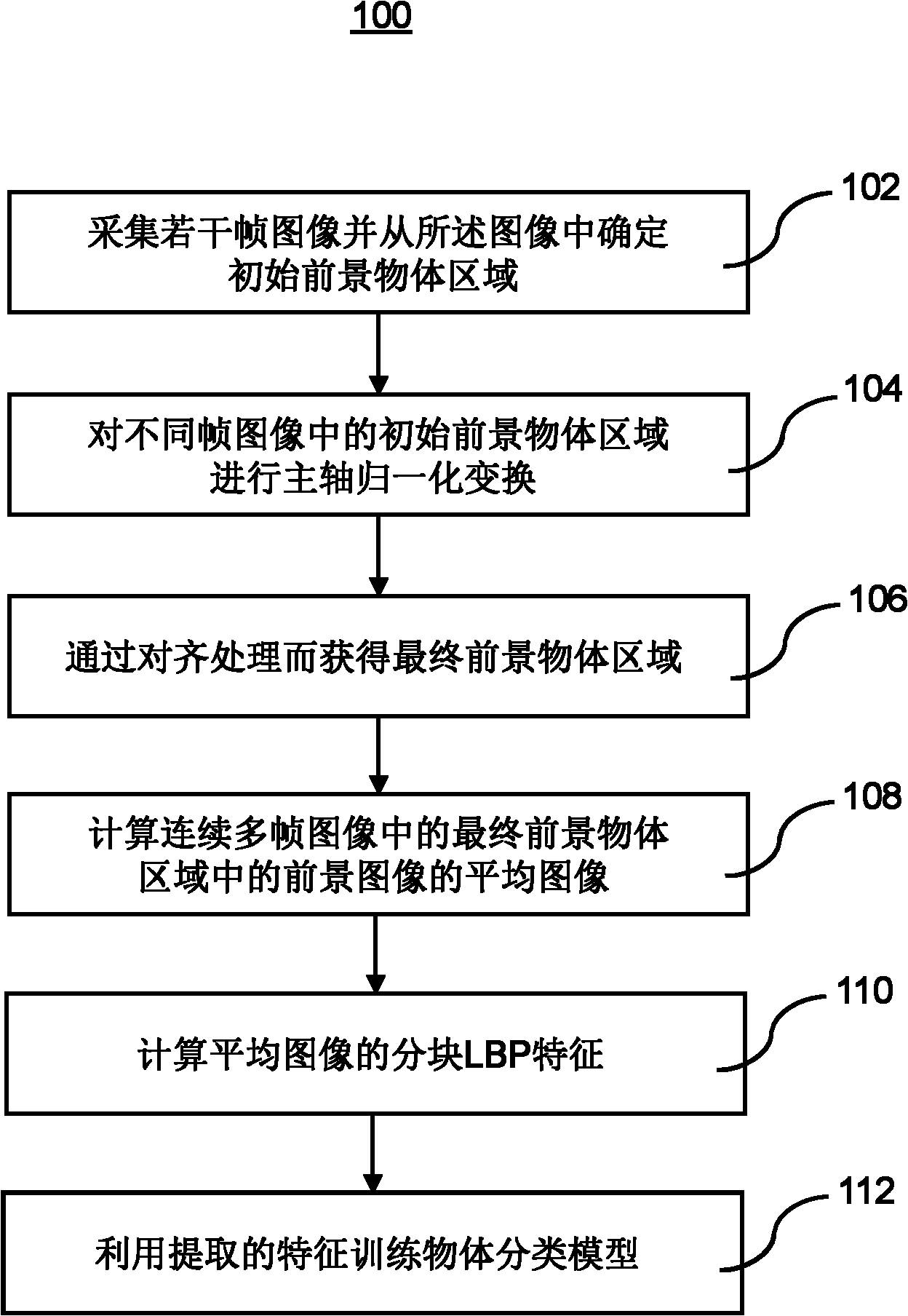

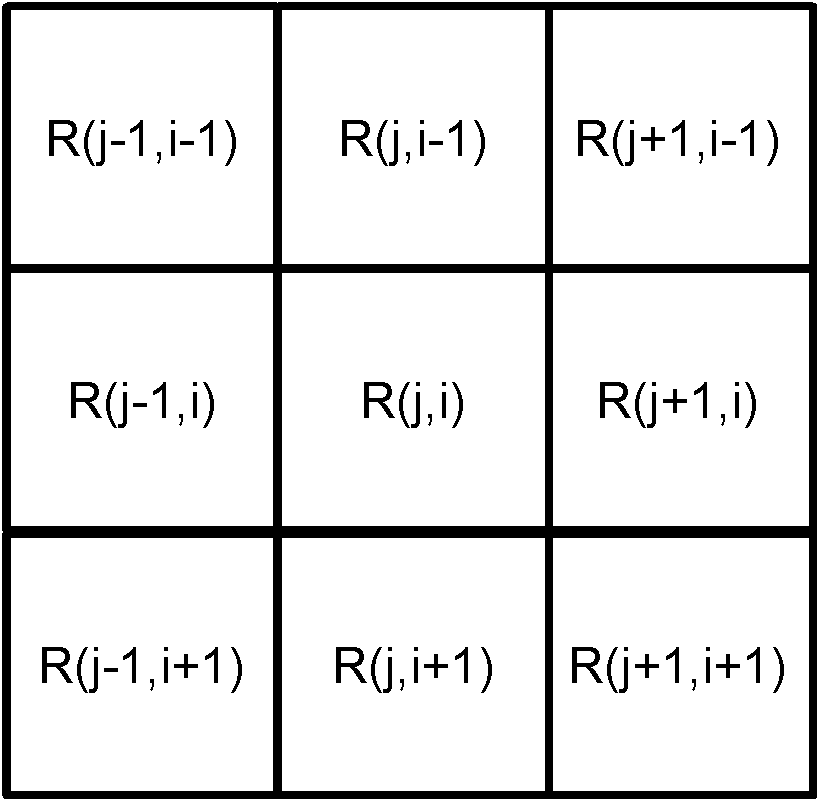

Method for training object classification model and identification method using object classification model

ActiveCN102004925AReduce the intra-class distanceImprove stabilityCharacter and pattern recognitionPattern recognitionVisual angle

Owner:WUXI ZGMICRO ELECTRONICS CO LTD

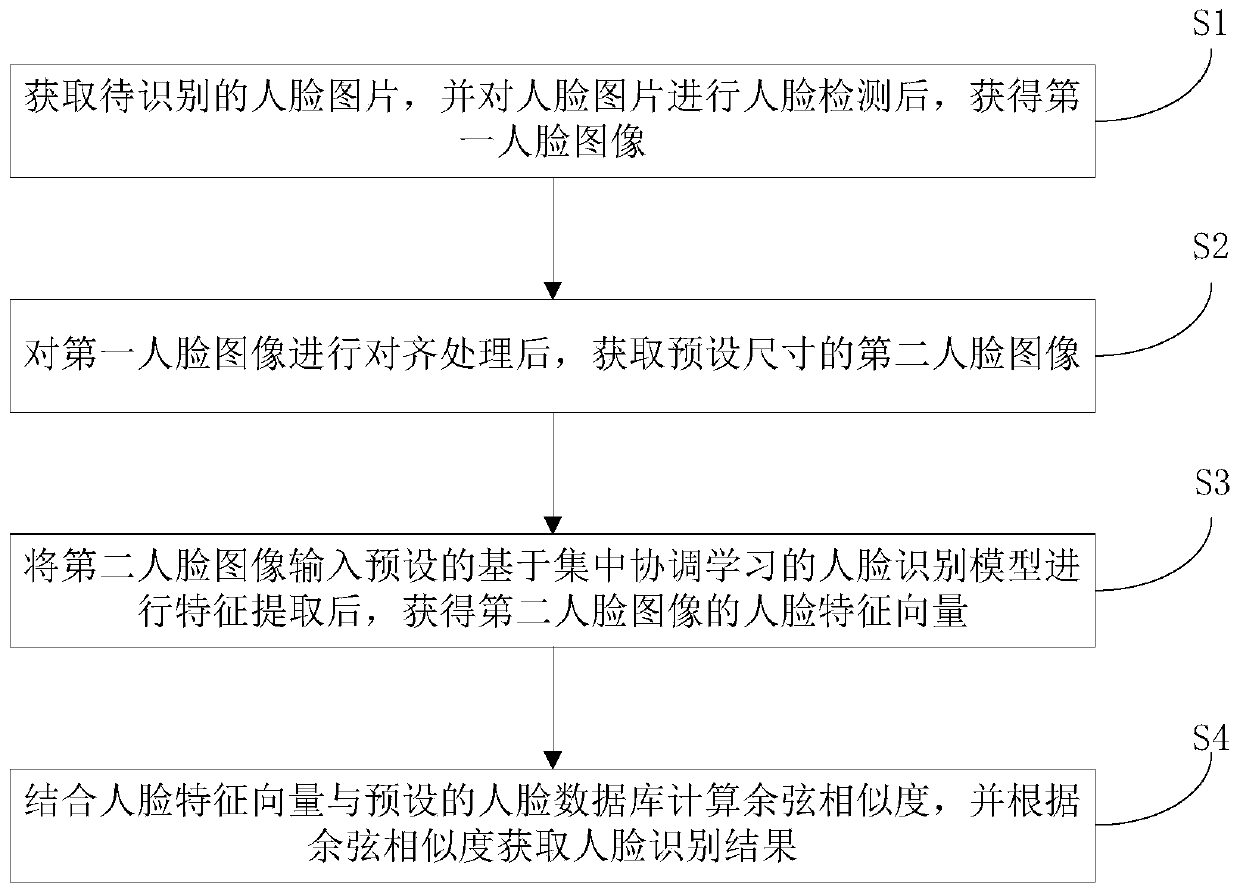

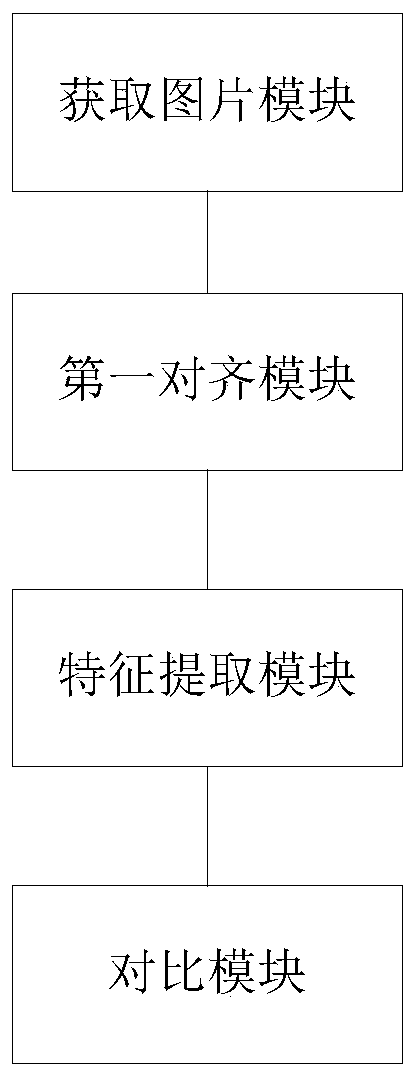

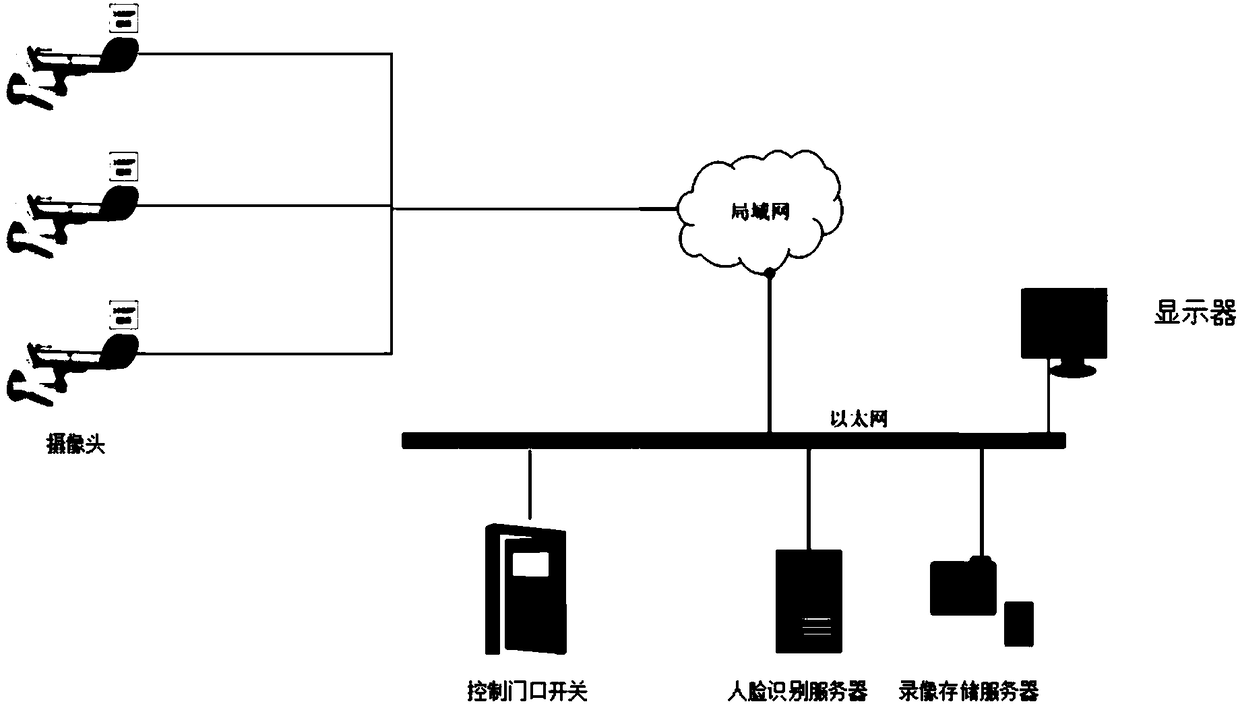

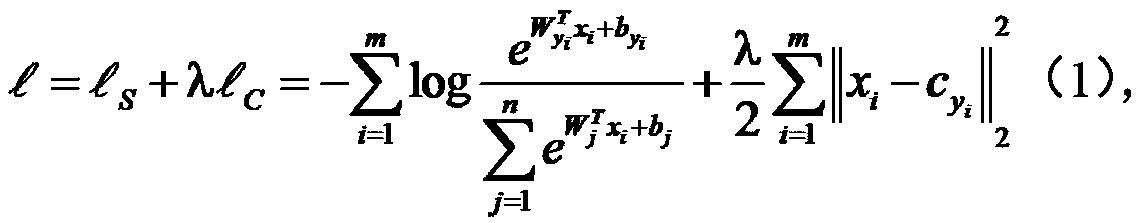

Face recognition method, system and device based on centralized coordination learning

PendingCN109784219AImprove classification efficiencyImprove recognition accuracyCharacter and pattern recognitionNeural architecturesFace detectionFeature vector

The invention discloses a face recognition method, system and device based on centralized coordination learning, and the method comprises the following steps: obtaining a to-be-recognized face image,carrying out the face detection of the face image, and obtaining a first face image; After alignment processing is carried out on the first face image, a second face image with a preset size is obtained; inputting the second face image into a preset face recognition model based on centralized coordination learning for feature extraction, and obtaining a face feature vector of the second face image; and calculating cosine similarity by combining the face feature vector and a preset face database, and obtaining a face recognition result according to the cosine similarity. According to the invention, a face recognition model based on centralized coordination learning is adopted to carry out feature extraction on the face image, each feature is pulled to an original point and is respectively put into all quadrants, the inter-class distance is larger, the classification efficiency and recognition accuracy of the face are improved, and the method can be widely applied to the technical fieldof face recognition.

Owner:GUANGZHOU HISON COMP TECH

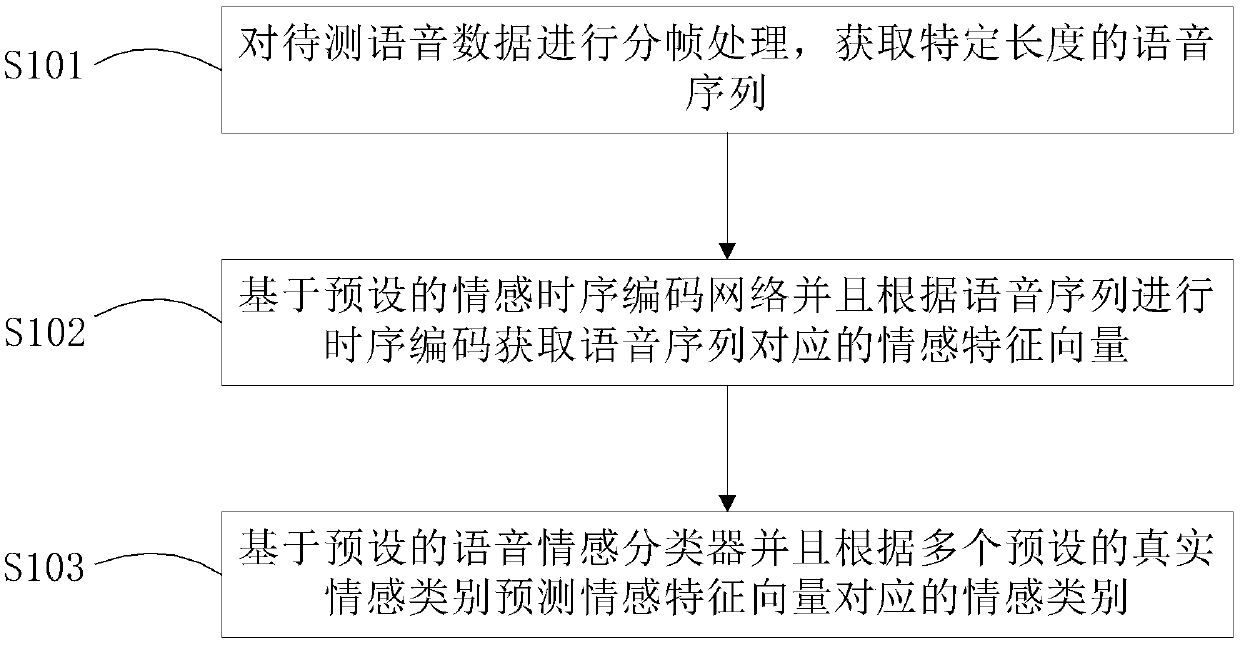

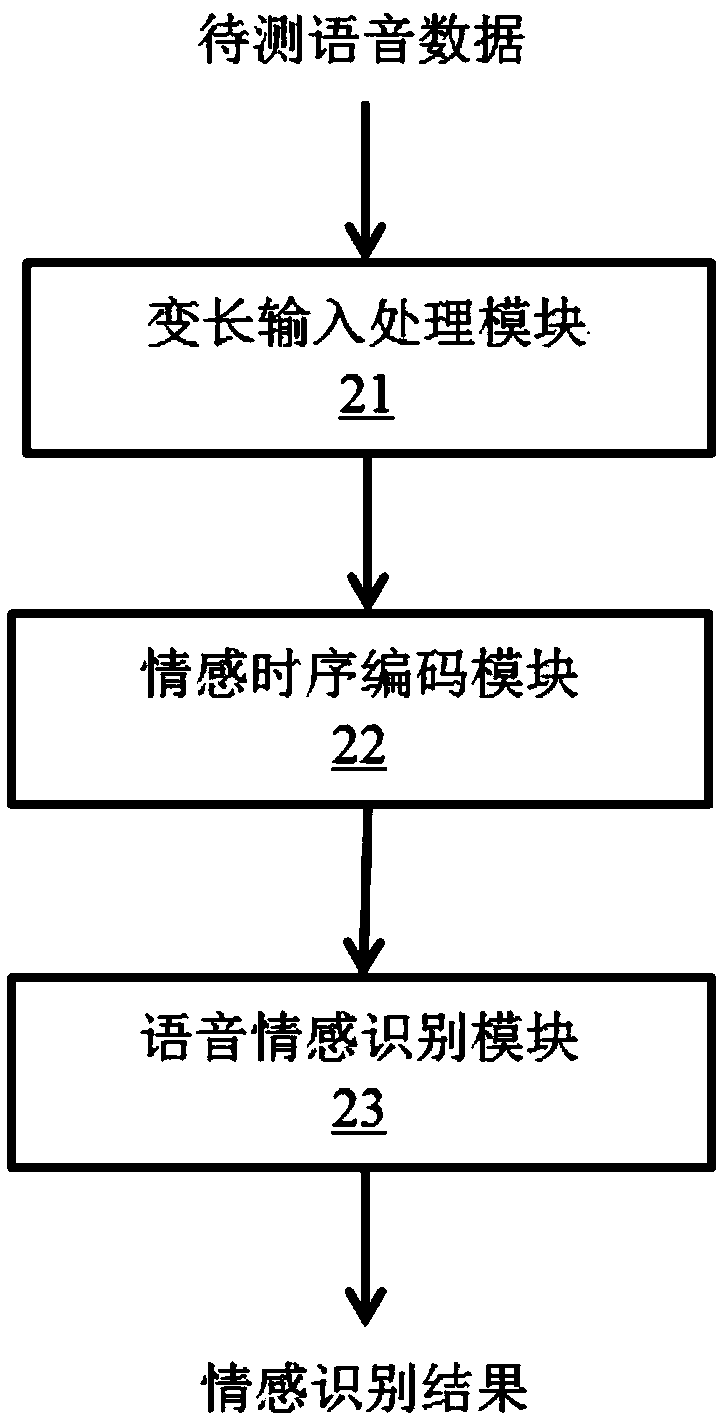

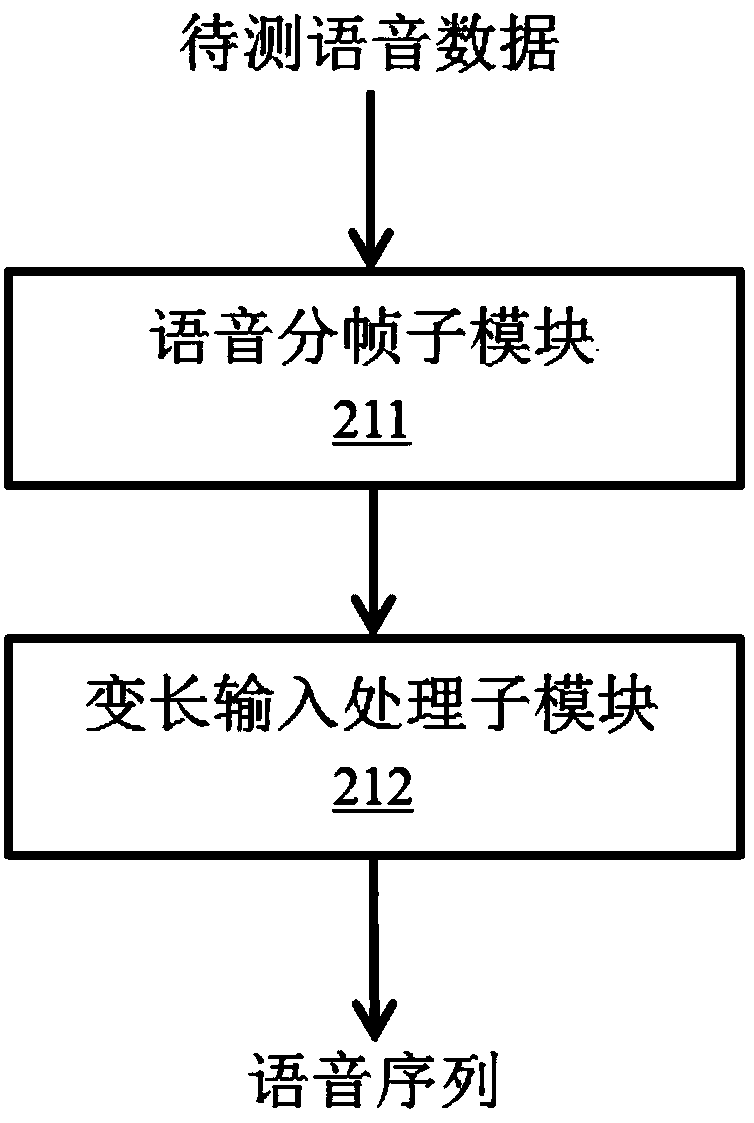

Speech emotion recognition method and system based on ternary loss

The invention belongs to the technical field of emotion recognition, particularly relates to a speech emotion recognition method and system based on ternary loss, and aims at solving the technical problem of accurately recognizing confusable emotion categories. For this purpose, the speech emotion recognition method comprises the following steps: framing the speech data to be measured so as to obtain a speech sequence of the specific length; temporal coding is performed based on the preset emotional temporal coding network and according to the speech sequence so as to obtain the emotional feature vectors corresponding to the speech sequence; and predicting the emotion category corresponding to the emotion feature vectors based on the preset speech emotion classifier and according to multiple preset real emotion categories. According to the speech emotion recognition method, the confusable speech emotion categories can be greatly recognized, and the method can be executed and implemented by the speech emotion recognition system.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

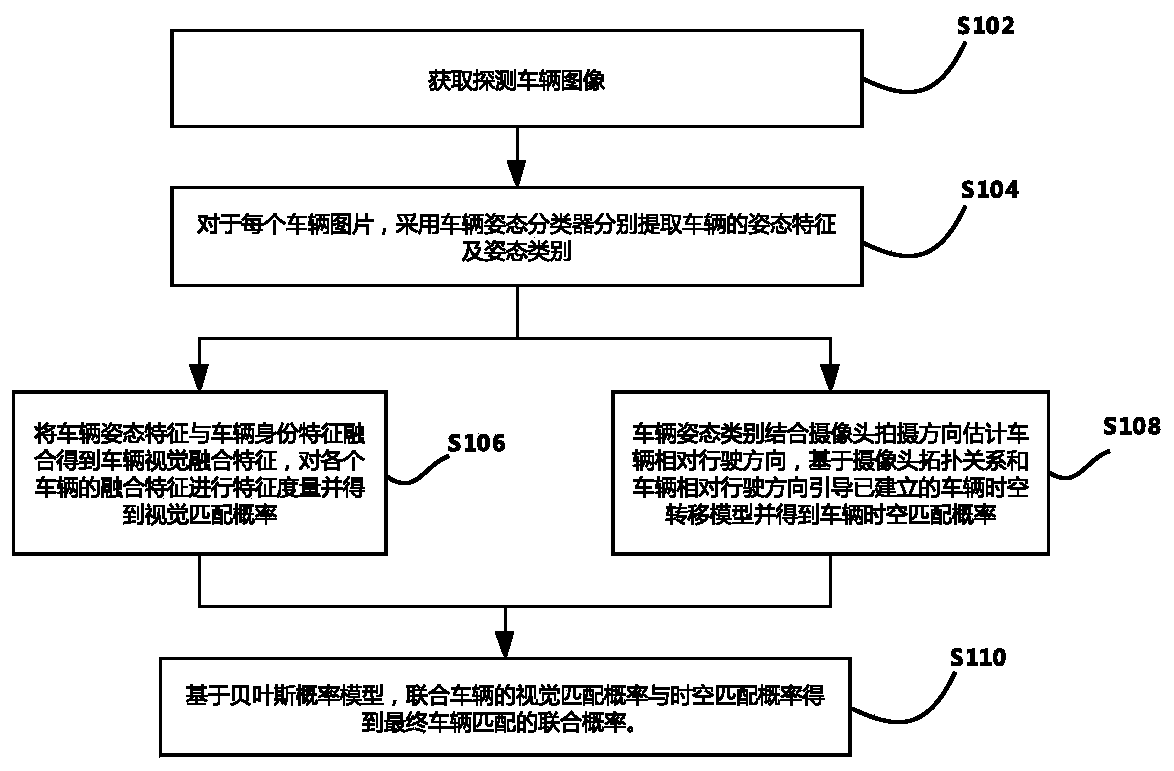

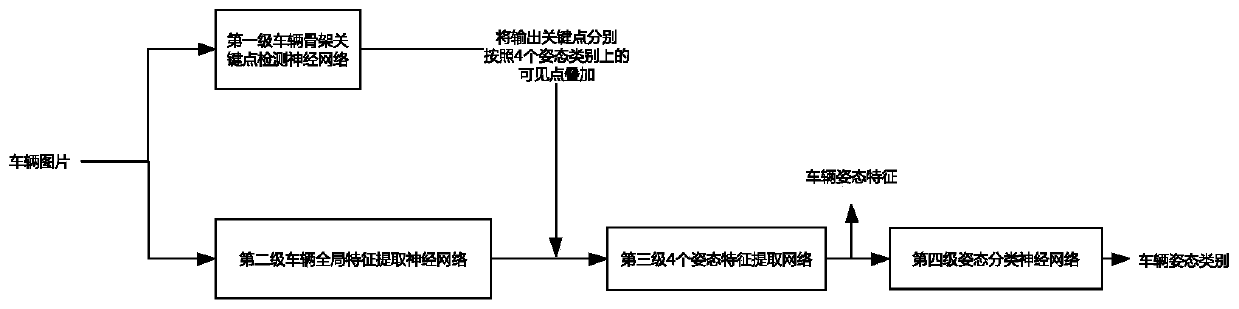

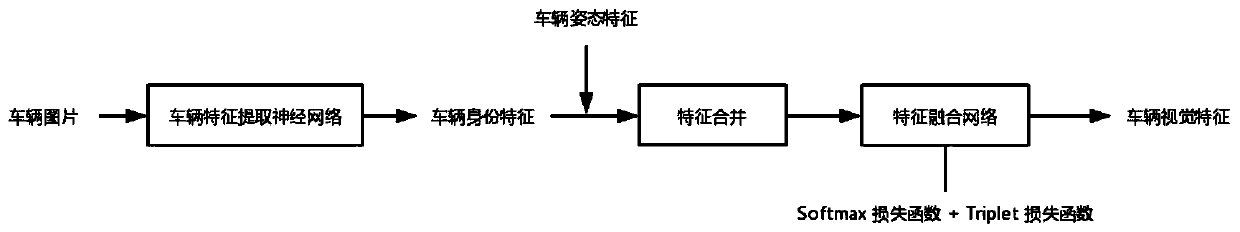

Vehicle re-recognition method based on space-time constraint model optimization

PendingCN110795580AReduce the intra-class distanceExpand the distance between different ID classesInternal combustion piston enginesCharacter and pattern recognitionVisual matchingAlgorithm

The invention discloses a vehicle re-recognition method based on space-time constraint model optimization. The method comprises the following steps: 1) obtaining a to-be-queried vehicle image; 2) fora given vehicle query image and a plurality of candidate pictures, extracting vehicle attitude features through a vehicle attitude classifier and outputting a vehicle attitude category; 3) fusing thevehicle attitude feature and the fine-grained identity feature of the vehicle to obtain a fusion feature of the vehicle based on visual information, and obtaining a visual matching probability; 4) estimating the relative driving direction of the vehicle, and establishing a vehicle space-time transfer model; 5) obtaining a vehicle space-time matching probability; 6) based on the Bayesian probability model, combining the visual matching probability and the space-time matching probability of the vehicle to obtain a final vehicle matching joint probability; and 7) arranging the joint probabilitiesof the queried vehicle and all candidate vehicles in a descending order to obtain a vehicle re-recognition sorting table. The method provided by the invention greatly reduces the false recognition rate of vehicles and improves the accuracy of a final recognition result.

Owner:WUHAN UNIV OF TECH

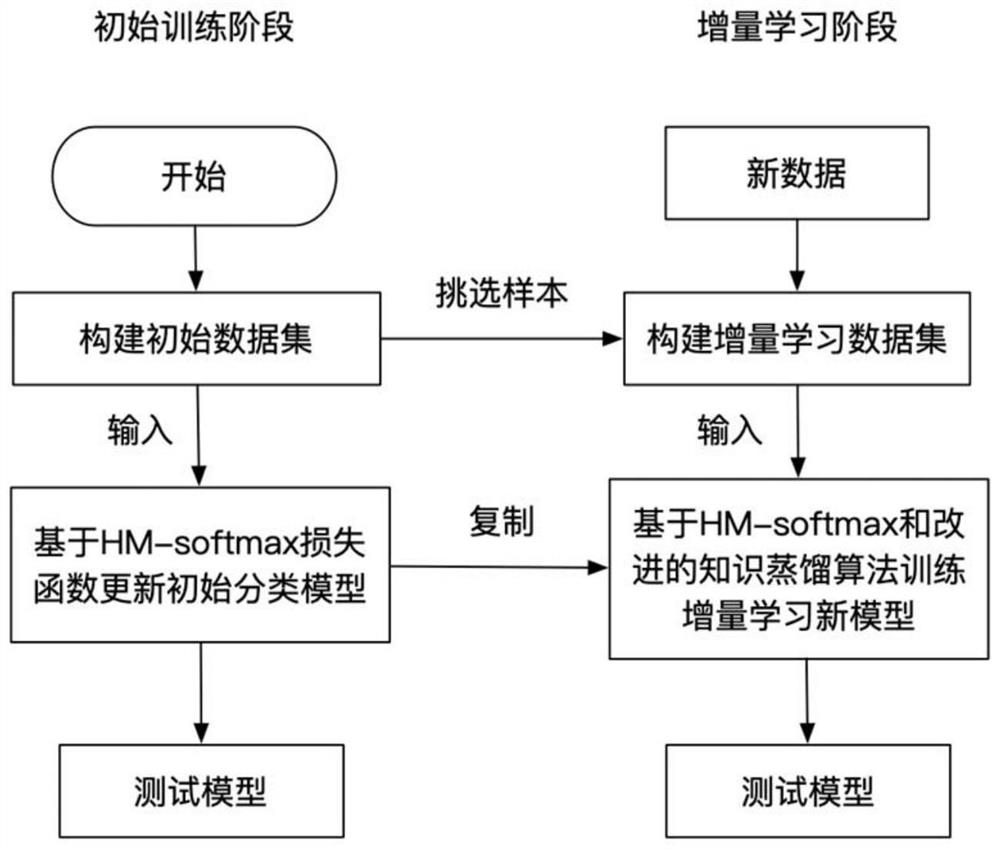

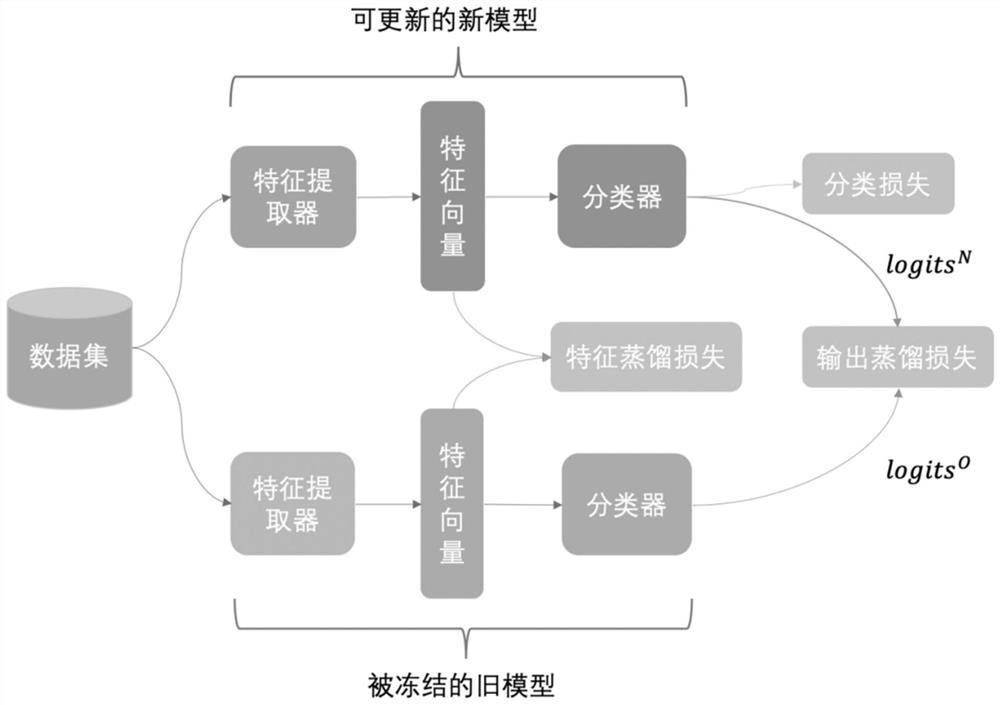

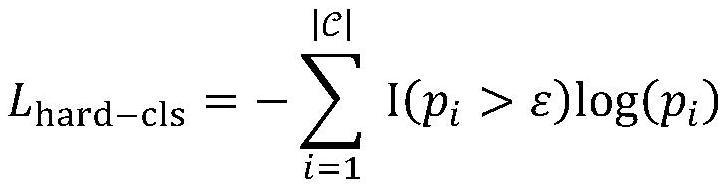

Image big data-oriented class increment classification method, system and device and medium

ActiveCN112990280AIncrease the distance between classesEasy to identifyCharacter and pattern recognitionNeural architecturesData setLearning data

The invention discloses an image big data-oriented class increment classification method, system and device and a medium. The method comprises an initialization training stage and an increment learning stage. The initialization training stage comprises the following steps: constructing an initial data set of an image; and training an initial classification model according to the initial data set. The incremental learning stage comprises the following steps: constructing an incremental learning data set according to the initial data set and new data of the image; obtaining a new incremental learning model according to the initial classification model, and training the new incremental learning model according to an incremental learning data set and a distillation algorithm to obtain a model capable of identifying new and old categories, wherein the distillation algorithm enables the inter-class distance of the model to be enlarged and the intra-class distance to be reduced. The incremental learning model is updated through the distillation algorithm, the inter-class distance of the model is enlarged, the intra-class distance of the model is reduced, the new and old data recognition performance of the model can be improved under limited storage space and computing resources, and the method, system and device can be widely applied to the field of big data application.

Owner:SOUTH CHINA UNIV OF TECH

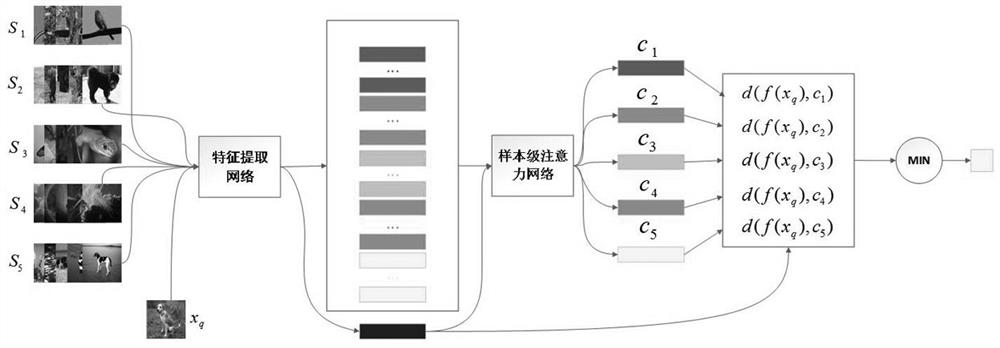

Few-sample learning method based on sample-level attention network

ActiveCN111985581AEasy accessIncrease distanceCharacter and pattern recognitionNeural learning methodsFeature vectorFeature extraction

The invention relates to a few-sample learning method based on a sample-level attention network. The method comprises the steps of respectively inputting a sample of a support set and a sample of a query set into a feature extraction module, and obtaining a corresponding support set feature vector and a query set feature vector; inputting the support set feature vector corresponding to each classinto a sample-level attention network module to obtain a class prototype of each class; calculating the distance between the query set feature vector and each class prototype to obtain the probabilitydistribution of the class to which the query set feature vector belongs; jointly training the feature extraction module and the sample-level attention network module by adopting cross entropy loss ofthe query set and classification loss of the support set, and obtaining a gradient updating network through back propagation. According to the method, a new target task can be solved only by learninga large number of similar learning tasks, and for the target task, model updating is not needed any more.

Owner:FUZHOU UNIV

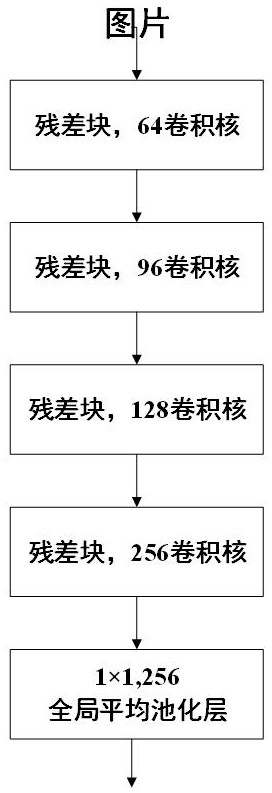

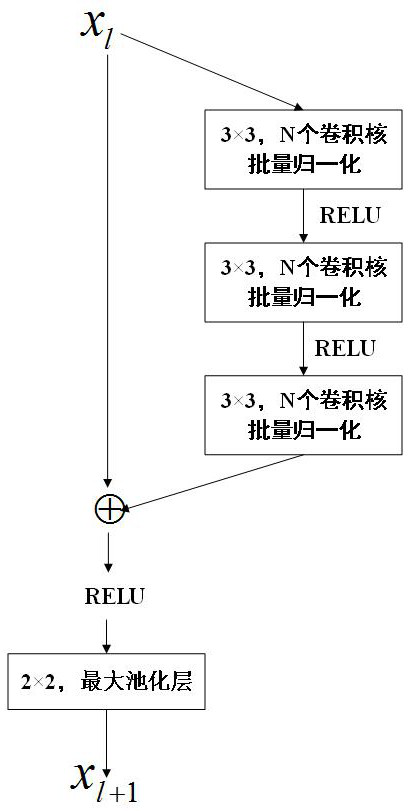

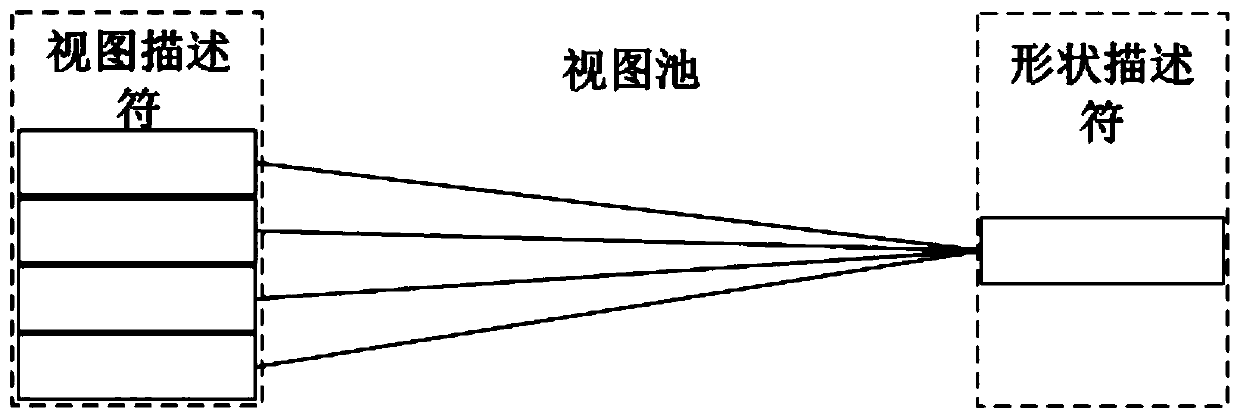

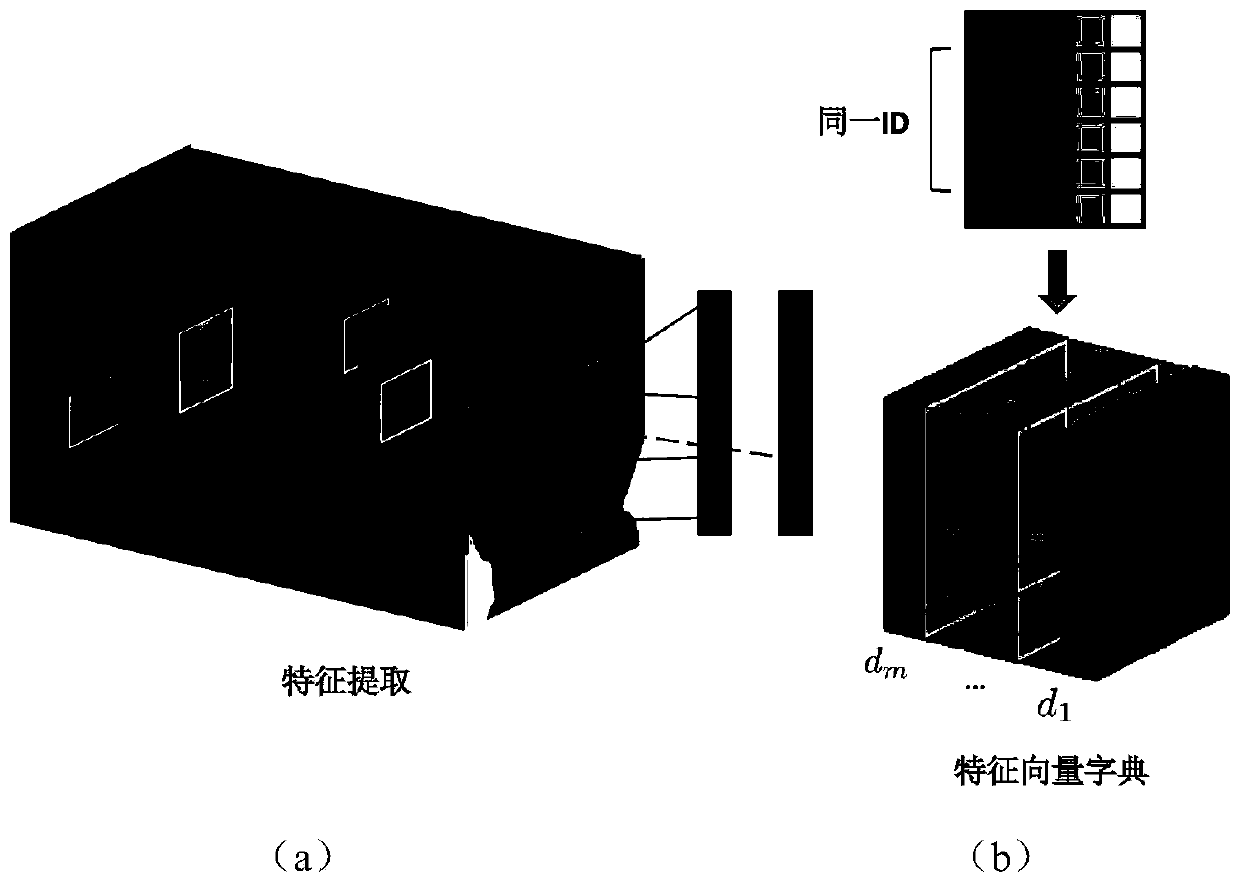

Multi-view three-dimensional model retrieval method and system based on pairing depth feature learning

ActiveCN111382300AImprove retrieval performanceEasy to identifyDigital data information retrievalSpecial data processing applicationsSi modelFeature learning

The invention discloses a multi-view three-dimensional model retrieval method and a multi-view three-dimensional model retrieval system based on pairing depth feature learning. The multi-view three-dimensional model retrieval method comprises the steps of: acquiring two-dimensional views of a to-be-retrieved three-dimensional model at different angles, and extracting an initial view descriptor ofeach two-dimensional view; aggregating the plurality of initial view descriptors to obtain a final view descriptor; extracting potential features and category features of the final view descriptor respectively; performing weighted combination on the potential features and the category features to form a shape descriptor; and performing similarity calculation on the obtained shape descriptor and ashape descriptor of the three-dimensional model in a database to realize retrieval of the multi-view three-dimensional model. According to the multi-view three-dimensional model retrieval method, a multi-view three-dimensional model retrieval framework GPDFL is provided, potential features and category features of the model are fused, and the feature recognition capability and the model retrievalperformance can be improved.

Owner:SHANDONG NORMAL UNIV

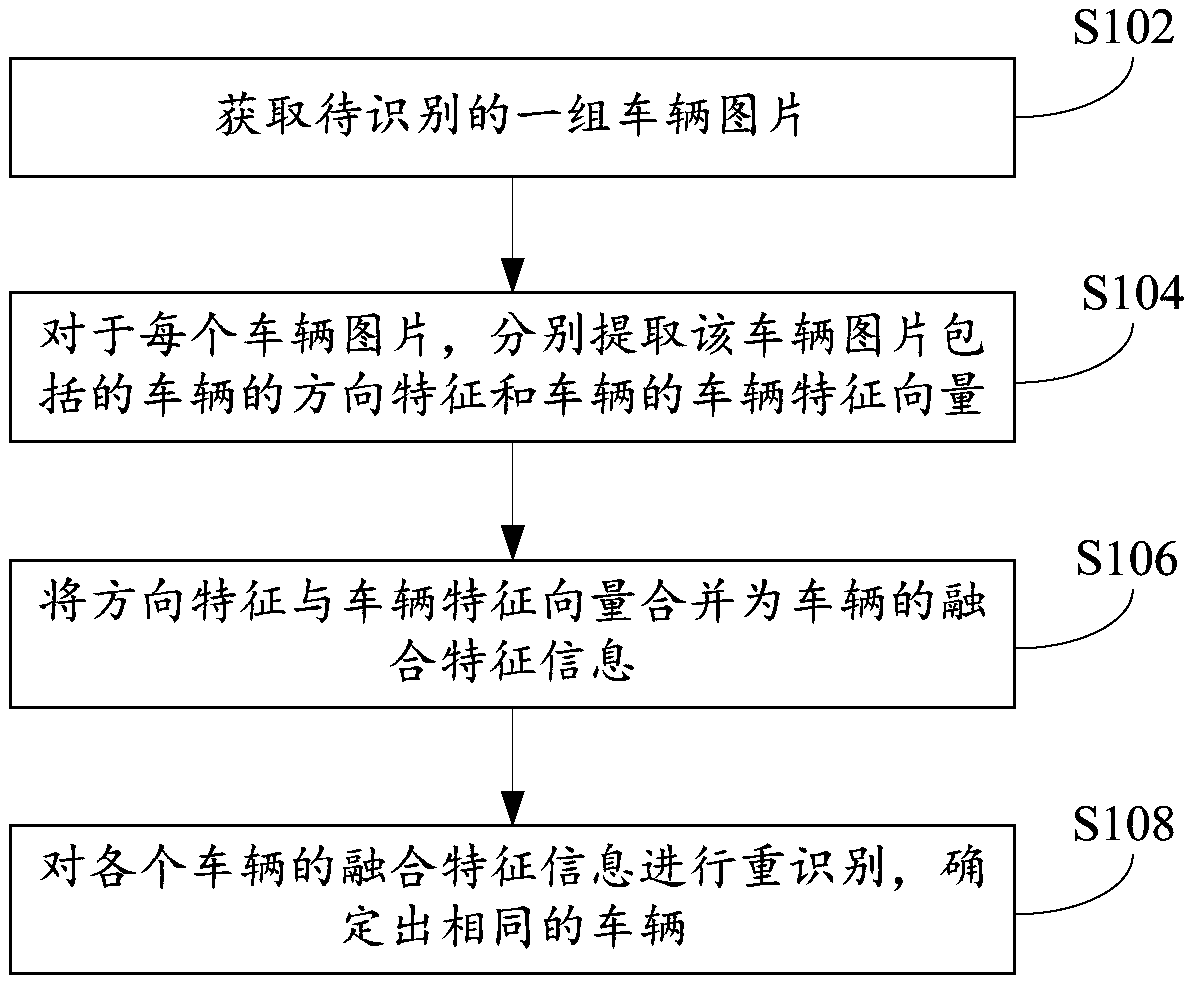

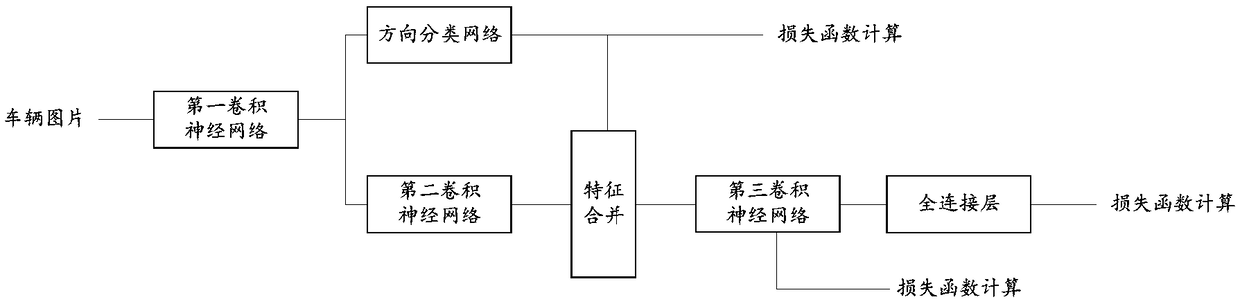

Vehicle weight identification method, device and system

ActiveCN109034086ANarrow down the comparison rangeImprove matchCharacter and pattern recognitionNeural architecturesFeature vectorRe identification

The invention provides a vehicle re-identification method, a device and a system, which relate to the technical field of vehicle identification. The method comprises the following steps: obtaining a group of vehicle pictures to be identified; for each vehicle picture, the vehicle direction feature and the vehicle feature vector being extracted from the vehicle picture respectively; merging the directional feature and the vehicle feature vector into the fusion feature information of the vehicle; the fusion feature information of each vehicle being re-recognized, and the same vehicle being determined. The vehicle weight identification method, device and system of the invention greatly improve the accuracy of the vehicle weight identification and further improve the identification efficiencyby fusing the directional features of the vehicle and the vehicle features.

Owner:BEIJING KUANGSHI TECH

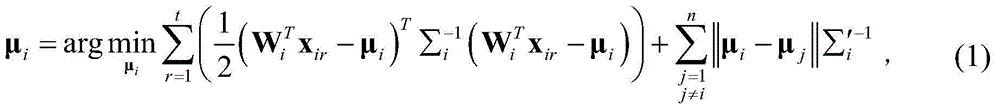

Three-dimensional face recognition method based on Bayesian multivariate distribution characteristic extraction

ActiveCN104636729ASolve the problem of insufficient training samplesAccelerated trainingThree-dimensional object recognitionAcquired characteristicClassification methods

The invention discloses a three-dimensional face recognition method based on Bayesian multivariate distribution characteristic extraction. The method comprises three-dimensional data preprocessing, characteristic extraction and identification classification. The method has the advantages that the defect of large computation amount in the prior art is overcome; a three-dimensional face depth map is used for identifying, so that the computation amount can be reduced, and the identification efficiency is increased; the problem of insufficient training samples in single sample identification can be solved, and the training samples are added with a partitioning method; a characteristic extraction method based on Bayesian analysis is provided on the basis, so that the obtained characteristics have a minimum intra-class distance and a maximum inter-class distance, namely, optimal separability is realized; moreover, a classification method based on Mahalanobis distance is adopted, so that optimal identification classification is realized. As proved by experiment data, the method disclosed by the invention has a good three-dimensional face recognition result.

Owner:ZHEJIANG UNIV OF TECH

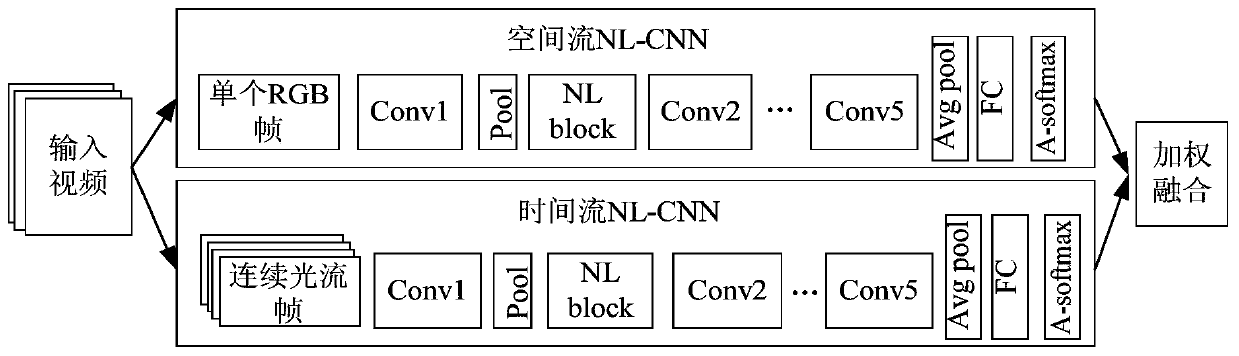

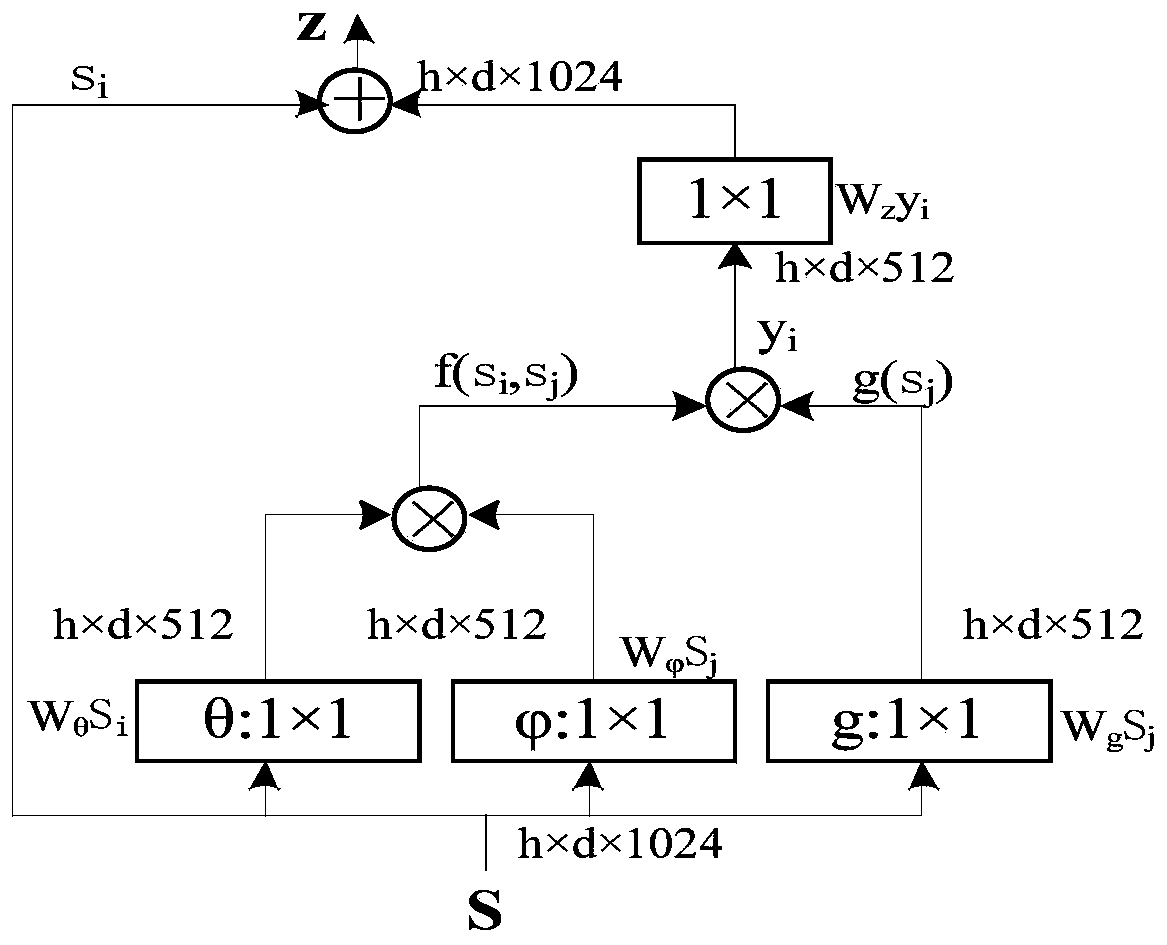

Human body behavior recognition method of non-local double-flow convolutional neural network model

InactiveCN110826462ADeepen the depthReduce overfittingCharacter and pattern recognitionNeural architecturesHuman bodyFeature extraction

The invention relates to a human body behavior recognition method of a non-local double-flow convolutional neural network model. Two shunt networks are improved on the basis of a double-flow convolutional neural network model; a non-local feature extraction module is added into the spatial flow CNN and the time flow CNN for extracting a more comprehensive and clearer feature map. According to themethod, the depth of the network is deepened to a certain extent, network over-fitting is effectively relieved, non-local features of a sample can be extracted, an input feature map is subjected to de-noising processing, and the problem of low recognition accuracy caused by reasons such as complex background environment, diverse human body behaviors and high action similarity in a behavior video is solved. According to the method, an A-softmax loss function is adopted for training in a loss layer; on the basis of a softmax function, m times of limitation is added to a classification angle, andthe weight W and bias b of a full connection layer are limited, so that the inter-class distance of samples is larger, the intra-class distance of the samples is smaller, better recognition precisionis obtained, and finally a deep learning model with higher identification capability is obtained.

Owner:SHANGHAI MARITIME UNIVERSITY

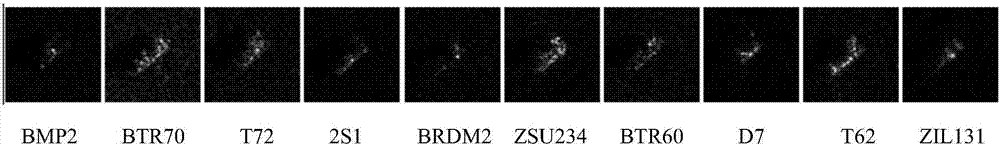

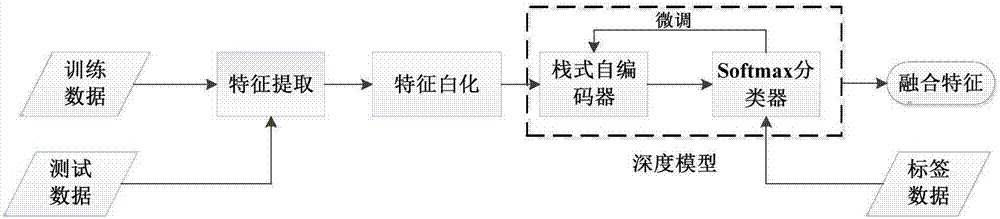

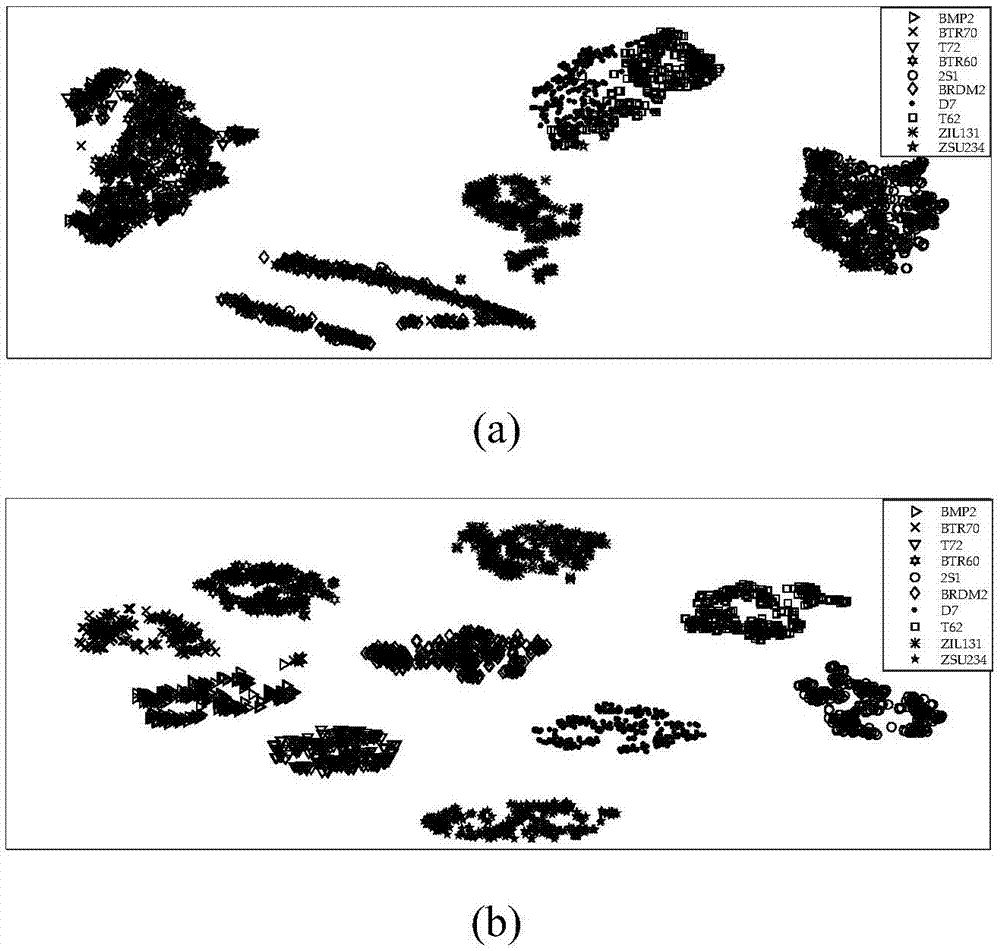

Feature fusion method based on stack-type self-encoder

ActiveCN106874952AFeatures are highly complementaryReduce redundancyCharacter and pattern recognitionFeature vectorAlgorithm

The invention provides a feature fusion method based on a stack-type self-encoder. The technical scheme of the invention comprises the following steps: firstly extracting binary mode texture features of three local spots of an image, selecting and extracting a plurality of types of baseline features of the image through a feature selection method, carrying out the series connection of all obtained features, and obtaining a series vector; secondly carrying out the standardization and whitening of the series vector; enabling a whitened result to serve as the input of SAE, and carrying out the training of the SAE through employing a layer-by-layer greedy training method; finally carrying out the fine tuning of the SAE through the trained SAE and a softmax filter, and enabling a loss function to be minimum, wherein the output of the SAE is a fusion feature vector which is high in discrimination performance. The selected feature redundancy is small, and more information is provided for feature fusion.

Owner:NAT UNIV OF DEFENSE TECH

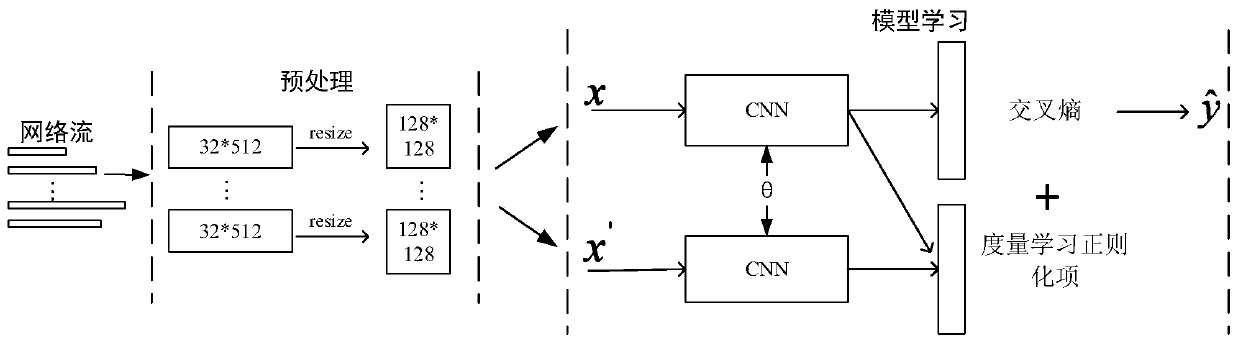

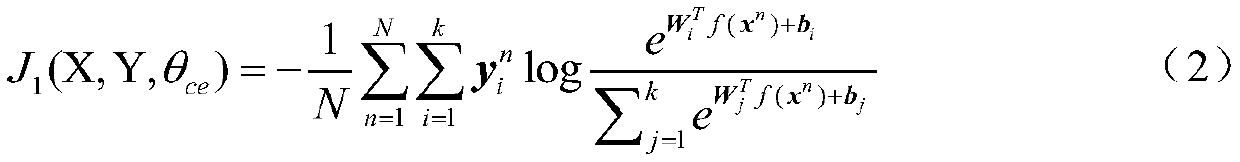

Network traffic classification system and method based on depth discrimination features

ActiveCN110796196ADistance between subclassesAccurate classificationCharacter and pattern recognitionNeural architecturesInternet trafficModel learning

The invention relates to a network traffic classification system and method based on depth discrimination features. The network traffic classification system comprises a preprocessing module and a model learning module, wherein the preprocessing module uses network flows with different lengths generated by different applications as input, and each network flow is expressed as a flow matrix with afixed size so as to meet the input format requirement of a convolutional neural network (CNN); and the model learning module trains the deep convolutional neural network by taking the flow matrix obtained by the preprocessing module as input under the supervision of a target function formed by a metric learning regularization item and a cross entropy loss item, so that the neural network can learnthe input flow matrix to obtain more discriminative feature representation, and the classification result is more accurate.

Owner:INST OF INFORMATION ENG CAS

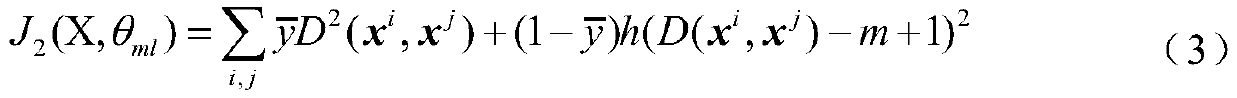

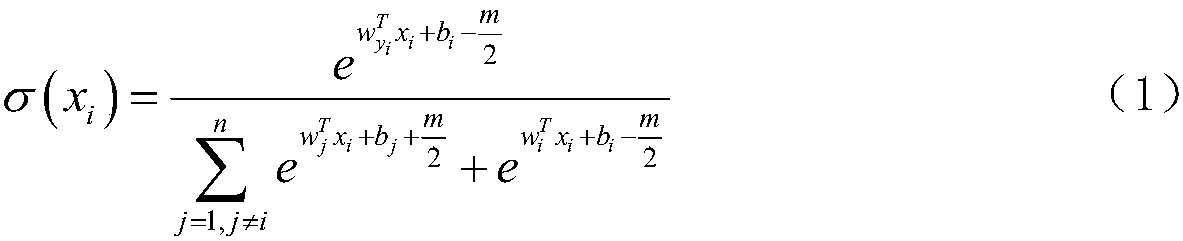

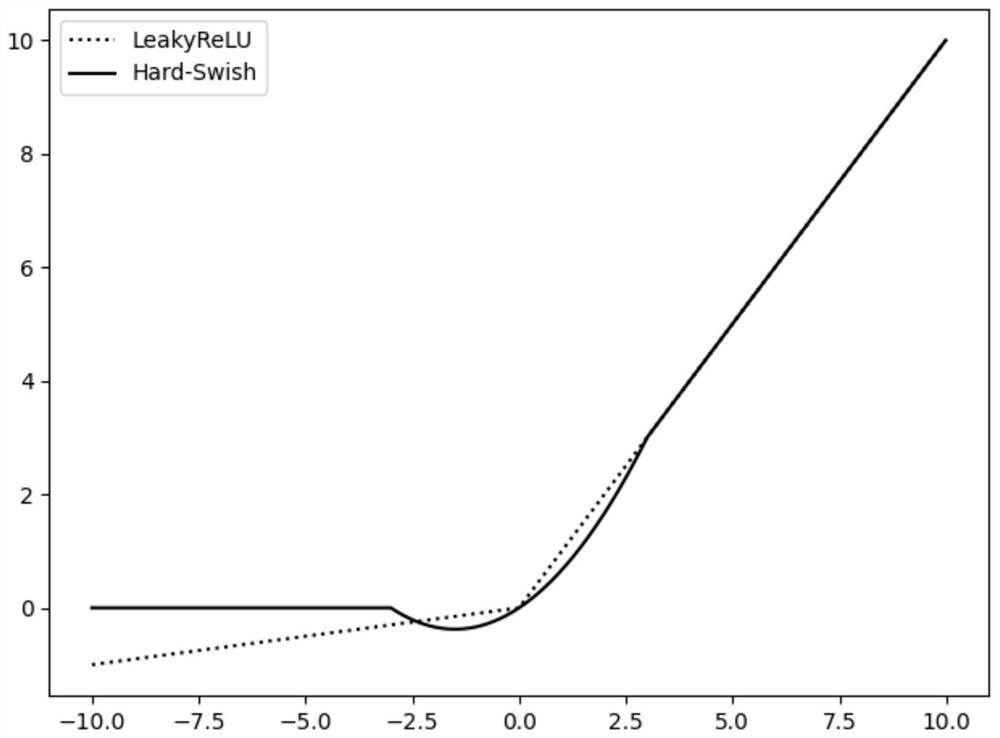

Image similarity calculation method based on improved SoftMax loss function

ActiveCN108960342AStrong image feature expression abilityAvoid image recognition accuracy is not highCharacter and pattern recognitionNeural architecturesTest phaseActivation function

The invention discloses an image similarity calculation method based on an improved Soft-Max loss function. An activating function of the improved Soft-Max layer in an image identification network isan improved Soft-Max activation function. In a reverse propagation process, the improved Soft-Max loss function is utilized for updating a network weight. Compared with a traditional Soft-Max loss function, the improved Soft-Max loss function has an advantage of increasing a decision edge which is obtained through learning of an image identification network. In a testing period, a trained image identification model is utilized for performing characteristic vector extraction on two testing images, and cosine similarity between the characteristic vector is calculated. Compared with an image similarity threshold, if the cosine similarity is higher than or equal with the image similarity threshold, a fact that the two images are in the same kind is determined; and if the cosine similarity is lower than the image similarity threshold, a fact that the two images are in different kinds is determined.

Owner:CHINA JILIANG UNIV

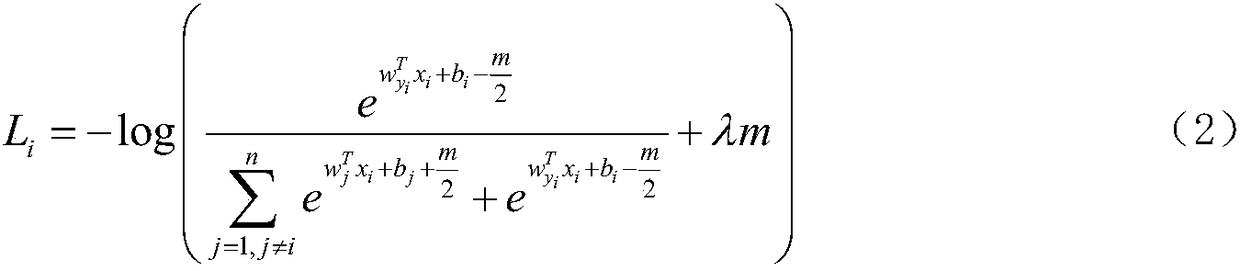

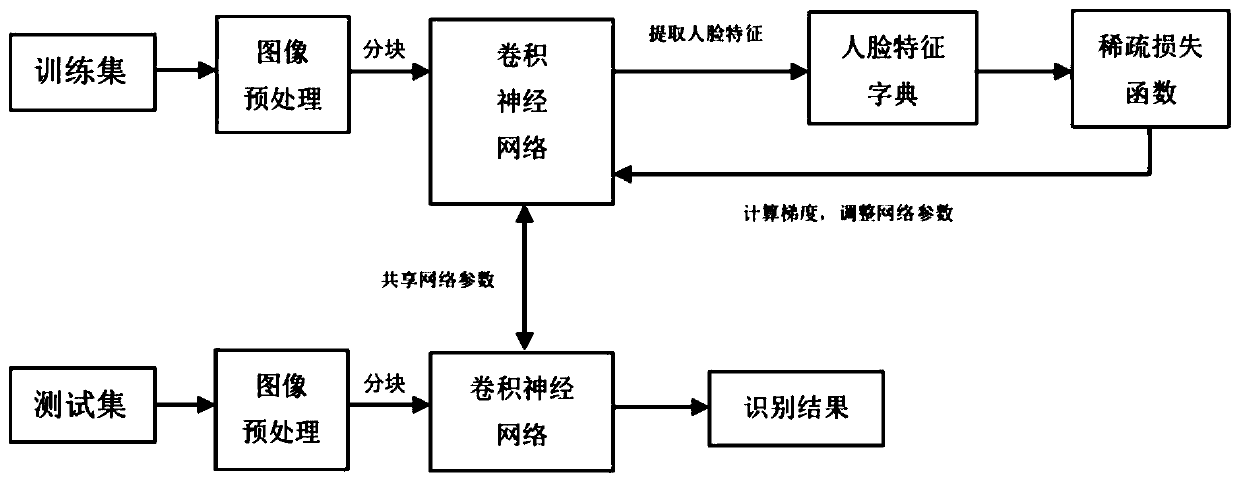

Small sample face recognition method combining sparse representation and neural network

PendingCN111126307AAchieve enhanced effectPreserve featuresCharacter and pattern recognitionInternal combustion piston enginesCosine similarityNeural network nn

The invention discloses a small-sample face recognition method combining sparse representation and a convolutional neural network, and the method comprises the following steps: firstly carrying out the preprocessing of a face image, carrying out the face alignment and five-sense-organ positioning according to face key points, and cutting the face image into four local regions; extacting local features and overall features with higher discrimination by using a convolutional neural network, and constructing a block feature dictionary in combination with a sparse representation algorithm, so as to achieve the effect of sample enhancement; adding sparse representation constraints and cosine similarity to redefine a loss function of the convolutional neural network so as to reduce the intra-class distance between the features and expand the inter-class distance; and finally, carrying out face recognition by adopting enhanced sparse representation classification. The method is high in recognition performance, and has certain robustness for shielding changes in a small sample problem.

Owner:SOUTHEAST UNIV

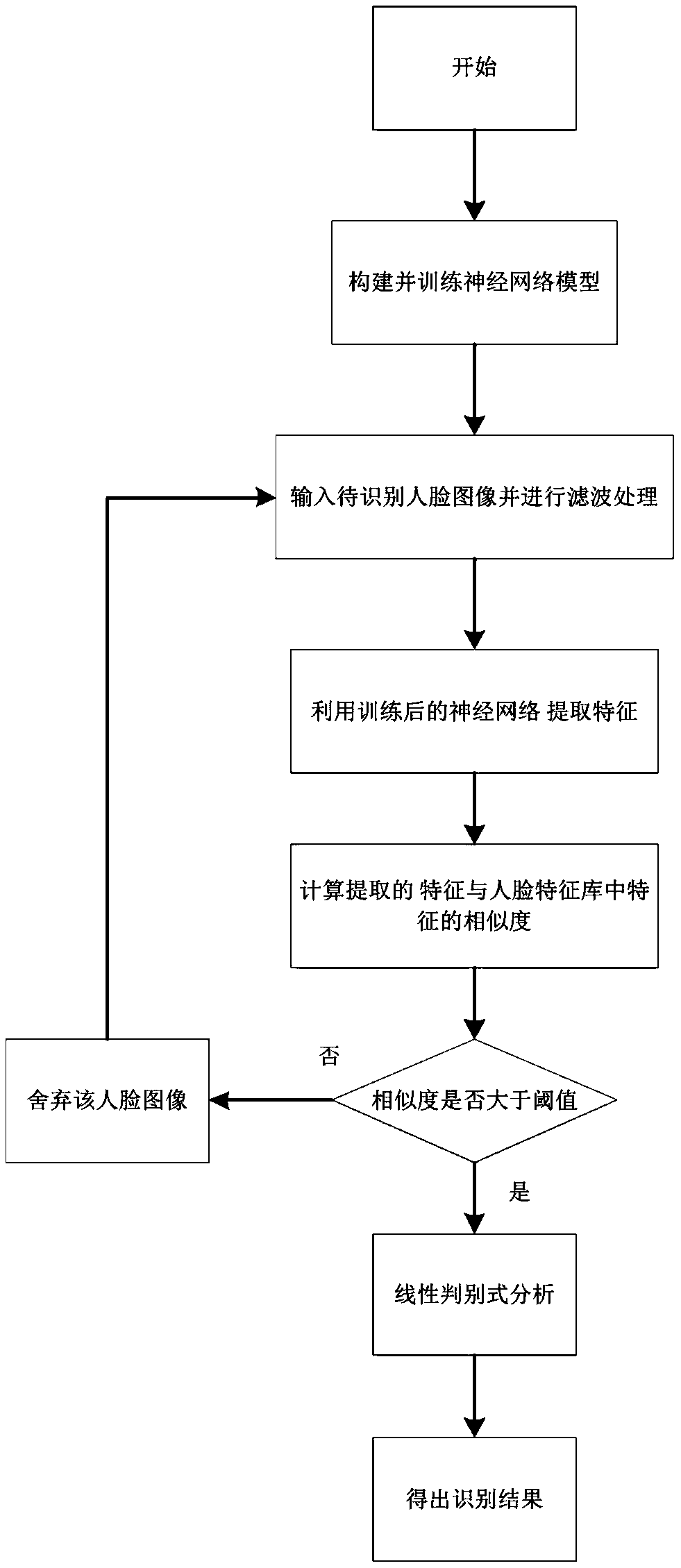

Face recognition method based on feature analysis

InactiveCN108898123AImprove performanceNo loss of image detailCharacter and pattern recognitionDiscriminantData set

The invention discloses a face recognition method based on feature analysis, and belongs to the field of face recognition. A neural network model for feature extraction is constructed and trained by using a face data set so as to obtain the trained neural network model; constructing a face feature library; the face image to be recognized is filtered and the processed image is inputted into the trained neural network model to extract the features; and the similarity between the extracted features and the features in the face feature library is calculated, if the similarity is greater than the threshold, the linear discriminant is applied to improve the matching accuracy of the features of which the similarity is greater than the threshold and the recognition result is obtained, or the faceimage corresponding to the feature is discarded. The face recognition method is suitable for multiple actual environments such as the access control system, the bank system, etc. Compared with the mainstream face recognition SDK, the system algorithm has good robustness to the environment change.

Owner:CHENGDU KOALA URAN TECH CO LTD

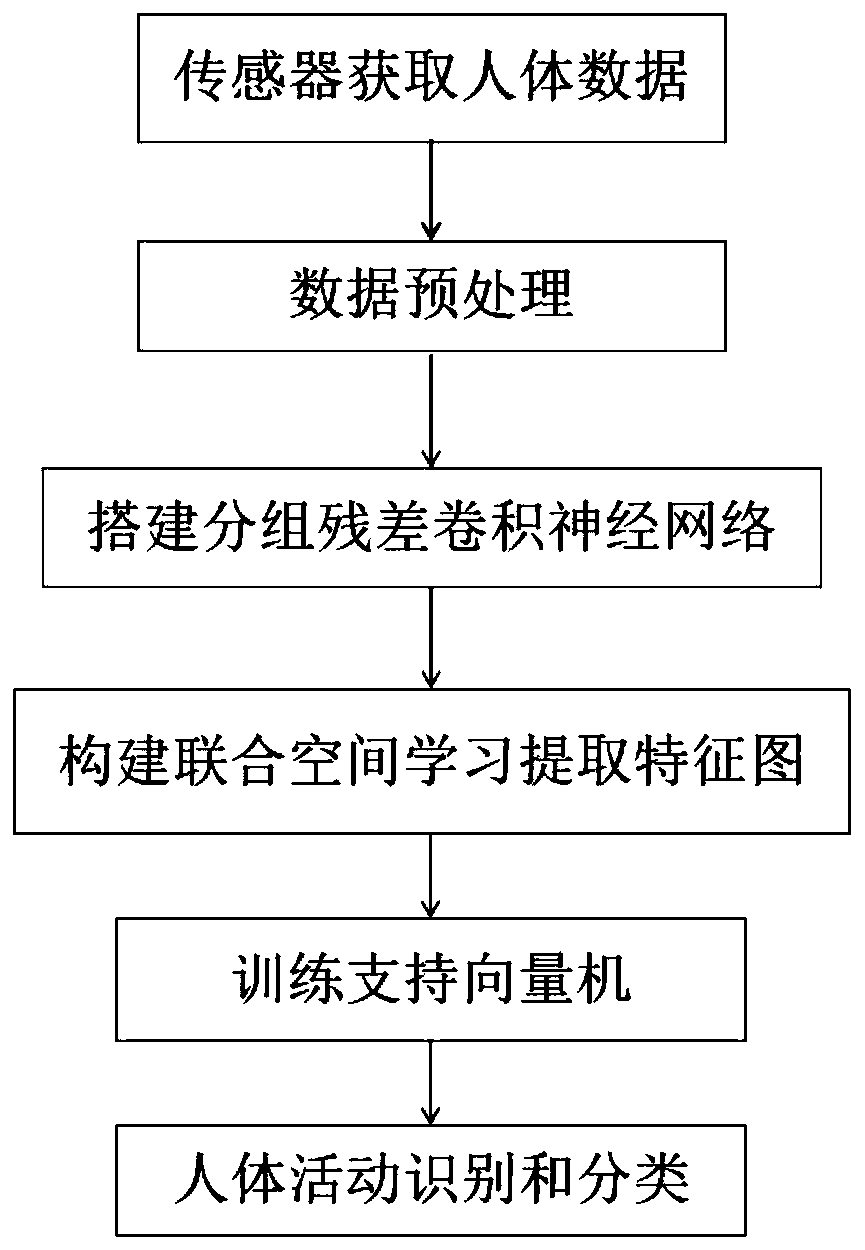

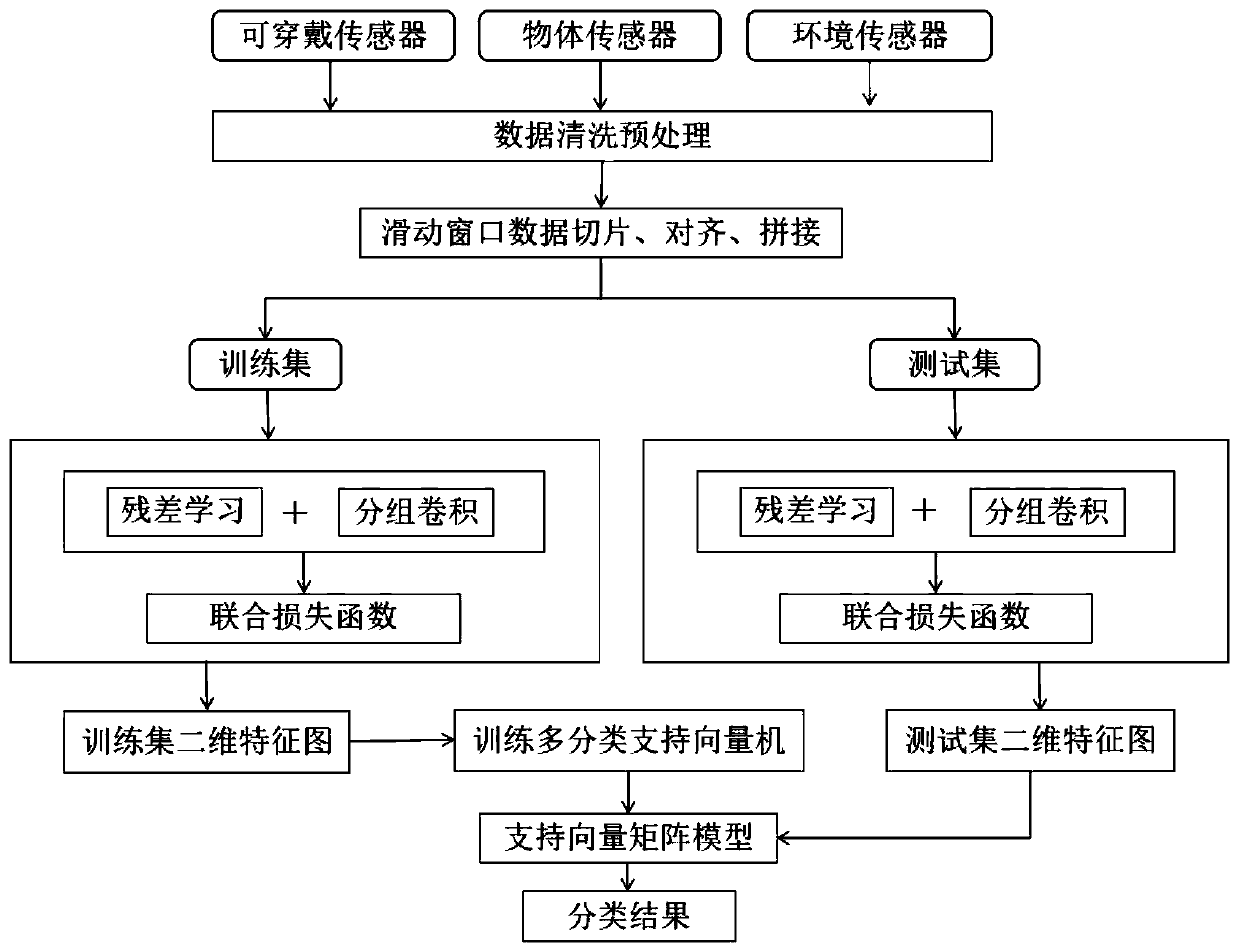

Human body activity recognition method based on grouping residual joint spatial learning

PendingCN111597869AIncrease the distance between classesReduce the intra-class distanceCharacter and pattern recognitionNeural architecturesHuman bodyActivity classification

A human body activity recognition method based on grouping residual joint spatial learning comprises the following steps: step 1, collecting human, object and environment signals by using various sensors, grouping, aligning and slicing single-channel data based on a sliding window, and constructing a two-dimensional activity data subset; step 2, building a grouping residual convolutional neural network, and constructing a joint space loss function optimization network model by utilizing a center loss function and a cross entropy loss function in order to extract a feature map of a two-dimensional activity data subset; and step 3, training a multi-classification support vector machine by utilizing the extracted two-dimensional features to realize a human body activity classification task based on the feature map. According to the invention, fine human body activities can be identified; the inter-class distance of the extracted spatial features is increased in combination with a joint spatial loss function, and the intra-class distance is reduced; based on the spatial feature map of the human body activity data, a multi-classification support vector machine is combined to carry outclassification learning on the feature map, and the accuracy of human body activity classification is improved.

Owner:ZHEJIANG UNIV OF TECH

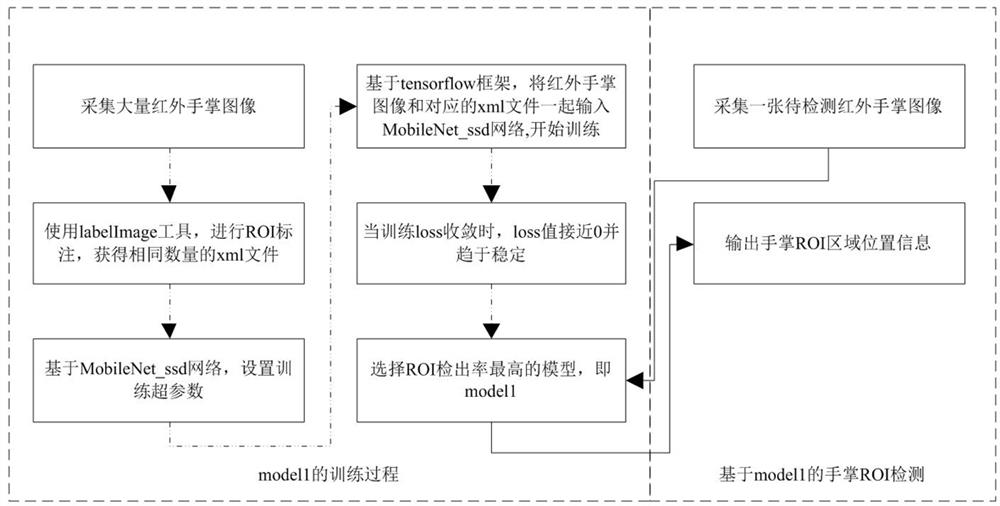

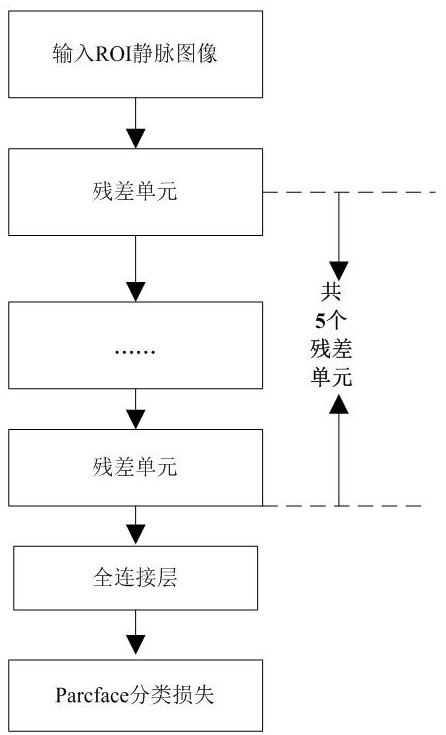

Non-contact palm vein recognition method based on improved residual network

ActiveCN112200159AResolve scalingSolve the problem of translationNeural architecturesSubcutaneous biometric featuresFeature vectorRadiology

The invention relates to a non-contact palm vein recognition method based on an improved residual network. The non-contact palm vein recognition method comprises the following steps: 1) collecting twoinfrared images of the same palm; 2) positioning an ROI area of a palm; 3) carrying out palm vein registration; 4) carrying out palm vein verification; and 5) carrying out palm vein verification judgment: setting an identification threshold T, calculating the distance between the feature vector and the registration template, judging that palm vein verification succeeds if the distance is smallerthan the set identification threshold T, and otherwise, judging that verification fails. According to the improved residual network structure provided by the invention, texture features of different scales of an input sample can be extracted during training, so that the trained model has the capability of extracting multi-scale texture information, and the extracted feature vector better expressesthe information of the input sample; and various scaling and translation problems of an input sample can be solved to a certain extent.

Owner:TOP GLORY TECH INC CO LTD

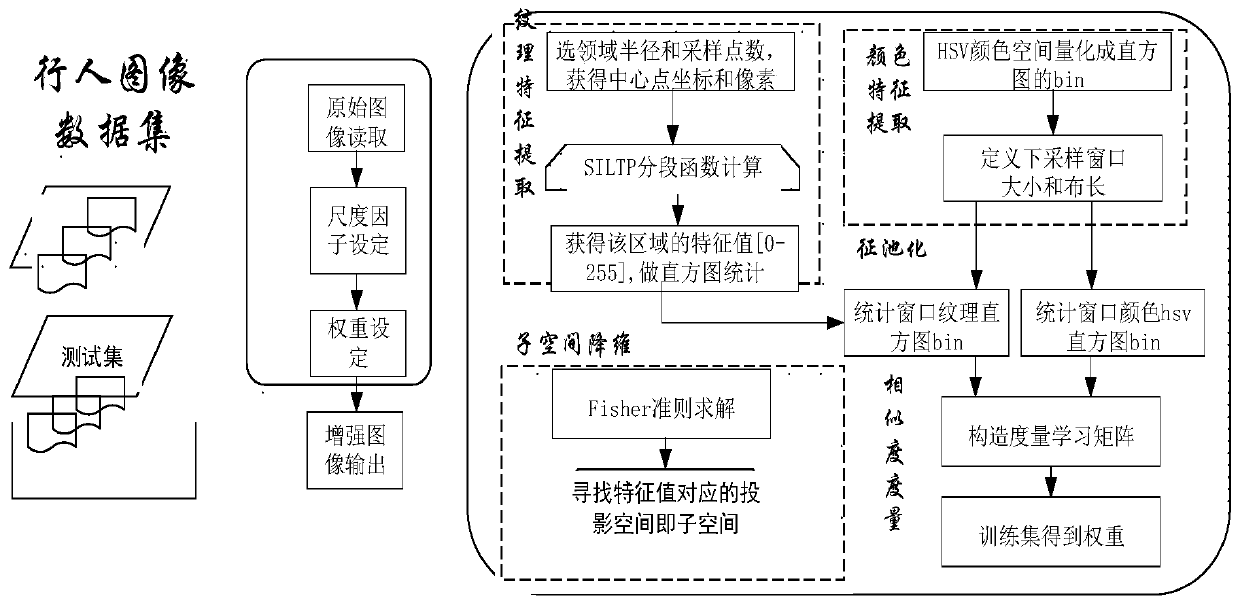

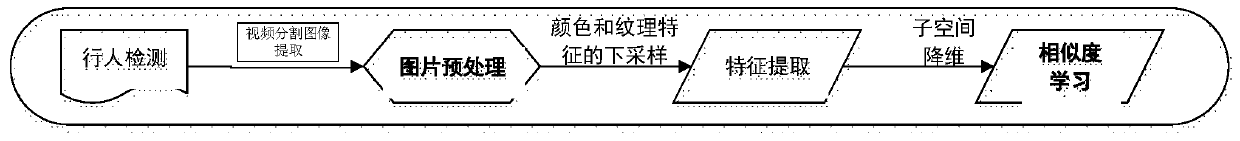

Pedestrian re-identification method based on local high-frequency features and hybrid metric learning

PendingCN111259756AEliminate uneven lightingScale hasBiometric pattern recognitionNeural learning methodsFeature setSmart surveillance

The invention discloses a pedestrian re-identification method based on local high-frequency features and hybrid metric learning. A local frequency feature representation method is adopted, a color andtexture feature set of a target image is extracted under illumination and visual angle change conditions, a sliding window is adopted to describe local details and extract image features, the maximumvalue of features appearing locally at high frequency is taken as a feature value, and multi-scale feature descriptor cascading is obtained. After subspace dimension reduction, weight coefficients ofa metric learning matrix and a hybrid metric learning matrix thereof are obtained according to the posterior probability of sample occurrence, and finally a similarity degree as a basis for pedestrian re-identification is obtained. According to the invention, the pedestrian appearance features can be used to identify images related to the given pedestrian in a multi-camera monitoring scene, and the method has good application values in the fields of intelligent monitoring, intelligent security, criminal investigation, pedestrian retrieval, pedestrian tracking, behavior analysis and the like.

Owner:XIAN PEIHUA UNIV

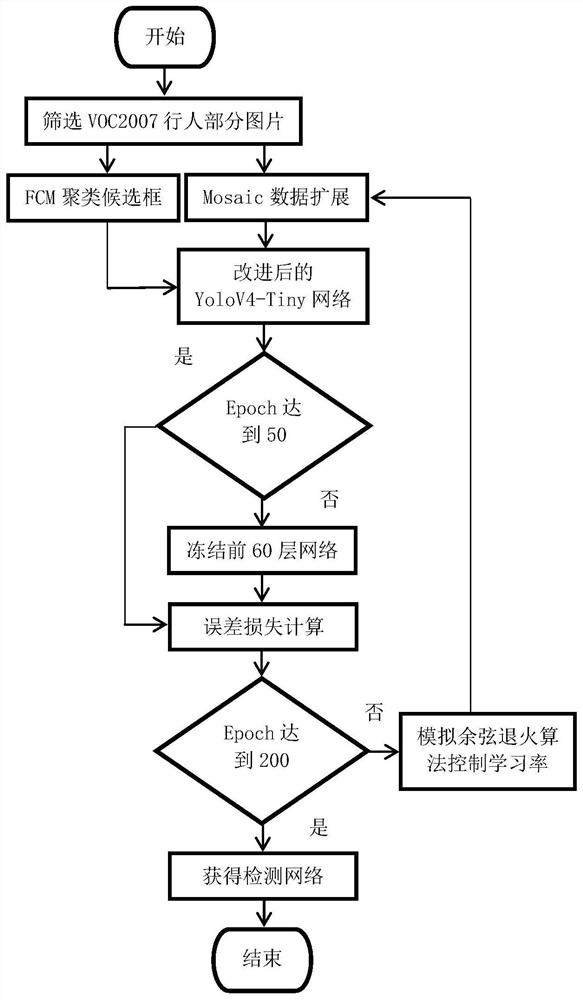

Single-camera multi-target pedestrian tracking method

PendingCN112836640AResolve inconsistenciesHigh precisionImage enhancementImage analysisCorrection algorithmComputer graphics (images)

The invention relates to a single-camera multi-target pedestrian tracking method, which comprises the following steps: firstly, acquiring pedestrian video images by using a camera installed in a monitoring area, then correspondingly adjusting the size of the acquired images, inputting the adjusted images into a trained and improved YoloV4-Tiny pedestrian detection network, removing an abnormal pedestrian detection frame in a detection result by adopting a binning method, then inputting the screened detection result into a DeepSort algorithm to track pedestrians and record tracking information, and finally correcting an abnormally disappeared pedestrian target by adopting a correction algorithm based on a pedestrian unmatched frame number and a predicted position. Based on the improved YoloV4-Tiny, the binning method, the improved DeepSort and the correction method of the pedestrian unmatched frame number and the predicted position, high performance suitable for a real scene is basically achieved, and the method has the advantages of simultaneous multi-target positioning, accurate positioning, high real-time performance and high stability.

Owner:ZHEJIANG UNIV OF TECH

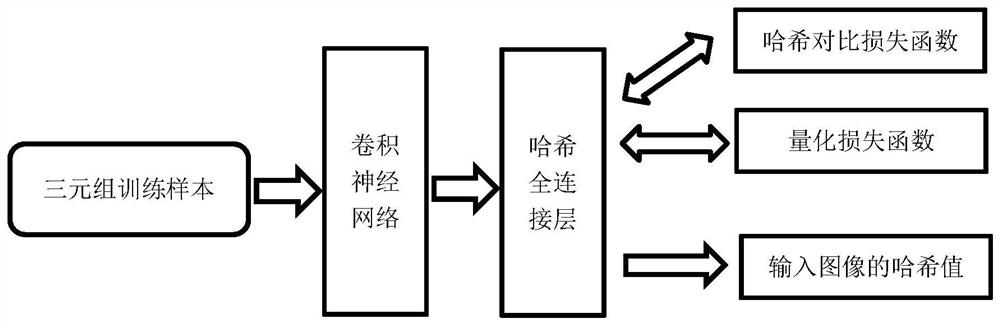

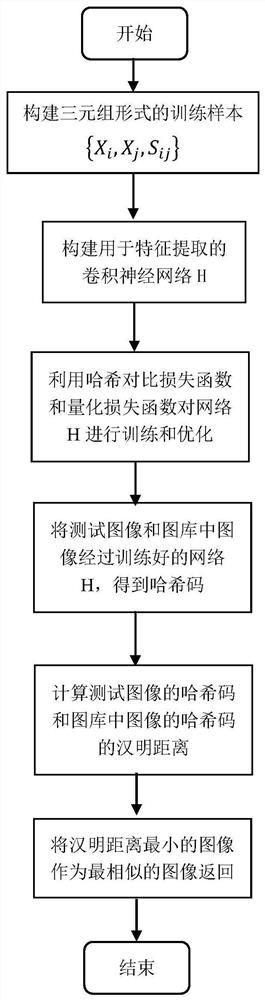

Deep hash method based on metric learning

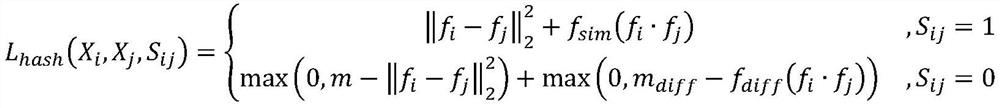

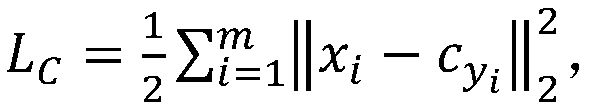

ActiveCN111611413AReduce the intra-class distanceFast and Accurate Image RetrievalStill image data indexingStill image data queryingQuantization (image processing)Feature vector

The invention discloses a deep hash method based on metric learning, relates to the field of computer vision and image processing, and solves the problems that a comparison loss function of an existing deep hash method can only enable feature vectors of images of the same category before quantization to be close as much as possible, but cannot encourage the same symbol; the values of different types of images before quantization are far away as far as possible, but the symbols cannot be encouraged to be opposite; finally, the quantized hash code is poor in discriminability, and misjudgment andother problems are caused. According to the invention, a hash comparison loss function is constructed; sign bit constraint is carried out on the real numerical value feature vector before quantization, so that the hash code of the representative image obtained after the real numerical value feature vector before quantization is quantized by a sign function is more accurate, and the sign is constrained through two control functions of fsim (fi.fj) and fdiff (fi.fj), other parts in the expression are used for enabling the feature values of the same category of images to be close and the featurevalues of different categories of images to be far. According to the method, the classification precision is effectively improved, and the misjudgment rate is reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Face recognition method

InactiveCN111401299AImprove effectivenessImprove fitting abilityCharacter and pattern recognitionNeural architecturesData setTest set

The invention provides a face recognition method. The face recognition method comprises the following steps: S1, obtaining face training test resources from a public data set; S2, preprocessing the image; S3, inputting the processed face data set into a convolutional neural network of a novel network structure of an improved NVM module to obtain a trained lightweight convolutional neural network;S4, inputting the face images in the test set into the trained lightweight convolutional neural network model, and judging whether the lightweight model can be accurately verified to effectively classify the face data set or not.

Owner:SHANGHAI APPLIED TECHNOLOGIES COLLEGE

Internet encrypted traffic interaction feature extraction method based on graph structure

ActiveCN112217834AReduce the intra-class distanceIncrease the distance between classesCharacter and pattern recognitionNeural architecturesData packFeature extraction

The invention discloses an Internet encrypted traffic interaction feature extraction method based on a graph structure, belongs to the technical field of encrypted network traffic classification, andis applied to fine-grained classification of network traffic after TLS encryption. Encrypted traffic interaction characteristics based on the graph structure are extracted from an original packet sequence, and the graph structure characteristics include sequence information, packet direction information, packet length information, burst traffic information and the like of data packets. Through quantitative calculation, compared with a packet length sequence, the intra-class distance is obviously reduced and the inter-class distance is increased after the graph structure characteristics are used. According to the method, the encrypted traffic characteristics with richer dimensions and higher discrimination can be obtained, and then the method is combined with deep neural networks such as agraph neural network to carry out refined classification and identification of the encrypted traffic. A large number of experimental data experiments prove that compared with an existing method, the method adopting the graph structure characteristics in combination with the graph neural network has higher accuracy and lower false alarm rate.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

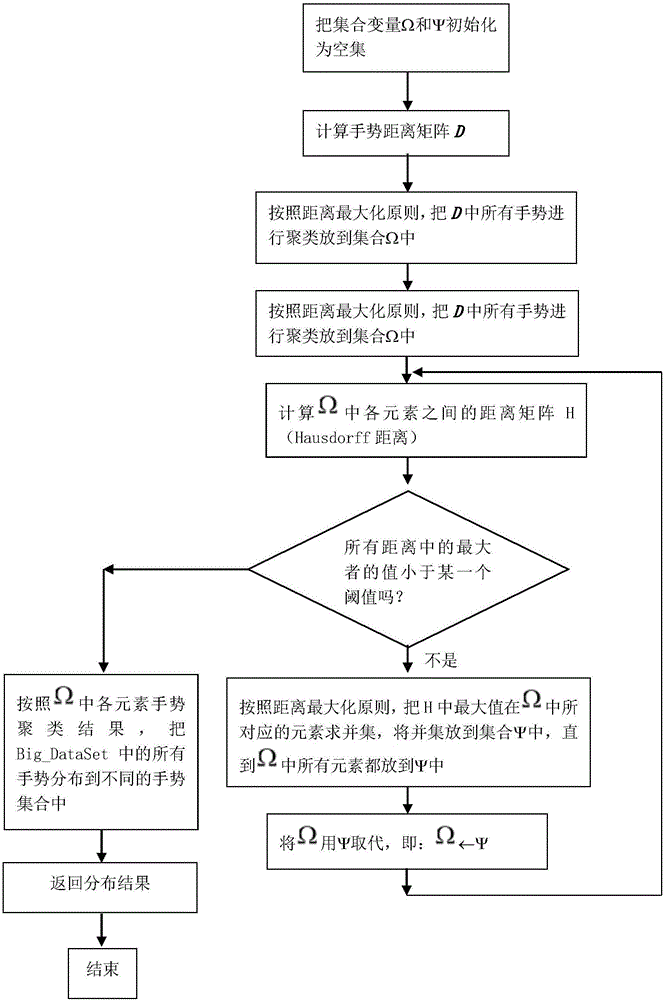

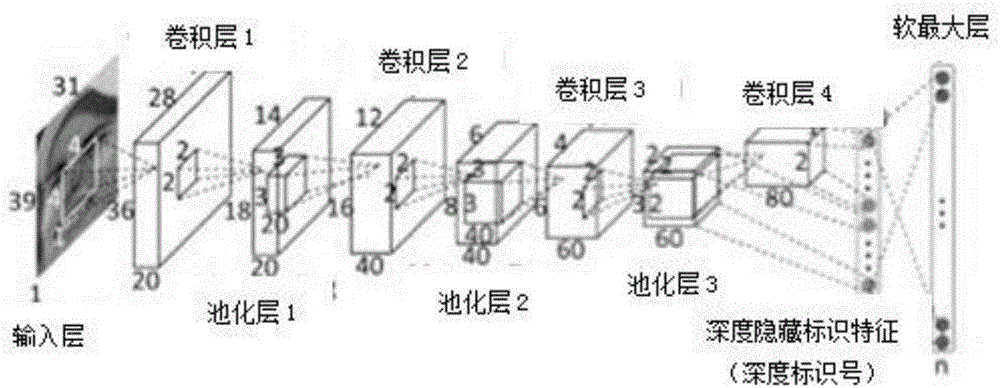

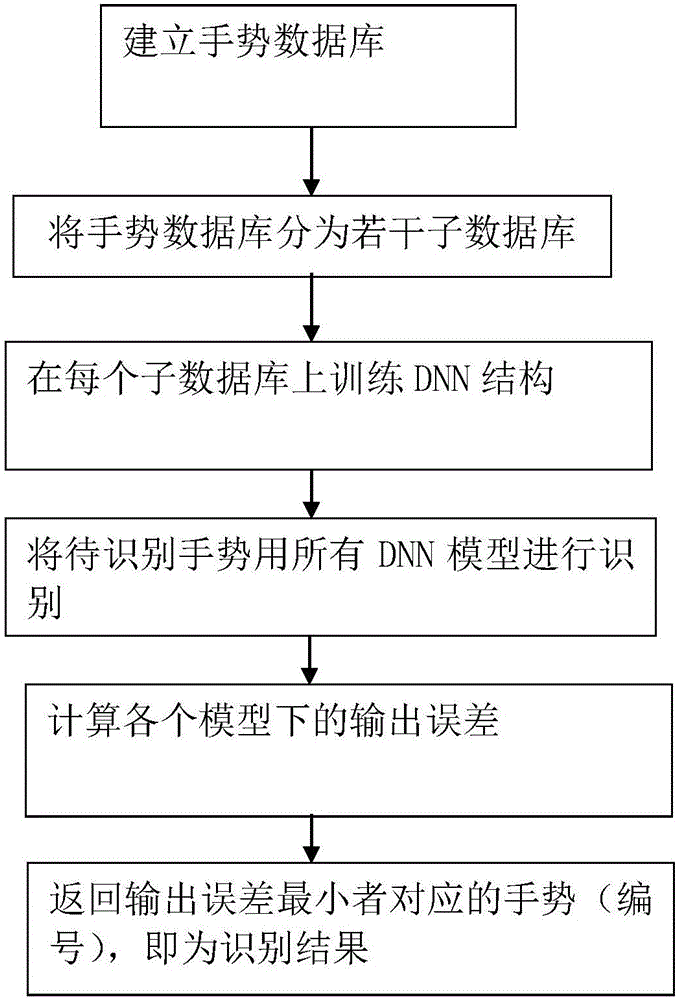

DNN group gesture identification method based on optimized gesture database distribution

InactiveCN106529475AClass spacing is largeReduce the intra-class distanceCharacter and pattern recognitionNeural learning methodsData setStructure of Management Information

The invention provides a DNN group gesture identification method based on optimized gesture database distribution, wherein the method belongs to the field of a computer. The method comprises the steps of (1), acquiring gestures and forming a gesture data set; (2), re-classifying the gesture set by means of optimized gesture database distribution for obtaining a plurality of sub-databases; (3), performing learning training on a DNN model on each sub-database, and obtaining DNN structures; (4), inputting a to-be-identified gesture, acquiring the to-be-identified gesture by means of Kinect equipment, realizing gesture segmentation by means of a background subtracting method, and separating human hands from a background; (5), respectively transmitting the to-be-identified gesture to each DNN structure for identification, and calculating an output error E of each DNN identification result by means of a formula of E=(anticipated output)-(network response); and (6), returning an output result which corresponds with a least output error E, and determining the output result as the identified gesture.

Owner:UNIV OF JINAN

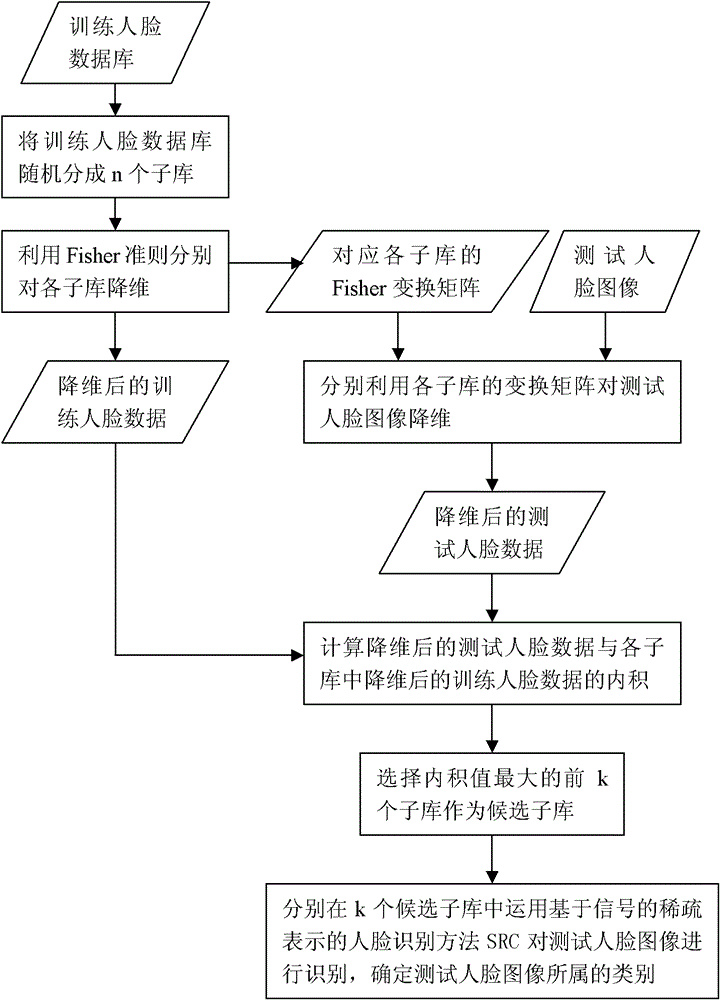

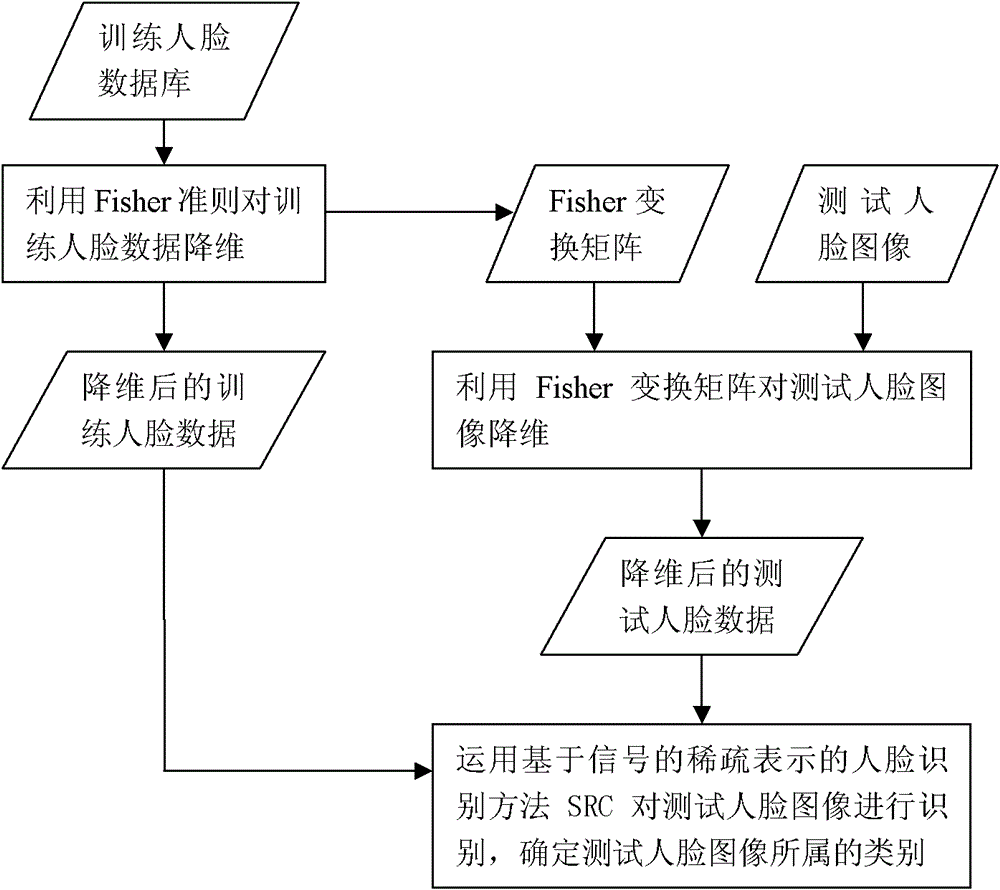

Sparse characteristic face recognition method based on multilevel classification

InactiveCN101976360BEfficient extractionReduce computational complexityCharacter and pattern recognitionState of artComputation complexity

The invention discloses a sparse characteristic face recognition method based on multilevel classification, which mainly solves the defect that the traditional face recognition method can not effectively use multi-class face recognition. A realization process comprises the following steps of: (1) randomly dividing a face database for training into n sub-bases, respectively reducing the dimension of each sub-base, and retaining training face data after dimension reduction and a transformation matrix corresponding to each sub-base; (2) inputting a test face image, reducing the dimension of the test face image by using the transformation matrix of each sub-base, and retaining the test face data after dimension reduction; (3) carrying out inner-product operation by using the test face data after dimension reduction and training face data in each sub-base, using the front k sub-bases with a maximum inner product as candidate sub-bases, and reducing a searching range into the k sub-bases; (4) respectively recognizing faces in the k sub-bases and ensuring the classification of the test face images. Compared with the prior art, the invention is capable of effectively extracting face features and reducing computation complexity and is suitable for multi-class face recognition.

Owner:XIDIAN UNIV

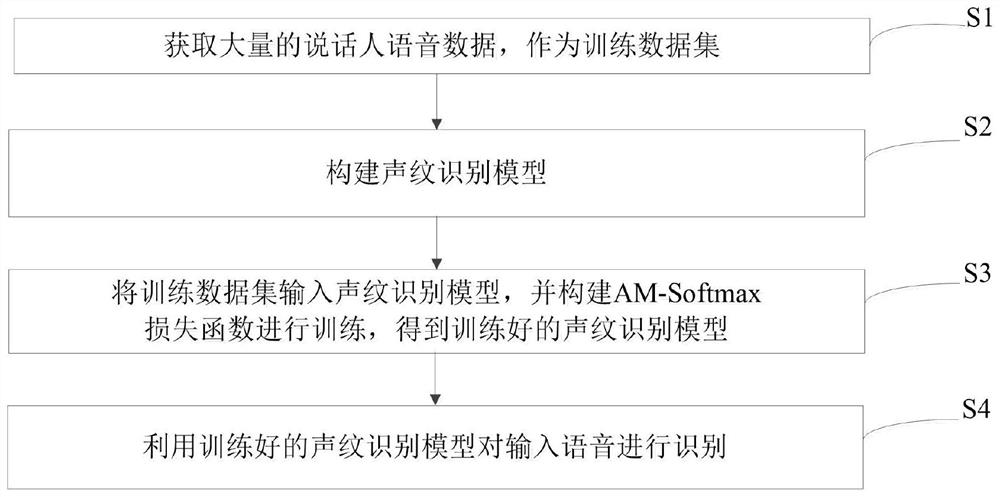

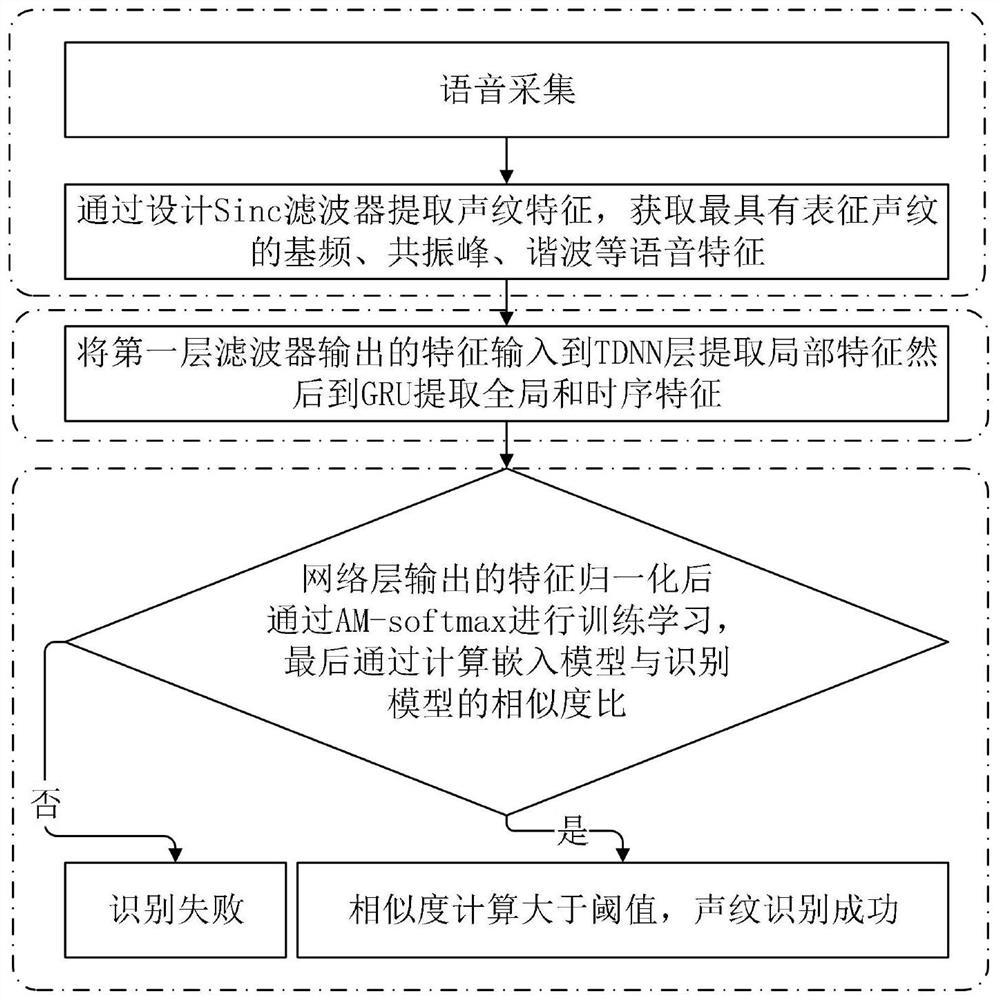

End-to-end text-independent voiceprint recognition method and system

The invention provides an end-to-end text-independent voiceprint recognition method and system. The method comprises the following steps of capturing important narrow-band loudspeaker characteristics of an original voice sample by designing a filter based on a Sinc function; then, using a time delay neural network (TDNN) and a gated loop unit (GRU) for generating a hybrid neural network structure of complementary speaker information of different levels, adopting a multi-level pooling strategy, adding an attention mechanism to a pooling layer, and extracting feature information which most represents the frame level and the utterance level of a speaker from a time delay neural network layer and a gated loop unit layer, performing regularization processing on the speaker vector extraction layer; carrying out training through an AM-softmax loss function; and finally, realizing an end-to-end text-independent voiceprint recognition process through similarity calculation of the embedded model and the recognition model. Therefore, the accuracy and applicability of end-to-end text-independent voiceprint recognition are improved.

Owner:WUHAN UNIV OF TECH

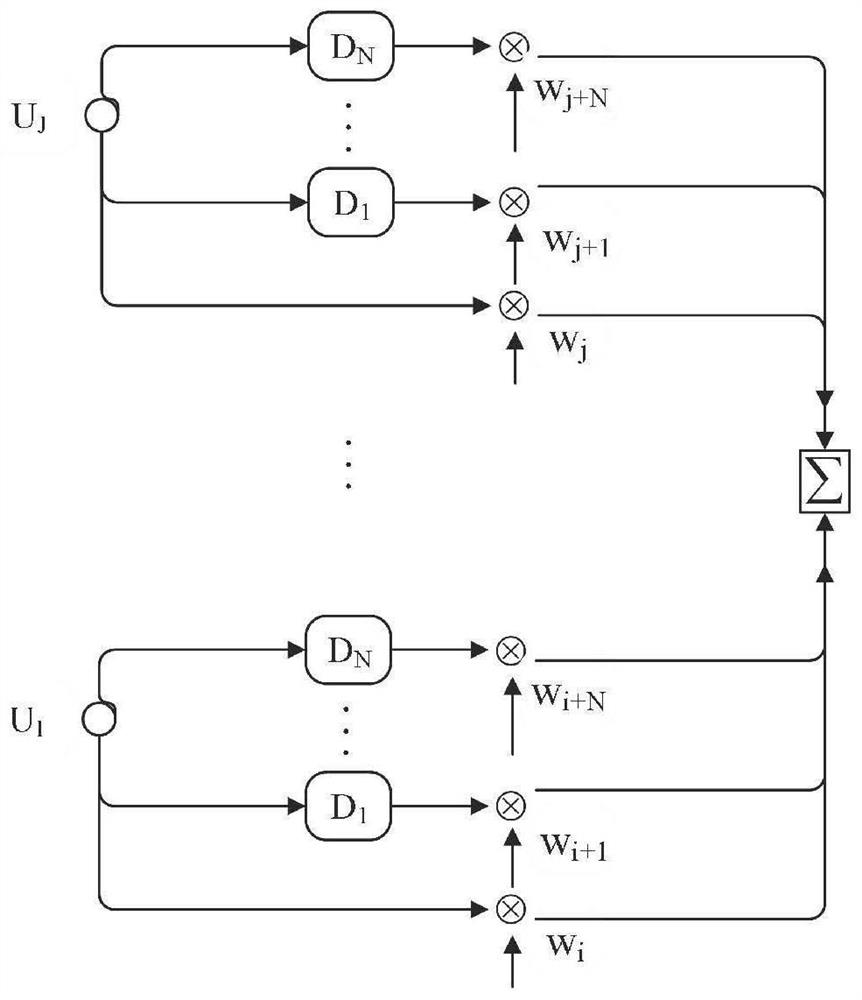

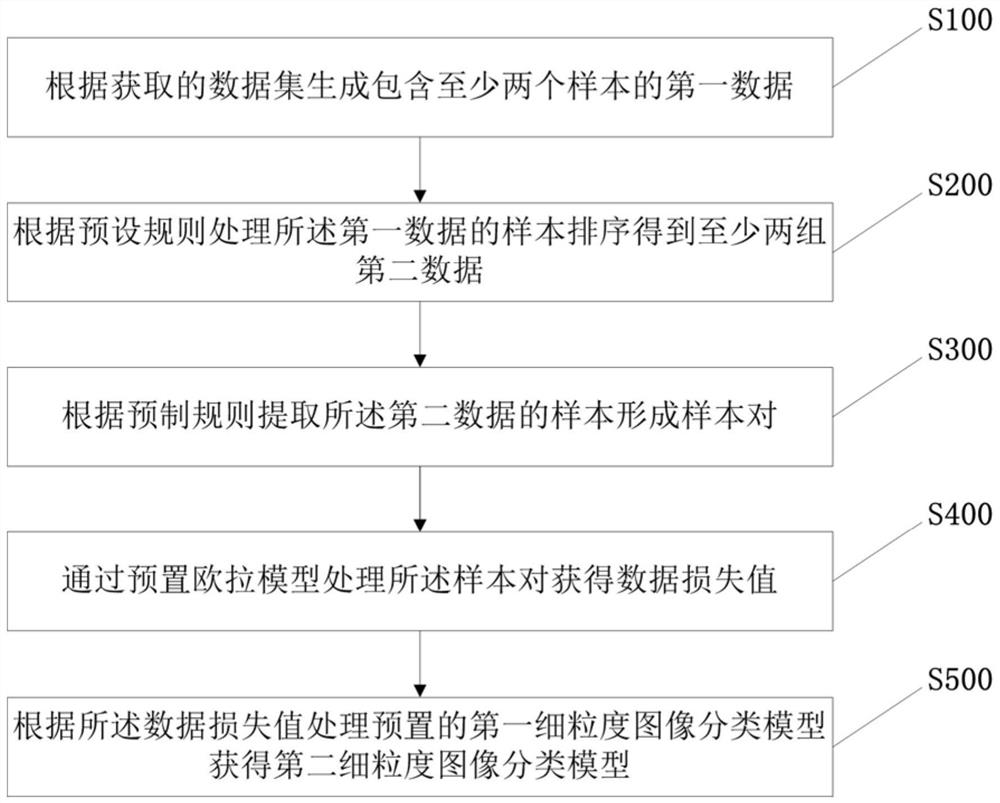

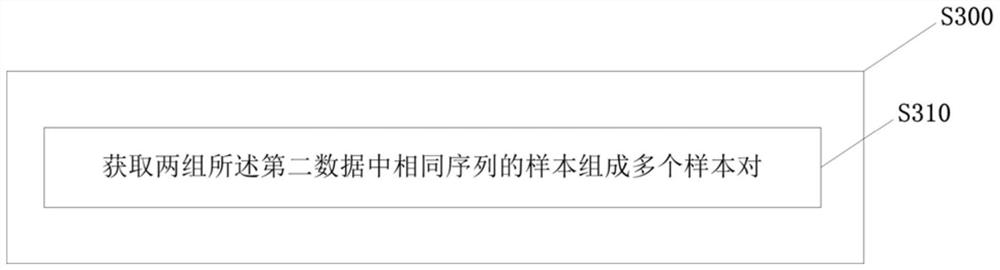

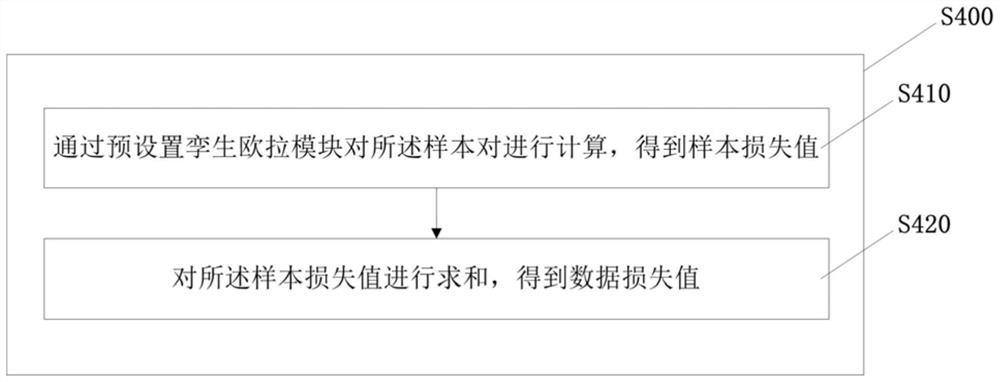

Fine-grained image classification model processing method and device

PendingCN111931823AImprove performanceIncrease the distance between classesCharacter and pattern recognitionData setAlgorithm

The embodiment of the invention provides a fine-grained image classification model processing method, and relates to the technical field of image classification. The fine-grained image classificationmodel processing method comprises the steps of generating first data comprising at least two samples according to an obtained data set; processing sample sorting of the first data according to a preset rule to obtain at least two groups of second data; extracting a sample of the second data according to a preset rule to form a sample pair; processing the sample pair through a preset Euler model toobtain a data loss value; and processing a preset first fine-grained image classification model according to the data loss value to obtain a second fine-grained image classification model. Accordingto the invention, the class spacing can be reduced and the intra-class distance can be increased, so that the fine-grained image classification model can improve the fine-grained image classificationperformance.

Owner:PING AN TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com