Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

62results about How to "Increase the distance between classes" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

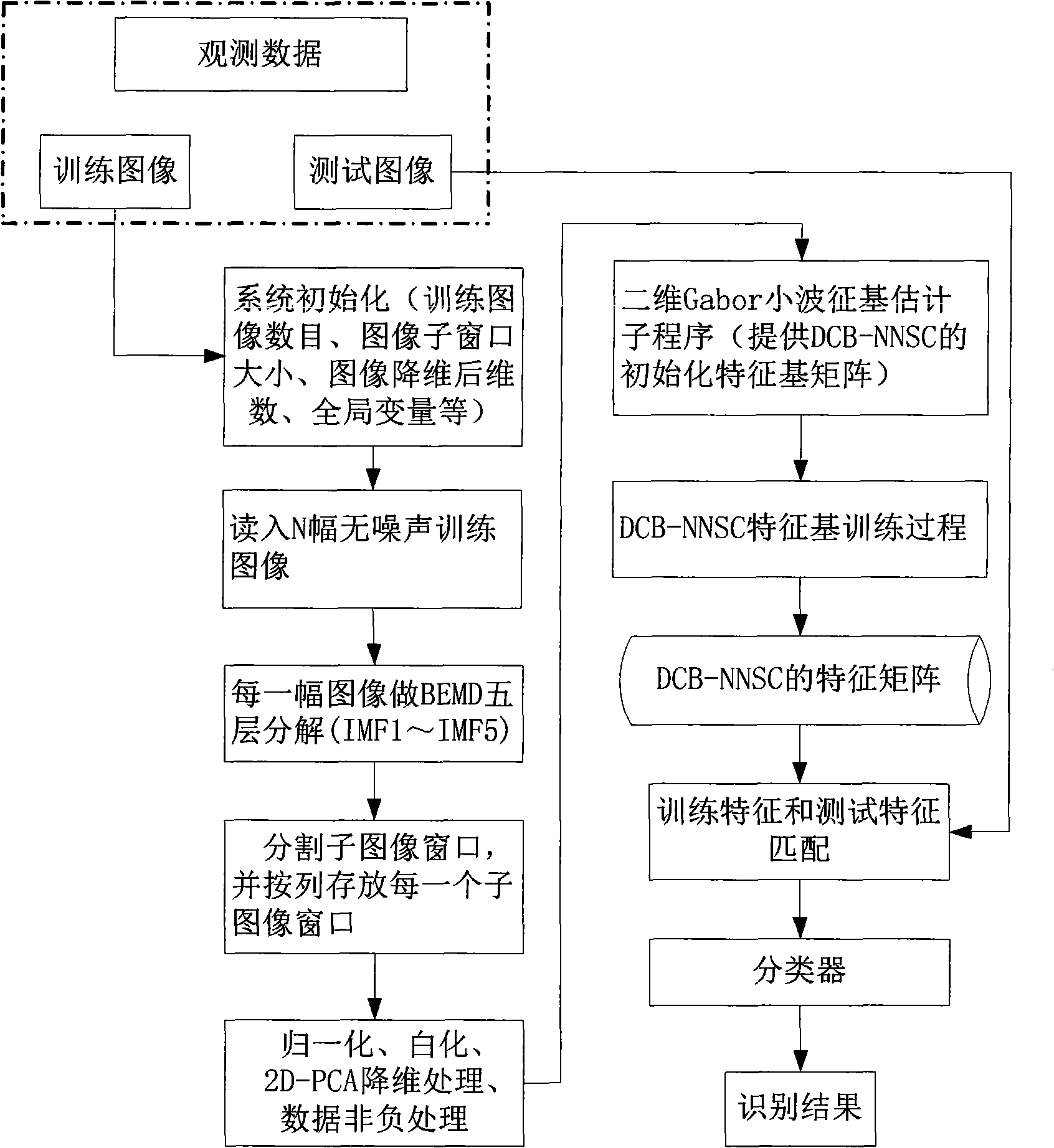

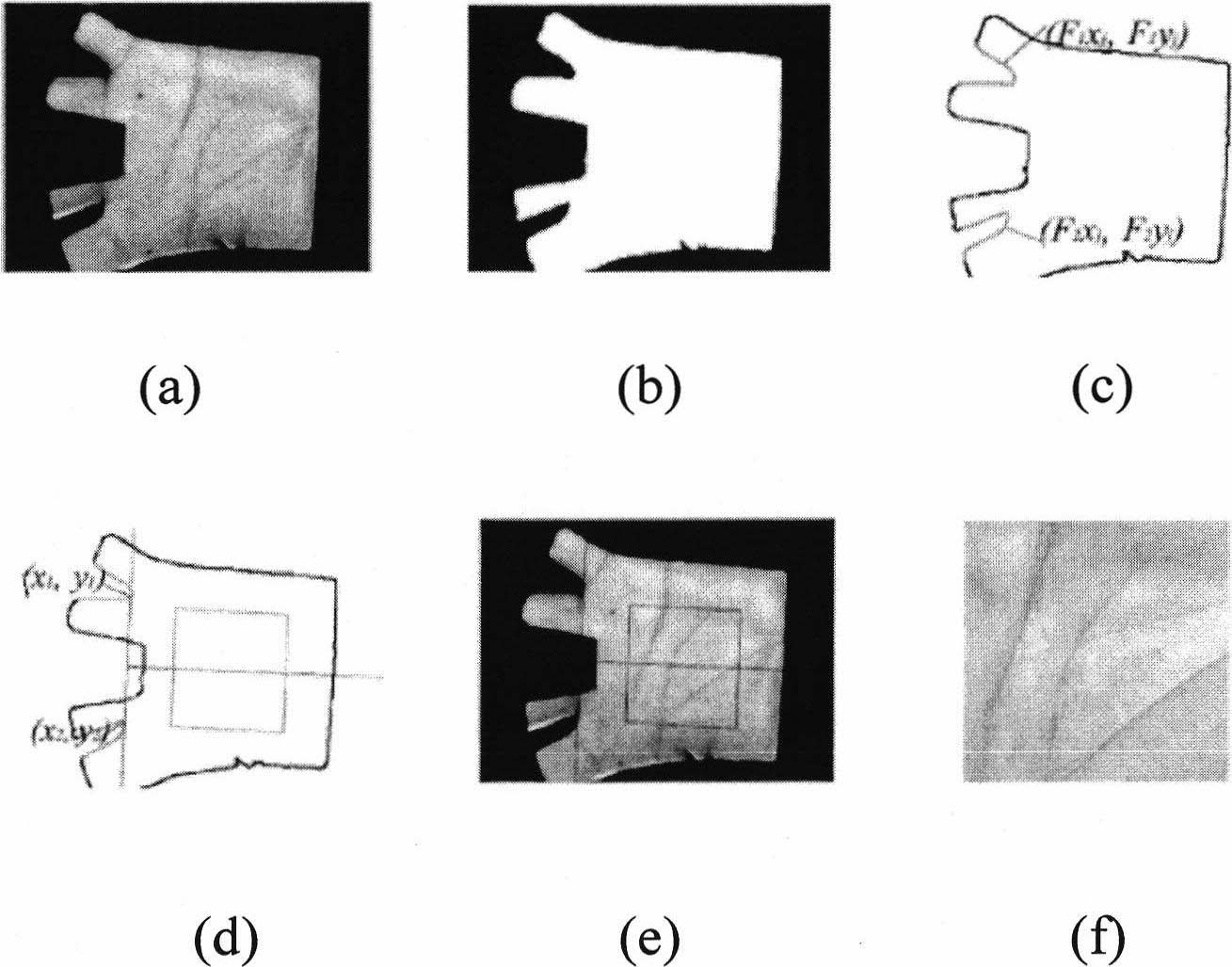

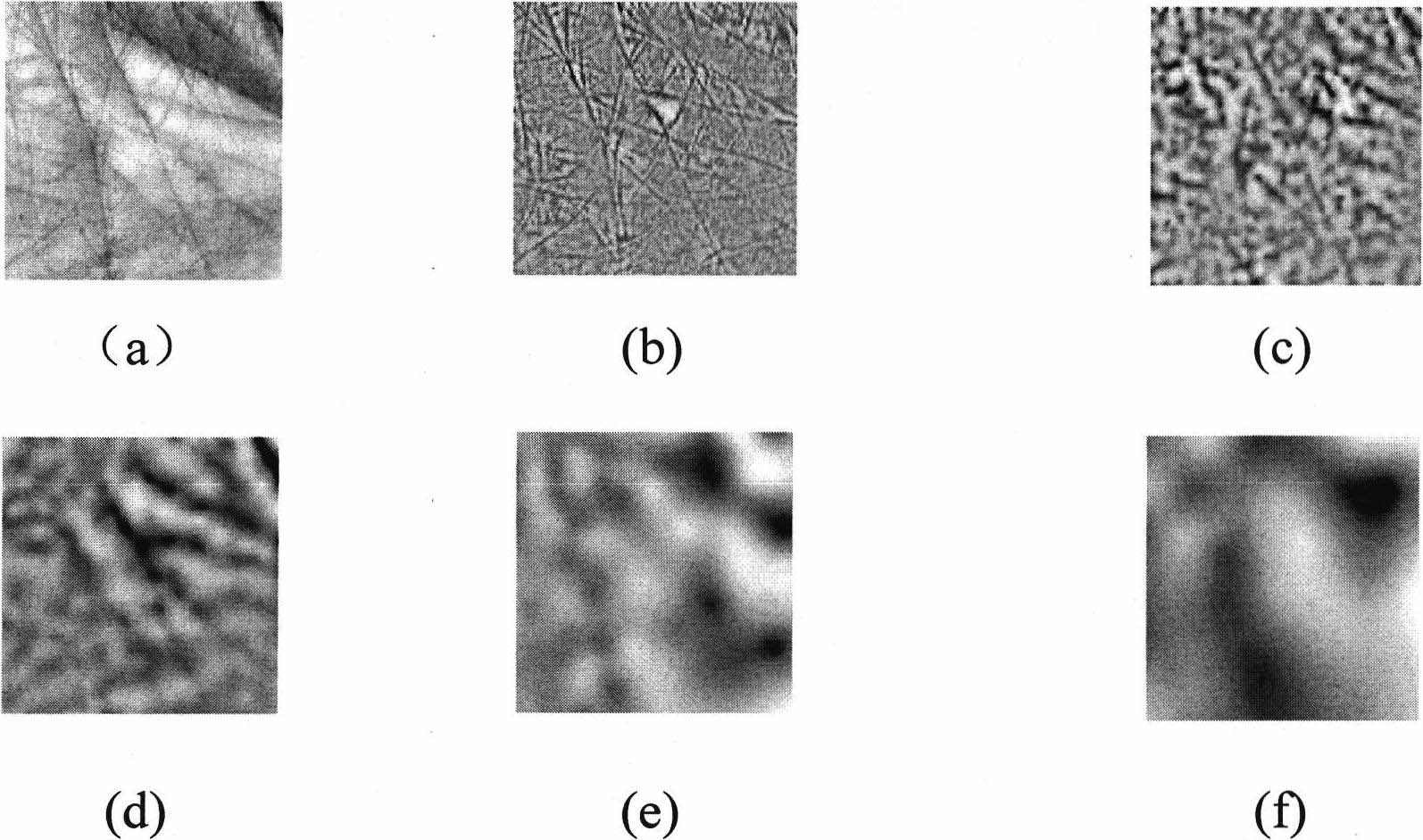

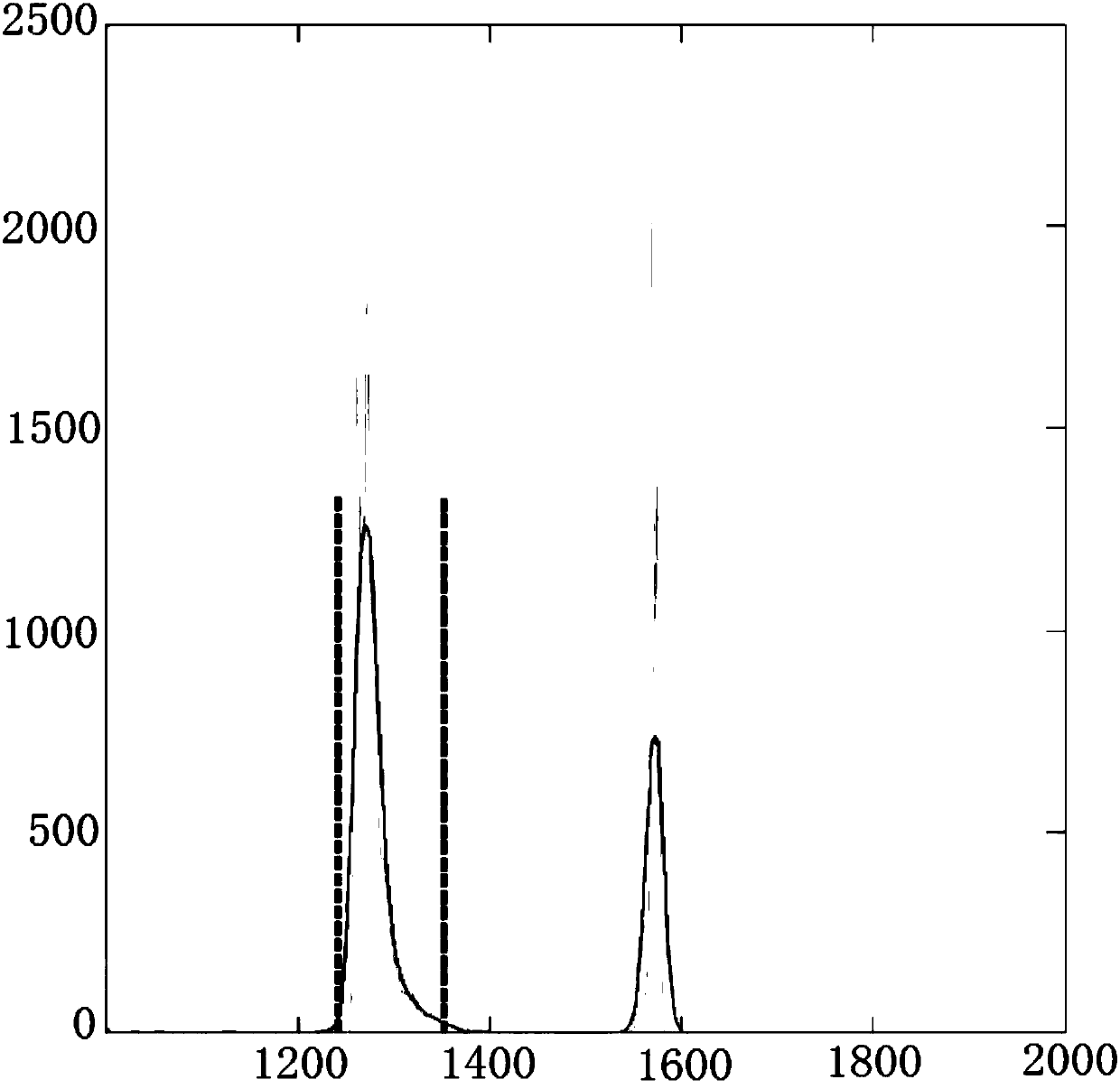

Method for extracting characteristic of natural image based on dispersion-constrained non-negative sparse coding

InactiveCN101866421AAggregation tightEfficient extractionCharacter and pattern recognitionFeature extractionNerve cells

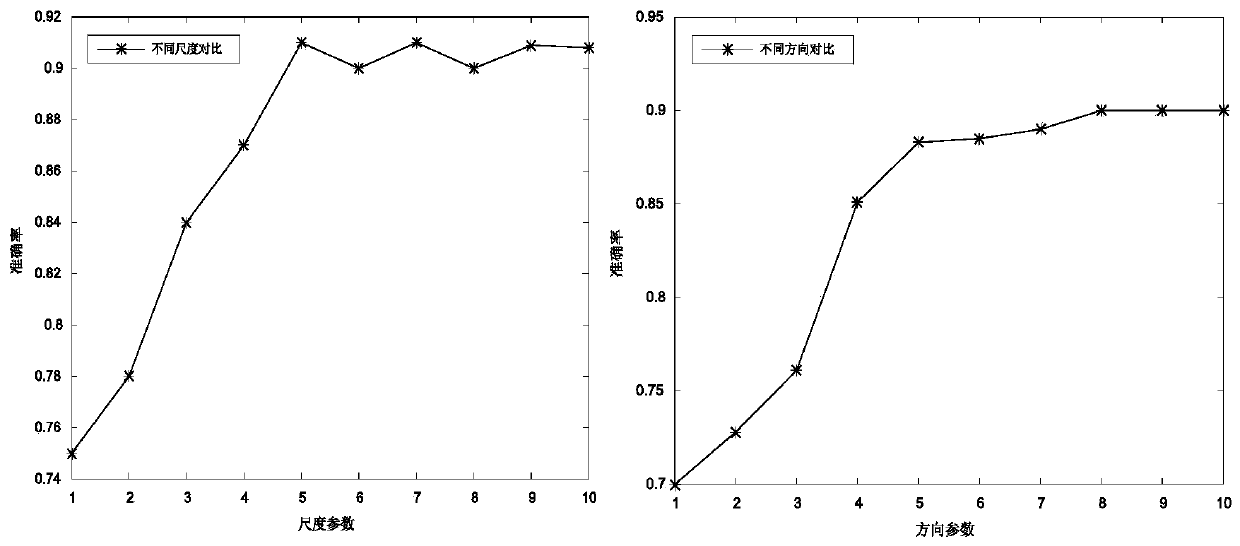

The invention discloses a method for extracting the characteristic of a natural image based on dispersion-constrained non-negative sparse coding, which comprises the following steps of: partitioning an image into blocks, reducing dimensions by means of 2D-PCA, non-negative processing image data, initializing a wavelet characteristic base based on 2D-Gabor, defining the specific value between intra-class dispersion and extra-class dispersion of a sparsity coefficient, training a DCB-NNSC characteristic base, and image identifying based on the DCB-NNSC characteristic base, etc. The method has the advantages of not only being capable of imitating the receptive field characteristic of a V1 region nerve cell of a human eye primary vision system to effectively extract the local characteristic of the image; but also being capable of extracting the characteristic of the image with clearer directionality and edge characteristic compared with a standard non-negative sparse coding arithmetic; leading the intra-class data of the characteristic coefficient to be more closely polymerized together to increase an extra-class distance as much as possible with the least constraint of specific valuebetween the intra-class dispersion and the extra-class dispersion of the sparsity coefficient; and being capable of improving the identification performance in the image identification.

Owner:SUZHOU VOCATIONAL UNIV

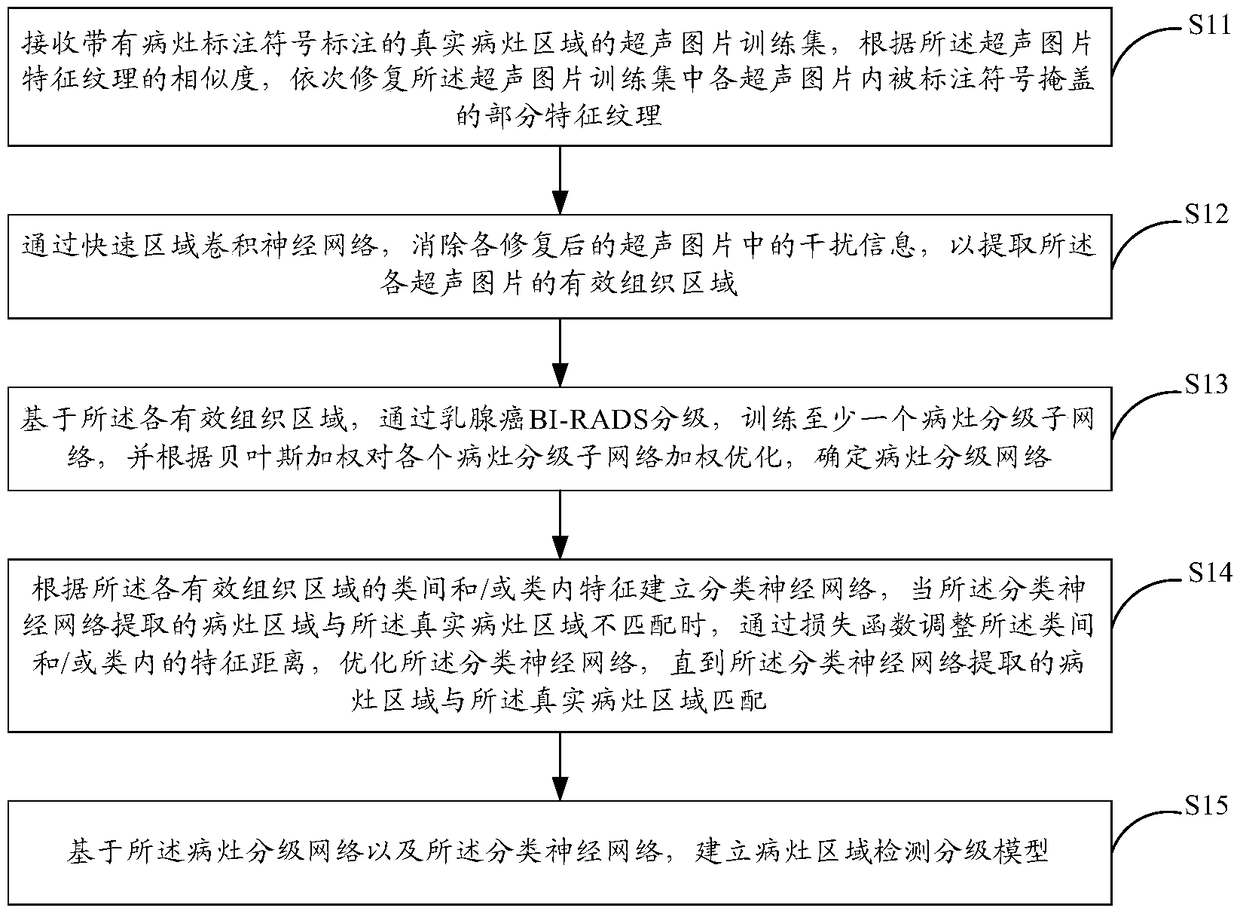

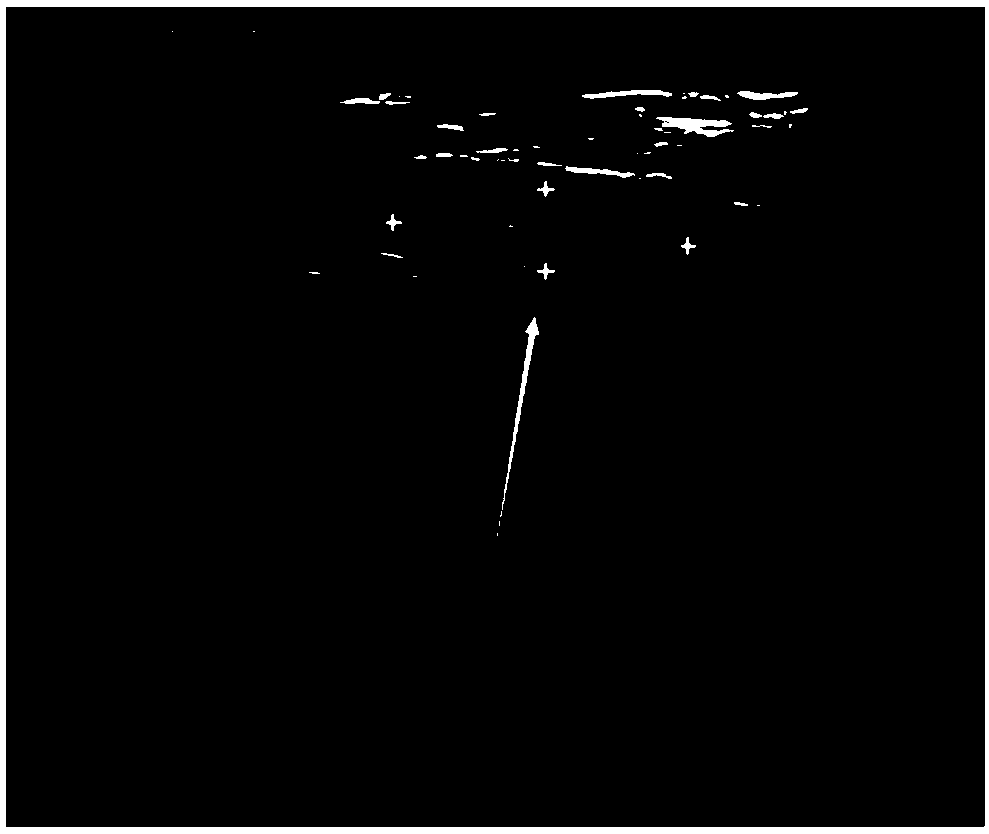

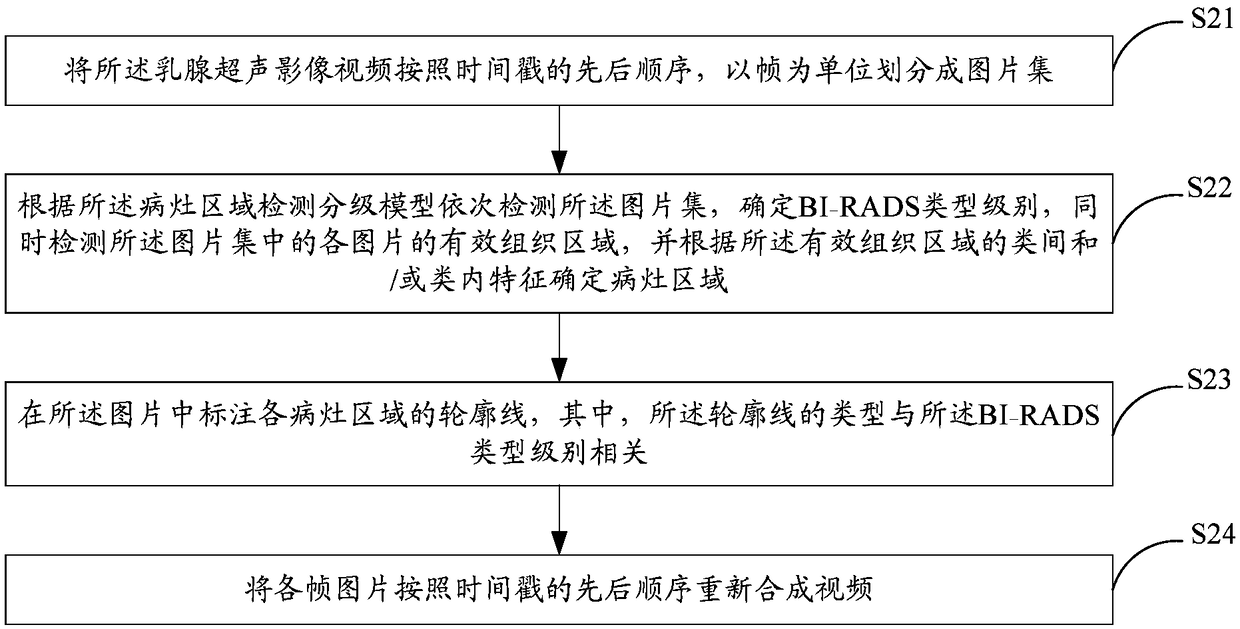

Real-time labeling method and system for breast ultrasonic focus areas based on artificial intelligence

ActiveCN108665456AEasy to identifyImprove work efficiencyImage enhancementImage analysisPattern recognitionTimestamp

The embodiment of the invention provides a real-time labeling method for breast ultrasonic focus areas based on artificial intelligence. The method includes the steps that a breast ultrasonic image video is divided into picture sets according to the timestamp order at frames; the picture sets are sequentially detected according to a focus-area detection classification model, the BI-RADS type levelis determined, and meanwhile the focus areas are determined; contour lines of all the focus areas are labeled in pictures, wherein the types of the contour lines are related to the BI-RADS type level; all the frame pictures are anew synthesized to form a video according to the timestamp order. The embodiment of the invention also provides an establishing method for the focus-area detection classification model, and the establishing method is used for establishing the focus-area detection classification model in the real-time labeling method for the focus areas. The embodiment of the inventionalso provides a real-time labeling system for the breast ultrasonic focus areas based on artificial intelligence. According to the real-time labeling method and system for the breast ultrasonic focusareas based on artificial intelligence and the establishing method for the focus-area detection classification model in the embodiment, the focus recognition capability is enhanced, the misdiagnosisrate is reduced, and the embodiment assists doctors in giving a more-accurate suggestion.

Owner:广州尚医网信息技术有限公司

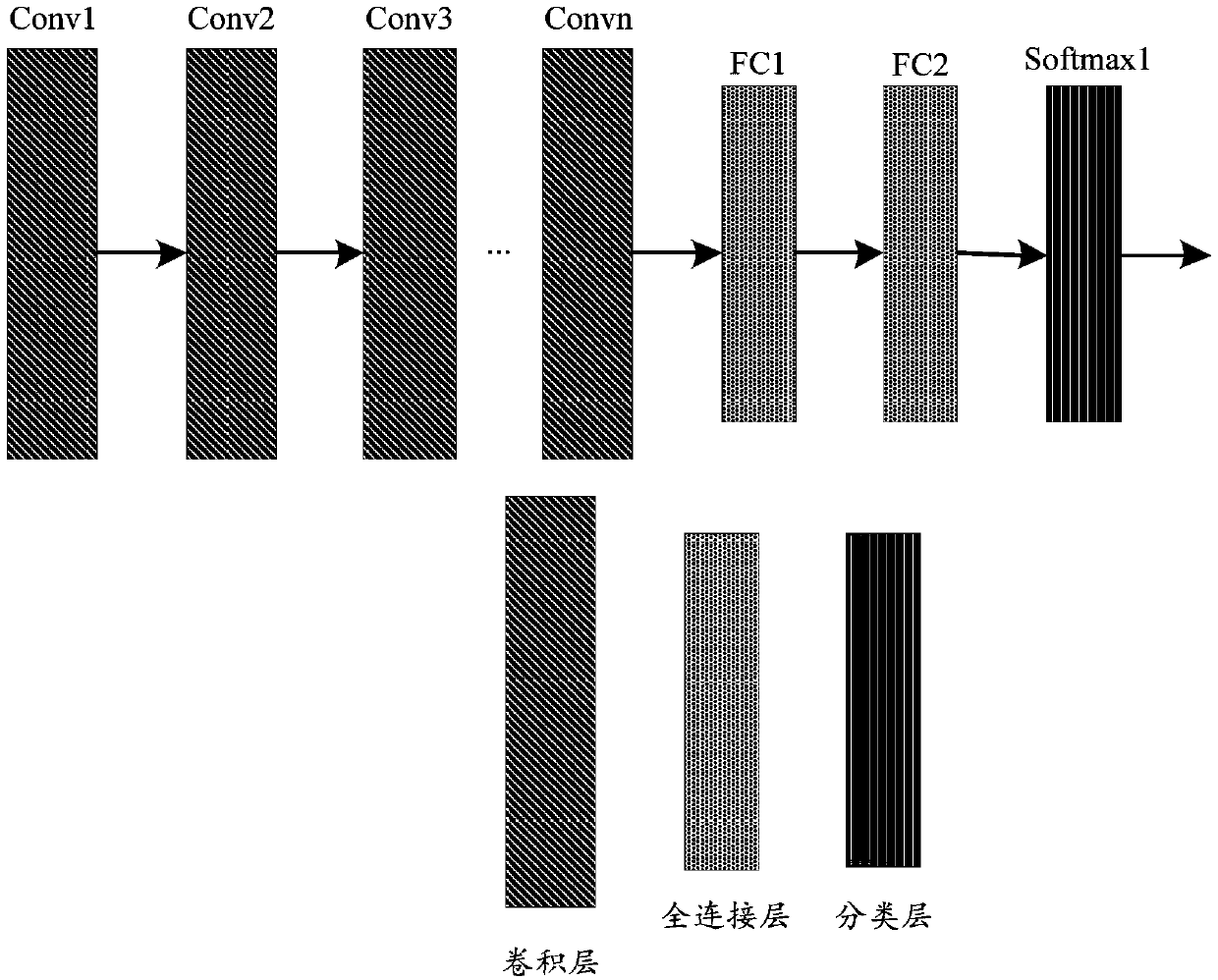

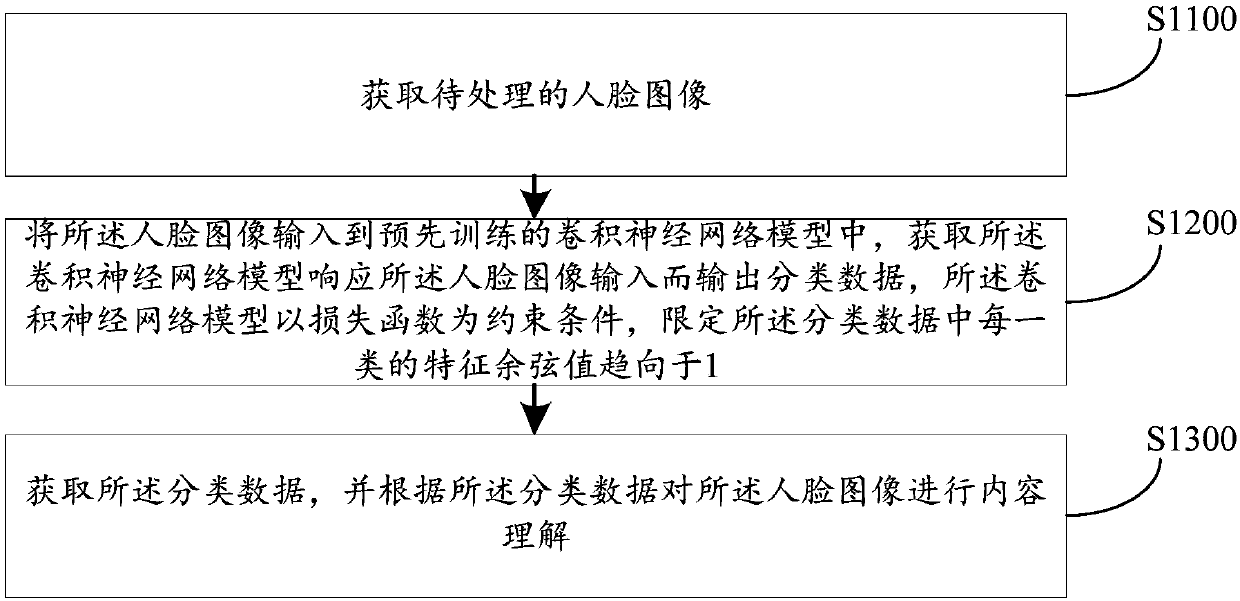

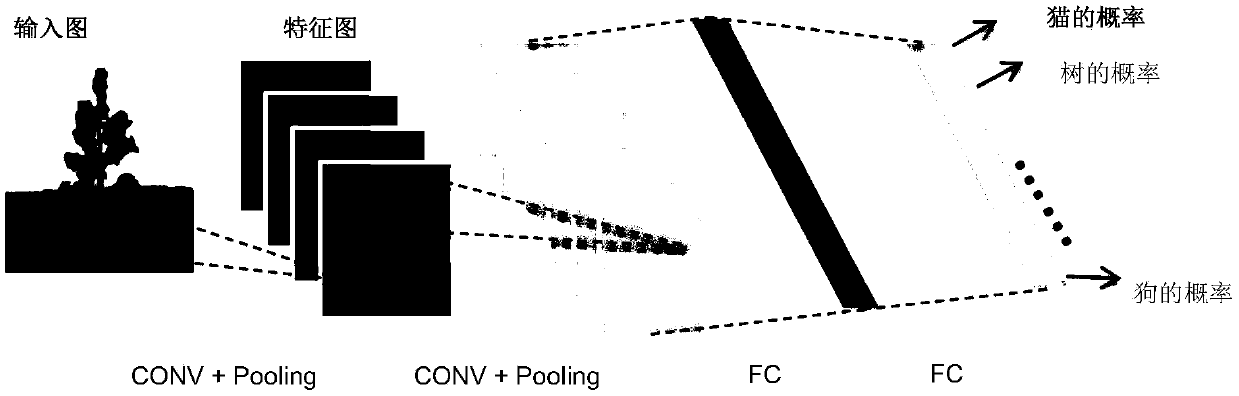

Face image processing method and device and server

ActiveCN107818314AIncrease the distance between classesIncrease the differenceCharacter and pattern recognitionWeight valueNetwork model

The embodiment of the invention discloses a face image processing method and device and a server. The method comprises the following steps: obtaining a to-be-processed human face image; inputting thehuman face image into a pre-trained convolution neural network model, and obtaining the classification data outputted when the convolution neural network model responds to the input of the human faceimage, wherein the convolution neural network model takes a loss function as a constraint condition, so as to enable the feature cosine value of each class of the classification data to approach to one; obtaining the classification data, and carrying out the content understanding of the human face image according to the classification data. The cosine value between a feature vector and a weight value of the loss function is enabled to approach to one, so as to achieve the convergence of inner-class distance. The convergence of inner-class distance enables the inner-class distance of the classification data to be increased, and the increase of the inner-class distance enables the classification data to be different more apparently. The increase of the robustness of data also can improve theaccuracy of content understanding.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

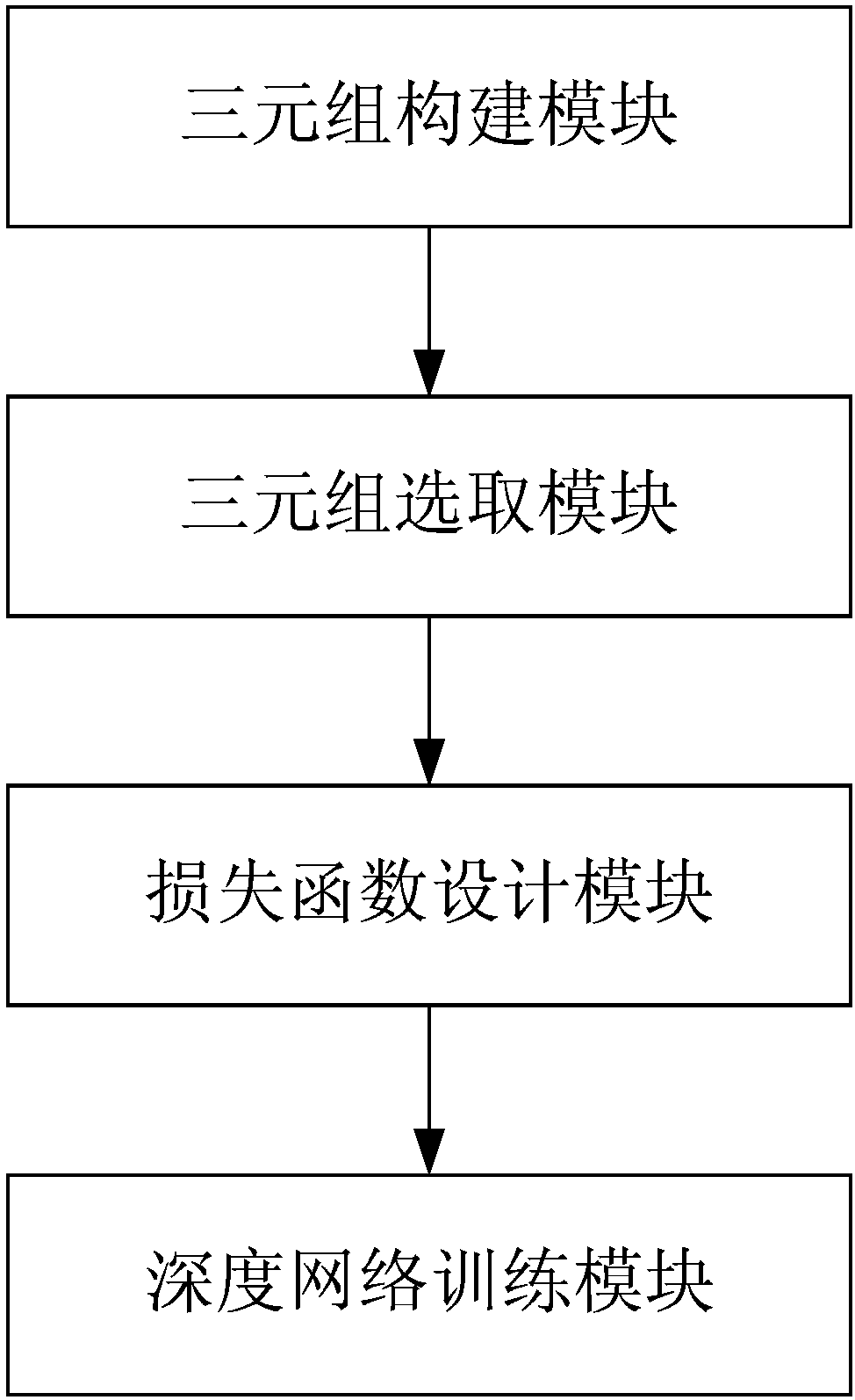

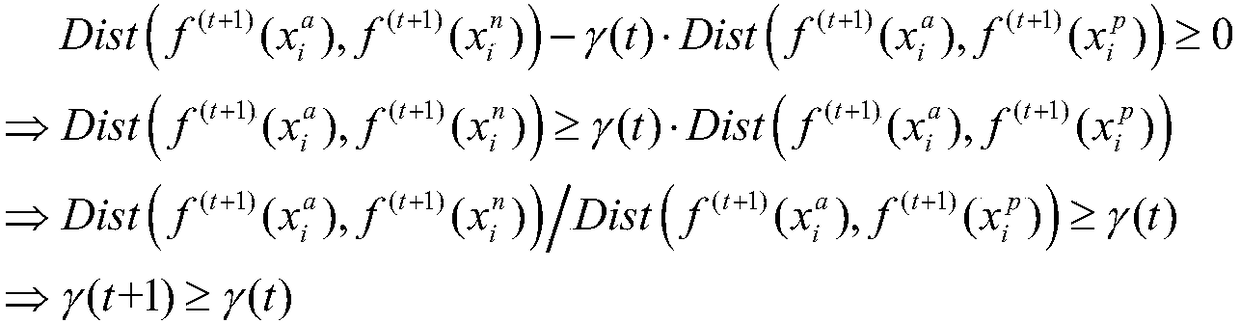

Trace ratio criterion-based triple loss function design method

ActiveCN108399428ATo achieve the purpose of learningIncrease the distance between classesCharacter and pattern recognitionNeural architecturesData setSelection criterion

The invention discloses a trace ratio criterion-based triple loss function design method. Through investigation and survey of image feature extraction, a triple loss function and a trace ratio criterion, the trace ratio criterion is used as a triple selection criterion and a loss calculation method. The method mainly comprises the steps of A, performing triple sample establishment: establishing triple samples by samples in a data set; B, performing triple sample selection: performing screening in the established triple samples, setting an effective selection mechanism, and while the precisionloss is avoided, increasing the training speed; C, performing loss function design: according to the triple samples obtained in the step B, calculating distances between current samples in triples andpositive and negative samples, and designing the loss function for calculating an error between a model prediction result and a real result; and D, performing deep network training: transmitting themodel error to a deep convolutional neural network, performing update adjustment on network parameters, and iteratively training a model until convergence.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

Multi-modal face recognition method performing through face depth prediction

ActiveCN108197587AIncrease the distance between classesRich identity informationCharacter and pattern recognitionTest phaseNetwork model

The invention discloses a multi-modal face recognition method performing through face depth prediction. The method comprises the steps of data extracting, wherein the face segmentation and scaling ofDepth modal data are conducted, cascade network model training, face recognition network training, model merging and testing phase performing. According to the method, not only is the face Depth imagepredicted by a network model close to a real face Depth image, but also the between-class distance of the predicted Depth image is enhanced by a cascaded classification network, so that the predictedface Depth image has richer identification information. According to the method, a face Depth modal is predicted through a face RGB modal to increase the diversification of face modal data, and multi-modal face recognition can be achieved under the premise that the existing hardware of an RGB camera is not changed. By combining the RGB modal and the predicted Depth modal, the accuracy of the multi-modal face recognition is higher than the accuracy of the face recognition only using RGB modal data.

Owner:SEETATECH BEIJING TECH CO LTD

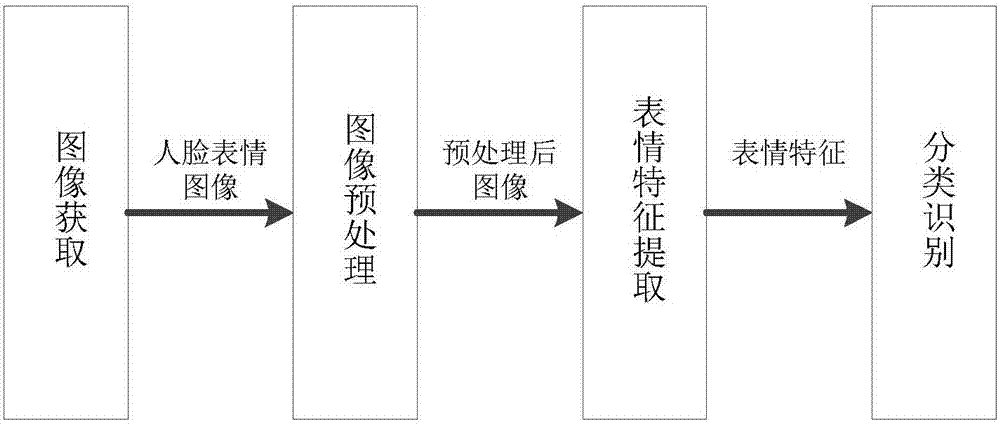

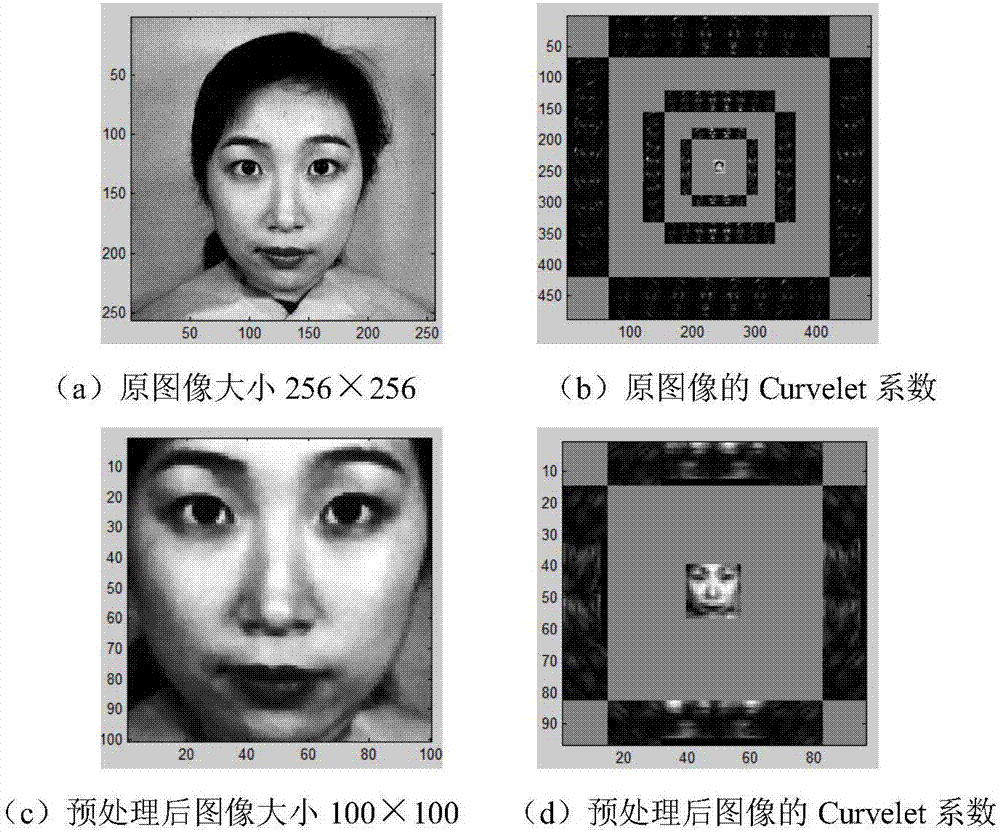

Human face expression recognition method based on Curvelet transform and sparse learning

InactiveCN106980848AImprove discrimination abilityGood refactoring abilityAcquiring/recognising facial featuresMultiscale geometric analysisSparse learning

The invention discloses a human face expression recognition method based on Curvelet transform and sparse learning. The method comprises the following steps: 1, inputting a human face expression image, carrying out the preprocessing of the human face expression image, and cutting and obtaining an eye region and a mouth region from the human face expression image after processing; 2, extracting the human face expression features through Curvelet transform, carrying out the Curvelet transform and feature extraction of the human face expression image after preprocessing, the eye region and the mouth region, carrying out the serial fusion of the three features, and obtaining fusion features; 3, carrying out the classification recognition based on the sparse learning, and respectively employing SRC for classification and recognition of the human face Curvelet features and fusion features; or respectively employing FDDL for classification and recognition of the human face Curvelet features and fusion features. The Curvelet transform employed in the method is a multi-scale geometric analysis tool, and can extract the multi-scale and multi-direction features. Meanwhile, the method employs a local region fusion method, and enables the fusion features to be better in imaging representing capability and feature discrimination capability.

Owner:HANGZHOU DIANZI UNIV

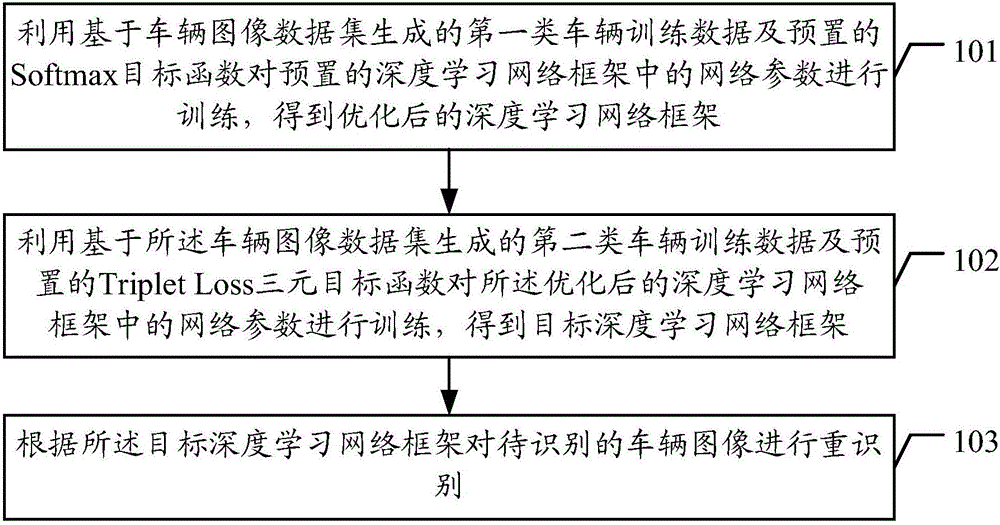

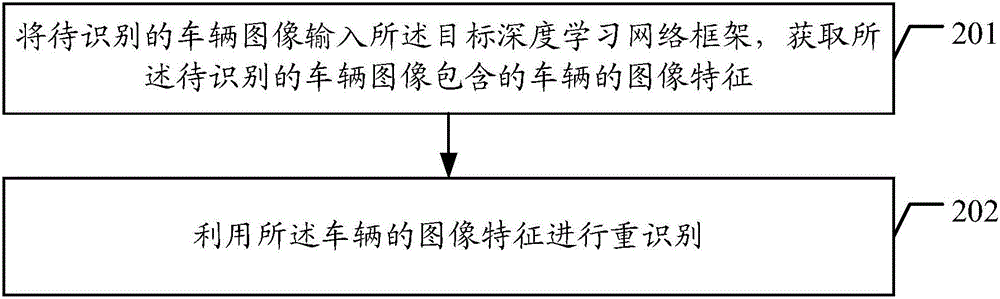

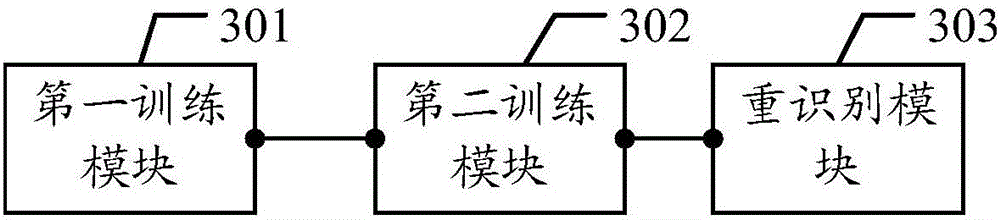

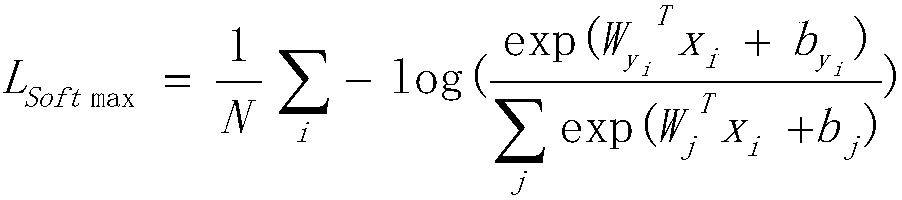

Method and device of vehicle reidentification based on multiple objective function deep learning

InactiveCN106709528AEasy to re-identifyMeet the needs of re-identificationCharacter and pattern recognitionData setSimulation

The present invention discloses a method and device of vehicle reidentification based on multiple objective function deep learning. The method comprises: employing first-class vehicle training data generated based on a vehicle image data set and a preset Softmax objective function to train the preset network parameters in a deep learning network frame, obtaining an optimal depth learning network frame, employing second-class vehicle training data generated based on the vehicle image data and a preset Triplet Loss ternary objective function to train the optimal network parameters in the depth learning network frame, obtaining a target depth learning network frame, and performing reidentification of the vehicle image to be identified according to the target depth learning network frame. Through adoption of the target depth learning network frame, the image features with high robustness and high reliability can be obtained to facilitate vehicle reidentification and effectively satisfy the requirement of the vehicle reidentification in the monitoring video.

Owner:SHENZHEN UNIV

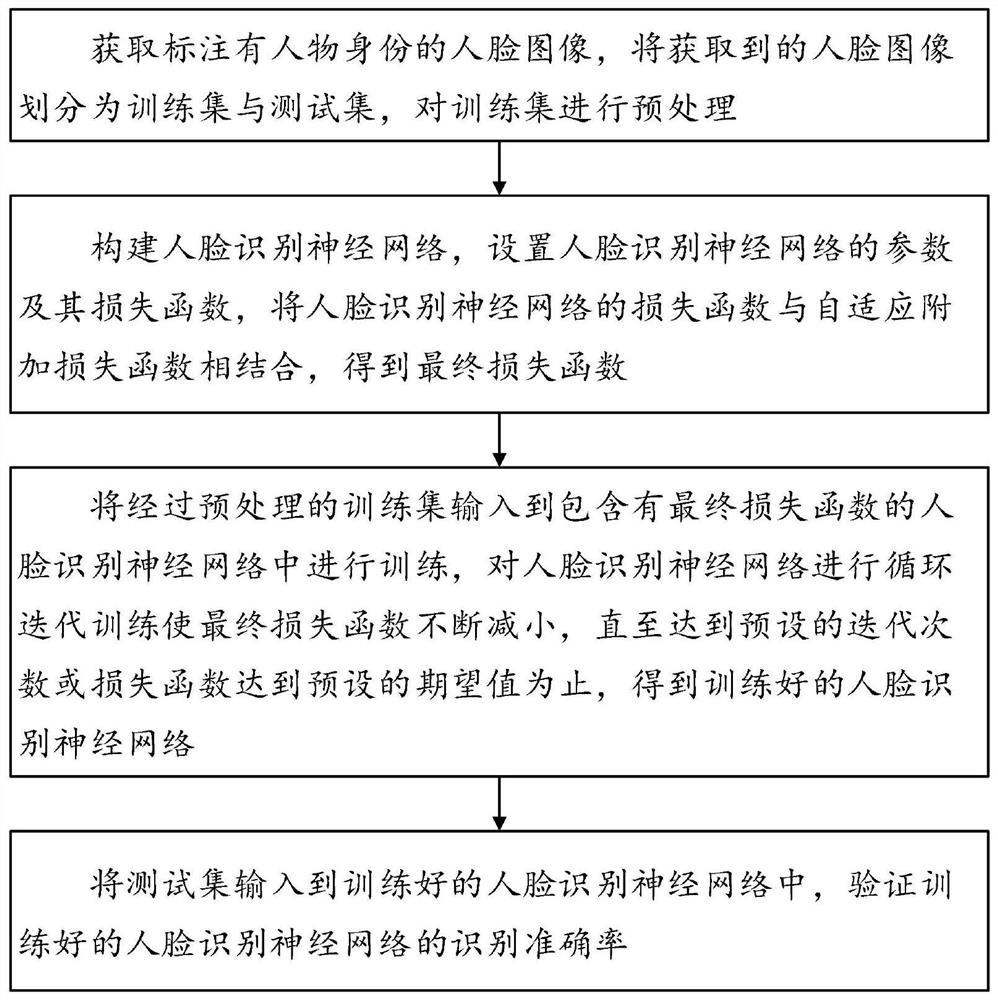

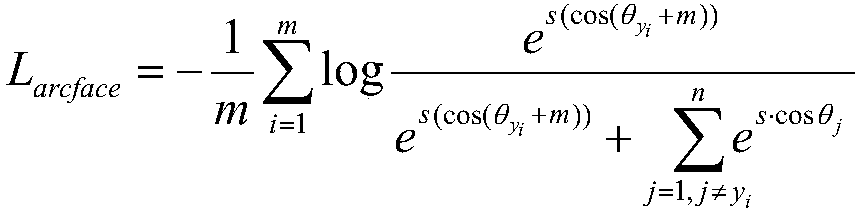

Face recognition neural network training method, system and device and storage medium

InactiveCN111967392AGuaranteed generalization abilityImprove accuracyCharacter and pattern recognitionNeural architecturesTest setNeural network nn

The invention discloses a face recognition neural network training method, system and device and a storage medium, and the method comprises the following steps: obtaining a face image as a training set and a test set, and combining a loss function of a face recognition neural network with an adaptive additional loss function; inputting the preprocessed training set into a face recognition neural network for training; inputting the test set into the trained face recognition neural network, and verifying the recognition accuracy of the trained face recognition neural network. According to the invention, when the face recognition neural network is trained, the loss function is combined with an adaptive additional loss function to obtain a final loss function; the intra-class distance when theface images are classified is shortened through the final loss function, the inter-class distance when the face images are classified is increased, meanwhile, balance of multi-sample classes and few-sample classes is considered, when sample distribution is unbalanced, the generalization performance of the face recognition neural network can be guaranteed, and the accuracy and reliability degree of face recognition are improved.

Owner:GUANGDONG ELECTRIC POWER SCI RES INST ENERGY TECH CO LTD

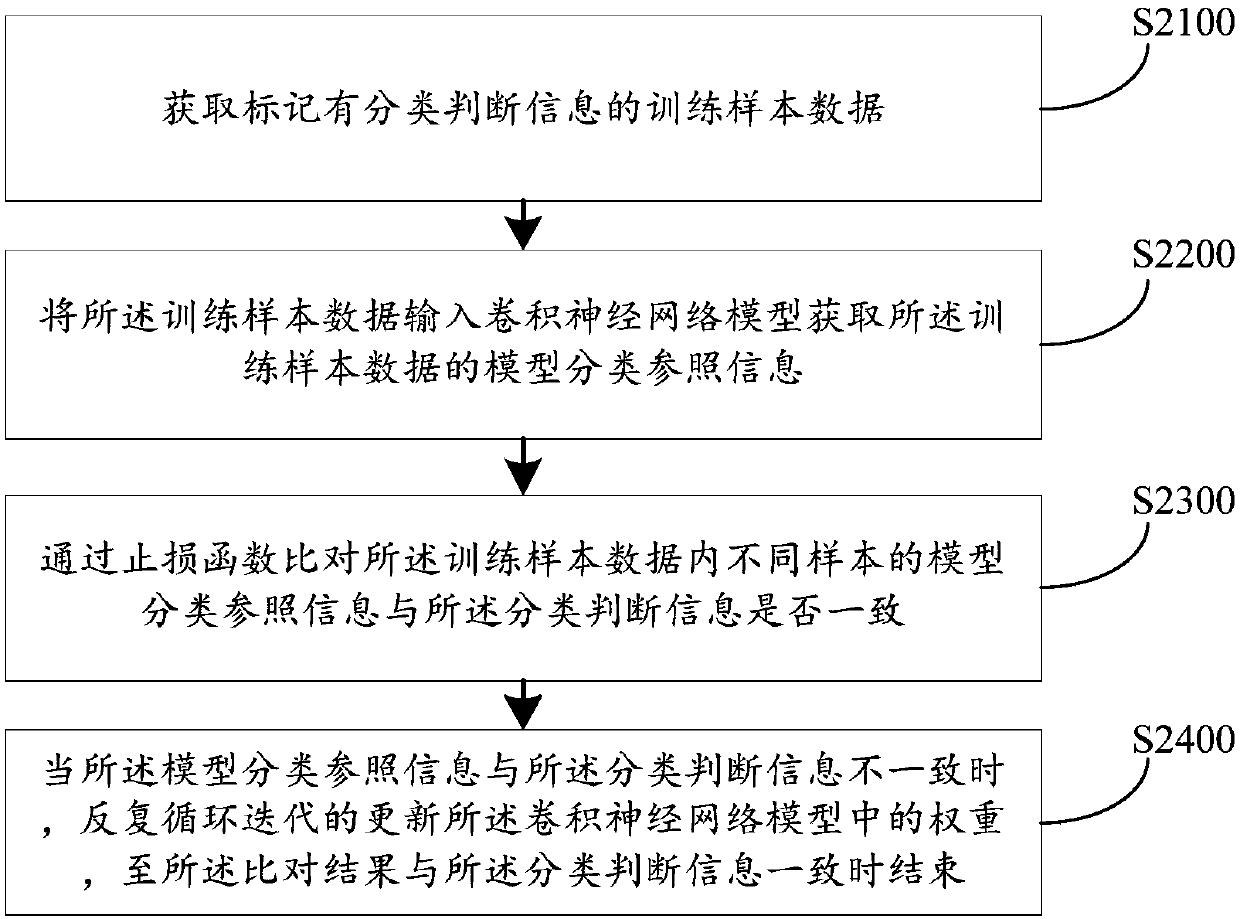

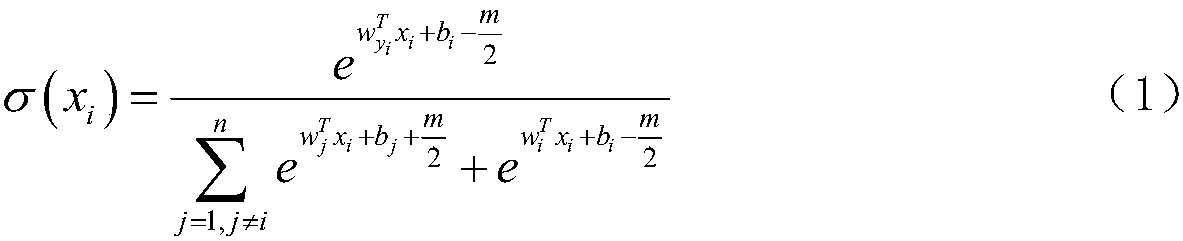

Image processing method and apparatus, and server

ActiveCN107679513AIncrease the distance between classesEnsure safetyCharacter and pattern recognitionNeural architecturesImaging processingImage identification

Embodiments of the invention disclose an image processing method and apparatus, and a server. The method comprises the following steps of obtaining a to-be-processed human face image; inputting the human face image to a convolutional neural network model with a loss function, wherein the loss function directionally screens and increases a between-class distance after image classification accordingto a preset expectation; and obtaining classification data output by the convolutional neural network model, and according to the classification data, performing content understanding on the human face image. The new loss function is established on the convolutional neural network model and has the effect of screening and increasing the between-class distance after the image classification; and the between-class distance of the classification data output by the convolutional neural network model obtained by training through the loss function is increased, so that the between-class distance inan image identification process is increased, the saliency of difference between images is remarkably improved, the image comparison accuracy is remarkably improved, and the security of applying theimage processing method is effectively guaranteed.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

Method and system for realizing smoke detection by using deep learning classification model

ActiveCN109598891AEliminate interfering targetsReduce false detection rateFire alarm radiation actuationDetection rateFalse detection

The invention discloses a method for realizing smoke detection by using a deep learning classification model. The method comprises the following steps of: getting a frame of smoke image from the videostream; processing the smoke image with a Gaussian mixture model to obtain a motion region of the smoke image to be removed; processing the image using a dark channel smoke removing algorithm to obtain a smokeless image model; obtaining a difference image between the smoke image to be removed and the smokeless image model; binarizing the difference image to obtain a suspected smoke area; obtaining the intersection area between the motion area and the suspected smoke area, entering the intersection area into the trained deep learning classification model to get the final smoke recognition result; marking the smoke area in the smoke image to be removed according to the smoke recognition result. The method and system for realizing smoke detection by using deep learning classification model use a lightweight deep learning classification model to achieve higher accuracy and detection rate, reduce the false detection rate, and realize the effect of real-time detection.

Owner:SOUTH CENTRAL UNIVERSITY FOR NATIONALITIES

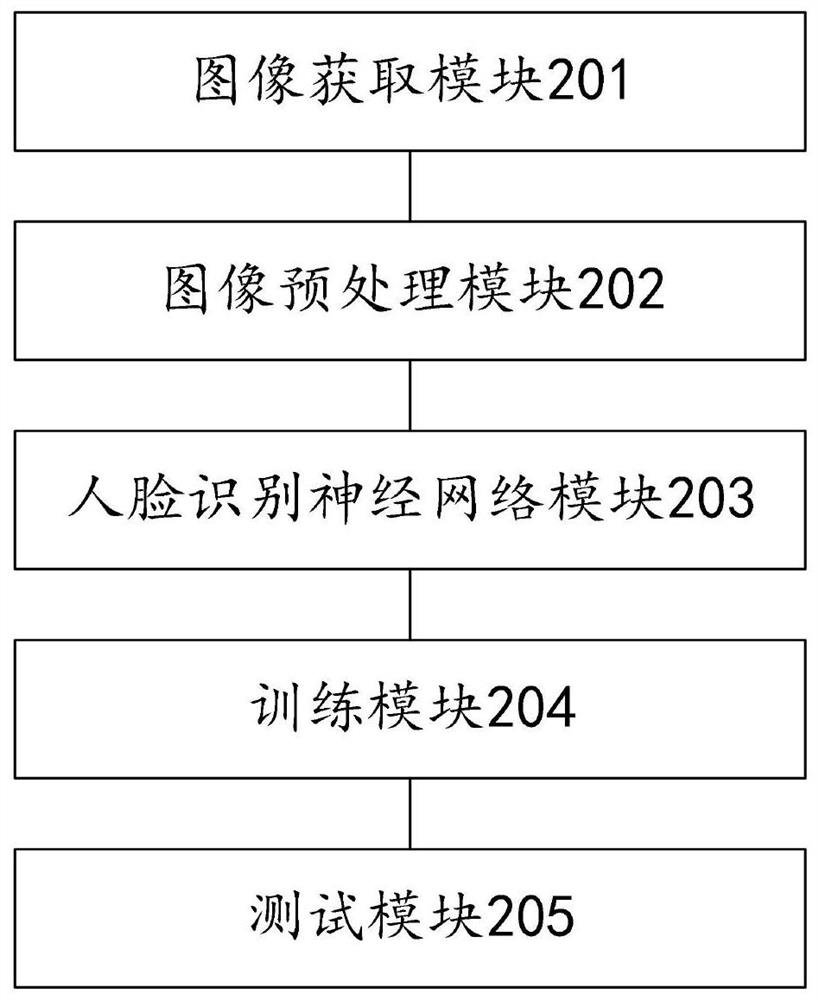

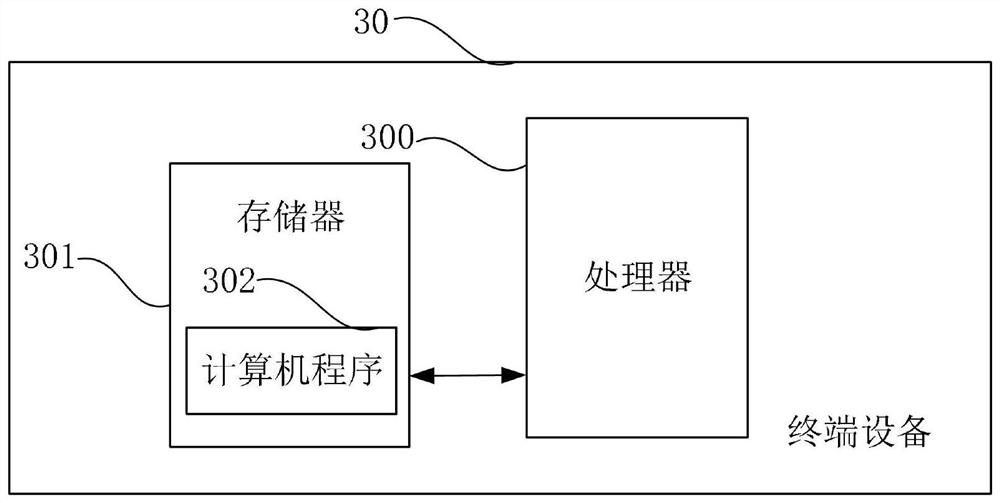

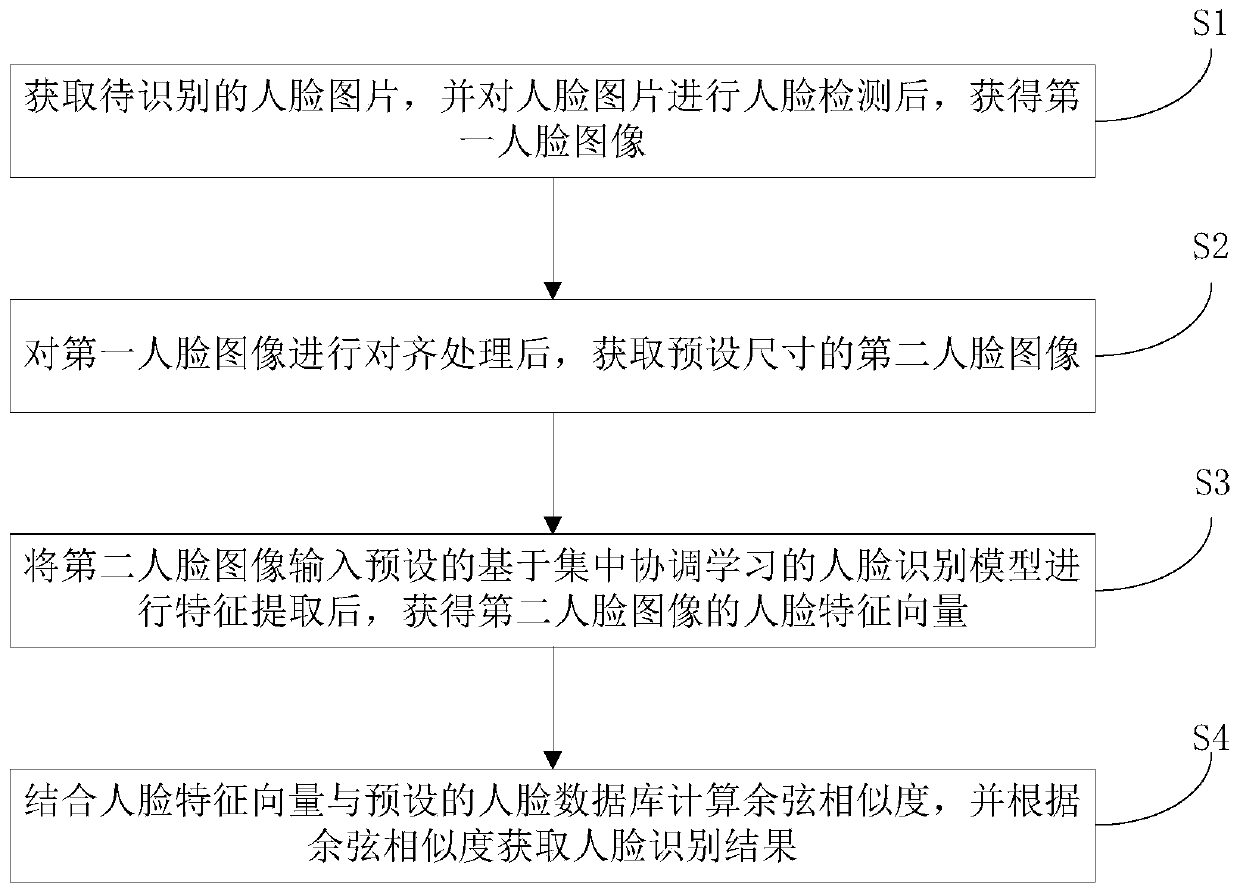

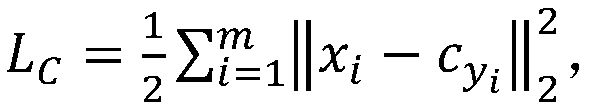

Face recognition method, system and device based on centralized coordination learning

PendingCN109784219AImprove classification efficiencyImprove recognition accuracyCharacter and pattern recognitionNeural architecturesFace detectionFeature vector

The invention discloses a face recognition method, system and device based on centralized coordination learning, and the method comprises the following steps: obtaining a to-be-recognized face image,carrying out the face detection of the face image, and obtaining a first face image; After alignment processing is carried out on the first face image, a second face image with a preset size is obtained; inputting the second face image into a preset face recognition model based on centralized coordination learning for feature extraction, and obtaining a face feature vector of the second face image; and calculating cosine similarity by combining the face feature vector and a preset face database, and obtaining a face recognition result according to the cosine similarity. According to the invention, a face recognition model based on centralized coordination learning is adopted to carry out feature extraction on the face image, each feature is pulled to an original point and is respectively put into all quadrants, the inter-class distance is larger, the classification efficiency and recognition accuracy of the face are improved, and the method can be widely applied to the technical fieldof face recognition.

Owner:GUANGZHOU HISON COMP TECH

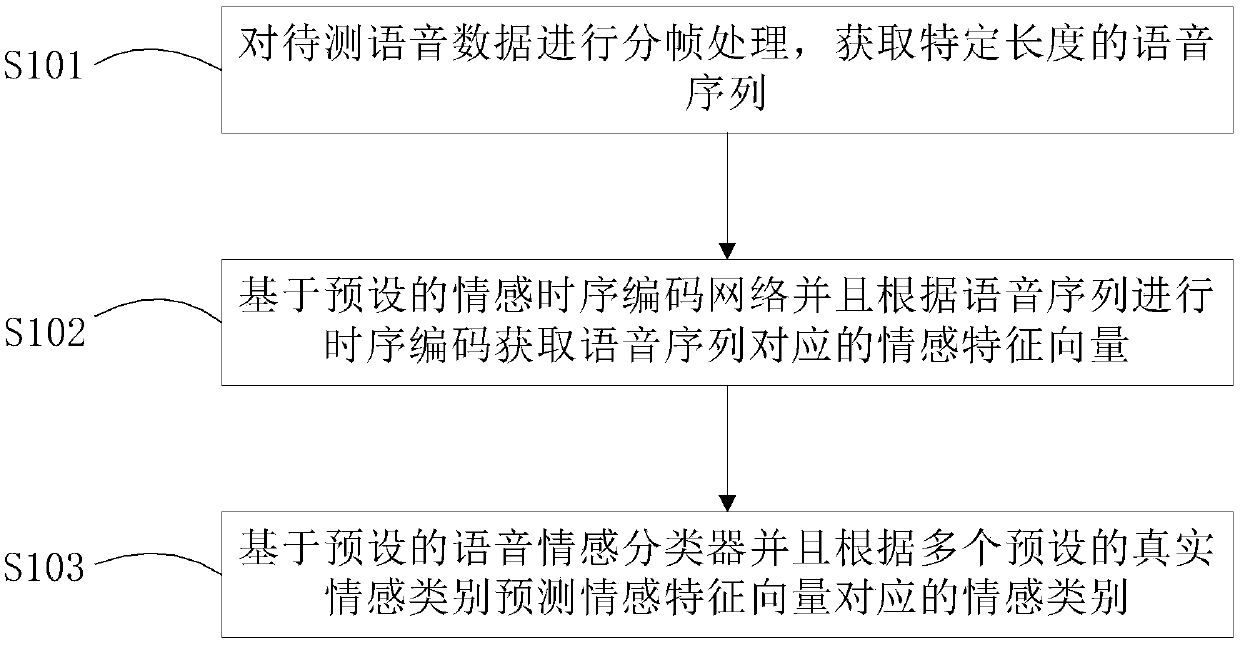

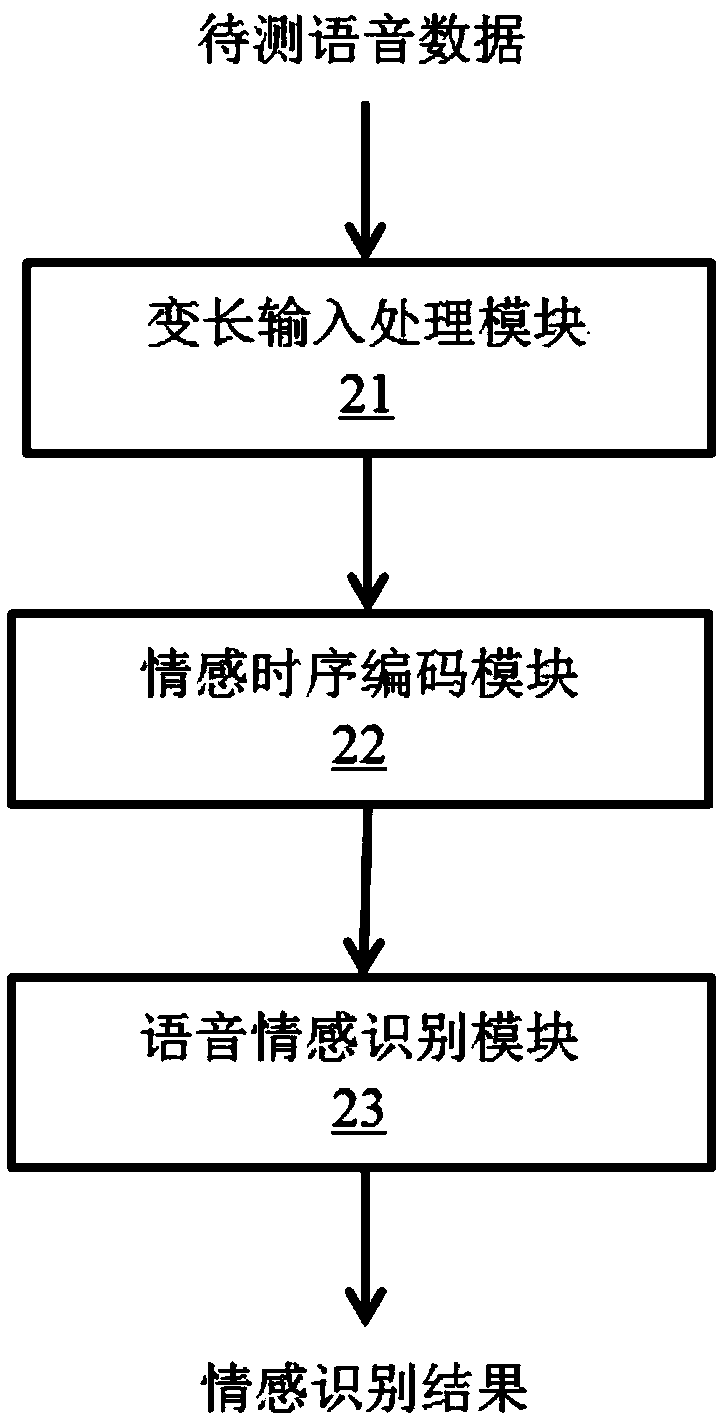

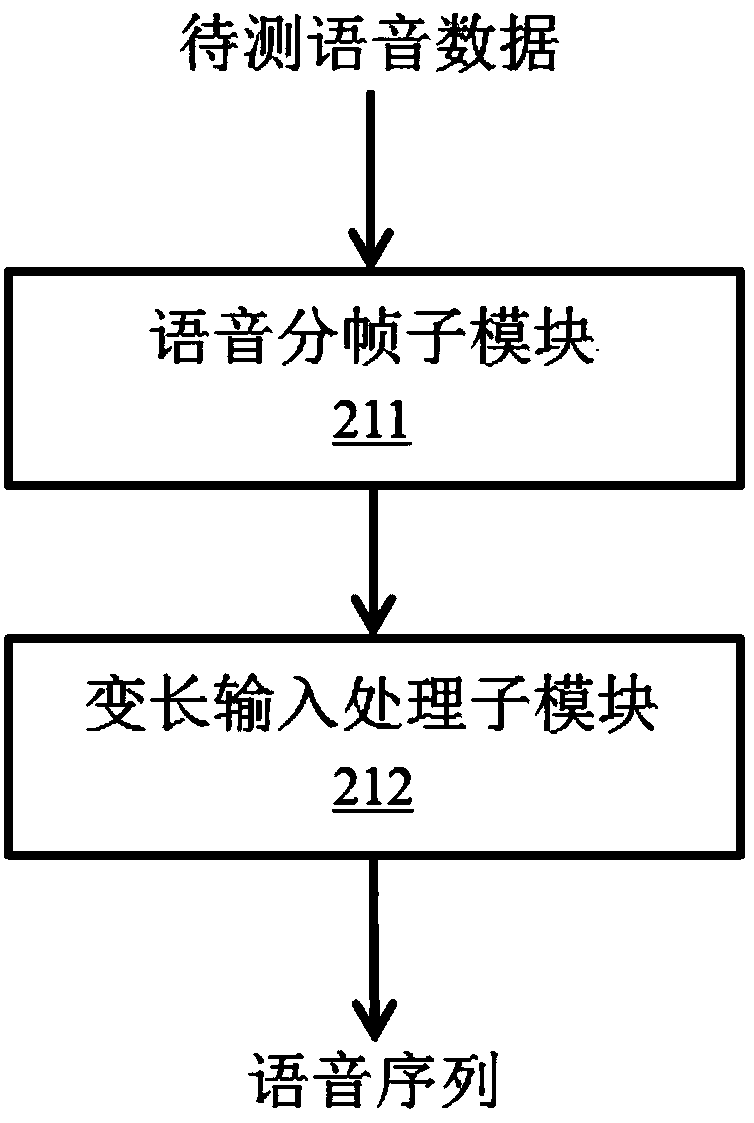

Speech emotion recognition method and system based on ternary loss

The invention belongs to the technical field of emotion recognition, particularly relates to a speech emotion recognition method and system based on ternary loss, and aims at solving the technical problem of accurately recognizing confusable emotion categories. For this purpose, the speech emotion recognition method comprises the following steps: framing the speech data to be measured so as to obtain a speech sequence of the specific length; temporal coding is performed based on the preset emotional temporal coding network and according to the speech sequence so as to obtain the emotional feature vectors corresponding to the speech sequence; and predicting the emotion category corresponding to the emotion feature vectors based on the preset speech emotion classifier and according to multiple preset real emotion categories. According to the speech emotion recognition method, the confusable speech emotion categories can be greatly recognized, and the method can be executed and implemented by the speech emotion recognition system.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

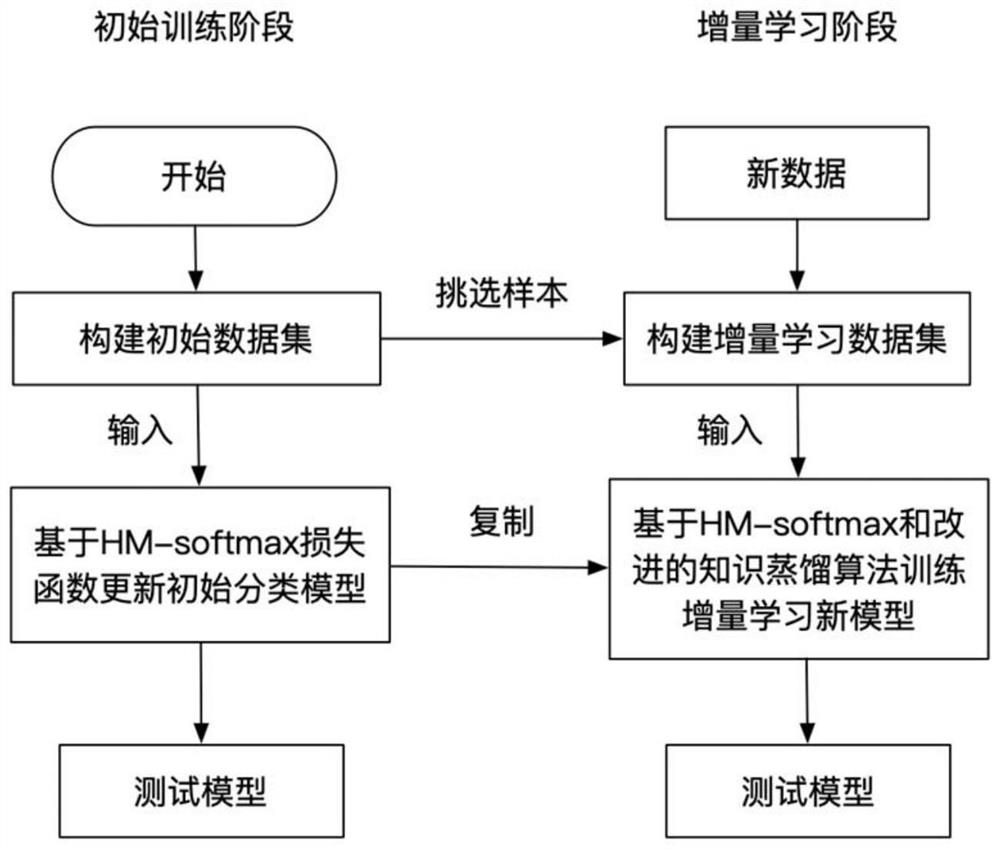

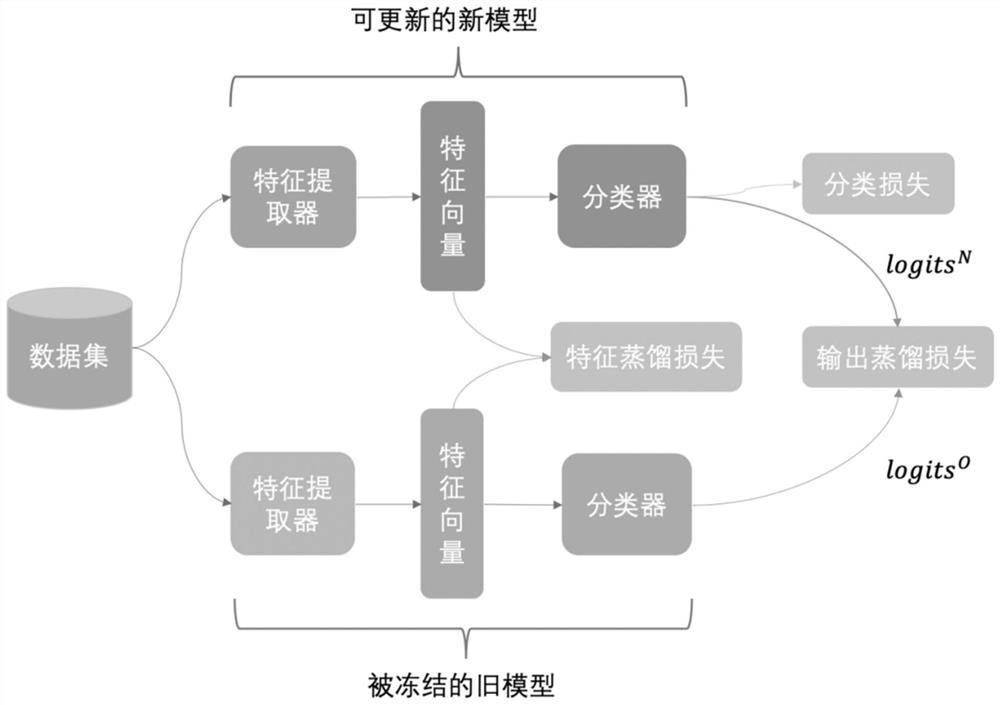

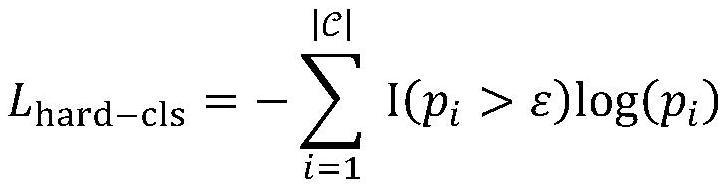

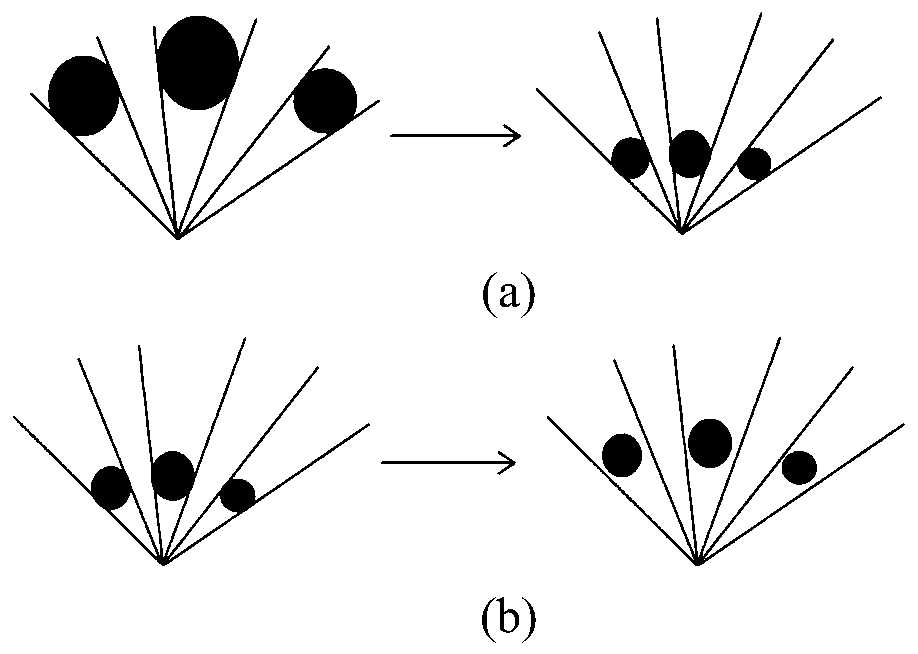

Image big data-oriented class increment classification method, system and device and medium

ActiveCN112990280AIncrease the distance between classesEasy to identifyCharacter and pattern recognitionNeural architecturesData setLearning data

The invention discloses an image big data-oriented class increment classification method, system and device and a medium. The method comprises an initialization training stage and an increment learning stage. The initialization training stage comprises the following steps: constructing an initial data set of an image; and training an initial classification model according to the initial data set. The incremental learning stage comprises the following steps: constructing an incremental learning data set according to the initial data set and new data of the image; obtaining a new incremental learning model according to the initial classification model, and training the new incremental learning model according to an incremental learning data set and a distillation algorithm to obtain a model capable of identifying new and old categories, wherein the distillation algorithm enables the inter-class distance of the model to be enlarged and the intra-class distance to be reduced. The incremental learning model is updated through the distillation algorithm, the inter-class distance of the model is enlarged, the intra-class distance of the model is reduced, the new and old data recognition performance of the model can be improved under limited storage space and computing resources, and the method, system and device can be widely applied to the field of big data application.

Owner:SOUTH CHINA UNIV OF TECH

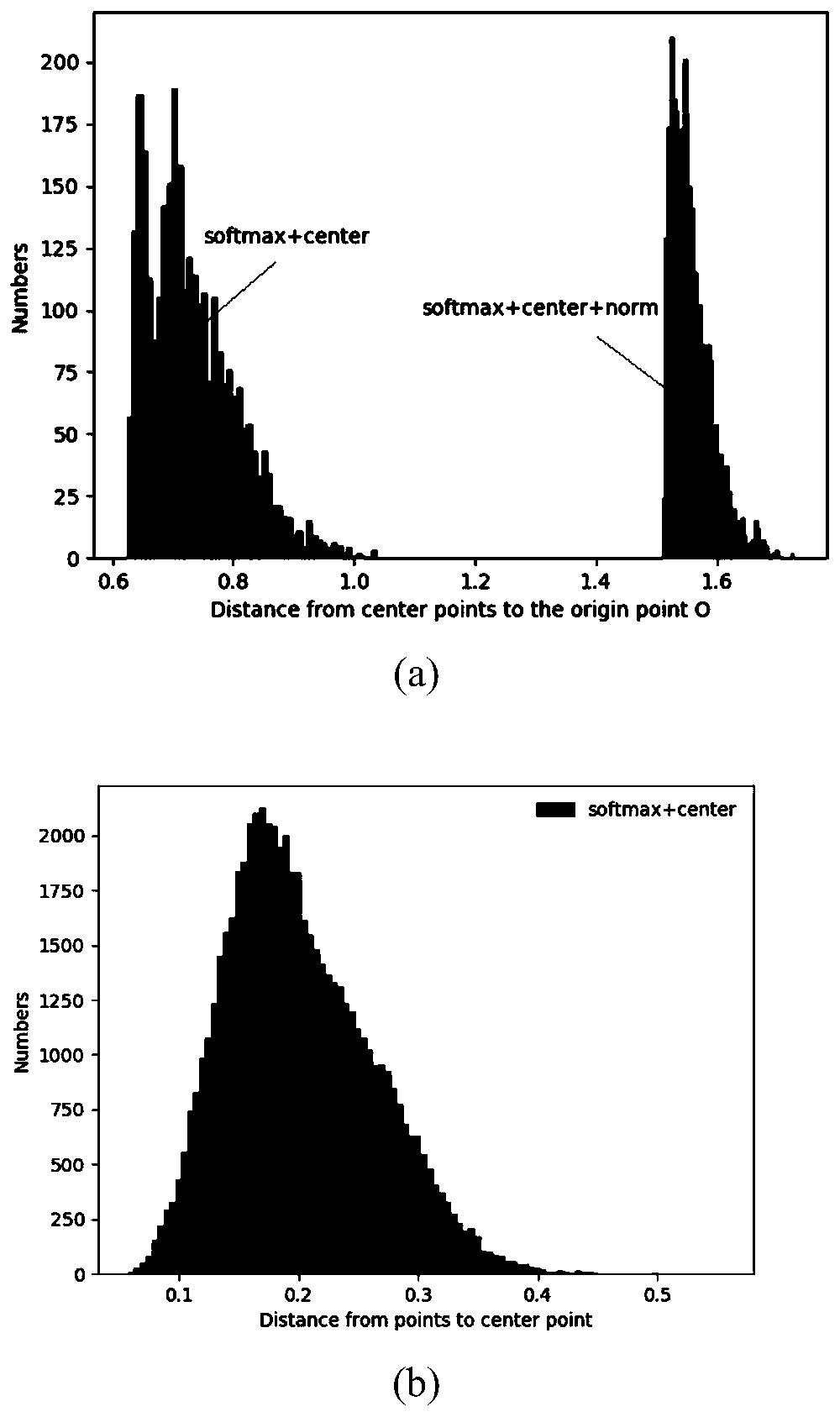

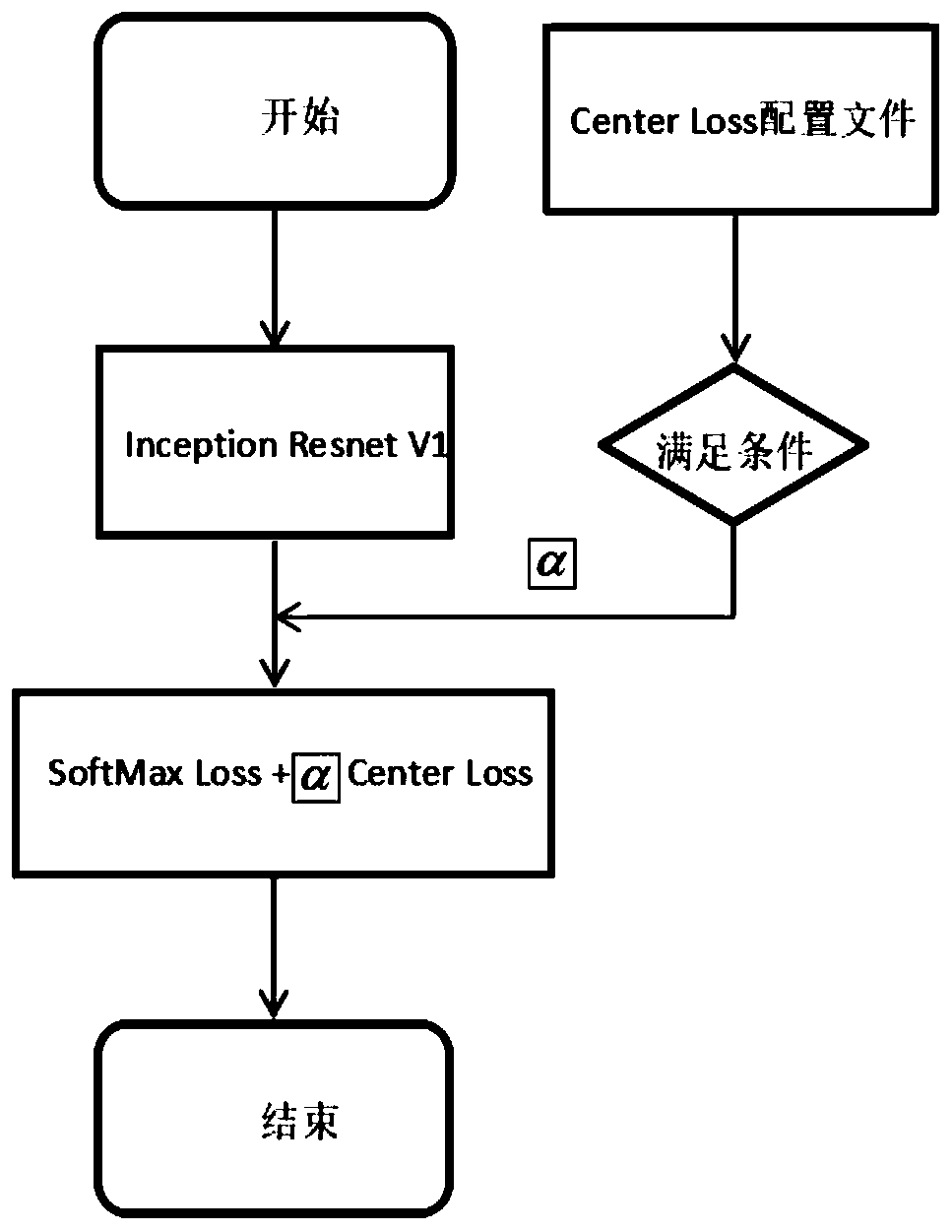

Face image feature extraction method and face recognition method based on modular constraint CentreFace

ActiveCN111368683AImprove accuracyIncrease distanceCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention discloses a face image feature extraction method and face recognition method based on modular constraint CentreFace, and the method comprises the following steps: obtaining a low-resolution face data set, and carrying out the preprocessing of the data set; selecting a proper basic convolutional neural network according to the task environment of the application; performing joint supervision on the face recognition model on the training data set by using Softmax loss, center loss and mode loss functions to obtain a face recognition model; and extracting a feature representation vector of the face image by using a face recognition model, and judging similarity according to a threshold or giving a face recognition result according to distance sorting. The invention further provides a modular loss function for joint training based on a loss function of a CentreFace algorithm, and a better face recognition model is obtained through a large number of low-resolution face imagesunder monitoring.

Owner:NANJING UNIV OF POSTS & TELECOMM

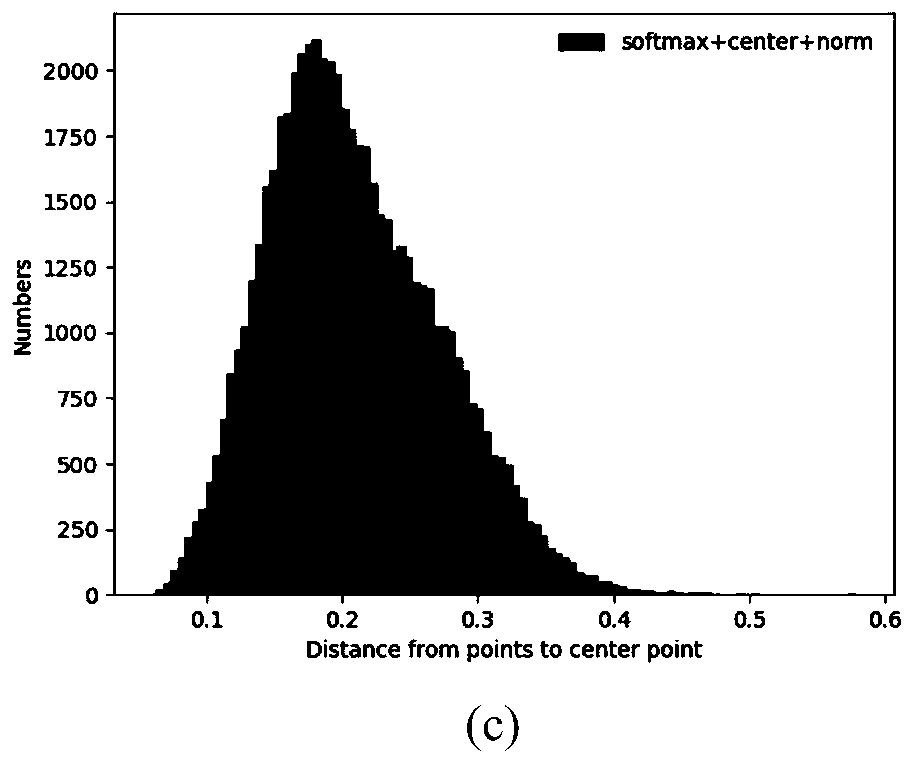

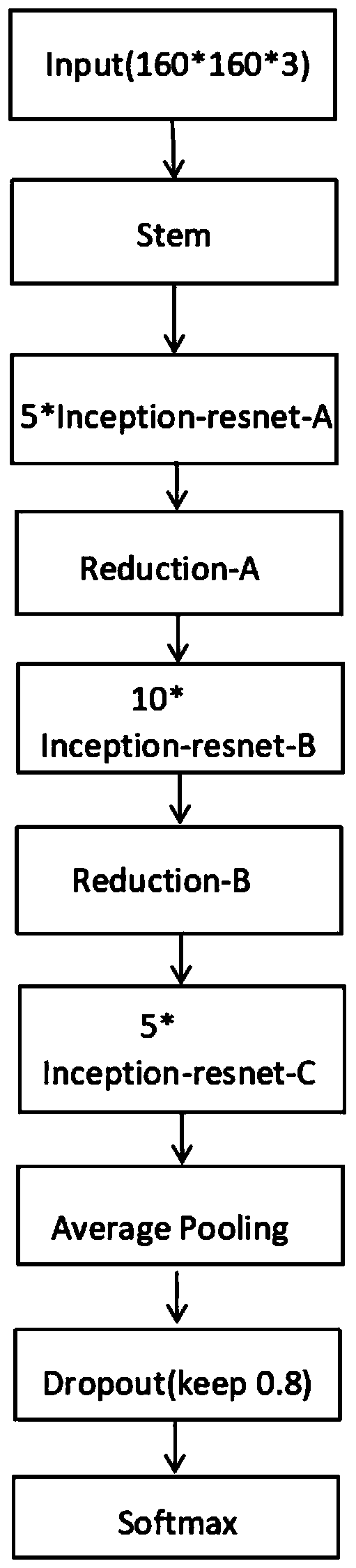

A face model training method based on Center Loss improvement

ActiveCN109902757AImprove accuracyImprove robustnessInternal combustion piston enginesCharacter and pattern recognitionData setNetwork structure

The invention relates to a face model training method based on Center Loss improvement, and the method comprises the steps: (1),cutting and screening face images in an original total data set, and dividing the face images into a training set, a verification set, and a test set; (2) preprocessing the face images in the training set; (3) building a network structure, and optimizing a target loss function; (4) inputting the data in the training set into a network structure for training; (5) storing the face model; and (6) testing the face model by using the test set. On the basis that the face model has a certain classification capability, the intra-class aggregation capability of the model is enhanced, the targets of effectively increasing the inter-class distance and reducing the intra-class distance are achieved, and the accuracy and robustness of face recognition are improved.

Owner:山东领能电子科技有限公司

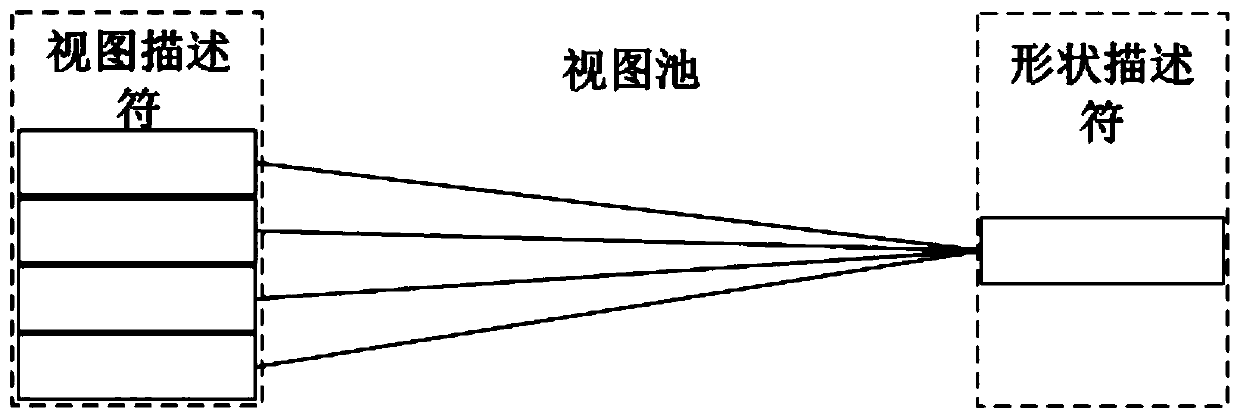

Multi-view three-dimensional model retrieval method and system based on pairing depth feature learning

ActiveCN111382300AImprove retrieval performanceEasy to identifyDigital data information retrievalSpecial data processing applicationsSi modelFeature learning

The invention discloses a multi-view three-dimensional model retrieval method and a multi-view three-dimensional model retrieval system based on pairing depth feature learning. The multi-view three-dimensional model retrieval method comprises the steps of: acquiring two-dimensional views of a to-be-retrieved three-dimensional model at different angles, and extracting an initial view descriptor ofeach two-dimensional view; aggregating the plurality of initial view descriptors to obtain a final view descriptor; extracting potential features and category features of the final view descriptor respectively; performing weighted combination on the potential features and the category features to form a shape descriptor; and performing similarity calculation on the obtained shape descriptor and ashape descriptor of the three-dimensional model in a database to realize retrieval of the multi-view three-dimensional model. According to the multi-view three-dimensional model retrieval method, a multi-view three-dimensional model retrieval framework GPDFL is provided, potential features and category features of the model are fused, and the feature recognition capability and the model retrievalperformance can be improved.

Owner:SHANDONG NORMAL UNIV

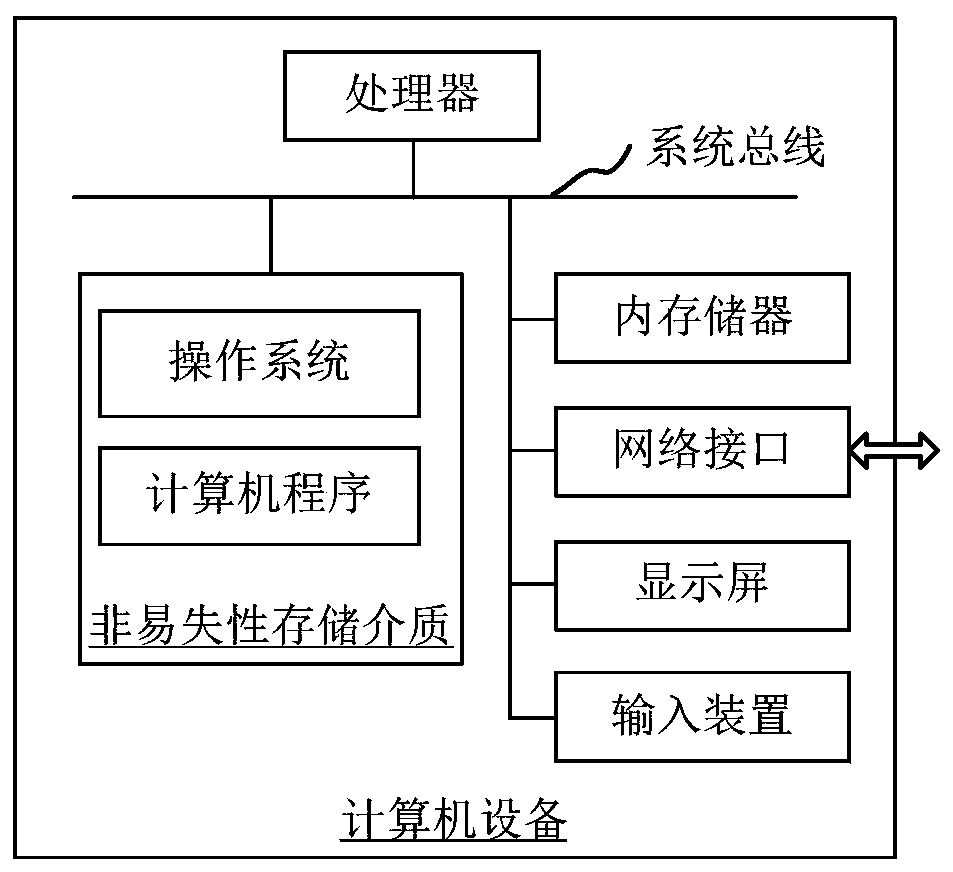

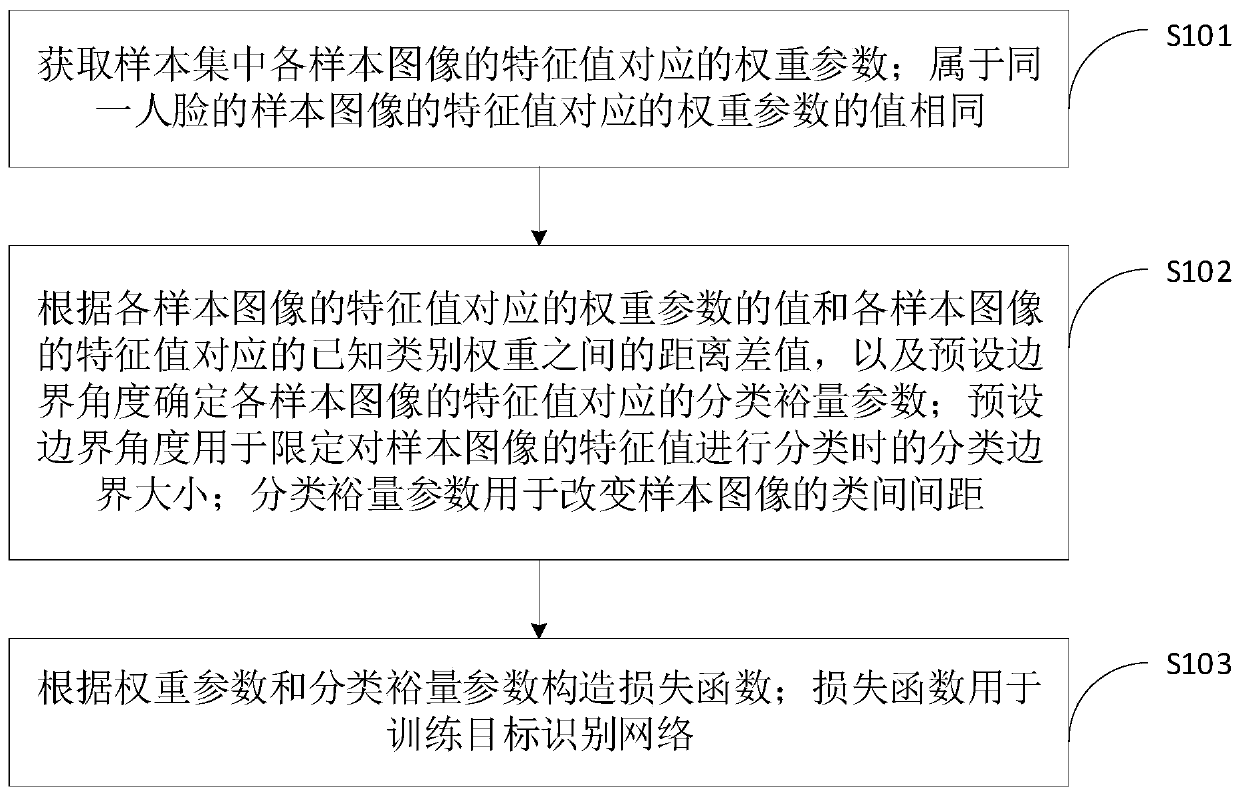

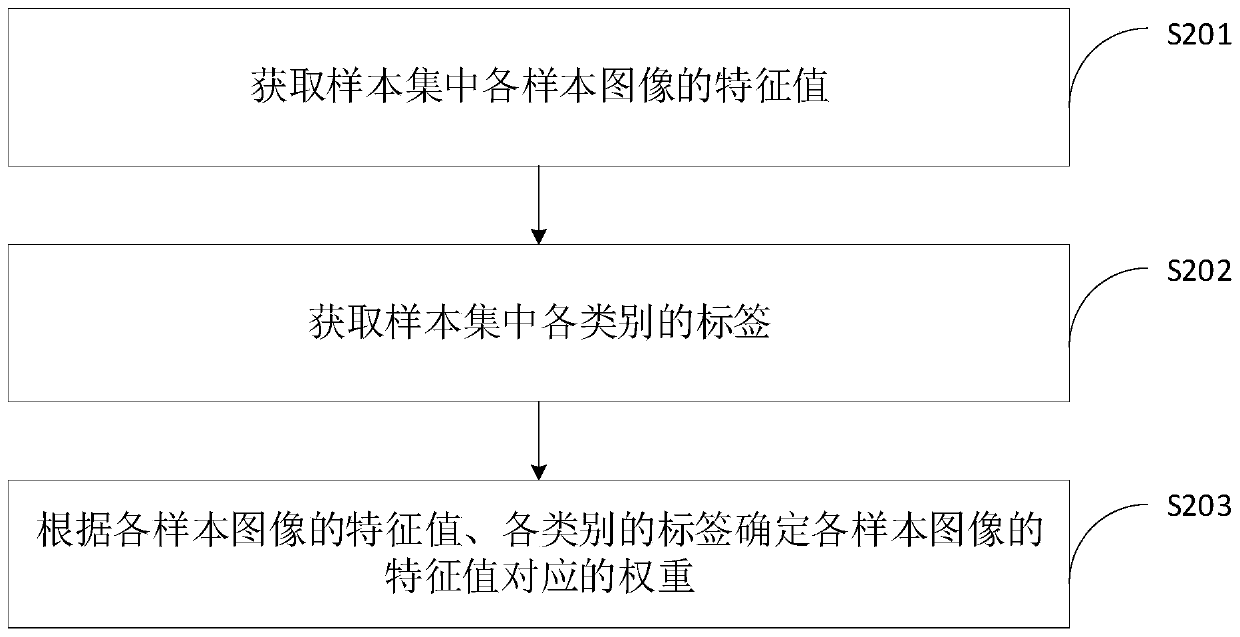

Target recognition network training method and device, computer equipment and storage medium

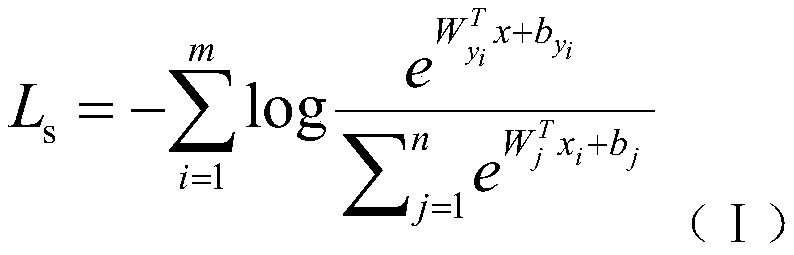

ActiveCN110705489AClassification results are compactReduce in quantityCharacter and pattern recognitionSample graphGoal recognition

The invention relates to a target recognition network training method and device, computer equipment and a storage medium. The method comprises the following steps: obtaining a weight corresponding toa feature value of each sample image in a sample set, and determining a classification allowance parameter corresponding to the feature value of each sample image according to a distance difference value between an unknown weight corresponding to the feature value of each sample image and a known weight corresponding to the feature value of each sample image and a preset boundary angle; and thenfurther constructing a loss function according to the weight and the classification margin parameter, and finally training the target recognition network by using the constructed loss function. According to the loss function constructed by the method, the network quality of the target recognition network trained by using the loss function can be improved, so that the face recognition precision when the trained target recognition network is used for face recognition is improved.

Owner:MEGVII BEIJINGTECH CO LTD

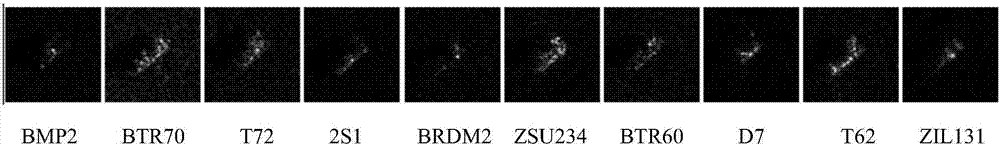

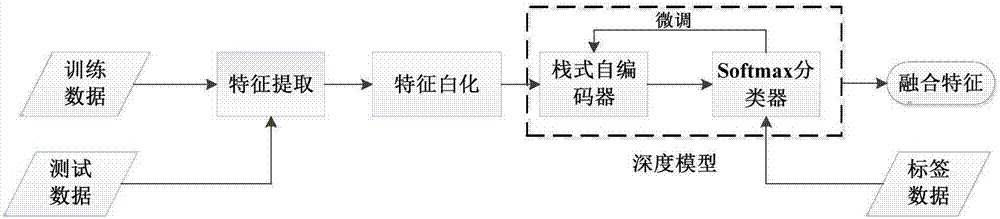

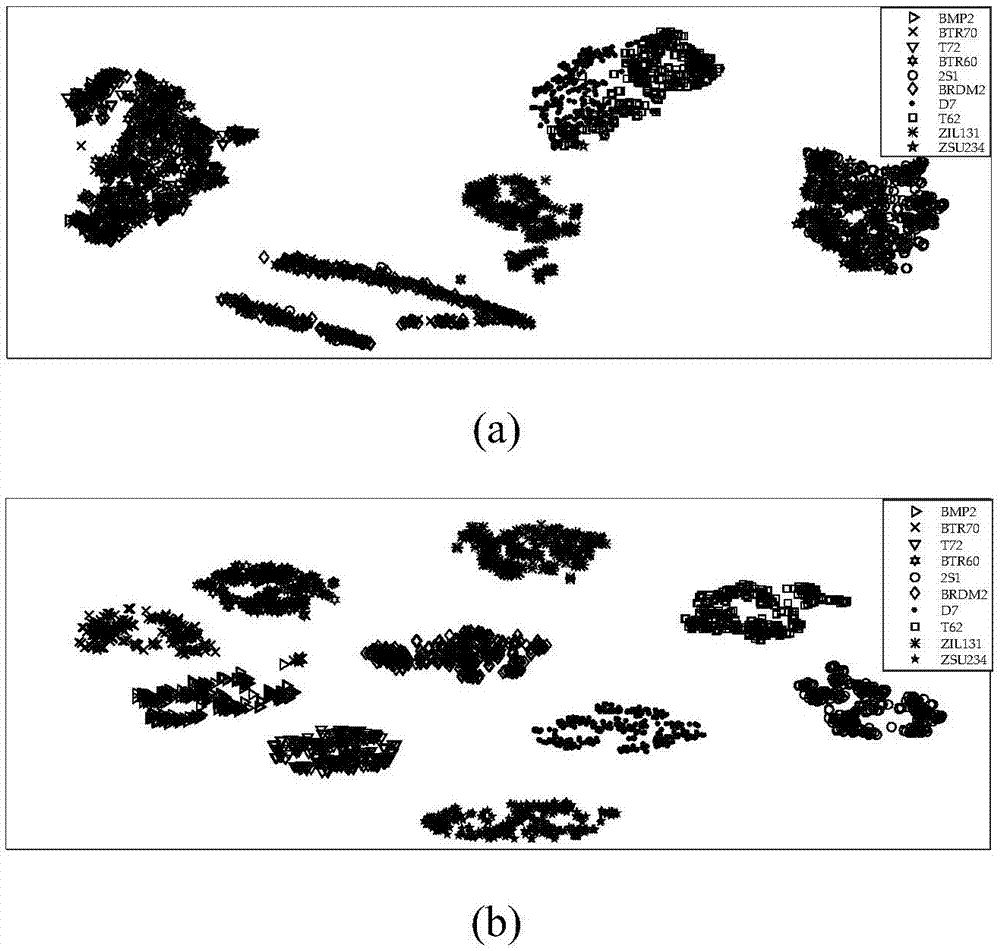

Feature fusion method based on stack-type self-encoder

ActiveCN106874952AFeatures are highly complementaryReduce redundancyCharacter and pattern recognitionFeature vectorAlgorithm

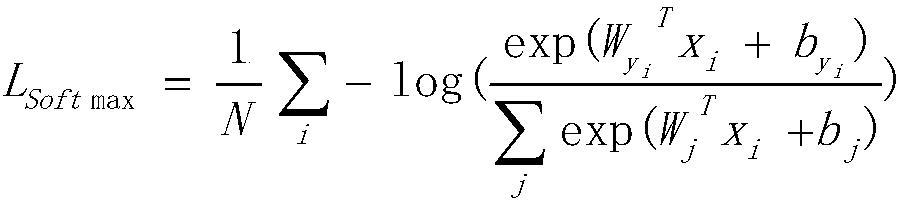

The invention provides a feature fusion method based on a stack-type self-encoder. The technical scheme of the invention comprises the following steps: firstly extracting binary mode texture features of three local spots of an image, selecting and extracting a plurality of types of baseline features of the image through a feature selection method, carrying out the series connection of all obtained features, and obtaining a series vector; secondly carrying out the standardization and whitening of the series vector; enabling a whitened result to serve as the input of SAE, and carrying out the training of the SAE through employing a layer-by-layer greedy training method; finally carrying out the fine tuning of the SAE through the trained SAE and a softmax filter, and enabling a loss function to be minimum, wherein the output of the SAE is a fusion feature vector which is high in discrimination performance. The selected feature redundancy is small, and more information is provided for feature fusion.

Owner:NAT UNIV OF DEFENSE TECH

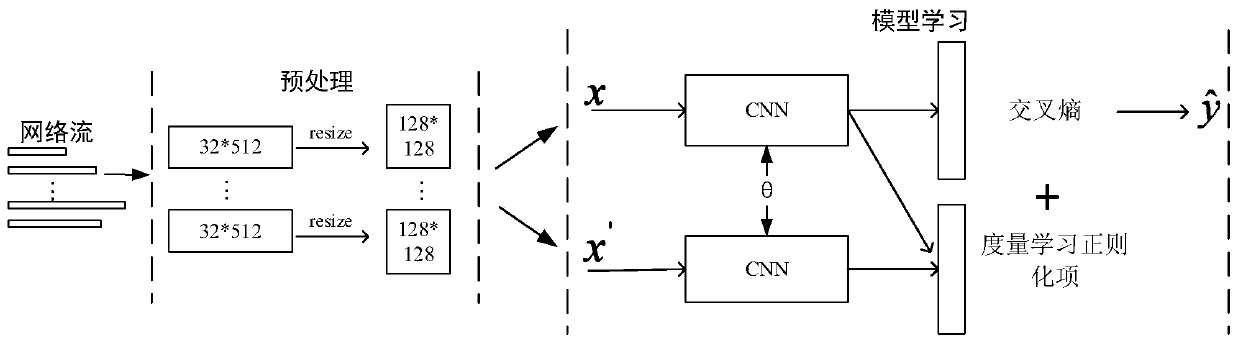

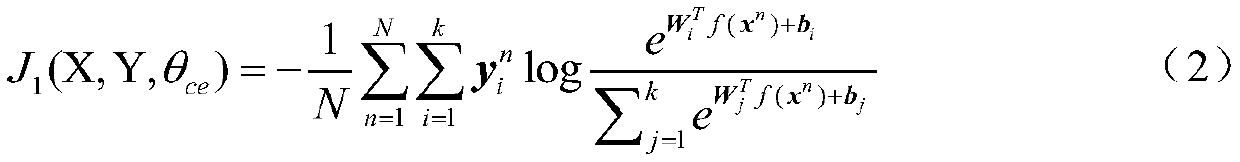

Network traffic classification system and method based on depth discrimination features

ActiveCN110796196ADistance between subclassesAccurate classificationCharacter and pattern recognitionNeural architecturesInternet trafficModel learning

The invention relates to a network traffic classification system and method based on depth discrimination features. The network traffic classification system comprises a preprocessing module and a model learning module, wherein the preprocessing module uses network flows with different lengths generated by different applications as input, and each network flow is expressed as a flow matrix with afixed size so as to meet the input format requirement of a convolutional neural network (CNN); and the model learning module trains the deep convolutional neural network by taking the flow matrix obtained by the preprocessing module as input under the supervision of a target function formed by a metric learning regularization item and a cross entropy loss item, so that the neural network can learnthe input flow matrix to obtain more discriminative feature representation, and the classification result is more accurate.

Owner:INST OF INFORMATION ENG CAS

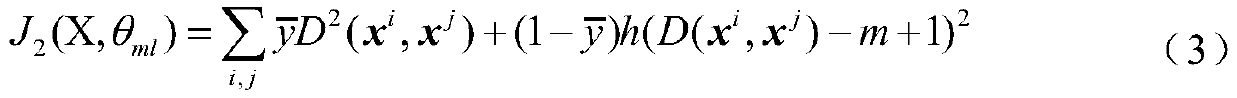

Image similarity calculation method based on improved SoftMax loss function

ActiveCN108960342AStrong image feature expression abilityAvoid image recognition accuracy is not highCharacter and pattern recognitionNeural architecturesTest phaseActivation function

The invention discloses an image similarity calculation method based on an improved Soft-Max loss function. An activating function of the improved Soft-Max layer in an image identification network isan improved Soft-Max activation function. In a reverse propagation process, the improved Soft-Max loss function is utilized for updating a network weight. Compared with a traditional Soft-Max loss function, the improved Soft-Max loss function has an advantage of increasing a decision edge which is obtained through learning of an image identification network. In a testing period, a trained image identification model is utilized for performing characteristic vector extraction on two testing images, and cosine similarity between the characteristic vector is calculated. Compared with an image similarity threshold, if the cosine similarity is higher than or equal with the image similarity threshold, a fact that the two images are in the same kind is determined; and if the cosine similarity is lower than the image similarity threshold, a fact that the two images are in different kinds is determined.

Owner:CHINA JILIANG UNIV

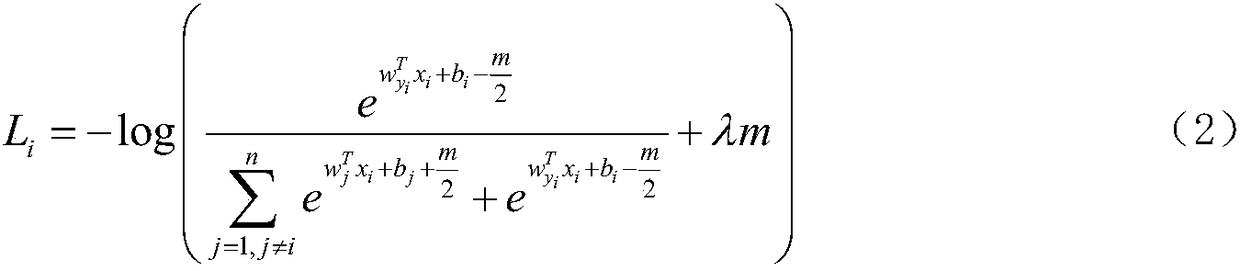

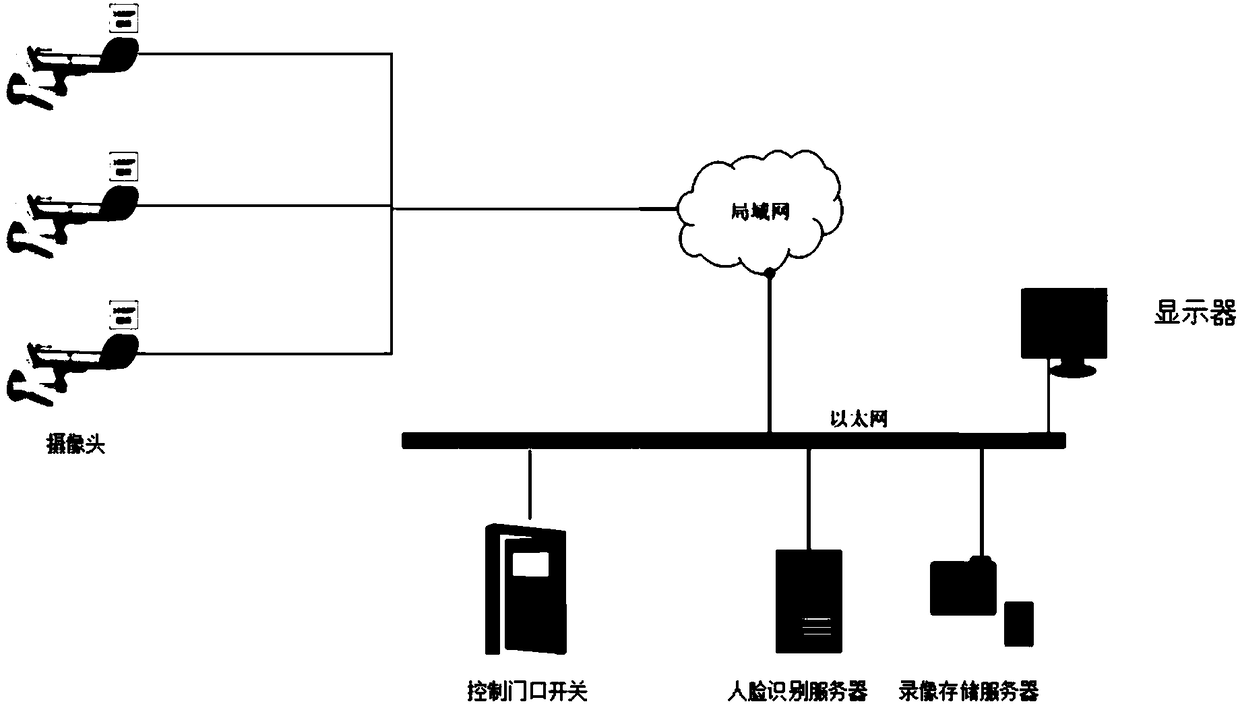

Face recognition method based on feature analysis

InactiveCN108898123AImprove performanceNo loss of image detailCharacter and pattern recognitionDiscriminantData set

The invention discloses a face recognition method based on feature analysis, and belongs to the field of face recognition. A neural network model for feature extraction is constructed and trained by using a face data set so as to obtain the trained neural network model; constructing a face feature library; the face image to be recognized is filtered and the processed image is inputted into the trained neural network model to extract the features; and the similarity between the extracted features and the features in the face feature library is calculated, if the similarity is greater than the threshold, the linear discriminant is applied to improve the matching accuracy of the features of which the similarity is greater than the threshold and the recognition result is obtained, or the faceimage corresponding to the feature is discarded. The face recognition method is suitable for multiple actual environments such as the access control system, the bank system, etc. Compared with the mainstream face recognition SDK, the system algorithm has good robustness to the environment change.

Owner:CHENGDU KOALA URAN TECH CO LTD

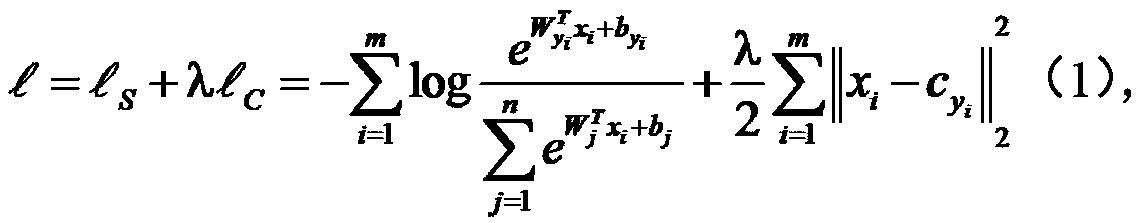

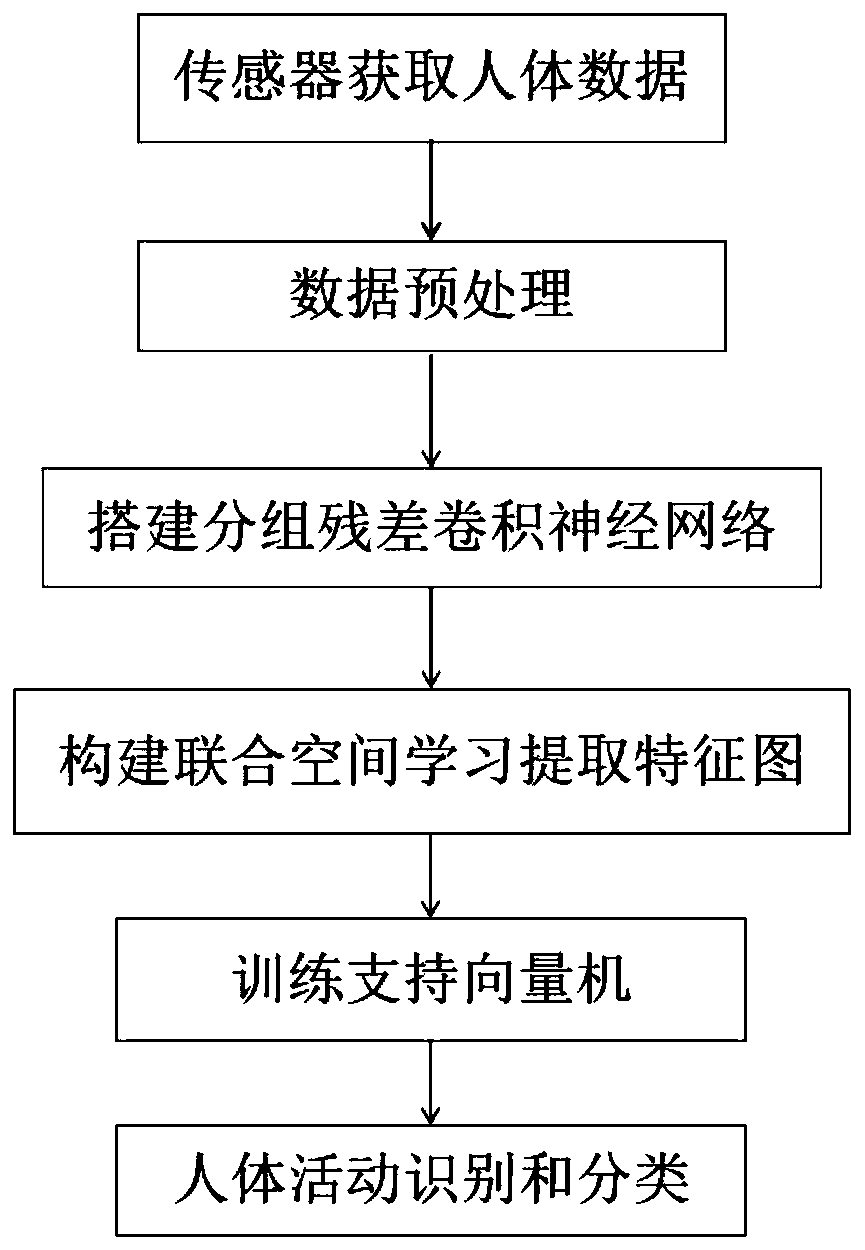

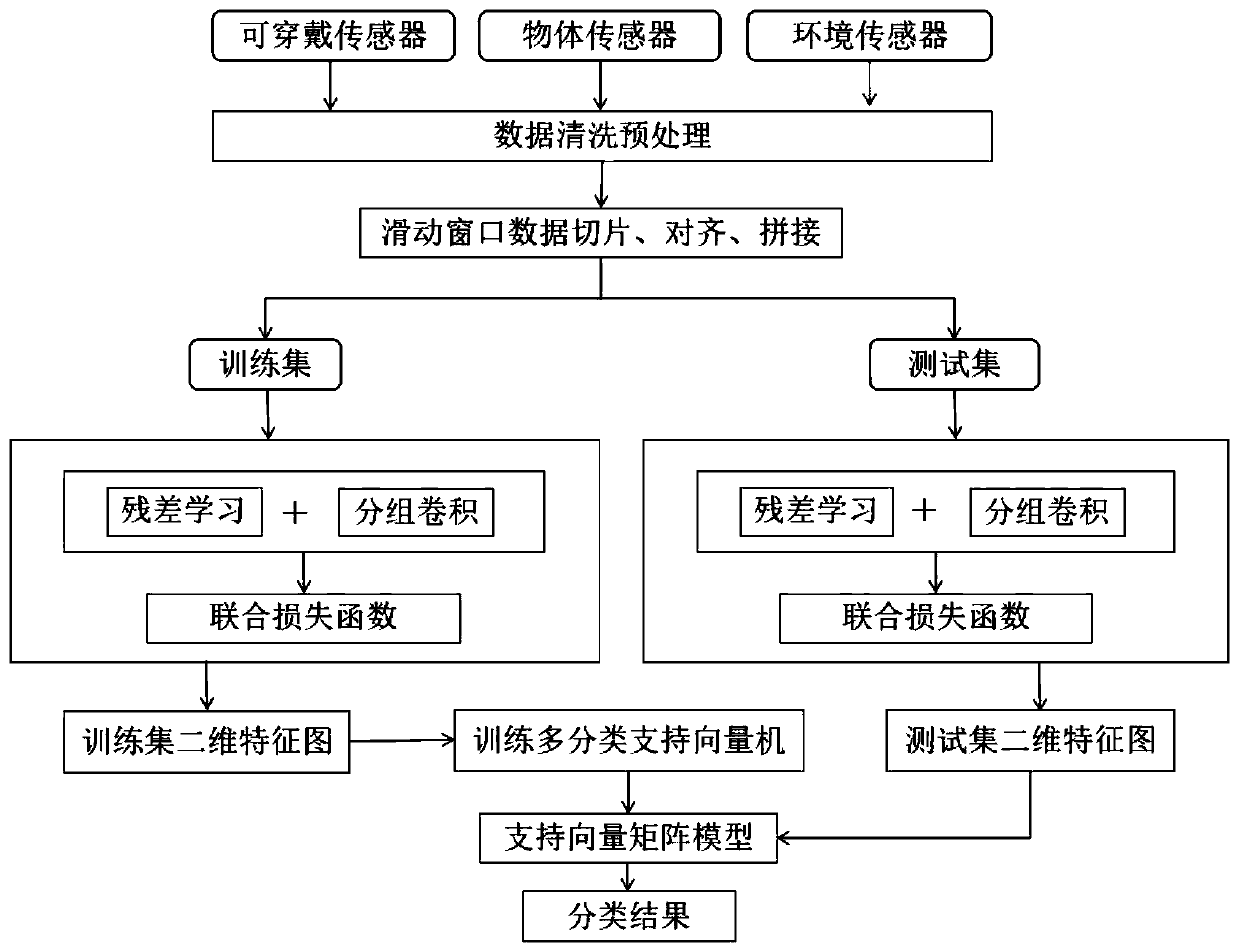

Human body activity recognition method based on grouping residual joint spatial learning

PendingCN111597869AIncrease the distance between classesReduce the intra-class distanceCharacter and pattern recognitionNeural architecturesHuman bodyActivity classification

A human body activity recognition method based on grouping residual joint spatial learning comprises the following steps: step 1, collecting human, object and environment signals by using various sensors, grouping, aligning and slicing single-channel data based on a sliding window, and constructing a two-dimensional activity data subset; step 2, building a grouping residual convolutional neural network, and constructing a joint space loss function optimization network model by utilizing a center loss function and a cross entropy loss function in order to extract a feature map of a two-dimensional activity data subset; and step 3, training a multi-classification support vector machine by utilizing the extracted two-dimensional features to realize a human body activity classification task based on the feature map. According to the invention, fine human body activities can be identified; the inter-class distance of the extracted spatial features is increased in combination with a joint spatial loss function, and the intra-class distance is reduced; based on the spatial feature map of the human body activity data, a multi-classification support vector machine is combined to carry outclassification learning on the feature map, and the accuracy of human body activity classification is improved.

Owner:ZHEJIANG UNIV OF TECH

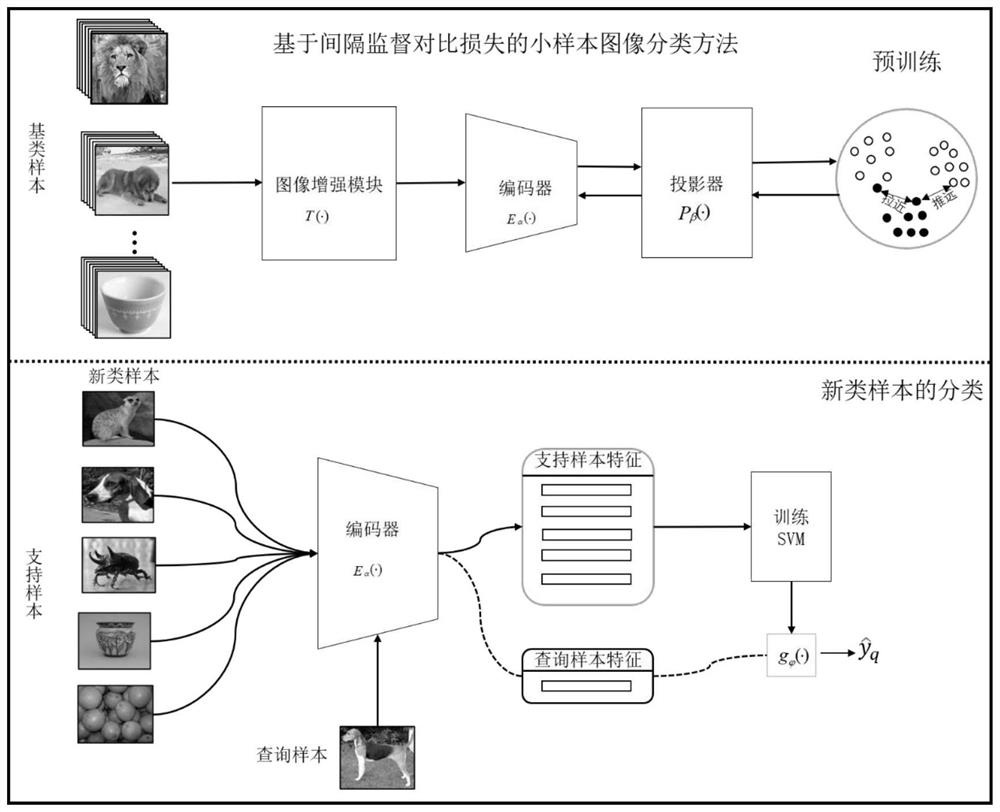

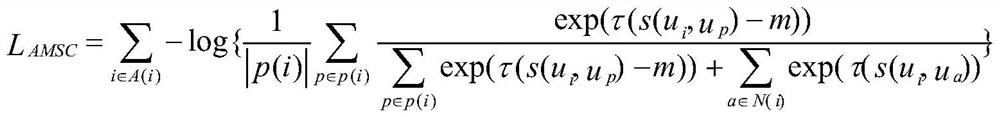

Small sample image classification method based on interval supervision contrast loss

InactiveCN114580566AMigratoryReduce distanceCharacter and pattern recognitionData setMathematical model

The invention relates to the technical field of small sample image classification, in particular to a small sample image classification method based on interval supervised contrast loss, which comprises the following steps of: pre-training a model on a base class data set by utilizing a novel interval supervised contrast loss function, fixing parameters in an encoder in the pre-training model, extracting features from the support image samples in the new-class data set and training an SVM classifier; and finally, performing classification decision on the query samples by using the SVM. The interval supervision contrast loss function establishes a mathematical model for the contrast relationship between the base class samples instead of only paying attention to the class to which the base class samples belong, and the pre-trained backbone network has better mobility. The supervised contrast loss function in the invention can further reduce the distance of intra-class data and increase the inter-class distance by increasing the interval parameter, thereby further improving the classification performance.

Owner:NANTONG UNIVERSITY

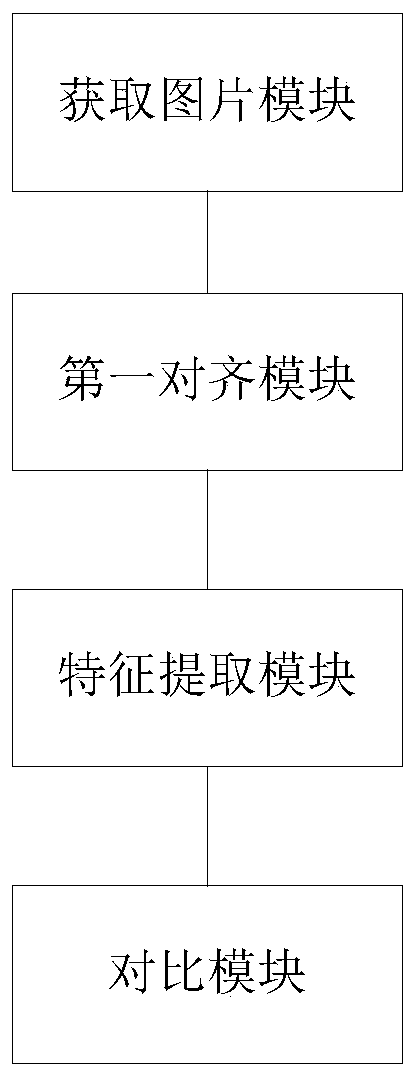

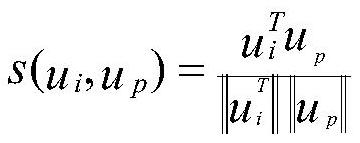

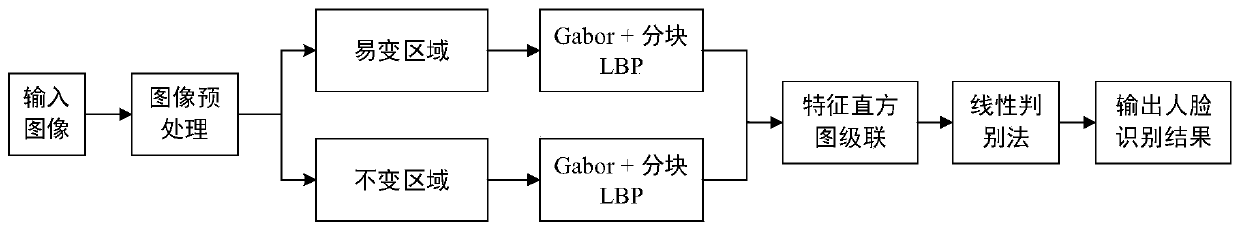

Regional feature extraction face recognition method

ActiveCN110826408AImprove recognition accuracyImprove accuracyAcquiring/recognising facial featuresFeature extractionImage pair

The invention discloses a regional feature extraction face recognition method. The method is specifically implemented according to the following steps: 1, obtaining a to-be-recognized face image; 2, preprocessing the acquired face image by using a multi-task convolutional neural network, and marking key points of the face; 3, according to different key point position information, segmenting the human face into an expression variable region and an expression invariable region; 4, inputting the images of the expression variable region and the expression invariable region into a Gabor & block LBPfeature extraction channel to obtain a feature histogram containing face feature information; and 5, processing the feature histogram containing the human face feature information in the step 4 by using a linear discrimination method, and matching the processed human face feature information with human face features in a database to obtain a human face recognition result. According to the regional feature extraction face recognition method, the problem that the face recognition rate is low due to the influence of a non-limited environment in the prior art is solved.

Owner:XI'AN POLYTECHNIC UNIVERSITY

Single-camera multi-target pedestrian tracking method

PendingCN112836640AResolve inconsistenciesHigh precisionImage enhancementImage analysisCorrection algorithmComputer graphics (images)

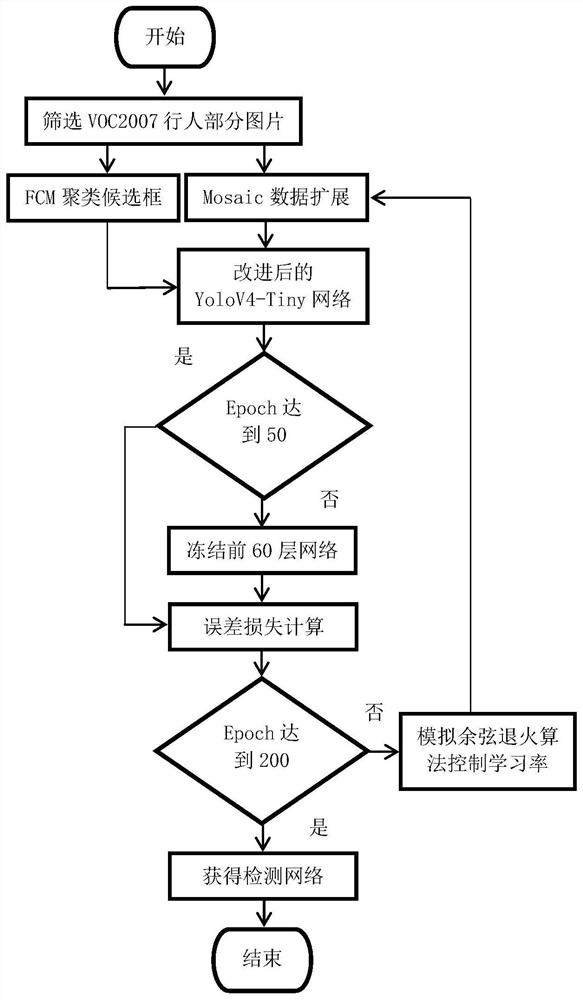

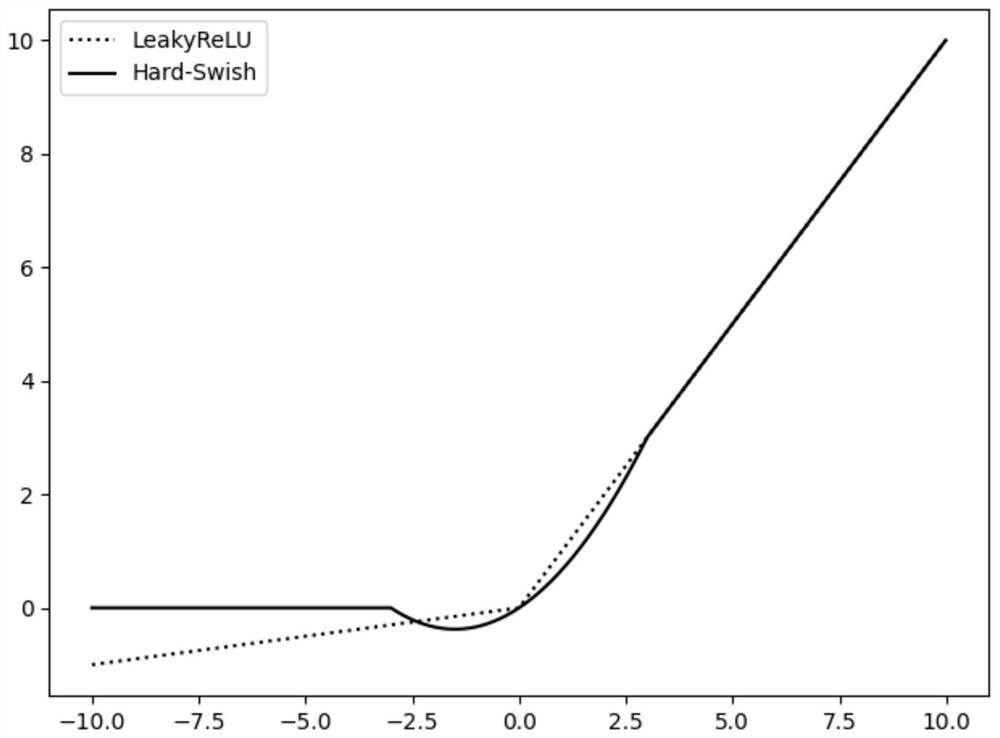

The invention relates to a single-camera multi-target pedestrian tracking method, which comprises the following steps: firstly, acquiring pedestrian video images by using a camera installed in a monitoring area, then correspondingly adjusting the size of the acquired images, inputting the adjusted images into a trained and improved YoloV4-Tiny pedestrian detection network, removing an abnormal pedestrian detection frame in a detection result by adopting a binning method, then inputting the screened detection result into a DeepSort algorithm to track pedestrians and record tracking information, and finally correcting an abnormally disappeared pedestrian target by adopting a correction algorithm based on a pedestrian unmatched frame number and a predicted position. Based on the improved YoloV4-Tiny, the binning method, the improved DeepSort and the correction method of the pedestrian unmatched frame number and the predicted position, high performance suitable for a real scene is basically achieved, and the method has the advantages of simultaneous multi-target positioning, accurate positioning, high real-time performance and high stability.

Owner:ZHEJIANG UNIV OF TECH

User recognition method for advertising machine based on human faces

ActiveCN108960186AFast recognitionAffect experienceCharacter and pattern recognitionNeural architecturesPersonalizationPattern recognition

The invention relates to a user recognition method for an advertising machine based on human faces. The advertising machine plays full-screen advertisements all the time under the condition that thereis no human face; when a human face appears on the screen, AR interaction is performed through the screen to capture an image of the human face, and an eigenvalue of the face image is calculated; andthe eigenvalue of the face image is matched with eigenvalues in a face database so as to perform recognition on the user. The method for calculating the eigenvalue of the face image is further improved, thereby being capable of improving the recognition speed on the basis of ensuring the face recognition accuracy. Identity recognition is performed on the advertising machine by using the human face as an identity feature, thereby being capable of pushing personalized advertisement contents in a targeted manner, avoiding the impact imposed on the user experience by fingerprint recognition in the prior art, and even avoiding a defect that two different users having similar fingerprints may pass fingerprint verification at the same time.

Owner:NANJING KIWI NETWORK TECH CO LTD

Face recognition method

InactiveCN111401299AImprove effectivenessImprove fitting abilityCharacter and pattern recognitionNeural architecturesData setTest set

The invention provides a face recognition method. The face recognition method comprises the following steps: S1, obtaining face training test resources from a public data set; S2, preprocessing the image; S3, inputting the processed face data set into a convolutional neural network of a novel network structure of an improved NVM module to obtain a trained lightweight convolutional neural network;S4, inputting the face images in the test set into the trained lightweight convolutional neural network model, and judging whether the lightweight model can be accurately verified to effectively classify the face data set or not.

Owner:SHANGHAI APPLIED TECHNOLOGIES COLLEGE

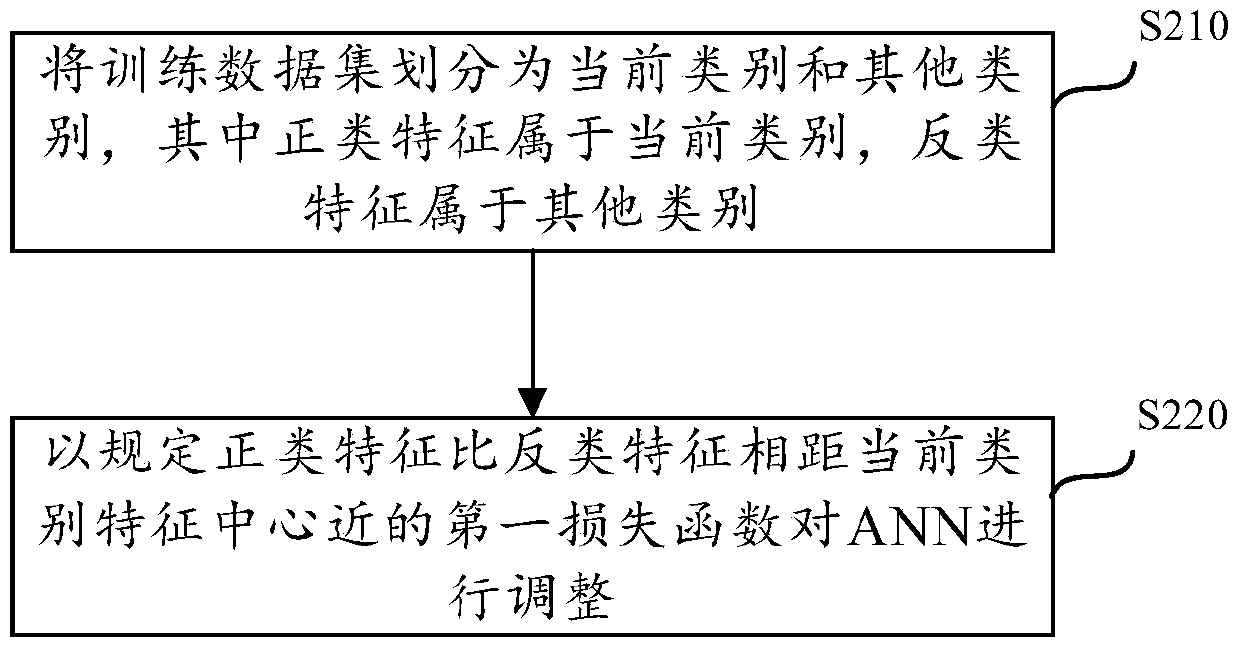

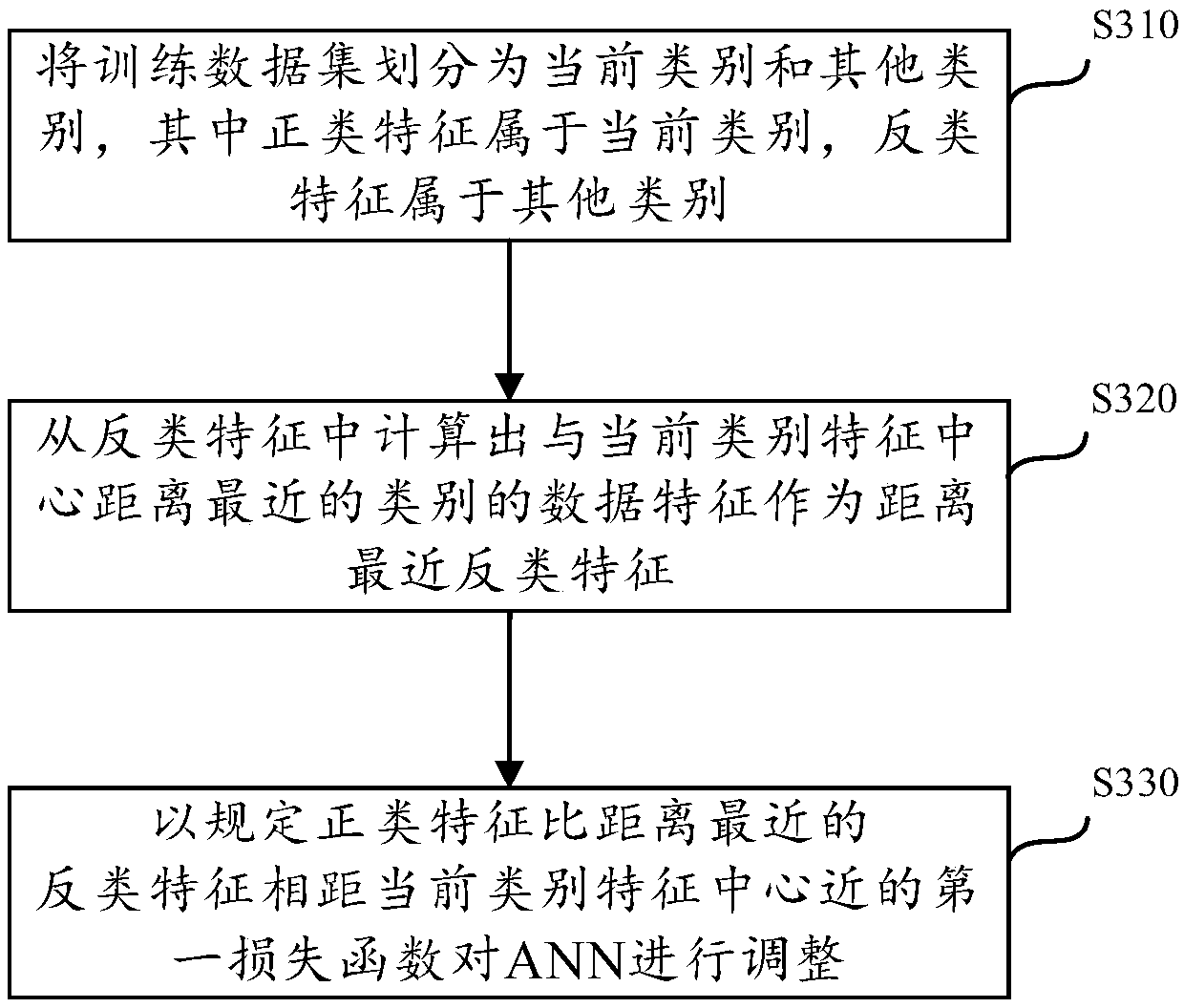

Artificial neural network adjustment method and device

ActiveCN110633722AImprove forecast accuracyIncrease the distance between classesCharacter and pattern recognitionPattern recognitionNerve network

The invention provides an ANN (artificial neural network) adjustment method and device. The ANN includes a plurality of layers and is trained for classification reasoning. For example, the ANN may bea neural network trained for fine-grained image recognition. The method comprises the steps that a training data set is divided into a current category and other categories according to the currentlytargeted category, data features belonging to the current category are called as positive category features, and data features belonging to the other categories are called as negative category features; and the ANN is adjusted with a first loss function that specifies that the positive class feature is closer to a current class feature center of the current class than the negative class feature. According to the method, an effective loss function is designed from a loss function, the intra-class distance can be shortened, the inter-class distance can be increased, the classification effect canbe improved, and the prediction precision of the artificial neural network can be improved on the whole.

Owner:XILINX TECH BEIJING LTD

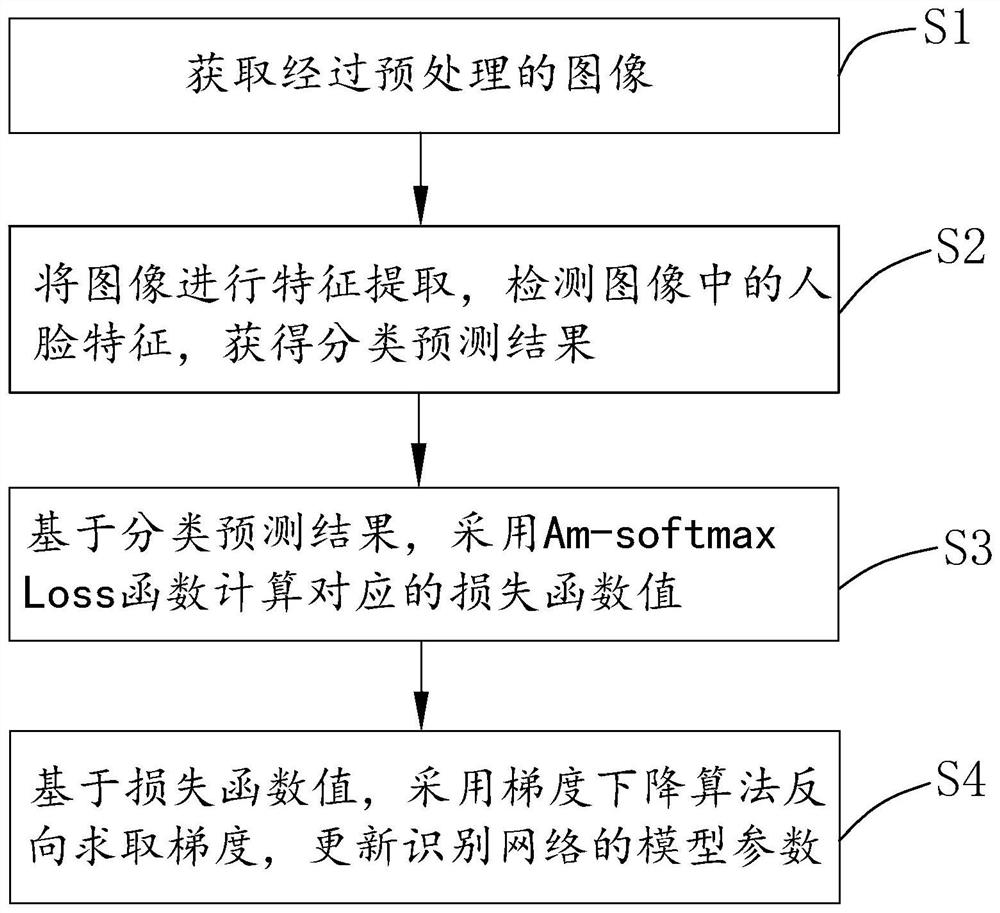

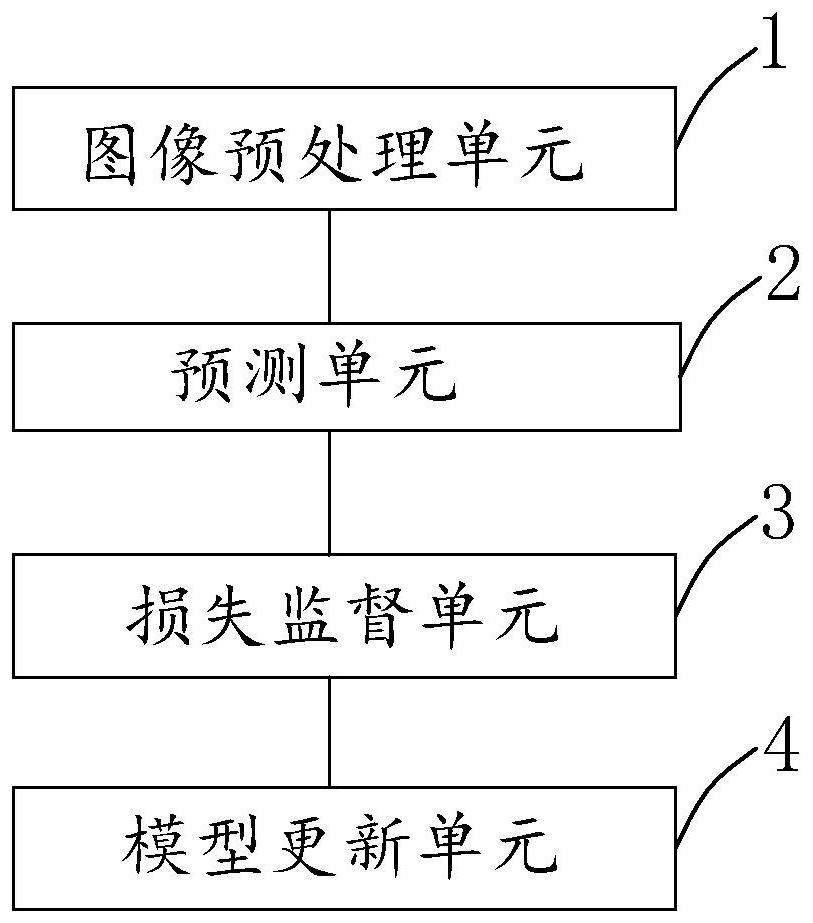

Face living body recognition network training method and system and electronic equipment

PendingCN114445917AImprove recognition accuracyAccurate distinctionNeural architecturesNeural learning methodsFeature extractionDescent algorithm

The invention provides a human face living body recognition network training method and system and electronic equipment. The method comprises the following steps: acquiring a preprocessed image; performing feature extraction on the image, detecting face features in the image, and obtaining a classification prediction result; calculating a corresponding loss function value by adopting an Am-softmax Loss function based on a classification prediction result; based on the loss function value, a gradient descent algorithm is adopted to reversely solve a gradient and update model parameters of the recognition network, so that the trained recognition network can establish an angle boundary between different samples, a large-angle interval supervision function is formed between the different samples, the difference between the samples can be greatly increased, and the recognition accuracy is improved. The inter-class distance is enhanced, so that the trained recognition network has higher recognition precision when facing various attack faces, the face living bodies are accurately distinguished, and the recognition accuracy is improved.

Owner:SHENZHEN VIRTUAL CLUSTERS INFORMATION TECH

Internet encrypted traffic interaction feature extraction method based on graph structure

ActiveCN112217834AReduce the intra-class distanceIncrease the distance between classesCharacter and pattern recognitionNeural architecturesData packFeature extraction

The invention discloses an Internet encrypted traffic interaction feature extraction method based on a graph structure, belongs to the technical field of encrypted network traffic classification, andis applied to fine-grained classification of network traffic after TLS encryption. Encrypted traffic interaction characteristics based on the graph structure are extracted from an original packet sequence, and the graph structure characteristics include sequence information, packet direction information, packet length information, burst traffic information and the like of data packets. Through quantitative calculation, compared with a packet length sequence, the intra-class distance is obviously reduced and the inter-class distance is increased after the graph structure characteristics are used. According to the method, the encrypted traffic characteristics with richer dimensions and higher discrimination can be obtained, and then the method is combined with deep neural networks such as agraph neural network to carry out refined classification and identification of the encrypted traffic. A large number of experimental data experiments prove that compared with an existing method, the method adopting the graph structure characteristics in combination with the graph neural network has higher accuracy and lower false alarm rate.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com