Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

757 results about "Real-time rendering" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Real-time rendering is one of the interactive areas of computer graphics, it means creating synthetic images fast enough on the computer so that the viewer can interact with a virtual environment. The most common place to find real-time rendering is in video games. The rate at which images are displayed is measured in frames per second or Hertz. The frame rate is the measurement of how quickly an imaging device produces unique consecutive images.

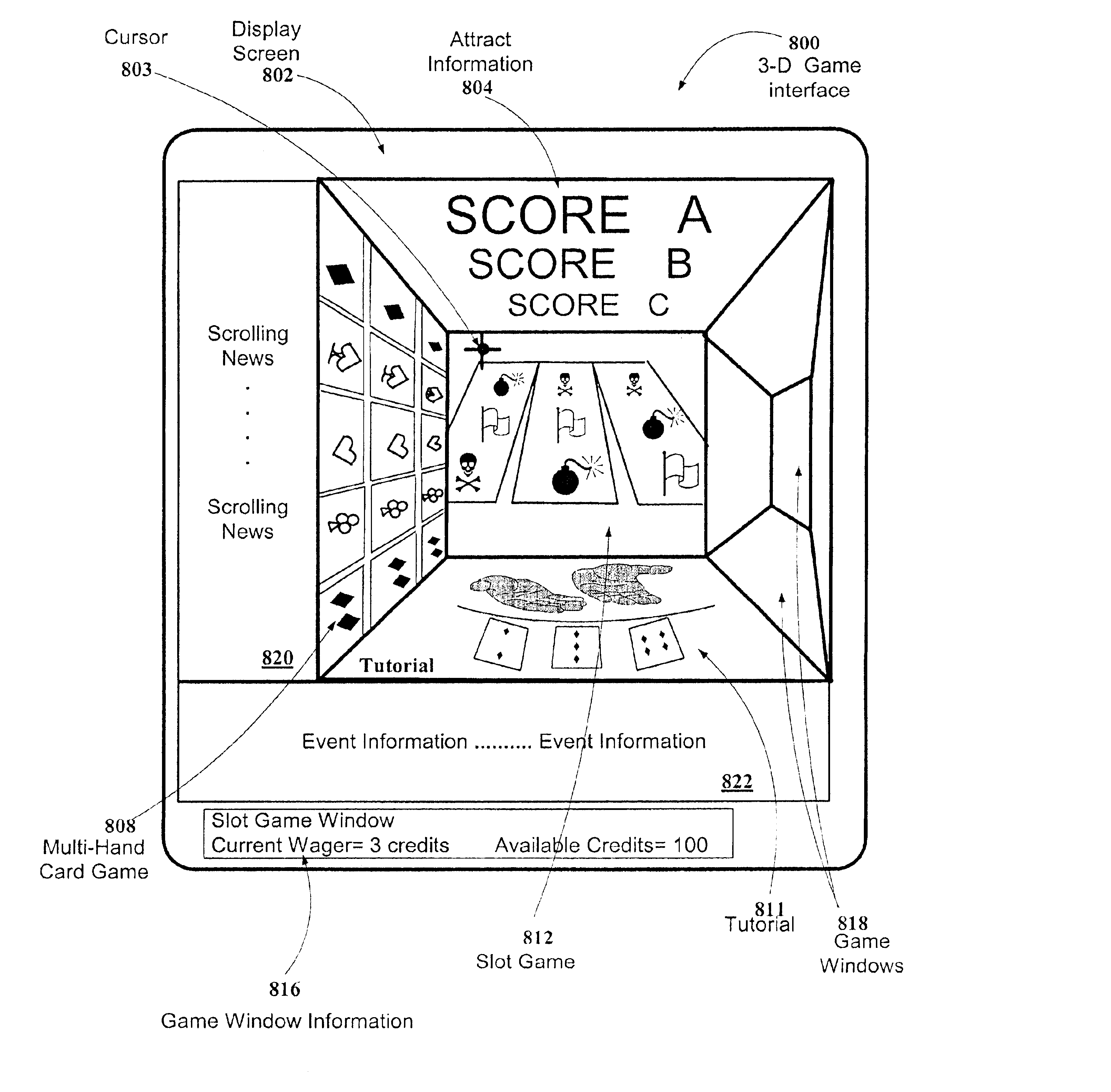

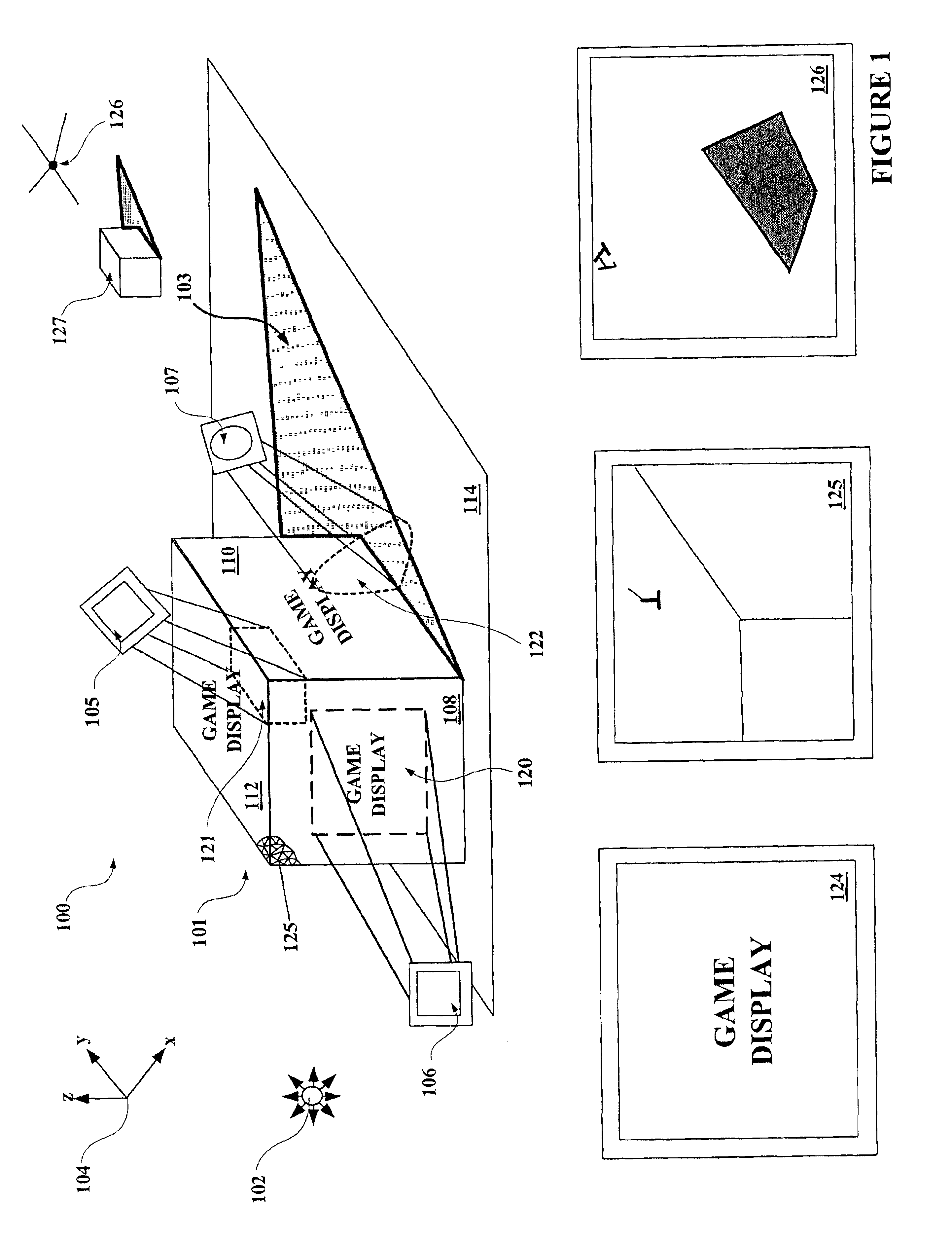

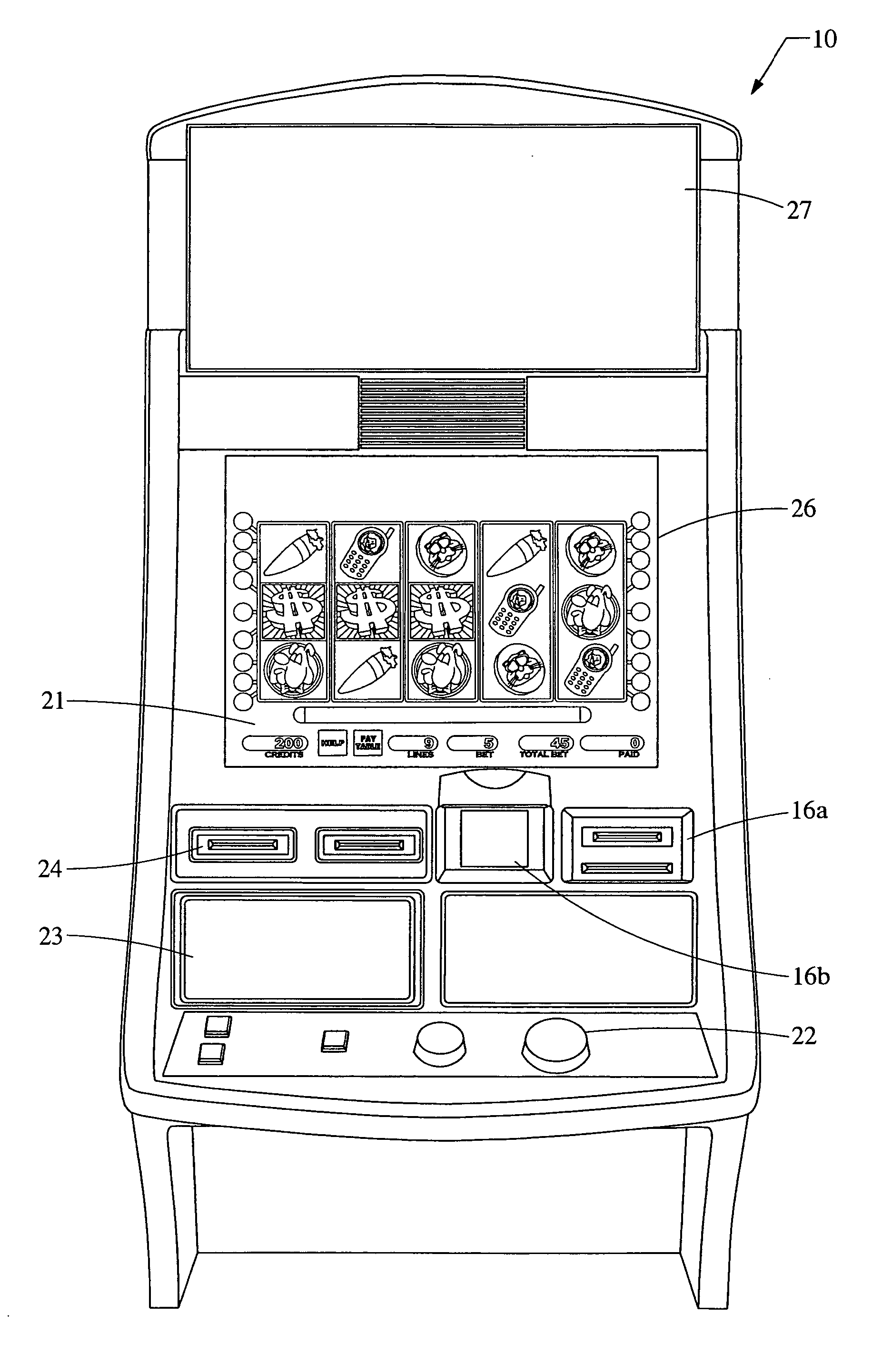

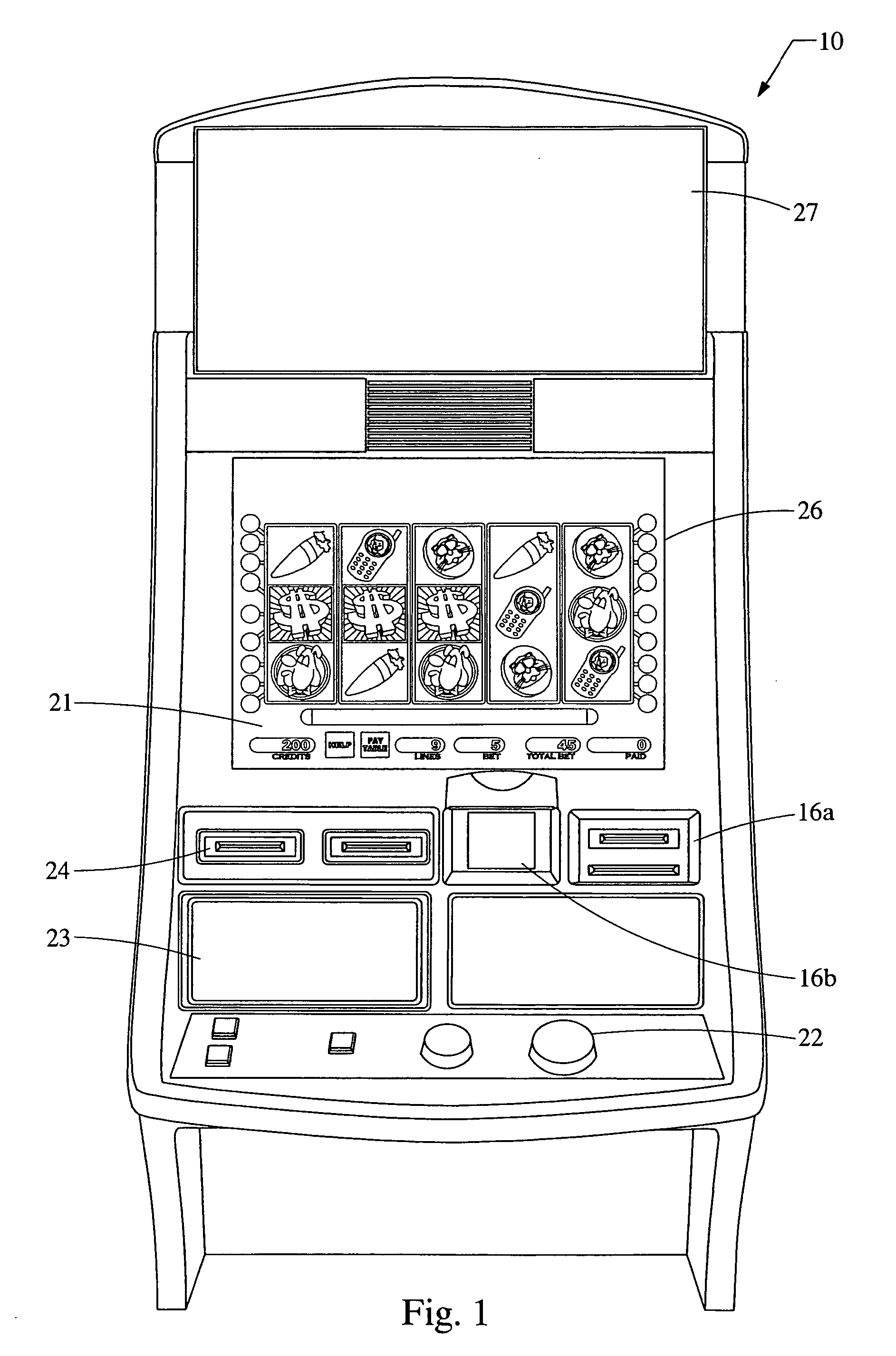

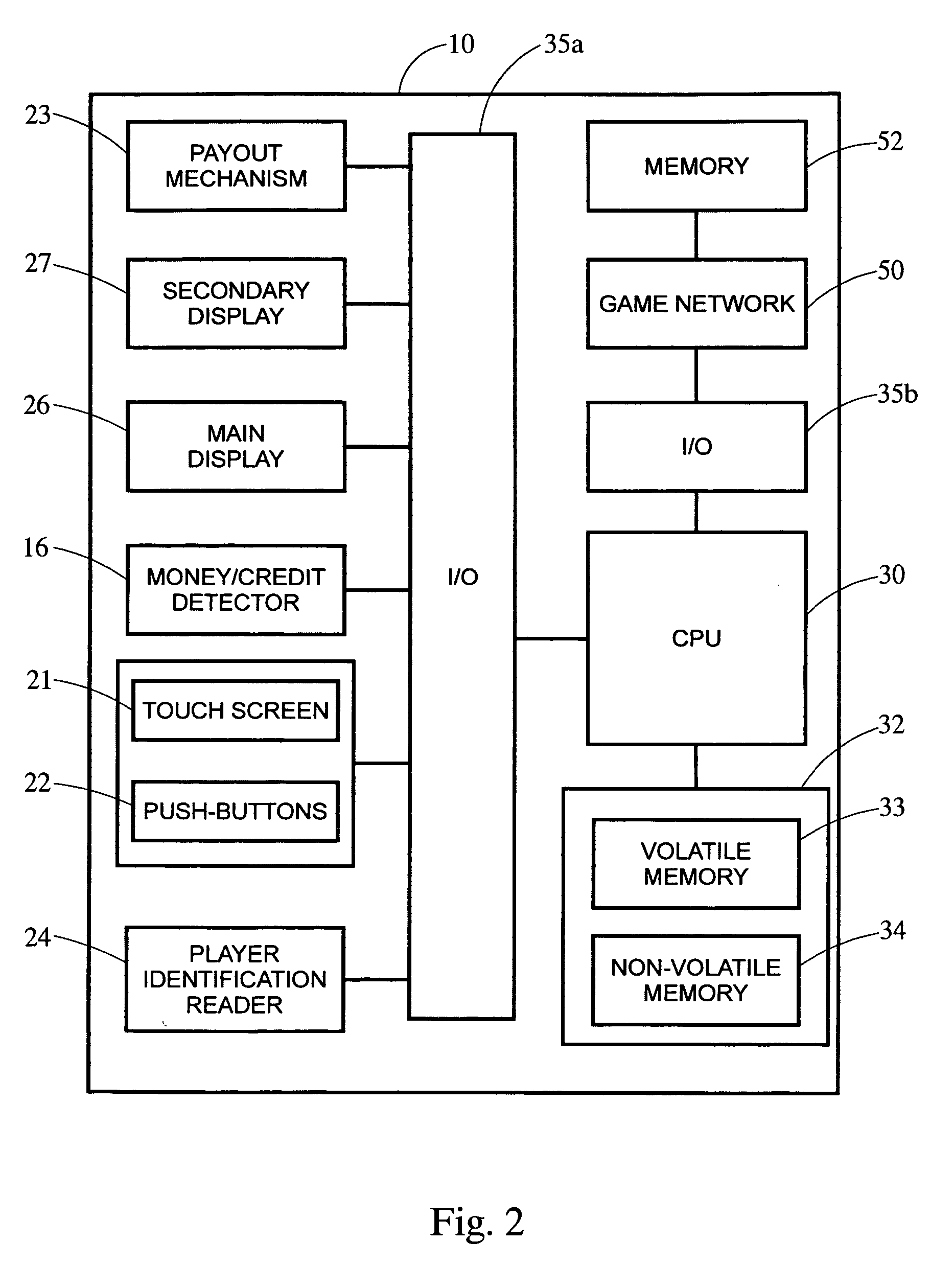

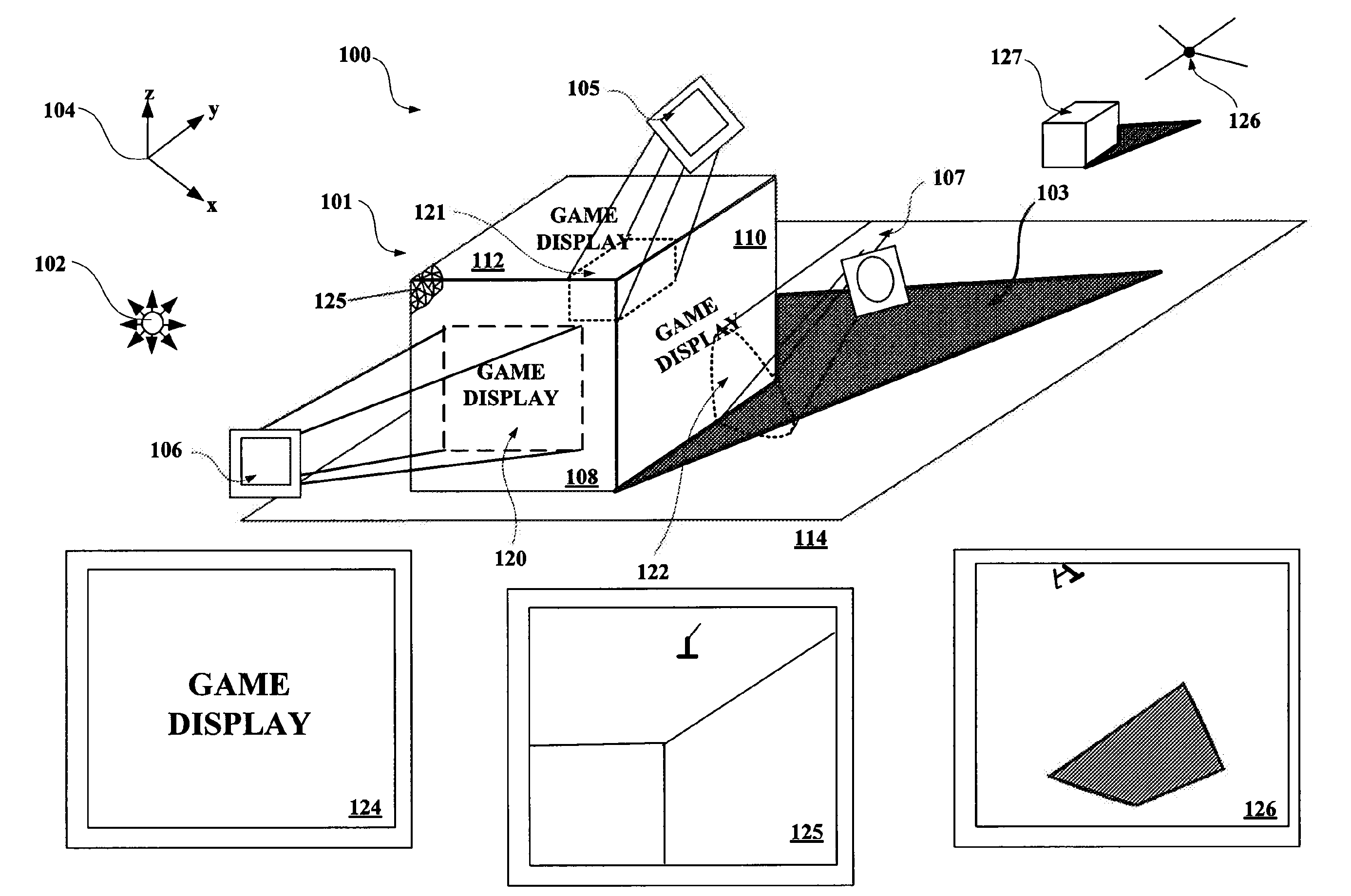

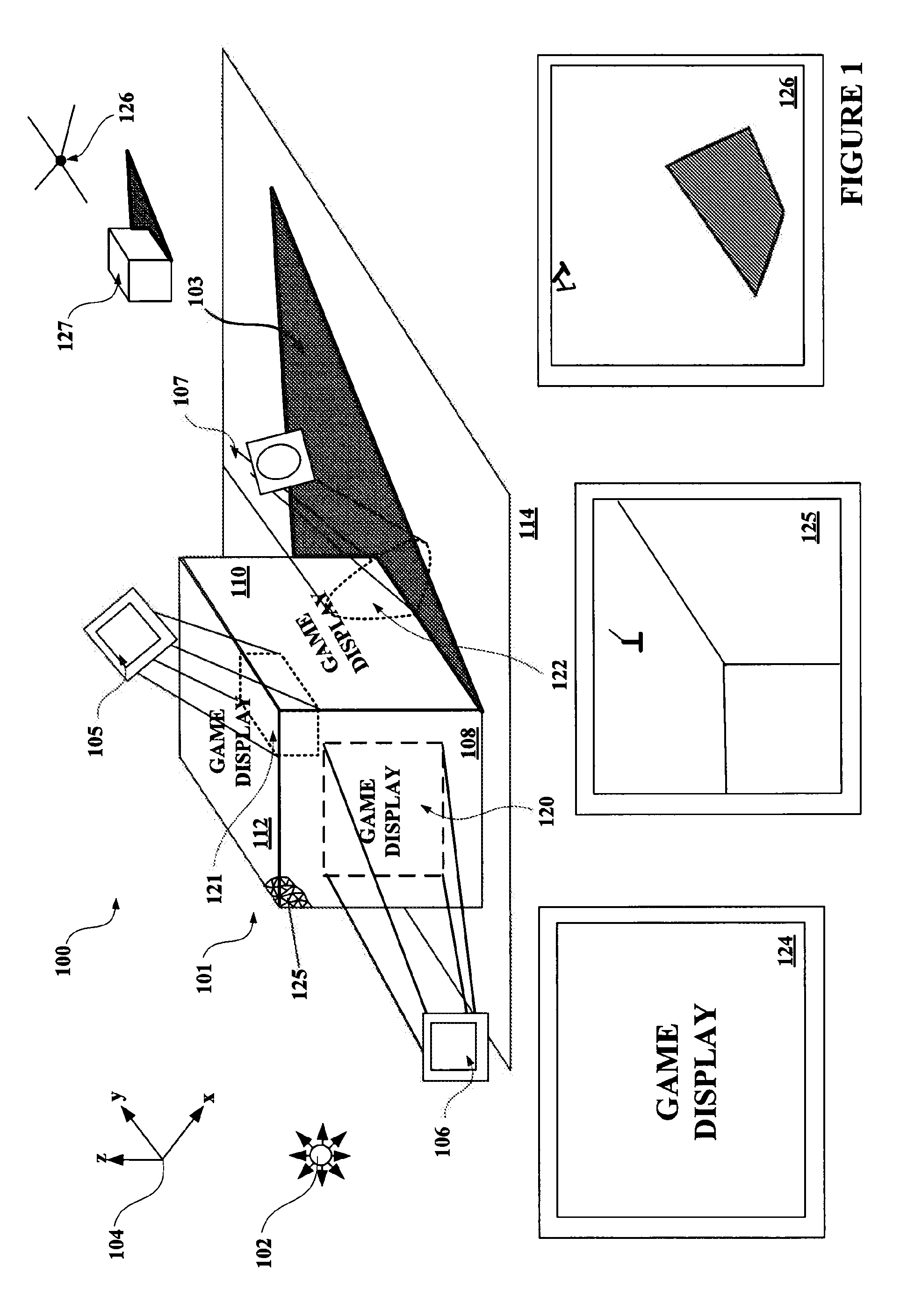

Virtual cameras and 3-D gaming environments in a gaming machine

InactiveUS6887157B2Increase excitementRoulette gamesApparatus for meter-controlled dispensingGame playerVirtual camera

A disclosed gaming machine provides method and apparatus for presenting a plurality of game outcome presentations derived from one or more virtual 3-D gaming environments stored on the gaming machine. While a game of chance is being played on the gaming machine, two-dimensional images derived from a three-dimensional object in the 3-D gaming environment may be rendered to a display screen on the gaming machine in real-time as part of the game outcome presentation. To add excitement to the game, a 3-D position of the 3-D object and other features of the 3-D gaming environment may be controlled by a game player. Nearly an unlimited variety of virtual objects, such as slot reels, gaming machines and casinos, may be modeled in the 3-D gaming environment.

Owner:IGT

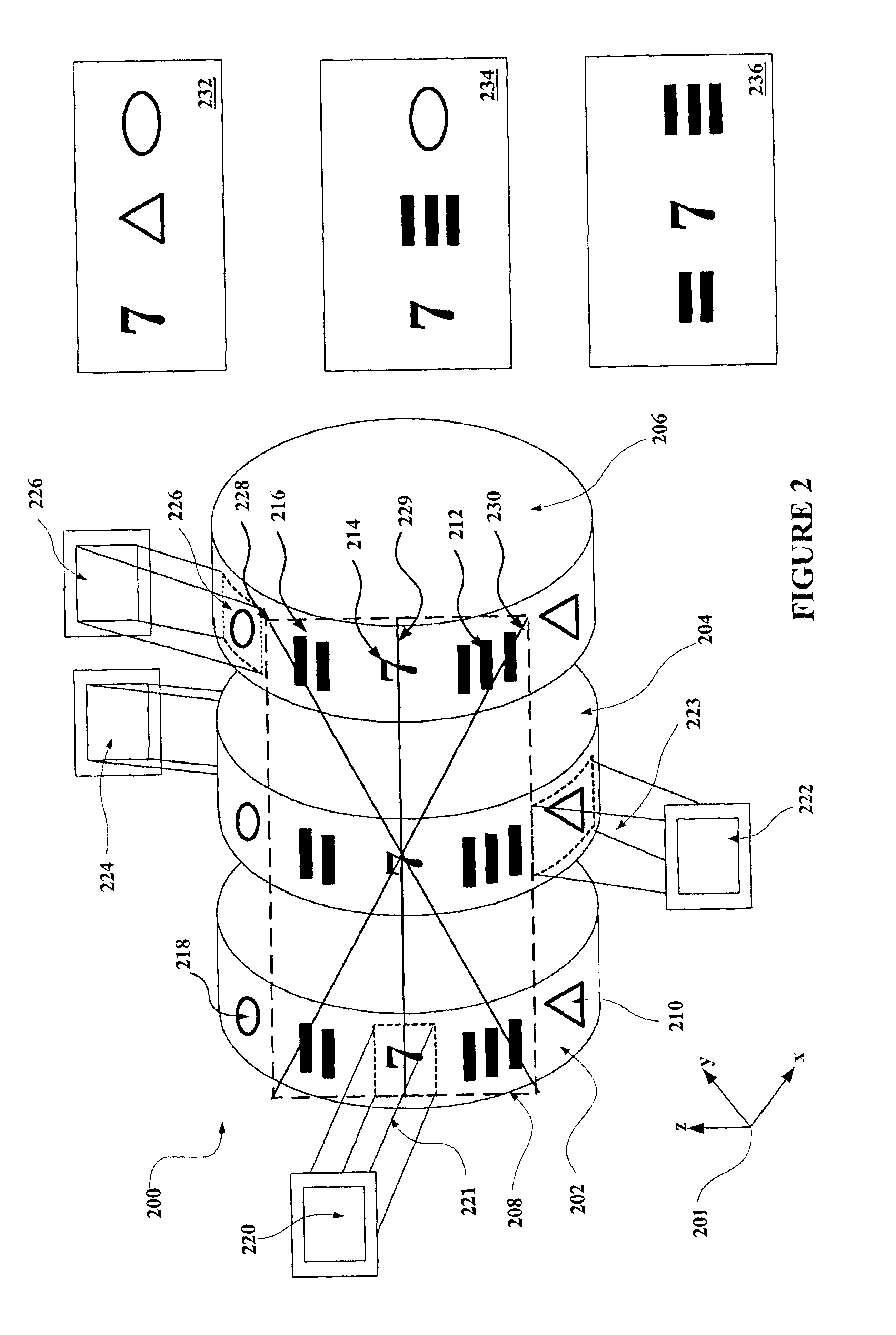

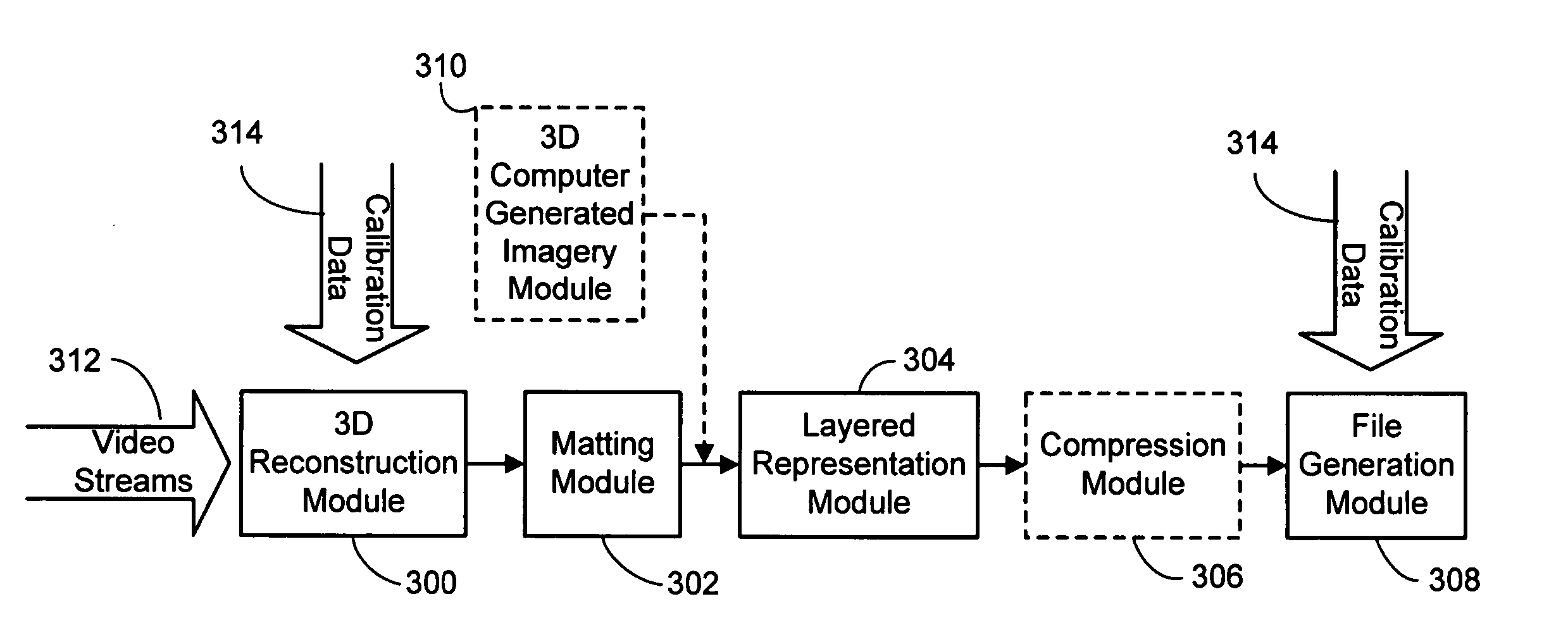

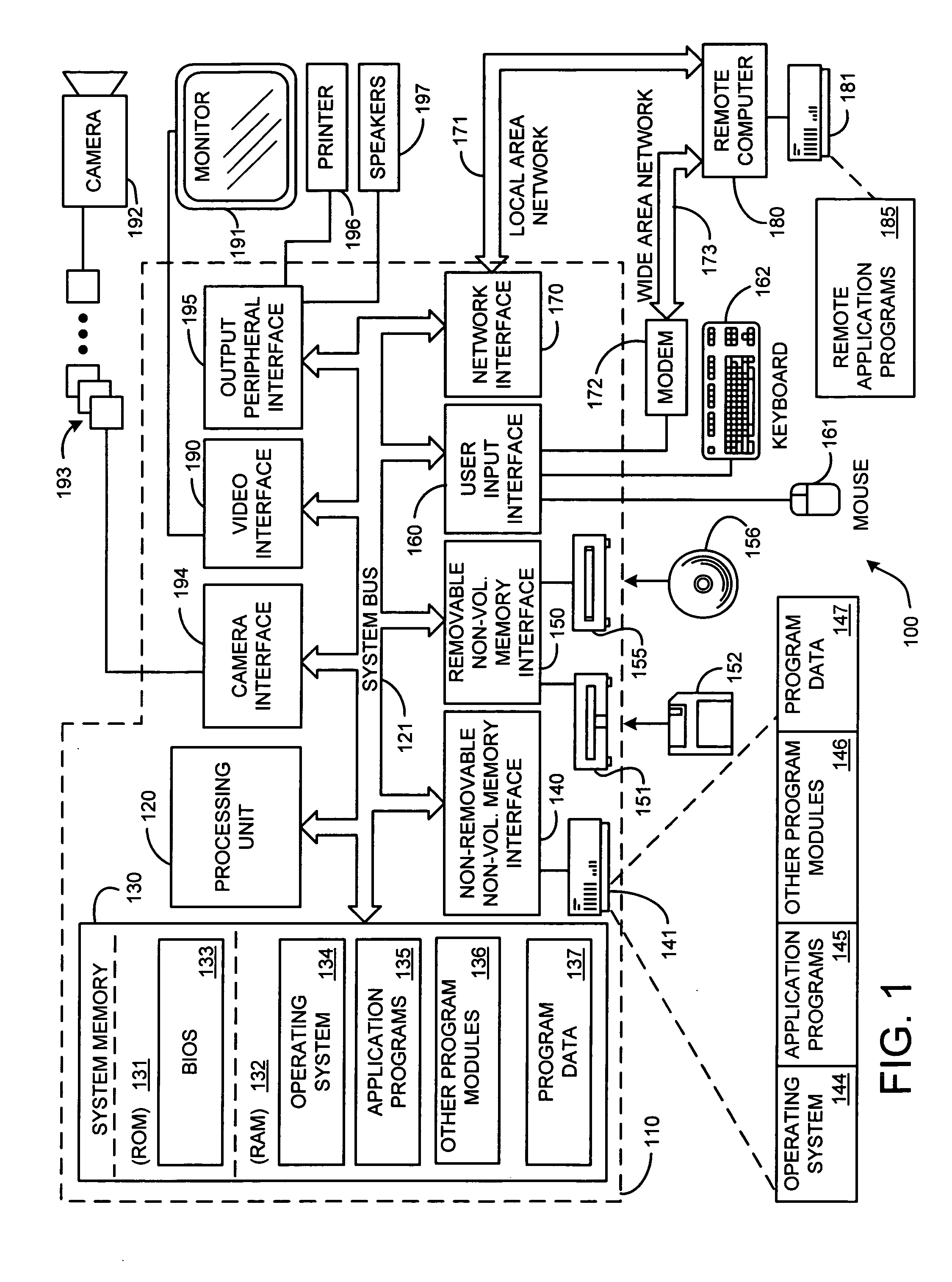

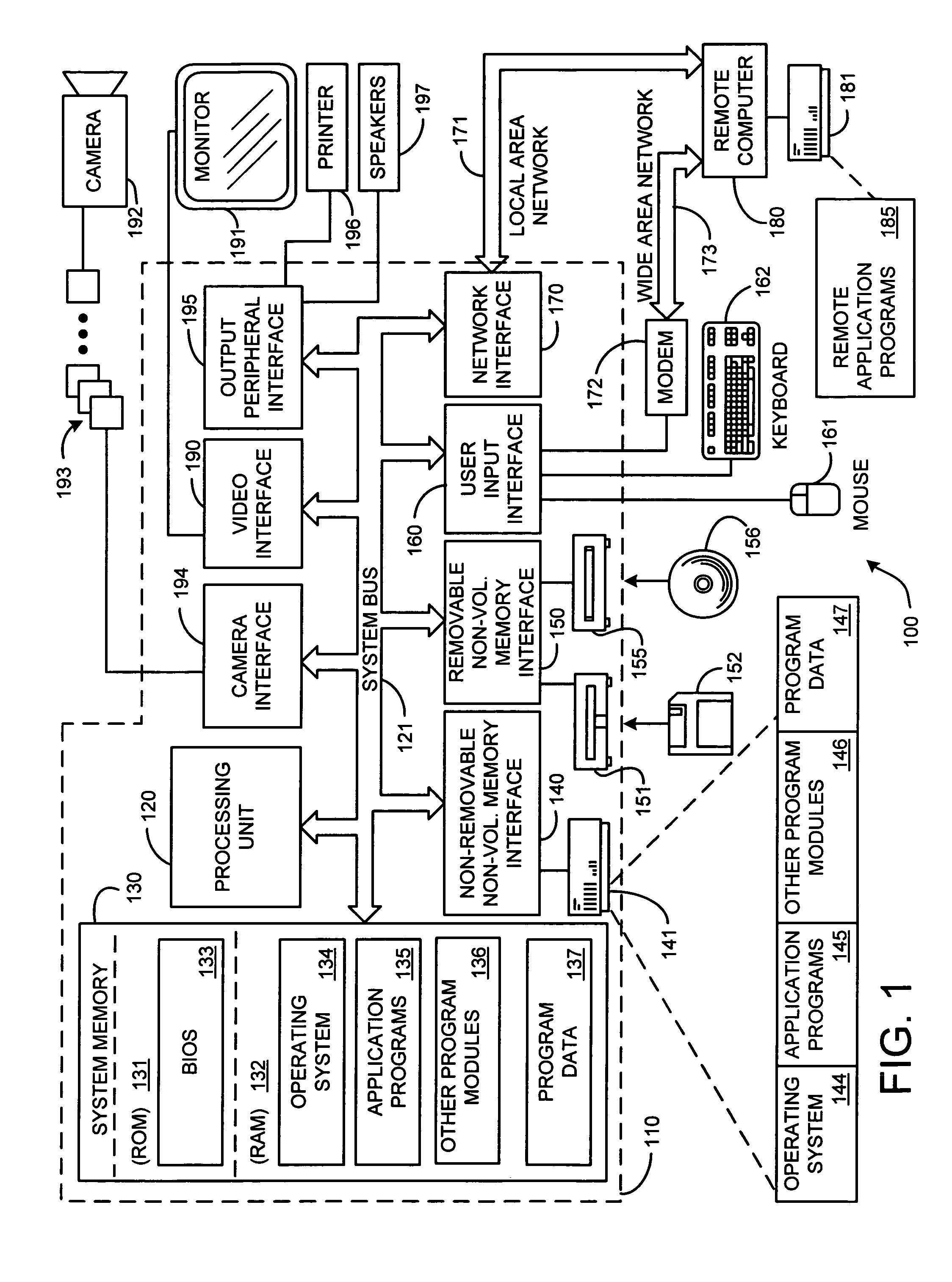

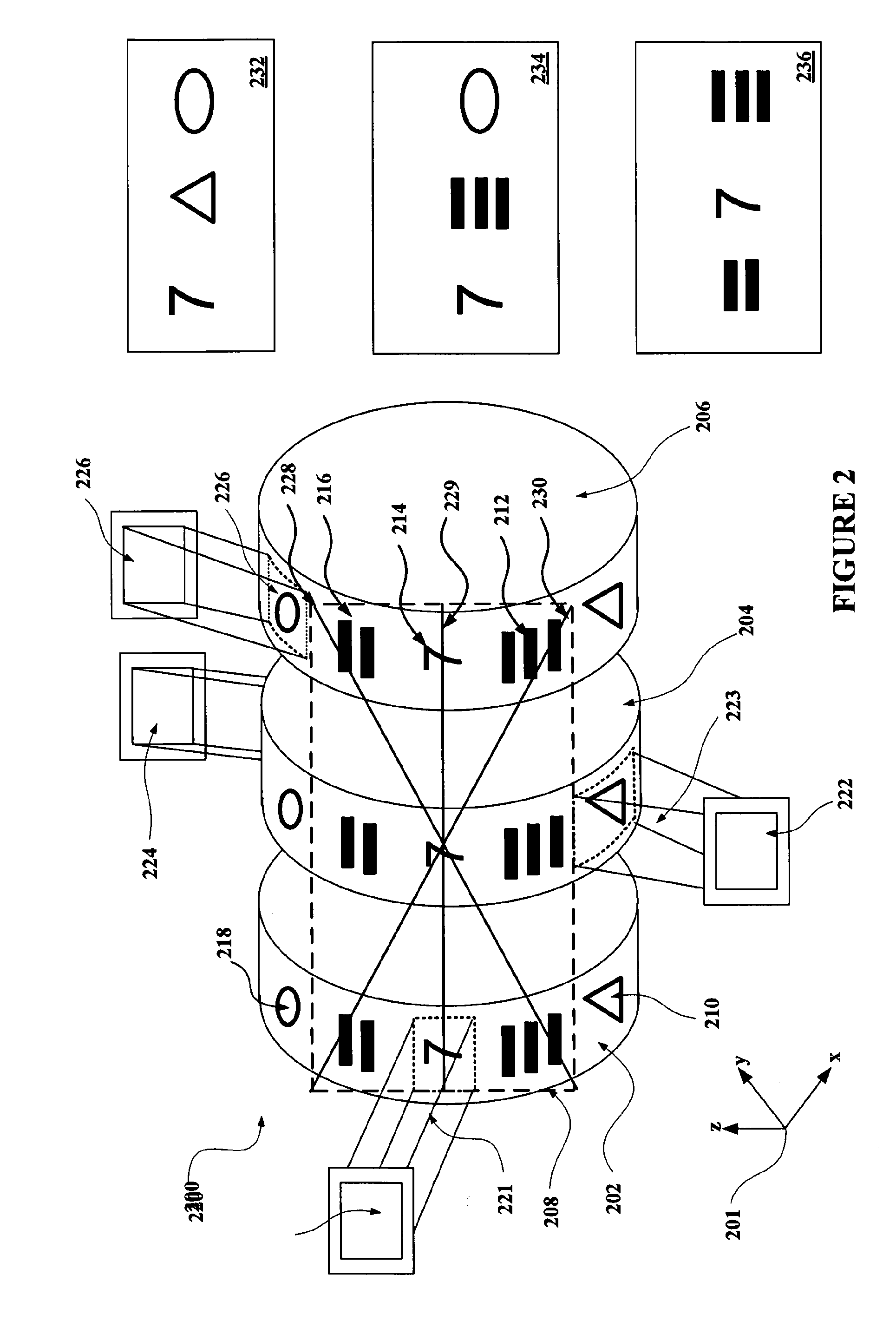

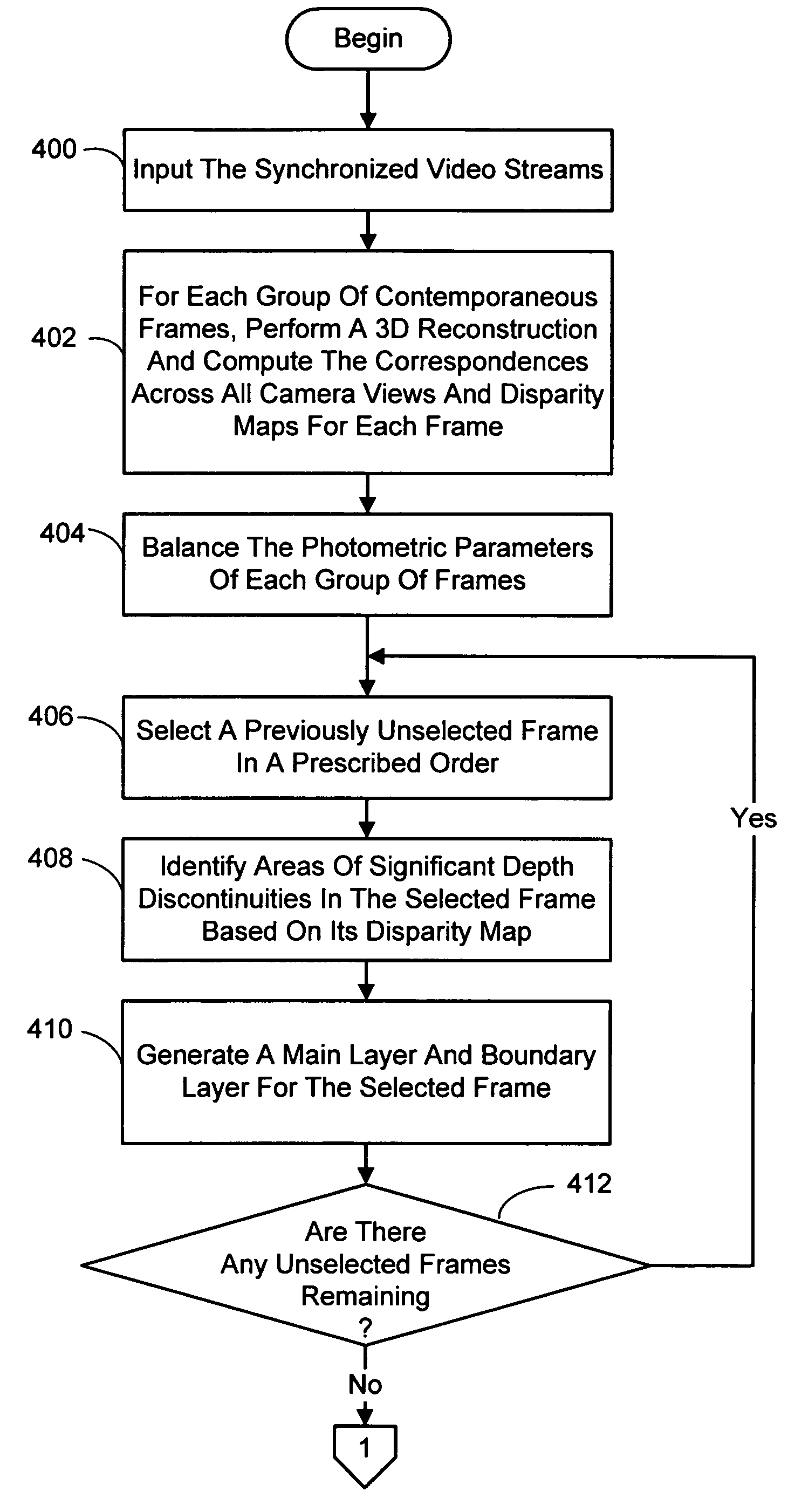

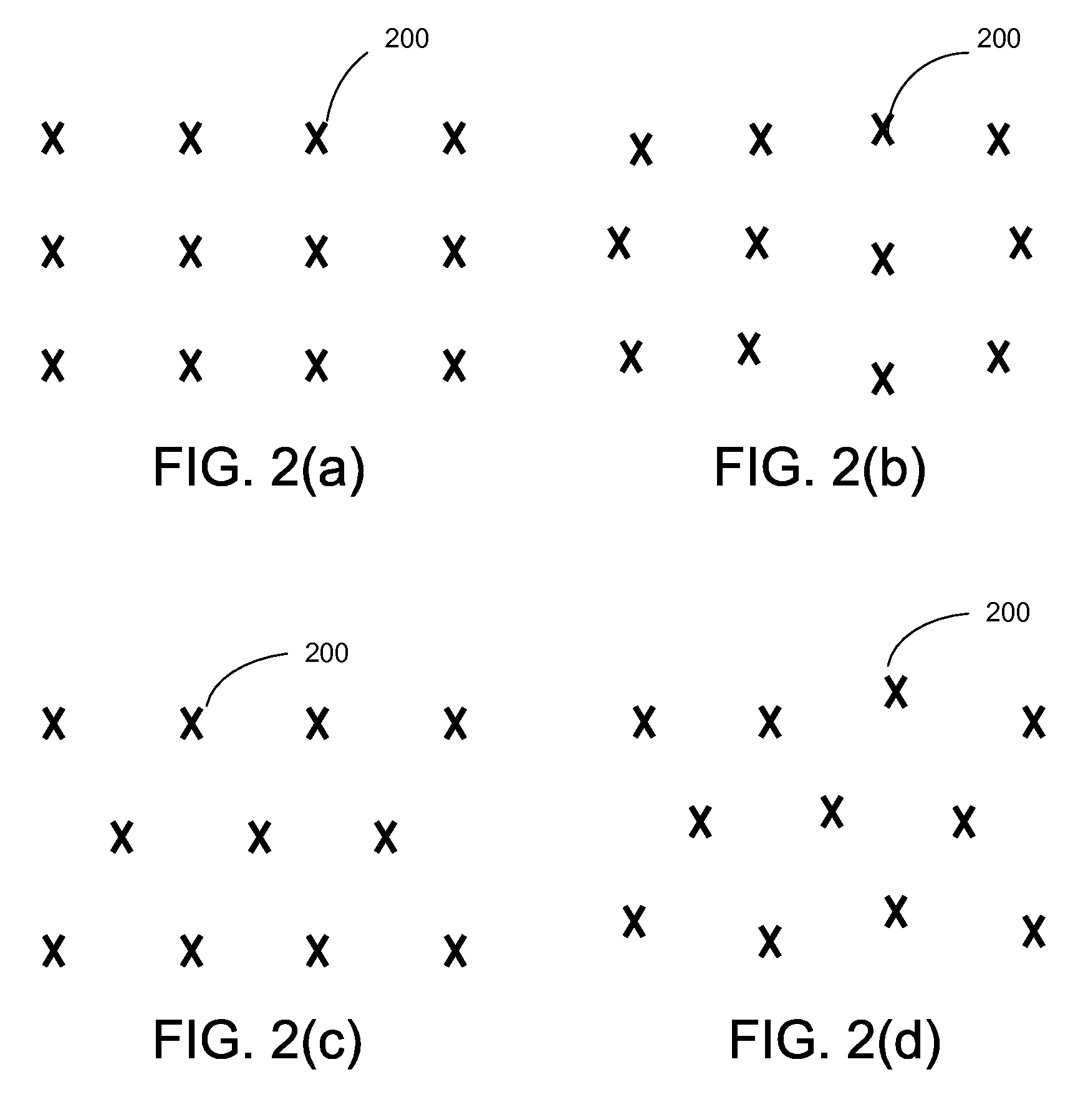

Interactive viewpoint video system and process employing overlapping images of a scene captured from viewpoints forming a grid

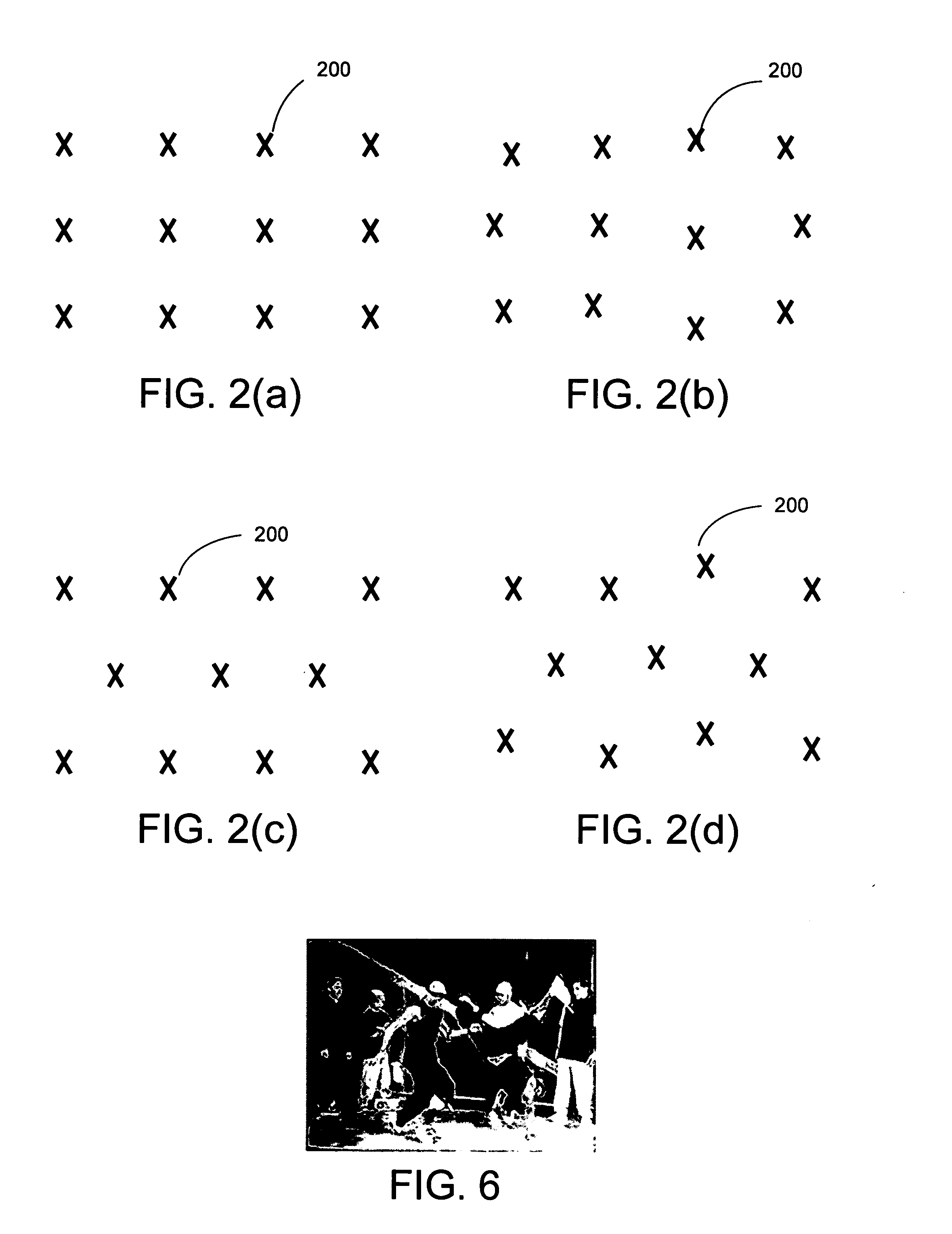

InactiveUS20050286759A1Low costCapture portableImage enhancementTelevision system detailsReverse timeViewpoints

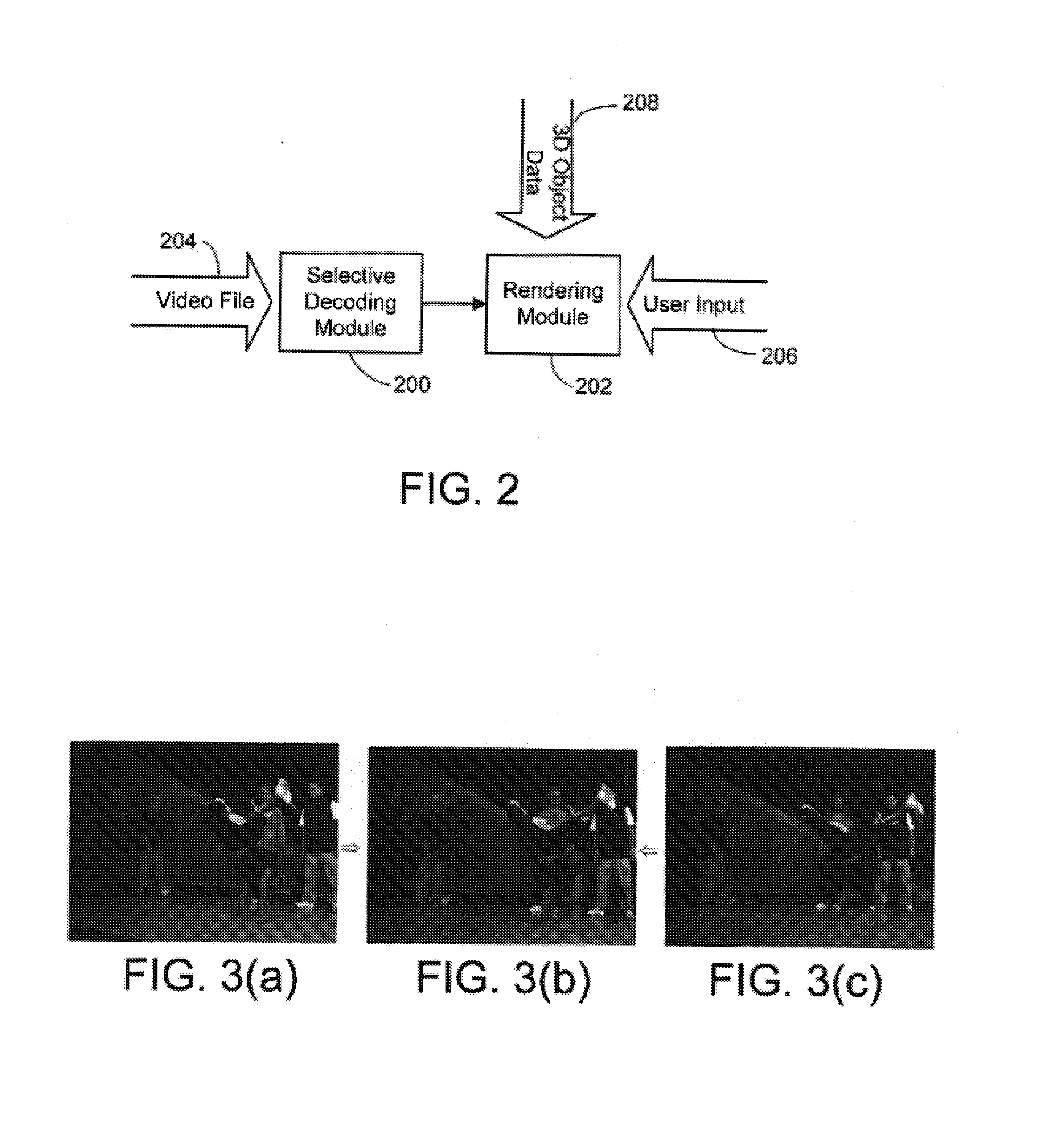

A system and process for generating, and then rendering and displaying, an interactive viewpoint video in which a user can watch a dynamic scene while manipulating (freezing, slowing down, or reversing) time and changing the viewpoint at will. In general, the interactive viewpoint video is generated using a small number of cameras to capture multiple video streams. A multi-view 3D reconstruction and matting technique is employed to create a layered representation of the video frames that enables both efficient compression and interactive playback of the captured dynamic scene, while at the same time allowing for real-time rendering.

Owner:MICROSOFT TECH LICENSING LLC

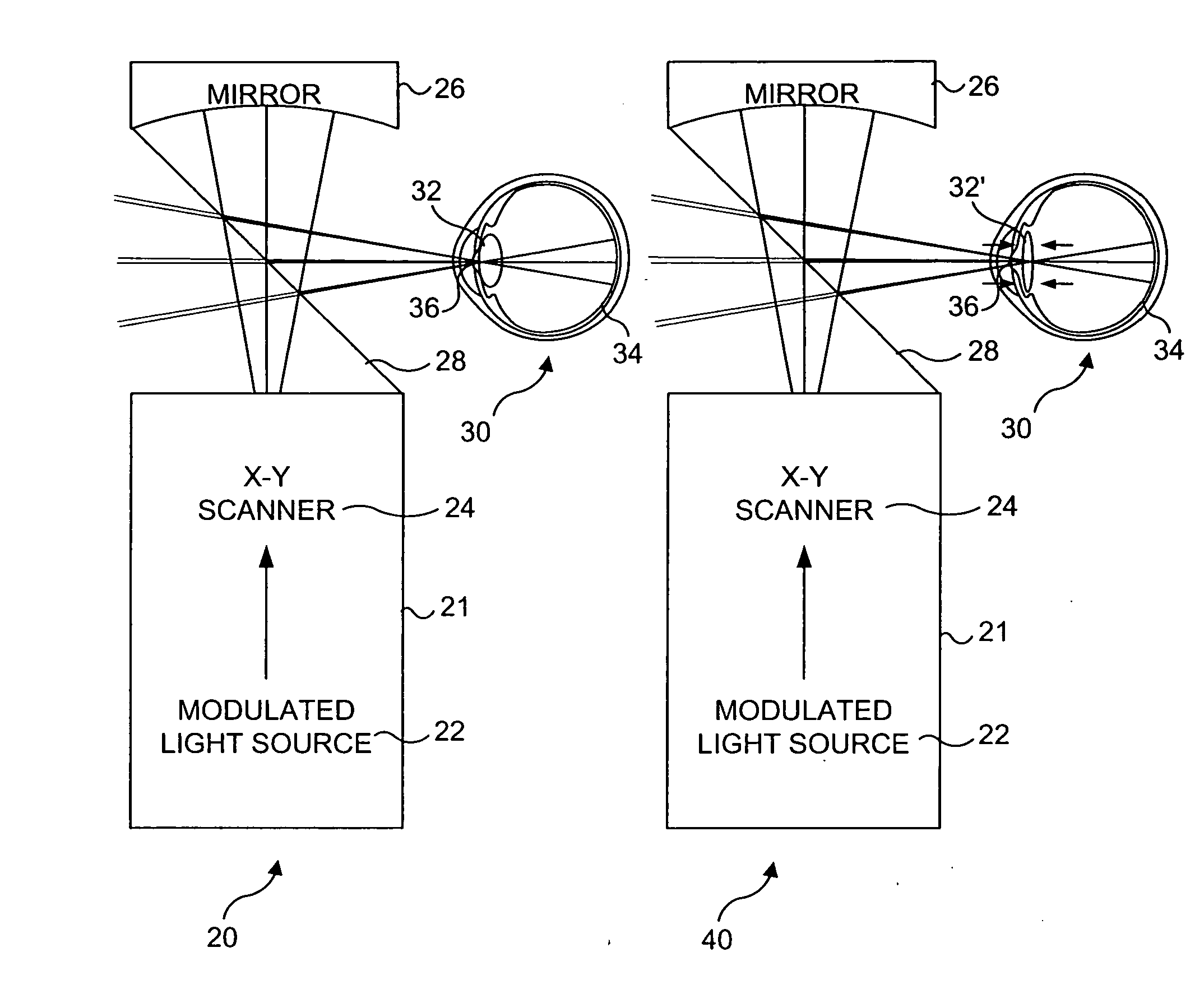

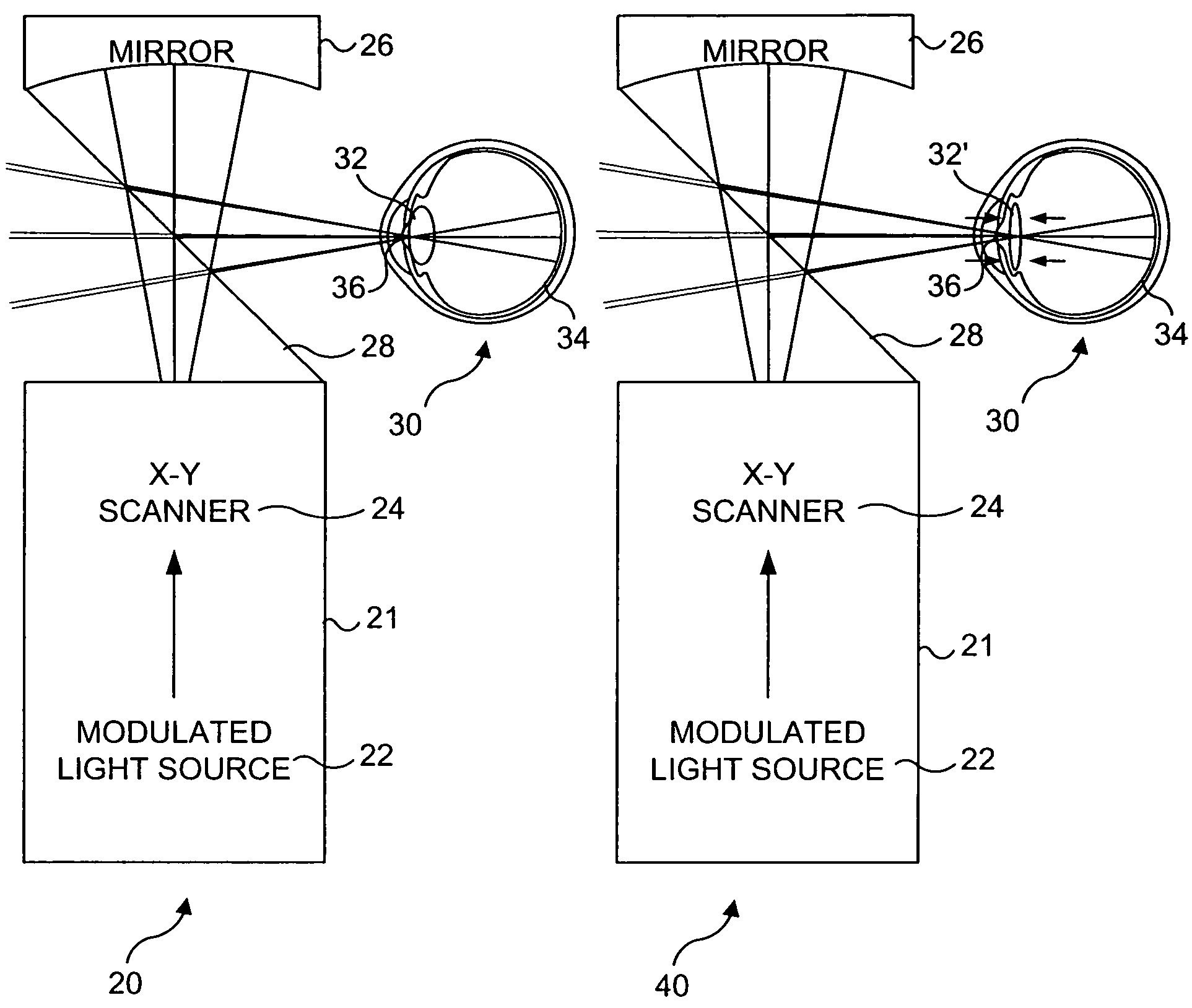

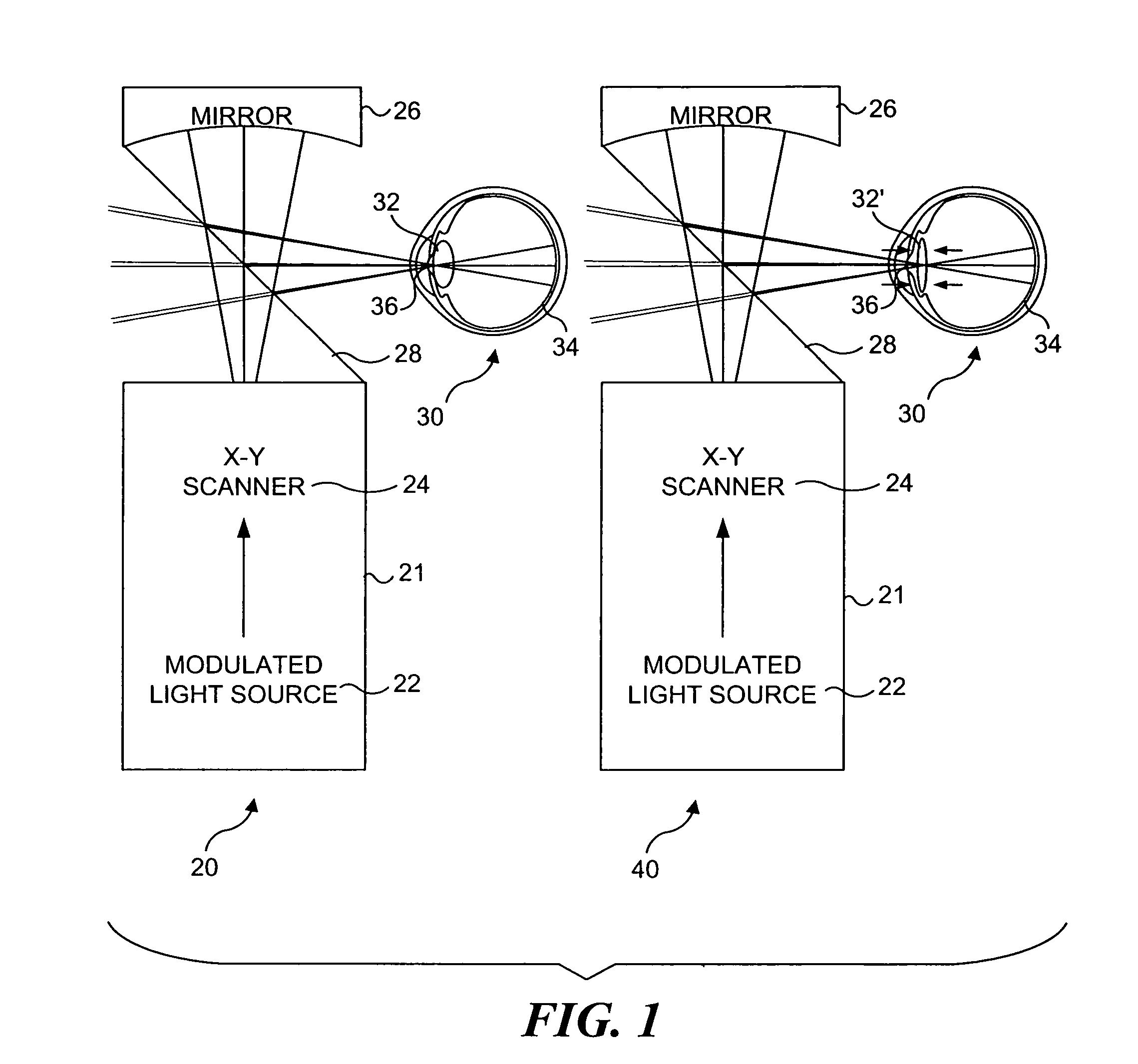

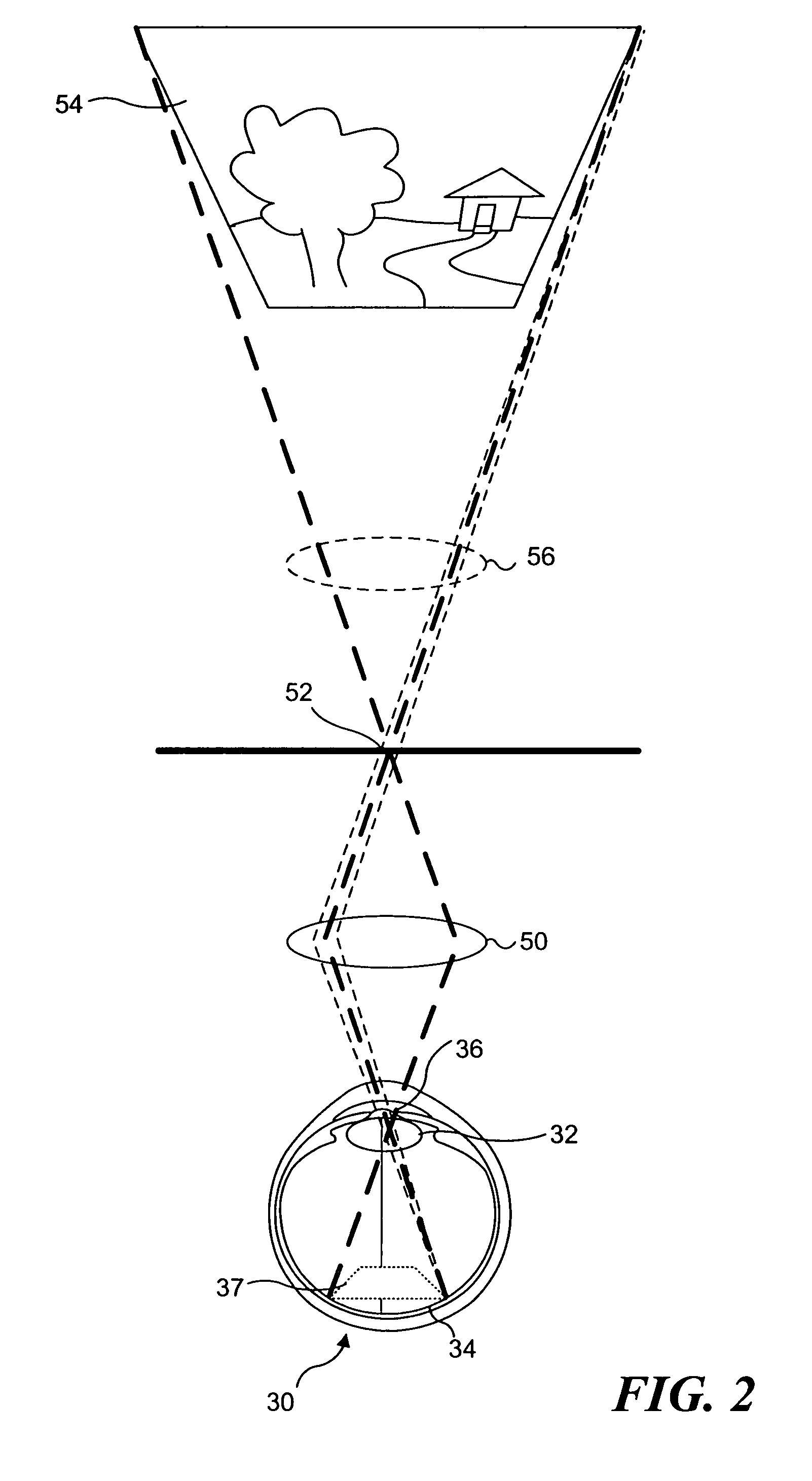

Materials and methods for simulating focal shifts in viewers using large depth of focus displays

ActiveUS20060232665A1Improve interactivityEnhances perceived realismTelevision system detailsSteroscopic systemsDisplay deviceDepth of field

A large depth of focus (DOF) display provides an image in which the apparent focus plane is adjusted to track an accommodation (focus) of a viewer's eye(s) to more effectively convey depth in the image. A device is employed to repeatedly determine accommodation as a viewer's gaze within the image changes. In response, an image that includes an apparent focus plane corresponding to the level of accommodation of the viewer is provided on the large DOF display. Objects that are not at the apparent focus plane are made to appear blurred. The images can be rendered in real-time, or can be pre-rendered and stored in an array. The dimensions of the array can each correspond to a different variable. The images can alternatively be provided by a computer controlled, adjustable focus video camera in real-time.

Owner:UNIV OF WASHINGTON

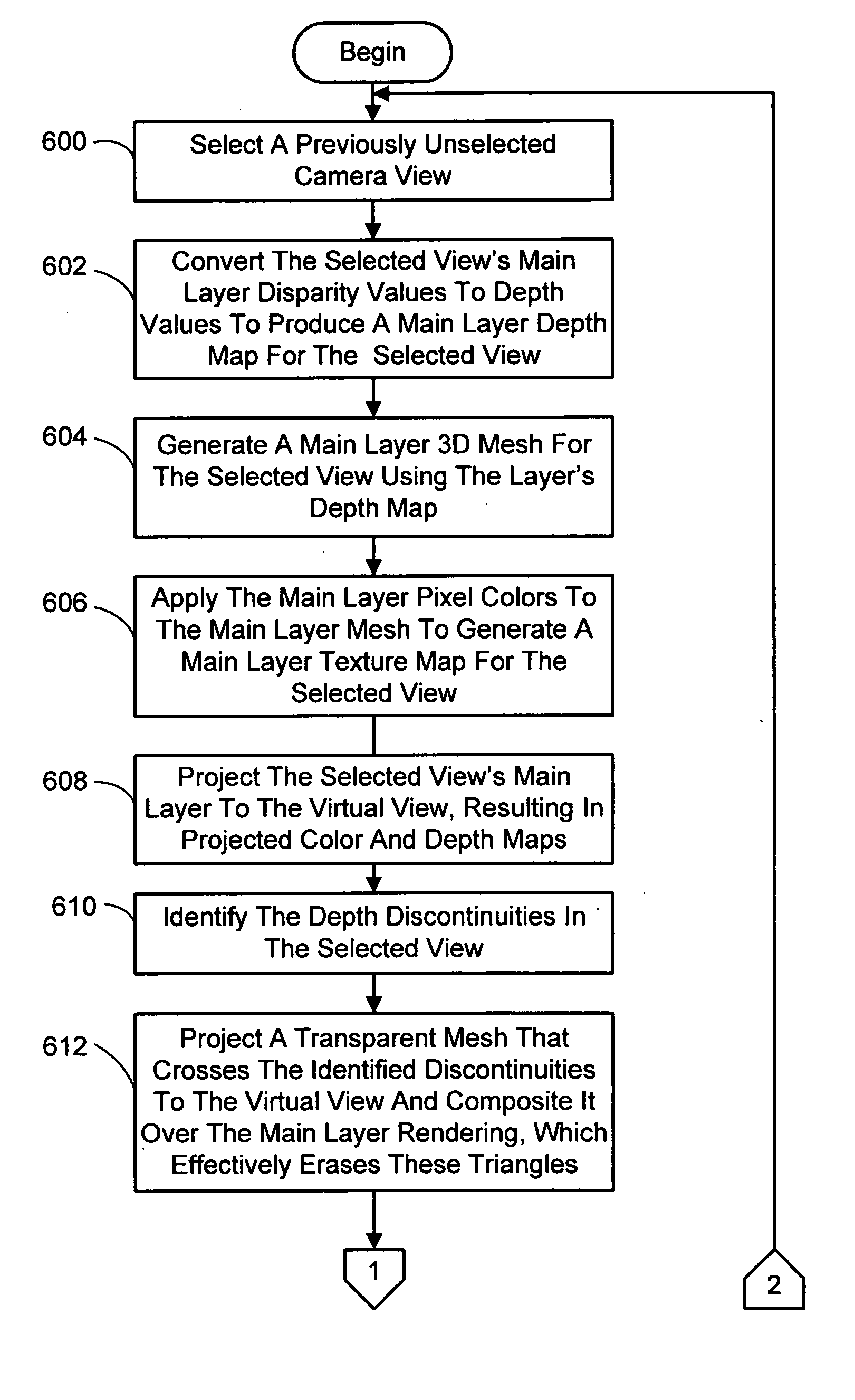

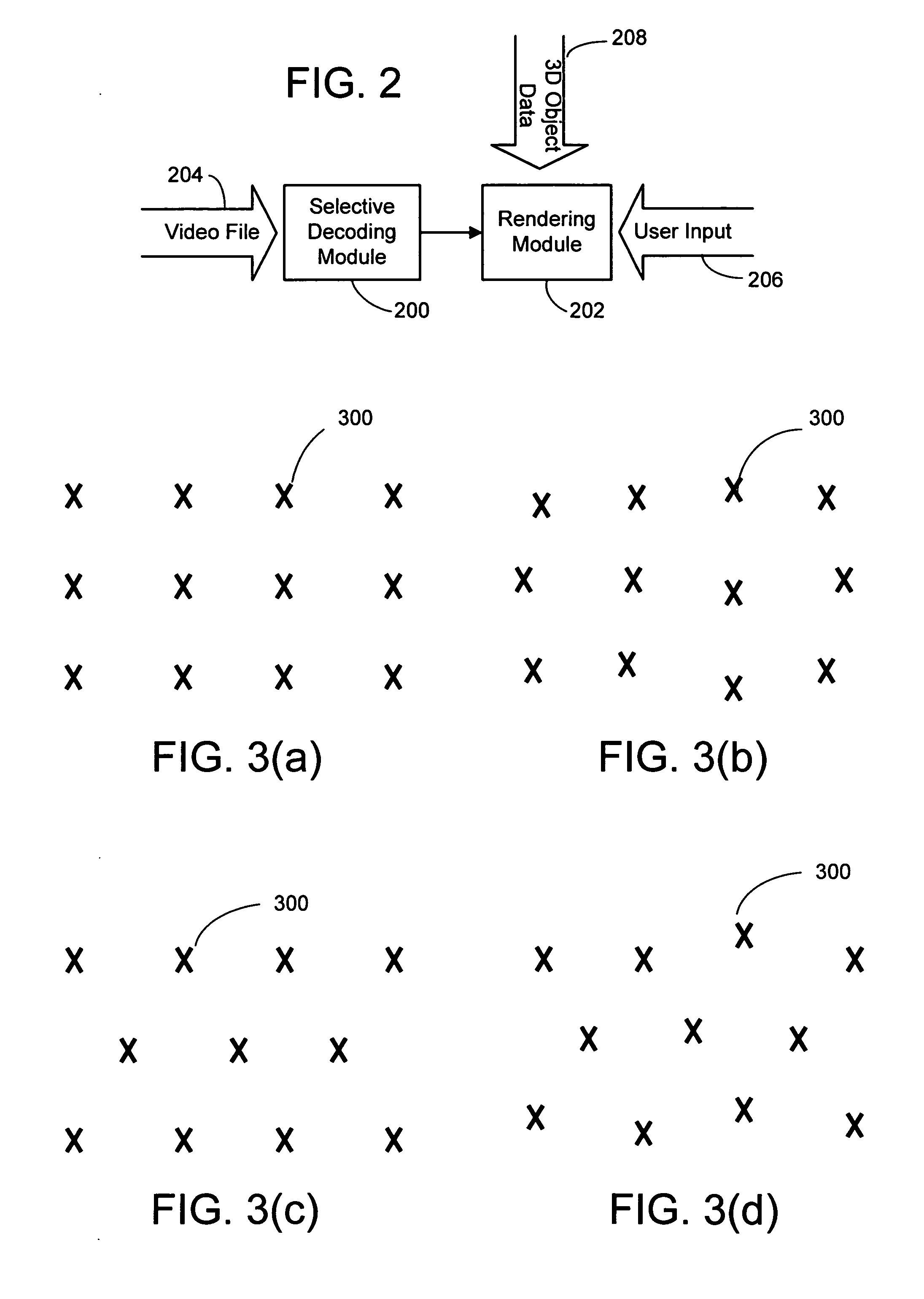

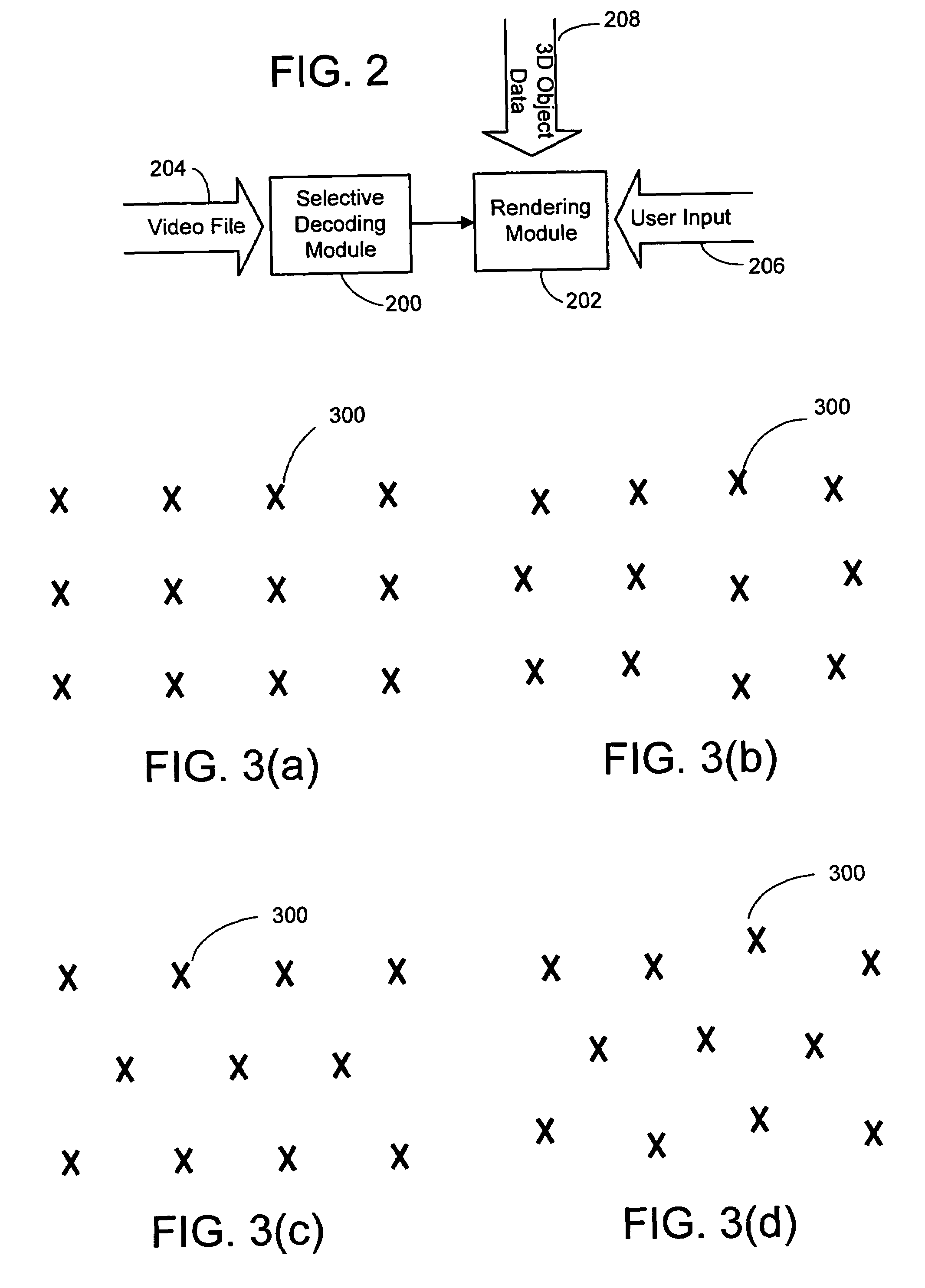

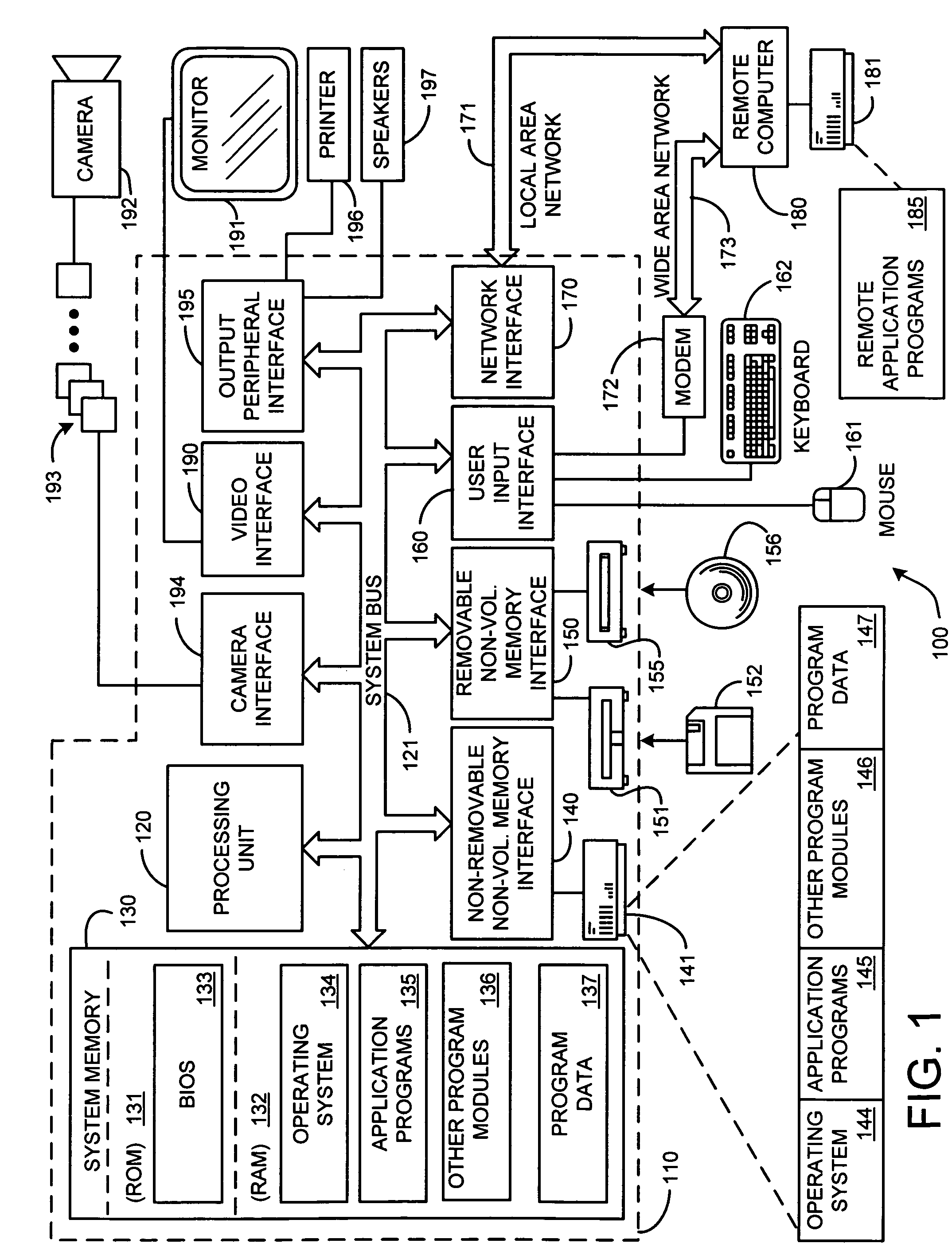

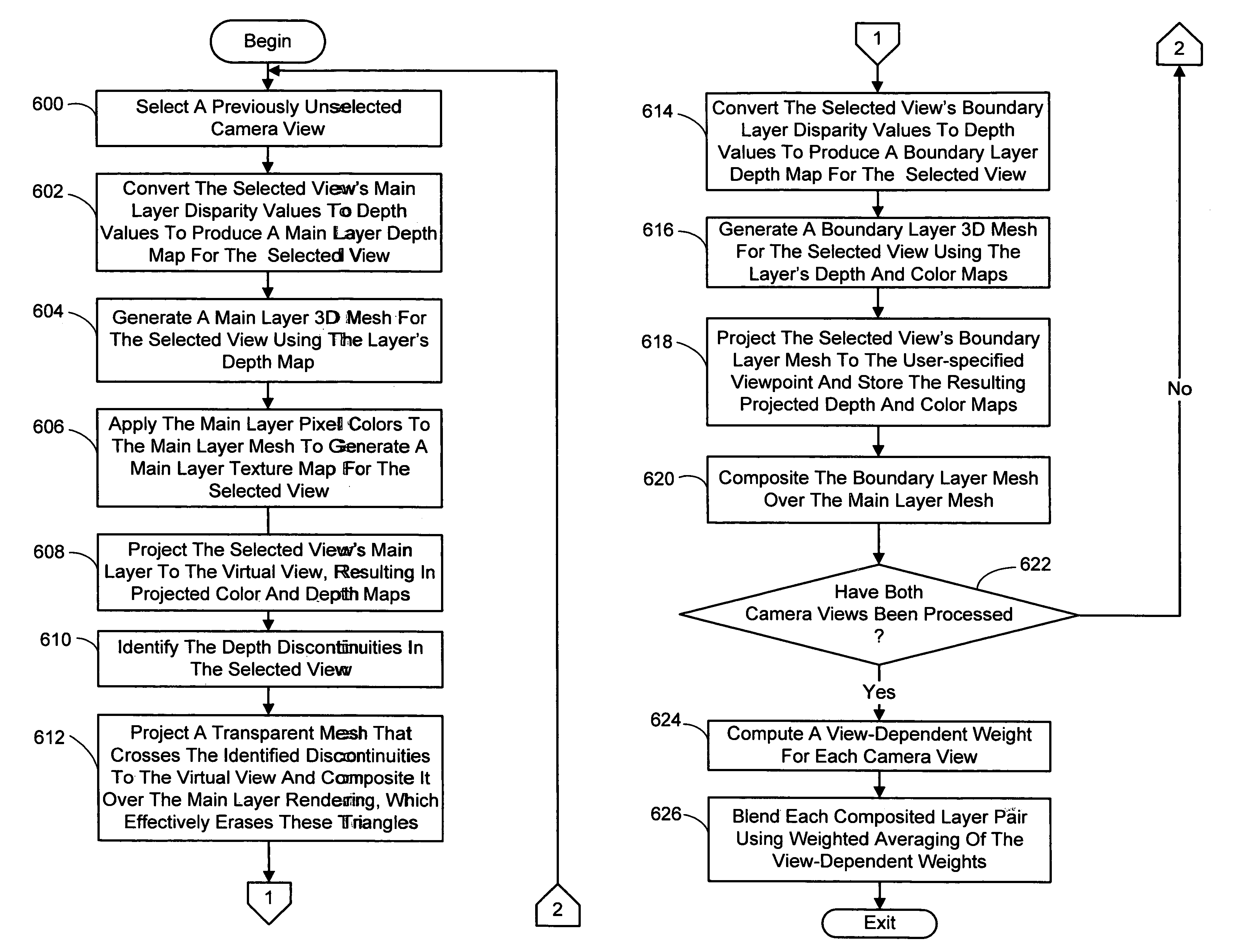

Real-time rendering system and process for interactive viewpoint video that was generated using overlapping images of a scene captured from viewpoints forming a grid

InactiveUS20060028489A1Cathode-ray tube indicatorsInput/output processes for data processingViewpointsComputer graphics (images)

A system and process for rendering and displaying an interactive viewpoint video is presented in which a user can watch a dynamic scene while manipulating (freezing, slowing down, or reversing) time and changing the viewpoint at will. The ability to interactively control viewpoint while watching a video is an exciting new application for image-based rendering. Because any intermediate view can be synthesized at any time, with the potential for space-time manipulation, this type of video has been dubbed interactive viewpoint video.

Owner:MICROSOFT TECH LICENSING LLC

Materials and methods for simulating focal shifts in viewers using large depth of focus displays

InactiveUS7428001B2Improve interactivityEnhances perceived realismTelevision system detailsCathode-ray tube indicatorsDisplay deviceDepth of focus

A large depth of focus (DOF) display provides an image in which the apparent focus plane is adjusted to track an accommodation (focus) of a viewer's eye(s) to more effectively convey depth in the image. A device is employed to repeatedly determine accommodation as a viewer's gaze within the image changes. In response, an image that includes an apparent focus plane corresponding to the level of accommodation of the viewer is provided on the large DOF display. Objects that are not at the apparent focus plane are made to appear blurred. The images can be rendered in real-time, or can be pre-rendered and stored in an array. The dimensions of the array can each correspond to a different variable. The images can alternatively be provided by a computer controlled, adjustable focus video camera in real-time.

Owner:UNIV OF WASHINGTON

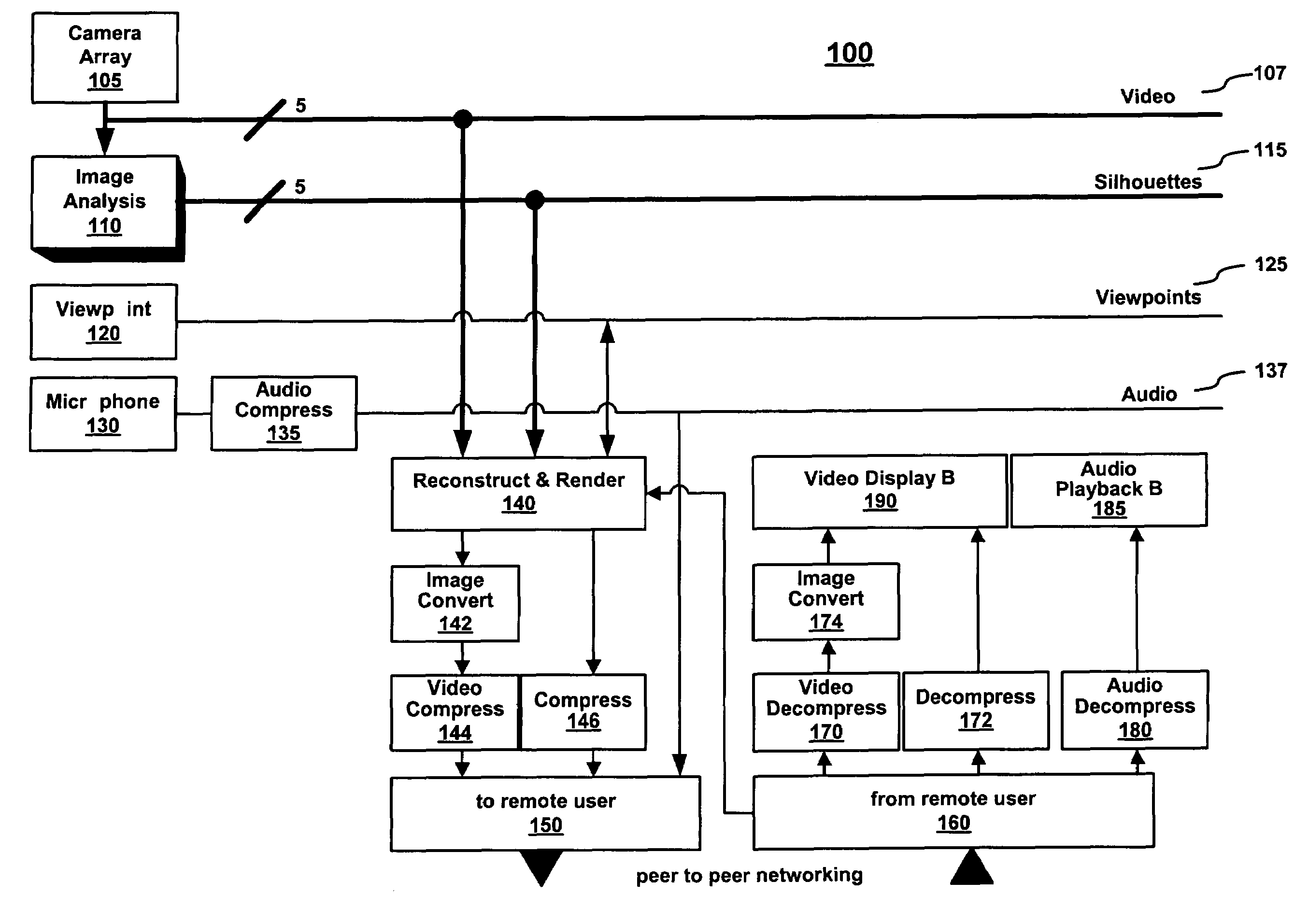

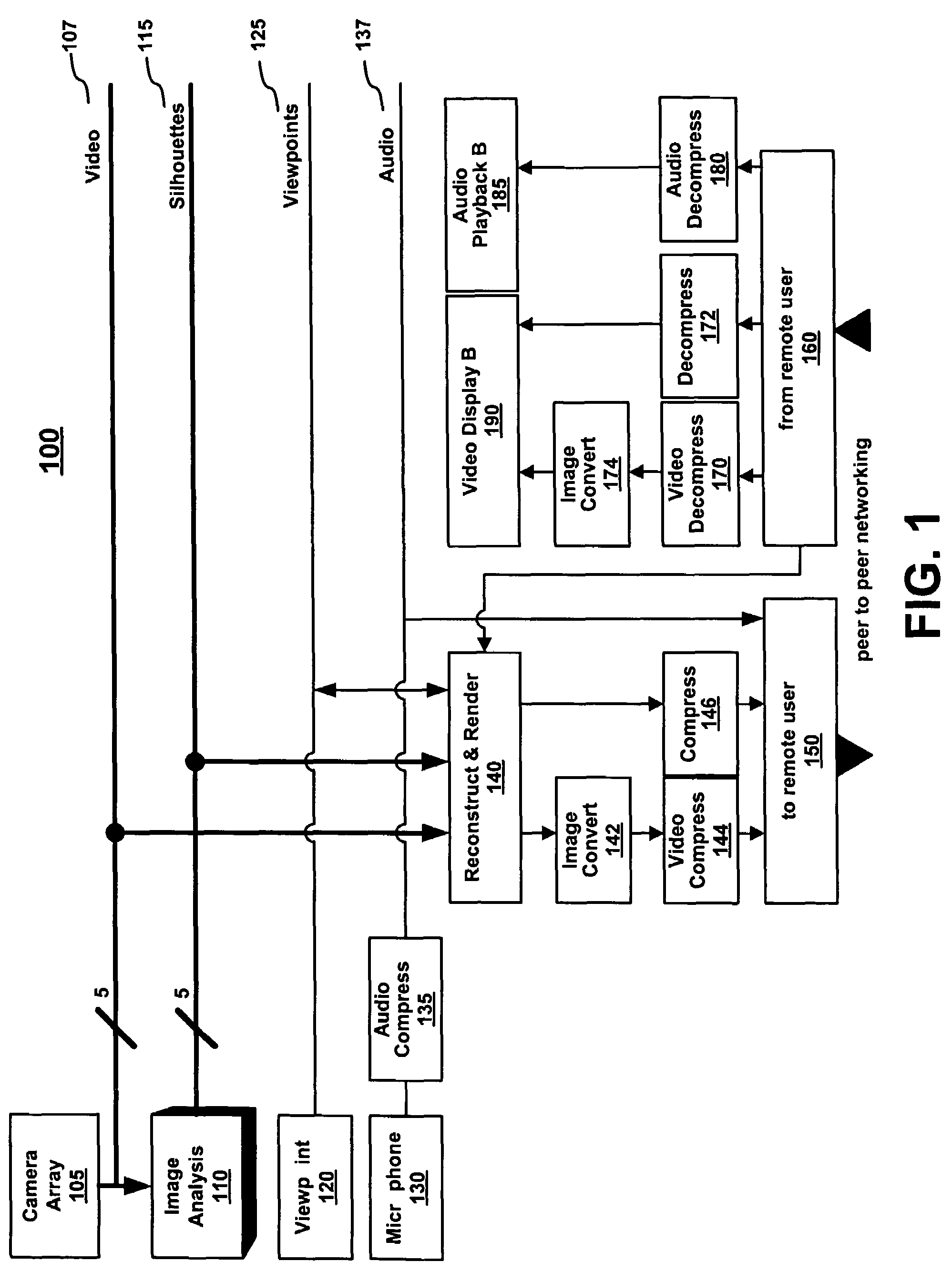

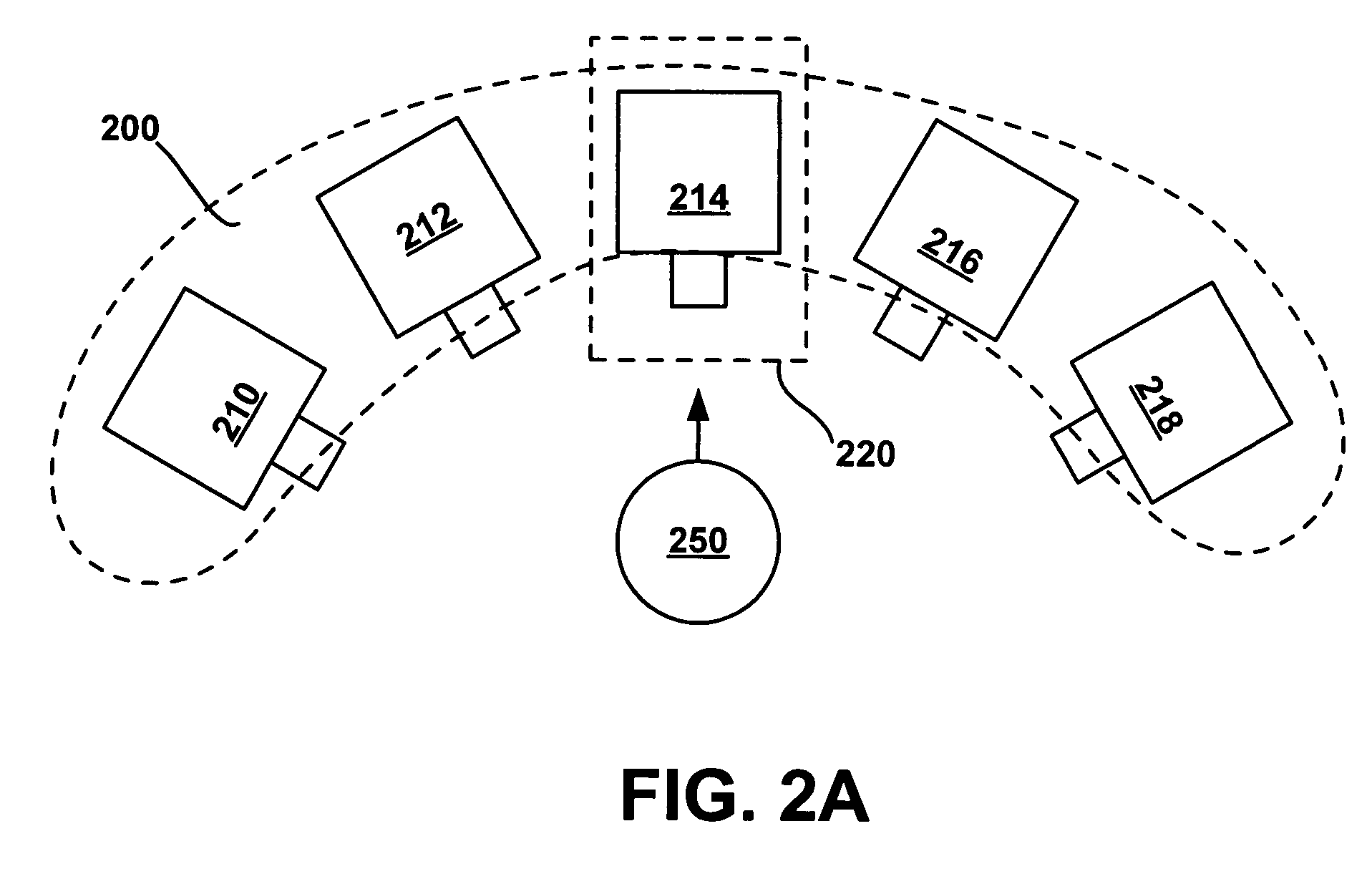

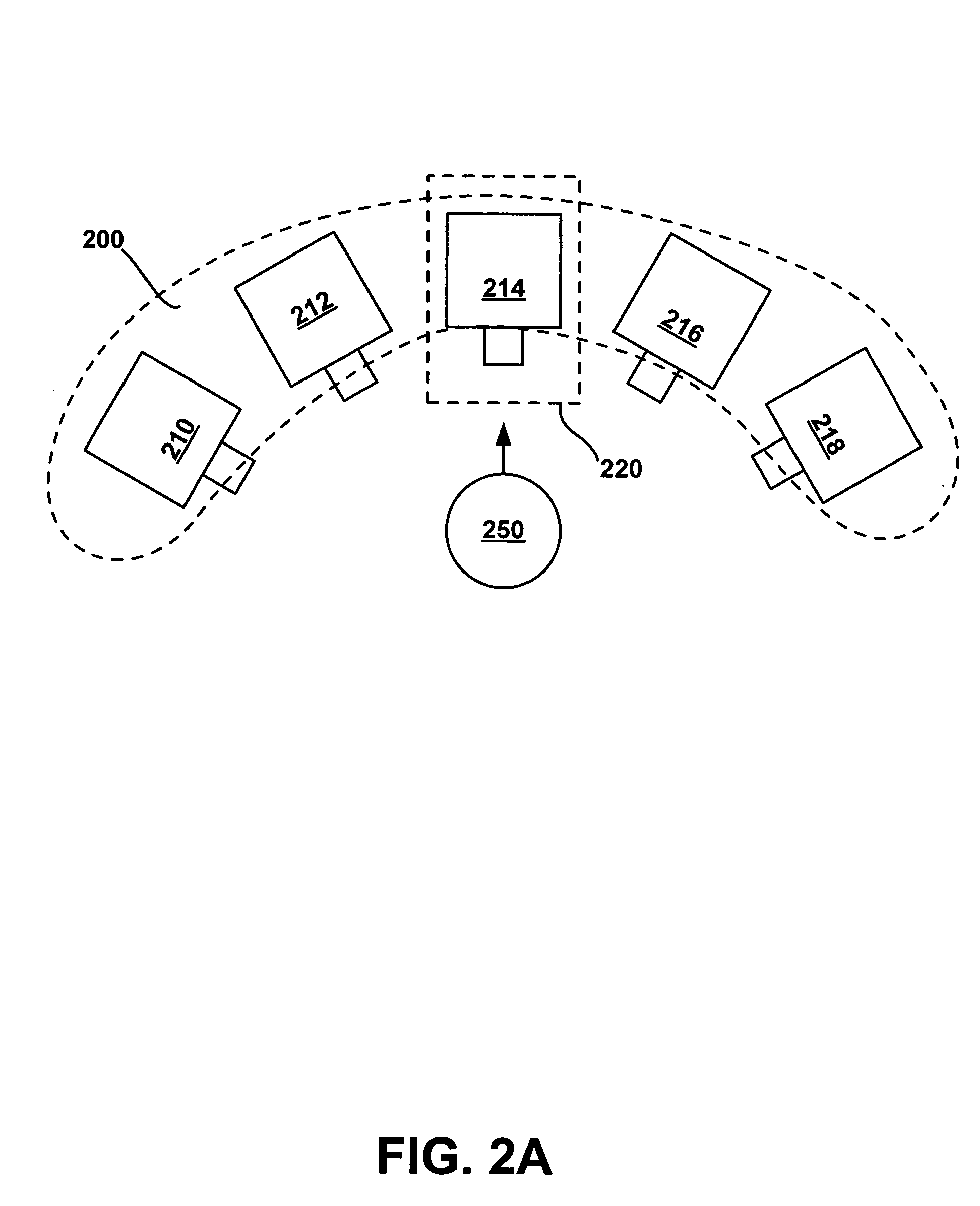

Method and system for real-time rendering within a gaming environment

A method and system for real-time rendering within a gaming environment. Specifically, one embodiment of the present invention discloses a method of rendering a local participant within an interactive gaming environment. The method begins by capturing a plurality of real-time video streams of a local participant from a plurality of camera viewpoints. From the plurality of video streams, a new view synthesis technique is applied to generate a rendering of the local participant. The rendering is generated from a perspective of a remote participant located remotely in the gaming environment. The rendering is then sent to the remote participant for viewing.

Owner:HEWLETT PACKARD DEV CO LP

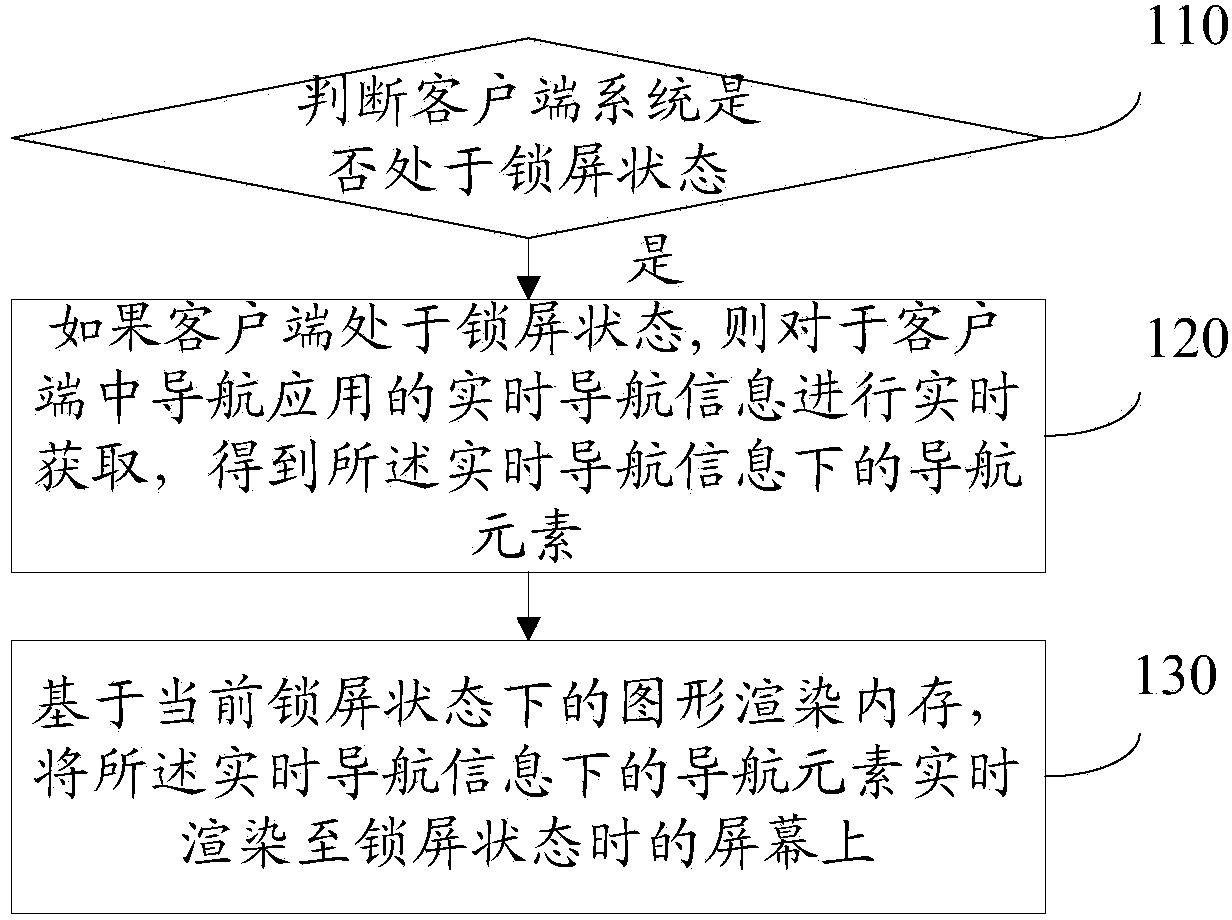

Method and device for display of navigation information

InactiveCN104374399AImprove transmission efficiencyReduce the impact of normal drivingInstruments for road network navigationGraphicsReal time navigation

The invention discloses a method and a device for display of navigation information and relates to the technical field of map navigation. The method comprises the following steps: detecting whether a client side is in a screen-locking state; acquiring real-time navigation information applied to navigation of the client side in real time if the client side is in the screen-locking state to obtain navigation elements under the real-time navigation information; and based on an internal storage based on a pattern under the current screen-locking state, rendering the navigation elements under the real-time navigation information to a screen in the screen-locking state in real time. According to the method and the device for the display of the navigation information, the navigation information is displayed to a user in a picture form, so that the navigation information can be dynamically displayed on a screen-locking interface; the intuition performance is high, the influence of the environment in which a voice is located is reduced, the user can see the navigation application without unlocking the screen to perform a series of operations such as finding and switching to navigation application and then entering into a foreground so that the navigation can be implemented by directly seeing the navigation elements; the influence on the driving of the user is reduced.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

Wagering game with 3D rendering of a mechanical device

A stand-alone or server-linked gaming terminal that displays on a plasma display in the top-box area a 3D-rendered mechanical device that is pre-rendered or rendered in real time using a 3D-graphics processor or the like. The 3D-rendered mechanical device depicts the game outcome or a bonus game displayed in the top box display. The images or animation representing the 3D-rendered mechanical device may be stored on a digital video recorder (DVR) within the gaming terminal or downloaded remotely from a storage device coupled to the gaming terminal. The DVR outputs the mechanical device images as analog video, and is capable of receiving analog video input, converting the analog video to a digital format such as MPEG, and storing the converted video on a storage media. Additional structural elements such as a frame may be arranged about the top-box display to add depth or dimensionality to the 3D-rendered images displayed thereon.

Owner:BALLY GAMING INC

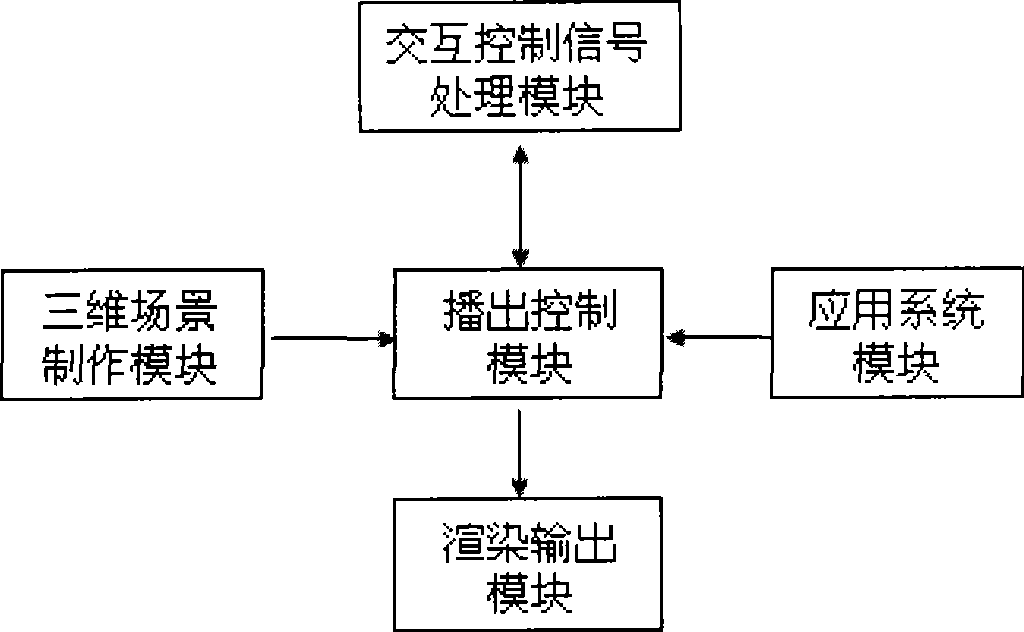

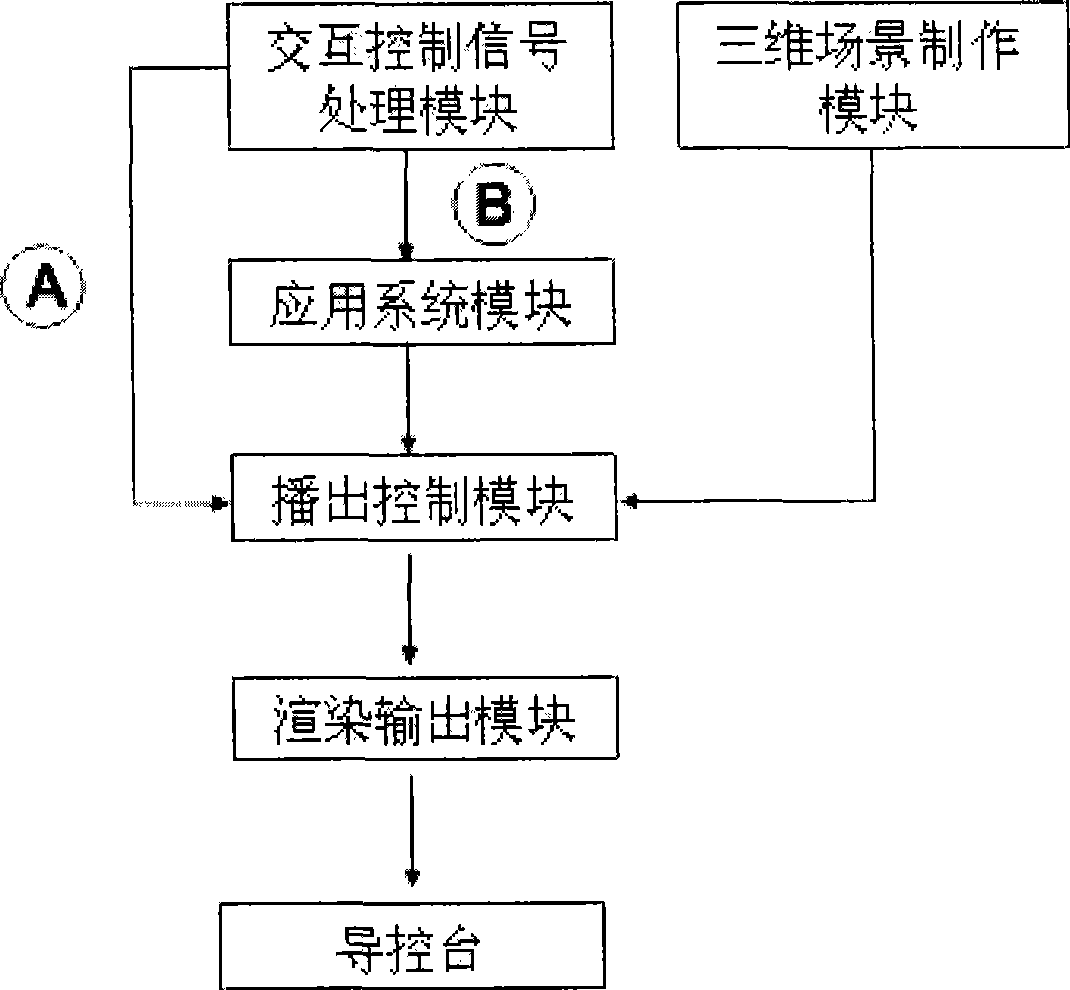

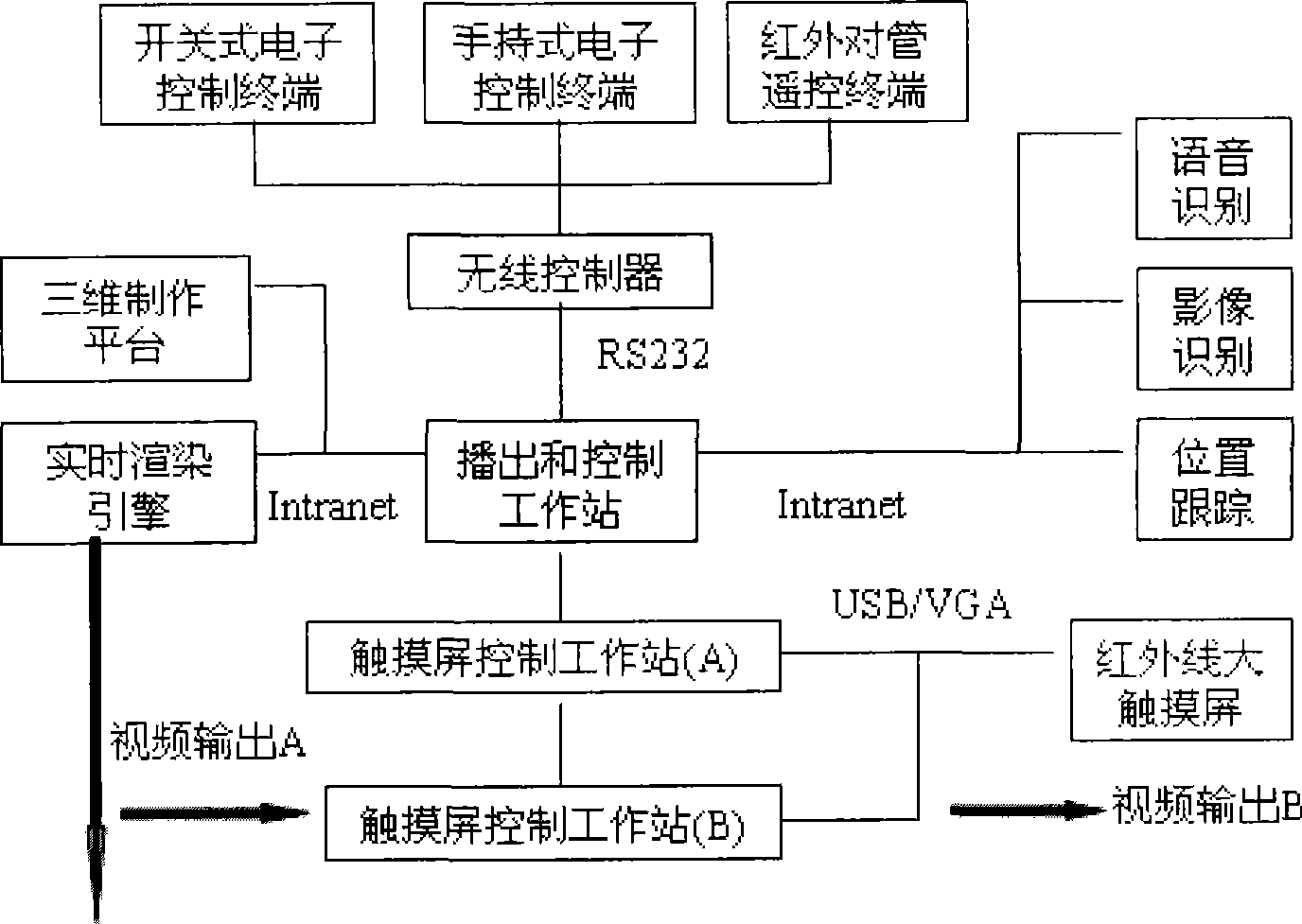

System for implementing remote control interaction in virtual three-dimensional scene

InactiveCN101465957ATo achieve the effect of random selectionImprove the problem that the interaction cannot be realizedTelevision system detailsColor television detailsTelevision stationHuman–computer interaction

The invention relates to a system and a controlling method for realizing remote control interaction in a virtual three-dimensional scene. An interactive control system solves how to carry out the interaction and control on a virtual studio scene, animation and an object in the scene by an operator. The system mainly comprises three modules: first, a three-dimensional producing and real-time rendering module; second, a playing and controlling module; third, an interactive module. The interactive module includes subsystems such as a wireless remote control subsystem, a voice recognizing subsystem, an image recognizing subsystem, a position tracking subsystem (an action recognizing subsystem), a touch screen subsystem (a visual multimedia playing and controlling interface and a multi-point touch controlling method are provided), and the like. A three-dimensional real-time rendering engine can be driven by the playing and controlling module by the operator through various interactive methods, thus realizing the selectively instant playing of the virtual scene and the controlling of the virtual object. The system can be applied to virtual studios of television stations and other virtual interactive demonstrating occasions.

Owner:应旭峰 +1

Method and apparatus for automatic camera calibration using one or more images of a checkerboard pattern

ActiveUS20140285676A1Accurate imagingPractical implementationTelevision system detailsImage enhancementImaging qualityCheckerboard pattern

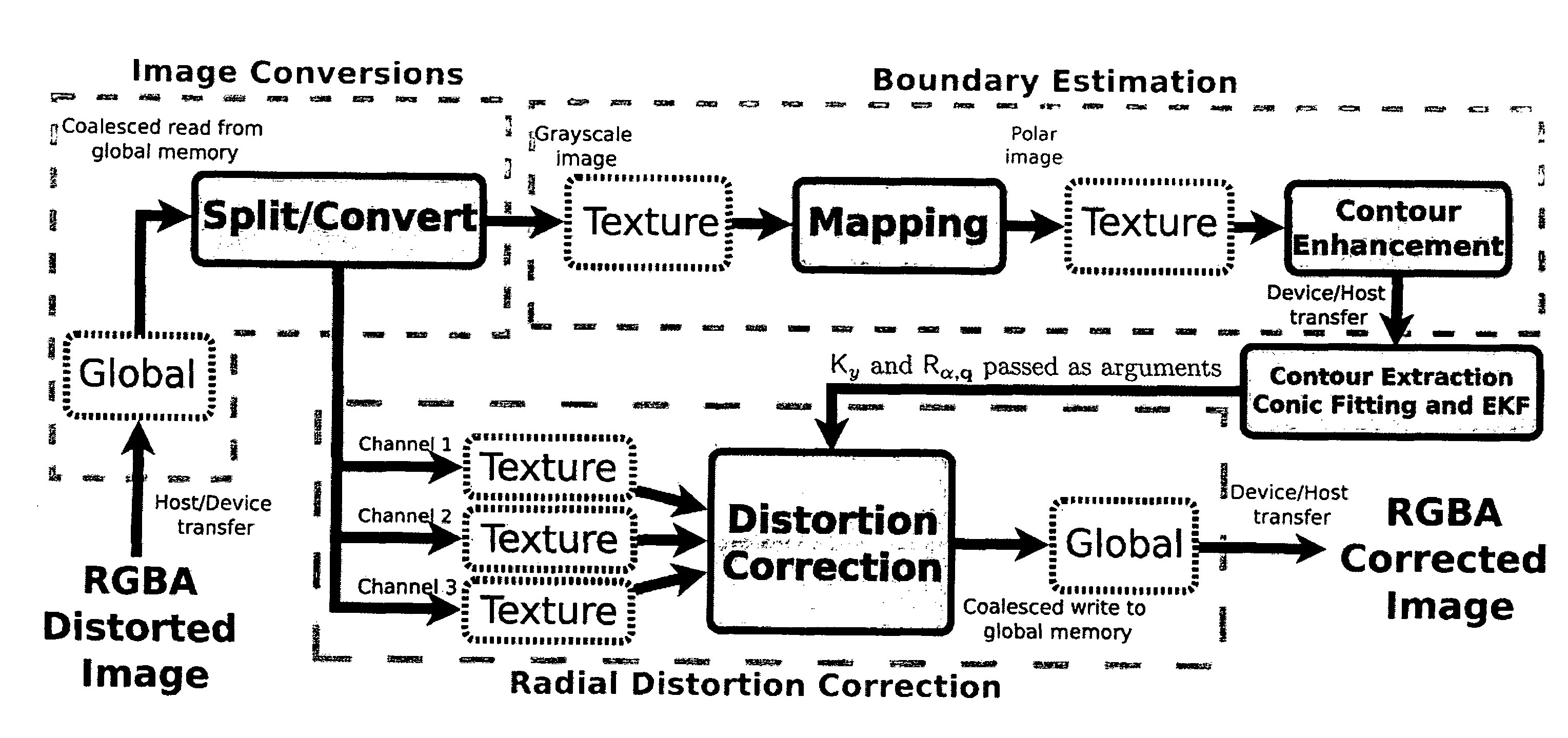

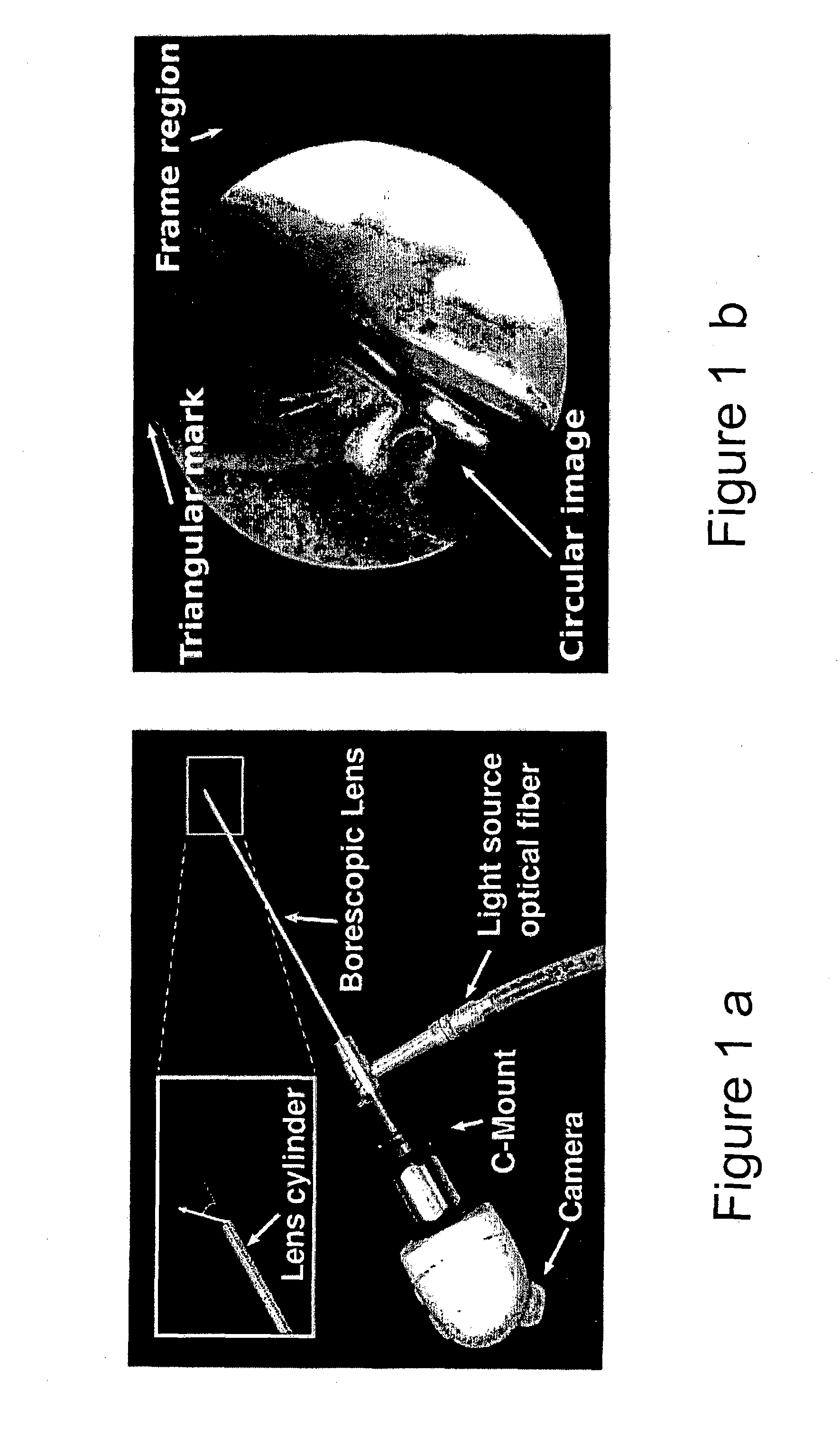

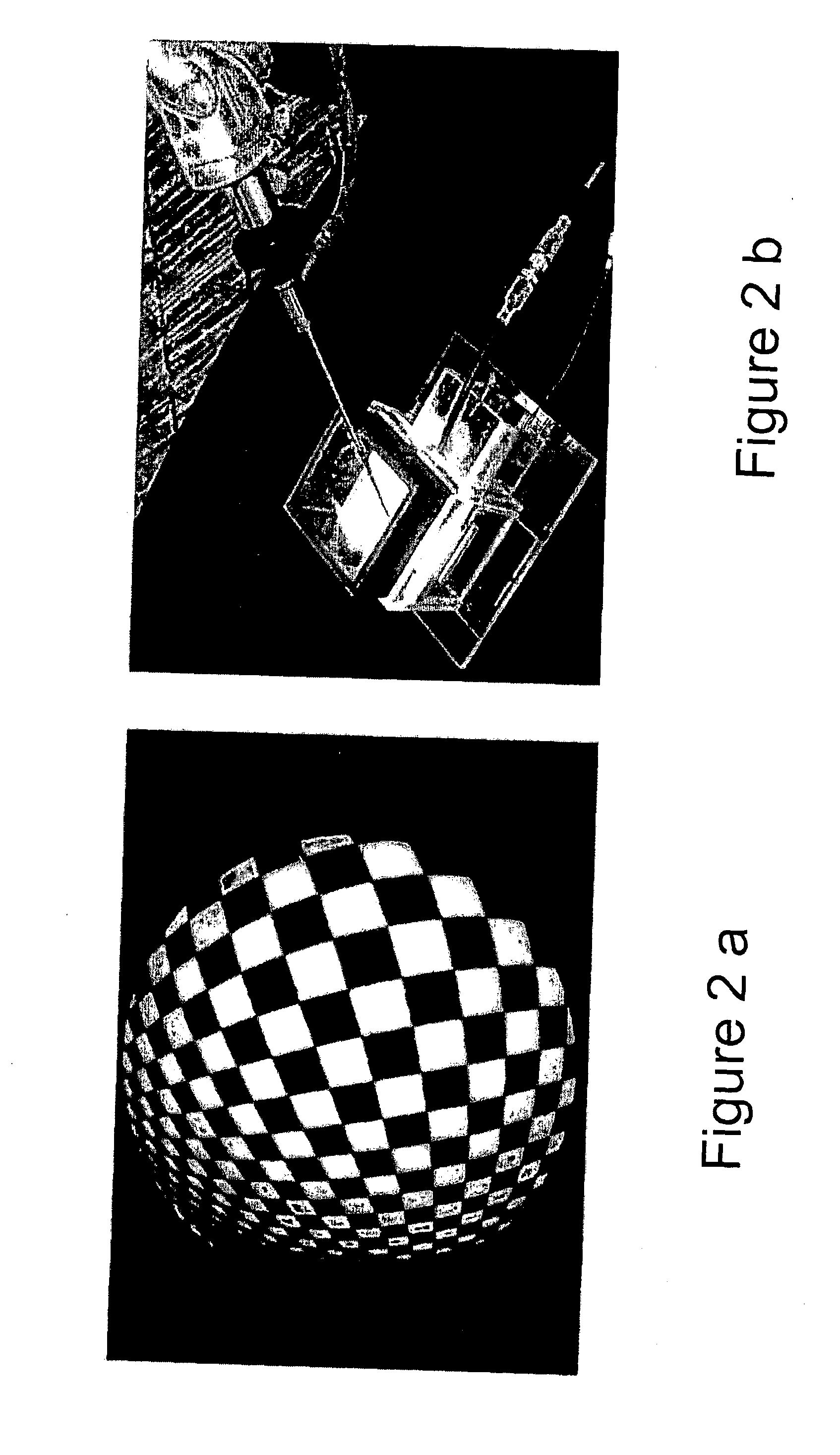

The present invention relates to a high precision method, model and apparatus for calibrating, determining the rotation of the lens scope around its symmetry axis, updating the projection model accordingly, and correcting the image radial distortion in real-time using parallel processing for best image quality.The solution provided herein relies on a complete geometric calibration of optical devices, such as cameras commonly used in medicine and in industry in general, and subsequent rendering of perspective correct image in real-time. The calibration consists on the determination of the parameters of a suitable mapping function that assigns each pixel to the 3D direction of the corresponding incident light. The practical implementation of such solution is very straightforward, requiring the camera to capture only a single view of a readily available calibration target, that may be assembled inside a specially designed calibration apparatus, and a computer implemented processing pipeline that runs in real time using the parallel execution capabilities of the computational platform.

Owner:UNIVE DE COIMBRA

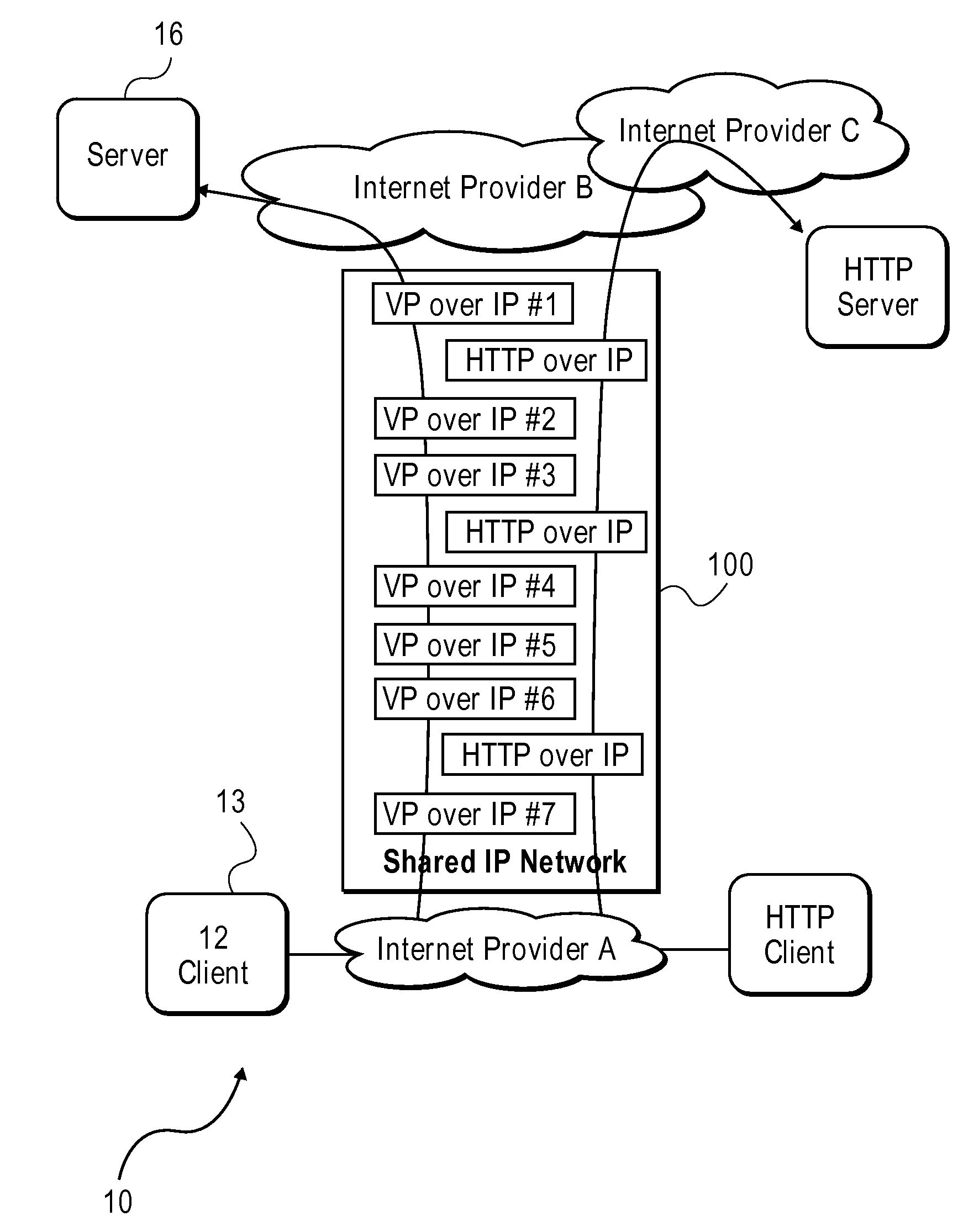

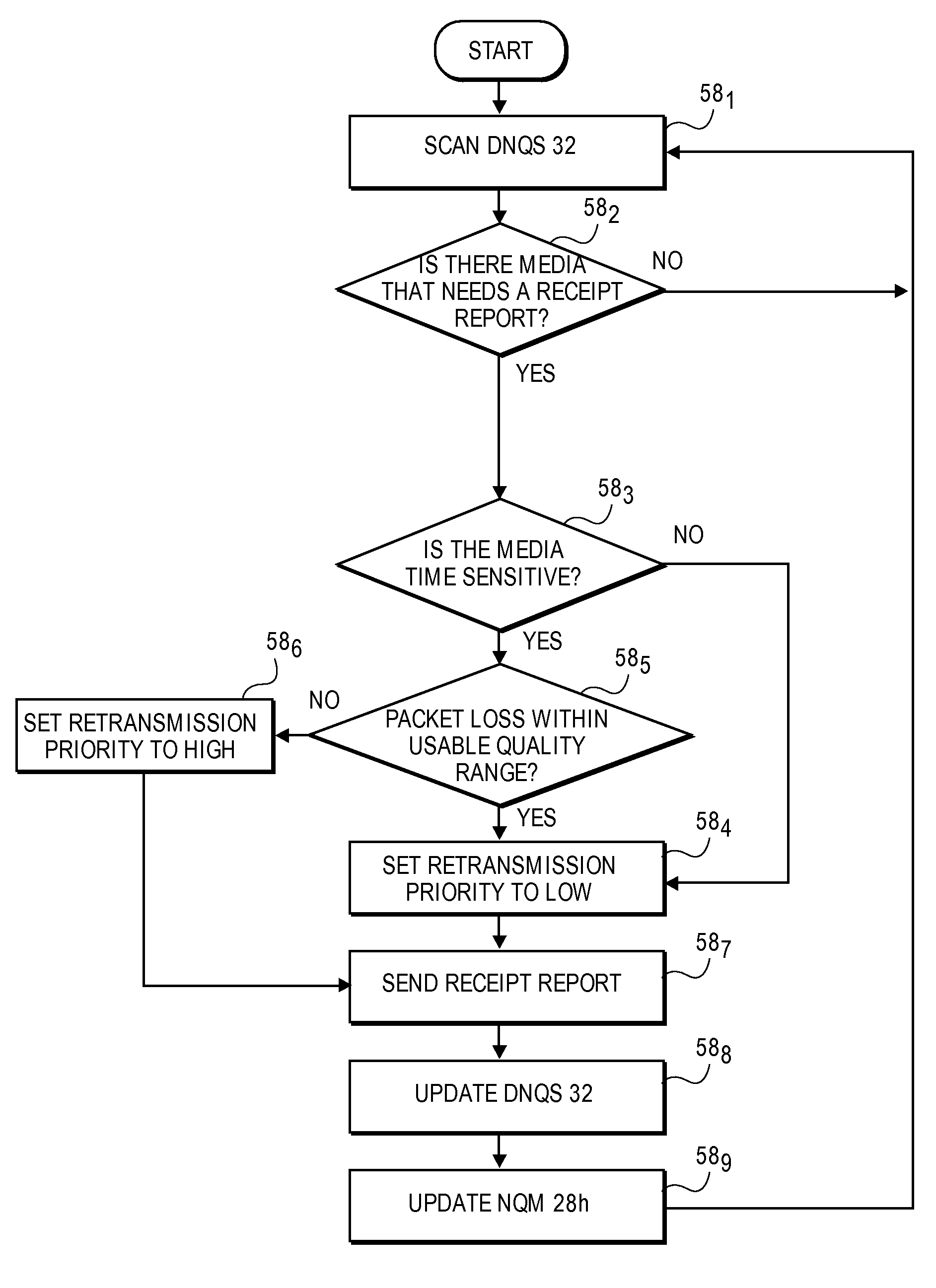

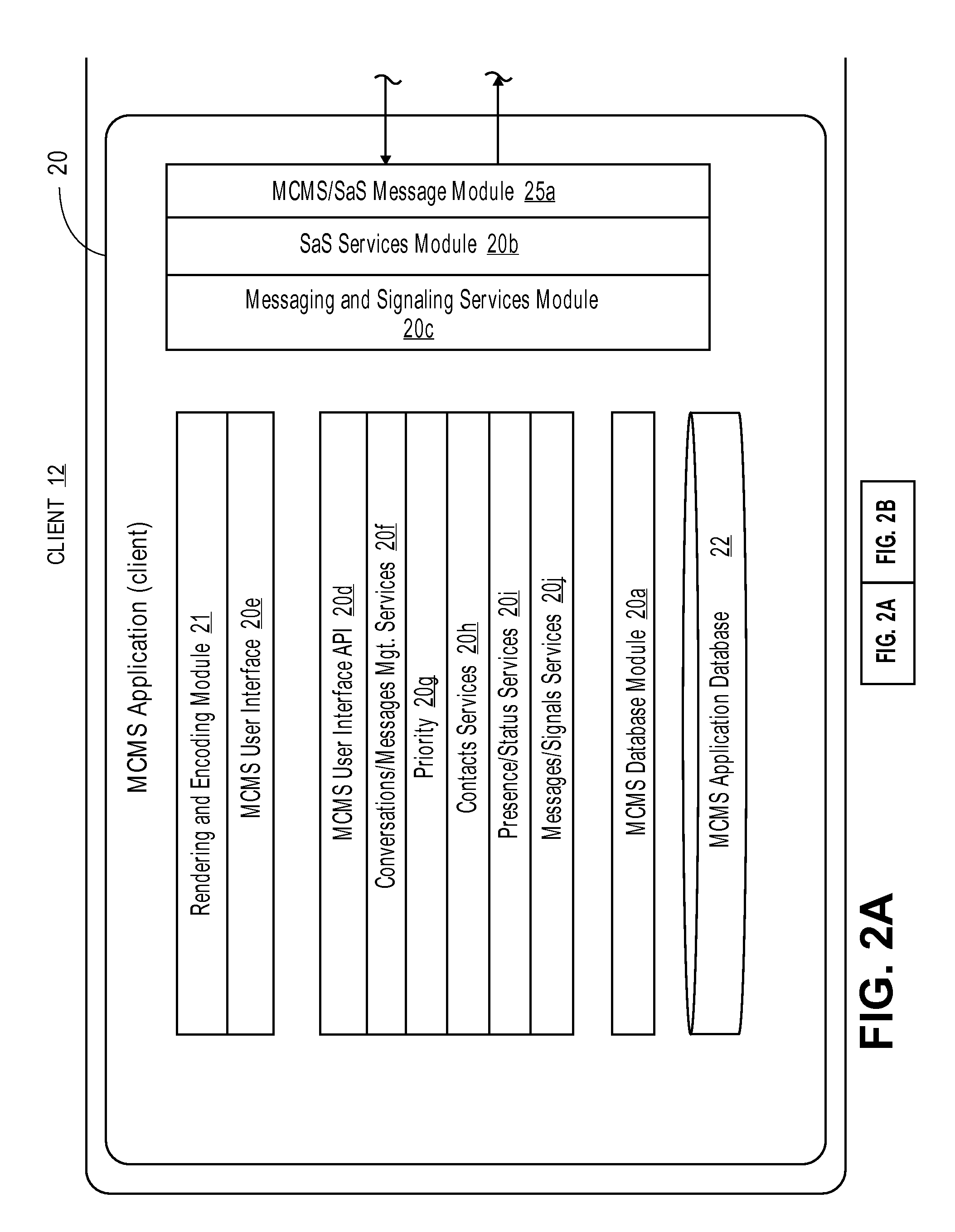

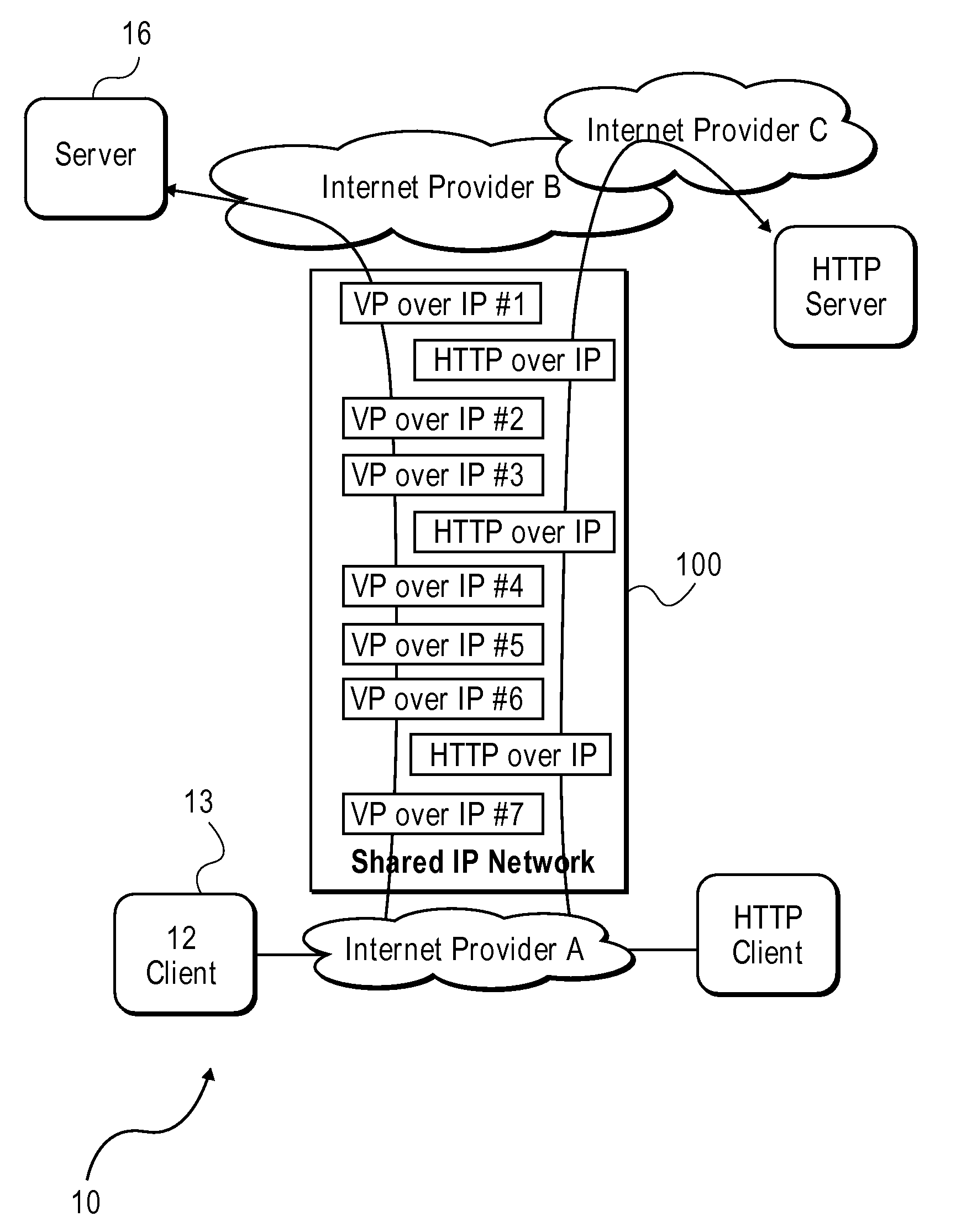

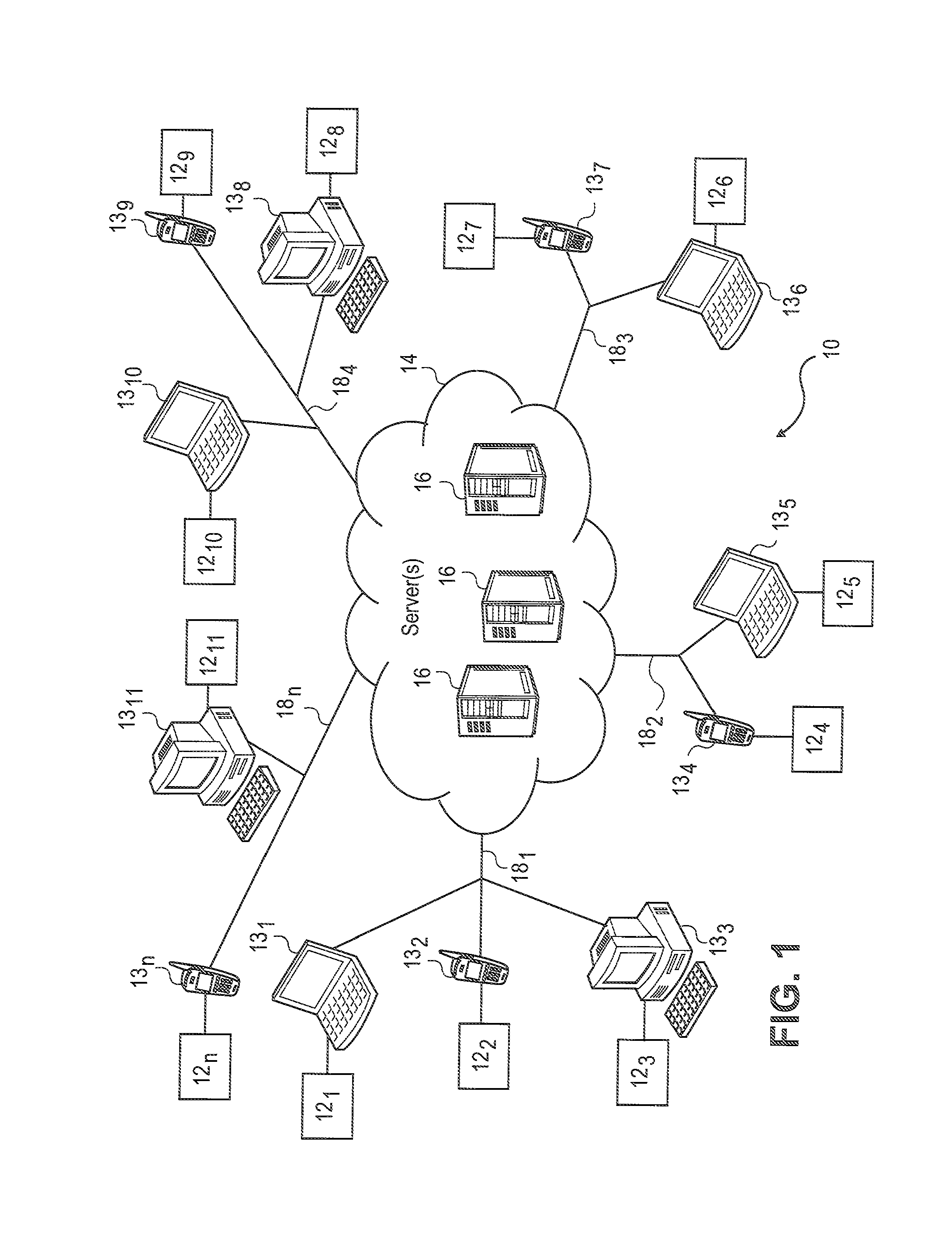

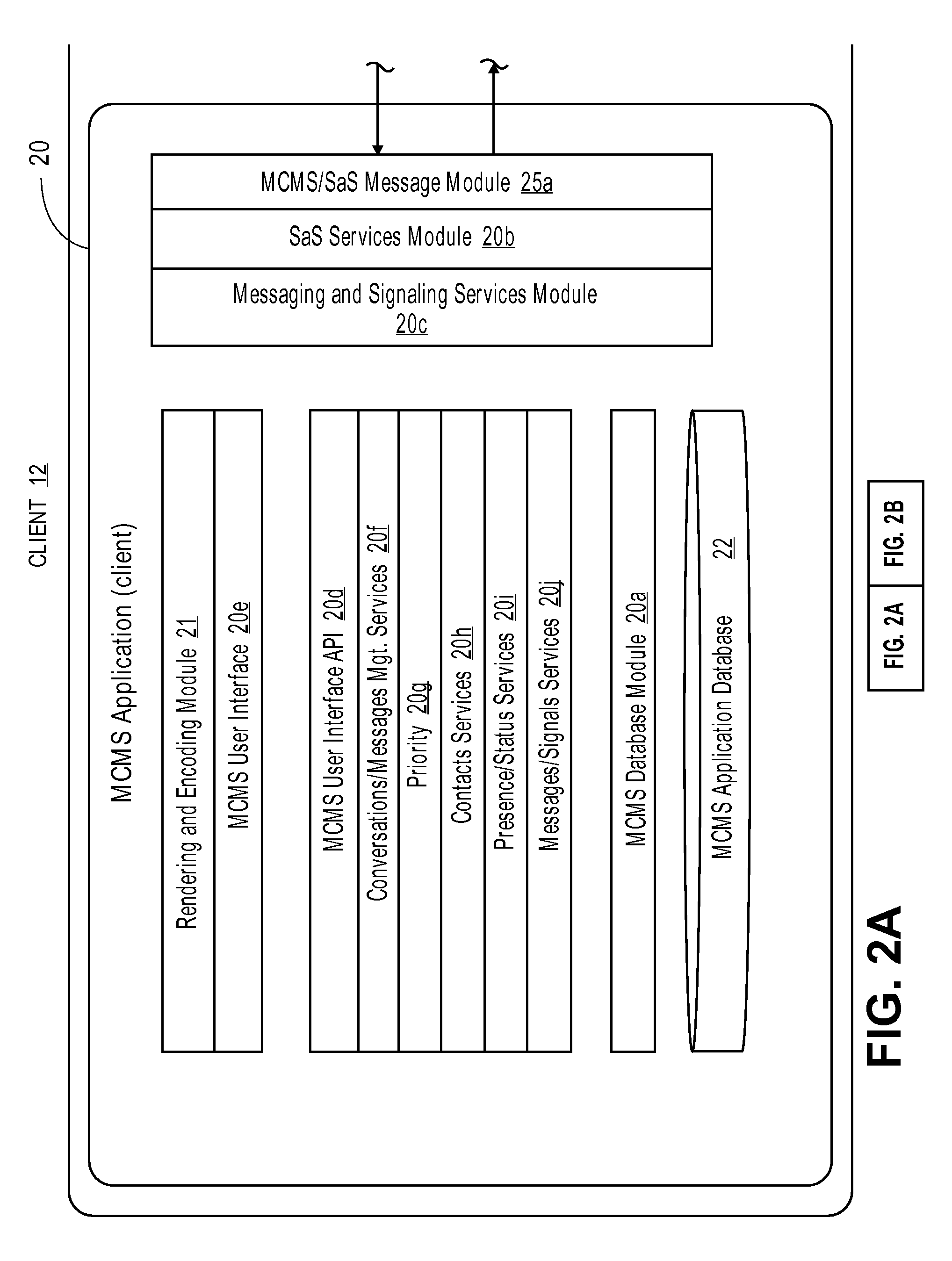

Method and system for real-time synchronization across a distributed services communication network

ActiveUS20090168760A1Time-division multiplexNetwork connectionsData synchronizationDistributed services

A method for progressively synchronizing stored copies of indexed media transmitted between nodes on a network. The method includes progressively transmitting available indexed media from a sending node to a receiving node with a packet size and packetization interval sufficient to enable the near real-time rendering of the indexed media, wherein the near real-time rendering of the indexed media provides a recipient with an experience of reviewing the transmitted media live. At the receiving node, the transmitted indexed media is progressively receive and any indexed media that is not already locally stored at the receiving node is noted. The receiving node further continually generates and transmits to the sending node requests as needed for the noted indexed media. In response, the sending node transmits the noted indexed media to the receiving node. Both the sending node and the receiving node store the indexed media. As a result, both the sending node and the receiving node each have synchronized copies of the indexed media.

Owner:VOXER IP

Method and system for real-time synchronization across a distributed services communication network

ActiveUS8099512B2Multiple digital computer combinationsData switching networksData synchronizationDistributed services

A method for progressively synchronizing stored copies of indexed media transmitted between nodes on a network. The method includes progressively transmitting available indexed media from a sending node to a receiving node with a packet size and packetization interval sufficient to enable the near real-time rendering of the indexed media, wherein the near real-time rendering of the indexed media provides a recipient with an experience of reviewing the transmitted media live. At the receiving node, the transmitted indexed media is progressively receive and any indexed media that is not already locally stored at the receiving node is noted. The receiving node further continually generates and transmits to the sending node requests as needed for the noted indexed media. In response, the sending node transmits the noted indexed media to the receiving node. Both the sending node and the receiving node store the indexed media. As a result, both the sending node and the receiving node each have synchronized copies of the indexed media.

Owner:VOXER IP

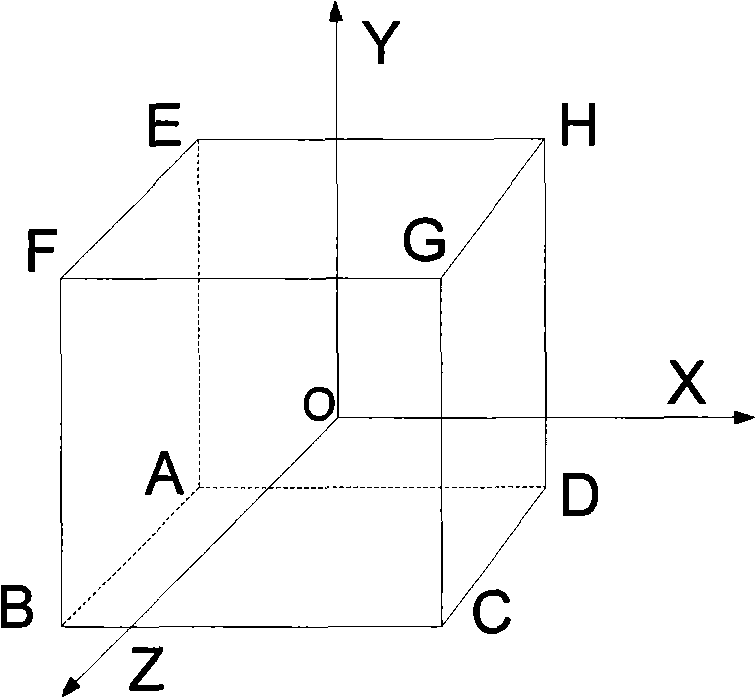

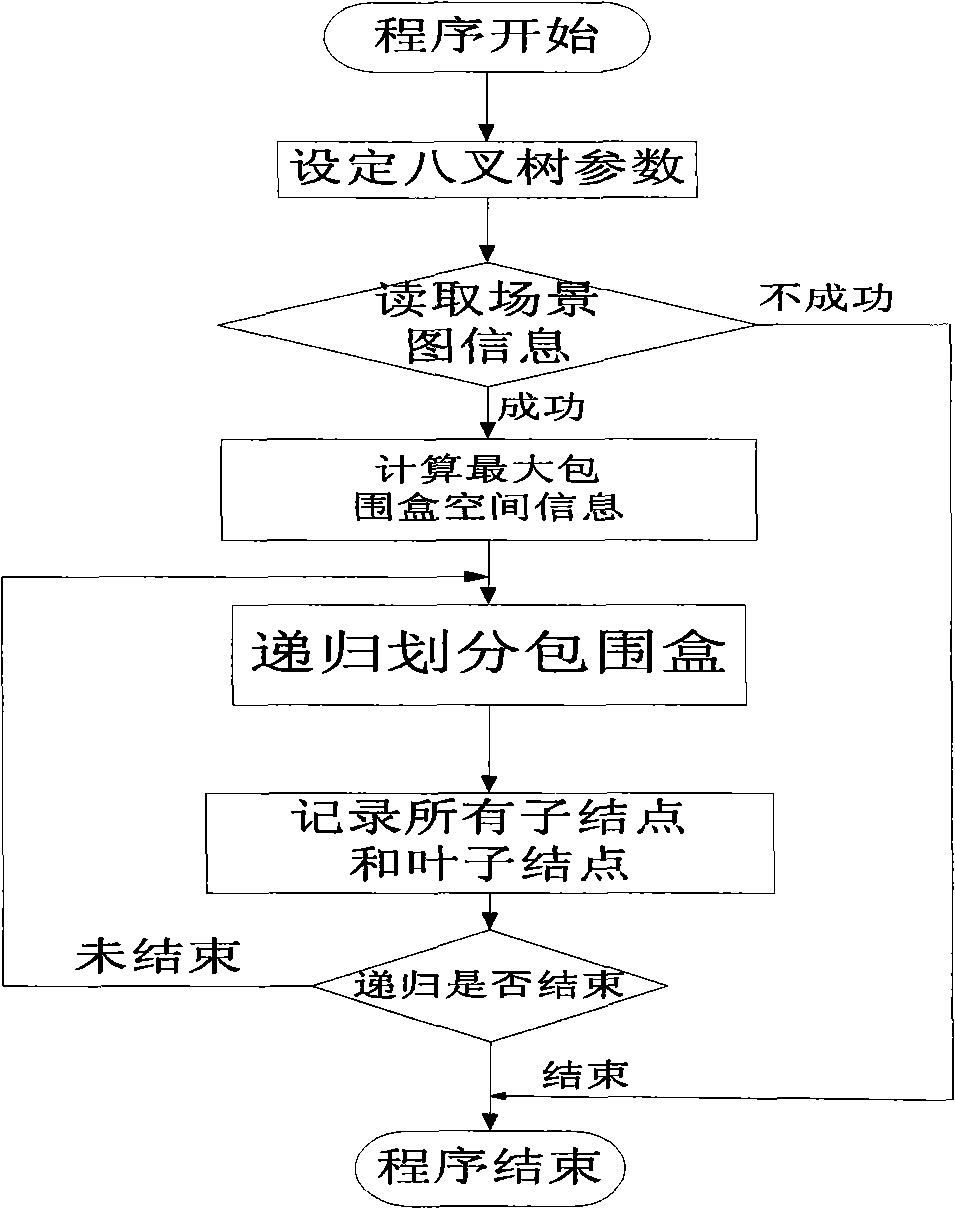

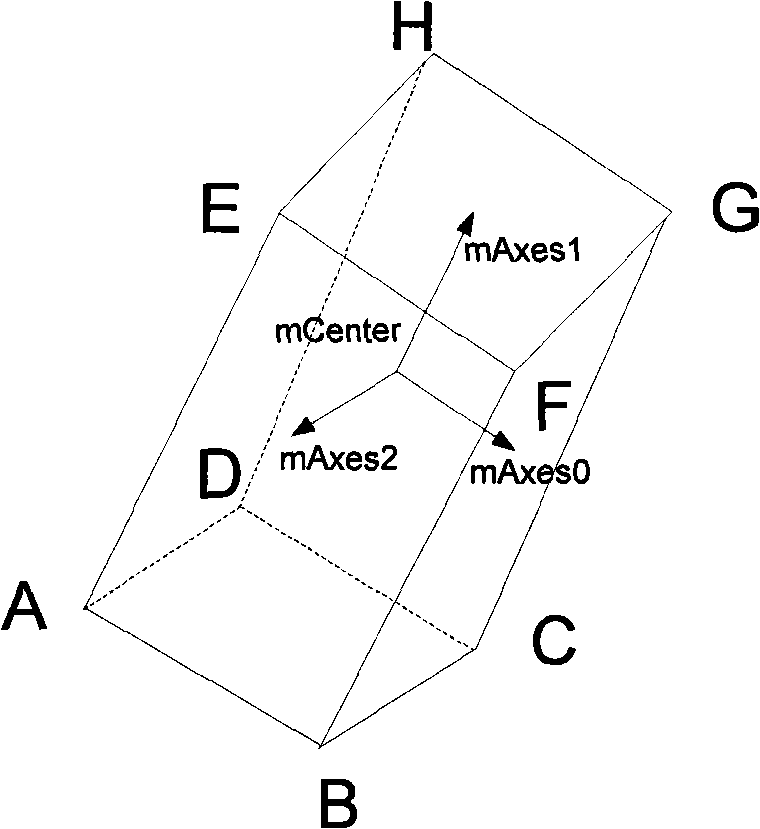

Method for processing cosmically complex three-dimensional scene based on eight-fork tree

InactiveCN101281654AImprove organizationImprove good performanceImage coding3D-image renderingGraphicsComputer graphics

The invention relates to a large scale complex three-dimensional scene processing method based on the octree, belonging to the computer graphics and the virtual reality field, including the steps: (1) loading the large scale three-dimensional scene, organizing all the elements in the scene by the scene drawings; (2) building and generating the octree structure of the scene, recording the related information; (3) using the octree structure, performing the fast sight elimination through the crossing detection algorithm of the retinal cone body and the bounding box; (4) rendering the objects in the retinal cone body. The invention considers the deficiency to render the large scale complex three-dimensional scene when the present drawing renders the engine, uses the octree structure to organize the scene, cuts the geometrical nodes outside the retinal cone body by the space information and the tree structure, fast computes the node sequence required to render, reduces the number of the triangle surfaces transmitted to the rendering channels, thereby effectively advancing the rendering speed, reaching the realtime requirement.

Owner:SHANGHAI UNIV

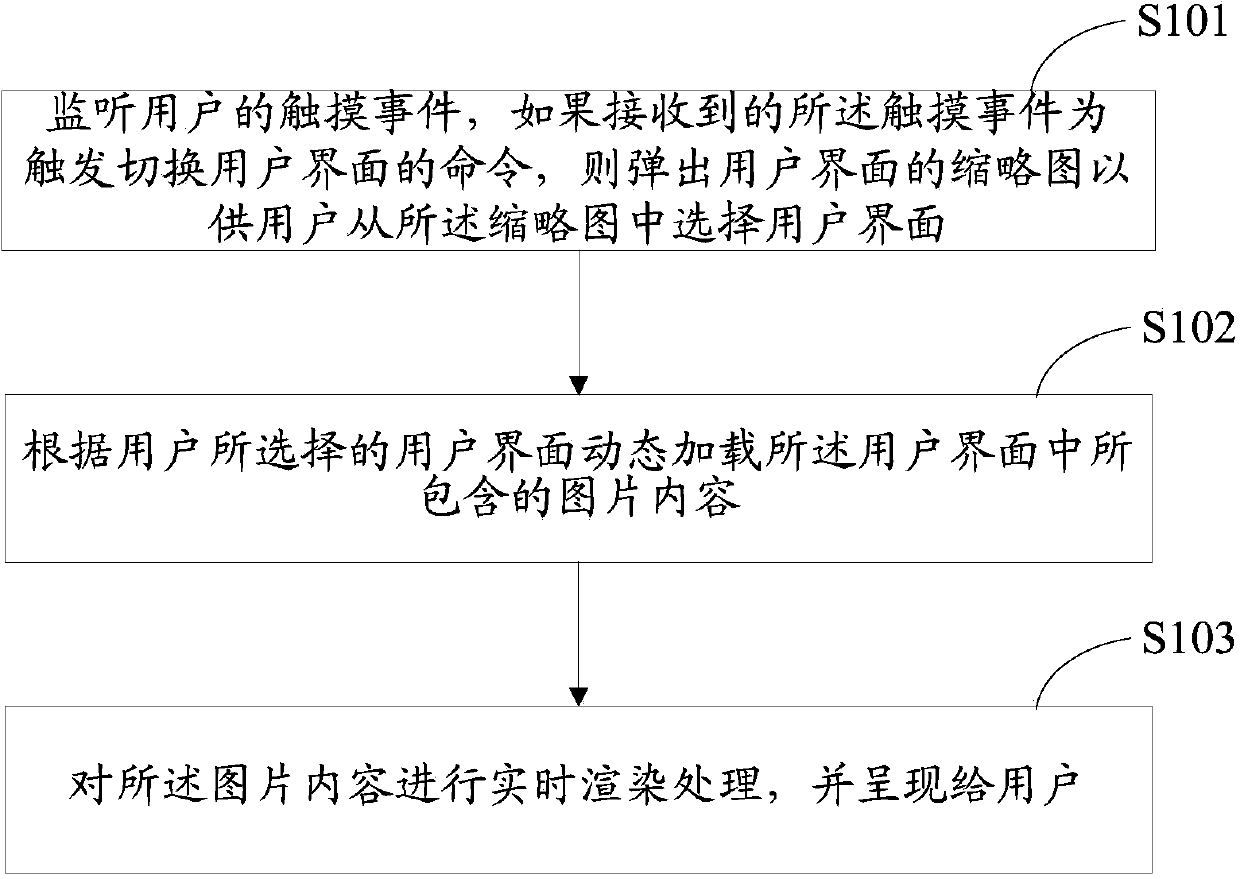

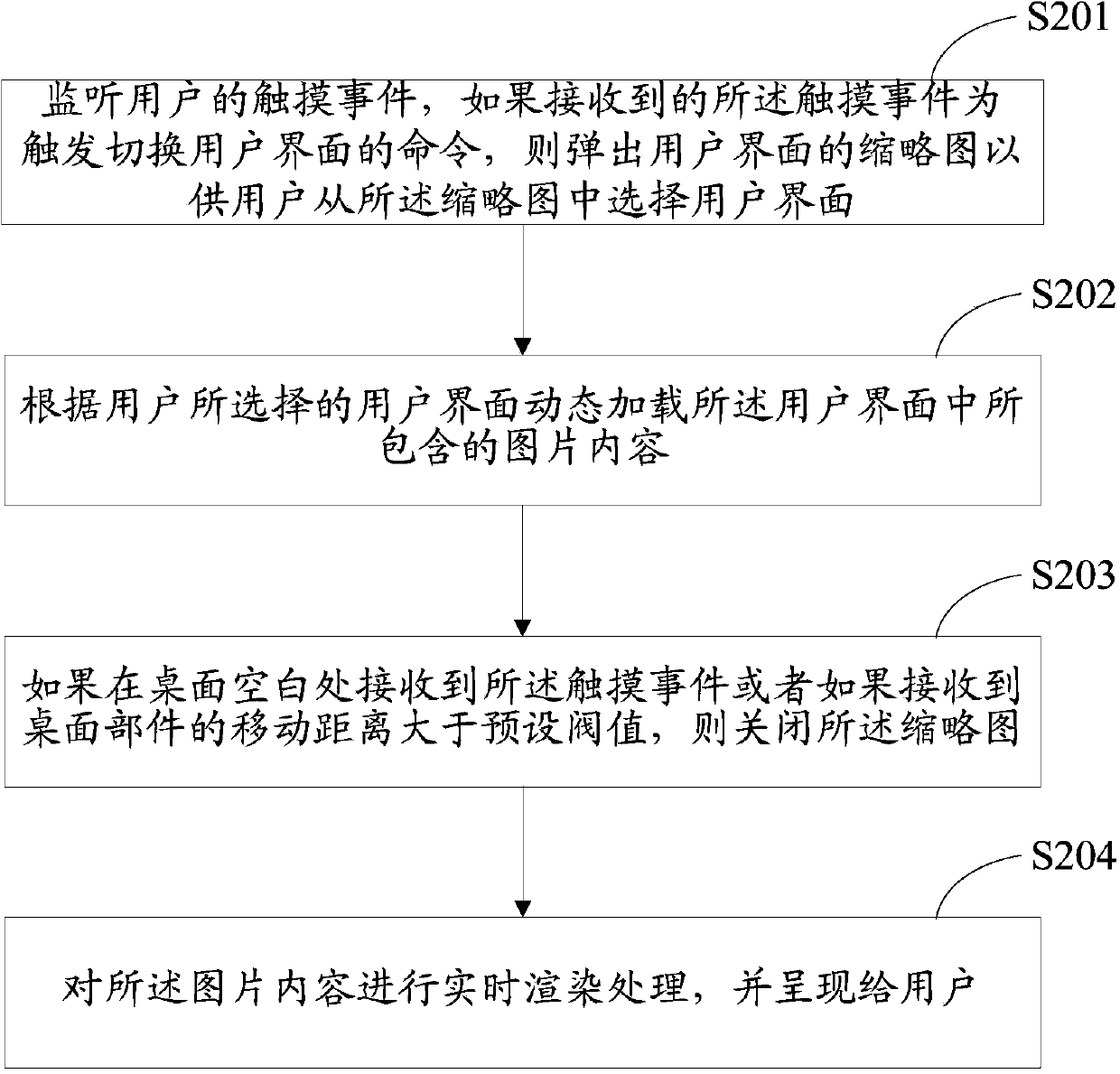

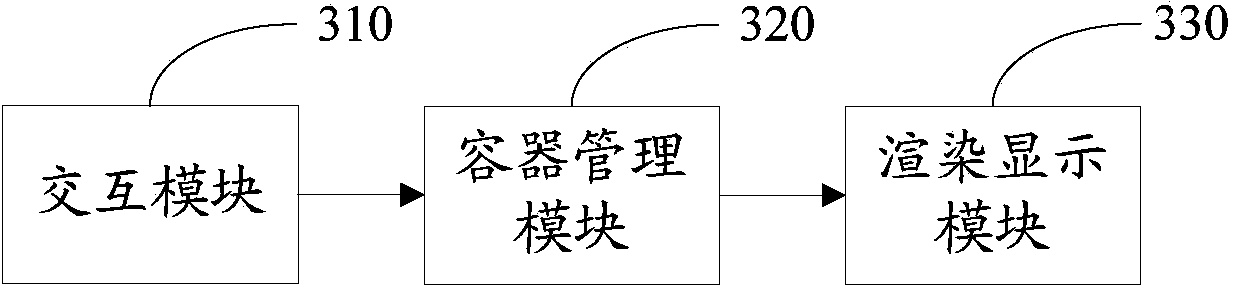

Touch screen terminal and multi-interface switching method thereof

InactiveCN103793134AReal-time rendering processing implementationInput/output processes for data processingThumbnailComputer terminal

The invention discloses a touch screen terminal and a multi-interface switching method thereof. The method includes: monitoring a touch even from a user; if the touch event which triggers a command of switching a user interface is received, popping out a thumbnail of the user interface for the user to select the user interface from the thumbnail; dynamically loading image contents included by the user interface, according to the user-selected user interface; rendering the image contents in real time and displaying the contents to the user. The image contents include icons of desktop devices including a clock device and a weather device; rendering treatments include window setting, matrix projection, lighting, rotation and zooming. The touch screen terminal and the multi-interface switching method thereof have the advantages that the user interface can be rendered and displayed in real time and accordingly users can dynamically change the user interface according to needs.

Owner:SHENZHEN TINNO WIRELESS TECH

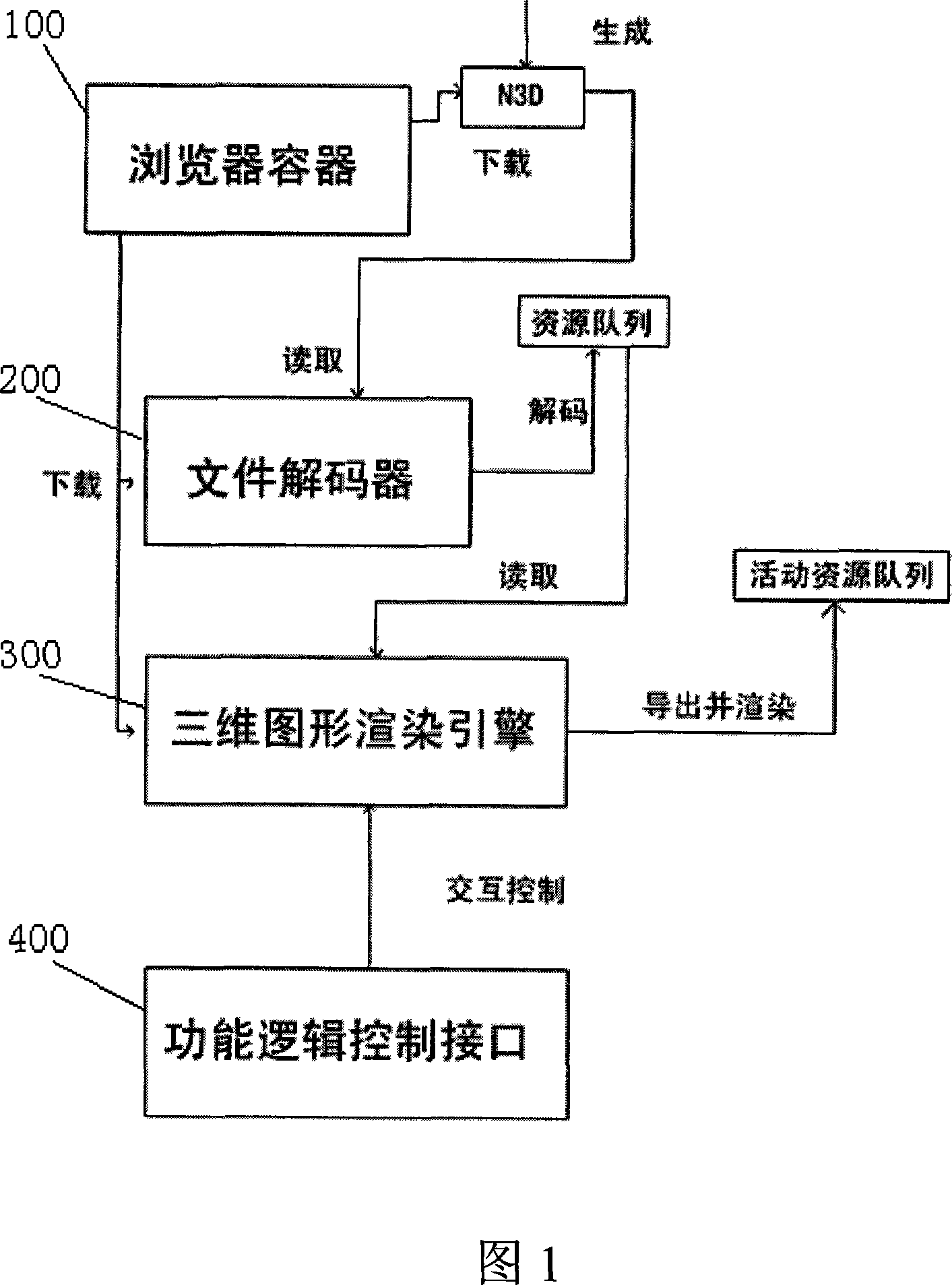

Three-dimensional web page realizing method based on browser

InactiveCN101067827AReduce file sizeImprove interactivitySpecial data processing applications3D-image renderingGraphicsWeb page

This invention provides a realization method for 3-D webs of a browser including four systems to finish the work: a browser container, a file decoder, a 3-D graph romance engine and a functional logic control interface, and the basic flow includes: a, collecting conversion model data to store them to a specific format, b, utilizing a browser to load the file decoder, the 3-D graph romance engine and 3-D web file dynamically, c, decoding the file timely by the file decoder to put it in a scene resource queue, d, starting up the engine to read the resource queue and generate an active resource queue and carry out real time romance, e, realizing mutual motion with the browser by the functional logic control interface.

Owner:上海创图网络科技股份有限公司

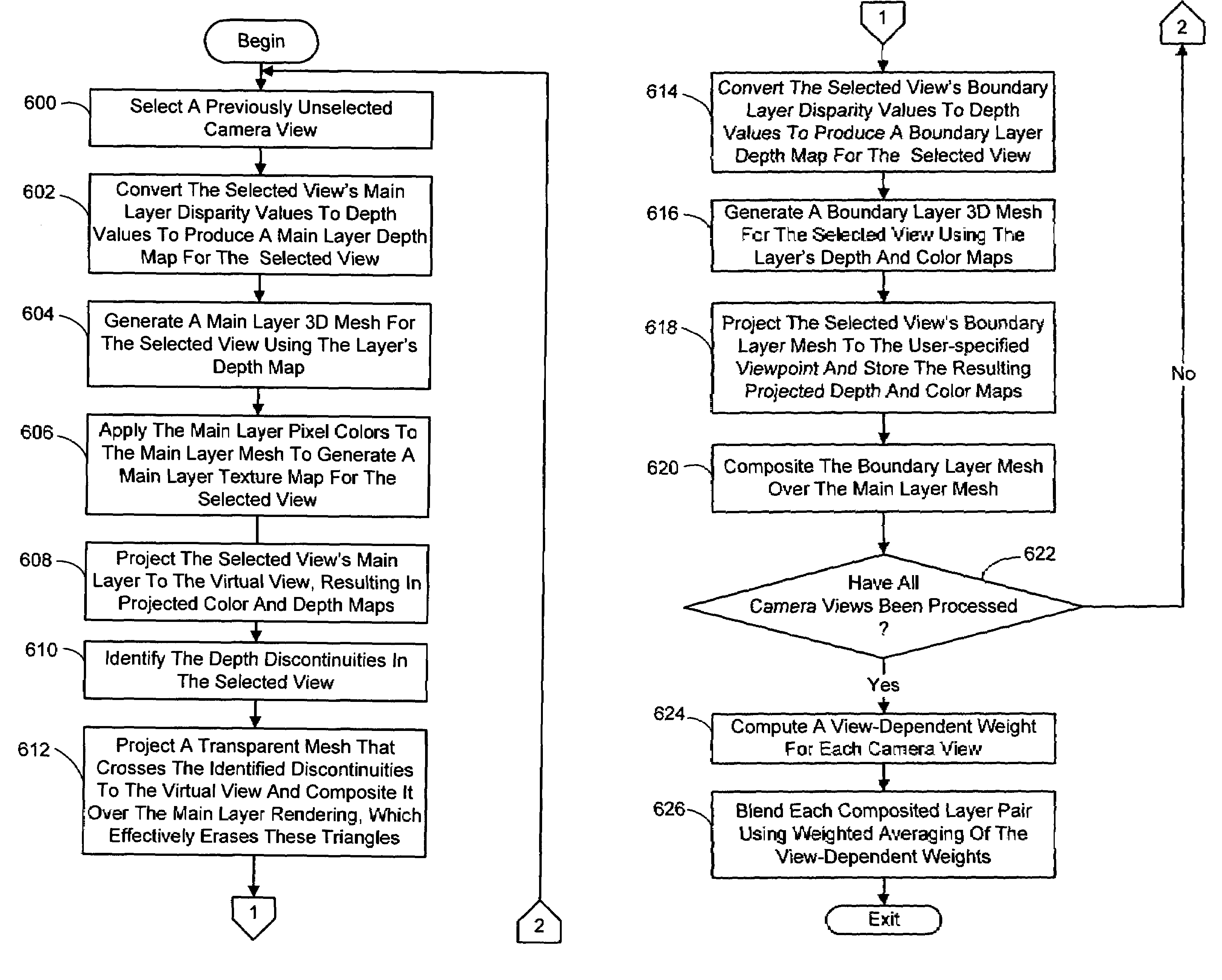

Real-time rendering system and process for interactive viewpoint video that was generated using overlapping images of a scene captured from viewpoints forming a grid

InactiveUS7142209B2Cathode-ray tube indicatorsInput/output processes for data processingViewpointsComputer graphics (images)

A system and process for rendering and displaying an interactive viewpoint video is presented in which a user can watch a dynamic scene while manipulating (freezing, slowing down, or reversing) time and changing the viewpoint at will. The ability to interactively control viewpoint while watching a video is an exciting new application for image-based rendering. Because any intermediate view can be synthesized at any time, with the potential for space-time manipulation, this type of video has been dubbed interactive viewpoint video.

Owner:MICROSOFT TECH LICENSING LLC

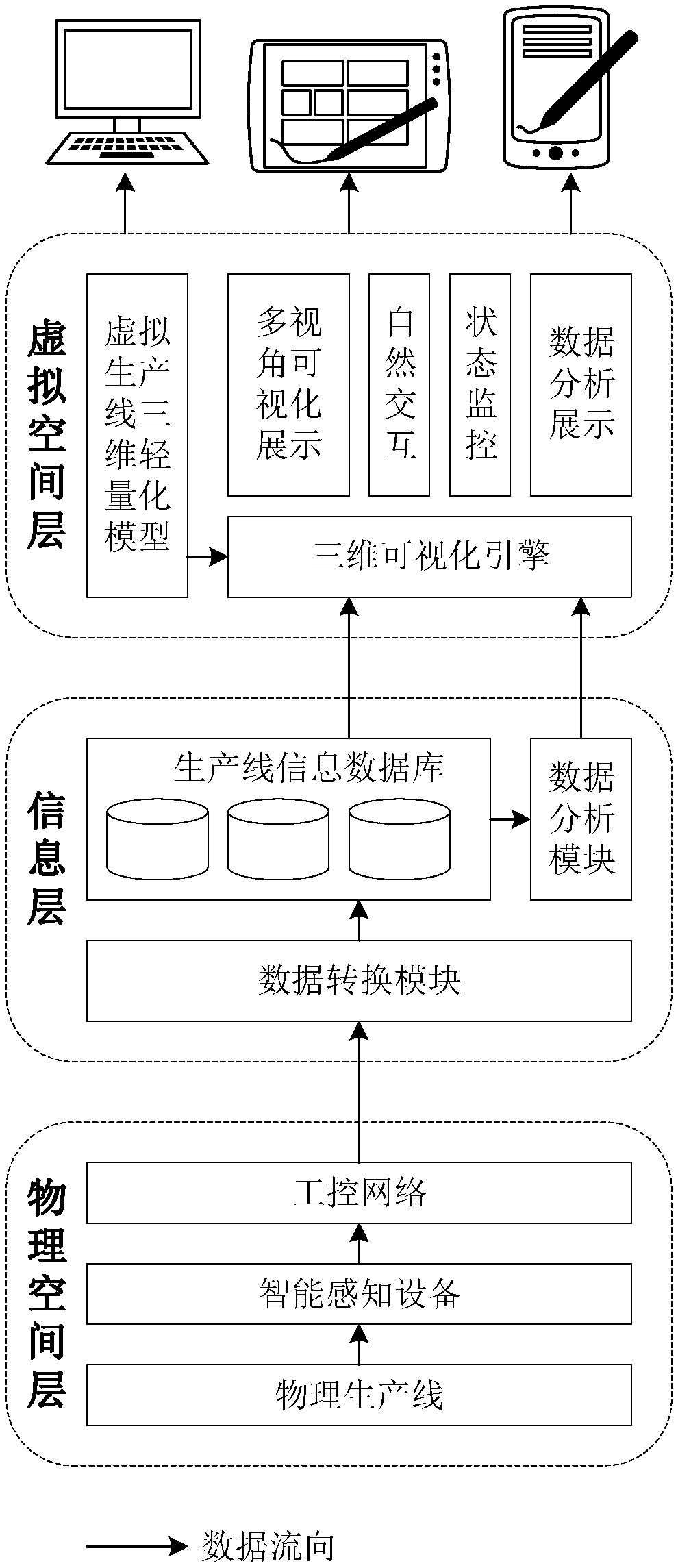

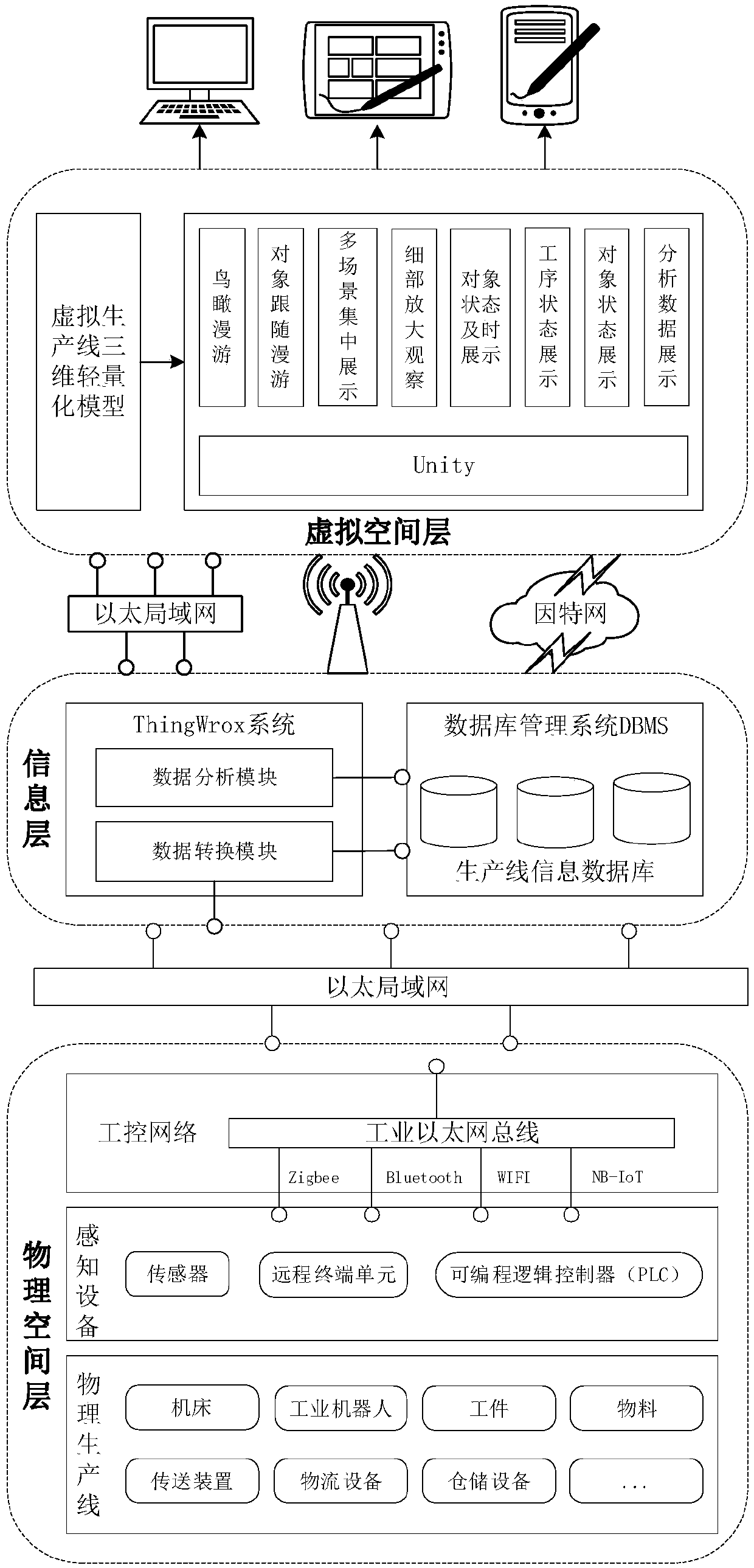

Digital twin system of an intelligent production line

InactiveCN109613895AImplement twin mirroringData processing applicationsTotal factory controlTime informationInformation layer

The invention relates to a digital twin system of an intelligent production line, which comprises a physical space layer, an information layer and a virtual space layer. The physical space layer is composed of a physical production line, intelligent sensing devices and an industrial control network. The information layer includes a data conversion module, a data analysis module and a production line information database. The virtual space layer can adapt to a variety of platforms and environments including personal computers and handheld devices, is used for generating a virtual production line consistent with the physical production line by a 3D visual engine through online real-time and offline non-real-time rendering under the driving of the production line information database of the information layer, and has the functions of multi-angle of view visual display, natural interaction, state monitoring and so on. The real-time state information of the physical production line is collected by various intelligent sensing devices, and based on the information, a three-dimensional visual engine is driven to generate a virtual production line model consistent with the physical production line through rendering, thereby realizing the twin mirror image of the virtual production line and the physical production line.

Owner:CHINA ELECTRONIC TECH GRP CORP NO 38 RES INST

3-d text in a gaming machine

InactiveUS20080188304A1Quality improvementImprove text qualityRoulette gamesApparatus for meter-controlled dispensingGraphicsHuman–computer interaction

Methods and apparatus on a gaming machine for presenting a plurality of game outcome presentations derived from one or more virtual 3-D gaming environments stored on the gaming machine are described. While a game of chance is being played on the gaming machine, two-dimensional images derived from a 3-D object in the 3-D gaming environment may be rendered to a display screen on the gaming machine in real-time as part of a game outcome presentation. The 3-D objects in the 3-D gaming environment may include 3-D texts objects that are used to display text to the display screen of the gaming machine as part of the game outcome presentation. Apparatus and methods are described for generating and displaying information in a textual format that is compatible with a 3-D graphical rendering system. In particular, font generation and typesetting methods that are applicable in a 3-D gaming environment are described.

Owner:IGT

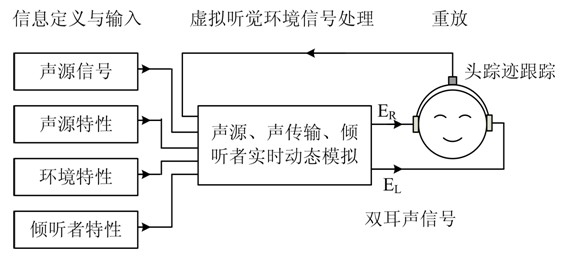

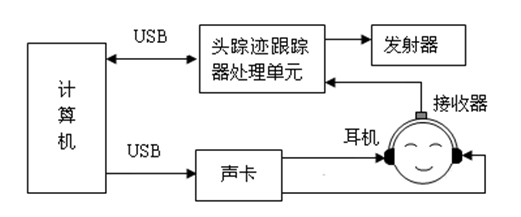

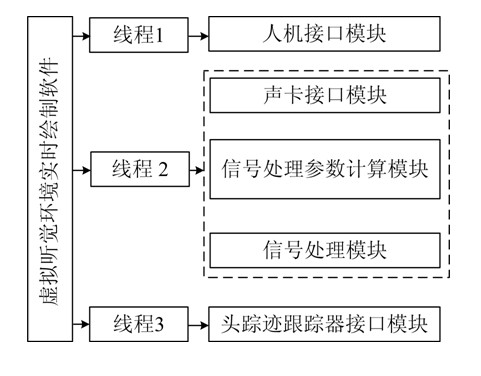

Real-time rendering method for virtual auditory environment

ActiveCN102572676AImplement dynamic processingReduce computationStereophonic systemsSound sourcesHeadphones

The invention discloses a real-time rendering method for a virtual auditory environment. According to the method, the initial information of the virtual auditory environment can be set, and a head trace tracker is used for detecting the dynamic spatial positions of six degrees of freedom of motion of the head of a listener in real time, and dynamically simulating sound sources, sound transmission, environment reflection, the radiation and binaural sound signal conversion of a receiver, and the like in real time according to the data. In the simulation of the binaural sound signal conversion, a method for realizing joint processing on a plurality of virtual sound sources in different directions and at difference distances by utilizing a shared filter is adopted, so that signal processing efficiency is improved. A binaural sound signal is subjected to earphone-ear canal transmission characteristic balancing, and then is fed to an earphone for replay, so that a realistic spatial auditory event or perception can be generated.

Owner:SOUTH CHINA UNIV OF TECH

Interactive viewpoint video employing viewpoints forming an array

InactiveUS7286143B2Low costCapture portableTelevision system detailsImage enhancementViewpointsComputer graphics (images)

Owner:MICROSOFT TECH LICENSING LLC

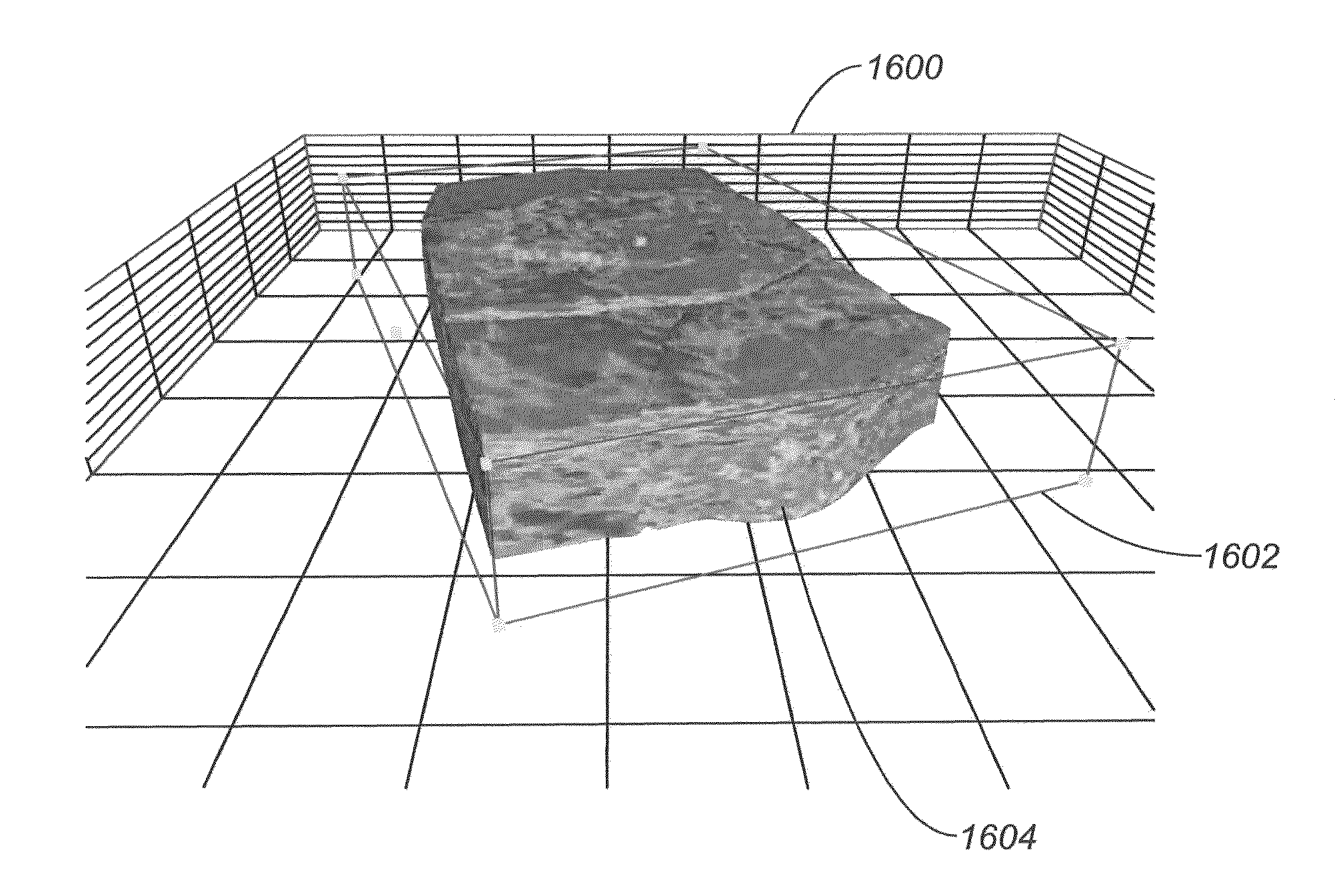

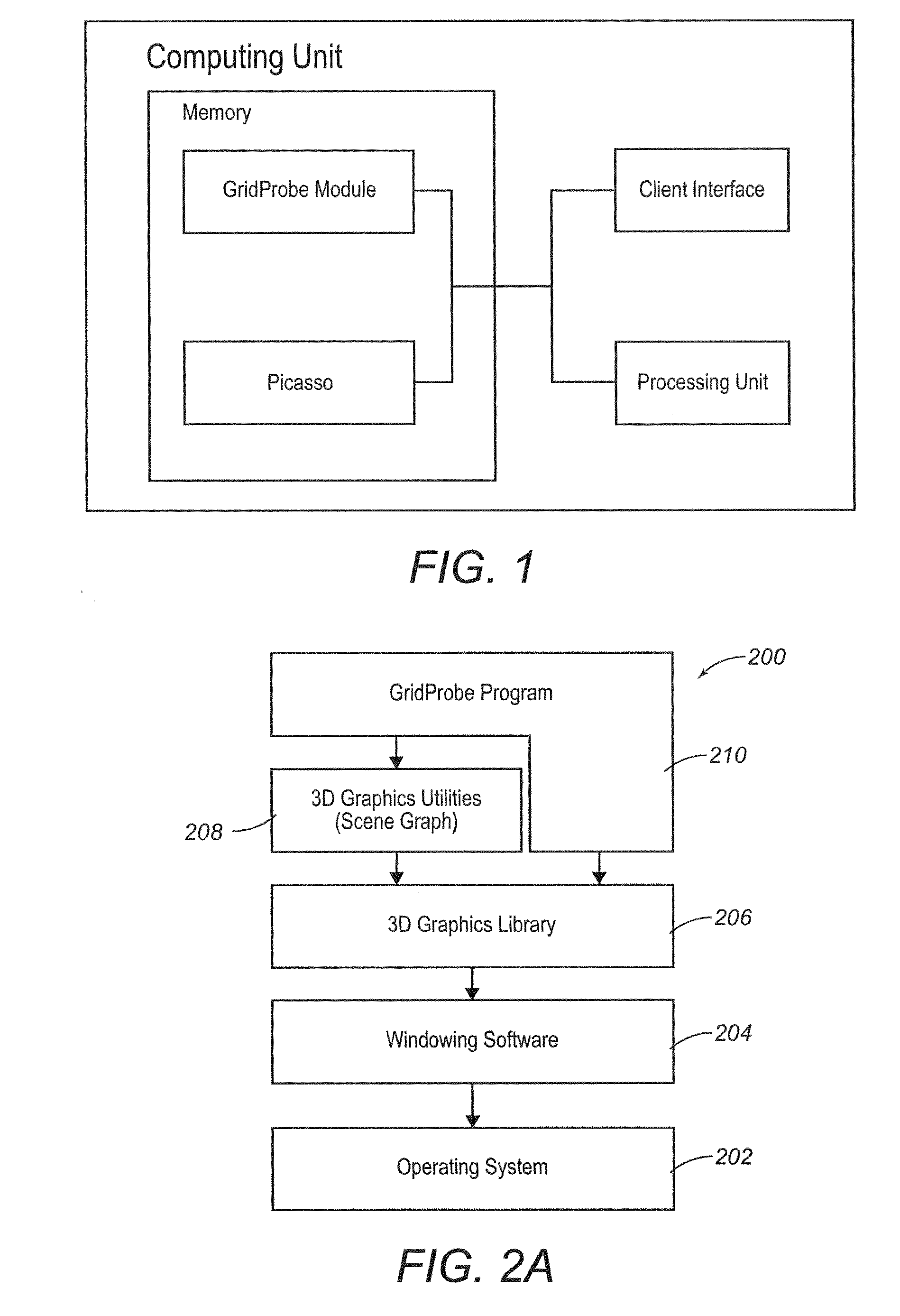

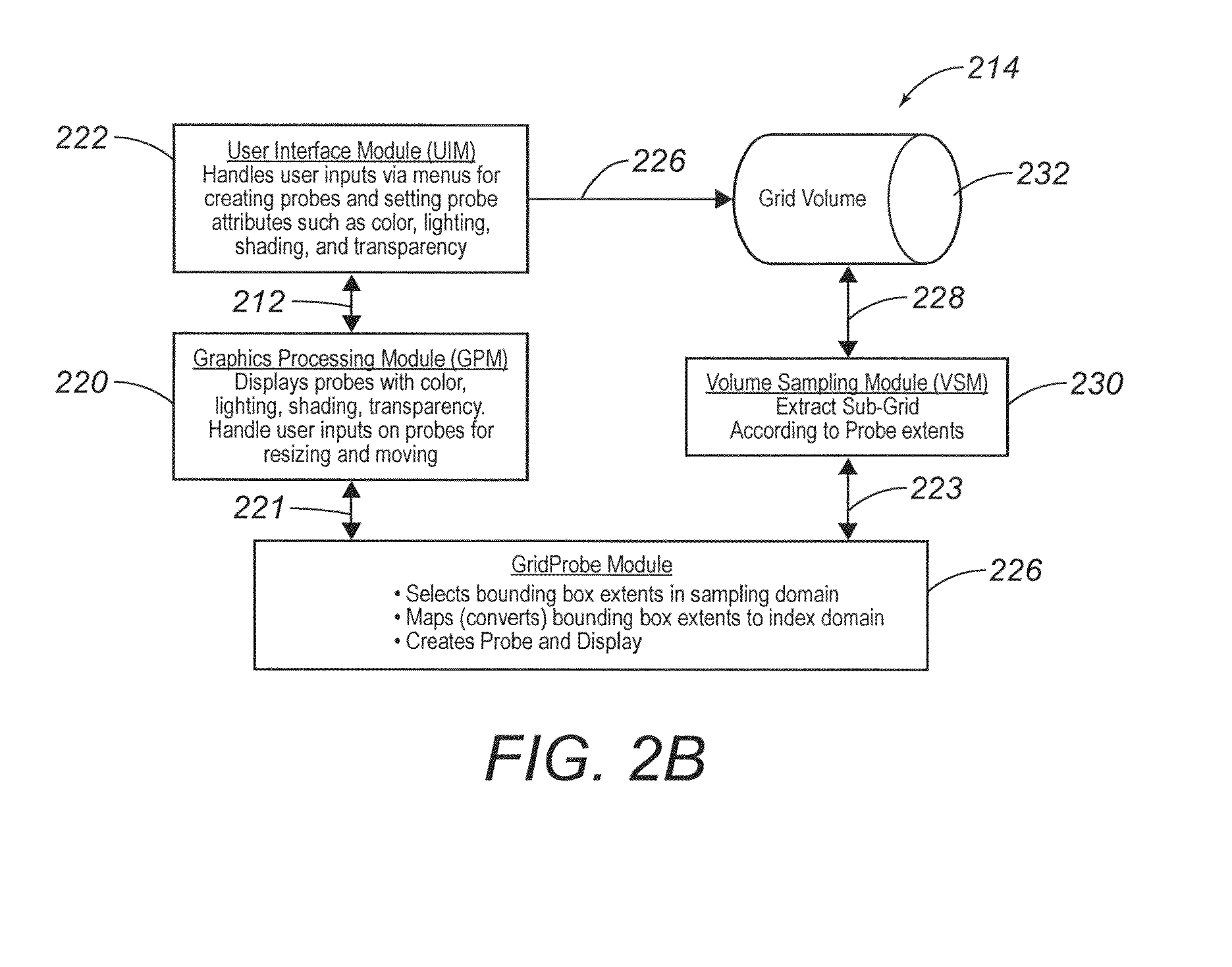

Systems and Methods for Imaging a Three-Dimensional Volume of Geometrically Irregular Grid Data Representing a Grid Volume

Owner:LANDMARK GRAPHICS

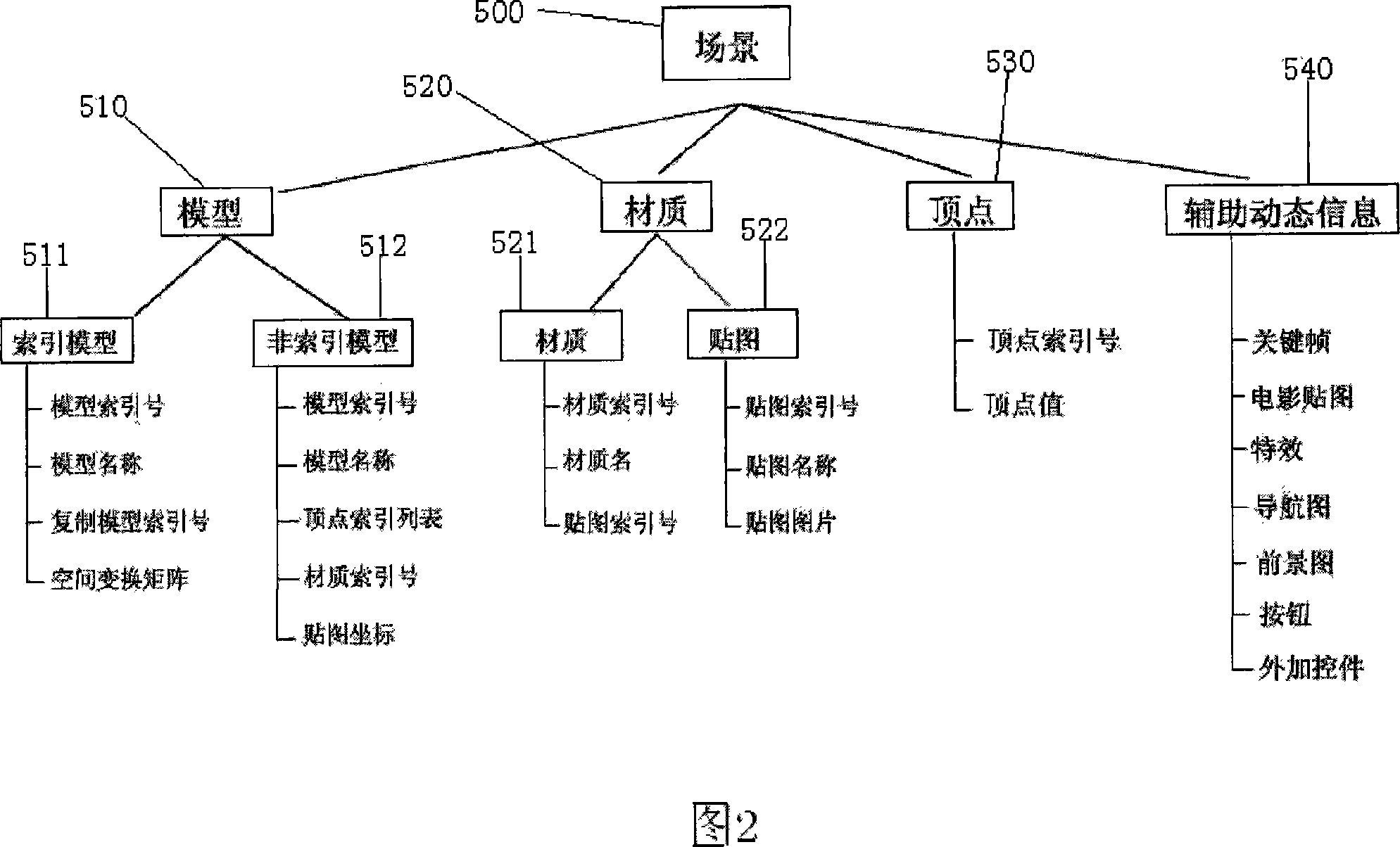

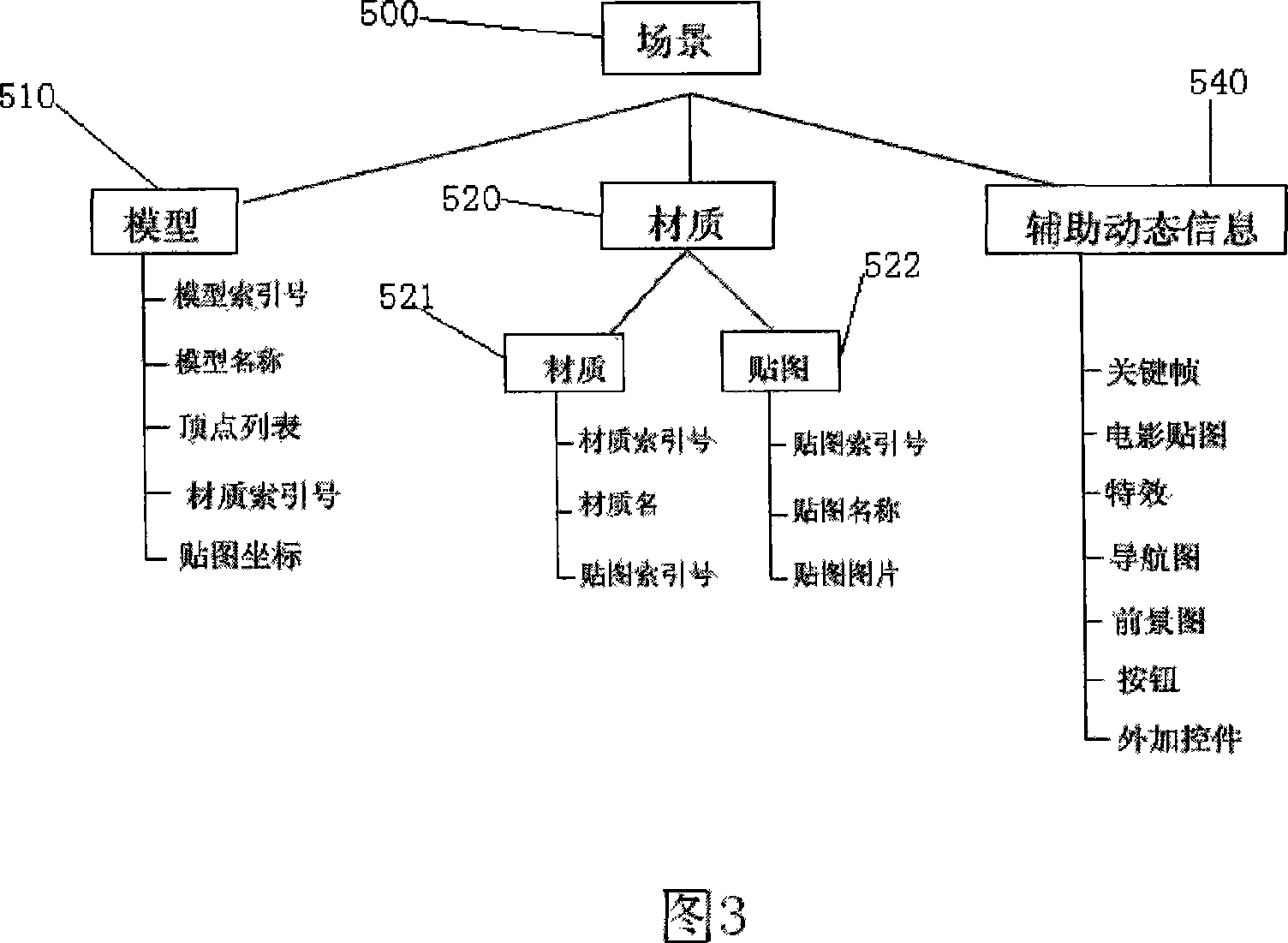

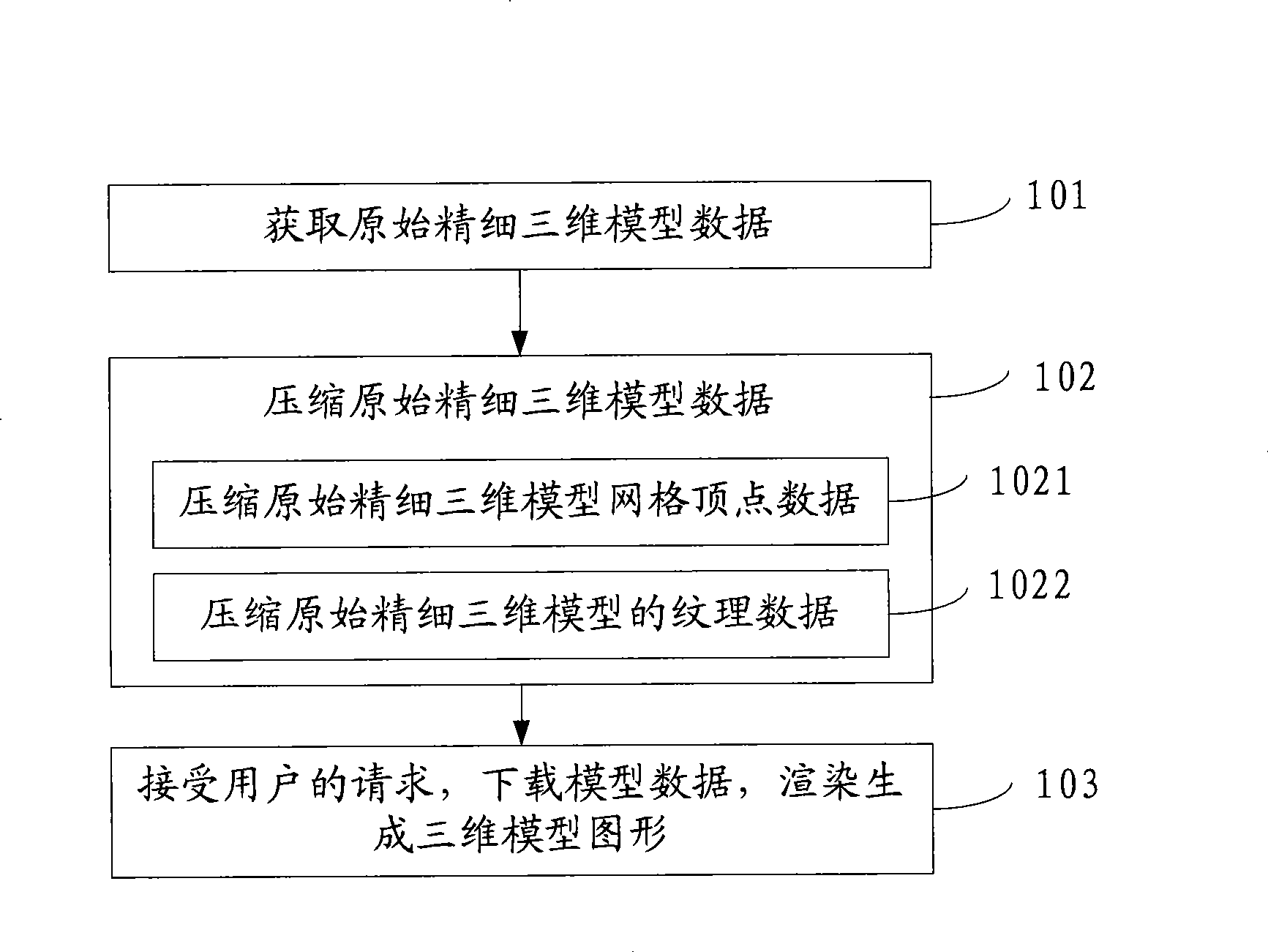

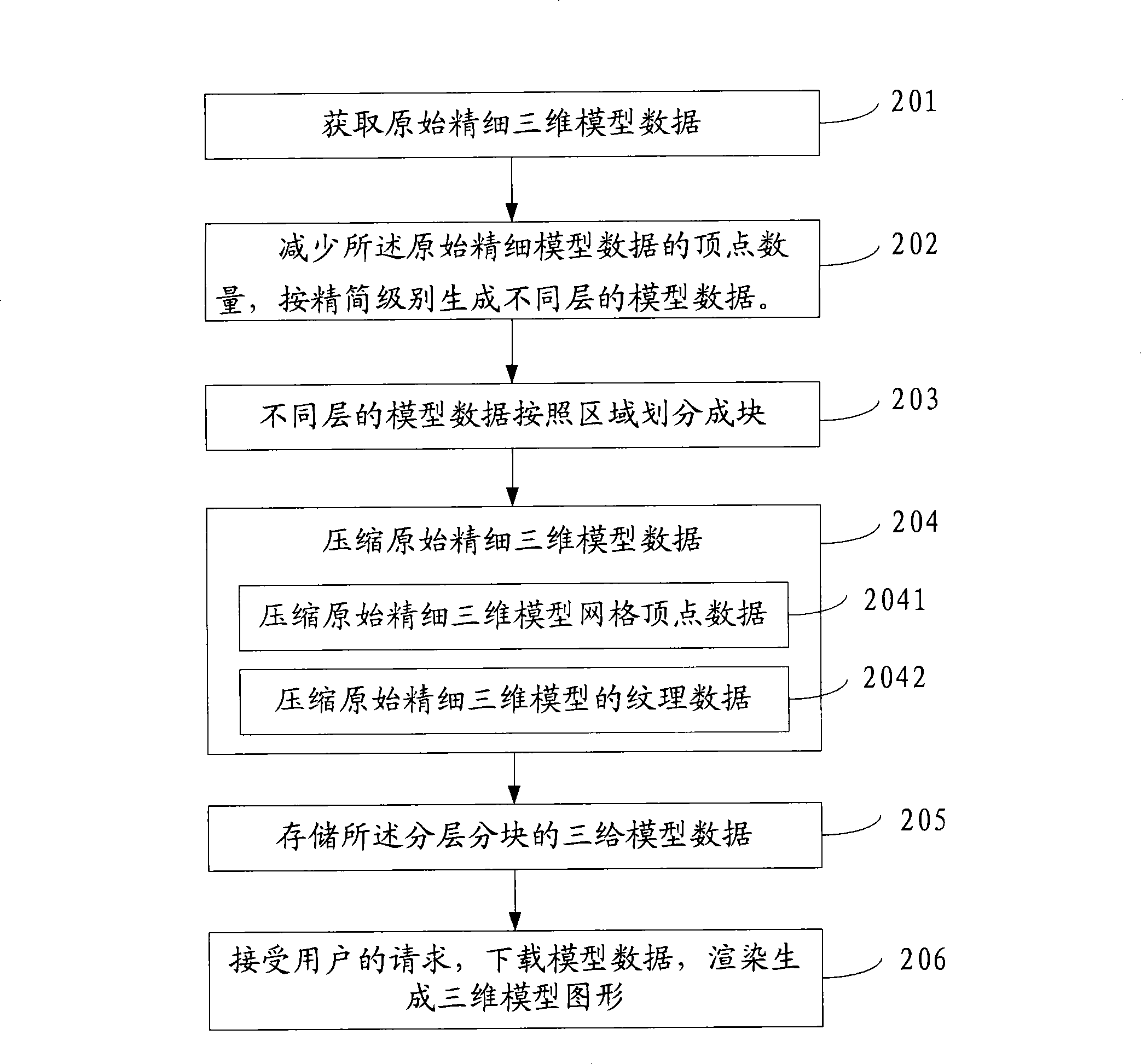

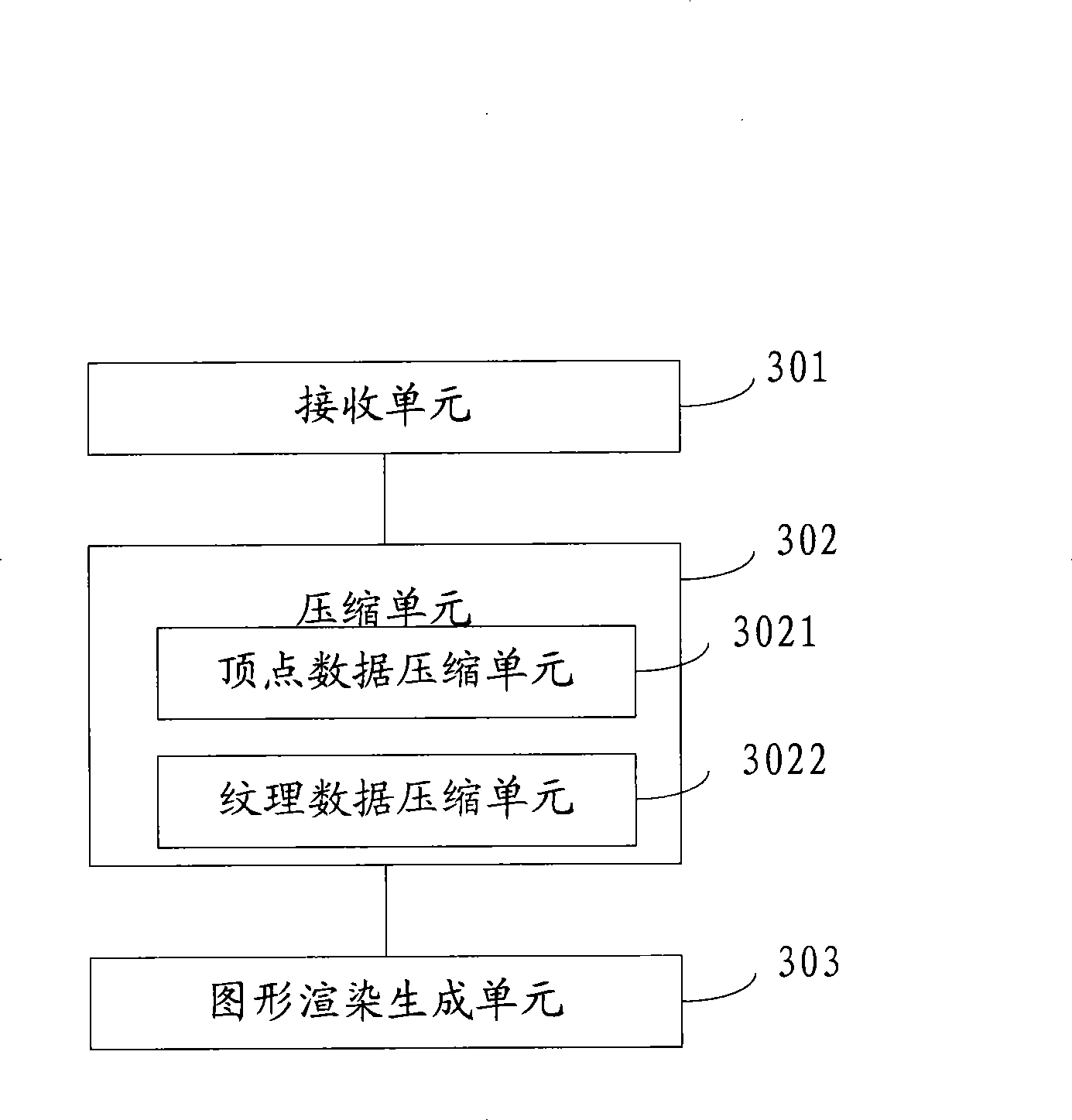

Three-dimensional model image generating method and apparatus

ActiveCN101364310AReduce the number of verticesReduce complexity3D-image rendering3D modellingGraphicsGraphic system

The invention provides a pattern generation method for a three-dimensional model, which comprises the following steps: original precise three-dimensional model data is obtained, and the three-dimensional model data comprises polygon mesh vertex data which indicates a three-dimensional object; the three-dimensional model data is compressed, and redundant data of the three-dimensional model is reduced; a user request is received, the compressed three-dimensional model data is downloaded, and a three-dimensional model pattern is generated through rendering. Through the pattern generation method, the model complexity is reduced, the number of the polygons which need to be processed in an image system is reduced, the data size which needs to be transmitted is reduced, more particularly, the data size which is needed for the pattern generation in a rendering manner is reduced, the rendering speed is increased, and the real-time rendering request of the user can be satisfied. A pattern generation device for the three-dimensional model is also provided.

Owner:BEIJING LINGTU SOFTWARE TECH CO LTD

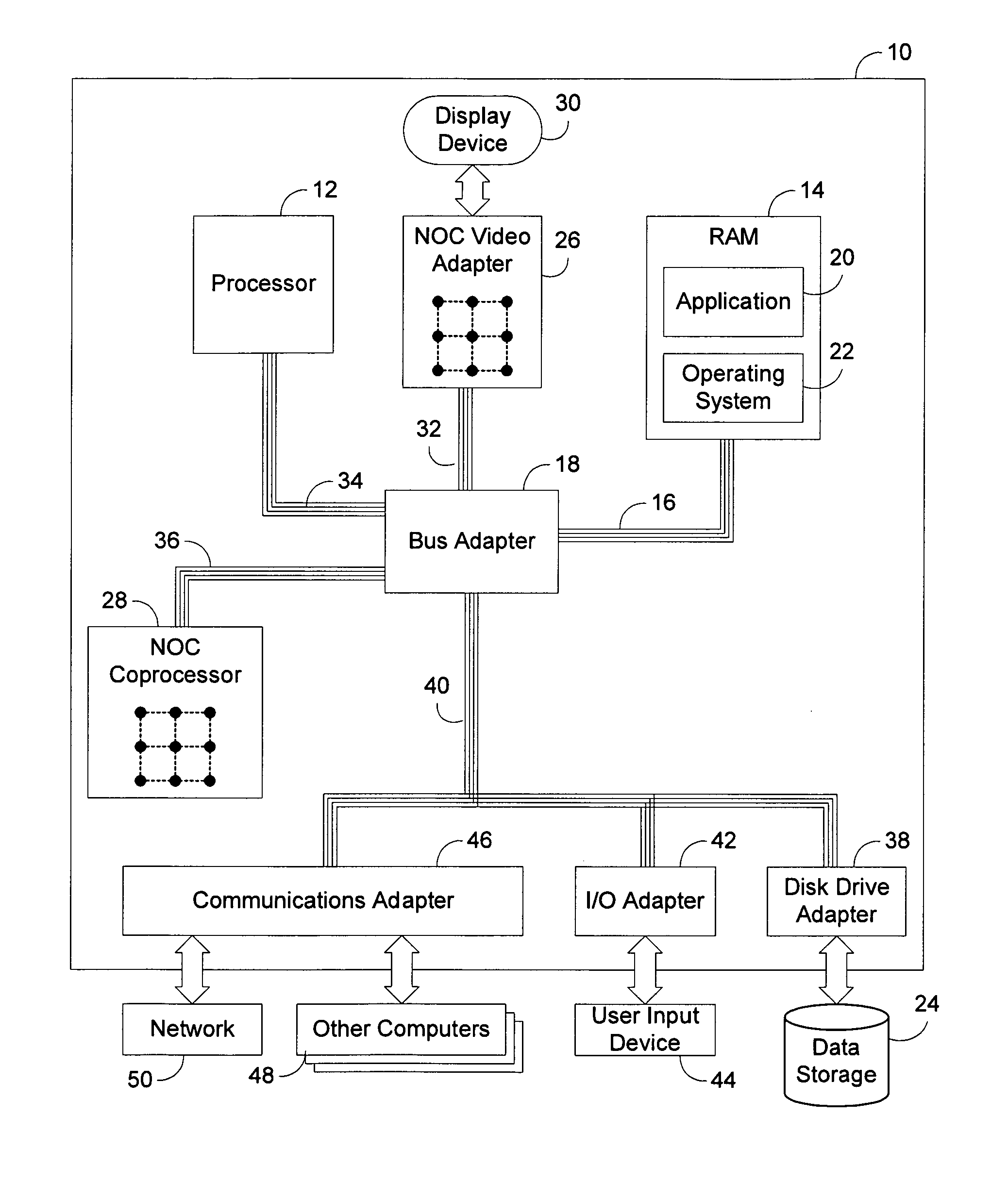

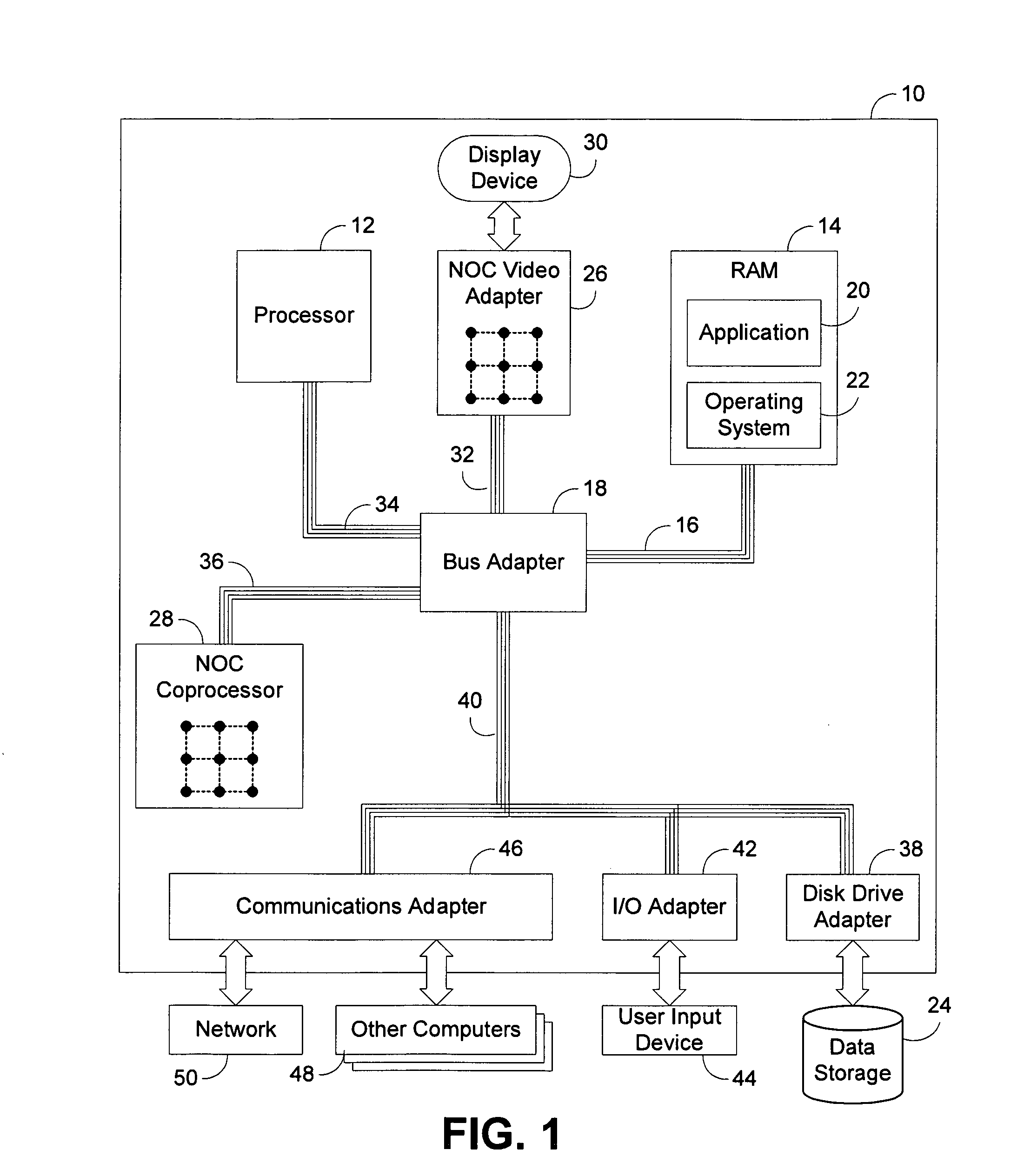

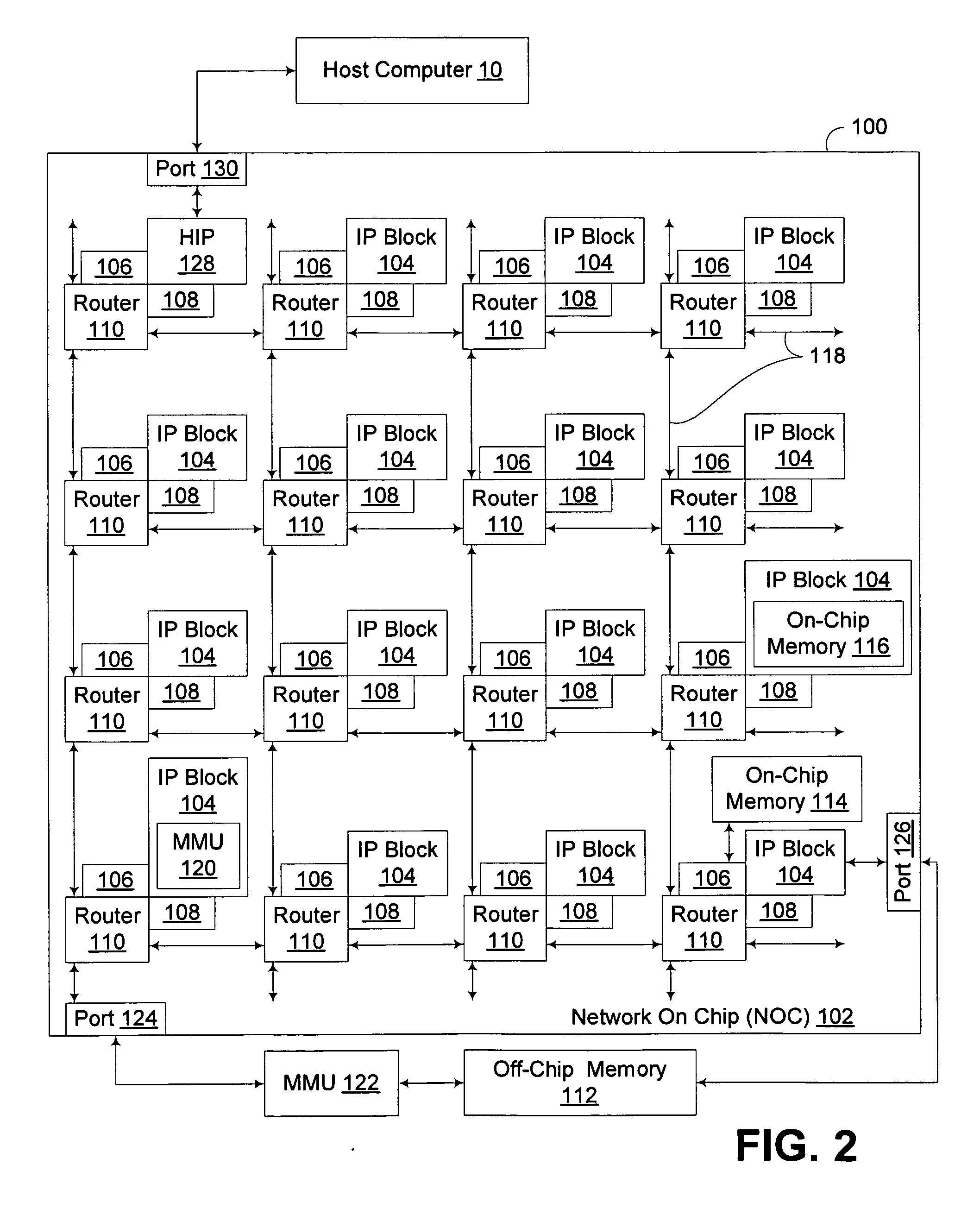

Hybrid rendering of image data utilizing streaming geometry frontend interconnected to physical rendering backend through dynamic accelerated data structure generator

InactiveUS20090256836A1Generate efficientlyEasy to adaptCathode-ray tube indicatorsDetails involving image processing hardwareComputer graphics (images)DEVS

A circuit arrangement and method provide a hybrid rendering architecture capable of interfacing a streaming geometry frontend with a physical rendering backend using a dynamic accelerated data structure (ADS) generator. The dynamic ADS generator effectively parallelizes the generation of the ADS, such that an ADS may be built using a plurality of parallel threads of execution. By doing so, both the frontend and backend rendering processes are amendable to parallelization, and enabling if so desired real time rendering using physical rendering techniques such as ray tracing and photon mapping. Furthermore, conventional streaming geometry frontends such as OpenGL and DirectX compatible frontends can readily be adapted for use with physical rendering backends, thereby enabling developers to continue to develop with known API's, yet still obtain the benefits of physical rendering techniques.

Owner:IBM CORP

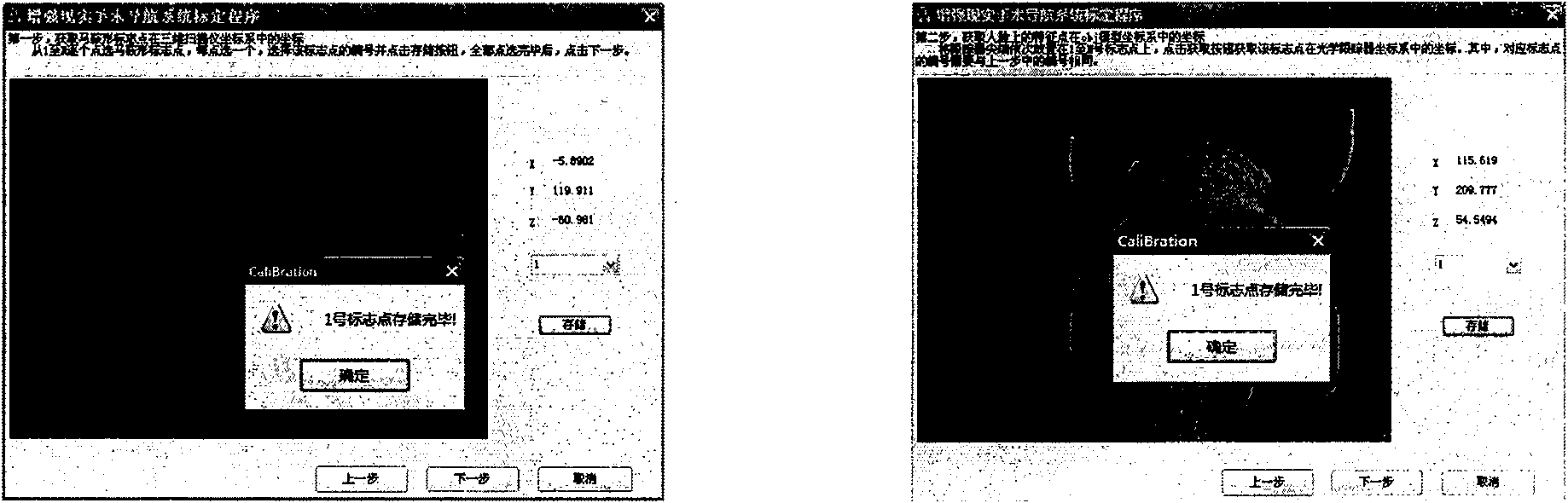

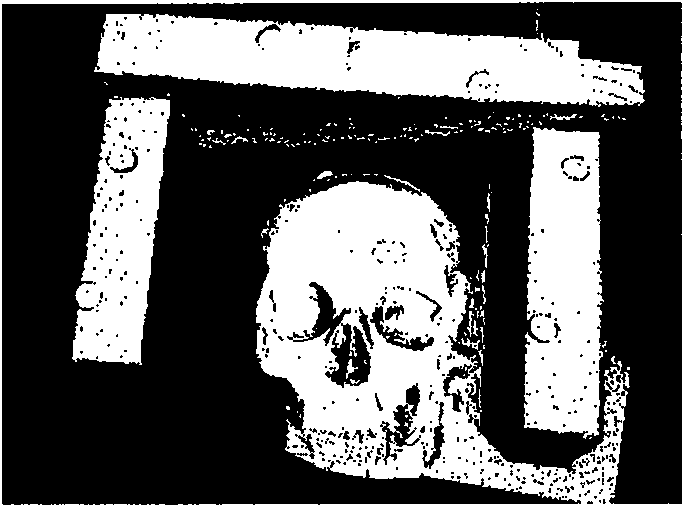

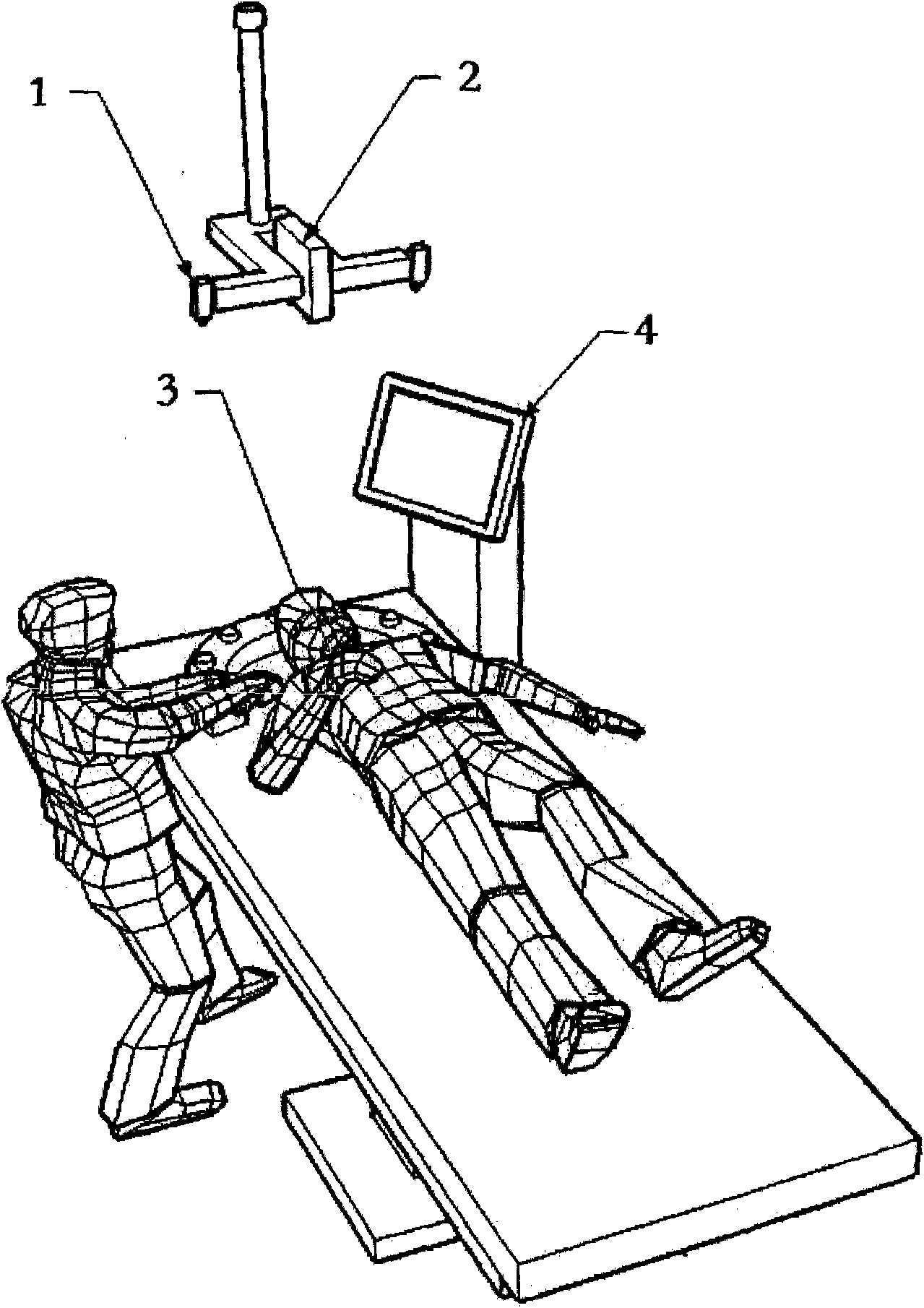

Nasal endoscope minimally invasive operation navigating system based on augmented reality technique

The invention relates to a nasal endoscope minimally invasive operation navigating system based on an augmented reality technique, comprising an infrared tracking camera, a three-dimensional scanner, a three-dimensional stereoscopic display, which are respectively connected with a computer, and a nasal endoscope which is connected with the three-dimensional stereoscopic display. Patient encephalic organization and a three-dimensional model of blood vessel and face skin are rebuilt by the computer; then, a first transition matrix of a three-dimensional scanner coordinate system and an infrared tracking camera coordinate system is multiplied by a second transition matrix of a three-dimensional scanner coordinate system and a three-dimensional model coordinate system of the face skin, the obtained result is multiplied by a third real time data of the position and the posture of the nasal endoscope which is obtained by the infrared tracking camera, a three-dimensional model image which is corresponding to an image which is captured by the nasal endoscope is real time rendered through the computer by the obtained data, and the image is real time overlapped with the image which is captured by the nasal endoscope and is displayed on the three-dimensional stereoscopic display, thereby realizing the blending of true and false images.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

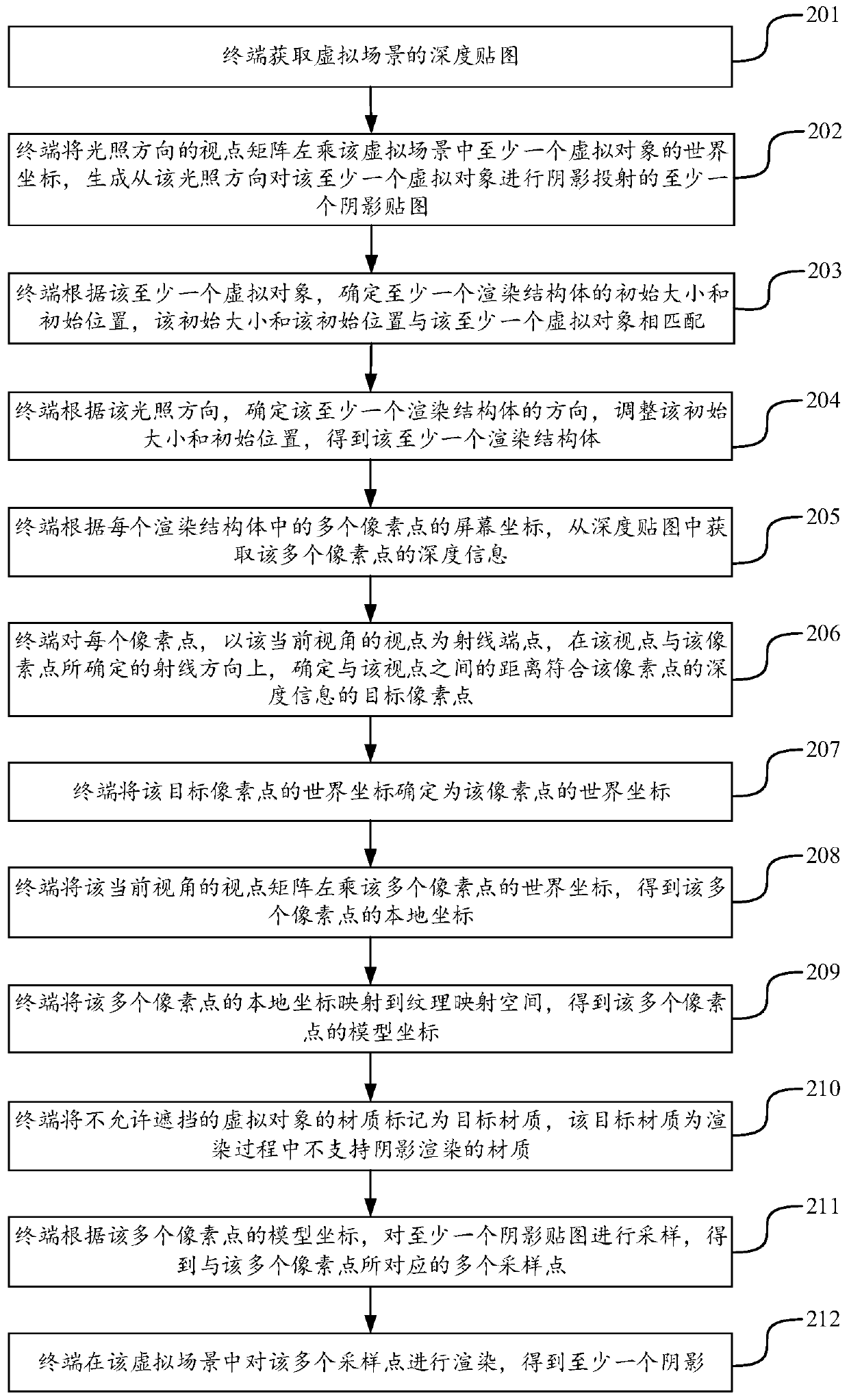

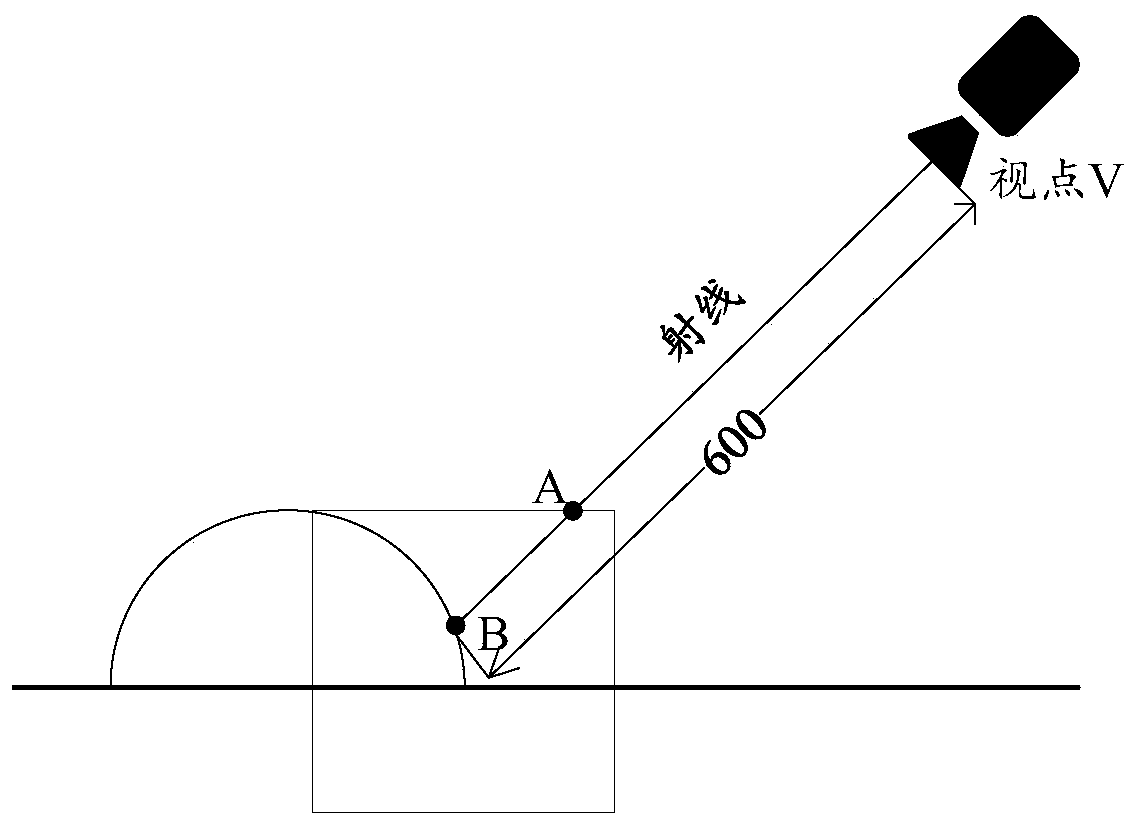

Shadow rendering method and device, terminal and storage medium

ActiveCN109993823AAchieve real-time renderingSave memory resourcesImage rendering3D-image renderingThird partyShadow mapping

The invention discloses a shadow rendering method and device, a terminal and a storage medium, and belongs to the technical field of image rendering. The method comprises the steps of obtaining at least one rendering structural body in a virtual scene according to an illumination direction in the virtual scene; obtaining model coordinates of the plurality of pixel points according to the current visual angle and the depth information of the plurality of pixel points; sampling at least one shadow map according to the model coordinates of the plurality of pixel points to obtain a plurality of sampling points corresponding to the plurality of pixel points; and rendering the plurality of sampling points in the virtual scene to obtain the at least one shadow. Under the condition that a third-party plug-in is not used, real-time rendering of the shadow is achieved based on the function of the rendering engine, memory resources of the terminal are saved, and the processing efficiency of a CPUof the terminal is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

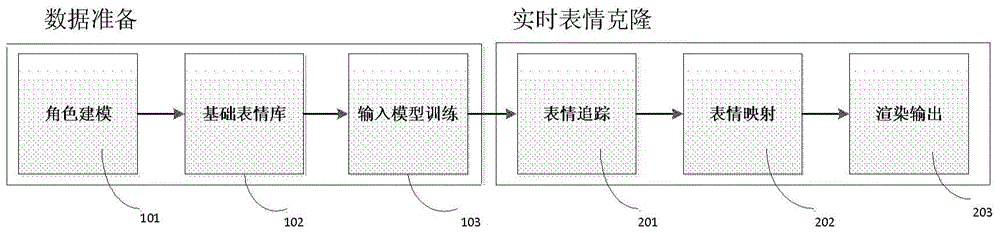

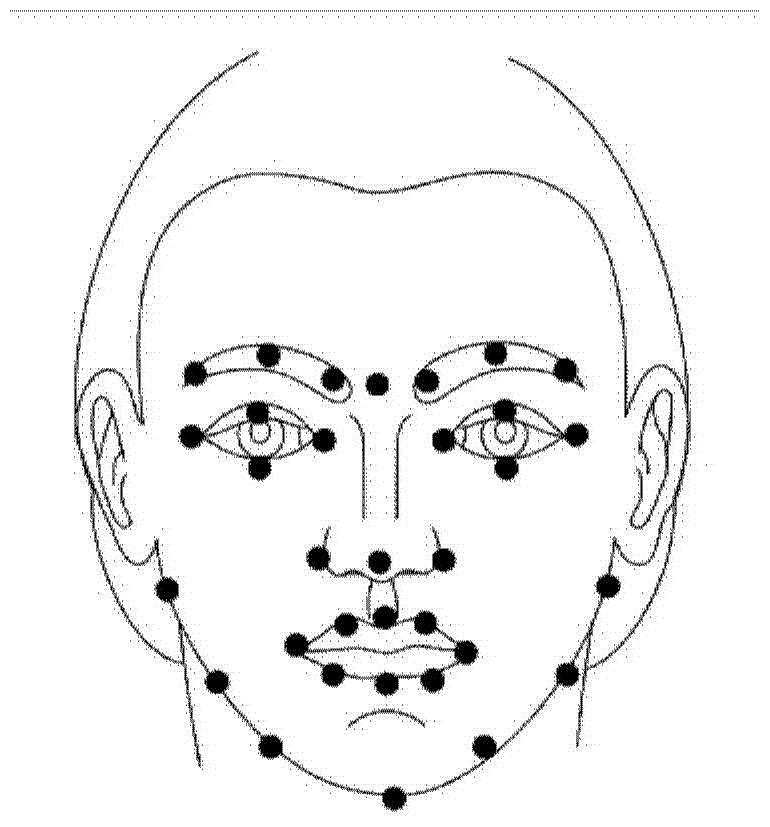

Expression cloning method and device capable of realizing real-time interaction with virtual character

The invention discloses an expression cloning method and device capable of realizing real-time interaction with a virtual character and belongs to the fields such as computer graphics and virtual reality. The method includes the following steps that: 1, modeling and skeleton binding are performed on the virtual character; 2, the basic expression base of the virtual character is established; 3, expression input training is carried out: the maximum displacement of facial feature points under each basic expression is recorded; 4, expression tracking is carried out: the facial expression change of a real person is recorded through motion capture equipment, and the weights of the basic expressions are obtained through calculation; 5, expression mapping is carried out: the obtained weights of the basic expressions are transferred to the virtual character in real time, and rotation interpolation is performed on corresponding skeletons; and the real-time rendering output of the expression of the virtual character is carried out. With the method adopted, the expression of the virtual character can be synthesized rapidly, stably and vividly, so that the virtual character can perform expression interaction with the real person stably in real time.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

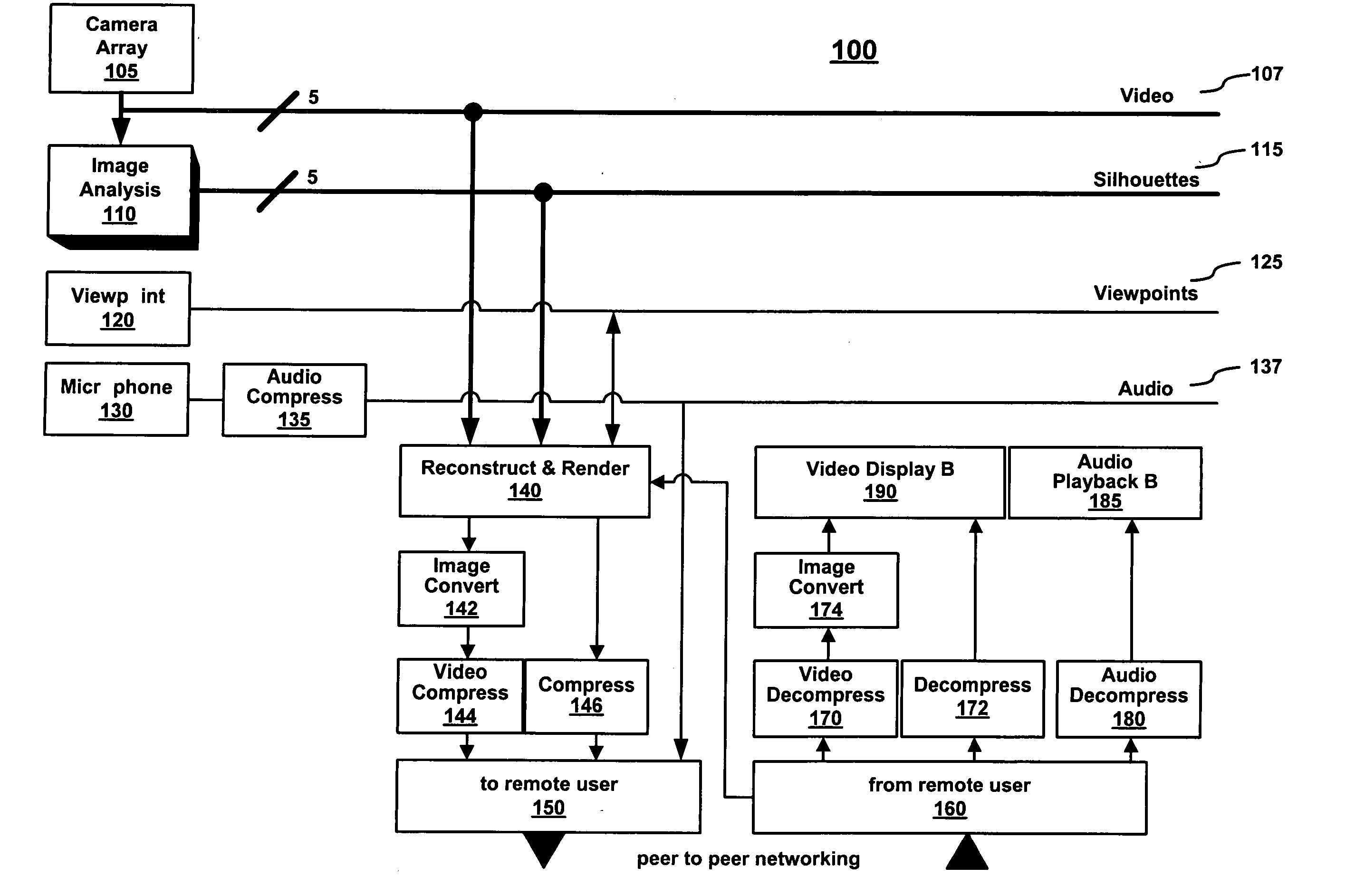

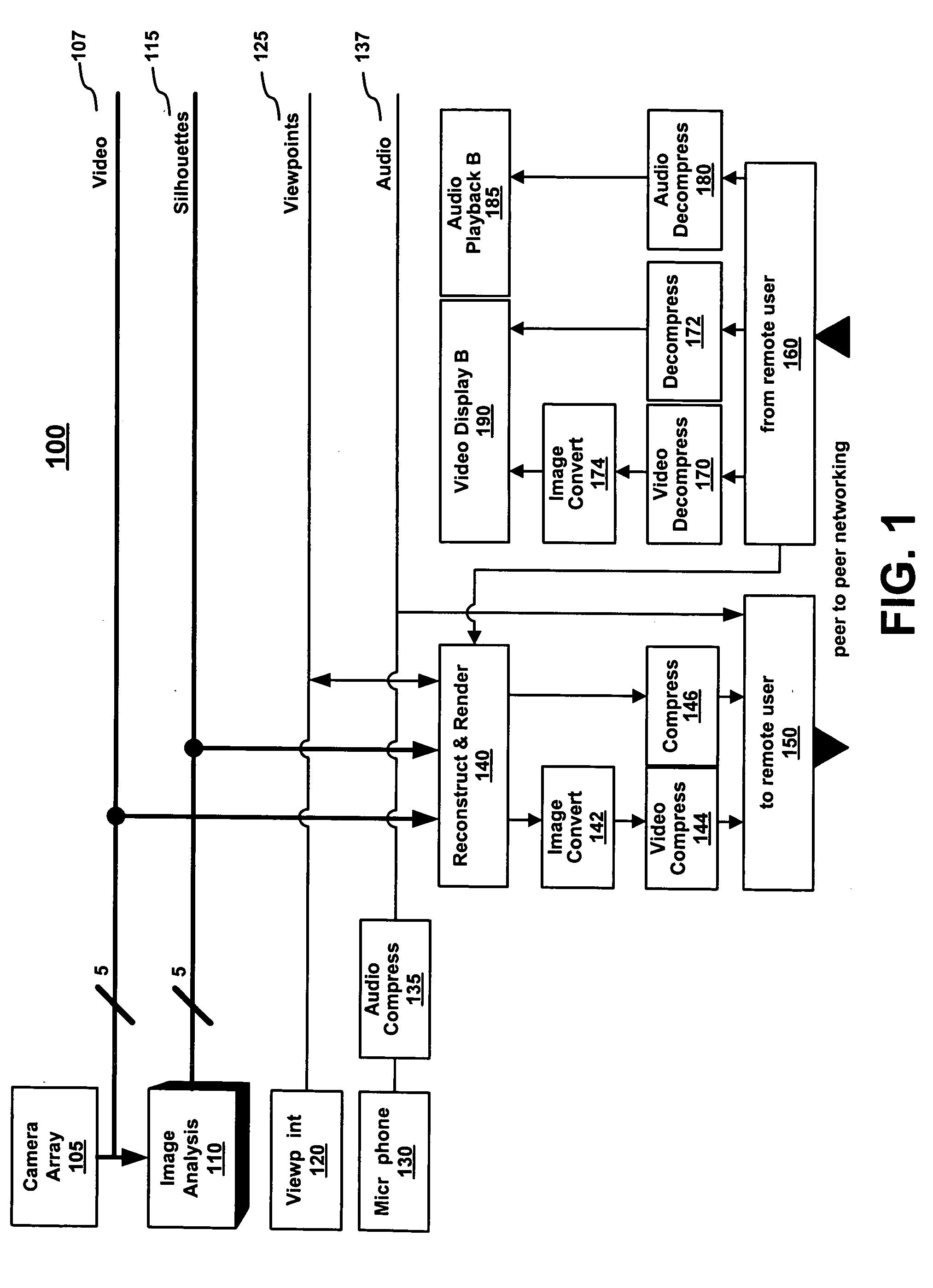

Method and system for real-time rendering within a gaming environment

InactiveUS20050085296A1Television conference systemsTwo-way working systemsViewpointsComputer graphics (images)

A method and system for real-time rendering within a gaming environment. Specifically, one embodiment of the present invention discloses a method of rendering a local participant within an interactive gaming environment. The method begins by capturing a plurality of real-time video streams of a local participant from a plurality of camera viewpoints. From the plurality of video streams, a new view synthesis technique is applied to generate a rendering of the local participant. The rendering is generated from a perspective of a remote participant located remotely in the gaming environment. The rendering is then sent to the remote participant for viewing.

Owner:HEWLETT PACKARD DEV CO LP

Method and system for real-time synchronization across a distributed services communication network

InactiveUS8559319B2Easy data transferQuality improvementMultiplex system selection arrangementsSpecial service provision for substationData synchronizationDistributed services

A system for progressively synchronizing stored copies of indexed media transmitted between nodes on a network. The system includes a transmitter at the sending node configured to progressively transmit available indexed media to a receiving node with a packet size and packetization interval sufficient to enable the near real-time rendering of the indexed media, wherein the near real-time rendering of the indexed media provides a recipient with an experience of reviewing the transmitted media live. The system also includes a receiver at the receiving node that progressively receives the transmitted indexed media and continually notes any indexed media that is not already locally stored at the receiving node. The receiver also continually generates and transmits to the sending node requests as needed for the noted indexed media. In response, the transmitter at the sending node transmits the noted indexed media to the receiving node. Both the sending node and the receiving node have storage elements configured to store the indexed media respectively. As a result, both the sending node and the receiving node each have synchronized copies of the indexed media.

Owner:VOXER IP

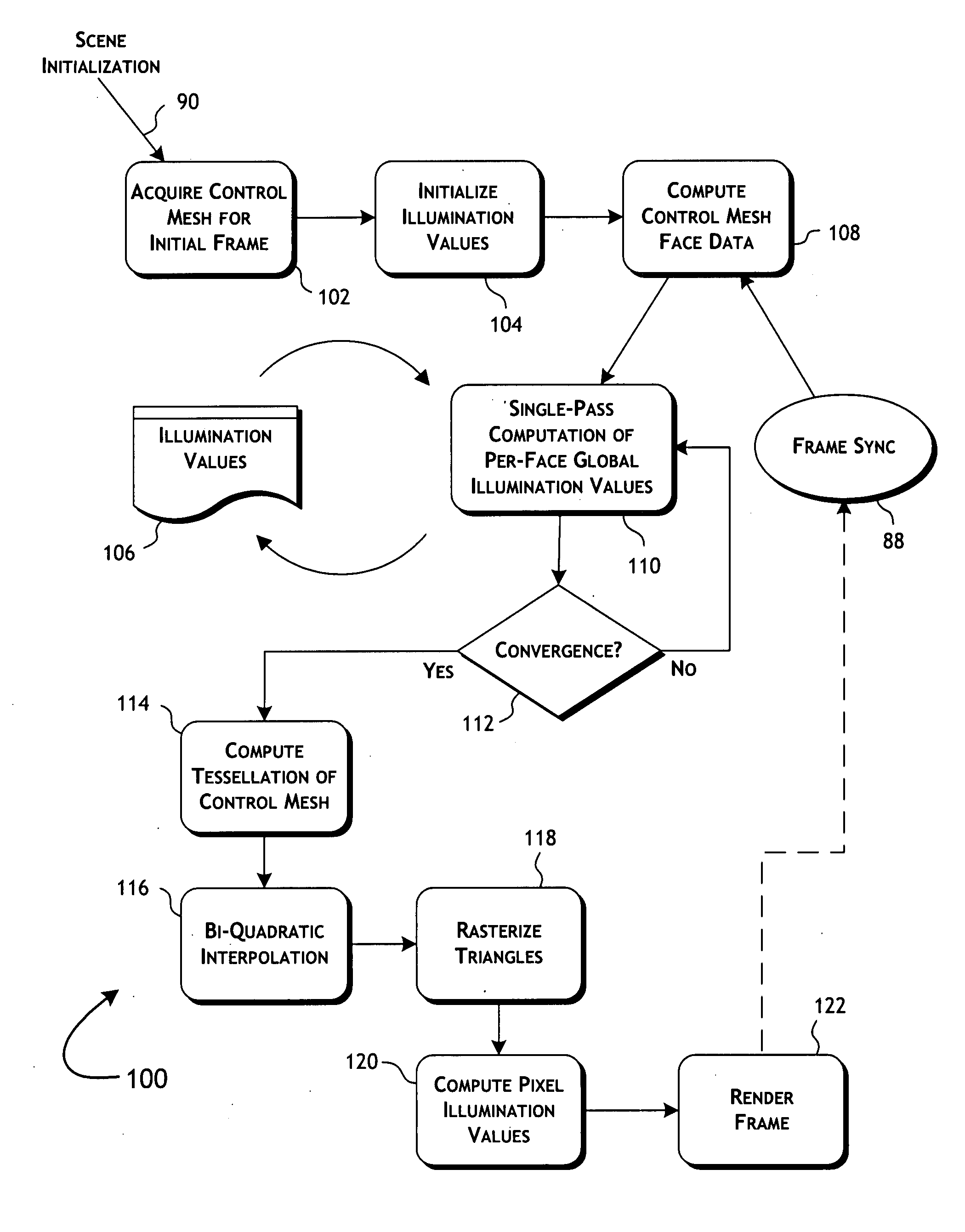

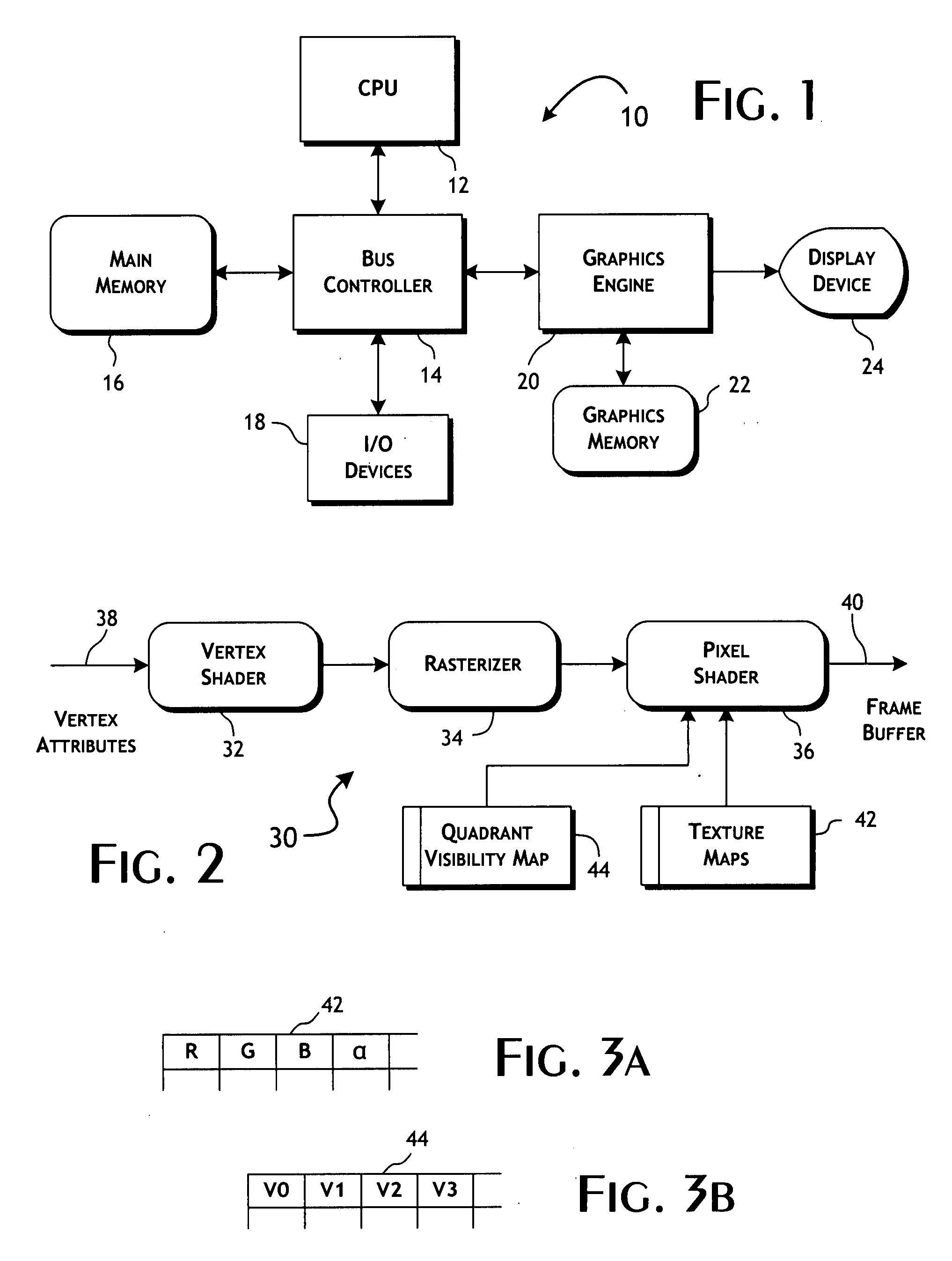

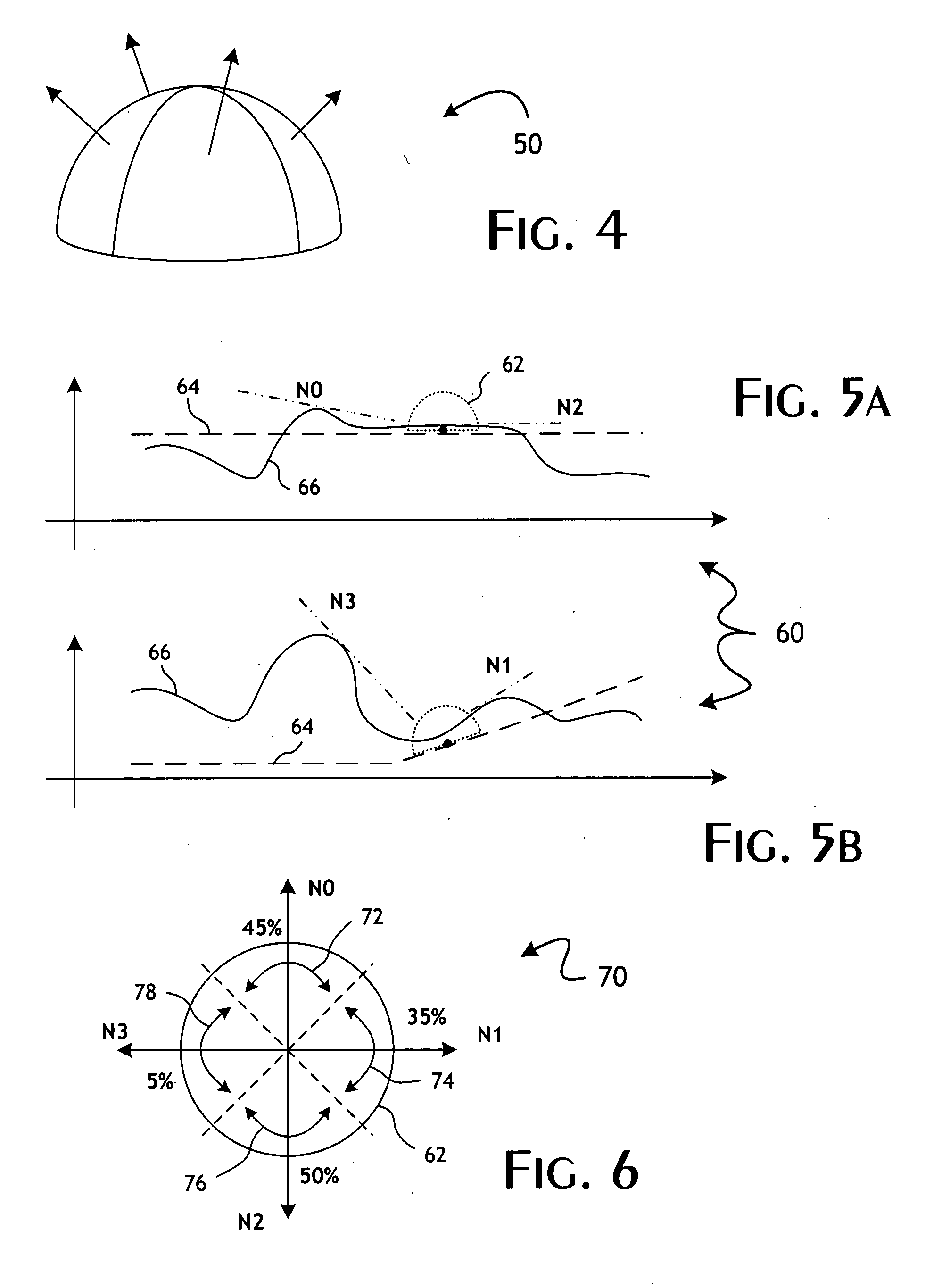

System and methods for real-time rendering of deformable geometry with global illumination

InactiveUS20080018647A1Increase the amount of calculationOptimally balanced useCathode-ray tube indicatorsAnimationGraphicsAnimation

Frames of image data representative of a scene containing deformable geometry subject to global illumination are computed in real-time. Frame animation data is computed for a series of frames representative, in animated sequence, of a scene containing deformable geometry. The frame animation data includes vertex attributes of a control mesh descriptive of the three dimensional surface of graphical elements occurring within the scene. Based on the frame animation data, respective illumination values are computed for the individual polygons of the control mesh to determine the global illumination of the scene. The computation is performed iteratively with respect to each frame set of frame animation data until a qualified convergence of global illumination is achieved. Within each iteration, the respective illumination values are determined based on the frame-to-frame coherent polygon illumination values determined in a prior iteration. Qualified convergence is achieved within the frame-to-frame interval defined by a real-time frame rate.

Owner:INTEL CORP

Real-time rendering system and process for interactive viewpoint video

A system and process for rendering and displaying an interactive viewpoint video is presented in which a user can watch a dynamic scene while manipulating (freezing, slowing down, or reversing) time and changing the viewpoint at will. The ability to interactively control viewpoint while watching a video is an exciting new application for image-based rendering. Because any intermediate view can be synthesized at any time, with the potential for space-time manipulation, this type of video has been dubbed interactive viewpoint video.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com