Method for processing cosmically complex three-dimensional scene based on eight-fork tree

A three-dimensional scene, large-scale technology, applied in the direction of image data processing, 3D image processing, instruments, etc., to achieve the effect of easy organization and expansion, increased speed, and improved rendering speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] A preferred embodiment of the present invention is described as follows in conjunction with accompanying drawing:

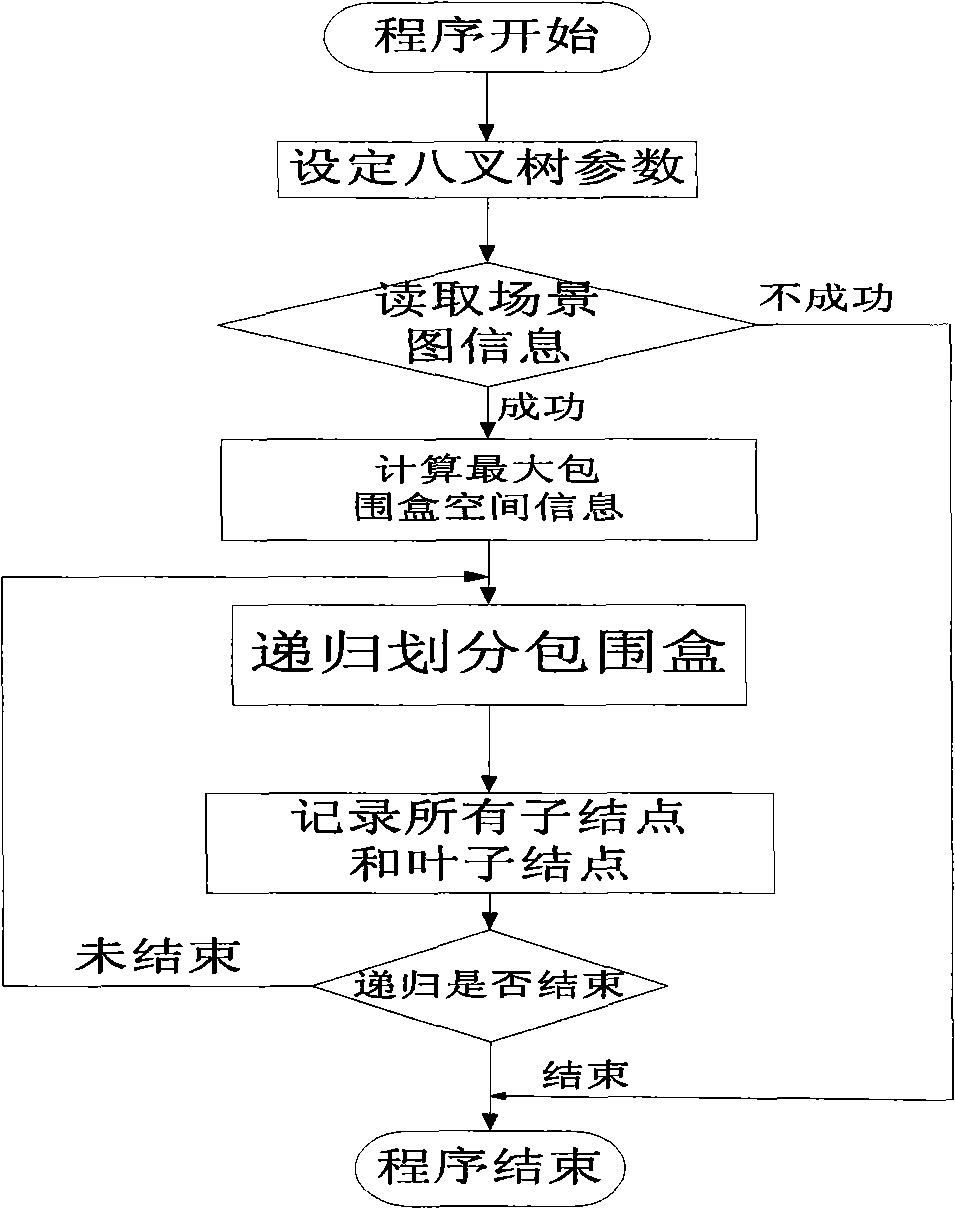

[0032] This large-scale complex 3D scene processing method based on octree is divided into four steps:

[0033] Step 1: Load a large-scale 3D scene, and use a scene graph to organize all elements in the scene.

[0034] For a graphics rendering engine, how to organize the elements in the scene is a crucial issue. A good scene organization method is not only conducive to memory management, but also can speed up the rendering of the scene. The present invention adopts the scene graph to organize the scenes. This scene organization mode is not only beneficial to memory management and reduces memory overhead, but also can accelerate scene rendering, and is also conducive to the realization of real-time interaction.

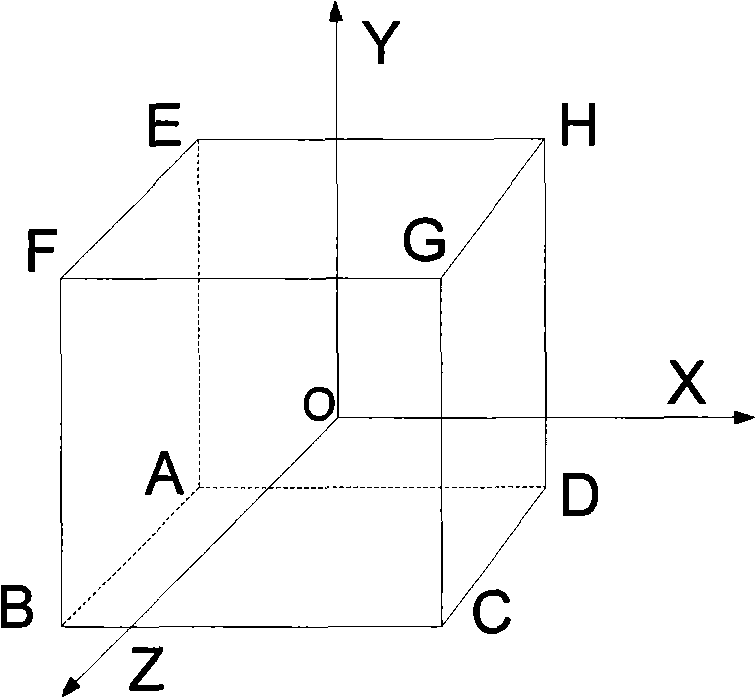

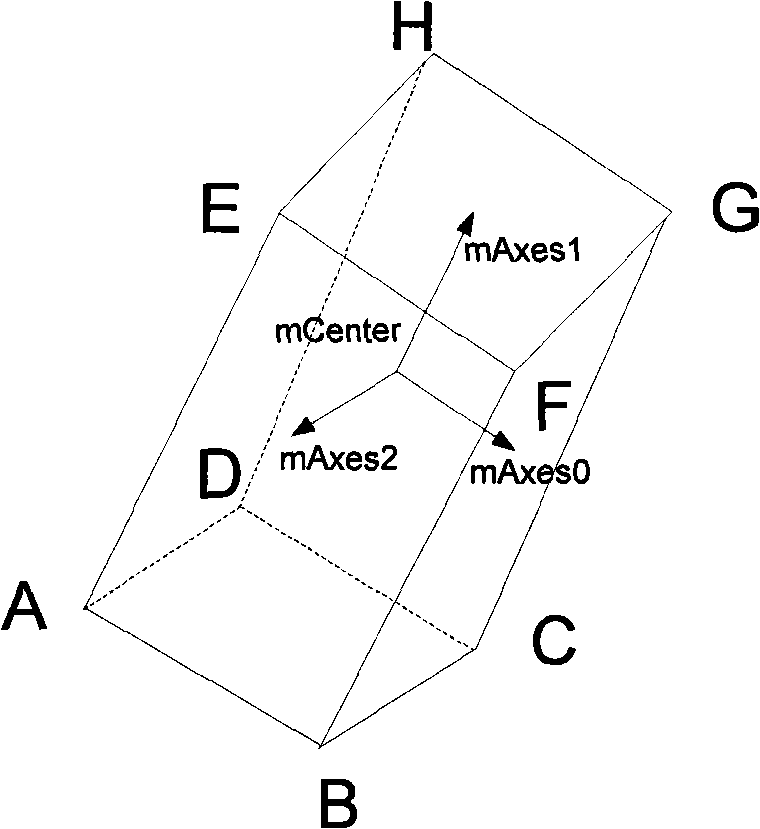

[0035] Step 2: Establish and generate the octree structure of the scene, and record relevant information.

[0036] 1. Establish the basic data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com