Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

81 results about "Memory compaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory compaction is the process of relocating active pages in memory in order to create larger, physically contiguous regions — memory defragmentation, in other words. It is useful in a number of ways, not the least of which is making huge pages available.

Managing a codec engine for memory compression/decompression operations using a data movement engine

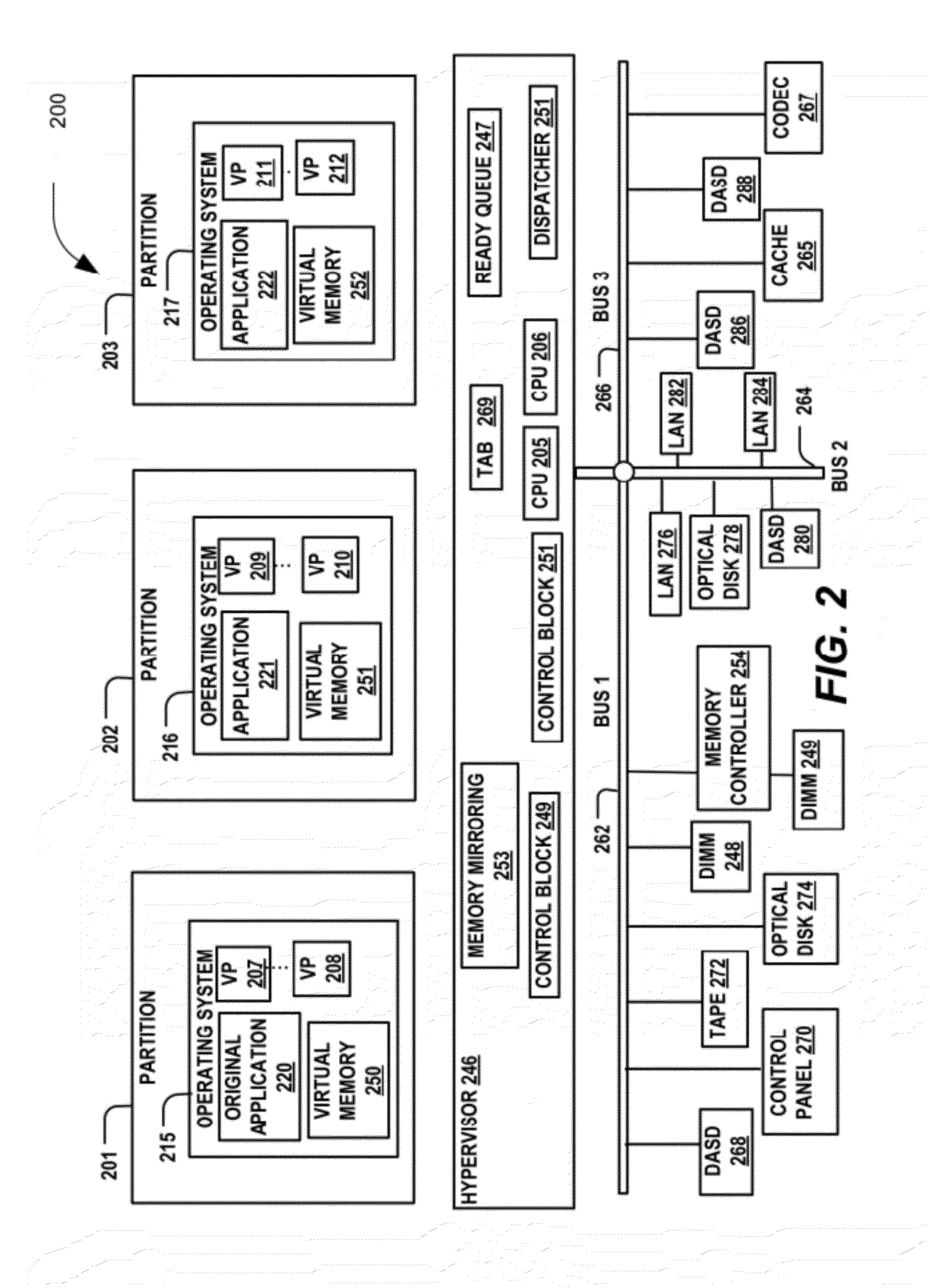

InactiveUS7089391B2Memory architecture accessing/allocationMemory adressing/allocation/relocationComputerized systemMemory controller

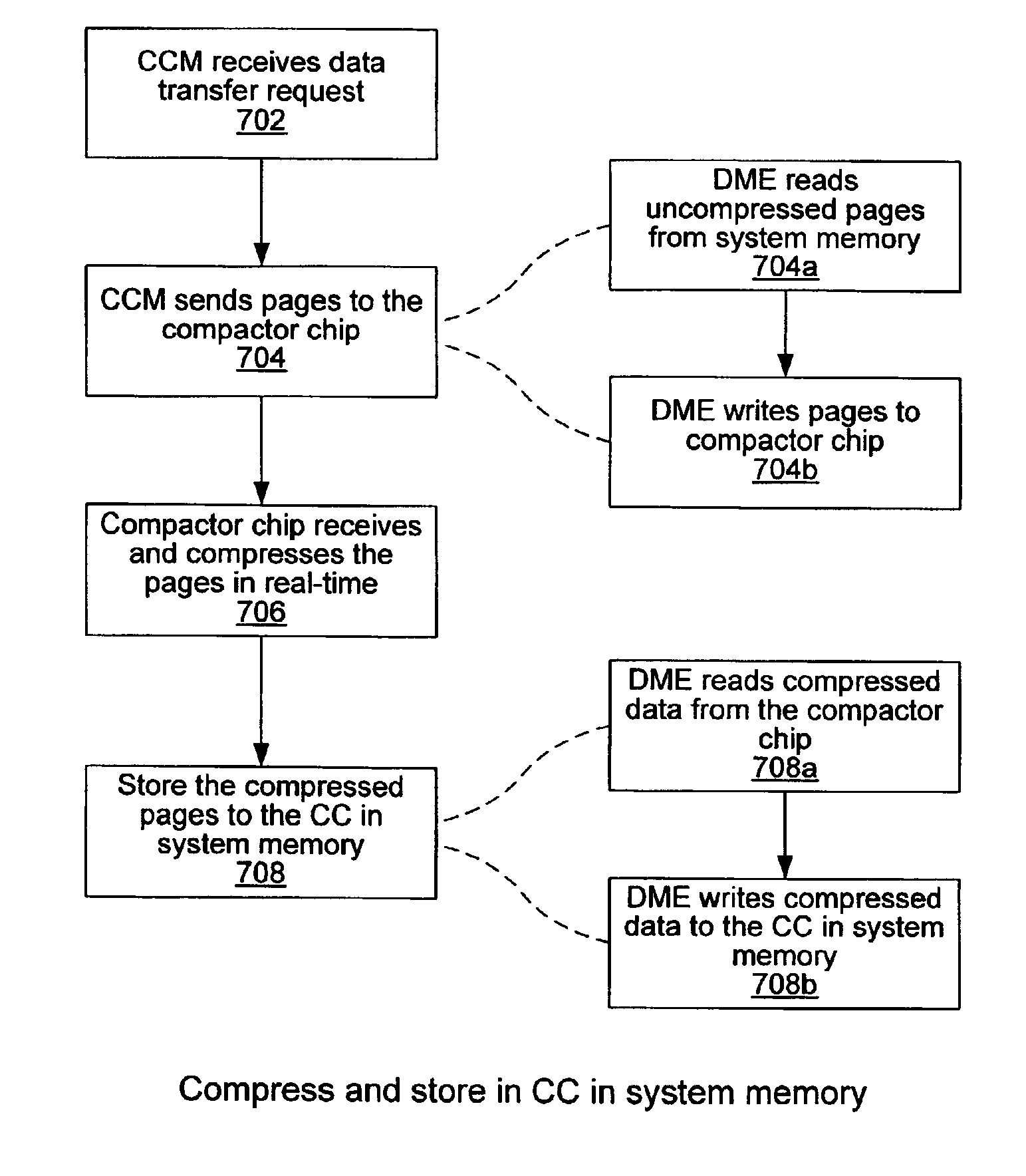

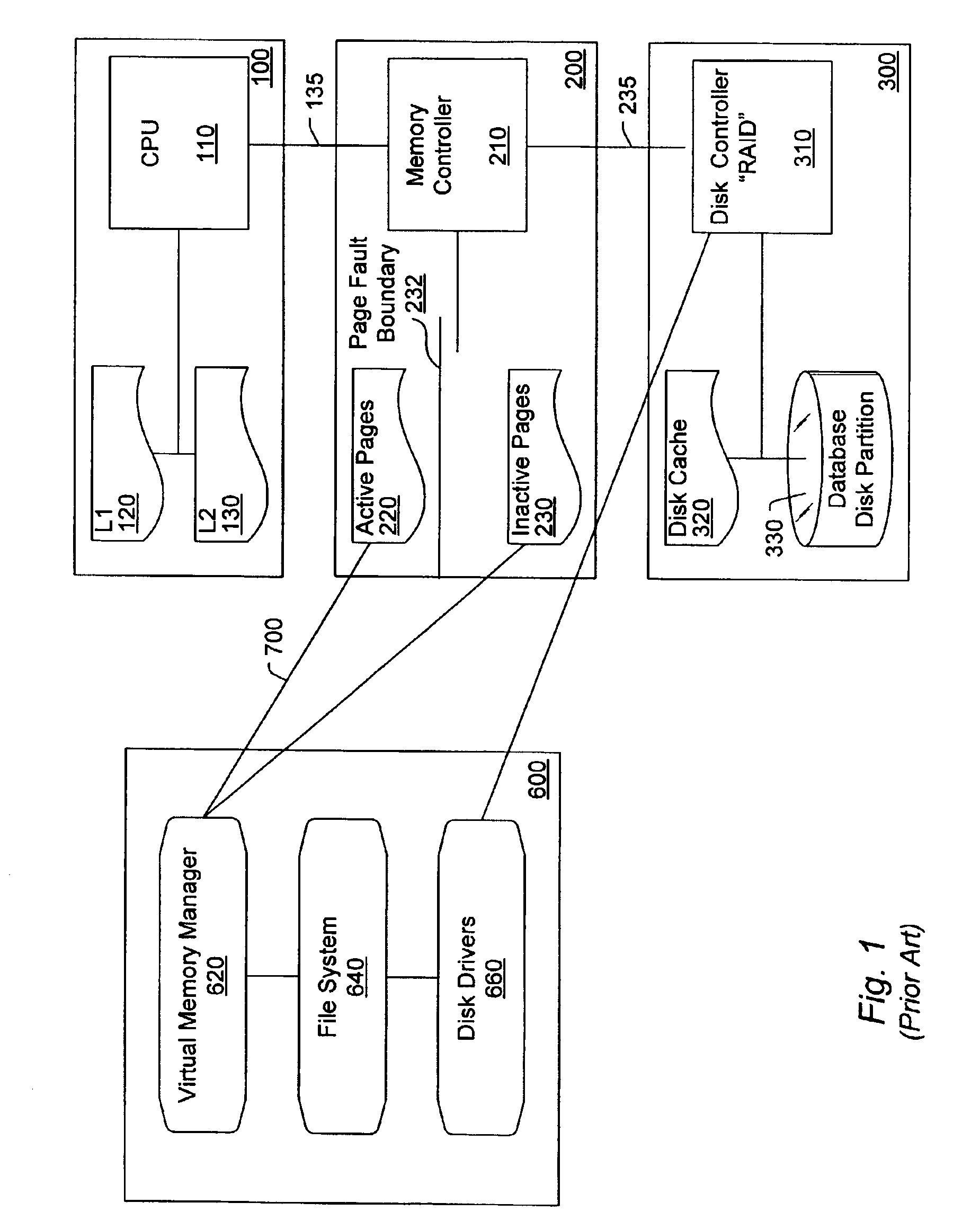

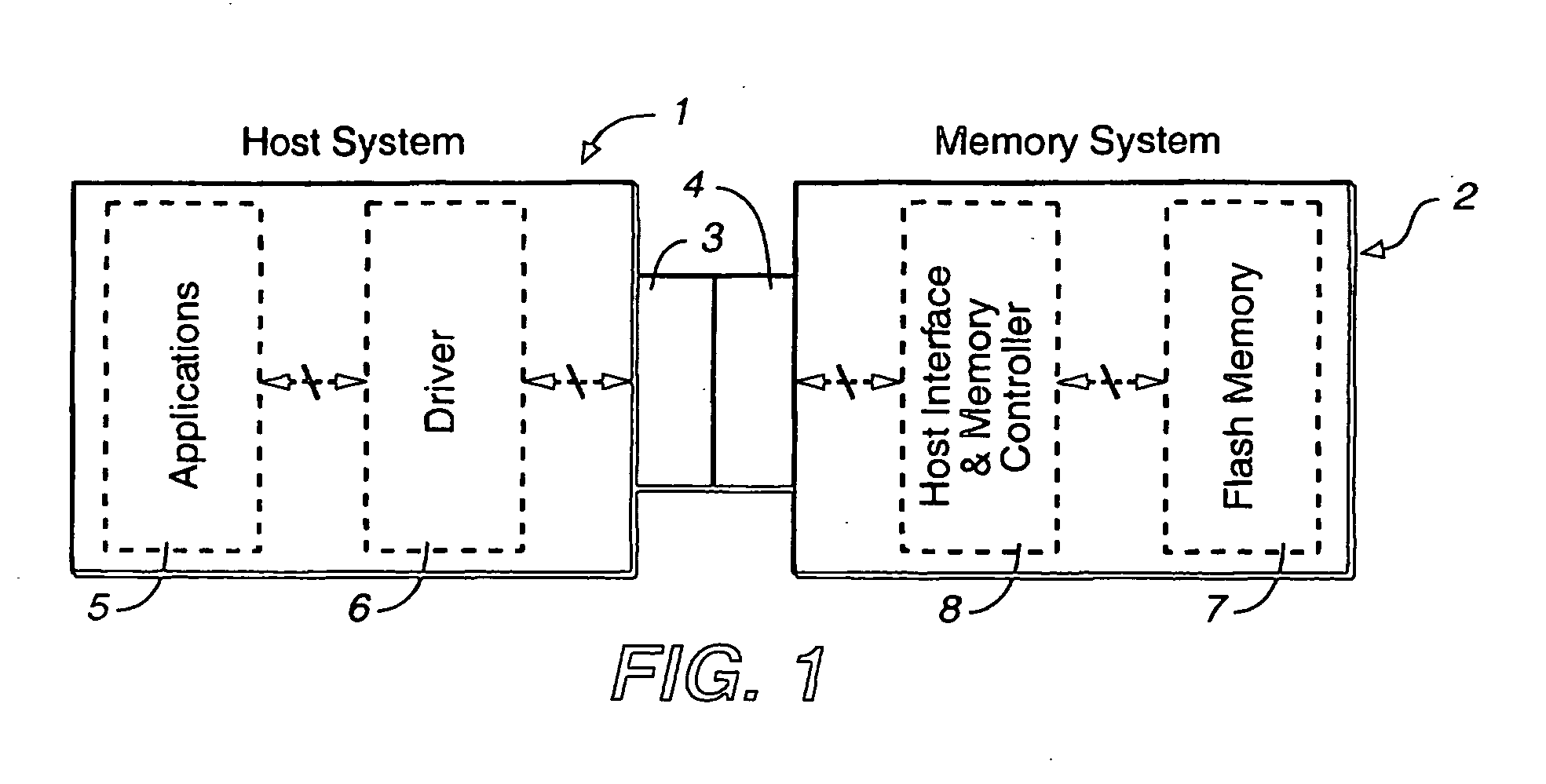

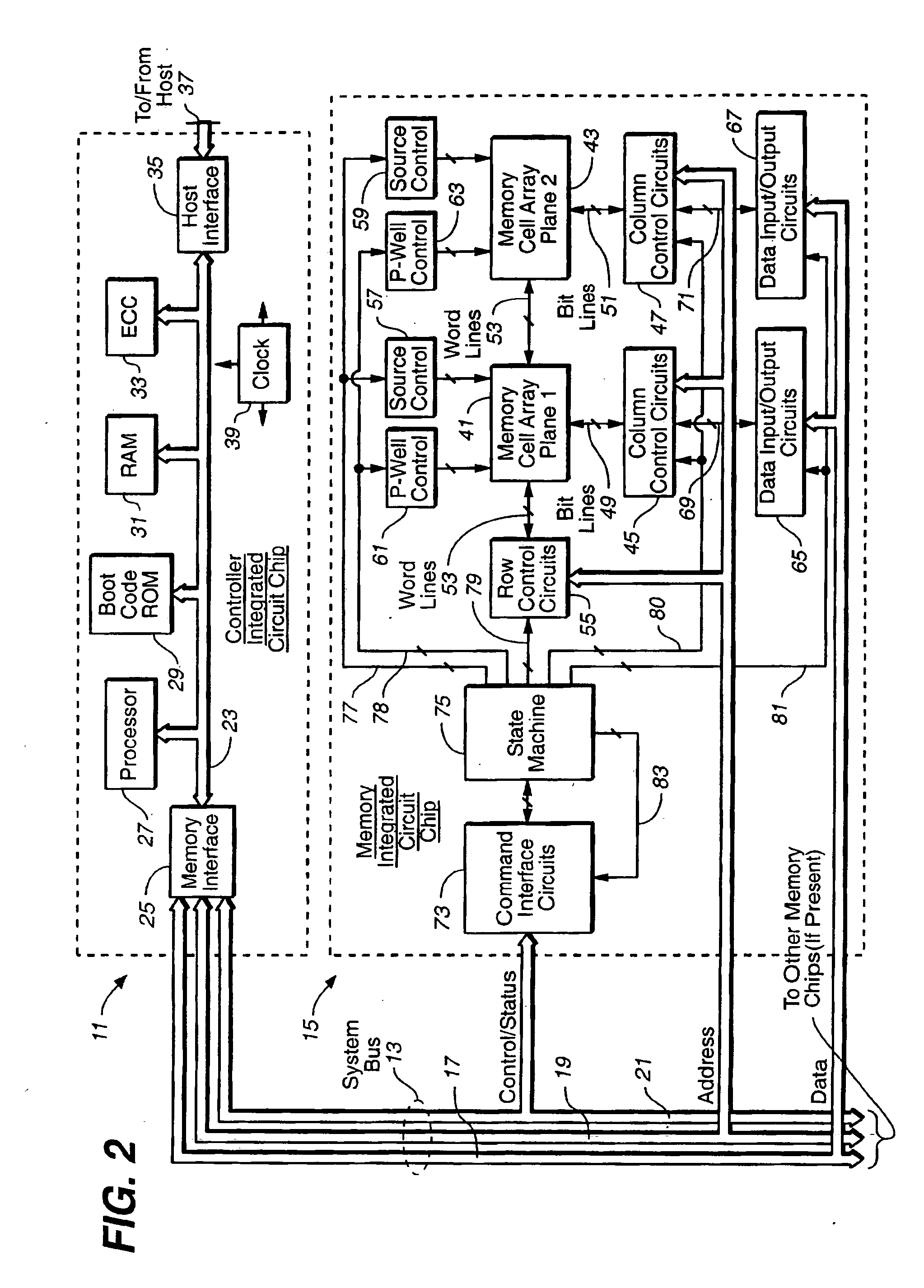

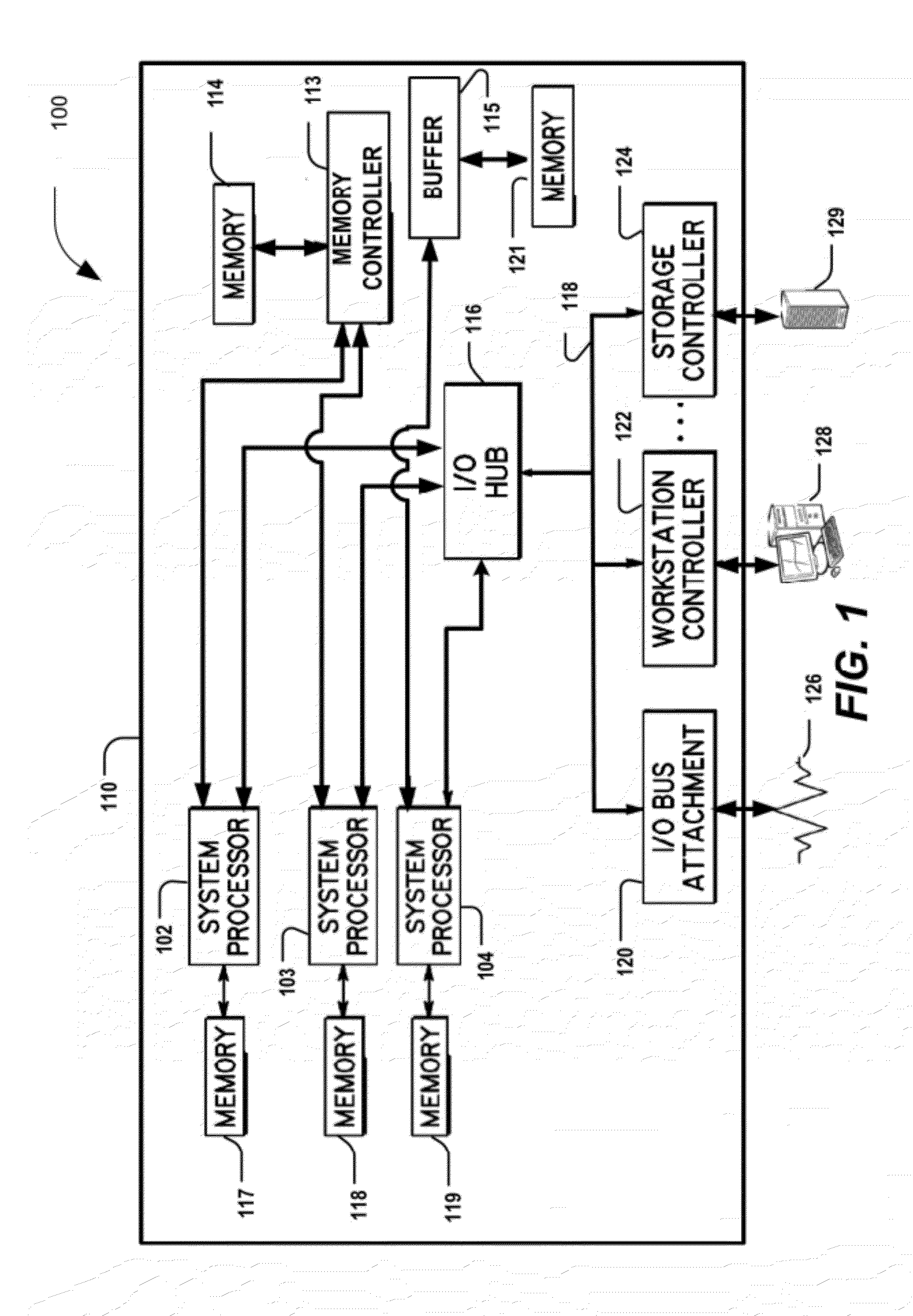

A system and method for managing a functional unit in a system using a data movement engine. An exemplary system may comprise a CPU coupled to a memory controller. The memory controller may include or couple to a data movement engine (DME). The memory controller may in turn couple to a system memory or other device which includes at least one functional unit. The DME may operate to transfer data to / from the system memory and / or the functional unit, as described herein. In one embodiment, the DME may also include multiple DME channels or multiple DME contexts. The DME may operate to direct the functional unit to perform operations on data in the system memory. For example, the DME may read source data from the system memory, the DME may then write the source data to the functional unit, the functional unit may operate on the data to produce modified data, the DME may then read the modified data from the functional unit, and the DME may then write the modified data to a destination in the system memory. Thus the DME may direct the functional unit to perform an operation on data in system memory using four data movement operations. The DME may also perform various other data movement operations in the computer system, e.g., data movement operations that are not involved with operation of the functional unit.

Owner:INTELLECTUAL VENTURES I LLC

Methods for adaptive file data handling in non-volatile memories with a directly mapped file storage system

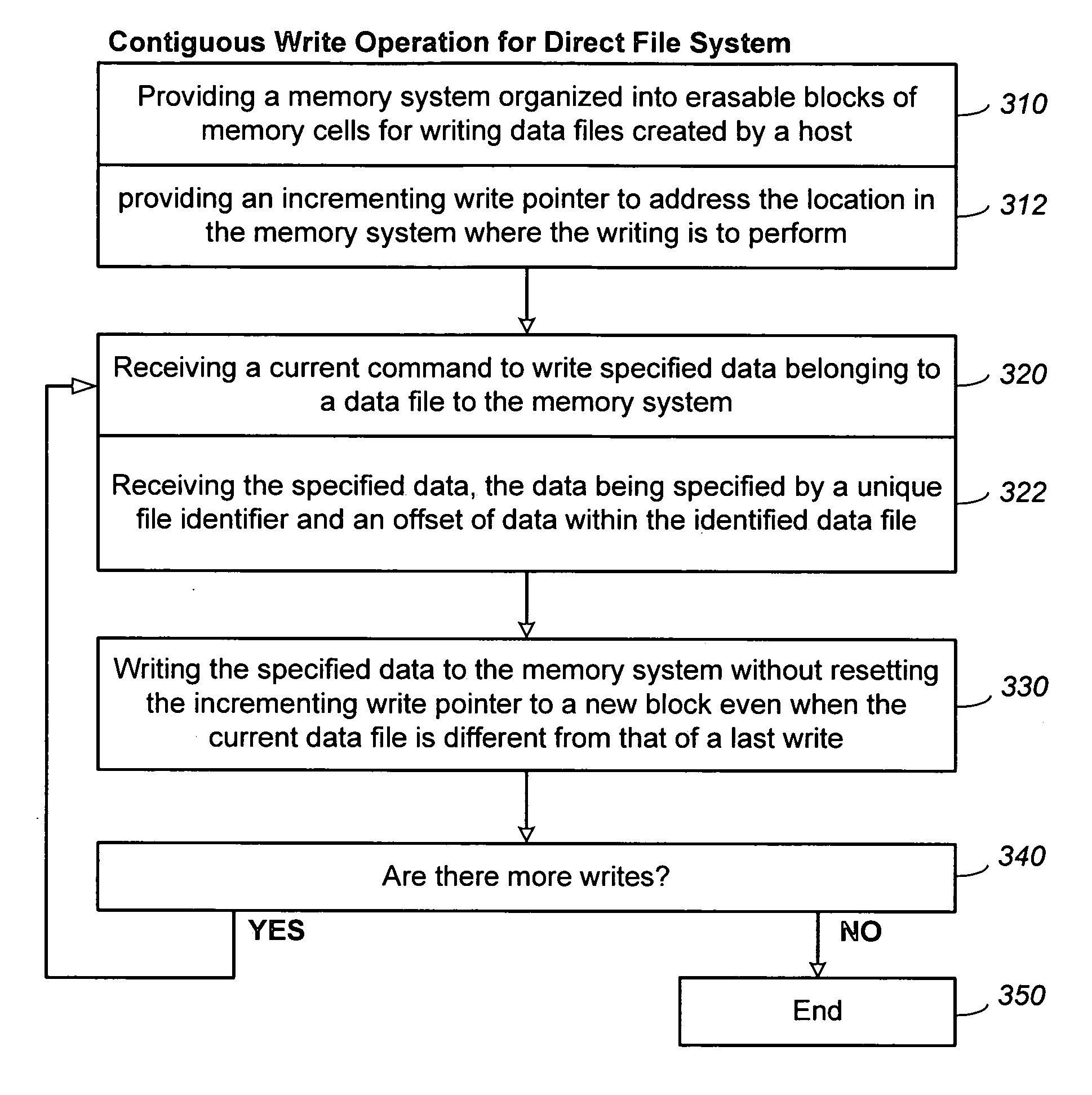

InactiveUS20070143561A1Promote high performanceImprove performanceMemory systemsData compressionWaste collection

In a memory system with a file storage system, an optimal file handling scheme is adaptively selected from a group thereof based on the attributes of the file being handled. The file attributes may be obtained from a host or derived from a history of the file had with the memory system. In one embodiment, a scheme for allocating memory locations for a write operation is dependent on an estimated size of the file to be written. In another embodiment, a scheme for allocating memory locations for a relocation operation, such as for garbage collection or data compaction, is dependent on an estimated access frequency of the file in question. In this way, the optimal handling scheme can be used for the particular file at any time.

Owner:SANDISK TECH LLC

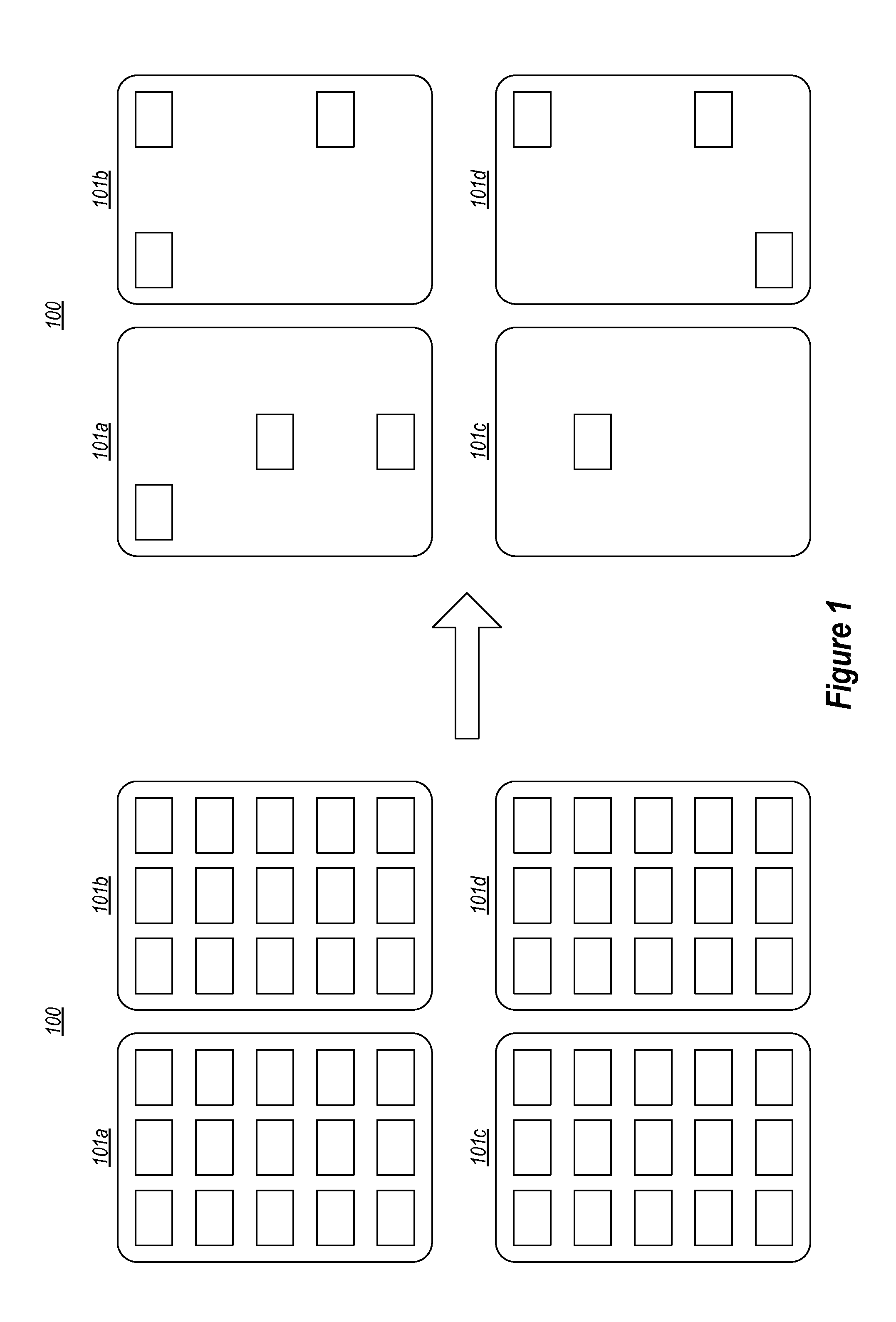

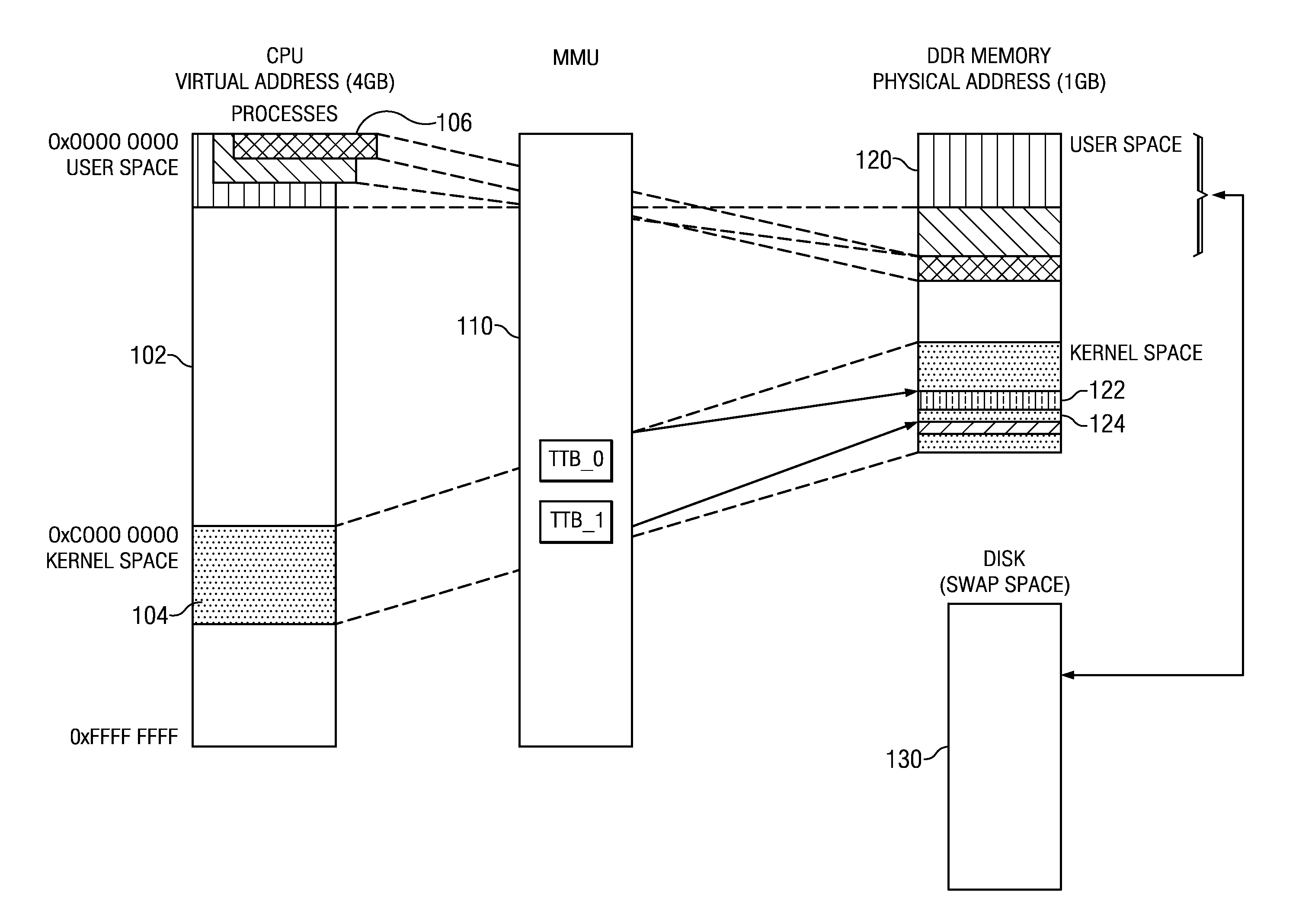

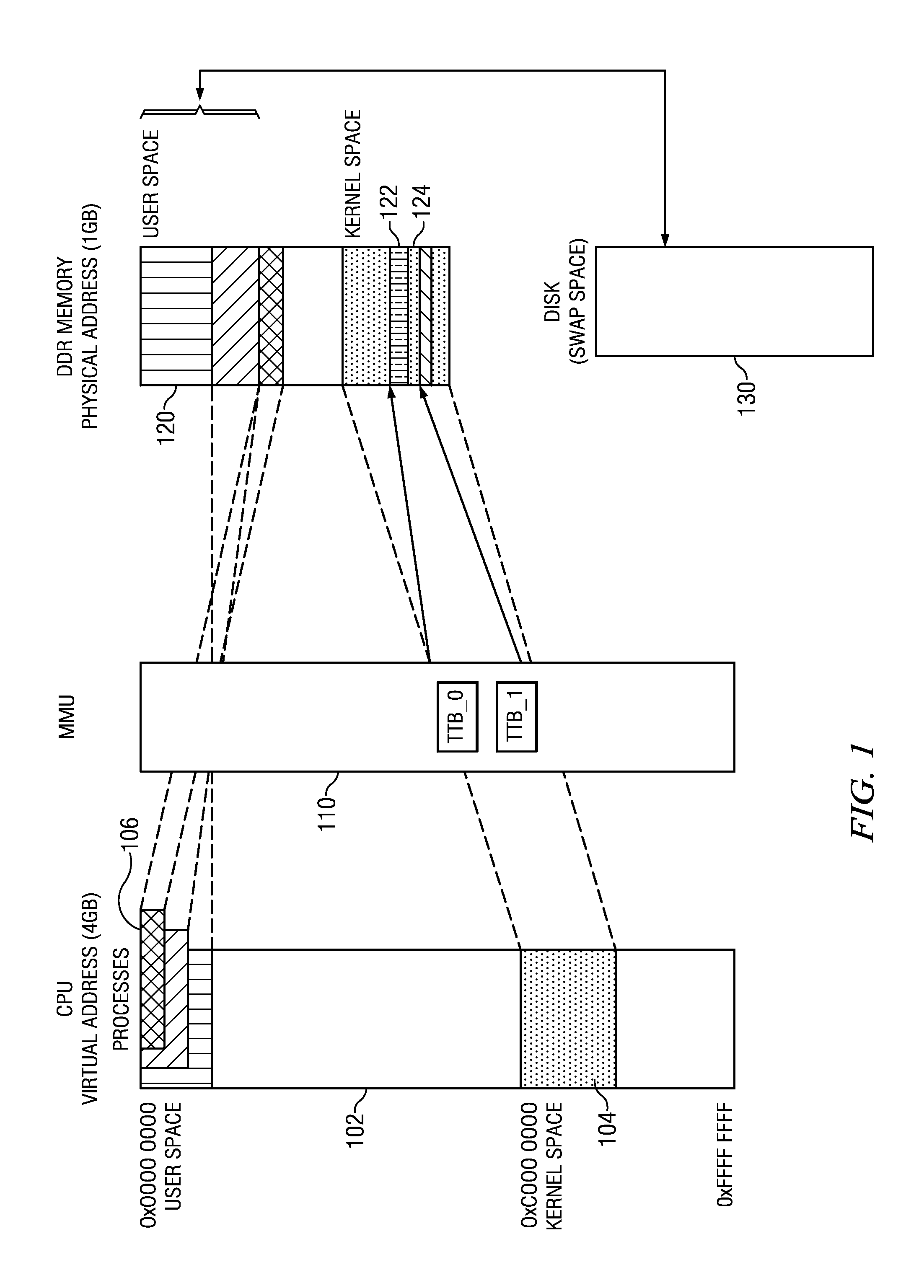

Dynamically Configurable Memory System

ActiveUS20110283071A1Memory architecture accessing/allocationEnergy efficient ICTPower modeOperational system

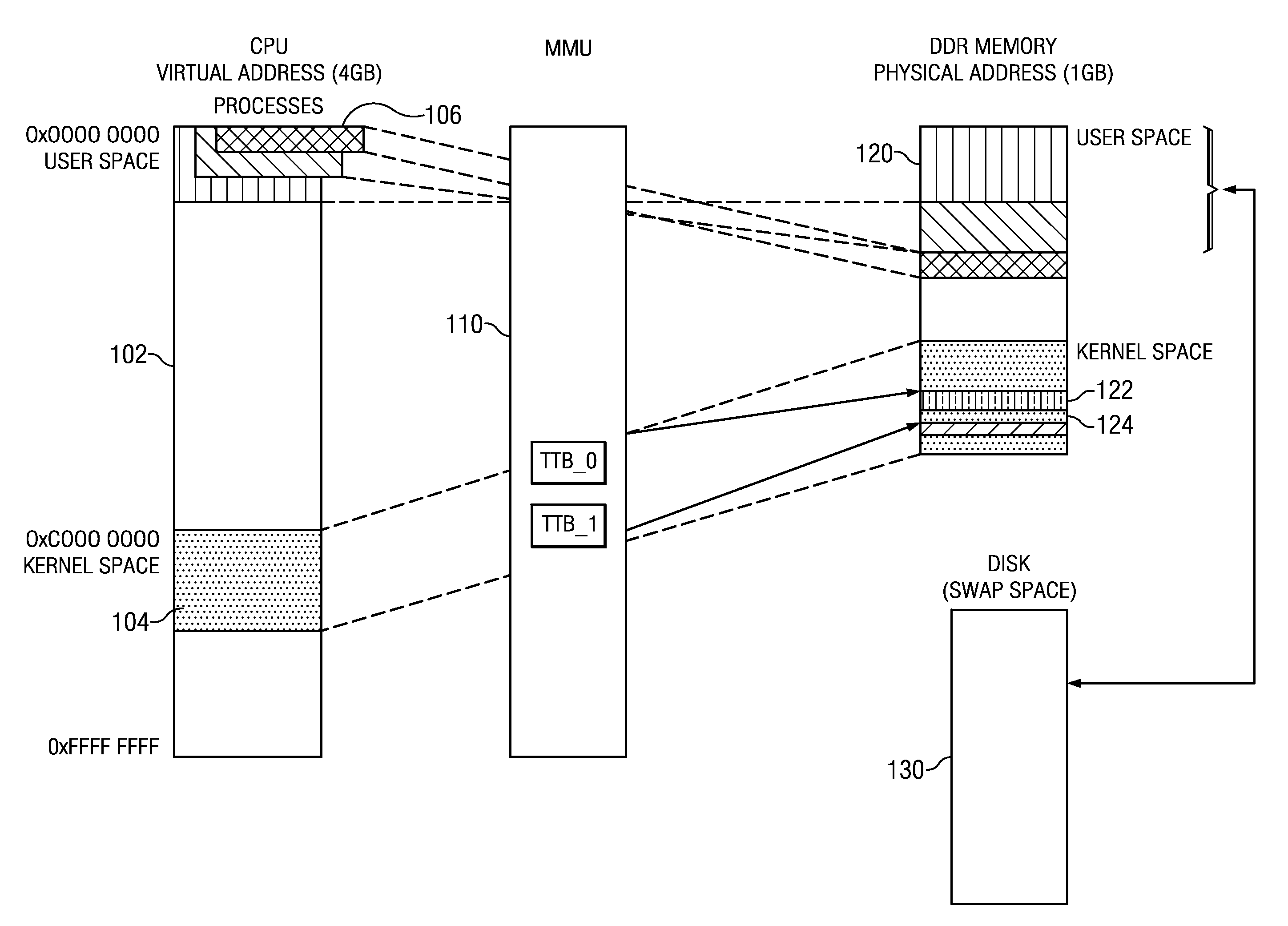

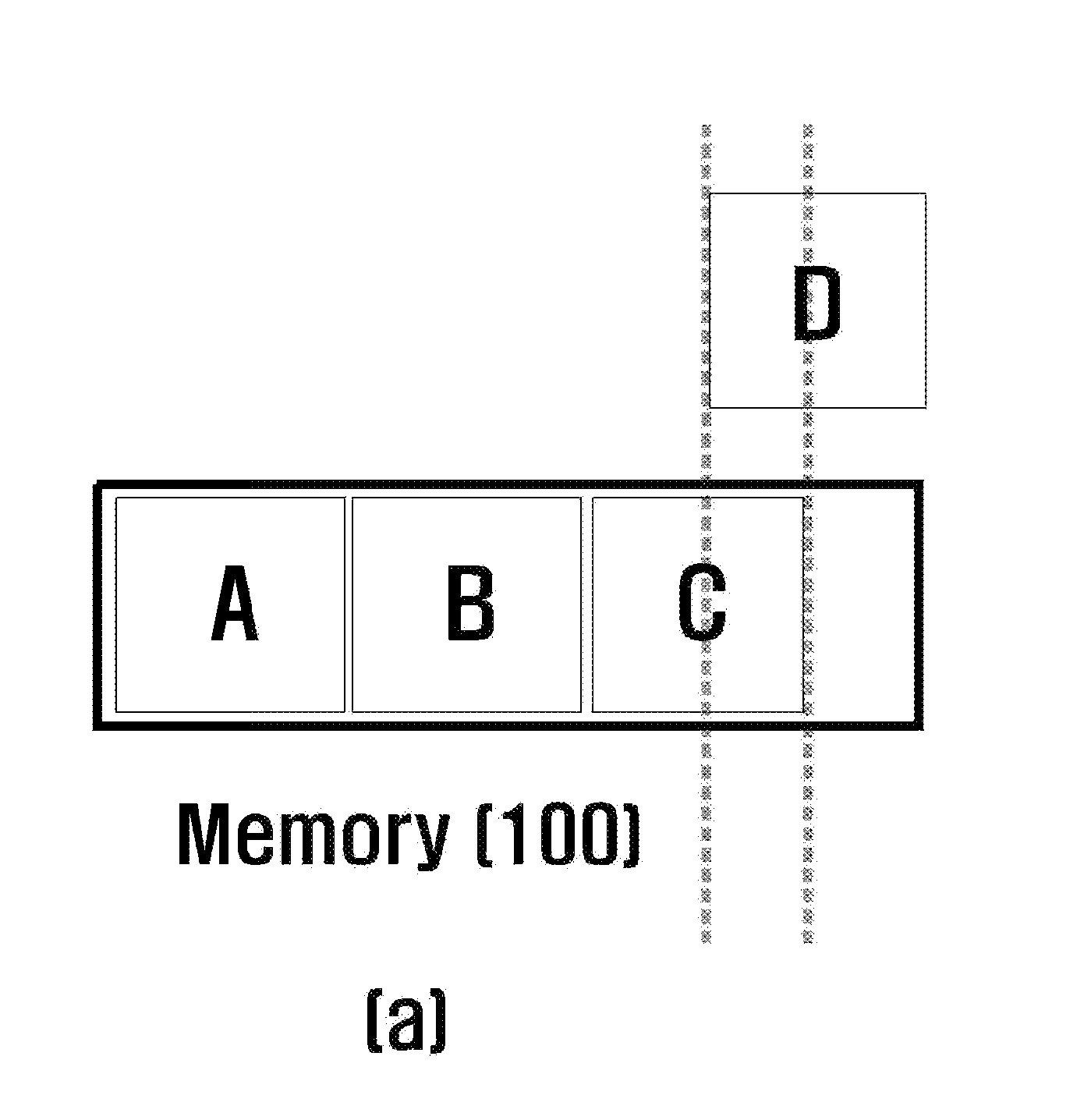

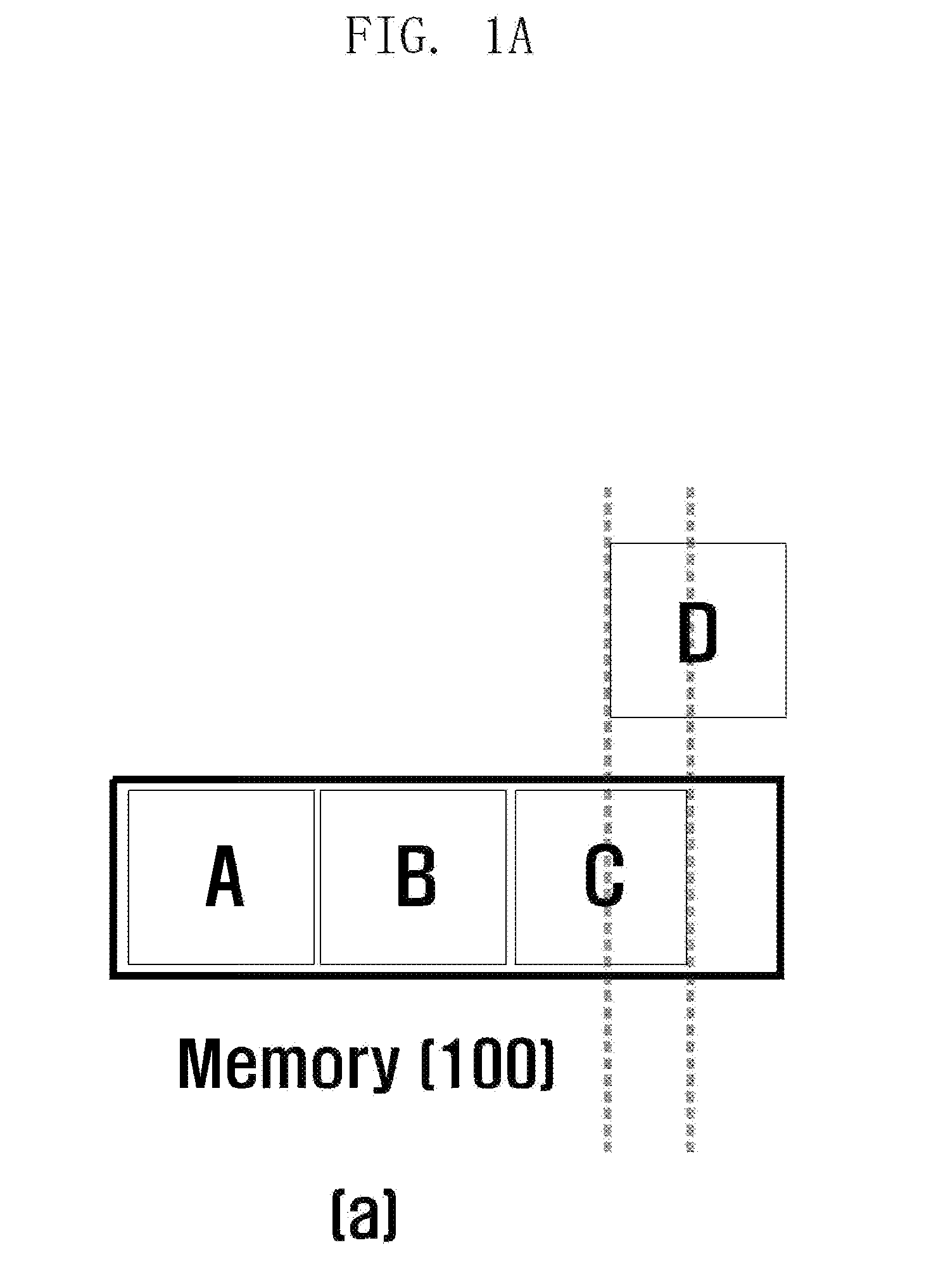

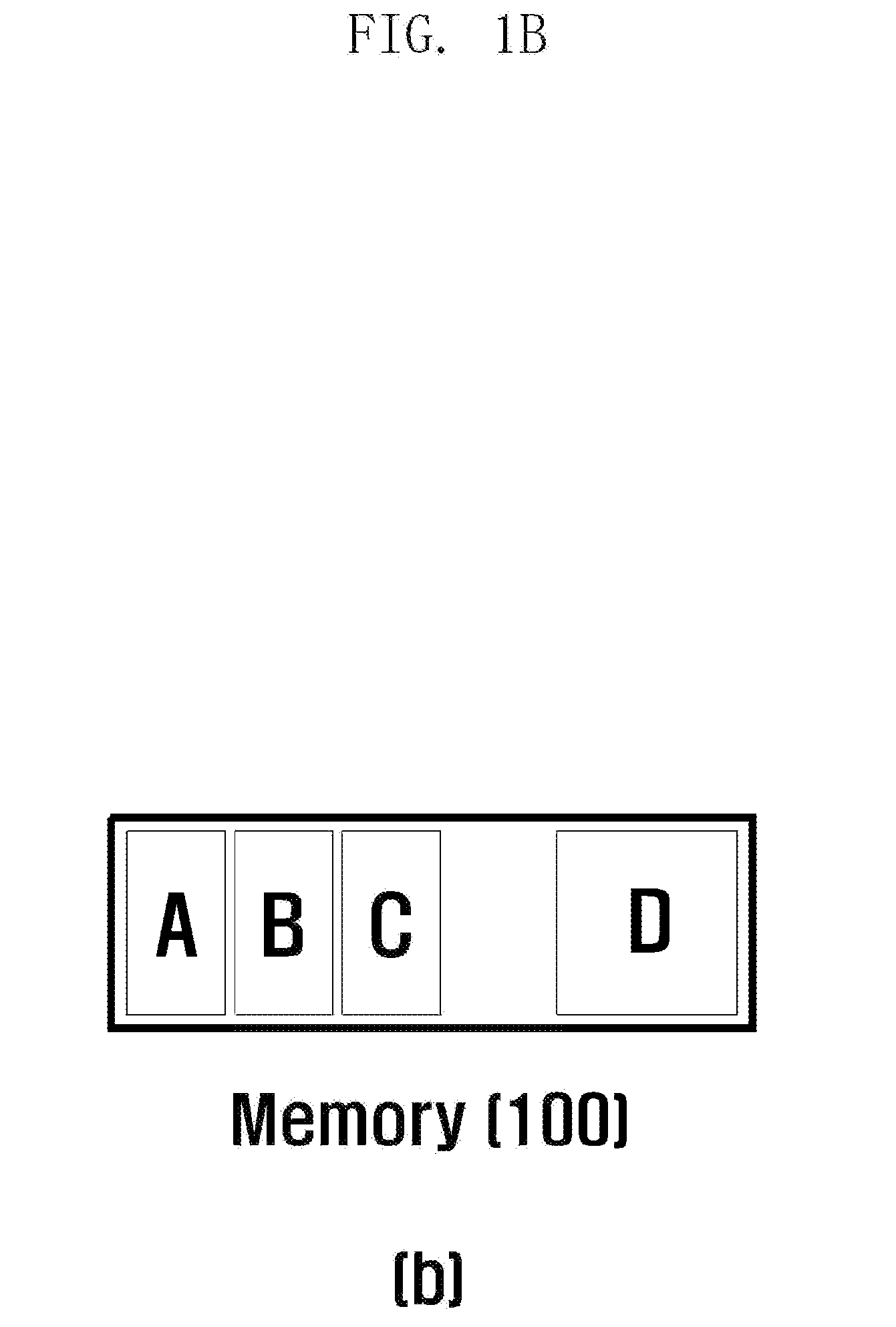

In a digital system with a processor coupled to a paged memory system, the memory system may be dynamically configured using a memory compaction manager in order to allow portions of the memory to be placed in a low power mode. As applications are executed by the processor, program instructions are copied from a non-volatile memory coupled to the processor into pages of the paged memory system under control of an operating system. Pages in the paged memory system that are not being used by the processor are periodically identified. The paged memory system is compacted by copying pages that are being used by the processor from a second region of the paged memory into a first region of the paged memory. The second region may be placed in a low power mode when it contains no pages that are being used by the processor.

Owner:TEXAS INSTR INC

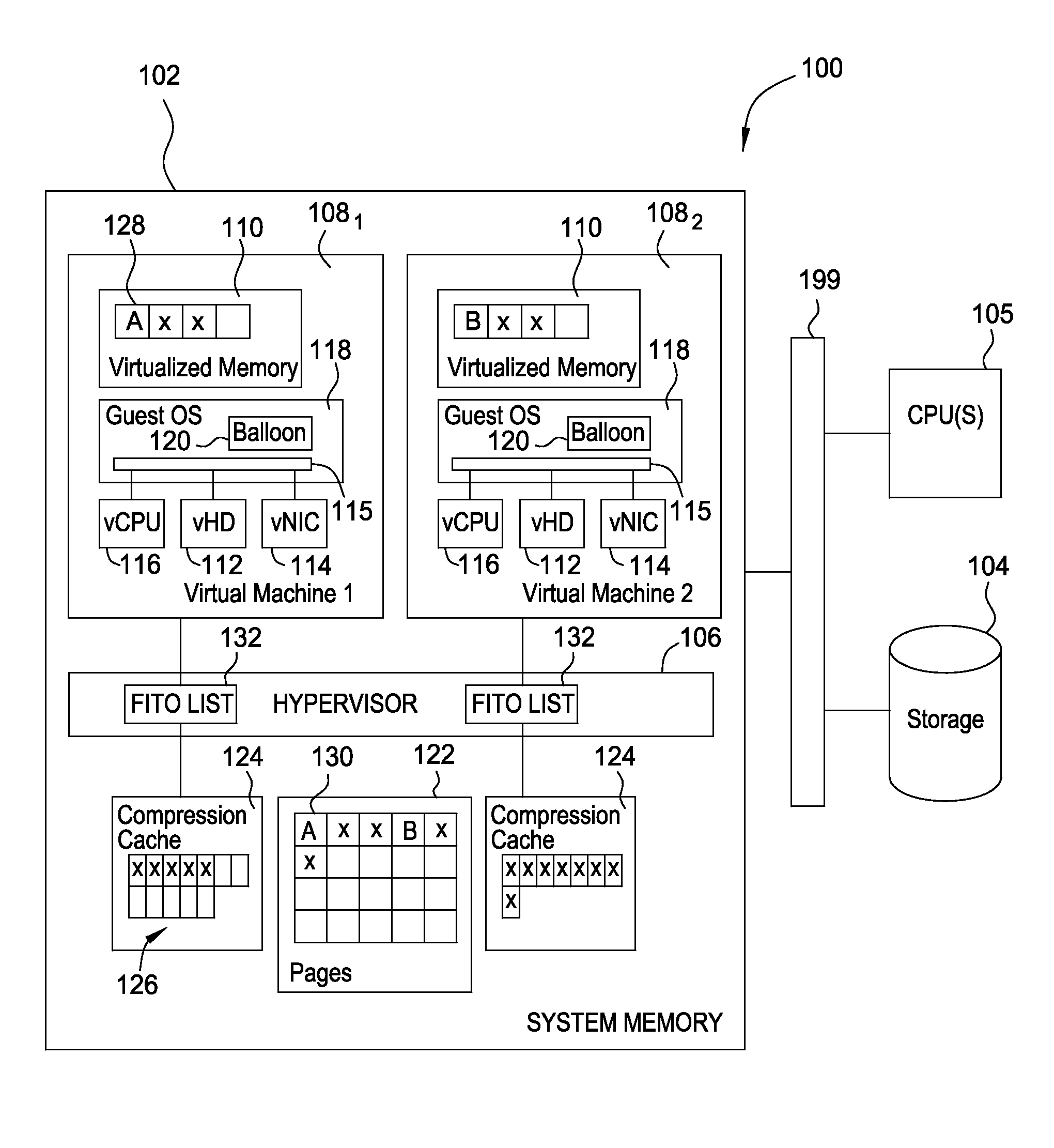

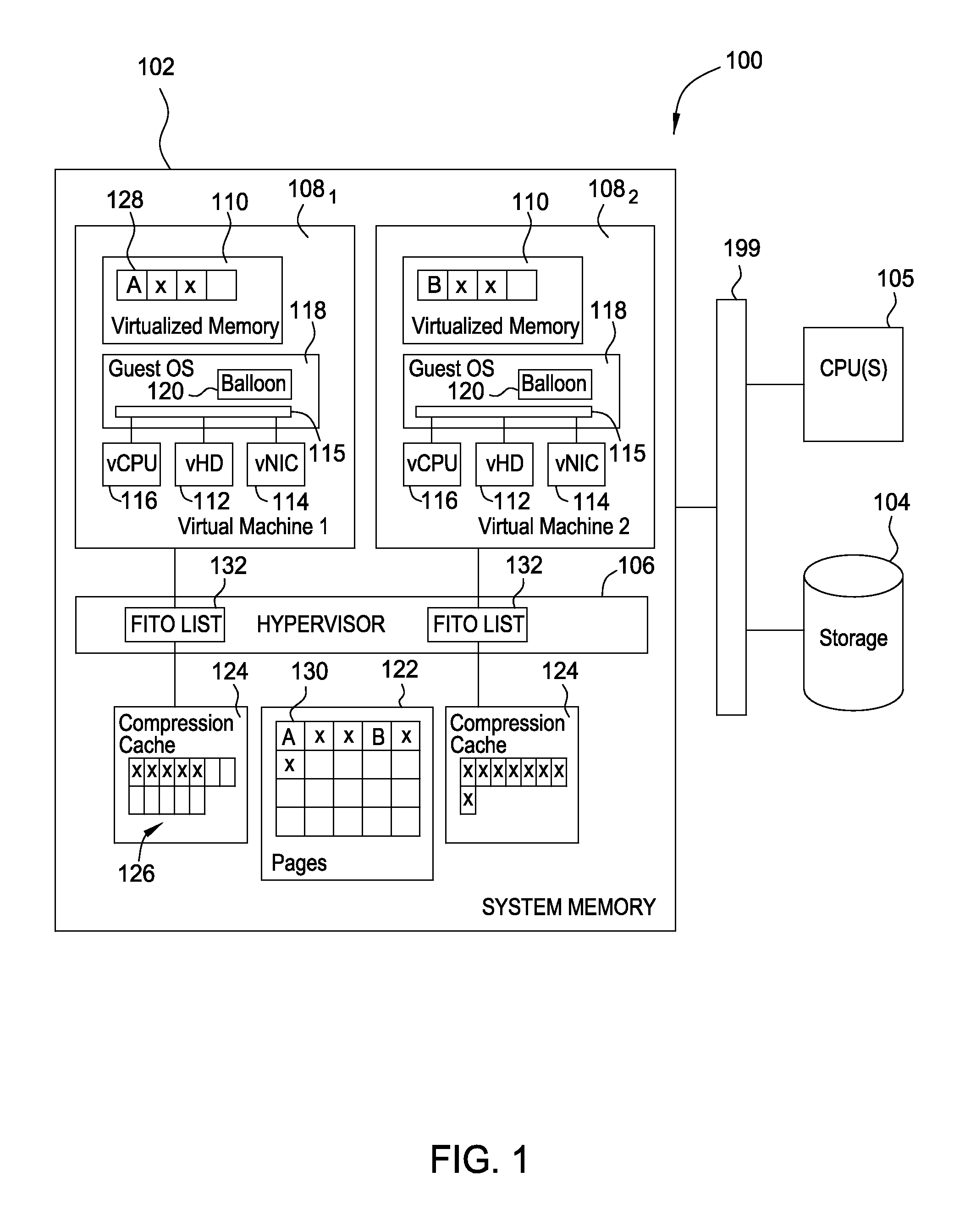

Memory compression policies

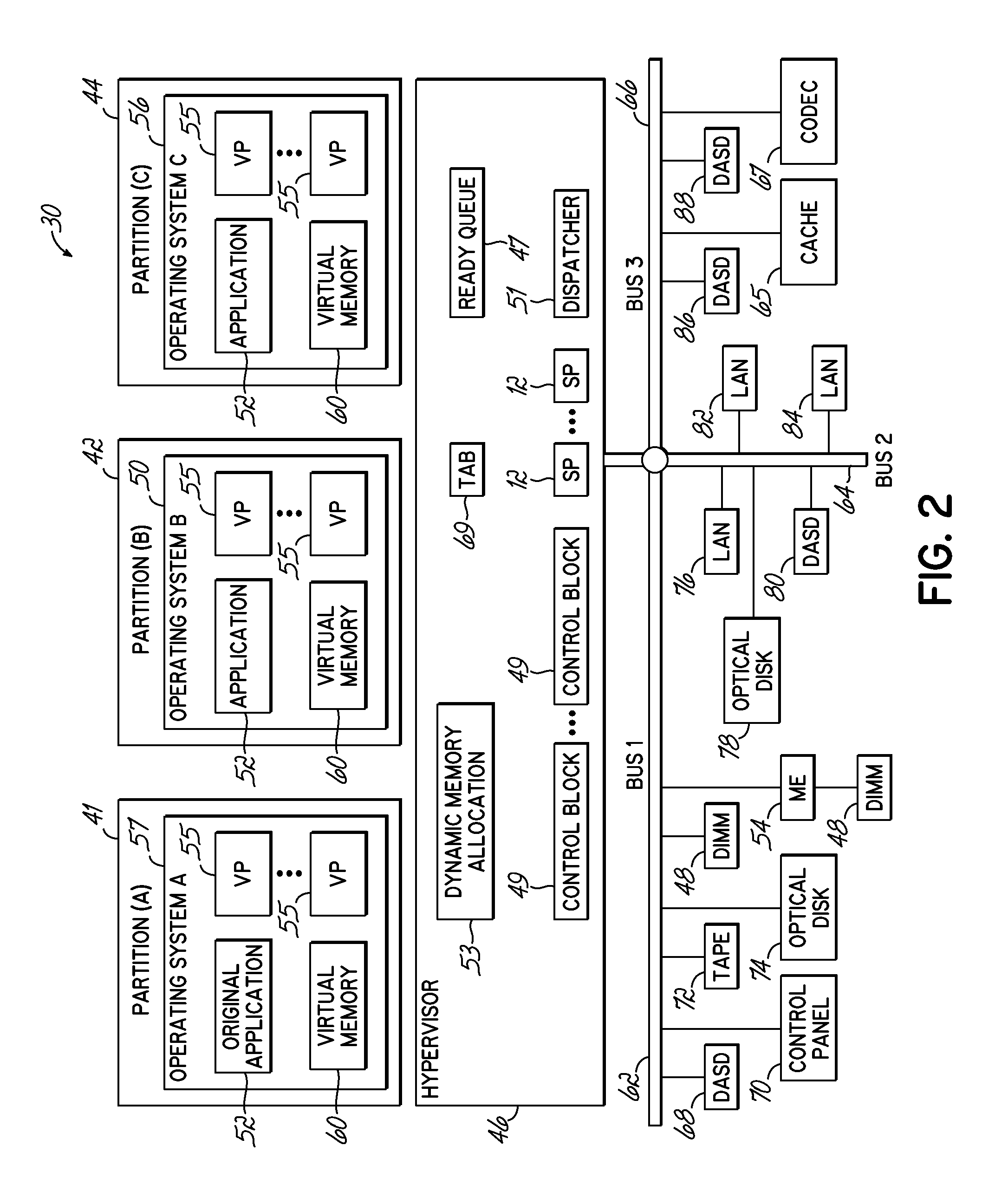

ActiveUS20120036325A1Reduce capacityAvoid placingMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingMemory compaction

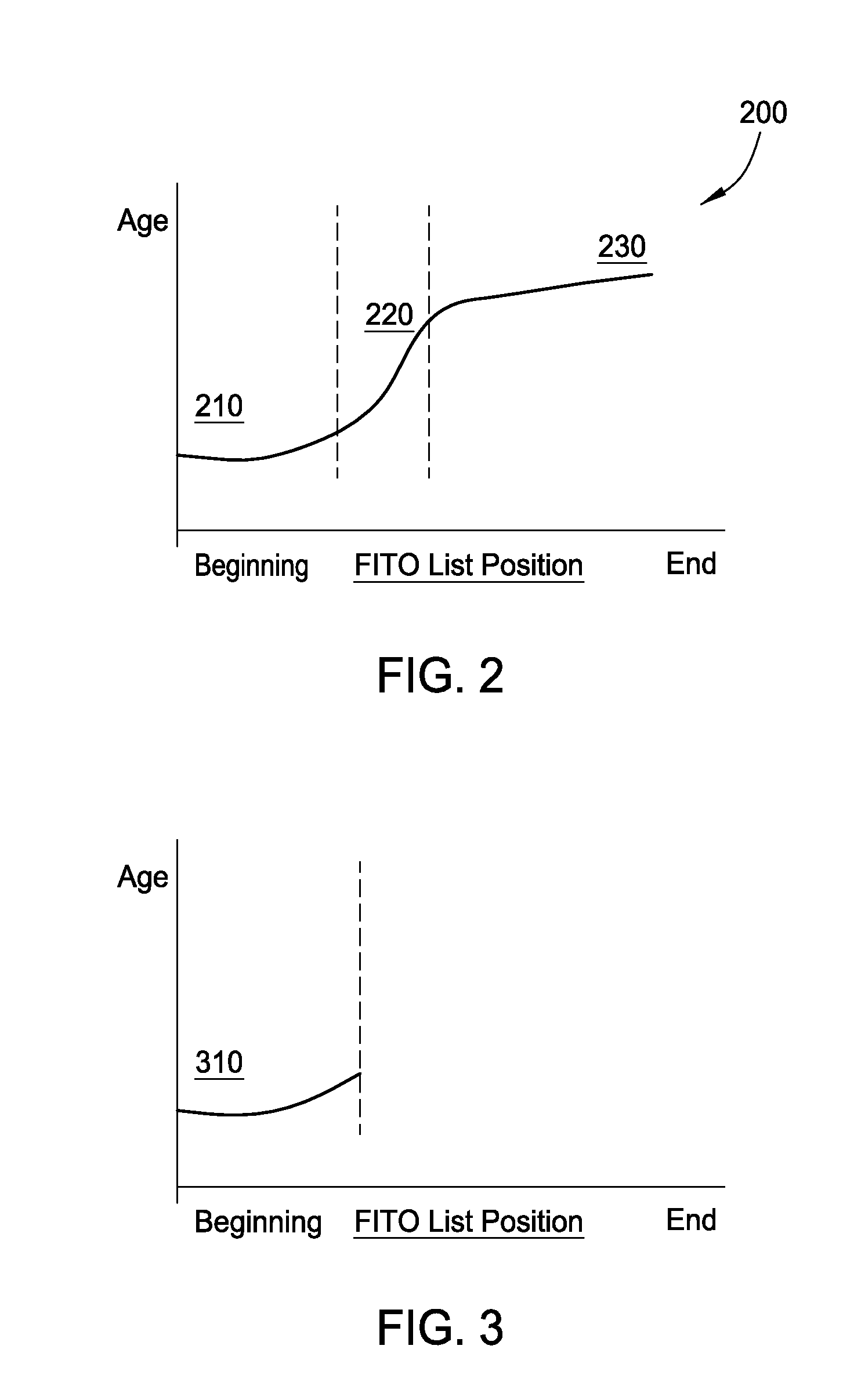

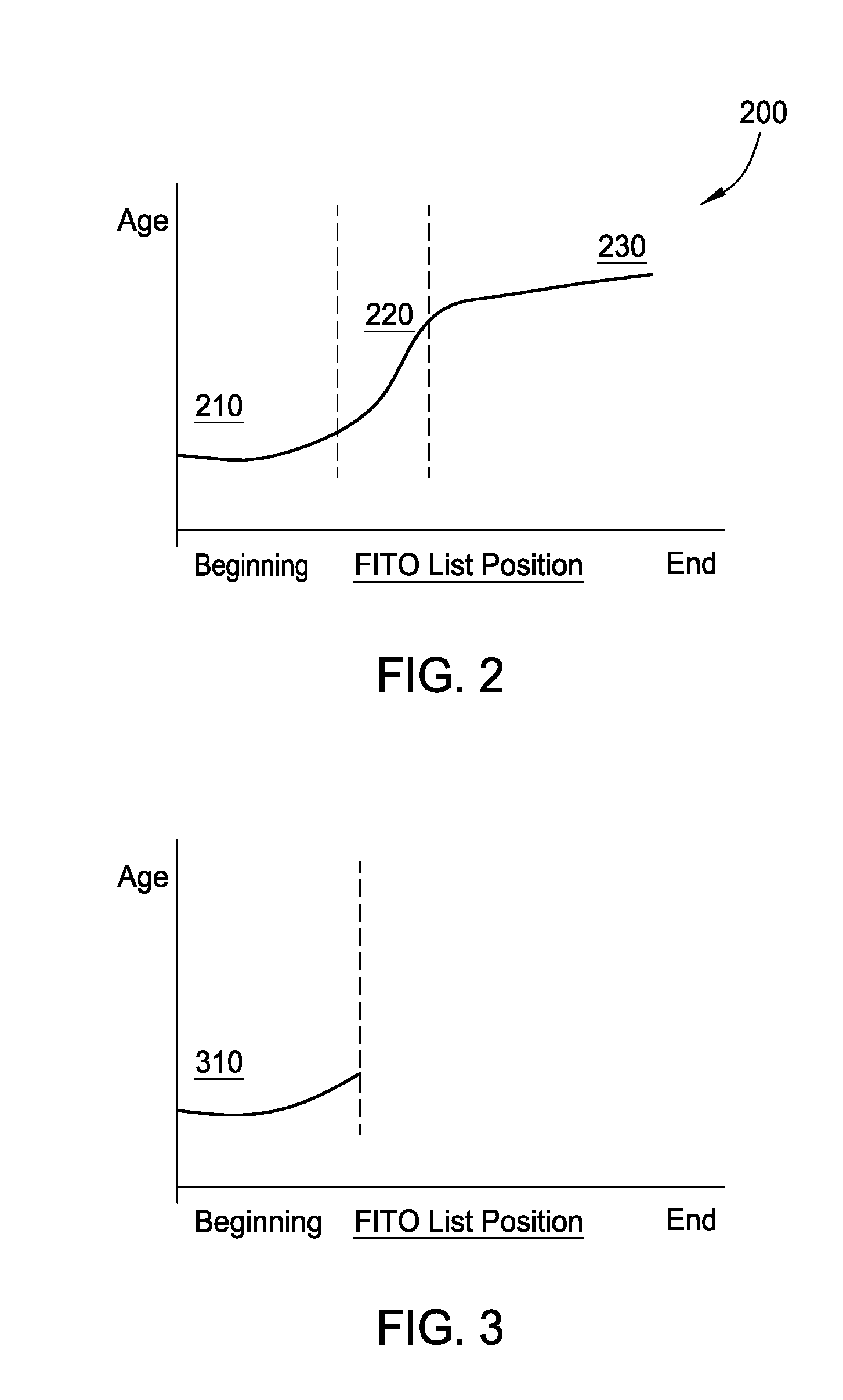

Techniques are disclosed for managing memory within a virtualized system that includes a memory compression cache. Generally, the virtualized system may include a hypervisor configured to use a compression cache to temporarily store memory pages that have been compressed to conserve memory space. A “first-in touch-out” (FITO) list may be used to manage the size of the compression cache by monitoring the compressed memory pages in the compression cache. Each element in the FITO list corresponds to a compressed page in the compression cache. Each element in the FITO list records a time at which the corresponding compressed page was stored in the compression cache (i.e. an age). A size of the compression cache may be adjusted based on the ages of the pages in the compression cache.

Owner:VMWARE INC

Modeling memory compression

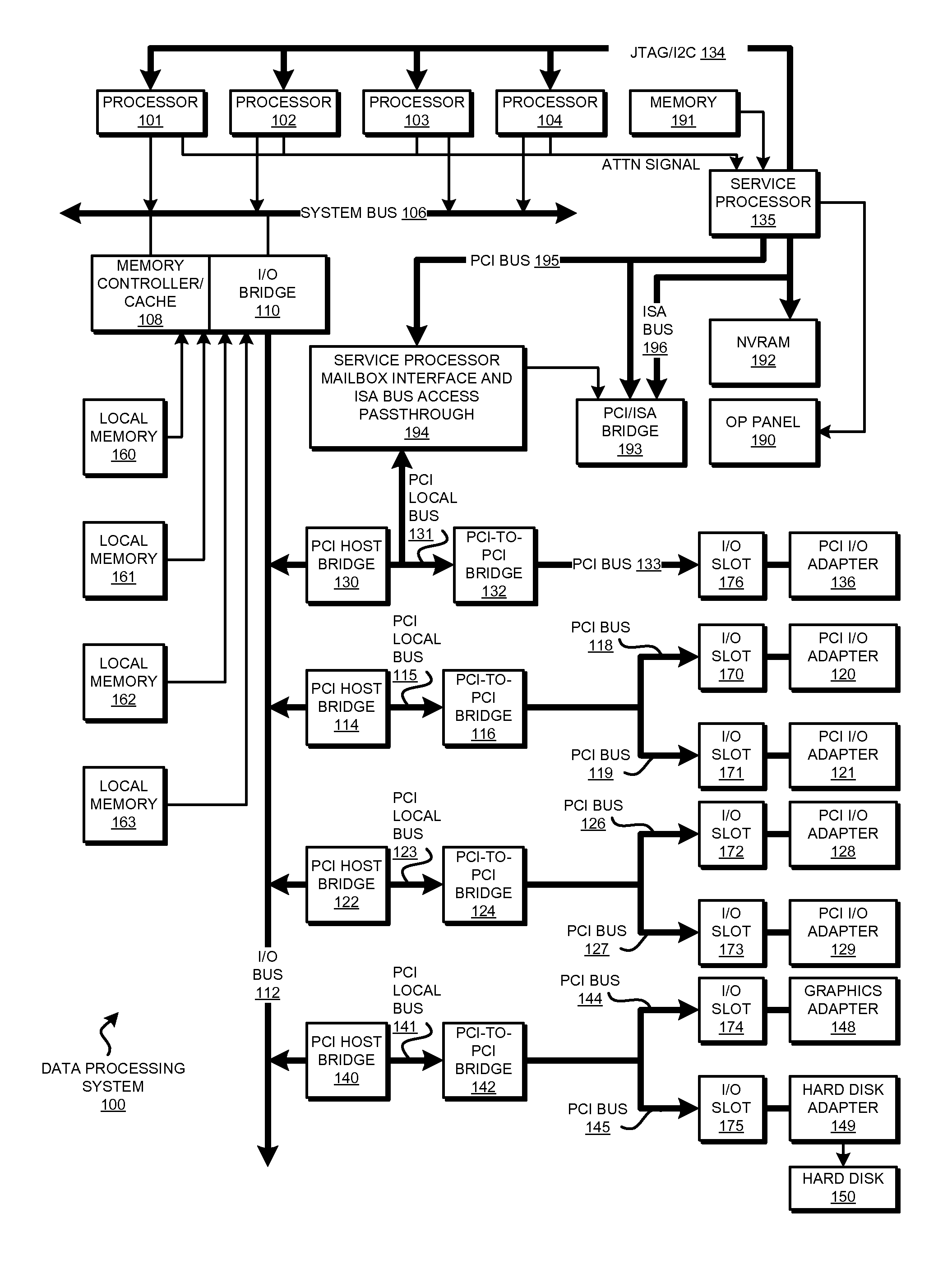

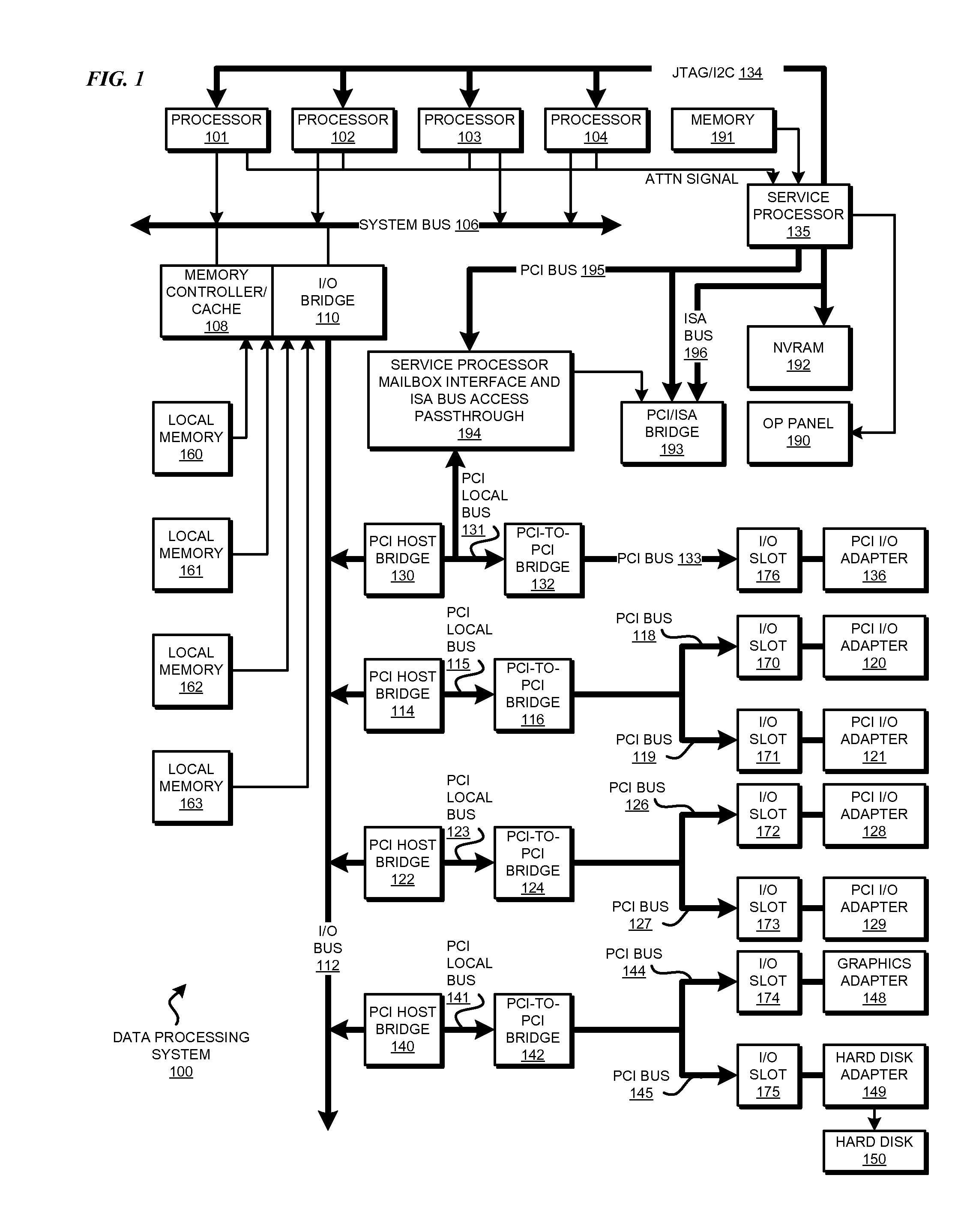

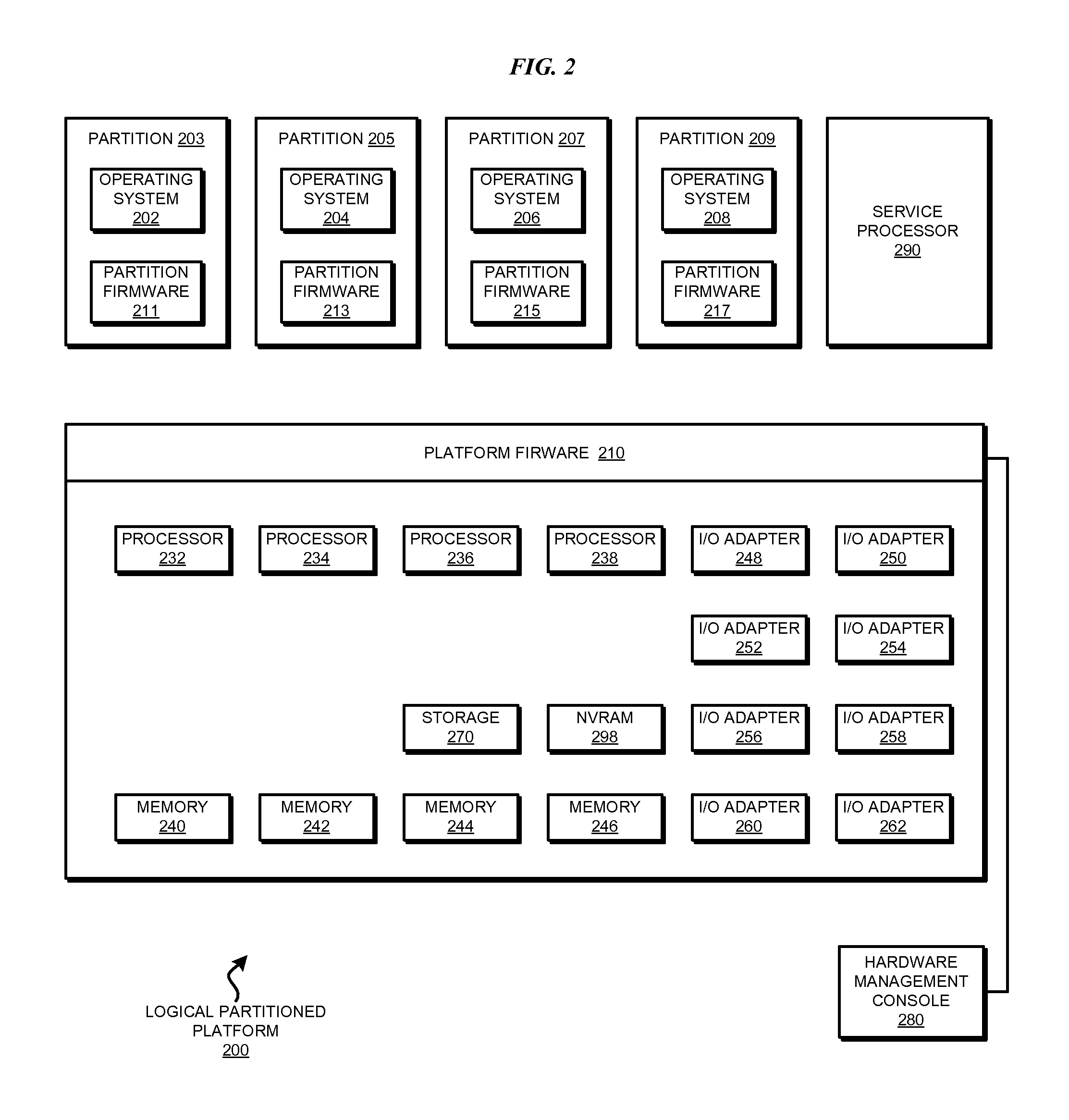

InactiveUS20110238943A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemReference rate

A method, system, and computer usable program product for modeling memory compression are provided in the illustrative embodiments. A subset of candidate pages is received. The subset of candidate pages is a subset of a set of candidate pages used in executing a workload in a data processing system. A candidate page is compressible uncompressed data in a memory associated with the data processing system. The subset of candidate pages is compressed in a scratch space. A compressibility of the workload is computed based on the compression of the subset of candidate pages. Page reference information of the subset of candidate pages is received. A memory reference rate of the workload is determined. A recommendation is presented about a memory compression model for the workload in the data processing system.

Owner:IBM CORP

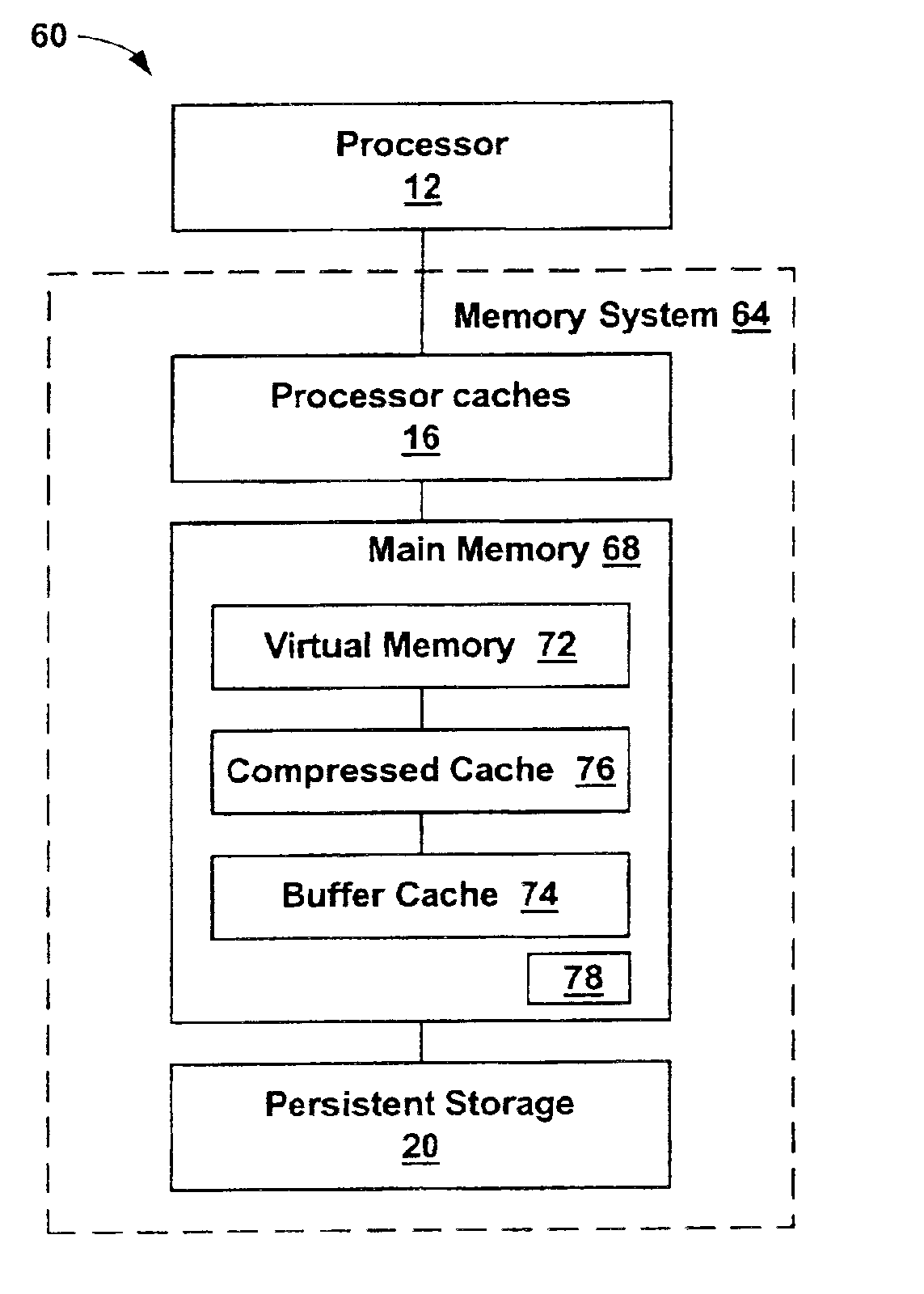

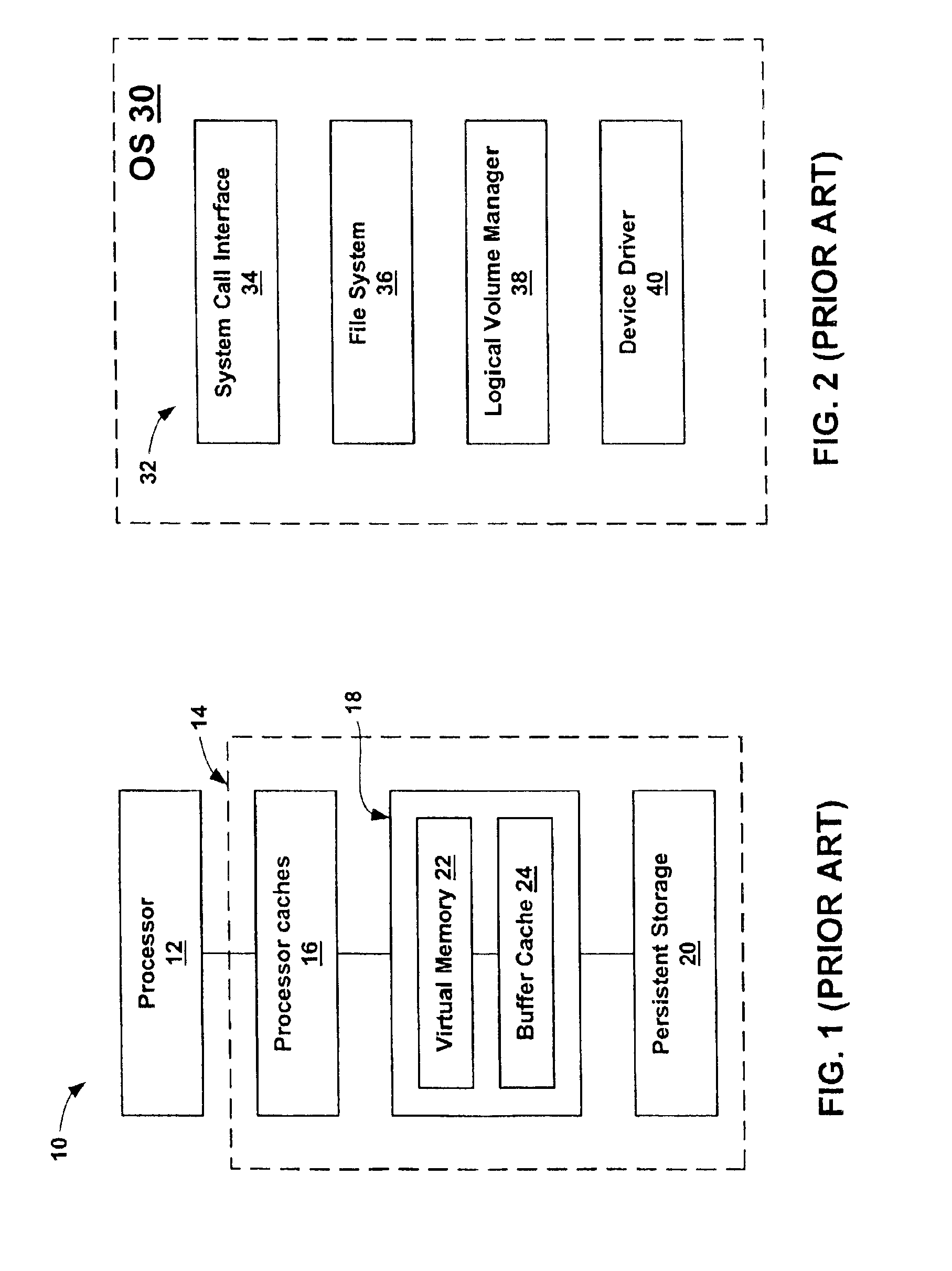

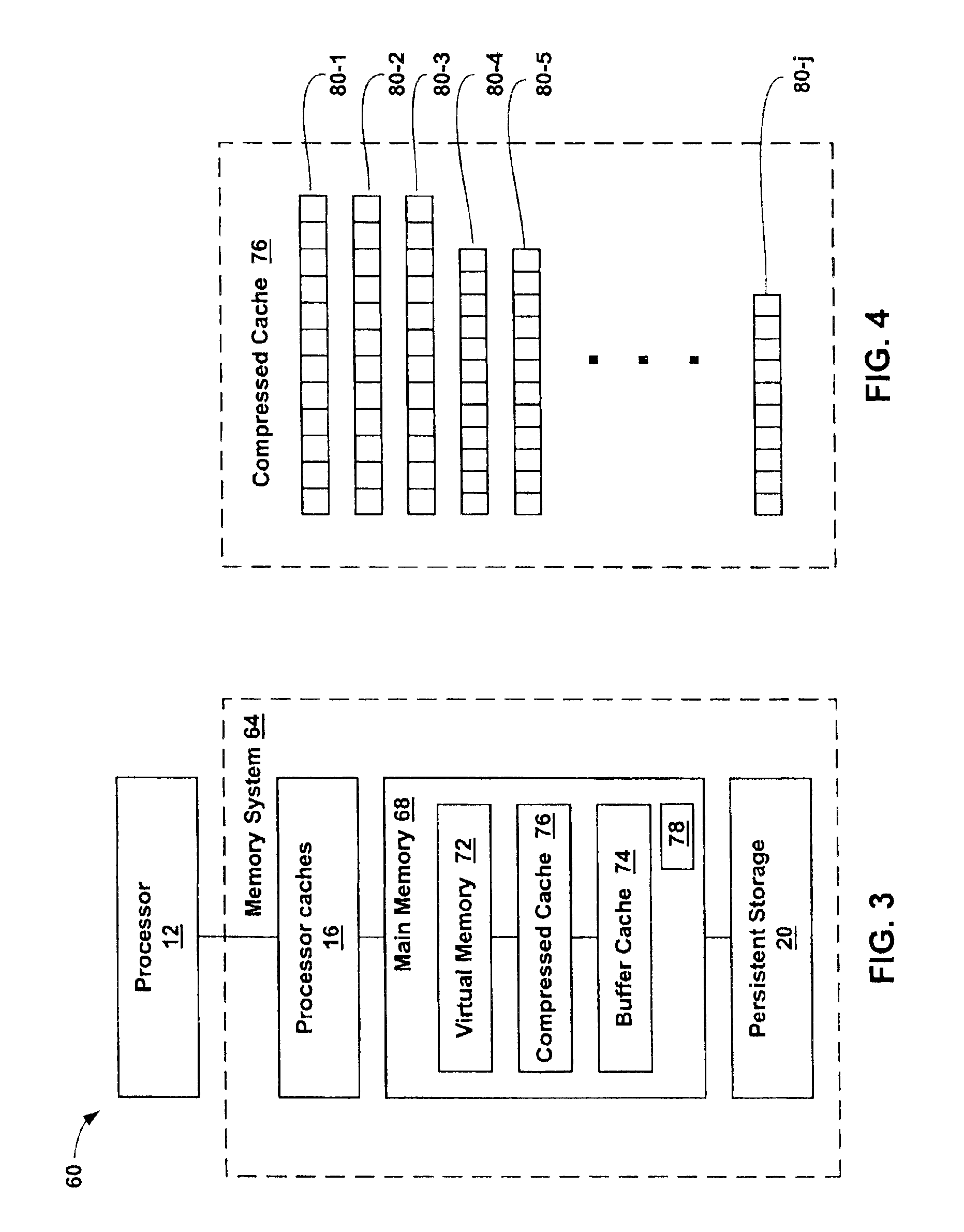

Memory compression for computer systems

InactiveUS20030229761A1Memory architecture accessing/allocationData processing applicationsOperational systemComputerized system

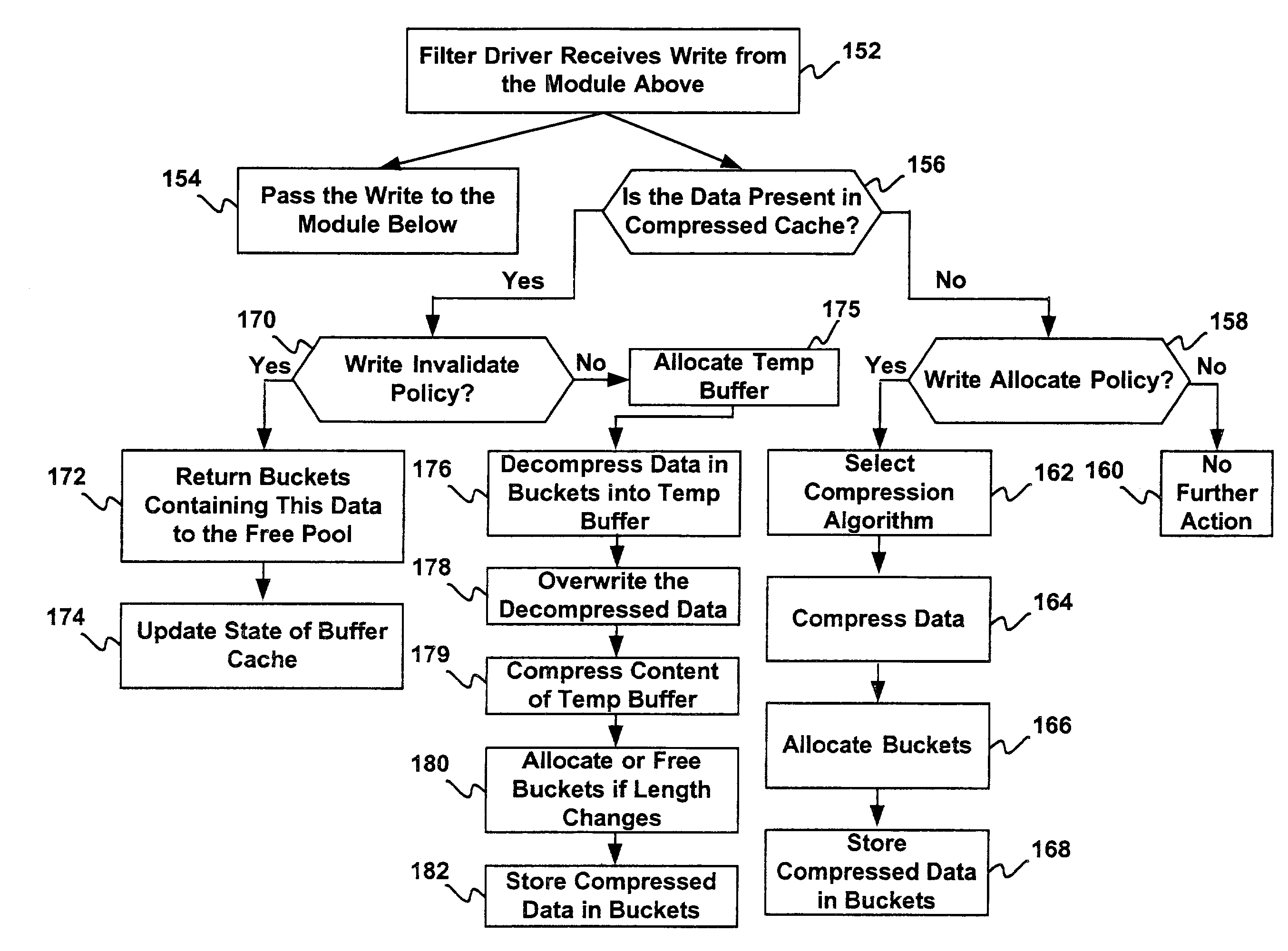

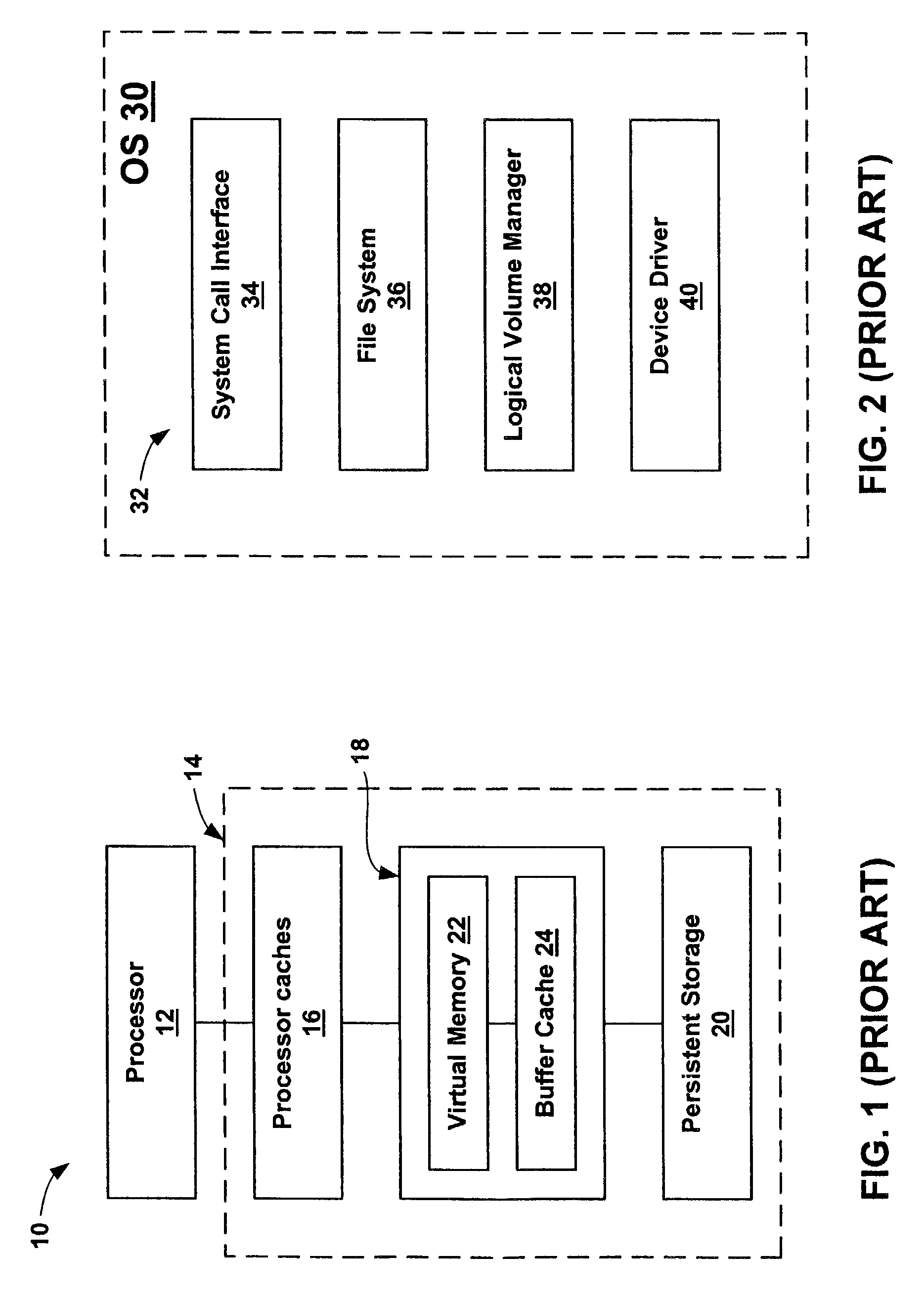

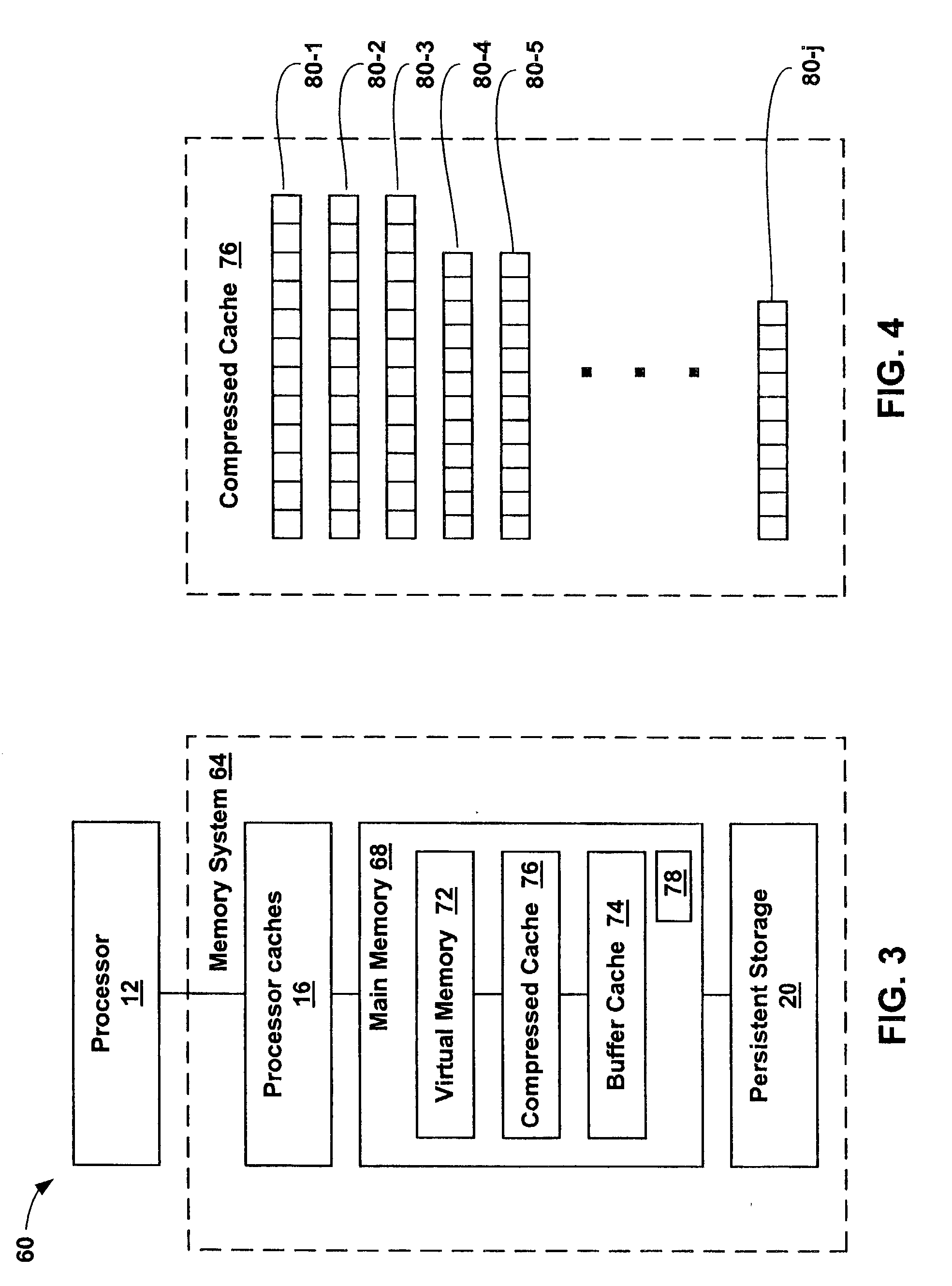

A computer system is provided including a processor, a persistent storage device, and a main memory connected to the processor and the persistent storage device. The main memory includes a compressed cache for storing data retrieved from the persistent storage device after compression and an operating system. The operating system includes a plurality of interconnected software modules for accessing the persistent storage device and a filter driver interconnected between two of the plurality of software modules for managing memory capacity of the compressed cache and the buffer cache.

Owner:VALTRUS INNOVATIONS LTD +1

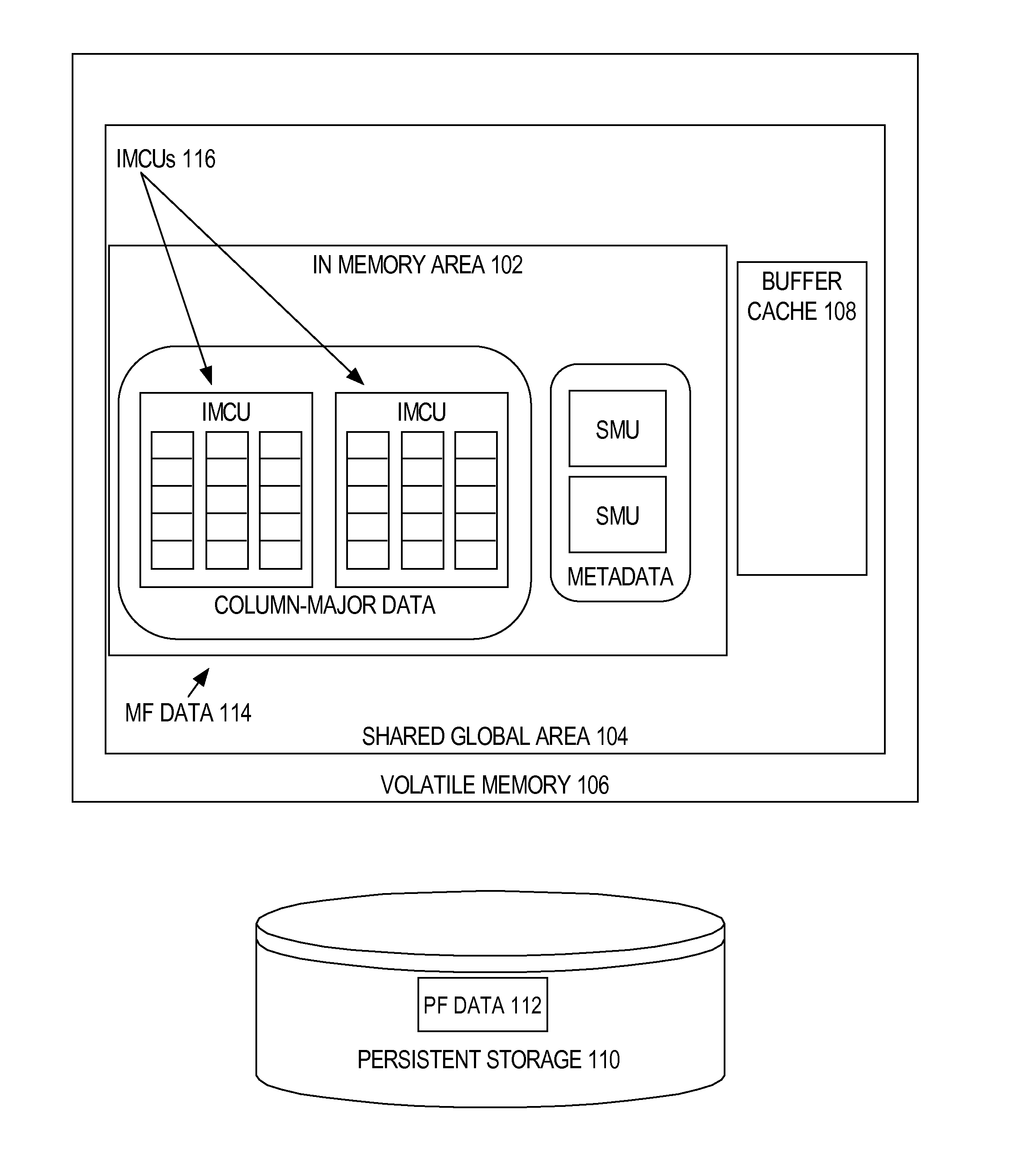

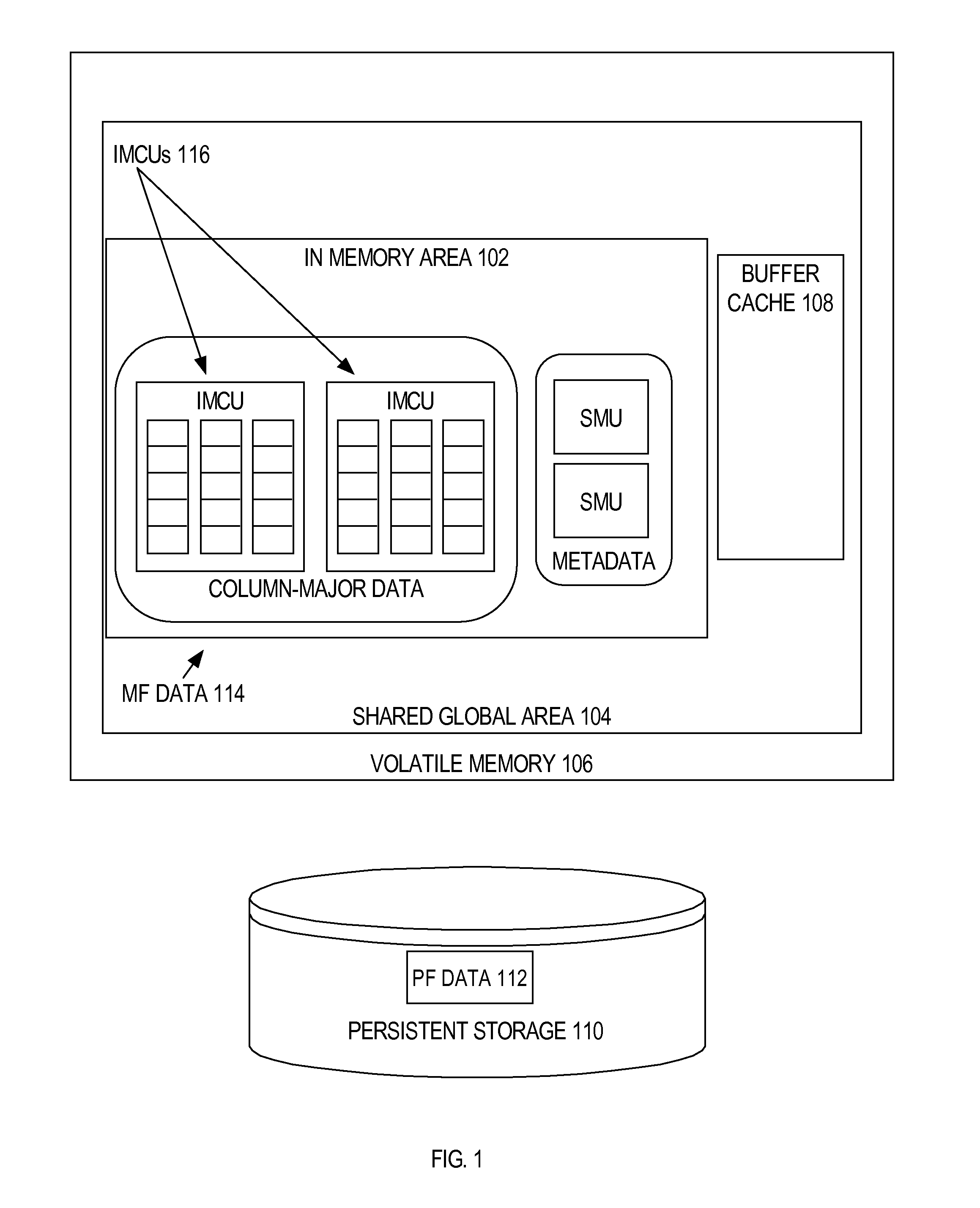

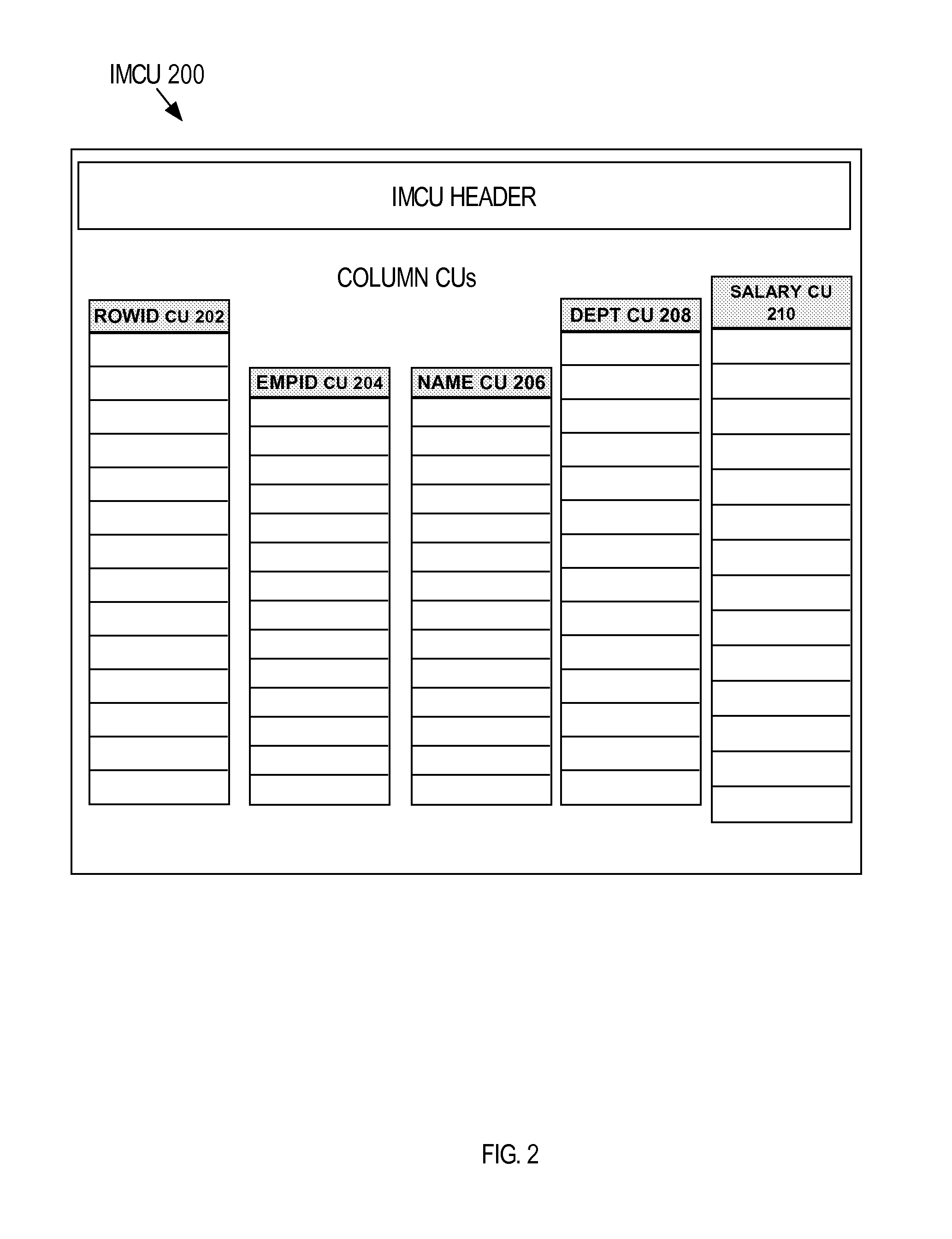

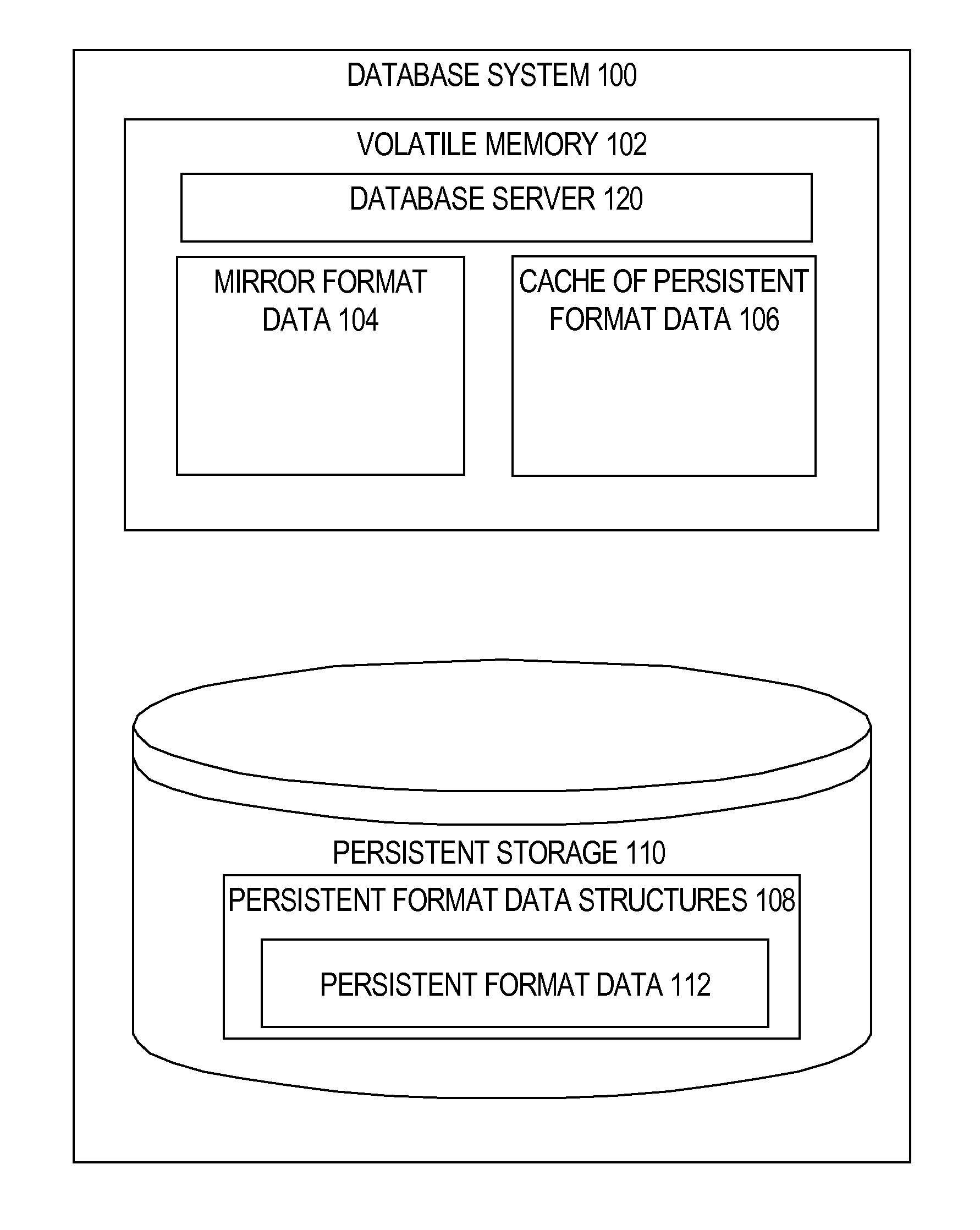

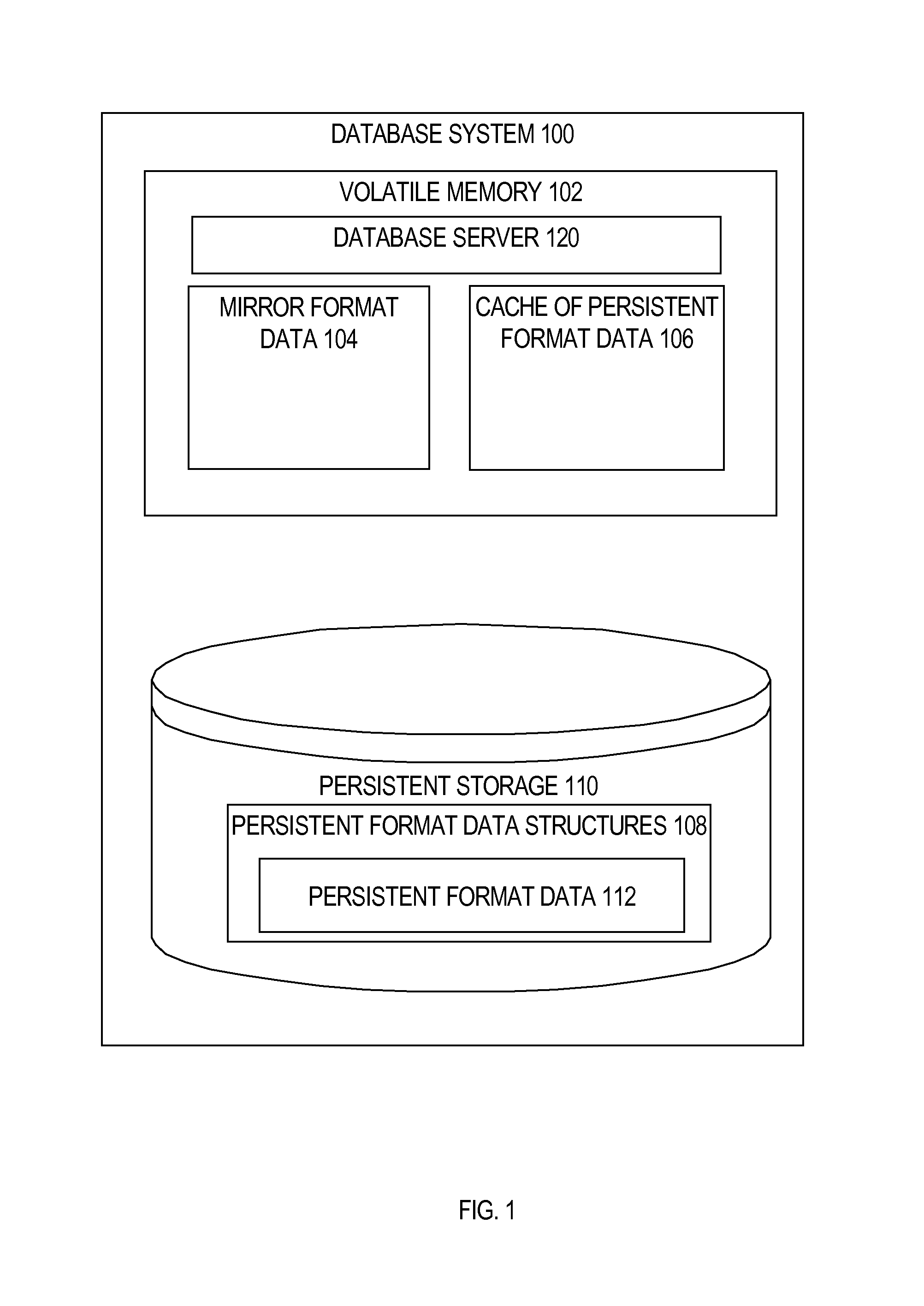

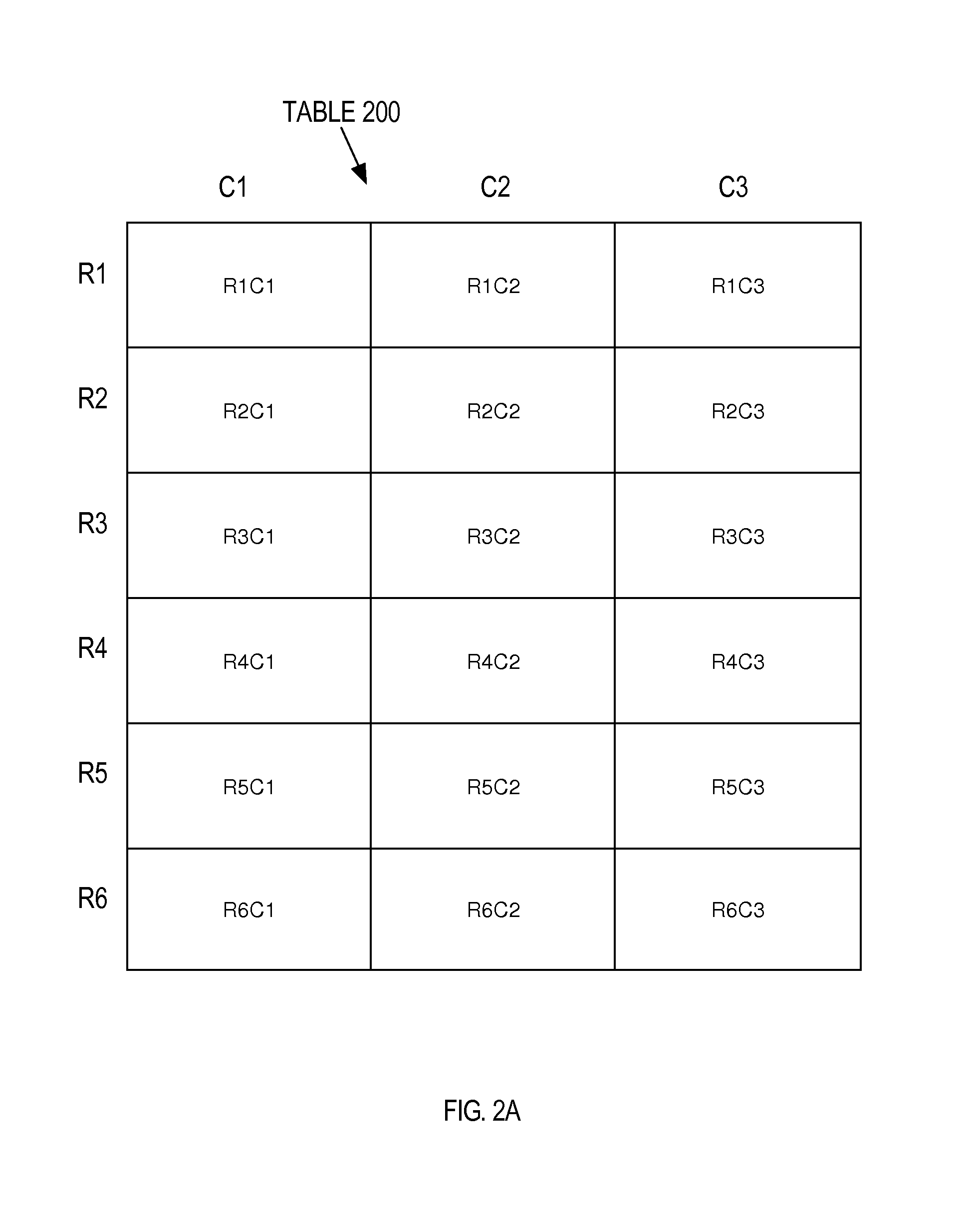

Optimizer statistics and cost model for in-memory tables

ActiveUS20160350371A1Memory architecture accessing/allocationDigital data information retrievalExecution planImage compression

Techniques are provided for determining costs for alternative execution plans for a query, where at least a portion of the data items required by the query are in in-memory compression-units within volatile memory. The techniques involve maintaining in-memory statistics, such as statistics that indicate what fraction of a table is currently present in in-memory compression units, and the cost of decompressing in-memory compression units. Those statistics are used to determine, for example, the cost of a table scan that retrieves some or all of the necessary data items from the in-memory compression-units.

Owner:ORACLE INT CORP

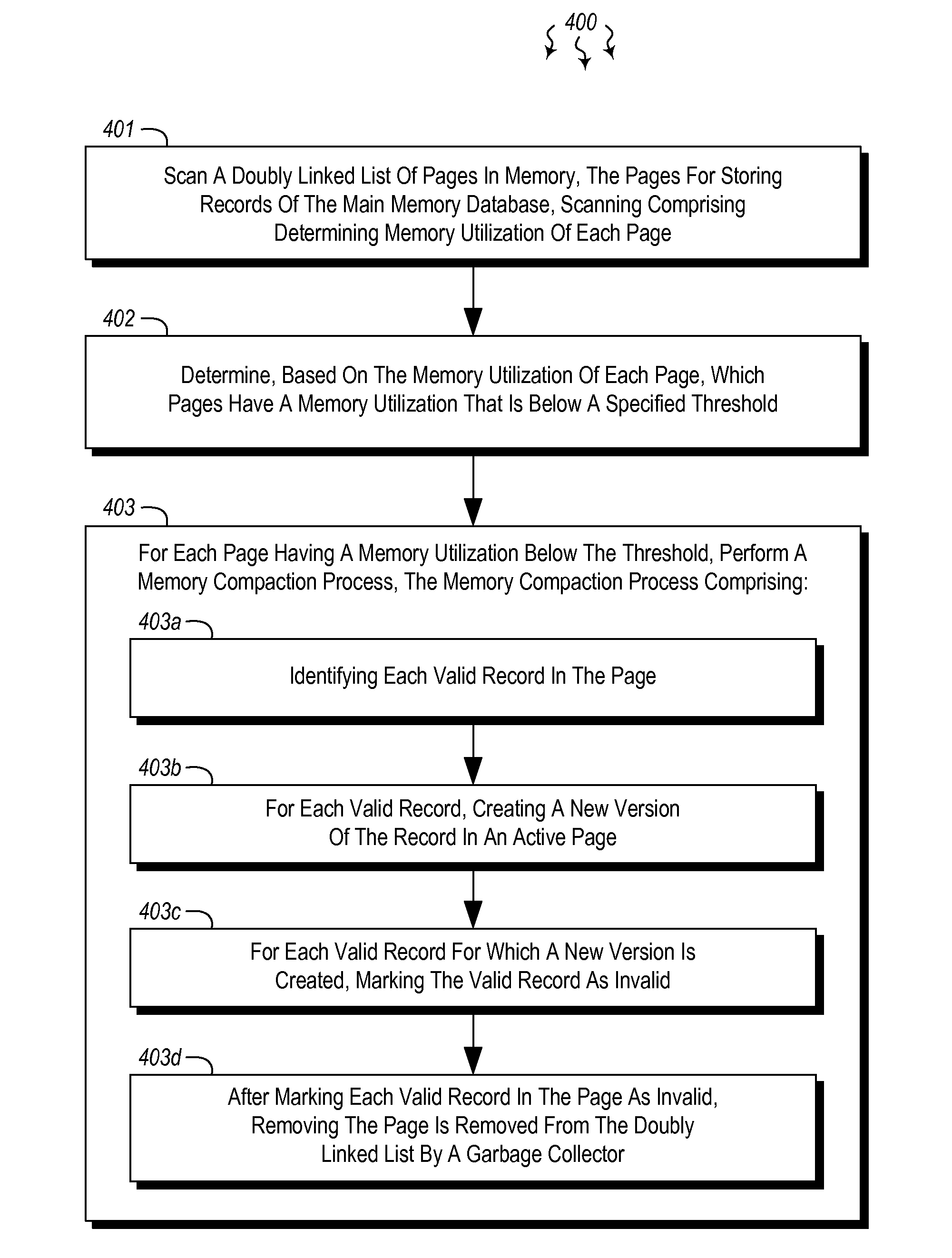

Memory compaction mechanism for main memory databases

InactiveUS20130346378A1Memory architecture accessing/allocationDigital data processing detailsIn-memory databaseData content

The present invention extends to methods, systems, and computer program products for performing memory compaction in a main memory database. The main memory database stores records within pages which are organized in doubly linked lists within partition heaps. The memory compaction process uses quasi-updates to move records from a page to the emptied to an active page in a partition heap. The quasi-updates create a new version of the record in the active page, the new version having the same data contents as the old version of the record. The creation of the new version can be performed using a transaction that employs wait for dependencies to allow the old version of the record to be read while the transaction is creating the new version thereby minimizing the effect of the memory compaction process on other transactions in the main memory database.

Owner:MICROSOFT TECH LICENSING LLC

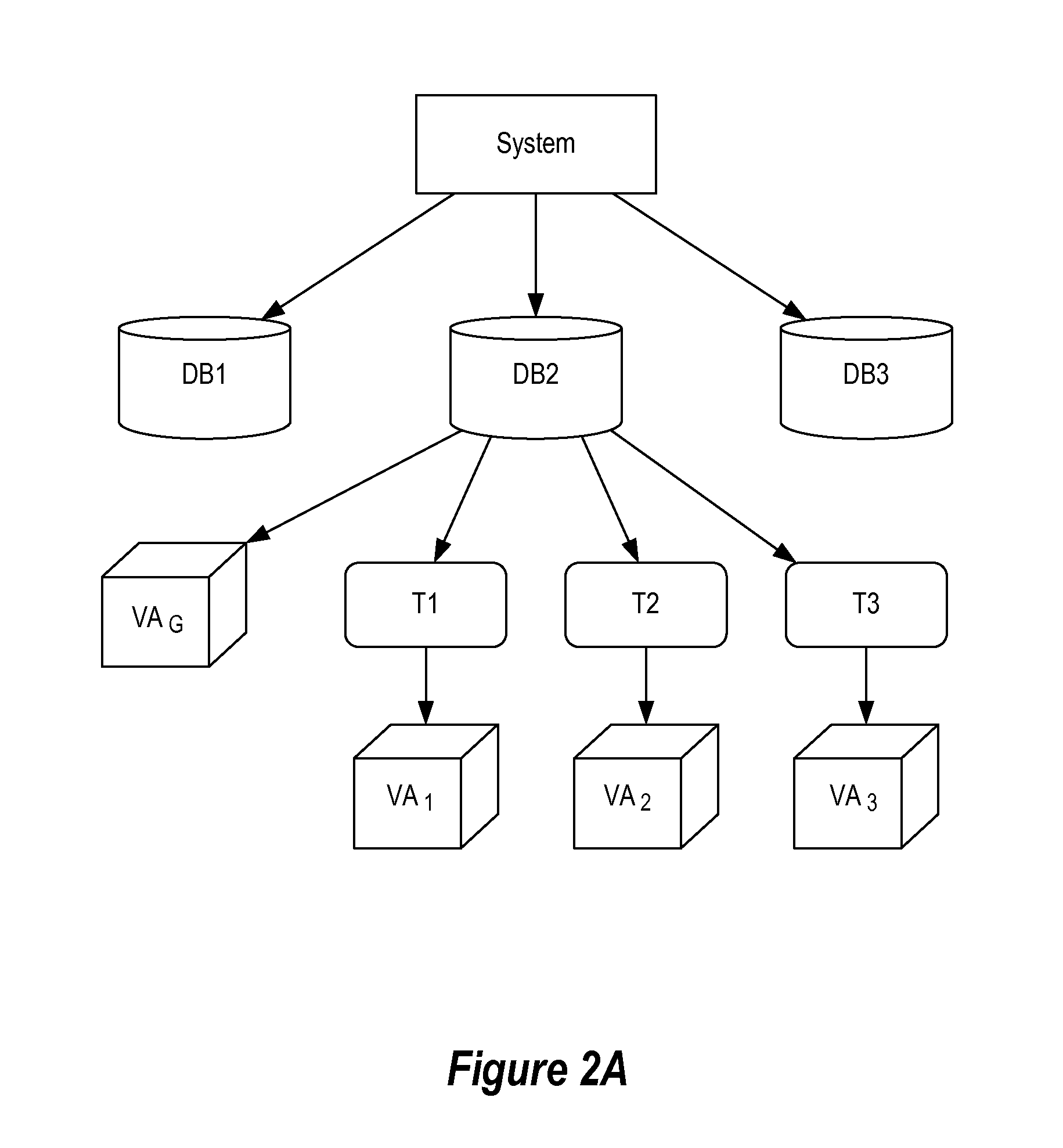

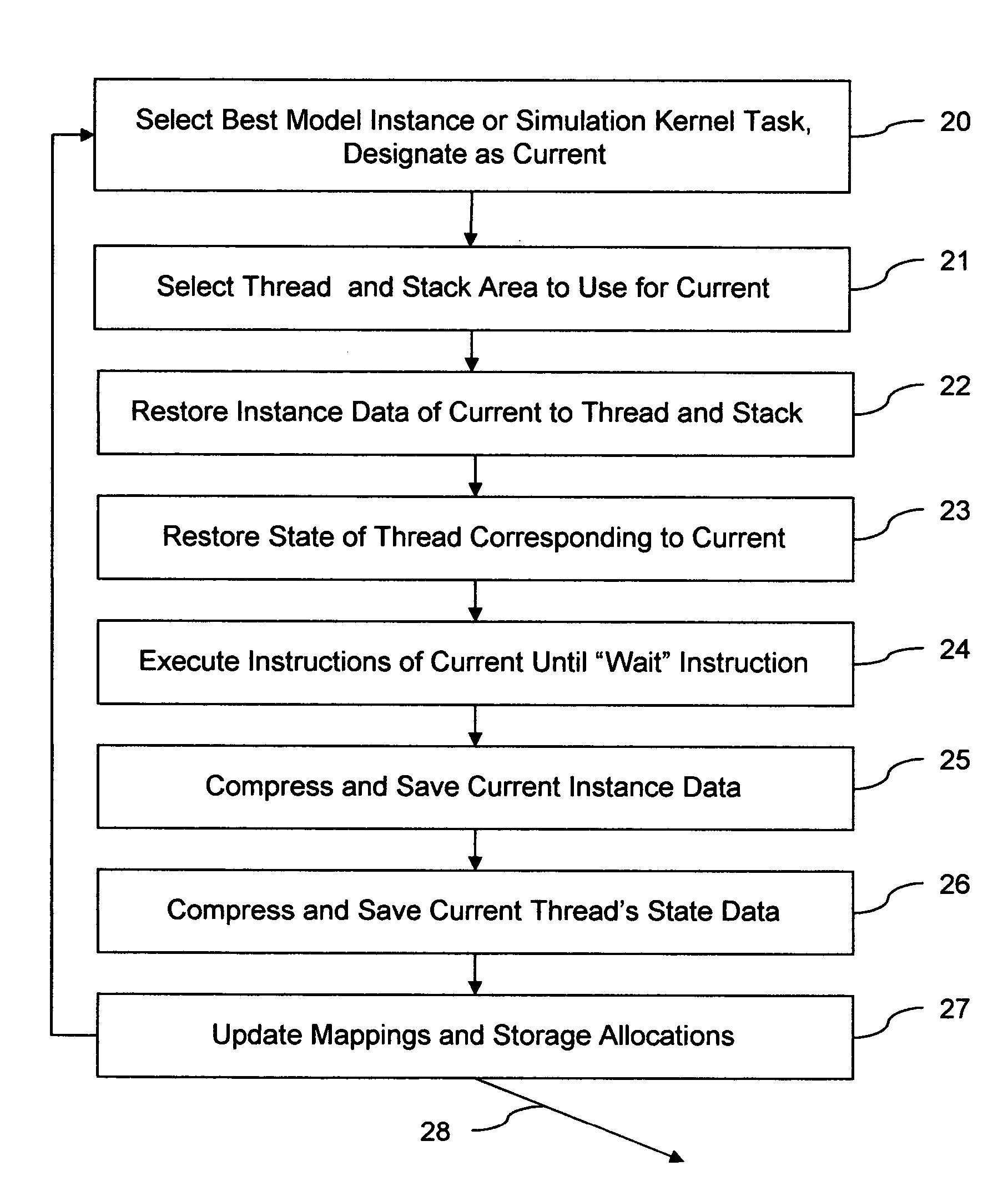

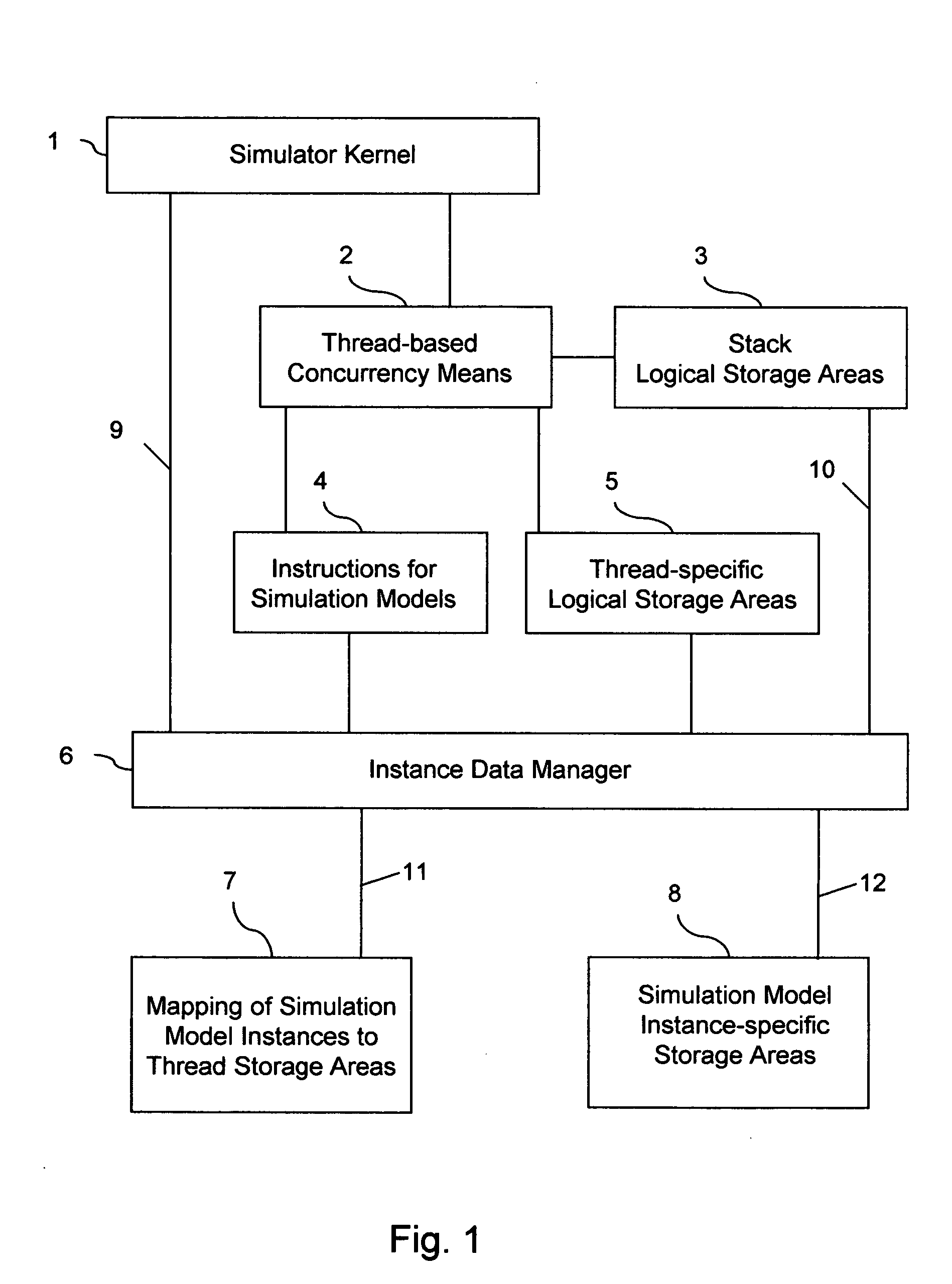

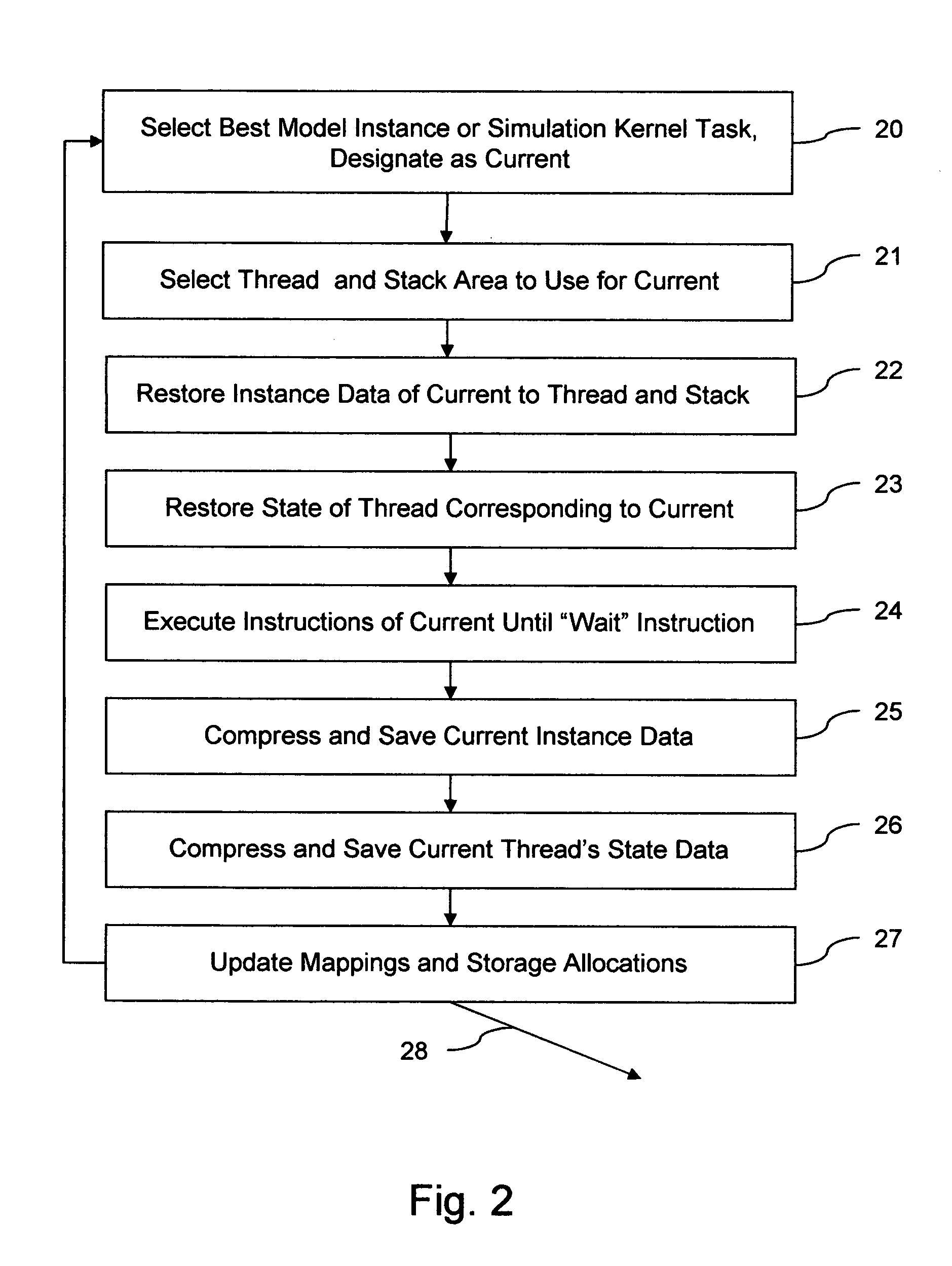

Method and machine for efficient simulation of digital hardware within a software development environment

InactiveUS20050066305A1Reducing CPU branch mis-predictionEasy to useCAD circuit designSpecial data processing applicationsSoftware development processParallel computing

The invention provides run-time support for efficient simulation of digital hardware in a software development enviromnent, facilitating combined hardware / software co-simulation. The run-time support includes threads of execution that minimize stack storage requirements and reduce memory-related run-time processing requirements. The invention implements shared processor stack areas, including the sharing of a stack storage area among multiple threads, storing each thread's stack data in a designated area in compressed form while the thread is suspended. The thread's stack data is uncompressed and copied back onto a processor stack area when the thread is reactivated. A mapping of simulation model instances to stack storage is determined so as to minimize a cost function of memory and CPU run-time, to reduce the risk of stack overflow, and to reduce the impact of blocking system calls on simulation model execution. The invention also employs further memory compaction and a method for reducing CPU branch mis-prediction.

Owner:LISANKE ROBERT JOHN

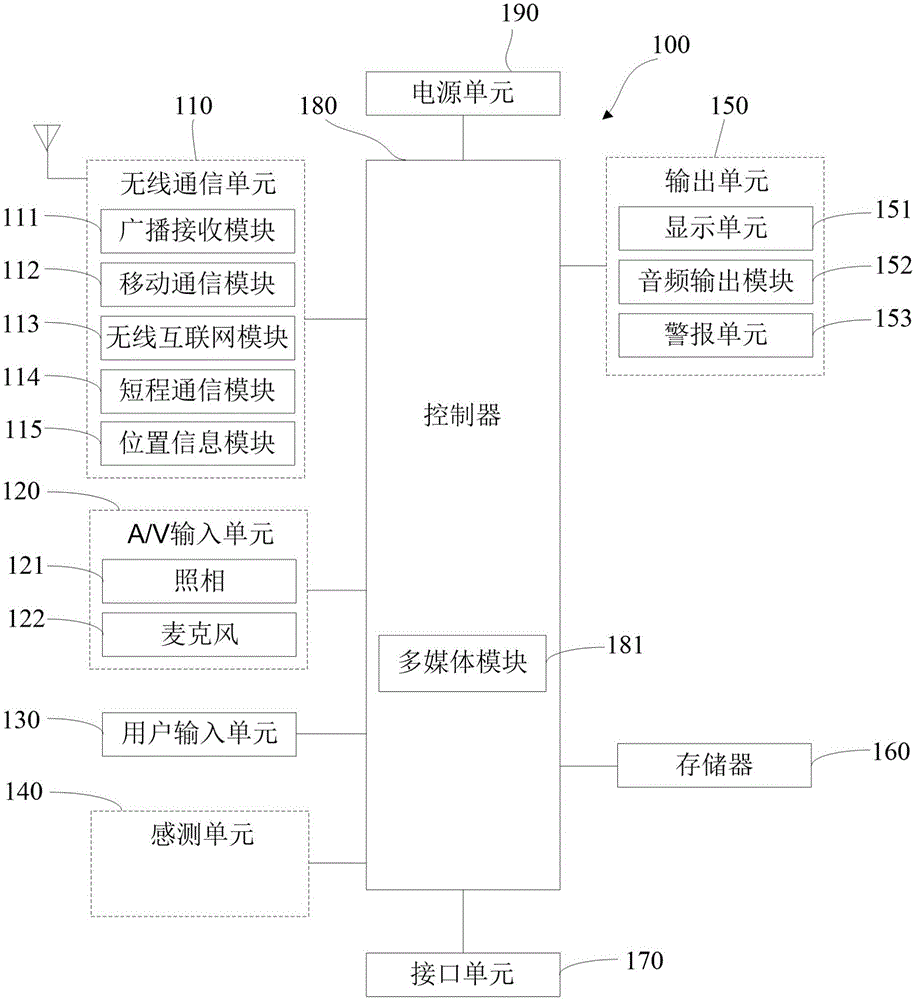

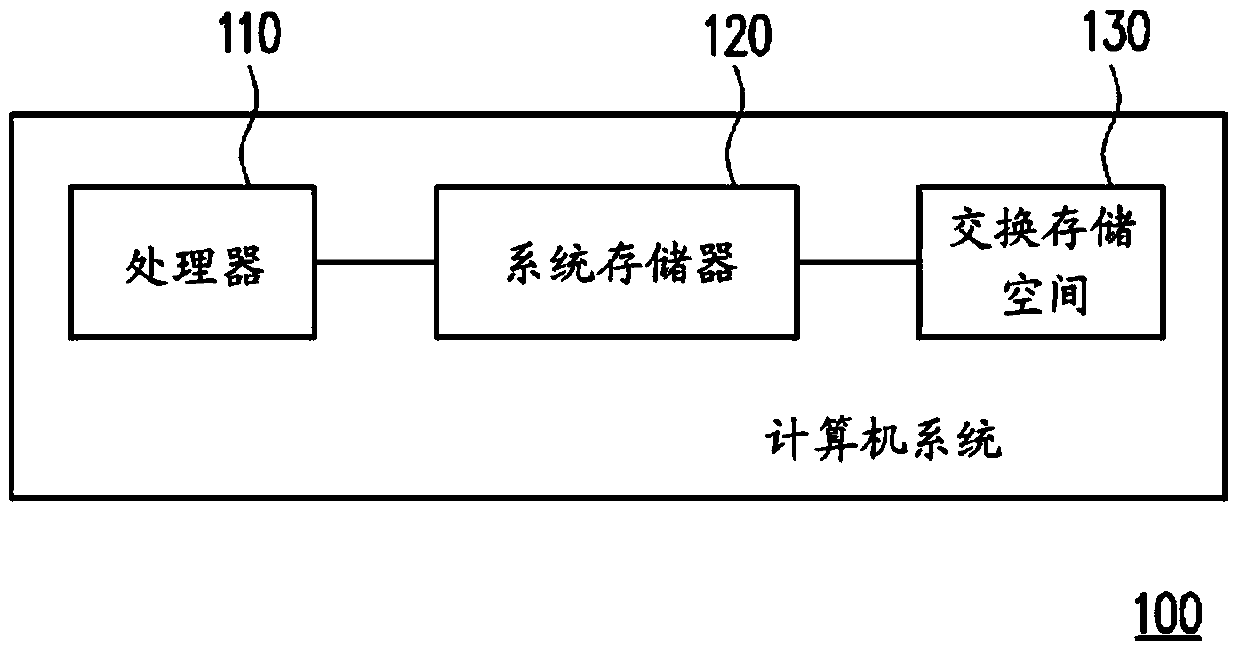

Memory compression method of electronic device and apparatus thereof

ActiveUS20150339059A1Reduce manufacturing costImprove data loading speedMemory architecture accessing/allocationInput/output to record carriersComputer hardwareTerm memory

Disclosed are a memory compression method of an electronic device and an apparatus thereof. The method for compressing memory in an electronic device may include: detecting a request for executing the first application; determining whether or not the memory compression is required for the execution of the first application; when the memory compression is required, compressing the memory corresponding to an application in progress in the background of the electronic device; and executing the first application.

Owner:SAMSUNG ELECTRONICS CO LTD

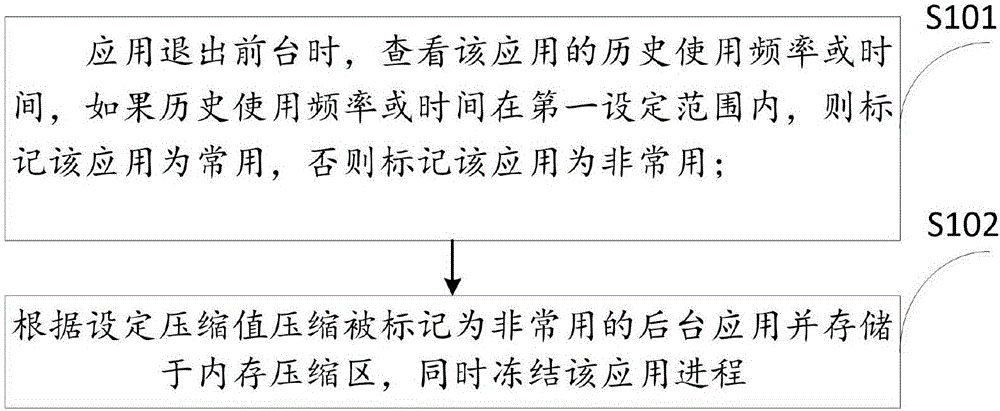

Terminal application storage processing method and device

InactiveCN106843450AEfficient use ofReduce power consumptionPower managementPower supply for data processingComputer terminalMemory compaction

The invention discloses a terminal application storage processing method and device. The method comprises, when an application exits a foreground, checking the historical application frequency or time of the application; when the historical application frequency and time is in a first set range, marking the application as a frequently-used application, otherwise as a non-frequently-used application; according to set compression values, compressing and storing the non-frequency-used applications into a memory compressing zone, and freezing the processes of the applications. The terminal application storage processing method and device can release the memory occupied by the non-frequently-used applications and meanwhile freeze the applications to increase available memory space and to reduce power consumption of the non-frequently-used applications at background; when the non-frequently-used applications are recalled, uncompresses and unfreezes the applications to achieve rapid starting of the applications.

Owner:NUBIA TECHNOLOGY CO LTD

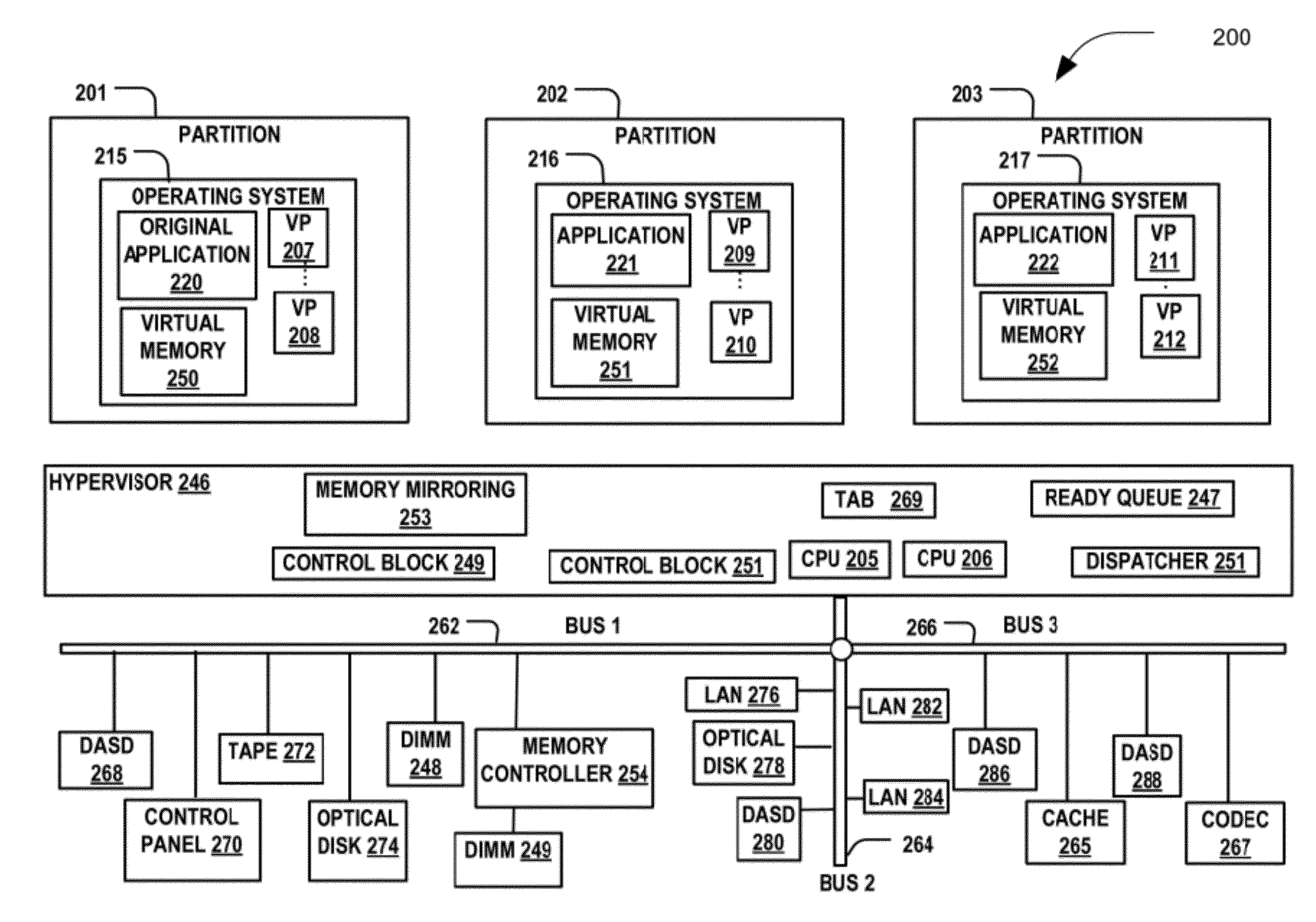

Memory mirroring with memory compression

ActiveUS20120124415A1Increase volumeLow costRedundant hardware error correctionData storeMemory compaction

Owner:LENOVO GLOBAL TECH INT LTD

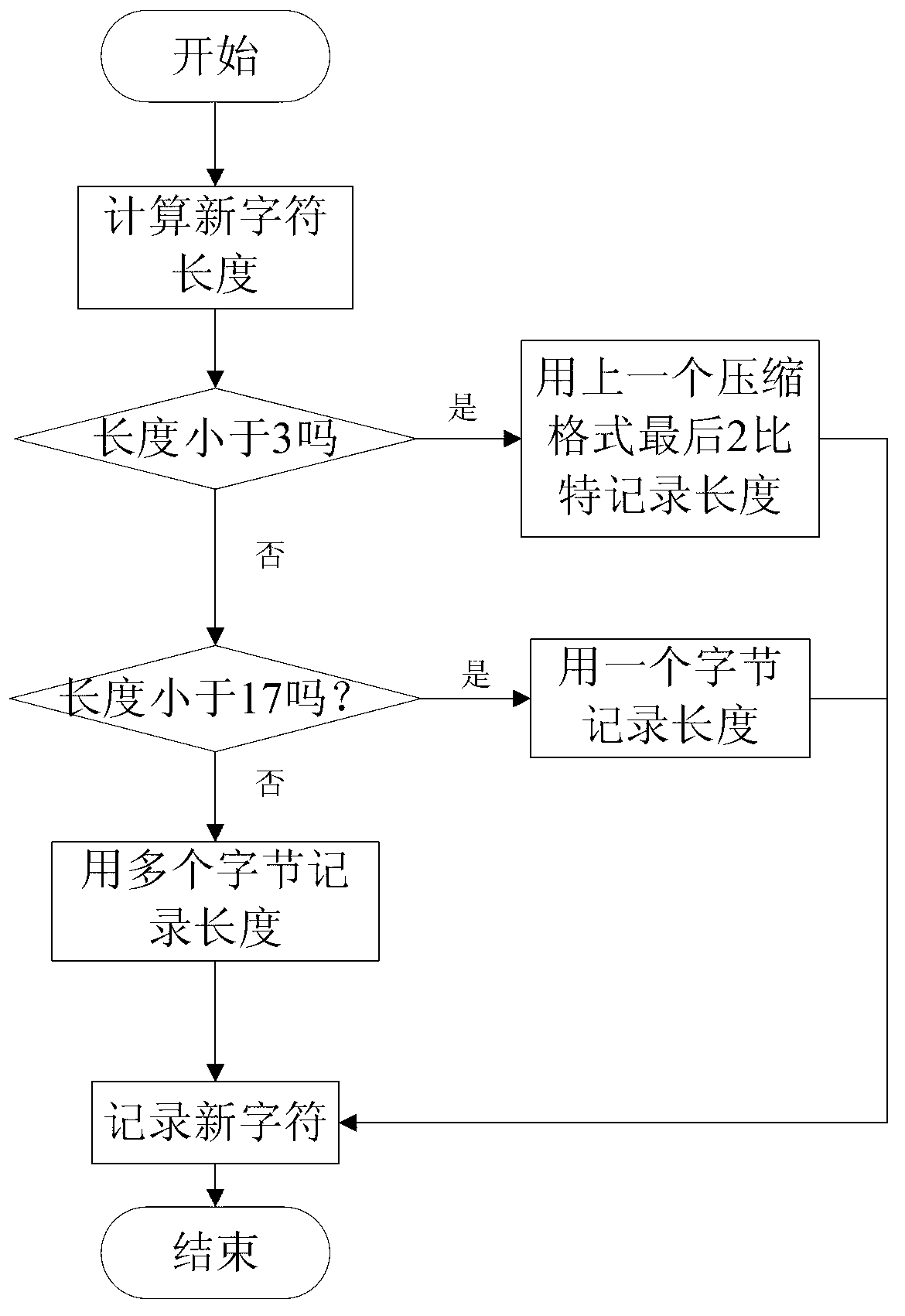

Mobile device memory compression method based on dictionary encoding and run-length encoding

InactiveCN103258030AImprove operational efficiencyIncrease the compression ratioCode conversionSpecial data processing applicationsImage compressionCompression method

The invention discloses a mobile device memory compression method based on dictionary encoding and run-length encoding. The mobile device memory compression method based on the dictionary encoding and the run-length encoding mainly solves the problem that an existing dictionary encoding compression method and an existing run-length encoding compression method are low in compression ratio of memory data. The mobile device memory compression method based on the dictionary encoding and the run-length encoding mainly comprises the following steps of (1) reading in the memory data and the lengths of the storage data, (2) judging whether the memory data are compressible data, directly recording the lengths of the data and the data when the data are not compressible data, and using a run-length encoding compressed format to compress continuous identical character strings when the data are compressible data, (3) using a dictionary compressed format to compress other ordinary memory data, (4) judging whether compression is carried out on the tail of the memory data, stopping compressing when the compression is carried out on the tail of the memory data, and continuing reading in the memory data when the compression is not carried out on the tail of the memory data. Compared with existing other storage compression methods, the mobile device memory compression method based on the dictionary encoding and the run-length encoding is higher in compression ratio, thus more residual space can be released for an internal storage of a mobile device, operating efficiency of the mobile device can be improved, and the mobile device memory compression method based on the dictionary encoding and the run-length encoding can be used in mobile devices which need memory compression.

Owner:XIDIAN UNIV +1

Memory compression policies

ActiveUS8484405B2Low utilizationInduce guest level swappingMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingMemory compaction

Techniques are disclosed for managing memory within a virtualized system that includes a memory compression cache. Generally, the virtualized system may include a hypervisor configured to use a compression cache to temporarily store memory pages that have been compressed to conserve memory space. A “first-in touch-out” (FITO) list may be used to manage the size of the compression cache by monitoring the compressed memory pages in the compression cache. Each element in the FITO list corresponds to a compressed page in the compression cache. Each element in the FITO list records a time at which the corresponding compressed page was stored in the compression cache (i.e. an age). A size of the compression cache may be adjusted based on the ages of the pages in the compression cache.

Owner:VMWARE INC

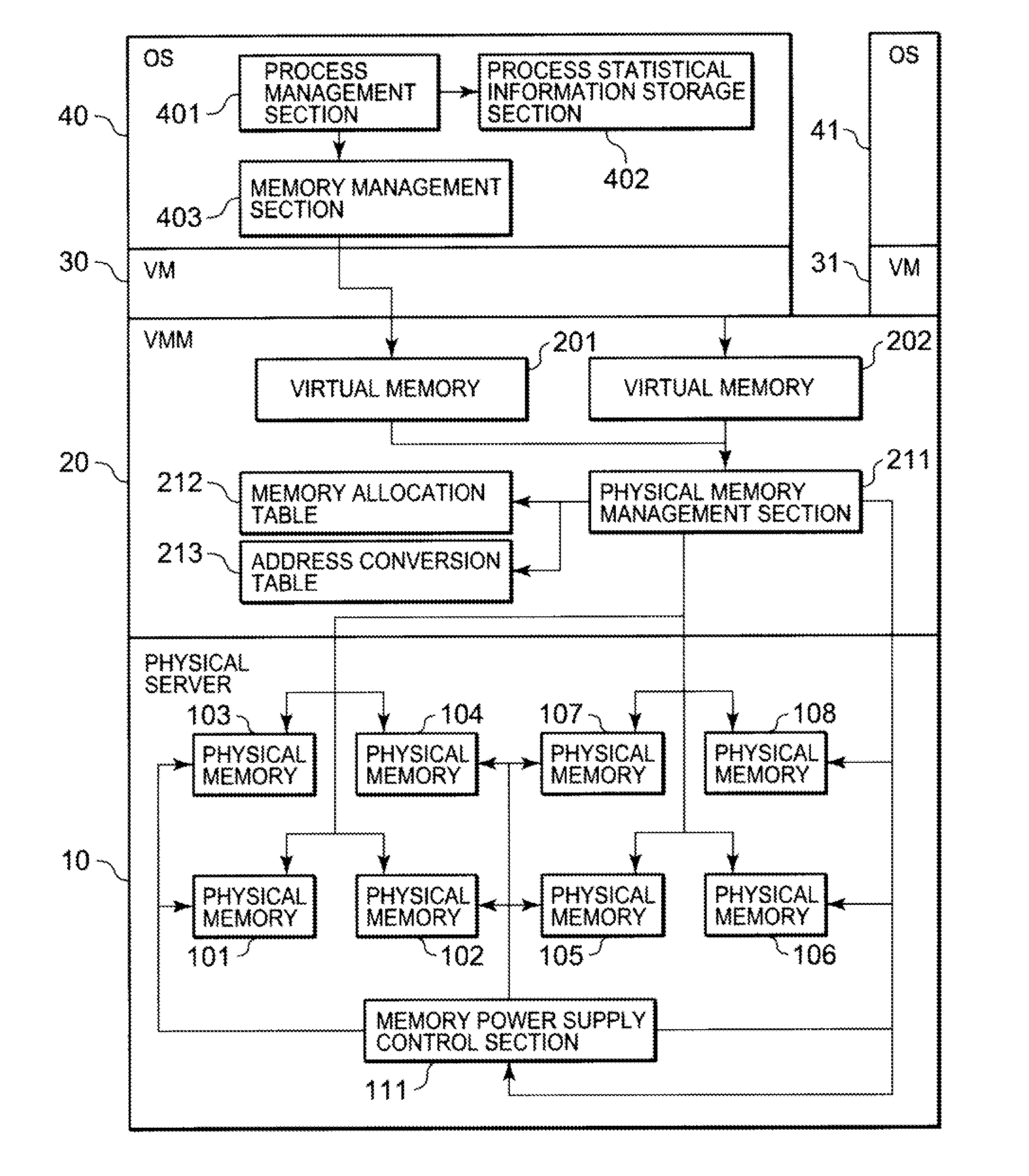

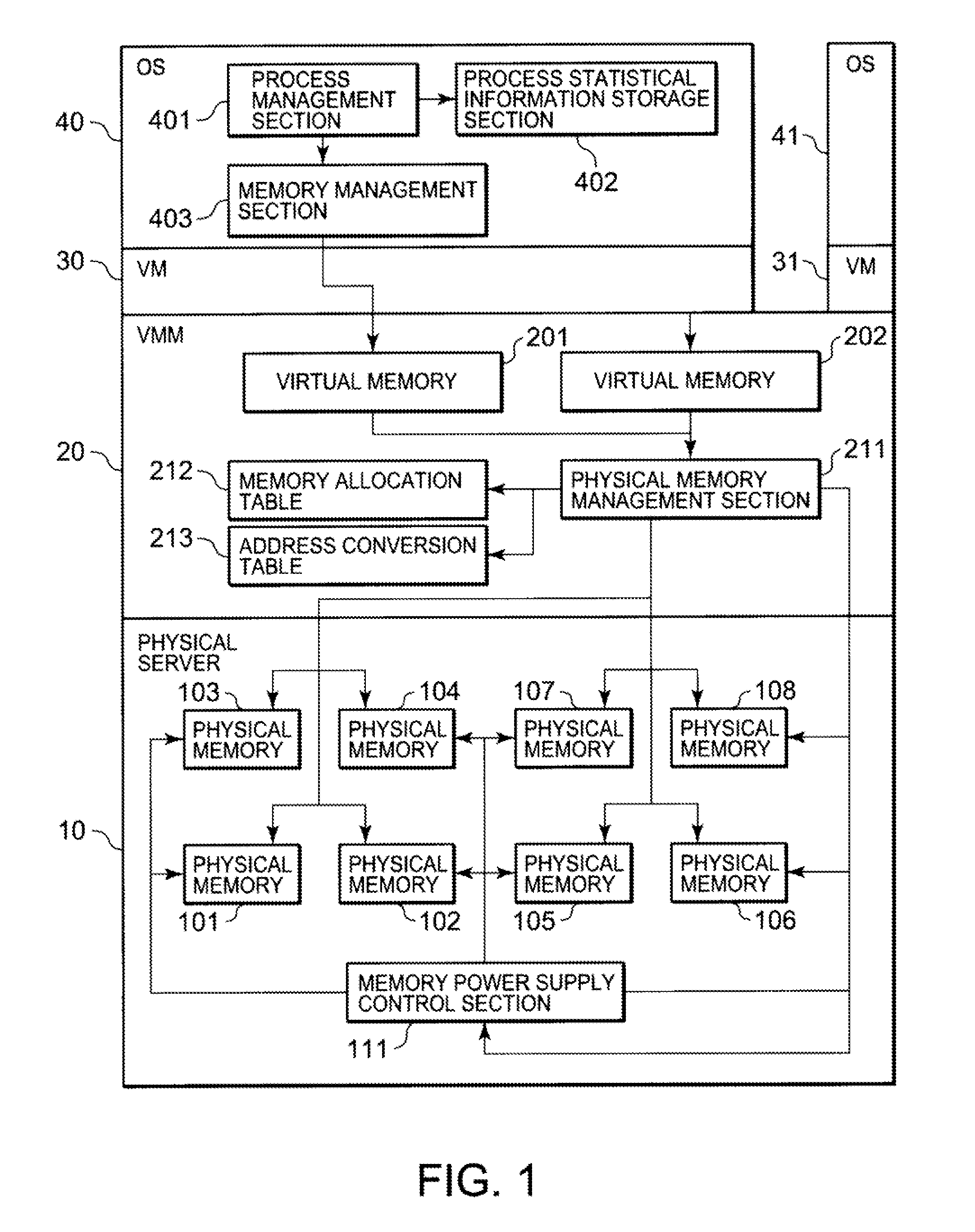

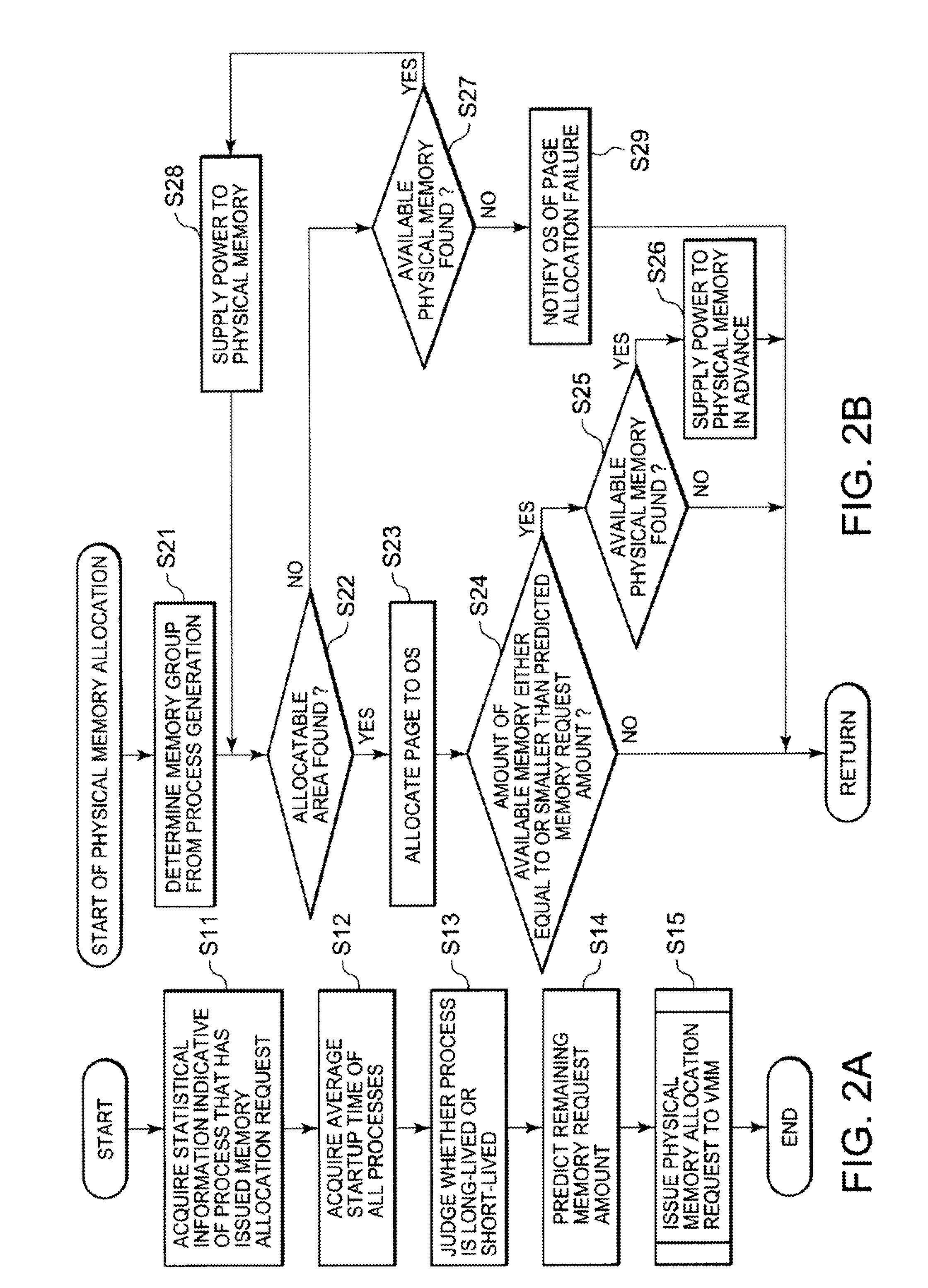

Memory power consumption reduction system, and method and program therefor

InactiveUS20100235669A1Reduce power consumptionMemory adressing/allocation/relocationPower supply for data processingParallel computingExecution control

Disclosed is a memory power consumption reduction system that includes a memory allocation section, which allocates an area of a plurality of physical memories included in a physical information processing device to a process of a virtual machine when the virtual machine is to be controlled with a VMM; a memory compaction section, which performs memory compaction when the physical memory area allocated to the process by the memory allocation section is deallocated upon termination of the process; and a power supply control section, which exercises control so as to shut off the power supply to a physical memory that is unused as a result of memory compaction by the memory compaction section.

Owner:NEC CORP

Prioritizing repopulation of in-memory compression units

ActiveUS20160085834A1Digital data processing detailsDatabase distribution/replicationDatabase serverData mining

To prioritize repopulation of in-memory compression units (IMCU), a database server compresses, into an IMCU, a plurality of data units from a database table. In response to changes to any of the plurality of data units within the database table, the database server performs the steps of: (a) invalidating corresponding data units in the IMCU; (b) incrementing an invalidity counter of the IMCU that reflects how many data units within the IMCU have been invalidated; (c) receiving a data request that targets one or more of the plurality of data units of the database table; (d) in response to receiving the data request, incrementing an access counter of the IMCU; and (e) determining a priority for repopulating the IMCU based, at least in part, on the invalidity counter and the access counter.

Owner:ORACLE INT CORP

Method and computer system for memory management on virtual machine system

ActiveCN103729305AMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationAccess timeComputerized system

A method and a computer system for memory management on a virtual machine system are provided. The memory management method includes the following steps. A least recently used (LRU) list is maintained by at least one processor according to a last access time, wherein the LRU list includes a plurality of memory pages. A first portion of the memory pages are stored in a virtual memory, a second portion of the memory pages are stored in a zram driver, and a third portion of the memory pages are stored in at least one swap disk. A space in the zram driver is set by the at least one processor. The space in the zram driver is adjusted by the processor according to a plurality of access probabilities of the memory pages in the zram driver, an overhead of a pseudo page fault, and an overhead of a true page fault.

Owner:IND TECH RES INST

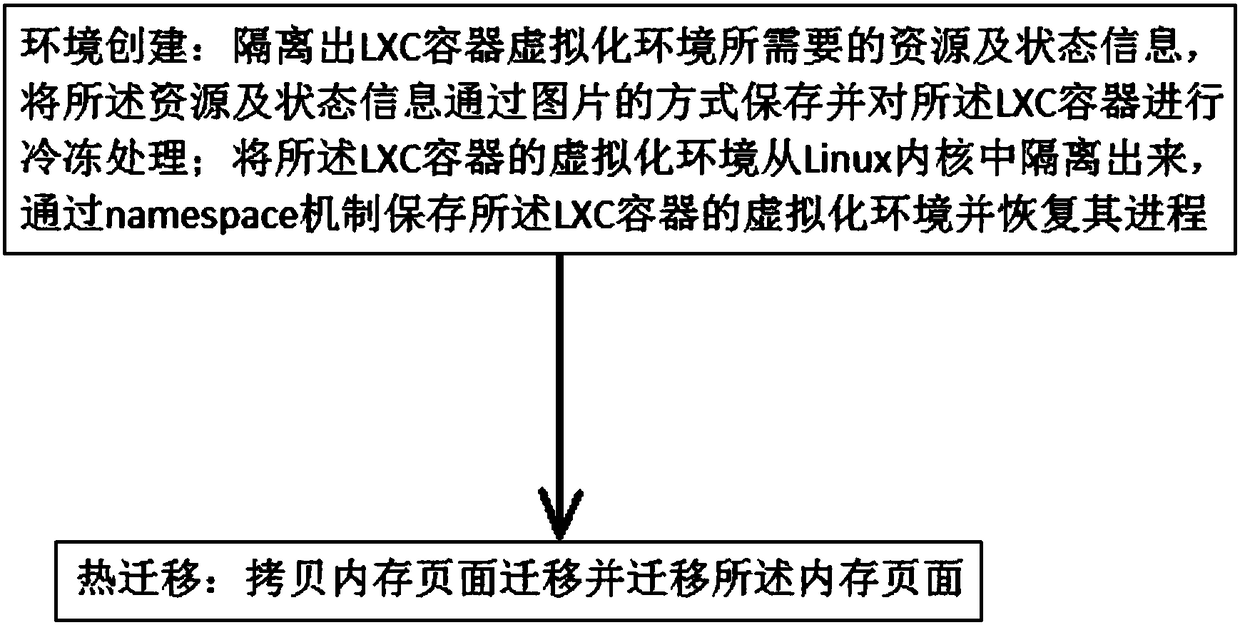

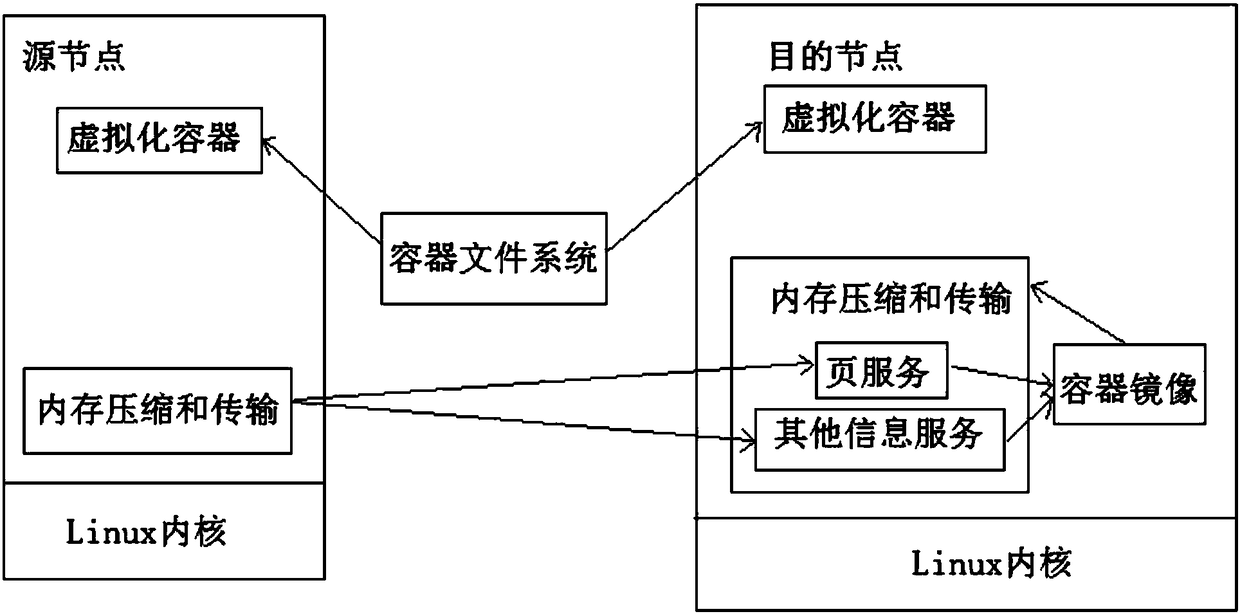

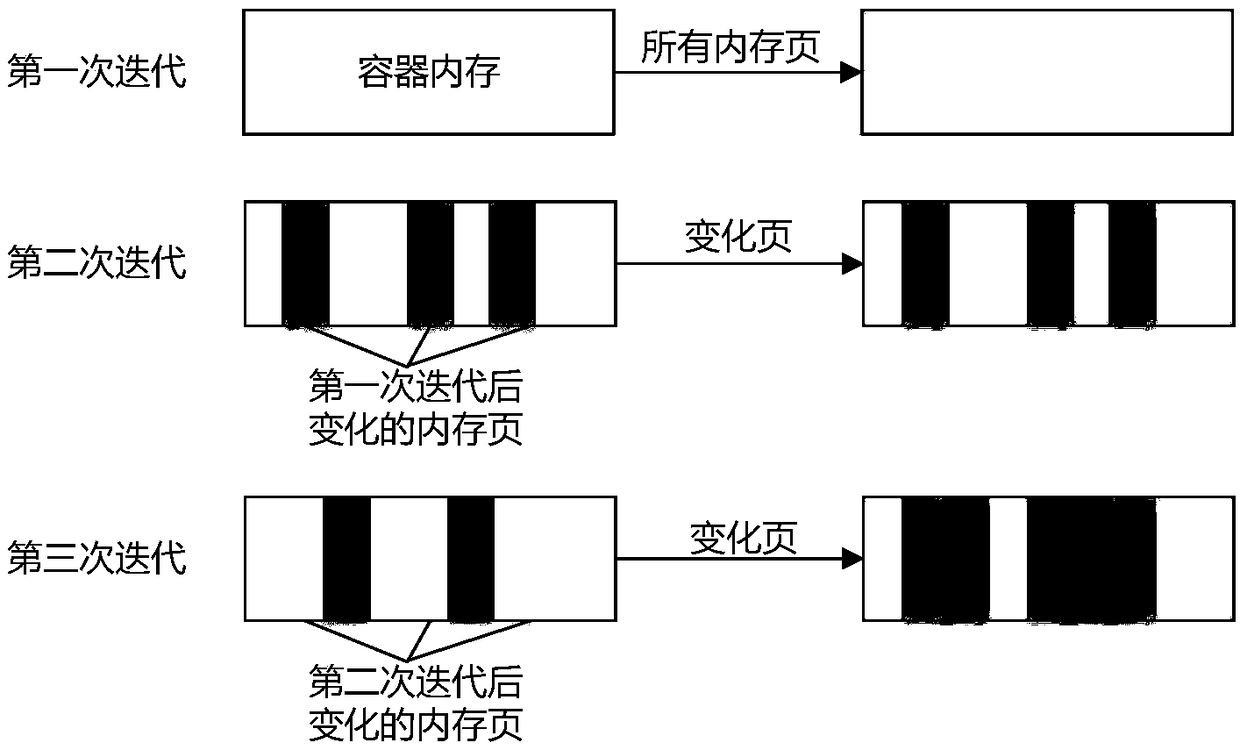

Stateful service container thermal migration method based on memory compaction transmission

InactiveCN108279969AIncrease usageReduce transmissionSoftware simulation/interpretation/emulationVirtualizationLinux kernel

The invention relates to a stateful service container thermal migration method based on memory compaction transmission. The method comprises the following steps of 1, environment creation, wherein resources and status information needed by the LXC container virtualization environment are isolated, the resources and the status information are saved in a picture mode, and freezing treatment is performed on an LXC container; the LXC container virtualization environment is isolated from a Linux kernel, the LXC container virtualization environment is saved through a namespace mechanism, and the process is recovered; 2, thermal migration, wherein memory page migration is copied, and a memory page is migrated. According to the method, redundant data transmission is lowered, the dynamic migrationefficiency is improved, the steps are simplified, and operation is convenient.

Owner:中科边缘智慧信息科技(苏州)有限公司

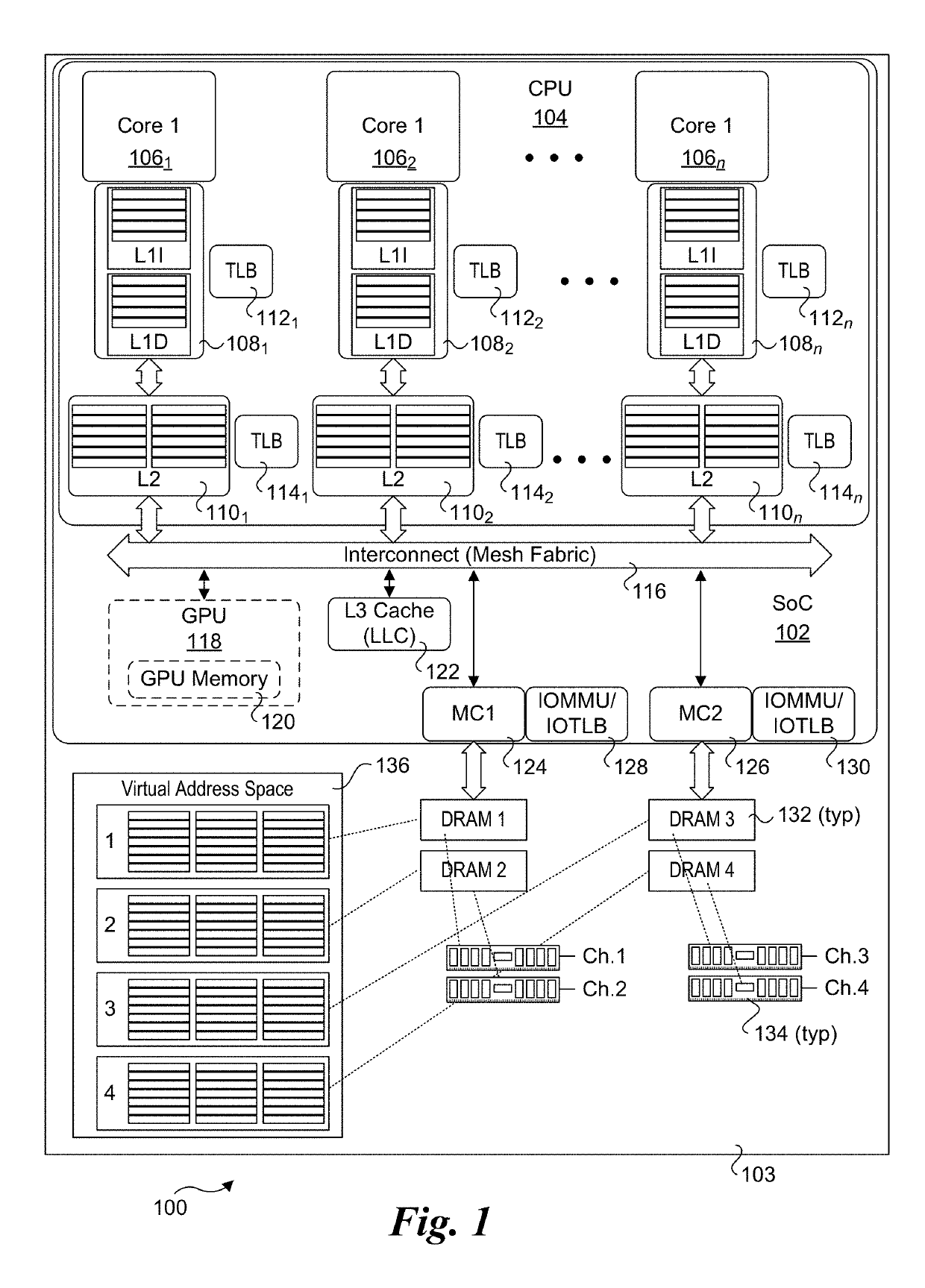

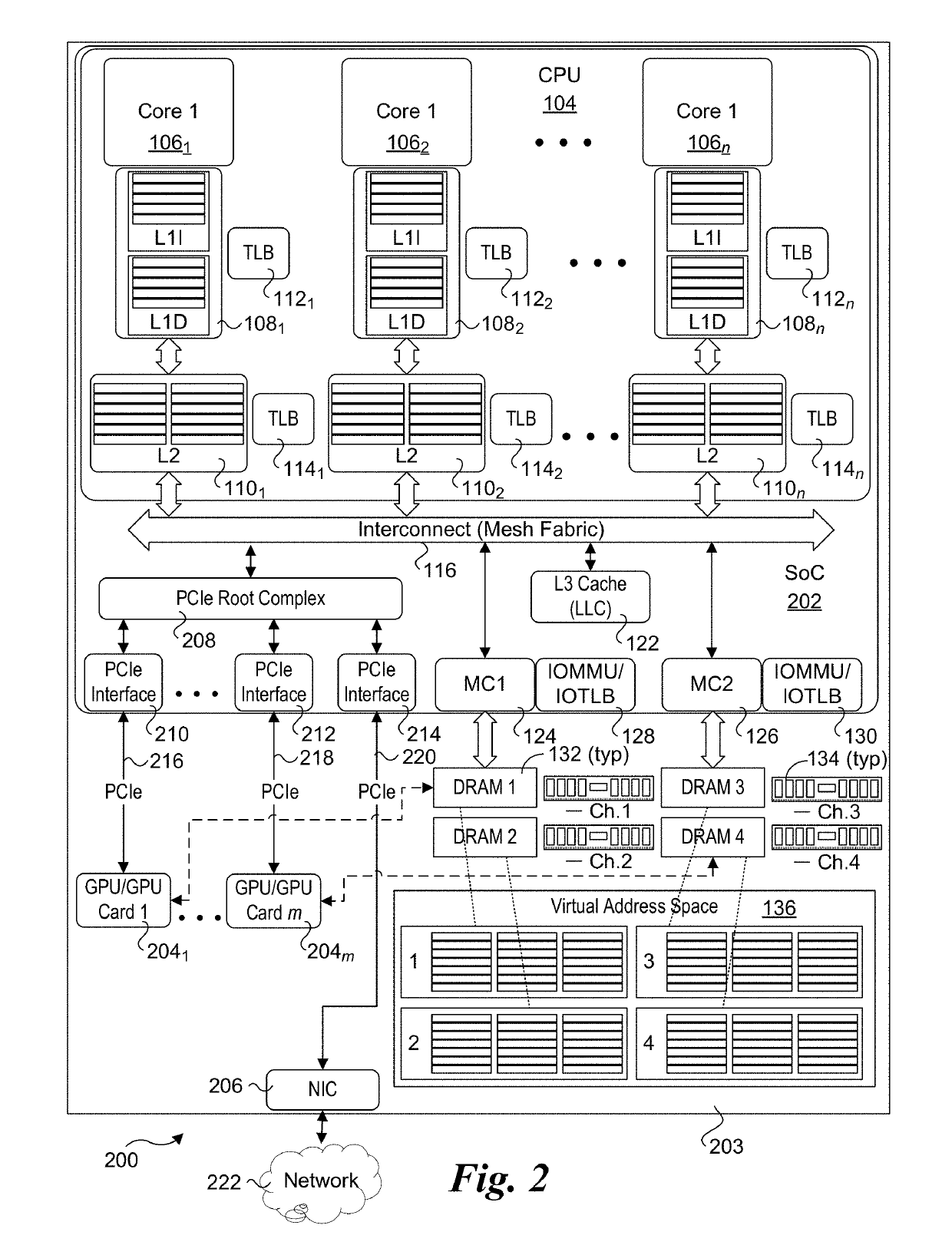

Scalable application-customized memory compression

InactiveUS20190243780A1Memory architecture accessing/allocationMemory systemsParallel computingImage compression

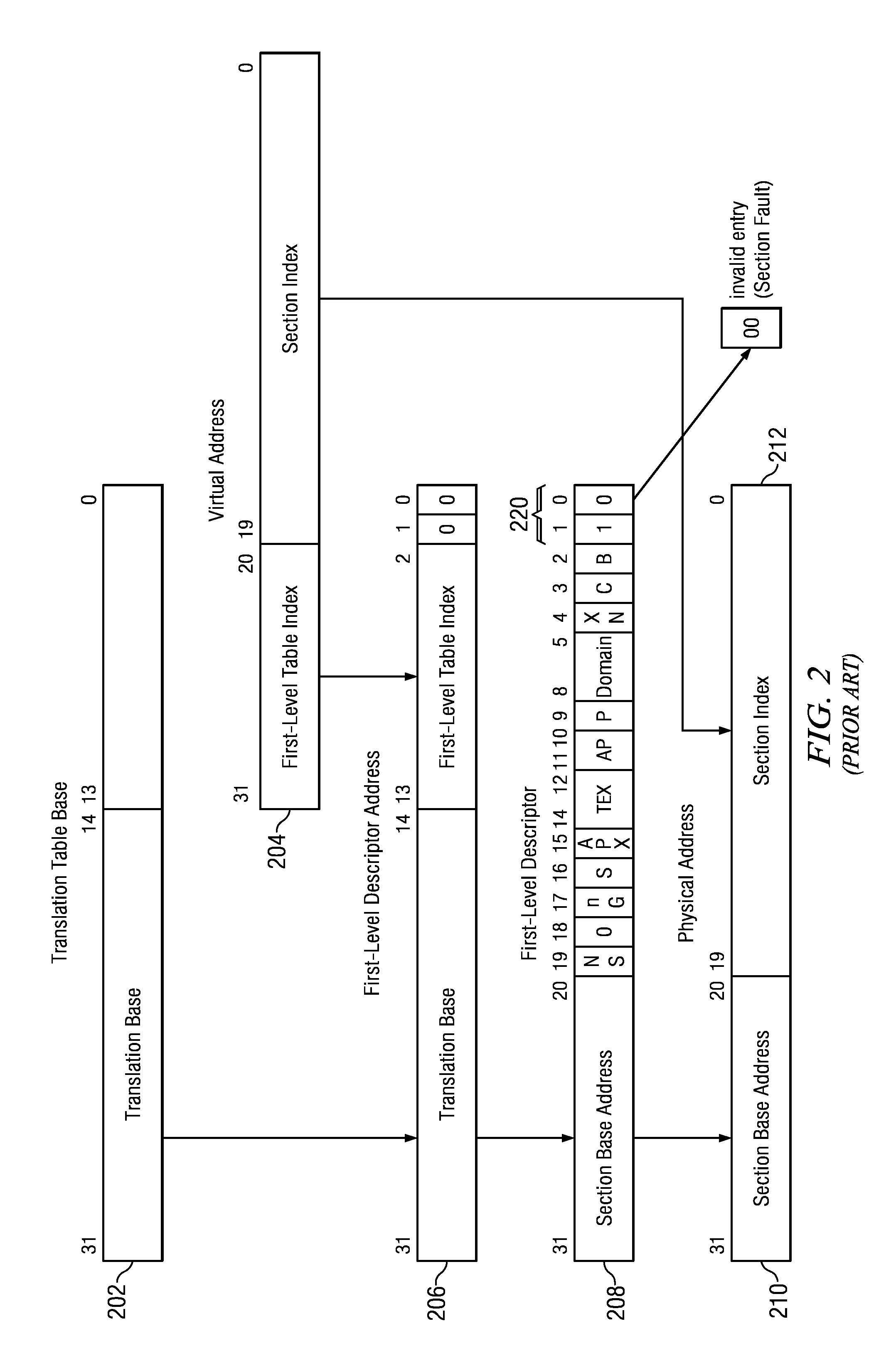

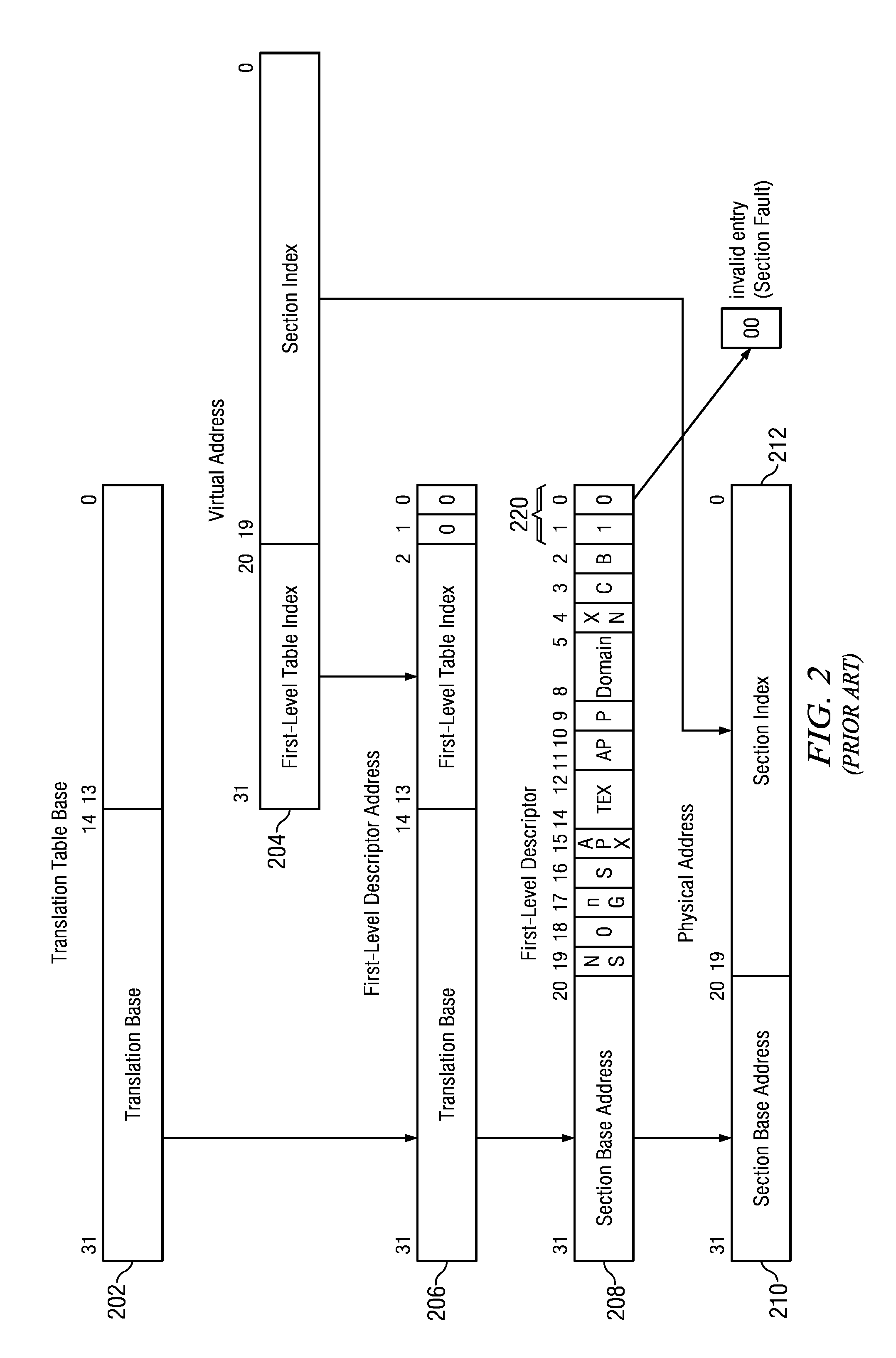

Methods and apparatus for scalable application-customized memory compression. Data is selectively stored in system memory using compressed formats or uncompressed format using a plurality of compression schemes. A compression ID is used to identify the compression scheme (or no compression) to be used and included with read and write requests submitted to a memory controller. For memory writes, the memory controller dynamically compresses data written to memory cache lines using compression algorithms (or no compression) identified by compression ID. For memory reads, the memory controller dynamically decompresses data stored memory cache lines in compressed formats using decompression algorithms identified by the compression ID. Page tables and TLB entries are augments to include a compression ID field. The format of memory cache lines includes a compression metabit indicating whether the data in the cache line is compressed. Support for DMA reads and writes from IO devices such as GPUs using selective memory compression is also provided.

Owner:INTEL CORP

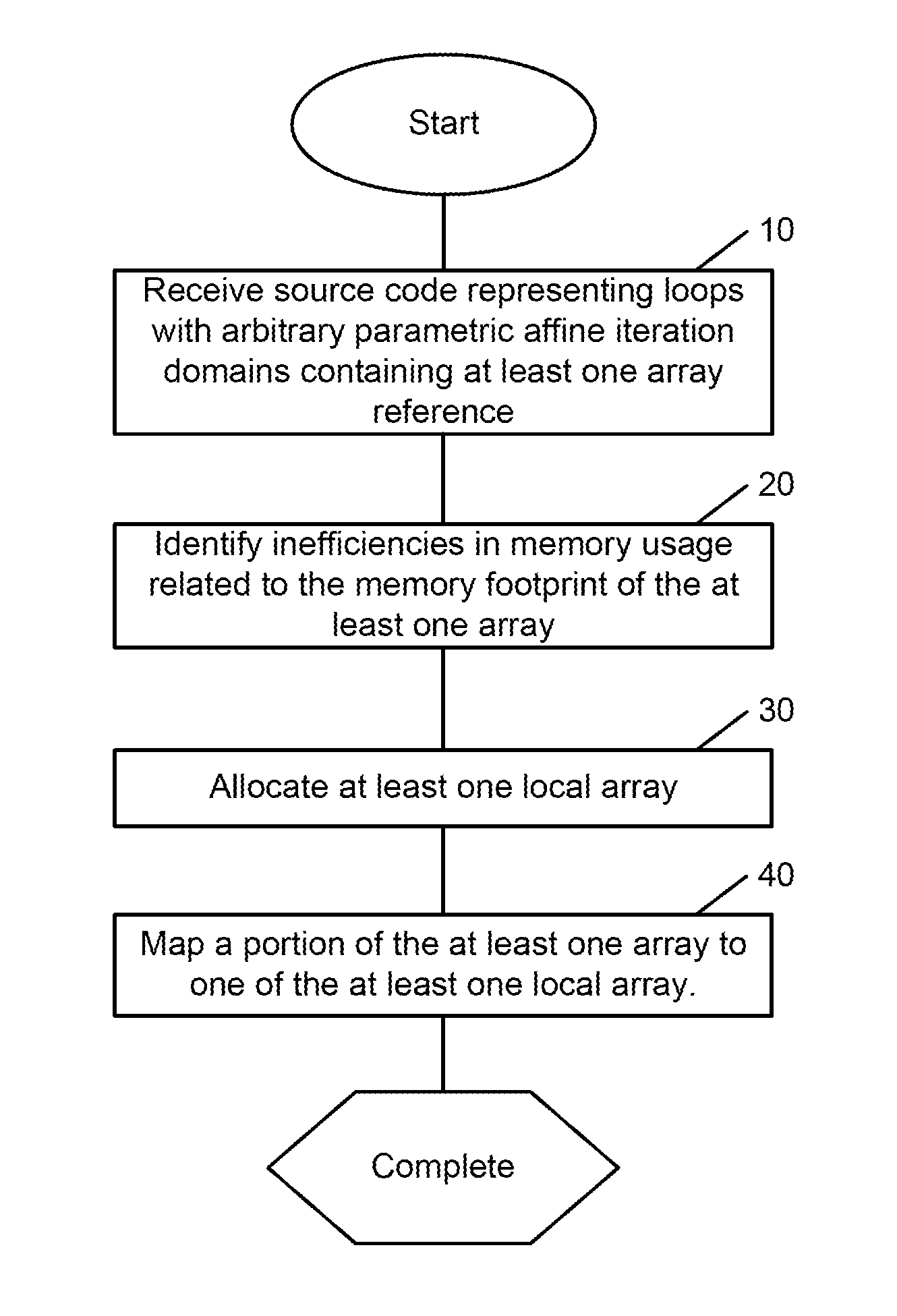

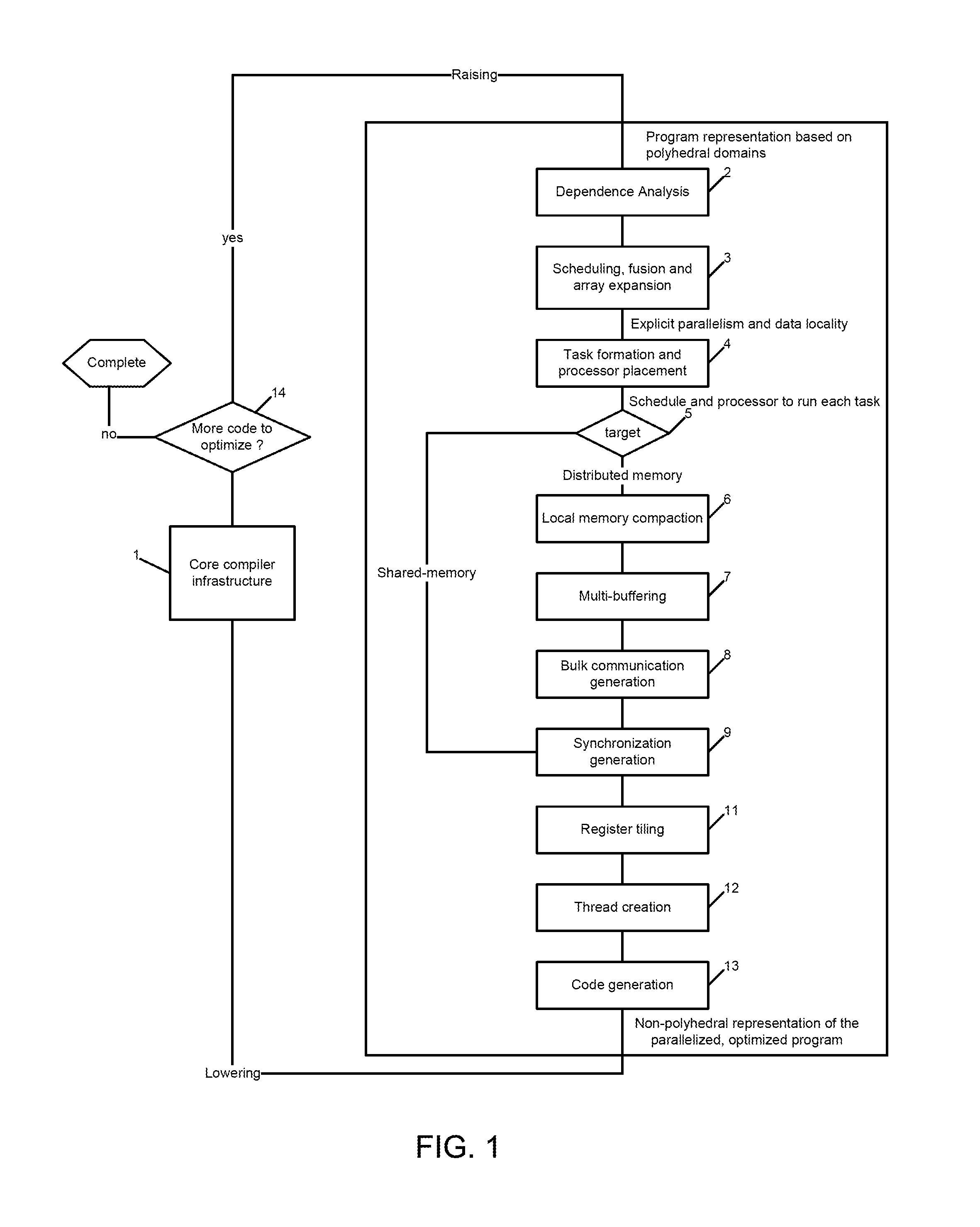

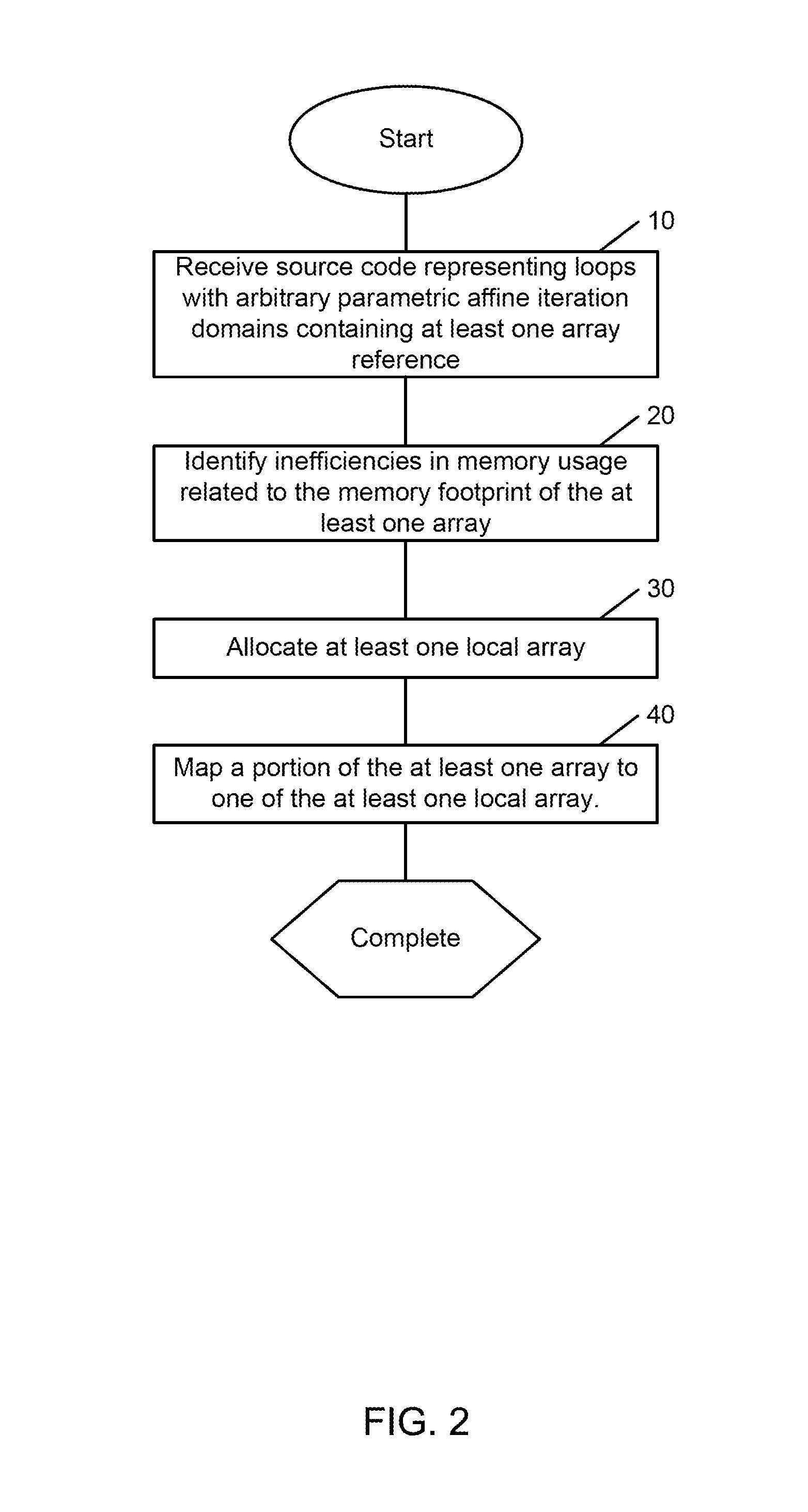

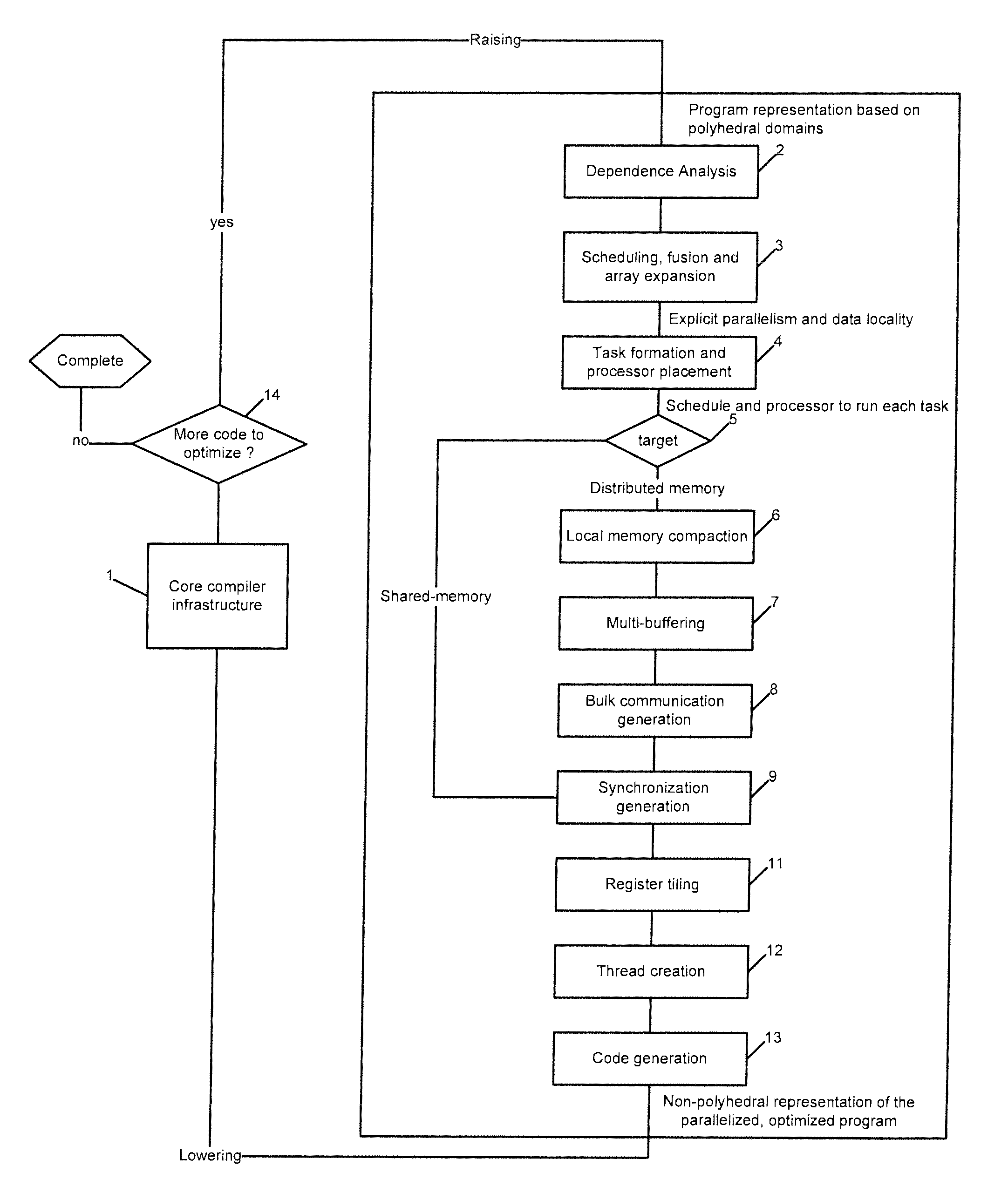

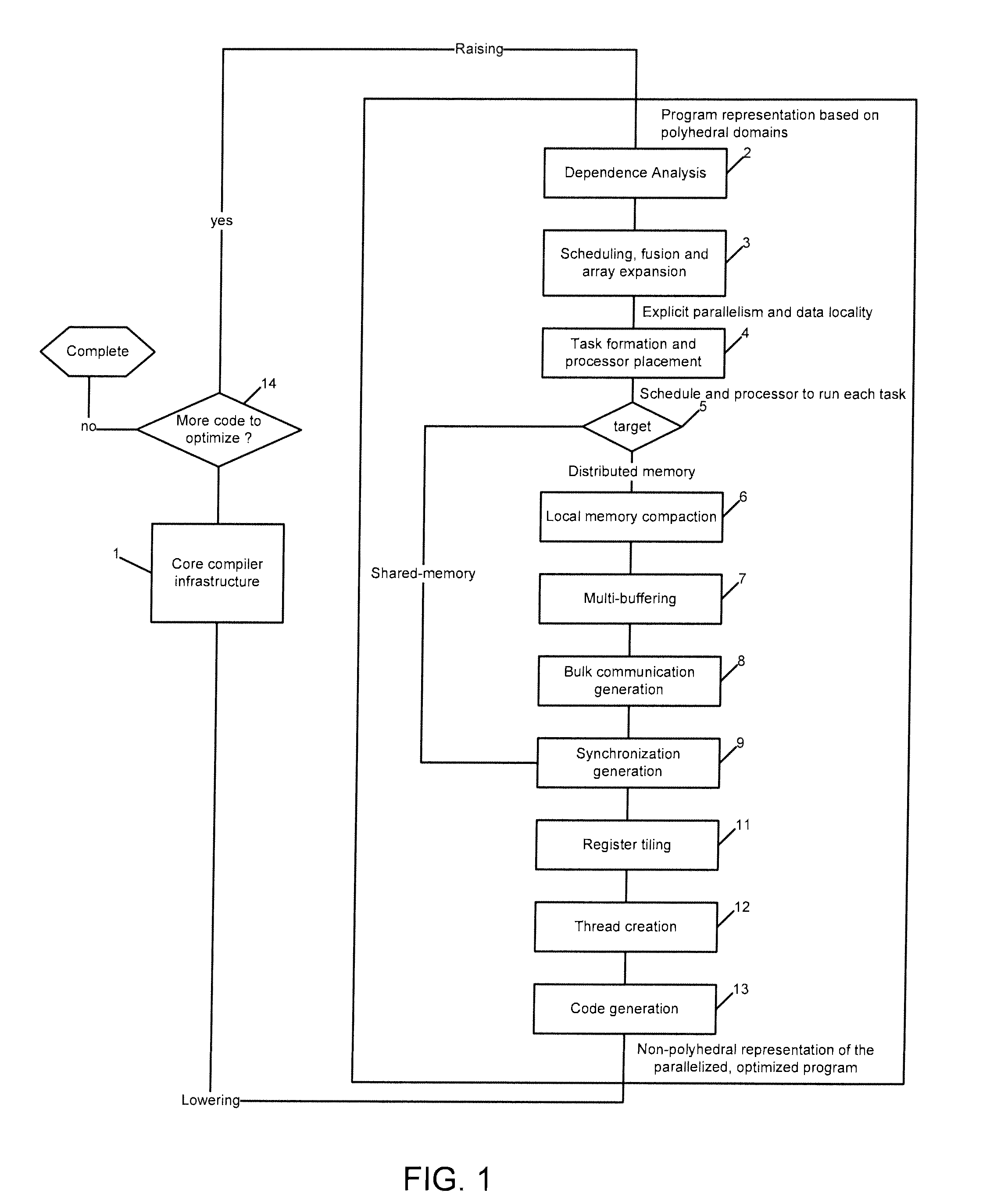

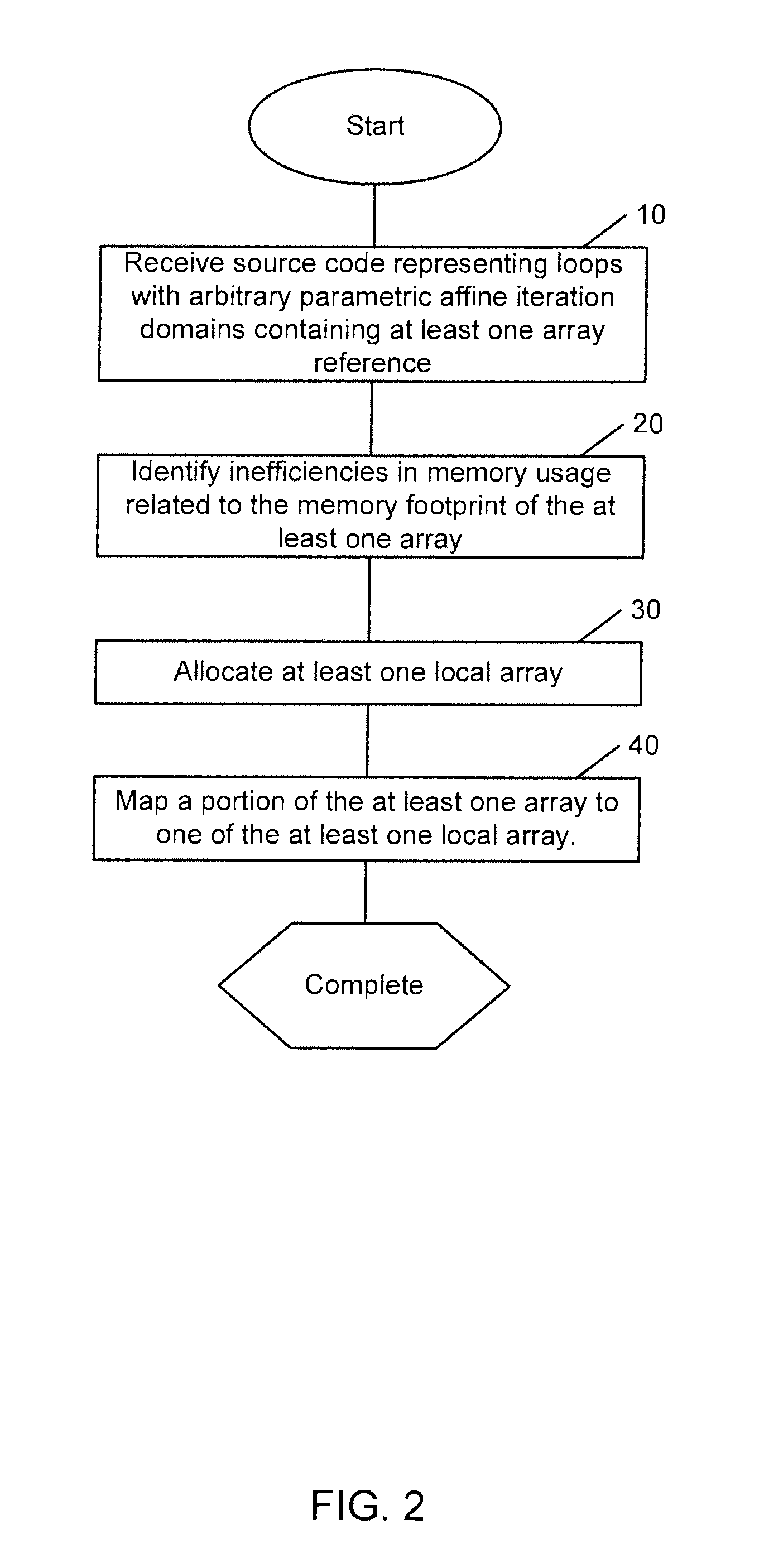

Methods and apparatus for local memory compaction

ActiveUS8661422B2Reduces memory size requirement and memory footprintSoftware engineeringProgram controlTerm memorySource code

Owner:QUALCOMM INC

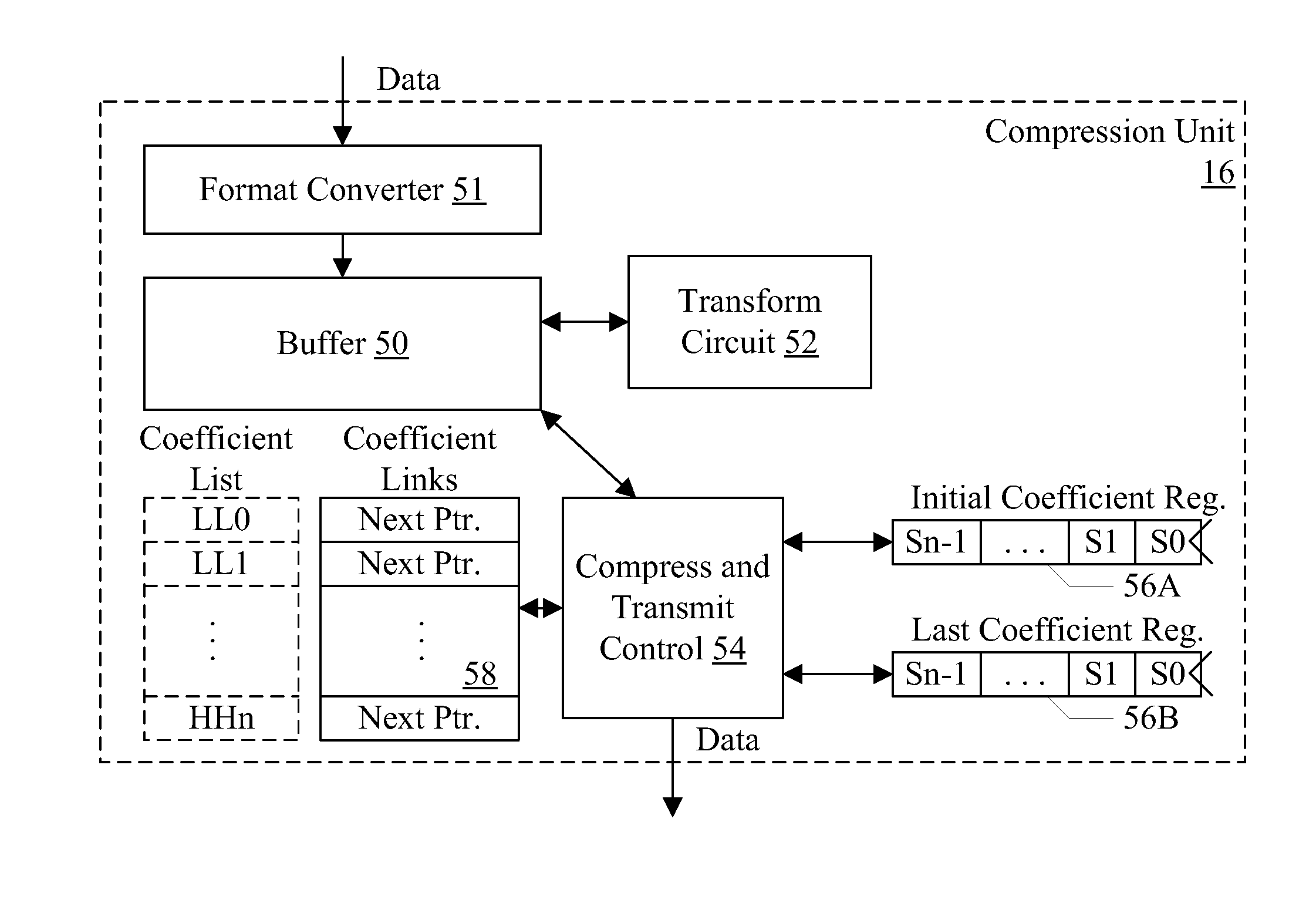

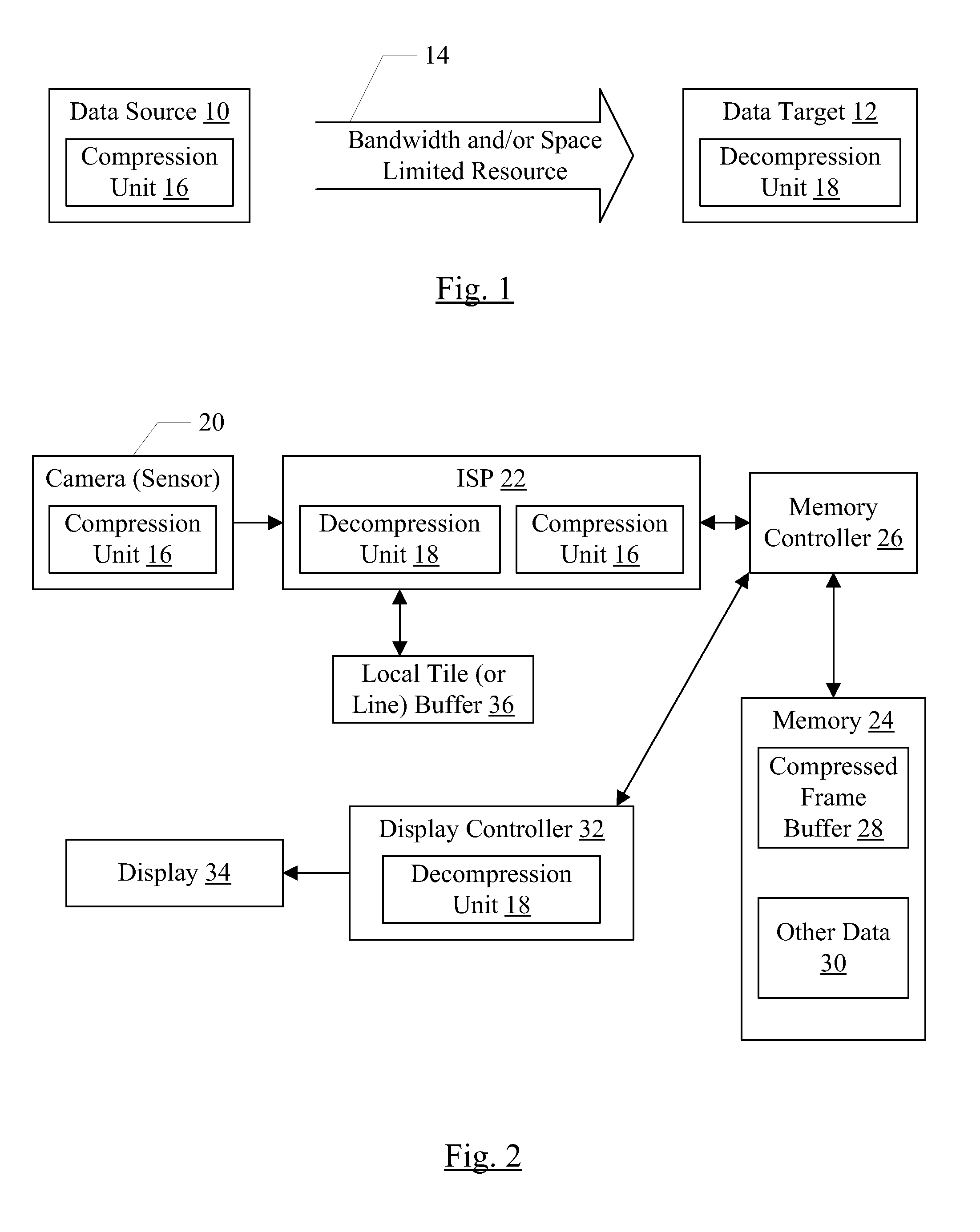

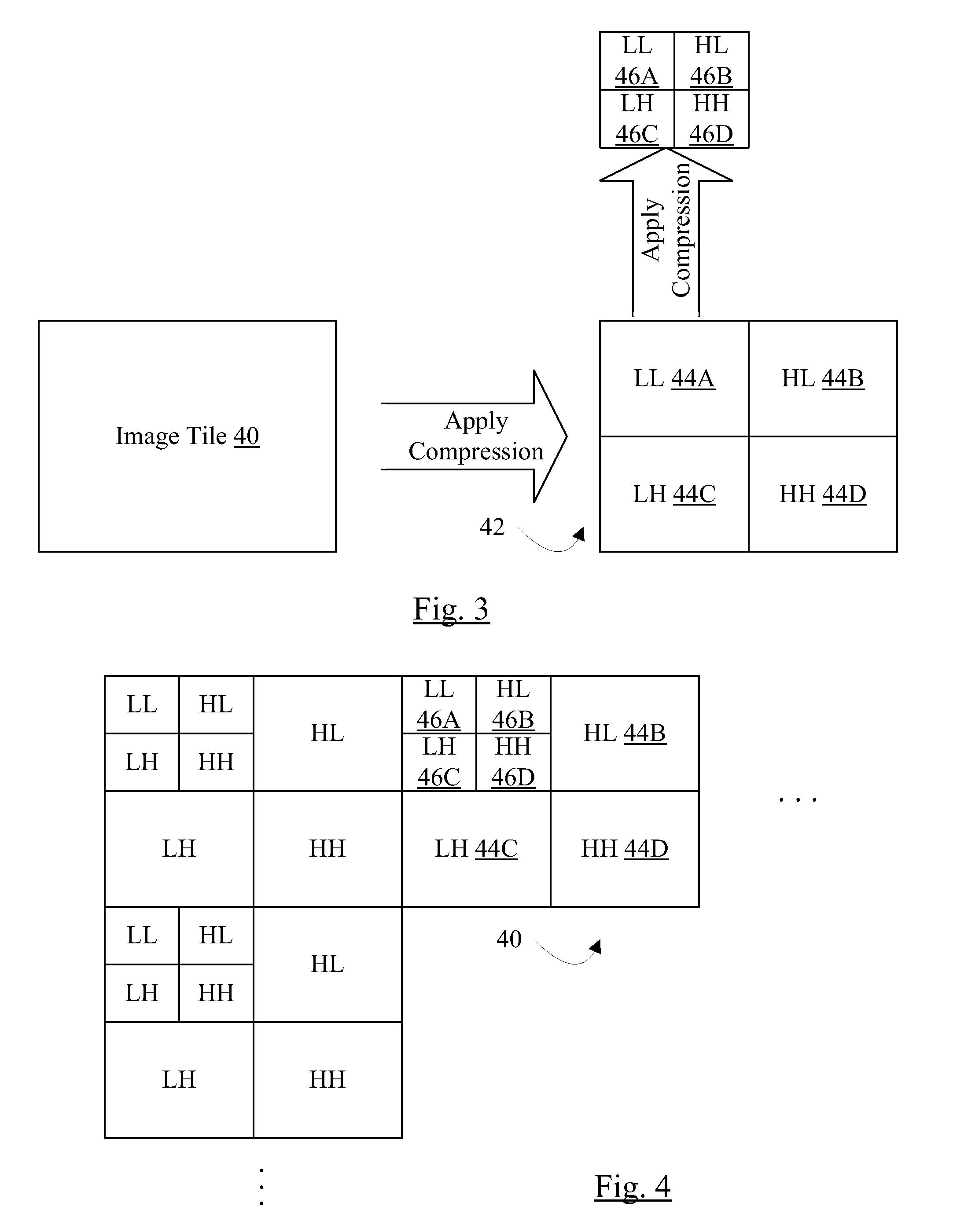

Memory compression technique with low latency per pixel

ActiveUS8378859B2Lower latencyLittle hardwareMemory architecture accessing/allocationTelevision system detailsLatency (engineering)Computer science

In an embodiment, a compression unit is provided which may perform compression of images with low latency and relatively little hardware. Similarly, a decompression unit may be provided which may decompress the images with low latency and hardware. In an embodiment, the transmission of compressed coefficients may be performed using less than two passes through the list of coefficients. During the first pass, the most significant coefficients may be transmitted and other significance groups may be identified as linked lists. The linked lists may then be traverse to send the other significance groups. In an embodiment, a color space conversion may be made to permit filtering of fewer color components than might be possible in the source color space.

Owner:APPLE INC

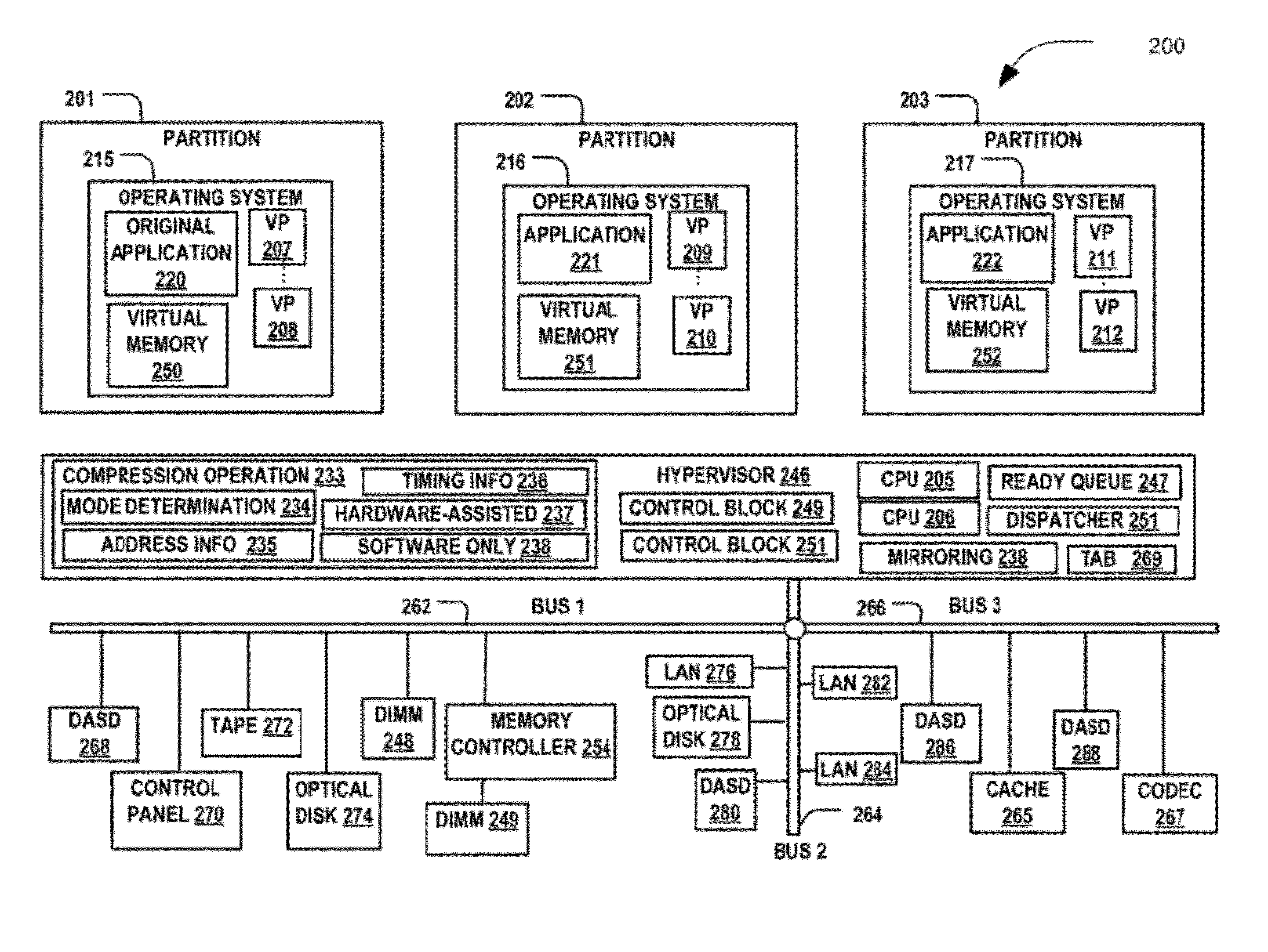

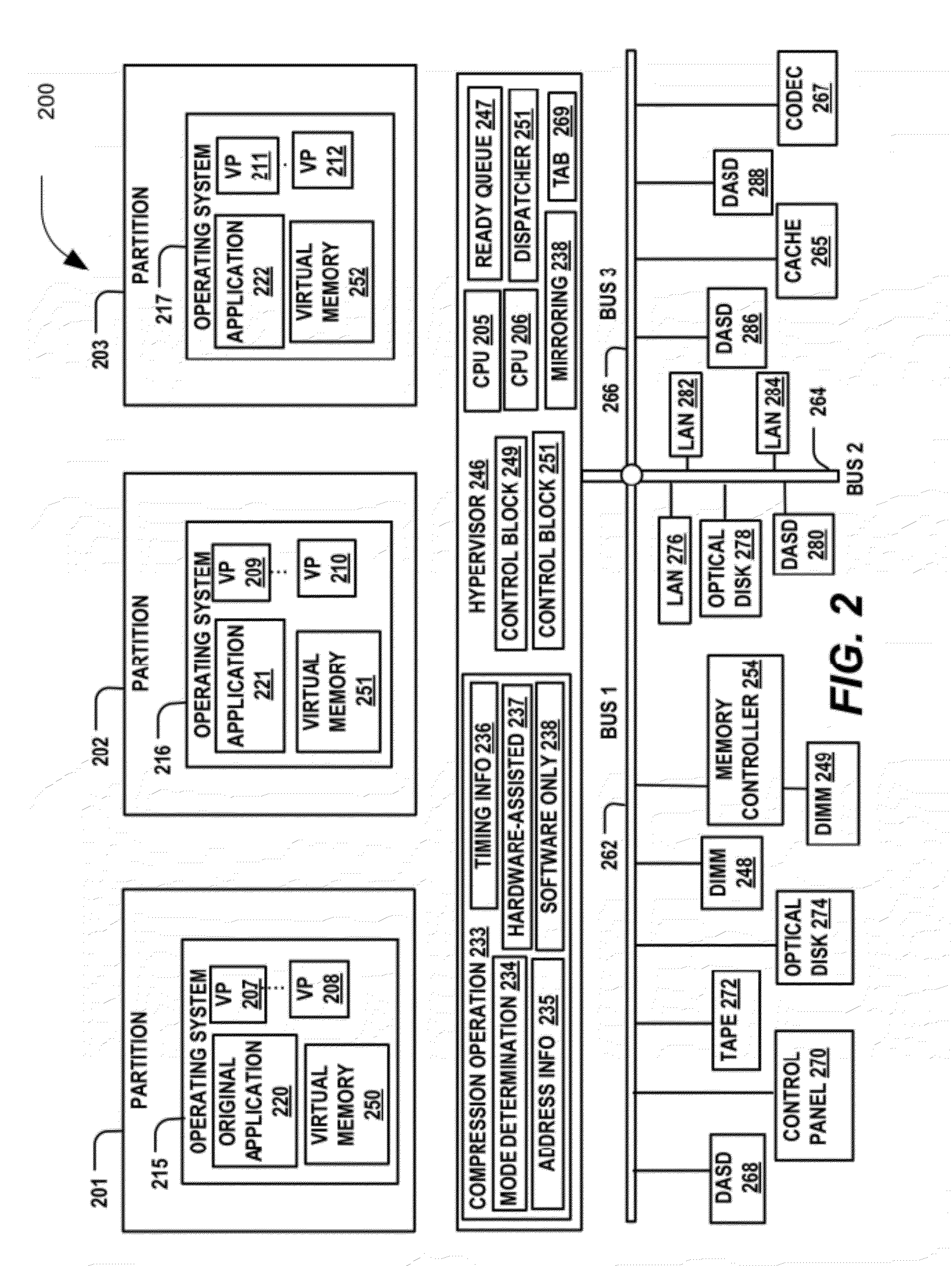

Memory management using both full hardware compression and hardware-assisted software compression

InactiveUS20120144146A1Improve stress resistanceImprove performanceMemory architecture accessing/allocationMemory systemsComputer architectureEngineering

Systems and methods to manage memory are provided. A particular method may include selecting one of a plurality of compression modes to perform memory compression operations at a server computer. The plurality of compression modes may include a first memory compression mode configured to perform a first memory compression operation using a compression engine, and a second compression mode configured to perform a second memory compression operation using the compression engine. At least one of the first compression operation and the second compression operation may be performed according to the selected compression mode.

Owner:LENOVO ENTERPRISE SOLUTIONS SINGAPORE

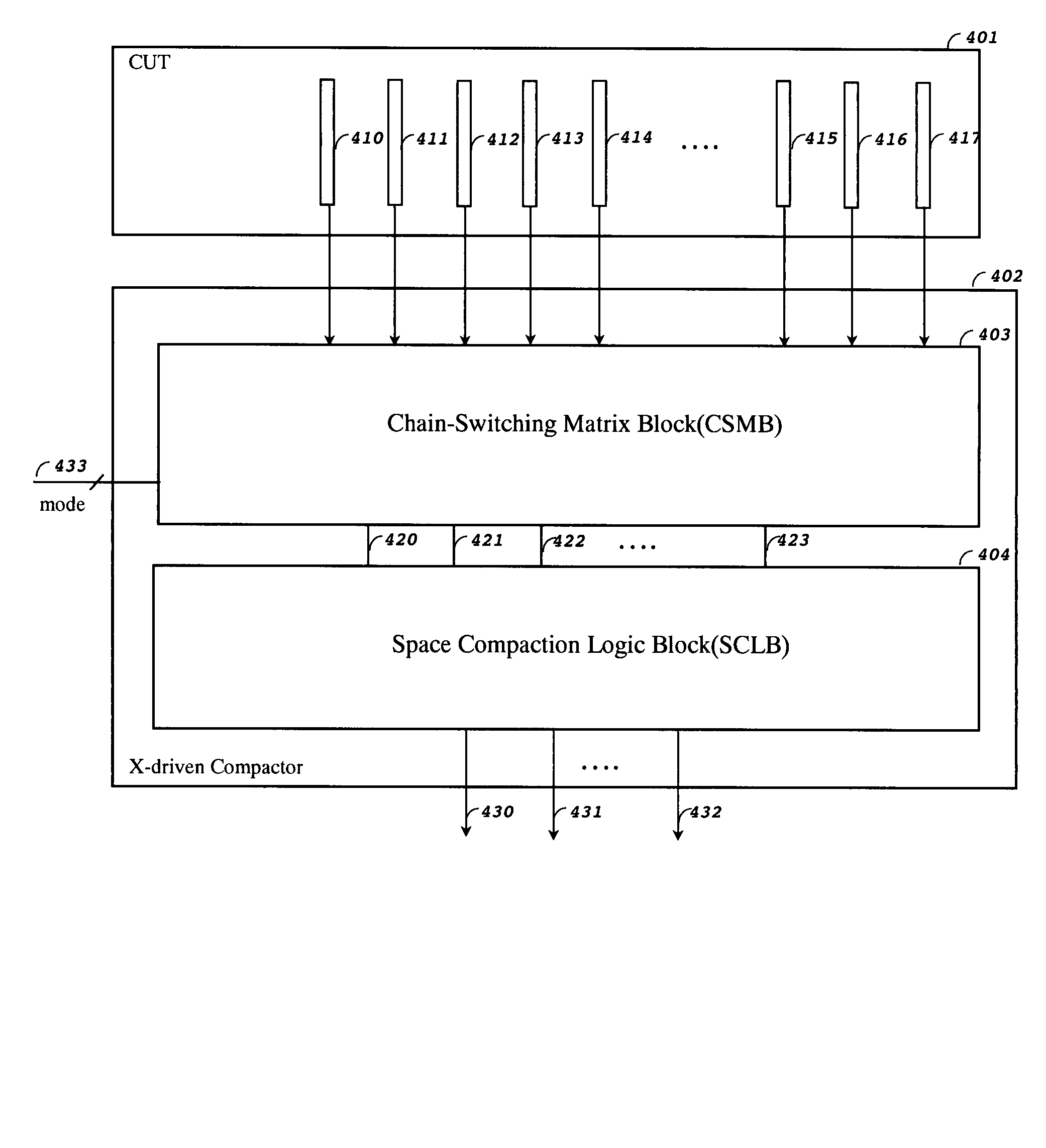

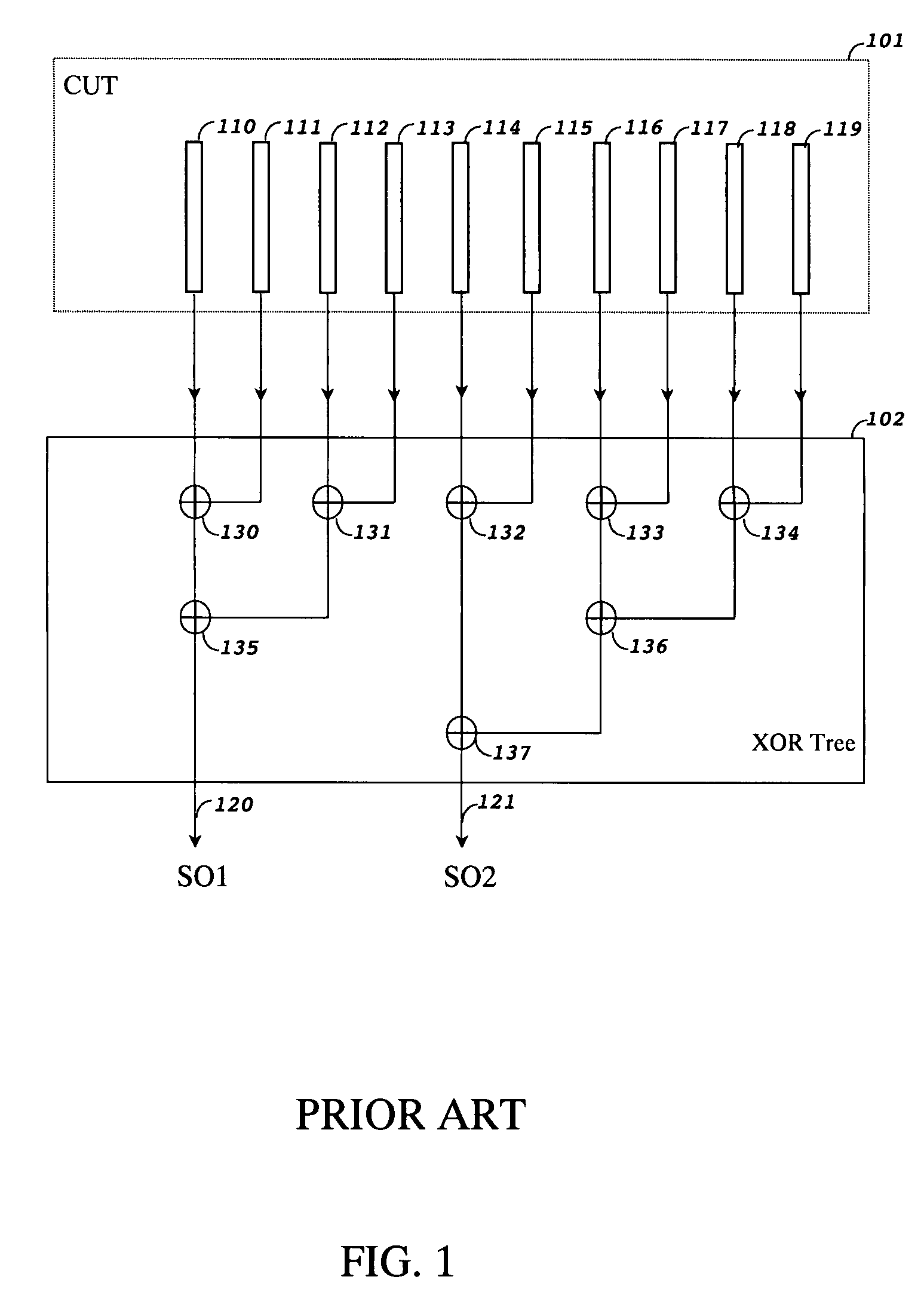

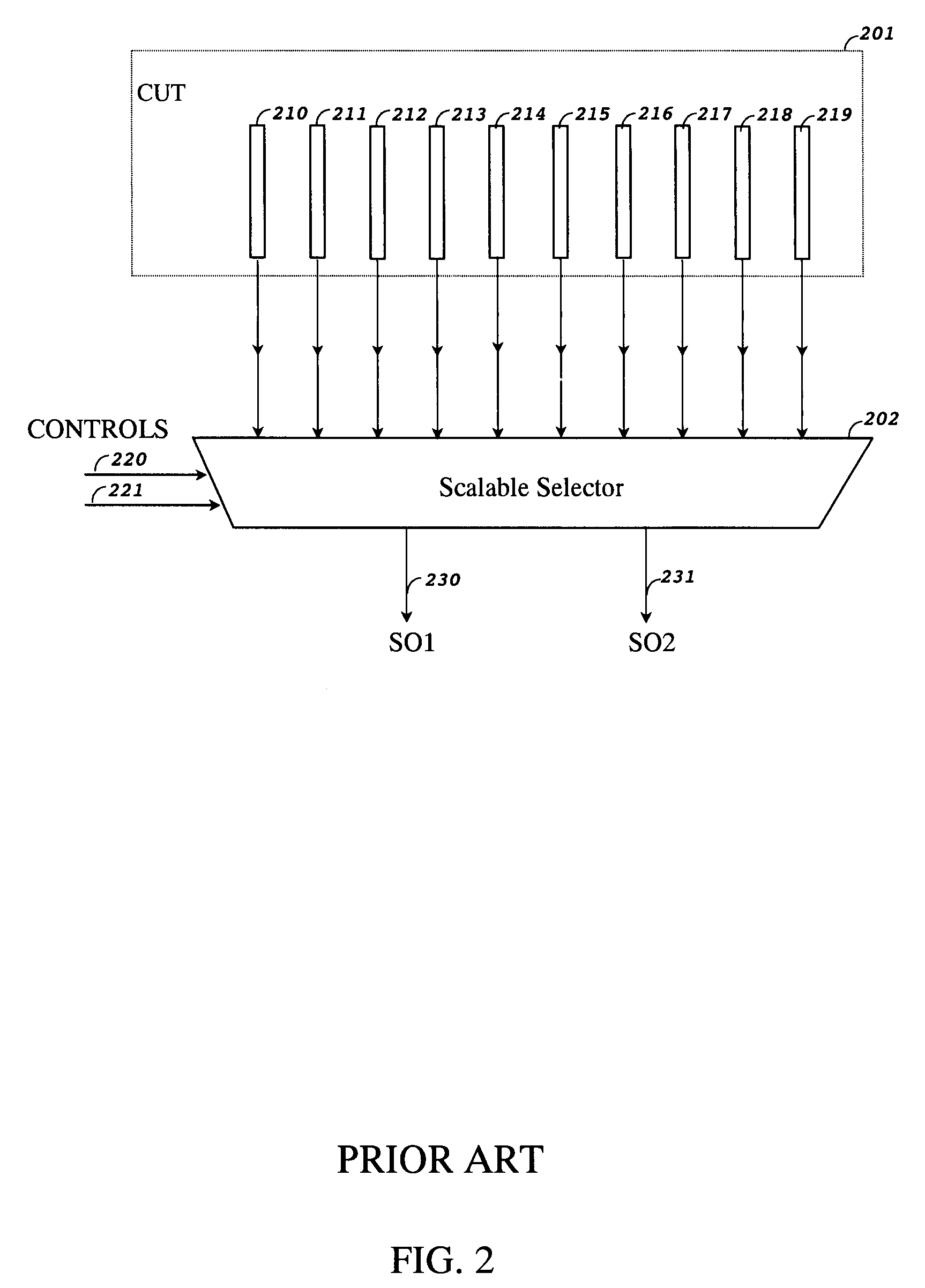

Compacting test responses using X-driven compactor

InactiveUS7779322B1Minimizes masking errorImprove fault coverageElectronic circuit testingCompression testTest response

A method and apparatus for compacting test responses containing unknown values in a scan-based integrated circuit. The proposed X-driven compactor comprises a chain-switching matrix block and a space compaction logic block. The chain-switching matrix block switches the internal scan chain outputs before feeding them to the space compaction logic block for compaction so as to minimize X-induced masking and error masking. The X-driven compactor further selectively includes a finite-memory compaction logic block to further compact the outputs of the space compaction logic block.

Owner:SYNTEST TECH

Dynamically configurable memory system

ActiveUS8589650B2Memory architecture accessing/allocationEnergy efficient ICTPower modeComputer architecture

In a digital system with a processor coupled to a paged memory system, the memory system may be dynamically configured using a memory compaction manager in order to allow portions of the memory to be placed in a low power mode. As applications are executed by the processor, program instructions are copied from a non-volatile memory coupled to the processor into pages of the paged memory system under control of an operating system. Pages in the paged memory system that are not being used by the processor are periodically identified. The paged memory system is compacted by copying pages that are being used by the processor from a second region of the paged memory into a first region of the paged memory. The second region may be placed in a low power mode when it contains no pages that are being used by the processor.

Owner:TEXAS INSTR INC

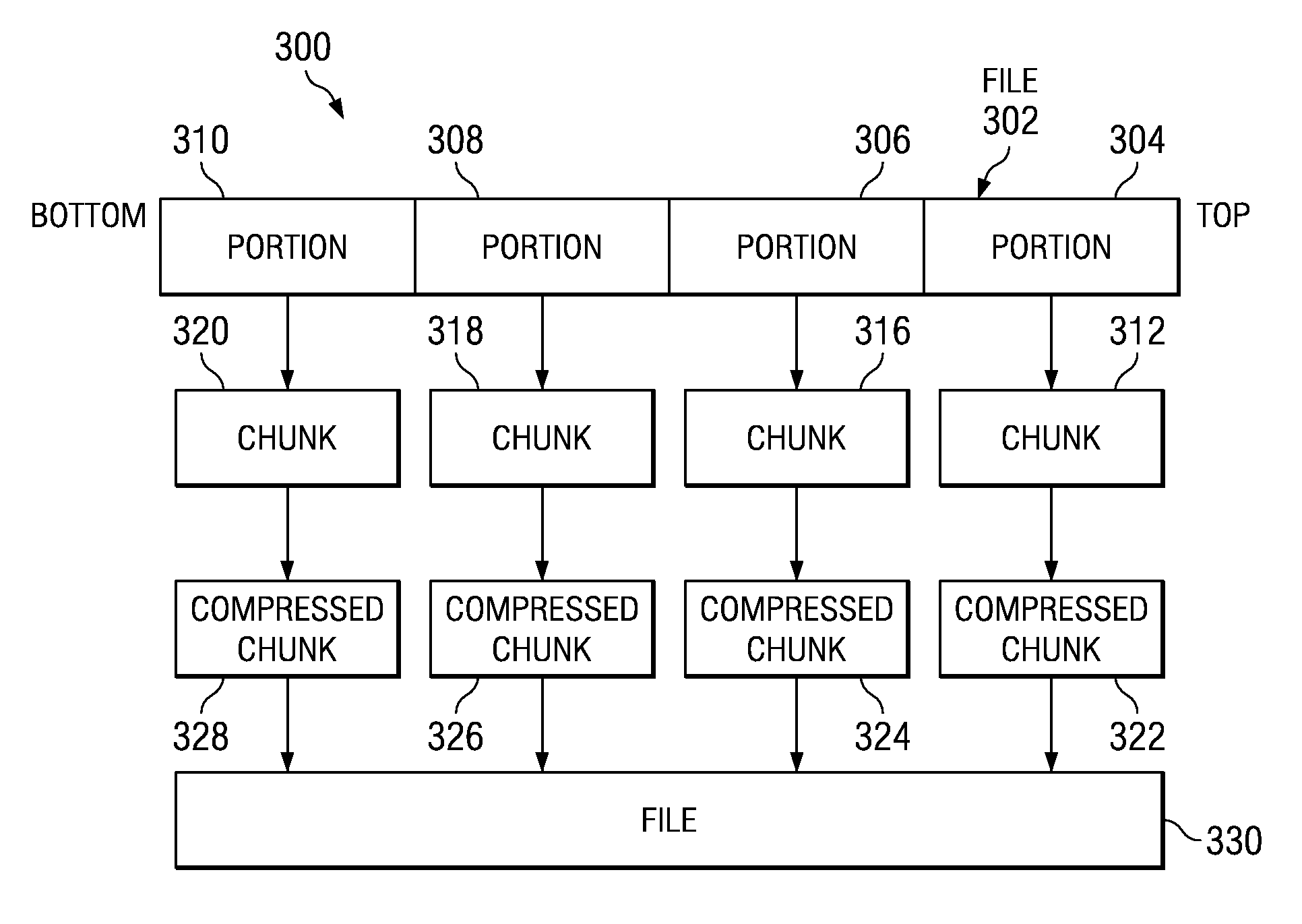

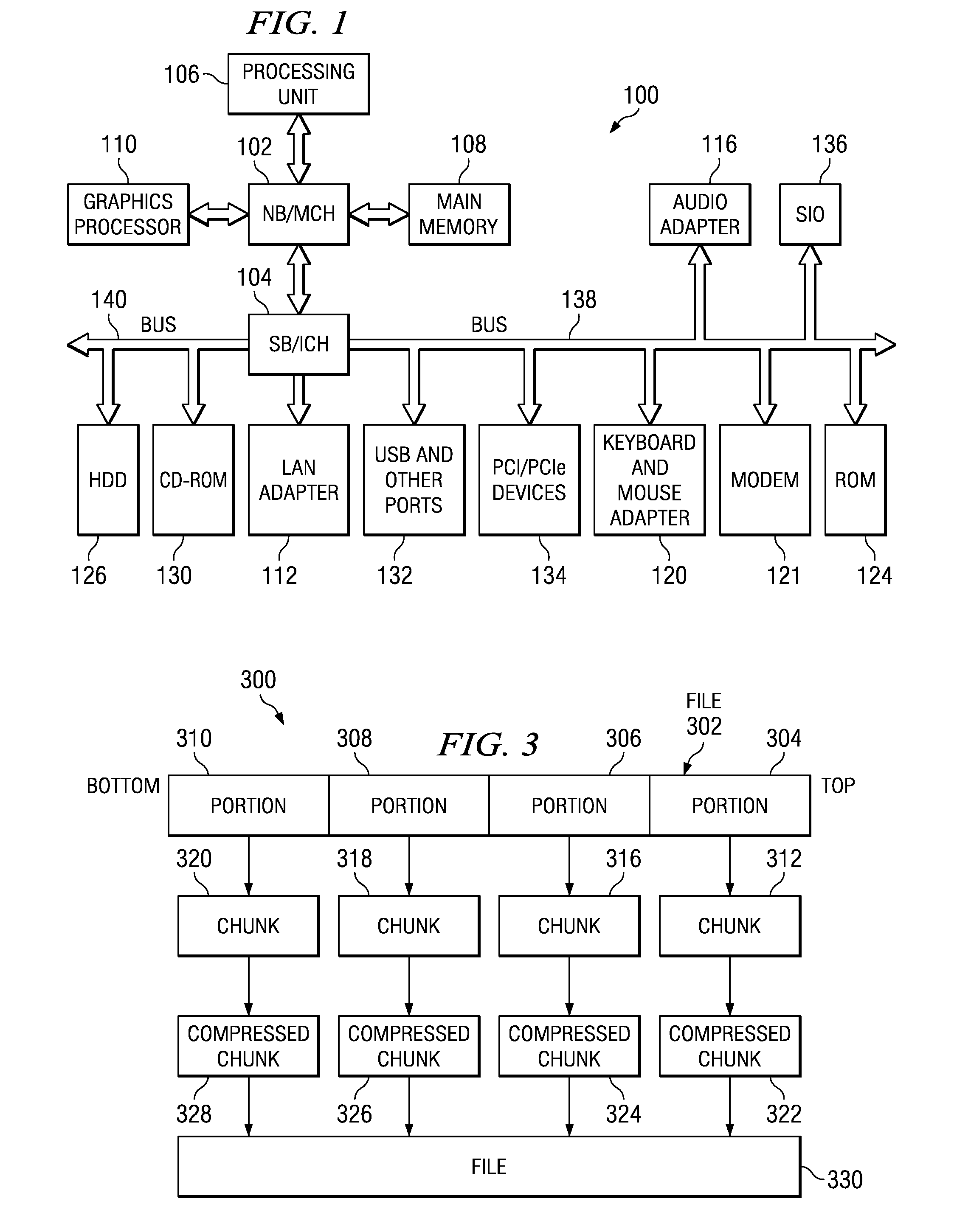

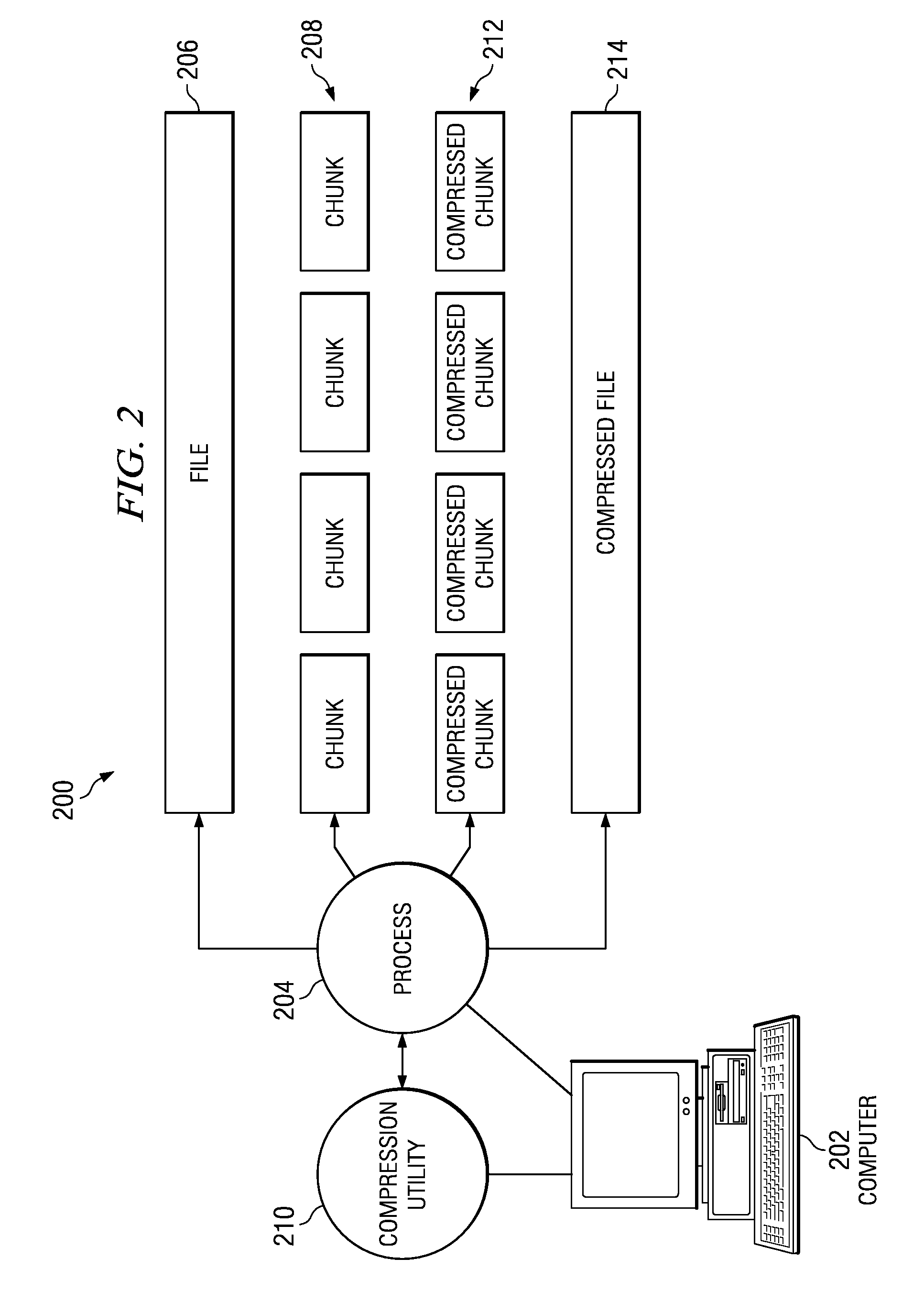

Compressing files using a minimal amount of memory

InactiveUS20080307014A1Code conversionSpecial data processing applicationsComputer programMemory compaction

A computer implemented method, apparatus, and computer program code for compressing a file in a computer. An amount of memory available for use in the computer is determined. A size of the file is determined. A chunk size is determined based on the size of the file and the amount of memory available for use. A set of chunks are created by obtaining a chunk of chunk size from the file, and truncating the file an amount equal to the chunk size, until the file is completely truncated. A new file containing compressed chunks is created by repeatedly selecting a chunk from the set of chunks, compressing the chunk to form a compressed chunk, writing the compressed chunk to the new file, and deleting the chunk from the set of chunks, until each chunk in the set of chunks is deleted. The new file containing the compressed chunks is saved.

Owner:IBM CORP

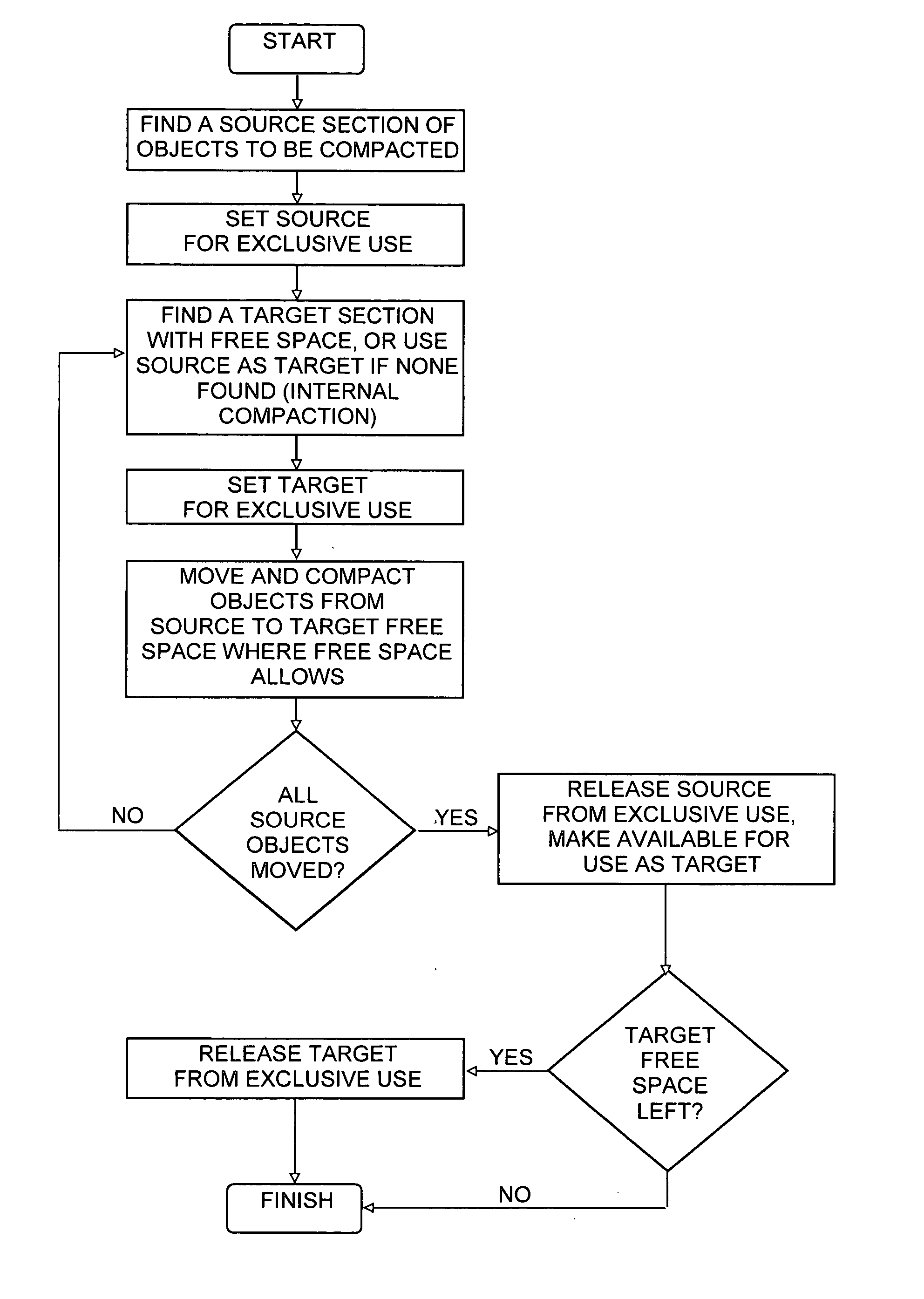

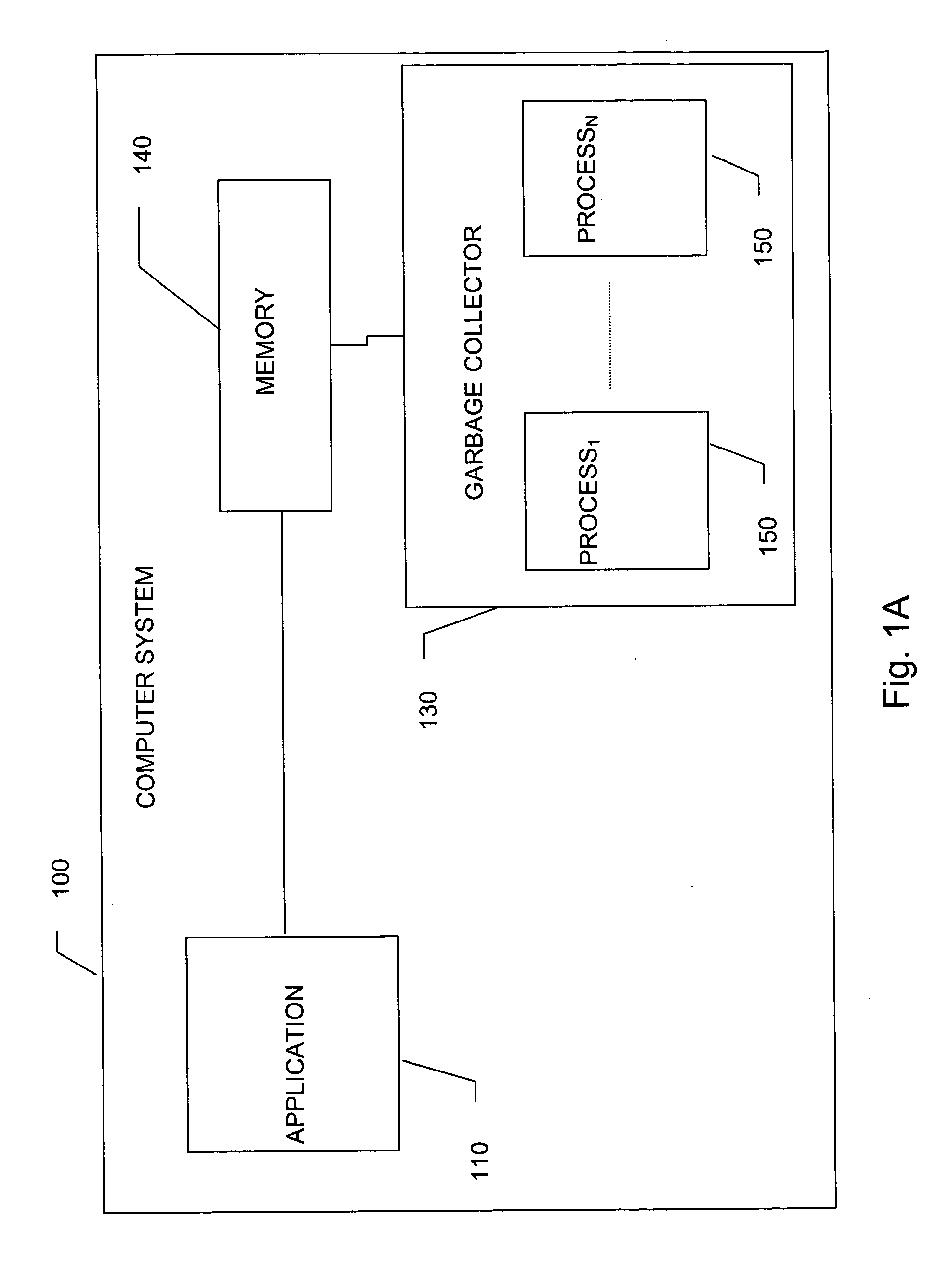

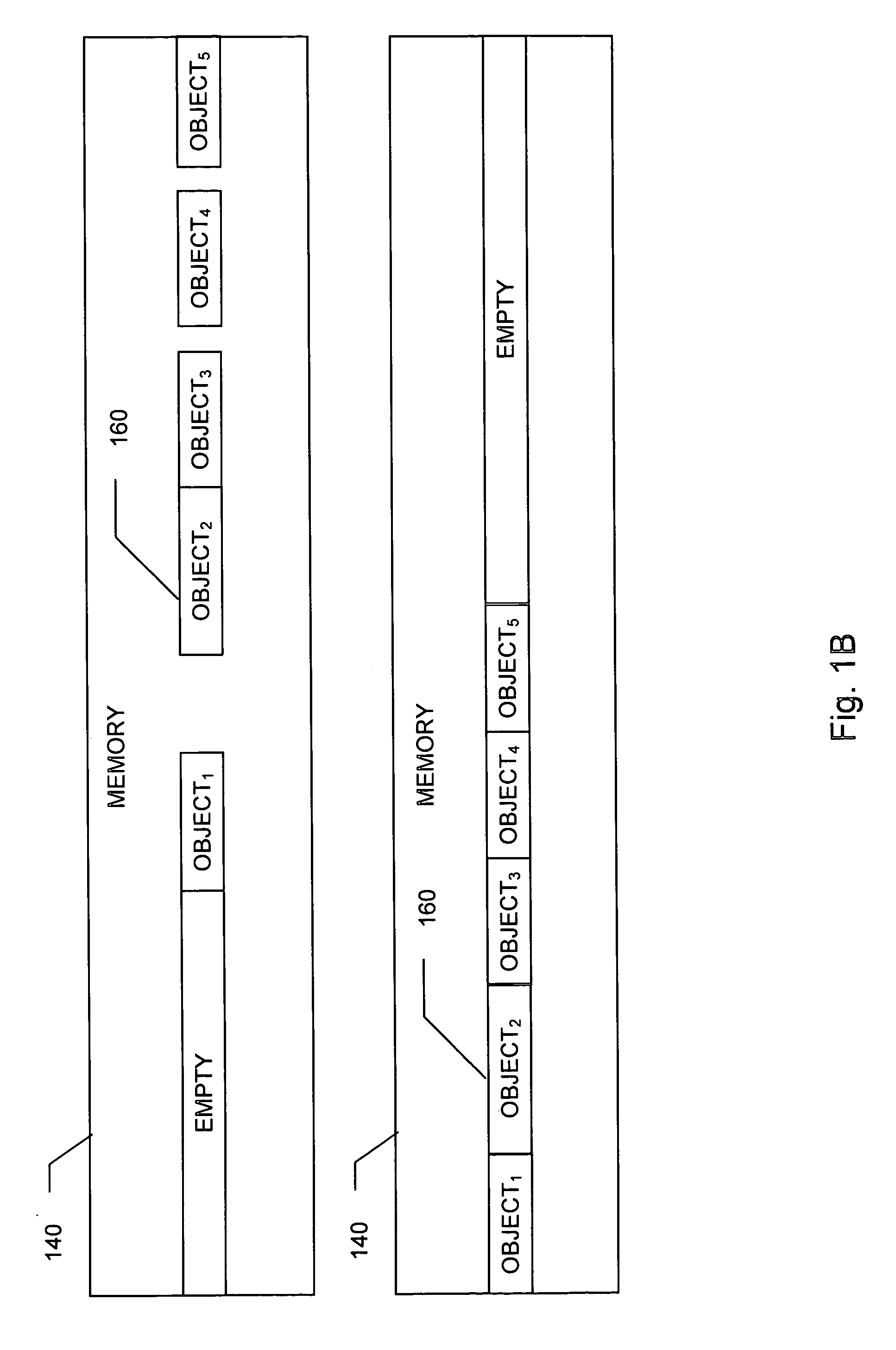

Parallel memory compaction

InactiveUS20050138319A1Improve compactionData processing applicationsMemory adressing/allocation/relocationParallel computingTerm memory

A method for compaction of objects within a computer memory, the method including dividing a memory space into a plurality of non-overlapping sections, selecting a plurality of source sections from among the sections, each containing at least one object, selecting a plurality of target sections from among the sections, and moving any of the objects from the source section to the target section, where each of a plurality of pairs of the source and target sections is exclusively available to a different process from among a plurality of processes operative to perform any of the steps with a predefined degree of concurrency.

Owner:IBM CORP

Memory compression for computer systems

InactiveUS6857047B2Improve performanceMemory architecture accessing/allocationData processing applicationsOperational systemComputerized system

A computer system is provided including a processor, a persistent storage device, and a main memory connected to the processor and the persistent storage device. The main memory includes a compressed cache for storing data retrieved from the persistent storage device after compression and an operating system. The operating system includes a plurality of interconnected software modules for accessing the persistent storage device and a filter driver interconnected between two of the plurality of software modules for managing memory capacity of the compressed cache and the buffer cache.

Owner:VALTRUS INNOVATIONS LTD +1

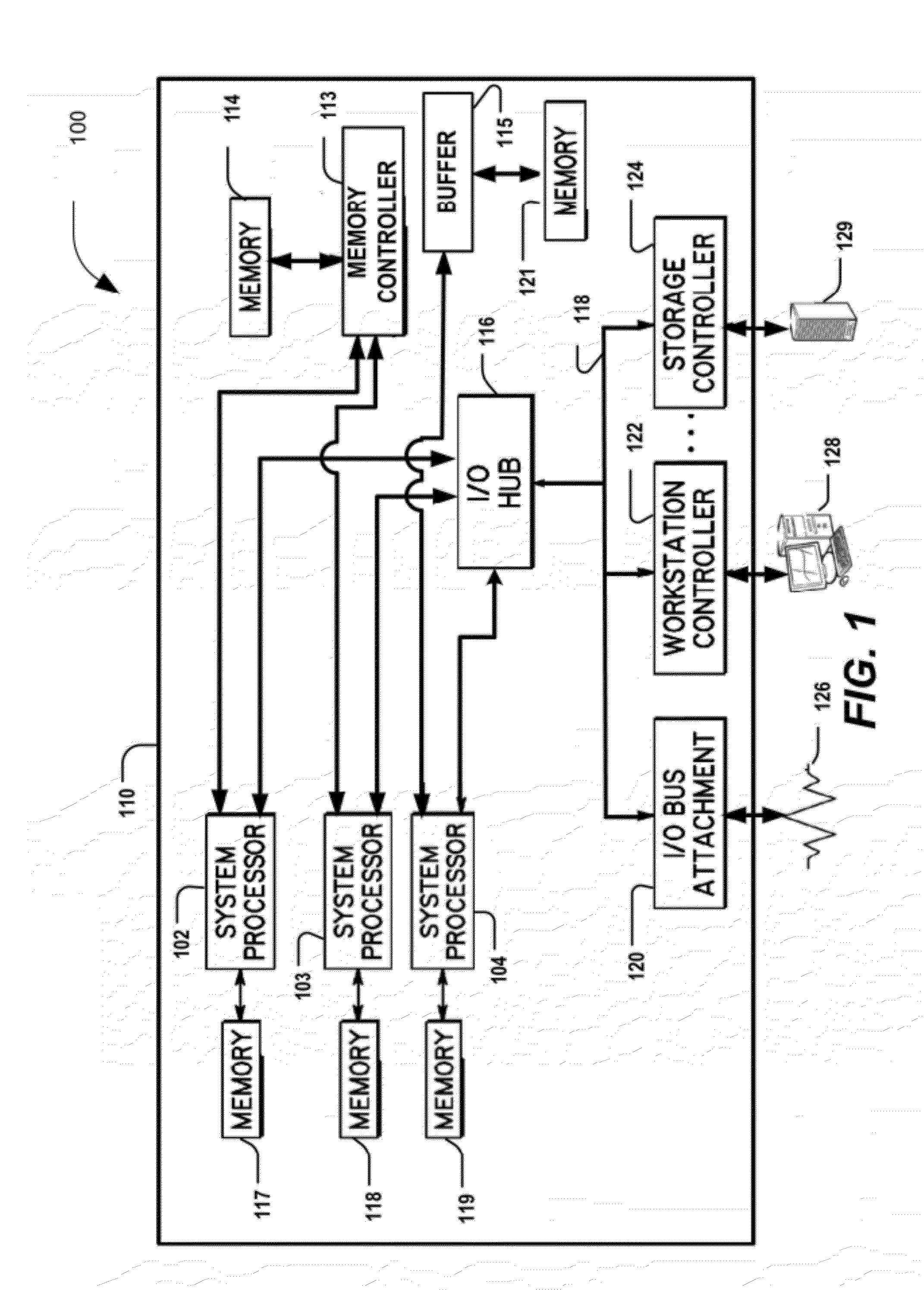

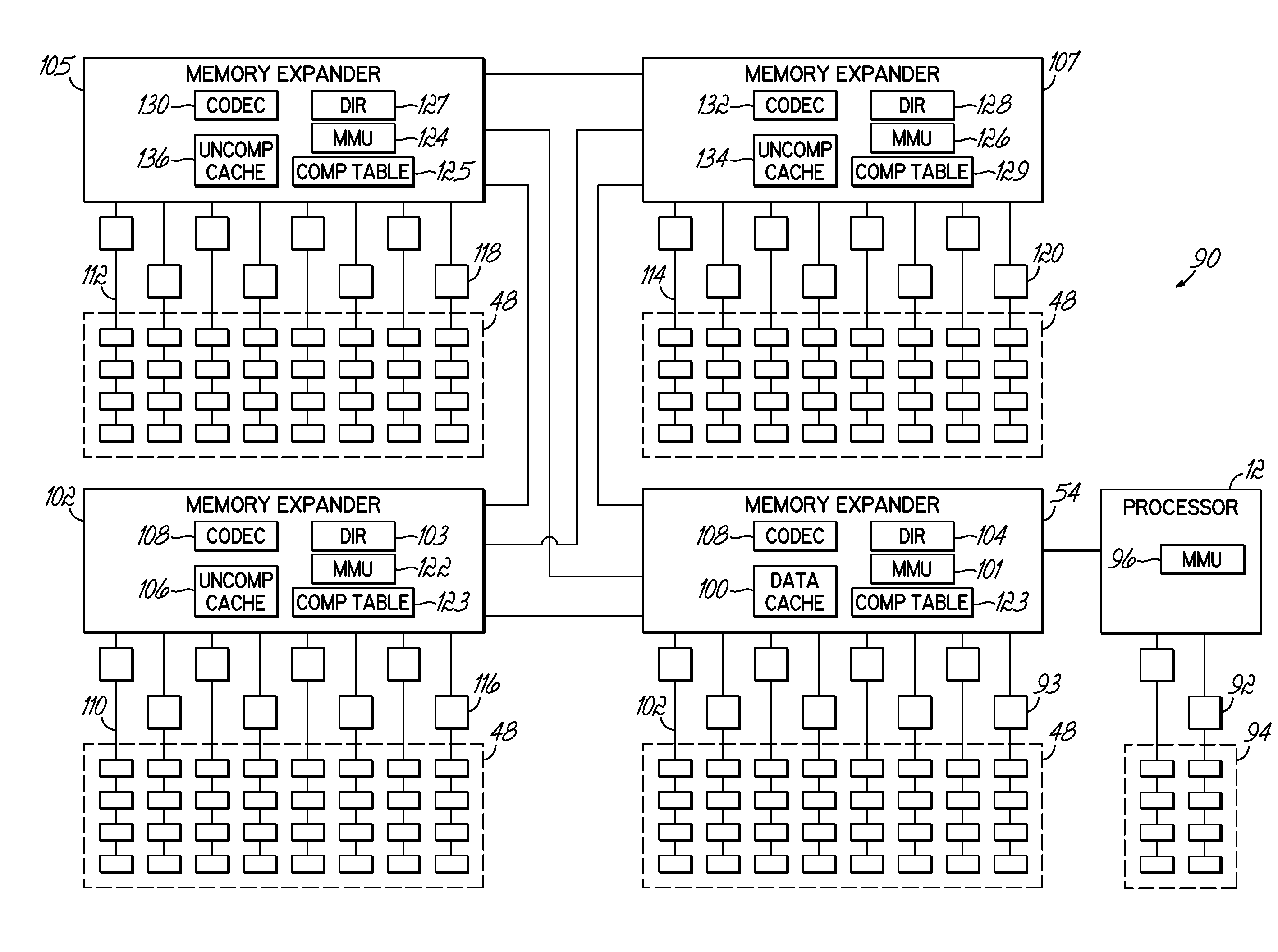

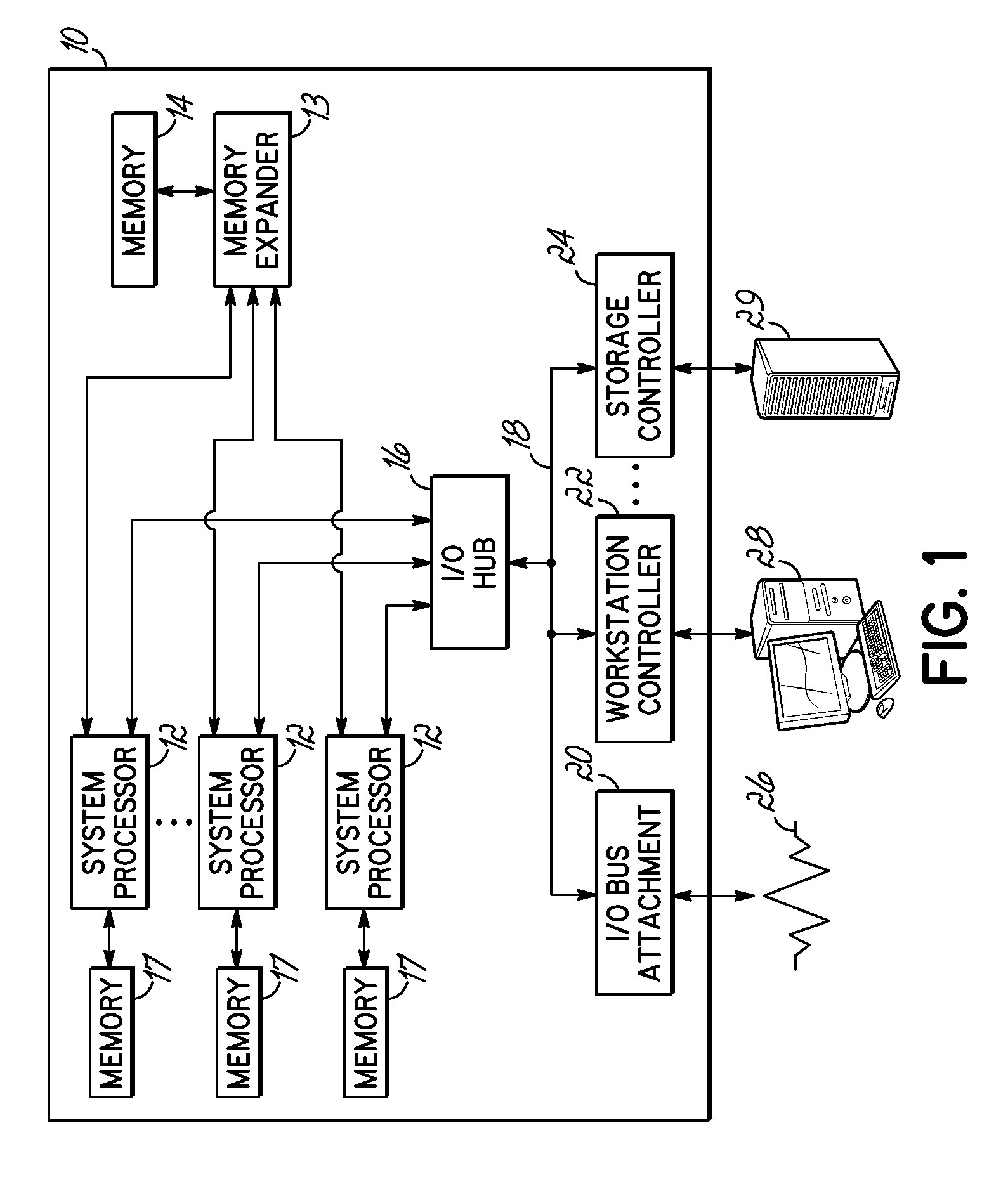

Memory compression implementation in a multi-node server system with directly attached processor memory

ActiveUS7966455B2Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingDirect communication

A method, apparatus and program product enable scalable bandwidth and memory for a system having processor with directly attached memory. Multiple memory expander microchips provide the additional bandwidth and memory while in communication with the processor. Lower latency data may be stored in a memory expander microchip node in the most direct communication with the processor. Memory and bandwidth allocation between may be dynamically adjusted.

Owner:LENOVO GLOBAL TECH INT LTD

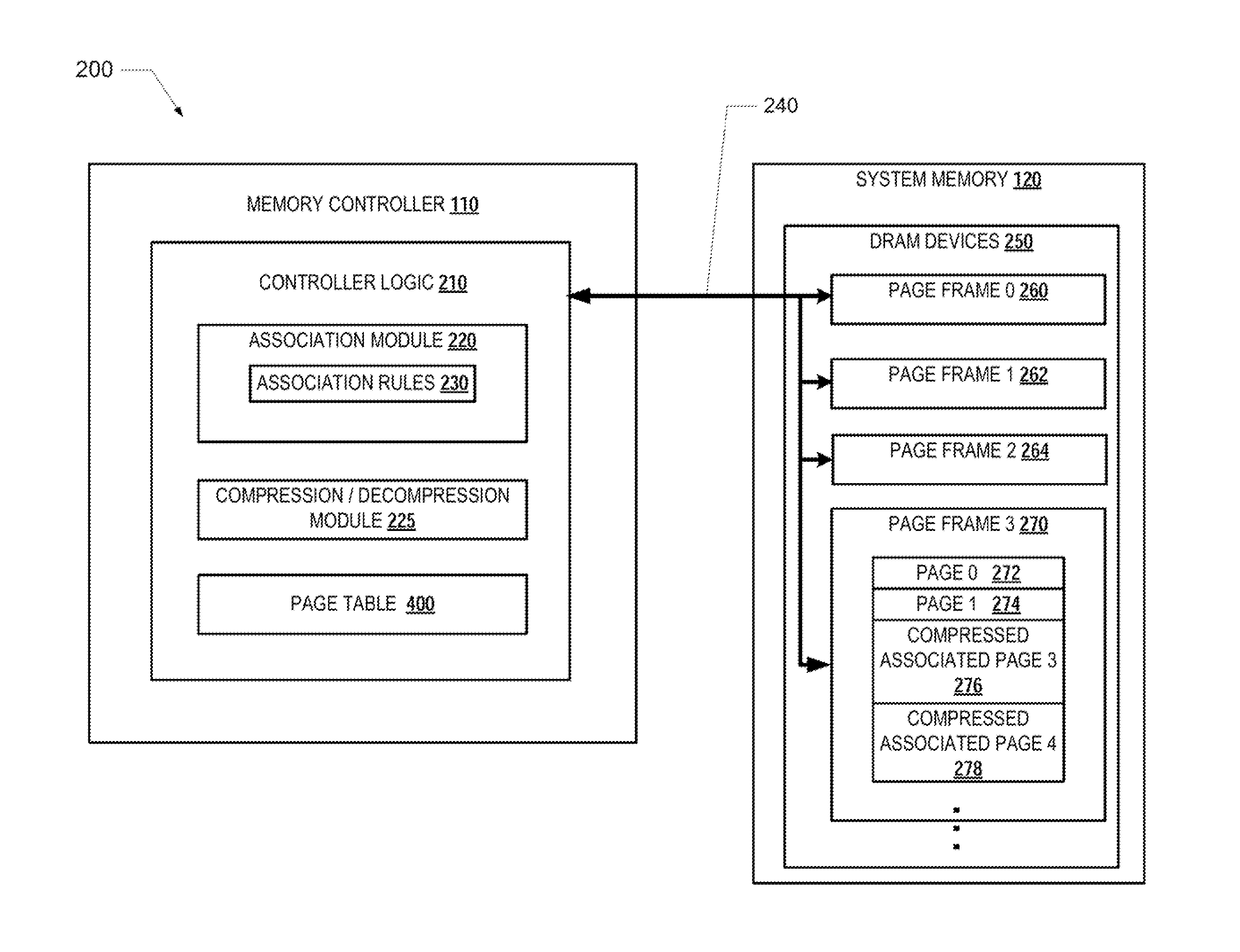

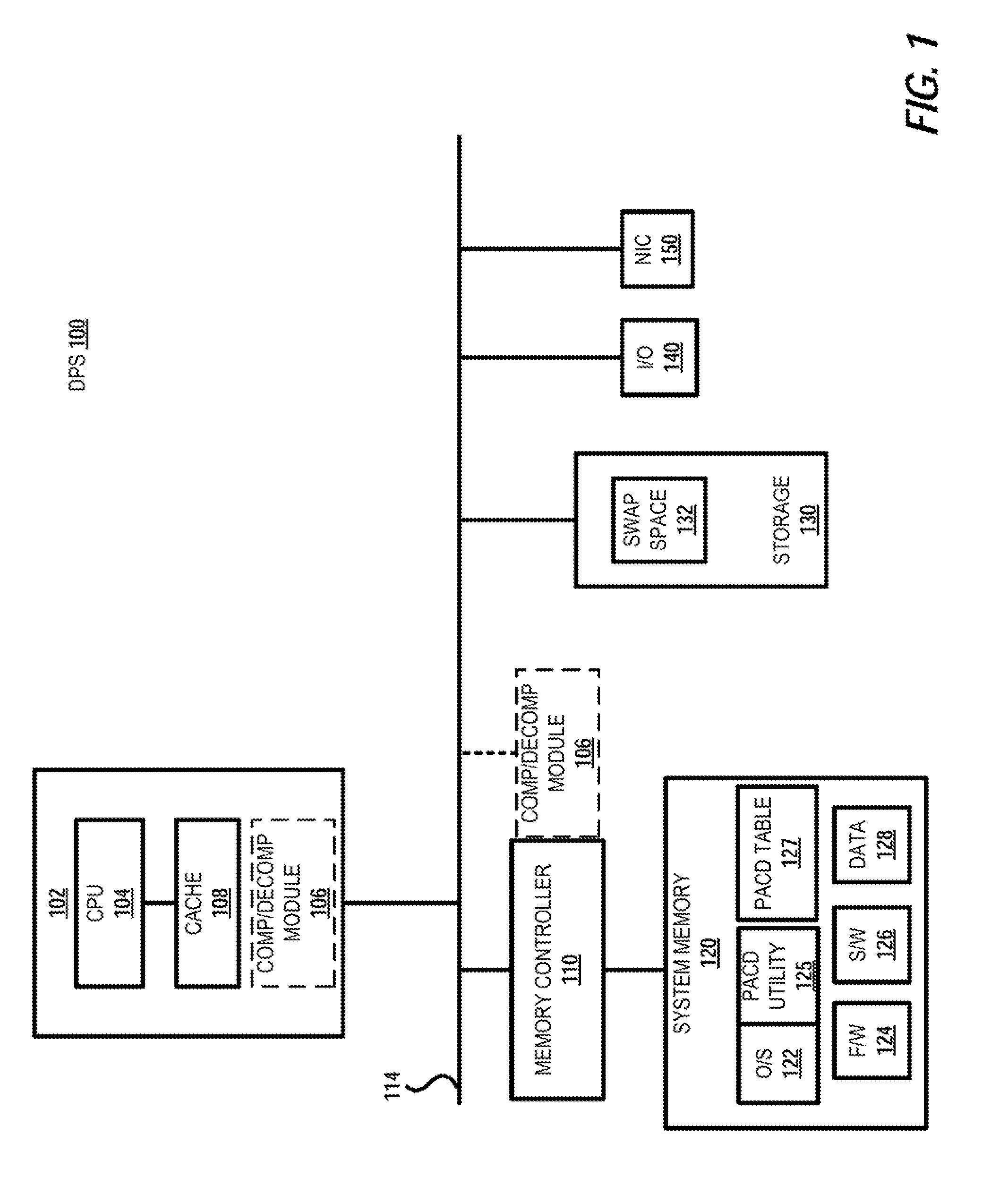

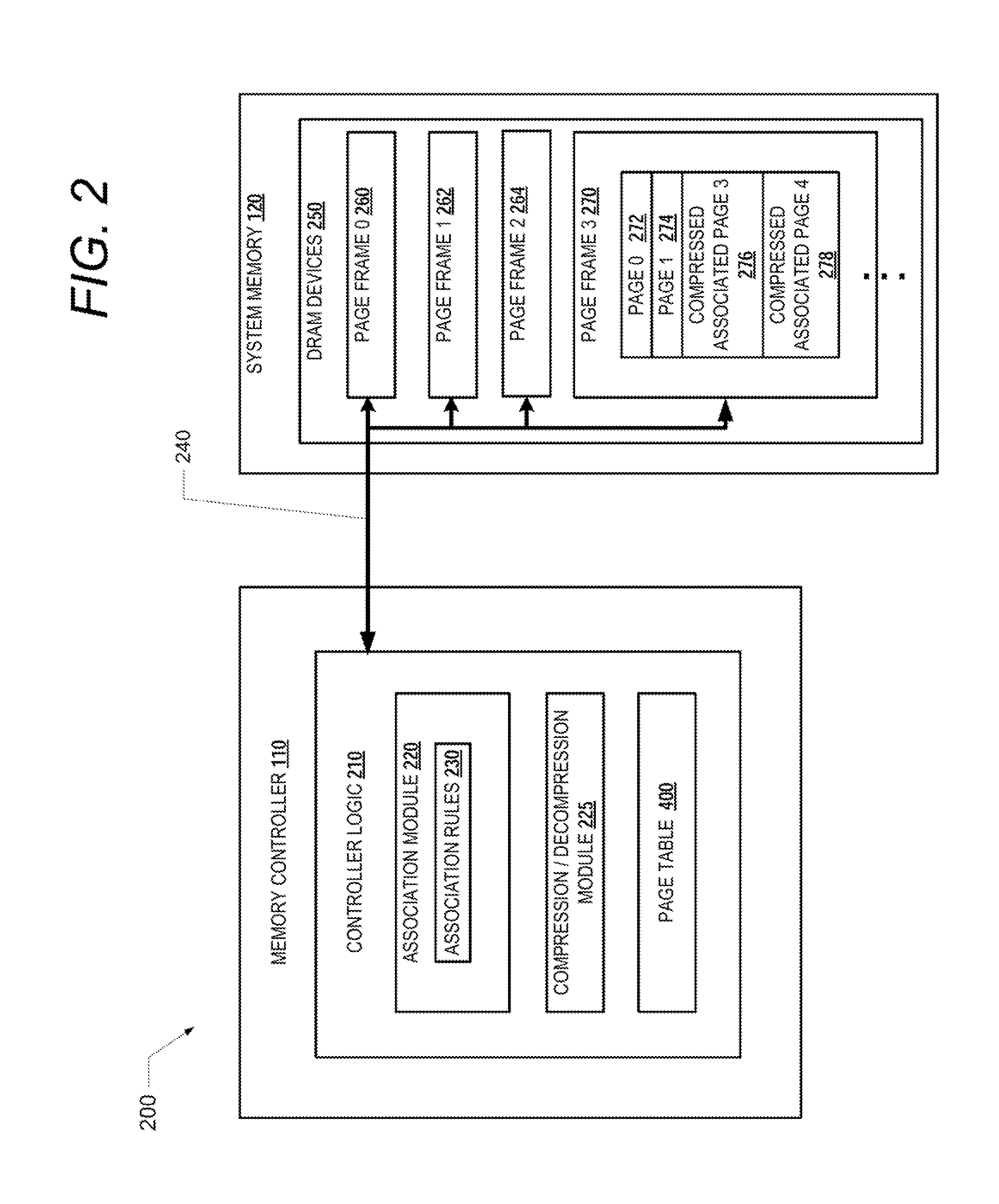

Efficient management of computer memory using memory page associations and memory compression

InactiveUS20140173243A1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer memoryOperating system

A method for managing memory operations includes reading a first memory page from a storage device responsive to a request for the first memory page. The first memory page is stored to a system memory. Based on a pre-established set of association rules, one or more associated memory pages are identified that are related to the first memory page. The associated memory pages are read from the storage device and compressed to generate corresponding compressed associated memory pages. The compressed associated memory pages are also stored to the system memory to enable faster access to the associated memory pages during processing of the first memory page. The compressed associated memory pages are individually decompressed in response to the particular page being required for use during processing.

Owner:IBM CORP

Methods And Apparatus For Local Memory Compaction

ActiveUS20100192138A1Reduces memory size requirement and memory footprintSoftware engineeringMemory adressing/allocation/relocationComputer softwareSource code

Methods, apparatus and computer software product for local memory compaction are provided. In an exemplary embodiment, a processor in connection with a memory compaction module identifies inefficiencies in array references contained within in received source code, allocates a local array and maps the data from the inefficient array reference to the local array in a manner which improves the memory size requirements for storing and accessing the data. In another embodiment, a computer software product implementing a local memory compaction module is provided. In a further embodiment a computing apparatus is provided. The computing apparatus is configured to improve the efficiency of data storage in array references. This Abstract is provided for the sole purpose of complying with the Abstract requirement rules. This Abstract is submitted with the explicit understanding that it will not be used to interpret or to limit the scope or the meaning of the claims.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com