Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

87results about How to "Discriminating" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Track and convolutional neural network feature extraction-based behavior identification method

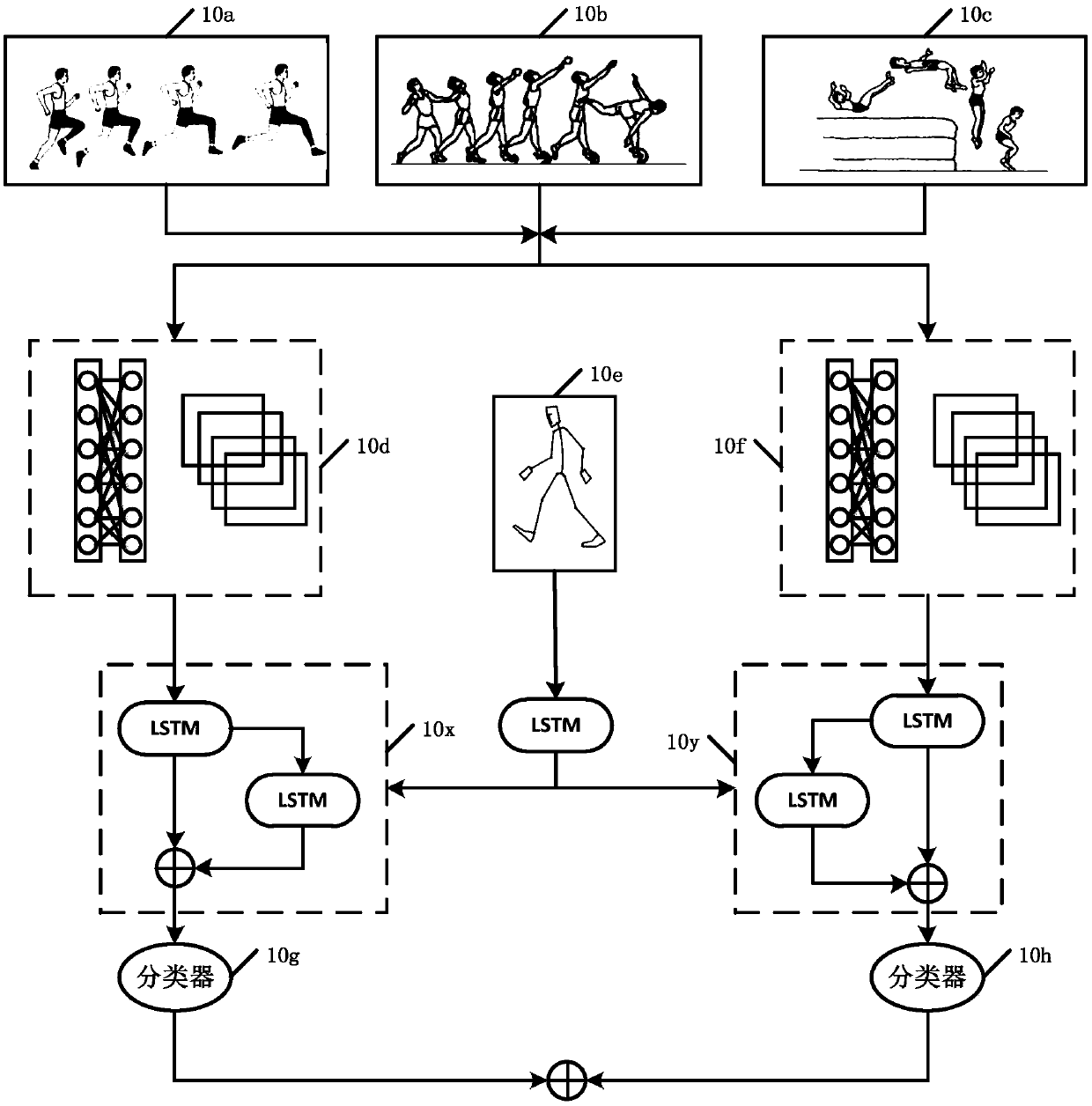

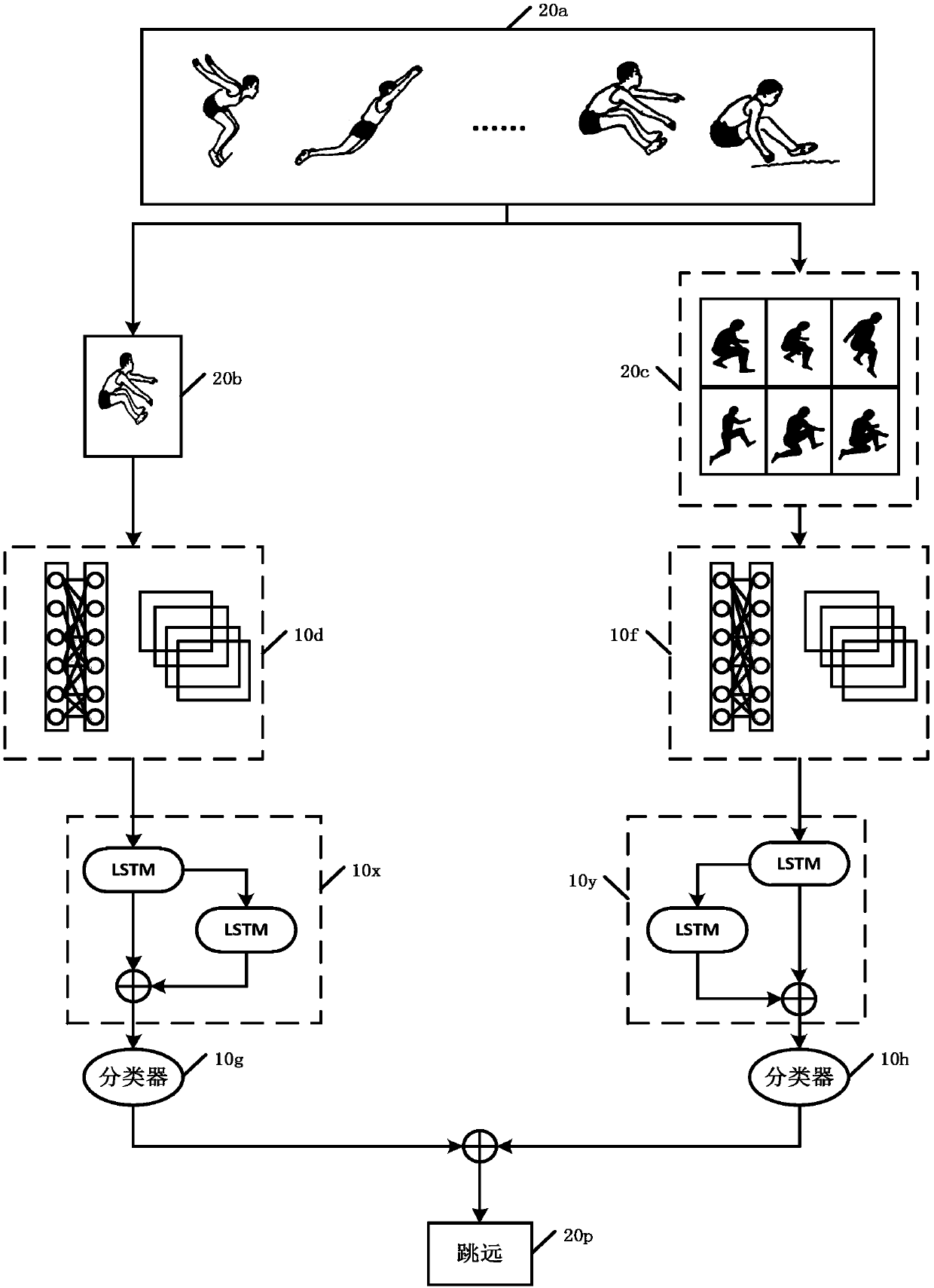

ActiveCN106778854AReduce computational complexityReduced characteristicsCharacter and pattern recognitionNeural architecturesVideo monitoringHuman behavior

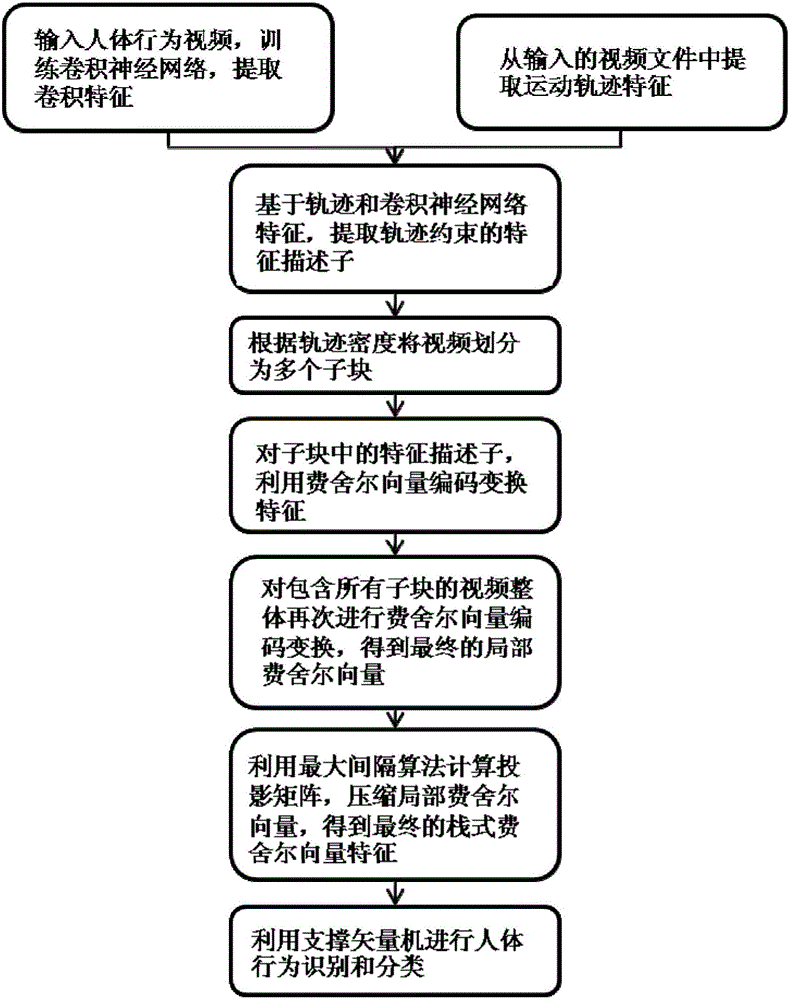

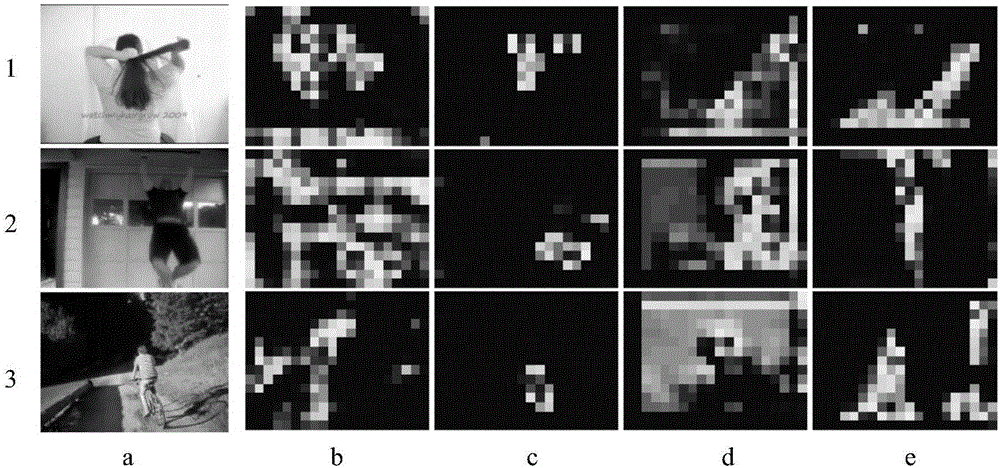

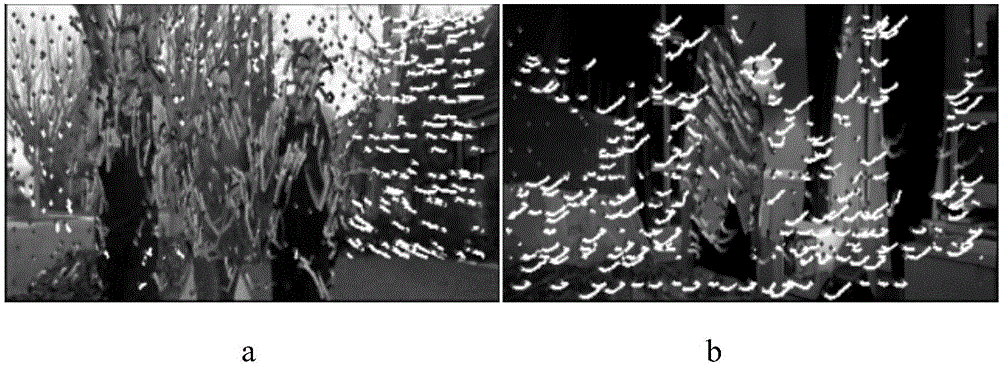

The invention discloses a track and convolutional neural network feature extraction-based behavior identification method, and mainly solves the problems of computing redundancy and low classification accuracy caused by complex human behavior video contents and sparse features. The method comprises the steps of inputting image video data; down-sampling pixel points in a video frame; deleting uniform region sampling points; extracting a track; extracting convolutional layer features by utilizing a convolutional neural network; extracting track constraint-based convolutional features in combination with the track and the convolutional layer features; extracting stack type local Fisher vector features according to the track constraint-based convolutional features; performing compression transformation on the stack type local Fisher vector features; training a support vector machine model by utilizing final stack type local Fisher vector features; and performing human behavior identification and classification. According to the method, relatively high and stable classification accuracy can be obtained by adopting a method for combining multilevel Fisher vectors with convolutional track feature descriptors; and the method can be widely applied to the fields of man-machine interaction, virtual reality, video monitoring and the like.

Owner:XIDIAN UNIV

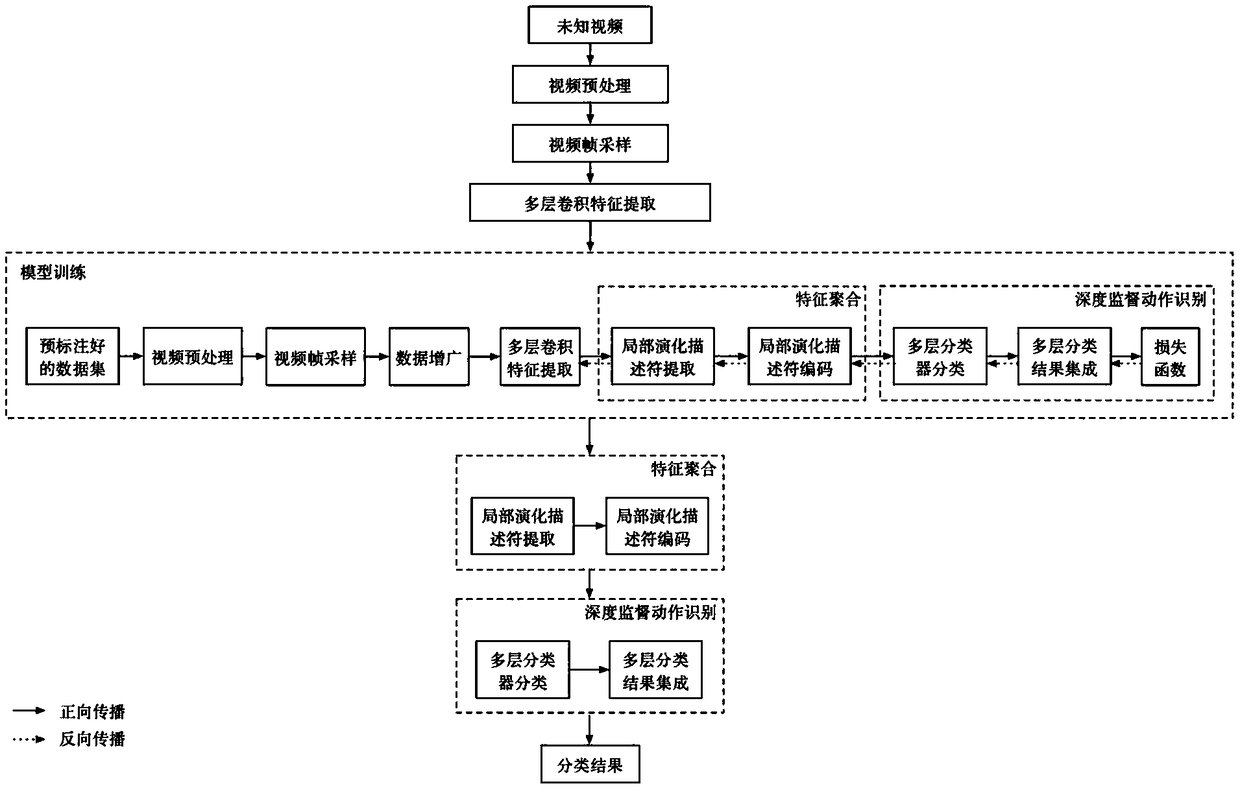

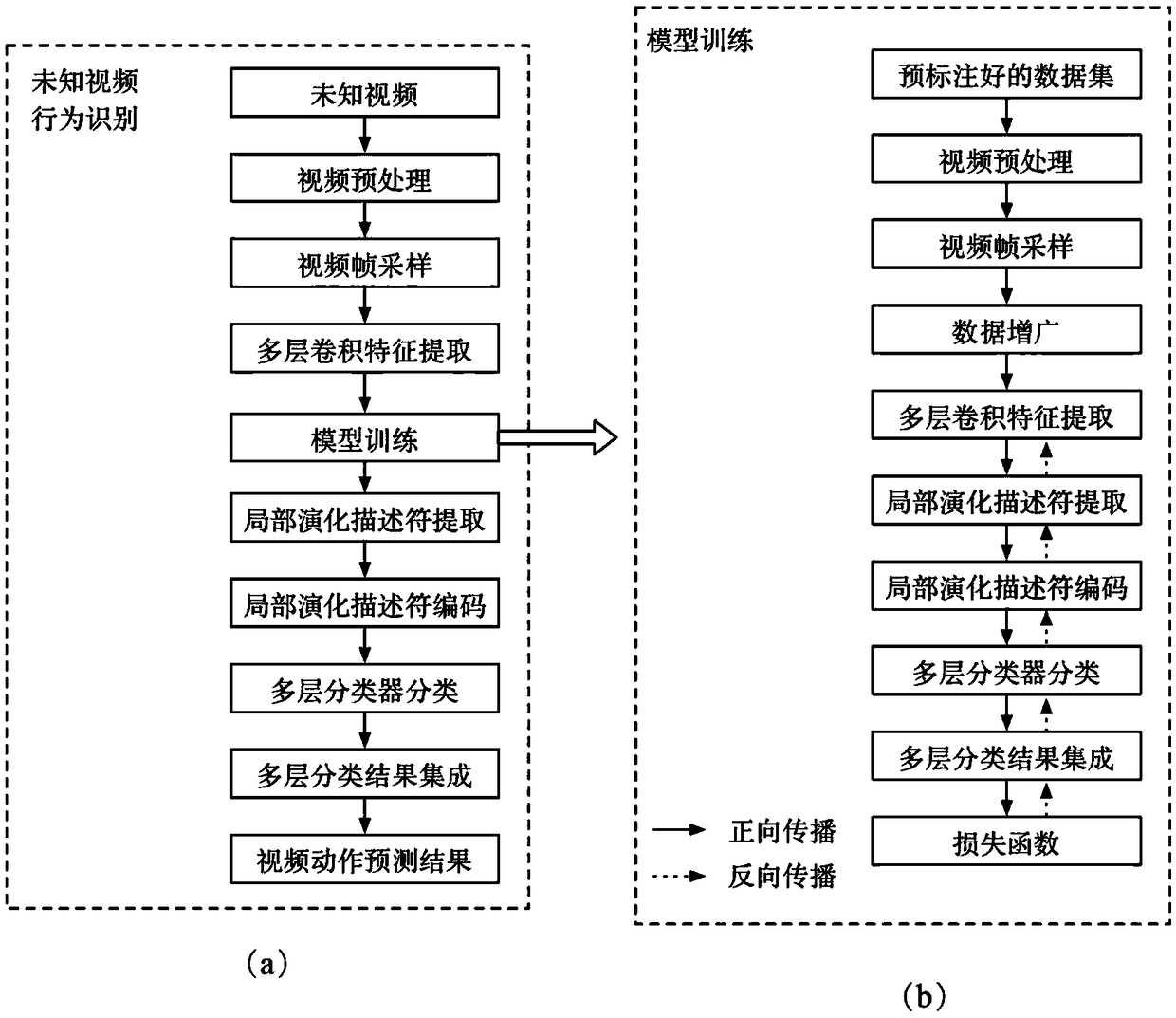

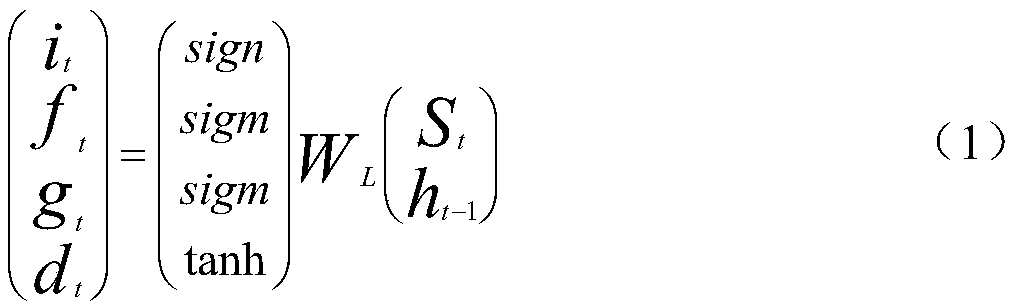

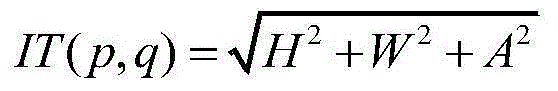

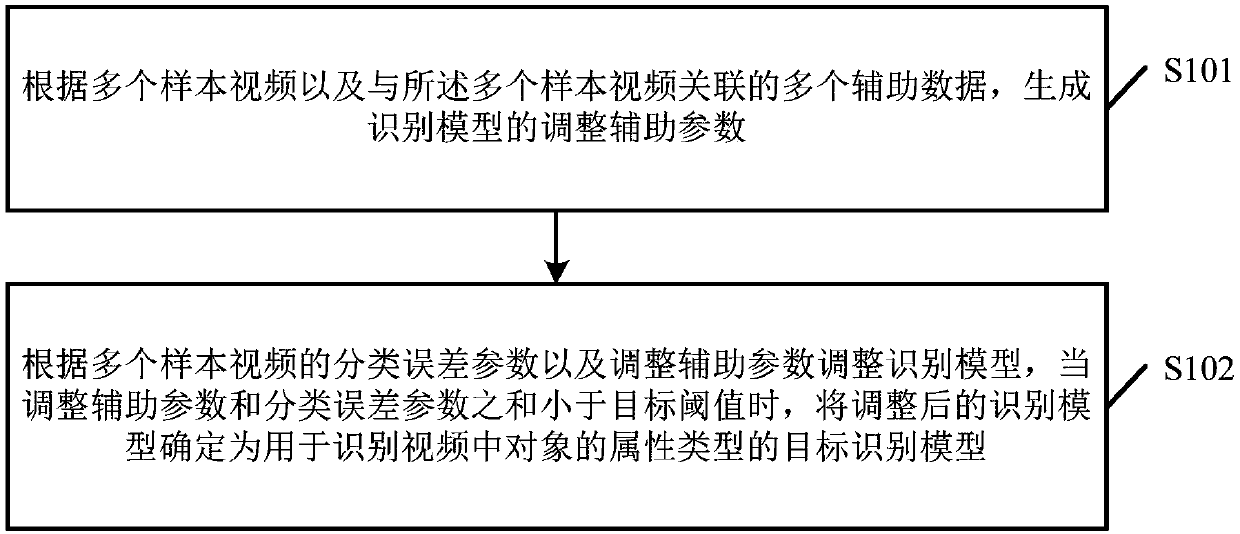

A behavior recognition method of depth supervised convolution neural network based on training feature fusion

ActiveCN109446923AEasy to implementDiscriminatingCharacter and pattern recognitionNeural architecturesTemporal informationVisual perception

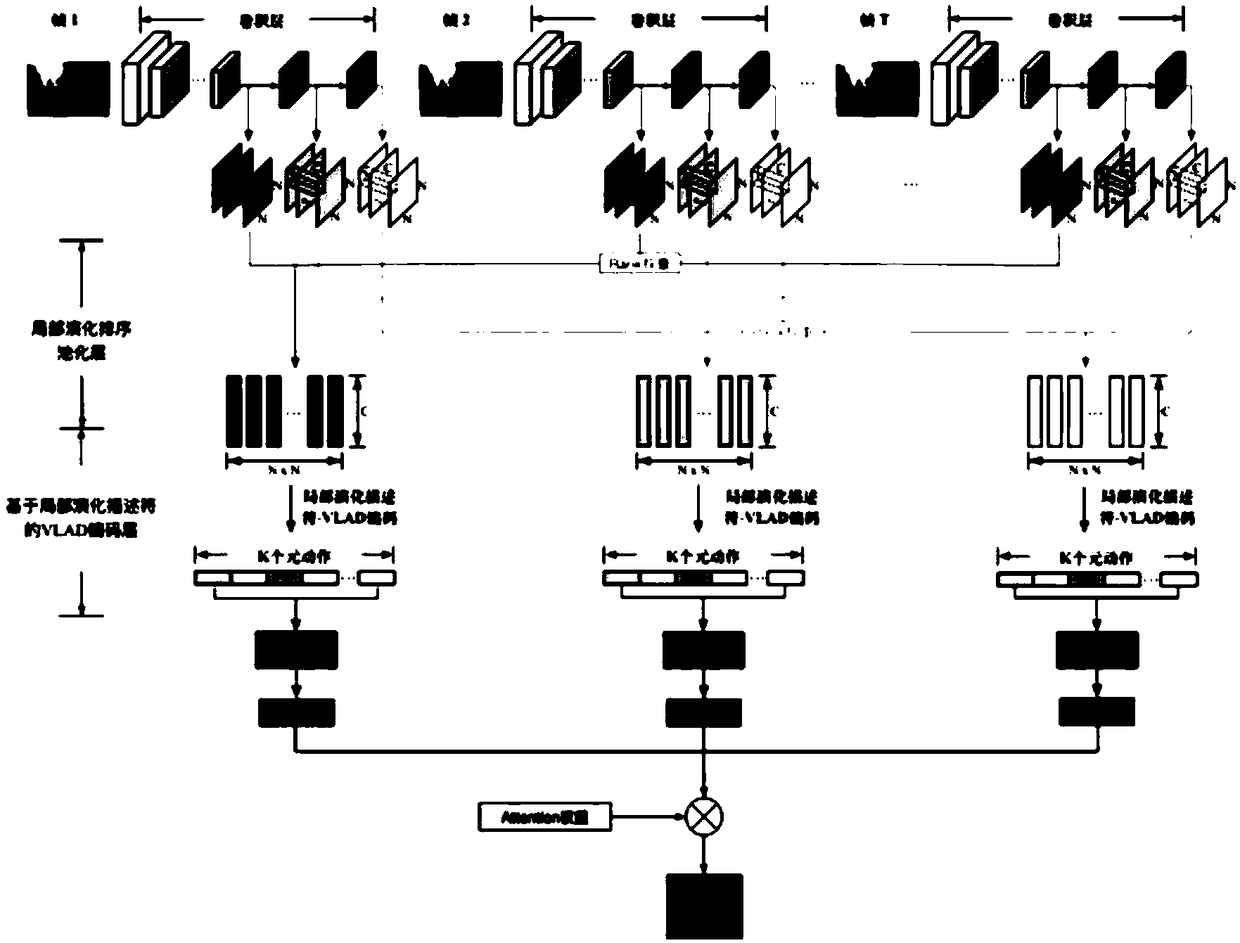

The invention provides a behavior recognition method of depth supervised convolution neural network based on training feature fusion, belonging to the artificial intelligence computer vision field. This method extracts multi-layer convolution features of target video, designs local evolutionary pooling layer, and maps video convolution features to a vector containing time information by using local evolutionary pooling layer, thus extracts local evolutionary descriptors of target video. The local evolutionary descriptors of target video are extracted by using local evolutionary pooling layer.By using VLAD coding method, multiple local evolutionary descriptors are coded into meta-action based video level representations. Based on the complementarity of the information among the multiple levels of convolution network, the final classification results are obtained by integrating the results of the multiple levels of convolution network. The invention fully utilizes the time information to construct the video level representation, and effectively improves the accuracy of the video behavior recognition. At the same time, the performance of the whole network is improved by integrating the multi-level prediction results to improve the discriminability of the middle layer of the network.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

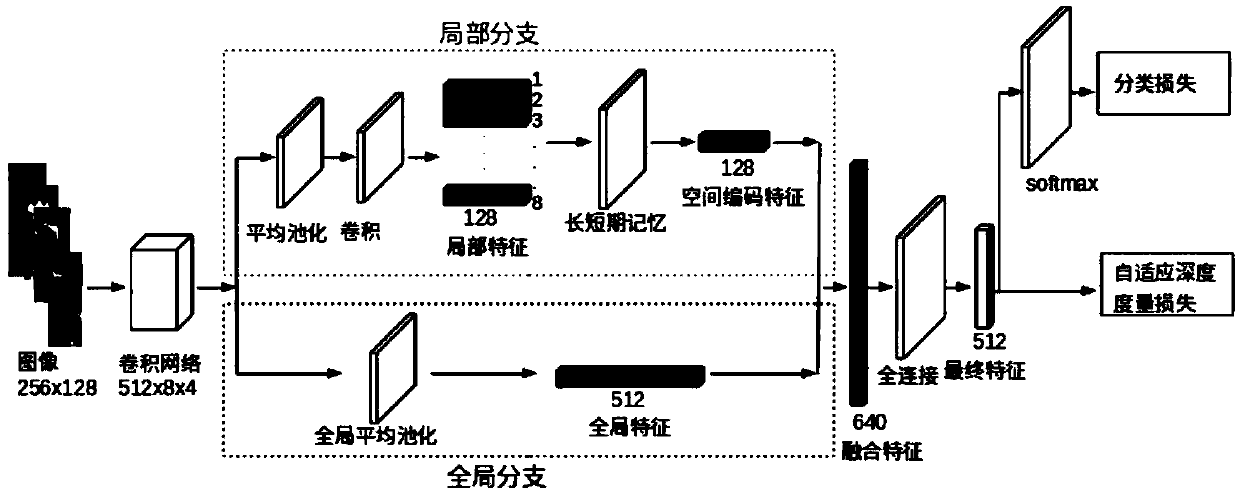

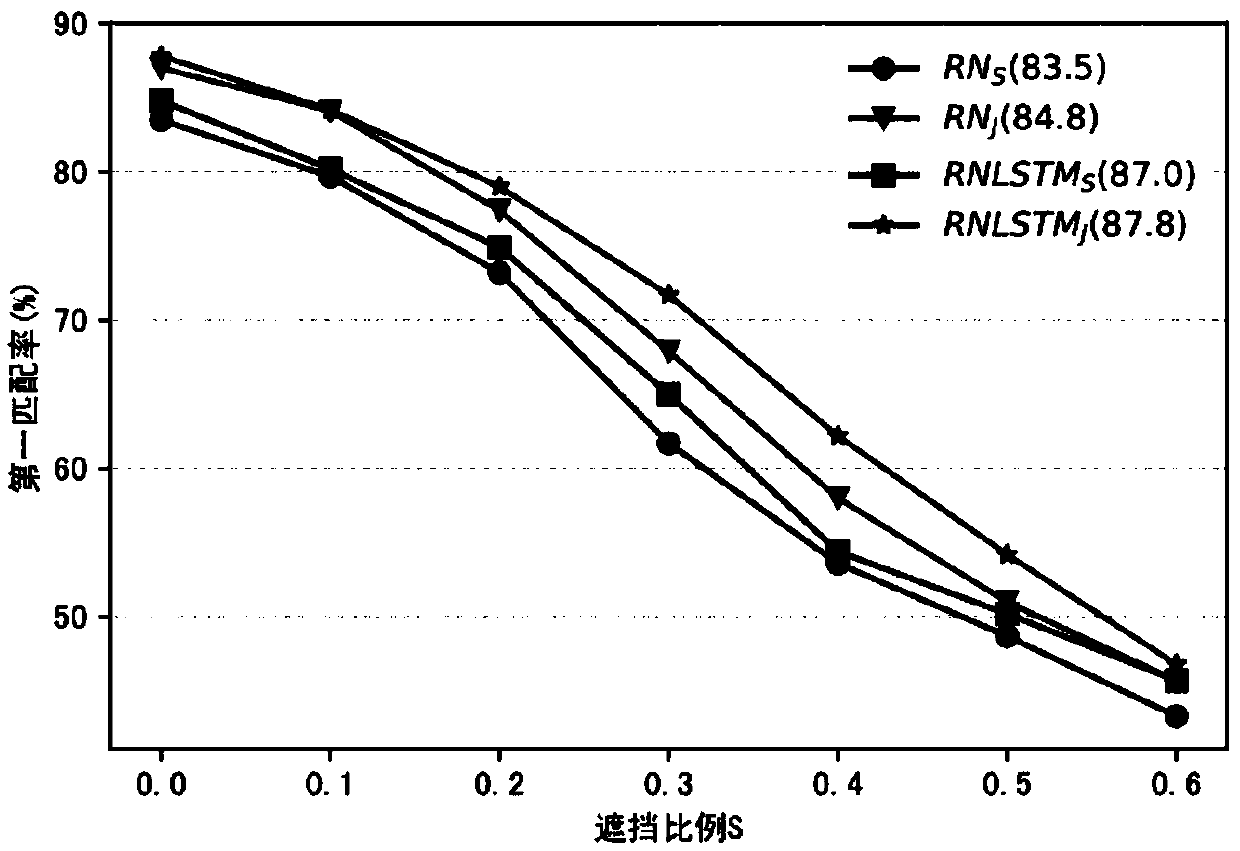

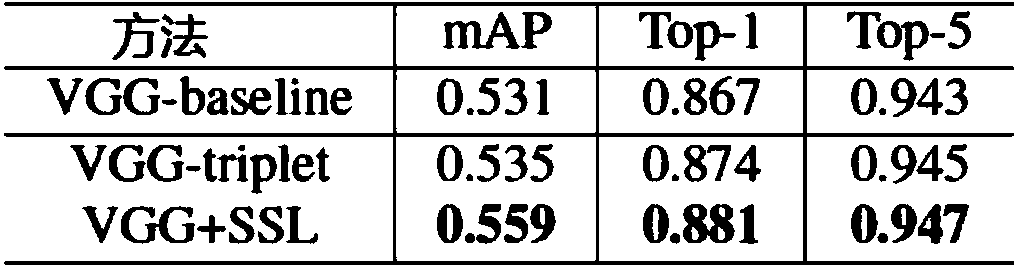

Covered pedestrian re-identification method based on adaptive deep metric learning

ActiveCN108960127AImprove robustnessImprove training efficiencyBiometric pattern recognitionSemanticsNetwork model

The invention provides a covered pedestrian re-identification method based on adaptive deep metric learning and relates to the computer vision technique. The method is characterized by, to begin with,designing convolutional neural network structure, which is robust to coverage, and extracting middle-and-low-level semantic features of a pedestrian image in the network; then, extracting local features robust to coverage, and combining global features, then, studying high-level semantics features, learning features sufficiently discriminating for pedestrian identity change through adaptive neighbor deep metric loss, and combined with classification loss, finishing update learning of the whole network quickly and stably; and finally, according to a trained network model, extracting output ofa first full connection layer as feature representation from a test image and finishing subsequent feature similarity comparison and sorting to obtain a final pedestrian re-identification result. Themethod effectively improves robustness of features to coverage.

Owner:XIAMEN UNIV

Video human action reorganization method based on sparse subspace clustering

InactiveCN104732208AImprove performanceIncreased overall recognition accuracyCharacter and pattern recognitionFeature extractionThree-dimensional space

The invention belongs to computer visual pattern recognition and a video picture processing method. The computer visual pattern recognition and the video picture processing method comprise the steps that establishing a three-dimensional space-time sub-frame cube in a video human action reorganization model, establishing a human action characteristic space, conducting the clustering processing, updating labels, extracting the three-dimensional space-time sub-frame cube in the video human action reorganization model and the human action reorganization from monitoring video, extracting human action characteristic, confirming category of human sub-action in each video and classifying and merging on videos with sub-category labels. According to the computer visual pattern recognition and the video picture processing method, the highest identification accuracy is improved by 16.5% compared with the current international Hollywood2 human action database. Thus, the video human action reorganization method has the advantages that human action characteristic with higher distinguishing ability, adaptability, universality and invariance property can be extracted automatically, the overfitting phenomenon and the gradient diffusion problem in the neural network are lowered, and the accuracy of human action reorganization in a complex environment is improved effectively; the computer visual pattern recognition and the video picture processing method can be applied to the on-site video surveillance and video content retrieval widely.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

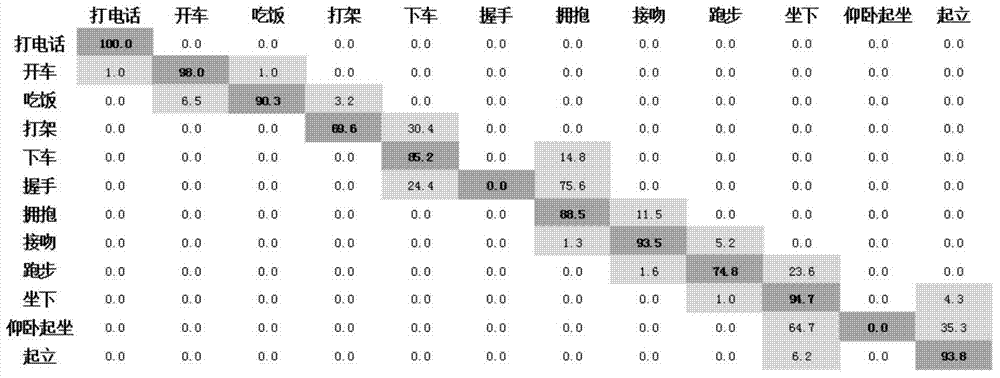

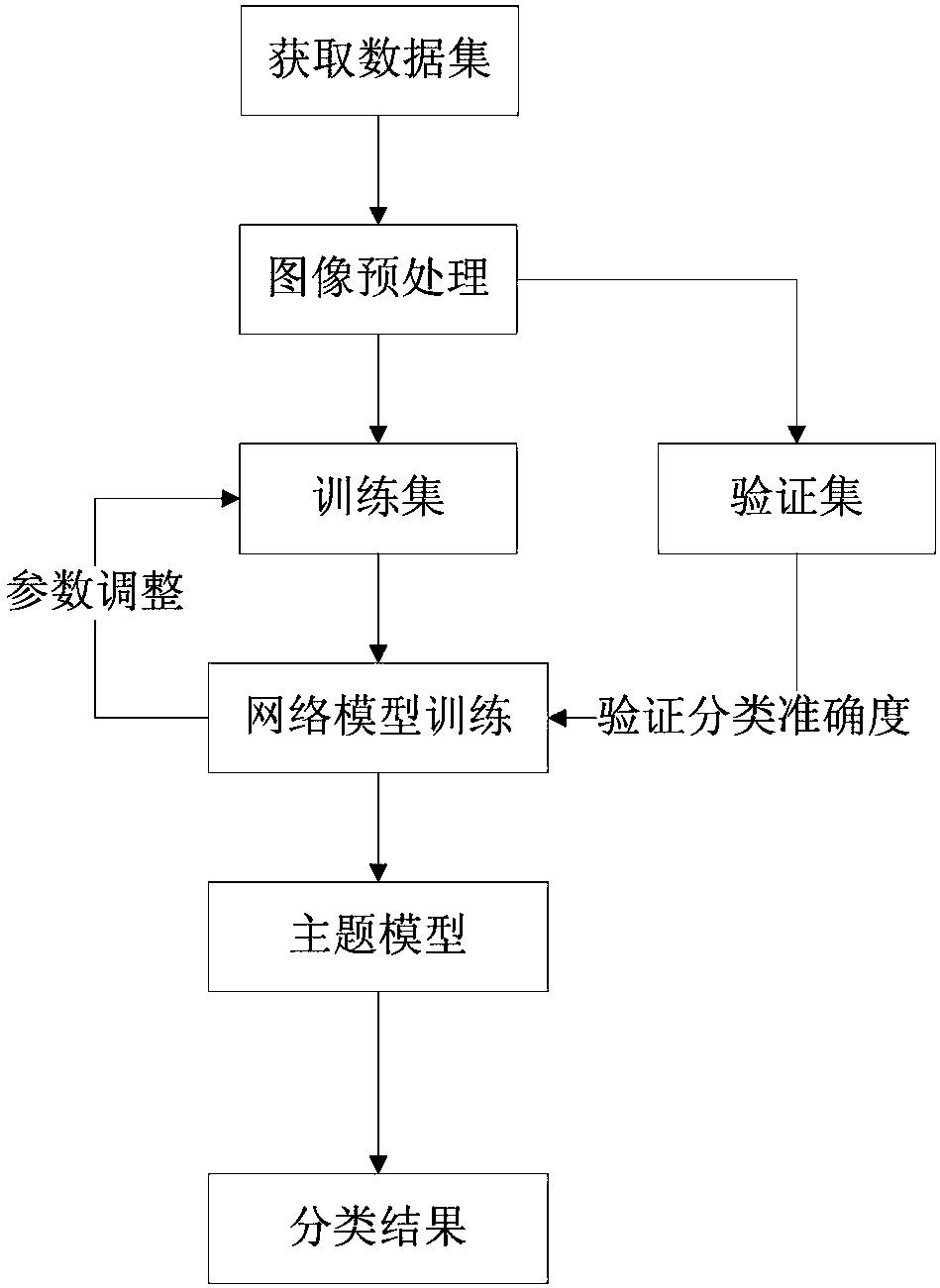

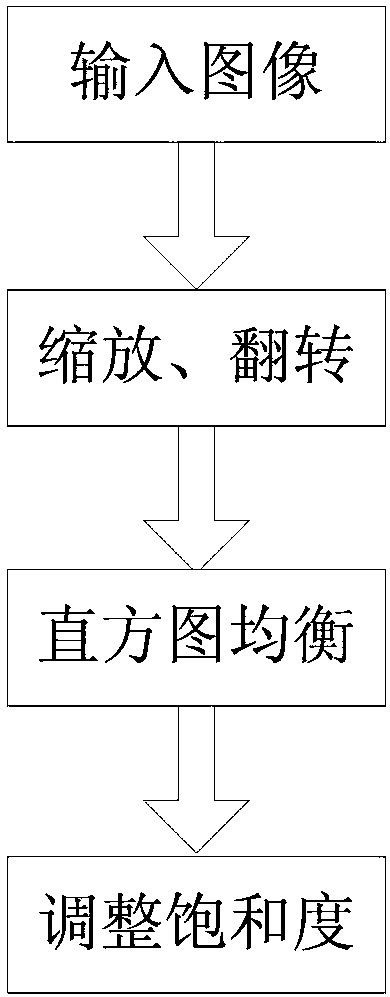

Topic model-fused scene image classification method

InactiveCN107808132AExtended datasetIncrease the number ofCharacter and pattern recognitionSubject specificImaging Feature

The invention discloses a topic model-fused scene image classification method, and relates to the field of deep learning and image classification. The method comprises the following steps of: preprocessing data sets, and expanding the quantity of obtained data sets so as to obtain an image data format according with deep learning model processing; constructing a convolutional neural network modelaccording with scene image classification, and pre-training the processed image data sets by using a convolutional neural network; and carrying out end-to-end iterative training on the constructed convolutional neural network by using training sets, adjusting parameters in the network, verifying the trained model by using verification sets, modeling extracted scene image features with discrimination ability, extracting hidden topic variables between the features and images so as to obtain image topic distribution represented by a k-dimensional vector, wherein k represents a topic quantity, each image can be considered as a probability distribution diagram formed by a plurality of topics, and scene image classification is realized by utilizing a classifier.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

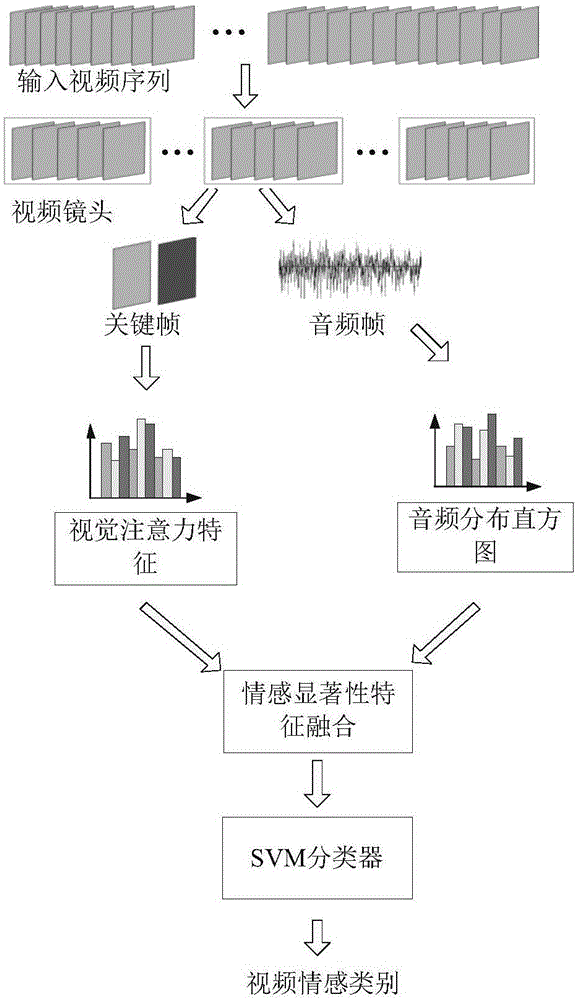

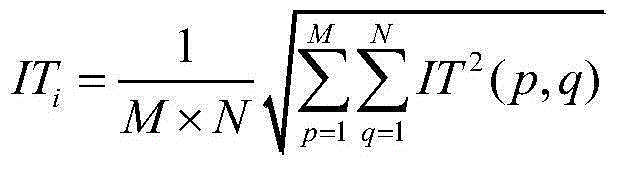

Video emotion identification method based on emotion significant feature integration

ActiveCN105138991ADiscriminatingEasy to implementCharacter and pattern recognitionIntegration algorithmVision based

The invention discloses a video emotion identification method based on emotion significant feature integration. A training video set is acquired, and video cameras are extracted from a video. An emotion key frame is selected for each video camera. The audio feature and the visual emotion feature of each video camera in the training video set are extracted. The audio feature is based on a word package model and forms an emotion distribution histogram feature. The visual emotion feature is based on a visual dictionary and forms an emotion attention feature. The emotion attention feature and the emotion distribution histogram feature are integrated from top to bottom to form a video feature with emotion significance. The video feature with emotion significance is sent into an SVM classifier for training, wherein the video feature is formed in the training video set. Parameters of a training model are acquired. The training model is used for predicting the emotion category of a tested video. An integration algorithm provided by the invention has the advantages of simple realization, mature and reliable trainer and quick prediction, and can efficiently complete a video emotion identification process.

Owner:SHANDONG INST OF BUSINESS & TECH

Induction motor fault diagnosis method based on discriminant convolutional feature learning

ActiveCN105910827ASimple modelFew connection parametersEngine testingMotor vibrationFeature learning

The invention discloses an induction motor fault diagnosis method based on discriminant convolutional feature learning. The induction motor fault diagnosis method comprises the following steps that induction motor vibration signals of the known fault category are acquired and the motor vibration signals are marked according to the category so that training samples are acquired; discriminant convolutional feature learning is performed on the training samples, and all the training samples are represented by utilizing feature vectors obtained through learning; and tags are added on the feature vectors obtained through learning and an SVM classifier is trained by utilizing the feature vectors, and the optimal classification parameters of the SVM classifier are determined. The effective fault features of the motor vibration signals are learned in an unsupervised manner. Compared with the existing fault diagnosis technology and the machine learning method, the discriminant convolutional feature learning method is more intelligent, simple in model and less in connection parameters so that stability and practicality of an intelligent feature extraction method can be enhanced.

Owner:SOUTHEAST UNIV

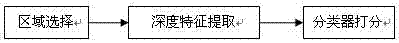

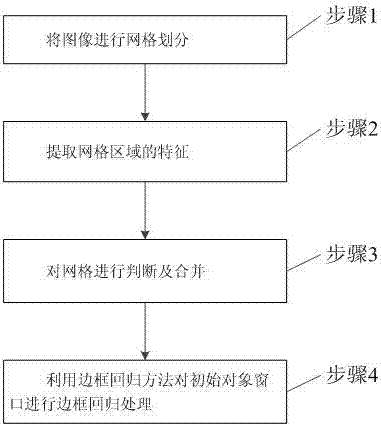

Fast target detection method based on grid judgment

ActiveCN107247956AReduce lossesFast and accurate target detection effectCharacter and pattern recognitionGrid basedMachine learning

Owner:成都快眼科技有限公司

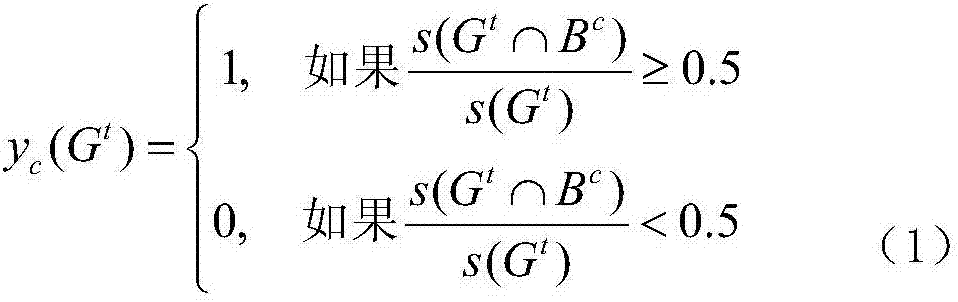

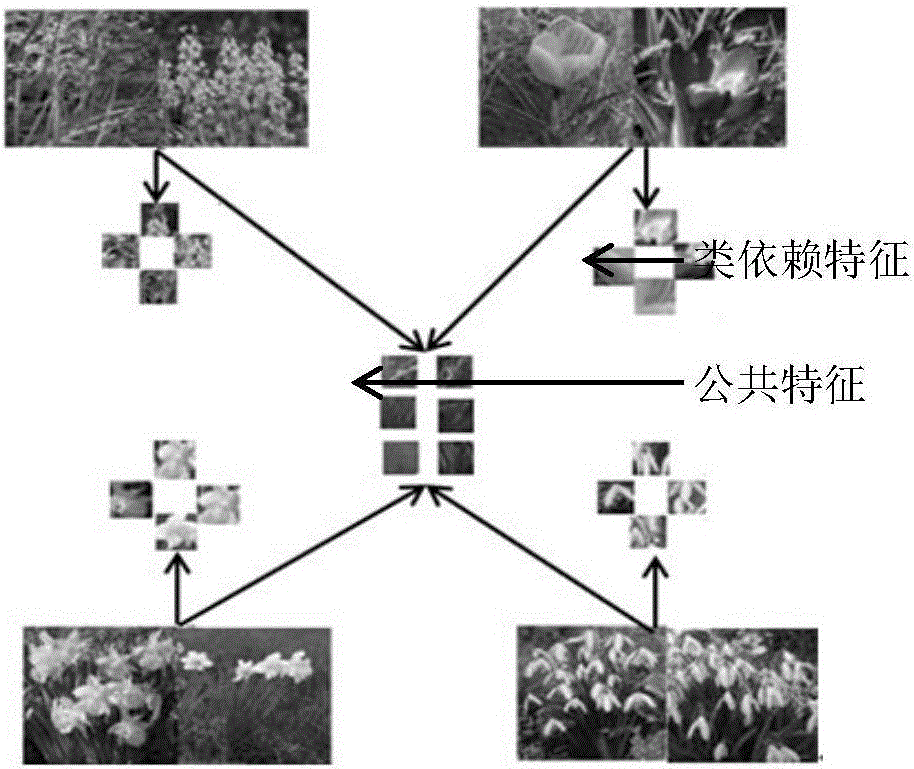

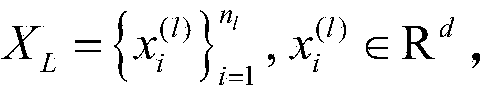

Fine grain image classification method based on common dictionary pair and class-specific dictionary pair

InactiveCN106778807ASolve the blockageReduce computational complexityCharacter and pattern recognitionSingular value decompositionImaging processing

The invention belongs to the digit image processing field, provides dictionary with better discrimination property, prevents solving problems of standard sparse coding, greatly shortens classification time, and allows coefficient to have certain discrimination property; the invention provides the fine grain image classification method based on common dictionary pair and class-specific dictionary pair; the method comprises the following steps: 1, extracting a SIFT characteristic matrix of image database training samples, and using a K-singular value decomposition method K-SVD to obtain an initialization dictionary; 2, building a dictionary learning model based on the common dictionary pair and class-specific dictionary pair; 3, using an iteration method to solve the dictionary model built in step 2, thus obtaining an integral dictionary D and a parse dictionary P, and using the parse dictionary to solve the tested sample sparse expression matrix; using a minimizing reconstruction error method to determine the image belonging class. The method is mainly applied to the digit image processing occasions.

Owner:TIANJIN UNIV

Hyperspectral image classification method based on semi-supervised dictionary learning

ActiveCN104392251ADiscriminatingStrong generalizationClimate change adaptationCharacter and pattern recognitionSmall sampleSupervised learning

The invention discloses a hyperspectral image classification method based on semi-supervised dictionary learning, mainly solving the problem of high dimension of hyperspectral image and low classification precision in small sample status. The hyperspectral image classification method includes: expressing the pixel dot of the hyperspectral image via the spectral feature vector; selecting mark sample set, no-mark sample set and the test sample set; forming class label matrix with mark sample; forming Laplacian matrix without mark sample; using the alternating optimization strategy and the gradient descent method for solving the semi-supervised dictionary learning model; using the learned dictionary for coding the mark sample, no-mark sample and test sample; using the learned sparse code as the characteristic for classifying the hyperspectral image. The hyperspectral image classification method based on semi-supervised dictionary learning adopts the semi-supervised thought to obtain higher classification accuracy compared with the supervised learning method and is applied to the field of precision agriculture, vegetation investigation and military reconnaissance.

Owner:XIDIAN UNIV

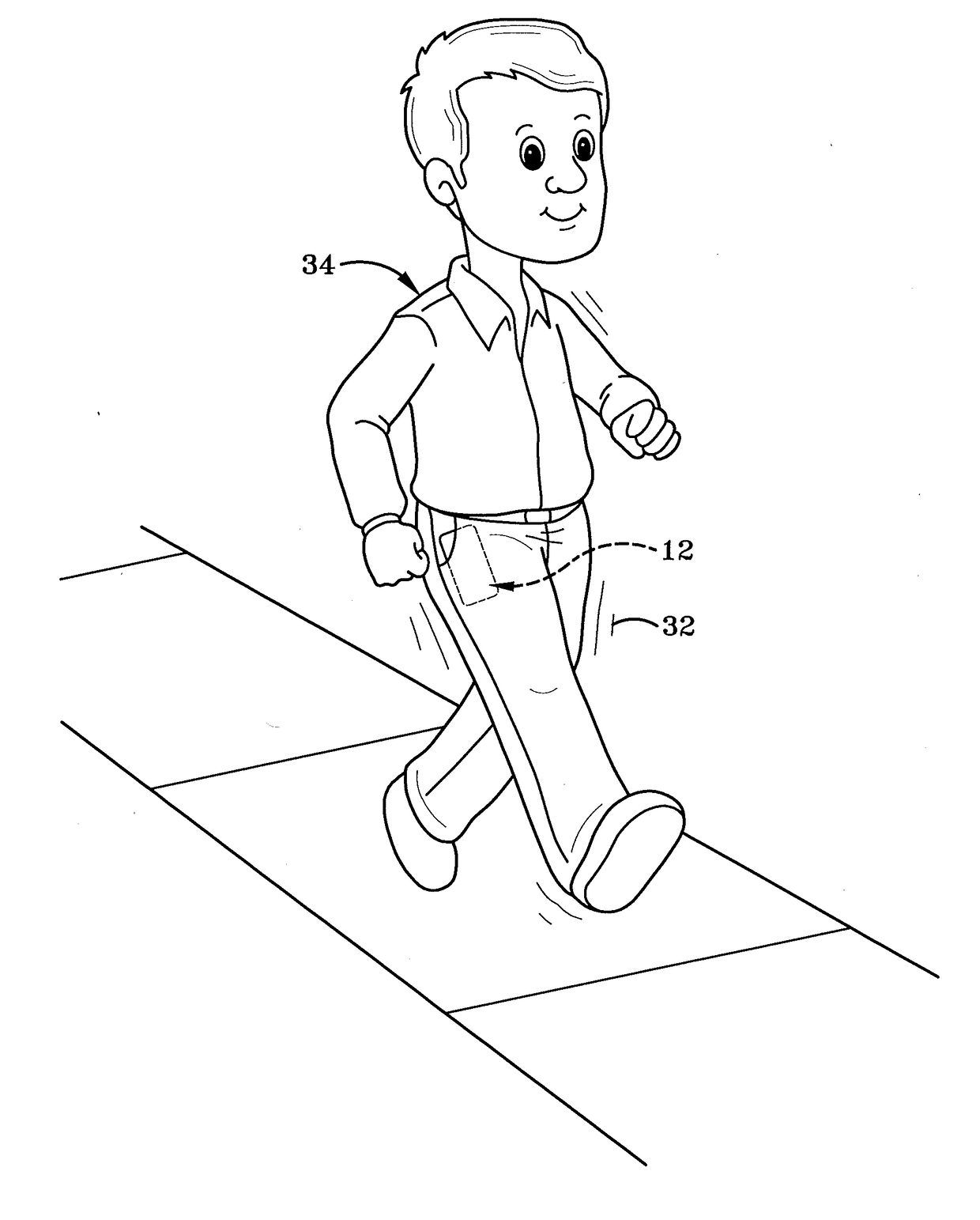

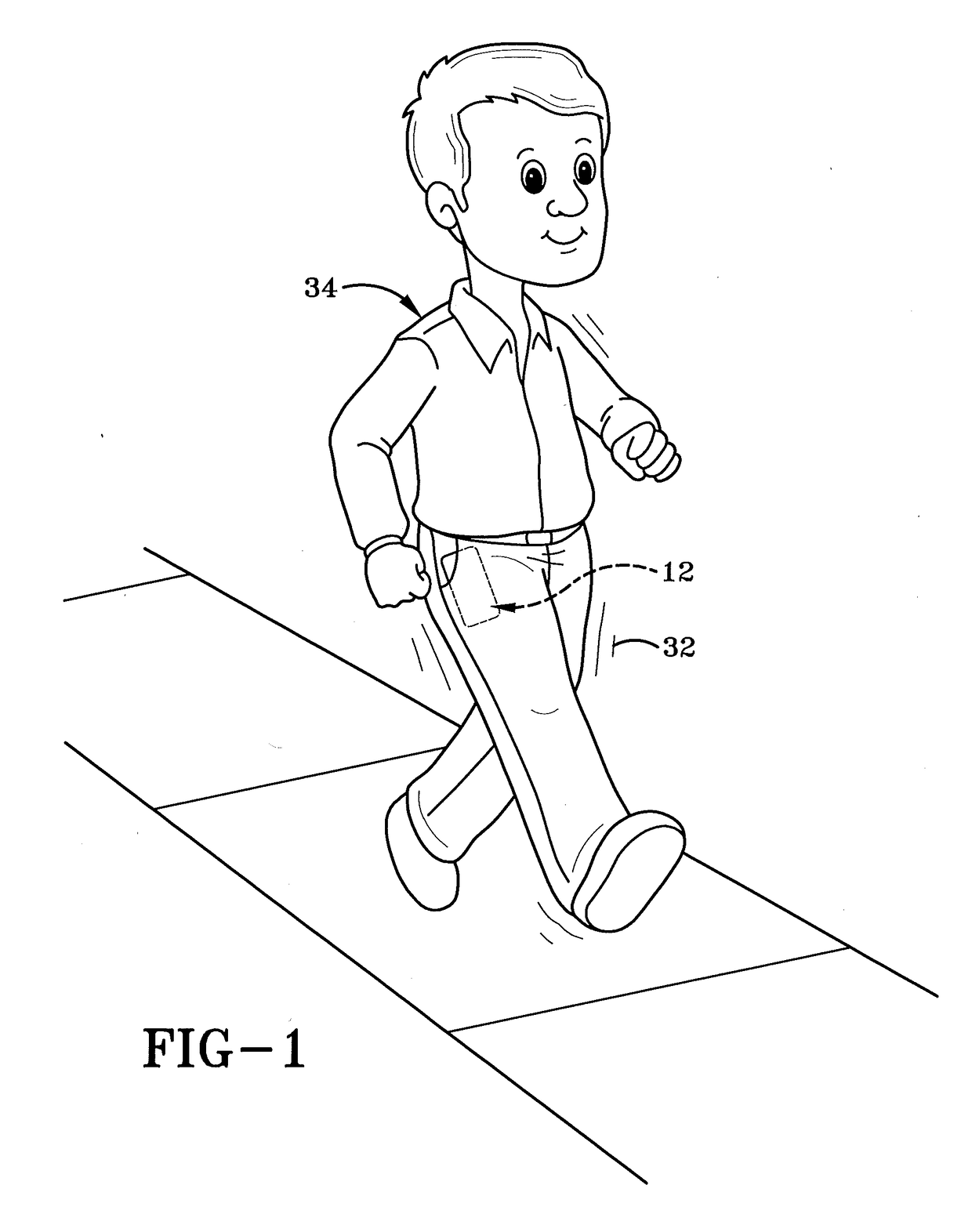

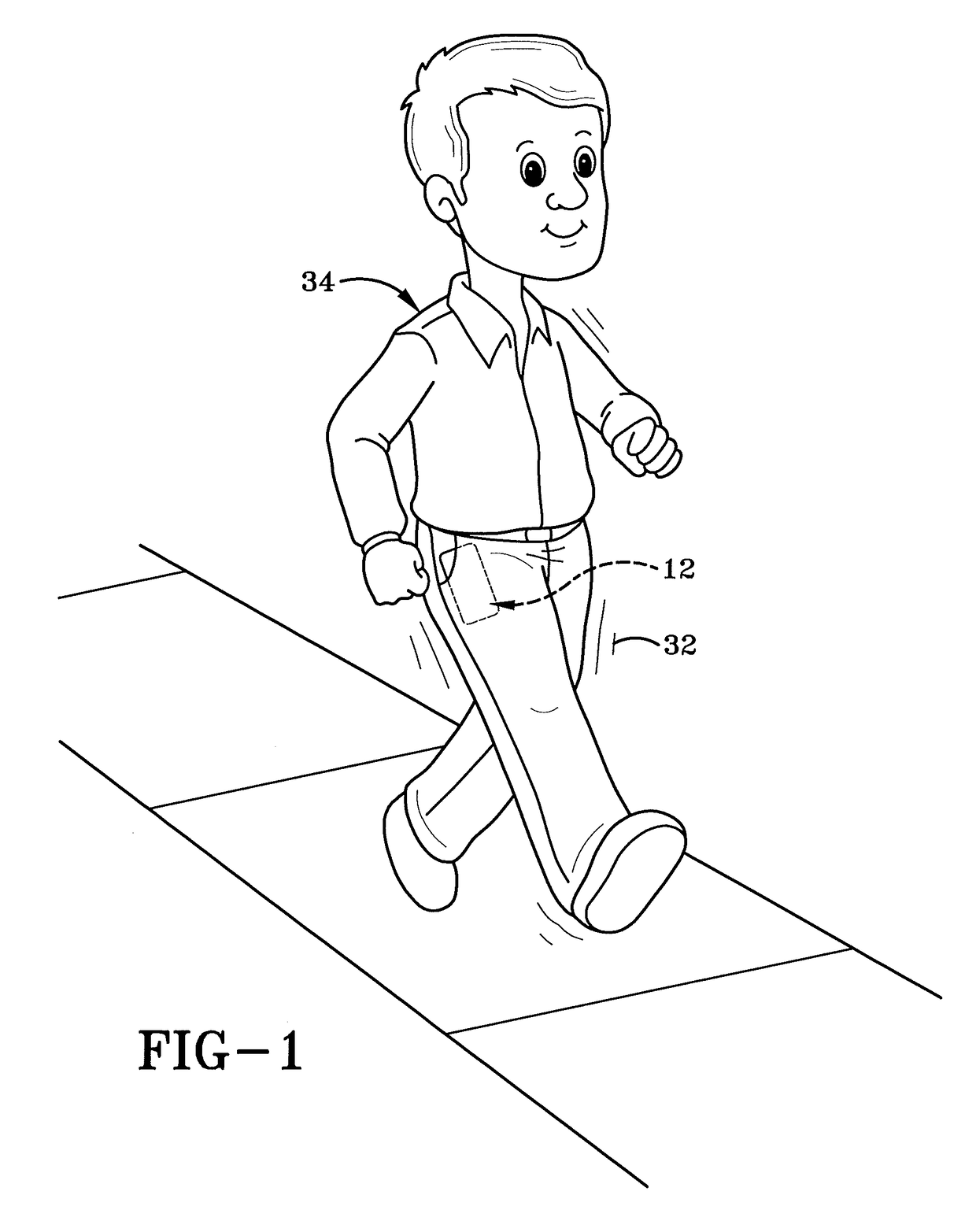

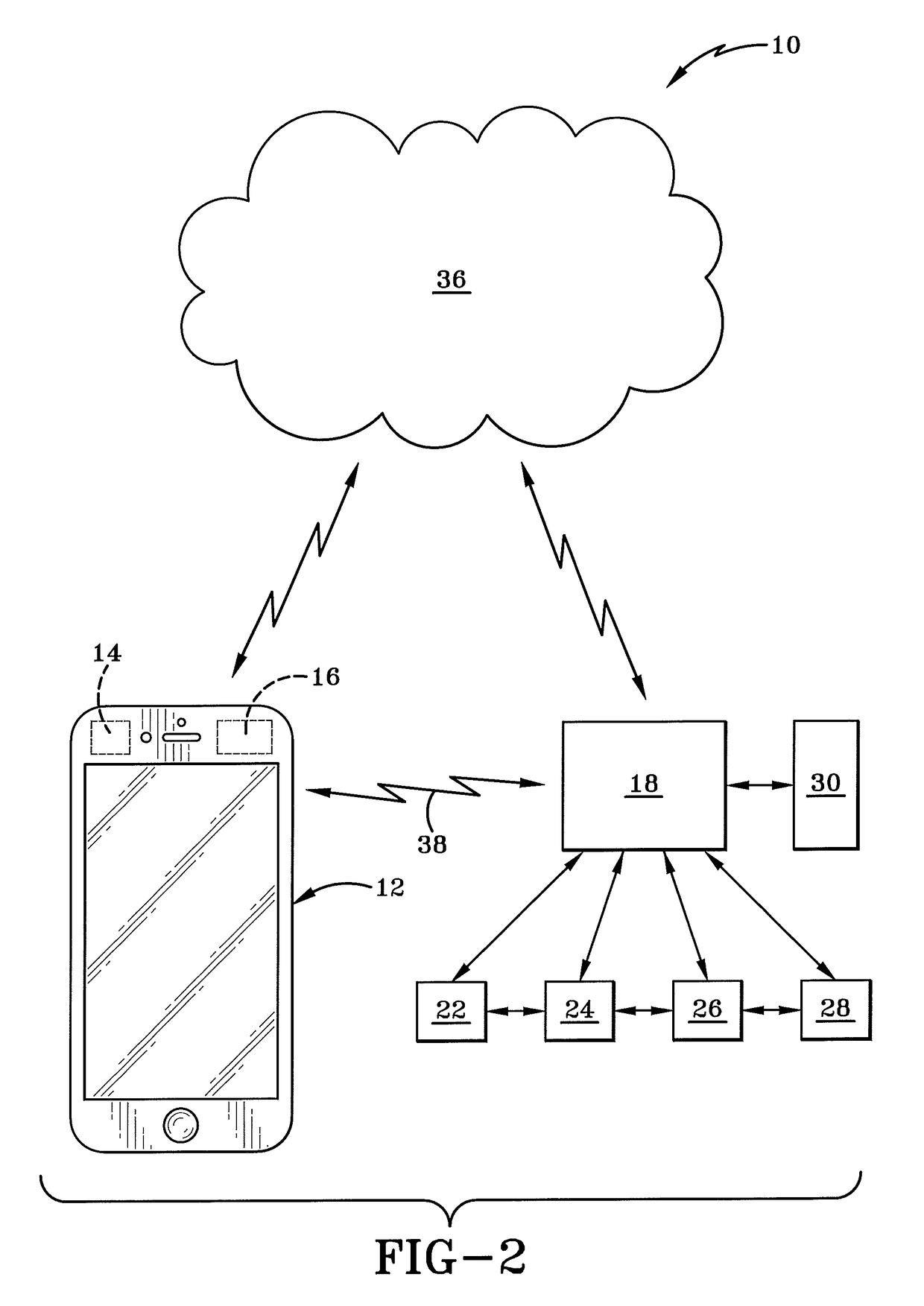

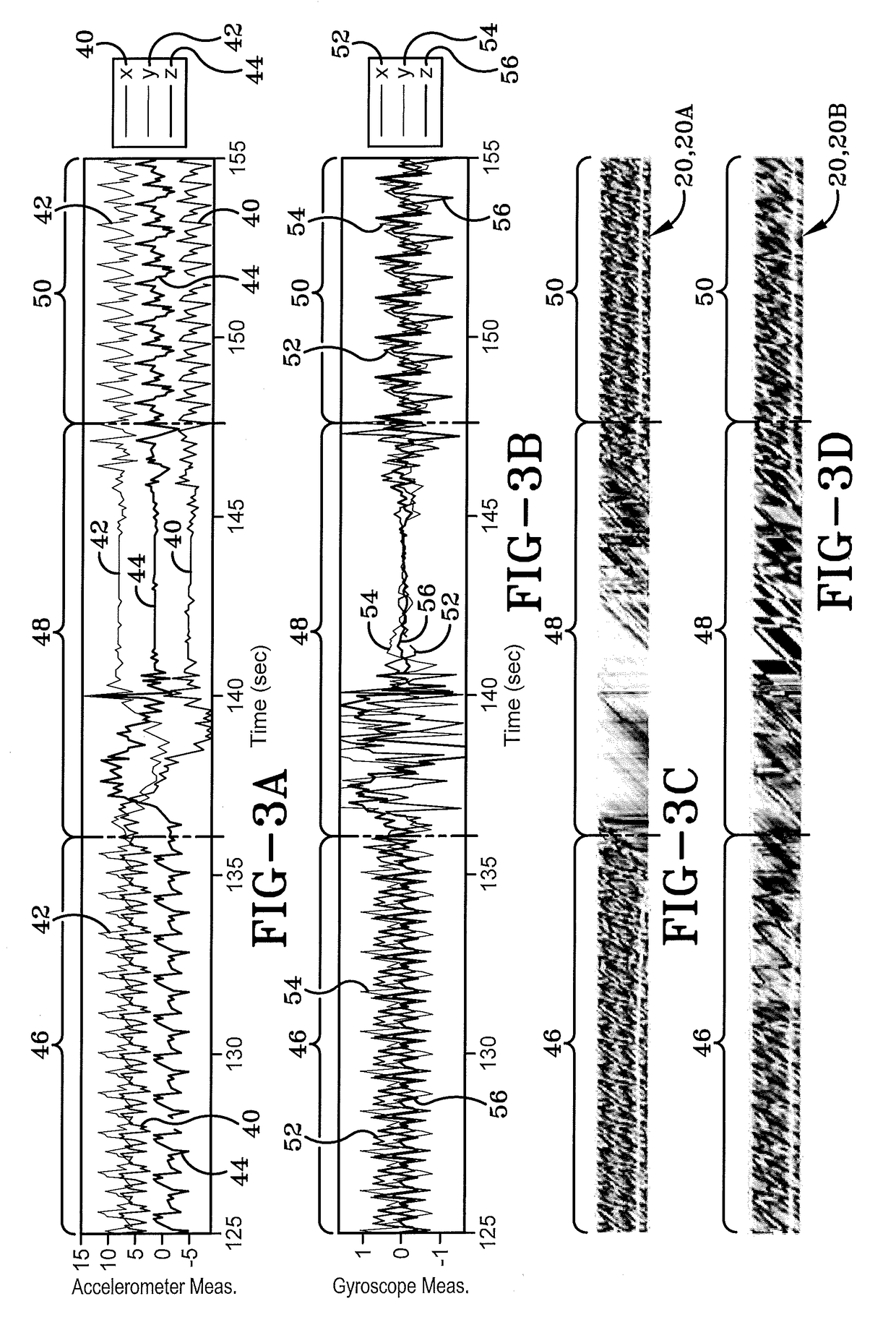

Gait authentication system and method thereof

ActiveUS20180078179A1Boost gait biometrics accuracyImprove discriminationPerson identificationInertial sensorsFeature vectorGyroscope

A gait authentication system and method is provided. The system includes a mobile computing device configured to be carried by a person while moving with a unique gait. A first sensor (e.g. an accelerometer) is carried by the mobile computing device and generates a first signal. A second sensor (e.g. a gyroscope) is carried by the mobile computing device and generates a second signal. A gait dynamics logic implements an identity vector (i-vector) approach to learn feature representations from a sequence of arbitrary feature vectors carried by the first and second signals. In the method for real time gait authentication, the computing of invariant gait representations are robust to sensor placement while preserving highly discriminative temporal and spatial gait dynamics and context.

Owner:BAE SYST INFORMATION & ELECTRONICS SYST INTERGRATION INC

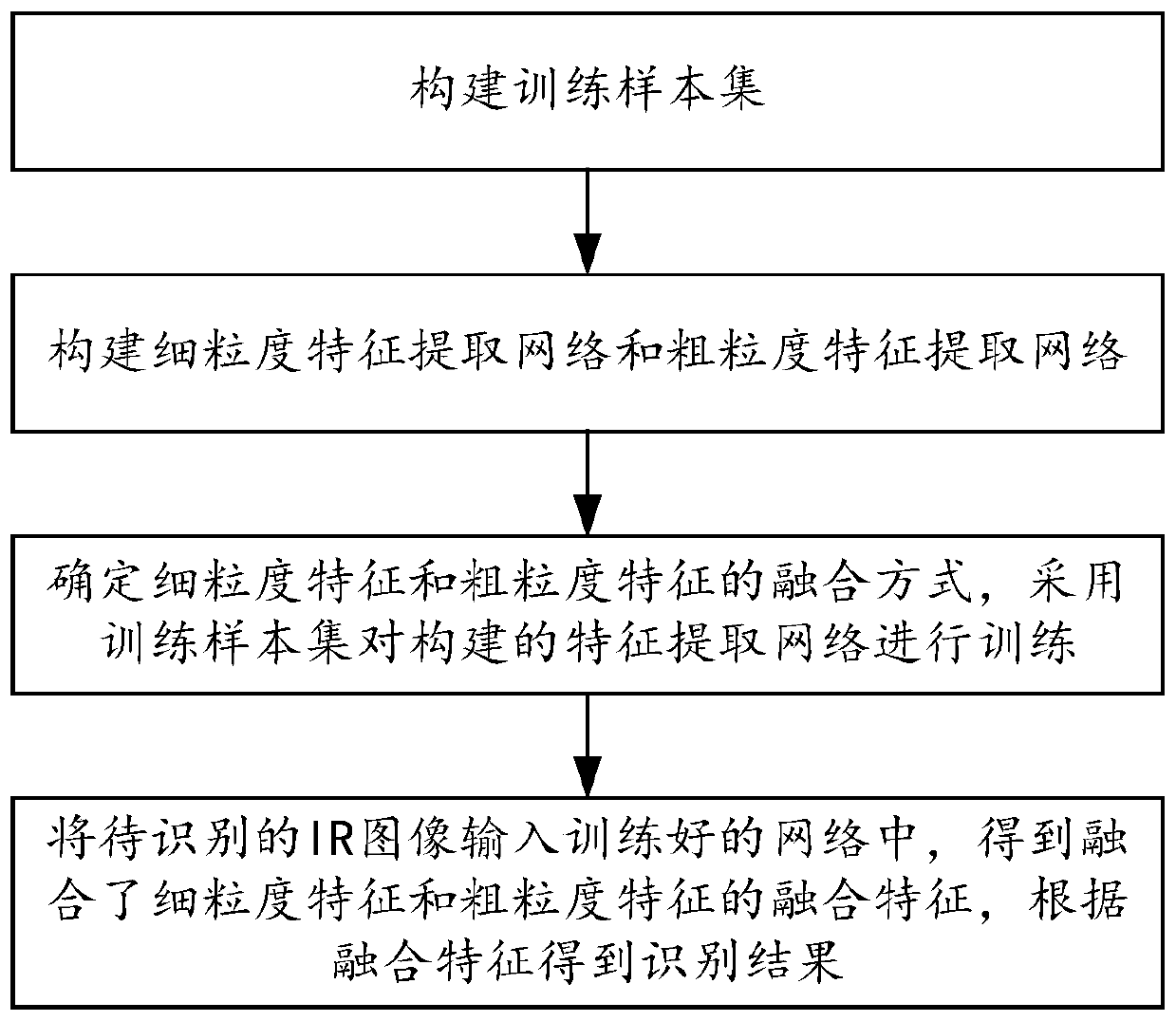

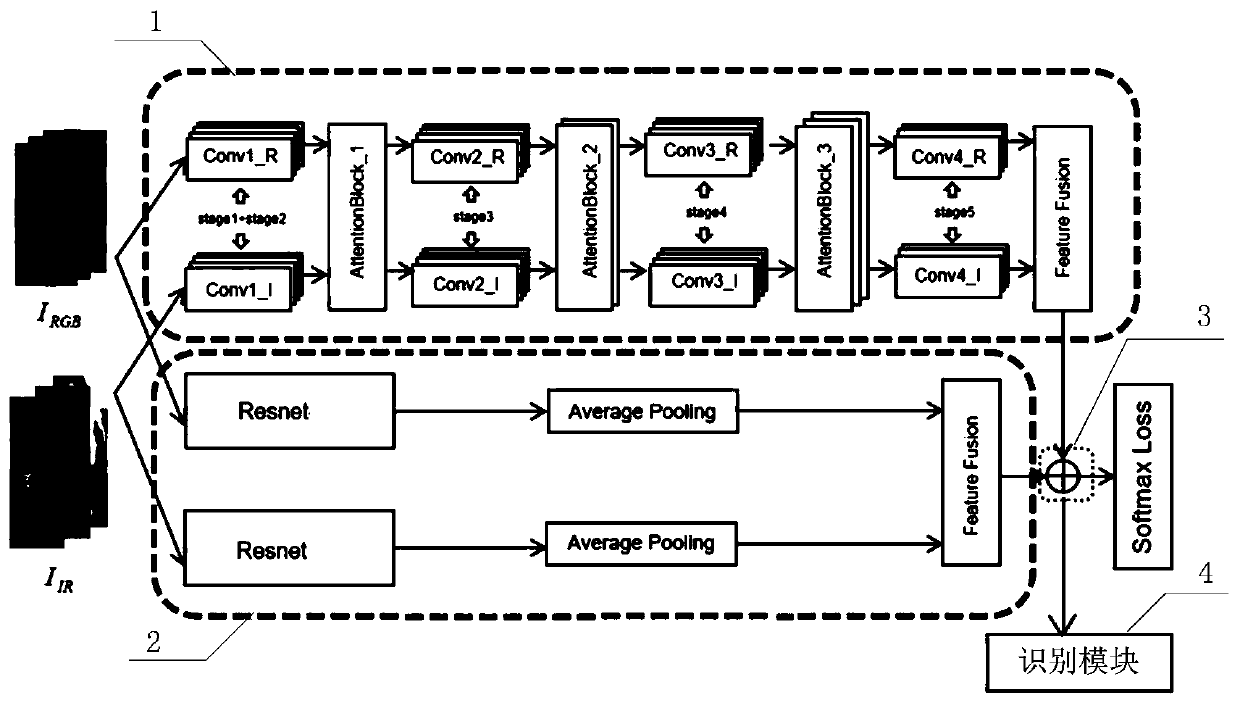

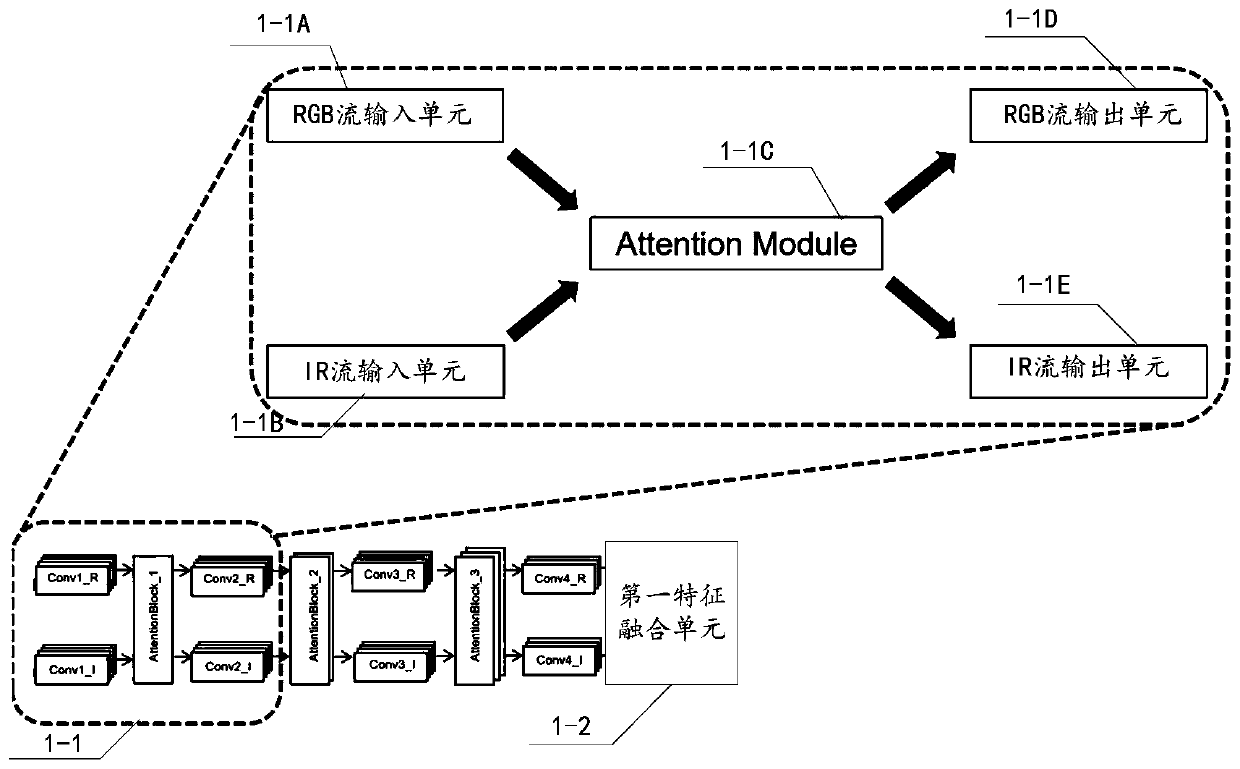

Multi-granularity cross modal feature fusion pedestrian re-identification method and re-identification system

ActiveCN110598654AImprove network recognition abilityGuaranteed robustnessCharacter and pattern recognitionRe identificationIr image

The invention discloses a multi-granularity cross modal feature fusion pedestrian re-identification method and a re-identification system. The pedestrian re-identification method comprises the steps:1, constructing a training sample set; 2, constructing a fine-grained feature extraction network and a coarse-grained feature extraction network; 3, training the fine-grained feature extraction network and the coarse-grained feature extraction network by adopting the training sample set to obtain a trained network; 4, respectively inputting a to-be-identified IR image into the fine-grained featureextraction network and the coarse-grained feature extraction network; and extracting fine-grained features and coarse-grained features of the to-be-identified image, fusing the extracted features toobtain a fused feature Ftest, obtaining the probability that pedestrians in the to-be-identified image belong to each category, and selecting the pedestrian category with the maximum probability valueas an identification result. According to the method, fine-grained features of small regions of an image and global coarse-grained features are combined to obtain more discriminative fusion featuresfor pedestrian classification and recognition.

Owner:HEFEI UNIV OF TECH

Gait authentication system and method thereof

ActiveUS10231651B2Improve discriminationImprove accuracyProgramme controlComputer controlFeature vectorAccelerometer

A gait authentication system and method is provided. The system includes a mobile computing device configured to be carried by a person while moving with a unique gait. A first sensor (e.g. an accelerometer) is carried by the mobile computing device and generates a first signal. A second sensor (e.g. a gyroscope) is carried by the mobile computing device and generates a second signal. A gait dynamics logic implements an identity vector (i-vector) approach to learn feature representations from a sequence of arbitrary feature vectors carried by the first and second signals. In the method for real time gait authentication, the computing of invariant gait representations are robust to sensor placement while preserving highly discriminative temporal and spatial gait dynamics and context.

Owner:BAE SYST INFORMATION & ELECTRONICS SYST INTERGRATION INC

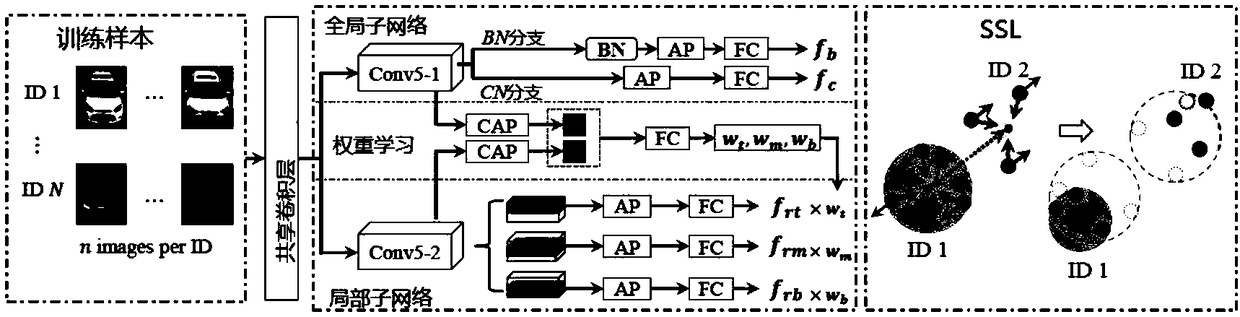

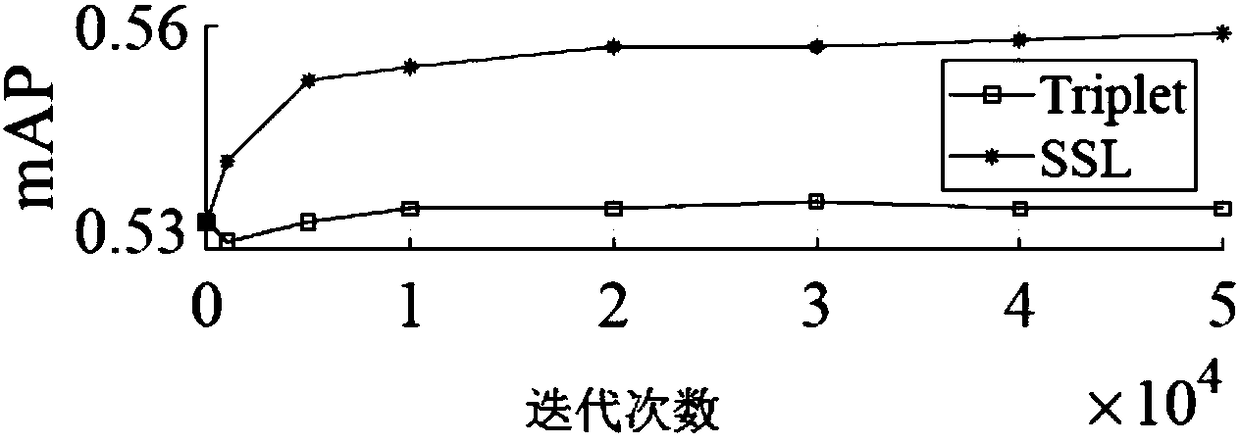

Vehicle re-identification method and system

ActiveCN108171247AAccurate re-identificationReduce computational complexityCharacter and pattern recognitionComputation complexityFeature learning

The invention provides a vehicle re-identification method and system based on vehicle component features and set distance measurement learning. The method comprises the steps that global features andlocal features of vehicles are extracted, and the weight of each local feature is determined based on the quality of the local features; and the vehicle re-identification process is completed throughset distance measurement learning. According to the vehicle re-identification method and system, set distance measurement learning is used to accelerate the feature learning process; according to setdistance measurement learning, first, different pictures of the same vehicle are regarded as a set, and the feature learning process is optimized by shortening the picture distance inside each set andmeanwhile prolonging the distance between different sets; and calculation complexity of training is effectively lowered, meanwhile, the features with higher distinction can be obtained, and vehicle re-identification can be performed more accurately.

Owner:PEKING UNIV

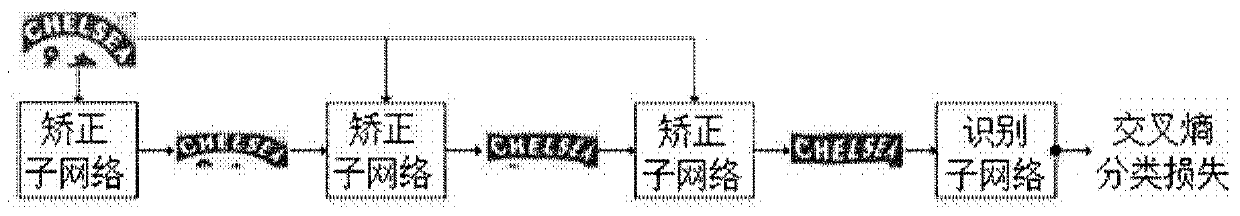

Irregular character recognition device and method based on deep learning

PendingCN110427938AExcellent correction resultTake advantage ofCharacter recognitionNetwork modelStore instruction

An irregular character recognition device based on deep learning comprises a memory for storing instructions and character images, a processor which is configured to execute the instruction so as to input an original character image containing irregular characters to the neural network model, and identify and outputt characters in the character image, wherein the neural network model comprises a correction sub-network used for correcting an original character image containing irregular characters and outputting the corrected character image, and an identification sub-network which is connectedwith the output end of the correction sub-network and is used for receiving the character image output by the correction sub-network and identifying characters in the character image.

Owner:OBJECTEYE (BEIJING) TECH CO LTD

Method for extracting textural features of robust

ActiveCN103258202AExpressiveDiscriminatingImage analysisCharacter and pattern recognitionFeature setImaging processing

The invention discloses a method for extracting textural features of a robust, and belongs to the technical field of image processing. The method comprises the implementation steps: pre-processing an input image, generating a feature set F, carrying out binaryzation on the feature set F based on a threshold value of each feature, carrying out binary encoding and generating a particular pixel label, carrying out rotation invariant even local binary pattern (LBP) encoding on the input image, generating an LBP label of each pixel point, constructing a 2-D coexistence histogram by the particular pixel label and the LBP label of each pixel point, vectoring the coexistence histogram and then applying the coexistence histogram into textural expression. The method is applied so as to reduce binary quantitative loss in an existing LBP mode, at the same time maintain robustness of changes of illumination, rotation, scale and visual angles by the extraction features.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

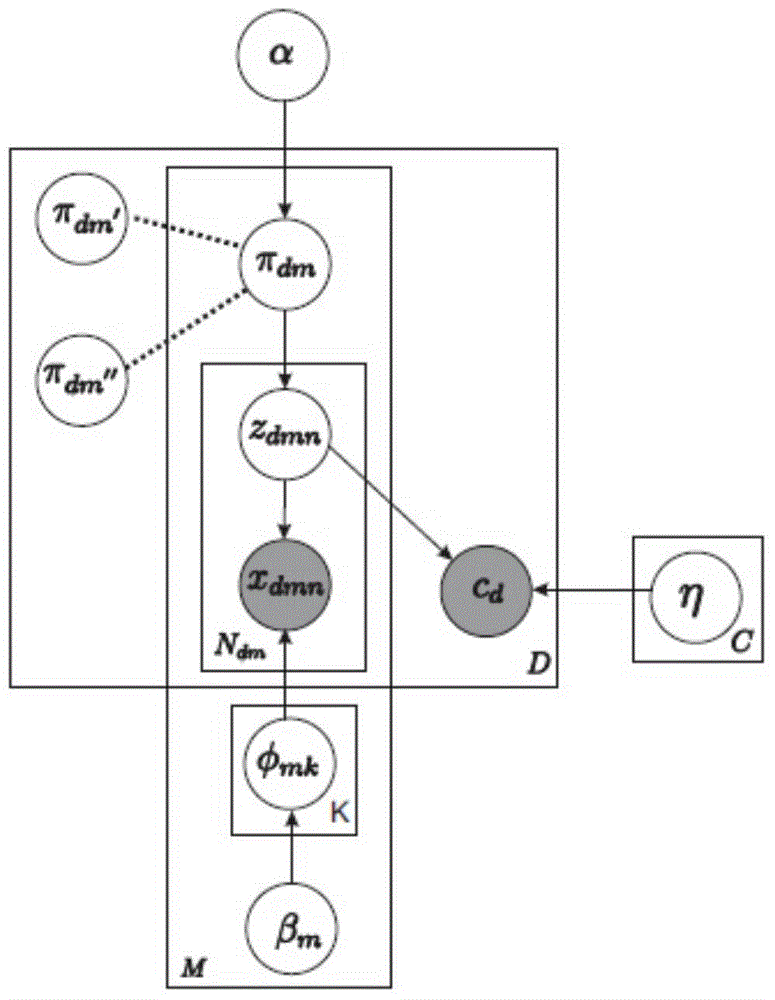

Cross-modal searching method based on topic model

InactiveCN104317837ACross-modal retrieval implementationDiscriminatingWeb data retrievalSpecial data processing applicationsModal dataAlgorithm

The invention discloses a cross-modal searching method based on a topic model. The cross-modal searching method based on the topic model comprises the following steps that (1) feature extracting and label recording are conducted on all types of modal data in a database, (2) a cross-modal searching image model based on the topic model is built, (3) the cross-modal searching image model based on the topic model is solved by using a Gibbs sampling method, (4) a user submits data of a type of modal, and another relative type of modal data are returned by using the cross-modal searching model after feature extracting, and (5) the real corresponding information and the label information of the cross-modal data are used, and the cross-modal searching model is assessed from two aspects of correspondence and differentiation. The cross-modal searching method based on the topic model introduces the cross-modal topic and the different modal topic improving concept, the labor information is used, the interpretability and the flexibility of the topic modeling are improved and the cross-modal searching method based on the topic model has a good expandability and discrimination performance.

Owner:ZHEJIANG UNIV

Pedestrian re-identification model training method and device and pedestrian re-identification method and device

PendingCN111738090AEasy to identifyDiscriminatingCharacter and pattern recognitionNeural architecturesFeature extractionNerve network

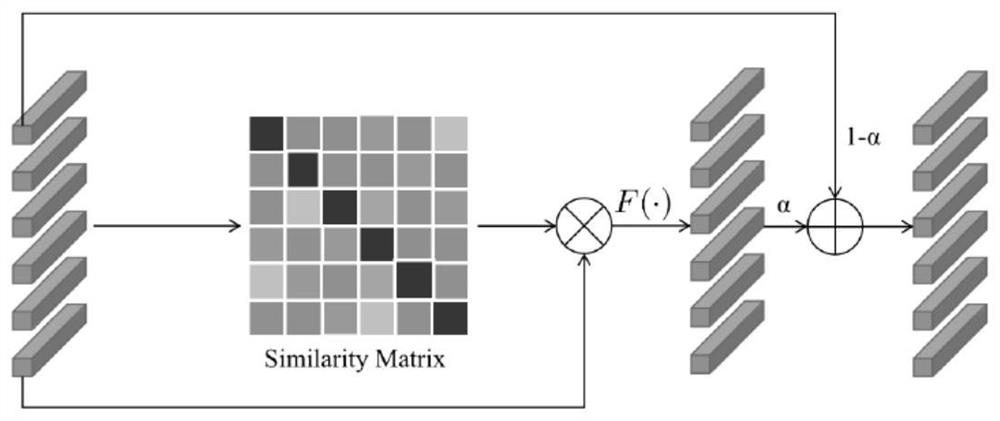

The invention discloses a pedestrian re-identification model training method and device and a pedestrian re-identification method and device, and the pedestrian re-identification model training methodcomprises the steps: carrying out the feature extraction of a pedestrian image through a convolution network of a pedestrian re-identification model, and obtaining the original features of the pedestrian image; processing the original features by using an attention module of the pedestrian re-identification model to obtain a plurality of pedestrian local features; determining a similarity matrixamong the pedestrian local features by using a graph neural network of the pedestrian re-identification model, and adjusting the pedestrian local features according to the similarity matrix; and determining a pedestrian recognition result and training loss of a pedestrian re-recognition model based on the adjusted pedestrian local features, and optimizing model parameters according to the trainingloss. According to the invention, the important pedestrian local features in the image can be automatically extracted without introducing extra annotation information, so that the final pedestrian local features have higher discrimination capability, and the model recognition performance is also improved.

Owner:BEIJING SANKUAI ONLINE TECH CO LTD

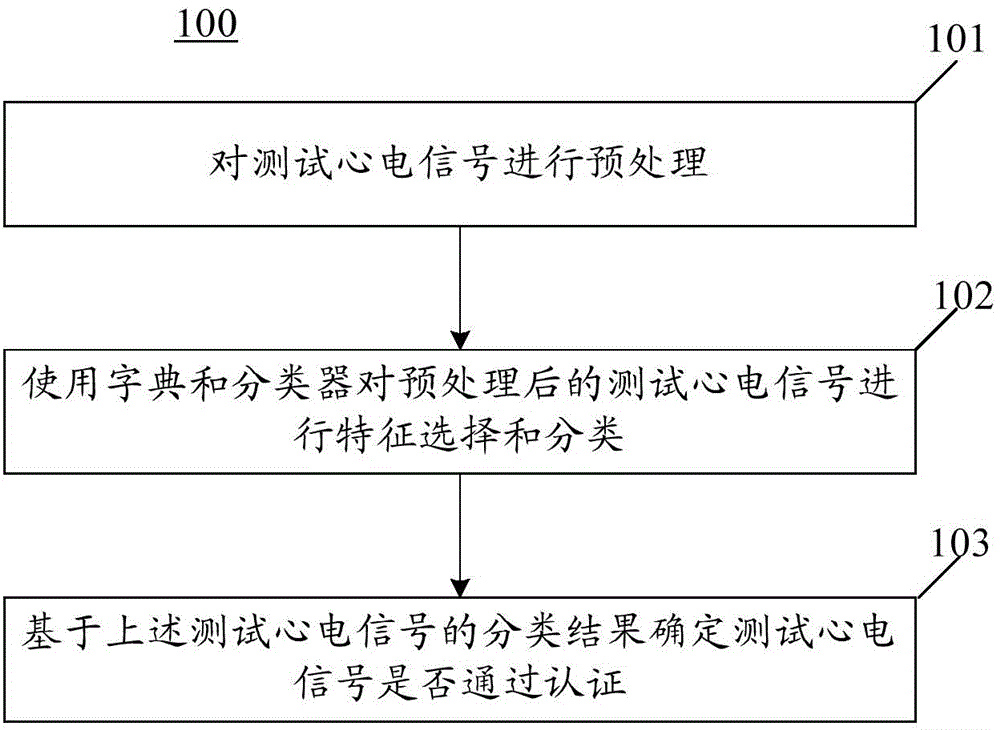

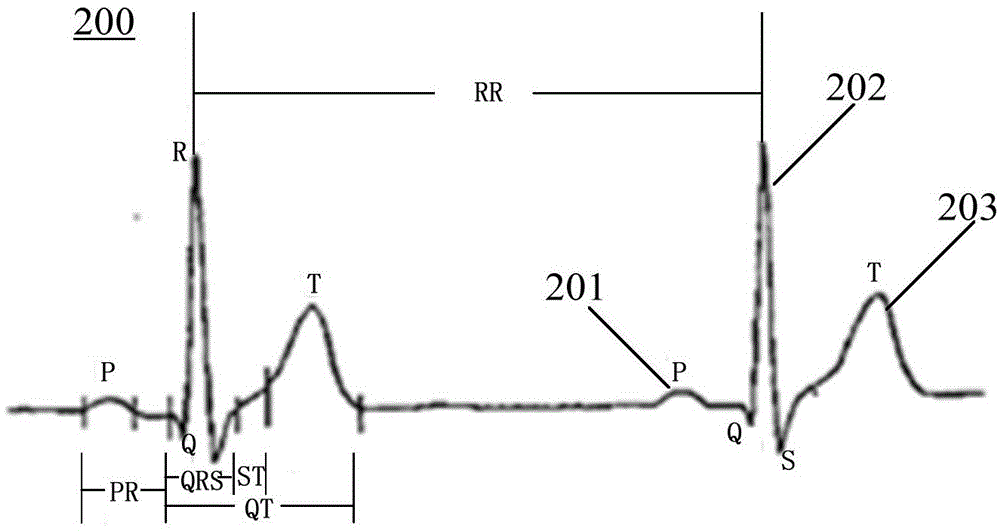

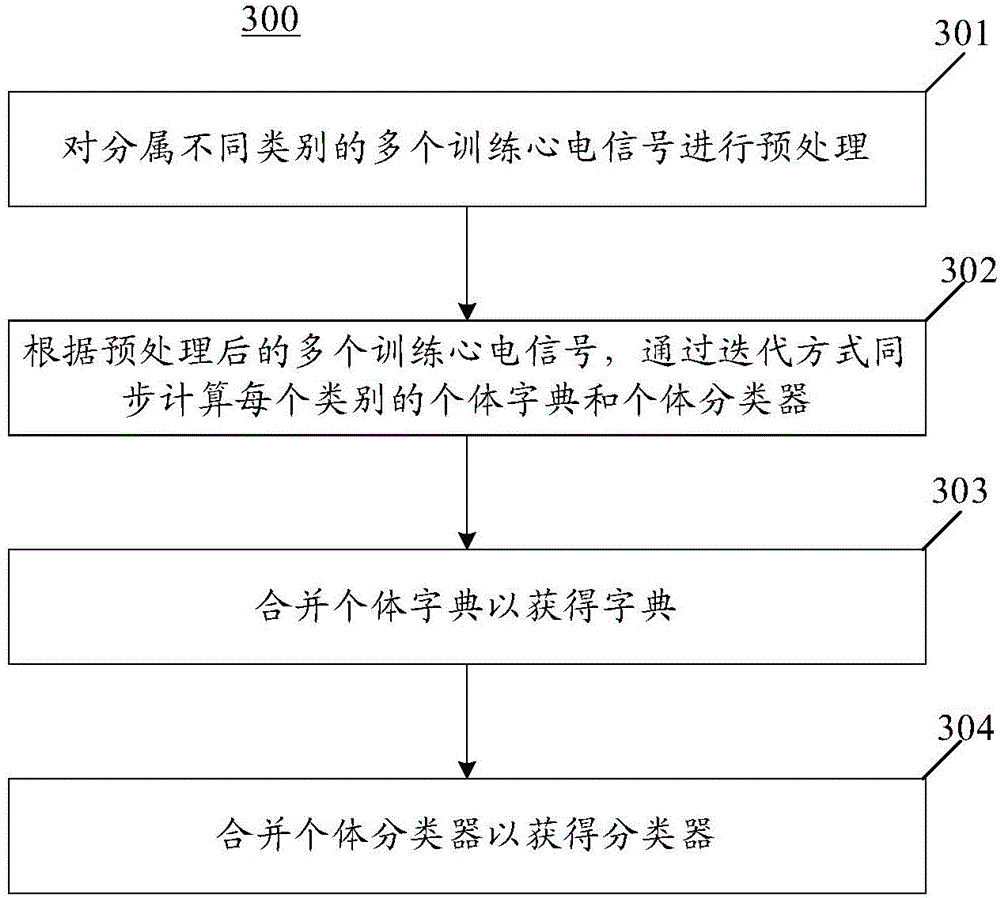

Electrocardiosignal-based authentication method, apparatus and system

ActiveCN105989266AAvoid negative effectsDiscriminatingCharacter and pattern recognitionDigital data authenticationEcg signalFeature selection

The invention discloses an electrocardiosignal-based authentication method, apparatus and system. An embodiment of the method comprises the steps of preprocessing test electrocardiosignals; performing characteristic selection and classification on the preprocessed test electrocardiosignals by using a dictionary and a classifier, wherein the dictionary and the classifier are obtained through synchronous learning; and determining whether the test electrocardiosignals pass authentication or not based on a classification result of the test electrocardiosignals. According to the embodiment, the robust classifier is obtained through the synchronous learning dictionary and classifier, and the precision and efficiency of authentication are improved.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

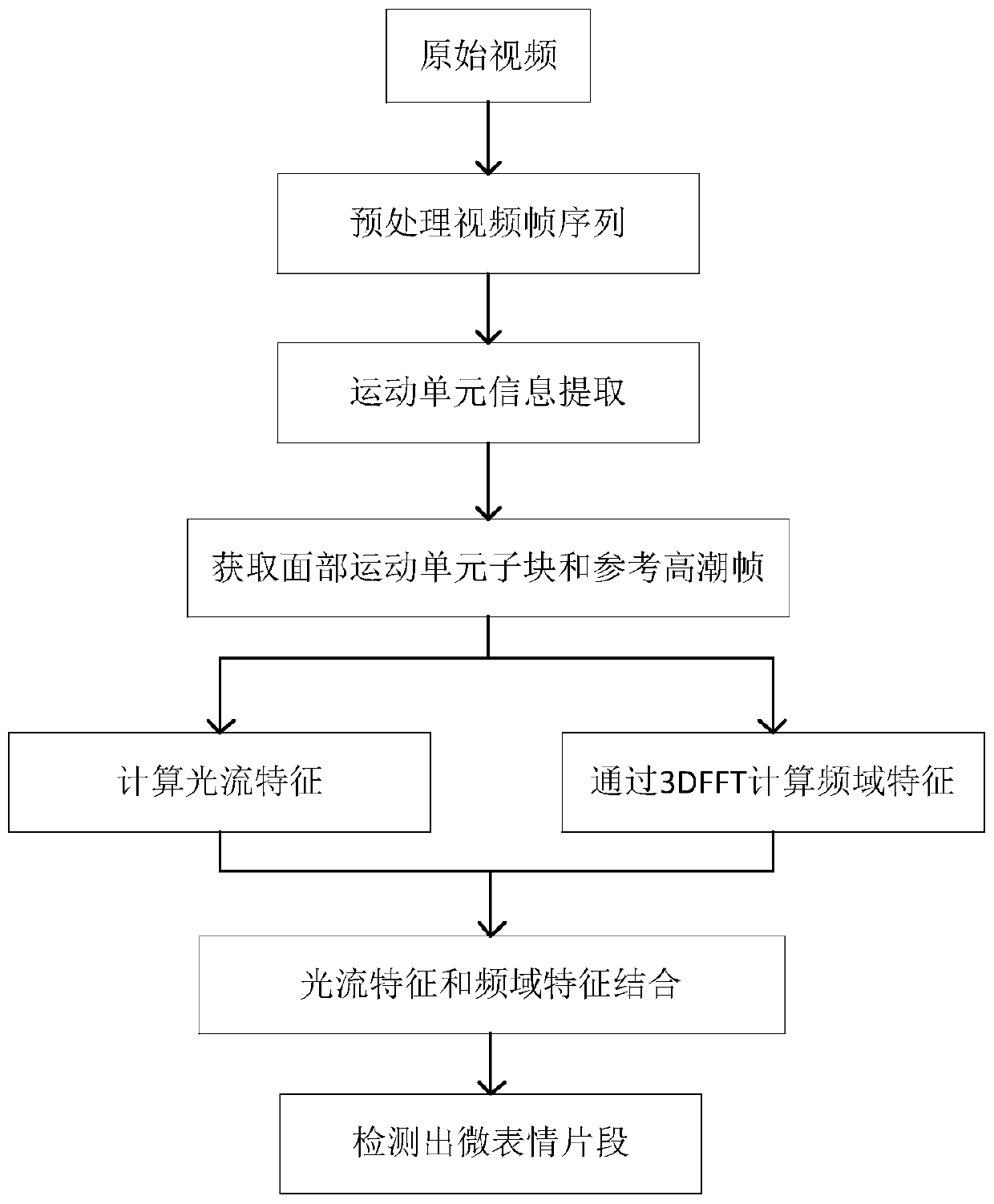

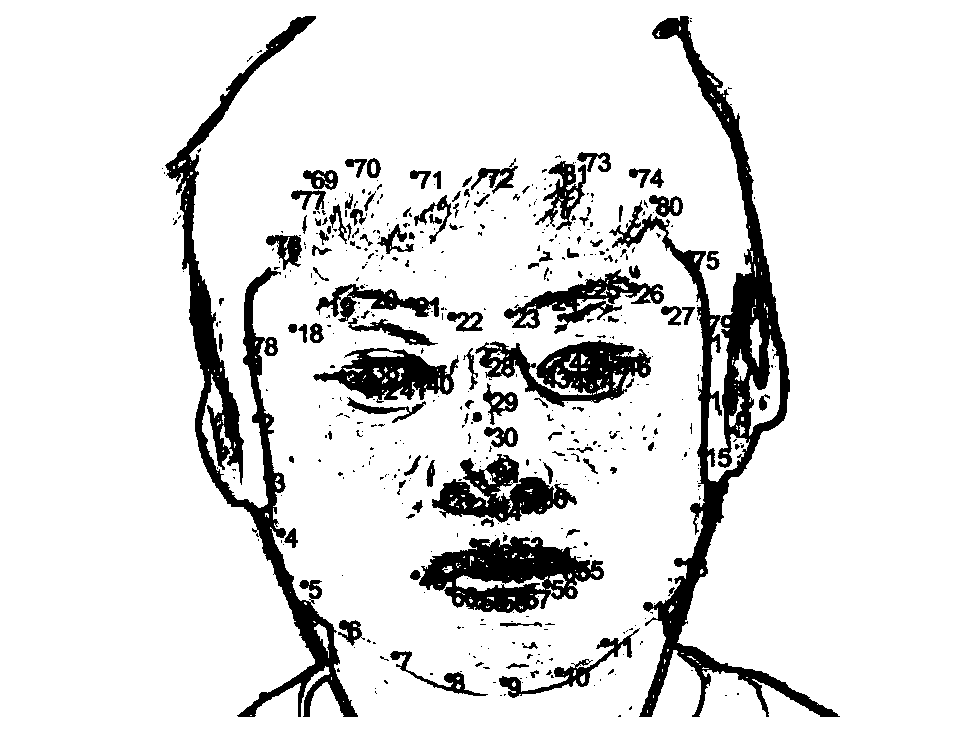

Multi-domain fusion micro-expression detection method based on motion unit

ActiveCN111582212APrecise positioningSmall amount of calculationAcquiring/recognising facial featuresFace detectionFrame sequence

The invention relates to a multi-domain fusion micro-expression detection method based on a motion unit, and the method comprises the steps: (1) carrying out the preprocessing of a micro-expression video: obtaining a video frame sequence, carrying out the face detection and positioning, and carrying out the face alignment; (2) performing motion unit detection on the video frame sequence to obtainmotion unit information of the video frame sequence; (3) according to the motion unit information, finding out a facial motion unit sub-block containing the maximum micro-expression motion unit information amount ME as a micro-expression detection area through a semi-decision algorithm, and meanwhile, extracting a plurality of peak frames of the micro-expression motion unit information amount ME as reference climax frames of micro-expression detection by setting a dynamic threshold value; and (4) realizing micro-expression detection through a multi-domain fusion micro-expression detection method. According to the method, the influence of redundant information on micro-expression detection is reduced, the calculated amount is reduced, and the micro-expression detection has higher comprehensive discrimination capability. The calculation speed is high, and the micro-expression detection precision is high.

Owner:SHANDONG UNIV

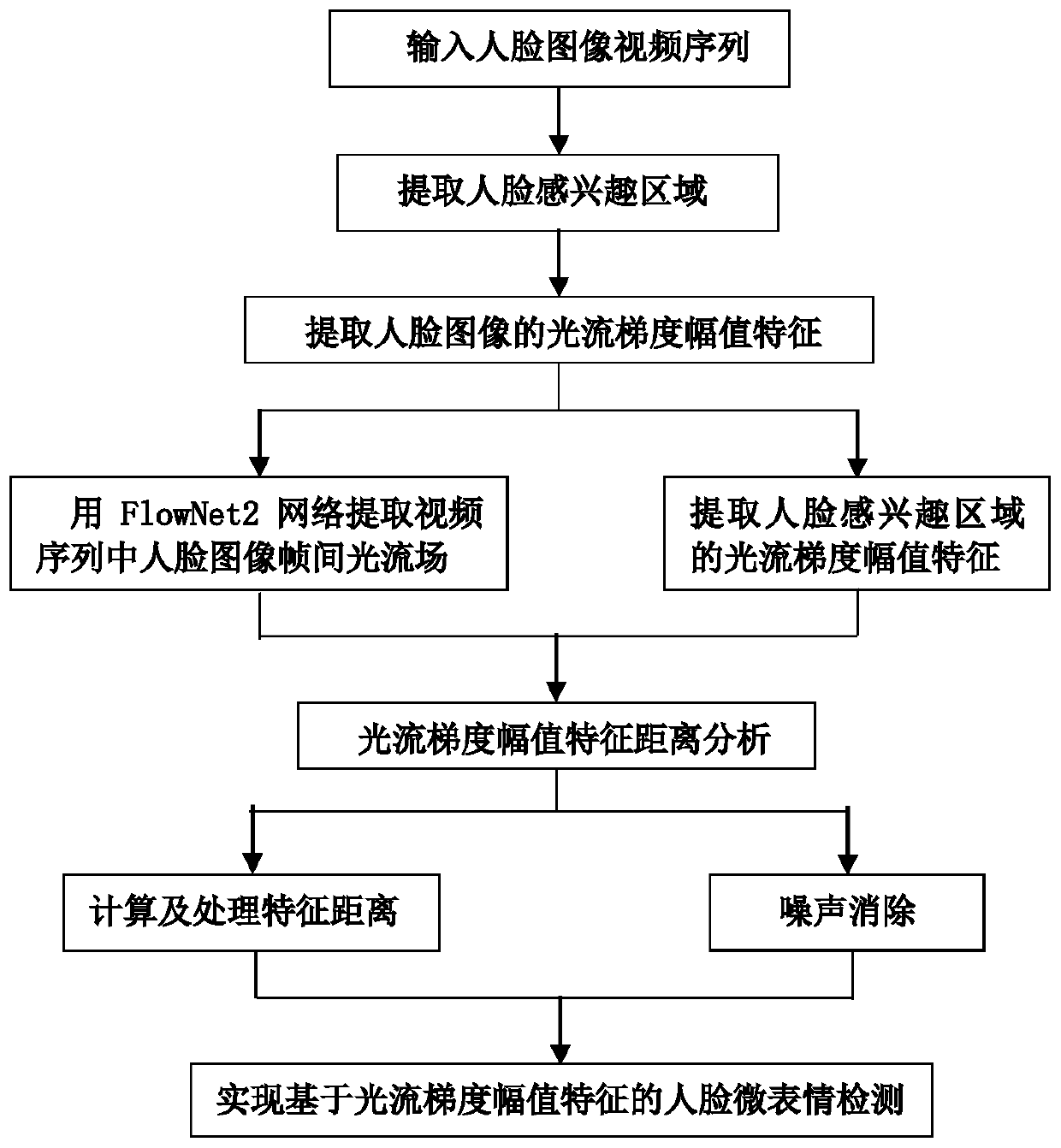

Optical flow gradient amplitude characteristic-based subtle facial expression detection method

ActiveCN110991348AAvoid Cumulative ErrorsReduce detection impactAcquiring/recognising facial featuresGraphicsDistance analysis

The invention discloses an optical flow gradient amplitude characteristic-based subtle facial expression detection method and relates to processing for identifying a graphic recording carrier. According to the method, face edges are obtained by means of fitting according to face key points, and a face region of interest is extracted; an optical flow field between face image frames in a video sequence is extracted by using a FlowNet2 network; the optical flow gradient amplitude characteristics of the face region of interest are extracted; characteristic distances are calculated and processed, and noise elimination is carried out; and therefore, subtle facial expression detection based on the optical flow gradient amplitude characteristics is completed. According to subtle facial expressiondetection in the prior art, subtle facial expression motion cannot be captured in extracted face image motion features; the features contain excessive interference information, and as a result, the subtle facial expression detection is susceptible to the influence of head deviation, blinking motion, accumulated noise and single-frame noise in feature distance analysis. However, with the method adopted, the above defects in the prior art can be eliminated.

Owner:HEBEI UNIV OF TECH

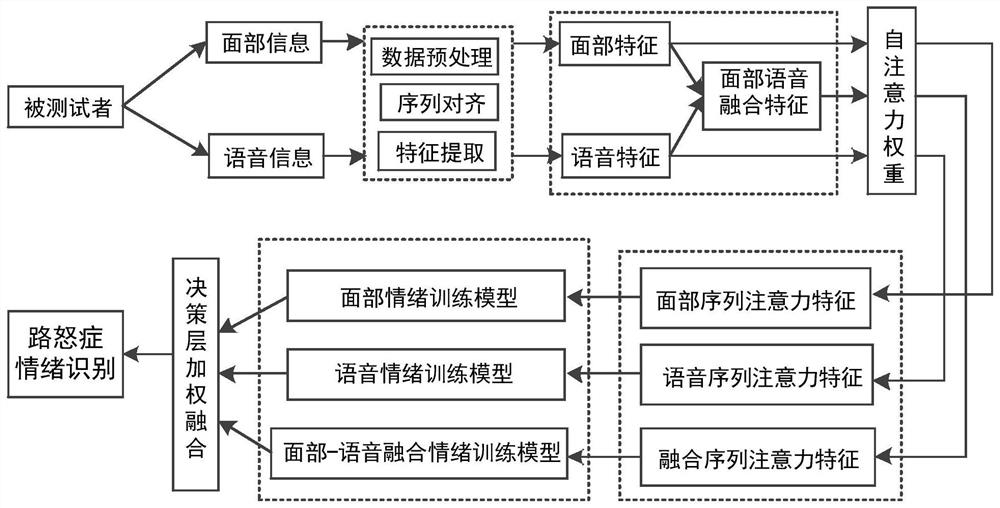

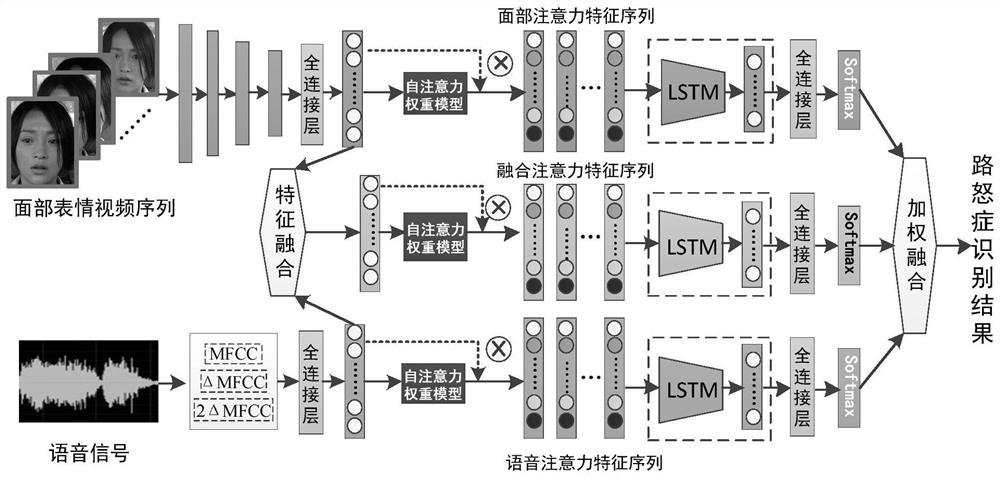

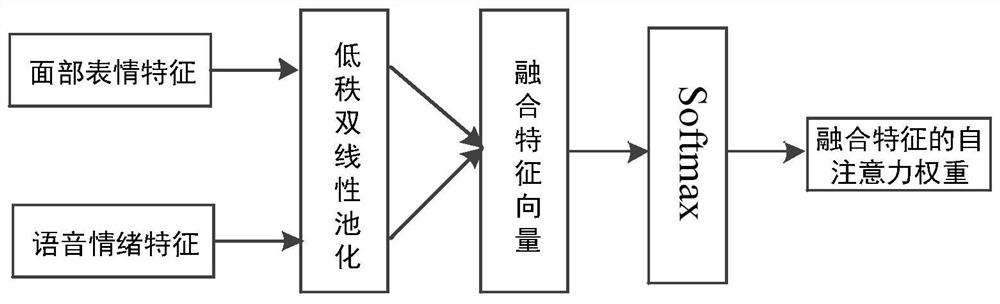

Driver road rage identification method based on deep fusion of facial expressions and voice

PendingCN113591525AAchieve alignmentImplement featuresSpeech analysisCharacter and pattern recognitionNerve networkDriver/operator

The invention discloses a driver road rage identification method based on deep fusion of facial expressions and voice. The method comprises the following steps: extracting facial image information and voice information of a driver from facial video image information of the driver; preprocessing the facial image frame information and inputting the facial image frame information into a multilayer convolutional neural network to obtain facial expression features; firstly, extracting Mel-frequency cepstral coefficients and first-order and second-order coefficient values of voice information for initial feature extraction, and splicing initial features of two sections of voice segments and inputting the splicing initial features into a full connection layer network to obtain discriminative voice frame features corresponding to facial expression frames; performing low-rank bilinear pooling fusion on the obtained facial frame expression features and the voice frame features to obtain fusion features; and performing decision fusion on the facial expression features, the voice features and the fusion features to obtain a final road rage recognition result. In a complex driving environment, the driver anger identification result can still be output in a high-precision mode, and then safe driving early warning is effectively carried out.

Owner:LANHAI FUJIAN INFORMATION TECH CO LTD

Image recognition method and device, and related equipment

PendingCN110147699AImprove accuracyDiscriminatingCharacter and pattern recognitionImage identificationPattern recognition

Owner:PEKING UNIV +1

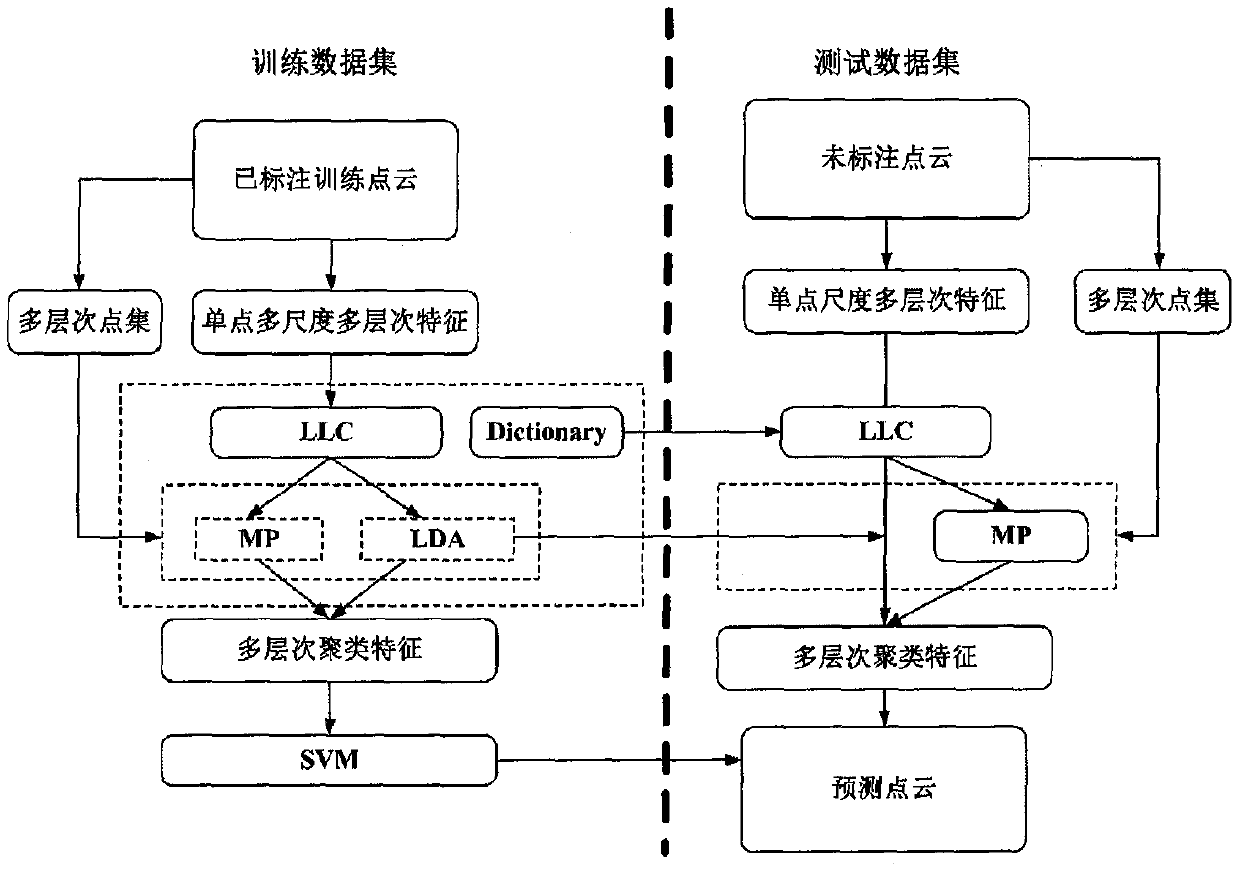

Point cloud classification method based on multi-level aggregation feature extraction and fusion

InactiveCN111275052AFully segmentedEfficient constructionCharacter and pattern recognitionPoint cloudAlgorithm

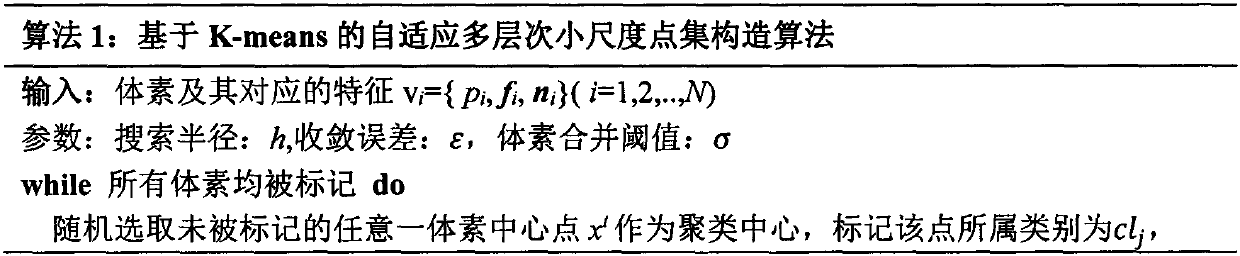

The invention provides a point cloud classification method based on multi-level aggregation feature extraction and fusion. The method comprises the following steps: (1) constructing a multi-level point set; (2) point set feature extraction based on LLC-LDA; (3) point set feature extraction based on multi-scale maximum pooling (LLC-MP); and (4) point cloud classification based on multi-level pointset feature fusion. The invention provides a multi-level point set aggregation feature extraction and fusion method based on multi-scale maximum pooling and local Discriminant alteration (LDA), and point cloud classification is realized based on the fused aggregation features. The multi-level point set aggregation feature extraction and fusion method based on multi-scale maximum pooling is used for point cloud classification. According to the algorithm, multi-level clustering is carried out; adaptively acquiring a multi-level and multi-scale target point set; the method comprises the followingsteps of: expressing point cloud single-point features by using local linear constraint sparse coding (LLC), and extracting the point cloud single-point features; a scale pyramid is constructed by using point coordinates, features capable of representing local distribution of a point set are constructed based on a maximum pooling method, then the features and an LLC-LDA model are fused to extractglobal features of the point set, and finally point cloud classification is realized by using multi-level aggregation features of the fused point set.

Owner:NANJING FORESTRY UNIV

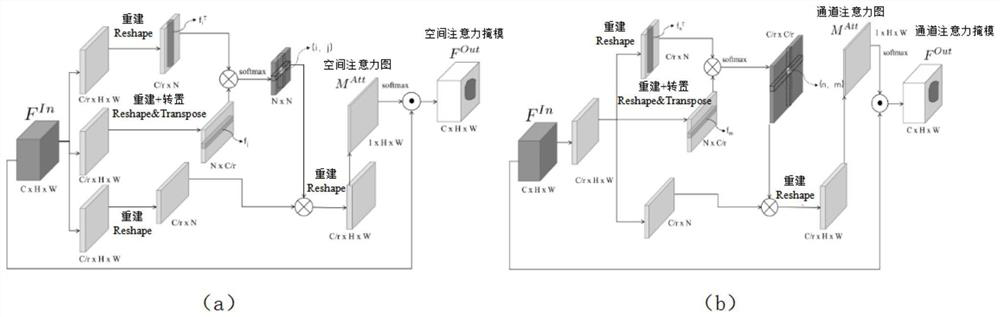

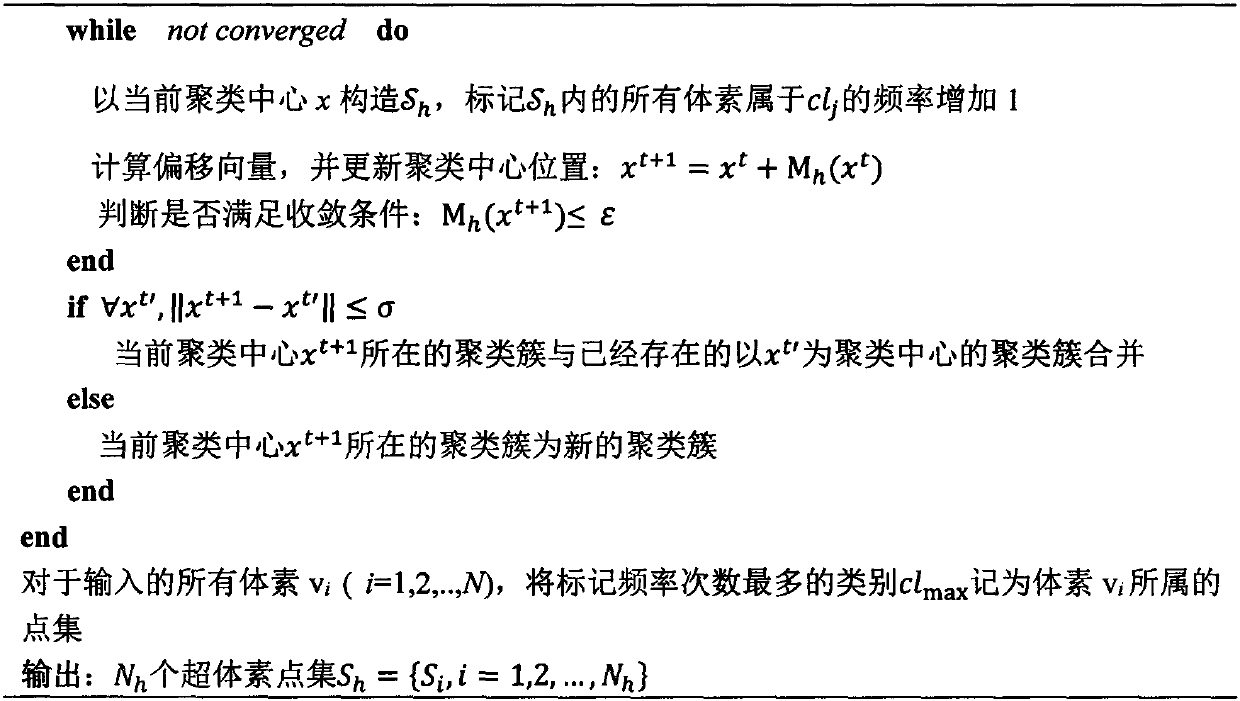

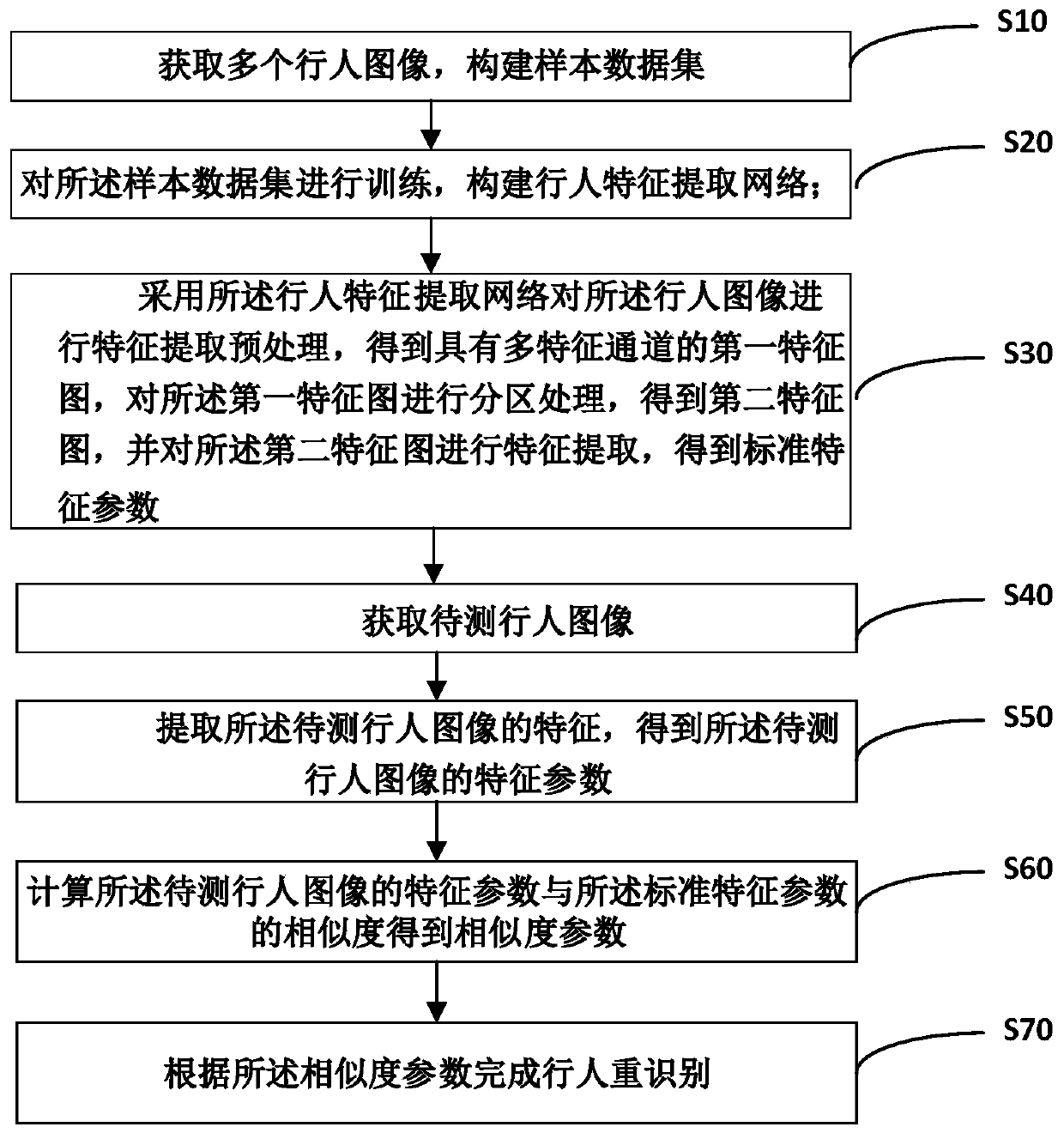

A pedestrian re-identification method and system

PendingCN109886242AEnhanced regional featuresEnhance the more prominent regional features in the input pedestrian imageCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention provides a pedestrian re-identification method and system, and the method comprises the steps: obtaining a plurality of pedestrian images, building a sample data set: carrying out the training of the sample data set, building a pedestrian feature extraction network; carrying out feature extraction preprocessing on the pedestrian image by adopting the pedestrian feature extraction network to obtain a first feature map with multiple feature channels, carrying out partition processing on the first feature map to obtain a second feature map, carrying out feature extraction on the second feature map to obtain standard feature parameters; obtaining a to-be-detected pedestrian image; extracting features of the to-be-detected pedestrian image to obtain feature parameters of the to-be-detected pedestrian image; calculating the similarity between the characteristic parameters of the to-be-detected pedestrian image and the standard characteristic parameters to obtain similarity parameters; and completing pedestrian re-identification according to the similarity parameters. Therefore, the features extracted by the weighted partition feature extraction mode are more discriminative,so that the discrimination capability of the depth model is improved.

Owner:CHONGQING INST OF GREEN & INTELLIGENT TECH CHINESE ACADEMY OF SCI

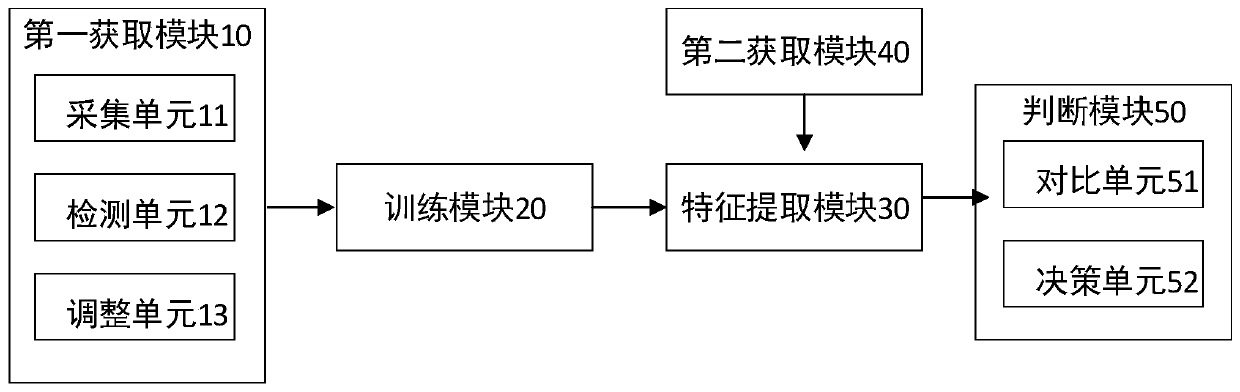

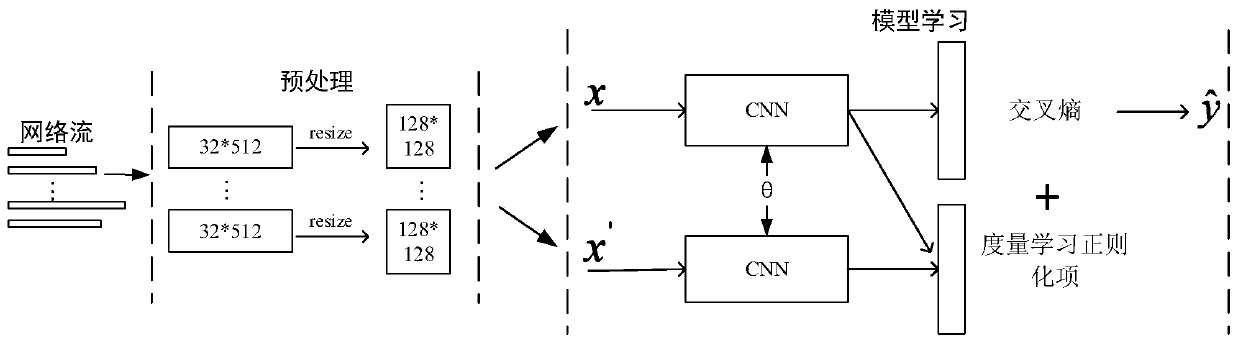

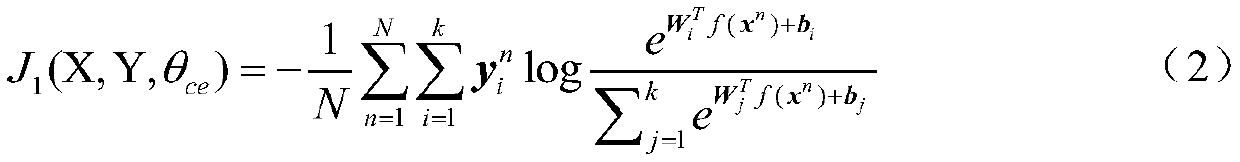

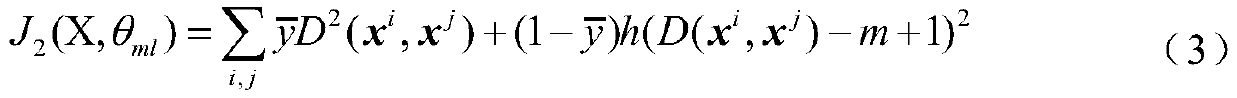

Network traffic classification system and method based on depth discrimination features

ActiveCN110796196ADistance between subclassesAccurate classificationCharacter and pattern recognitionNeural architecturesInternet trafficModel learning

The invention relates to a network traffic classification system and method based on depth discrimination features. The network traffic classification system comprises a preprocessing module and a model learning module, wherein the preprocessing module uses network flows with different lengths generated by different applications as input, and each network flow is expressed as a flow matrix with afixed size so as to meet the input format requirement of a convolutional neural network (CNN); and the model learning module trains the deep convolutional neural network by taking the flow matrix obtained by the preprocessing module as input under the supervision of a target function formed by a metric learning regularization item and a cross entropy loss item, so that the neural network can learnthe input flow matrix to obtain more discriminative feature representation, and the classification result is more accurate.

Owner:INST OF INFORMATION ENG CAS

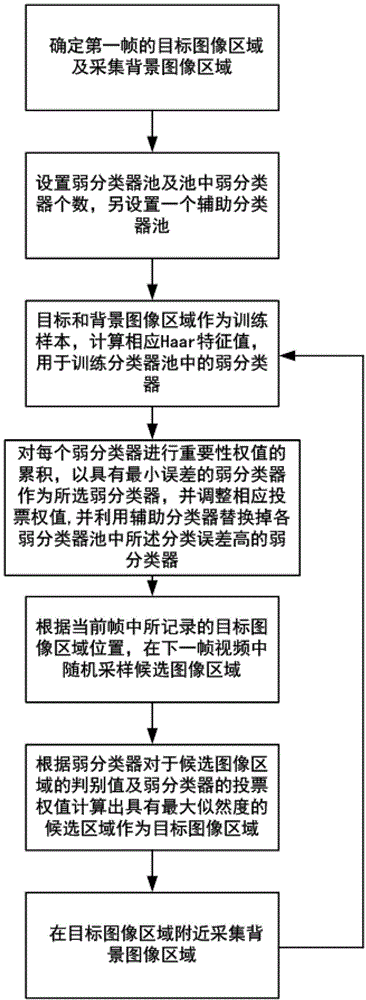

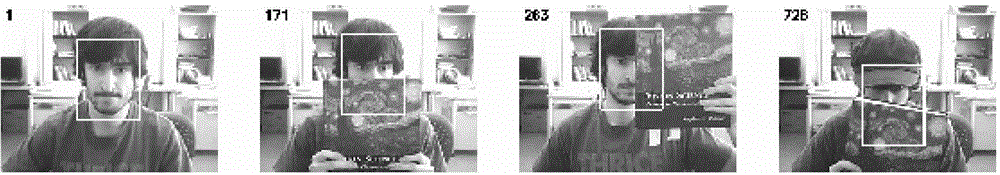

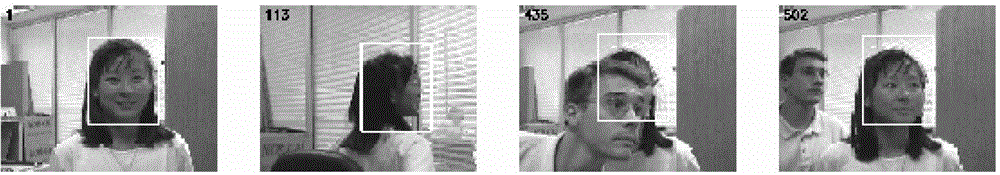

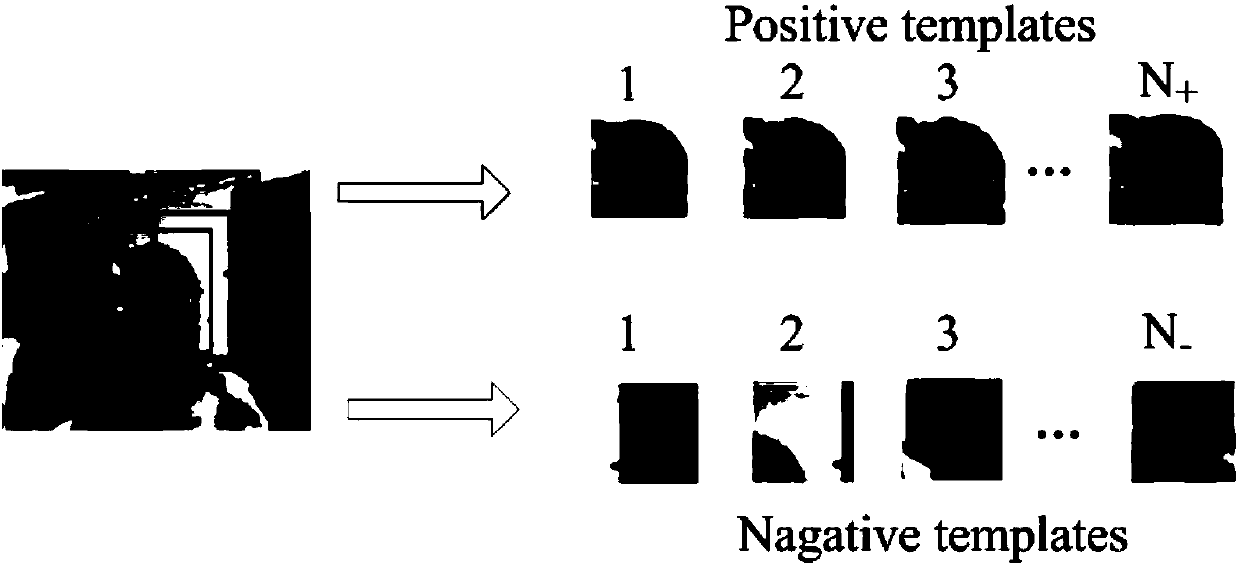

Online adaptive adjustment tracking method for target image regions

InactiveCN102915450AImprove accuracyImprove stabilityCharacter and pattern recognitionPattern recognitionErrors and residuals

The invention relates to an online adaptive adjustment tracking method for target image regions. The method includes the steps of acquiring Haar features from different estimated positions in a newly input video frame, calculating likelihood of the image regions at the positions by a Boosting structured classifier, and using one image region with highest likelihood as a target image region in the current frame. The softer is updated online by the aid of a weak classifier pool and an auxiliary classifier pool, and adaptivity of the classifier to appearance change of a target is further improved. Probabilities of occurrence of background and target image samples are asymmetrical, distribution weights of the samples are adjusted according to classification errors of the weak classifiers, and accordingly the classifiers are highly sensitive to occurrence of targets in the video frames. Therefore, the targets in video can be tracked more stably by the method.

Owner:CHANGZHOU INST OF TECH

Adversarial network texture surface defect detection method based on abnormal feature editing

PendingCN112164033AReduce detection accuracyImprove detection accuracyImage enhancementImage analysisPattern recognitionData set

The invention belongs to the technical field of image processing, and particularly discloses an adversarial network texture surface defect detection method based on abnormal feature editing. The adversarial network texture surface defect detection method comprises the following steps of: acquiring defect-free good product images and corresponding defect images to form an image data set jointly; constructing an adversarial network which comprises a generator and a discriminator, wherein the generator is used for extracting image features, detecting abnormal features and then editing the abnormal features by using normal features to obtain a reconstructed image, and the discriminator is used for discriminating the good product images and the reconstructed image; training the adversarial network through using the image data set according to a pre-constructed optimization target, so as to obtain a reconstructed image generation model; and inputting an image to be detected into the reconstructed image generation model to obtain a reconstructed image corresponding to the image to be detected, and further acquiring the texture surface defects according to the image to be detected and thecorresponding reconstructed image. The adversarial network texture surface defect detection method has high detection precision for defects of different shapes, sizes and contrast ratios on differenttexture surfaces.

Owner:HUAZHONG UNIV OF SCI & TECH

Accurate target tracking method on condition of severe shielding

InactiveCN108549905AImprove robustnessIncrease the prior probabilityCharacter and pattern recognitionCooperation modelReconstruction error

The invention provides a Gaussian sparse representation cooperation model which is used for target tracking on the condition of severe shielding. The accurate target tracking method is characterized in that a manner of combining sparse coding and LLC coding is utilized for performing sparse representation on candidate samples. According to method of the invention, a sparse solution can be easily acquired and furthermore a high-precision reconstruction error is obtained; furthermore prior probability is added into the model so that the sample at the periphery of the next target frame can be more easily used as a final tracking result. Through a large number of experiments for comparing with other methods, the accurate target tracking method can realize better target tracking effect on the condition of severe shielding.

Owner:SHANGHAI FERLY DIGITAL TECH

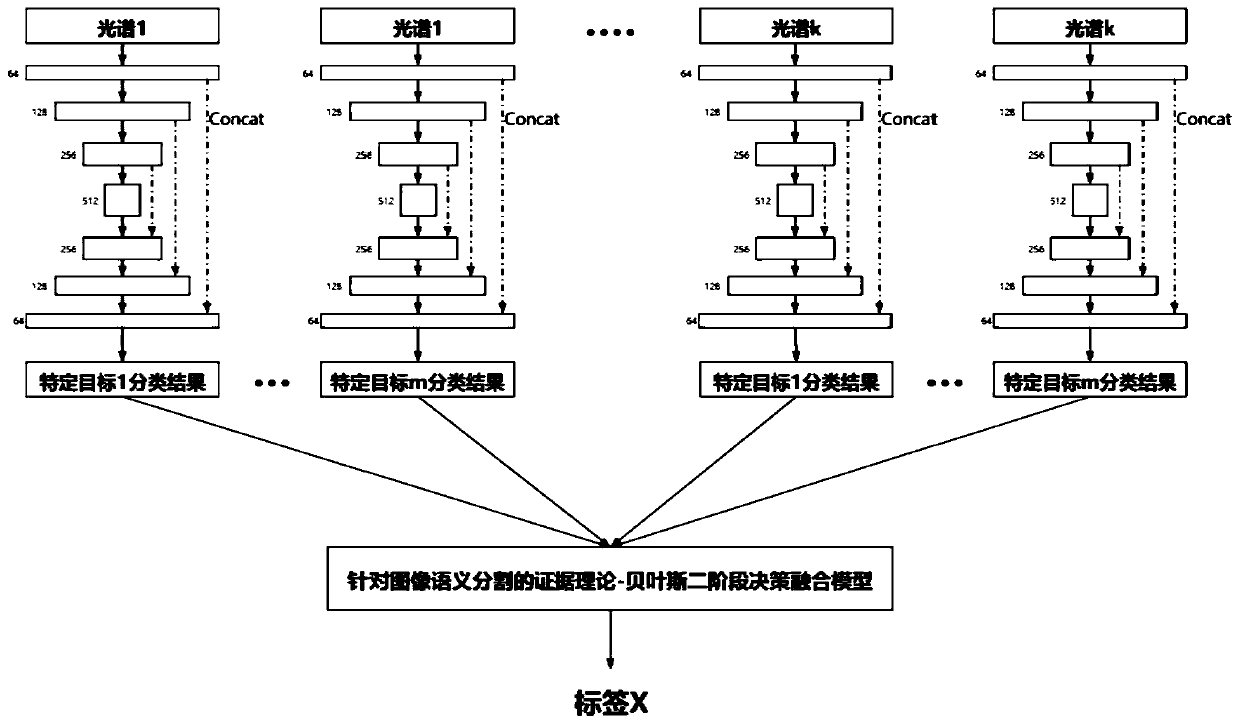

Data deep fusion image segmentation method for multispectral rescue robot

ActiveCN111582280ADiscriminatingImprove accuracyCharacter and pattern recognitionNeural architecturesPattern recognitionRescue robot

The invention provides a data deep fusion image segmentation method for a multispectral rescue robot, and aims to further improve the precision of image semantic segmentation of the rescue robot, improve the accuracy of troubleshooting and analysis of the rescue robot in a disaster site, and perform autonomous detection work without manual command and control. The method comprises the following steps: generating a target segmentation training data set; constructing a U-shaped network for identifying single-spectrum single-target image segmentation; establishing an evidence theory-Bayesian two-stage decision fusion model for image semantic segmentation; and training to obtain a semantic segmentation model of multispectral data fusion. The evidence theory-Bayesian two-stage decision fusion model for image semantic segmentation is obviously improved in the aspects of accuracy, recall rate, accuracy and the like, the robot can autonomously check a disaster site in a complex disaster reliefenvironment, the detection accuracy and efficiency are improved, the labor cost is reduced, the disaster relief time is shortened, and casualties are reduced.

Owner:吉林省森祥科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com