A behavior recognition method of depth supervised convolution neural network based on training feature fusion

A convolutional neural network and feature fusion technology, applied in the field of artificial intelligence computer vision, can solve the problem of missing local information in video space

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0087] The specific implementation method of the present invention will be further described in detail below in conjunction with the accompanying drawings.

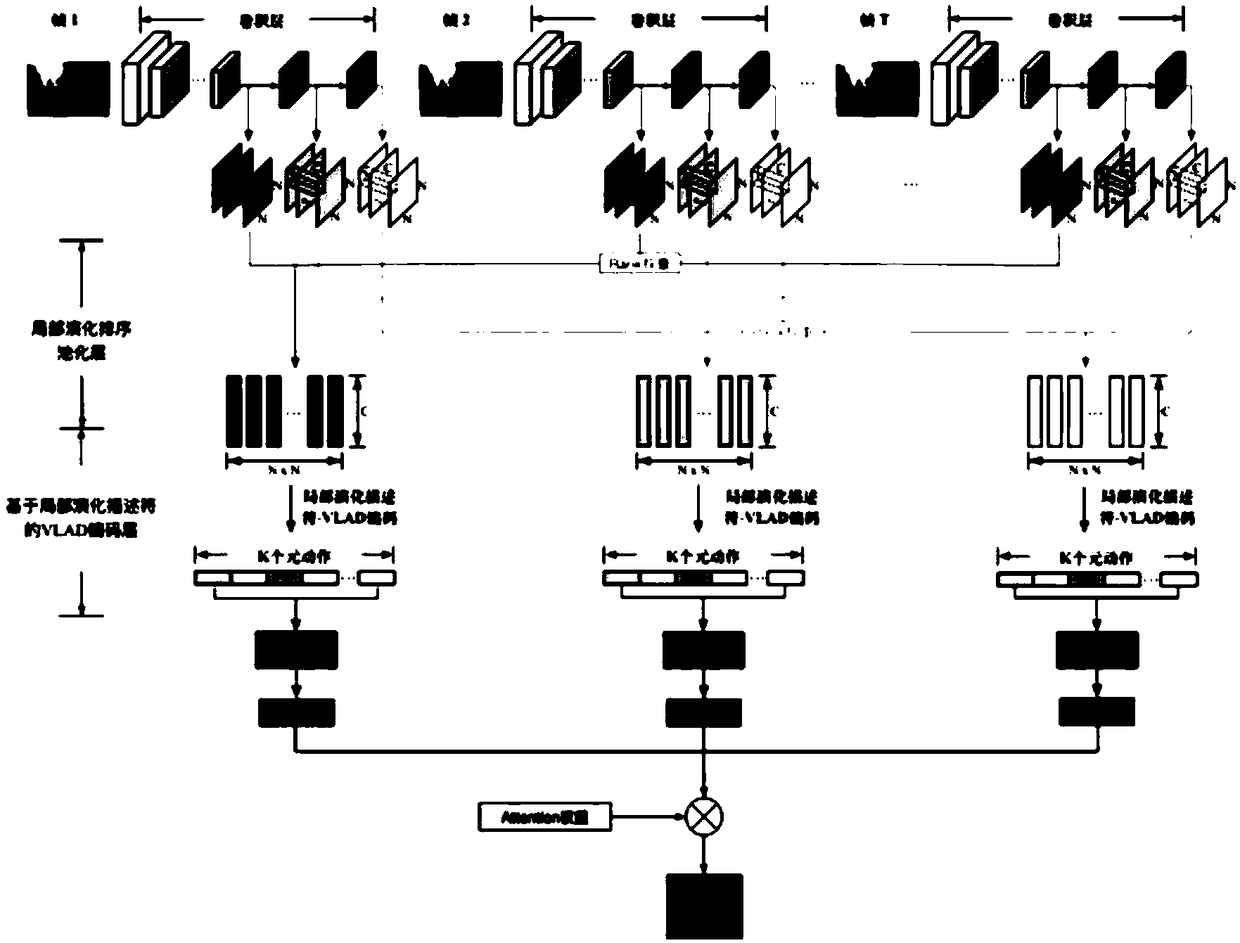

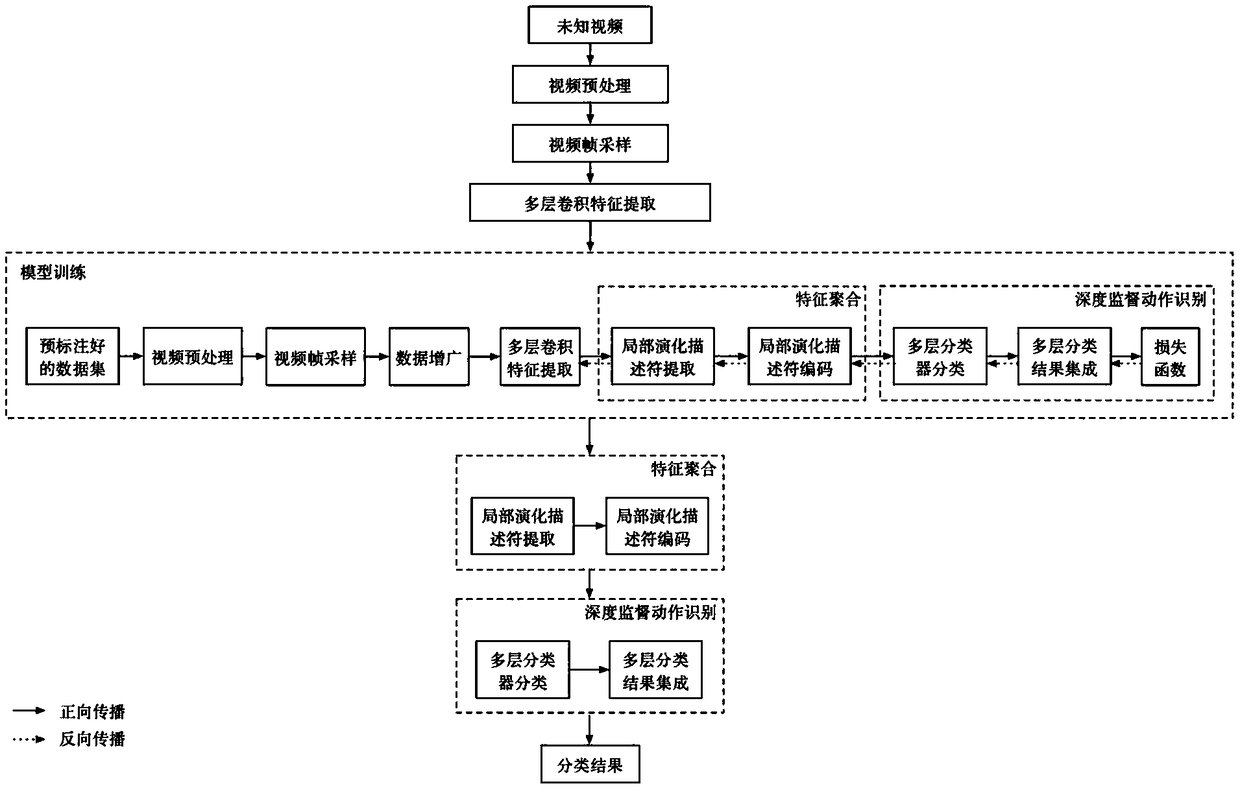

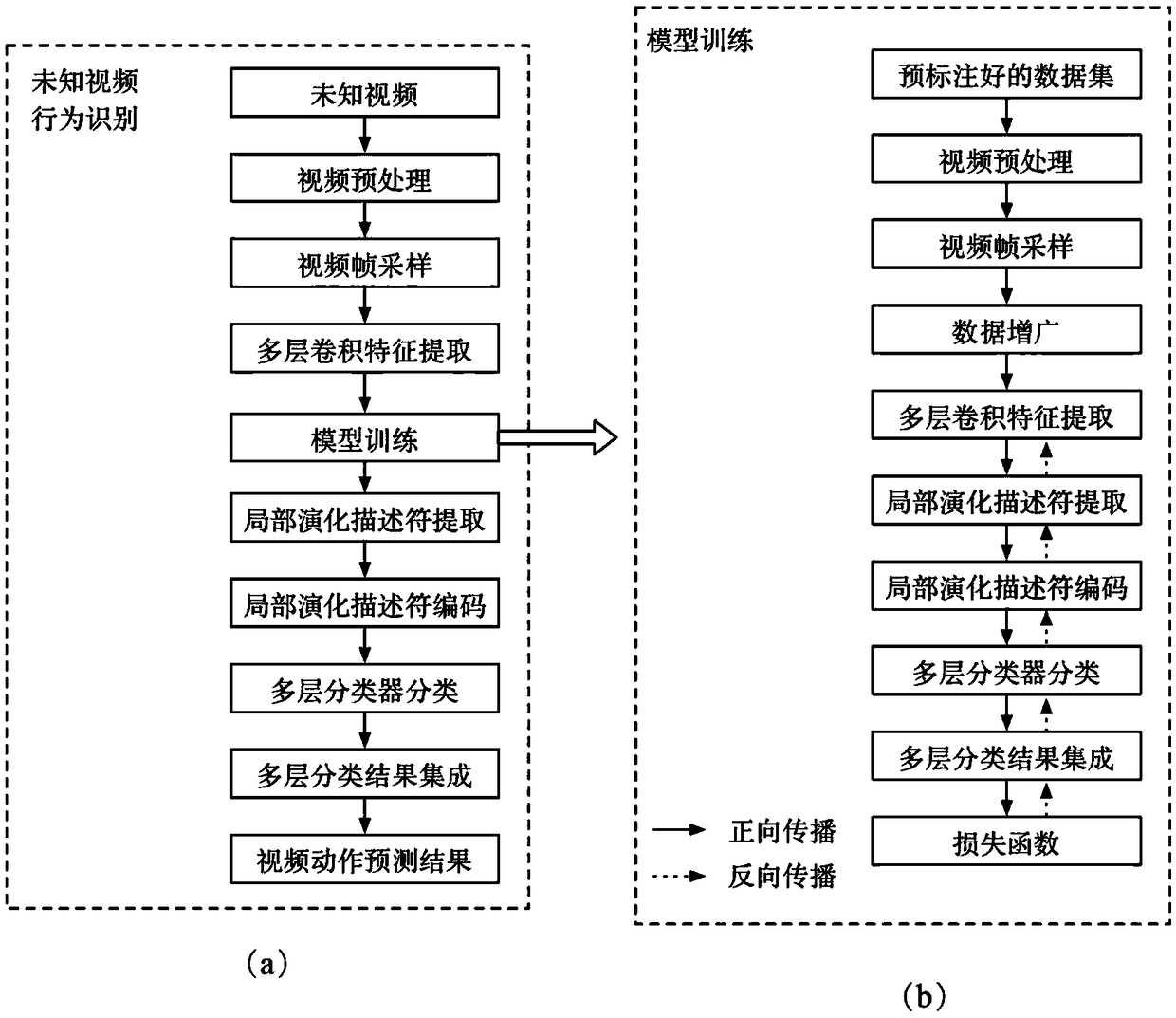

[0088]Execution environment of the present invention is to have computer to realize following three main functions to form: 1, multi-layer convolution feature extraction function, this function is to extract the multi-layer feature map of each frame of video. 2. Feature aggregation function, including the local evolution description pooling layer, the function of this layer is to encode the multi-frame feature map obtained by each layer into a local evolution descriptor; and the VLAD encoding layer based on the local evolution descriptor, the layer's The function is to encode local evolution descriptors into meta-action based video-level representations. 3. The method of deep supervised action recognition. The function of this method is to use the multi-layered video-level representation obtained above to identify the act...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com