Video emotion identification method based on emotion significant feature integration

A feature fusion and emotion recognition technology, which is applied in character and pattern recognition, instruments, computer components, etc., can solve problems that affect the accuracy of video classification and recognition, and the discrimination of video emotional features is not obvious.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The present invention will be described in detail below in conjunction with the drawings:

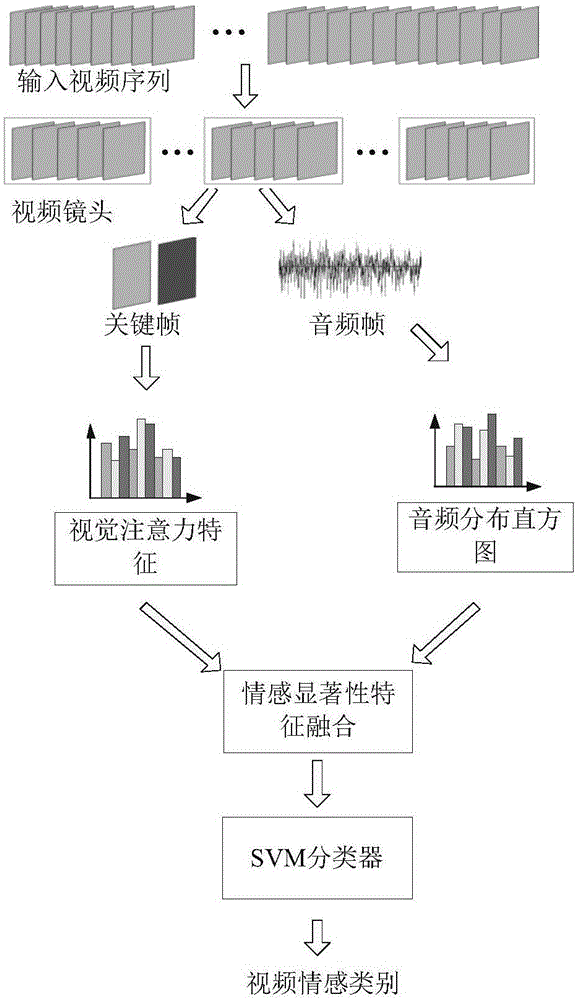

[0044] figure 1 Shows the video emotion recognition method based on the fusion of emotional salient features provided by the present invention. Such as figure 1 As shown, the method specifically includes the following steps:

[0045] Step 1: Perform structural analysis on the video, use the theory of mutual information entropy based on information theory to detect the boundaries of shots and extract video shots, and then select emotional key frames for each shot. The specific extraction steps include:

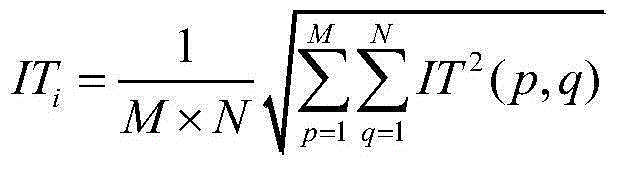

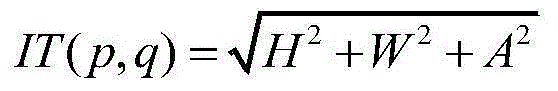

[0046] Step 1.1: Calculate the color emotion intensity value of each video frame with the lens as the unit, take time as the horizontal axis and the color emotion intensity value on the vertical axis to obtain the lens emotion fluctuation curve; the color emotion intensity value calculation method is as follows:

[0047] IT i = 1 M X N X p = 1 M ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com