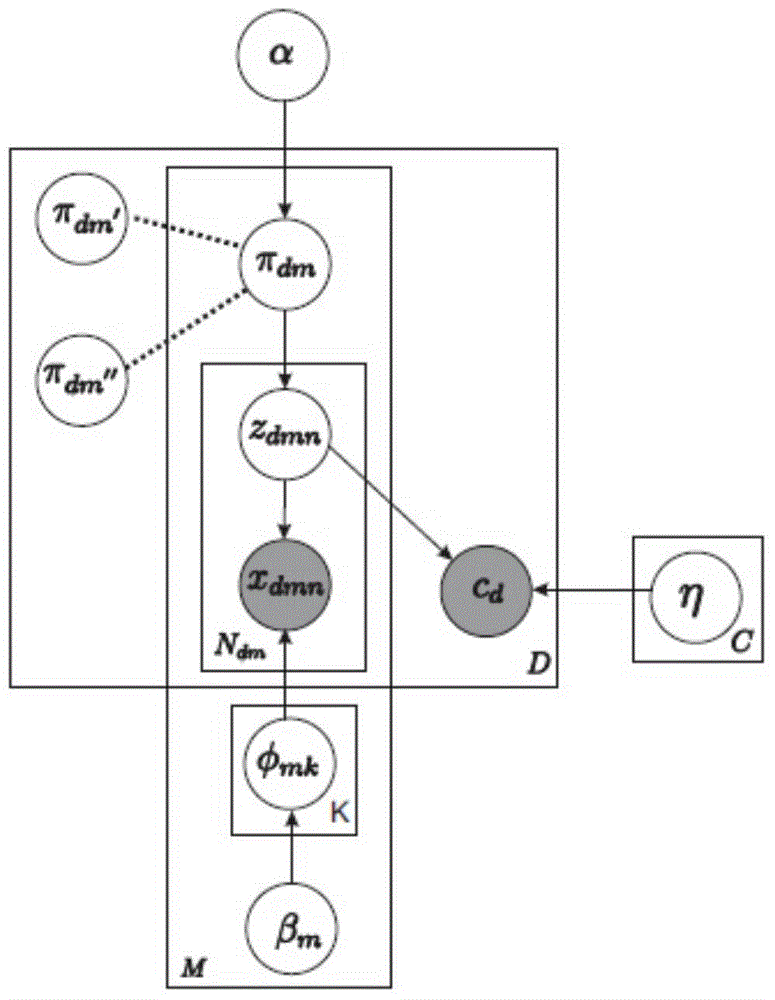

Cross-modal searching method based on topic model

A topic model and cross-modal technology, applied in the field of cross-modal retrieval, can solve the problems of lack of internal mechanism analysis of cross-modal data, implicit space not interpretable, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0100] In order to verify the effect of the present invention, use the webpage of "Wikipedia-featured text" (Wikipedia feature articles), each webpage contains an image and several sections that describe the content of the image to form a cross-modal document, these Cross-modal document data is used as the data set of the experiment of the present invention (as attached figure 2 ). Here, the data set contains two modalities of text and image, the size of the thesaurus dictionary of the text is set to 5000 dimensions, and the number of cluster center points of the image is set to 1000. The whole dataset is divided into 10 categories. The database contains a total of 2866 cross-media documents, 1 / 5 of which are randomly selected for testing, and the other documents are used as training data. According to the steps described in the specific embodiment, the experimental results obtained are as follows:

[0101] Table 1. Results on the Wikipedia dataset

[0102]

[0103] At...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com