Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

48 results about "Software cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache is a software development tool from InterSystems. It provides an advanced database management system and rapid application development environment. It's considered part of a new generation of database technology that provides multiple modes of data access. The data is only described once in a single integrated dictionary...

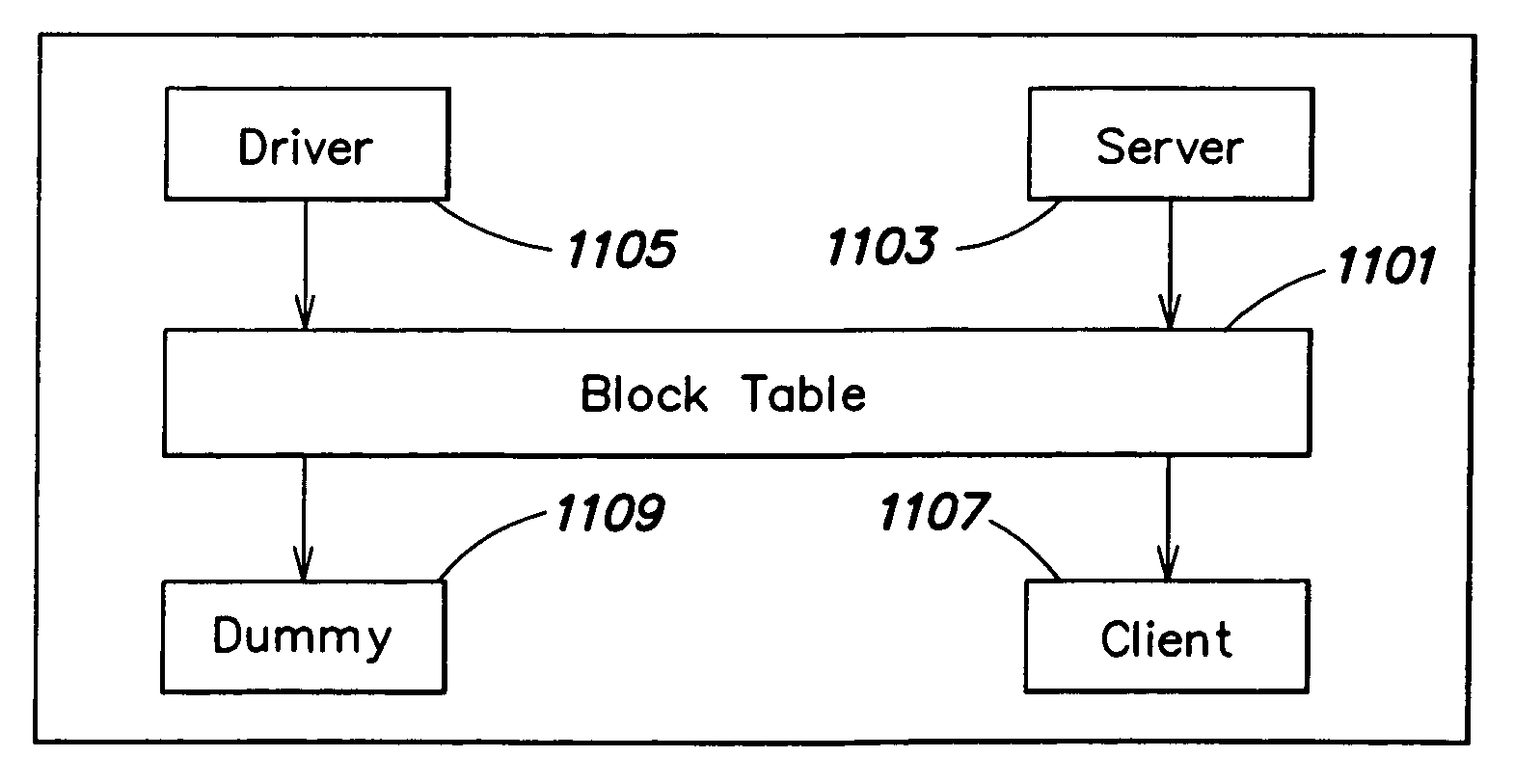

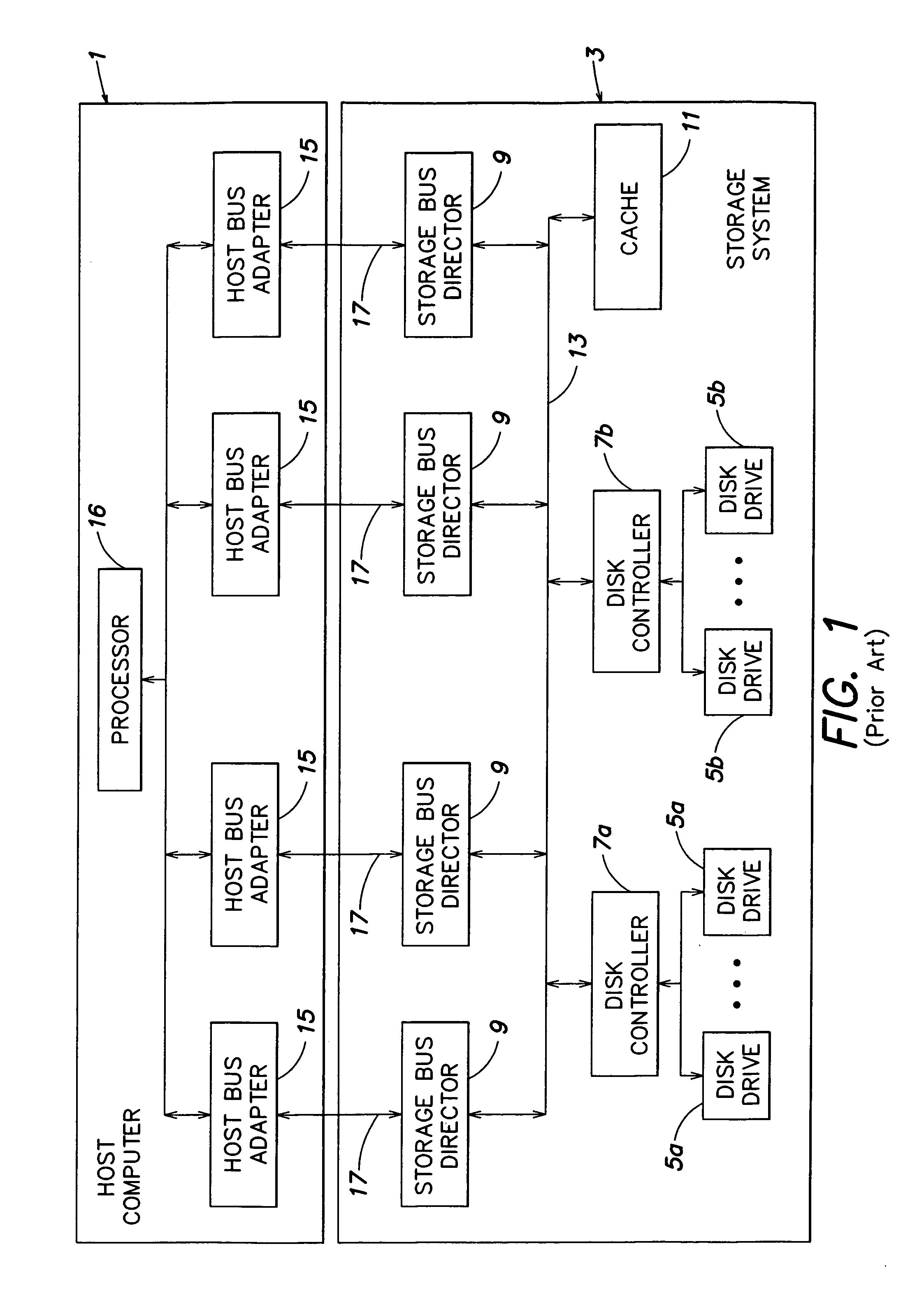

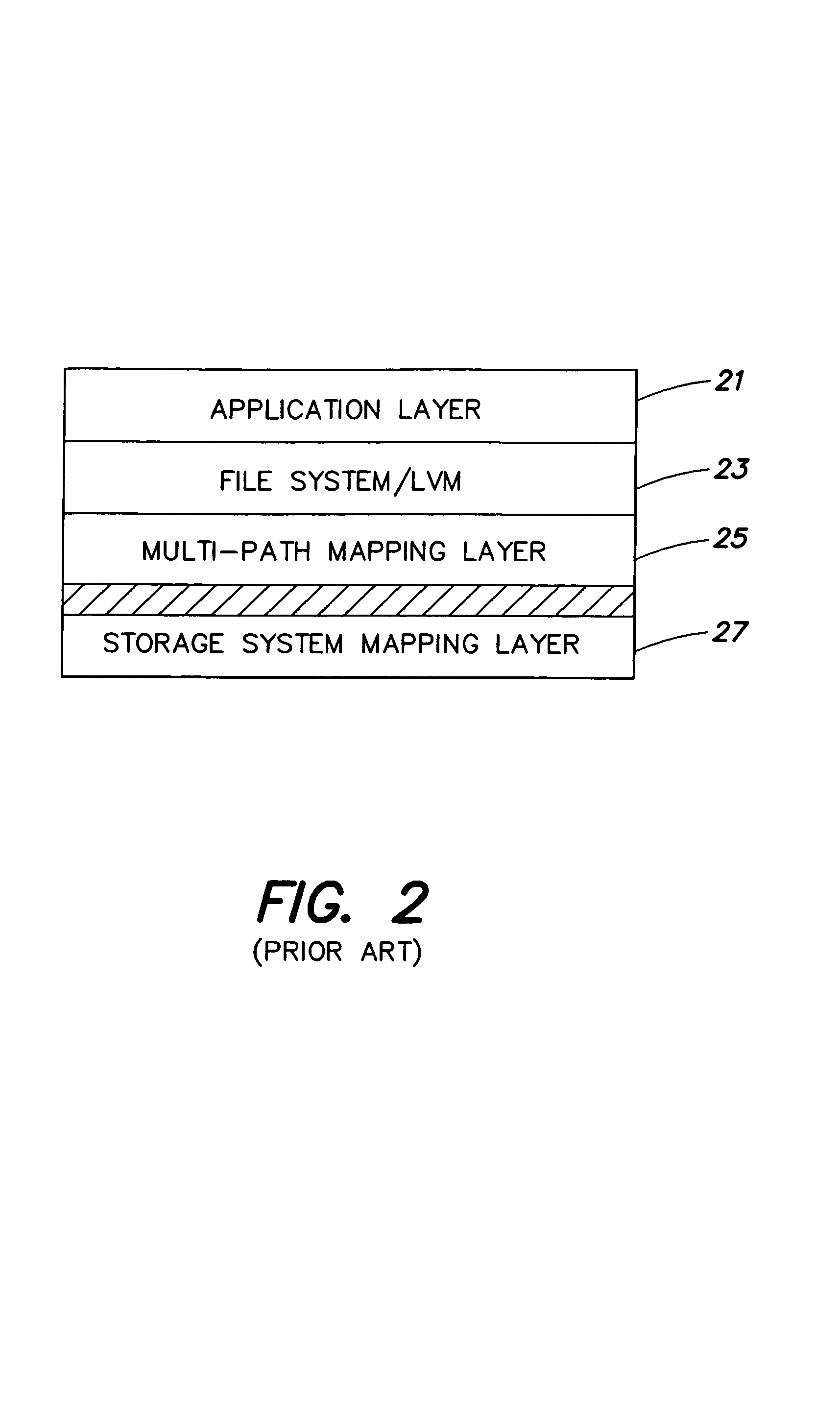

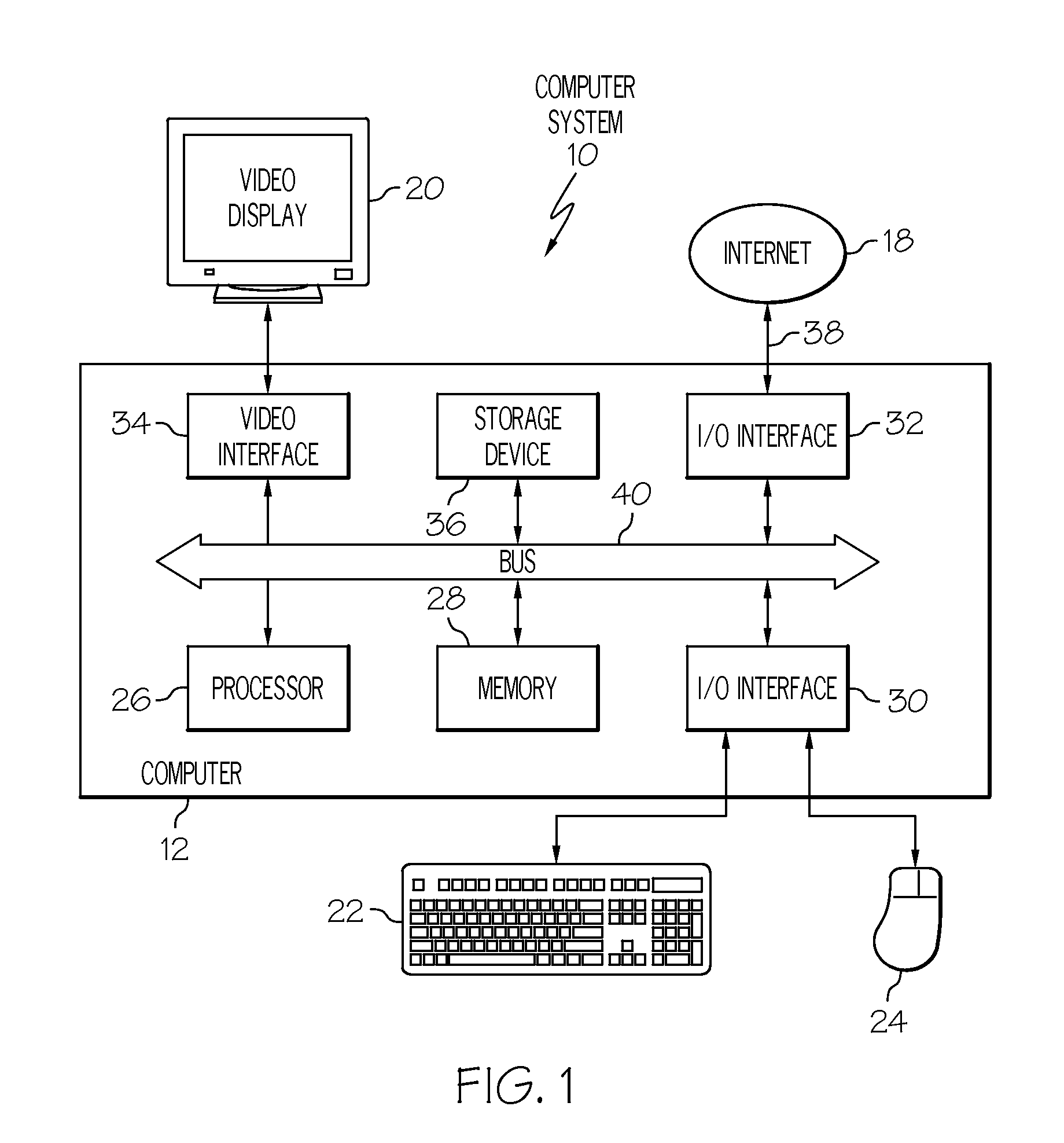

Method and apparatus for implementing a software cache

ActiveUS7124249B1Memory adressing/allocation/relocationMicro-instruction address formationComputerized systemSoftware cache

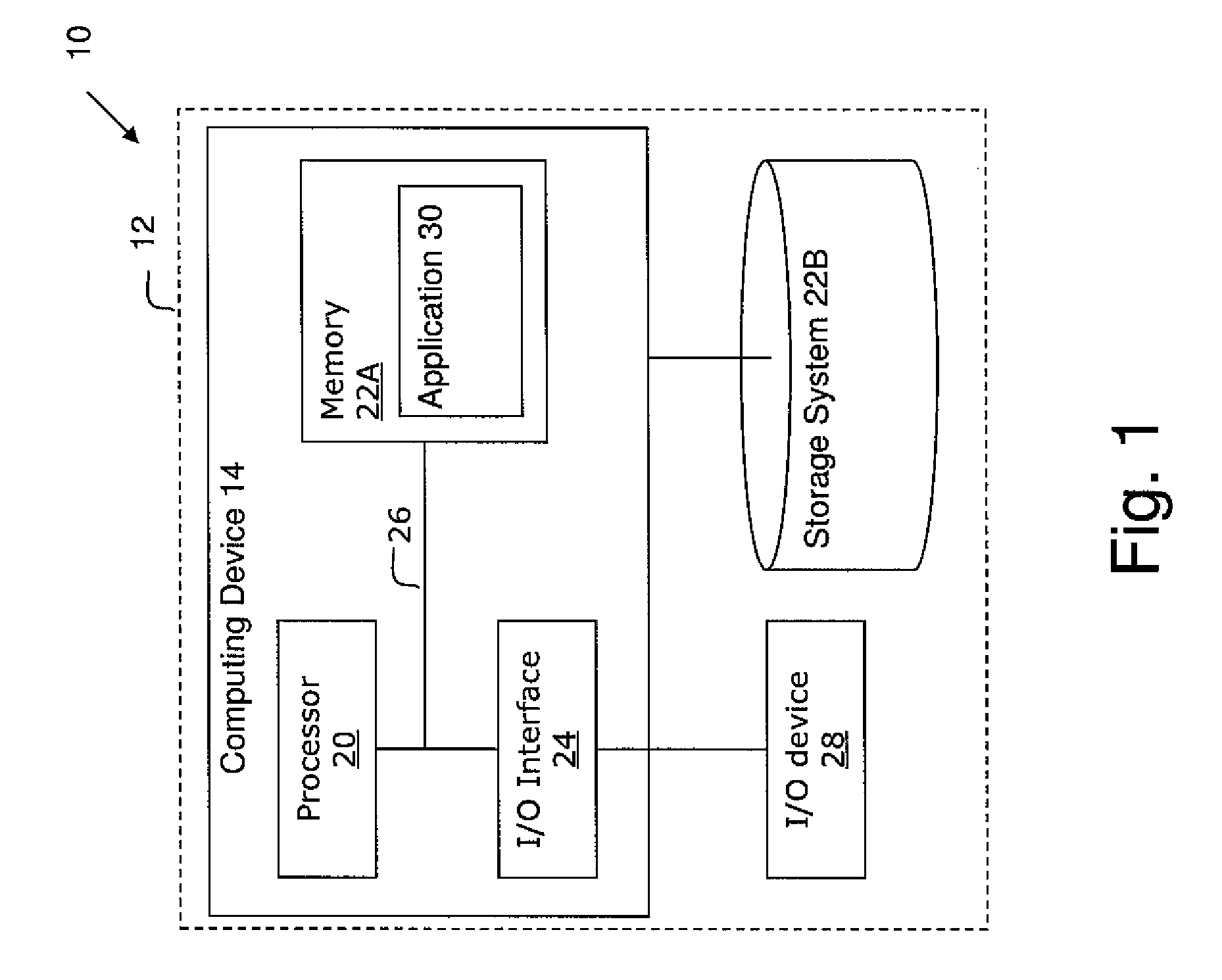

A method and apparatus for use in a computer system including a plurality of host computers including a root host computer and at least one child host computer. The root host computer exports at least a portion of the volume of storage to the at least one child host computer so they can share access to the volume of storage. In one embodiment, the volume of storage is stored on at least one non-volatile storage device. In another aspect, the volume of storage is made available to the root from a storage system. In a further aspect, at least a second portion of the volume of storage is exported from a child host computer to at least one grandchild host computer.

Owner:EMC IP HLDG CO LLC

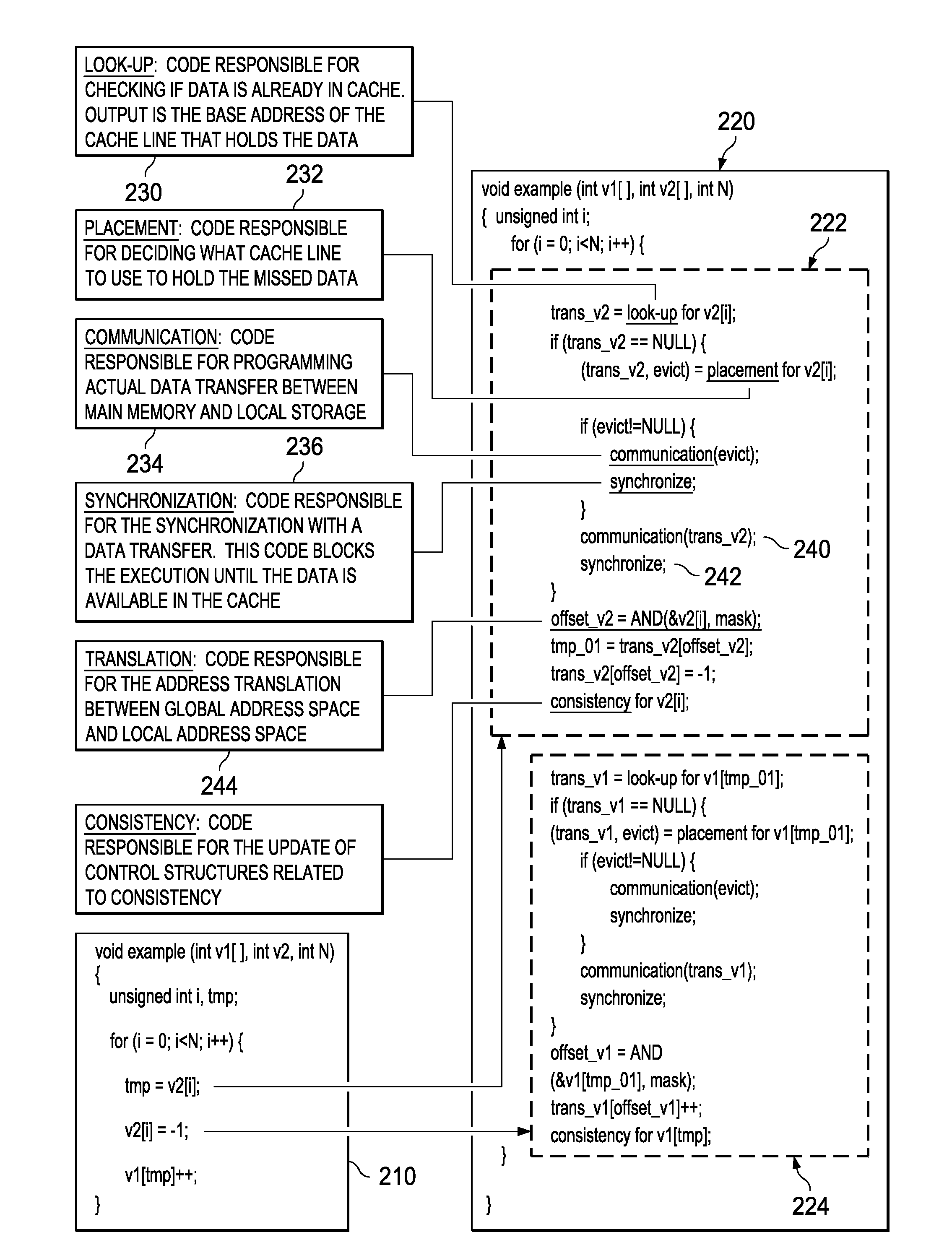

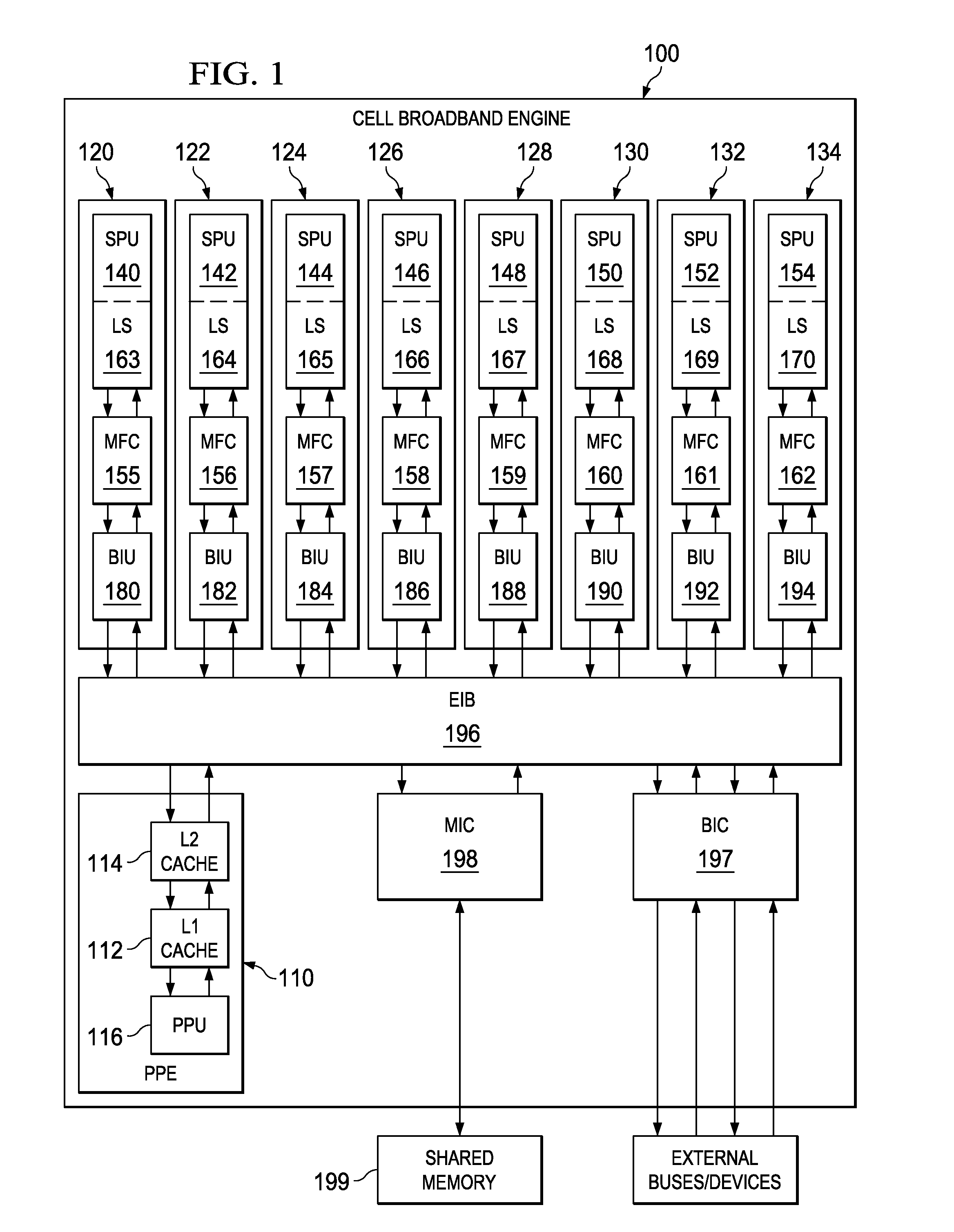

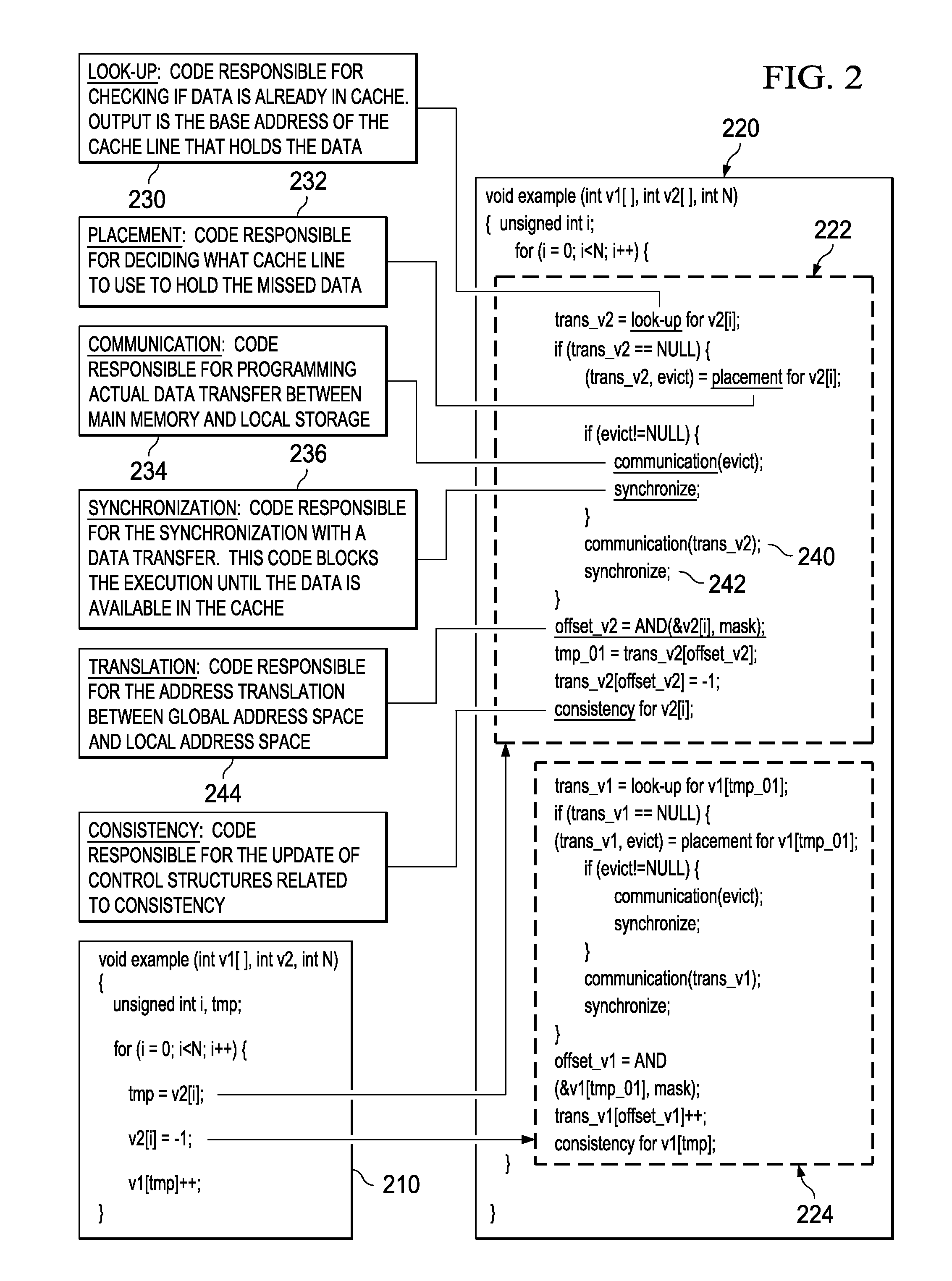

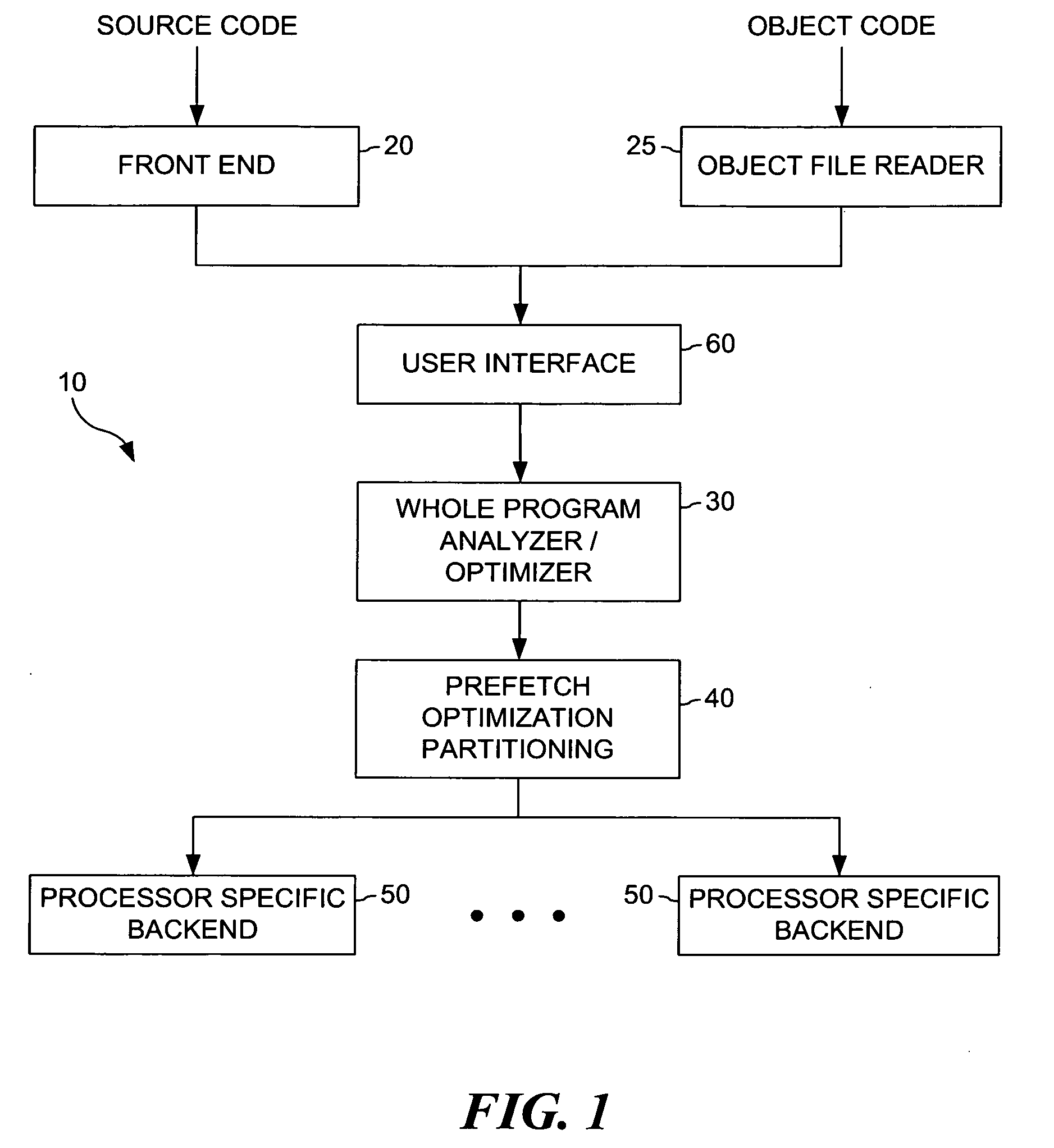

Optimized Code Generation Targeting a High Locality Software Cache

InactiveUS20100088673A1Error preventionError detection/correctionConventional memoryParallel computing

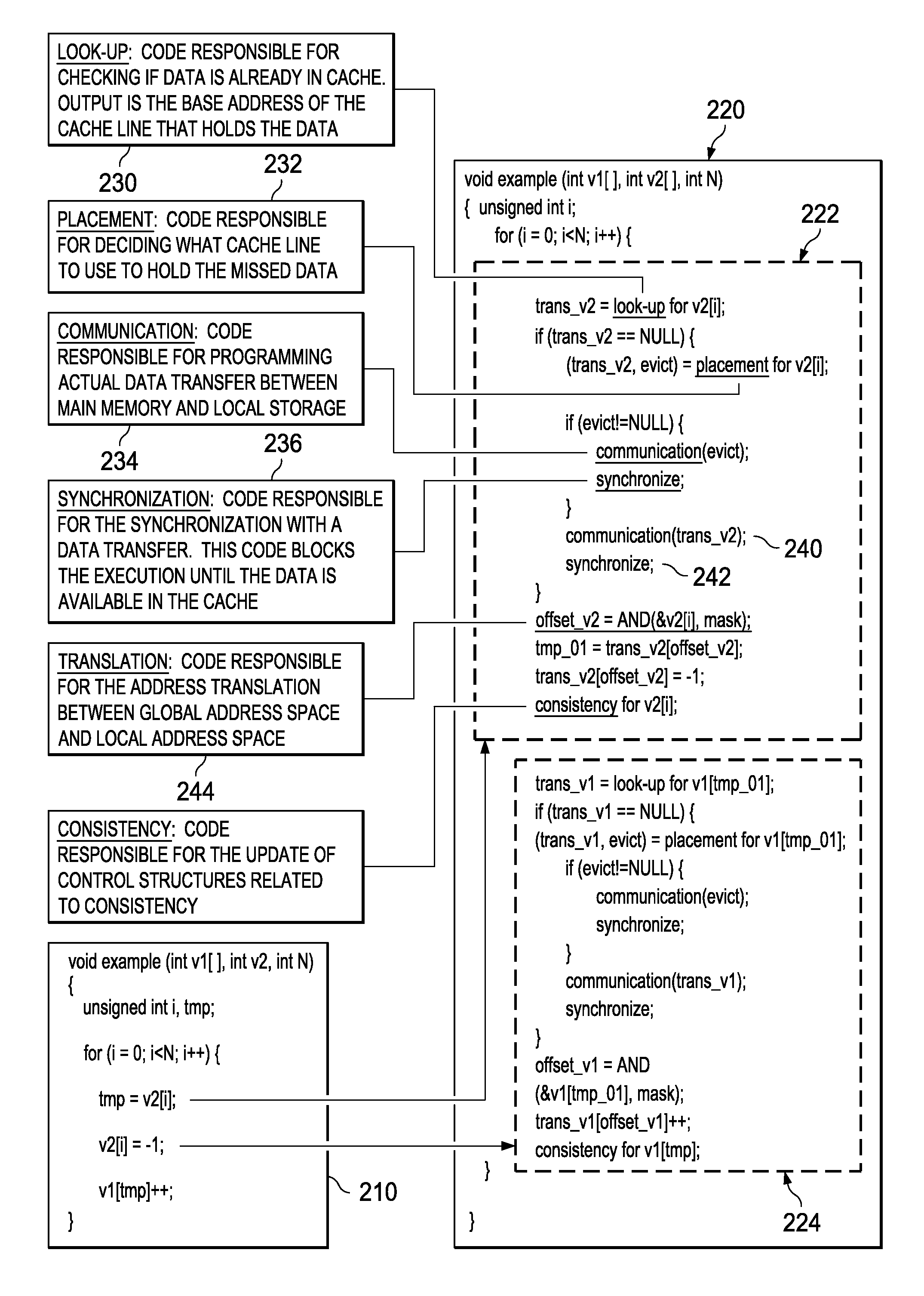

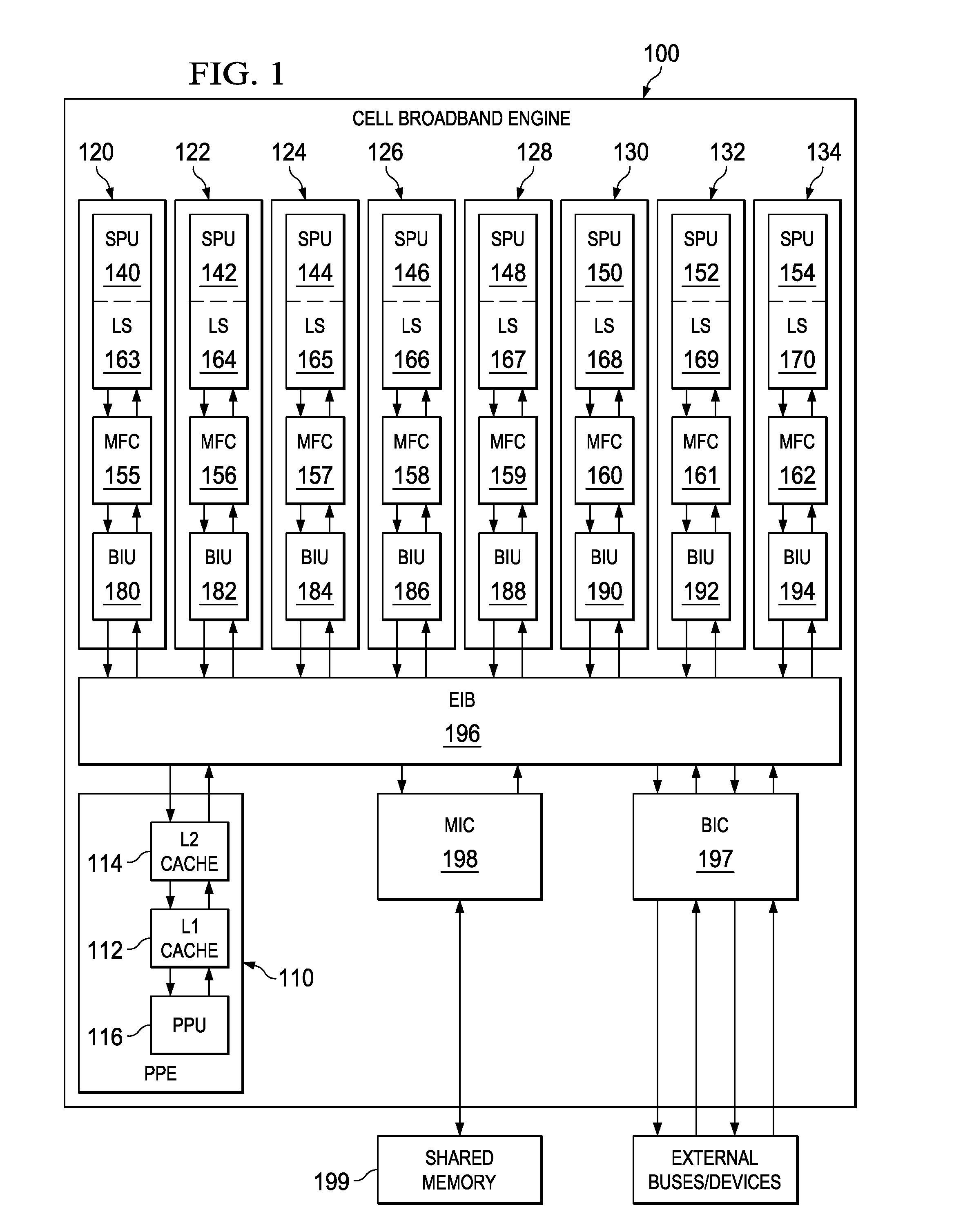

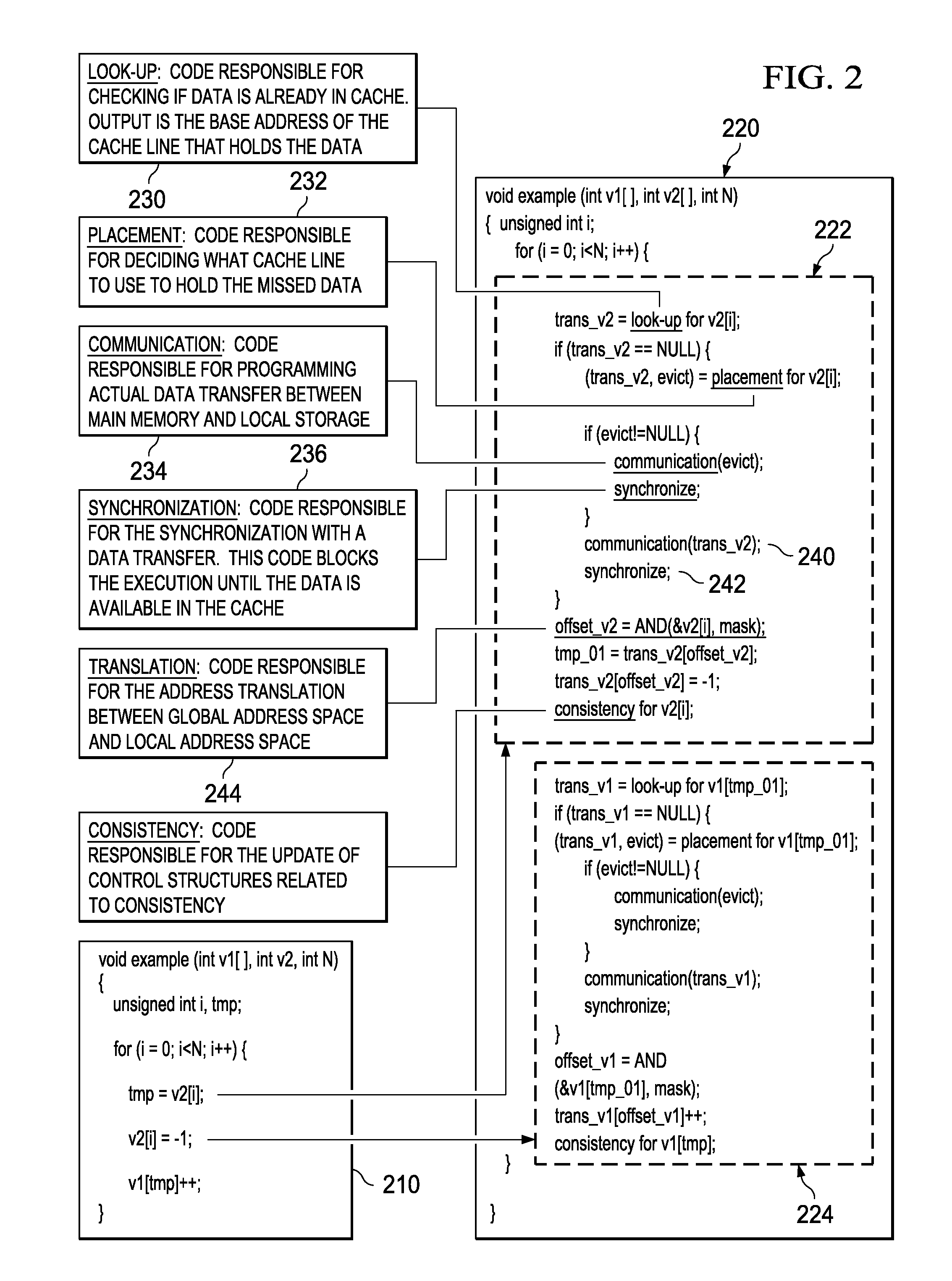

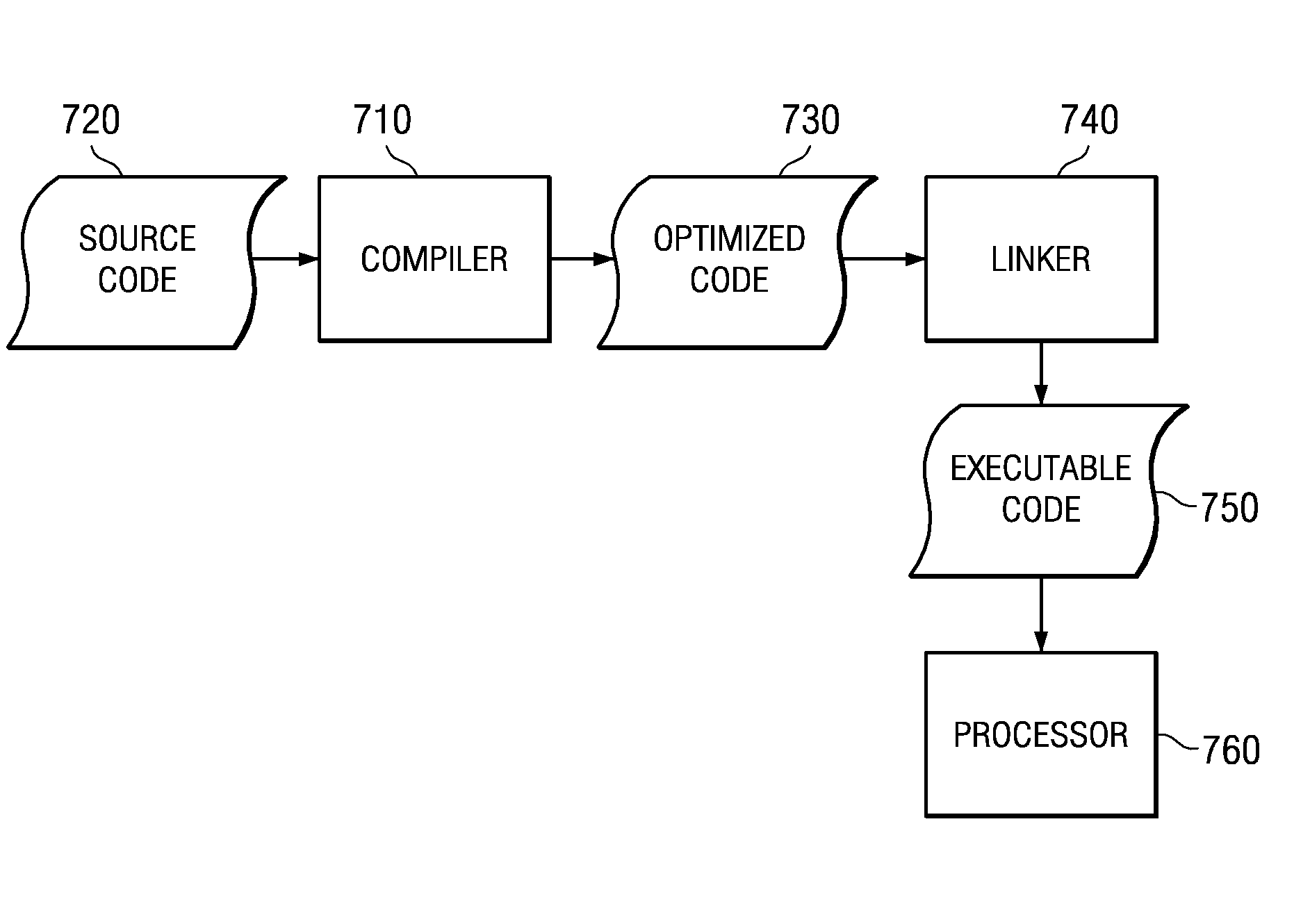

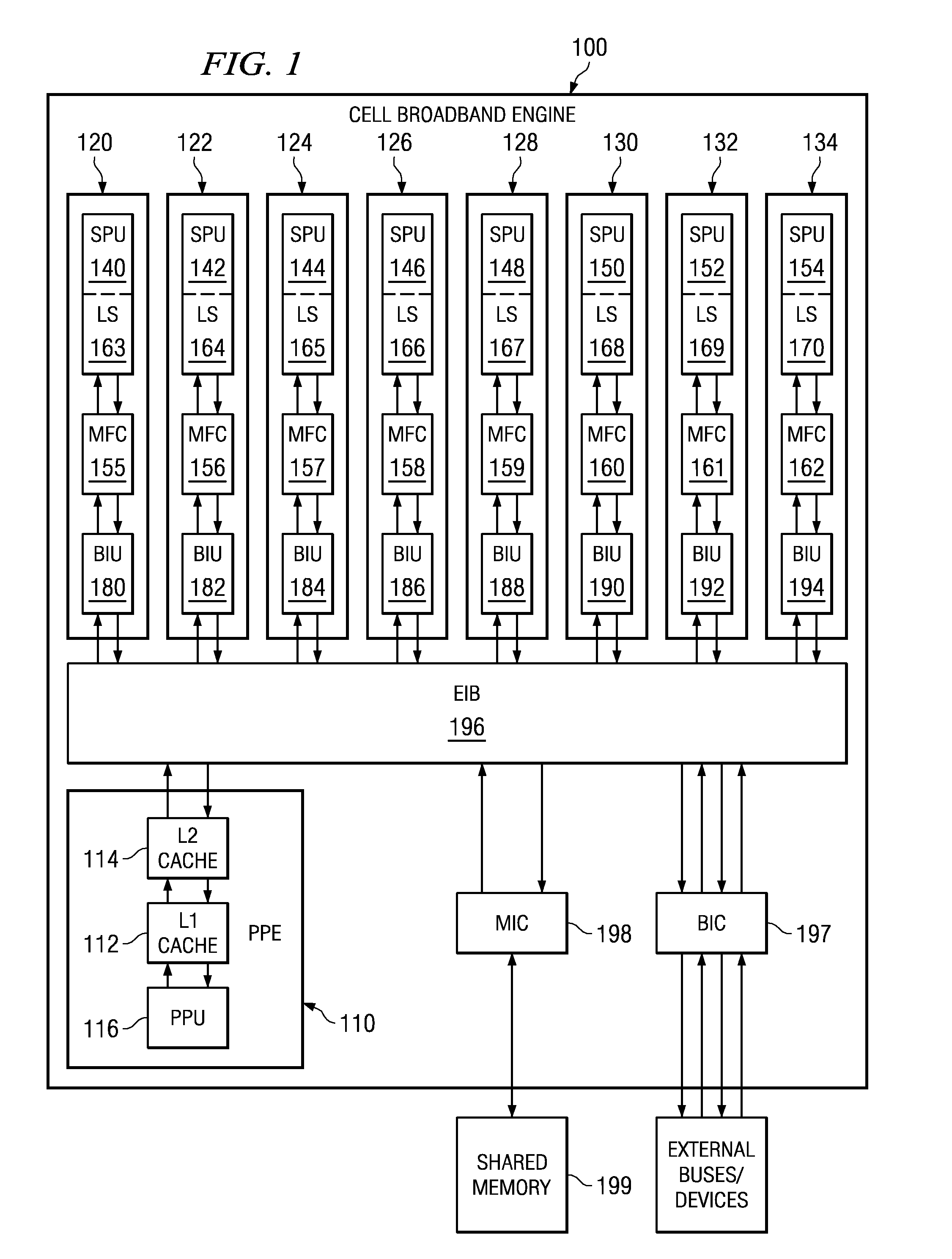

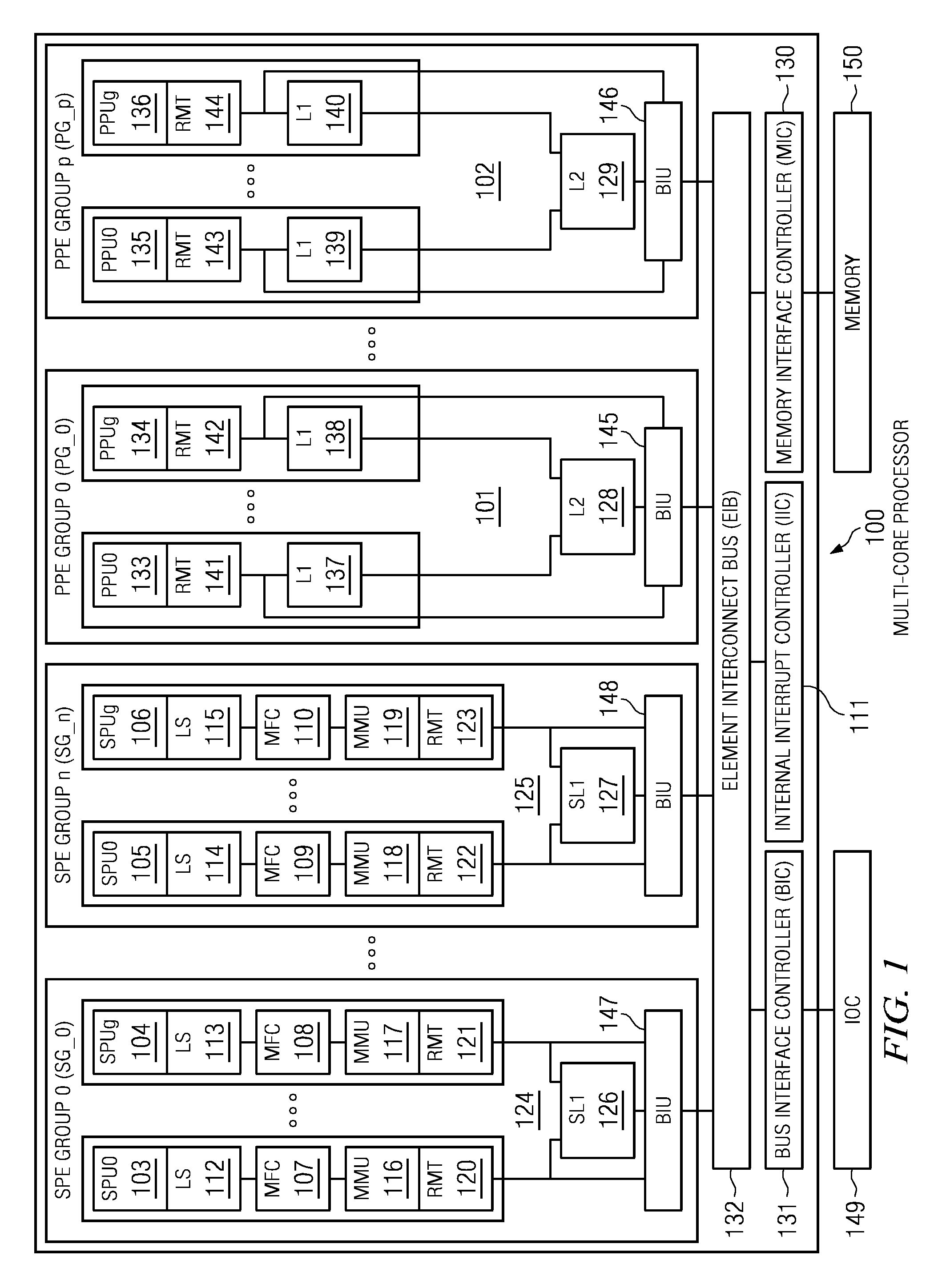

Mechanisms for optimized code generation targeting a high locality software cache are provided. Original computer code is parsed to identify memory references in the original computer code. Memory references are classified as either regular memory references or irregular memory references. Regular memory references are controlled by a high locality cache mechanism. Original computer code is transformed, by a compiler, to generate transformed computer code in which the regular memory references are grouped into one or more memory reference streams, each memory reference stream having a leading memory reference, a trailing memory reference, and one or more middle memory references. Transforming of the original computer code comprises inserting, into the original computer code, instructions to execute initialization, lookup, and cleanup operations associated with the leading memory reference and trailing memory reference in a different manner from initialization, lookup, and cleanup operations for the one or more middle memory references.

Owner:GLOBALFOUNDRIES INC

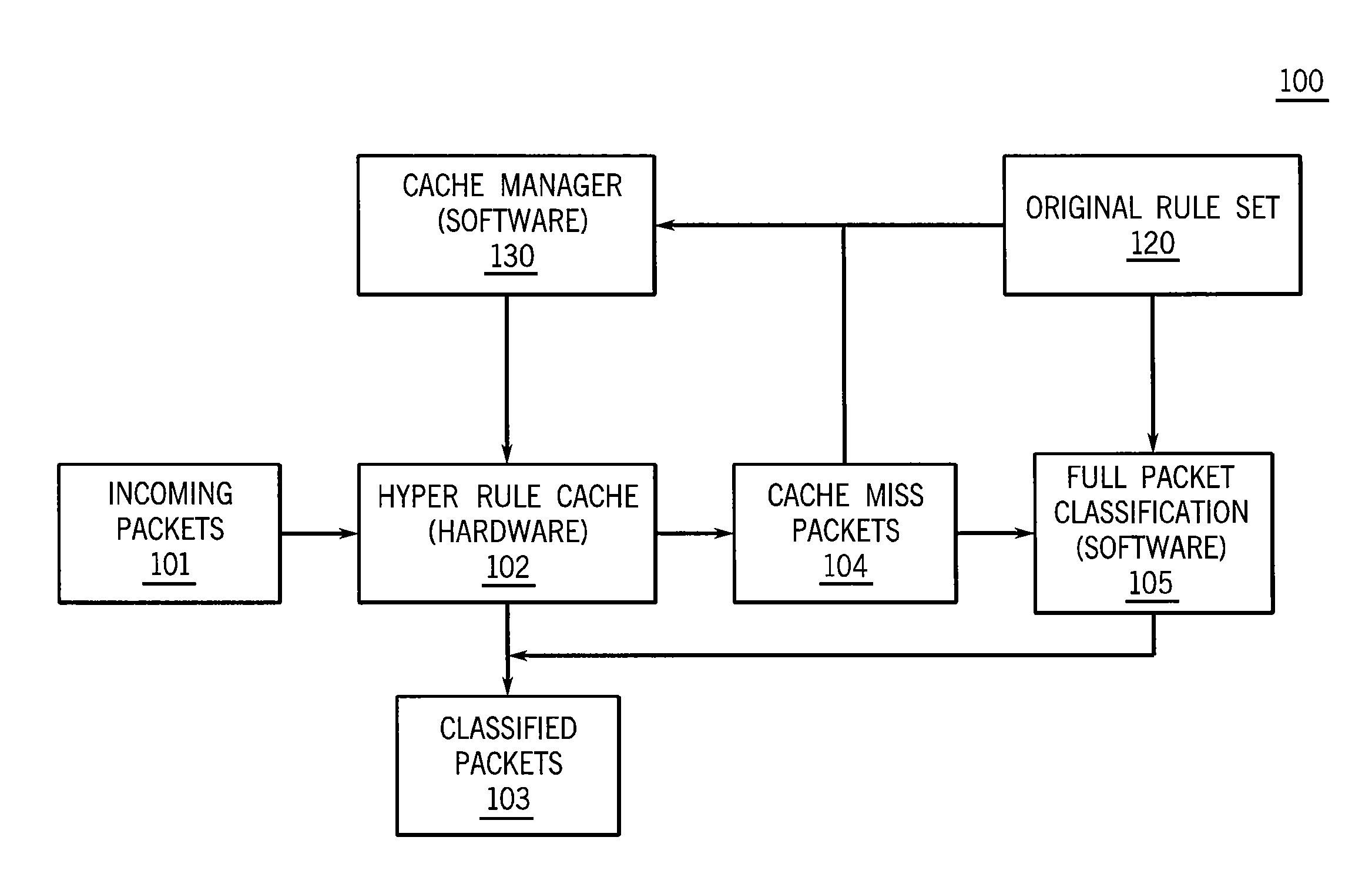

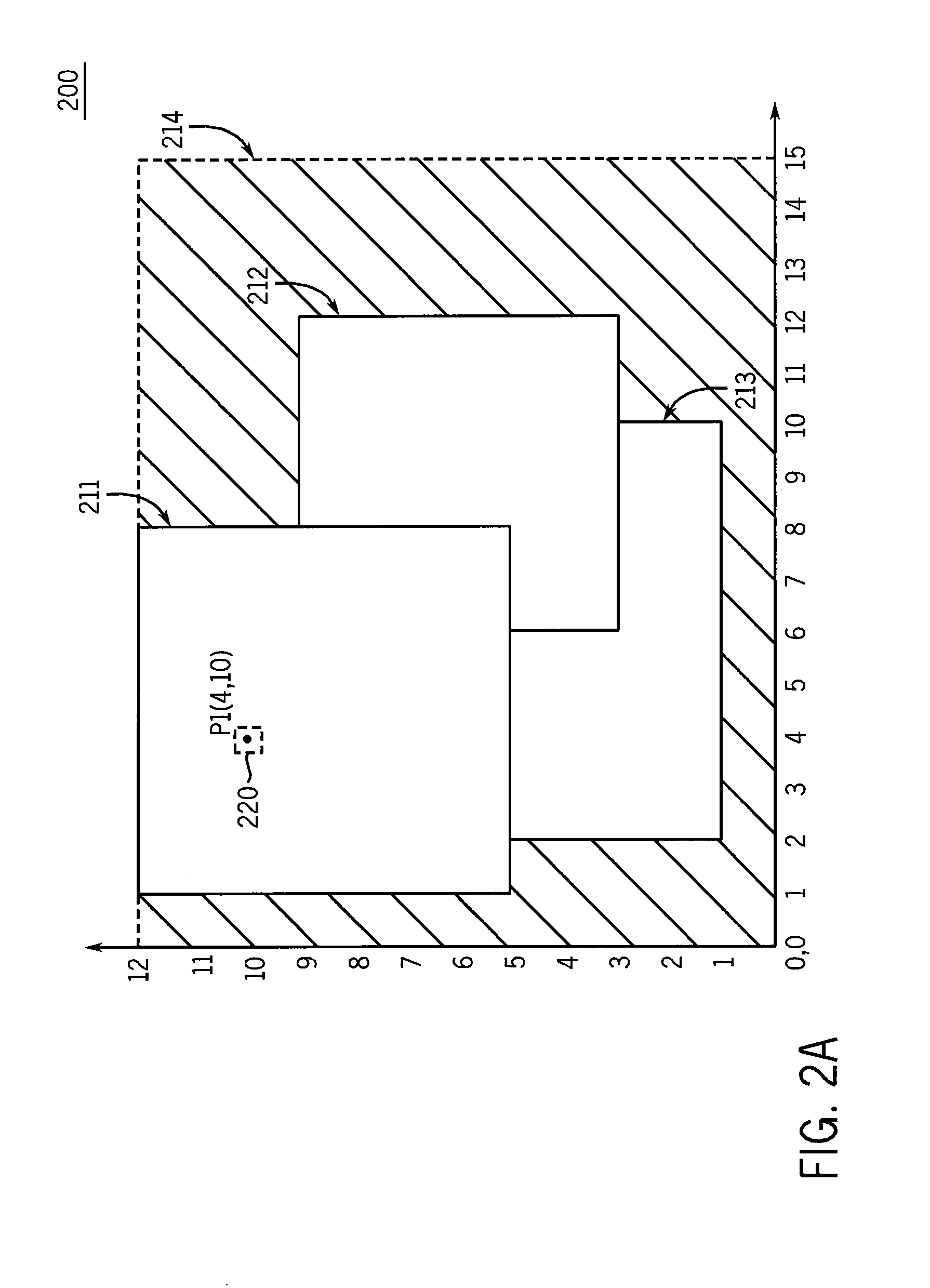

Packet Router Having Improved Packet Classification

ActiveUS20100067535A1Faster searching algorithmFaster searching algorithmsEnergy efficient ICTData switching by path configurationSoftware cacheRule sets

A computer-implemented method for classifying received packets using a hardware cache of evolving rules and a software cache having an original rule set. The method including receiving a packet, processing the received packet through a hardware-based packet classifier having at least one evolving rule to identify at least one cache miss packet, and processing the cache miss packet through a software based packet classifier including an original rule set. Processing the cache miss packet includes determining whether to expand at least one of the at least one evolving rules in the hardware-based packet classifier based on the cache miss packet. The determination includes determining whether an evolving rule has both the same action and lies entirely within one of the rule of the original rule set.

Owner:WISCONSIN ALUMNI RES FOUND

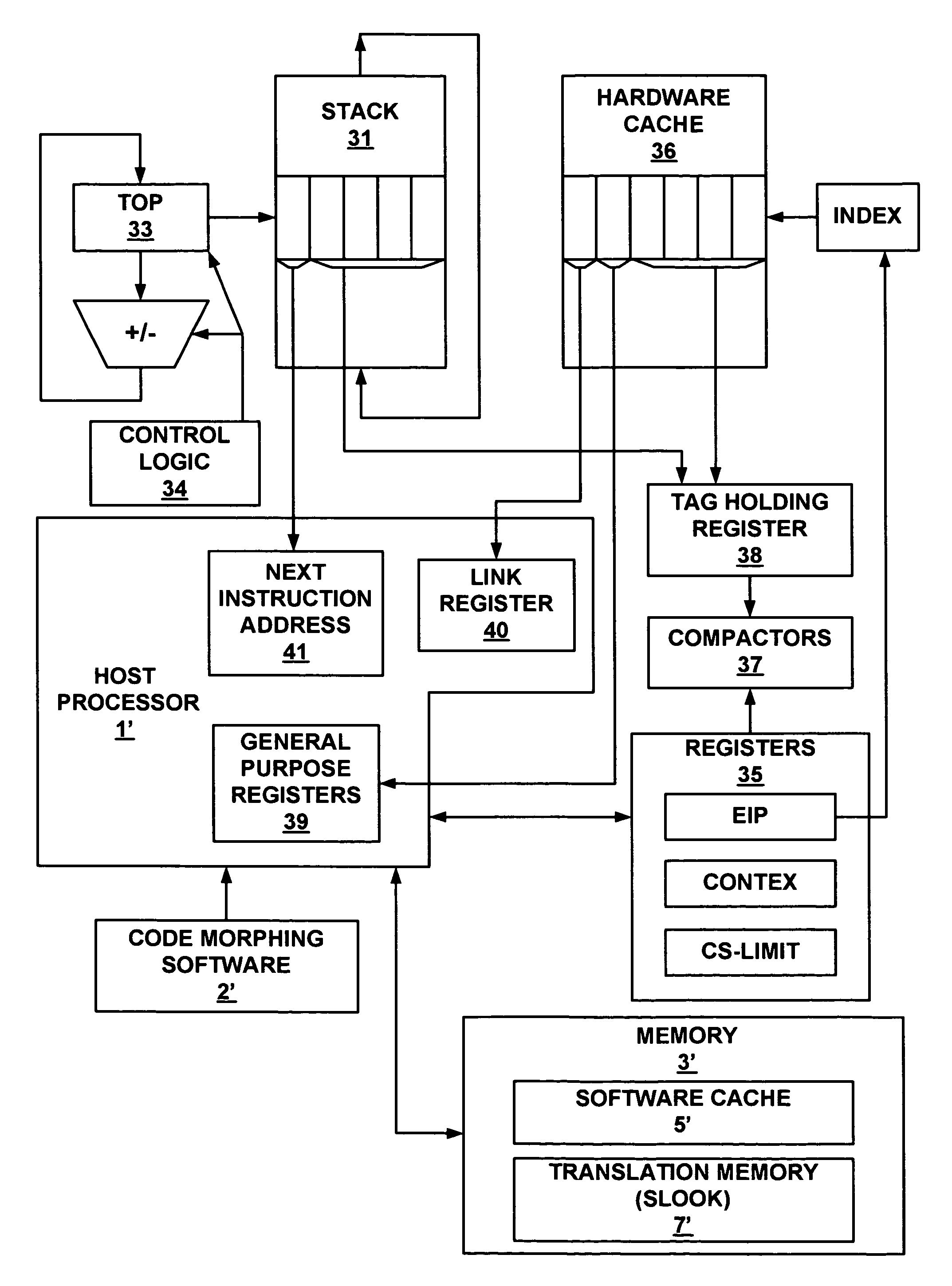

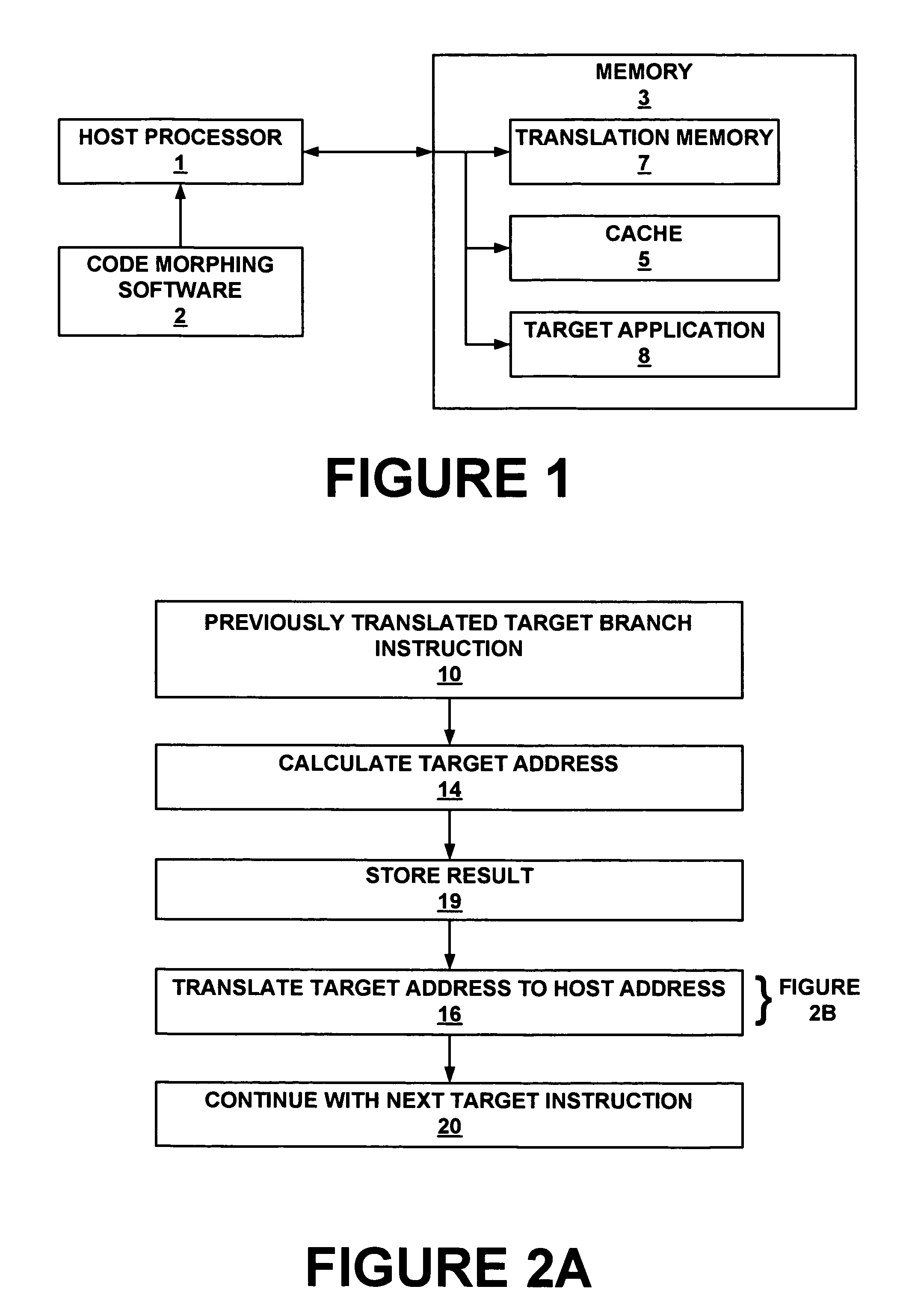

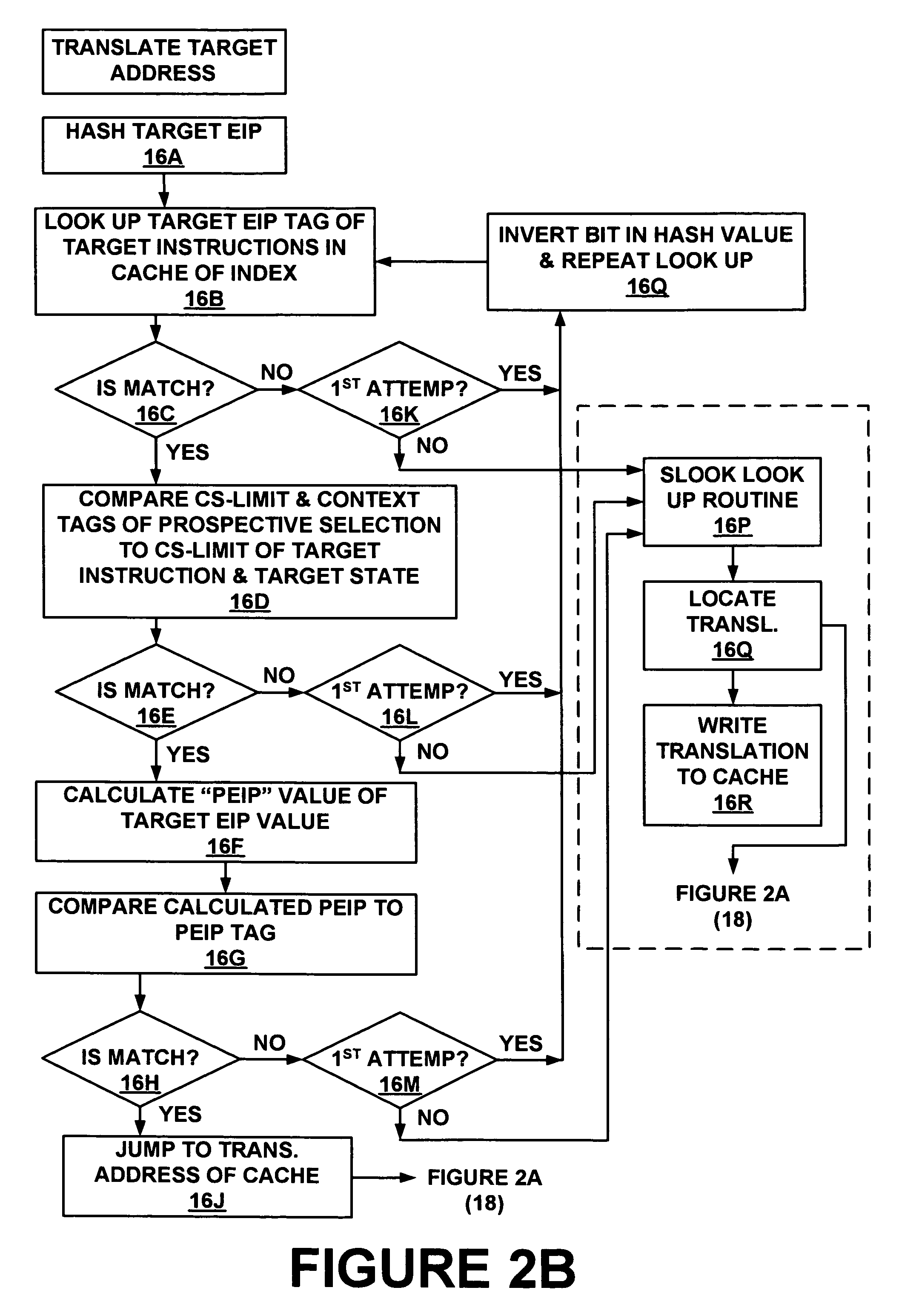

Method and system for storing and retrieving a translation of target program instruction from a host processor using fast look-up of indirect branch destination in a dynamic translation system

InactiveUS7644210B1Fast executionProgram control using stored programsDigital computer detailsProgram instructionParallel computing

Dynamic translation of indirect branch instructions of a target application by a host processor is enhanced by including a cache to provide access to the addresses of the most frequently used translations of a host computer, minimizing the need to access the translation buffer. Entries in the cache have a host instruction address and tags that may include a logical address of the instruction of the target application, the physical address of that instruction, the code segment limit to the instruction, and the context value of the host processor associated with that instruction. The cache may be a software cache apportioned by software from the main processor memory or a hardware cache separate from main memory.

Owner:INTELLECTUAL VENTURES HOLDING 81 LLC

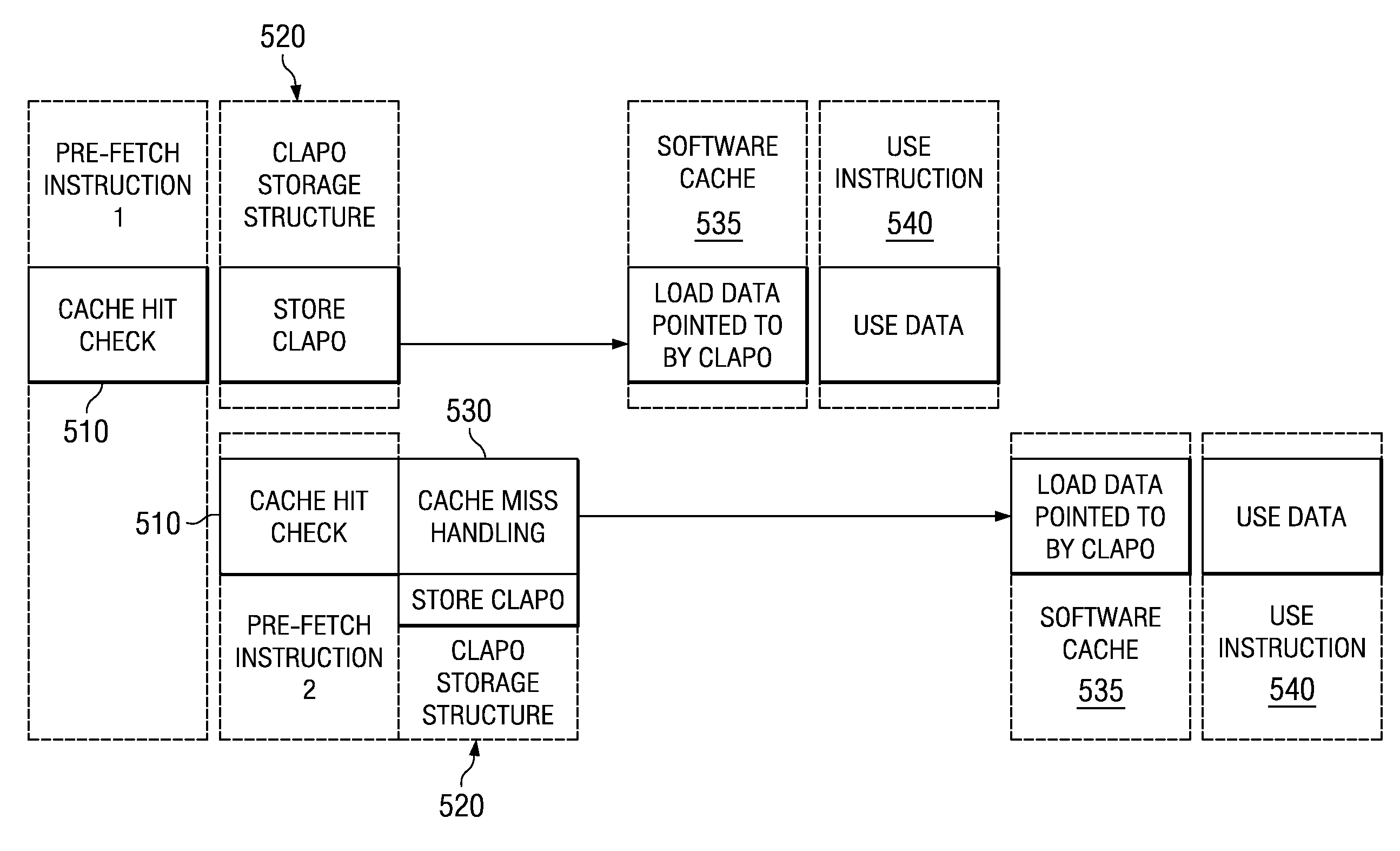

Method to efficiently prefetch and batch compiler-assisted software cache accesses

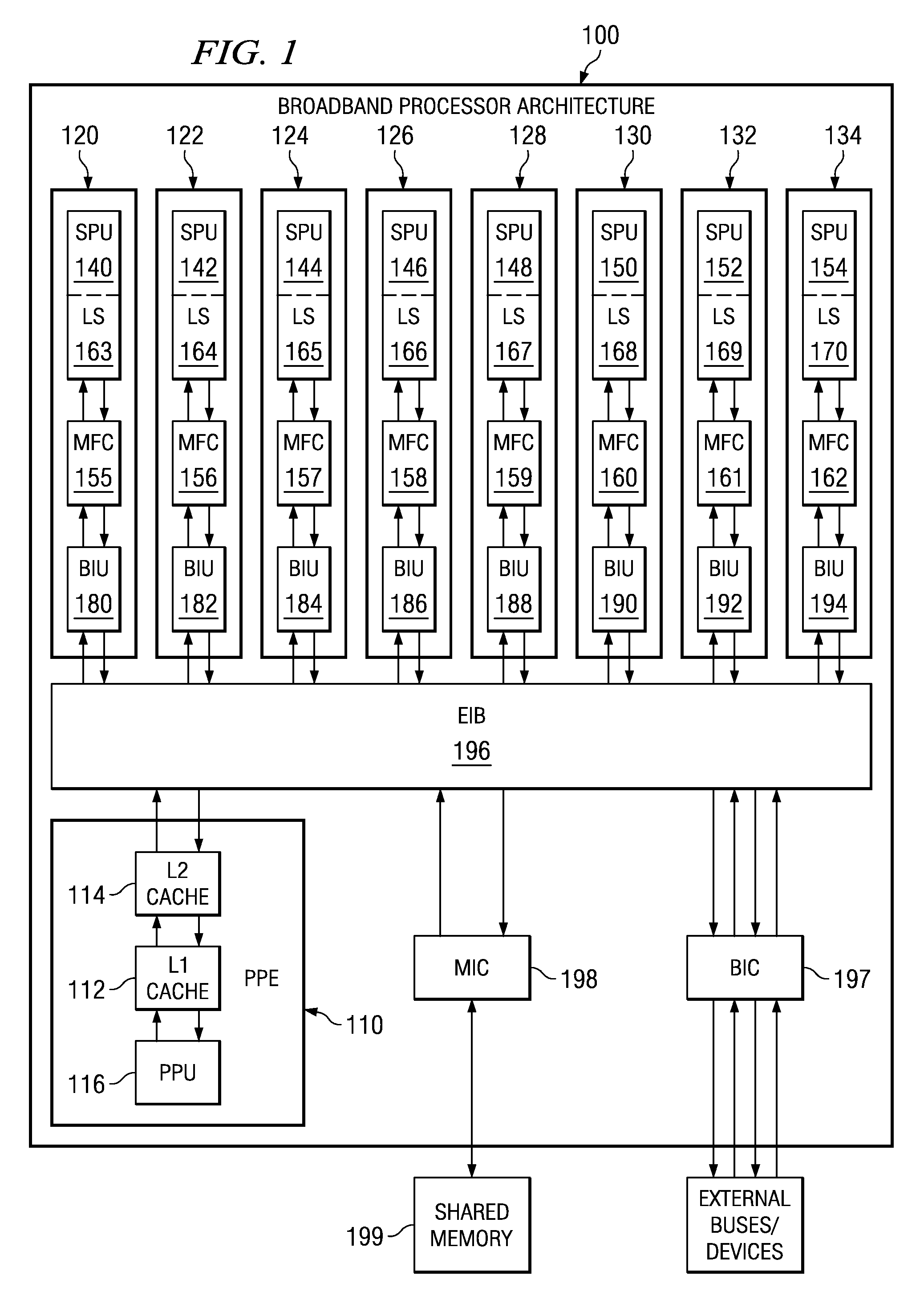

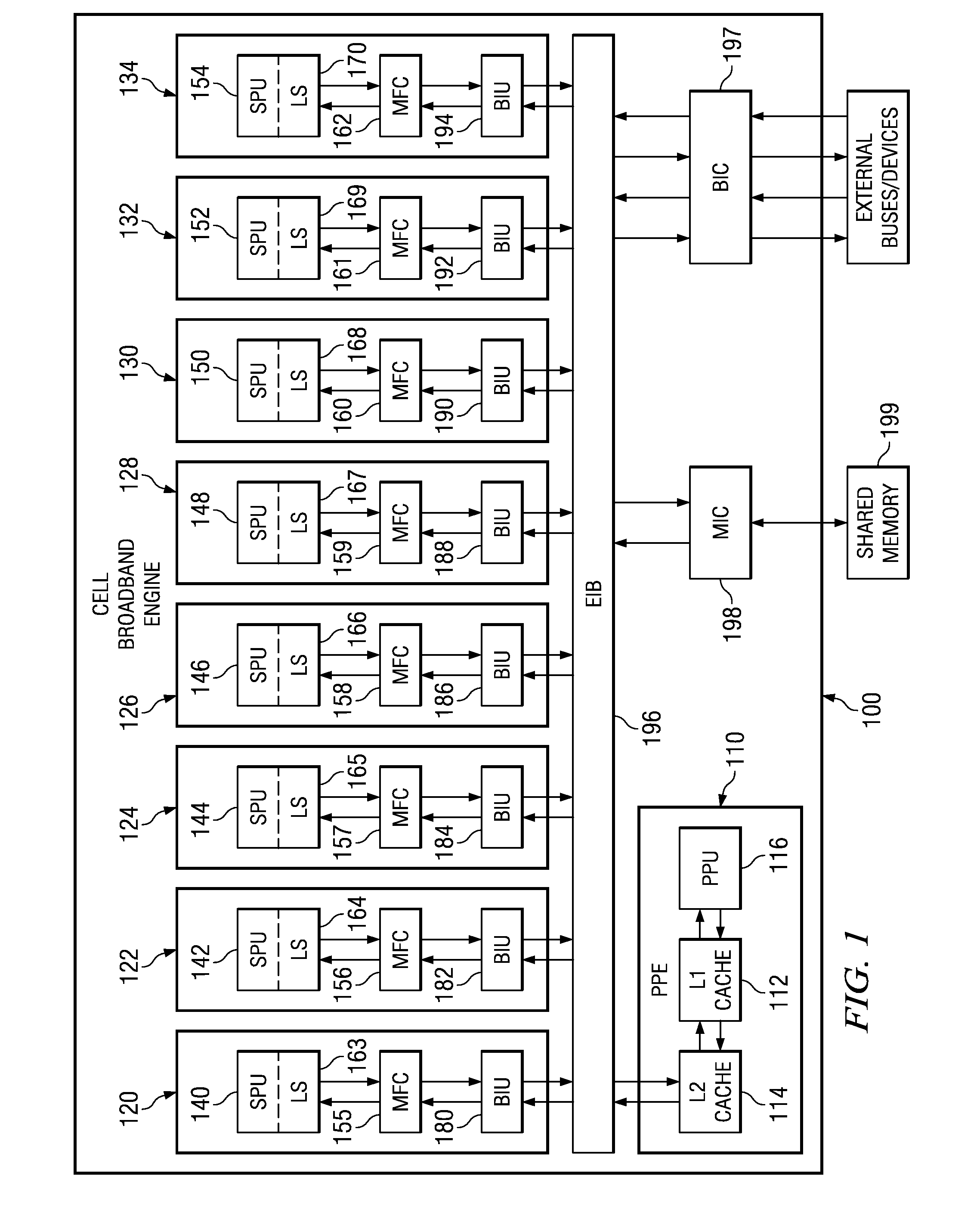

InactiveUS7493452B2Reduce overheadEfficient accessMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressProcessor register

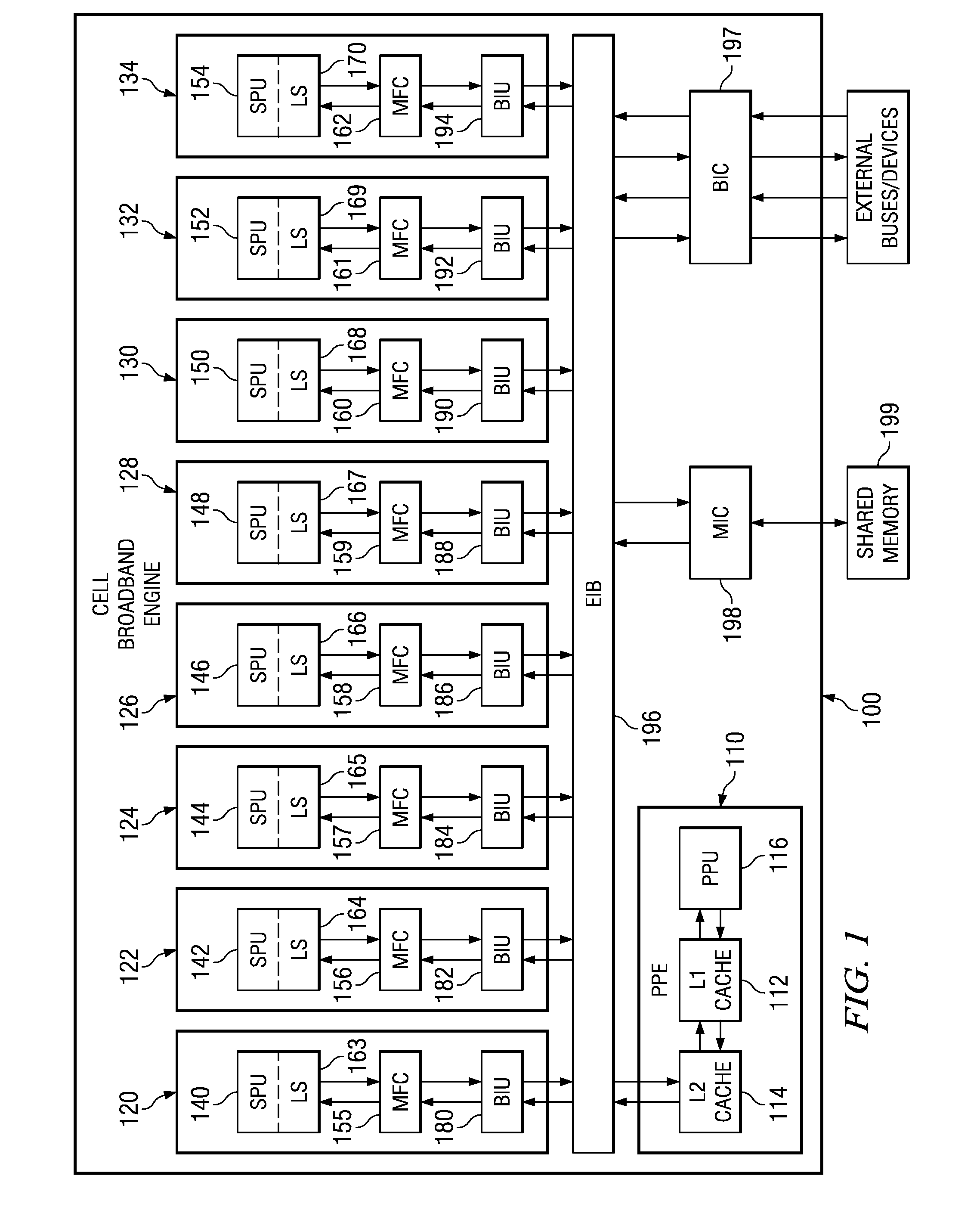

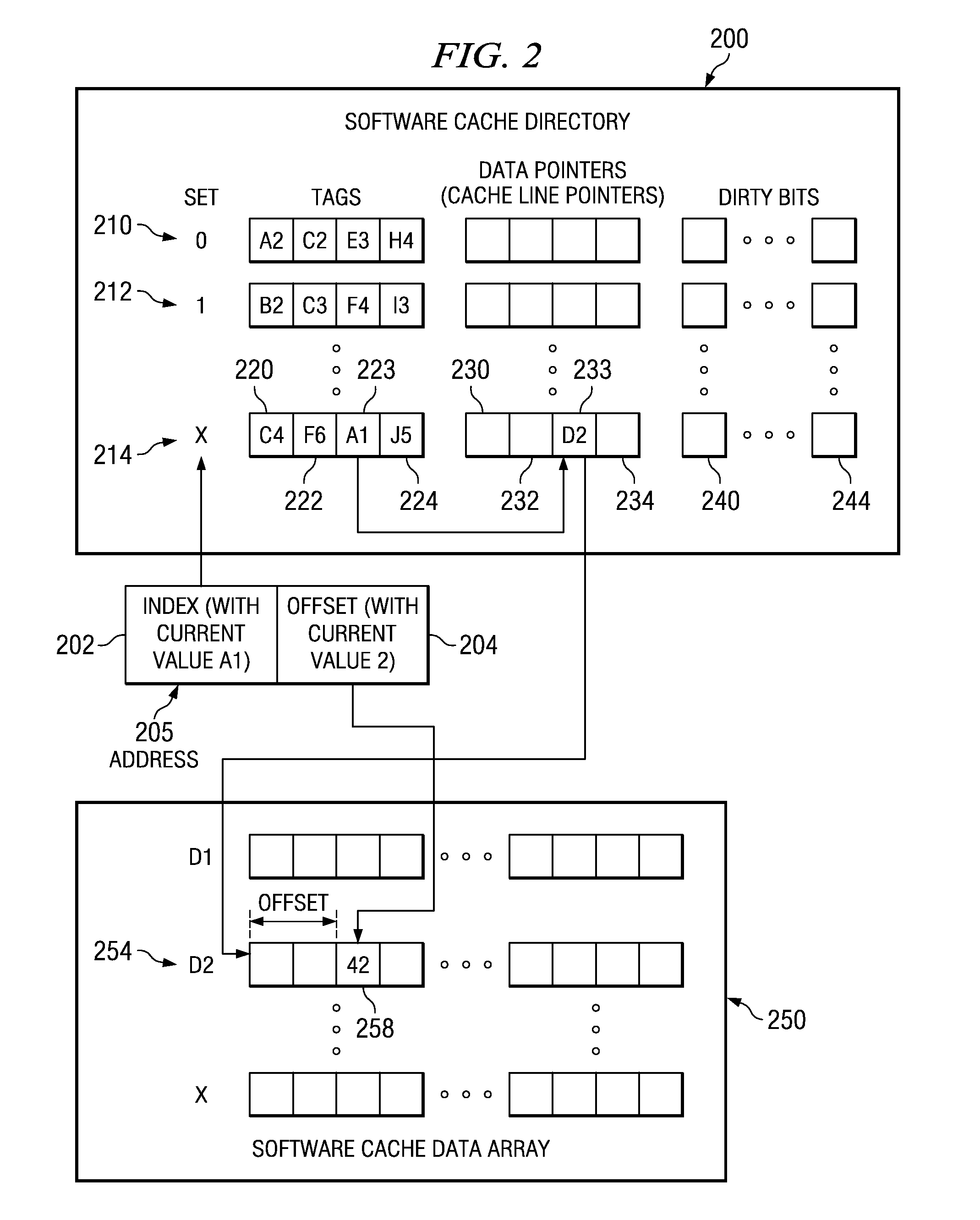

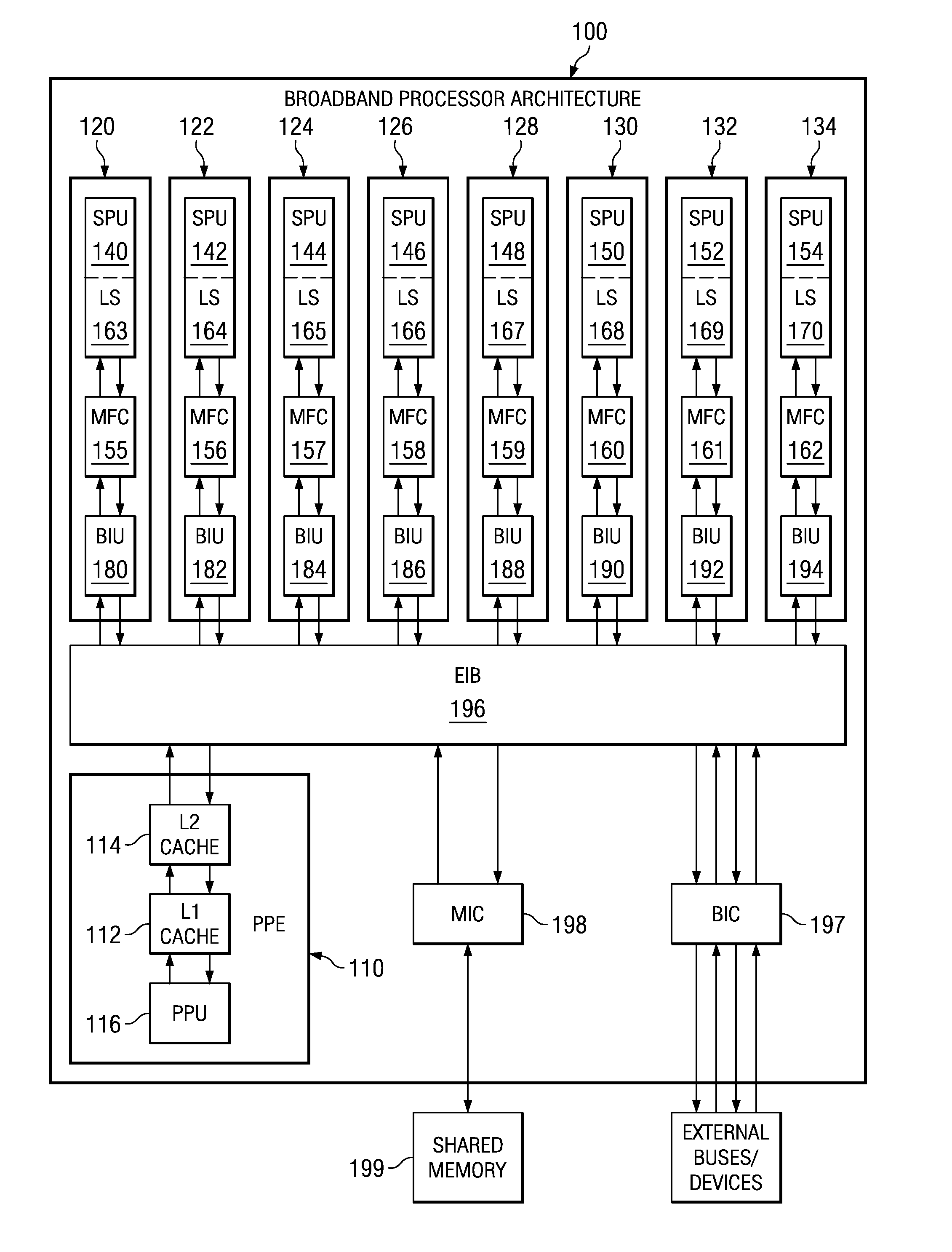

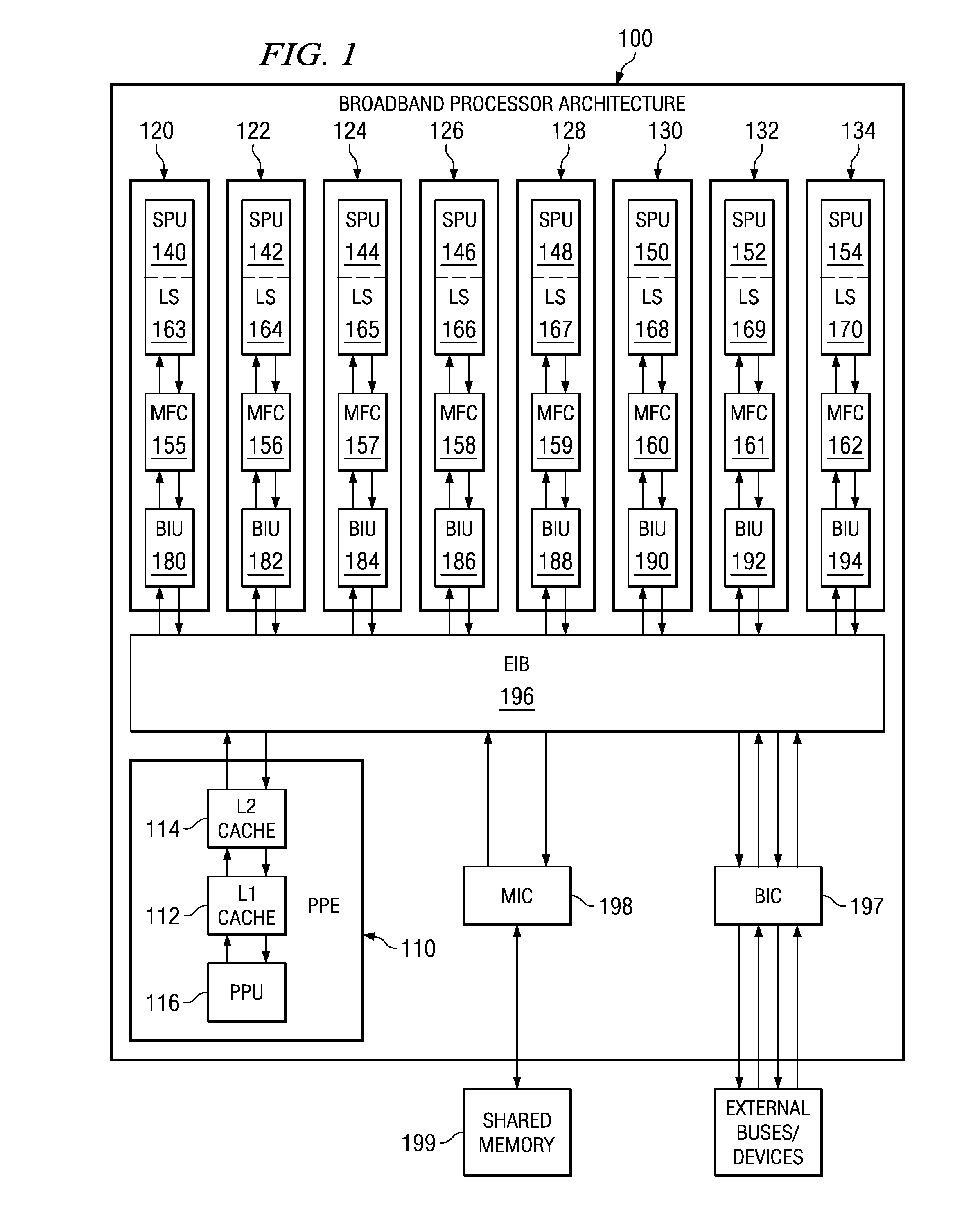

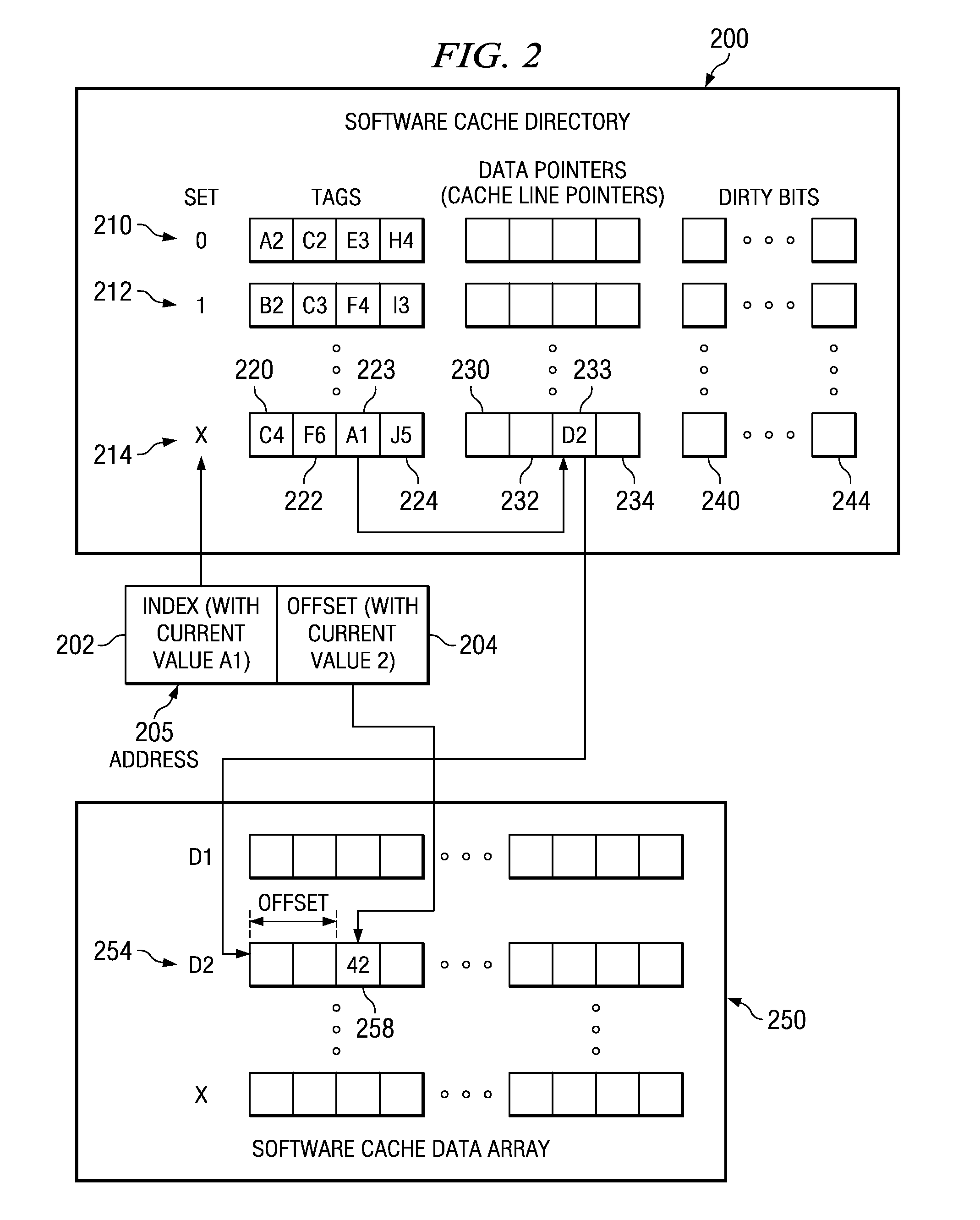

A method to efficiently pre-fetch and batch compiler-assisted software cache accesses is provided. The method reduces the overhead associated with software cache directory accesses. With the method, the local memory address of the cache line that stores the pre-fetched data is itself cached, such as in a register or well known location in local memory, so that a later data access does not need to perform address translation and software cache operations and can instead access the data directly from the software cache using the cached local memory address. This saves processor cycles that would otherwise be required to perform the address translation a second time when the data is to be used. Moreover, the system and method directly enable software cache accesses to be effectively decoupled from address translation in order to increase the overlap between computation and communication.

Owner:INT BUSINESS MASCH CORP

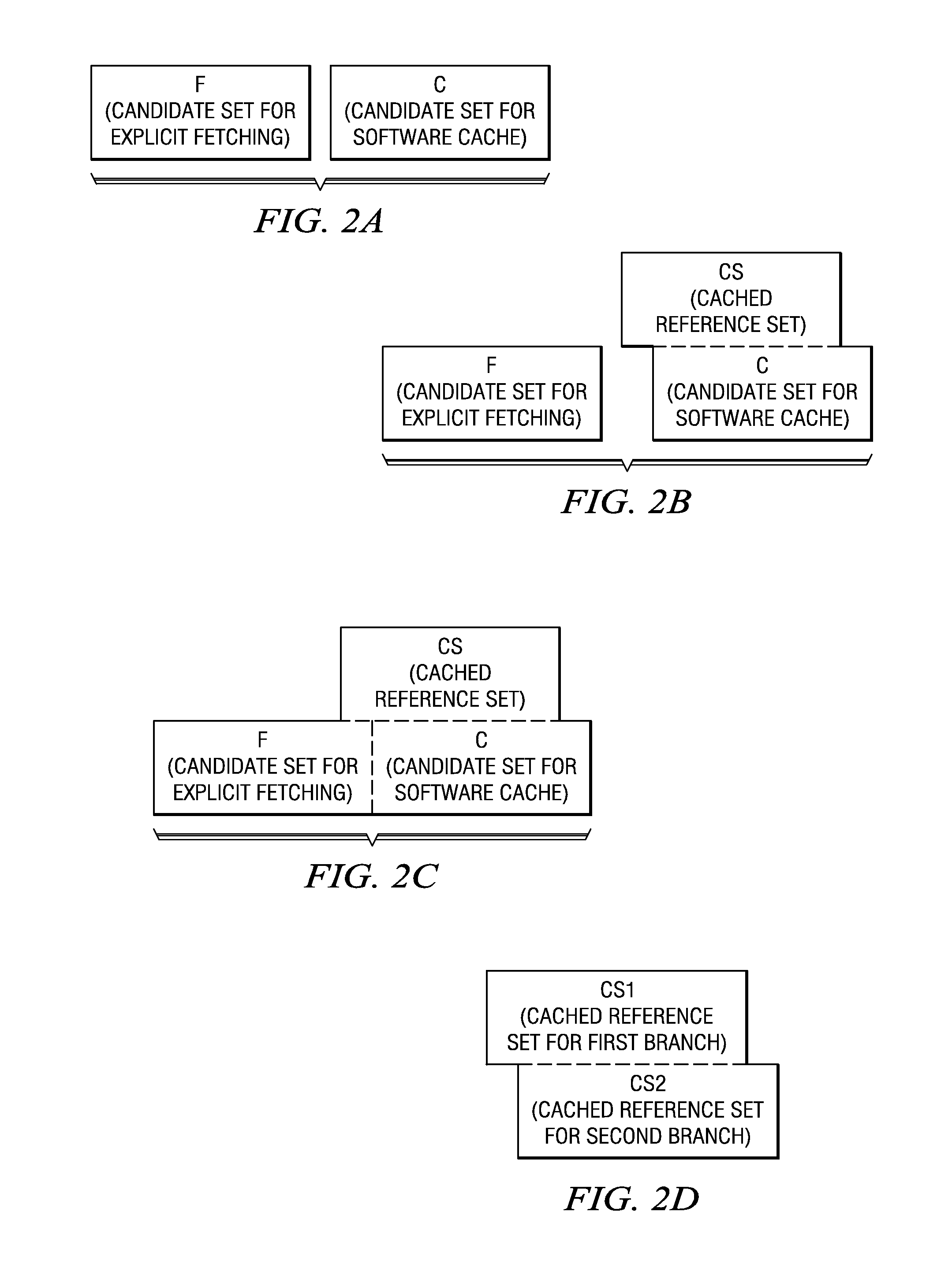

Compiler implemented software cache apparatus and method in which non-aliased explicitly fetched data are excluded

InactiveUS20070261042A1Keep properlyReduce in quantitySoftware engineeringProgram controlParallel computingSoftware cache

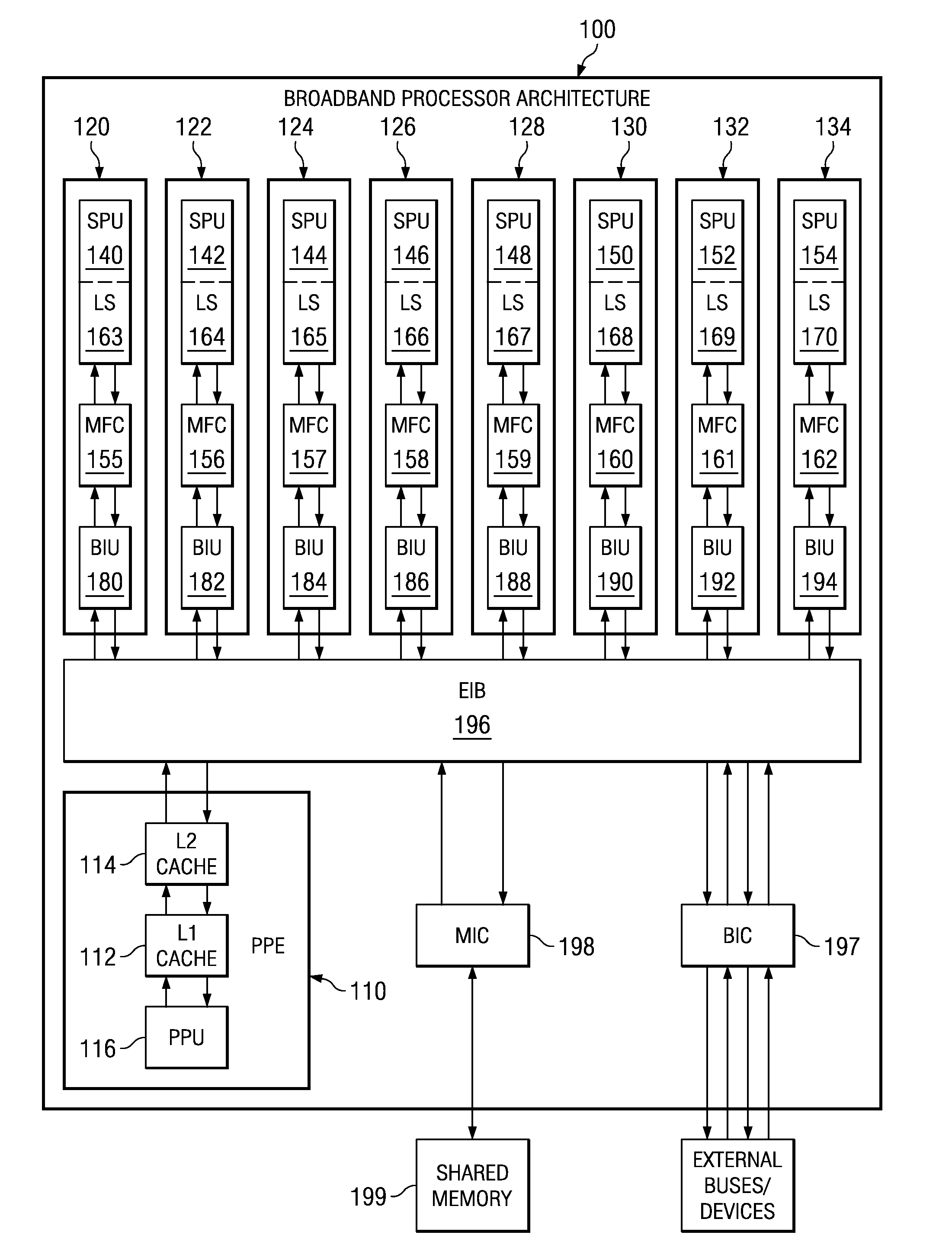

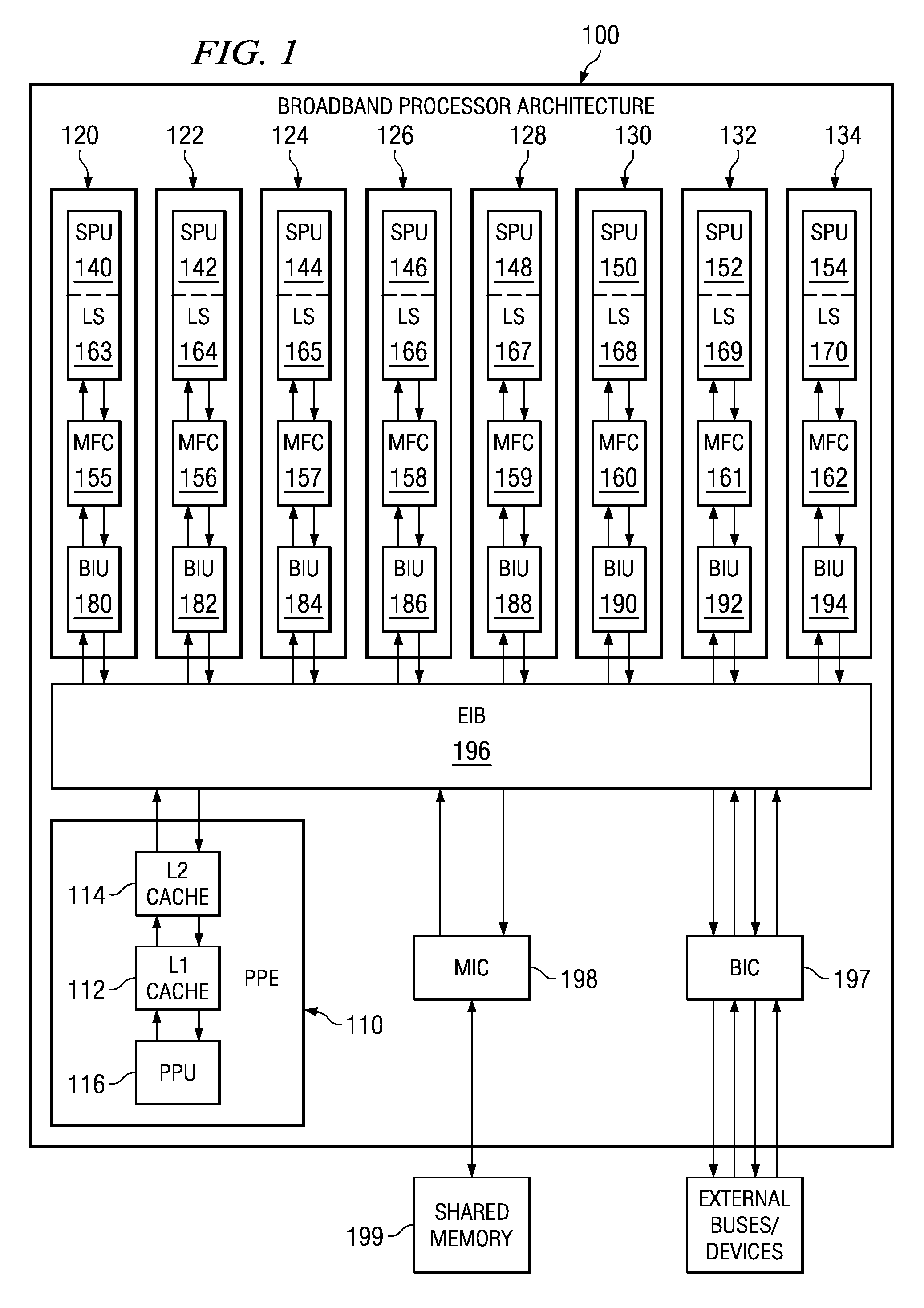

A compiler implemented software cache apparatus and method in which non-aliased explicitly fetched data are excluded are provided. With the mechanisms of the illustrative embodiments, a compiler uses a forward data flow analysis to prove that there is no alias between the cached data and explicitly fetched data. Explicitly fetched data that has no alias in the cached data are excluded from the software cache. Explicitly fetched data that has aliases in the cached data are allowed to be stored in the software cache. In this way, there is no runtime overhead to maintain the correctness of the two copies of data. Moreover, the number of lines of the software cache that must be protected from eviction is decreased. This leads to a decrease in the amount of computation cycles required by the cache miss handler when evicting cache lines during cache miss handling.

Owner:IBM CORP

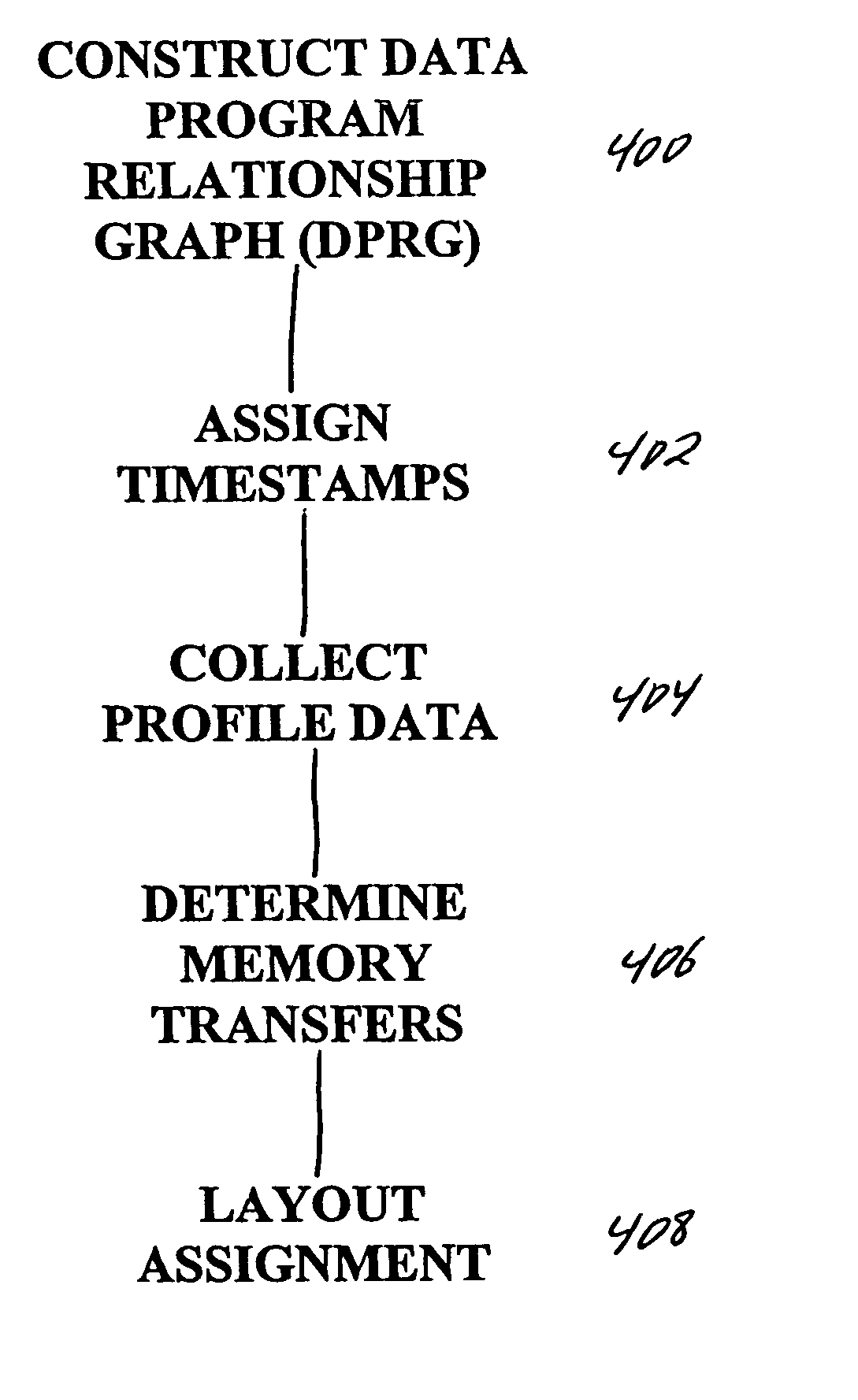

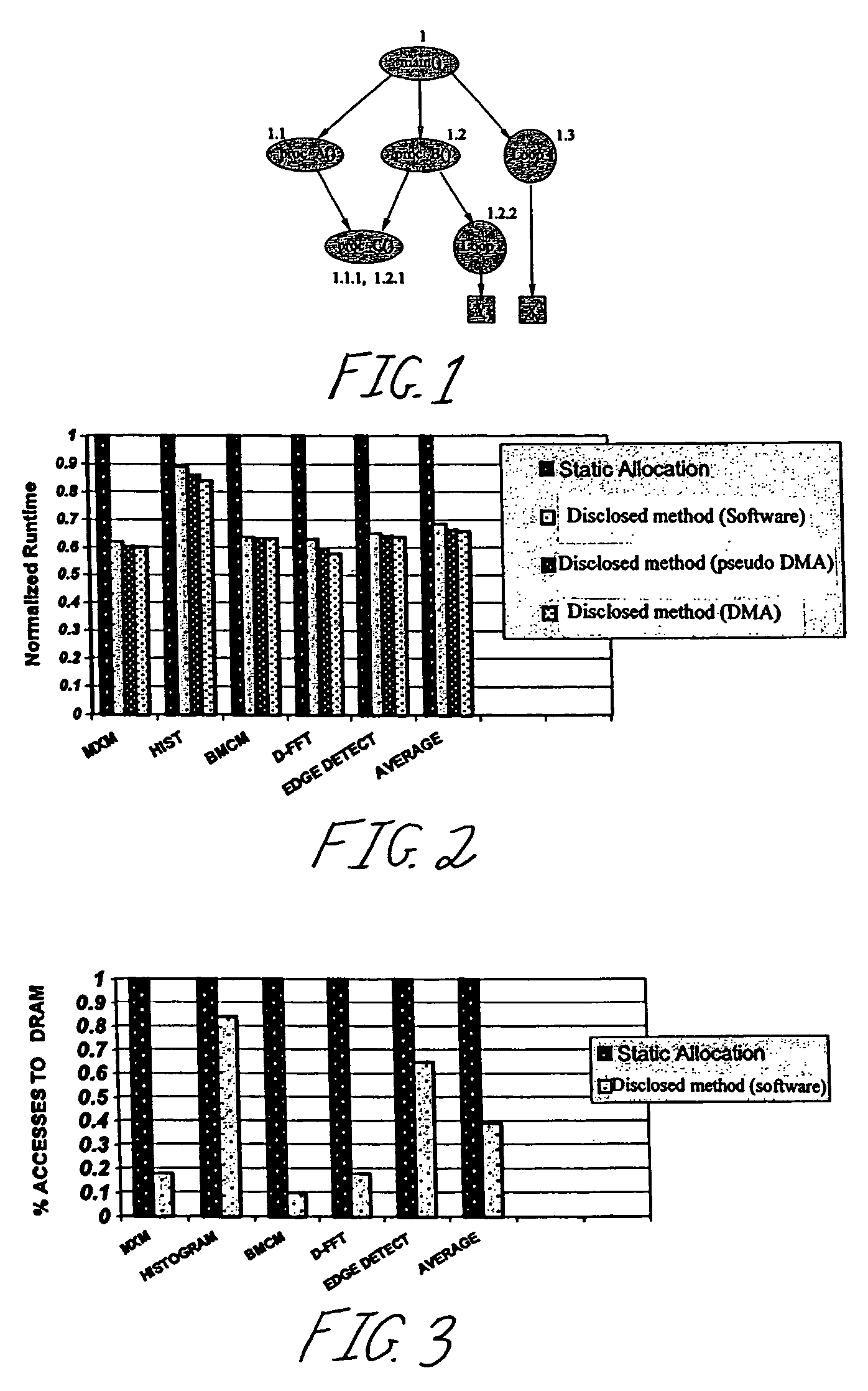

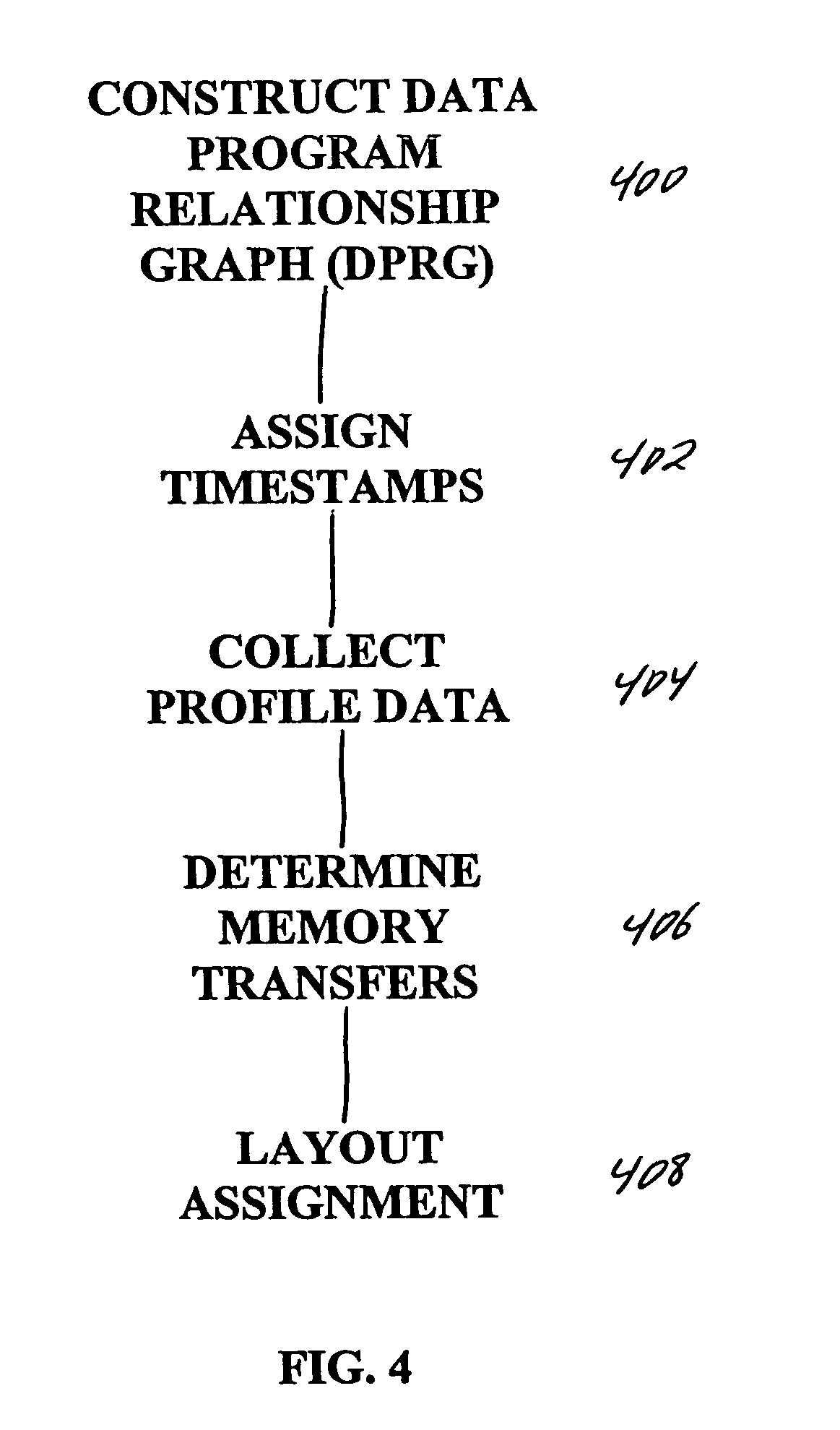

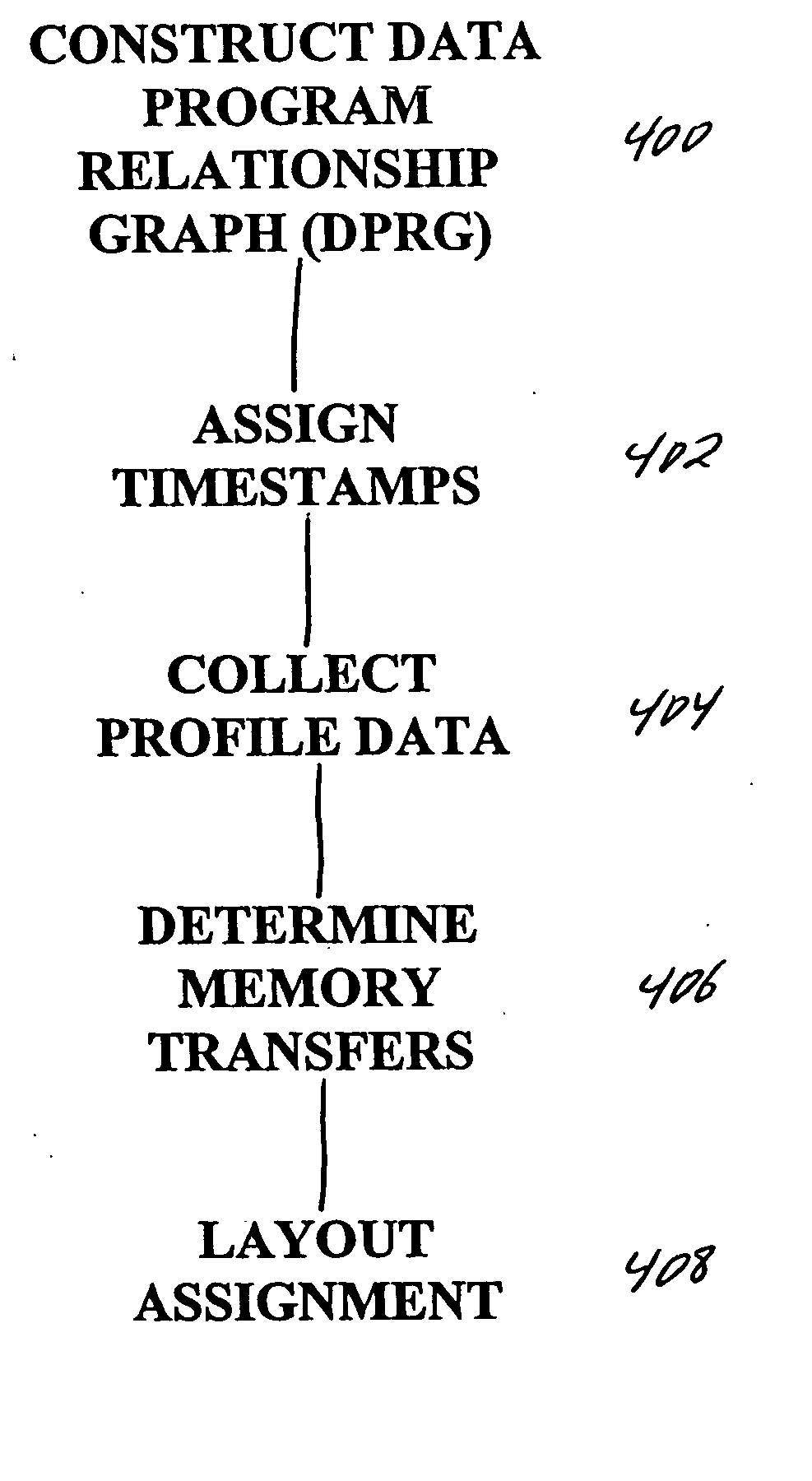

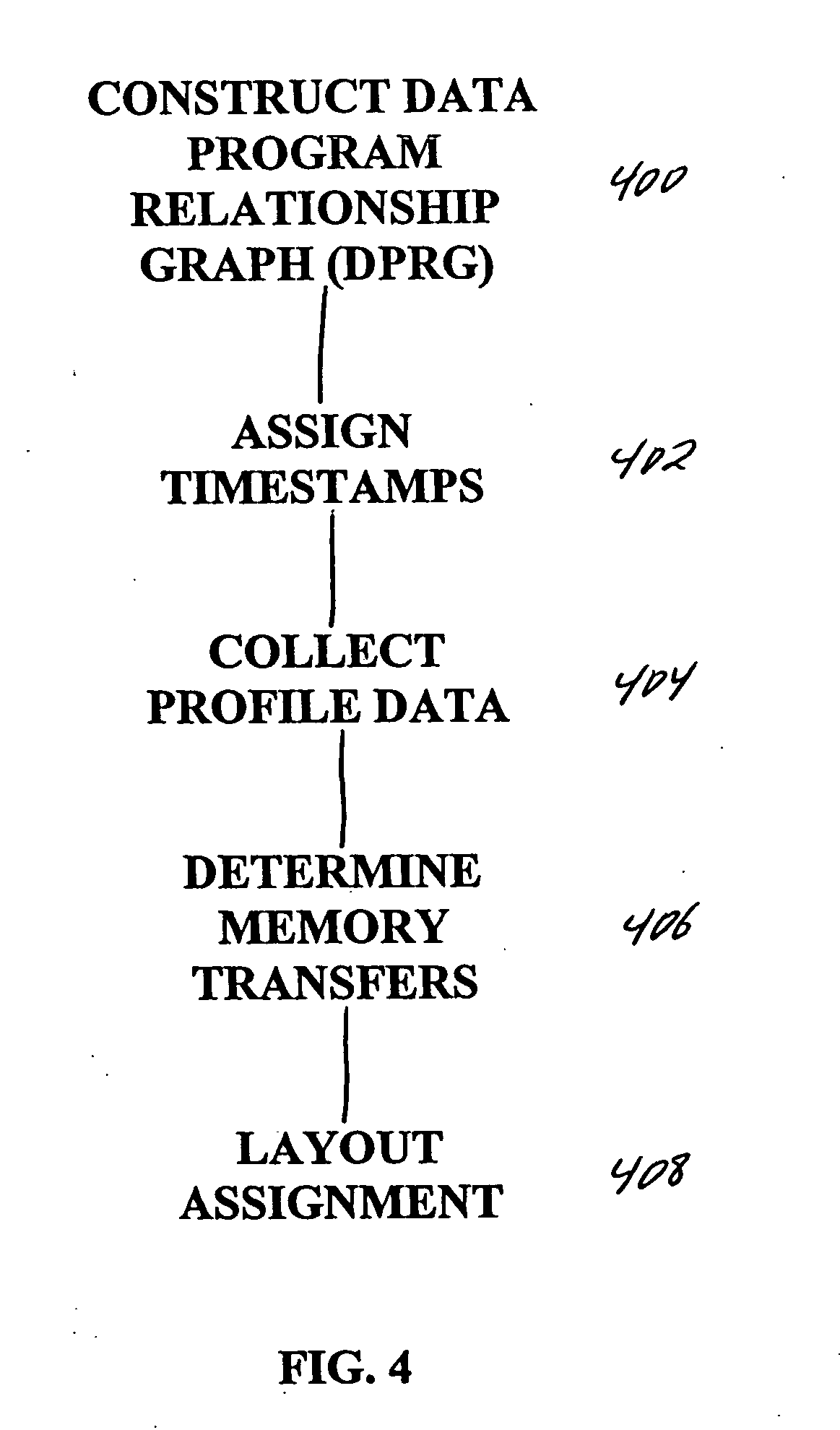

Compiler-driven dynamic memory allocation methodology for scratch-pad based embedded systems

InactiveUS7367024B2Avoid overheadReduce overheadEnergy efficient ICTProgram controlAccess timeParallel computing

A highly predictable, low overhead and yet dynamic, memory allocation methodology for embedded systems with scratch-pad memory is presented. The dynamic memory allocation methodology for global and stack data (i) accounts for changing program requirements at runtime; (ii) has no software-caching tags; (iii) requires no run-time checks; (iv) has extremely low overheads; and (v) yields 100% predictable memory access times. The methodology provides that for data that is about to be accessed frequently is copied into the SRAM using compiler-inserted code at fixed and infrequent points in the program. Earlier data is evicted if necessary.

Owner:UNIV OF MARYLAND

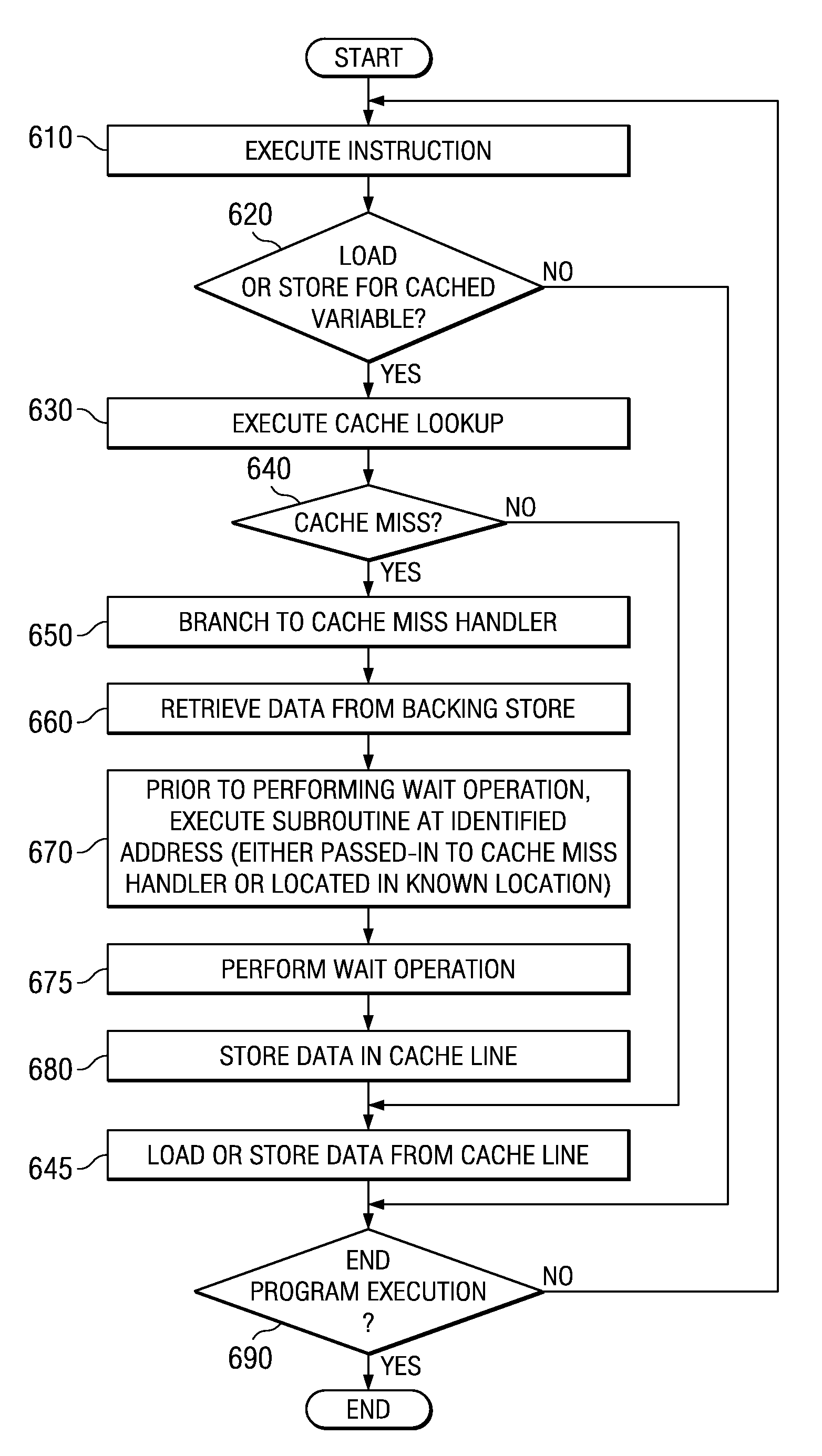

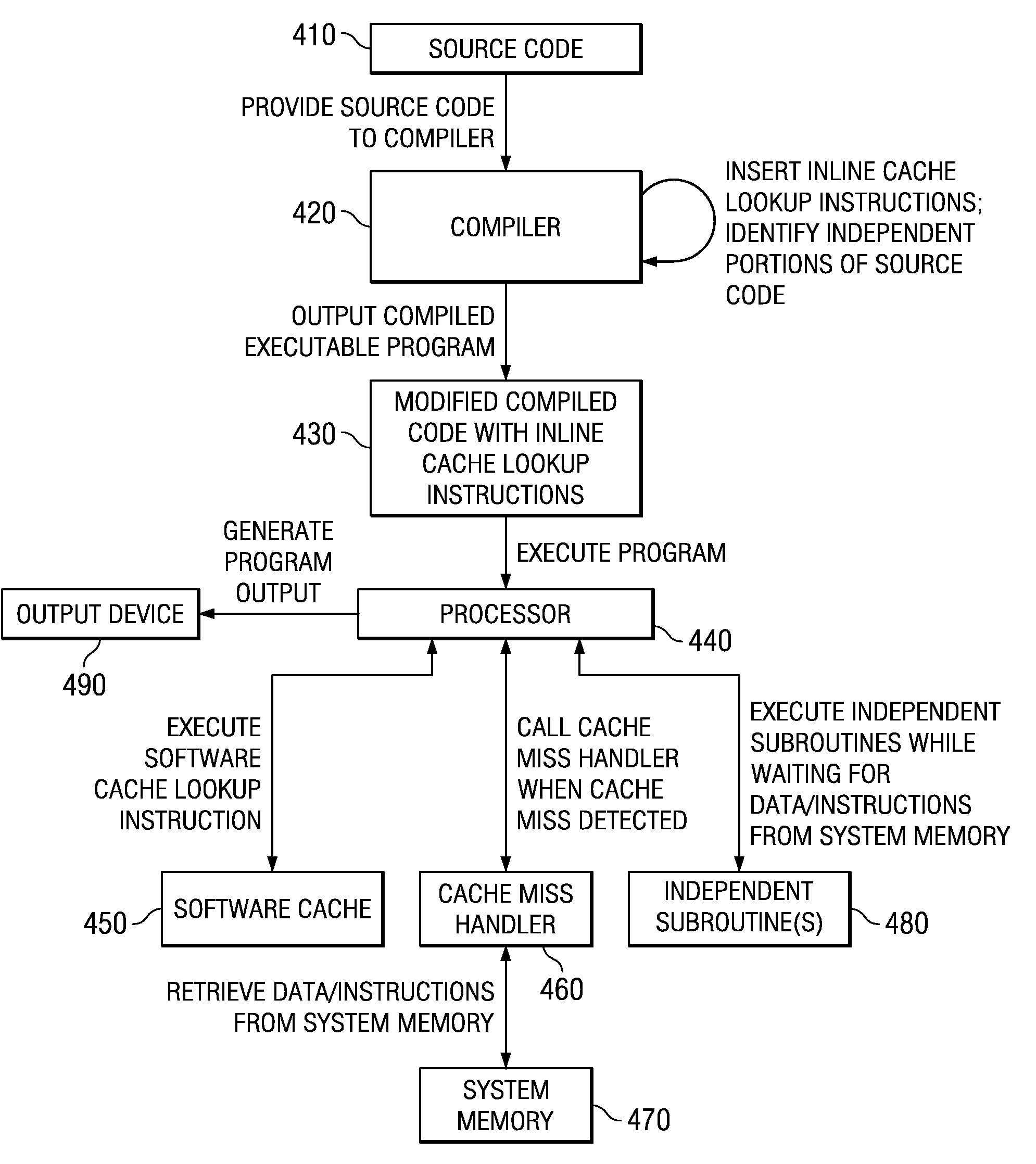

Apparatus and method for performing useful computations while waiting for a line in a system with a software implemented cache

InactiveUS20070283098A1Memory systemsMicro-instruction address formationParallel computingSoftware cache

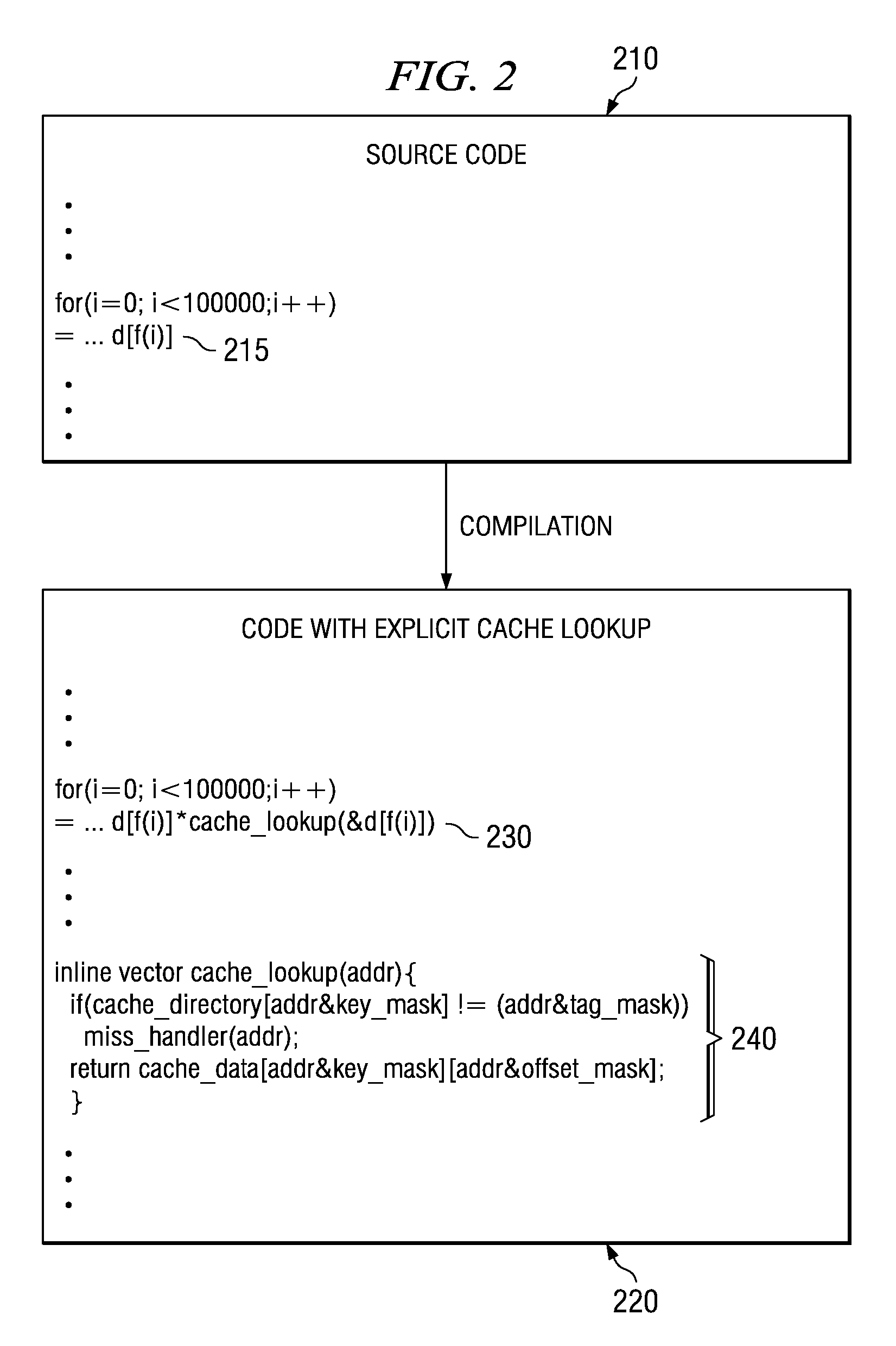

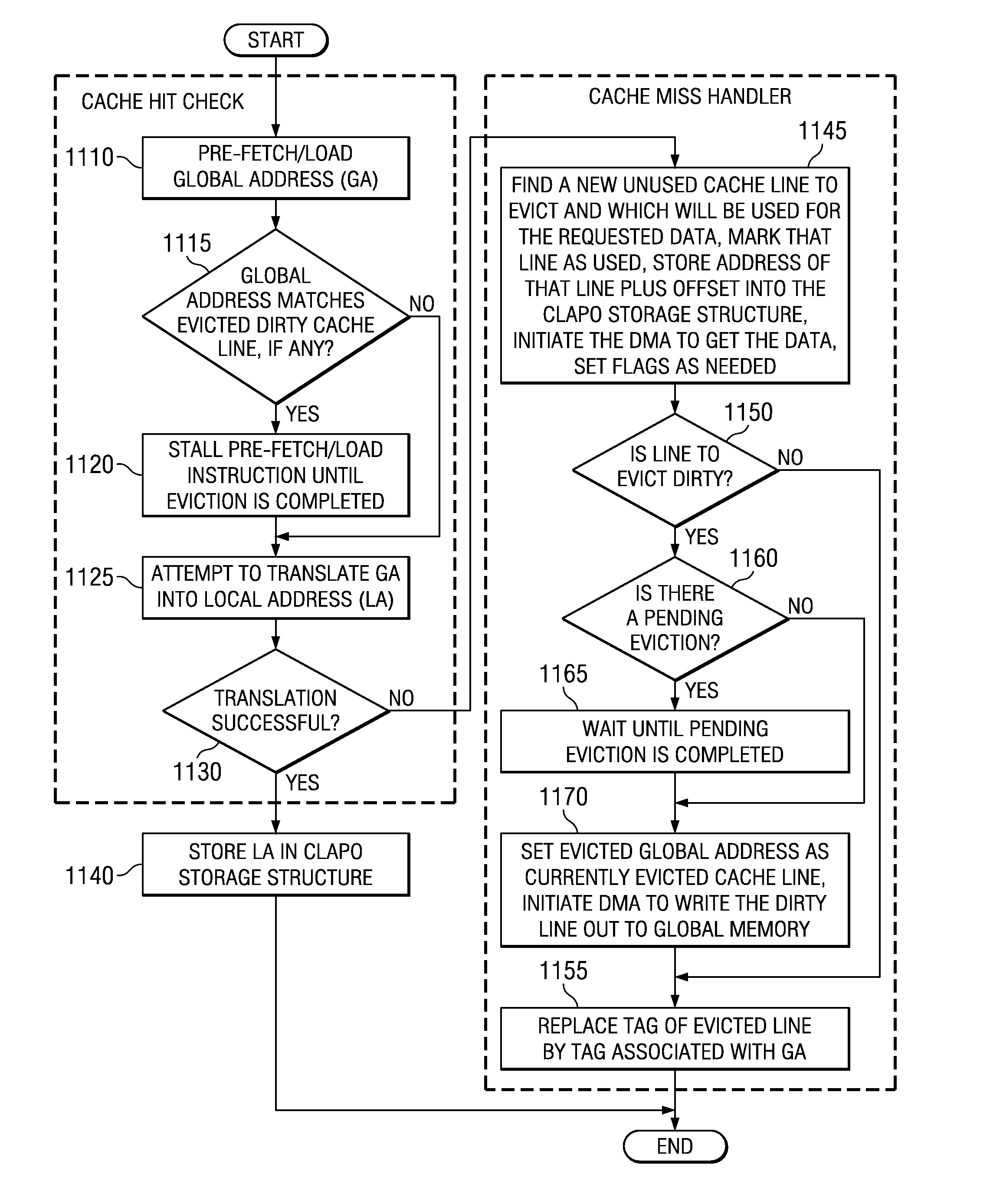

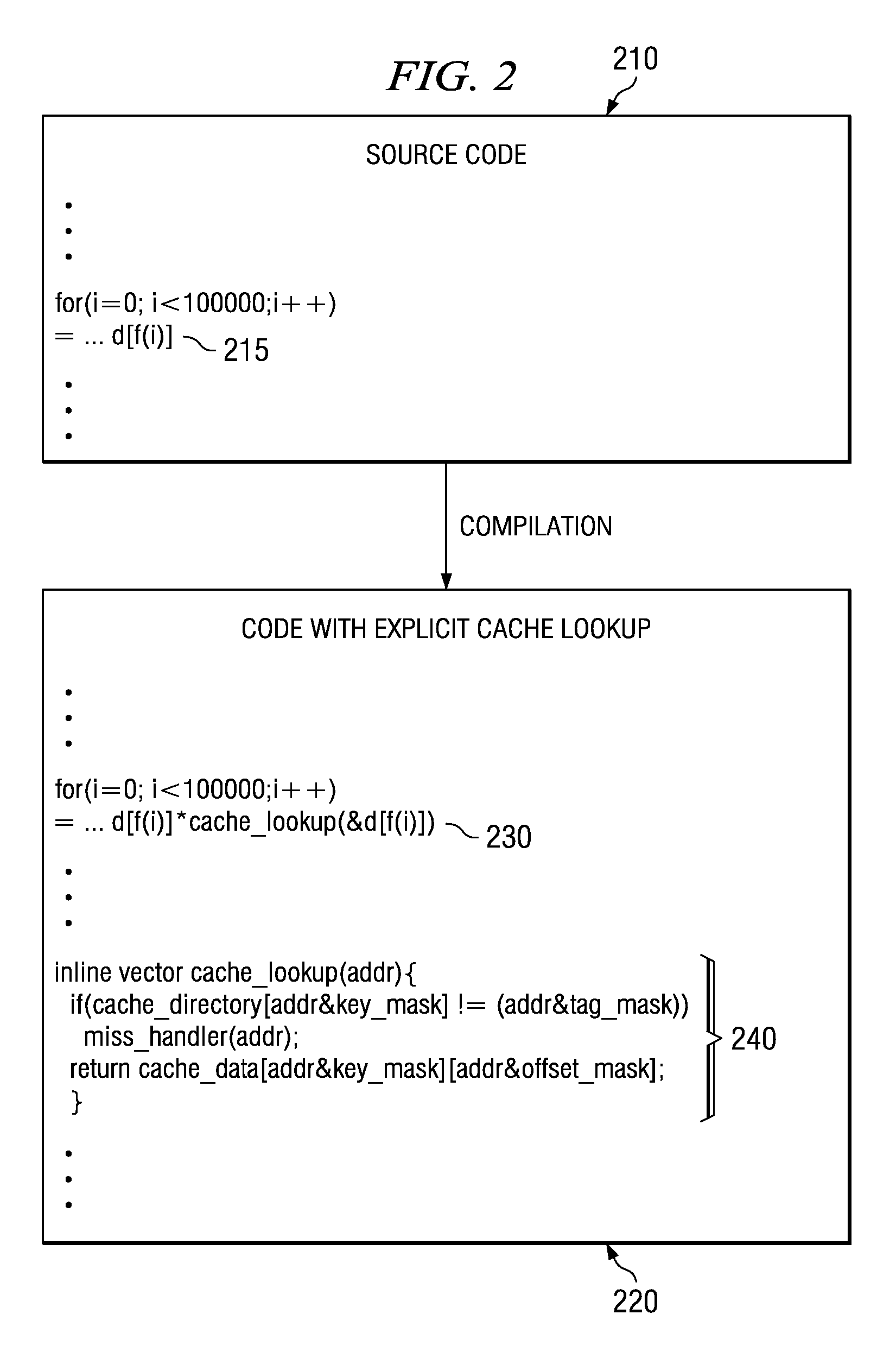

An apparatus and method for performing useful computations during a software cache reload operation is provided. With the illustrative embodiments, in order to perform software caching, a compiler takes original source code, and while compiling the source code, inserts explicit cache lookup instructions into appropriate portions of the source code where cacheable variables are referenced. In addition, the compiler inserts a cache miss handler routine that is used to branch execution of the code to a cache miss handler if the cache lookup instructions result in a cache miss. The cache miss handler, prior to performing a wait operation for waiting for the data to be retrieved from the backing store, branches execution to an independent subroutine identified by a compiler. The independent subroutine is executed while the data is being retrieved from the backing store such that useful work is performed.

Owner:IBM CORP

System and Method to Efficiently Prefetch and Batch Compiler-Assisted Software Cache Accesses

InactiveUS20080046657A1Reduce overheadEfficient accessMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressData access

A system and method to efficiently pre-fetch and batch compiler-assisted software cache accesses are provided. The system and method reduce the overhead associated with software cache directory accesses. With the system and method, the local memory address of the cache line that stores the pre-fetched data is itself cached, such as in a register or well known location in local memory, so that a later data access does not need to perform address translation and software cache operations and can instead access the data directly from the software cache using the cached local memory address. This saves processor cycles that would otherwise be required to perform the address translation a second time when the data is to be used. Moreover, the system and method directly enable software cache accesses to be effectively decoupled from address translation in order to increase the overlap between computation and communication.

Owner:IBM CORP

Compiler-driven dynamic memory allocation methodology for scratch-pad based embedded systems

InactiveUS20060080372A1Avoid overheadReduce overheadEnergy efficient ICTProgram controlDistribution methodAccess time

A highly predictable, low overhead and yet dynamic, memory allocation methodology for embedded systems with scratch-pad memory is presented. The dynamic memory allocation methodology for global and stack data (i) accounts for changing program requirements at runtime; (ii) has no software-caching tags; (iii) requires no run-time checks; (iv) has extremely low overheads; and (v) yields 100% predictable memory access times. The methodology provides that for data that is about to be accessed frequently is copied into the SRAM using compiler-inserted code at fixed and infrequent points in the program. Earlier data is evicted if necessary.

Owner:UNIV OF MARYLAND

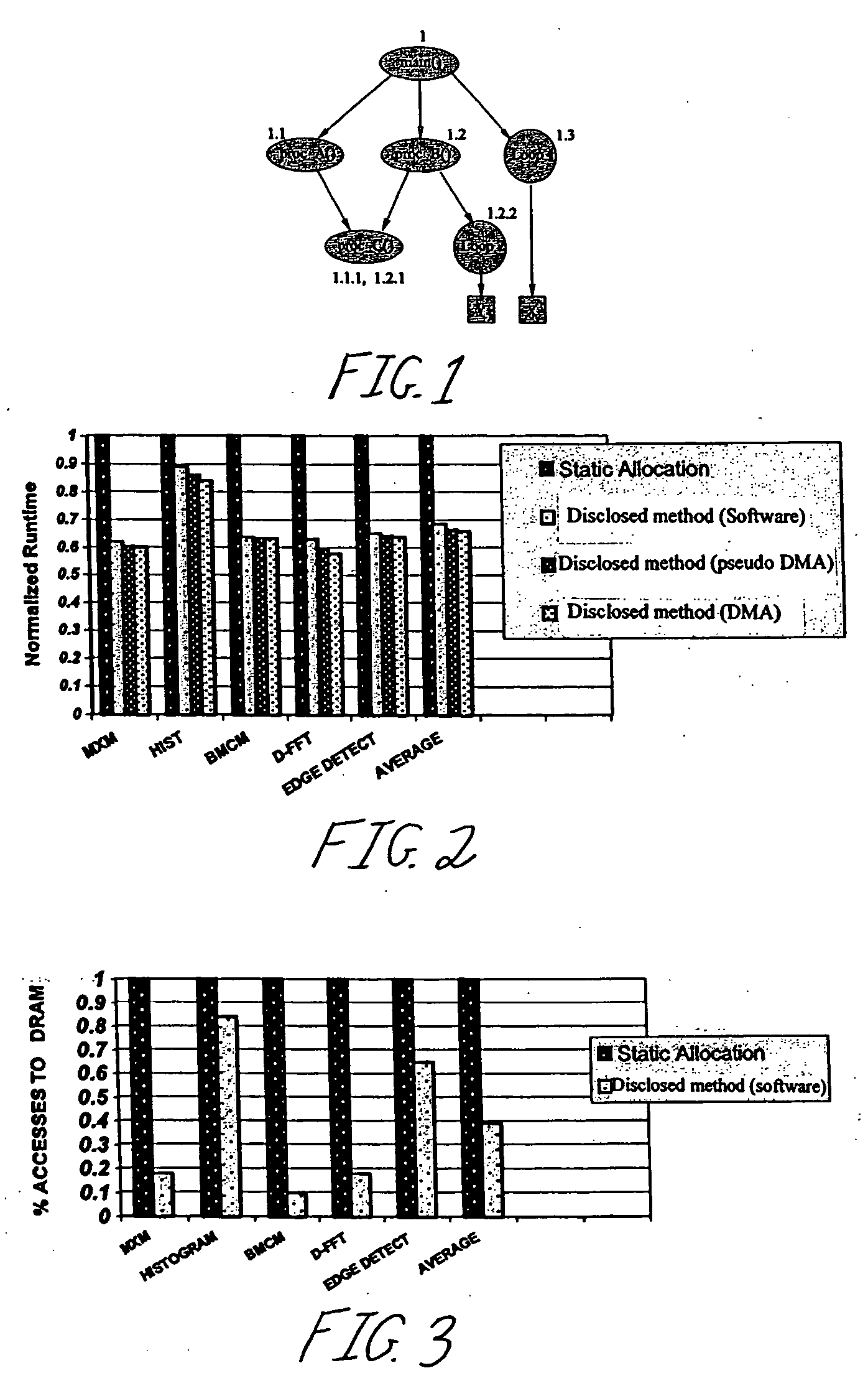

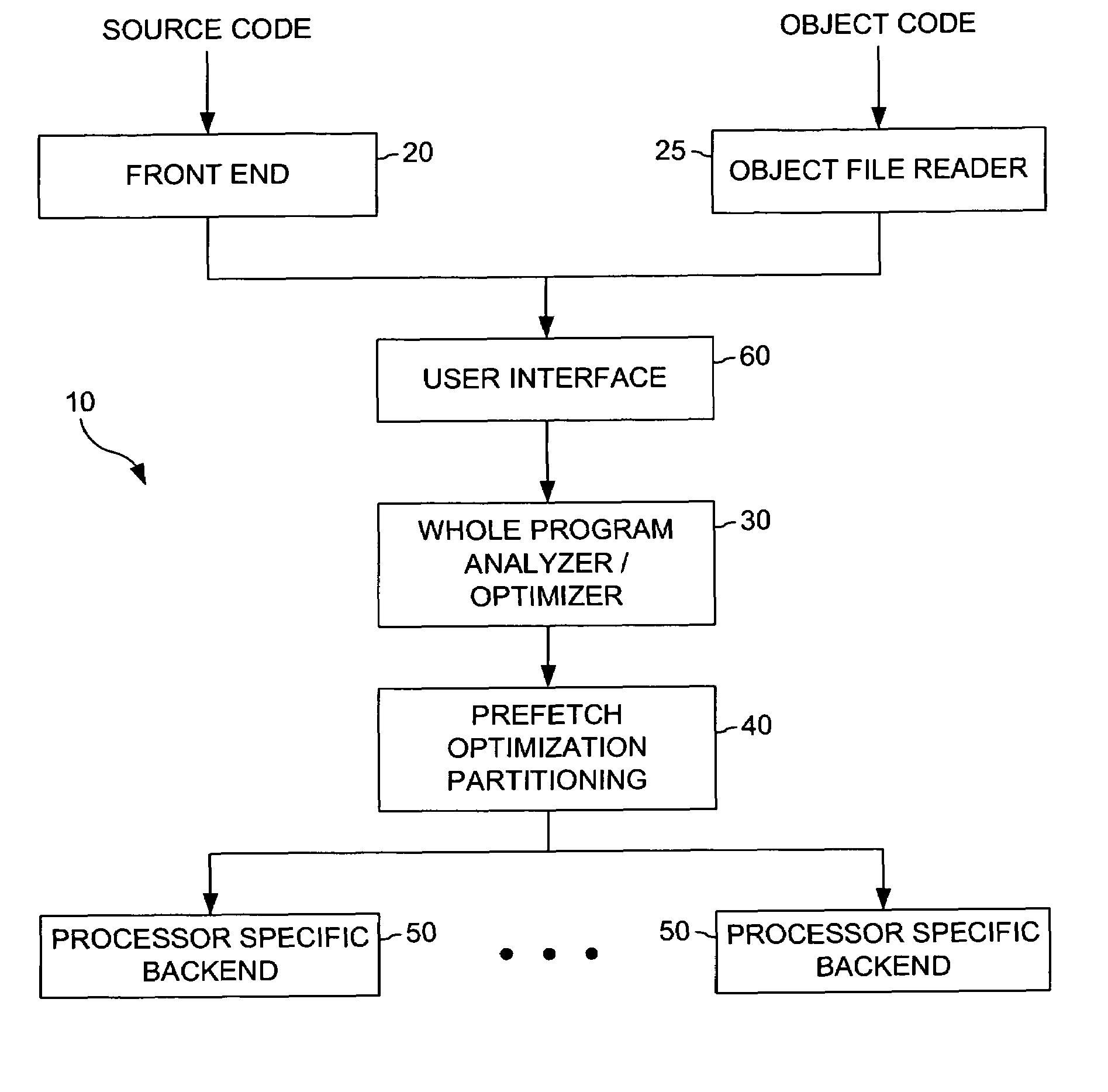

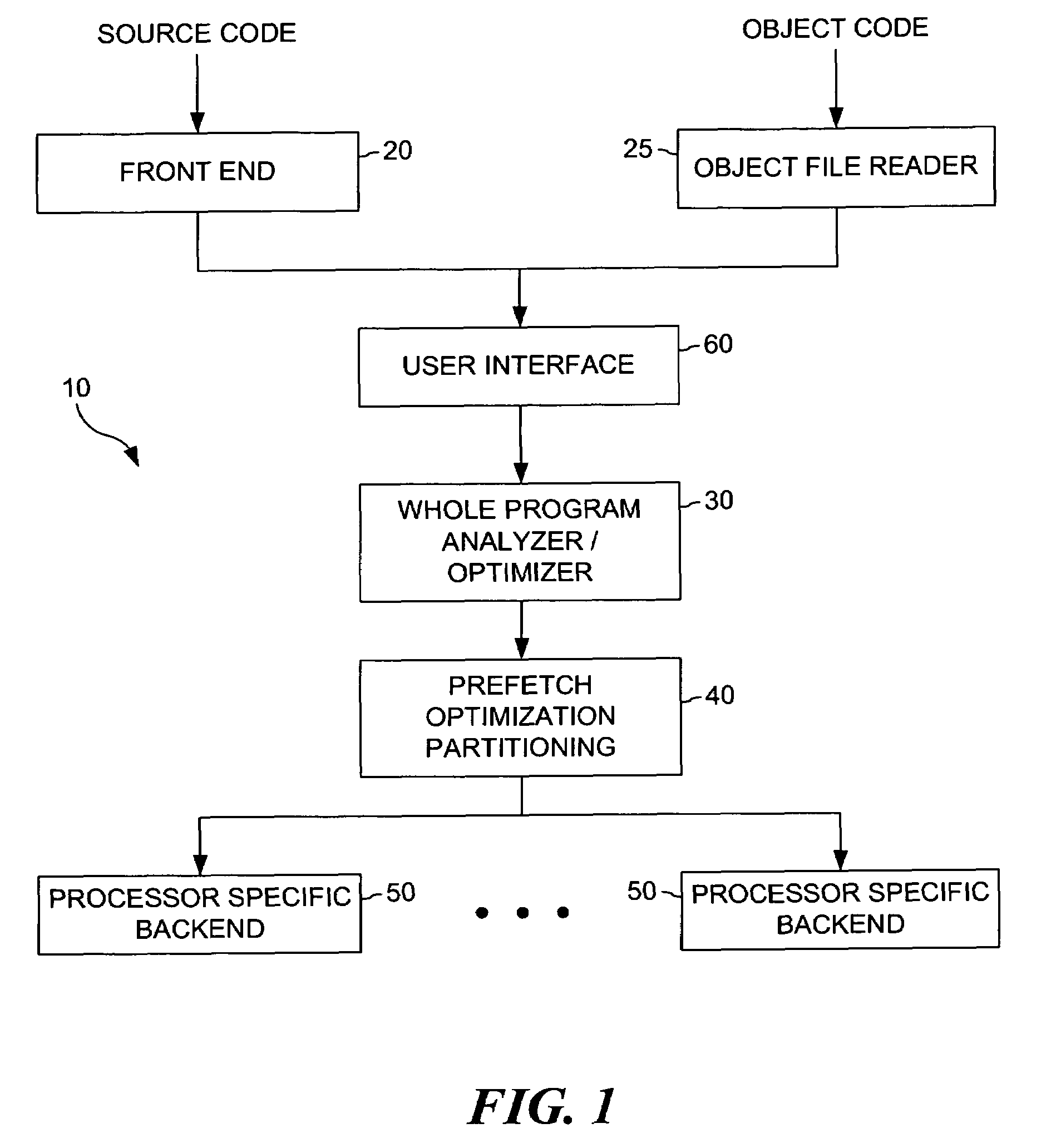

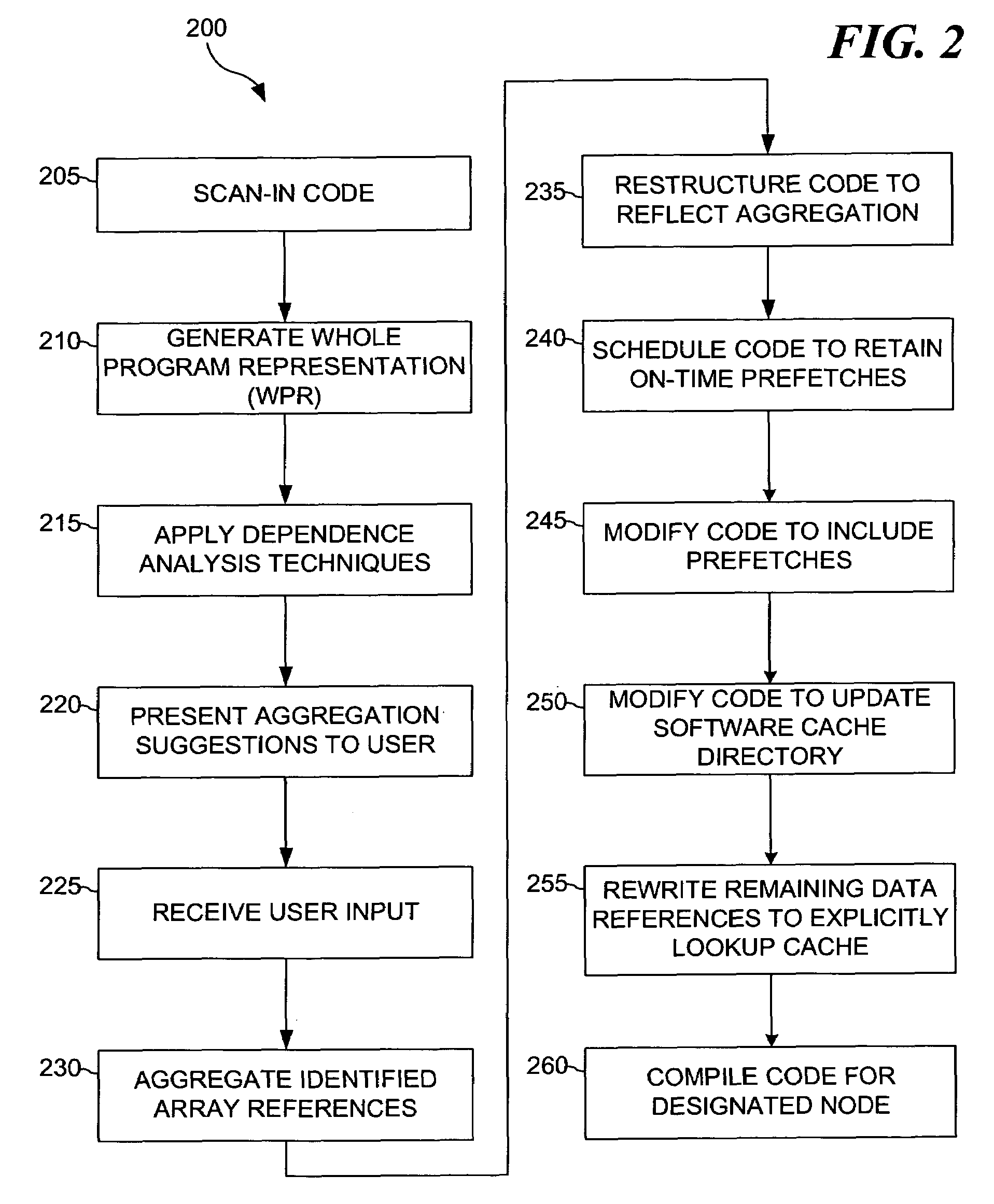

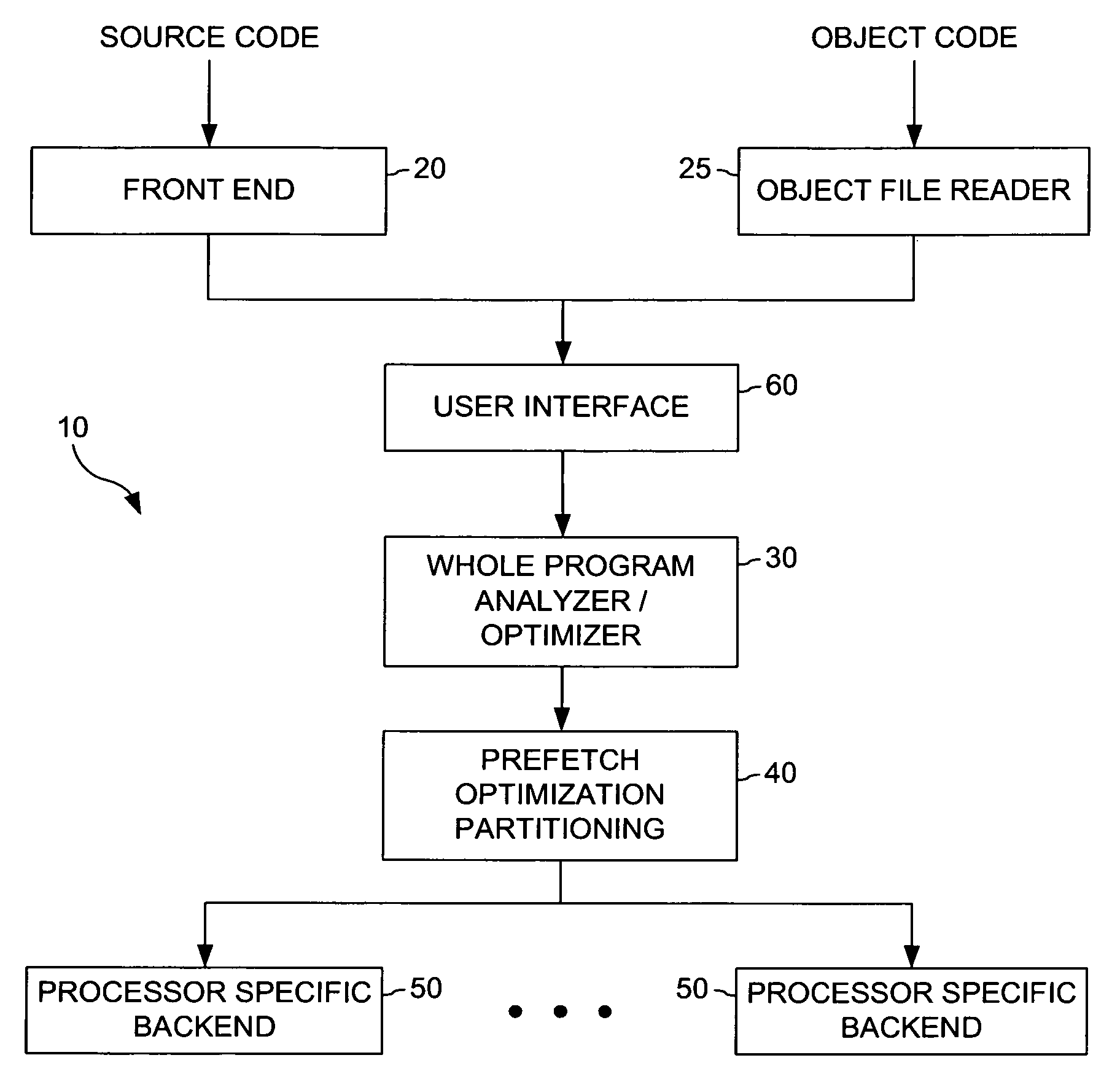

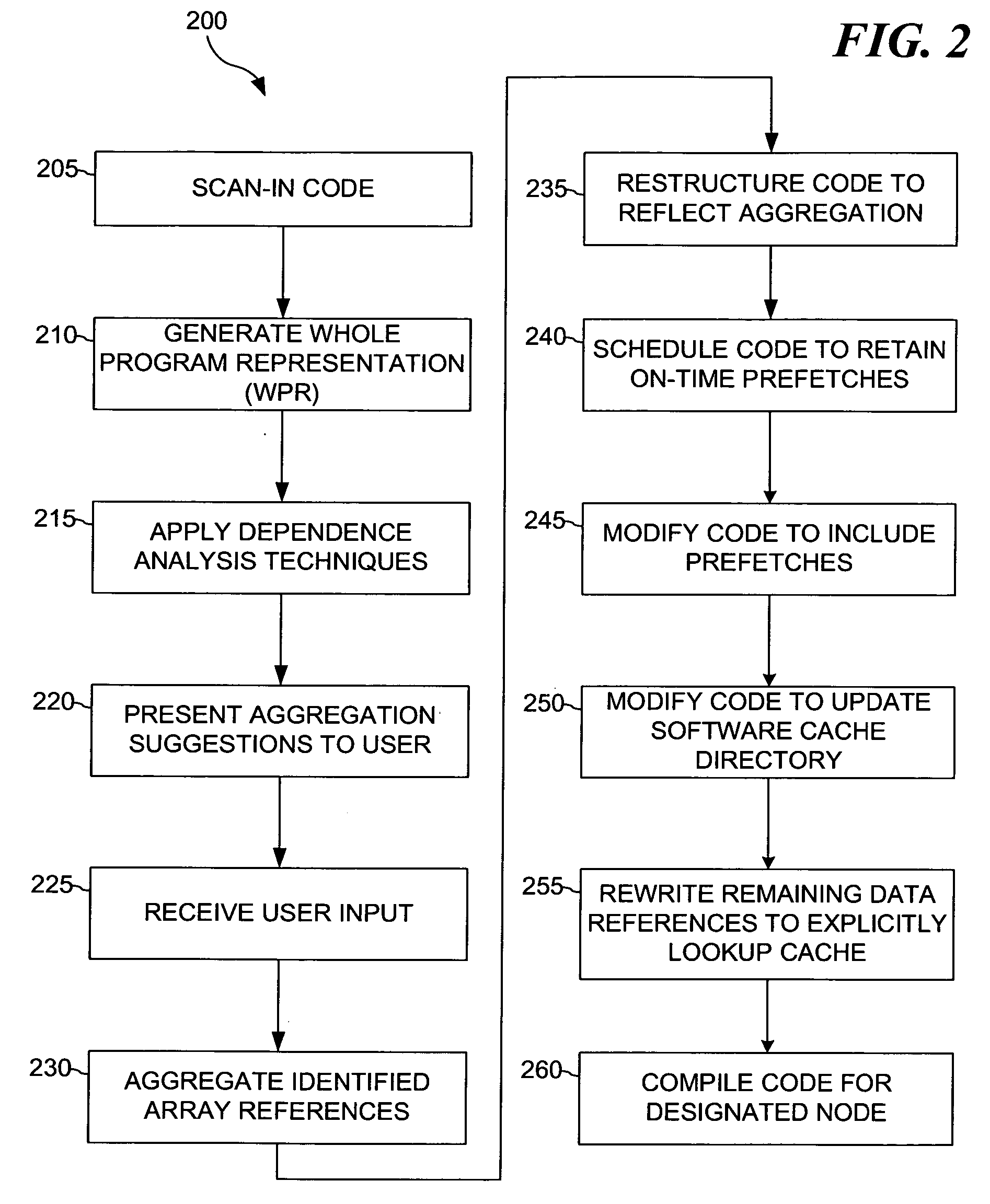

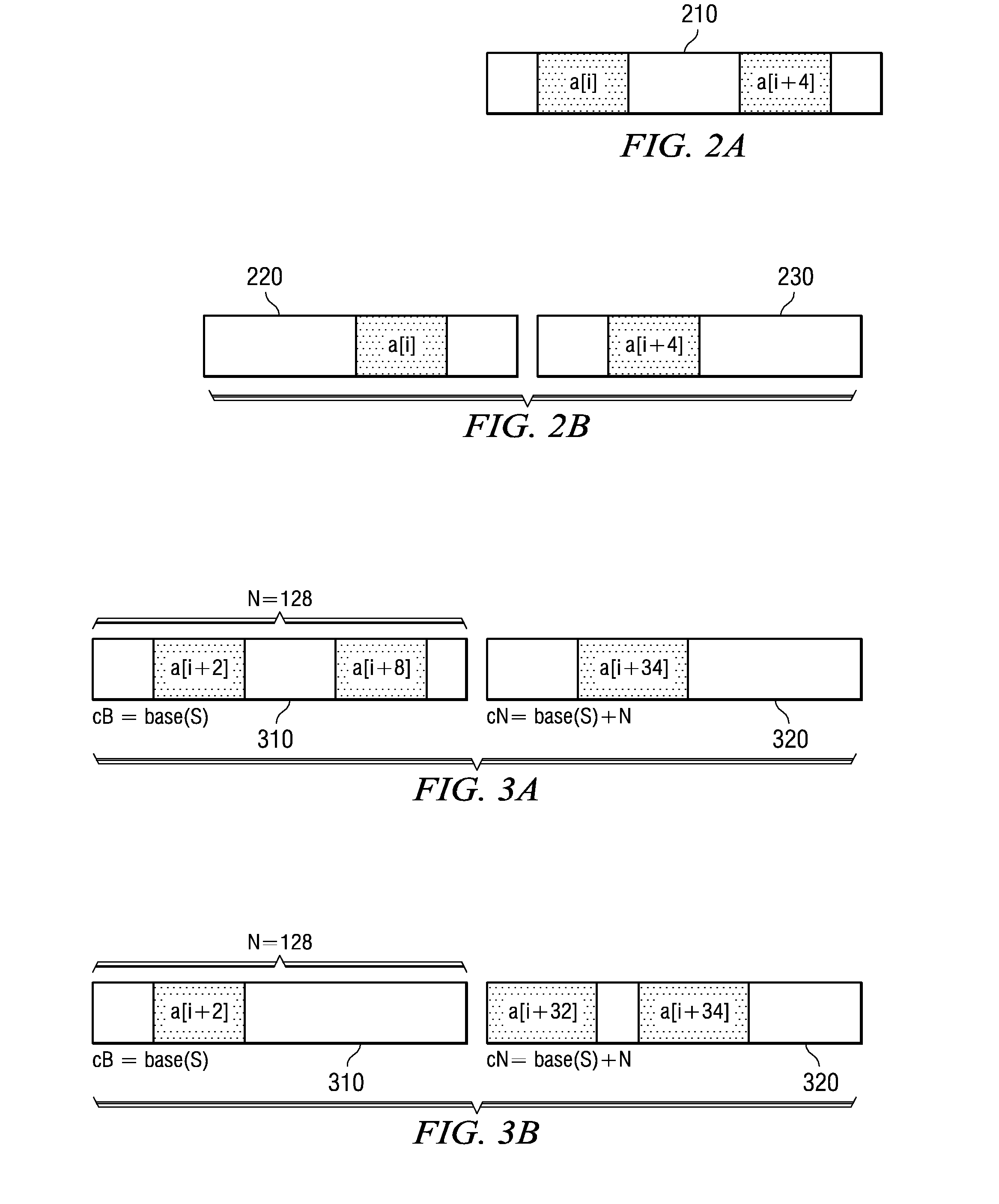

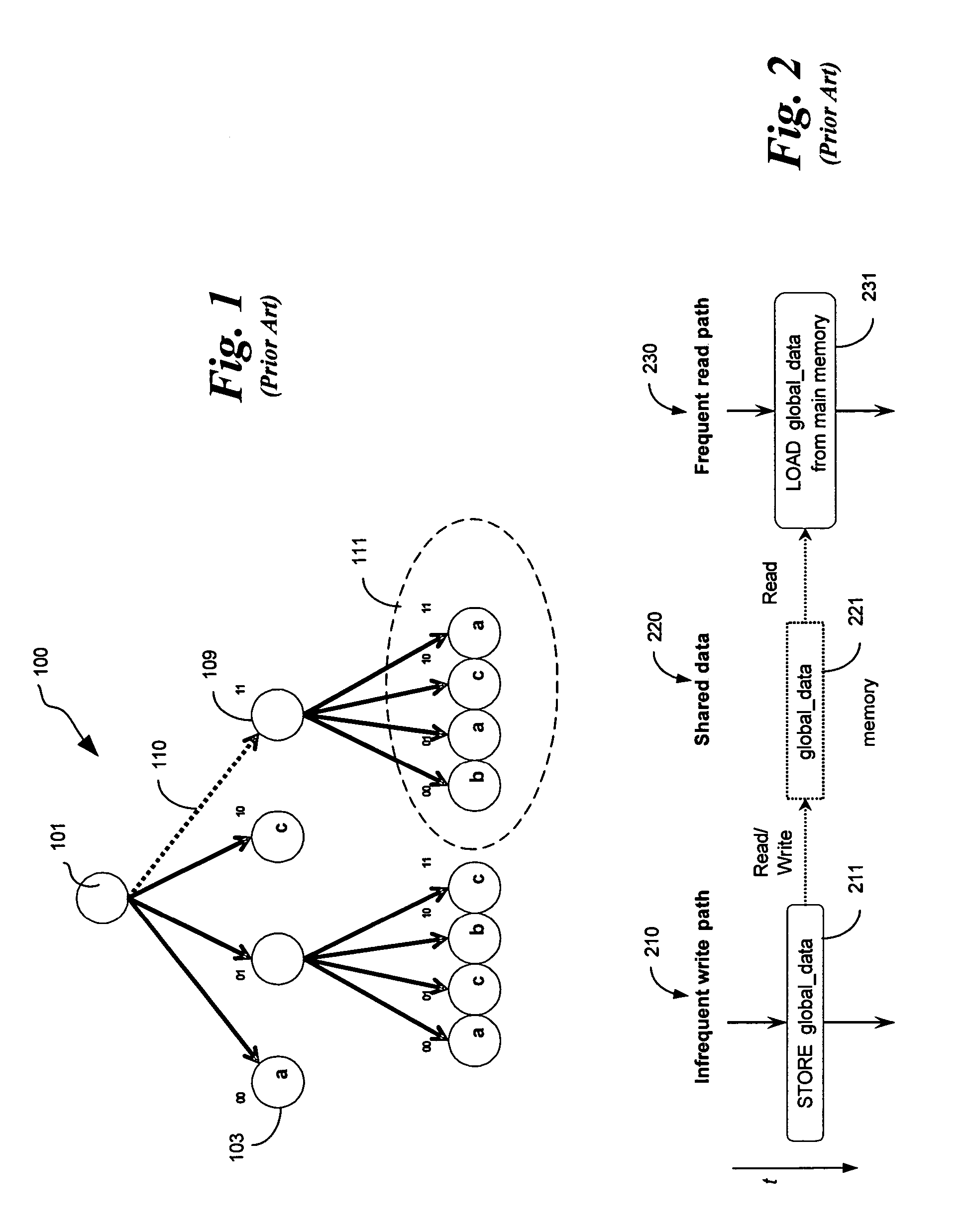

Software managed cache optimization system and method for multi-processing systems

The present invention provides for a method for computer program code optimization for a software managed cache in either a uni-processor or a multi-processor system. A single source file comprising a plurality of array references is received. The plurality of array references is analyzed to identify predictable accesses. The plurality of array references is analyzed to identify secondary predictable accesses. One or more of the plurality of array references is aggregated based on identified predictable accesses and identified secondary predictable accesses to generate aggregated references. The single source file is restructured based on the aggregated references to generate restructured code. Prefetch code is inserted in the restructured code based on the aggregated references. Software cache update code is inserted in the restructured code based on the aggregated references. Explicit cache lookup code is inserted for the remaining unpredictable accesses. Calls to a miss handler for misses in the explicit cache lookup code are inserted. A miss handler is included in the generated code for the program. In the miss handler, a line to evict is chosen based on recent usage and predictability. In the miss handler, appropriate DMA commands are issued for the evicted line and the missing line.

Owner:INT BUSINESS MASCH CORP

Software managed cache optimization system and method for multi-processing systems

The present invention provides for a method for computer program code optimization for a software managed cache in either a uni-processor or a multi-processor system. A single source file comprising a plurality of array references is received. The plurality of array references is analyzed to identify predictable accesses. The plurality of array references is analyzed to identify secondary predictable accesses. One or more of the plurality of array references is aggregated based on identified predictable accesses and identified secondary predictable accesses to generate aggregated references. The single source file is restructured based on the aggregated references to generate restructured code. Prefetch code is inserted in the restructured code based on the aggregated references. Software cache update code is inserted in the restructured code based on the aggregated references. Explicit cache lookup code is inserted for the remaining unpredictable accesses. Calls to a miss handler for misses in the explicit cache lookup code are inserted. A miss handler is included in the generated code for the program. In the miss handler, a line to evict is chosen based on recent usage and predictability. In the miss handler, appropriate DMA commands are issued for the evicted line and the missing line.

Owner:IBM CORP

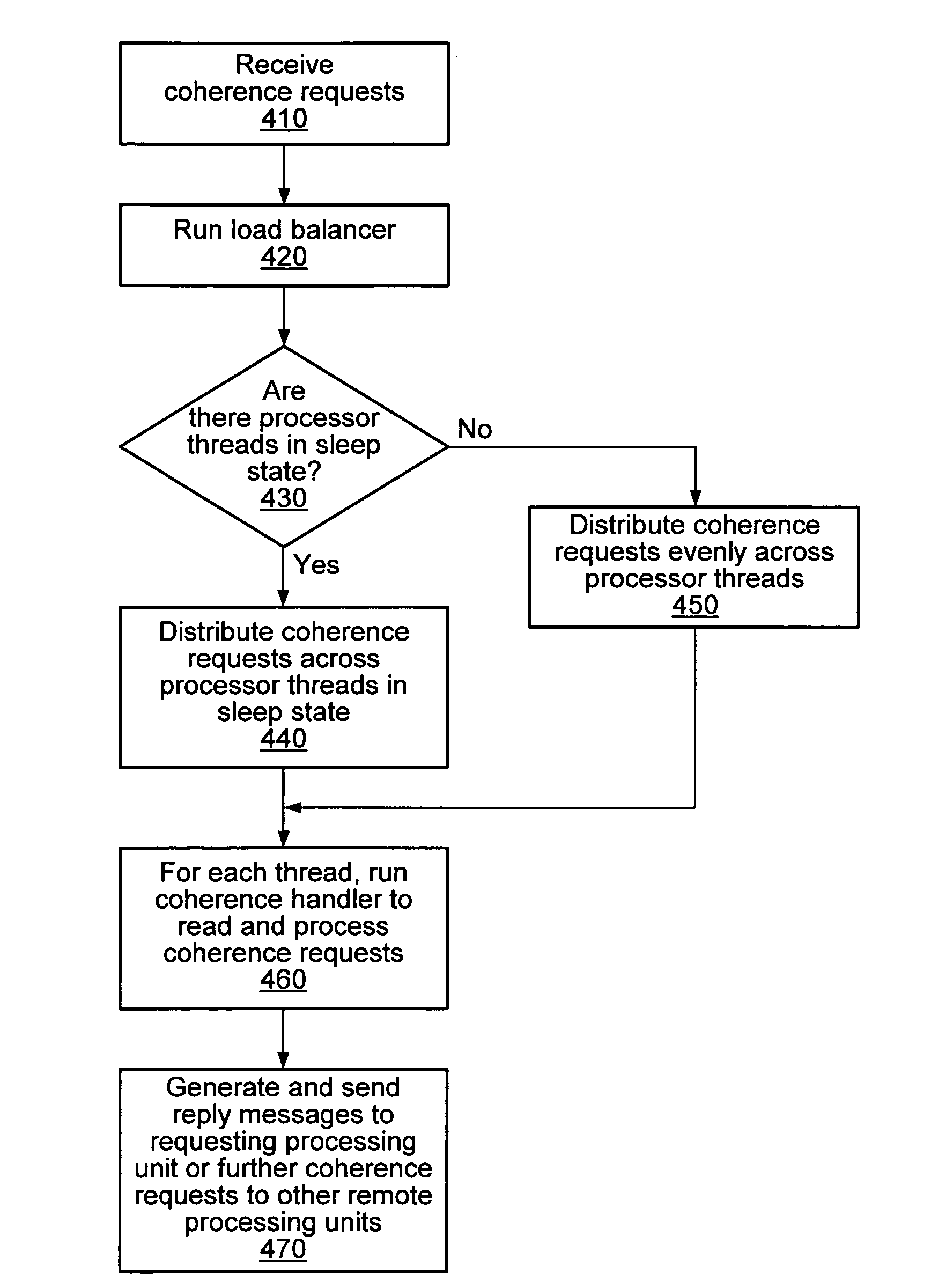

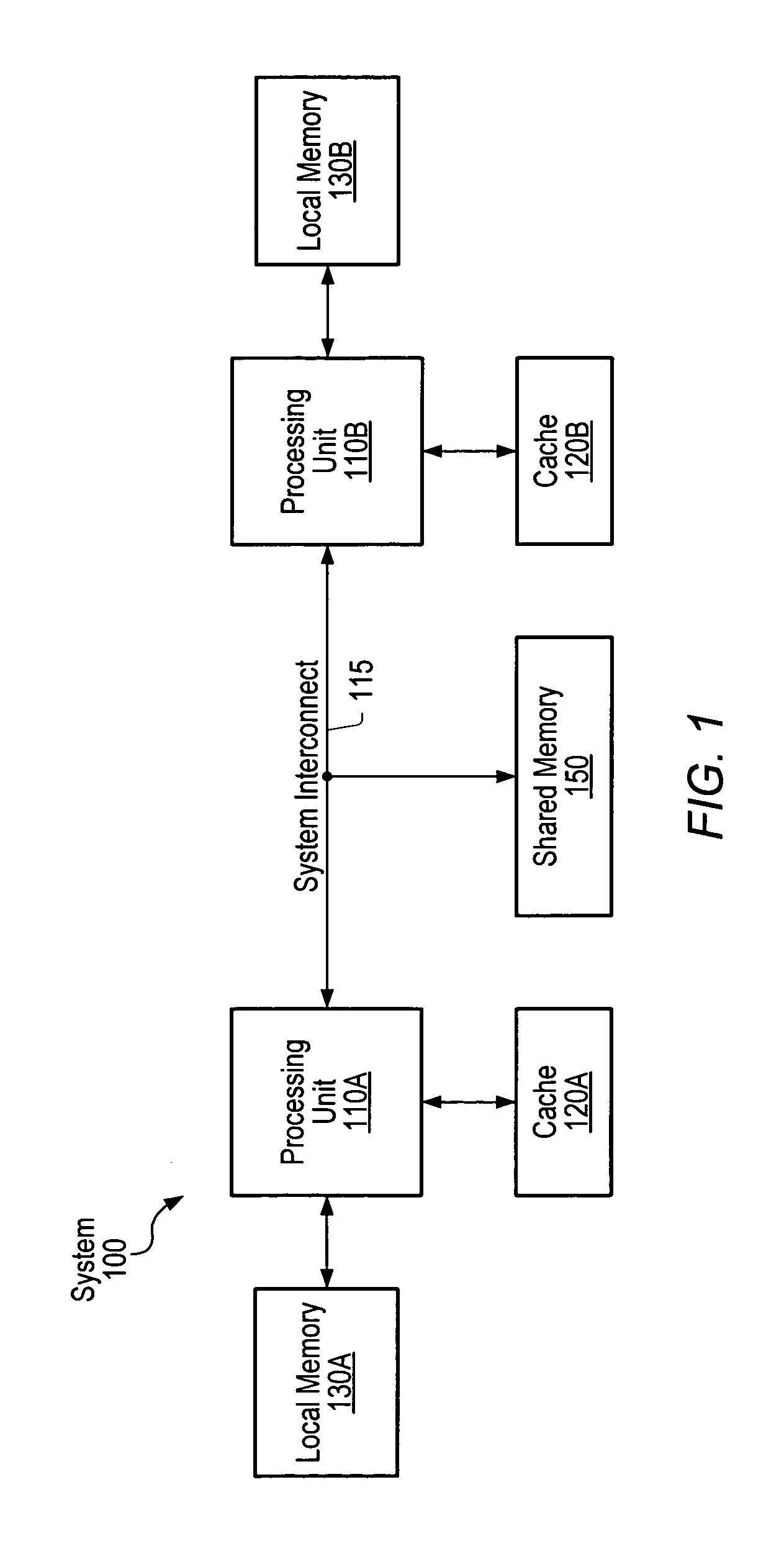

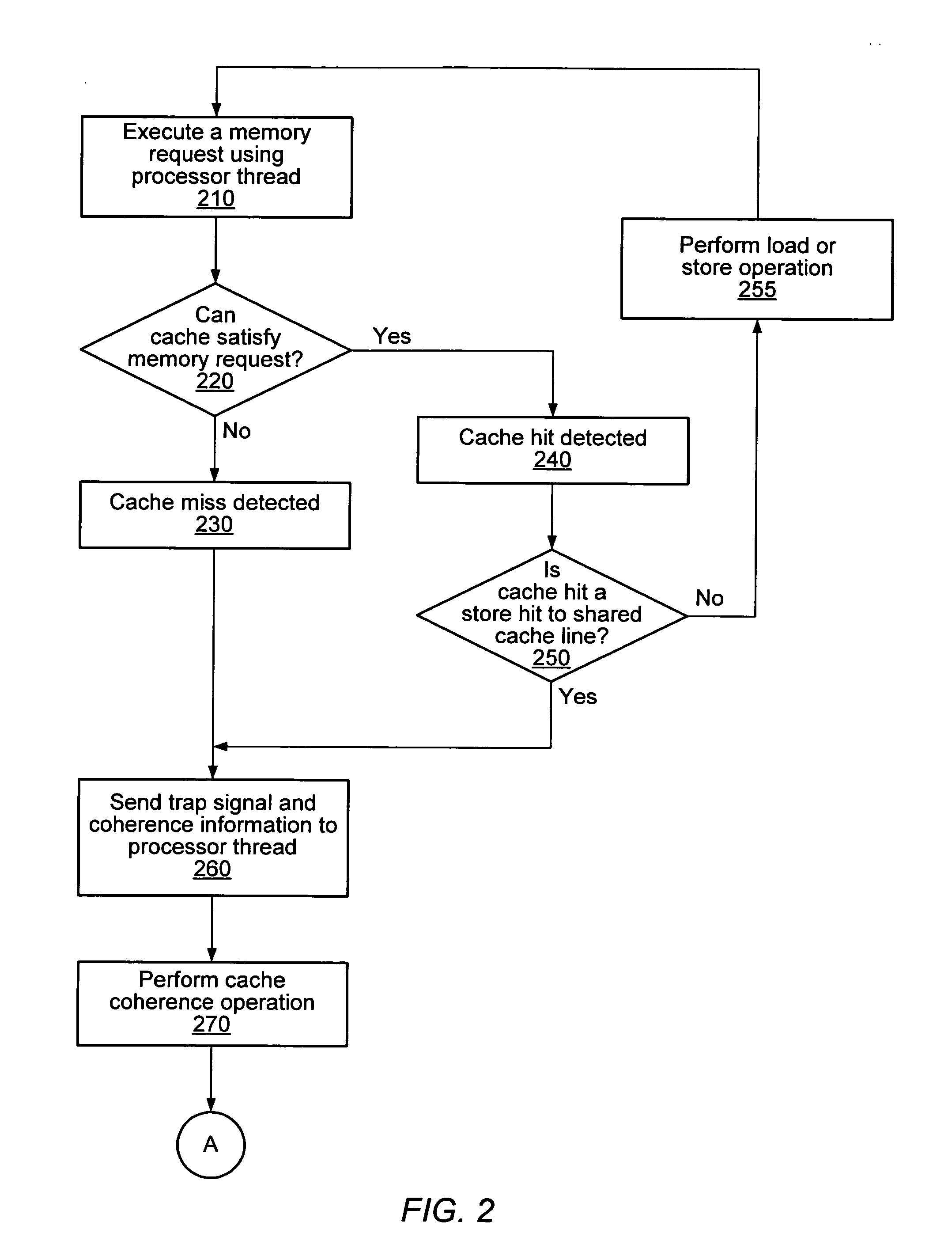

System and method for efficient software cache coherence

Software-based cache coherence protocol. A processing unit may execute a memory request using a processor thread. In response to detecting a cache hit to shared or a cache miss associated with the memory request, a cache may provide both a trap signal and coherence information to the processor thread of the processing unit. After receiving the trap signal and the coherence information, the processor thread may perform a cache coherence operation for the memory request using at least the received coherence information. The processing unit may include a plurality of processor threads and a load balancer. The load balancer may receive coherence requests from one or more remote processing units and distribute the received coherence requests across the plurality of processor threads. The load balance may preferentially distribute the received coherence requests across the plurality of processor threads based on the operation state of the processor threads.

Owner:ORACLE INT CORP

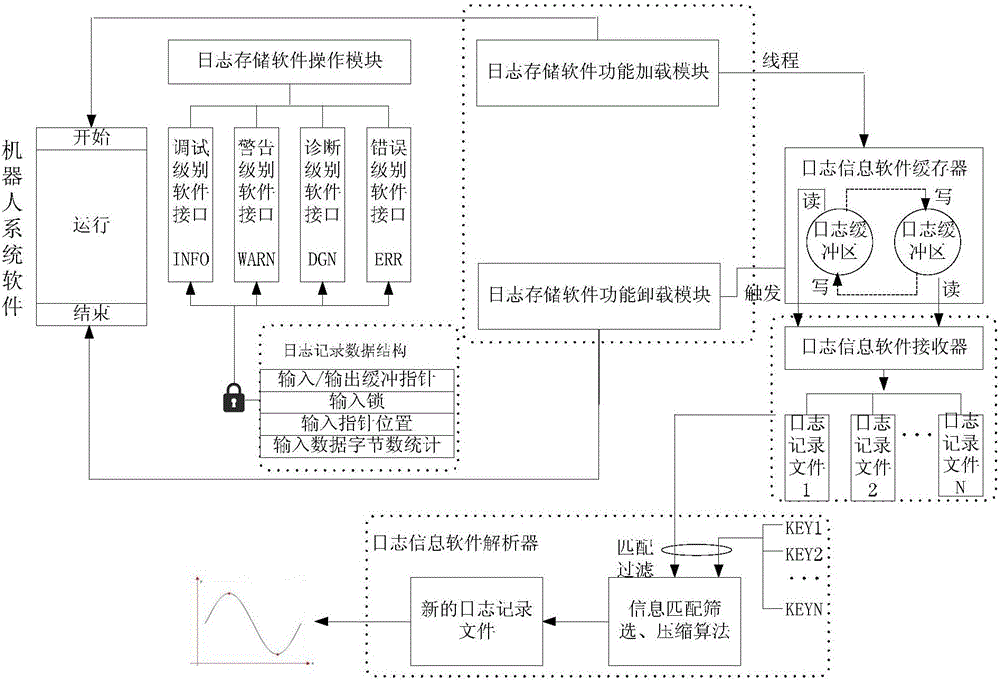

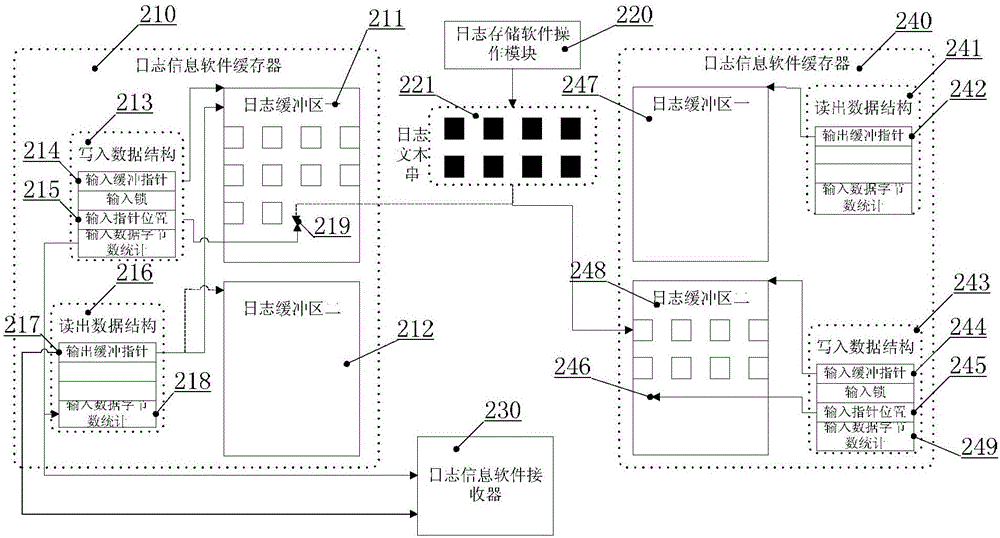

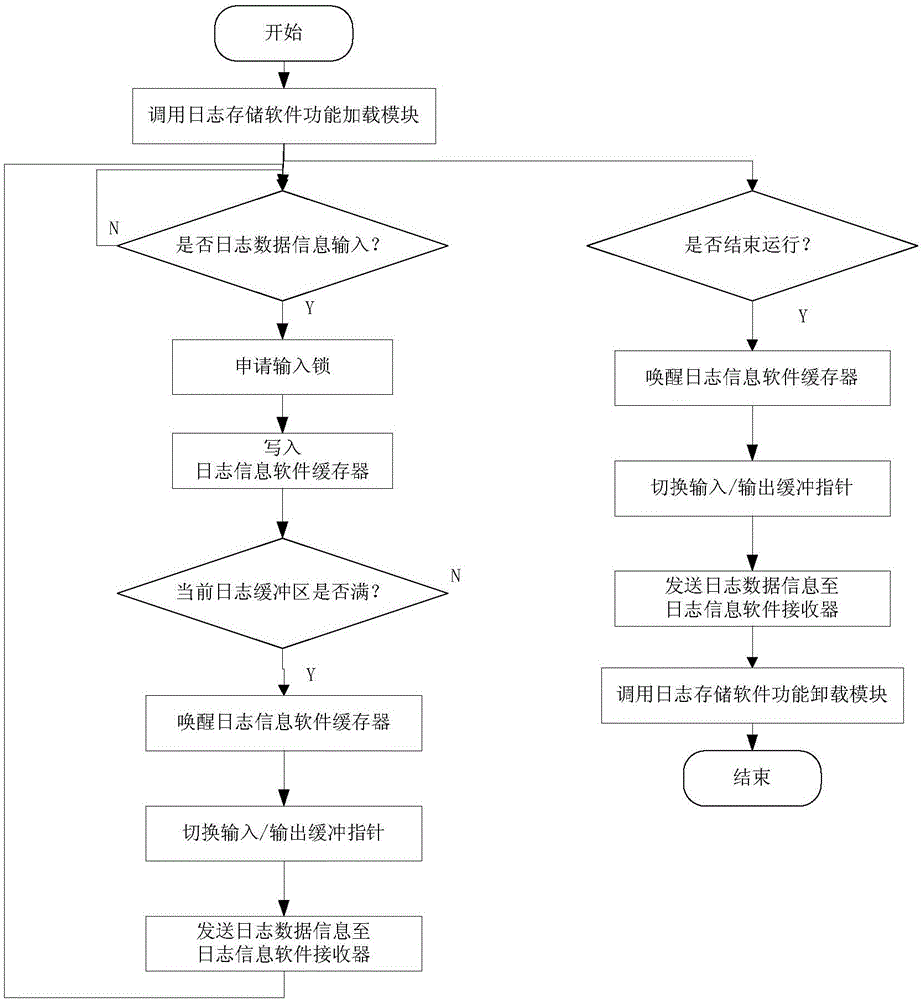

Double-buffering type robot software log storage method

The invention provides a double-buffering type robot software log storage method. The method is characterized by comprising the following steps of: setting a log storage software function loading module, a log storage software function unloading module, a log storage software operation module, a log information software buffer, a log information software receiver and a log information software analyzer, wherein the log information software buffer comprises two log buffer areas with same storage spaces; when one log buffer area is full of data, switching an input pointer to the other log buffer area so as not to delay the data input; pointing an output pointer to the log buffer area full of data, outputting the log data information in the log buffer area full of data, and setting the log buffer area to be in an idle state. According to the method, the log data information can be stored, and the overall operation load of robot system software can be greatly reduced, so that benefit is brought to improve the software defect check efficiency.

Owner:FOSHAN INST OF INTELLIGENT EQUIP TECH +1

Optimized code generation targeting a high locality software cache

Mechanisms for optimized code generation targeting a high locality software cache are provided. Original computer code is parsed to identify memory references in the original computer code. Memory references are classified as either regular memory references or irregular memory references. Regular memory references are controlled by a high locality cache mechanism. Original computer code is transformed, by a compiler, to generate transformed computer code in which the regular memory references are grouped into one or more memory reference streams, each memory reference stream having a leading memory reference, a trailing memory reference, and one or more middle memory references. Transforming of the original computer code comprises inserting, into the original computer code, instructions to execute initialization, lookup, and cleanup operations associated with the leading memory reference and trailing memory reference in a different manner from initialization, lookup, and cleanup operations for the one or more middle memory references.

Owner:GLOBALFOUNDRIES INC

Efficient Software Cache Accessing With Handle Reuse

A mechanism for efficient software cache accessing with handle reuse is provided. The mechanism groups references in source code into a reference stream with the reference stream having a size equal to or less than a size of a software cache line. The source code is transformed into optimized code by modifying the source code to include code for performing at most two cache lookup operations for the reference stream to obtain two cache line handles. Moreover, the transformation involves inserting code to resolve references in the reference stream based on the two cache line handles. The optimized code may be output for generation of executable code.

Owner:IBM CORP

Reducing Runtime Coherency Checking with Global Data Flow Analysis

ActiveUS20090293047A1Reducing runtime coherency checkingSoftware engineeringProgram controlData streamParallel computing

Reducing runtime coherency checking using global data flow analysis is provided. A determination is made as to whether a call is for at least one of a DMA get operation or a DMA put operation in response to the call being issued during execution of a compiled and optimized code. A determination is made as to whether a software cache write operation has been issued since a last flush operation in response to the call being the DMA get operation. A DMA get runtime coherency check is then performed in response to the software cache write operation being issued since the last flush operation.

Owner:IBM CORP

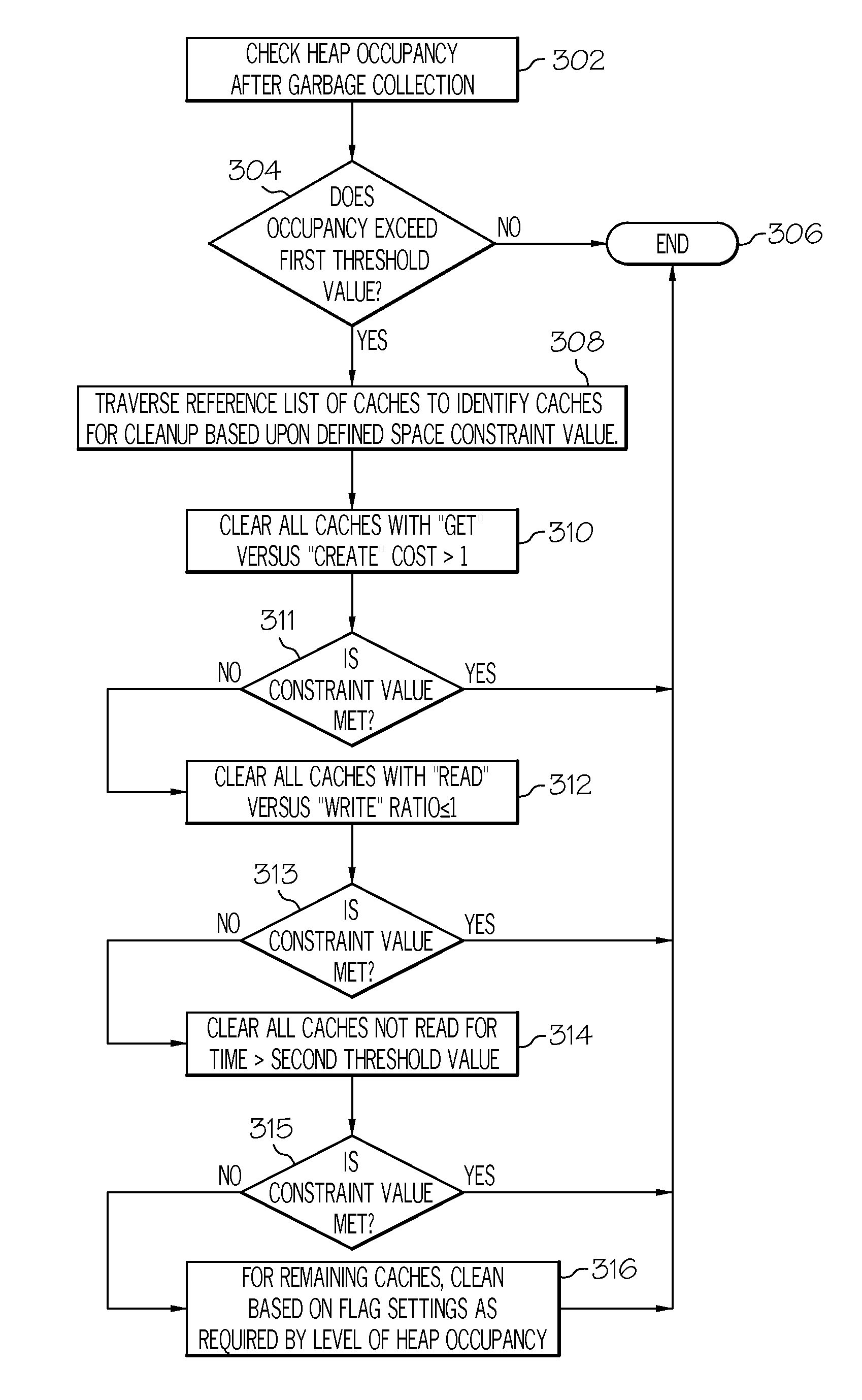

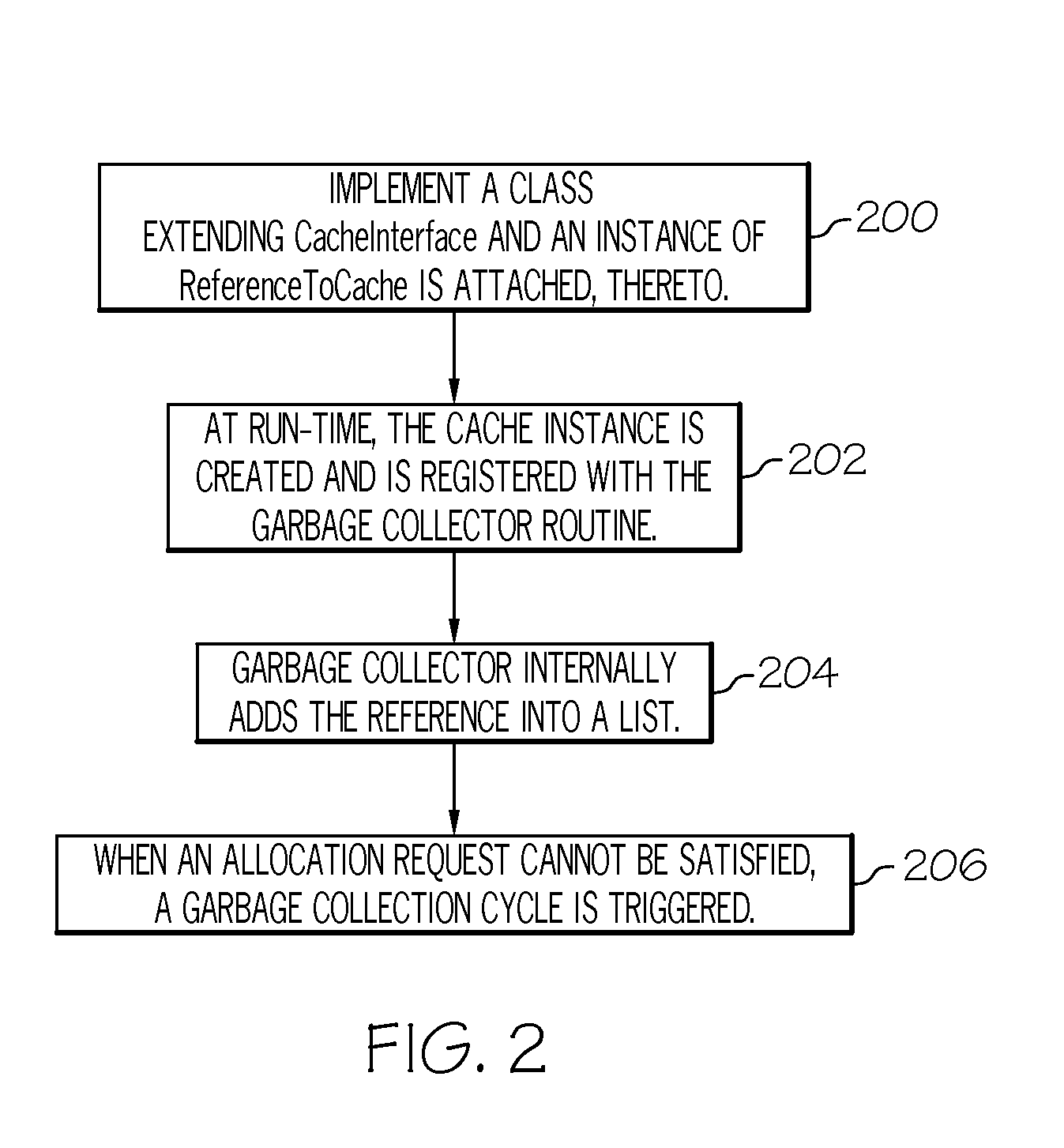

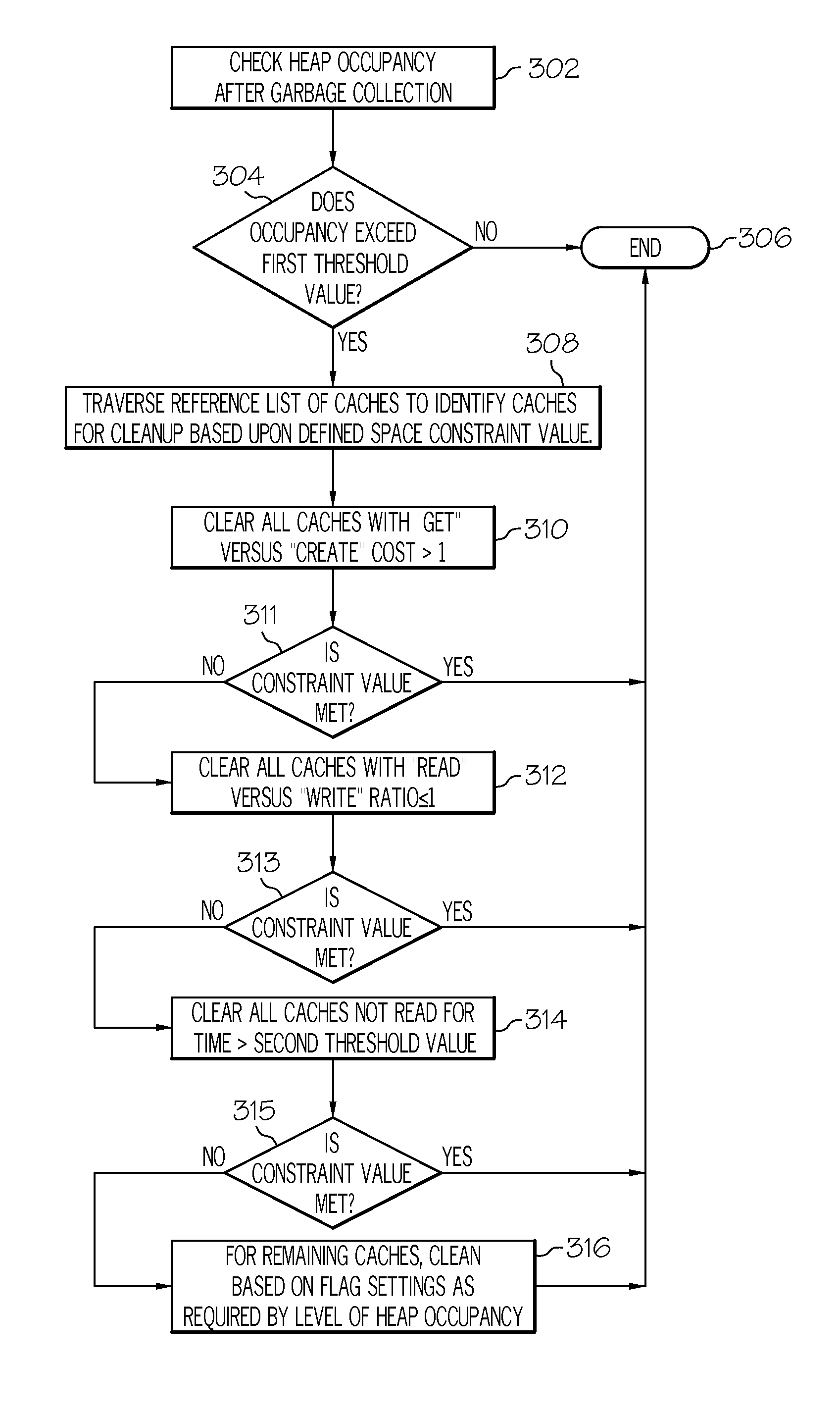

Efficient memory management in software caches

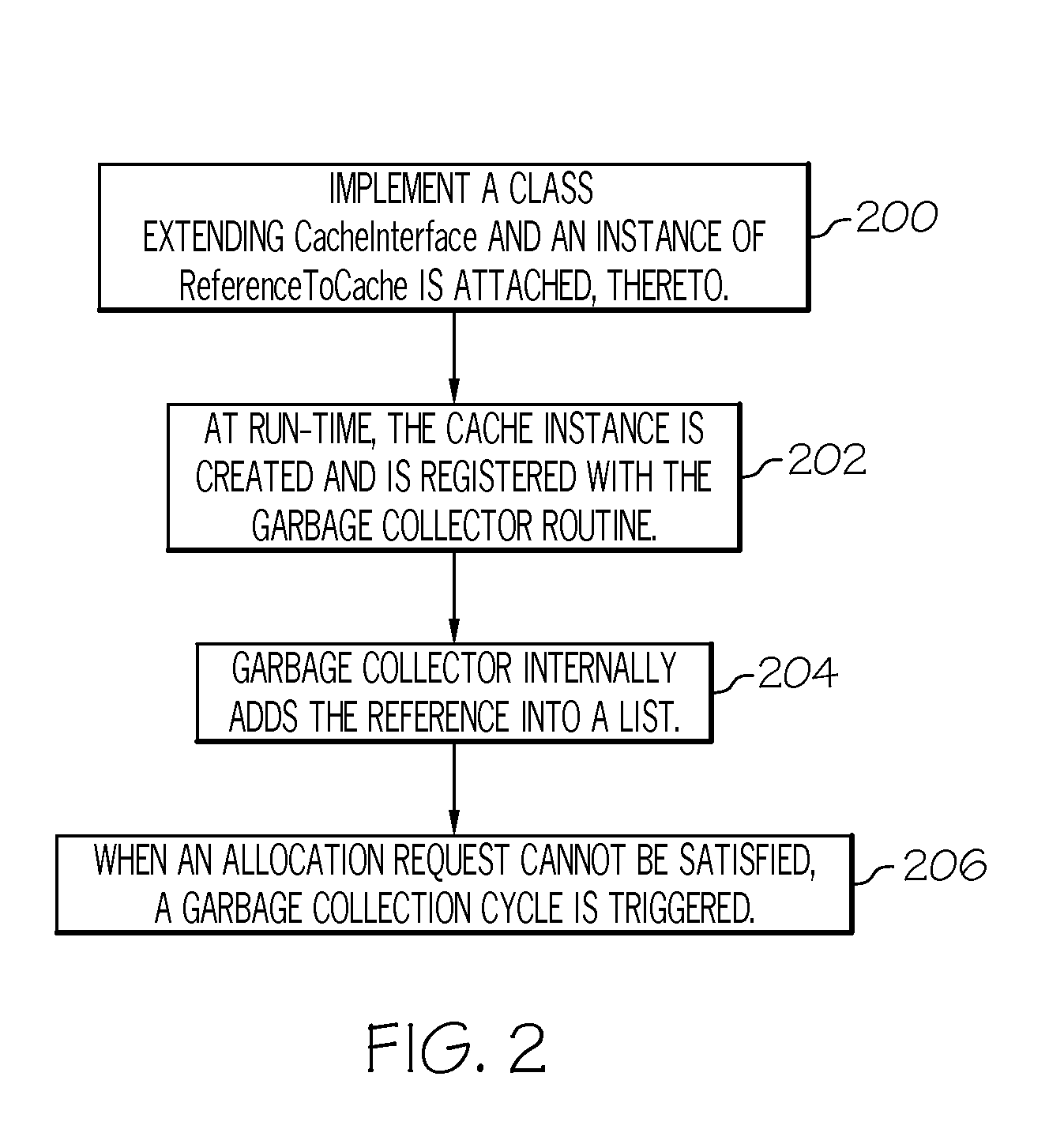

The use of heap memory is optimized by extending a cache implementation with a CacheInterface base class. An instance of a ReferenceToCache is attached to the CacheInterface base class. The cache implementation is registered to a garbage collector application. The registration is stored as a reference list in a memory. In response to an unsatisfied cache allocation request, a garbage collection cycle is triggered to check heap occupancy. In response to exceeding a threshold value, the reference list is traversed for caches to be cleaned based upon a defined space constraint value. The caches are cleaned in accordance with the defined space constraint value.

Owner:IBM CORP

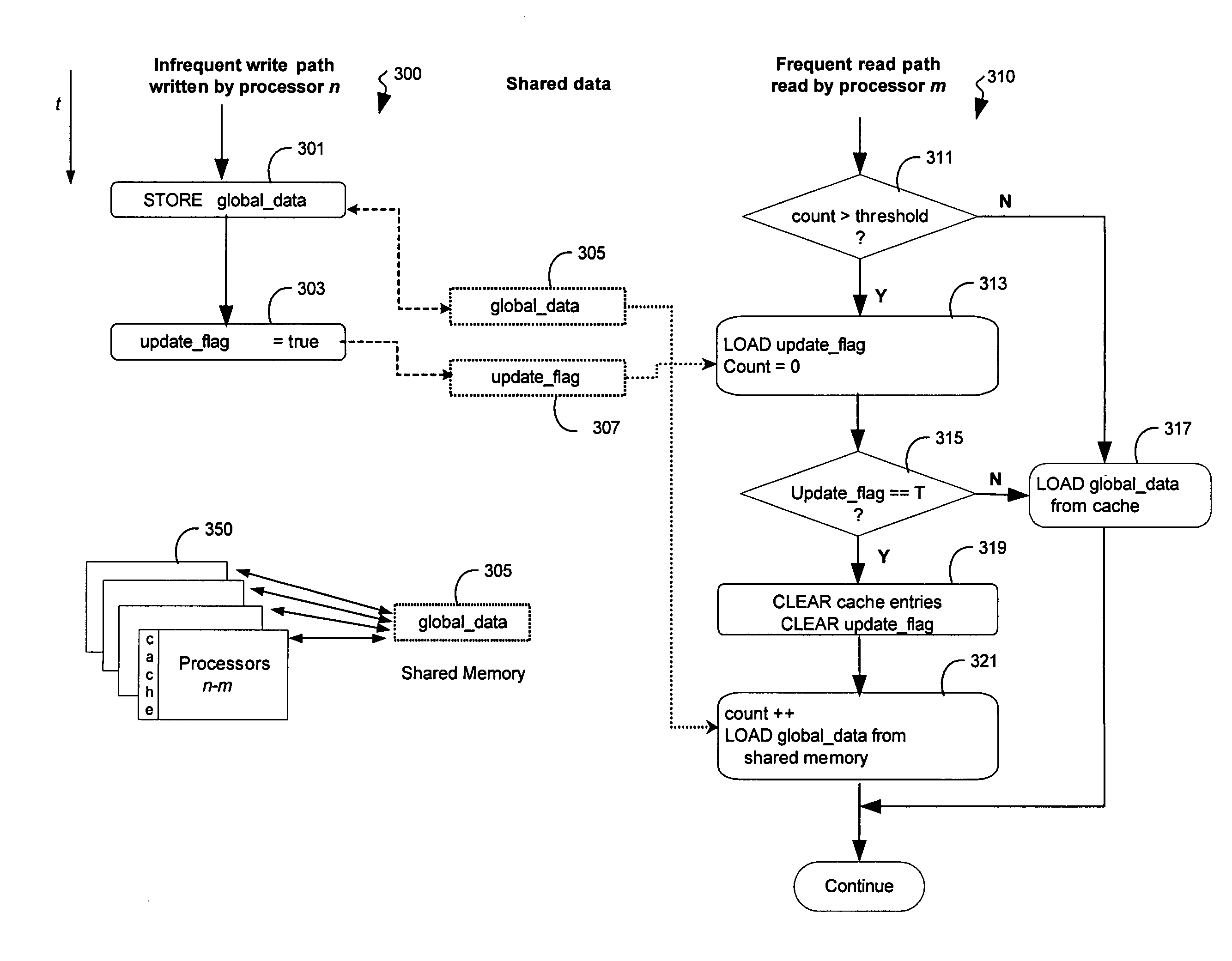

Software caching with bounded-error delayed update

In some embodiments, the invention involves a system and method relating to software caching with bounded-error delayed updates. Embodiments of the present invention describe a delayed-update software-controlled cache, which may be used to reduce memory access latencies and improve throughput for domain specific applications that are tolerant of errors caused by delayed updates of cached values. In at least one embodiment of the present invention, software caching may be implemented by using a compiler to automatically generate caching code in application programs that must access and / or update memory. Cache is accessed for a period of time, even if global data has been updated, to delay costly memory accesses. Other embodiments are described and claimed.

Owner:INTEL CORP

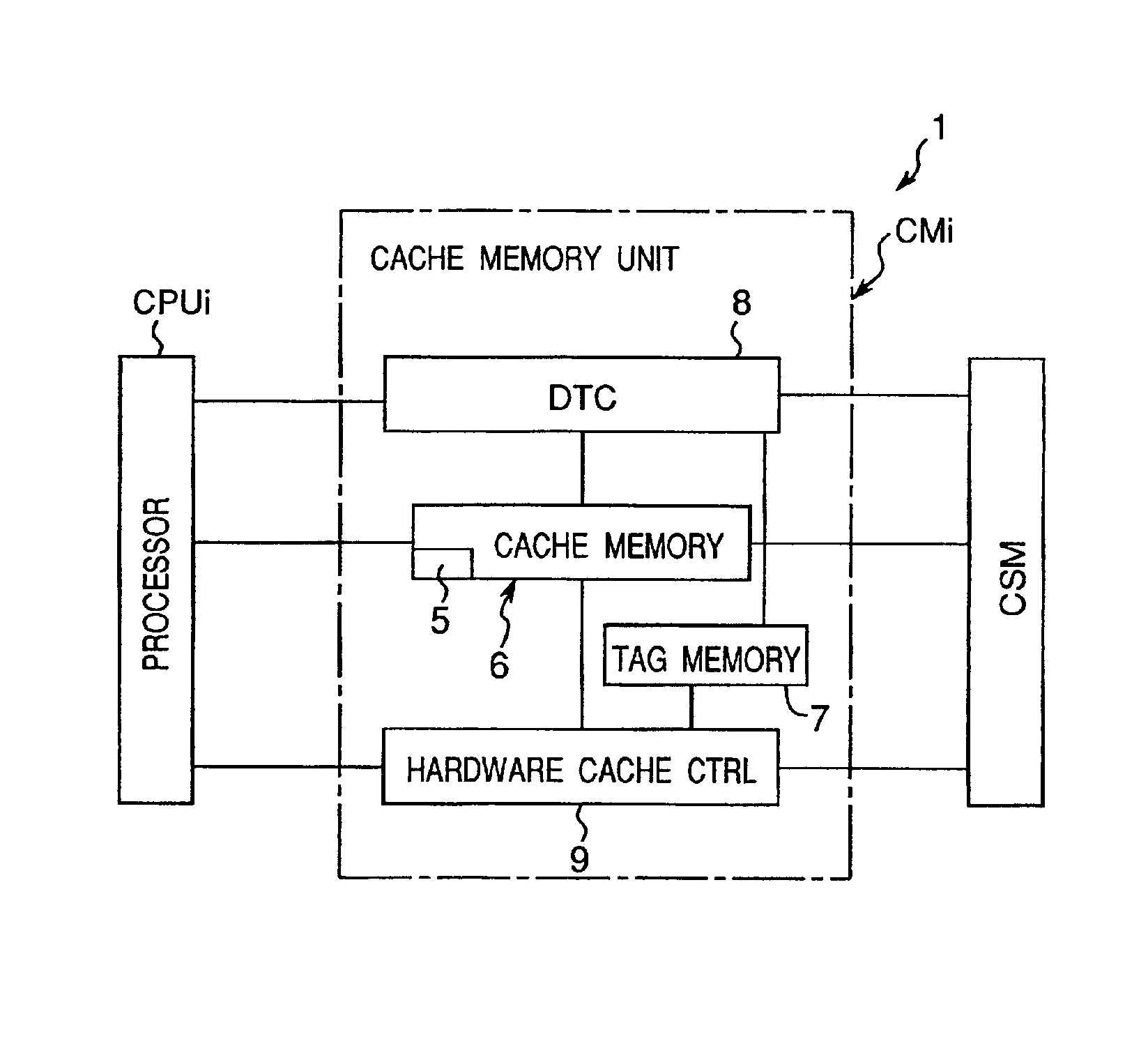

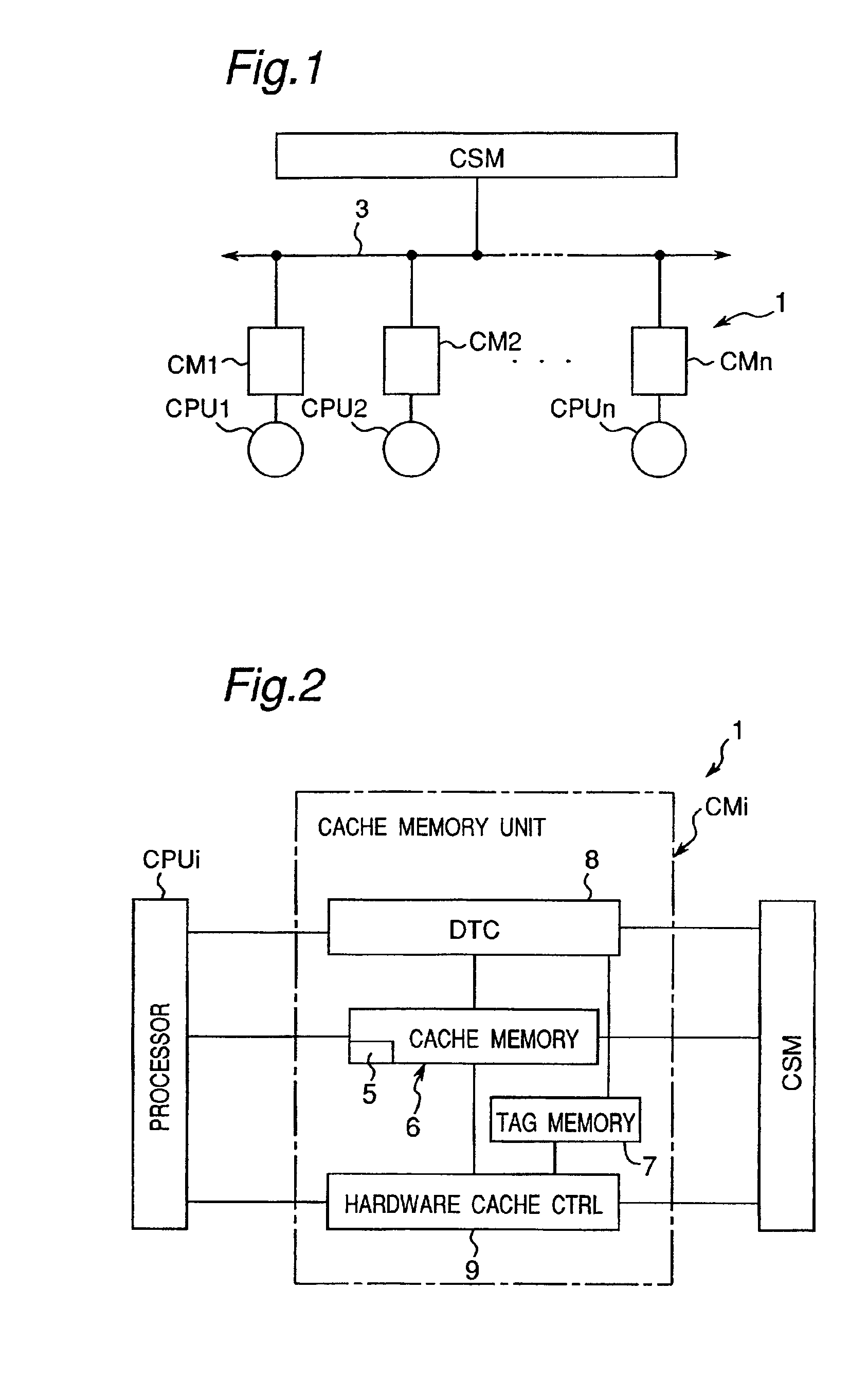

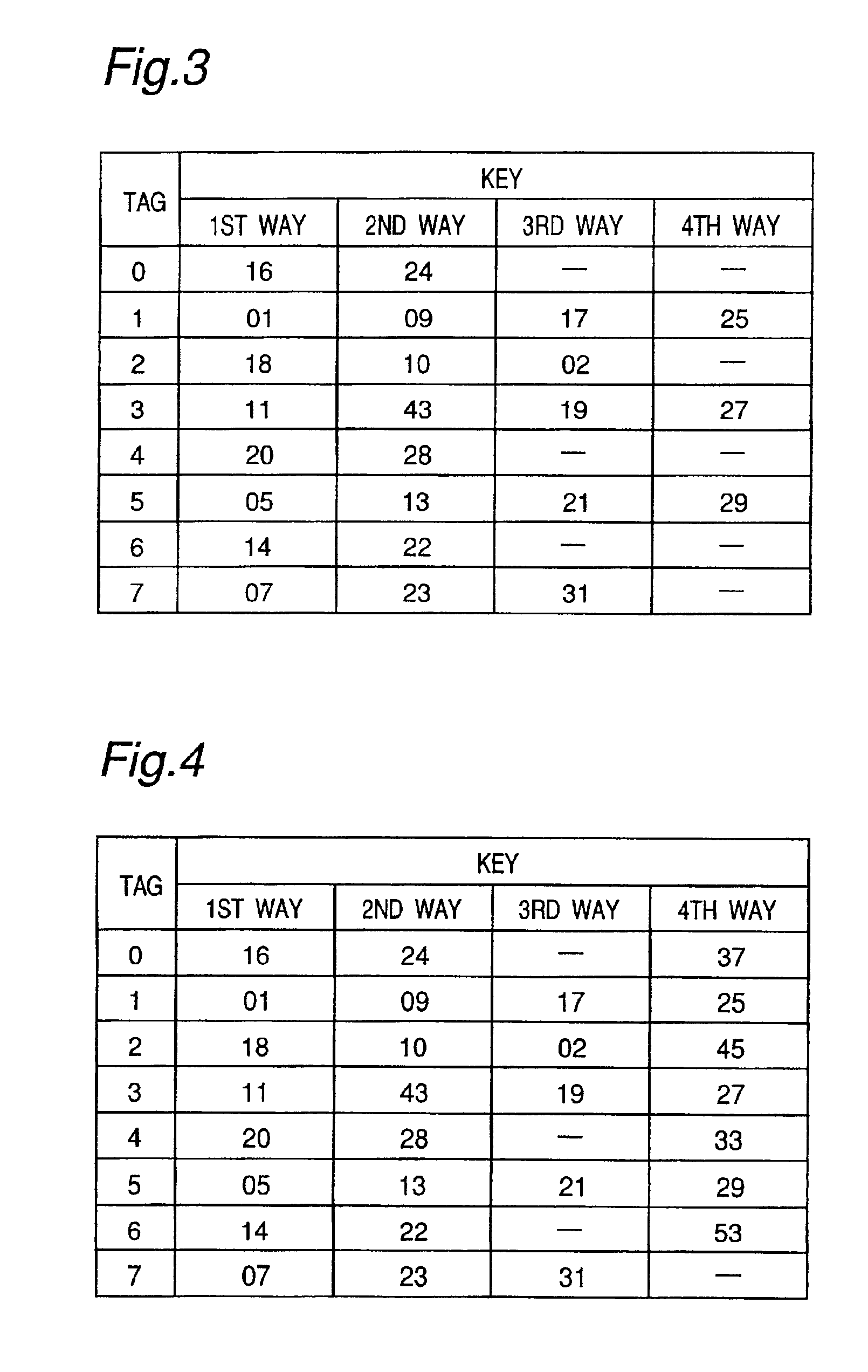

Cache memory system

InactiveUS6950902B2Memory architecture accessing/allocationProgram synchronisationSoftware cacheData transmission

A cache memory system having a small-capacity and high-speed access cache memory provided between a processor and a main memory, including a software cache controller for performing software control for controlling data transfer to the cache memory in accordance with a preliminarily programmed software and a hardware cache controller for performing hardware control for controlling data transfer to the cache memory by using a predetermined hardware such that the processor causes the software cache controller to perform the software control but causes the hardware cache controller to perform the hardware control when it becomes impossible to perform the software control.

Owner:SEMICON TECH ACADEMIC RES CENT

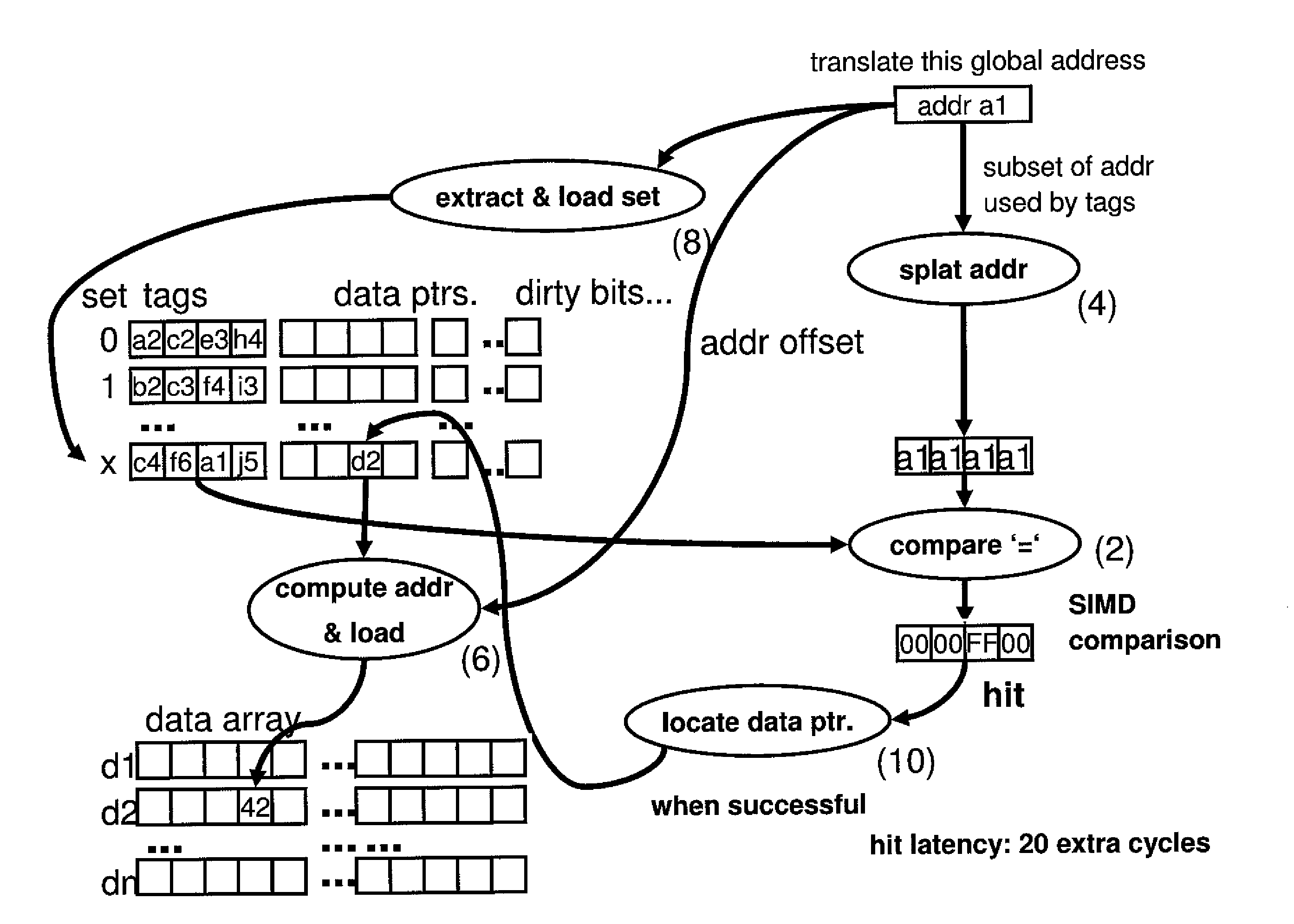

Optimized software cache lookup for SIMD architectures

InactiveUS8370575B2Improve performanceBig amount of dataMemory adressing/allocation/relocationMicro-instruction address formationPosition dependentSoftware cache

Process, cache memory, computer product and system for loading data associated with a requested address in a software cache. The process includes loading address tags associated with a set in a cache directory using a Single Instruction Multiple Data (SIMD) operation, determining a position of the requested address in the set using a SIMD comparison, and determining an actual data value associated with the position of the requested address in the set.

Owner:IBM CORP

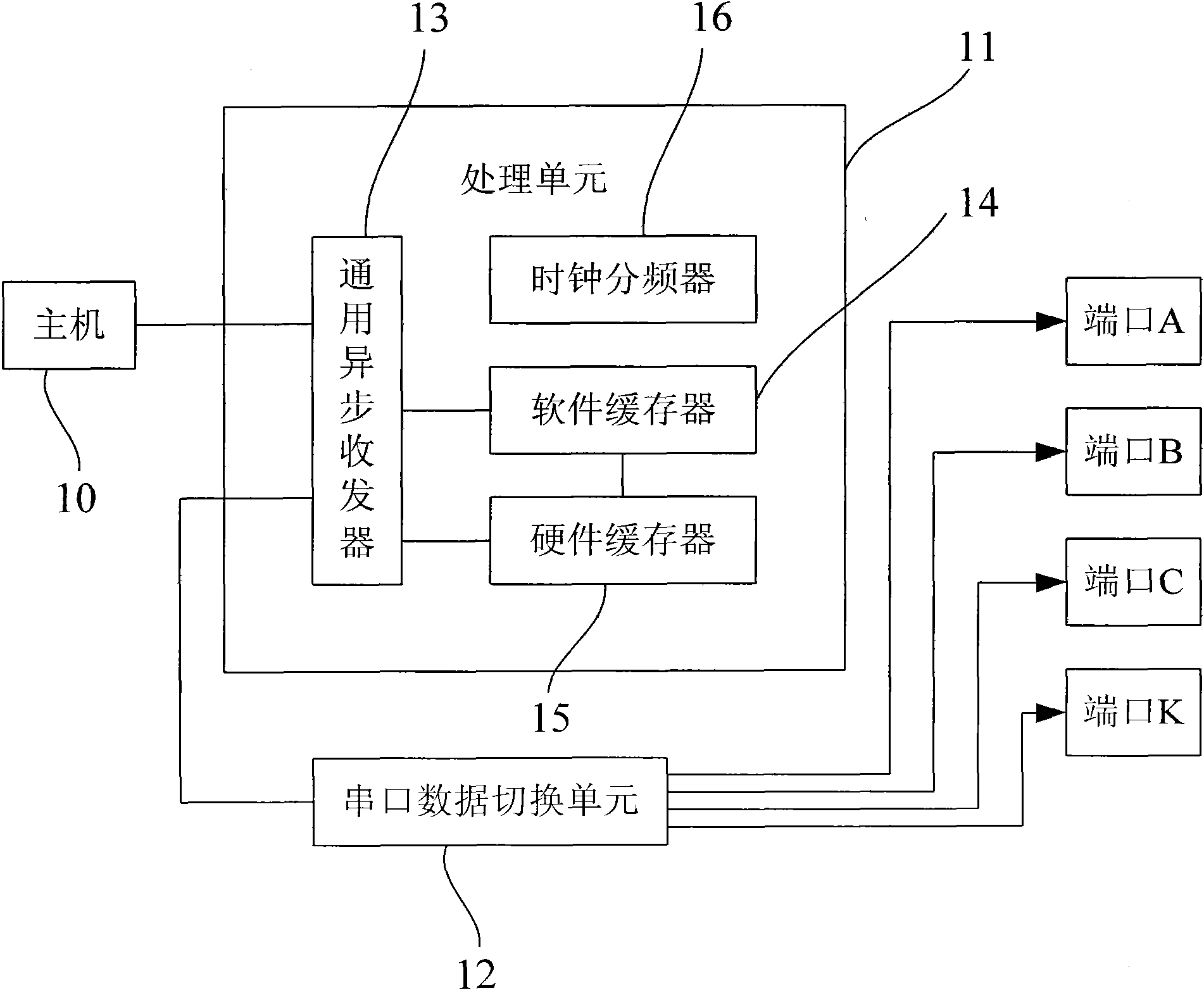

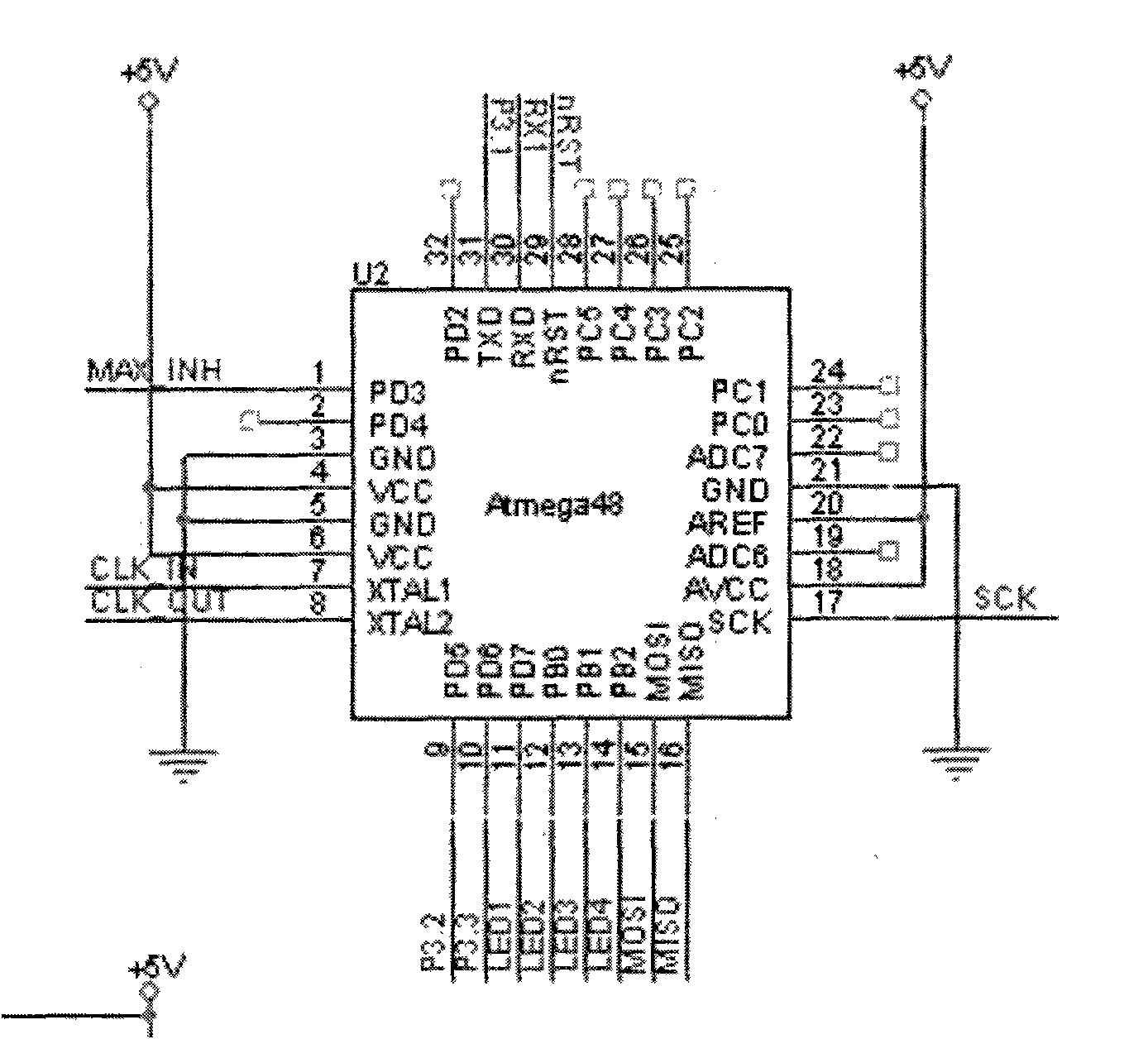

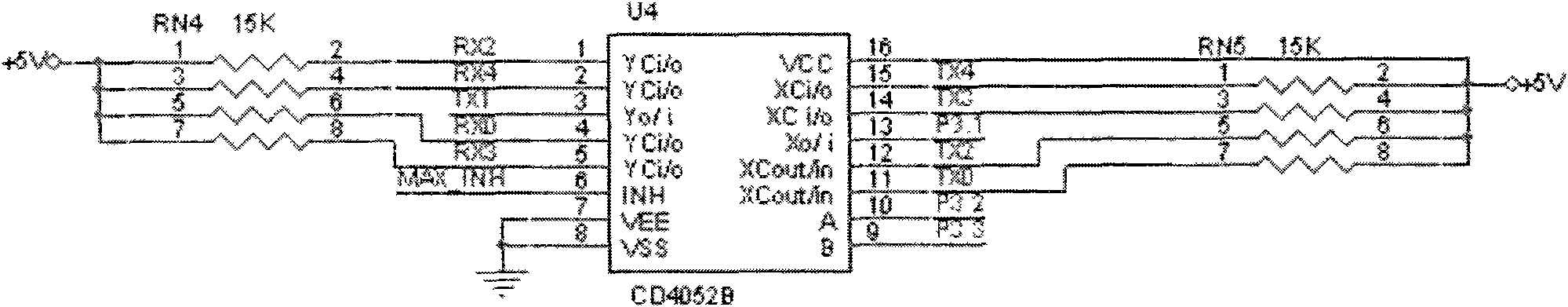

Serial hub and multi-serial high-speed communication method

InactiveCN101894086AReduce bit error rateGuaranteed full speed transmissionElectric digital data processingCommunication interfaceTransceiver

The invention provides a serial hub and a multi-serial high-speed communication method. The serial hub comprises a processing unit and a serial data switching unit, wherein the processing unit is connected with a host and is used for processing data and command signals transmitted from the host; the serial data switching unit is connected with the processing unit and a plurality of serial ports and is used for switching communication links or setting communication interface parameters according to control signals of the processing unit to ensure that a host port is connected with the corresponding serial port; the processing unit further comprises a universal asynchronous transceiver, a software buffer and a hardware buffer, wherein the universal asynchronous transceiver is connected with the host and is used for realizing the communication with the host, the software buffer is connected with the universal asynchronous transceiver and is used for storing overflow data, and the hardware buffer is connected with the software buffer and is used for overcoming time delay caused by the hardware buffer. The serial hub has the advantages of high communication speed, low error rate and wide application range.

Owner:昆山三泰新电子科技有限公司

Compiler Implemented Software Cache Apparatus and Method in which Non-Aliased Explicitly Fetched Data are Excluded

InactiveUS20080229291A1Reduce the amount requiredReduce in quantitySoftware engineeringProgram controlParallel computingSoftware cache

A compiler implemented software cache apparatus and method in which non-aliased explicitly fetched data are excluded are provided. With the mechanisms of the illustrative embodiments, a compiler uses a forward data flow analysis to prove that there is no alias between the cached data and explicitly fetched data. Explicitly fetched data that has no alias in the cached data are excluded from the software cache. Explicitly fetched data that has aliases in the cached data are allowed to be stored in the software cache. In this way, there is no runtime overhead to maintain the correctness of the two copies of data. Moreover, the number of lines of the software cache that must be protected from eviction is decreased. This leads to a decrease in the amount of computation cycles required by the cache miss handler when evicting cache lines during cache miss handling.

Owner:INT BUSINESS MASCH CORP

Performing useful computations while waiting for a line in a system with a software implemented cache

InactiveUS7461205B2Program control using stored programsMemory systemsParallel computingSoftware cache

Mechanisms for performing useful computations during a software cache reload operation are provided. With the illustrative embodiments, in order to perform software caching, a compiler takes original source code, and while compiling the source code, inserts explicit cache lookup instructions into appropriate portions of the source code where cacheable variables are referenced. In addition, the compiler inserts a cache miss handler routine that is used to branch execution of the code to a cache miss handler if the cache lookup instructions result in a cache miss. The cache miss handler, prior to performing a wait operation for waiting for the data to be retrieved from the backing store, branches execution to an independent subroutine identified by a compiler. The independent subroutine is executed while the data is being retrieved from the backing store such that useful work is performed.

Owner:INT BUSINESS MASCH CORP

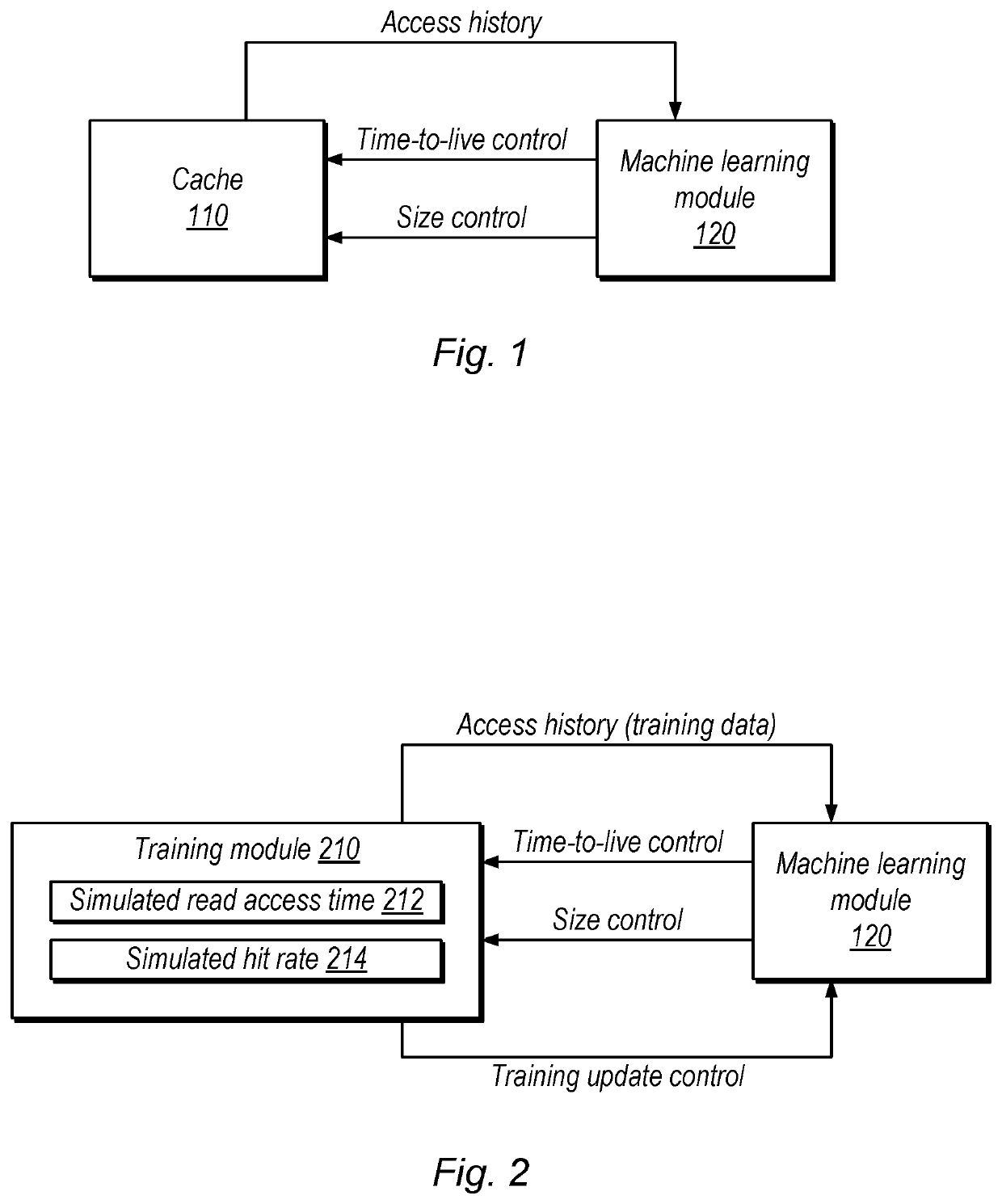

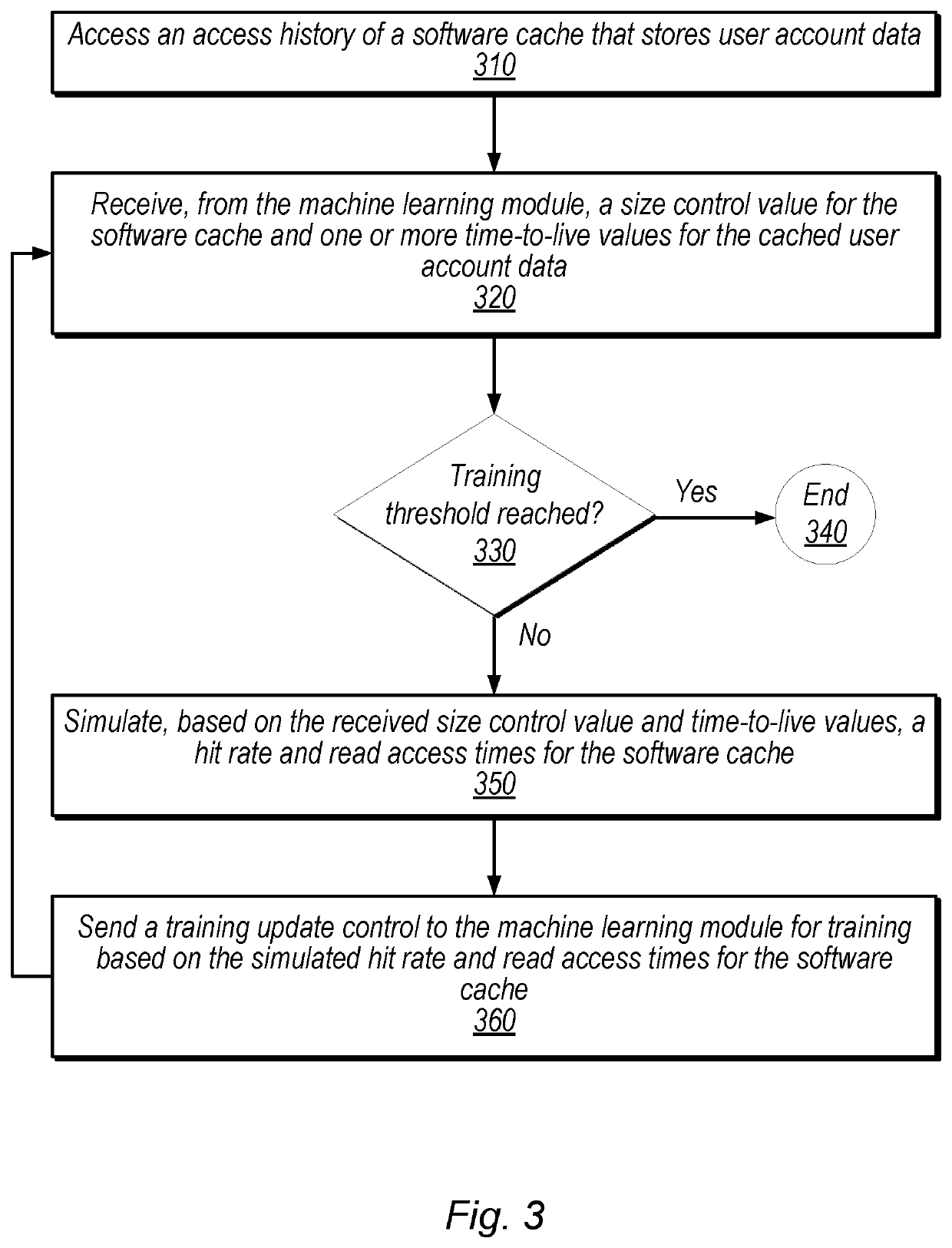

Controlling Cache Size and Priority Using Machine Learning Techniques

ActiveUS20200201773A1Memory architecture accessing/allocationMachine learningCache accessParallel computing

Techniques are disclosed relating to controlling cache size and priority of data stored in the cache using machine learning techniques. A software cache may store data for a plurality of different user accounts using one or more hardware storage elements. In some embodiments, a machine learning module generates, based on access patterns to the software cache, a control value that specifies a size of the cache and generates time-to-live values for entries in the cache. In some embodiments, the system evicts data based on the time-to-live values. The disclosed techniques may reduce cache access times and / or improve cache hit rate.

Owner:PAYPAL INC

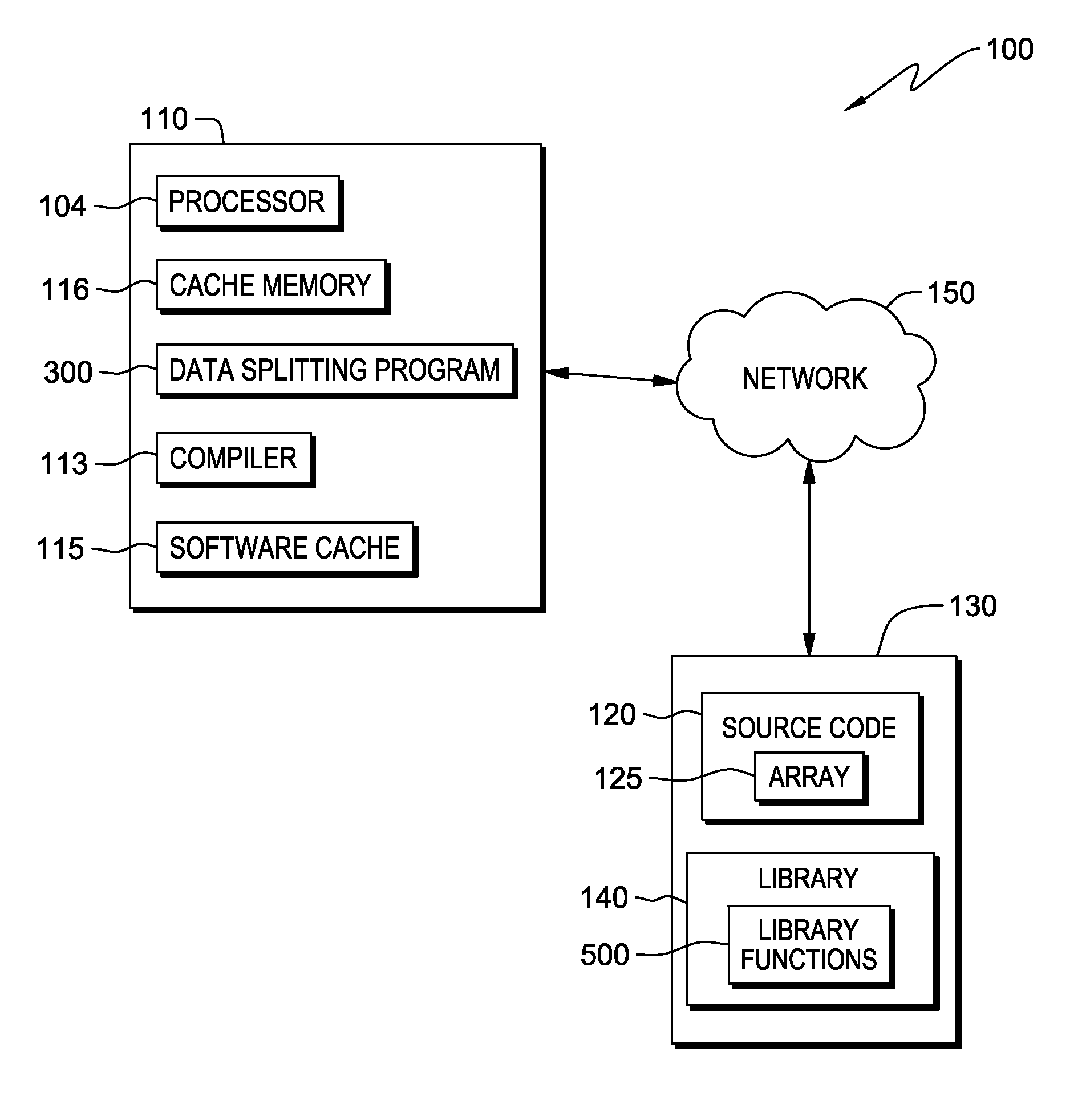

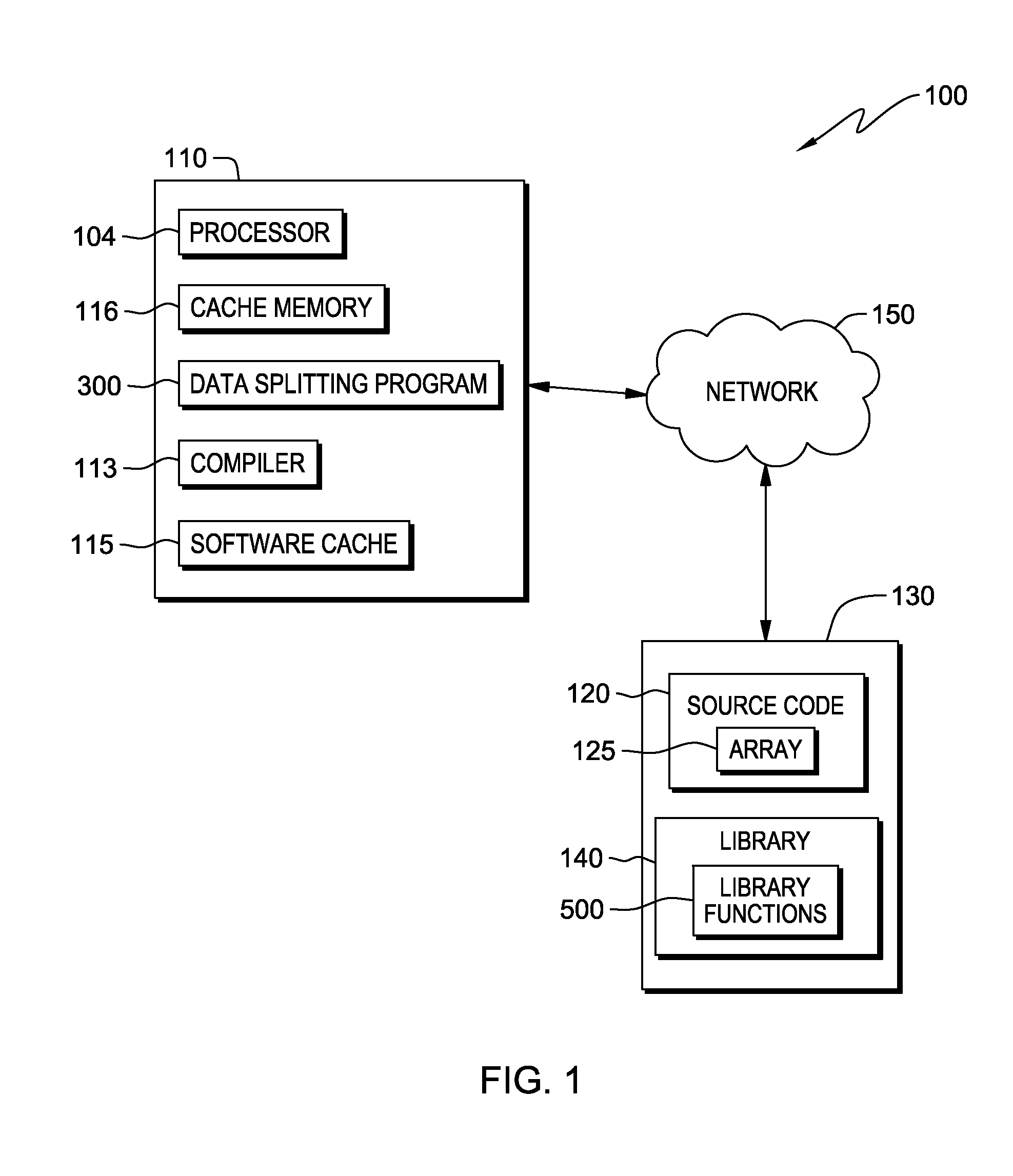

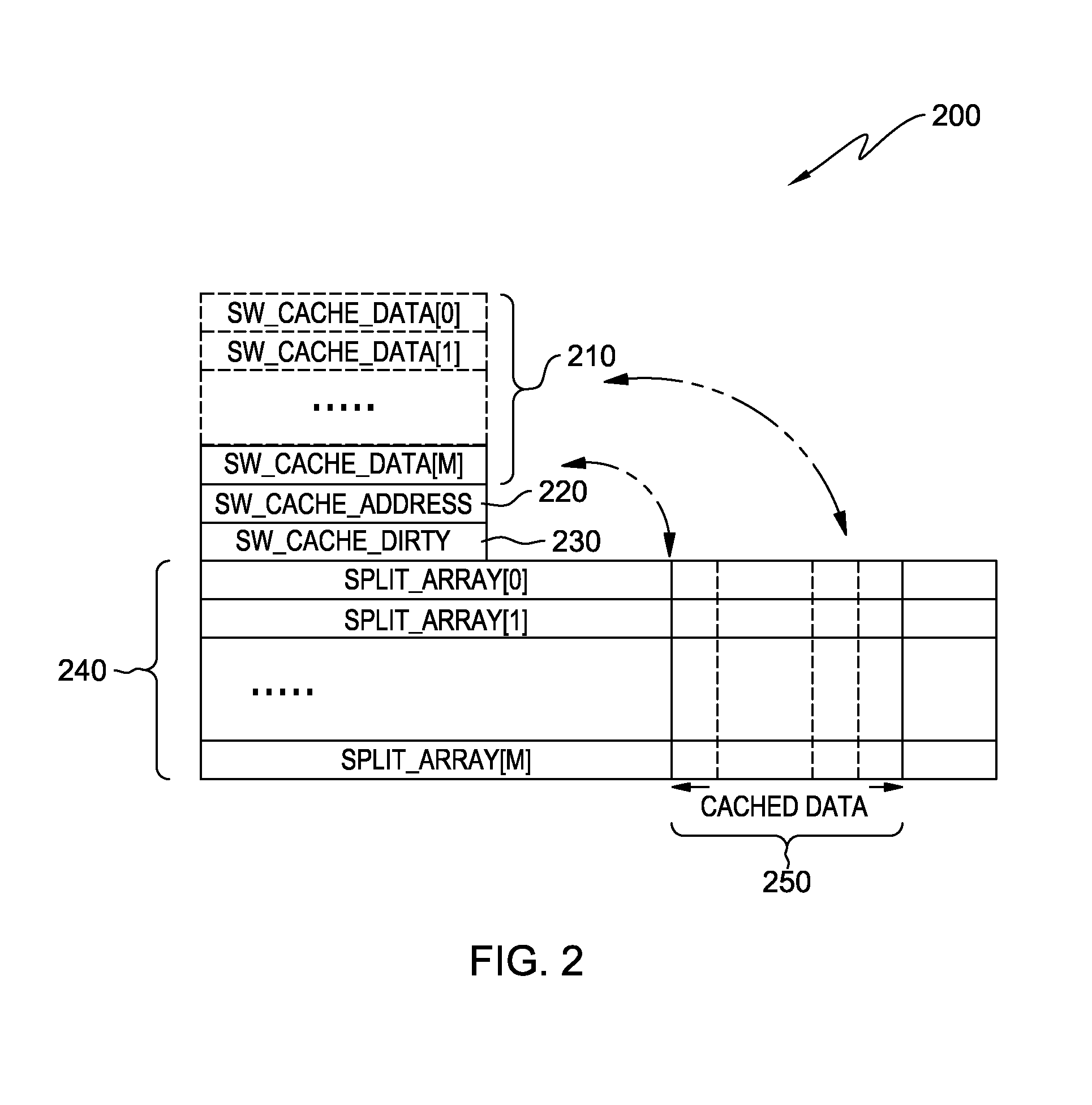

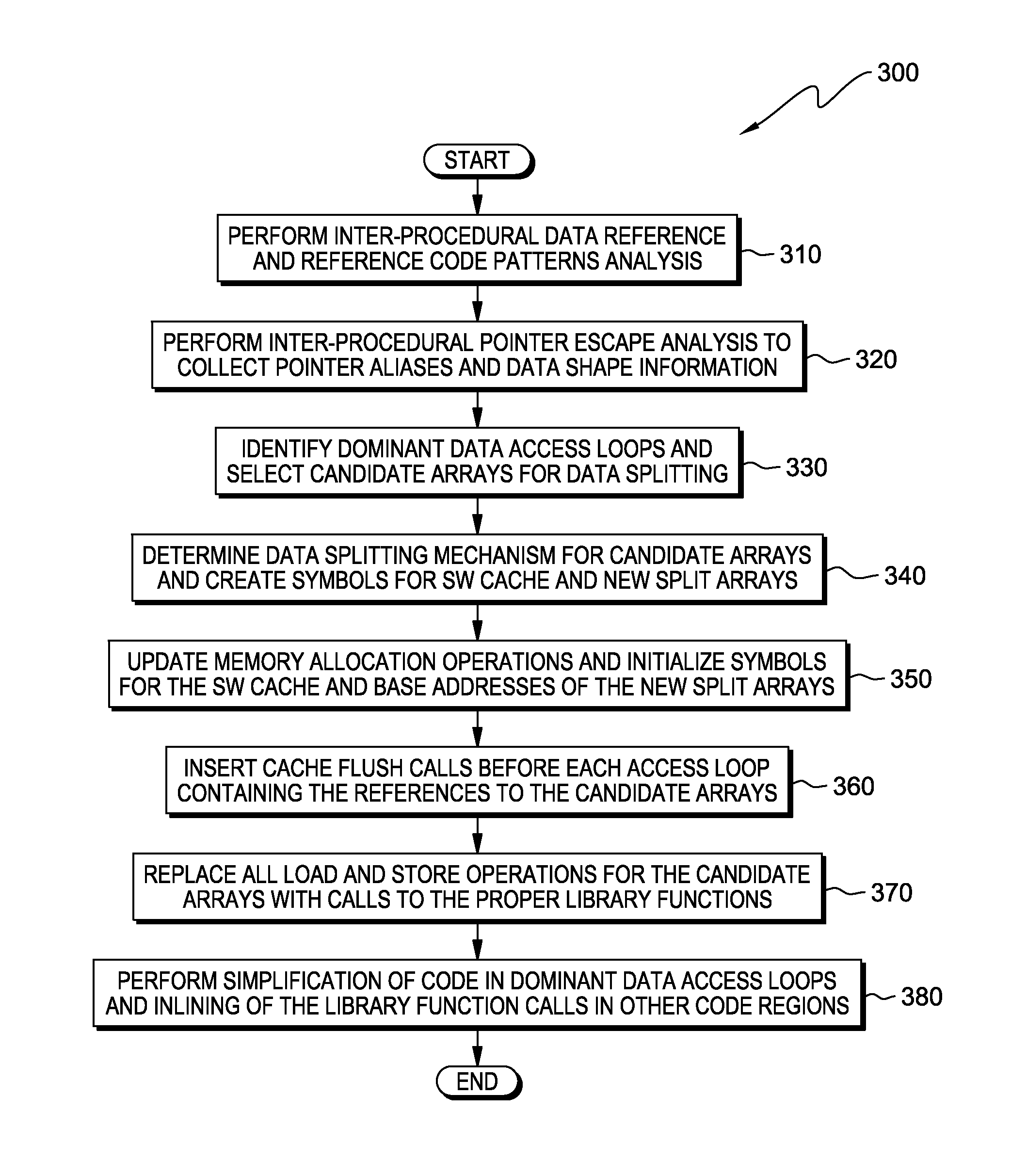

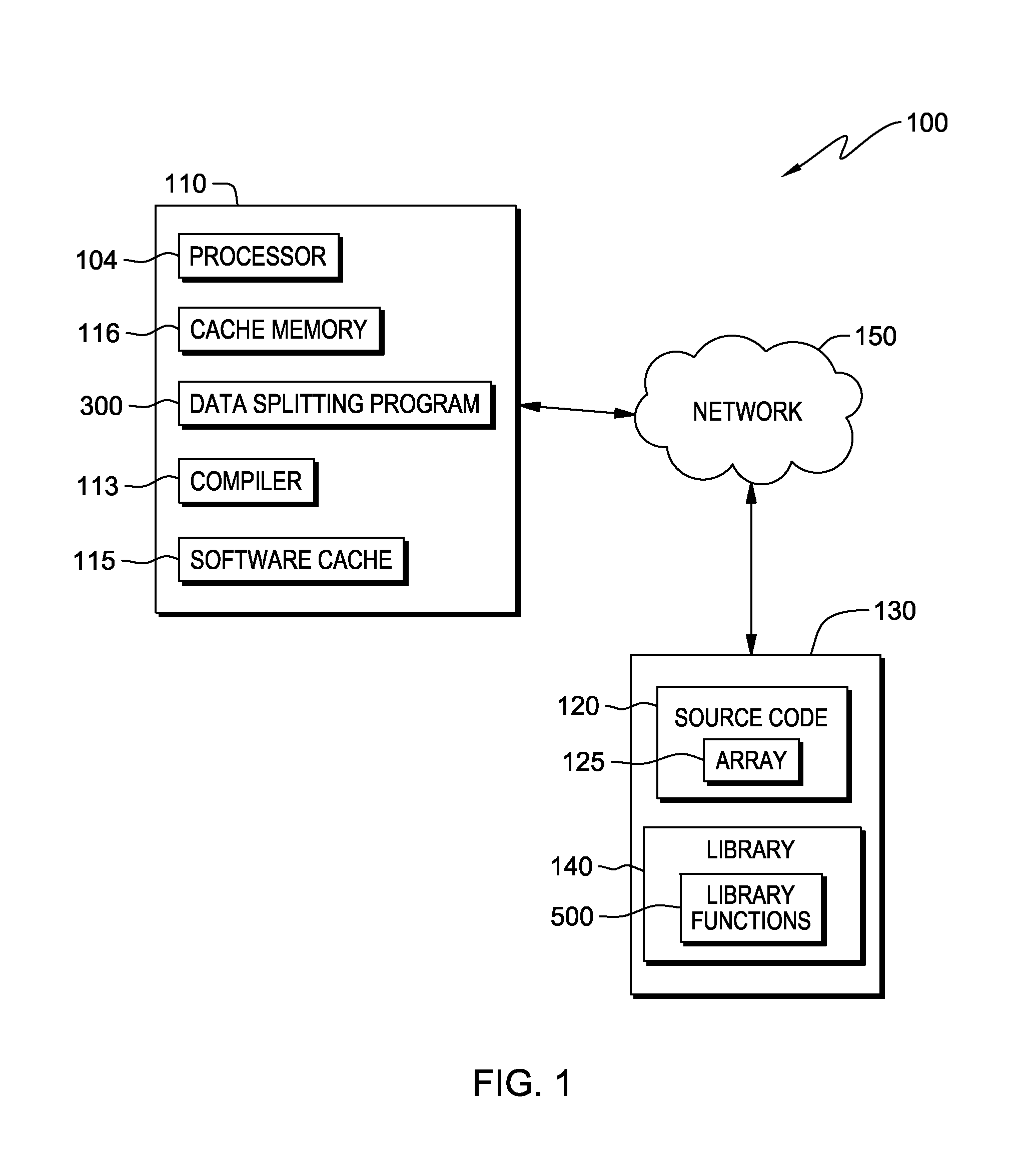

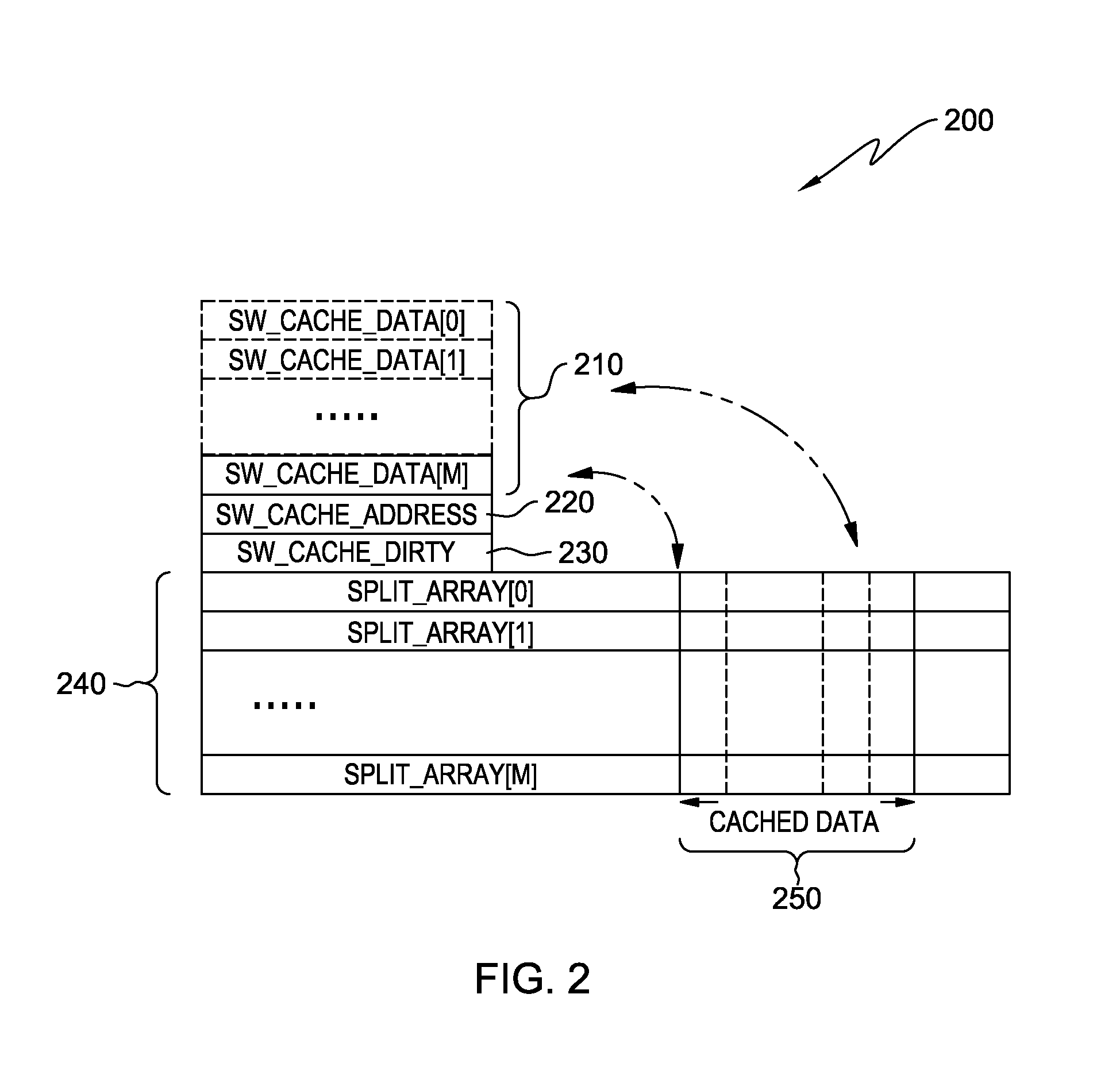

Optimizing memory bandwidth consumption using data splitting with software caching

InactiveUS20150067268A1Memory architecture accessing/allocationMemory adressing/allocation/relocationDatum referenceEscape analysis

A computer processor collects information for a dominant data access loop and reference code patterns based on data reference pattern analysis, and for pointer aliasing and data shape based on pointer escape analysis. The computer processor selects a candidate array for data splitting wherein the candidate array is referenced by a dominant data access loop. The computer processor determines a data splitting mode by which to split the data of the candidate array, based on the reference code patterns, the pointer aliasing, and the data shape information, and splits the data into two or more split arrays. The computer processor creates a software cache that includes a portion of the data of the two or more split arrays in a transposed format, and maintains the portion of the transposed data within the software cache and consults the software cache during an access of the split arrays.

Owner:GLOBALFOUNDRIES INC

Efficient memory management in software caches

Owner:INT BUSINESS MASCH CORP

Optimizing memory bandwidth consumption using data splitting with software caching

InactiveUS9104577B2Memory architecture accessing/allocationMemory adressing/allocation/relocationCircular referenceDatum reference

A computer processor collects information for a dominant data access loop and reference code patterns based on data reference pattern analysis, and for pointer aliasing and data shape based on pointer escape analysis. The computer processor selects a candidate array for data splitting wherein the candidate array is referenced by a dominant data access loop. The computer processor determines a data splitting mode by which to split the data of the candidate array, based on the reference code patterns, the pointer aliasing, and the data shape information, and splits the data into two or more split arrays. The computer processor creates a software cache that includes a portion of the data of the two or more split arrays in a transposed format, and maintains the portion of the transposed data within the software cache and consults the software cache during an access of the split arrays.

Owner:GLOBALFOUNDRIES INC

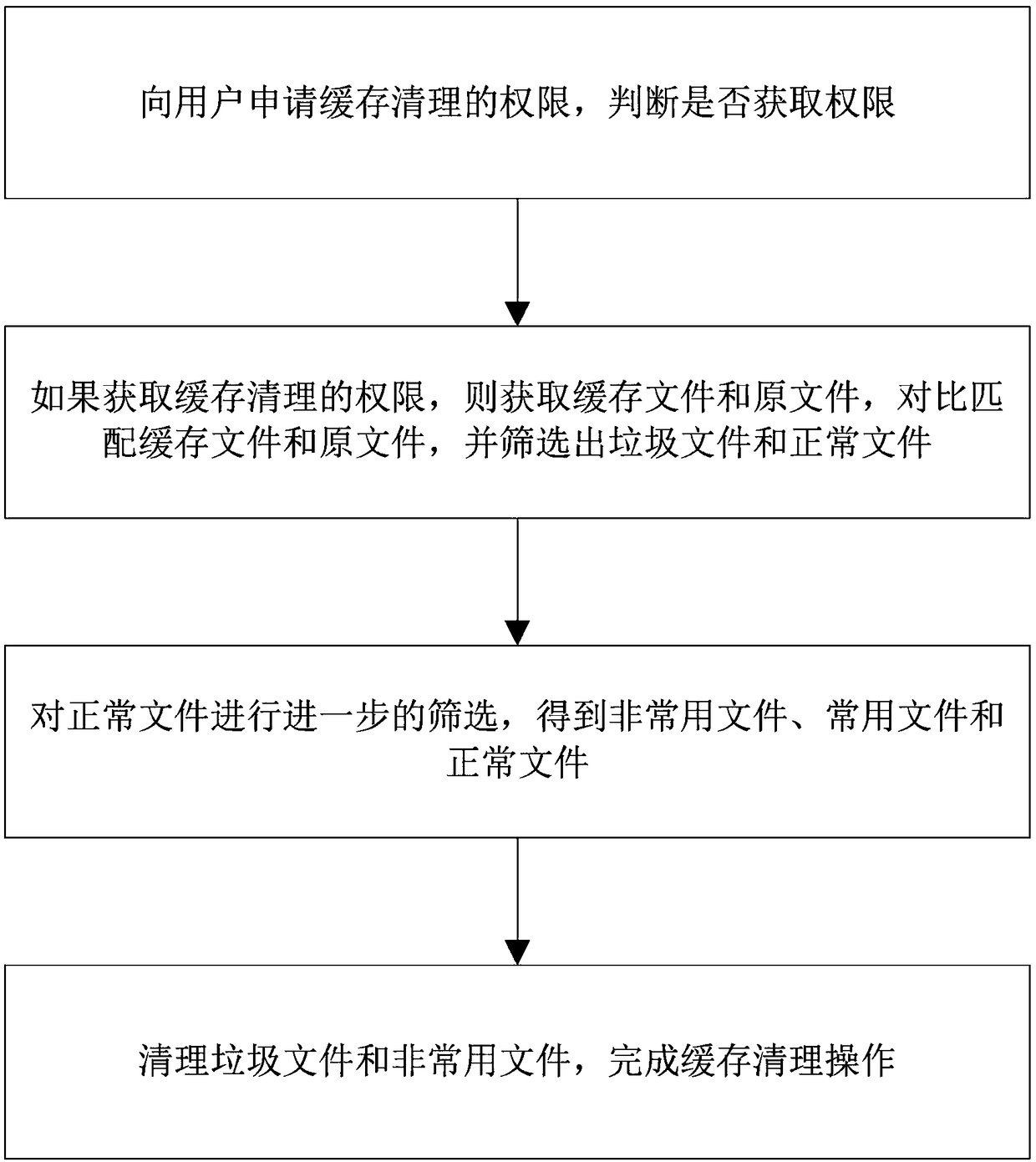

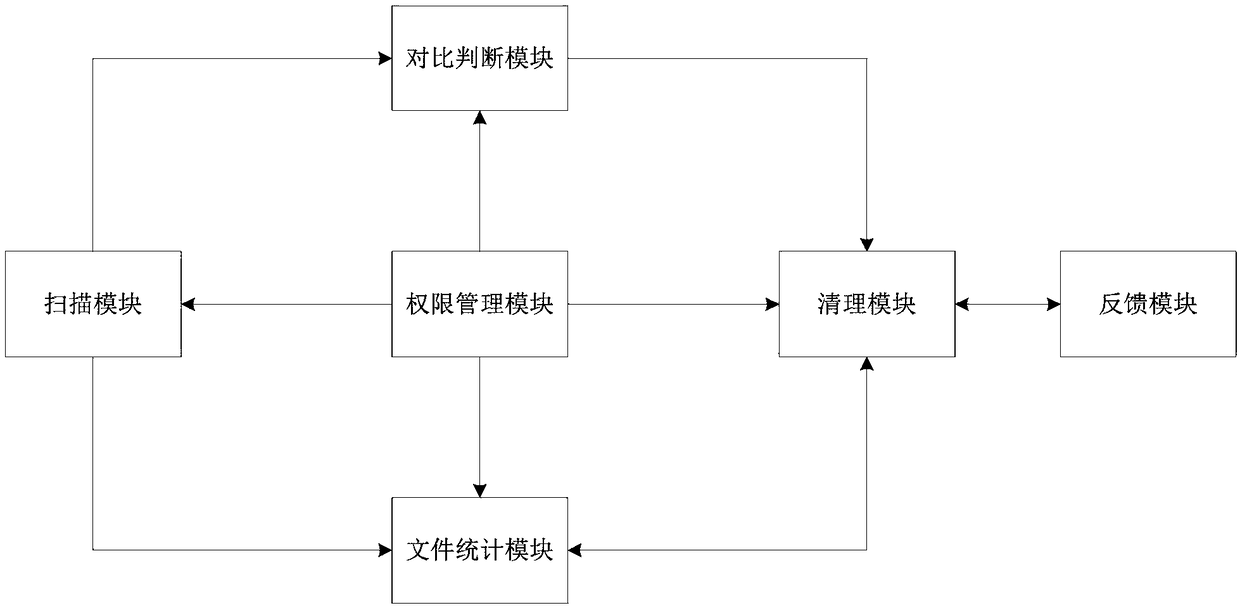

A method and system for cleaning software cache

InactiveCN109408414AIntelligent cleaning methodVoid cleaningMemory systemsTerminal equipmentRights management

The invention proposes a method and system for cleaning software cache. The right management module first applies to the user for the permission of cache cleaning, if user agrees to grant permission,the scanning module scans the storage space of the terminal device, scans the software cache files through the software cache directory, the cache file is compared with the original file by the comparison judgment module, If the corresponding original file does not exist, the cache file is marked as junk, Cleanup module cleans marked garbage files. The unmarked cache file is a normal file, and unusual software is marked by counting the usage of the normal file, and garbage files and unusual software are cleaned by a cleaning module, so that the invention can more flexibly and intelligently clean the cache file, and the accuracy and efficiency of the file cleaning are improved.

Owner:湖北华联博远科技有限公司

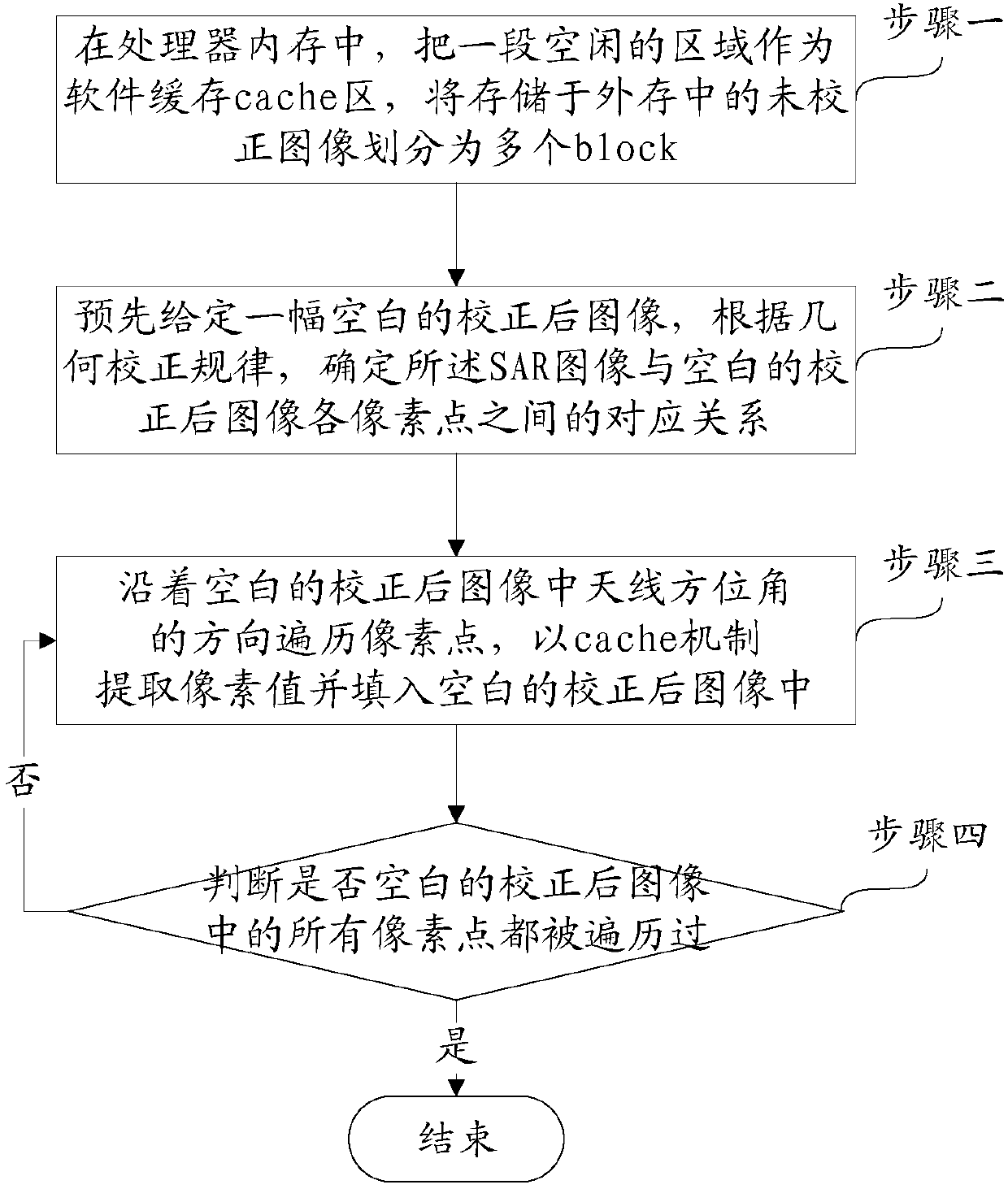

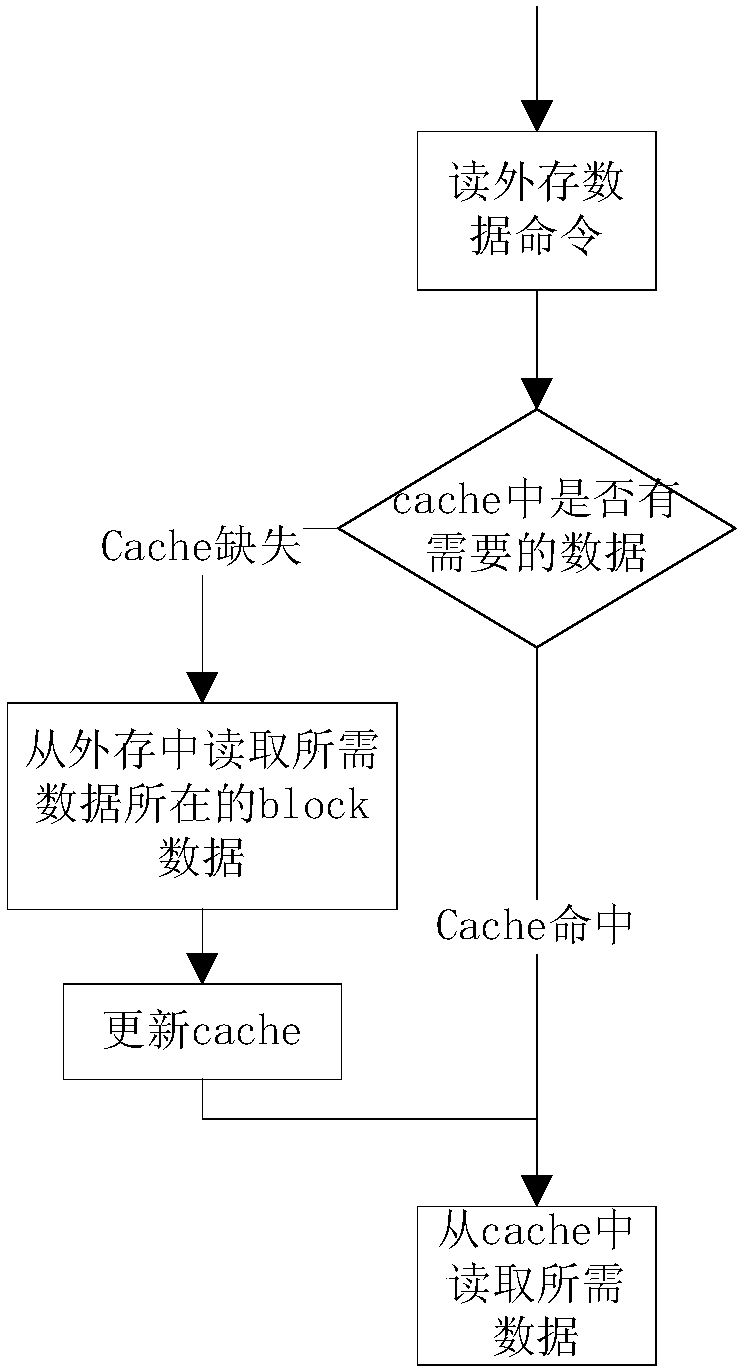

Method for applying software cache technology to geometric correction of SAR images

ActiveCN108038830AImprove interaction efficiencyImage enhancementImage analysisExternal storageSoftware cache

The invention discloses a method for applying a software cache technology to geometric correction of SAR images. The method specifically comprises the following steps of: in a processor memory, takingan idle area as a software cache area, and storing uncorrected SAR images in a plurality of blocks, wherein addresses of data stored in the blocks are continuous; determining a corresponding relationship between the uncorrected SAR images and each pixel of pre-given blank corrected images according to a geometric correction rule; and traversing the pixel points on the blank corrected images alongan inclined line in an antenna azimuth angle direction, extracting pixel values via a cache mechanism and filling the pixel values into the blank corrected images until the pixel points on all the blank uncorrected images are traversed. The method has the advantages of breaking through the bottleneck caused by low efficiency of accessing external memories by processors, and improving the data interaction efficiency.

Owner:北京理工雷科电子信息技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com