Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

44 results about "Visual modality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual Modality - A Visual Learner. Learns by seeing and by watching demonstrations Likes visual stimuli such as pictures, slides, graphs, demonstrations, etc. Conjures up the image of a form by seeing it in the “mind’s eye” Often has a vivid imagination.

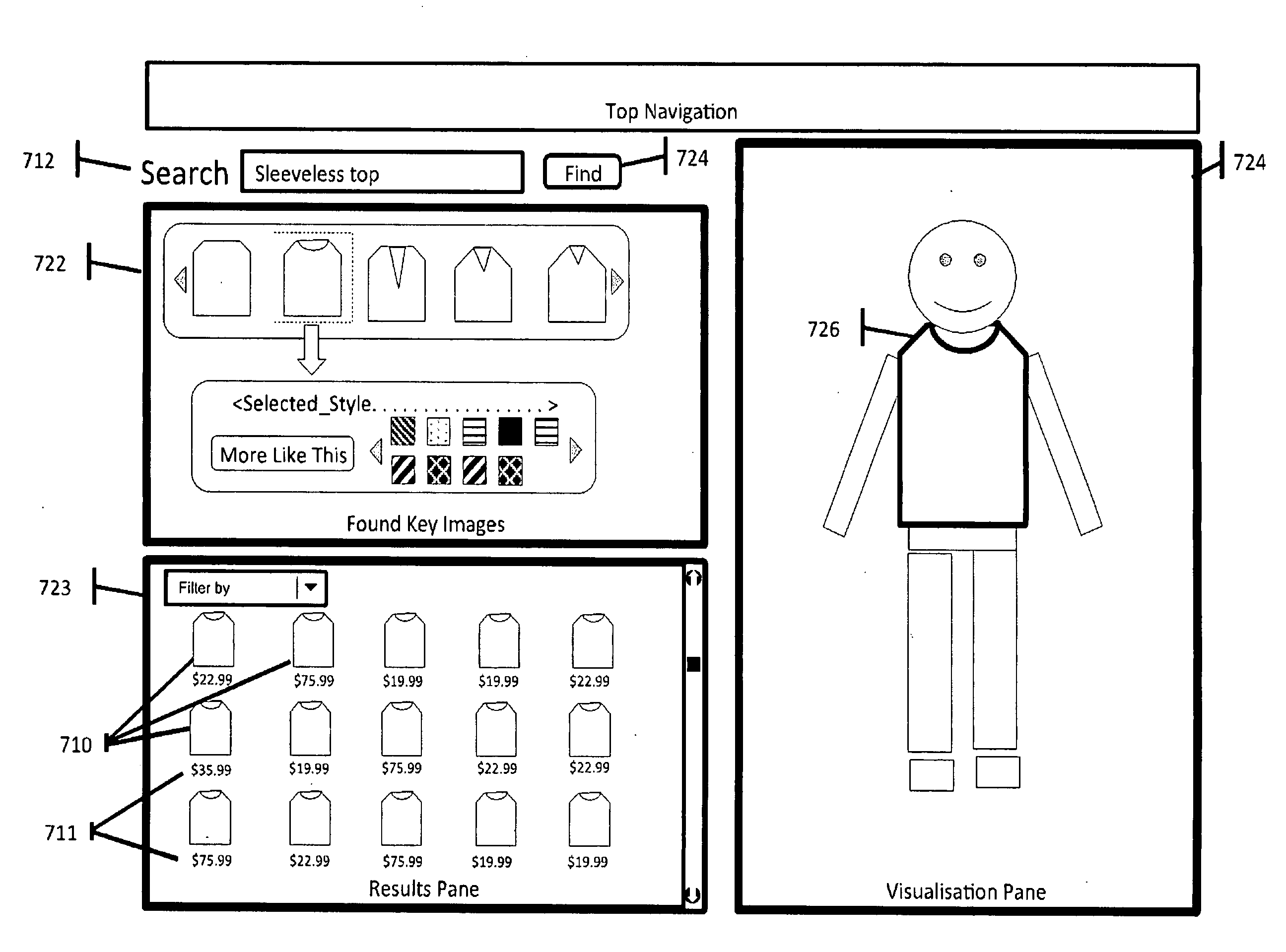

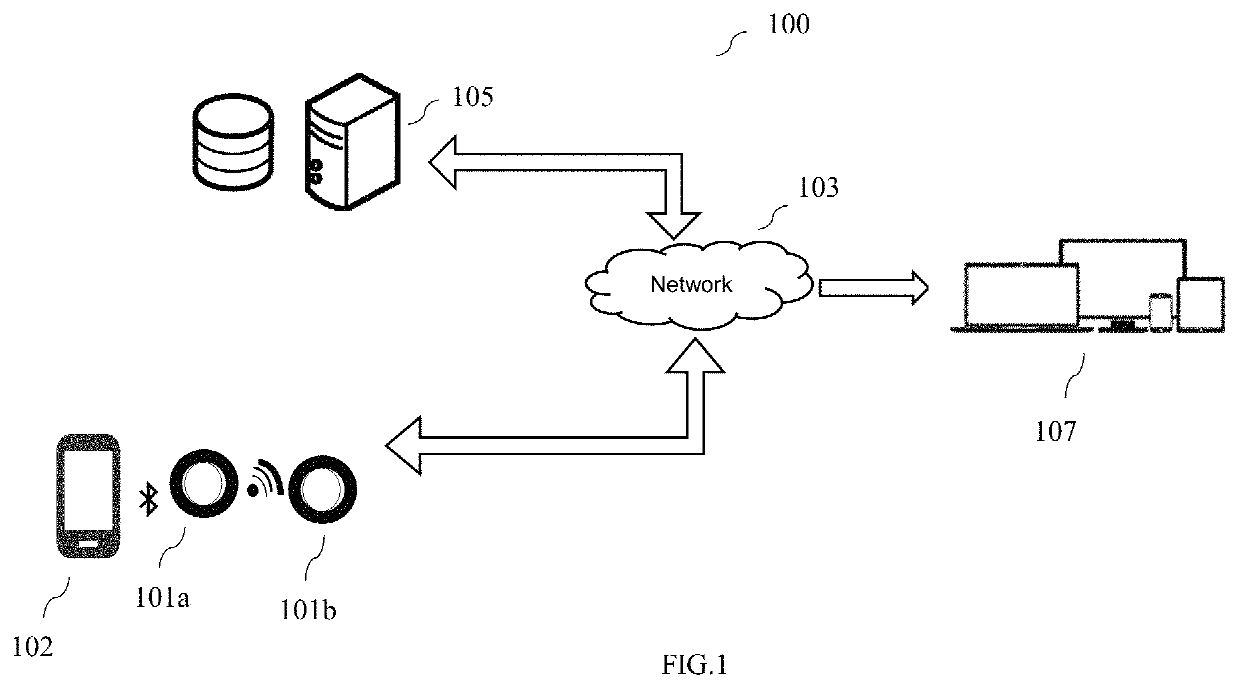

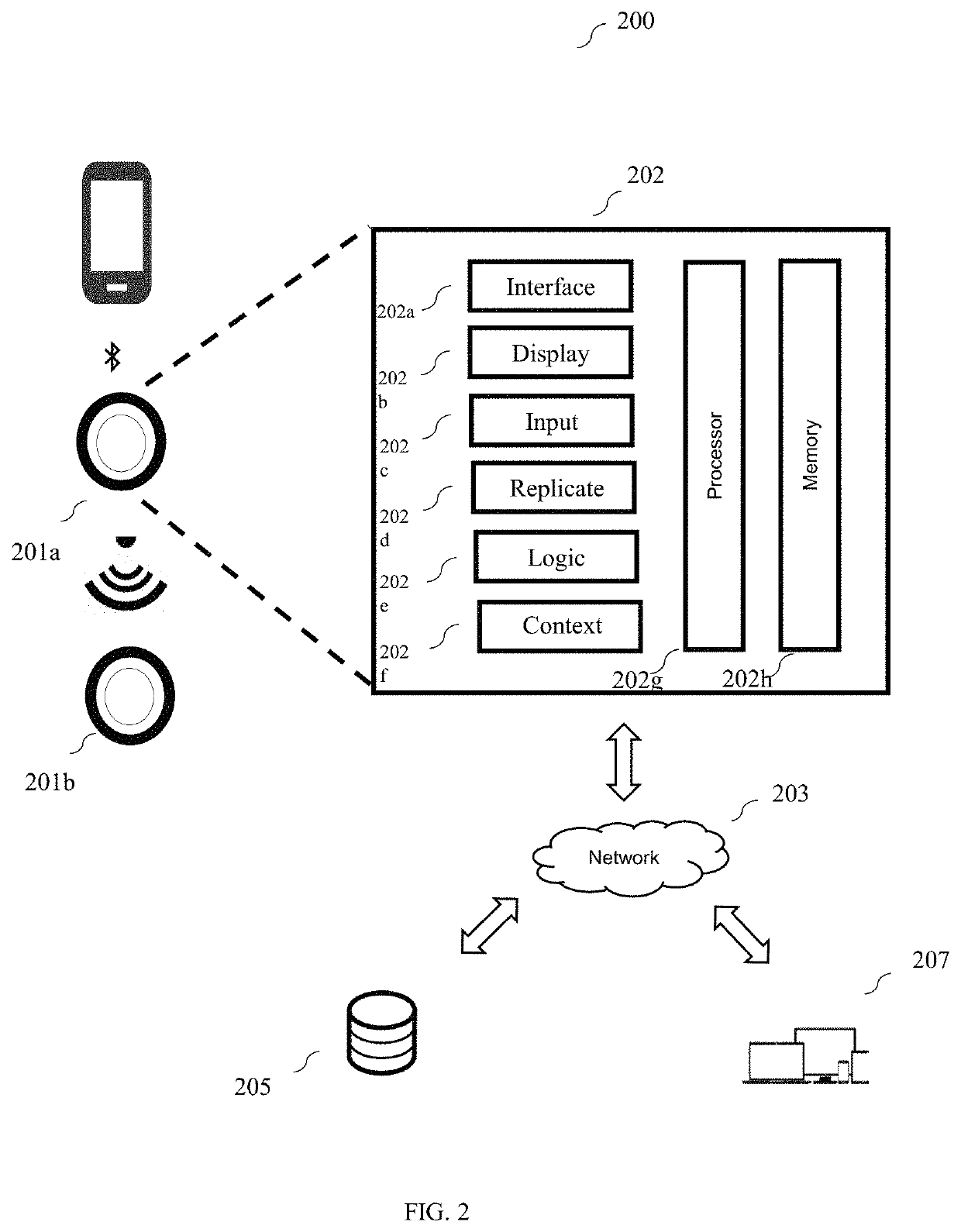

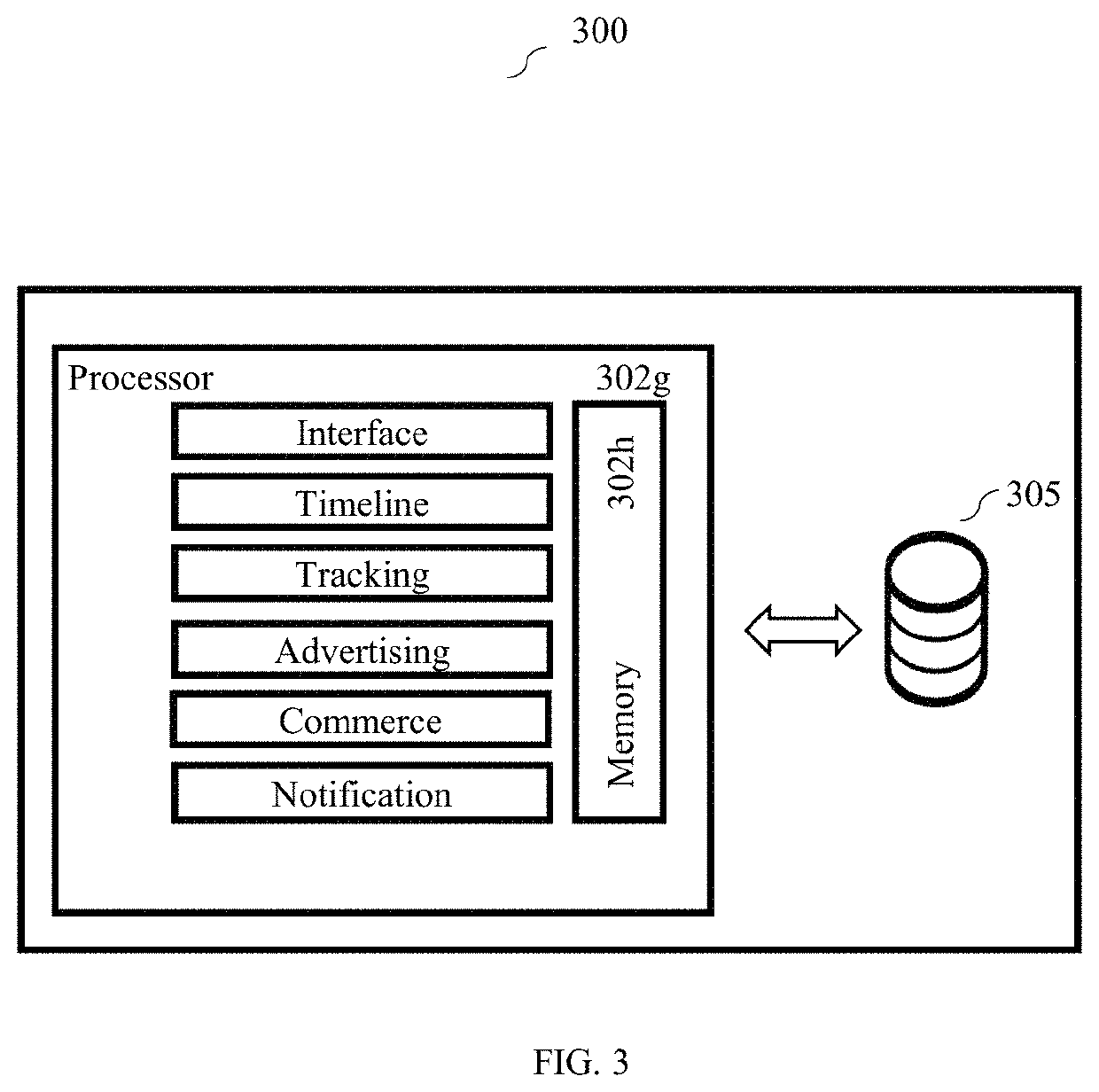

Methods and systems for facilitating selecting and/or purchasing of items

Methods and systems for facilitating the selecting and / or the purchasing of items are provided. Items to be purchased may be clothing items. The use of a visualization pane comprising an avatar may be used to facilitate item selection. The avatar may represent a person such as a user and clothing items may be represented on the avatar so as to provide a preview of how the clothing items would look on the user. Searching for purchasable items may be done textually or visually using key images or by a combination of both. Key images selected from a dictionary of key images may to represent search criteria. Coupon offers may be presented to a user which may be redeemable instantly or later at a store location.

Owner:FARIBAULT CLAUDE +4

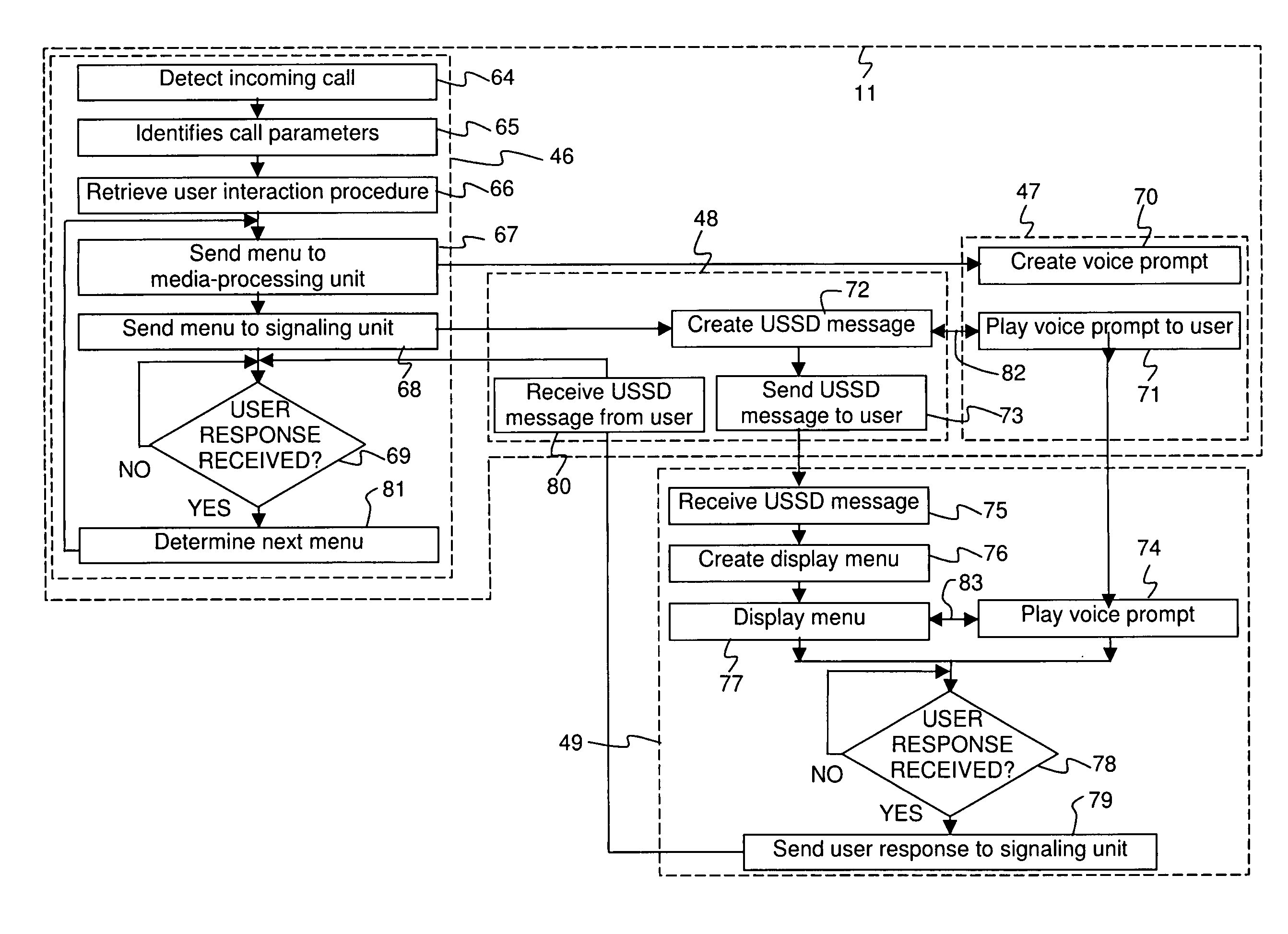

Enhanced visual IVR capabilities

InactiveUS20070135101A1Special service for subscribersRadio/inductive link selection arrangementsData connectionComputer science

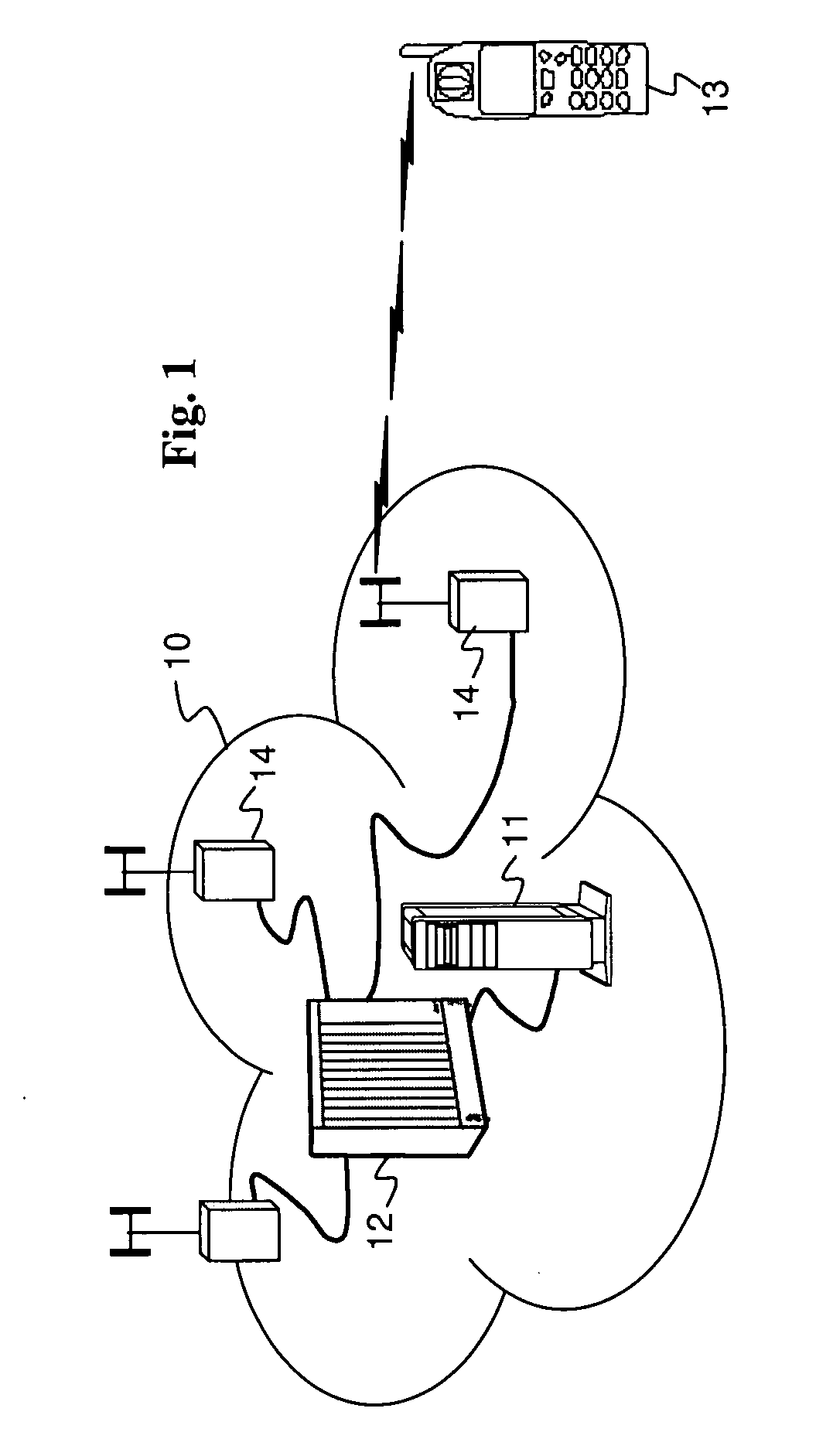

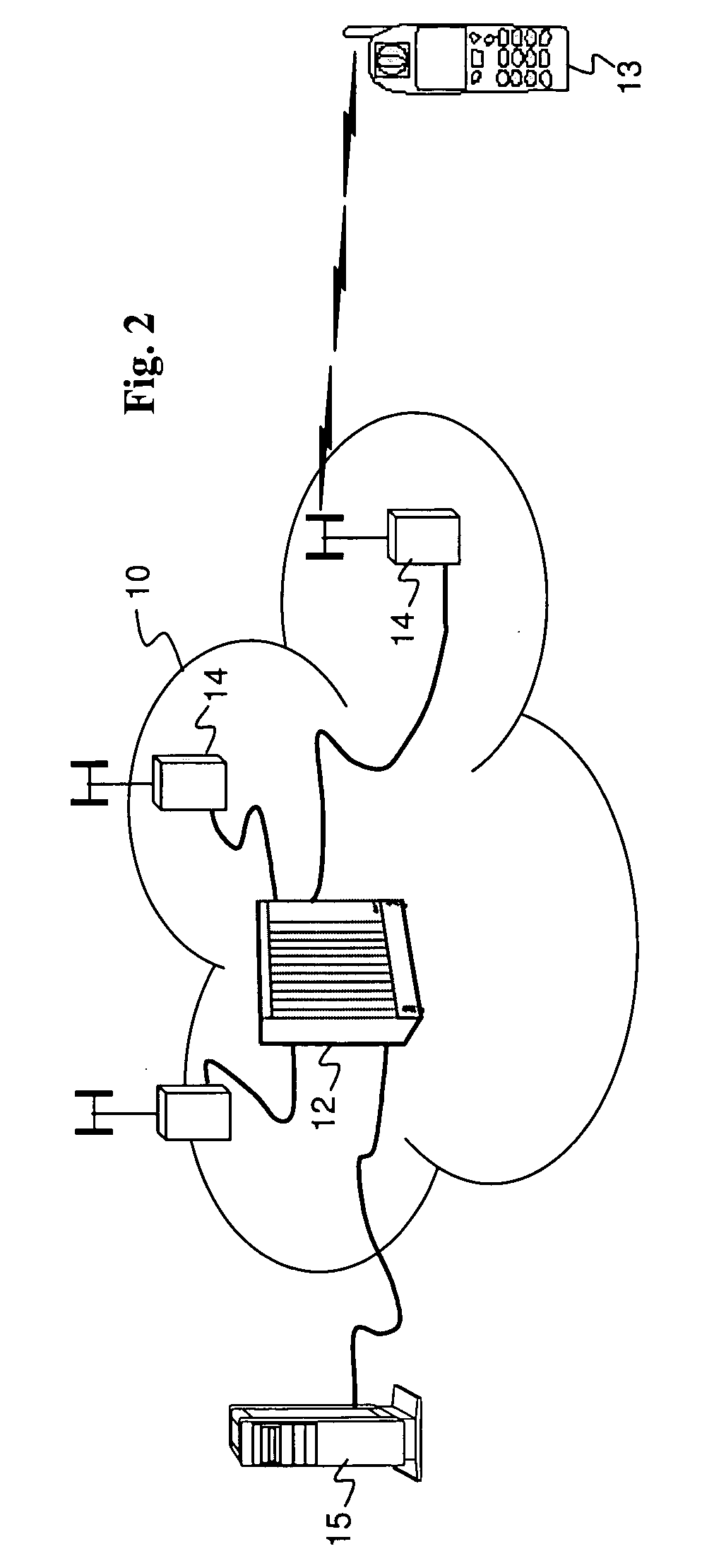

A multi-modal user interface method and system enabling a user of a cellular telephone being connected to a cellular network via both a voice connection and a data connection to receive a menu in both an audible manner and a visual manner, during the course of the call and substantially simultaneously.

Owner:COMVERSE

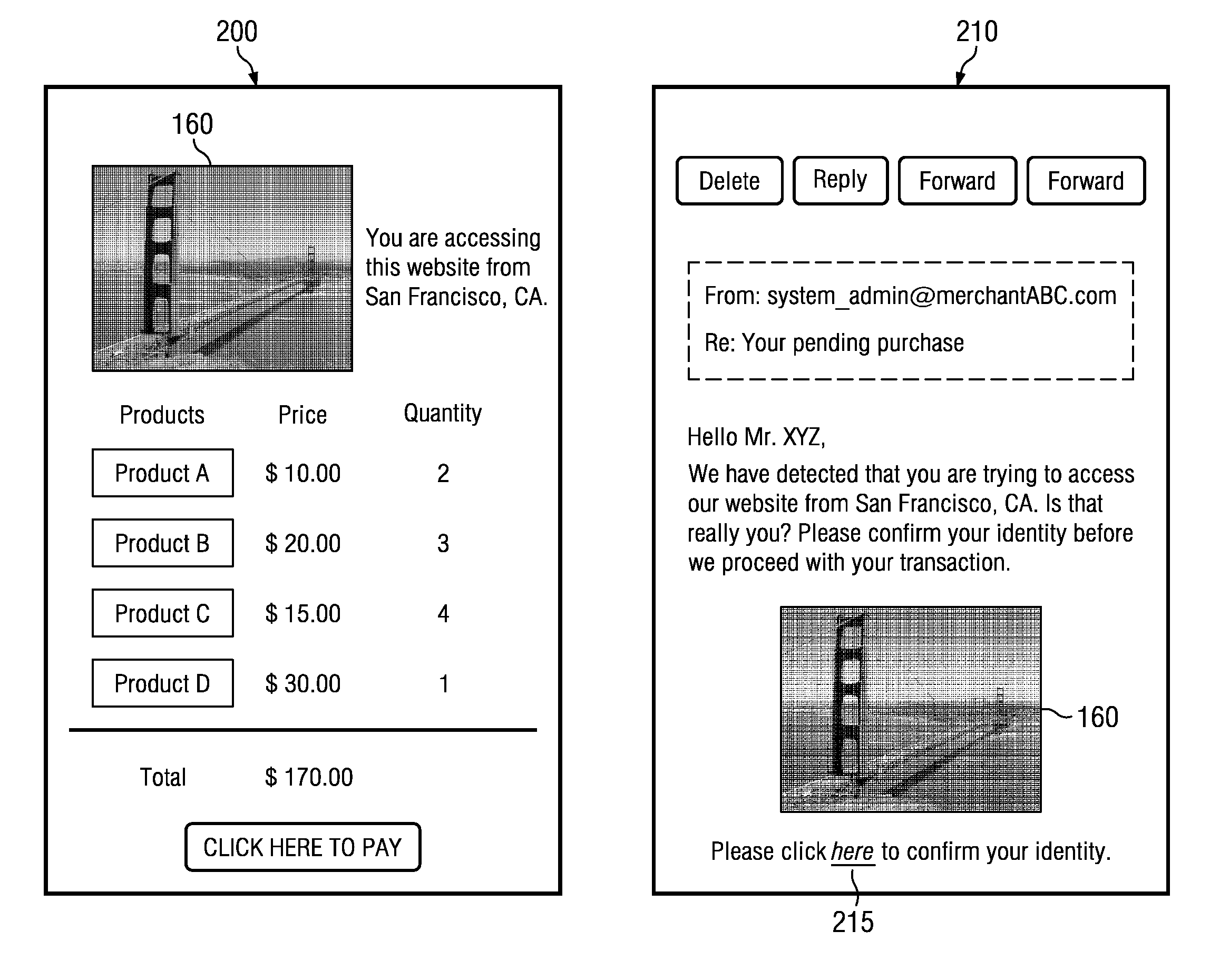

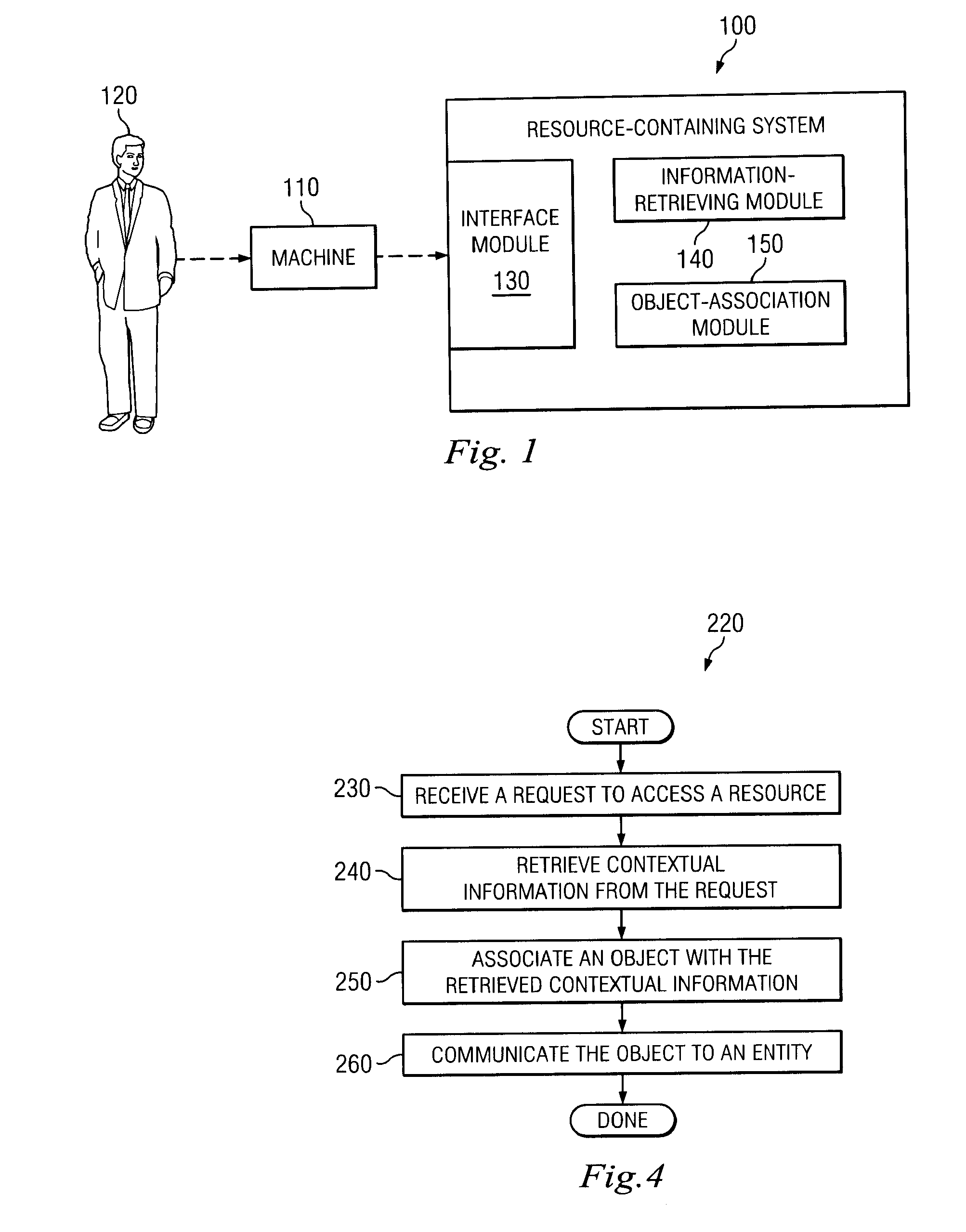

Visualization of Access Information

The present disclosure involves a method. The method involves: receiving a request to access a resource; retrieving contextual information from the request; and dynamically associating an object with the retrieved contextual information, the object having at least one of: an audio component and a visual component that represent the contextual information in an auditory manner and a visual manner, respectively. The present disclosure also involves a system. The system involves: means for receiving a request to access a resource; means for retrieving contextual information from the request; means for associating an object with the retrieved contextual information, the object having at least one of: an audio component and a visual component that convey the contextual information in an auditory manner and a visual manner, respectively; and means for communicating the object to an entity, wherein the entity is selected from the group consisting of: a machine that is making the request, a user whose account is to be accessed by the request, and a representative of the user.

Owner:PAYPAL INC

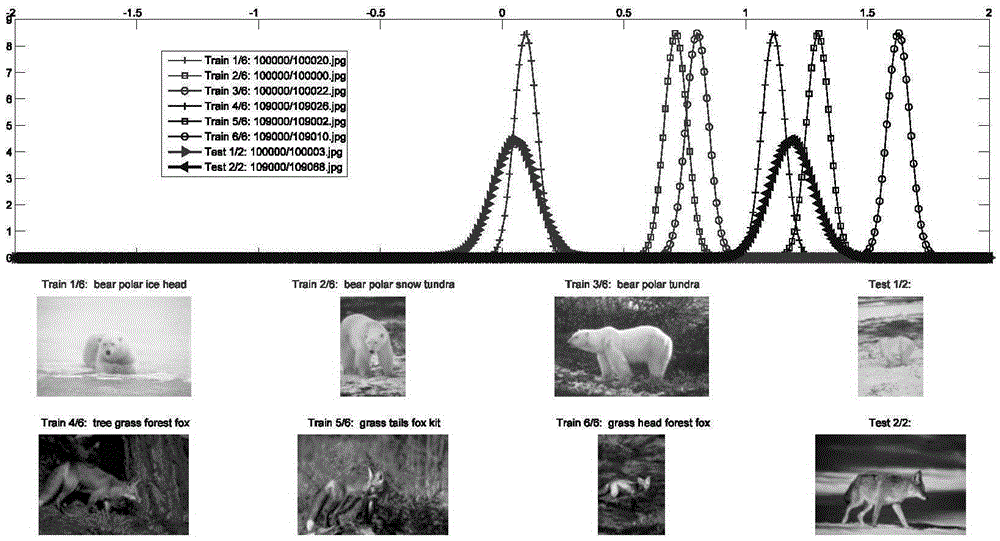

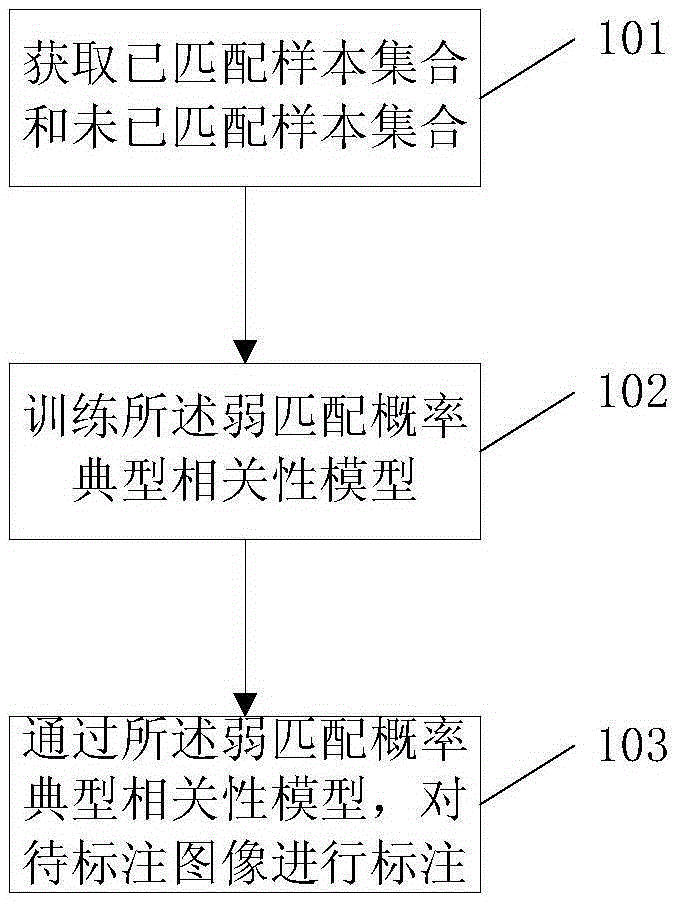

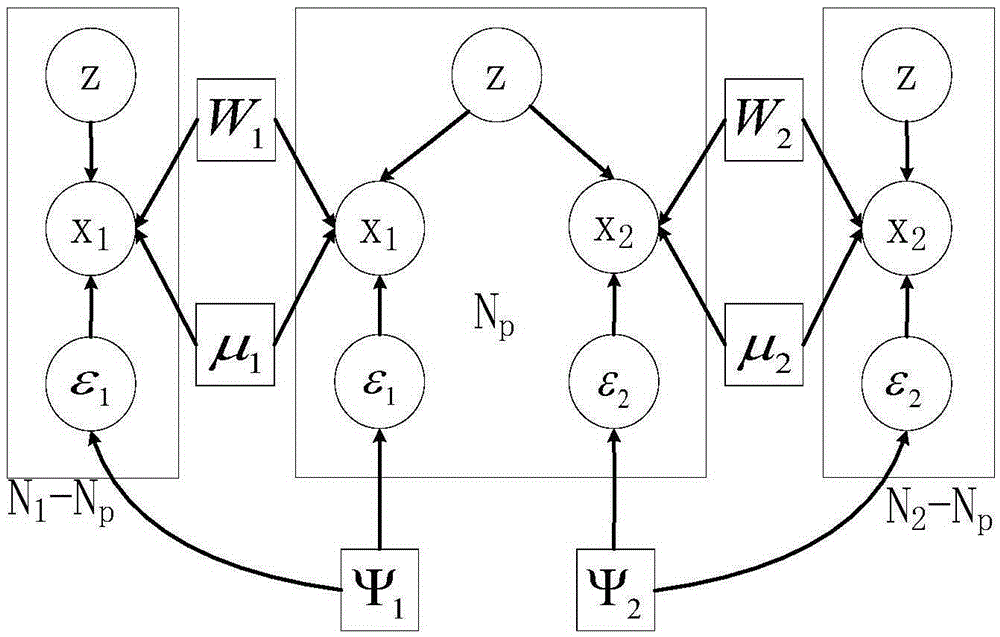

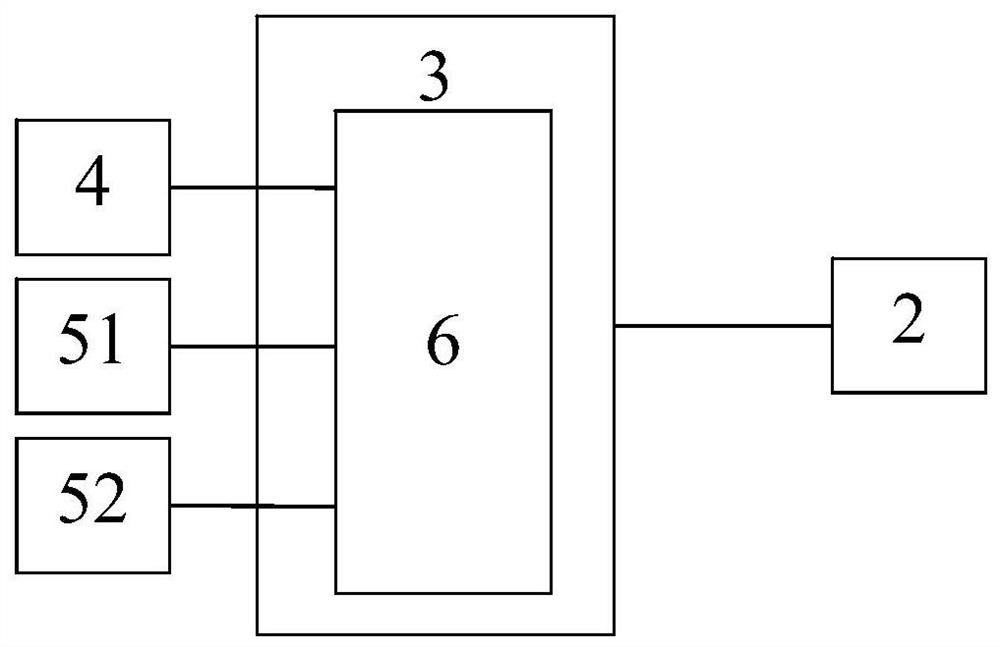

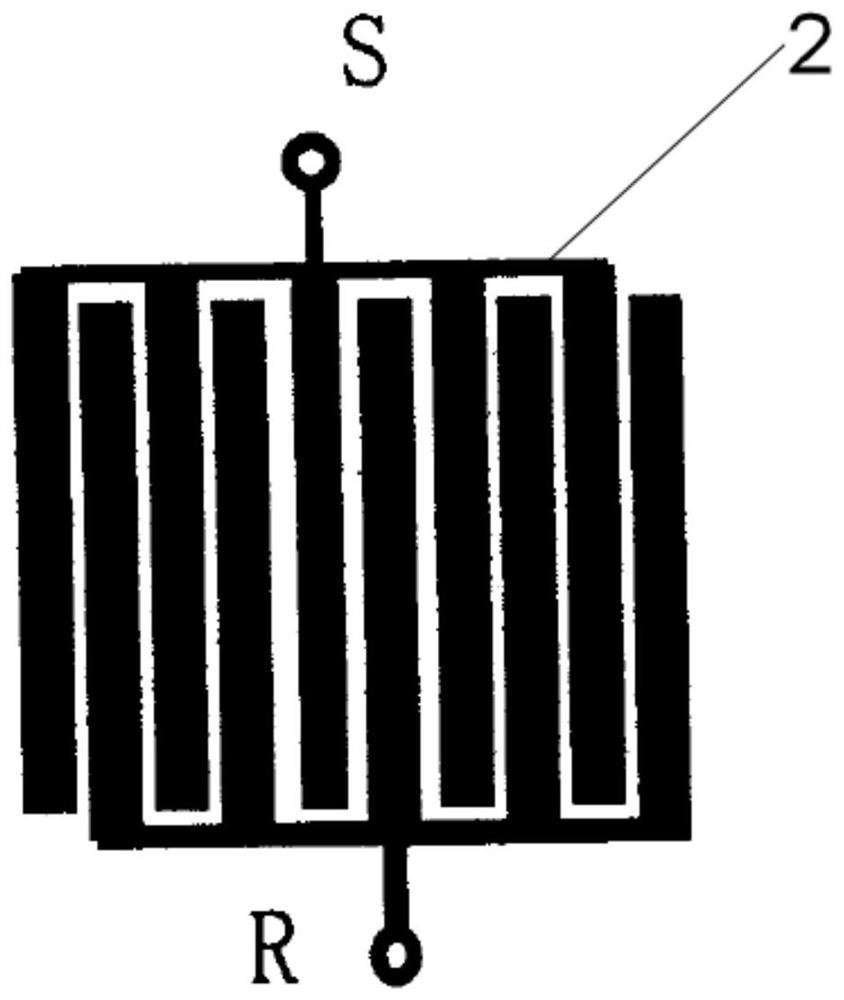

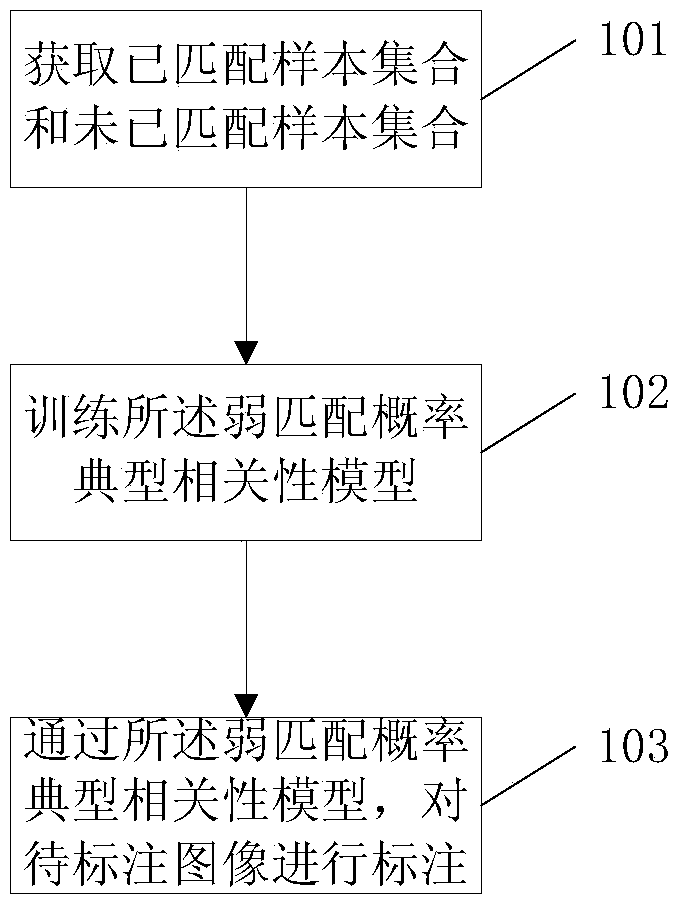

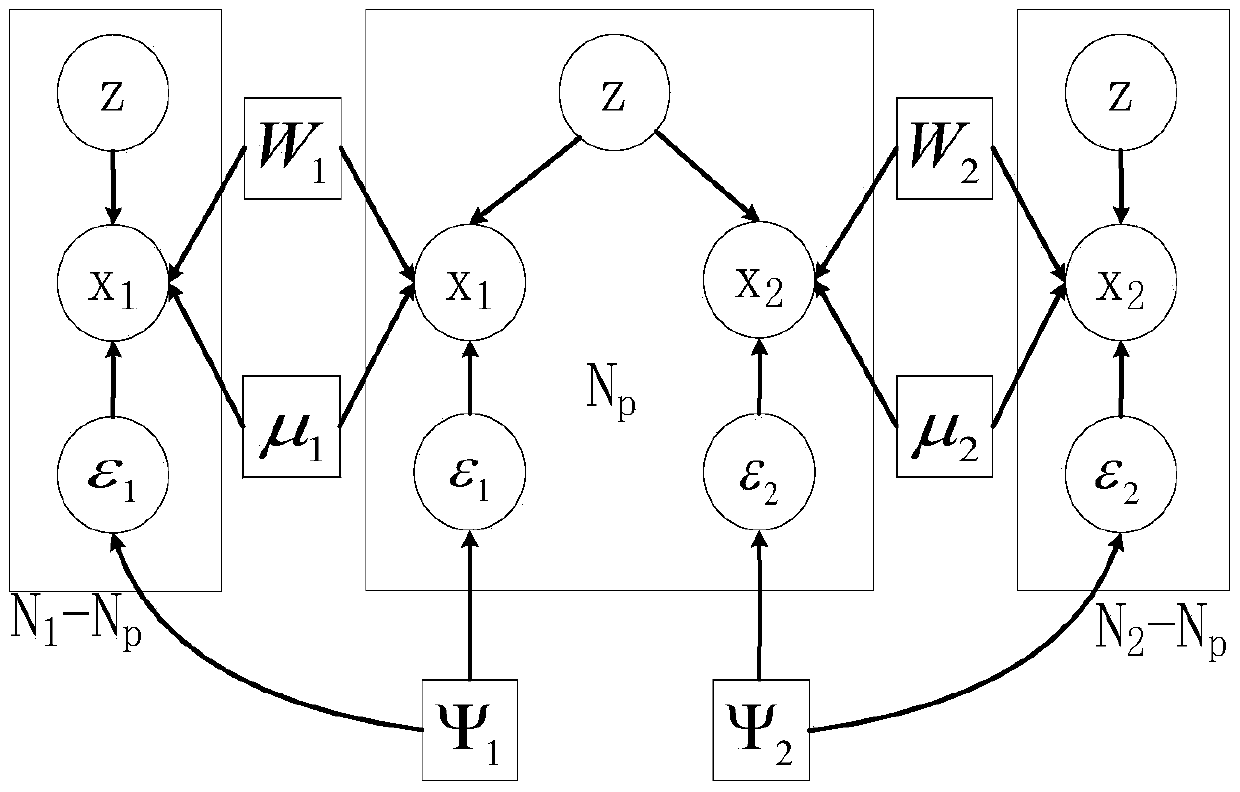

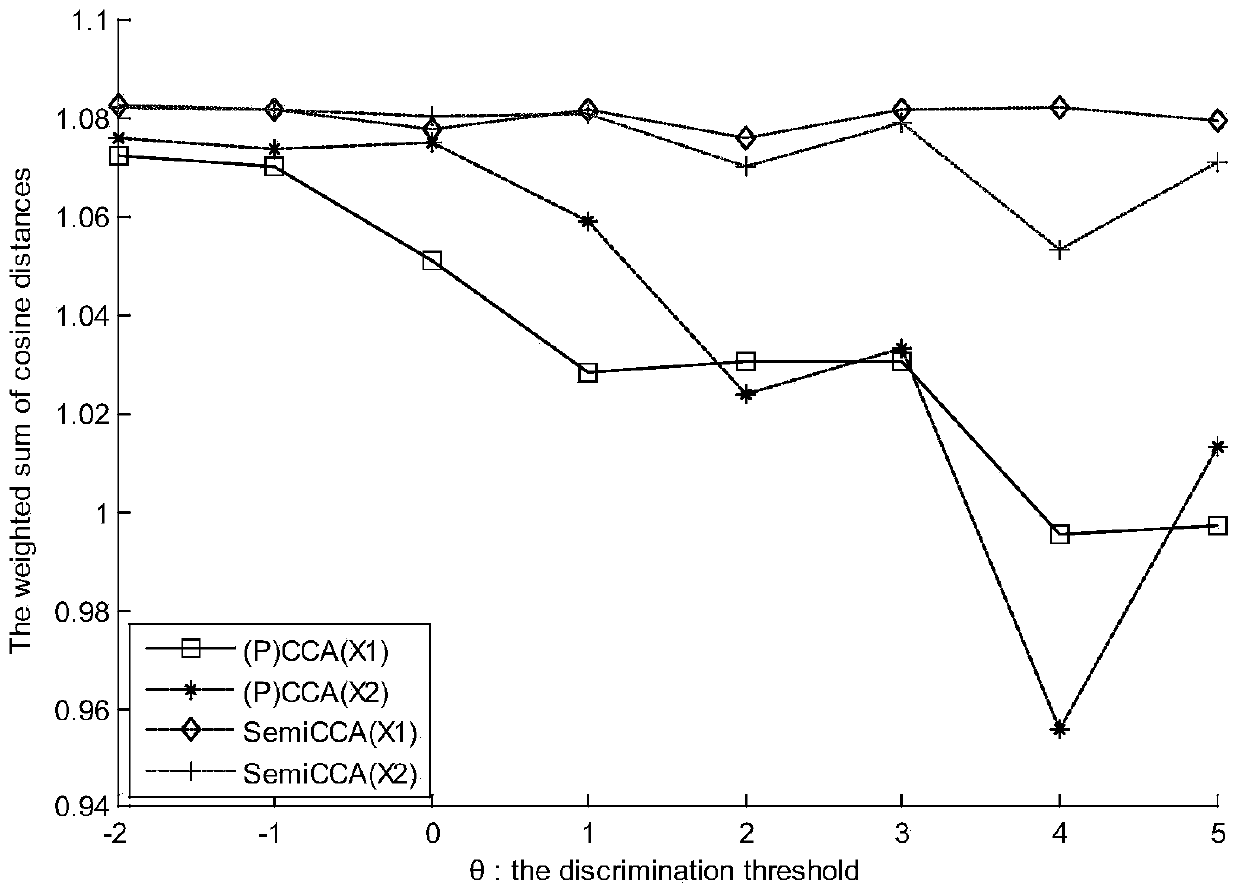

Image annotation method based on weak matching probability canonical correlation model

ActiveCN105389326APrevent overfittingGood effectCharacter and pattern recognitionSpecial data processing applicationsFeature setImaging Feature

The invention discloses an image annotation method and system based on a weak matching probability canonical correlation model, relating to the technical field of processing of network cross-media information. The image annotation method comprises the following steps: obtaining an annotated image and a non-annotated image in an image database, respectively extracting image features and textual features of the annotated image and the non-annotated image, and generating a matched sample set and an unmatched sample set, wherein the matched sample set contains an annotated image feature set and an annotated textual feature set; and the unmatched sample set contains a non-annotated image feature set and a non-annotated textual feature set; training the weak matching probability canonical correlation model according to the matched sample set and the unmatched sample set; and annotating an image to be annotated through the weak matching probability canonical correlation model. According to the invention, correlation between a visual modality and a textual modality is learned by using the annotated image, keywords of the annotated image and the non-annotated image simultaneously; and an unknown image can be accurately annotated.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

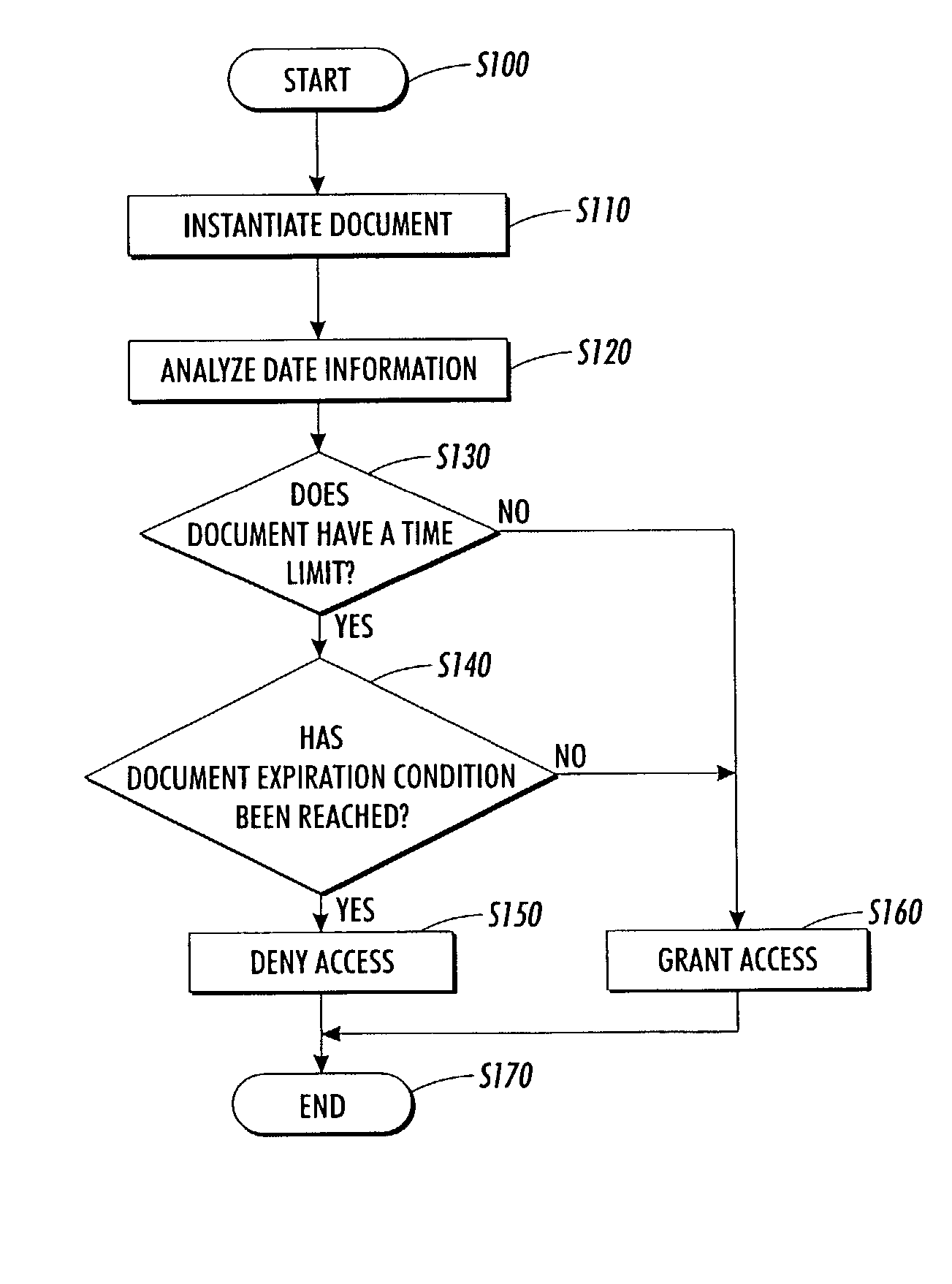

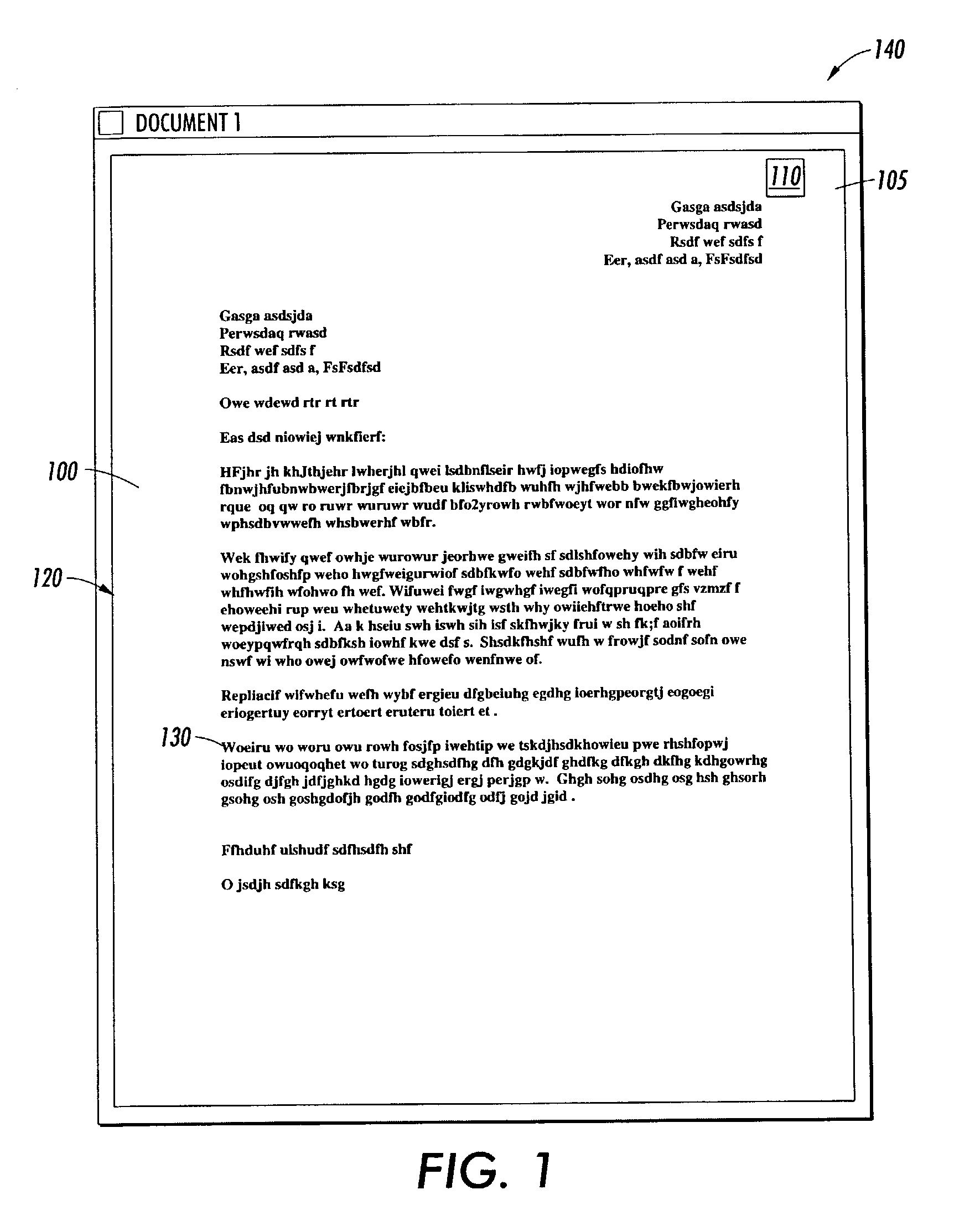

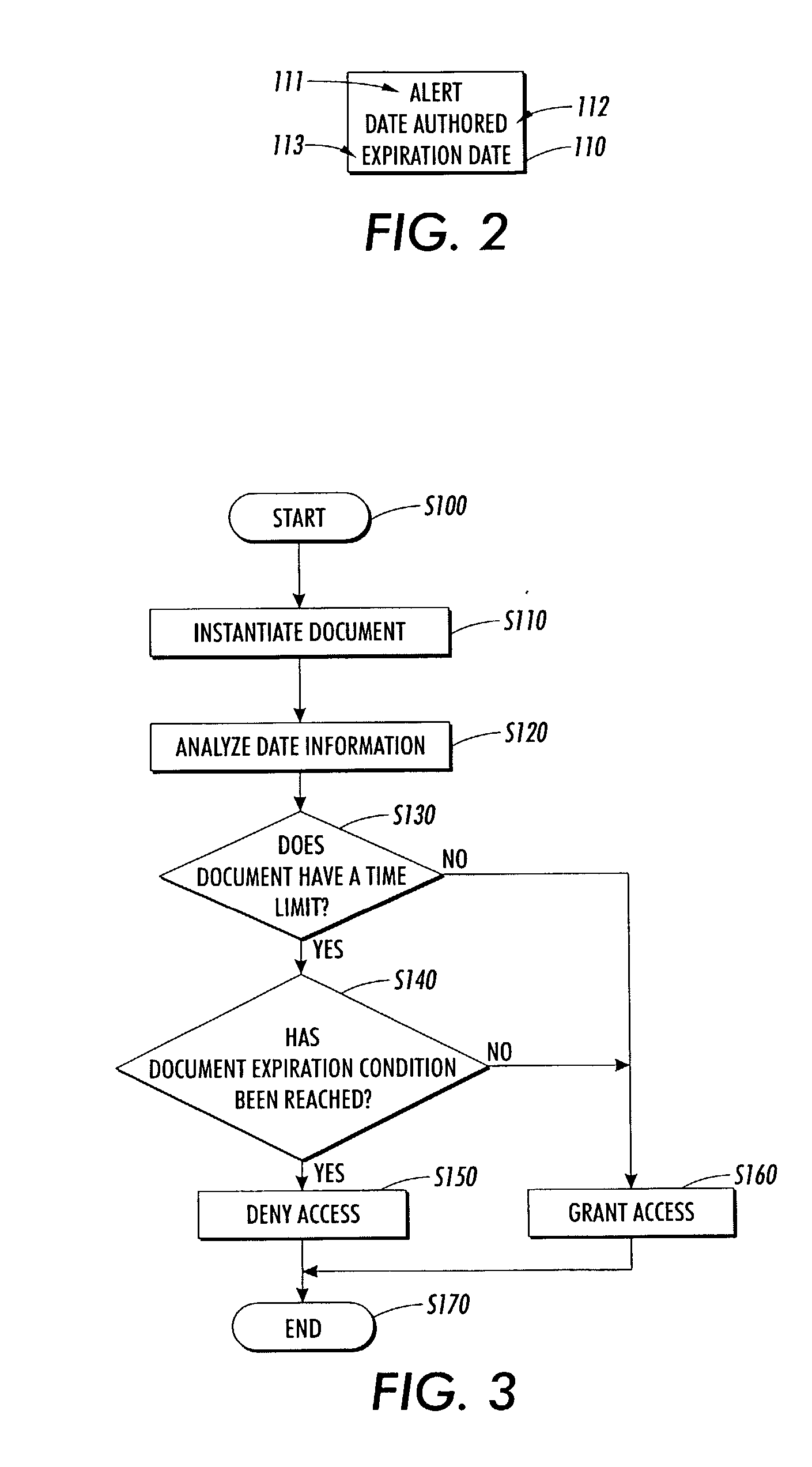

Systems and methods for visually representing the aging and/or expiration of electronic documents

ActiveUS20040025113A1Special data processing applicationsDocument management systemsElectronic documentMilestone

Systems and methods enforce an expiration date for an electronic document and represent the expiration visually. In various exemplary embodiments, the author specifies an expiration date for an electronic document. The expiration date is either a date stamp or is an integer representing a period of time from the creation date. In one embodiment, the access to the document is unavailable to a user after the expiration date. In various exemplary documents, the visual representation of the document ages after a milestone date is reached. In one embodiment, random pixels are added to the visual representation until the document expires. In another embodiment, an algorithm applies visual metaphor bitmaps to the document's visual representation. In one embodiment, the bitmaps are templates. In one embodiment, the document is mapped to the applied bitmaps. The document may be rendered illegible after the expiration date is reached.

Owner:III HLDG 6

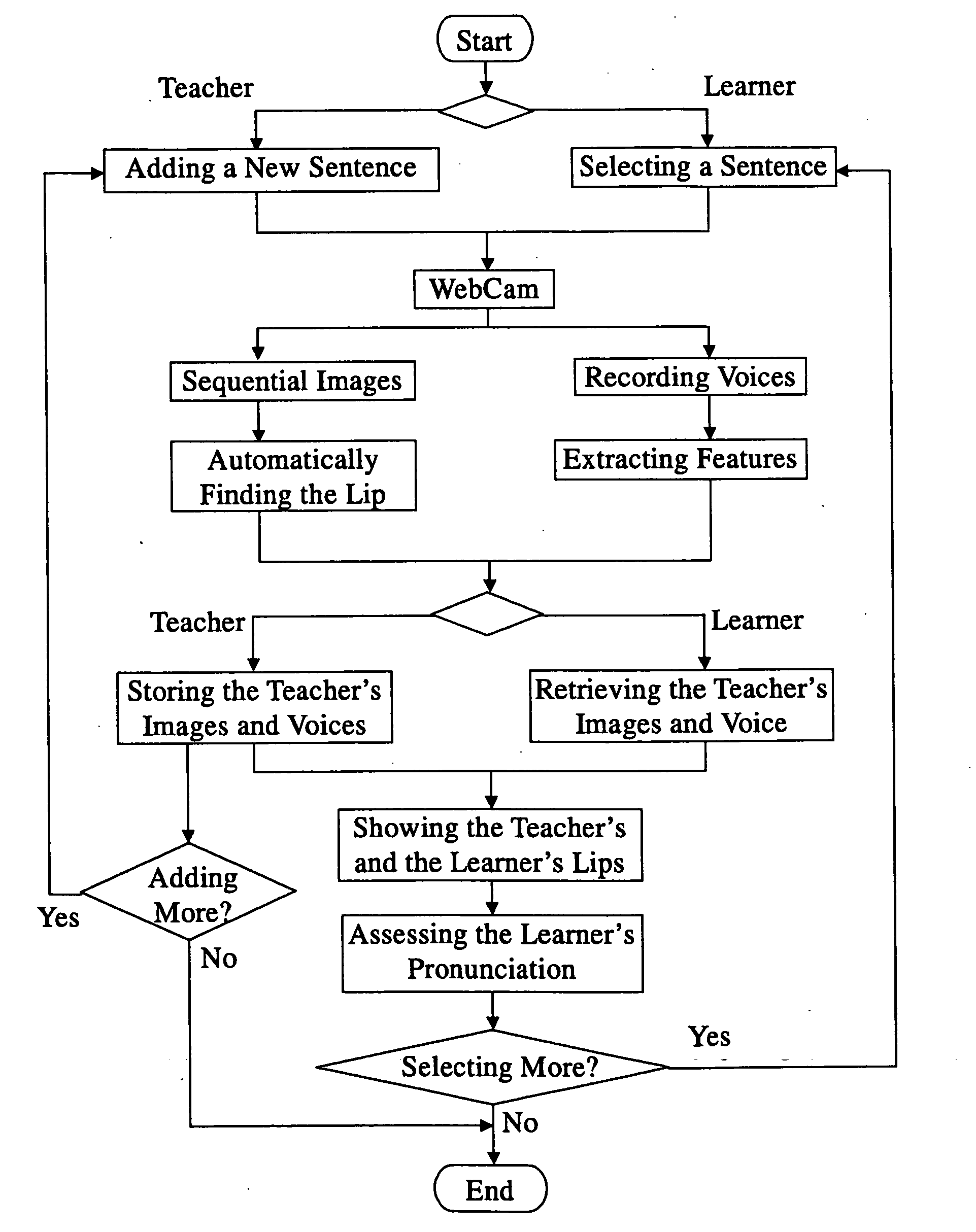

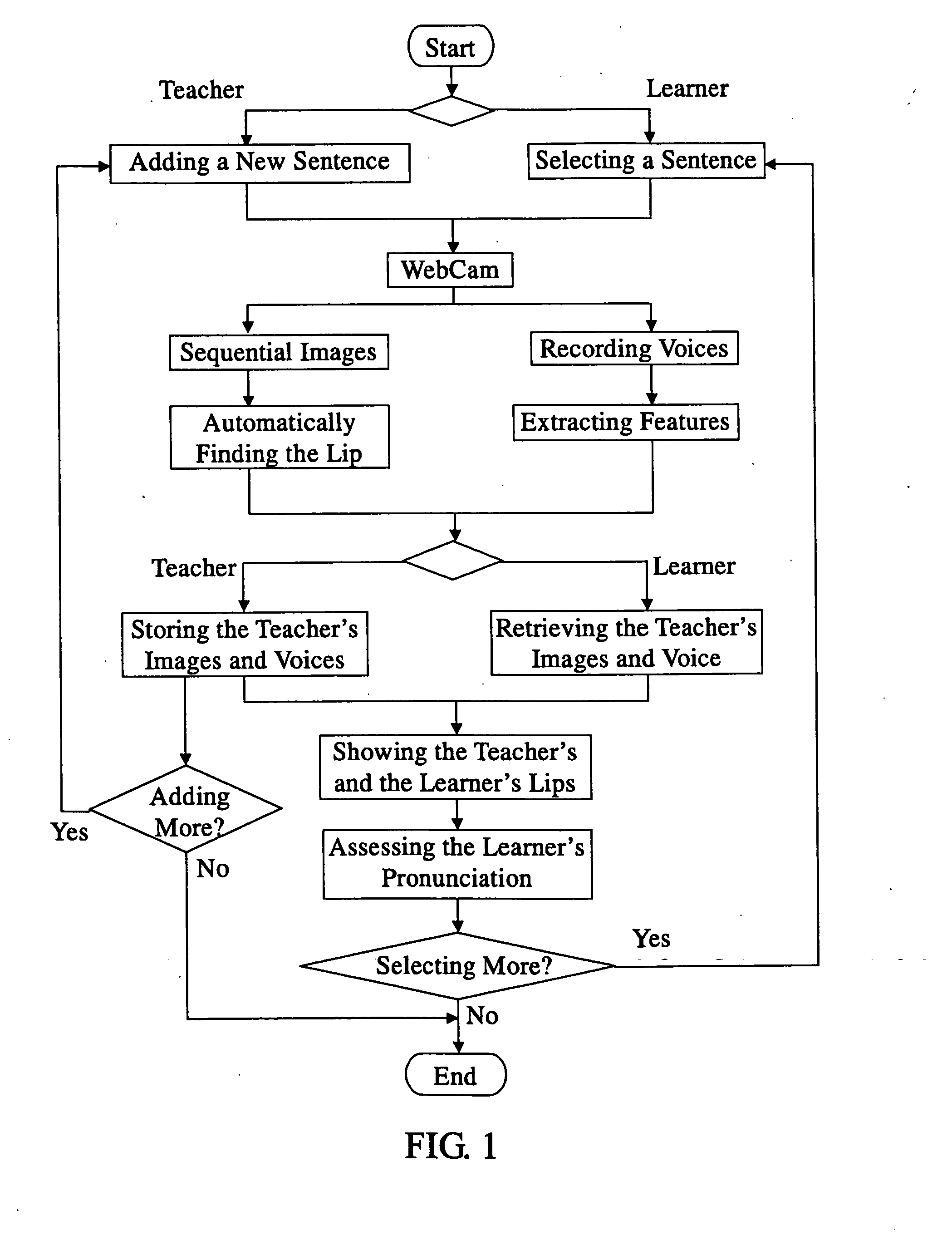

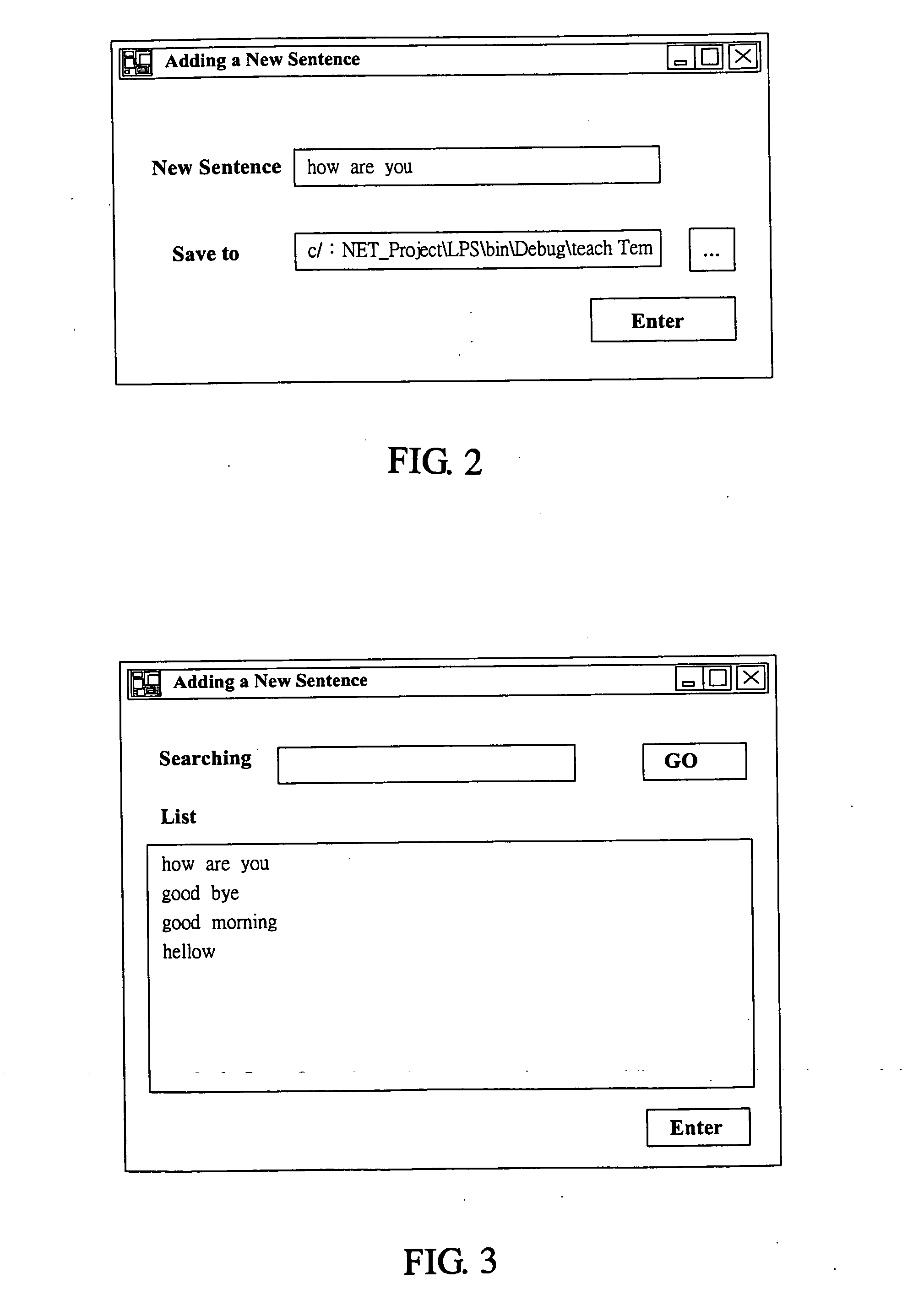

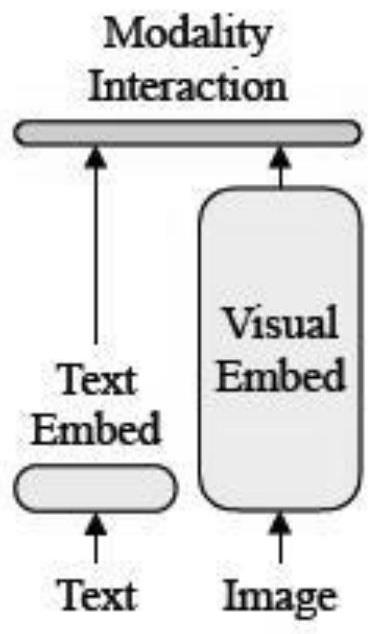

Method for assessing learner's pronunciation through voice and image

InactiveUS20080004879A1Rectify itEasy to practiceSpeech recognitionTeaching apparatusImage evaluationVisual perception

Owner:NAT KAOHSIUNG UNIV OF SCI & TECH

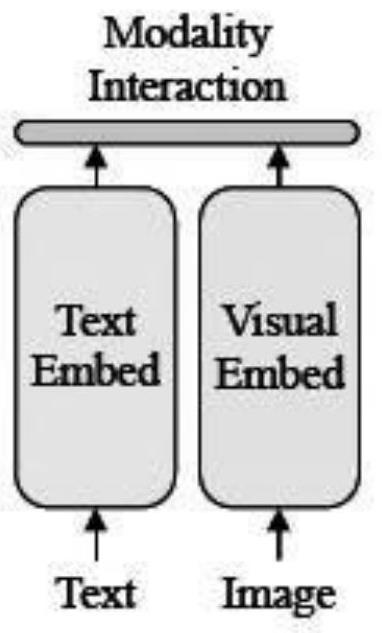

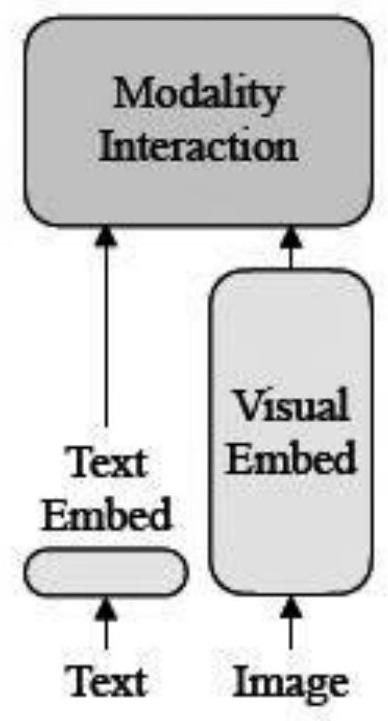

Multi-modal pre-training method based on image-text linear combination

PendingCN114298158AProcessing speedShort timeCharacter and pattern recognitionNatural language data processingFeature vectorLinguistic model

A multi-modal pre-training method based on image-text linear combination belongs to the technical field of image-text multi-modal retrieval, and comprises the following steps: S1, respectively carrying out feature extraction on a text and an image; s2, establishing a relation between two modes of a text and an image in an interaction layer; s2.1, jointly inputting the feature vectors of the visual modality and the language modality obtained in the step S1 into an interaction layer of a multi-modality pre-training model; s2.2, utilizing an attention mechanism in the Transform to enable the two modes to be connected with each other; s3, taking the image-text matching or shielding language model as a pre-training target, and training the model to be available; and S4, taking a specific application scene and a downstream task as training targets, carrying out fine tuning training on the pre-training model, and enabling the performance of the model to be optimal in the scene. The training method provided by the invention solves the bottleneck problem of model operation time and the performance problem of the improved pre-training model after fine tuning, and has relatively important scientific significance and practical application value.

Owner:HUNAN UNIV OF TECH

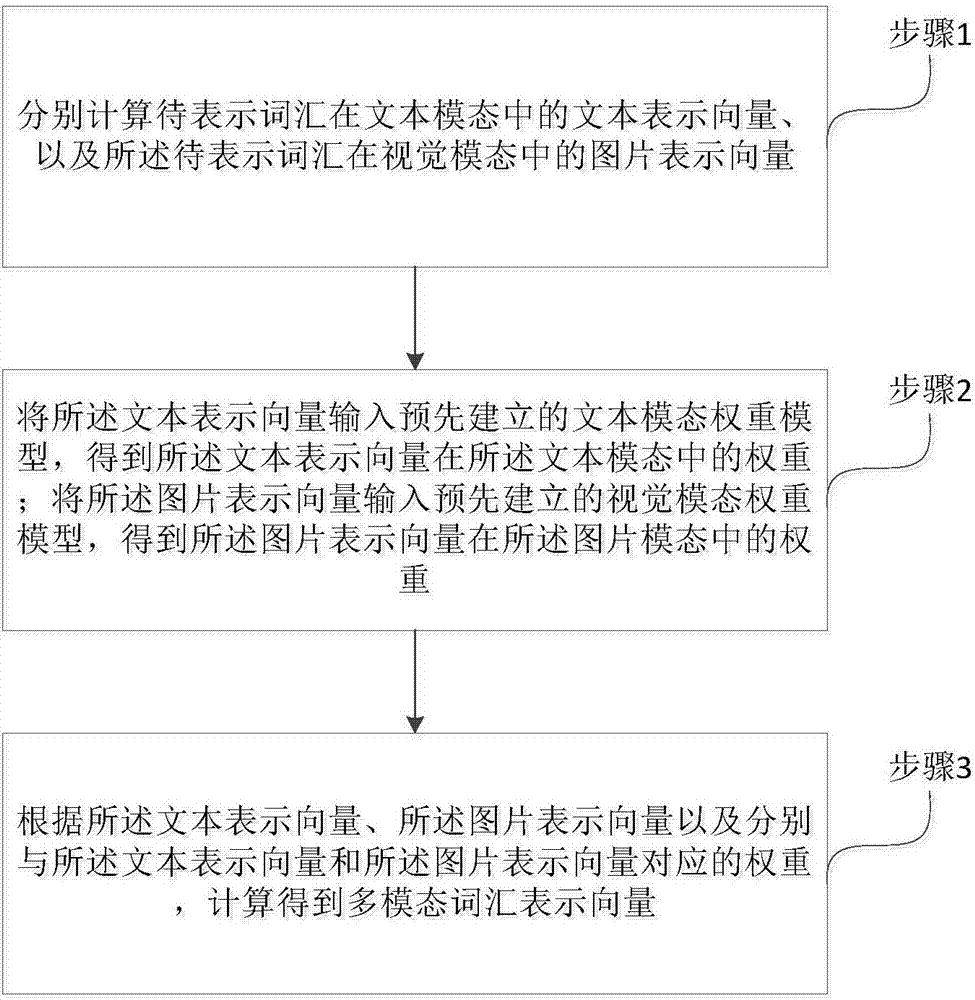

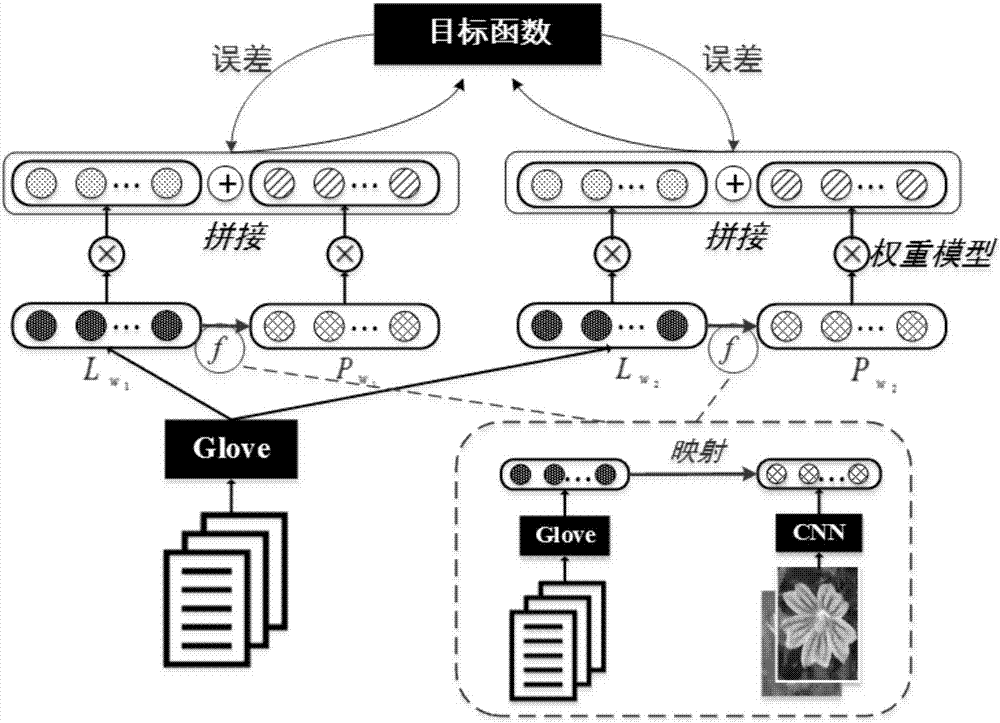

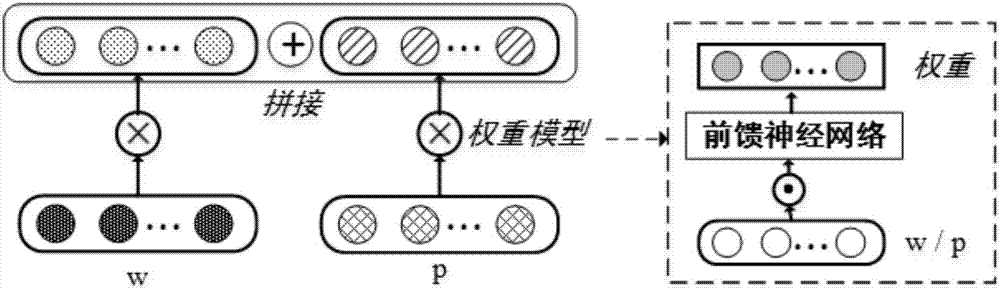

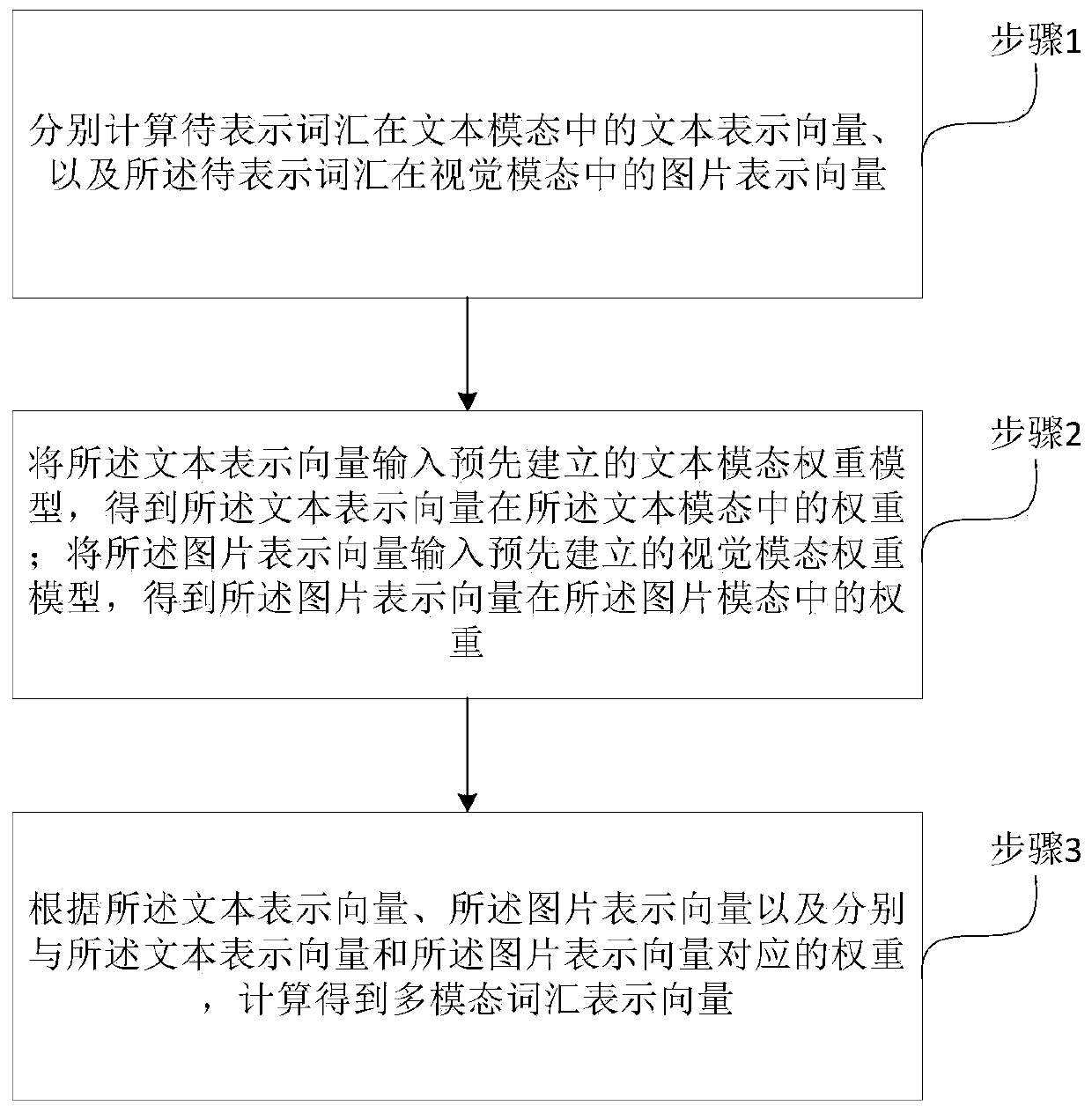

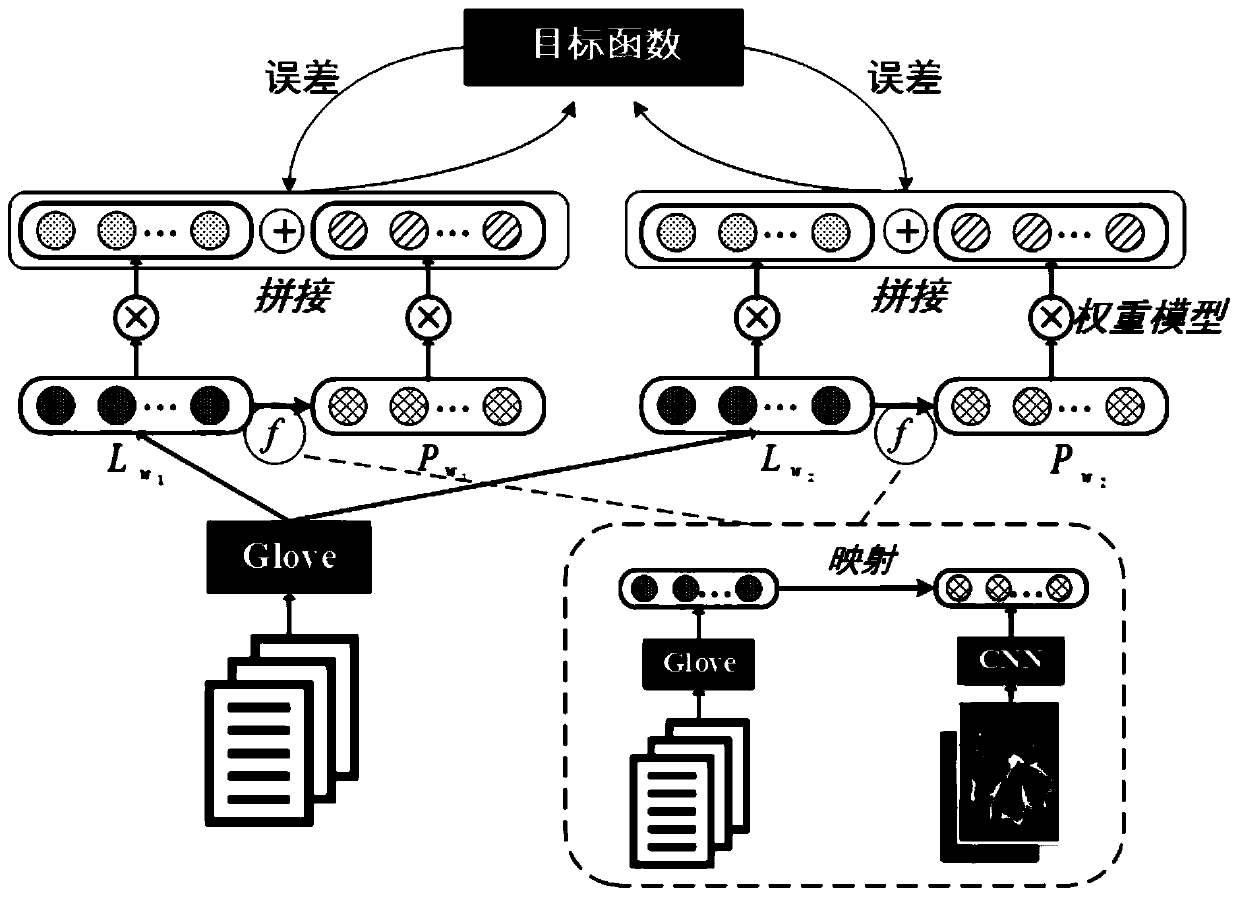

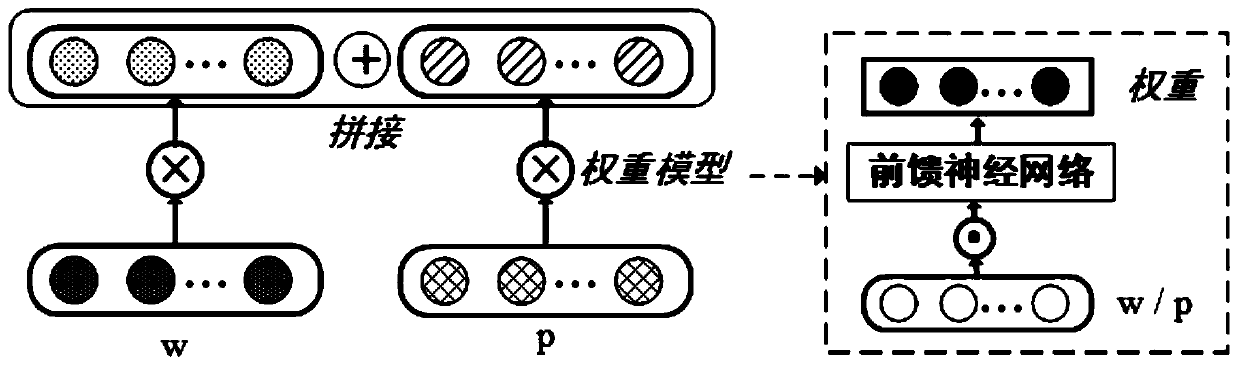

Multi-modality vocabulary representing method based on dynamic fusing mechanism

ActiveCN107480196AEnhance expressive abilityExplanation of validityNatural language analysisSpecial data processing applicationsPattern recognitionNetwork model

The invention provides a multi-modality vocabulary representing method. The multi-modality vocabulary representing method comprises the steps of calculating a text representing vector of a vocabulary to be represented in text modality and a picture representing vector of the vocabulary to be represented in visual modality; inputting the text representing vector into a pre-established text modality weight model to obtain the weight of the text representing vector in the text modality; inputting the picture representing vector into a pre-established visual modality weight model to obtain the weight of the picture representing vector in picture modality; conducting calculation to obtain a multi-modality vocabulary representing vector according to the text representing vector, the picture representing vector and weights corresponding to the text representing vector and the picture representing vector respectively. The text modality weight model is a neural network model of which input is the text representing vector and output is the weight of the text representing vector in the corresponding text modality; the visual modality weight model is a neural network model of which input is the picture representing vector and output is the weight of the picture representing vector in the corresponding visual modality.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

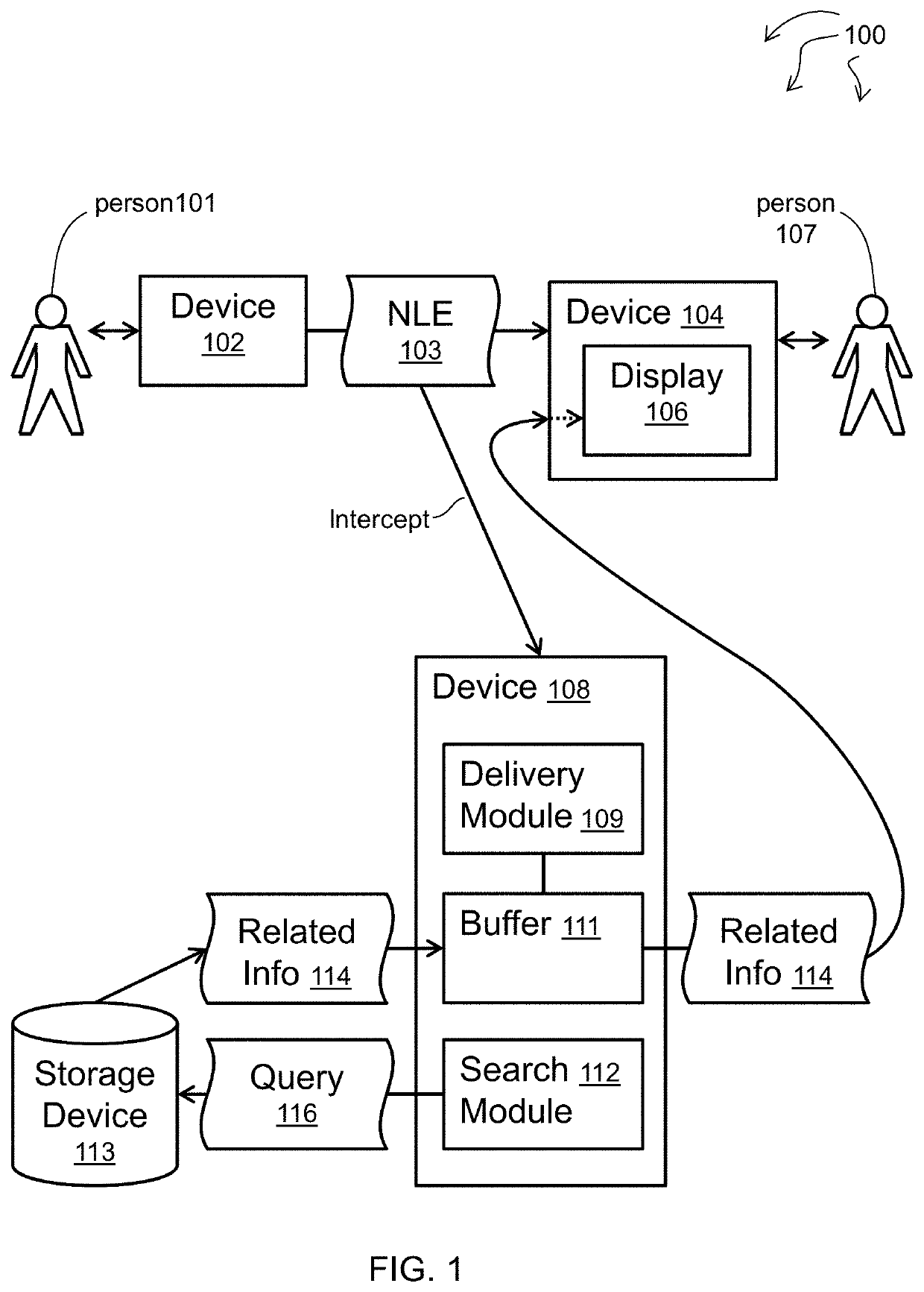

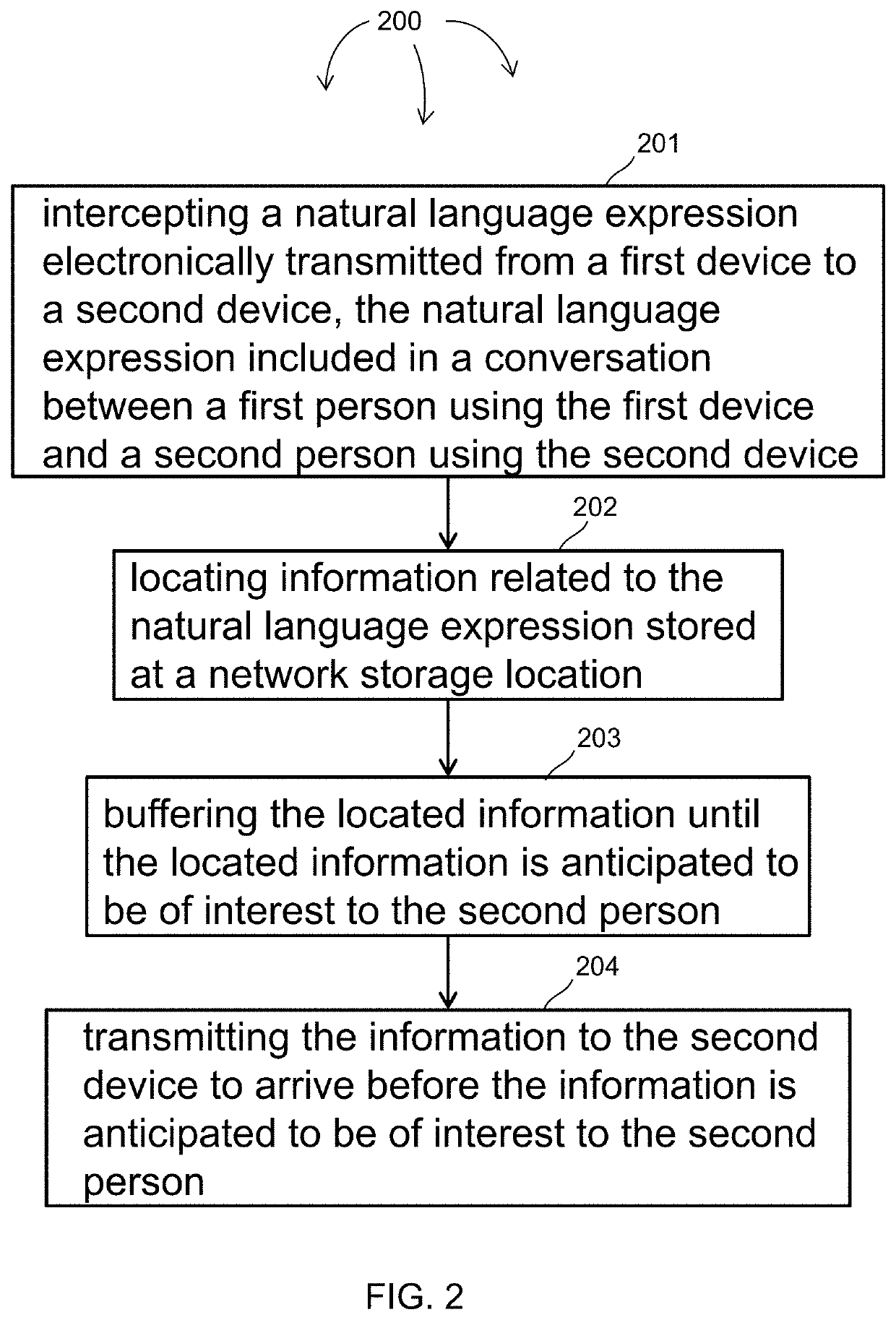

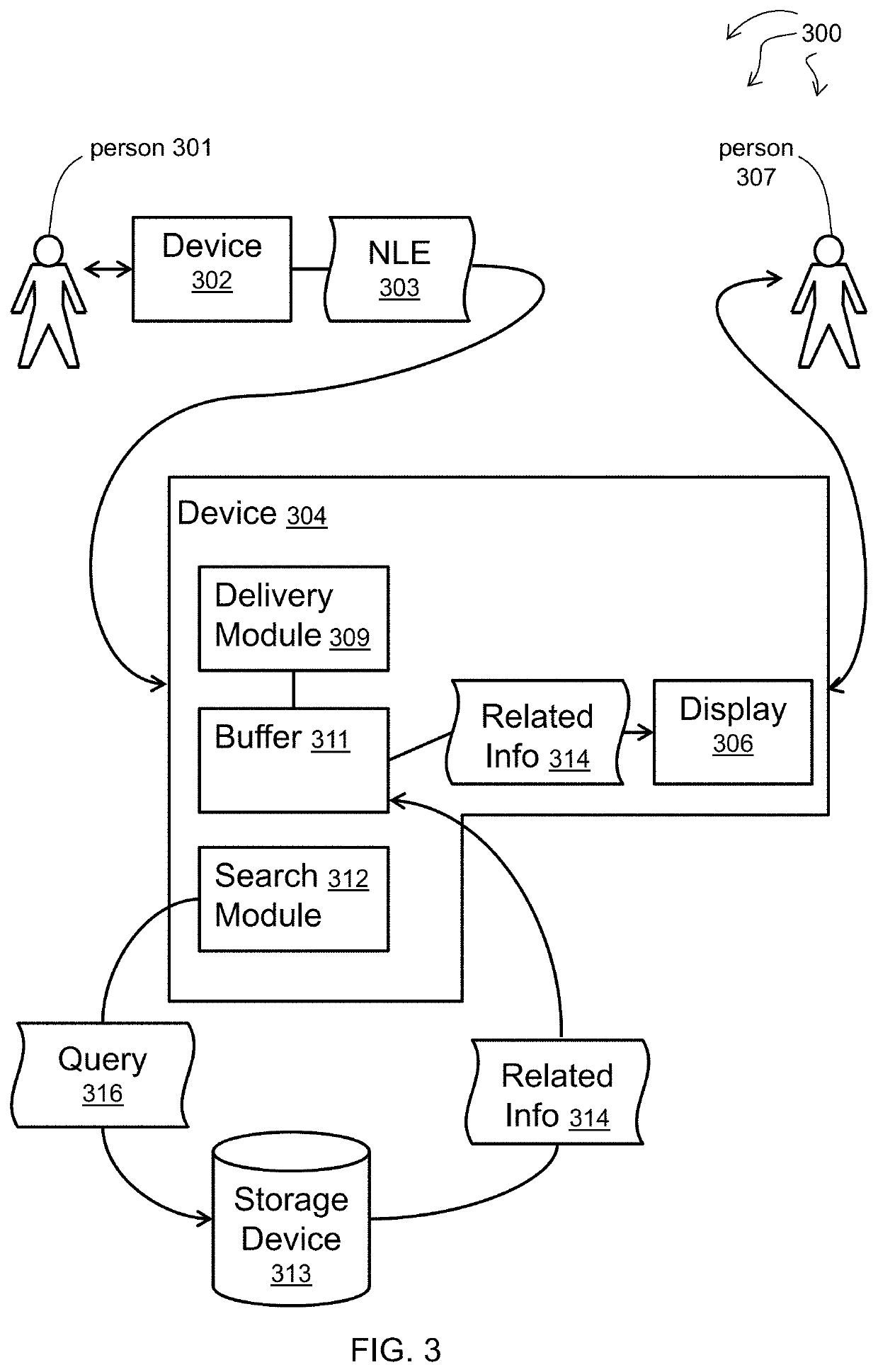

Visually presenting information relevant to a natural language conversation

InactiveUS20200043479A1Natural language translationSemantic analysisVisual presentationConcept search

The present invention extends to methods, systems, and computer program products for automatically visually presenting information relevant to an utterance. Natural language expressions from conversation participants are received and processed to determine a topic and concepts, a search finds relevant information and it is visually displayed to an assisted user. Applications can include video conferencing, wearable devices, augmented reality, and heads-up vehicle displays. Topics, concepts, and information search results are analyzed for relevance and non-repetition. Relevance can depend on a user profile, conversation history, and environmental information. Further information can be requested through non-verbal modes. Searched and displayed information can be in languages other than that spoken in the conversation. Many-party conversations can be processed.

Owner:SOUNDHOUND AI IP LLC

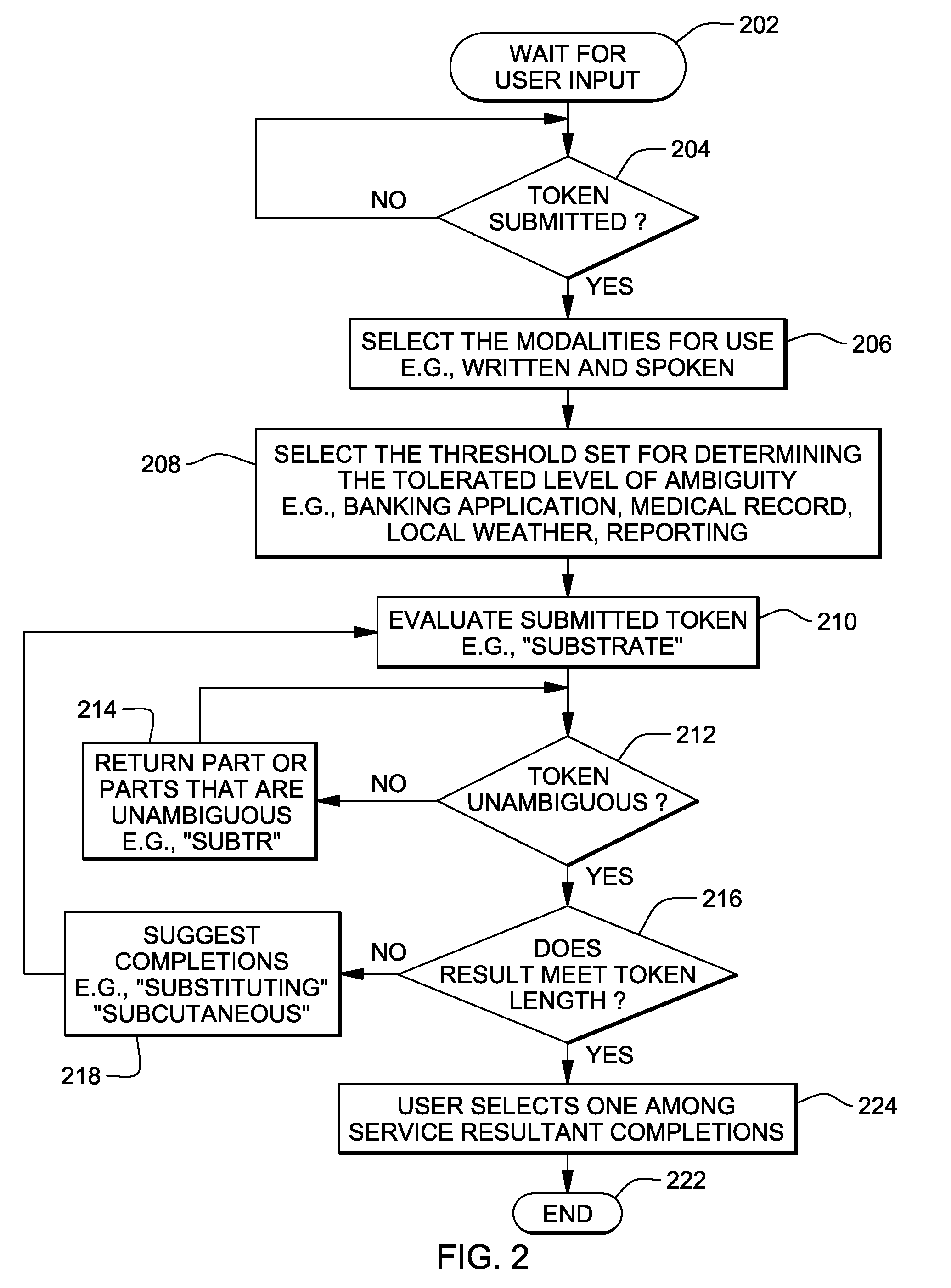

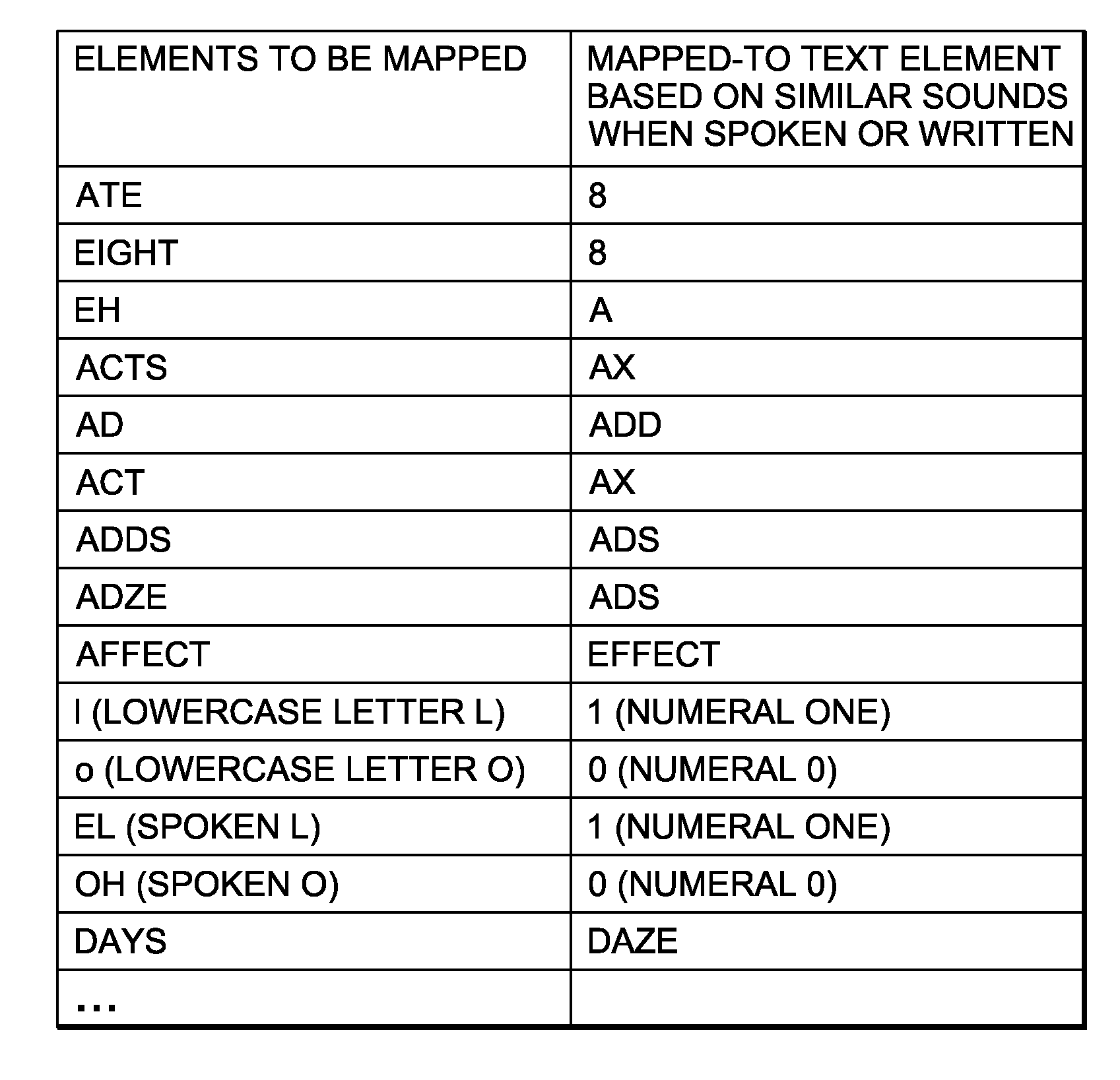

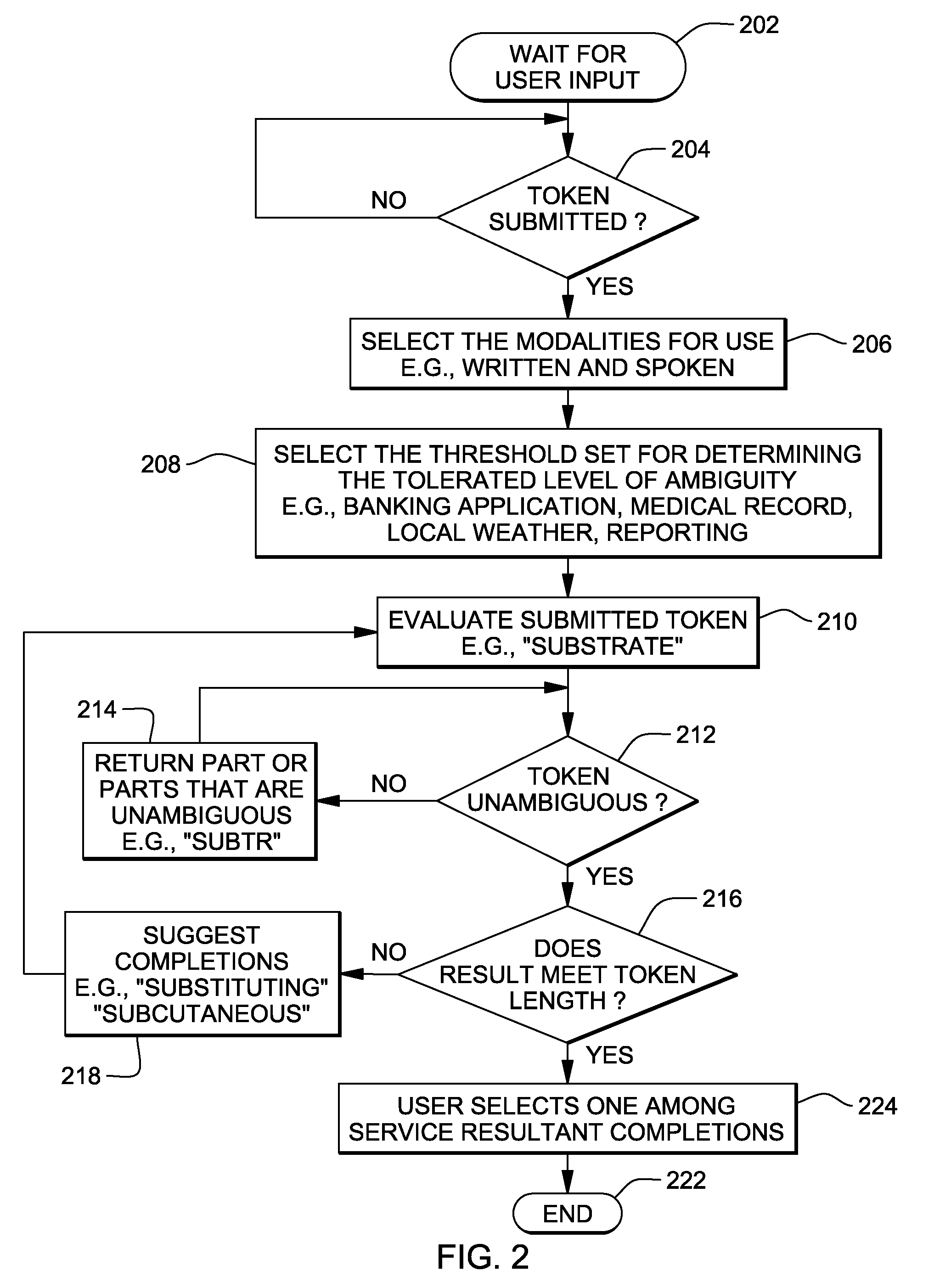

Method for reducing errors while transferring tokens to and from people

InactiveUS20100290482A1Minimize miscommunicationMinimize transformationLoop networksDiagnostic Radiology ModalityAlgorithm

A system, method and computer program produce for handling and minimizing miscommunication and transformation of tokens that are processed by humans, either verbally or in writing, during some part of a usage scenario. This is accomplished by filtering out confusing tokens, as determined by calculating a distance metric for each token. A distance metric may be calculated along a print modality, a visual modality or a verbal modality.

Owner:IBM CORP

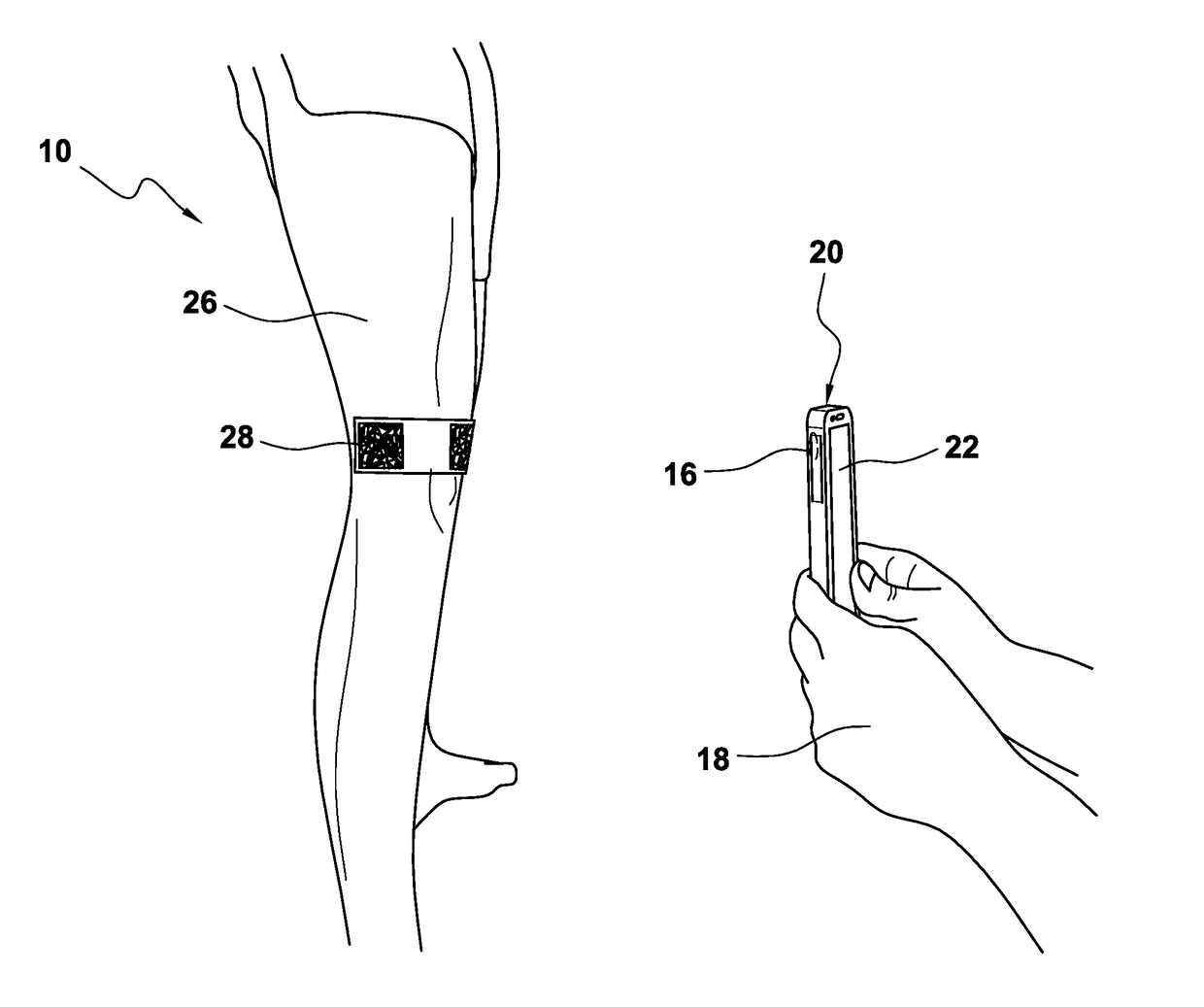

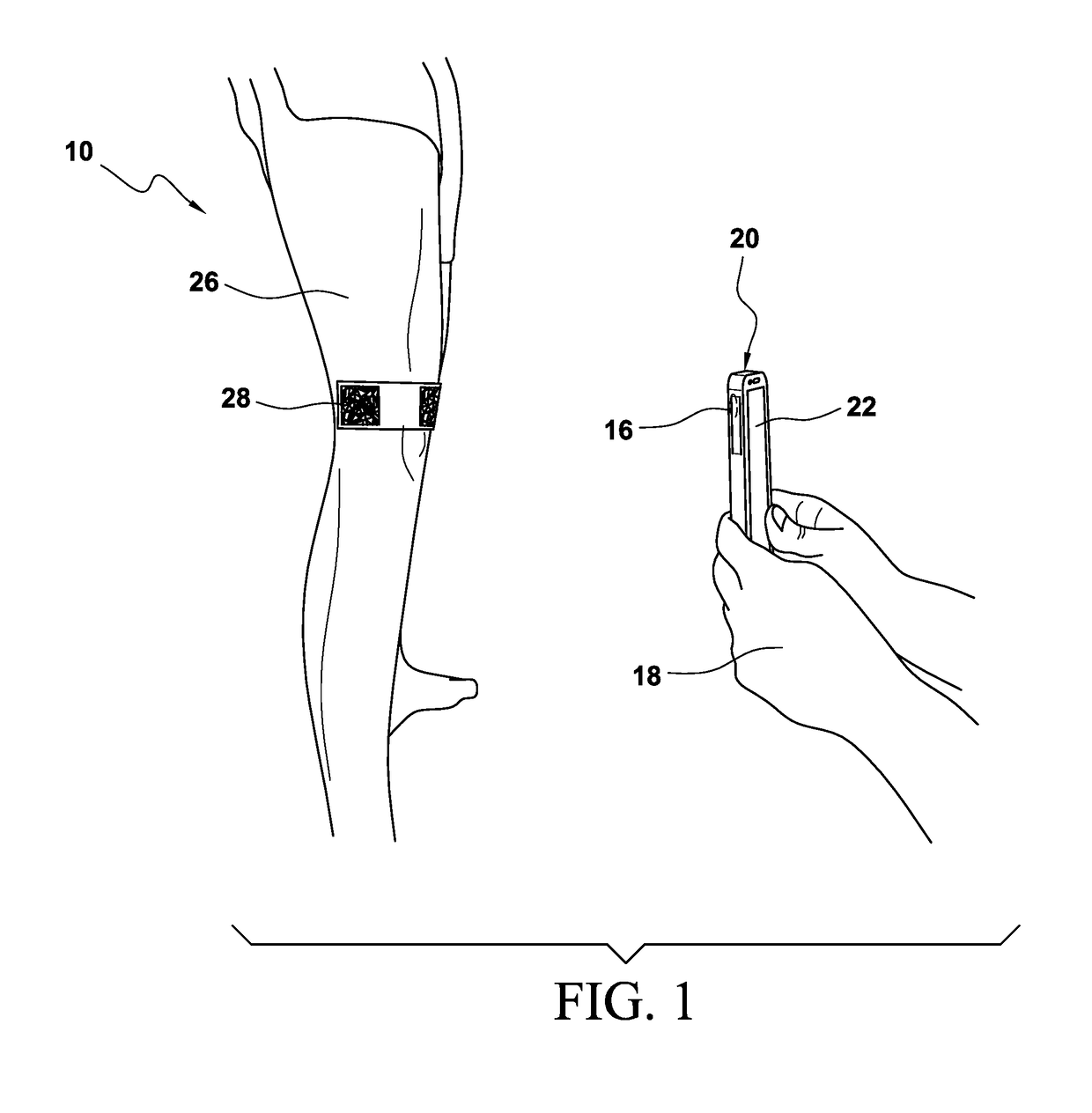

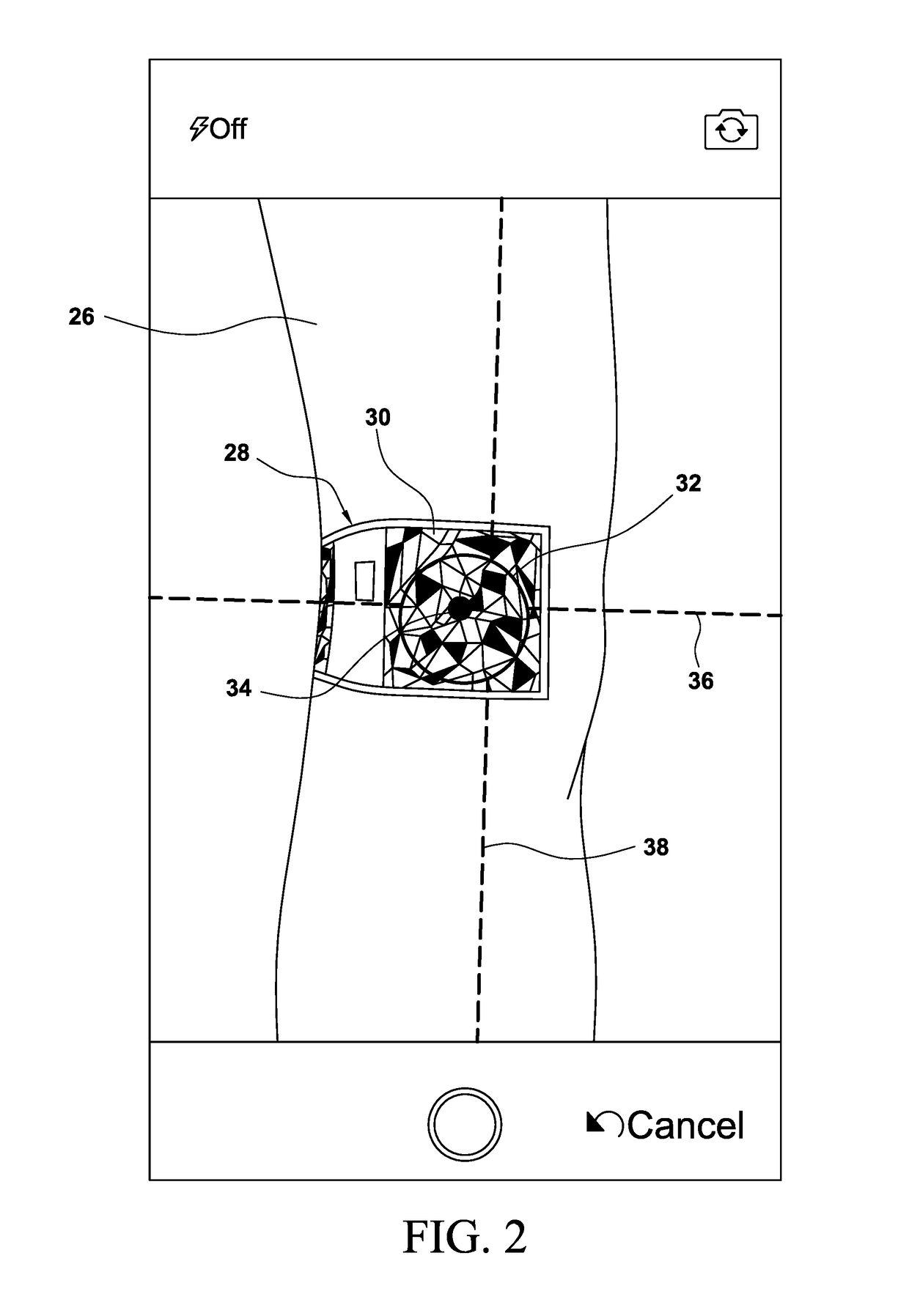

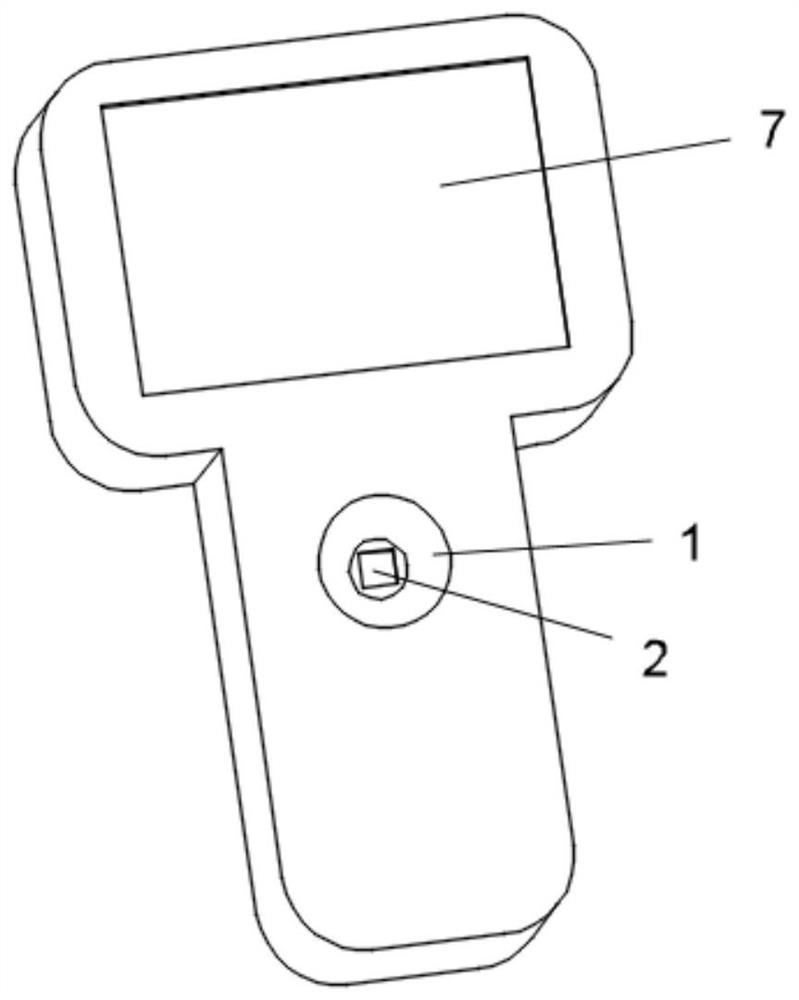

Measurement and ordering system for orthotic devices

ActiveUS20180000613A1Easy and accurate measurementMedical simulationPhysical therapies and activitiesComputer terminalOrthotic device

A system and method are provided for electronically capturing a subject's anatomy comprising an electronic device with a camera, display screen, and end-user software program to interface with the user. The software program tracks a target placed in view area, and gives visual feedback to the user based on target tracking. The software program includes criteria represented visually, via audio feedback, or haptic feedback, to the user indicating how to position the camera relative to the anatomy. The end-user software program may have means to automatically capture anatomy on the electronic device based on the criteria being met. The end-user software program may have means to electronically transmit the anatomical information to a remote location where said information can be used to build a custom orthotic device. The system may also have the means to detect and distinguish anatomic contours from other objects in the view area.

Owner:VISION QUEST IND VQ ORTHOCARE

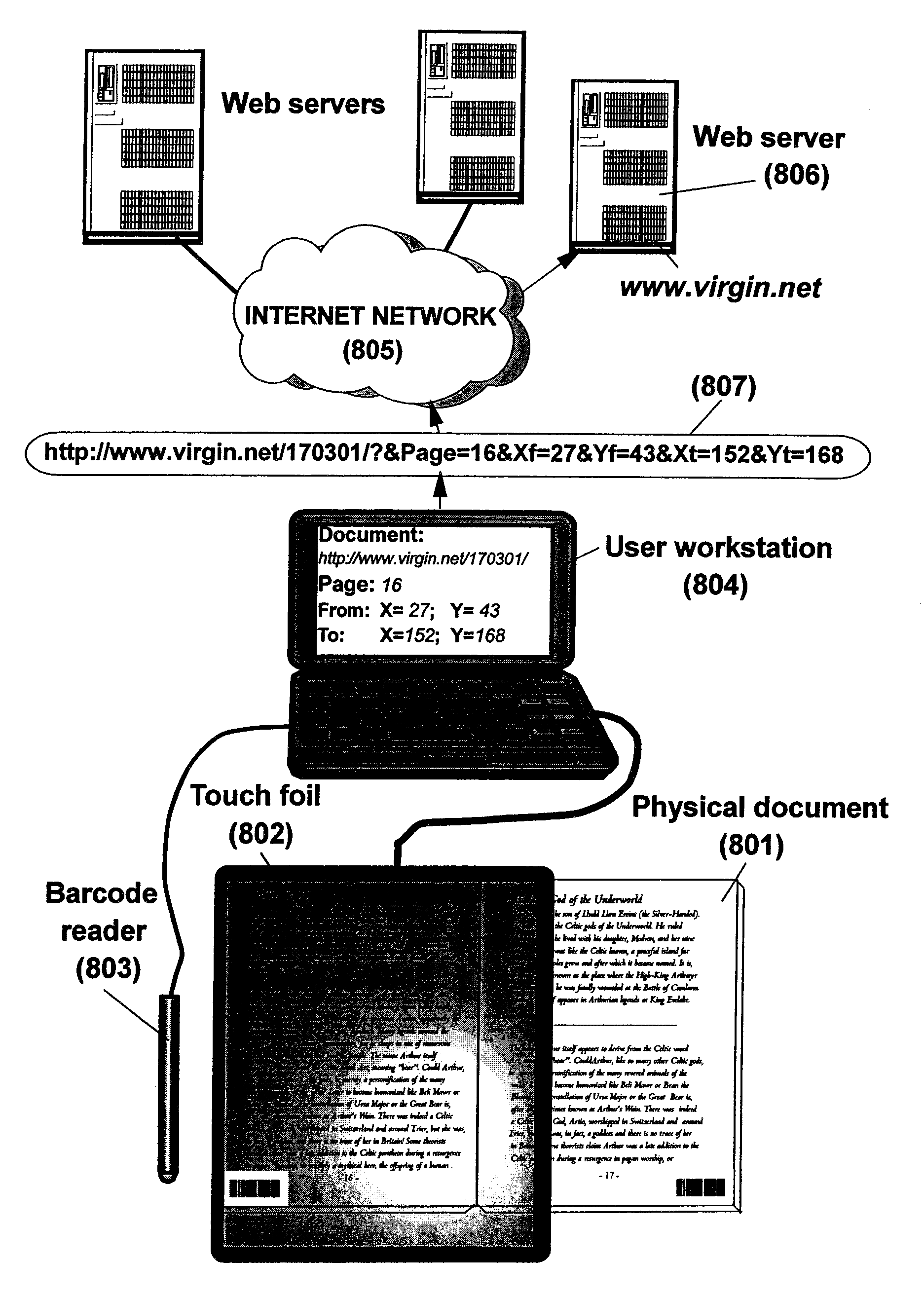

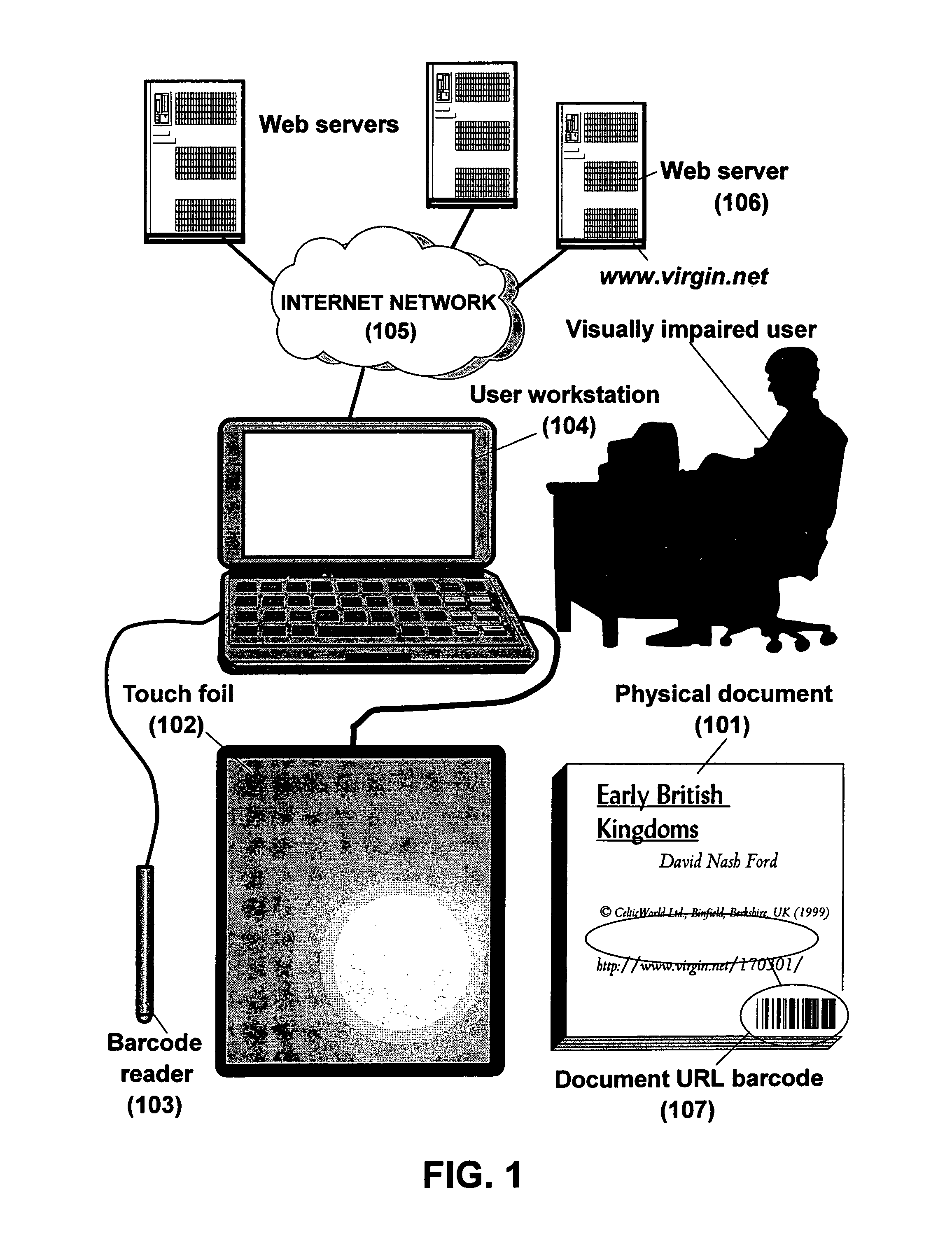

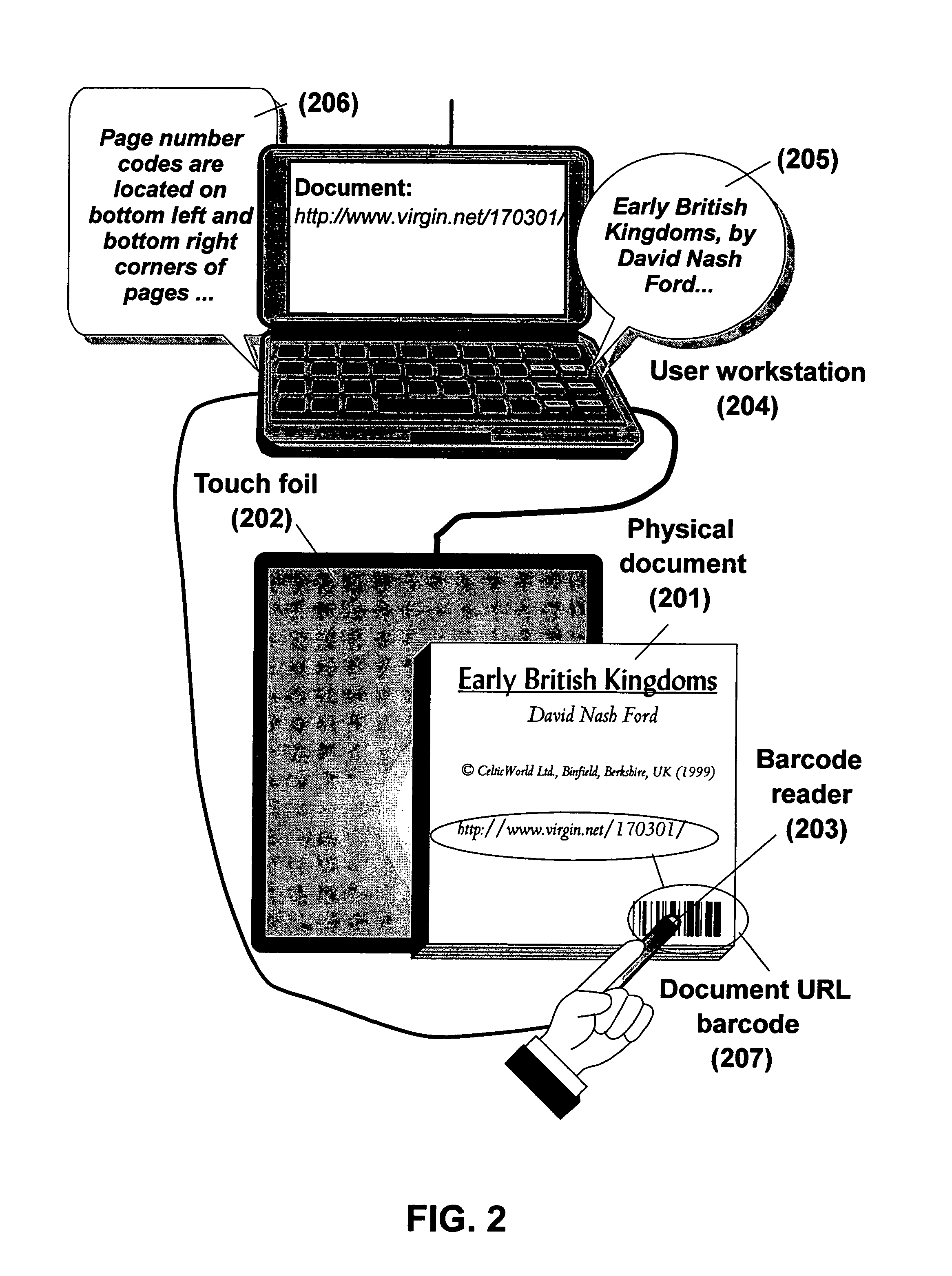

System and method to enable blind people to have access to information printed on a physical document

A method and system for use in a user system, for accessing information related to a physical document. An electronic copy of an existing physical document is identified and located. The electronic copy of the physical document is an exact replica of the physical document. One or more pages of the physical document are identified. A selected part of the physical document is identified using the position of points on the identified one or more pages of the physical document and in response, data related to the selected part of the physical document is retrieved from the electronic copy of the physical document. The retrieved data is presented visually to a visually impaired person or orally to a blind person on the user system, which enables the visually impaired person to see or hear, respectively, the retrieved data.

Owner:KYNDRYL INC

Color and symbol coded display on a digital badge for communicating permission to approach and activate further digital content interaction

ActiveUS10783546B2Facilitate communicationAdvertisementsStatic indicating devicesSocial mediaElectronic communication

The present invention claims and discloses a color and, or symbol coded display on a digital interactive badge for communicating a permission to approach and activate further digital content interaction. A non-verbal person to person ‘line of sight’ digital or electronic communication protocol standard (NVP) which allows one person to communicate with another using a direct line of sight signal from one interactive badge or screen to another. It allows the user to communicate and display their feelings, emotions, states of mind and consciousness, their like-mindedness with others, their social media activity, images, favorite brands, videos, purchased items and advertising all inside a new and comprehensive language NVP and in a visual way. NVP also allows the user to send invites to approach, talk, interact and to send digital information to their NVP capable interactive badge or screen or static receiver. NVP has been designed to run on hardware that can be programmed from the NVP interface and is designed specifically for the wearable technology market.

Owner:BLUE STORM MEDIA

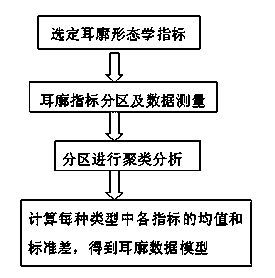

Personalized auricle data model building method based on normal auricle morphological classification

InactiveCN104077805ASmooth connectionCloselyCharacter and pattern recognitionProsthesisPersonalizationEar Cartilage

The invention discloses a personalized auricle data model building method based on normal auricle morphological classification. Classification indexes for describing a precise auricle structure and building indexes of the personalized data model for describing a basic auricle shape are included. On one hand, data measuring is carried out on auricle indexes, the position of a crus of helix serves as a boundary for partitioned clustering analysis to obtain the auricle data model; on the other hand, according to personalized index data, the personalized auricle data model is further obtained on the basis of the data model obtained through normal auricle classification. Compared with traditional auricle visual modality description and classification, the data model in the method is obtained after clustering analysis of quantitative auricle morphological indexes, so that classification is more accurate, standard and objective, and real auricle information can be reflected better. The personalized auricle data model built due to the fact that personalized index values are introduced into the auricle data model has significant meaning in manufacturing personalized ear cartilage supports and further reconstructing personalized auricles.

Owner:THE THIRD XIANGYA HOSPITAL OF CENT SOUTH UNIV

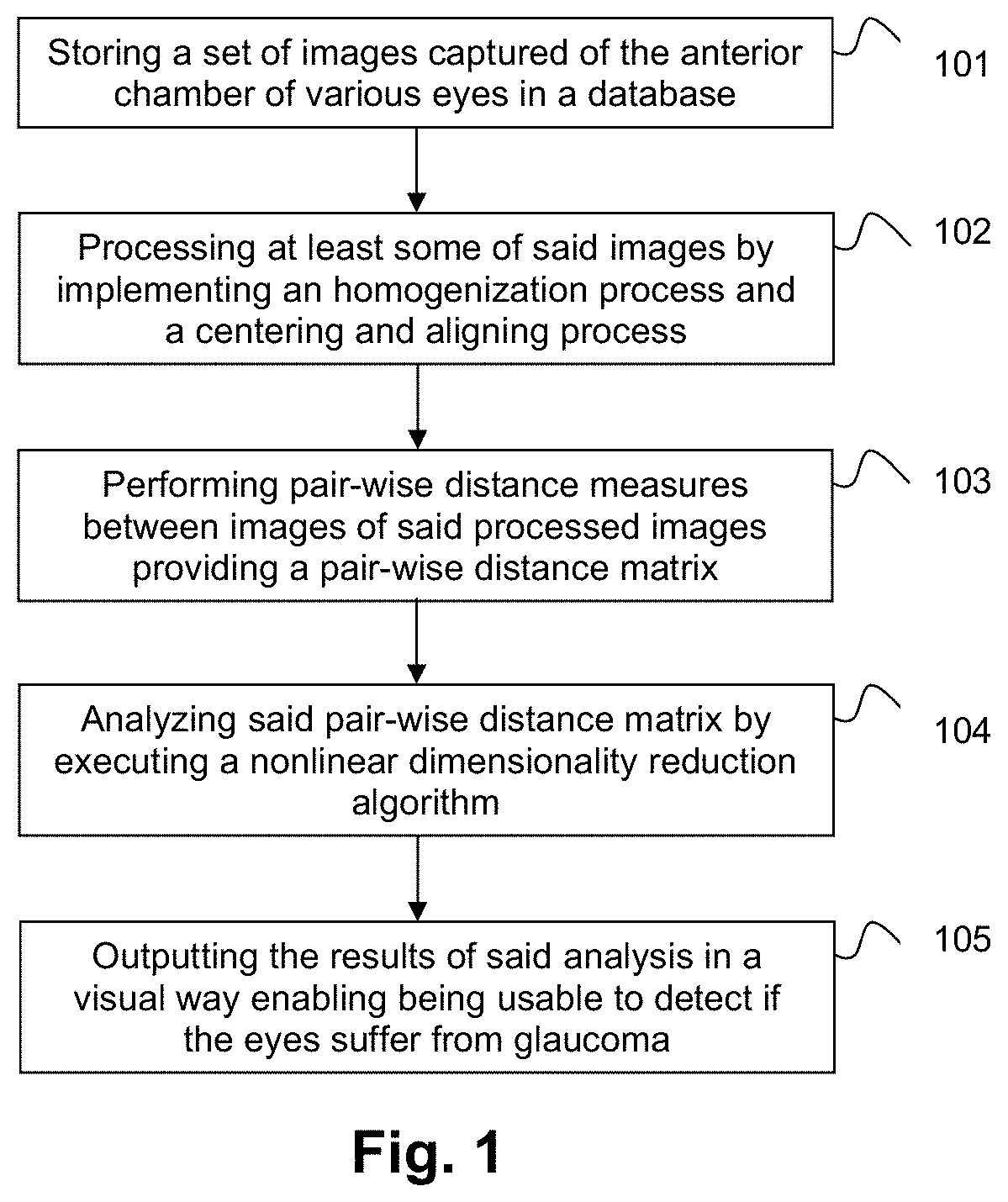

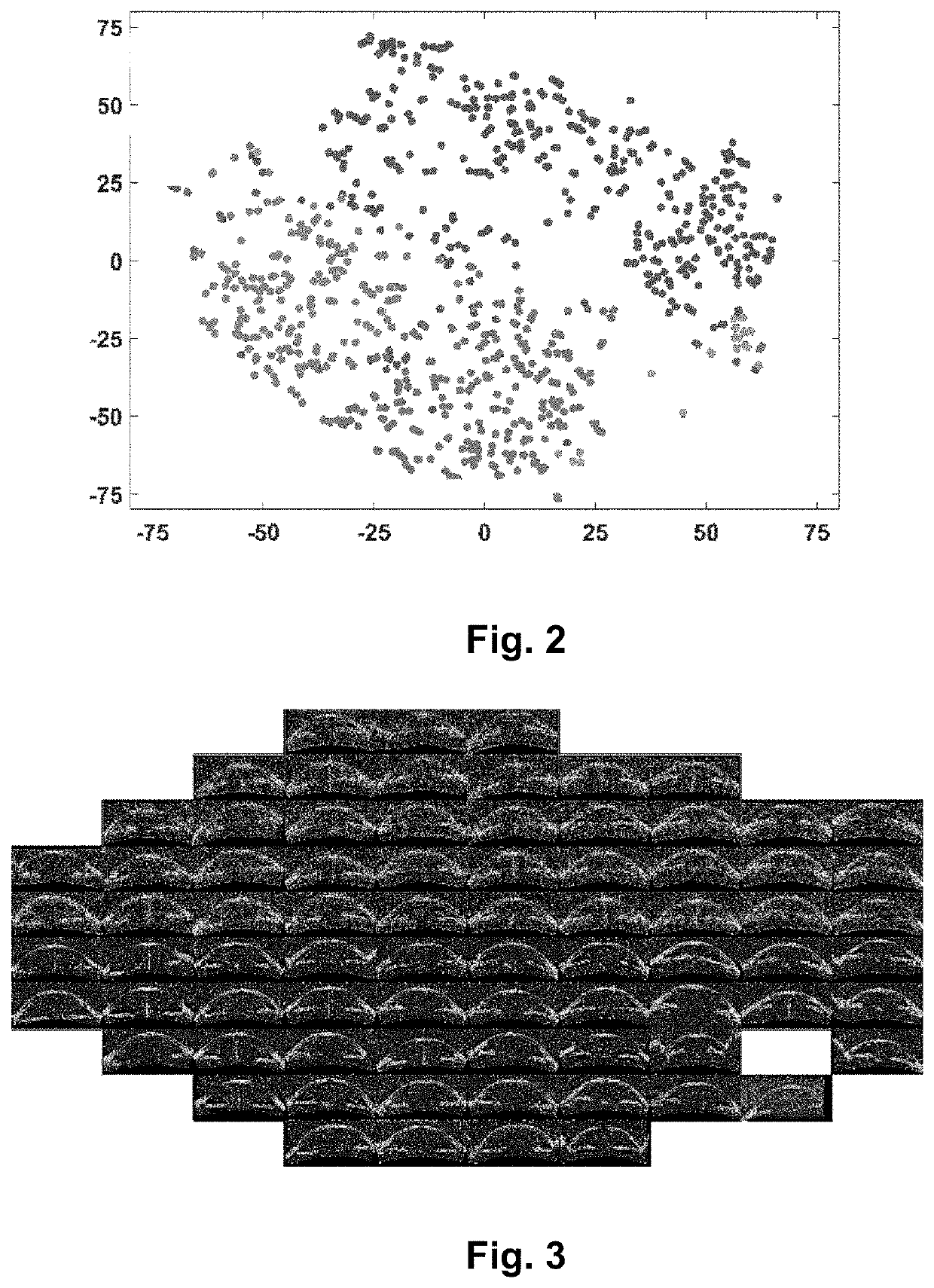

Image processing method for glaucoma detection and computer program products thereof

ActiveUS20200305706A1Quantity minimizationMinimizes amount of informationImage enhancementMedical data miningImage manipulationEngineering

The method comprises storing a set of images captured of the anterior chamber of various eyes, and using a processor for: a) processing some of said stored images by implementing an homogenization process that adjusts an horizontal and a vertical spatial resolution of each image of the set to be the same, and a centering and aligning process that computes statistical properties of the images, and uses said computed statistical properties to compute a centroid and a covariance matrix of each image; b) performing pair-wise distance measures between images of said processed images providing a pair-wise distance matrix; c) analyzing said pair-wise distance matrix by executing a nonlinear dimensionality reduction algorithm that assigns a point in an n-dimensional space associated to each analyzed image; and d) outputting the results of said analysis in a visual way enabling being usable to detect if said eyes suffer from glaucoma.

Owner:UNIV POLITECNICA DE CATALUNYA +1

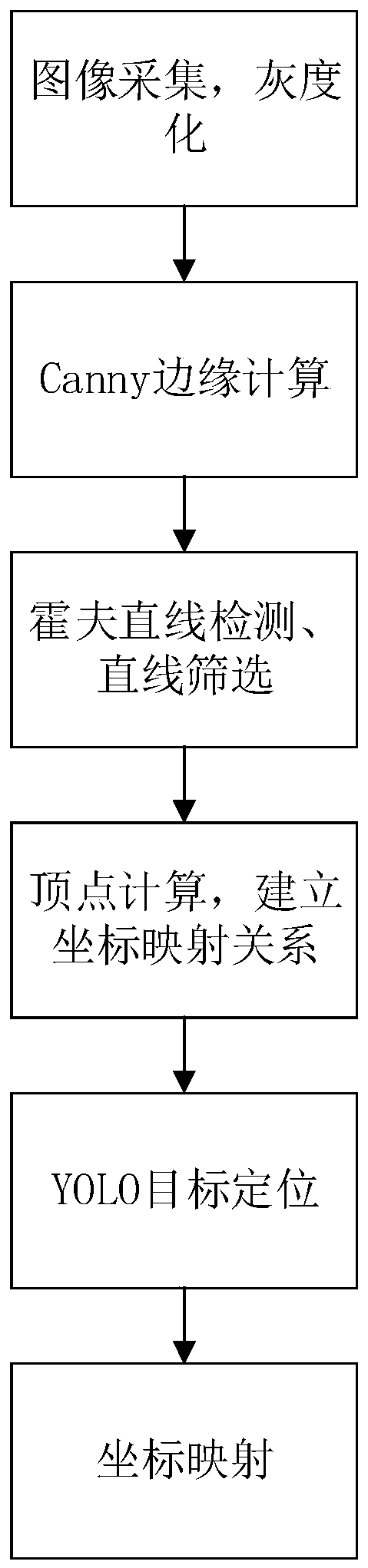

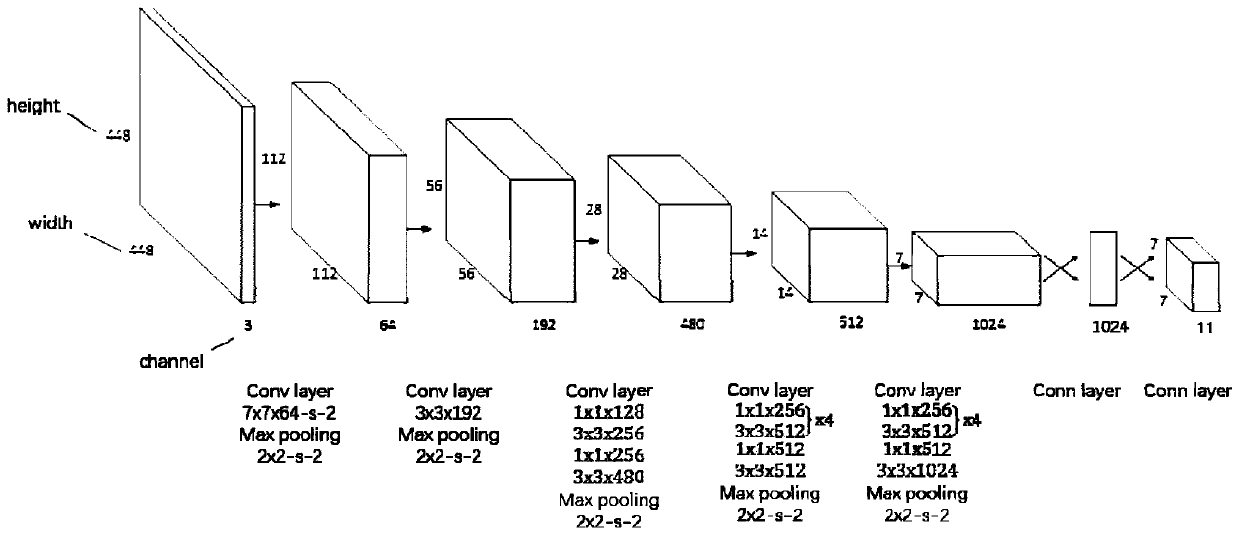

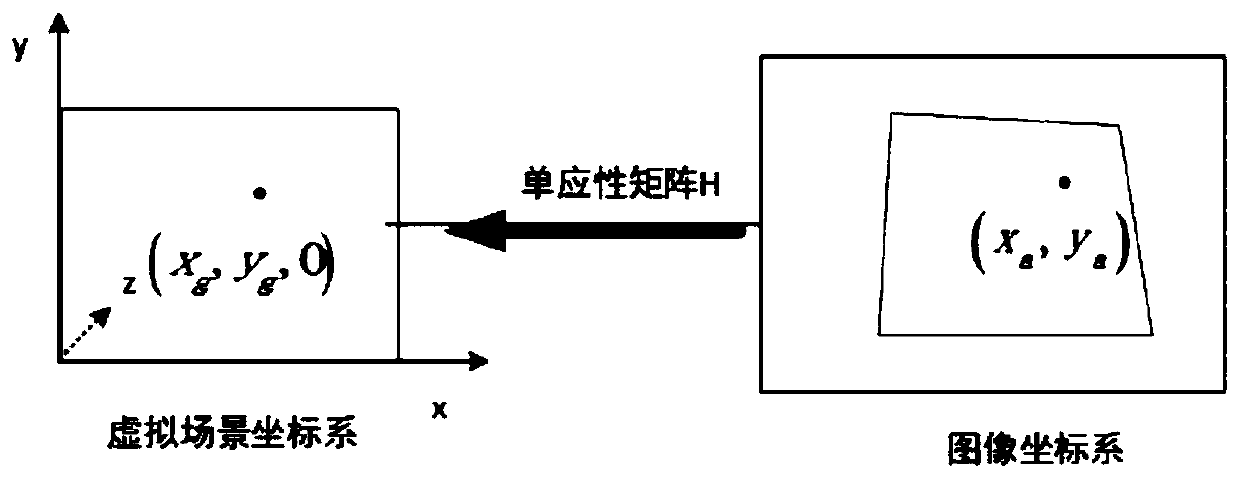

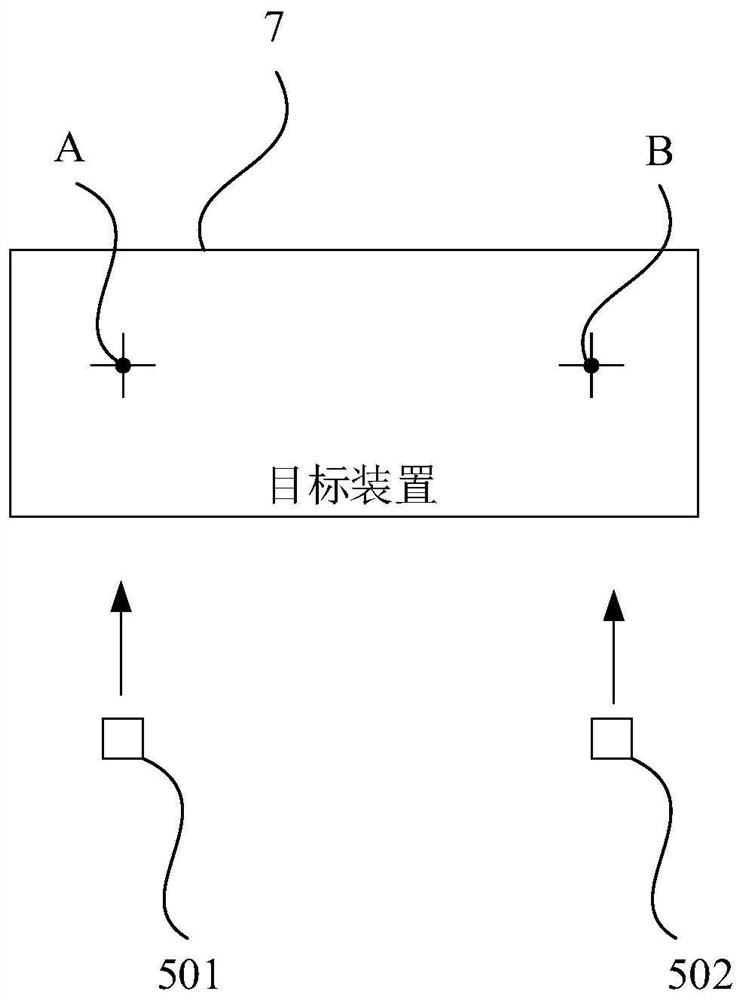

Projection interaction method based on pure machine vision positioning

The invention discloses a projection interaction method based on pure machine vision positioning, and the method comprises the following steps: S1, carrying out the graying of a source image collectedby a vision sensor, and positioning the boundary and four vertexes of a projection region in the image; S2, establishing a coordinate mapping relationship from a source image coordinate system to a projection scene coordinate system, and solving a coordinate transformation matrix H; S3, detecting a contact position of an interactive carrier in the source image on a projection plane based on a target detection algorithm in deep learning; S4, mapping the contact to a projection scene coordinate system through the coordinate transformation relationship established in the step S2 to complete human-computer interaction. Aiming at the defect that a current projection interaction scheme based on infrared positioning depends on infrared equipment, a projection plane is positioned by adopting a method based on straight line detection, contact detection is realized by adopting a pure vision mode for positioning, interaction carrier coordinates are mapped into a projection scene coordinate system through a coordinate mapping relationship, and accurate interaction is realized.

Owner:SOUTH CHINA UNIV OF TECH

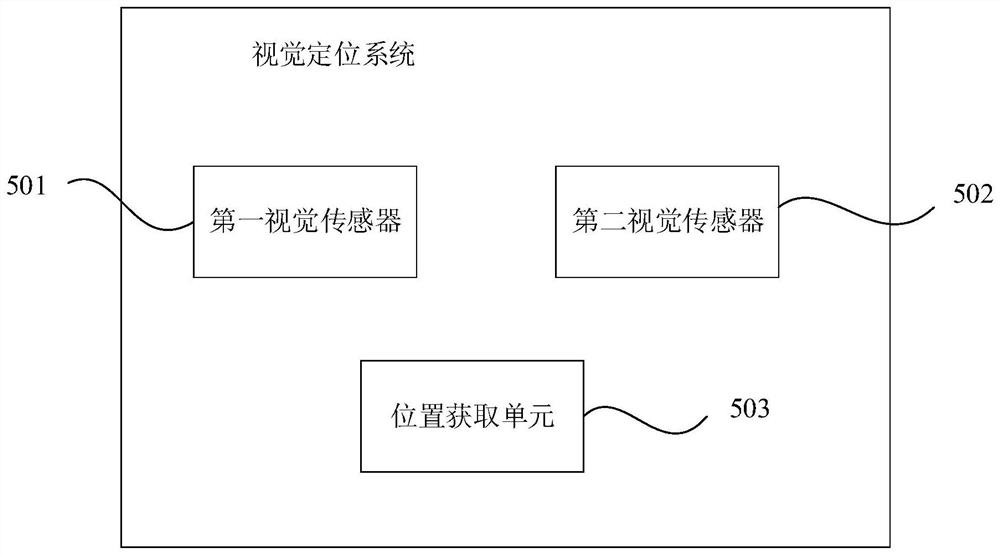

Visual positioning system, battery replacement equipment and battery replacement control method

PendingCN113670192AAchieve precise positioningHigh positioning accuracyCharging stationsUsing optical meansComputer hardwareControl engineering

The invention discloses a visual positioning system, battery replacement equipment and a battery replacement control method. The visual positioning system comprises a first visual sensor, a second visual sensor and a position acquisition unit, wherein the first visual sensor is used for acquiring a first image of a first position of the target device; the second visual sensor is used for acquiring a second image of a second position of the target device; the position acquisition unit is used for acquiring position information of the target device according to the first image and the second image. High positioning precision is obtained through a visual mode, and accurate positioning between the battery replacement equipment and the vehicle to be subjected to battery replacement is realized.

Owner:AULTON NEW ENERGY AUTOMOTIVE TECHNOLOGY GROUP

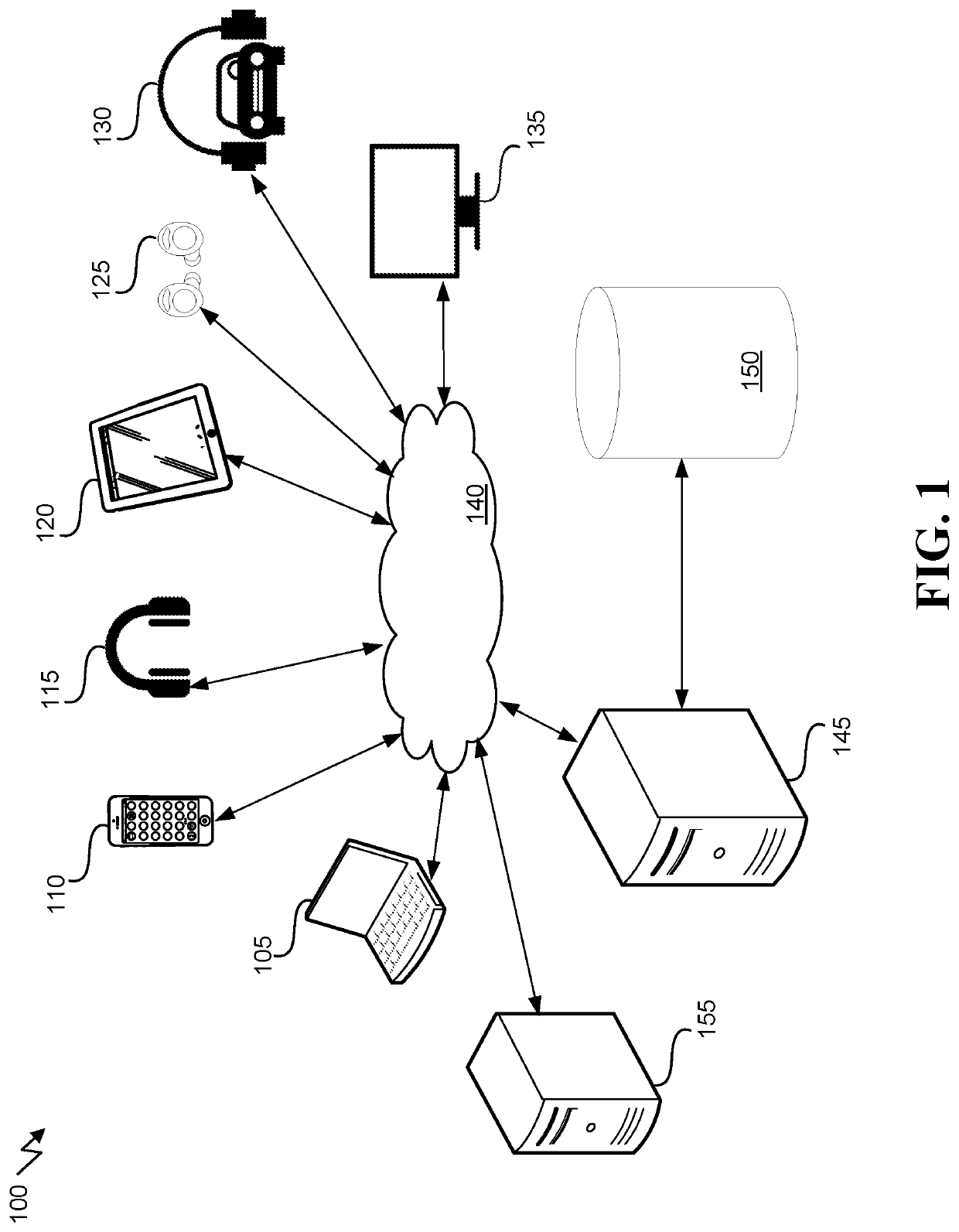

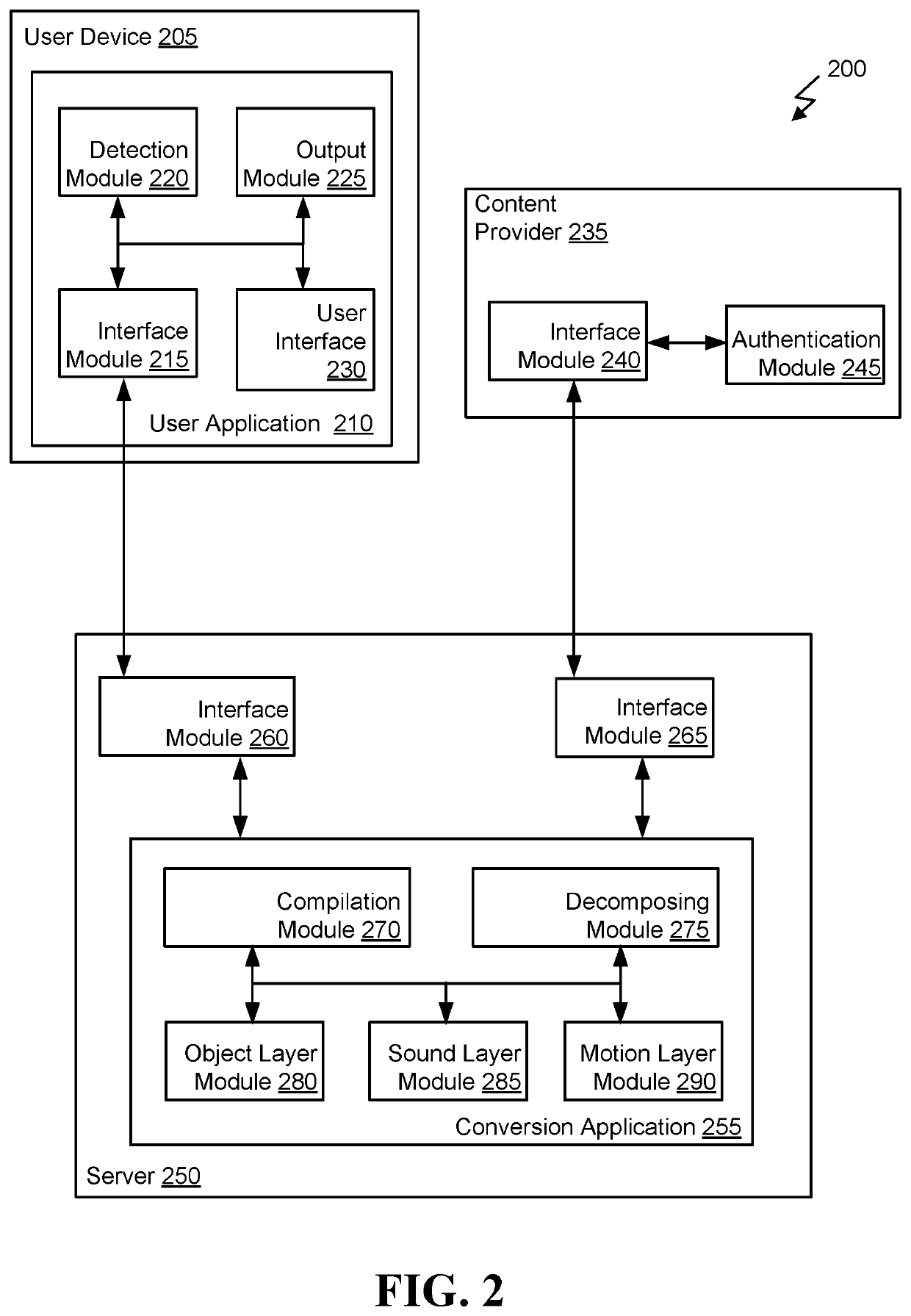

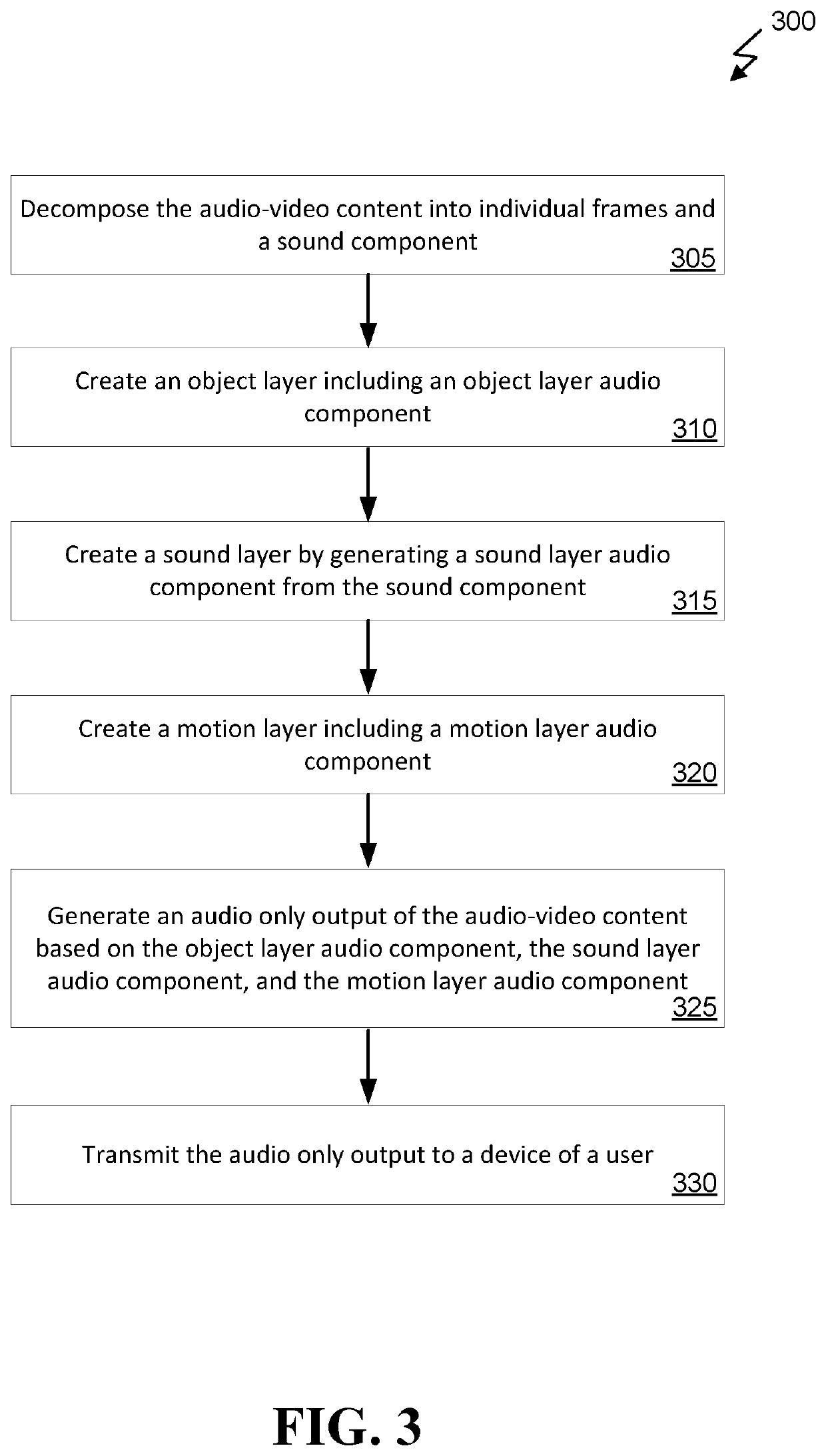

Eyes free entertainment

ActiveUS10645464B2Natural language translationTelevision system detailsVisual perceptionAudio frequency

Owner:DISH NETWORK LLC

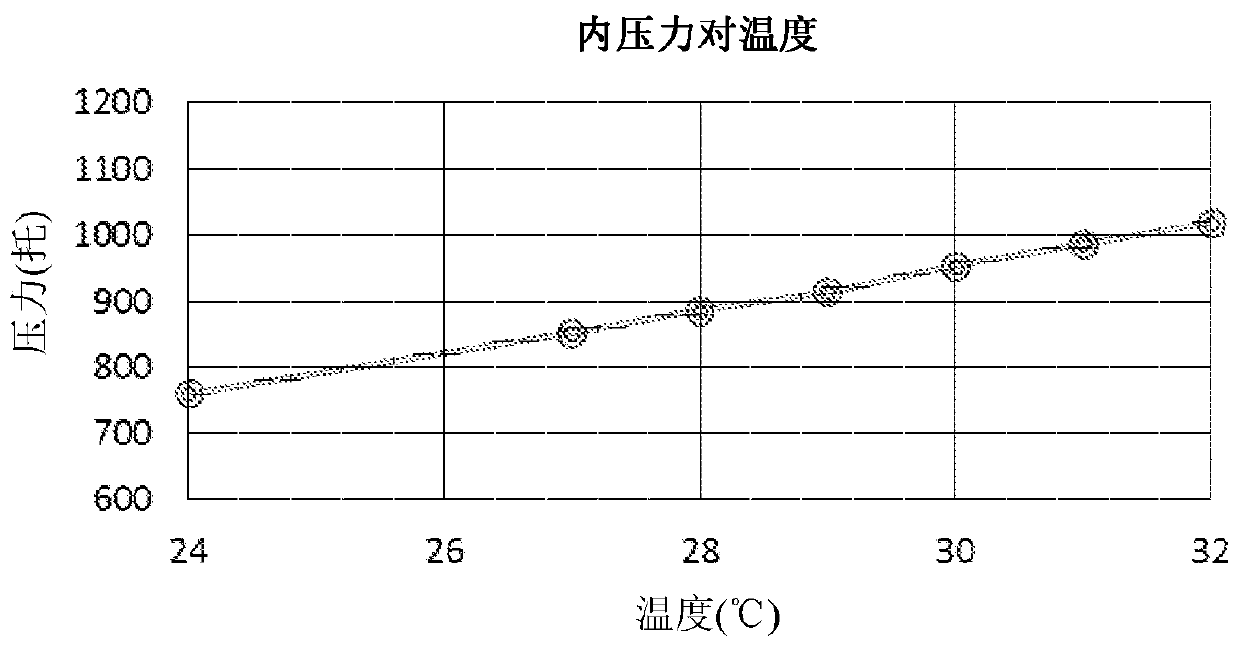

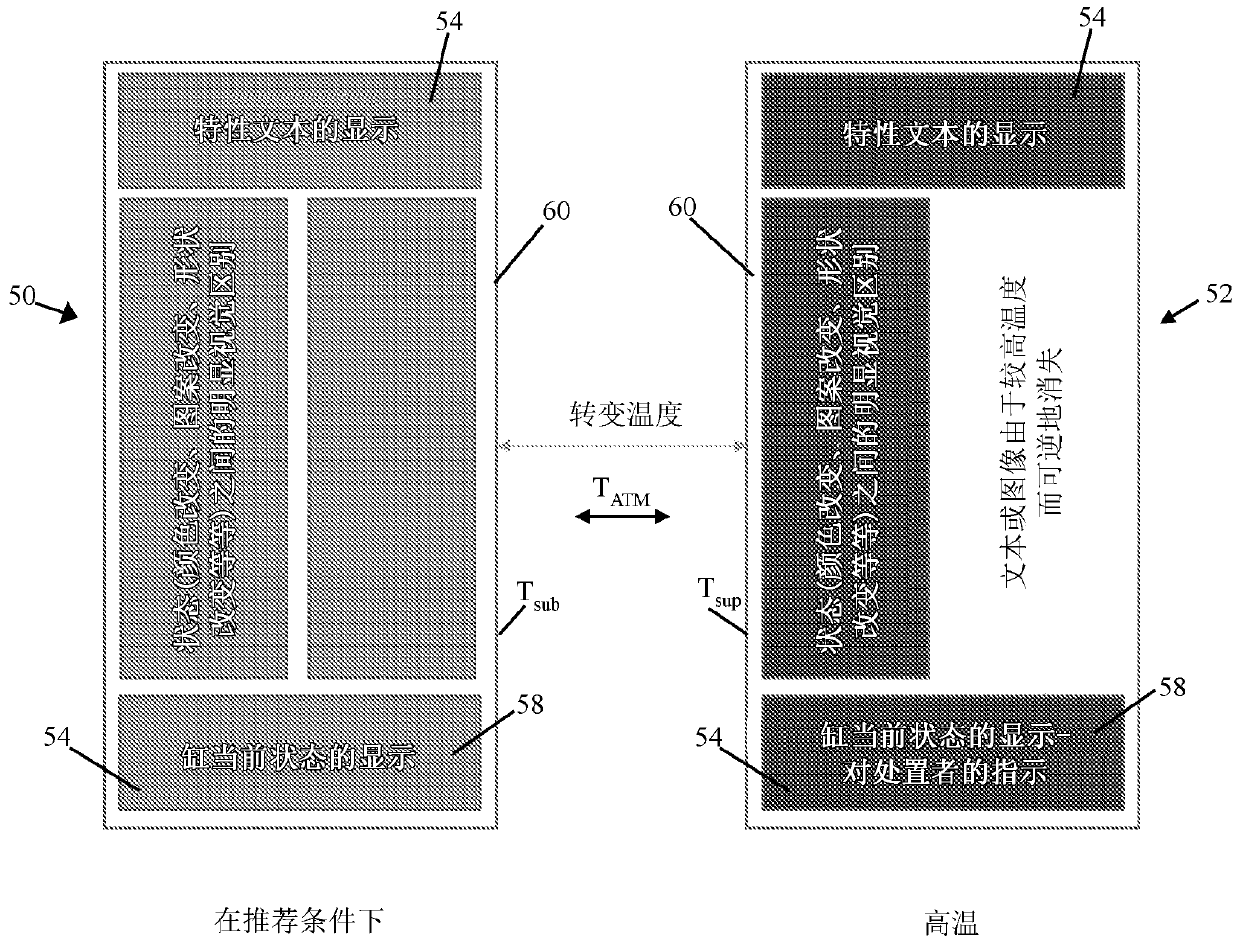

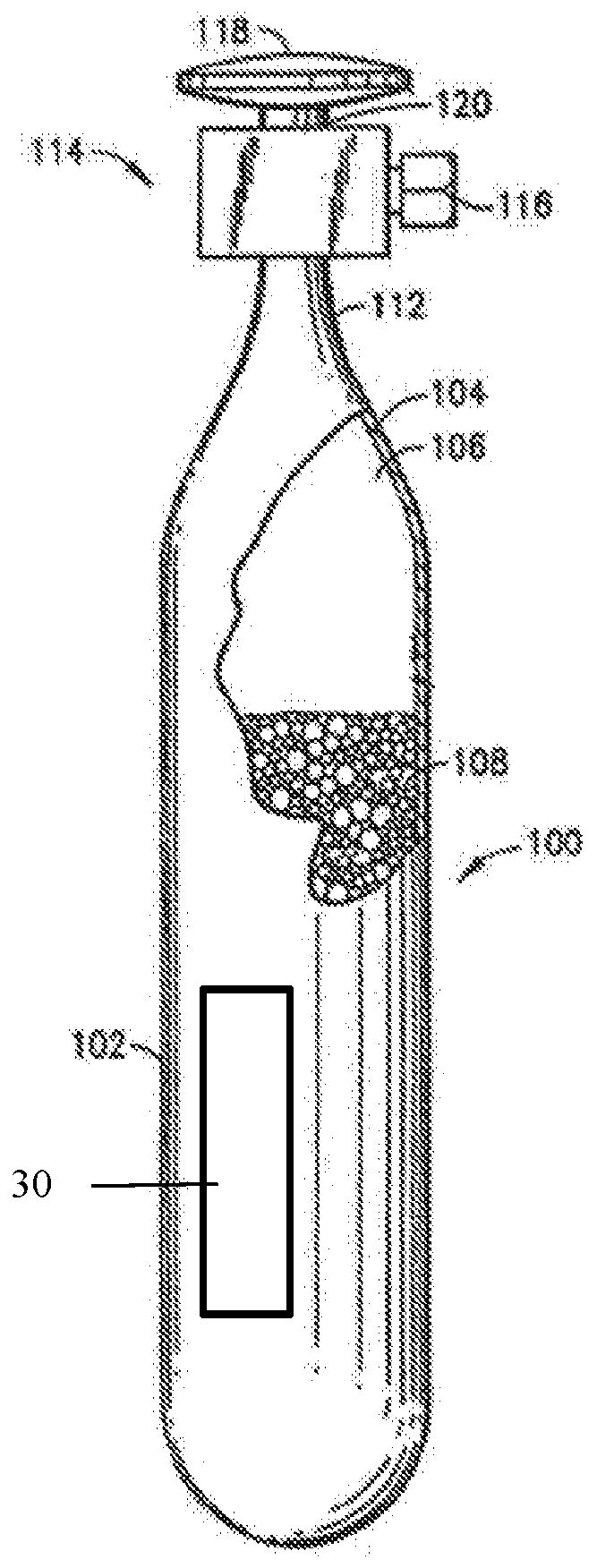

Thermochromic indicator for reagent gas vessel

PendingCN110770498ARelieve pressureReduce the temperatureVessel mounting detailsVessel geometry/arrangement/sizeSorbentProcess engineering

A dispensing and storage vessel for use in housing a gas such as toxic and hazardous gas in combination with a temperature sensitive label is described. Example dispensing and storage vessels includean adsorbent and a reagent gas within an internal volume of the vessel. A thermochromic label is adhered to the vessel and communicates temperature-sensitive information in a visual fashion to a user.

Owner:ENTEGRIS INC

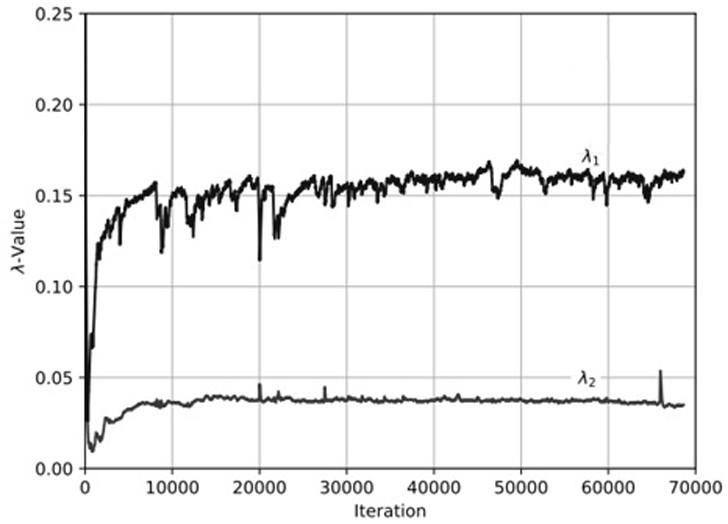

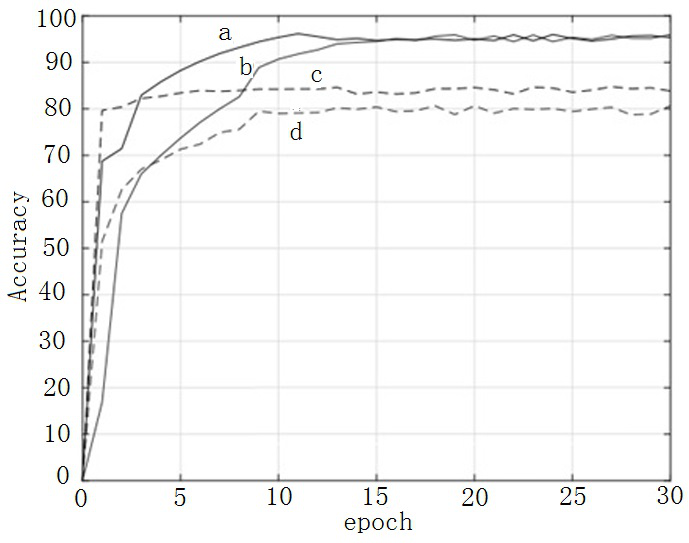

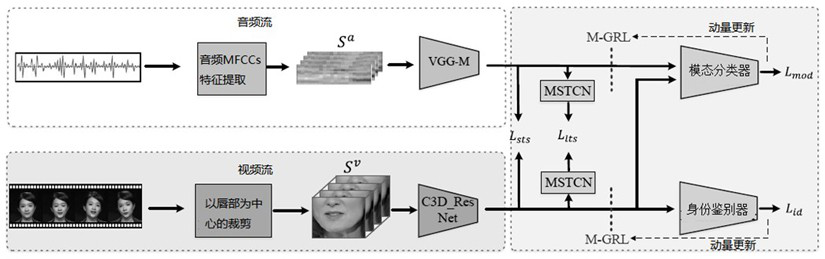

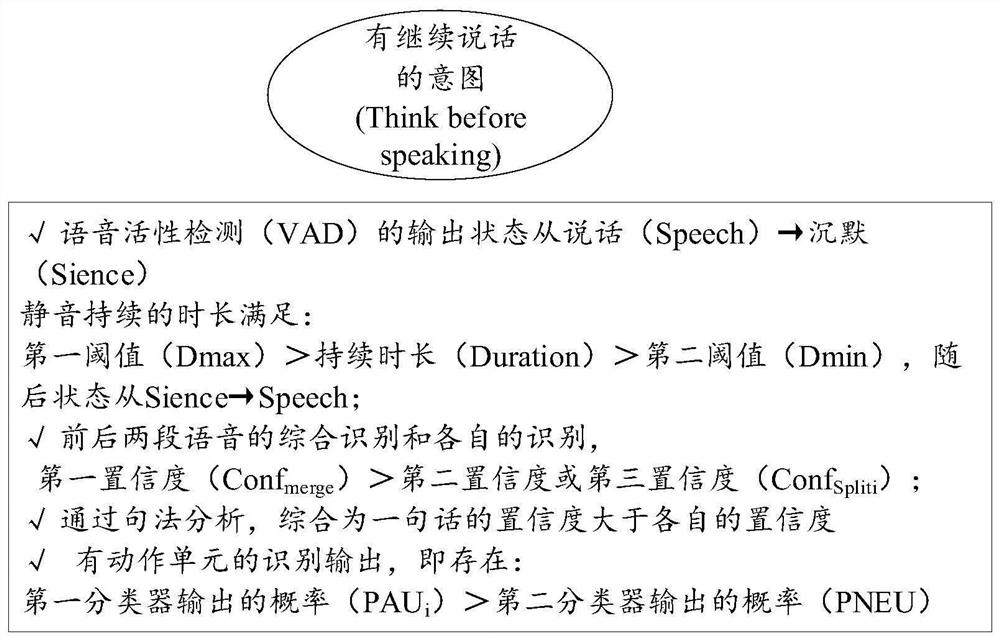

An adversarial double-contrast self-supervised learning method for cross-modal lip reading

ActiveCN113239903BOptimal Representation LearningCharacter and pattern recognitionSpeech recognitionMedicineNoise

The present invention proposes an adversarial double-contrast self-supervised learning method for cross-modal lip reading, which includes a visual encoder, an audio encoder, two multi-scale temporal convolutional networks with average pooling, an identity discriminator, and a model state classifier. The method learns effective visual representations by combining audio-visual synchrony-based dual contrastive learning, identity adversarial training, and modality adversarial training. In double-contrastive learning, the noise-contrastive estimate is used as the training objective to distinguish real samples from noise samples. In the adversarial training, an identity discriminator and a modality classifier are proposed for audio-visual representation, the identity discriminator is used to distinguish whether the input visual features have a common identity, and the modality classifier is to predict whether the input features belong to the visual modality or not. The audio modality is then utilized for adversarial training using a momentum gradient reversal layer.

Owner:NAT UNIV OF DEFENSE TECH

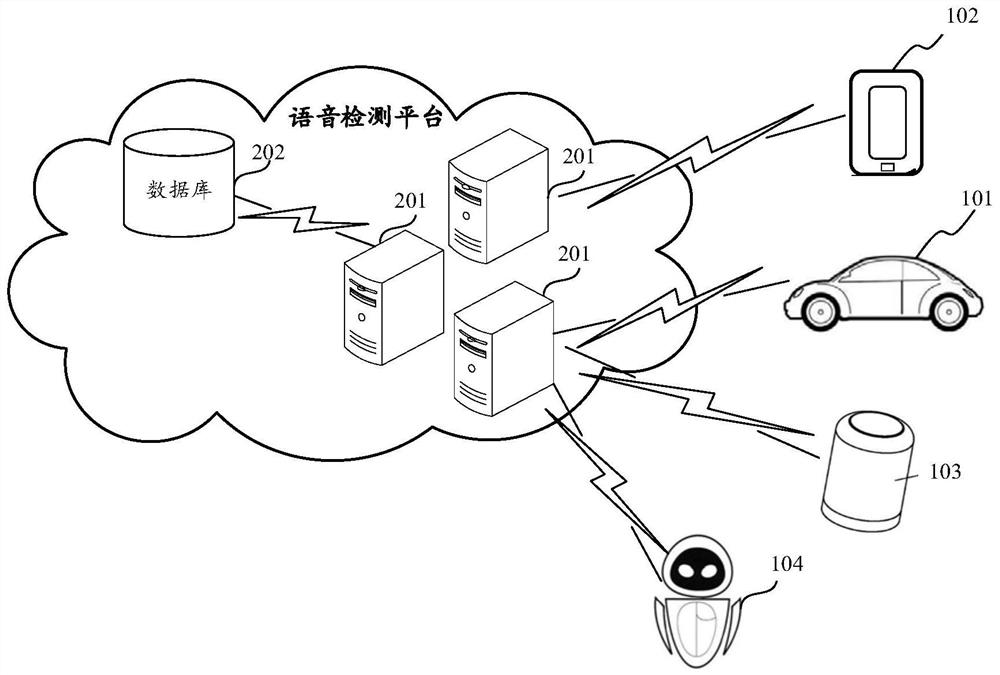

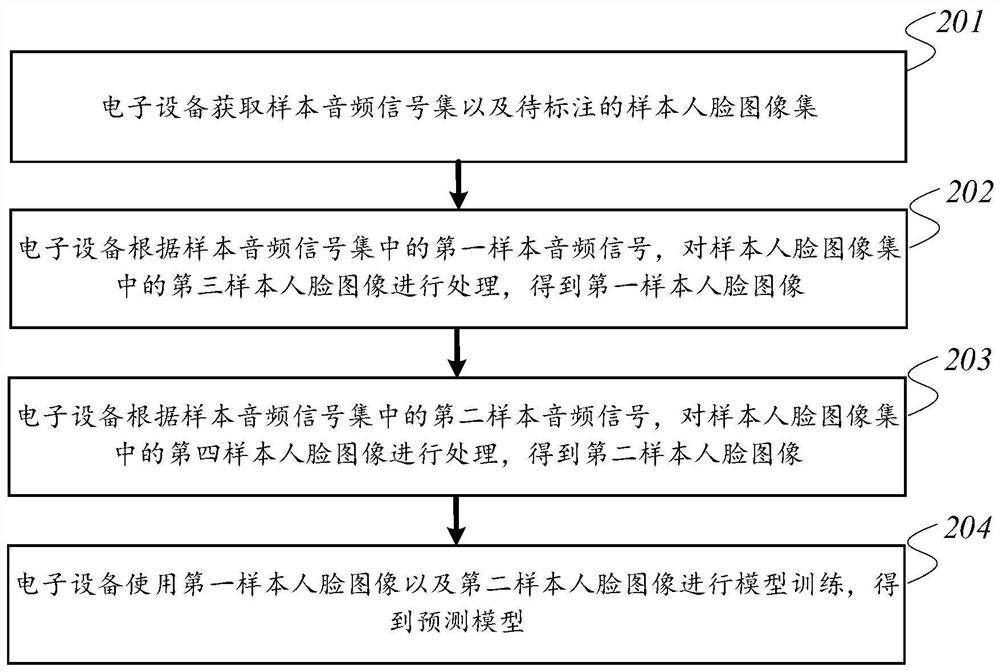

Speech detection method, training method of prediction model, device, equipment and medium

ActiveCN112567457BSpeech recognitionAcquiring/recognising facial featuresBackground noiseVisual perception

Owner:HUAWEI TECH CO LTD

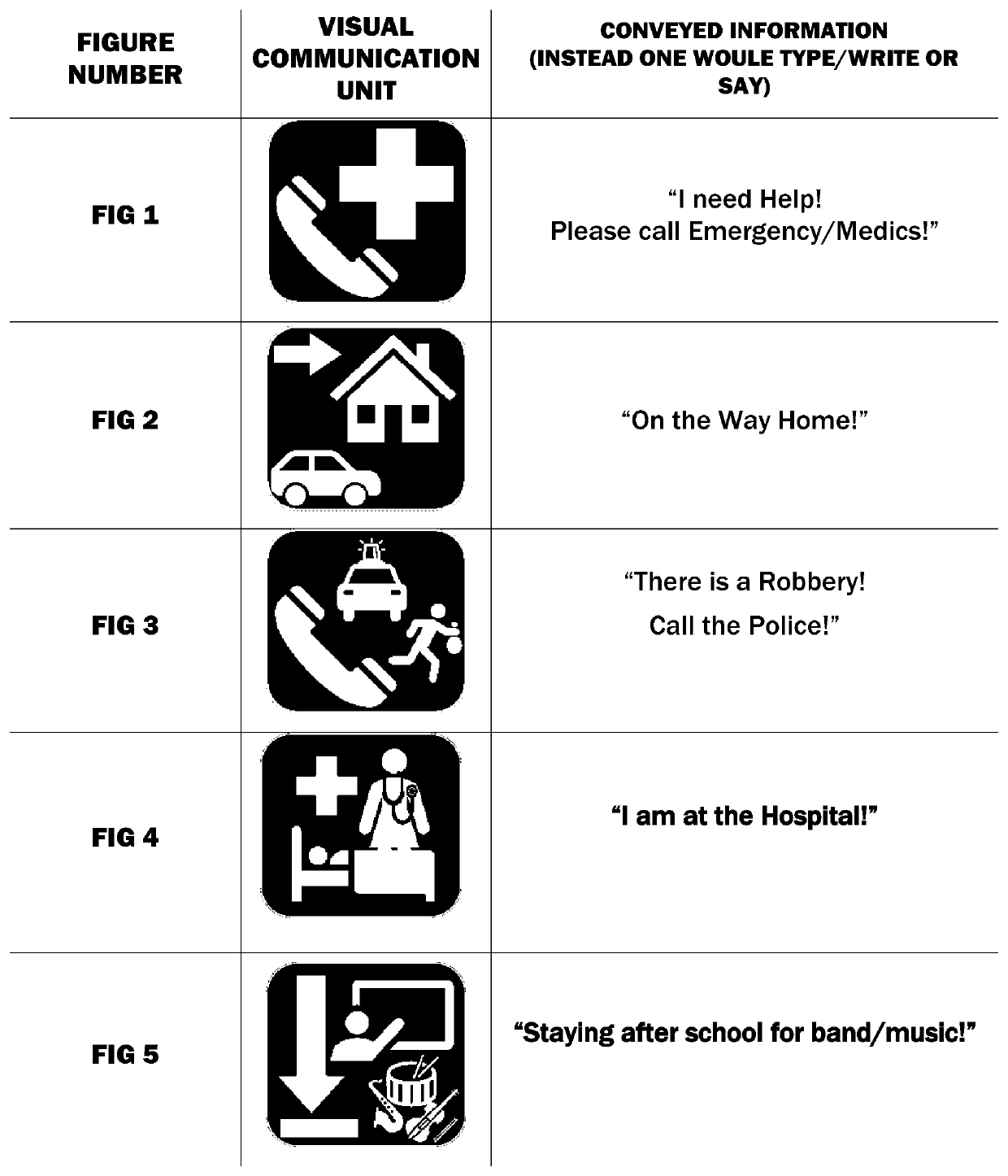

Display of a single or plurality of picture(s) or visual element(s) as a set or group to visually convey information that otherwise would be typed or written or read or sounded out as words or sentences.

InactiveUS20200118302A1Communication delayShorten the time2D-image generationAnimationTelecommunicationsAnimation

The method for creating or using or re-using one or more visual communication unit(s) to visually portray and convey information to be communicated, visually. This visual communication can be in lieu of or in conjunction with other written, and / or spoken, and / or machine language, voice / audible media, and / or any other communication methods. This is done by creating or using one or more visual element(s), picture(s), geometric object(s), painting(s), drawing(s), video(s), movie(s), clip(s), art(s), animation(s), (please note, these are not an exhaustive list of elements), or re-using already made or existing visual communication unit(s), or a combination of, for visually portraying and conveying the information to be communicated, visually. Then communicating the visual communication unit(s) conveying the information visually, through the communication channel(s), tool(s), format(s), and medium(s) of choice.

Owner:SCHLAKE FARIMEHR

A kind of lubricating oil film level measuring instrument and measuring method thereof

The invention relates to a lubricating oil film level tester and a test method thereof. The tester includes a capacitance sensor arranged in an oil tank and a measurement module connected to the capacitance sensor. The measurement module includes a processor configured to is: Obtain the first measured value ε for the first medium representing the lowest oil film level min , to obtain a second measurement ε for the second medium representing the highest oil film level max , to obtain the third measured value ε of the third medium measured by the capacitive sensor x , calculate the oil film level of the third medium and feedback it to the user through visual and / or auditory means, where the first measured value ε min , the second measured value ε max and the third measured value ε x They are proportional to the first dielectric coefficient of the first medium, the second dielectric coefficient of the second medium, and the third dielectric coefficient of the third medium, and the proportional ratios are the same. The third medium is unused lubricating Oil.

Owner:北京雅士科莱恩石油化工有限公司

An Image Annotation Method Based on Weak Matching Probability Canonical Correlation Model

ActiveCN105389326BPrevent overfittingGood effectCharacter and pattern recognitionSpecial data processing applicationsFeature setImaging Feature

The invention discloses an image tagging method and system based on a typical correlation model of weak matching probability. The invention relates to the technical field of network cross-media information processing, including acquiring tagged images and unlabeled images in an image database, and extracting the tagged images respectively. With the image features and text features of the unlabeled image, a matched sample set and an unmatched sample set are generated, the matched sample set includes an annotated image feature set and an annotated text feature set, and the unmatched sample set includes An unlabeled image feature set and an unlabeled text feature set; according to the matched sample set and the unmatched sample set, train the weak matching probability typical correlation model; through the weak matching probability typical correlation model, treat Annotate images for annotation. The present invention simultaneously uses marked images and their keywords and unmarked images to learn the association between the visual modality and the text modality, and accurately marks unknown images.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

Method for reducing errors while transferring tokens to and from people

InactiveUS8116338B2Minimize miscommunication and transformationMinimize miscommunicationLoop networksDiagnostic Radiology ModalityAlgorithm

A system, method and computer program produce for handling and minimizing miscommunication and transformation of tokens that are processed by humans, either verbally or in writing, during some part of a usage scenario. This is accomplished by filtering out confusing tokens, as determined by calculating a distance metric for each token. A distance metric may be calculated along a print modality, a visual modality or a verbal modality.

Owner:IBM CORP

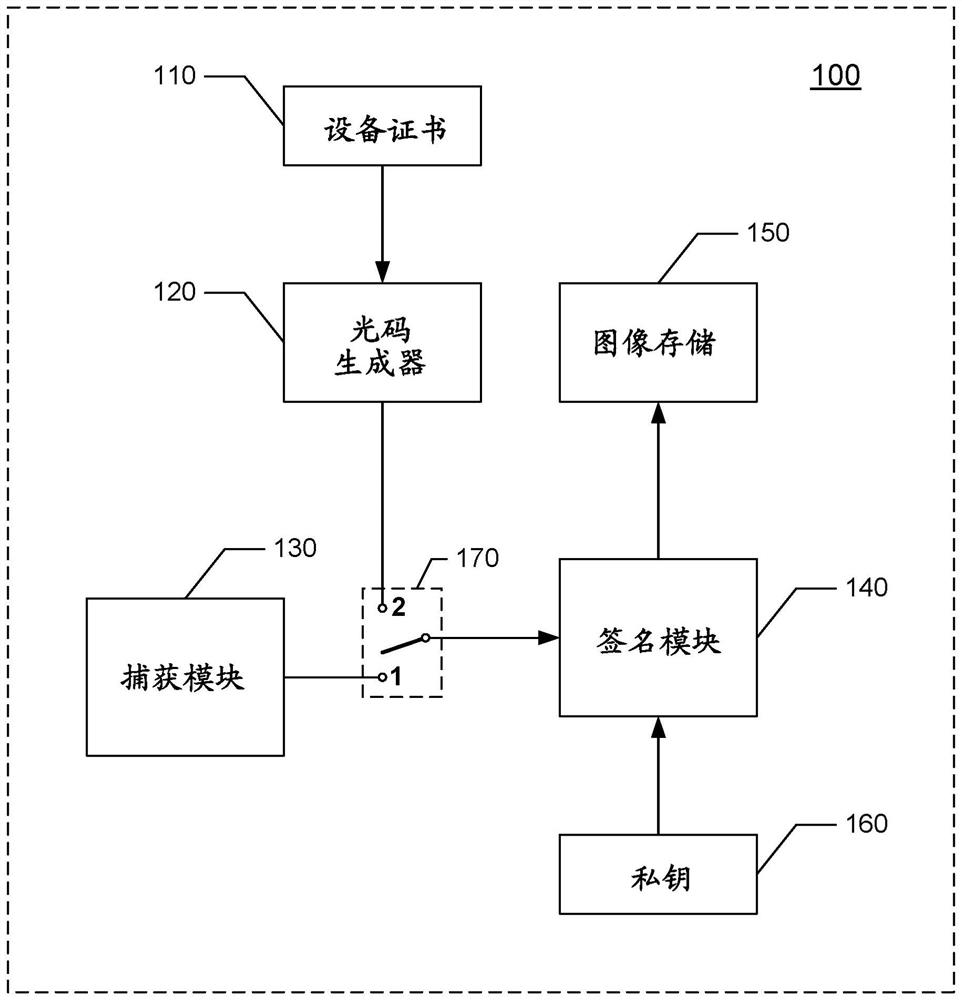

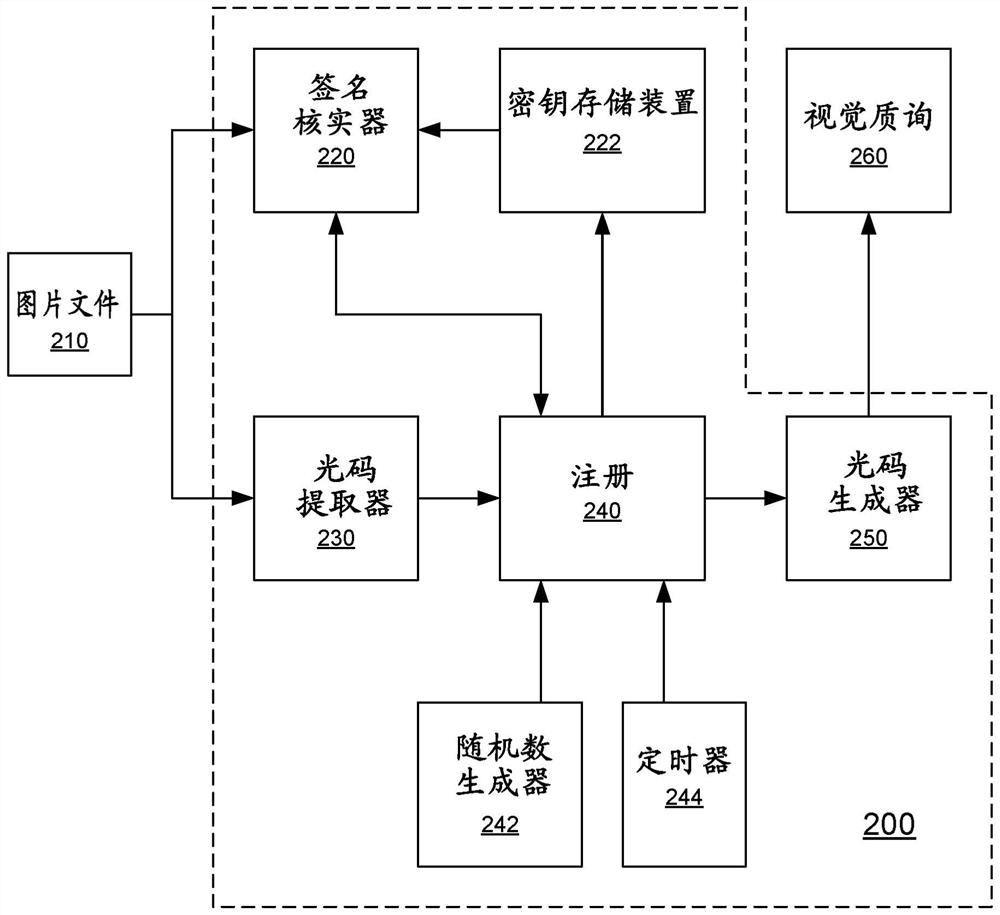

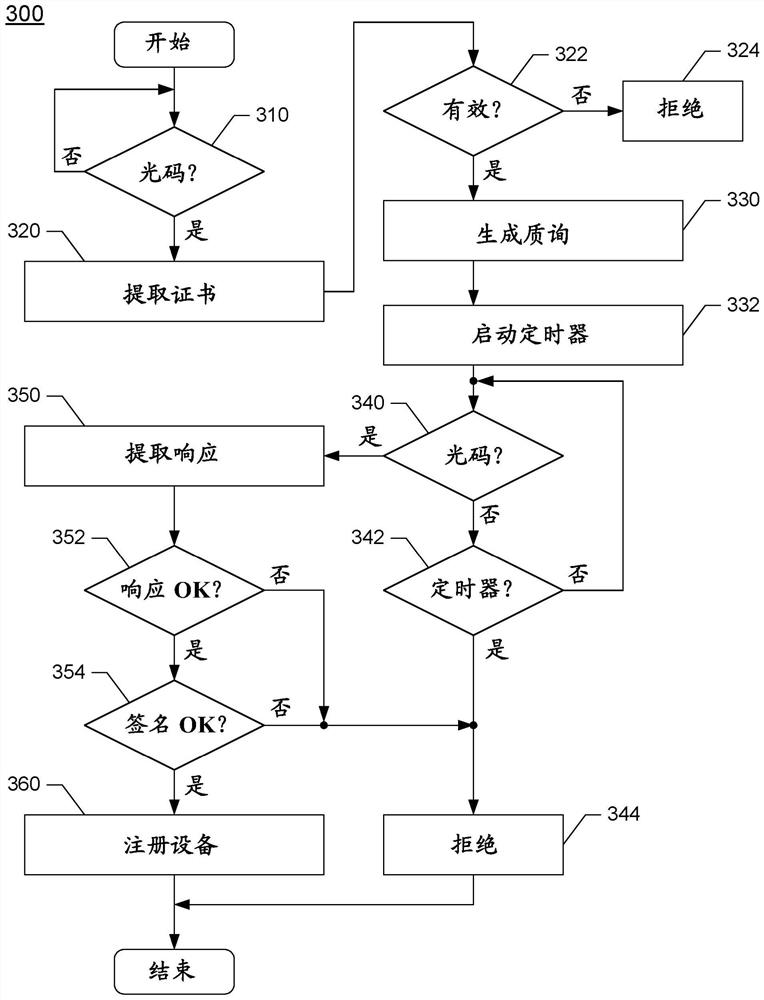

Visual registration of camera

PendingCN114731275AKey distribution for secure communicationPublic key for secure communicationPattern recognitionComputer graphics (images)

Visually registering a camera using an optical code and a picture file, including: receiving the picture file and the optical code from the camera, where the optical code includes a public key of the camera; generating a visual challenge using the random number created by the random number generator; transmitting the visual challenge to a user of the camera to capture the visual challenge; receiving the captured visual challenge from the camera; extracting a response from the captured visual challenge; comparing the response to a random number to verify a signature of the captured visual challenge using a public key of the camera and convert an optical code received from the camera into a valid certificate; and registering the camera and adding the valid certificate to the key store.

Owner:SONY CORP +1

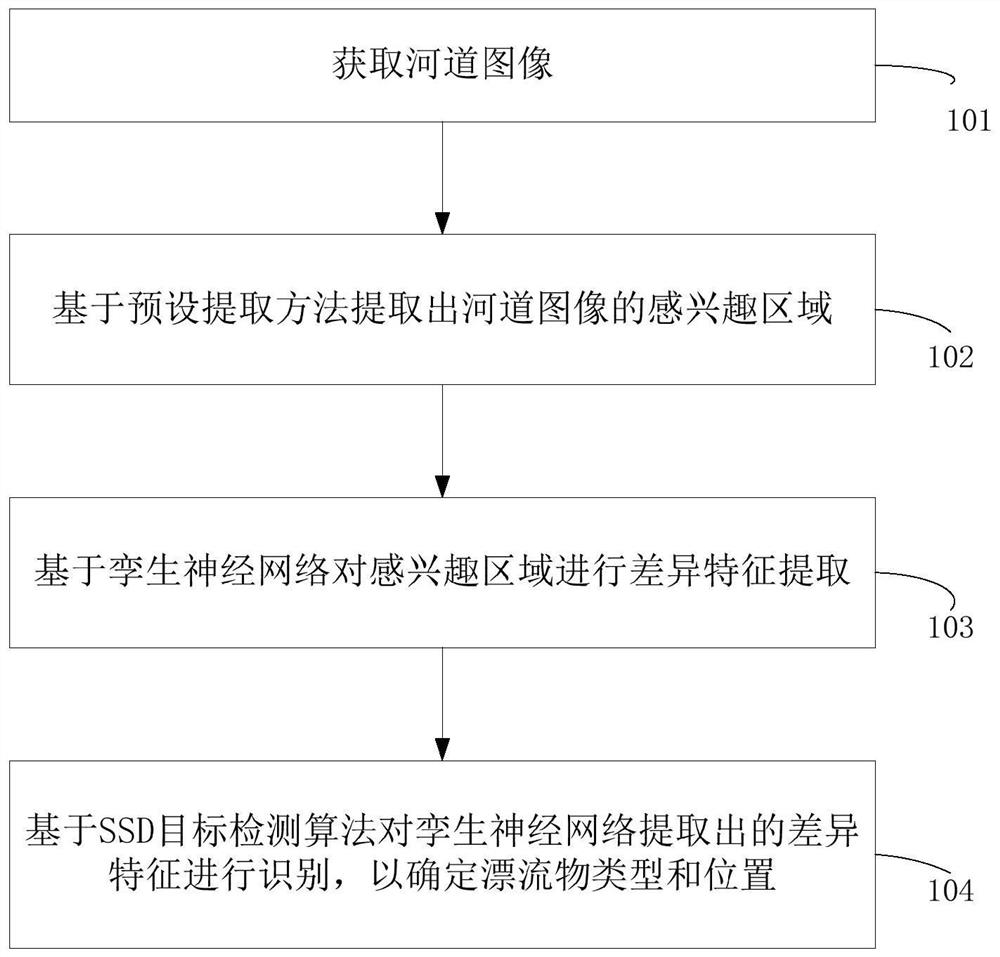

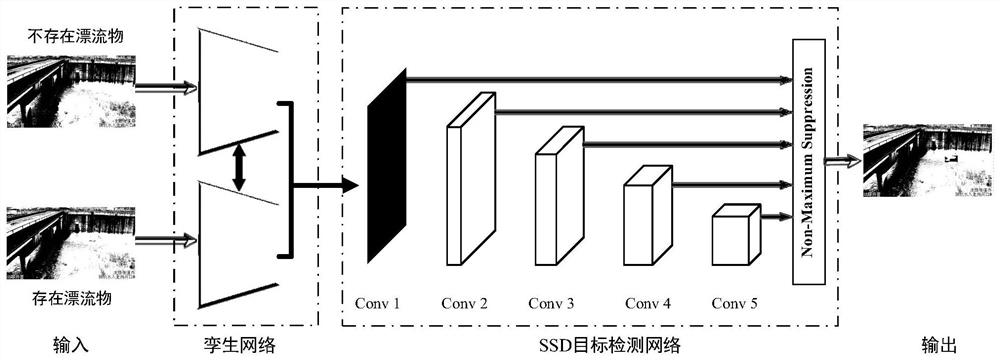

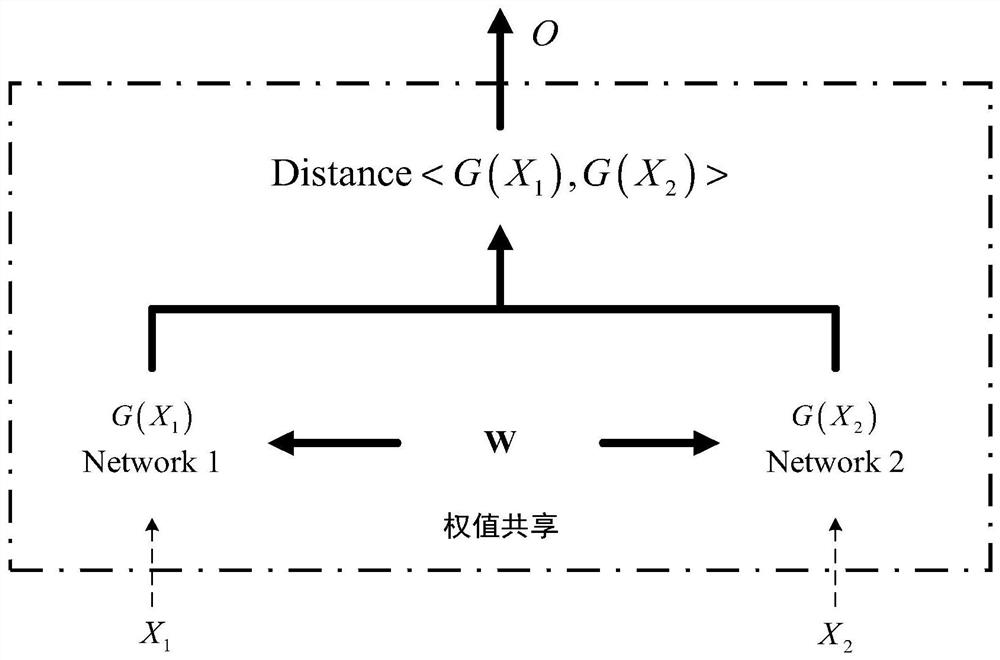

Visual detection method and device for riverway drifts

PendingCN113297918AImprove recognition accuracyImprove accuracyCharacter and pattern recognitionNeural architecturesFeature extractionRiver routing

The invention relates to a visual detection method and device for riverway drifts. The method comprises the following steps: acquiring a riverway image; extracting a region of interest of the riverway image based on a preset extraction method; carrying out difference feature extraction on the region of interest based on a twin neural network; and identifying difference features extracted by the twin neural network based on an SSD target detection algorithm to determine the type and position of a drift object. The method has the beneficial effects that from the perspective of computer vision, a target detection model based on the twin network is constructed, appearance of river surface drifts is regarded as feature differences, detection of the drifts is converted into identification and positioning of difference features, and thus category judgment, position positioning and area and flow velocity calculation of the drifts are accurately realized. The river drift detection is realized in a visual mode, the labor cost is low, the dependence on hardware equipment is weak, the maintenance is convenient, and the detection precision is high.

Owner:SHENZHEN POLYTECHNIC

A multimodal vocabulary representation method based on dynamic fusion mechanism

ActiveCN107480196BEnhance expressive abilityImprove relevanceNatural language analysisSpecial data processing applicationsPattern recognitionNetwork model

The invention provides a multi-modality vocabulary representing method. The multi-modality vocabulary representing method comprises the steps of calculating a text representing vector of a vocabulary to be represented in text modality and a picture representing vector of the vocabulary to be represented in visual modality; inputting the text representing vector into a pre-established text modality weight model to obtain the weight of the text representing vector in the text modality; inputting the picture representing vector into a pre-established visual modality weight model to obtain the weight of the picture representing vector in picture modality; conducting calculation to obtain a multi-modality vocabulary representing vector according to the text representing vector, the picture representing vector and weights corresponding to the text representing vector and the picture representing vector respectively. The text modality weight model is a neural network model of which input is the text representing vector and output is the weight of the text representing vector in the corresponding text modality; the visual modality weight model is a neural network model of which input is the picture representing vector and output is the weight of the picture representing vector in the corresponding visual modality.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

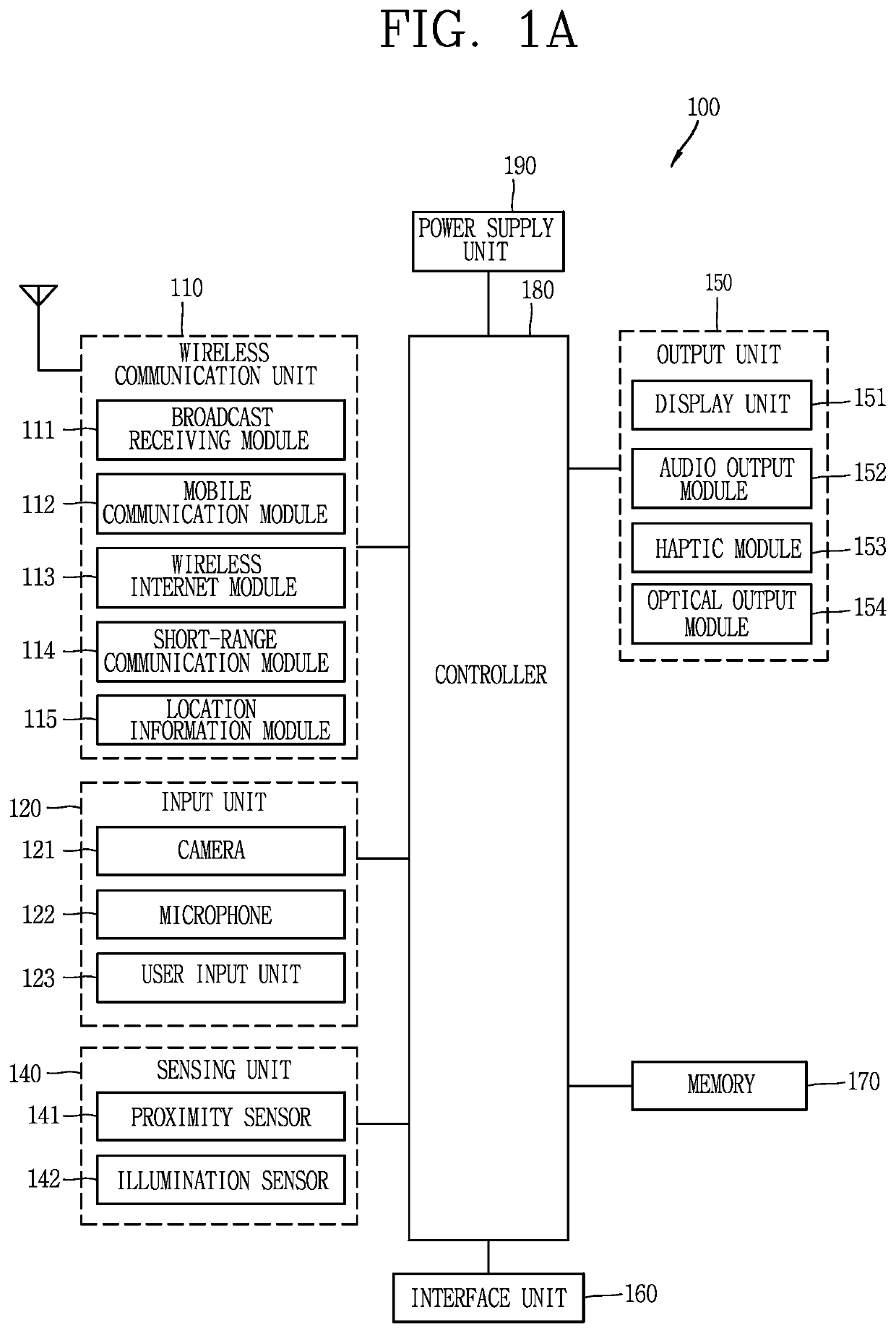

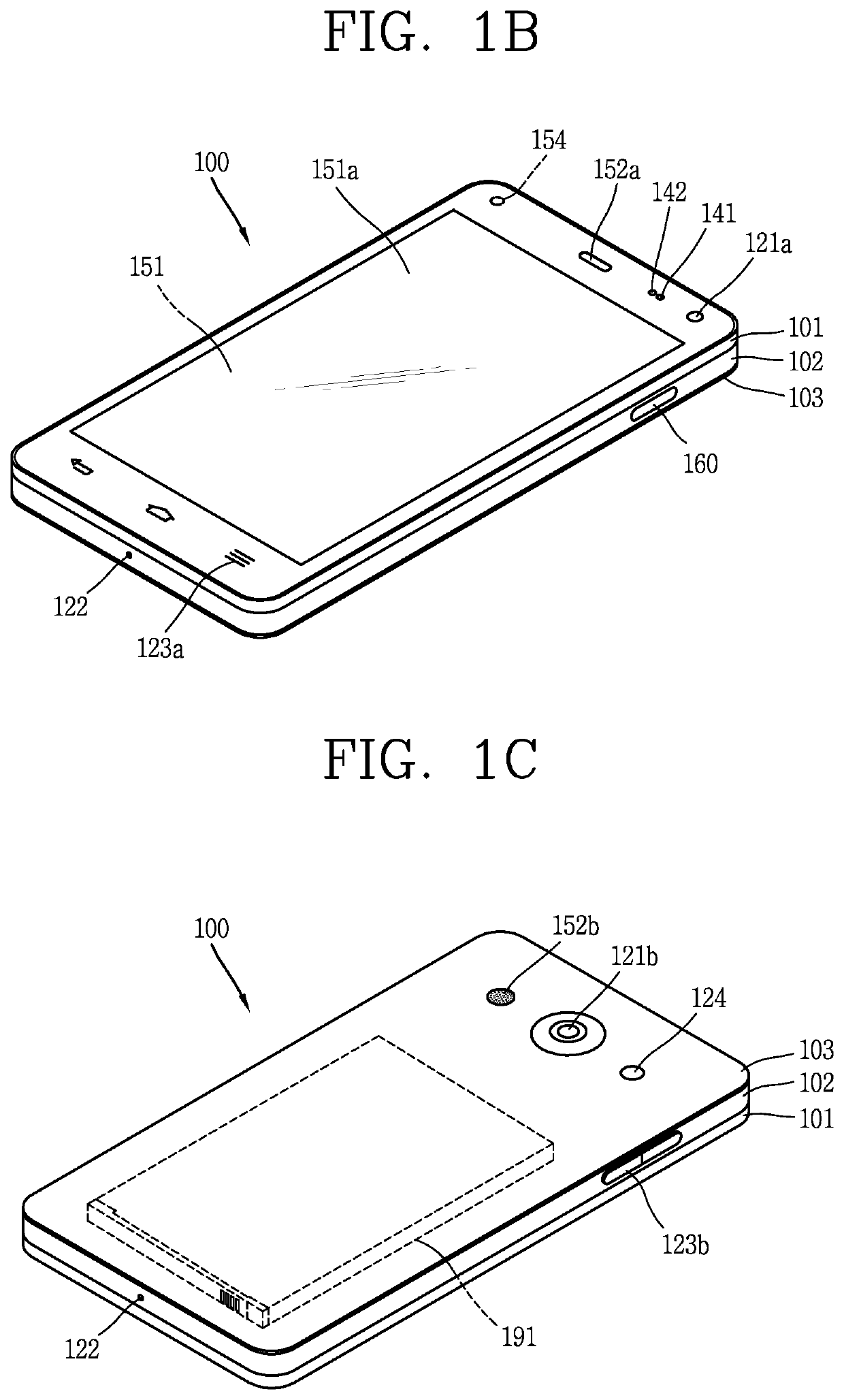

Terminal detecting and displaying object position

ActiveUS10621749B2Reduce delaysAvoid rapid degradationImage analysisPictoral communicationPersonal memoryPhysical medicine and rehabilitation

The present invention is to provide a terminal for performing a secretarial function for assisting a person's memory at home or in a company, and a system comprising the same. The terminal comprises: a camera module configured to capture an image of a predetermined space when a person's motion in the predetermined space is detected; and a memory for storing the image captured by the camera module, wherein when a user request is received, the position of an object corresponding to the user request is detected using the image stored in the memory, and guide information can be outputted in at least one manner of a visual manner, an auditory manner and a tactile manner so that the detected position is guided.

Owner:LG ELECTRONICS INC

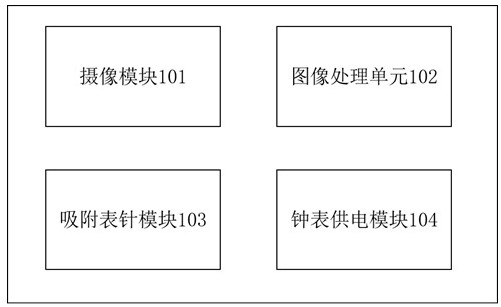

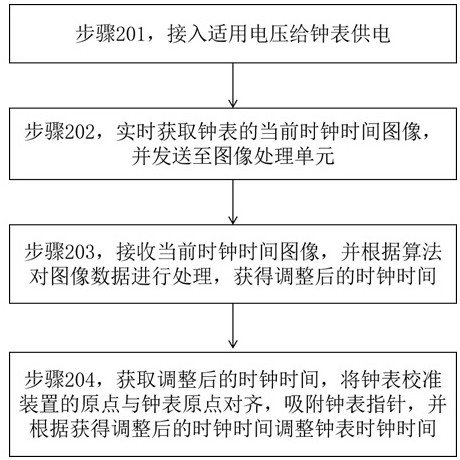

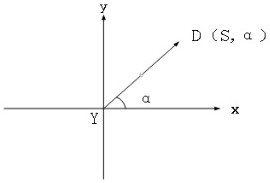

A device and method for calibrating a clock

ActiveCN112947032BImprove efficiencyHigh degree of intelligenceImage enhancementImage analysisClock timeImaging processing

The present invention proposes a device and method for clock calibration. The current clock time of the clock is photographed in real time by using the camera module in a visual way. The image processing unit receives the current clock time image and processes the image data according to an algorithm to obtain the adjusted clock time. , obtain the adjusted clock time, align the origin of the clock calibration device with the clock origin, absorb the clock hands, and adjust the clock time according to the obtained adjusted clock time. The present invention utilizes the cyclic data processing mode of the image processing unit to obtain the current clock of the watch for precise calibration, which is more convenient, more efficient and more intelligent.

Owner:深圳市越芯电子有限责任公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com