Projection interaction method based on pure machine vision positioning

A technology of projection interaction and machine vision, which is applied to the components of color TVs, image reproducers of projection devices, instruments, etc., can solve the problems of high construction cost, interference, and immovability of interactive systems, and achieves strong mobility. , good stability, low equipment cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be described in further detail below in conjunction with the accompanying drawings.

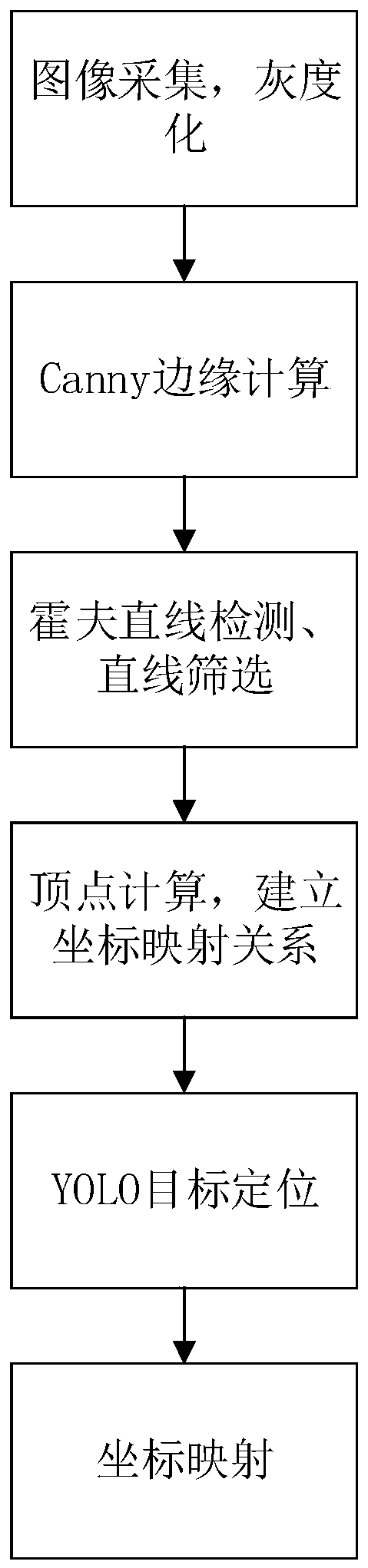

[0050] Such as figure 1 A projection interaction method based on pure machine vision positioning is shown, including the following steps:

[0051] S1. Perform grayscale processing on the source image collected by the visual sensor, and locate the boundary and four vertices of the projection area in the image, specifically including the following steps:

[0052] 1.1. The present invention has no special requirements for the visual sensor, and a conventional usb camera is used to meet the requirements. In this embodiment, a HID TTQI camera is used, and an opencv image processing library is used to obtain an RGB source image.

[0053] Since the human eye has the highest sensitivity to green and the lowest sensitivity to blue, the source image is grayscaled according to the following formula:

[0054] Gray=R*0.299+G*0.587+B*0.114

[0055] Among them, R, G, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com