A multimodal vocabulary representation method based on dynamic fusion mechanism

A lexical representation, multimodal technology, applied in unstructured text data retrieval, semantic tool creation, natural language analysis, etc., can solve problems such as inaccurate representation results, inaccurate lexical weights, and lexical differences are not considered.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] Preferred embodiments of the present invention are described below with reference to the accompanying drawings. Those skilled in the art should understand that these embodiments are only used to explain the technical principles of the present invention, and are not intended to limit the protection scope of the present invention.

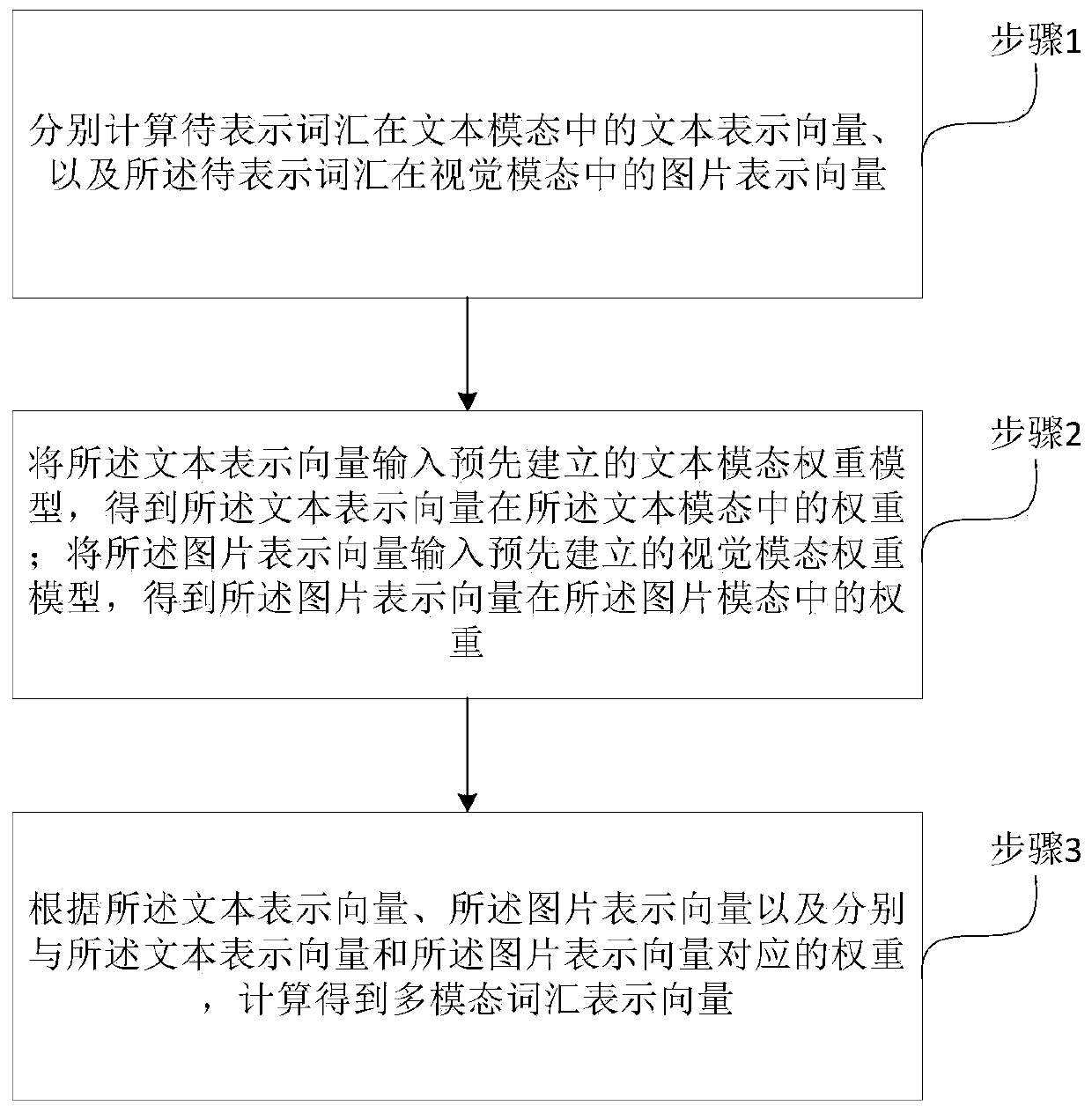

[0041] Such as figure 1 as shown, figure 1 A flowchart of a multimodal vocabulary representation method based on a dynamic fusion mechanism provided by the present invention, including step 1, step 2 and step 3, wherein,

[0042] Step 1: Calculate the text representation vector of the vocabulary to be represented in the text mode and the image representation vector of the vocabulary to be represented in the visual mode;

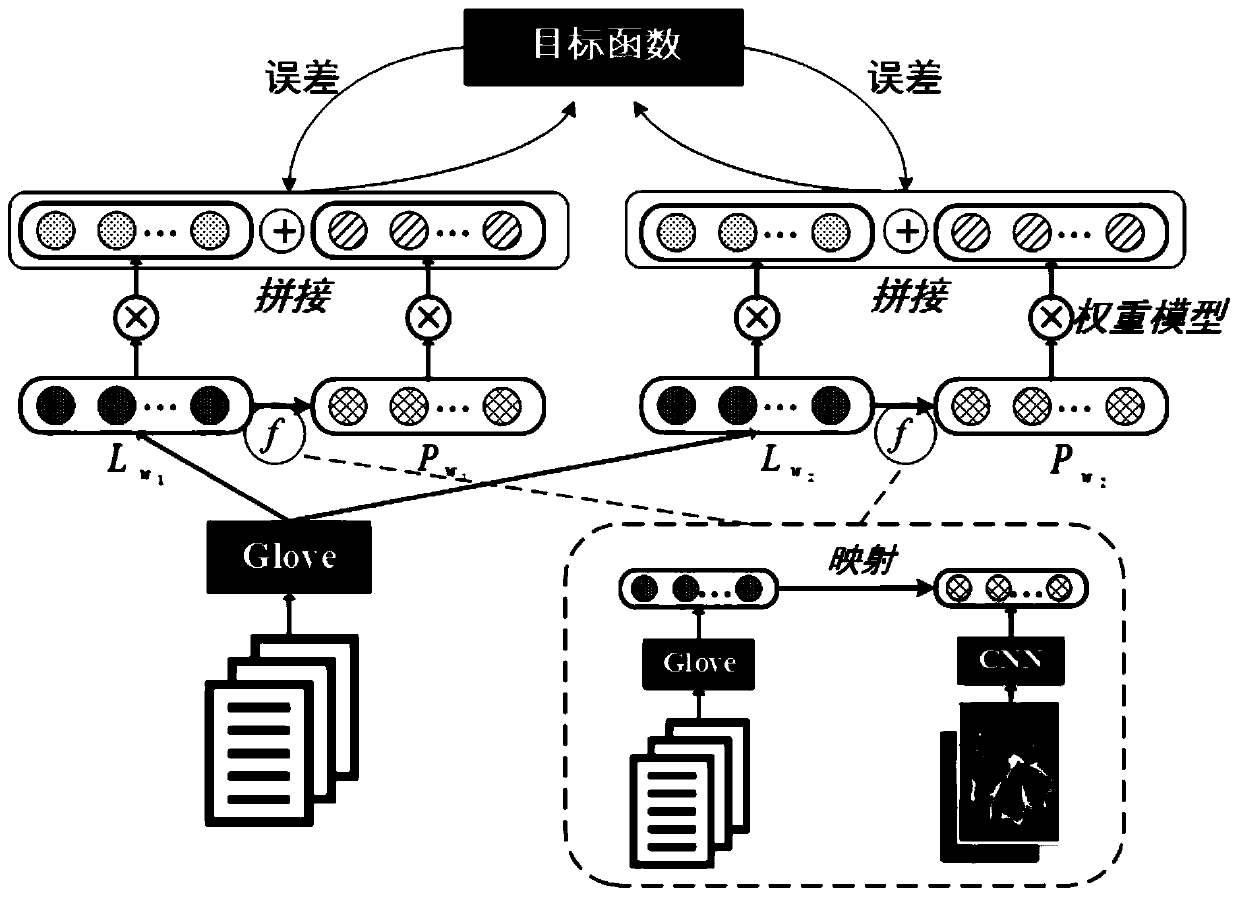

[0043]The purpose of calculating the text representation vector and image representation vector of the vocabulary is to convert the vocabulary into a form that can be recognized by the computer. In practical applications, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com