Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

42 results about "Language modelling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

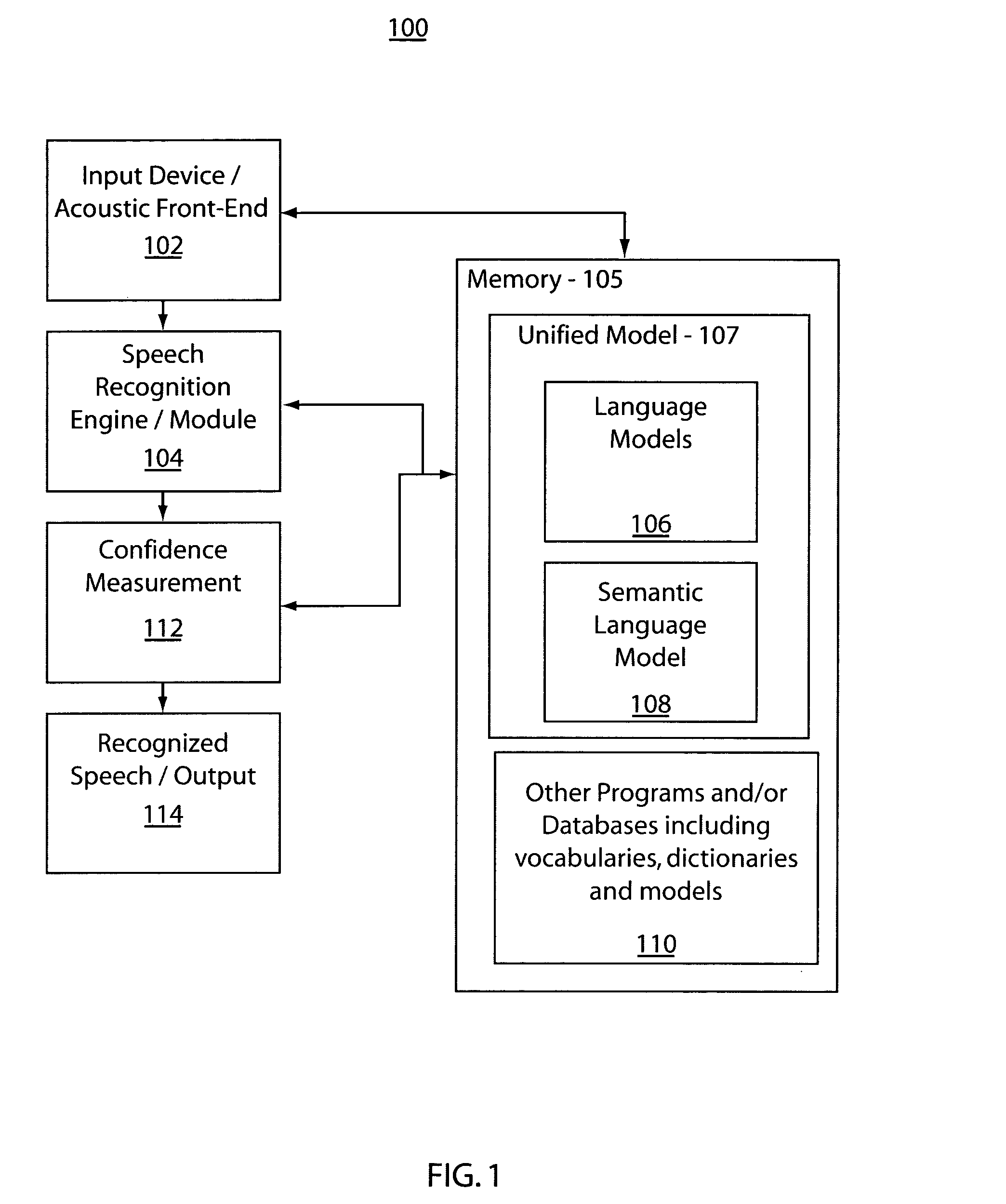

Semantic language modeling and confidence measurement

ActiveUS7475015B2Speech recognitionSpecial data processing applicationsHypothesisSpeech identification

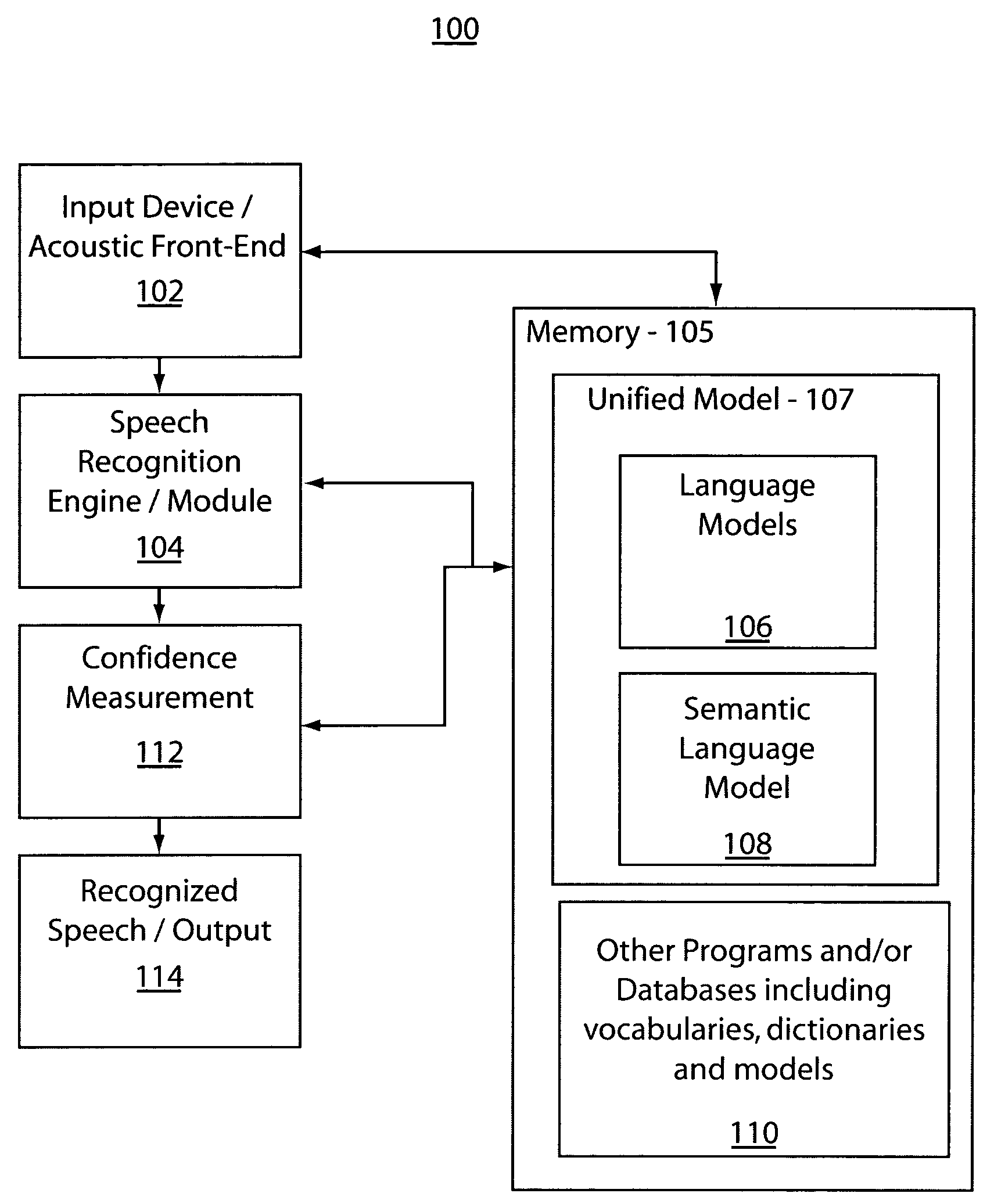

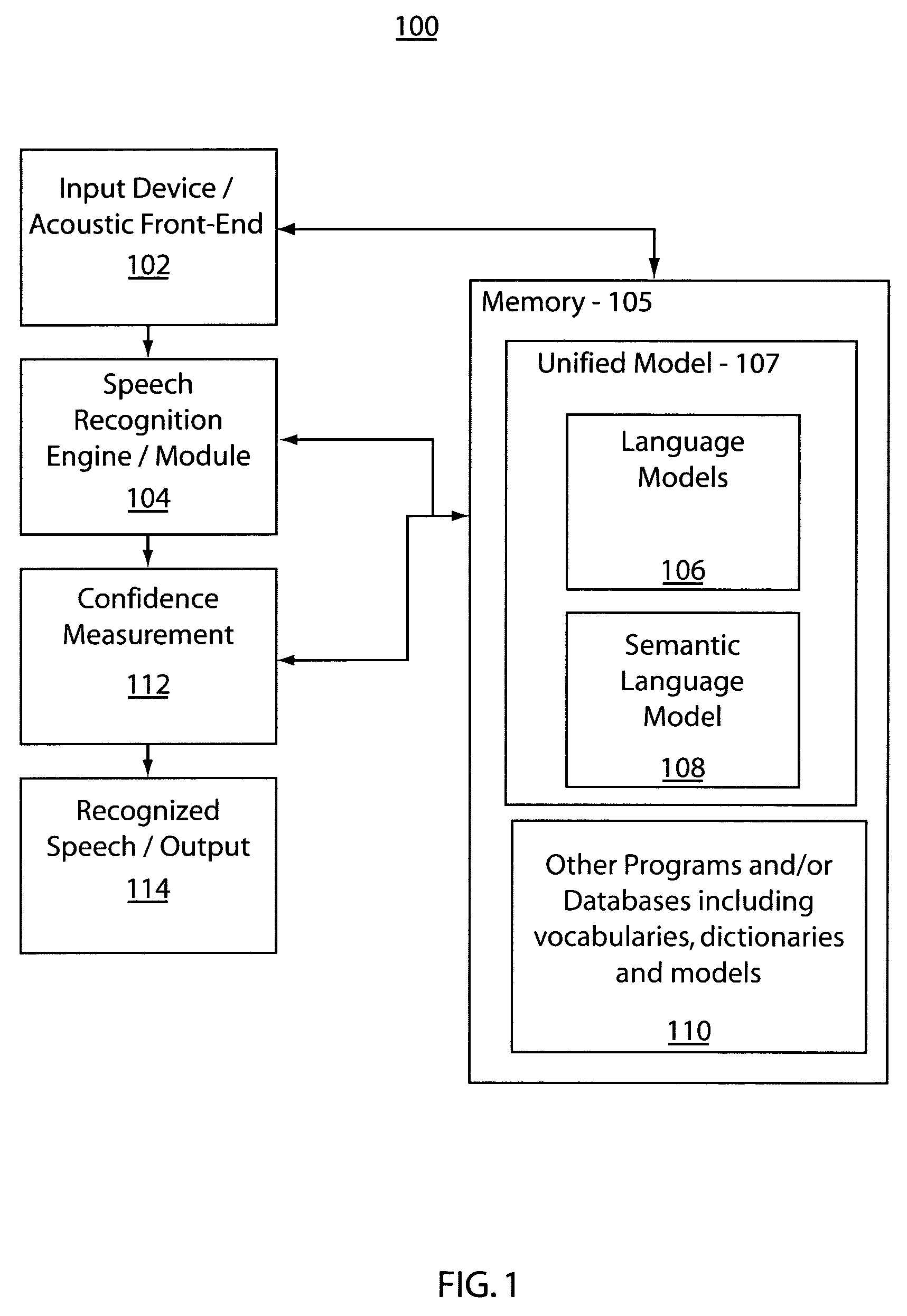

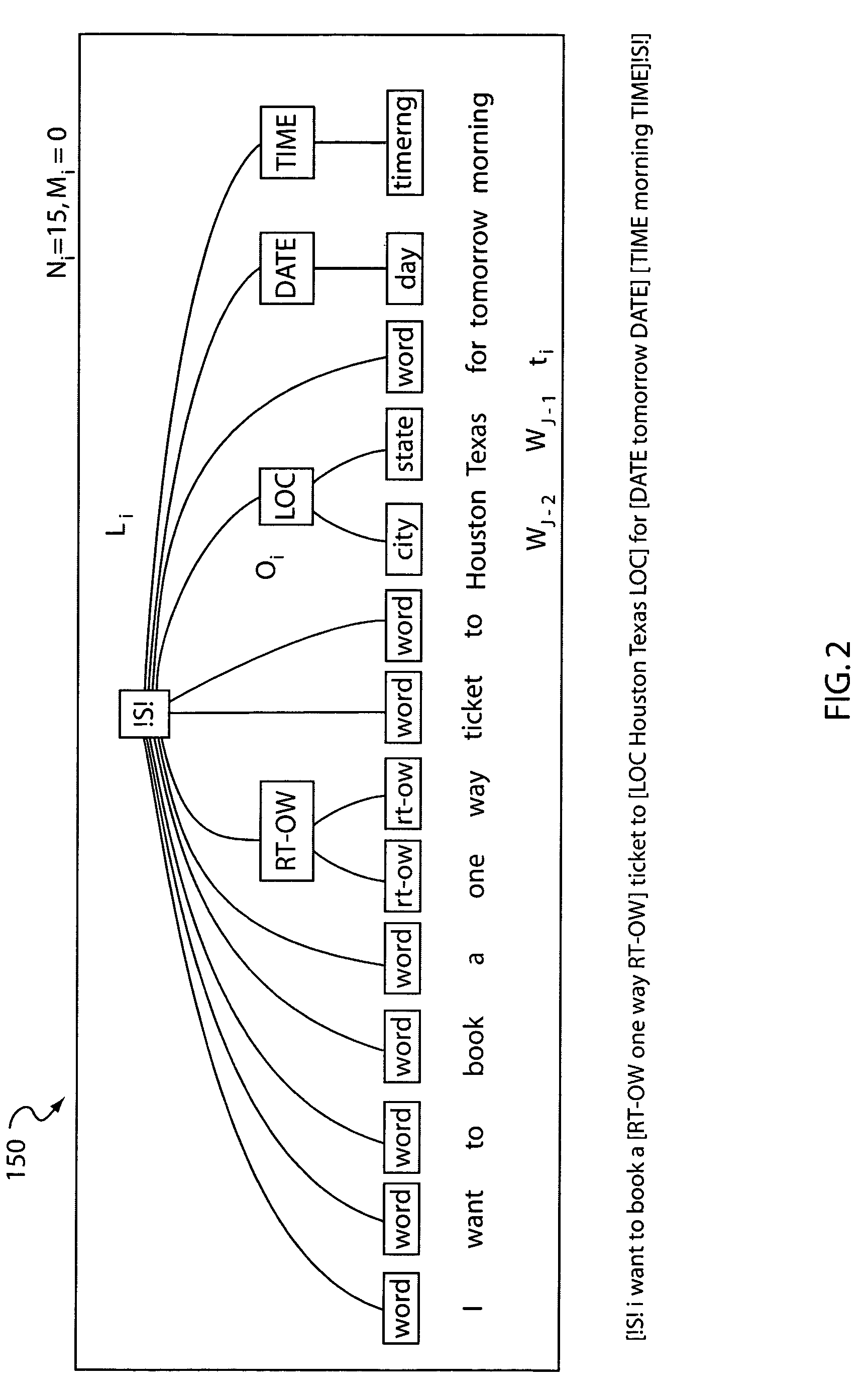

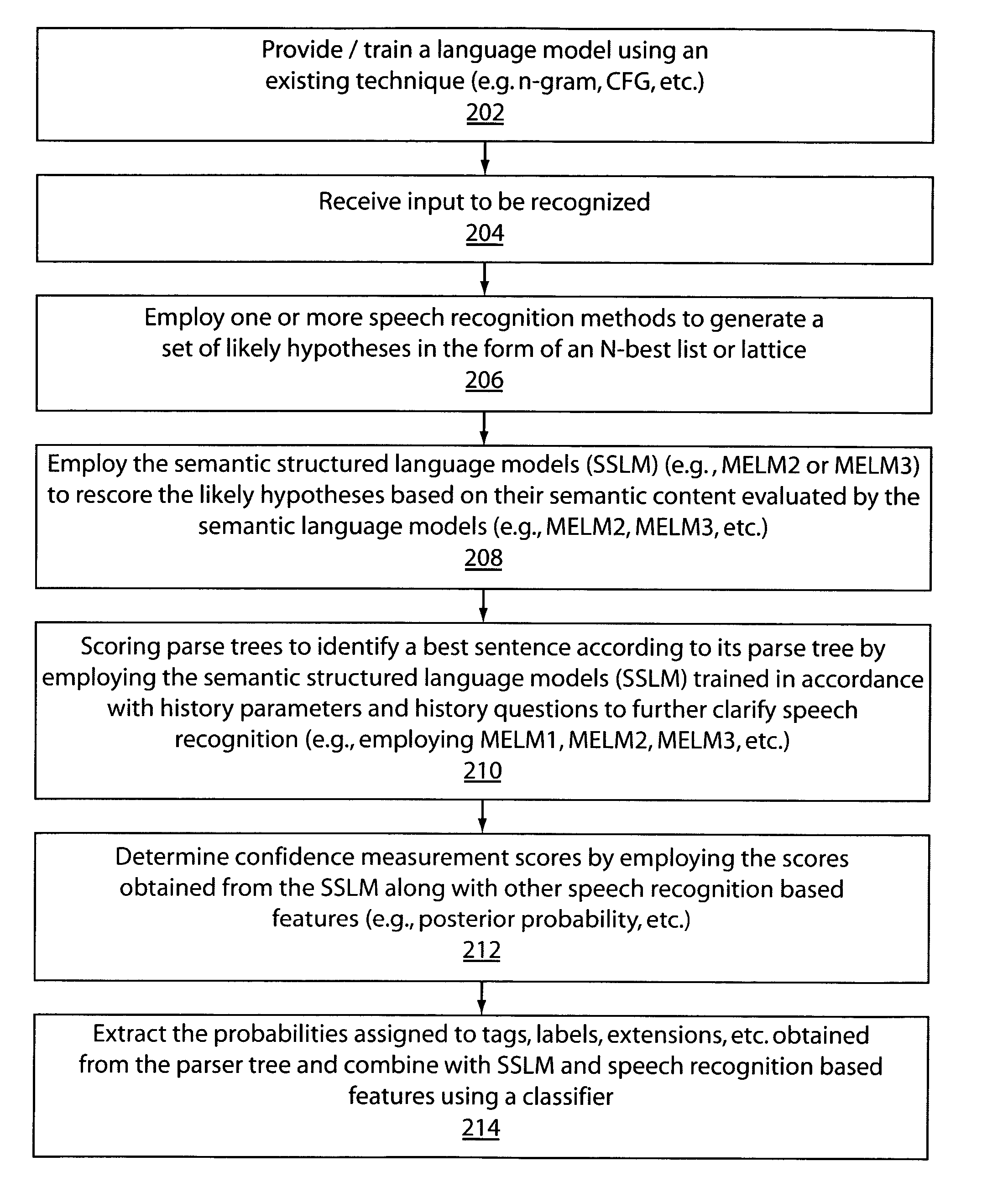

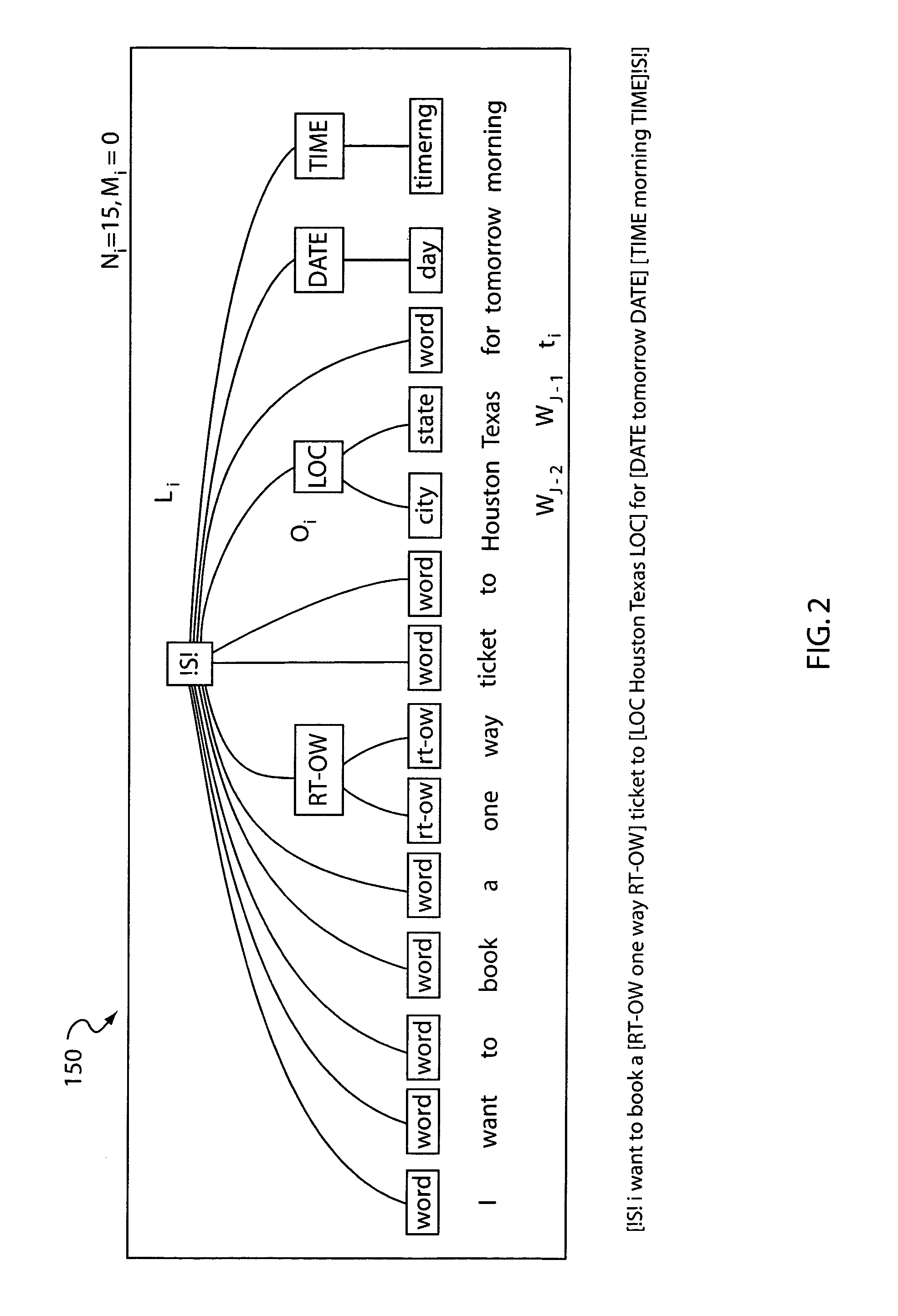

A system and method for speech recognition includes generating a set of likely hypotheses in recognizing speech, rescoring the likely hypotheses by using semantic content by employing semantic structured language models, and scoring parse trees to identify a best sentence according to the sentence's parse tree by employing the semantic structured language models to clarify the recognized speech.

Owner:MICROSOFT TECH LICENSING LLC

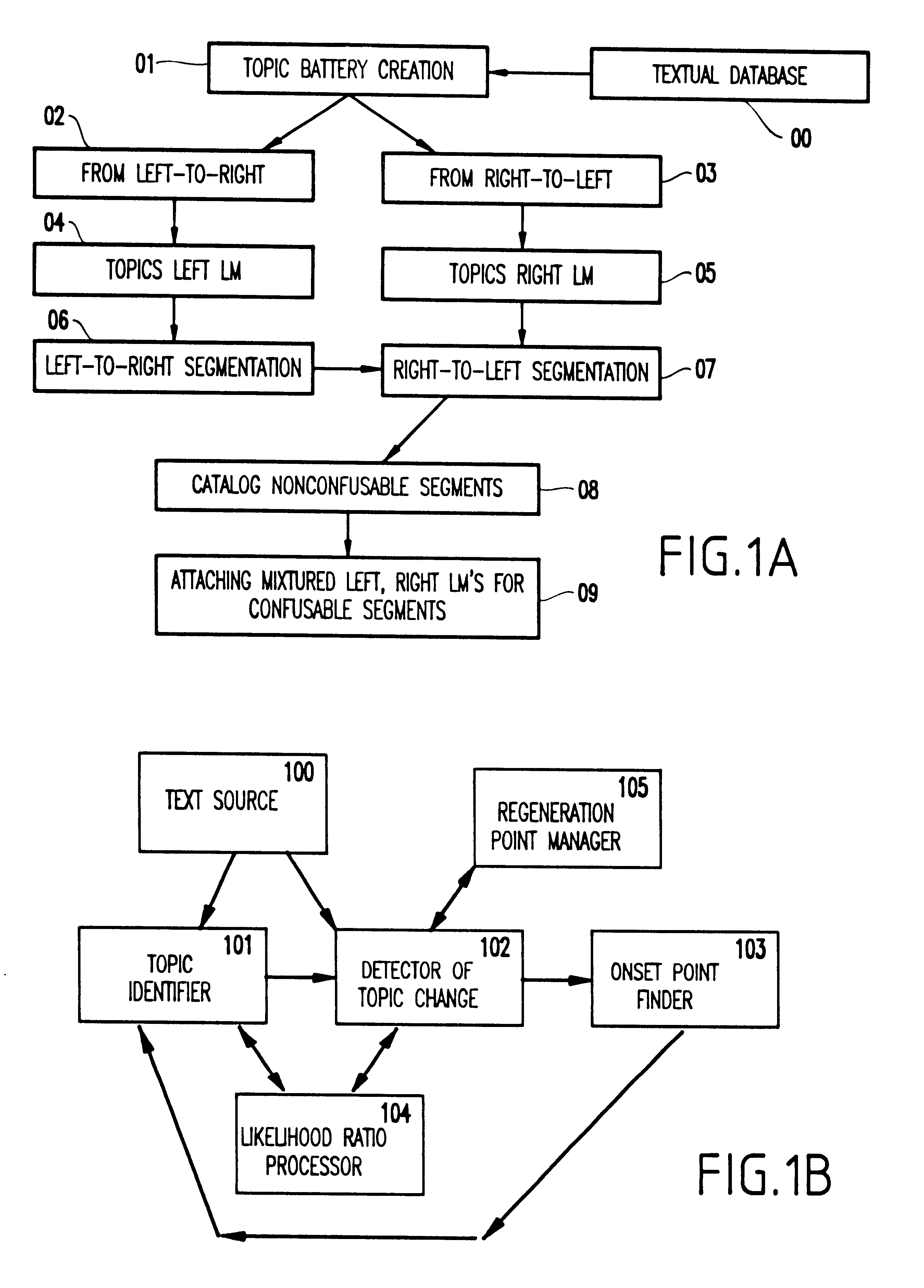

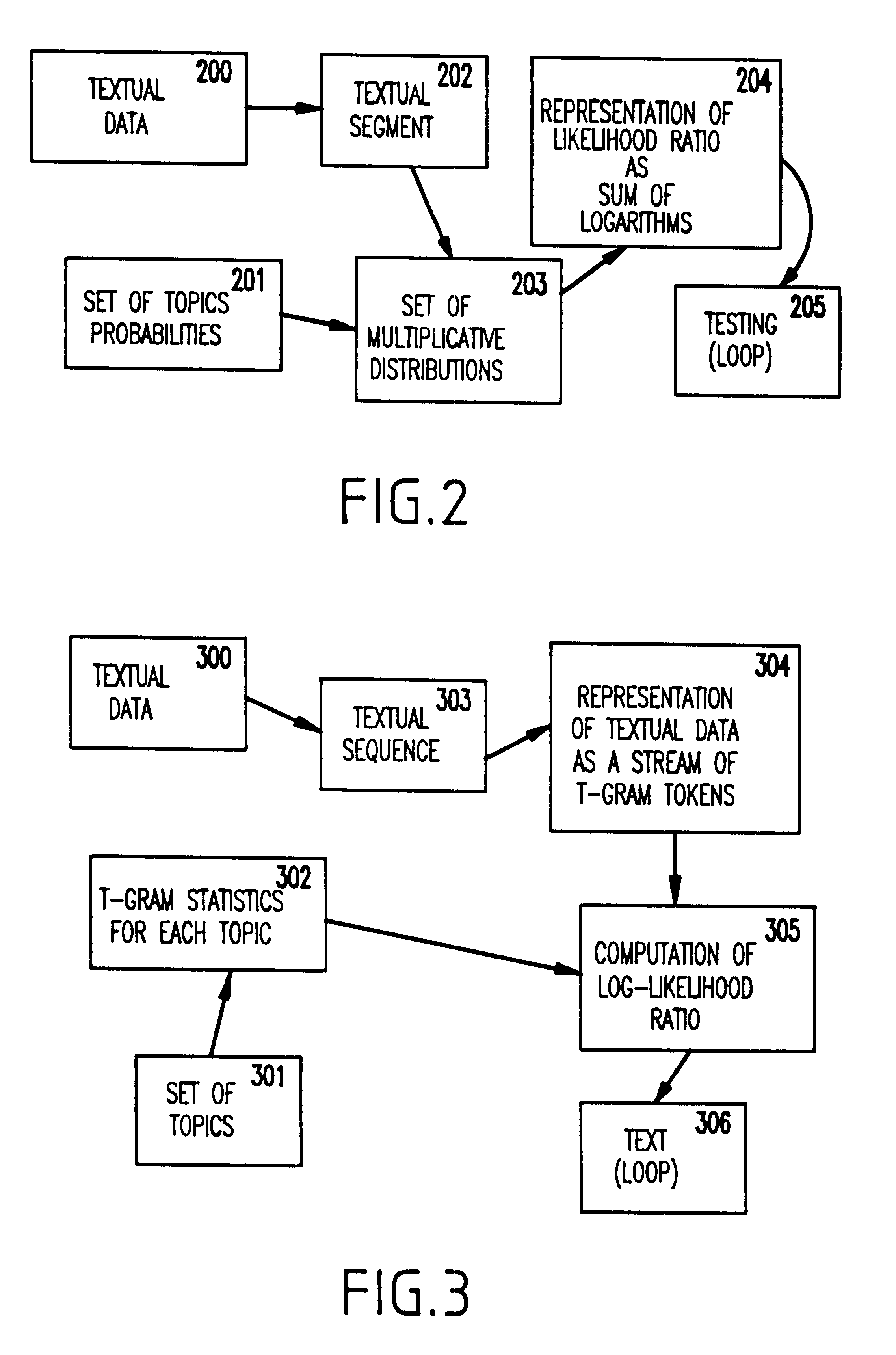

Method and system for off-line detection of textual topical changes and topic identification via likelihood based methods for improved language modeling

InactiveUS6529902B1Improved language modelingEasy to useDigital data information retrievalData processing applicationsData setData mining

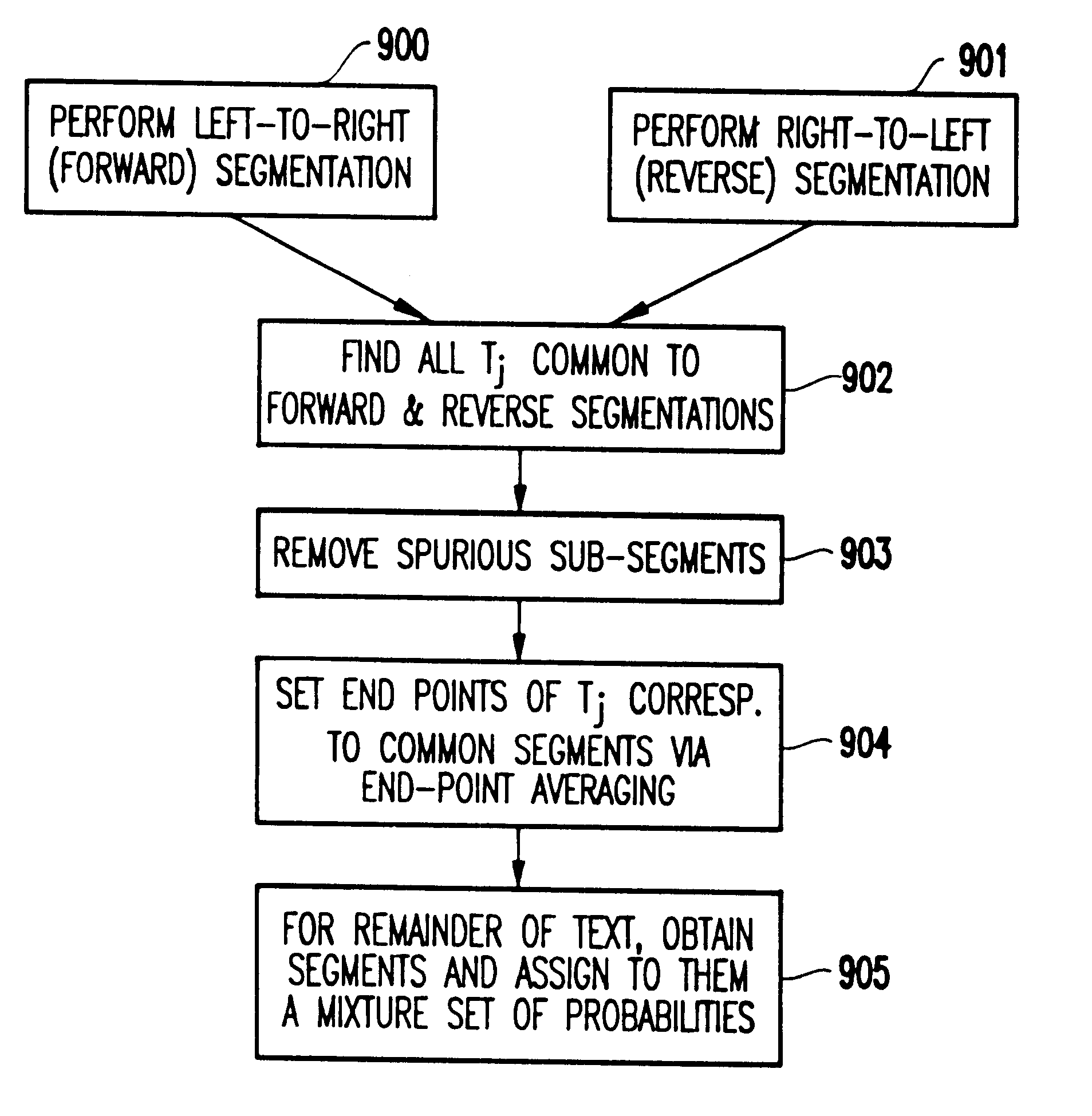

A system (and method) for off-line detection of textual topical changes includes at least one central processing unit (CPU), at least one memory coupled to the at least one CPU, a network connectable to the at least one CPU, and a database, stored on the at least one memory, containing a plurality of textual data set of topics. The CPU executes first and second processes in first and second directions, respectively, for extracting a segment having a predetermined size from a text, computing likelihood scores of a text in the segment for each topic, computing likelihood ratios, comparing them to a threshold, and defining whether there is a change point at the current last word in a window.

Owner:IBM CORP

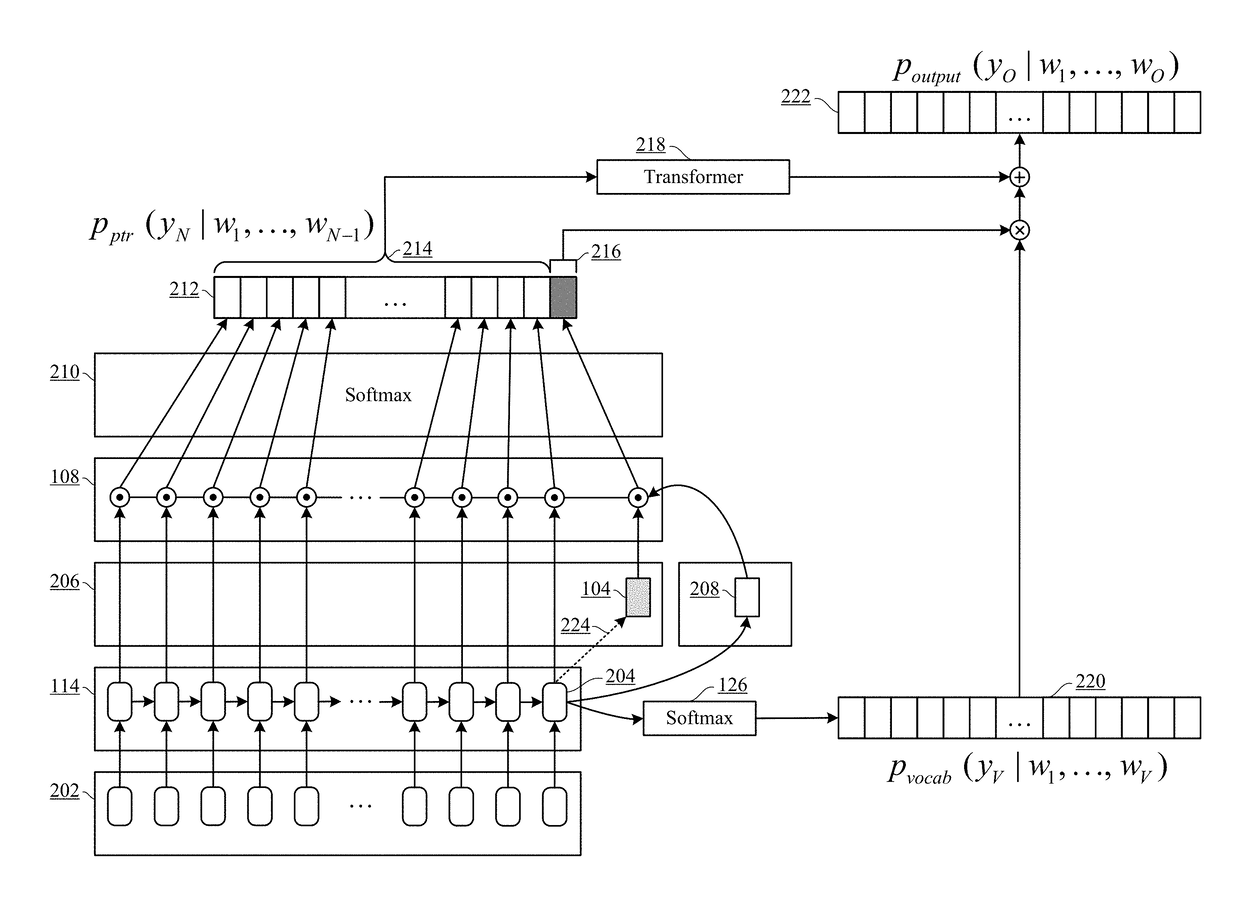

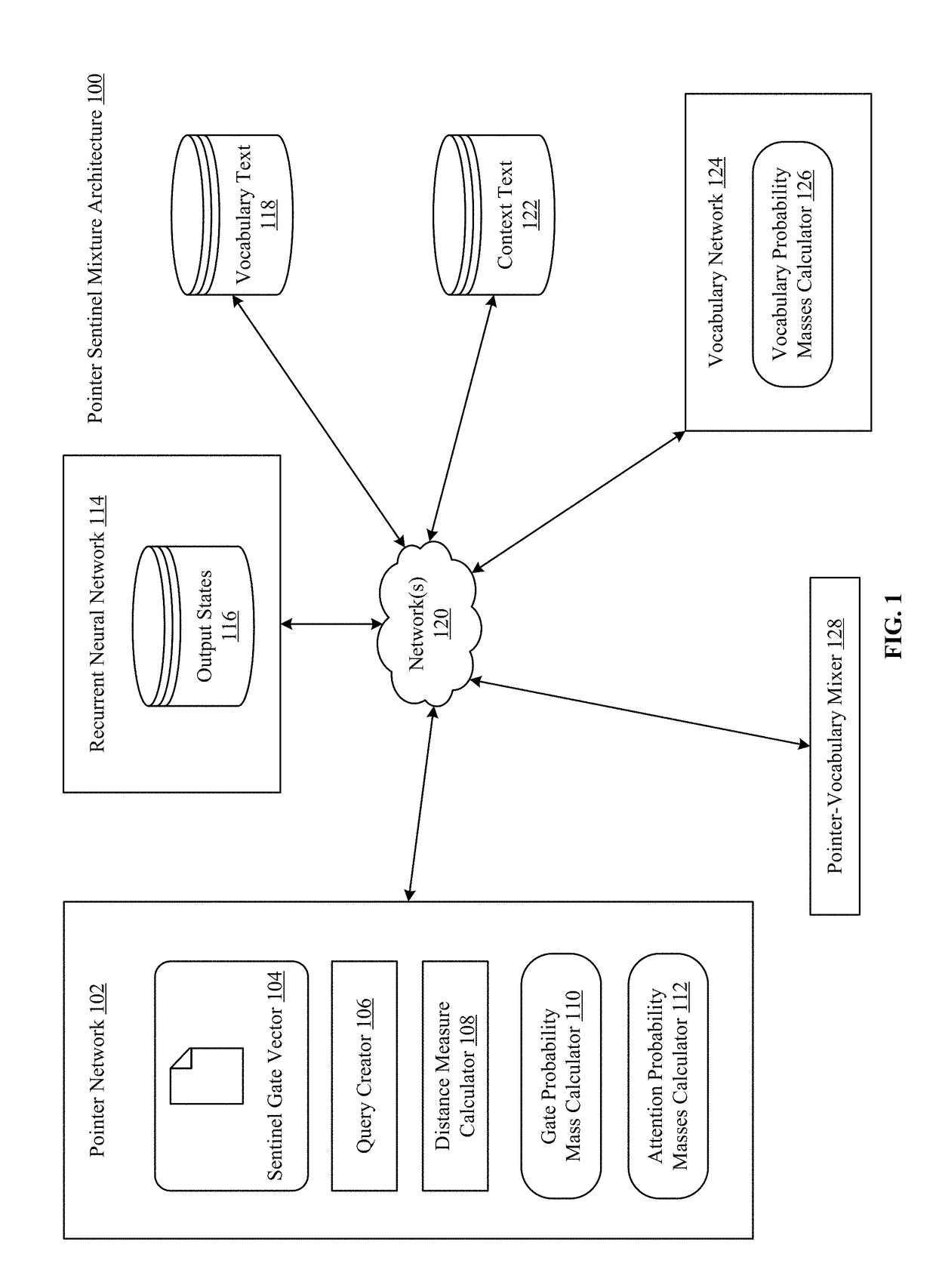

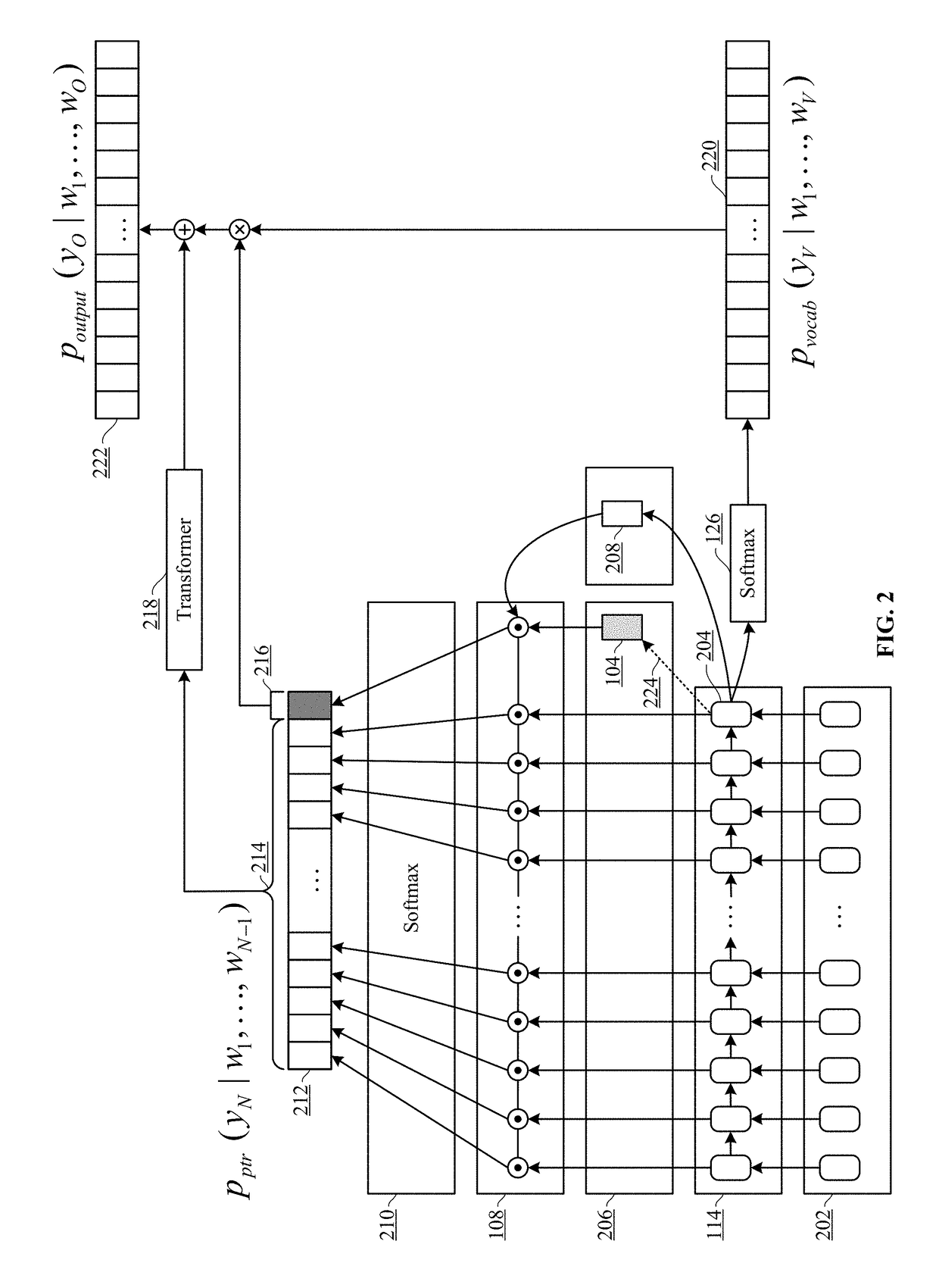

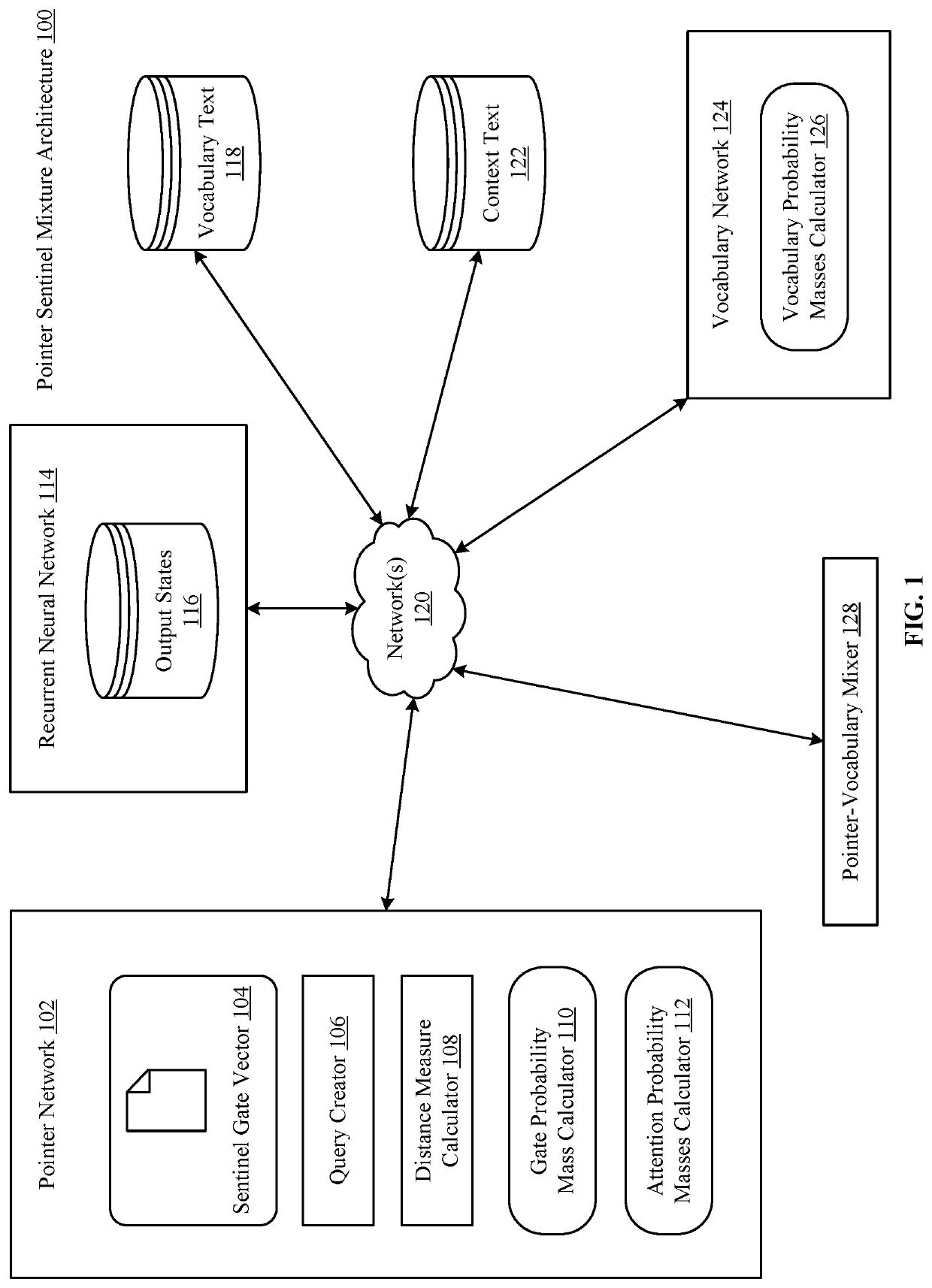

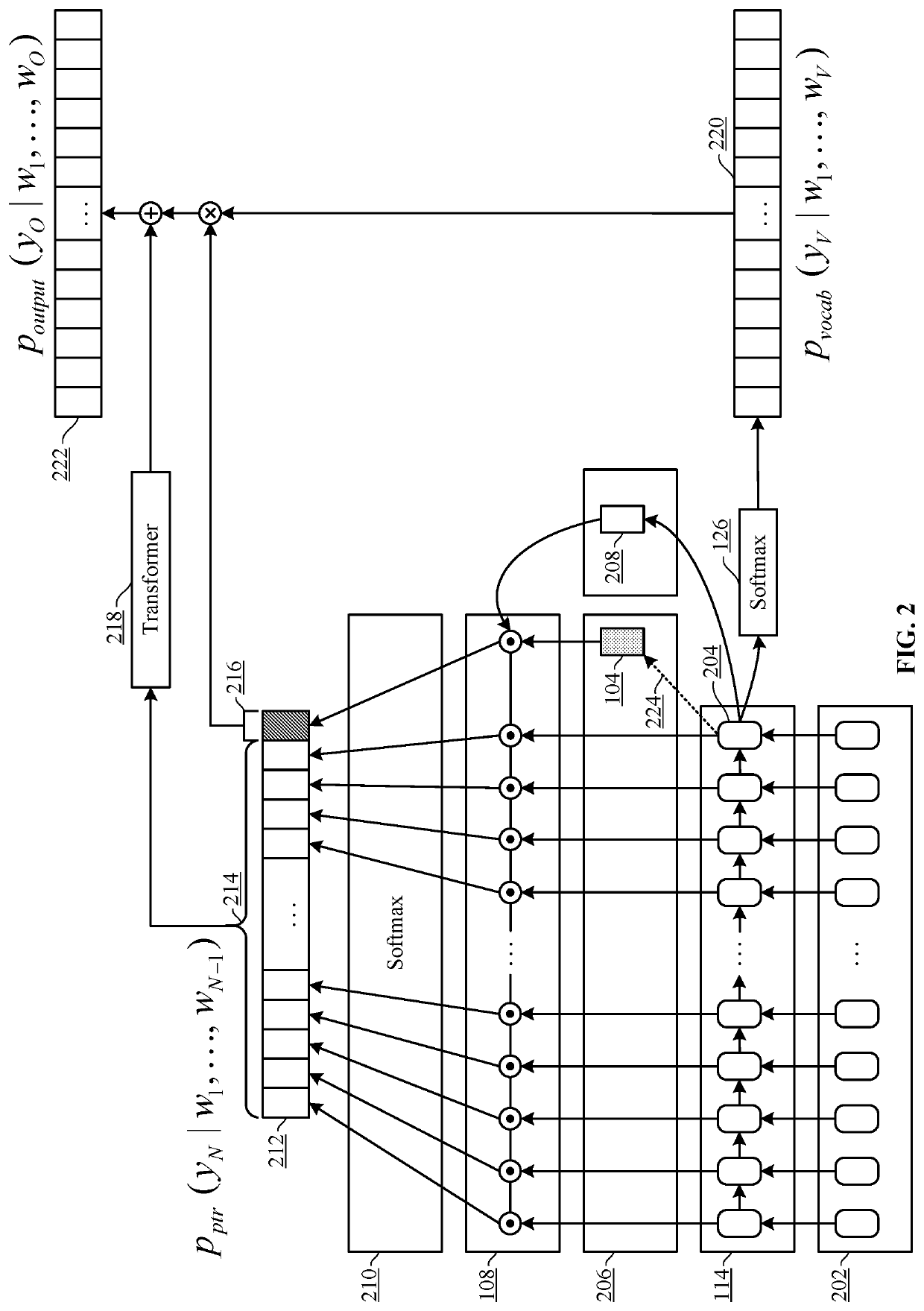

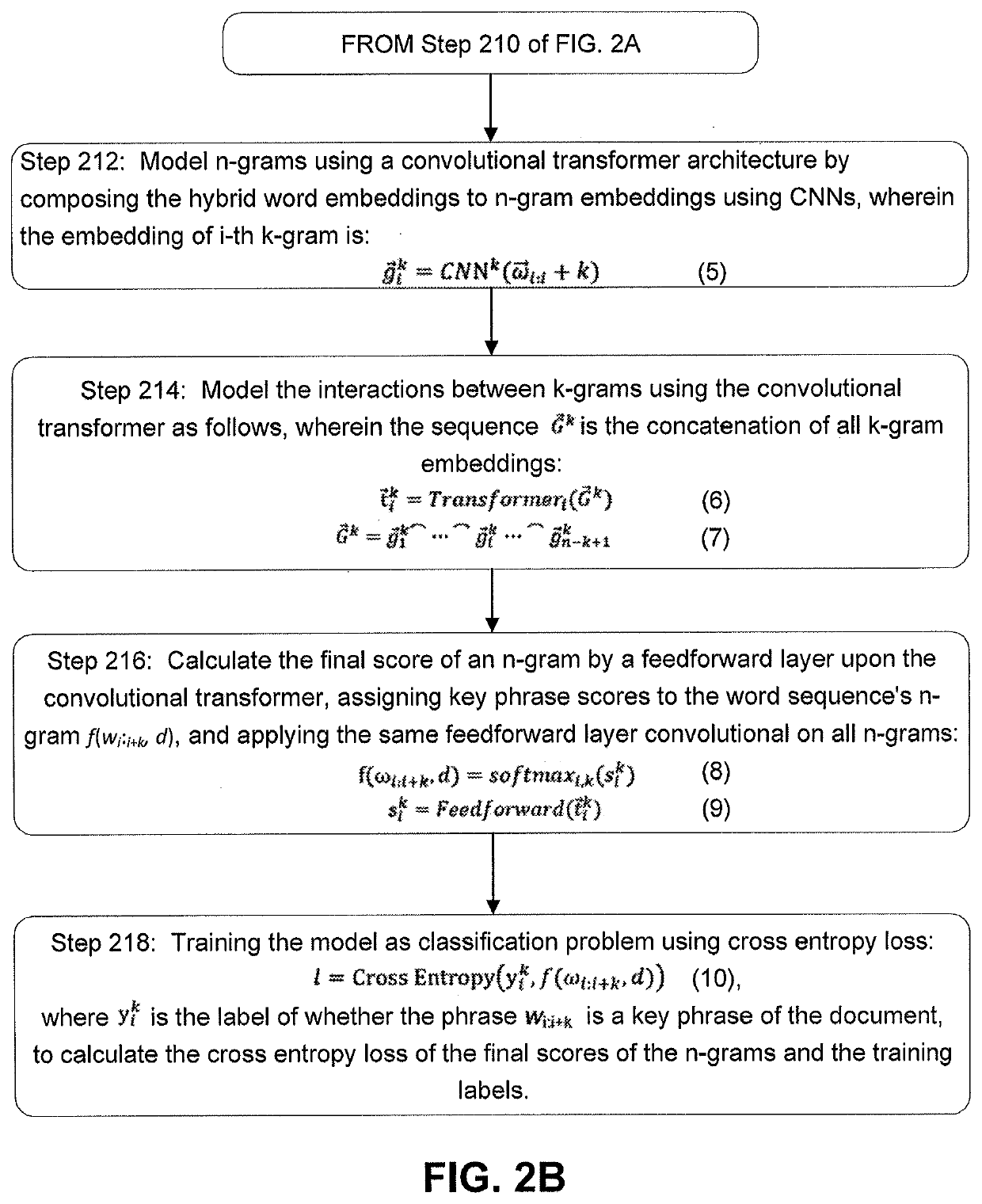

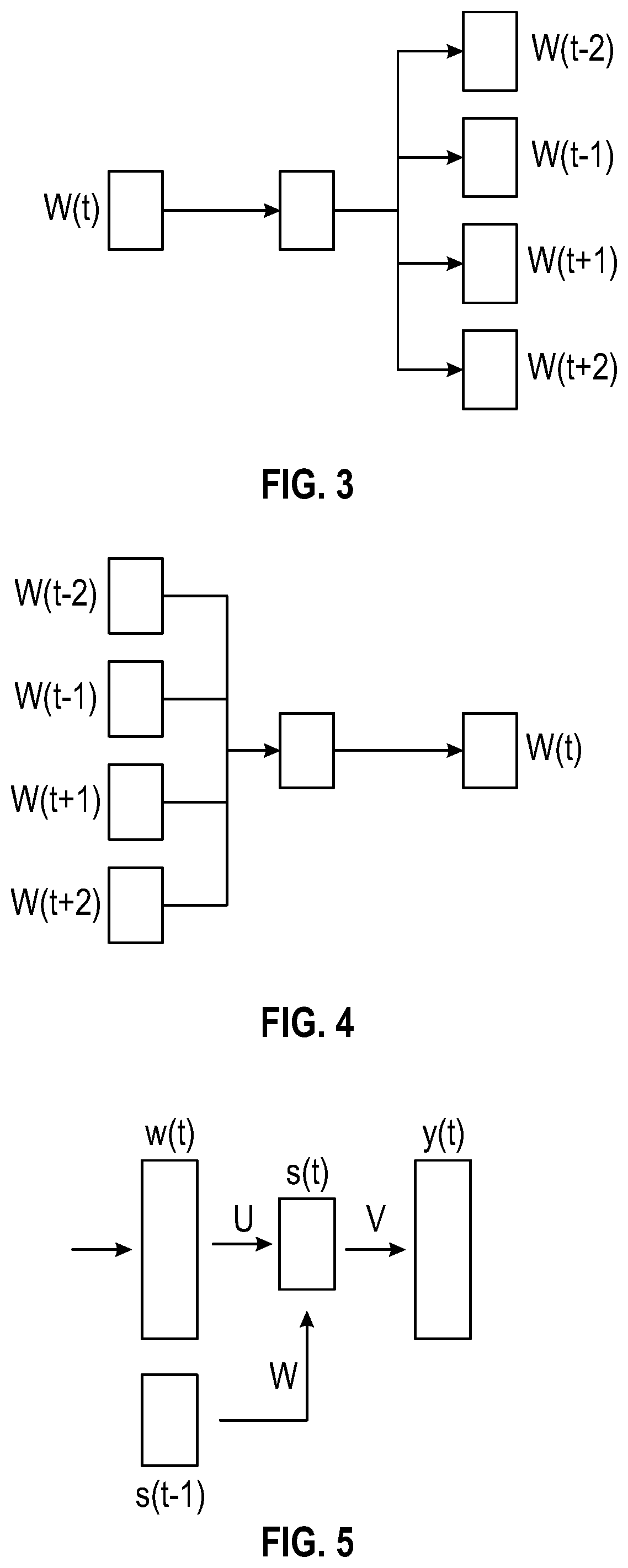

Pointer sentinel mixture architecture

The technology disclosed provides a so-called “pointer sentinel mixture architecture” for neural network sequence models that has the ability to either reproduce a token from a recent context or produce a token from a predefined vocabulary. In one implementation, a pointer sentinel-LSTM architecture achieves state of the art language modeling performance of 70.9 perplexity on the Penn Treebank dataset, while using far fewer parameters than a standard softmax LSTM.

Owner:SALESFORCE COM INC

Semantic language modeling and confidence measurement

ActiveUS20050055209A1Speech recognitionSpecial data processing applicationsLanguage modellingParse tree

A system and method for speech recognition includes generating a set of likely hypotheses in recognizing speech, rescoring the likely hypotheses by using semantic content by employing semantic structured language models, and scoring parse trees to identify a best sentence according to the sentence's parse tree by employing the semantic structured language models to clarify the recognized speech.

Owner:NUANCE COMM INC

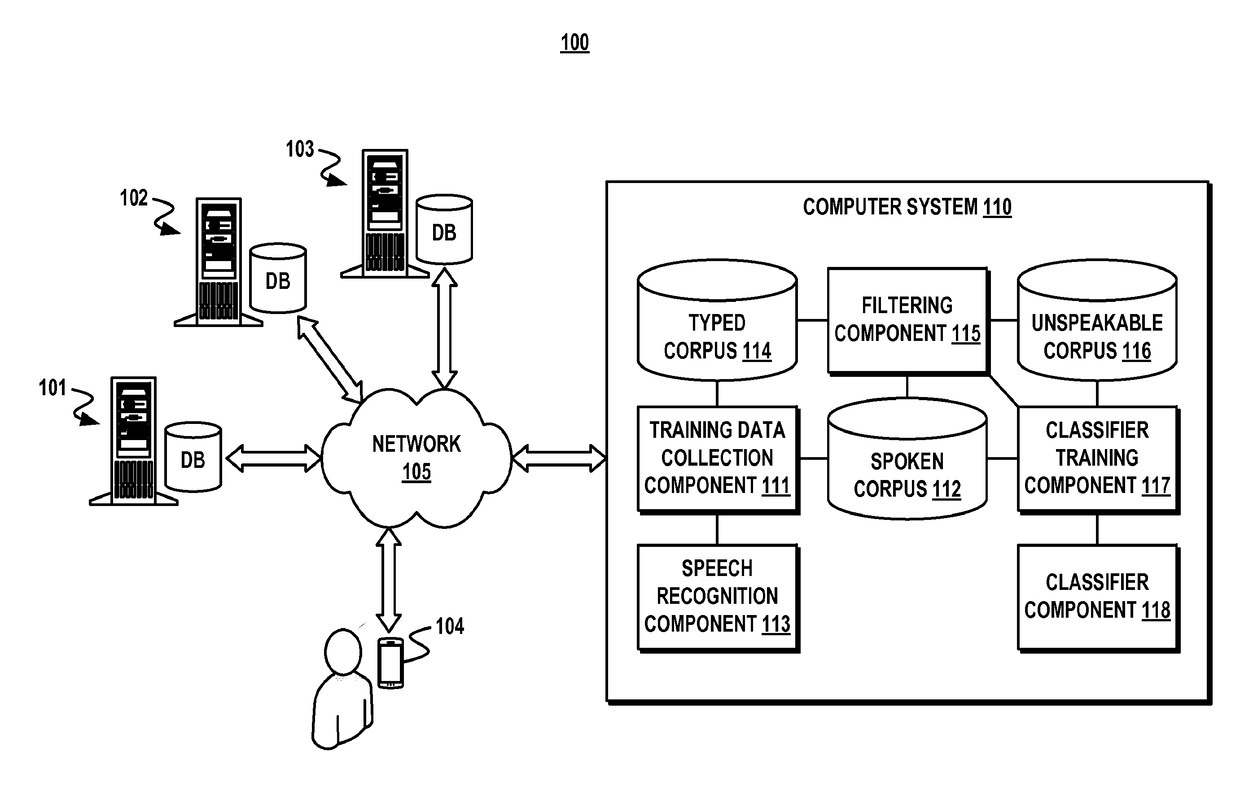

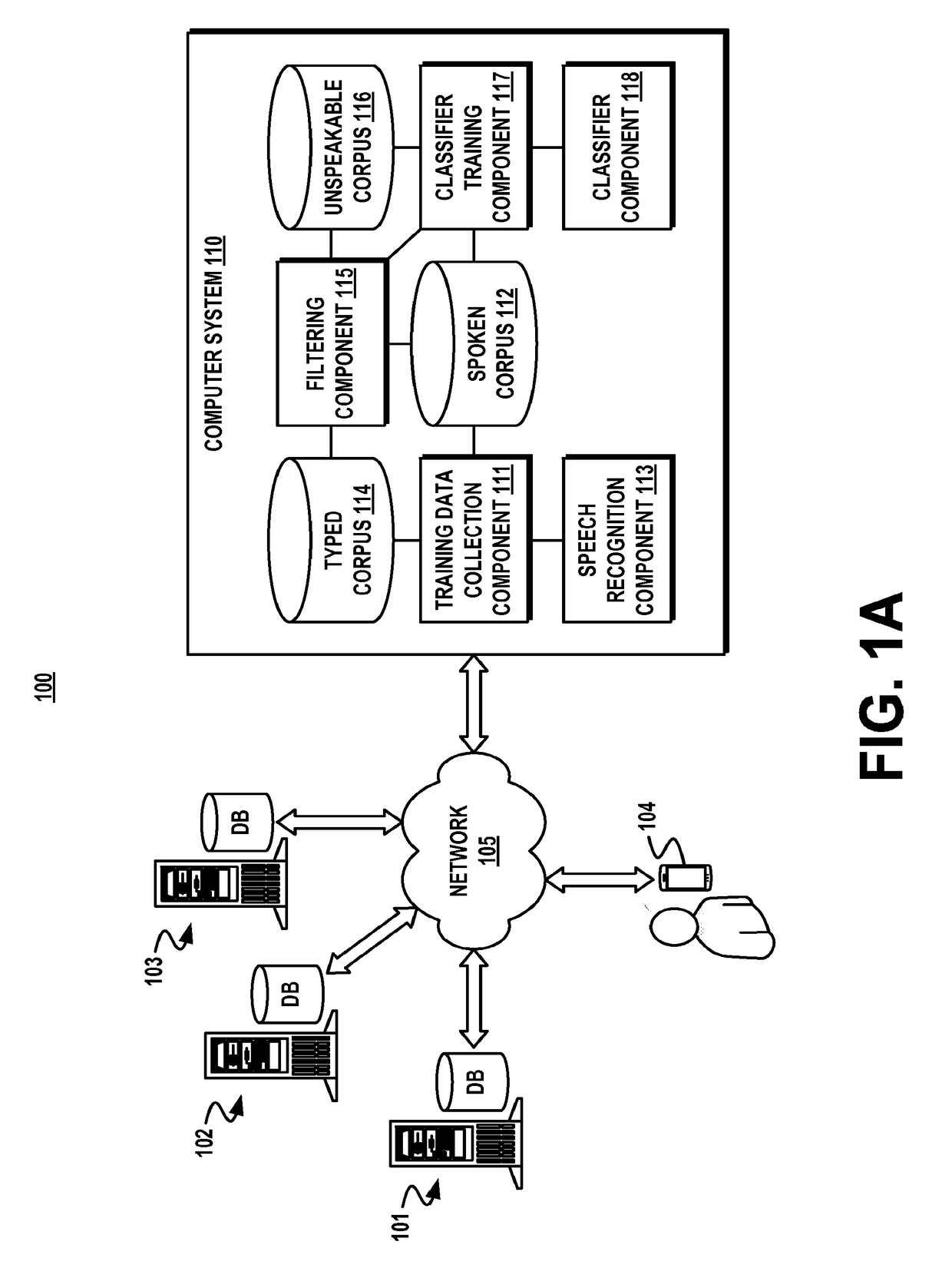

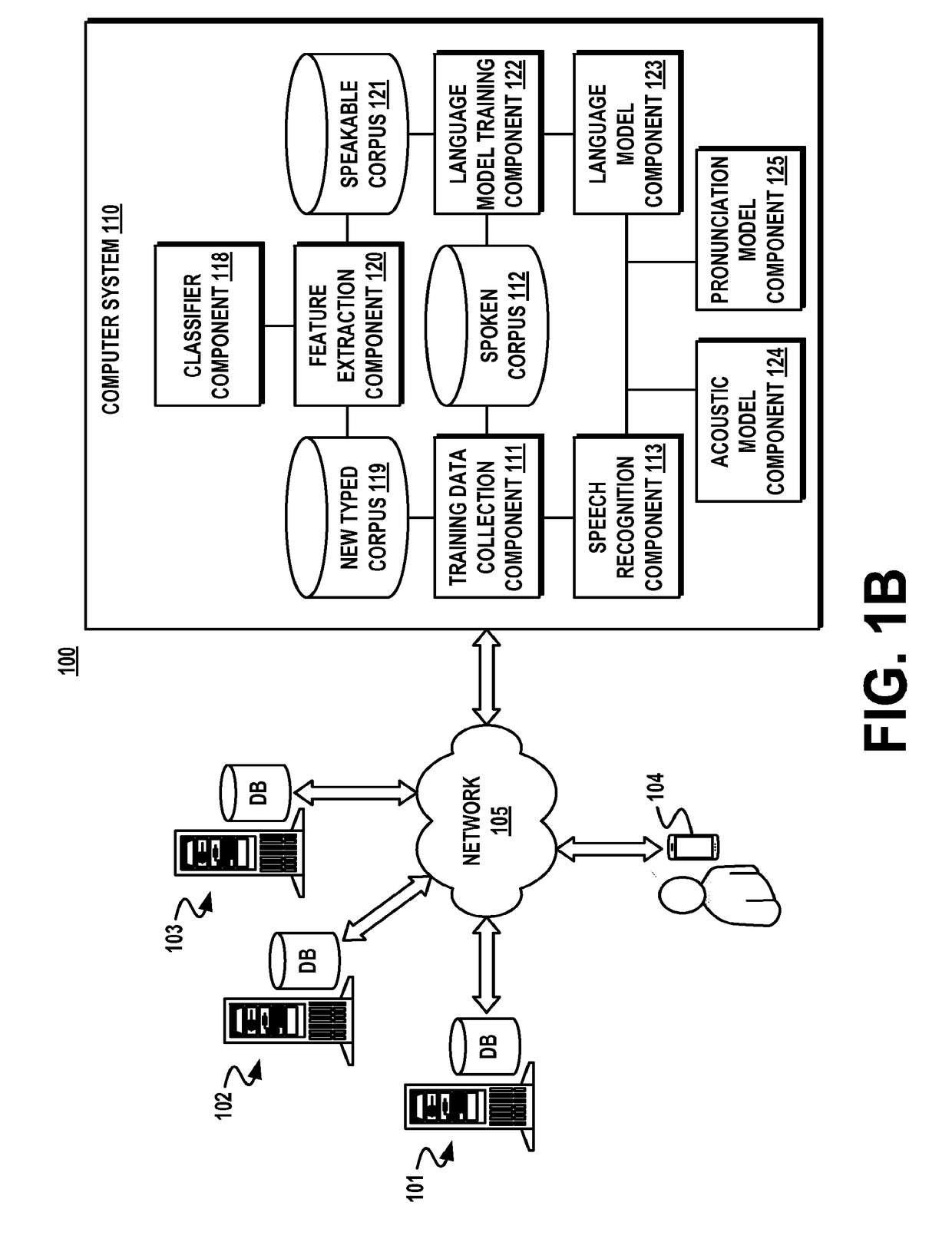

Language modeling based on spoken and unspeakable corpuses

A computer system for language modeling may collect training data from one or more information sources, generate a spoken corpus containing text of transcribed speech, and generate a typed corpus containing typed text. The computer system may derive feature vectors from the spoken corpus, analyze the typed corpus to determine feature vectors representing items of typed text, and generate an unspeakable corpus by filtering the typed corpus to remove each item of typed text represented by a feature vector that is within a similarity threshold of a feature vector derived from the spoken corpus. The computer system may derive feature vectors from the unspeakable corpus and train a classifier to perform discriminative data selection for language modeling based on the feature vectors derived from the spoken corpus and the feature vectors derived from the unspeakable corpus.

Owner:MICROSOFT TECH LICENSING LLC

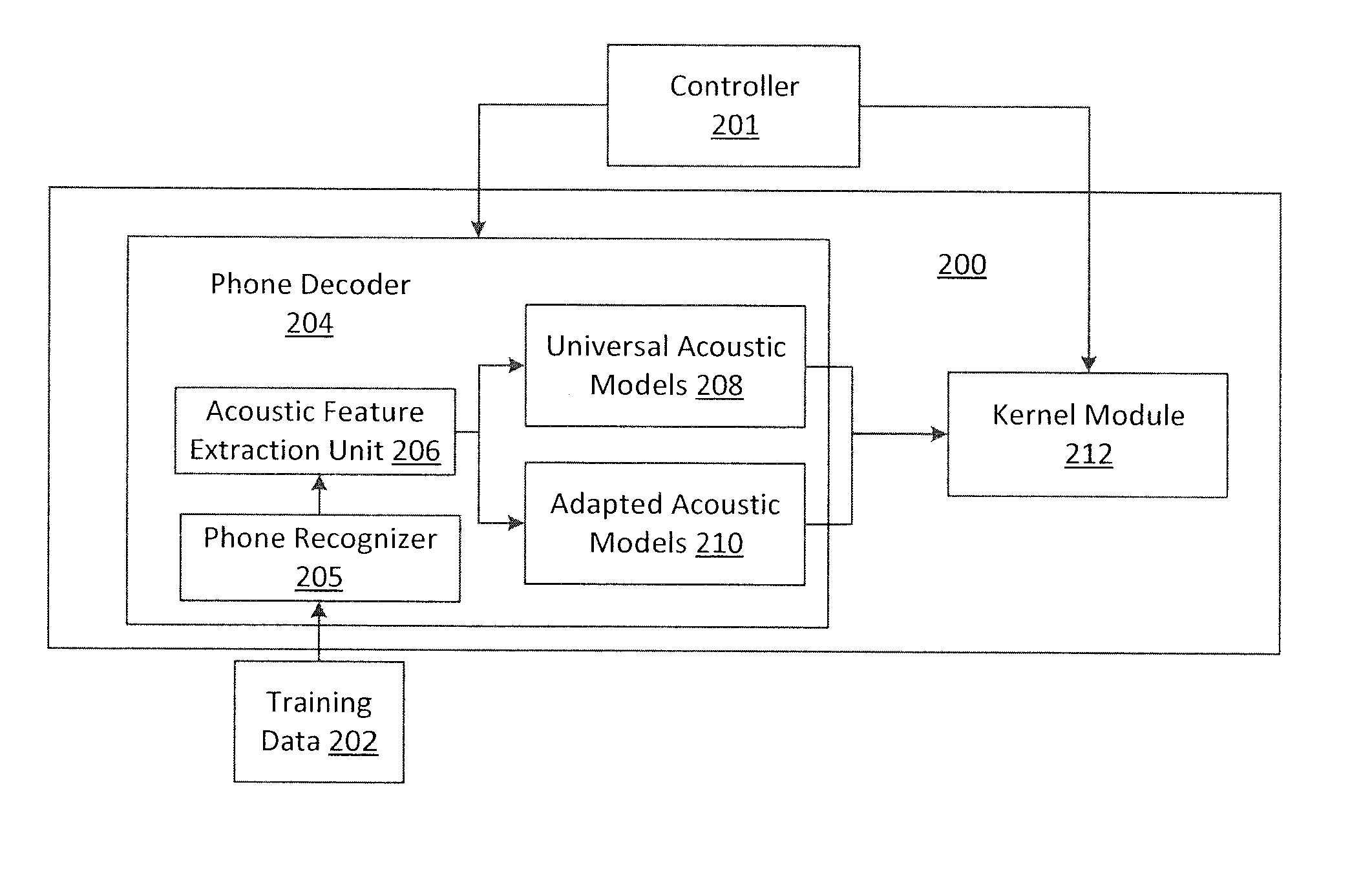

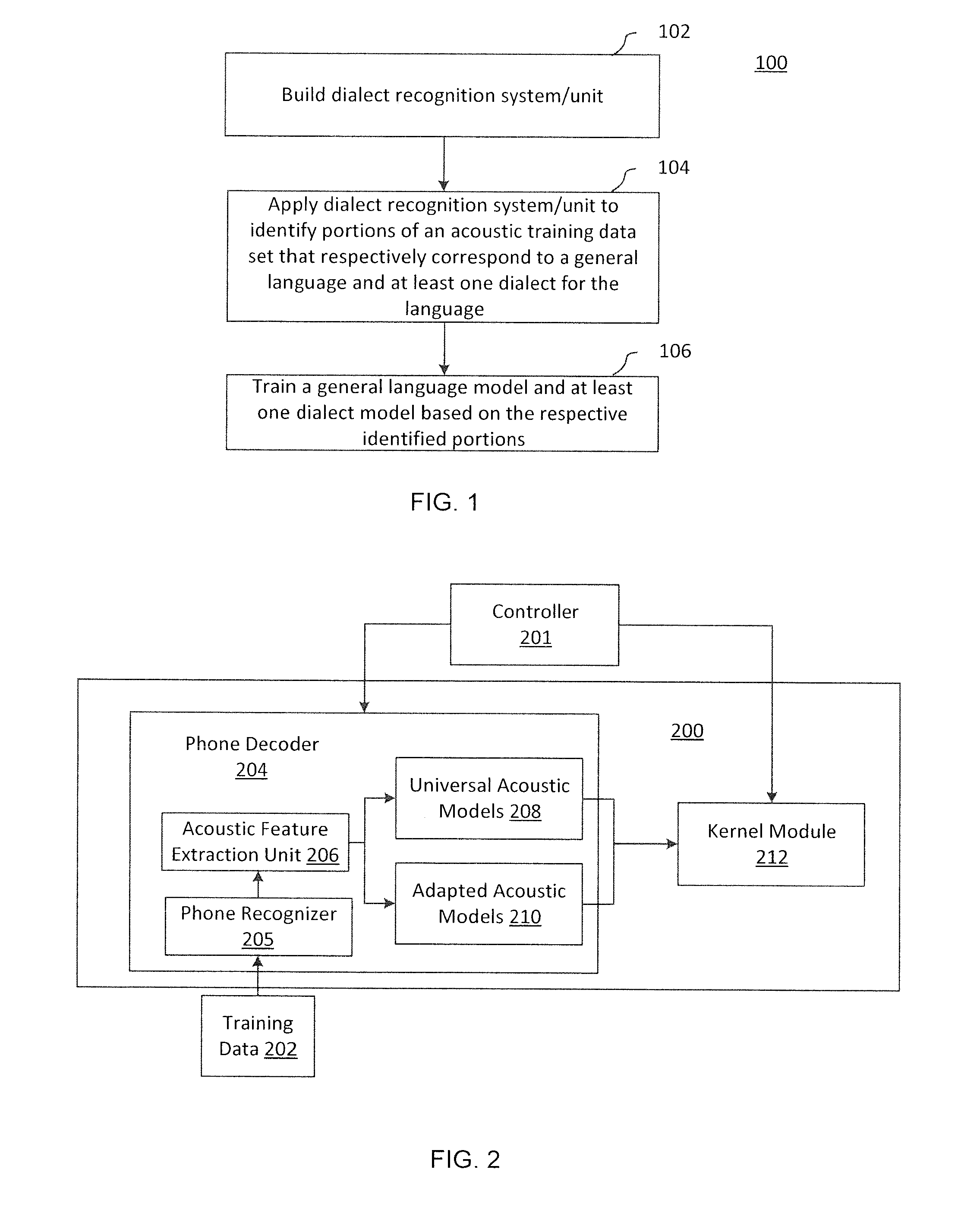

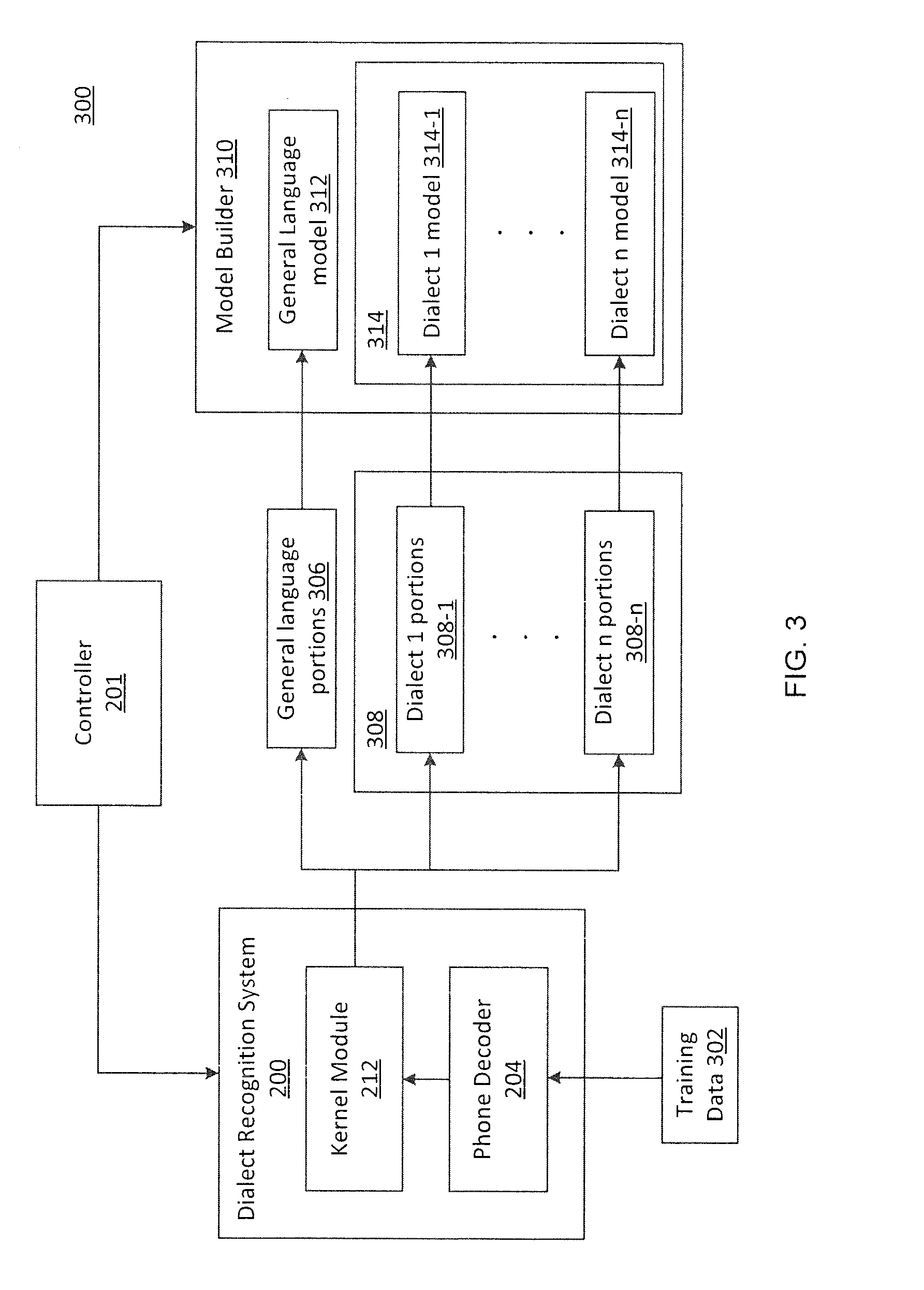

Dialect-specific acoustic language modeling and speech recognition

Methods and systems for automatic speech recognition and methods and systems for training acoustic language models are disclosed. In accordance with one automatic speech recognition method, an acoustic input data set is analyzed to identify portions of the input data set that conform to a general language and to identify portions of the input data set that conform to at least one dialect of the general language. In addition, a general language model and at least one dialect language model is applied to the input data set to perform speech recognition by dynamically selecting between the models in accordance with each of the identified portions. Further, speech recognition results obtained in accordance with the application of the models is output.

Owner:IBM CORP

Pointer sentinel mixture architecture

Owner:SALESFORCE COM INC

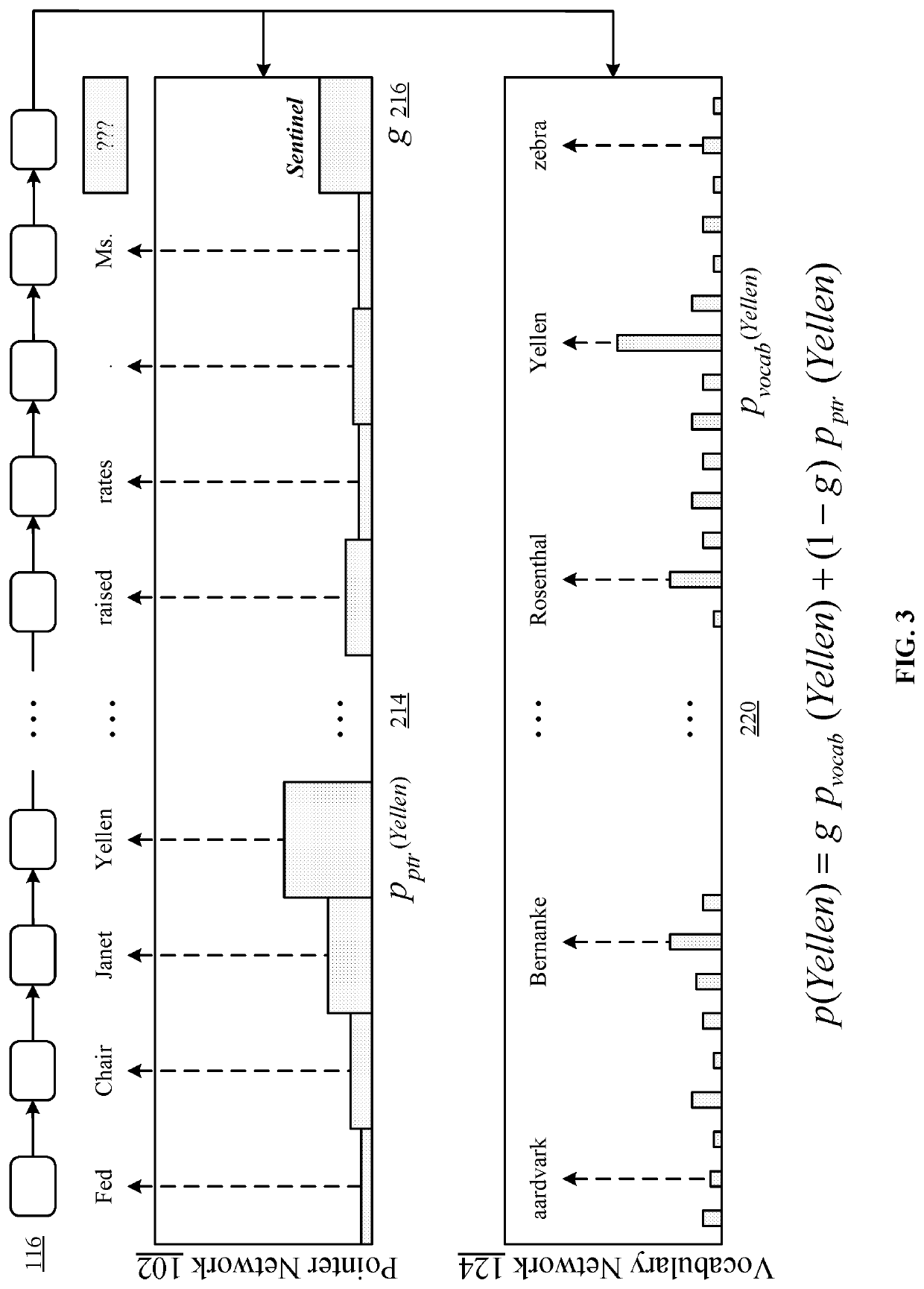

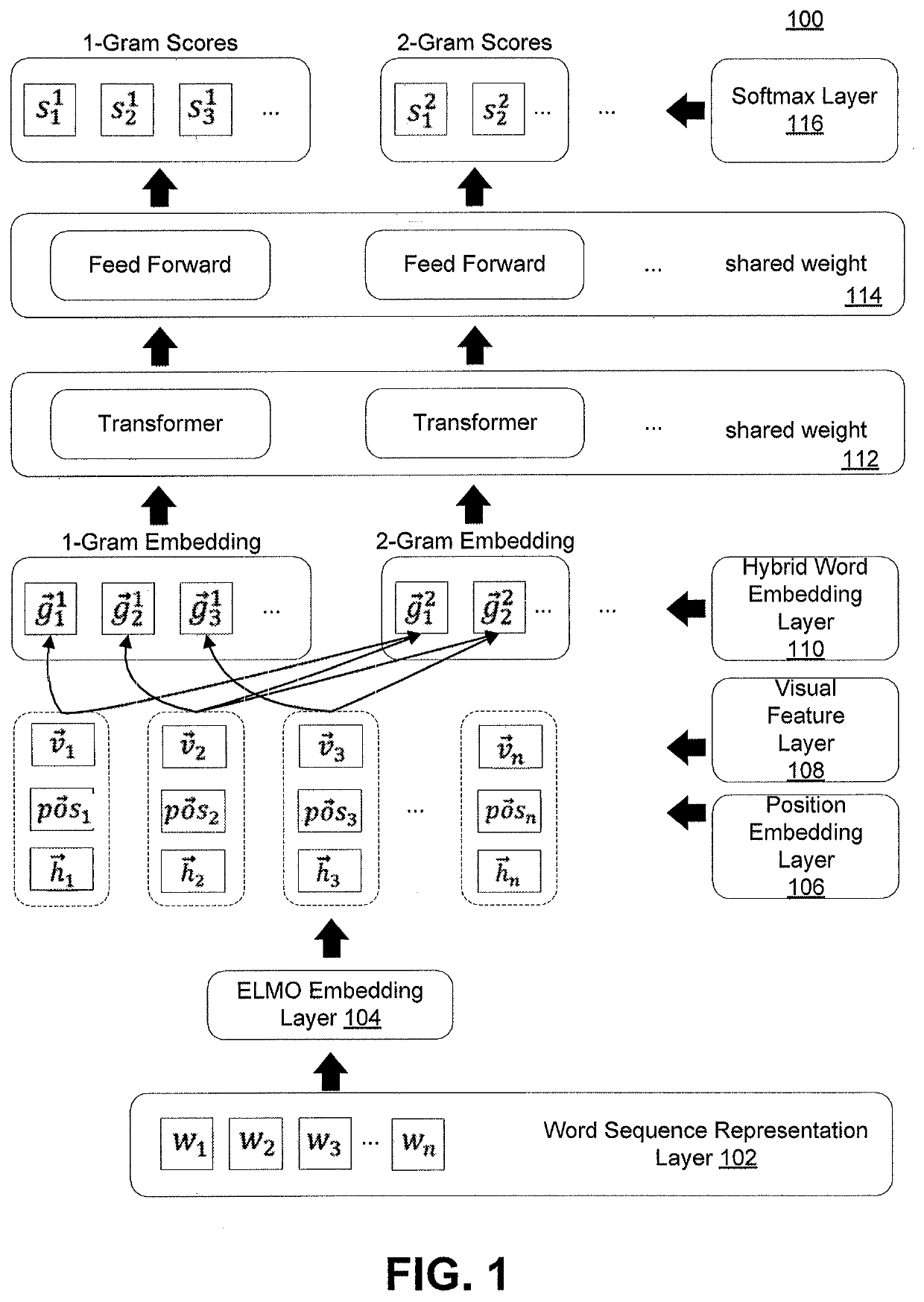

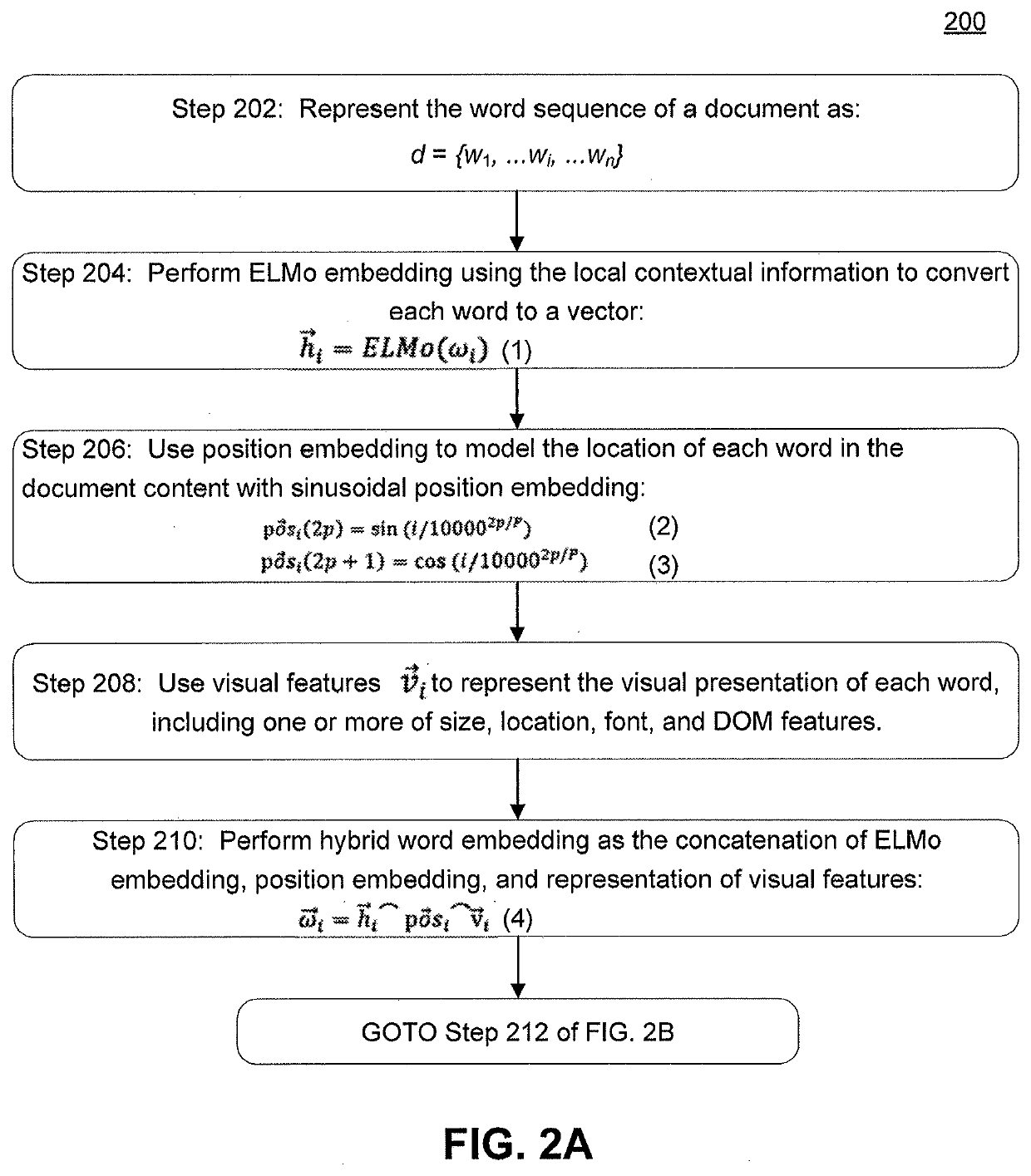

Keyphrase extraction beyond language modeling

ActiveUS20210004439A1Well formedSemantic analysisCharacter and pattern recognitionPattern recognitionLanguage modelling

A system for extracting a key phrase from a document includes a neural key phrase extraction model (“BLING-KPE”) having a first layer to extract a word sequence from the document, a second layer to represent each word in the word sequence by ELMo embedding, position embedding, and visual features, and a third layer to concatenate the ELMo embedding, the position embedding, and the visual features to produce hybrid word embeddings. A convolutional transformer models the hybrid word embeddings to n-gram embeddings, and a feedforward layer converts the n-gram embeddings into a probability distribution over a set of n-grams and calculates a key phrase score of each n-gram. The neural key phrase extraction model is trained on annotated data based on a labeled loss function to compute cross entropy loss of the key phrase score of each n-gram as compared with a label from the annotated dataset.

Owner:MICROSOFT TECH LICENSING LLC

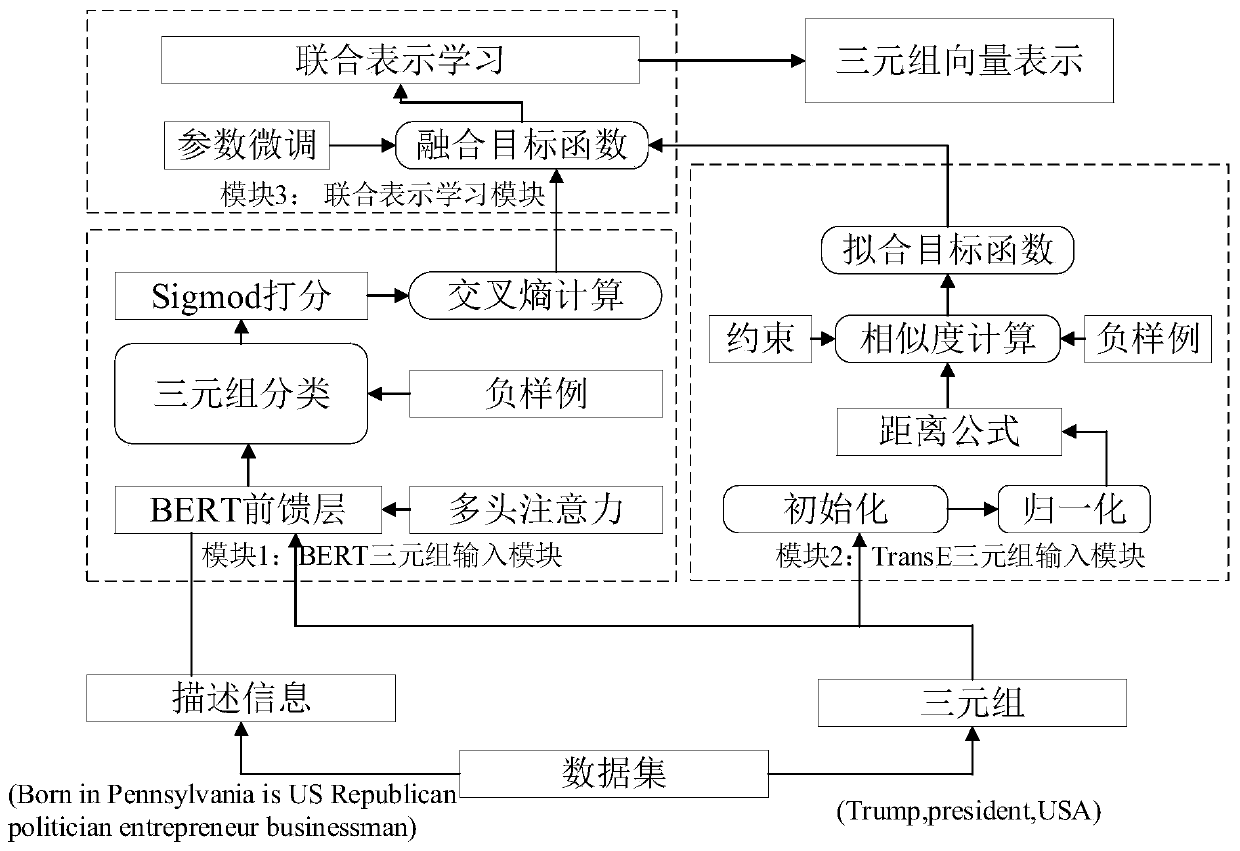

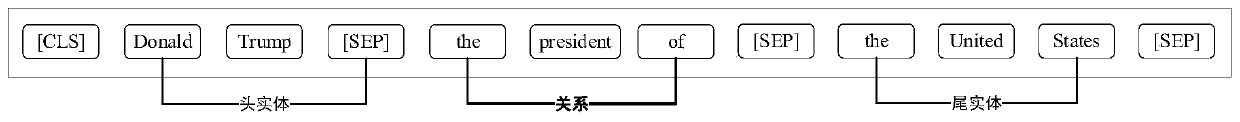

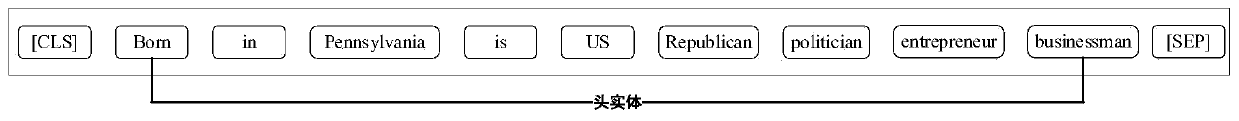

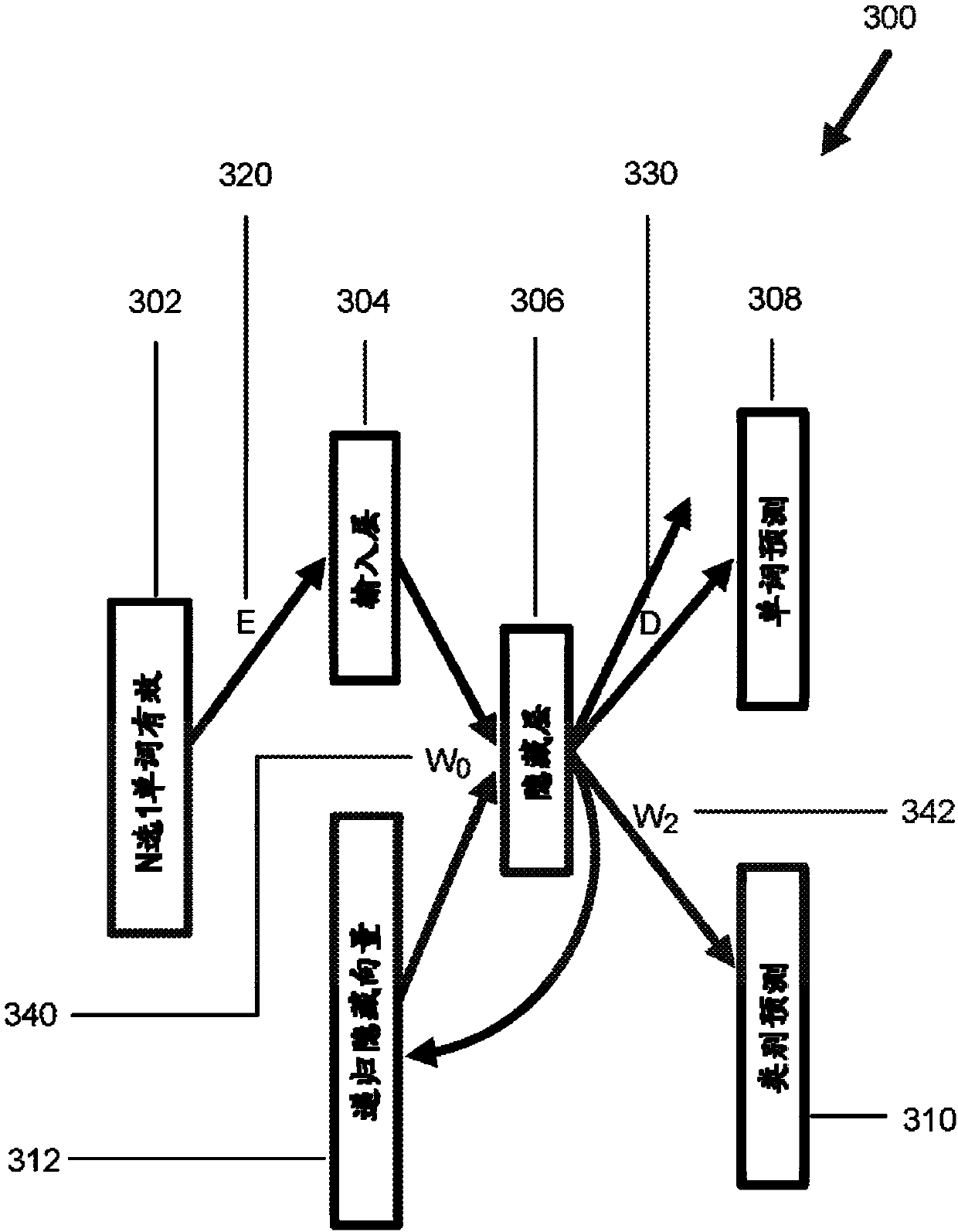

Model fusion triad representation learning system and method based on deep learning

ActiveCN111581395AReduce complexitySolve problems with different semanticsNatural language data processingMachine learningLinguistic modelRound complexity

The invention discloses a model fusion triad representation learning system and method based on deep learning. The method comprises the following steps: carrying out the embedded representation of a word through a pre-trained BERT language model, and obtaining a more contextualized representation of the word; meanwhile, a masking language modeling task of a BERT structure is used for taking a triple of the masking language modeling task as sequence input; the method is used for solving the problem of multiple semantics of the same entity; the mapping entity relationship can be represented differently in different fields by using a projection or conversion matrix; however, the transformed BERT can take the triad or the description information thereof as text input and train the triad and the description information together; the mechanism of the BERT itself has different word vectors for the entity relationship in different sentences, and the problem of different semantics of the entityrelationship is effectively solved, so that the selection of TransE is not limited by the model itself. On the contrary, the model is simple enough to truly reflect the corresponding relationship among the triples. Meanwhile, the complexity of the model is reduced.

Owner:XI AN JIAOTONG UNIV

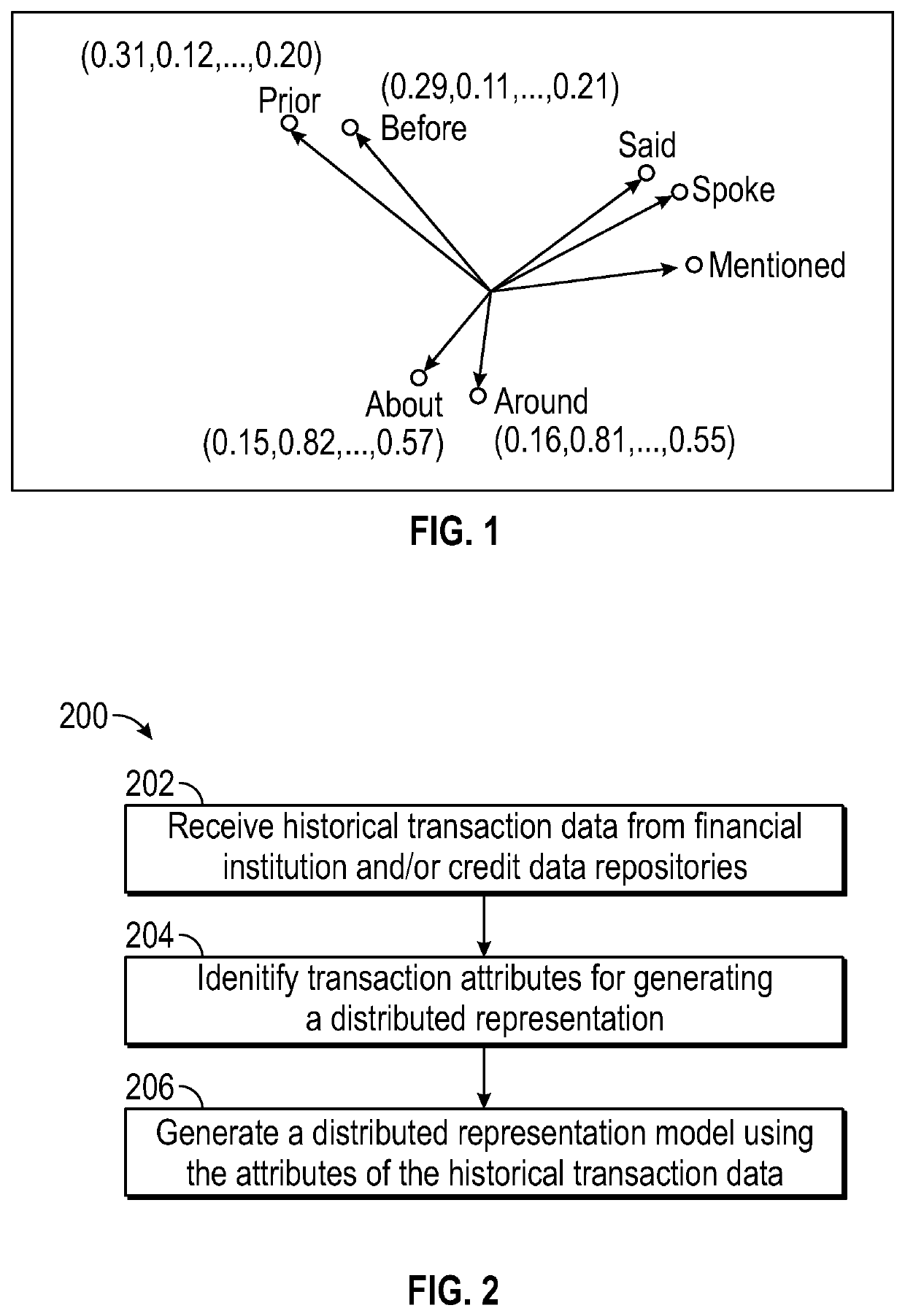

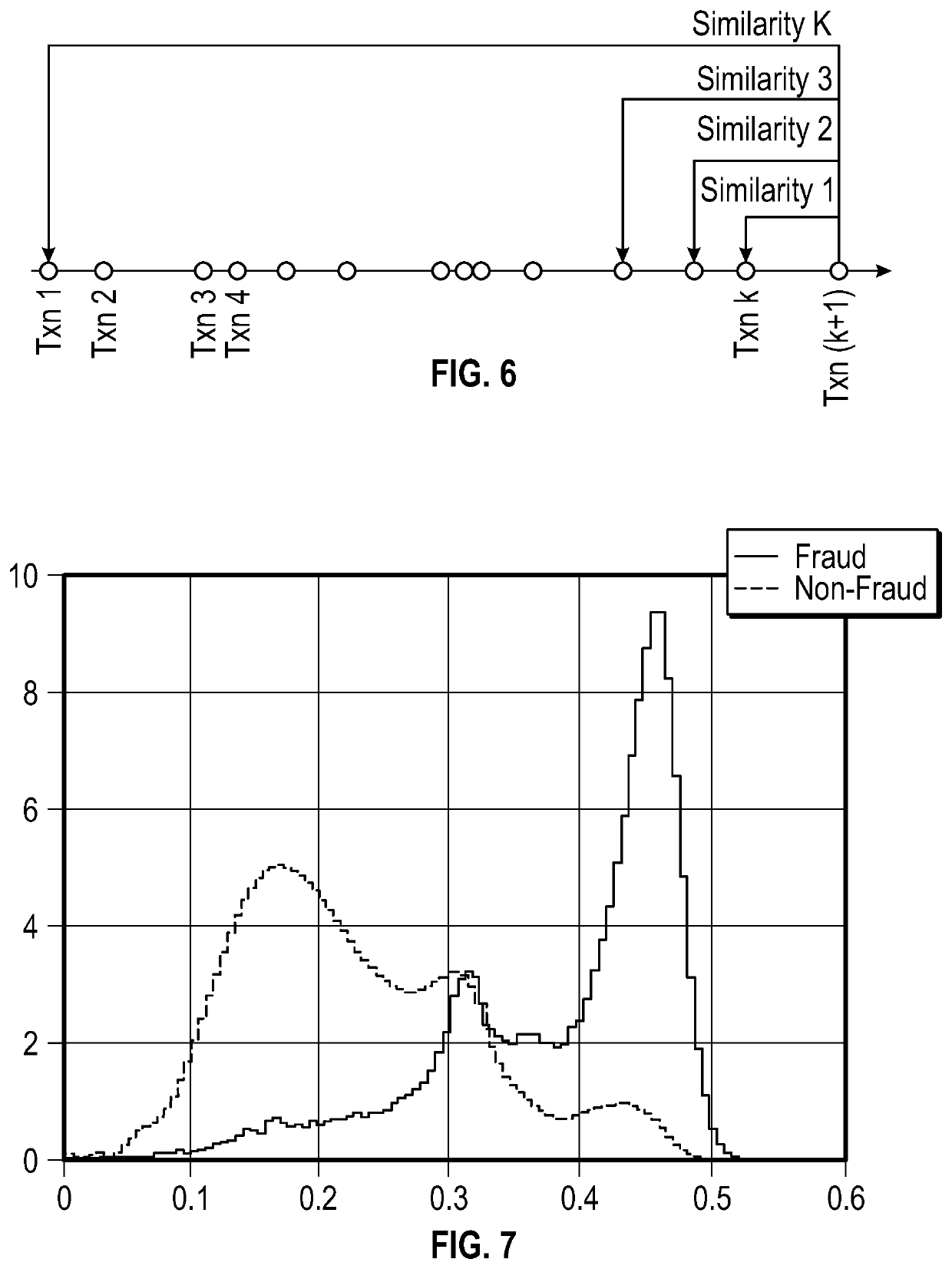

Behavior analysis using distributed representations of event data

ActiveUS11151468B1Reduce data volumeProbabilistic networksSpecial data processing applicationsLinguistic modelEvent data

The features relate to artificial intelligence directed detection of user behavior based on complex analysis of user event data including language modeling to generate distributed representations of user behavior. Further features are described for reducing the amount of data needed to represent relationships between events such as transaction events received from card readers or point of sale systems. Machine learning features for dynamically determining an optimal set of attributes to use as the language model as well as for comparing current event data to historical event data are also included.

Owner:EXPERIAN INFORMATION SOLUTIONS

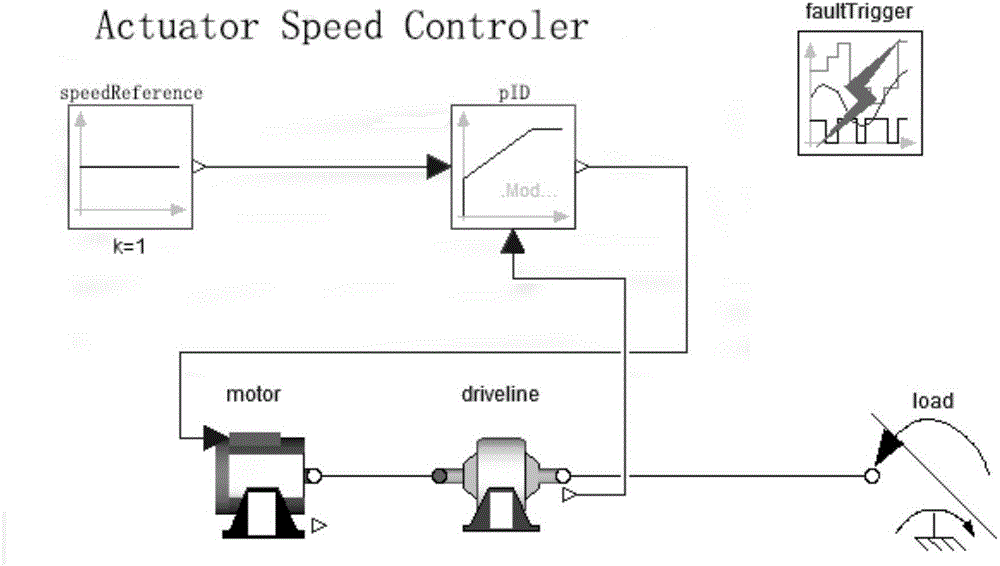

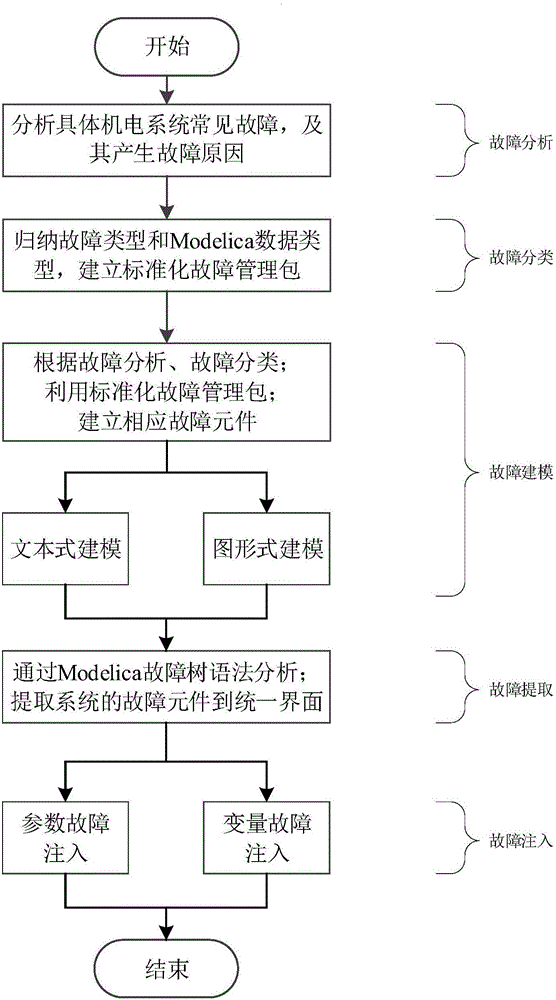

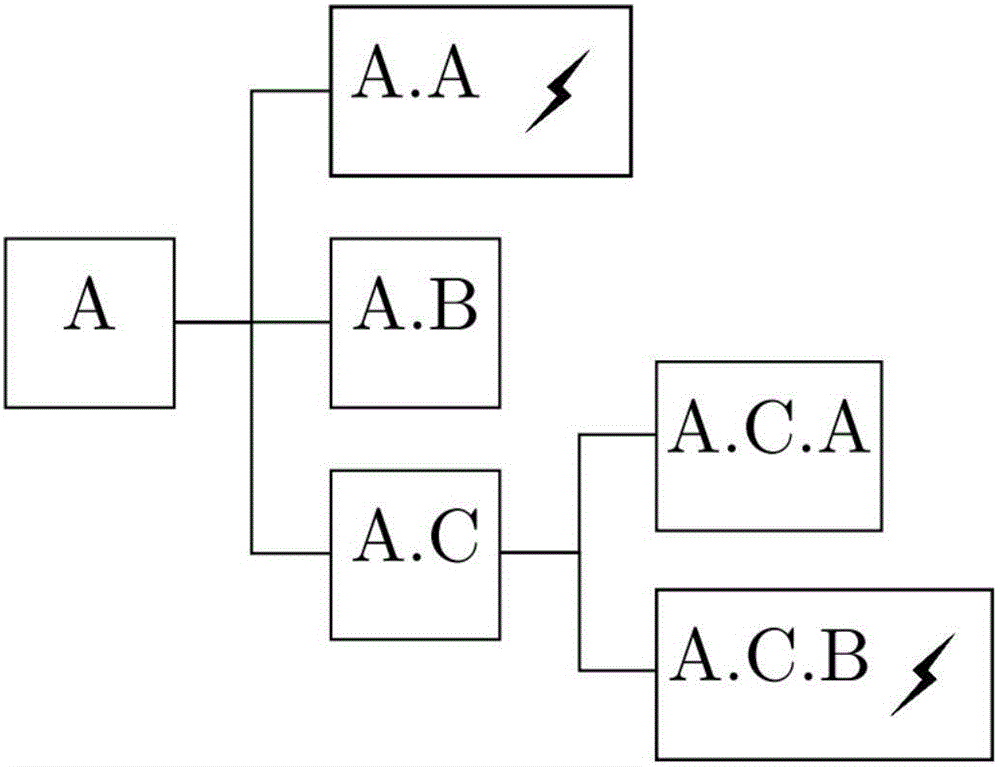

Fault management method based on Modelica model

ActiveCN106250608AShort construction periodIntuitive displayGeometric CADMachine gearing/transmission testingModelicaCoupling

The invention provides a fault management method based on a Modelica model. The method comprises the following specific steps of (1) fault analysis; (2) fault classification; (3) fault modelling; (4) fault extraction; and (5) fault injection for realizing modification of parameters of a fault component in the Modelica model, wherein the fault injection includes parameter type fault injection and variable type fault injection. Through the steps, the fault management based on the Modelica model is realized, the flexible modelling of the fault component and a unified fault management interface are provided, and a unified fault injection mode is adopted to be convenient for application of subsequent search methods related to fault management. The method has the characteristics of simple Modelica language modelling, visual display, strong applicability and the like, so that the method can well adapt to a complex system with the stronger electromechanical liquid coupling, the unified and reliable fault management method is provided, the construction period of subsequent fault diagnosis platforms is reduced, and the method has popularization and application value.

Owner:BEIHANG UNIV

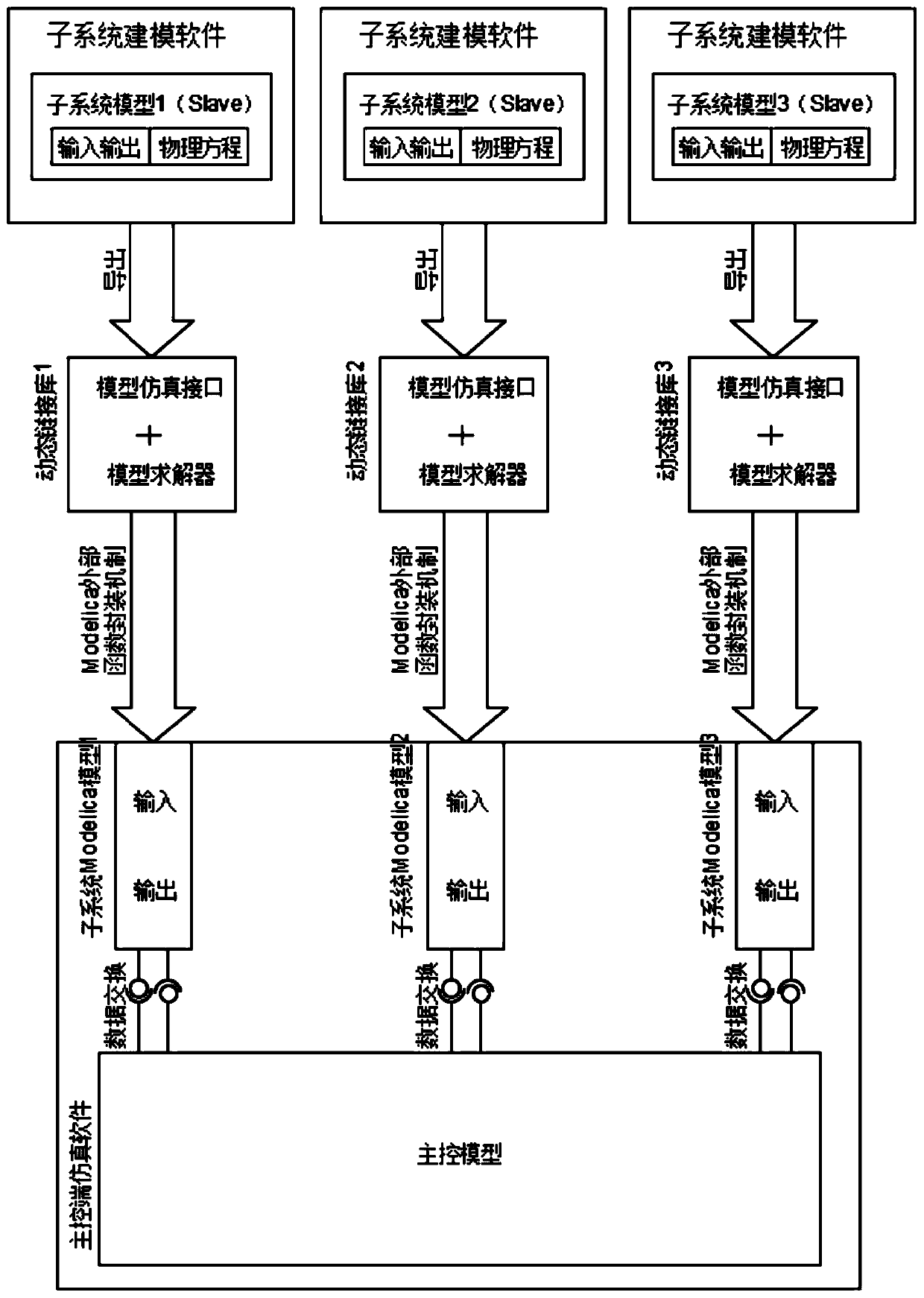

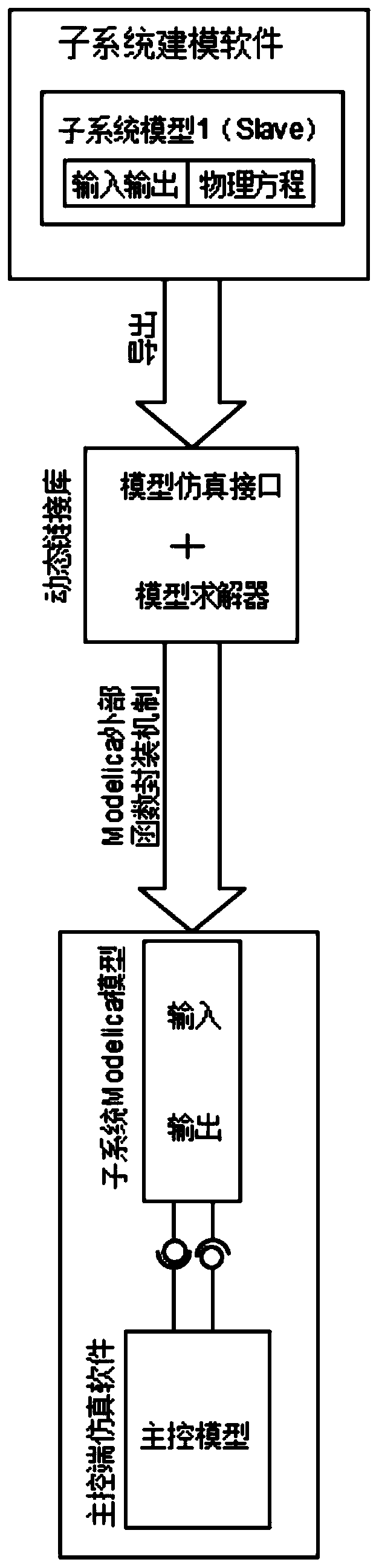

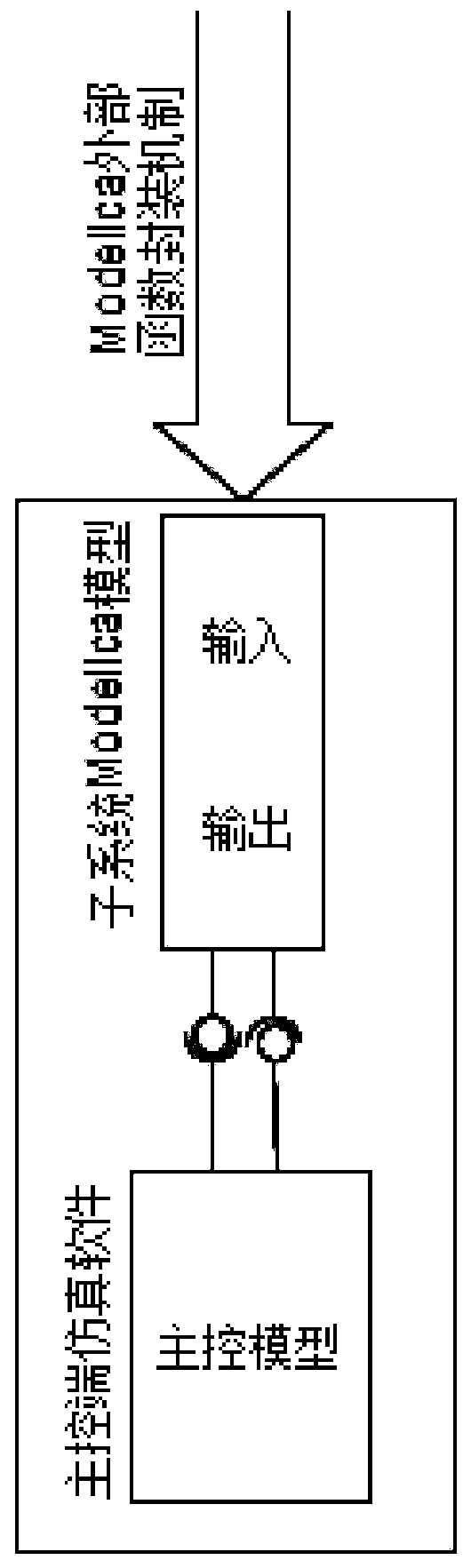

Joint simulation system based on Modelica and construction method thereof

PendingCN111414695AFast solutionReduce couplingDesign optimisation/simulationData synchronizationLinguistic model

The invention discloses a joint simulation system based on Modelica and a construction method thereof. The method comprises the steps of: establishing a subsystem model in each modeling software; using various different modeling software and languages, for example, a C language model is established in Visual Studio, a Simulink model is established in Simulink, an AMESim model is established in AMESim, a Fortran language model is established in Visual Studio, and the like; compiling each subsystem model to generate a dynamic link library, wherein the dynamic link library comprises a model simulation interface and a model solver; packaging each sub-calculation model into a subsystem Modelica model by utilizing an external function mechanism of Modelica; and finally, writing a master controlmodel by using a Modelica language, defining a sampling step length of each subsystem module, and scheduling each subsystem by using a solver of a master control end to realize data synchronization ofthe system. According to the technology, multi-modeling software and multi-language modeling can be supported, system simulation is divided into system scheduling and subsystem calculation, all subsystems are placed in independent processes to be solved, and the solving speed of the system is increased.

Owner:苏州同元软控信息技术有限公司 +1

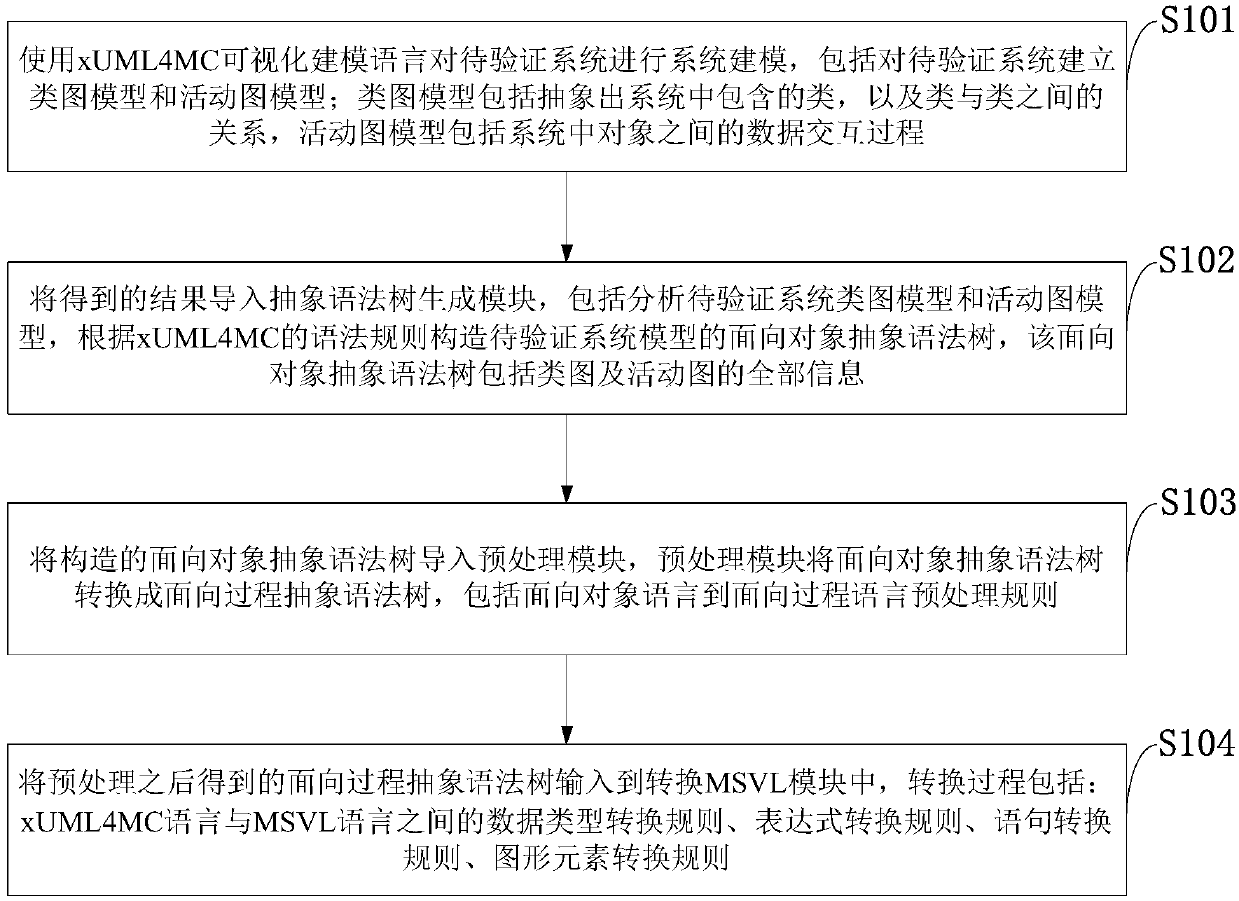

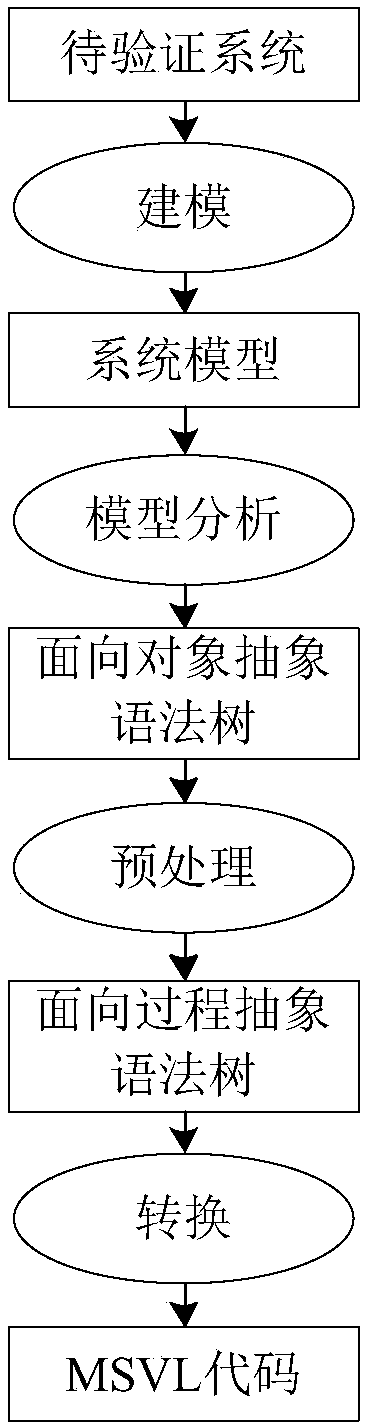

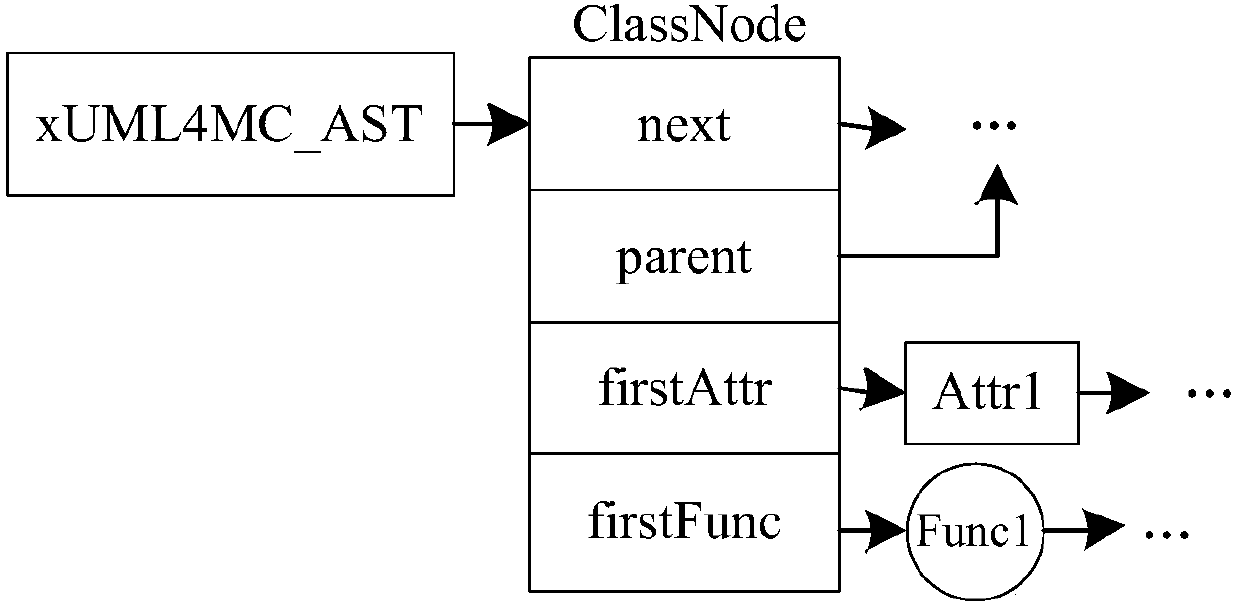

Method for converting xUML4MC model into MSVL program, and computer program

ActiveCN108037913AAchieve unificationImprove development efficiencyTransformation of program codeSoftware designModel descriptionProcess oriented

The invention belongs to the technical field of computer software and discloses a method for converting an xUML4MC model into an MSVL program, and a computer program. The method for converting the xUML4MC model into the MSVL program comprises the steps of establishing a class diagram model and activity diagram model description system; converting a class diagram and an activity diagram into an object-oriented abstract grammar tree; preprocessing and converting the object-oriented abstract grammar tree into a process-oriented abstract grammar tree; and converting the process-oriented abstract grammar tree into the MSVL program. The method is suitable for model detection system modeling; a UML-based visual modeling mode is intuitive in modeling and easy to master, and is more convenient andaccurate compared with a text language-based modeling mode; and the MSVL program generated by conversion can be directly used for model detection. The unification of software design modeling and modeldetection system modeling is realized; and the popularization of a model detection technology in the industrial circles is facilitated.

Owner:XIAN UNIV OF POSTS & TELECOMM

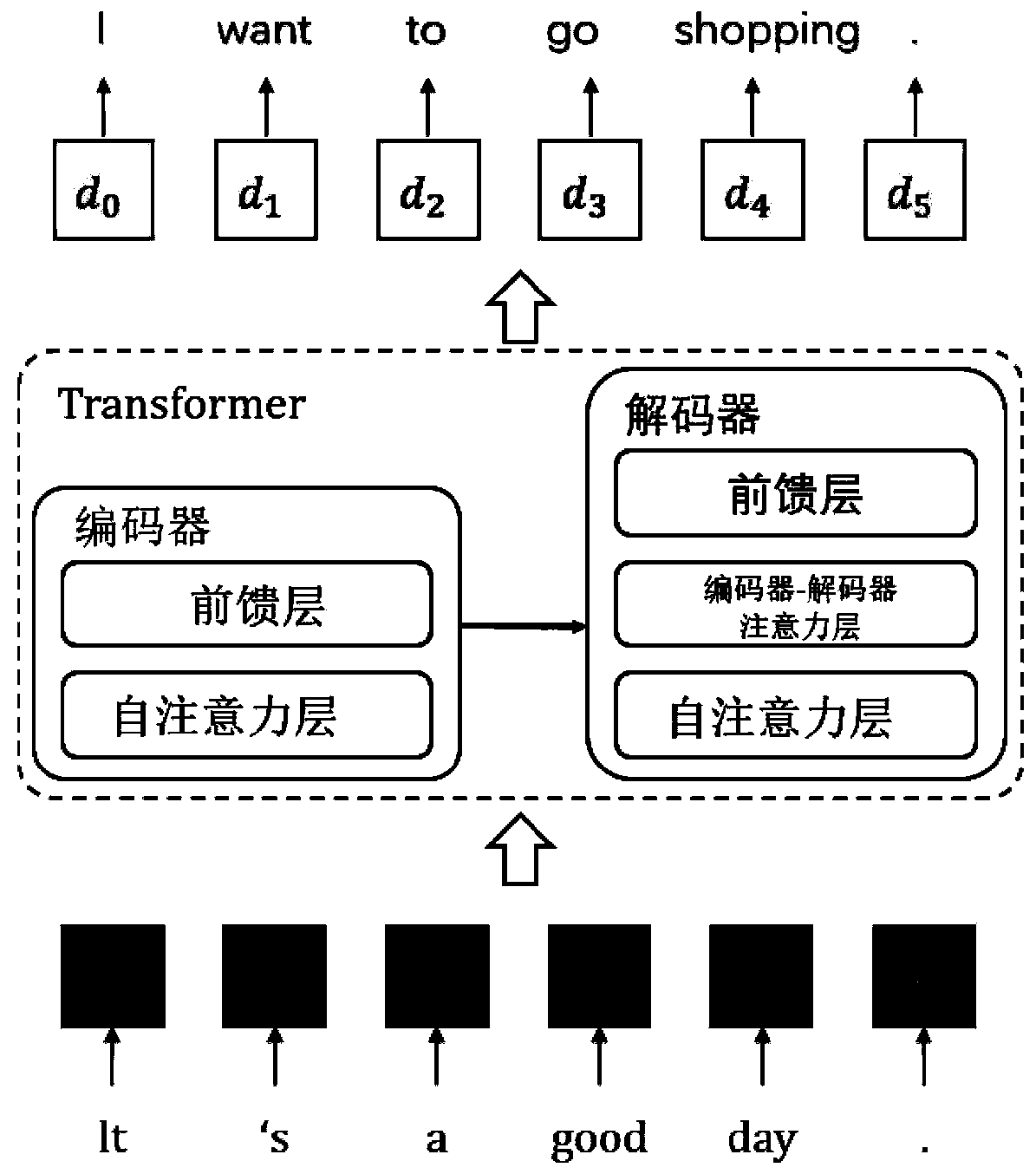

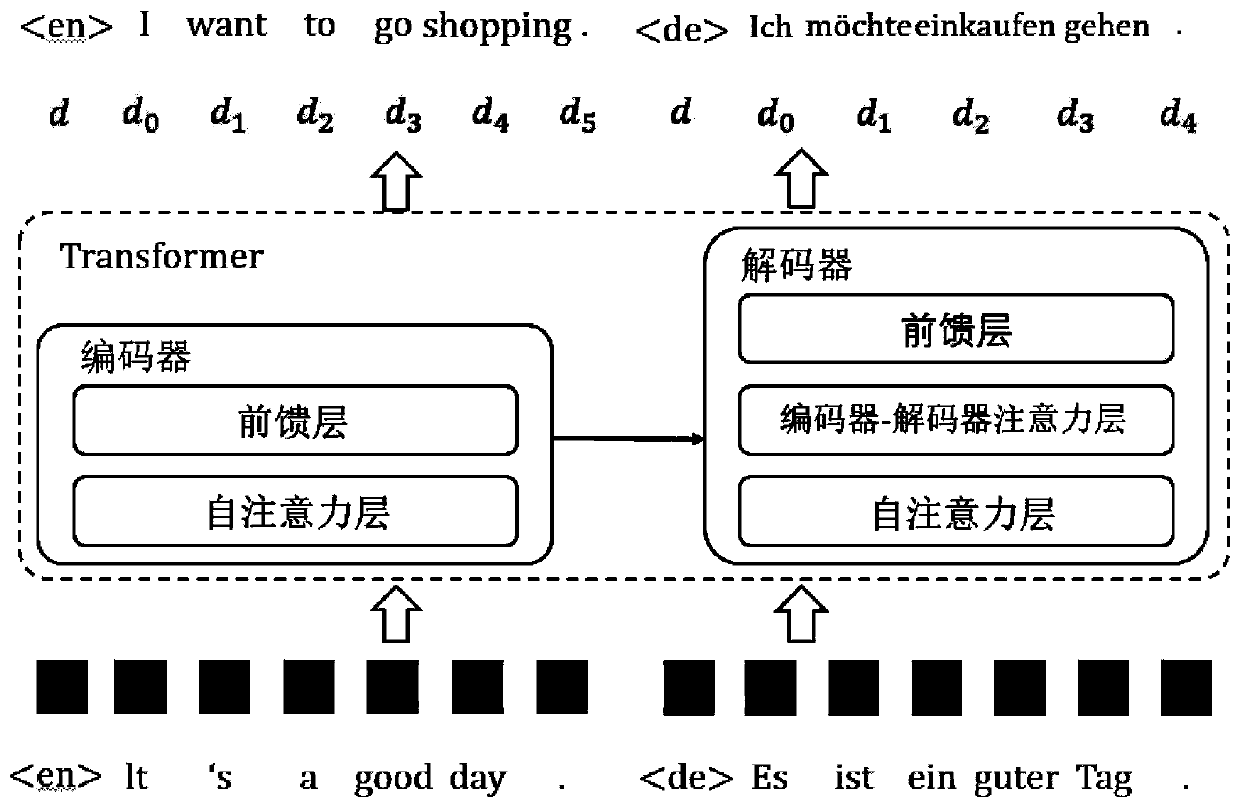

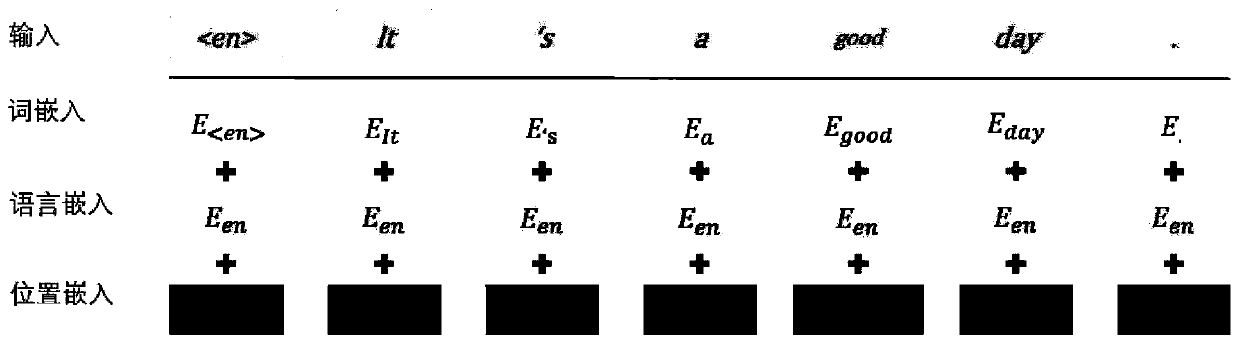

Encoder-decoder framework pre-training method for neural machine translation

ActiveCN111382580AFast convergenceImprove robustnessNatural language translationEnergy efficient computingPattern recognitionEncoder decoder

The invention discloses an encoder-decoder framework pre-training method for neural machine translation. The encoder-decoder framework pre-training method comprises the steps of: constructing a largenumber of multi-language document-level monolingual corpora, and adding a special identifier in front of each sentence to represent the language type of the sentence; processing sentence pairs to obtain training data; training monolingual data of different languages to obtain converged pre-training model parameters; constructing parallel corpora, and initializing parameters of a neural machine translation model by using the pre-training model parameters; finely adjusting model parameters of the initialized neural machine translation model through parallel corpora to finish a training process;and in a decoding stage, encoding a source language sentence by using an encoder of the trained neural machine translation model, and decoding by using a decoder to generate a target language sentence. According to the encoder-decoder framework pre-training method, the model has language modeling capability and language generation capability, the pre-training model is applied to the neural machinetranslation model, the convergence rate of the model can be increased, and the robustness of the model is improved.

Owner:沈阳雅译网络技术有限公司

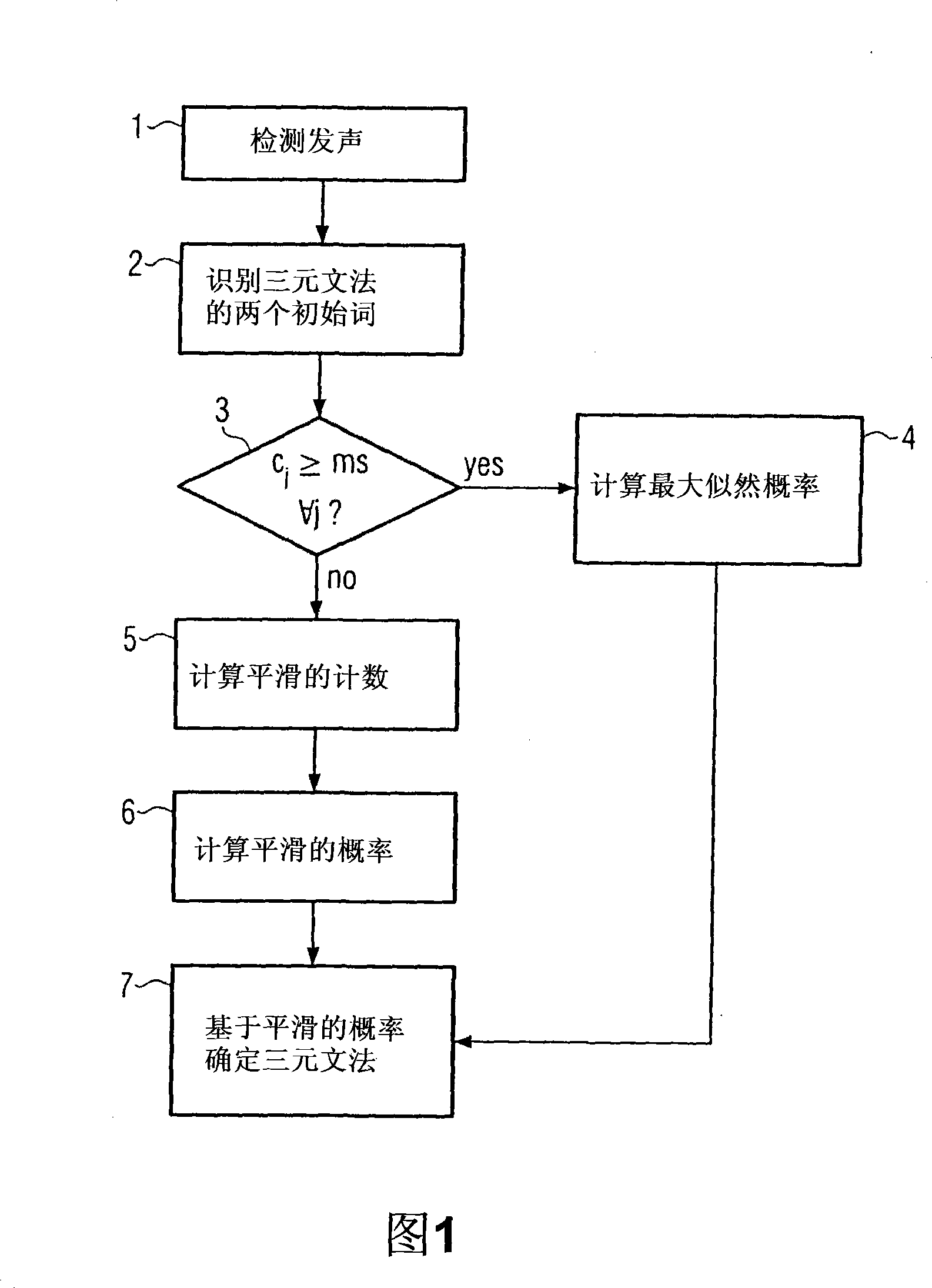

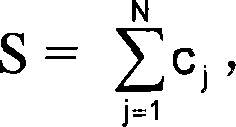

Speech recognition by statistical language using square-rootdiscounting

InactiveCN101123090ARobust Statistical Language ModelingSpeech recognitionAlgorithmLanguage modelling

The present invention relates to a method for statistical language modeling and speech recognition providing a predetermined number of words in a predetermined order, providing a training corpus comprising a predetermined number of sequences of words, wherein each of the sequences consist of the provided predetermined number of words in the predetermined order followed by at least one additional word, providing word candidates and for each word candidate calculating on the basis of the training corpus the probability that the word candidate follows the provided predetermined number of words, determining at least one word candidate for which the calculated probability exceeds a predetermined threshold, wherein the probabilities of the word candidate are calculated based on smoothed maximum-likelihood probabilities calculated for the sequences of words of the training corpus, wherein the smoothed maximum-likelihood probabilities are larger than or equal to a predetermined real positive number that is less than or equal to the inverse of the predetermined number of sequences of words of the training corpus.

Owner:NUANCE COMM INC

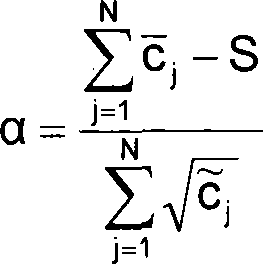

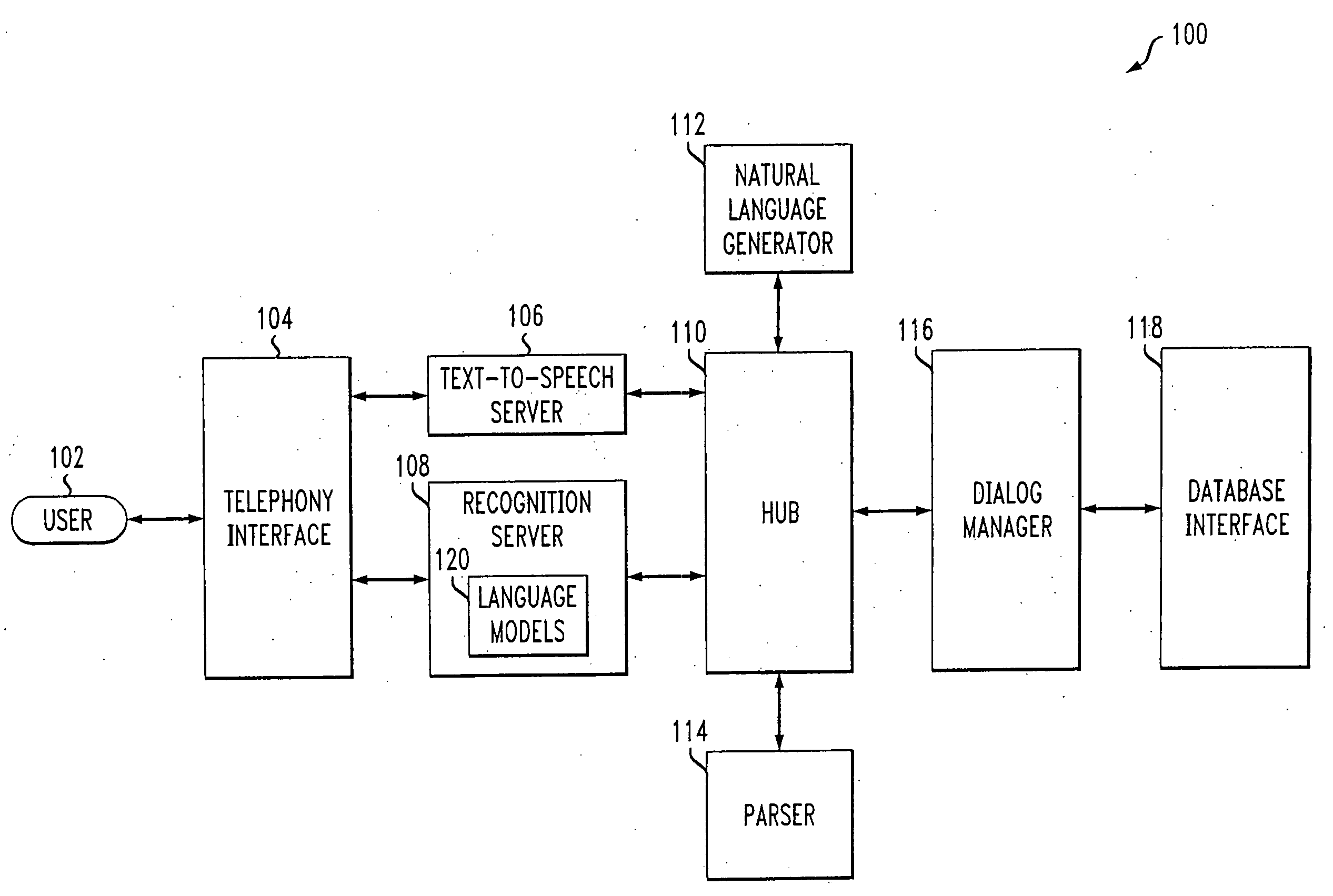

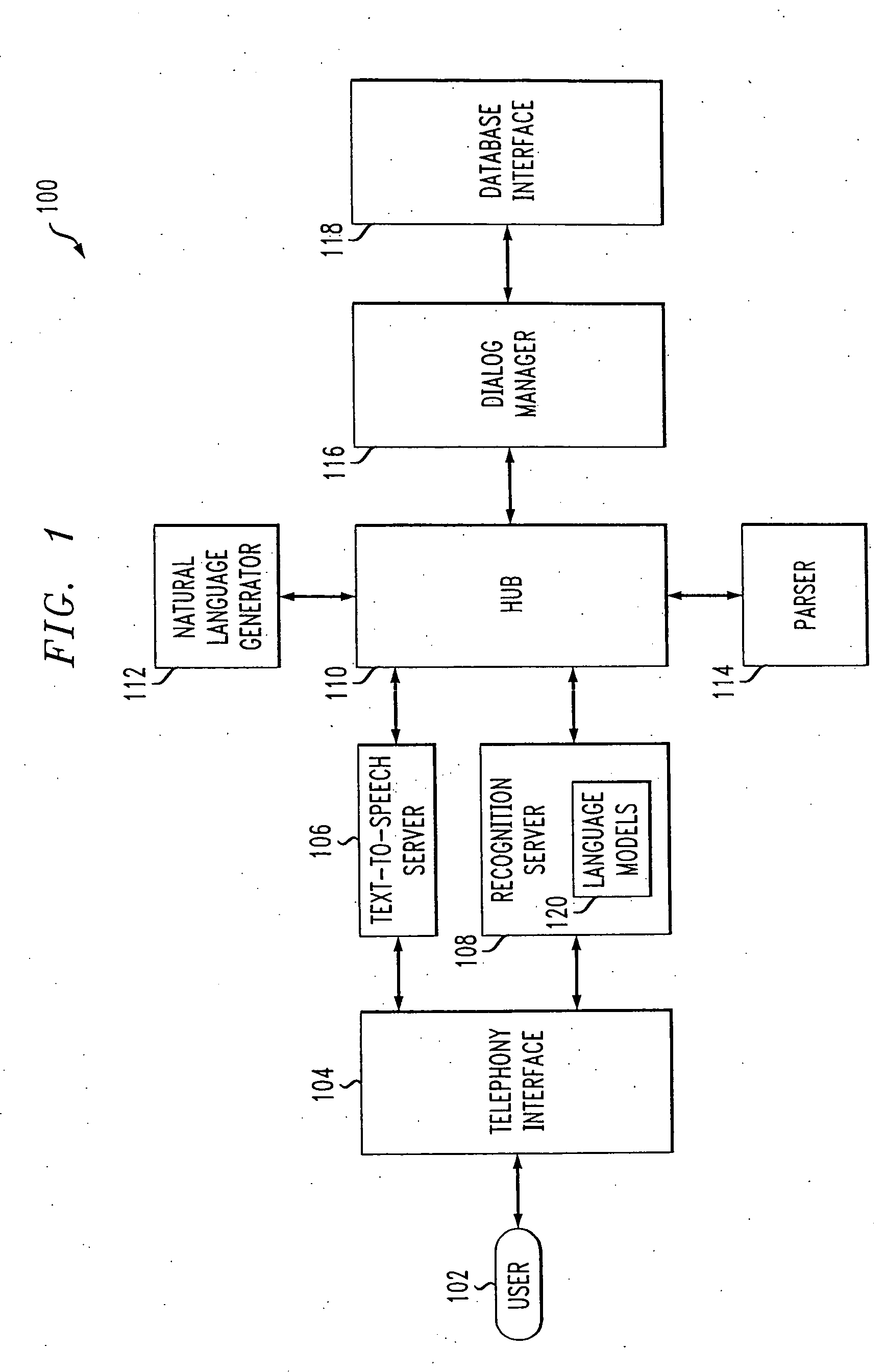

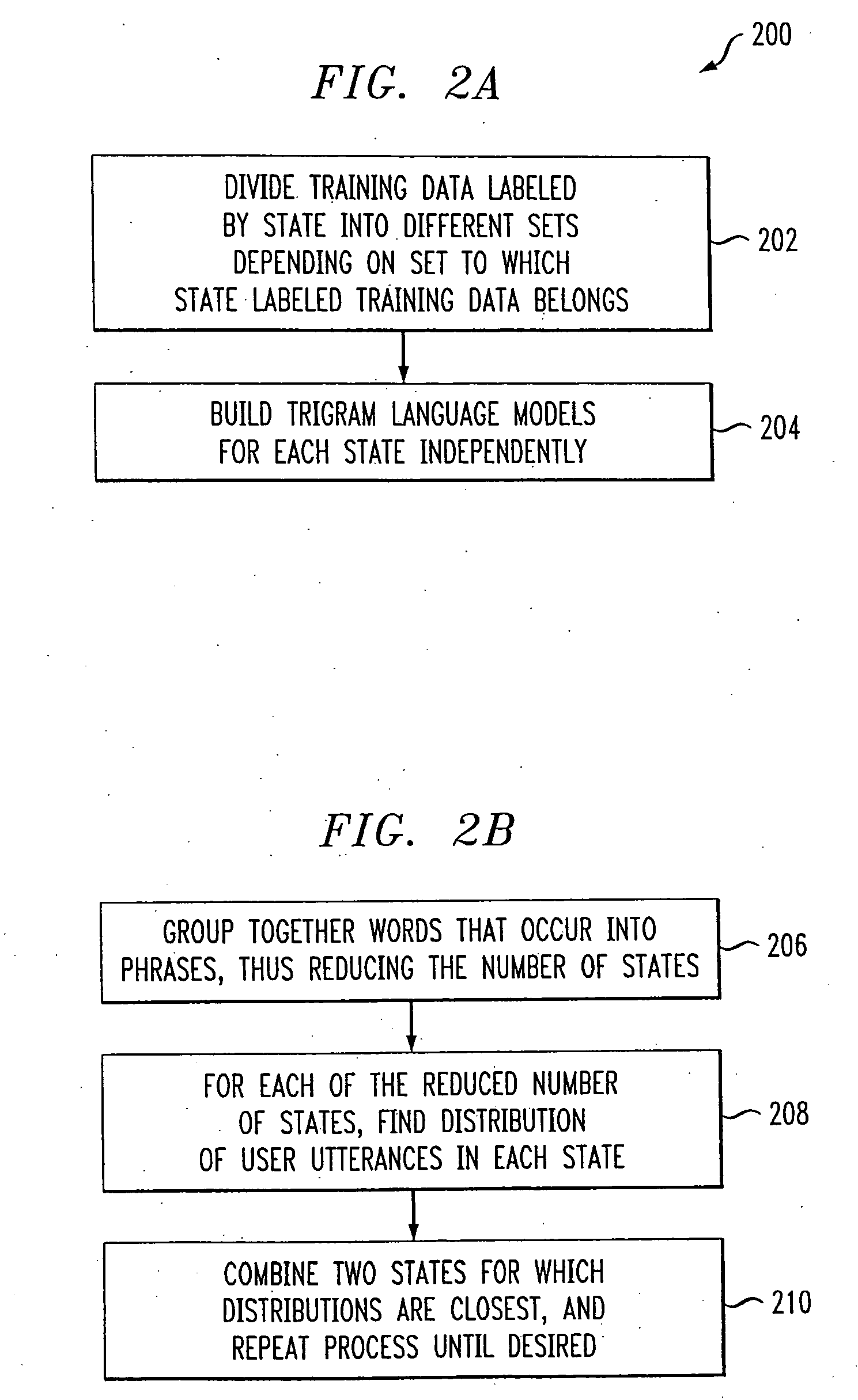

Methods and apparatus for generating dialog state conditioned language models

InactiveUS20060293901A1Simple modelImprove usabilitySpeech recognitionNatural language understandingDialog system

Techniques are provided for generating improved language modeling. Such improved modeling is achieved by conditioning a language model on a state of a dialog for which the language model is employed. For example, the techniques of the invention may improve modeling of language for use in a speech recognizer of an automatic natural language based dialog system. Improved usability of the dialog system arises from better recognition of a user's utterances by a speech recognizer, associated with the dialog system, using the dialog state-conditioned language models. By way of example, the state of the dialog may be quantified as: (i) the internal state of the natural language understanding part of the dialog system; or (ii) words in the prompt that the dialog system played to the user.

Owner:NUANCE COMM INC

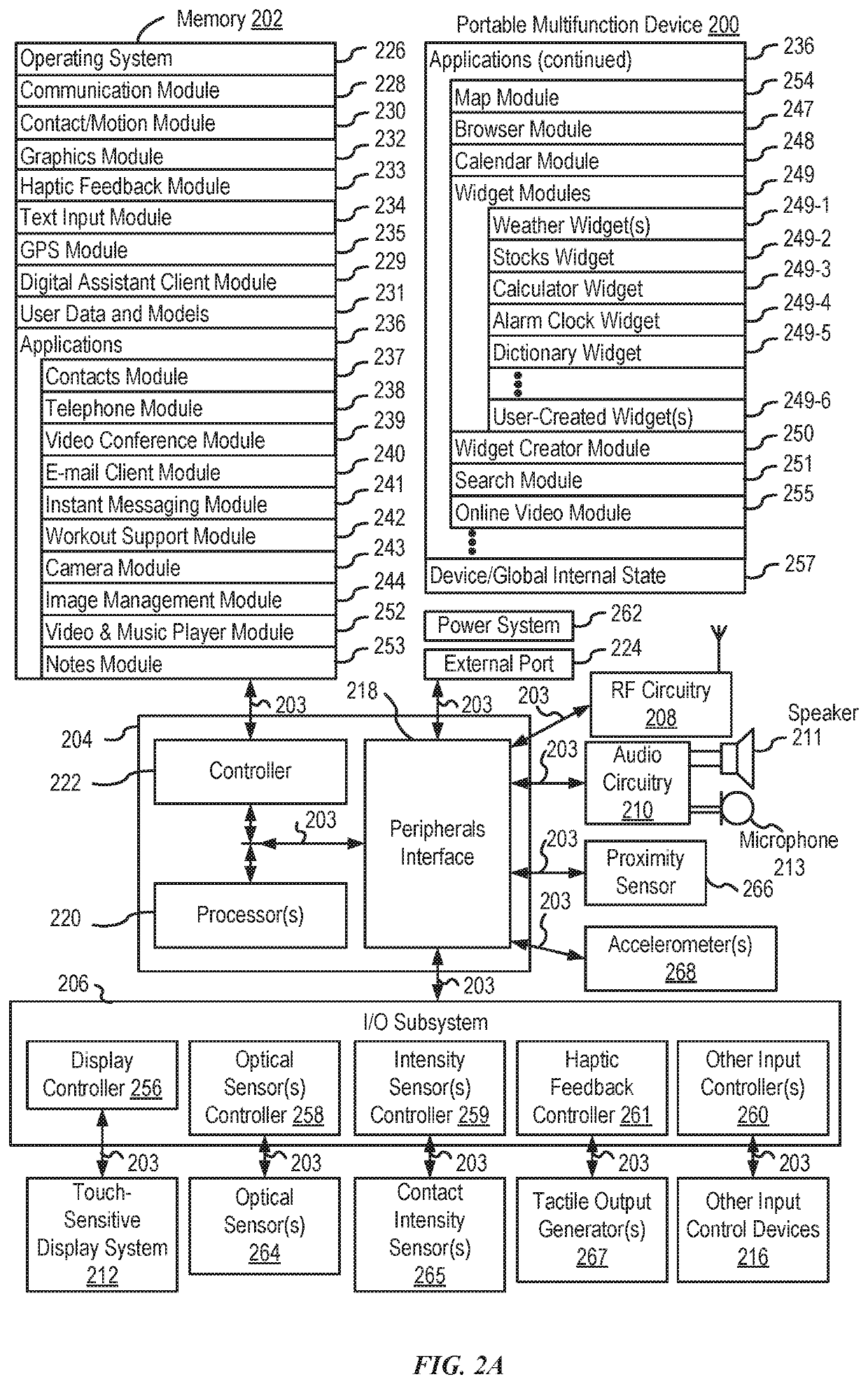

Unified language modeling framework for word prediction, auto-completion and auto-correction

Systems and processes for unified language modeling are provided. In accordance with one example, a method includes, at an electronic device with one or more processors and memory, receiving a character of a sequence of characters and determining a current character context based on the received character of the sequence of characters and a previous character context. The method further includes determining a current word representation based on the current character context and determining a current word context based on the current word representation and a previous word context. The method further includes determining a next word representation based on the current word context and providing the next word representation.

Owner:APPLE INC

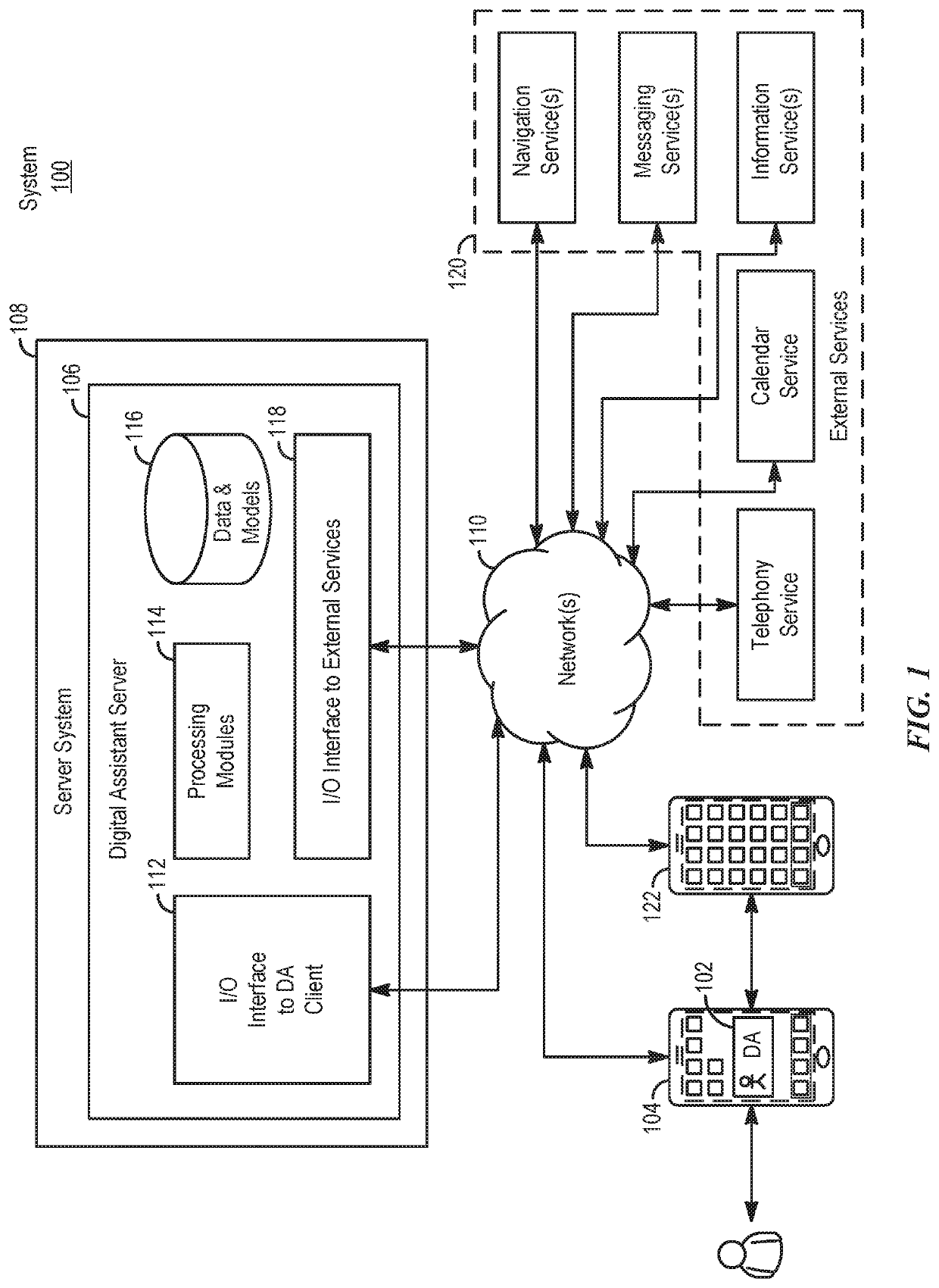

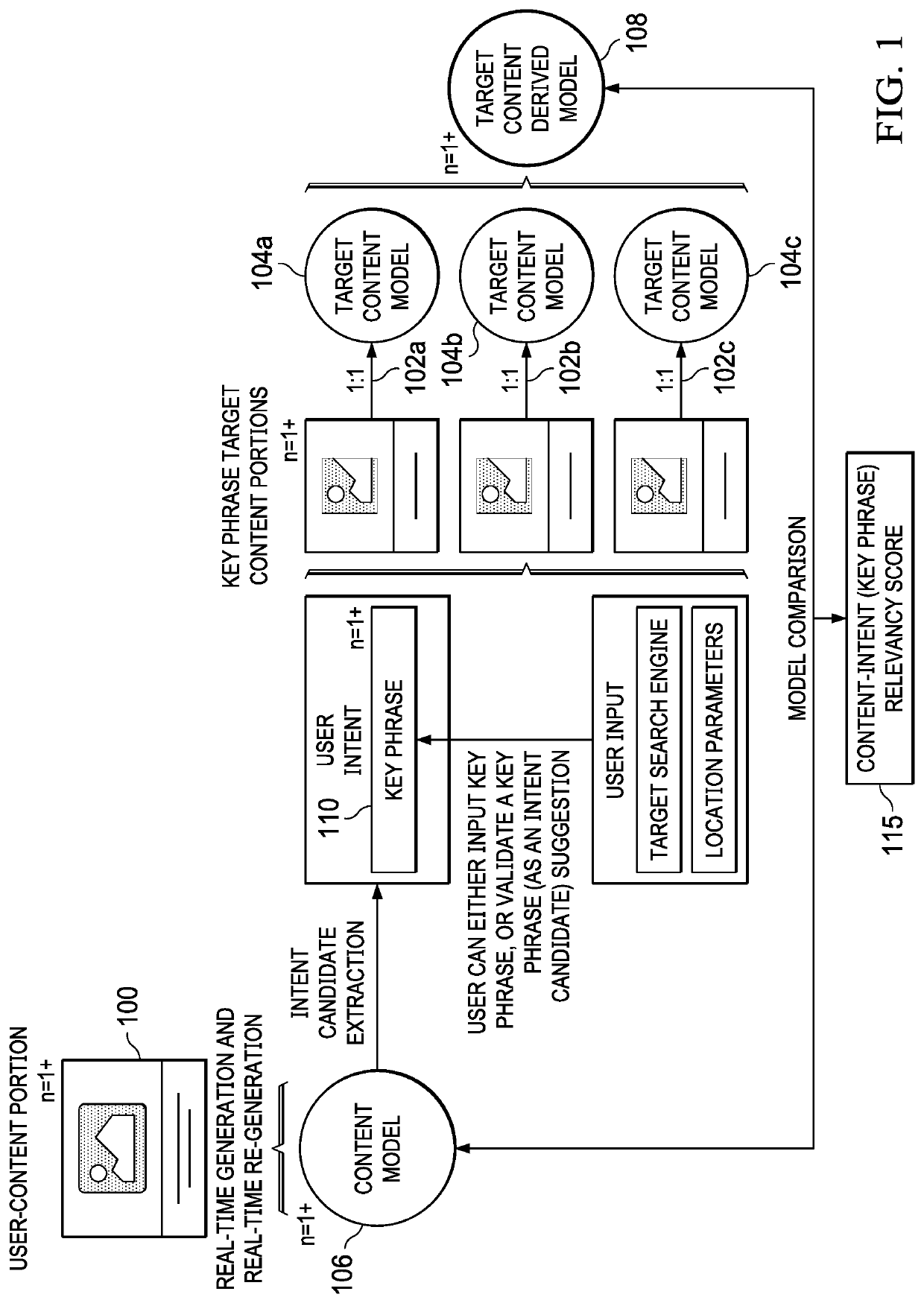

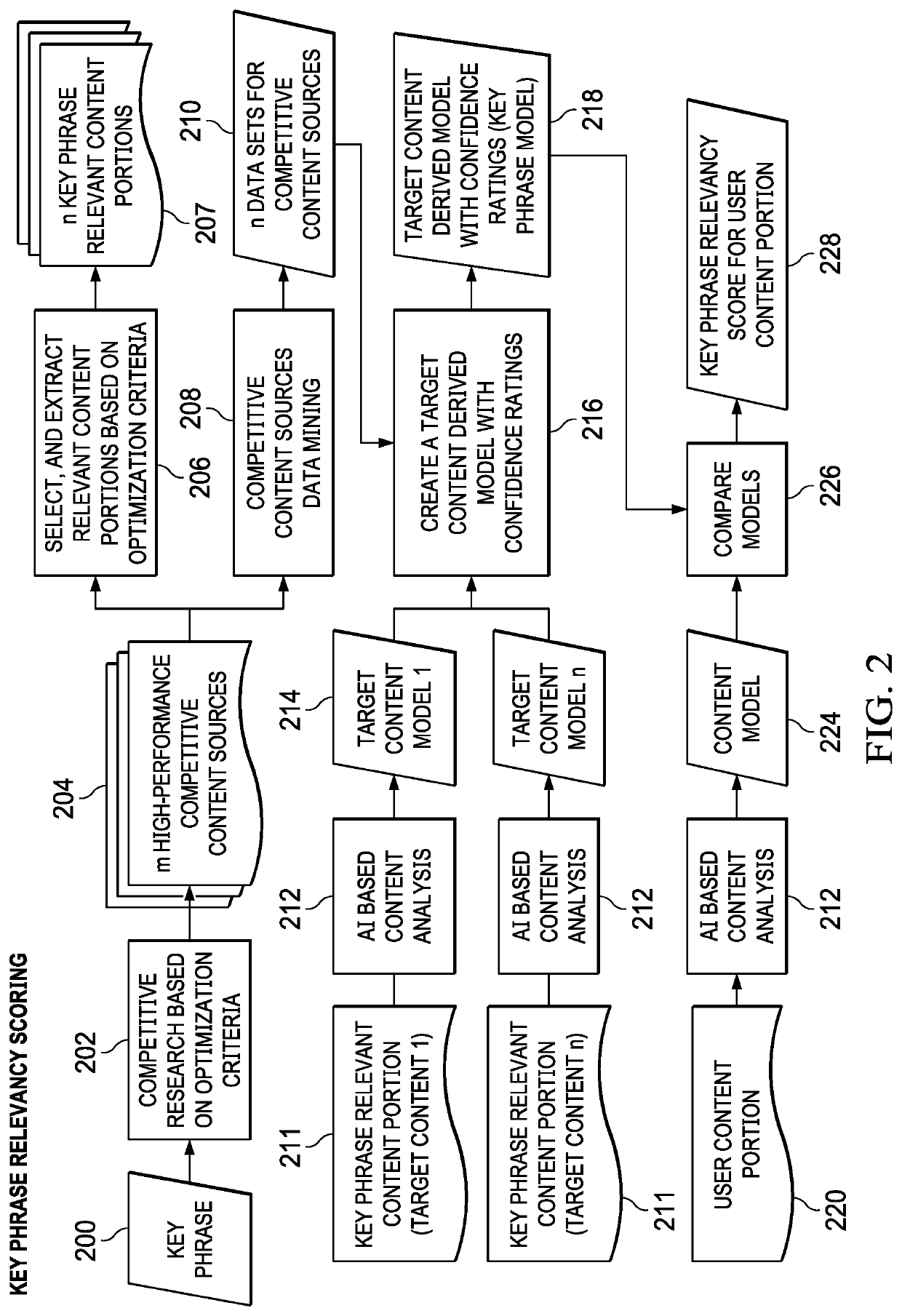

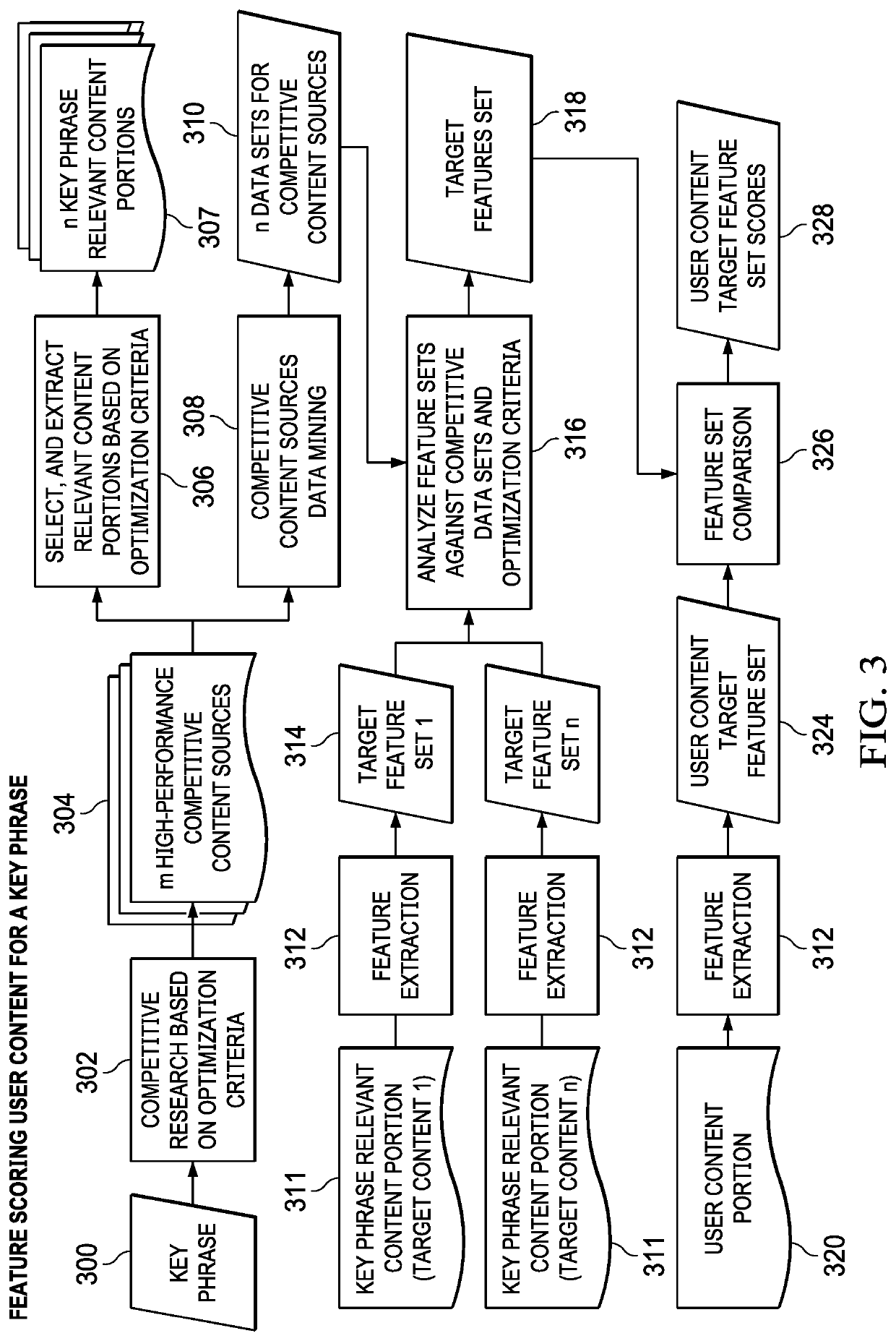

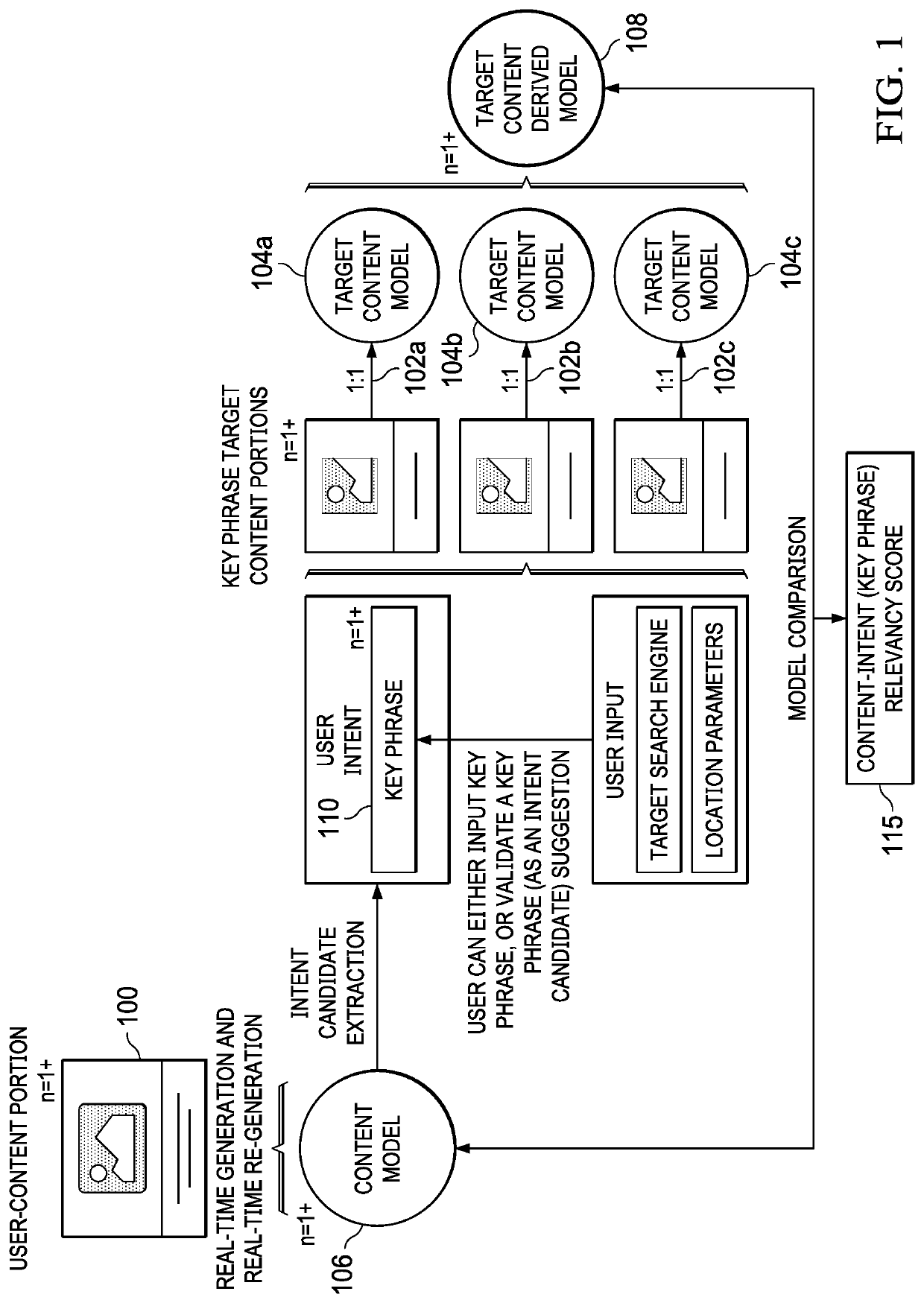

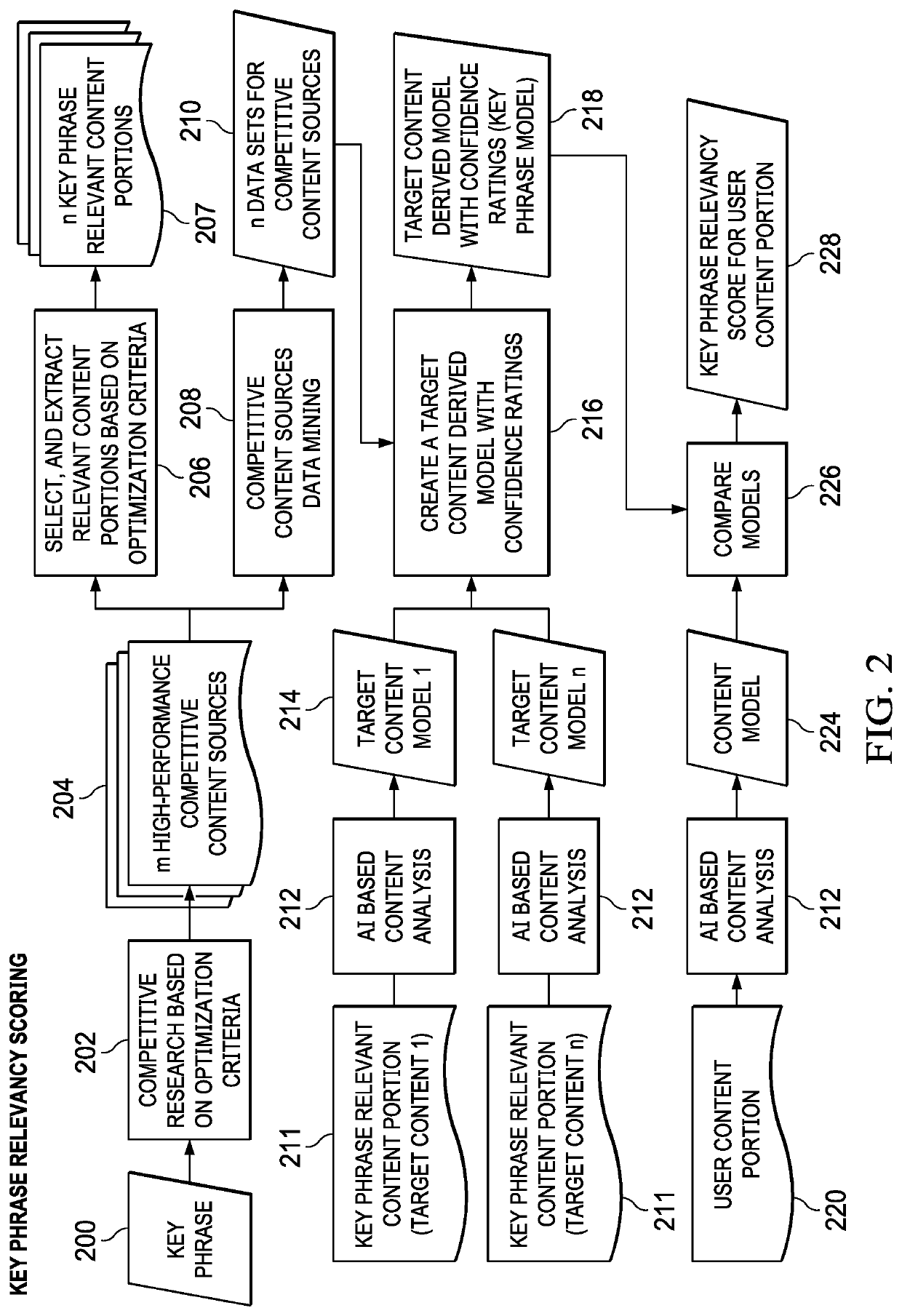

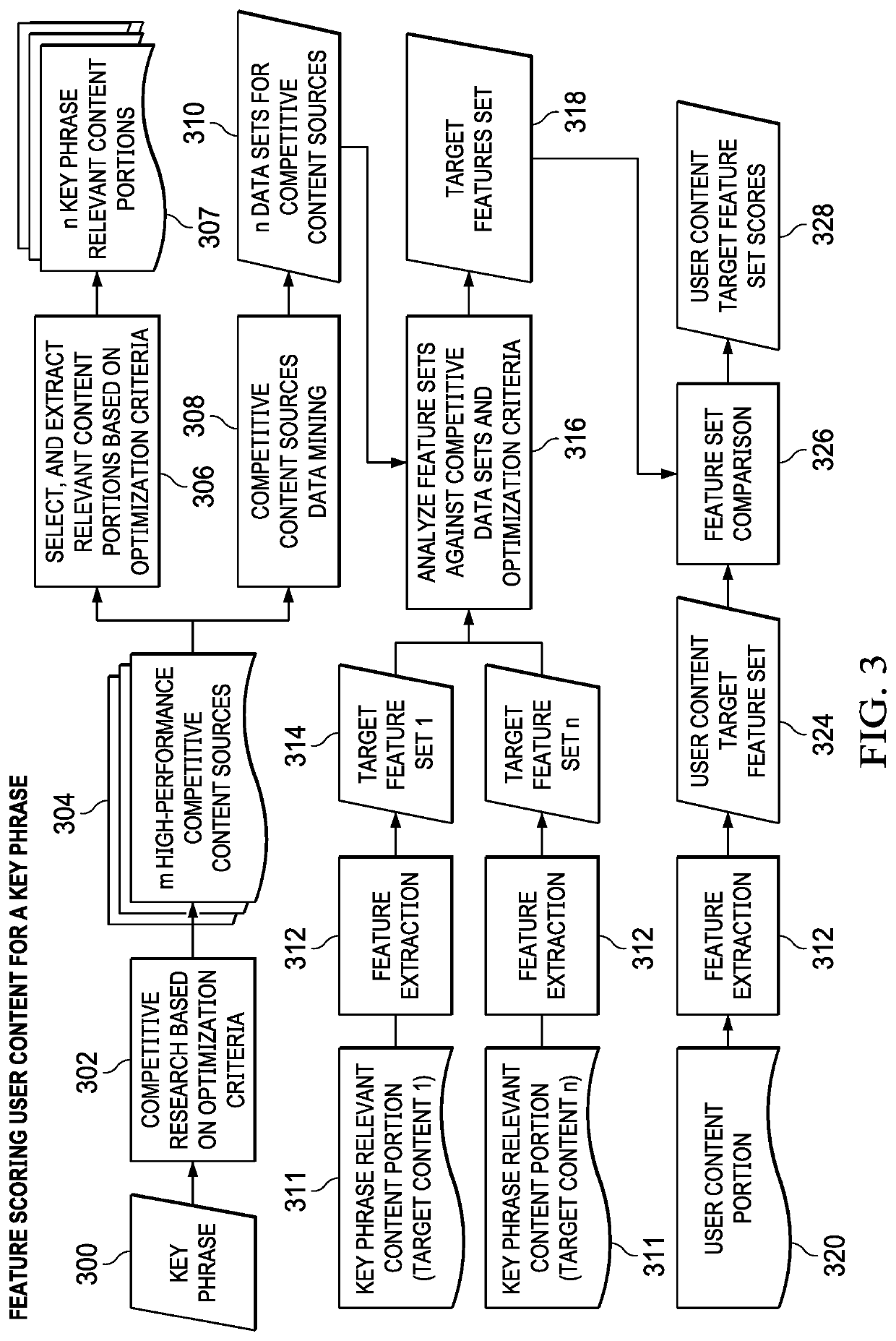

Content generation using target content derived modeling and unsupervised language modeling

Content generation leverages an unsupervised, generative pre-trained language model (e.g., a generative-AI). In this approach, a model derived by applying to given content relevant competitive content and one or more optimization targets is received. Based on optimization criteria encoded as embedding signals in the model, a determination is made regarding whether a template suitable for use as an input to the generative-AI exists in a set of templates. If so, the model embedding signals are merged into the template, or the template itself is transformed using the embedding signals, in either case creating a modified template. If, however, no template suitable as the input exists, the model and other information are input to a natural language processor to generate a generative-AI input. Either the modified template or the generative-AI input, as the case may be, is then applied through the generative-AI to generate an output competitively-optimized with respect to the optimization targets.

Owner:INK CONTENT INC

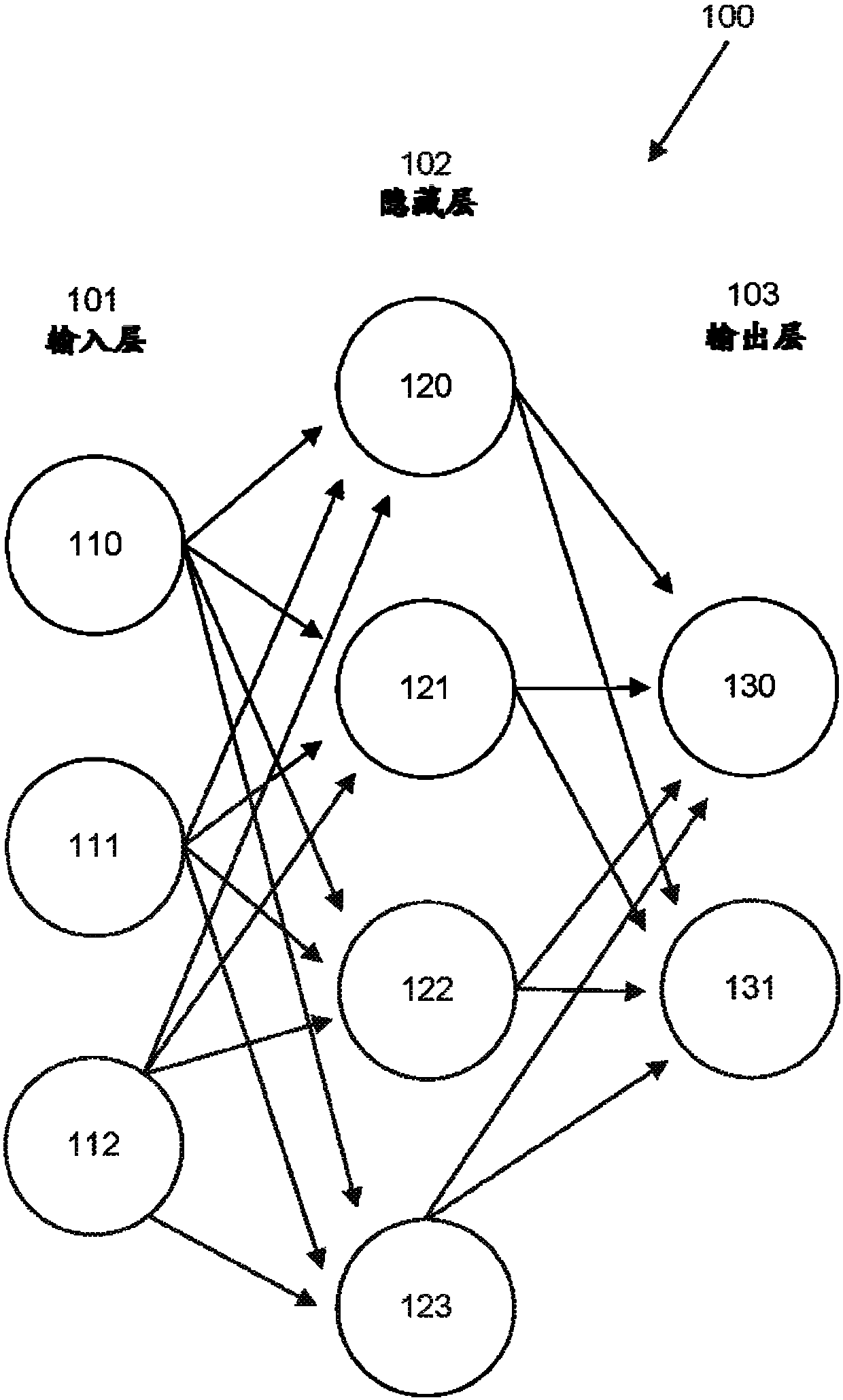

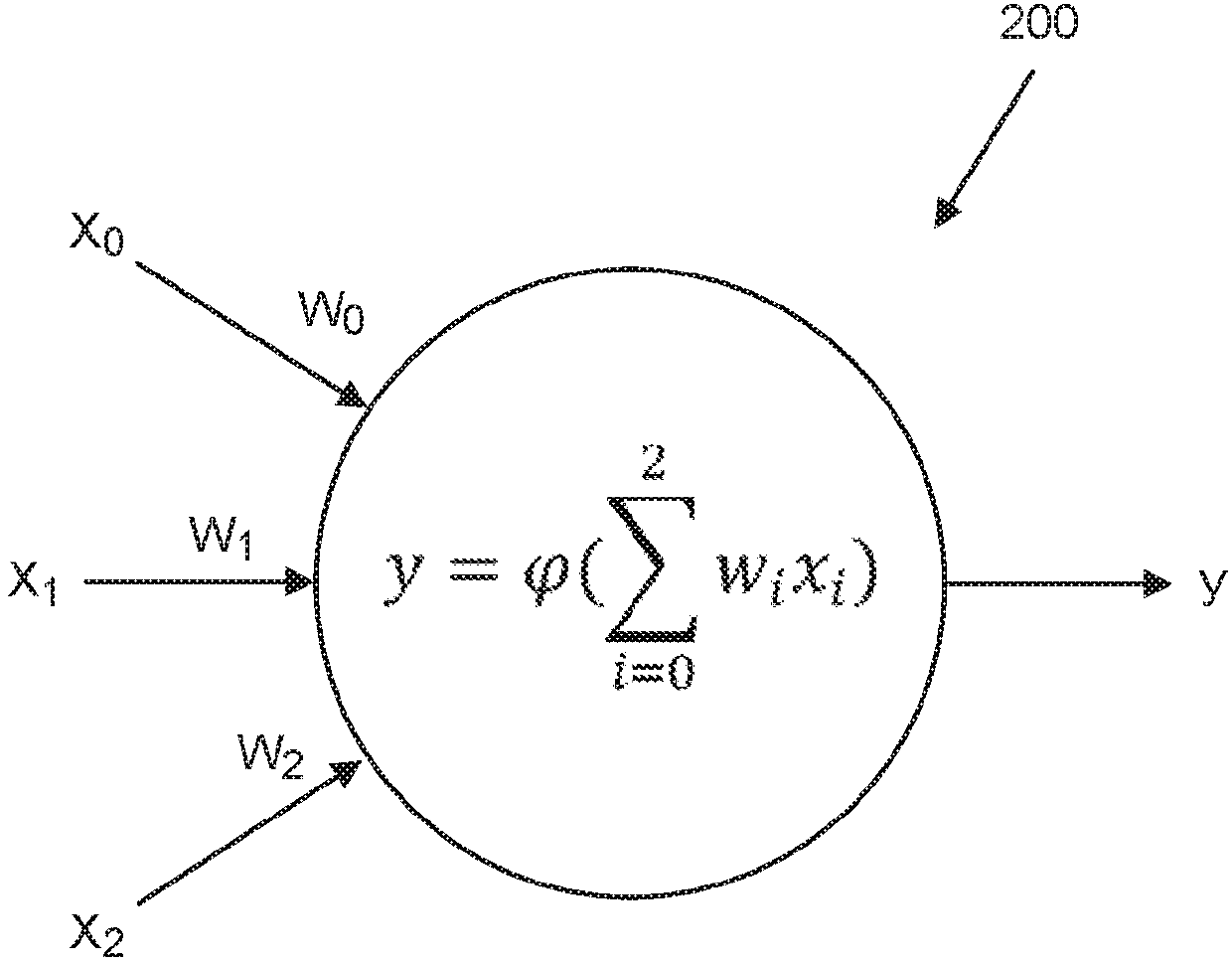

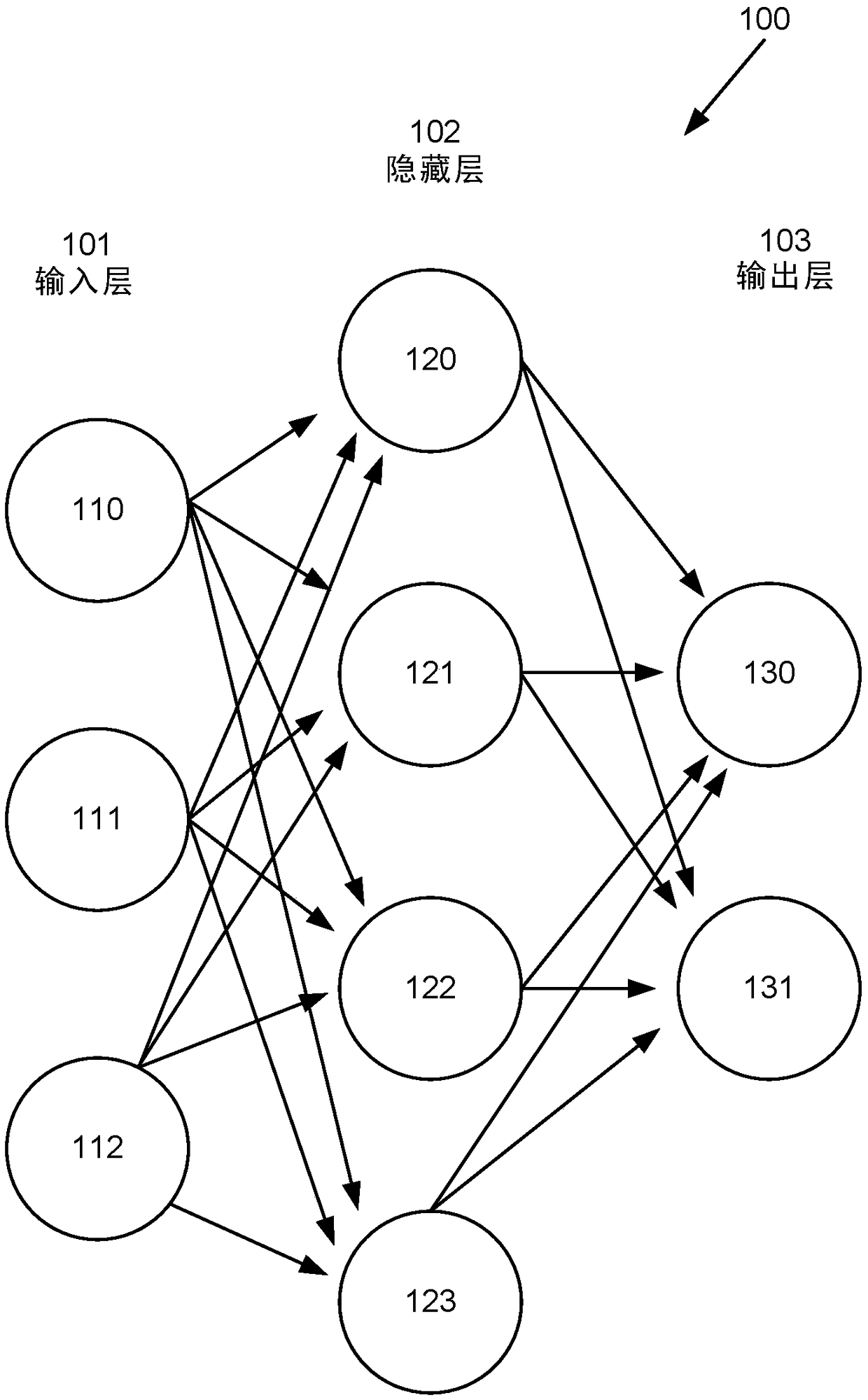

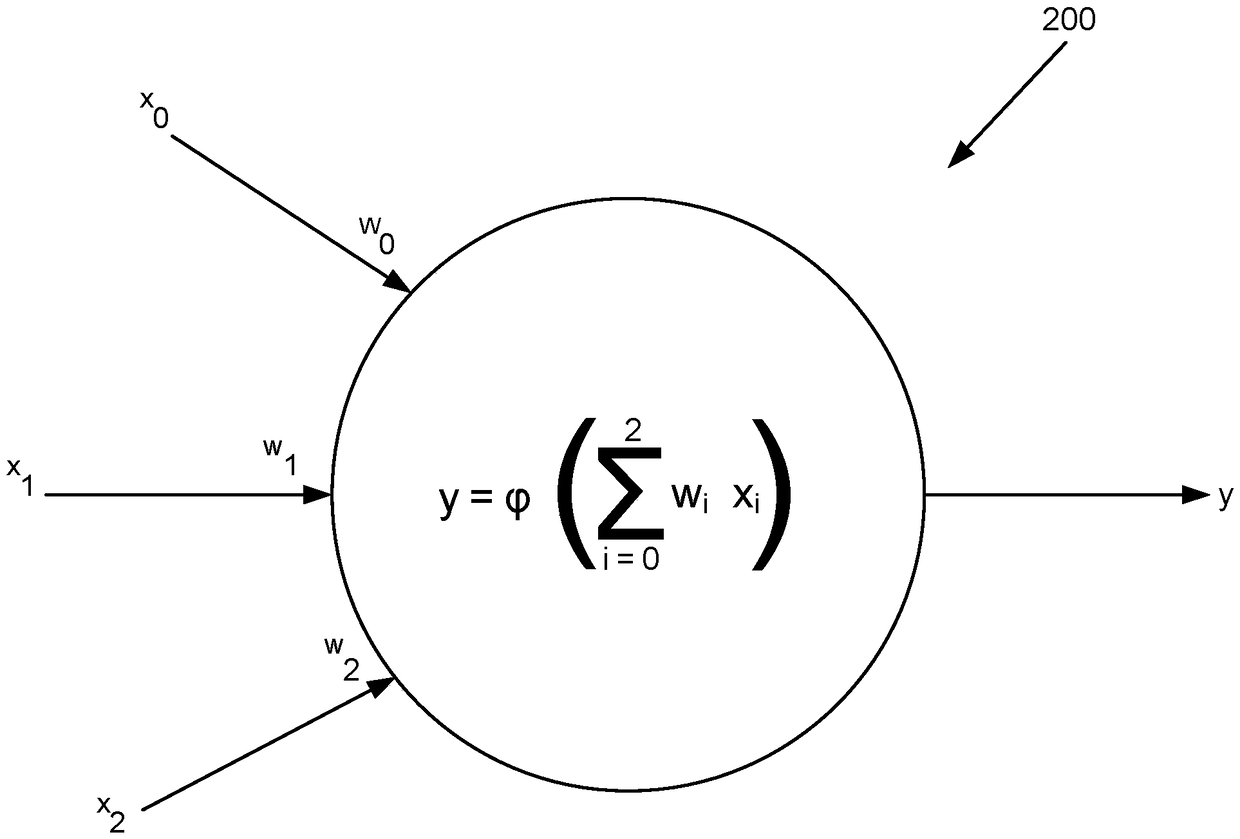

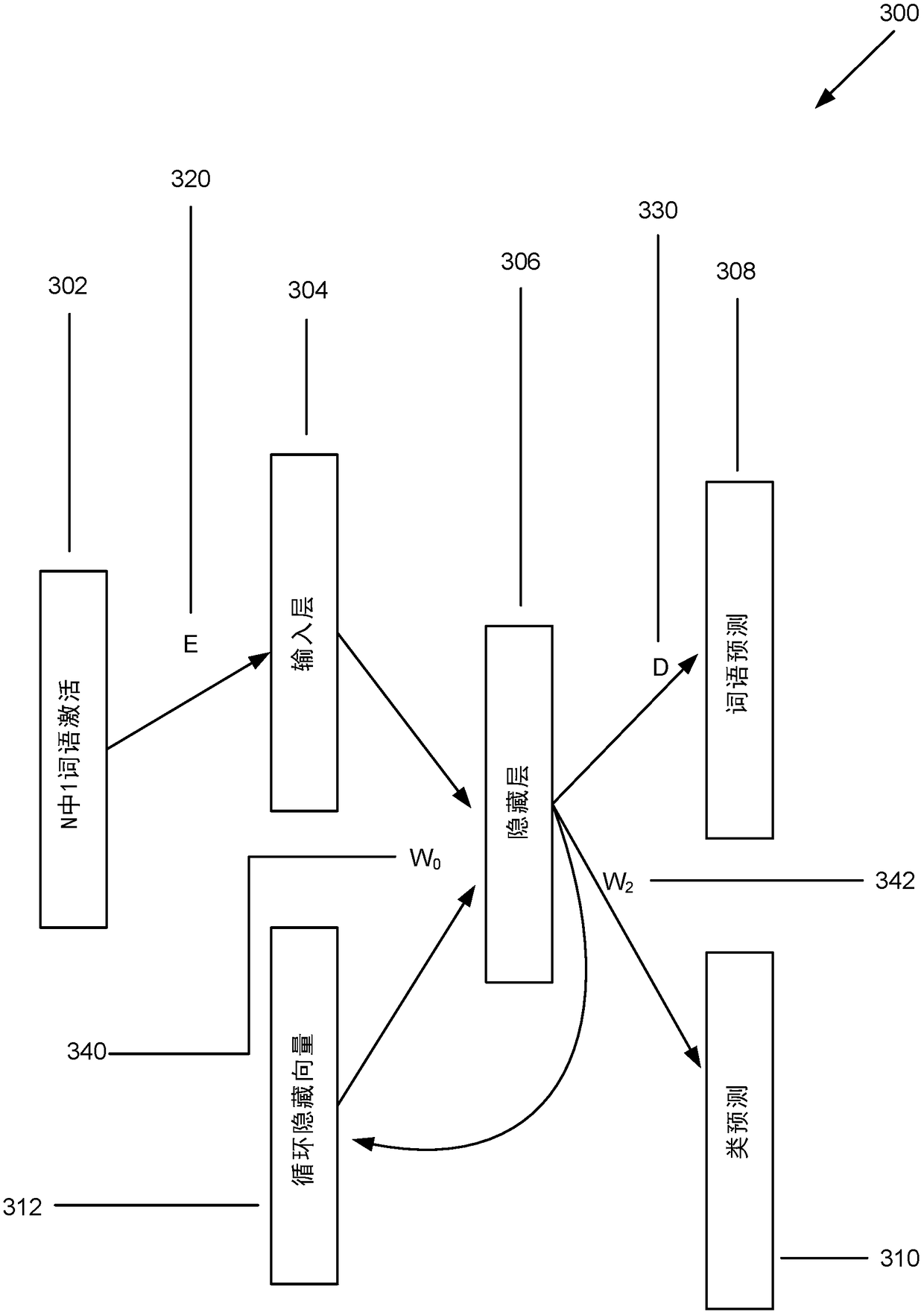

Improved artificial neural network for language modelling and prediction

The present invention relates to an improved artificial neural network for predicting one or more next items in a sequence of items based on an input sequence item. The improved artificial neural network has greatly reduced memory requirements, making it suitable for use on electronic devices such as mobile phones and tablets. The invention includes an electronic device on which the improved artificial neural network operates, and methods of predicting the one or more next items in the sequence using the improved artificial neural network.

Owner:MICROSOFT TECH LICENSING LLC

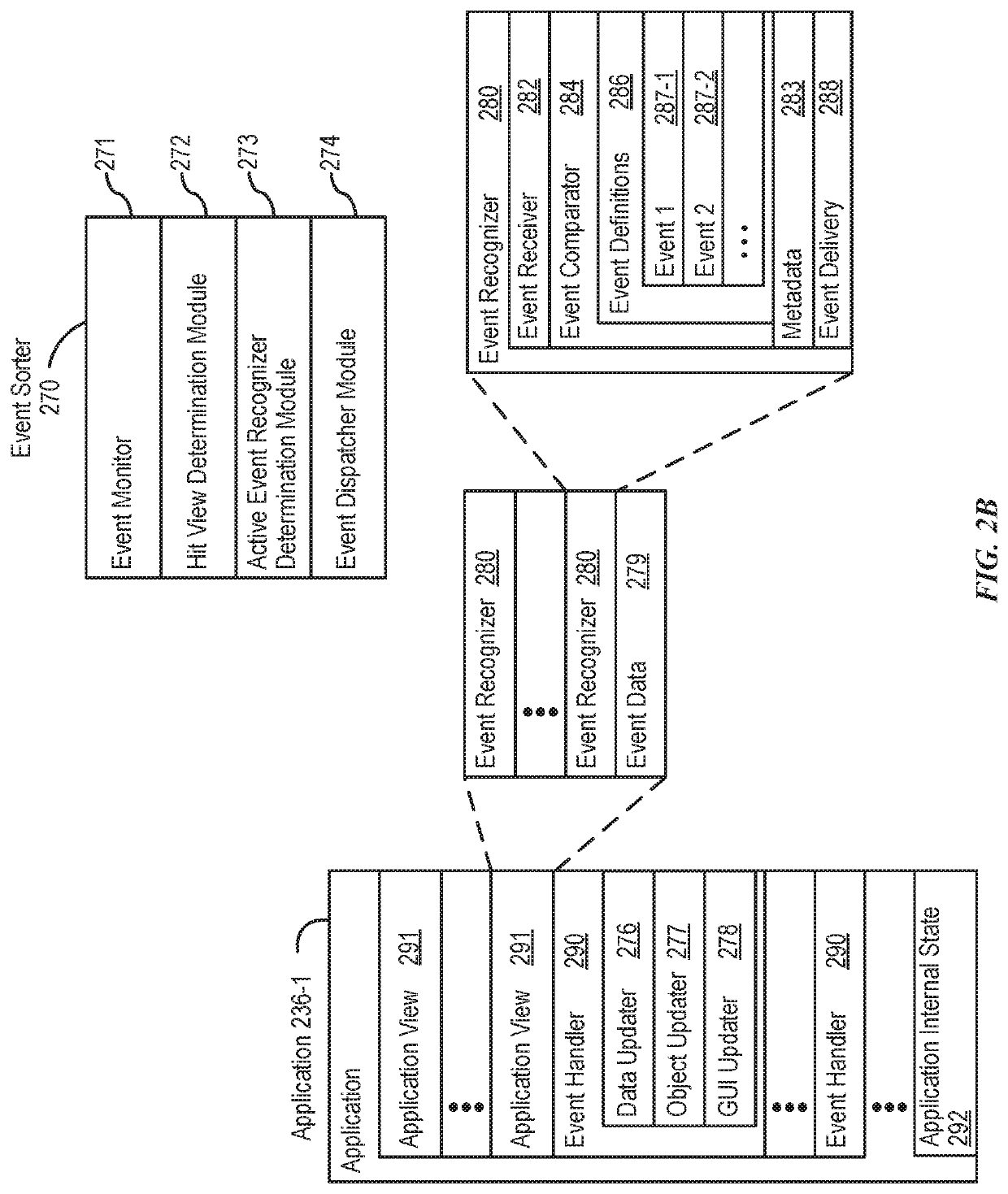

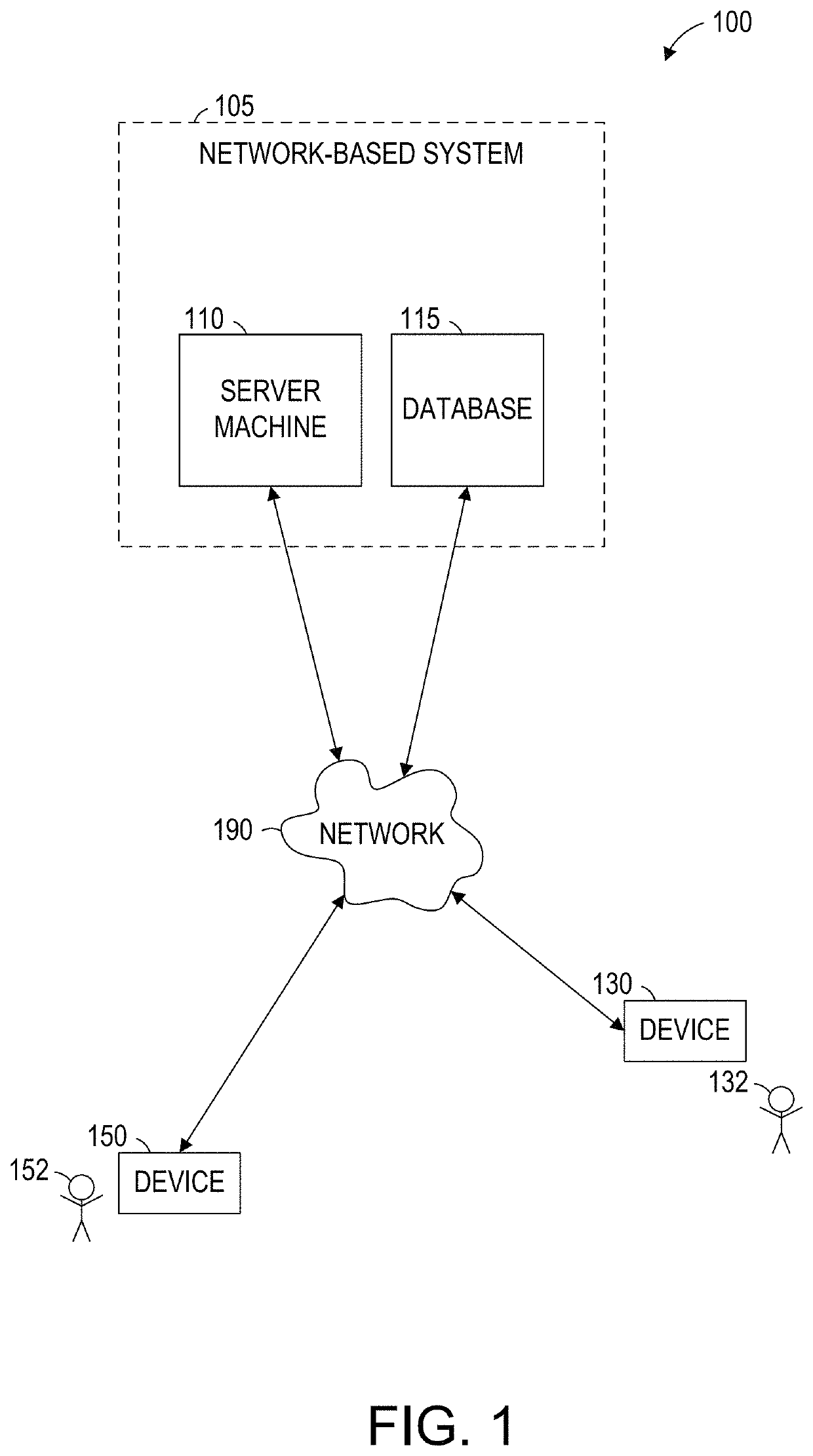

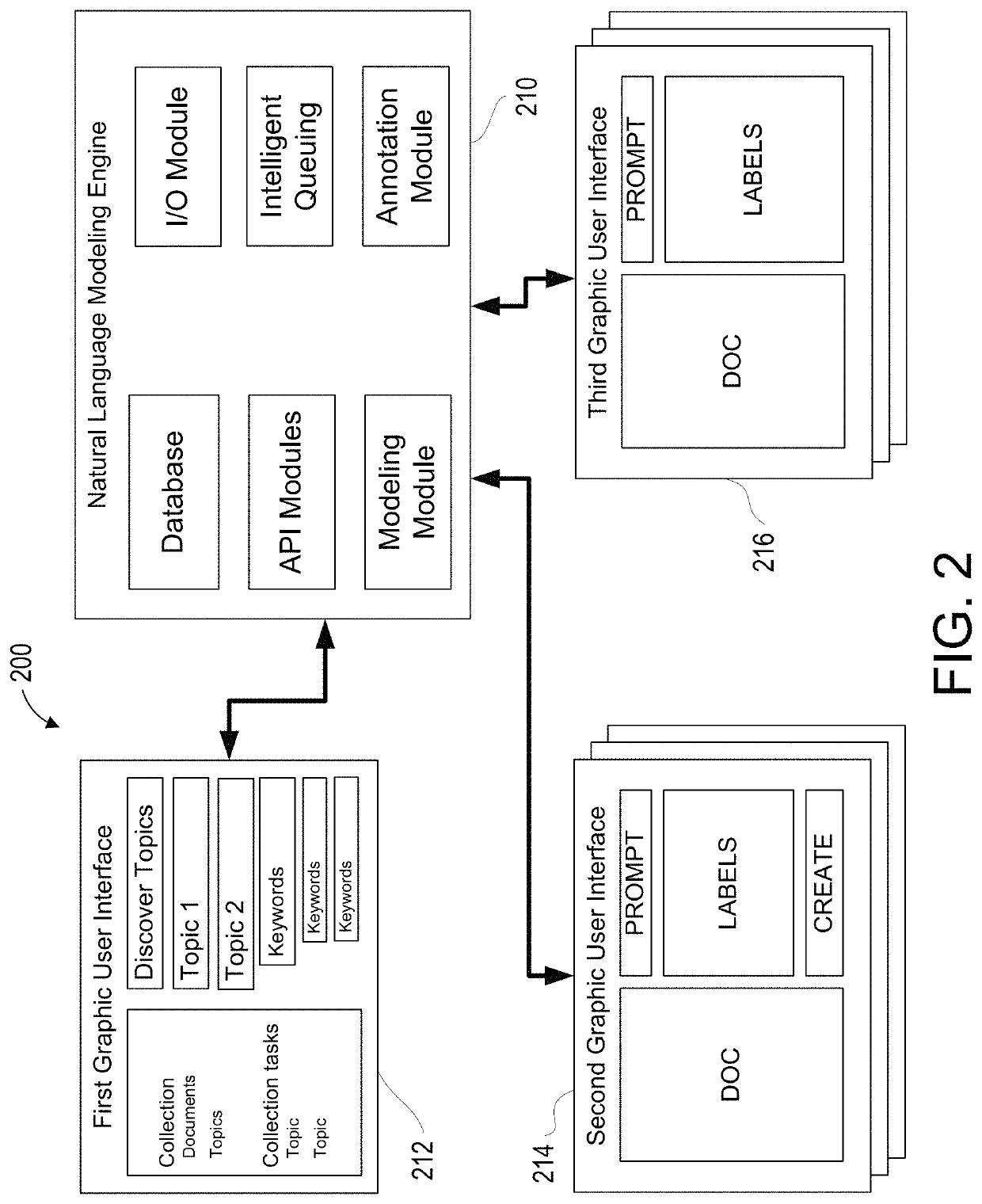

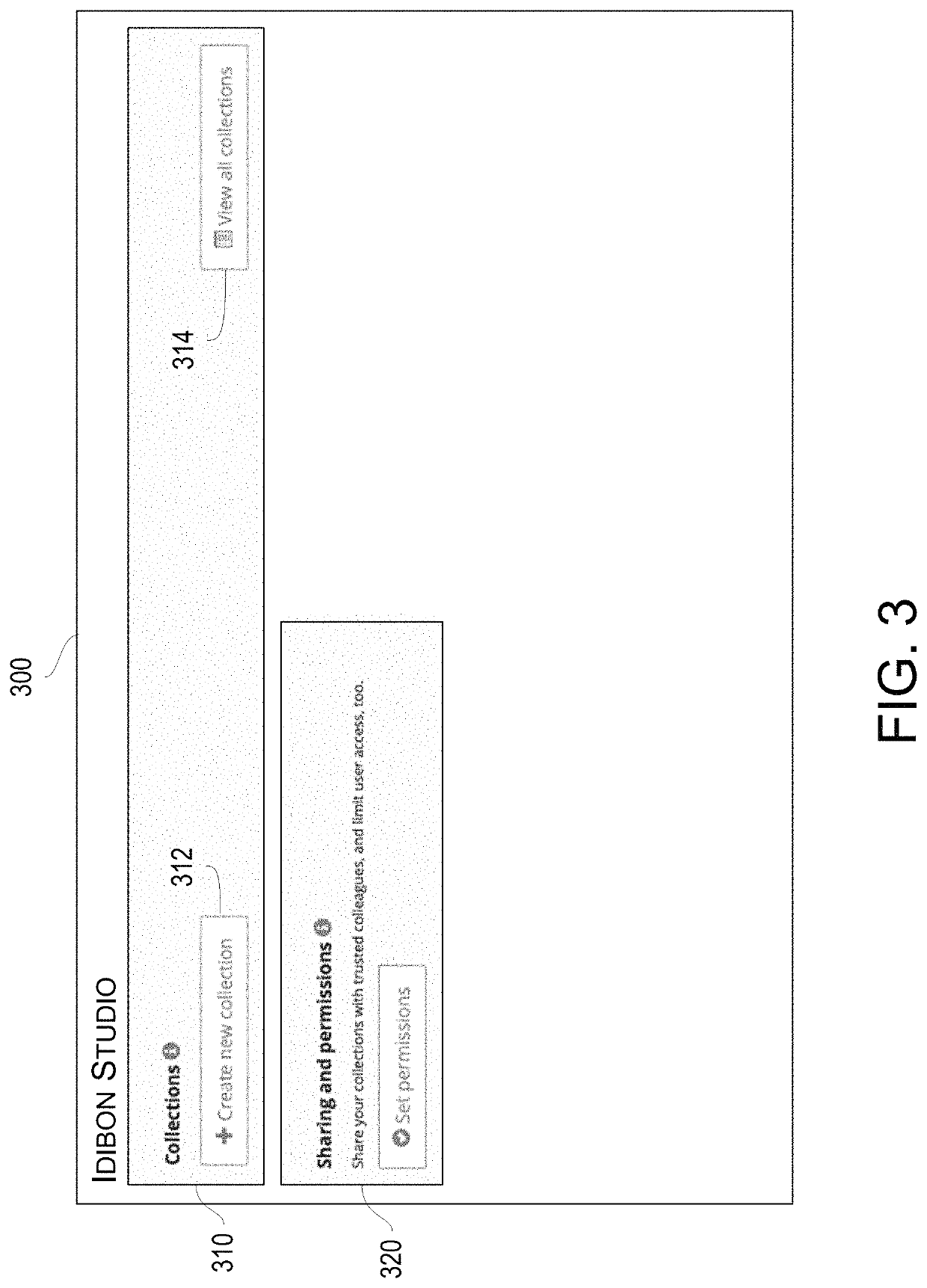

Graphical systems and methods for human-in-the-loop machine intelligence

ActiveUS11295071B2Facilitates annotationEfficiently verifying the accuracy of the natural language modeling engine's ontologyNatural language translationData processing applicationsGraphic systemModelSim

Methods and systems are disclosed for creating and linking a series of interfaces configured to display information and receive confirmation of classifications made by a natural language modeling engine to improve organization of a collection of documents into an hierarchical structure. In some embodiments, the interfaces may display to an annotator a plurality of labels of potential classifications for a document as identified by a natural language modeling engine, collect annotated responses from the annotator, aggregate the annotated responses across other annotators, analyze the accuracy of the natural language modeling engine based on the aggregated annotated responses, and predict accuracies of the natural language modeling engine's classifications of the documents.

Owner:100 CO GLOBAL HLDG LLC

System and method for natural language processing

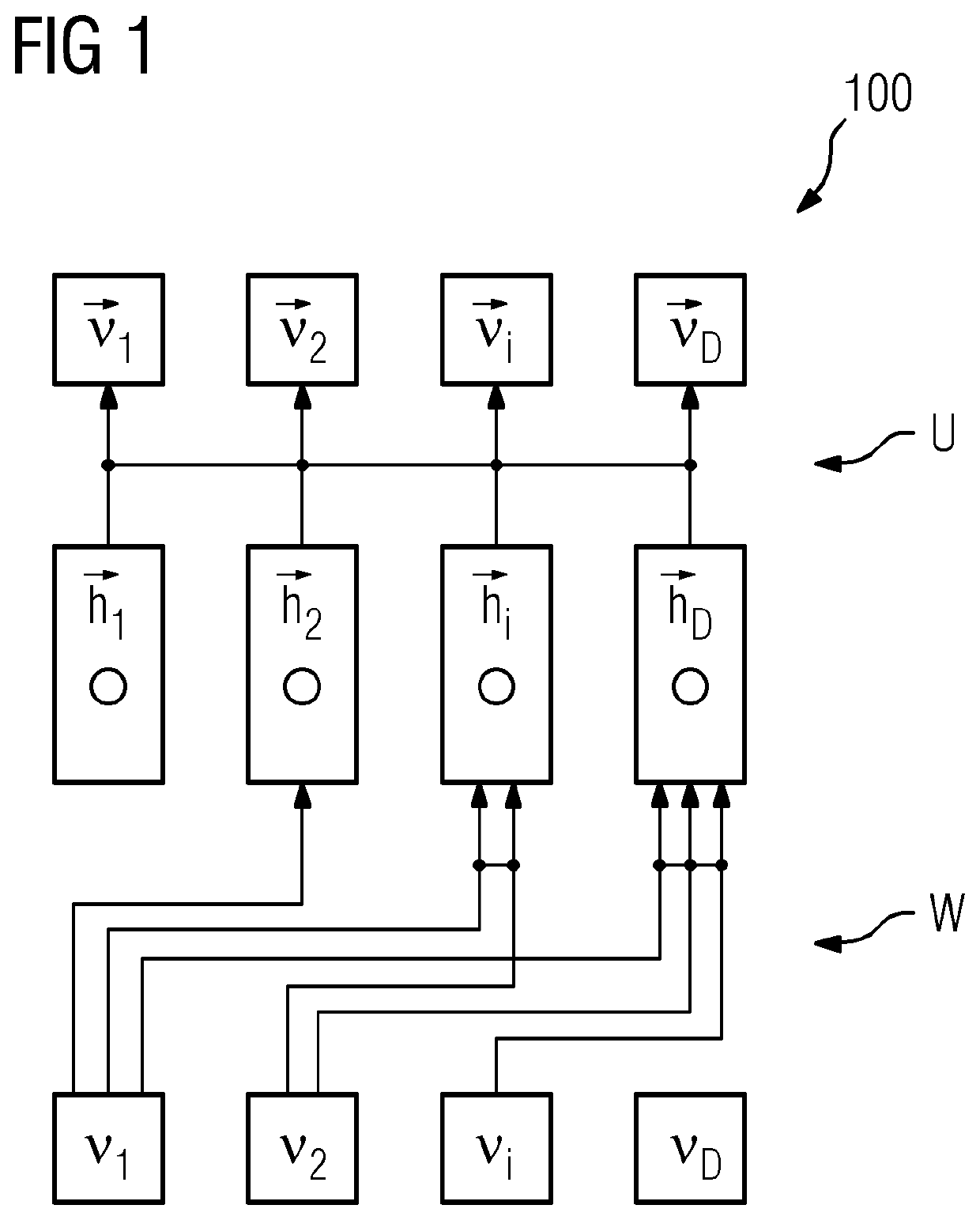

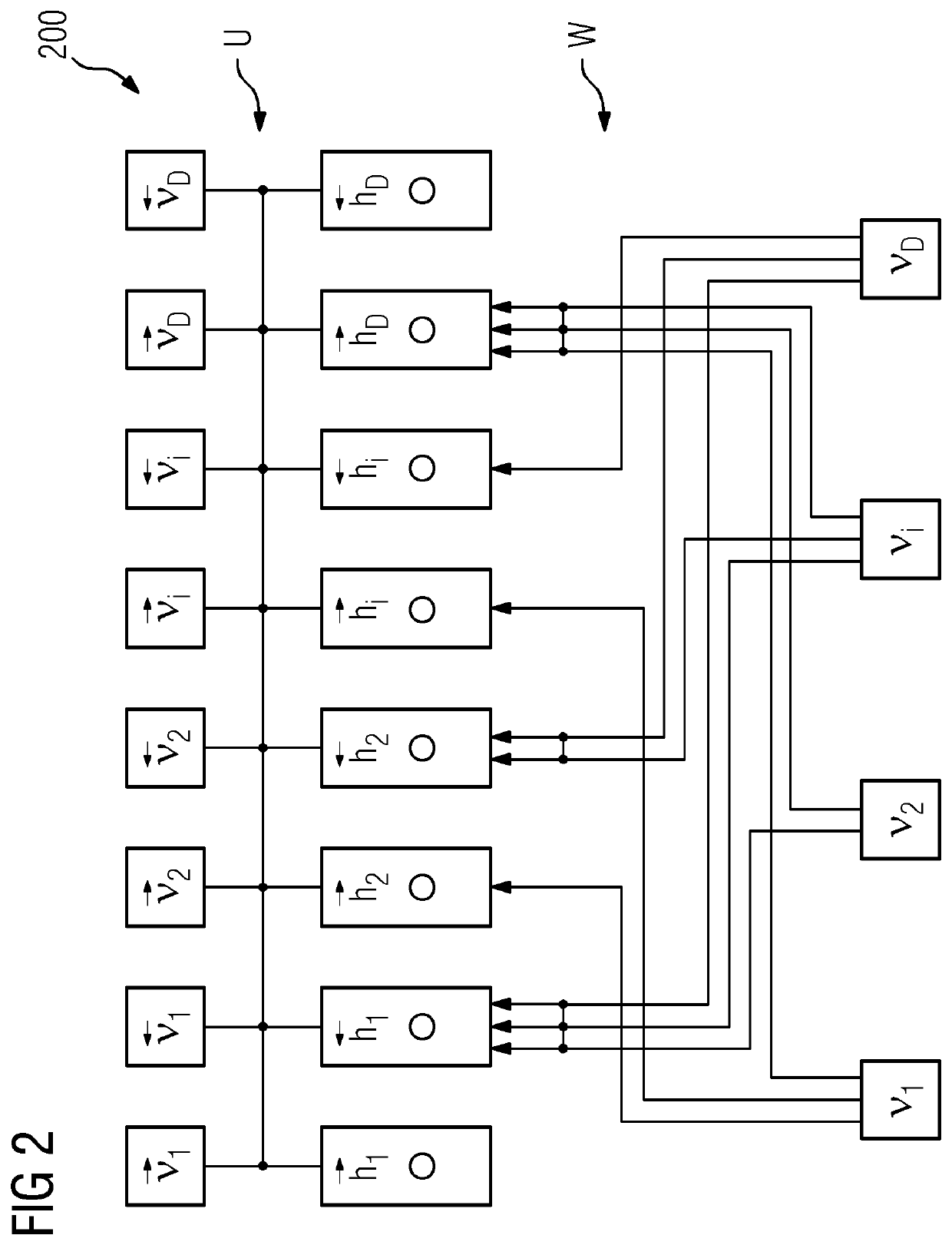

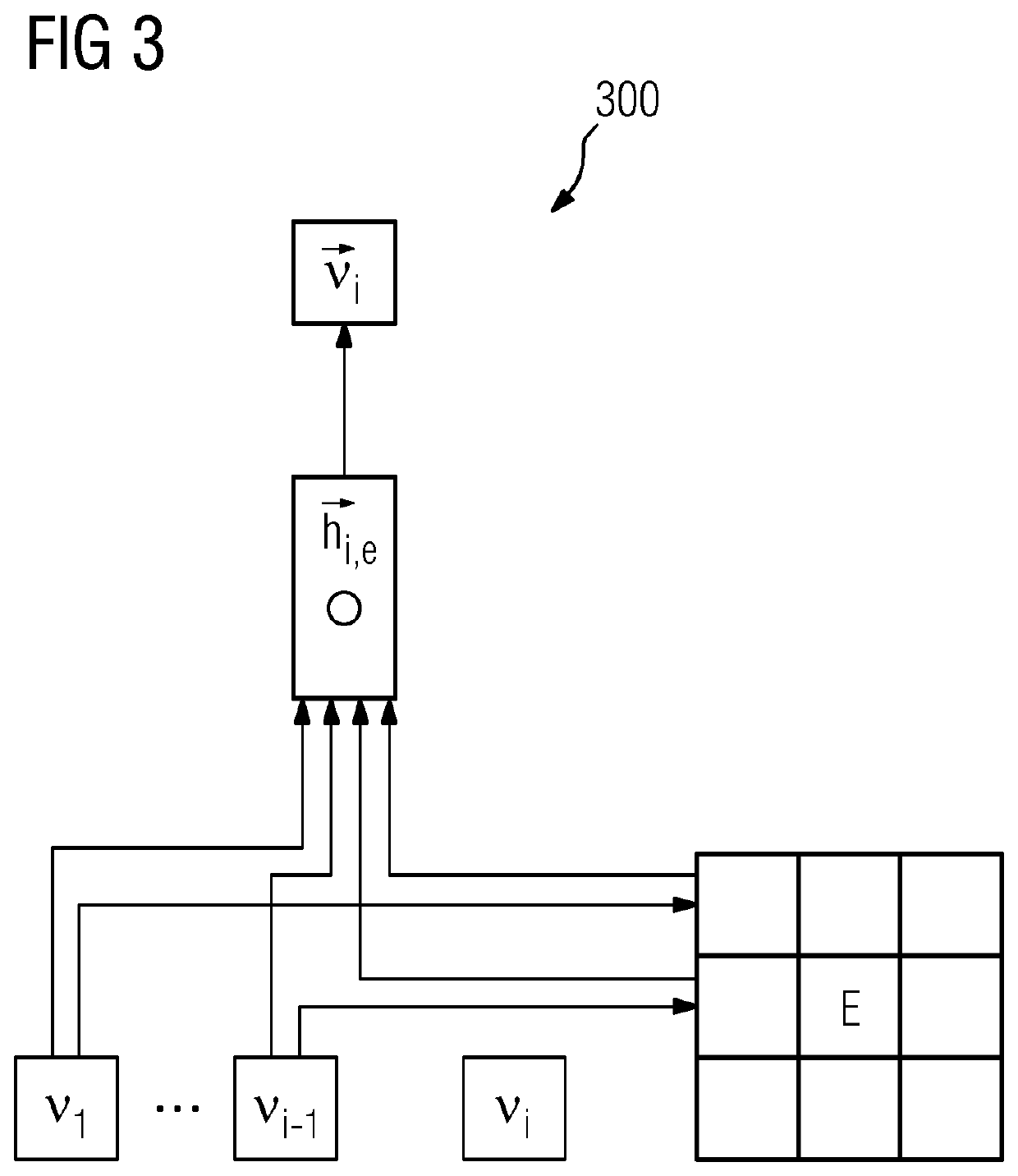

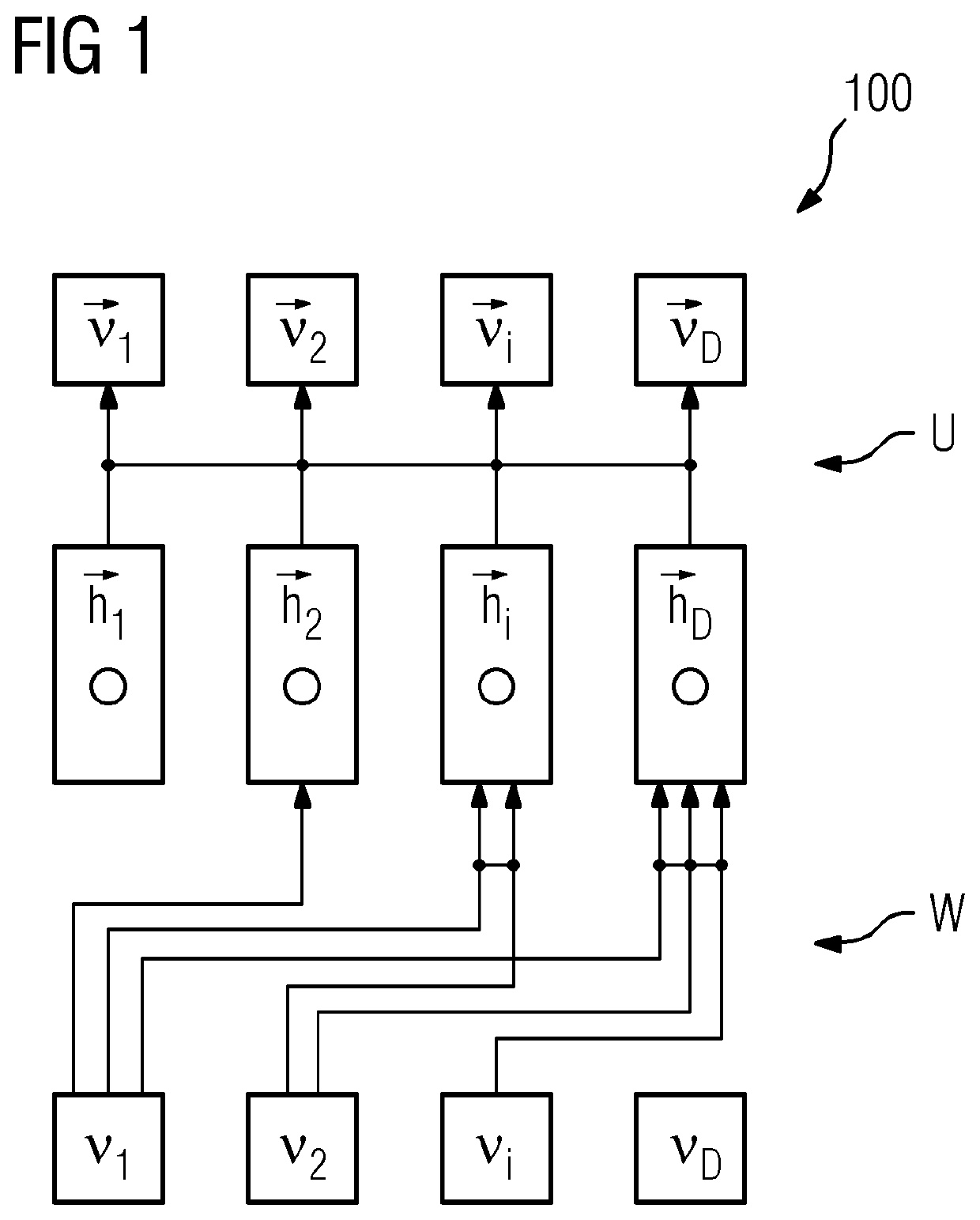

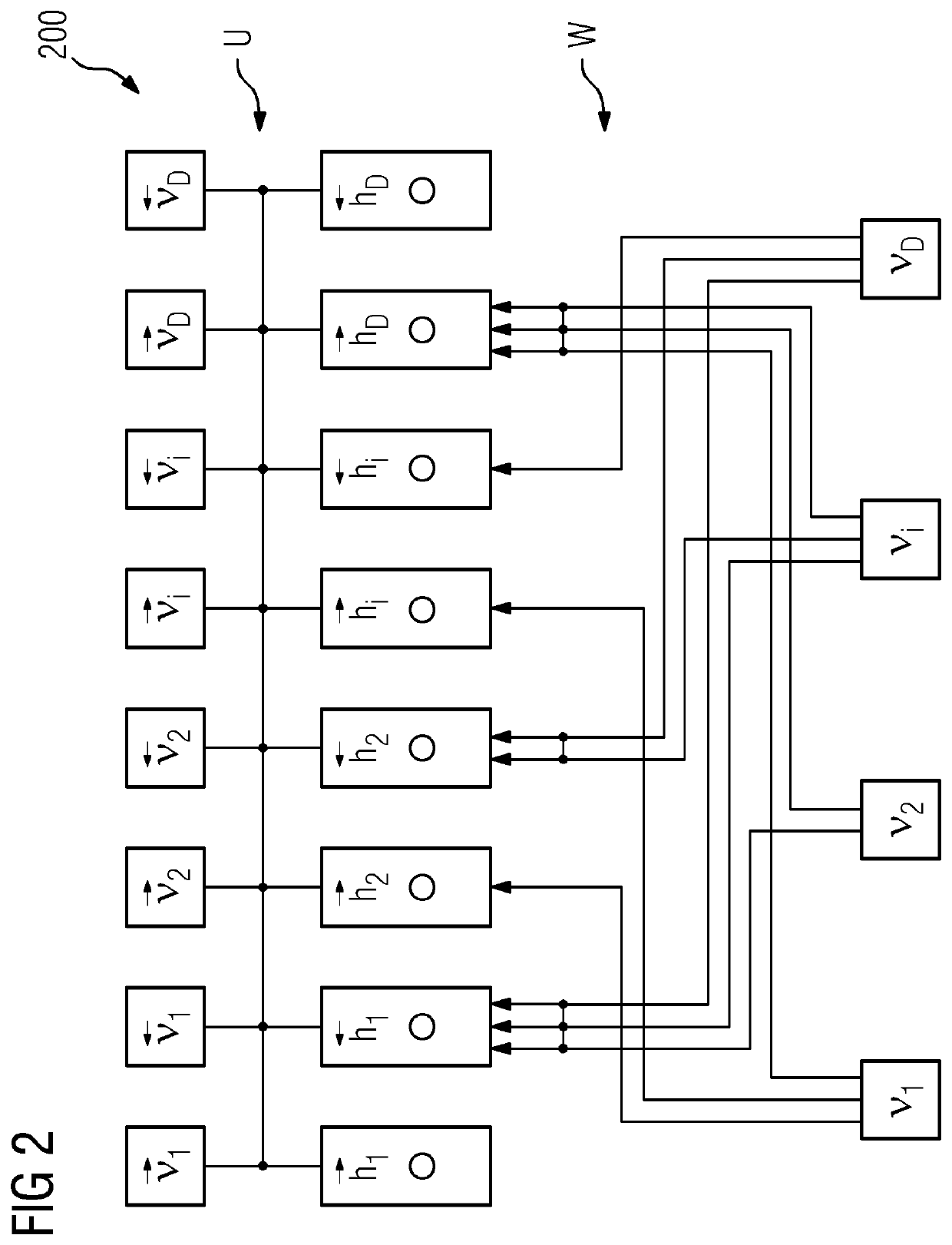

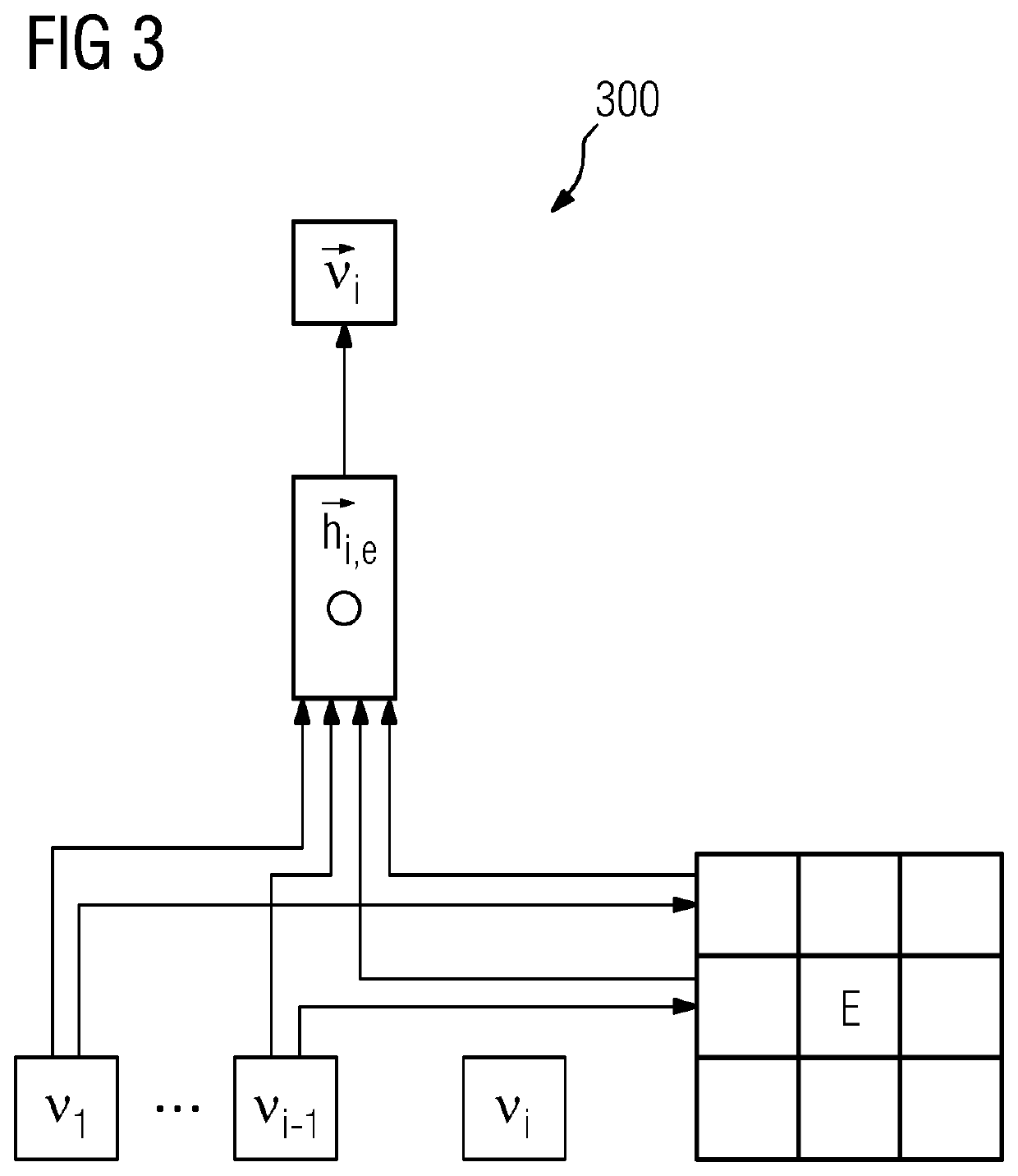

ActiveUS20200311205A1Improve topic modellingIncrease probabilitySemantic analysisSpecial data processing applicationsHidden layerLanguage modelling

The invention refers to a natural language processing system configured for receiving an input sequence ci of input words representing a first sequence of words in a natural language of a first text and generating an output sequence of output words representing a second sequence of words in a natural language of a second text and modeled by a multinominal topic model, wherein the multinominal topic model is extended by an incorporation of full contextual information around each word vi, wherein both preceding words vi around each word vi are captured by using a bi-directional language modelling and a feed-forward fashion, wherein position dependent forward hidden layers {right arrow over (h)}i and backward hidden layers for each word vi are computed.

Owner:SIEMENS AG

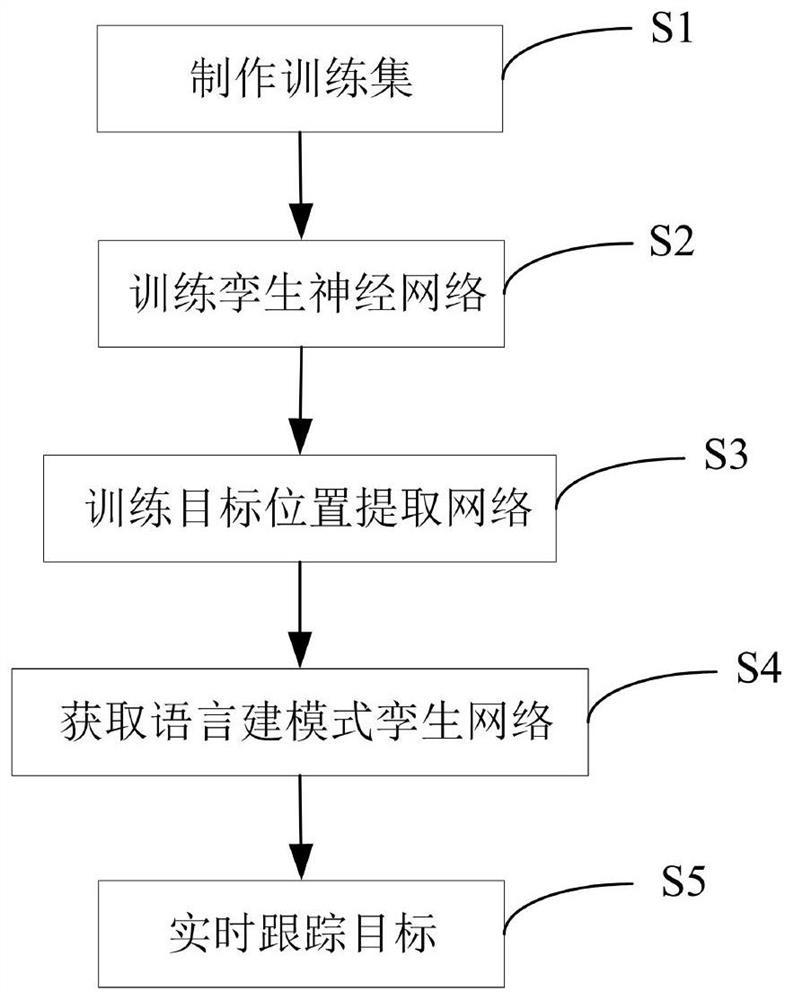

Target tracking method based on language modeling twin network

PendingCN114581485AEasy to implementImprove scalabilityImage enhancementImage analysisData setEngineering

The invention relates to a target tracking method based on a language building mode twin network, and the method comprises the steps: S1, obtaining a video containing the continuous motion of a target, and making a training data set according to the video; s2, training a twin neural network according to the training data set; s3, keeping parameters of the twin neural network unchanged, and training a target position extraction network; s4, performing joint training on the trained twin neural network and the trained target position extraction network to obtain a language building mode twin network; and S5, acquiring a real-time image of the target to be tracked, and performing real-time tracking on the target to be tracked by using the language modeling twin network. Expert experience knowledge does not need to be integrated into the algorithm, and the implementation process is simpler and more convenient. In addition, the system is high in expansibility and can be connected to a more general intelligent system.

Owner:上海瀚所信息技术有限公司

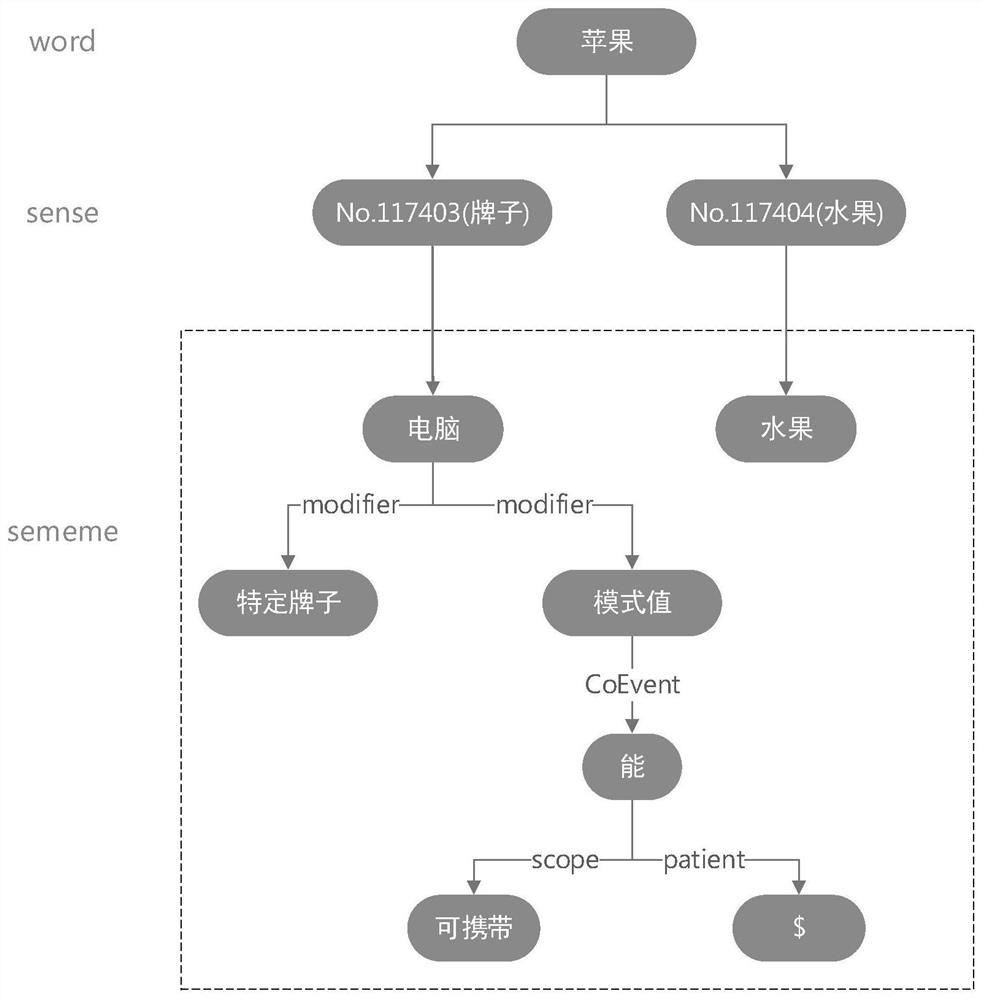

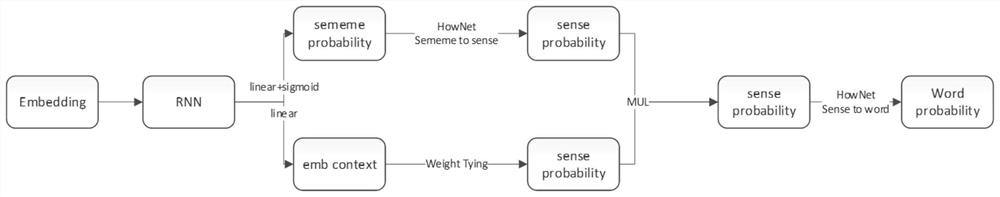

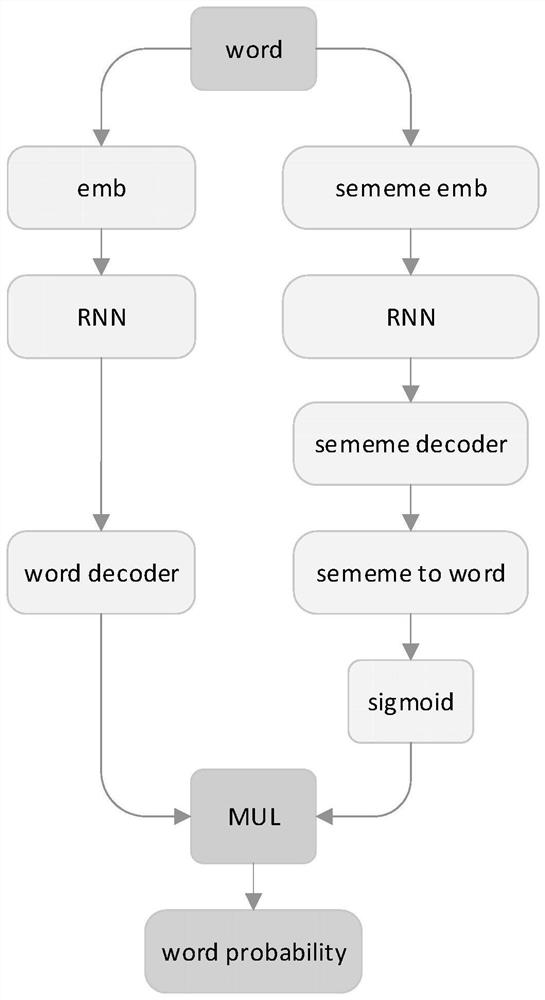

Language meaning understanding method fusing semantic information

ActiveCN112464673AImprove robustnessProof of validitySemantic analysisEnergy efficient computingProgramming languageInformation processing

The invention discloses a semantic meaning understanding method fusing semantic information, and belongs to the technical field of language information processing. The problem that an existing language modeling method is high in complexity and cannot give consideration to the effect is solved. The method comprises the following steps of: firstly, processing a language by taking each word as a unitaccording to two paths, wherein the left path is a word encoder + RNN + word decoder, and the output of the left path is recorded as wl; the right path comprises a synonym encoder+RNN + synonym decoder+a word decoder + sigmoid, and the output of the right path is recorded as wr; and then fusing the outputs of the two paths. The method is mainly for language meaning understanding.

Owner:HARBIN ENG UNIV

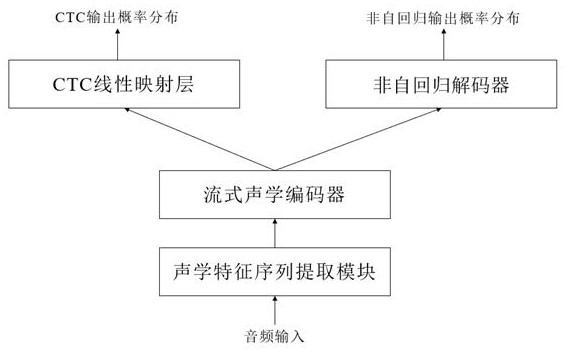

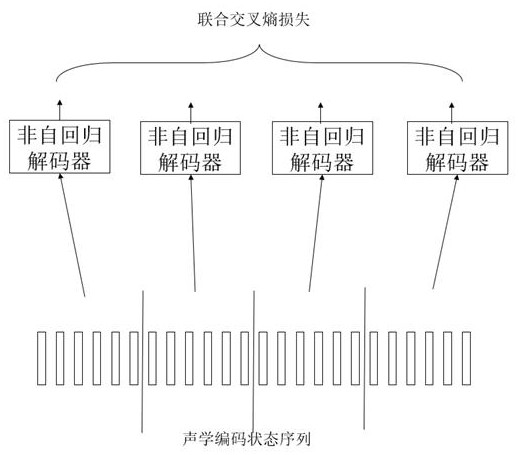

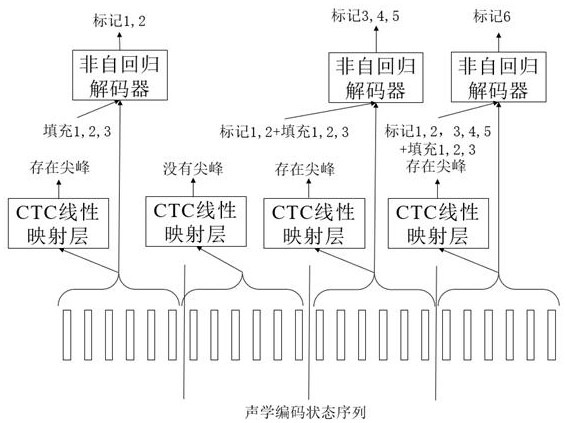

Streaming speech recognition system and method based on non-autoregression model

PendingCN114203170AAvoid lostStreaming inference speed improvementSpeech recognitionLanguage modellingAcoustics

The invention discloses a streaming speech recognition system and method based on a non-autoregression model. The method comprises the following steps: S11, extracting an acoustic feature sequence; s12, generating an acoustic coding state sequence; s13, generating an acoustic coding state sequence; s14, CTC output probability distribution and connection time sequence loss are calculated; s15, performing alignment by using a viterbi algorithm; s16, inputting section by section and calculating joint cross entropy loss; s17, calculating a gradient according to the joint loss of the joint time sequence loss and the joint cross entropy loss, and carrying out back propagation; s18, circularly executing the steps S12 to S17 until the training is completed; the system comprises an acoustic feature sequence extraction module, a streaming acoustic encoder, a CTC linear transformation layer and a non-autoregressive decoder which are sequentially connected with one another. According to the invention, non-autoregressive decoding is carried out on the input audio segments segment by segment, so that the streaming reasoning speed is improved. And the loss of the language modeling capability is avoided.

Owner:董立波

Artificial neural network with side input for language modelling and prediction

The present invention relates to an improved artificial neural network for predicting one or more next items in a sequence of items based on an input sequence item. The artificial neural network is implemented on an electronic device comprising a processor, and at least one input interface configured to receive one or more input sequence items, wherein the processor is configured to implement theartificial neural network and generate one or more predicted next items in a sequence of items using the artificial neural network by providing an input sequence item received at the at least one input interface and a side input as inputs to the artificial neural network, wherein the side input is configured to maintain a record of input sequence items received at the input interface.

Owner:MICROSOFT TECH LICENSING LLC

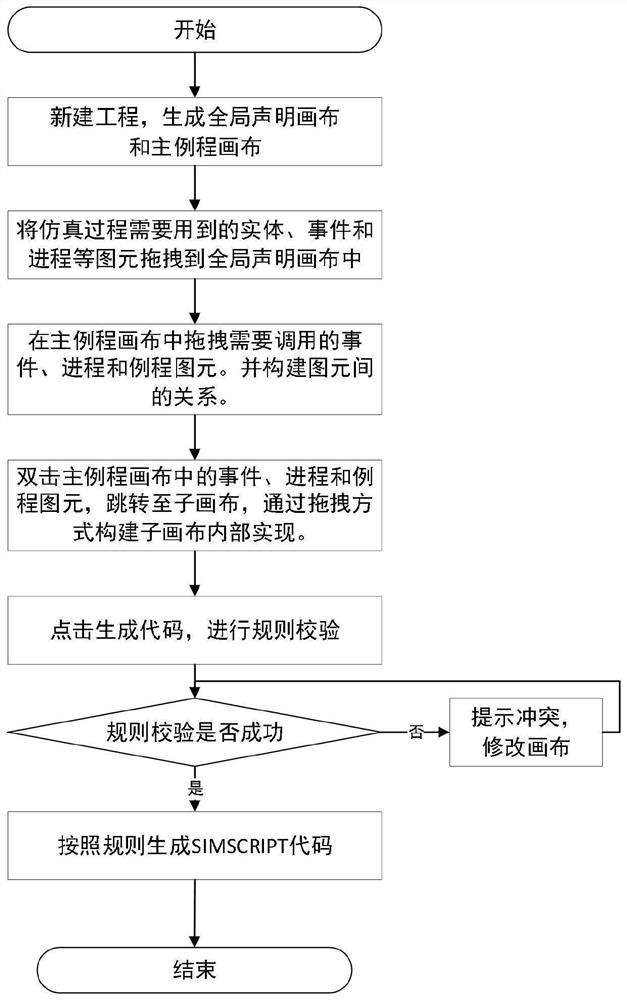

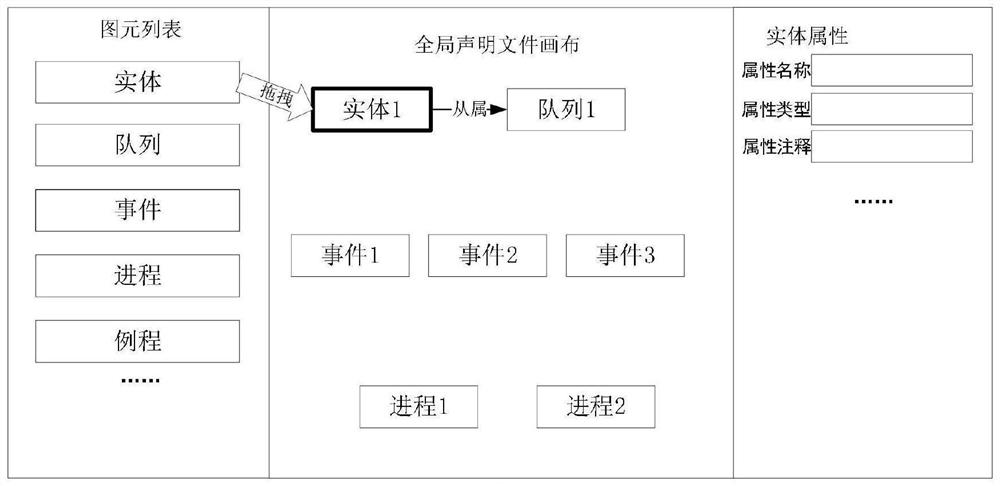

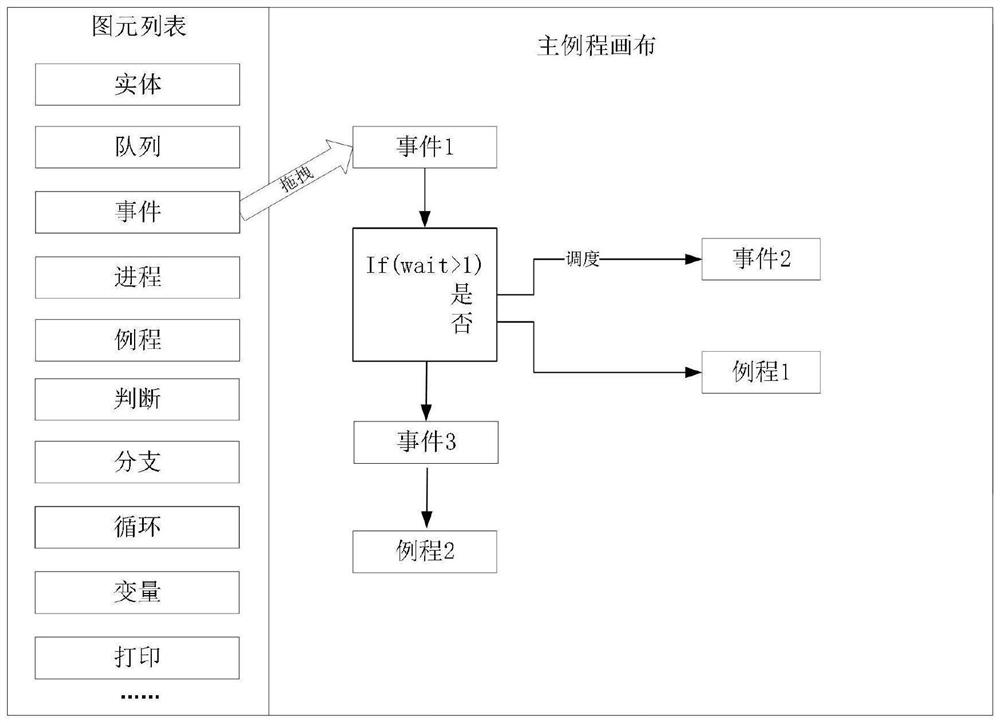

SIMSCRIPT language-oriented discrete event simulation graphical modeling method

ActiveCN111880784AEasy to reuseFacilitate communicationDesign optimisation/simulationVisual/graphical programmingSimulation languageModelSim

The invention provides an SIMSCRIPT language-oriented discrete event simulation graphical modeling method, which comprises the following steps of: adding entities, routines, events and other primitives into a canvas serving as a primitive bearing container in a dragging manner according to entity flow graphs, activity cycle graphs and other modeling technologies, and representing an interaction relationship among the entities, the routines and the events through connecting lines, according to the method, primitives can be drawn and managed in the canvases, the canvases can be divided into a plurality of canvases according to the calling relation and the hierarchical relation, all the canvases can be stored as engineering files of specific formats, and the engineering files can automatically generate SIMSCRIPT simulation codes according to mapping rules. The discrete event simulation program is built based on the SIMSCRIPT simulation language and a graphical dragging mode, the problem that SIMSCRIPT grammar and manual code writing need to be familiar with when SIMSCRIPT language modeling is adopted is solved, the modeling process can be clear and visual, model reuse is simpler, thelearning cost is lower, user groups are wider, and communication between domain experts and modeling personnel is facilitated.

Owner:中国人民解放军国防大学联合作战学院

System and method for natural language processing

ActiveUS11182559B2Improve topic modellingIncrease probabilityNatural language translationSemantic analysisHidden layerLanguage modelling

The invention refers to a natural language processing system configured for receiving an input sequence ci of input words representing a first sequence of words in a natural language of a first text and generating an output sequence of output words representing a second sequence of words in a natural language of a second text and modeled by a multinominal topic model, wherein the multinominal topic model is extended by an incorporation of full contextual information around each word vi, wherein both preceding words vi around each word vi are captured by using a bi-directional language modelling and a feed-forward fashion, wherein position dependent forward hidden layers {right arrow over (h)}i and backward hidden layers i for each word vi are computed.

Owner:SIEMENS AG

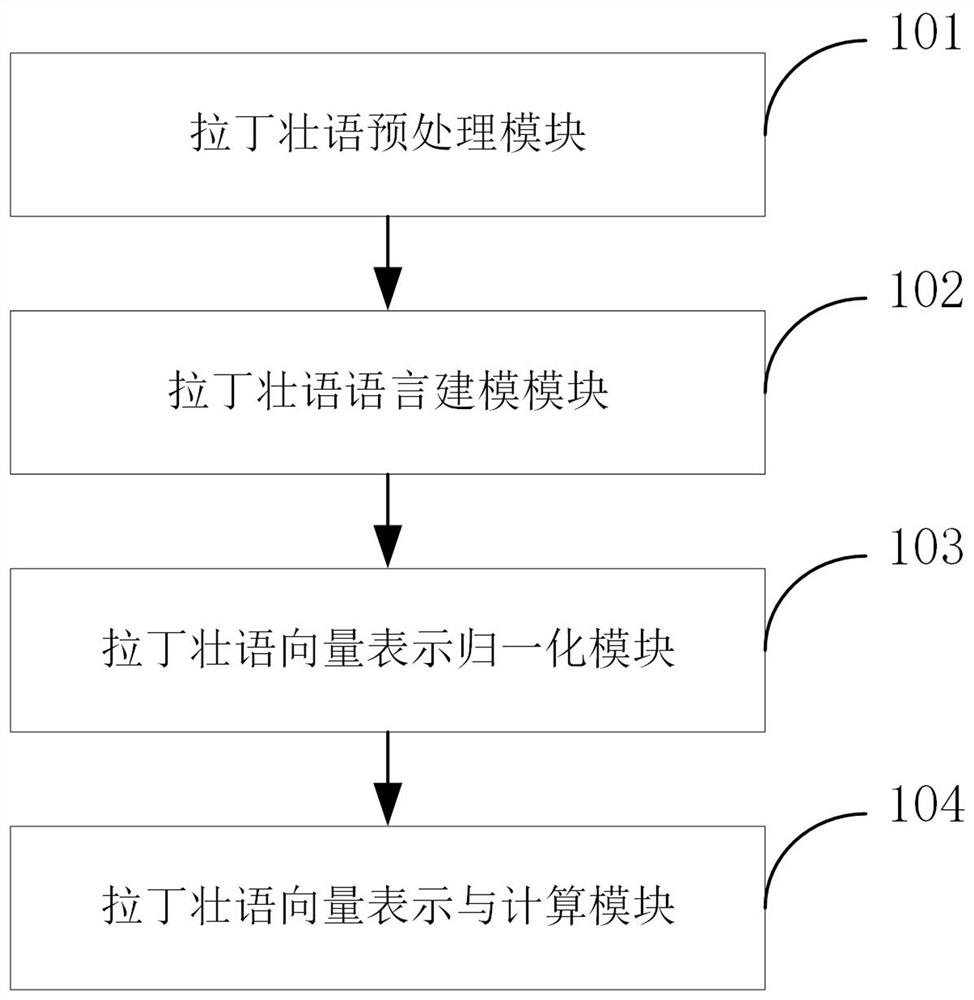

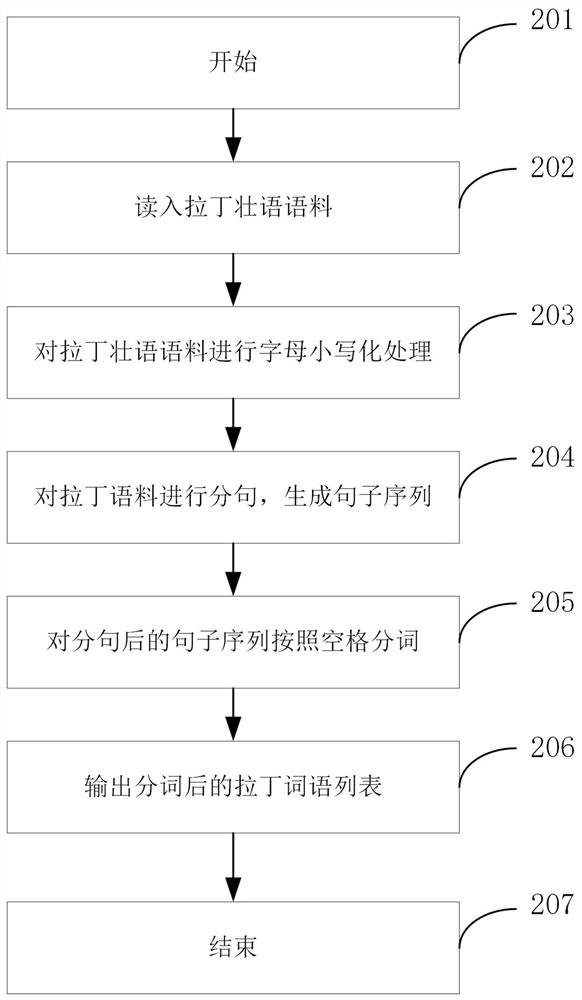

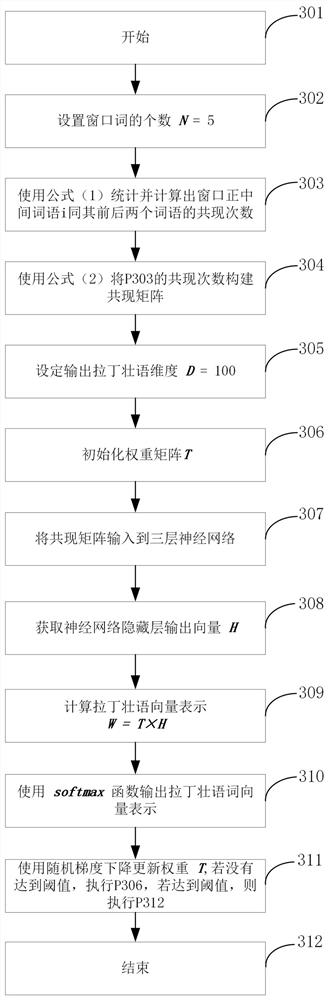

Latin Zhuang language vector representation and calculation method

PendingCN114091453ASolve computing problemsNatural language data processingNeural architecturesComputational problemTheoretical computer science

The invention provides a Latin Zhuang language vector representation and calculation method. A representation and calculation model composed of a Latin Zhuang language preprocessing module, a Latin Zhuang language modeling module, a Latin Zhuang language vector representation normalization module and a Latin Zhuang language vector representation and calculation module which are sequentially connected is included. After a text written by the Latin Zhuang language is processed through the representation and calculation model, the vector representation of the Latin Zhuang language text can be finally obtained. The method solves the problem of vector representation and calculation of the Latin Zhuang language, and can be used for automatic processing and application of the Latin Zhuang language.

Owner:GUILIN UNIV OF ELECTRONIC TECH

Content generation using target content derived modeling and unsupervised language modeling

Owner:INK CONTENT INC

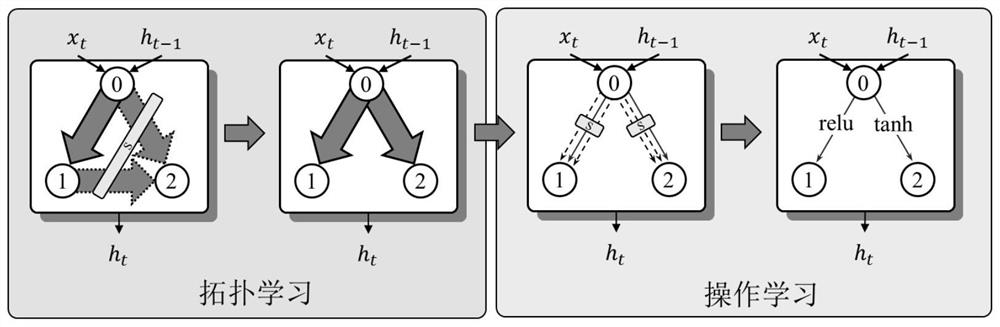

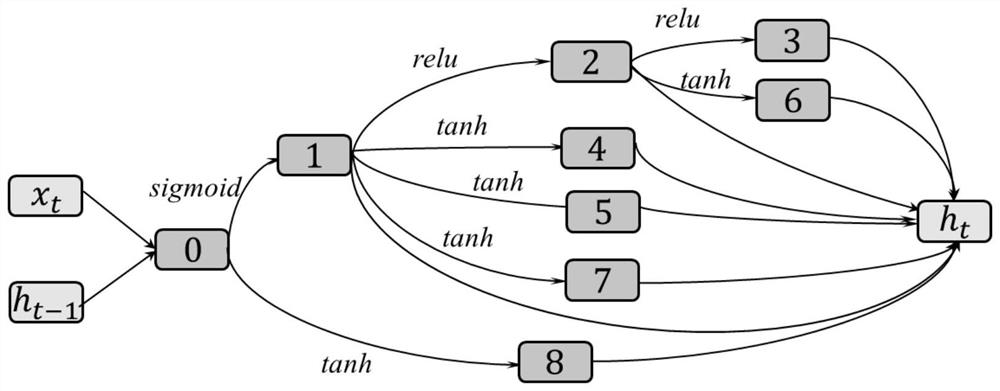

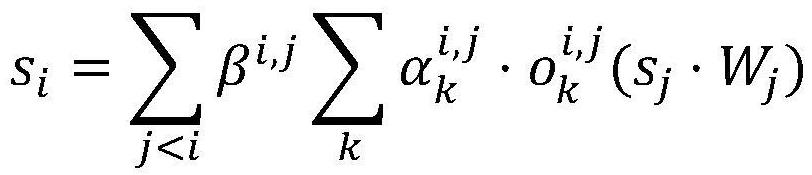

Language modeling system structure searching method for translation tasks

InactiveCN113111668AGuaranteed coupling effectImprove the optimal solutionNatural language translationDigital data information retrievalAlgorithmModelSim

The invention discloses a language modeling system structure search method for translation tasks, and the method comprises the following steps: obtaining and processing training data through the Internet, and modeling and training a network structure representation space; carrying out normalization operation on structure parameter values of meta-structure topology and operation in the training process; optimizing structure parameters and model parameters of the used model, and adjusting and optimizing a network structure and target parameters; further obtaining a discretized final structure according to the weight difference of different topologies and operations obtained after tuning, wherein the search result comprises the topological structure of the meta-structure and the operation used between the nodes; and circularly unfolding the searched meta-structures by using a connection mode between the meta-structures to obtain an integral model, performing parameter tuning on the model again by using training data, and finally training until convergence. The method greatly improves the possibility that the optimal solution of the model structure falls into the representation space of the search structure, thereby improving the effectiveness of the network structure search method.

Owner:沈阳雅译网络技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com