Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

50 results about "Victim cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A victim cache is a small, usually fully associative cache placed in the refill path of CPU cache, and it stores all the blocks evicted from that level of cache.

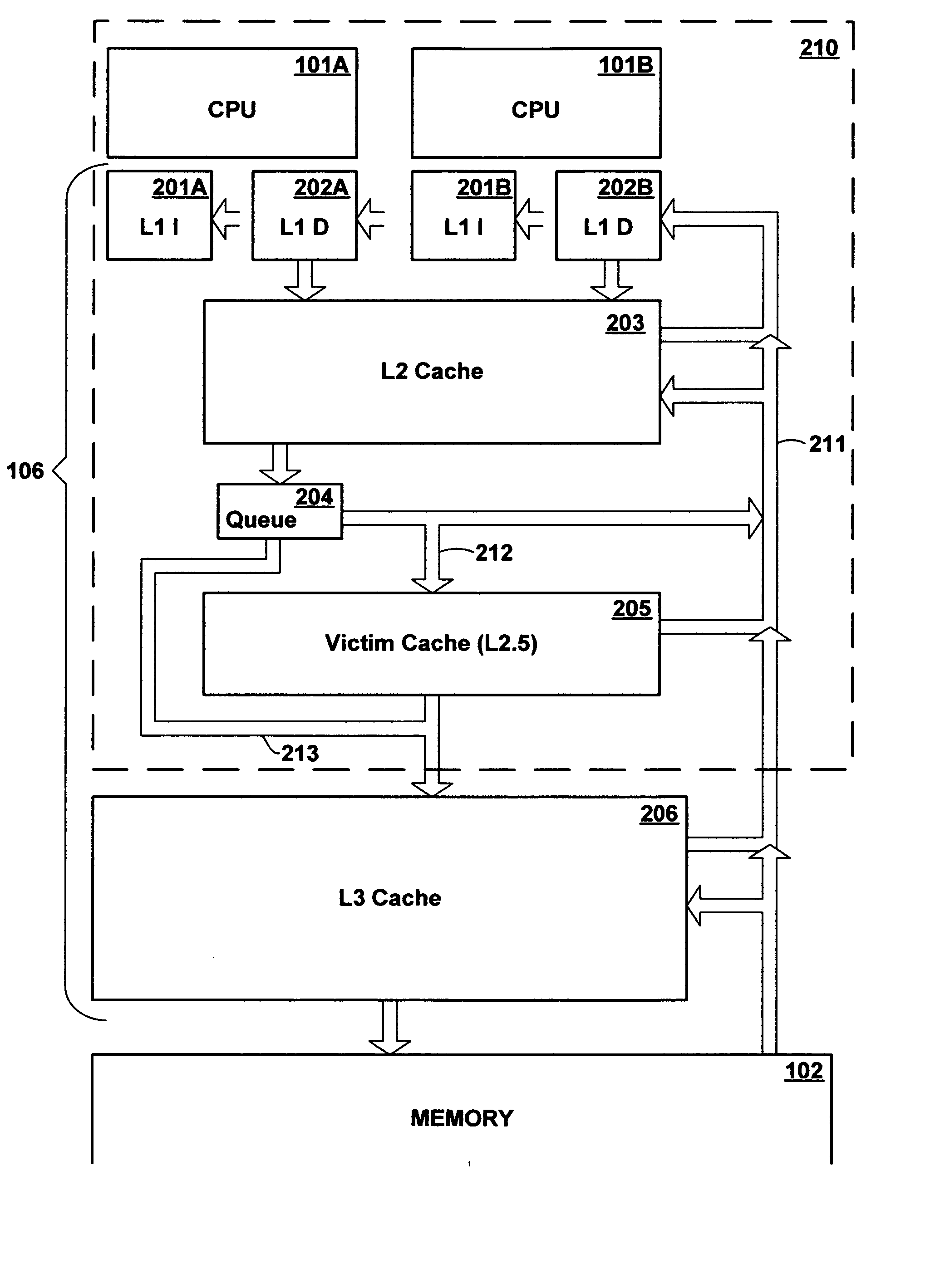

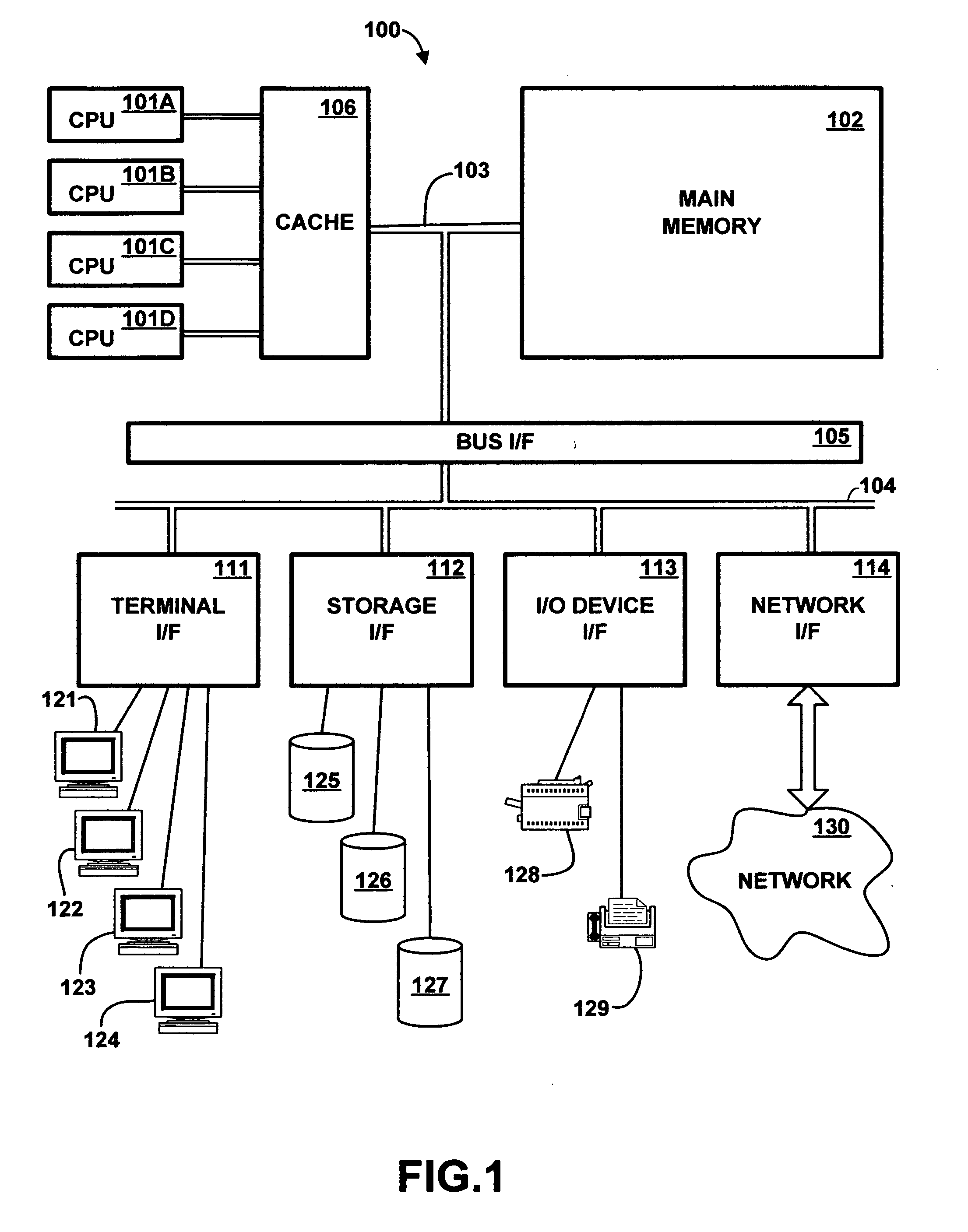

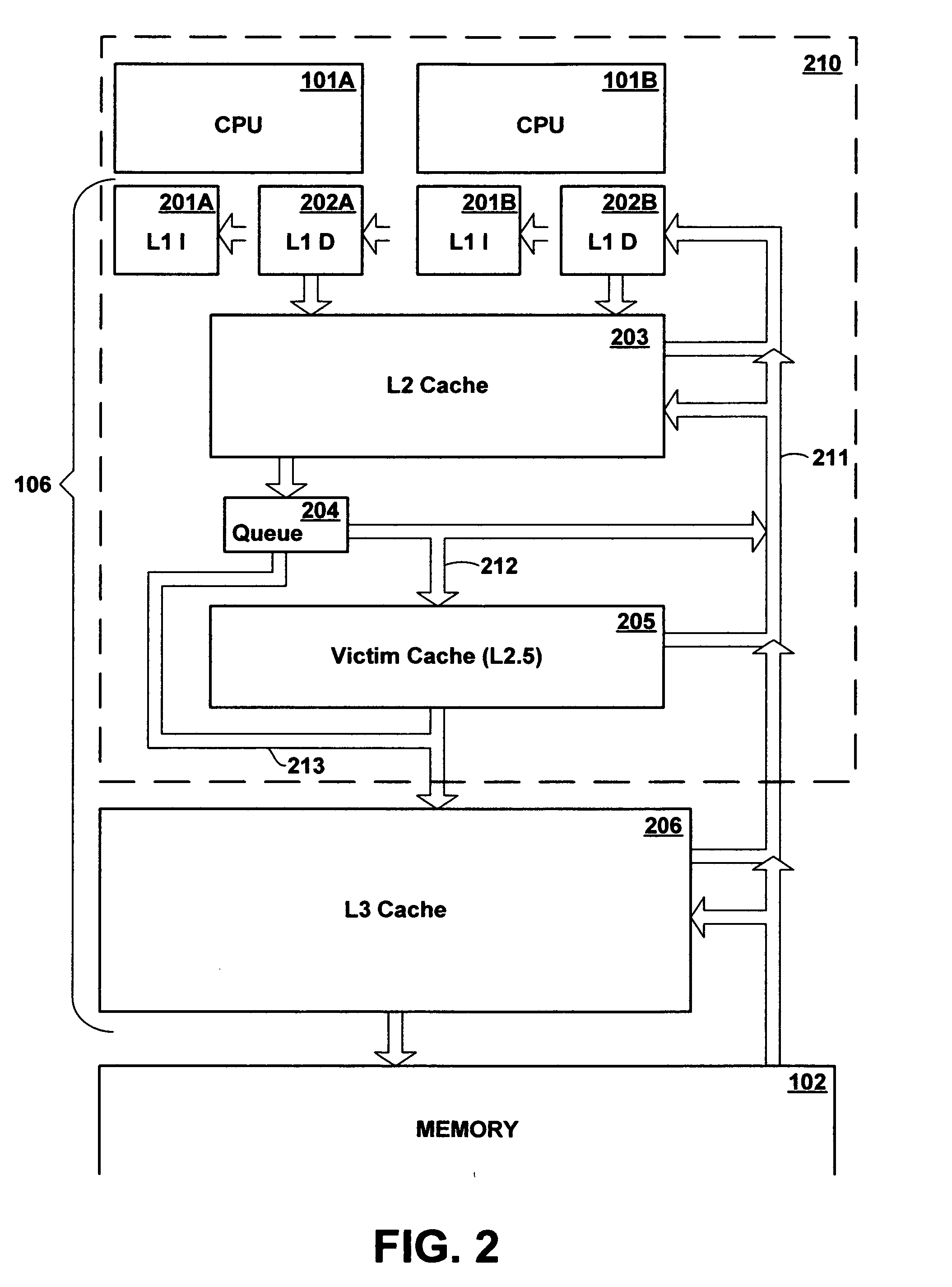

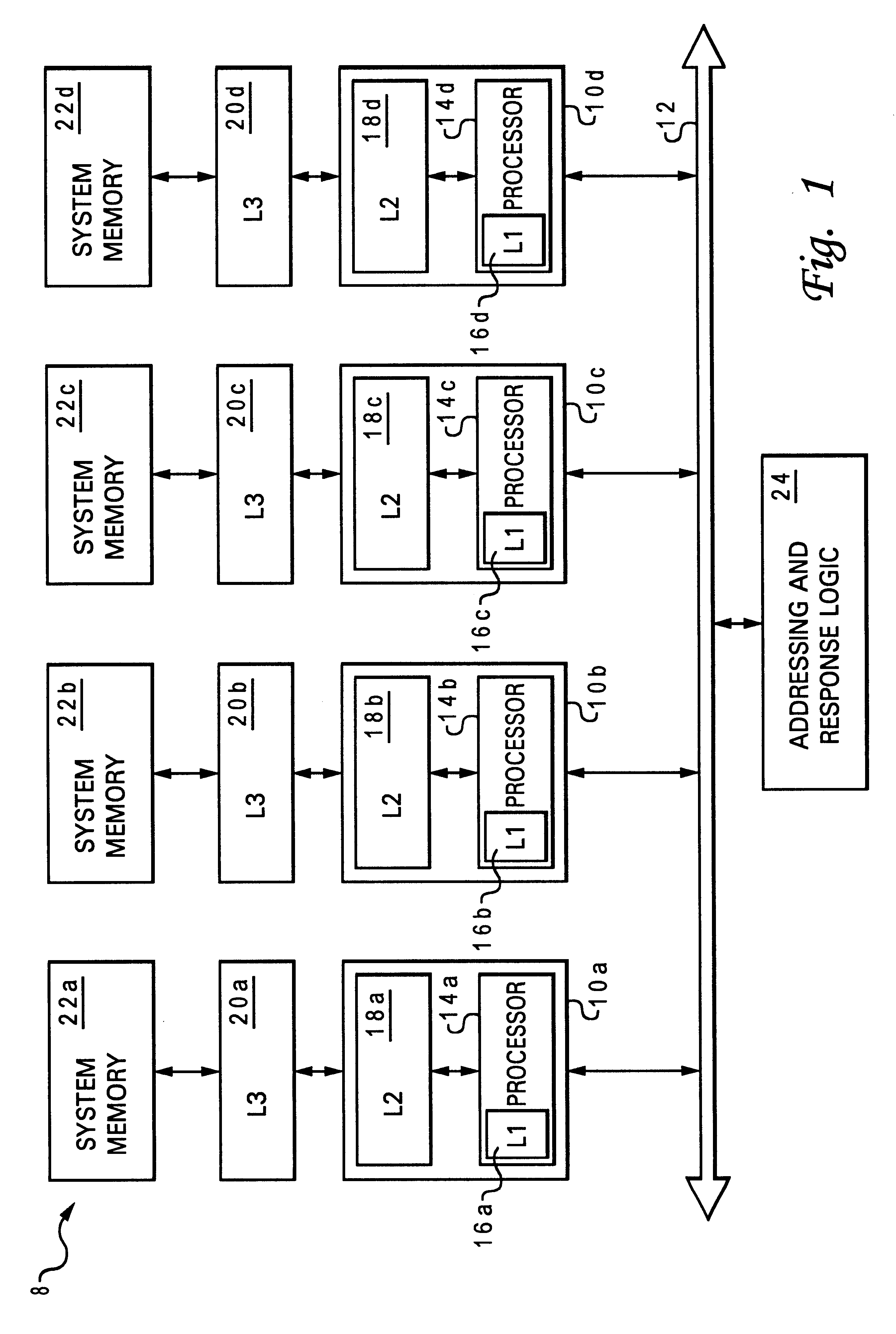

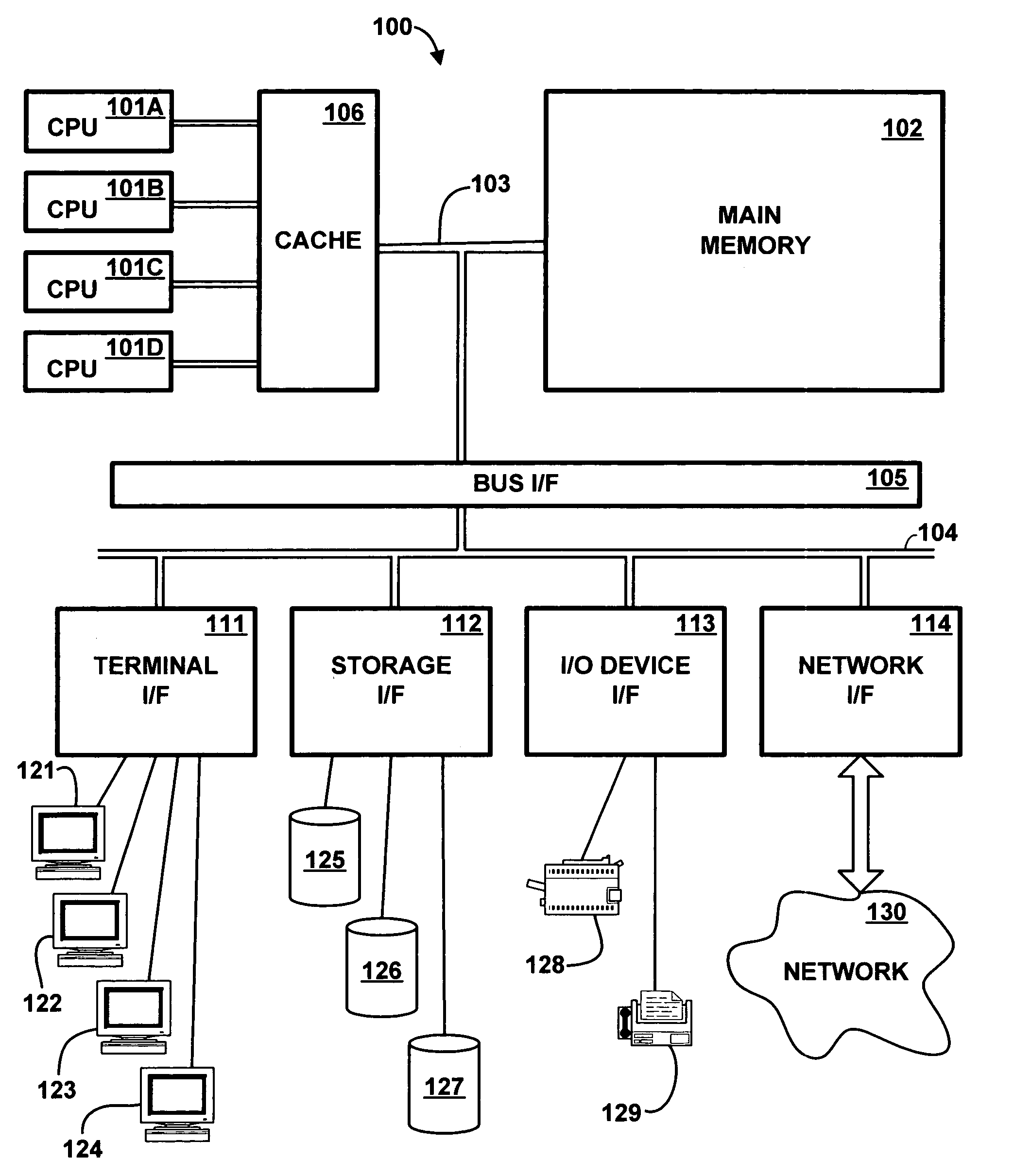

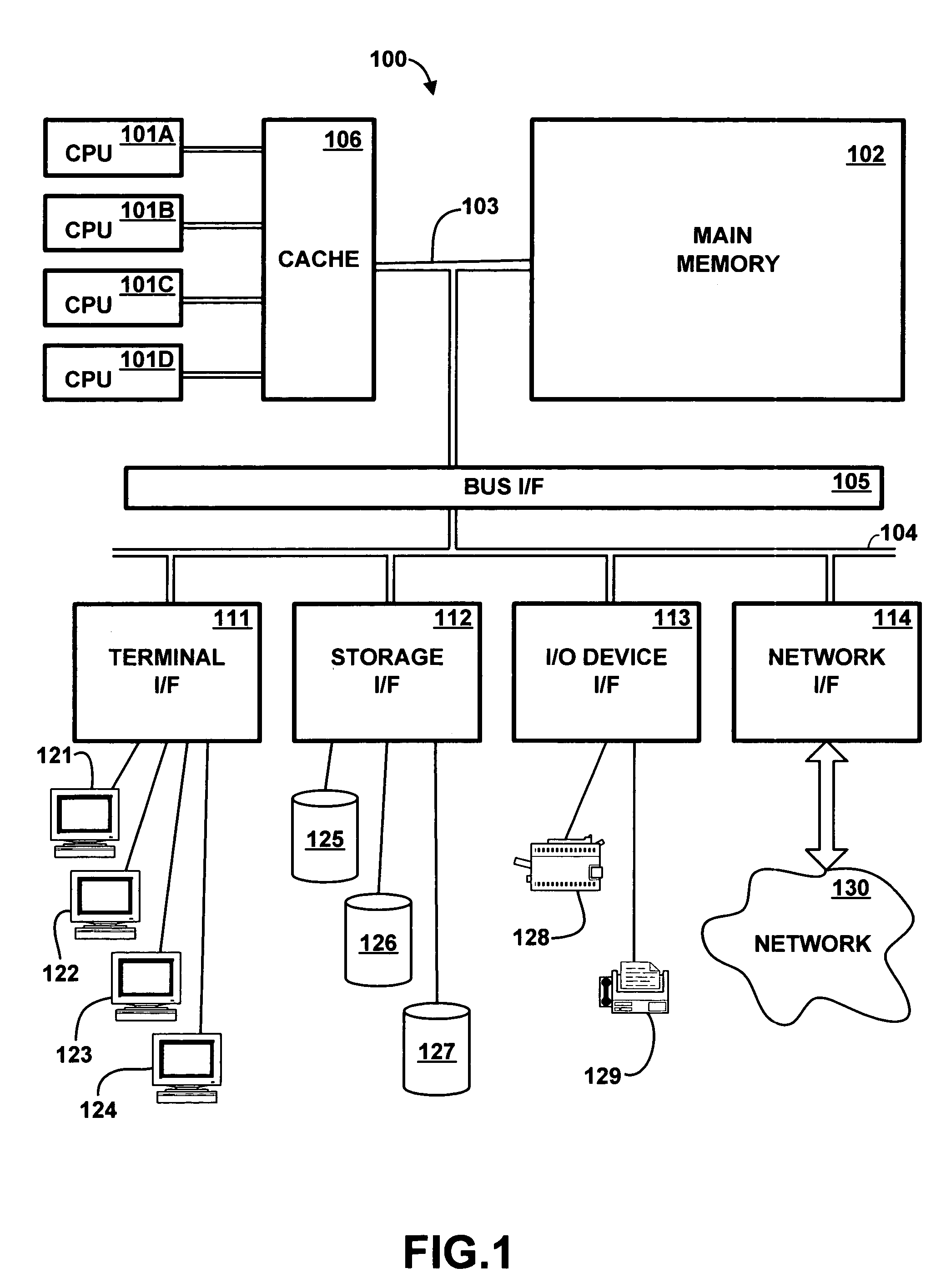

Multi-level cache architecture having a selective victim cache

A computer system cache memory contains at least two levels. A lower level selective victim cache receives cache lines evicted from a higher level cache. A selection mechanism selects lines evicted from the higher level cache for storage in the victim cache, only some of the evicted lines being selected for the victim. Preferably, two priority bits associated with each cache line are used to select lines for the victim. The priority bits indicate whether the line has been re-referenced while in the higher level cache, and whether it has been reloaded after eviction from the higher level cache.

Owner:IBM CORP

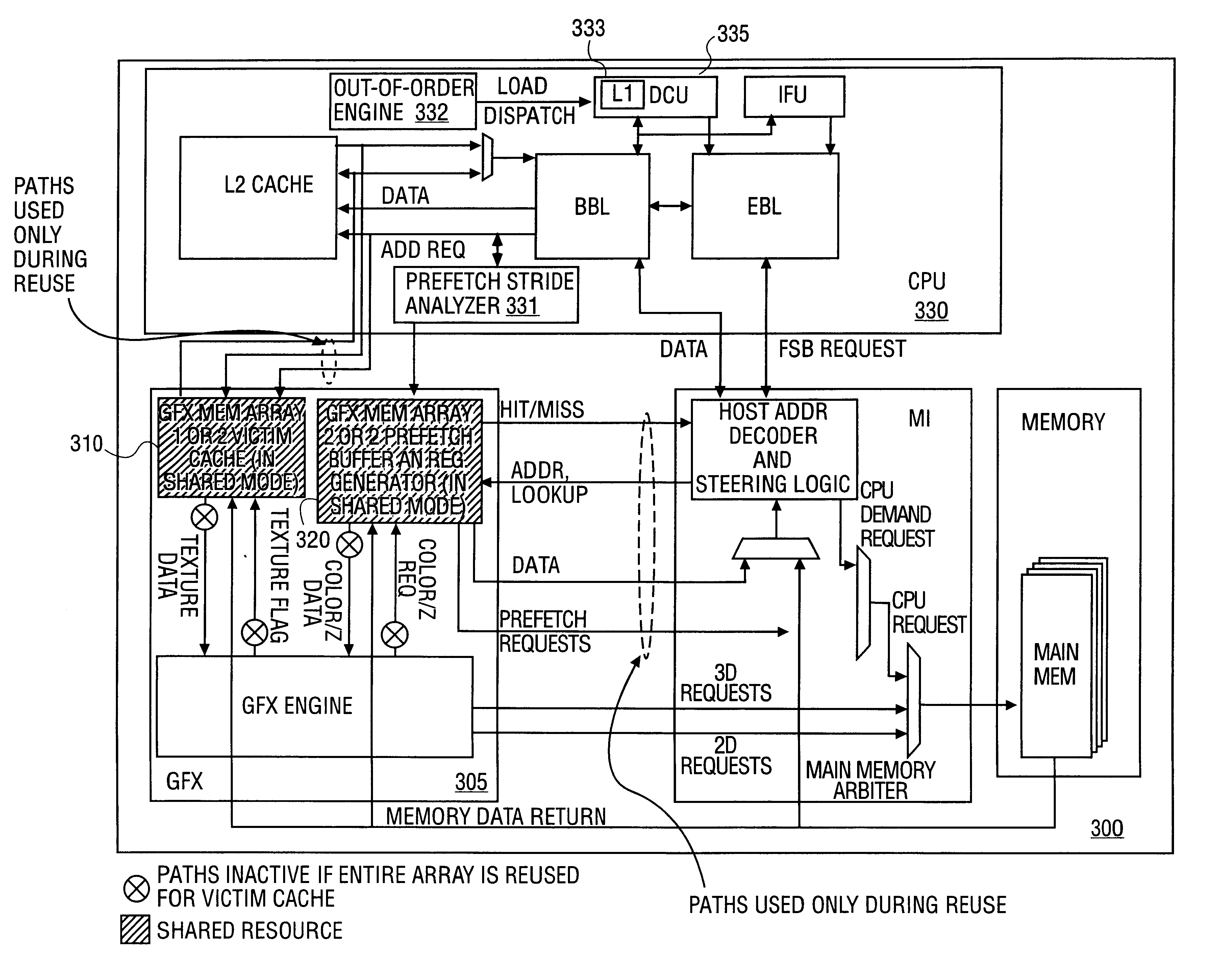

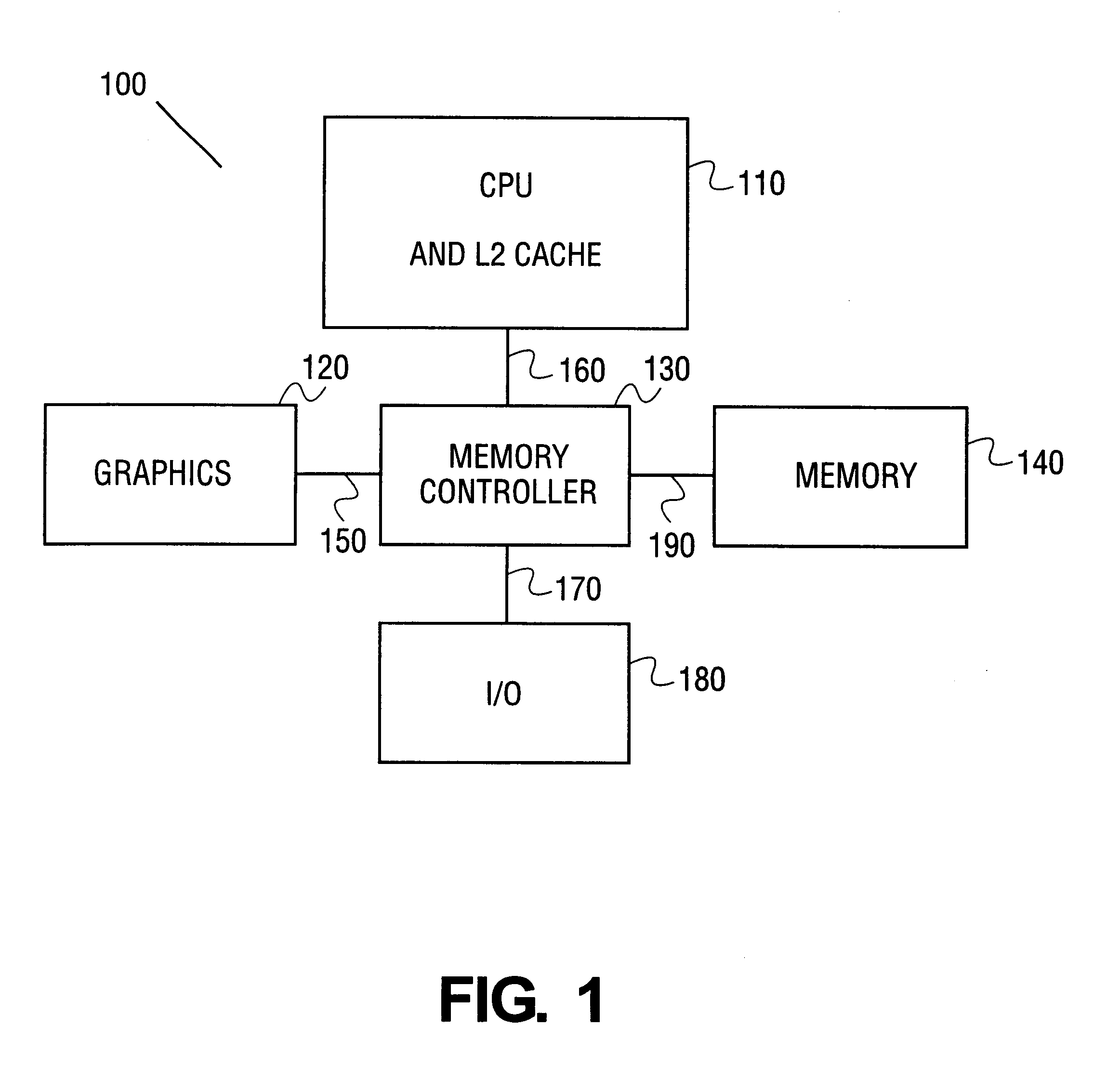

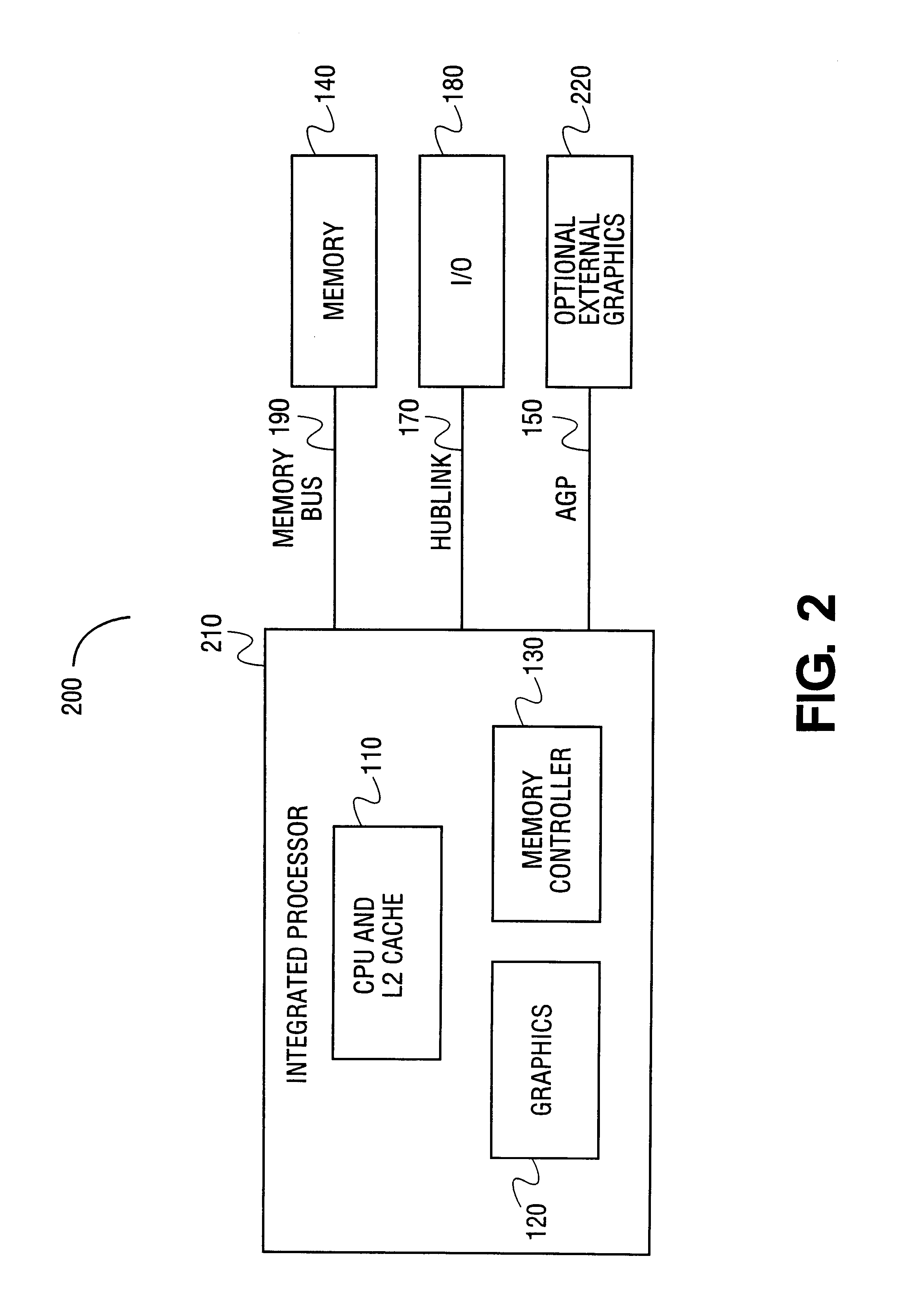

Opportunistic sharing of graphics resources to enhance CPU performance in an integrated microprocessor

InactiveUS6842180B1Memory architecture accessing/allocationMemory adressing/allocation/relocationGraphicsAddress decoder

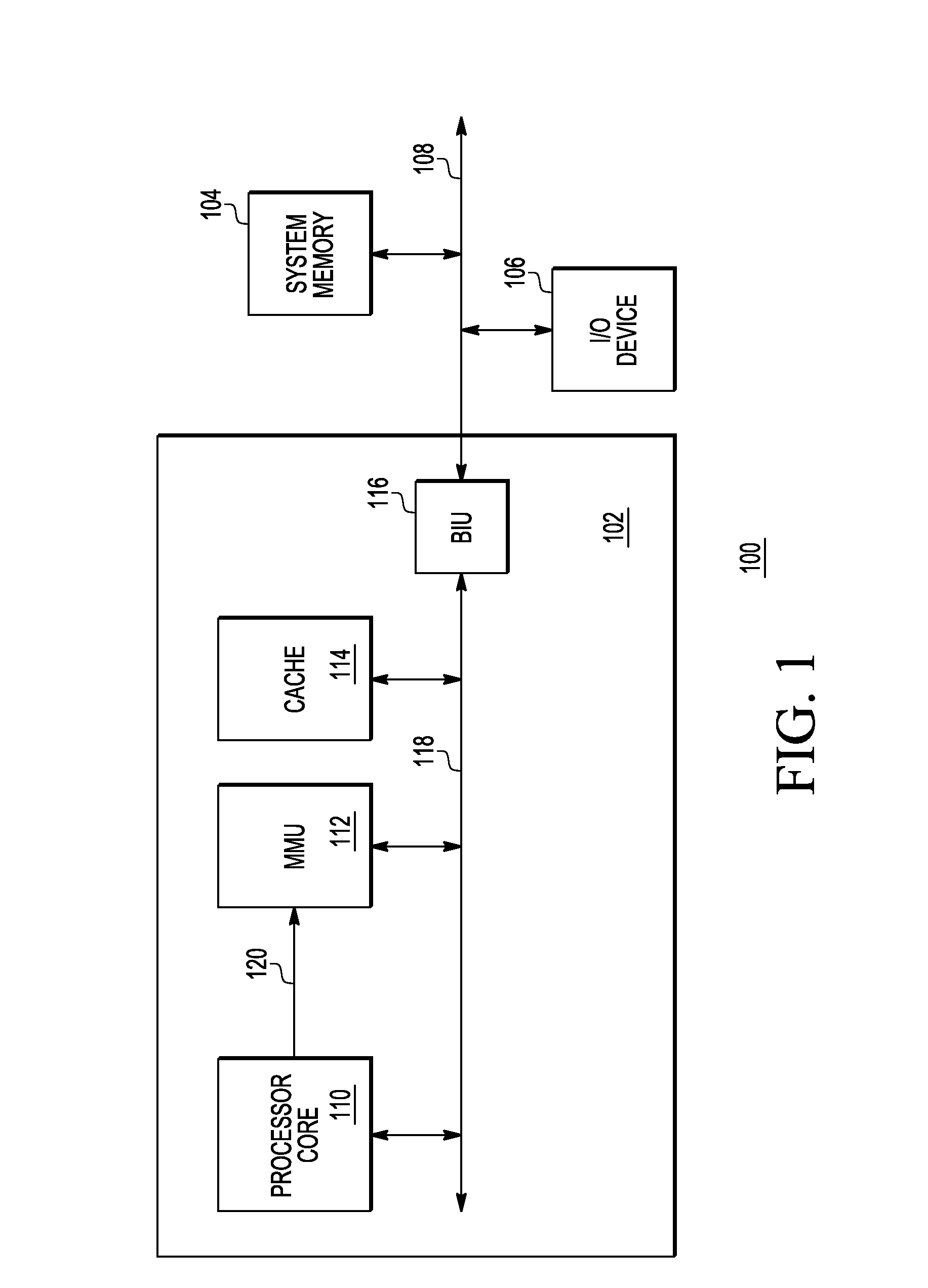

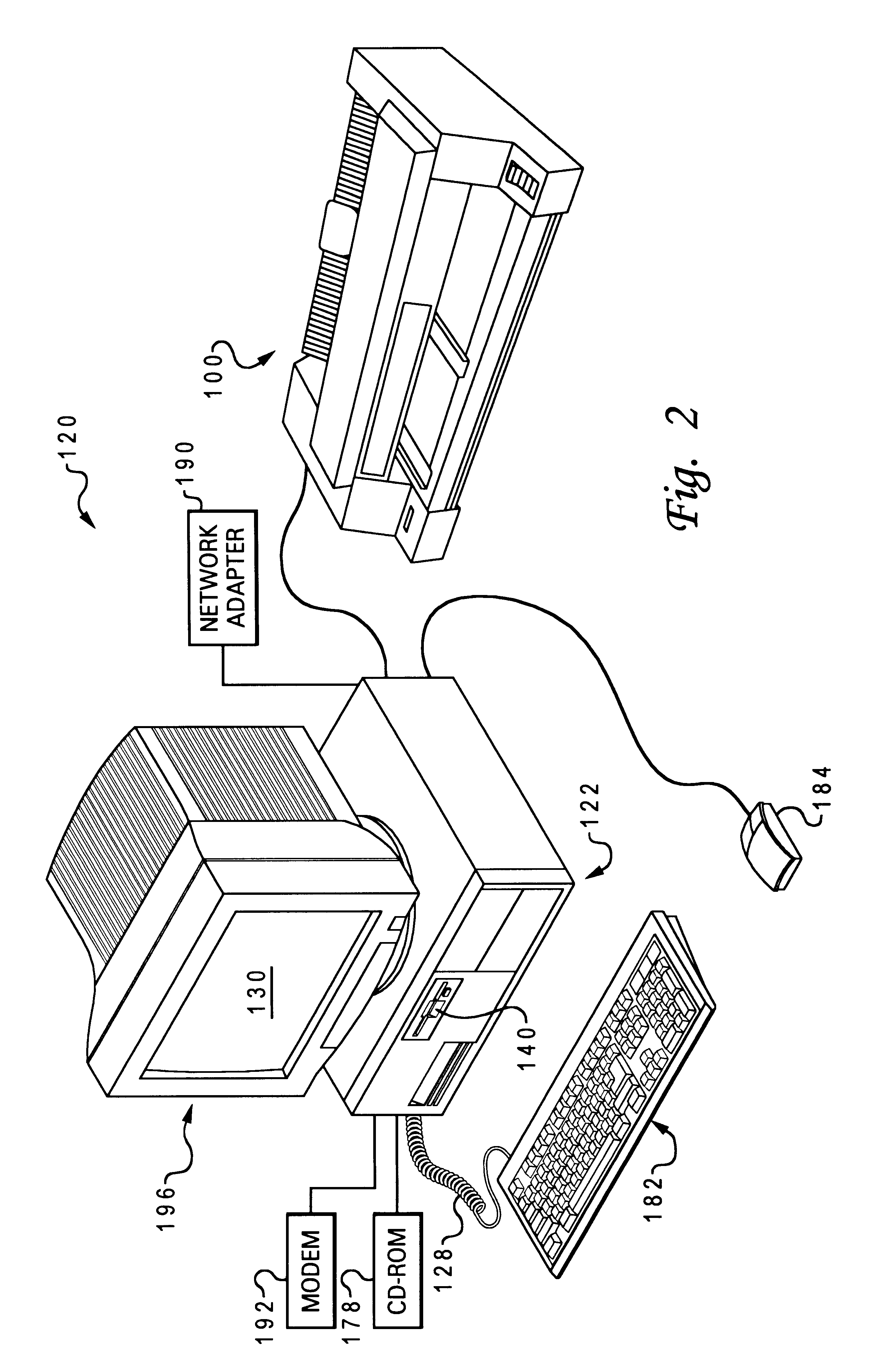

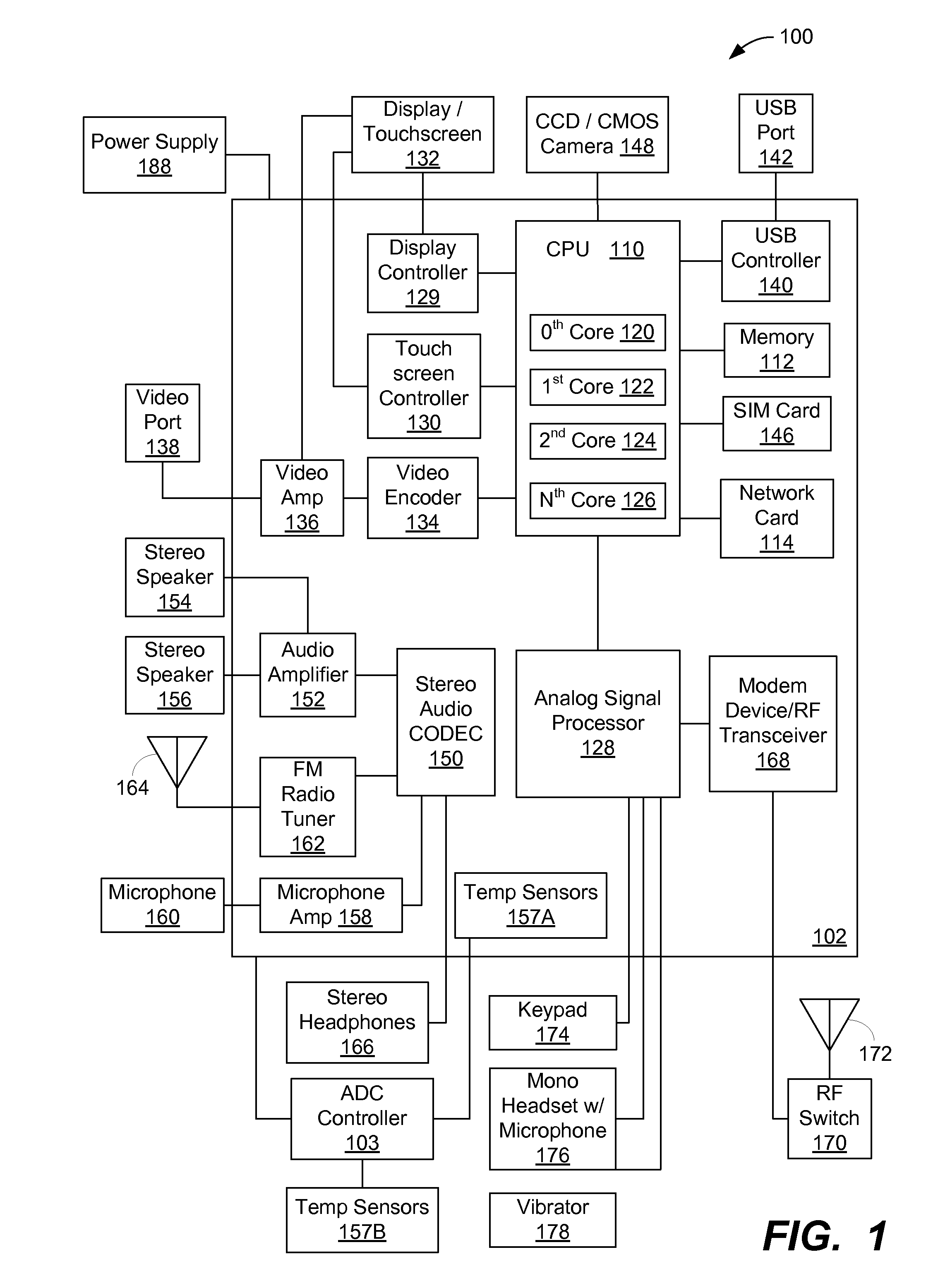

An electronic device that has an integrated central processing unit (CPU) including a pre-fetch stride analyzer and an out-of-order engine is provided. The electronic device also has a graphics engine, having graphics memory, that is coupled to the integrated CPU. A main memory that is coupled to a memory controller is provided. The memory controller is also coupled to the CPU and the graphics engine. The device has a host address decoder coupled to the integrated CPU. A front side bus (FSB) is provided that is coupled to the integrated CPU and the host address decoder. Also provided is a plurality of memory components. Accordingly, either the plurality of memory components or the graphics memory can be shared to perform alternate memory functions. Additionally, a method is provided that determines allocation availability between memory components in an integrated computer processing unit. The method also shares an available memory component as a pre-fetch buffer and another available memory component as a victim cache.

Owner:INTEL CORP

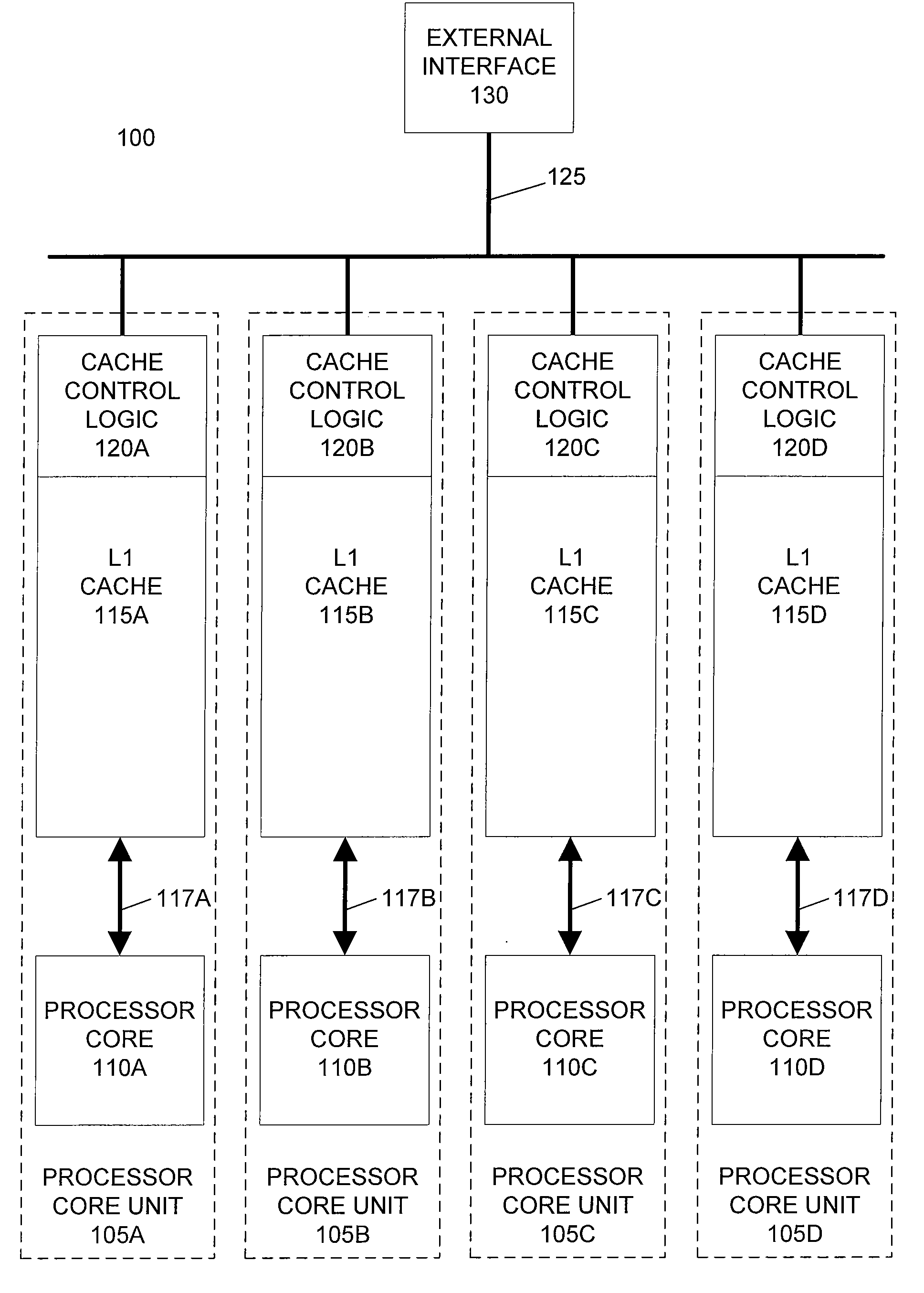

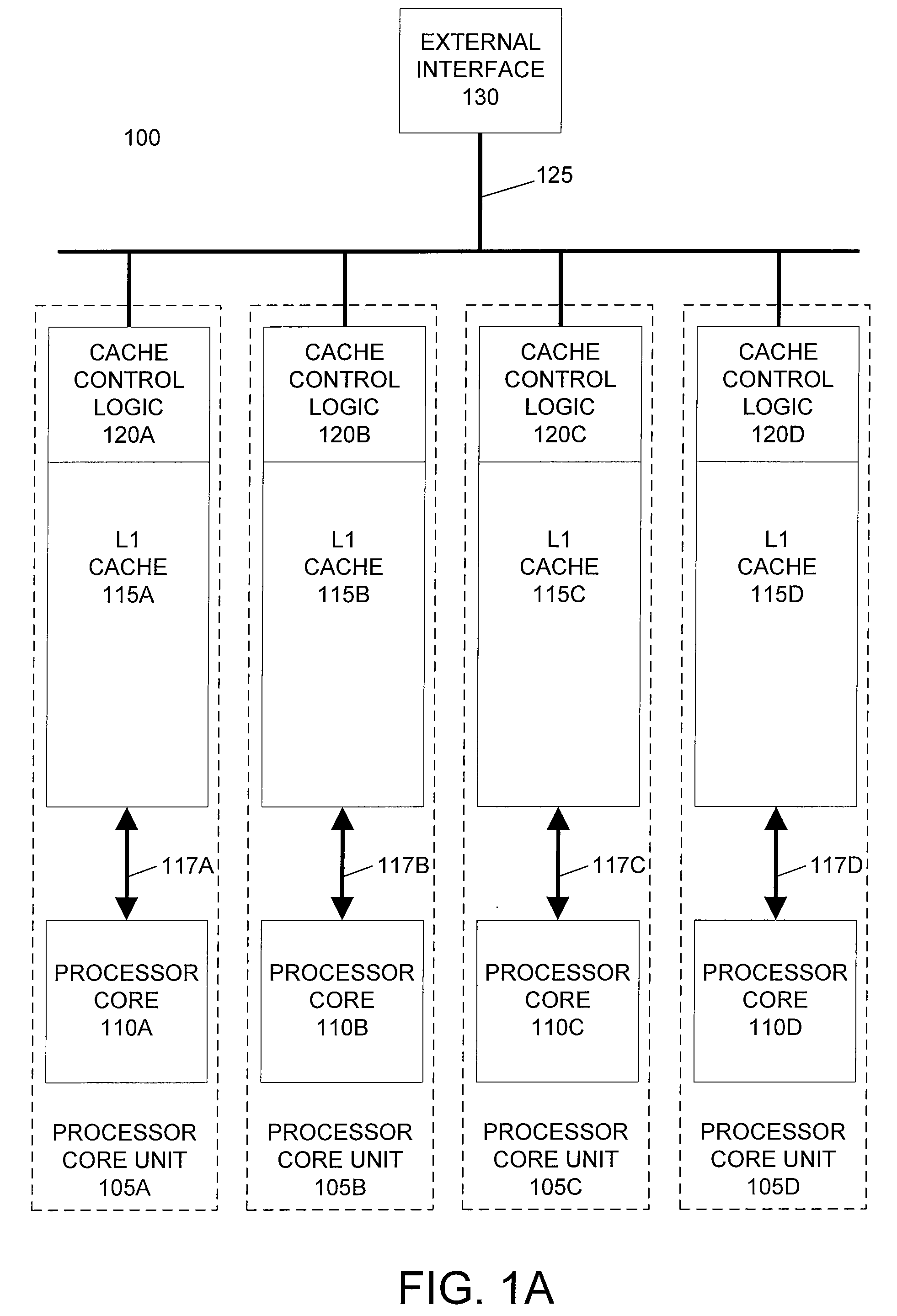

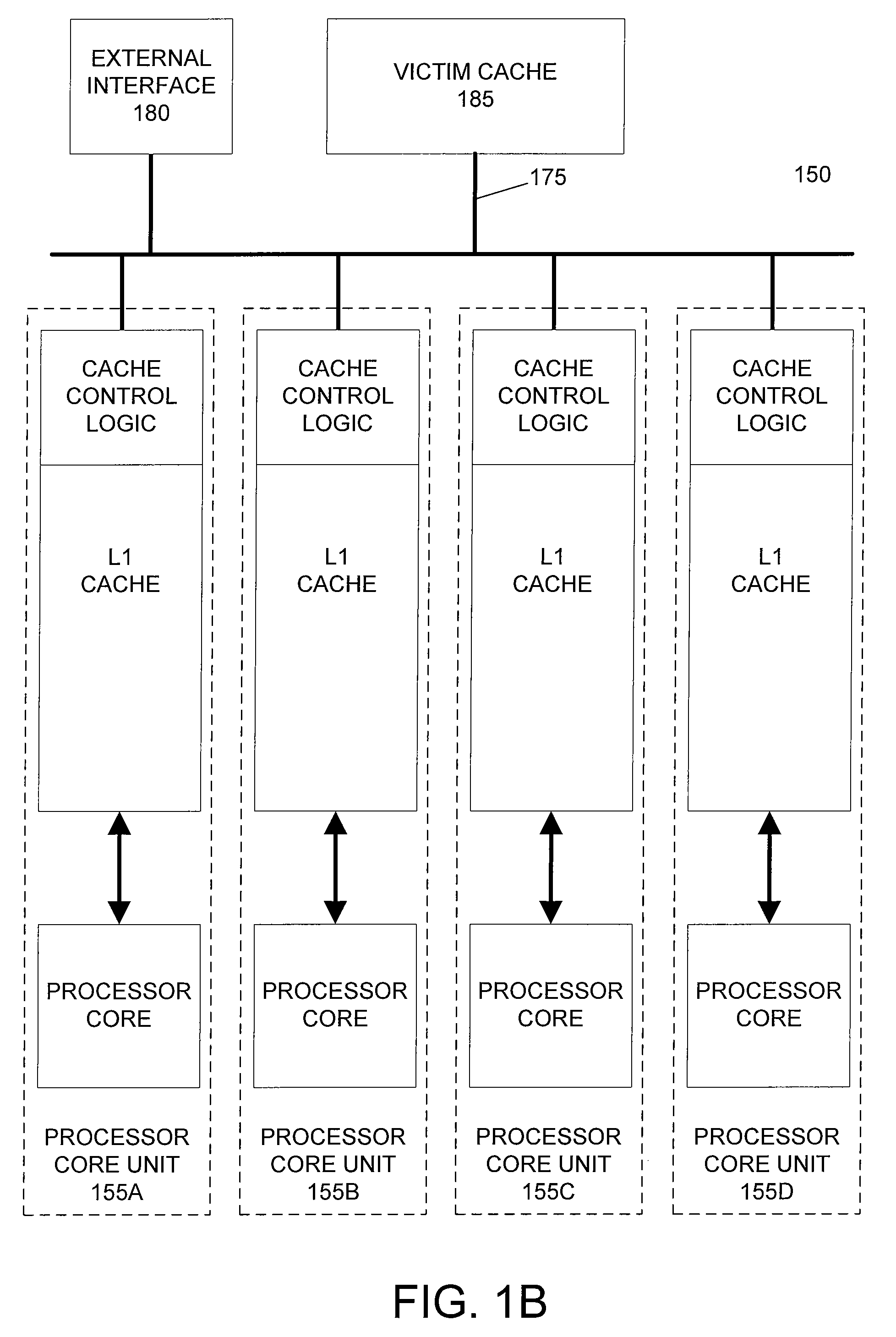

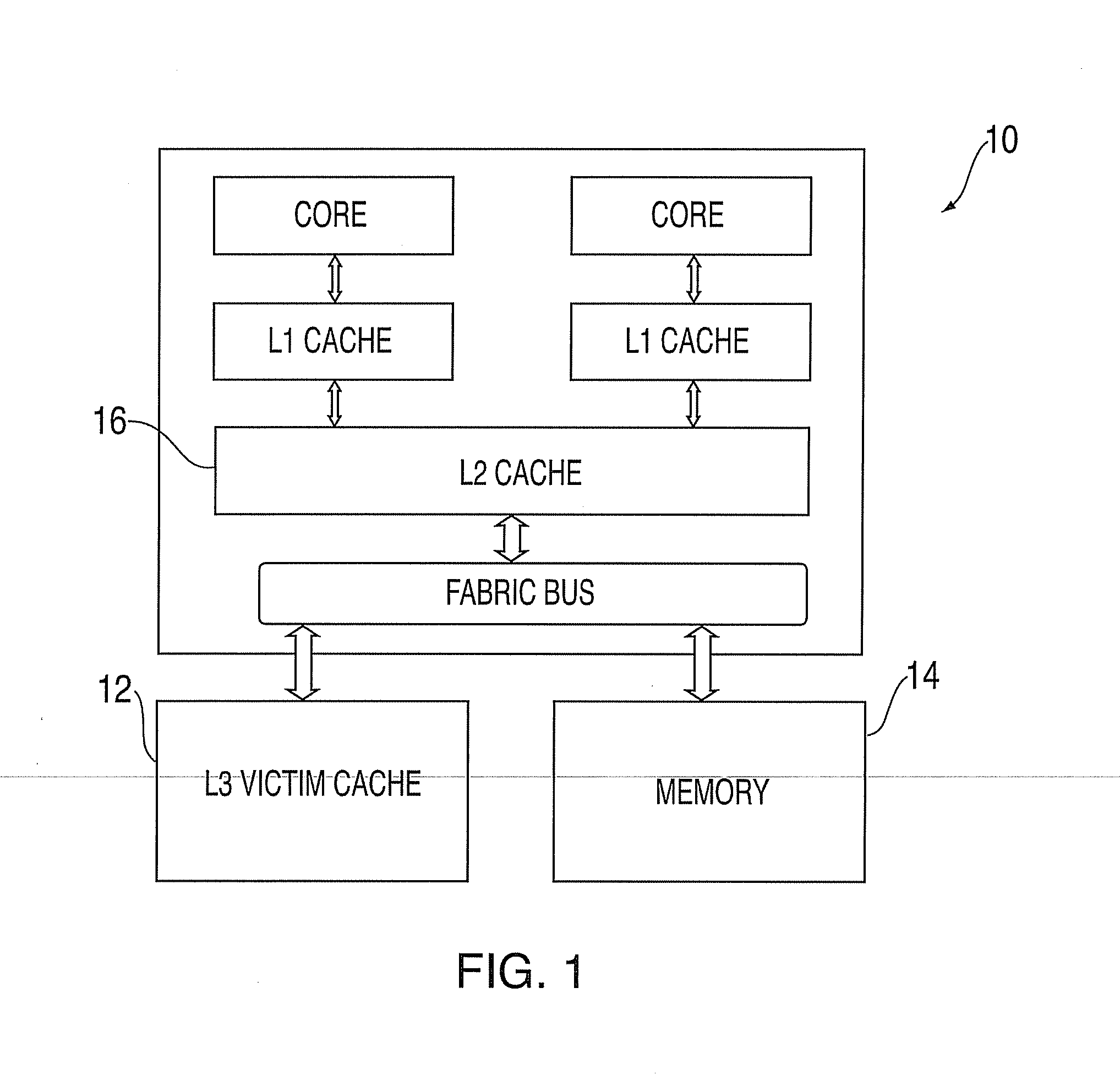

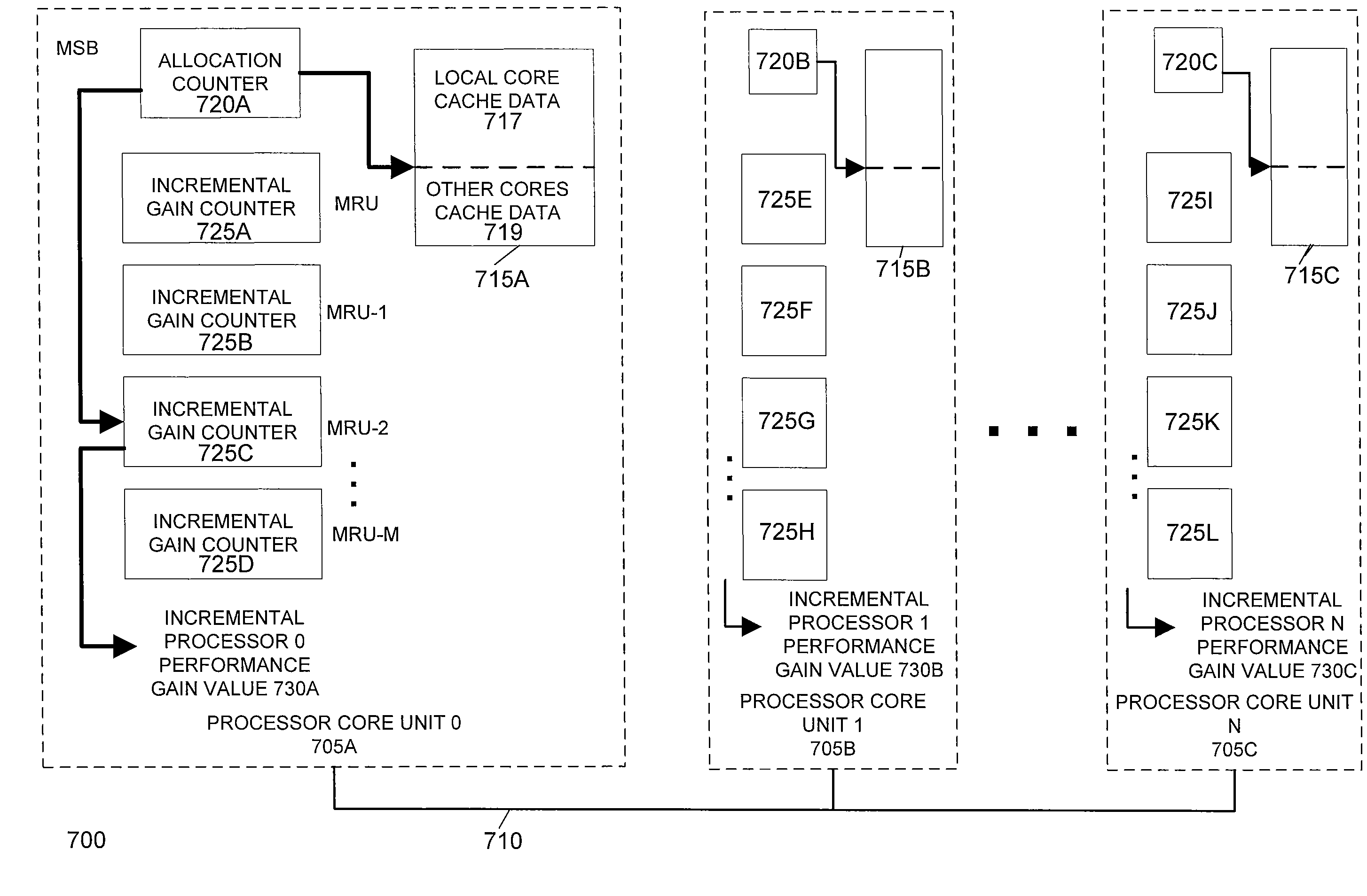

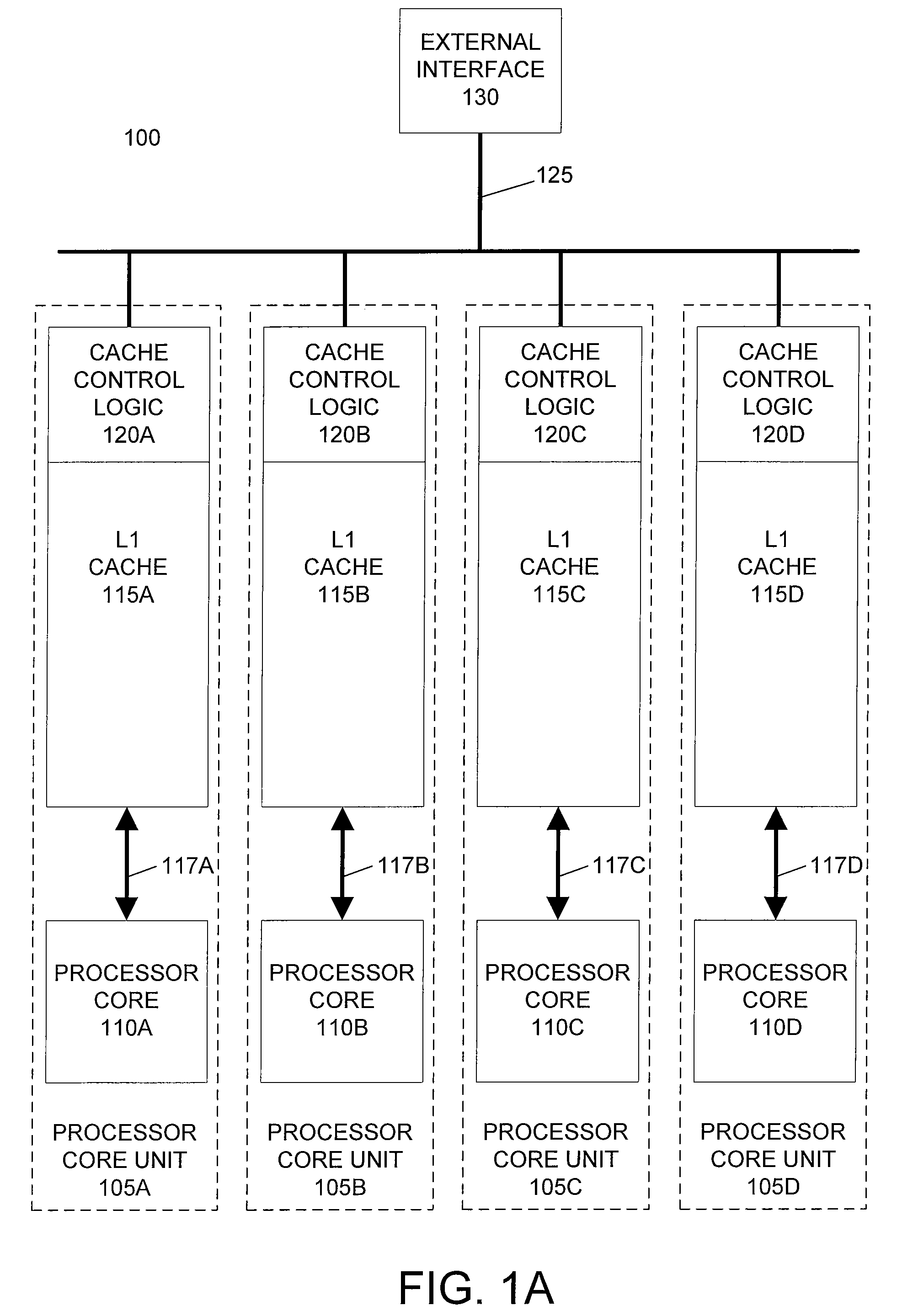

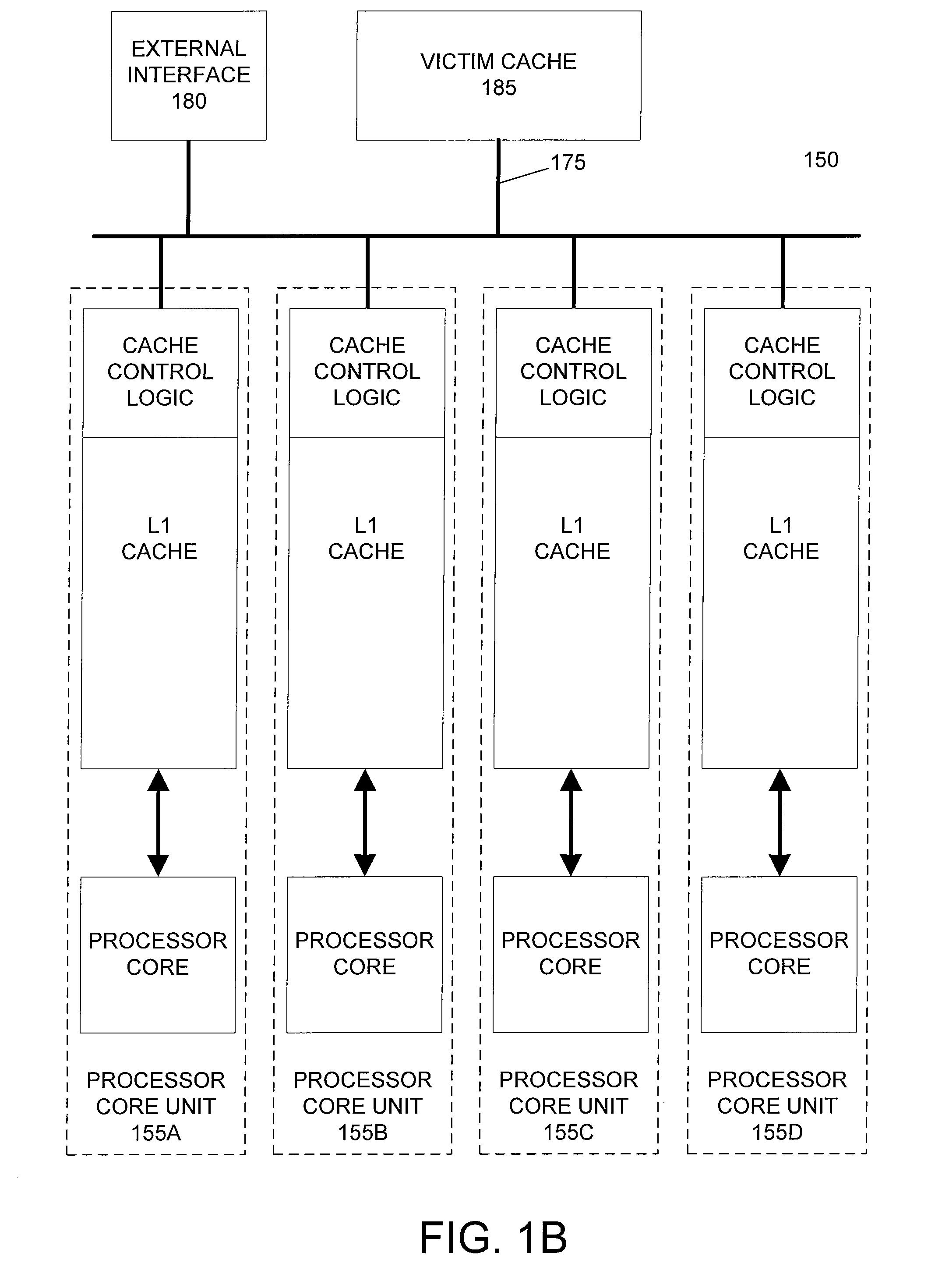

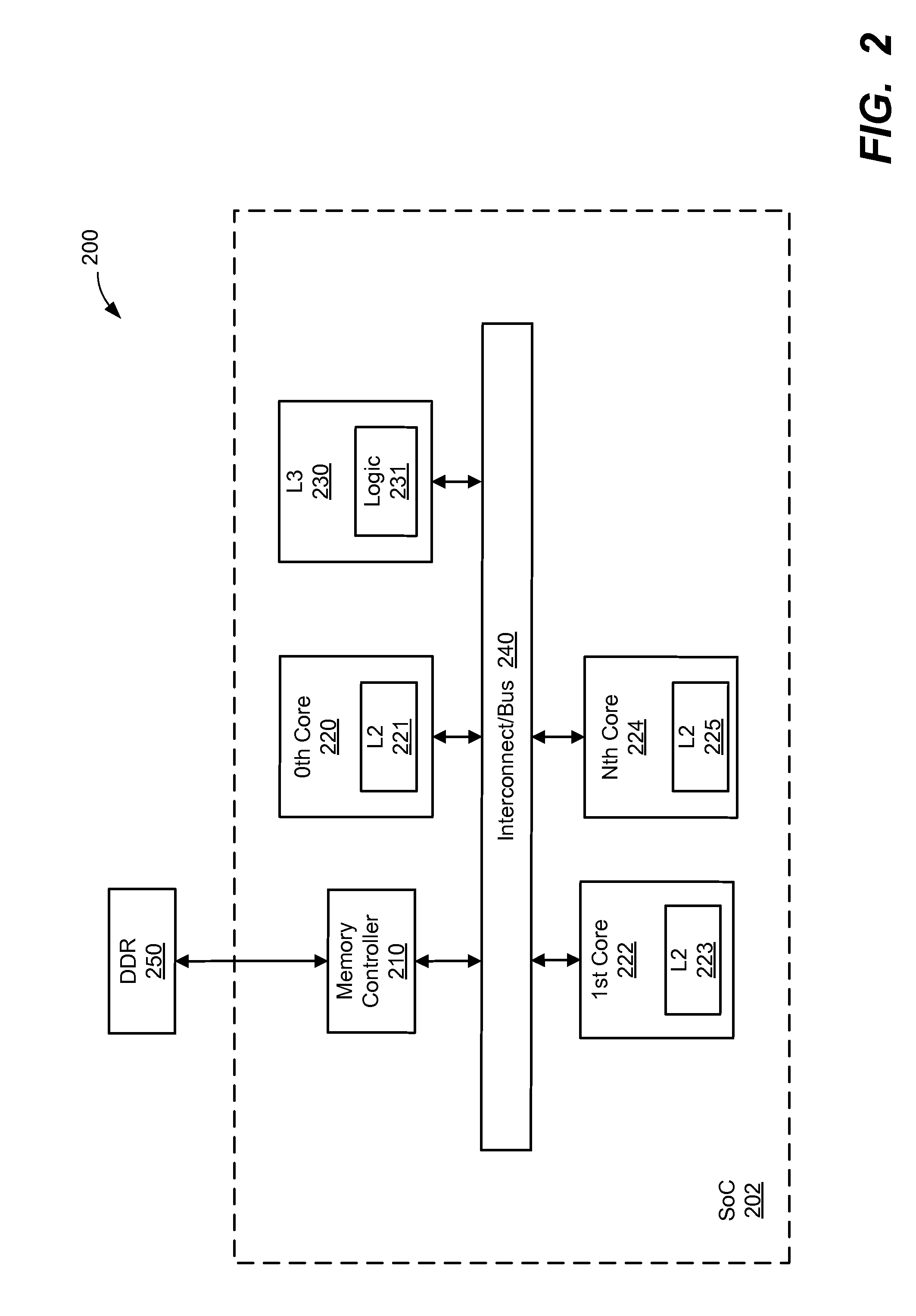

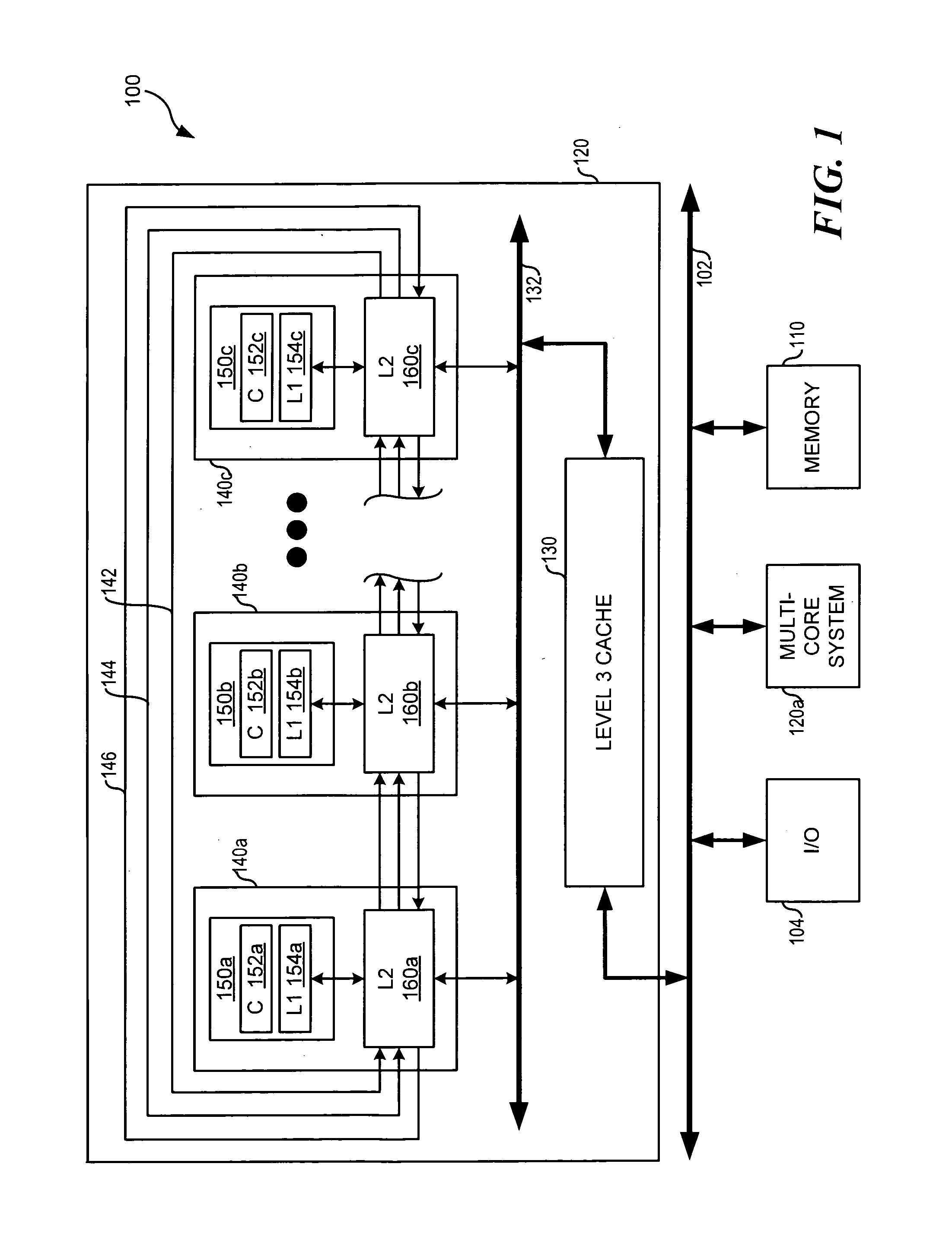

Horizontally-shared cache victims in multiple core processors

ActiveUS20080091880A1Lower latencyImprove performanceEnergy efficient ICTMemory adressing/allocation/relocationLatency (engineering)Multi-core processor

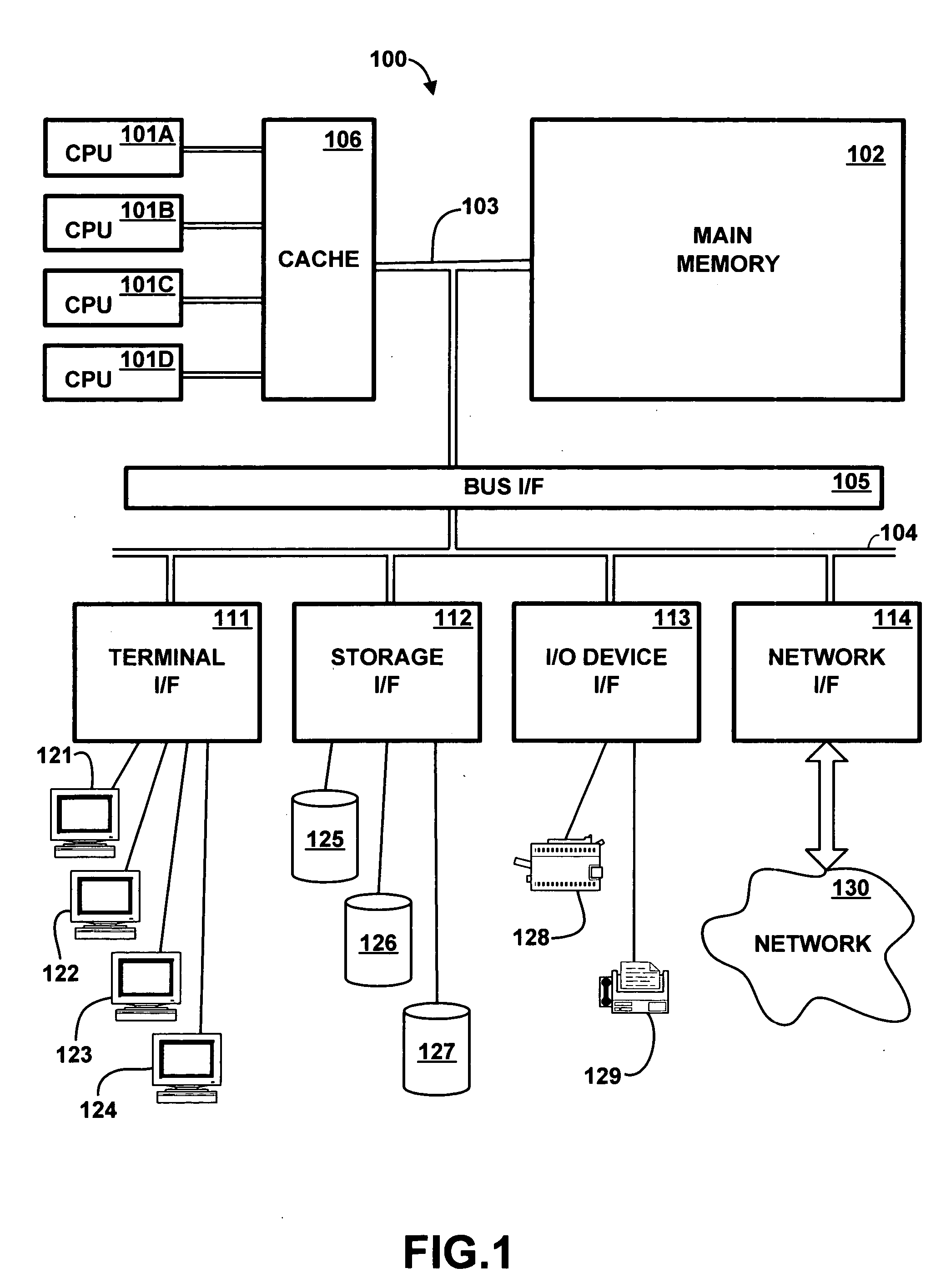

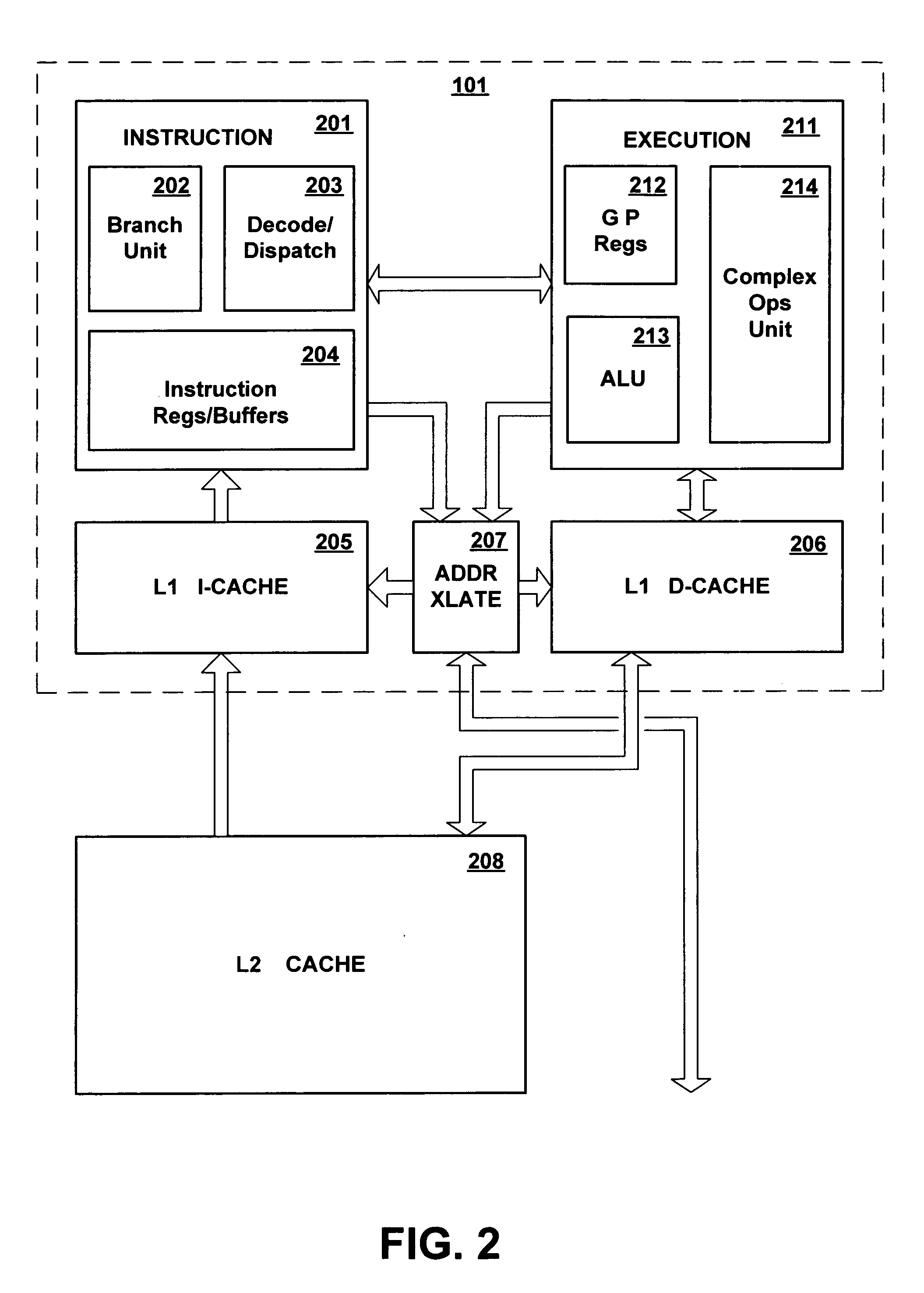

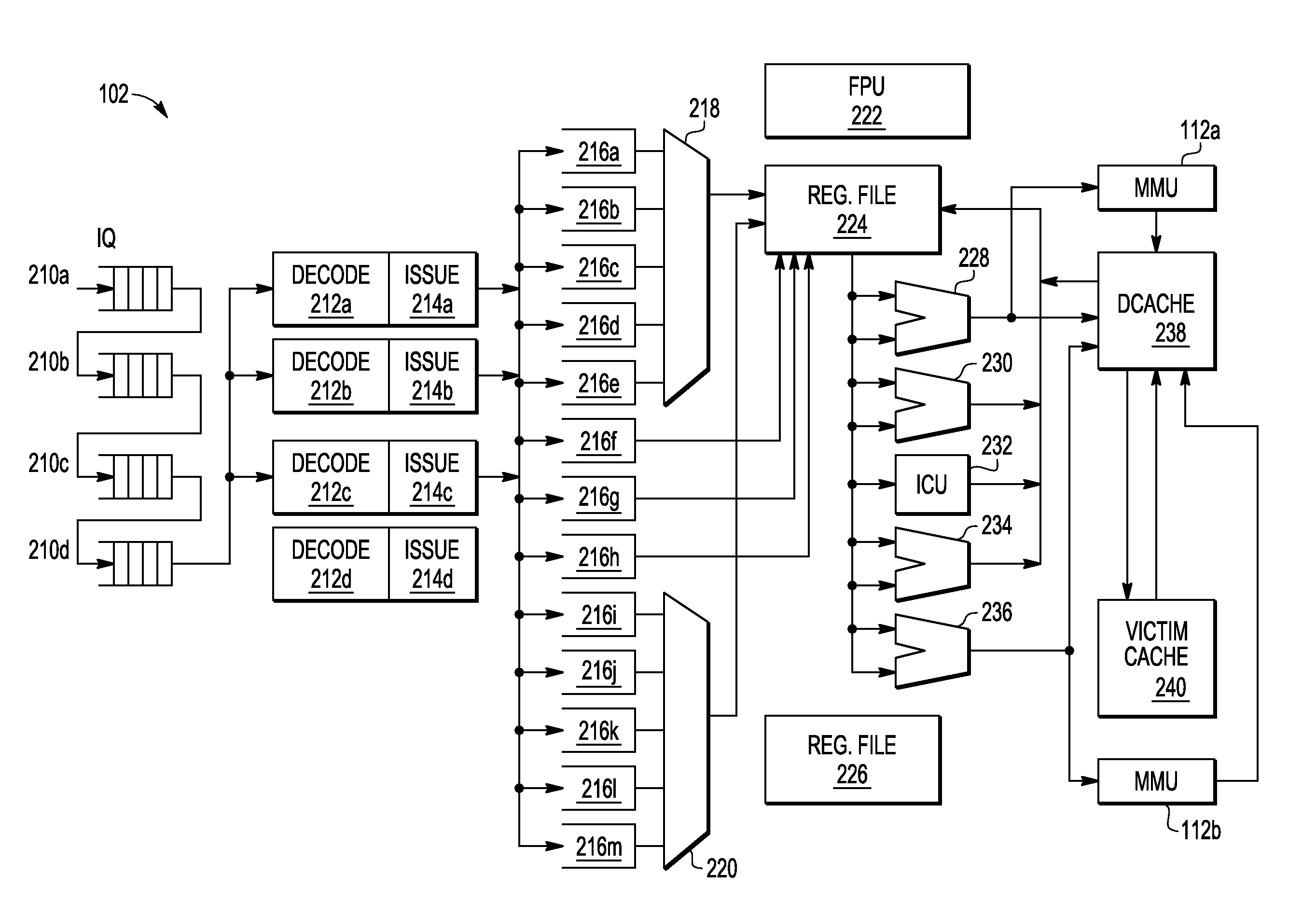

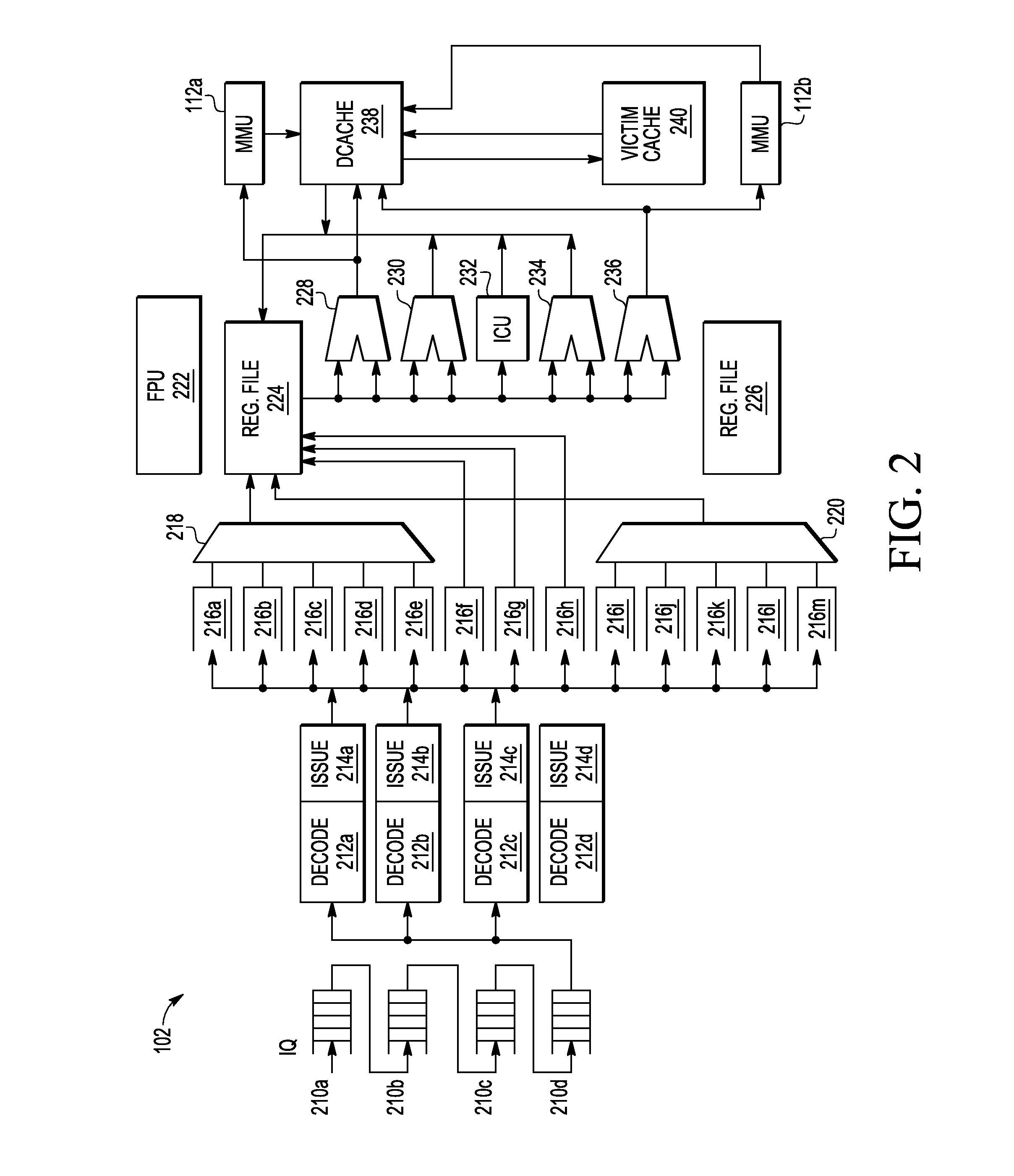

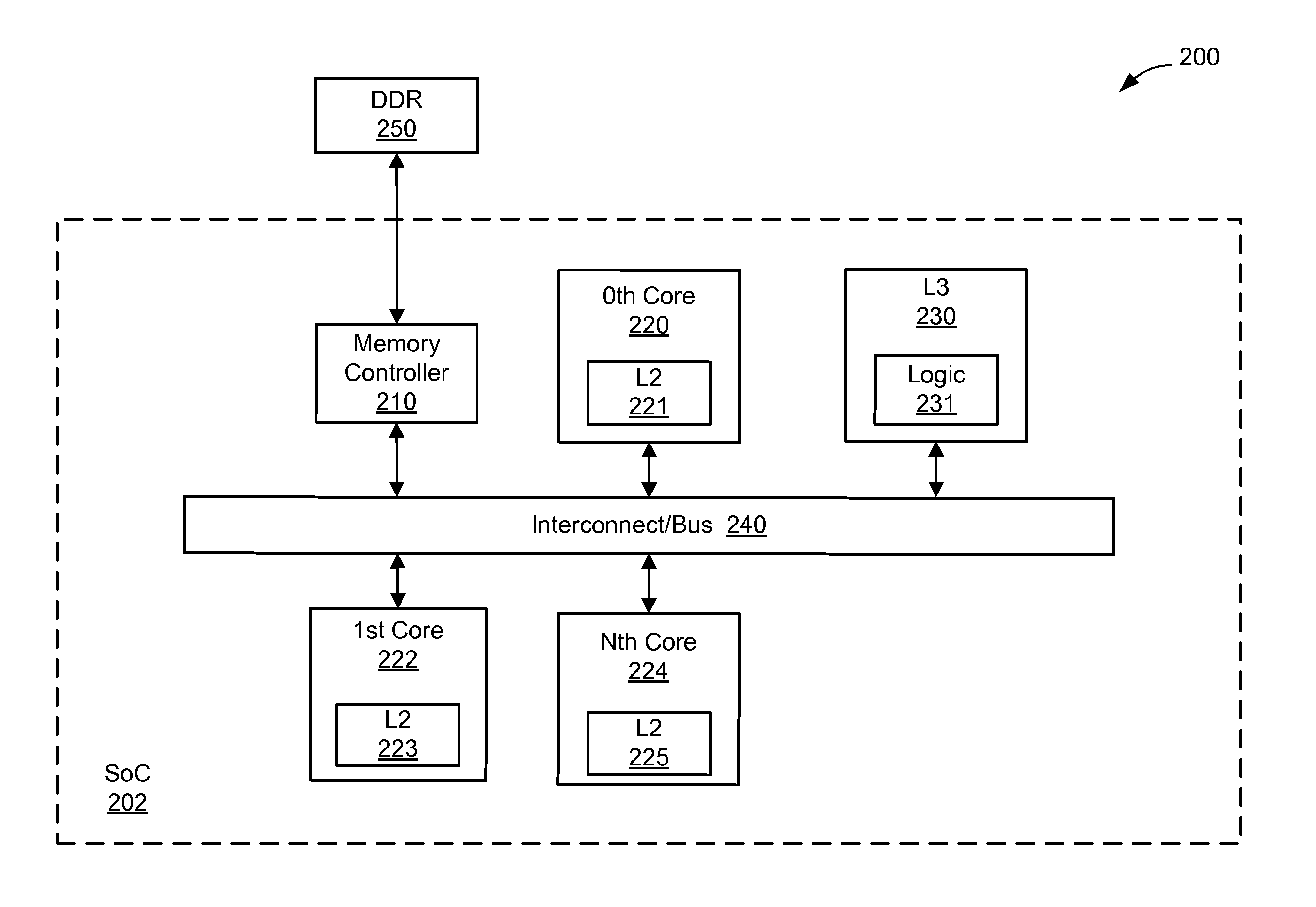

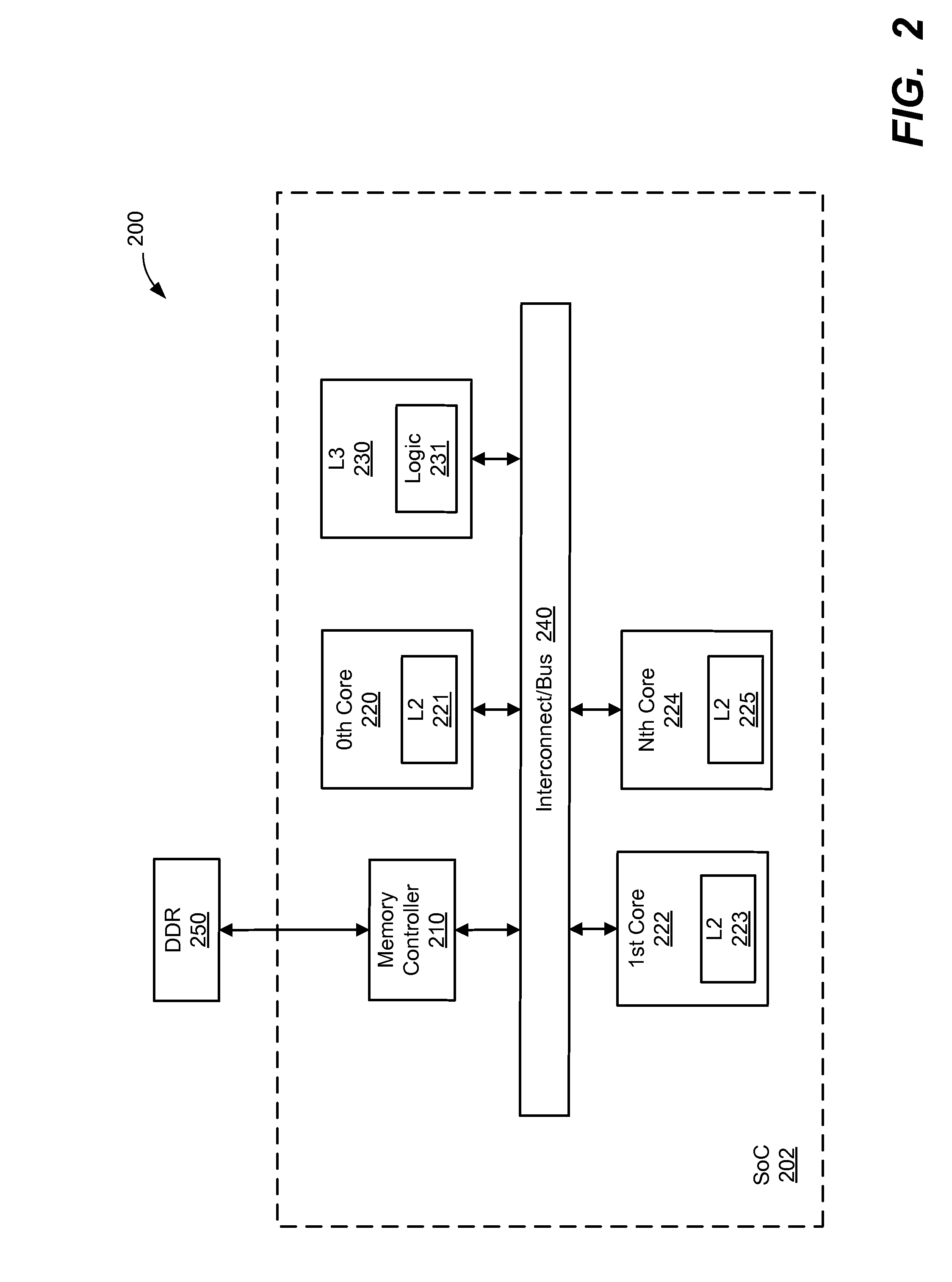

A processor includes multiple processor core units, each including a processor core and a cache memory. Victim lines evicted from a first processor core unit's cache may be stored in another processor core unit's cache, rather than written back to system memory. If the victim line is later requested by the first processor core unit, the victim line is retrieved from the other processor core unit's cache. The processor has low latency data transfers between processor core units. The processor transfers victim lines directly between processor core units' caches or utilizes a victim cache to temporarily store victim lines while searching for their destinations. The processor evaluates cache priority rules to determine whether victim lines are discarded, written back to system memory, or stored in other processor core units' caches. Cache priority rules can be based on cache coherency data, load balancing schemes, and architectural characteristics of the processor.

Owner:MIPS TECH INC

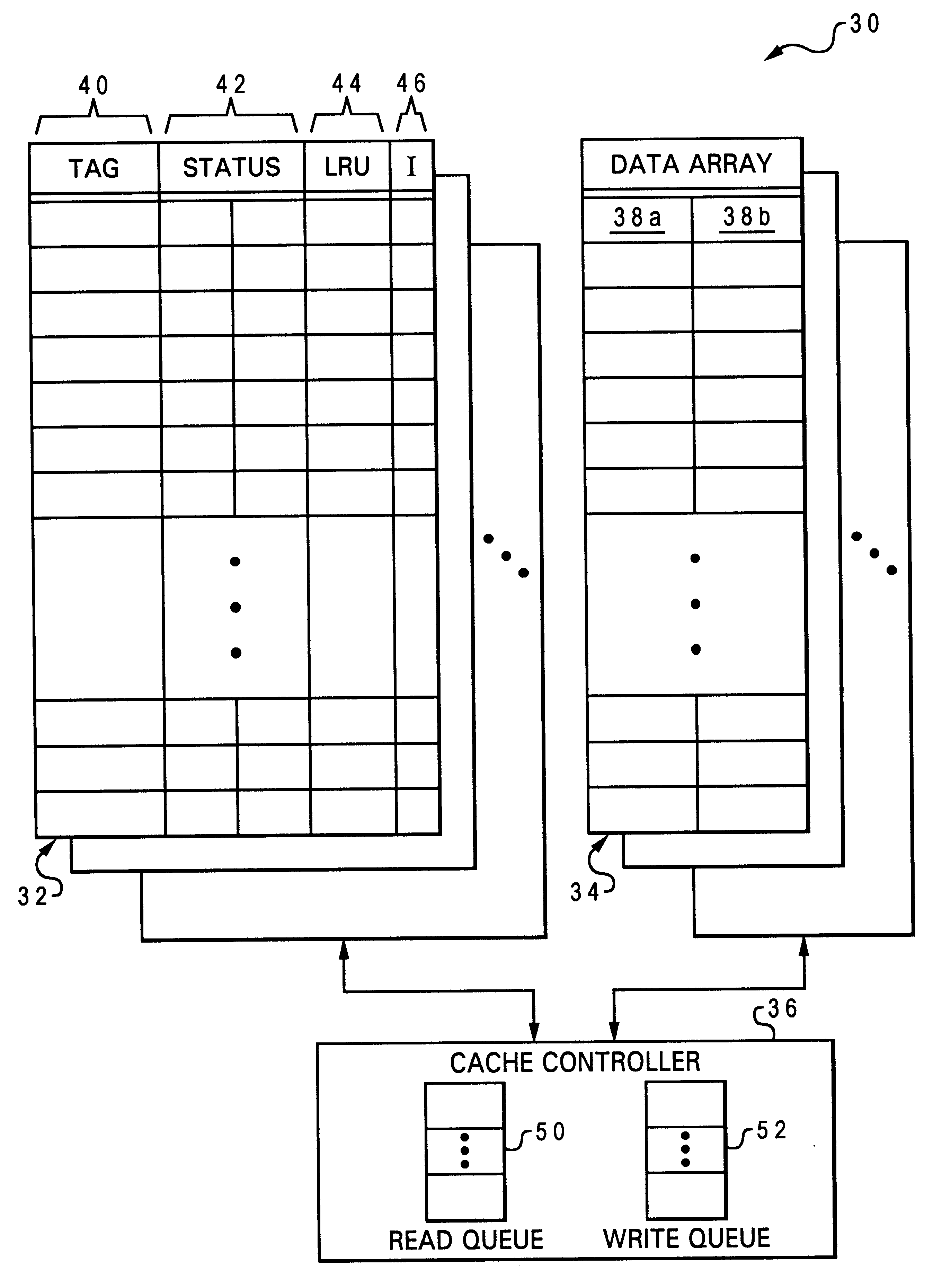

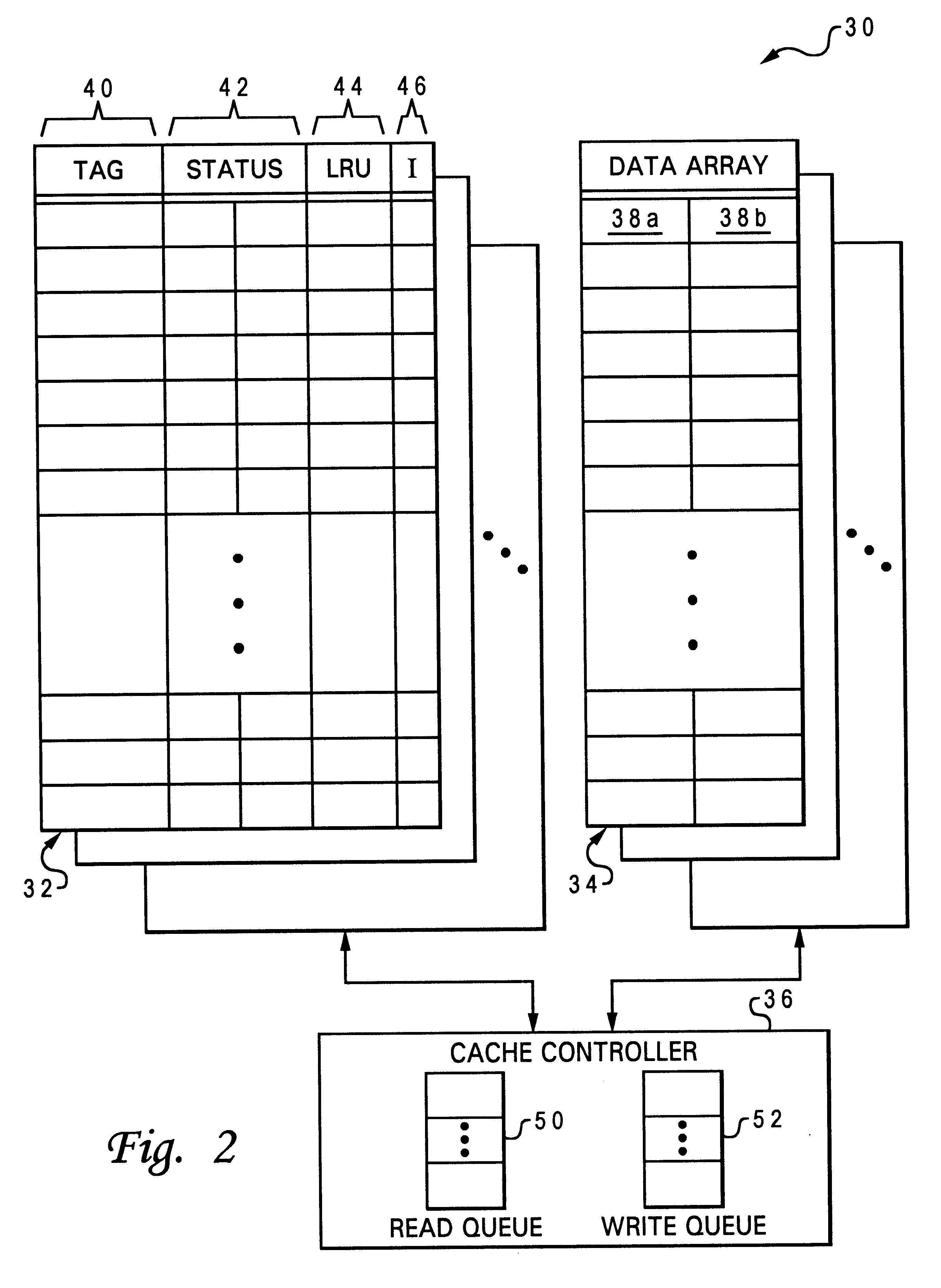

Data processing system, cache, and method that select a castout victim in response to the latencies of memory copies of cached data

A data processing system includes a processing unit, a distributed memory including a local memory and a remote memory having differing access latencies, and a cache coupled to the processing unit and to the distributed memory. The cache includes a congruence class containing a plurality of cache lines and a plurality of latency indicators that each indicate an access latency to the distributed memory for a respective one of the cache lines. The cache further includes a cache controller that selects a cache line in the congruence class as a castout victim in response to the access latencies indicated by the plurality of latency indicators. In one preferred embodiment, the cache controller preferentially selects as castout victims cache lines having relatively short access latencies.

Owner:IBM CORP

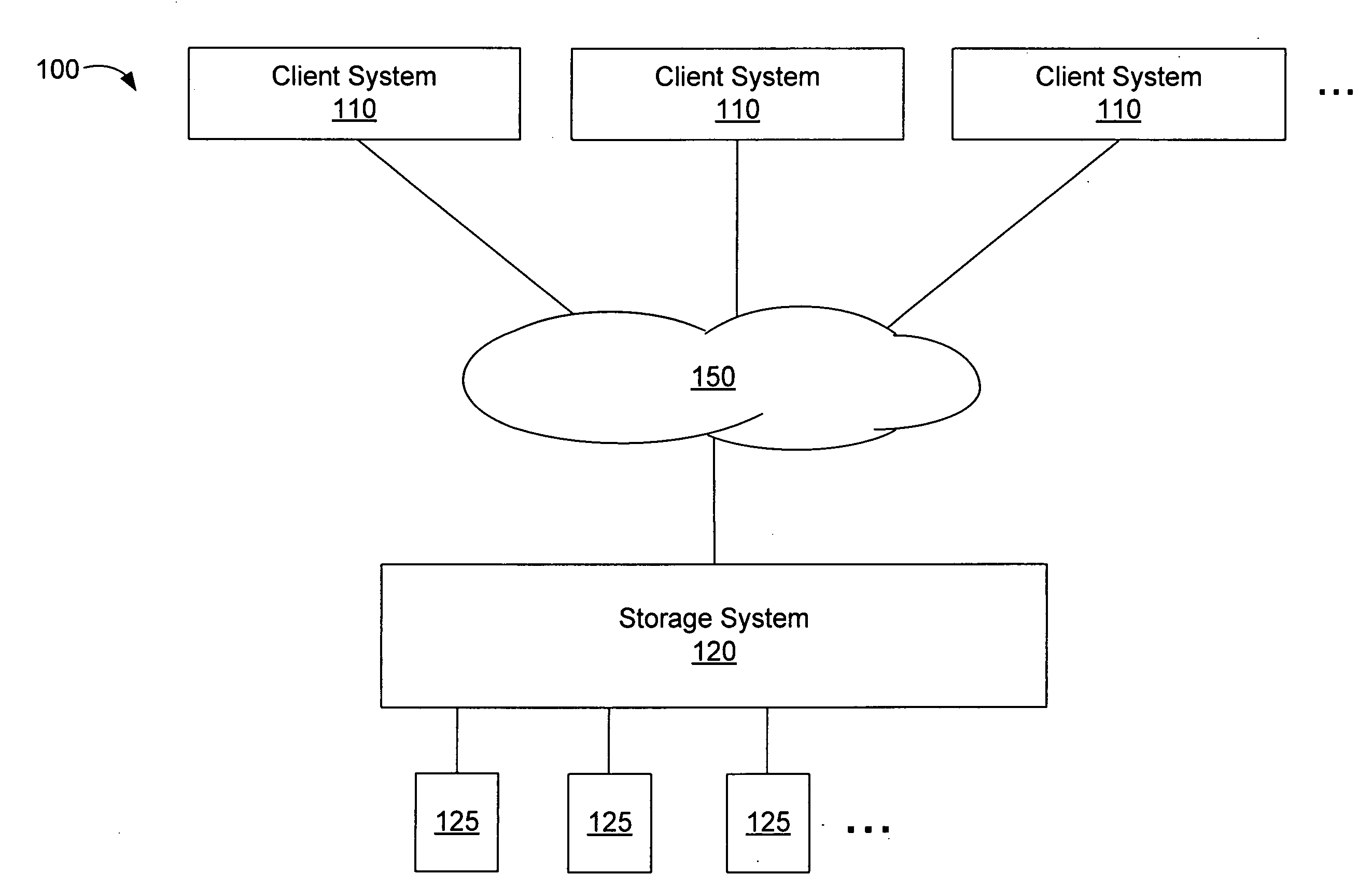

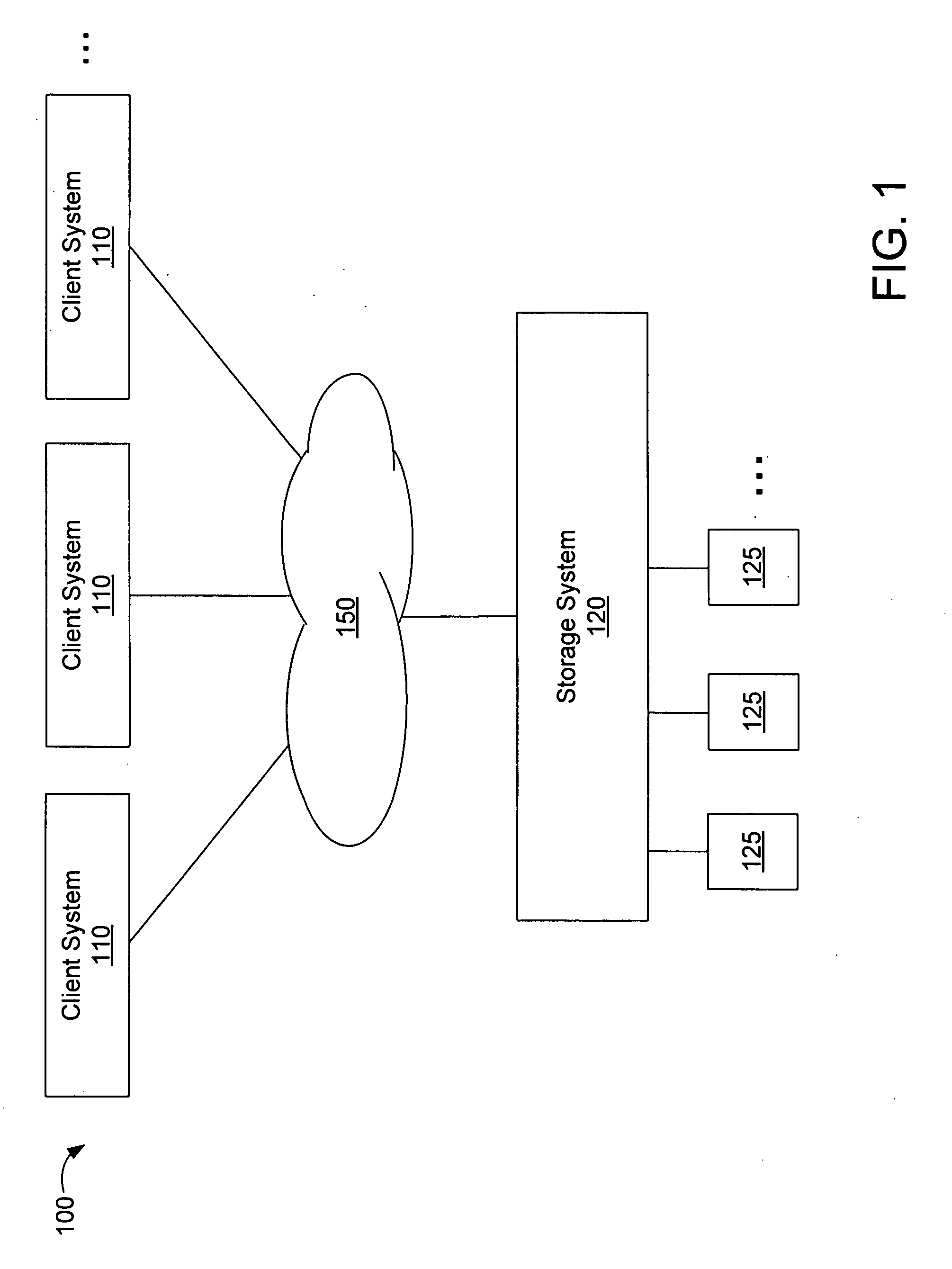

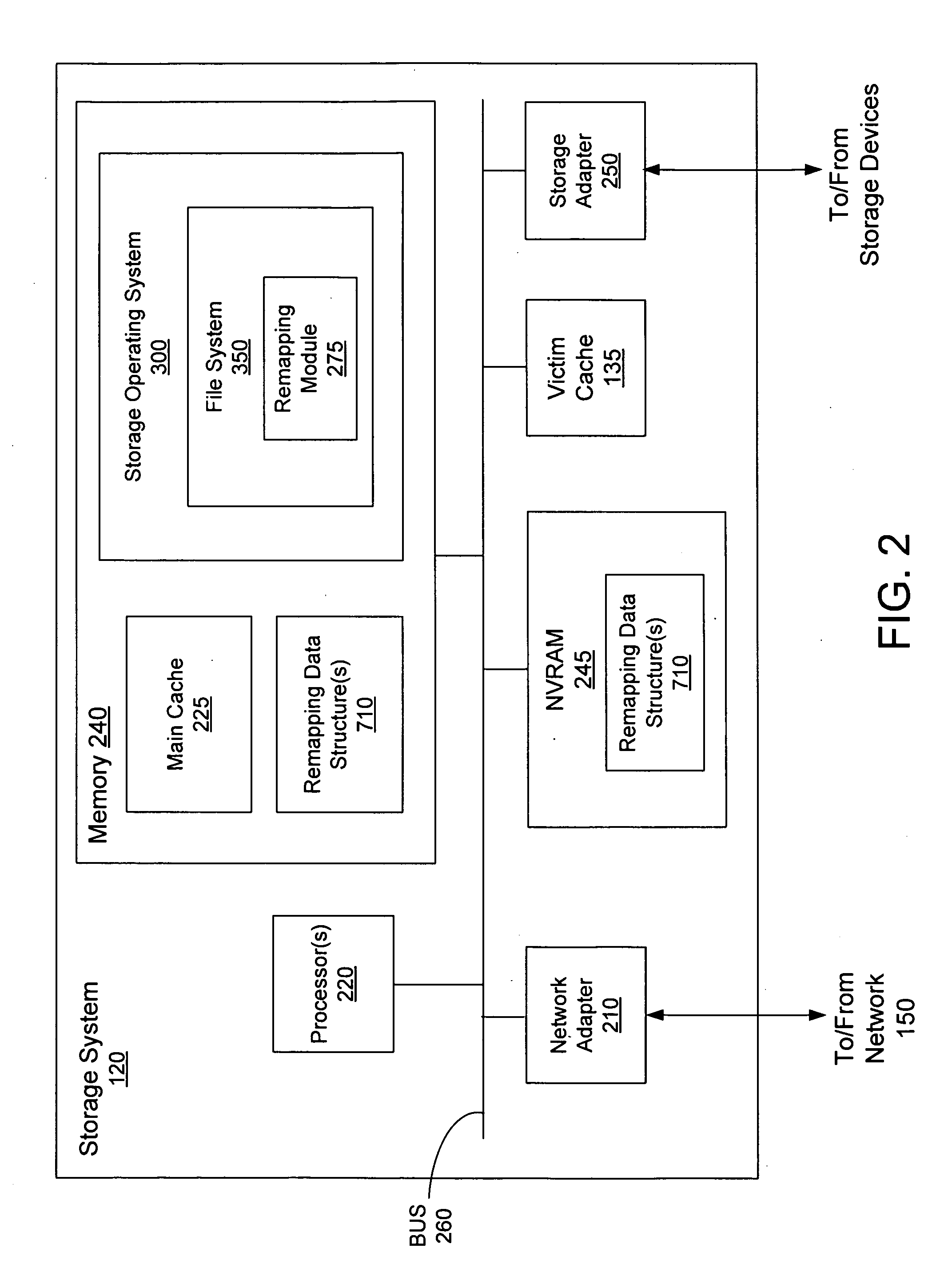

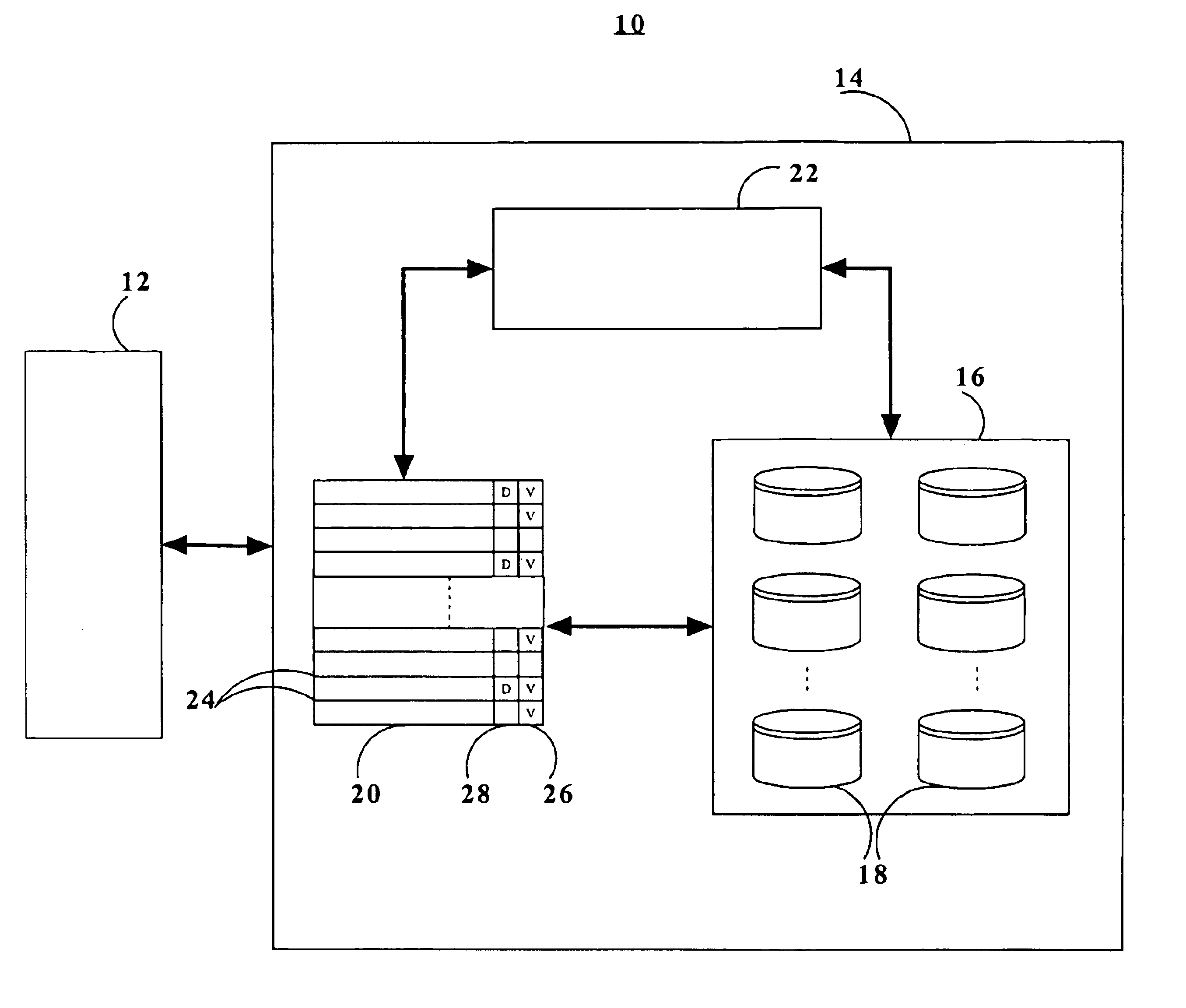

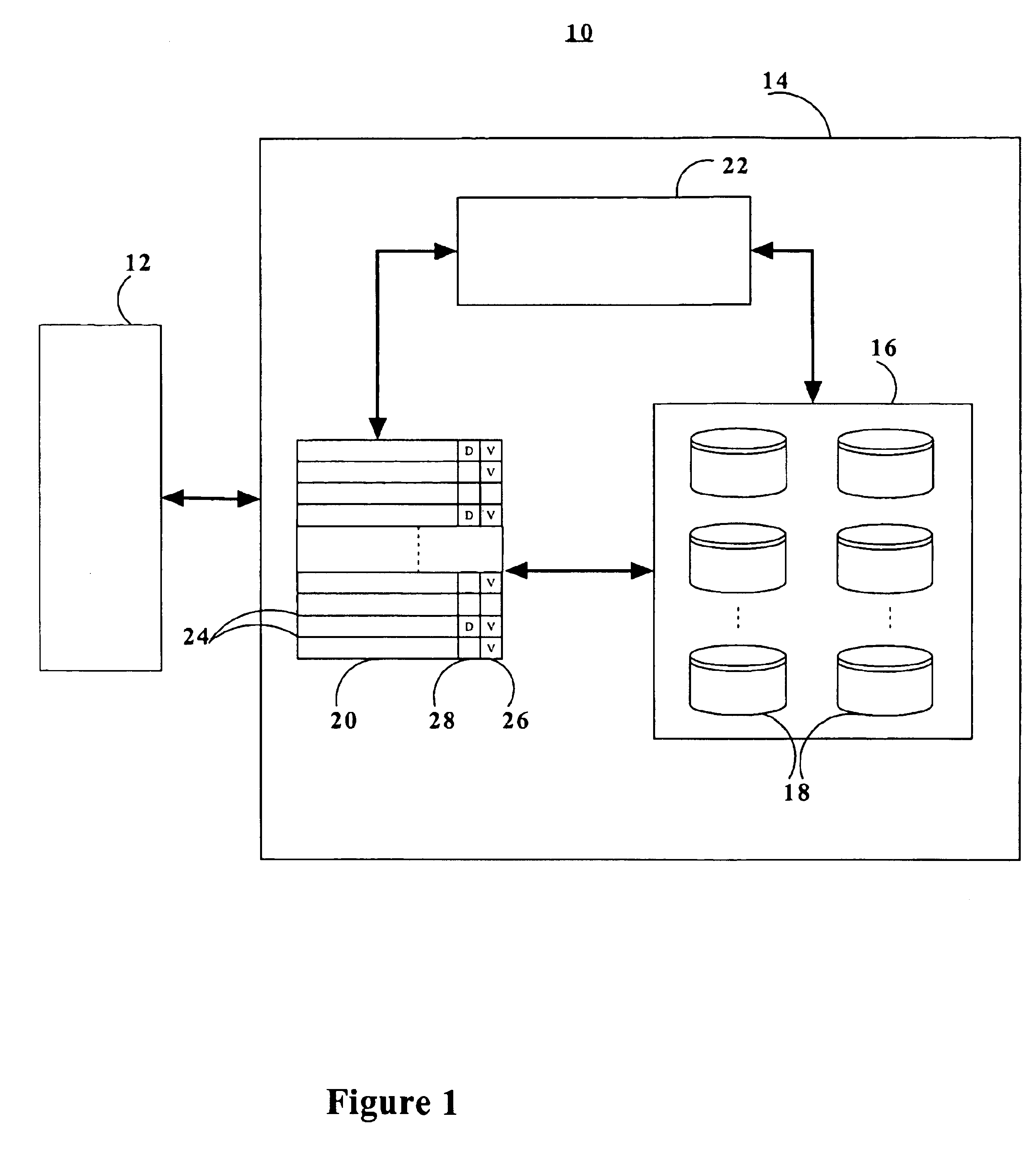

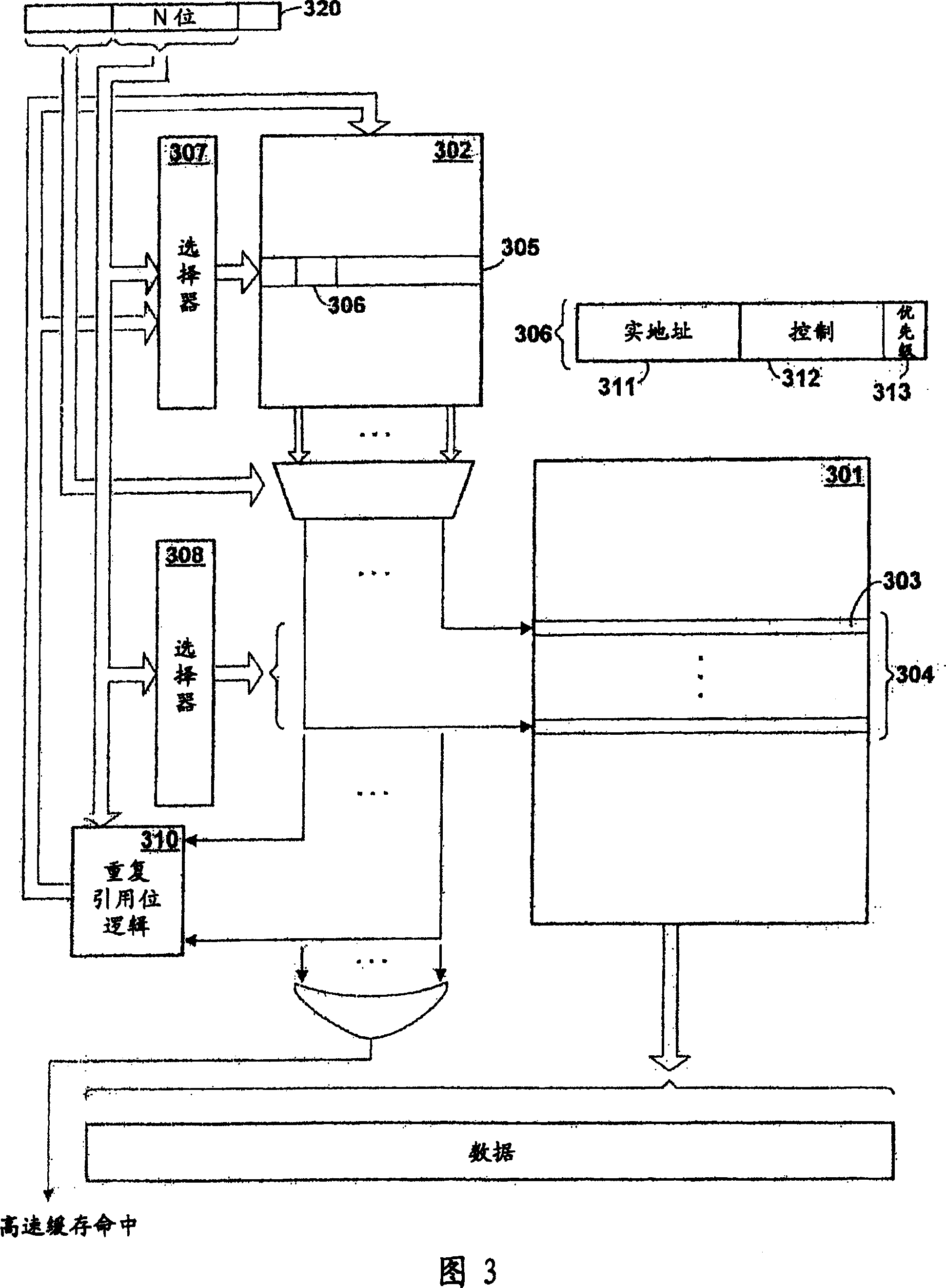

Remapping of Data Addresses for a Large Capacity Victim Cache

InactiveUS20100217952A1Reduce storage spaceAccurate accessMemory adressing/allocation/relocationMicro-instruction address formationParallel computingLarge capacity

Method and apparatus for remapping addresses for a victim cache used in a storage system is provided. The storage system may store data blocks having associated storage system addresses. Blocks may be stored to a main cache and blocks evicted from main cache may be stored in the victim cache, each evicted block having a storage system address and a victim cache address where it is stored in the victim cache. Remapping data for remapping between storage system addresses to victim cache addresses may be stored in remapping data structures. The victim cache may be sub-divided into two or more sub-sections, each sub-section having an associated remapping data structure for storing its remapping data. By sub-dividing the victim cache, the bit size of victim cache addresses stored in the remapping data structures may be reduced, thus reducing the overall storage size of the remapping data for the victim cache.

Owner:NETWORK APPLIANCE INC

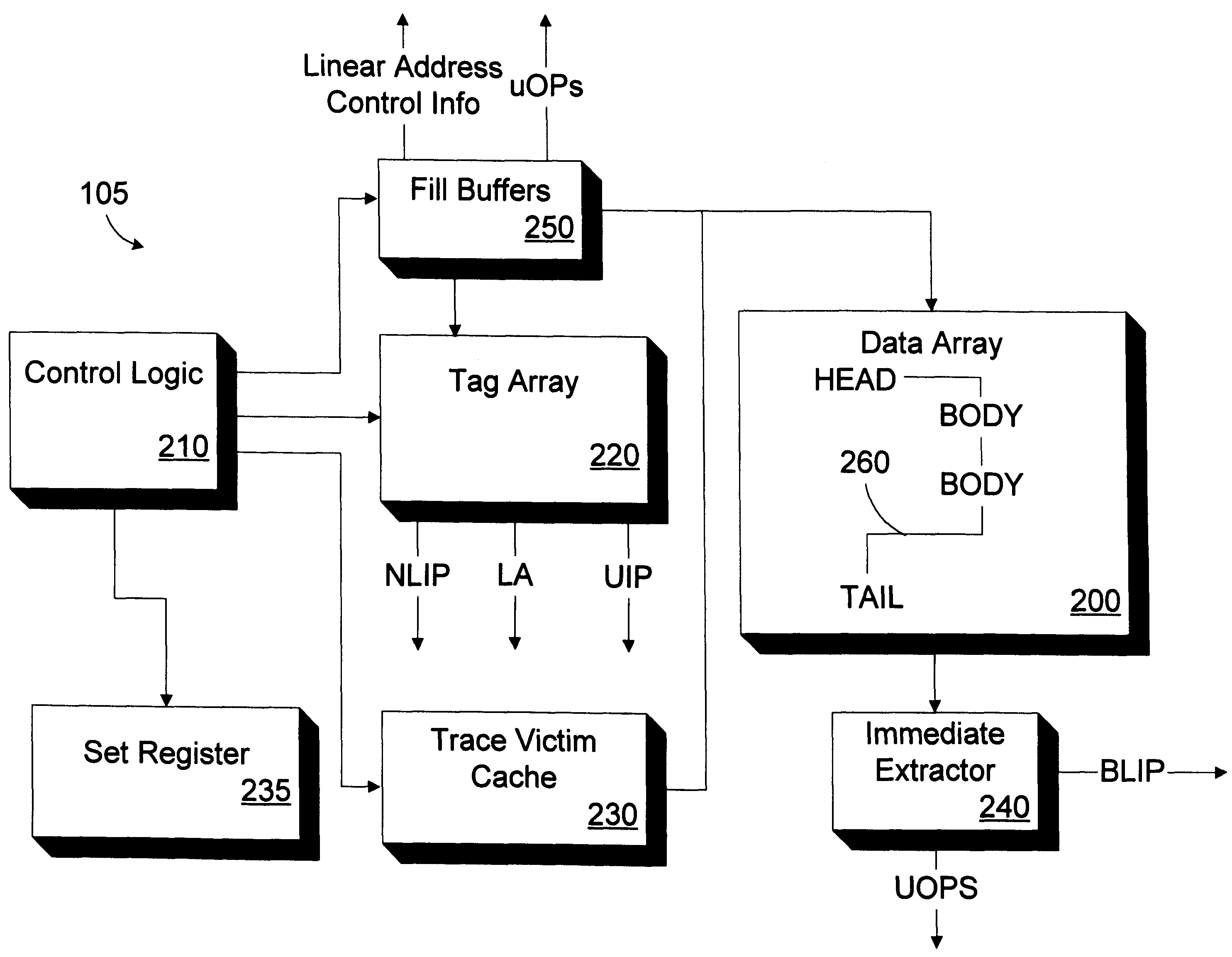

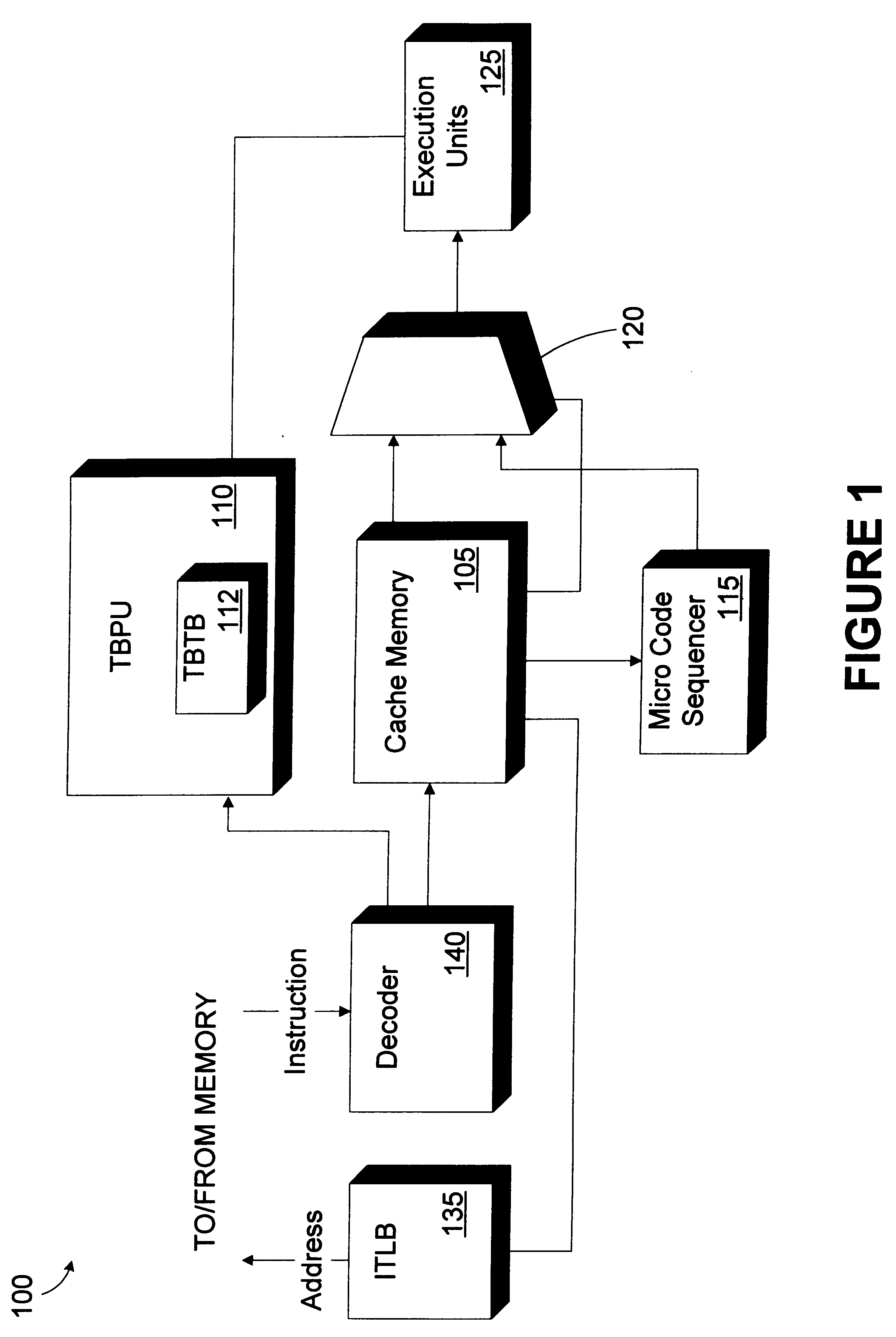

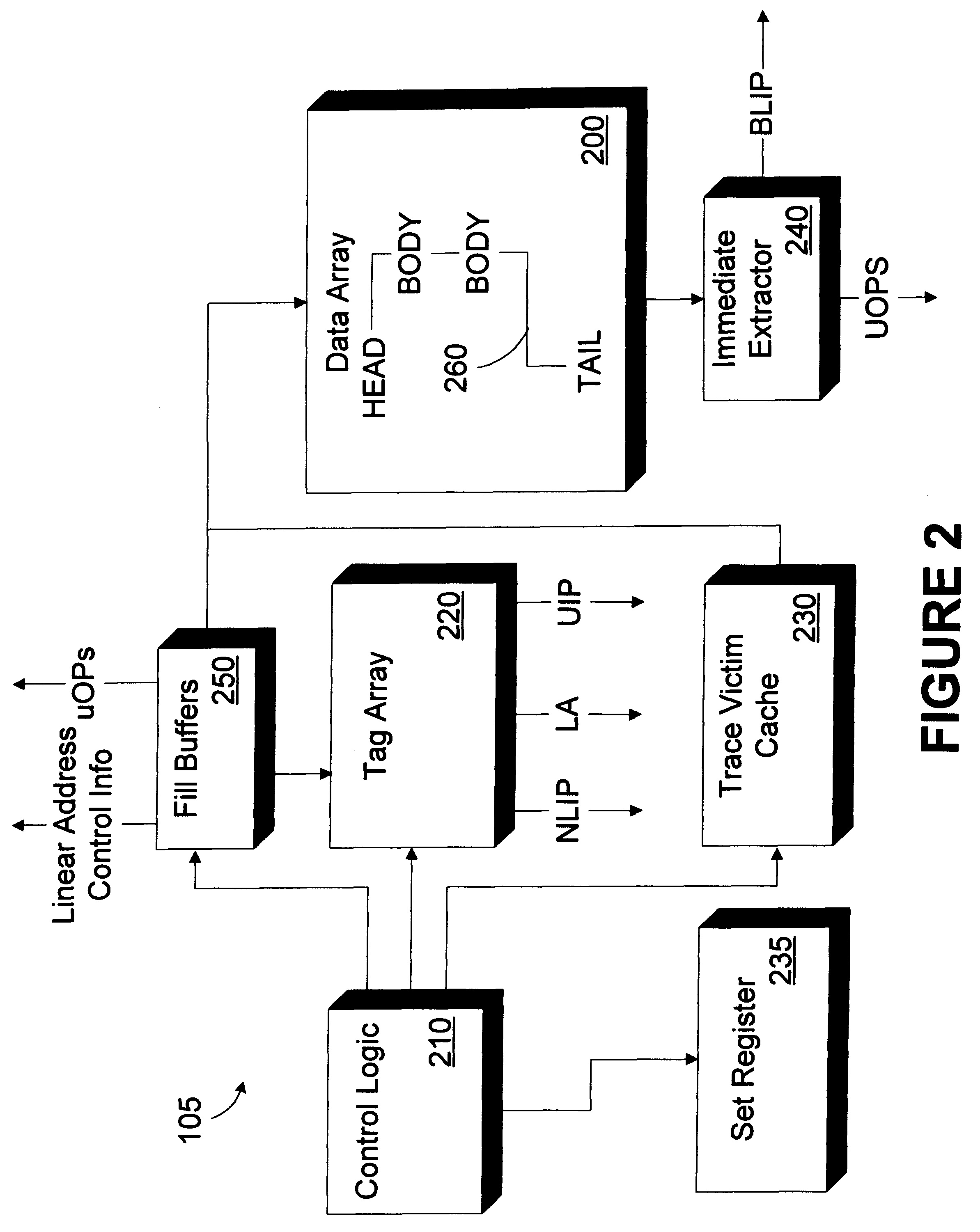

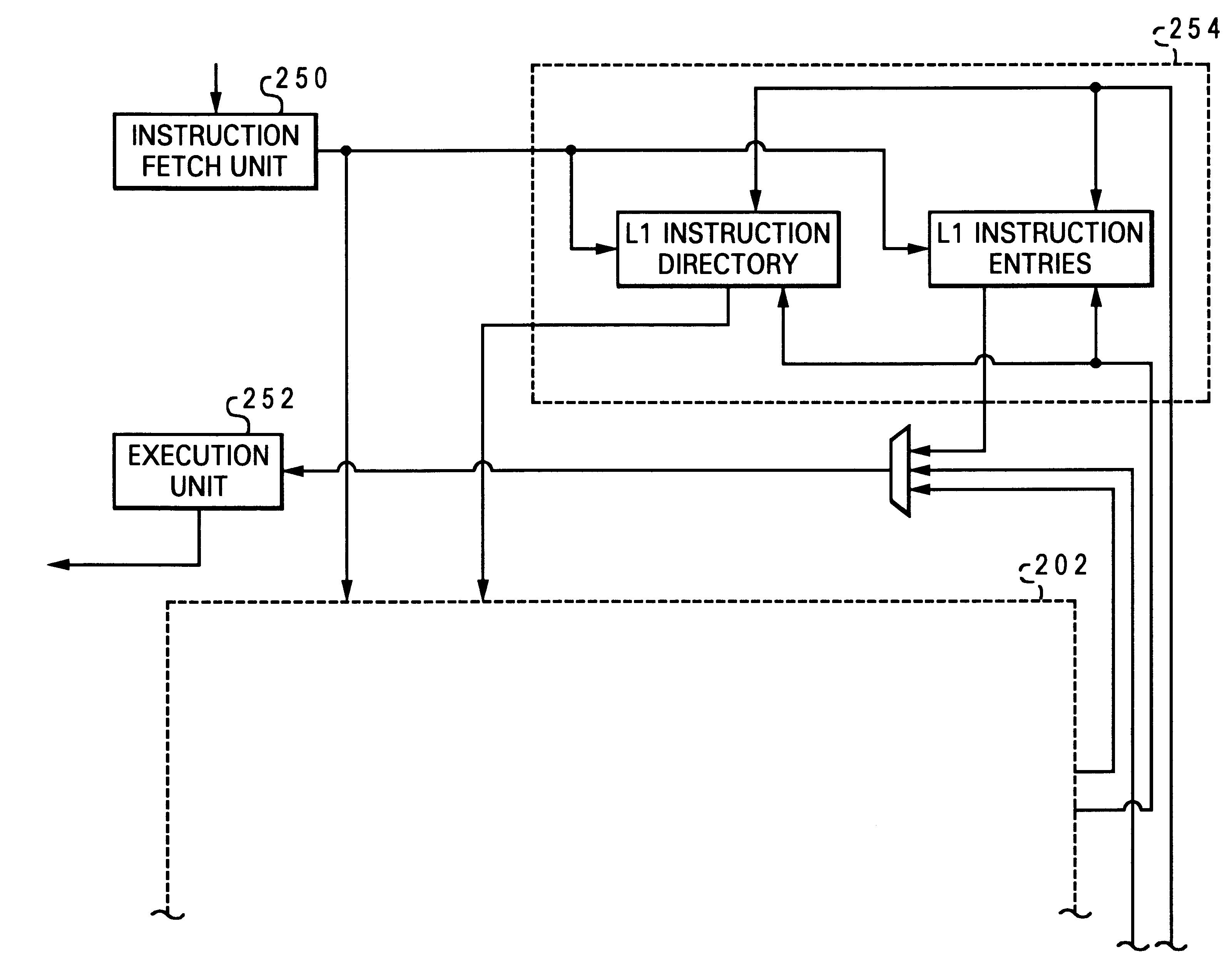

Trace victim cache

InactiveUS6216206B1Memory adressing/allocation/relocationDigital computer detailsParallel computingLine segment

A cache memory includes a data array and a trace victim cache. The data array is adapted to store a plurality of trace segments. Each trace segment includes at least one trace segment member. The trace victim cache is adapted to store plurality of entries. Each entry includes a replaced trace segment member selected for replacement from one of the plurality of trace segments. A method for accessing cached instructions, the cached instructions being stored in a data array, the cached instructions being organized in trace segments, the trace segment having a plurality of trace segment members, includes retrieving a first trace segment member of a first trace segment; identifying an expected location within the data array of at least one subsequent trace segment member of the first trace segment; determining if the subsequent trace segment member is stored in the data array at the expected location; and determining if the subsequent trace segment member is stored in a trace victim cache if the subsequent trace segment member is not stored in the data array at the expected location.

Owner:INTEL CORP

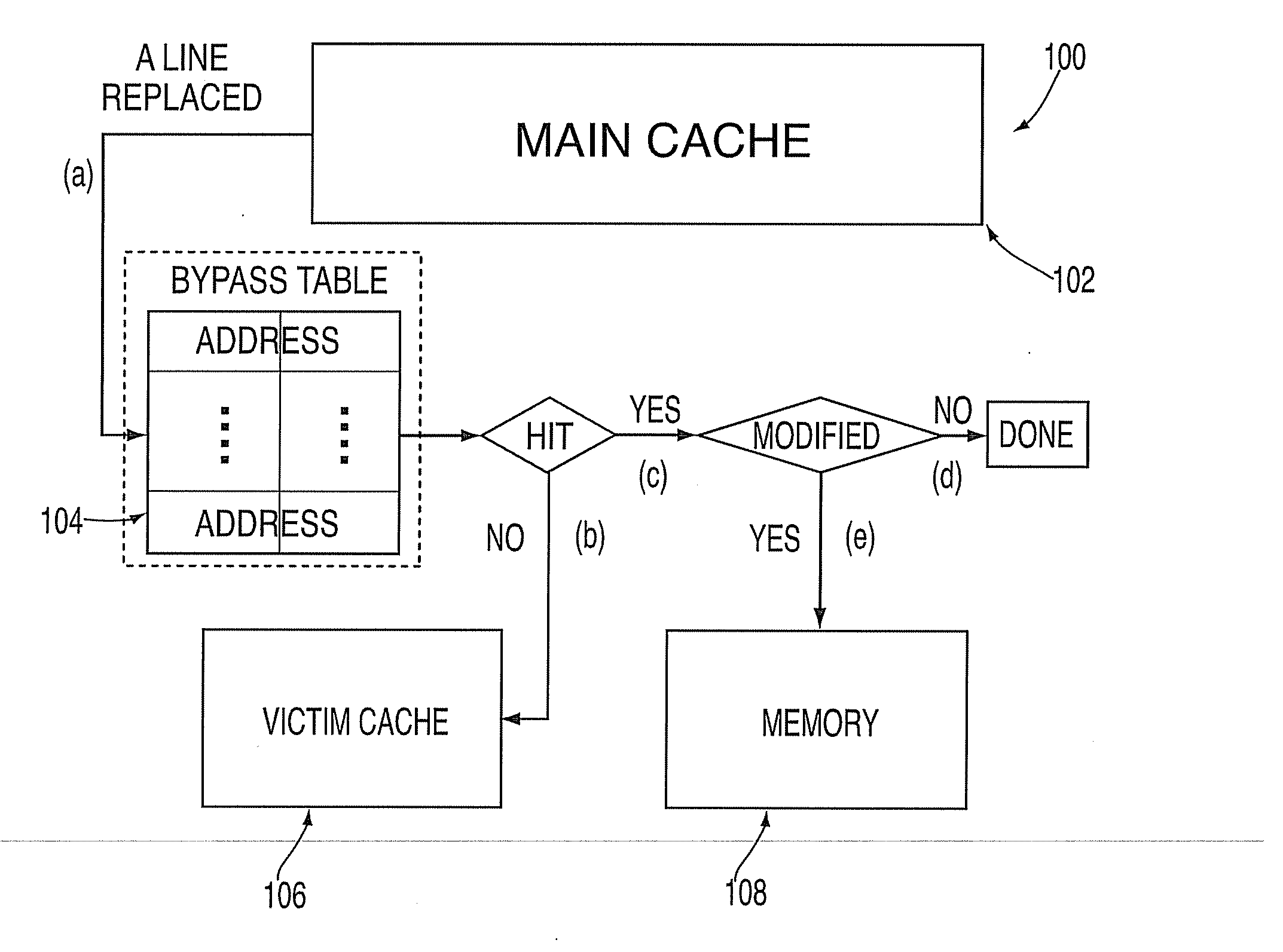

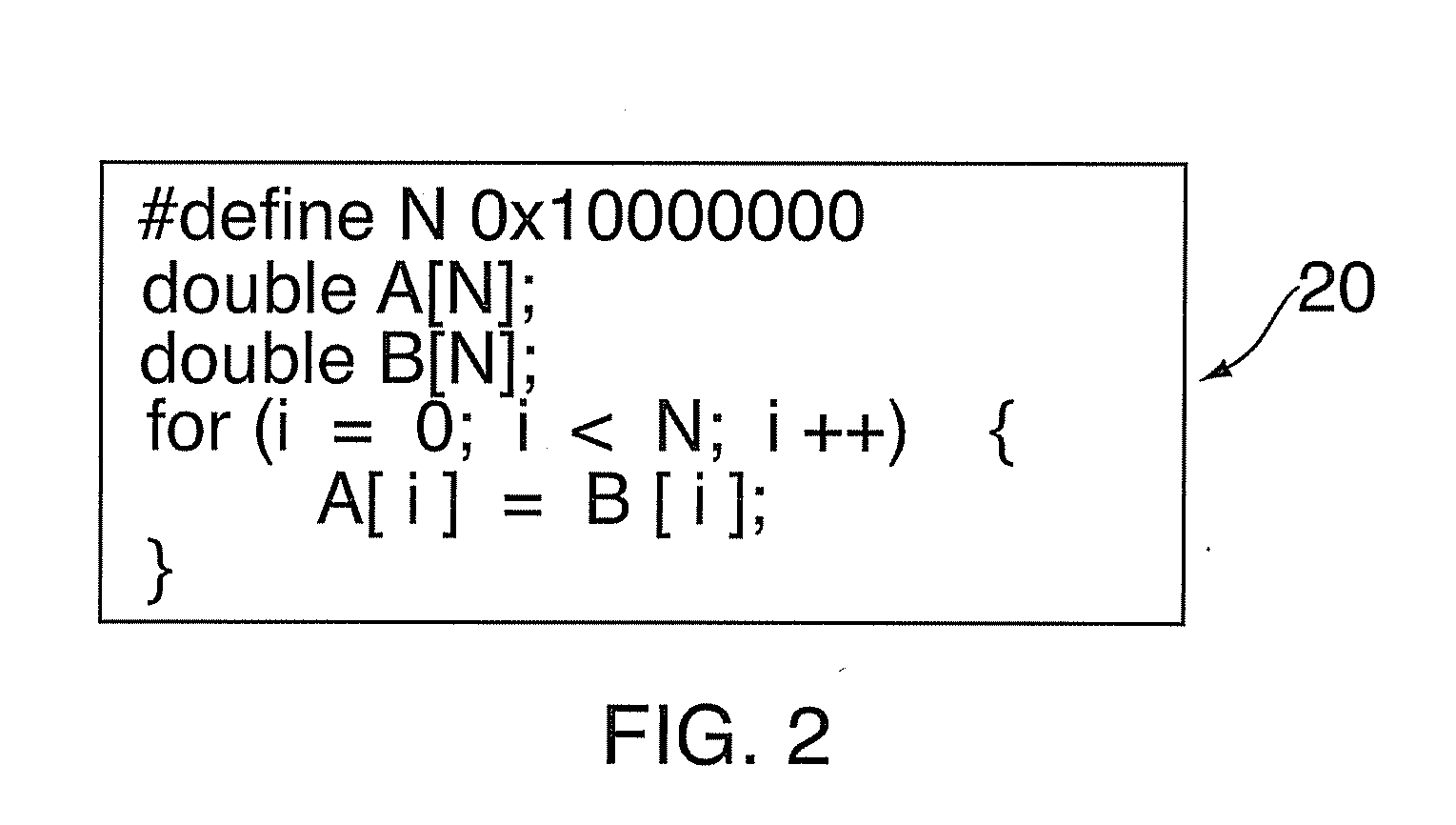

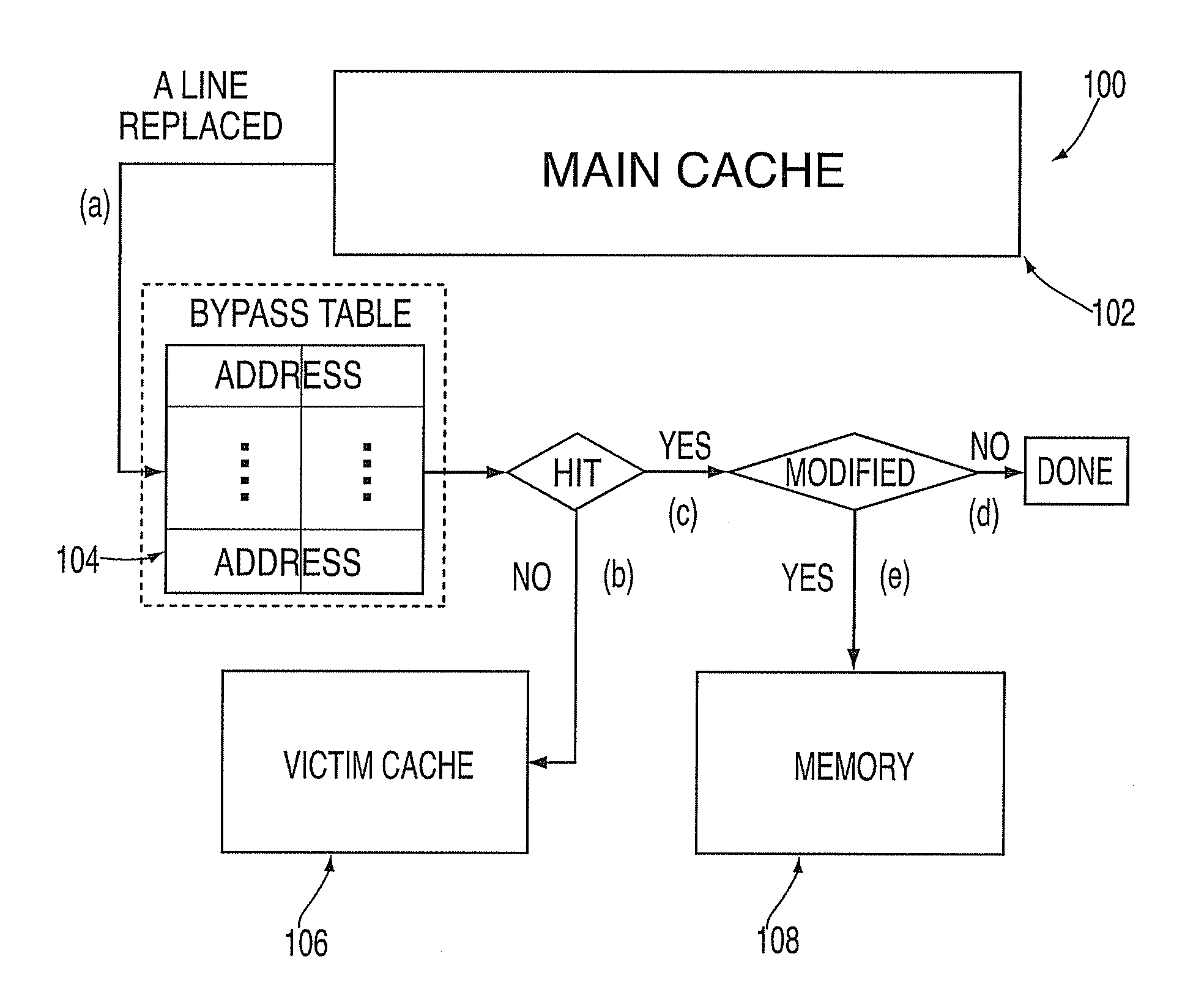

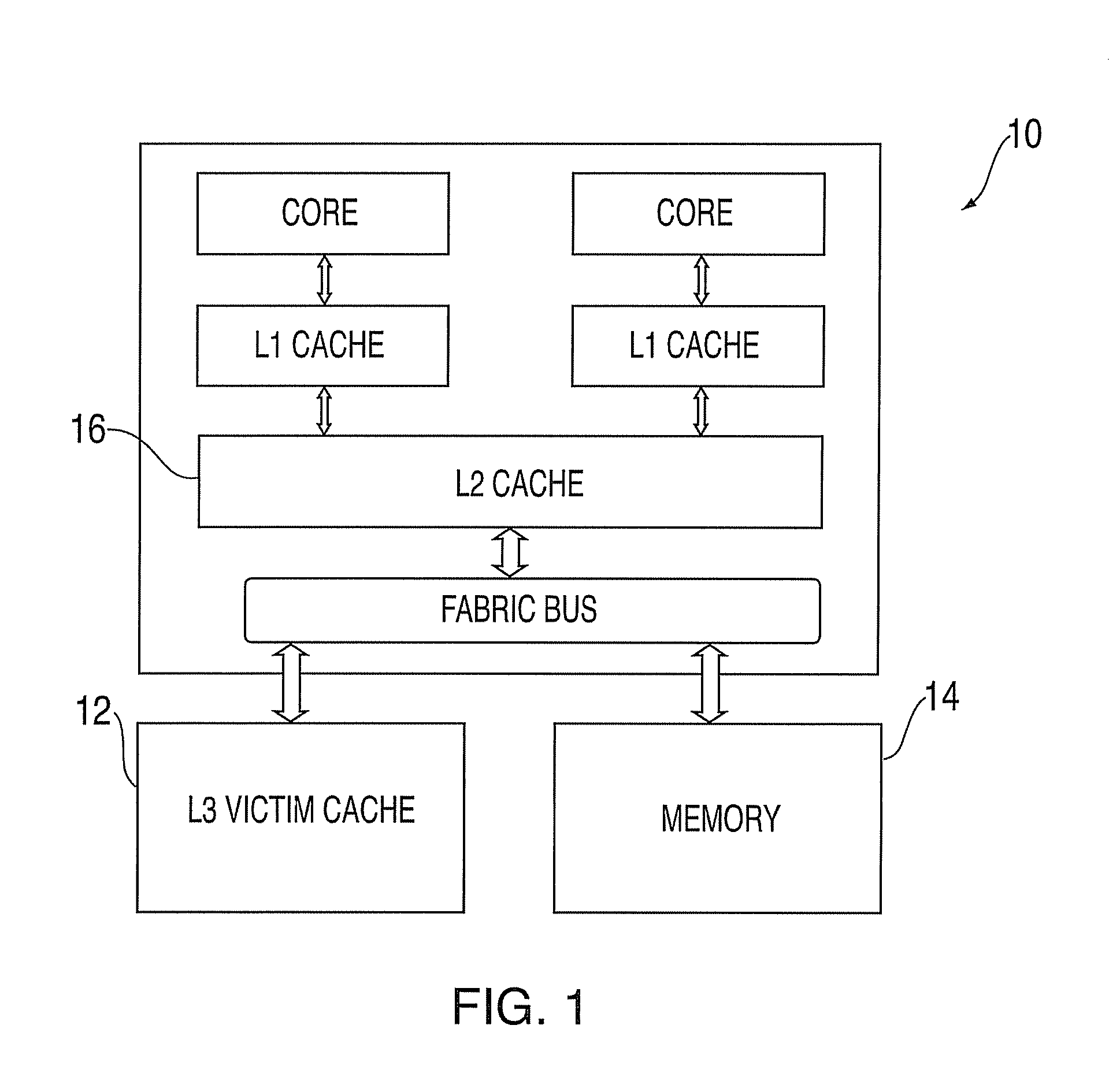

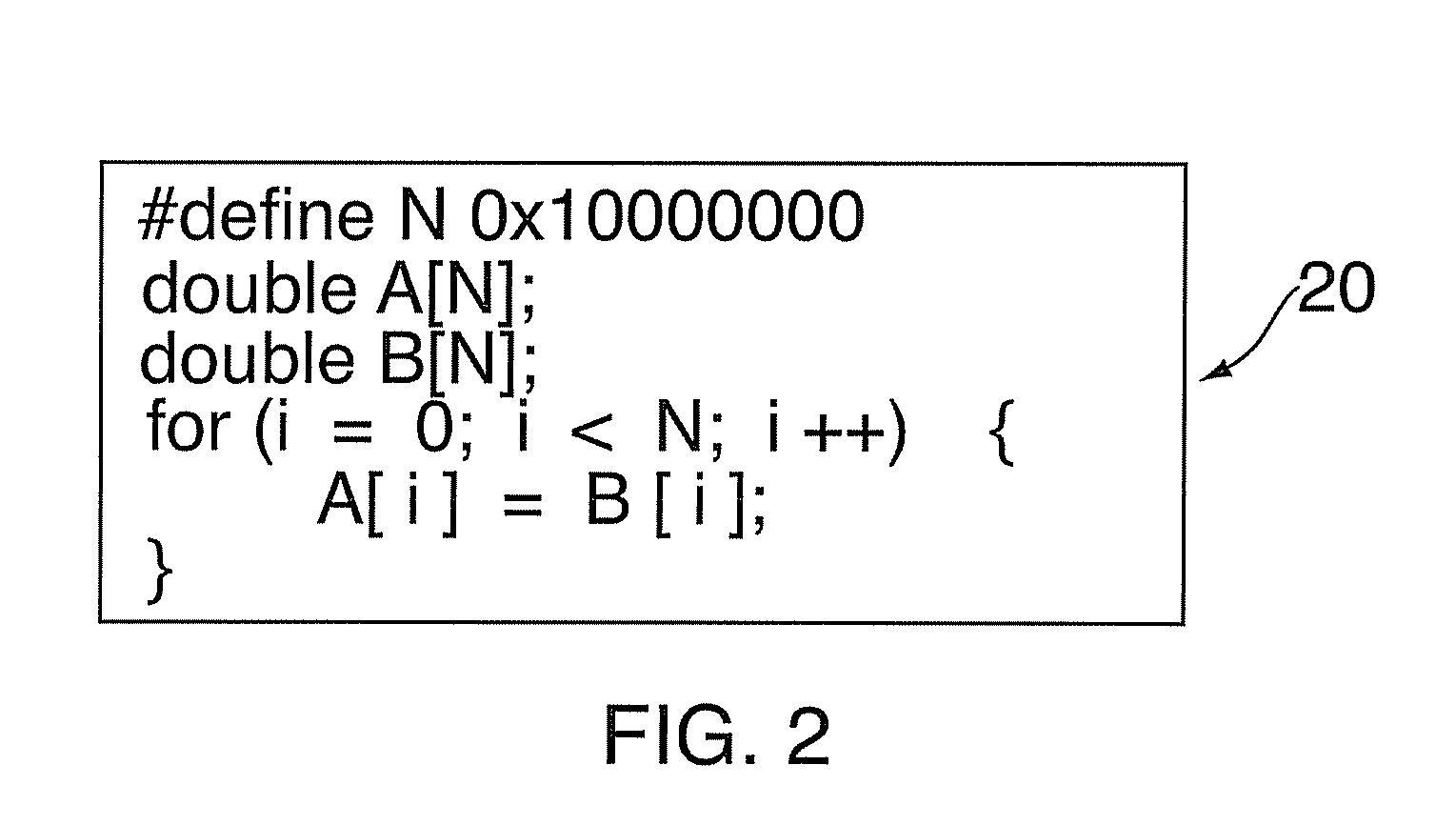

Complier assisted victim cache bypassing

InactiveUS20070260819A1Avoid pollutionReduce in quantityEnergy efficient ICTEnergy efficient computingParallel computingVictim cache

A method for compiler assisted victim cache bypassing including: identifying a cache line as a candidate for victim cache bypassing; conveying a bypassing-the-victim-cache information to a hardware; and checking a state of the cache line to determine a modified state of the cache line, wherein the cache line is identified for cache bypassing if the cache line that has no reuse within a loop or loop nest and there is no immediate loop reuse or there is a substantial across loop reuse distance so that it will be replaced from both main and victim cache before being reused.

Owner:IBM CORP

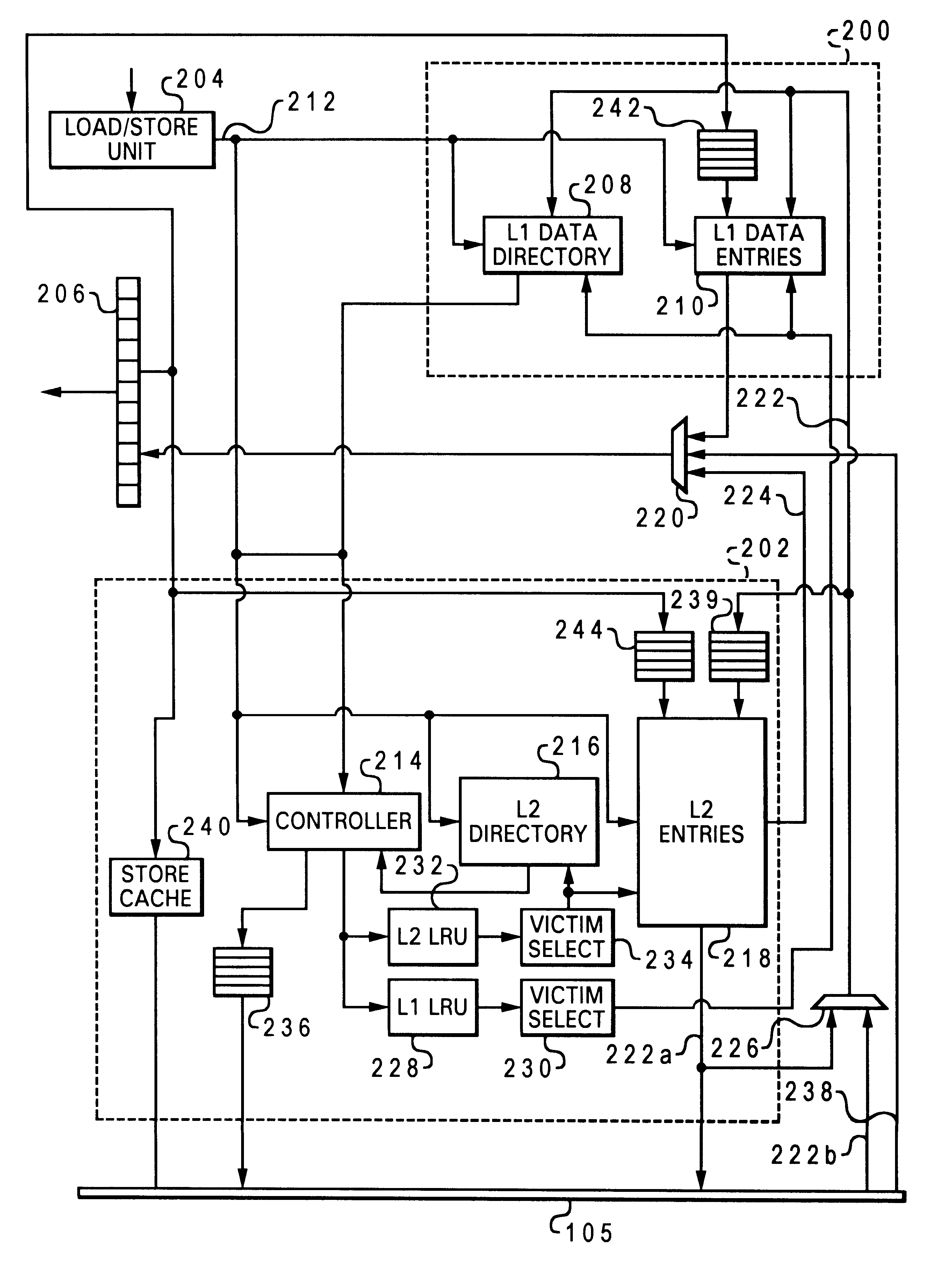

Layered local cache with lower level cache updating upper and lower level cache directories

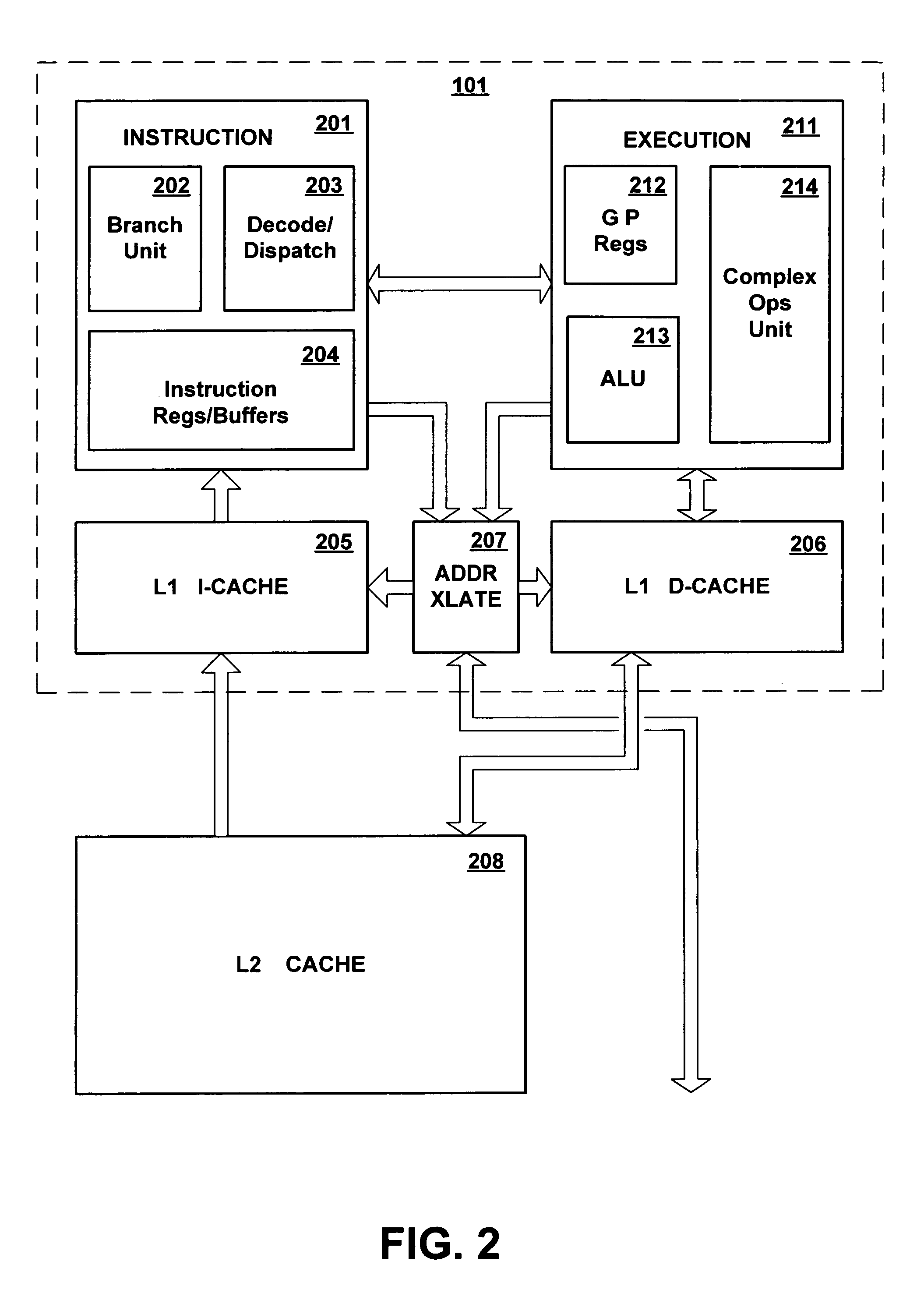

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

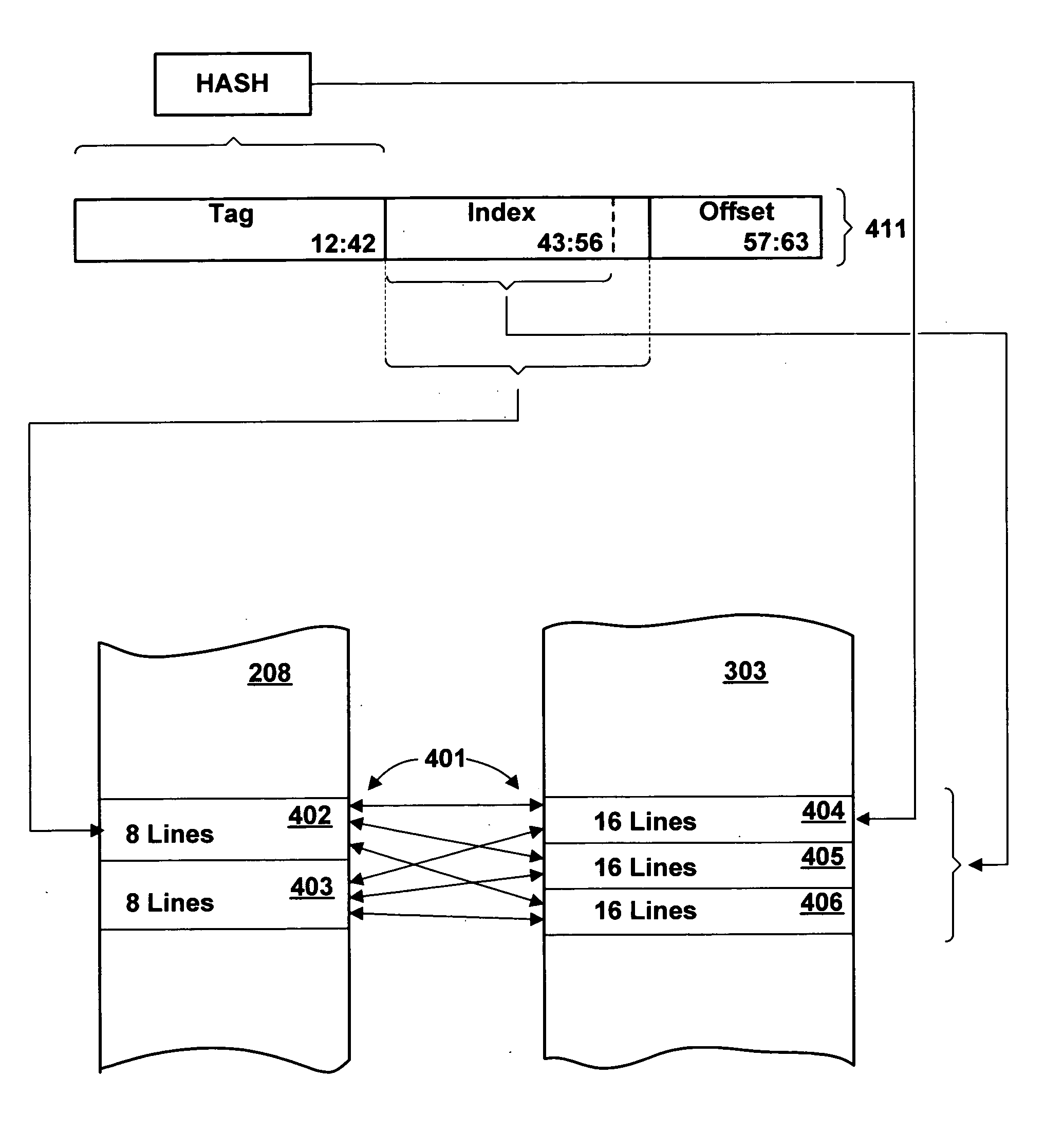

Multi-level cache having overlapping congruence groups of associativity sets in different cache levels

ActiveUS7136967B2Long cache lifeIncrease available associativityMemory adressing/allocation/relocationParallel computingVictim cache

A computer cache memory having at least two levels includes associativity sets allocated to congruence groups, each congruence group having multiple associativity sets (preferably two) in the higher level cache and multiple associativity sets (preferably three) in the lower level cache. The address range of an associativity set in the higher level cache is distributed among all the associativity sets in the lower level cache within the same congruence group, so that these lower level associativity sets are effectively shared by all associativity sets in the same congruence group in the higher level. The lower level cache is preferably a victim cache of the higher level cache. This sharing of lower level associativity sets by different associativity sets in the higher level effectively increases the associativity of the lower level to hold cast-outs of a hot associativity set in the upper level.

Owner:INT BUSINESS MASCH CORP

Layered local cache mechanism with split register load bus and cache load bus

InactiveUS6405285B1Memory adressing/allocation/relocationConcurrent instruction executionLoad busRelevant information

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:INTEL CORP

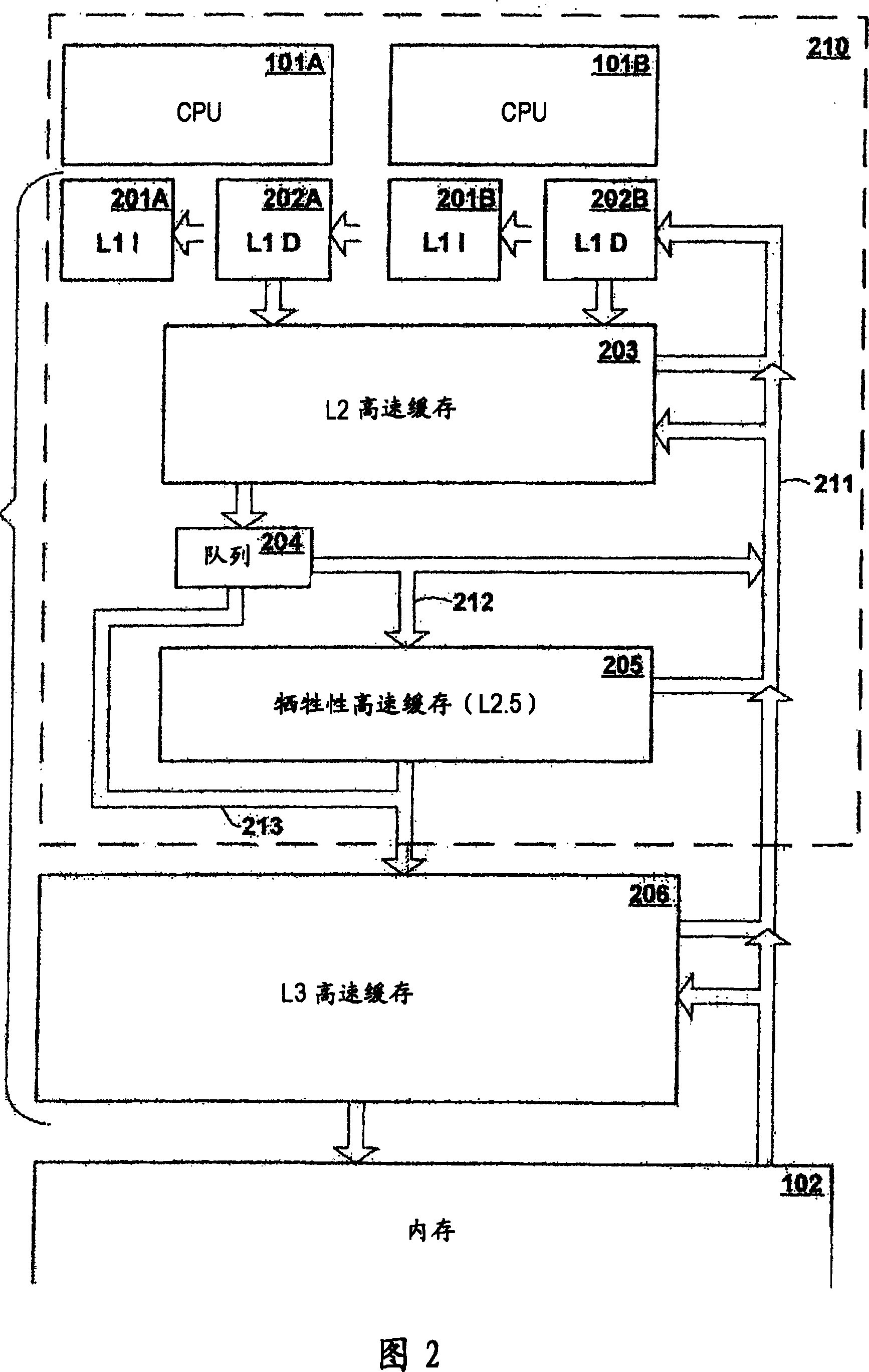

Queue-less and state-less layered local data cache mechanism

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

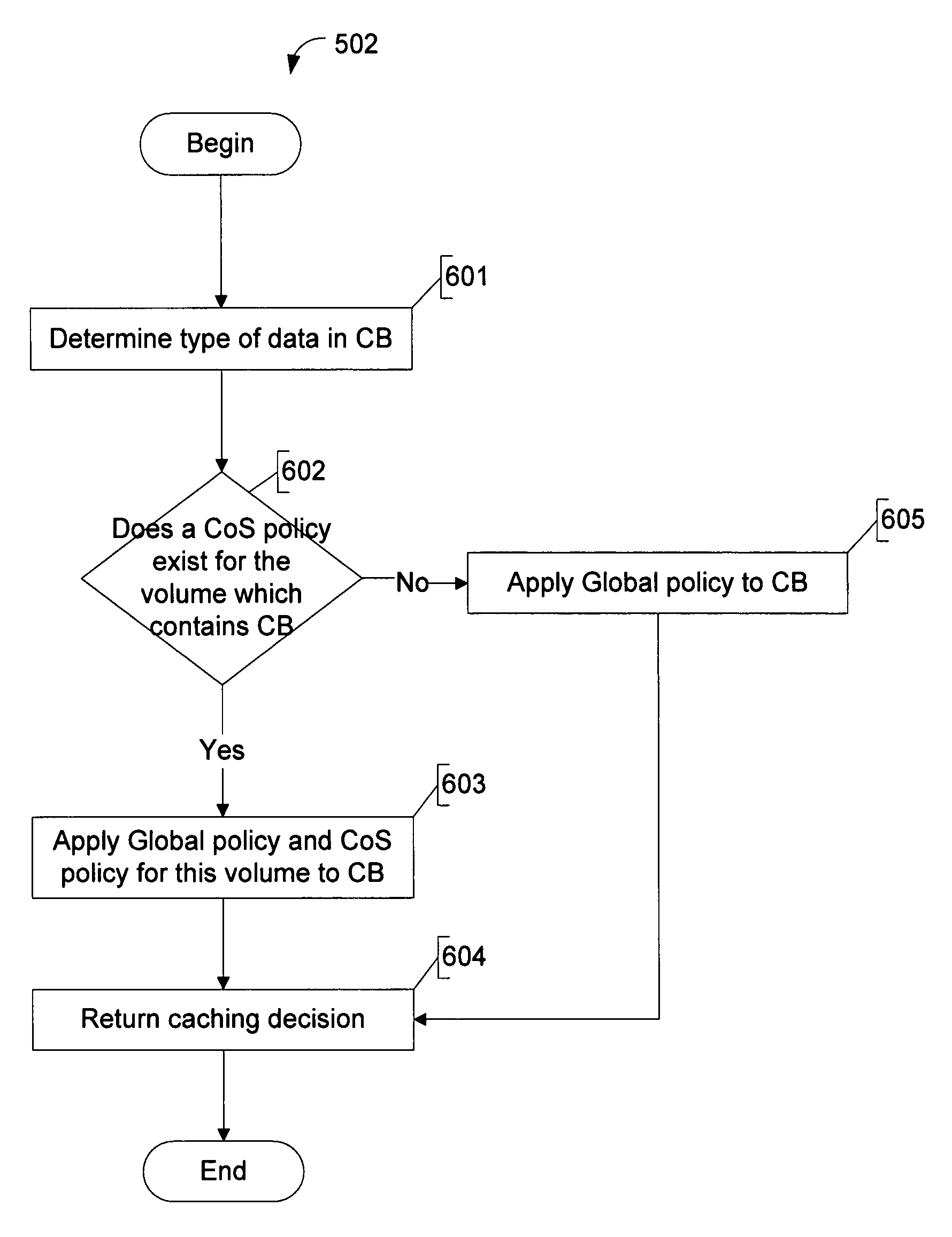

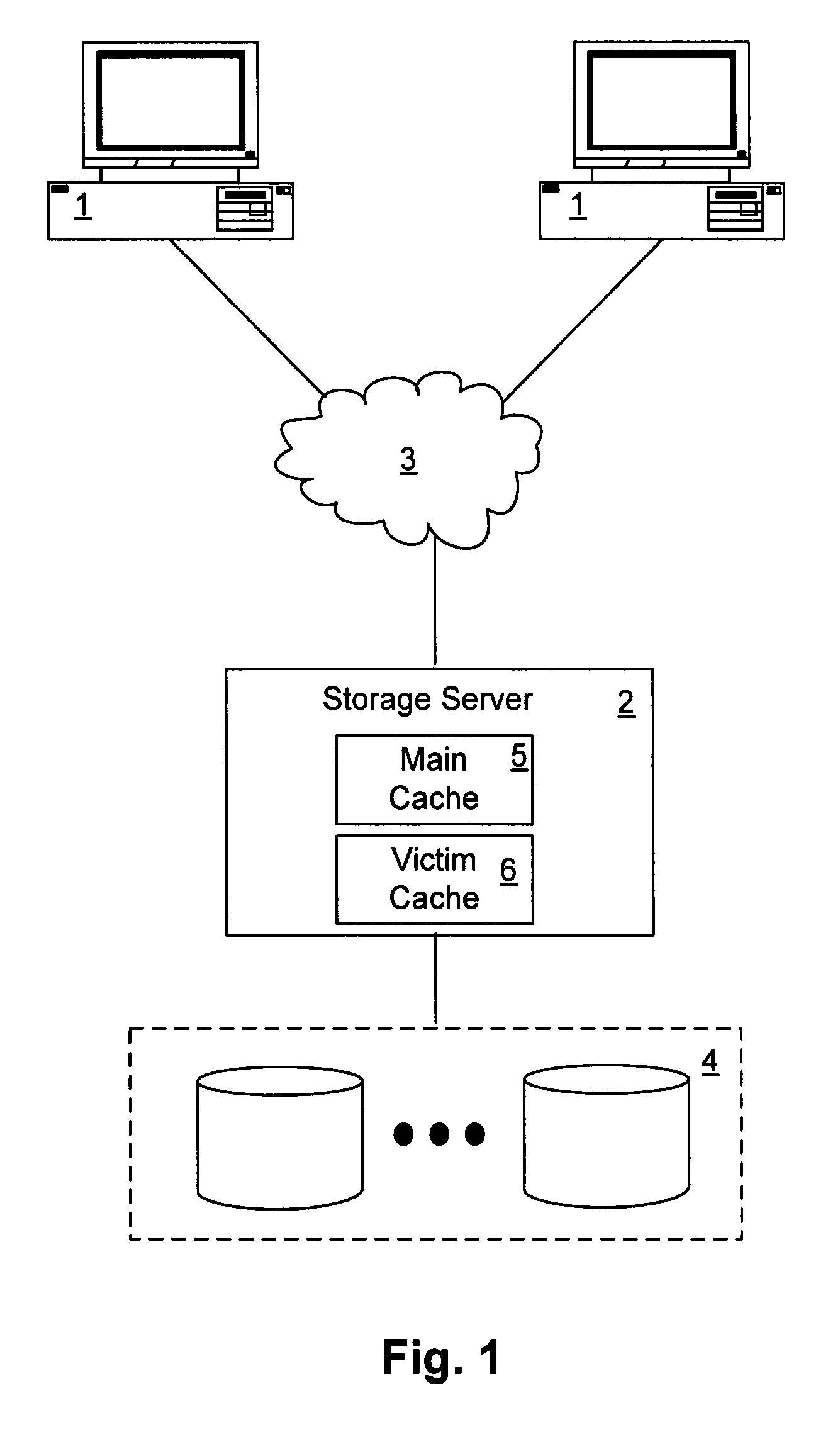

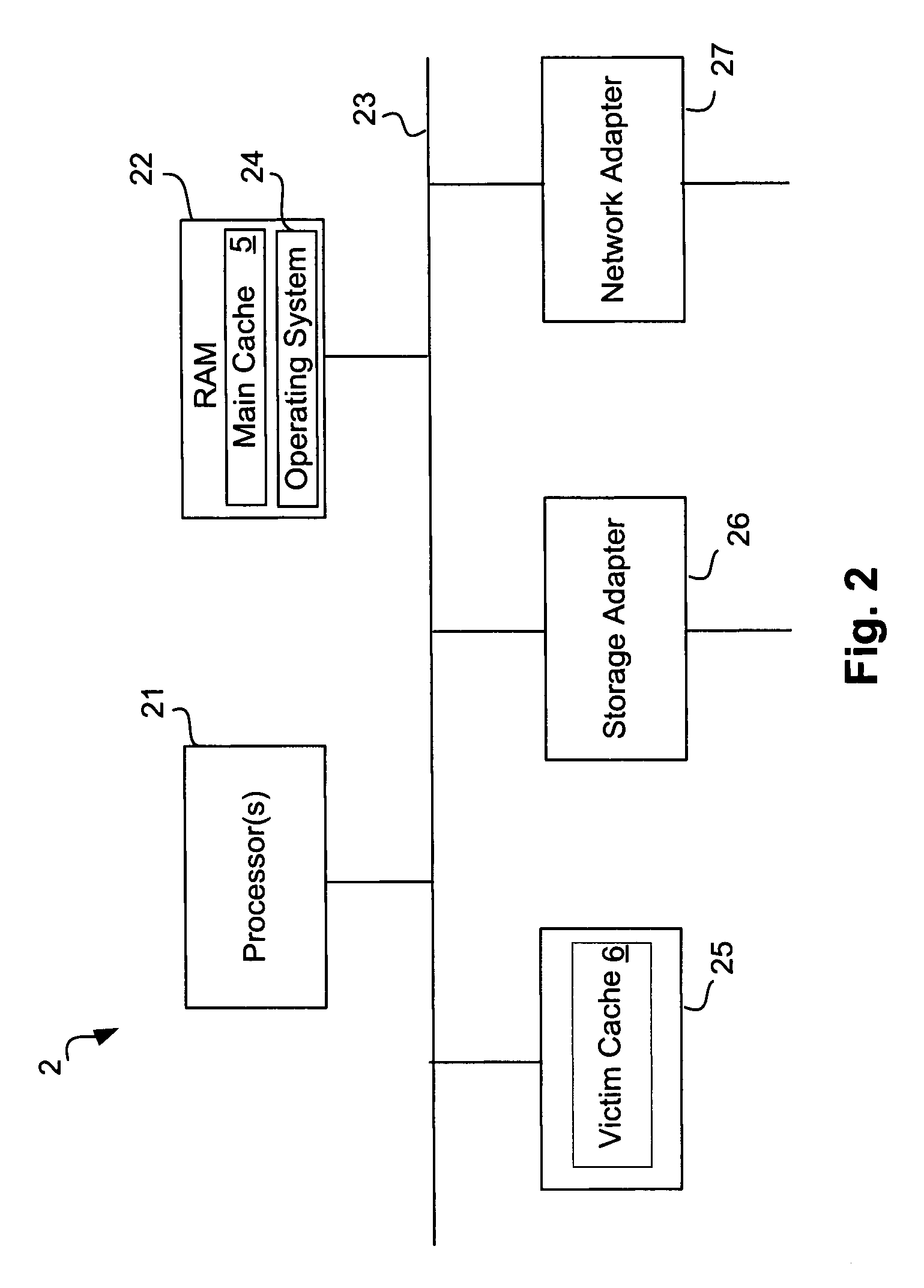

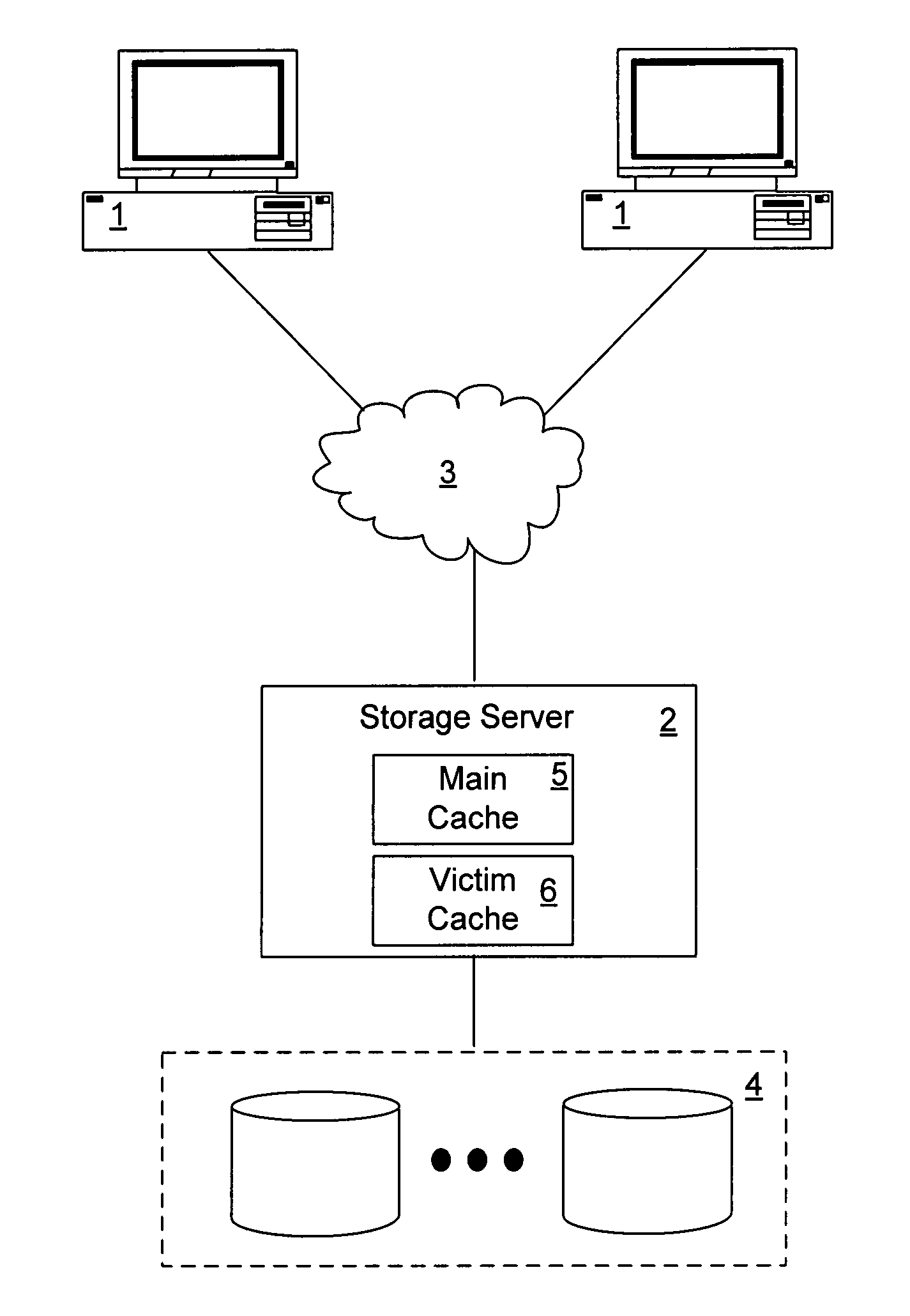

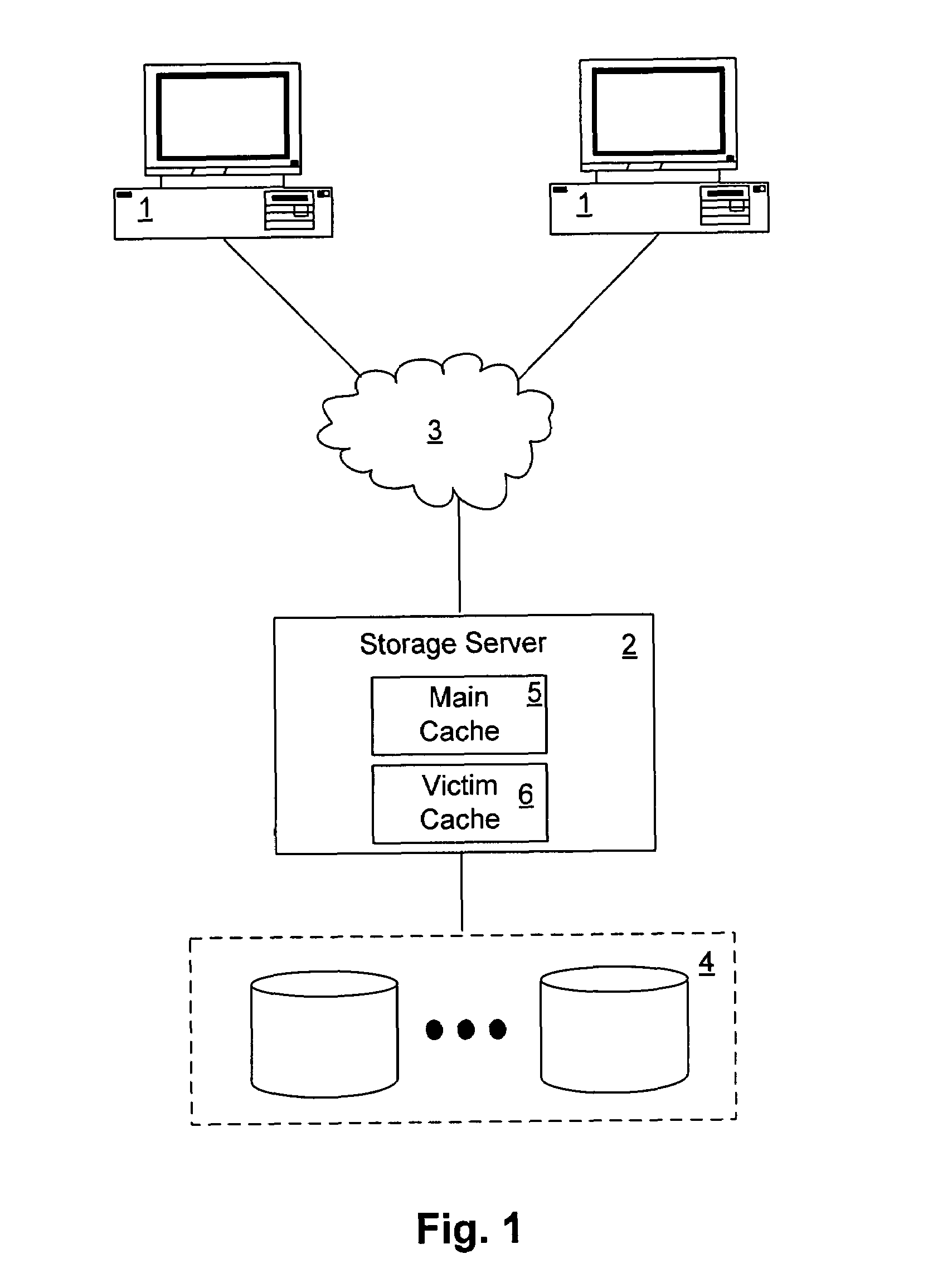

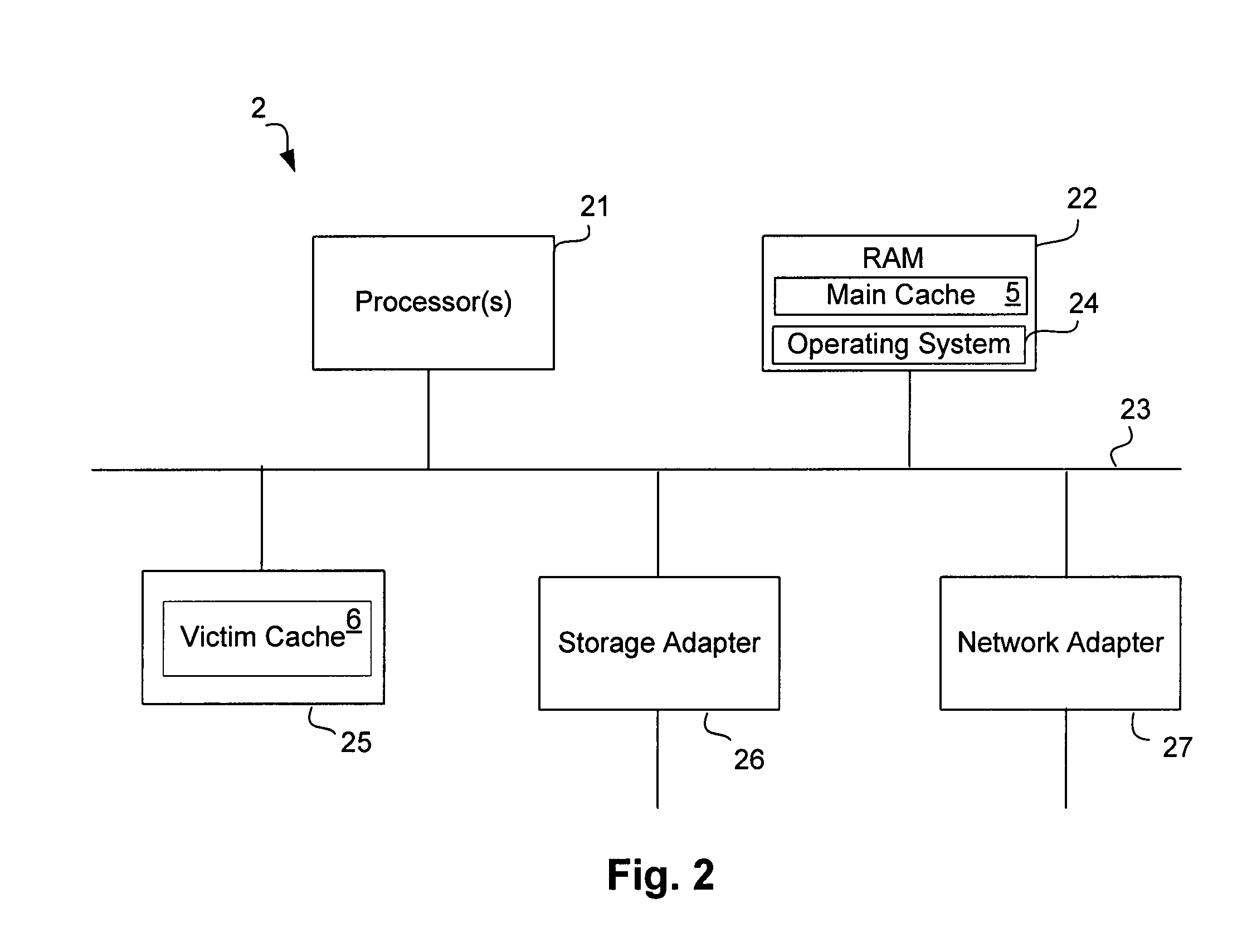

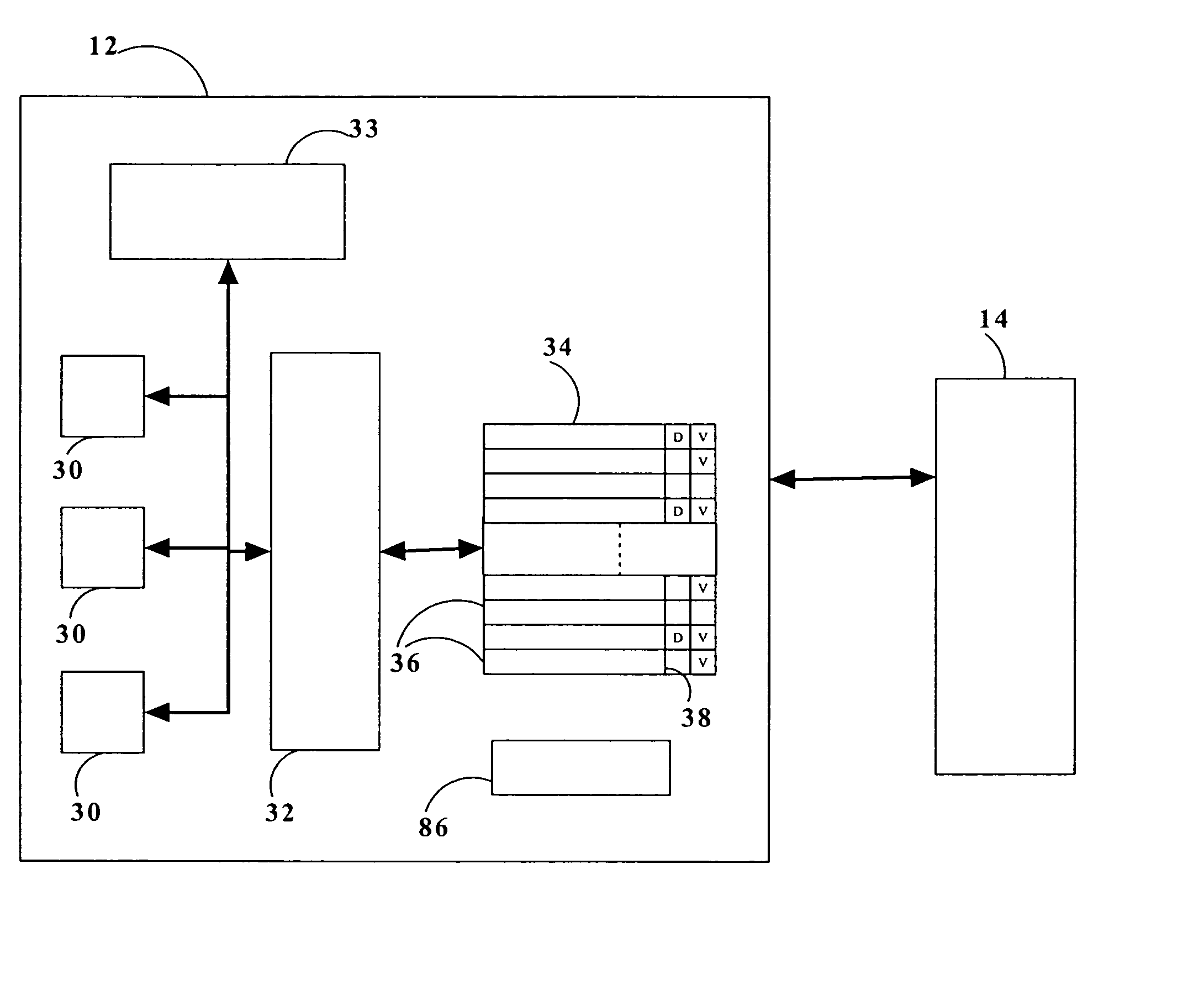

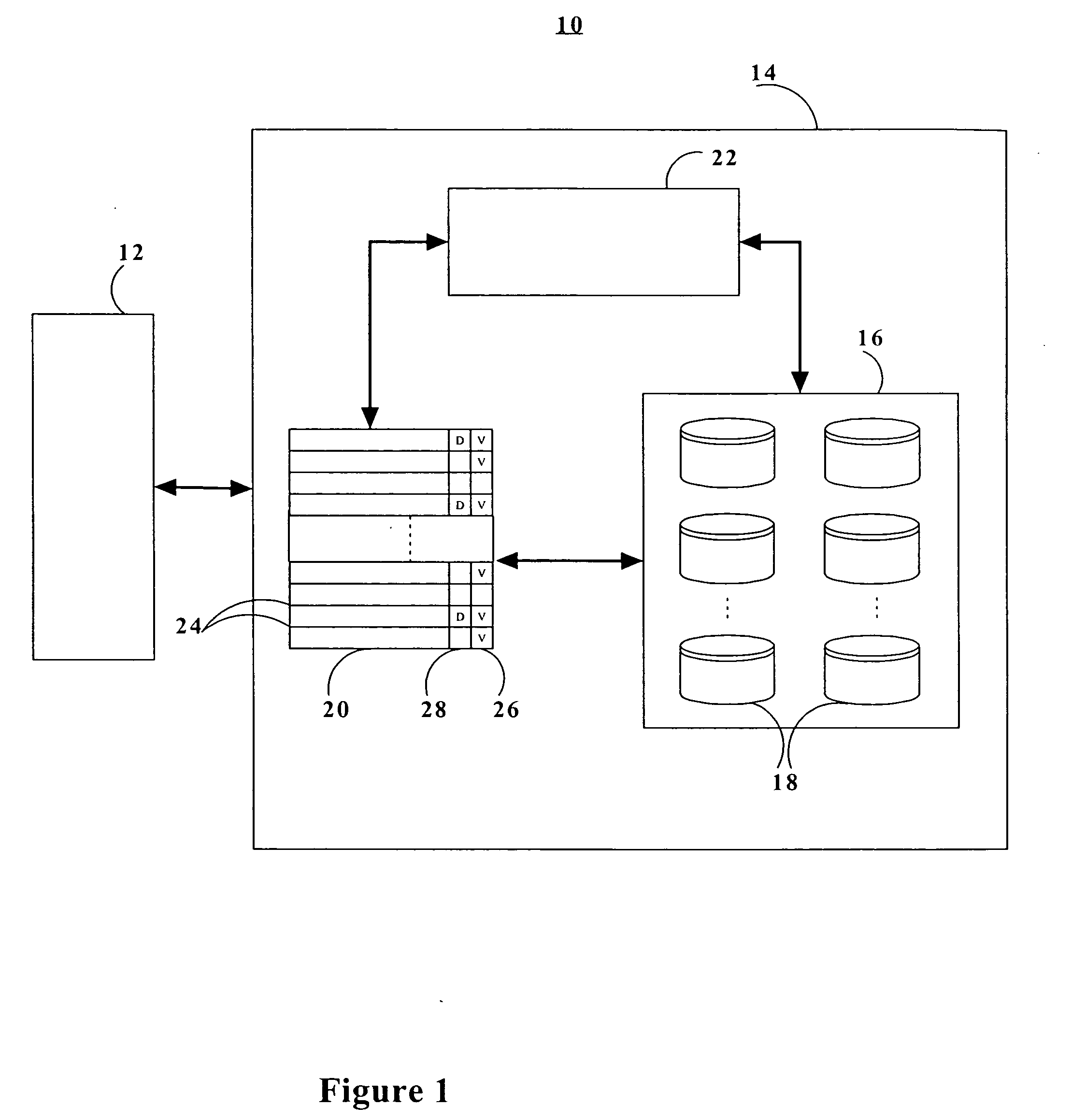

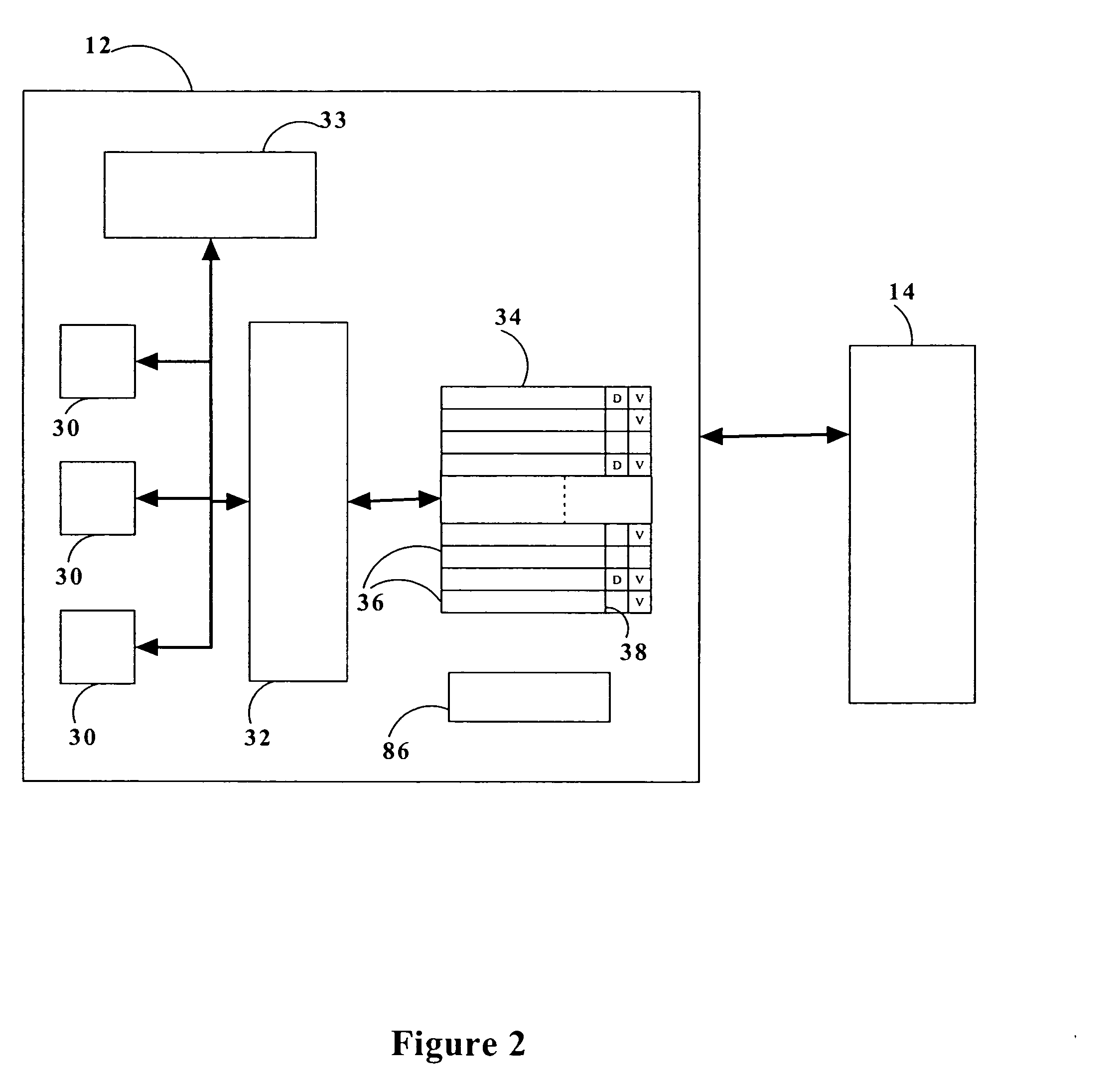

Intelligent caching of data in a storage server victim cache

ActiveUS7752395B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMass storageService control

A network storage server has a non-volatile mass storage facility, a main cache and a victim cache. A technique of intelligently determining whether to cache a data block in the victim cache includes determining whether to store the data block in the victim cache based on a first caching policy and the type of data contained within the data block. The first caching policy may be a global policy. The determination of whether to store the data block in the victim cache further may be based on a second caching policy, which may be a volume-specific control of service (CoS) policy.

Owner:NETWORK APPLIANCE INC

Multi-level cache having overlapping congruence groups of associativity sets in different cache levels

ActiveUS20050125592A1Increase available associativityDeficient in low levelMemory adressing/allocation/relocationParallel computingVictim cache

A computer cache memory having at least two levels includes associativity sets allocated to congruence groups, each congruence group having multiple associativity sets (preferably two) in the higher level cache and multiple associativity sets (preferably three) in the lower level cache. The address range of an associativity set in the higher level cache is distributed among all the associativity sets in the lower level cache within the same congruence group, so that these lower level associativity sets are effectively shared by all associativity sets in the same congruence group in the higher level. The lower level cache is preferably a victim cache of the higher level cache. This sharing of lower level associativity sets by different associativity sets in the higher level effectively increases the associativity of the lower level to hold cast-outs of a hot associativity set in the upper level.

Owner:IBM CORP

Systems and methods for reconfiguring cache memory

ActiveUS20120221793A1Memory architecture accessing/allocationEnergy efficient ICTParallel computingProgram Thread

A microprocessor system is disclosed that includes a first data cache that is shared by a first group of one or more program threads in a multi-thread mode and used by one program thread in a single-thread mode. A second data cache is shared by a second group of one or more program threads in the multi-thread mode and is used as a victim cache for the first data cache in the single-thread mode.

Owner:NXP USA INC

Partial tag offloading for storage server victim cache

ActiveUS7698506B1Memory architecture accessing/allocationMemory systemsParallel computingVictim cache

A technique for partially offloading, from a main cache in a storage server, the storage of cache tags for data blocks in a victim cache of the storage server, is described. The technique includes storing, in the main cache, a first subset of the cache tag information for each of the data blocks, and storing, in a victim cache of the storage server, a second subset of the cache tag information for each of the data blocks. This technique avoids the need to store the second subset of the cache tag information in the main cache.

Owner:NETWORK APPLIANCE INC

Provision of a victim cache within a storage cache heirarchy

InactiveUS20050138292A1Conveniently reside in computer systemImprove performanceMemory adressing/allocation/relocationParallel computingData storing

Owner:EMC IP HLDG CO LLC

Horizontally-shared cache victims in multiple core processors

ActiveUS7774549B2Lower latencyReduce the amount requiredEnergy efficient ICTMemory adressing/allocation/relocationLatency (engineering)Multi-core processor

A processor includes multiple processor core units, each including a processor core and a cache memory. Victim lines evicted from a first processor core unit's cache may be stored in another processor core unit's cache, rather than written back to system memory. If the victim line is later requested by the first processor core unit, the victim line is retrieved from the other processor core unit's cache. The processor has low latency data transfers between processor core units. The processor transfers victim lines directly between processor core units' caches or utilizes a victim cache to temporarily store victim lines while searching for their destinations. The processor evaluates cache priority rules to determine whether victim lines are discarded, written back to system memory, or stored in other processor core units' caches. Cache priority rules can be based on cache coherency data, load balancing schemes, and architectural characteristics of the processor.

Owner:MIPS TECH INC

Method for upper level cache victim selection management by a lower level cache

InactiveUS6446166B1Memory adressing/allocation/relocationUnauthorized memory use protectionLoad busRelevant information

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

Provision of a victim cache within a storage cache hierarchy

InactiveUS6901477B2Conveniently reside in computer systemImprove performanceMemory adressing/allocation/relocationCache hierarchyParallel computing

Owner:EMC IP HLDG CO LLC

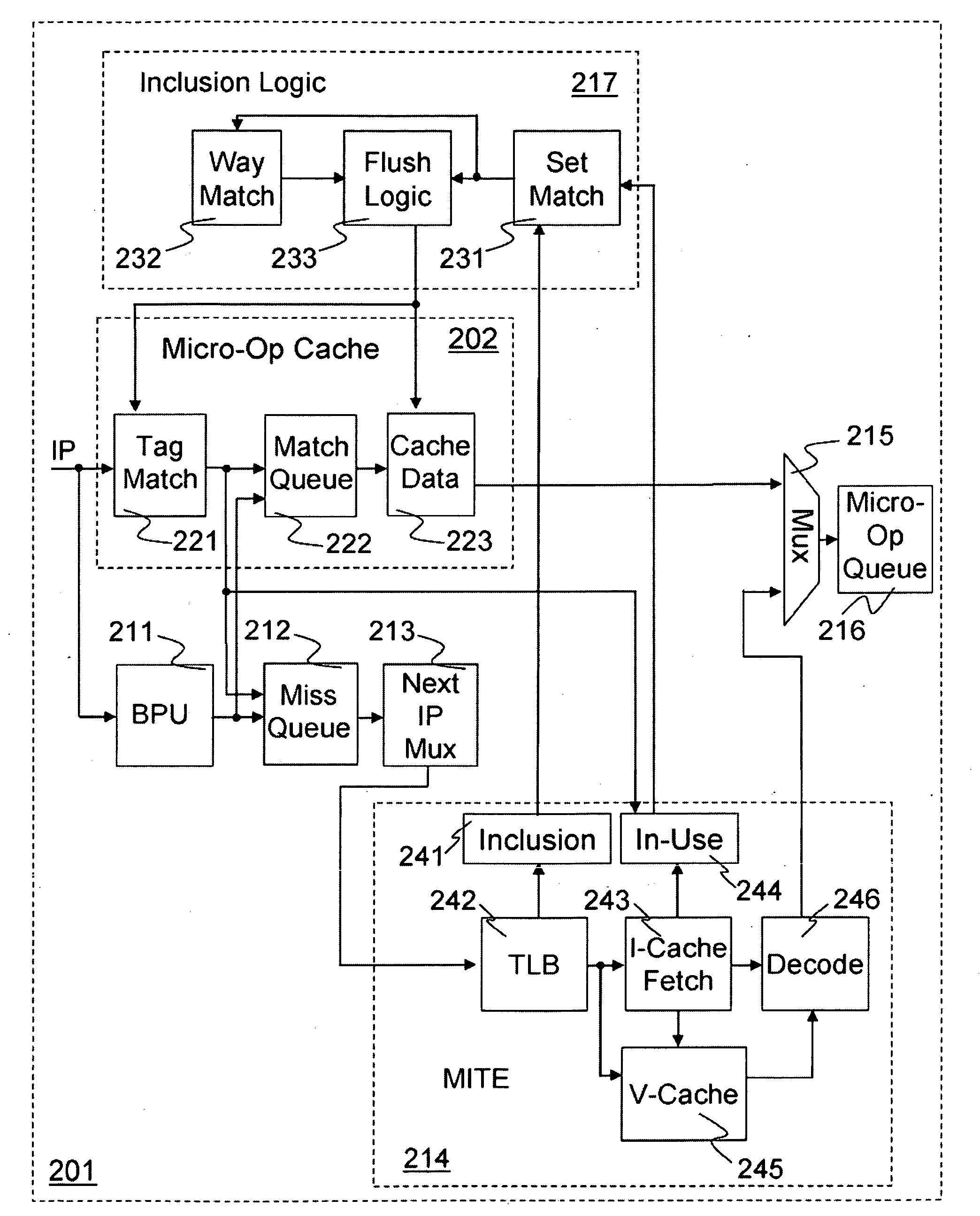

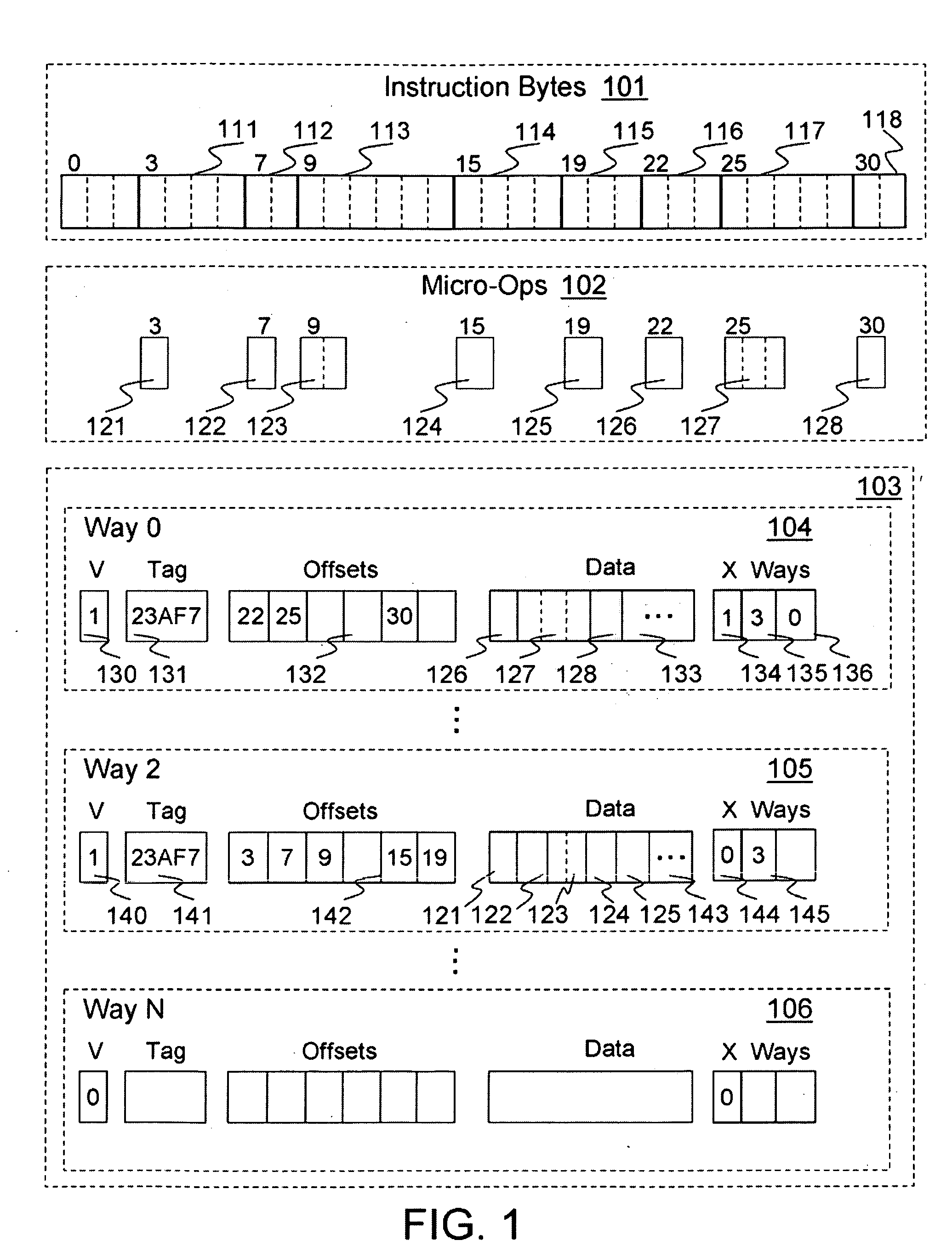

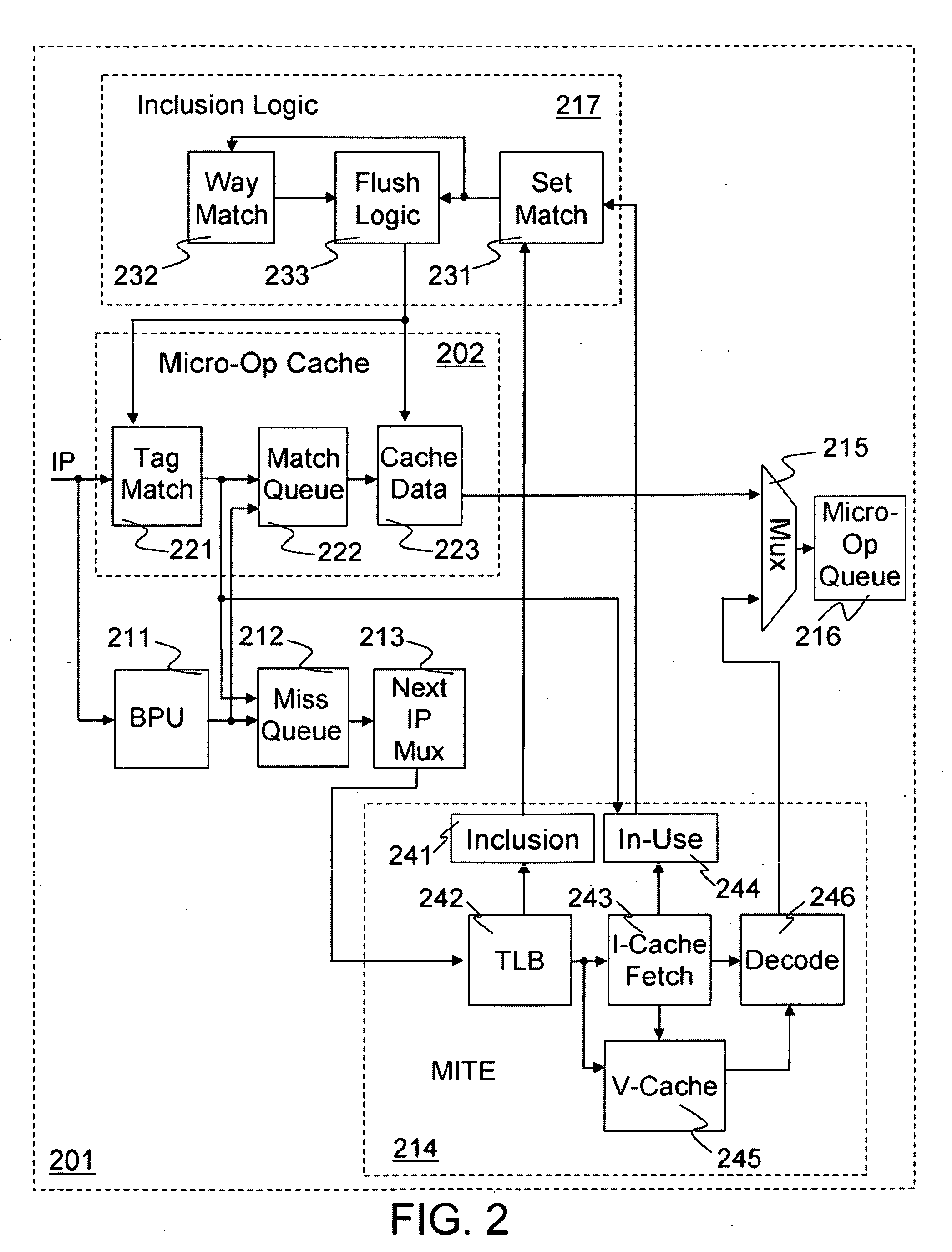

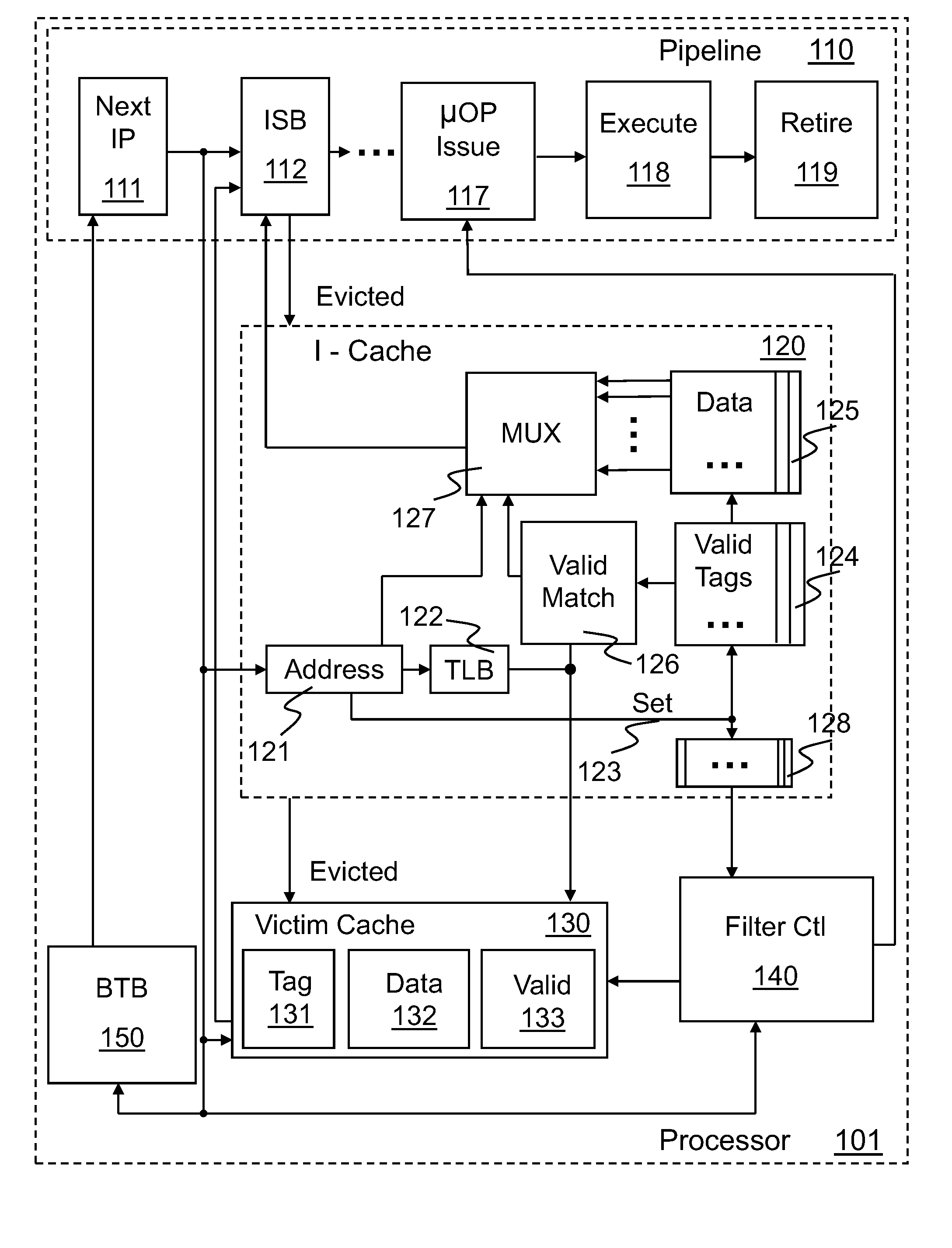

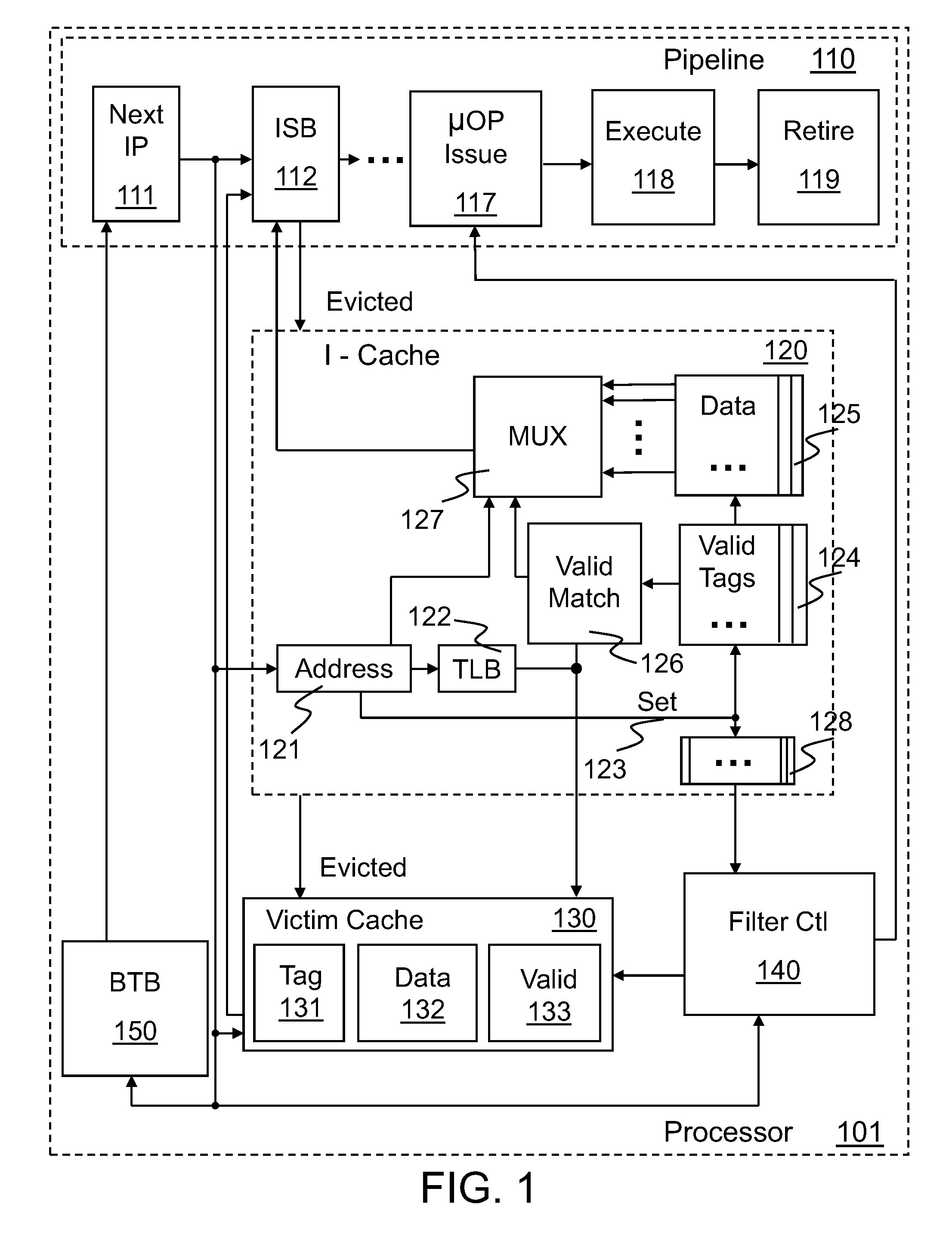

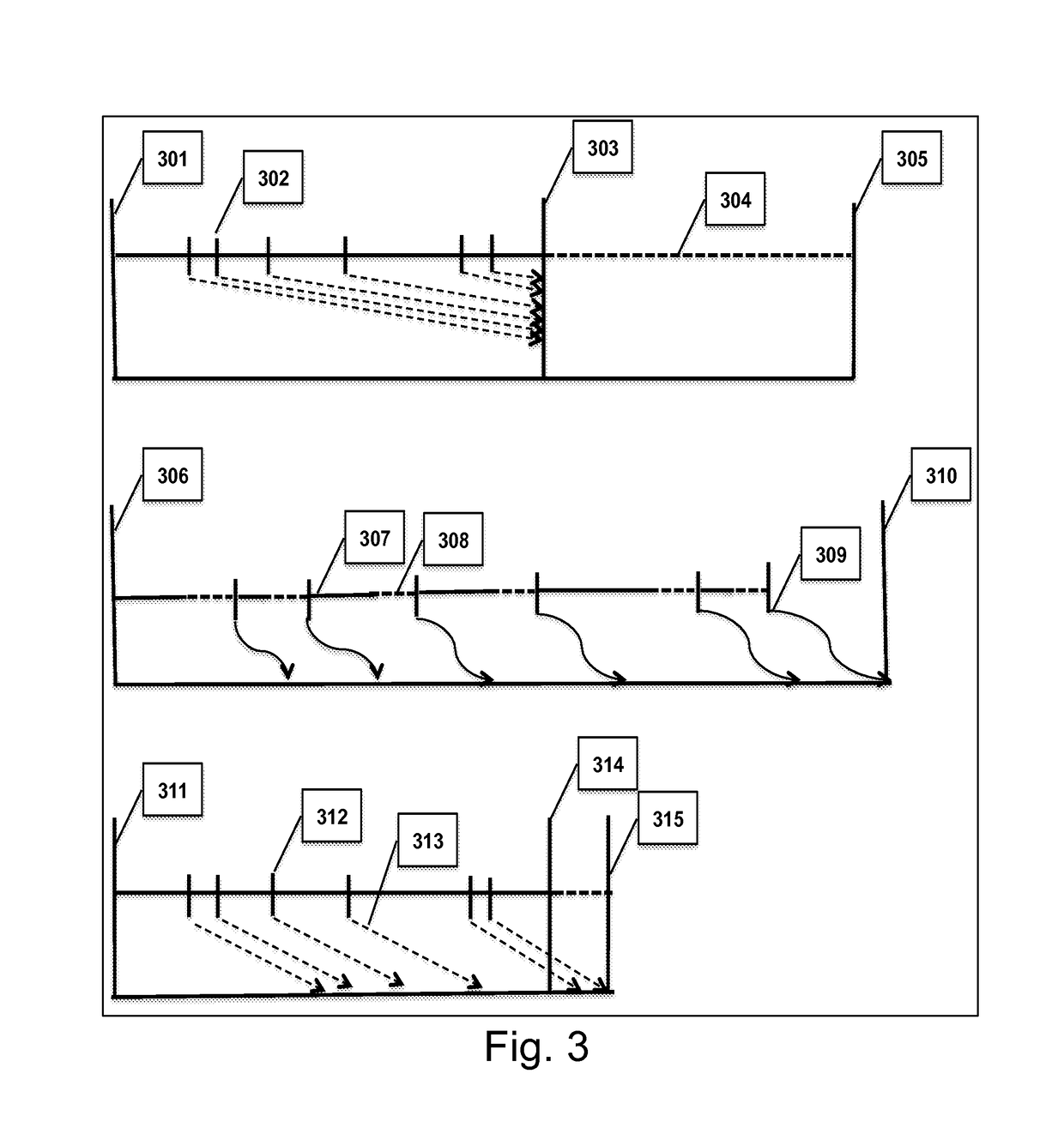

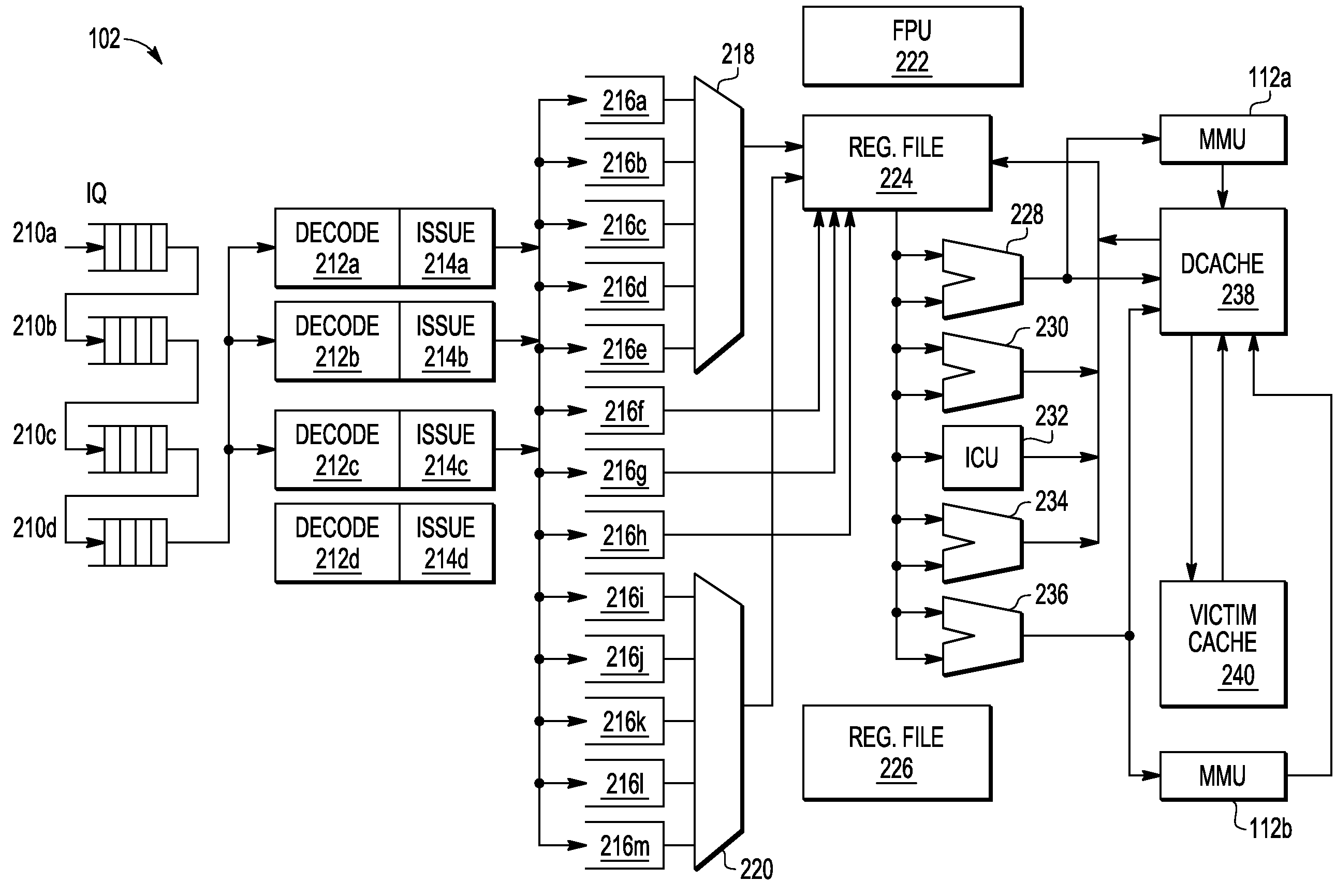

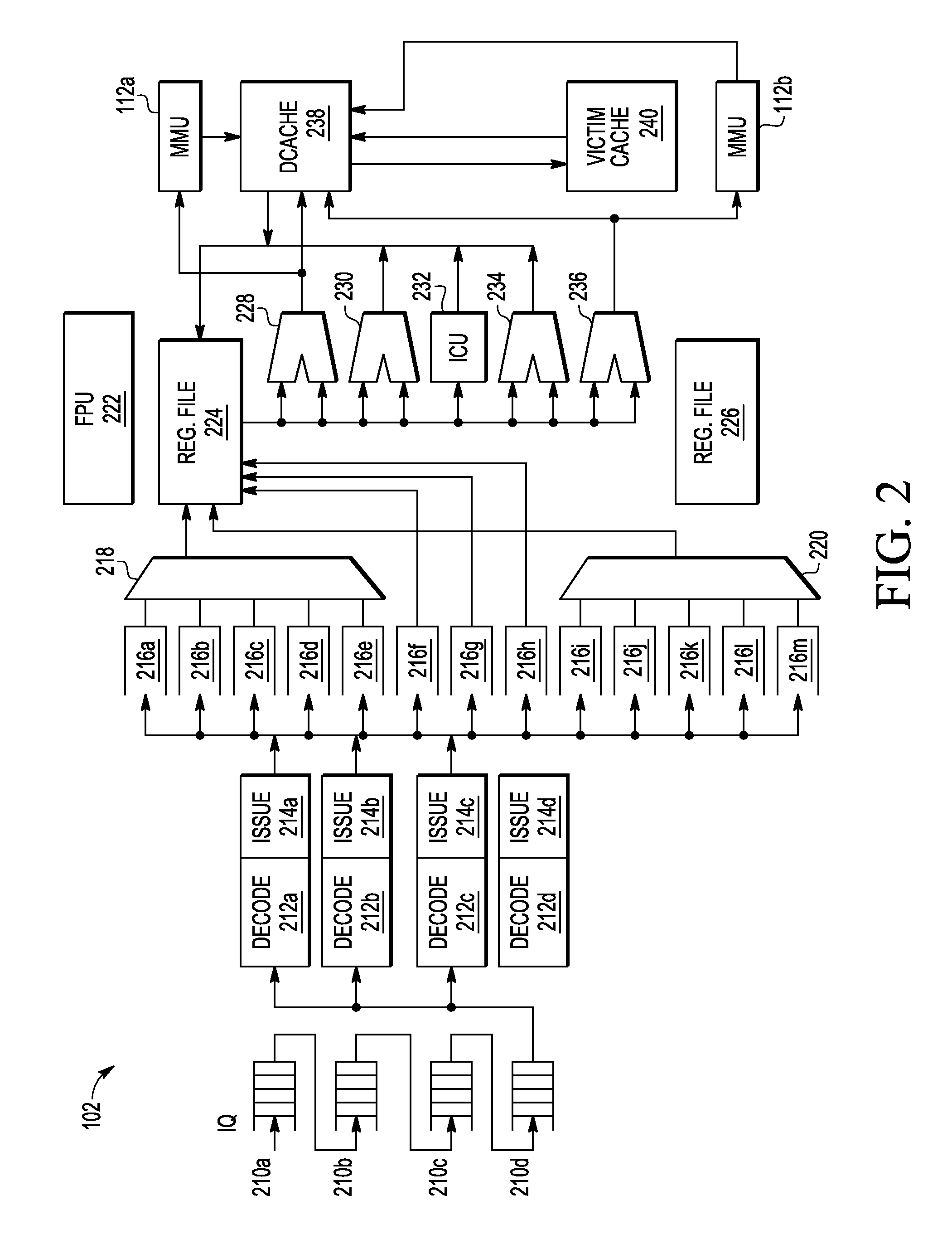

Method and apparatus for pipeline inclusion and instruction restarts in a micro-op cache of a processor

Methods and apparatus for instruction restarts and inclusion in processor micro-op caches are disclosed. Embodiments of micro-op caches have way storage fields to record the instruction-cache ways storing corresponding macroinstructions. Instruction-cache in-use indications associated with the instruction-cache lines storing the instructions are updated upon micro-op cache hits. In-use indications can be located using the recorded instruction-cache ways in micro-op cache lines. Victim-cache deallocation micro-ops are enqueued in a micro-op queue after micro-op cache miss synchronizations, responsive to evictions from the instruction-cache into a victim-cache. Inclusion logic also locates and evicts micro-op cache lines corresponding to the recorded instruction-cache ways, responsive to evictions from the instruction-cache.

Owner:INTEL CORP

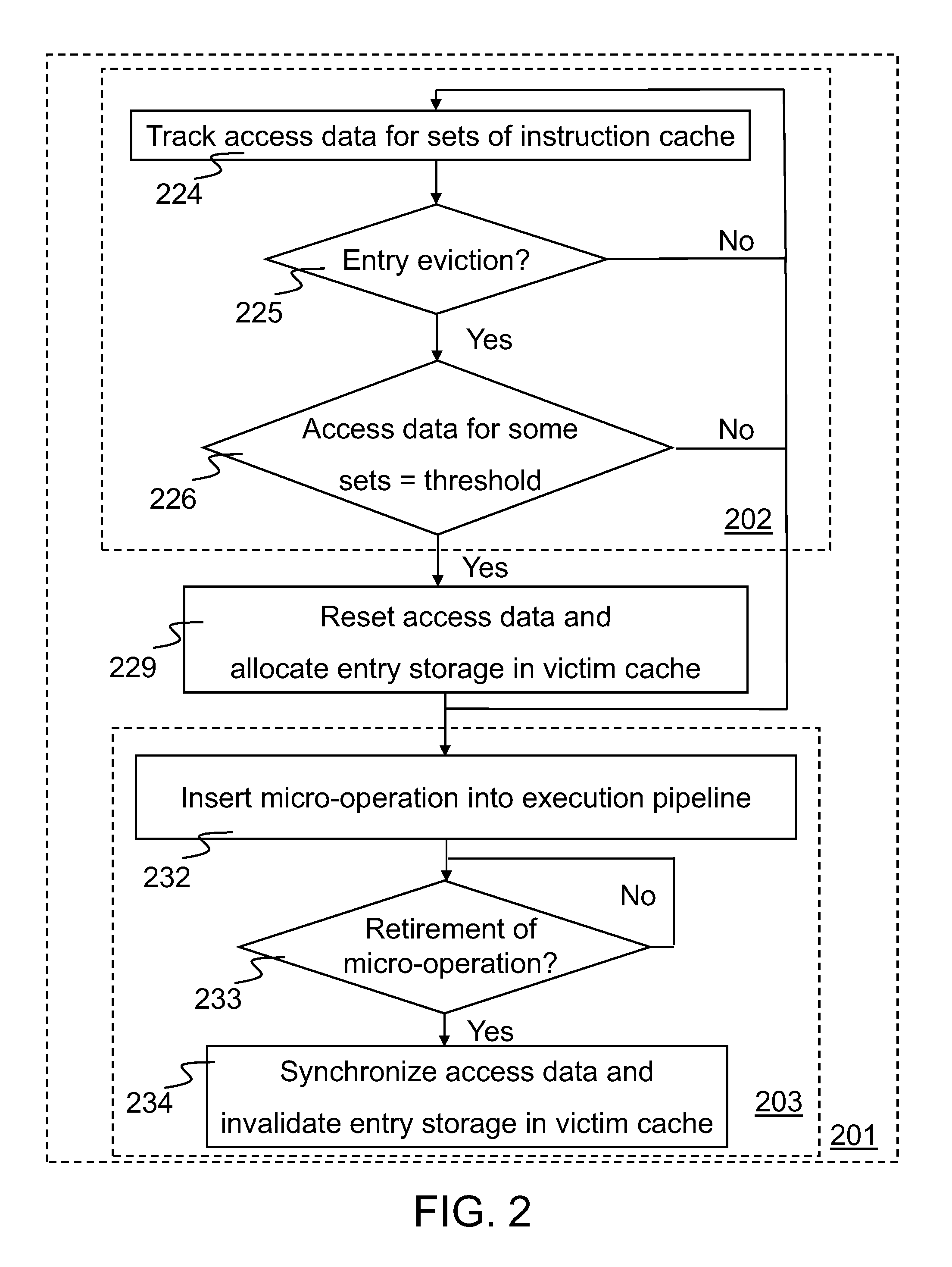

Tracking temporal use associated with cache evictions

A method and apparatus for tracking temporal use associated with cache evictions to reduce allocations in a victim cache is disclosed. Access data for a number of sets of instructions in an instruction cache is tracked at least until the data for one or more of the sets reach a predetermined threshold condition. Determinations whether to allocate entry storage in the victim cache may be made responsive in part to the access data for sets reaching the predetermined threshold condition. A micro-operation can be inserted into the execution pipeline in part to synchronize the access data for all the sets. Upon retirement of the micro-operation from the execution pipeline, access data for the sets can be synchronized and / or any previously allocated entry storage in the victim cache can be invalidated.

Owner:INTEL CORP

High performance store instruction management via imprecise local cache update mechanism

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

System and method for atomic persistence in storage class memory

ActiveUS10163510B1Atomicity of operationDurability of operationMemory architecture accessing/allocationInput/output to record carriersFast pathMemory hierarchy

Emerging byte-addressable persistent memory technologies, generically referred to as Storage Class Memory, offer performance advantages and access similar to Dynamic Random Access Memory while having the persistence of disk. Unifying storage and memory into a memory tier that can be accessed directly requires additional burden to ensure that groups of memory operations to persistent or nonvolatile memory locations are performed sequentially, atomically, and not caught in the cache hierarchy.The present invention provides a lightweight solution for the atomicity and durability of write operations to nonvolatile memory, while simultaneously supporting fast paths through the cache hierarchy to memory. The invention includes a hardware-supported solution with modifications to the memory hierarchy comprising a victim cache and additional memory controller logic. The invention also includes a software only method and system that provides atomic persistence to nonvolatile memory using a software alias in DRAM and log in nonvolatile memory.

Owner:GILES ELLIS ROBINSON +1

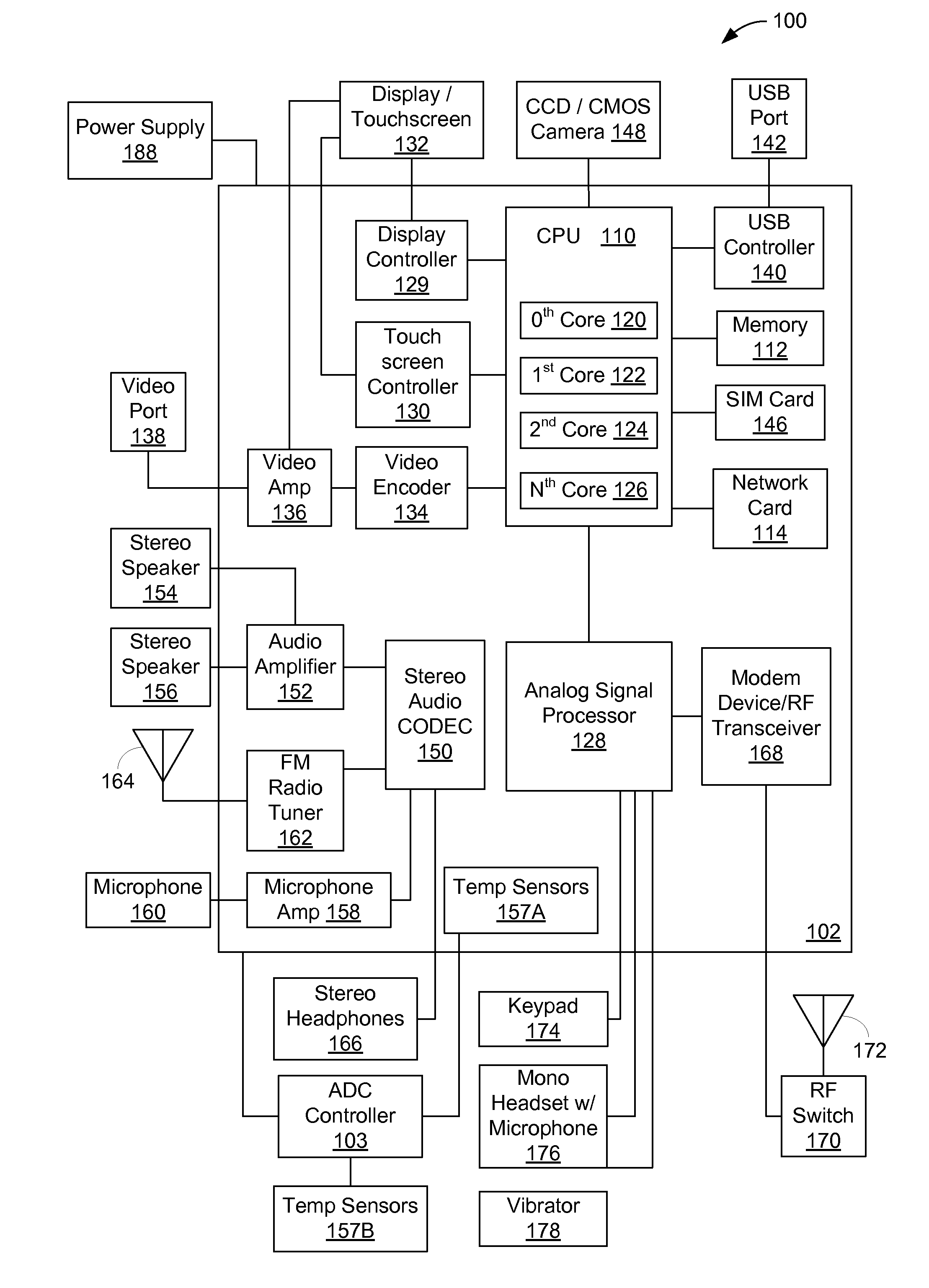

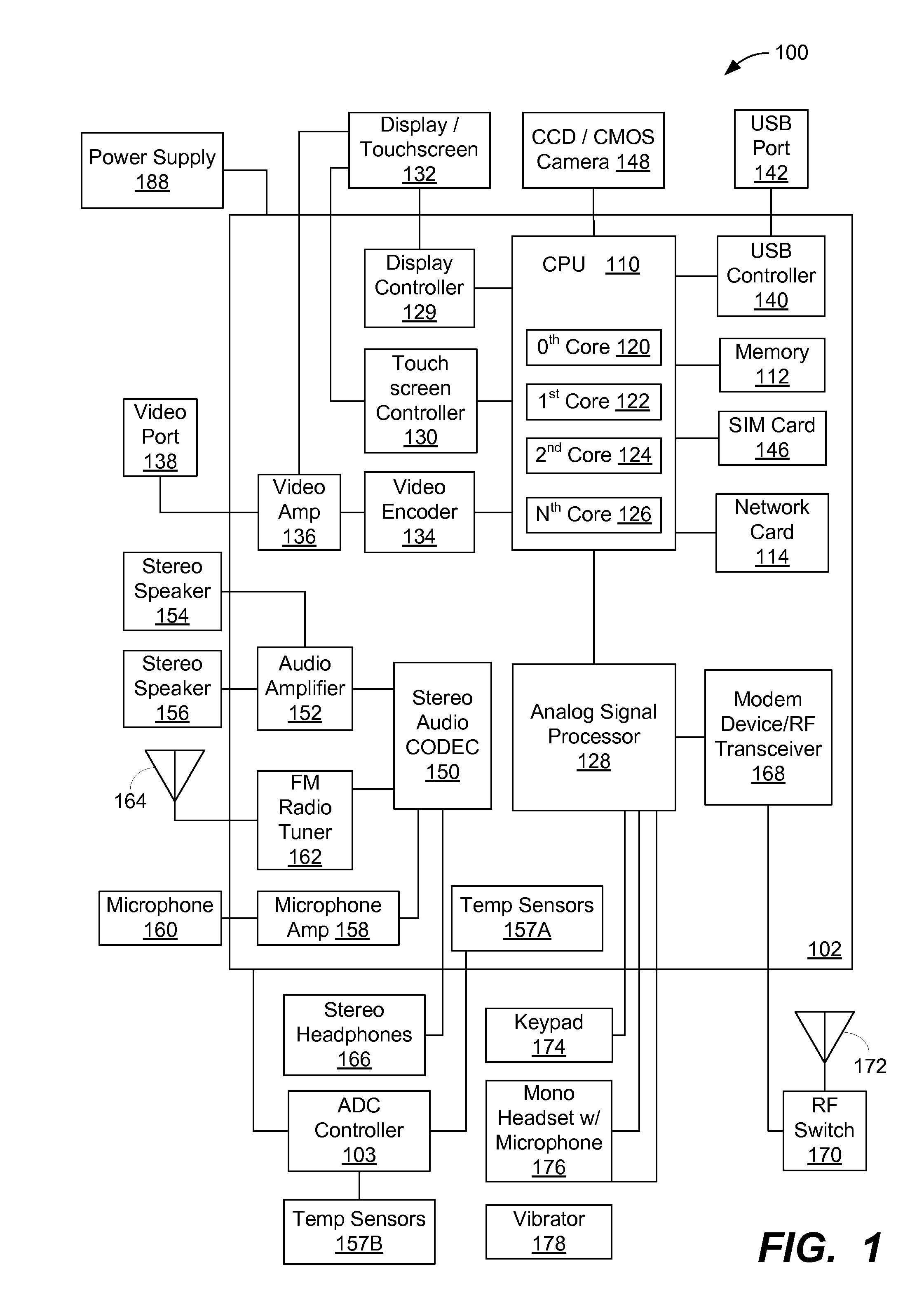

System and method for improving a victim cache mode in a portable computing device

InactiveUS20160210239A1Easy to operateMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingMemory controller

Systems and methods for improved operation of a victim cache in a portable computing device (PCD) are presented. A lower level cache is operated as a victim to an upper level cache, the lower level cache containing a plurality of cache lines. A filter is operated in association with the lower level victim cache, and reflects the cache lines contained in the victim cache. For a miss at the upper level cache, the filter is checked to determine if the requested cache line is in the victim cache. If checking the filter determines that the requested cache line is in the victim cache the requested cache line is retrieved from the victim cache. If checking the filter determines that the request cache line is not in the victim cache, the victim cache is bypassed and the cache line is requested from a memory controller.

Owner:QUALCOMM INC

Digital data processing device and method for managing cache data

A computer system cache memory contains at least two levels. A lower level selective victim cache receives cache lines evicted from a higher level cache. A selection mechanism selects lines evicted from the higher level cache for storage in the victim cache, only some of the evicted lines being selected for the victim. Preferably, two priority bits associated with each cache line are used to select lines for the victim. The priority bits indicate whether the line has been re-referenced while in the higher level cache, and whether it has been reloaded after eviction from the higher level cache.

Owner:IBM CN

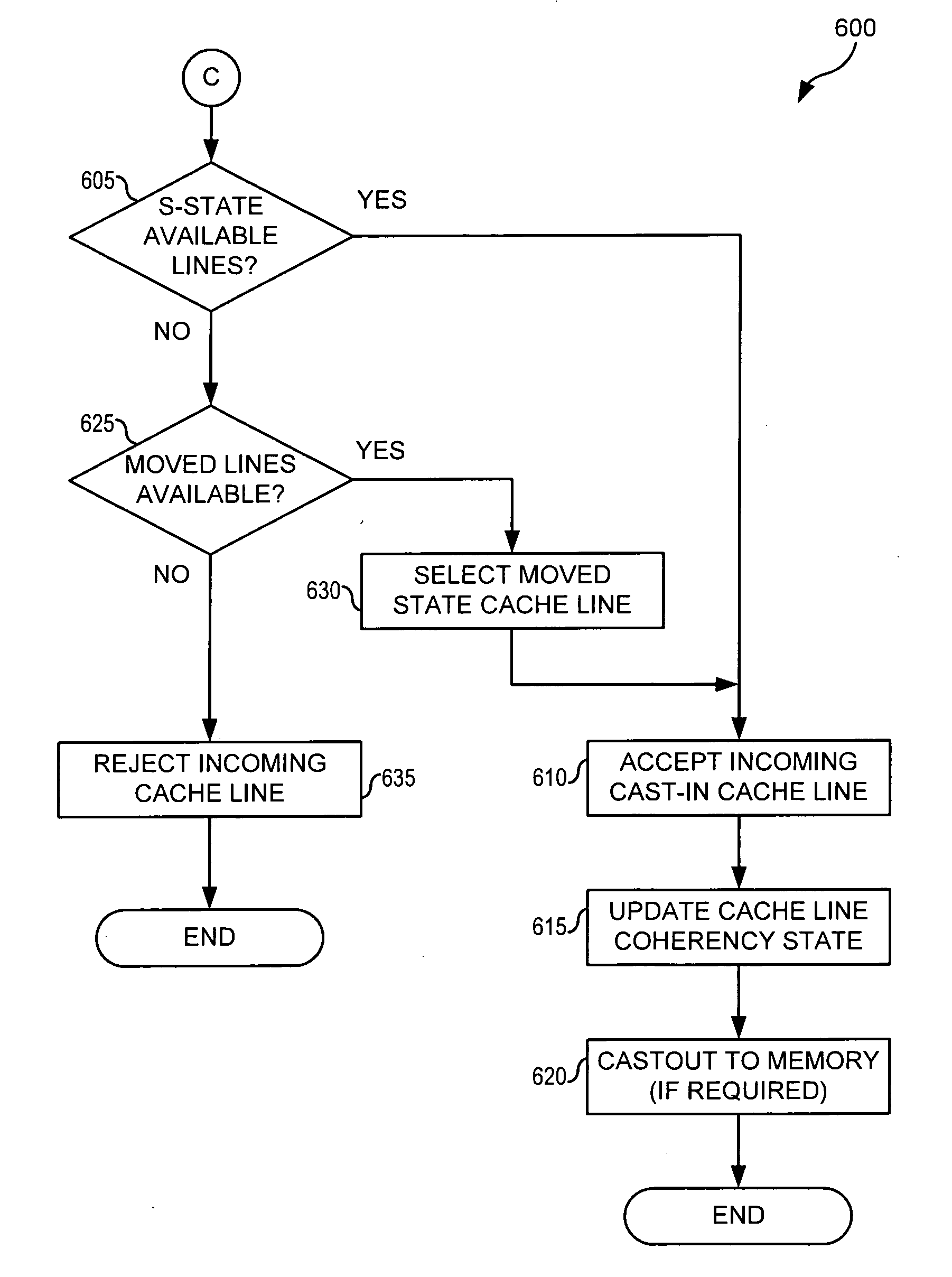

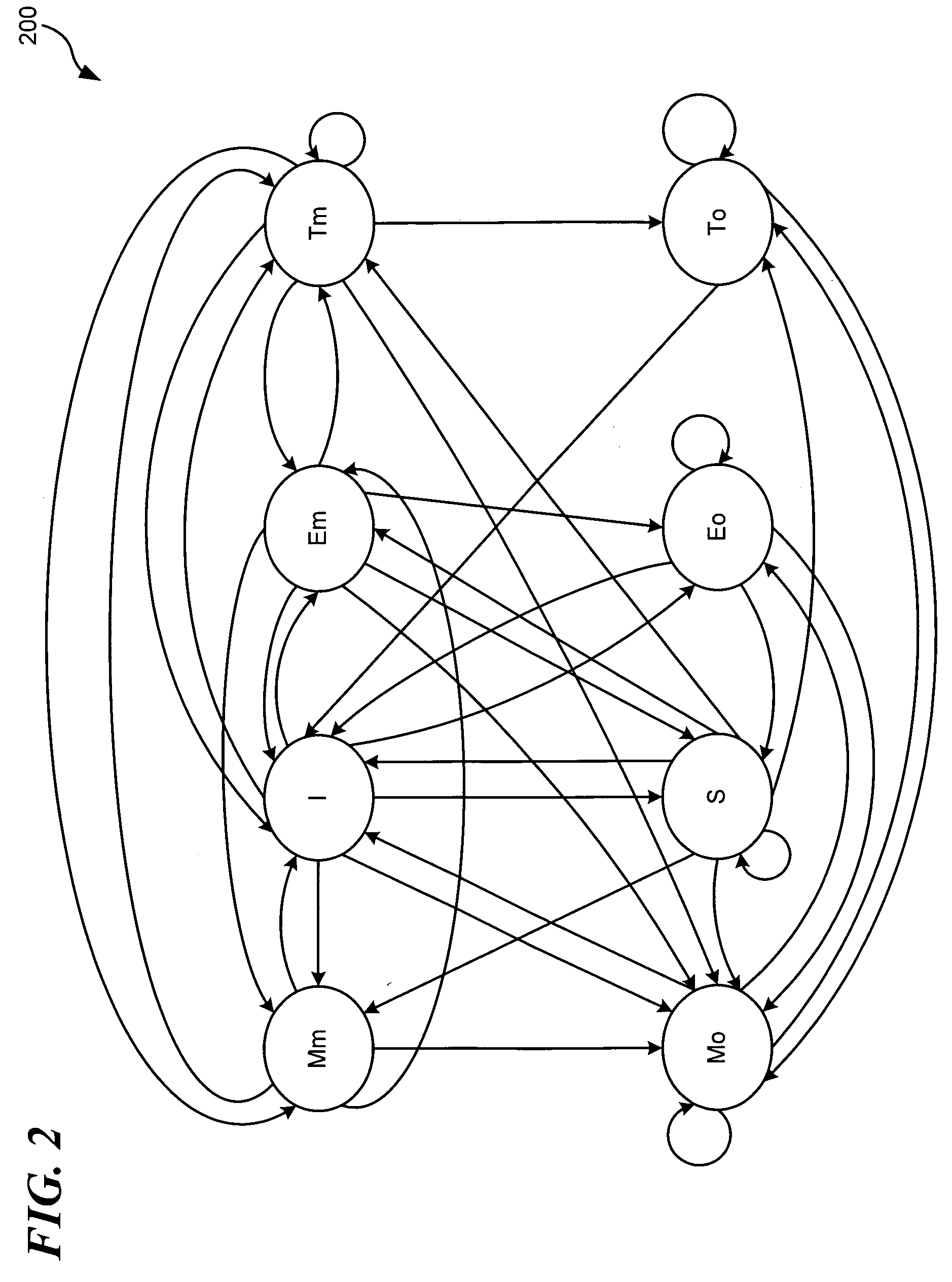

System and Method for Cache Line Replacement Selection in a Multiprocessor Environment

A method for managing a cache operates in a data processing system with a system memory and a plurality of processing units (PUs). A first PU determines that one of a plurality of cache lines in a first cache of the first PU must be replaced with a first data block, and determines whether the first data block is a victim cache line from another one of the plurality of PUs. In the event the first data block is not a victim cache line from another one of the plurality of PUs, the first cache does not contain a cache line in coherency state invalid, and the first cache contains a cache line in coherency state moved, the first PU selects a cache line in coherency state moved, stores the first data block in the selected cache line and updates the coherency state of the first data block.

Owner:IBM CORP

Complier assisted victim cache bypassing

InactiveUS7506119B2Energy efficient ICTMemory adressing/allocation/relocationParallel computingVictim cache

A method for compiler assisted victim cache bypassing including: identifying a cache line as a candidate for victim cache bypassing; conveying a bypassing-the-victim-cache information to a hardware; and checking a state of the cache line to determine a modified state of the cache line, wherein the cache line is identified for cache bypassing if the cache line that has no reuse within a loop or loop nest and there is no immediate loop reuse or there is a substantial across loop reuse distance so that it will be replaced from both main and victim cache before being reused.

Owner:INT BUSINESS MASCH CORP

High performance load instruction management via system bus with explicit register load and/or cache reload protocols

A method of improving memory access for a computer system, by sending load requests to a lower level storage subsystem along with associated information pertaining to intended use of the requested information by the requesting processor, without using a high level load queue. Returning the requested information to the processor along with the associated use information allows the information to be placed immediately without using reload buffers. A register load bus separate from the cache load bus (and having a smaller granularity) is used to return the information. An upper level (L1) cache may then be imprecisely reloaded (the upper level cache can also be imprecisely reloaded with store instructions). The lower level (L2) cache can monitor L1 and L2 cache activity, which can be used to select a victim cache block in the L1 cache (based on the additional L2 information), or to select a victim cache block in the L2 cache (based on the additional L1 information). L2 control of the L1 directory also allows certain snoop requests to be resolved without waiting for L1 acknowledgement. The invention can be applied to, e.g., instruction, operand data and translation caches.

Owner:IBM CORP

System and method for adaptive implementation of victim cache mode in a portable computing device

ActiveUS20160210230A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData setParallel computing

Systems and methods for adaptive implementation of victim cache modes in a portable computing device (PCD) are presented. In operation, an upper level cache is partitioned into a main portion and a sample portion; and a lower level cache is partitioned into a corresponding main portion and sample portion in communication with the main portion and sample portion of the upper level cache. A victim mode sample data set and a normal mode sample data set are obtained from the lower level cache. Based on the victim mode and a normal mode sample data sets, a determination is made whether to operate the lower level cache as a victim to the upper level cache. The main portion of lower level cache is caused to operate either as a victim or non-victim to the main portion of the upper level cache in accordance with the determination.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com