Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

349 results about "Graph spectra" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A graph whose spectrum consists entirely of integers is known as an integral graph. The maximum vertex degree of a connected graph is an eigenvalue of iff is a regular graph. Two nonisomorphic graphs can share the same spectrum. Such graphs are called cospectral.

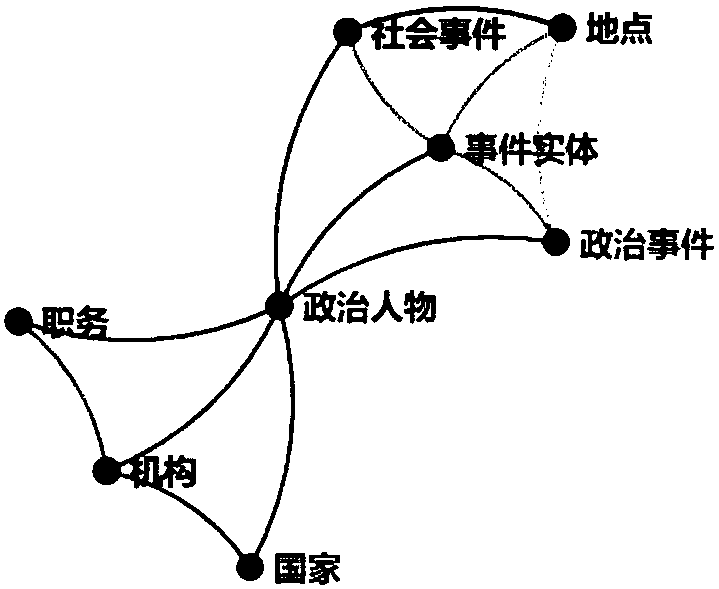

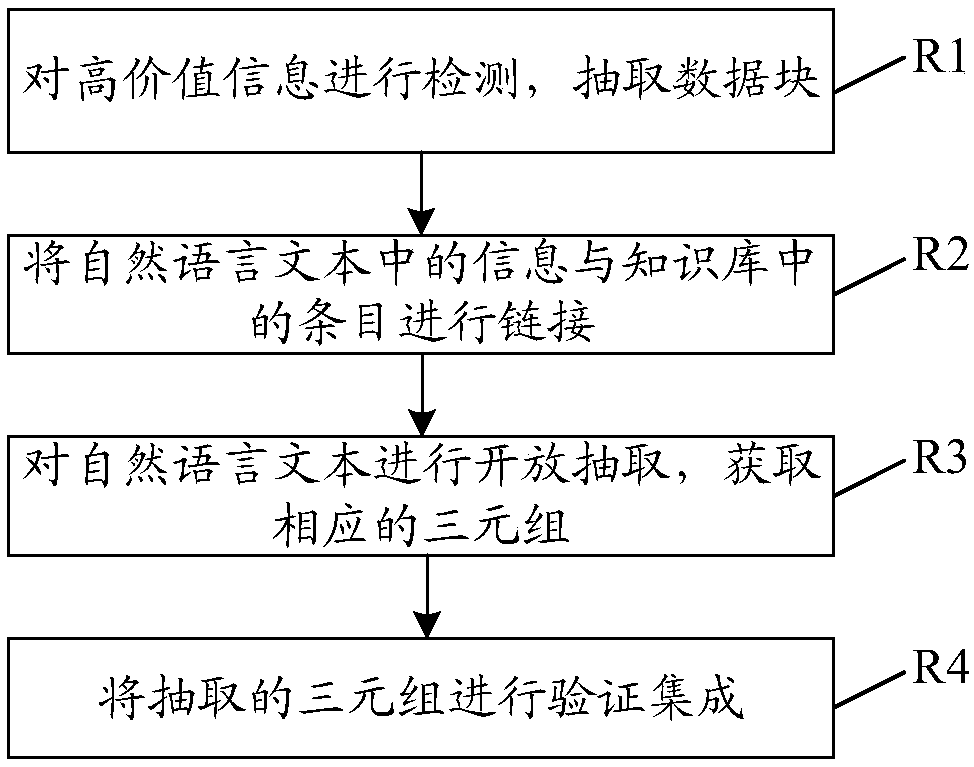

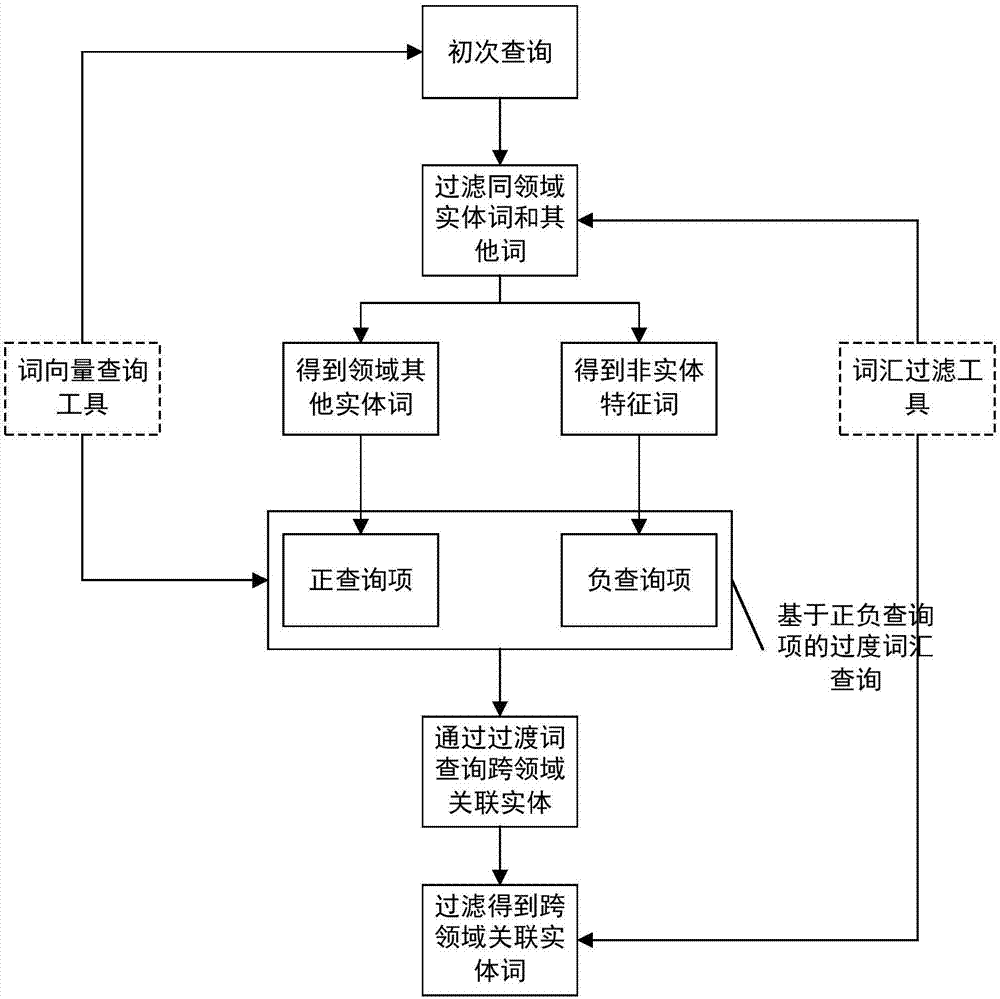

Abnormal information text classification method based on knowledge graph

InactiveCN108595708AImprove reliabilitySpecial data processing applicationsEntity linkingGraph spectra

The invention provides an abnormal information text classification method based on a knowledge graph. According to the method, first, a domain knowledge graph is constructed, and an entity identifierand an entity link based on the domain knowledge graph are constructed; second, text feature representation vectors v<text> and entity feature representation vectors v<ent> are constructed; and last,the text feature representation vectors and the entity feature representation vectors are merged to obtain new text representation vectors v<merge> fusing knowledge features, classified training is performed on the new text representation vectors, and a final classification result is obtained.

Owner:BEIHANG UNIV +1

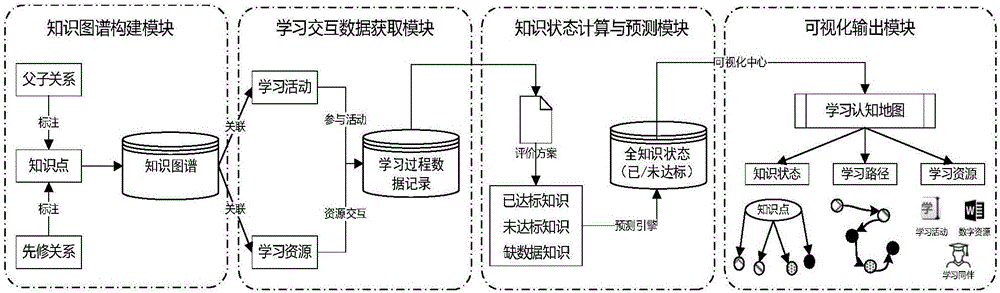

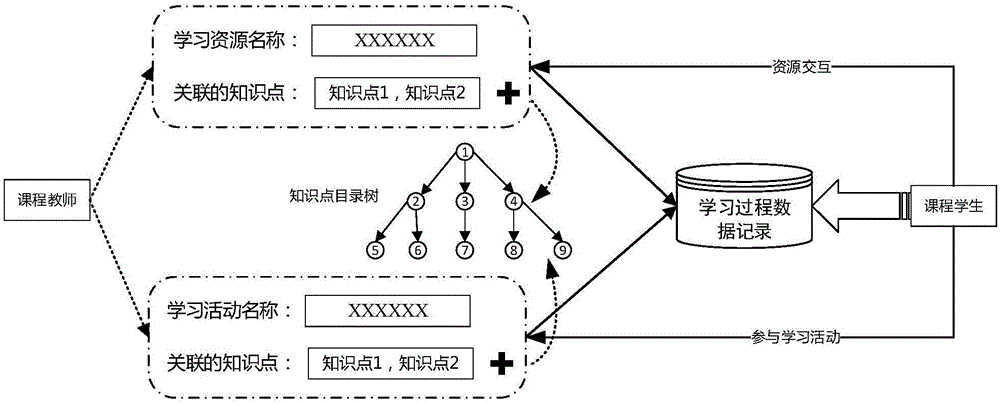

Online learning cognitive map generation system and method for representing knowledge learning mastering state of leaner in specific field

The invention discloses an online learning cognitive map generation system and method for representing the knowledge learning mastering state of a leaner in a specific field. The system comprises an intelligent terminal and a server. The intelligent terminal is used for online learning interaction. The online learning cognitive map generation system runs in the server comprising a knowledge graph construction module, a learning interaction data acquisition module, a knowledge state calculation and prediction module and a visual output module. The online learning cognitive map generation system and method are suitable for a common online learning platform and can simulate the cognitive structure of the learner and diagnose the learning defect of the leaner to provide personalized learning resources and social network services for the learner, so that learning confusion is reduced, cognitive loads are reduced, and learning pertinency and accuracy are improved.

Owner:BEIJING NORMAL UNIVERSITY

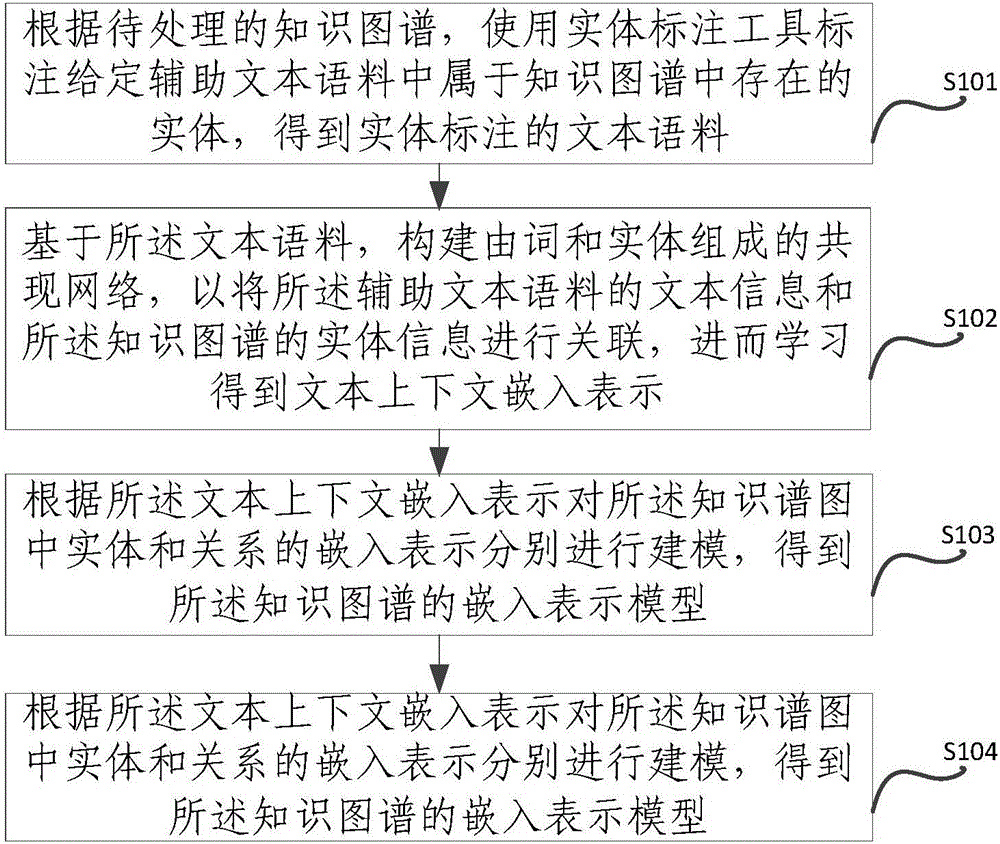

Method and device for acquiring knowledge graph vectoring expression

ActiveCN105824802ARich relevant informationSolve the problem of insufficient representation effect caused by sparsityNatural language data processingSpecial data processing applicationsStochastic gradient descentGraph spectra

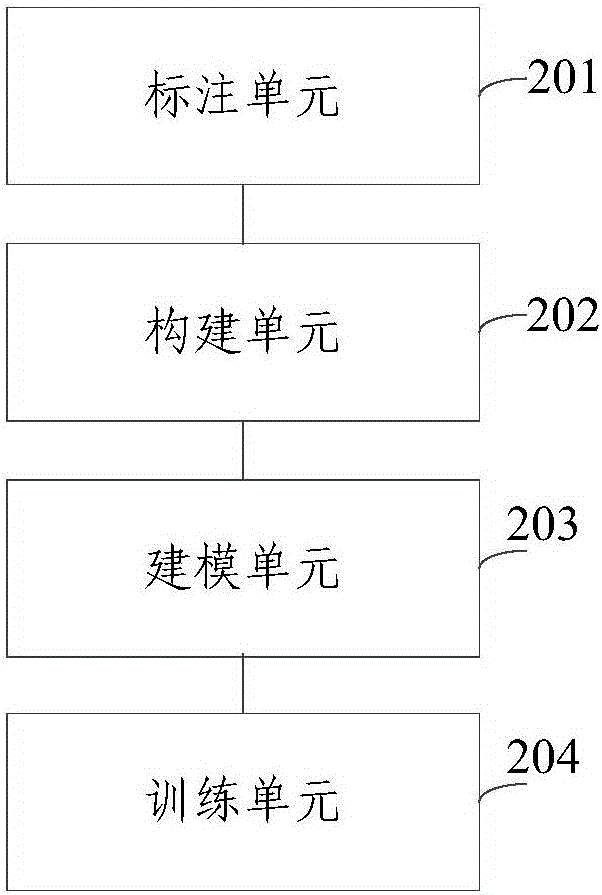

The invention discloses a method and a device for acquiring knowledge graph vectoring expression. The method comprises the following steps of labeling an entity, existed in and belonging to a knowledge graph, in a given auxiliary text corpus by utilization of an entity labeling tool according to a to-be-processed knowledge graph so as to obtain an entity-labeled text corpus; constructing a co-occurrence network comprising words and entities on the basis of the text corpus so as to relate text information of the auxiliary text corpus to entity information of the knowledge graph, and then learning to obtain a text context embedded expression; respectively modeling the embedded expression of the entity and relation in the knowledge graph according to the text context embedded expression so as to obtain an embedded expression model of the knowledge graph; training the embedded expression model by utilization of a stochastic gradient descent algorithm so as to obtain the embedded expression of the entity and relation in the knowledge graph. The method and the device disclosed by the invention have the advantages that not only can the expression capability of the relation be improved, but also the problem of insufficient expression effect caused by sparseness of the knowledge graph can be effectively solved.

Owner:TSINGHUA UNIV

Knowledge graph representation learning method

InactiveCN105630901AEvaluation function optimizationRelational databasesSpecial data processing applicationsFeature vectorGraph spectra

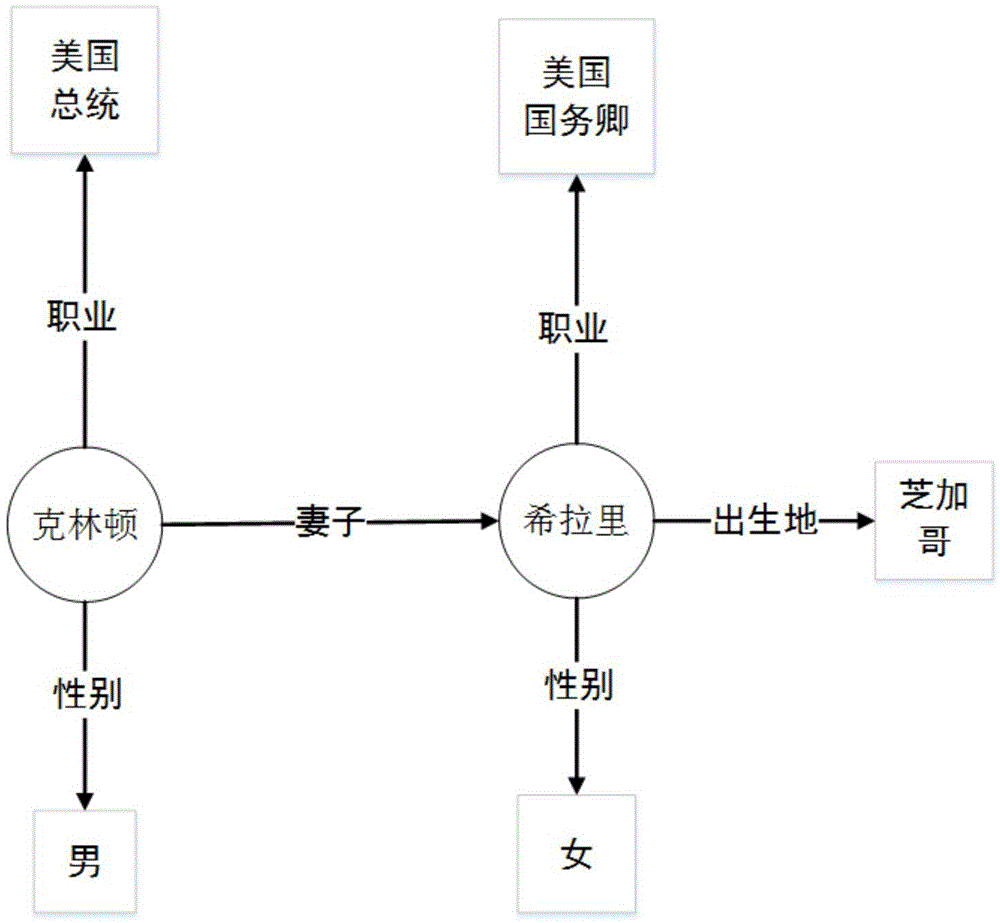

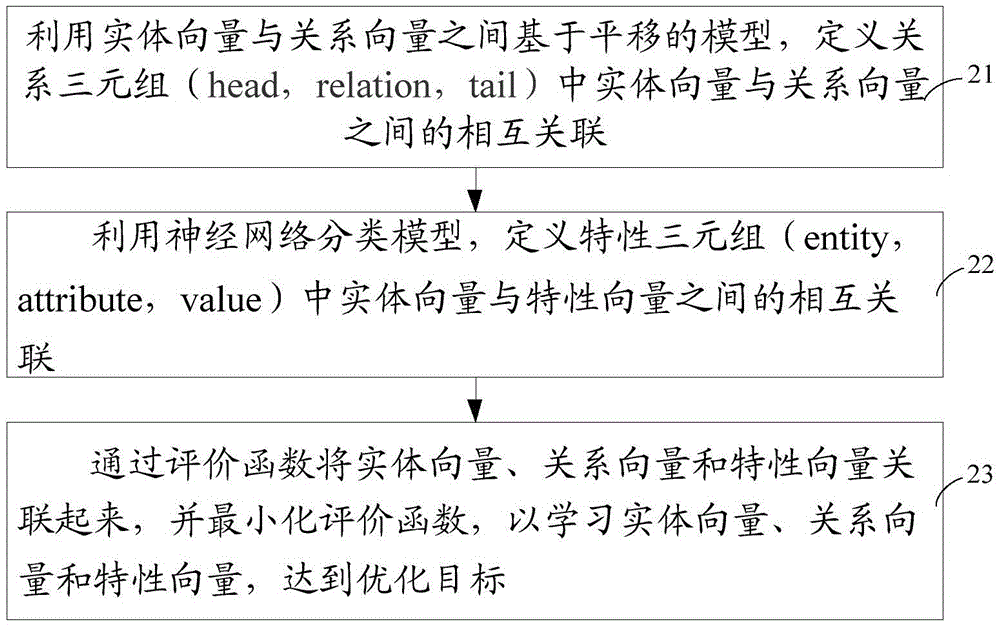

The invention discloses a knowledge graph representation learning method. The method comprises the following steps: defining the correlation between entity vectors and relation vectors in a relation triple (head, relation, tail) by utilizing a translation-based model between the entity vector and the relation vector; defining the correlation between entity vectors and feature vectors in a feature triple (entity, attribute, value) by utilizing a neural network classification model; and correlating the entity vectors, the relation vectors and the feature vectors with one another through an evaluation function, and minimizing the evaluation function to learn the entity vectors, the relation vectors and the feature vectors so as to achieve the optimization aim. By adopting the method disclosed in the invention, the relation among the entity, the relation and the feature can be accurately represented.

Owner:TSINGHUA UNIV

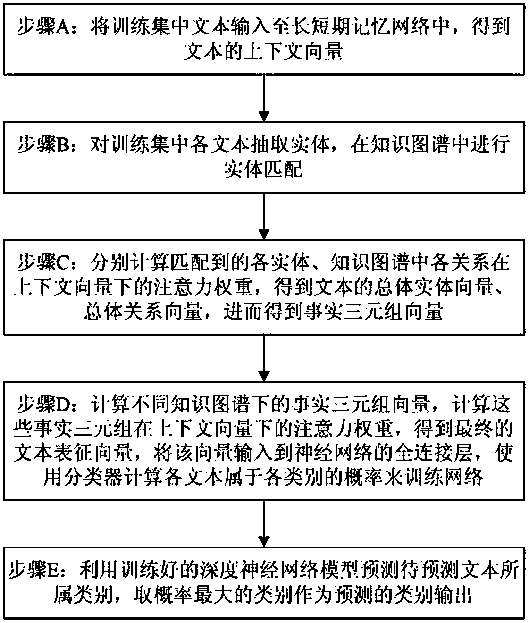

A neural network text classification method based on a multi-knowledge map

ActiveCN108984745AImprove understandingReliable classificationCharacter and pattern recognitionSpecial data processing applicationsNerve networkGraph spectra

The invention relates to a neural network text classification method based on a multi-knowledge map. The method comprises the following steps: inputting a text in a training set into a long-term and short-term memory network to obtain a context vector of the text; extracting entities from each text in the training set, and performing entity matching in the knowledge map; calculating the attentionweights of matched entities and relationships in the knowledge map under context vector to obtain the overall entity vector, the overall relationship vector and the fact triple vector of the text; computing the fact triple vectors under different knowledge maps, calculating the attention weights of these fact triples, obtaining the text representation vectors and inputting them to the full connection layer of the neural network, and using a classifier to calculate the probability of each text belonging to different categories to train the network; using the trained deep neural network model to predict the category of the text to be predicted. This method improves the model's understanding of text semantics and can classify text content more reliably, accurately and robustly.

Owner:FUZHOU UNIV

Building method of knowledge map based on vertical field

InactiveCN105956052ARealize self-learningAchieve scalabilitySpecial data processing applicationsText database clustering/classificationKnowledge classificationGraph spectra

The invention provides a building method of a knowledge map based on a vertical field. The method comprises the following steps of (1) extracting the word realization of classes of an on-line encyclopedia and the hyponymy between classes; (2) merging the field knowledge information, defining the data attribute and the relationship attribute of the field, and further setting the statute on the definition domain and the value domain of the attributes; (3) studying an entity layer, i.e., extracting an entity and filling the attribute value of the entity; performing mass processing on structurized and semi-structurized data by D2R or data collecting tools; and for non-structurized text data, defining the classes and the attributes of the upper layer body and the relationship between the classes and the attributes, and recognizing examples according to the relationship between the classes and the attributes. The method has the advantages that by using the method, the built knowledge classification of the vertical field knowledge map is clear; the self study and the automatic expansion of the knowledge map are realized; and the key effects are achieved on the information retrieval and semantic analysis of the vertical field.

Owner:QINGDAO PENGHAI SOFT CO LTD

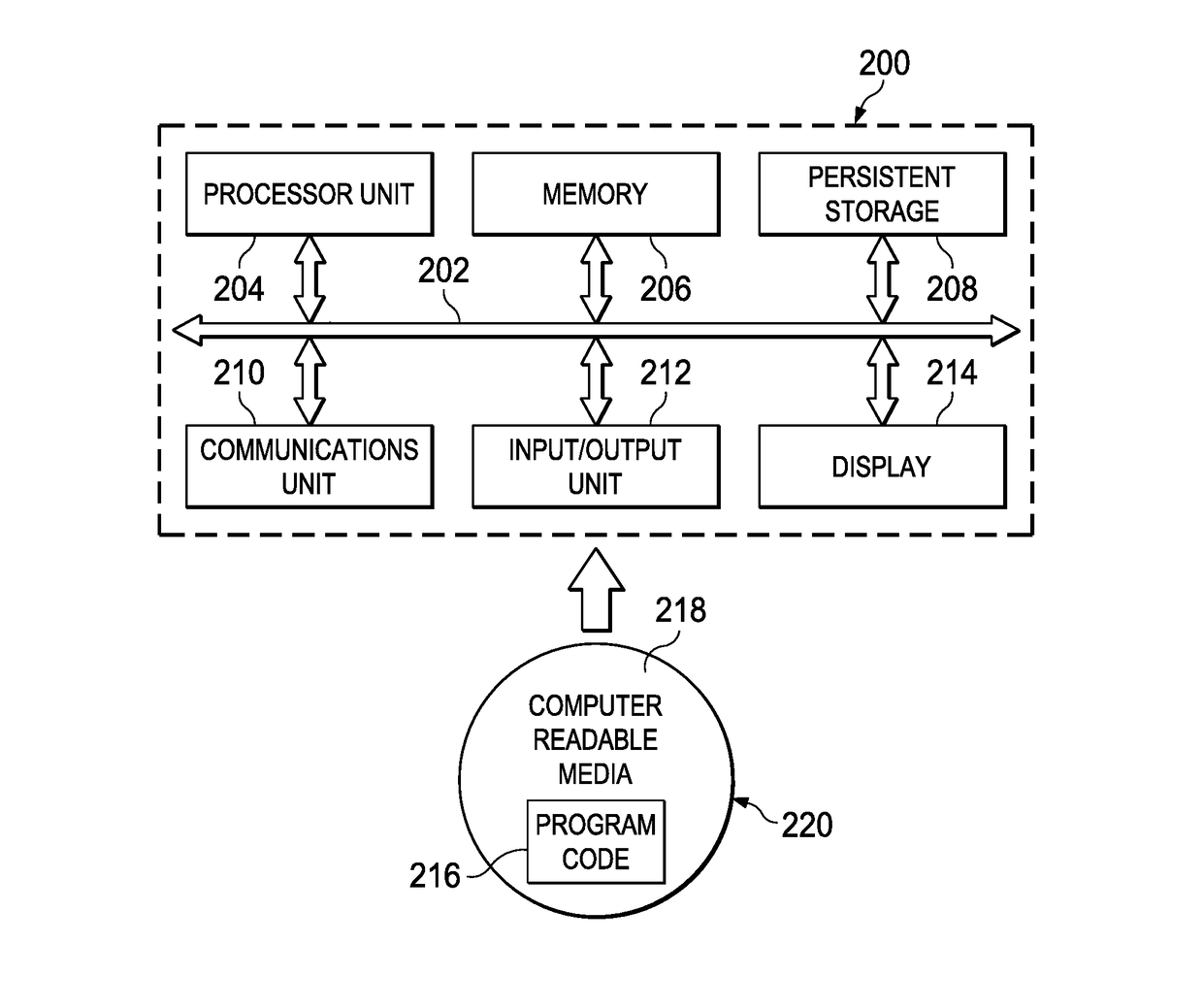

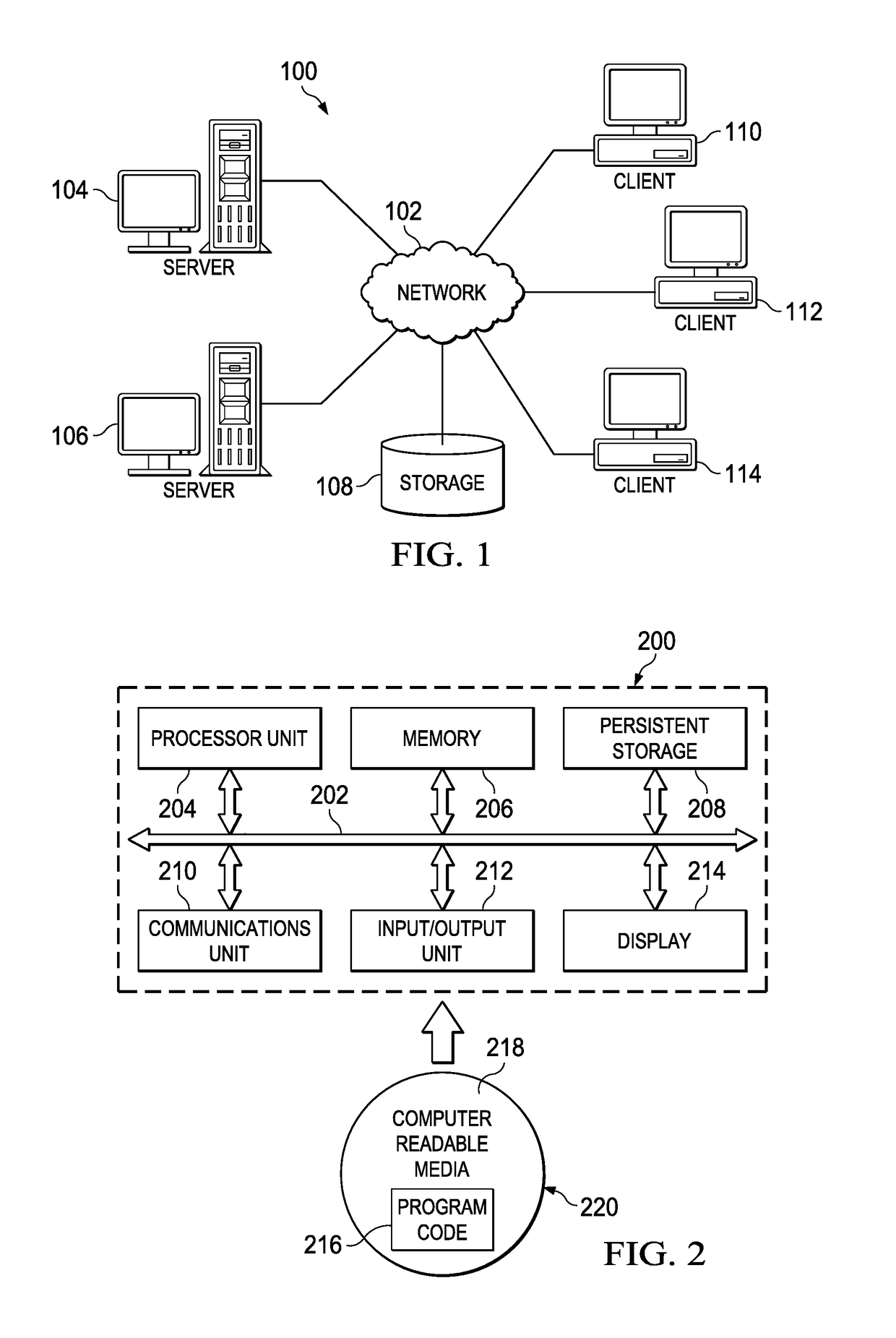

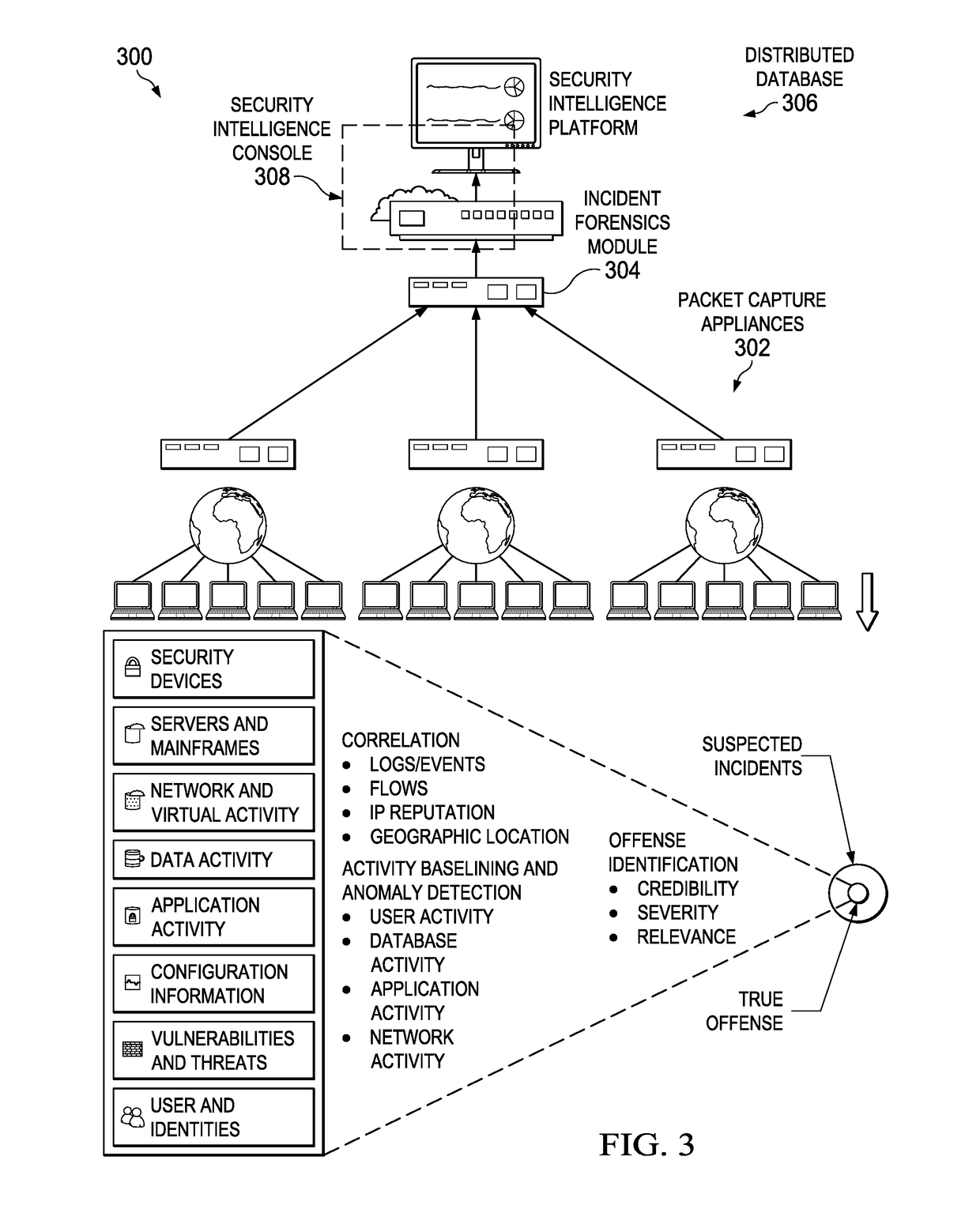

Cognitive offense analysis using contextual data and knowledge graphs

ActiveUS20180048661A1Simplifies further analysisSimplifies corrective taskDigital data information retrievalPlatform integrity maintainanceGraph spectraData source

An automated method for processing security events in association with a cybersecurity knowledge graph. The method begins upon receipt of information from a security system representing an offense. An initial offense context graph is built based in part on context data about the offense. The graph also activity nodes connected to a root node; at least one activity node includes an observable. The root node and its one or more activity nodes represent a context for the offense. The knowledge graph, and potentially other data sources, are then explored to further refine the initial graph to generate a refined graph that is then provided to an analyst for further review and analysis. Knowledge graph exploration involves locating the observables and their connections in the knowledge graph, determining that they are associated with known malicious entities, and then building subgraphs that are then merged into the initial graph.

Owner:IBM CORP

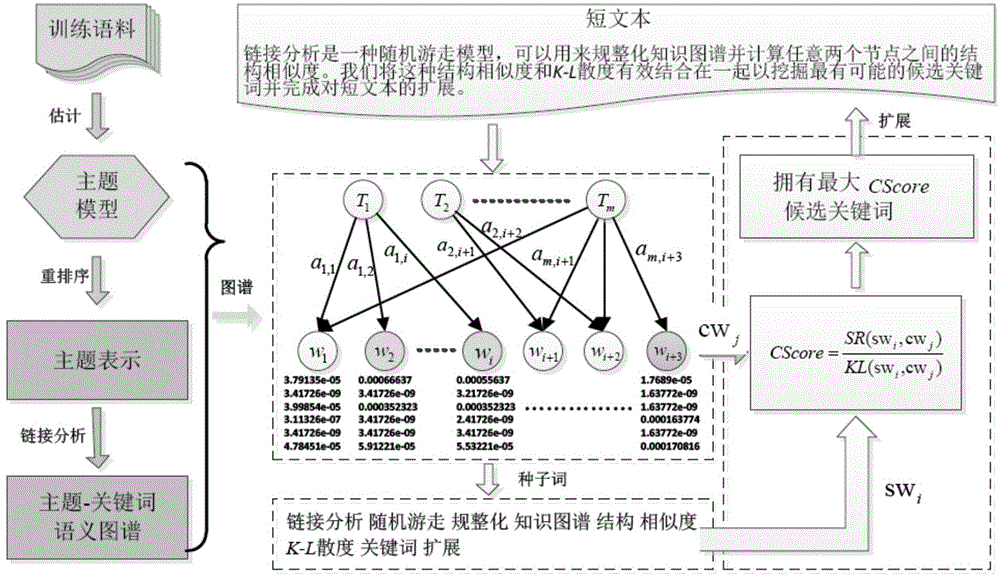

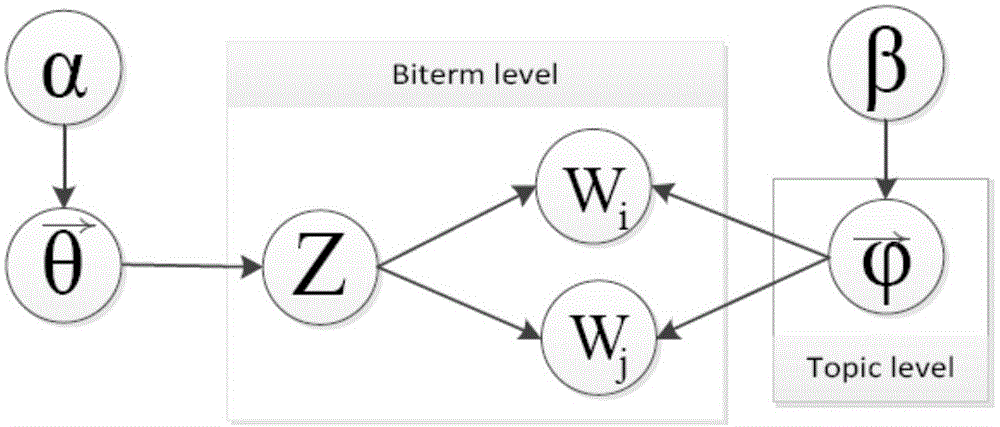

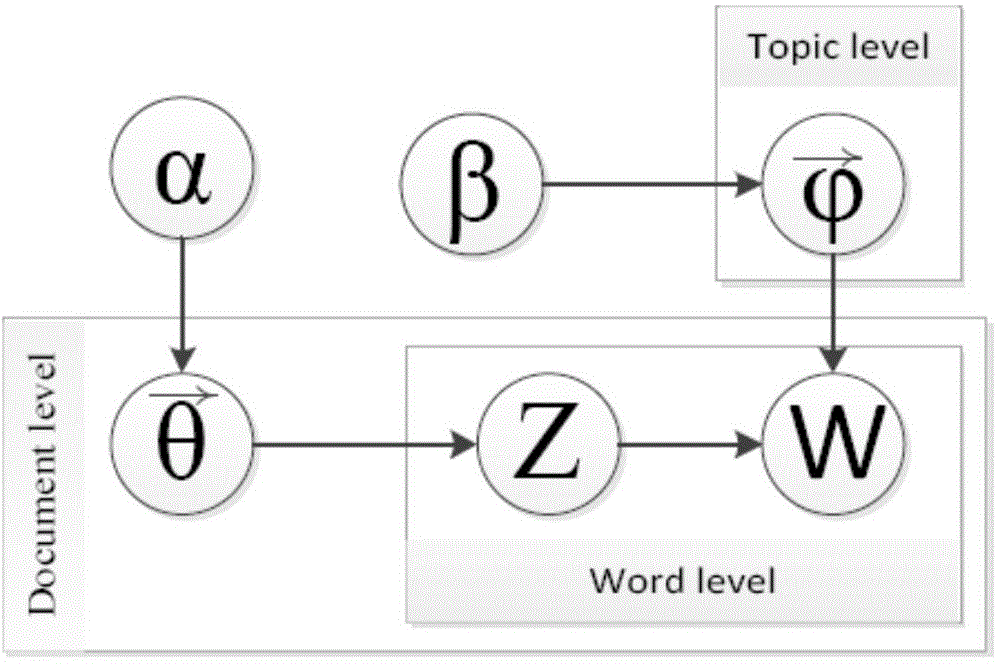

Short text characteristic expanding method based on semantic atlas

ActiveCN104391942AImprove classification performanceSolve the sparsity problemSemantic analysisSpecial data processing applicationsGraph spectraData set

The invention discloses a short text characteristic expanding method based on a semantic atlas. The method includes the steps: performing subject modeling by the aid of a training data set of a short text, and extracting subject term distribution; reordering the subject term distribution; building a candidate keyword dictionary and a subject-keyword semantic atlas; calculating comprehensive similarity degree evaluation of candidate keywords and seed keywords based on a link analysis method, and selecting the most similar candidate keywords to finish expanding the short text. Compared with a short text characteristic representation method based on a language model, the method is simple to operate and high in execution efficiency, and semantic correlation information between the keywords is sufficiently used. Compared with a traditional short text characteristic representation method based on a word bag model, the problems of data sparseness and semantic sensitivity are effectively relieved, and the method is independent of external large-scale auxiliary training corpus or a search engine.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Intelligent question and answer method and system

InactiveCN107818164ATargeted optimizationImprove accuracySpeech recognitionNeural architecturesFeature vectorGraph spectra

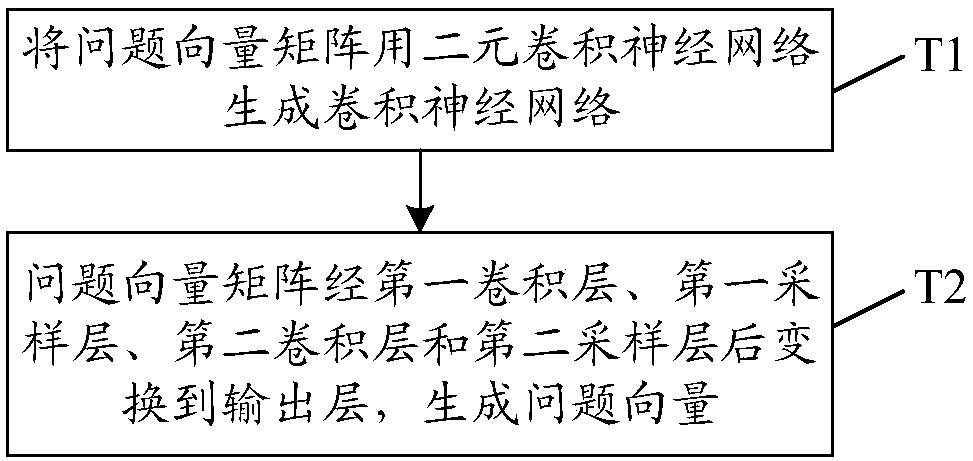

The invention provides an intelligent question and answer method and system. The technical problems that an existing intelligent question and answer method is poor in pertinence and low in question and answer accuracy rate are solved. The intelligent question and answer method comprises the following steps that 1, a knowledge graph in related fields is established; 2, voice of questions of a useris converted into text; 3, the text is quantified by using a skip-gram model, and a question vector matrix is generated; 4, a convolutional neural network is used for generating a question feature vector from the question vector matrix; 5, the similarity of the user's question feature vector and candidate answer feature vector is calculated; 6, answers are fed back to the user through rank learning.

Owner:NORTHEAST NORMAL UNIVERSITY

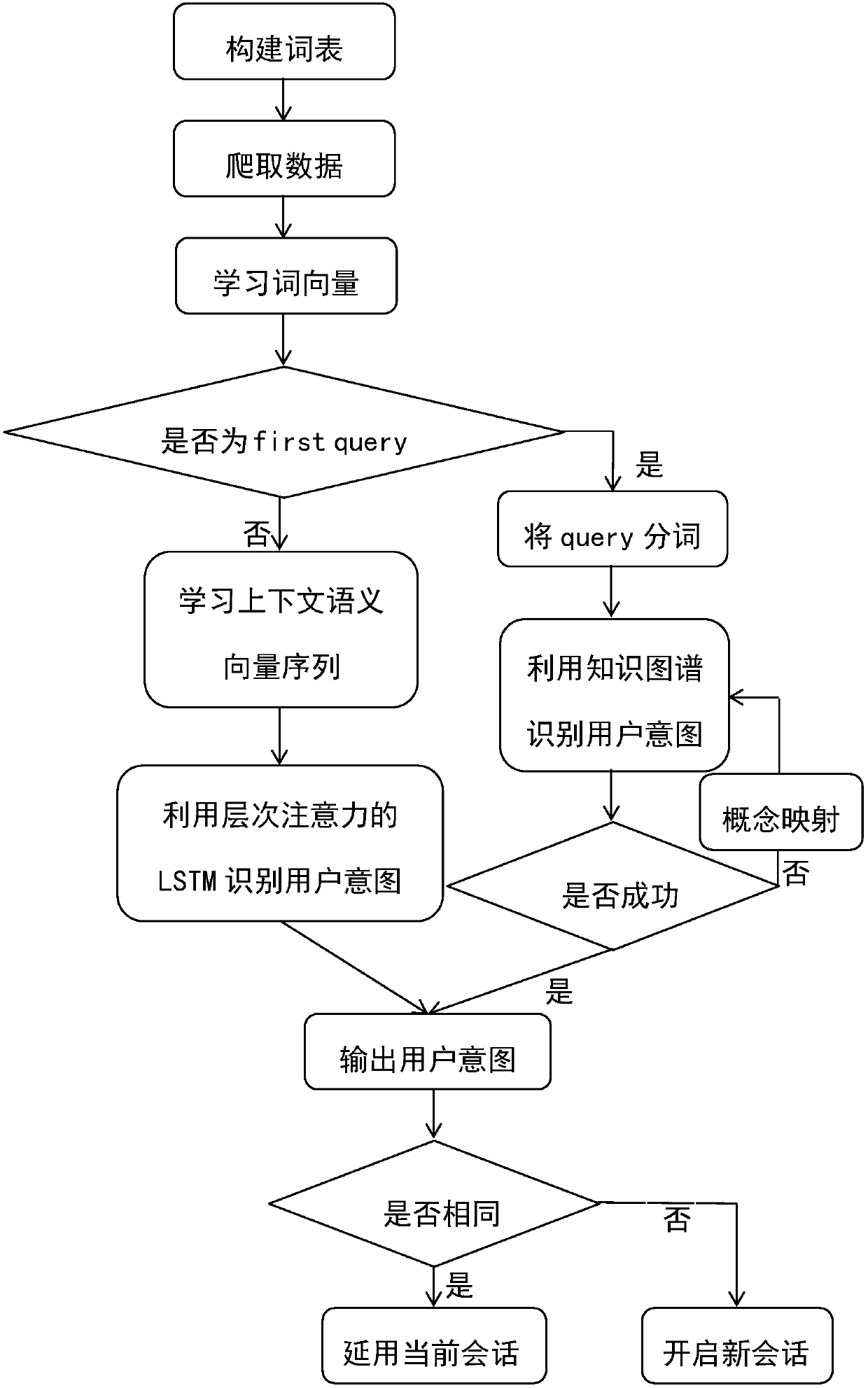

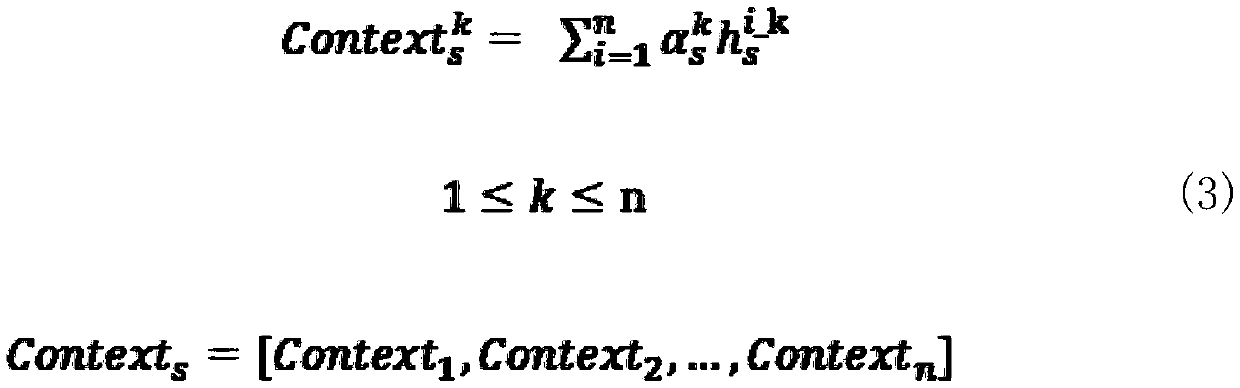

Multi-wheel dialogue management method for hierarchical attention LSTM and knowledge graph

ActiveCN108874782AImprove accuracyFull context semanticsSemantic analysisNeural architecturesTime informationGraph spectra

The invention discloses a multi-turn dialog management method for hierarchical attention LSTM and knowledge graph, and belongs to the field of natural language processing. The method has the core idea: taking conversation contents of the user and the system in the conversation as a context, extracting the context deep semantic through important and timing information of the word and sentence level, specifically in two steps, firstly extracting sentence semantics at the word level by utilizing the first attention mechanism LSTM, and then extracting context semantics through the second attentionmechanism LSTM at the sentence level; the attention mechanism keeps important information, and the attention mechanism is realized through the knowledge graph as external knowledge, the LSTM retainstiming information that collectively identifies the user intent and the recognition result is used to determine whether to open the next session. According to the multi-turn dialog management method for hierarchical attention LSTM and knowledge graph, the knowledge graph and the LSTM are utilized to learn the contextual deep semantics, the attention mechanism is utilized to filter out useless information, and therefore the user intention identification efficiency and accuracy are improved.

Owner:北京寻领科技有限公司

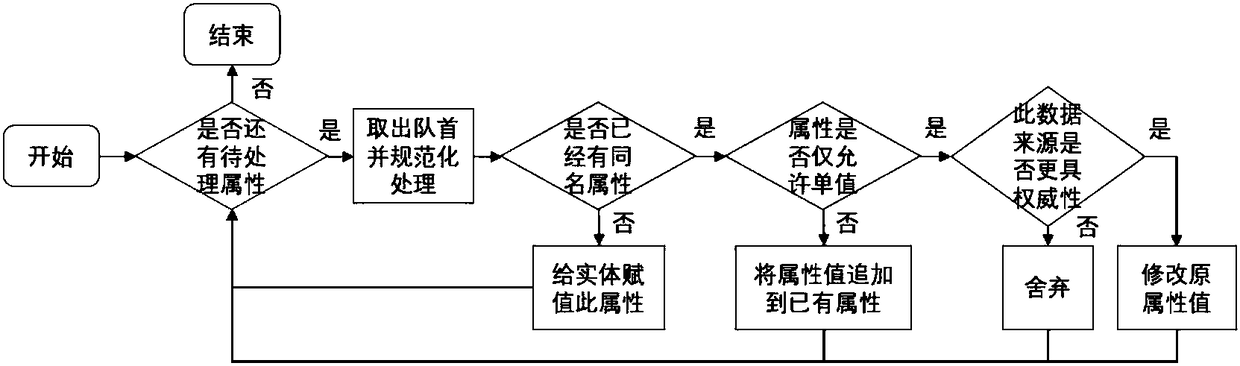

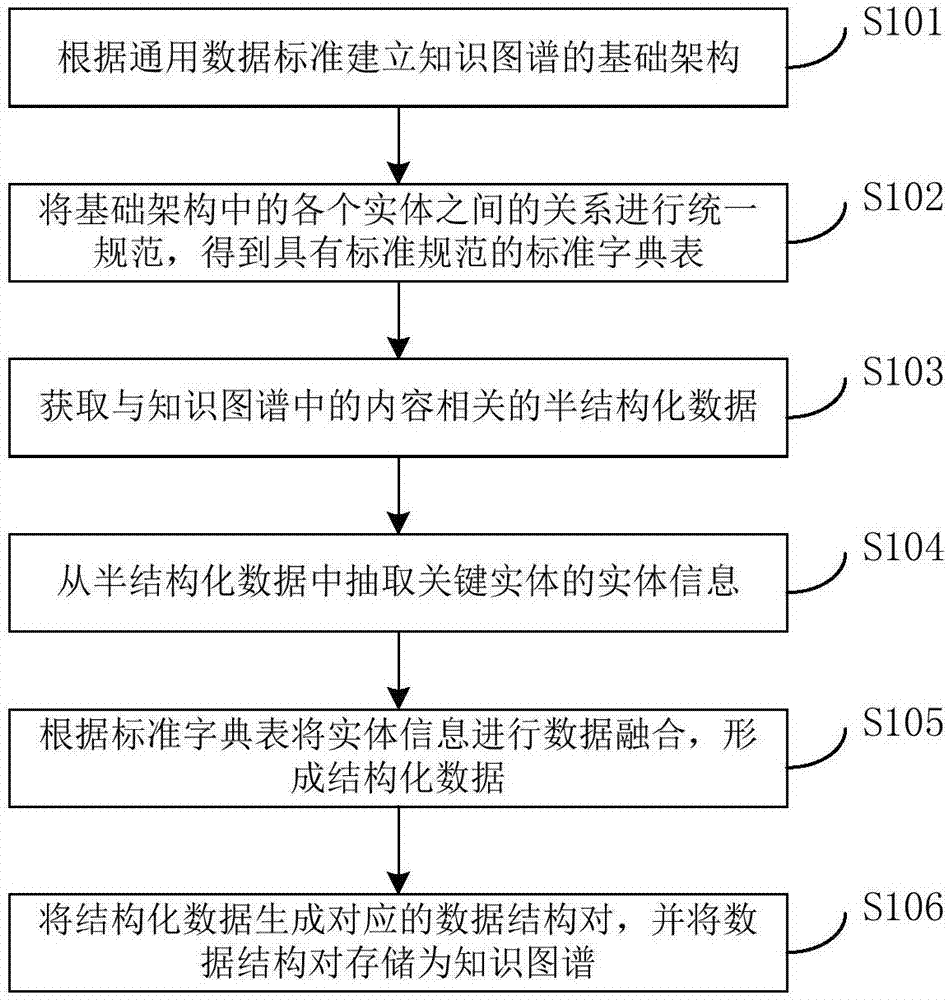

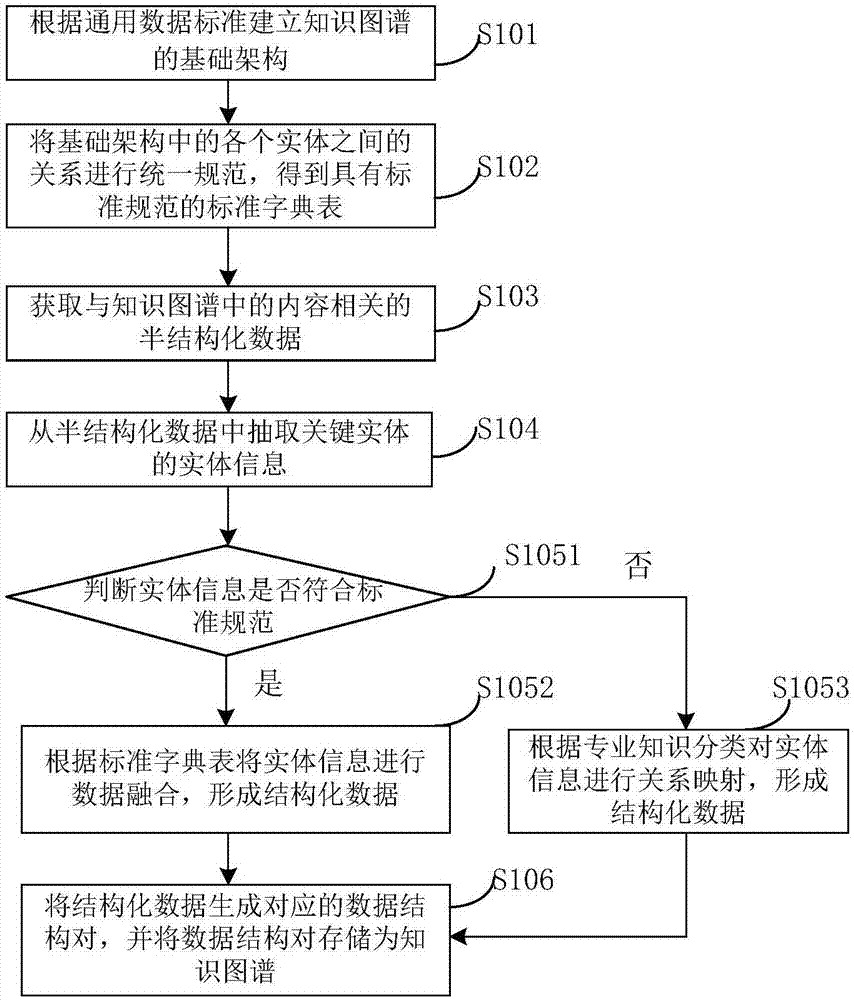

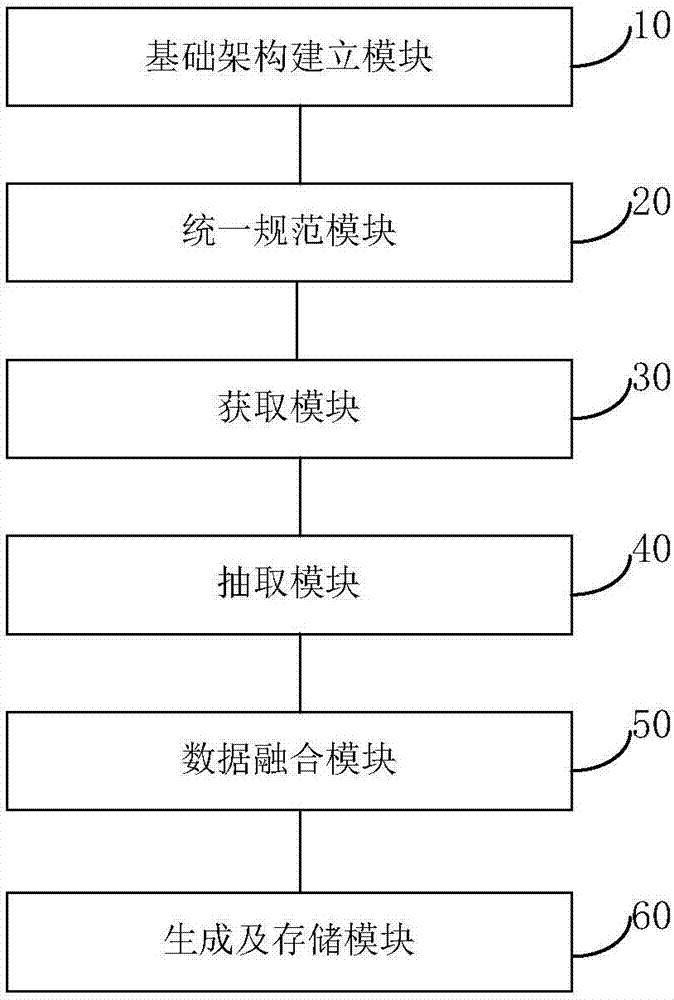

Knowledge graph establishment method and system

ActiveCN107491555ASemi-structured data mapping/conversionSpecial data processing applicationsGraph spectraSemi-structured data

The invention provides a knowledge graph establishment method and system. The knowledge graph establishment method comprises the steps that a basic architecture of a knowledge graph is established according to general data standards; the relations of entities in the basic architecture are normalized in a unified mode to obtain a standard dictionary table having standard specifications; semi-structural data associated with contents in the knowledge graph is obtained; entity information of key entities is extracted from the semi-structural data; data fusion is conducted on the entity information according to the standard dictionary table to form structural data; the structural data form corresponding data structure pairs, and the data structure pairs are stored as the knowledge graph. The data is obtained and data fusion is completed by establishing the basic architecture of the knowledge graph and utilizing multiple network channels, the function converting the semi-structural data into the structural data is achieved, and a foundation is laid for further development of artificial intelligence technologies on the basis.

Owner:法玛门多(常州)生物科技有限公司

Vector representation method of knowledge mapping domain and inference method and system of knowledge mapping domain

InactiveCN106909622AHigh precisionImprove relational reasoningRelational databasesSpecial data processing applicationsPattern recognitionGraph spectra

The invention provides a vector representation method of a knowledge mapping domain. The method comprises the steps that the multi-step relation paths of the entity pairs in a knowledge mapping domain and between the relations and entity pairs are expressed as the initial low-dimensional vector; a model of the low-dimensional vector of the multi-step relation paths of the entity pairs and between the relations and the entity pairs is trained using a loss function with variable intervals. The model learned from the vector representation method is using for relation inferences to improve the accuracy of inferences in different knowledge mapping domains.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

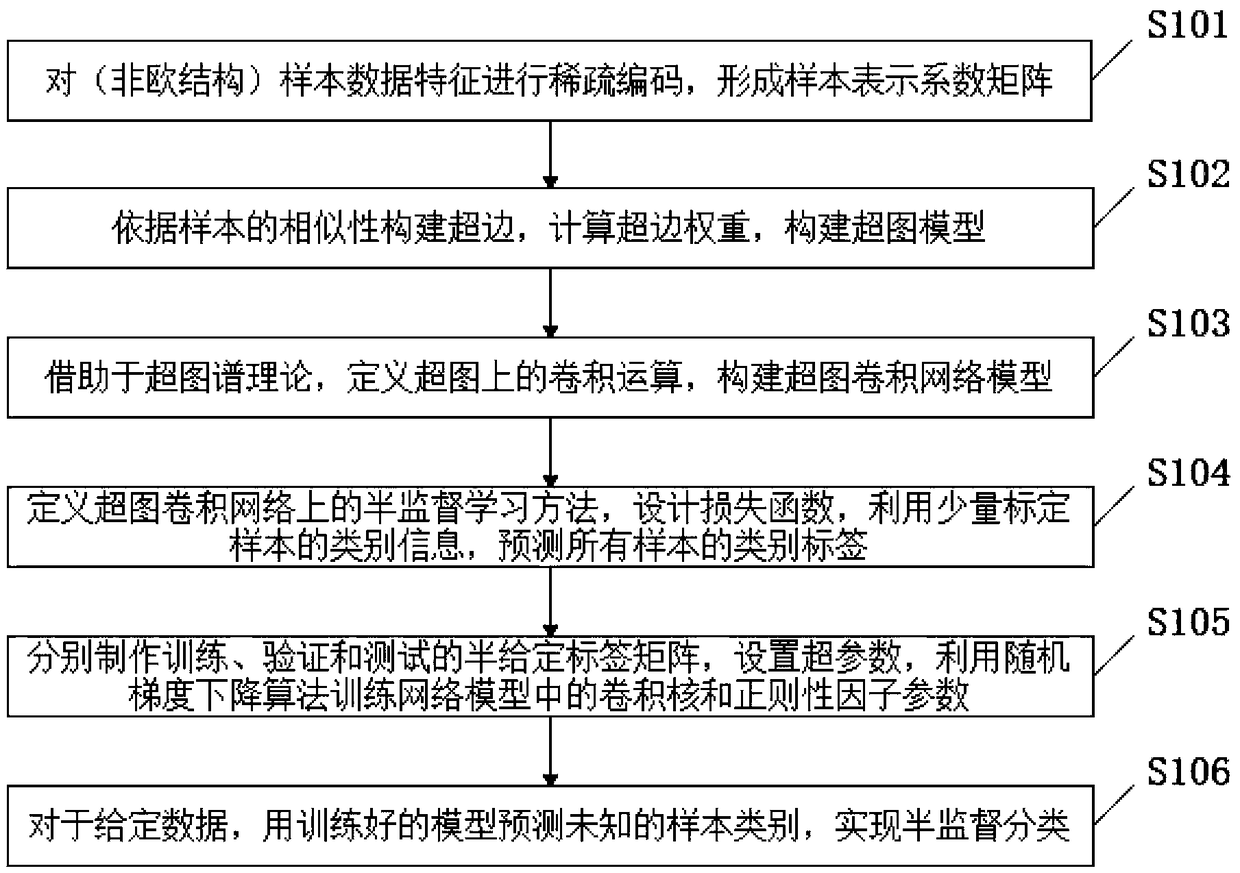

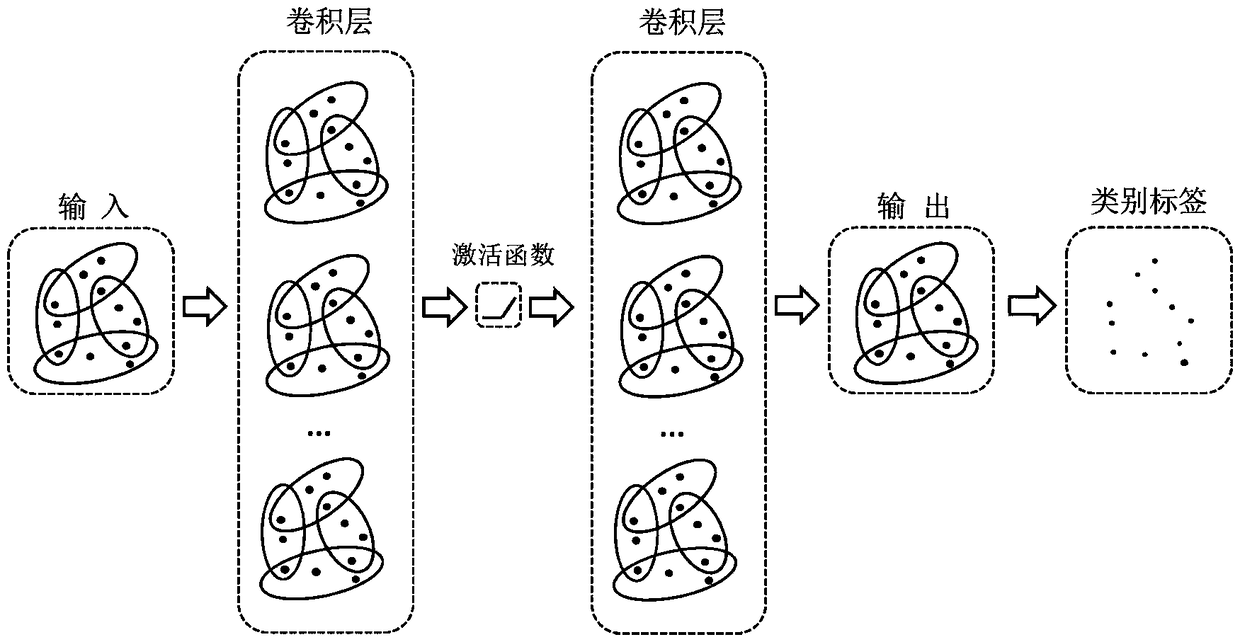

Hypergraph convolutional network model and semi-supervised classification method thereof

The invention provides a hypergraph convolutional network model and a semi-supervised classification method thereof. The method comprises the following steps: 1, carrying out sparse coding on sample data features of a non-Euclidean structure to form a sample representation coefficient matrix; 2, constructing a hyperedge according to the similarity of the samples, calculating a hyperedge weight, and constructing a hyper-graph model; 3, by means of a hypergraph theory, defining convolution operation on a hypergraph, and constructing a hypergraph convolution network model; 4, defining a semi-supervised learning method on the hypergraph convolutional network, designing a loss function, and predicting category labels of all samples by using category information of a small number of calibrationsamples; 5, respectively making a semi-given label matrix for training, verifying and testing, setting network hyper-parameters, training a network model, and learning a convolution kernel and a regularity factor parameter of the network according to a random gradient descent algorithm; and 6, for given data, predicting an unknown sample category by using the trained model to realize semi-supervised classification.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

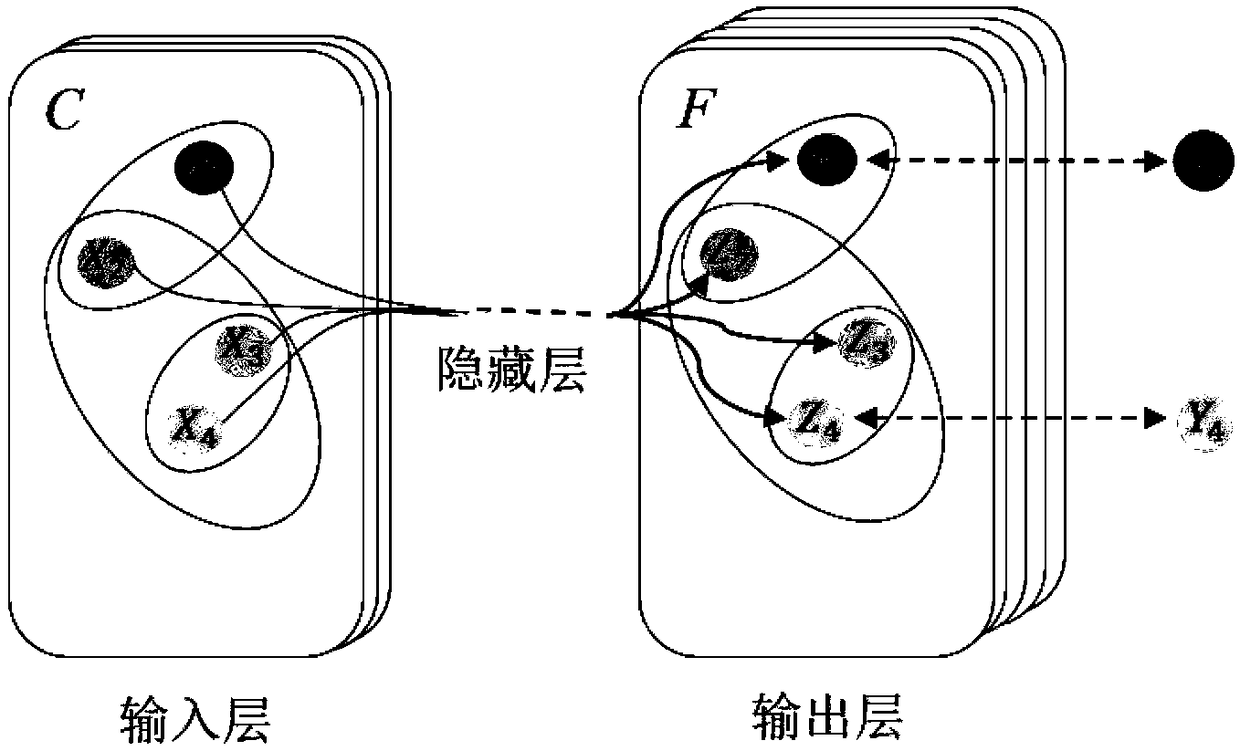

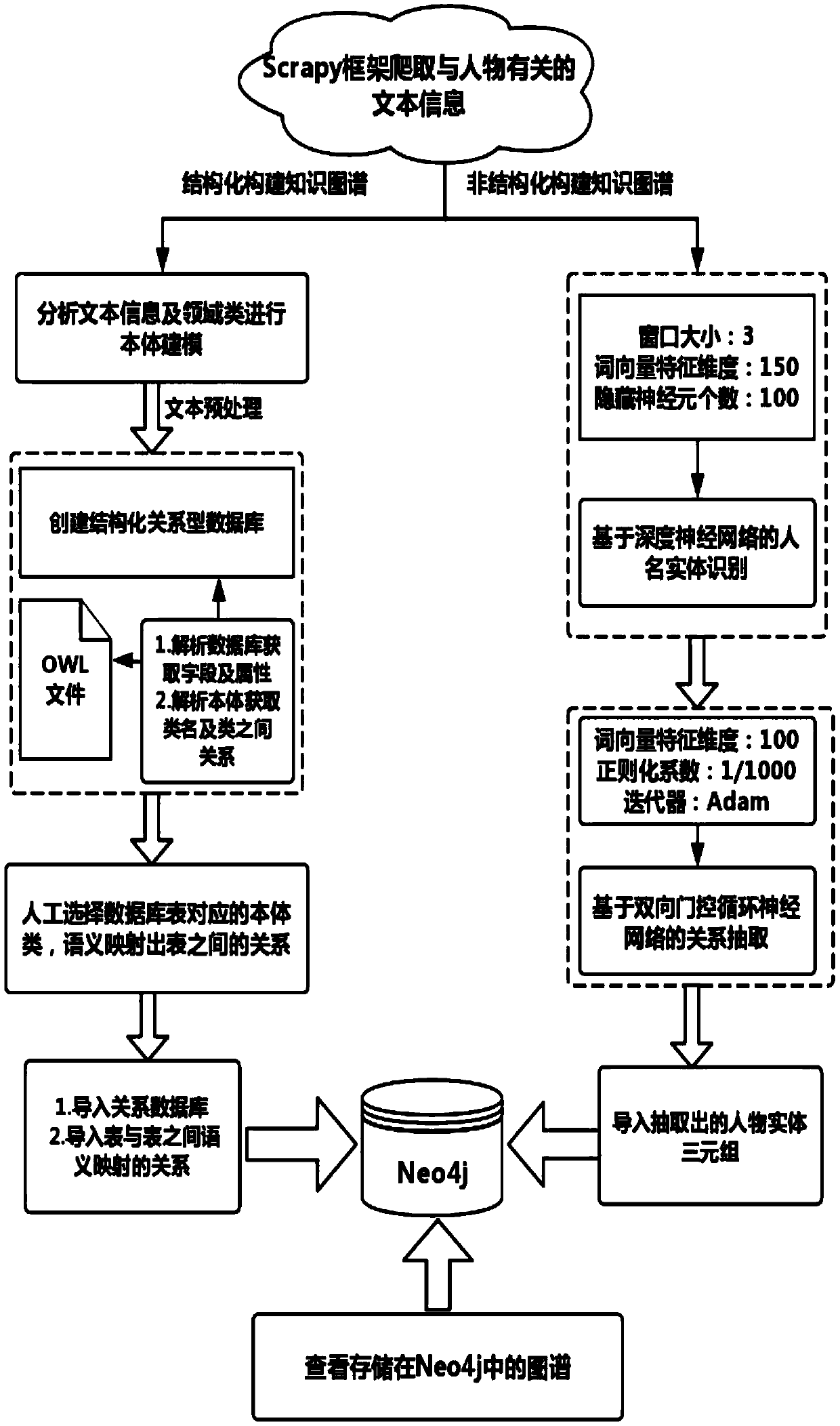

Character relationship graph construction method based on integration of ontology and multiple neural networks

PendingCN110222199ATo achieve the purpose of entity identificationImprove query efficiencyWeb data indexingVisual data miningGraph spectraThe Internet

The invention relates to a character relationship graph construction method based on integration of an ontology and multiple neural networks. The method comprises the following steps: crawling data related to a character in a certain domain in the Internet; establishing a domain character ontology; extracting data from a structured data table which contains multiple types of entities and has repeated entities to construct a standardized entity table; matching the two class names of the character ontology model with the two entity table names through a semantic mapping algorithm, automaticallyobtaining all entity relationships, and storing the entity relationships in a Neo4j database in a graph structure; for the text data in the structured table, carrying out character entity recognitionand relationship extraction by using a sliding window, entity position characteristics and a bidirectional gating recurrent neural network; and updating the current graph structure of the newly addedrelationship to form a domain character relationship knowledge graph. The character relationship advanced features can be extracted from the original relational data and the text data, manual design is not needed, the recognition effect is improved, and the efficiency of constructing the character relationship graph by the complex webpage text is improved.

Owner:QINGDAO UNIV

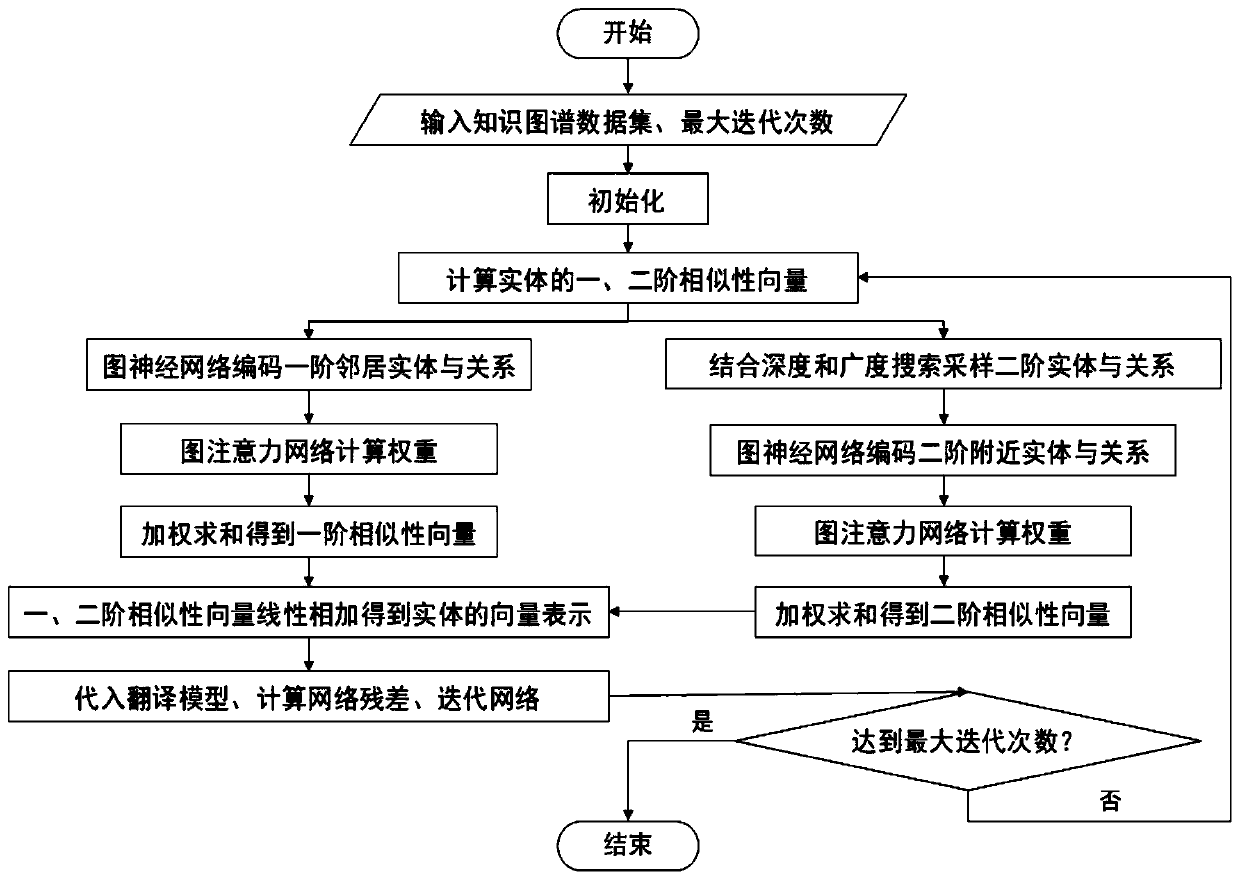

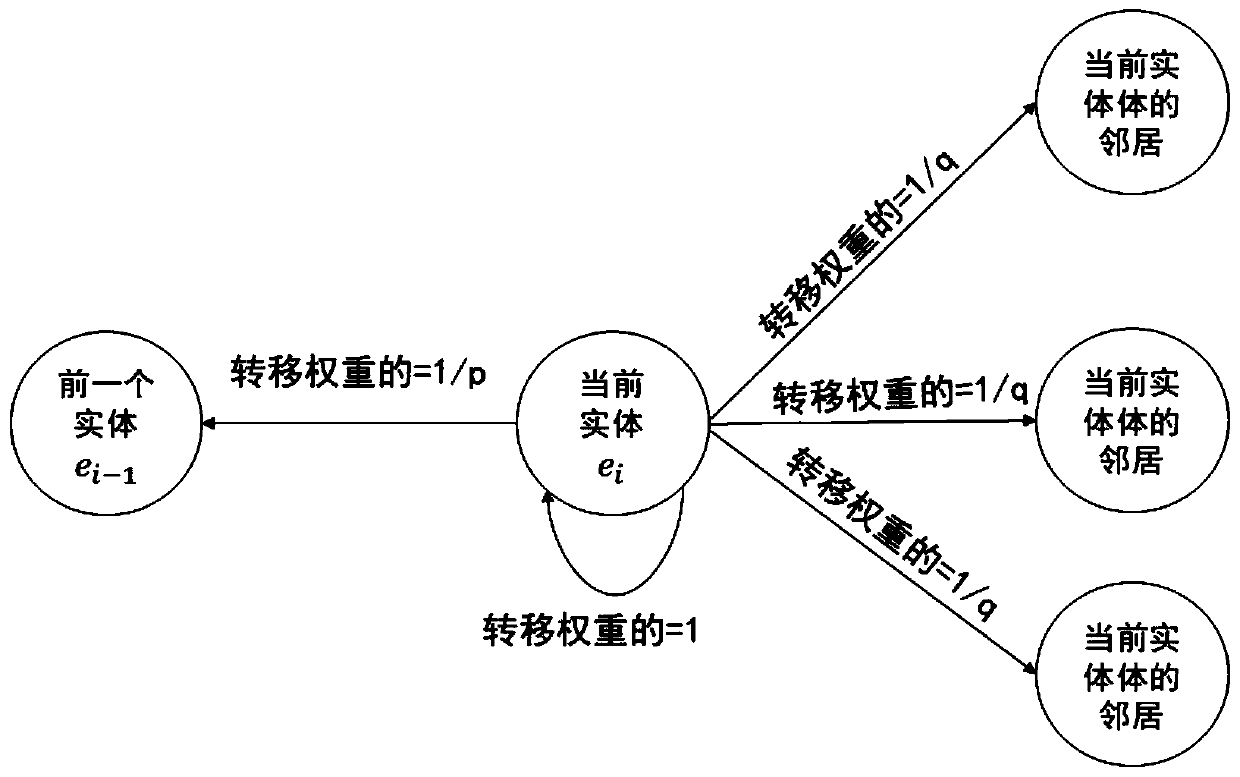

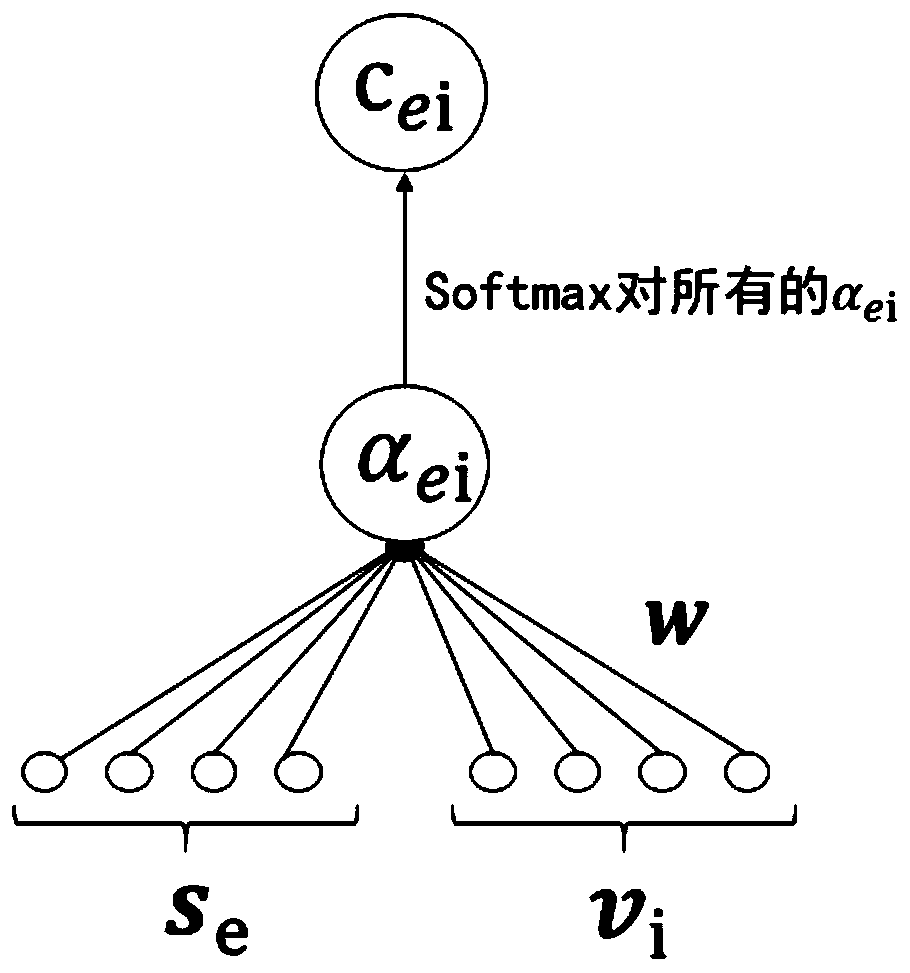

Knowledge graph entity semantic space embedding method based on graph second-order similarity

ActiveCN109829057AVector representation goodSolving the Semantic Space Embedding ProblemNeural learning methodsSemantic tool creationData setGraph spectra

The invention discloses a knowledge graph entity semantic space embedding method based on graph second-order similarity, and the method comprises the steps: (1) inputting a knowledge graph data set and a maximum number of iterations; (2) calculating first-order and second-order similarity vector representations through first-order and second-order similarity feature embedding processing by considering a relation between entities through a graph attention mechanism to obtain first-order and second-order similarity semantic space embedding representations; (3) carrying out weighted summation onthe final first-order similarity vector and the final second-order similarity vector of the entity to obtain a final vector representation of the entity, inputting a translation model to calculate a loss value to obtain a graph attention network and a graph neural network residual, and iterating the network model; And (4) performing link prediction and classification test on the network model. According to the method, the relation between entities is mined by using a graph attention mechanism for the first time, and patents have a relatively good effect in the application fields of link prediction, classification and the like of the knowledge graph.

Owner:SUN YAT SEN UNIV

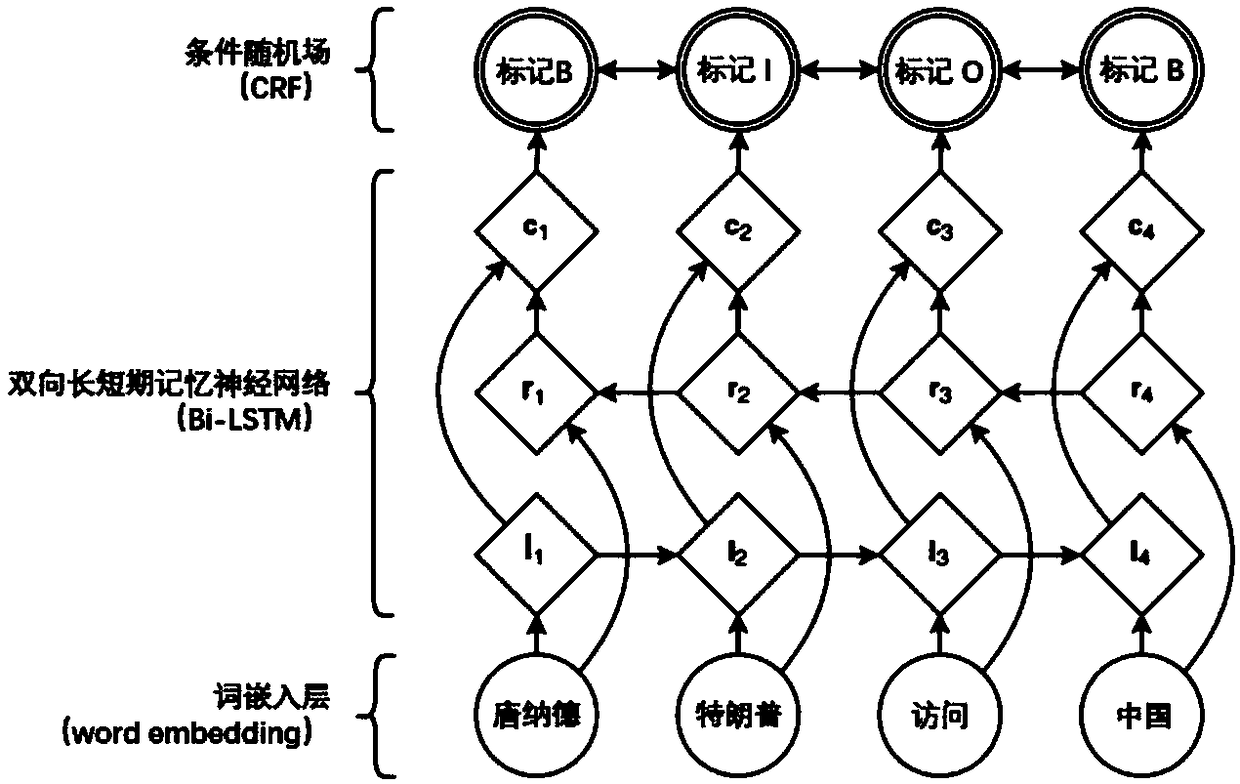

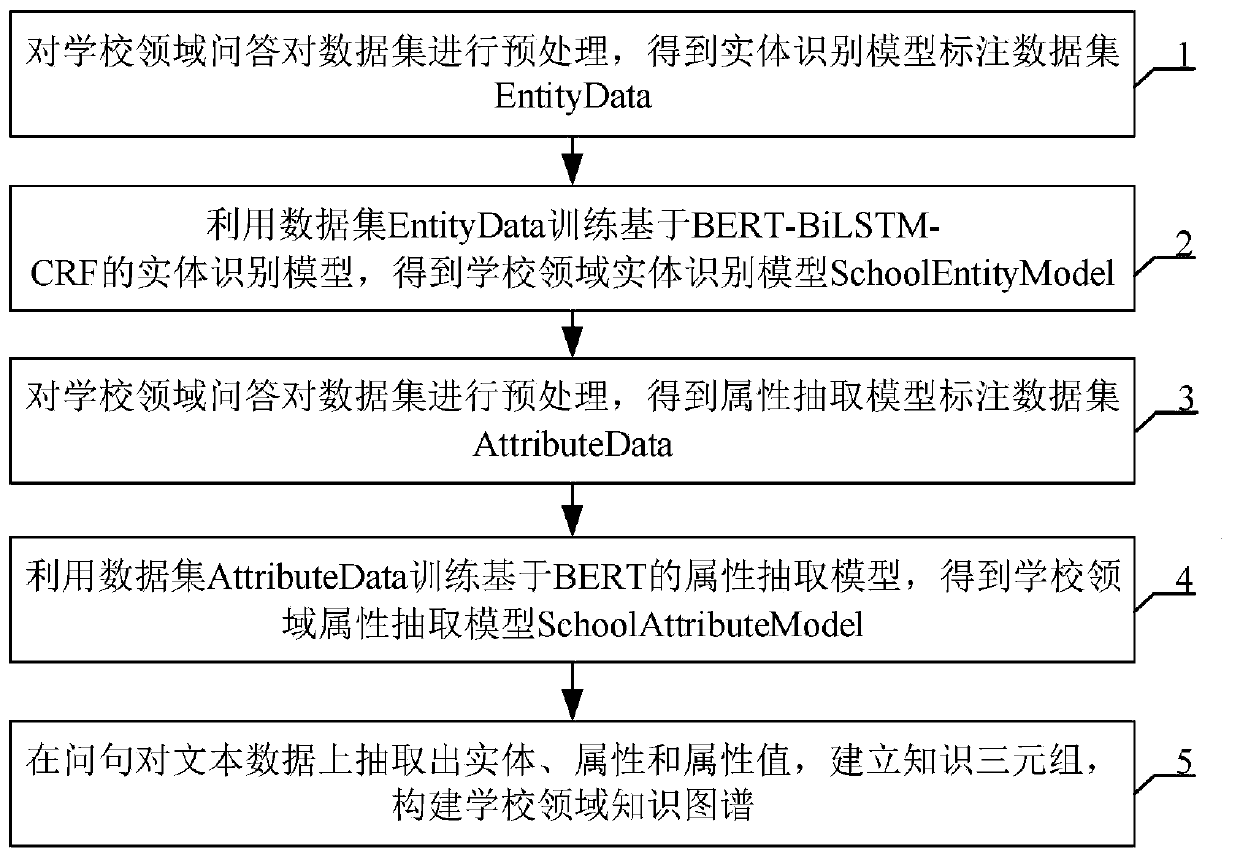

School domain knowledge graph construction method based on entity recognition and attribute extraction model

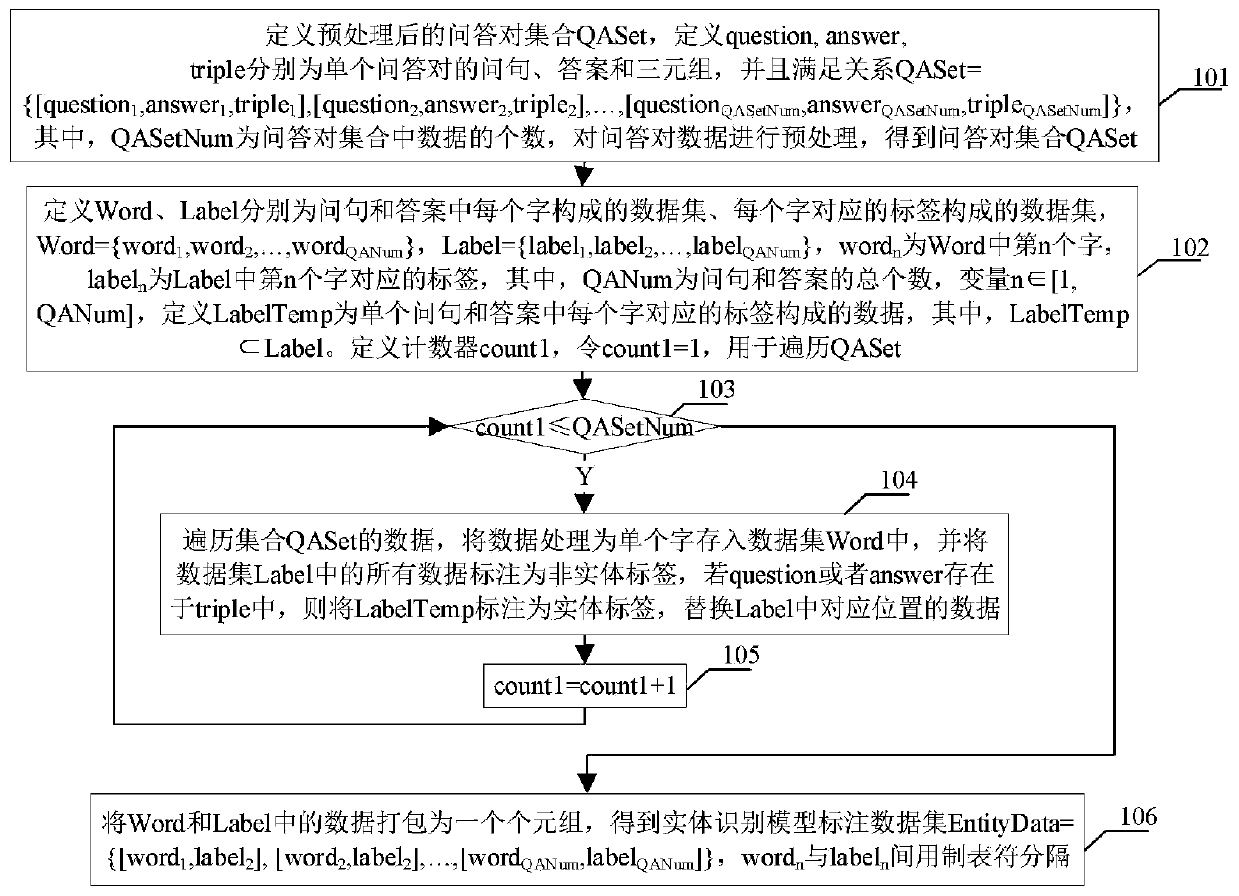

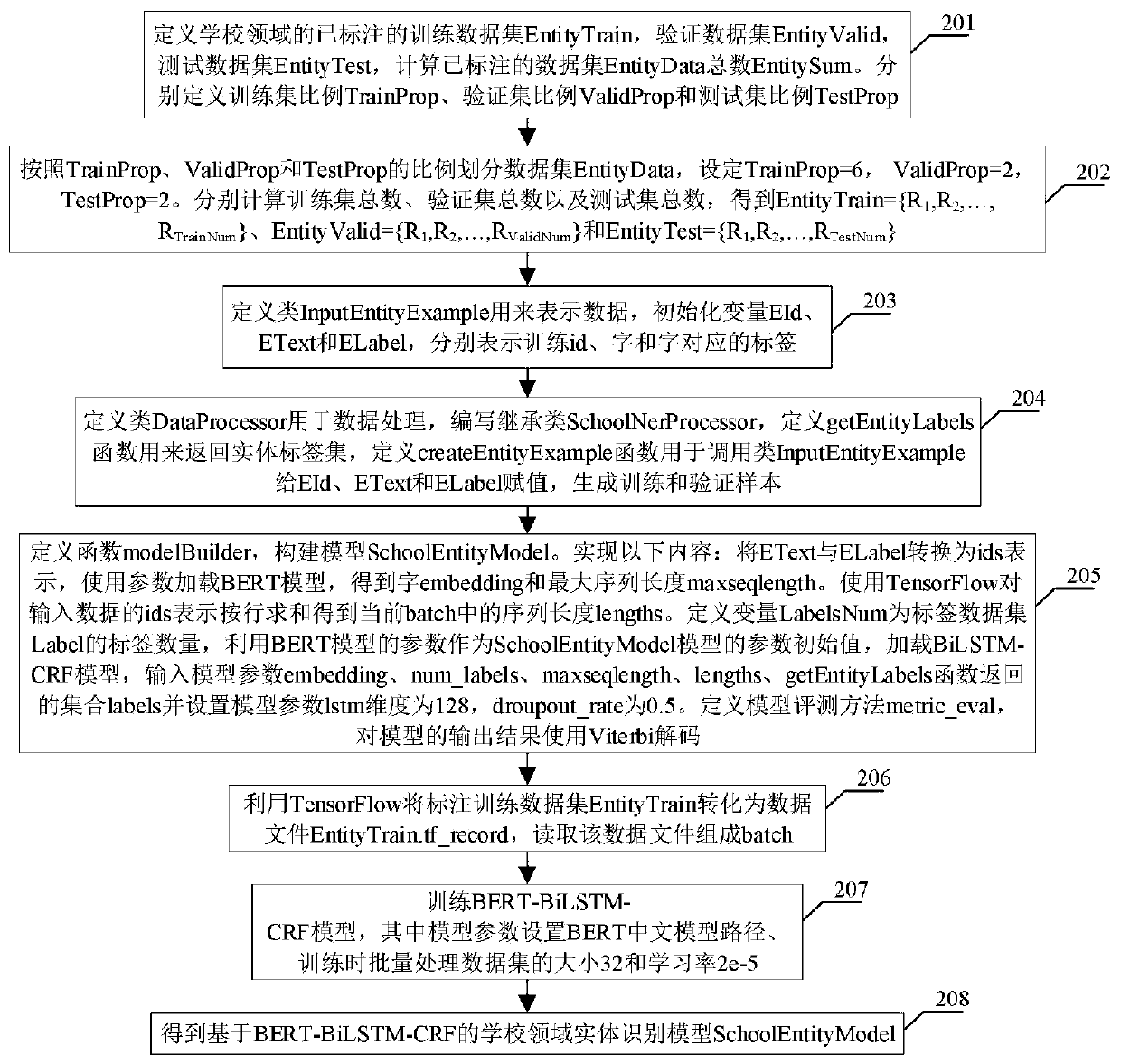

PendingCN110287334AEfficient constructionImprove generalization abilityData processing applicationsSpecial data processing applicationsPaired DataGraph spectra

The invention discloses a school domain knowledge graph construction method based on entity identification and an attribute extraction model. The method comprises the steps of firstly, preprocessing a school domain question and answer pair data set to obtain an entity recognition model annotation data set EntityData; training an entity identification model based on BERT-BiLSTM-CRF by utilizing the data set EntityData so as to obtain a school domain entity identification model SchioolEntityModel; preprocessing the school domain question and answer pair data set to obtain an attribute extraction model annotation data set AttributeData; training a BERT-based attribute extraction model by utilizing the data set AttributeData so as to obtain a school domain attribute extraction model SchioolAttributeModel; and finally, extracting the entities, attributes and attribute values in the question pair data set through the ScheolEntity Model and the ScheolAttributeModel respectively, so that a knowledge triple is established, and a school domain knowledge graph is constructed. According to the method, the school domain knowledge graph can be effectively constructed.

Owner:HUAIYIN INSTITUTE OF TECHNOLOGY

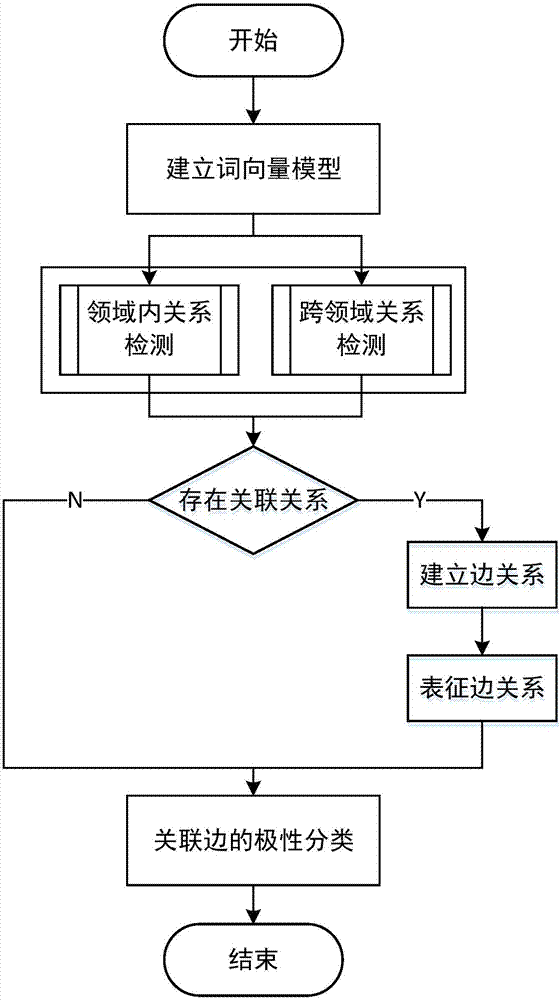

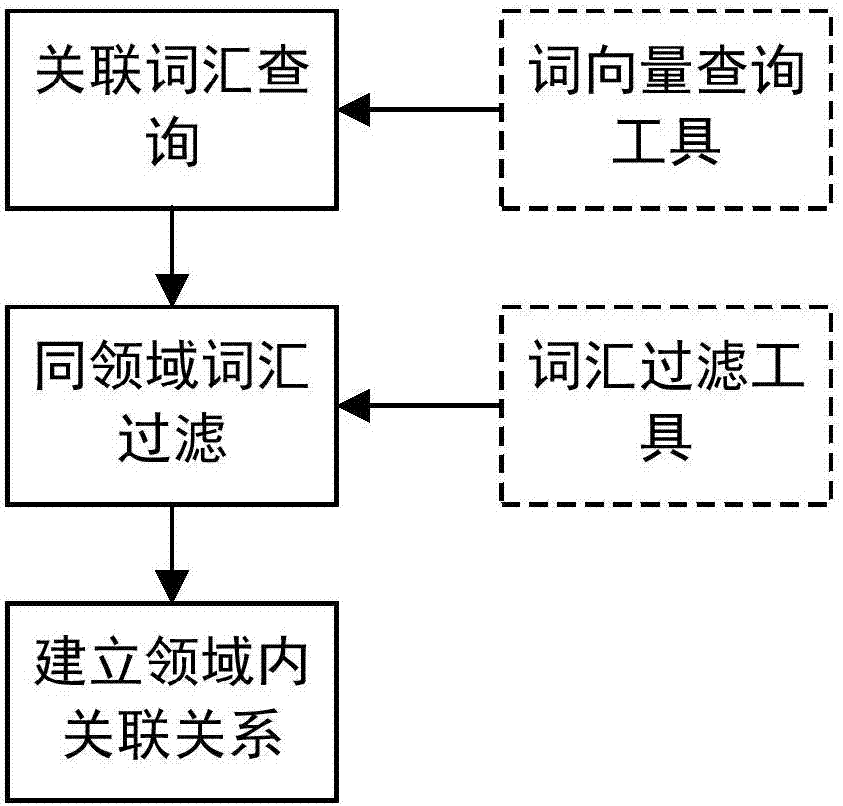

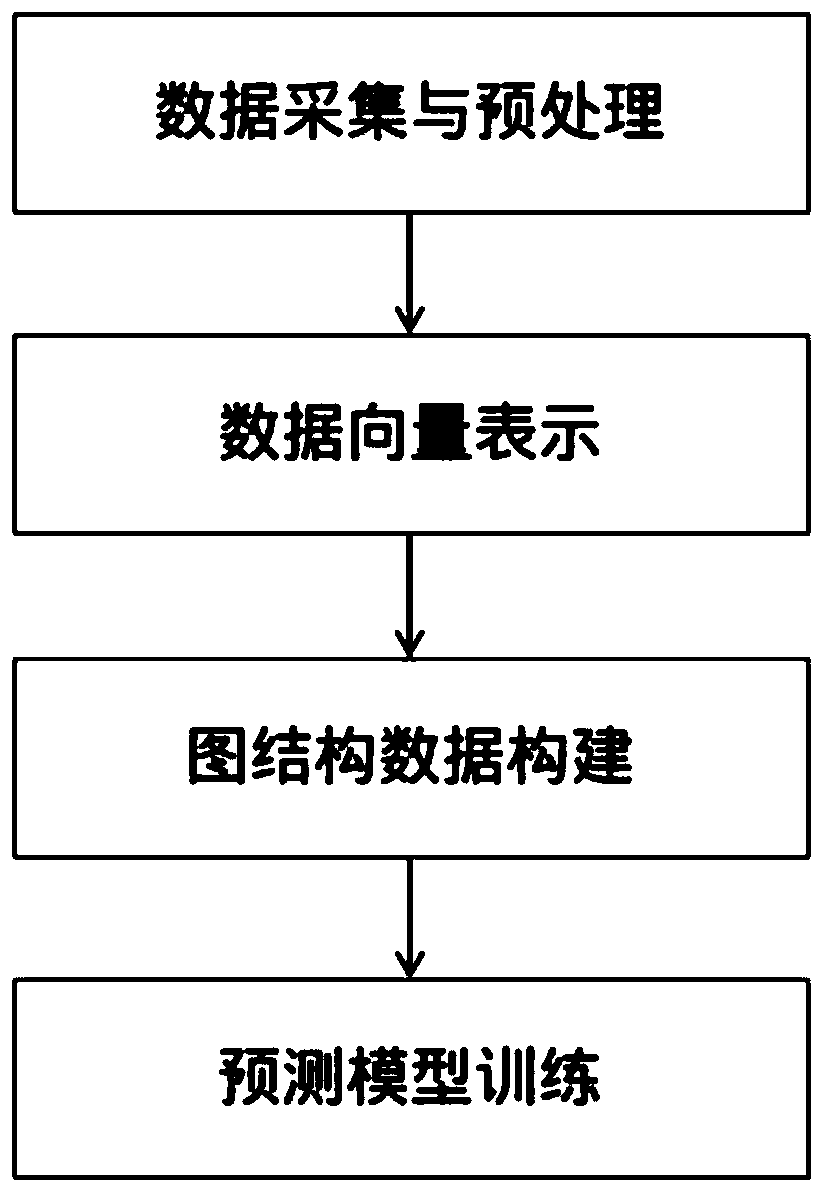

Healthy diet knowledge network construction method based on neural network and graph structure

InactiveCN107391906AClose to daily lifeNatural language data processingNeural architecturesHealthy dietNODAL

The invention discloses a healthy diet knowledge network construction method based on a neural network and a graph structure. The method comprises the steps that word vector modeling is performed on a text corpus, so that each non-stop word in the text corpus corresponds to one word vector with a fixed length; a cosine similarity between two word vectors is used to measure the relational degree between entities corresponding to the two word vectors; food material entity nodes and symptom entity nodes are extracted, the two types of entity nodes are regarded as entity nodes in a topological structure, edge relations between the entity nodes are constructed to form the graph structure, and all the edge relations between the entity nodes are described by one group of representative words; vector expressions corresponding to each representative word are arranged to obtain a representative matrix of the edge relations between the entity nodes; and a classification framework based on a deep neural network is designed, the representative matrix is input, and polarities of the edge relations between the entity nodes are classified. Through the method, the problems that a traditional healthy diet knowledge base is not high in automation degree and obvious in domain limitation are effectively solved.

Owner:SOUTH CHINA UNIV OF TECH

Vector constraint embedded transformation knowledge graph inference method

InactiveCN106528609AReduce calculationAdd constraintsRelational databasesSpecial data processing applicationsGraph spectraAlgorithm

The invention discloses a vector constraint embedded transformation knowledge graph inference method. The method comprises the steps of: step 1, acquiring a semantic type of each relation and each entity in a knowledge graph; step 2, embedding an entity set and a relation set into a low-dimensional continuous vector space, and normalizing the entity set and the relation set; step 3, mapping the normalized entity set and relation set into a corresponding vector matrix according to an original triple corresponding relation; step 4, calculating a scoring loss function value of each triple in the knowledge graph in the low-dimensional continuous space to construct a training model; step 5, optimizing the training model for the triples which satisfy a relation semantic type; step 6, repeating step 5 until a loop end condition is satisfied; and step 7, calculating the next triple, repeating steps 4 to 6 until all triples are calculated, and outputting the entity set and the relation set of the training model. The inference method can improve the inference accuracy of knowledge discovery and enhance the prediction precision.

Owner:XIAMEN UNIV OF TECH

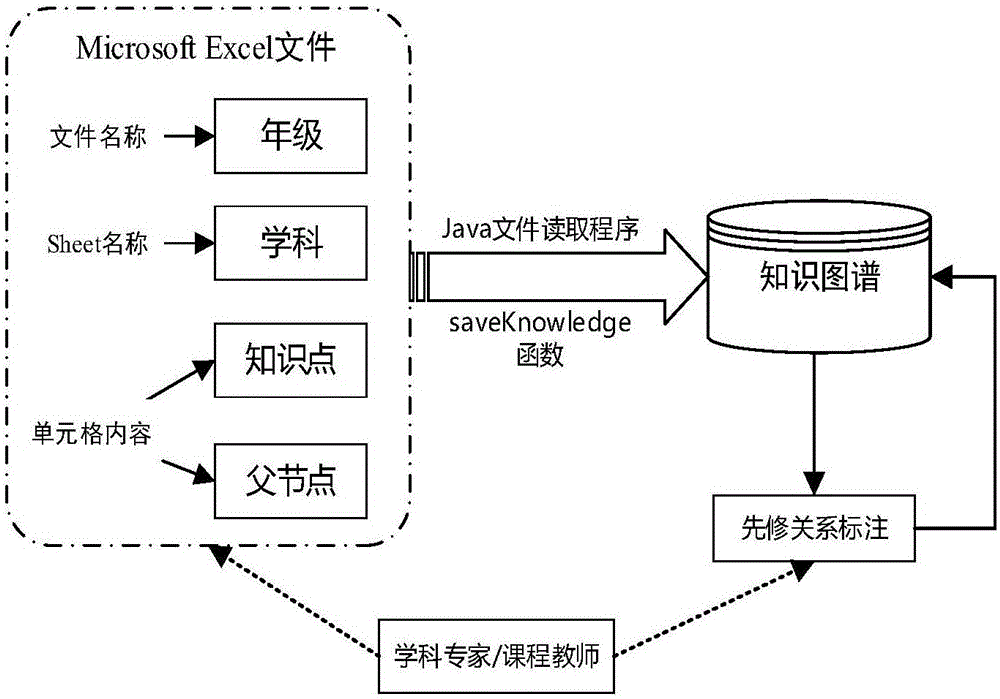

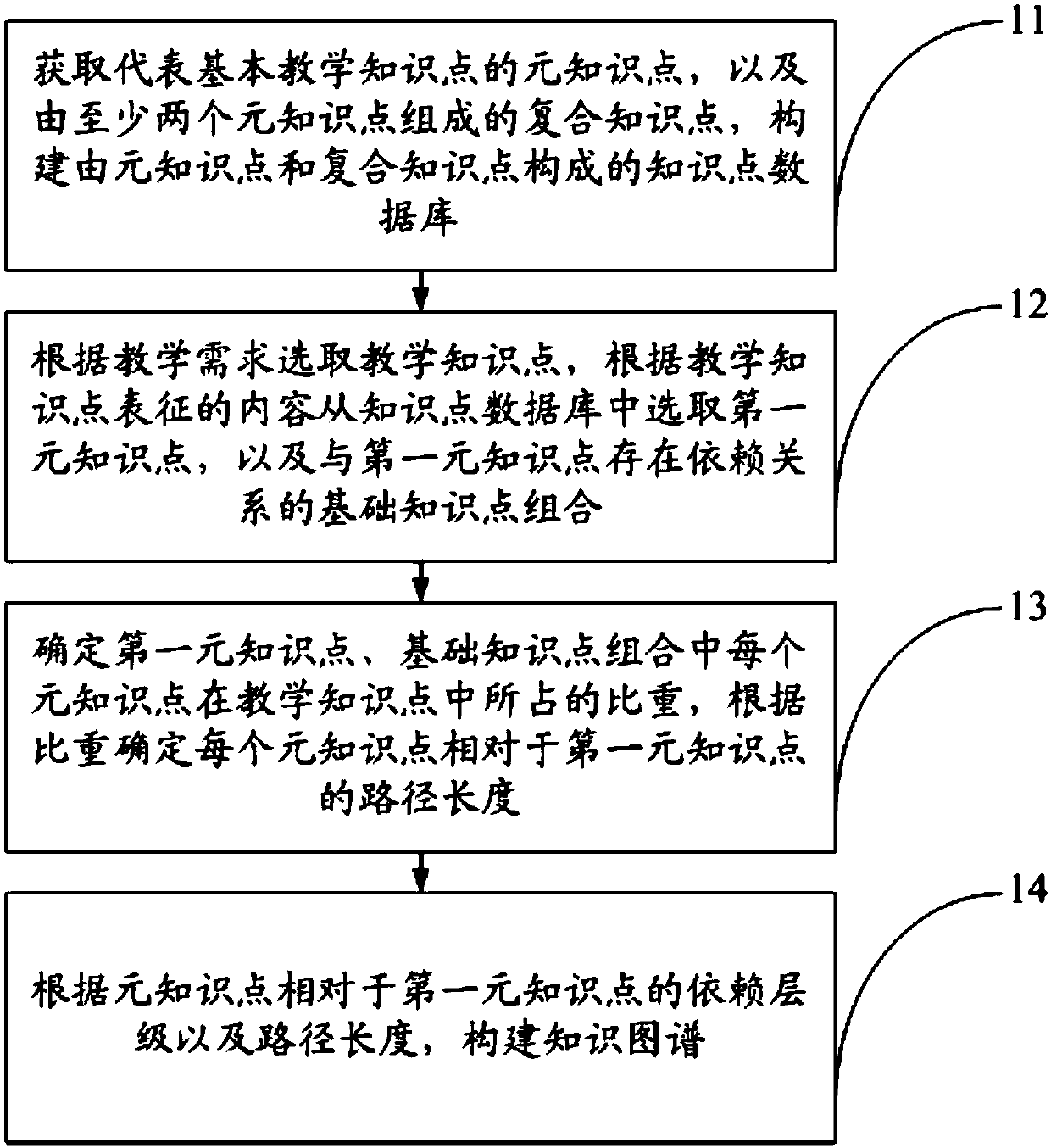

Knowledge graph construction method based on knowledge point connection relation

PendingCN107784088ARealize leak checking and fillingRealize the effect of drawing inferences about other cases from one instanceSpecial data processing applicationsBasic knowledgePath length

The invention provides a knowledge graph construction method based on a knowledge point connection relation and belongs to the field of education and learning. The method comprises steps as follows: acquiring meta-knowledge points, and establishing a knowledge point database comprising the meta-knowledge points; selecting the meta-knowledge points according to content characterizing teaching knowledge points and a basic knowledge point combination having dependence relationships with the meta-knowledge points; determining path length of each meta-knowledge point, relative to a first meta-knowledge point, in the basic knowledge point combination; constructing the knowledge graph according to the dependence level and the path length. On the basis of the content, a net-like relationship graphcontaining the dependence relationships and path length values corresponding to the dependence relationships is drawn, then the knowledge graph is obtained, the meta-knowledge point with the highestcorrelation with the first meta-knowledge point can be determined at any time in the learning process, so that effects of making up the deficiencies and drawing inferences are achieved in the learningprocess, the probability of occurrence of learning blind areas is reduced, and finally, effectiveness of learning is enhanced.

Owner:杭州博世数据网络有限公司

Systems and Methods for Graph-Based AI Training

ActiveUS20200081445A1Inhibit progressBig amount of dataRoad vehicles traffic controlCharacter and pattern recognitionGraph spectraTheoretical computer science

Graphs are powerful structures made of nodes and edges. Information can be encoded in the nodes and edges themselves, as well as the connections between them. Graphs can be used to create manifolds which in turn can be used to efficiently train more robust AI systems. Systems and methods for graph-based AI training in accordance with embodiments of the invention are illustrated. In one embodiment, a graph interface system including a processor, and a memory configured to store a graph interface application, where the graph interface application directs the processor to obtain a set of training data, where the set of training data describes a plurality of scenarios, encode the set of training data into a first knowledge graph, generate a manifold based on the first knowledge graph, and train an AI model by traversing the manifold.

Owner:DRISK INC

Knowledge graph representation learning method based on path tensor decomposition

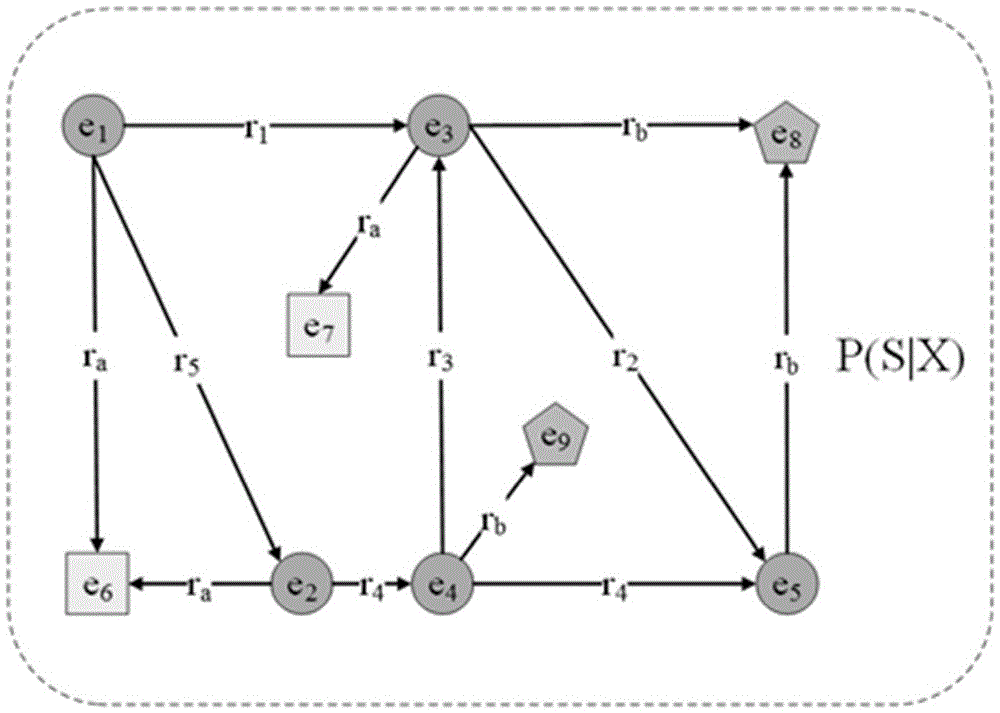

InactiveCN106528610AEfficient mining of multipath relationshipsRelational databasesSpecial data processing applicationsGraph spectraDecomposition

The invention discloses a knowledge graph representation learning method based on path tensor decomposition, comprising the following steps of: step 1, extracting an entity set, a relation set and a triple set in a knowledge graph, and embedding the entity set and the relation set which satisfy a triple into a low-dimensional continuous vector space; step 2, acquiring a path between entities through a PRA (Progressive Refinement Approach) algorithm; step 3, carrying out tensor decomposition on the path where all entities exist possibly, and calculating a decomposition loss function value; step 4, repeating step 3 until a convergent preset value or the maximum number of iteration is reached; step 5, if the maximum number of iteration or the convergent preset value is reached, calculating a next triple-related path, and repeating steps 2 to 4 until all triples of a training set are executed; and step 6, outputting the corresponding entity set and relation set in a training model. The representation learning method can improve the inference accuracy of knowledge discovery and enhance the prediction precision.

Owner:XIAMEN UNIV OF TECH

Time series data event prediction method and system based on graph convolutional neural network and application thereof

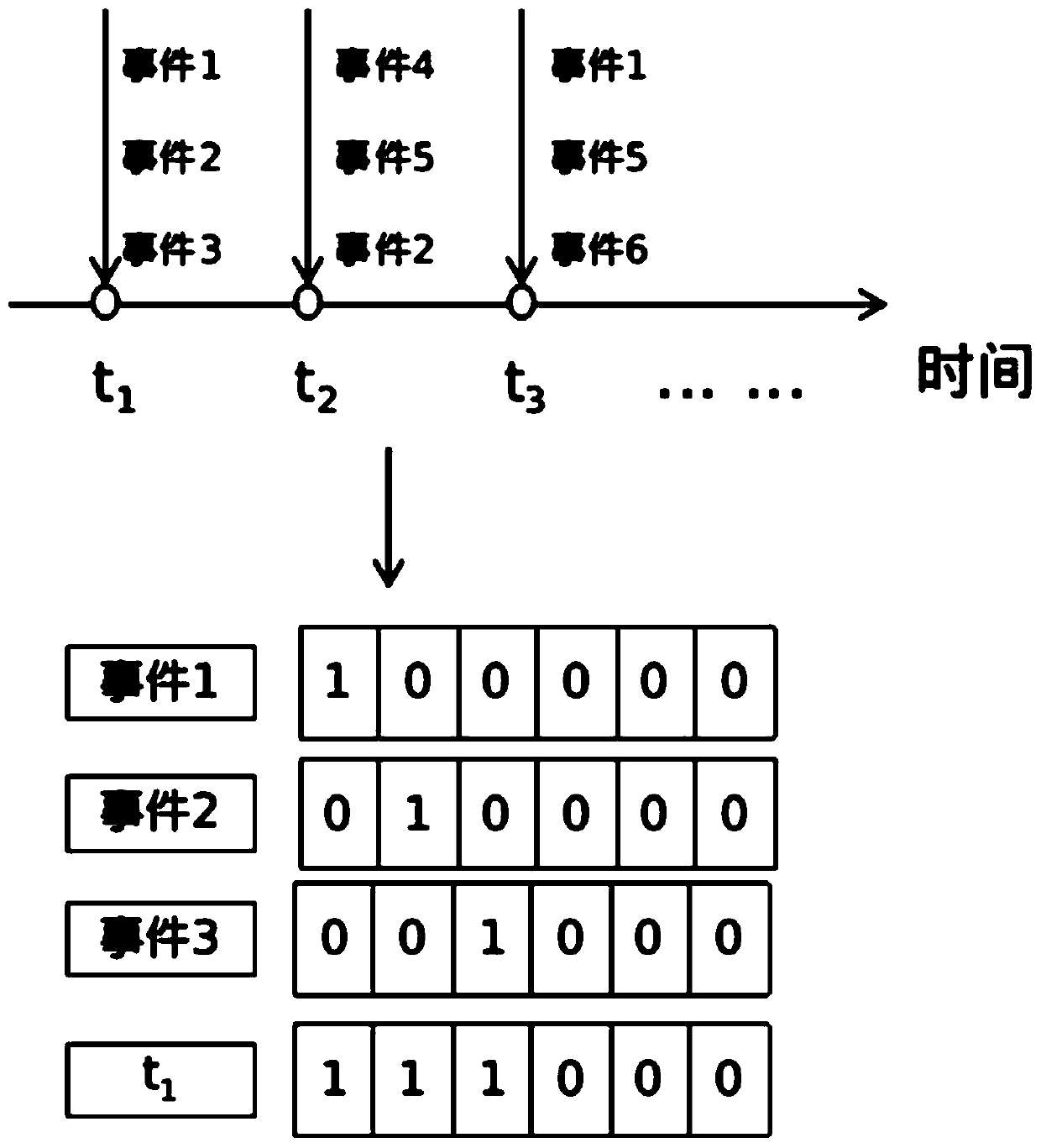

ActiveCN111367961AImprove robustnessPrediction is accurateDigital data information retrievalNeural architecturesGraph spectraEngineering

The invention discloses a time series data event prediction method and system based on a graph convolutional neural network and application of the time series data event prediction method and system,and the method comprises the steps: converting time series data after data cleaning into event sequence data at a preset time interval, and obtaining vector representation of an event and an event set; taking an event set contained in each piece of sequence sample data in the event sequence data at the last moment as a prediction target, and taking the prediction target as a corresponding sequencesample label to obtain labeled event sequence data; and when the graph convolutional neural network model is trained to meet a preset convergence condition, testing a model prediction effect by usingthe test set, and taking the model of the test effect as a final event prediction model. The method can make up for the defects that the traditional method has high requirements on data quantity andquality and cannot fully utilize the knowledge graph.

Owner:XI AN JIAOTONG UNIV

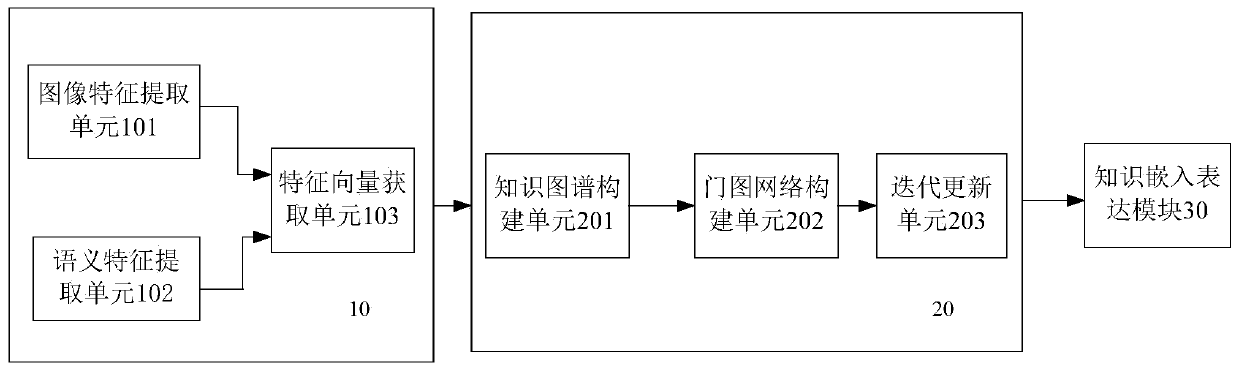

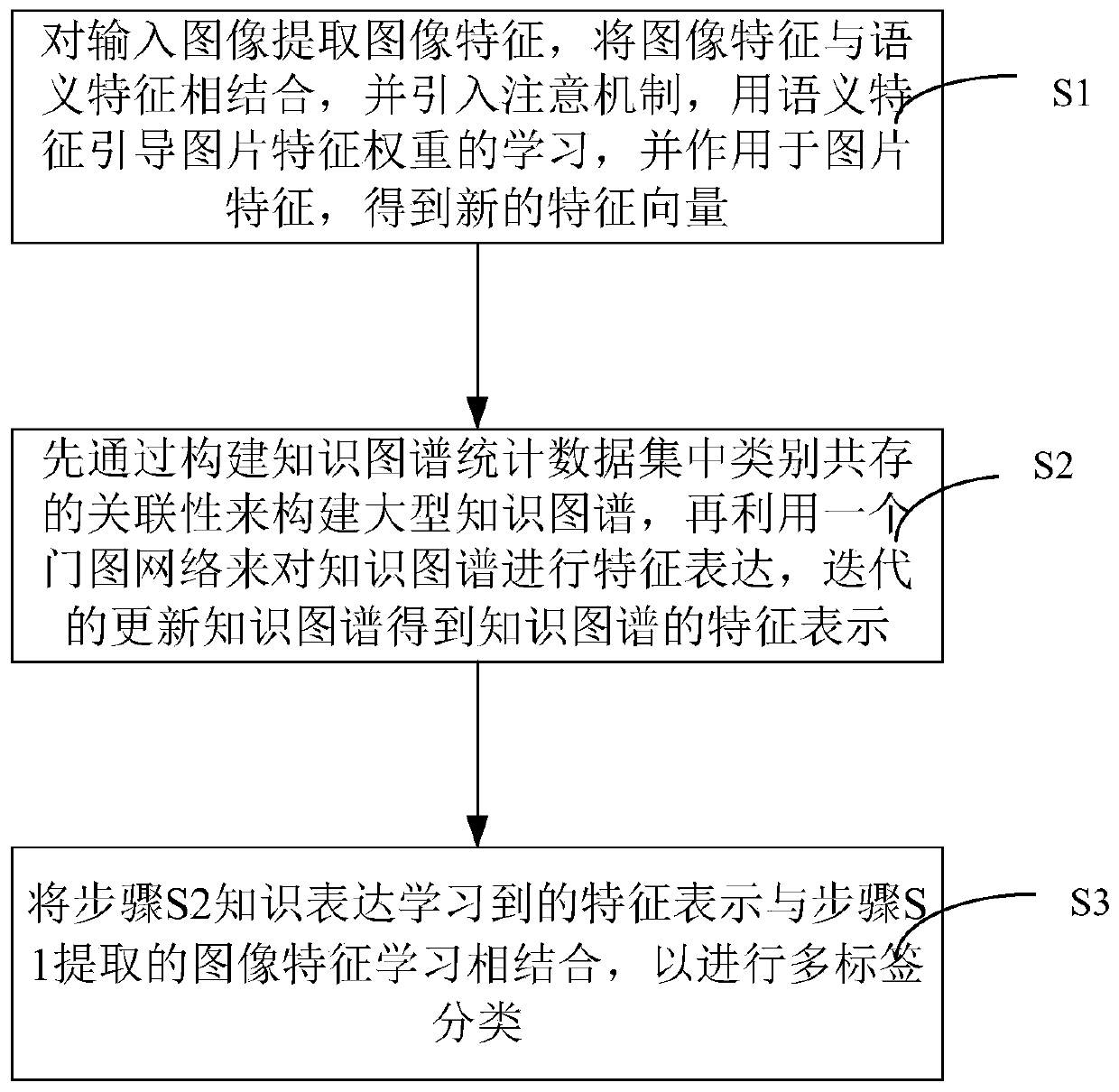

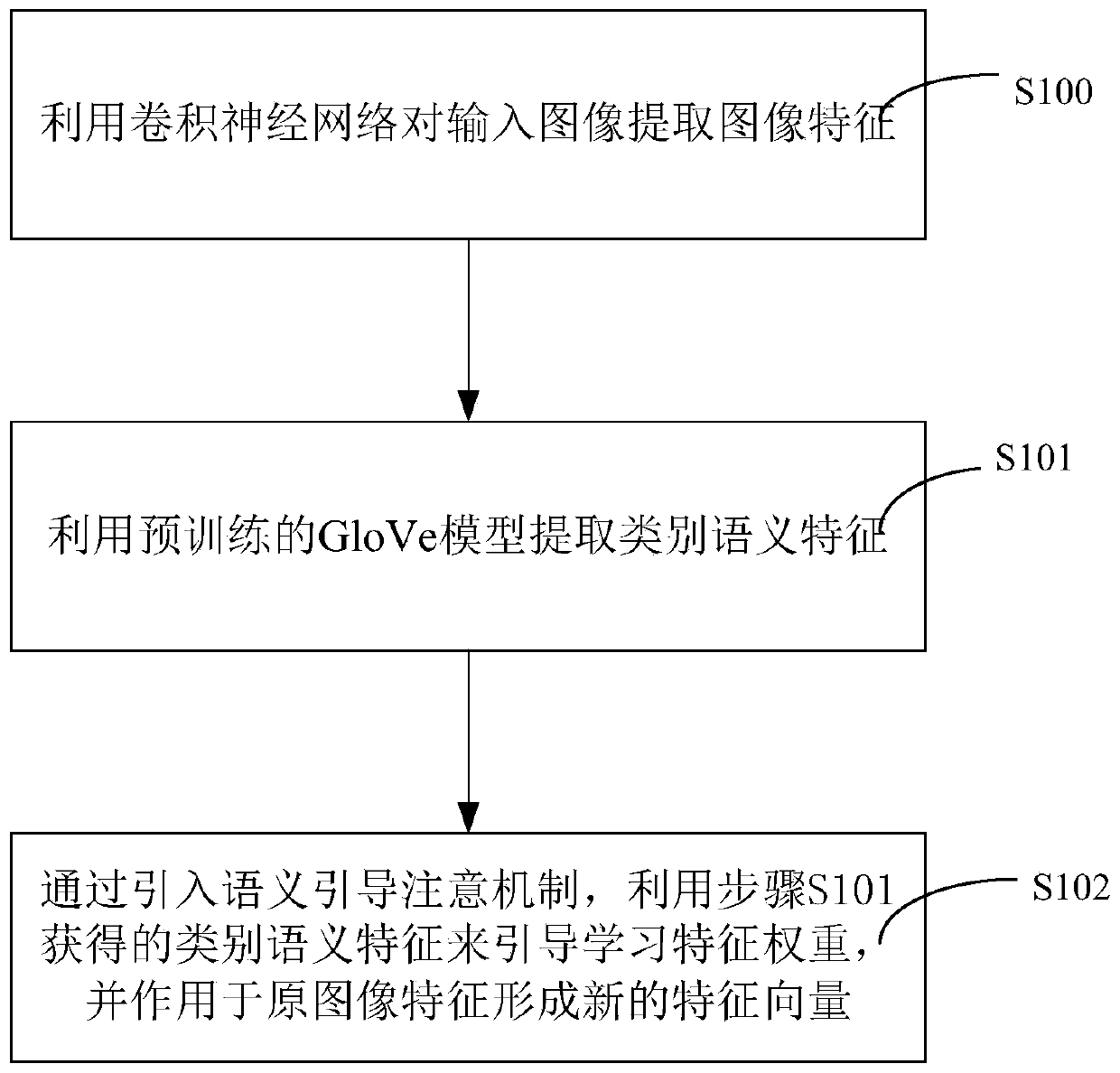

Graph representation learning framework based on specific semantics and multi-label classification method thereof

ActiveCN110084296AGood effectImprove classificationCharacter and pattern recognitionEnergy efficient computingFeature vectorImage extraction

The invention discloses a graph representation learning framework based on specific semantics and a multi-label classification method thereof. The framework comprises a semantic coupling module used for extracting image features from an input image by using a convolutional neural network, combining the image features with semantic features, introducing an attention mechanism, guiding learning of image feature weights by using the semantic features, and acting on the image features to obtain new feature vectors; a semantic interaction module which is used for constructing a large knowledge graph by constructing the relevance of category coexistence in the knowledge graph statistical data set, then performing feature expression on the knowledge graph by utilizing a door graph network, and iteratively updating the knowledge graph to obtain feature representation of the knowledge graph; and a knowledge embedding expression module which is used for combining the feature representation learned by the knowledge expression of the semantic interaction module with the image feature learning extracted by the semantic coupling module so as to realize multi-label classification.

Owner:SUN YAT SEN UNIV

Knowledge graph inference method based on relation detection and reinforcement learning

ActiveCN108256065AImprove robustnessNo human intervention requiredSpecial data processing applicationsNeural learning methodsNerve networkGraph spectra

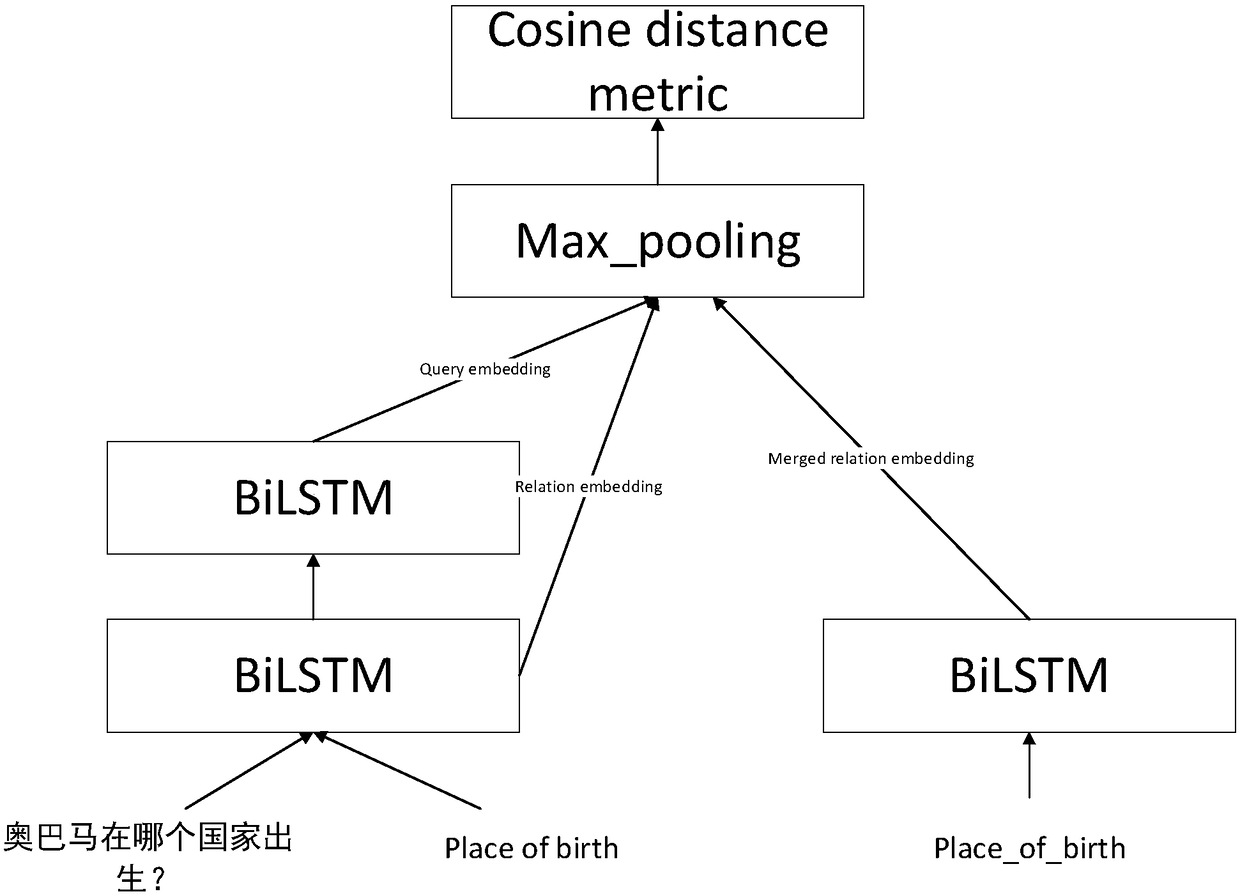

The invention discloses a knowledge graph inference method based on relation detection and reinforcement learning. The method comprises the steps that on the basis of character string fuzzy matching between a domain knowledge graph and an entity dictionary and a CNN-LSTM-CRF-based entity recognition model, an entity in a question input by a user is detected, and entity detection is completed; relation detection is completed by a neural network based semantic matching model, and the relation detection model is characterized in that low-dimension manifold expression is obtained through the neural network according to the input question, the relation related to the question and the relation not related to the question, on the basis of the low-dimension manifold expression, rank loss optimization model parameters are adopted, so that the question can search the relation set for the relation most similar to the semantics; according to knowledge graph inference based on reinforcement learning, for each time step, on the basis of a strategy function pi theta, under the current entity et, one out-going relation rt+1 is selected, the next entity et+1 is executed, the final entity eT is reached through a preset sequential decision with the maximum inference path length T, and the entity eT is adopted as an answer of the question to be output.

Owner:智言科技(深圳)有限公司

Question-answering system based on domain knowledge graph and construction method thereof

InactiveCN110555153AAccurate expression of intentThe result is accurateWeb data indexingSpecial data processing applicationsGraph spectraThe Internet

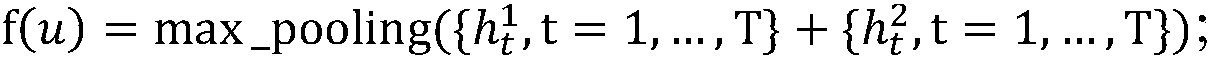

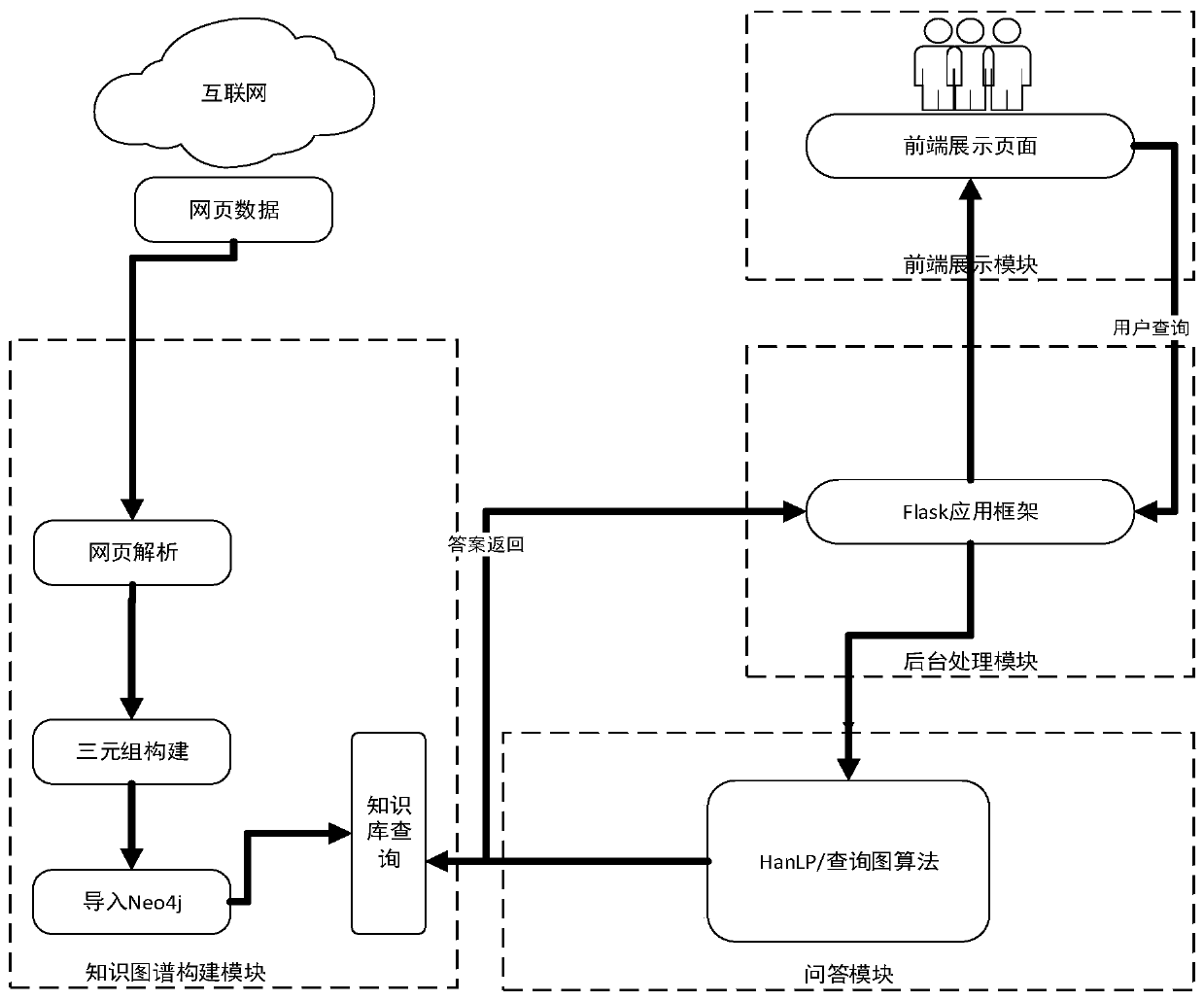

The invention discloses a question-answering system based on a domain knowledge graph and a construction method thereof, which comprises the steps of crawling information of a given domain from the Internet, extracting triples in the information, and inserting the triples into a graph database for storage; carrying out word segmentation and syntactic analysis on a question input by a user to obtain a dependency relationship table among words in the question, the dependency relationship being a dependency relationship among the words in one sentence; generating a query semantic graph by traversing the dependency relationship table, and converting the query semantic graph into a query statement of a graph database; and finally, querying the graph database by using the query statement to obtain an answer. The invention further provides a question-answering system by adopting the method. The question-answering system comprises a knowledge graph construction module, a question-answering module, a background processing module and a front-end display module. The invention is oriented to a given field, effective information in the internet is crawled, triples are extracted to create a knowledge graph database, and a question-answering system returns accurate and concise answers by querying the knowledge graph database.

Owner:JINAN UNIVERSITY

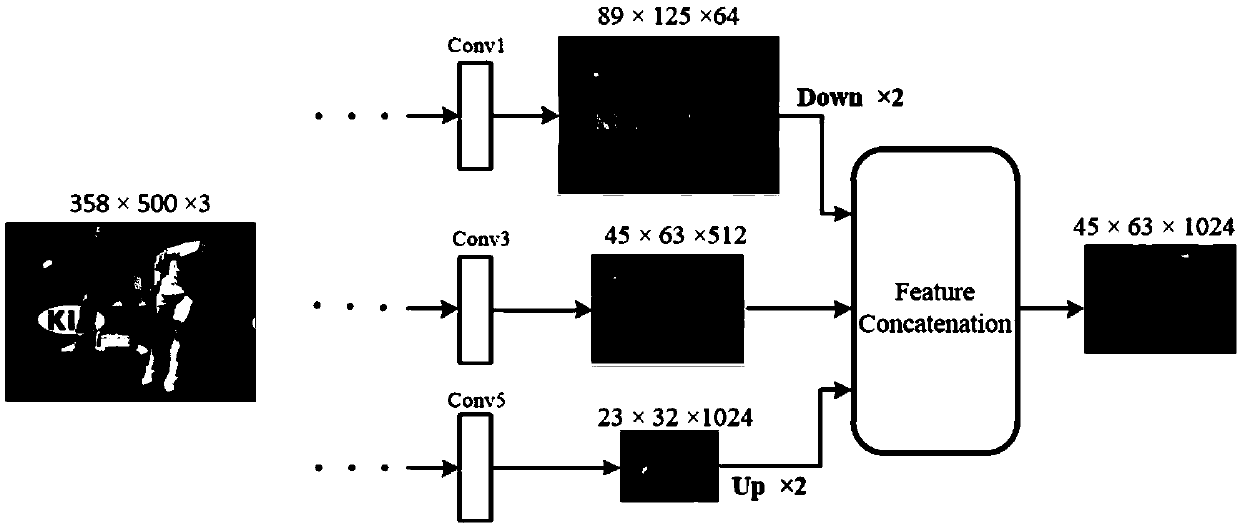

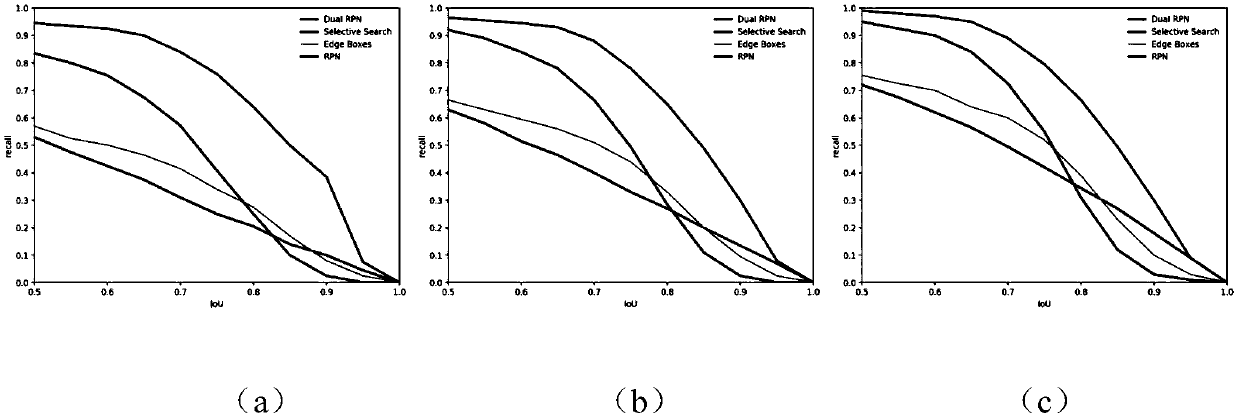

An enhanced full convolution instance semantic segmentation algorithm suitable for small target detection

InactiveCN109583517AEasy to integrateAchieve recallCharacter and pattern recognitionNeural architecturesPattern recognitionImaging processing

The invention belongs to the technical field of image processing, and discloses an enhanced full convolution instance semantic segmentation algorithm suitable for small target detection based on a full convolution instance semantic segmentation (FCIS) algorithm, which comprises the steps of shared feature map extraction, preselection frame extraction, generation of a position sensitive score map,classification and regression. In the extraction process of the shared feature map, a conv1 feature map, a conv3 feature map and a conv5 feature map are fused, so that high-semantic information and high-detail information are reserved in the shared feature map; In the pre-selection frame extraction process, a dual RPN algorithm is provided for the poor network extraction effect of the pre-selection frame, and the average recall rate of the algorithm is increased by 7% compared with that of the RPN algorithm. The mAP of the EFCIS algorithm is improved by 3.5% compared with the FCIS algorithm, and for a small-size target, the mAP of the EFCIS algorithm is improved by 2.9% compared with the FCIS algorithm. Experiments show that the small target grabbing capacity can be improved very easily.

Owner:EAST CHINA JIAOTONG UNIVERSITY

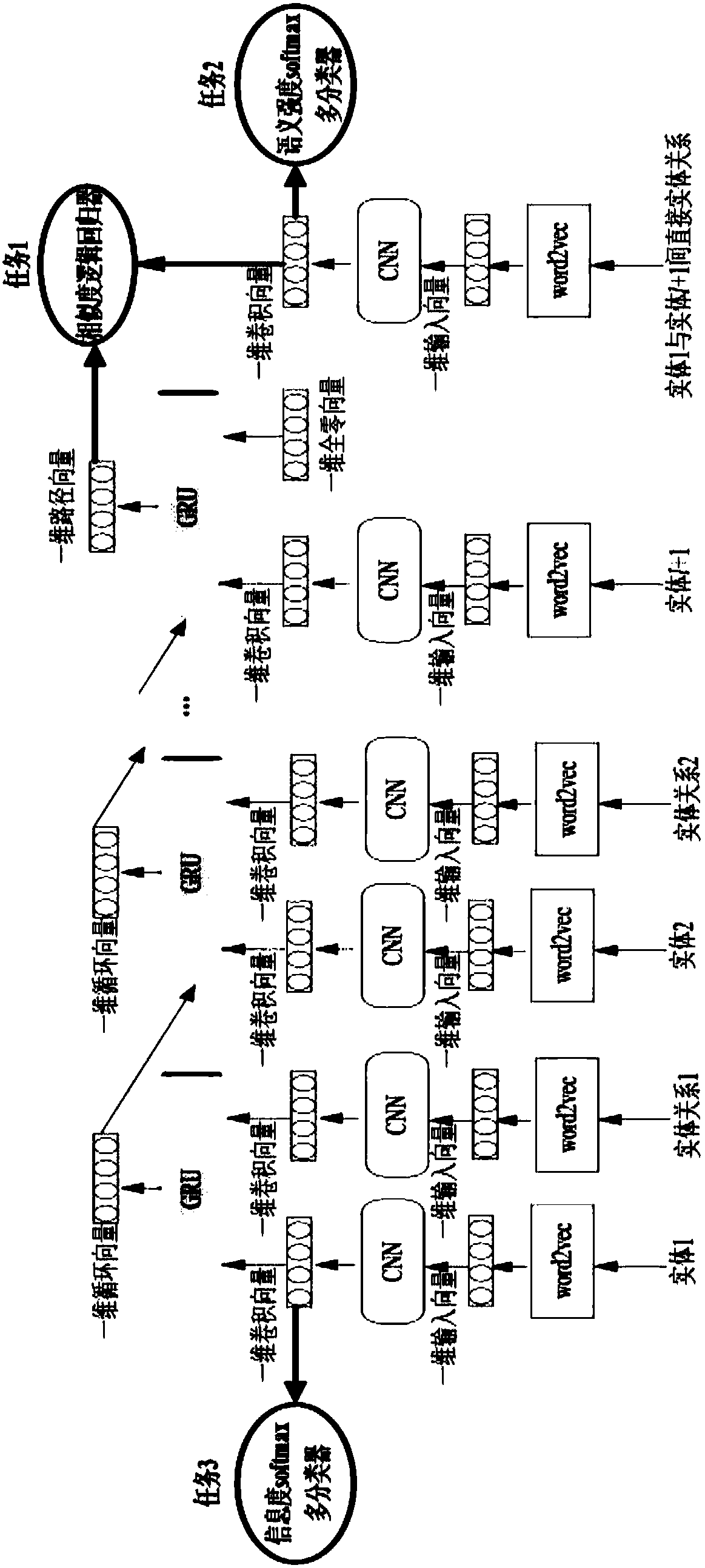

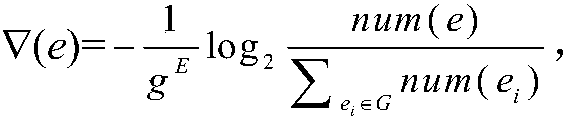

Word embedding deep learning method based on knowledge graph

ActiveCN107729497AImprove accuracyImprove generalization abilityNeural architecturesSpecial data processing applicationsGraph spectraPath length

The invention discloses a word embedding deep learning method based on a knowledge graph. In the training sample set construction stage, firstly, entity relations in the knowledge graph are divided according to the semantic intensity, and then training samples with different path lengths are generated on the basis of the divided entity relation groups. In the word embedding deep learning stage, athree-task deep neural network structure composed of a word2vec encoder, a convolution neural network, a gating cycle unit network, a softmax classifier, a logic regression device and the like is constructed, and then parameters of the deep neural network structure are optimized by taking a training sample set generated in the previous stage as input through iteration. After training is completed,a word nesting encoder composed of the word2vec encoder and the convolution neural network is reserved. Compared with the prior art, the method has the advantage of being high in word embedding accuracy, high in generalization ability, easy to implement and the like, and can be effectively applied to the fields such as big data analysis, electronic commerce, intelligent traffic, medical health and intelligent manufacturing.

Owner:TONGJI UNIV

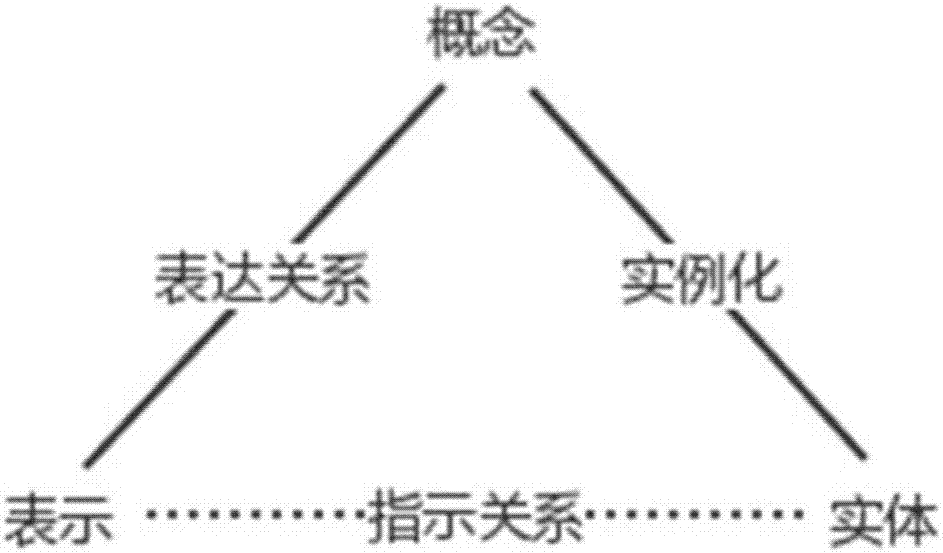

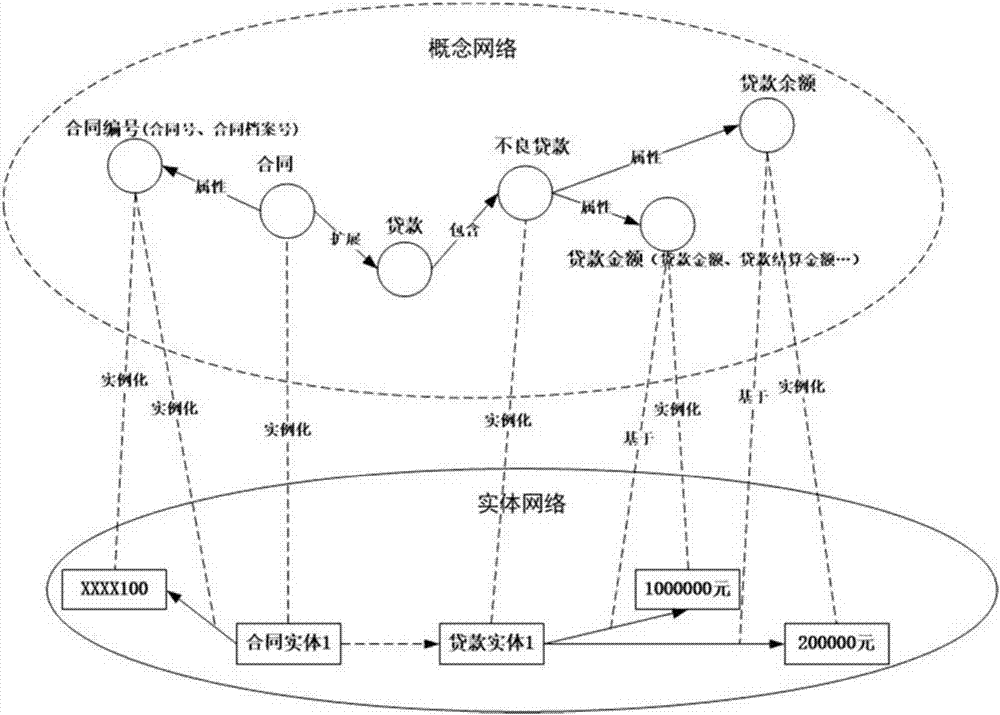

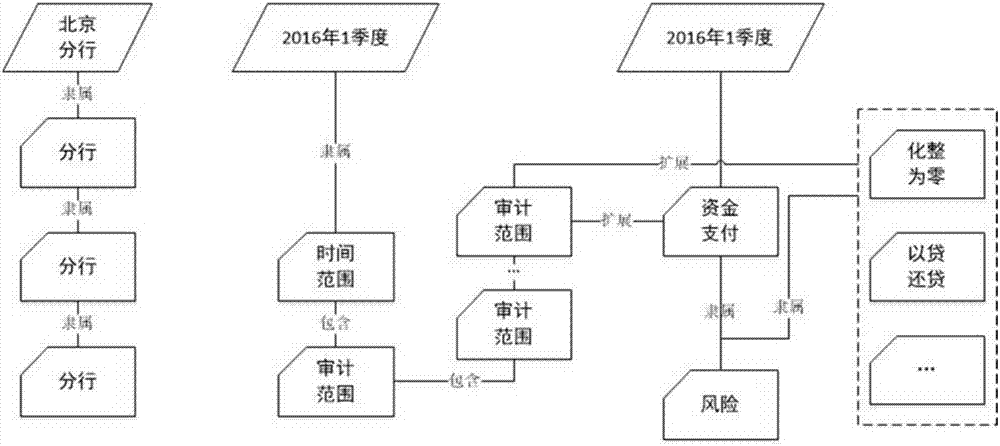

Domain knowledge graph based on semantic triangle and query method

InactiveCN106874261AEasy to combEasy to findSemantic analysisText database queryingGraph spectraKnowledge graph

The invention discloses a domain knowledge graph based on a semantic triangle and a query method. The graph comprises a concept layer and an entity layer; the concept layer is composed of a set of concepts, and each concept has a unique identification and is represented by a represent word item and a candidate word item set; according to field related knowledge and the concept set of the concept layer, multiple entities corresponding to each concept are instantiated, the entity layer is formed, the entities are extensions of concepts, and each entity has a unique identification and is represented by a represent word item and a candidate word item set; an association relationship established according to the field related knowledge is set between the related concepts; an association relationship established according to the field related knowledge is set between the concept layer and the entity layer; an association relationship established according to the field related knowledge is set between the entities. By means of the method, separation of the concepts and the entities is achieved, knowledge summarization is convenient, different effects of the concepts and the entities in knowledge understanding and application are distinguished, and the query efficiency is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

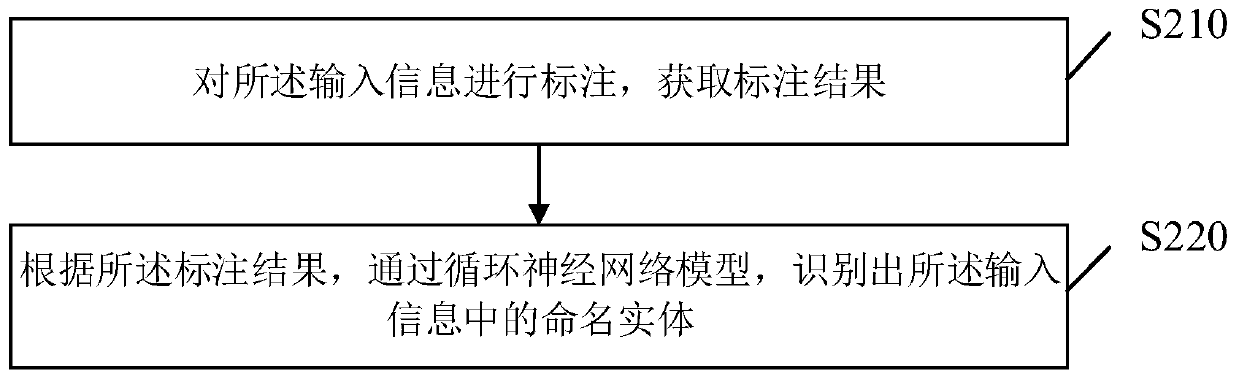

Question answering method, question answering device, computer equipment and storage medium

ActiveCN110502621AEfficient use ofReduce data volumeText database queryingSpecial data processing applicationsText miningGraph spectra

The invention discloses a question answering method and device, computer equipment and a storage medium. The question answering method comprises the steps: obtaining input information of a user; recognizing named entities in the input information, and linking the named entities to candidate entities, corresponding to the named entities, in the Chinese knowledge graph, to form entity pairs, whereinthe entity pairs comprise the named entities and the candidate entities; matching candidate relations of candidate entities in the Chinese knowledge graph through a relation model; according to the entity pairs and the candidate relations, forming candidate triples, wherein the candidate triples comprise named entities, candidate entities and candidate relations; obtaining a sorting result corresponding to each candidate triple based on the learning sorting model; and querying the Chinese knowledge graph according to a sorting result to obtain an answer to the input information. According tothe question answering method, external resources can be effectively utilized, and a large amount of context information can be provided through text mining, and based on the learning sorting model, good answers can be obtained when question and answer corpus data are few.

Owner:PING AN TECH (SHENZHEN) CO LTD

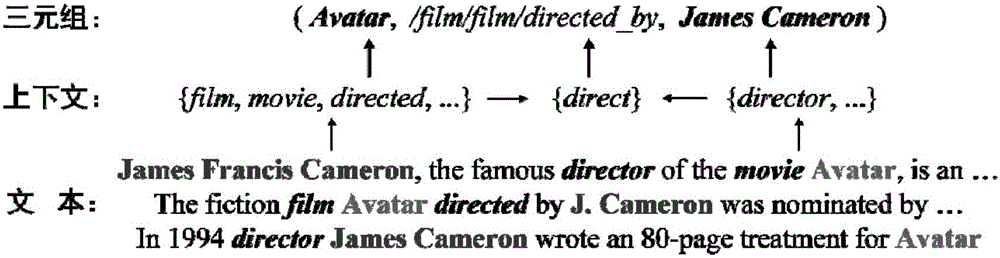

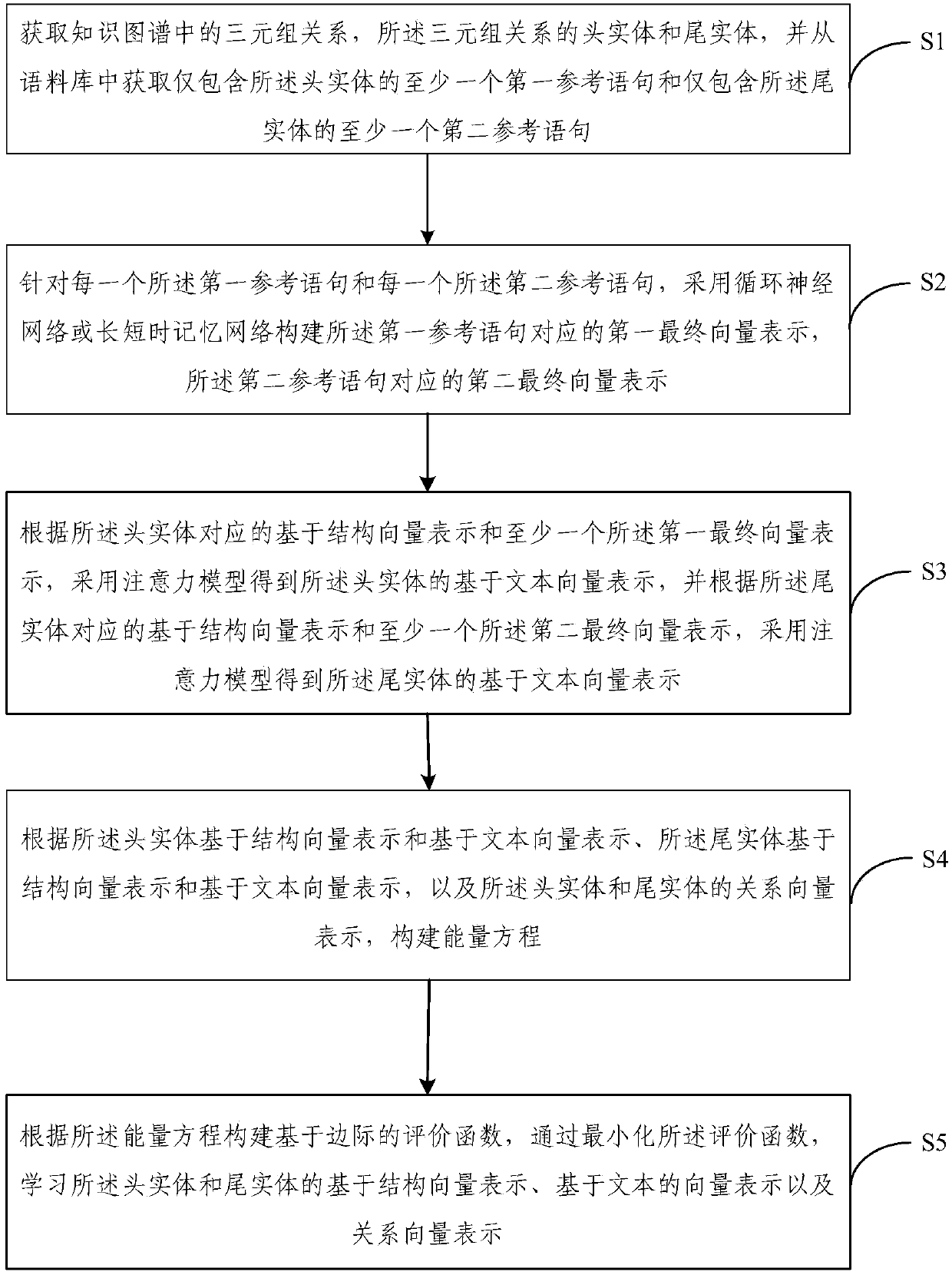

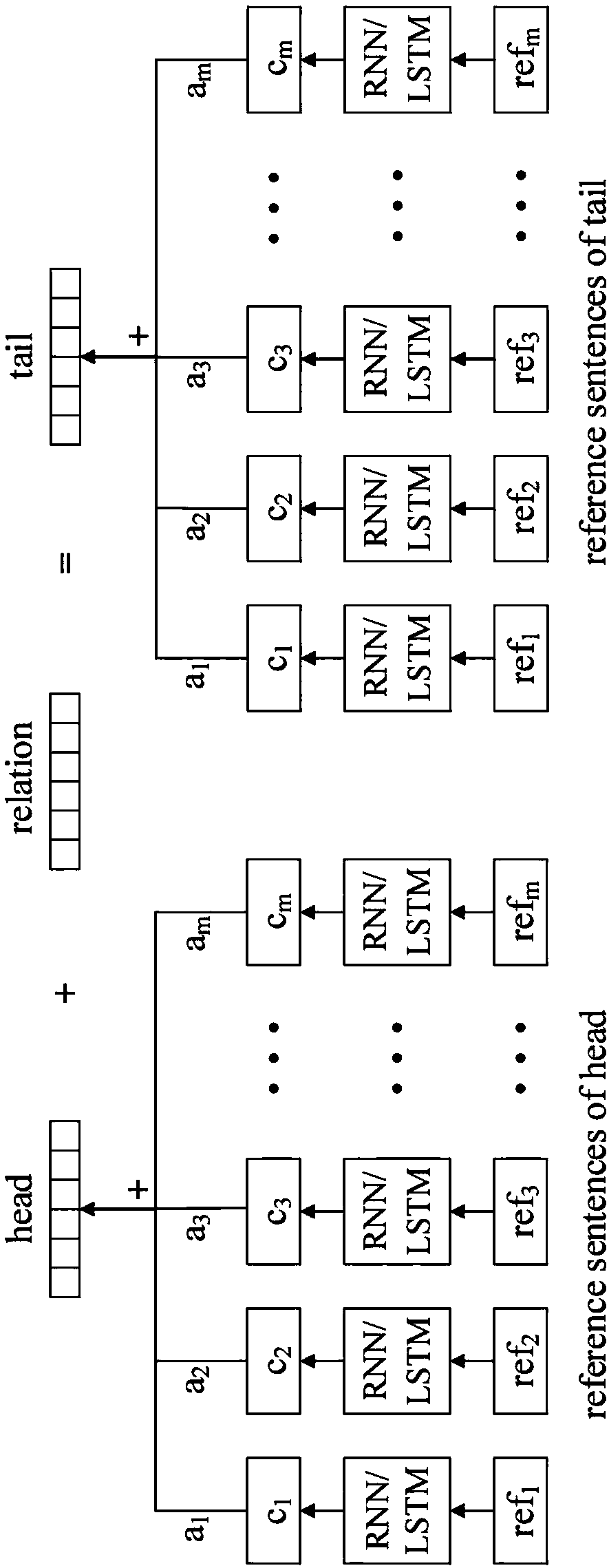

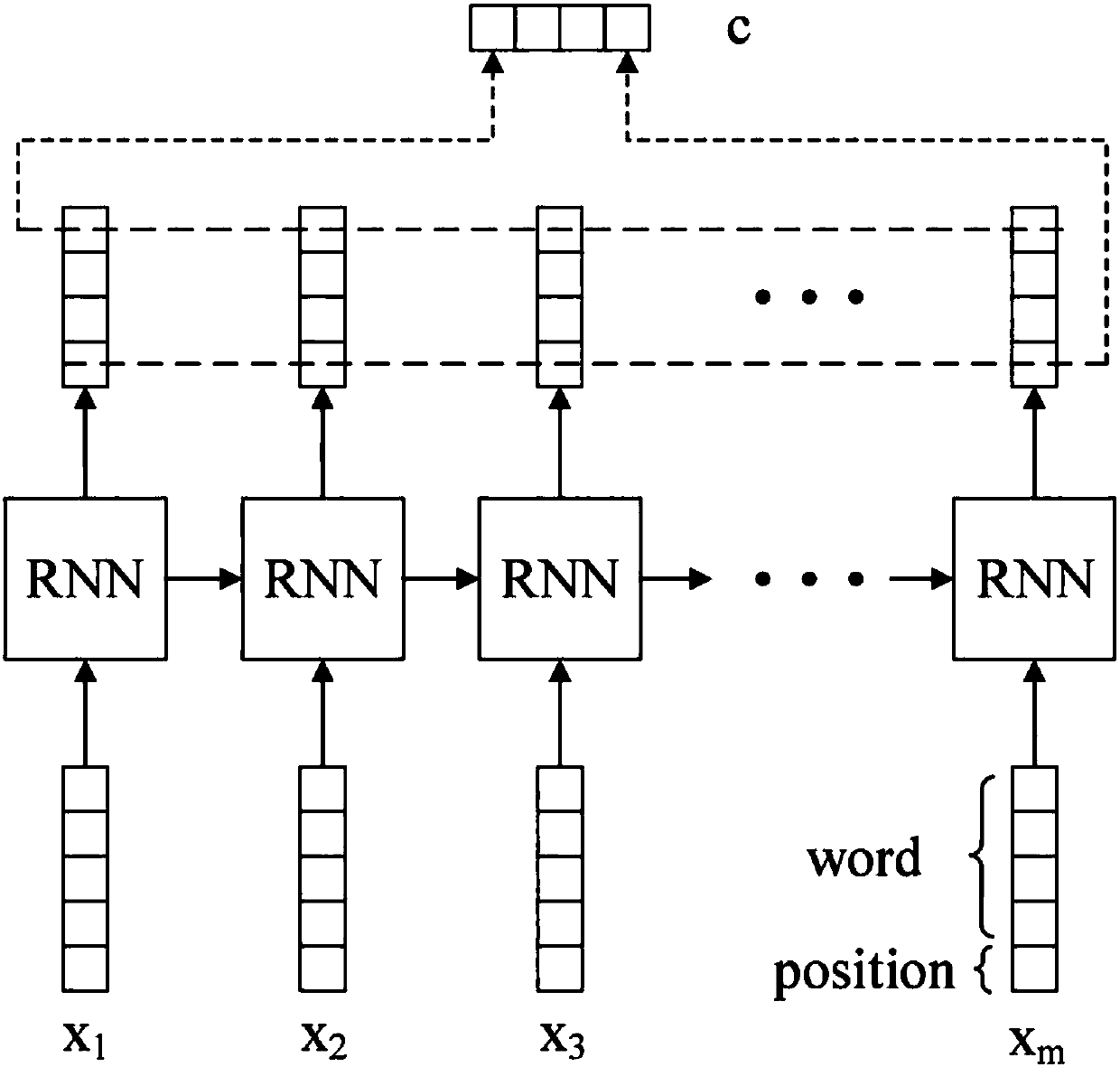

Sequence text information-combined knowledge graph expression learning method and device

InactiveCN107871158AEnhance representation learning abilityEnhance expressive abilitySemantic analysisNeural architecturesRelation classificationGraph spectra

The invention provides a sequence text information-combined knowledge graph expression learning method and a knowledge graph expression learning device. According to the method, not only the ternary relation group information between entities is utilized, but also the sequence text information containing the entities in a designated corpus is fully utilized. An energy equation is constructed, so that the entities have different expression vectors in the structured ternary relation group information and the non-structured text information. Meanwhile, a marginal-based evaluation function is minimized, and the expression of structure-based entity vectors, text-based entity vectors and relation vectors is learned. Therefore, the expression learning effect of a knowledge graph is remarkably improved. According to the method and the device, the learned knowledge graph expression fully utilizes the sequence text information of entities contained in the corpus. Therefore, the higher accuracy can be obtained in the tasks such as ternary group relation classification, ternary group head and tail entity prediction and the like. The good practicability is achieved, and the expression performance of the knowledge graph is improved.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com