Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

134 results about "View model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A view model or viewpoints framework in systems engineering, software engineering, and enterprise engineering is a framework which defines a coherent set of views to be used in the construction of a system architecture, software architecture, or enterprise architecture. A view is a representation of a whole system from the perspective of a related set of concerns.

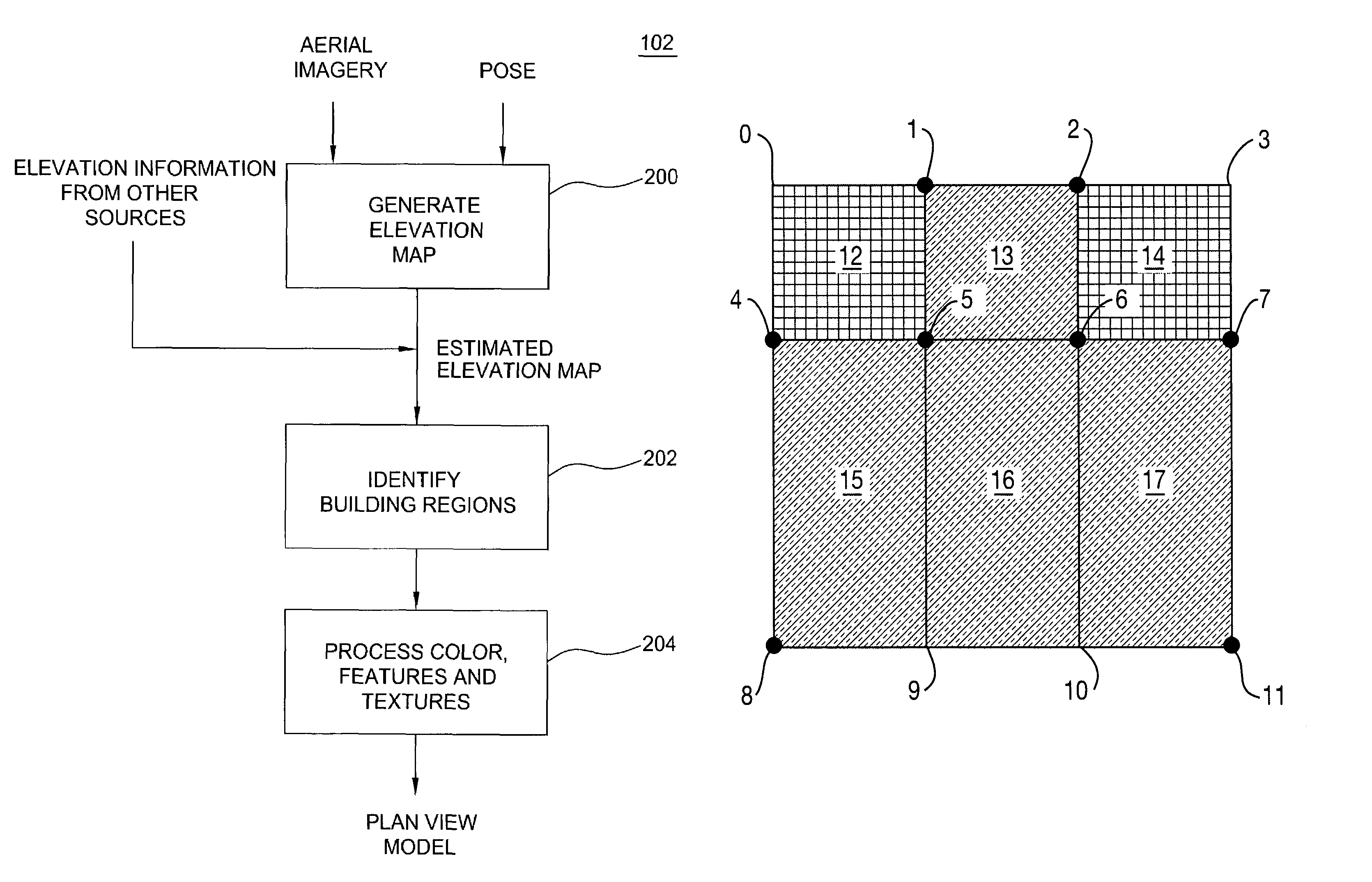

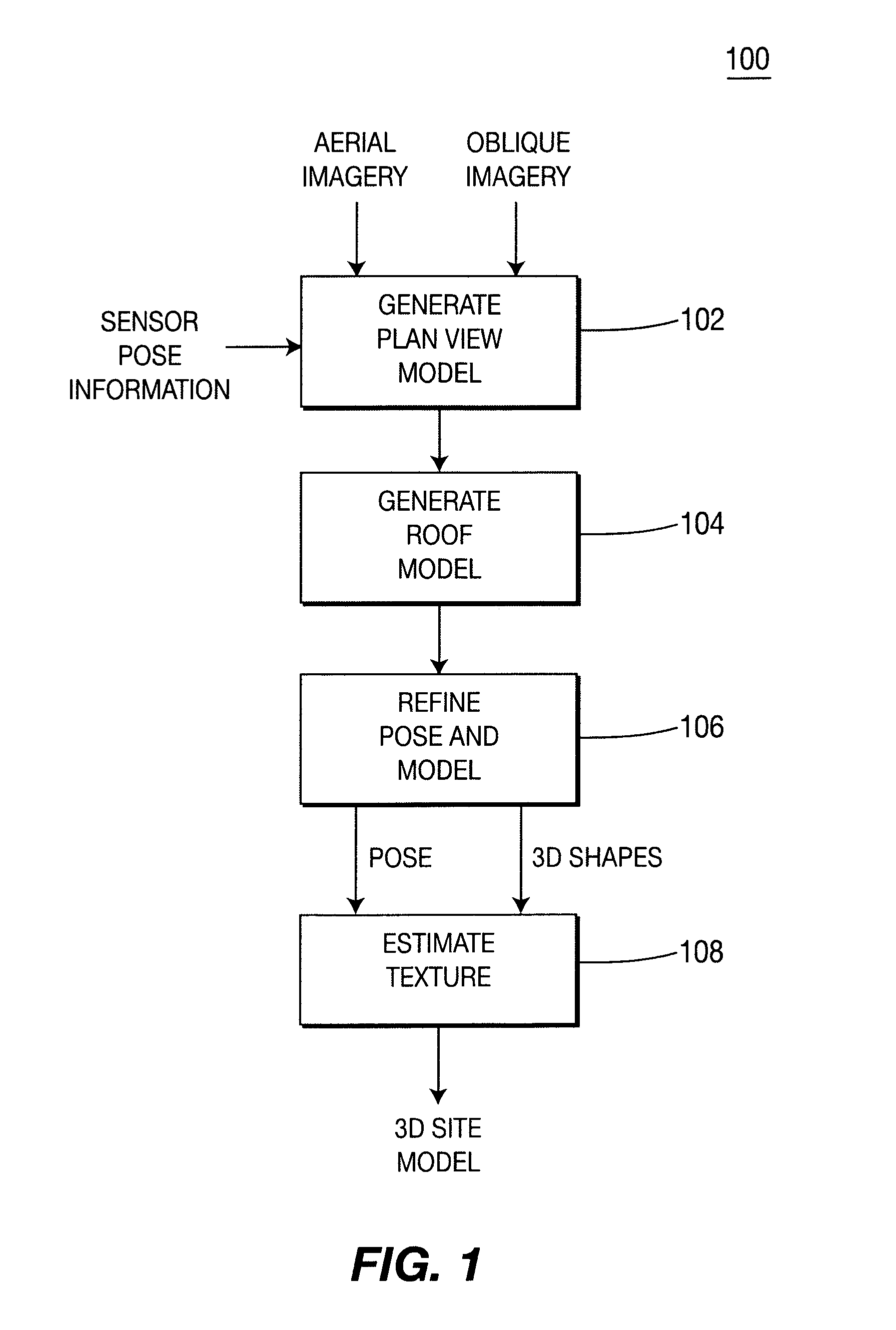

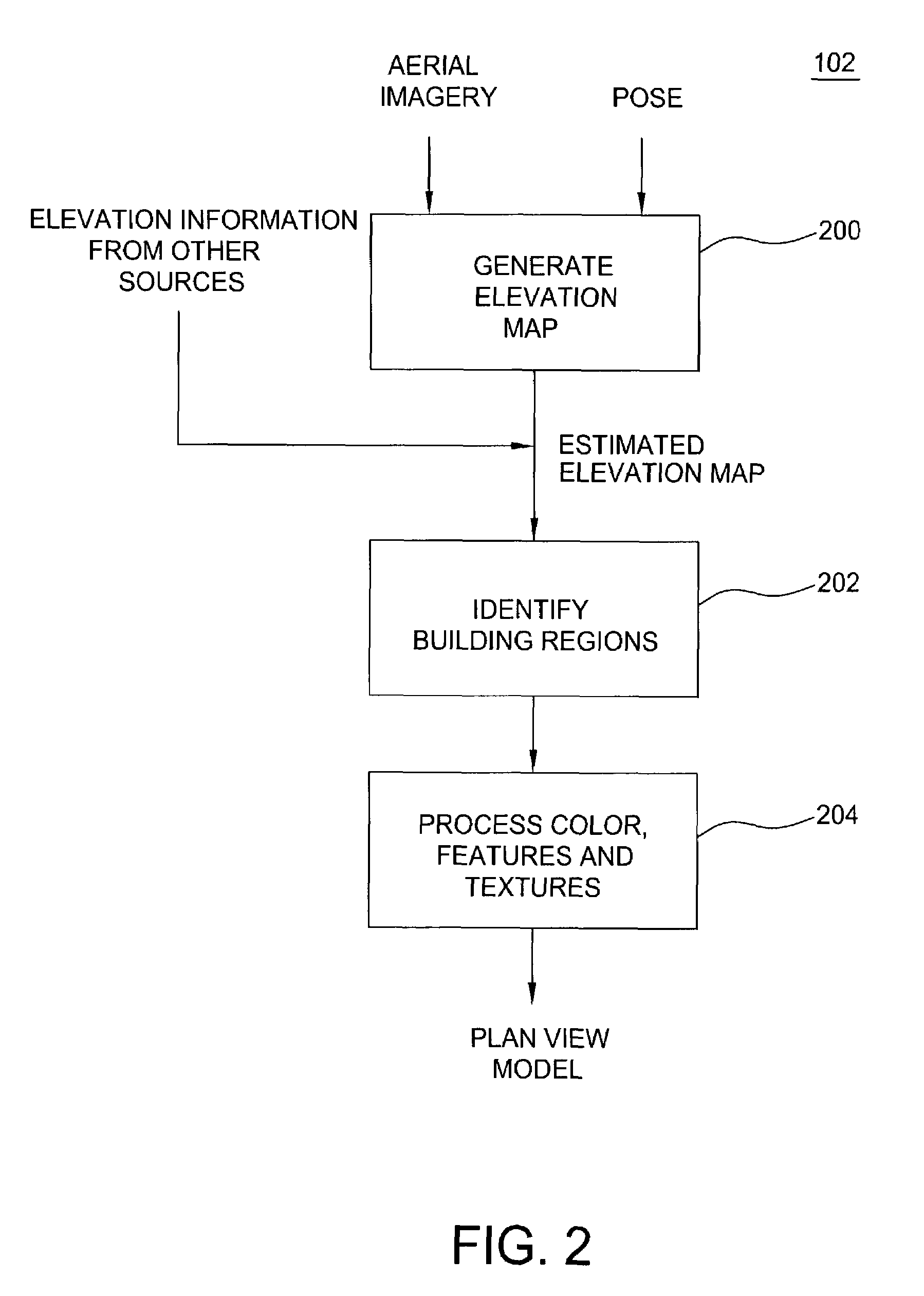

Method and apparatus for automatically generating a site model

ActiveUS7509241B2Overcome disadvantagesPrecise definitionGeometric CADDetails involving processing stepsSite modelView model

A method and apparatus for automatically combining aerial images and oblique images to form a three-dimensional (3D) site model. The apparatus or method is supplied with aerial and oblique imagery. The imagery is processed to identify building boundaries and outlines as well as to produce a depth map. The building boundaries and the depth map may be combined to form a 3D plan view model or used separately as a 2D plan view model. The imagery and plan view model is further processed to determine roof models for the buildings in the scene. The result is a 3D site model having buildings represented rectangular boxes with accurately defined roof shapes.

Owner:SRI INTERNATIONAL

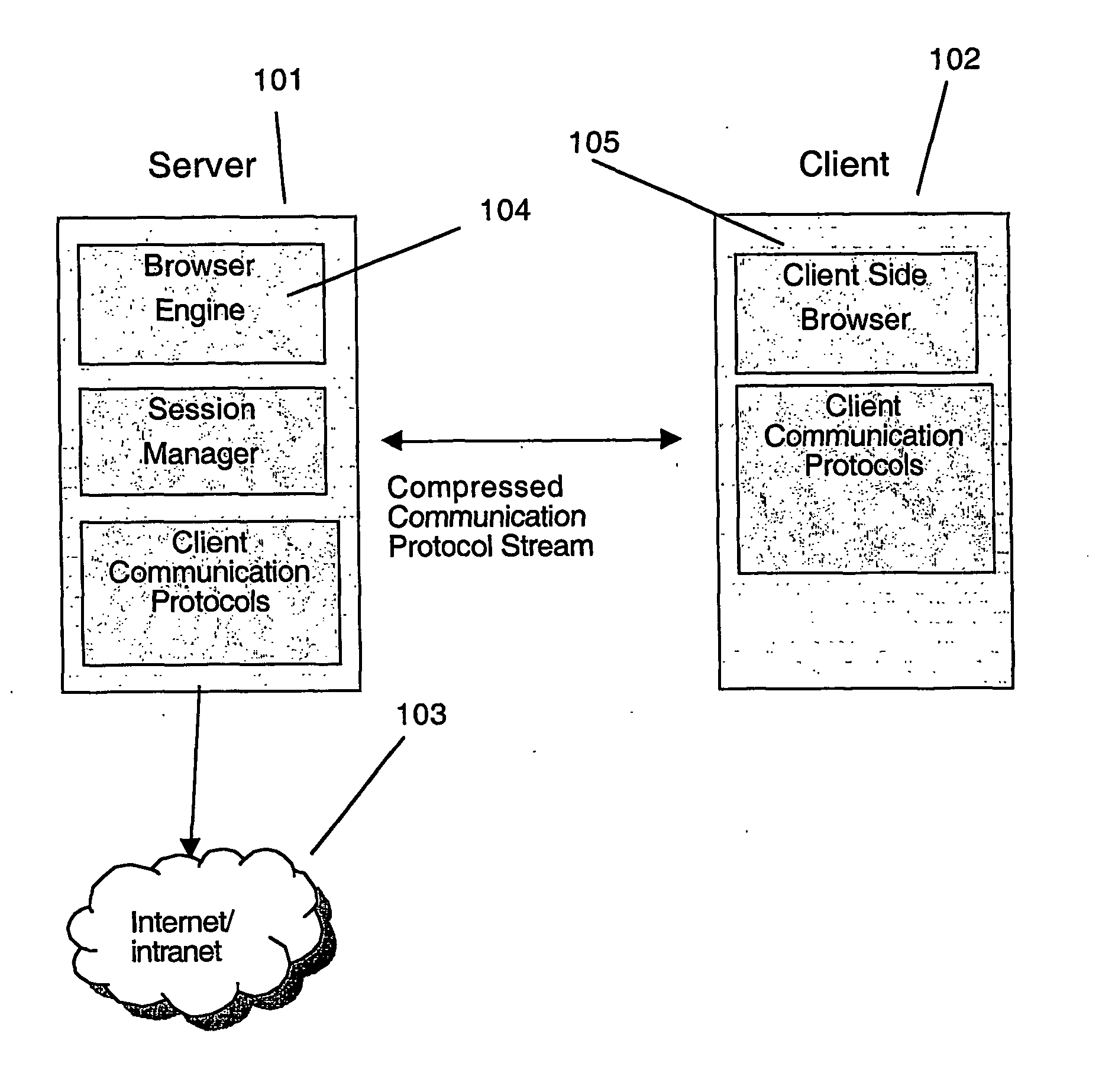

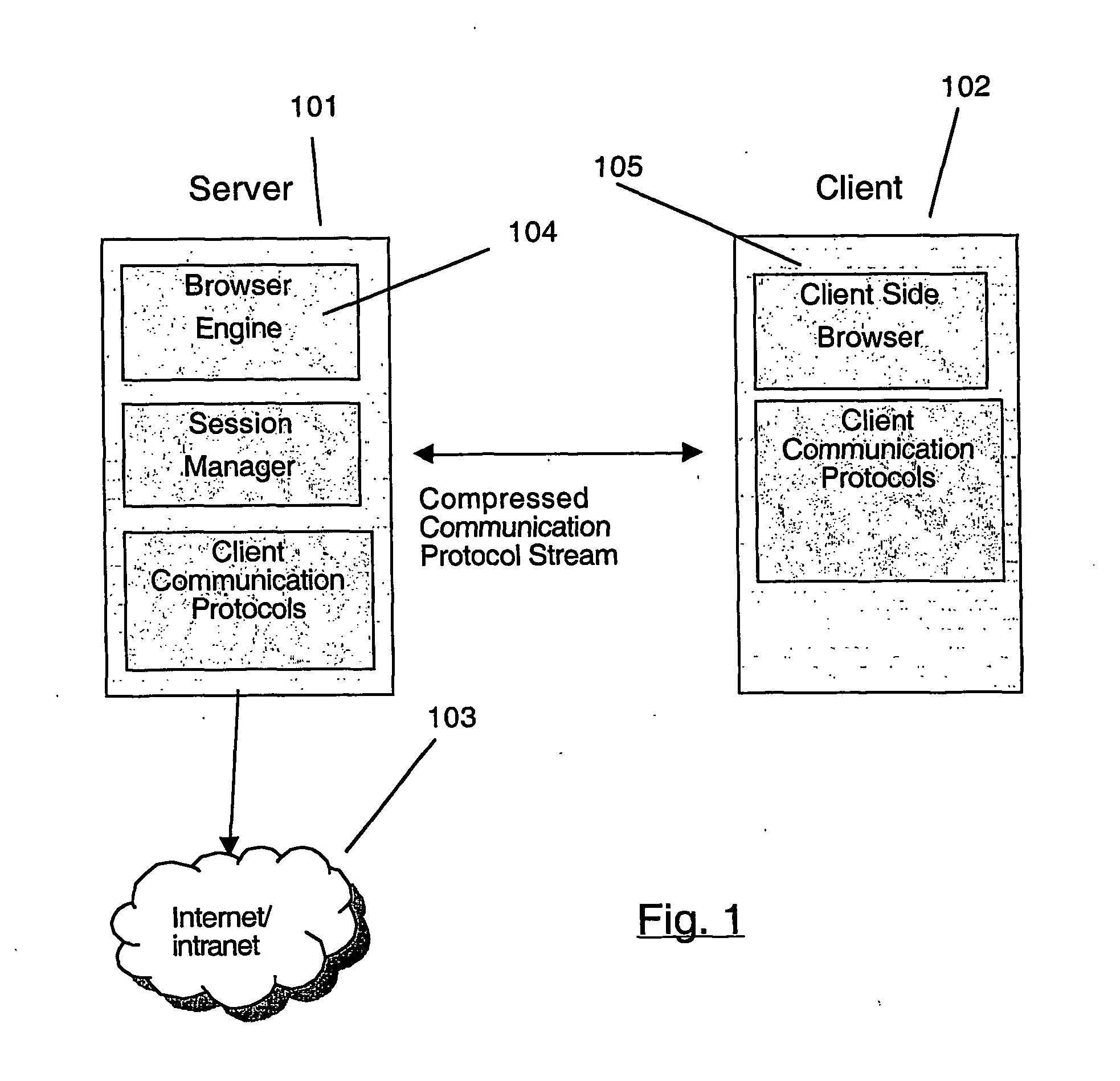

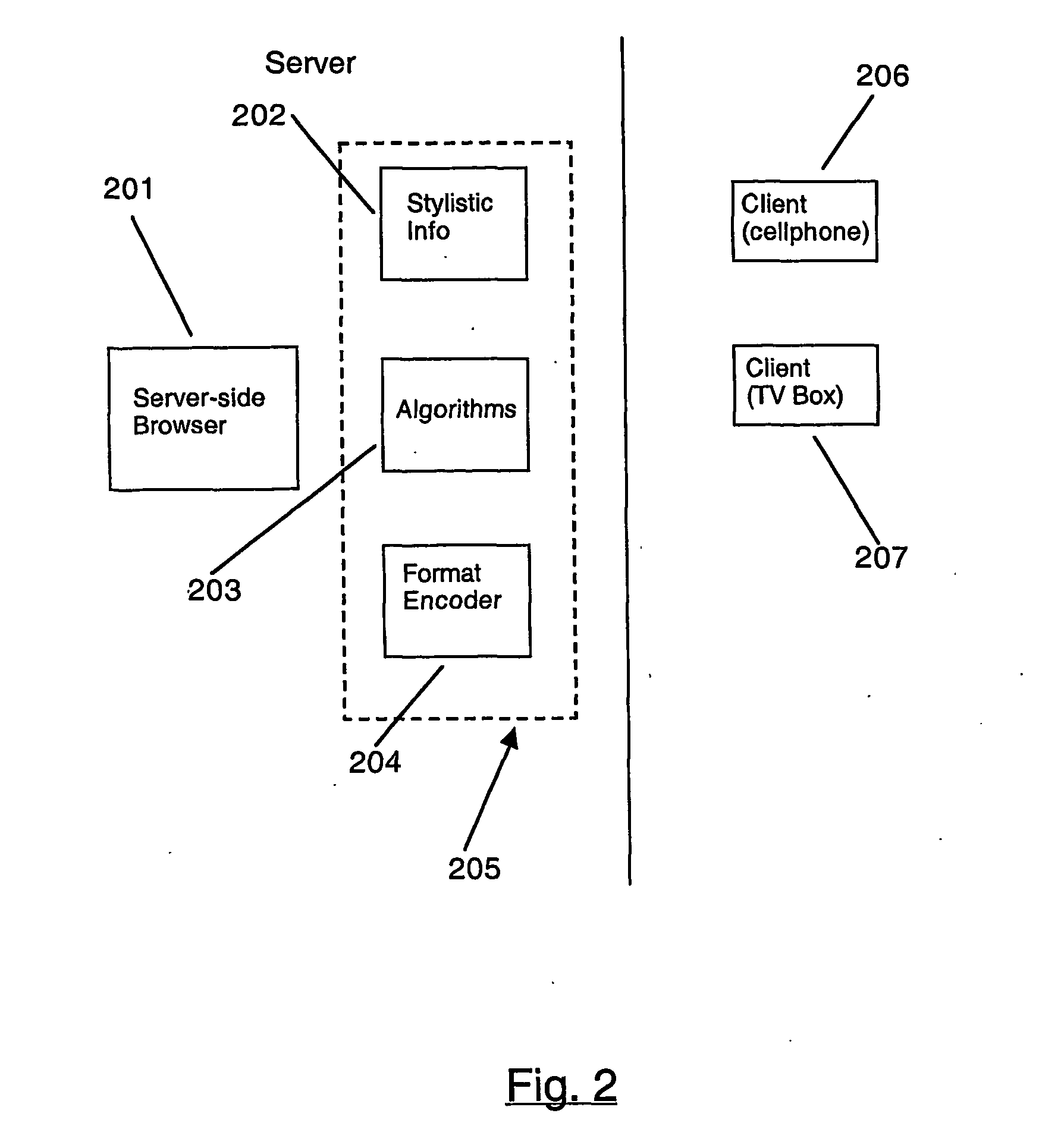

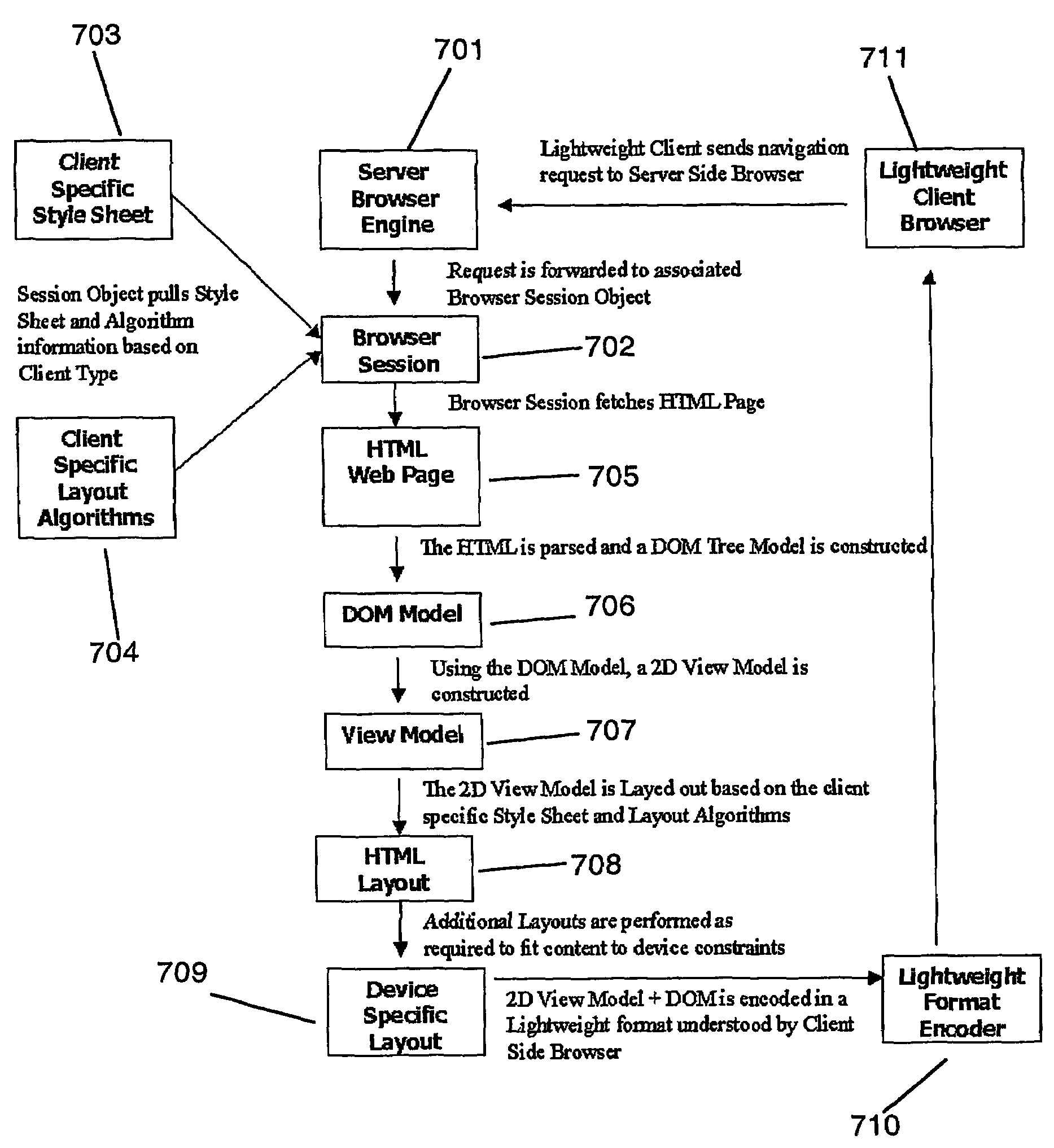

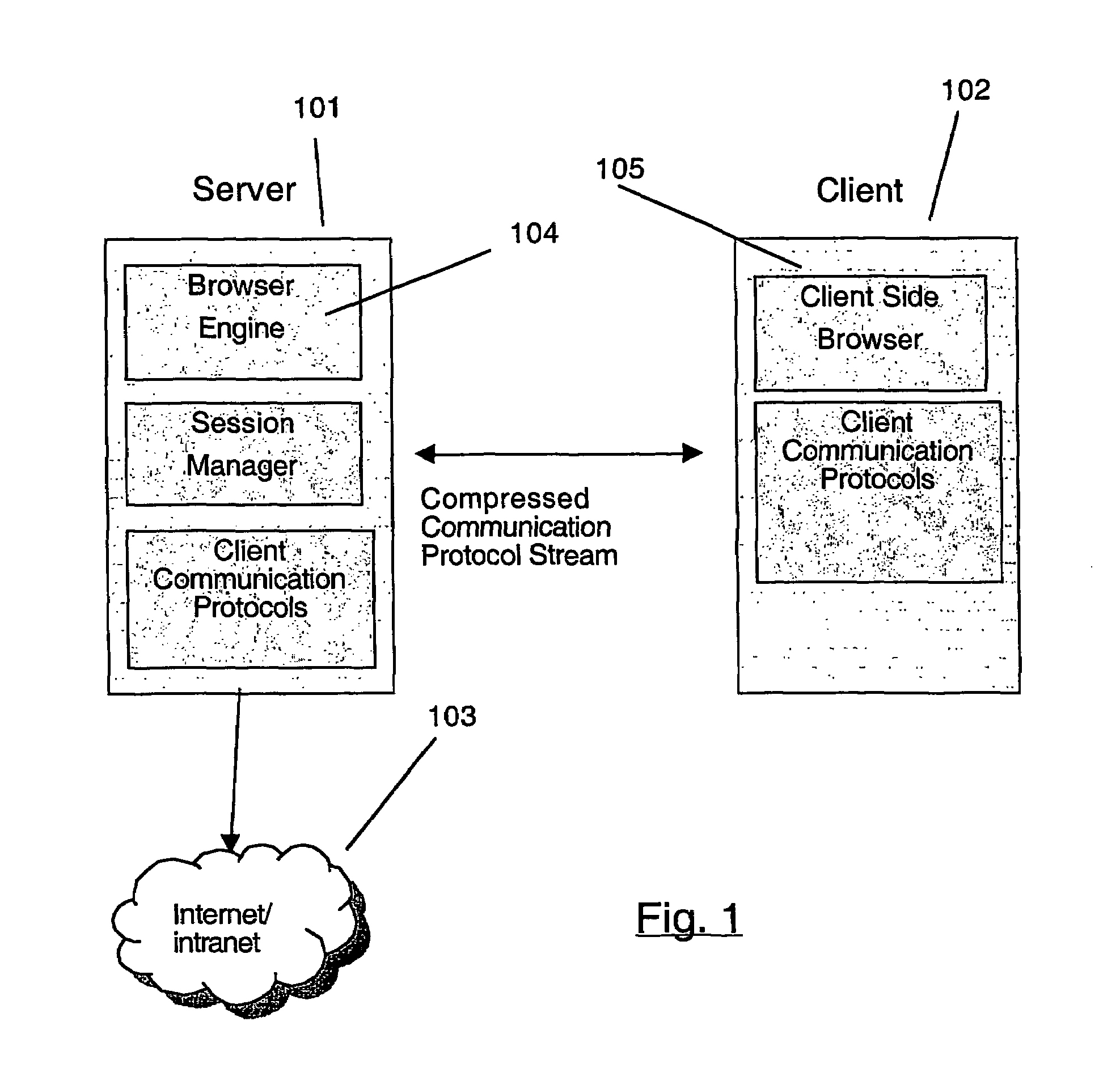

Server-based browser system

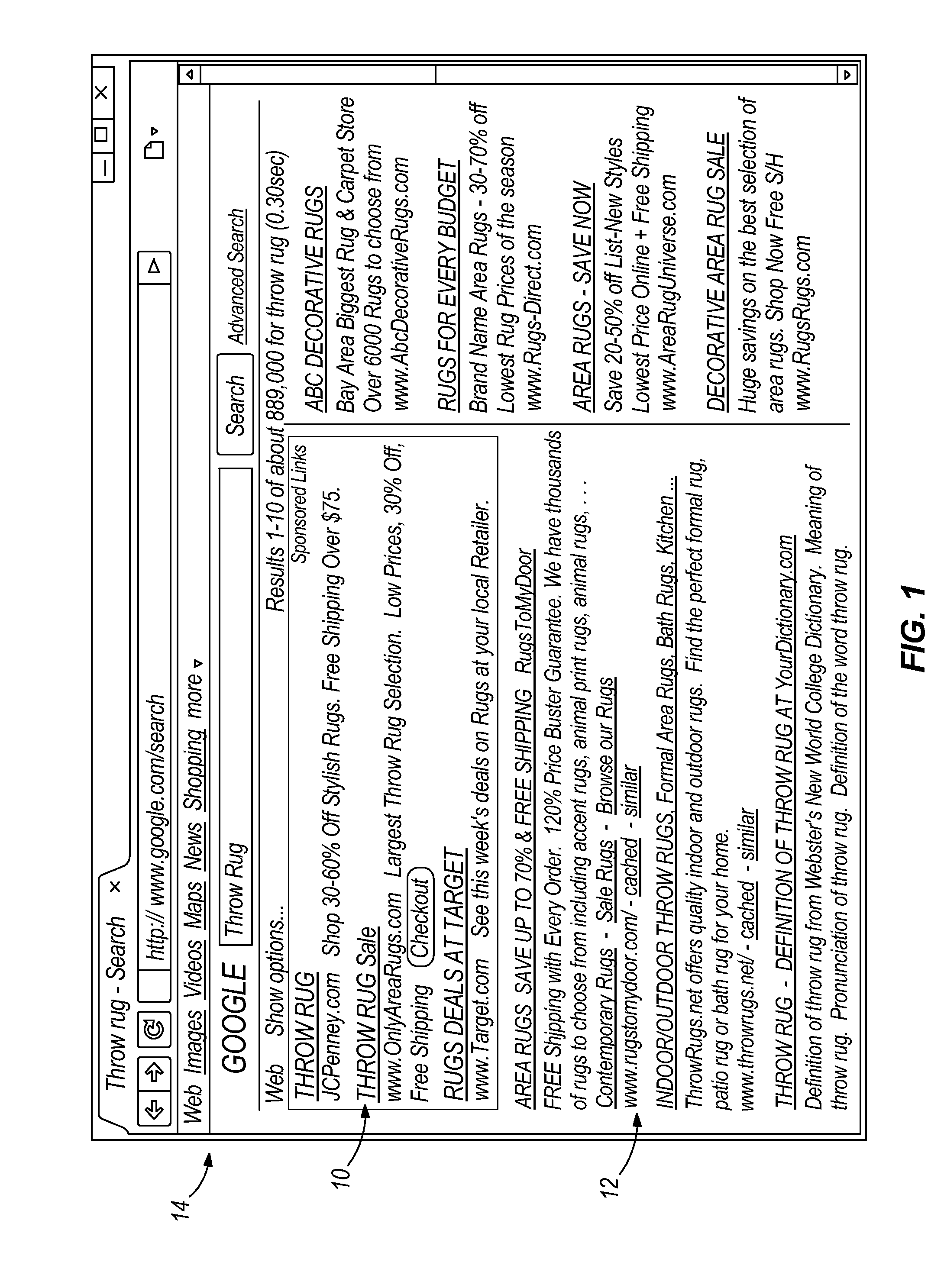

ActiveUS20050027823A1Natural language data processingMultiple digital computer combinationsView modelUniform resource locator

A server-based browser system provides a server-based browser and a client system browser. The client browser reports the position of a user click on its display screen, hotspot ID, or URL to the server-side browser which retrieves a Document Object Model (DOM) model and view tree for the client and finds the location on the Web page that the user clicked on using the coordinates or hotspot ID received from the client. If there is a script associated with the location, it is executed and the resulting page location is requested from the appropriate server. If there is a URL associated with the location, it is requested from the appropriate server. The response Web page HTML definition is parsed and a DOM tree model is created which is used to create a view tree model. The server-side browser retrieves a style sheet, layout algorithms, and device constraints for the client device and lays out the view model using them onto a virtual page and determines the visual content. Textual and positional information are highly compressed and formatted into a stream and sent to the client browser which decodes the stream and displays the page to the user using the textual and positional information.

Owner:MERCURY KINGDOM ASSETS

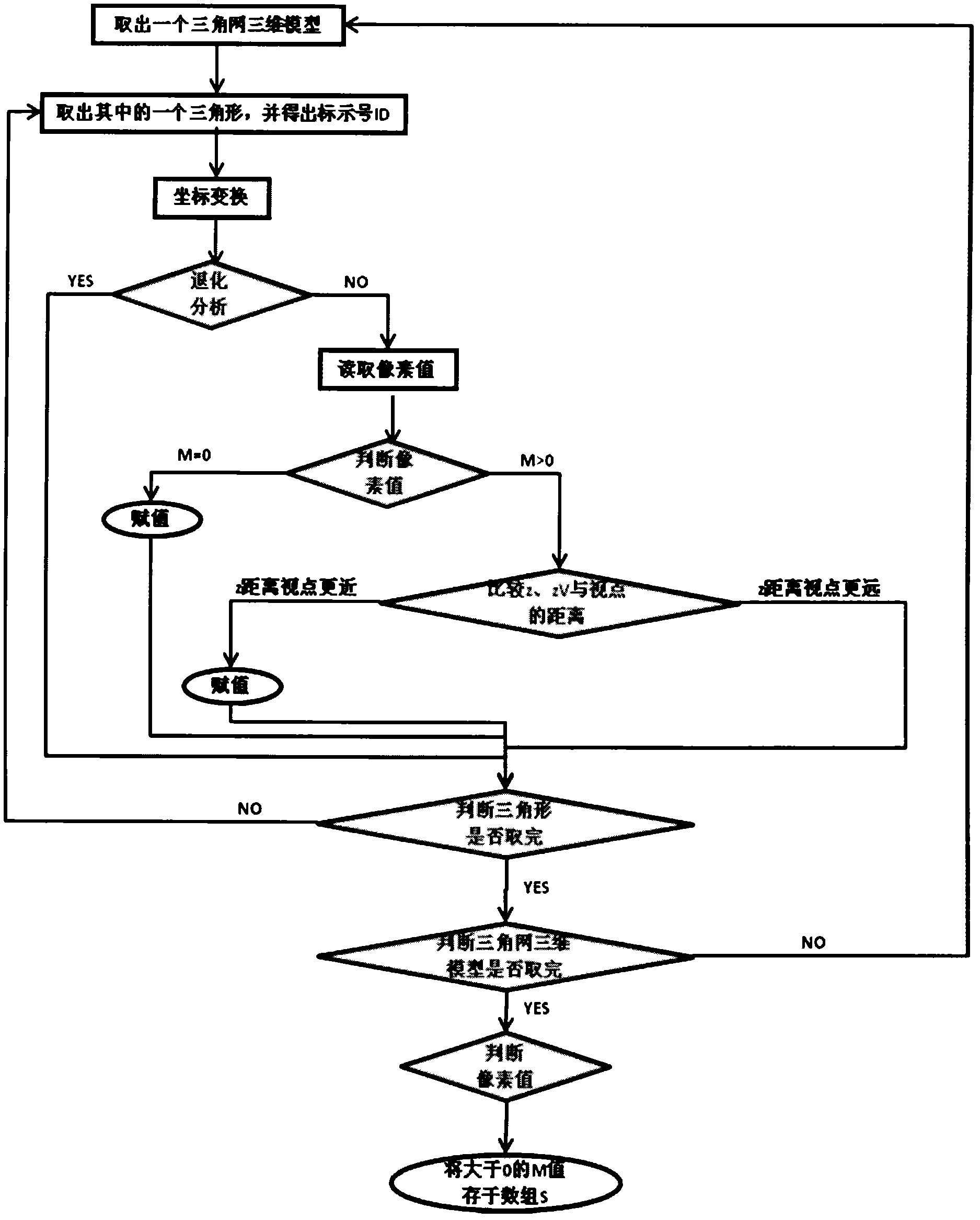

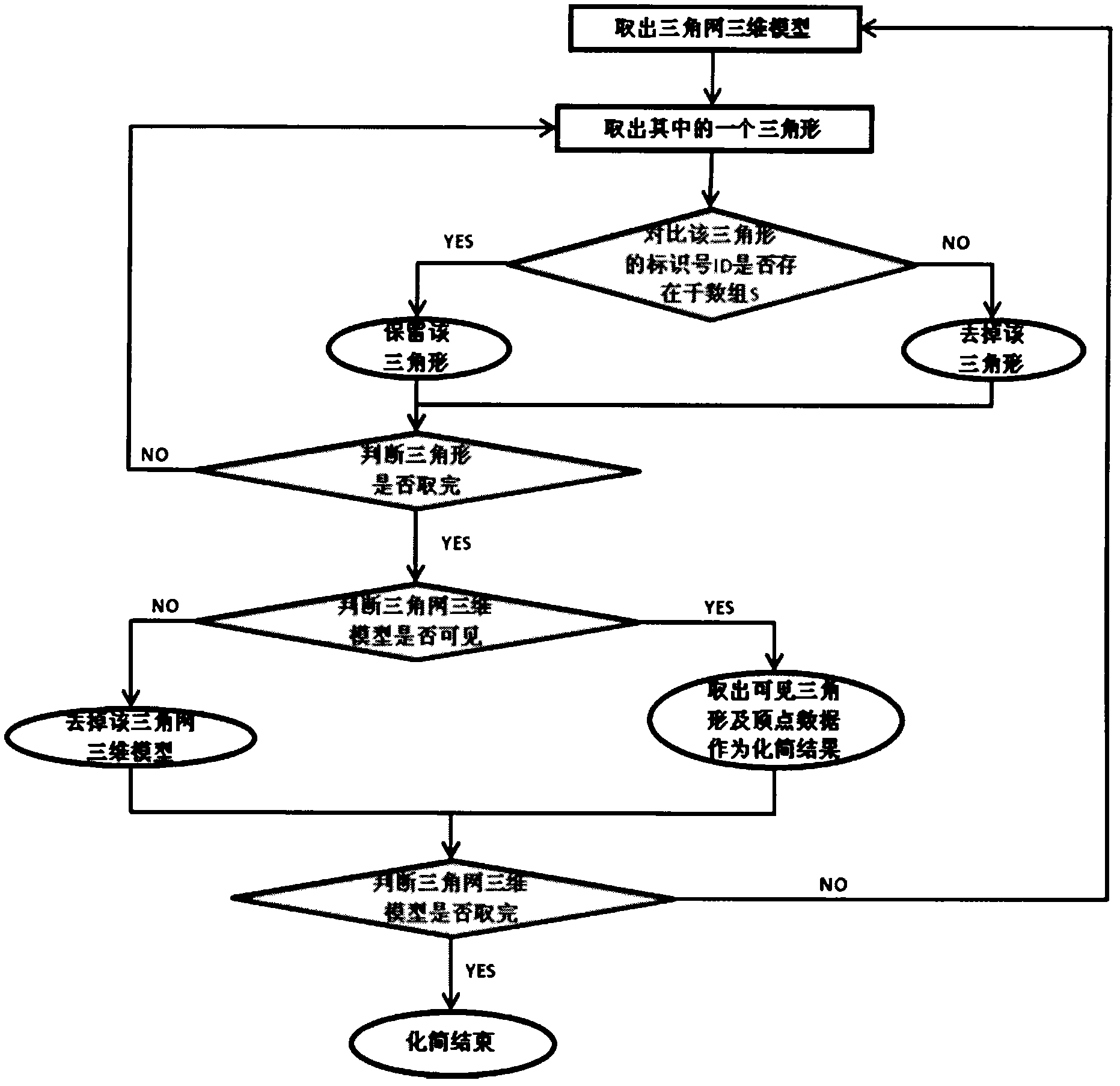

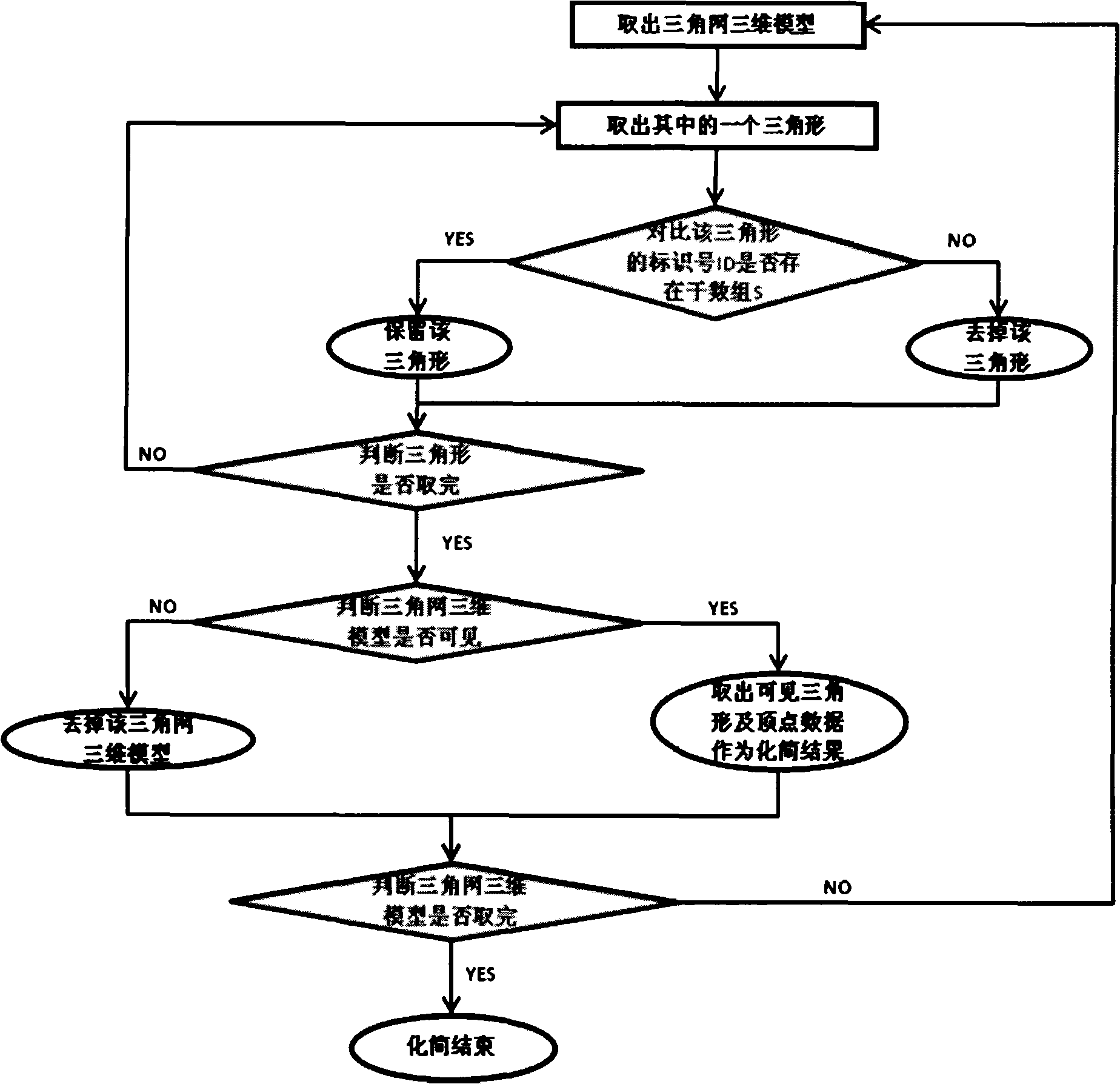

Method for carrying out self-adaption simplification, gradual transmission and rapid charting on three-dimensional model

InactiveCN102044089ADisplay losslessGuaranteed correctness3D-image rendering3D modellingResidenceView model

The invention provides a method for carrying out self-adaption simplification, gradual transmission and rapid charting on a three-dimensional model by utilizing a space solid view model, which simulates and analyzes the display process of the three-dimensional model by utilizing the space solid view model to realize the self-adaption simplification, gradual transmission and rapid charting of the three-dimensional model, thereby completely solving the problems of network transmission and display of the mass three-dimensional model data. The beneficial effects of the method provided by the invention are mainly reflected in that the lossless display and self-adaption simplification of the three-dimensional model can be guaranteed; the display effect before the simplification is consistent to that after the simplification; the gradual transmission designed based on the simplification method can completely solve the conflict between the explosion type increase of the mass space data and the transmission through the limited network bandwidth; the data volume of the three-dimensional model after the simplification is still small; and the charting speed of the three-dimensional model can be obviously increased by utilizing a residence memory when a view window is refreshed.

Owner:董福田

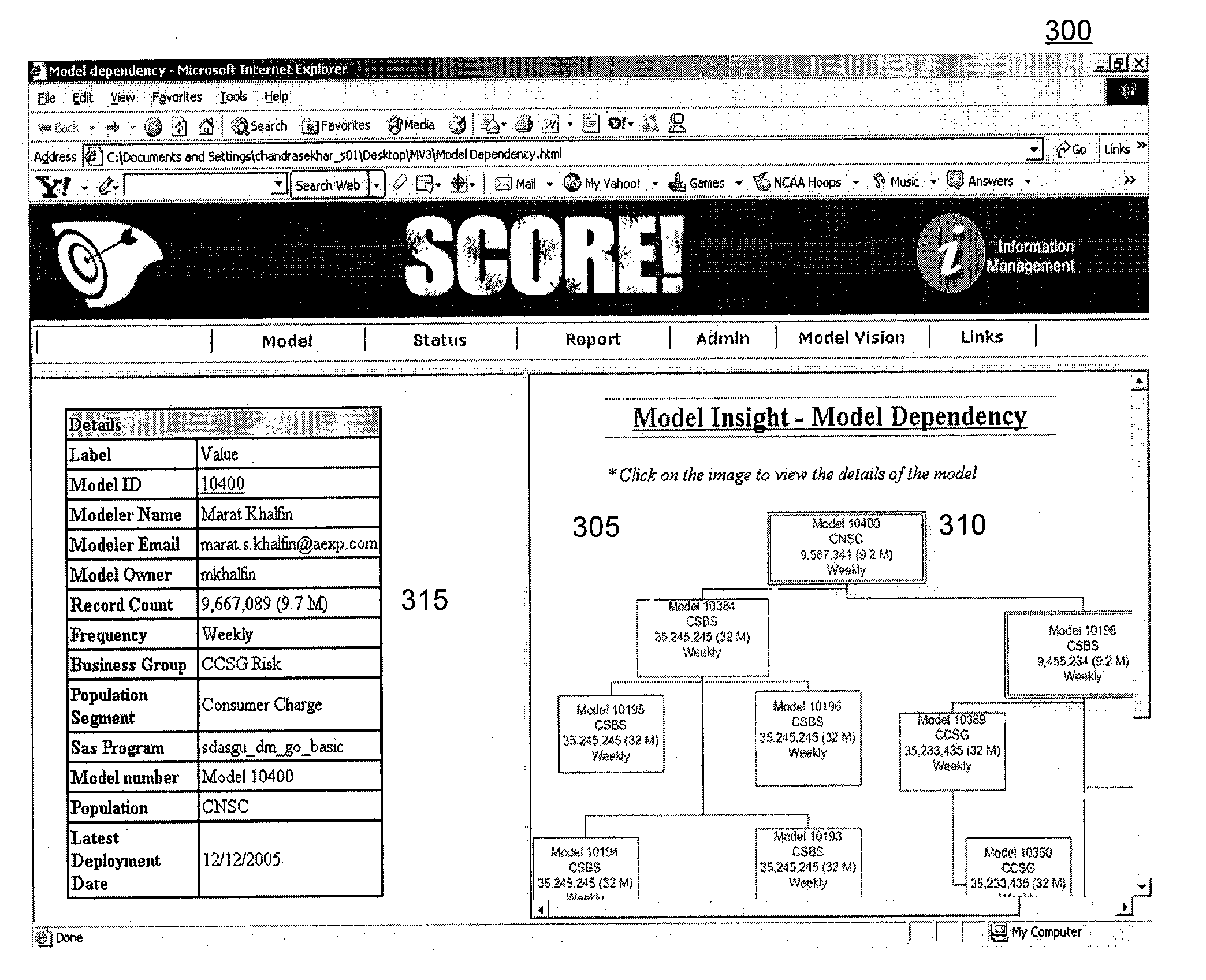

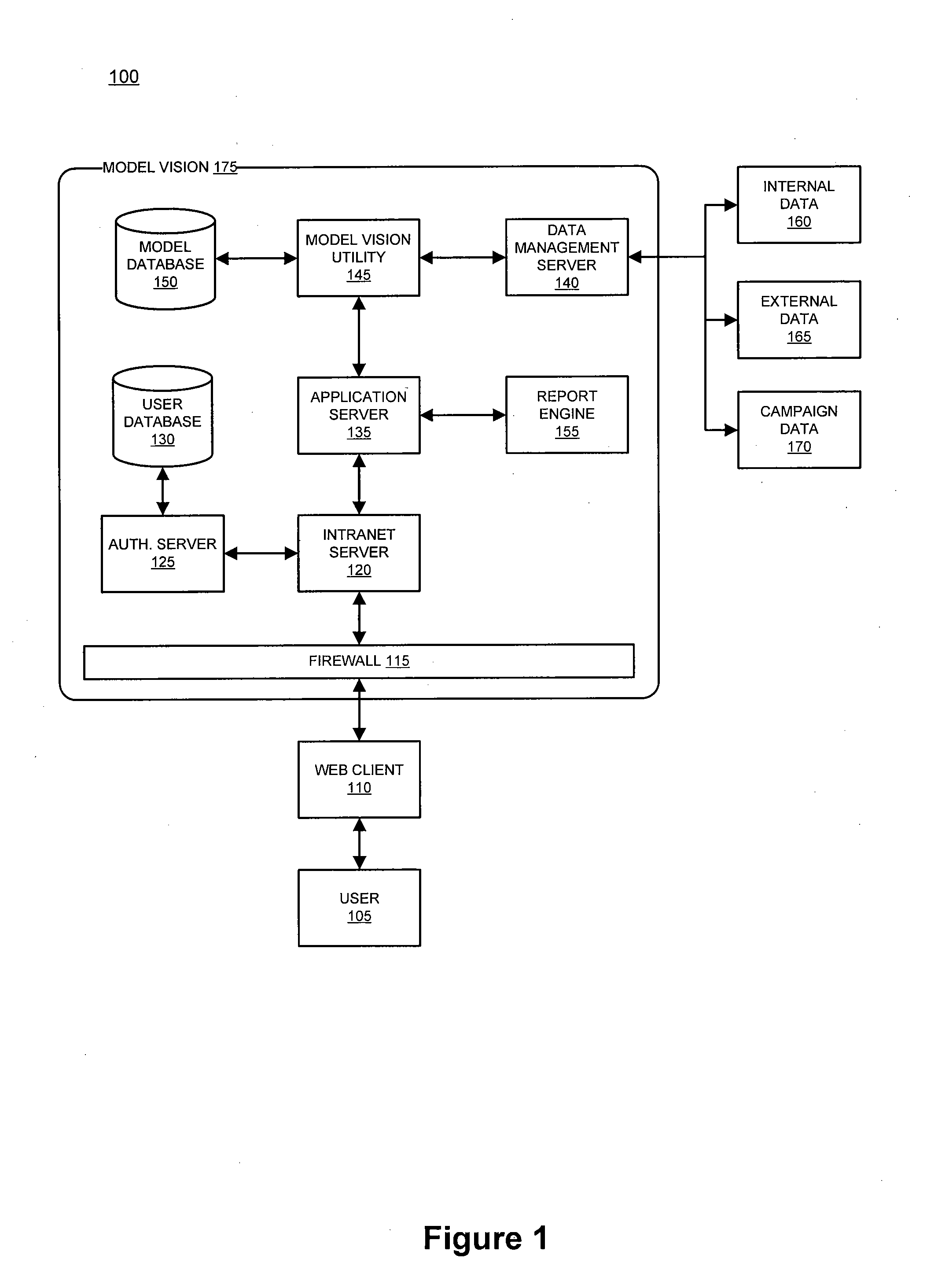

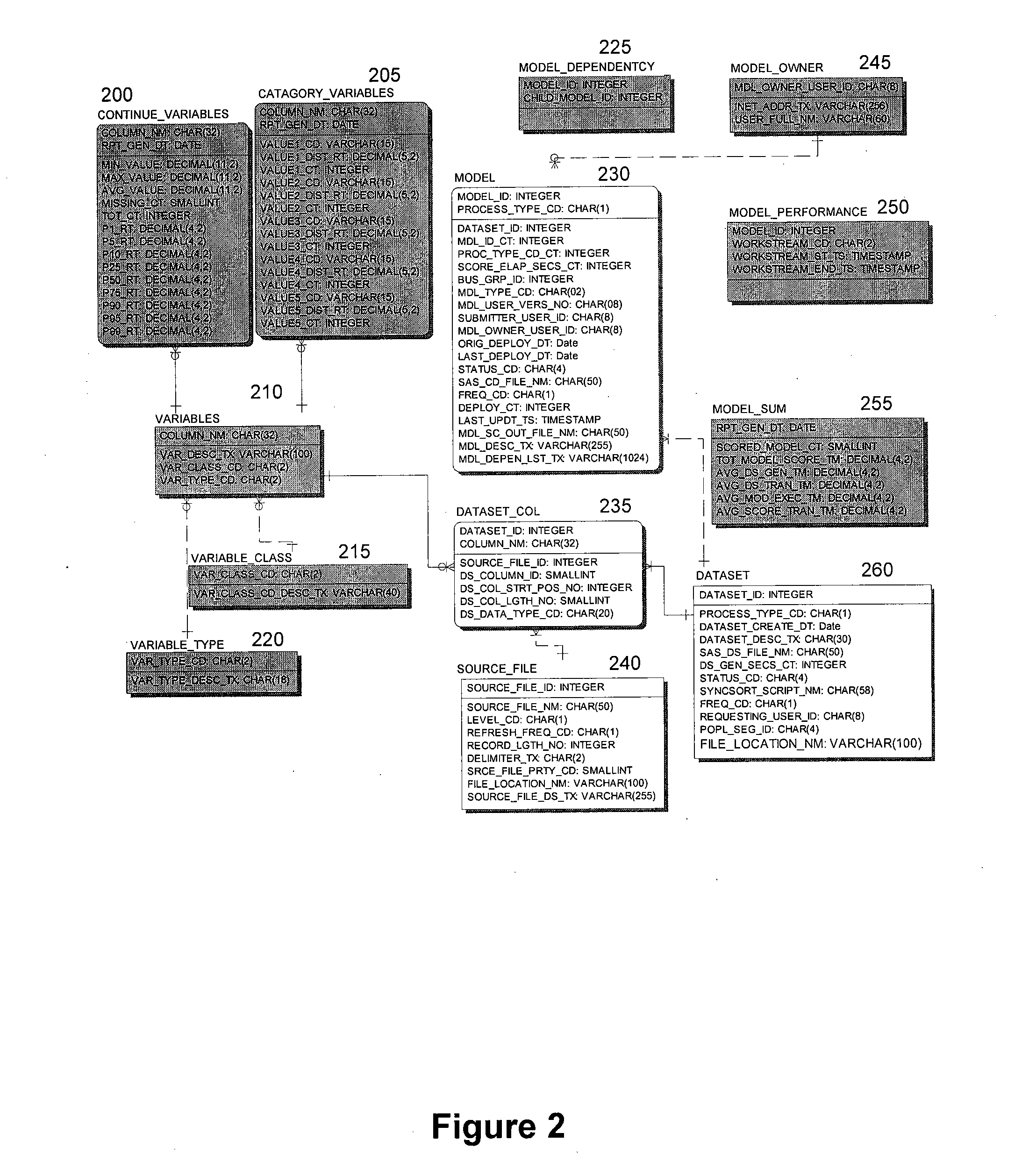

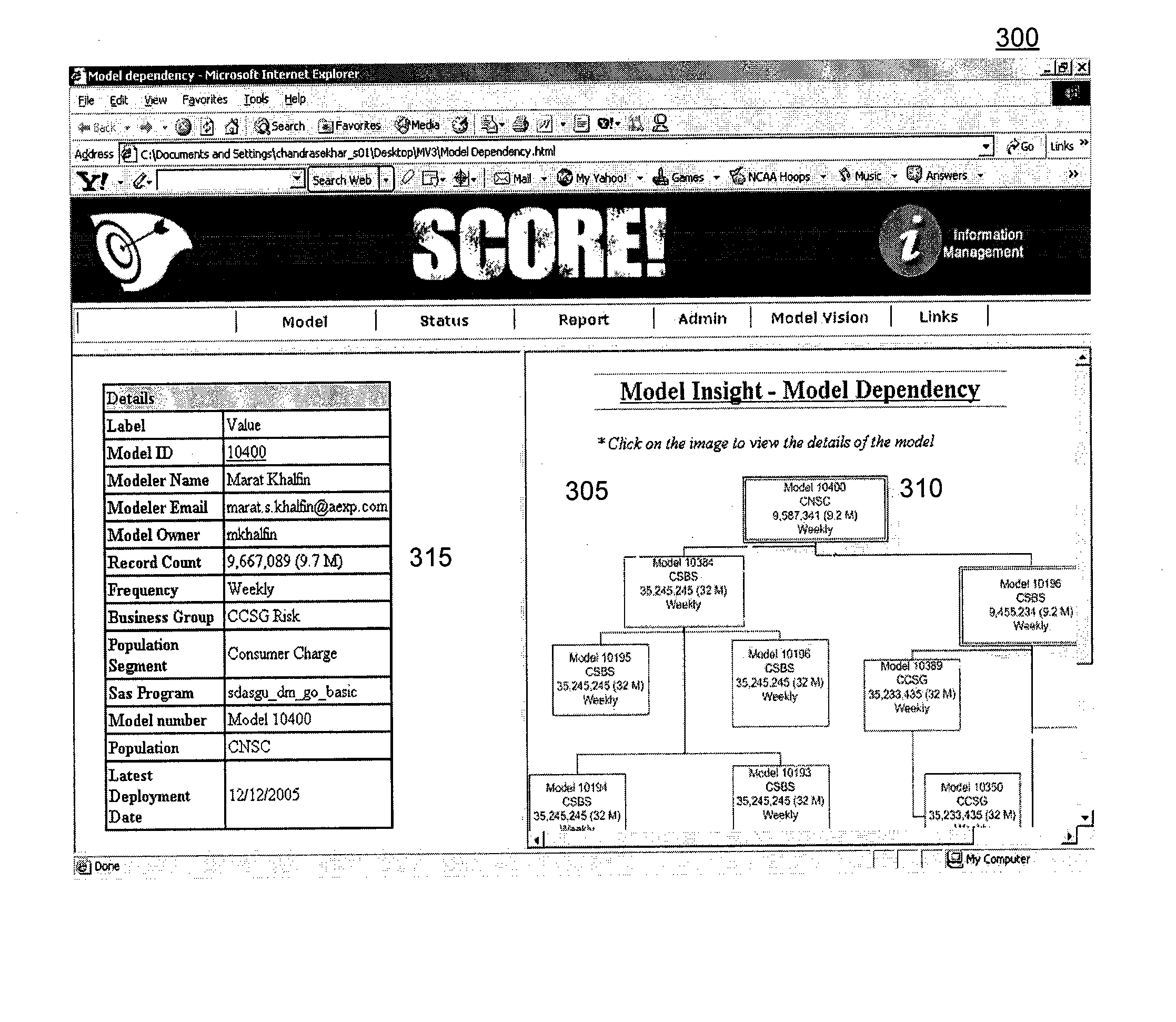

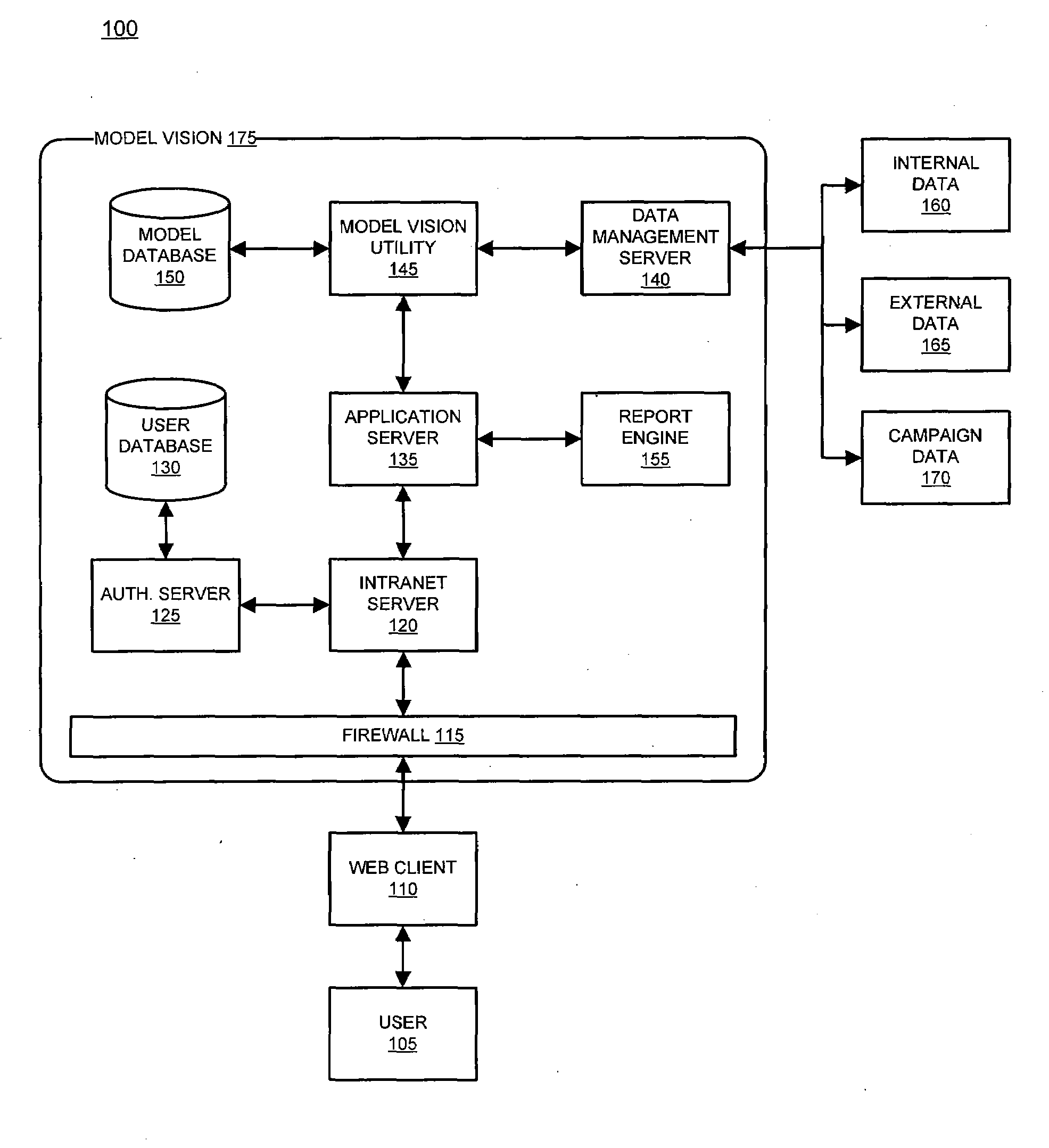

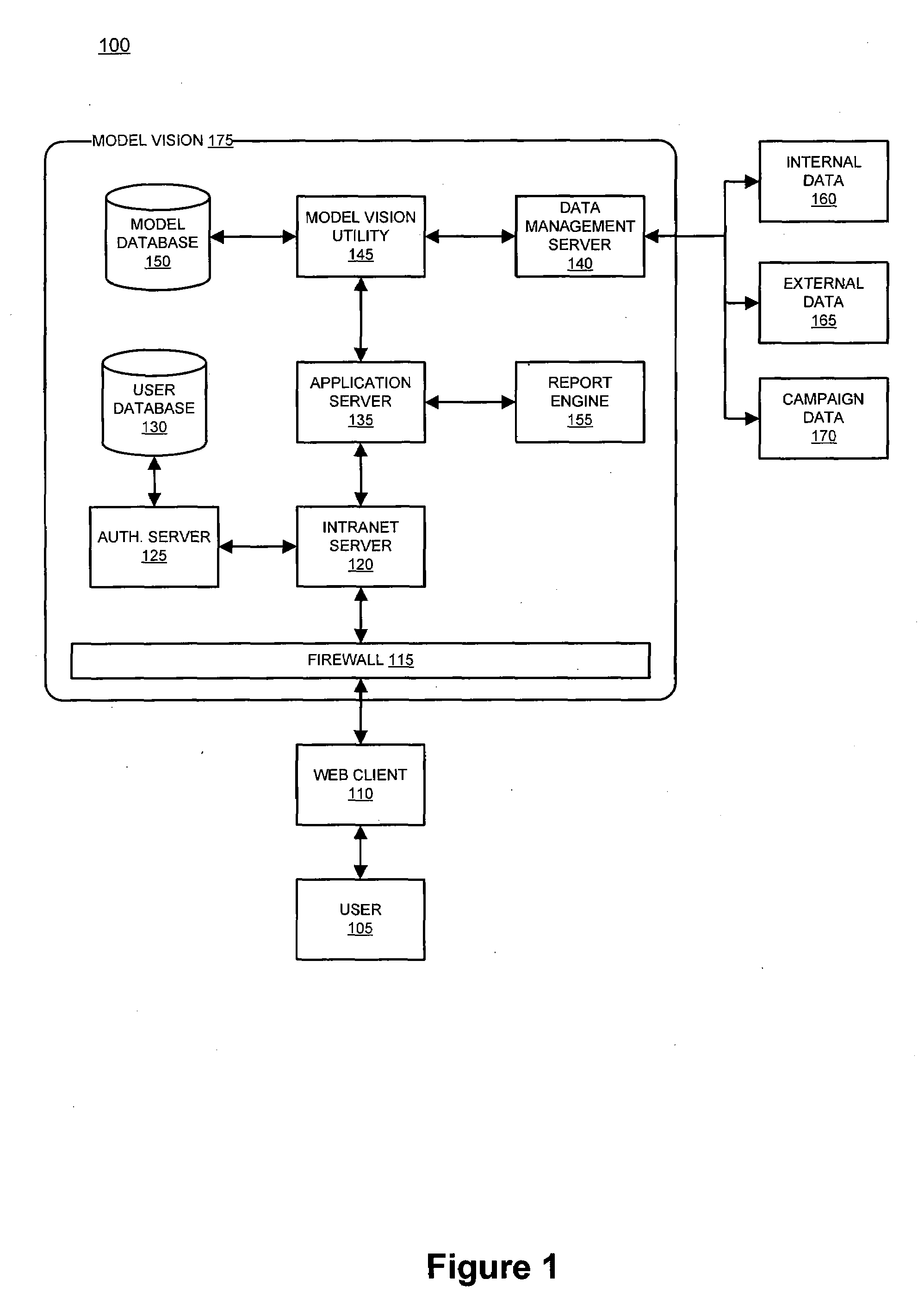

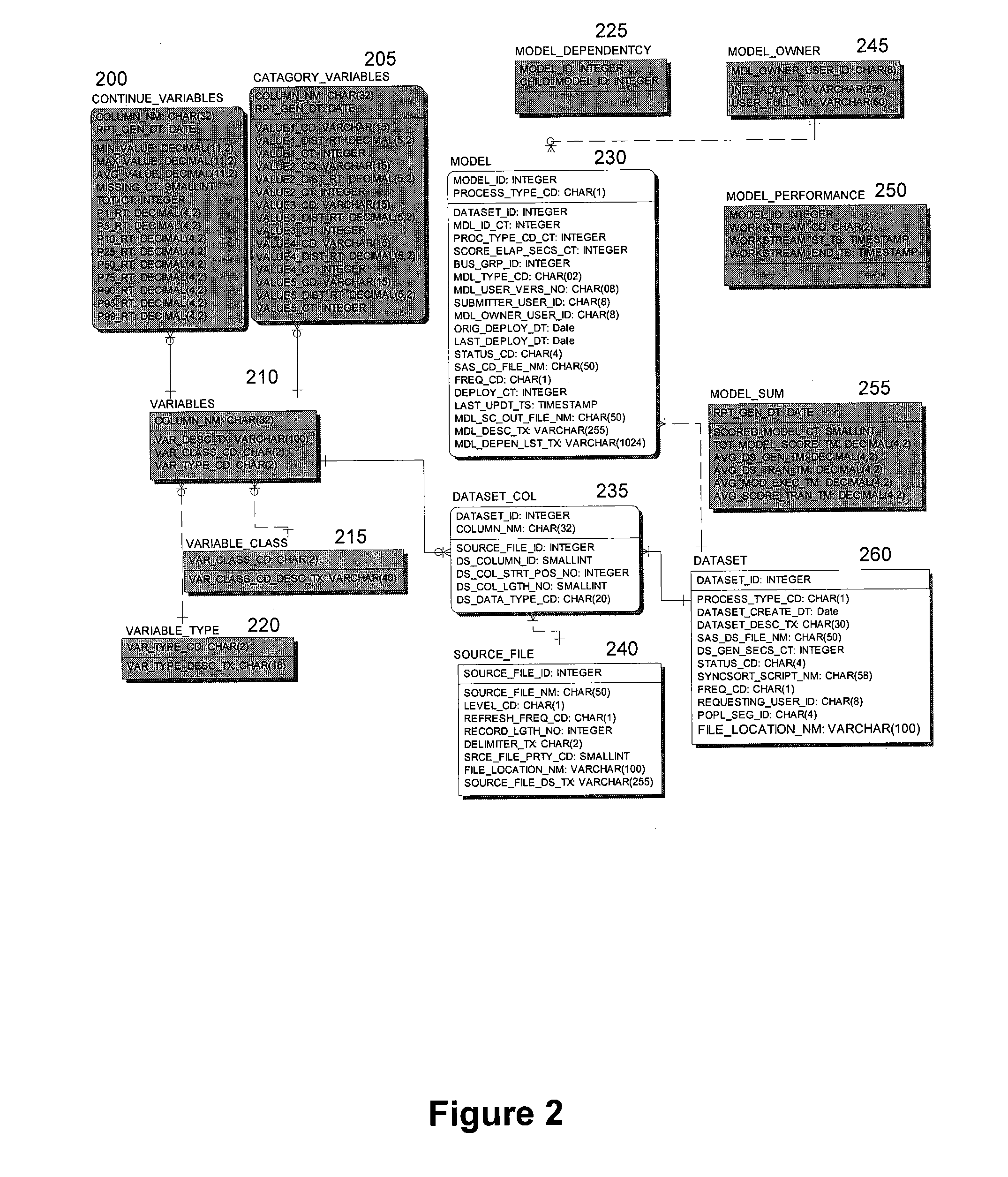

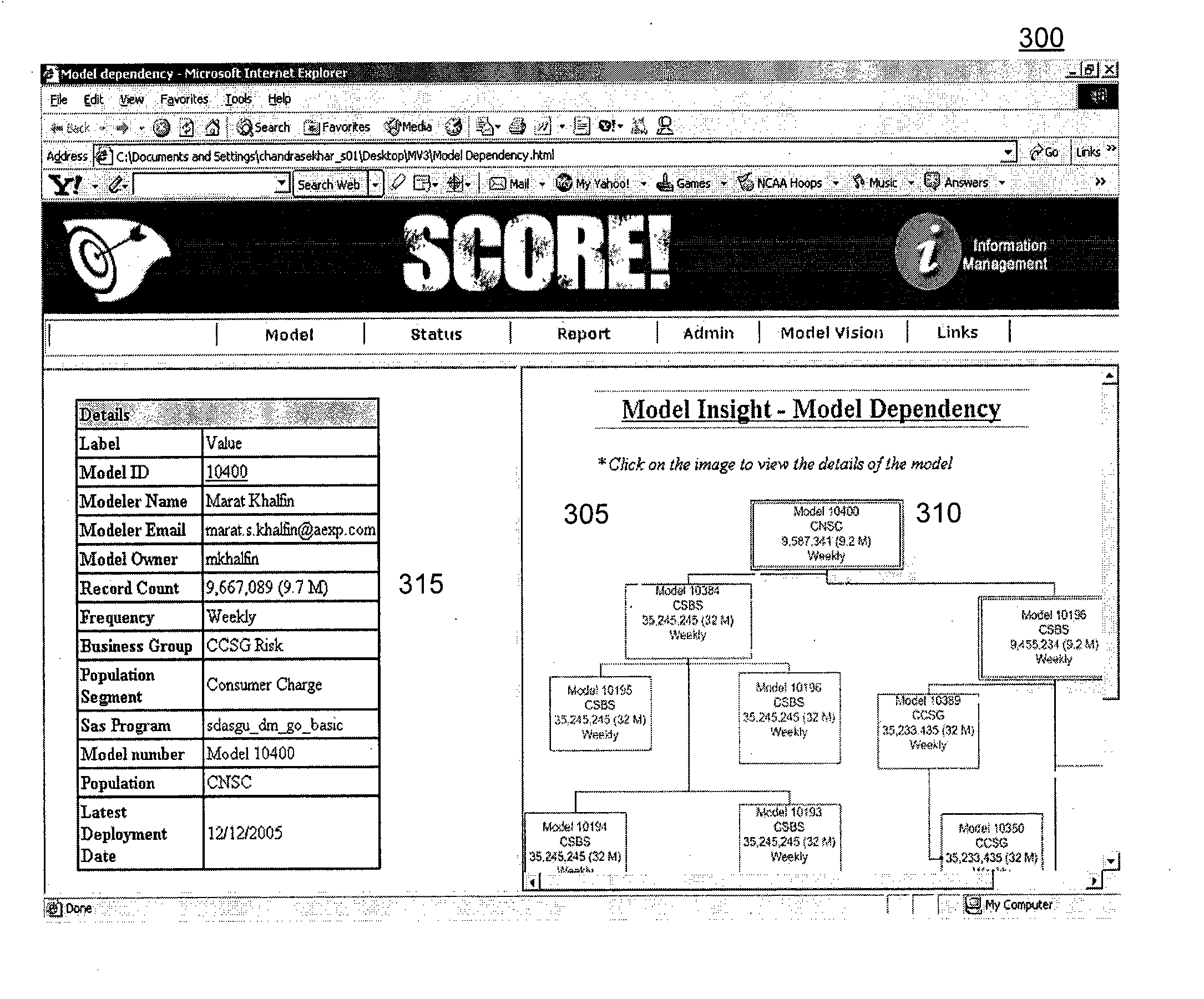

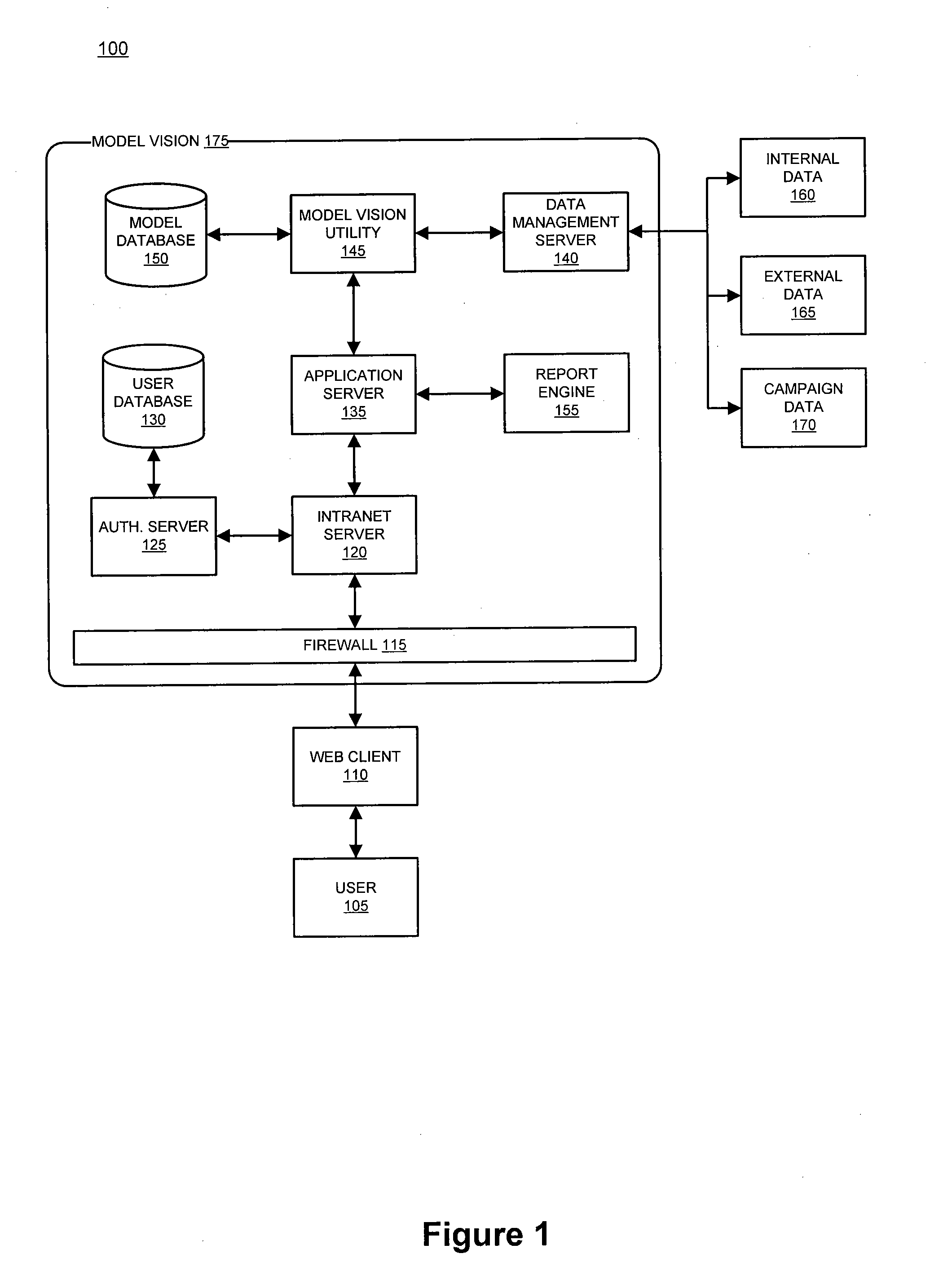

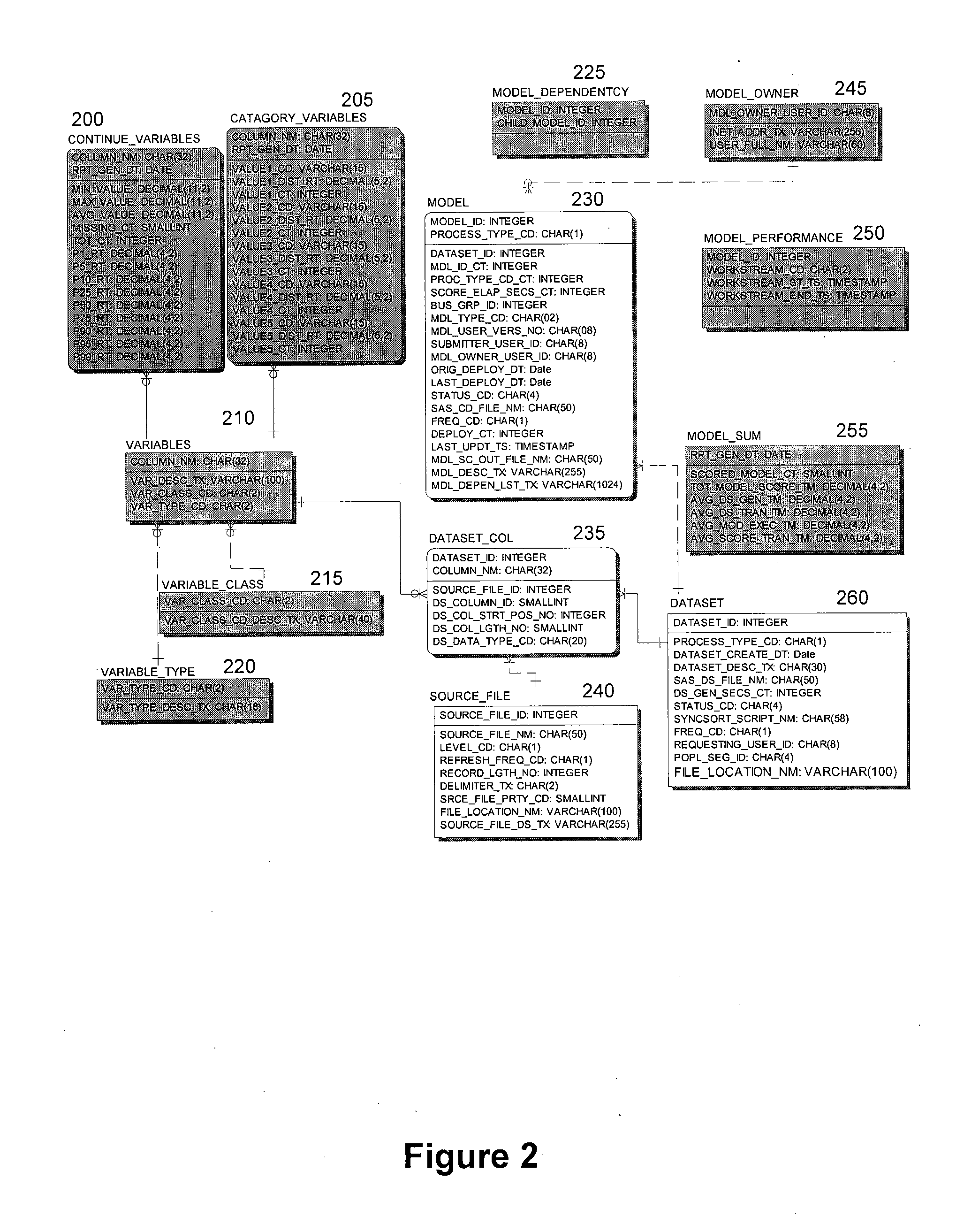

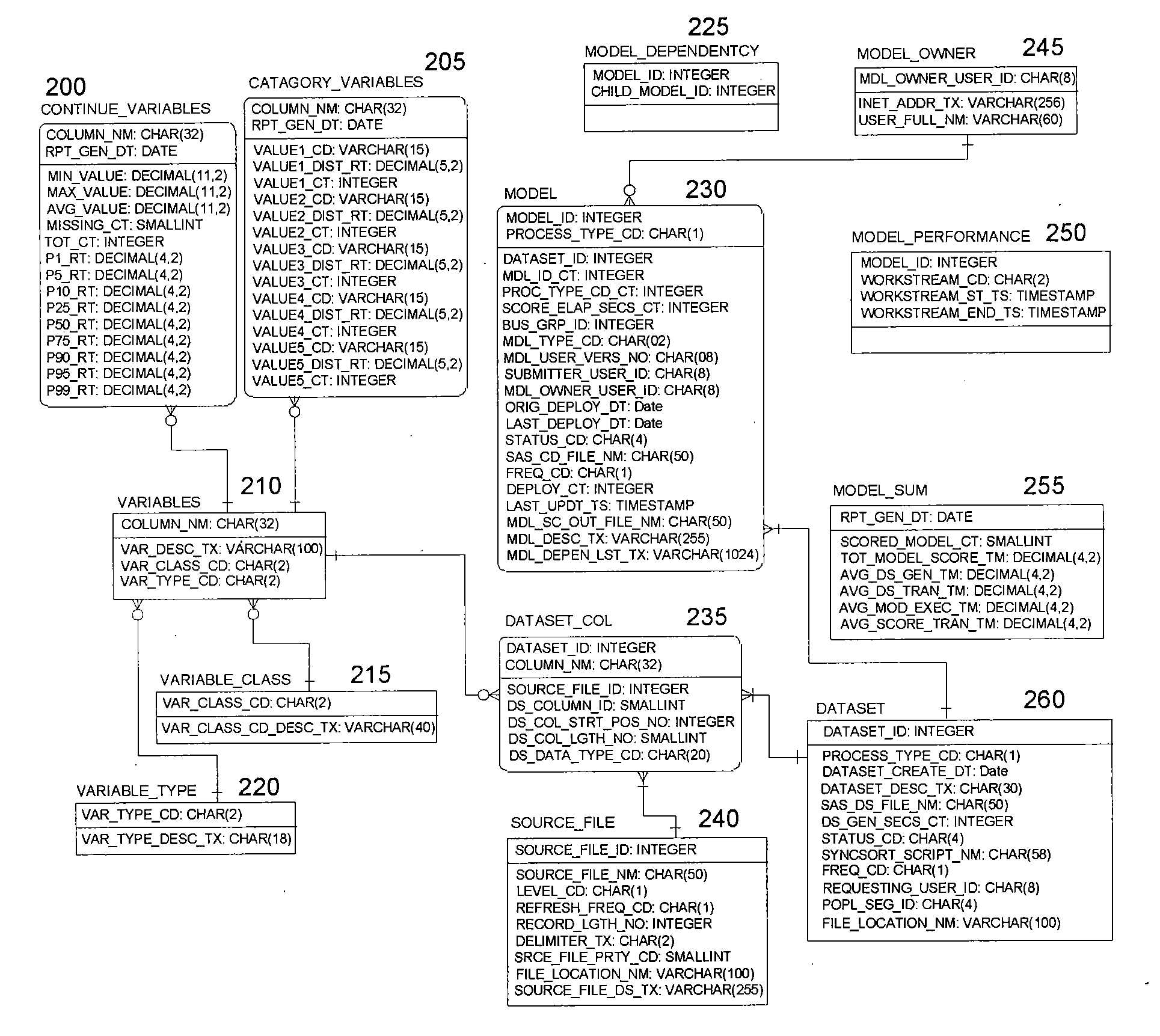

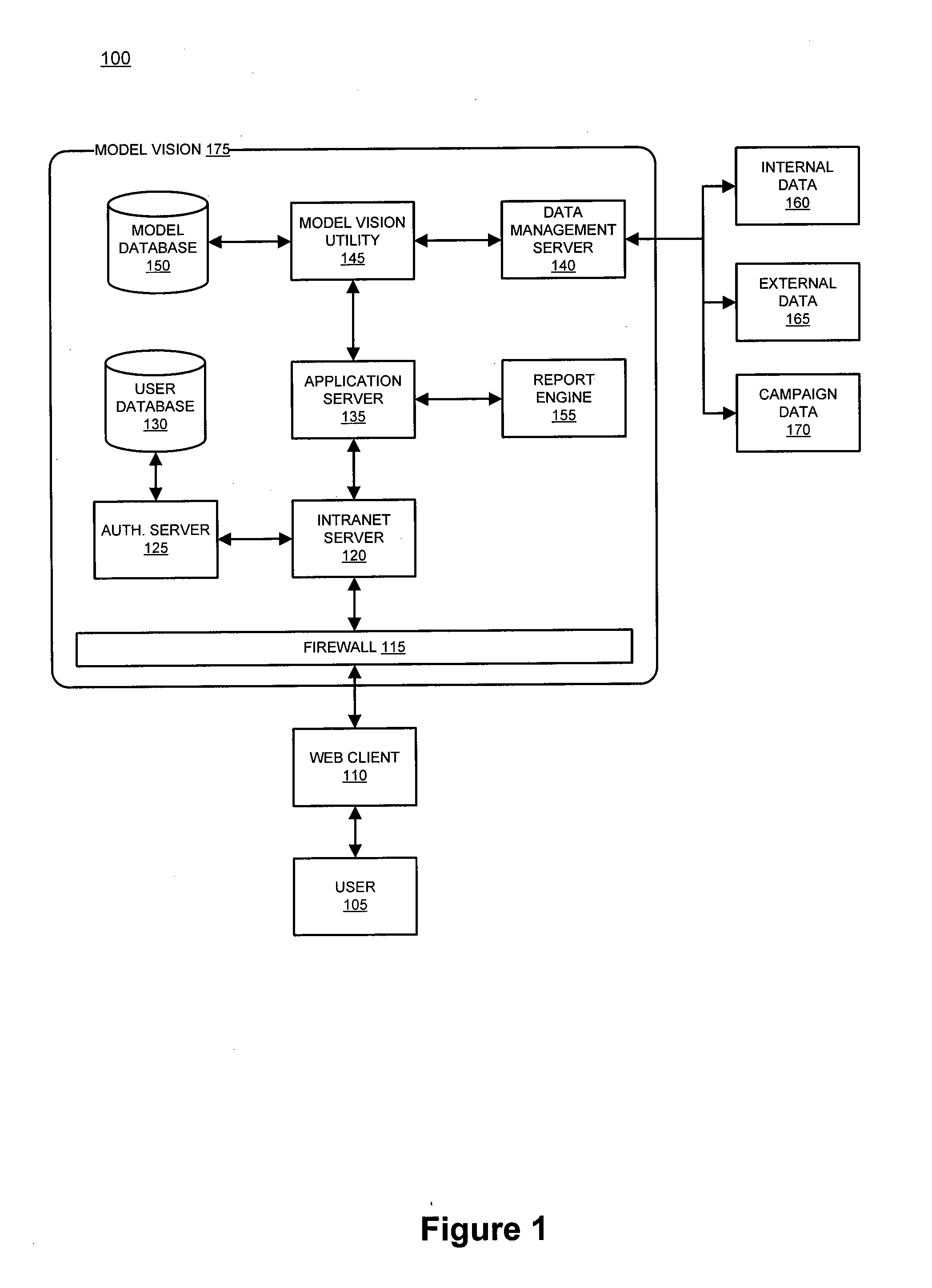

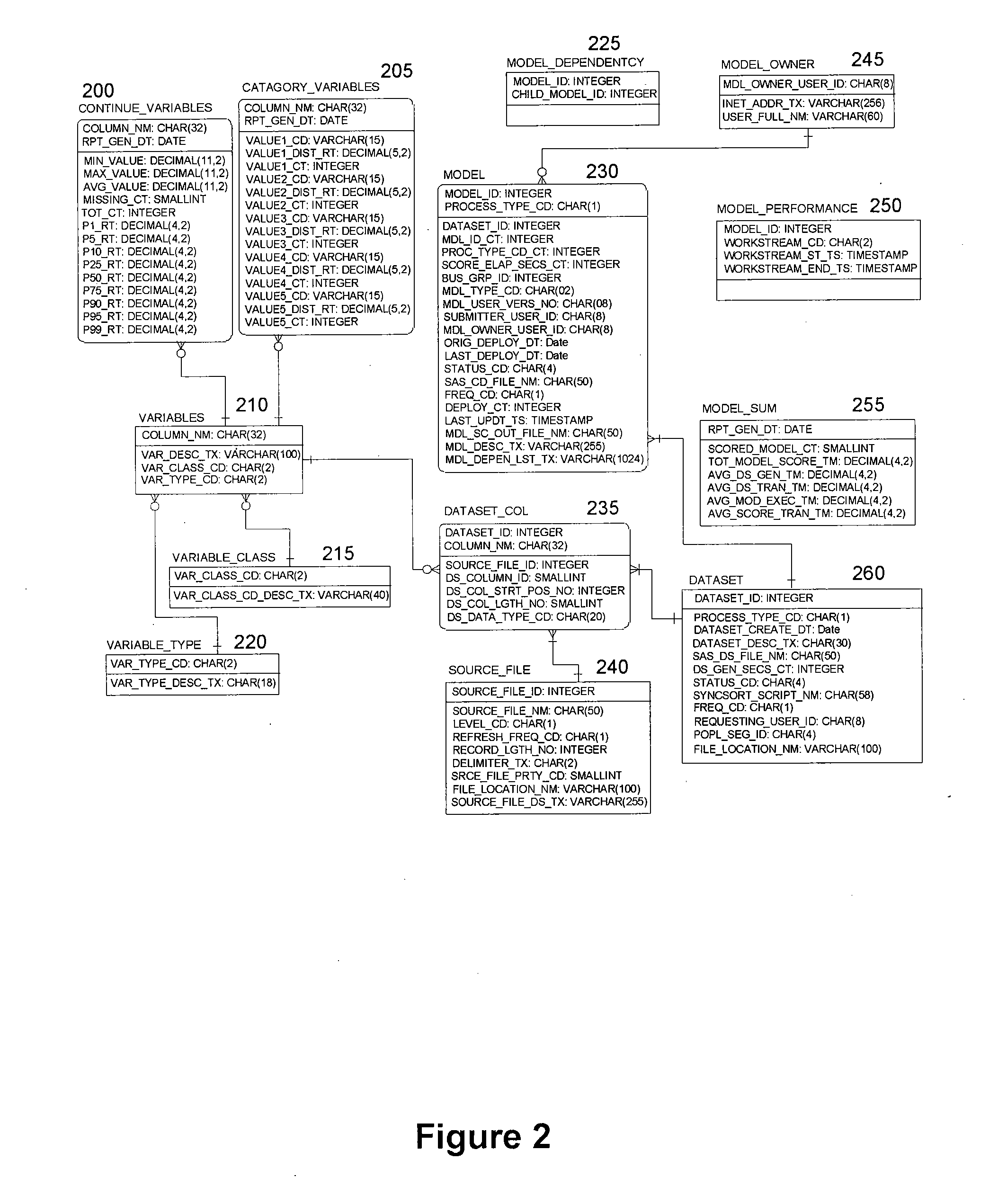

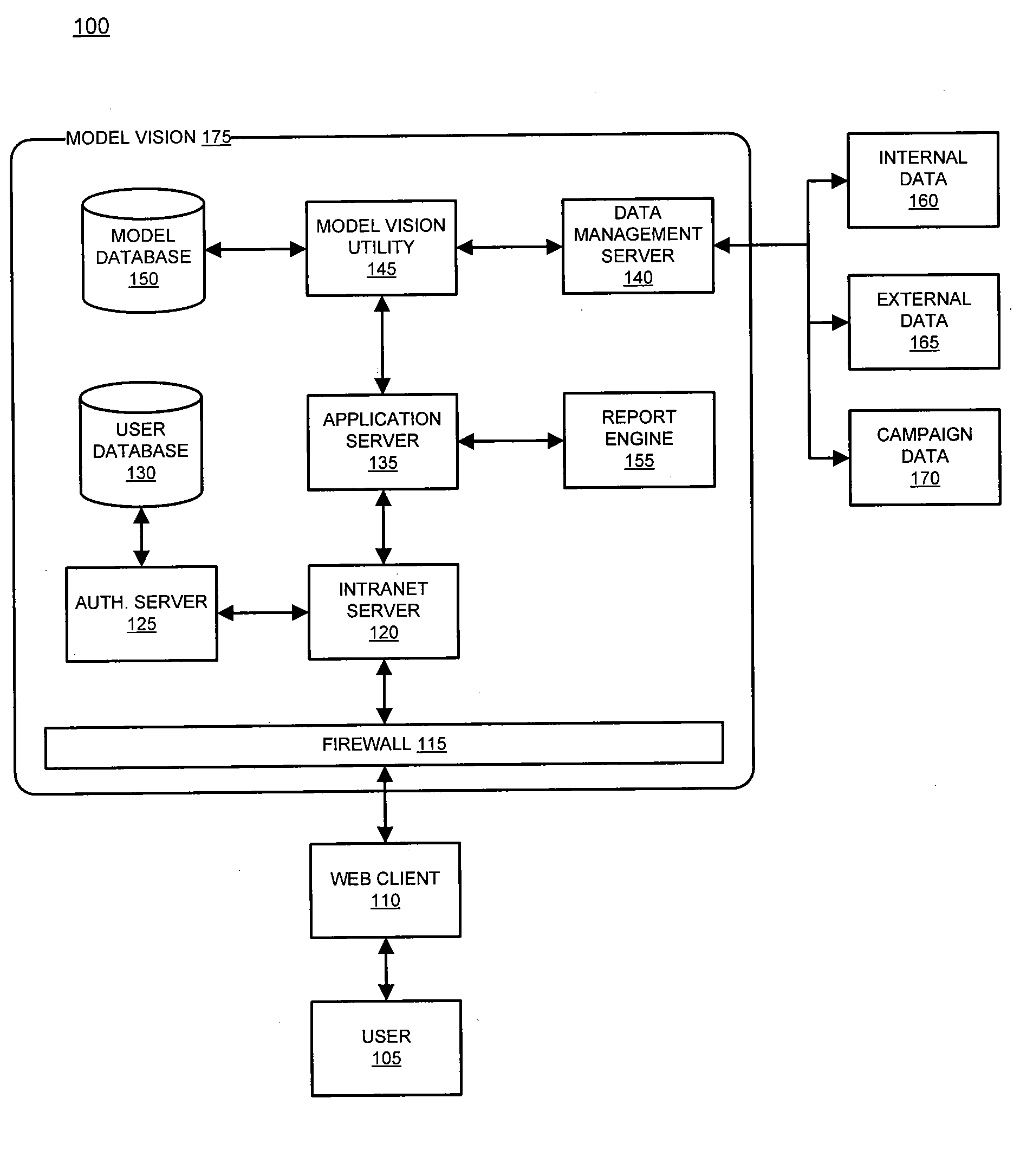

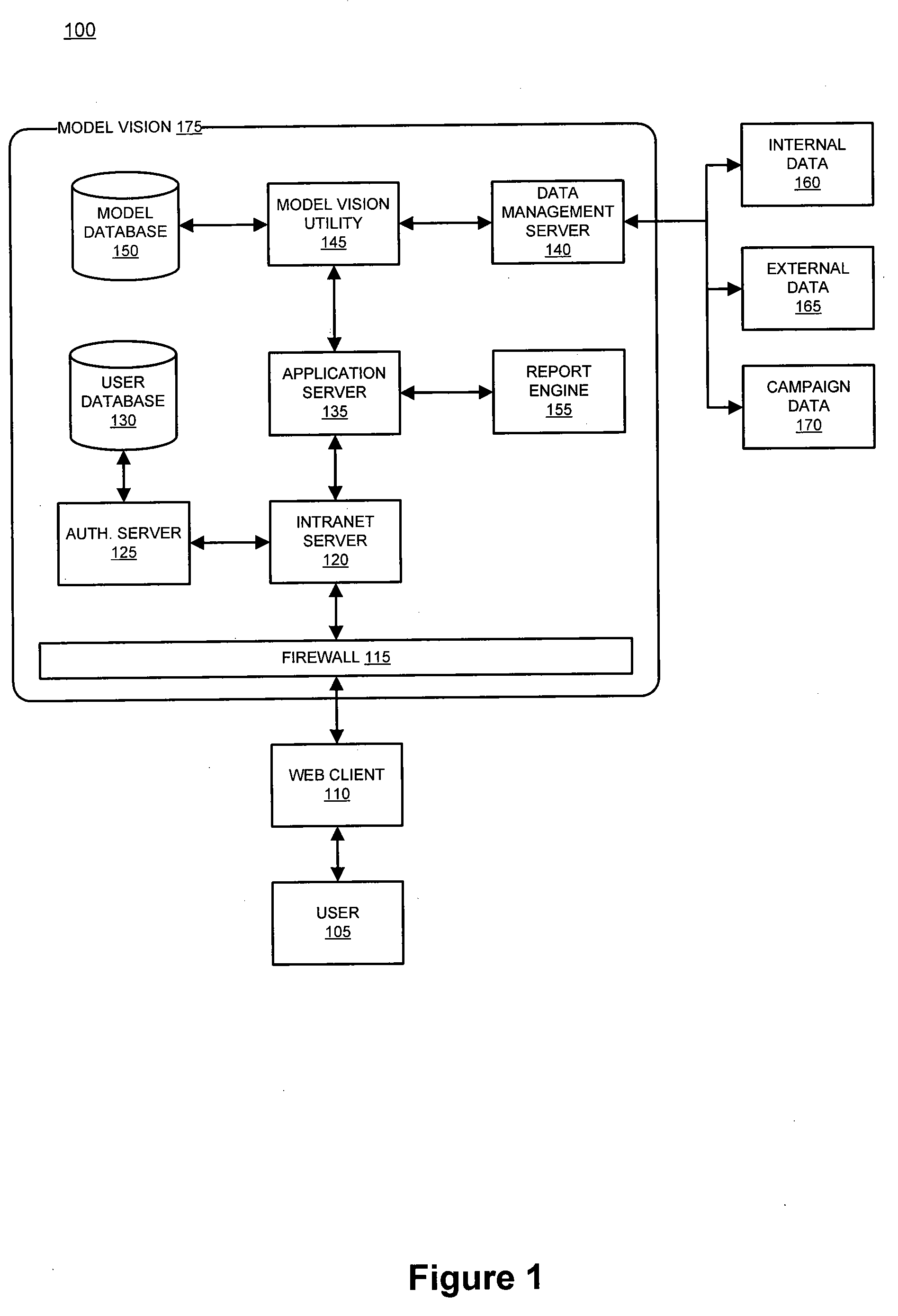

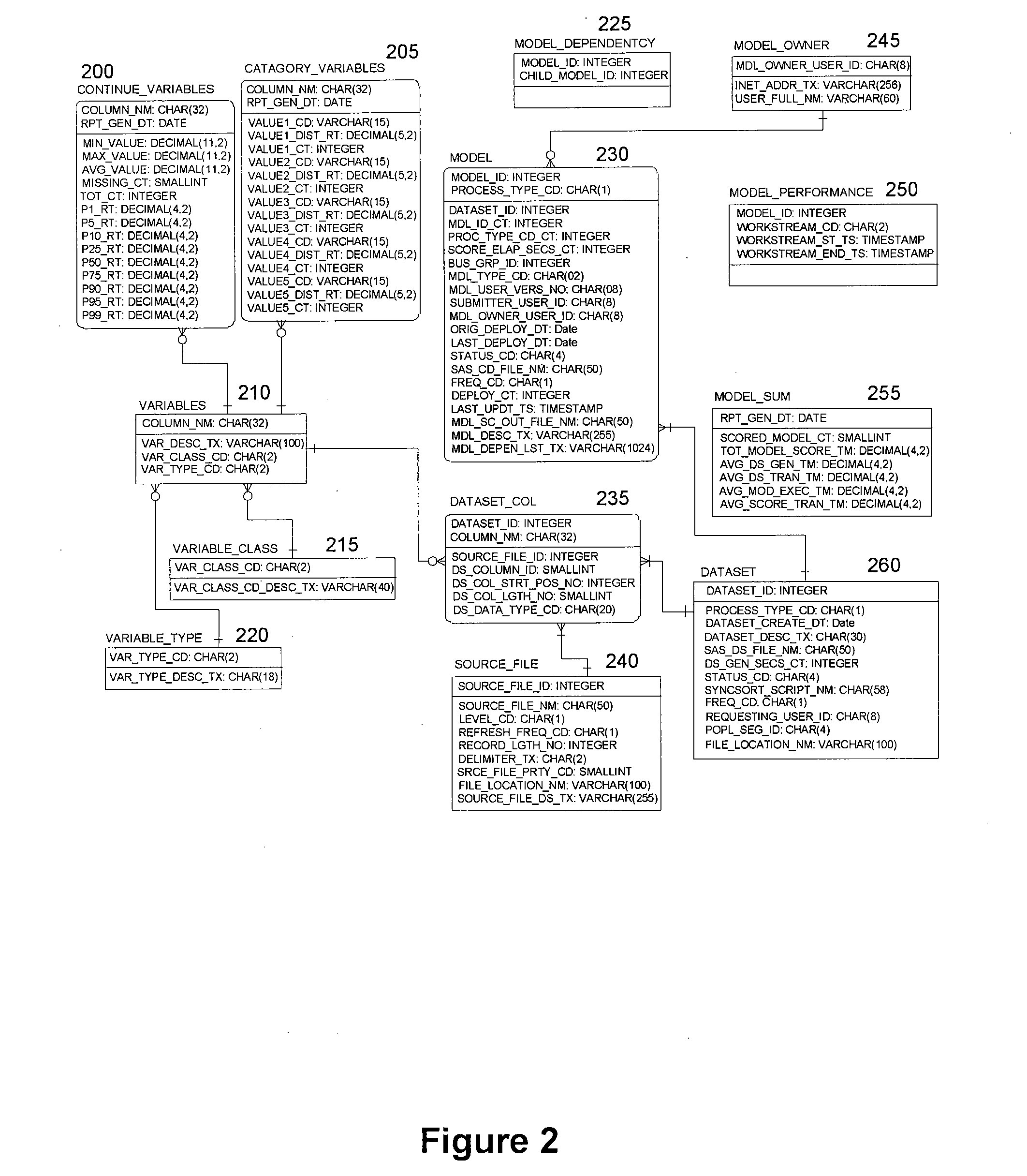

System and method for managing simulation models

ActiveUS20080126394A1Extension of timeShorten the timeDigital data processing detailsResourcesRelational databaseView model

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and retuning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

System and method for managing simulation models

ActiveUS20080126034A1Extension of timeShorten the timeComputation using non-denominational number representationResourcesRelational databaseView model

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and returning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

System and method for managing simulation models

ActiveUS20080126313A1Extension of timeShorten the timeDigital data processing detailsResourcesView modelRelational database

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and retuning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

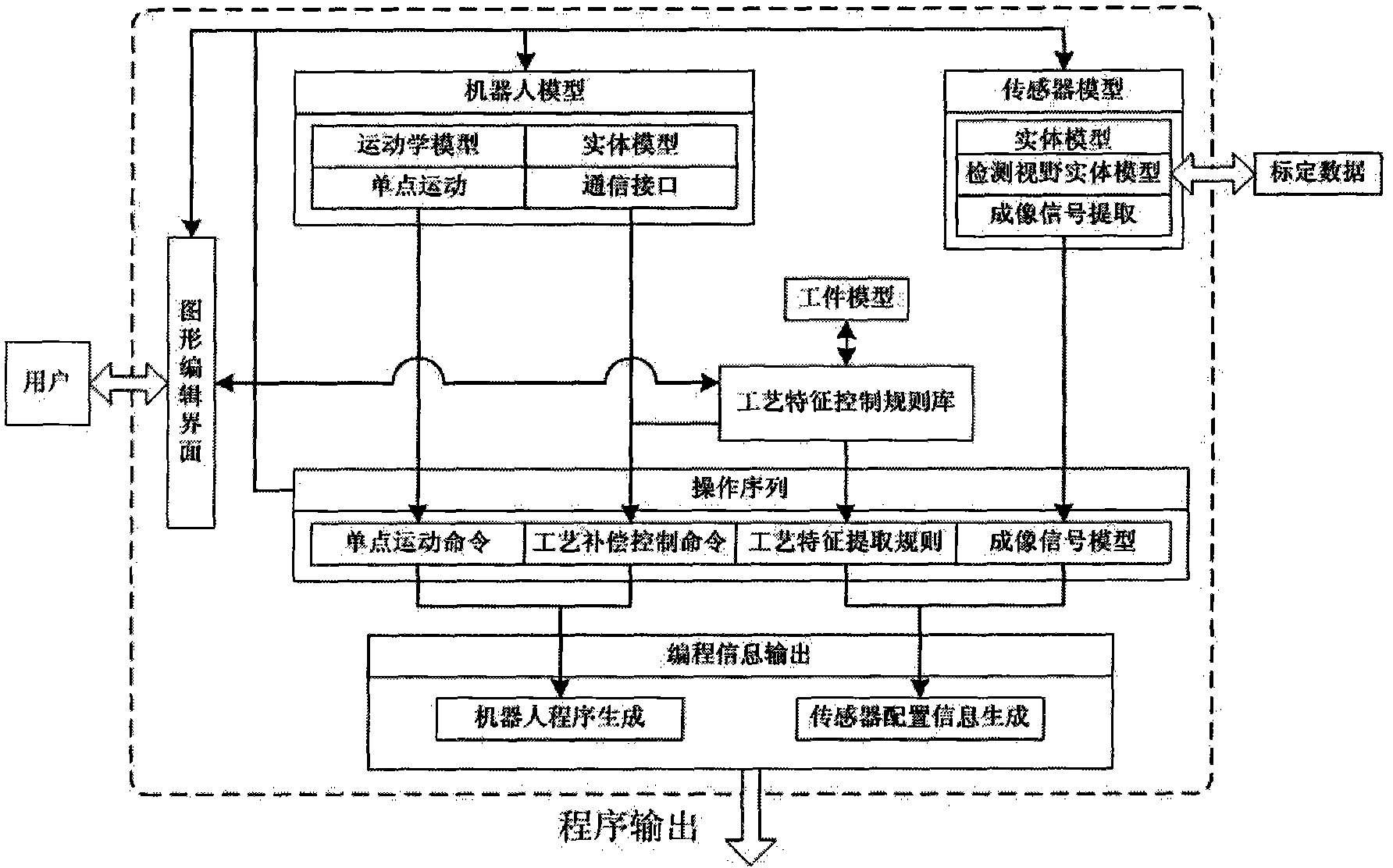

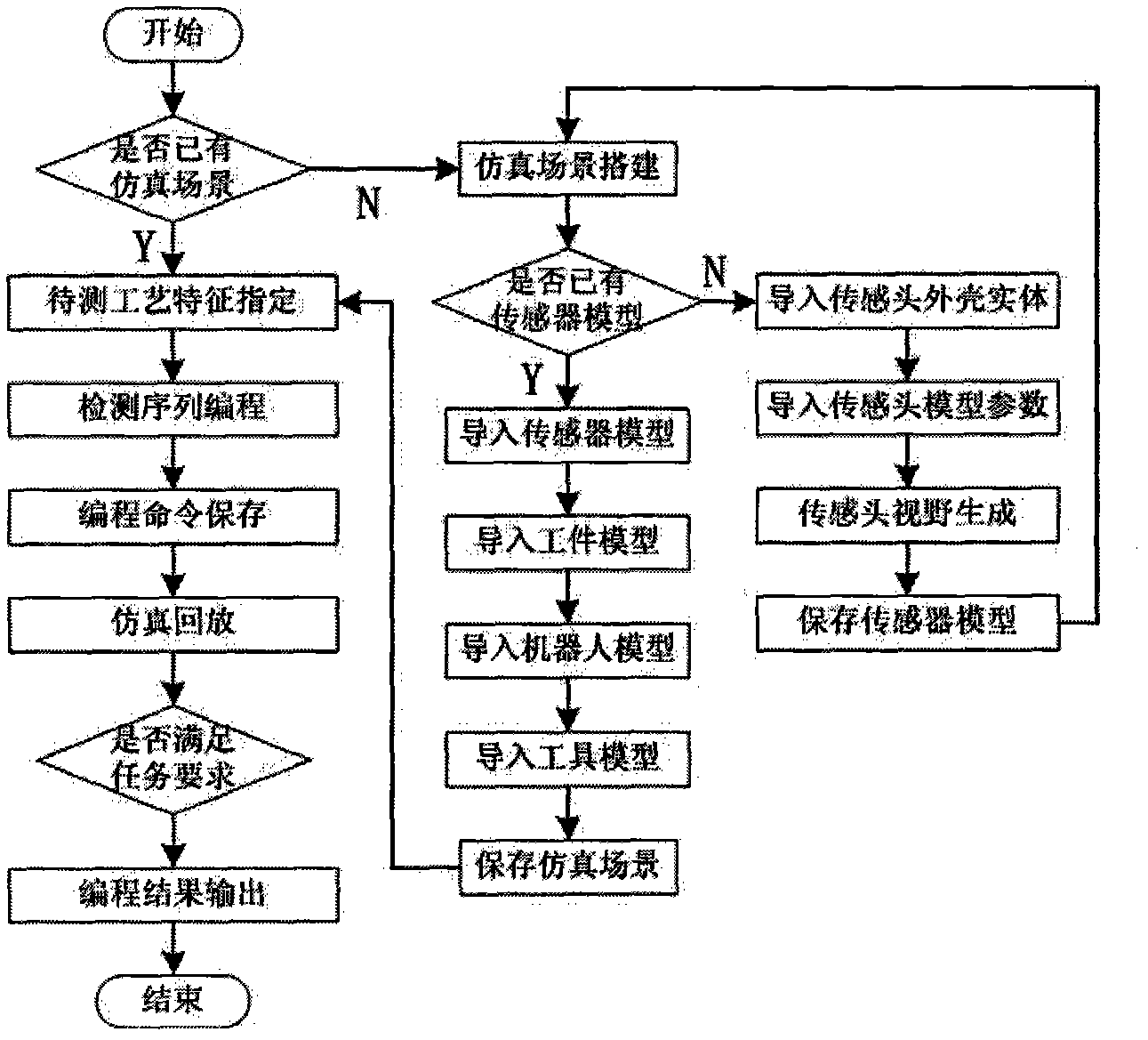

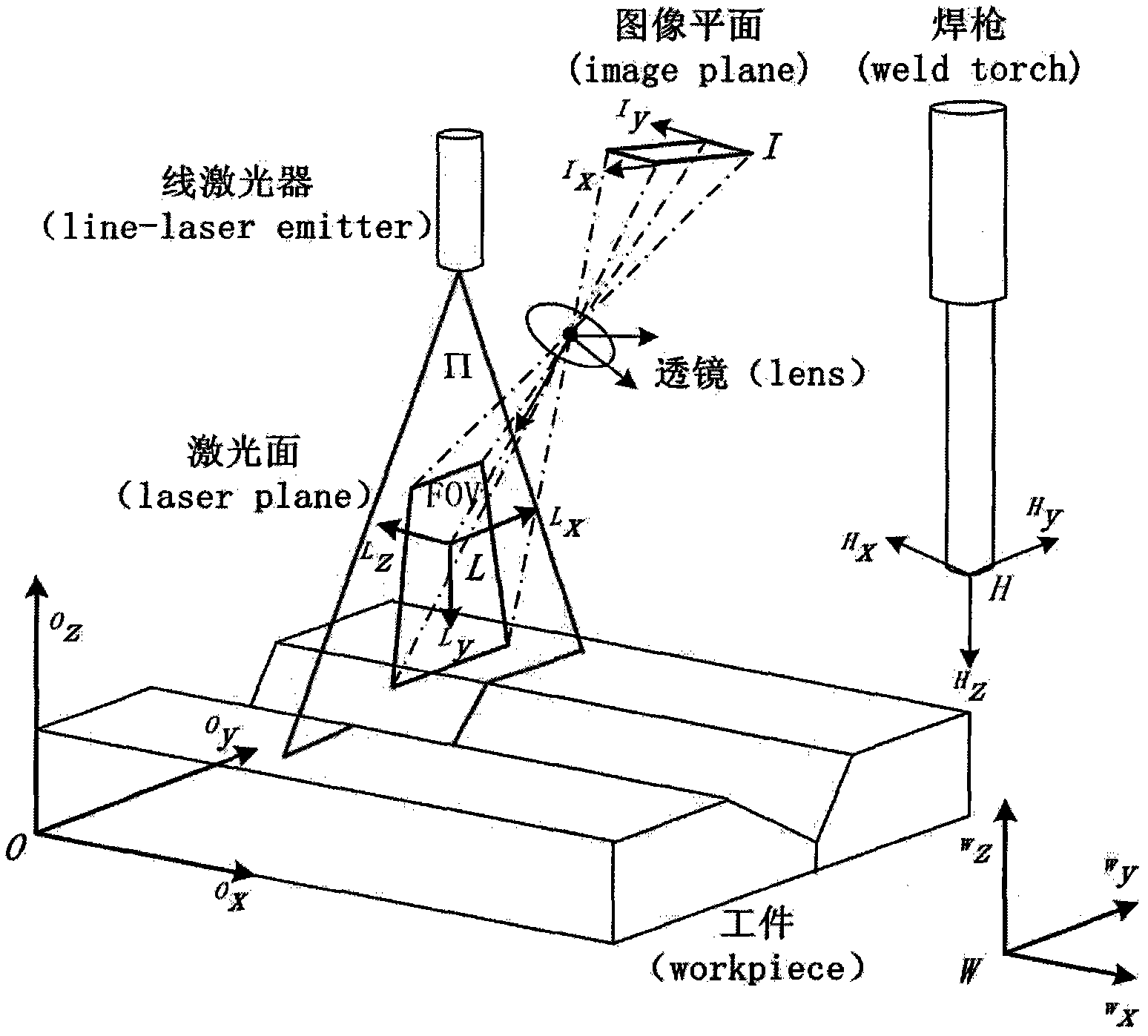

Off-line programming system and method of optical visual sensor with linear structure for welding robot

InactiveCN101973032AIntuitive detection statusIntuitive display of detection statusManipulatorInteractive graphicsSimulation

The invention relates to an off-line programming system and method of an optical visual sensor with a linear structure for a welding robot. The system comprises a sensor model, a robot model, a process control rule base, a graphic editing interface, an operation sequence module and a programming information output, wherein the sensor model is used for simulating a sensor imaging process to acquire a view model and simultaneously completing detection view substantiation to be convenient for user graphic programming; the robot model is used for simulating the single-point motion of the robot and providing communication interface information of connecting sensor input signals; the process control rule base is used for providing process feature extraction rules for different welding tasks andcontrol command related information of processes; the graphic editing interface is used for interactive graphic programming between a user and a system; the operation sequence module is used for saving the information of a series of detection points, wherein the information comprises single-point motion commands, process compensation control commands, process feature extraction rules and imaging signal model information; and the programming information output is used for outputting the program text of the robot and the configuration information text of the sensor system.

Owner:SOUTHEAST UNIV

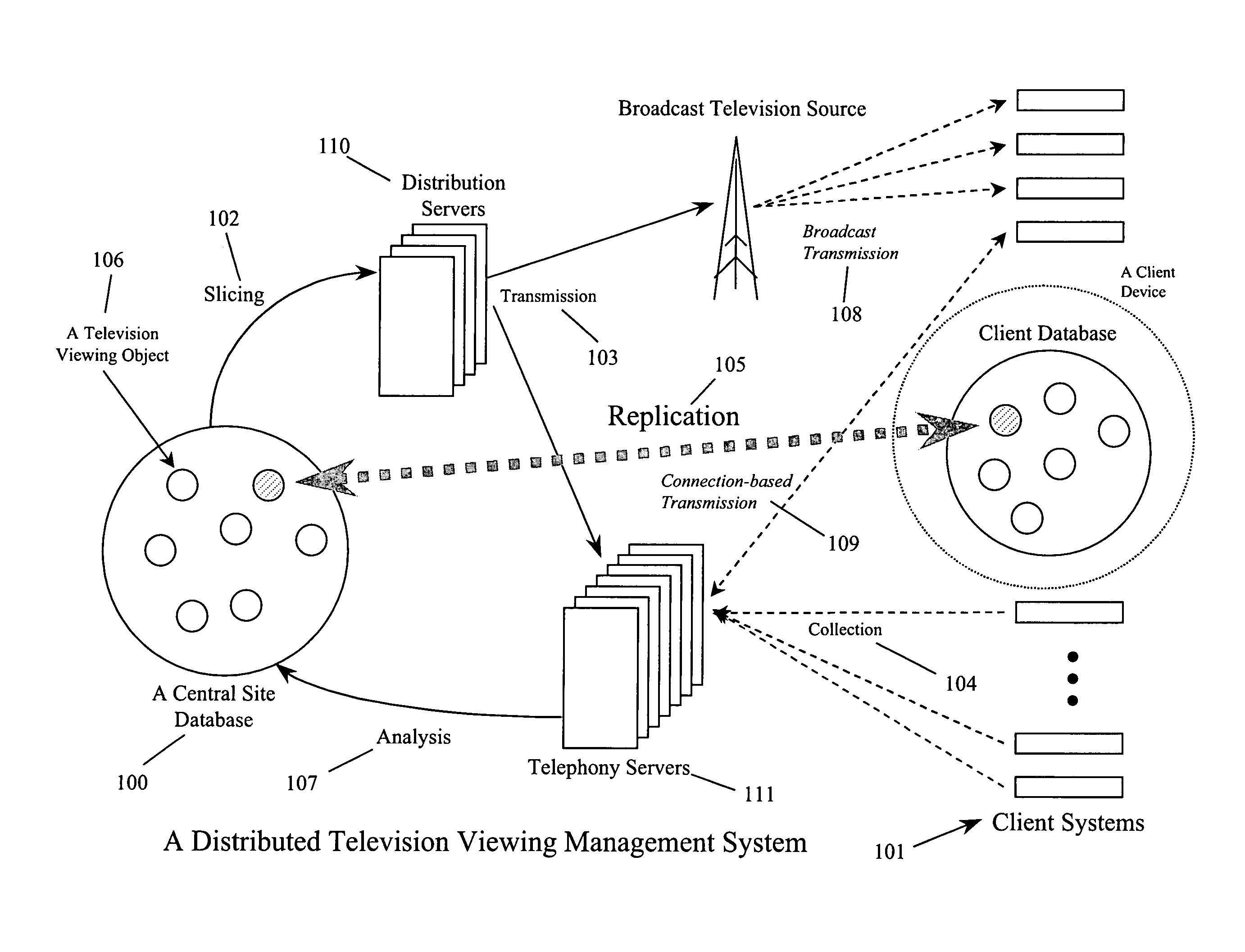

Distributed database management system

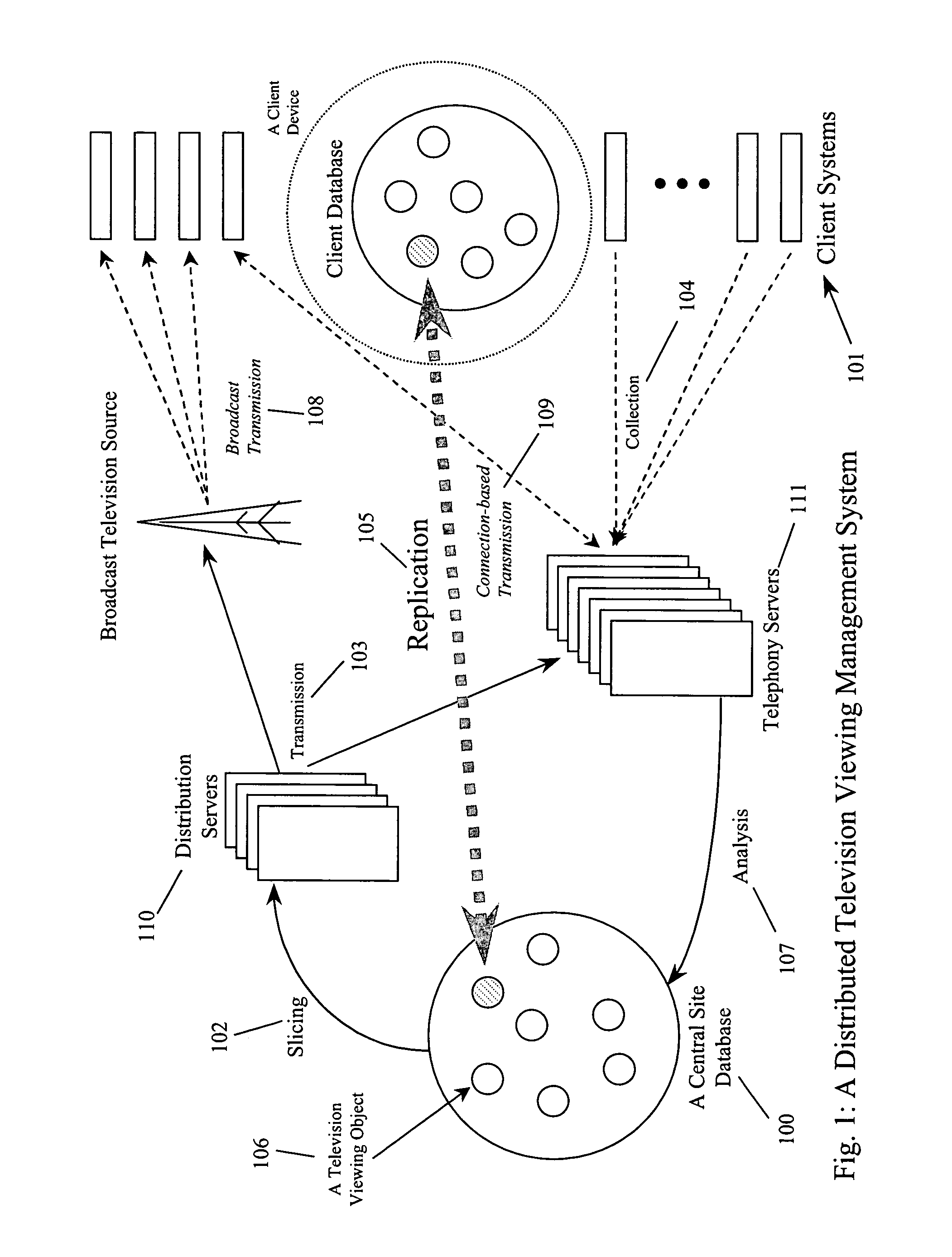

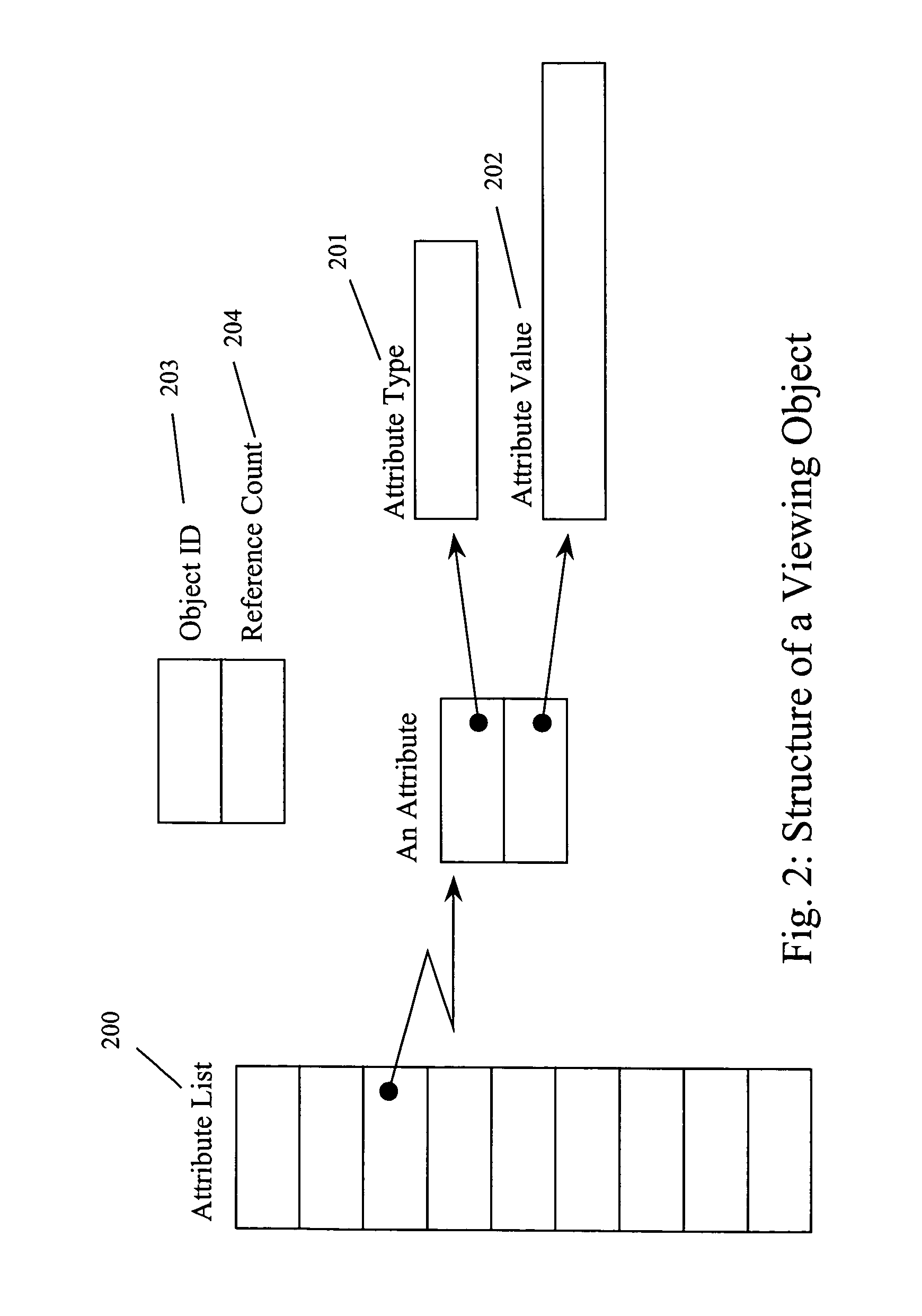

ActiveUS8478114B1Good serviceImproving statistical modelsTelevision system detailsRecord information storageGeographic regionsWeb content

A distributed database management system provides a central database resident on a server that contains database objects. Objects, e.g., program guide data, to be replicated are gathered together into distribution packages called “slices,” that are transmitted to client devices. A slice is a subset of the central database which is relevant to clients within a specific domain, such as a geographic region, or under the footprint of a satellite transmitter. The viewer selects television programs and Web content from displayed sections of the program guide data which are recorded to a storage device. The program guide data are used to determine when to start and end recordings. Client devices periodically connect to the server using a phone line and upload information of interest which is combined with information uploaded from other client devices for statistical, operational, or viewing models.

Owner:TIVO SOLUTIONS INC

System and method for managing simulation models

ActiveUS20080126030A1Extension of timeShorten the timeComputation using non-denominational number representationResourcesView modelRelational database

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and retuning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

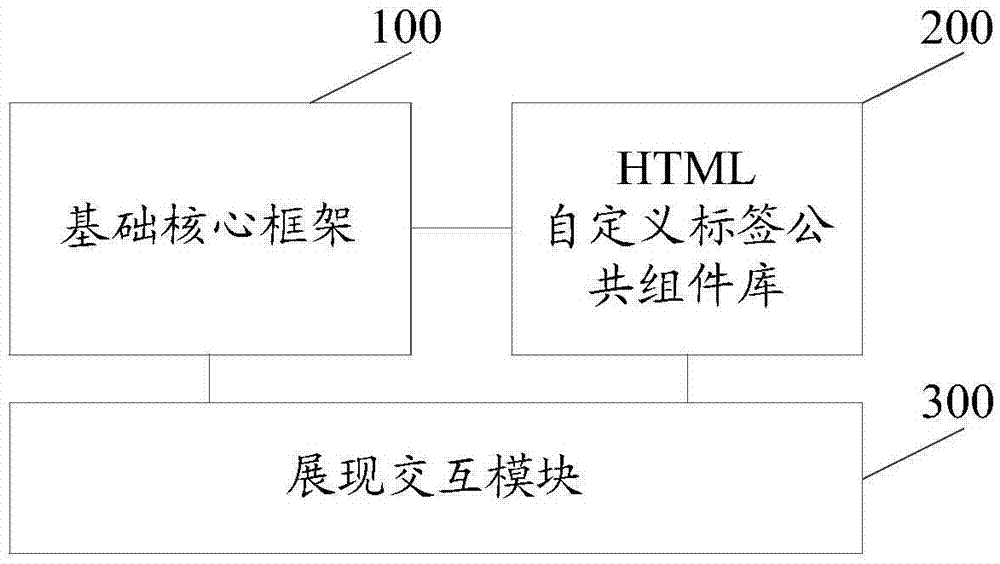

MVVM (Model-View-View Model) architecture based Web front-end presentation system

InactiveCN105446740AQuick buildImprove developmentProgramming languages/paradigmsSoftware designCore functionGeneral purpose

The present invention discloses an MVVM (Model-View-View Model) architecture based Web front-end presentation system. The system comprises a basic core framework, an HTML custom tag public component library and a presentation interactive module. The basic core framework is used for encapsulating an API (Application Programming Interface) of a Knockout and providing a basic function of the Knockout, so as to implement a basic core function of an MVVM architecture mode. The HTML custom tag public component library is based on the basic core framework, and implements a general-purpose component in manner of a custom front-end HTML tag by combining a Bootstrap ui framework and a Highcharts ui framework. The presentation interactive module comprises a presentation view unit and a user-defined presentation interactive unit. Thus according to the MVVM architecture based Web front-end presentation system disclosed by the present invention, by means of the basic core framework, the HTML custom tag public component library and the presentation interactive module, richness of interface display is improved, and development efficiency is improved.

Owner:STATE GRID INFORMATION & TELECOMM GRP +1

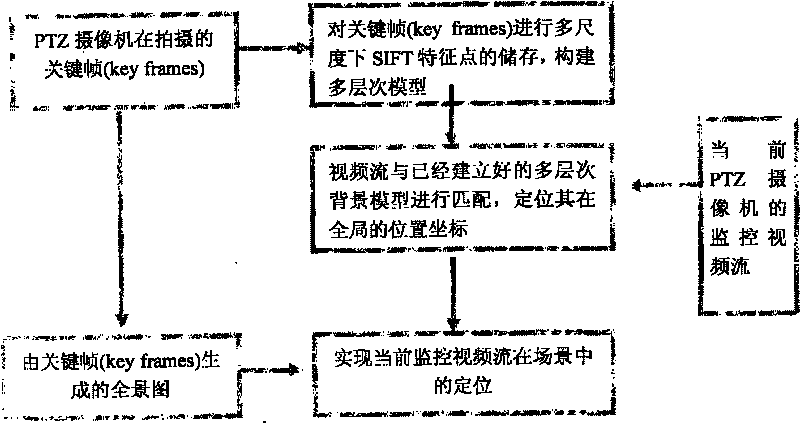

PTZ tracking method and system based on multi-layered full-view modeling

InactiveCN101719986ARealize monitoringTelevision system detailsColor television detailsPattern recognitionComputer graphics (images)

The invention discloses a PTZ tracking method based on multi-layered full-view modeling, which comprises the steps of: 1, establishing a multi-layered view model by using SIFI characteristic points; and 2, determining the whole position of the current monitoring view by using the matching of the SIFI characteristic points. By using the algorithms, the invention can effectively expand the size adaptability of an SIFI characteristic point set in an image, solve the problem that a PTZ camera can not effectively match with the view model during the large-size zooming, and realizes the positioning of the current monitoring range in the whole view by the single PTZ camera, thereby optimizing the PTZ tracking effect and obtaining the motion track information of a monitored target in the whole view.

Owner:湖北莲花山计算机视觉和信息科学研究院

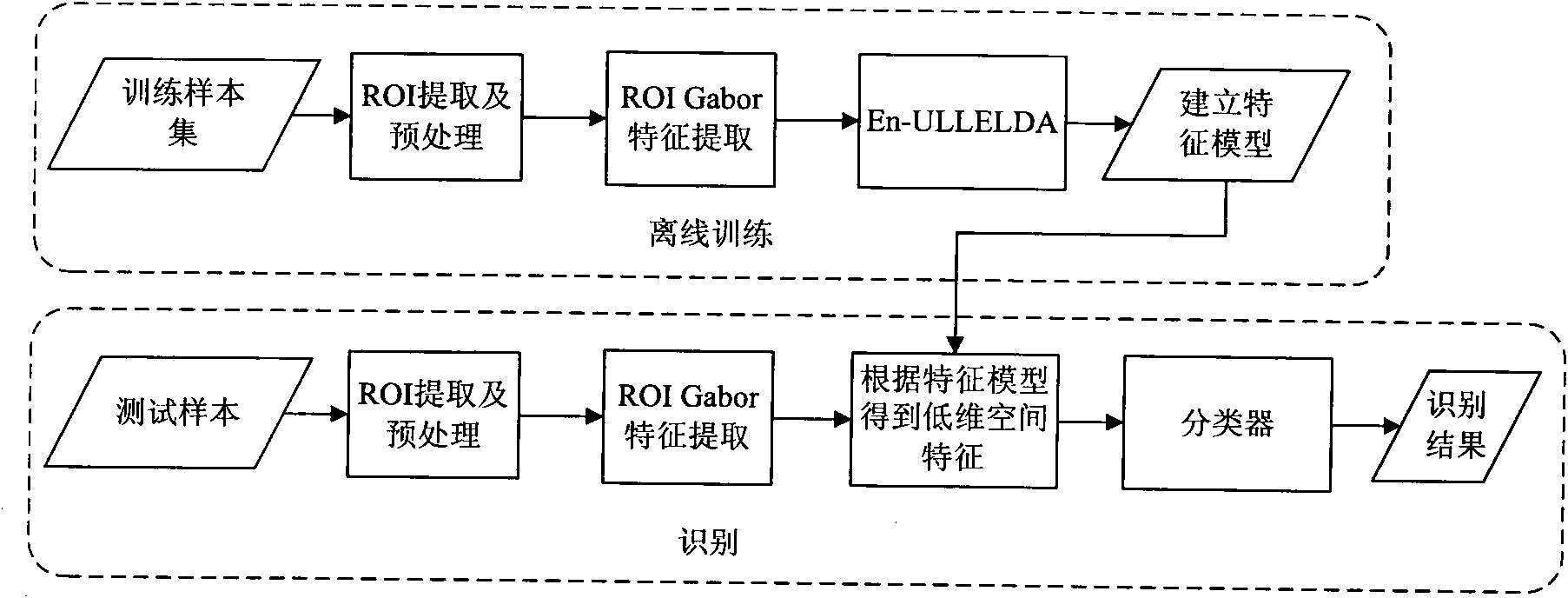

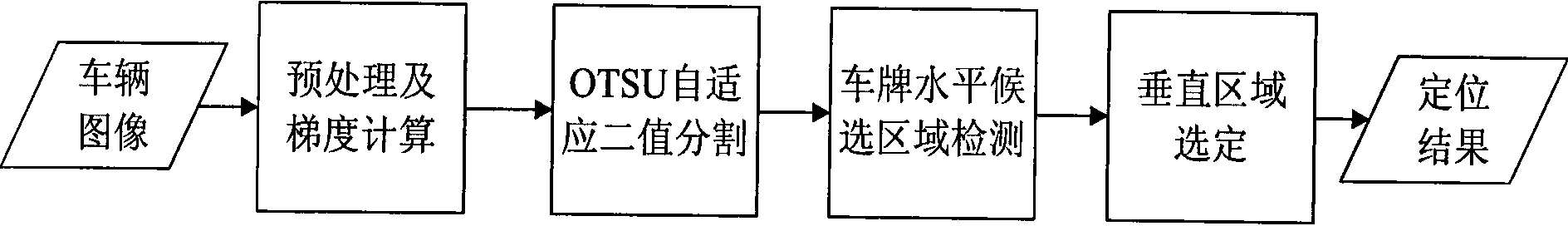

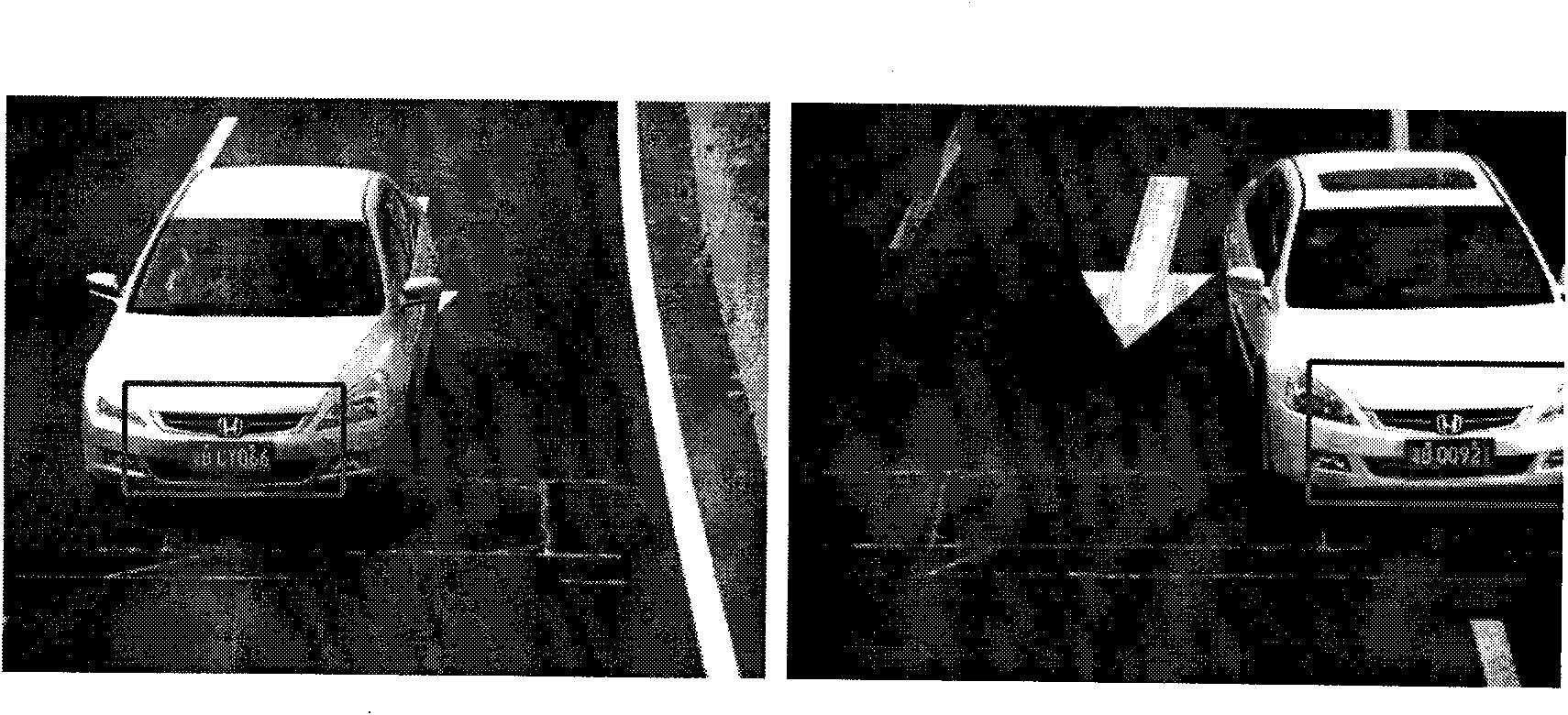

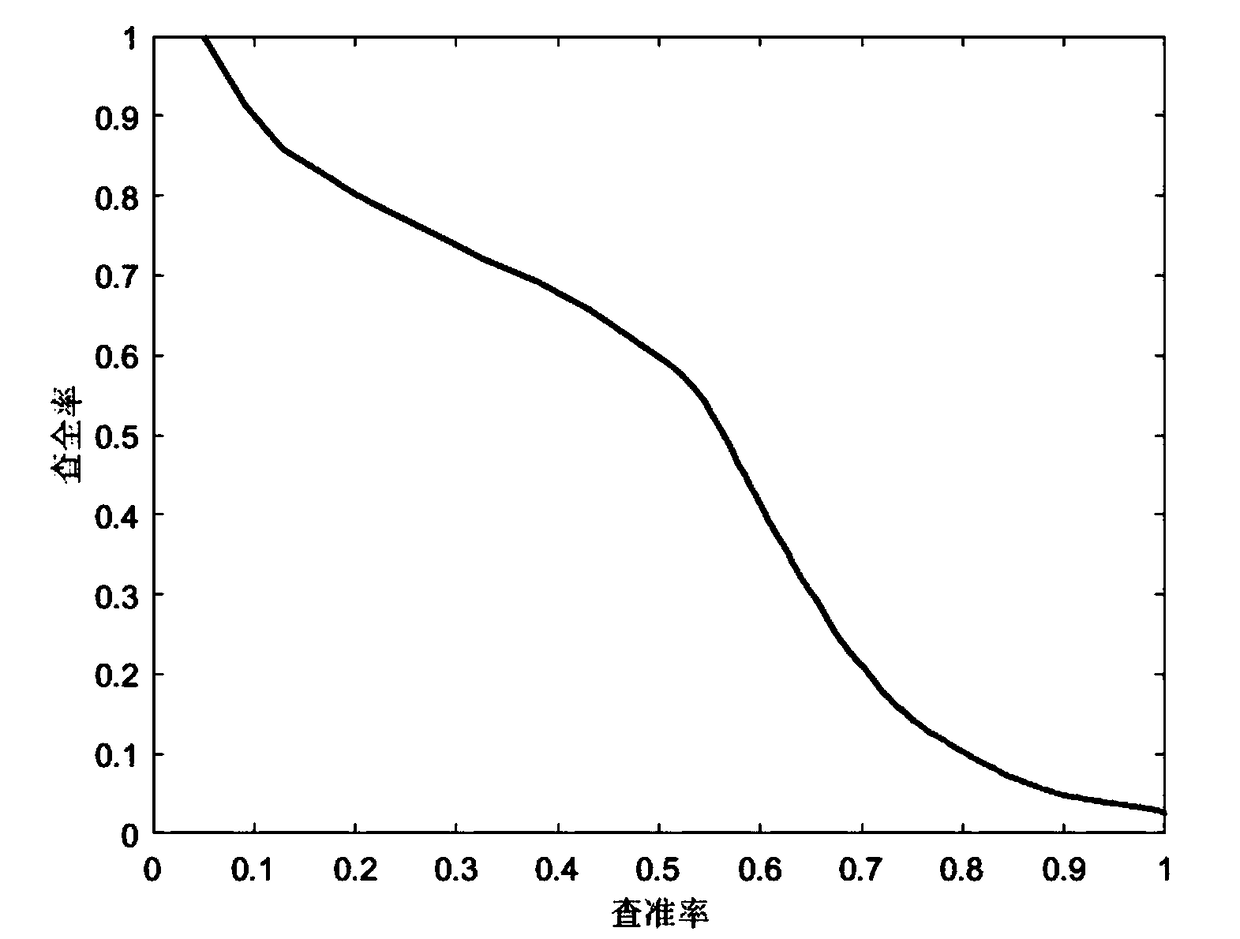

En-ULLELDA-based method of multi-view model recognition

InactiveCN101635027AImprove recognition rateCharacter and pattern recognitionGabor wavelet transformTest sample

The invention provides an En-ULLELDA-based (ensemble-unified locally linear embedding and linear discriminant analysis) method of multi-view model recognition. The method comprises the following steps: firstly, selecting a vehicle ROI (region of interest); secondly, extracting the high-level information that can reflect the model as features through Gabor wavelet transform; then, carrying out the dimension reduction of features on the basis of a novel manifold learning algorithm En-ULLELDA, so as to transform the features to a low-dimensional space; and obtaining the corresponding models from the features of a test sample by a classifier during the recognition. According to test results, the invention can effectively handle the problem of multi-view model recognition on urban roads; and the invention can eliminate the effects of parameter variation on the recognition results by employing the integration technology, so that the invention has higher robustness.

Owner:深圳市麟静科技有限公司

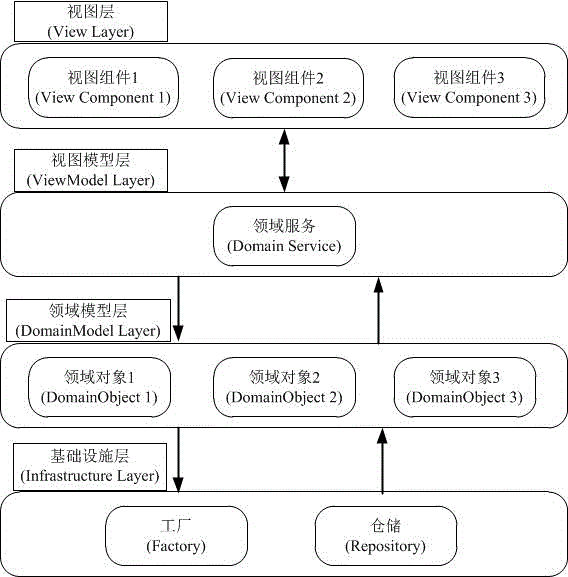

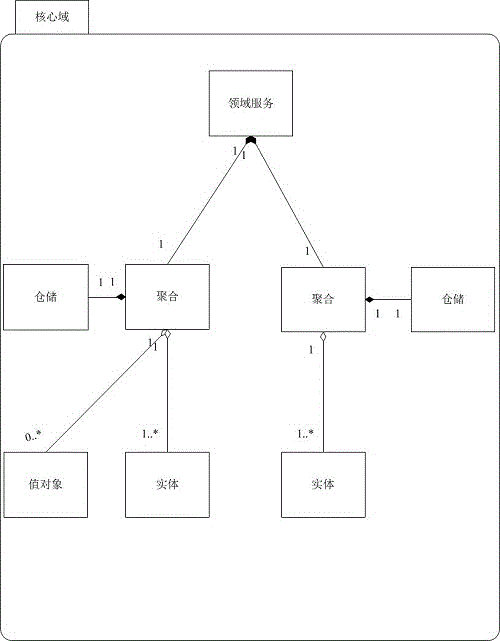

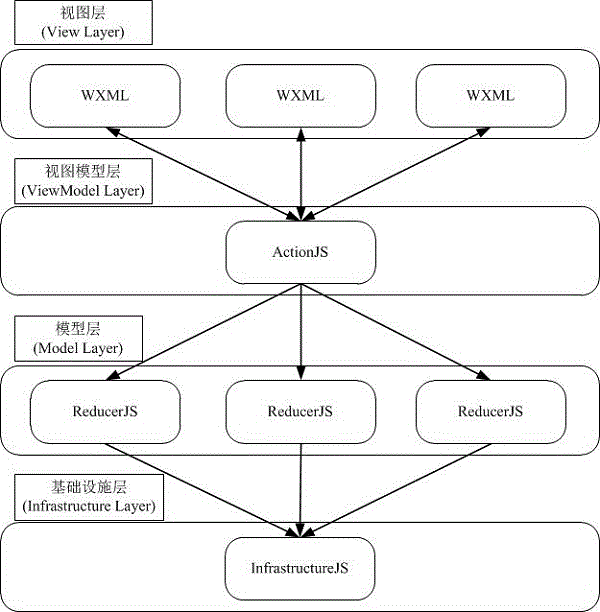

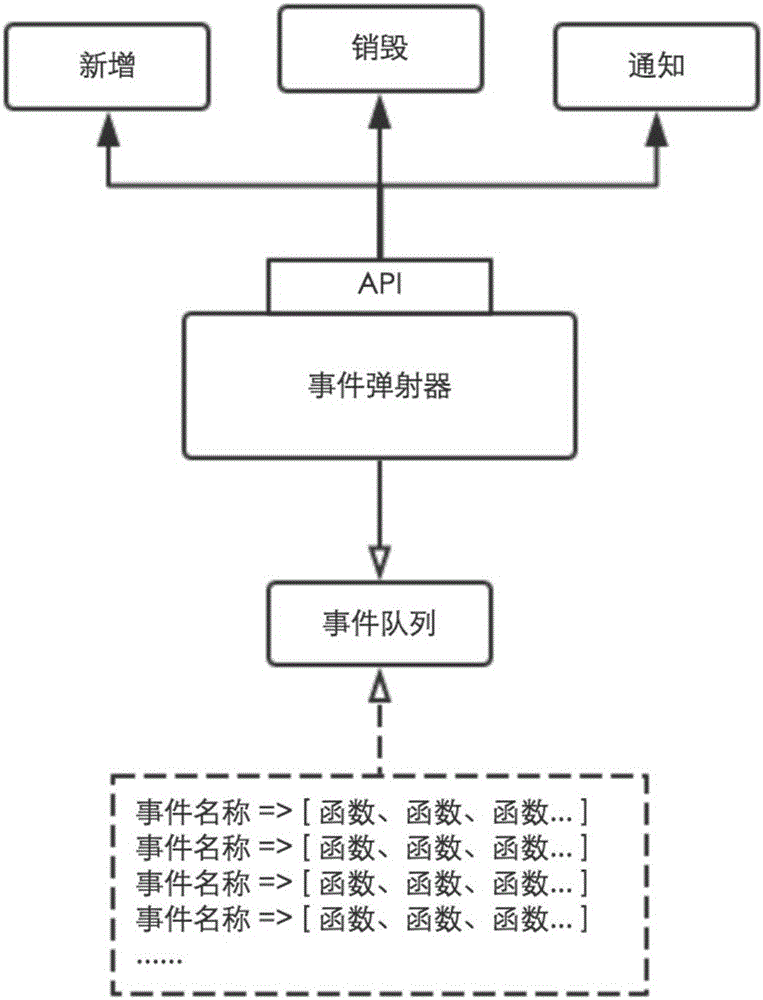

Domain driving design-based MVVM design model

InactiveCN106815016AReduce complexityImprove maintainabilitySoftware designModel driven codeExtensibilitySoftware system

The invention provides a domain driving design-based MVVM design model (DDMVVM). An overall structure of the domain driving design-based MVVM design model is divided into four layers: a view layer, a view model layer, a domain model layer and an infrastructure layer. A domain driving design-based MVVM model method specifically comprises the following steps: designing at two aspects: strategic design and tactics design; developing a frame on the basis of a domain driving design-based MVVM model; realizing each layer of the domain driving design-based MVVM design model under a wechat mini-application development platform. The domain driving design-based MVVM design model is suitable for the field of software engineering and software architecture in a graph user interface program; the domain driving design-based MVVM design model can effectively guide and normalize the software developer to emphasize on the field of system core business; the complexity of the software system can be effectively reduced; the quality attributes, such as maintainability and expandability, of the software system can be effectively promoted.

Owner:SICHUAN UNIV

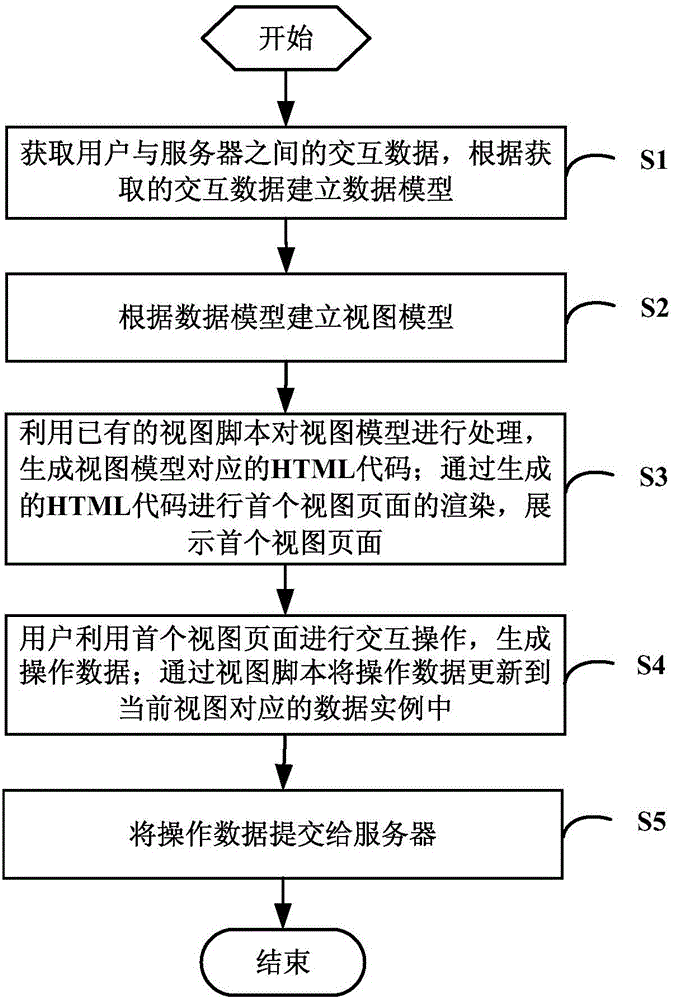

A method and a system realizing behavior, data and view linkage at a front end

ActiveCN106126249AReduce complexityImprove good performanceSoftware designSpecific program execution arrangementsView modelComputer graphics (images)

The invention provides method and a system realizing behavior, data and view linkage at a front end and relates to the technical field of front end development. The method comprises the steps of establishing a data model; establishing a view model according to the data model; when it is needed to display a first view page allowing users to perform interactive operations, processing the view model by using an existing view script to generate an HTML code corresponding to the view model, and rendering the first view page via the generated HTML code and displaying the first view page; allowing the users to use the displayed first view page to perform interactive operations and generate operation data, and updating the operation data to data instances corresponding to current views via the view script; finally submitting the operation data to a server. The method and the system enable front ends to achieve a behavior, data and view linkage effect, reduce the complexity of front end code development and debugging and facilitate extension and maintenance.

Owner:厦门众联世纪股份有限公司

System and method for managing simulation models

ActiveUS20080126156A1Extension of timeShorten the timeDigital data processing detailsResourcesRelational databaseView model

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and retuning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

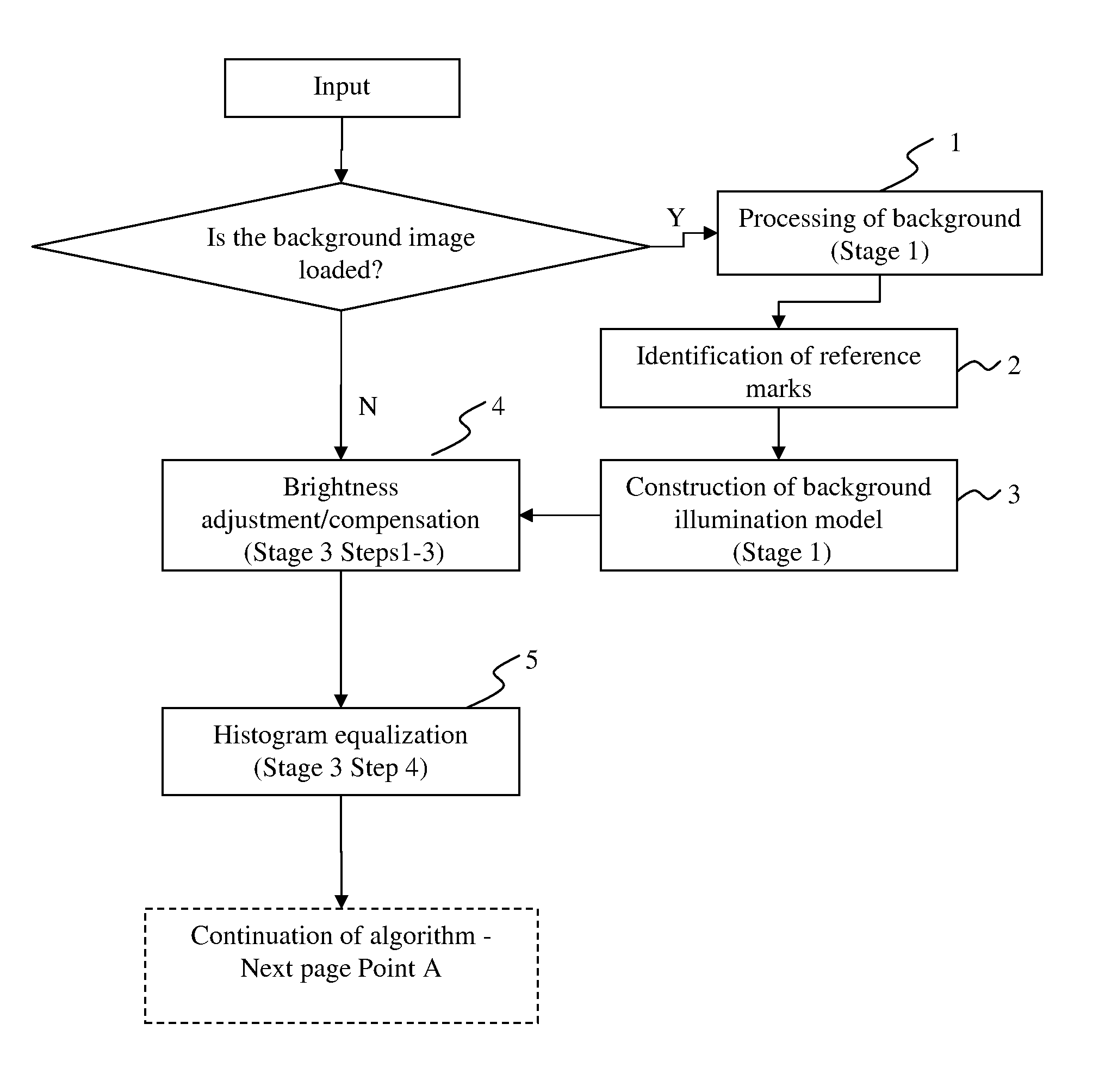

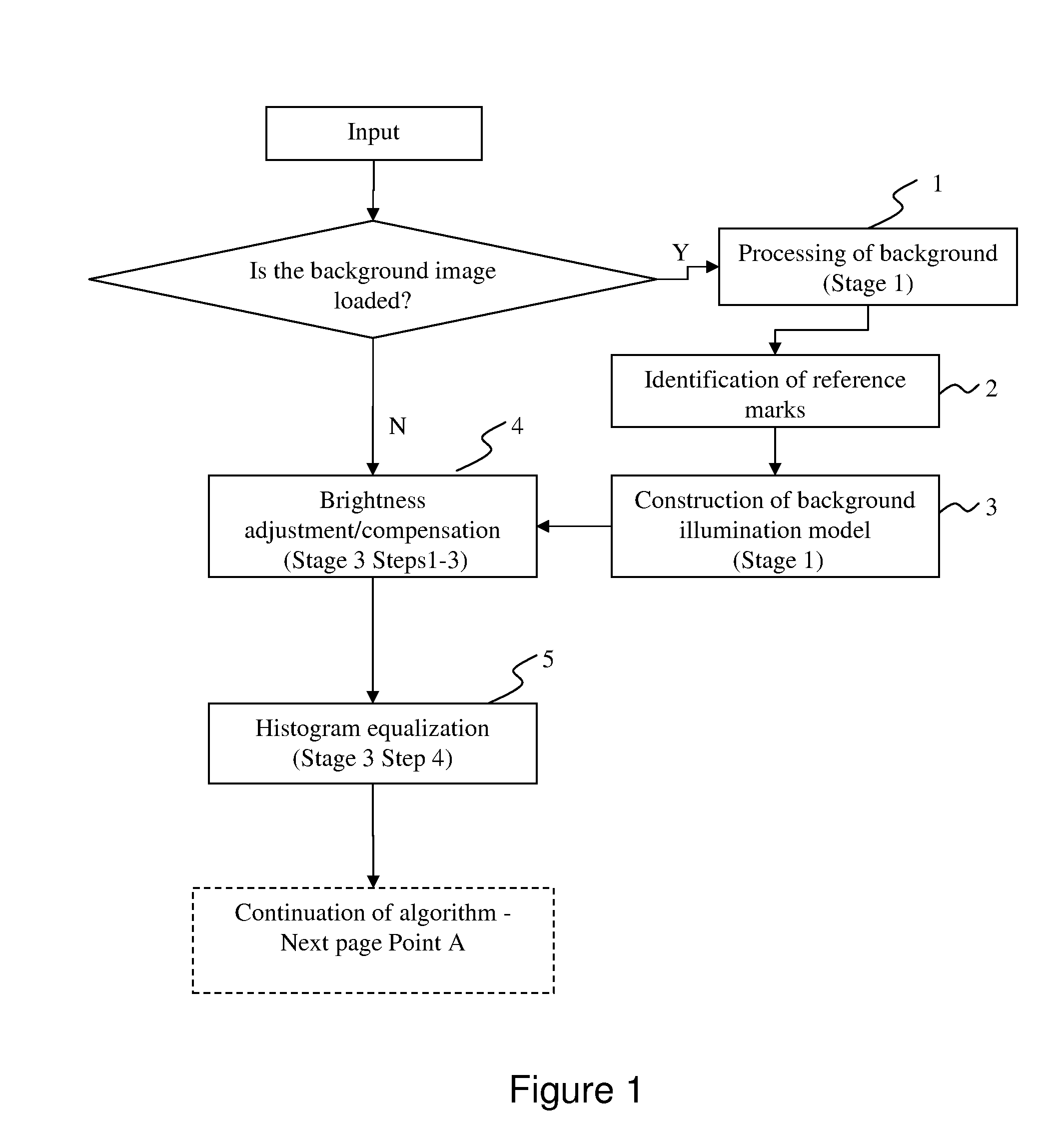

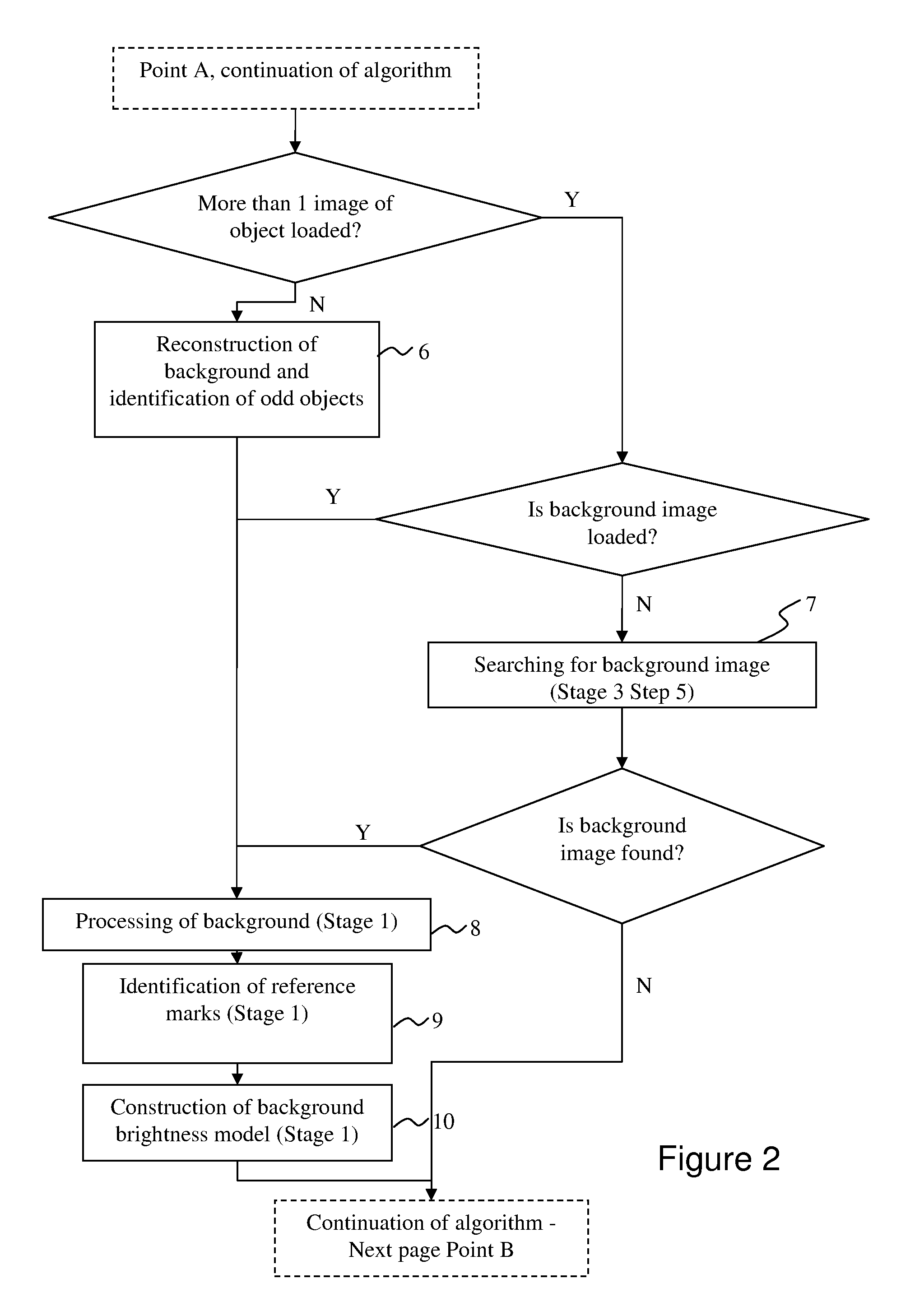

System and method for computer-aided image processing for generation of a 360 degree view model

InactiveUS8503826B2Same brightnessSame contrastImage enhancementImage analysisImaging processingView model

Owner:3DBIN

System and method for managing simulation models

ActiveUS20080126057A1Extension of timeShorten the timeDigital data processing detailsAnalogue computers for electric apparatusView modelRelational database

A system and method for viewing models and model variables within a sophisticated modeling environment is disclosed. The system provides varying levels of insight into a modeling infrastructure to help the user understand model and model variable dependencies, usage, distribution, and / or the like. The method includes storing model and model variable data within a relational database system, receiving a request from a user interfacing with the system via a web interface, extracting search criteria and presentation preferences from the request, formulating and executing one or more queries on the database to retrieve the required data, formatting the data in accordance with the request, and retuning the data to the requesting user in the form of a web page.

Owner:AMERICAN EXPRESS TRAVEL RELATED SERVICES CO INC

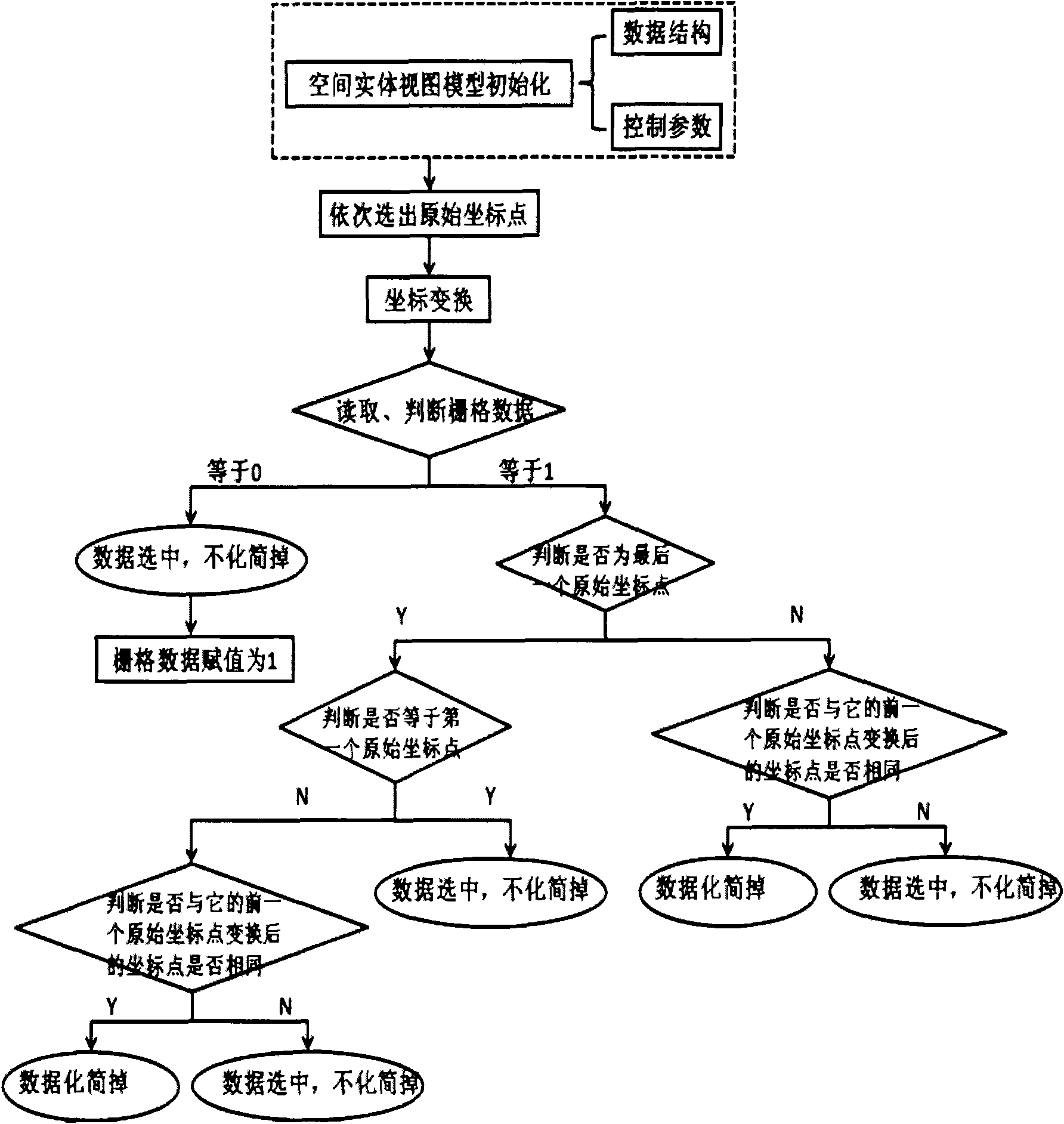

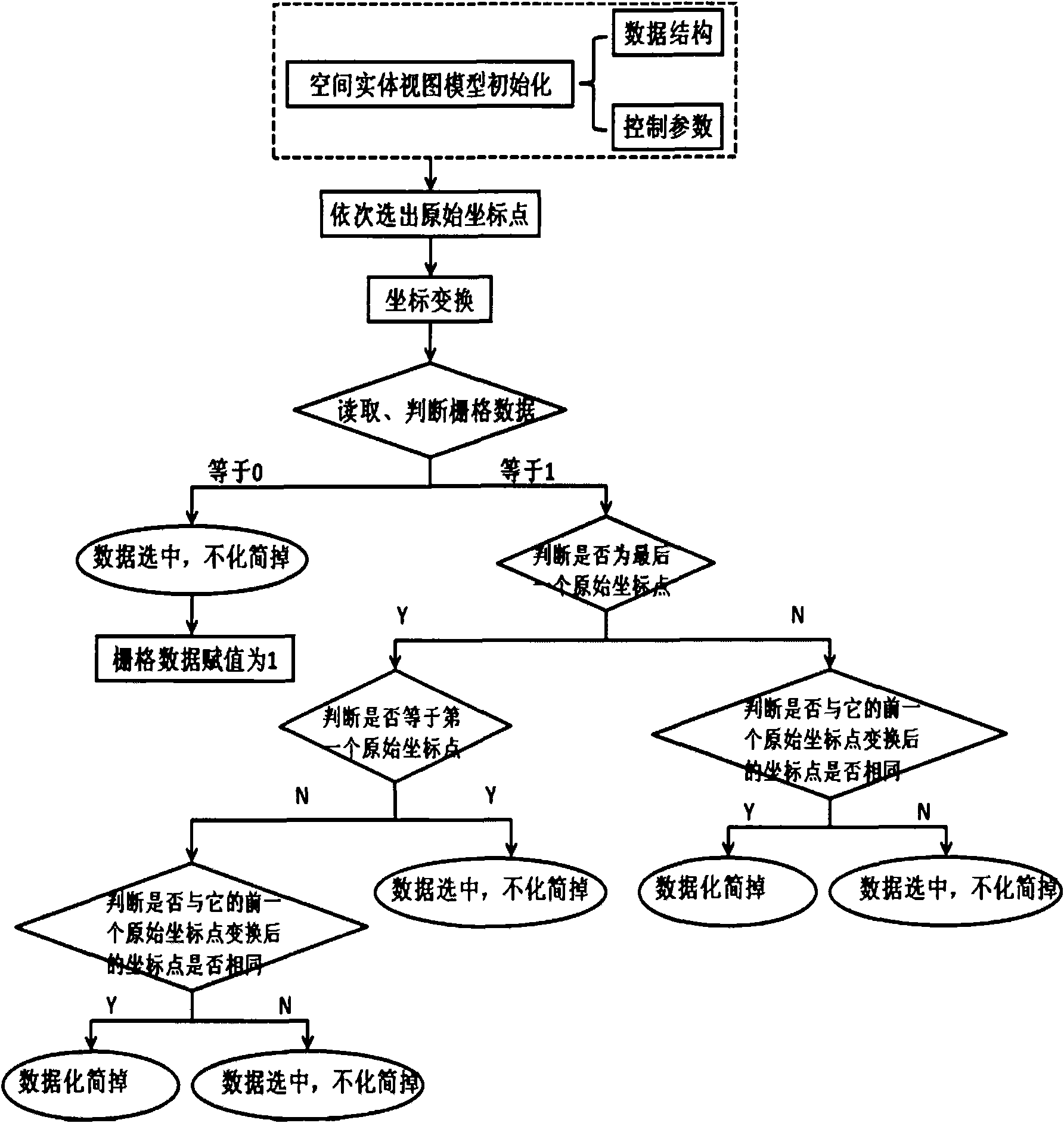

Vectordata self-adaptation simplification method based on spatial entity view model

InactiveCN101819590AImprove transmission efficiencyImprove display efficiencySpecial data processing applicationsView modelComputer vision

The invention provides a vectordata self-adaptation simplification method based on a spatial entity view model, which comprises the step of reading and judging the pixel value of coordinate points in a coordinate system of the view window of the model through a pixel operation module of the module so as to further judge if all original coordinate points of the vectordata need to be simplified. The invention has the advantages of enhancing the transmission efficiency and display efficiency of the vectordata, not only ensuring the correct display of the spatial relation of any simplified complex vectordata, but also ensuring the correct display of the spatial relations among all simplified vectordata.

Owner:董福田

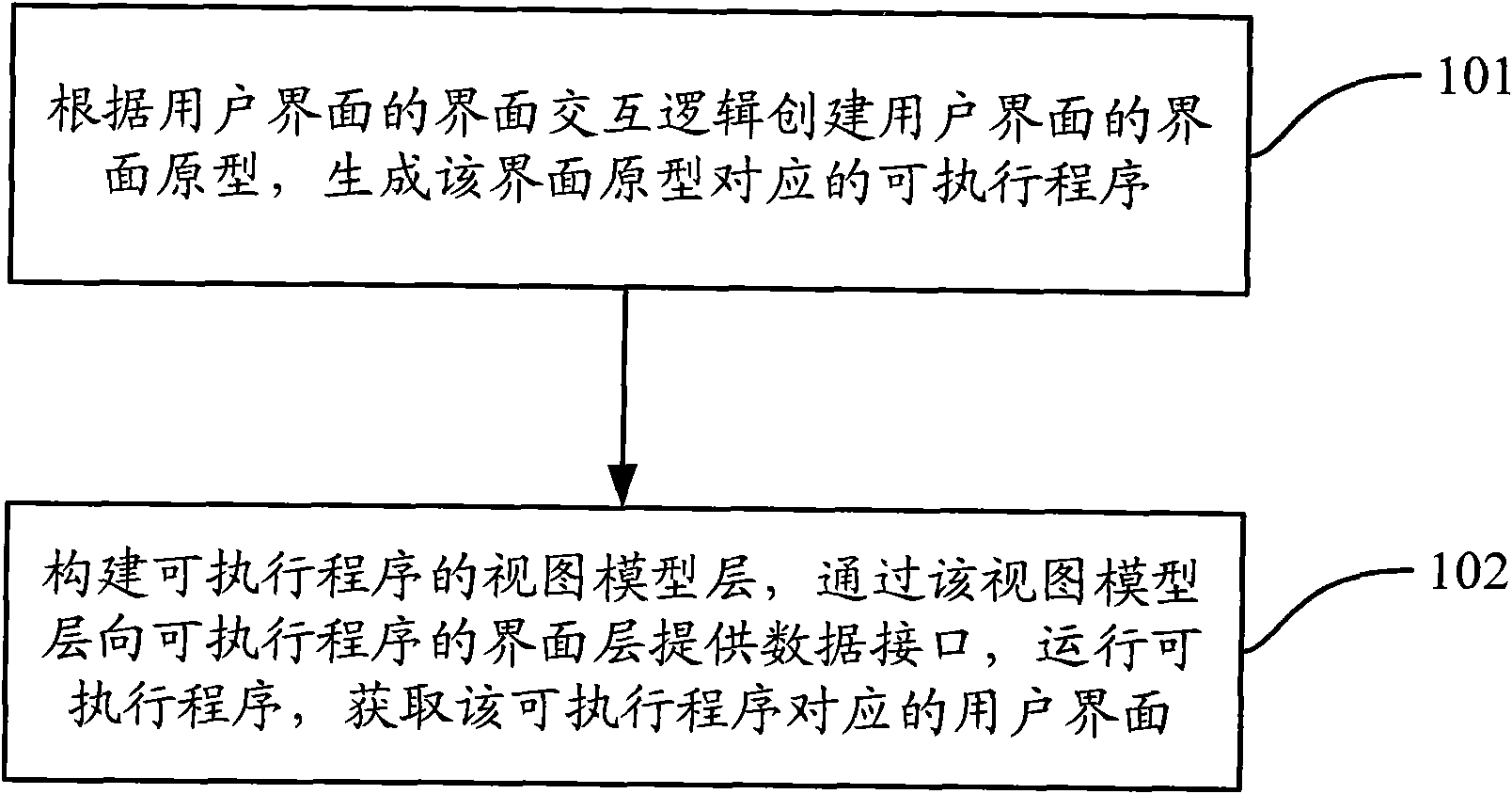

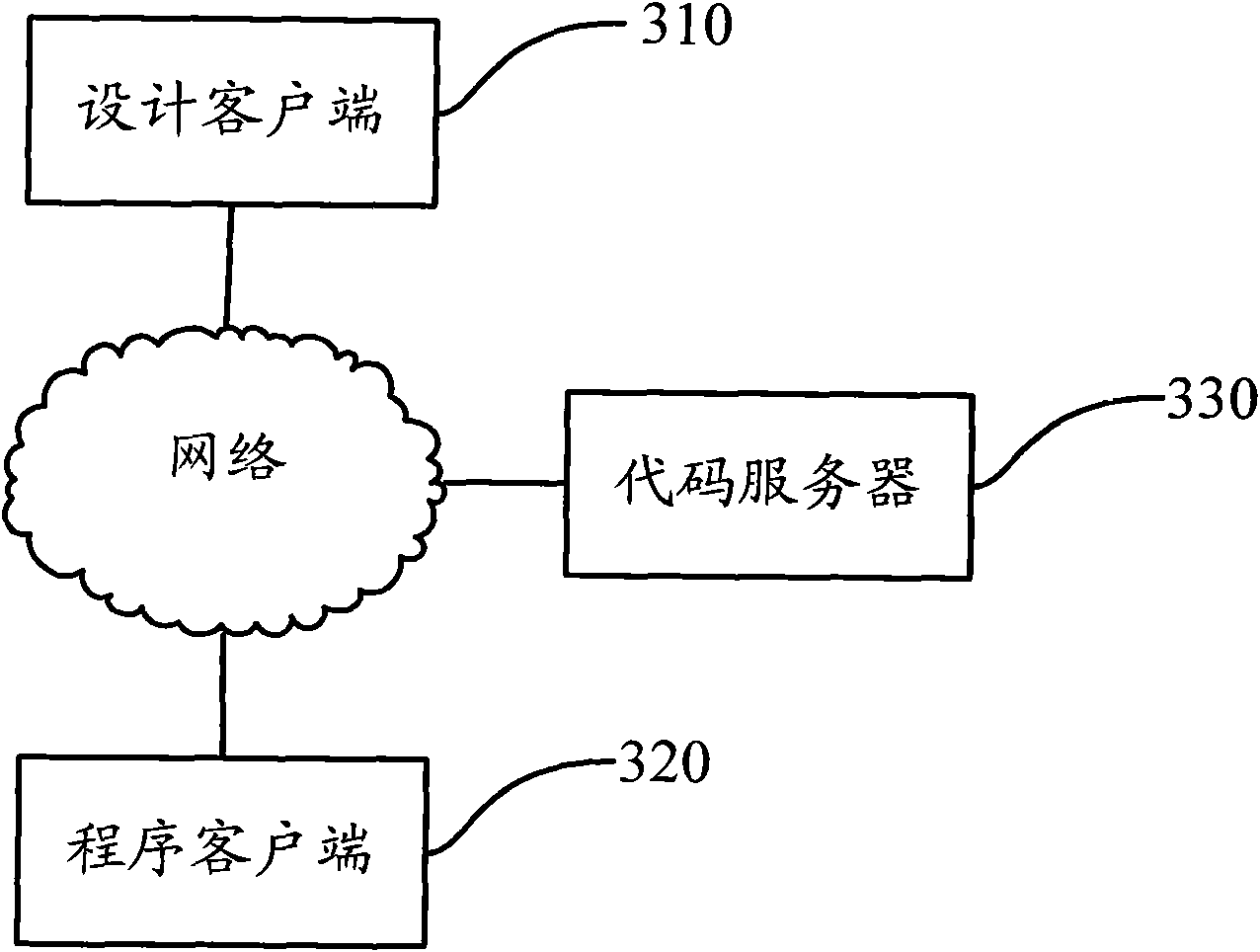

Method and device for creating user interface

ActiveCN102135873AImprove collaborationReduce duplication of effortSpecific program execution arrangementsView modelInterface layer

The invention discloses a method for creating a user interface, which comprises the following steps of: creating an interface prototype of the user interface according to an interface interaction logic of the user interface, generating an executable program corresponding to the interface prototype, creating a view model layer of the executable program, providing data interfaces to the interface layer of the executable program through the view model layer, running the executable program, and obtaining a user interface corresponding to the executable program. By using the method for creating the user interface, the working efficiency of creating of a user interface can be increased. The invention also discloses a device applying the method.

Owner:TENCENT TECH (SHENZHEN) CO LTD

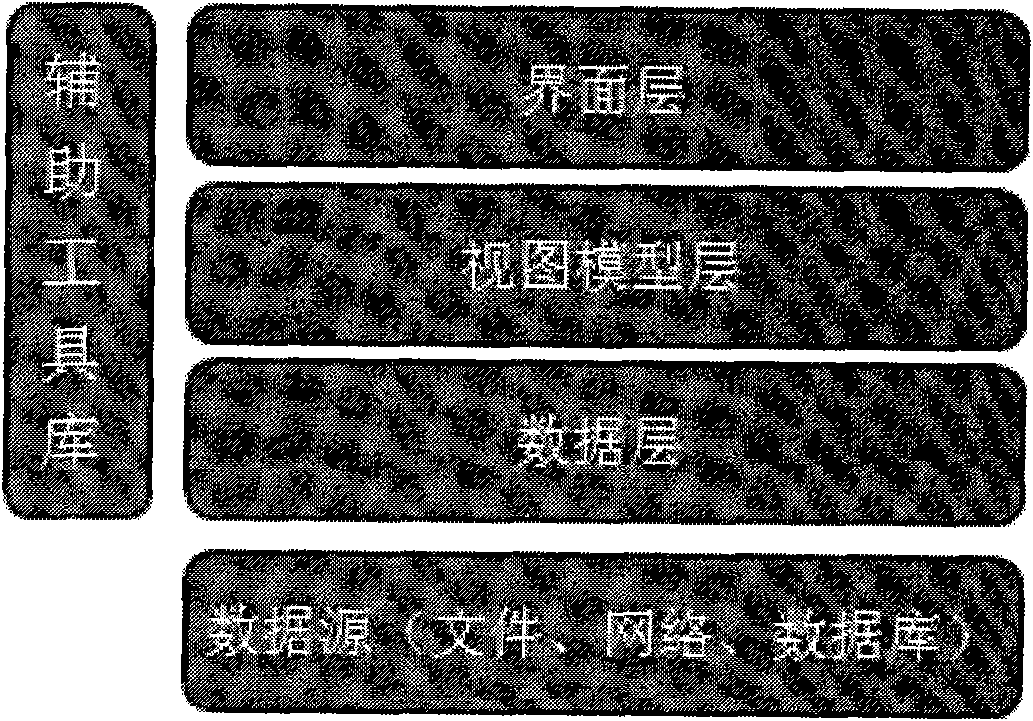

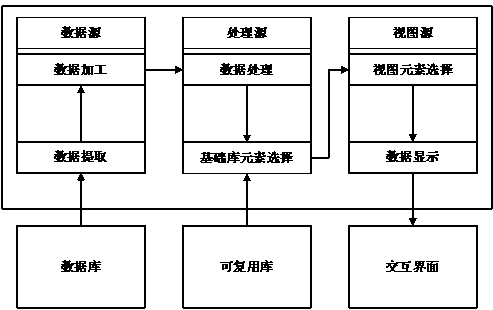

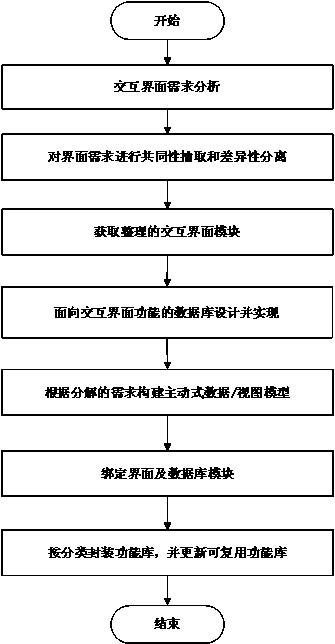

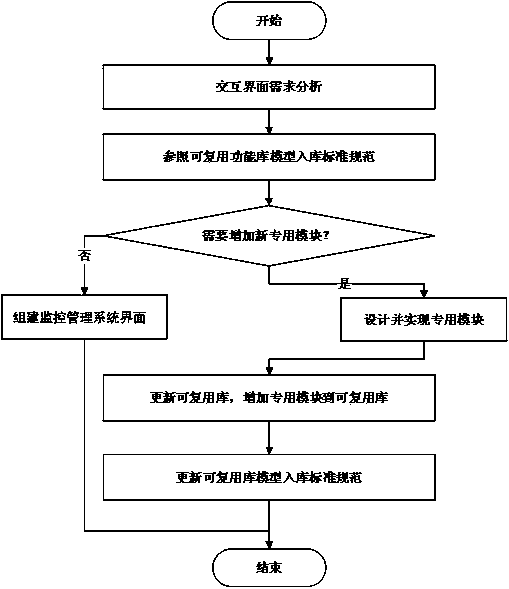

Interactive-interface fast implementation method based on reusable library

InactiveCN103914307AImprove effectivenessImprove usabilitySpecific program execution arrangementsView modelSoftware engineering

The invention provides an interactive-interface fast implementation method based on a reusable library. The interactive-interface fast implementation method comprises correlating data and interface elements by virtue of active data / view model design, constructing a reusable basic element library, a universal function library and a special function library according to the characteristics of an interactive interface and then constructing a reusable library, and finally raising model into-library standard specifications, thereby providing a unified standard interface a configuration path, a database table from and a data / view model binding specification. The method is suitable for a development platform based on remote access of WEB and based on local interactive interfaces of tools such as QT and VS; the method is capable of realizing various types of interfaces needing to be interacted based on the reusable library, and has the characteristics of simple function library design rule, high reusability, quick interface molding and the like; besides, the effectiveness of the reusable library and the usability of the interactive interfaces can be further improved by improving and optimizing the standard specifications of the reusable library.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

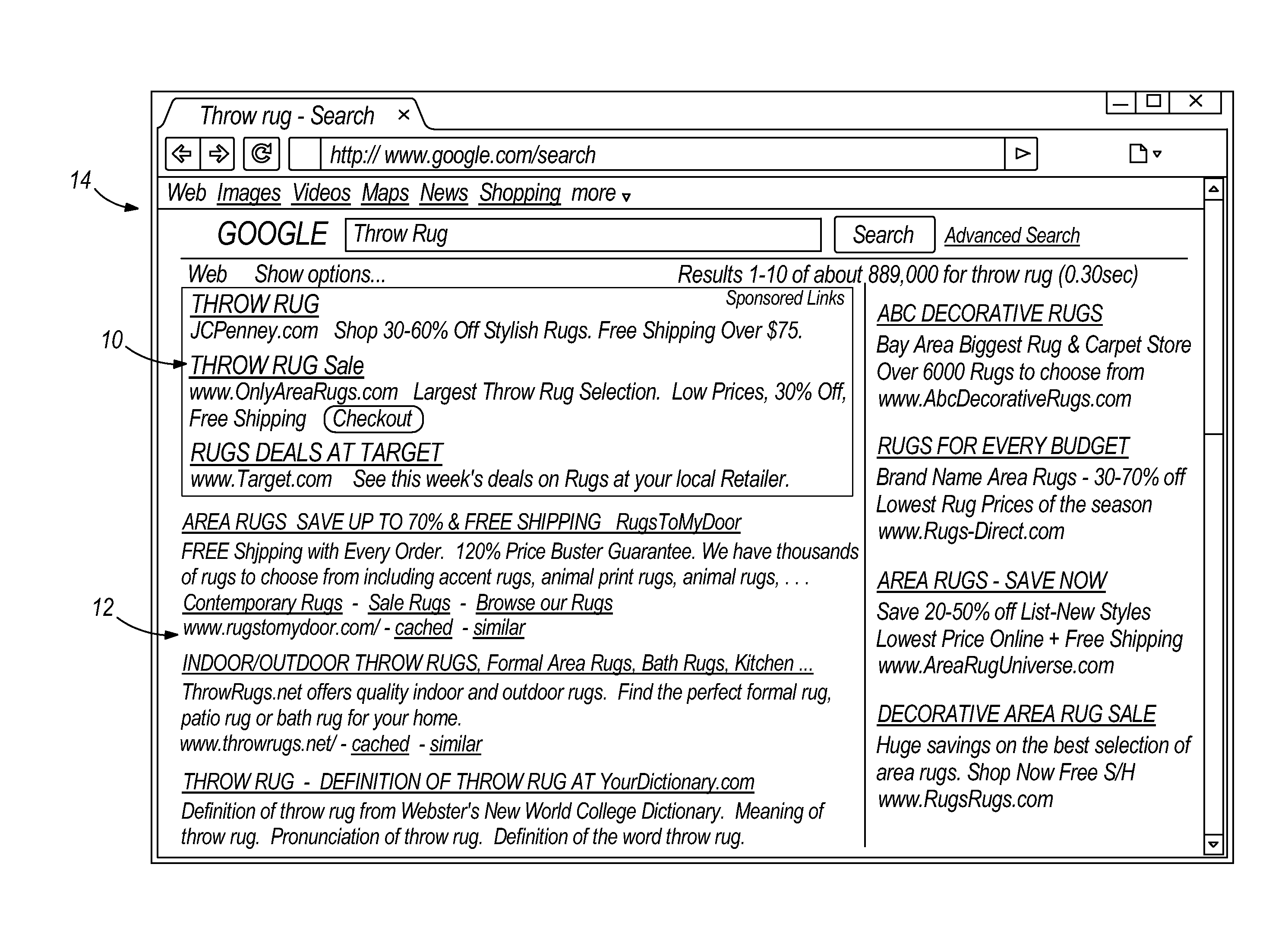

Server-based browser system

ActiveUS7587669B2Natural language data processingMultiple digital computer combinationsView modelClient-side

A server-based browser system provides a server-based browser and a client system browser. The client browser reports the position of a user click on its display screen, hotspot ID, or URL to the server-side browser which retrieves a Document Object Model (DOM) model and view tree for the client and finds the location on the Web page that the user clicked on using the coordinates or hotspot ID received from the client. If there is a script associated with the location, it is executed and the resulting page location is requested from the appropriate server. If there is a URL associated with the location, it is requested from the appropriate server. The response Web page HTML definition is parsed and a DOM tree model is created which is used to create a view tree model. The server-side browser retrieves a style sheet, layout algorithms, and device constraints for the client device and lays out the view model using them onto a virtual page and determines the visual content. Textual and positional information are highly compressed and formatted into a stream and sent to the client browser which decodes the stream and displays the page to the user using the textual and positional information.

Owner:MERCURY KINGDOM ASSETS

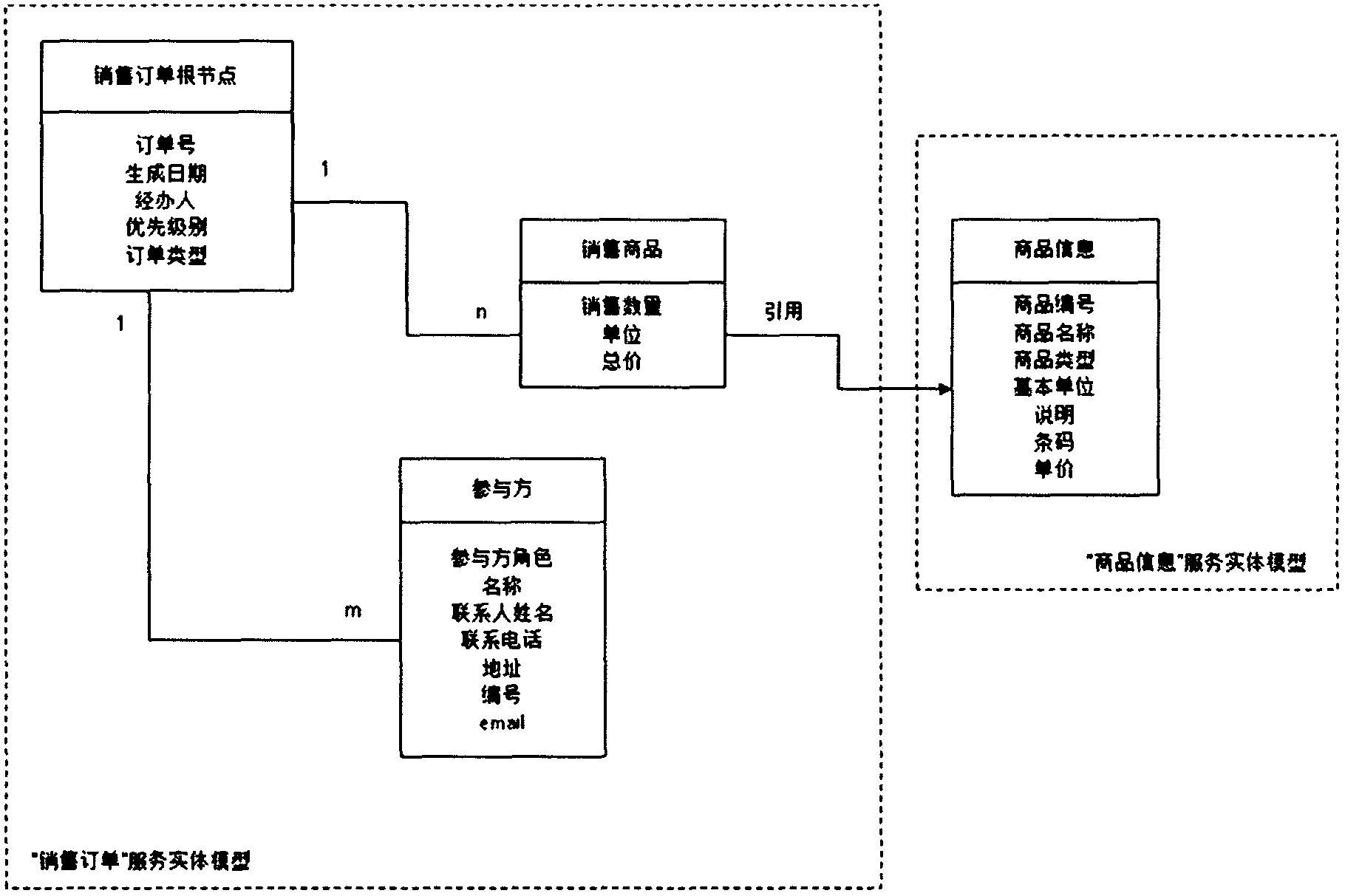

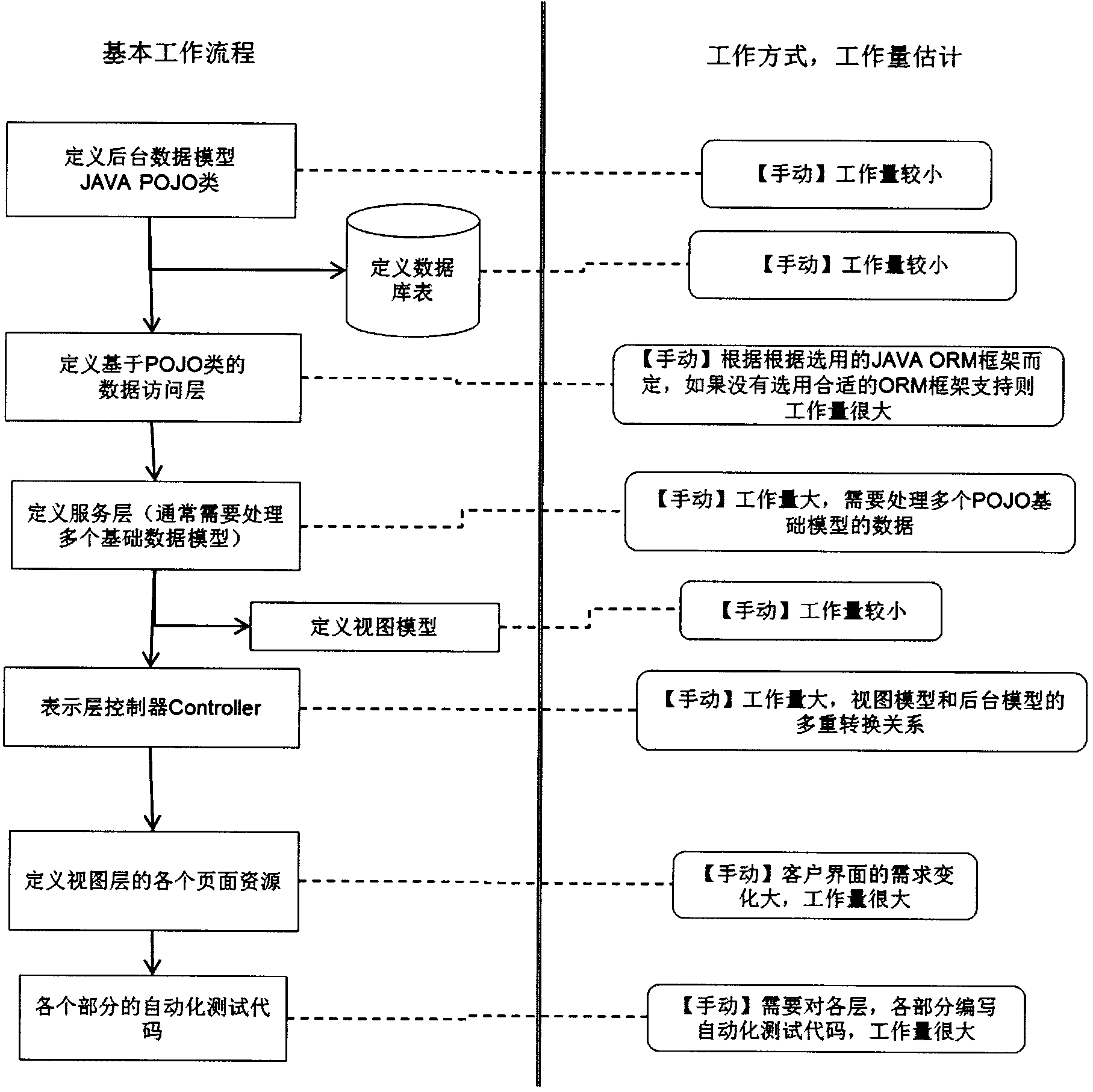

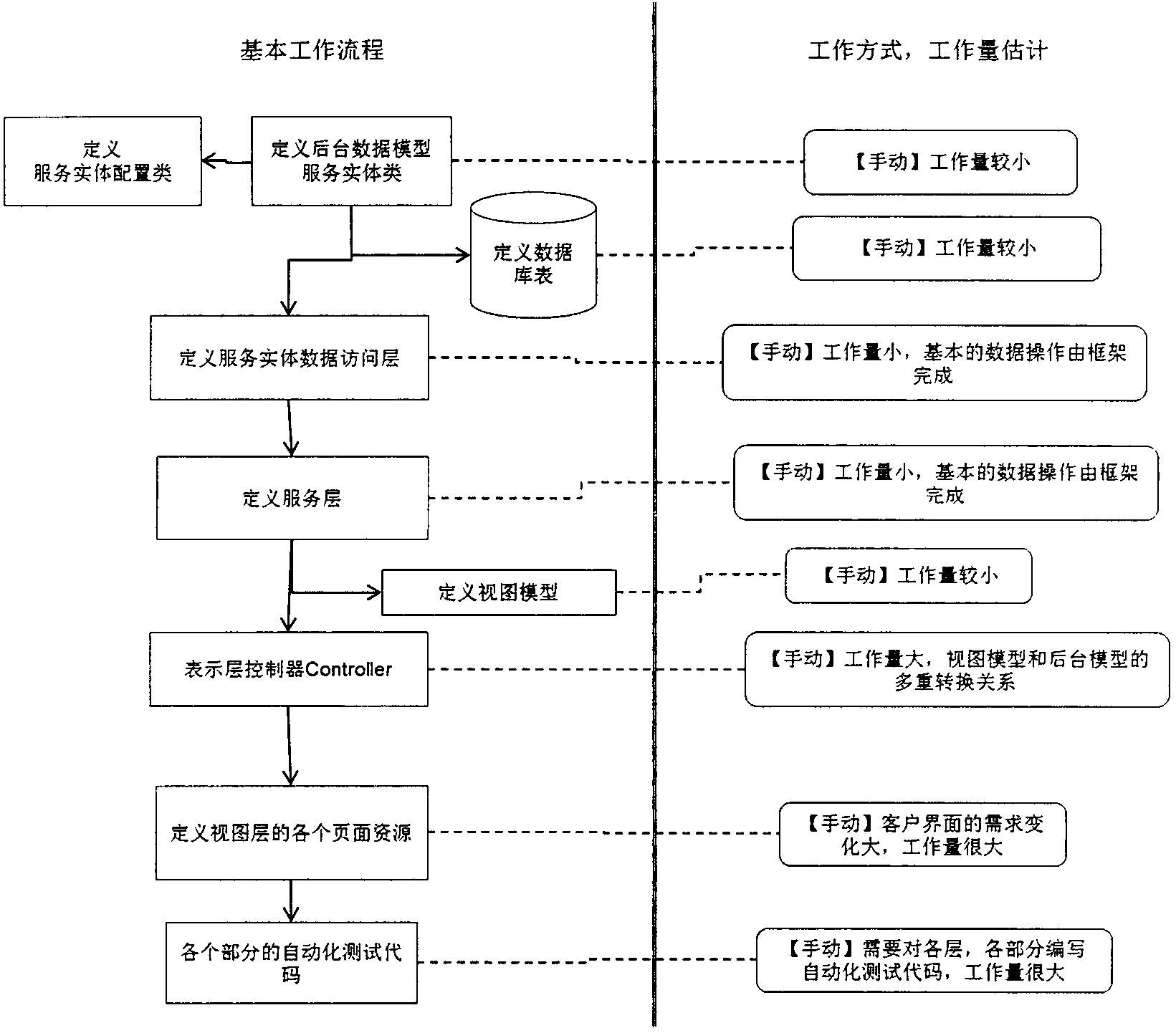

Rapid modeling frame of general business model based on star structure

InactiveCN104049957ARealize business needsImprove modeling efficiencySpecific program execution arrangementsSupporting systemView model

The invention designs a rapid modeling frame of a general business model based on a star structure. According to the basic thoughts, after the frame carries out semantic analysis according to information provided by a background business model and a foreground view model, a series of resource codes are generated to realize user-oriented code resources with basic application functions of adding, deleting, checking, changing and displaying. These code resources comprise code and database persistent layer service, database table and the like for business data processing by background, list views and editing and checking views with foreground default style, logic for generating the control style of the foreground to complete conversion of foreground and background, and multilingual resource files and automated testing codes of a related support system. According to the rapid modeling frame, the code resources complied with human power traditionally are generated by the frame, so that the man power cost is reduced, and the development efficiency is improved.

Owner:CHENGDU TJUT TECH CO LTD

Model sequencing for managing advertising pricing

Owner:OPTIMINE SOFTWARE

Method and system for access and modification of formatted text

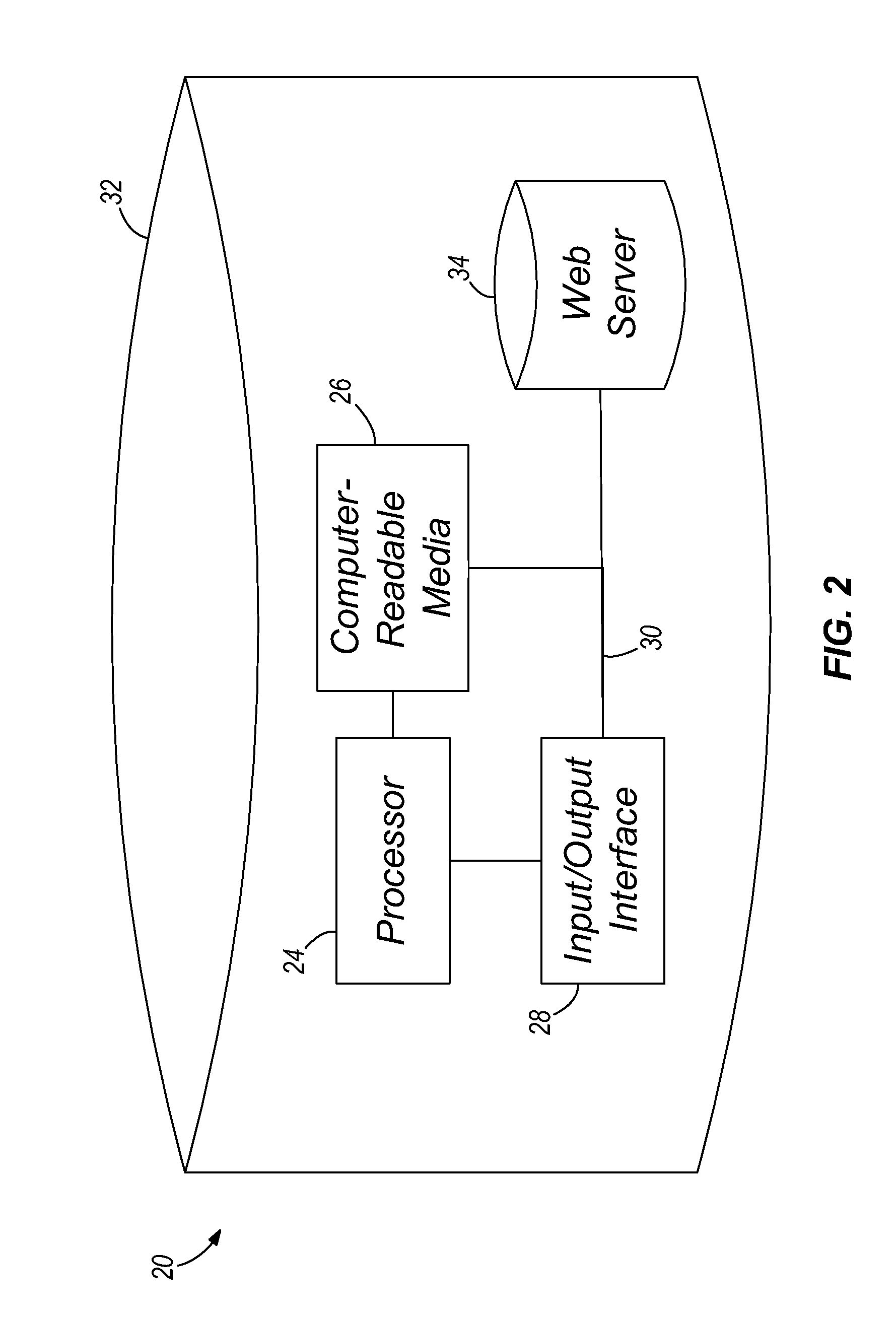

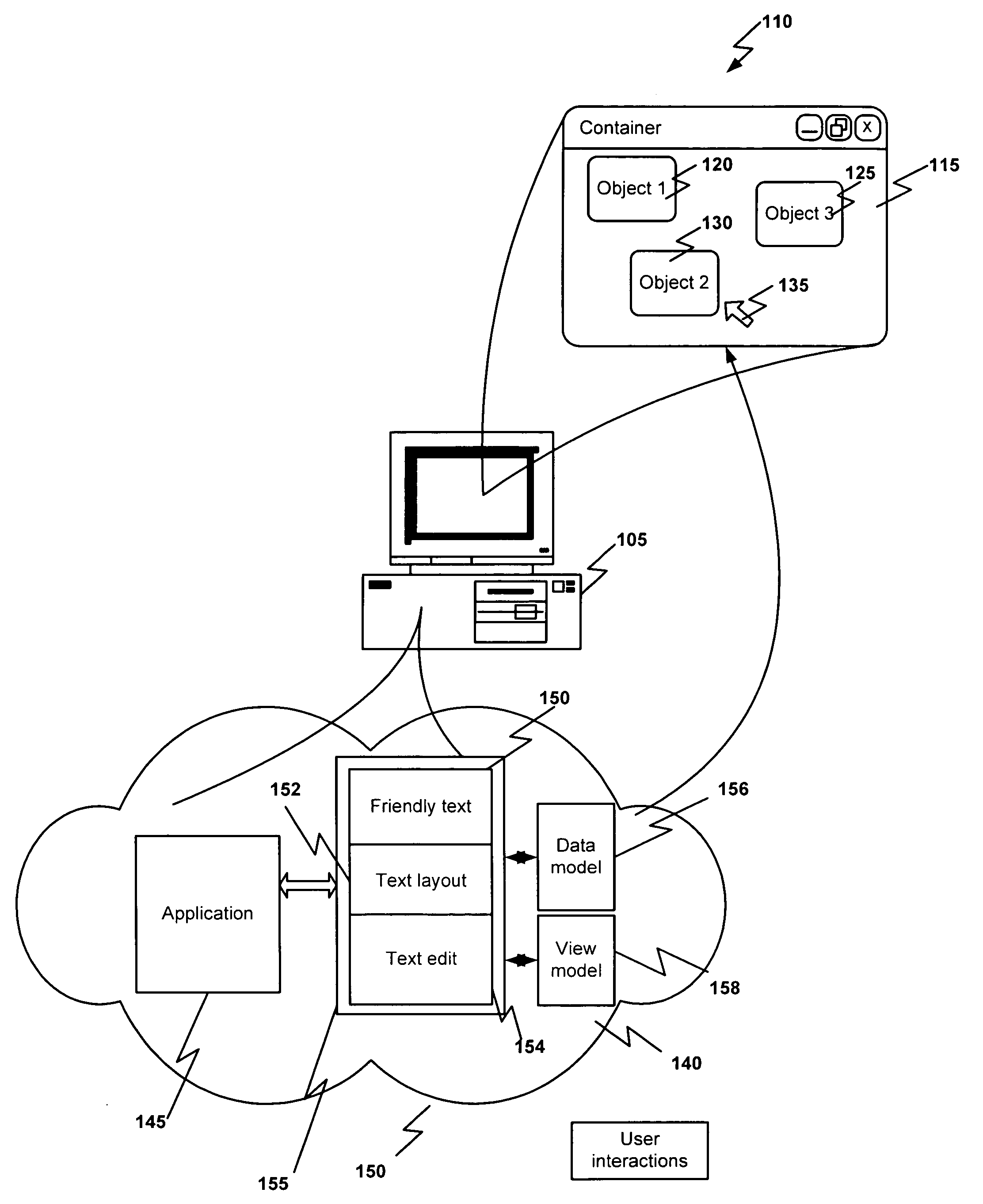

InactiveUS20050216922A1Natural language data processingSpecial data processing applicationsAbstraction layerView model

Embodiments of the present invention relate to methods, systems, and computer-readable media for editing an object displayed by a computer system. Editing comprises detecting an edit operation for an object displayed on a video display of a computer system. An edit operation request is then sent to an abstraction layer via an application program interface provided by the abstraction layer to initiate editing of the object by the abstraction layer. The abstraction layer is a text object model that has two models, a data model for accessing persistent content of text, and a view model for accessing presentation and interaction appearance of text. The text object model has several abstract classes and receives the edit operation request, determines the type of container in which the object is displayed based on properties related to the object to be edited, determines the operations required, incorporating all traditional text manipulation operations including actual editing, layout manipulations and text formatting. The abstraction layers read set of properties related to the object and the container in which the object is displayed in accordance with user instructional interactions.

Owner:MICROSOFT TECH LICENSING LLC

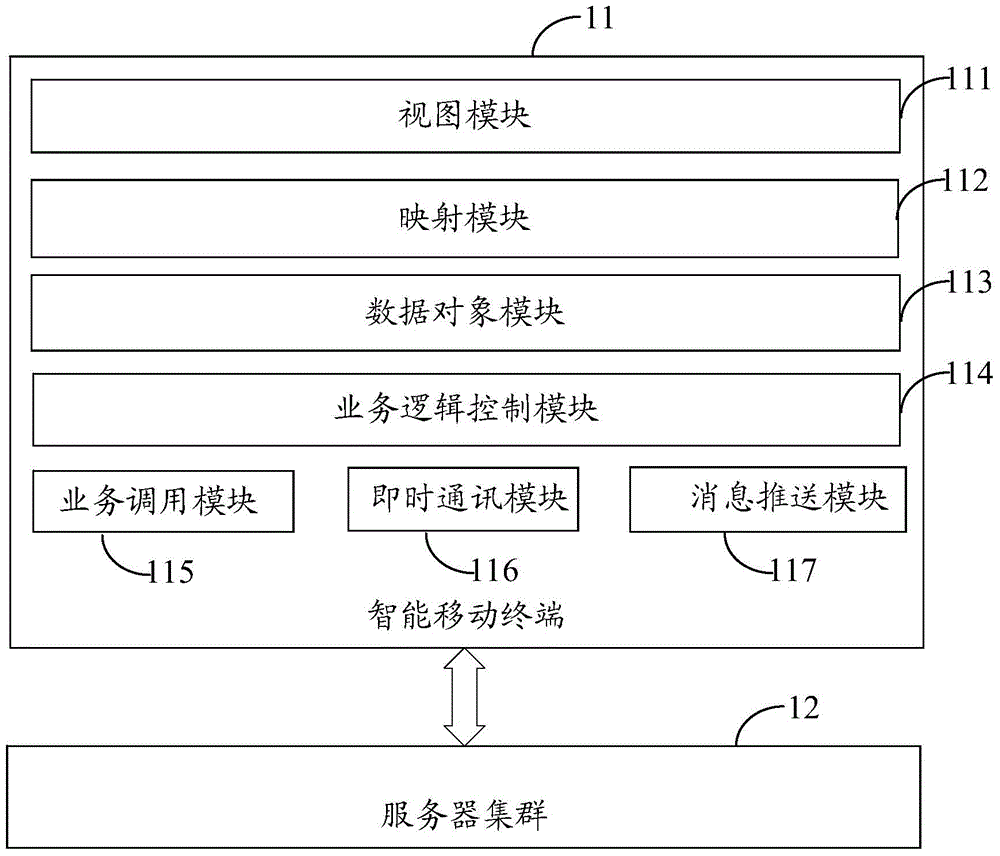

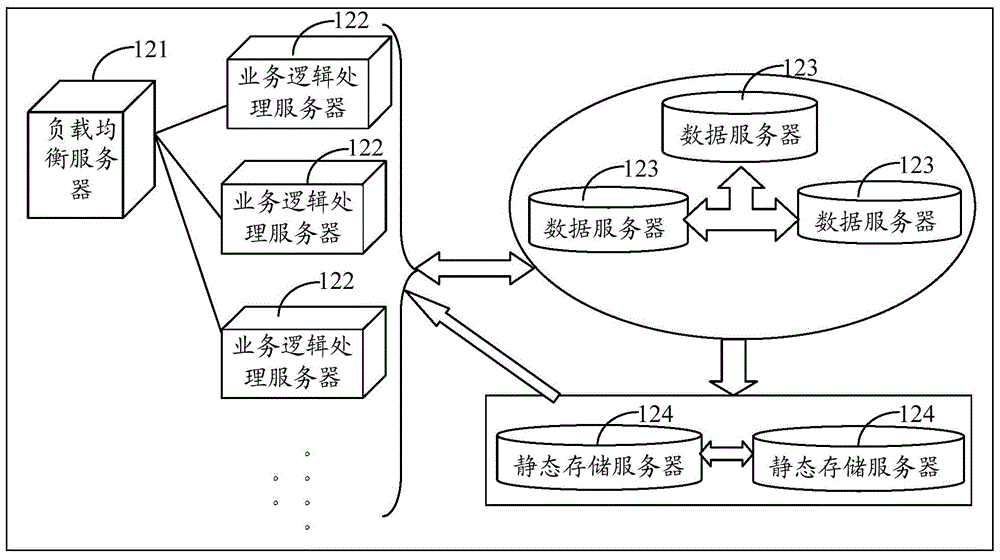

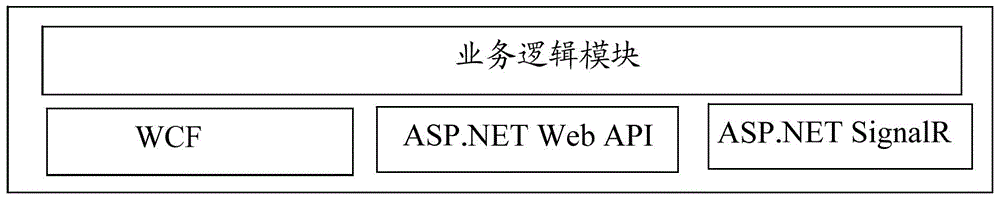

Cross-platform application system

The invention provides a cross-platform application system. The cross-platform application system comprises an intelligent mobile terminal and a server cluster, wherein the server cluster is used for processing service logic, and feeding back a processed result to the intelligent mobile terminal; the server cluster stores data for processing the service logic; and the intelligent mobile terminal comprises a view module, a mapping module, a data object module, a service-logic control module, a service calling module, an instant messaging module and a message pushing module. The cross-platform application system provided by the invention has the advantages that the view module, the mapping module and the data object module form an MVVM (Model-view-view Model) model, and a data layer and an interface layer corresponding to each service is effectively separated, so that any language can be adopted for developing the data layer to reduce the developing cost and the possibility generated by different branches, the benefit for unified management of source codes is achieved and the finishing of the same service at the same time is guaranteed.

Owner:AGRICULTURAL BANK OF CHINA

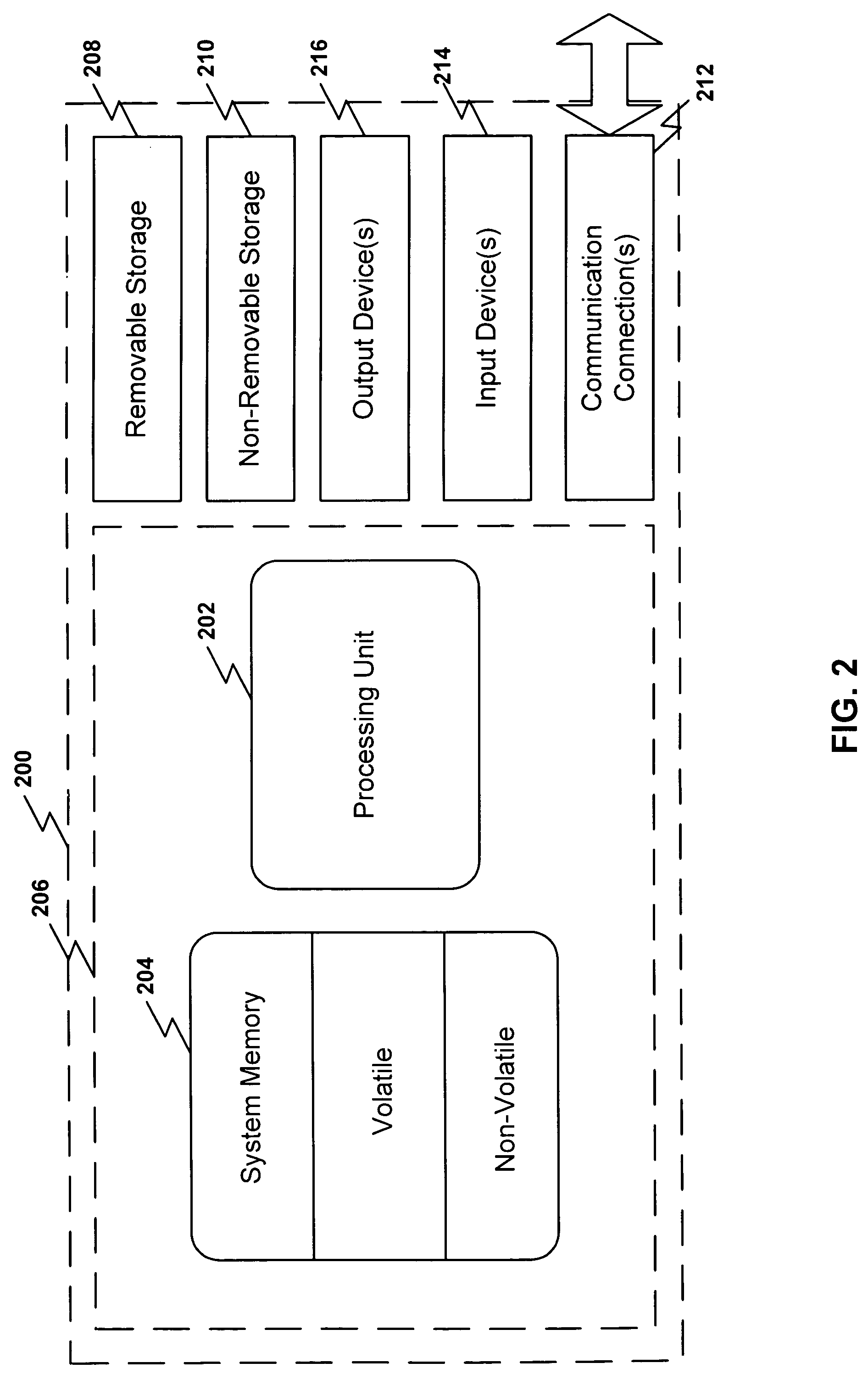

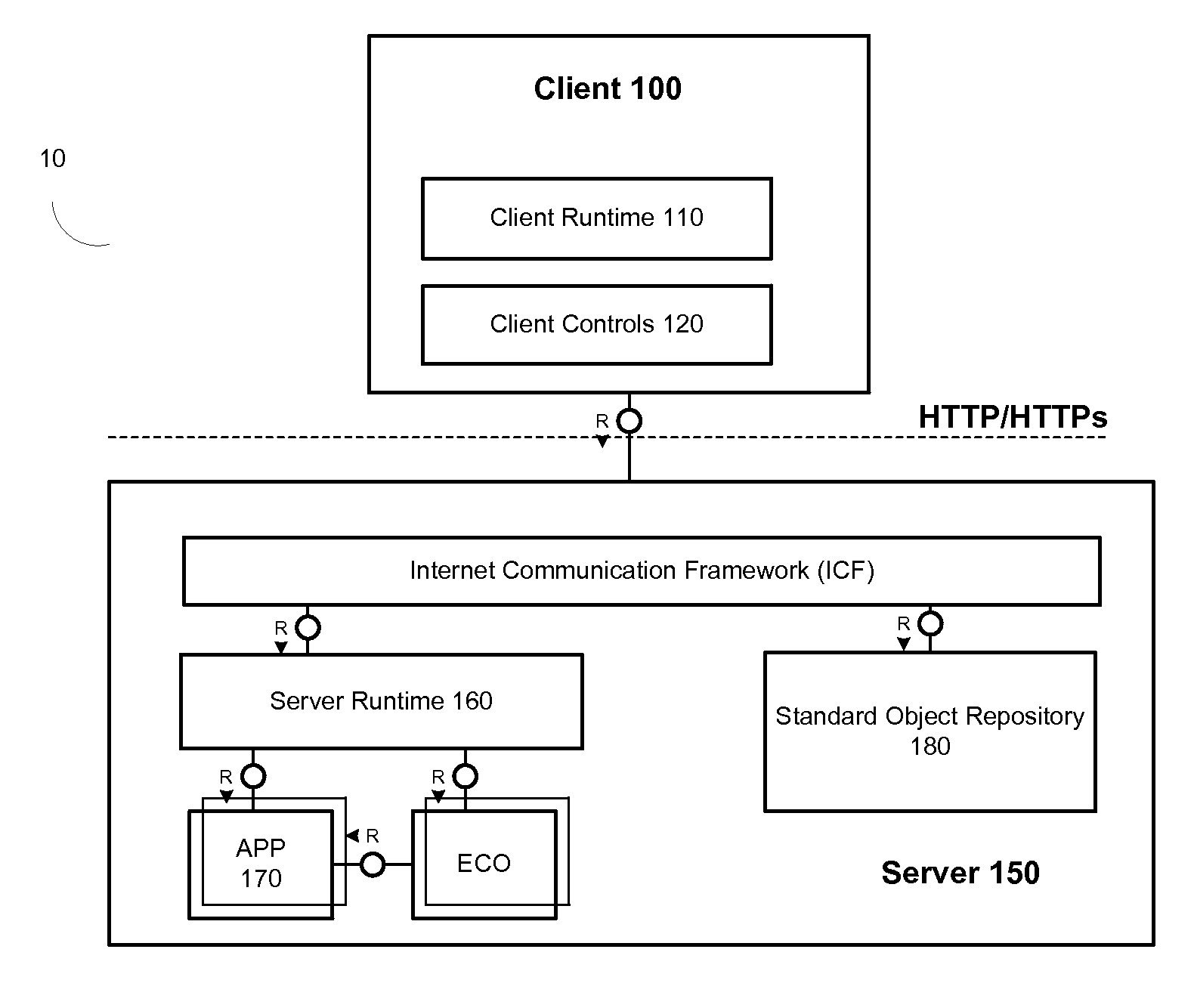

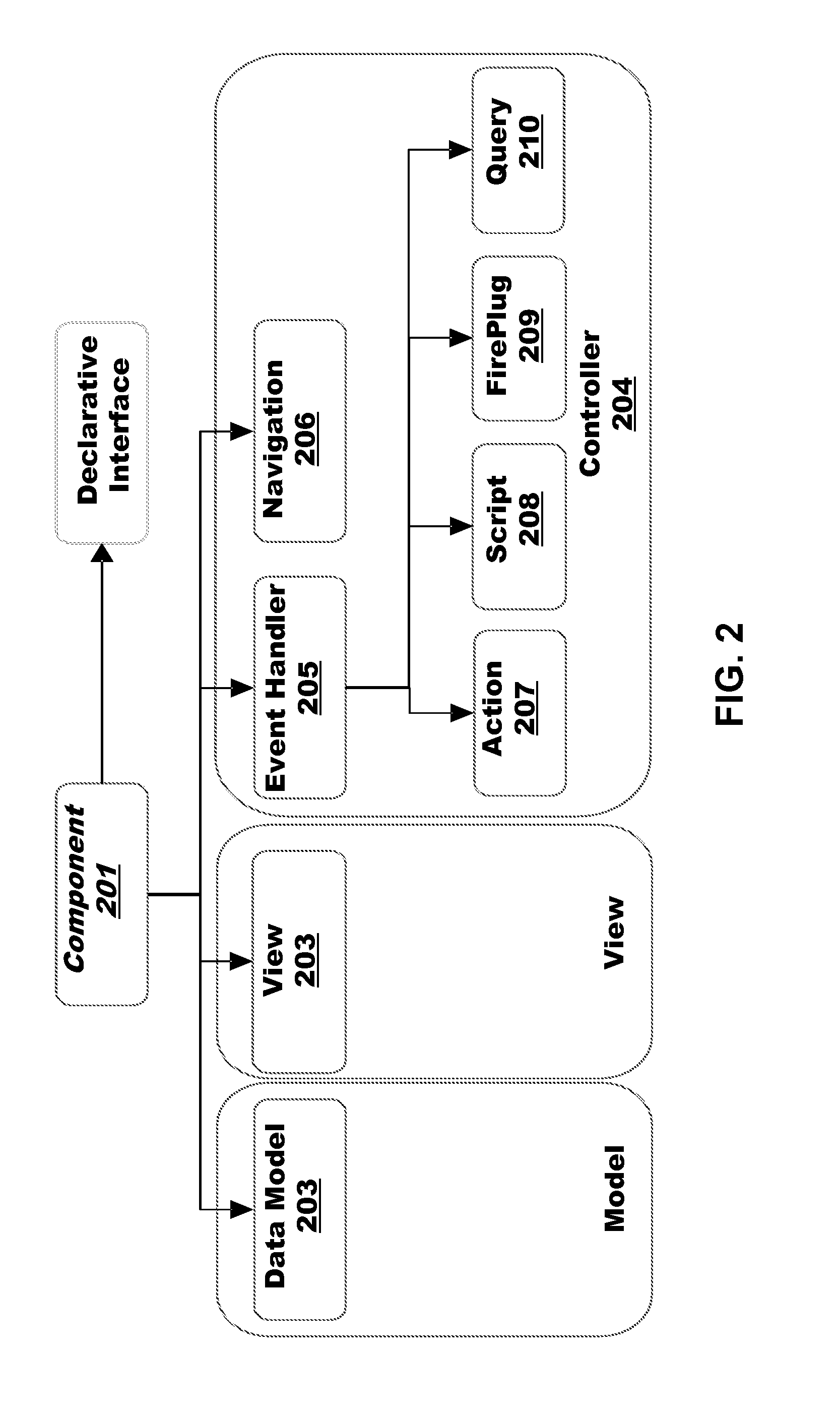

Logical data model abstraction in a physically distributed environment

ActiveUS20120030591A1Not visibleMultiple digital computer combinationsExecution for user interfacesGraphicsGraphical user interface

A component object binds business driven services to a graphical user interface (GUI). The object includes a data model, view model and controller. The view model graphically presents, and the controller manipulates data used by the object. The controller includes an event handler that respond to events generated within the GUI and binds data used by the object to a data source which can be another component object or a remotely located source. The event handler can call a local script to calculate the value of a data element within the object. The component object can be instantiated on a client, and can be configured to communicate with a corresponding component object on a server. The client and server component objects can exchange only that data that needs to be exchanged to maintain the current state of a user interface in the client computer.

Owner:SAP AG

Multi-source image fusion and feature extraction algorithm based on depth learning

InactiveCN109308486AHigh precisionImprove efficiencyCharacter and pattern recognitionViewpointsDodecahedron

The invention discloses a multi-source image fusion and feature extraction algorithm based on depth learning, comprises placing each three-dimensional model in a virtual dodecahedron, arranging a virtual camera on twenty vertices of the dodecahedron, taking virtual photos of the original object from the viewpoints of three-dimensional space, and obtaining twenty views of a single object to form amulti-view model database; The multi-view model database is divided into training set, test set and verification set according to the ratio of 7: 2: 1. The loss function is redefined by using the hidden variable of view posture label, and the loss function is minimized by back propagation algorithm. After minimizing the loss function, the last layer of the neural network outputs multiple views ofa single object through a softmax cascade, and scores of the categories under the constraint of the candidate view gesture tags. The invention avoids the dependence on the space where the feature is located, and improves the accuracy of the target classification.

Owner:TIANJIN UNIV +1

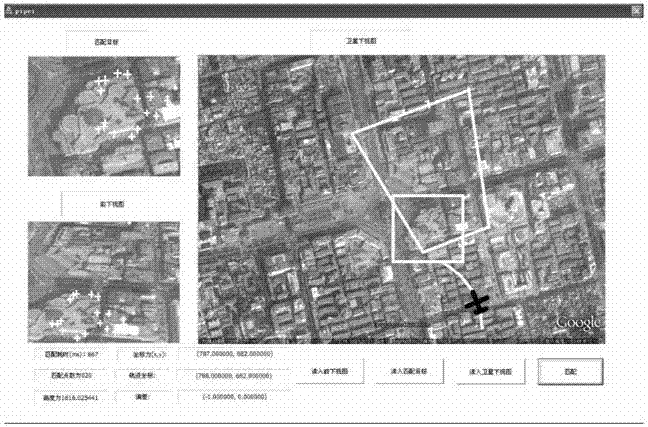

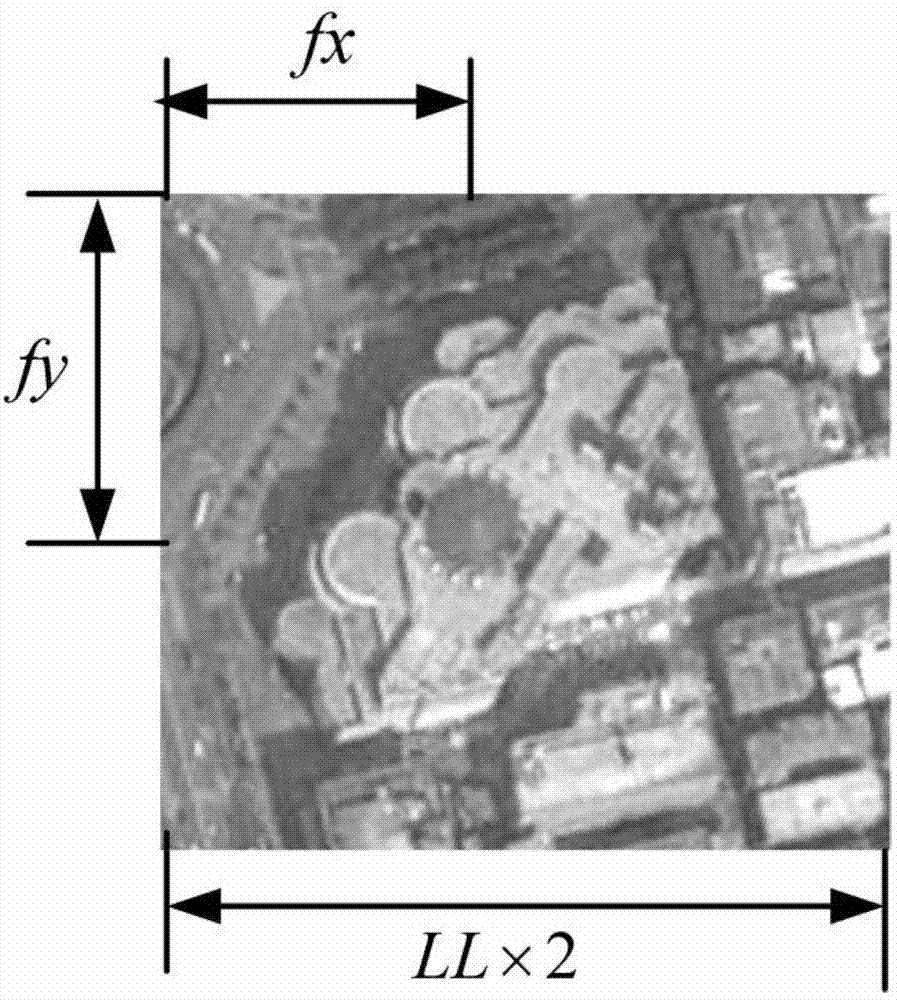

Scale invariant feature transform-based unmanned aerial vehicle scene matching positioning method

ActiveCN102853835AHigh positioning accuracyHigh speedImage analysisNavigational calculation instrumentsScale-invariant feature transformScene matching

The invention relates to a scale invariant feature transform-based unmanned aerial vehicle scene matching positioning method. The scale invariant feature transform-based unmanned aerial vehicle scene matching positioning method is characterized by comprising the following steps of 1, extracting feature description vectors of an image of a matching target and a front-lower view of an unmanned aerial vehicle by a scale invariant feature transform algorithm, 2, determining if the front-lower view in the frame and the image of the matching target are matching or not, and 3, if the front-lower view and the image of the matching target are matching, recording coordinates of a matching point in a satellite map comprising the image of the matching target and the matching target, in the front-lower view of the unmanned aerial vehicle, calculating current position coordinates of the unmanned aerial vehicle in the satellite map according to the coordinates of the matching point and carrying out positioning of the unmanned aerial vehicle, and if the front-lower view and the image of the matching target are not matching, reading an unmanned aerial vehicle front-lower view in the next frame and sequentially carrying out matching. The scale invariant feature transform-based unmanned aerial vehicle scene matching positioning method realizes accurate matching of a front-lower view of an unmanned aerial vehicle and a matching target in a satellite map, determination of current position coordinates of the unmanned aerial vehicle according to a built unmanned aerial vehicle front-lower view model, and positioning of the unmanned aerial vehicle.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

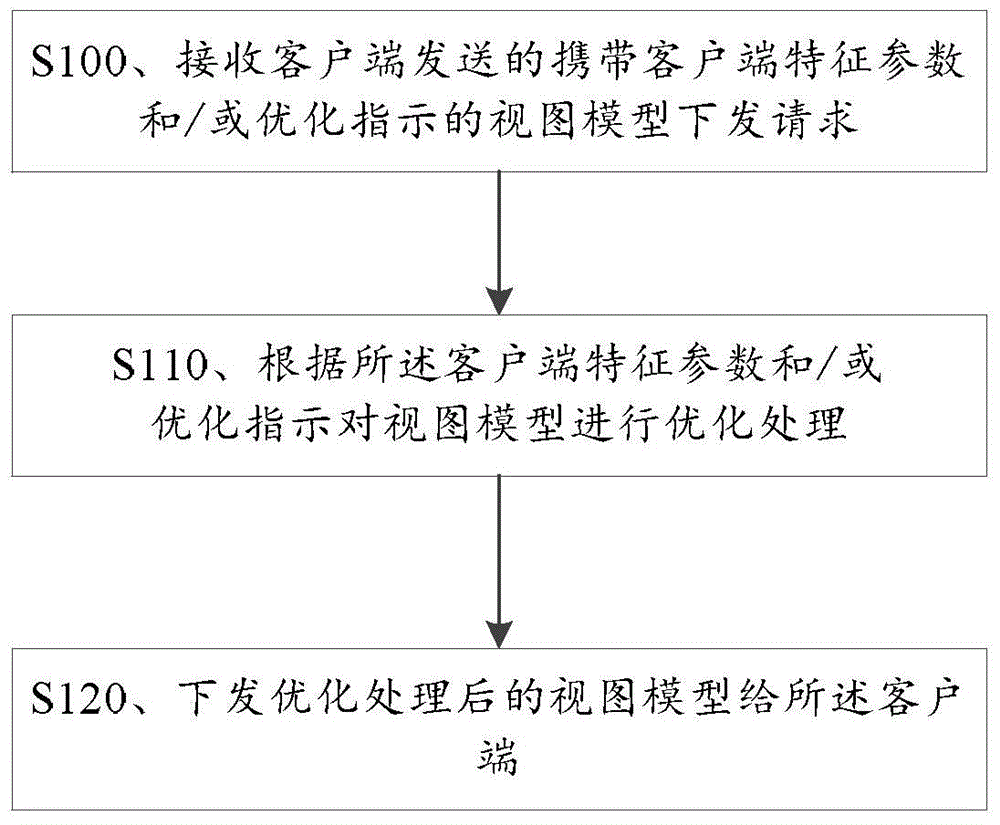

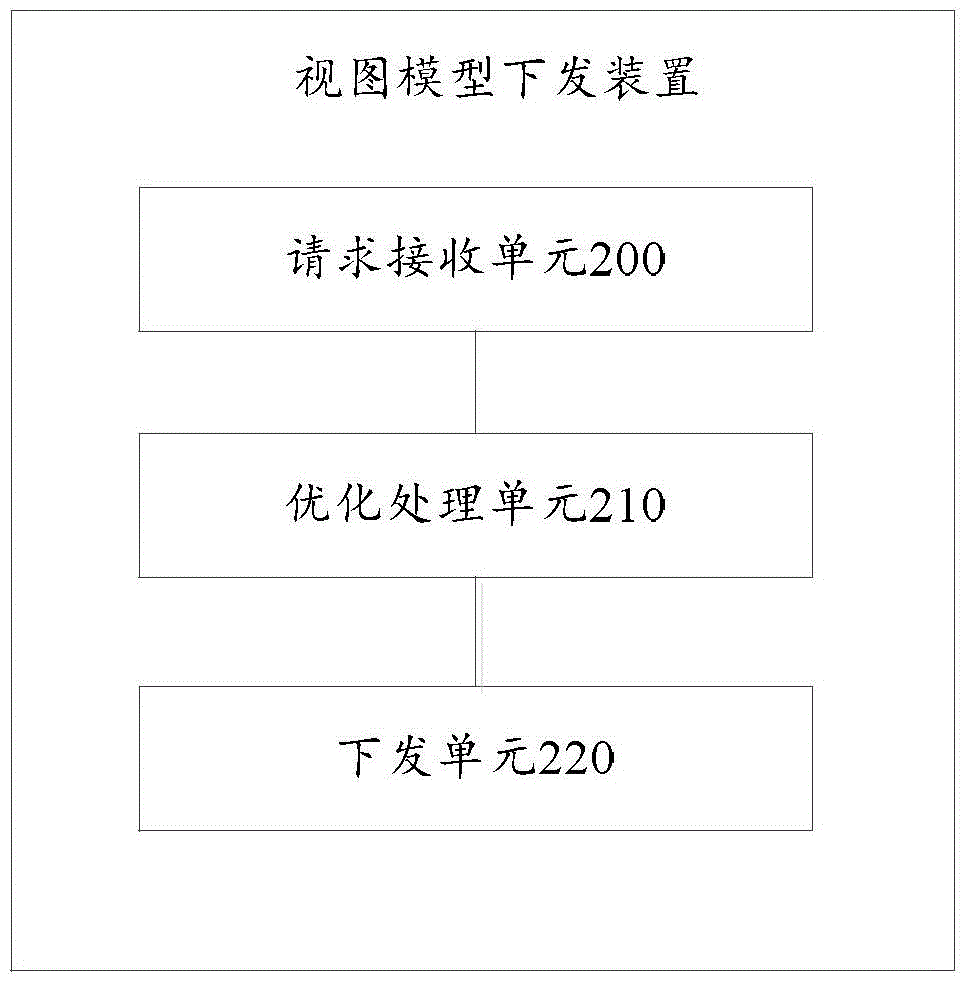

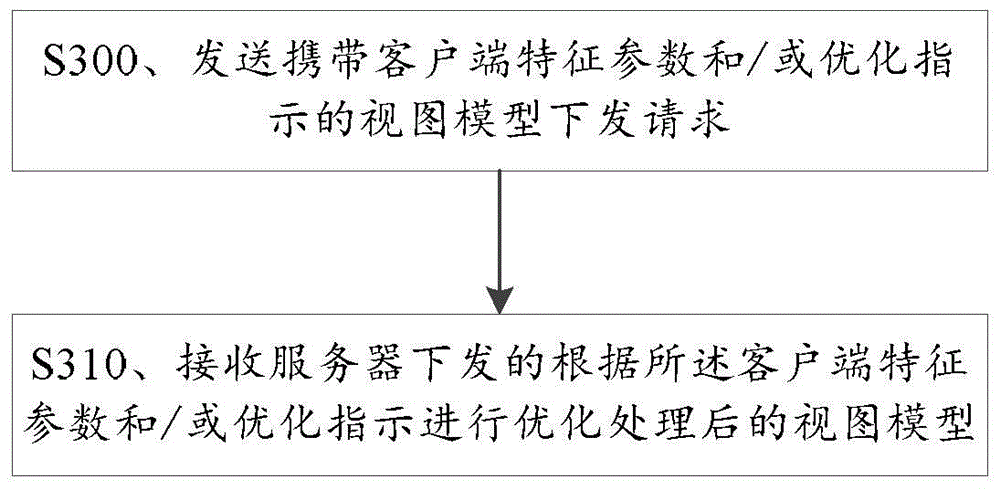

View model requesting and issuing method and device

ActiveCN104615647AImproving the efficiency of displaying view modelsAvoid CatonTransmissionSpecial data processing applicationsComputer graphics (images)View model

The invention provides a view model requesting and issuing method and device. The view model issuing method comprises the steps of receiving a view model issuing request which carries the characteristic parameters and / or optimization hints of a client end and is sent by the client end, conducting optimization processing on a view model according to the characteristic parameters and / or the optimization hints of the client end, and issuing the view model after optimization processing to the client end. According to the view model requesting and issuing method and device, corresponding optimization processing is conducted on the view model which is issued to the client end according to the characteristic parameters and / or the optimization hints of the client end, so that when the client end receives the view model, the view model can be directly loaded without carrying out the operations such as analysis, view tree generation and rendering, the efficiency of displaying the view model by the client end is greatly improved, and the stopped problem of the view model in the display process can be effectively solved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

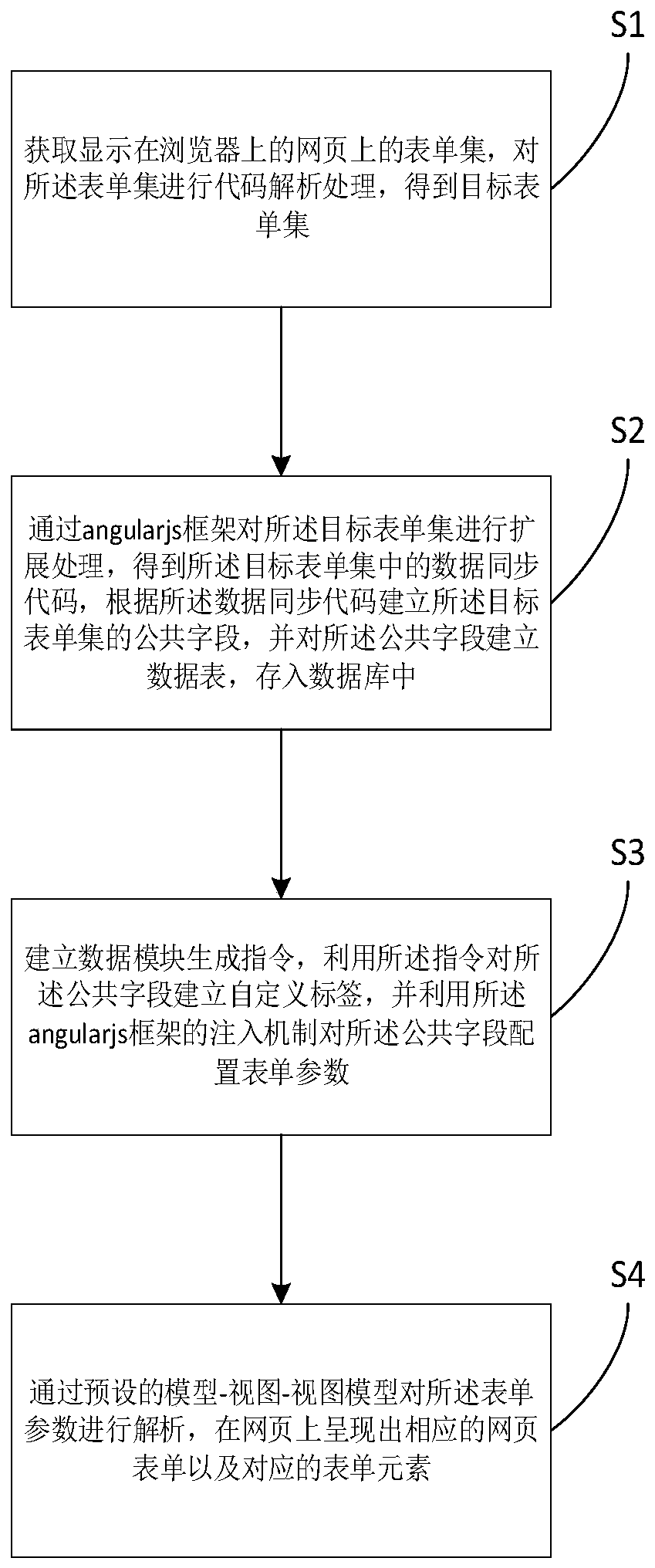

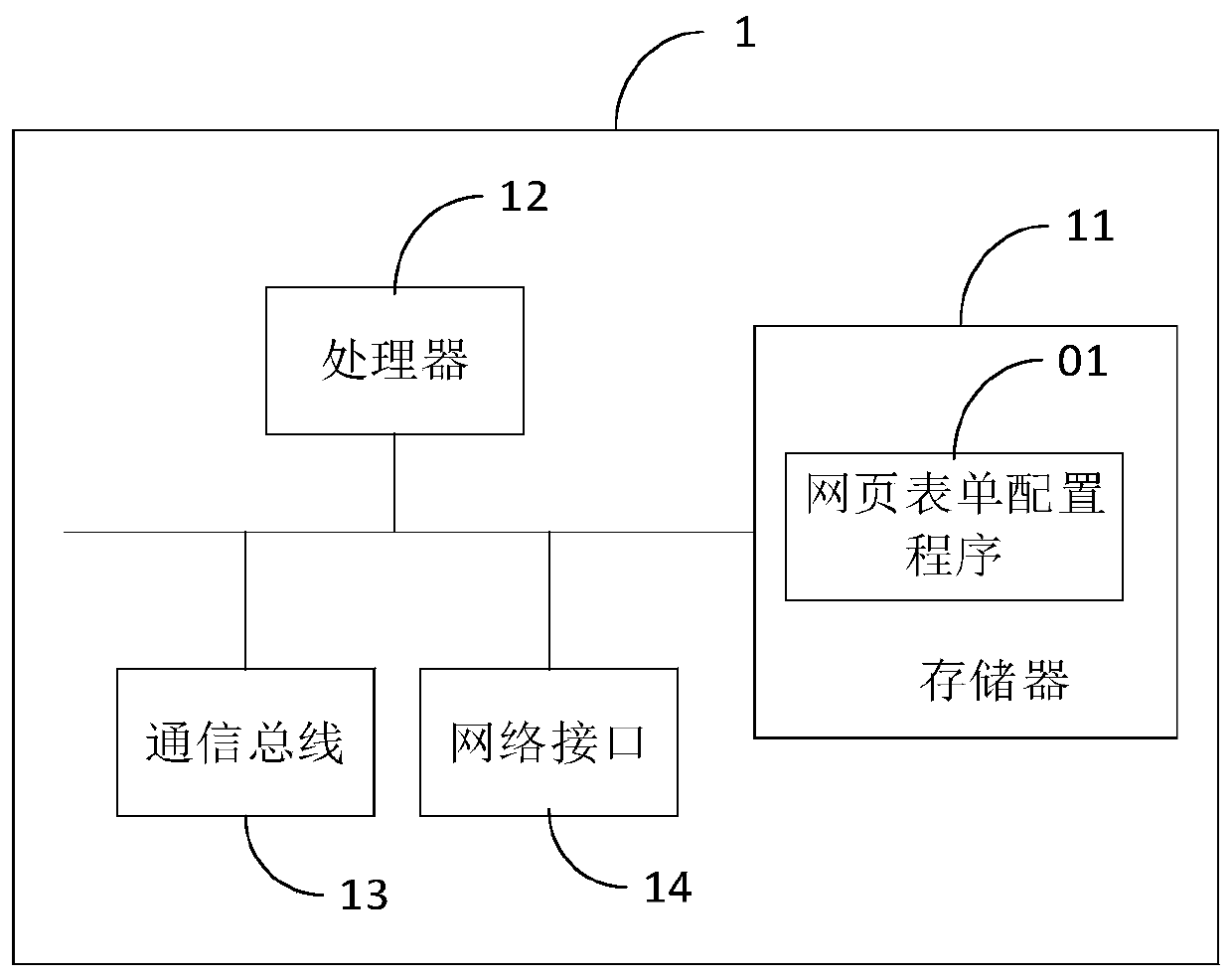

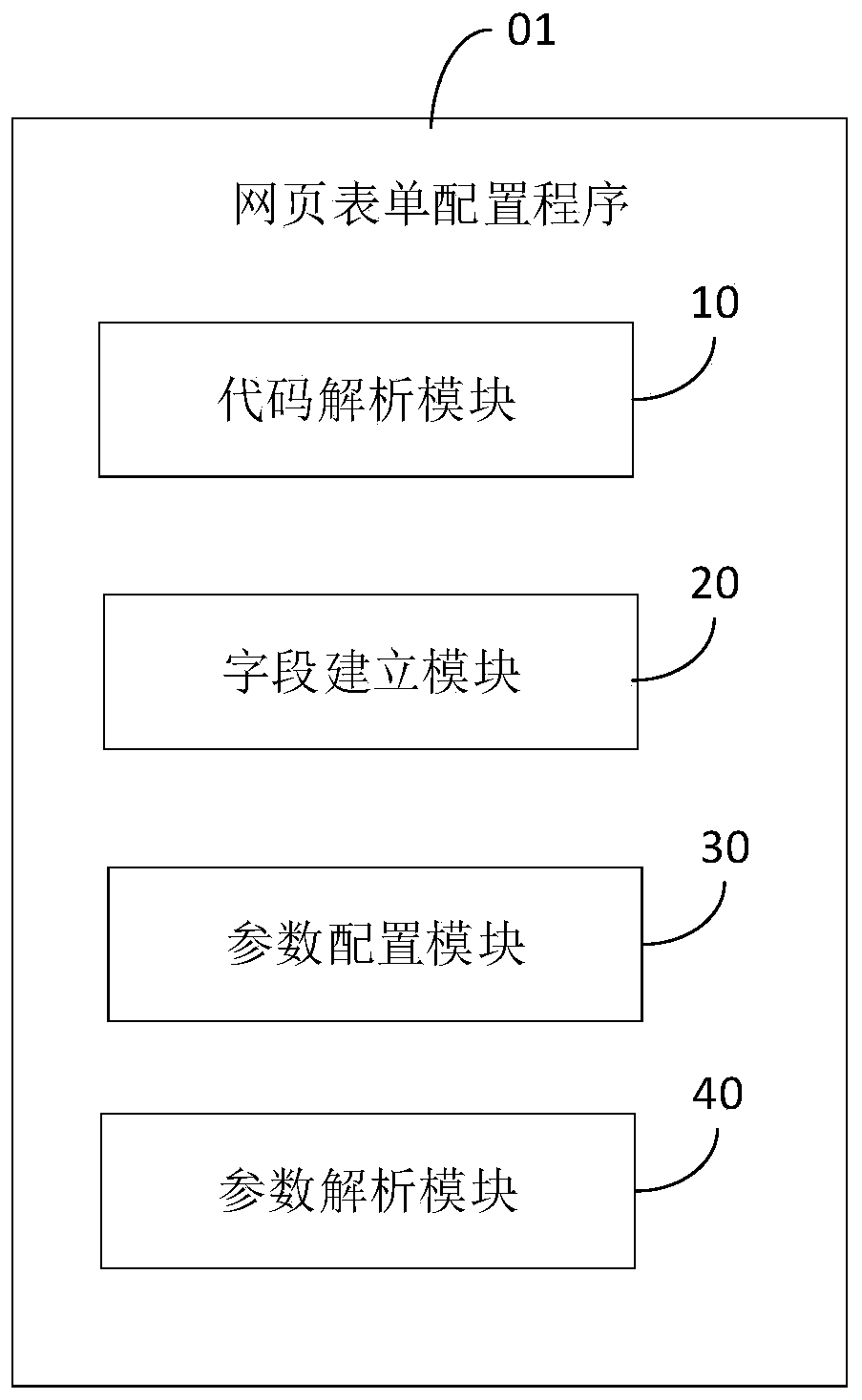

Webpage form configuration method and device and computer readable storage medium

PendingCN110442816AWebsite content managementSpecial data processing applicationsData synchronizationView model

The invention relates to a big data technology, and discloses a webpage form configuration method, which comprises the following steps of: obtaining a form set of webpages displayed on a browser, andperforming code analysis processing on the form set to obtain a target form set; performing extension processing on the target form set through an angularjs framework to obtain a data synchronizationcode of the target form set, establishing a public field according to the data synchronization code, establishing a data table for the public field, and storing the data table into a database; generating an instruction by establishing a data module, establishing a custom label for the public field by utilizing the instruction, and configuring form parameters for the public field by utilizing the angularjs framework injection mechanism; and analyzing the form parameters by utilizing a preset model-view-view model, and presenting a corresponding webpage form and a corresponding form element on awebpage. The invention further provides a webpage form configuration device and a computer readable storage medium. According to the invention, efficient configuration of the webpage form is realized.

Owner:PING AN TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com