Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1351 results about "Textural feature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

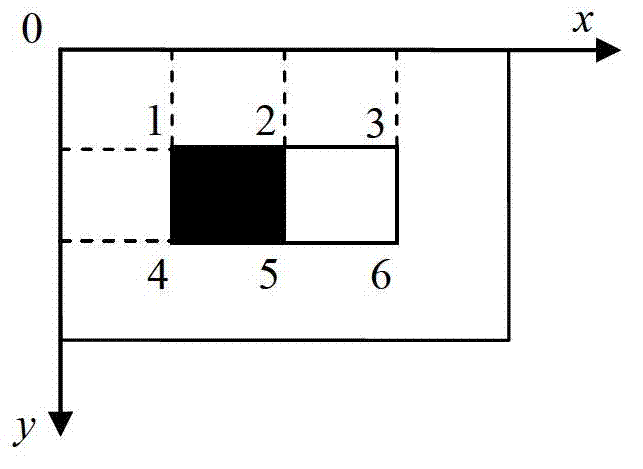

Textural Features for Image Classification. Abstract: Texture is one of the important characteristics used in identifying objects or regions of interest in an image, whether the image be a photomicrograph, an aerial photograph, or a satellite image.

Image intelligent mode recognition and searching method

InactiveCN101211341AImprove hit rateShort response timeCharacter and pattern recognitionSpecial data processing applicationsThe InternetUniform resource locator

The invention puts forward an image intelligent mode identification search method. The method can establish an image sample training set database and combine with basic text search engine technology and basic image content inquiry technology, so that a network creeper can perform Internet image search and URL information resolution, so as to catch the image URL and relevant information into a local primary database; perform such pre-processes as preliminary filtration, decompression and image pre-classification and etc for the images; then, calculate color characteristics, grain characteristics and shape characteristics of the extraction images, so as to gain corresponding characteristic vector sets; combine with the image URL information before saving the images into the image basic database and establishing an index for the images; perform characteristic vector similarity calculation for images in the image basic databases and sample training sets, and then, save the classified images into an image classification database; accept key words or image description that are input by the user, create the index vector, perform similarity calculation with the image characteristic vectors in the image classification database, and then, return the index results to the user.

Owner:SHANGHAI XINSHENG ELECTRONICS TECH

Apparatus and method for statistical image analysis

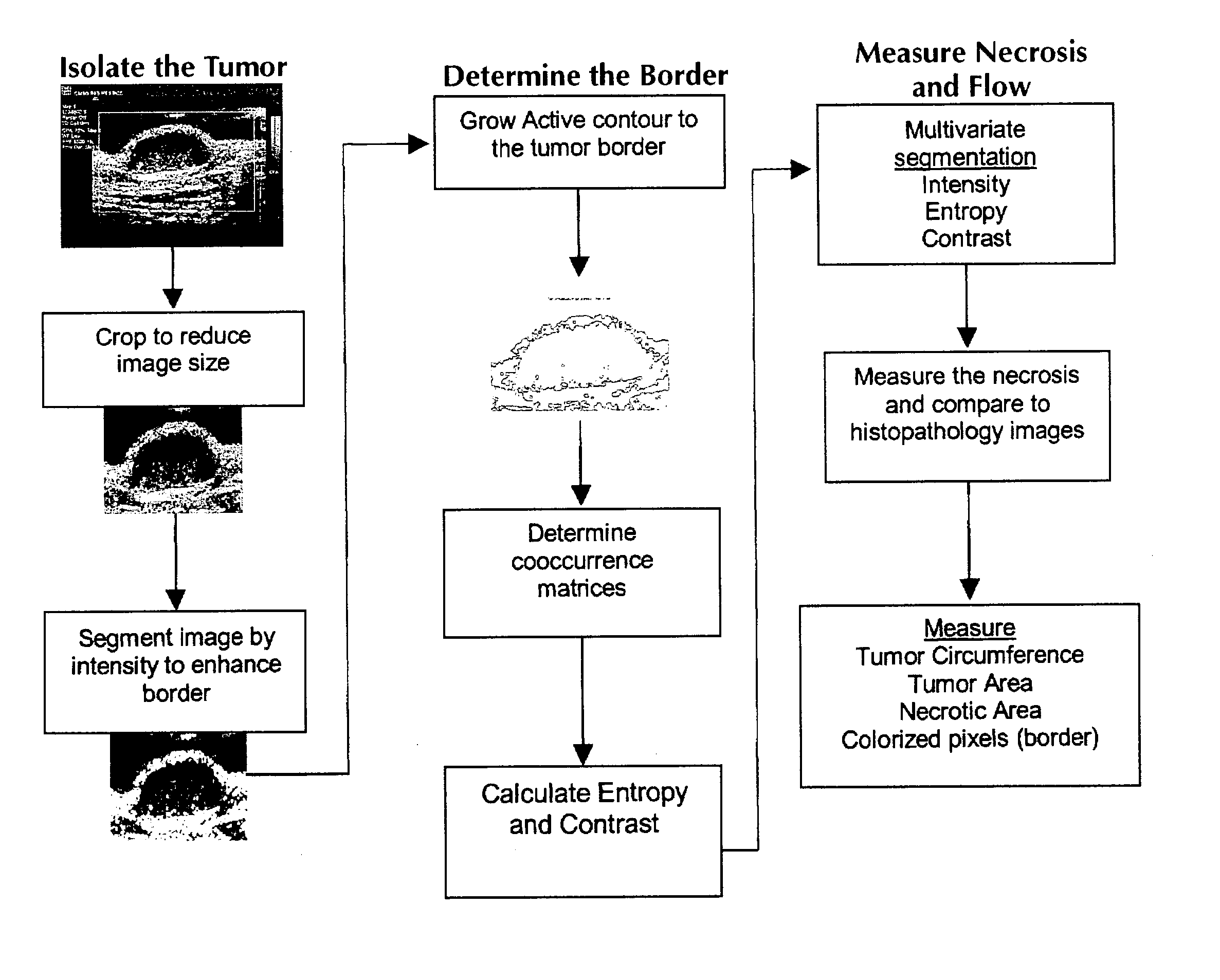

An apparatus, system, method, and computer readable medium containing computer-executable code for implementing image analysis uses multivariate statistical analysis of sample images, and allows segmentation of the image into different groups or classes, depending on a correlation to one or more sample textures, or sample surface features. In one embodiment, the invention performs multivariate statistical analysis of ultrasound images, wherein a tumor may be characterized by segmenting viable tissue from necrotic tissue, allowing for more detailed in vivo analysis of tumor growth beyond simple dimensional measurements or univariate statistical analysis. Application of the apparatus and method may also be used for characterizing other types of samples having textured features including, for example, tumor angiogenesis biomarkers from Power Doppler.

Owner:PFIZER INC

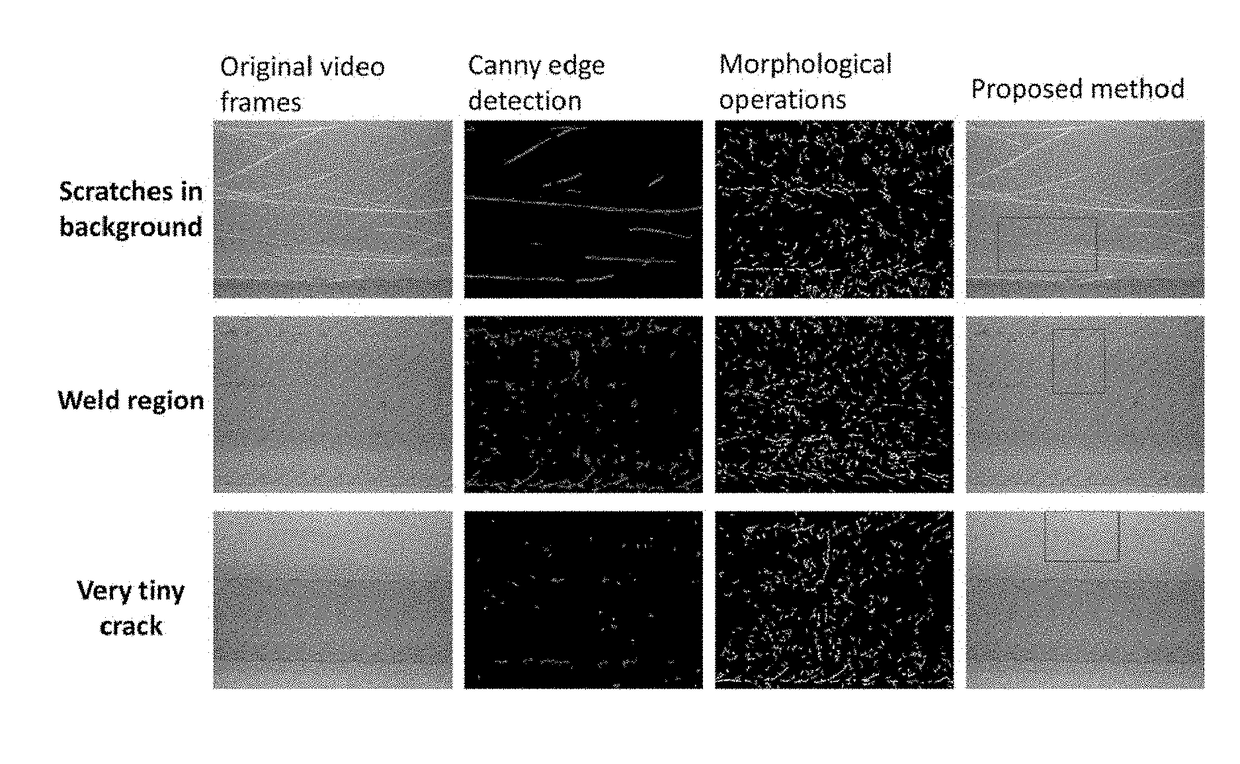

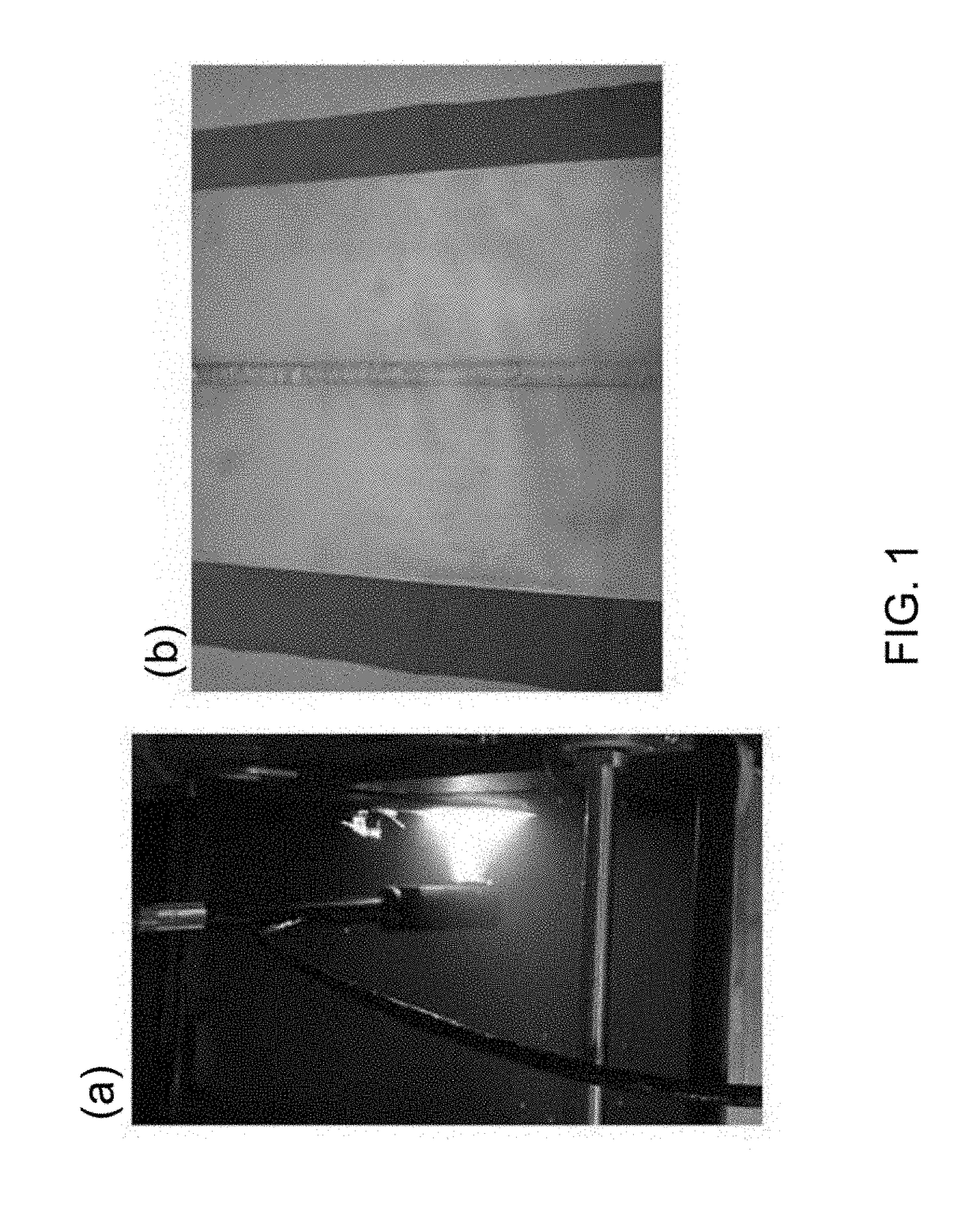

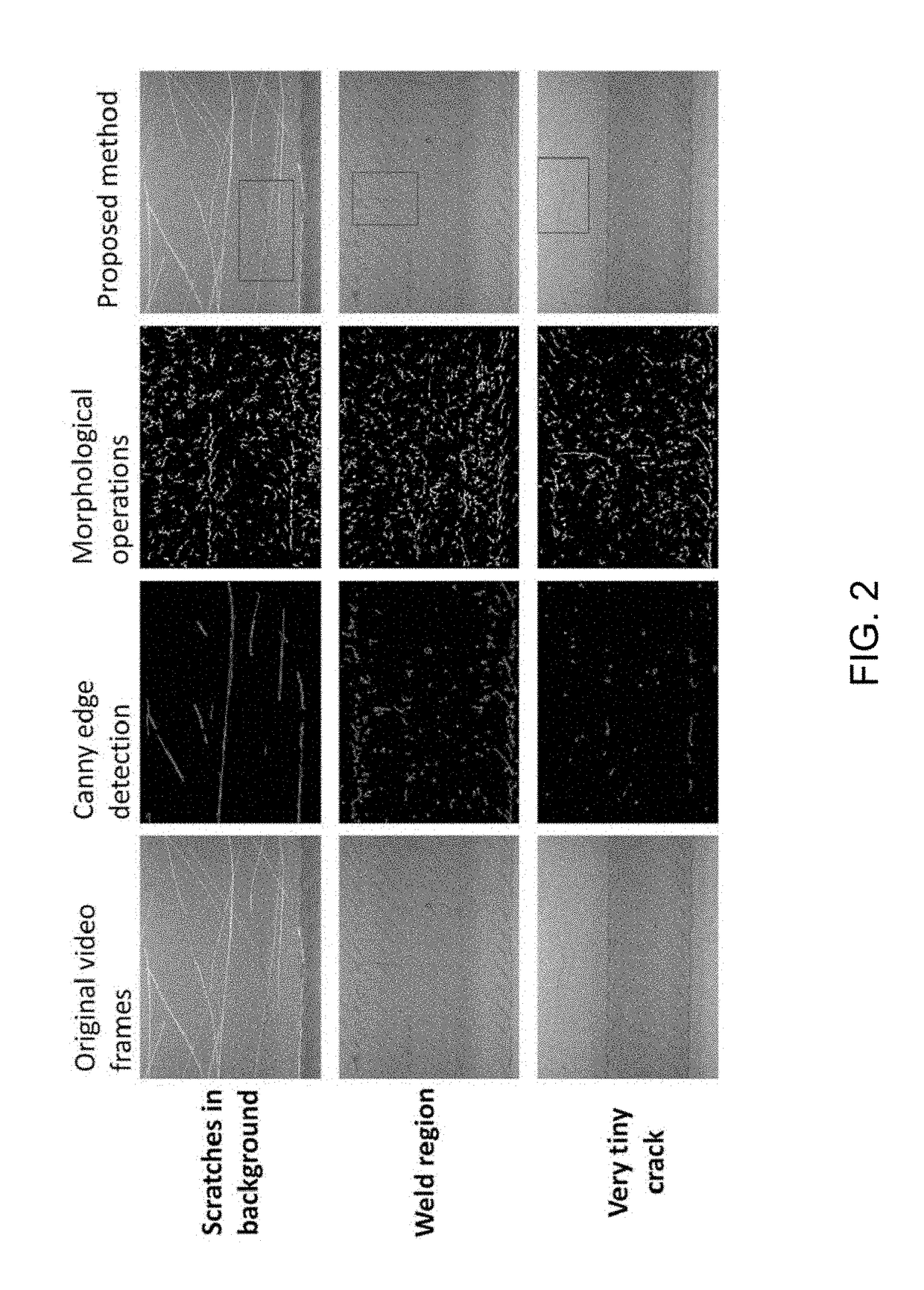

Methods and systems for crack detection

Systems and methods suitable for capable of autonomous crack detection in surfaces by analyzing video of the surface. The systems and methods include the capability to produce a video of the surfaces, the capability to analyze individual frames of the video to obtain surface texture feature data for areas of the surfaces depicted in each of the individual frames, the capability to analyze the surface texture feature data to detect surface texture features in the areas of the surfaces depicted in each of the individual frames, the capability of tracking the motion of the detected surface texture features in the individual frames to produce tracking data, and the capability of using the tracking data to filter non-crack surface texture features from the detected surface texture features in the individual frames.

Owner:PURDUE RES FOUND INC

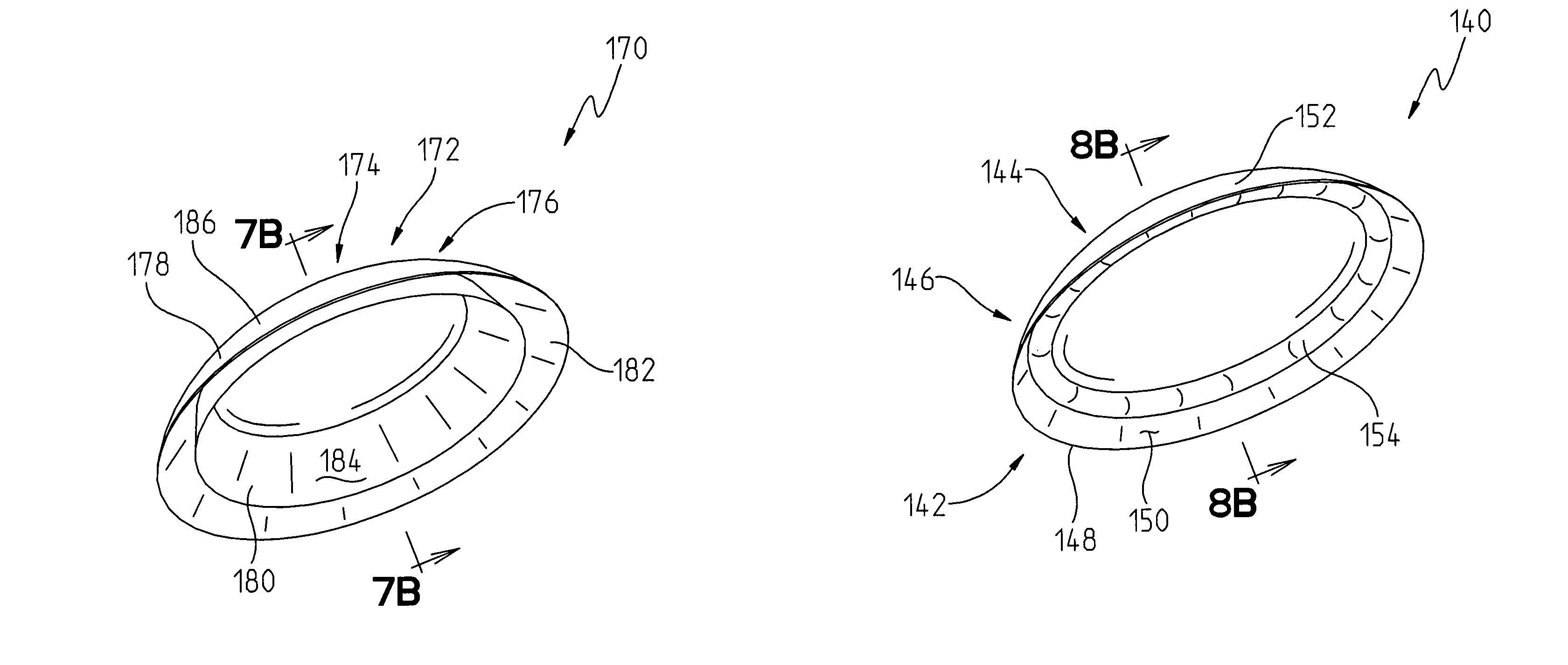

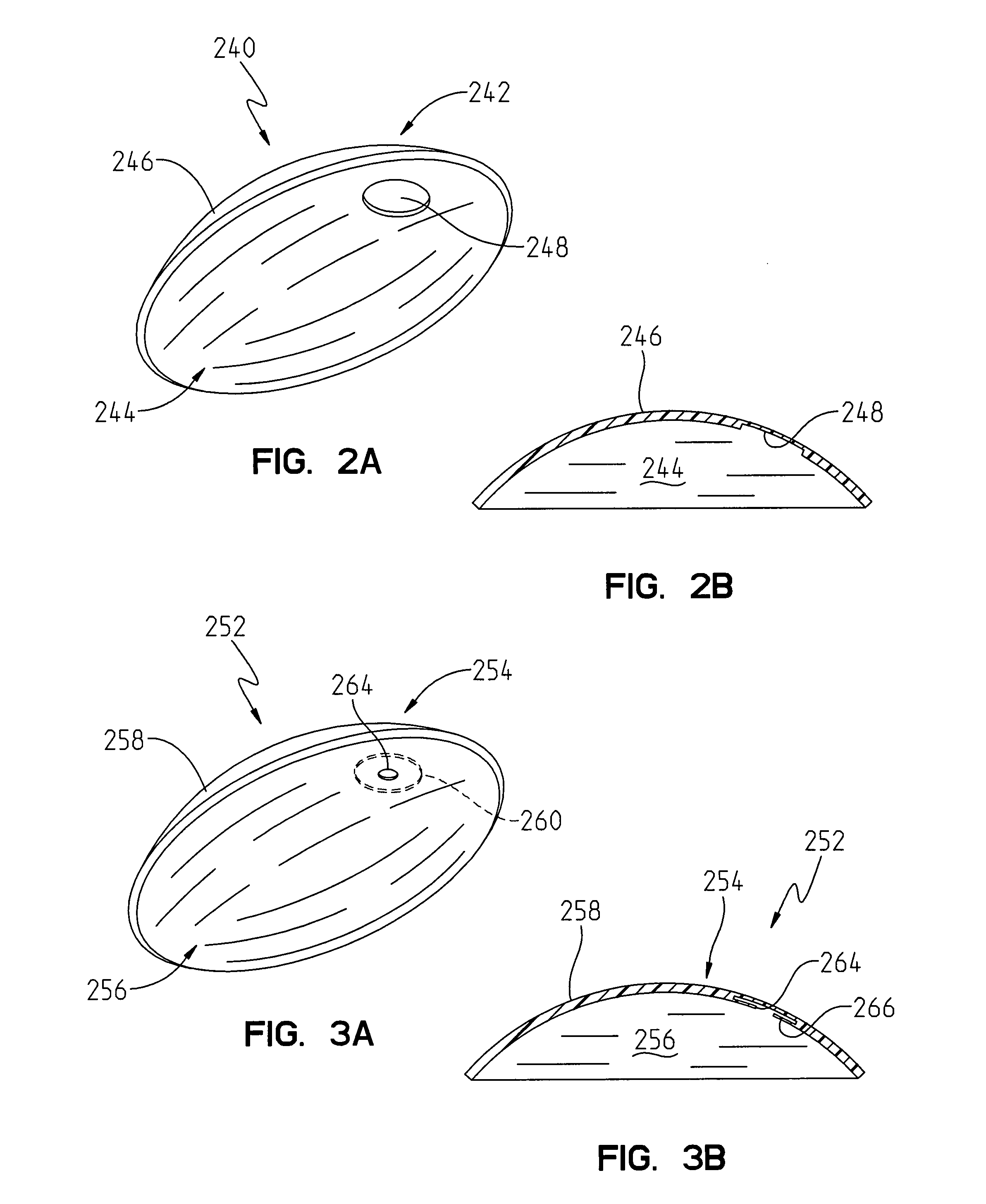

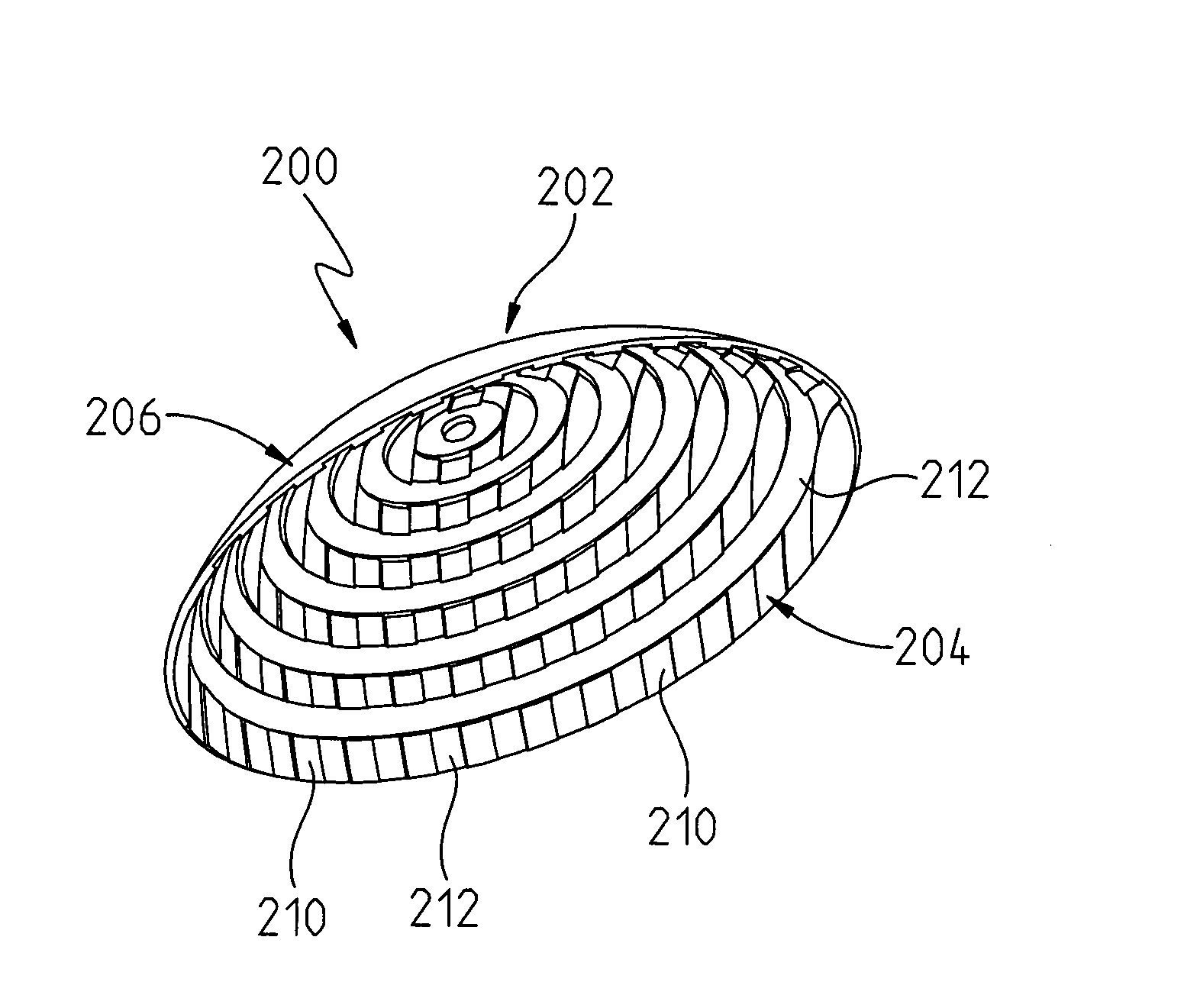

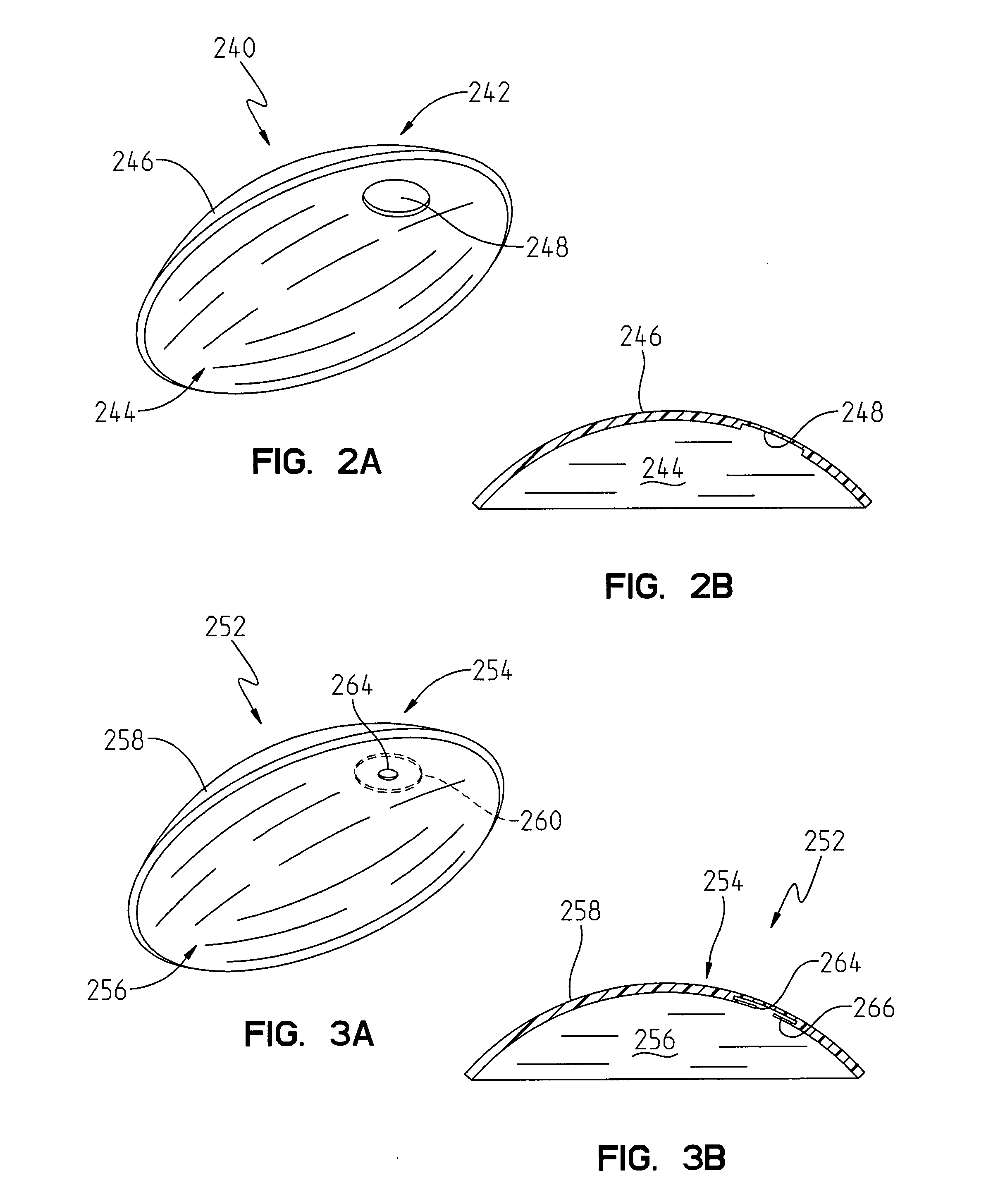

Contact lens materials, designs, substances, and methods

InactiveUS7878650B2Improve performanceReduce the possibilityOptical partsPerformance enhancementReduced size

A contact lens is provided that is capable of being worn by a user. The lens includes a contact lens body having an eye-engaging surface placeable against a surface of an eye and an outer surface. Microphobic features are provided in the lens for eliminating microbes from the eye-engaging surface. Preferably, these microphobic features are chosen from a group consisting of electrical charge inducing agents, magnetic field inducing agents, chemical agents and textural features. In another embodiment of the present invention, a contact lens is provided that can include a reservoir portion capable of holding a performance enhancement agent for enhancing the performance of the lens. The performance enhancement agent can include such thins as sealant solutions, protective agents, therapeutic agents, anti-microbial agents, medications and reduced size transparent portions. In other embodiments, a wide variety of designs, materials and substances are disclosed for use with contact lenses.

Owner:DOMESTIC ASSET LLP

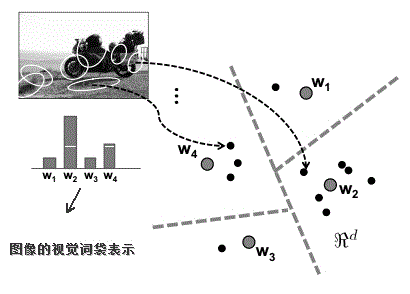

Remote sensing image classification method based on multi-feature fusion

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

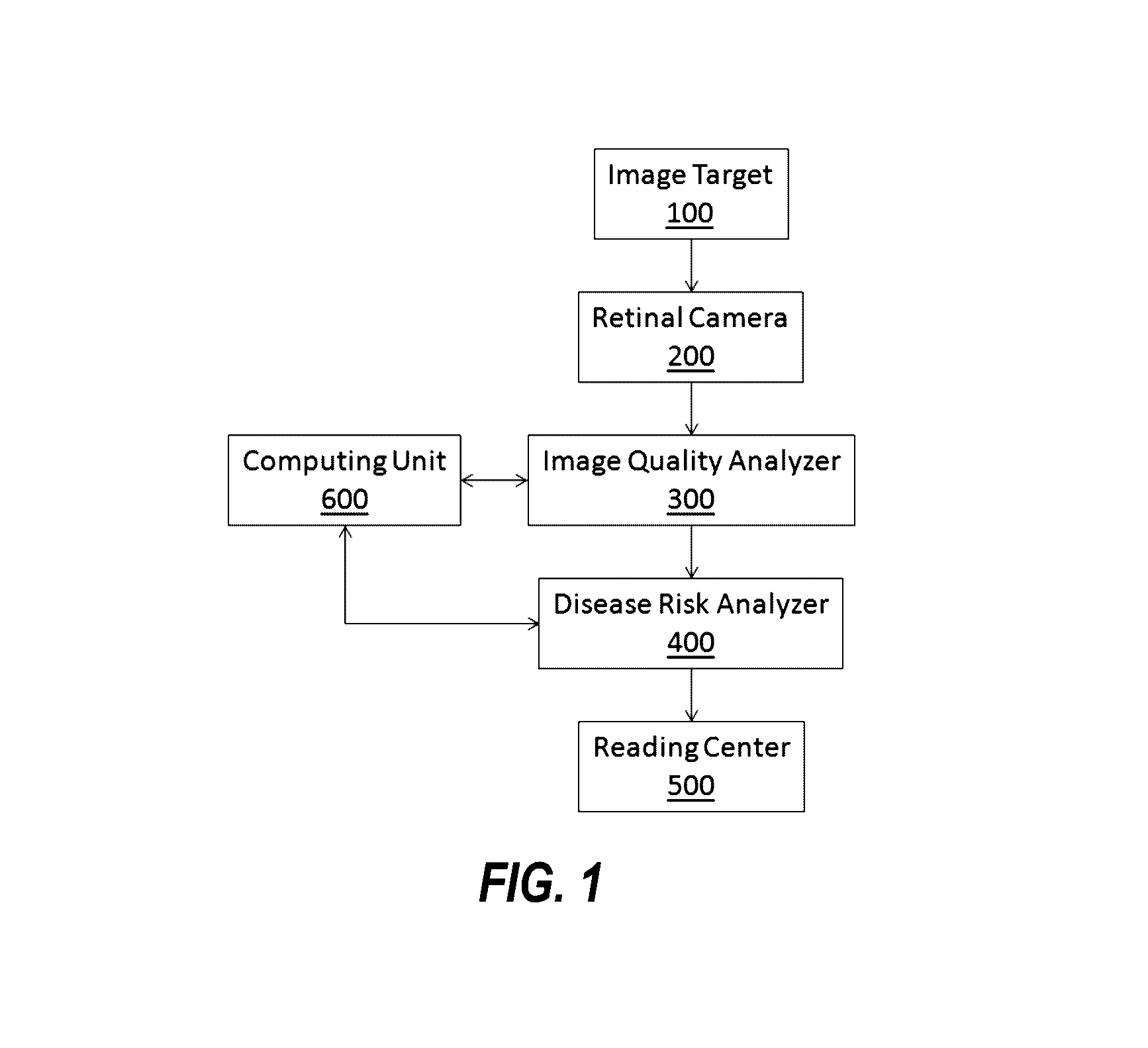

System and methods for automatic processing of digital retinal images in conjunction with an imaging device

Systems and methods of obtaining and recording fundus images by minimally trained persons, which includes a camera for obtaining images of a fundus of a subject's eye, in combination with mathematical methods to assign real time image quality classification to the images obtained based upon a set of criteria. The classified images will be further processed if the classified images are of sufficient image quality for clinical interpretation by machine-coded and / or human-based methods. Such systems and methods can thus automatically determine whether the quality of a retinal image is sufficient for computer-based eye disease screening. The system integrates global histogram features, textural features, and vessel density, as well as a local non-reference perceptual sharpness metric. A partial least square (PLS) classifier is trained to distinguish low quality images from normal quality images.

Owner:VISIONQUEST BIOMEDICAL

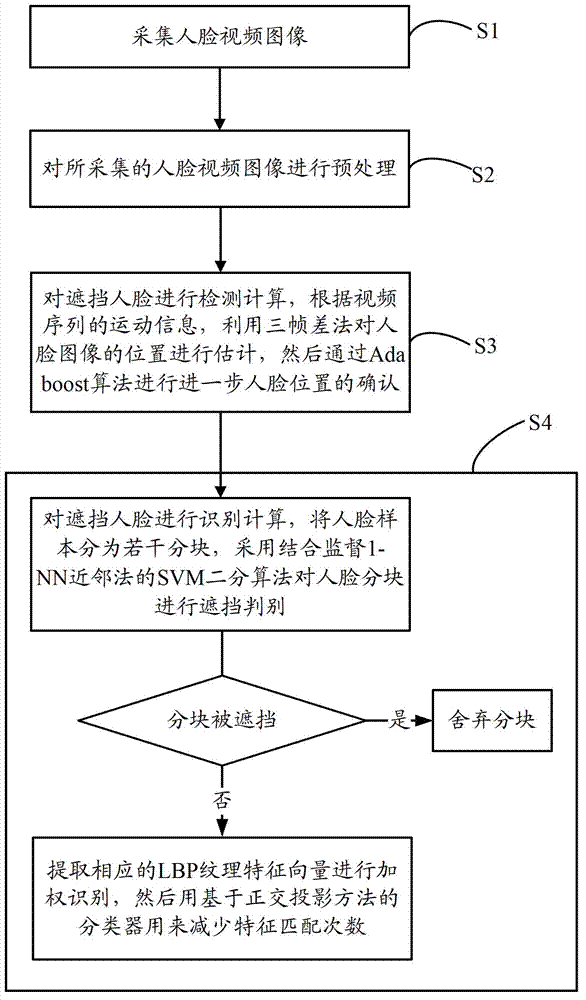

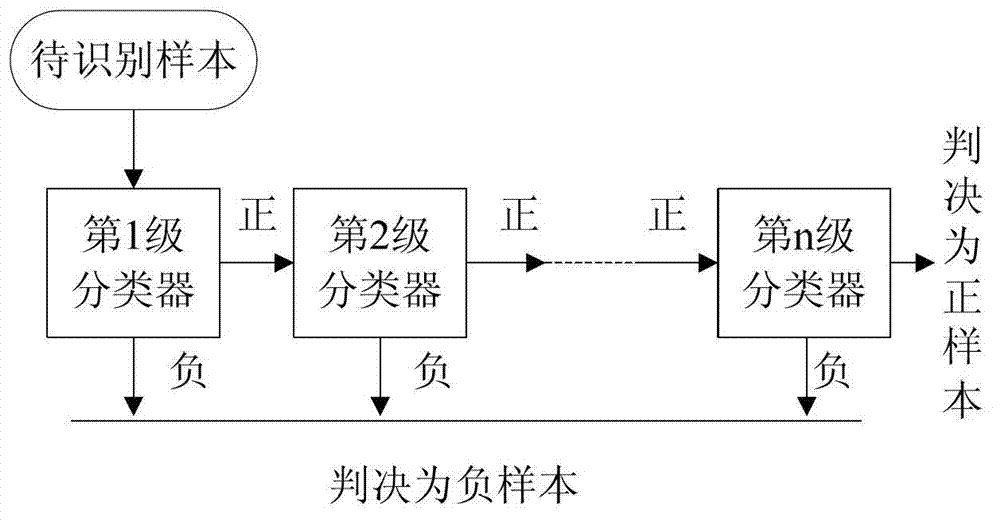

Method and system for authenticating shielded face

InactiveCN102855496AReduce training timeEasy to detectCharacter and pattern recognitionFace detectionSupport vector machine

The invention discloses a method and a system for authenticating a shielded face, wherein the method comprises the following steps: S1) collecting a face video image; S2) preprocessing the collected face video image; S3) performing detection calculation on the shielded face, evaluating a position of a face image by utilizing a three-frame difference method according to motion information of a video sequence, and further confirming the position of the face according to an Adaboost algorithm; and S4) performing authenticating calculation on the shielded face, dividing a face sample into a plurality of sub-blocks, performing shielding distinguishment on the sub-blocks of the face by adopting a SVM(Support Vector Machine) binary algorithm combined with a supervising 1-NN k-Nearest neighbor method, if the sub-blocks are shielded, directly abandoning the sub-blocks, and if the sub-blocks are not shielded, extracting a corresponding LBP (Length Between Perpendiculars) textural feature vector for performing weighting identification, and then using a classifier based on a rectangular projection method to reduce feature matching times. According to the method for authenticating the shielded face, the detection rate and the detection speed for the local shielded face are effectively increased.

Owner:SUZHOU UNIV

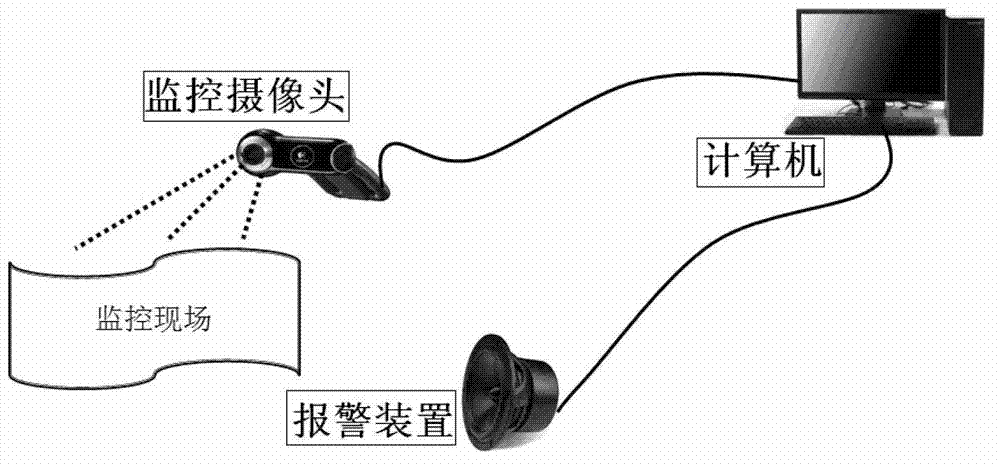

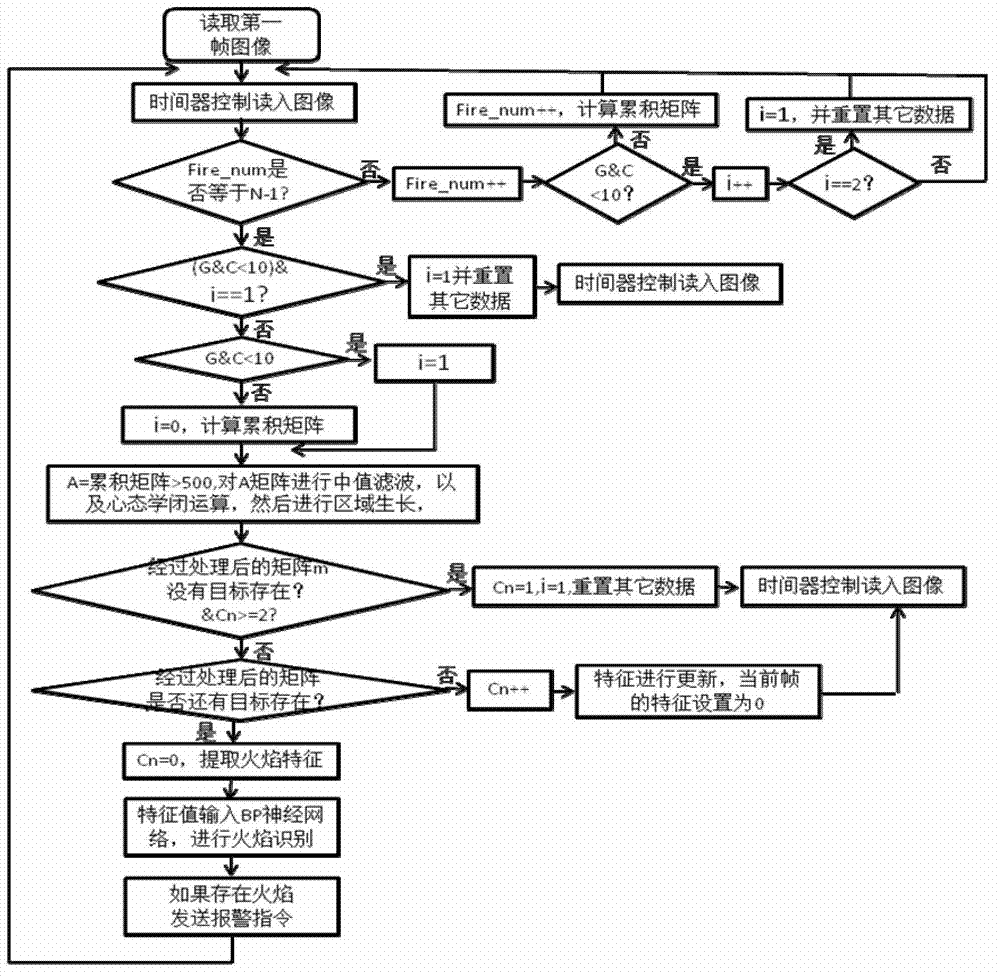

Video flame detecting method based on multi-feature fusion technology

InactiveCN103116746AExcellent capture qualityChoose from a wide range of applicationsCharacter and pattern recognitionDecision modelMulti target tracking

The invention provides a video flame detecting method based on a multi-feature fusion technology. The video flame detecting method includes firstly using a cumulative geometrical independent component analysis (C-GICA) method to capture a moving target in combination with a flame color decision model, tracking moving targets in current and historical frames in combination with a multi-target tracking technology based on moving target areas, extracting color features, edge features, circularity degrees and textural features of the targets, inputting the features into a back propagation (BP) neural network, and further detecting flames after the decision of the BP neural network. According to the video flame detecting method, spatial-temporal features of the moving features, color features, textural features and the like are comprehensively applied, the defects of algorithms of existing video flame detecting technologies are overcome, and reliability and applicability of the video flame detecting method are effectively improved.

Owner:UNIV OF SCI & TECH OF CHINA

Fuzzy clustering steel plate surface defect detection method based on pre classification

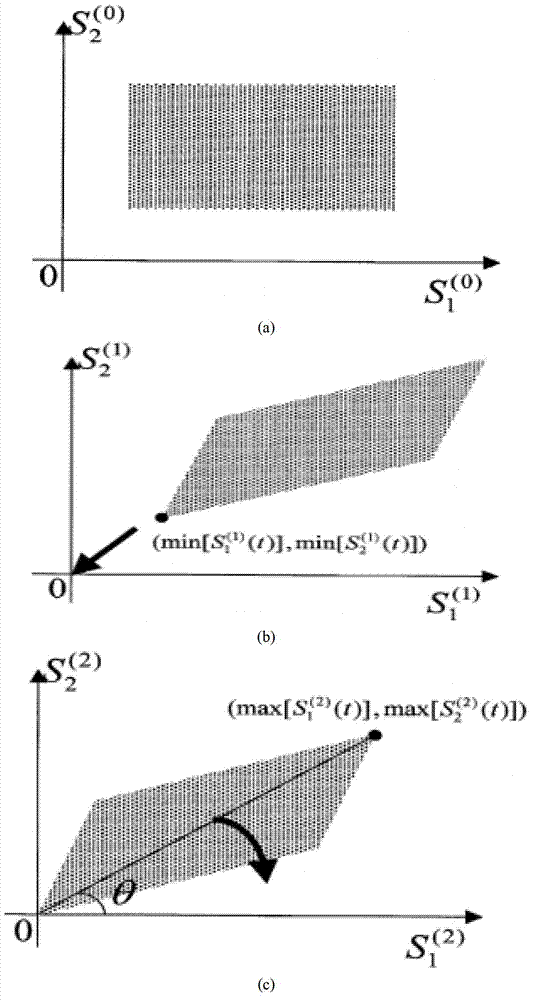

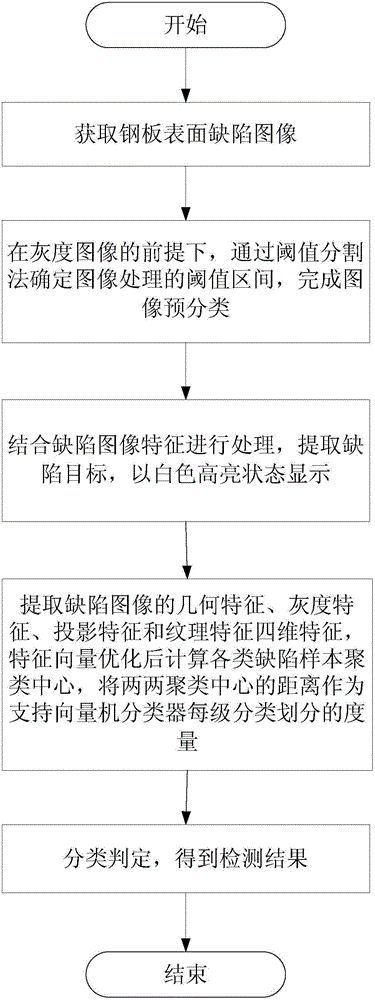

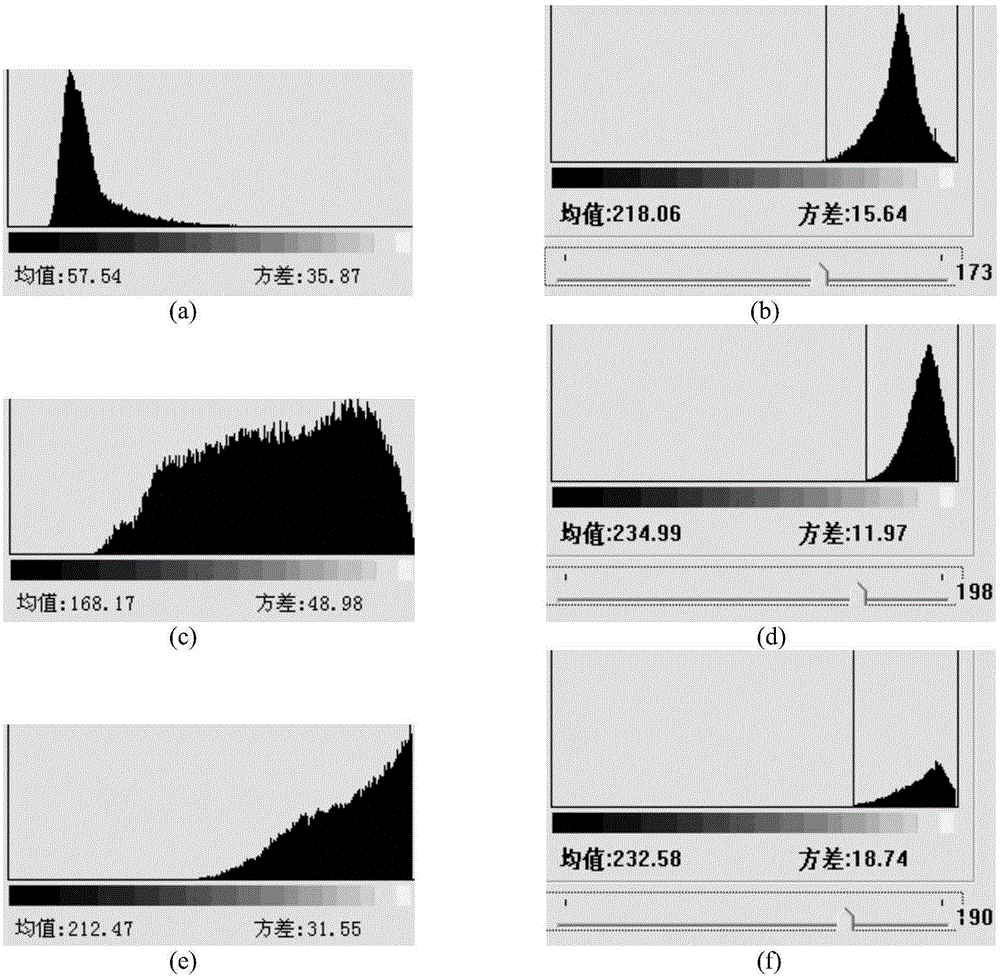

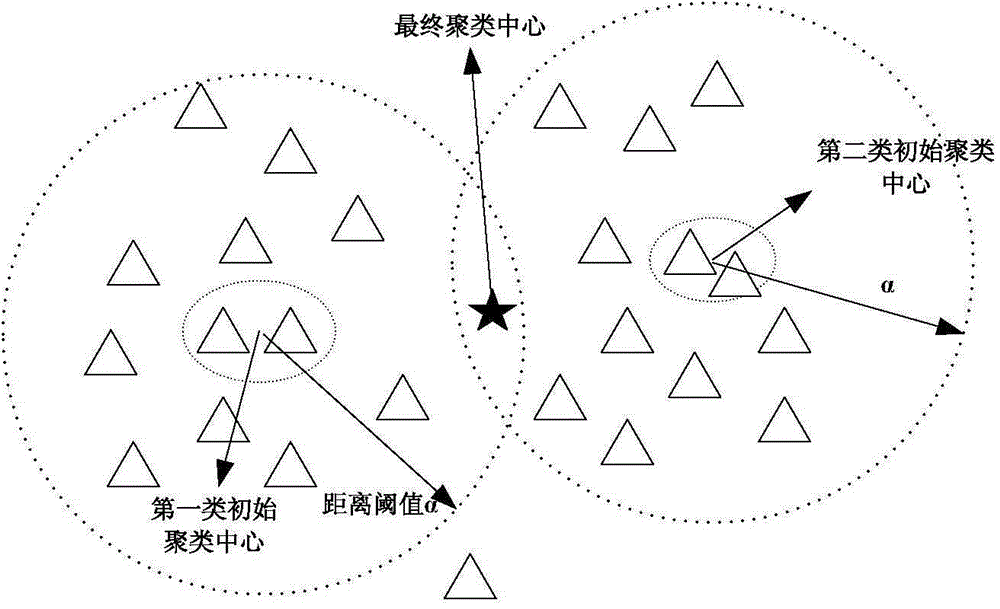

InactiveCN104794491AFast classificationImprove accuracyImage analysisCharacter and pattern recognitionGray levelDimensionality reduction

The invention relates to the technical field of digital image processing and pattern recognition, discloses a fuzzy clustering steel plate surface defect detection method based on pre classification and aims to overcome defects of judgment missing and mistaken judgment by the existing steel plate surface detection method and improve the accuracy of steel plate surface defect online real-time detection effectively during steel plate surface defect detection. The method includes the steps of 1, acquiring steel plate surface defect images; 2 performing pre classification on the images acquired through step 1, and determining the threshold intervals of image classification; 3, classifying images of the threshold intervals of the step 2, and generating white highlighted defect targets; 4, extracting geometry, gray level, projection and texture characteristics of defect images, determining input vectors supporting a vector machine classifier through characteristic dimensionality reduction, calculating the clustering centers of various samples by the fuzzy clustering algorithm, and adopting the distances of two cluster centers as scales supporting the vector machine classifier to classify; 5, determining classification, and acquiring the defect detection results.

Owner:CHONGQING UNIV

Contact lens materials, designs, substances, and methods

InactiveUS20080002149A1Improve performanceReduce the possibilityOptical partsPerformance enhancementReduced size

A contact lens is provided that is capable of being worn by a user. The lens includes a contact lens body having an eye-engaging surface placeable against a surface of an eye and an outer surface. Microphobic features are provided in the lens for eliminating microbes from the eye-engaging surface. Preferably, these microphobic features are chosen from a group consisting of electrical charge inducing agents, magnetic field inducing agents, chemical agents and textural features. In another embodiment of the present invention, a contact lens is provided that can include a reservoir portion capable of holding a performance enhancement agent for enhancing the performance of the lens. The performance enhancement agent can include such thins as sealant solutions, protective agents, therapeutic agents, anti-microbial agents, medications and reduced size transparent portions. In other embodiments, a wide variety of designs, materials and substances are disclosed for use with contact lenses.

Owner:DOMESTIC ASSET LLP

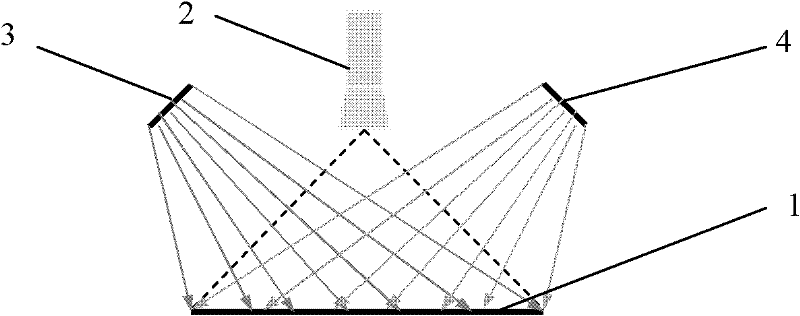

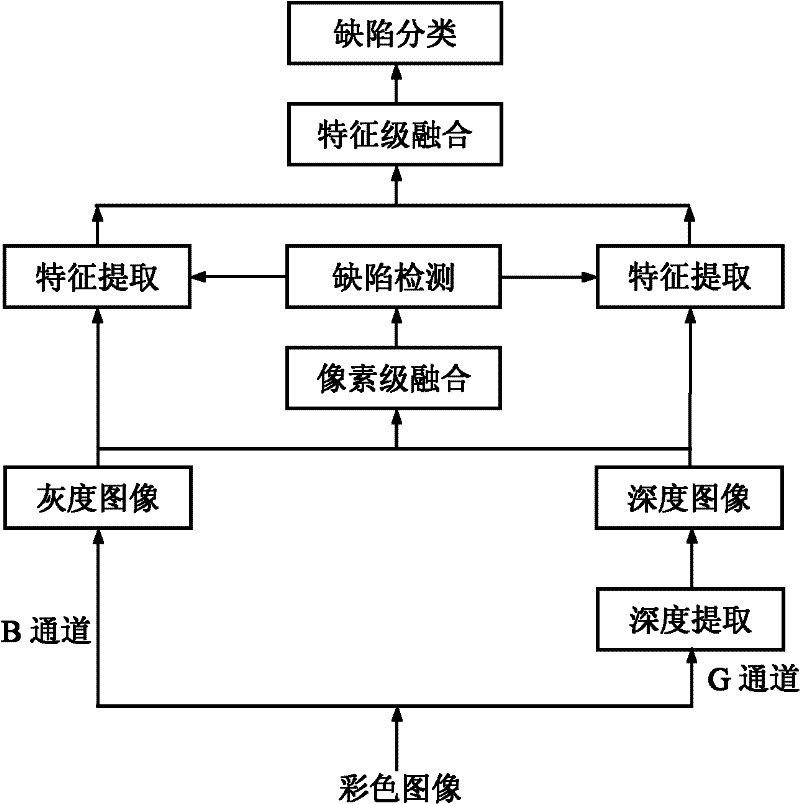

A Surface Defect Detection Method Based on Fusion of Gray Level and Depth Information

ActiveCN102288613AImprove accuracyAccurate detectionImage enhancementOptically investigating flaws/contaminationEdge extractionSurface structure

The invention relates to an on-line detecting method for surface defects of an object and a device for realizing the method. The accuracy for the detection and the distinguishing of the defects is improved through the fusion of grey and depth information, and the method and the device can be applied to the detection of the object with a complicated shape and a complicated surface. A grey image and a depth image of the surface of the object are collected by utilizing the combination of a single colored area array CCD (charge-coupled device) camera and a plurality of light sources with different colors, wherein obtaining of the depth information is achieved through a surface structured light way. The division and the defect edge extraction of the images are carried out through the pixel level fusion of the depth image and the grey image, so that the area where the defects are positioned can be detected more accurately. According to the detected area with the defects, the grey characteristics, the texture characteristics and the two-dimensional geometrical characteristics of the defects are extracted from the grey image; the three-dimensional geometrical characteristics of the defects are extracted from the depth image; further, the fusion of characteristic levels is carried out; and a fused characteristic quantity is used as the input of a classifier to classify the defects, thereby achieving the distinguishing of the defects.

Owner:UNIV OF SCI & TECH BEIJING

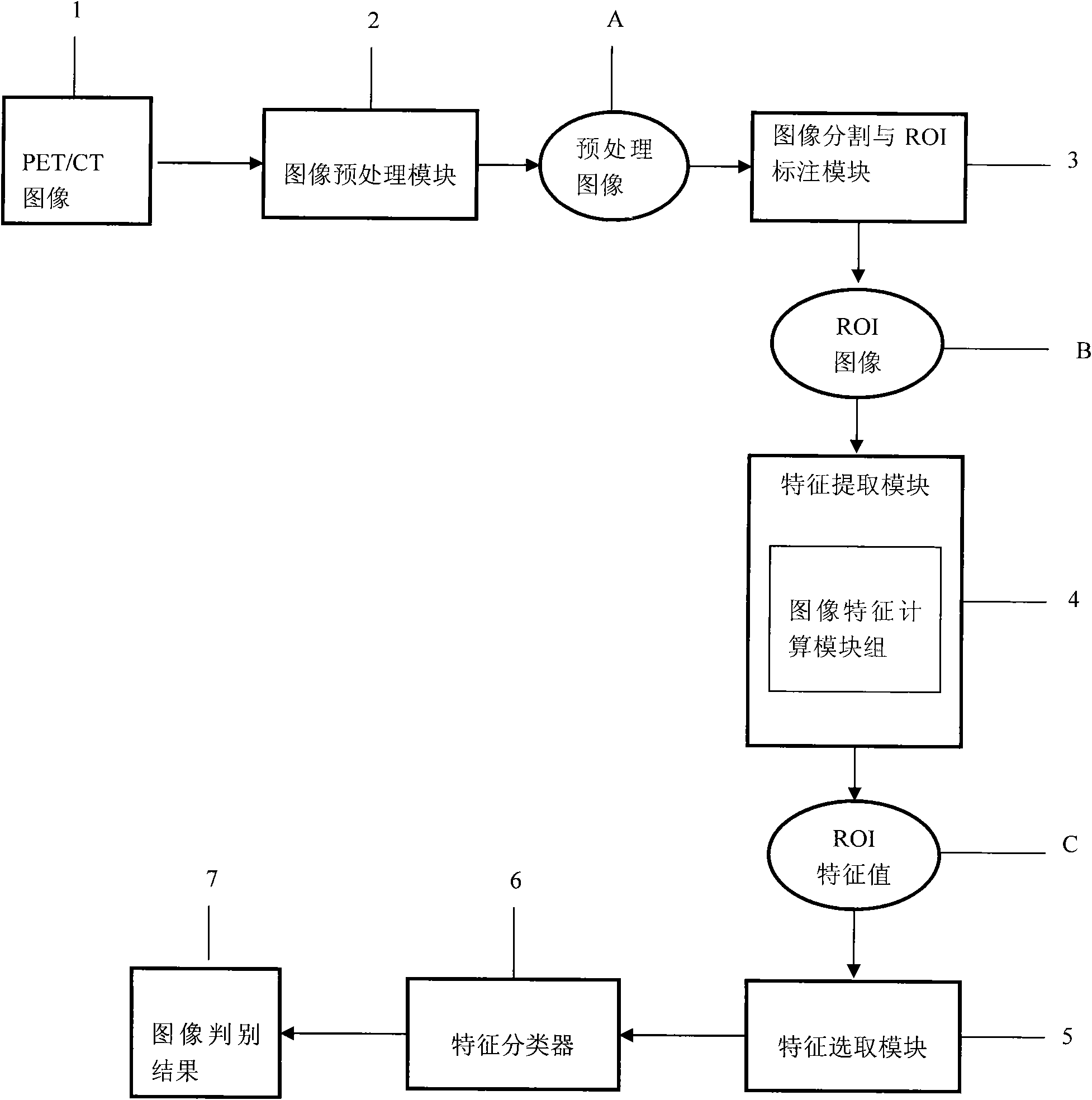

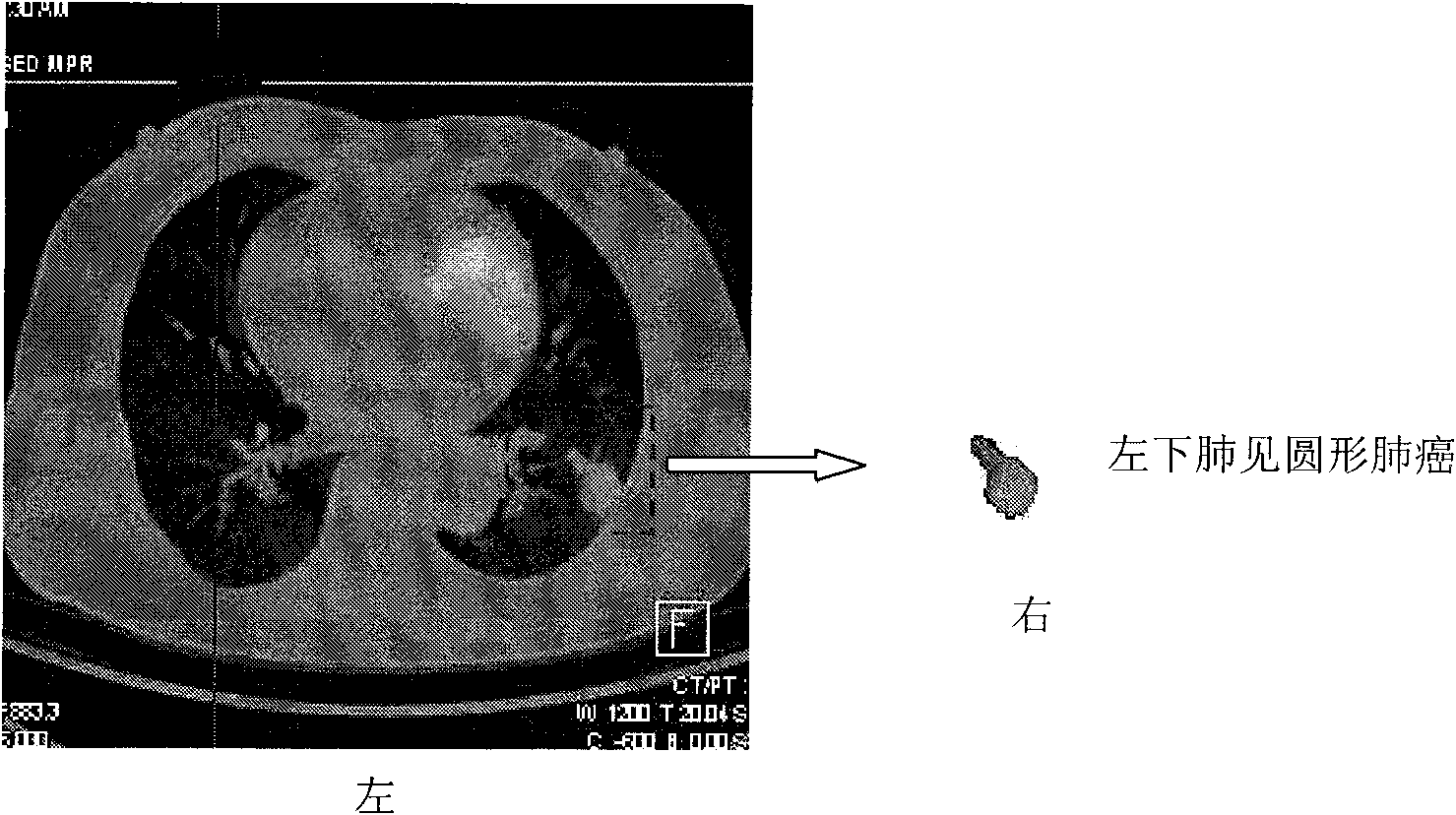

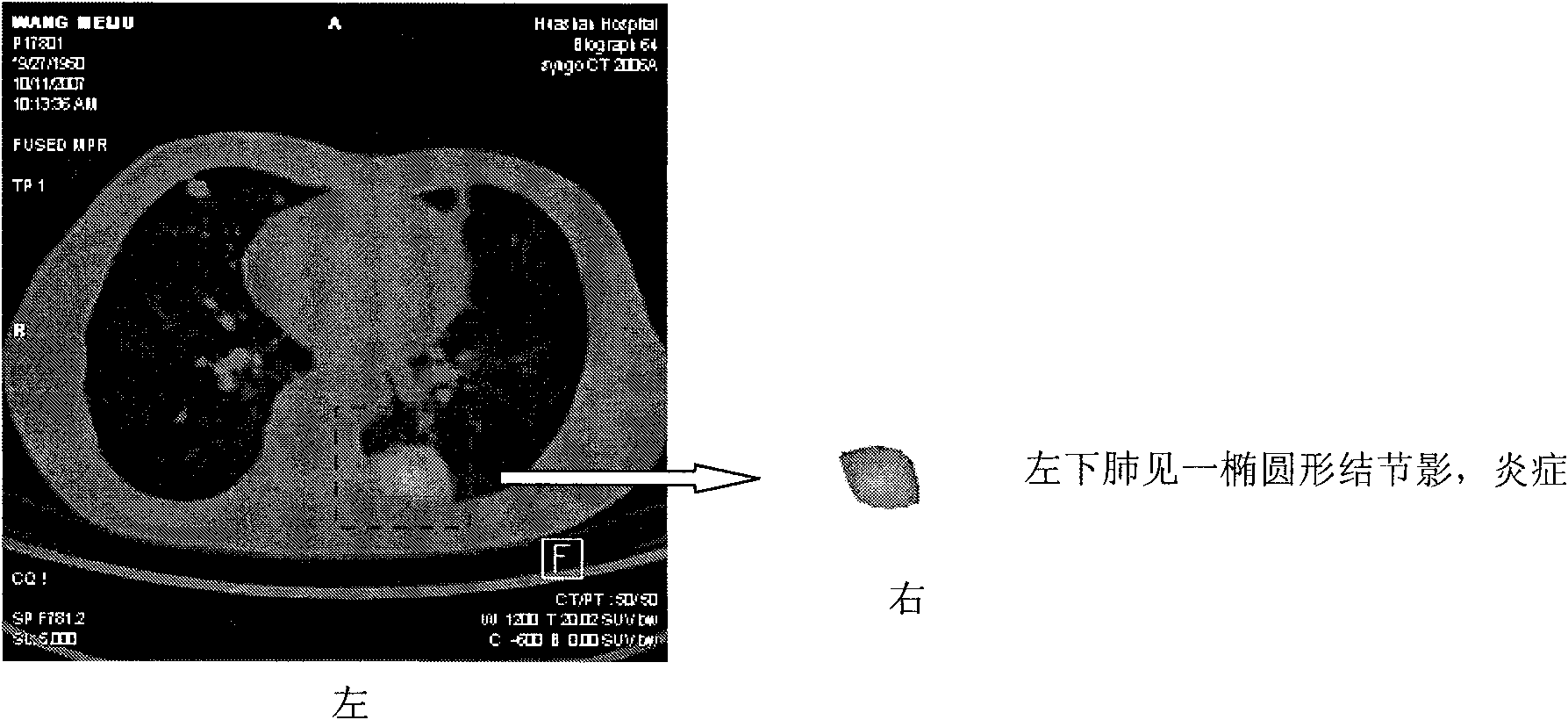

System for detecting pulmonary malignant tumour and benign protuberance based on PET/CT image texture characteristics

InactiveCN101669828AImprove processing efficiencyImprove user experienceImage analysisComputerised tomographsTumour tissueFungating tumour

The invention relates to a system for detecting a pulmonary malignant tumour and a benign protuberance based on PET / CT image texture characteristics, belonging to the technical field of medical digital image processing. The invention has the major function of searching useful texture characteristics from a PET / CT picture to better distinguish a pulmonary tumour tissue and a benign tissue. The system comprises the following steps: firstly, dividing a region of interest ROI from the PET / CT image and then extracting five texture characteristics of roughness, contrast, busyness, complexity and intensity of the ROI; then, carrying out classifying discrimination on the characteristics by distance calculation and a characteristic classifier and distinguishing the malignant tumour and the benign protuberance effectively by the combined data of various characteristics.

Owner:FUDAN UNIV

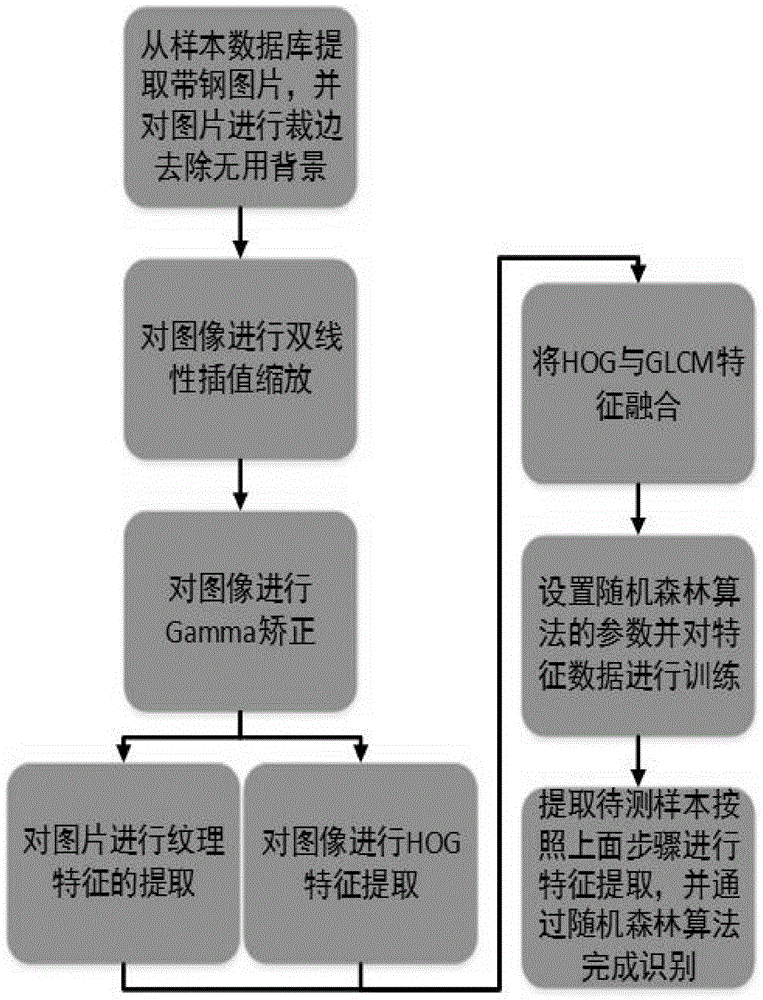

Strip steel surface area type defect identification and classification method

ActiveCN104866862AEasy to handleAccurate identificationCharacter and pattern recognitionFeature setHistogram of oriented gradients

The invention discloses a strip steel surface area type defect identification and classification method which comprises the following steps: extracting strip steel surface pictures in a training sample database, removing useless backgrounds and keeping the category of the pictures to a corresponding label matrix; carrying out bilinear interpolation algorithm zooming on the pictures; carrying out color space normalization on images of the zoomed pictures by adopting a Gamma correction method; carrying out direction gradient histogram feature extraction on the corrected pictures; carrying out textural feature extraction on the corrected pictures by using a gray-level co-occurrence matrix; combining direction gradient histogram features and textural features to form a feature set, which comprises two main kinds of features, as a training database; training the feature data with an improved random forest classification algorithm; carrying out bilinear interpolation algorithm zooming, Gamma correction, direction gradient histogram feature extraction and textural feature extraction on the strip steel defect pictures to be identified in sequence; and then, inputting the feature data into an improved random forest classifier to finish identification.

Owner:CENT SOUTH UNIV

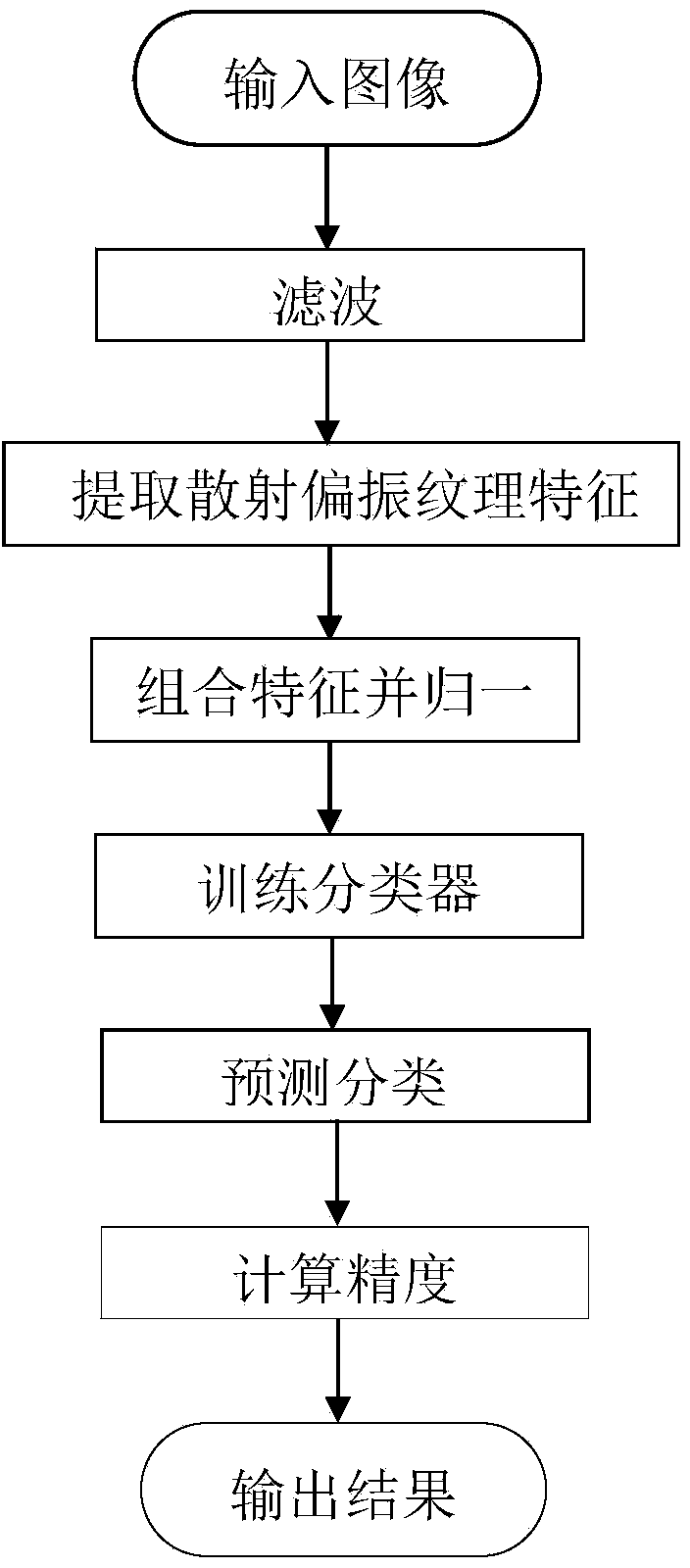

Polarimetric SAR (Synthetic Aperture Radar) image classification method based on SDIT (Secretome-Derived Isotopic Tag) and SVM (Support Vector Machine)

InactiveCN103824084ALow riskAvoid the curse of dimensionalityCharacter and pattern recognitionSupport vector machineImaging quality

The invention discloses a polarimetric SAR (Synthetic Aperture Radar) image classification method based on an SDIT (Secretome-Derived Isotopic Tag) and an SVM (Support Vector Machine). The method comprises the implementation steps of (1) inputting an image, (2) filtering, (3) extracting scattering and polarization textural features, (4) combining and normalizing the features, (5) training a classifier, (6) predicting classification, (7) calculating precision and (8) outputting a result. Compared with an existing method, the polarimetric SAR image classification method based on the SDIT and the SVM enables the empirical risk and the expected risk to be minimal at the same time, and has the advantages of high generalization capability and low classification complexity and also the advantages of describing the image characteristics comprehensively and meticulously and improving the classification precision, and in the meantime, the polarimetric SAR image classification method has a good denoising effect, and further is capable of enabling the outlines and edges of the polarimetric SAR images to be clear, improving the image quality, and enhancing the polarimetric SAR image classification performance.

Owner:XIDIAN UNIV

Automatic vehicle body color recognition method of intelligent vehicle monitoring system

ActiveCN102184413AOvercome the effects of color recognitionImprove recognition accuracyCharacter and pattern recognitionTemplate matchingFeature Dimension

The invention discloses an automatic vehicle body color recognition method of an intelligent vehicle monitoring system. The method comprises the following steps: firstly detecting a feature region on the behalf of a vehicle body color according to the position of a plate number and the textural features of the vehicle body; then, carrying out color space conversion and vector space quantization synthesis on pixels of the vehicle body feature region, and extracting normalization features of an obscure histogram Bin from the quantized vector space; carrying feature dimension reduction on the acquired high-dimension features by adopting an LDA (Linear Discriminant Analysis) method; carrying out various subspace analysis on the vehicle body color, then carrying out vehicle body color recognition of the subspaces by utilizing the recognition parameters of an offline training classifier, and adopting a multi-feature template matching or SVM (Space Vector Modulation) method; and finally, correcting color with easy intersection and low reliability according to the initial recognition reliability and color priori knowledge, so as to obtain the final vehicle body recognition result. The automatic vehicle body color recognition method is applicable to conditions of daylight, night and sunshine and is fast in recognition speed and high in recognition accuracy.

Owner:ZHEJIANG DAHUA TECH CO LTD

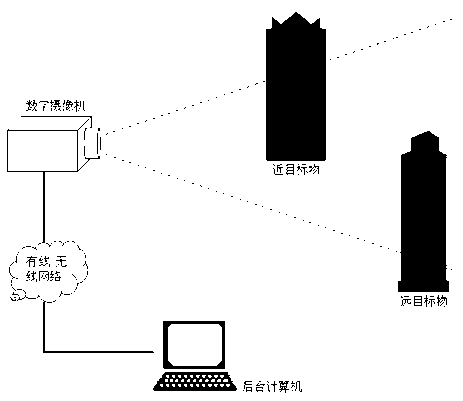

Haze monitoring method based on computer vision

InactiveCN103218622ALow costEasy to implementCharacter and pattern recognitionFeature vectorGrey level

Provided is a haze monitoring method based on computer vision. The monitoring method includes the steps: collecting data of pre-set far and near target regions which are dark in color in a scene, and giving haze monitoring results based on comparison of the computation of target object visual features and sample images under different haze conditions. Visual features expressing an image comprises color features containing pixel color saturation mean value and blue component mean value, shape features containing feature point number and edge pixel number, textural features containing grey level co-occurrence matrix features and wavelet transform sub-band coefficients, and feature vectors expressing differences between a far target object and a near target object. According to the haze monitoring method based on computer vision, a direct measurement method by means of visual features which is closely associated to manual observation of the haze in principle is put forward, comprehensive monitoring of haze conditions in an entire region is achieved easily, and high-precision monitoring results can be guaranteed with enough sample data.

Owner:WUHAN UNIV

Method for estimating aboveground biomass of rice based on multi-spectral images of unmanned aerial vehicle

ActiveUS20200141877A1Low input data requirementImprove estimation accuracyImage enhancementInvestigation of vegetal materialMultivariate linear modelVegetation Index

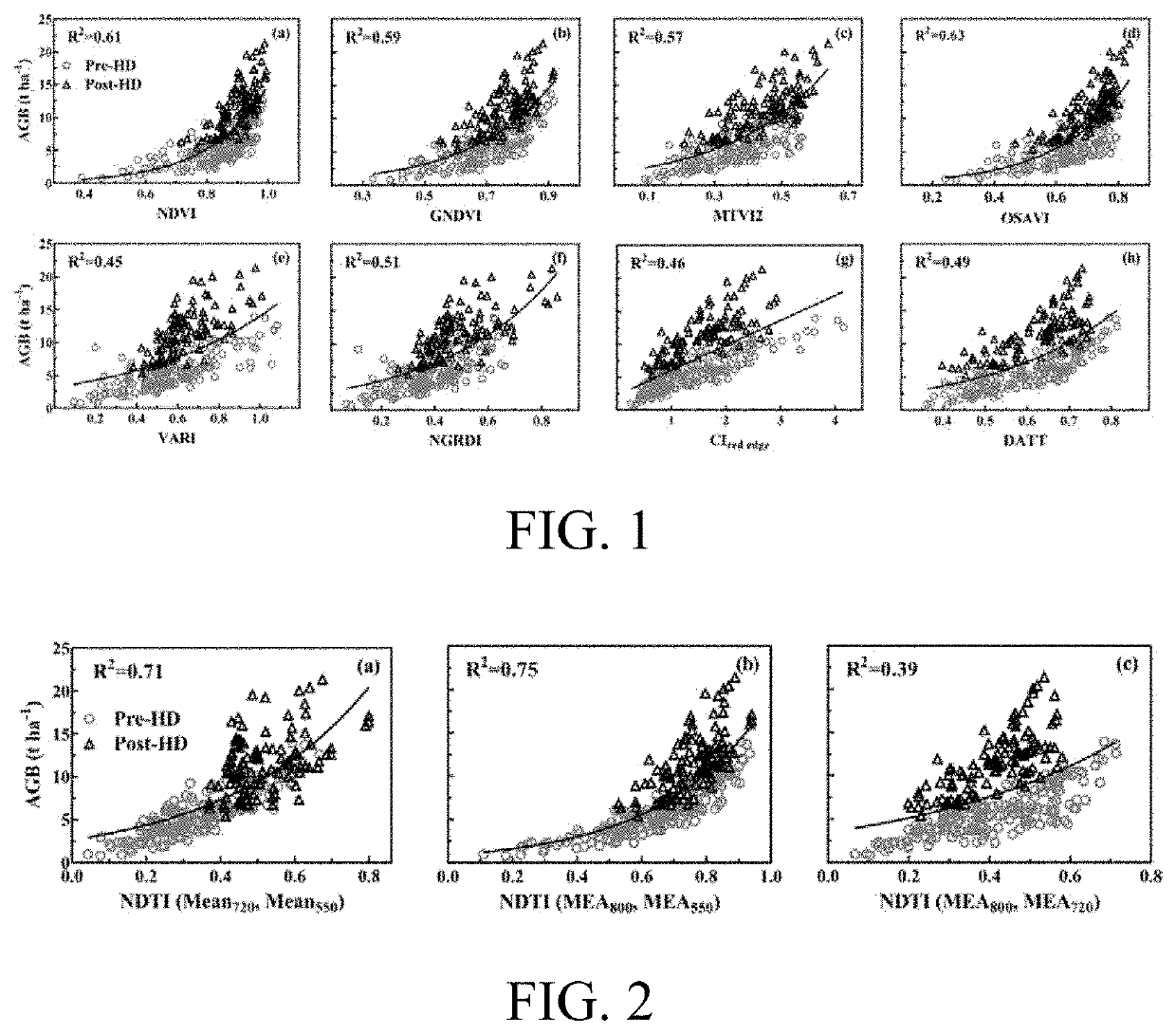

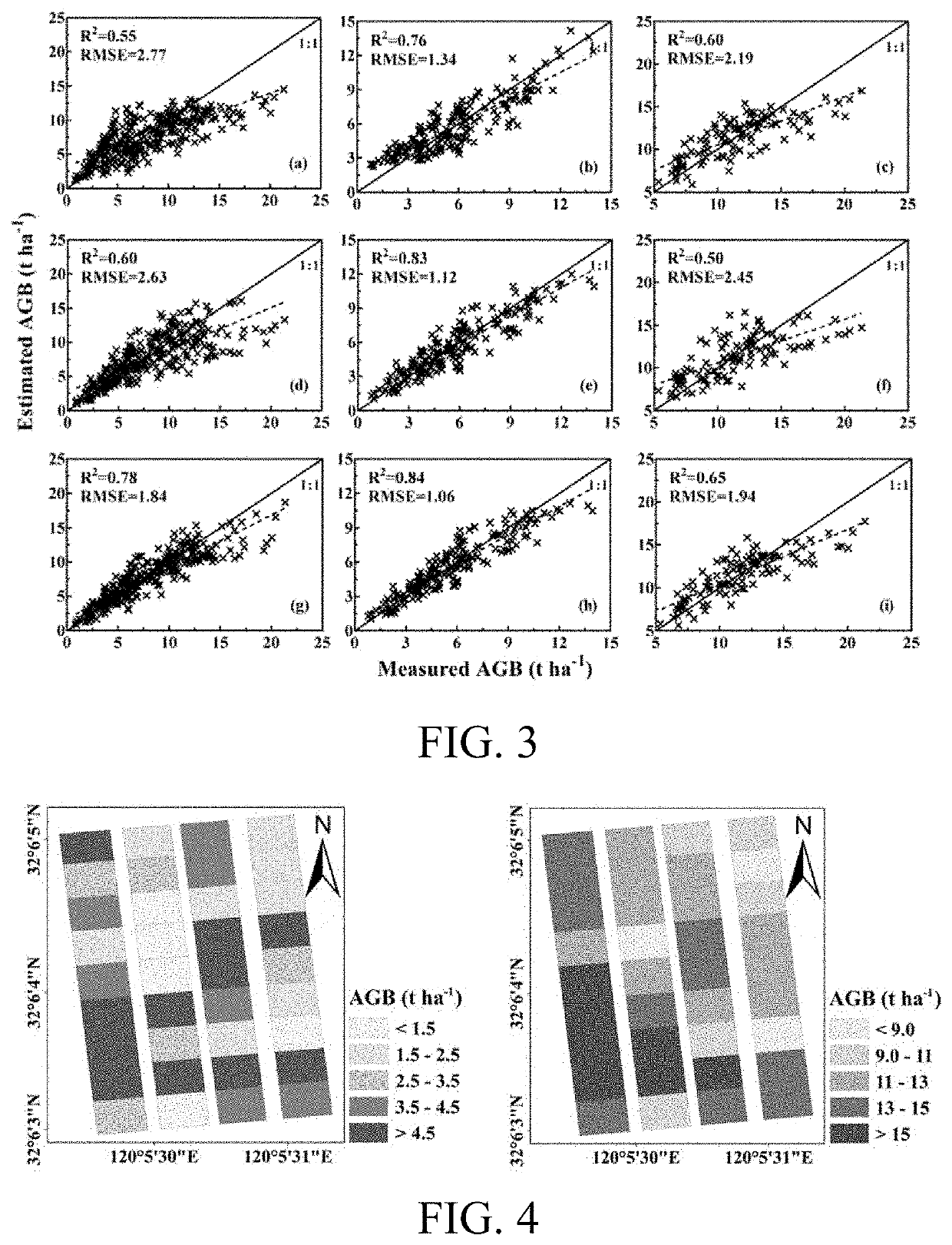

A method for estimating the aboveground biomass of rice based on multi-spectral images of an unmanned aerial vehicle (UAV), including: normatively collecting UAV multi-spectral image data of rice canopy and ground measured biomass data; after collection, preprocessing images, extracting reflectivity and texture feature parameters, calculating a vegetation index, and constructing a new texture index; and by stepwise multiple regression analysis, integrating the vegetation index and the texture index to estimate rice biomass, and establishing a multivariate linear model for estimating biomass. A new estimation model is verified for accuracy by a cross-validation method. The method has high estimation accuracy and less requirements on input data, and is suitable for the whole growth period of rice. Estimating rice biomass by integrating UAV spectrum and texture information is proposed for the first time, and can be widely used for monitoring crop growth by UAV remote sensing.

Owner:NANJING AGRICULTURAL UNIVERSITY

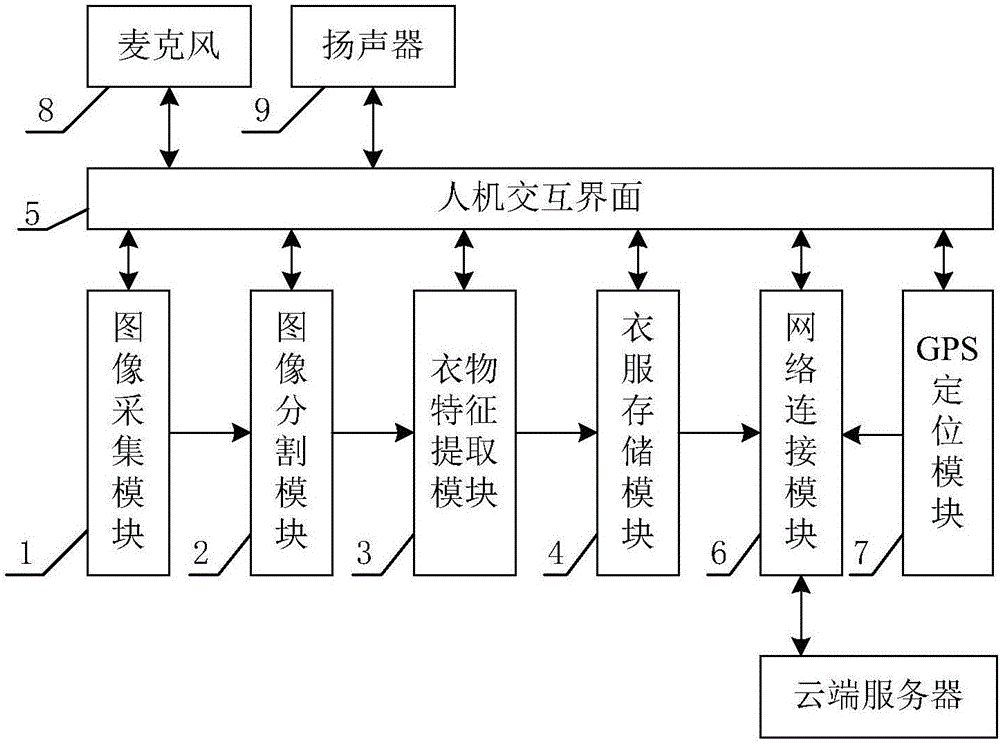

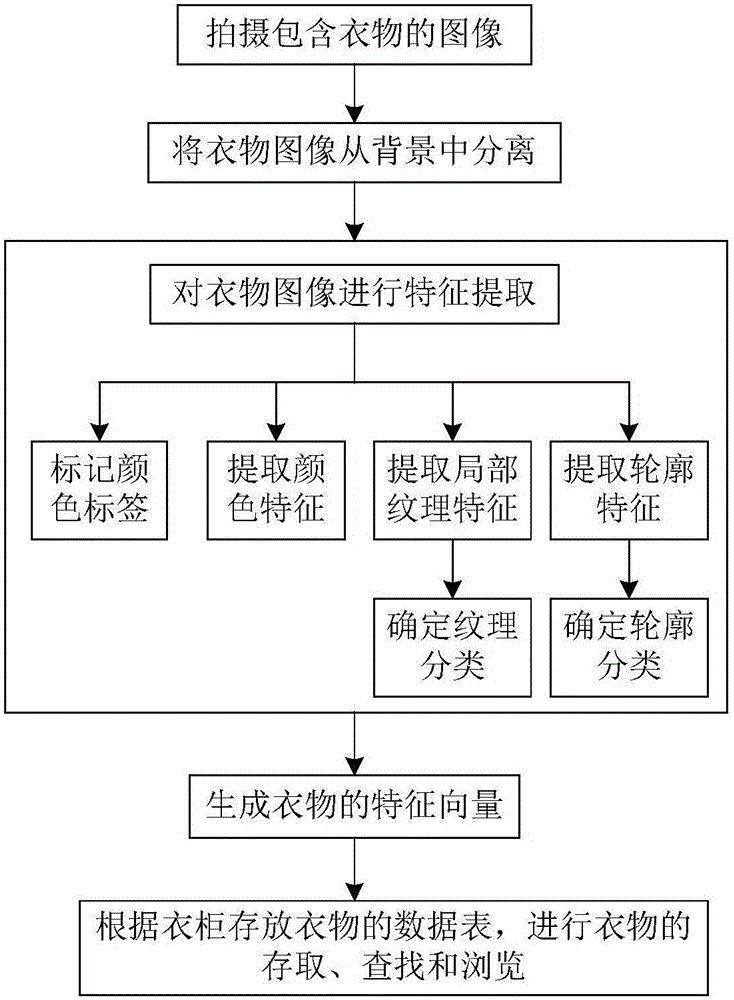

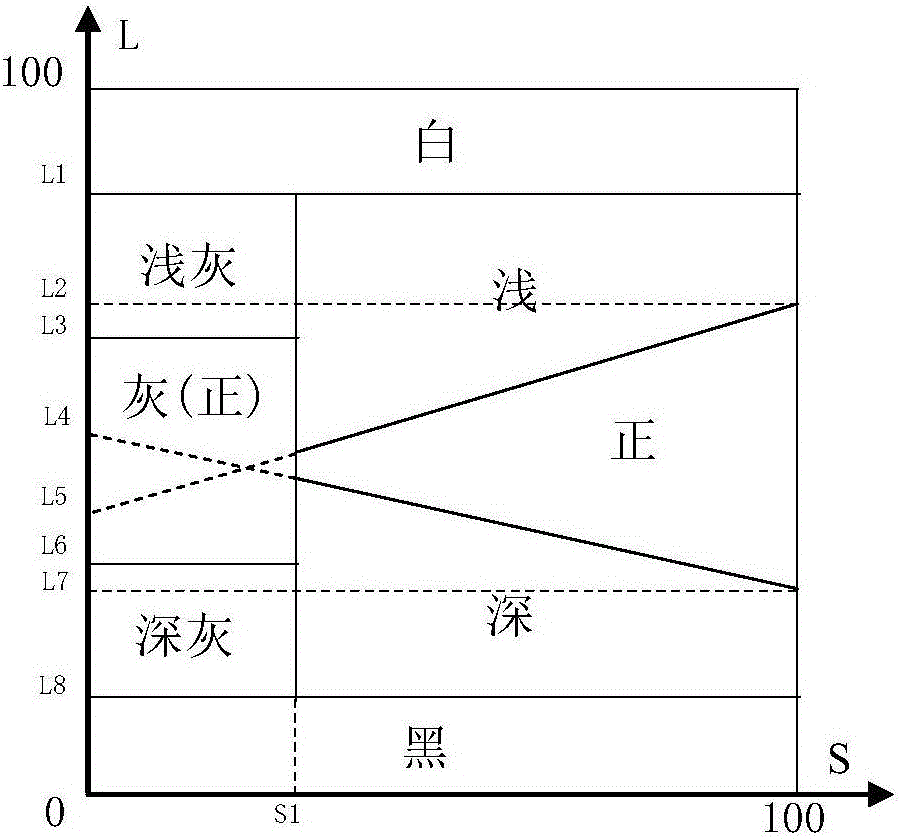

Wardrobe intelligent management apparatus and method

InactiveCN105046280AHumanized managementImprove experienceCharacter and pattern recognitionFeature vectorFeature extraction

The present invention provides a wardrobe intelligent management apparatus and method, and is used to the field of Internet of things and smart home technologies. The wardrobe intelligent management apparatus comprises an image collecting module, an image partitioning module, a clothing feature extracting module, a clothing storage module, and a human-computer interaction interface. The image collecting module shoots an image of clothing. The image partitioning module extracts the image of foreground (clothing). The clothing feature extracting module generates a feature vector of the clothing, wherein the feature vector comprises a clothing image, a color label, a color feature, a local texture feature, a texture category, a contour feature, and a contour category. The clothing storage module records information that the wardrobe stores the clothing. The wardrobe intelligent management method is based on the wardrobe intelligent management apparatus. A data table that clothing is stored in the wardrobe is used, and clothing storage, searching, and browsing are performed. According to the present invention, different clothing can be accurately recognized without adding a superfluous recognizer or changing an existing wardrobe structure, so that a structure is simple and costs are low.

Owner:BEIJING XIAOBAO SCI & TECH CO LTD

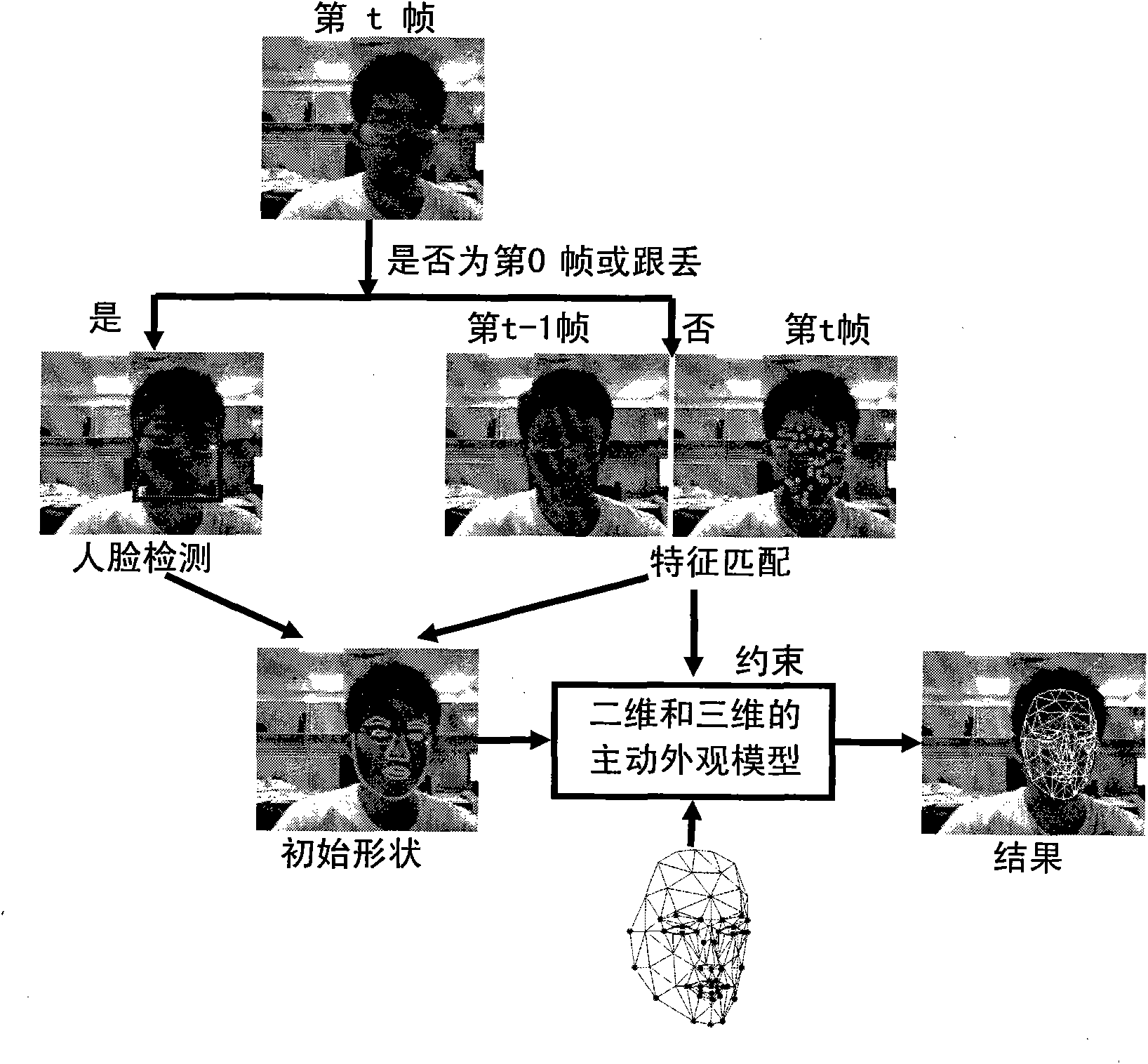

Method for tracking gestures and actions of human face

InactiveCN102402691ANo human intervention requiredFast trackingImage analysisCharacter and pattern recognitionFace detectionFeature point matching

The invention discloses a method for tracking gestures and actions of a human face, which comprises steps as follows: a step S1 includes that frame-by-frame images are extracted from a video streaming, human face detection is carried out for a first frame of image of an input video or when tracking is failed, and a human face surrounding frame is obtained, a step S2 includes that after convergent iteration of a previous frame of image, more remarkable feature points of textural features of a human face area of the previous frame of image match with corresponding feather points found in a current frame of image during normal tracking, and matching results of the feather points are obtained, a step S3 includes that the shape of an active appearance model is initialized according to the human face surrounding frame or the feature point matching results, and an initial value of the shape of a human face in the current frame of image is obtained, and a step S4 includes that the active appearance model is fit by a reversal synthesis algorithm, so that human face three-dimensional gestures and face action parameters are obtained. By the aid of the method, online tracking can be completed full-automatically in real time under the condition of common illumination.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

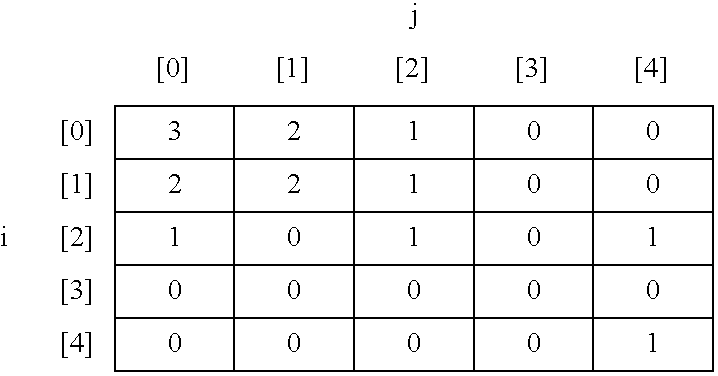

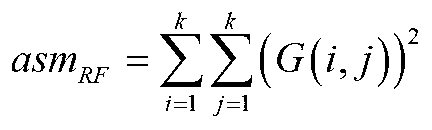

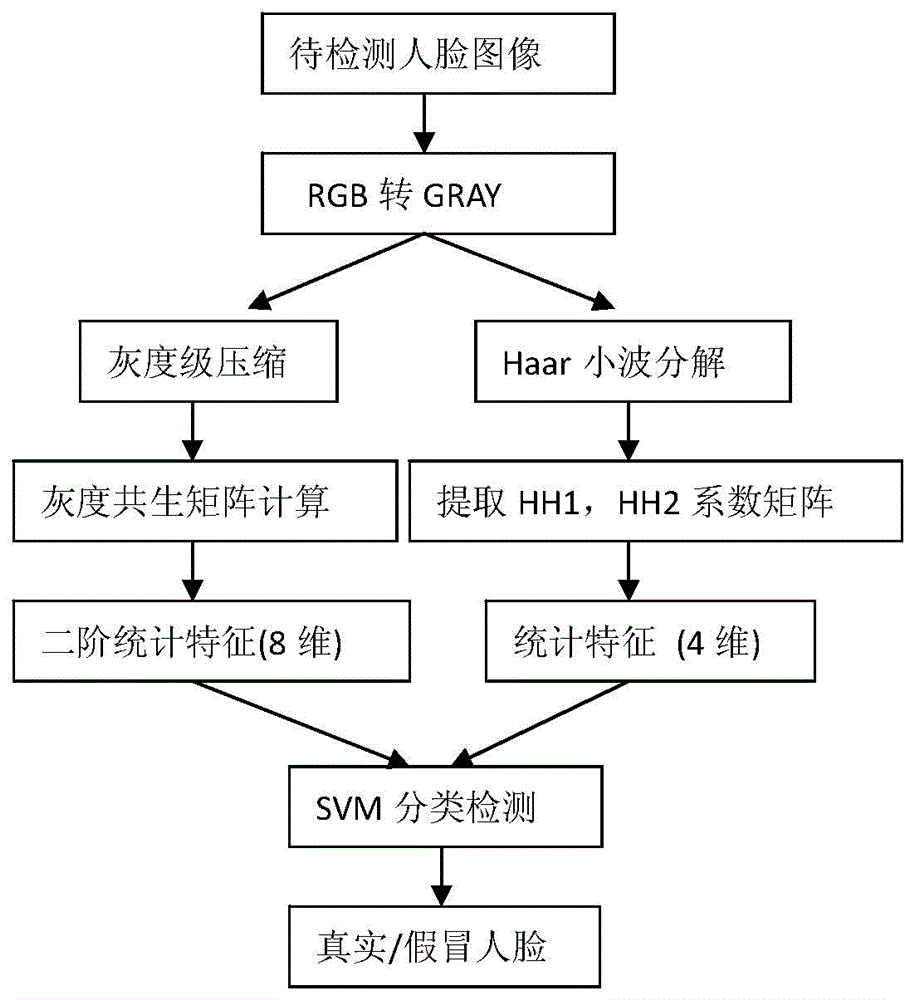

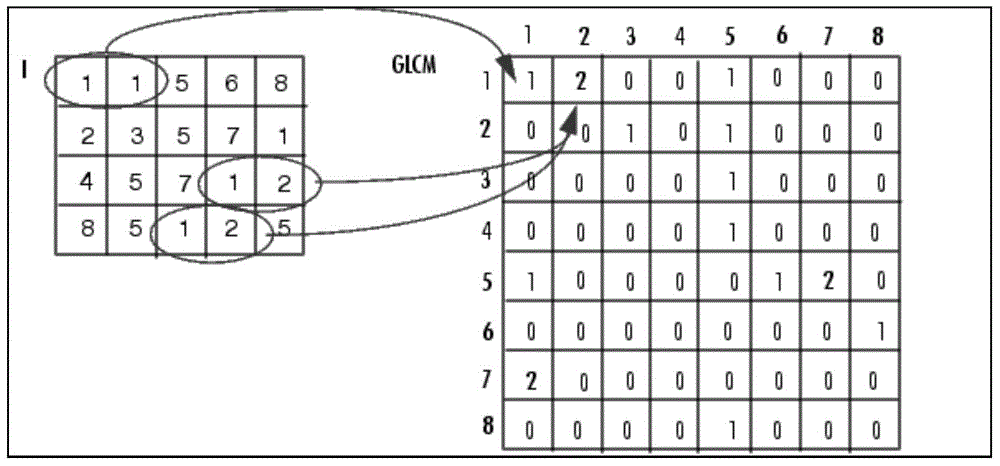

Living body human face detection method based on gray scale symbiosis matrixes and wavelet analysis

The invention discloses a living body human face detection method based on gray scale symbiosis matrixes and wavelet analysis. The method comprises: first of all, converting an RGB image comprising a human face area, which is obtained from a camera, into a gray scale image, compressing a gray scale grade to 16 grades, then respectively calculating four gray scale symbiosis matrixes (taking a distance of 1, and angles of 0 degree, 45 degrees, 90 degrees and 13 degrees respectively ), then extracting four texture characteristic quantities including energy, entropy, moment of inertia and correlation on the basis of the gray scale symbiosis matrixes, and respectively obtaining a mean value and a variance for the four texture characteristic quantities of the four gray scale symbiosis matrixes; at the same time, performing secondary decomposition on an original image by use of a Haar small wavelet base, extracting the coefficient matrixes of sub-bands HH1 and HH2 and obtaining a mean value and a variance; and finally sending all characteristic values as samples to be detected to a trained support vector machine for detection, and performing classification identification on real or counterfeit face images. The method provided by the invention has the advantages of reduced calculating complexity and improved detection accuracy.

Owner:BEIJING UNIV OF TECH

Texture-based insulator fault diagnostic method

InactiveCN102508110AOutstanding FeaturesProminenceImage analysisFault locationHigh pressureFeature fusion

The invention relates to a texture-based insulator fault diagnostic method. According to the invention, a visible light image collected in the inspection process of a high voltage transmission line by a helicopter is used as an object to be processed, and the diagnosis can be carried out based on an insulator fault of the visible light image. The method comprises the following steps of: inputting an insulator image, carrying out gray processing, obtaining a bounding rectangle and rotating, carrying out a GLCM (gray level co occurrence matrix) method, blocking, obtaining textural features, carrying out Gabor filtering, blocking, calculating block-mean value and variance, performing feature fusion, and determining whether to have a string-drop phenomenon based on a threshold value. The method provided by the invention diagnoses the insulator string-drop characteristic by texture, integrates the thoughts of the most classical GLCM texture diagnostic method in the texture diagnosis and the recent research focus Gabor filter texture diagnosis, adjusts the parameter settings of the GLCM and the Gabor filter and efficiently and accurately finds out the string-drop insulators. The method can effectively improve the efficiency of the thermal defect detection of the power transmission line and can be effectively applied to the inspection business of the vehicle-mounted or helicopter power transmission line.

Owner:SHANGHAI UNIV

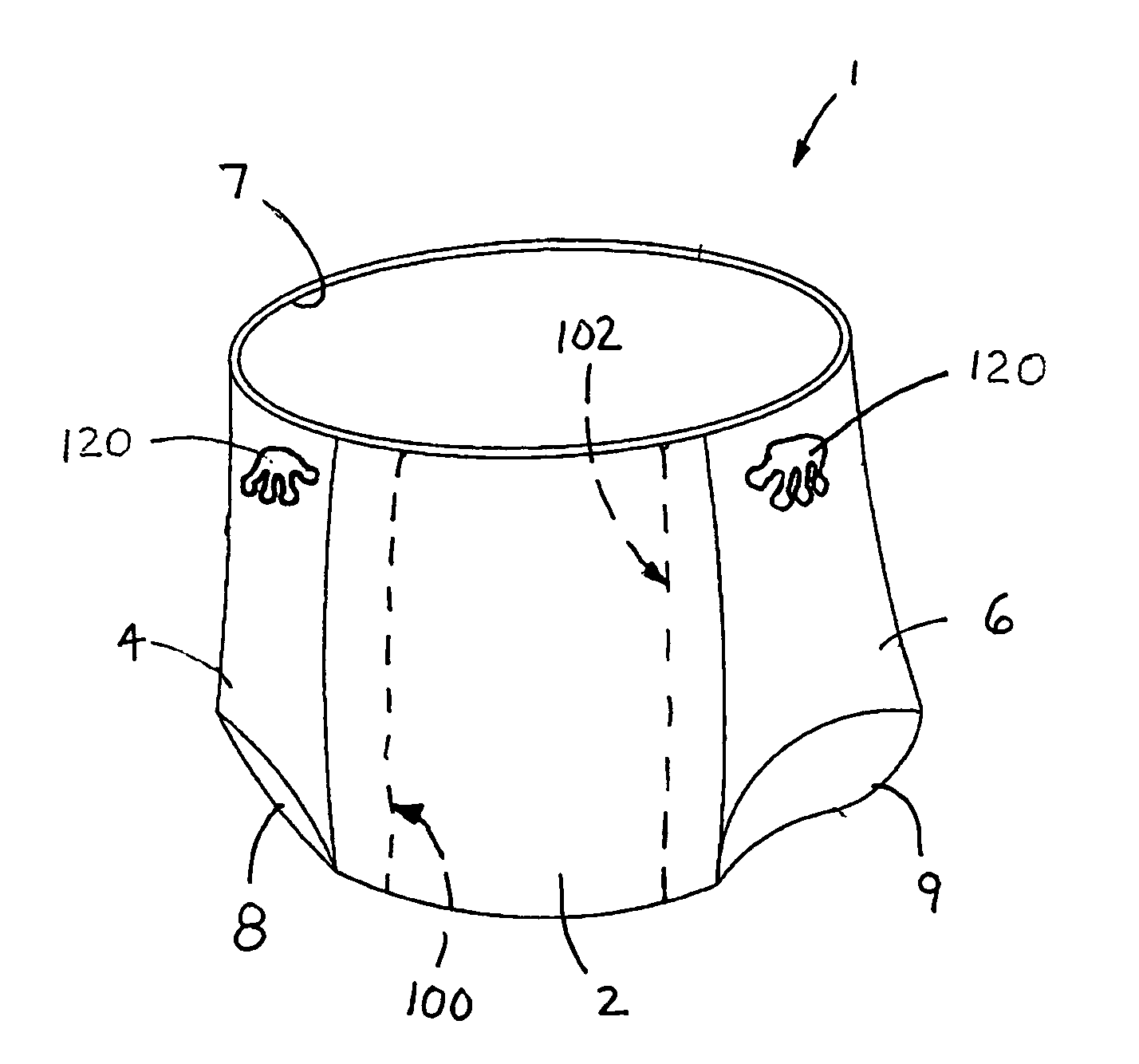

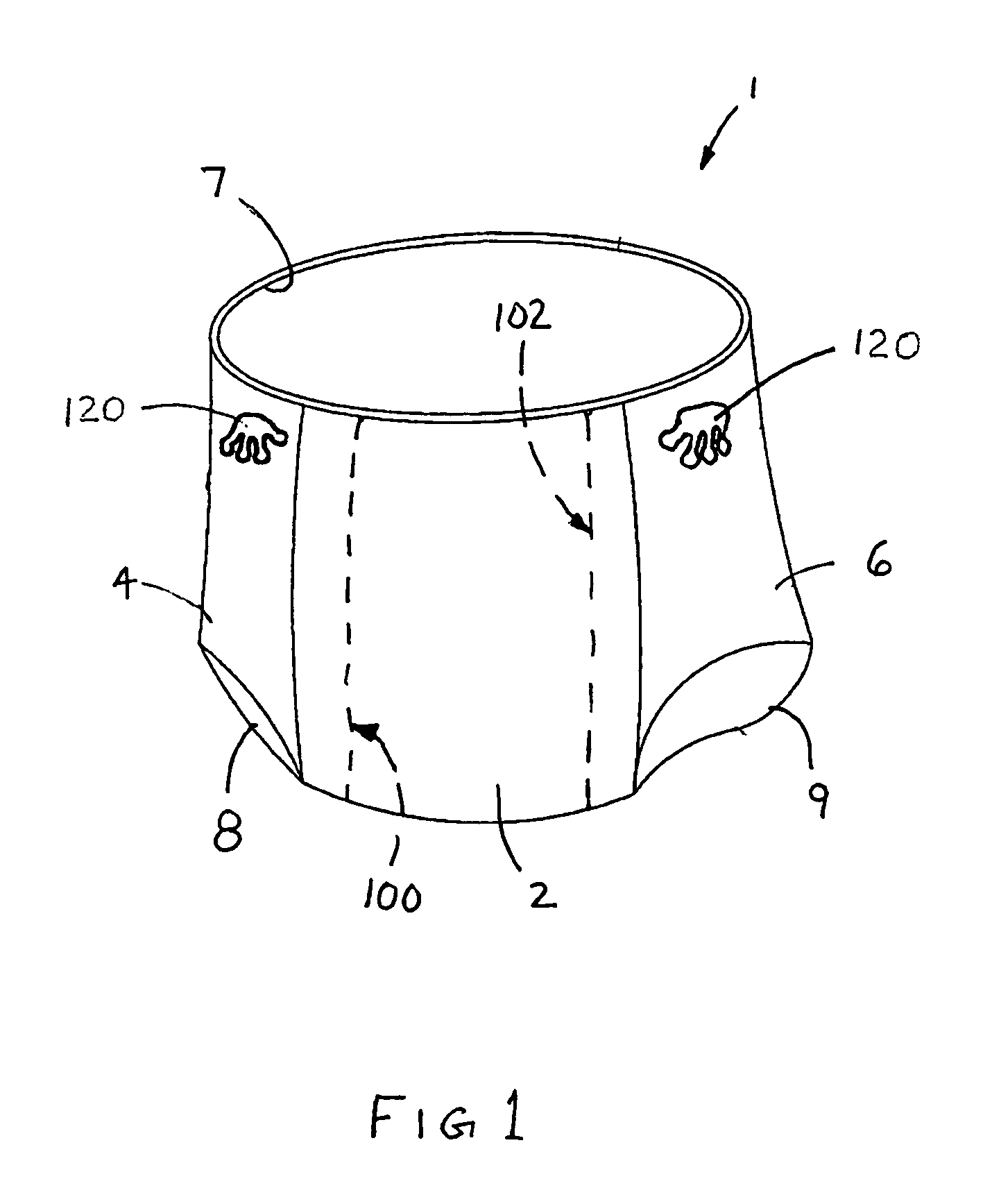

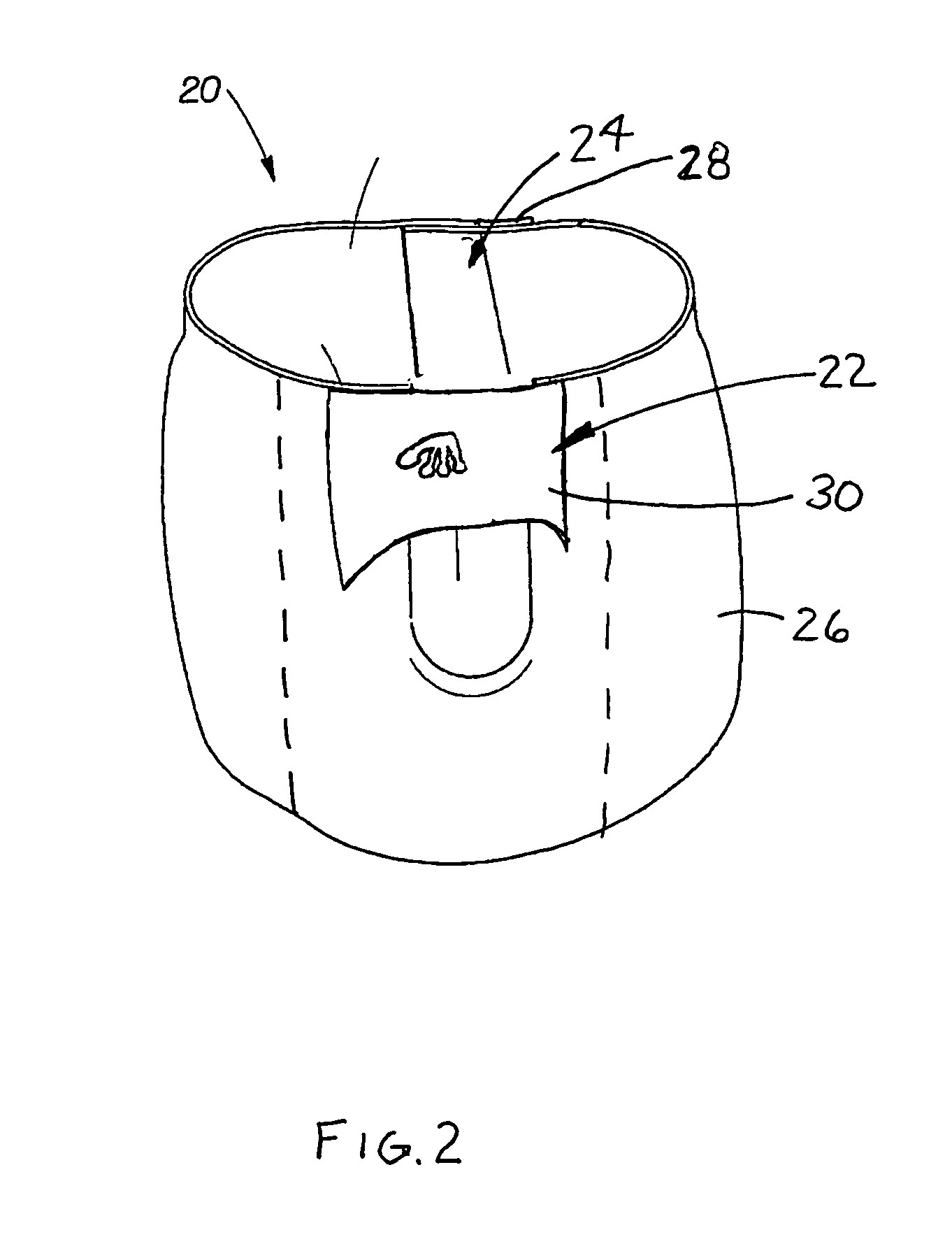

Pull-on wearable article with informational image

A pull-on wearable article includes a main portion including an outer cover, the main portion defining a front waist region, a rear waist region, and a crotch region extending between and connecting the front and rear waist regions. First and second extendable side panels extend between and connect the main portion front waist region and the main portion rear waist region to form the article in a closed waist configuration, at least a portion of each side panel being extendable between a relaxed state and an extended state. The article defines first and second side regions, each side region including one of the first and second side panels and a transverse region of the main portion bordering the respective side panel. An informational image is disposed in at least the first side region. The article may further include a texture feature formed in an outer surface of the article and positioned proximate to the informational image to form a composite image. Alternatively or additionally, the image includes a cognitively functional graphic.

Owner:PROCTER & GAMBLE CO

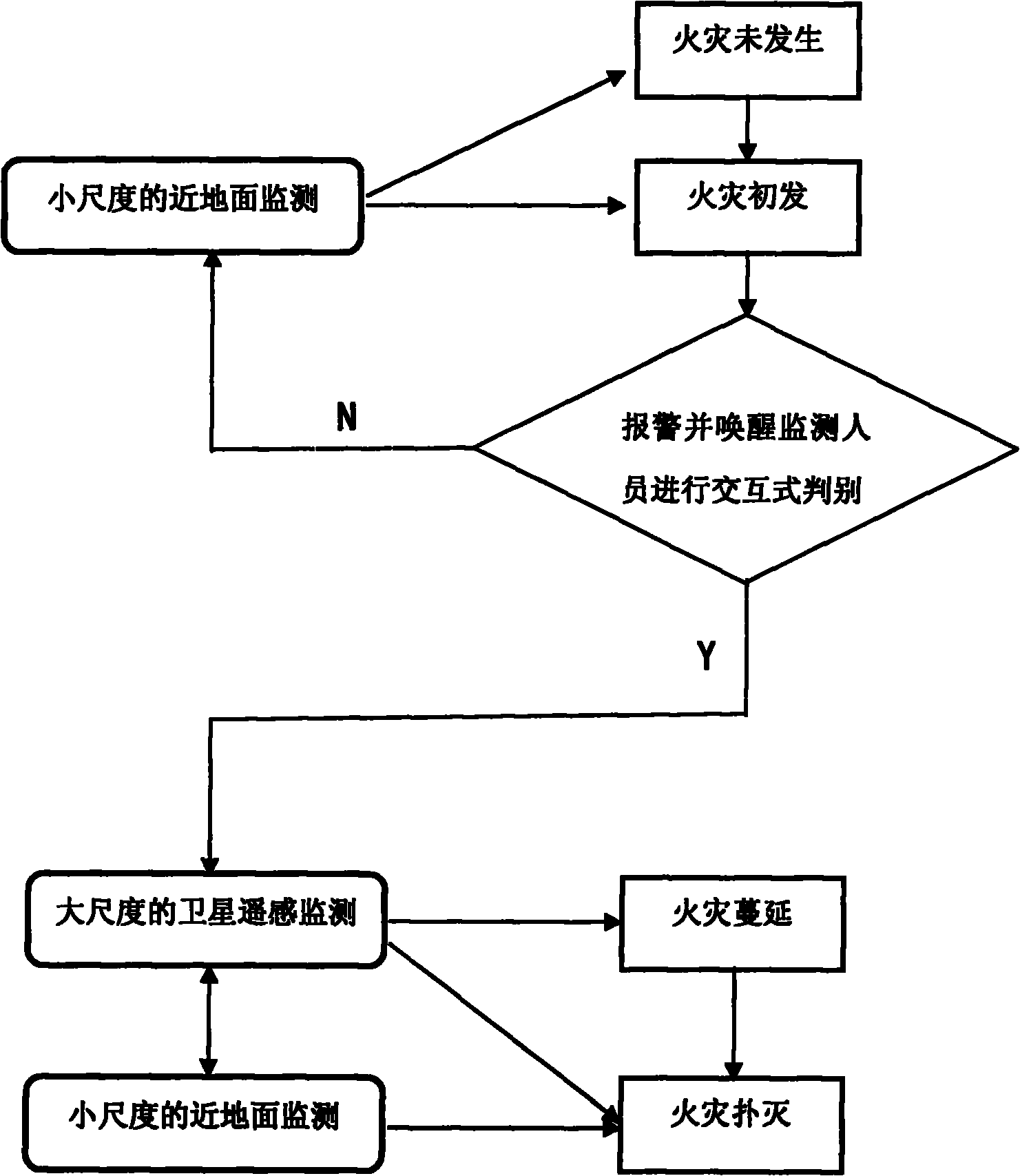

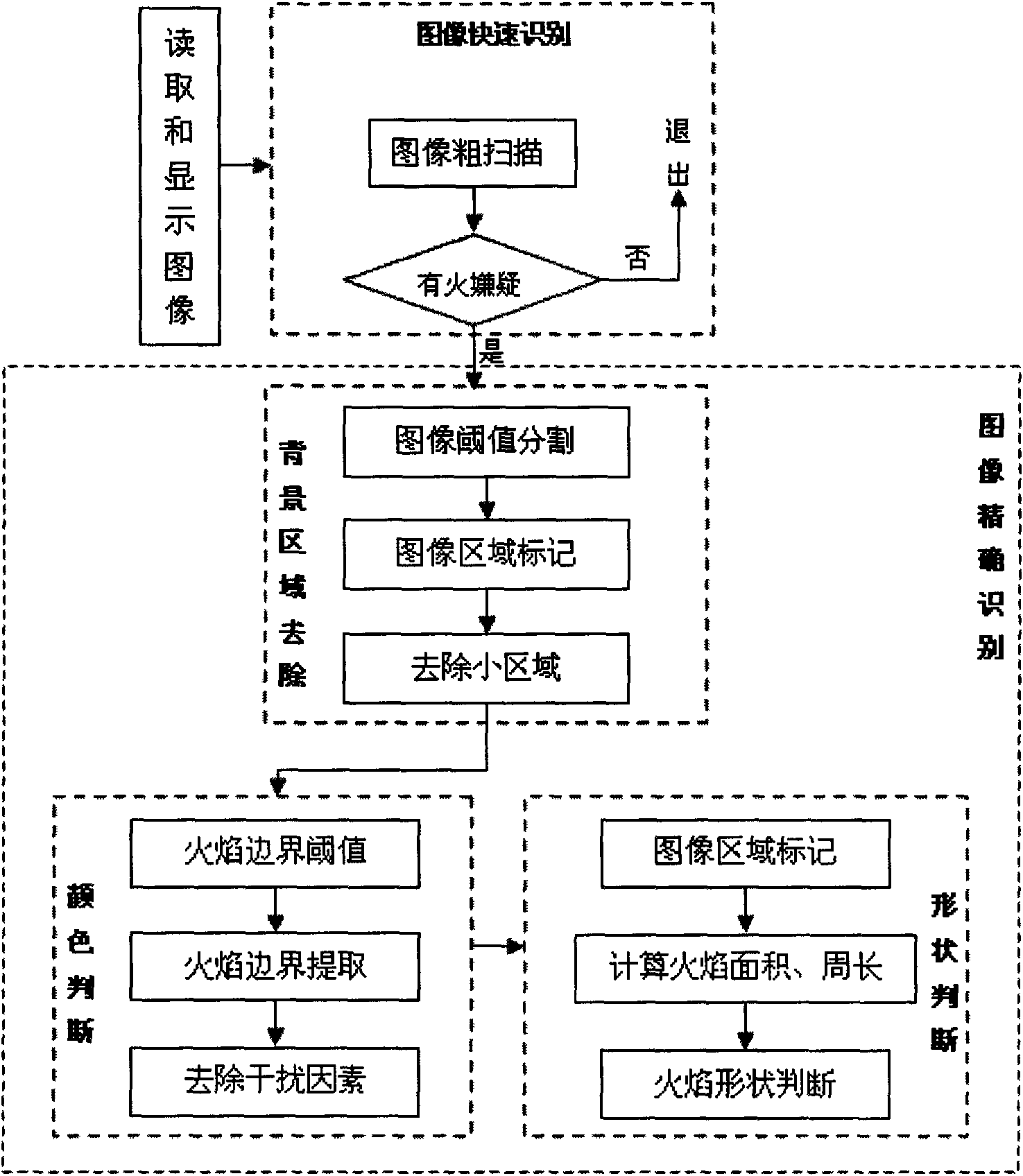

Visible light-thermal infrared based multispectral multi-scale forest fire monitoring method

InactiveCN101989373AReach the automatic identification functionImprove accuracyCharacter and pattern recognitionFire alarm radiation actuationCombustionRecognition algorithm

The invention relates to a fire monitoring method, in particular to a visible light-thermal infrared based multispectral multi-scale forest fire monitoring method which utilizes the advantages of a large-scale satellite remote sensing monitoring means and a small-scale near ground monitoring means to invent a novel monitoring method through reasonable configuration and mutual coordination of the two means. In the invention, the spectral features as well as picture pattern and textural features of forest fire points are researched, the forest fire recognition algorithms of visible light images and thermal infrared images are organically combined, and the interference and the like on forest combustion smoke and open fire recognition, which are caused by the cloud, fog, lamplight, red substances and the like of a forest district, are eliminated, so that the visible light-thermal infrared based multispectral multi-scale forest fire monitoring method by which various factor interferences can be eliminated is invented, thus a fire alarm automatic recognition function can be achieved, the manpower cost for 24-hour manual monitoring can be greatly lowered, and the accuracy of forest fire monitoring can be effectively improved.

Owner:INST OF GEOGRAPHICAL SCI & NATURAL RESOURCE RES CAS

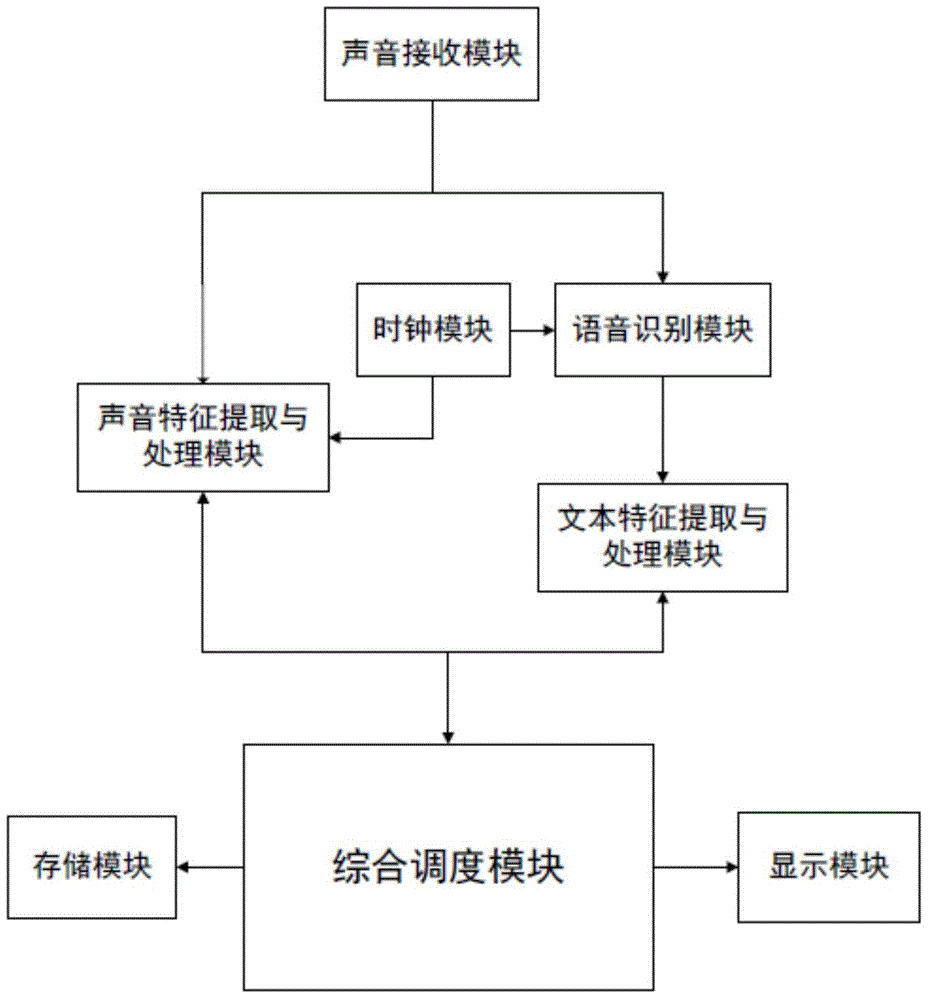

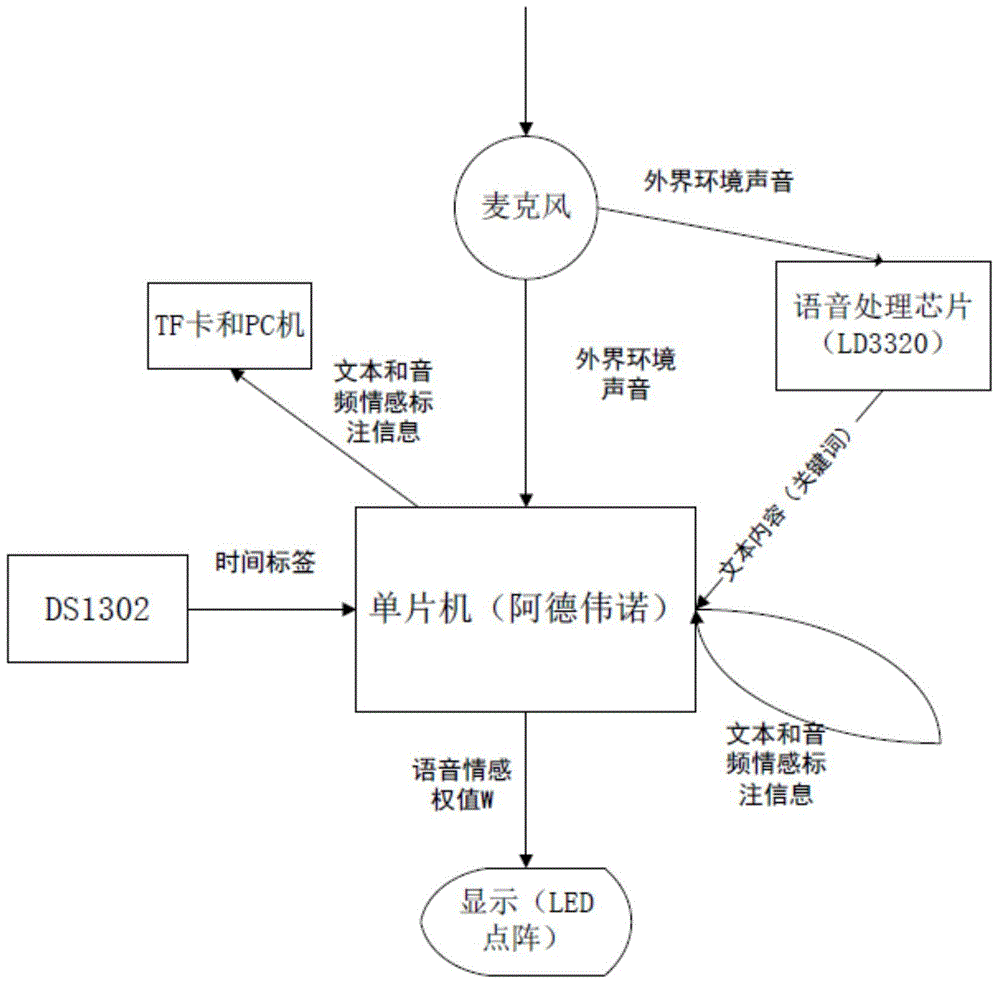

Multi-mode non-contact emotion analyzing and recording system

ActiveCN104102627AReduce feature dimensionImprove operational efficiencySpeech analysisSpecial data processing applicationsFeature extractionSpeech sound

The invention discloses a multi-mode non-contact emotion analyzing and recording system. The system is characterized by being composed of a voice receiving module, a voice feature extracting and processing module, a speech recognition module, a textural feature extracting and processing module, a comprehensive scheduling module, a displaying module and a clock module; the voice receiving module is used for completing receiving of voice from outside environment, the voice feature extracting and processing module is used for acquiring voice frequency emotion labeling information of speech, the voice recognition module is used for completing conversion from speech content to textural content, the textural feature extracting and processing module is used for acquiring textural emotion labeling information of the speech, the comprehensive scheduling module is used for completing processing, storing and scheduling of all data, the displaying module is used for completing displaying of detected speech emotion state, and the clock module is used for completing time recording and providing a time labeling function. By the multi-mode non-contact emotion analyzing and recording system, a textural mode and a voice frequency mode can be integrated to recognize speech emotions, so that accuracy of recognition is improved.

Owner:山东心法科技有限公司

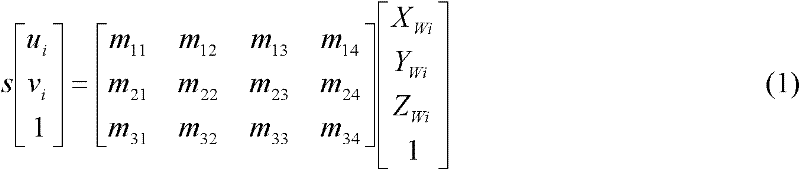

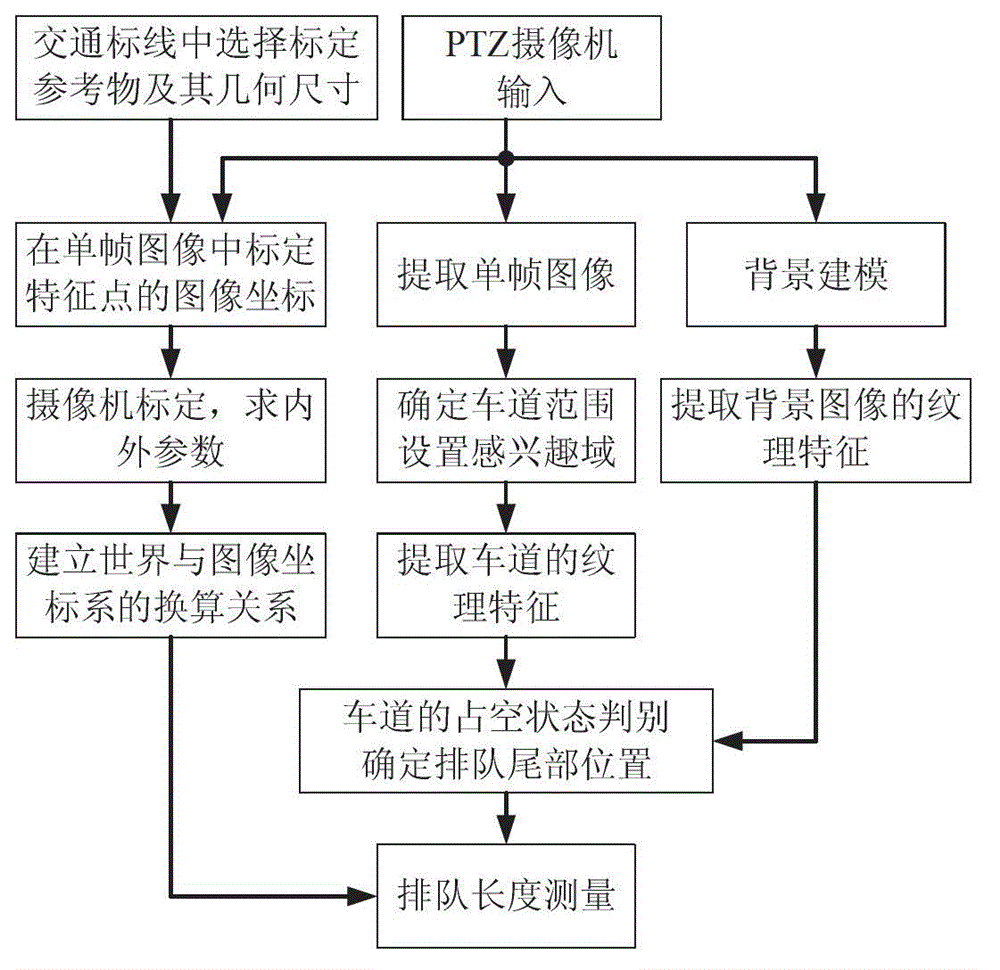

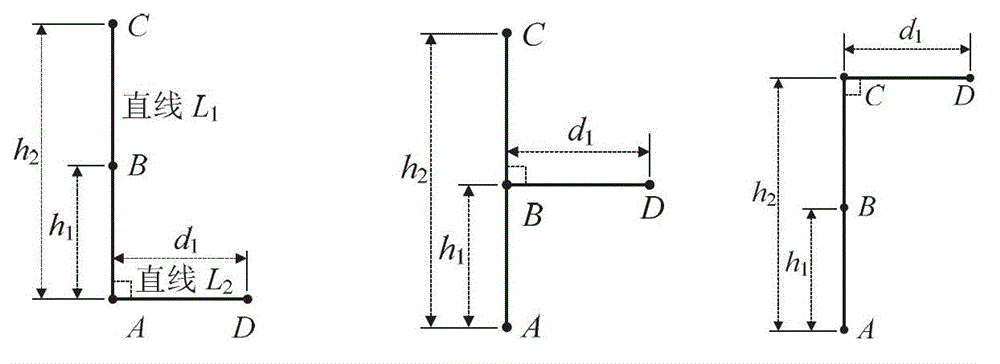

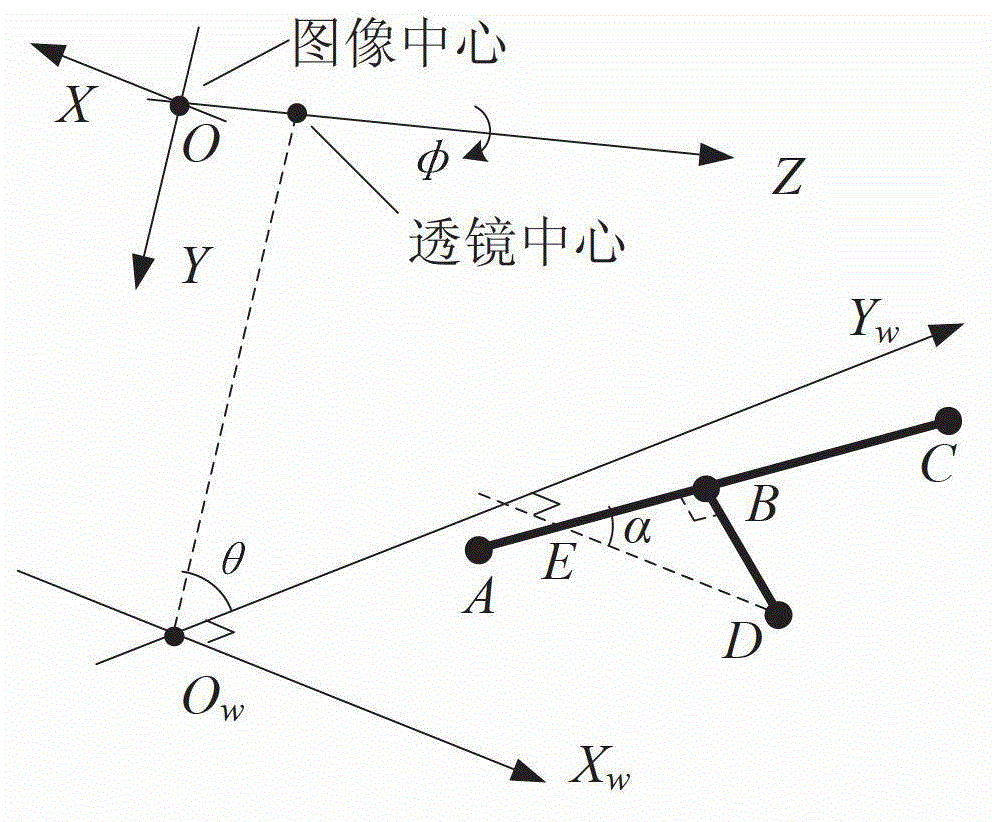

Vehicle queue length measurement method based on PTZ (Pan/Tilt/Zoom) camera fast calibration

InactiveCN102867414ARealize visual measurementEasy to chooseImage analysisDetection of traffic movementCamera auto-calibrationLength measurement

The invention discloses a vehicle queue length measurement method based on PTZ (Pan / Tilt / Zoom) camera fast calibration. The vehicle queue length measurement method based on the PTZ camera fast calibration comprises the following steps of choosing two vertically-crossed traffic markings to form a T-shaped scaling reference and establishing a conversion relation between coordinates of pixels in an image and coordinates of roadway corresponding points in a world coordinate system according to the defined models of the image coordinate system and the world coordinate system; acquiring video images of a traffic monitoring scene by adopting a PTZ camera, setting an ROI (Region Of Interests) of a lane, detecting the vehicle queue state in the ROI by the adoption of an adaptive background update algorithm and textural features, and acquiring the pixels and pixel coordinates of the tails of the vehicle queues; converting the detected pixel coordinates of the tails of the vehicle queues into the world coordinate and finally computing the length of the vehicle queues. The tail position of the vehicle queues is judged according to the textural features, and the measurement of length of the vehicle queues is finished by the combination of the camera, so that the vehicle queue length measurement method based on the PTZ camera fast calibration, disclosed by the invention, has the advantages of low cost, strong embedded type, and the like.

Owner:HUNAN UNIV +1

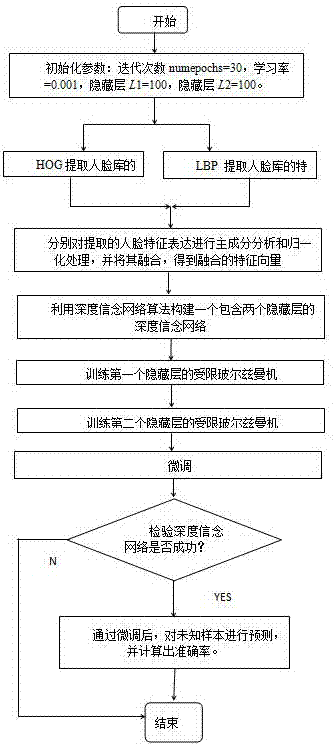

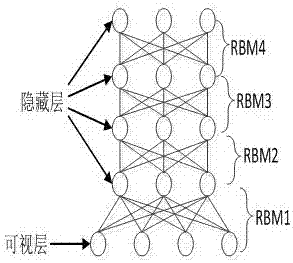

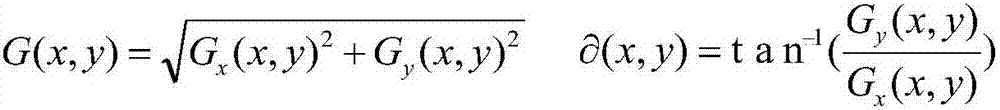

Multi-feature fusion-based deep learning face recognition method

InactiveCN107578007AImprove accuracyReduce operationCharacter and pattern recognitionNeural architecturesDeep belief networkFeature vector

The invention discloses a multi-feature fusion-based deep learning face recognition method. The multi-feature fusion-based deep learning face recognition method comprises performing feature extractionon images in ORL (Olivetti Research Laboratory) through a local binary pattern and an oriented gradient histogram algorithm; fusing acquired textual features and gradient features, connecting the twofeature vectors into one feature vector; recognizing the feature vector through a deep belief network of deep learning, and taking fused features as input of the deep belief network to layer by layertrain the deep belief network and to complete face recognition. By fusing multiple features, the multi-feature fusion-based deep learning face recognition method can improve accuracy, algorithm stability and applicability to complex scenes.

Owner:HANGZHOU DIANZI UNIV

Drone's low-altitude remote-sensing image high-resolution landform classifying method based on characteristic fusion

ActiveCN107292339AEliminate distractionsEasy to digCharacter and pattern recognitionClassification methodsLandform

The invention discloses a drone's low-altitude remote-sensing image high-resolution landform classifying method based on characteristic fusion. The method comprises the following steps: selecting common and representative landforms from to-be-processed remote sensing images and using them as the training samples of the landforms; extracting the color characteristics and the texture characteristics from the training samples of each landform; fusing the color characteristics and the texture characteristics; using a classifying method to classify and learn the fused characteristics to obtain the classifying model for each landform; extracting and fusing the color characteristics and the texture characteristics of the low-altitude remote sensing images of the to-be-classified drones; and finally, based on the fused characteristics of the classifying objects and in combination with the classifying model of each obtained landform, using the classifiers to divide the classifying objects into a certain landform. Therefore, the classification of the drone's low-altitude remote sensing images is achieved. According to the method of the invention, it is possible to more effectively and more quickly to extract the verification characteristics so that the classification result becomes more accurate.

Owner:CHONGQING UNIV

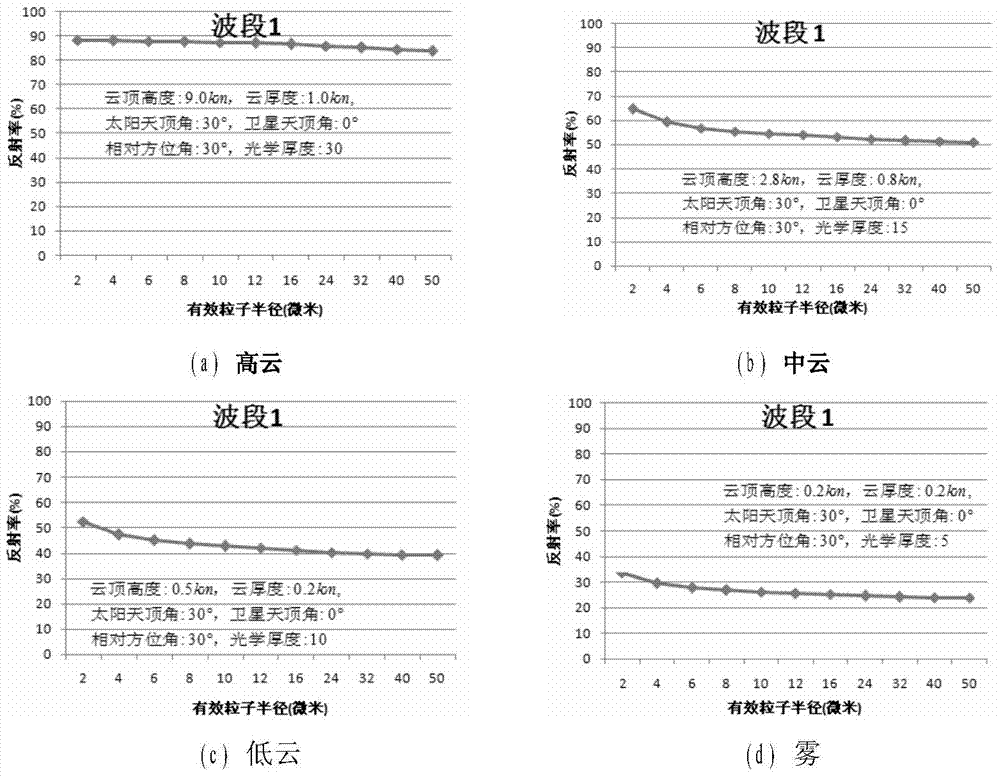

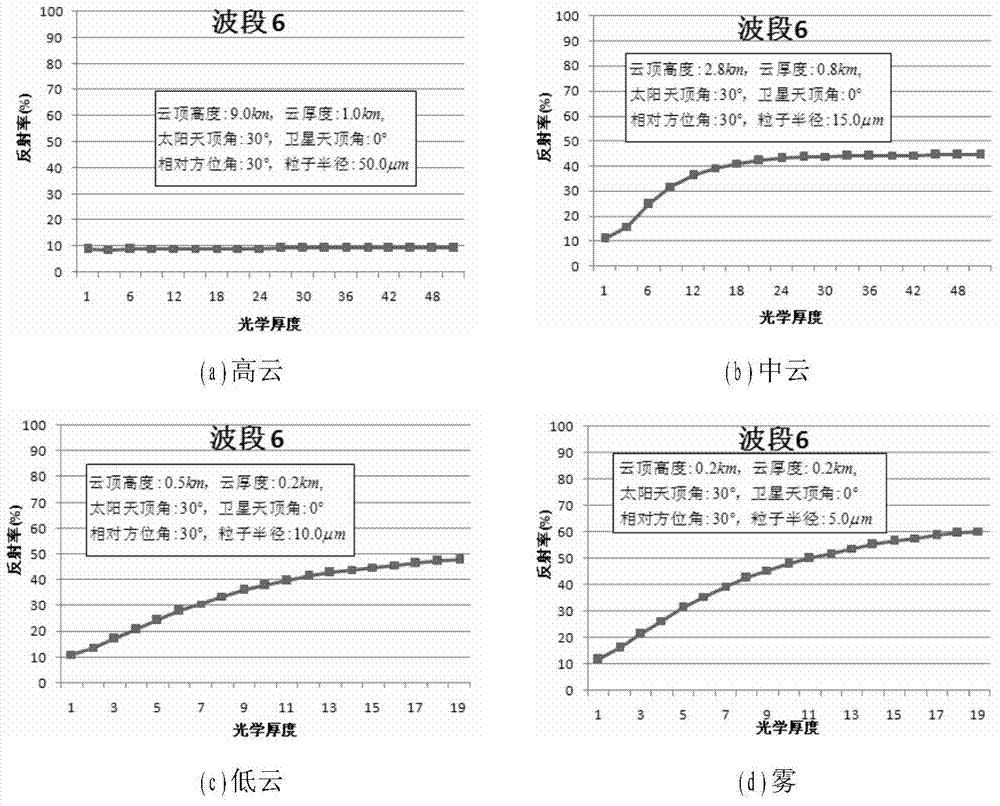

Daytime land radiation fog remote sensing monitoring method based on object-oriented classification

InactiveCN103926634AAvoid the status quo that is difficult to detectPlay a supporting roleInstrumentsFeature parameterSpectral signature

The invention provides a daytime land radiation fog remote sensing monitoring method based on object-oriented classification. The method comprises the steps of selecting EOS / MODIS satellite remote sensing data with the highest spatial resolution being 250 m, constructing cloud and fog feature parameters through the combination of atmospheric radiation transmission model simulation and statistic of a large number of the EOS / MODIS satellite remote sensing data, and selecting a suitable remote sensing image partitioning algorithm to conduct image partitioning on the cloud and fog feature parameters; calculating spectral signatures, textural features, geometrical features and cloud and fog feature parameter feature values of homogeneous units obtained through partitioning one by one, training the attributes of the homogeneous units constructed after the image partitioning on the basis of ground actual measurement meteorological observation data and by the adoption of a decision tree classification algorithm, and constructing the daytime land radiation fog remote sensing monitoring method for fog detection. According to the daytime land radiation fog remote sensing monitoring method, the problem that low clouds and fog are hard to distinguish due to the similarity of spectra and textures can be effectively avoided.

Owner:CHANGJIANG RIVER SCI RES INST CHANGJIANG WATER RESOURCES COMMISSION

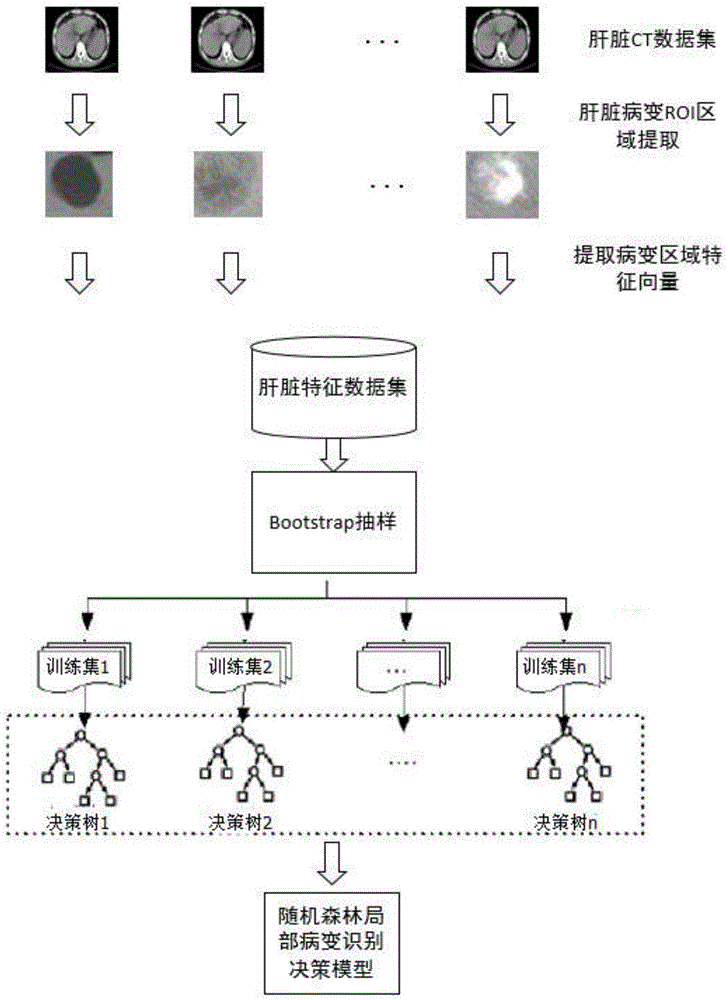

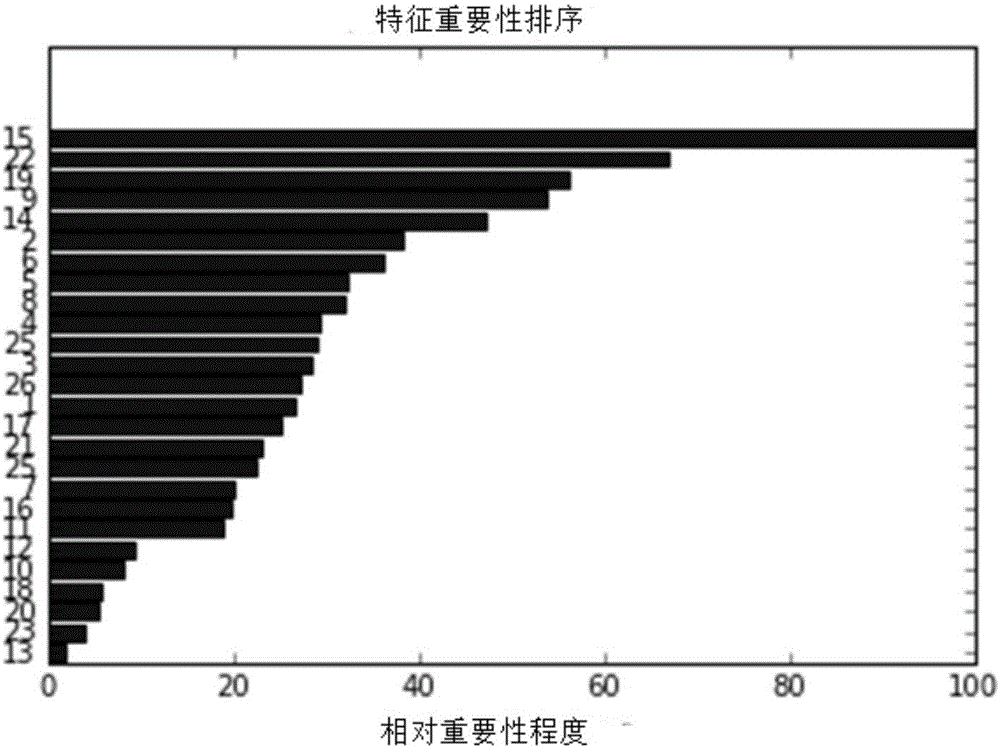

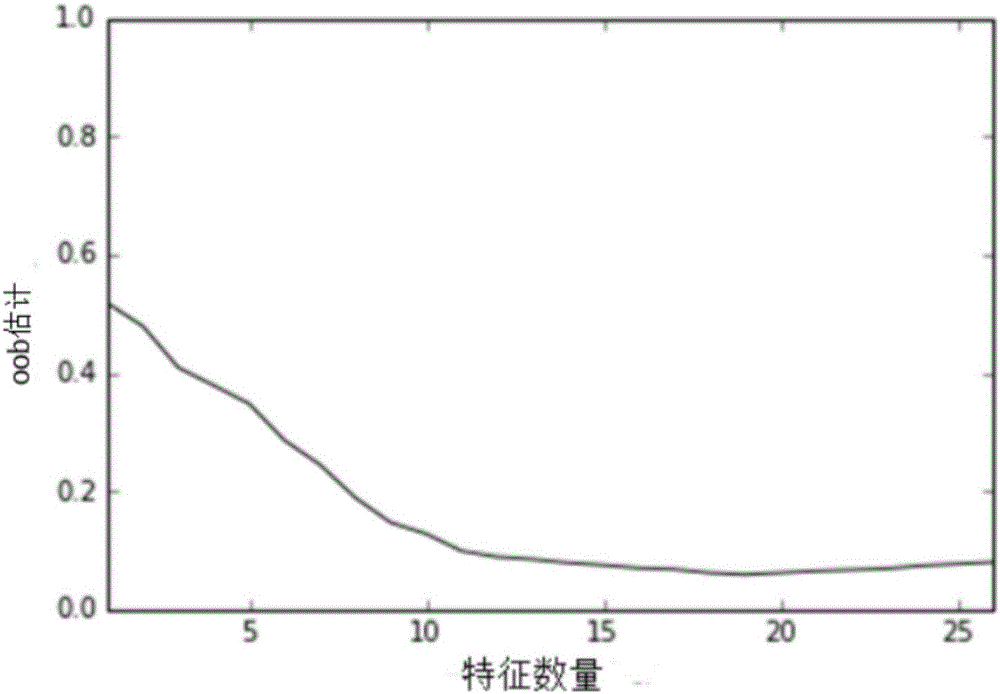

Pathology identification method for routine scan CT image of liver based on random forests

InactiveCN105931224AGreat medical valueDiagnostic scienceImage enhancementImage analysisFeature vectorData set

The invention discloses a pathology identification method for a routine scan CT image of the liver based on the random forests. The method comprises that image gray-level texture characteristic is extracted from a pathologic area of the routine scan CT image of the liver and serves as image characteristic vector expression, the random forests is used to select characteristics from the image characteristic vector of the pathologic area of the routine scan CT image of the liver to form a most effective characteristic combination, a most effective characteristic data set is trained and learned, the identification capability of a decision tree of random forests is balanced and optimized, and a final pathology identification model is obtained.

Owner:ZHEJIANG UNIV

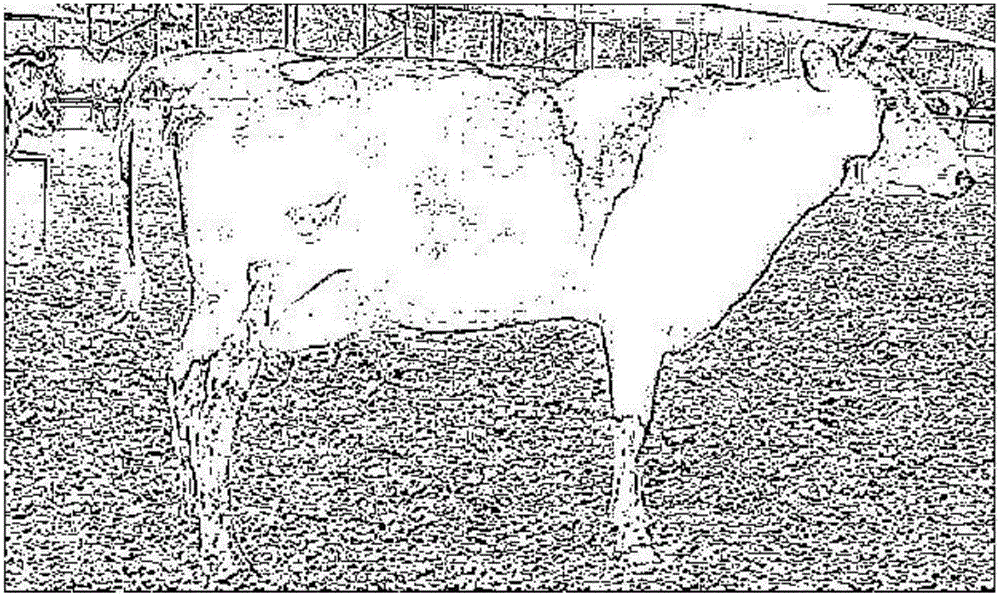

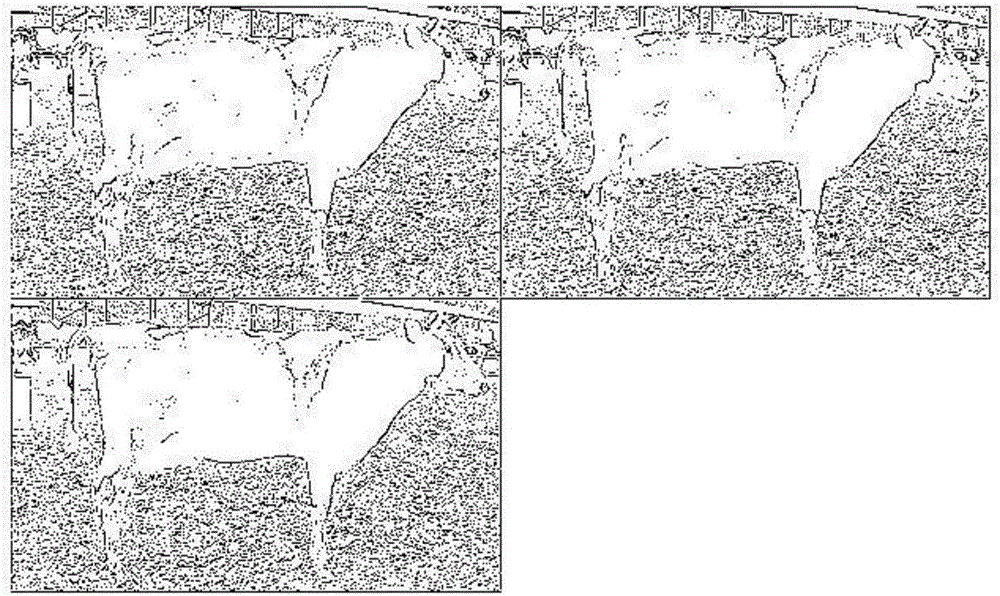

Dairy cow individual recognition method based on deep convolutional neural network

InactiveCN106778902AHighlight substantiveHighlight substantive featuresCharacter and pattern recognitionNeural learning methodsImaging processingData treatment

The invention provides a dairy cow individual recognition method based on a deep convolutional neural network, and relates to an image recognition method in image data processing. According to the dairy cow individual recognition method, the dairy cow individual can be effectively recognized by extracting characteristics by virtue of a convolutional neural network in deep learning and combining the characteristics with textural features of dairy cows. The dairy cow individual recognition method comprises the following steps: collecting dairy cow data; preprocessing a training set and a test set; designing the convolutional neural network; training the convolutional neural network; generating a recognition model; and recognizing the dairy cow individual by virtue of the recognition model. By virtue of the dairy cow individual recognition method, the defects that an existing algorithm for processing dairy cow images by virtue of an image processing technique is single, the stripe characteristics of the dairy cows are not adequately and well combined with an image processing technique and a mode recognition technique, and therefore, the recognition rate of the daily cows is low are overcome.

Owner:HEBEI UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com