Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

71 results about "Pincushion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A pincushion (or pin cushion) is a small, stuffed cushion, typically 3–5 cm (1.2–2.0 in) across, which is used in sewing to store pins or needles with their heads protruding to take hold of them easily, collect them, and keep them organized.

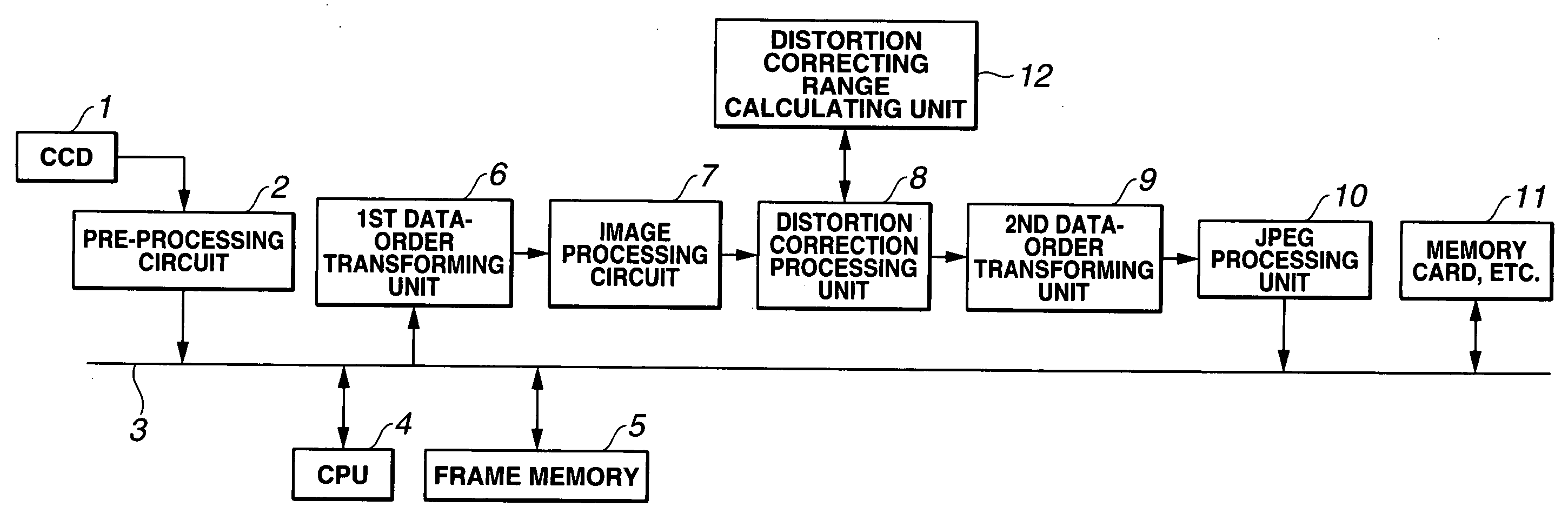

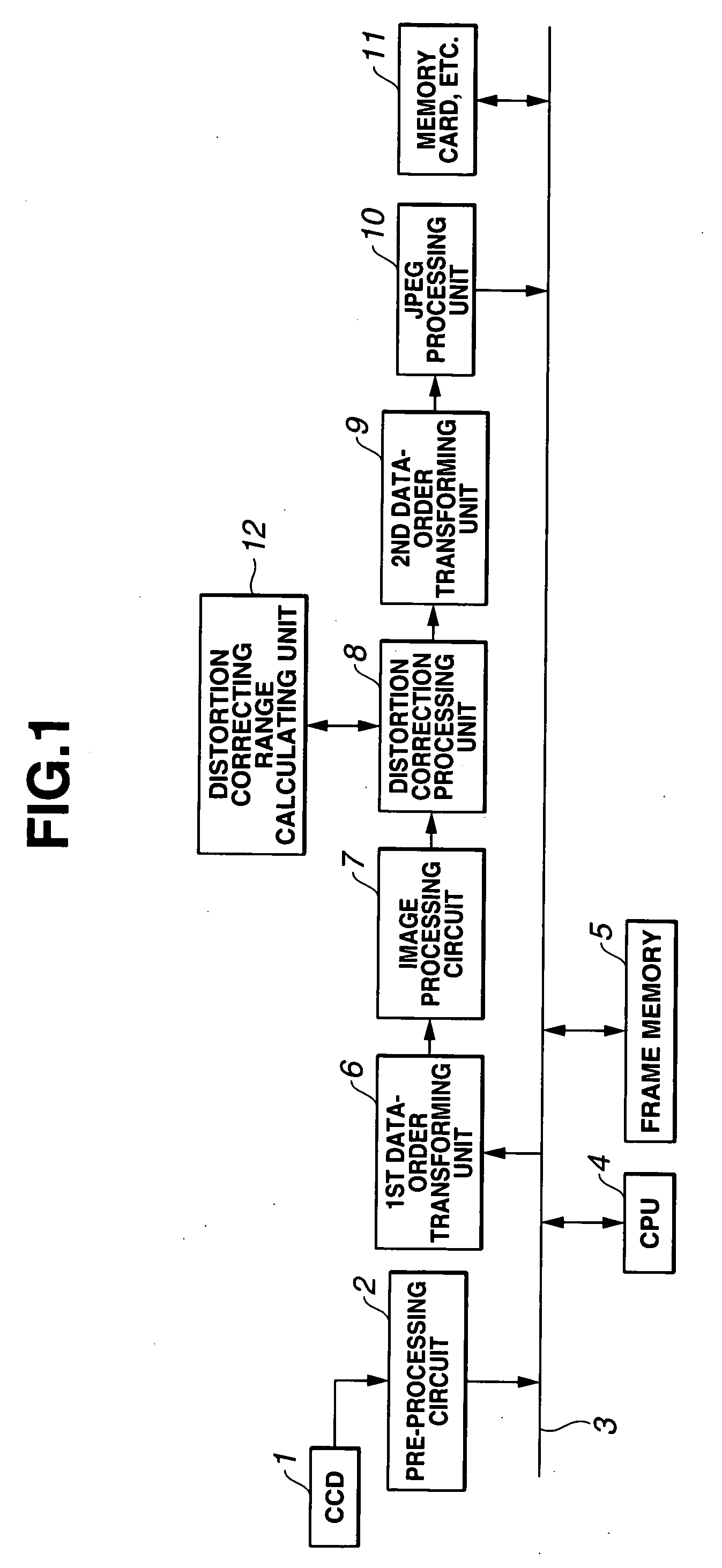

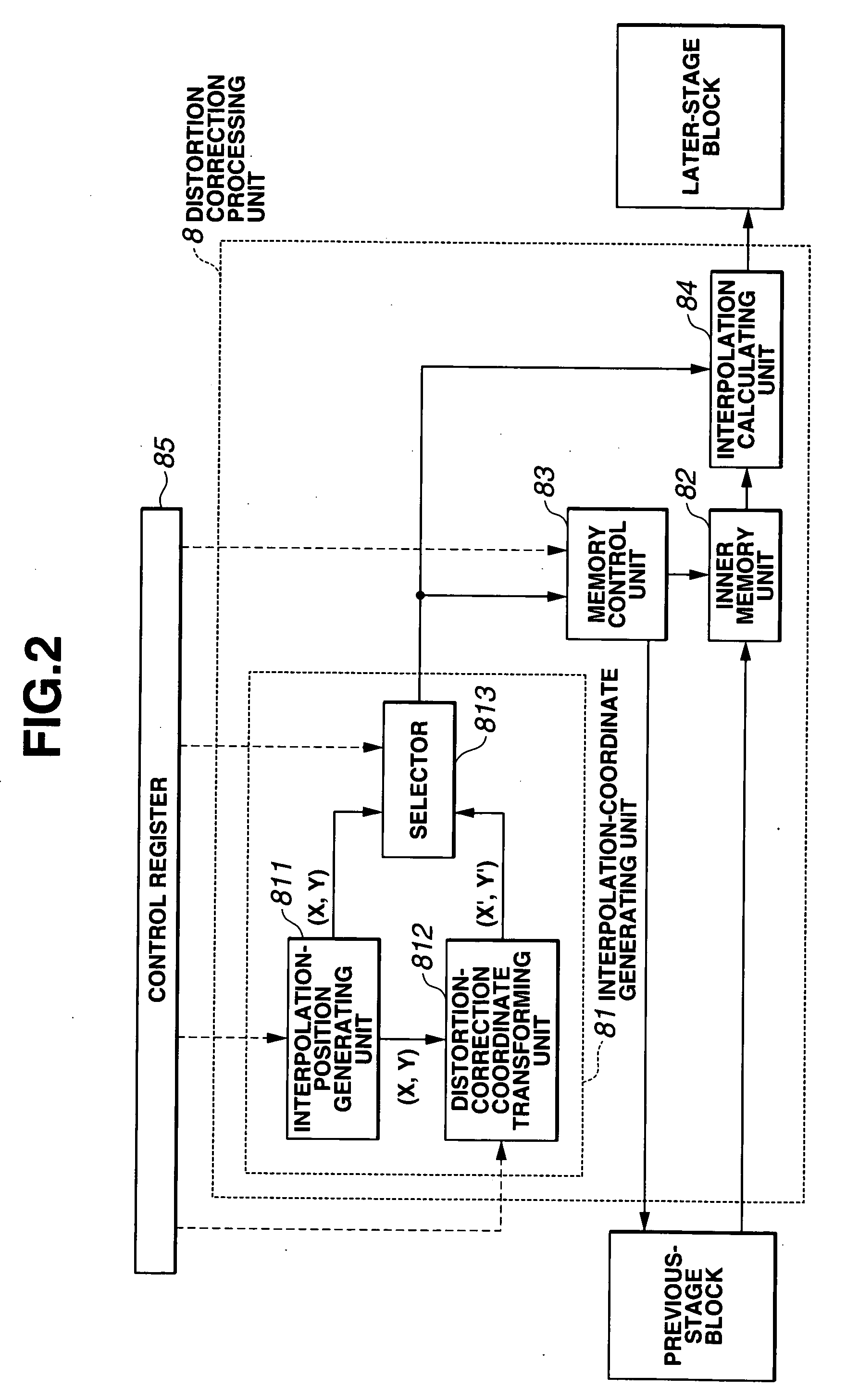

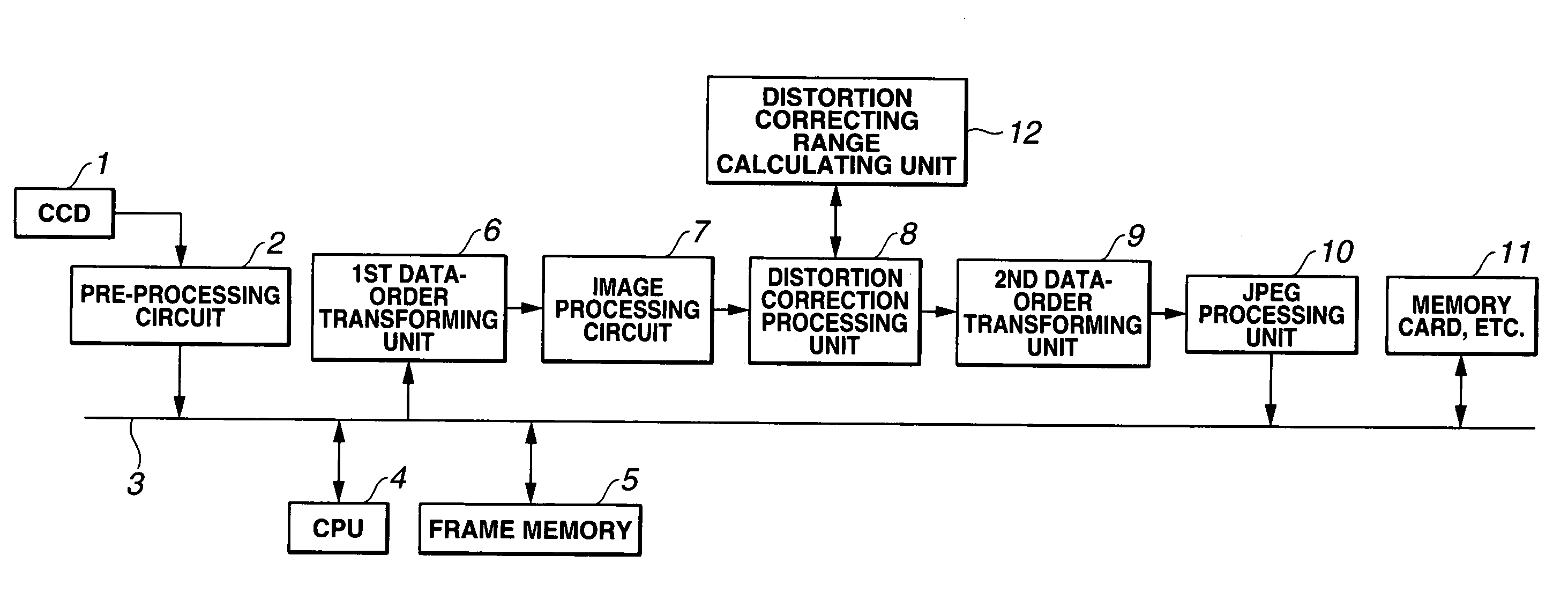

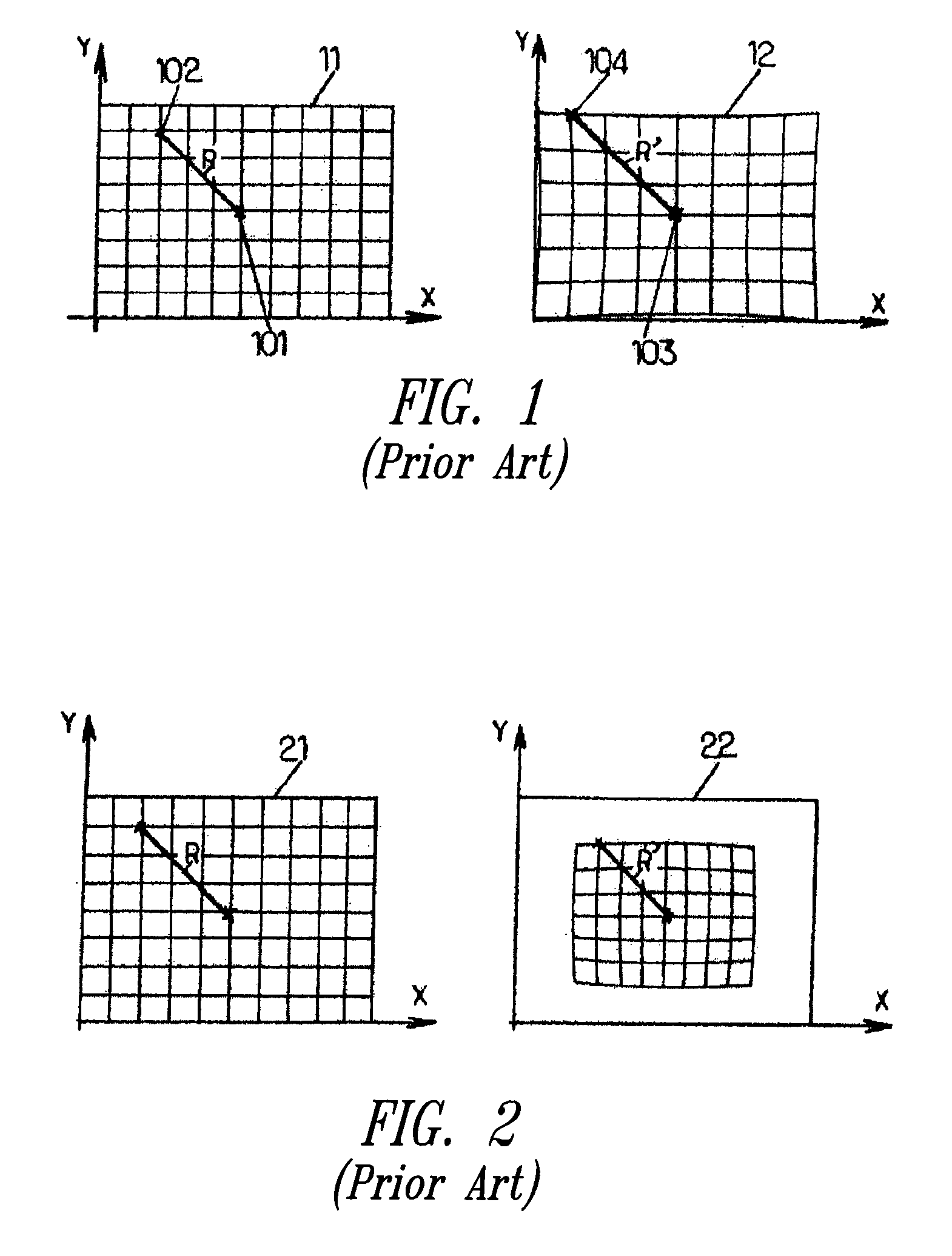

Image processing apparatus, image processing method, and distortion correcting method

ActiveUS20060188172A1DistortionCorrect distortionImage enhancementTelevision system detailsImaging processingOriginal data

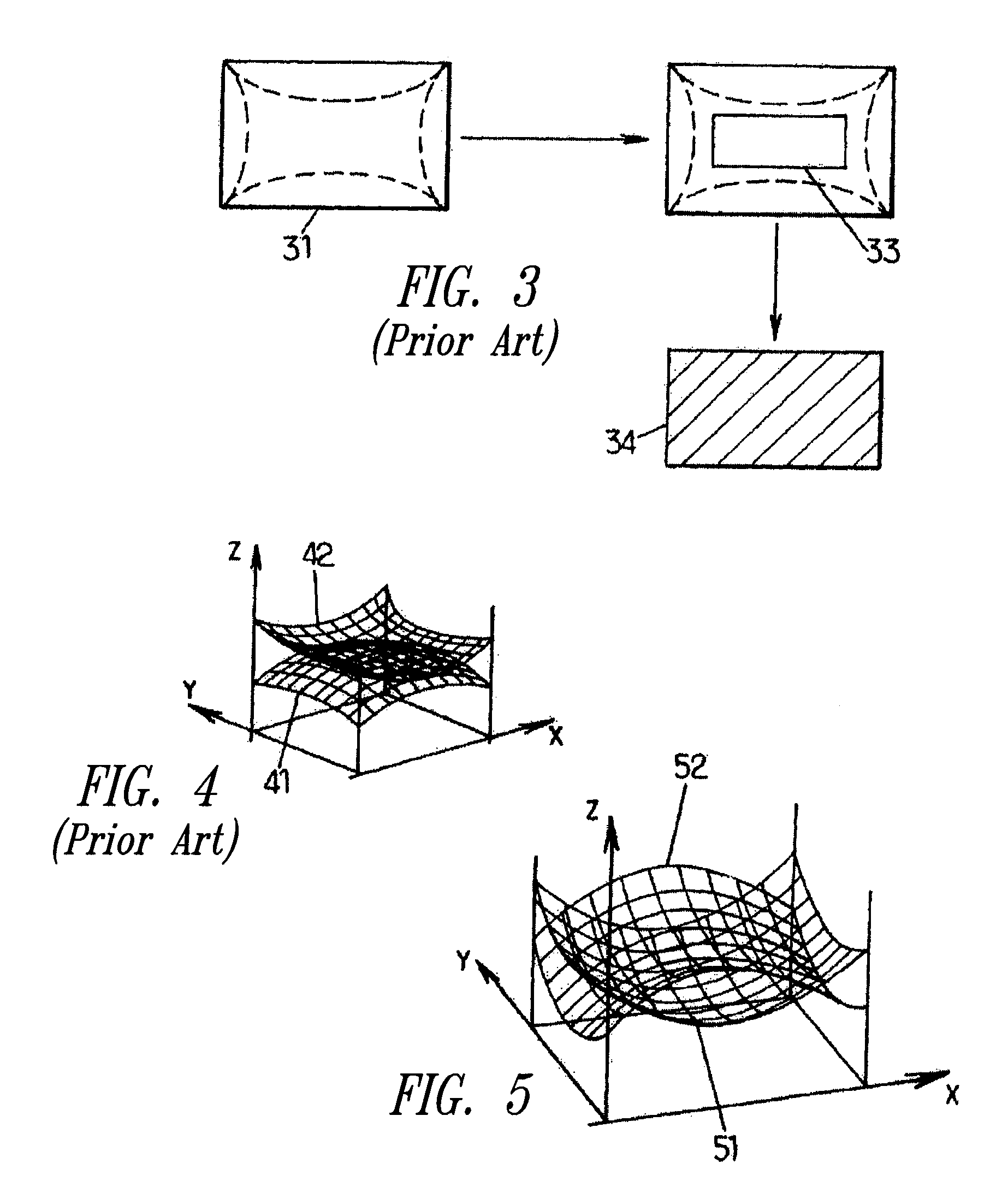

An image processing apparatus has a distortion correction processing unit. The image processing apparatus has a distortion correcting range calculating unit that calculates an input image range necessary for distortion correction processing of the distortion correction processing unit. Thus, the corrected image (output image) obtained by the distortion correction processing can be outputted without overs and shorts for the image output range. The distortion is corrected by effectively using input (picked-up) image data, serving as the original data. Further, the distortion including the pincushion distortion, the barrel distortion, and the curvilinear distortion is effectively corrected.

Owner:OLYMPUS CORP

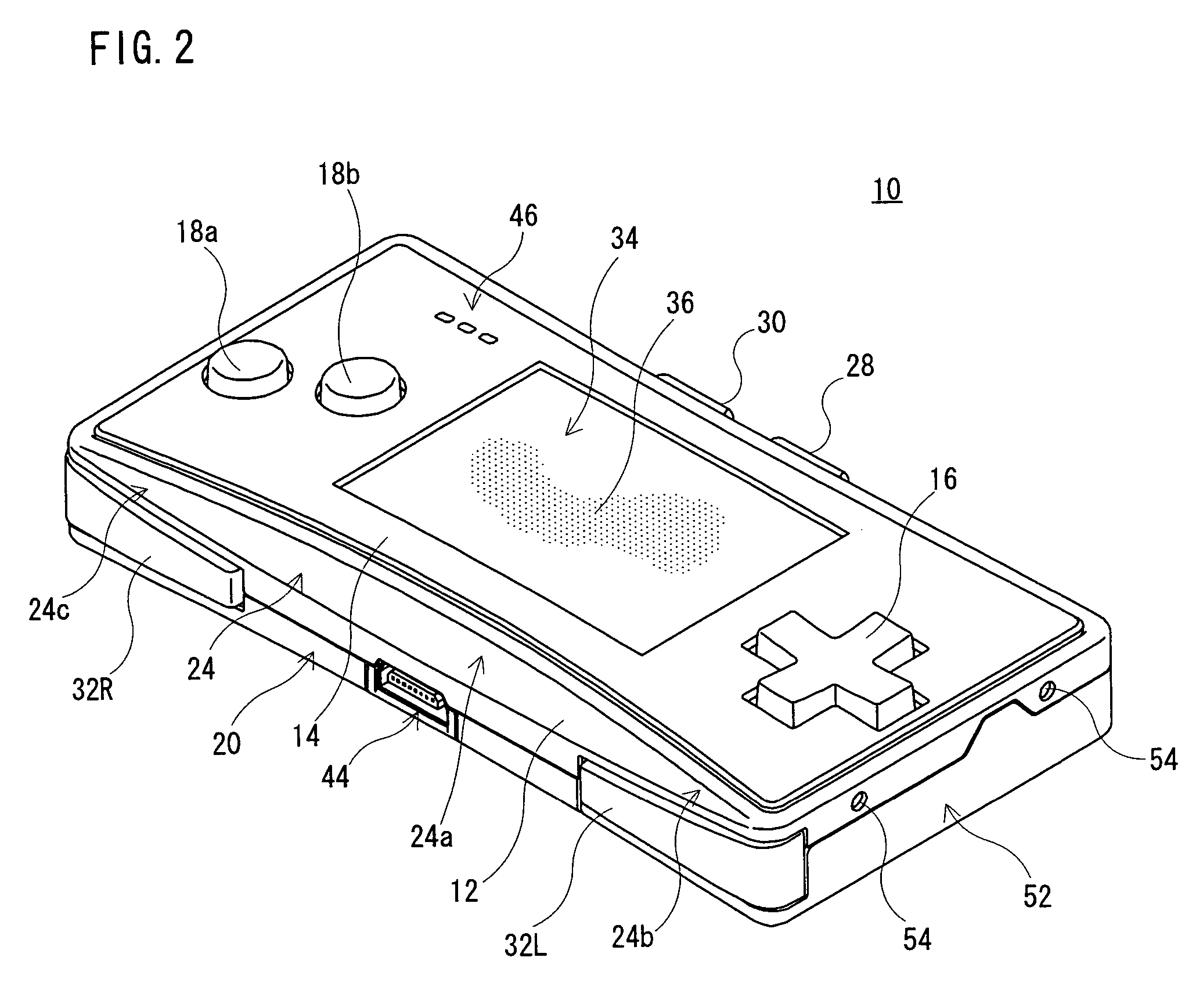

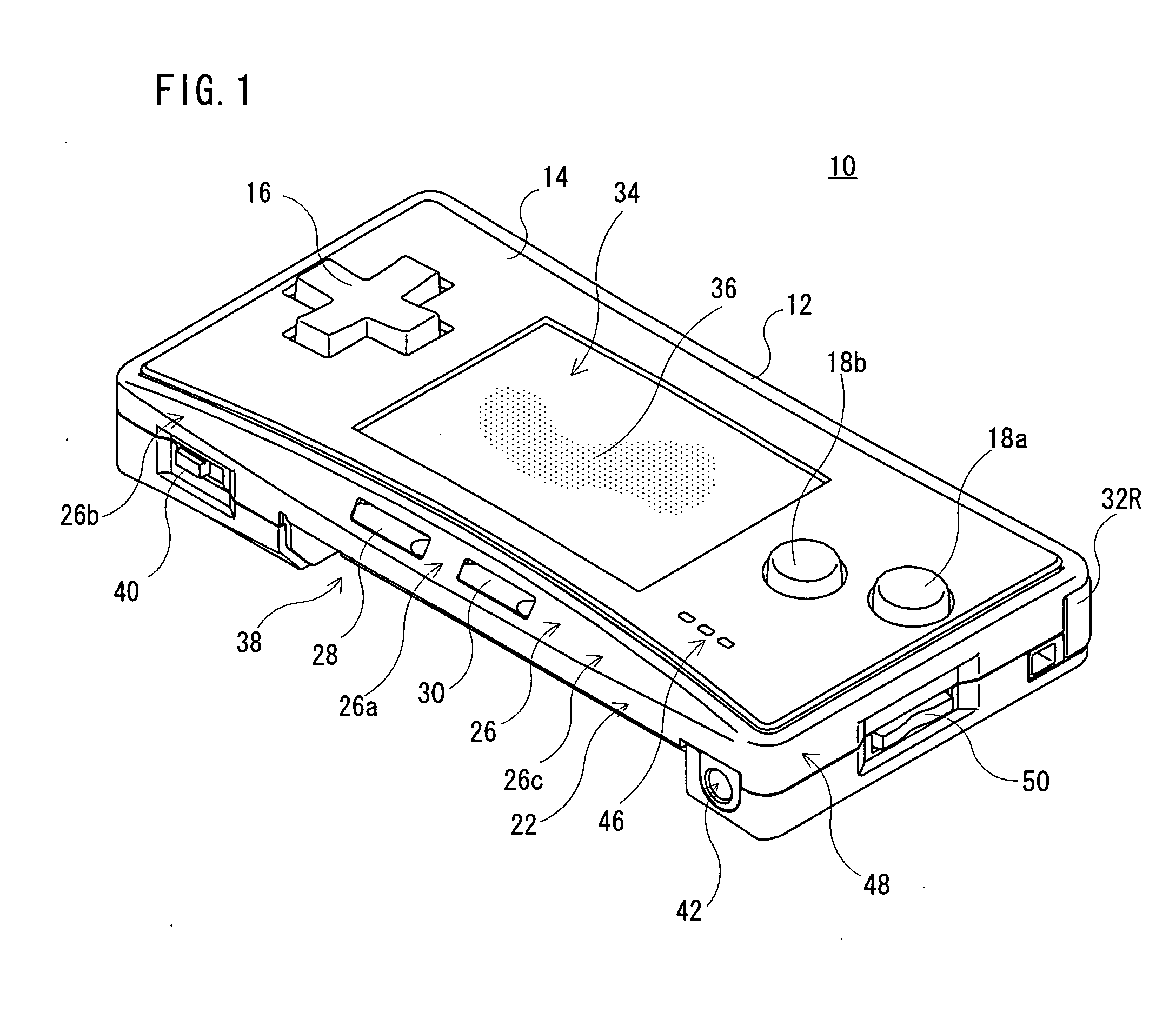

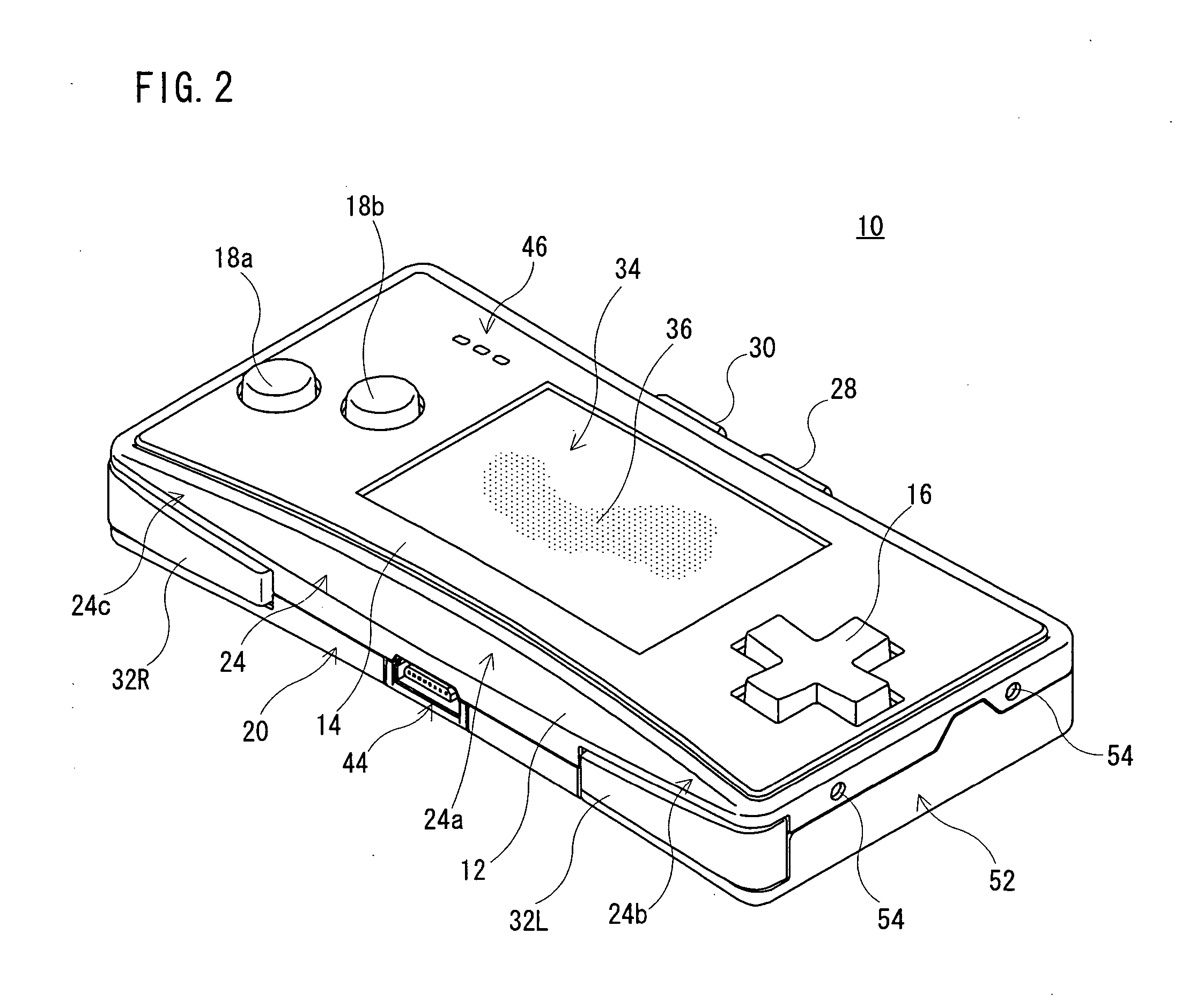

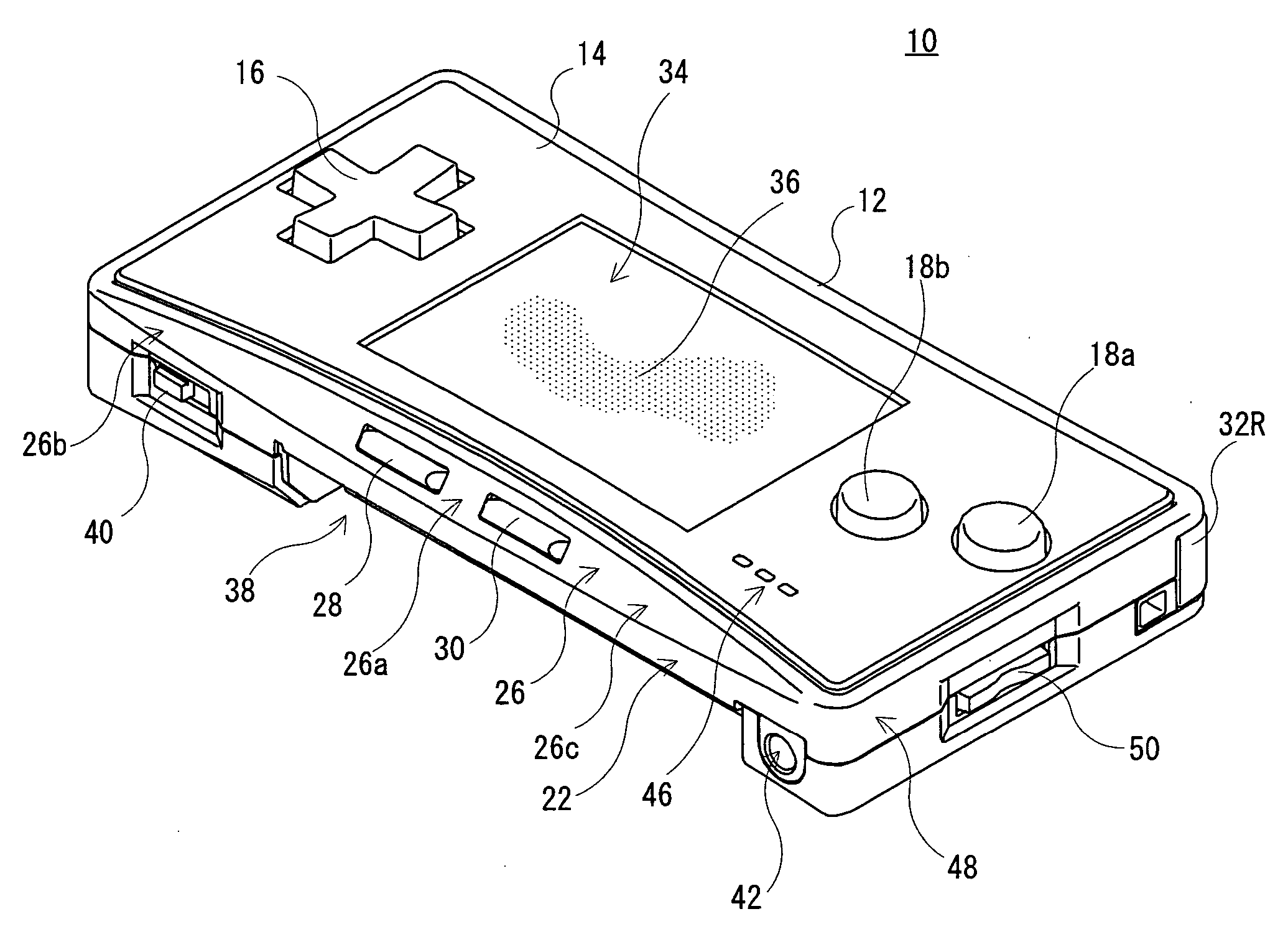

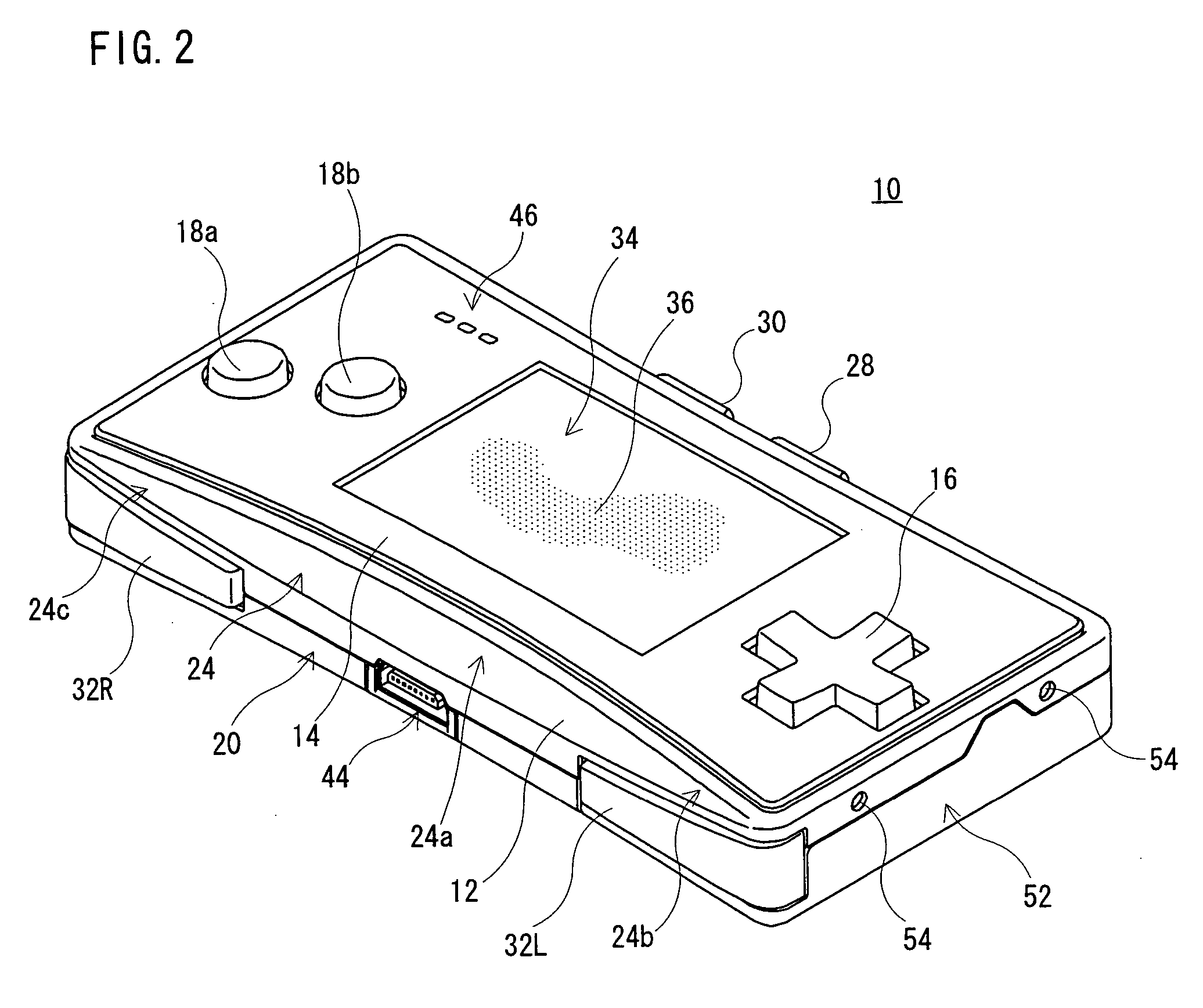

Operation device for game machine and hand-held game machine

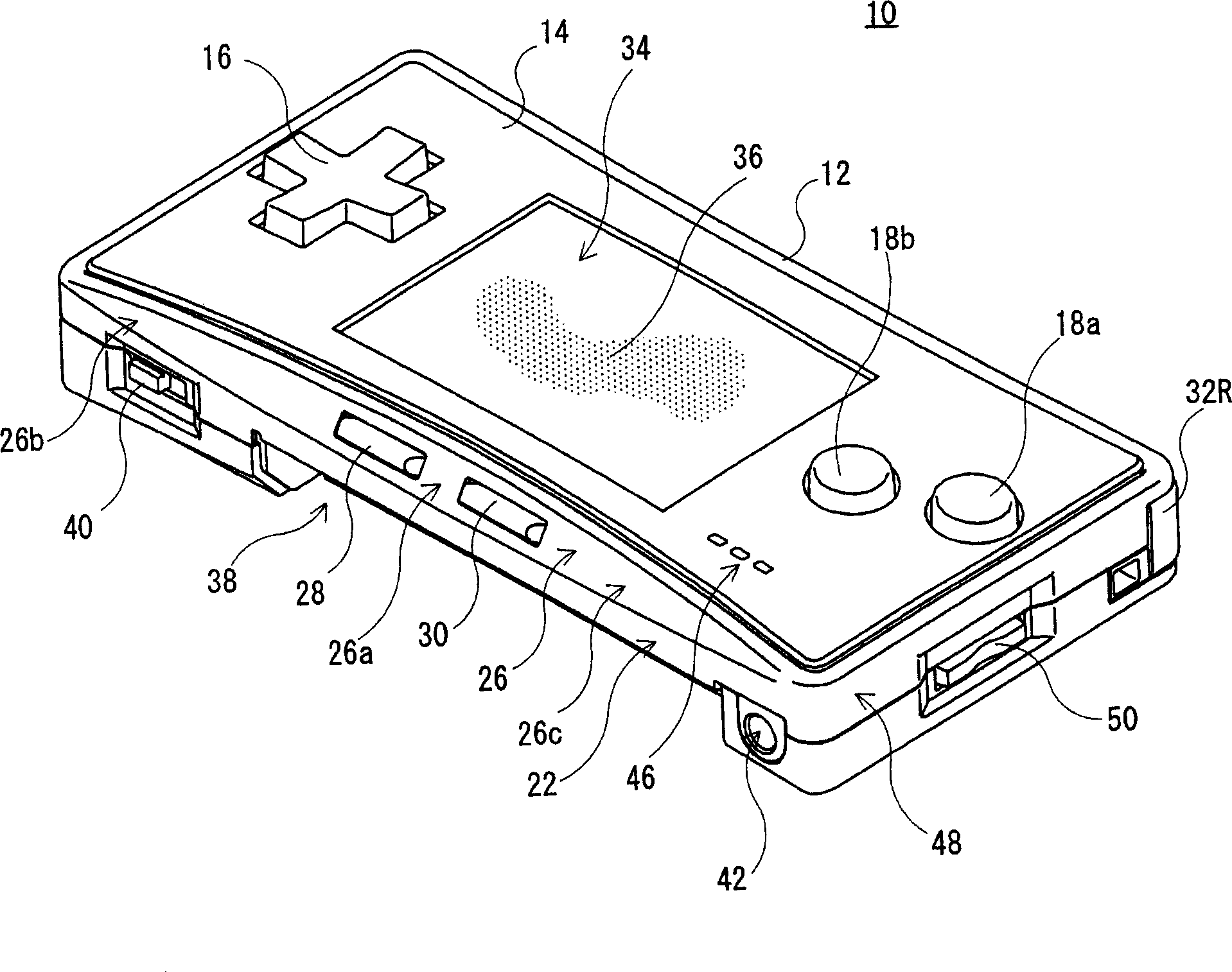

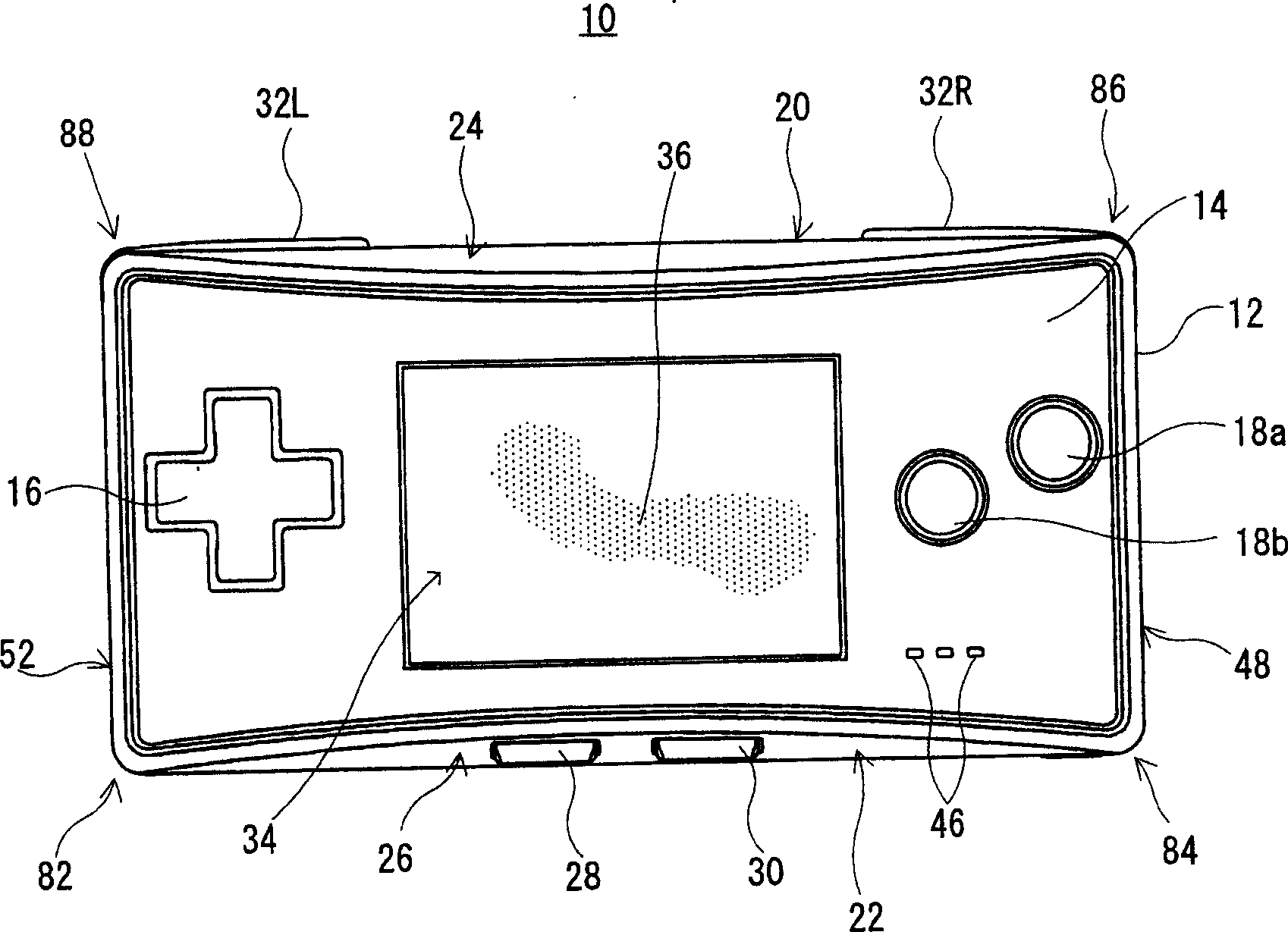

ActiveUS7193165B2Easy to operateInput/output for user-computer interactionEmergency actuatorsHand heldEngineering

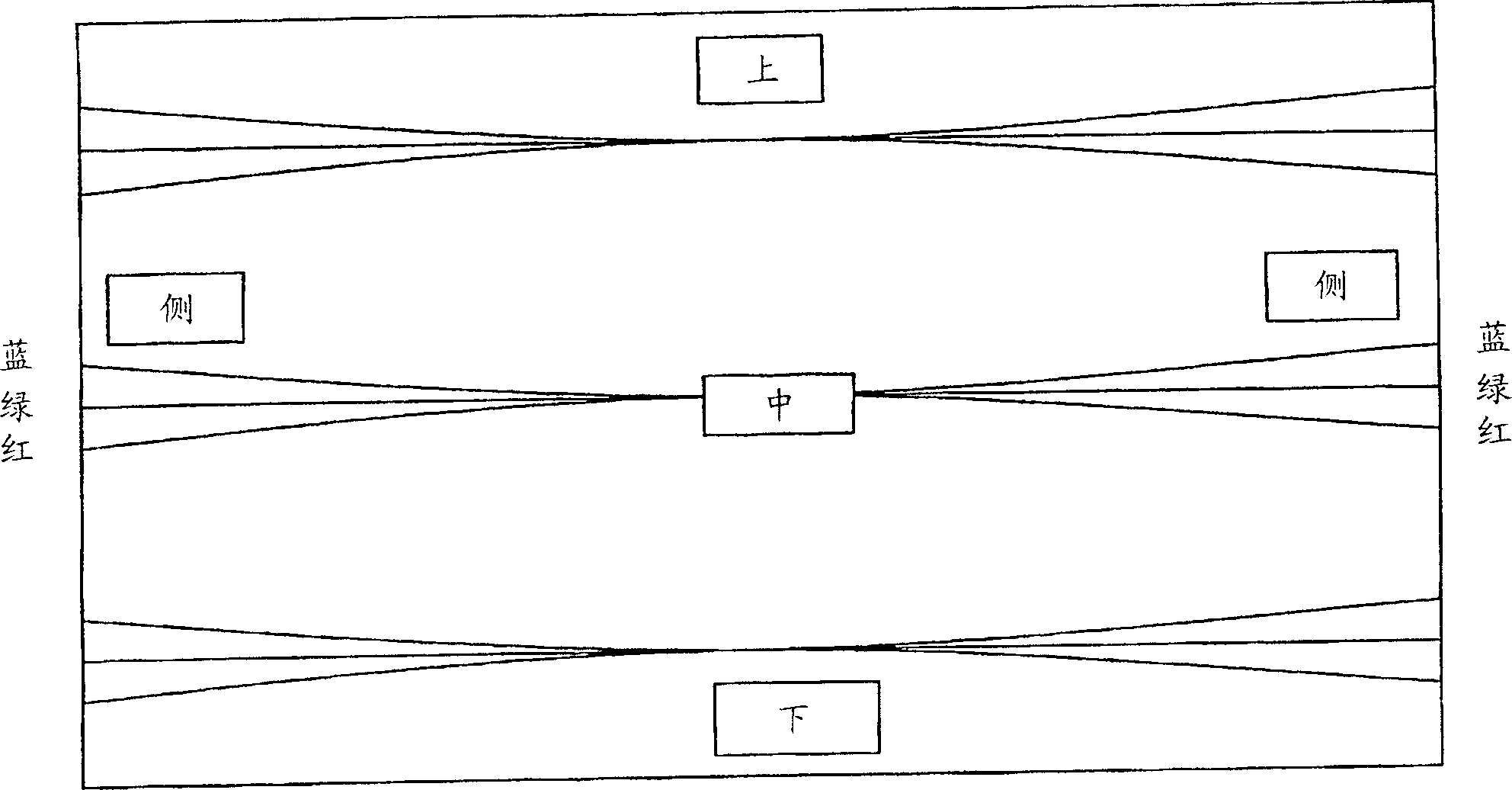

A hand-held game machine (10) includes a horizontally-long rectangular housing (12), and the housing is covered with a cover (14) almost entirely. Each of top main surfaces of the housing and the cover has a plane shape of pincushion, and thus, the top main surface of the housing forms a lower inclined surface (26) connecting a lower side surface (22) and the top main surface of the housing. On the top main surface of the housing, a cross key (16) and an A button (18a), and a B button (18b) are provided, and on the lower inclined surface (26), a start switch (28) and a select switch (30) are arranged. On left and right edges of an upper side surface (20) of the housing, a left switch (32L) and a right switch (32R) are arranged. As to each of the left switch and the right switch, a switch portion is formed at one end nearer to a center of the housing in the horizontal direction, and a pin is provided at the other end opposite thereto. The pin is inserted into a bearing formed on the housing to function as a pivot at the other end of each of the left switch and the right switch.

Owner:NINTENDO CO LTD

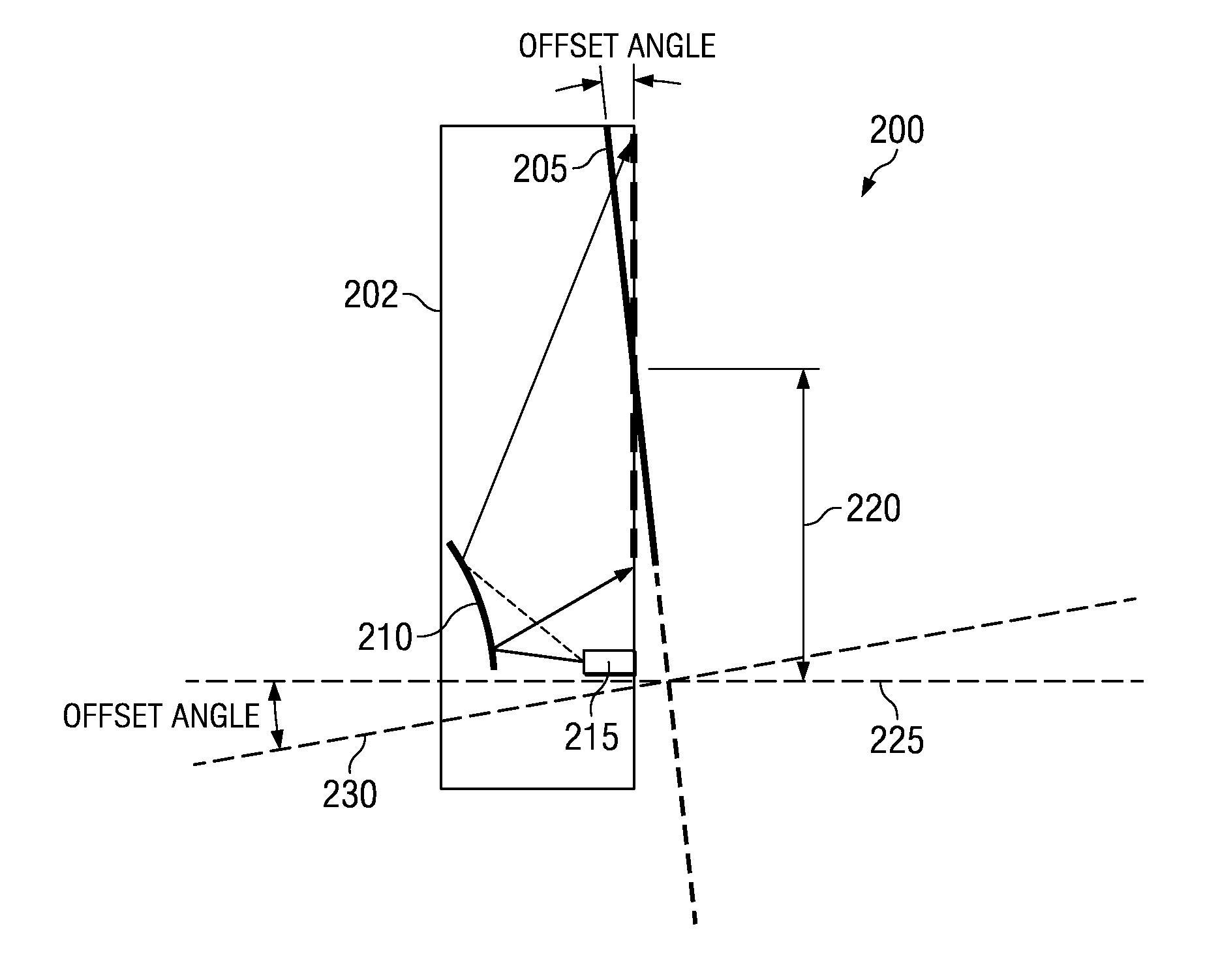

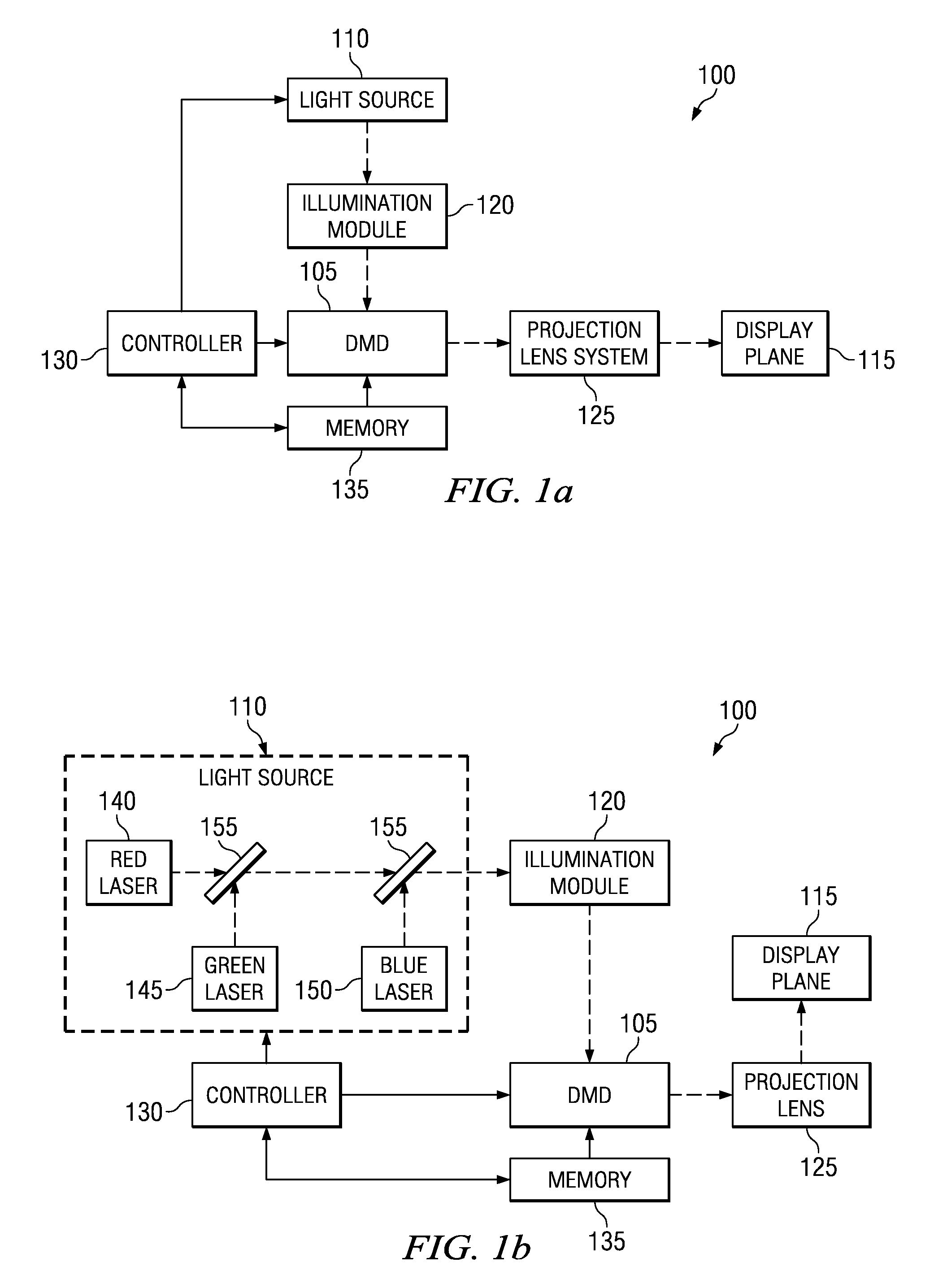

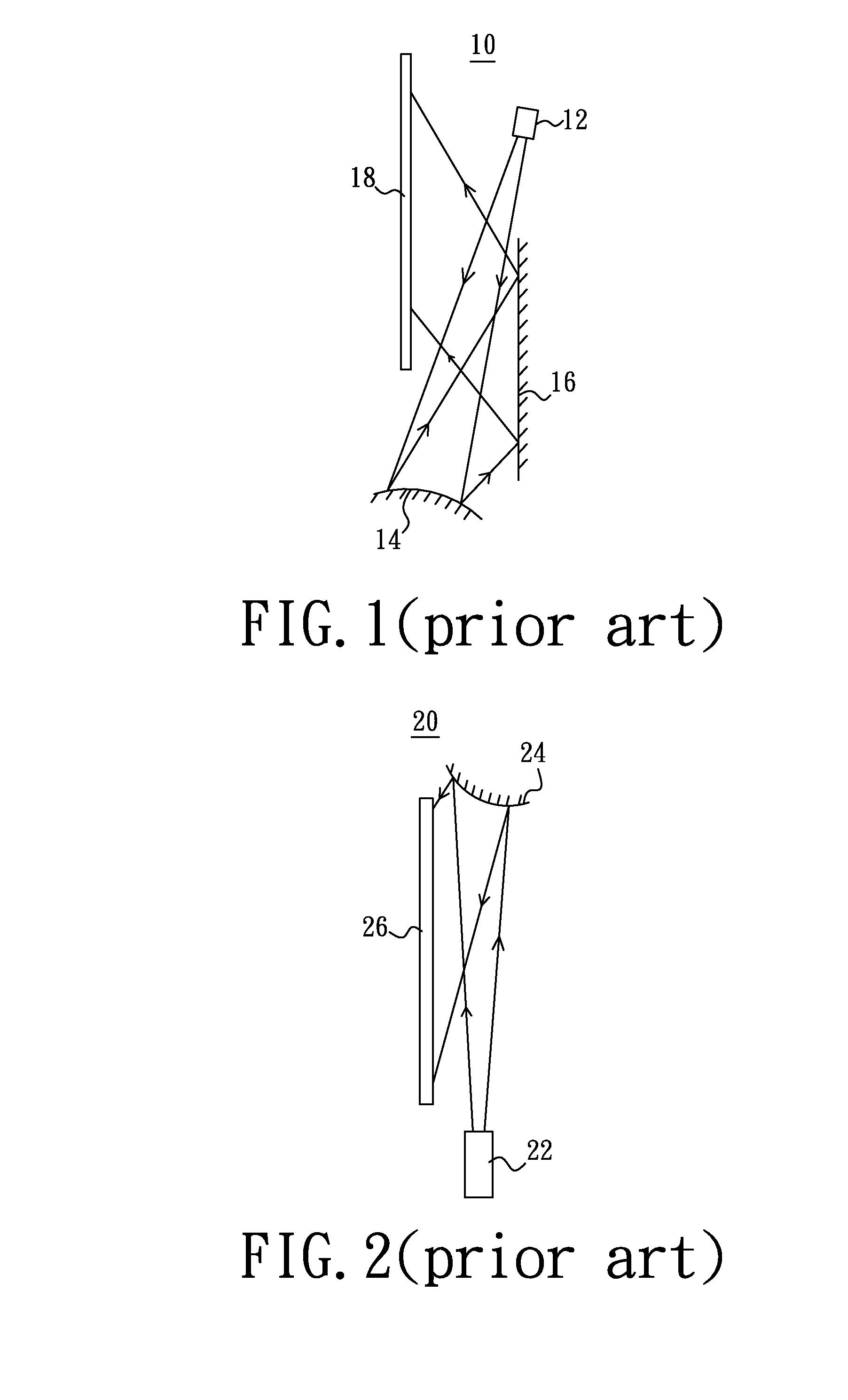

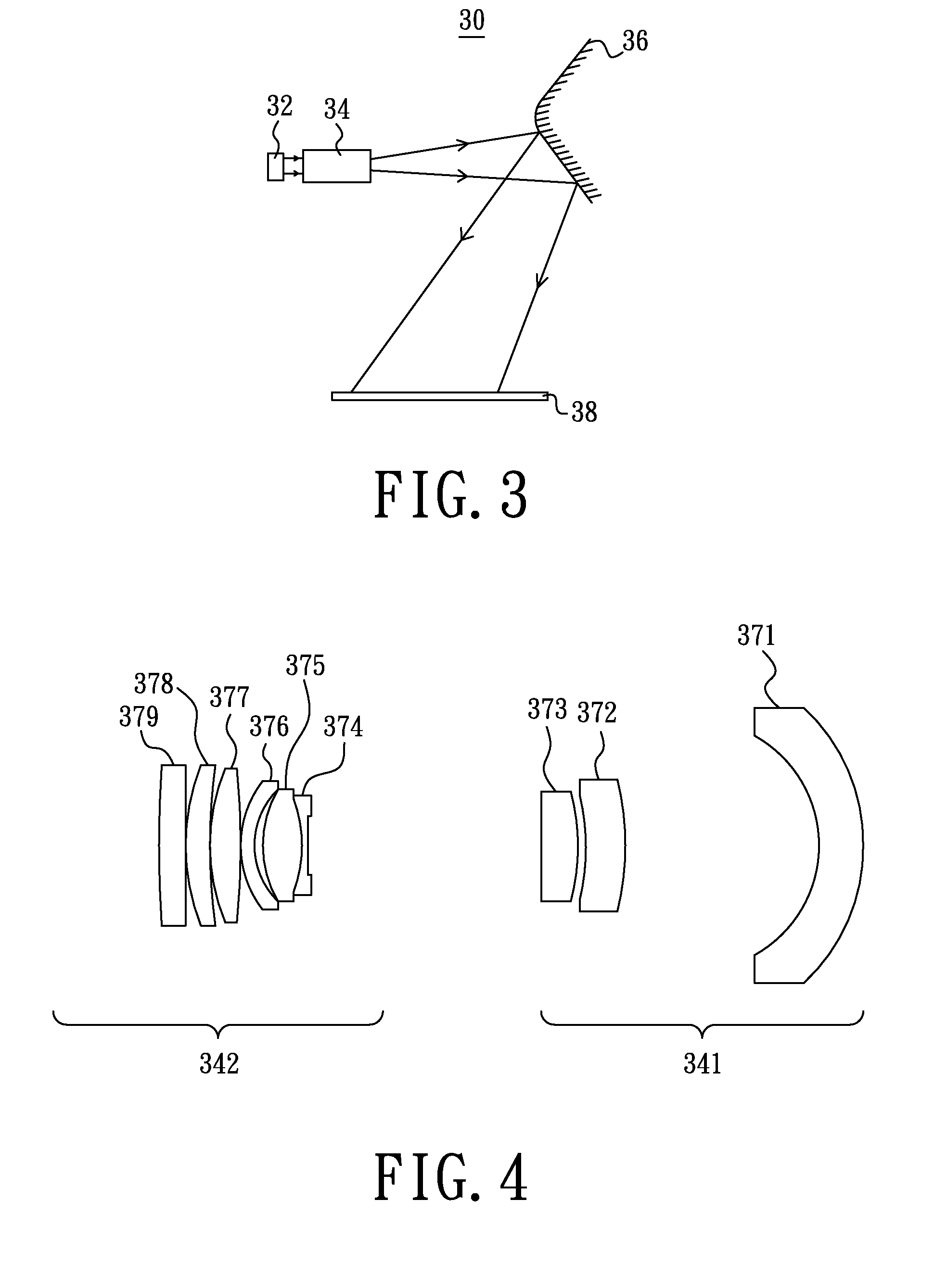

Offset projection distortion correction

A system and method for correcting optical distortion in an off-axis system is provided. The offset between the center of a display plane and the optical axis of the projection lens system is configured such that the offset is greater than half the vertical dimension of the display plane. In this manner, the distortion, such as a pincushion-type or barrel-type of distortion, is not symmetrical about the horizontal axis. In this scenario, the display plane, the projection lens system, a folding mirror, and / or the spatial light modulator may be tilted such that a keystone effect is induced. This keystone effect may be used to offset the distortion, particularly the pincushion-type or barrel-type of distortions.

Owner:TEXAS INSTR INC

Operation device for game machine and hand-held game machine

InactiveUS20060267928A1Easy to operateEasy to reachCathode-ray tube indicatorsVideo gamesHand heldEngineering

A hand-held game machine (10) includes a horizontally-long rectangular housing (12), and the housing is covered with a cover (14) almost entirely. Each of top main surfaces of the housing and the cover has a plane shape of pincushion, and thus, the top main surface of the housing forms a lower inclined surface (26) connecting a lower side surface (22) and the top main surface of the housing. At right and left edges of an upper side surface of the housing (20), a left switch (32L) and a right switch (32R) are arranged as a first operating means. On the top main surface of the housing, a cross key (16), and an A button (18a) and a B button (18b) are arranged as a second operating means, and on the lower inclined surface, a start switch (28) and a select switch (30) are arranged as a third operating means.

Owner:NINTENDO CO LTD

Image distortion correcting device and image distortion correcting method

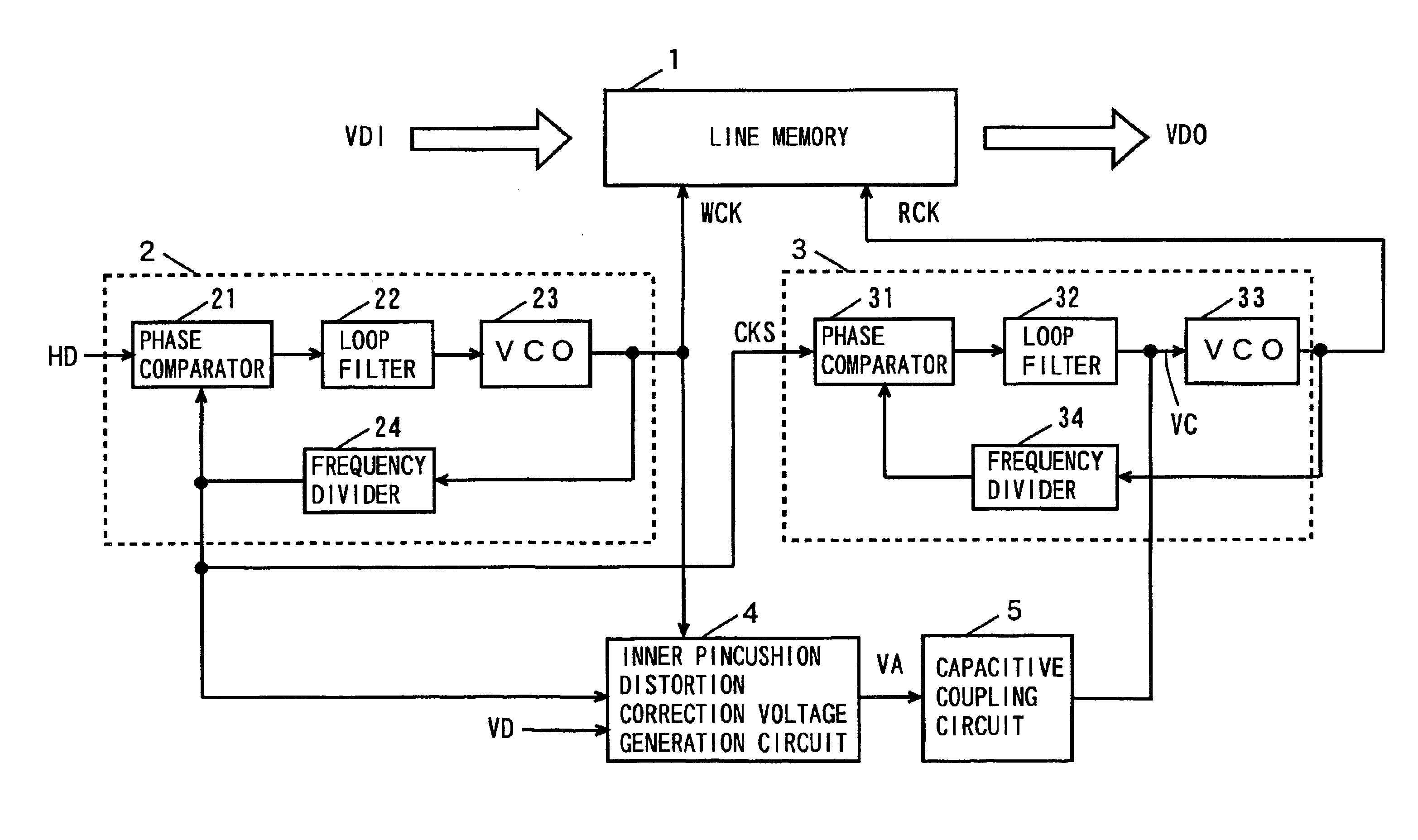

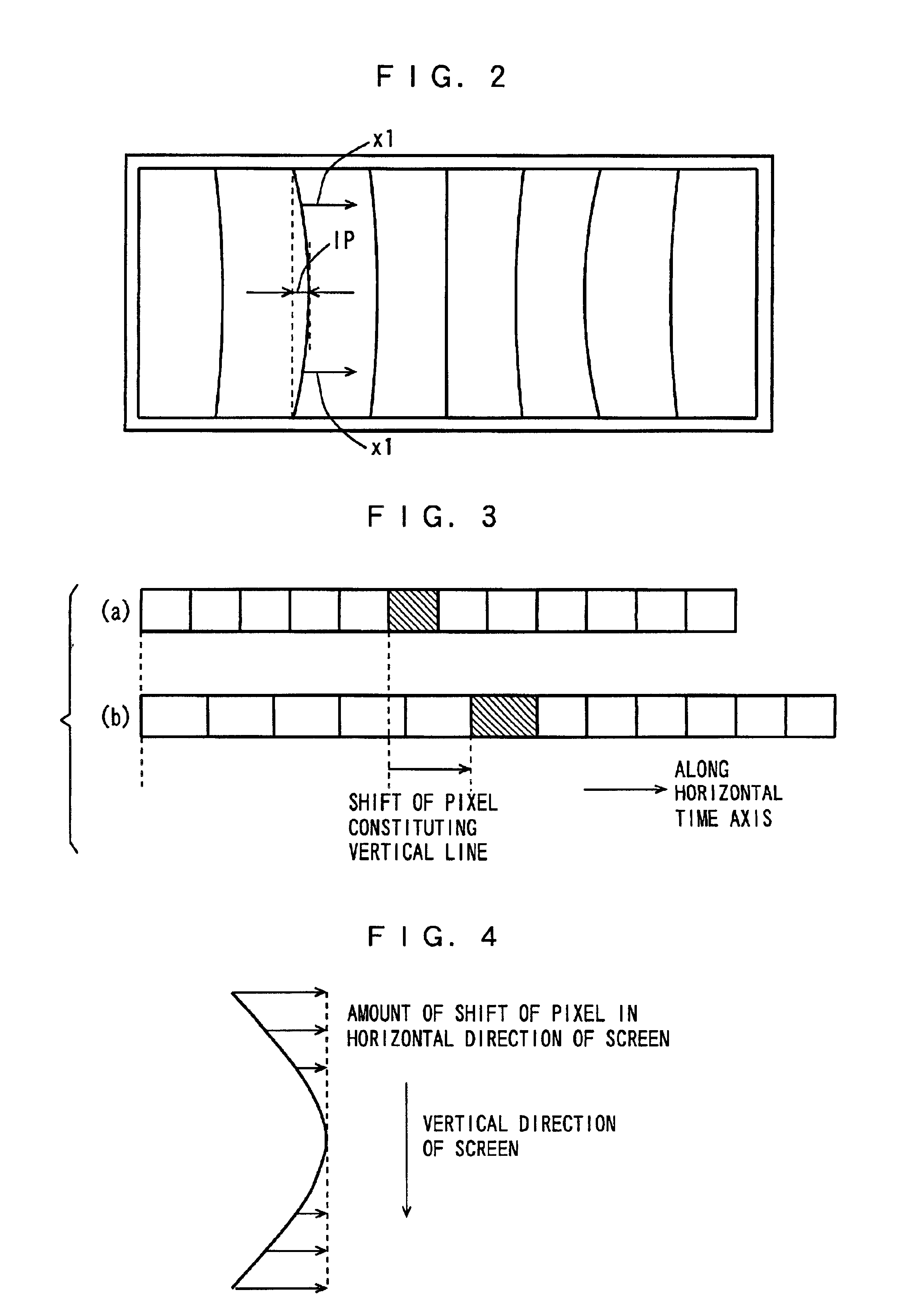

InactiveUS6989872B2Reduce power consumptionTelevision system detailsTelevision system scanning detailsLoop filterCapacitance

A write PLL circuit generates a write clock signal for writing a video signal into a line memory. A readout PLL circuit generates a read clock signal for reading out the video signal stored in the line memory. An inner pincushion distortion correction voltage generation circuit modulates a correction waveform in the horizontal scanning period of time by a correction waveform in the vertical scanning period of time, to generate an inner pincushion distortion correction waveform, and adds a DC correction pulse to the inner pincushion distortion correction waveform and outputs the inner pincushion distortion correction waveform as an inner pincushion distortion correction voltage. A capacitive coupling circuit superimposes the inner pincushion distortion correction voltage on an output voltage of a loop filter of the readout PLL circuit, and feeds the inner pincushion distortion correction voltage to a VCO as a control voltage.

Owner:PANASONIC CORP

Off-Axis Projection System and Method

ActiveUS20090141250A1Various typesBuilt-on/built-in screen projectorsSpatial light modulatorOptical axis

A system and method for correcting optical distortion in an off-axis system is provided. The offset between the center of a display plane and the optical axis of the projection lens system is configured such that the offset is greater than half the vertical dimension of the display plane. In this manner, the distortion, such as a pincushion-type or barrel-type of distortion, is not symmetrical about the horizontal axis. In this scenario, the display plane, the projection lens system, a folding mirror, and / or the spatial light modulator may be tilted such that a keystone effect is induced. This keystone effect may be used to offset the distortion, particularly the pincushion-type or barrel-type of distortions.

Owner:TEXAS INSTR INC

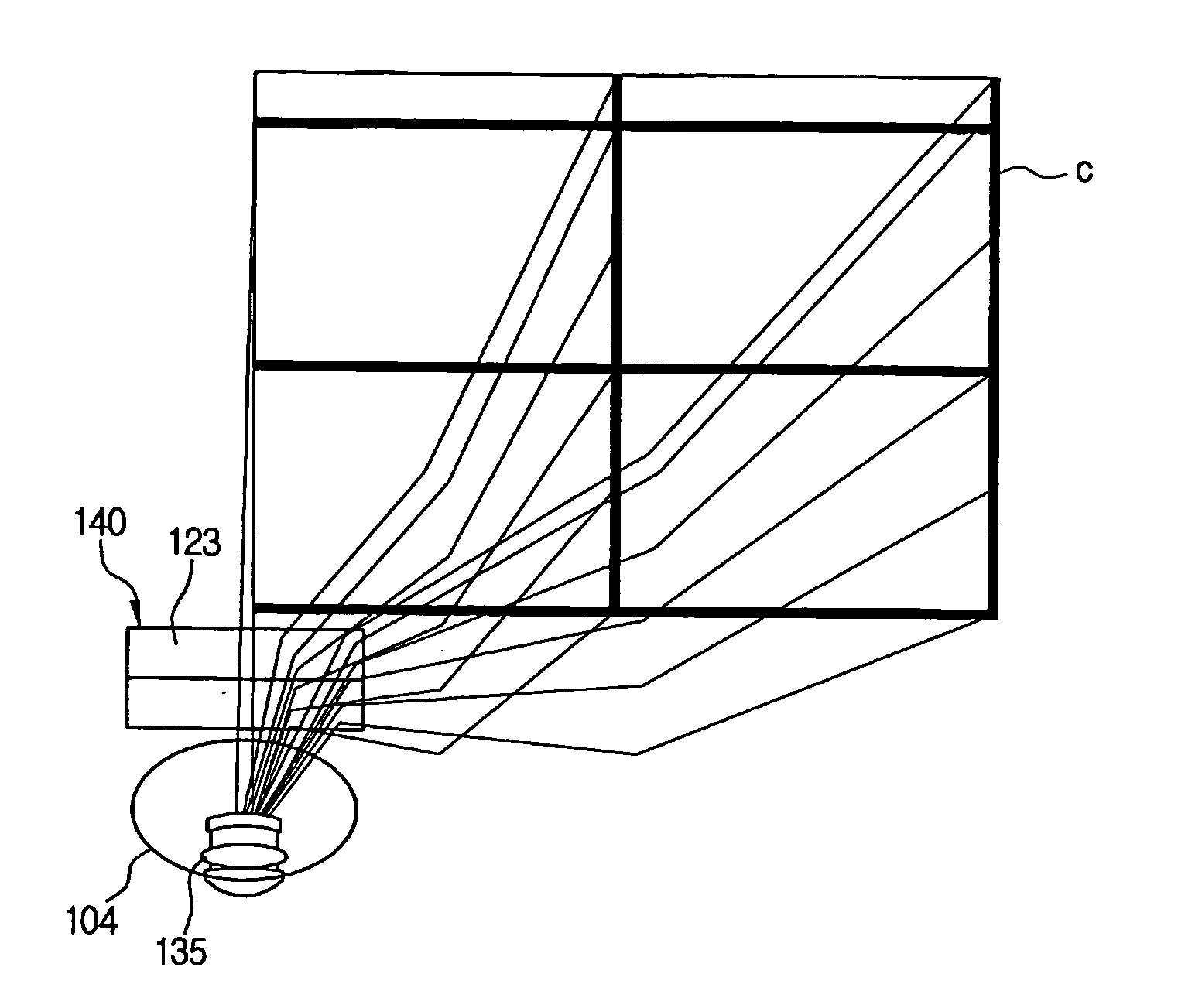

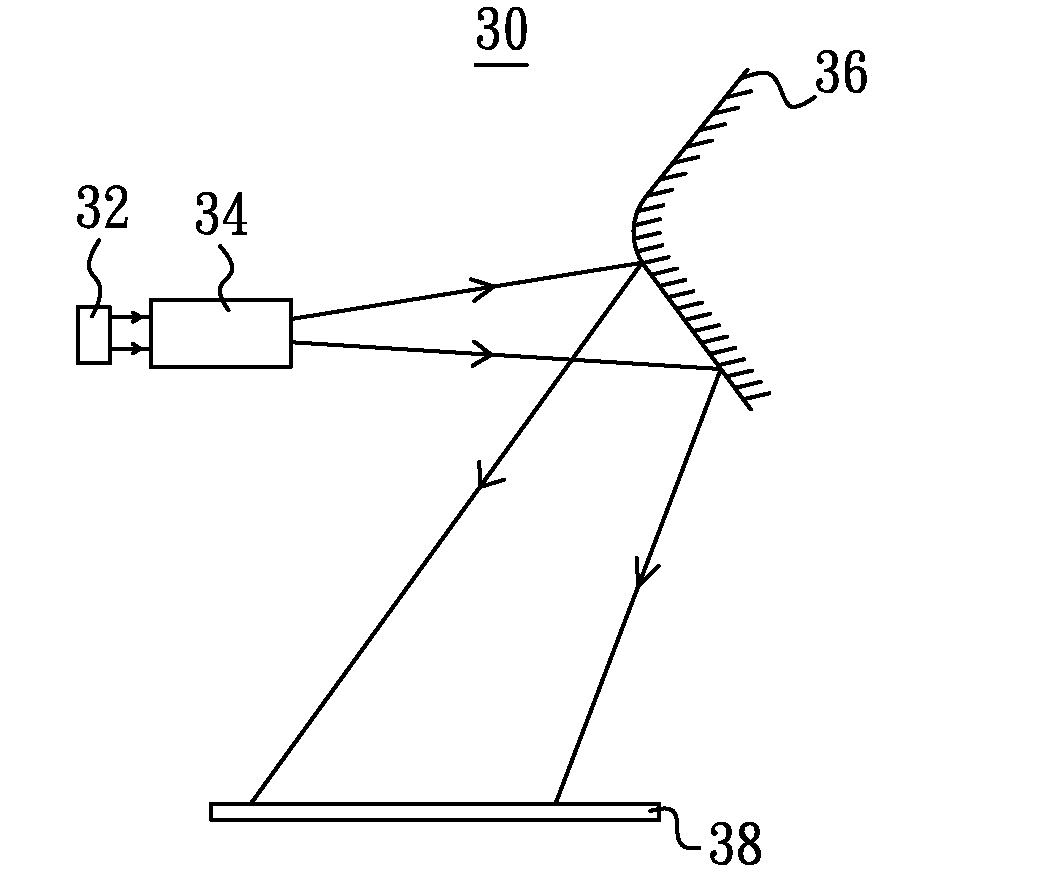

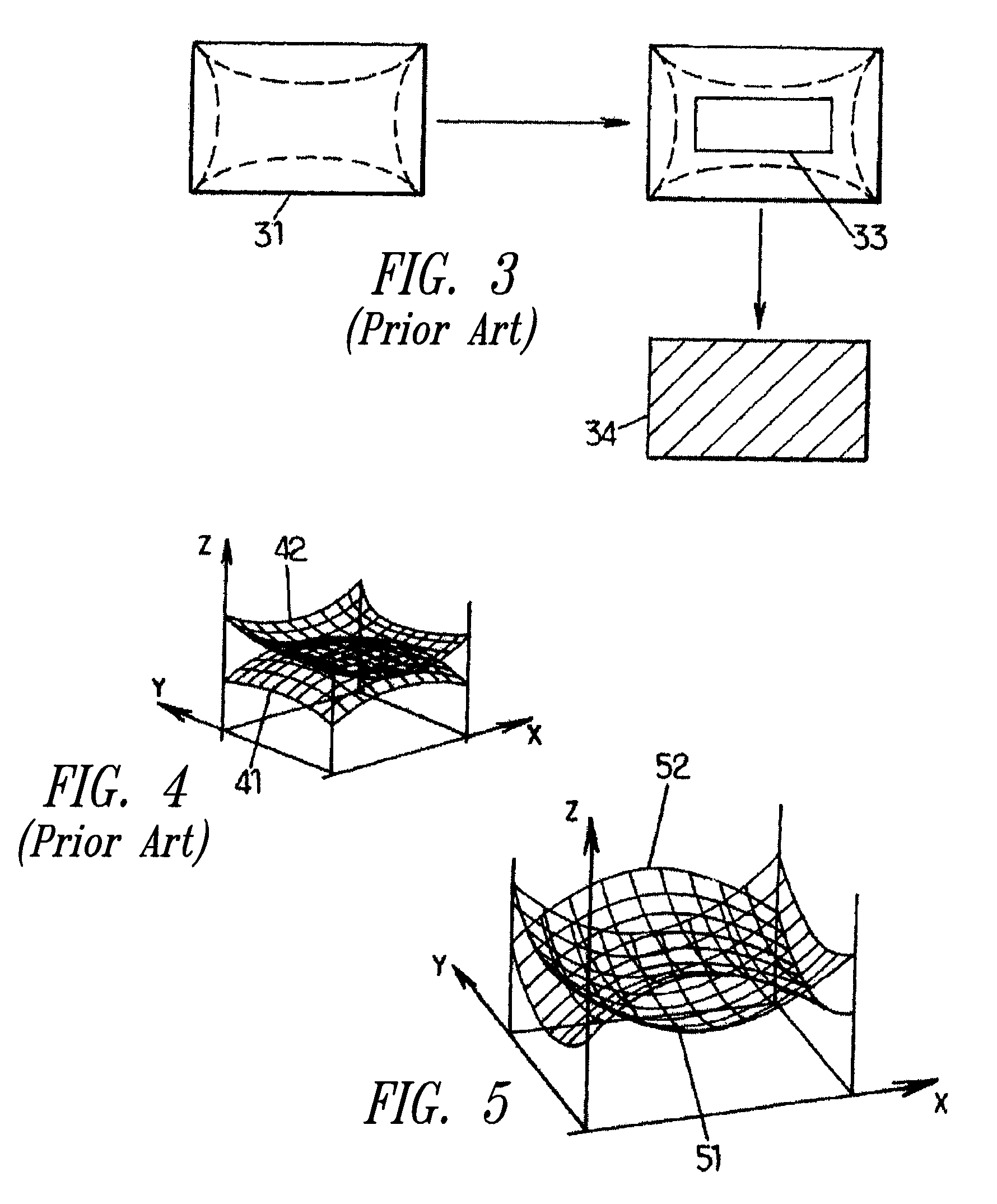

Optical projection system and image display device having the same

InactiveUS20050174545A1Quality improvementBig imageTelevision system detailsProjectorsAspheric lensProjection system

An optical projection system and an image display device having the same include a refraction part to create pincushion distortion in an image, and a reflection part to create barrel distortion in the pincushion-distorted image to compensate for the pincushion distortion and to project an enlarged image without distortion onto an image display surface. The refraction part includes a first cylinder symmetrical optic system having a first group of lenses including at least one aspheric lens, a second cylinder symmetrical optic system having a second group of lenses including at least one aspheric lens, a first planar reflecting optic system to bend the image that passes through the first cylinder symmetrical optic system by a first predetermined angle, and a second planar reflecting optic system to reflect the image that passes through the second cylinder symmetrical optic system to the reflection part. The reflection part includes a reflecting mirror inclinedly disposed at a predetermined angle and having an XY polynomial surface.

Owner:SAMSUNG ELECTRONICS CO LTD

Image processing apparatus, image processing method, and distortion correcting method

ActiveUS7813585B2Accurate imagingAvoid overwritingTelevision system detailsImage enhancementPattern recognitionImaging processing

Owner:OLYMPUS CORP

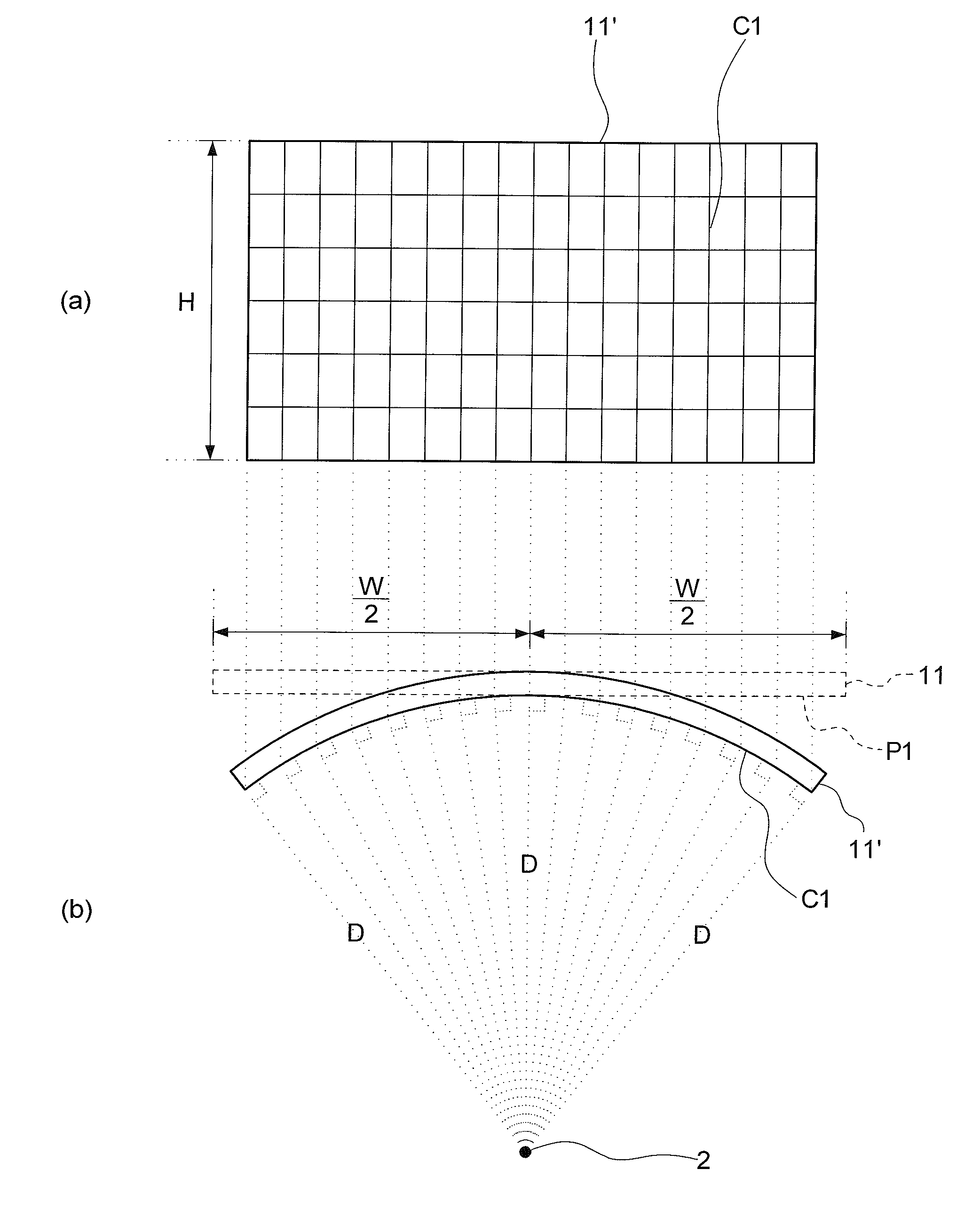

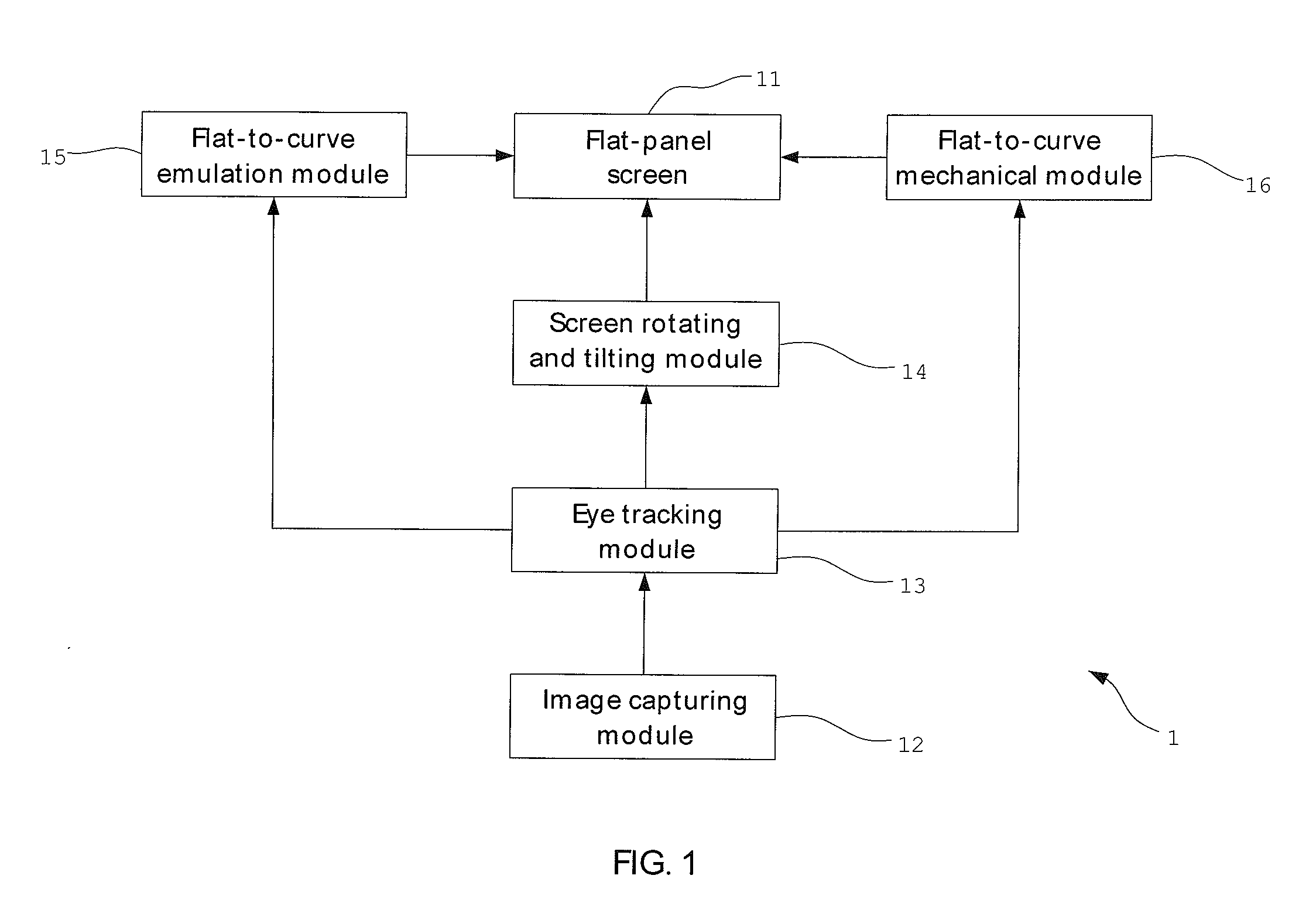

Display Device with Curved Display Function

InactiveUS20160100158A1Improve visual effectsAcquiring/recognising eyesCathode-ray tube indicatorsComputer graphics (images)Display device

A display device includes a flat-panel screen, an image capturing module, an eye tracking module, a screen rotating and tilting module, a flat-to-curve emulation module, and a flat-to-curve mechanical module. The display device employs the flat-to-curve emulation module to transform a rectangular flat image displayed by the flat-panel screen to a pincushion-like flat image according to a user's eye position; therefore, it may automatically provide the “emulated” curved image according to the user's eye position so that a user may perceive a visual effect like seeing a curved image. The display device further employs the flat-to-curve mechanical module to bend the flat-panel screen into a curved screen according to the user's eye position to bend the flat image displayed by the flat-panel screen into a curved image; therefore, it may automatically provide the “real” curved image according to the user's eye position to enhance the visual effect.

Owner:TOP VICTORY INVESTMENTS

Operation device for game machine and hand-held game machine

ActiveUS20060258456A1Easy to operateInput/output for user-computer interactionEmergency actuatorsHand heldEngineering

A hand-held game machine (10) includes a horizontally-long rectangular housing (12), and the housing is covered with a cover (14) almost entirely. Each of top main surfaces of the housing and the cover has a plane shape of pincushion, and thus, the top main surface of the housing forms a lower inclined surface (26) connecting a lower side surface (22) and the top main surface of the housing. On the top main surface of the housing, a cross key (16) and an A button (18a), and a B button (18b) are provided, and on the lower inclined surface (26), a start switch (28) and a select switch (30) are arranged. On left and right edges of an upper side surface (20) of the housing, a left switch (32L) and a right switch (32R) are arranged. As to each of the left switch and the right switch, a switch portion is formed at one end nearer to a center of the housing in the horizontal direction, and a pin is provided at the other end opposite thereto. The pin is inserted into a bearing formed on the housing to function as a pivot at the other end of each of the left switch and the right switch.

Owner:NINTENDO CO LTD

Method and device for correcting lens distortion

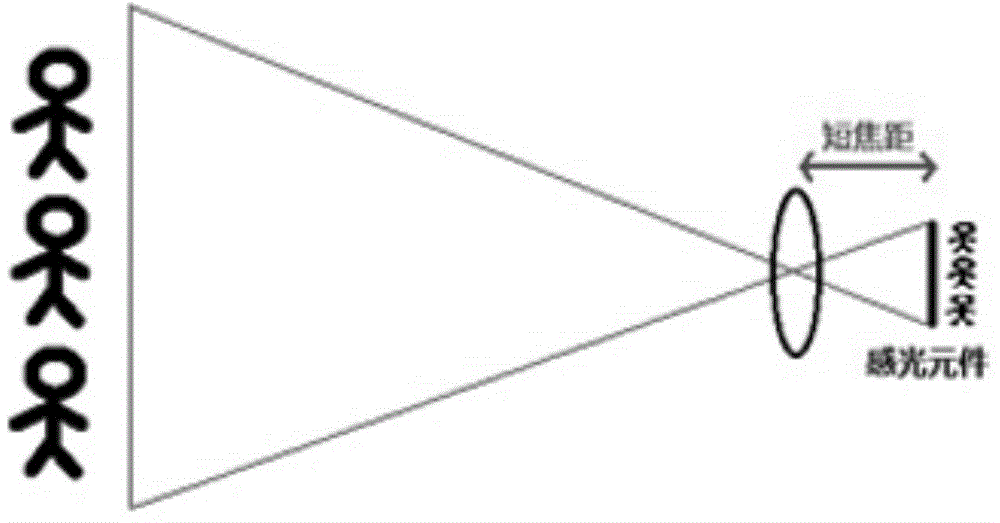

InactiveCN105827899AThe impact of reducing image qualityReduce use costTelevision system detailsColor television detailsCamera lensImaging quality

The present invention provides a method and device for correcting lens distortion. The method comprises a step of setting the viewing angle of a lens as a preset angle, wherein when the viewing angle of the lens is the preset angle, the distortion type of the lens is pincushion distortion or barrel distortion, and a step of correcting the distortion type of the lens into linear distortion from the pincushion distortion or barrel distortion. According to the technical scheme of the invention, the viewing angle of the lens is set as the preset angle, the easy capture of a wide range of scenery in shooting or video recording is satisfied, the distortion type of the lens is corrected into the linear distortion from the pincushion distortion or barrel distortion, the linear distortion can be corrected according to the image linear relation of the linear distortion further, and the influence of a lens distortion effect caused by reducing a large viewing angle on imaging quality is reduced. Additional accessories are not needed, the cost of use is reduced, and the portability of use is improved.

Owner:VIVO MOBILE COMM CO LTD

Optical projection system and image display device having the same

InactiveUS7370977B2Reduce depthBig imageTelevision system detailsProjectorsProjection systemPincushion

An optical projection system which creates pincushion distortion of an image and then creates a barrel distortion of the pincushion-distorted image to compensate for the pincushion distortion and then projects an enlarged image without substantial distortion onto an image display surface. An embodiment includes a polynomial reflector to compensate for the pincushion distortion, to enlarge an image, and to project the enlarged image toward a screen.

Owner:SAMSUNG ELECTRONICS CO LTD

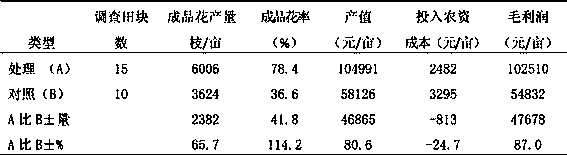

Method for improving cutting seedling quality of pincushion

InactiveCN107637290AReduce morbidityImprove seedling rateFlowers cultivationCultivating equipmentsGluconic acidPincushion

The invention relates to the field of pincushion cottage planting, and especially relates to a method for improving cutting seedling quality of pincushion. The method is used for solving the cutting seedling quality of the pincushion. 1, a cottage seedling bed is 100 cm in width, 80cm in height and 10-20 cm in length; the seedling bed is placed at the inner part of a 4m high greenhouse, and a sunshade screen is 50-70% sunshade rate; 2, the seedling matrix is a multilayered monomer raw material; 3, root growing agent is prepared by compounding one or more of glucose, saccharose, gluconic acid,pimacol, indolebutyric acid, and amino acid; cutting slips are immersed for 2-5 minutes and dried against sunshine for 5 minutes; 4, water and fertilizer management; 5, selection and treatment methodof cutting slips; 6, cottage method: boring a hole by a bamboo rod with diameter of 0.5 cm, wherein the hole depth is 10cm, and the surface depth inserted by cutting slips is 2-3 cm, thus the cuttingslips are breathable; 7, daily management. The pincushion seedling root is developed, and the method is strong in practicability and higher in survival rate.

Owner:玉溪市农业科学院 +1

Optical Projection System

ActiveUS20090079946A1Control distortionLower the volumeProjectorsOptical elementsAspheric lensProjection system

An optical projection system includes an image generation element, a projection lens set for receiving an image from the image generation element, refracting the image and projecting the image out, and a reflector for reflecting the image from the projection lens set and projecting the image to a screen. The projection lens set includes an aspherical lens with a distortion coefficient larger than 0.5 for producing a pincushion distortion of the image and several other lenses, and the absolute value of the sum of the distortion coefficients of the other lenses are less than the distortion coefficient of the aspherical lens. The reflector produces a barrel distortion to the image for compensating a pincushion distortion of the image produced by the projection lens set.

Owner:YOUNG OPTICS

Polyaniline pincushion array film having electric field responding hydrophilic/hydrophobic change and preparation method thereof

The invention provides a polyaniline pincushion array film having electric field responding hydrophilic / hydrophobic change and a preparation method thereof. The preparation method is characterized by comprising the preparation of a polystyrene ordered pincushion film template, the preparation of a polyurethane pincushion array matrix and the preparation of the polyaniline pincushion array film. The preparation method is scientific and reasonable, and low in energy consumption and cost. Micron porous edge pinpoint projections are formed on the surface of the prepared polyaniline pincushion array film; the surface of the matrix material is rough and has extremely high adhesion, and therefore, the coating quantity of the polyaniline on the surface of the matrix is increased, the electrical conductivity and the electrostriction are enhanced, and dimensional change can be induced by an electric field to further cause the change of the surface photonic structure of the film, and consequently, the change of optical properties is induced; the film is formed more evenly, and is controllable in tenacity and strength; due to the special pincushion structure, the contact angle of the polyaniline film is increased and the hydrophilic / hydrophobic change performance of the surface of the film is enhanced.

Owner:JILIN FEITE GENERATING PLANT MFG

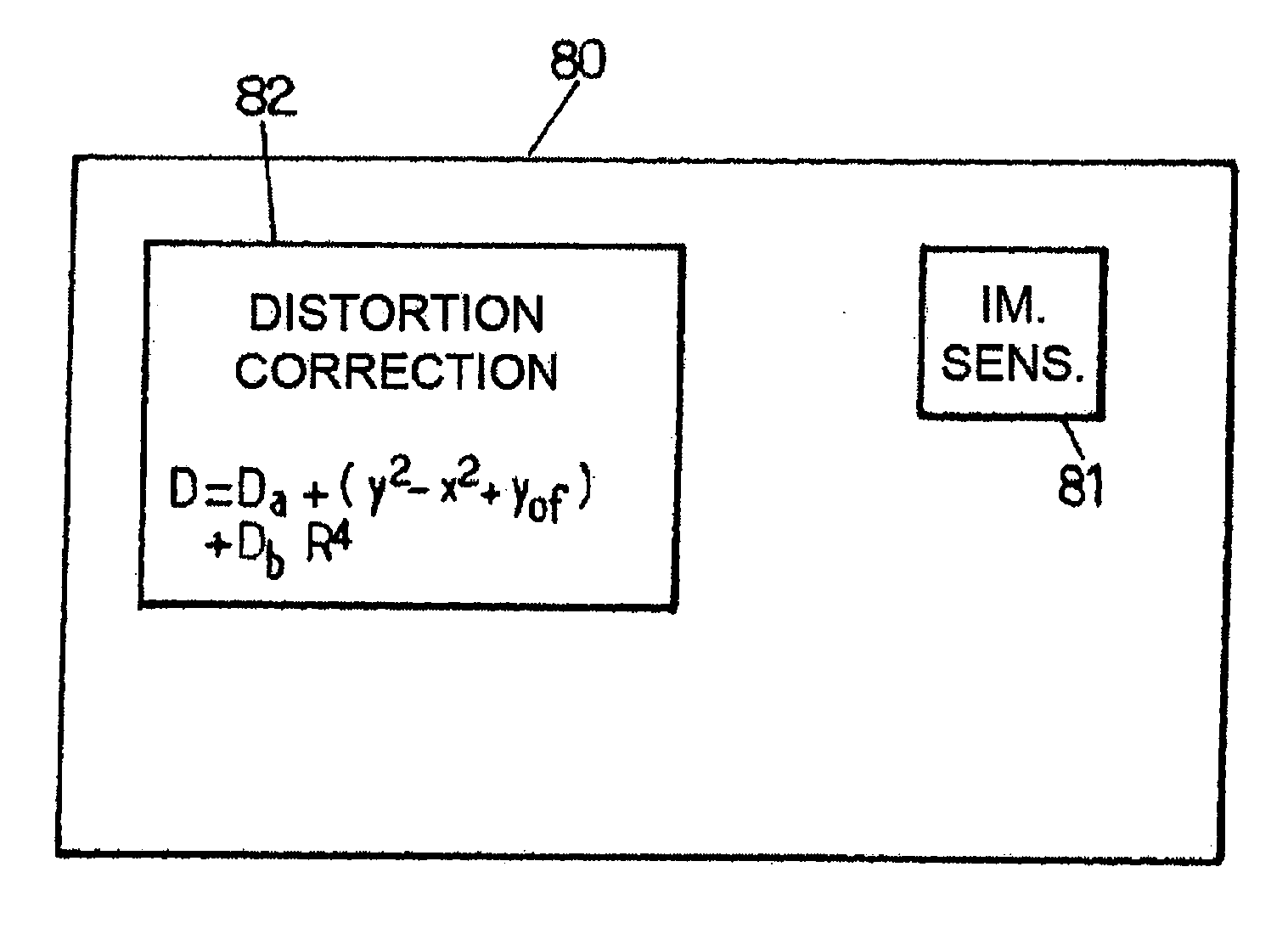

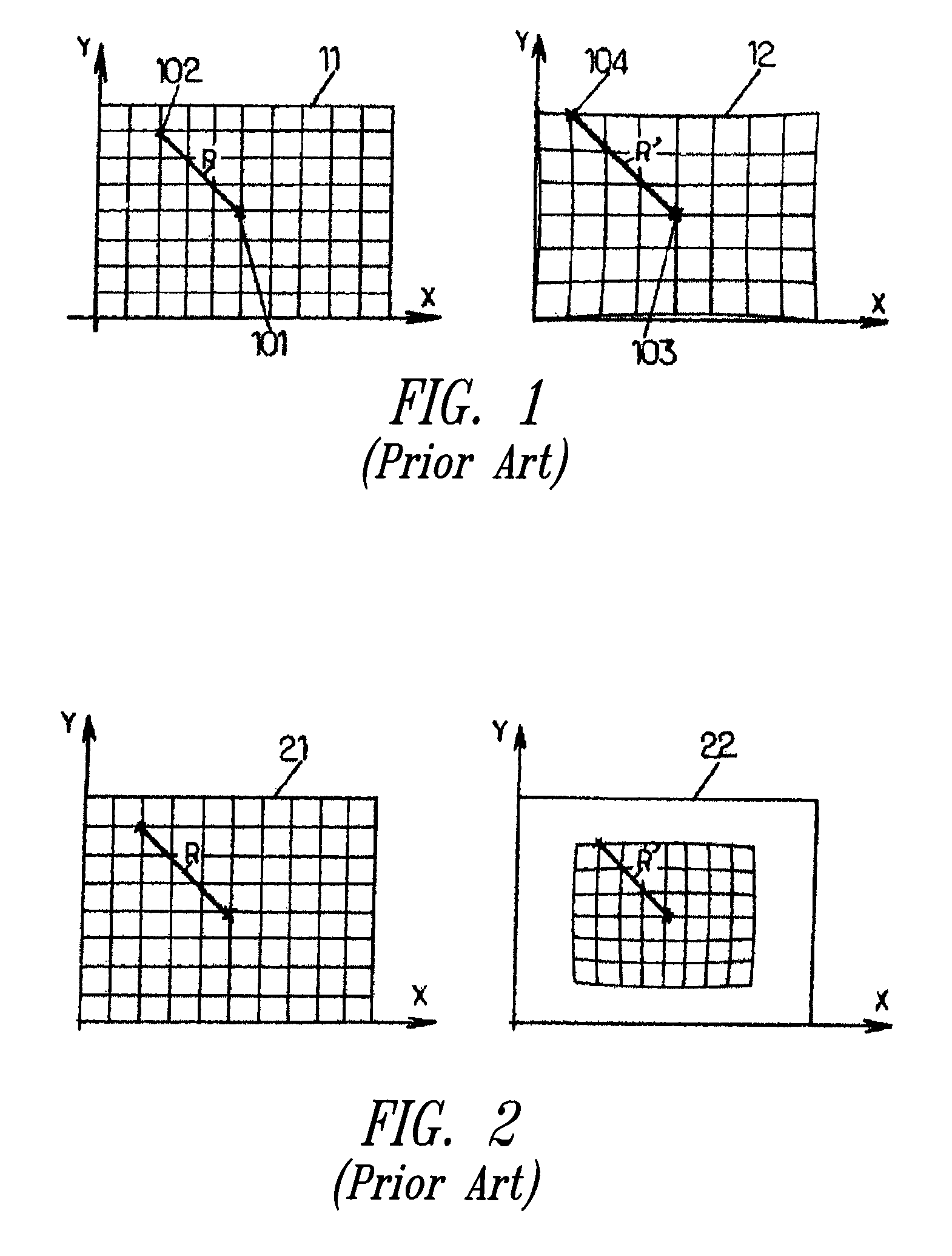

Correction of image distortion

ActiveUS20090067745A1Improve image qualityTelevision system detailsTelevision system scanning detailsPincushionDistortion

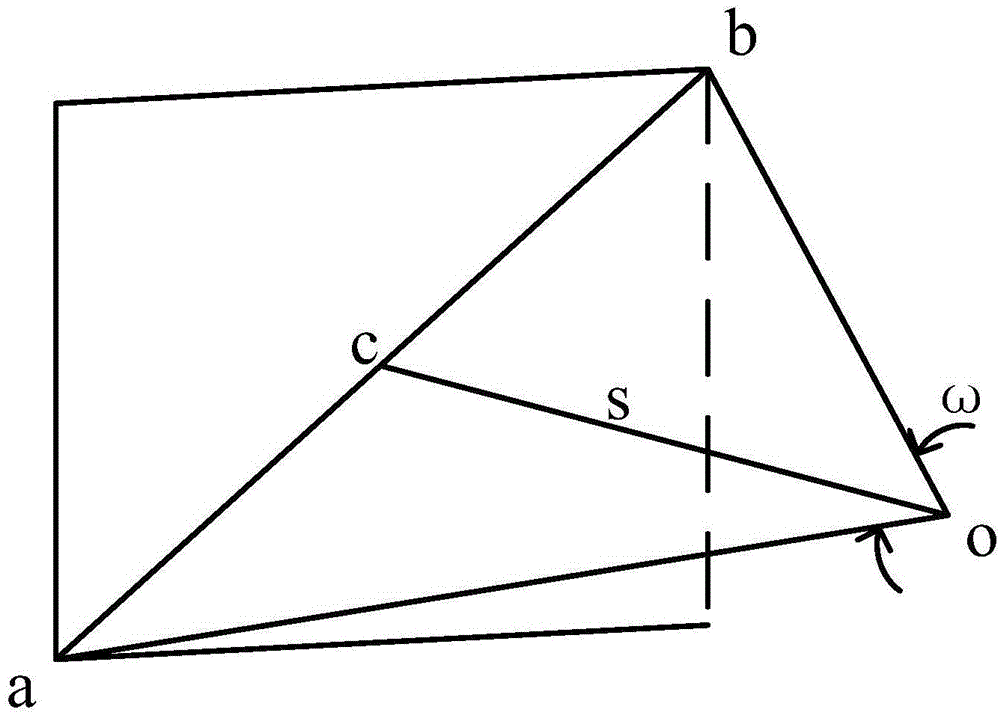

An image sensor captures an image of a scene. This captured image has a pincushion distortion relative to an undistorted image of the scene. The distortion is corrected based on an approximation of the distortion D which satisfies the following equation for a given pixel in the captured image: D=(R−R′) / R=Da*(y2−x2+yoffset)+Db*R4, where R is the distance between a pixel, which corresponds in the undistorted image to the given pixel in the captured image, and the center of the undistorted image, R′ is the distance between the given pixel and the center of the captured image, Da and Db are respective distortion parameters of the image sensor, x and y are coordinates of the given pixel in the captured image, R satisfies the following equation: R2=x2+y2 and yoffset corresponds to a constant value.

Owner:STMICROELECTRONICS SRL

Planting method for improving commodity rate of pink pincushion

The invention relates to the field of pincushion cottage planting, and especially relates to a planting method for improving commodity rate of a pink pincushion. The method is applied to solve the problem of poor quality of the planting flower ball of the pink pincushion. The method includes steps 1, pincushion seedling method; 2, application of a steel-frame greenhouse facility and matching witha sunshade, water and fertilizer integrated facility; 3, improvement of root soil; 4, planting: the planting method is single-ridge and single-line planting, single-ridge and double-line planting andothers; 800-1000 plants are planted per mu and transplanted in a pond built; 5, fertilizer application; 6, irrigation; 7, light temperature control in the facility; 8, trimming; 9, pest control; 10, flower picking. The pincushion is pure in flower color and has gloss pink color; the plant nutrition growth is robust; the pincushion is light in downy mildew and powdery mildew, good in flower ball quality, and high in commodity rate.

Owner:玉溪市农业科学院 +1

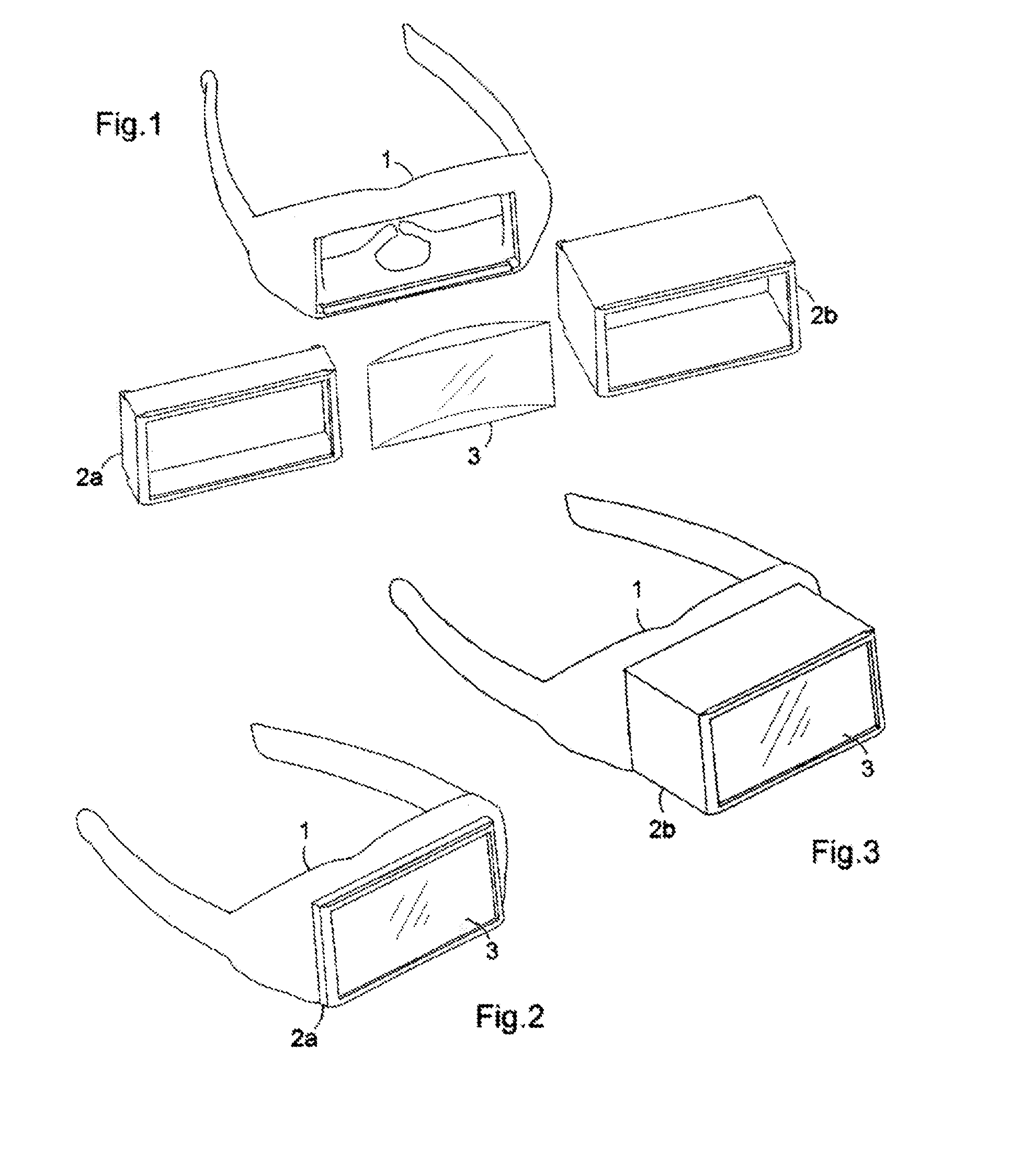

Holographic 3D eyewear for video gaming

3D eyewear that uses Cylindrical Lens that stretched across the viewers eye viewing space, while capturing and curving light (into pincushion and / or barrel distortion) from an image on a screen (such as 3D video games, videos, and pictures) and turn it into a Holographic 3D scene with depth. The technology depends on the viewers natural monocular cues combined with the optical distortion of the Lens to acquire this Holographic 3D effect. The eyewear has three different parts: the eyewear frame, eyewear drawer, and Cylindrical Lens. No special applications or devices are are need to this method of 3D. The only thing that is needed is the 3D eyewear, monitor, and normal 3D video game, video or picture to view True 3D with Depth (3D Holographic scene). Note: This method of 3D aims mostly towards 3D video gaming.

Owner:SKY IYIN

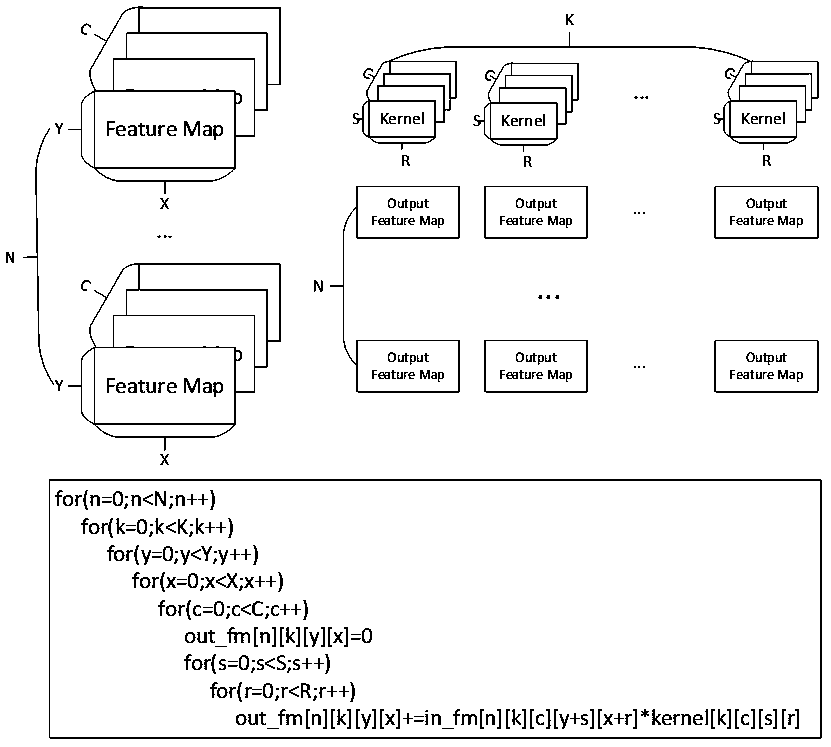

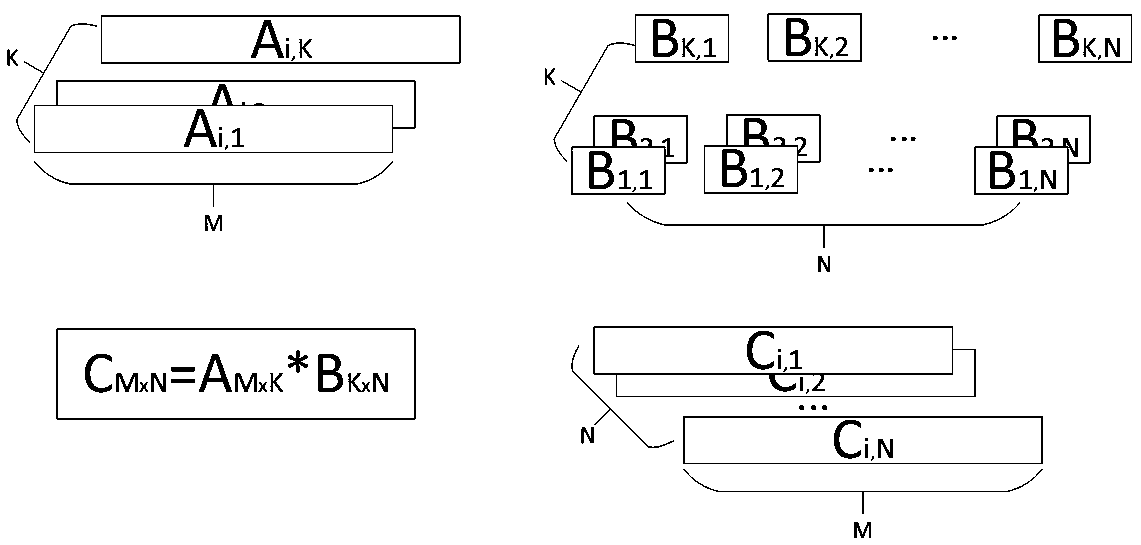

Hardware architecture of accelerated artificial intelligence processor

InactiveCN109191364AWork lessMeet needsProcessor architectures/configurationProgram controlData compressionHardware architecture

A Hardware architecture for an accelerated artificial intelligence processor includes: a main engine, a front lobe engine, a parietal lobe engine, a renderer engine, a pillow engine, a temporal lobe engine and a memory. The front-lobe engine obtains 5D tensor from the host and divides it into several sets of tensors, and sends these sets of tensors to the top-lobe engine. The front-lobe engine obtains 5D tensors from the host and divides them into several sets of tensors. The top engine acquires a set of tensors and divides them into a plurality of tensor waves, sends the tensor waves to the renderer engine to execute an input feature renderer, and outputs a portion of the tensors to the pincushion engine. The pincushion engine accumulates a partial tensor and executes an output feature renderer to obtain a final tensor sent to the temporal lobe engine. The temporal lobe engine compresses the data and writes the final tensor to memory. The artificial intelligence work in the inventionis divided into a plurality of highly parallel parts, some parts are allocated to an engine for processing, the number of engines is configurable, the scalability is improved, and all work partitioning and distribution are realized in the architecture, thereby obtaining high-performance efficiency. The artificial intelligence work in the invention is divided into a plurality of highly parallel parts, and some parts are allocated to an engine for processing, and the number of engines is configurable, and the scalability is improved.

Owner:NANJING ILUVATAR COREX TECH CO LTD (DBA ILUVATAR COREX INC NANJING)

Method for cultivating annually flowerable miniature pincushion and special inducer of method

ActiveCN110622865ARich sourcesEasy to get materialsBiocidePlant growth regulatorsMiniaturizationInducer

The invention discloses a method for cultivating annual flowerable miniature pincushion and a special inducer of the method, and belongs to the technical field of plant biology. The method for cultivating the annually flowerable miniature pincushion comprises the steps of explant selection, seedling culture, miniaturization culture and the like. Explant materials are rich in sources, the inducer used for seedling culture and miniaturization culture are safe and efficient, the miniaturization degree of the pincushion can be controlled, the flowering time of the obtained annual flowerable miniature pincushion can be controlled, flower types and sizes are abundant, and large-scale production of pincushion miniaturized products is facilitated.

Owner:浙江中环农业开发有限公司

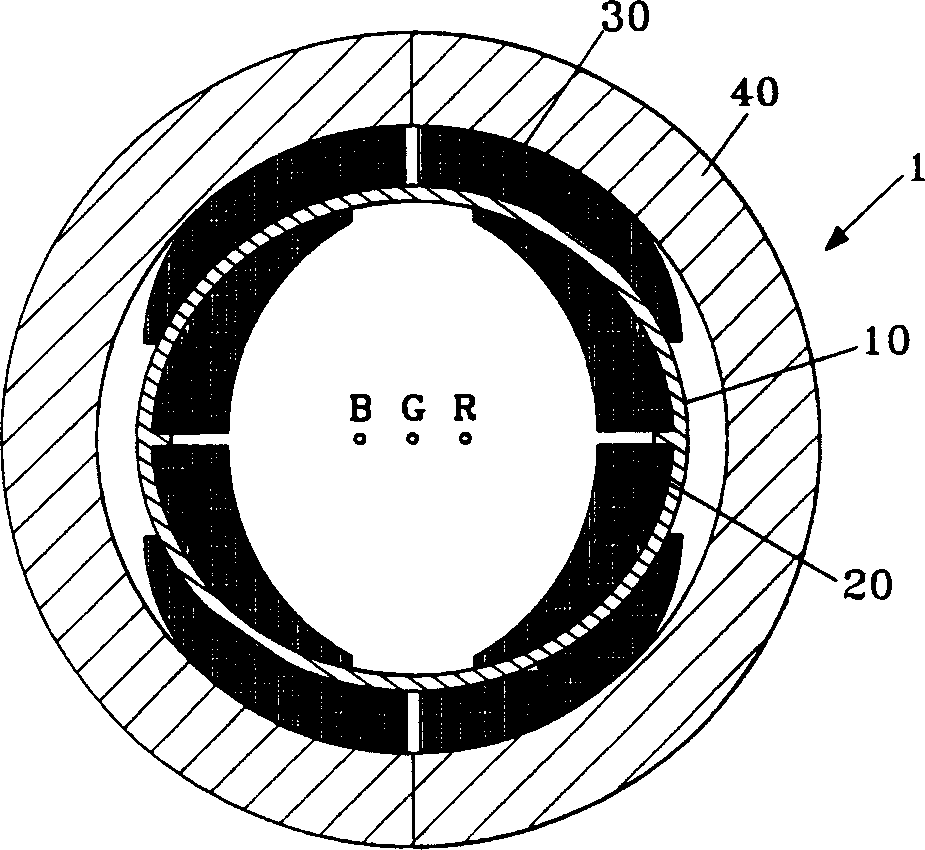

Deflection device

InactiveCN1466165AAvoid affecting featuresElectrode and associated part arrangementsVertical deflectionPincushion

A deflection yoke attached to a cathode ray tube to deflect an electron beam generated from an electron gun includes a coil separator having a horn shape and electrically insulating deflection coils, a horizontal deflection coil having a pair of upper and lower coils disposed on an inside of the coil separator to generate a horizontal deflection magnetic field to deflect the electron beam in a horizontal direction, a vertical deflection coil having a pair of right and left coils disposed on an outside of the coil separator to generate a vertical deflection magnetic field, having a deflection coil portion and a bent coil portion extended from the deflection coil portion to generate a magnetic field, which is in an opposite direction to another magnetic field generated from a portion of the deflection coil portion disposed adjacent to the bent coil portion and weakens another magnetic field generated from the deflection coil portion, and having a vertical radius and a horizontal radius, which is different from the vertical radius, and a ferrite core having a cylindrical shape covering a portion of the deflection coil portion of the vertical deflection coil to strengthen the vertical deflection magnetic field and the horizontal deflection magnetic field. A magnetic field generated from a corner portion of the vertical deflection coil is weakened to correct the pincushion distortion of the screen as well as the misconvergence.

Owner:SAMSUNG ELECTRO MECHANICS CO LTD

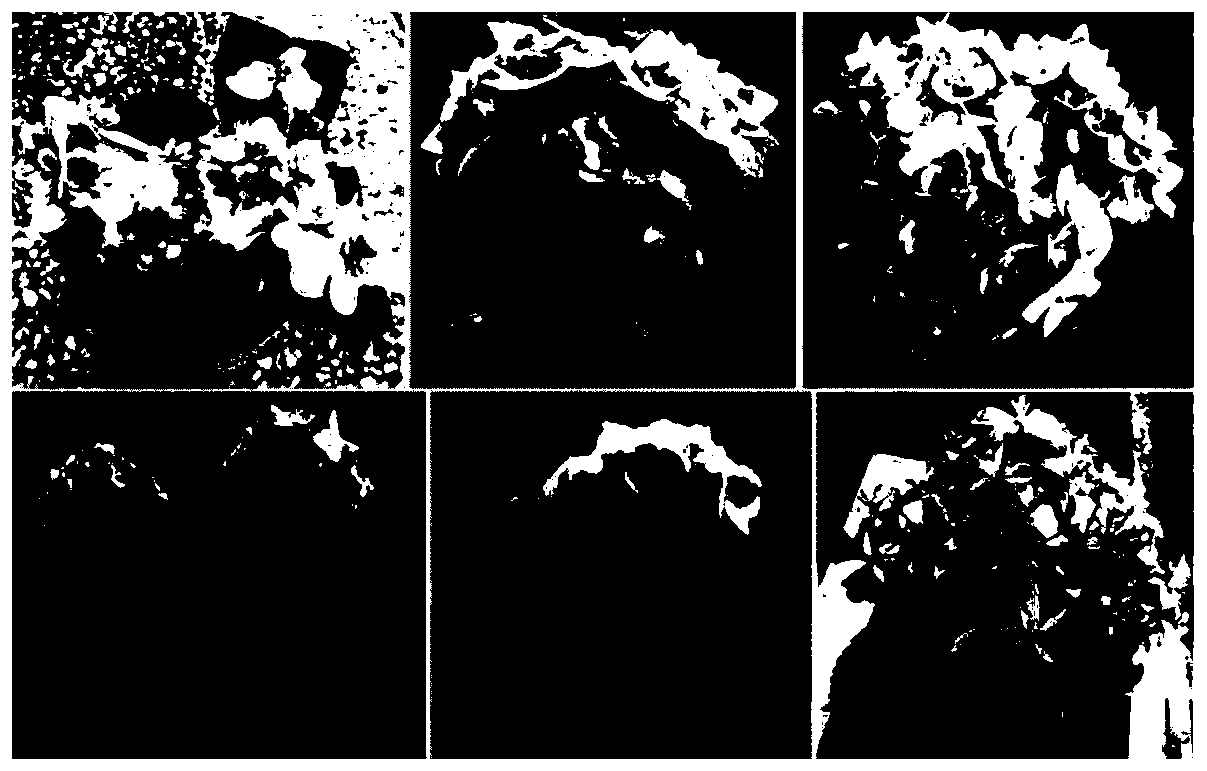

Sensors

InactiveUS20070285398A1Reduced pincushion errorEasy to manufactureElectronic switchingInput/output processes for data processingSpider webEngineering

A sensor comprised includes a rectangular conductive sheet. Electrical connectors are connected at each corner of the sheet. A number of non-conductive features, e.g. apertures, are formed in this sheet. In one embodiment, V-shaped features extend from near the corners of the sheet towards the centre of the sheet and then turn towards an adjacent corner. Smaller V-shaped features reside in the area between the larger features and the closest edge of the sheet. In another embodiment, a spider web formation of conductive tracks or paths is provided. This provides hardware correction of pincushion error. It reduces or eliminates the need for software correction of pincushion error.

Owner:NOKIA CORP

Correction of image distortion

InactiveUS8045822B2Improve image qualityTelevision system detailsTelevision system scanning detailsPincushionComputer science

Owner:STMICROELECTRONICS SRL

Operation device for game machine and hand-held game machine

A hand-held game machine (10) includes a horizontally-long rectangular housing (12), and the housing is covered with a cover (14) almost entirely. Each of top main surfaces of the housing and the cover has a plane shape of pincushion, and thus, the top main surface of the housing forms a lower inclined surface (26) connecting a lower side surface (22) and the top main surface of the housing. At right and left edges of an upper side surface of the housing (20), a left switch (32 L) and a right switch (32 R) are arranged as a first operating means. On the top main surface of the housing, a cross key (16), and an A button (18 a) and a B button (18 b) are arranged as a second operating means, and on the lower inclined surface, a start switch (28) and a select switch (30) are arranged as a third operating means.

Owner:NINTENDO CO LTD

Image processing apparatus, image processing method, and distortion correcting method

InactiveCN100552712CTelevision system detailsGeometric image transformationImaging processingPincushion

An image processing device having a distortion correction processing section (8), the image processing apparatus having a distortion correction range calculation section (12) for calculating an input required for the distortion correction processing section (8) to perform distortion correction processing image range. In this way, the corrected image (output image) obtained by distortion correction can be output without excess or deficiency for the image output range, and distortion correction processing that effectively utilizes input (photographed) image data as source data can be performed, and pincushion distortion can be eliminated. , Barrel distortion and string distortion etc. for effective distortion correction processing.

Owner:OLYMPUS CORP

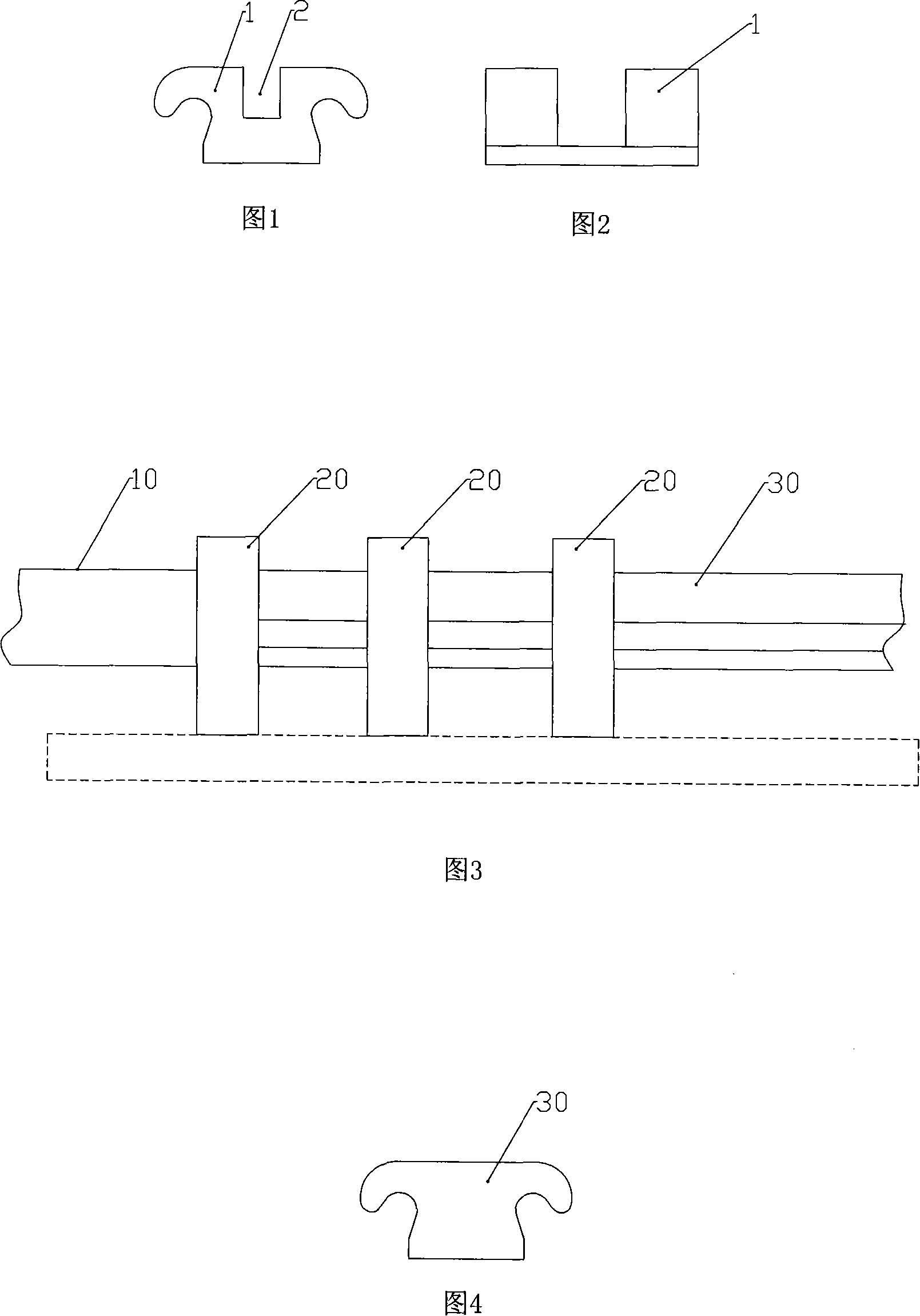

Method for producing dental orthodontic supporter groove

The invention discloses a making method of dental pincushion distortion tray groove, which comprises the following steps: rolling die; doing cold drawing; squeezing to form bar-shaped fine blank of pincushion distortion groove; manufacturing the fine blank into arch wire trench; cutting into individual product.

Owner:长沙市天天齿科器材有限公司

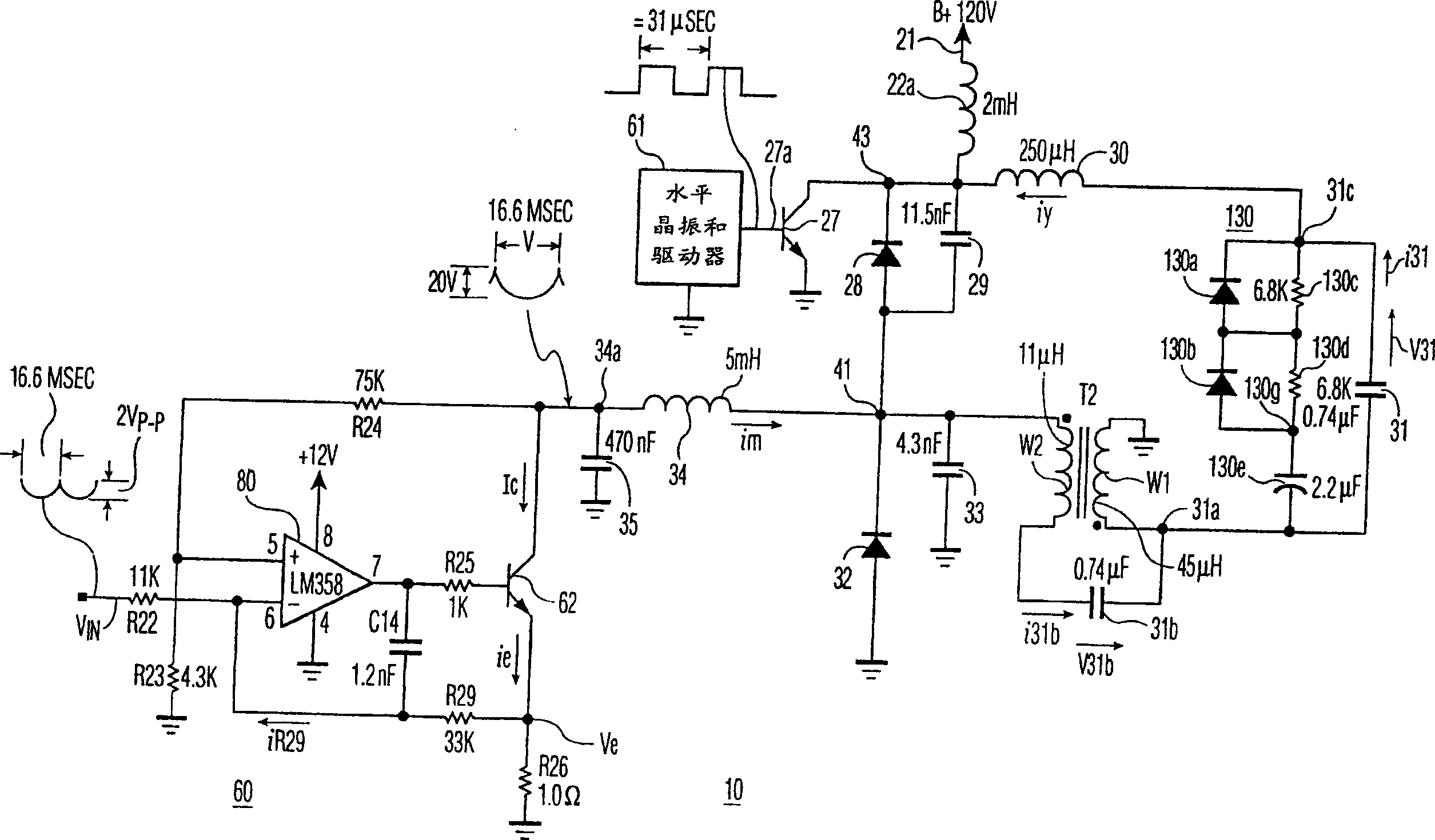

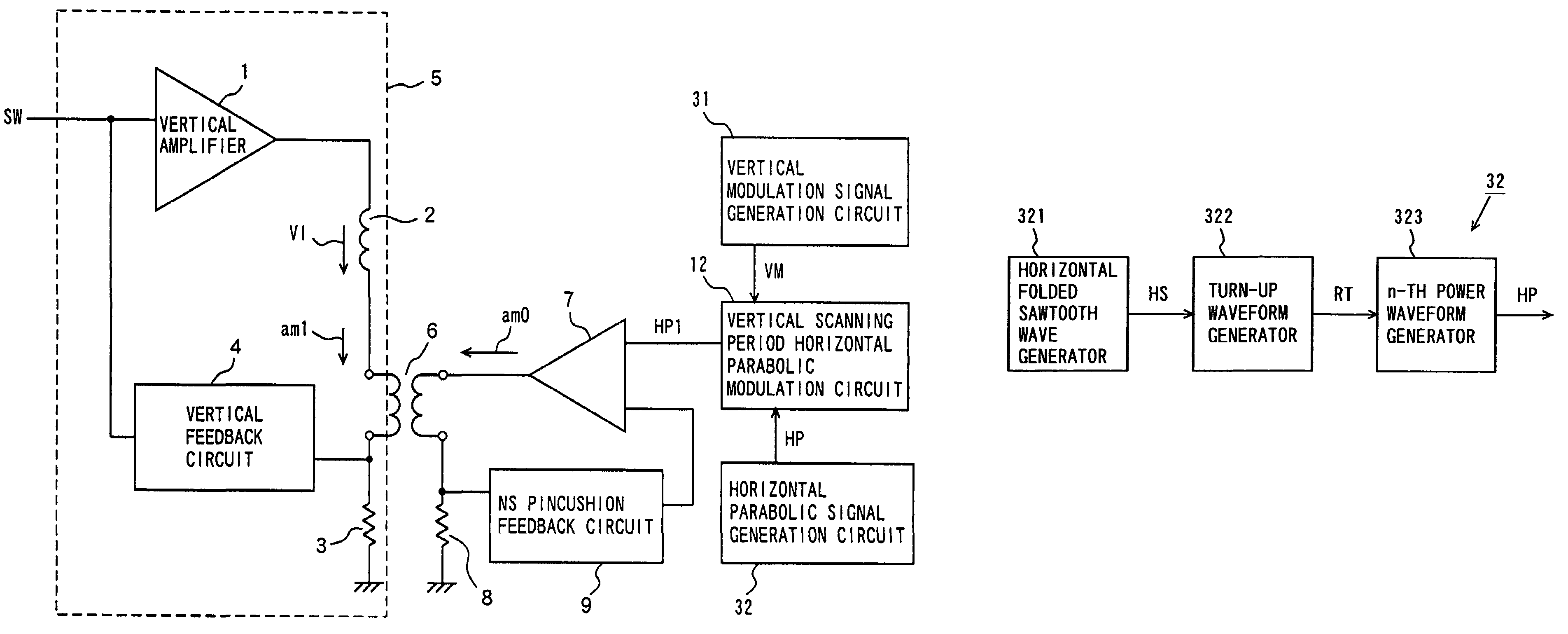

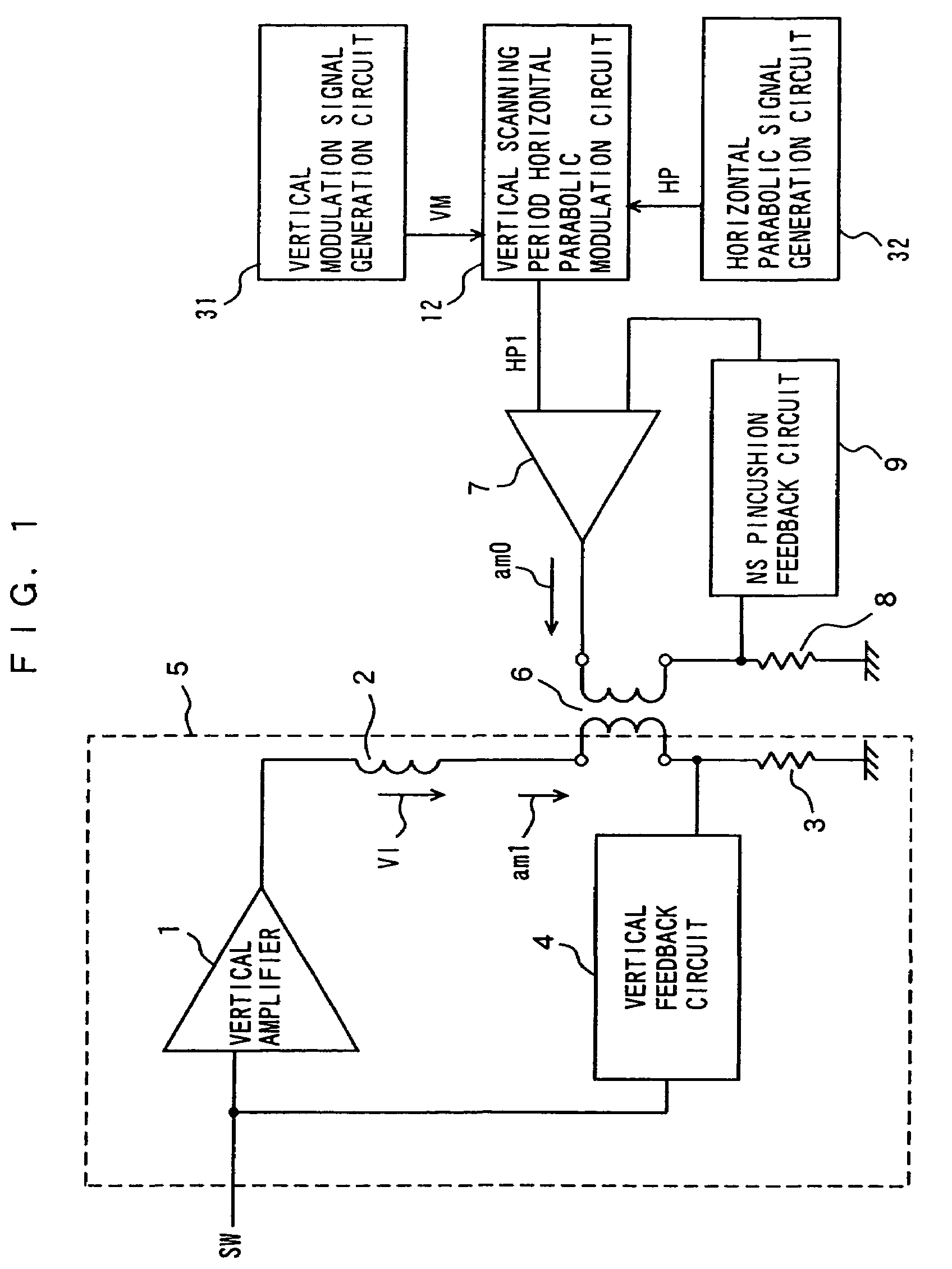

Deflection current modulating circuit

InactiveCN1433218ATelevision system detailsTelevision system scanning detailsNegative feedbackInductor

A horizontal deflection circuit generates a horizontal deflection current (iy) in a deflection winding (30), during a trace interval and during a retrace interval of a deflection cycle. A transistor (26) is responsive to a vertical rate parabola signal (VIN) and to a negative feedback signal (Ve) for producing a vertical rate parabolic modulation voltage at a collector of the transistor. A negative feedback network (R26) generates the feedback signal that is indicative of a current (ie) flowing in the transistor for increasing an output impedance at the collector of the transistor. An inductor (34) is coupled to the collector of the transistor for producing in the inductor a modulation current (im) to provide for side pincushion distortion correction in an East-West modulator.

Owner:THOMSON LICENSING SA

Vertical deflection apparatus

InactiveUS7166972B2Correct distortionTelevision system scanning detailsElectrode and associated part arrangementsVertical deflectionSuperimposition

A vertical deflection apparatus supplies a vertical deflection current to a vertical deflection coil to deflect an electron beam in the vertical direction of a screen. The apparatus includes: a vertical deflection current output circuit that outputs a vertical deflection current; a correction circuit that outputs a correction signal periodically changing in a parabolic shape in a horizontal scanning period to correct a north-south pincushion distortion; a modulation circuit that modulates the phase of the correction signal output from the correction circuit in a vertical scanning period; and a superimposition device that superimposes a correction current based on an output signal of the modulation circuit on the vertical deflection current. The correction circuit includes: a folded waveform generator; a turn-up waveform generator; and a correction signal generator that generates the correction signal having a peak corresponding to a turn-up point of a turn-up waveform generated by the turn-up waveform generator.

Owner:PANASONIC CORP

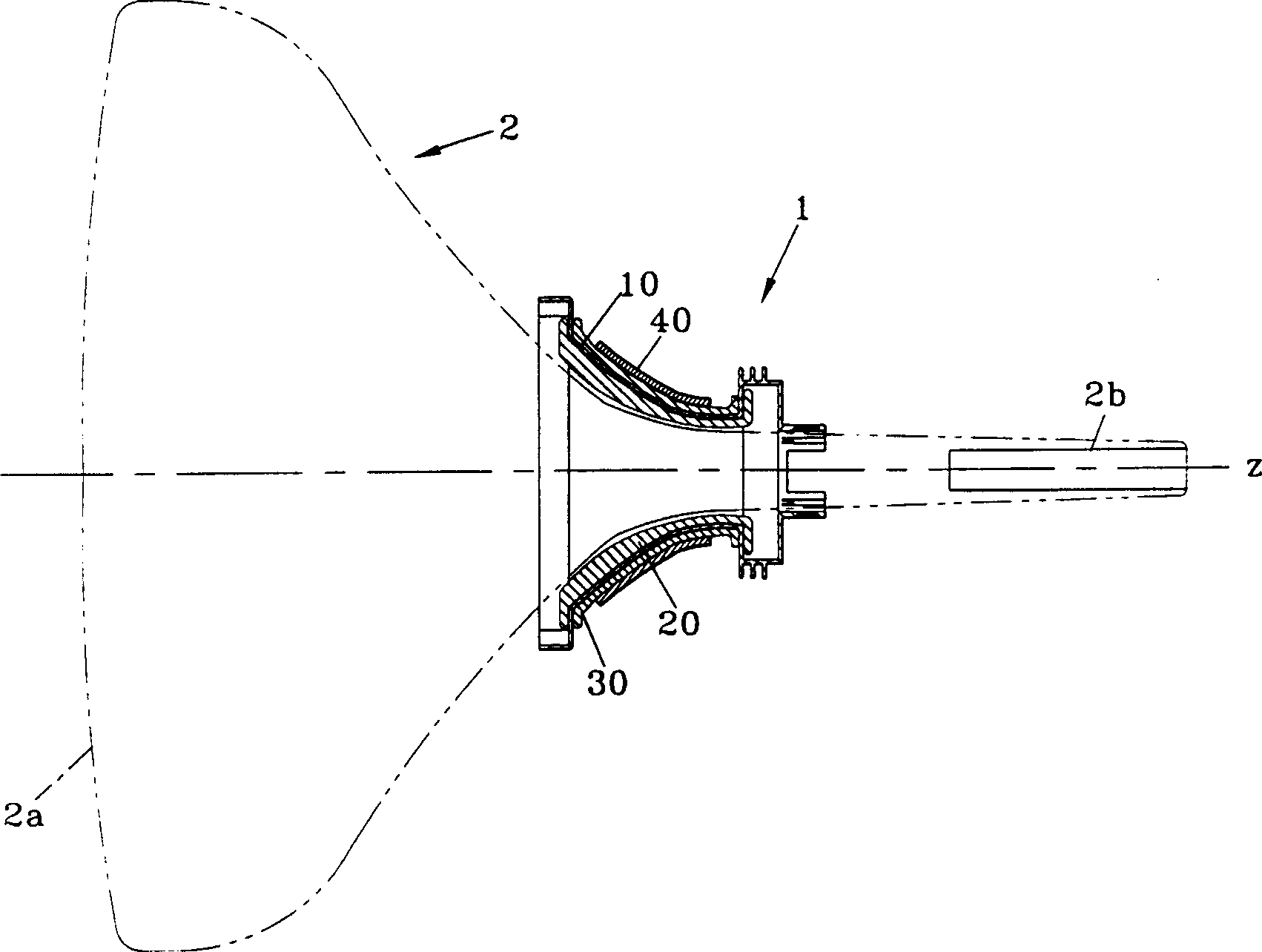

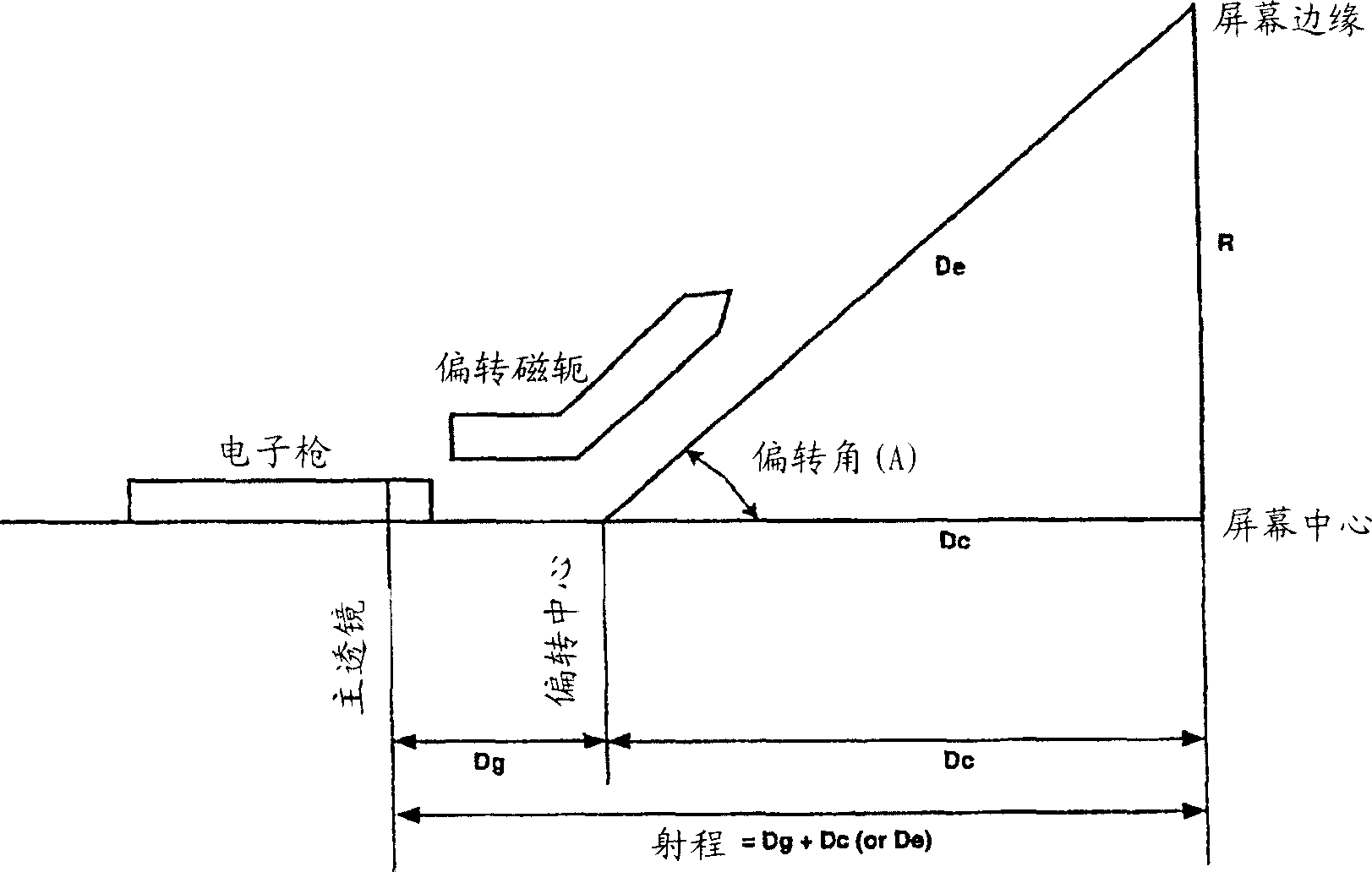

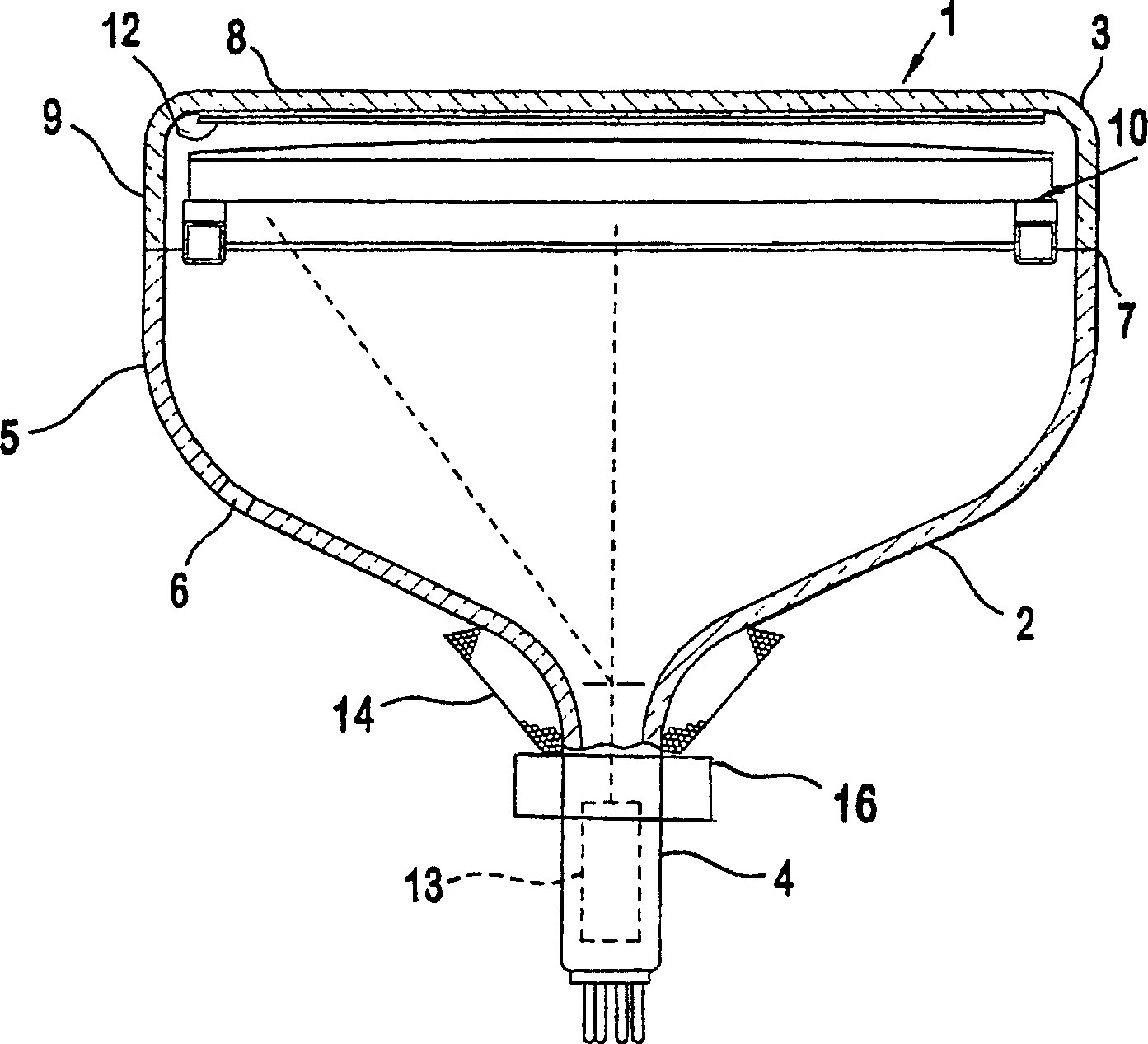

HDTV CRT display having optimized kinescope geometry, yoke field and electronic gun orientation

A cathode ray tube (1) has a faceplate panel (3) with a short axis and a long axis. The faceplate panel has a display screen on the inside of the panel and the panel extends back to a funnel (5) which houses an electron gun system within an integral neck (4). The electron gun system produces co-planar beams arranged in a linear array which is parallel to a short axis of the screen (12). A deflection system (14) is positioned over the neck of the funnel for applying electromagnetic control fields to electron beams emanating from the electron gun system directed toward the screen. The deflection system has a first deflection coil system for generating a substantially barrel shaped magnetic field for deflecting the beams in the direction of the long axis and a second deflection coil system for generating a substantially pincushion magnetic field for deflecting the beams in the direction of the short axis. At least one of the deflection coil systems generates a misconvergence along at least one of the axes parallel to the direction of the co-planar beam. Coils (16) for generating a quadrupolar magnetic field are coupled to the deflection coil systems for correcting misconvergence.

Owner:THOMSON LICENSING SA

Energy-saving and emission-reducing breeding method for Pincushion Urchin

ActiveCN107736283AReduce harvesting pressureAvoid pollutionClimate change adaptationPisciculture and aquariaWater qualityFishing

The invention discloses a breeding method for Pincushion Urchin. Compared with a traditional sea urchin breeding method, a large number of Pincushion Urchin larvae can be bred under the quite low energy consumption and culture wastewater discharge conditions so as to achieve the purposes of reducing natural resource fishing and catching pressure, and the problems that the transportation cost is high and the survival rate is low are solved. The method comprises the steps that firstly, a greenhouse is adopted as the breeding environment, under the effect of the greenhouse, water temperature of culture water is kept within the large range, no artificial heating seawater is adopted for breeding, and under the condition of saving the cost, pollution caused by a boiler to the environment is avoided; meanwhile, in the cultivation process, illumination within the range of 1,500-2,500 lx is adopted in the breeding process for culturing larvae, Chaetoceros mulleri and seawater photosynthetic bacteria are put, the purpose of purifying water can be achieved, and the problem that the cost is caused when a large amount of water is changed frequently is avoided.

Owner:DALIAN OCEAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com