Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

36 results about "Speech reconstruction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

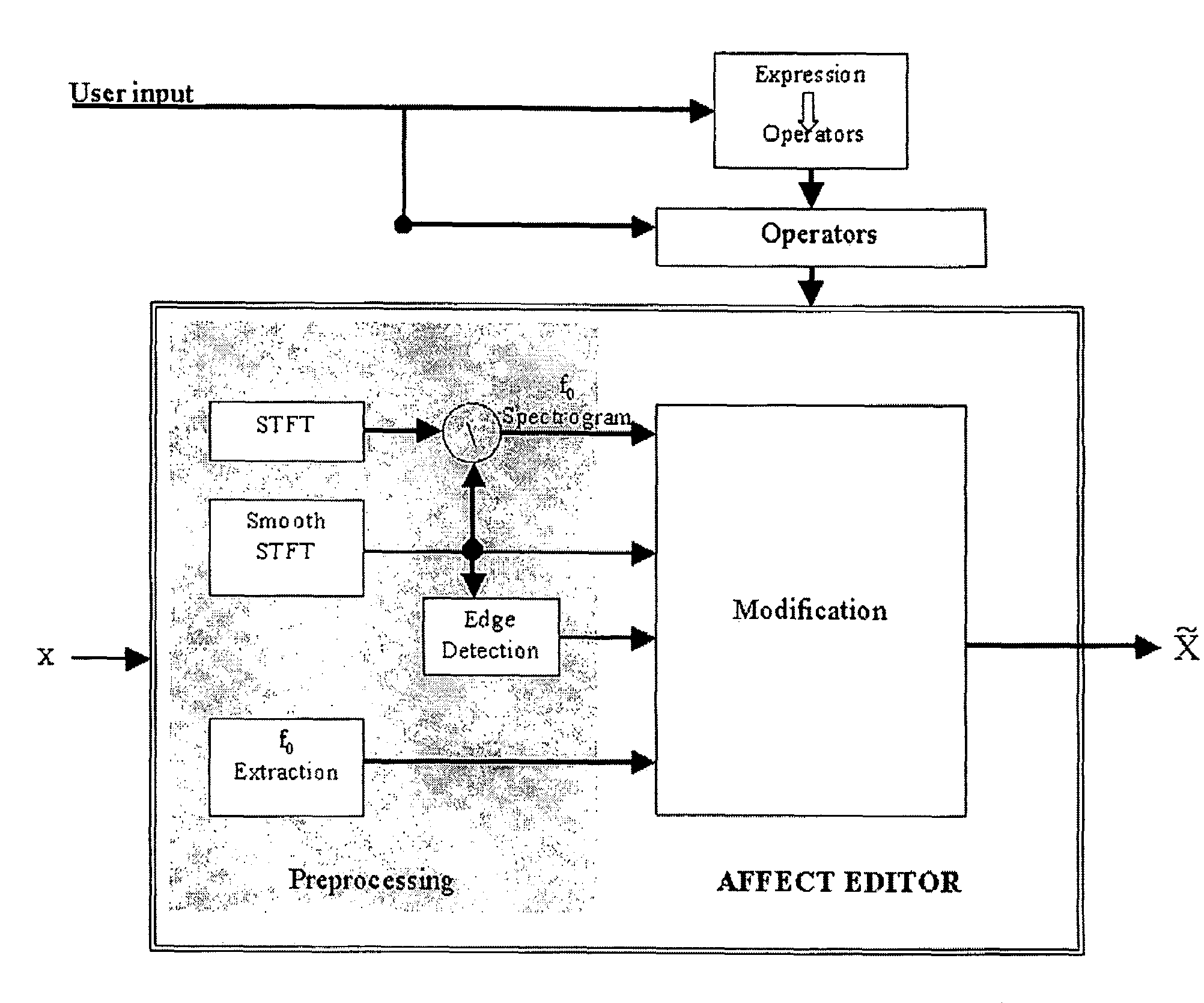

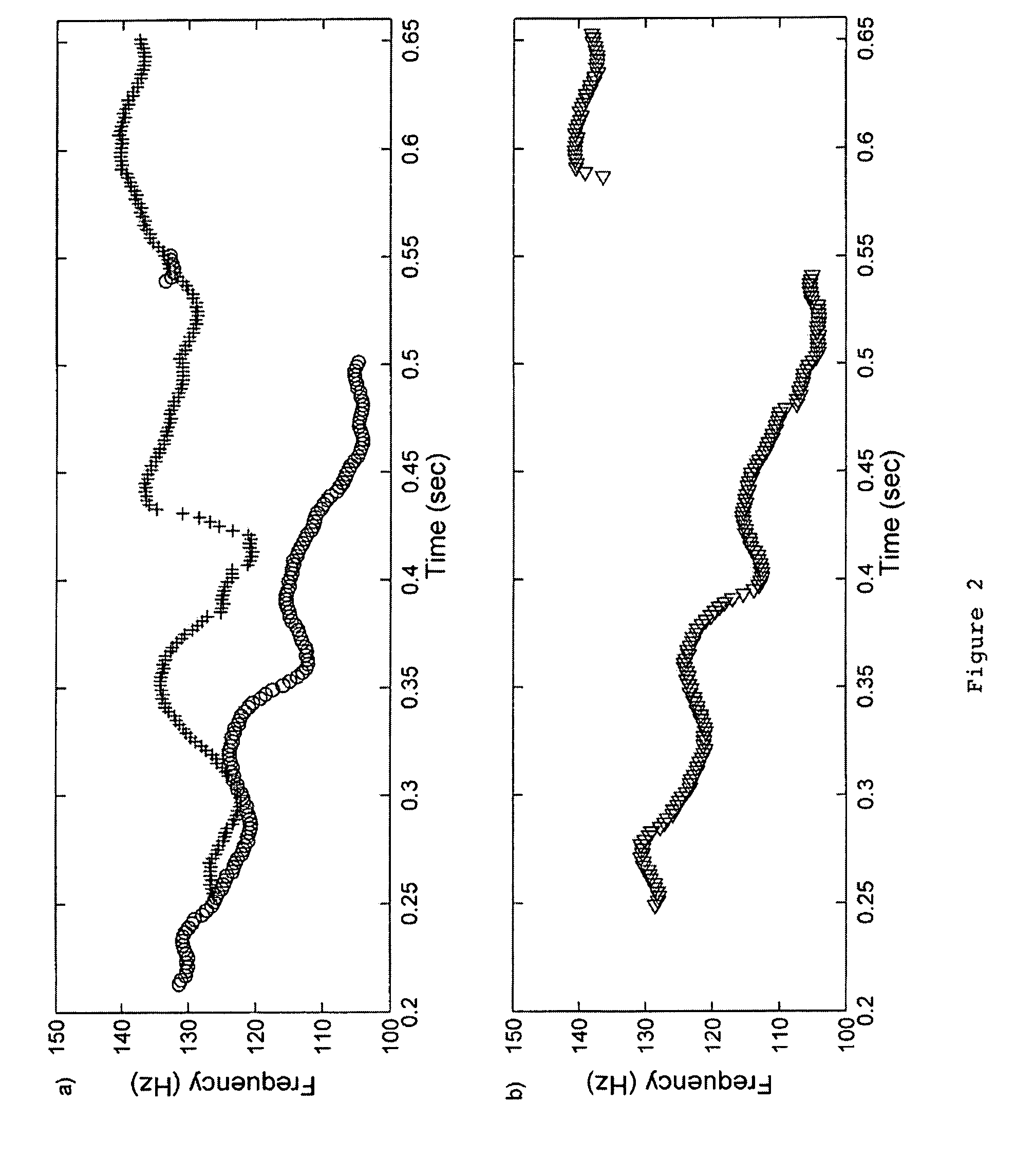

Speech Affect Editing Systems

ActiveUS20080147413A1Special data processing applicationsSpeech synthesisProcess systemsAnalysis data

This invention generally relates to system, methods and computer program code for editing or modifying speech affect. A speech affect processing system to enable a user to edit an affect content of a speech signal, the system comprising: input to receive speech analysis data from a speech processing system said speech analysis data, comprising a set of parameters representing said speech signal; a user input to receive user input data defining one or more affect-related operations to be performed on said speech signal; and an affect modification system coupled to said user input and to said speech processing system to modify said parameters in accordance with said one or more affect-related operations and further comprising a speech reconstruction system to reconstruct an affect modified speech signal from said modified parameters; and an output coupled to said affect modification system to output said affect modified speech signal.

Owner:SOBOL SHIKLER TAL

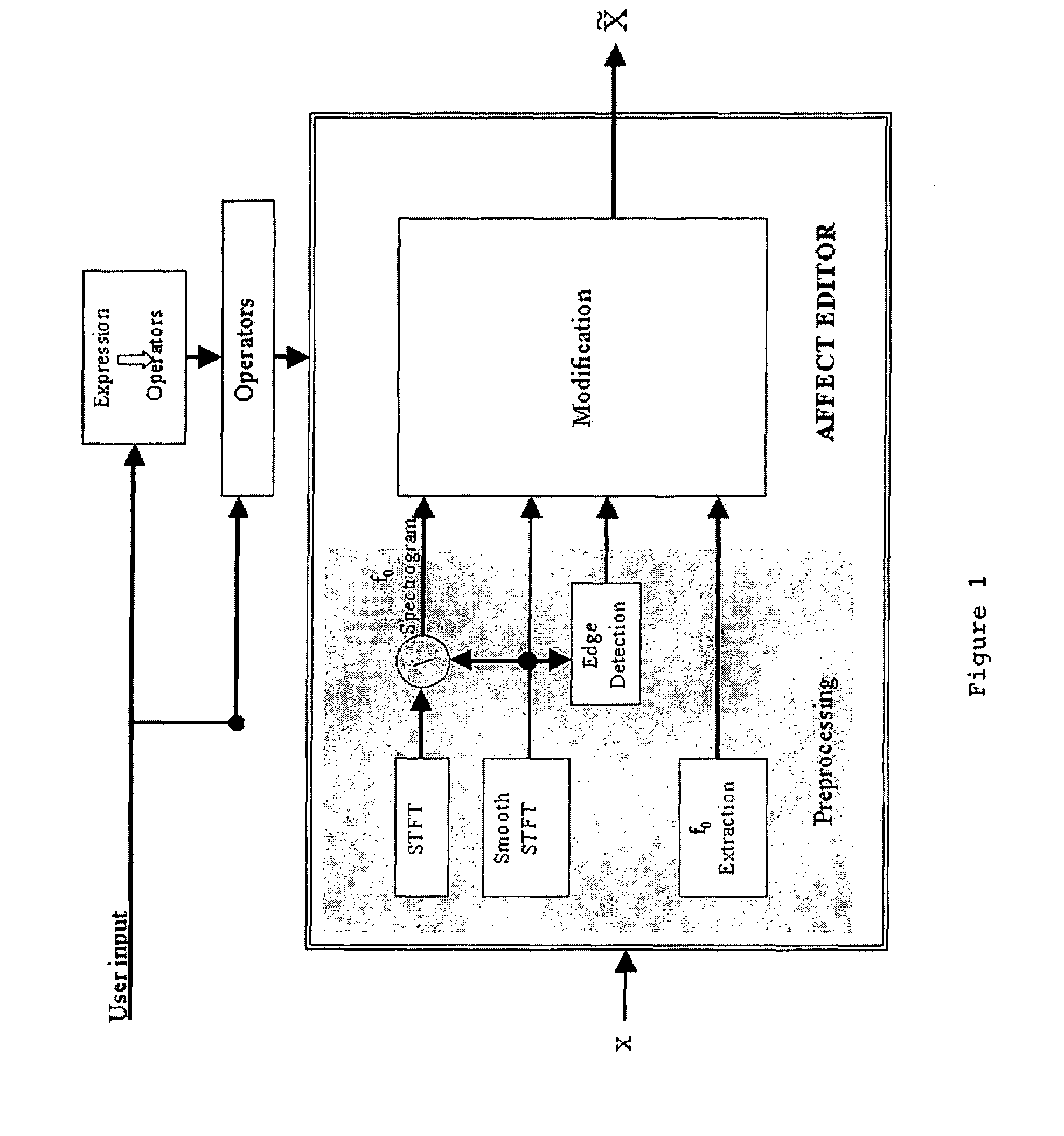

Partial speech reconstruction

InactiveUS20090119096A1Quality improvementMicrophonesPublic address systemsSignal-to-noise ratio (imaging)Speech reconstruction

A system enhances the quality of a digital speech signal that may include noise. The system identifies vocal expressions that correspond to the digital speech signal. A signal-to-noise ratio of the digital speech signal is measured before a portion of the digital speech signal is synthesized. The selected portion of the digital speech signal may have a signal-to-noise ratio below a predetermined level and the synthesis of the digital speech signal may be based on speaker identification.

Owner:NUANCE COMM INC

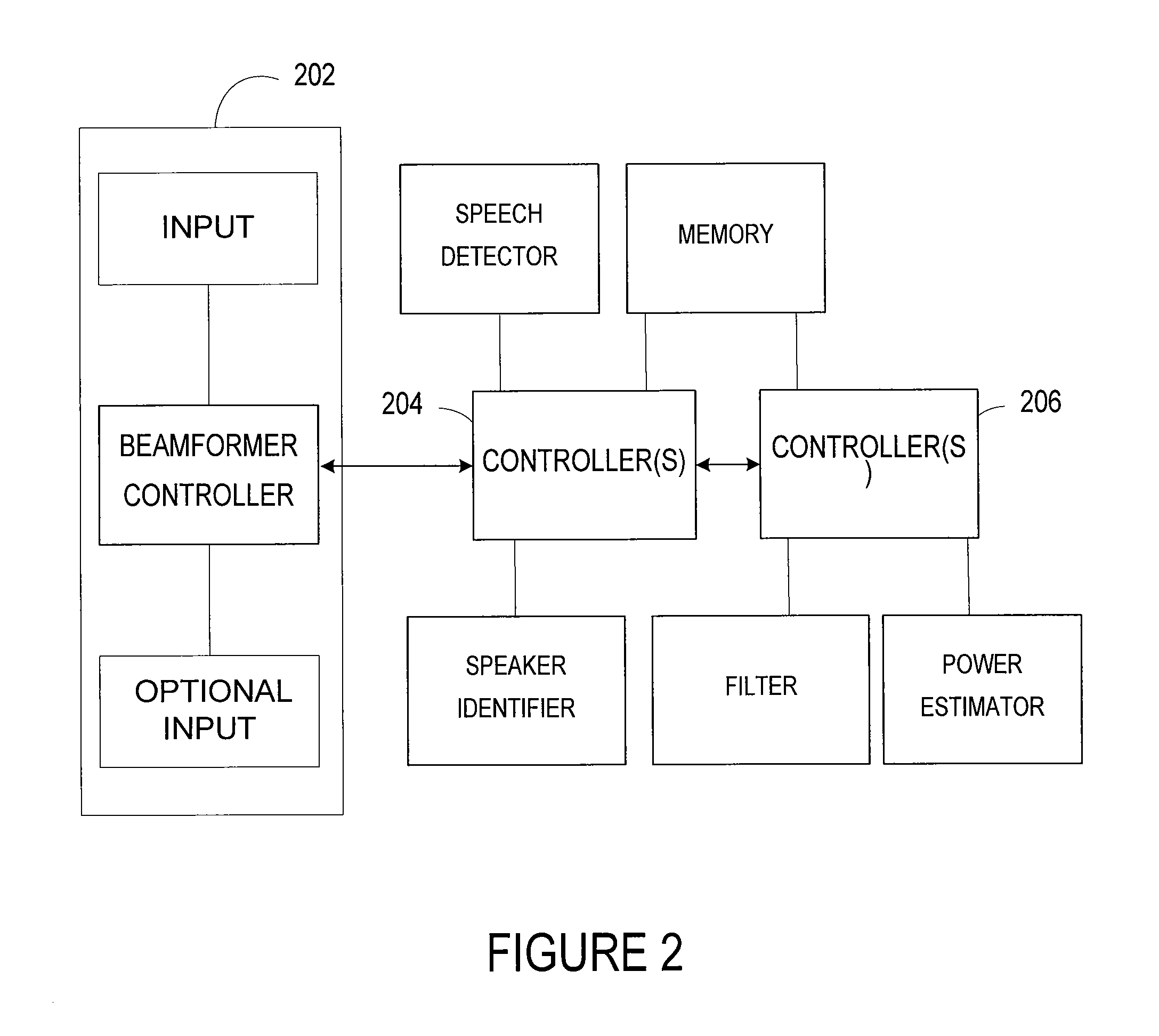

Voice conversion method and system

ActiveUS20090089063A1Reduce the differenceHigh similaritySpeech analysisFrequency spectrumSpeech reconstruction

A method, system and computer program product for voice conversion. The method includes performing speech analysis on the speech of a source speaker to achieve speech information; performing spectral conversion based on said speech information, to at least achieve a first spectrum similar to the speech of a target speaker; performing unit selection on the speech of said target speaker at least using said first spectrum as a target; replacing at least part of said first spectrum with the spectrum of the selected target speaker's speech unit; and performing speech reconstruction at least based on the replaced spectrum.

Owner:NUANCE COMM INC

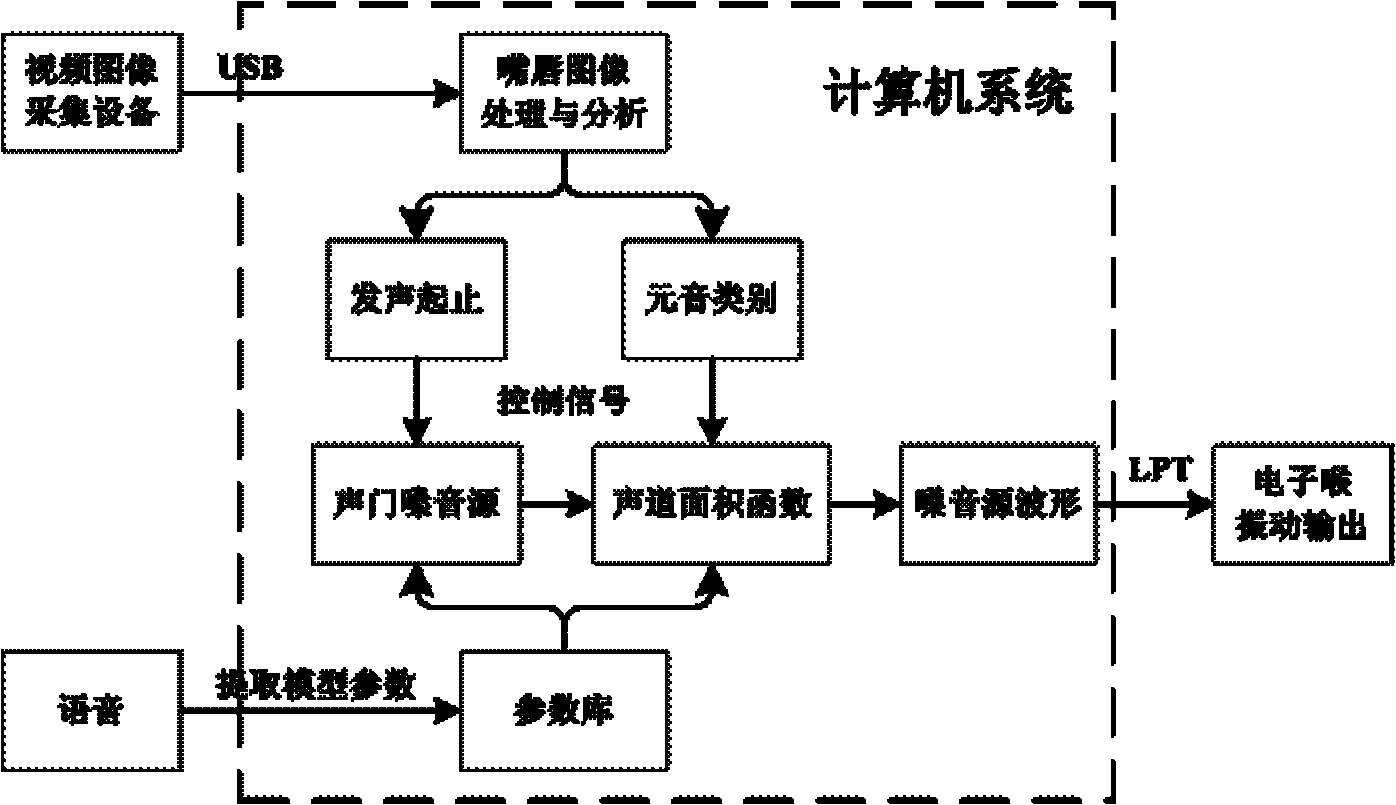

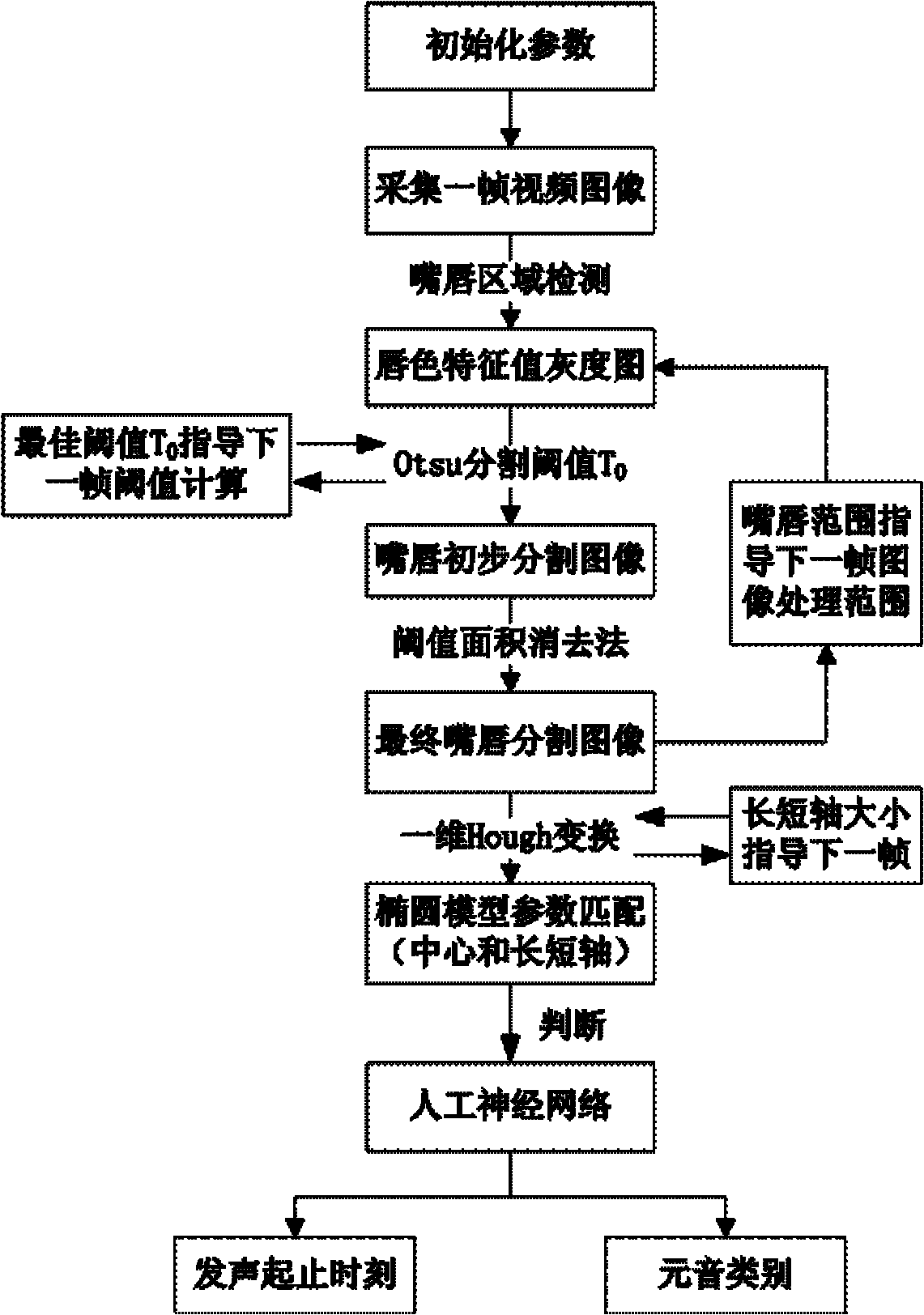

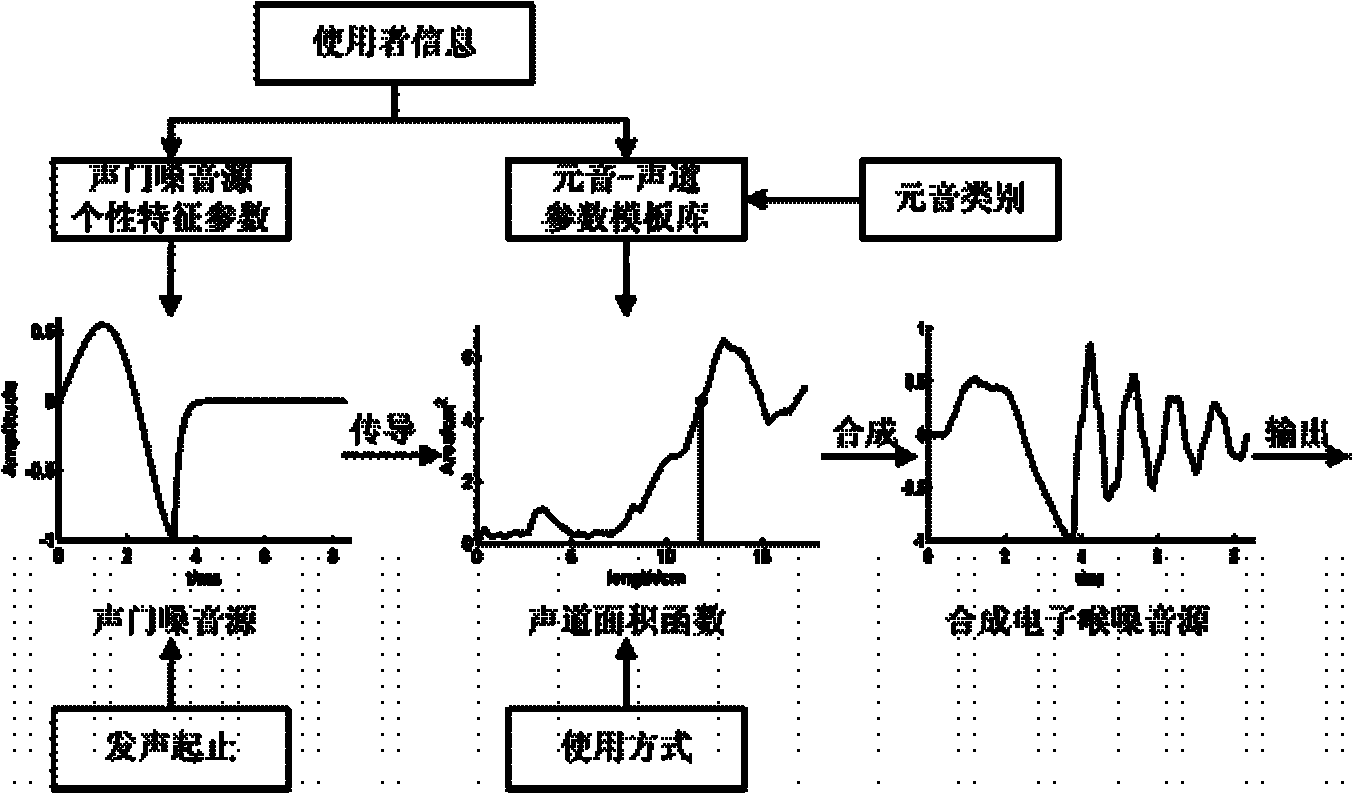

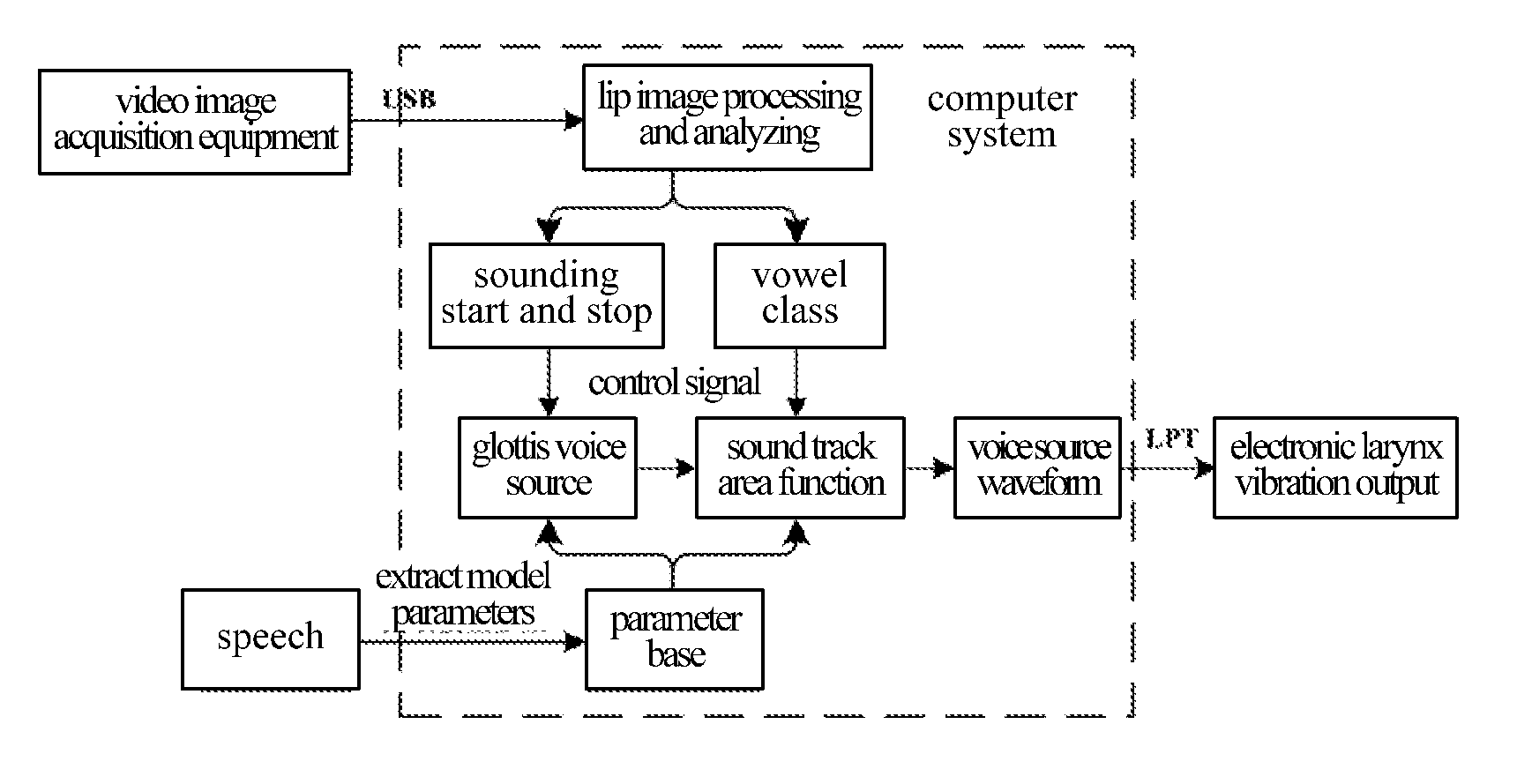

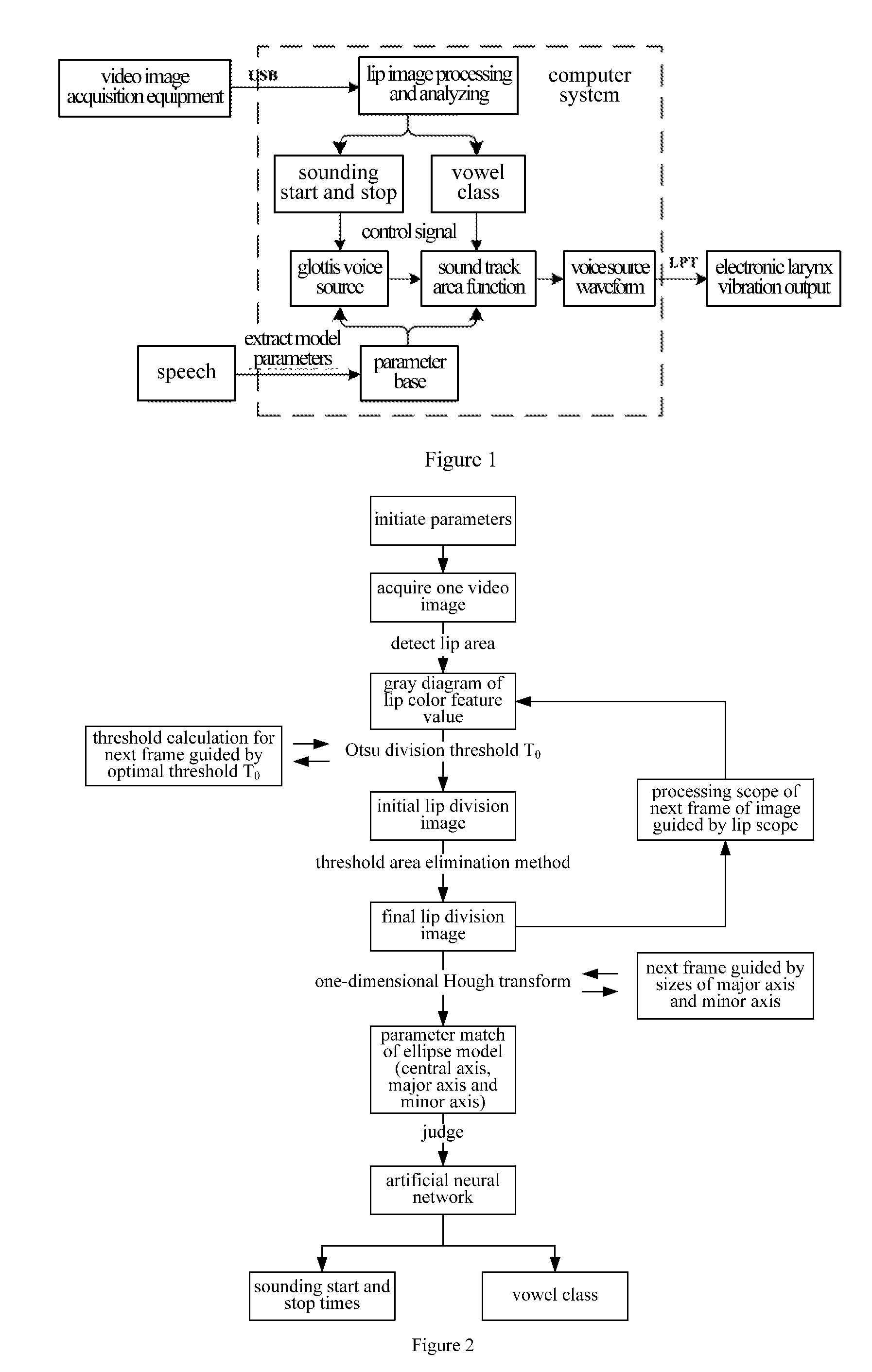

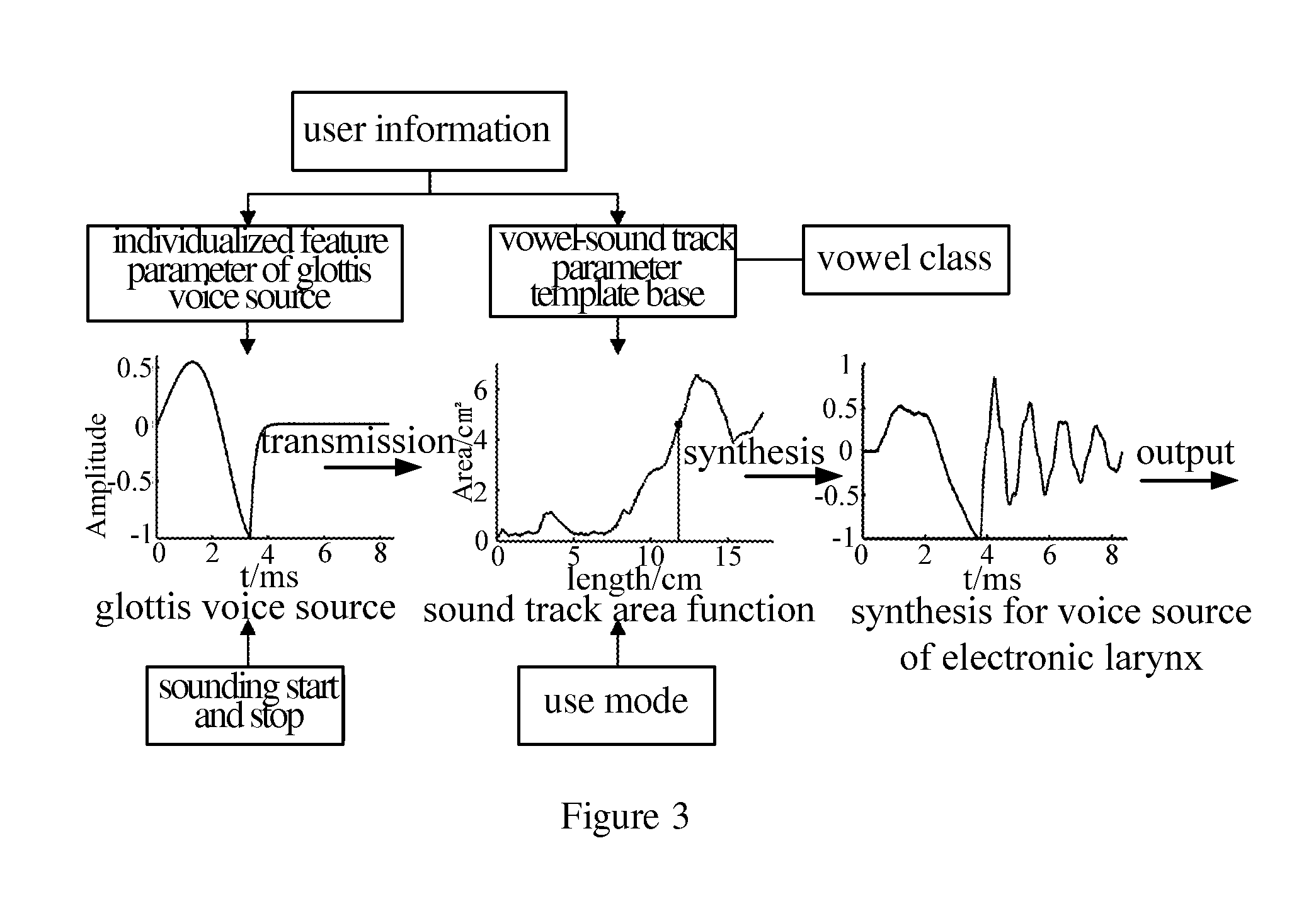

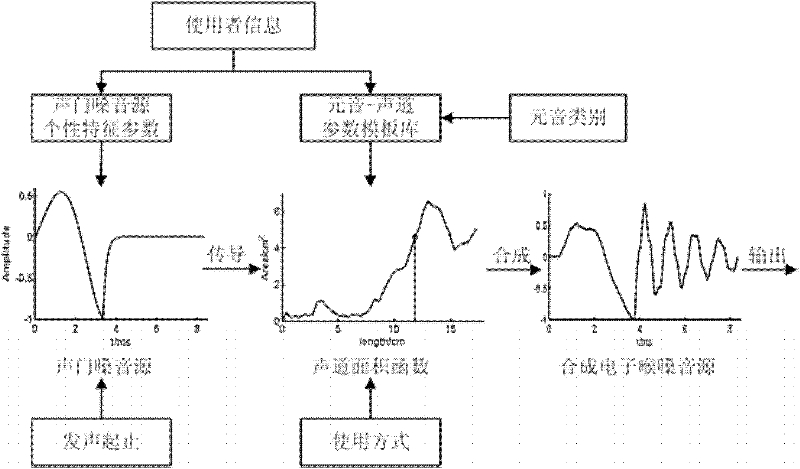

Electronic larynx speech reconstructing method and system thereof

ActiveCN101916566ARetain personality traitsQuality improvementCharacter and pattern recognitionSpeech recognitionImaging analysisVocal tract

The invention provides an electronic larynx speech reconstructing method and a system thereof. The method comprises the following steps of: firstly, extracting model parameters form collected speech as a parameter library; secondly, collecting the face image of a sounder, and transmitting the face image to an image analysis and processing module to obtain the sounding start moment, the sounding stop moment and the sounding vowel category; thirdly, synthesizing a voice source wave form through a voice source synthesizing module; and finally, outputting the voice source wave form through an electronic larynx vibration output module. Wherein the voice source synthesizing module is used for firstly setting the model parameters of a glottis voice source to synthesize the glottis voice source wave form, then simulating the transmission of the sound in the vocal tract by using a waveguide model and selecting the form parameters of the vocal tract according to the sounding vowel category so as to synthesize the electronic larynx voice source wave form. The speech reconstructed by the method and the system is closer to the sound of the sounder per se.

Owner:XI AN JIAOTONG UNIV

Speech affect editing systems

This invention generally relates to system, methods and computer program code for editing or modifying speech affect. A speech affect processing system to enable a user to edit an affect content of a speech signal, the system comprising: input to receive speech analysis data from a speech processing system said speech analysis data, comprising a set of parameters representing said speech signal; a user input to receive user input data defining one or more affect-related operations to be performed on said speech signal; and an affect modification system coupled to said user input and to said speech processing system to modify said parameters in accordance with said one or more affect-related operations and further comprising a speech reconstruction system to reconstruct an affect modified speech signal from said modified parameters; and an output coupled to said affect modification system to output said affect modified speech signal.

Owner:SOBOL SHIKLER TAL

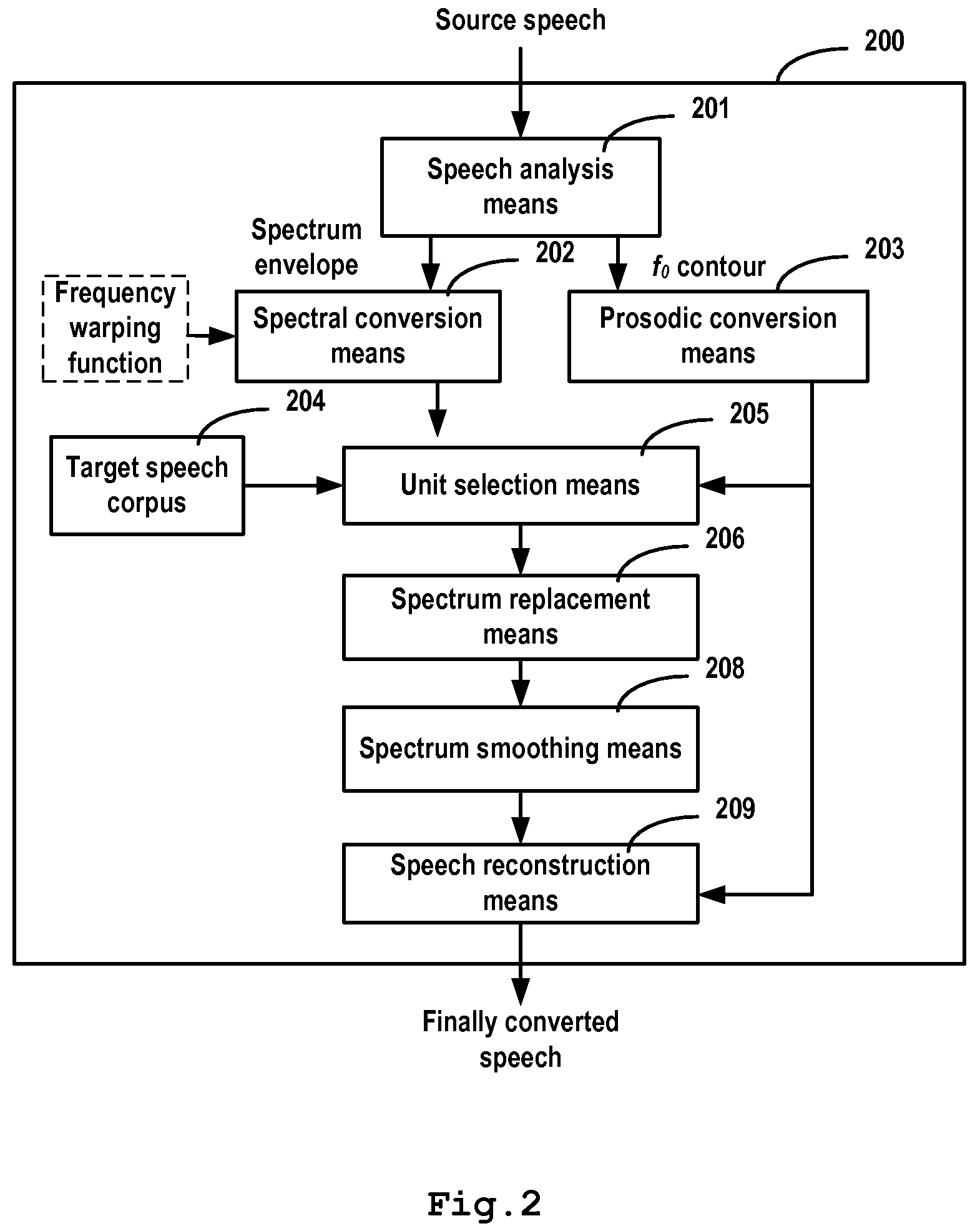

Speech enhancement

ActiveUS20150255083A1Enhanced speech component signalEnhanced signalSpeech recognitionSpeech synthesisAlgorithmSpeech reconstruction

A speech signal processing system is described for use with automatic speech recognition and hands free speech communication. A signal pre-processor module transforms an input microphone signal into corresponding speech component signals. A noise suppression module applies noise reduction to the speech component signals to generate noise reduced speech component signals. A speech reconstruction module produces corresponding synthesized speech component signals for distorted speech component signals. A signal combination block adaptively combines the noise reduced speech component signals and the synthesized speech component signals based on signal to noise conditions to generate enhanced speech component signals for automatic speech recognition and hands free speech communication.

Owner:CERENCE OPERATING CO

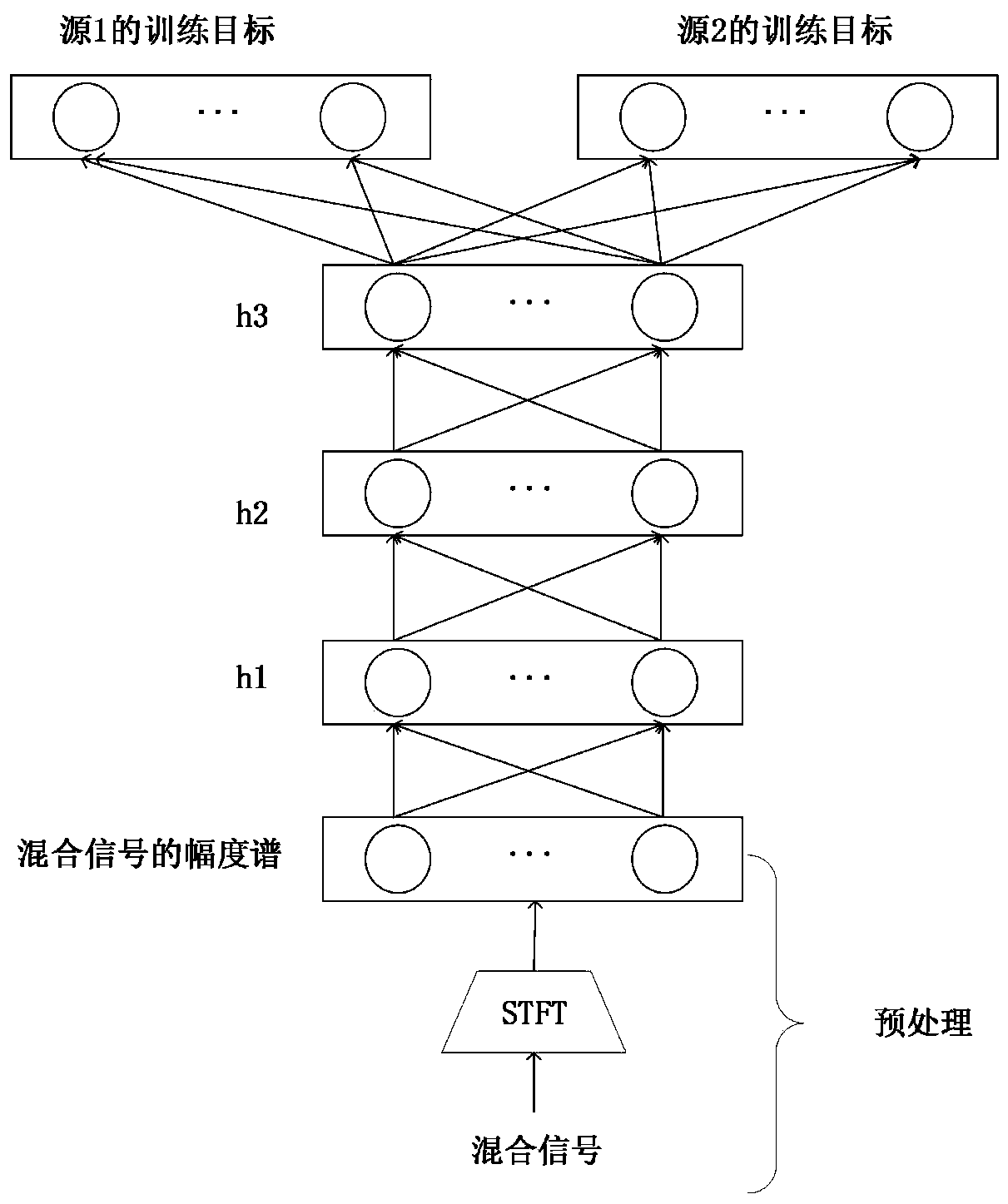

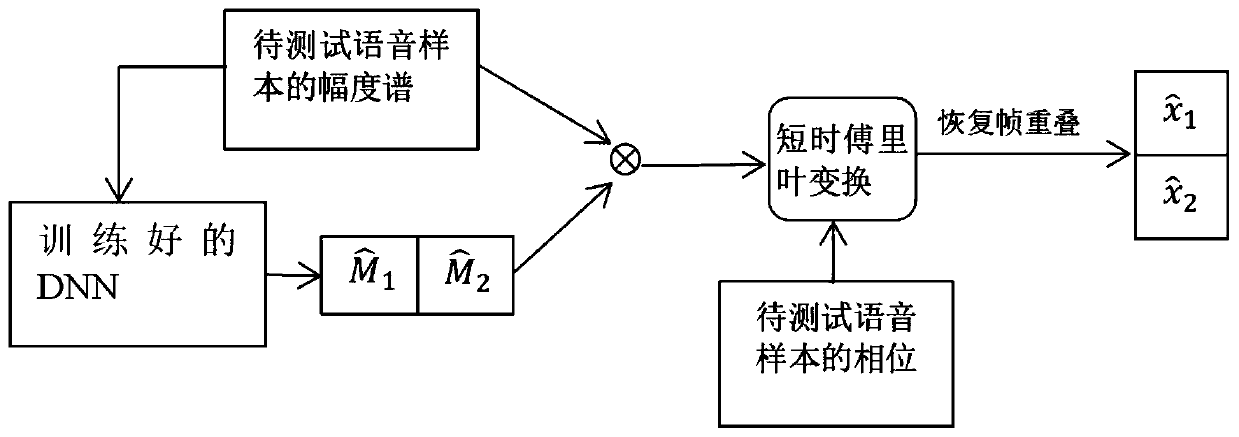

SCSS (Single Channel Speech Separation) algorithm based on DNN (Deep Neural Network)

ActiveCN110634502AReduce distortionImprove intelligibilitySpeech recognitionAlgorithmSpeech reconstruction

The invention provides an SCSS (Single Channel Speech Separation) algorithm based on a DNN (Deep Neural Network). The SCSS algorithm based on the DNN mainly comprises the following steps of preprocessing a training speech sample, and extracting feature information of the training speech sample; training the DNN by using a loss function so as to obtain a DNN model; preprocessing a speech sample tobe tested; extracting feature information of the speech sample to be tested; performing speech separation through the trained DNN model; and then obtaining a separation result through speech reconstruction. The DNN is trained by using the nonlinear relationship between input and output. Compared with a conventional separation method based on single-output DNN, the SCSS algorithm has the advantagesthat the combined relationship between the output is sufficiently mined; the separation efficiency is high; two source speech signals can be separated in one step; the voice distortion rate is effectively reduced; and meanwhile, the understandability of the separation speech is improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

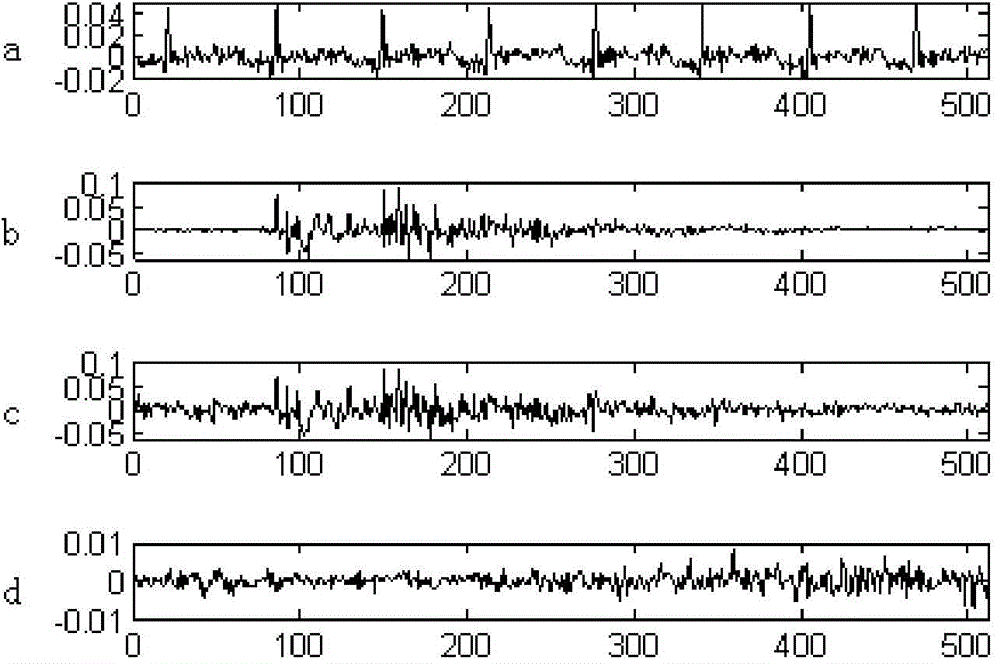

Speech reconstruction-based instantaneous noise suppressing method

ActiveCN104599677ATransient Noise SuppressionTransient Noise CancellationSpeech analysisNoise detectionDistribution characteristic

A speech reconstruction-based instantaneous noise suppressing method relates to the technical field of audio processing and solves the technical problem of instantaneous noise suppression. The speech reconstruction-based instantaneous noise suppressing method eliminates influence of instantaneous noise through instantaneous noise detection and instantaneous suppression and comprises, firstly, eliminating steady-state noise inside signals through traditional methods, and based on the different distribution characteristics of white voice noise signals and instantaneous noise signals, detecting instantaneous noise; secondly, after the instantaneous noise is detected, proposing a speech reconstruction-based algorithm to suppress the instantaneous noise, discarding frames containing the instantaneous signals, performing waveform reconstruction through uninterrupted signals adjacent in tandem to replace original signals. Therefore, the instantaneous noise can be completely eliminated under the condition without obvious speech distortion. The speech reconstruction-based instantaneous noise suppressing method is applicable to processing speech signals containing the instantaneous noise.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI +1

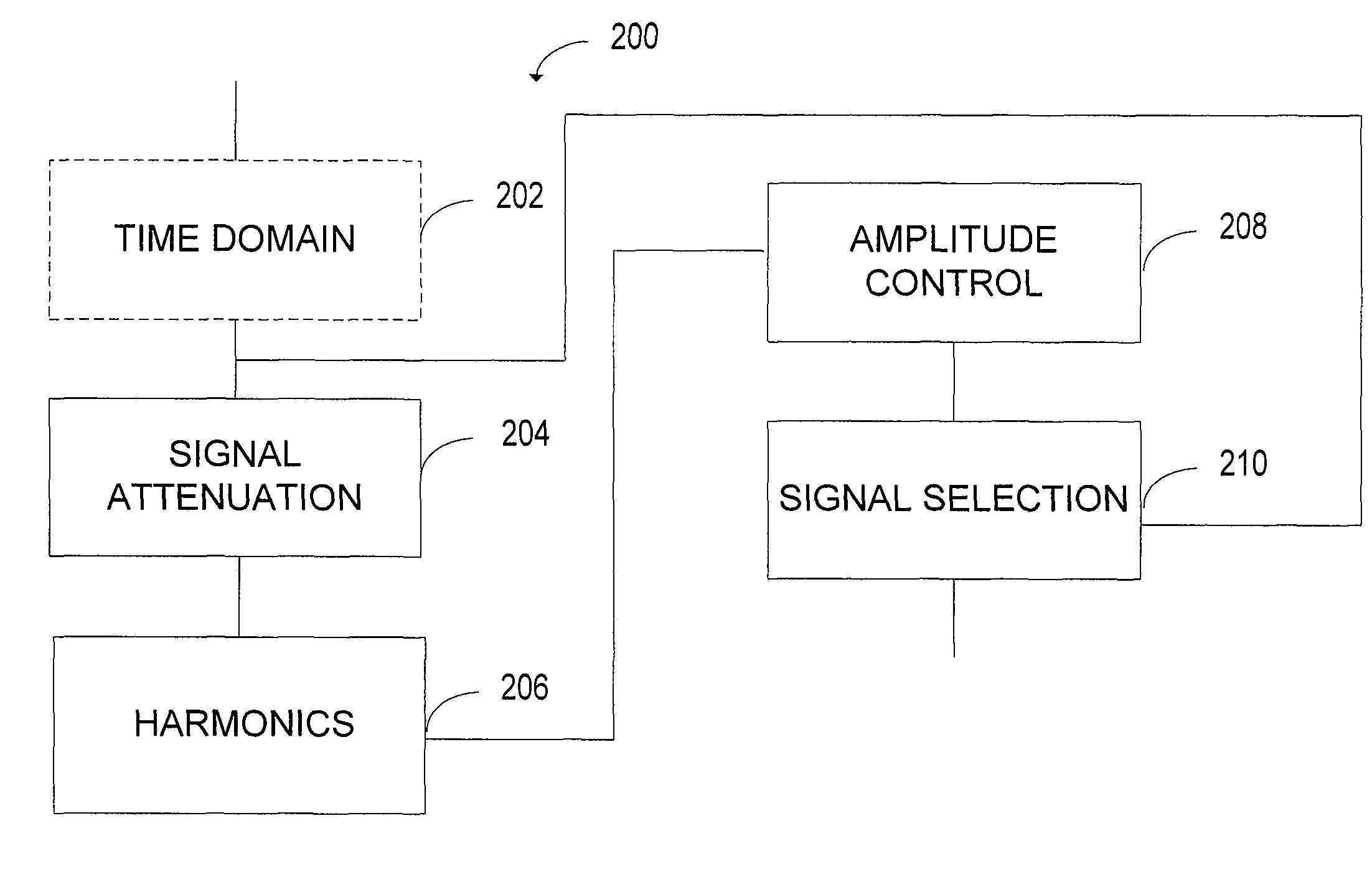

Speech enhancement through partial speech reconstruction

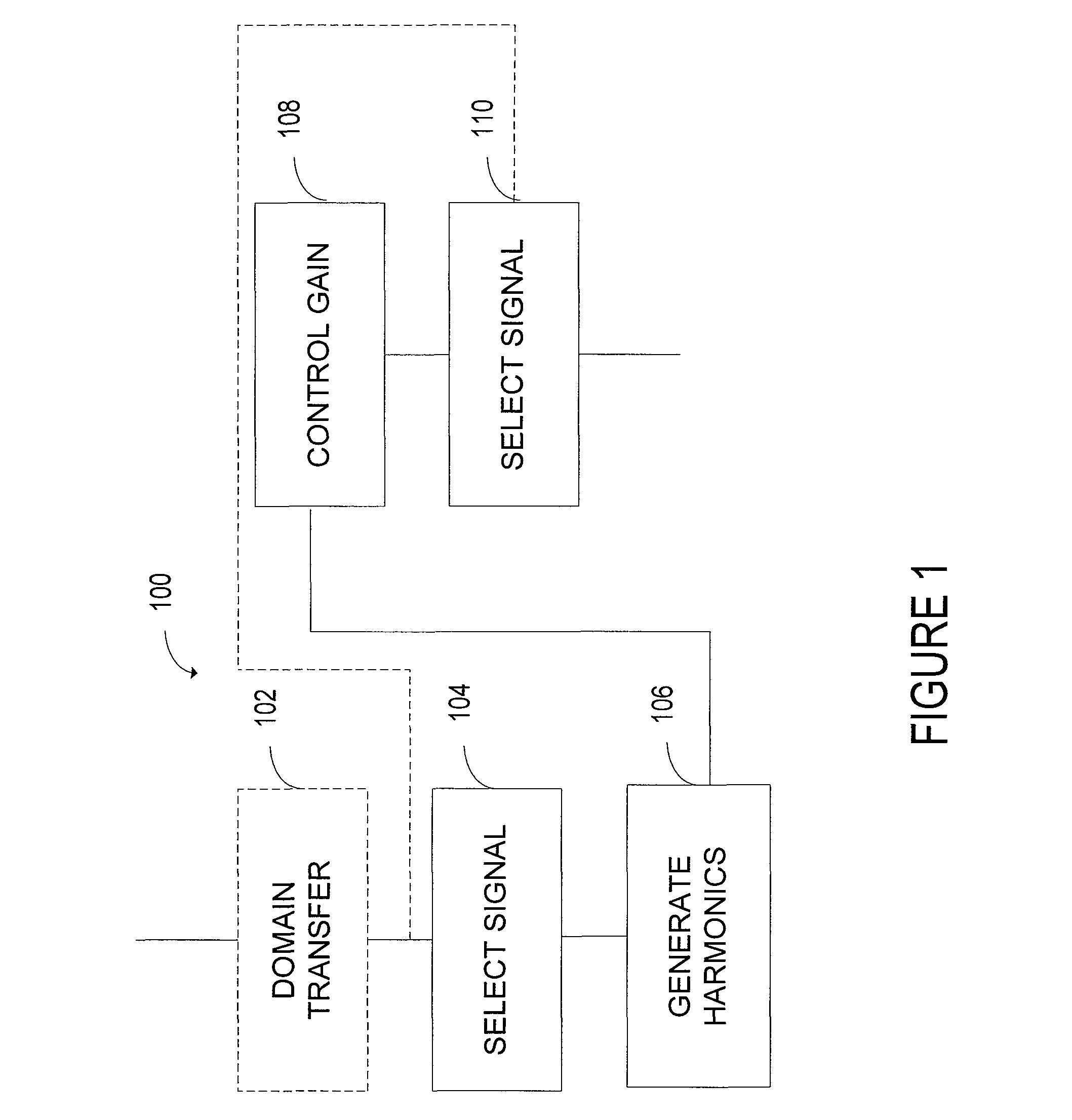

ActiveUS8606566B2Improve speech claritySpeech recognitionTransmission noise suppressionTime domainHarmonic

A system improves speech intelligibility by reconstructing speech segments. The system includes a low-frequency reconstruction controller programmed to select a predetermined portion of a time domain signal. The low-frequency reconstruction controller substantially blocks signals above and below the selected predetermined portion. A harmonic generator generates low-frequency harmonics in the time domain that lie within a frequency range controlled by a background noise modeler. A gain controller adjusts the low-frequency harmonics to substantially match the signal strength to the time domain original input signal.

Owner:MALIKIE INNOVATIONS LTD

Electrolaryngeal speech reconstruction method and system thereof

InactiveUS20130035940A1Function increaseImprove voice qualityCharacter and pattern recognitionSpeech synthesisProcess moduleVocal tract

The invention provides an electrolaryngeal speech reconstruction method and a system thereof. Firstly, model parameters are extracted from the collected speech as a parameter library, then facial images of a speaker are acquired and then transmitted to an image analyzing and processing module to obtain the voice onset and offset times and the vowel classes, then a waveform of a voice source is synthesized by a voice source synthesis module, finally, the waveform of the above voice source is output by an electrolarynx vibration output module, wherein the voice source synthesis module firstly sets the model parameters of a glottal voice source so as to synthesize the waveform of the glottal voice source, and then a waveguide model is used to simulate sound transmission in a vocal tract and select shape parameters of the vocal tract according to the vowel classes.

Owner:XI AN JIAOTONG UNIV

Method and apparatus for high resolution speech reconstruction

A method and apparatus identify a clean speech signal from a noisy speech signal. The noisy speech signal is converted into frequency values in the frequency domain. The parameters of at least one posterior probability of at least one component of a clean signal value are then determined based on the frequency values. This determination is made without applying a frequency-based filter to the frequency values. The parameters of the posterior probability distribution are then used to estimate a set of frequency values for the clean speech signal. A clean speech signal is then constructed from the estimated set of frequency values.

Owner:MICROSOFT TECH LICENSING LLC

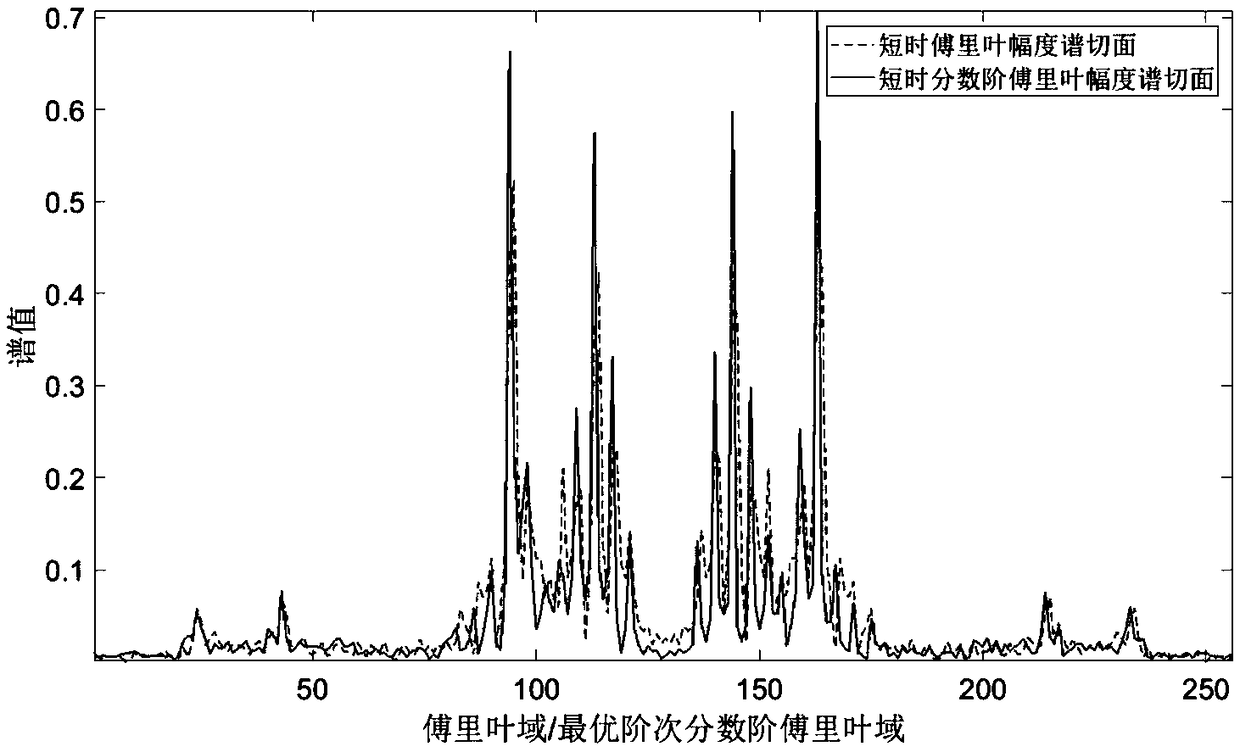

Speech enhancement system and method based on MFrSRRPCA algorithm

ActiveCN109215671AReduces the possibility of false eliminationsValid reservationSpeech analysisTime domainTime–frequency analysis

The invention discloses a speech enhancement system and method based on a multi-subband short-time fractional Fourier spectrum random rearrangement robust principal component analysis MFrSRRPCA algorithm. The realization steps are: a time-frequency analysis module generates time-frequency information of noisy speech; the time-frequency analysis module generates time-frequency information of noisyspeech. The time-frequency subband division module divides the time-frequency amplitude spectrum of the noisy speech into a plurality of noisy subbands. Each time-frequency amplitude spectrum enhancement module randomly disrupts the sequence of each frame spectrum element in the corresponding noisy sub-band, and generates the corresponding enhancement sub-band by using a robust principal componentanalysis algorithm according to the noise intensity estimation value in the corresponding sub-band. The time-frequency subband recombination module composes all the enhancement subbands to enhance the time-frequency amplitude spectrum. The time-domain speech reconstruction module reconstructs the enhanced time-frequency amplitude spectrum into enhanced speech. The invention can improve the soundquality and intelligibility of the noisy speech, and can be used for the speech enhancement and noise reduction of the speech receiving system.

Owner:XIDIAN UNIV

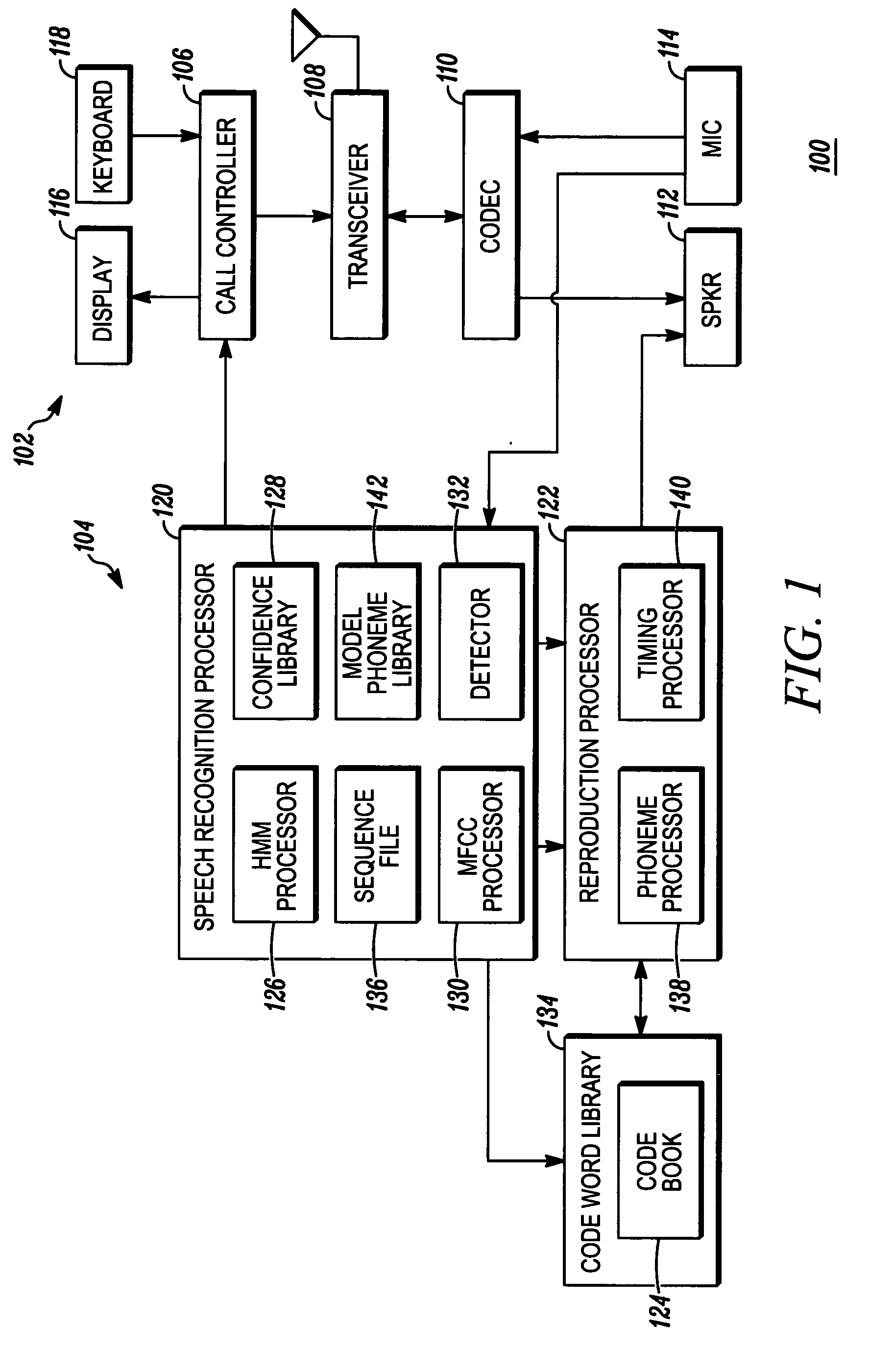

Voice quality control for high quality speech reconstruction

A method and apparatus are provided for reproducing a speech sequence of a user through a communication device of the user. The method includes the steps of detecting a speech sequence from the user through the communication device, recognizing a phoneme sequence within the detected speech sequence and forming a confidence level of each phoneme within the recognized phoneme sequence. The method further includes the steps of audibly reproducing the recognized phoneme sequence for the user through the communication device and gradually highlighting or degrading a voice quality of at least some phonemes of the recognized phoneme sequence based upon the formed confidence level of the at least some phonemes.

Owner:MOTOROLA INC

Voice conversion method and system

ActiveUS8234110B2Reduce the differenceHigh similaritySpeech analysisFrequency spectrumSpeech reconstruction

A method, system and computer program product for voice conversion. The method includes performing speech analysis on the speech of a source speaker to achieve speech information; performing spectral conversion based on said speech information, to at least achieve a first spectrum similar to the speech of a target speaker; performing unit selection on the speech of said target speaker at least using said first spectrum as a target; replacing at least part of said first spectrum with the spectrum of the selected target speaker's speech unit; and performing speech reconstruction at least based on the replaced spectrum.

Owner:NUANCE COMM INC

Deep-learning-based speech tone quality enhancement method, device and system

ActiveCN109147806AImprove voice qualityEasy to deploySpeech analysisLearning basedFeature extraction

The invention provides a deep-learning-based speech tone quality enhancement method, device and system. The method comprises: to-be-processed speech data are obtained and feature extraction is carriedout on the to-be-processed speech data to obtain features of the to-be-processed speech data; and on the basis of the features of the to-be-processed speech data, the to-be-processed speech data arereconstructed to be output speech data by using a trained speech reconstruction neural network, wherein the speech quality of the output speech data is higher than that of the to-be-processed speech data. According to the invention, the low-quality speech quality can be enhanced based on the deep learning method and the low-quality speech quality is reconstructed by the deep neural network to obtain the high-quality speech tone quality, so that the tone quality improvement effect that can not be realized by the traditional method is realized.

Owner:ANKER INNOVATIONS TECH CO LTD

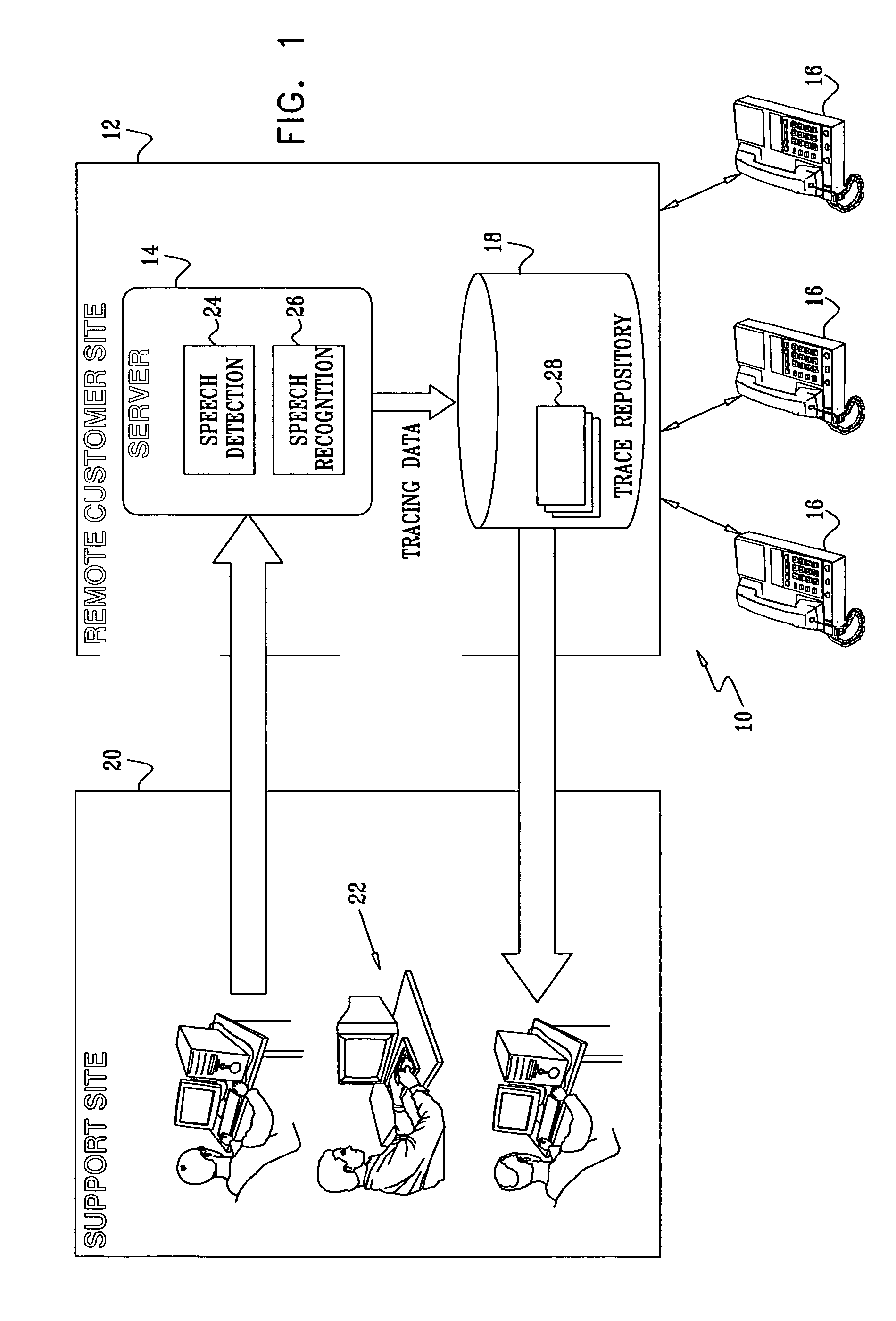

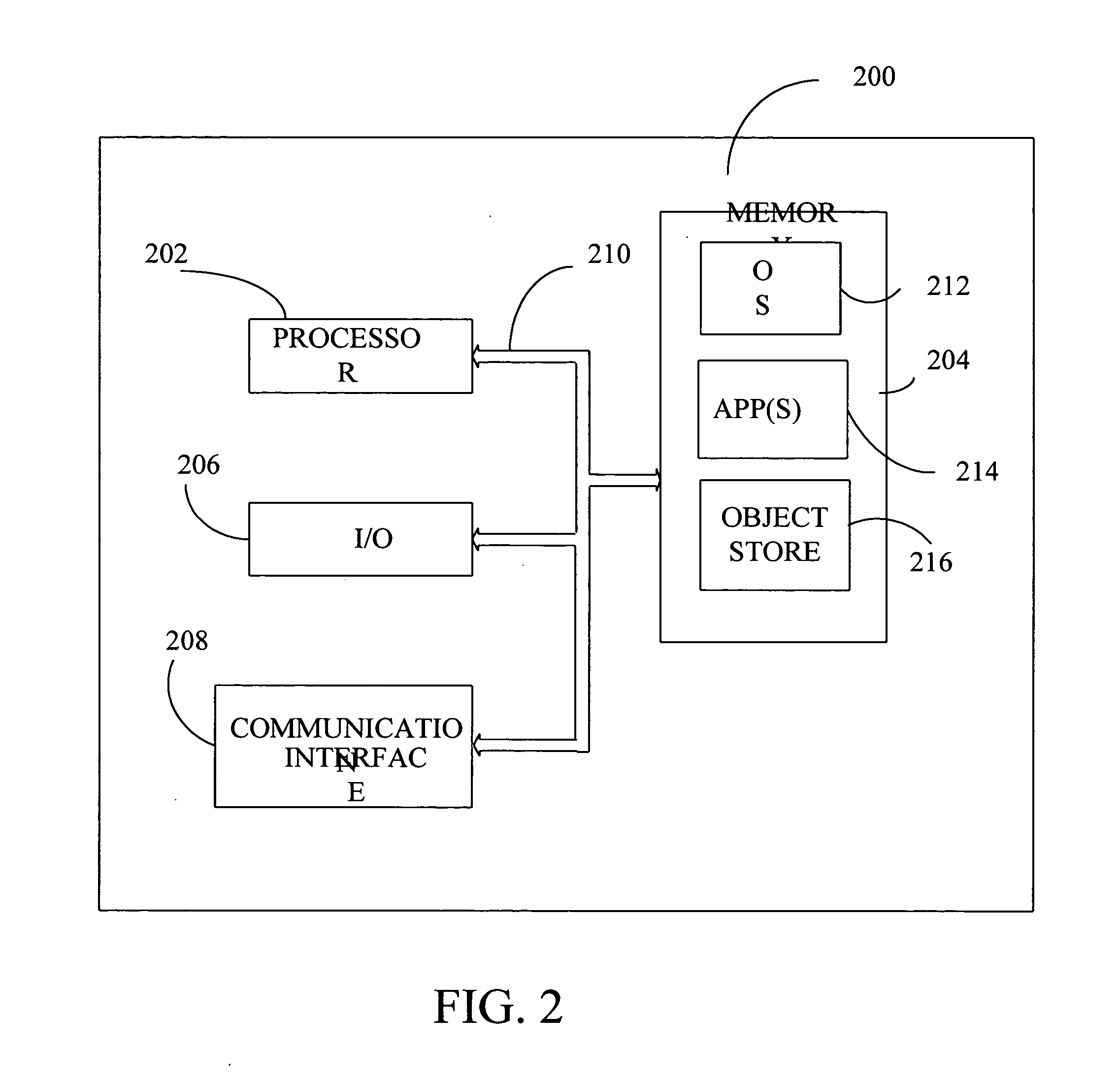

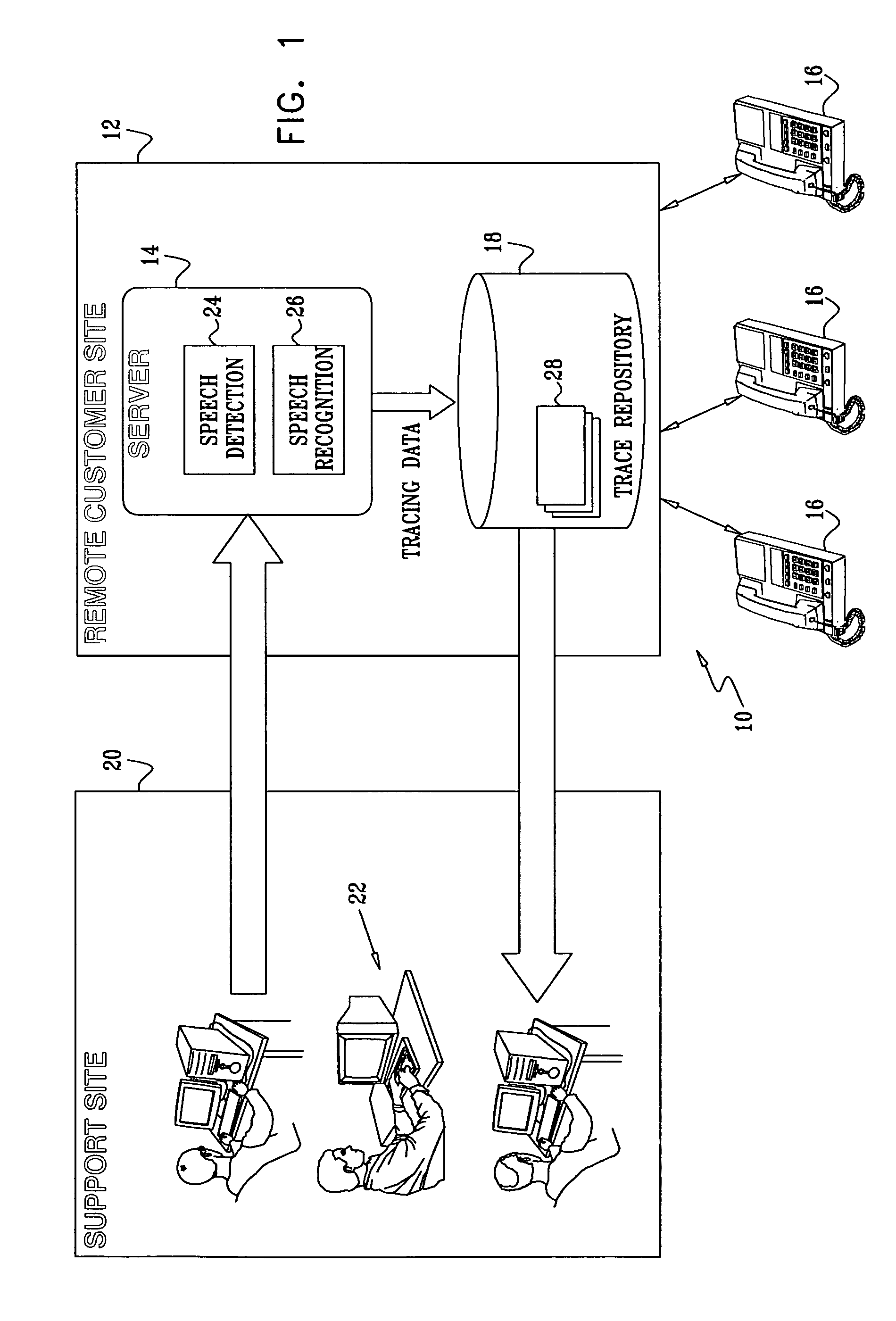

Remote tracing and debugging of automatic speech recognition servers by speech reconstruction from cepstra and pitch information

InactiveUS20070143107A1Character and pattern recognitionSpeech recognitionTrace fileSpeech reconstruction

Methods and systems are provided for remote tuning and debugging of an automatic speech recognition system. Trace files are generated on-site from input speech by efficient, lossless compression of MFCC data, which is merged with compressed pitch and voicing information and stored as trace files. The trace files are transferred to a remote site where human-intelligible speech is reconstructed and analyzed. Based on the analysis, parameters of the automatic speech recognition system are remotely adjusted.

Owner:NUANCE COMM INC

Method and apparatus for high resolution speech reconstruction

A method and apparatus identify a clean speech signal from a noisy speech signal. The noisy speech signal is converted into frequency values in the frequency domain. The parameters of at least one posterior probability of at least one component of a clean signal value are then determined based on the frequency values. This determination is made without applying a frequency-based filter to the frequency values. The parameters of the posterior probability distribution are then used to estimate a set of frequency values for the clean speech signal. A clean speech signal is then constructed from the estimated set of frequency values.

Owner:MICROSOFT TECH LICENSING LLC

Language recognition method and related device

ActiveCN111816159AImprove accuracySpeech recognitionNeural architecturesFeature extractionSpeech reconstruction

The invention discloses a language recognition method and a related device. The method comprises: acquiring input voice; respectively inputting the input voice into feature extraction layers in N parallel neural network models to obtain N pieces of voice feature information; inputting the N pieces of voice feature information into a data reconstruction layer, and carrying out voice reconstructionto obtain N pieces of reconstruction loss information; and determining a language category corresponding to the input voice according to the reconstruction loss information. According to the invention, the language recognition process based on self-supervised learning is realized, and due to the fact that the models of the languages are independent of one another, semantic features and time sequence features of different languages can be automatically mined without setting a large number of distinguishing features, so that the accuracy of language recognition is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Remote tracing and debugging of automatic speech recognition servers by speech reconstruction from cepstra and pitch information

InactiveUS7783488B2Character and pattern recognitionSpeech recognitionTrace fileSpeech reconstruction

Methods and systems are provided for remote tuning and debugging of an automatic speech recognition system. Trace files are generated on-site from input speech by efficient, lossless compression of MFCC data, which is merged with compressed pitch and voicing information and stored as trace files. The trace files are transferred to a remote site where human-intelligible speech is reconstructed and analyzed. Based on the analysis, parameters of the automatic speech recognition system are remotely adjusted.

Owner:NUANCE COMM INC

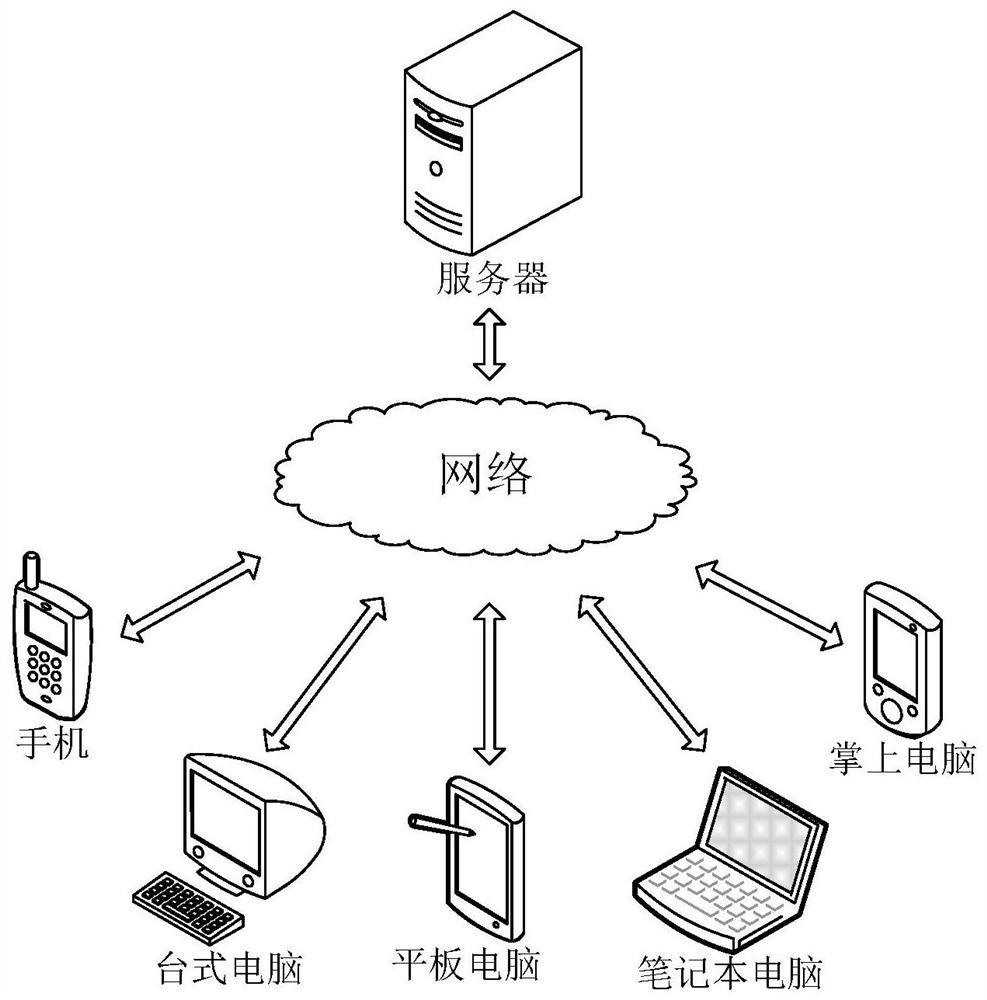

Voice processing method, device and equipment and storage medium

ActiveCN112712813AReduce occupancyReduce time consumptionSpeech analysisSpeech reconstructionEngineering

The invention relates to a voice processing method, device and equipment and a storage medium. The method comprises the steps: obtaining a to-be-processed first voice and a to-be-processed second voice; calling an encoder in a voice processing model obtained by performing optimization training based on at least one target speaker statement to encode the obtained voice, and respectively obtaining a first feature representing text information irrelevant to the identity of the speaker and a second feature representing tone information of the target speaker; and performing decoding and voice reconstruction based on the first feature and the second feature to obtain a target voice after tone conversion. Thus, through an end-to-end voice processing model, the voice processing model does not need a large number of target speaker statements, and the tone modeling ability of the target speaker can be completed only based on a small number of utterances, so that the occupation and time consumption of computing resources for model training are reduced.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

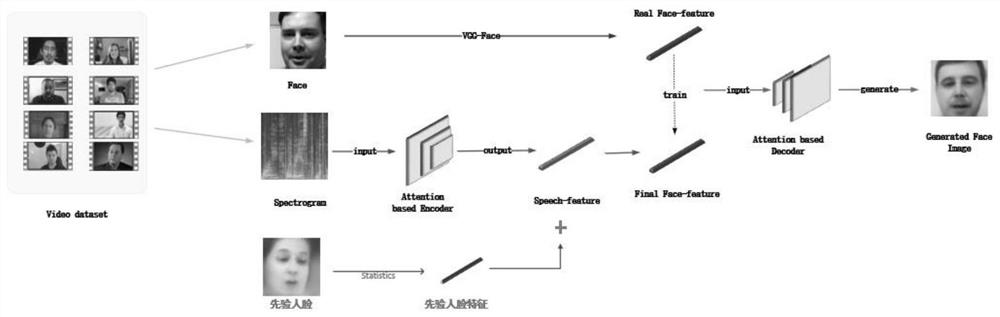

Cross-modal generation method based on voice and face images

ActiveCN112381040AFast convergenceScientific and reasonable designCharacter and pattern recognitionNeural architecturesPattern recognitionSpeech reconstruction

The invention relates to a cross-modal generation method based on voice and a face image. The method comprises the steps of voice reconstruction of a face and personalized voice synthesis of the faceimage. A voice reconstruction face model based on residual priori is provided for voice reconstruction of a face, and the face of the person is generated according to an input section of unknown voice. According to personalized voice synthesis of the face image, a face image personalized voice synthesis model based on residual priori is provided, and the voice of the person is synthesized according to the given face image and a section of text. The invention is scientific and reasonable in design, the effect of the voice reconstruction face model can generate the face image very similar to theoriginal face, the robustness is very high, the number of the generated faces is not a fixed number, the voice of any speaker is input, and the face similar to the speaker can be reconstructed. And the residual priori face image personalized speech synthesis model is also used for synthesizing the speech of the person according to any face image. In addition, the proposed residual priori knowledge method can accelerate convergence of the model and achieve a better effect.

Owner:TIANJIN UNIV

Video synthesis model training method and device, video synthesis method and device, storage medium, program product and electronic equipment

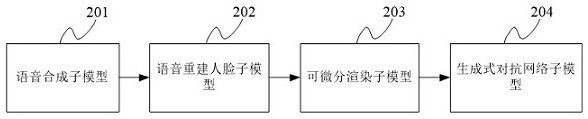

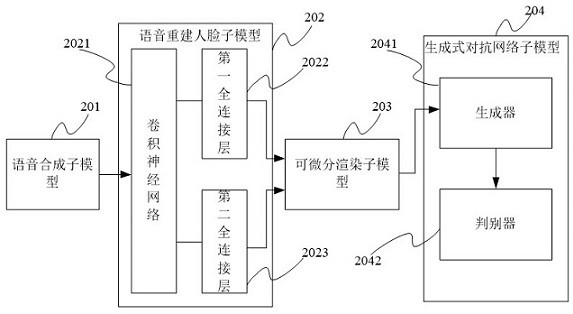

InactiveCN113469292AImprove relevanceImprove training efficiencyCharacter and pattern recognitionNeural learning methodsFeature vectorSynthesis methods

The invention provides a video synthesis model training method and device, a video synthesis method and device, a storage medium, a program product and electronic equipment, and the method comprises the steps: obtaining a sample text and a sample video which is a video that a real person reads the sample text; inputting the sample text into the speech synthesis sub-model to obtain a feature vector; inputting the feature vectors into a voice reconstruction human face sub-model to obtain human face feature parameters; inputting the face feature parameters and the sample video into the differentiable rendering sub-model to obtain a face feature map; and inputting the face feature map into the generative adversarial network sub-model to obtain a virtual real person video, and iteratively training the voice reconstruction face sub-model, the differentiable rendering sub-model and the generative adversarial network sub-model based on the virtual real person video and the sample video until a loss function value of the generative adversarial network sub-model meets a preset condition.

Owner:BEIJING CENTURY TAL EDUCATION TECH CO LTD

Electronic larynx speech reconstructing method and system thereof

ActiveCN101916566BRetain personality traitsQuality improvementCharacter and pattern recognitionSpeech recognitionSpeech soundsImaging analysis

The invention provides an electronic larynx speech reconstructing method and a system thereof. The method comprises the following steps of: firstly, extracting model parameters form collected speech as a parameter library; secondly, collecting the face image of a sounder, and transmitting the face image to an image analysis and processing module to obtain the sounding start moment, the sounding stop moment and the sounding vowel category; thirdly, synthesizing a voice source wave form through a voice source synthesizing module; and finally, outputting the voice source wave form through an electronic larynx vibration output module. Wherein the voice source synthesizing module is used for firstly setting the model parameters of a glottis voice source to synthesize the glottis voice source wave form, then simulating the transmission of the sound in the vocal tract by using a waveguide model and selecting the form parameters of the vocal tract according to the sounding vowel category so as to synthesize the electronic larynx voice source wave form. The speech reconstructed by the method and the system is closer to the sound of the sounder per se.

Owner:XI AN JIAOTONG UNIV

Speech enhancement

Owner:CERENCE OPERATING CO

A language recognition method and related device

ActiveCN111816159BImprove accuracySpeech recognitionNeural architecturesFeature extractionSpeech reconstruction

The application discloses a language recognition method and a related device. By obtaining the input speech; then input the input speech respectively into the feature extraction layers in N parallel neural network models to obtain N speech feature information; and input the N speech feature information into the data reconstruction layer for speech reconstruction to obtain N A reconstruction loss information; and then determine the language category corresponding to the input speech according to the reconstruction loss information. In this way, the language recognition process based on self-supervised learning is realized. Since the models of each language are independent of each other, the semantic features and timing features between different languages can be automatically mined without setting a large number of distinguishing features, thereby improving the accuracy of language recognition .

Owner:TENCENT TECH (SHENZHEN) CO LTD

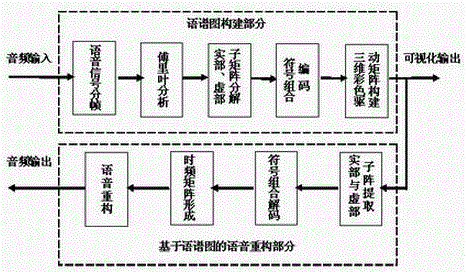

A color complex language spectrogram construction method that can realize speech reconstruction

InactiveCN104392728BImplement speech reconstructionSpeech analysisFast Fourier transformImaging processing

The invention provides a colored repeated sentence spectrum construction method for speech reconstruction, and belongs to the technical field of speech signal processing. The method is characterized in that two color channels respectively serve as a real part and an imaginary part in fourier transform; the position coordinates of R-B synthetic color in an R-G-B color space are corresponding to the real part and the imaginary part in the fourier transform, wherein G is the symbolic combination of the real part and the imaginary part. According to the method, the real part, the imaginary and the symbols of the real part and the imaginary, corresponding to the repeating number, can be analyzed according to the R-G-B color ratio; the speech spectrum is subjected to image processing, then the speech is reconstructed, thus the fourier transform can be performed by enhancing the speech by the image processing technology and the like, and as a result, the speech reconstruction is realized.

Owner:NORTHEAST NORMAL UNIVERSITY

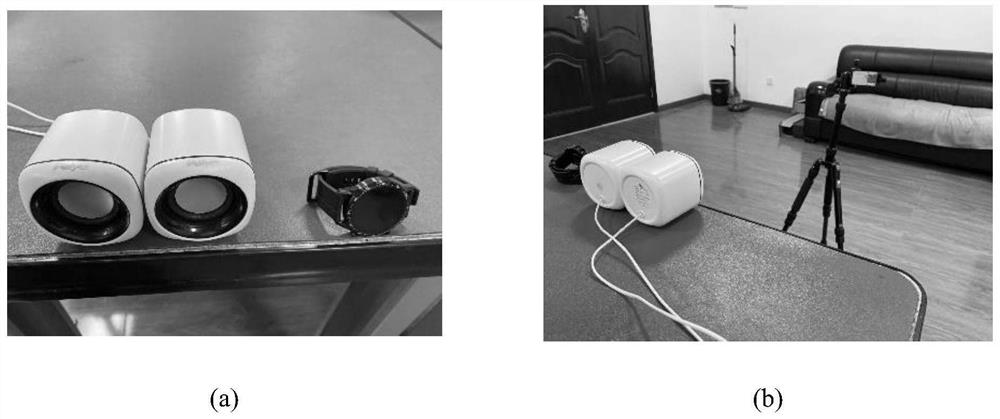

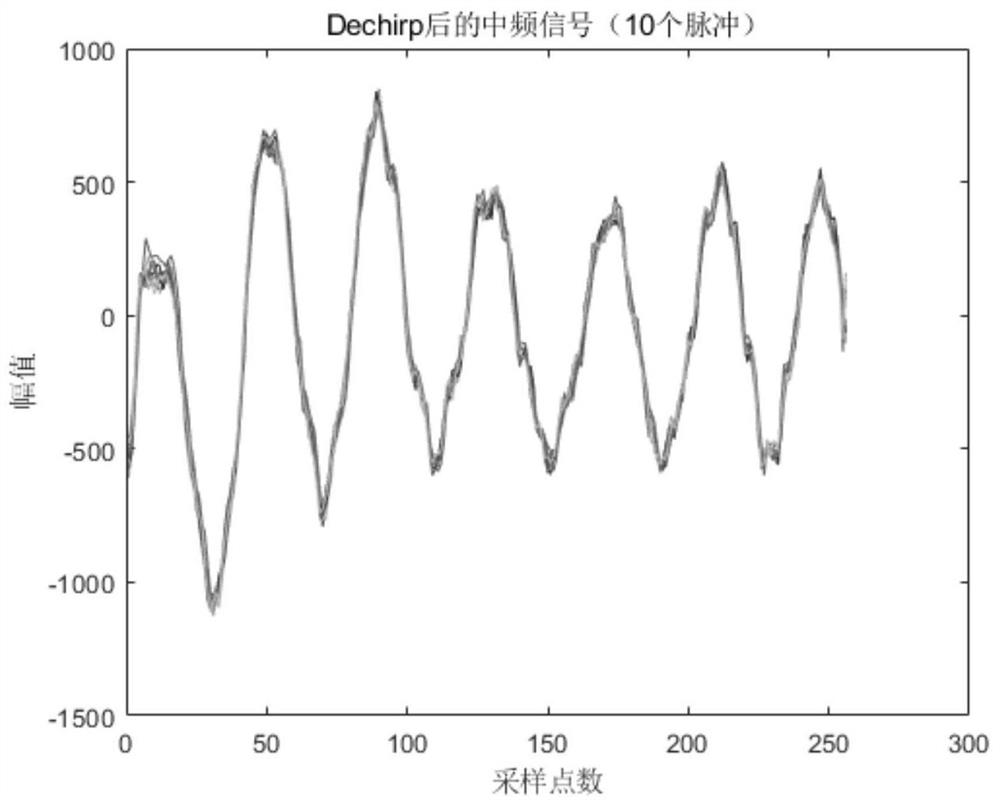

Speech reconstruction method based on millimeter wave radar phase ranging

ActiveCN112254802BQuality improvementHigh Quality Speech Information ReconstructionSubsonic/sonic/ultrasonic wave measurementUsing electrical meansSound sourcesSpeech reconstruction

The invention belongs to the field of voice reconstruction, and in particular relates to a voice reconstruction method based on millimeter-wave radar phase ranging, comprising the following steps: S1 using a discrete Fourier transform method to obtain a high-resolution one-dimensional range image sequence of a sound source target; S2 One-dimensional range image sequence peak point detection based on unit average constant false alarm detector; S3 Peak point phase information extraction and sound source audio information reconstruction. The beneficial effects obtained by the present invention are: high-quality speech information reconstruction can be realized through the present invention, and in a multi-sound source environment, the radar using the millimeter wave frequency band can actively detect the sound source target and accurately obtain the one-dimensional distance of the sound source target Image, and detect the phase information of the peak point, and then obtain the vibration information of the sound source target, and reconstruct the audio information of the sound source target, effectively eliminating the interference of other sound sources.

Owner:NAT UNIV OF DEFENSE TECH

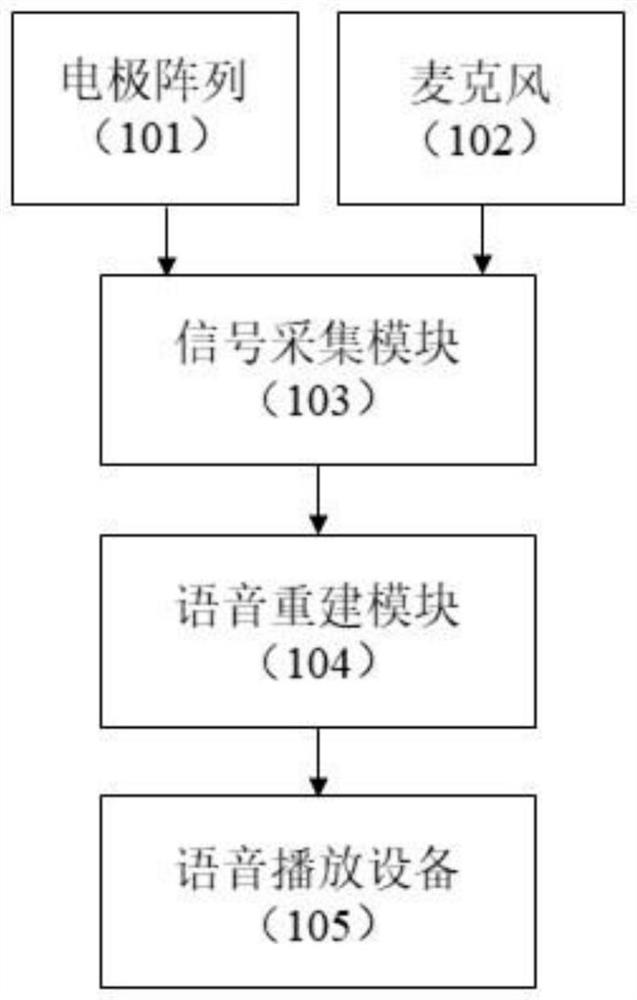

Silent voice reconstruction method based on sounding neural potential signal

PendingCN114530165ASpeech analysisCharacter and pattern recognitionInformation transmissionSpeech reconstruction

The invention discloses a soundless voice reconstruction method based on a sound production neural potential signal, and the method comprises the steps: collecting a sound production related neural potential signal and a voice signal during sound production through an electrode, and collecting the sound production related neural signal under a soundless condition; moreover, the connection between the silent neural potential signal and the voice signal of the same text is obtained through a deep learning network, the reconstruction of the voice signal under the silent condition, the transmission of the information content under the silent condition and the task of silent information transmission are completed, and the information transmission under the silent condition can be directly carried out. For some occasions where sound cannot be produced, the vocal cord does not vibrate, and the information transmission process is completed in a tacit reading mode.

Owner:ZHEJIANG UNIV

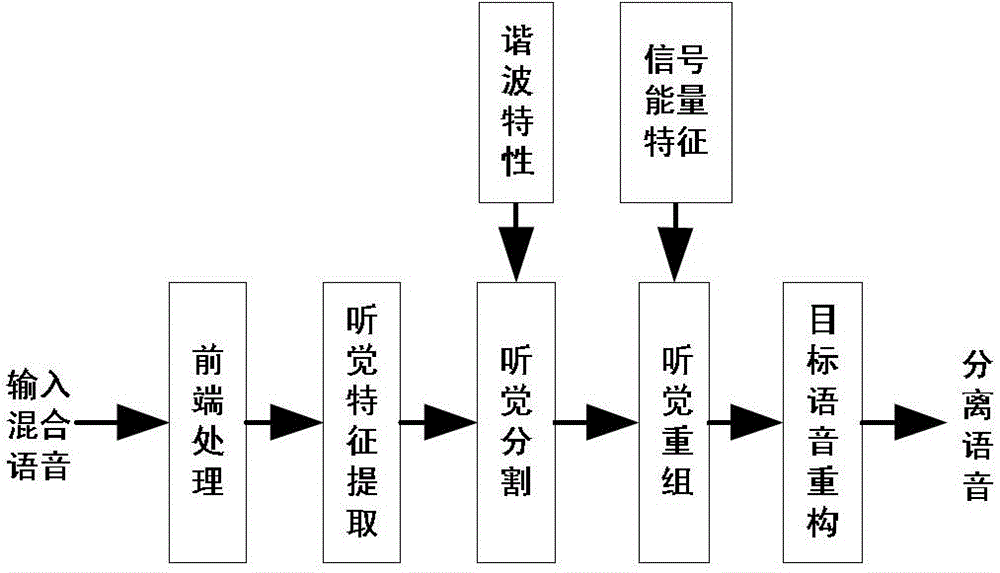

A Single-Channel Speech Blind Separation Method Based on Computational Auditory Scene Analysis

InactiveCN103456312BTroubleshooting Aliased Speech Separation IssuesSpeech analysisSpeech segmentationVoice communication

The invention relates to a single-channel speech blind separation method based on computational auditory scene analysis. The method includes the following steps: step 1, performing front-end processing on the input aliasing speech; step 2, processing the aliasing speech processed in step 1 Perform auditory feature extraction; step 3, perform auditory segmentation on the aliasing speech processed in step 2 based on harmonic characteristics; step 4, perform auditory reorganization on the aliasing speech processed in step 3 based on energy features; Four processed aliased speech for target speech reconstruction. In the presence of noise, the present invention can well solve the problem of single-channel aliasing speech separation, and the separated speech can be applied to the front end of speech recognition, and will have wide applications in the fields of artificial intelligence, speech communication, and sound signal enhancement prospect.

Owner:TAIYUAN UNIV OF TECH

Transient Noise Suppression Method Based on Speech Reconstruction

ActiveCN104599677BTransient Noise SuppressionTransient Noise CancellationSpeech analysisSpeech reconstructionDistribution characteristic

A transient noise suppression method based on speech reconstruction relates to the technical field of audio processing and solves the technical problem of suppressing transient noise. The method eliminates the influence of transient noise through two parts: transient noise detection and transient noise suppression; The distribution characteristics are used to detect the transient noise; secondly, after the transient noise is detected, an algorithm based on speech signal reconstruction is proposed to suppress the transient noise, and the frames containing the transient noise are discarded, and the adjacent undisturbed frames are used The signal undergoes waveform reconstruction to replace the original signal, thereby completely eliminating transient noise without significant speech distortion. The method provided by the invention is suitable for processing speech signals containing transient noise.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com