Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

41 results about "Gammatone filter" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

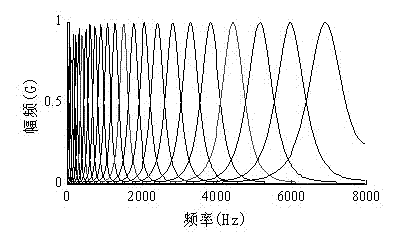

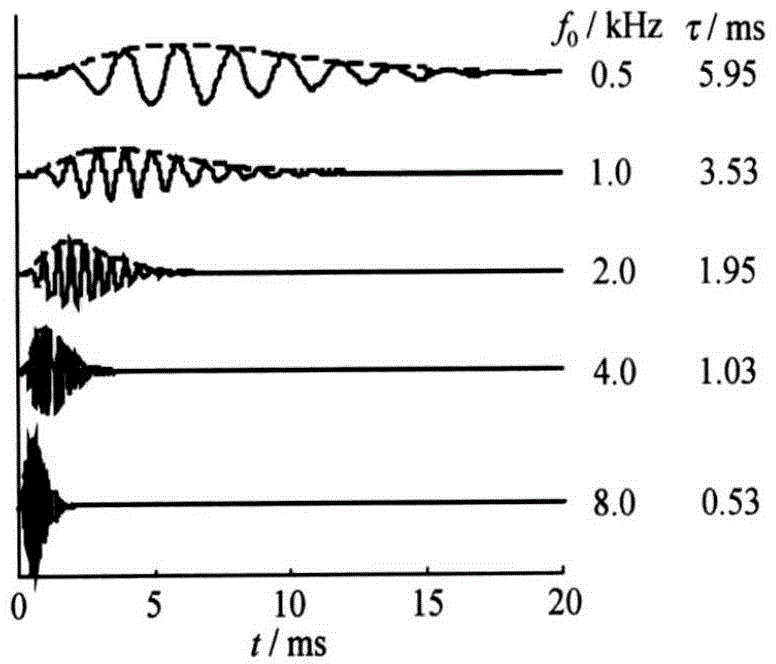

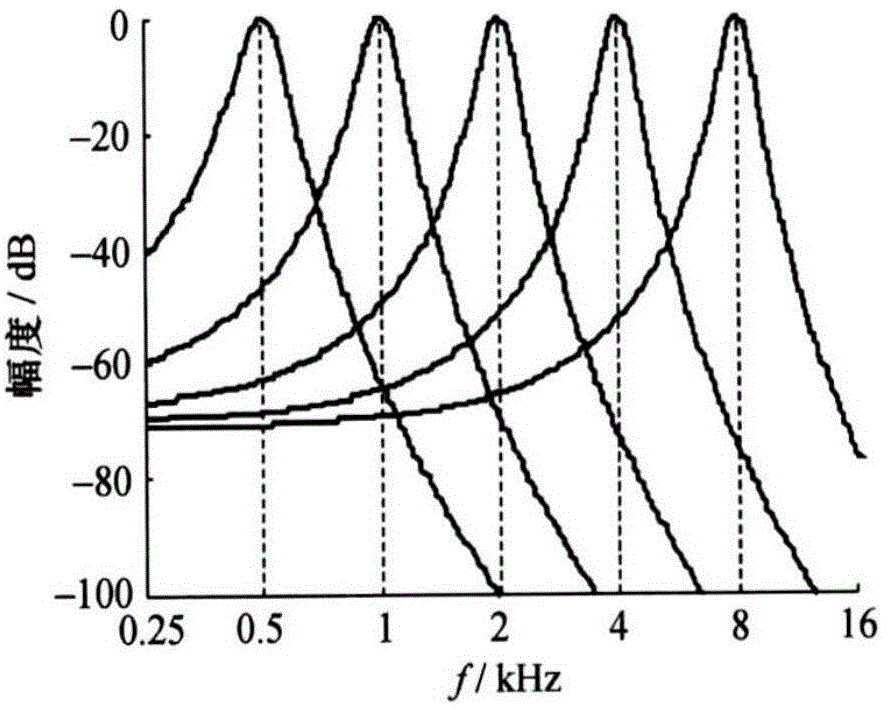

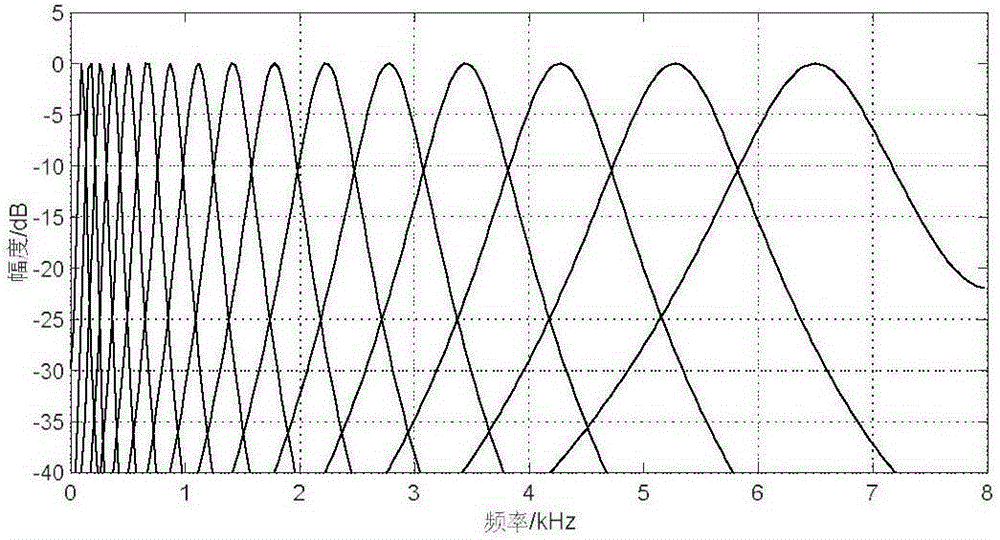

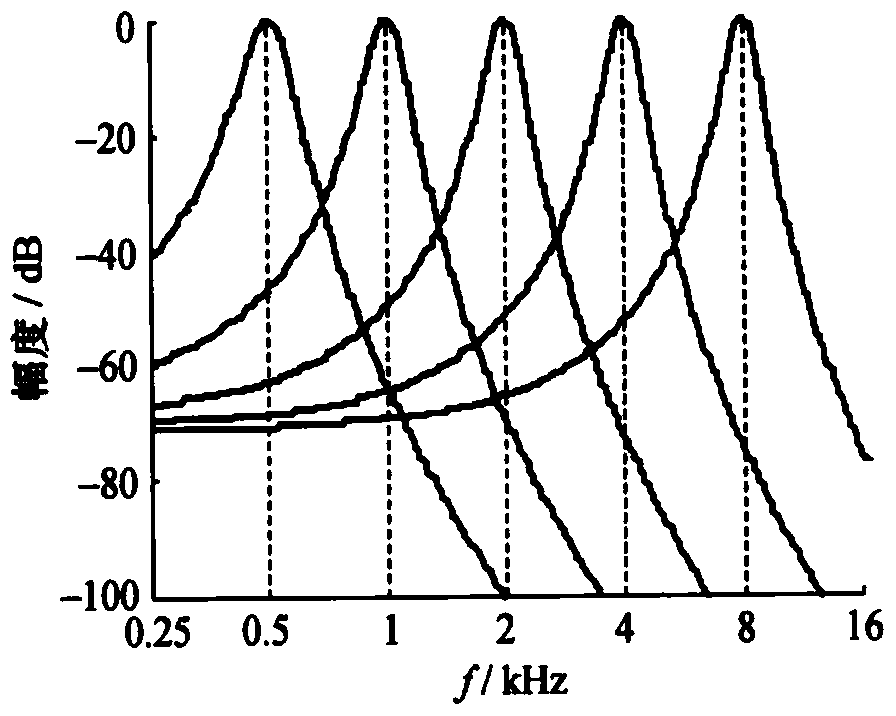

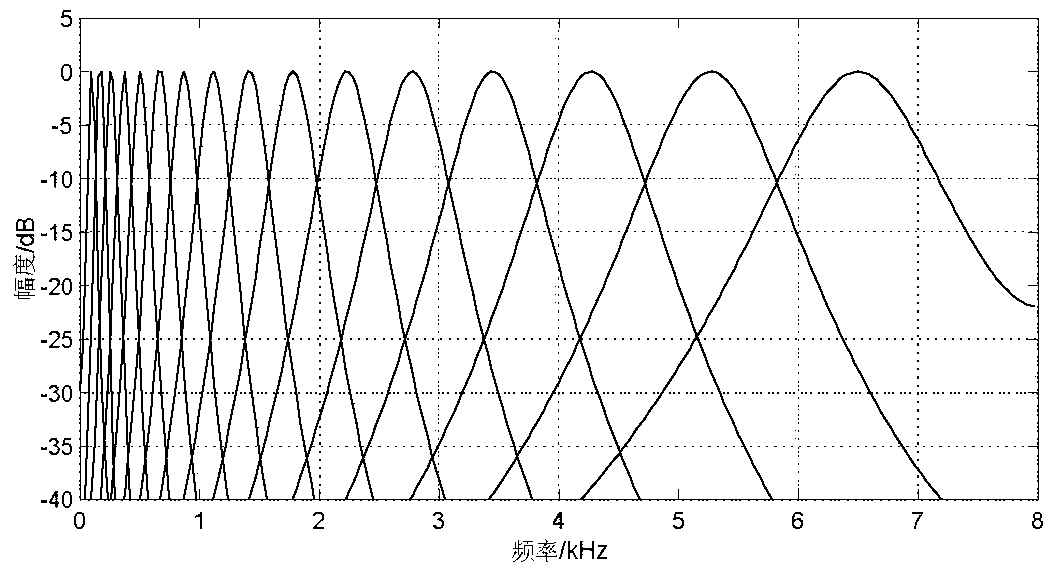

A gammatone filter is a linear filter described by an impulse response that is the product of a gamma distribution and sinusoidal tone. It is a widely used model of auditory filters in the auditory system. The gammatone impulse response is given by g(t)=atⁿ⁻¹e⁻²πᵇᵗcos(2πft+ϕ), where f (in Hz) is the center frequency, ϕ (in radians) is the phase of the carrier, a is the amplitude, n is the filter's order, b (in Hz) is the filter's bandwidth, and t (in seconds) is time.

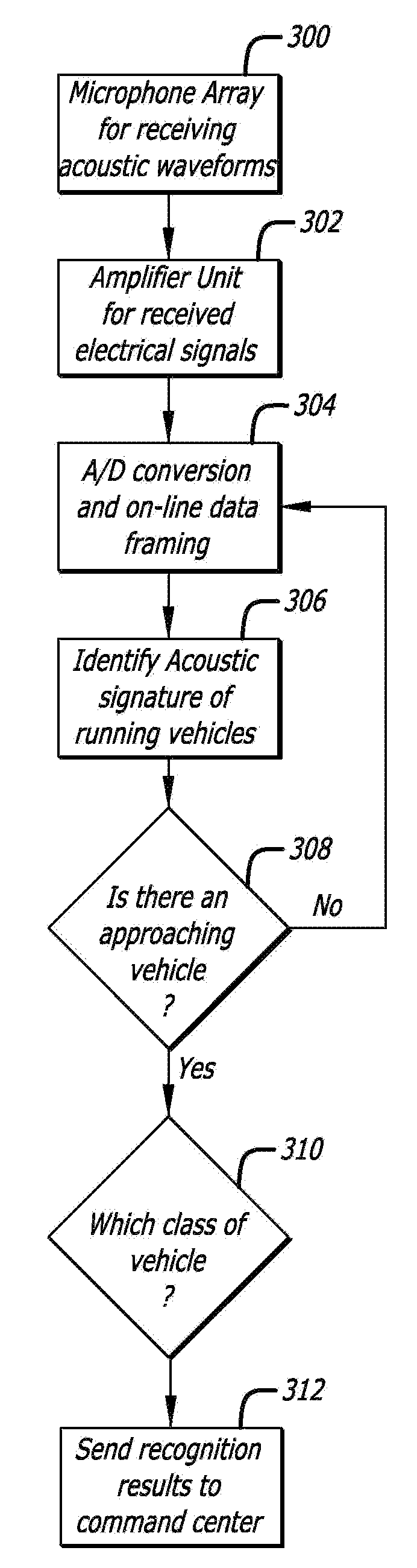

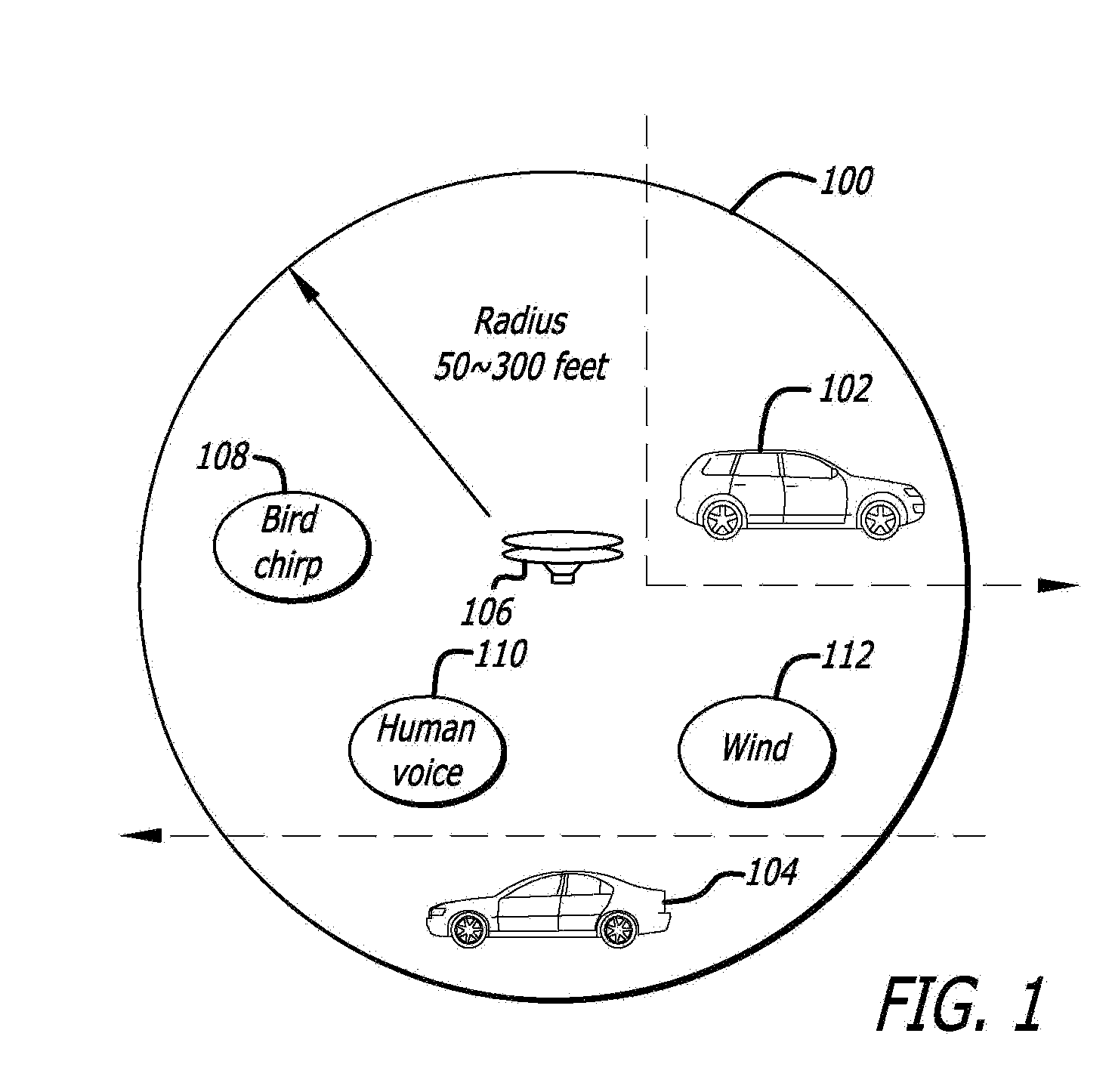

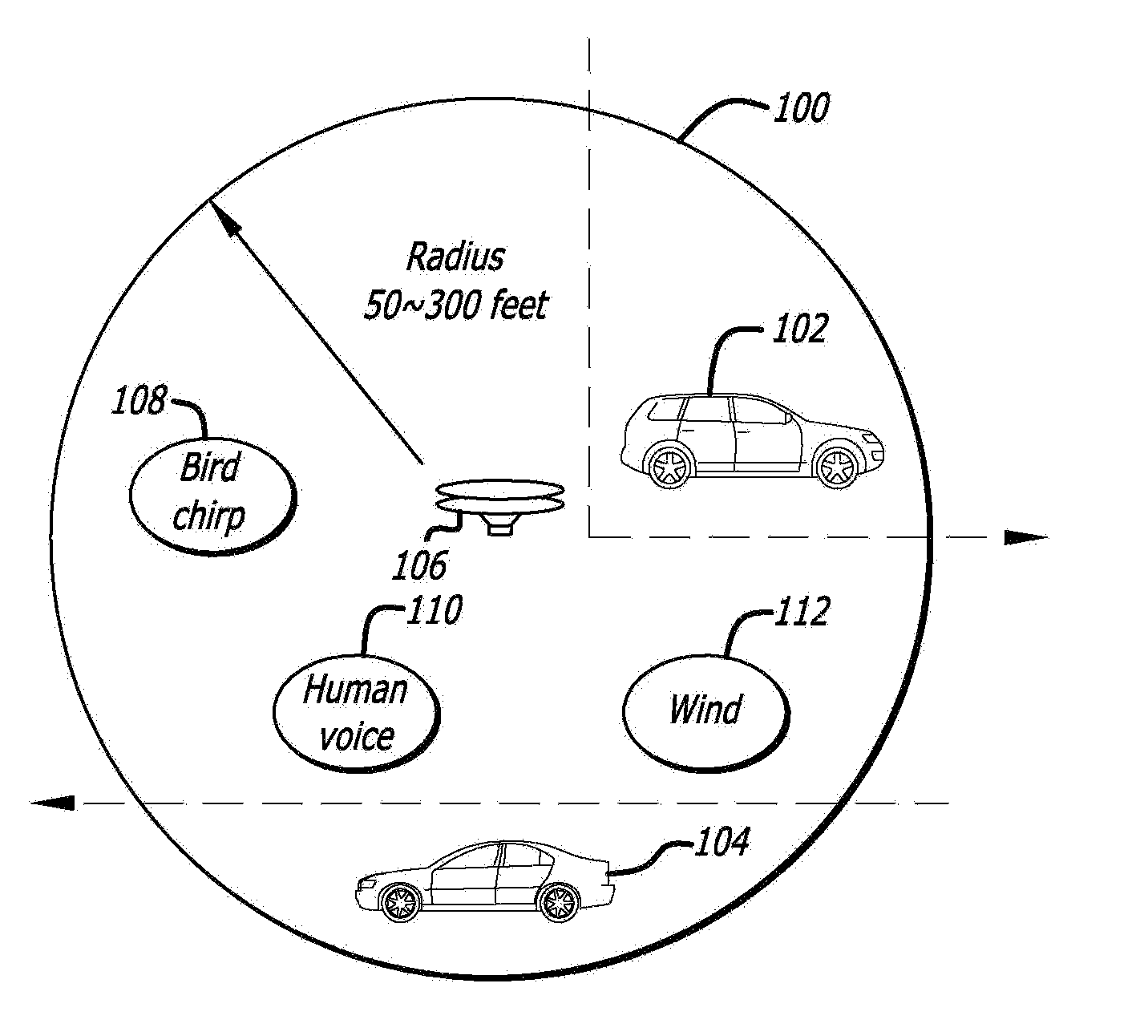

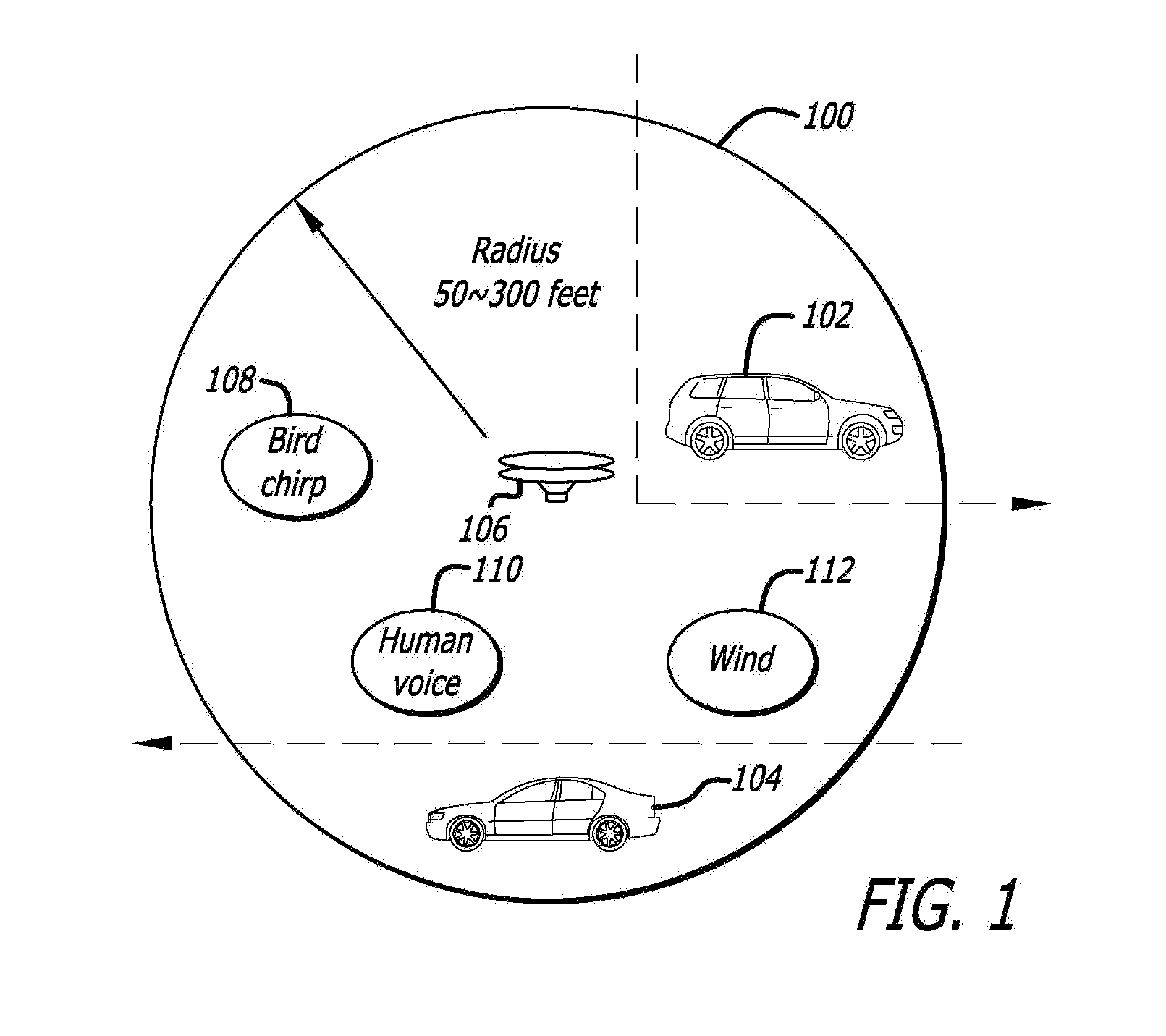

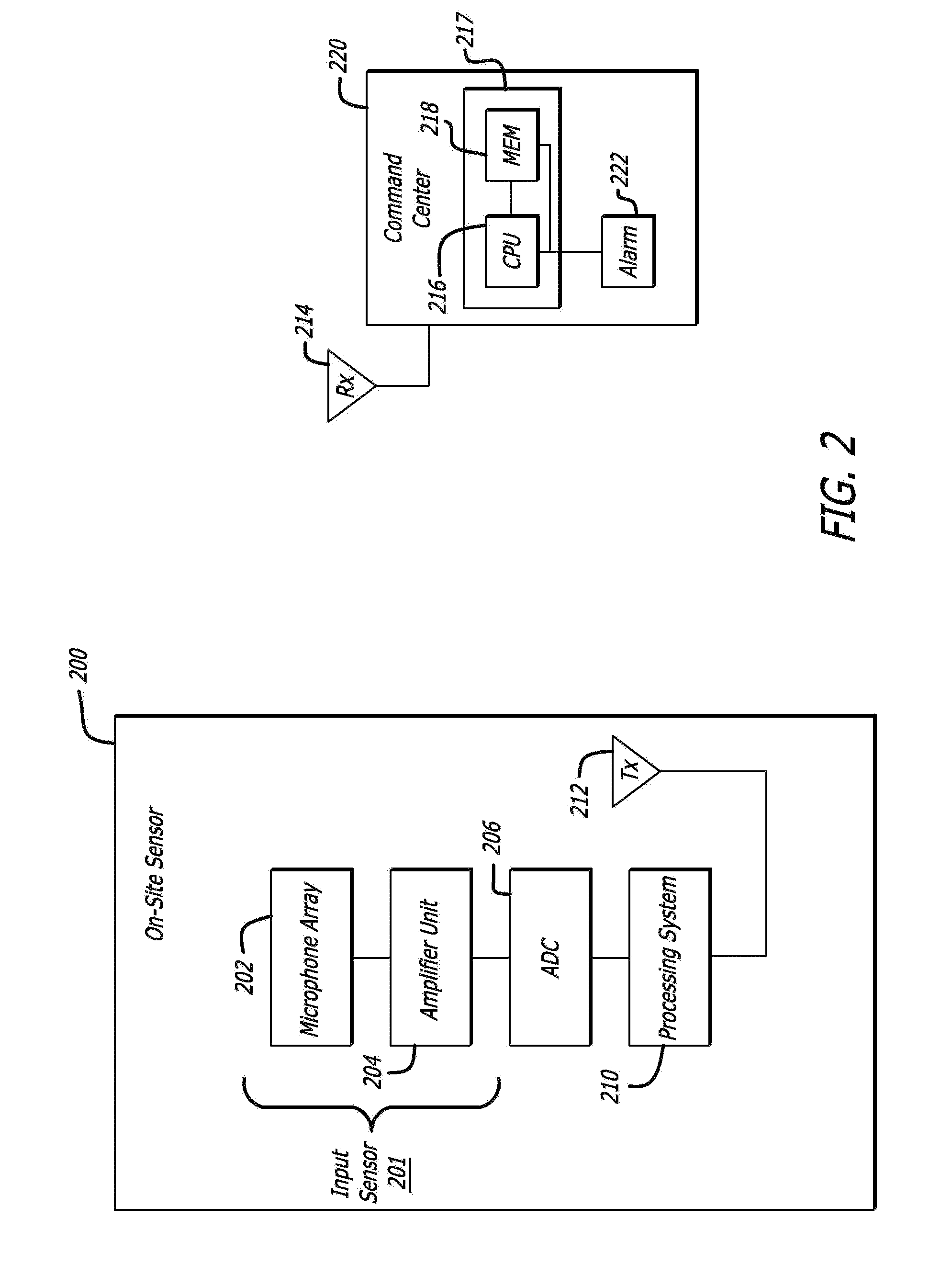

Detection and classification of running vehicles based on acoustic signatures

ActiveUS20090115635A1Analogue computers for vehiclesVibration measurement in fluidFeature vectorSynaptic weight

A method and apparatus for identifying running vehicles in an area to be monitored using acoustic signature recognition. The apparatus includes an input sensor for capturing an acoustic waveform produced by a vehicle source, and a processing system. The waveform is digitized and divided into frames. Each frame is filtered into a plurality of gammatone filtered signals. At least one spectral feature vector is computed for each frame. The vectors are integrated across a plurality of frames to create a spectro-temporal representation of the vehicle waveform. In a training mode, values from the spectro-temporal representation are used as inputs to a Nonlinear Hebbian learning function to extract acoustic signatures and synaptic weights. In an active mode, the synaptic weights and acoustic signatures are used as patterns in a supervised associative network to identify whether a vehicle is present in the area to be monitored. In response to a vehicle being present, the class of vehicle is identified. Results may be provided to a central computer.

Owner:UNIV OF SOUTHERN CALIFORNIA

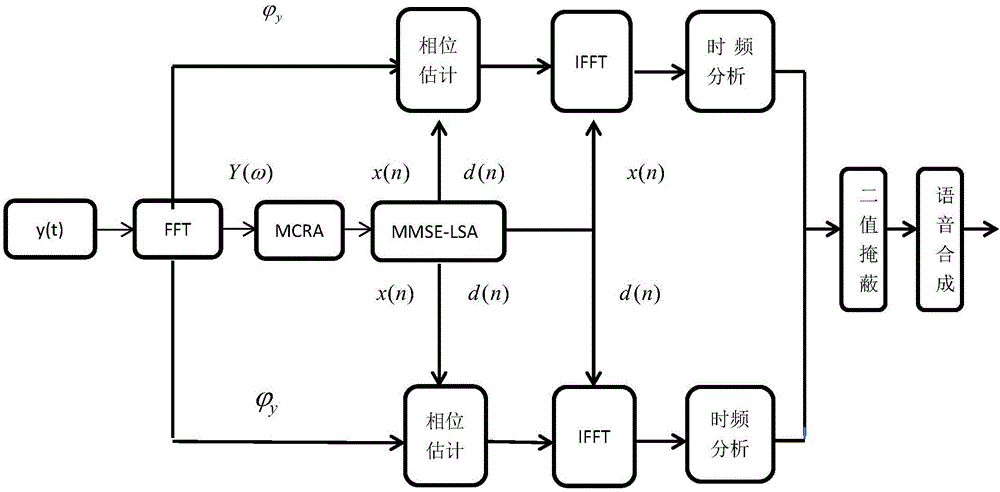

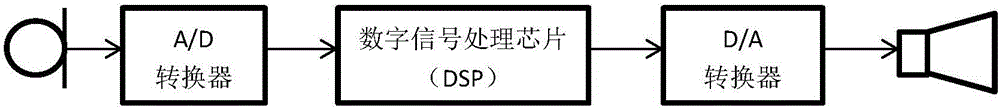

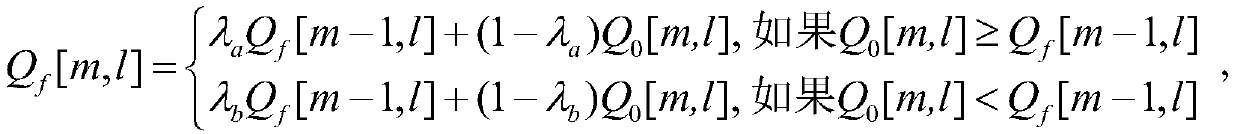

Voice enhancement method for fusing phase estimation and human ear hearing characteristics in digital hearing aid

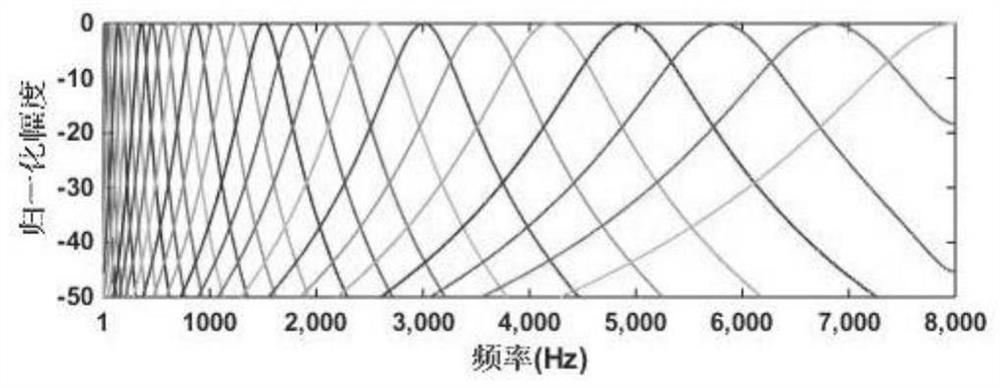

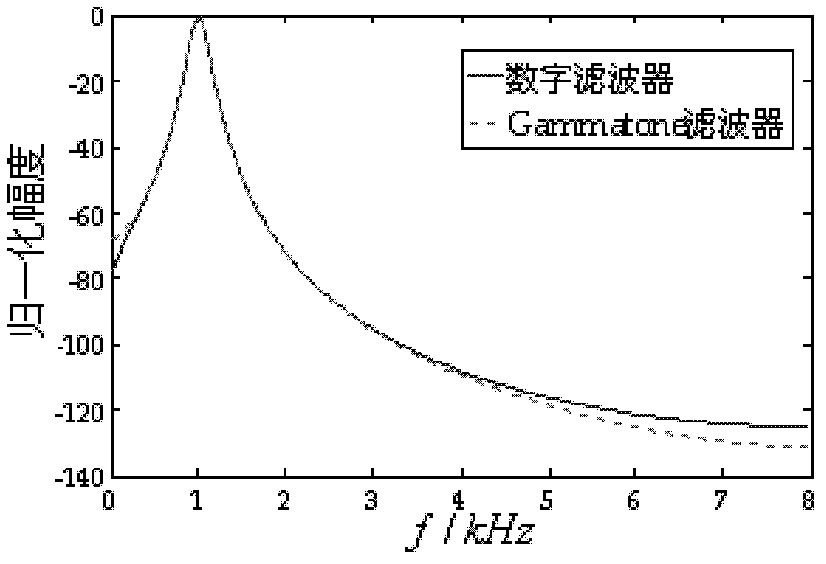

ActiveCN105741849AQuality improvementEnhanced auditory characteristicsSpeech analysisNoise power spectrumGammatone filter

The invention discloses a voice enhancement method for fusing phase estimation and human ear hearing characteristics in a digital hearing aid, comprising steps of obtaining a frequency domain expression mode containing noise through Fourier transformation, adopting a minimum value control recursive average method to obtain a noise power spectrum, obtaining initial enhancement voice and a noise amplitude spectrum, obtaining the initial enhancement voice and noise through correcting the phase of the voice and the noise through improving the phase estimation of the voice distortion under the low signal-to-noise ratio environment, performing filtering processing on the initial enhancement voice and the noise through a gammatone filter assembly which simulates the working mechanism of the artificial cochlea, performing analysis on the time frequency of the gammatone filter assembly to obtain the time frequency expression form consisting of time frequency units, using the hearing characteristics of the human ear to calculate binary mast containing noise in the time frequency domain, and using the mask value to obtain the enhanced voice after synthesis.

Owner:BEIJING UNIV OF TECH

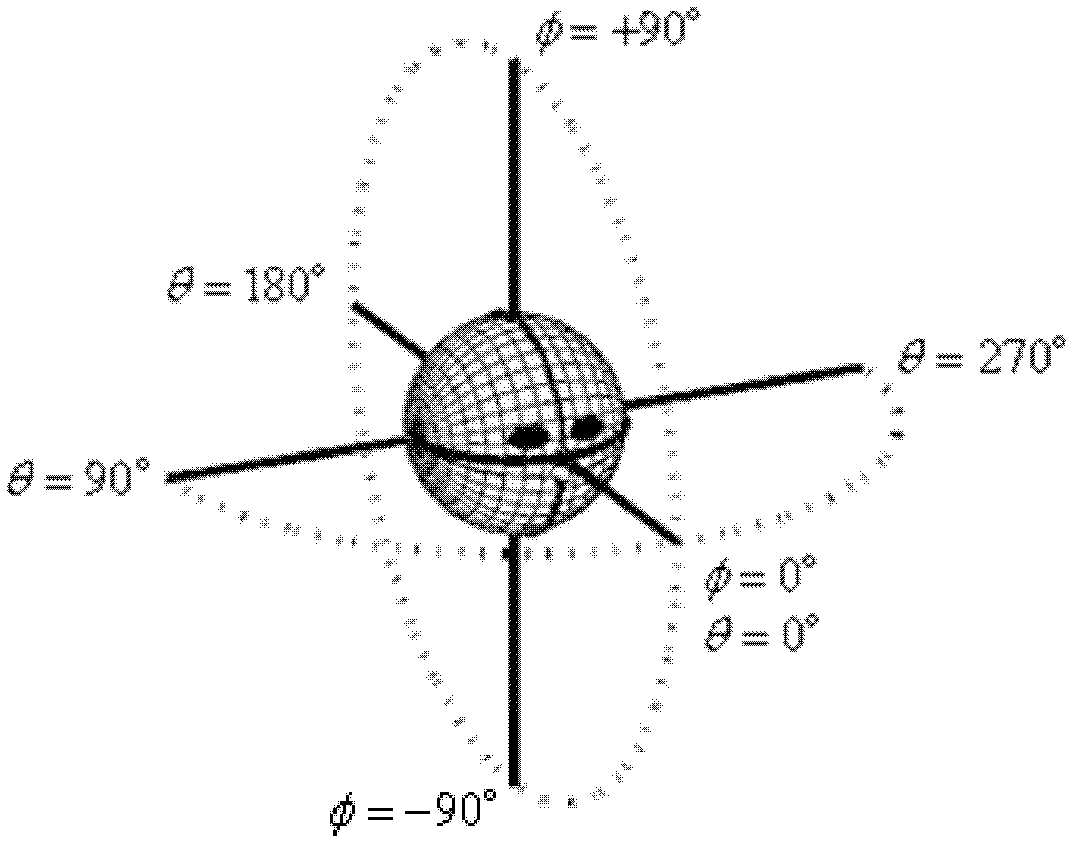

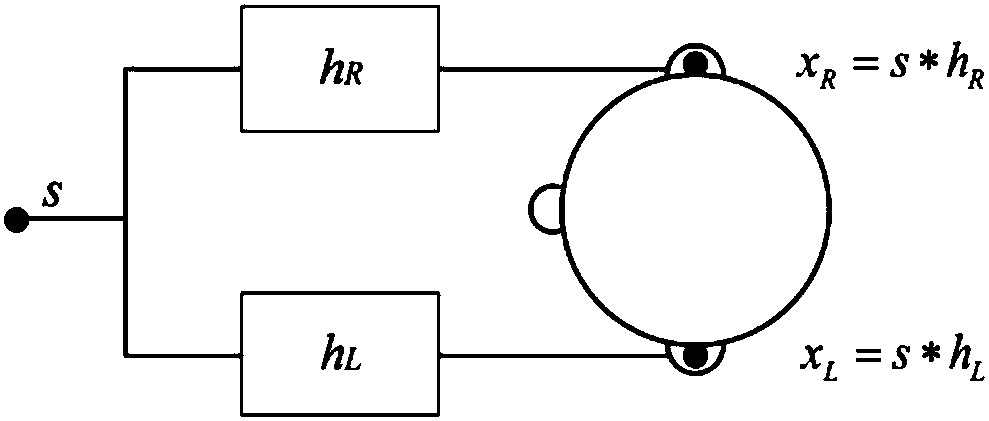

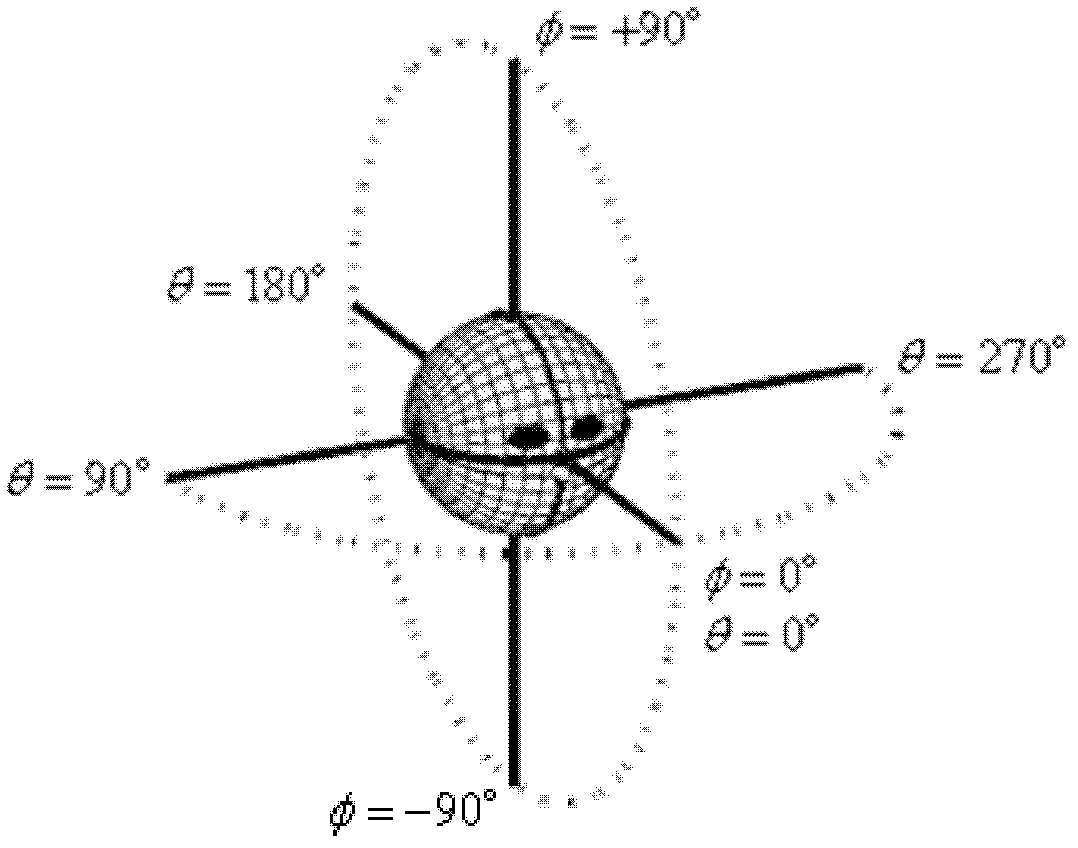

Dual-channel acoustic signal-based sound source localization method

InactiveCN102438189AFrequency/directions obtaining arrangementsInteraural time differenceSound sources

The invention relates to a dual-channel acoustic signal-based sound source localization method which is an improved sound source localization method. In the method, the mean value and the variance of the interaural time difference (ITD) and the interaural intensity difference (IID) of each frequency band are taken as characteristic clues for the localization of the azimuth of a sound source to set up an azimuth mapping model. In the actual localization of the sound source, dual-channel acoustic signals are inputted, the inputted acoustic signals are subjected to frequency band allocation and filtering processing by a Gammatone filter group which is similar to a human aural filter, then, are inputted to a characteristic extraction module, the localization information on the ITD and the IID of each subband is extracted, the localization clues on the ITD and the IID of each subband are integrated based on a Gaussian mixture model (GMM), and the likelihood values of the ITD and the IID on the corresponding frequency band of each azimuth angle are obtained and are served as the decision values for azimuth estimation. The system has higher sound source localization performance.

Owner:SOUTHEAST UNIV

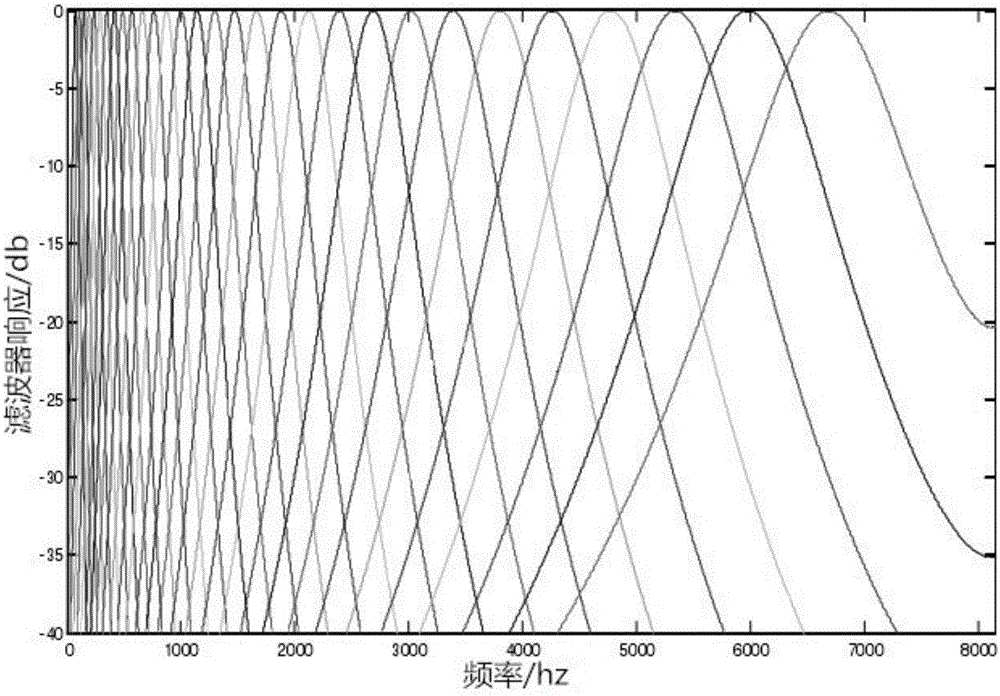

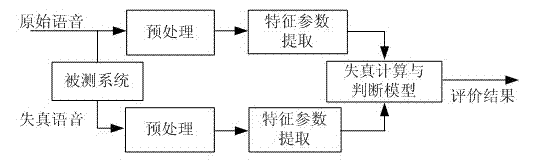

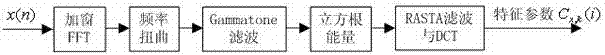

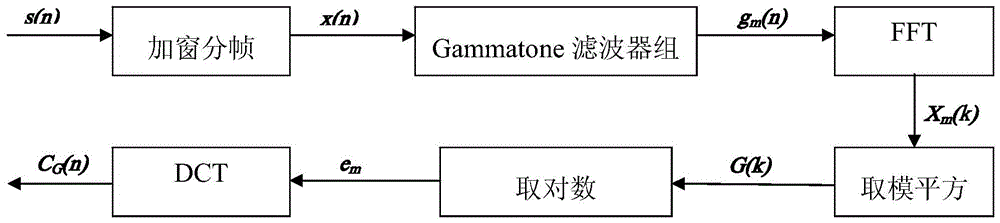

Hearing perception characteristic-based objective voice quality evaluation method

InactiveCN102881289AImprove relevanceReduce complexitySpeech analysisMel-frequency cepstrumGammatone filter

The invention discloses a hearing perception characteristic-based objective voice quality evaluation method which is simple and effective. An ear hearing model and non-linear compression conversion are introduced into an extraction process of MFCC (Mel frequency cepstrum coefficient) characteristic parameters according to psychoacoustics principles. According to the method, a Gammatone filter is adopted to simulate a cochlea basement membrane; and the strength-loudness perception characteristics of the voice are simulated through cube root non-linear compression conversion in an amplitude non-linear conversion process. By using new characteristic parameters, a voice quality evaluation method which is more accordant with the ear hearing perception characteristics is provided. Compared with other methods, the relevancy between objective evaluation results and subjective evaluation results is effective improved, the operation time is shorter and the complexity is lower, and the method has stronger adaptability, reliability and practicability. A new solution to improve the objective voice quality evaluation can be provided through the method for voice quality evaluation by simulating the hearing perception characteristics of human ears.

Owner:CHONGQING UNIV

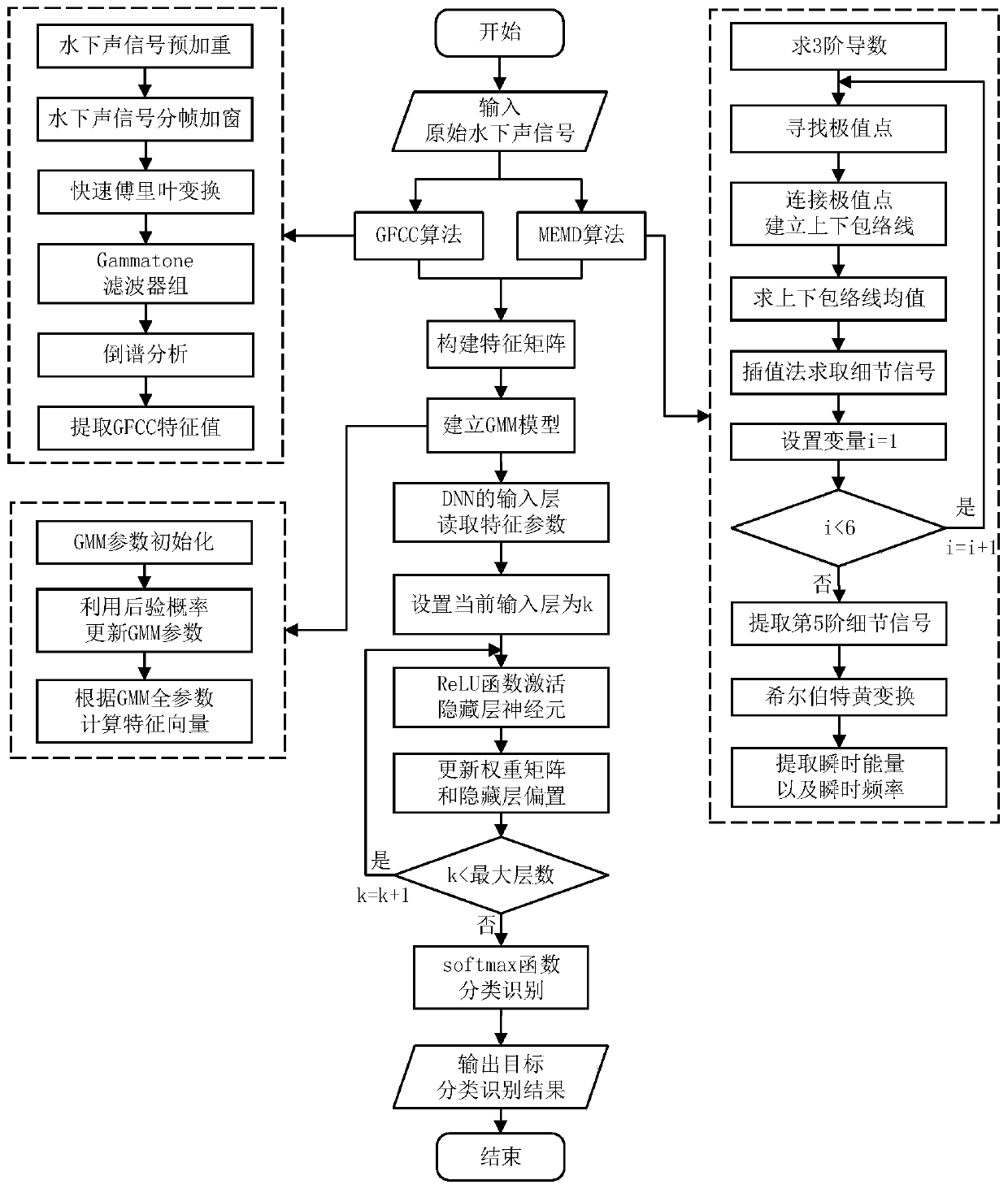

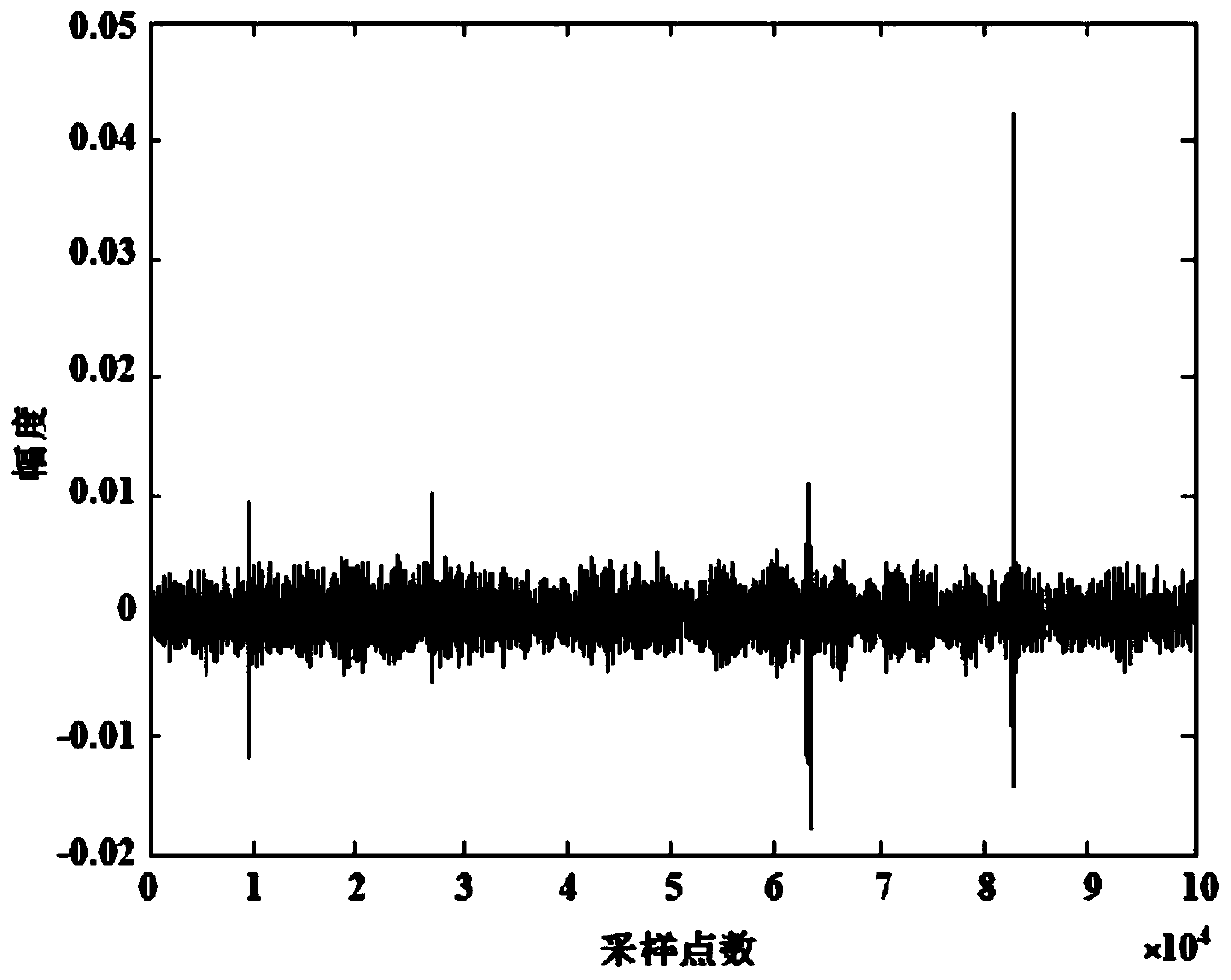

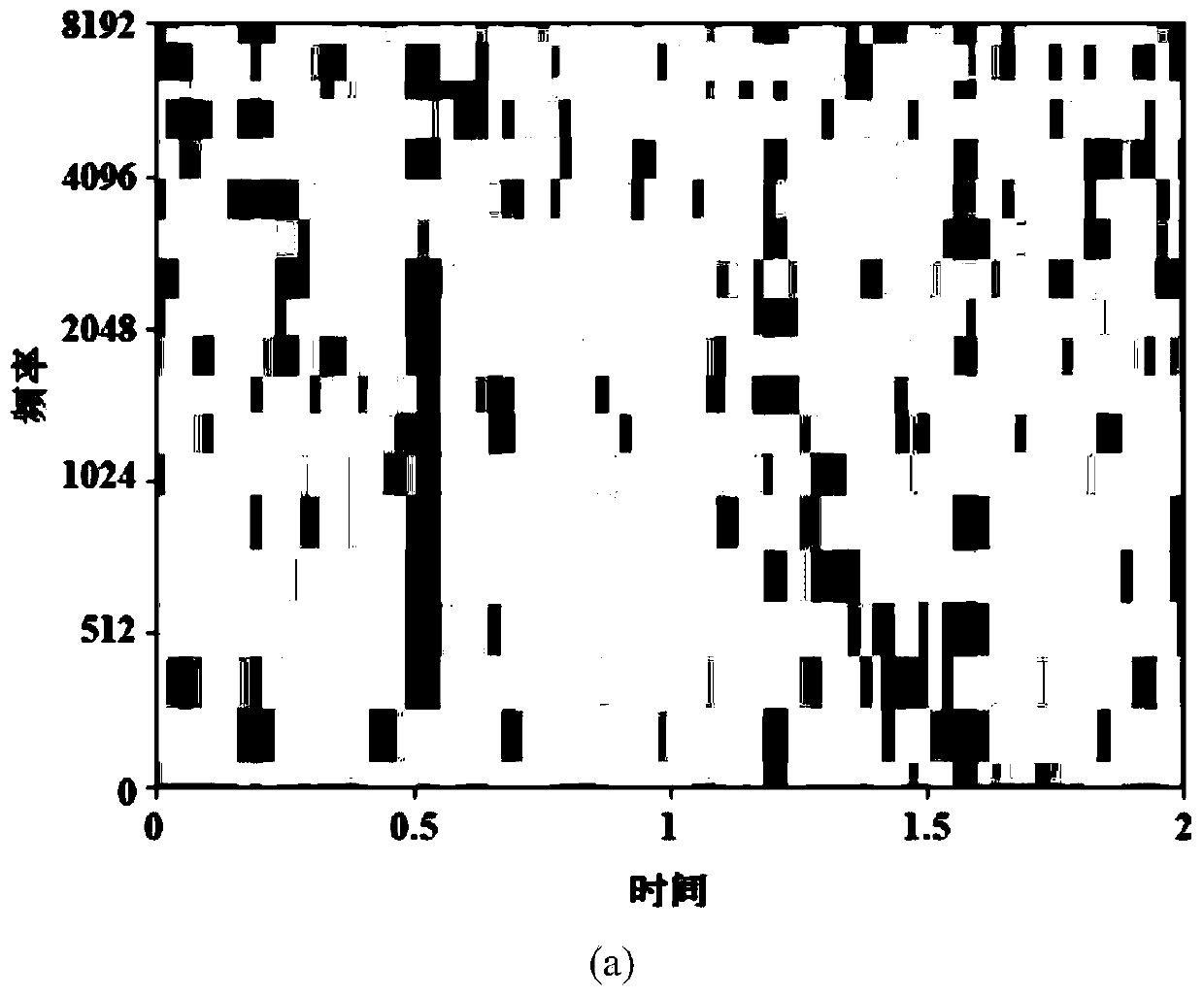

Underwater acoustic signal target classification and recognition method based on deep learning

ActiveCN109800700AEffective Voiceprint FeaturesImprove robustnessBiological neural network modelsCharacter and pattern recognitionFeature extractionDecomposition

The invention belongs to the technical field of underwater acoustic signal processing, and particularly relates to an underwater acoustic signal target classification and recognition method based on deep learning. The method comprises the following steps: (1) carrying out feature extraction on an original underwater acoustic signal through a Gammatone filtering cepstrum coefficient (GFCC) algorithm; (2) extracting instantaneous energy and instantaneous frequency by utilizing an improved empirical mode decomposition (MEMD) algorithm, fusing the instantaneous energy and the instantaneous frequency with characteristic values extracted by a GFCC algorithm, and constructing a characteristic matrix; (3) establishing a Gaussian mixture model GMM, and keeping the individual characteristics of theunderwater acoustic signal target; And (4) finishing underwater target classification and recognition by using a deep neural network (DNN). According to the underwater acoustic signal target classification and recognition method, the problems that a traditional underwater acoustic signal target classification and recognition method is single in feature extraction and poor in noise resistance can be solved, the underwater acoustic signal target classification and recognition accuracy can be effectively improved, and certain adaptability is still achieved under the conditions of weak target acoustic signals, long distance and the like.

Owner:HARBIN ENG UNIV

Bi-ear time delay estimating method based on frequency division and improved generalized cross correlation

ActiveCN107479030AImprove positioning accuracyGood anti-reverberation performanceSpeech analysisPosition fixationSound sourcesTime delays

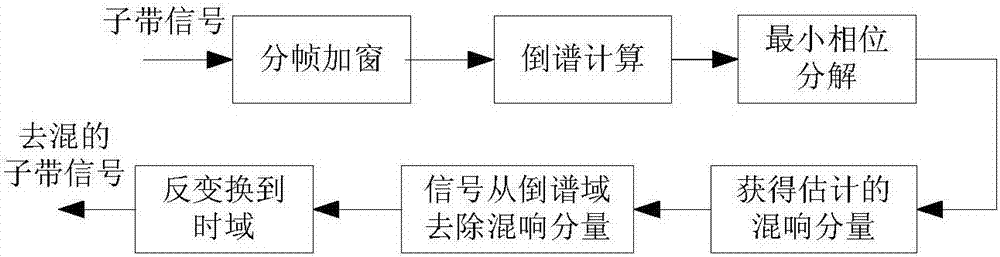

The invention provides a bi-ear time delay estimating method based on frequency division and improved generalized cross correlation in reverberation environment, and relates to the field of sound source positioning. A Gammatone filter is used to effectively simulate characteristics of a basal membrane of a human ear, voice signals are subjected to frequency division processing, and two-ear cross-correlation delay is estimated under a reverberation environment. Compared with a generalized cross correlation delay estimating method, the method can estimate time delay more accurately. The sound source positioning system has better robustness. A Gammatone filter is used to conduct frequency dividing processing for bi-ear signals, and each sub-band signal is subjected to inverse transformation to a time domain after reverberation processing of cepstrum and pre-filtering. Each sub-band signal of left and right ears are subjected to generalized cross correlation operation, an improved phase transformation weight function is employed in a generalized cross correlation algorithm to obtain cross correlation value of each sub-band for summing operation, and the bi-ear time difference corresponding to maximal cross correlation value is obtained.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Acoustic signature recognition of running vehicles using spectro-temporal dynamic neural network

InactiveUS20110169664A1Vibration measurement in fluidDetection of traffic movementFeature vectorDynamic neural network

Owner:UNIV OF SOUTHERN CALIFORNIA

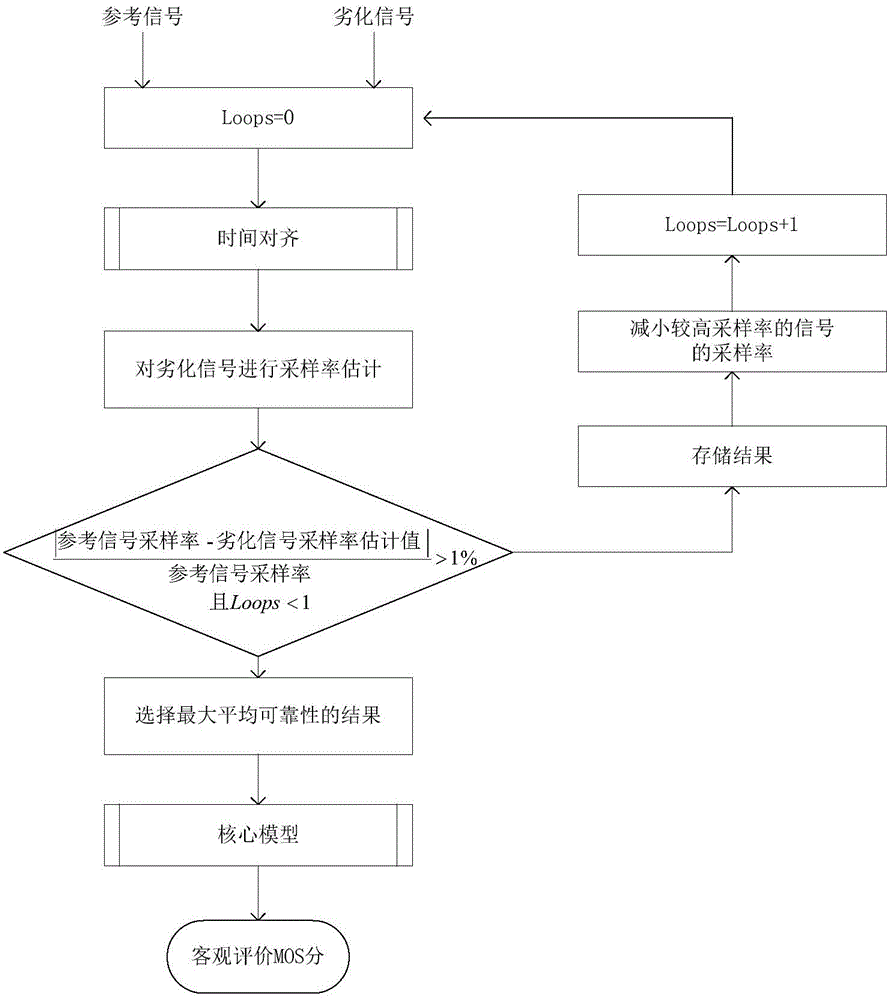

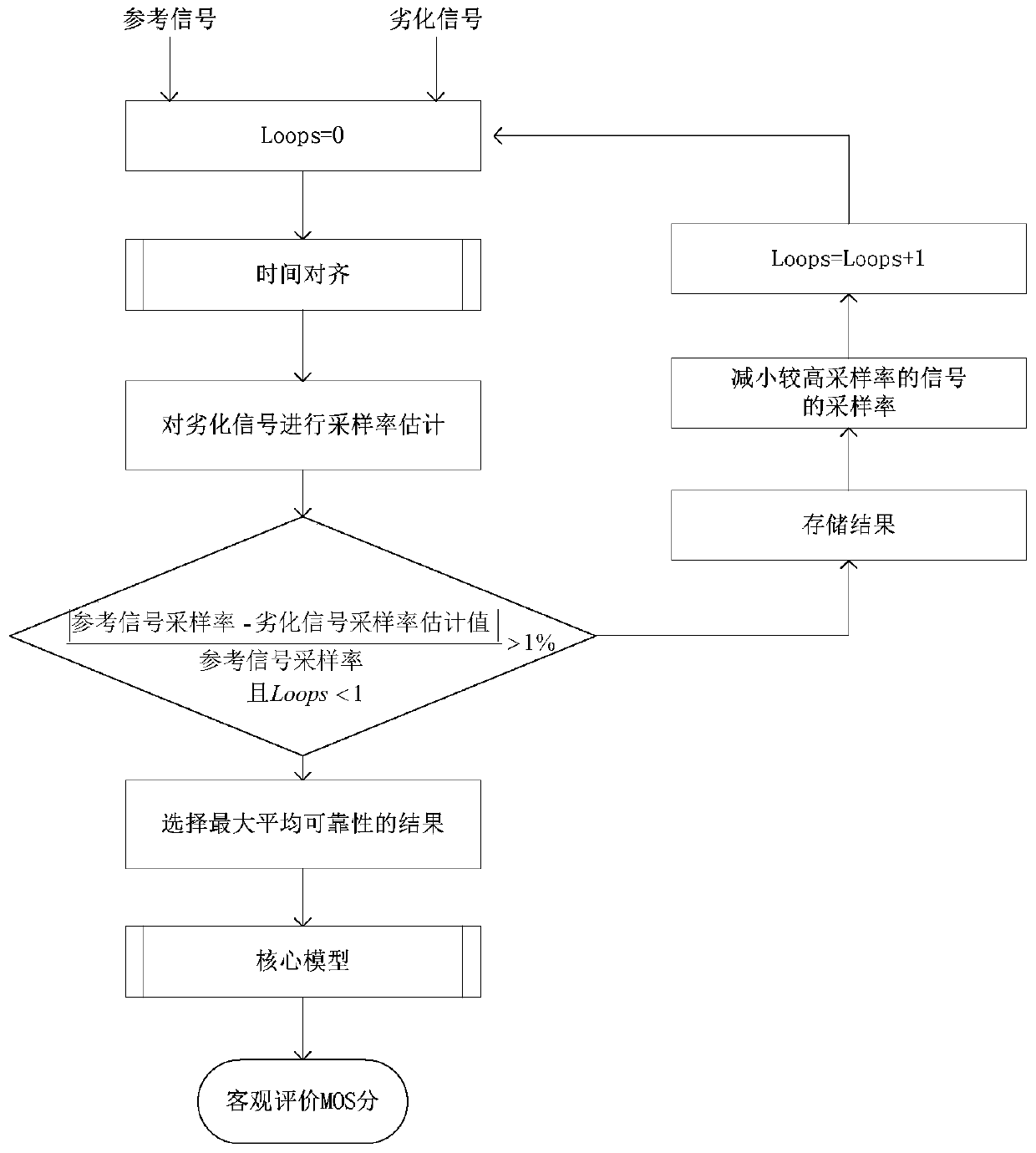

Auditory perception characteristic-based speech quality objective evaluating method

ActiveCN104485114AImprove relevanceEasy performance analysisSpeech analysisEvaluation resultFrequency spectrum

The invention discloses an auditory perception characteristic-based speech quality objective evaluating method. The method is characterized in that a frequency spectrum is mapped into a Buck spectrum module, and the Buck spectrum module is added into a Gammatone filter bank to filter. The method comprises the following specific steps of (1) processing a reference signal and a degraded signal through POLQA (Perceptual Objective Listening Quality Analysis), and adding the reference signal and the degraded signal into a core model; (2) mapping the frequency spectrum in the core model into the Buck spectrum module, adding the Buck spectrum module into the Gammatone filter bank to filter, and performing acoustic conversion to enable the extracted auditory spectrum to be more approximate to the auditory perception of ears of people; (3) after performing acoustic conversion, performing interference analysis to analyze the distortion of the degraded signal relative to the reference signal so as to obtain an objective evaluation MOS score. Compared with the other methods, the method has the advantage that the relevancy between an objective evaluation result and a subjective evaluation result is effectively improved.

Owner:HUNAN INST OF METROLOGY & TEST +1

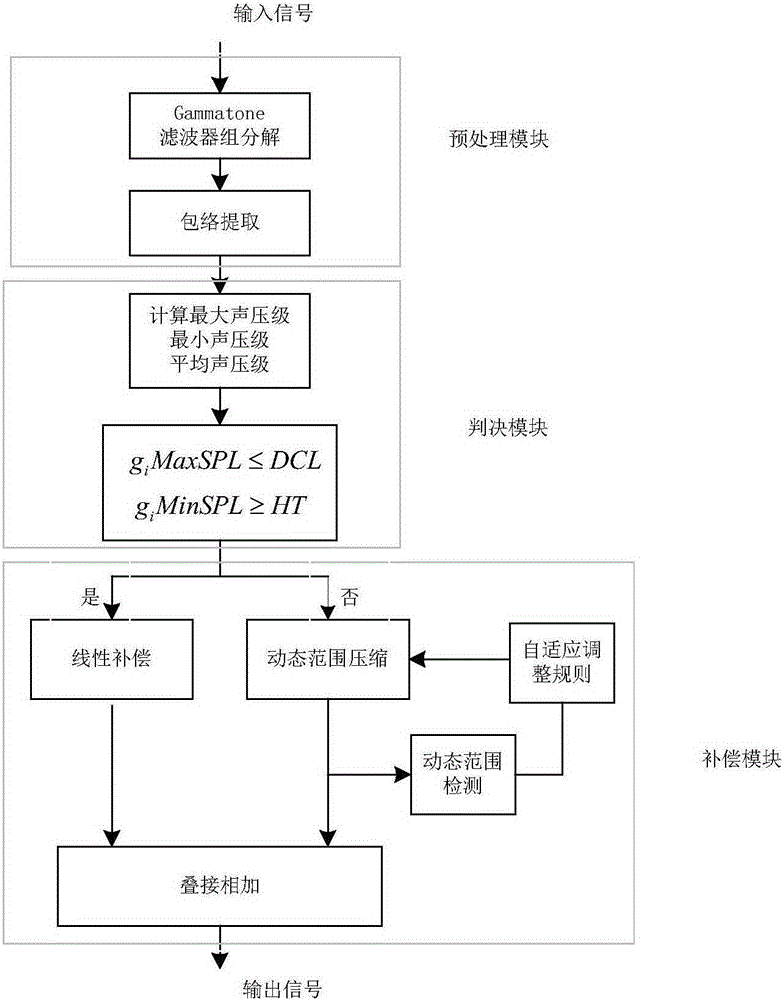

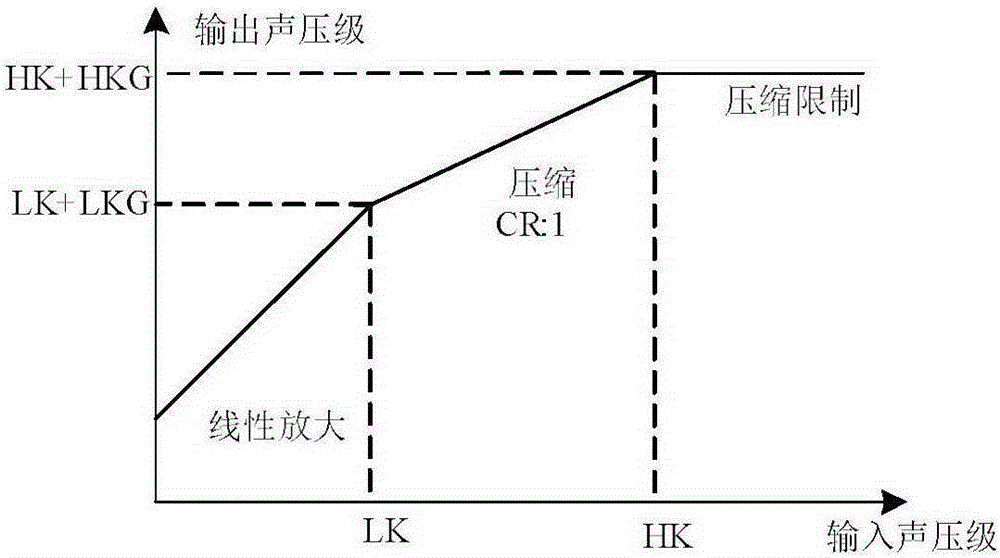

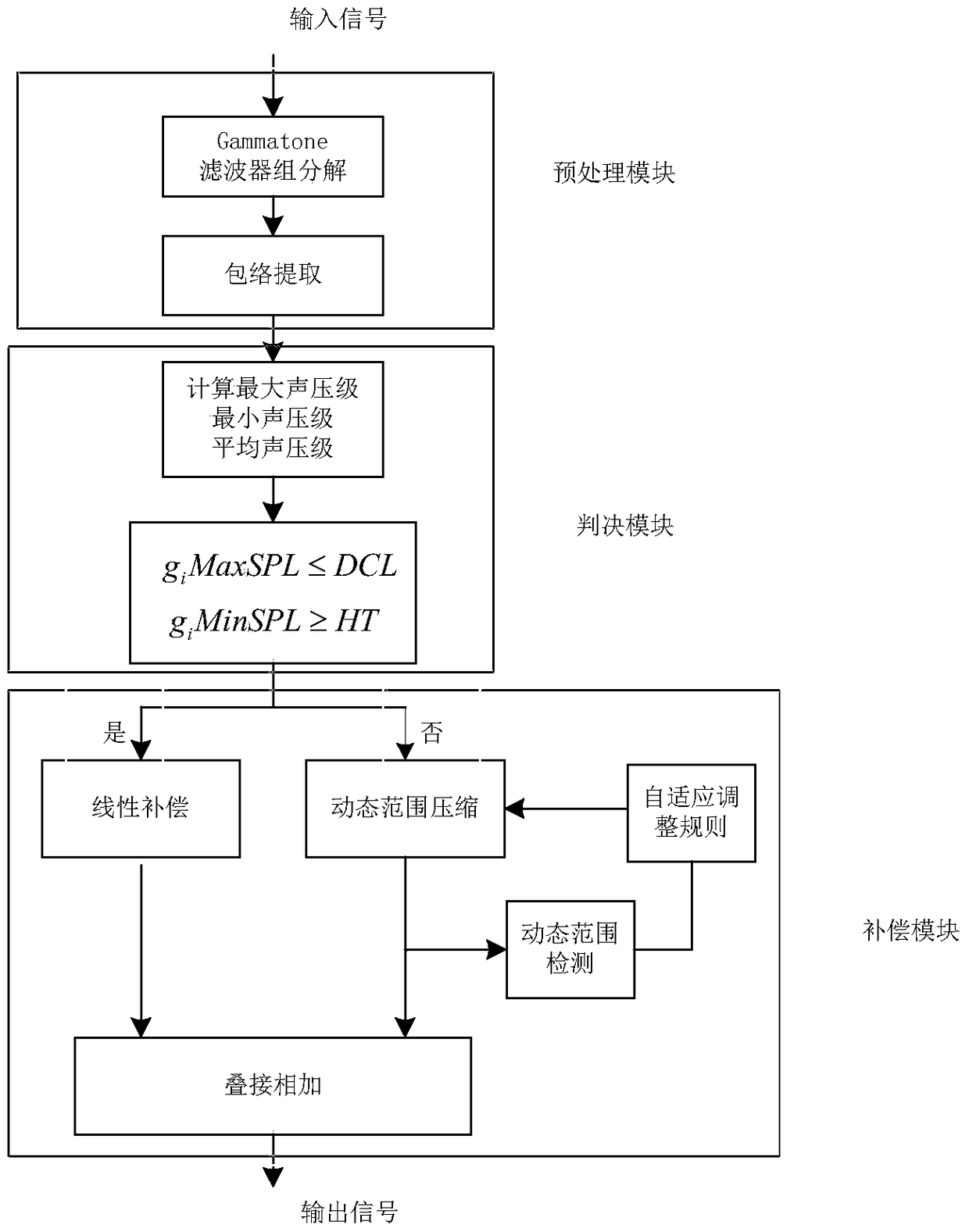

Self-adaptive hearing compensation method

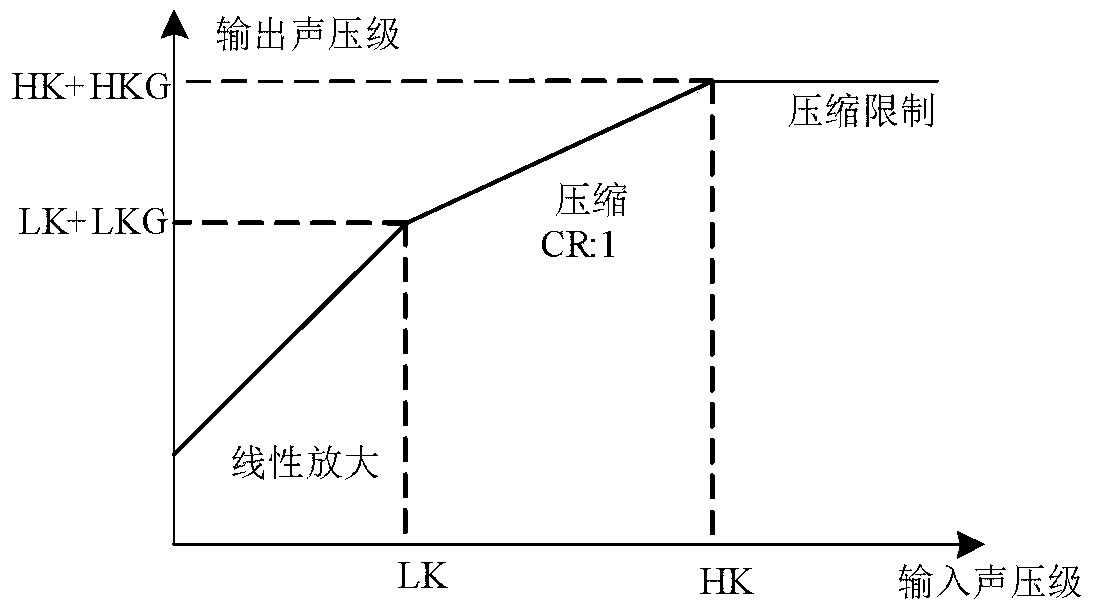

The invention discloses a self-adaptive hearing compensation method. The self-adaptive hearing compensation method comprises the following steps of firstly performing multi-channel decomposition for input signals by utilizing a gammatone filter group; then determining a compensation method based on a dynamic range of the signals in the channels and a hearing range of a hearing loss patient; if channel signals after being subjected to linear gain treatment are still within the hearing range of the patient, performing hearing compensation by using linear amplification so as to reduce distortion; otherwise, compensating by using dynamic range compression so as to add audibility. In addition, in order to reduce signal distortion caused by dynamic range compression and increase SNR (Signal to Noise Ratio) of an output signal in a noise environment, the self-adaptive compression method is adopted for hearing compensation so as to enable a compression ratio to be close to 1 to the greatest extent. Compared with existing hearing compensation methods, the self-adaptive hearing compensation method has higher intelligibility of compensated voice and obtains very strong practicability.

Owner:SOUTHEAST UNIV

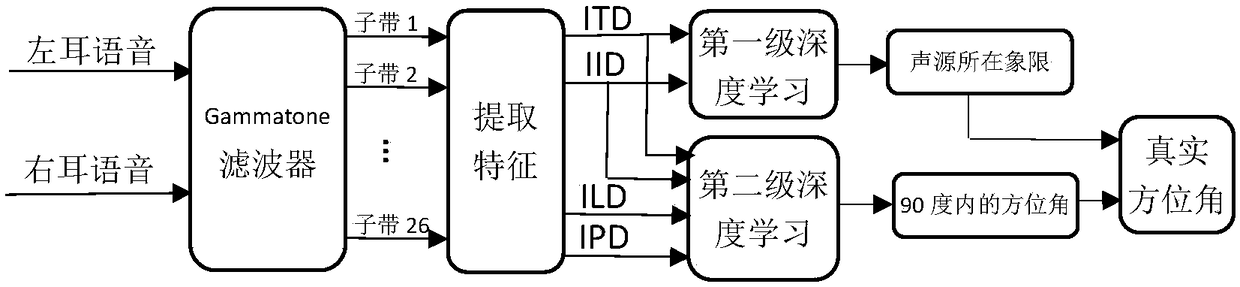

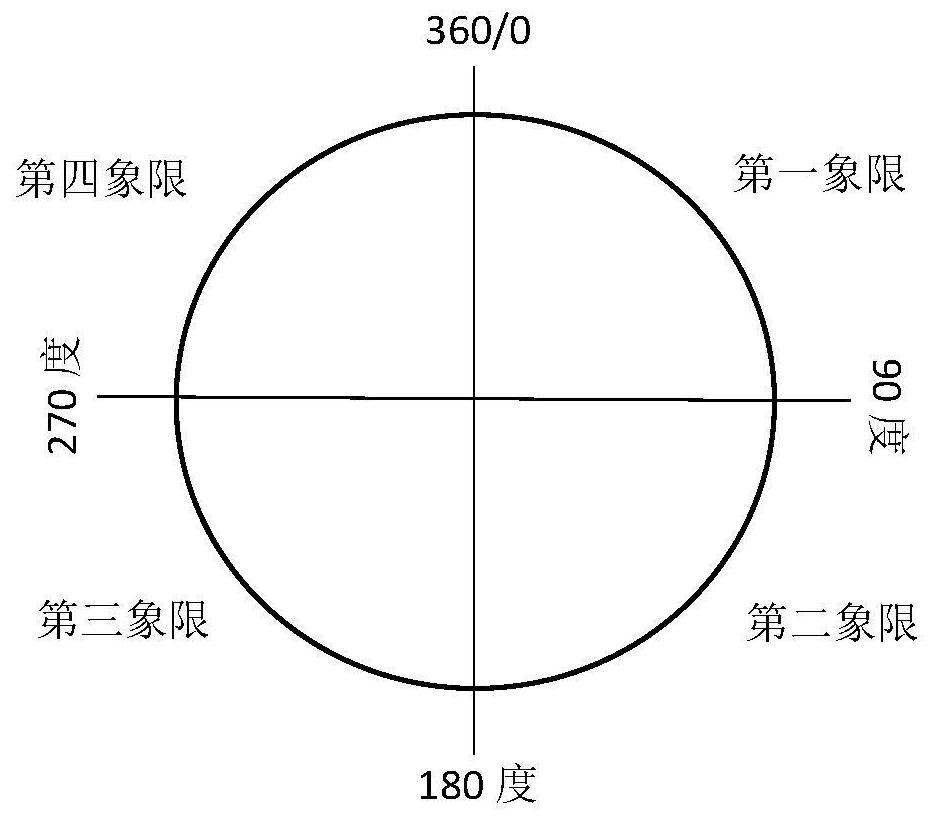

Deep learning-based binaural sound source positioning method in digital hearing aid

ActiveCN108122559AImprove learning abilityStrong offline trainingSpeech analysisSets with desired directivityInteraural time differencePattern recognition

The invention discloses a deep learning-based binaural sound source positioning method in a digital hearing aid. The method comprises the following steps: firstly, decomposing a binaural sound sourcesignal into a plurality of channels through a gammatone filter, extracting a high-energy channel through a weighting coefficient, then extracting a first type of features by using a head-related-transform function (HRTF), namely an Interaural Time Difference (ITD) and an Interaural Intensity Difference (IID) which are used as inputs of deep learning, and dividing a horizontal plane into four quadrants to narrow a positioning range; secondly, extracting a second type of features of head-related transform, namely an Interaural Level Difference (ILD) and an Interaural Phase Difference (IPD); andfinally, in order to realize more precise positioning, taking the first and second types of four features as inputs of next deep learning, thereby obtaining an azimuth angle of sound source positioning. Precise positioning of 72 azimuth angles is realized on the horizontal plane from 0 degree to 360 degrees at a step length of 5 degrees.

Owner:BEIJING UNIV OF TECH

Binaural speech separation method based on support vector machine

ActiveCN108091345AAchieve robustnessImprove robustnessSpeech analysisCharacter and pattern recognitionInteraural time differenceSound sources

The invention discloses a binaural speech separation method based on a support vector machine. The method comprises the steps that after a binaural signal passes through a Gammatone filter, the interaural time difference ITD and the parameter interaural intensity difference IID of each sub-band acoustic signal are extracted; in a training phase, the sub-band ITD and IID parameters extracted from apure mixed binaural signal containing two sound sources are used as the input features of the support vector machine SVM, and the SVM classifier of each sub-band is trained; and in a test phase, in an environment with reverberation and noise, the sub-band features of a test mixed binaural signal containing two sound sources are extracted, and the SVM classifier of each sub-band is used to classify the feature parameters of each sub-band to separate each sound source in mixed speech. According to the invention, the method is based on the classification capability of the support vector machinemodel; robust binaural speech separation in a complex acoustic environment is realized; and the problem of frequency point data loss is effectively solved.

Owner:SOUTHEAST UNIV

Sound scene classification method based on network model fusion

ActiveCN110600054ARich and three-dimensional informationEasy to identifySpeech analysisNeural architecturesSupport vector machineFrequency spectrum

The invention discloses a sound scene classification method based on network model fusion. According to the method, multiple different input characteristics are created through a sound track separation mode, an audio cutting mode and other modes, gamma pass filter cepstrum coefficients, Mel spectral characteristics and first-order and second-order differences of audio signals are extracted to serve as the input characteristics, multiple different corresponding convolutional neural network models are trained respectively, and lastly a support vector machine stacking method is adopted to realizea final fusion model. Through the method, the audio input characteristics with strong recognition are extracted by the adoption of the sound track separation mode, the audio cutting mode and other modes, a single-channel convolutional neural network and a two-channel convolutional neural network are constructed, a unique model fusion structure is generated finally, richer and more stereoscopic information can be obtained, the recognition rate and robustness of different sound scene classifications are effectively improved, and the method has a good application prospect.

Owner:南京天悦电子科技有限公司

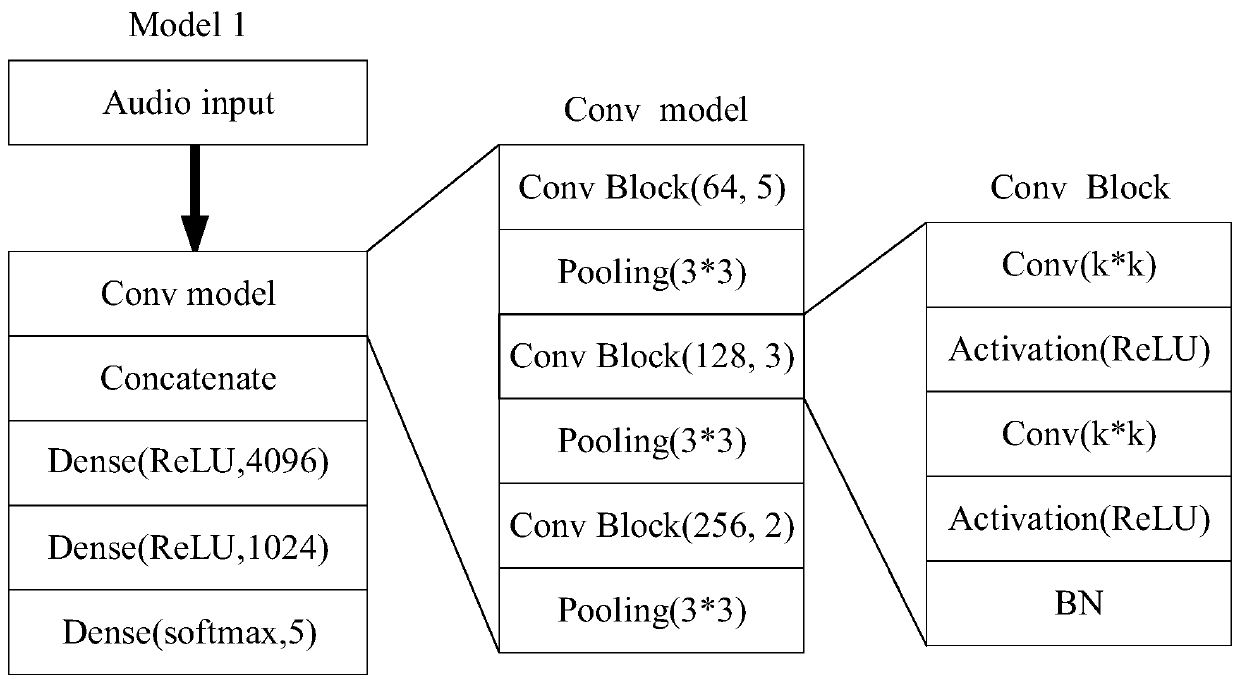

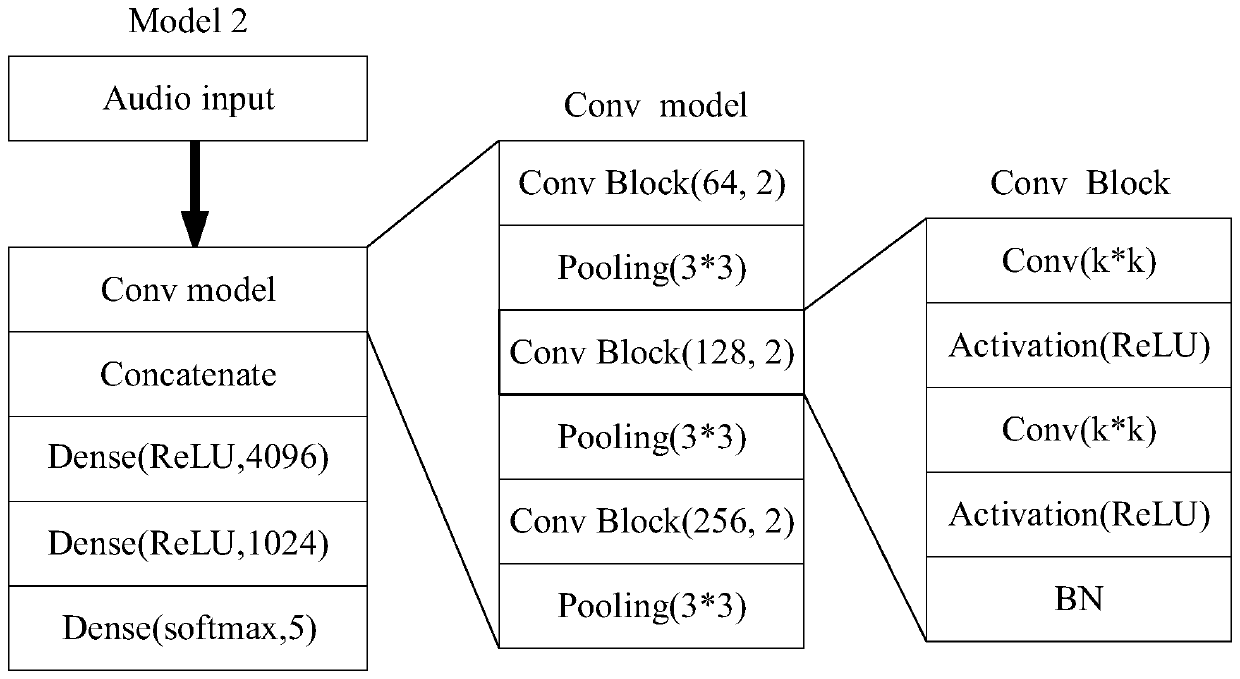

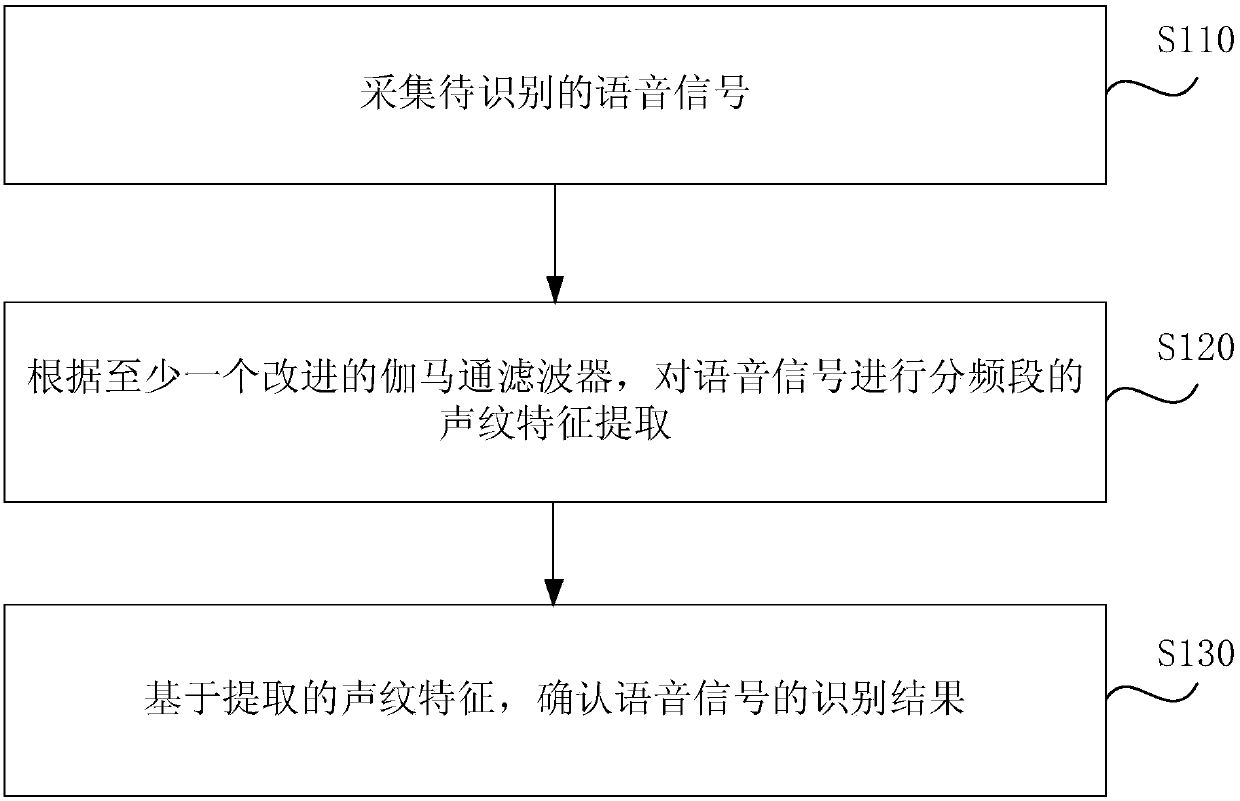

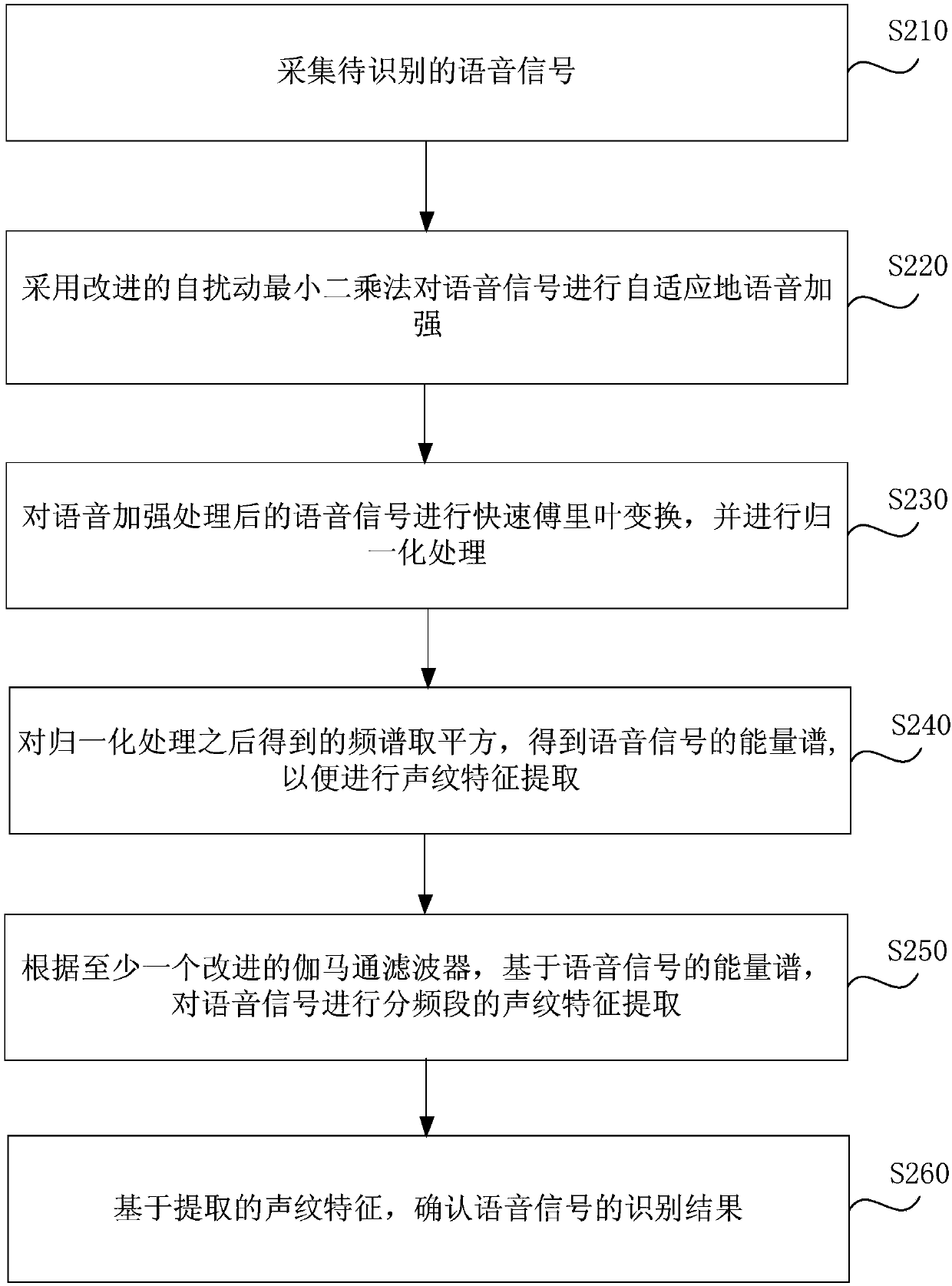

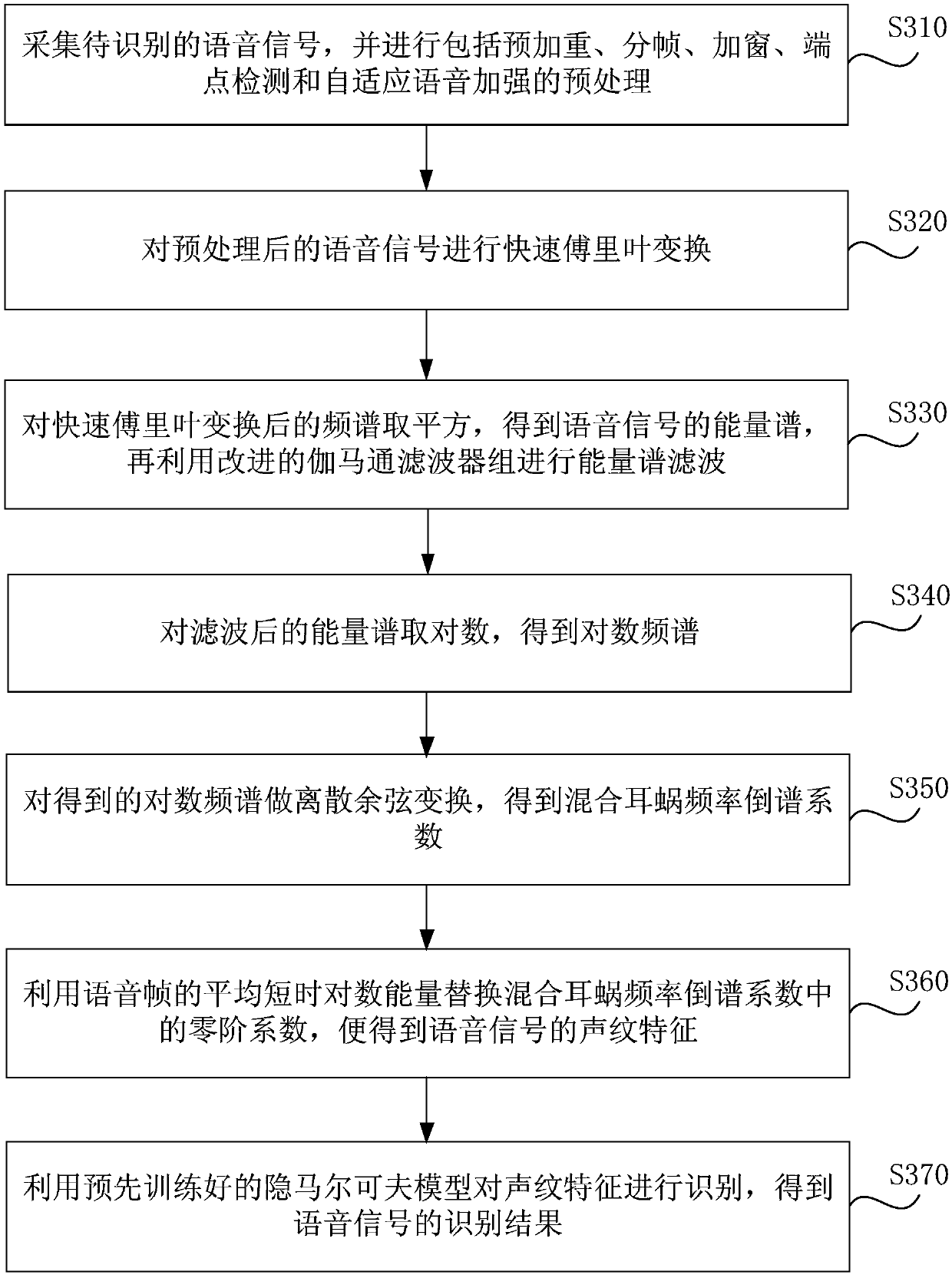

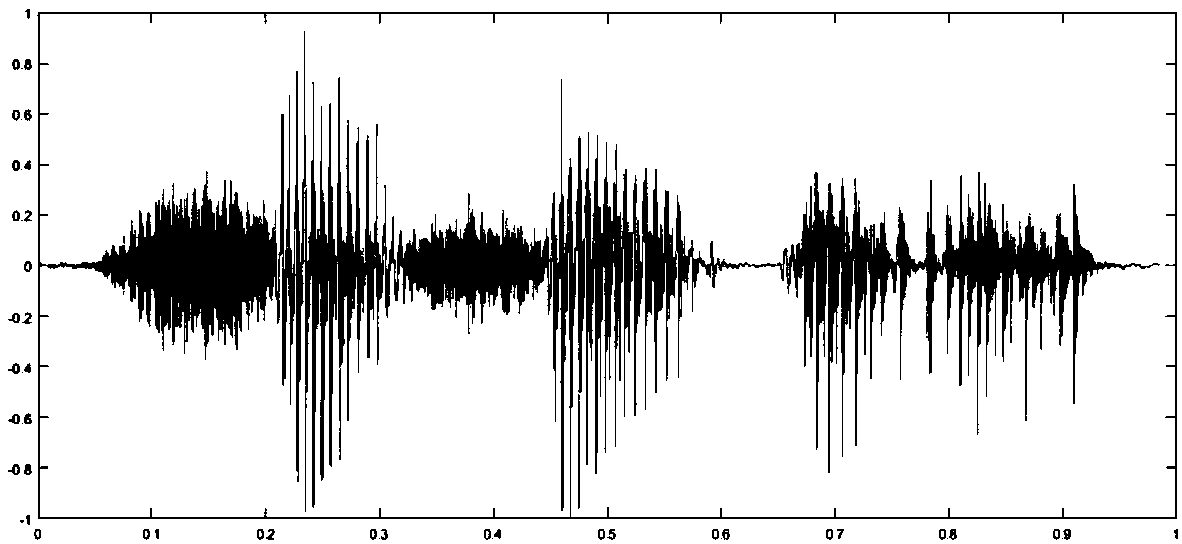

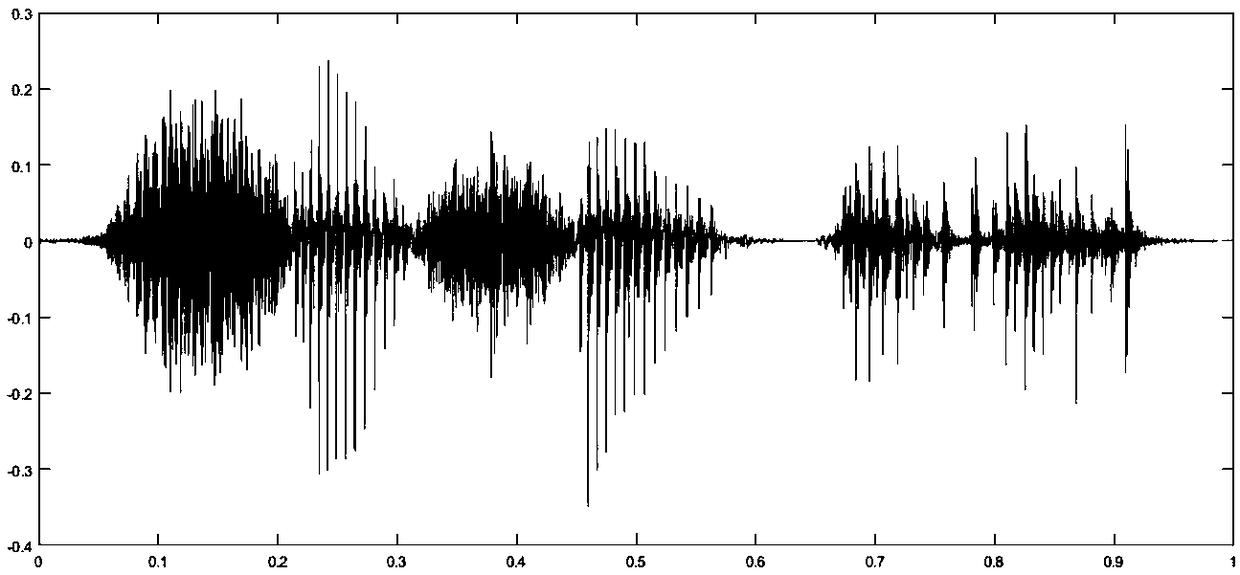

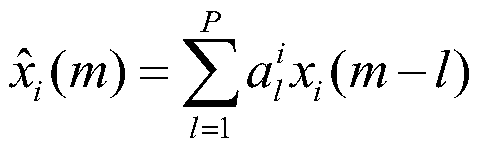

Voiceprint identifying method and device, server and storage medium

ActiveCN108564956AImprove accuracyImprove recognition accuracySpeech analysisFeature extractionImage resolution

An embodiment of the invention discloses a voiceprint identifying method and device, a server and a storage medium; the method comprises the following steps: voice signals to be identified are collected; according to at least one improved Gammatone wave filter, the voice signals are subjected to frequency band-based voiceprint feature extraction operation, and voice signal identifying results areconfirmed based on extracted voiceprint features. The voiceprint identifying method and device, the server and the storage medium disclosed in the embodiment of the invention can be used for solving aproblem that poor identifying effects are caused due to information loss of a high frequency part of voice based on technologies of the prior art, a resolution ratio of the wave filter for the high frequency part of the voice can be improved, accuracy of the voiceprint feature extraction can be improved, identifying effects on the high frequency part of the voice can be improved, and computational complexity and response time involved in voiceprint identification can be reduced.

Owner:京北方信息技术股份有限公司

Automatic recognition method for pharyngeal fricative in cleft palate speech based on PICGTFs and SSMC enhancement

ActiveCN109300486AThe test results are objective and accurateRealize automatic measurementSpeech analysisSignal classificationGammatone filter

The invention discloses an automatic recognition method for pharyngeal fricative in cleft palate speech based on PICGTFs and SSMC enhancement, which relates to the field of speech signal processing. The method uses piecewise index compression Gammatone filters PICGTFs to carry out filter processing on the speech, a speech signal spectrogram in each channel is subjected to enhancement processing based on a SSMC (Softsign-based Multi-Channel) model and a DoG (Difference of Gaussian) model, a feature vector is extracted from the enhanced spectrogram respectively and put to a KNN classifier for pattern recognition to determine whether to belong to the pharyngeal fricative, and the same classification result is taken as a final recognition result of the algorithm. The method makes full use of the differences between the pharyngeal fricative and the normal speech in frequency domain distribution of spectral energy, and in comparison with the prior art, the detection result is objective and accurate, high-degree automatic measurement is realized, reliable reference data are provided for clinical digital evaluation of the pharyngeal fricative, the development needs of precise medical treatment are met, and more accurate and effective signal classification and recognition can be carried out.

Owner:SICHUAN UNIV

Speaker recognition self-adaption method in complex environment based on GMM model

ActiveCN111489763AReflect dynamic characteristicsSolve the low recognition rateSpeech analysisGammatone filterVoice change

The invention relates to a signal processing technology, and in particular, relates to a speaker recognition self-adaption method in a complex environment based on a GMM model. The method comprises aGMM-based speaker recognition model construction stage, that is to say, after low-pass filtering, pre-emphasis, windowing, framing and other preprocessing are carried out on a voice signal, filteringand denoising are carried out through a Gammatone filter, and GMFCC combined characteristic parameters are extracted. The method further comprises a speaker recognition and self-adaptation stage, thatis to say, speaker recognition is completed by extracting speech feature parameters of a speaker to be recognized and conducting self-adaptation adjustment on the original model. According to the method, the defect that the speaker recognition accuracy is reduced due to illness or complex environment is overcome, a new feature parameter combination method is provided, different features can be analyzed in a combined mode, errors caused by voice changes due to different conditions of speakers are effectively compensated, and thus the recognition accuracy is improved.

Owner:WUHAN UNIV

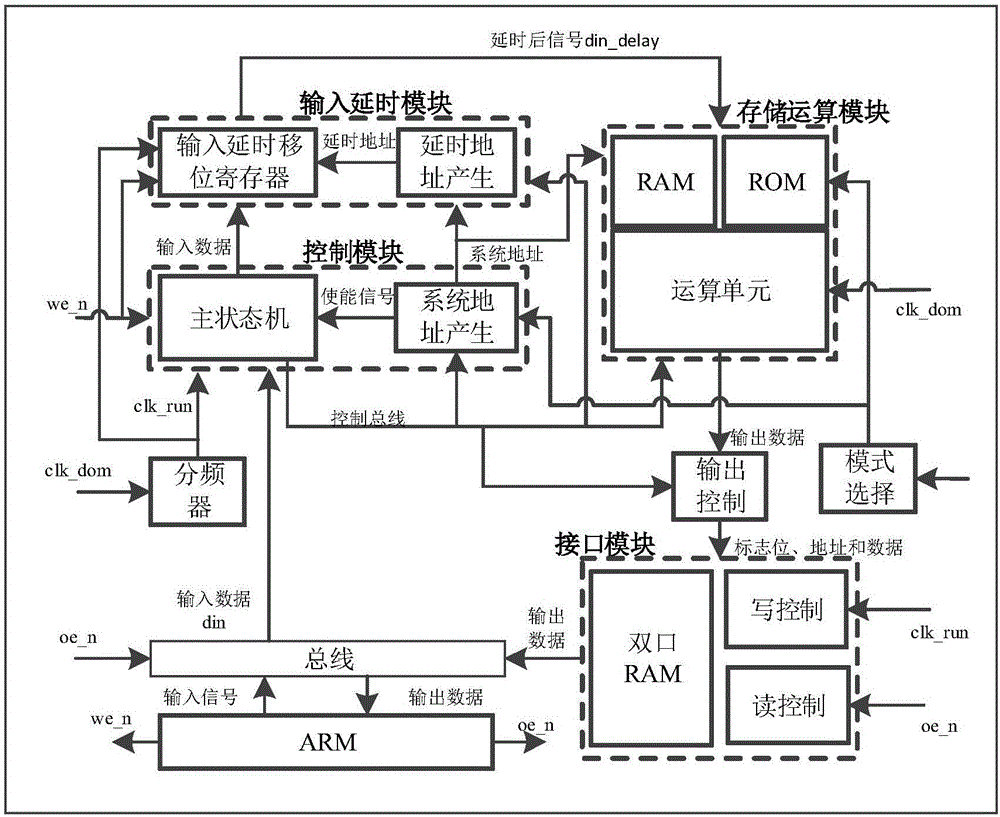

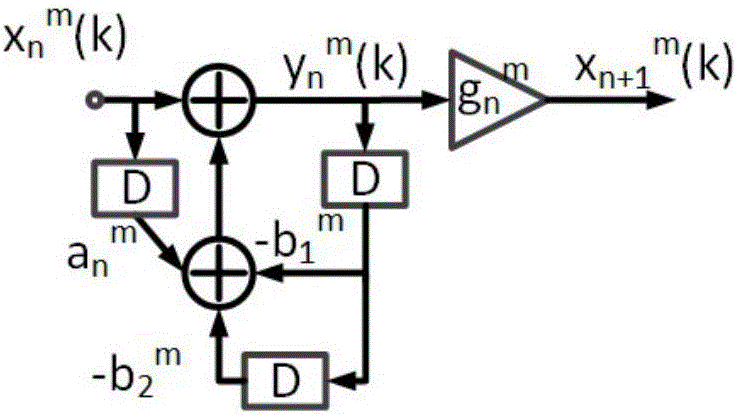

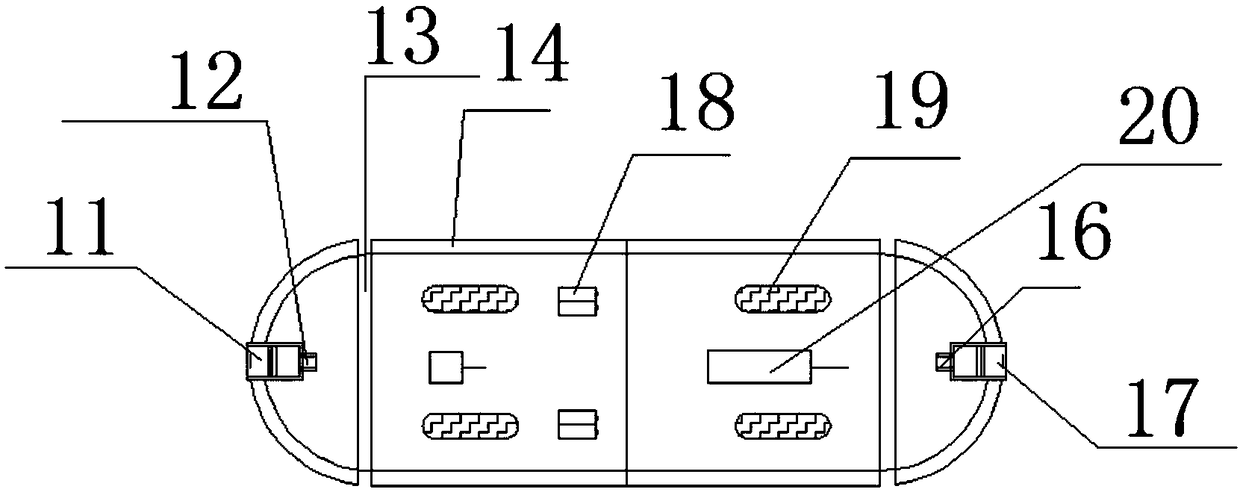

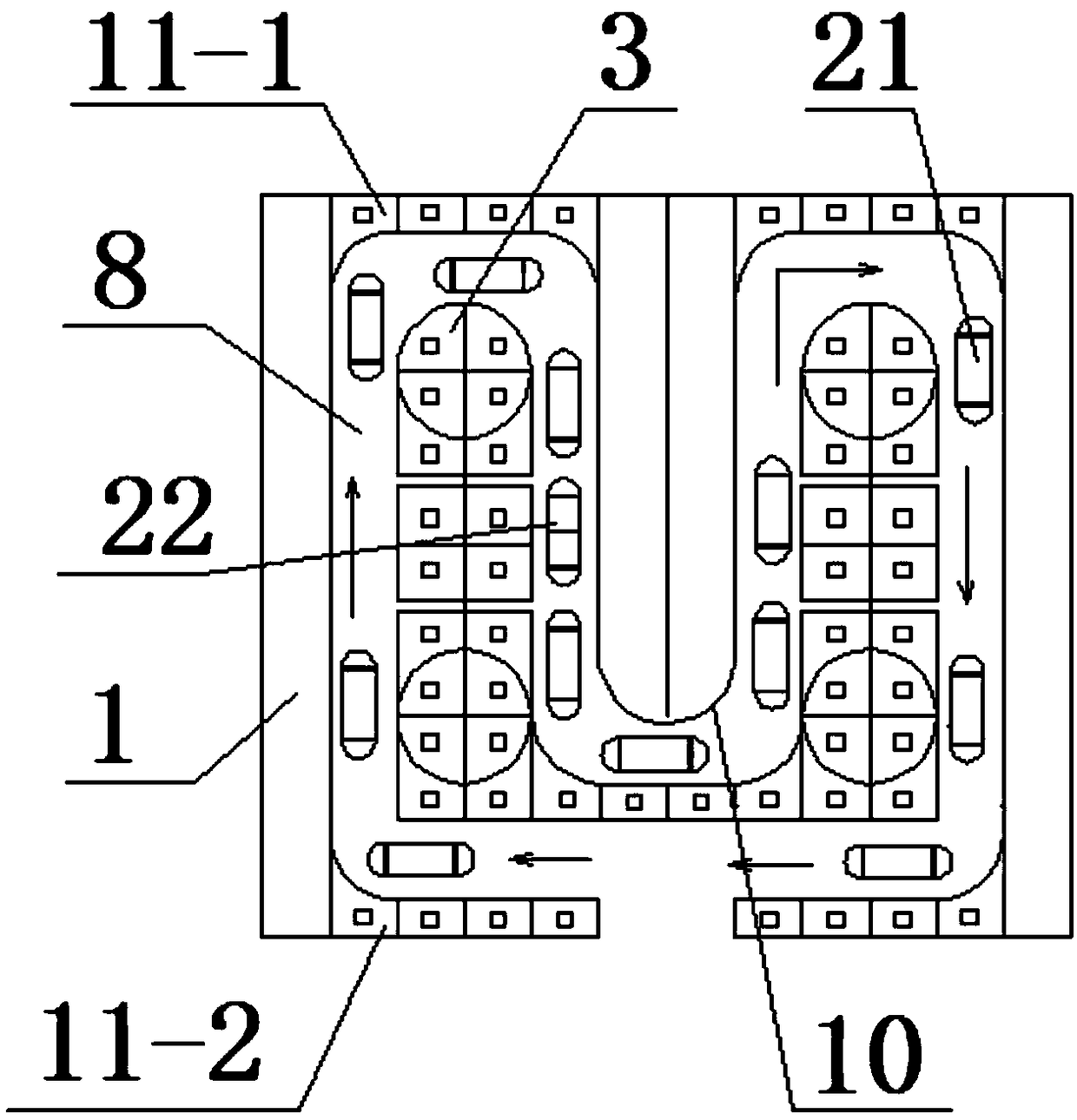

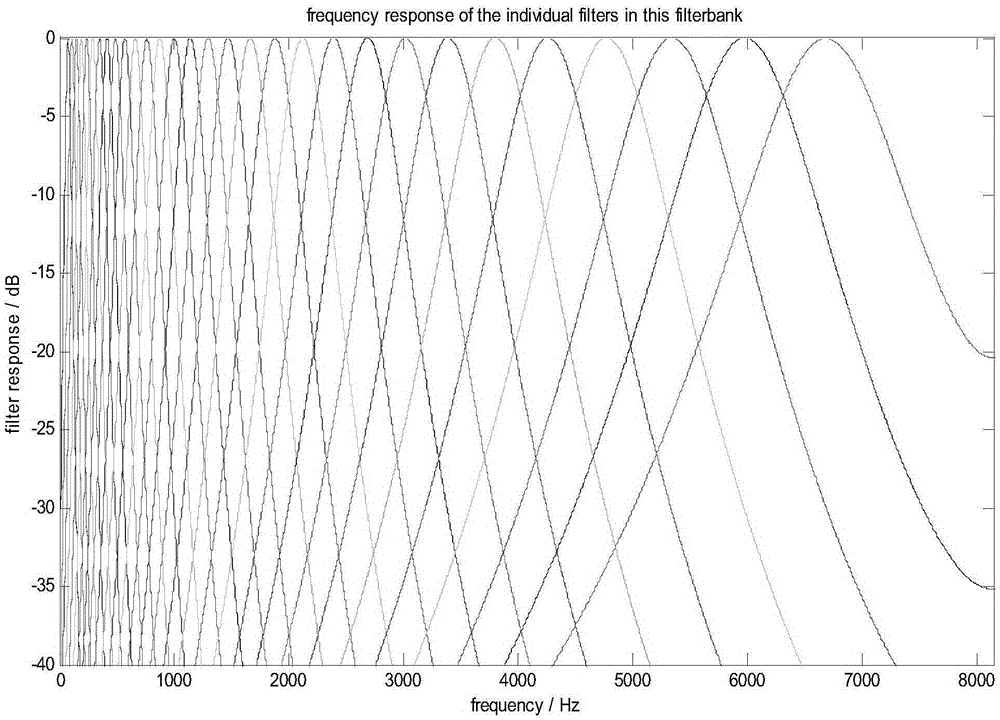

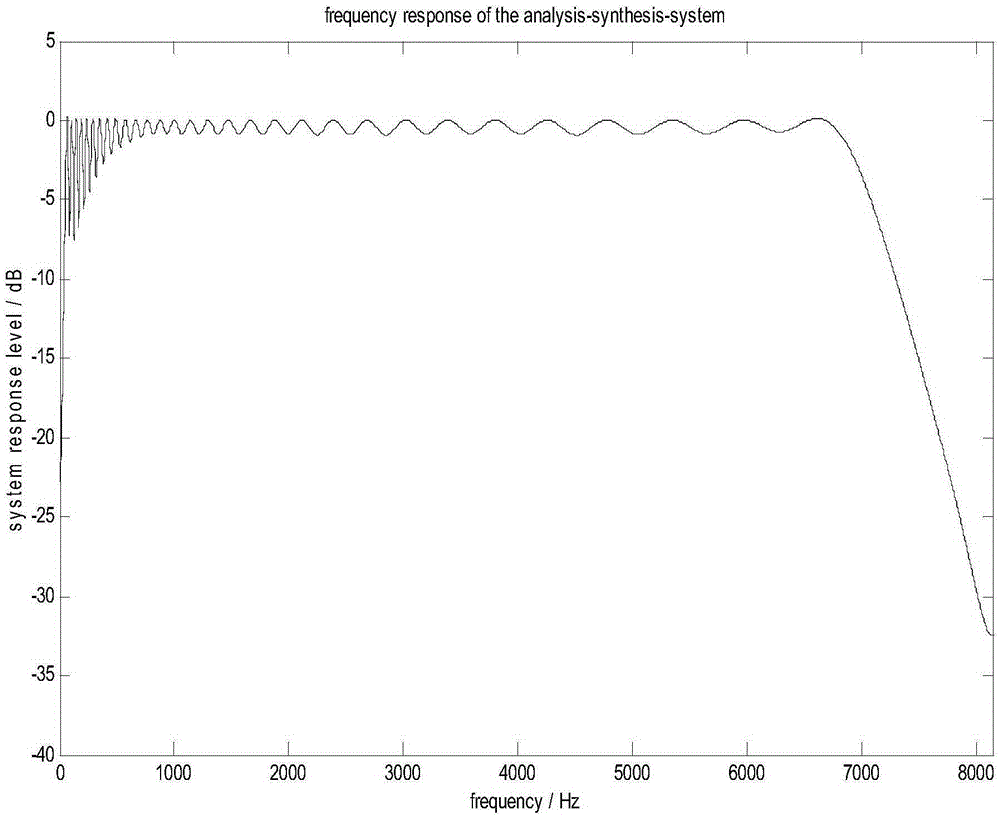

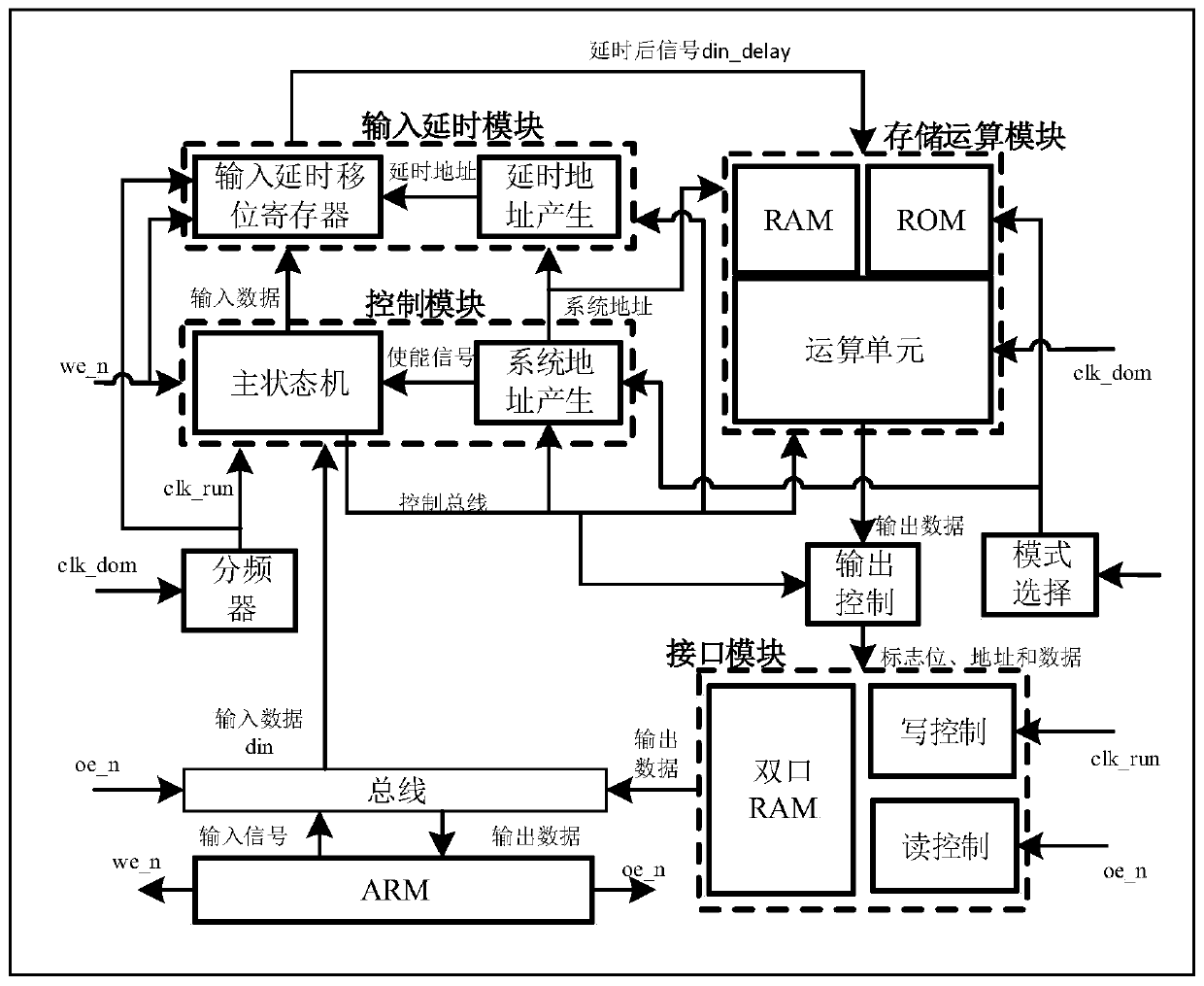

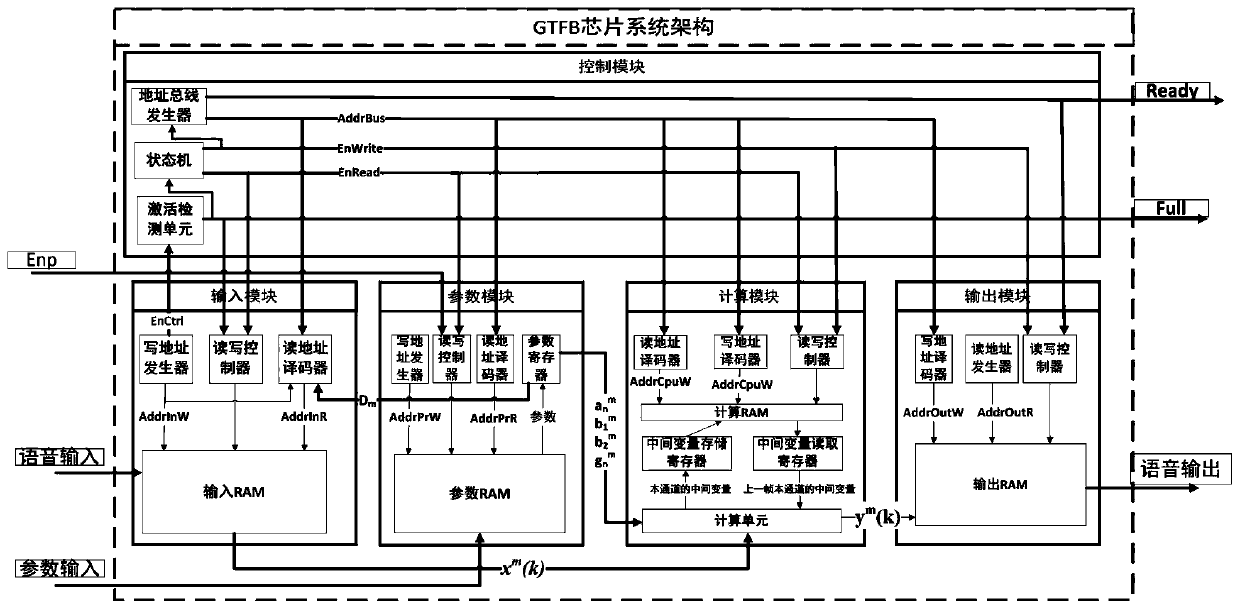

Gammatone filter bank chip system supporting voice real-time decomposition/synthesis

ActiveCN106486110ALow operating frequencyReduce the total number of interfacesSpeech synthesisDecompositionGammatone filter

The invention puts forward a Gammatone filter bank chip system supporting voice real-time decomposition / synthesis, and belongs to the field of digital circuit design. The system comprises five parts, namely, an input module, a parameter module, a control module, a calculation module, and an output module. The input module activates the control module after receiving a frame of voice data, adjusts the delay of each channel according to the delay of human ear basilar membranes on different sub-bands, and sends the voice data to the control module. The control module makes the parameter module read the parameters of the corresponding channels and transmit the parameters to the calculation module. The calculation module completes the Gammatone filtering algorithm of each channel, and saves the result to the output module. After the calculation module completes calculation of all the channels of the frame of voice data, the output module allows the stored data to be read externally. With the system, the number of clocks consumed for calculation of channels is reduced, and less power is consumed. A parameter configurable function is realized, and the parameters of the system can be adjusted flexibly according to the need. Voice decomposition and synthesis is realized.

Owner:TSINGHUA UNIV

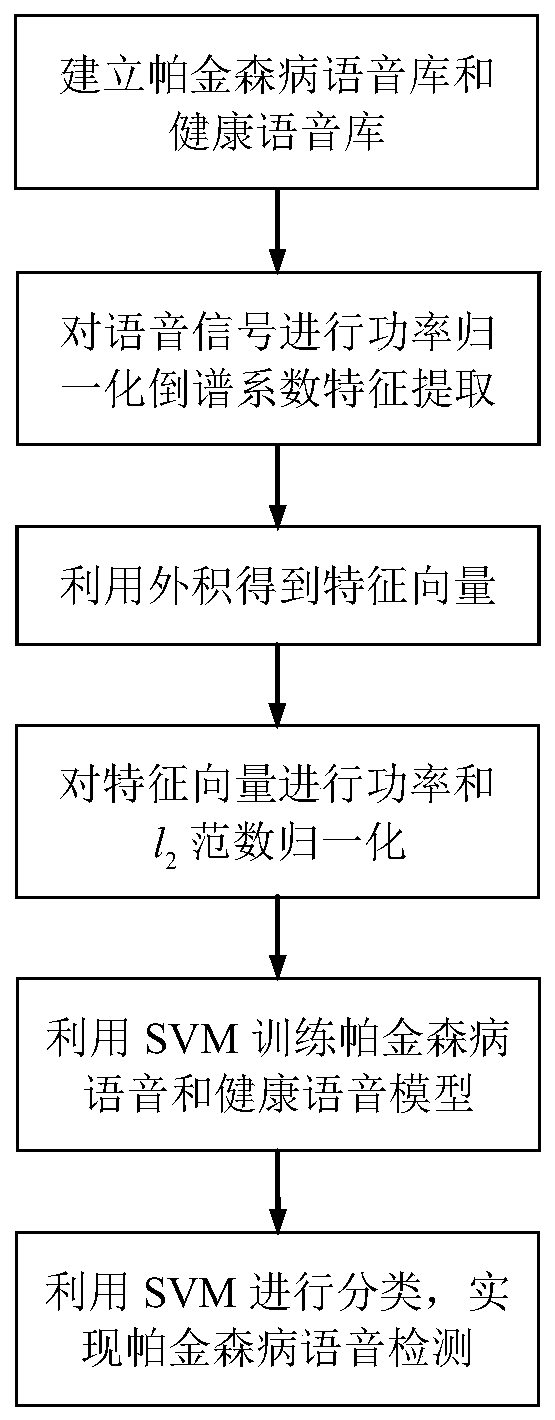

Parkinson's disease speech detection method based on characteristics of power normalized cepstrum coefficients

The invention discloses a Parkinson's disease speech detection method based on characteristics of power normalized cepstrum coefficients. The Parkinson's disease speech detection method solves the problem that Parkinson's disease speech detection is prone to being interfered by noise. The robustness of the extracted characteristics is enhanced through methods such as a Gammatone filter, noise removal and power normalization, and the detection method comprises the following steps that (1) a Parkinson's disease speech library and a healthy speech library are established; (2) characteristics of the power normalized cepstrum coefficients are extracted on a speech signal, specifically, firstly a speech signal is preprocessed, then the Gammatone filter is used for filtering to obtain a speech short-time power spectrum, then the speech short-time power spectrum is weighted and smoothed, and finally the characteristics of the power normalized cepstrum coefficients are calculated; (3) the outerproduct is used for obtaining characteristic vectors; (4) the characteristic vectors are subjected to power and l<2> norm normalization; (5) an SVM is used for training a Parkinson's disease speech model and a healthy speech model; and (6) an SVM classification method is used for classifying, and Parkinson's disease speech detection is realized.

Owner:JILIN UNIV

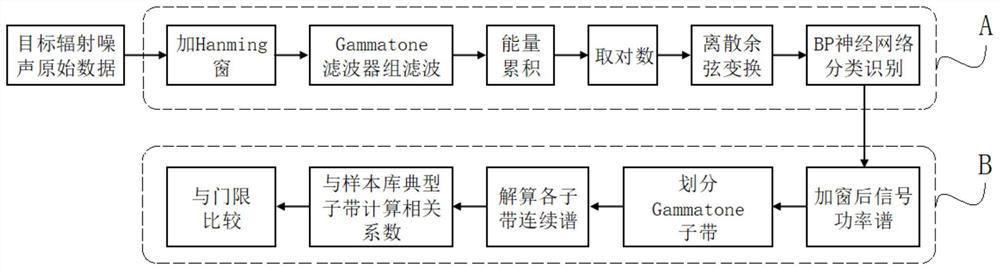

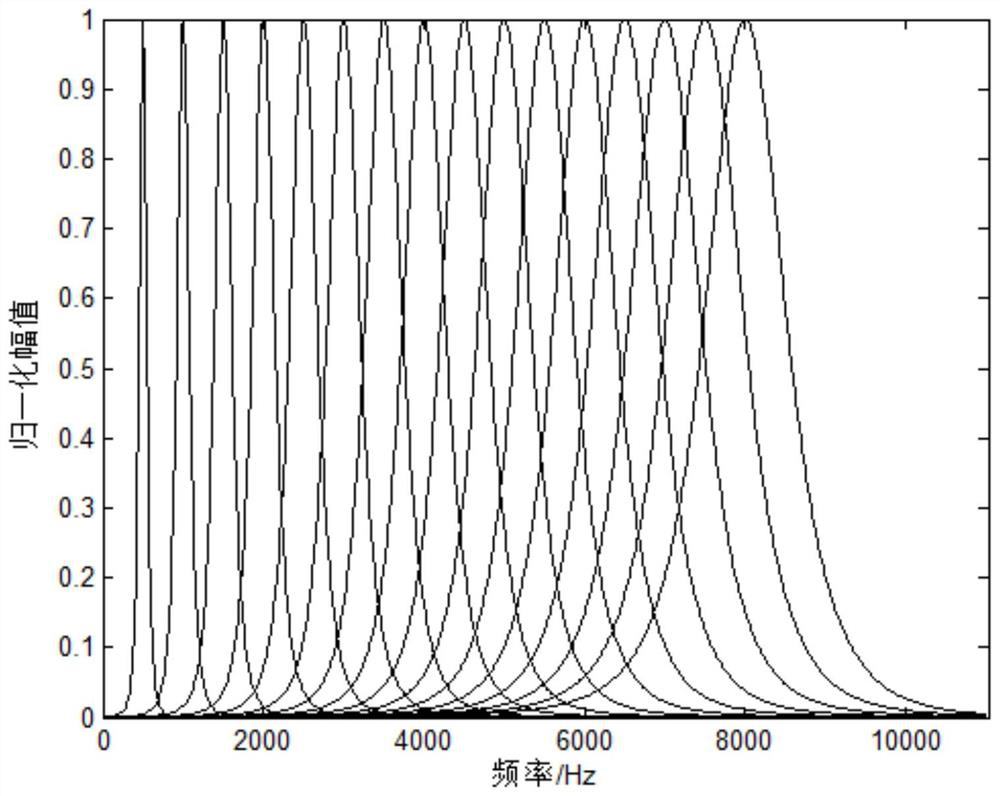

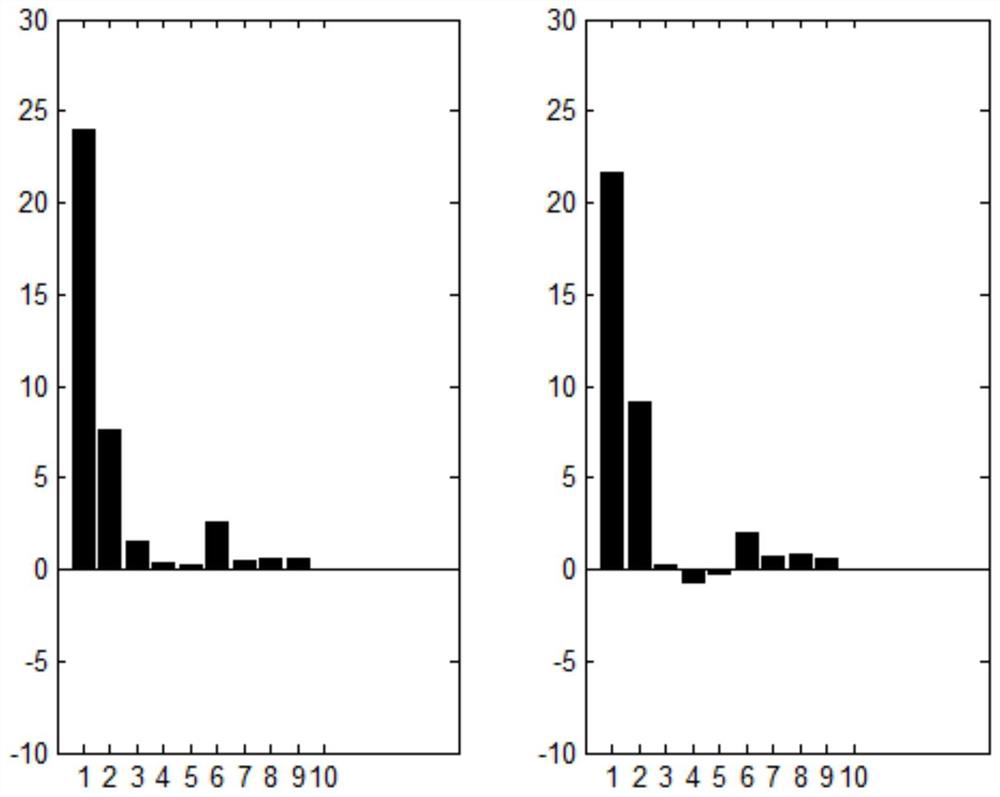

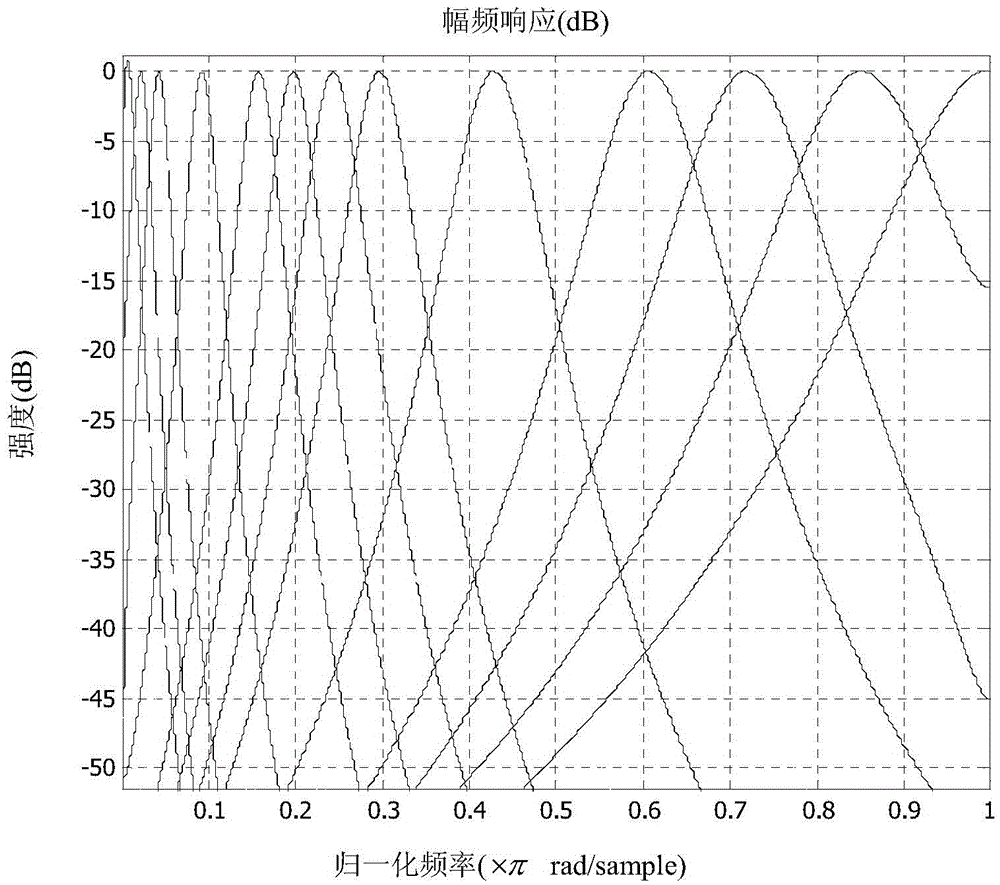

Target identification method based on continuous spectral characteristics of Gammatone frequency band

ActiveCN112086105ARealize precise identificationImprove the correct recognition rateSpeech analysisGammatone filterCenter frequency

The invention discloses a target identification method based on continuous spectral characteristics of a Gammatone frequency band. The target identification method comprises the following steps: firstly, performing windowing treatment on original target radiation noise data, selecting a Hamming window, establishing a corresponding window function, and performing fast Fourier transform on a windowed signal; determining number of Gammatone filter banks, determining center frequency of each filter at equal distance for an original signal frequency band, calculating impulse response of the Gammatone filter banks, performing fast Fourier transform on the impulse response, performing normalized processing, and establishing a corresponding Gammatone filter bank impulse response function. Comparedwith a conventional continuous spectral characteristic extraction, classification and identification method, the target identification method firstly performs preliminary identification, performs continuous spectrum in a sub band, can extract a stable sub band continuous spectrum as a typical sub band sample, and is more precise in whole frequency band continuous spectrum estimation, so that a correct identification rate of target radiation noise identification is increased.

Owner:750 TEST SITE OF CHINA SHIPBUILDING IND CORP

A method for objective assessment of speech quality based on auditory perception characteristics

ActiveCN104485114BImprove relevanceEasy performance analysisSpeech analysisEvaluation resultFrequency spectrum

A method for objective evaluation of speech quality based on auditory perception characteristics, characterized in that: the method is filtered by adding a Gammatone filter bank to a Bark spectrum module in spectrum mapping, and the concrete steps are: 1) by POLQA processing reference signals and degradation signal, then the reference signal and the degraded signal enter the core model; 2) the spectrum mapping in the core model is that the Barker spectrum module adds the Gammatone filter bank for filtering, and then performs auditory transformation to make the extracted auditory spectrum closer to people. 3) After the auditory transformation, the interference analysis is performed to analyze the distortion of the degraded signal relative to the reference signal, and the objective evaluation MOS score is obtained. Compared with other methods, the present invention effectively improves the correlation between the objective evaluation result and the subjective evaluation result.

Owner:HUNAN INST OF METROLOGY & TEST +1

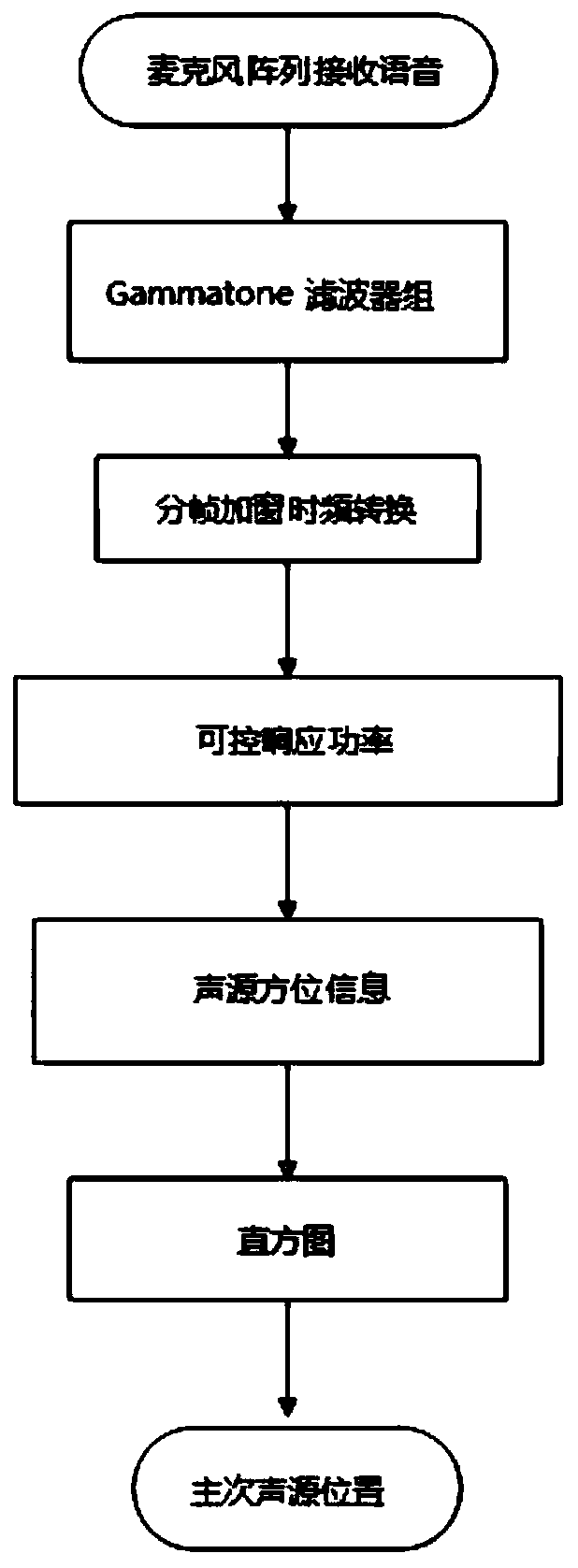

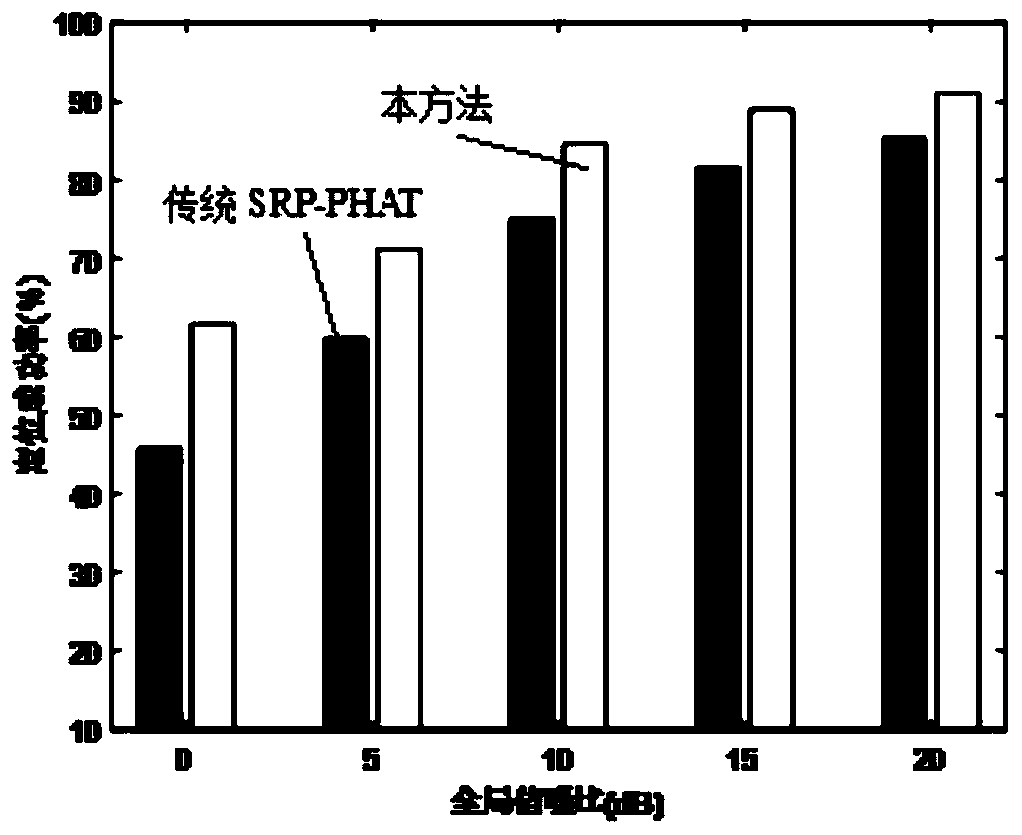

Multi-sound-source positioning method based on Gammatone filter and histogram

ActiveCN110133572AEasy to operateSmall amount of calculationPosition fixationBeacon systemsSound sourcesGammatone filter

Owner:NANJING INST OF TECH +1

Fault location method of capsule type floor heating device

InactiveCN108919187AHeating up fastDisposable cooling radiation transfer fastPosition fixationTime domainSound sources

The invention discloses a fault location method of a capsule type floor heating device. The fault location method is applied into a floor heating track. The fault location method comprises the steps that fault speech signals are acquired and unit impulse response is subjected to convolution to obtain binaural signals; and after the binaural signals pass through a Gammatone filter, sub-band signalsafter frequency division are obtained, dereverbration processing of minimum phase decomposition is carried out in each sub-band signal, and a generalized cross-correlation function is obtained by calculating the cross-correlation of various sub-bands after the inverse transformation from a cepstrum domain to a time domain. The fault location method takes binaural speech localization as a multi-classification problem, takes GCCF (Generalized Cross-Correlation Function) and an interaural level difference as localization characteristics to input into DNNs of a softmax regression structure on a top floor, outputs the probability of which a sound source is located in each direction, and takes a maximum probability azimuth as the position of the sound source. The fault location method is simpleand convenient in installation, and the capsule type floor heating device which can locate the fault is provided in an innovation mode.

Owner:毛述春

Vehicle Feature Extraction Method Based on Gammatone Filter Bank

The invention discloses a vehicle model feature extraction method based on a Gammatone filter bank, belongs to the field of mode recognition, and relates to a method for feature extraction of a vehicle radiation sound signal, specifically to a method for feature extraction which simulates hearing characteristics of human ears by calculating a cepstrum coefficient of the vehicle sound signal under the Gammatone filter bank. The method can simulate the characteristic of nonlinear frequency resolution of human ears by using the Gammatone filter bank, and divides vehicle sound signal filtering into different sub-band signals and obtains a cepstrum coefficient. Based on the principle of frequency resolution in the hearing characteristics, the vehicle model feature extraction method based on the Grammatone filter bank extracts a Grammatone cepstrum coefficient from the vehicle sound signal, and obtains frequency band-energy features of an original signal, involved calculation concerns commonly-used signal processing techniques, the principle is simple, the steps are clear, programming realization is facilitated, and the applicability is wide.

Owner:NORTHWEST INST OF NUCLEAR TECH

A real-time decomposition/synthesis method of digital speech based on auditory perception characteristics

ActiveCN106601249BEasy to operateComposite operations supportedSpeech recognitionSpeech synthesisSynthesis methodsGammatone filter

Owner:TSINGHUA UNIV

An Adaptive Hearing Compensation Method

The invention discloses a self-adaptive hearing compensation method. The self-adaptive hearing compensation method comprises the following steps of firstly performing multi-channel decomposition for input signals by utilizing a gammatone filter group; then determining a compensation method based on a dynamic range of the signals in the channels and a hearing range of a hearing loss patient; if channel signals after being subjected to linear gain treatment are still within the hearing range of the patient, performing hearing compensation by using linear amplification so as to reduce distortion; otherwise, compensating by using dynamic range compression so as to add audibility. In addition, in order to reduce signal distortion caused by dynamic range compression and increase SNR (Signal to Noise Ratio) of an output signal in a noise environment, the self-adaptive compression method is adopted for hearing compensation so as to enable a compression ratio to be close to 1 to the greatest extent. Compared with existing hearing compensation methods, the self-adaptive hearing compensation method has higher intelligibility of compensated voice and obtains very strong practicability.

Owner:SOUTHEAST UNIV

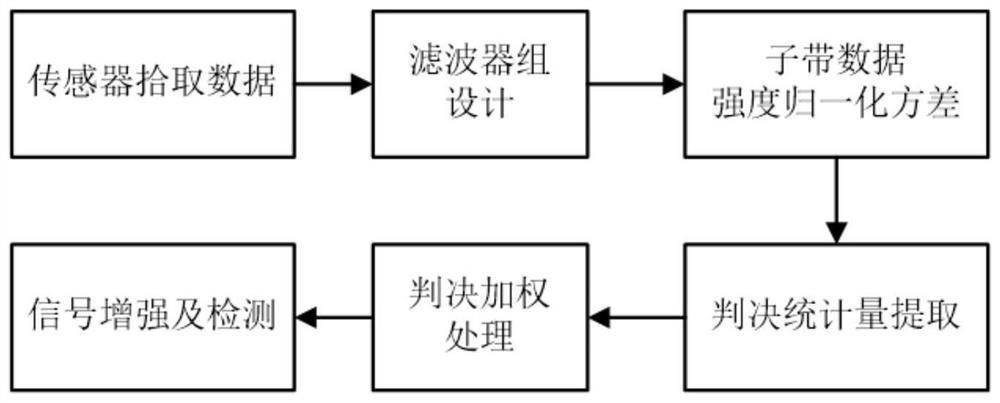

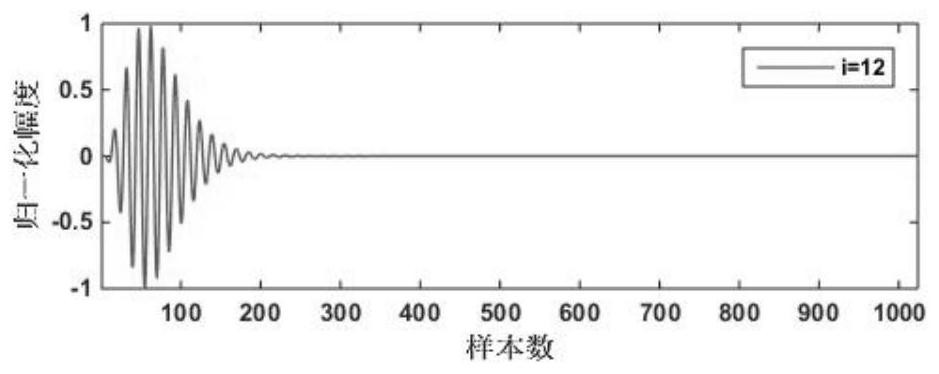

Signal detection method and system based on decision statistic design

ActiveCN113238206AAchieve enhancementEasy to detectWave based measurement systemsComplex mathematical operationsFilter (signal processing)Gammatone filter

The invention relates to the field of sonar signal processing, in particular to a signal detection method and system based on decision statistic design. The method comprises the steps of employing a Gammatone filter group to carry out the frequency band processing of sensor pickup data, and obtaining the data of each frequency band, according to the difference between the signal and the background noise in intensity and stability, conducting variance normalization processing on each frequency band data, and establishing a judgment statistic amount, and performing judgment processing on each frequency band data according to the judgment statistics to realize signal enhancement and detection. According to the method, under the condition that a single filtering frequency band does not need to be searched, the signal-to-noise ratio of synthetic data is improved, and signal enhancement and detection are achieved.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

A binaural sound source localization method based on deep learning in digital hearing aids

ActiveCN108122559BImprove learning abilityNarrow down your targetingSets with desired directivitySpeech analysisInteraural time differenceSound sources

The invention discloses a binaural sound source localization method based on deep learning in a digital hearing aid. First, binaural sound source signals are decomposed into several channels through a gammatone filter, and high-energy channels are extracted through weighting coefficients, and then the head correlation function ( head‑related‑transform function, HRTF) extracts the first type of features, that is, Interaural Time Difference (Interaural Time Difference, ITD) and Interaural Intensity Difference (Interaural Intensity Difference, IID) as the input of deep learning, and divides the horizontal plane into four Quadrant to narrow down the targeting. Then extract the second type of features of head-related transmission, namely, the interaural level difference (Interaural Level Difference, ILD) and the interaural phase difference (Interaural Phase Difference, IPD). Finally, in order to obtain more accurate positioning, the first type and The four features of the second category are used as the input of the next deep learning, so as to obtain the azimuth angle of the sound source localization. Realize the precise positioning of 72 azimuth angles from 0° to 360° on the horizontal plane with a step size of 5°.

Owner:BEIJING UNIV OF TECH

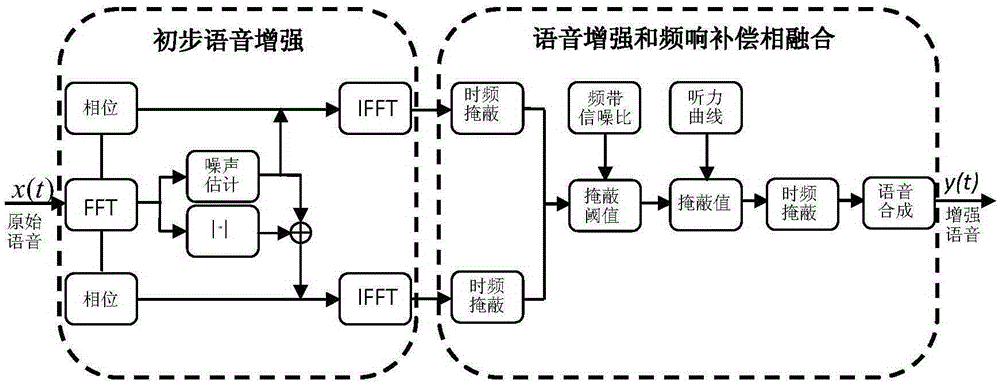

Speech Enhancement and Frequency Response Compensation Fusion Method in Digital Hearing Aid

ActiveCN103778920BEasy to implementImprove intelligibilitySpeech analysisGammatone filterPattern perception

The invention provides a speech enhancing and frequency response compensation fusion method in a digital hearing-aid. The speech enhancing and frequency response compensation fusion method includes the steps of (1) obtaining estimated noise and initial enhanced speech with an MCRA method, respectively carrying out filtering processing on the estimated noise and the initial enhanced speech through a gammatone filter, dividing a signal into M frequency bands through the perception mechanism of the cochleas to the signal, and meanwhile obtaining a time frequency expression mode of the signal, (2) computing masking threshold values of the frequency bands through factors such as the audio masking characteristic of the human ears and the frequency band signal to noise ratios, (3) dynamically computing masking values of noise-contained speech in a time frequency domain through a hearing curve of a hearing disorder patient, and processing speech enhancing and frequency response compensation at the same time, and (4) synthesizing output speech of the heating-aid through the masking values. According to the speech enhancing and frequency response compensation fusion method, the working mechanism of the human ears is sufficiently used, the speech characteristics are kept, music noise introduced through a spectral subtraction method is eliminated, the speech intelligibility of output signals of the hearing-aid is greatly improved, and the low complexity and the low power consumption are achieved.

Owner:湖南汨罗循环经济产业园区科技创新服务中心

Dual-channel acoustic signal-based sound source localization method

InactiveCN102438189BFrequency/directions obtaining arrangementsInteraural time differenceSound sources

The invention relates to a dual-channel acoustic signal-based sound source localization method which is an improved sound source localization method. In the method, the mean value and the variance of the interaural time difference (ITD) and the interaural intensity difference (IID) of each frequency band are taken as characteristic clues for the localization of the azimuth of a sound source to set up an azimuth mapping model. In the actual localization of the sound source, dual-channel acoustic signals are inputted, the inputted acoustic signals are subjected to frequency band allocation and filtering processing by a Gammatone filter group which is similar to a human aural filter, then, are inputted to a characteristic extraction module, the localization information on the ITD and the IID of each subband is extracted, the localization clues on the ITD and the IID of each subband are integrated based on a Gaussian mixture model (GMM), and the likelihood values of the ITD and the IID on the corresponding frequency band of each azimuth angle are obtained and are served as the decision values for azimuth estimation. The system has higher sound source localization performance.

Owner:SOUTHEAST UNIV

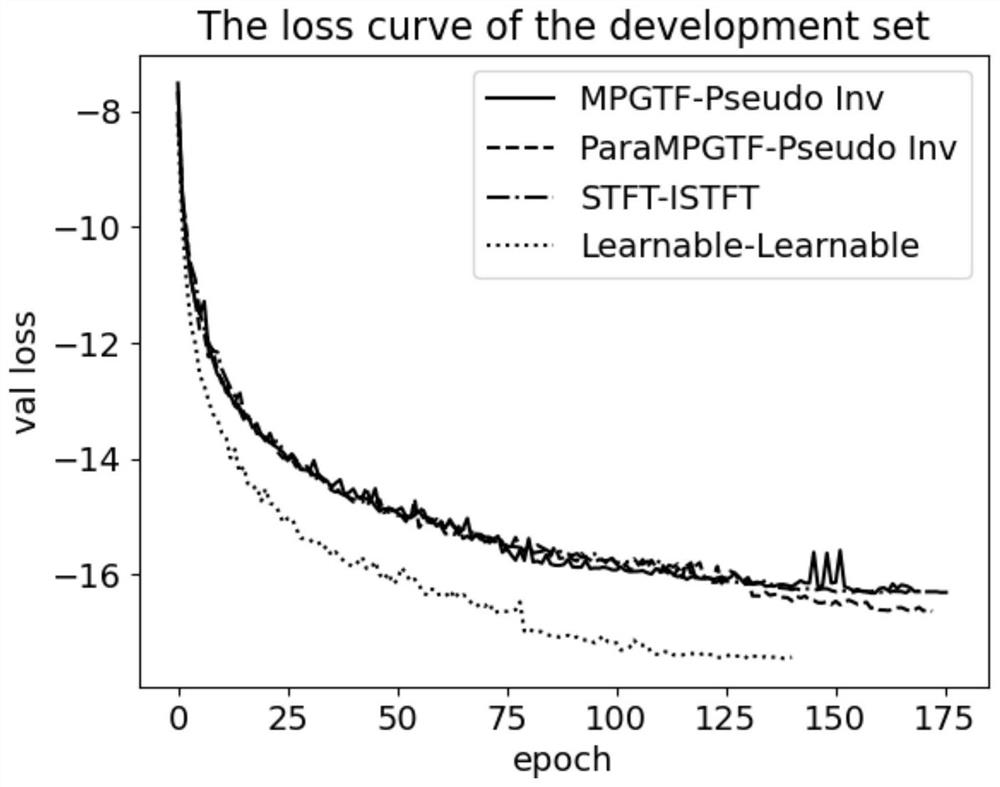

Voice separation method based on parameterized multi-phase gammatone filter bank

ActiveCN113077811ACompetitive performanceDistinctive featuresSpeech analysisHarmonic reduction arrangementEncoder decoderGammatone filter

The invention discloses a voice separation method based on a parameterized multi-phase gammatone filter bank. The method comprises the following steps of: firstly, constructing the parameterized multi-phase gammatone filter bank on the basis of a gammatone filter, then replacing an encoder of a Conv-Tasnet network with the parameterized multi-phase gammatone filter bank, keeping the encoder unchanged or adopting inverse transformation of the parameterized multi-phase gammatone filter bank to form a new Conv-Tasnet network, and training the new Conv-Tasnet network to obtain a final voice separation network. According to the method provided by the invention, under the condition that the decoder has learnable features, competitive performance is obtained; and under the condition that a decoder is inverse transformation of an encoder, the feature is superior to the features of manual design such as STFT and MPGTF.

Owner:NORTHWESTERN POLYTECHNICAL UNIV +1

A gamma-pass filter bank chip system supporting real-time speech decomposition/synthesis

ActiveCN106486110BLow operating frequencyReduce the total number of interfacesSpeech synthesisDecompositionGammatone filter

The invention puts forward a Gammatone filter bank chip system supporting voice real-time decomposition / synthesis, and belongs to the field of digital circuit design. The system comprises five parts, namely, an input module, a parameter module, a control module, a calculation module, and an output module. The input module activates the control module after receiving a frame of voice data, adjusts the delay of each channel according to the delay of human ear basilar membranes on different sub-bands, and sends the voice data to the control module. The control module makes the parameter module read the parameters of the corresponding channels and transmit the parameters to the calculation module. The calculation module completes the Gammatone filtering algorithm of each channel, and saves the result to the output module. After the calculation module completes calculation of all the channels of the frame of voice data, the output module allows the stored data to be read externally. With the system, the number of clocks consumed for calculation of channels is reduced, and less power is consumed. A parameter configurable function is realized, and the parameters of the system can be adjusted flexibly according to the need. Voice decomposition and synthesis is realized.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com