Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

186 results about "Formant" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

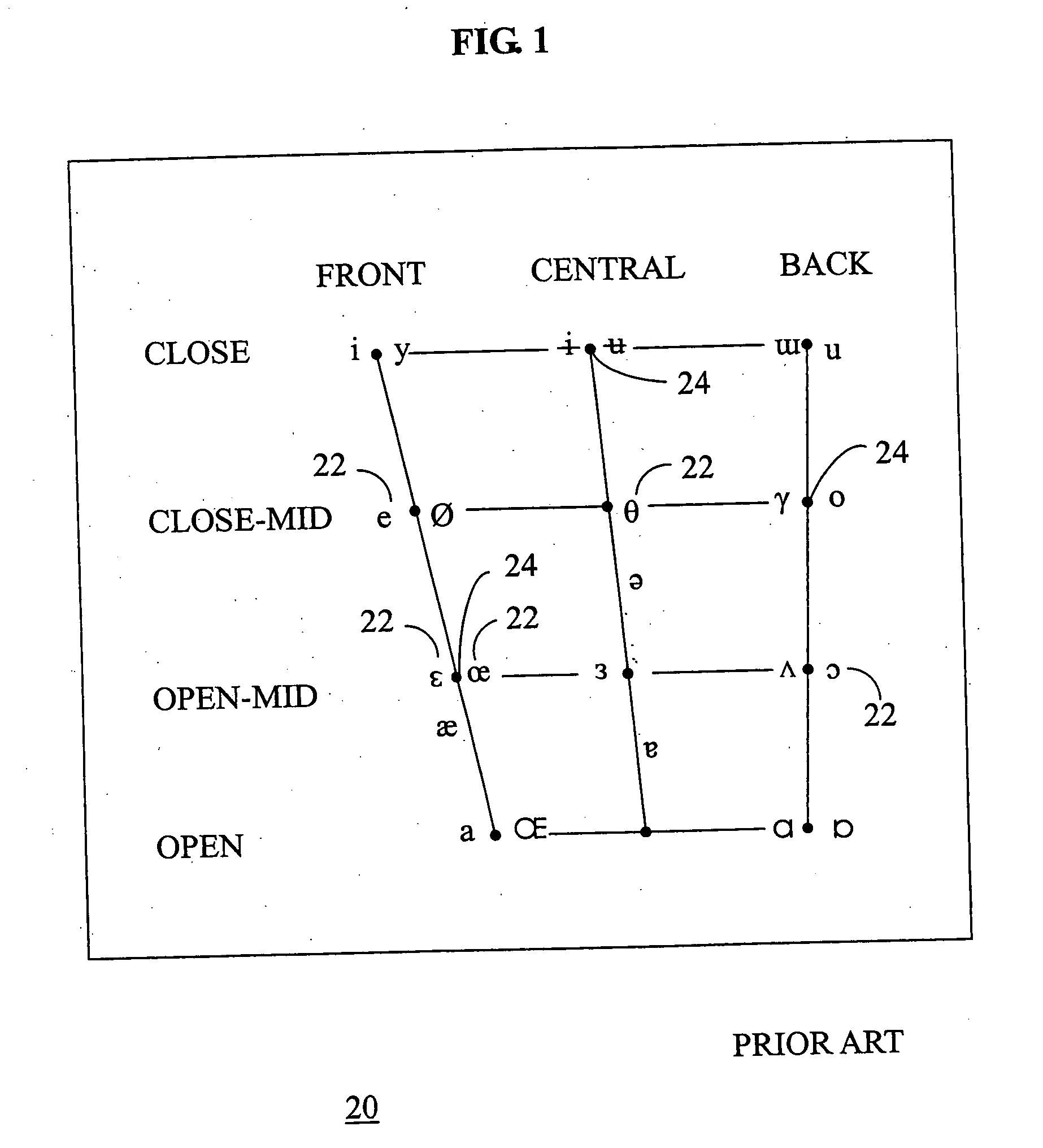

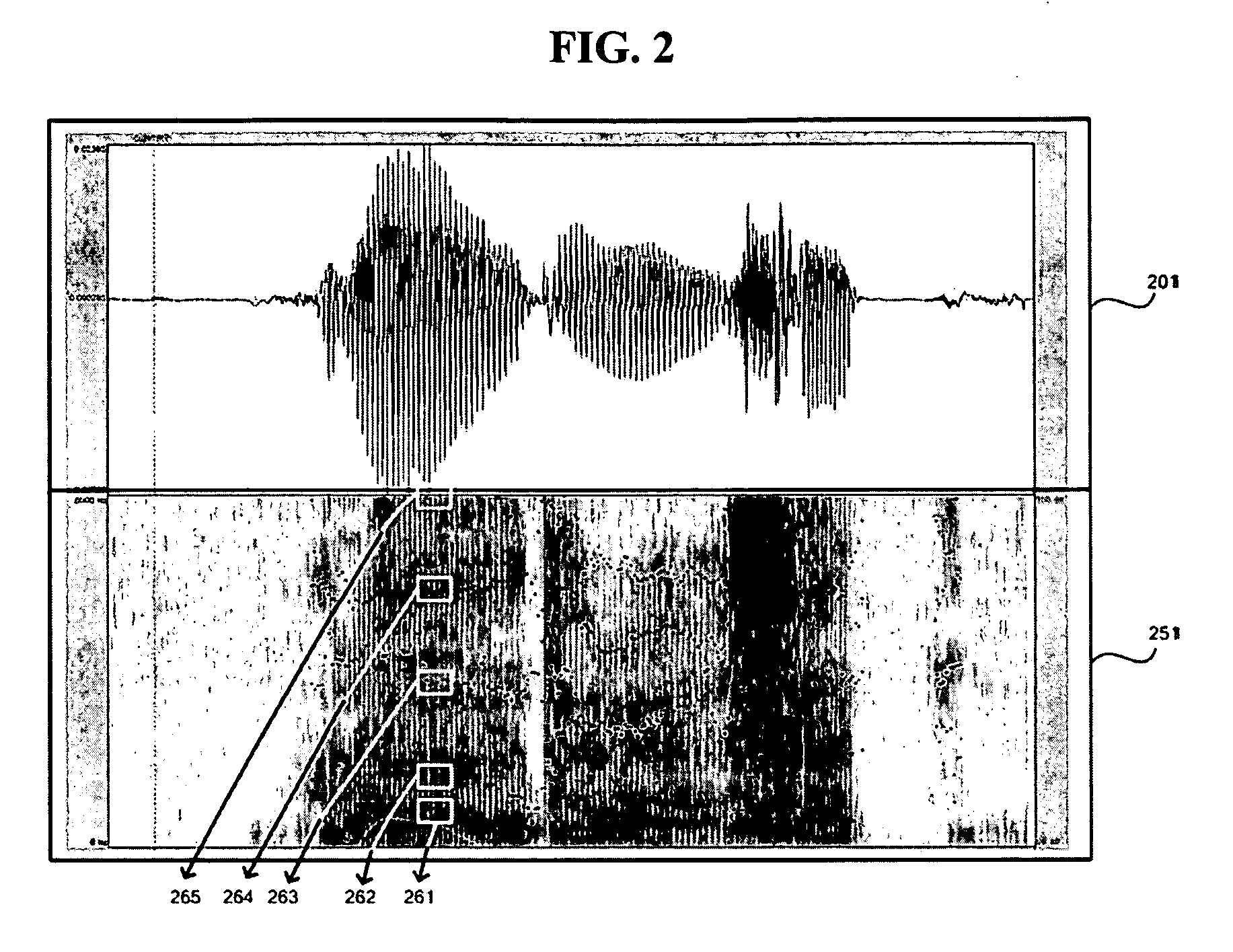

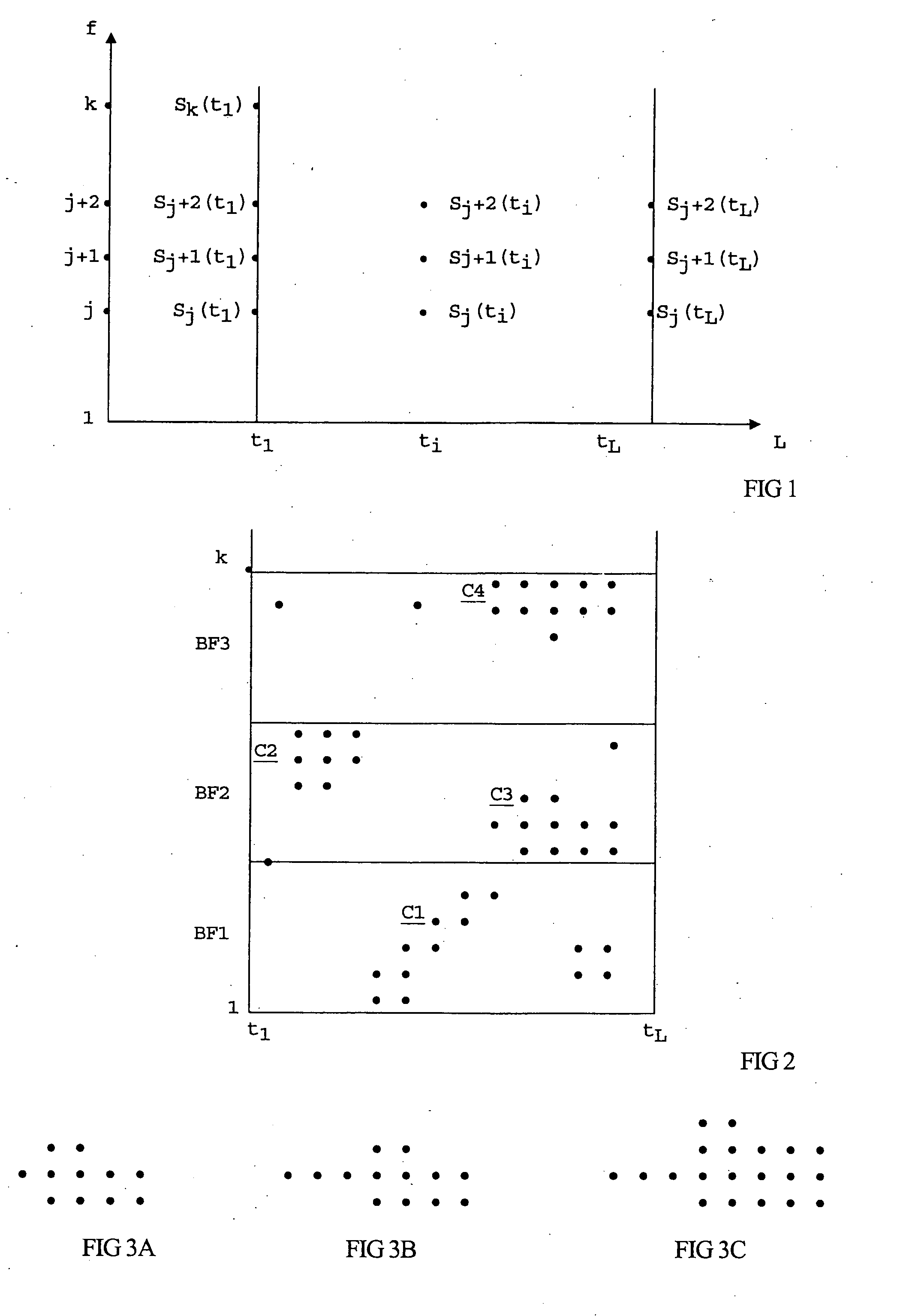

In speech science and phonetics, a formant is the spectral shaping that results from an acoustic resonance of the human vocal tract. However, in acoustics, the definition of a formant differs slightly as it is defined as a peak, or local maximum, in the spectrum. For harmonic sounds, with this definition, it is therefore the harmonic partial that is augmented by a resonance. The difference between these two definitions resides in whether "formants" characterise the production mechanisms of a sound or the produced sound itself. In practice, the frequency of a spectral peak can differ from the associated resonance frequency when, for instance, harmonics are not aligned with the resonance frequency. In most cases, this subtle difference is irrelevant and, in phonetics, formant can mean either a resonance or the spectral maximum that the resonance produces. Formants are often measured as amplitude peaks in the frequency spectrum of the sound, using a spectrogram (in the figure) or a spectrum analyzer and, in the case of the voice, this gives an estimate of the vocal tract resonances. In vowels spoken with a high fundamental frequency, as in a female or child voice, however, the frequency of the resonance may lie between the widely spaced harmonics and hence no corresponding peak is visible.

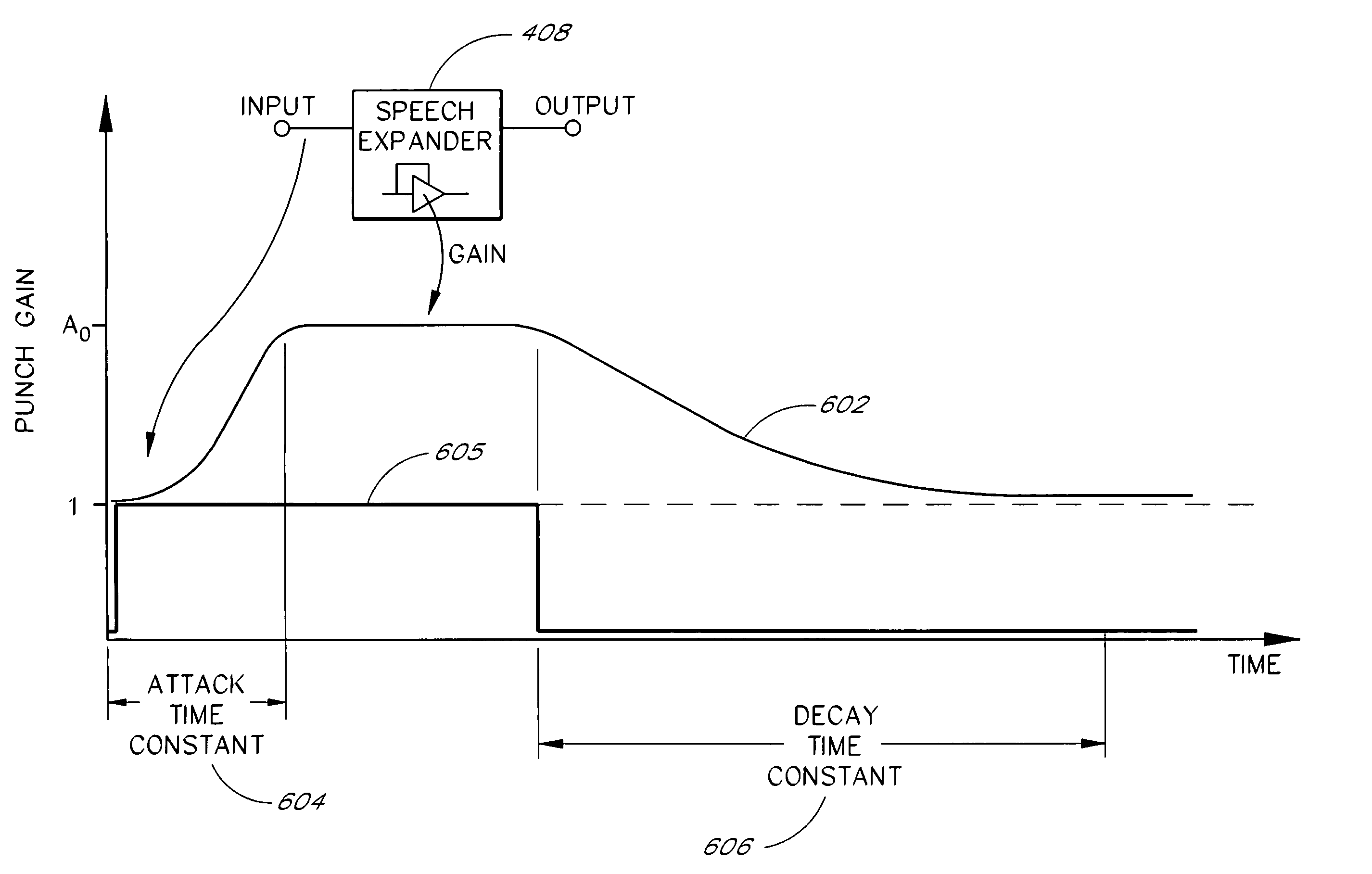

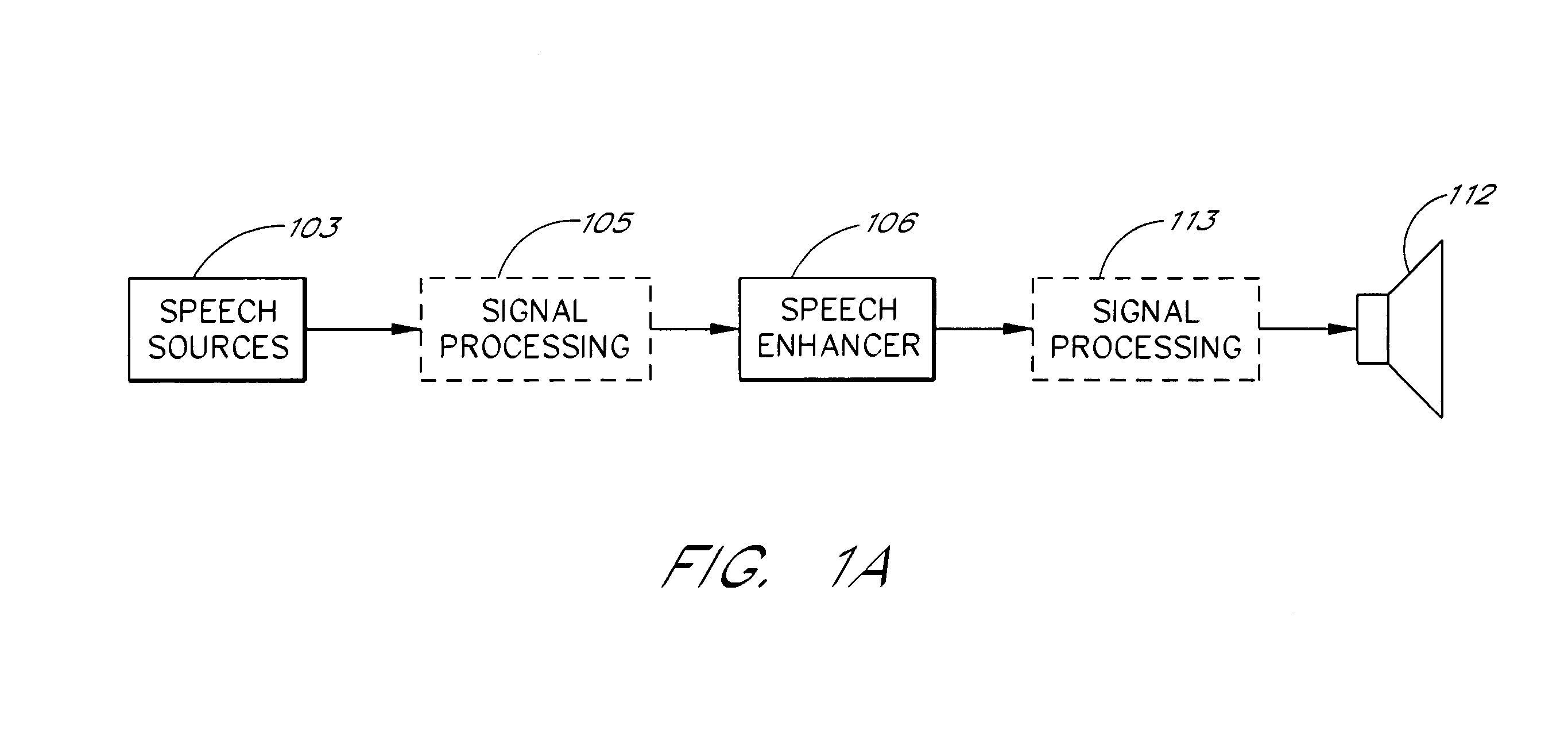

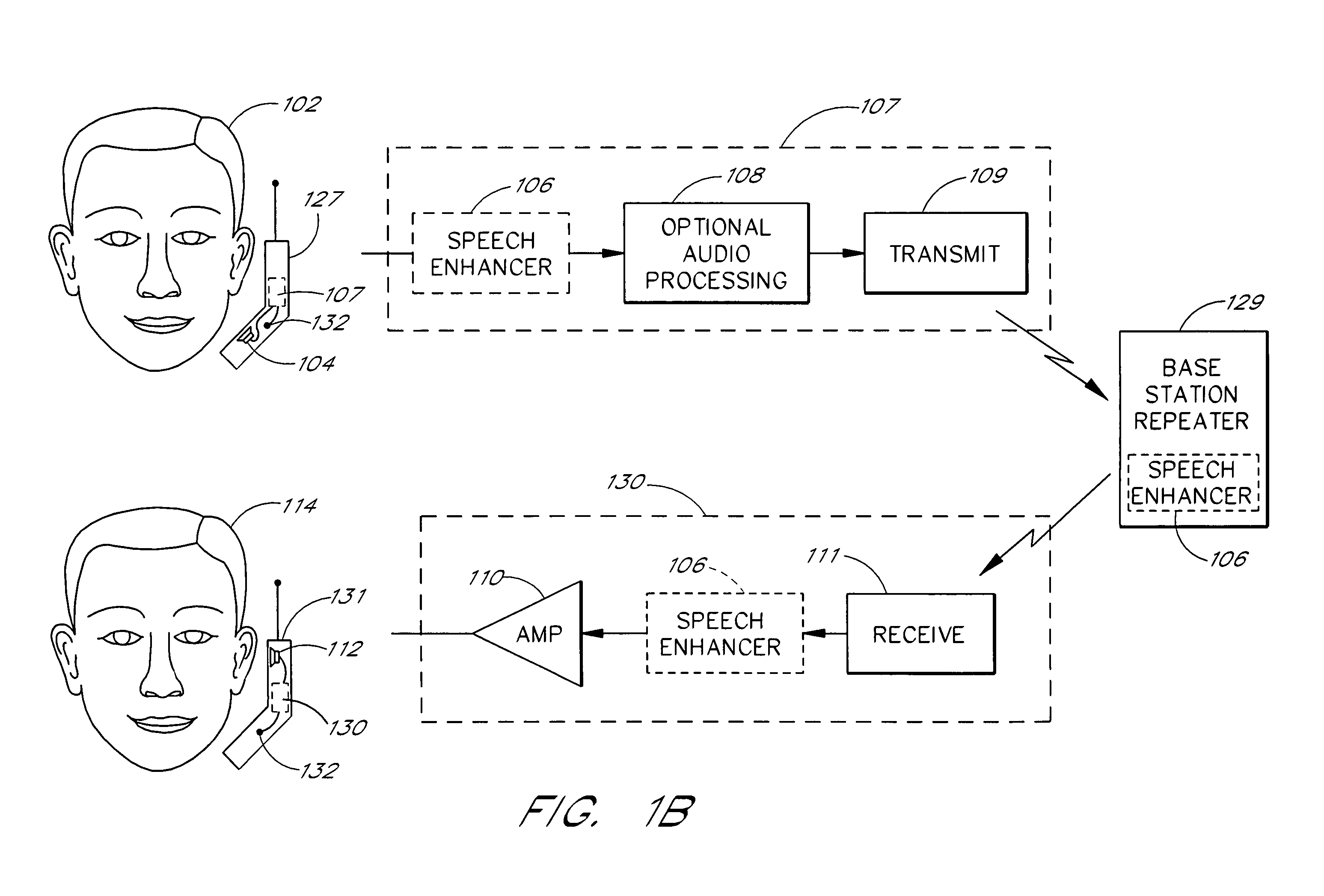

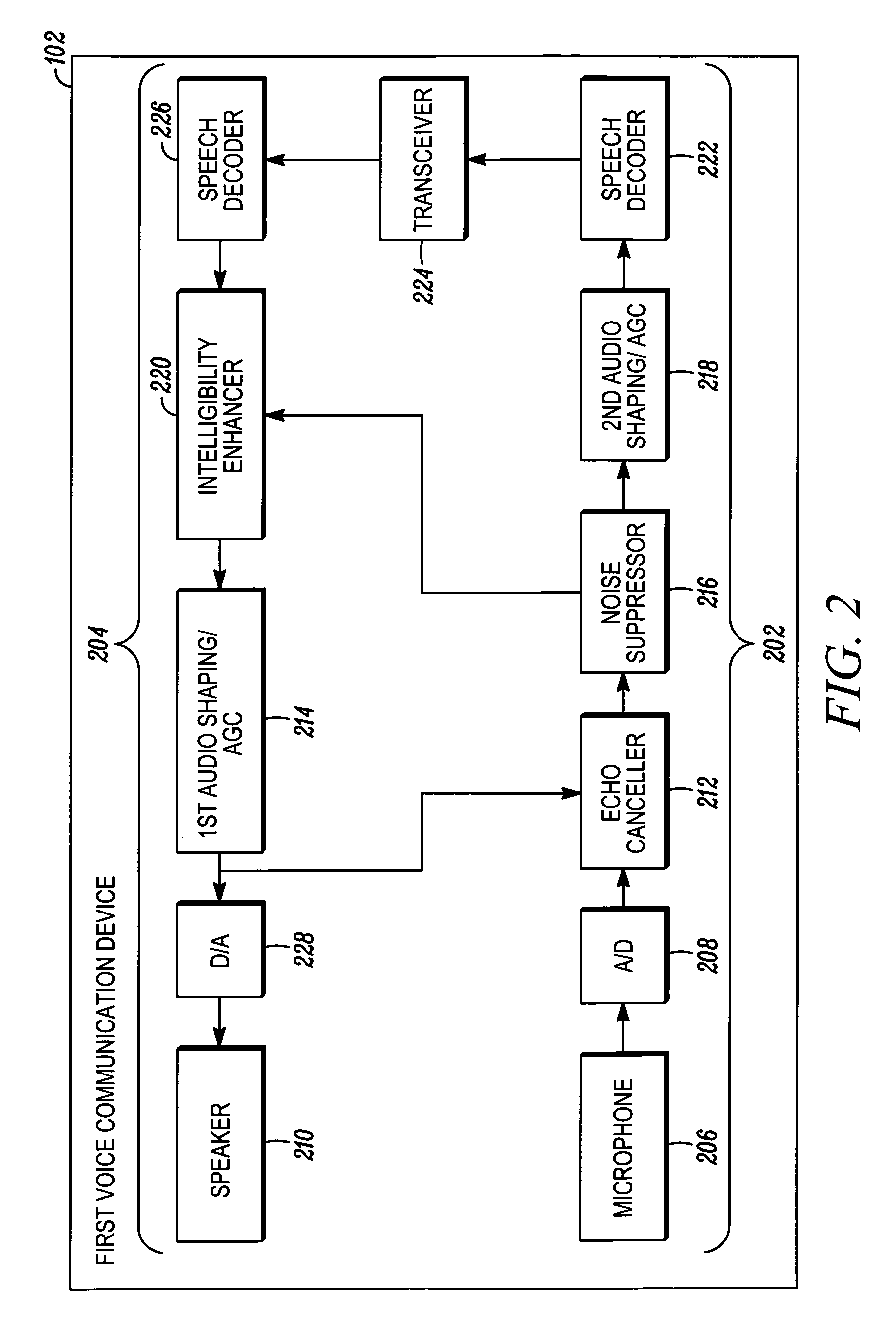

Voice intelligibility enhancement system

InactiveUS6993480B1Improve intelligibilityImprove speech clarityPublic address systemsSpeech analysisEnvironmental noiseHearing acuity

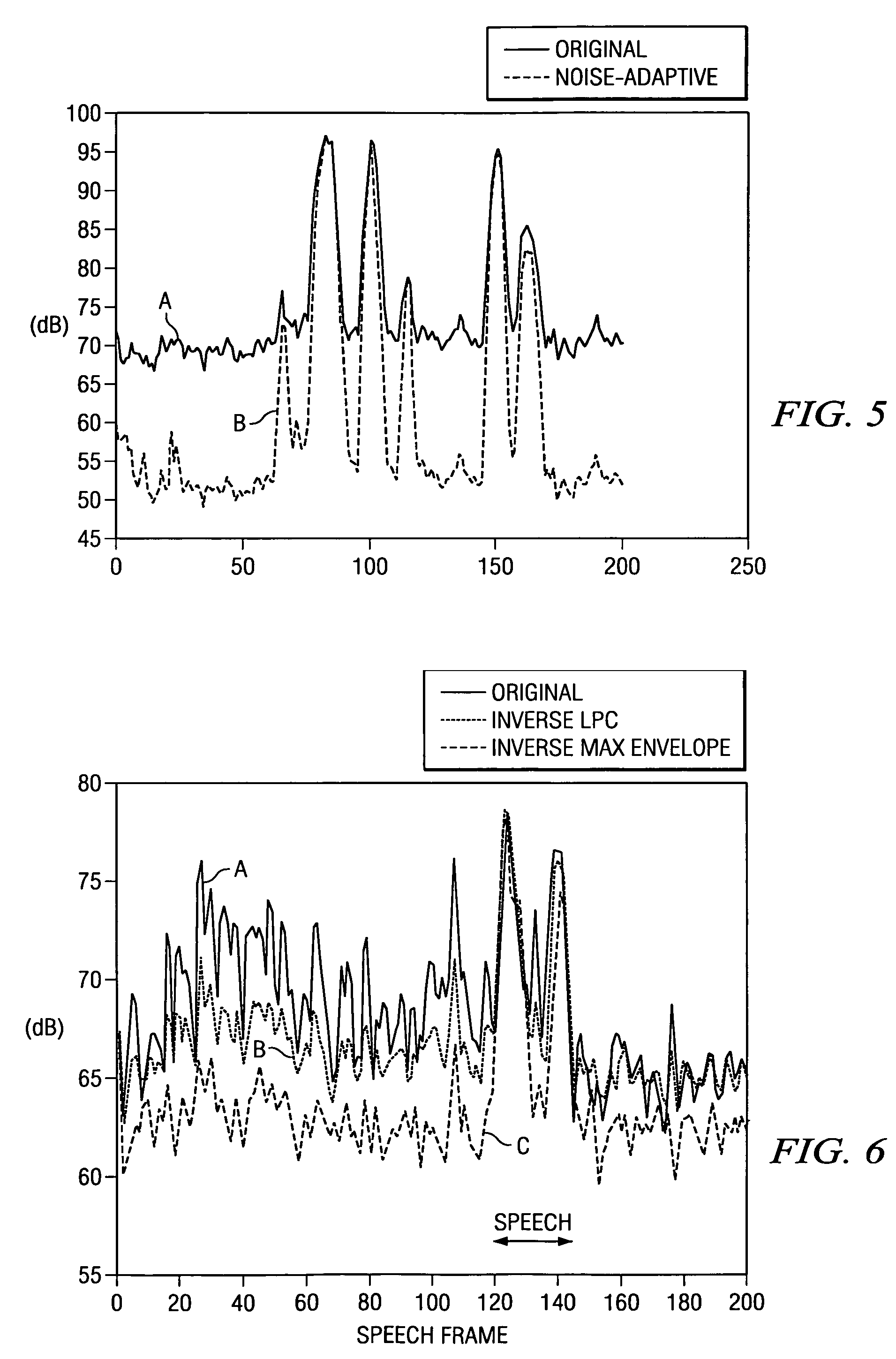

Intelligibility of a human voice projected by a loudspeaker in an environment of high ambient noise is enhanced by processing a voice signal in accordance with the frequency response characteristics of the human hearing system. Intelligibility of the human voice is derived largely from the pattern of frequency distribution of voice sounds, such as formants, as perceived by the human hearing system. Intelligibility of speech in a voice signal is enhanced by filtering and expanding the voice signal with a transfer function that approximates an inverse of equal loudness contours for tones in a frontal sound field for humans of average hearing acuity.

Owner:DTS

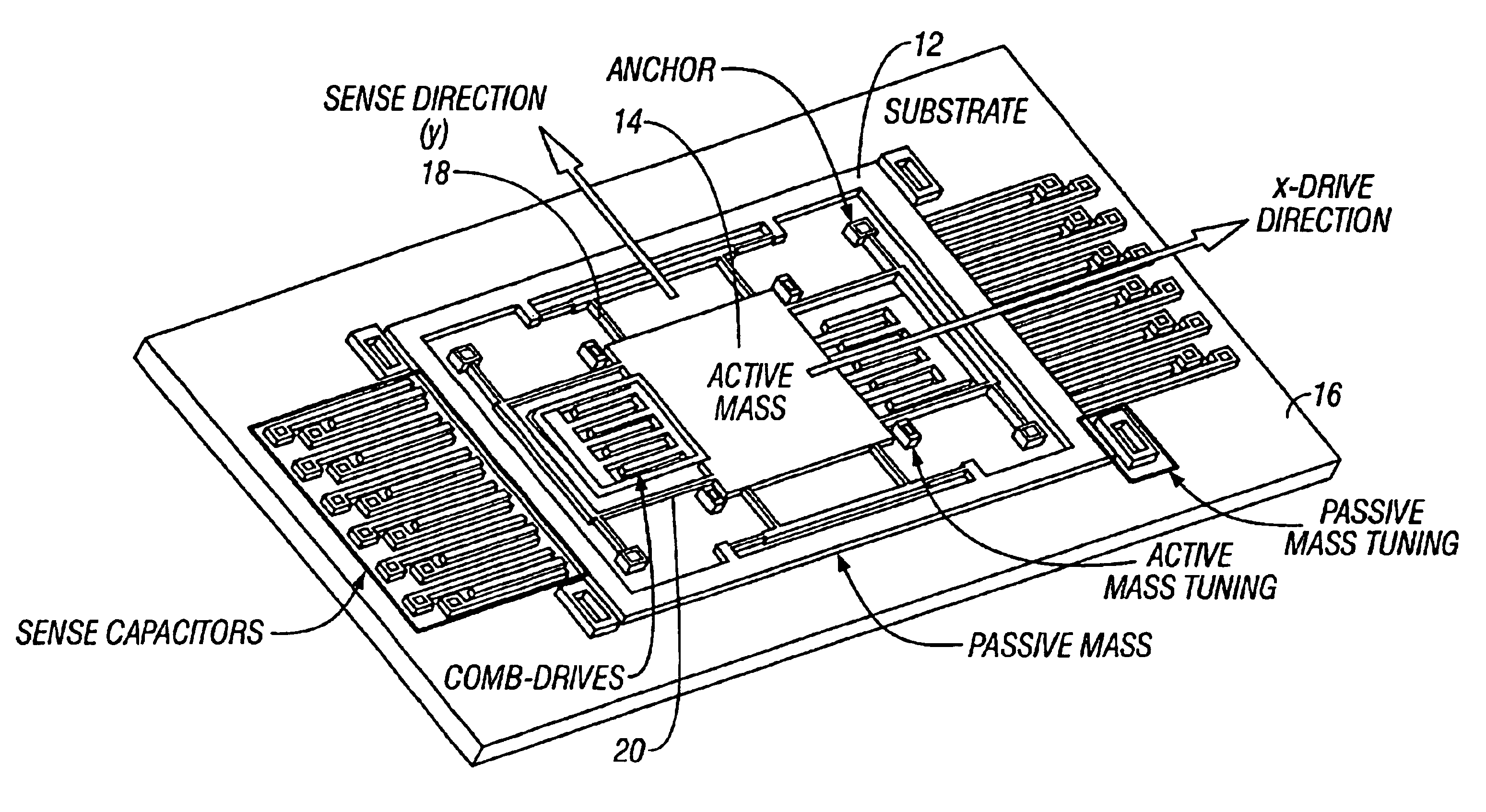

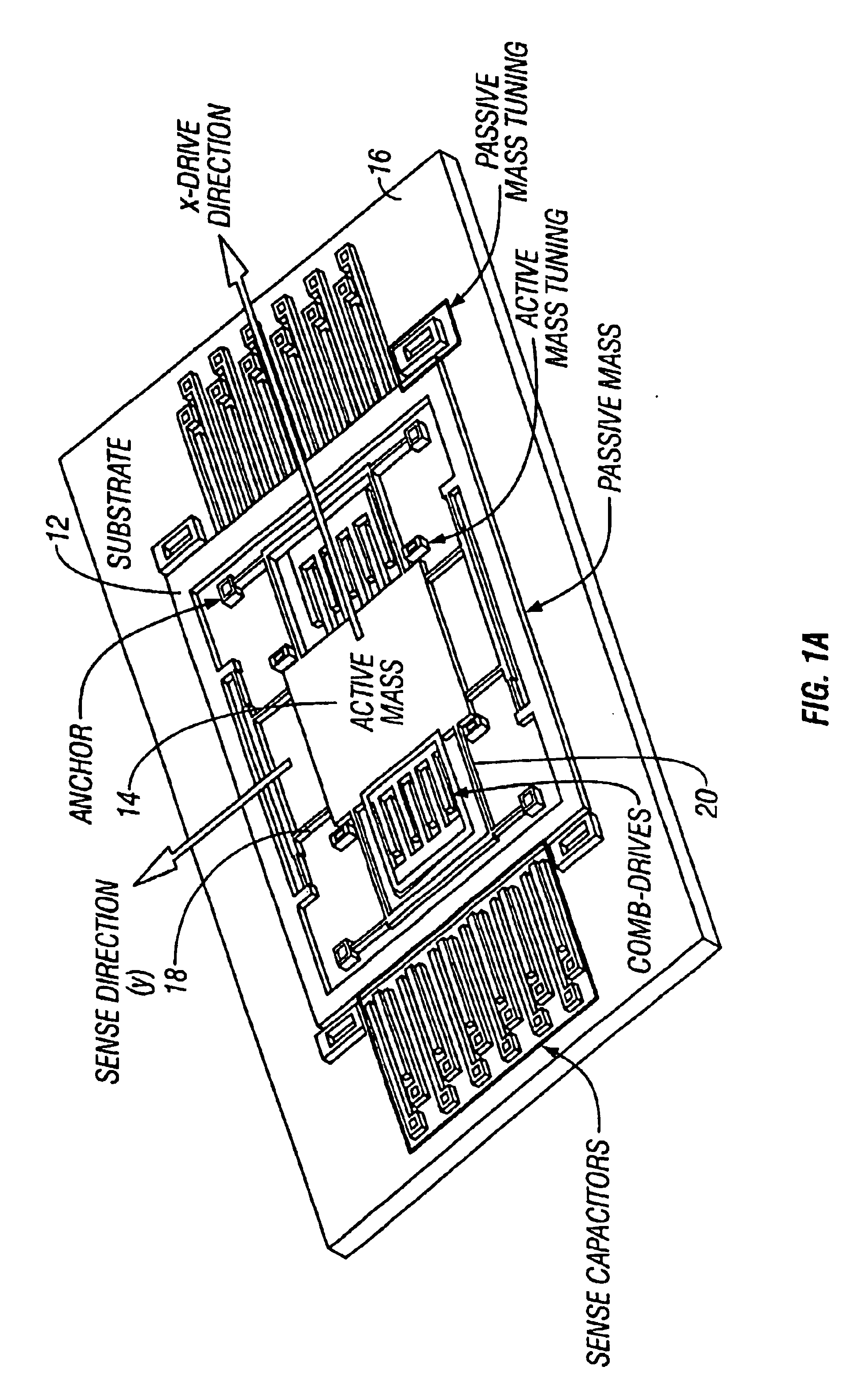

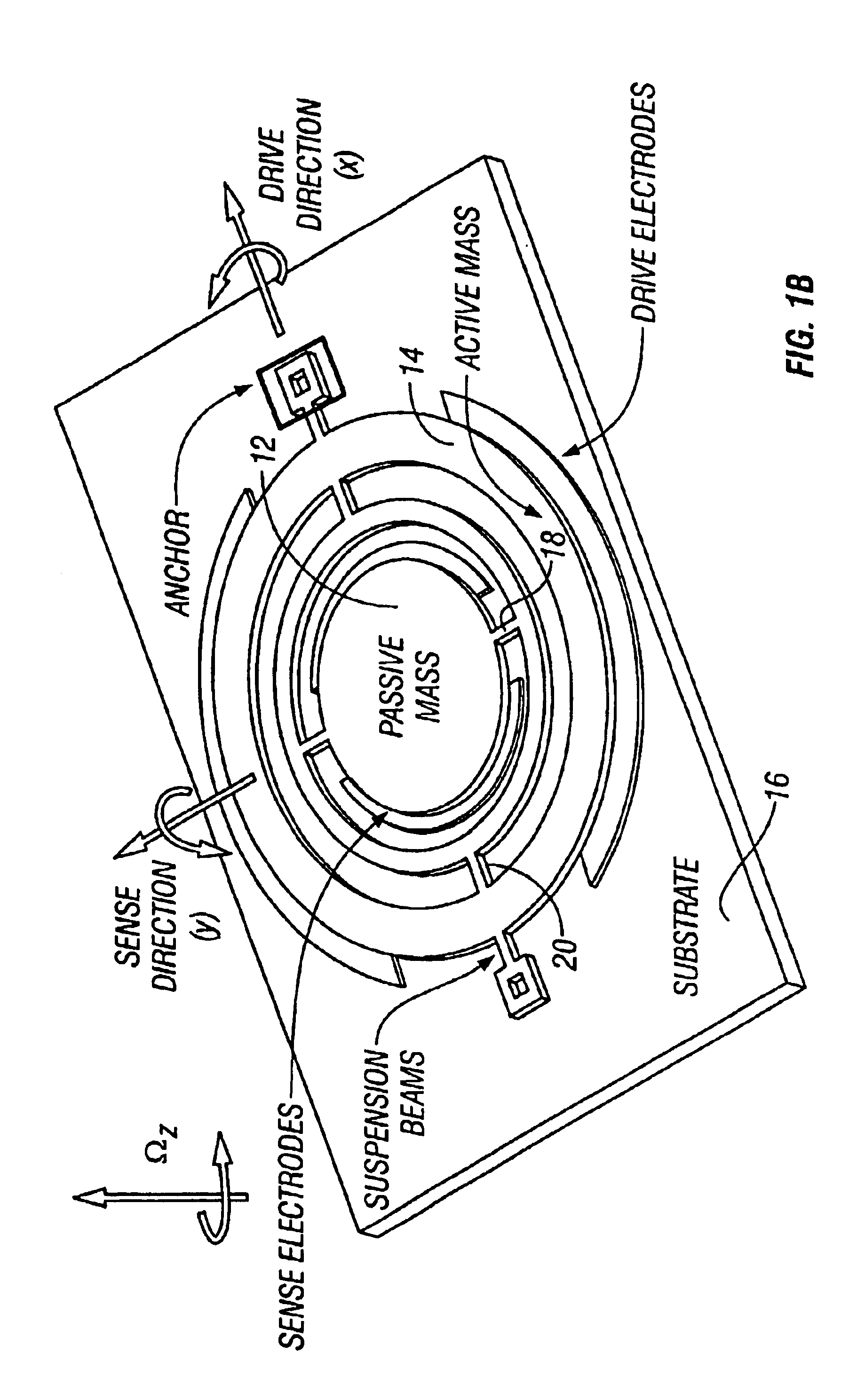

Non-resonant four degrees-of-freedom micromachined gyroscope

InactiveUS6845669B2WideOscillation amplitude is largeMechanical apparatusAcceleration measurement using interia forcesGyroscopeEngineering

A micromachined design and method with inherent disturbance-rejection capabilities is based on increasing the degrees-of-freedom (DOF) of the oscillatory system by the use of two independently oscillating proof masses. Utilizing dynamical amplification in the 4-degrees-of-freedom system, inherent disturbance rejection is achieved, providing reduced sensitivity to structural and thermal parameter fluctuations and damping changes over the operating time of the device. In the proposed system, the first mass is forced to oscillate in the drive direction, and the response of the second mass in the orthogonal direction is sensed. The response has two resonant peaks and a flat region between peaks. Operation is in the flat region, where the gain is insensitive to frequency fluctuations. An over 15 times increase in the bandwidth of the system is achieved due to the use of the proposed architecture. In addition, the gain in the operation region has low sensitivity to damping changes. Consequently, by utilizing the disturbance-rejection capability of the dynamical system, improved robustness is achieved, which can relax tight fabrication tolerances and packaging requirements and thus result in reducing production cost of micromachined methods.

Owner:RGT UNIV OF CALIFORNIA

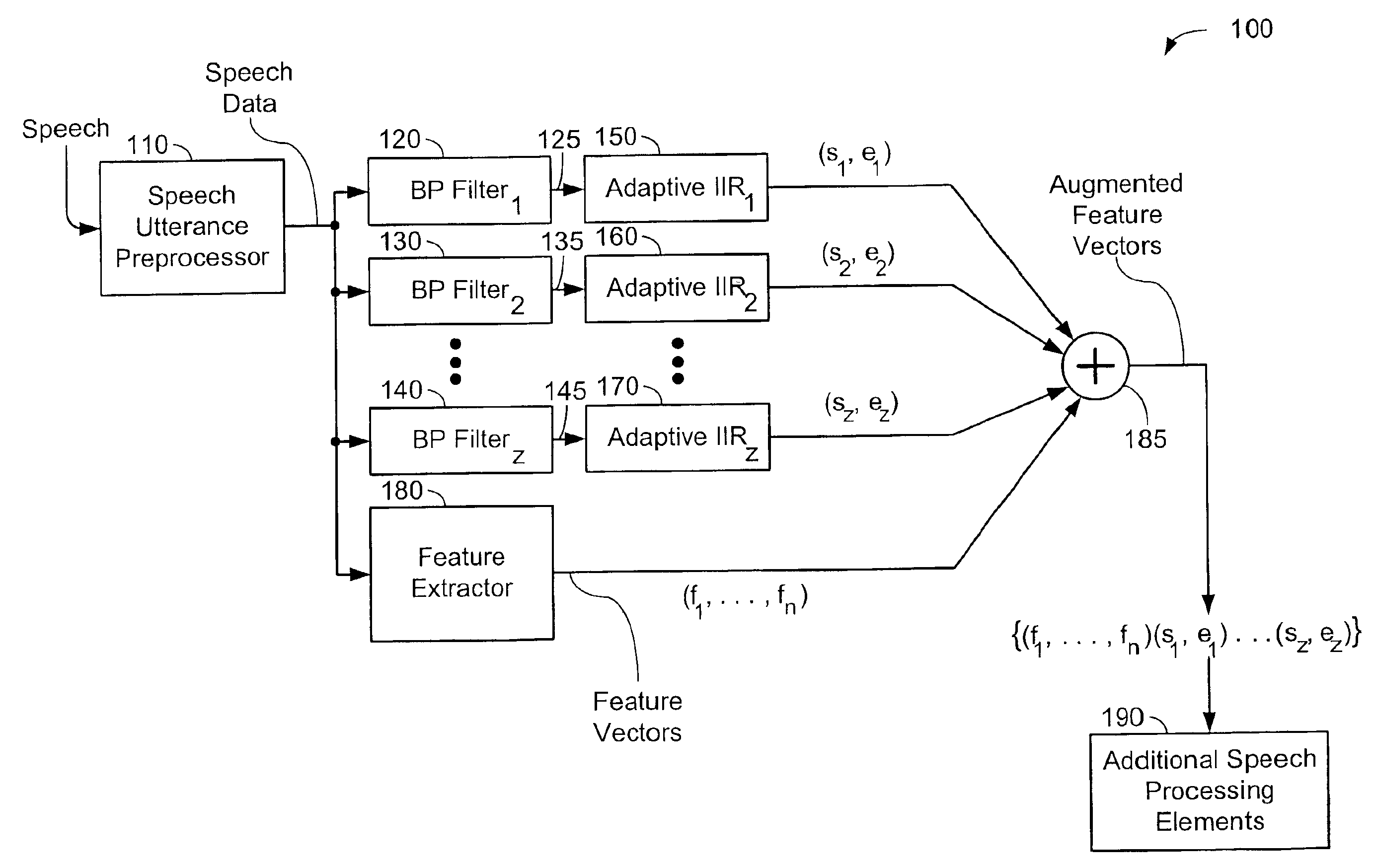

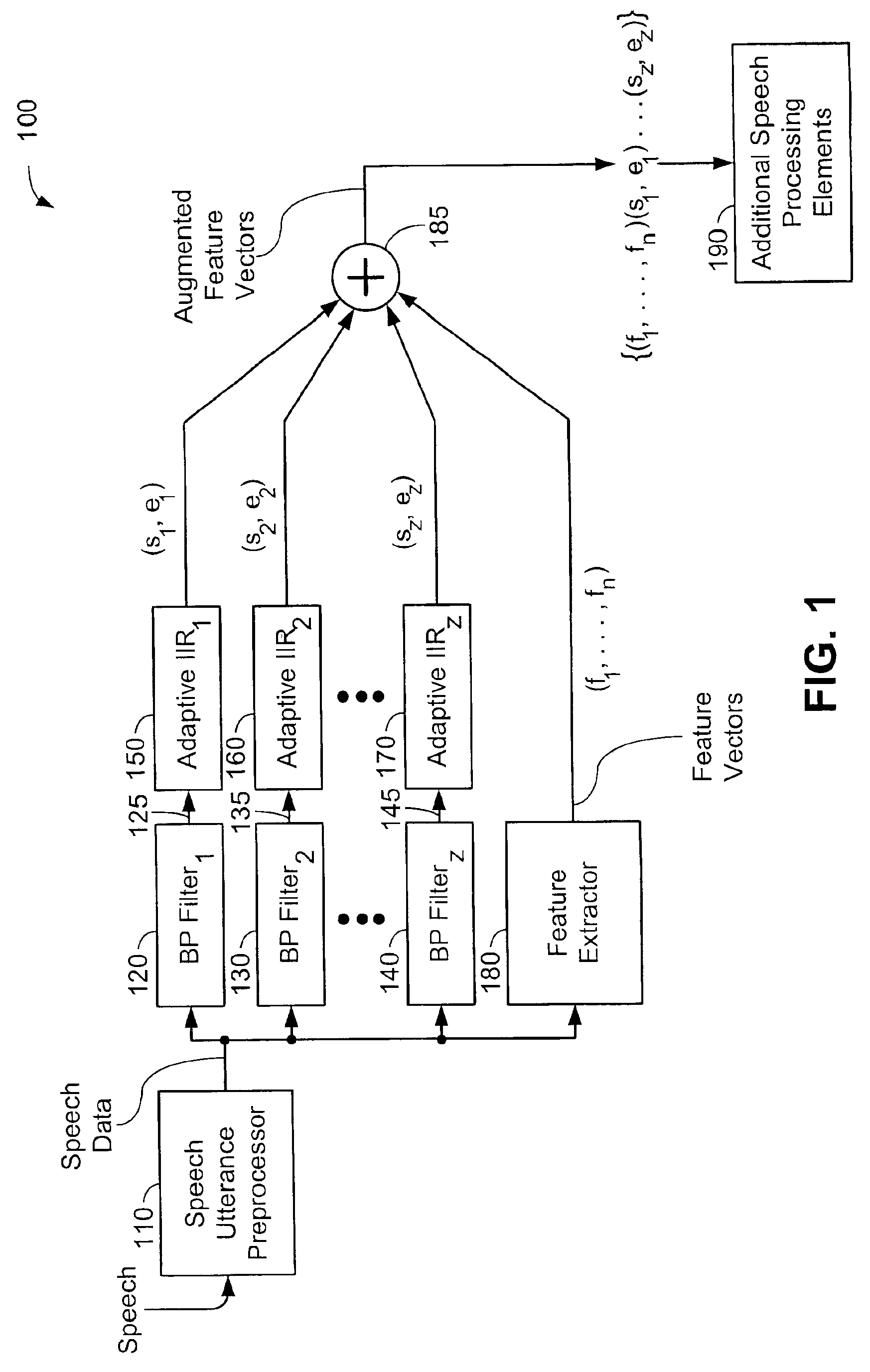

Determination and use of spectral peak information and incremental information in pattern recognition

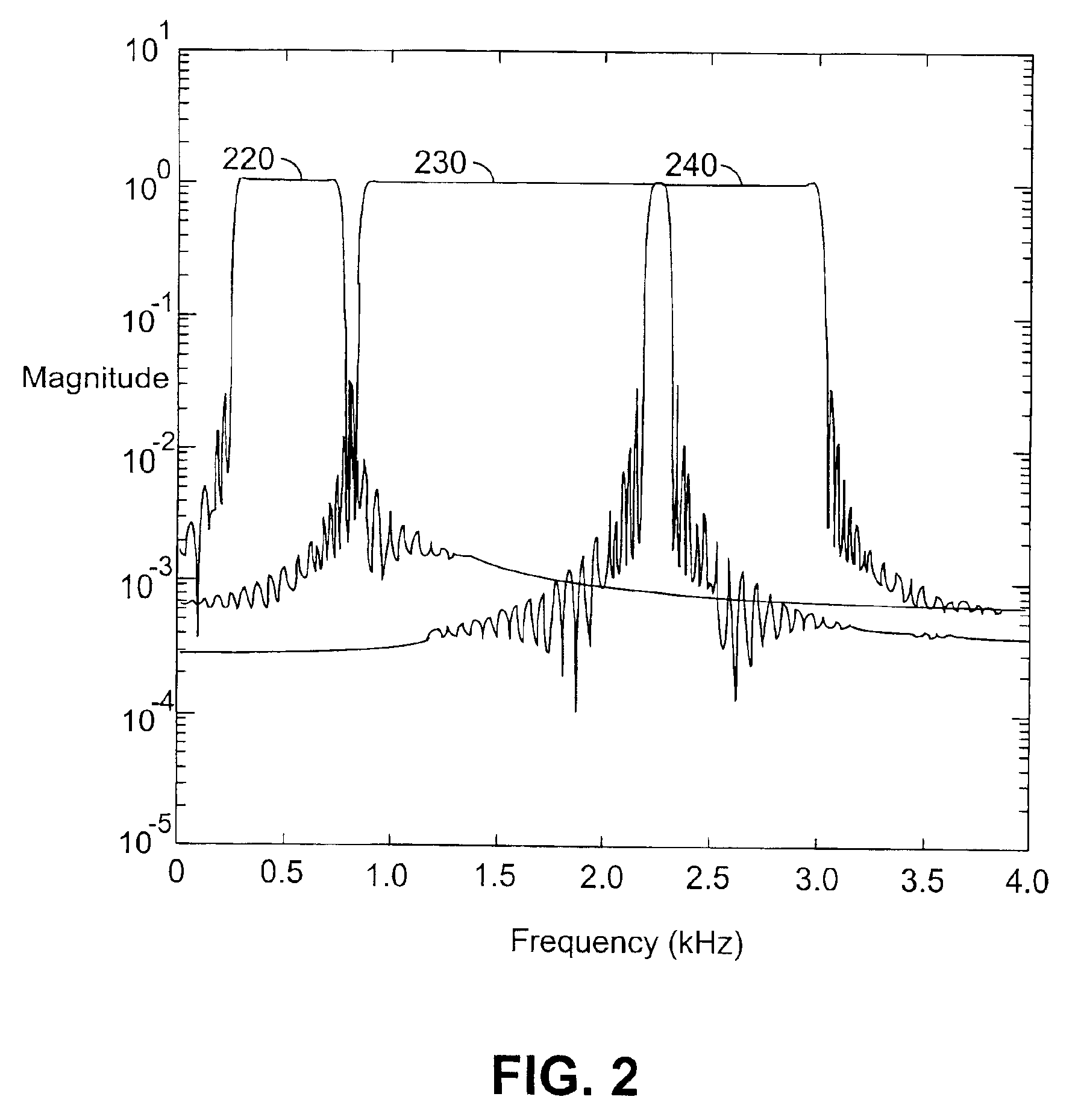

InactiveUS6920424B2Reduce errorsImprove noise immunitySpeech recognitionFeature vectorFrequency spectrum

Generally, the present invention determines and uses spectral peak information, which preferably augments feature vectors and creates augmented feature vectors. The augmented feature vectors decrease errors in pattern recognition, increase noise immunity for wide-band noise, and reduce reliance on noisy formant features. Illustratively, one way of determining spectral peak information is to split pattern data into a number of frequency ranges and determine spectral peak information for each of the frequency ranges. This allows single peak selection. All of the spectral peak information is then used to augment a feature vector. Another way of determining spectral peak information is to use an adaptive Infinite Impulse Response filter to provide this information. Additionally, the present invention can determine and use incremental information. The incremental information is relatively easy to calculate and helps to determine if additional or changed features are worthwhile. The incremental information is preferably determined by determining a difference between mutual information (between the feature vector and the classes to be disambiguated) for new or changed feature vectors and mutual information for old feature vectors.

Owner:IBM CORP

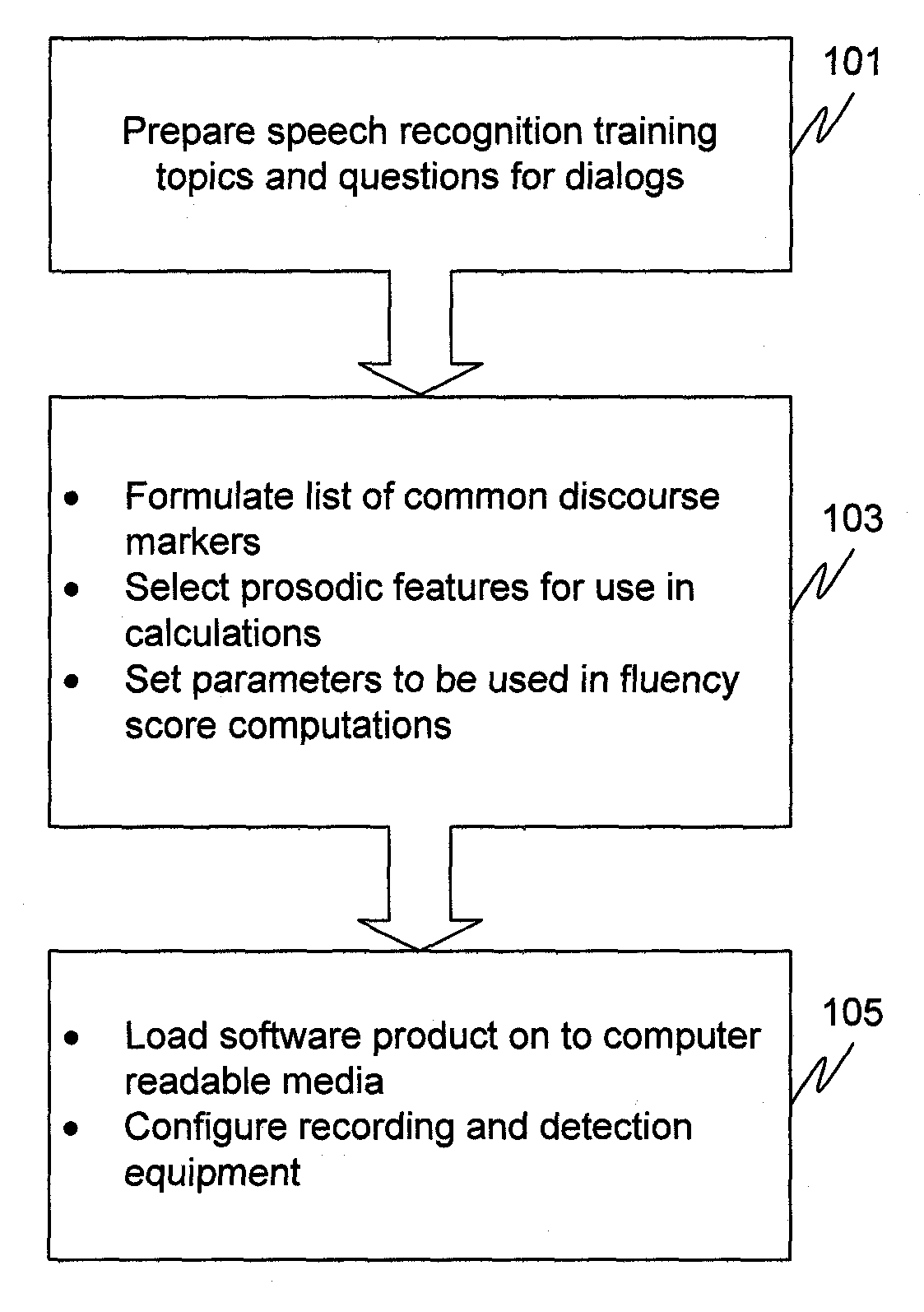

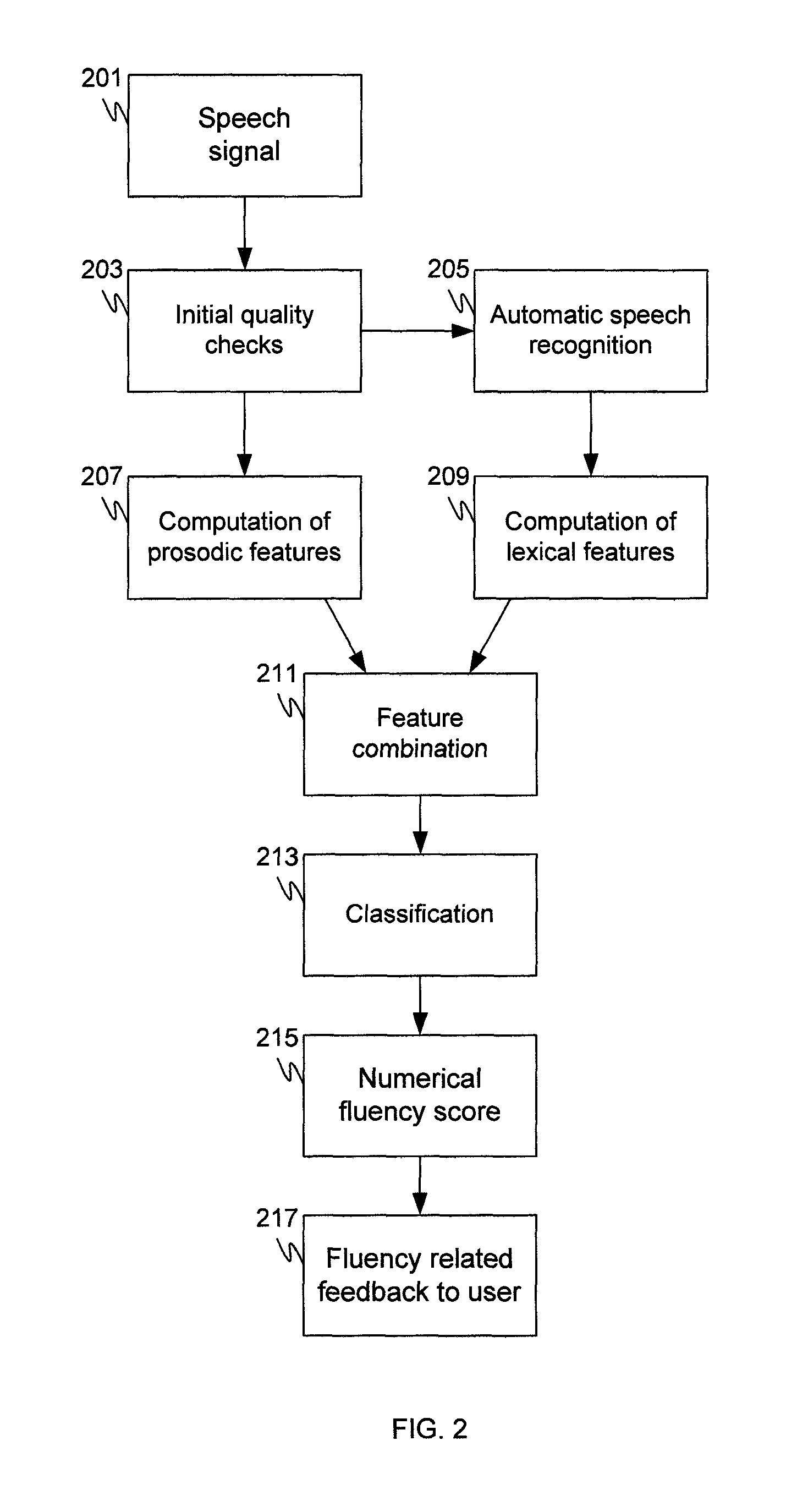

Automatic Evaluation of Spoken Fluency

A procedure to automatically evaluate the spoken fluency of a speaker by prompting the speaker to talk on a given topic, recording the speaker's speech to get a recorded sample of speech, and then analyzing the patterns of disfluencies in the speech to compute a numerical score to quantify the spoken fluency skills of the speakers. The numerical fluency score accounts for various prosodic and lexical features, including formant-based filled-pause detection, closely-occurring exact and inexact repeat N-grams, normalized average distance between consecutive occurrences of N-grams. The lexical features and prosodic features are combined to classify the speaker with a C-class classification and develop a rating for the speaker.

Owner:NUANCE COMM INC

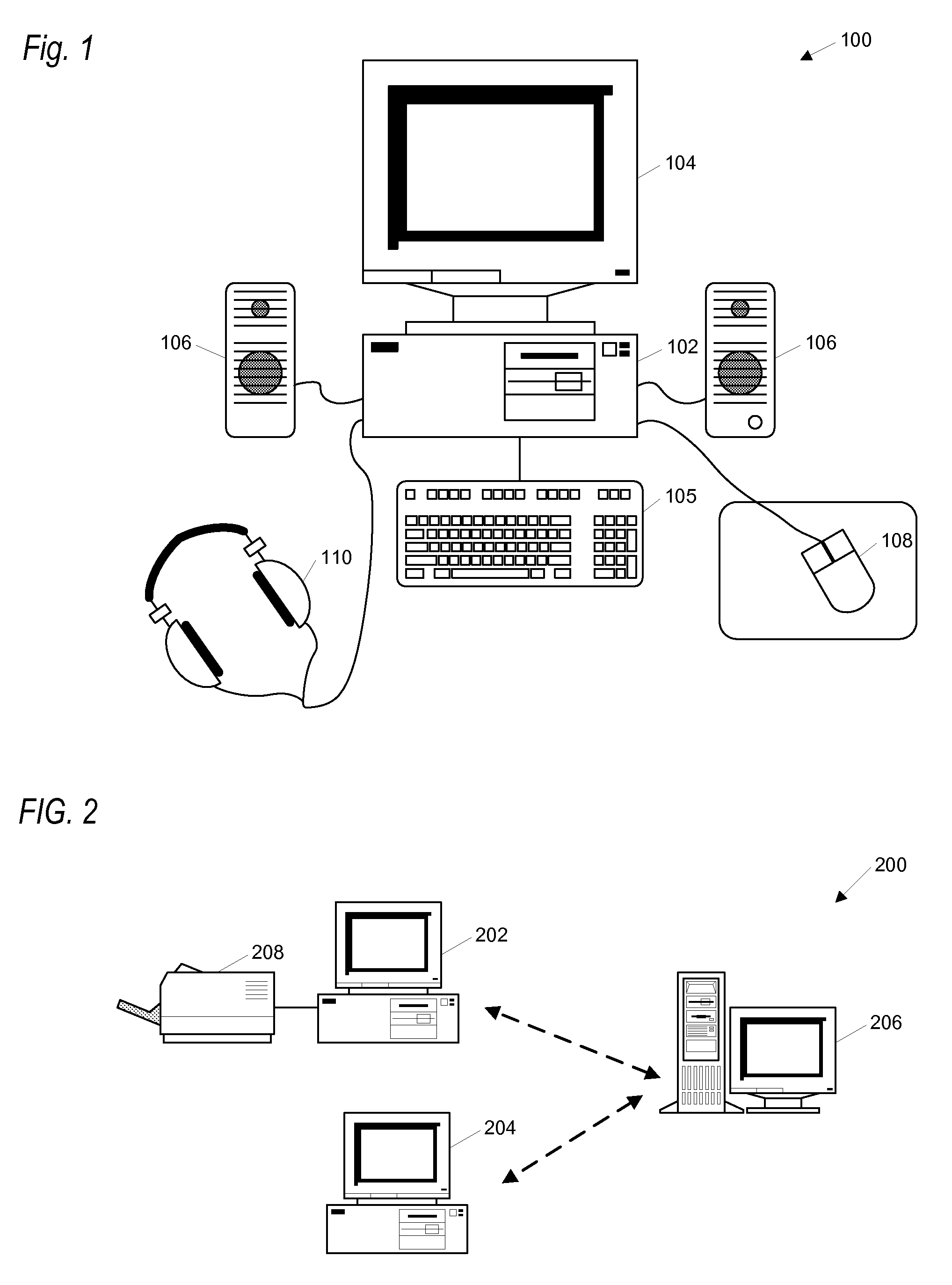

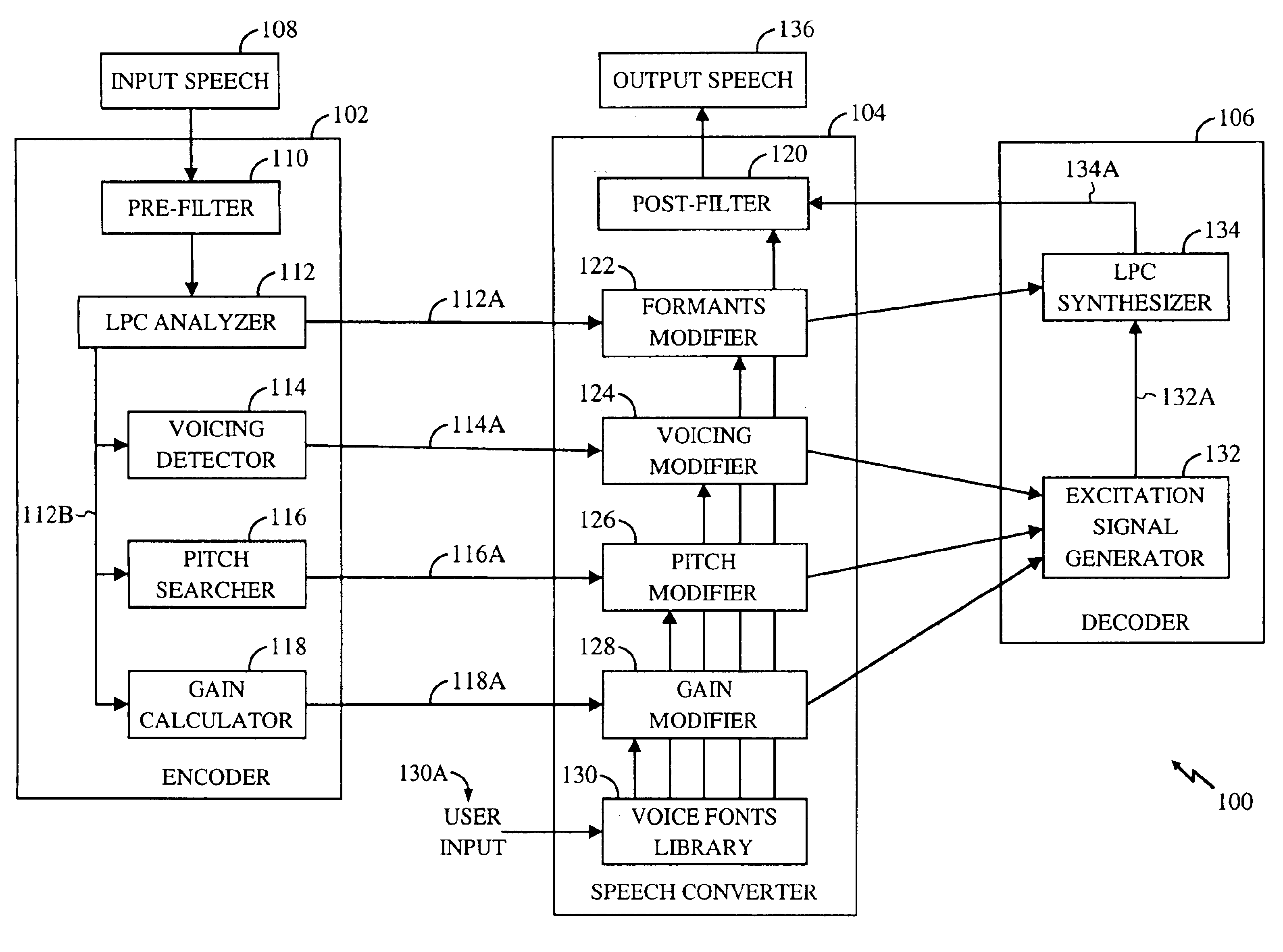

Method for enhancing memory and cognition in aging adults

InactiveUS20050175972A1Improve “ noisy ” sensory representationShorten time constantMental therapiesElectrical appliancesRandom orderFormant

A method on a computing device is provided for enhancing the memory and cognitive ability of an older adult by requiring the adult to differentiate between rapidly presented stimuli. The method trains the time order judgment of the adult by presenting upward and downward frequency sweeps, in random order, separated by an inter-stimulus interval. The upward and downward frequency sweeps are at frequencies common in formants which form the frequency components common in speech. Icons are associated with the upward and downward frequency sweeps to allow the adult to indicate an order in which the frequency sweeps are presented (i.e., UP-UP, UP-DOWN, DOWN-UP, and DOWN-DOWN). Correct selection of an order causes the inter-stimulus interval to be reduced, and / or the duration of the frequency sweeps to be adaptively shortened.

Owner:POSIT SCI CORP

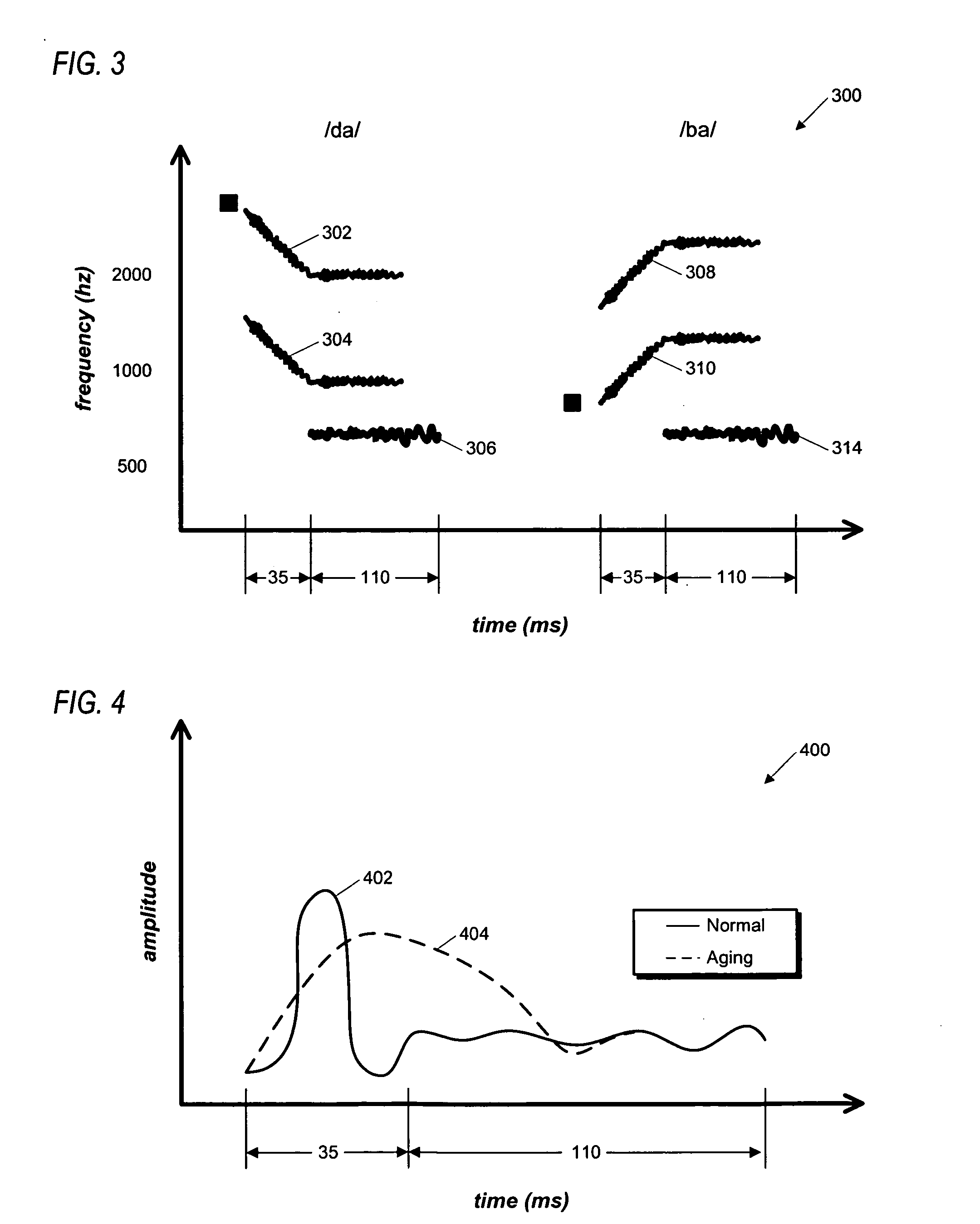

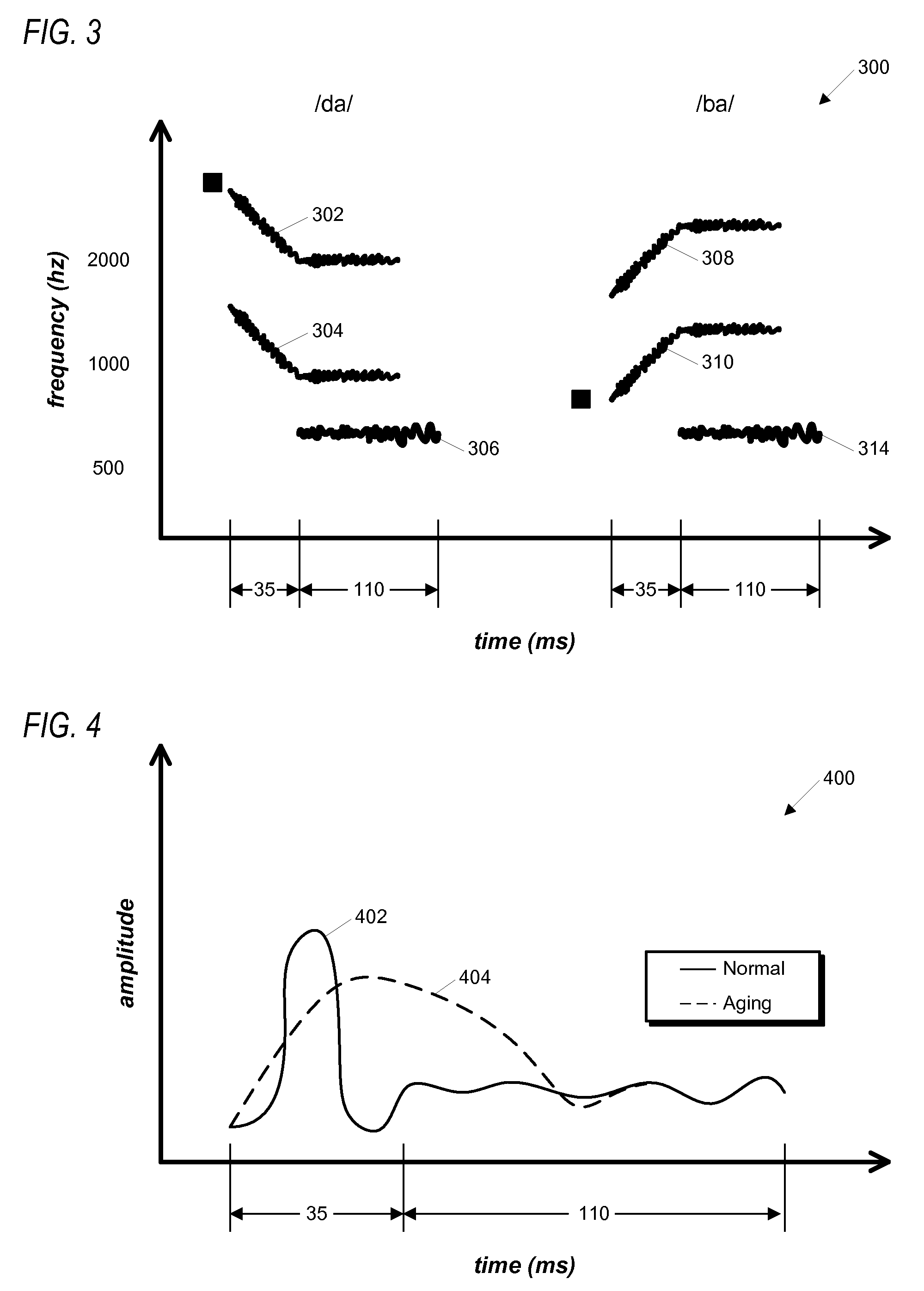

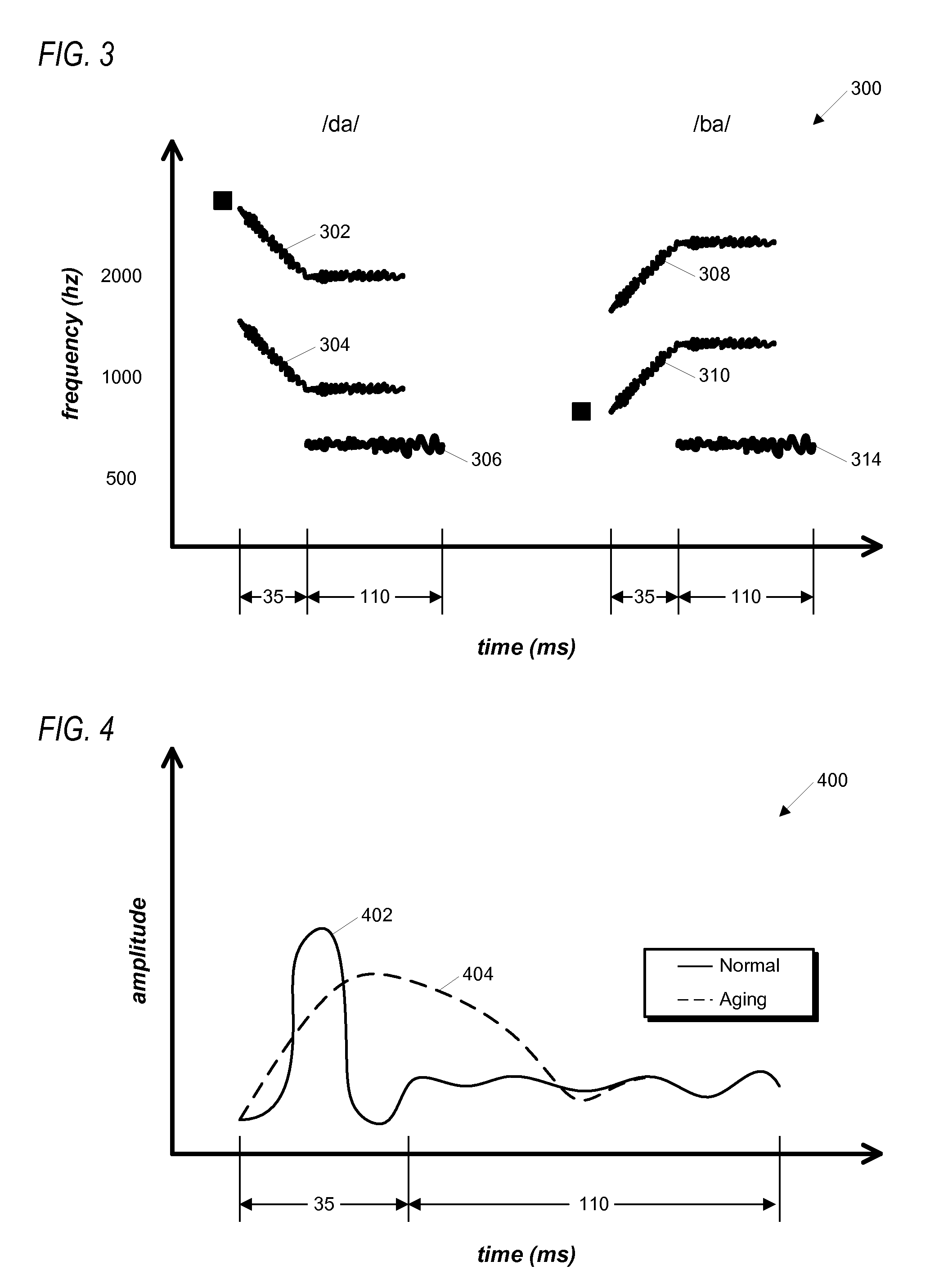

Progressions in HiFi assessments

Computer-implemented method for assessing an aging adult's ability to classify formant transition and segment duration information in making phonetic categorizations. A representative subset of multiple confusable pairs of phonemes is selected. A representative subset of multiple stimulus levels for the phonemes is selected for use with the phoneme subset. For each pair of phonemes of the phoneme subset, at each stimulus level of the stimulus level subset: icons for each phoneme are graphically presented, and a computer-generated phoneme from the pair is aurally presenting at the stimulus level. The adult is required to select one of the icons corresponding to the aurally presented phoneme, and the selection's correctness or incorrectness recorded as a response result. A success rate is determined based on the response results, the success rate comprising an estimate of the adult's success rate with respect to the multiple confusable pairs of phonemes at the multiple stimulus levels.

Owner:POSIT SCI CORP

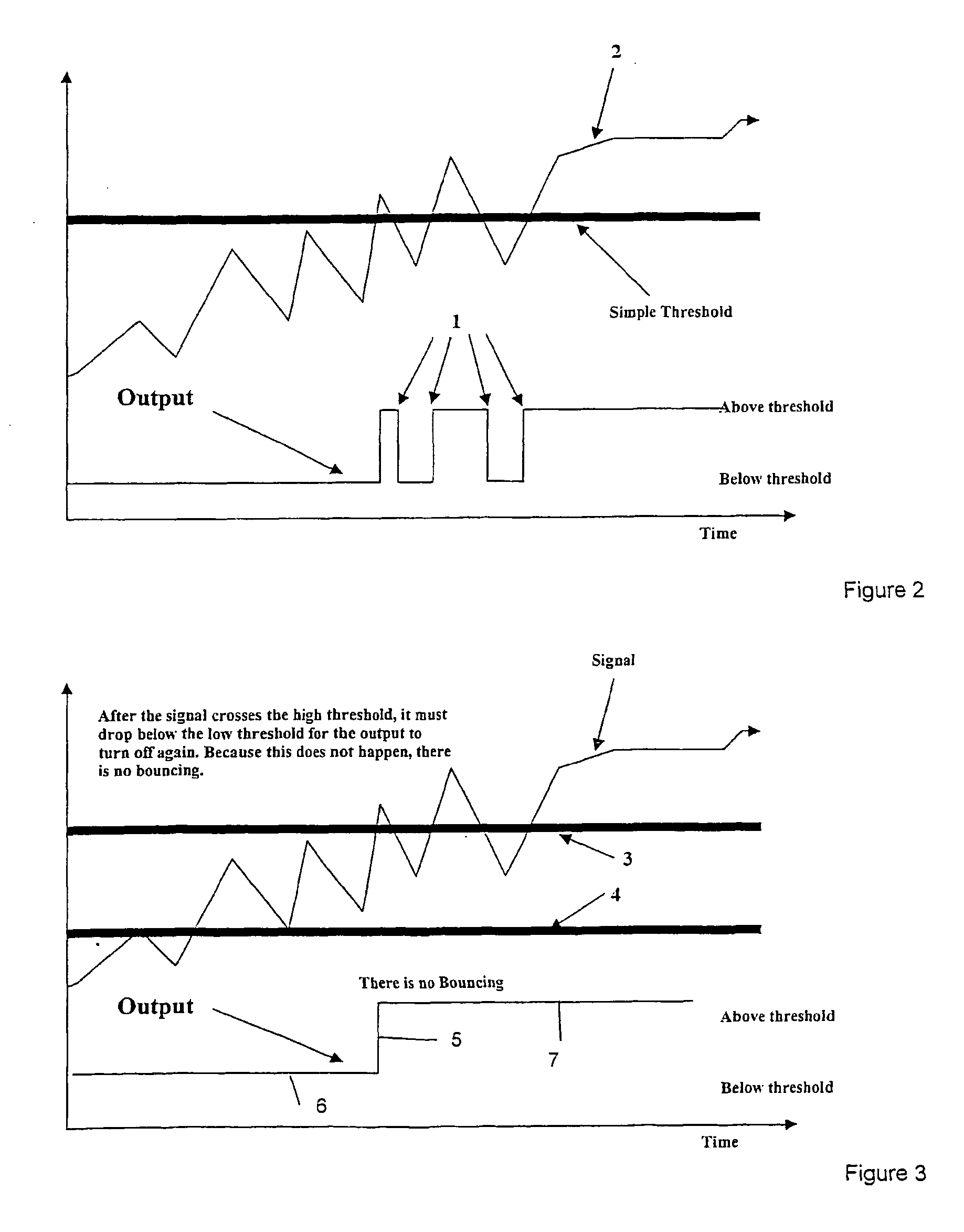

Method for improving speaker identification by determining usable speech

InactiveUS7177808B2Overcome limitationsEnhance a target speakerSpeech recognitionDependabilityFormant

Method for improving speaker identification by determining usable speech. Degraded speech is preprocessed in a speaker identification (SID) process to produce SID usable and SID unusable segments. Features are extracted and analyzed so as to produce a matrix of optimum classifiers for the detection of SID usable and SID unusable speech segments. Optimum classifiers possess a minimum distance from a speaker model. A decision tree based upon fixed thresholds indicates the presence of a speech feature in a given speech segment. Following preprocessing, degraded speech is measured in one or more time, frequency, cepstral or SID usable / unusable domains. The results of the measurements are multiplied by a weighting factor whose value is proportional to the reliability of the corresponding time, frequency, or cepstral measurements performed. The measurements are fused as information, and usable speech segments are extracted for further processing. Such further processing of co-channel speech may include speaker identification where a segment-by-segment decision is made on each usable speech segment to determine whether they correspond to speaker #1 or speaker #2. Further processing of co-channel speech may also include constructing the complete utterance of speaker #1 or speaker #2. Speech features such as pitch and formants may be extended back into the unusable segments to form a complete utterance from each speaker.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

Method, system and software for teaching pronunciation

The present invention relates to a method for teaching pronunciation. More particularly, but not exclusively, the present invention relates to a method for teaching pronunciation using formant trajectories and for teaching pronunciation by splitting speech into phonemes. (A) A speech signal is received from a user; (B) word(s) is / are detected within the signal; (C) voice / unvoiced segments are detected within the word(s); (D) formants of the voiced segments are calculated; (E) vowel phonemes are detected with the voiced segments; the vowel phonemes may be detected using a weighted sum of a Fourier transform measure of frequency energy and a measure based on the formants; and (F) a formant trajectory may be calculated for the vowel phonemes using the detected formants.

Owner:VISUAL PRONUNCIATION SOFTWARE

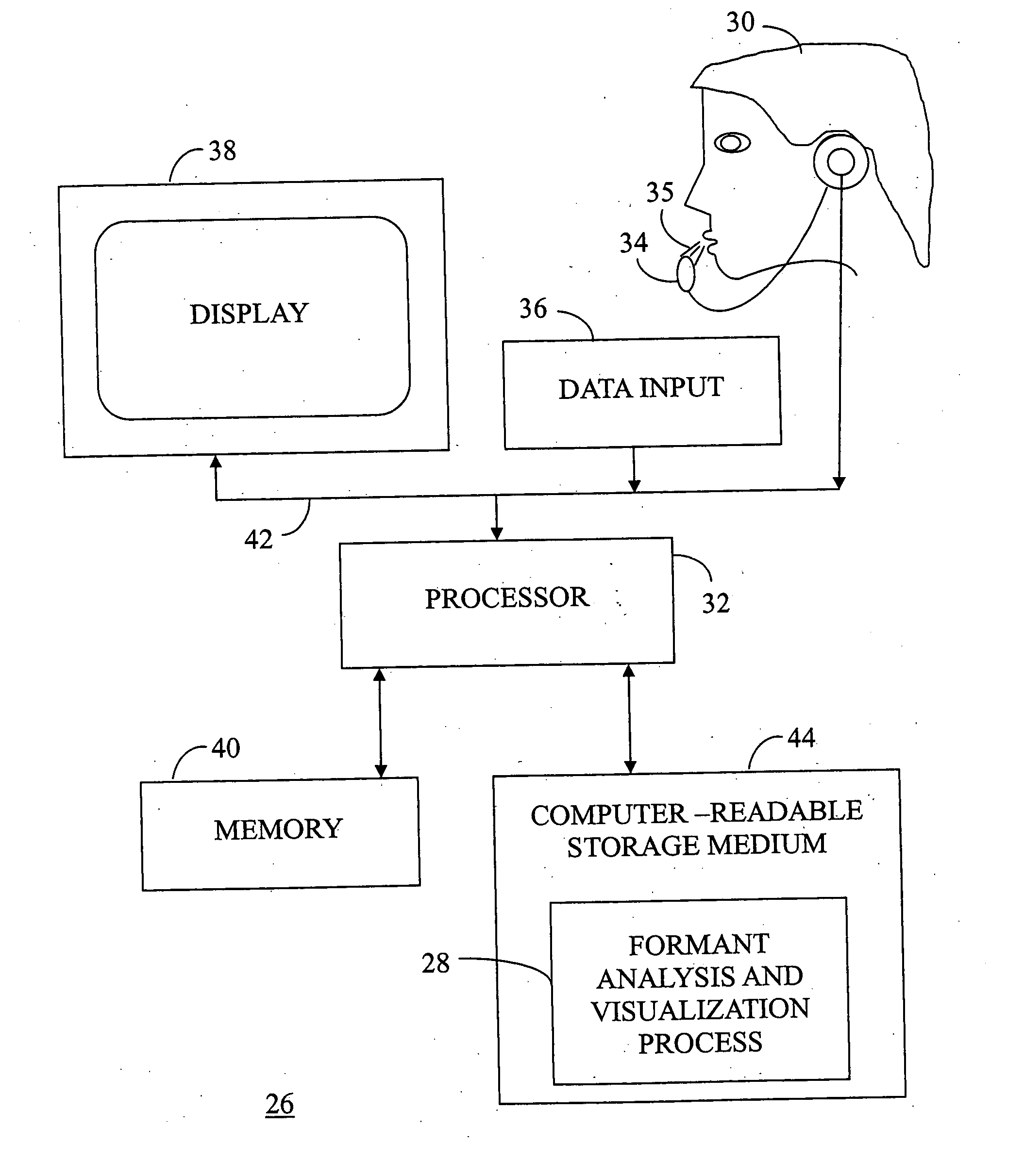

Real time voice analysis and method for providing speech therapy

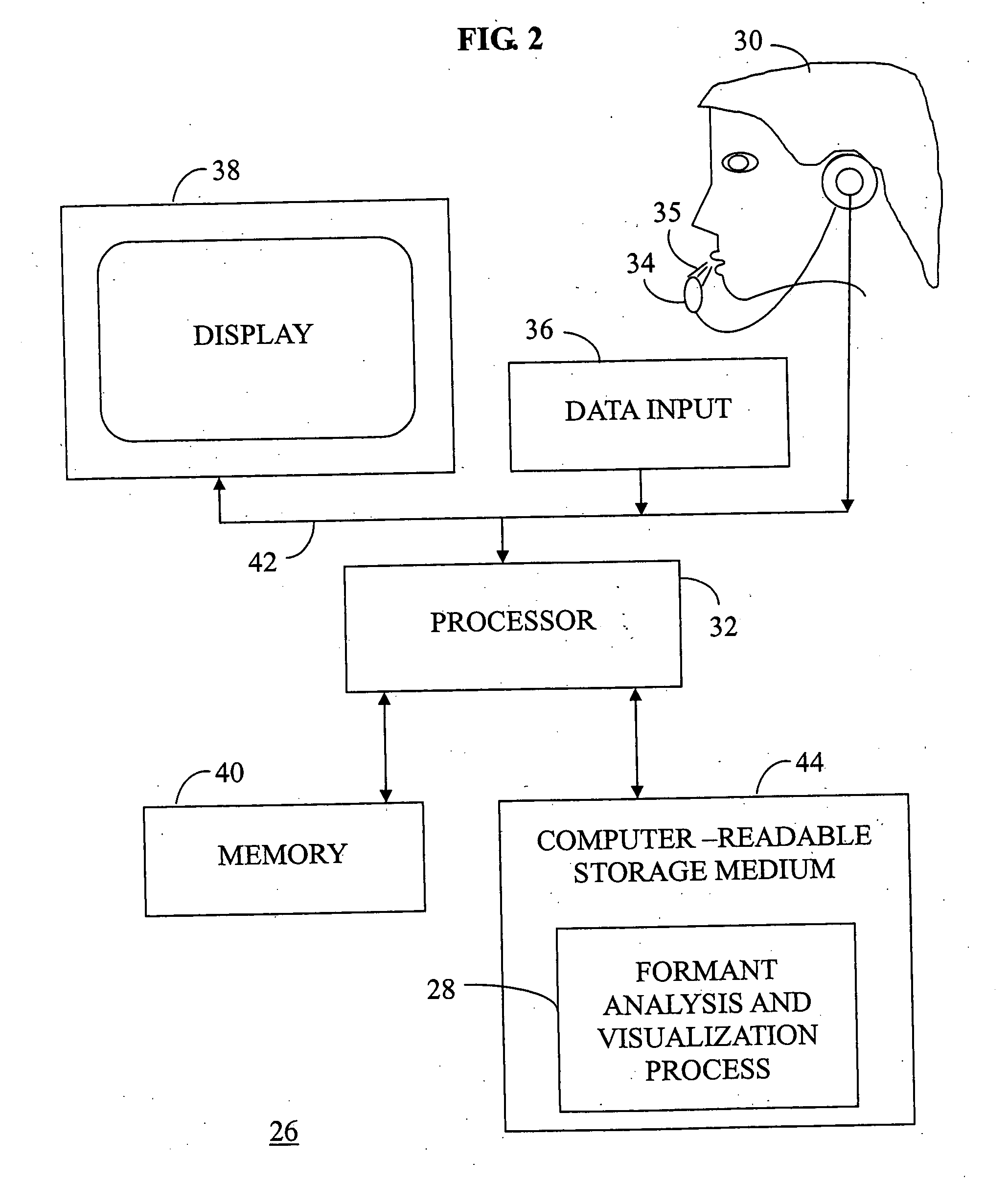

InactiveUS20070168187A1Easy to learnEasy to carrySpeech analysisElectrical appliancesData elementFormant

A method (196) for providing speech therapy to a learner (30) utilizes a formant estimation and visualization process (28) executable on a computing system (26). The method (196) calls for receiving a speech signal (35) from the learner (30) at an audio input (34) of the computing system (26) and estimating first and second formants (136, 138) of the speech signal (35). A target (94) is incorporated into a vowel chart (70) on a display (38) of the computing system (26). The target (70) characterizes an ideal pronunciation of the speech signal (35). A data element (134) of a relationship between the first and second formants (136, 138) is incorporated into the vowel chart (76) on the display (38). The data element (134) is compared with the target (70) to visualize an accuracy of the speech signal (35) relative to the ideal pronunciation of the speech signal (35).

Owner:FLETCHER SAMUEL G

Cognitive training using formant frequency sweeps

InactiveUS20070134635A1Increase awarenessImprove abilitiesElectrical appliancesTeaching apparatusRandom oracleRandom order

A method on a computing device for enhancing the memory and cognitive ability of a participant by requiring the participant to differentiate between rapidly presented aural stimuli. The method trains the time order judgment of the participant by iteratively presenting sequences of upward and downward formant frequency sweeps, in random order, separated by an inter-stimulus interval (ISI). The upward and downward formant frequency sweeps utilize frequencies common in formants, i.e., the characteristic frequency components common in human speech. Icons are associated with the upward and downward formant frequency sweeps to allow the participant to indicate an order in which the sweeps are presented (i.e., UP-UP, UP-DOWN, DOWN-UP, and DOWN-DOWN). Correct / incorrect selection of an order causes the ISI and / or the duration of the frequency sweeps to be adaptively shortened / lengthened. A maximum likelihood procedure may be used to dynamically modify the stimulus presentation, and / or, to assess the participant's performance in the exercise.

Owner:POSIT SCI CORP

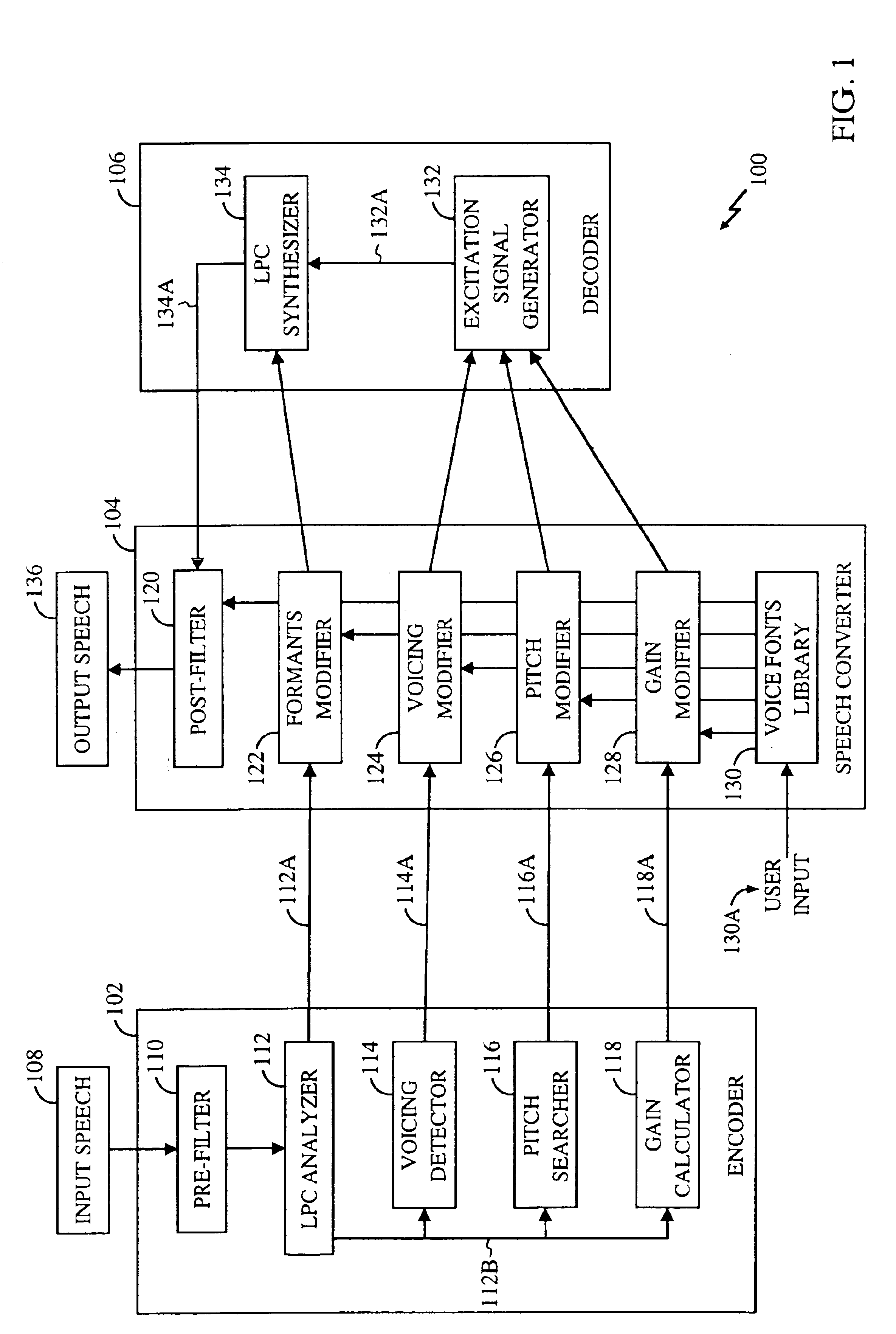

Speech converter utilizing preprogrammed voice profiles

A speech processing system modifies various aspects of input speech according to a user-selected one of various preprogrammed voice fonts. Initially, the speech converter receives a formants signal representing an input speech signal and a pitch signal representing the input signal's fundamental frequency. One or both of the following may also be received: a voicing signal comprising an indication of whether the input speech signal is voiced, unvoiced, or mixed, and / or a gain signal representing the input speech signal's energy. The speech converter also receives user selection of one of multiple preprogrammed voice fonts, each specifying a manner of modifying one or more of the received signals (i.e., formants, voicing, pitch, gain). The speech converter modifies at least one of the formants, voicing, pitch, and / or gain signals as specified by the selected voice font.

Owner:QUALCOMM INC

Method for improving speaker identification by determining usable speech

InactiveUS20050027528A1Enhance a target speakerPrevent degradationSpeech recognitionFormantDependability

Method for improving speaker identification by determining usable speech. Degraded speech is preprocessed in a speaker identification (SID) process to produce SID usable and SID unusable segments. Features are extracted and analyzed so as to produce a matrix of optimum classifiers for the detection of SID usable and SID unusable speech segments. Optimum classifiers possess a minimum distance from a speaker model. A decision tree based upon fixed thresholds indicates the presence of a speech feature in a given speech segment. Following preprocessing, degraded speech is measured in one or more time, frequency, cepstral or SID usable / unusable domains. The results of the measurements are multiplied by a weighting factor whose value is proportional to the reliability of the corresponding time, frequency, or cepstral measurements performed. The measurements are fused as information, and usable speech segments are extracted for further processing. Such further processing of co-channel speech may include speaker identification where a segment-by-segment decision is made on each usable speech segment to determine whether they correspond to speaker #1 or speaker #2. Further processing of co-channel speech may also include constructing the complete utterance of speaker #1 or speaker #2. Speech features such as pitch and formants may be extended back into the unusable segments to form a complete utterance from each speaker.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

Method for determining system time delay in acoustic echo cancellation and acoustic echo cancellation method

The invention relates to a method for determining system time delay in acoustic echo cancellation and an acoustic echo cancellation method employing the method for determining system time delay in acoustic echo cancellation. The method for determining system time delay in acoustic echo cancellation comprises the following steps: overlapping, segmenting and windowing collected original signals and reference signals respectively, and transforming the collected original signals and reference signals respectively into frequency-domain signals through quick Fourier transformation to obtain original frequency-domain signals and reference frequency-domain signals; finding out frequency values corresponding to n peak values with the highest energy in the segmented original frequency-domain signals and reference frequency-domain signals, wherein the frequency values are formant characteristic values; next, sequentially moving the formant frequency sequences of the reference frequency-domain signals forwards by an integral multiple of segmentation time t1, and correspondingly comparing two formant characteristic values respectively, wherein the time delay of forward moving with the most similarities is the system time delay in acoustic echo cancellation. Compared with the prior art, the method can determine dynamic and extra-large system time delay only with a very small amount of calculation, and has a wide application range, a small amount of calculation and a stable effect.

Owner:宁波菊风系统软件有限公司

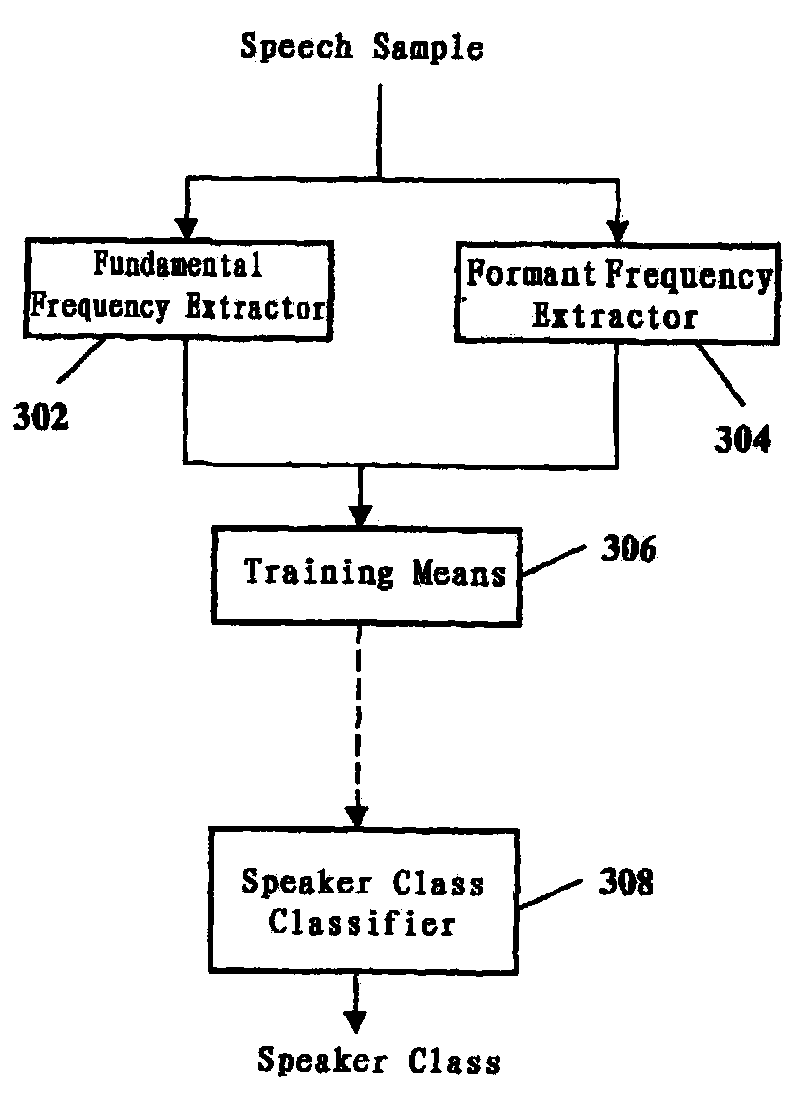

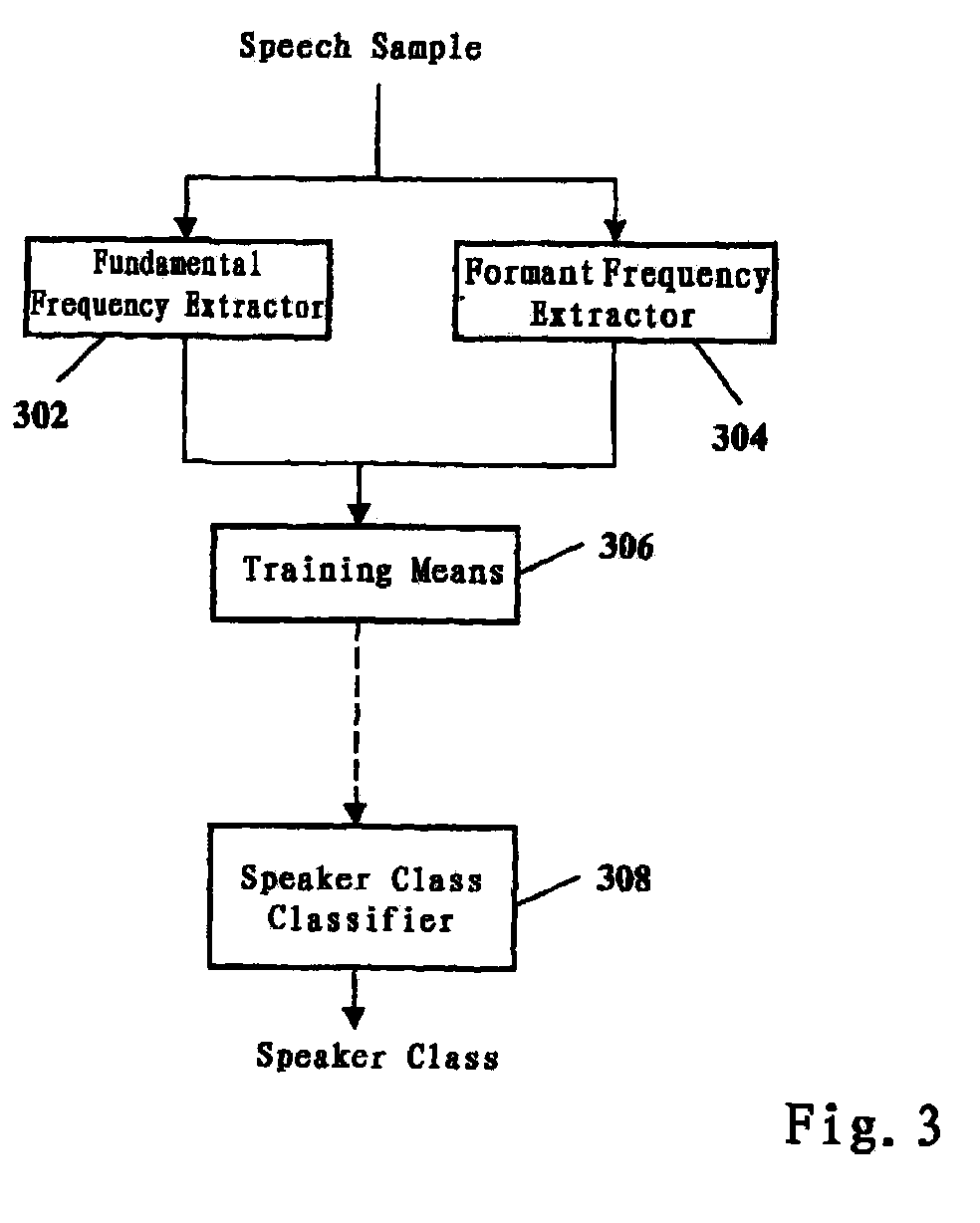

Method and apparatus for processing speech data

A method for processing speech data includes obtaining a pitch and at least one formant frequency for each of a plurality of first speech data; constructing a first feature space with the obtained fundamental frequencies and formant frequencies as features; and classifying the plurality pieces of first speech data using the first feature space, and thus a plurality of speech data classes and the corresponding description are obtained.

Owner:NUANCE COMM INC

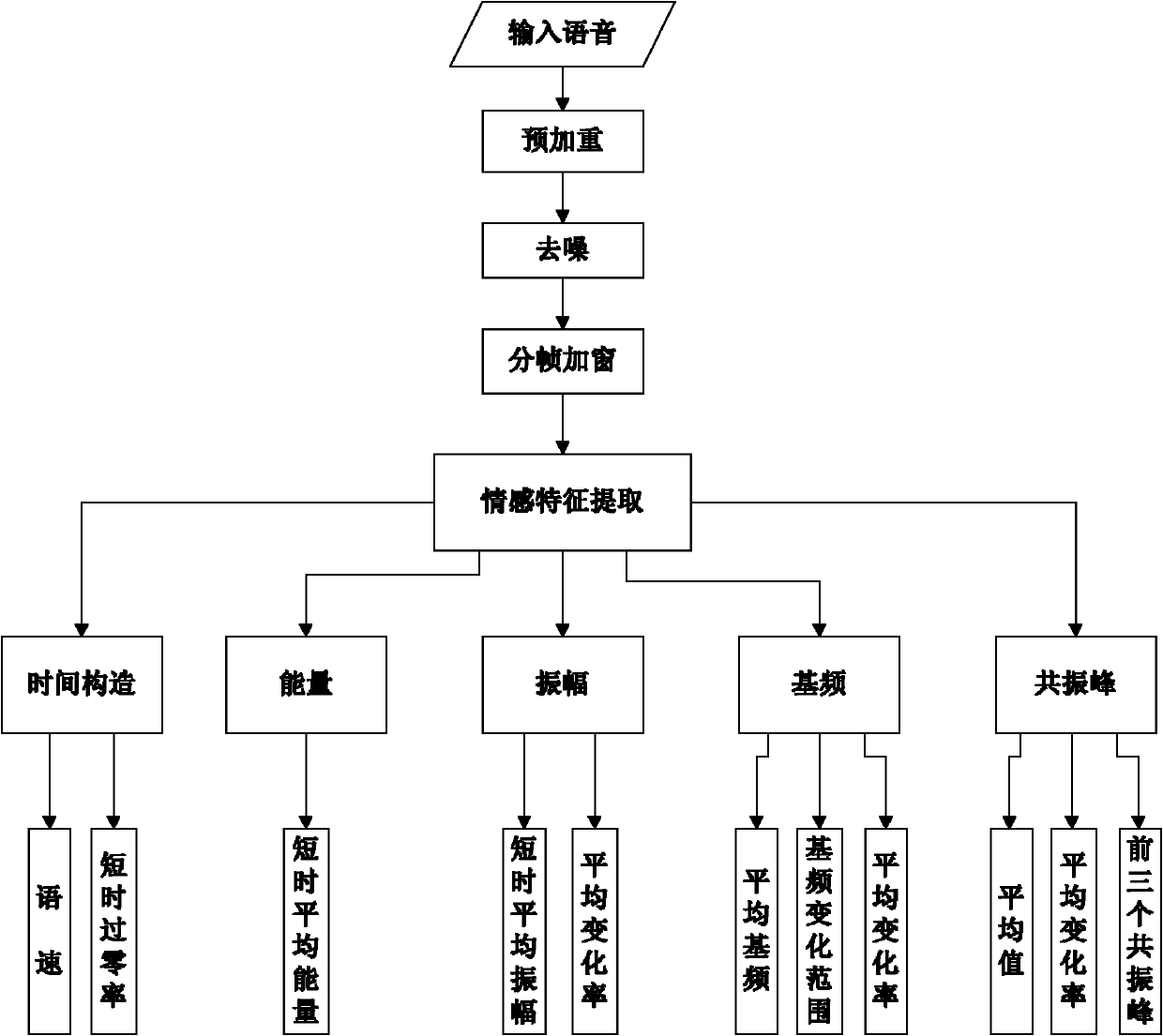

Speech emotion recognizing method based on hidden Markov model (HMM) / self-organizing feature map neural network (SOFMNN) hybrid model

ActiveCN102890930AMake up for the lack of access to timing informationOvercoming overlapping issuesSpeech recognitionFeature extractionHide markov model

The invention relates to a speech emotion recognizing method based on a hidden Markov model (HMM) / self-organizing feature map neural network (SOFMNN) hybrid model. The speech emotion is recognized by combining an HMM model and an SOFMNN model through the method. The speech emotion recognizing method specifically comprises the following steps of: 1) establishing an emotion speech data base; 2) carrying out speech signal pretreatments including weighting treatment, denoising and framing windowing; 3) extracting the speech emotion characteristics including the extraction of time, energy, amplitude, fundamental frequency and formant of speech emotion signals; and 4) training and recognizing by using the HMM / SOFMNN hybrid model. Compared with the prior art, the invention overcomes the problem that the HMM is difficult to solve the problem of mutual overlapping among model categories by self and makes up the shortage in the aspect of obtaining timing information by SOFMNN, so that the speech emotion recognizing rate is improved.

Owner:SHANGHAI SHANGDA HAIRUN INFORMATION SYST

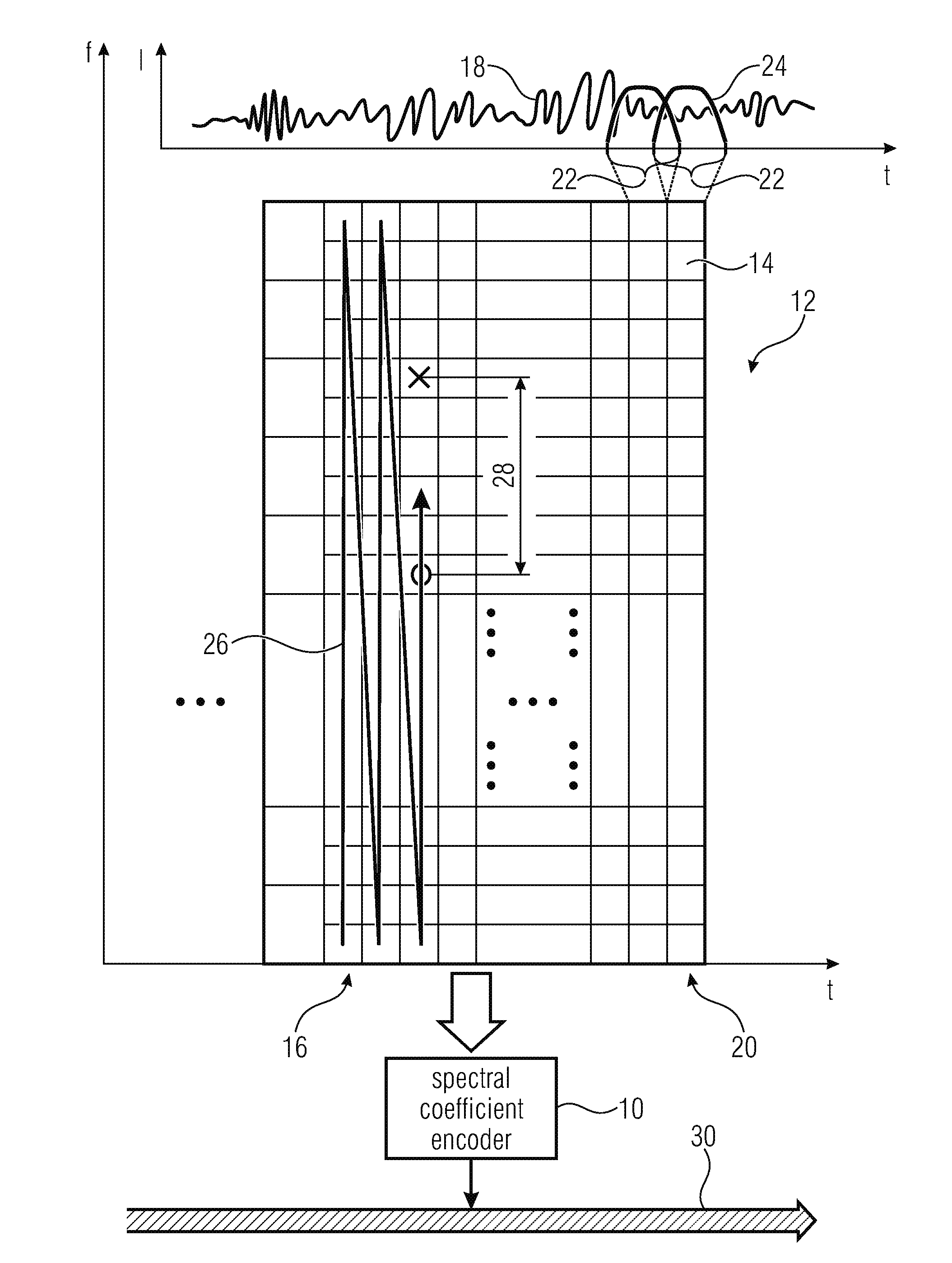

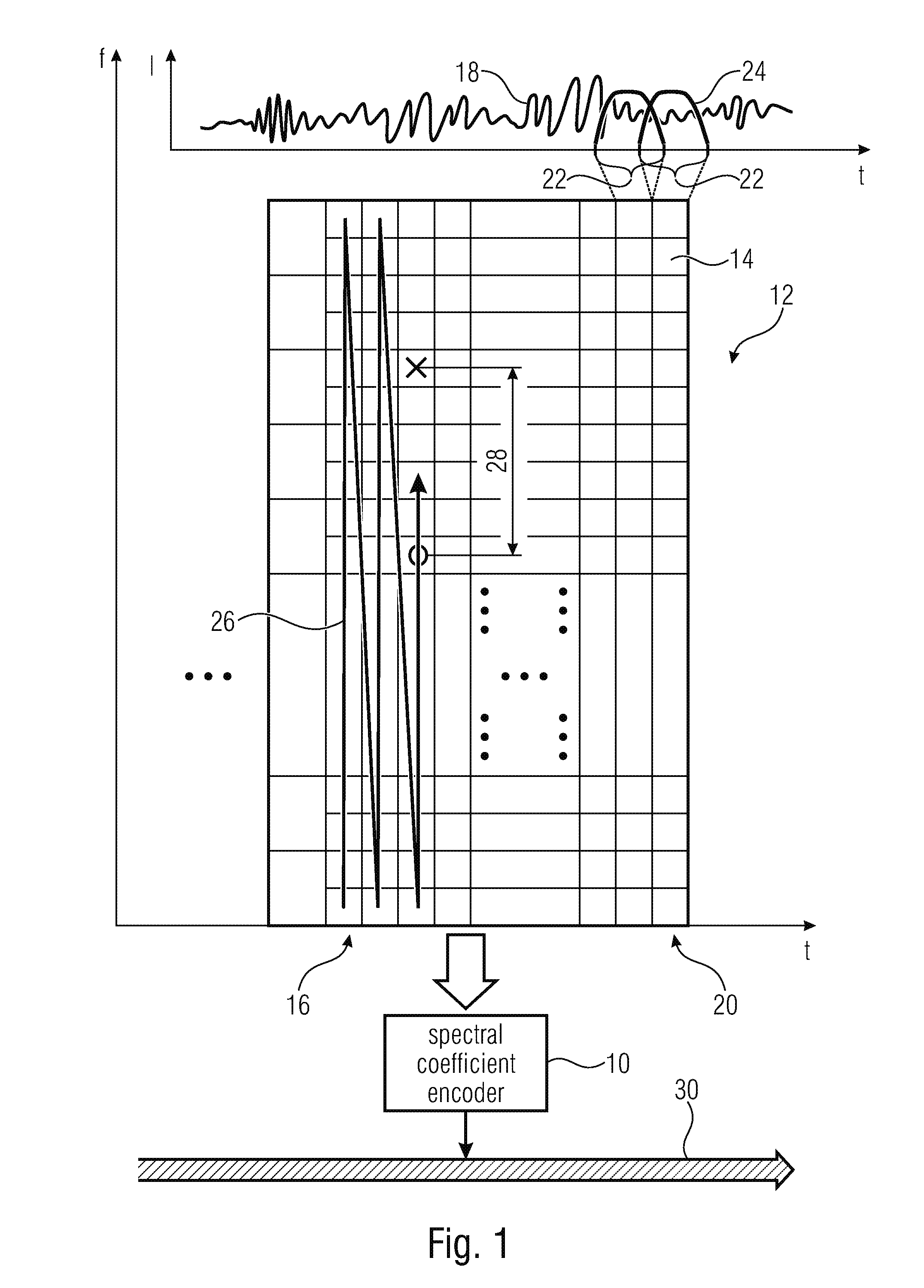

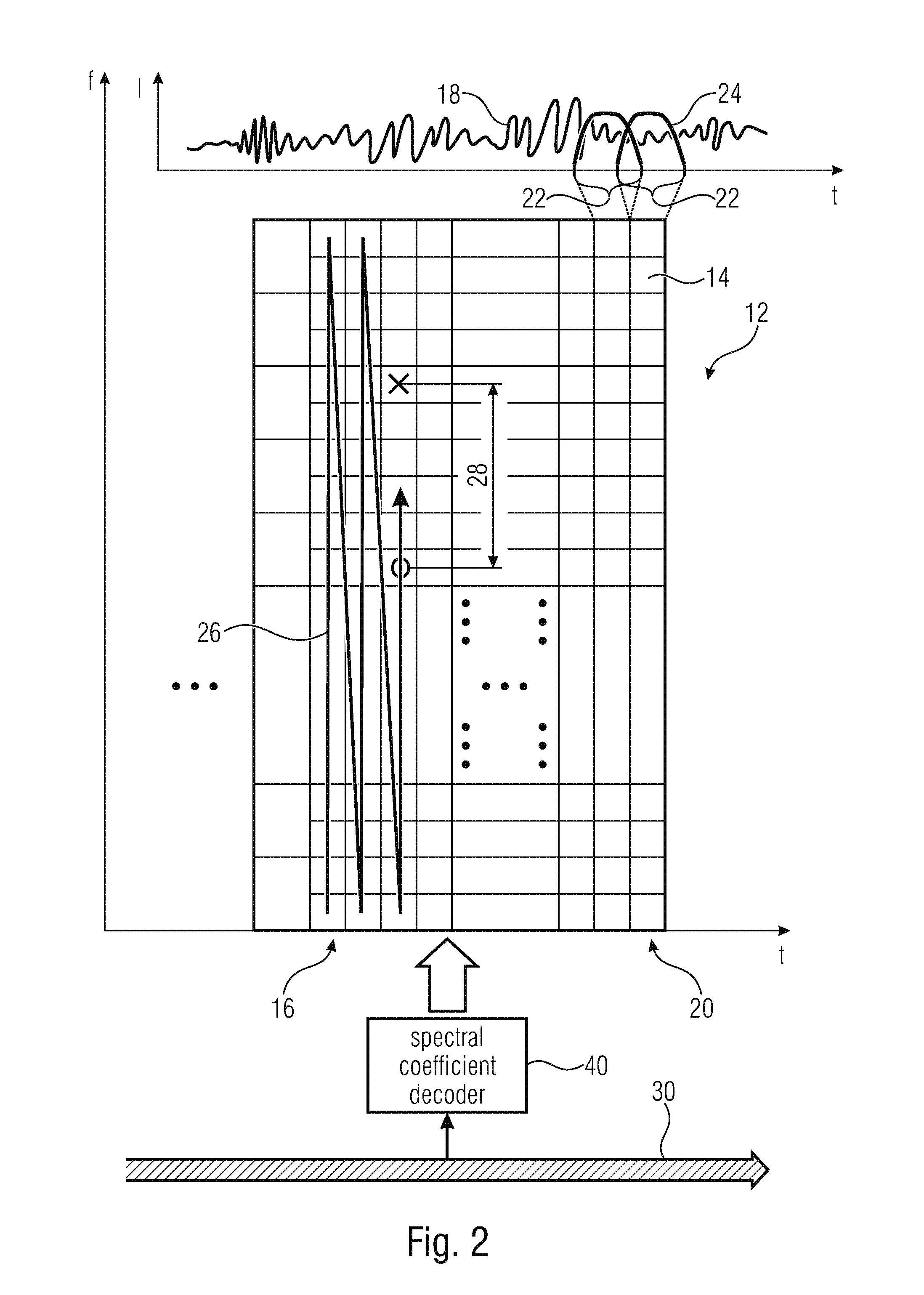

Coding of spectral coefficients of a spectrum of an audio signal

ActiveUS20160307576A1Enhancing entropy coding efficiencyImprove coding efficiencySpeech analysisCode conversionFrequency spectrumHarmonic

A coding efficiency of coding spectral coefficients of a spectrum of an audio signal is increased by en / decoding a currently to be en / decoded spectral coefficient by entropy en / decoding and, in doing so, performing the entropy en / decoding depending, in a context-adaptive manner, on a previously en / decoded spectral coefficient, while adjusting a relative spectral distance between the previously en / decoded spectral coefficient and the currently en / decoded spectral coefficient depending on an information concerning a shape of the spectrum. The information concerning the shape of the spectrum may have a measure of a pitch or periodicity of the audio signal, a measure of an inter-harmonic distance of the audio signal's spectrum and / or relative locations of formants and / or valleys of a spectral envelope of the spectrum, and on the basis of this knowledge, the spectral neighborhood which is exploited in order to form the context of the currently to be en / decoded spectral coefficients may be adapted to the thus determined shape of the spectrum, thereby enhancing the entropy coding efficiency.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

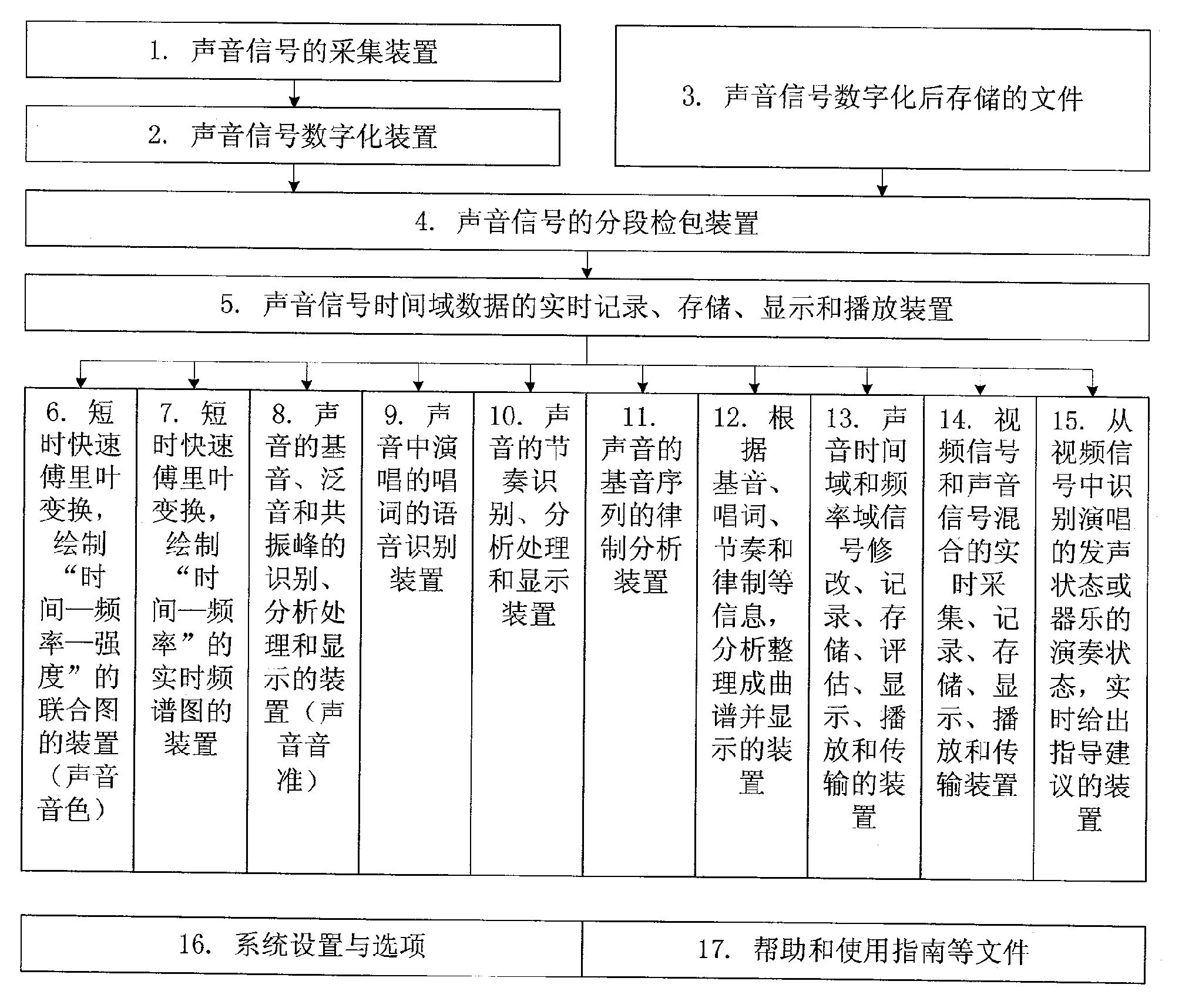

Computer real-time analysis system for singing and playing

The invention relates to a computer real-time analysis system for singing and playing, comprising a segmental package-inspection device of an acoustical signal, a real-time recording, storing, displaying and playing device of acoustical signal time-domain data, a device for drawing time-frequency-intensity joint picture with fast Fourier transform for a short time, a device for drawing time-frequency real-time spectrum with fast Fourier transform for a short time, a device for recognizing fundamental tone, harmonic tone and resonance peak of sound, analysis processing and displaying, a devicefor rhythm recognition of the sound, analysis processing and displaying, a device for analyzing and sorting-out into an opern and displaying according to the information such as fundamental tone, libretto, rhythm and tuning systems; and a device for modifying, recording, storing, evaluating, displaying, playing and transmitting an acoustical time-domain and frequency-domain signal. The system carries out computer real-time visualization and systematical and quantitative measurement and test to vocal music and instrumental music, and sing state and playing state can be recognized and guided byadopting the computer image and video automatic recognition technology.

Owner:李宋

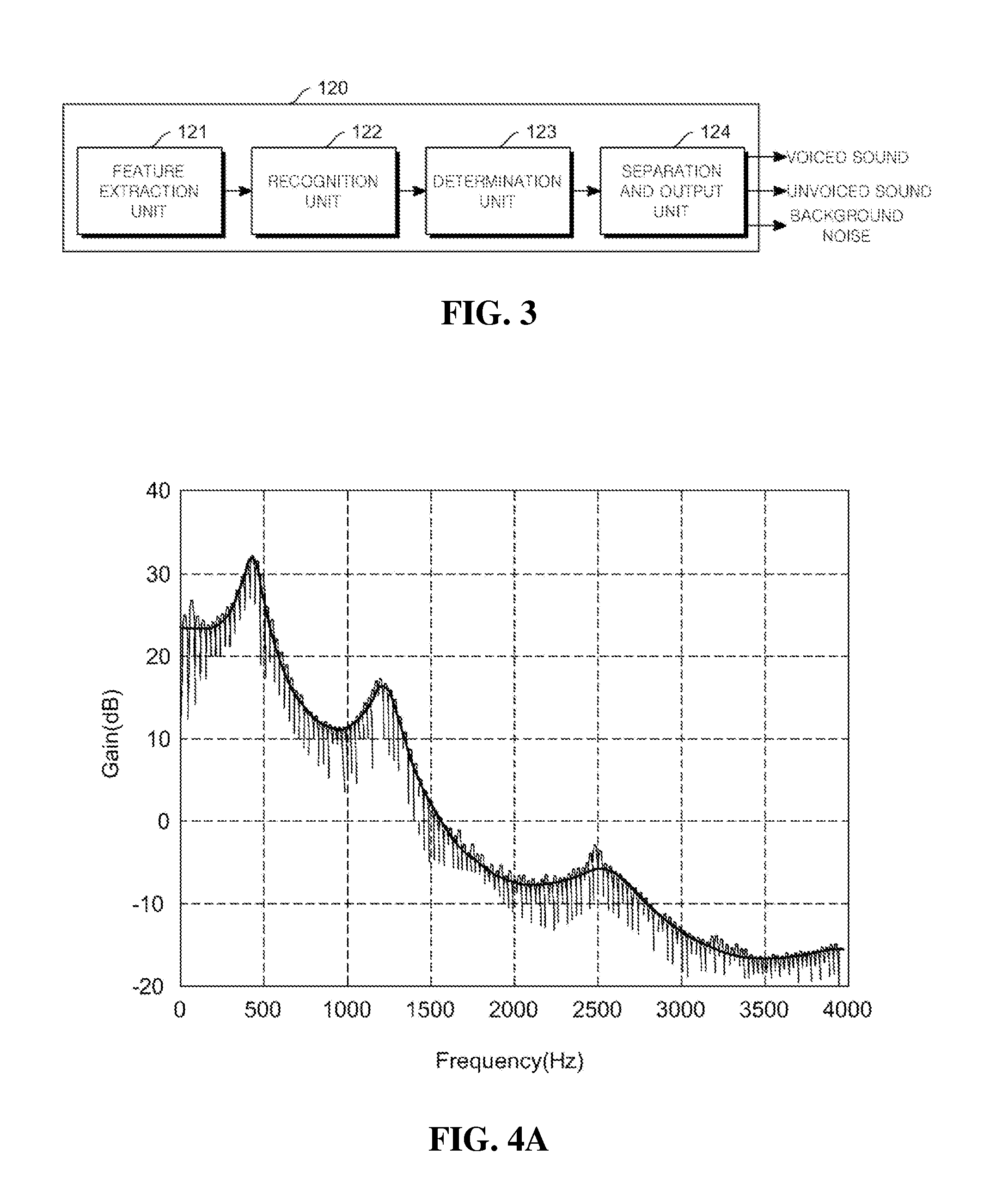

Methods and apparatus for formant-based voice systems

In one aspect, a method of processing a voice signal to extract information to facilitate training a speech synthesis model is provided. The method comprises acts of detecting a plurality of candidate features in the voice signal, performing at least one comparison between one or more combinations of the plurality of candidate features and the voice signal, and selecting a set of features from the plurality of candidate features based, at least in part, on the at least one comparison. In another aspect, the method is performed by executing a program encoded on a computer readable medium. In another aspect, a speech synthesis model is provided by, at least in part, performing the method.

Owner:CERENCE OPERATING CO

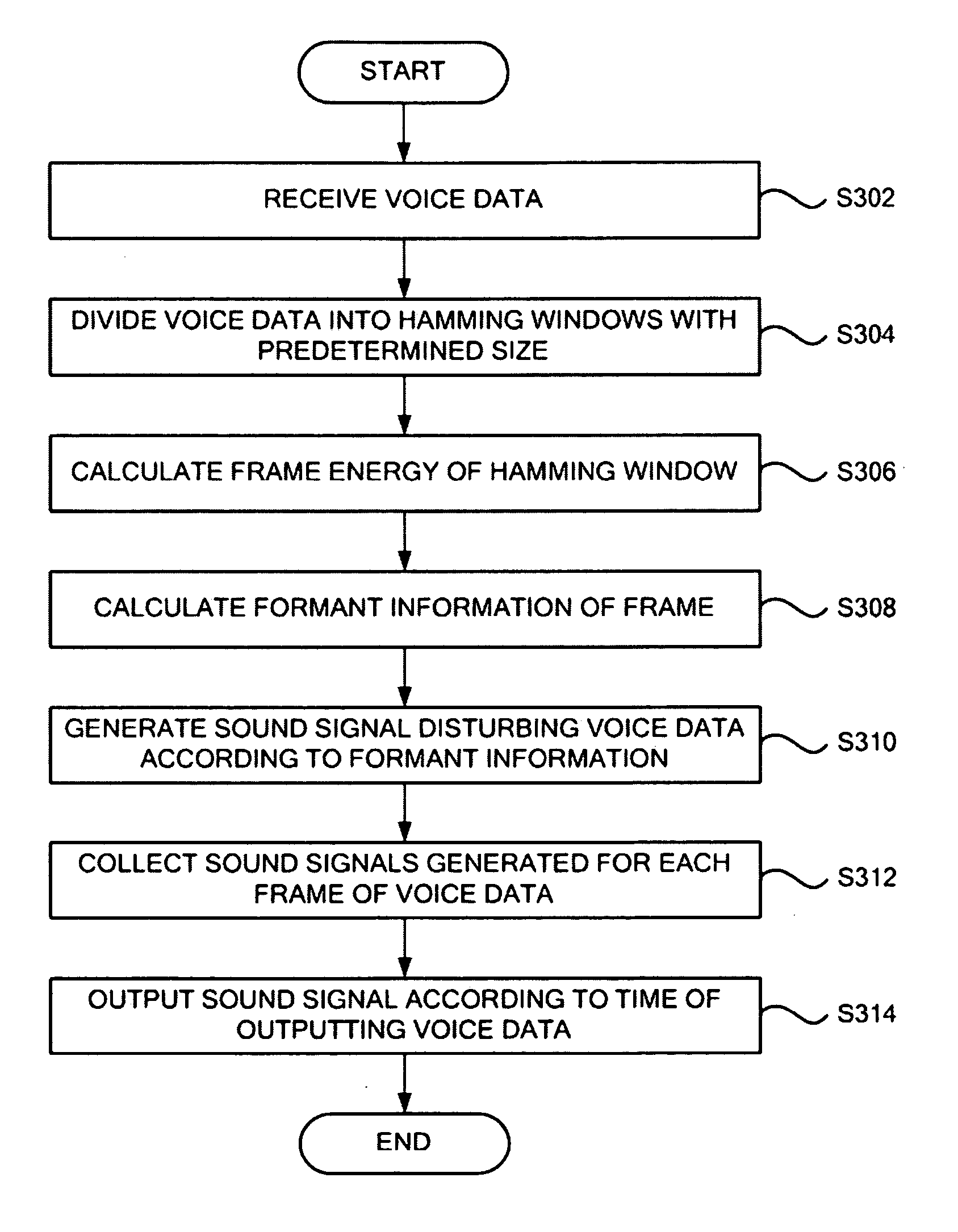

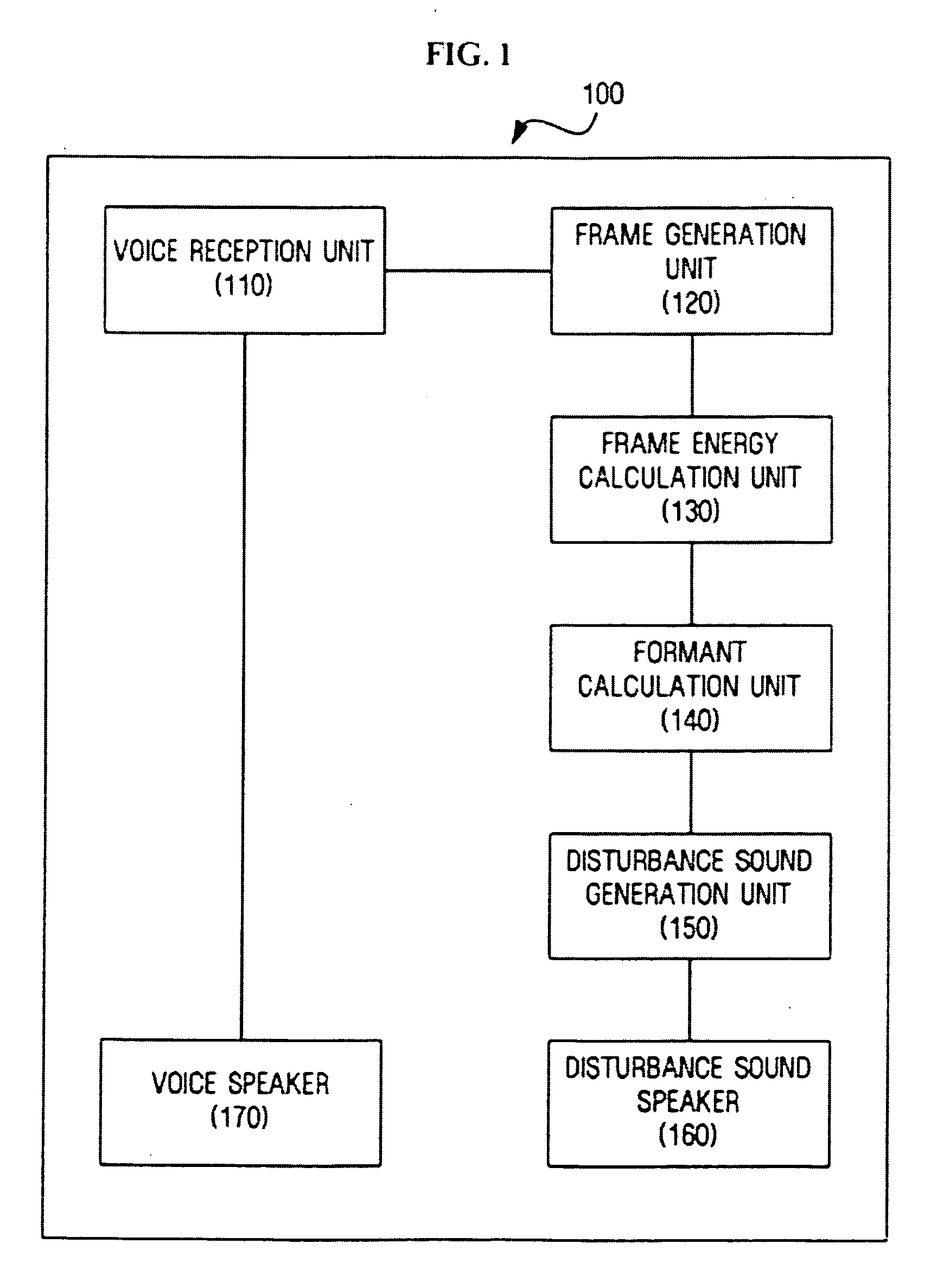

Method, medium, and system masking audio signals using voice formant information

InactiveUS20070055513A1Maintain privacyInhibitory contentEavesdropping prevention circuitsSecret communicationFormantA domain

A method, medium, and system for masking voice information of a communication device. The method of masking a user's voice through an output of a masking signal similar to a formant of voice data may include dividing the voice data received into frames of a predetermined size, transforming the frames on a frequency axis thereof, regarded as a domain, obtaining formant information of intensive signal regions in the transformed frames, generating a sound signal disturbing the formant information with reference to the formant information, and outputting the sound signal in accordance with a time point when the voice signal is output.

Owner:SAMSUNG ELECTRONICS CO LTD

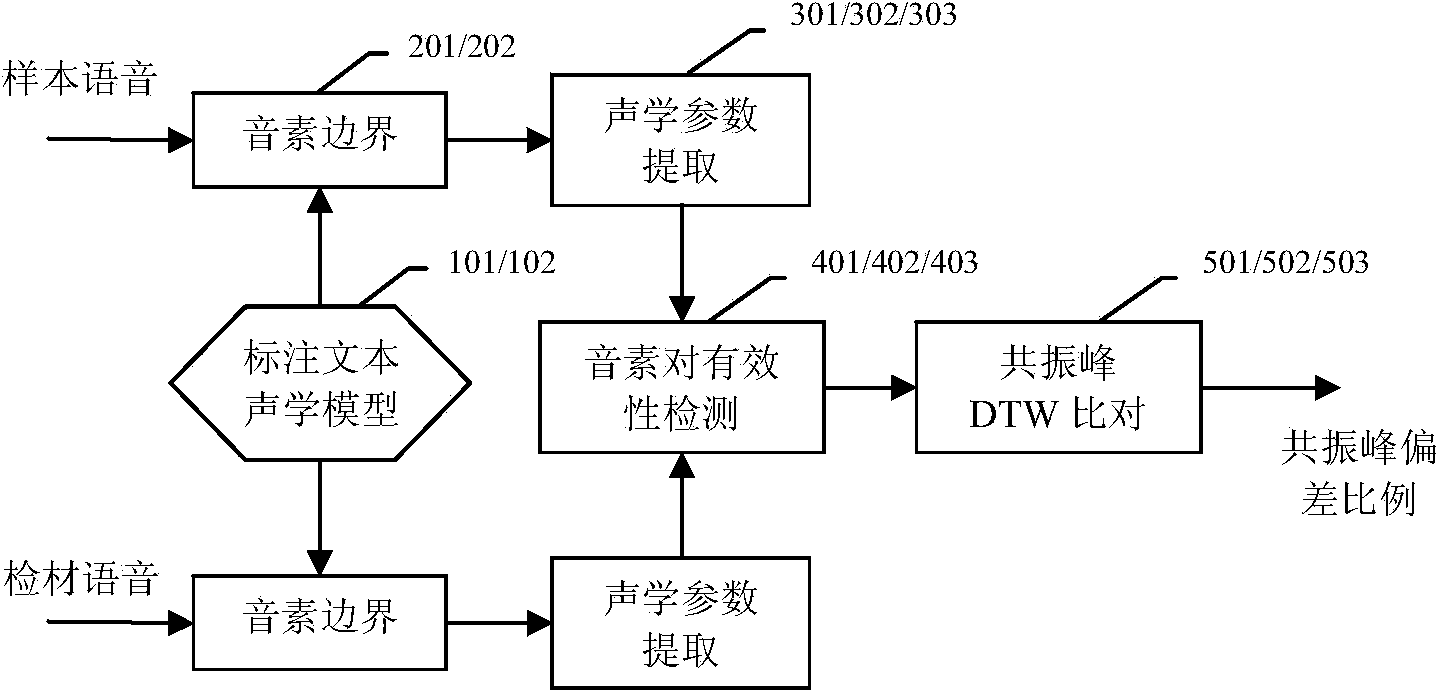

Resonance peak automatic matching method for voiceprint identification

ActiveCN103714826AHigh precisionImprove processing efficiencySpeech recognitionPeak alignmentResonance

The invention provides a resonance peak automatic matching method for voiceprint identification. The method comprises the following steps that: phoneme boundary positions in an inspection material and a sample in the voiceprint identification can be automatically marked through using continuous speech recognition-based forced alignment (FA) technology; as for identical vowel phoneme segments of the inspection material and the sample, whether a current phoneme is a valid analysable phoneme is automatically judged through using fundamental frequencies, resonance peaks and power spectrum density parameters; and deviation ratios of corresponding resonance peak time-frequency areas can be automatically rendered through using a dynamic time warping (DTW) algorithm and are adopted as analysis basis of final manual voiceprint identification. With the resonance peak automatic matching method for the voiceprint identification of the invention adopted, the boundaries of phonemes can be automatically marked, and whether the pronunciation of the phonemes is valid is judged, and therefore, processing efficiency can be greatly improved; and at the same time, an automatic resonance peak deviation alignment algorithm is performed on effective phoneme pairs, and therefore, the accuracy of resonance peak alignment can be improved.

Owner:ANHUI IFLYTEK INTELLIGENT SYST

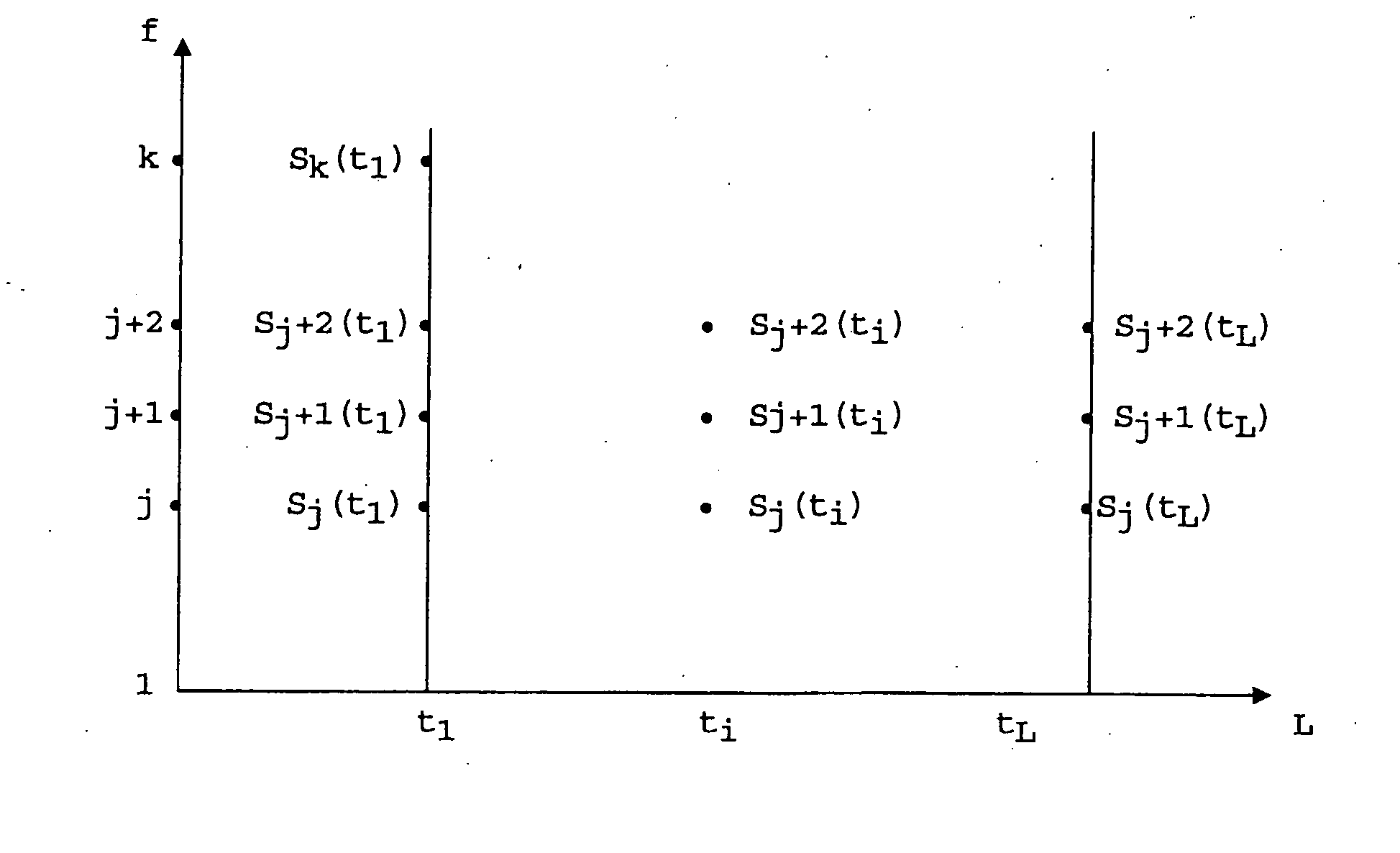

Method for identifying specific sounds

InactiveUS20050004797A1Reduce the amplitudeMinimum distanceSpeech analysisBurglar alarm mechanical vibrations actuationFrequency spectrumSlide window

A method of automated identification of specific sounds in a noise environment, comprising the steps of: a) continuously recording the noise environment, b) forming a spectral image of the sound recorded in a time / frequency coordinate system, c) analyzing time-sliding windows of the spectral image, d) selecting a family of filters, each of which defines a frequency band and an energy band, e) applying each of the filters to each of the sliding windows, and identifying connected components or formants, which are window fragments formed of neighboring points of close frequencies and powers, f) calculating descriptors of each formant, and g) calculating a distance between two formants by comparing the descriptors of the first formant with those of the second formant.

Owner:MIRIAD TECH

Automatic evaluation of spoken fluency

A procedure to automatically evaluate the spoken fluency of a speaker by prompting the speaker to talk on a given topic, recording the speaker's speech to get a recorded sample of speech, and then analyzing the patterns of disfluencies in the speech to compute a numerical score to quantify the spoken fluency skills of the speakers. The numerical fluency score accounts for various prosodic and lexical features, including formant-based filled-pause detection, closely-occurring exact and inexact repeat N-grams, normalized average distance between consecutive occurrences of N-grams. The lexical features and prosodic features are combined to classify the speaker with a C-class classification and develop a rating for the speaker.

Owner:MICROSOFT TECH LICENSING LLC

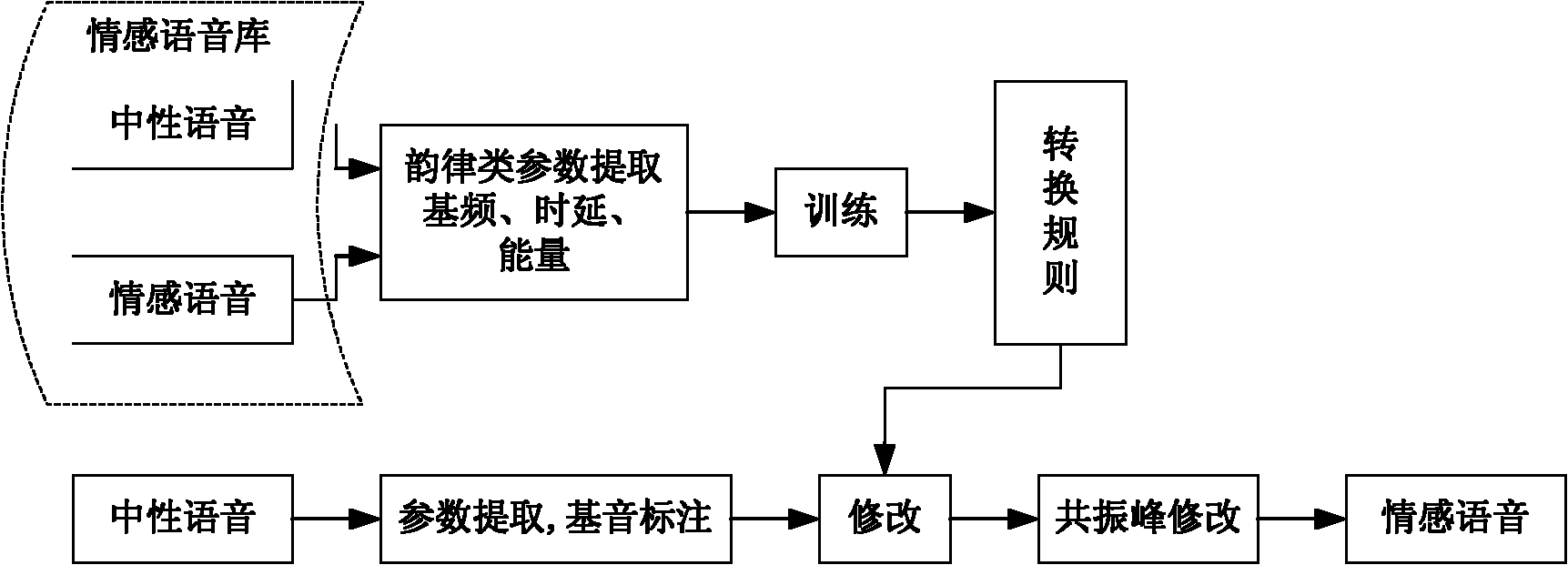

Method for converting emotional speech by combining rhythm parameters with tone parameters

InactiveCN102184731AThe modification effect is goodEmotional Voice NaturalSpeech recognitionSpeech synthesisFundamental frequencyFormant

The invention discloses a method for converting emotional speech by combining rhythm parameters (fundamental frequency, time length and energy) with a tone parameter (a formant), which mainly comprises the following steps of: 1, carrying out extraction and analysis of feature parameters on a Beihang University emotional speech database (BHUDES) emotional speech sample (containing neutral speech and four types of emotional speech of sadness, anger, happiness and surprise); 2, making an emotional speech conversion rule and defining each conversion constant according to the extracted feature parameters; 3, carrying out extraction of the feature parameters and fundamental tone synchronous tagging on the neutral speech to be converted; 4, setting each conversion constant according to the emotional speech conversion rule in the step 2, modifying a fundamental frequency curve, the time length and the energy and synchronously overlaying fundamental tones to synthesize a speech signal; and 5, carrying out linear predictive coding (LPC) analysis on the speech signal in the step 4 and modifying the formant by a pole of a transfer function so as to finally obtain the emotional speech rich in expressive force.

Owner:BEIHANG UNIV

Method and apparatus of increasing speech intelligibility in noisy environments

A method (400, 600, 700) and apparatus (220) for enhancing the intelligibility of speech emitted into a noisy environment. After filtering (408) ambient noise with a filter (304) that simulates the physical blocking of noise by a at least a part of a voice communication device (102) a frequency dependent SNR of received voice audio relative to ambient noise is computed (424) on a perceptual (e.g. Bark) frequency scale. Formants are identified (426, 600, 700) and the SNR in bands including certain formants are modified (508, 510) with formant enhancement gain factors in order to improve intelligibility. A set of high pass filter gains (338) is combined (516) with the formant enhancement gains factors yielding combined gains which are clipped (518), scaled (520) according to a total SNR, normalized (526), smoothed across time (530) and frequency (532) and used to reconstruct (532, 534) an audio signal.

Owner:GOOGLE TECH HLDG LLC

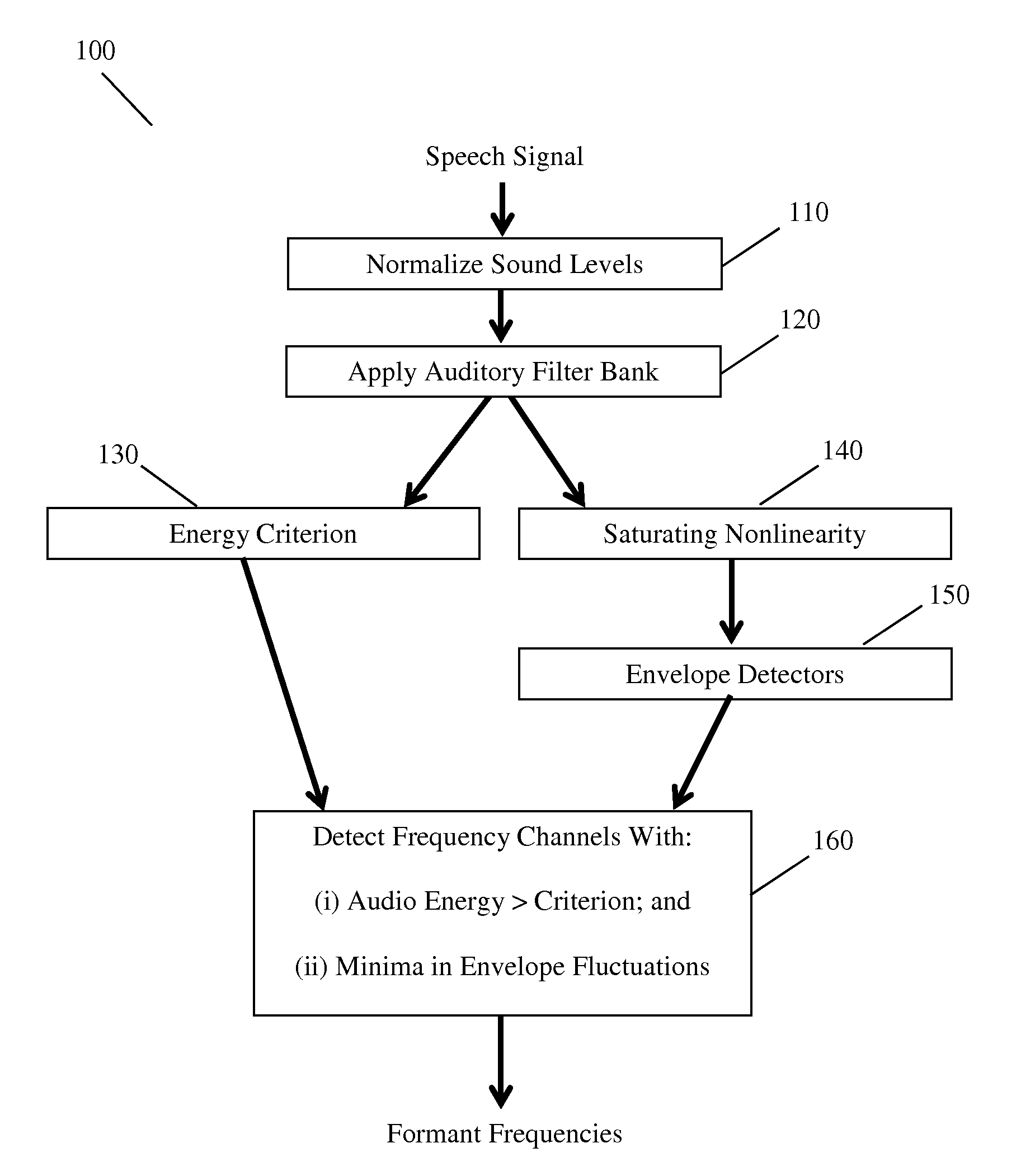

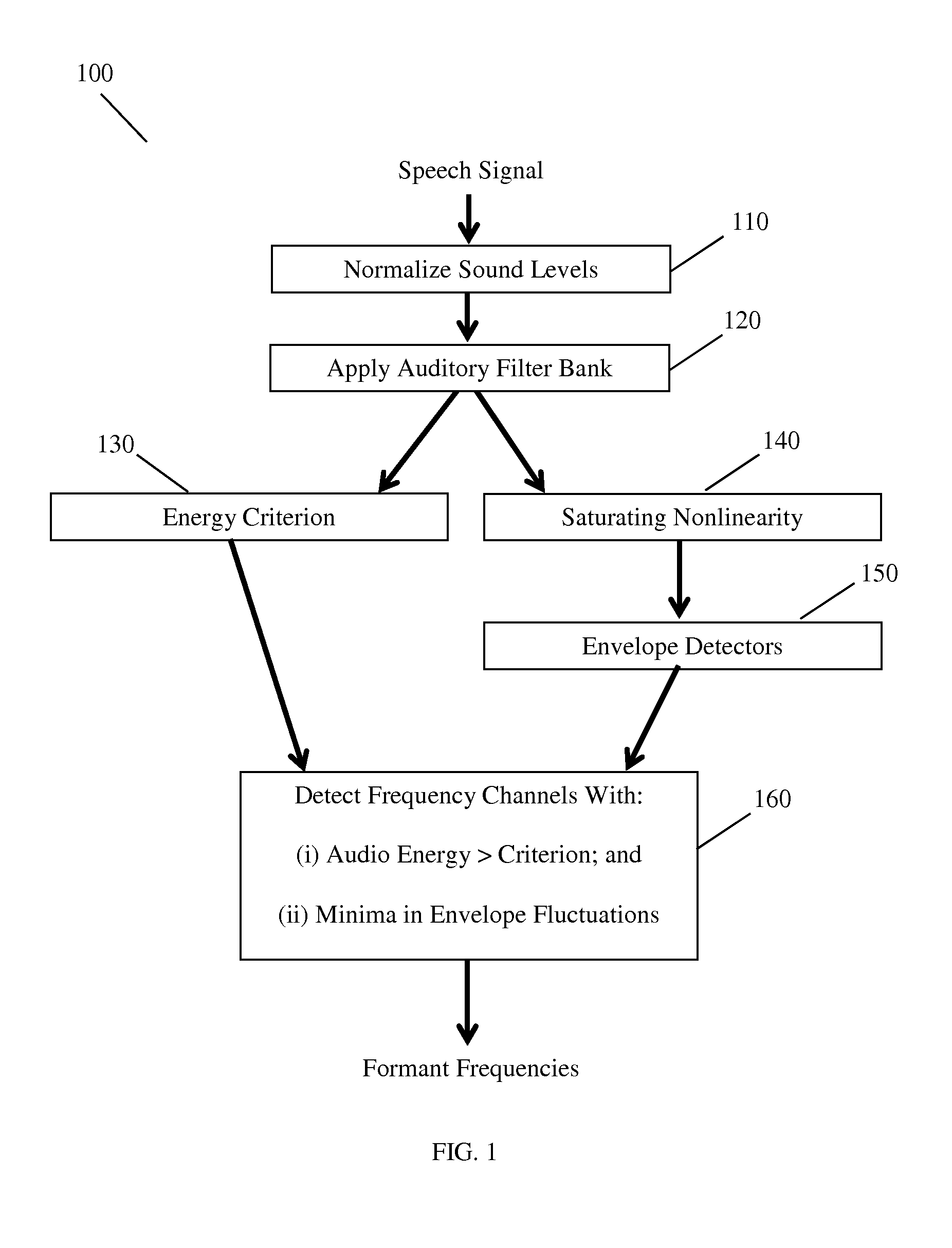

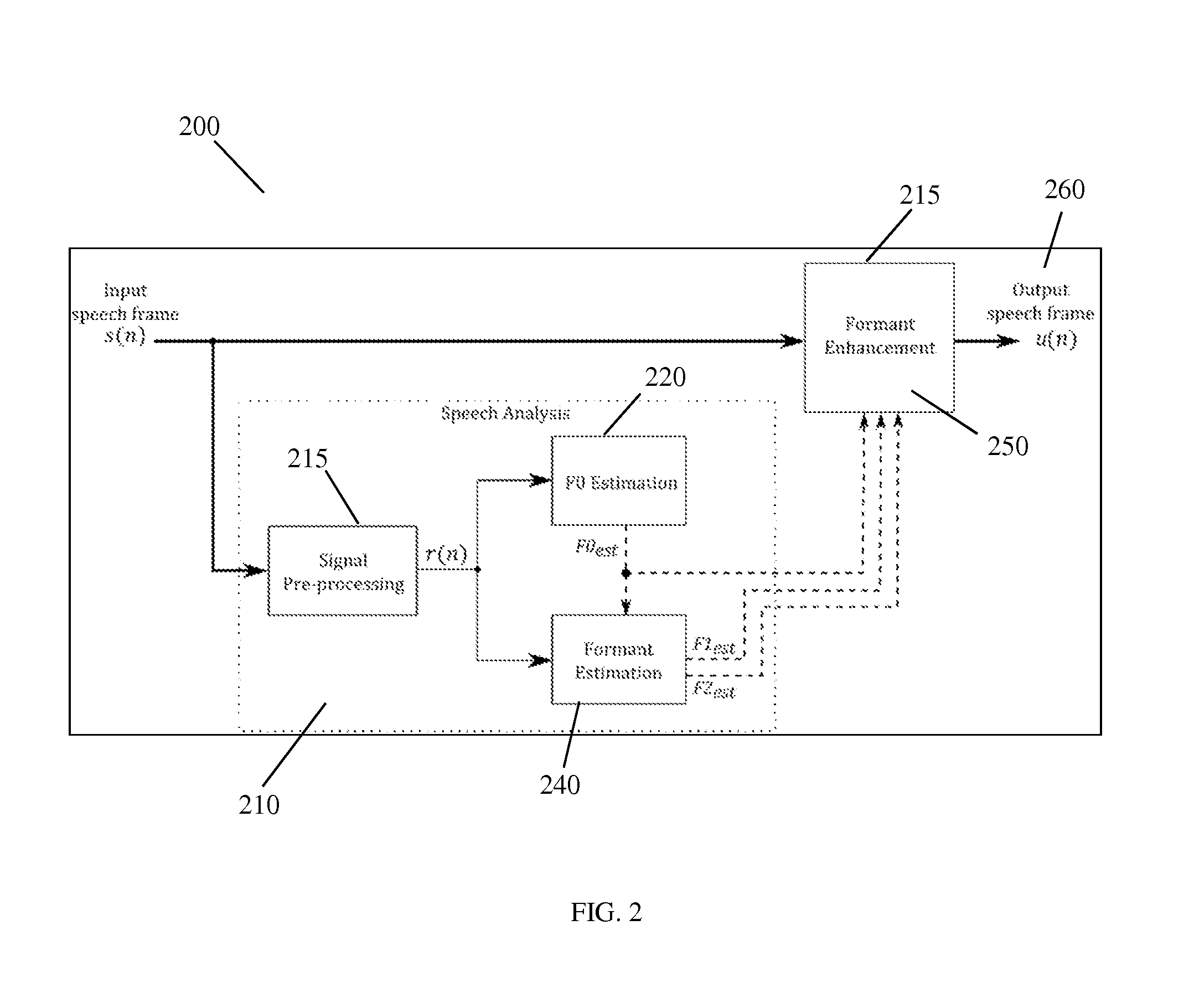

Method for detecting, identifying, and enhancing formant frequencies in voiced speech

InactiveUS20140309992A1Wide tuningImprove discriminationSpeech analysisHarmonicFundamental frequency

Formant frequencies in a voiced speech signal are detected by filtering the speech signal into multiple frequency channels, determining whether each of the frequency channels meets an energy criterion, and determining minima in envelope fluctuations. The identified formant frequencies can then be enhanced by identifying and amplifying the harmonic of the fundamental frequency (F0) closest to the formant frequency.

Owner:UNIVERSITY OF ROCHESTER

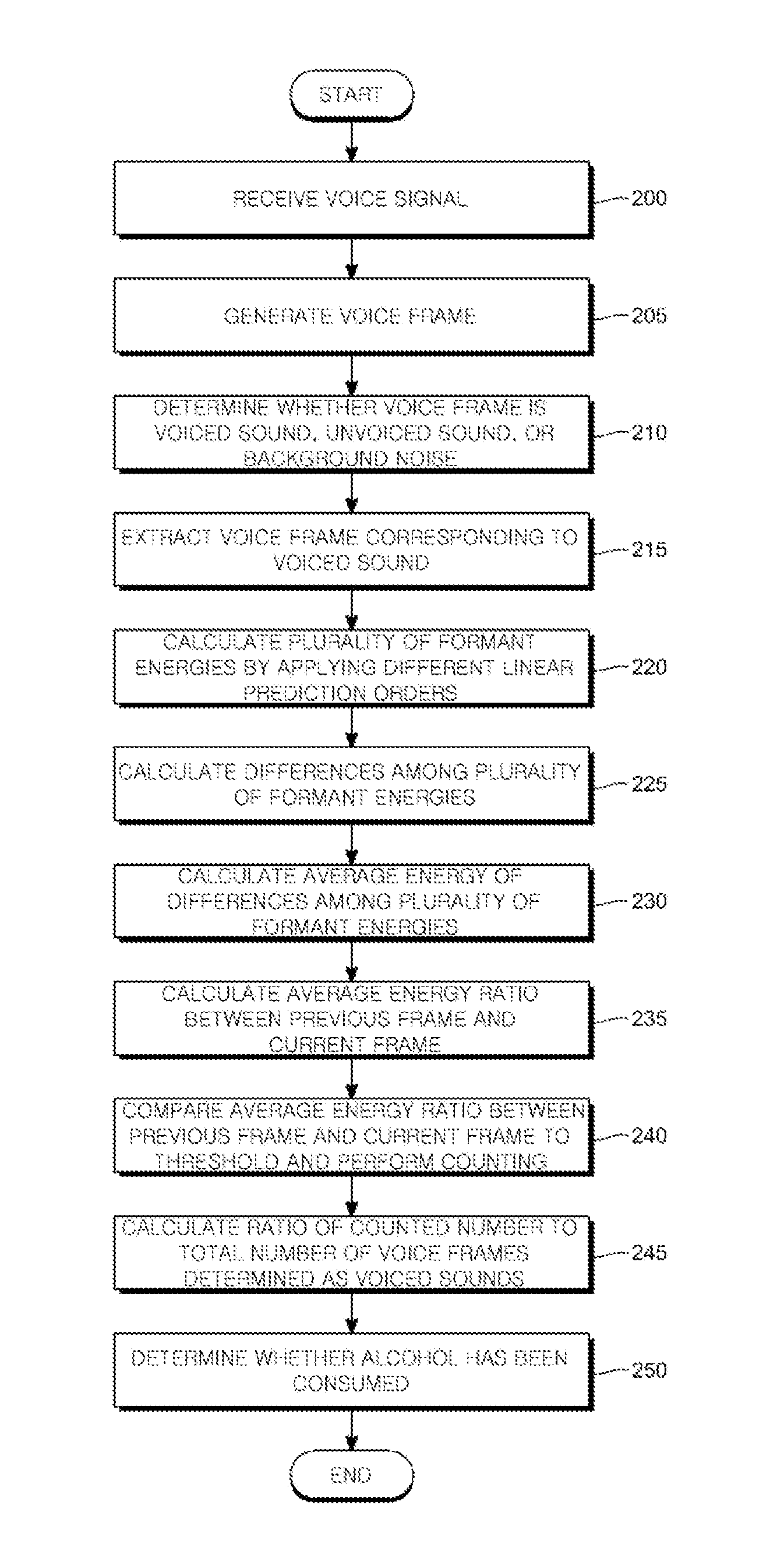

Method for determining alcohol consumption, and recording medium and terminal for carrying out same

Disclosed are a method for determining whether a person is drunk after consuming alcohol on the basis of a difference among a plurality of formant energy energies, which are generated by applying linear predictive coding according to a plurality of linear prediction orders, and a recording medium and a terminal for carrying out the method. The alcohol consumption determining terminal comprises: a voice input unit for receiving voice signals and converting same into voice frames and outputting the voice frames; a voiced / unvoiced sound analysis unit for extracting voice frames corresponding to a voiced sound from among the voice frames; an LPC processing unit for calculating a plurality of formant energy energies by applying linear predictive cording according to the plurality of linear prediction orders to the voice frames corresponding to the voiced sound; and an alcohol consumption determining unit for determining whether a person is drunk after consuming alcohol on the basis of a difference among the plurality of formant energy energies which have been calculated by the LPC processing unit, thereby determining whether a person is drunk after consuming alcohol depending on a change in the formant energy energies generated by applying linear predictive coding according to the plurality of linear prediction orders to voice signals.

Owner:FOUND OF SOONGSIL UNIV IND COOP

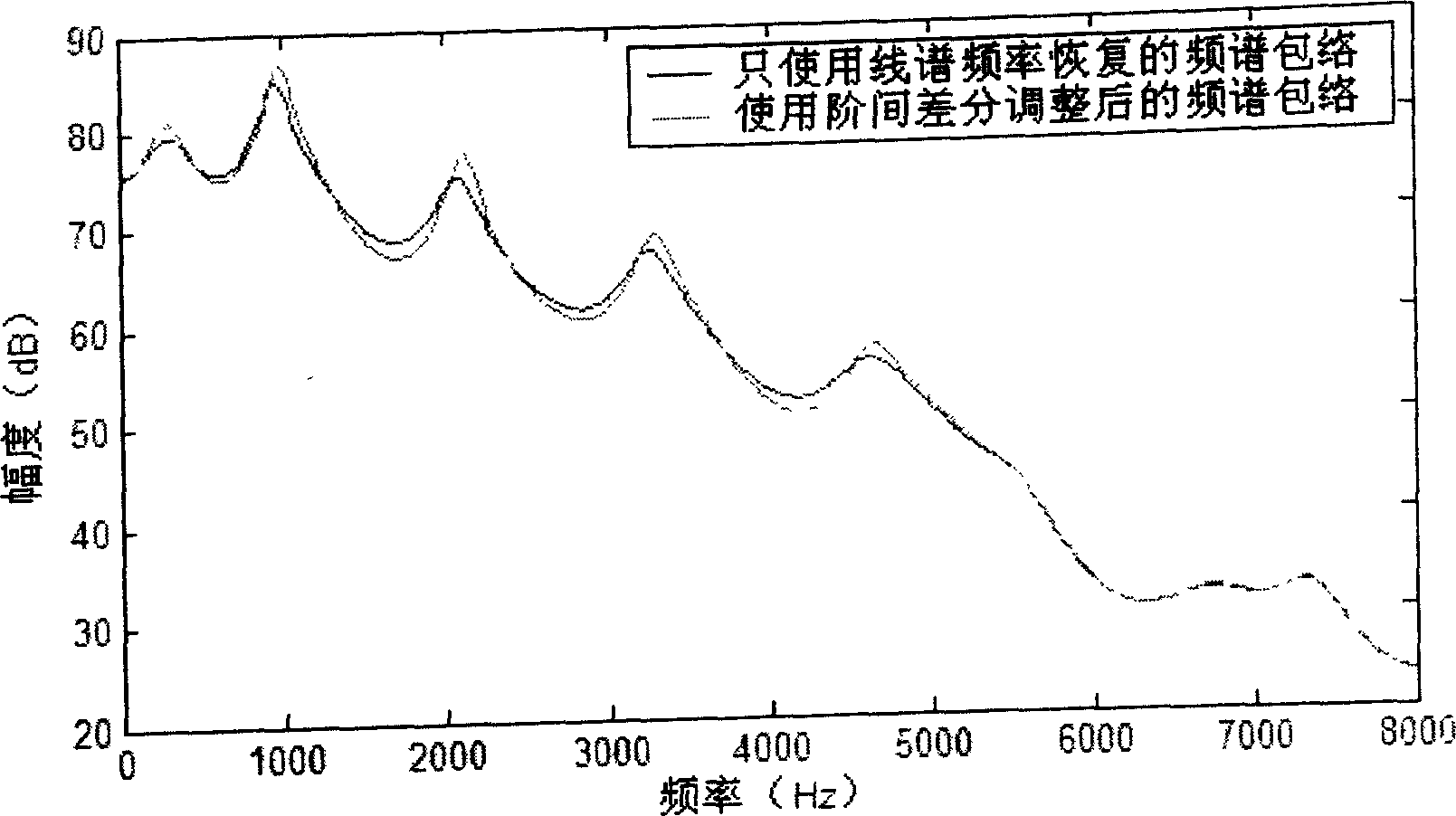

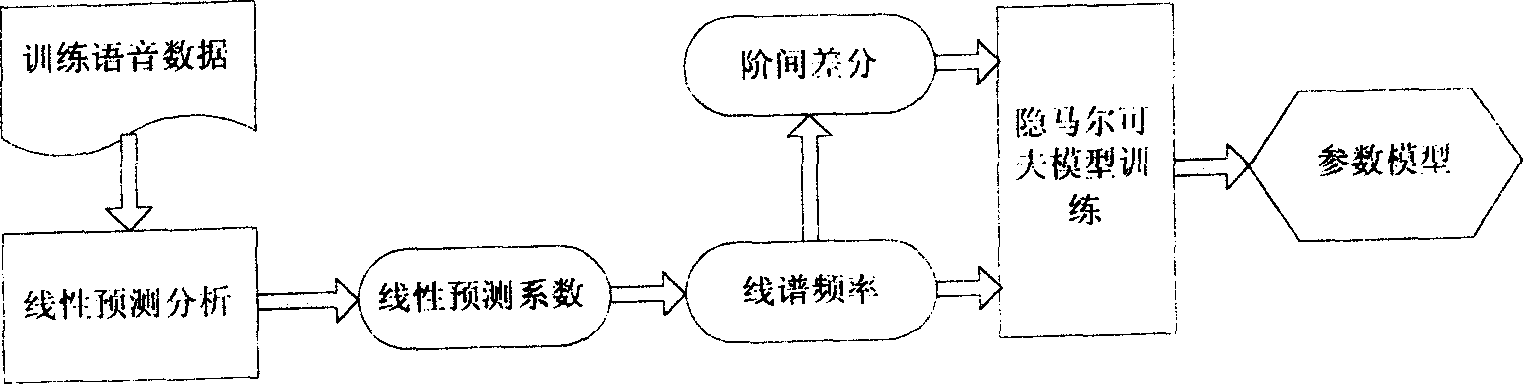

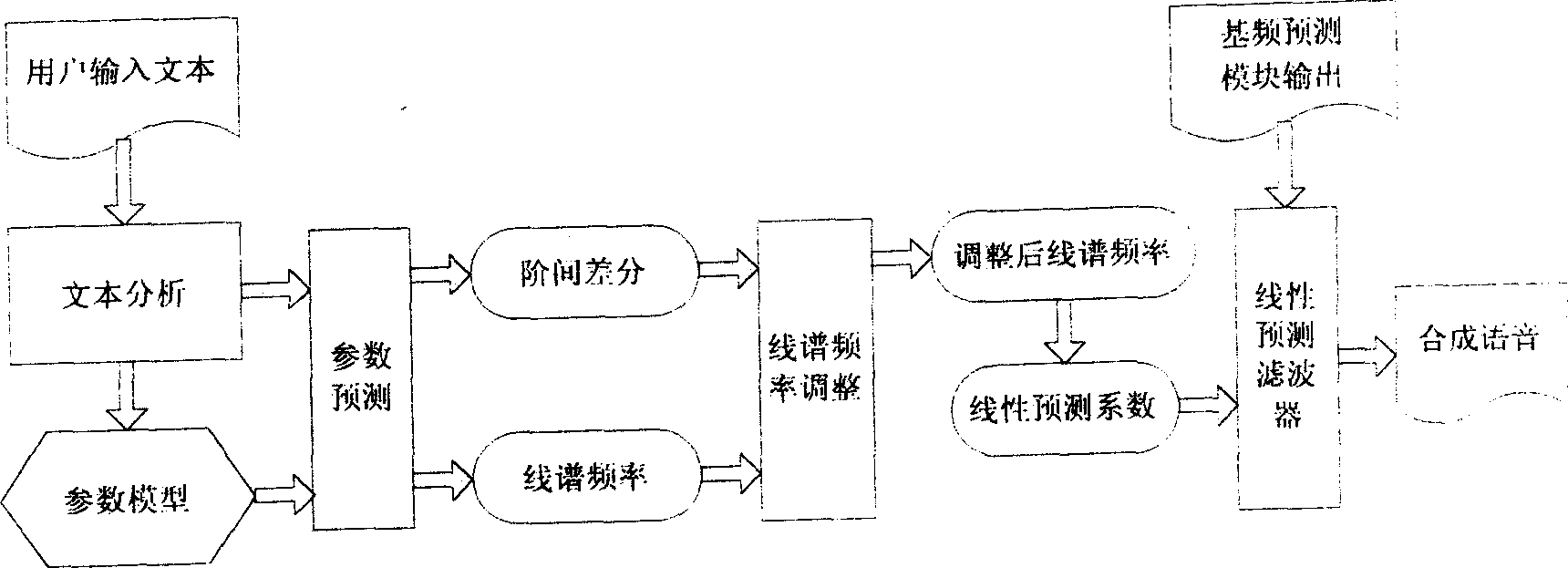

Frequency spectrum modelling and voice reinforcing method based on line spectrum frequency and its interorder differential parameter

The method includes following steps: when picking up parameters of frequency spectrum, the method considers difference between orders in line spectrum frequency as a part of picked up result; when modeling a model and training, carrying out independent modeling and training for line spectrum frequency and parameters of difference between orders; when making prediction, predicting line spectrum frequency and parameters of difference between orders respectively, and moreover carrying out adjustment for parameters of frequency spectrum by using difference between orders; finally, using adjusted parameters of frequency spectrum synthesizes output voice in order to reach purpose of raising tone quality of synthesized voice through enhancing and sharpening formant of synthesized voice.

Owner:IFLYTEK CO LTD

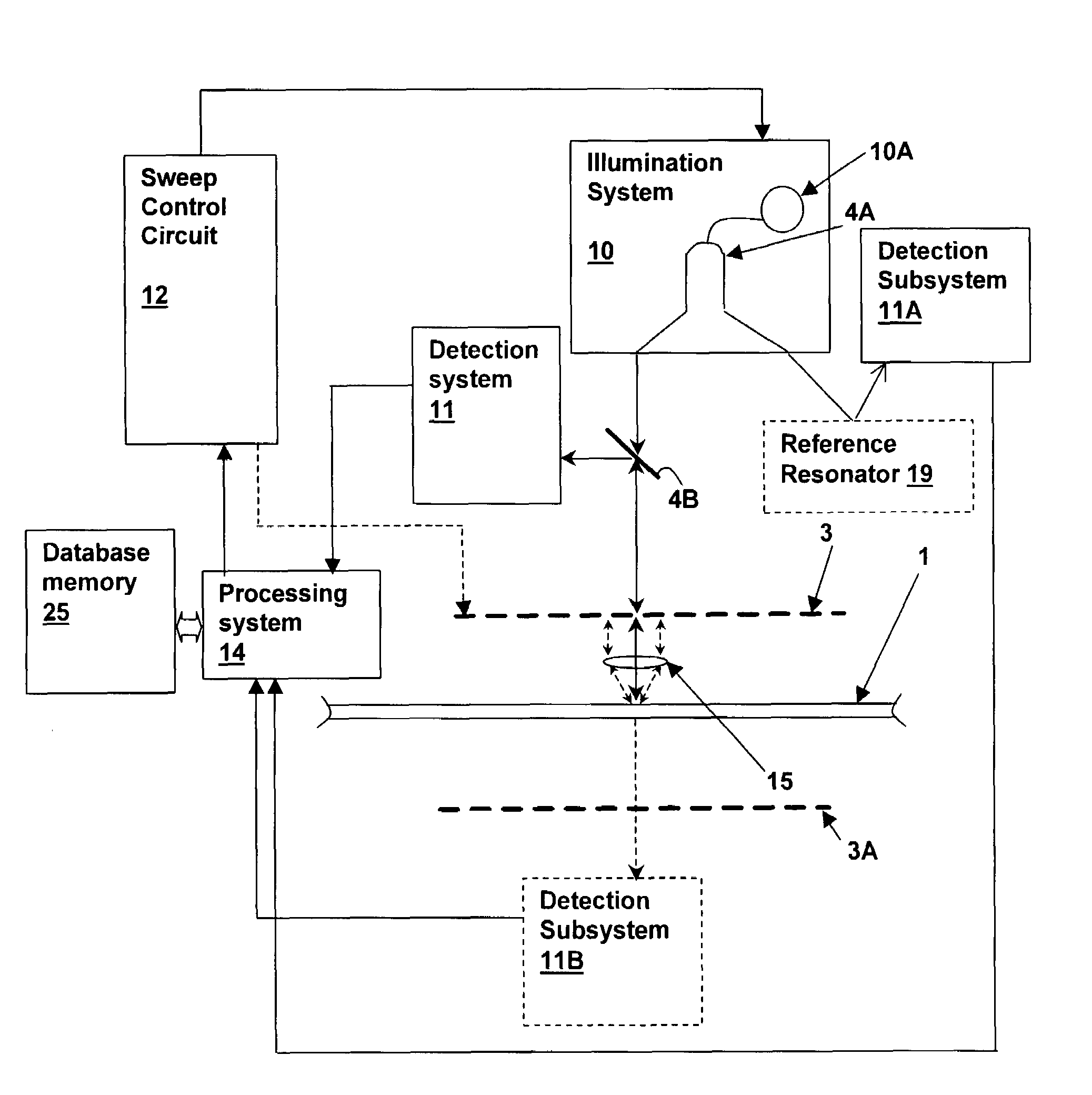

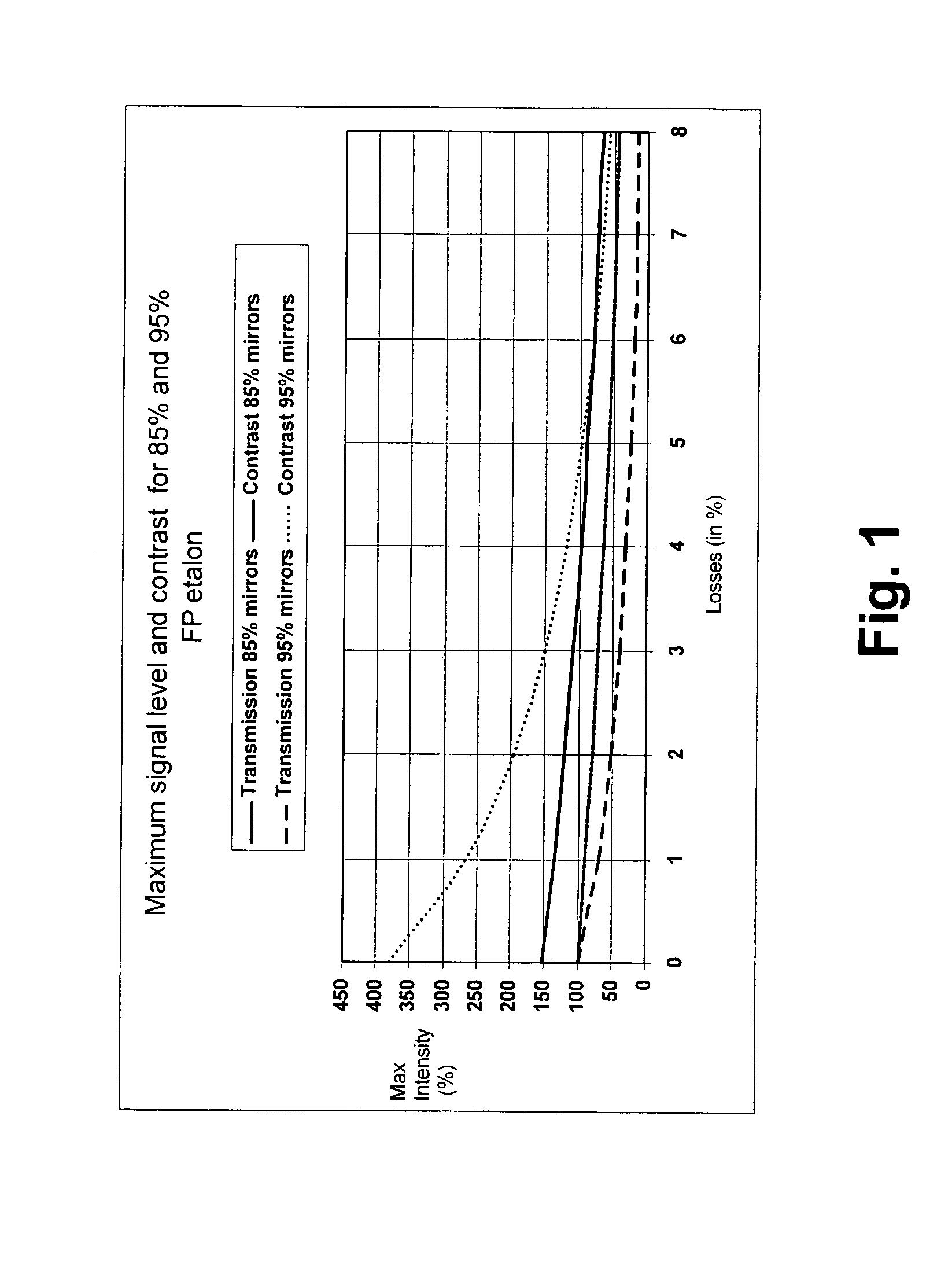

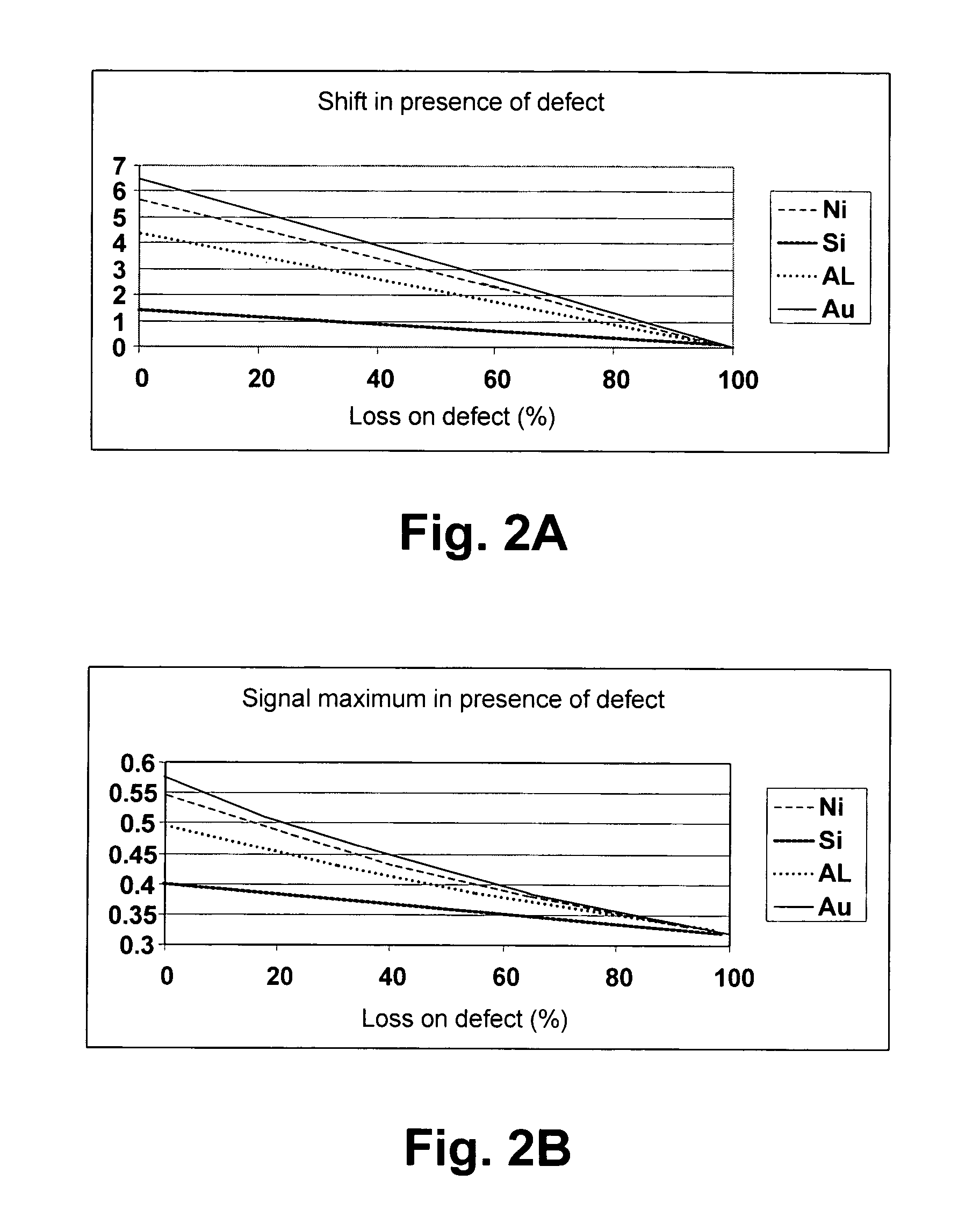

Resonator method and system for distinguishing characteristics of surface features or contaminants

InactiveUS7214932B2Beam/ray focussing/reflecting arrangementsPolarisation-affecting propertiesMaterial typeResonance

A resonator method and system for distinguishing characteristics of surface features or contaminants provides improved inspection or surface feature detection capability in scanning optical systems. A resonator including a surface of interest in the resonant path is coupled to a detector that detects light leaving the resonator. Changes in the resonance peak positions and peak intensities are evaluated against known changes for standard scatters in order to determine the material characteristics of an artifact at the surface of interest that causes a resonance change. The lateral size of the artifact is determined by de-convolving a known illumination spot size with the changing resonance characteristics, and the standard scatterer data is selected in conformity with the determined artifact size. The differential analysis using resonance peak shifts corresponding to phase and amplitude information provides an identification algorithm that identifies at least one artifact / material type is identified from matching known behaviors of artifacts / materials.

Owner:XYRATEX TECH LTD

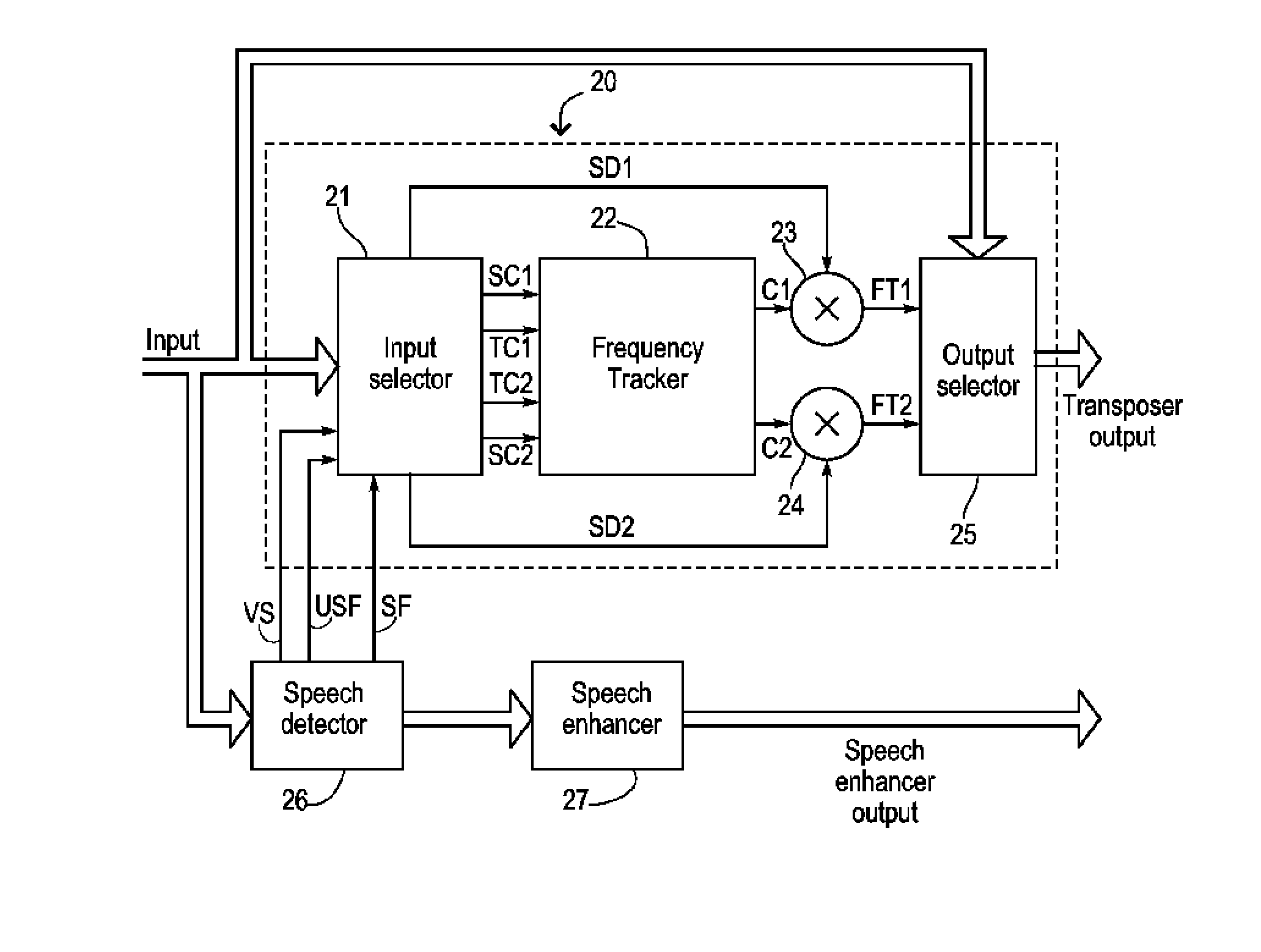

Hearing aid and a method of improved audio reproduction

A hearing aid comprising a frequency shifter (20) has means (22) for detecting a first frequency and a second frequency in an input signal. The frequency shifter (20) transposes a first frequency range of the input signal to a second frequency range of the input signal based on the presence of a fixed relationship between the first and the second detected frequency. The means (34, 35, 36) for detecting the fixed relationship between the first and the second frequency is used for controlling the frequency transposer (20). A speech detector (26) configured for detecting the presence of voiced and unvoiced speech is provided for suppressing the transposition of voiced-speech signals in order to preserve the speech formants. The purpose of transposing frequency bands in this way in a hearing aid is to render inaudible frequencies audible to a user of the hearing aid while maintaining the original envelope, harmonic coherence and speech intelligibility of the signal. The invention further provides a method for shifting a frequency range of an input signal in a hearing aid.

Owner:WIDEX AS

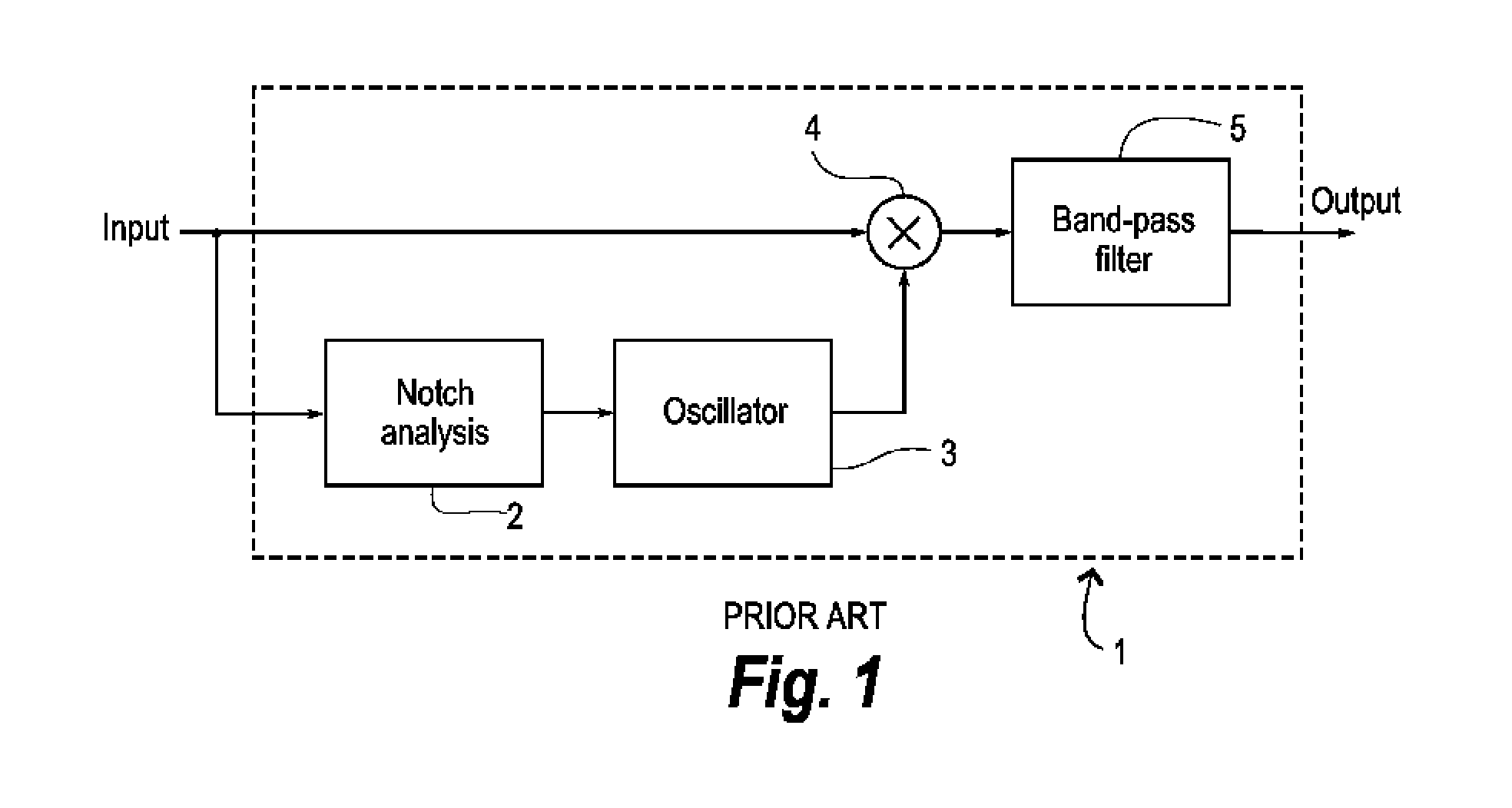

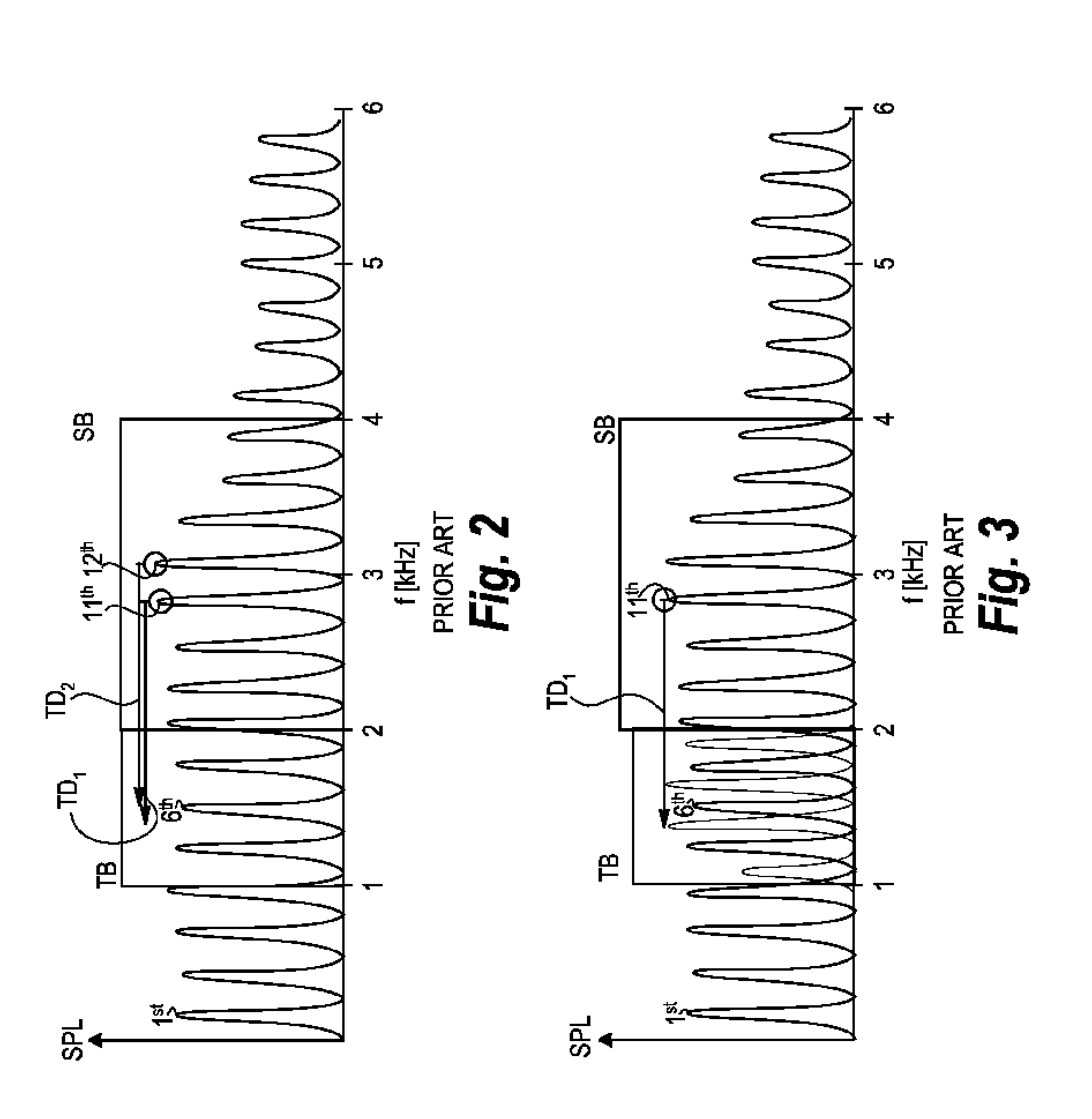

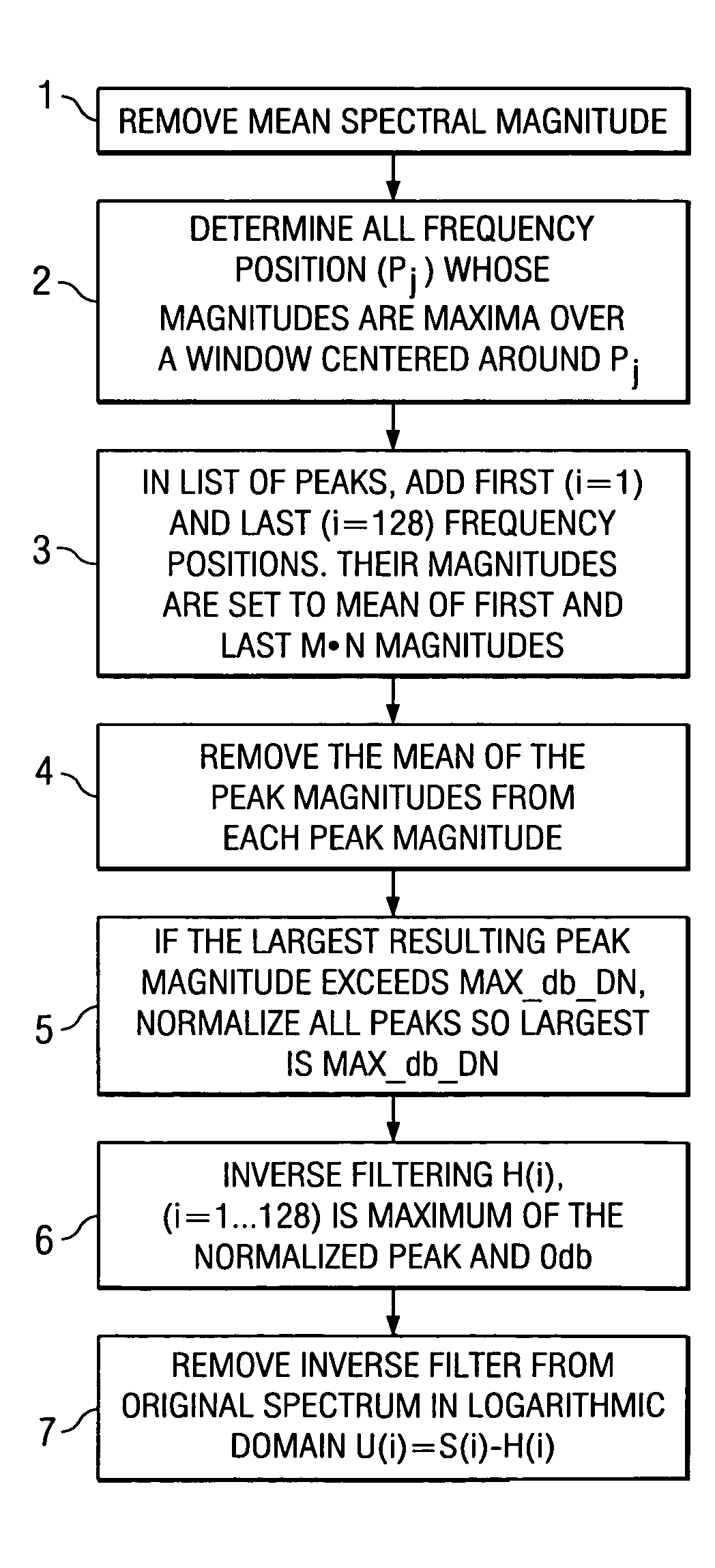

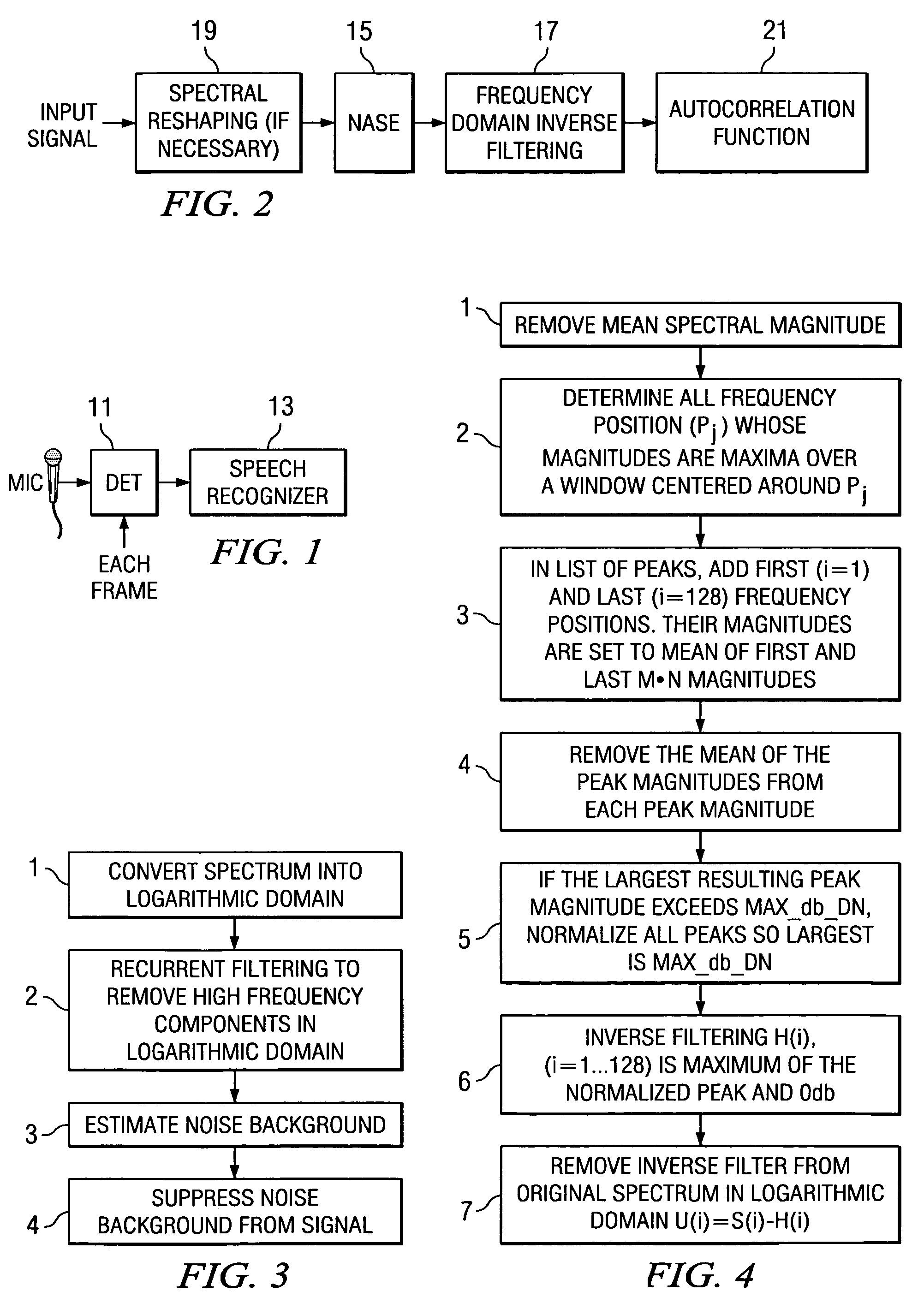

Noise-resistant utterance detector

A method and detector for providing a noise resistant utterance detector is provided by extracting a noise estimate (15) to augment the signal-to-noise ratio of the speech signal, inverse filtering (17) of the speech signal to focus on the periodic excitation part of the signal and spectral reshaping (19) to accentuate separation between formants.

Owner:TEXAS INSTR INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com