Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

539 results about "Three dimensional vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

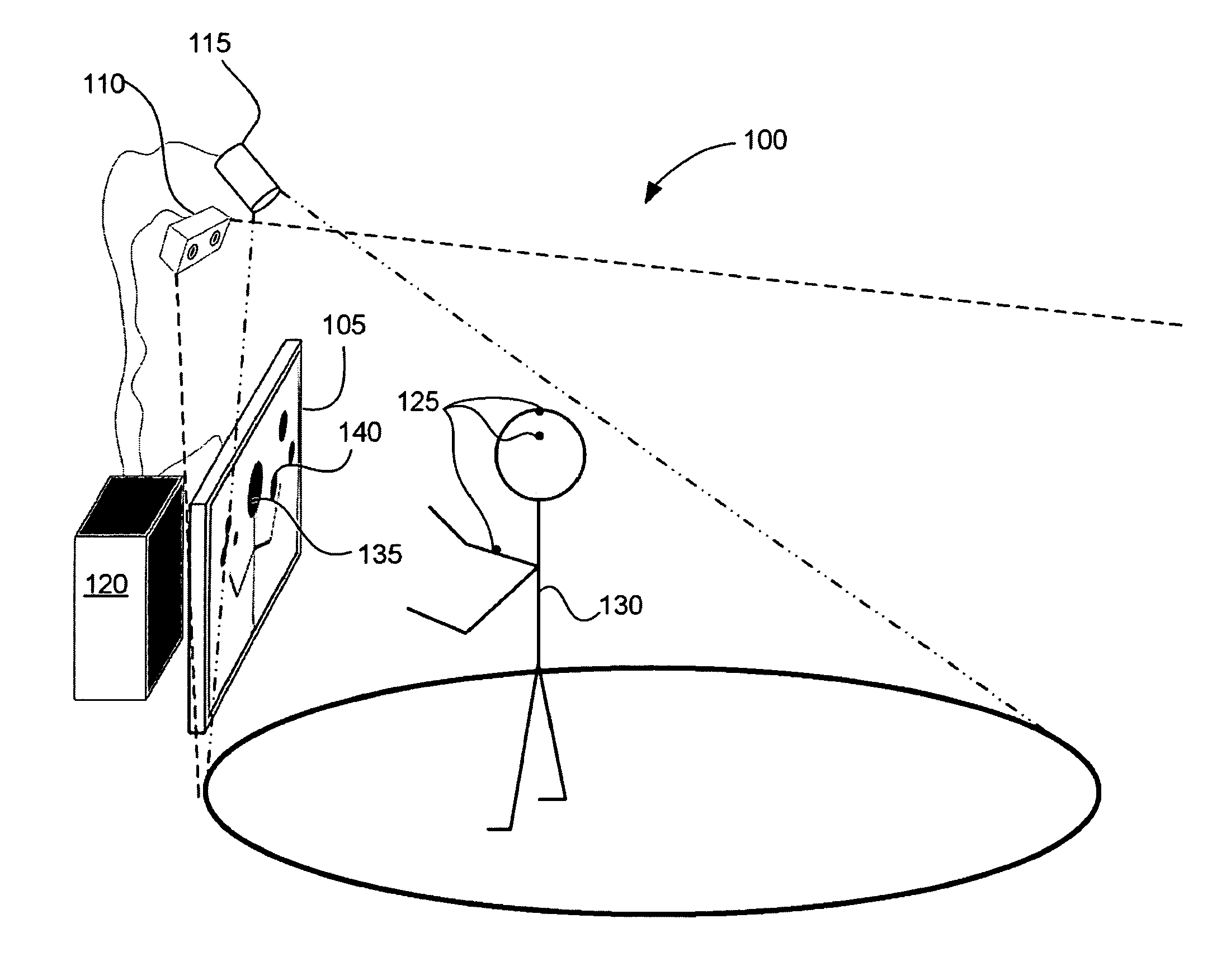

Display Using a Three-Dimensional vision System

InactiveUS20080252596A1Cathode-ray tube indicatorsInput/output processes for data processingThree-dimensional spaceInteractive video

An interactive video display system allows a physical object to interact with a virtual object. A light source delivers a pattern of invisible light to a three-dimensional space occupied by the physical object. A camera detects invisible light scattered by the physical object. A computer system analyzes information generated by the camera, maps the position of the physical object in the three-dimensional space, and generates a responsive image that includes the virtual object. A display presents the responsive image.

Owner:INTELLECTUAL VENTURES HLDG 67

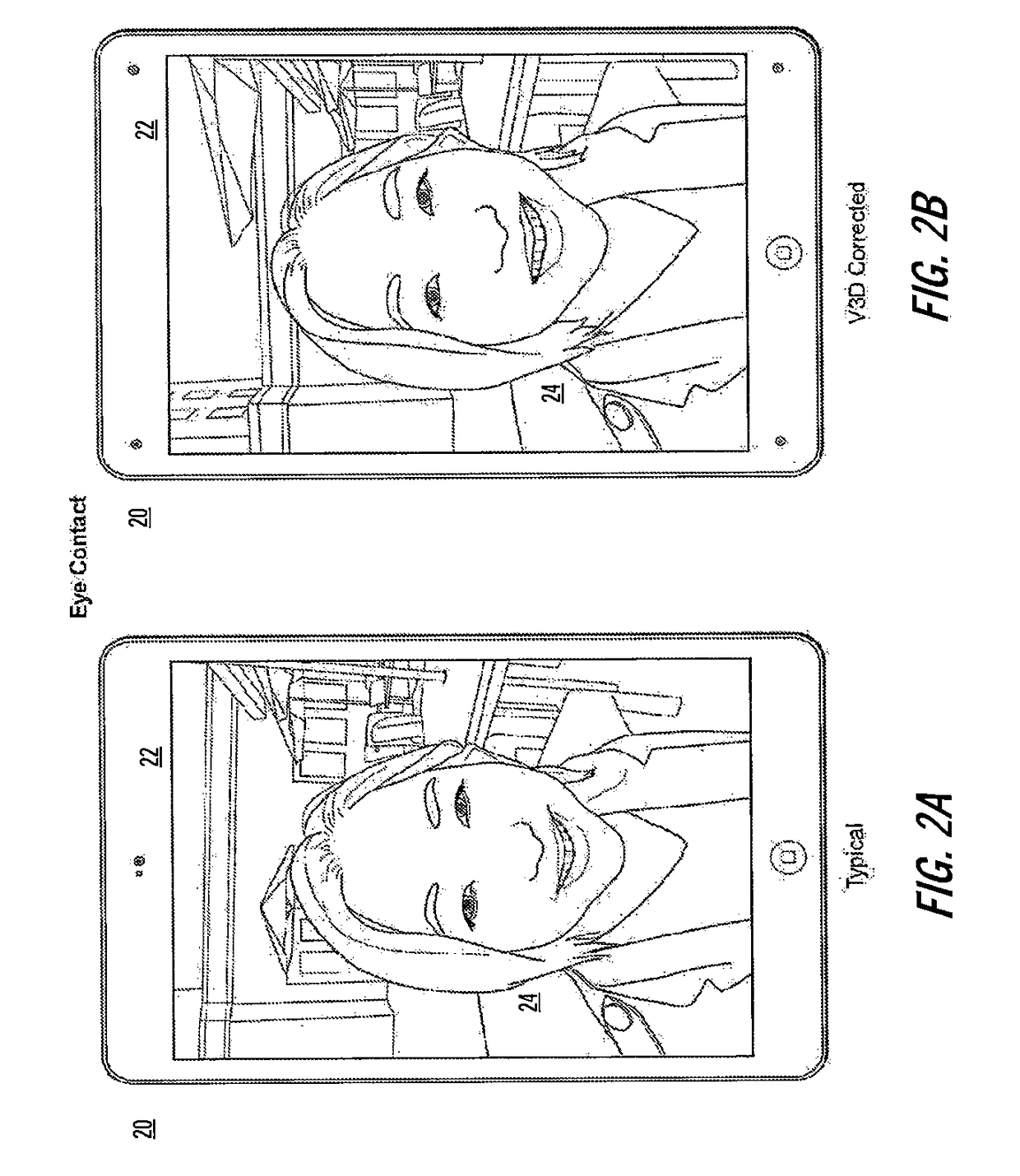

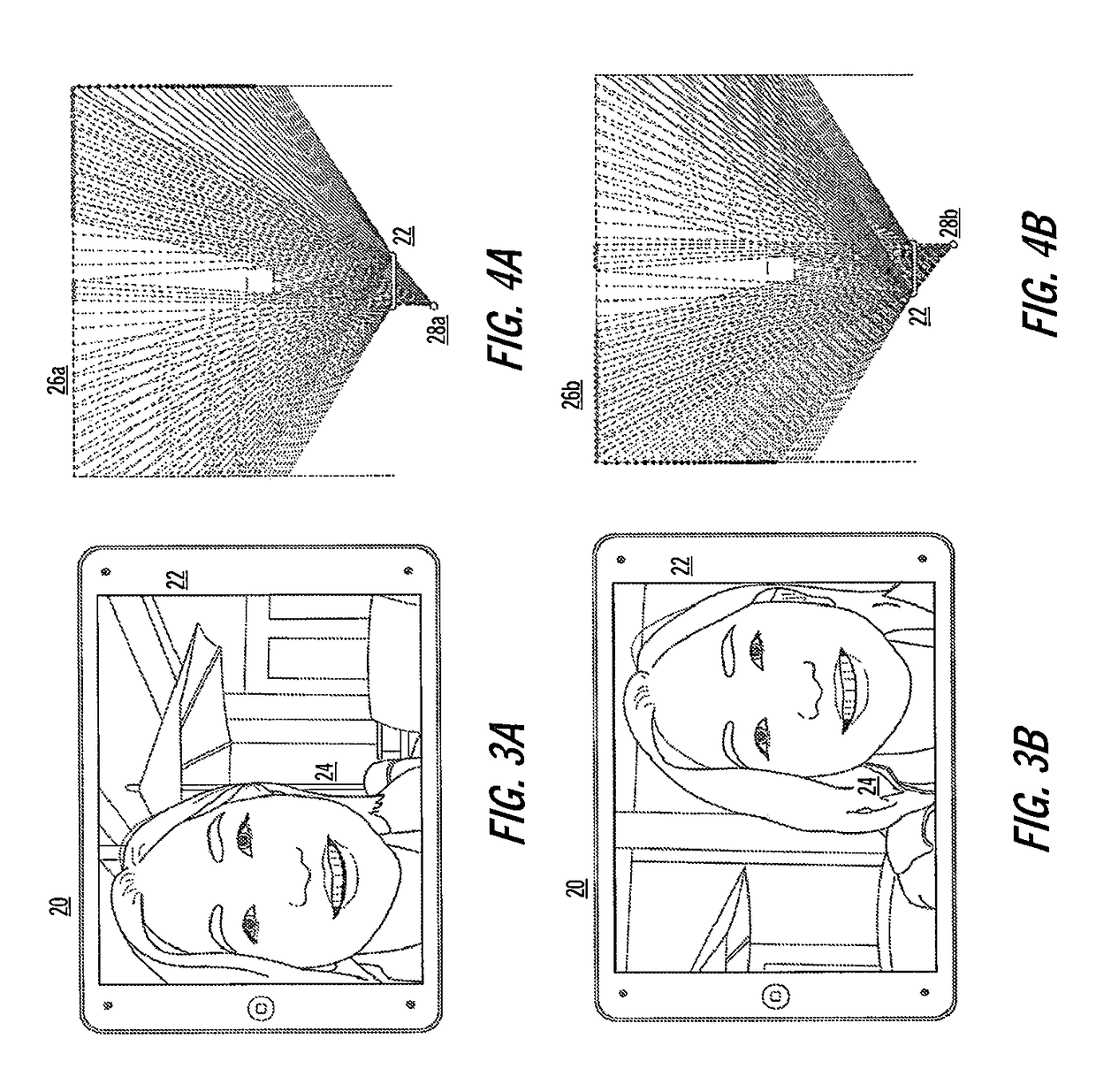

Virtual 3D methods, systems and software

ActiveUS20180307310A1Facilitate compositionEliminate the effects ofInput/output for user-computer interactionTelevision system detailsComputer graphics (images)Software

Methods, systems and computer program products (“software”) enable a virtual three-dimensional visual experience (referred to herein as “V3D”) videoconferencing and other applications, and capturing, processing and displaying of images and image streams.

Owner:MINE ONE GMBH

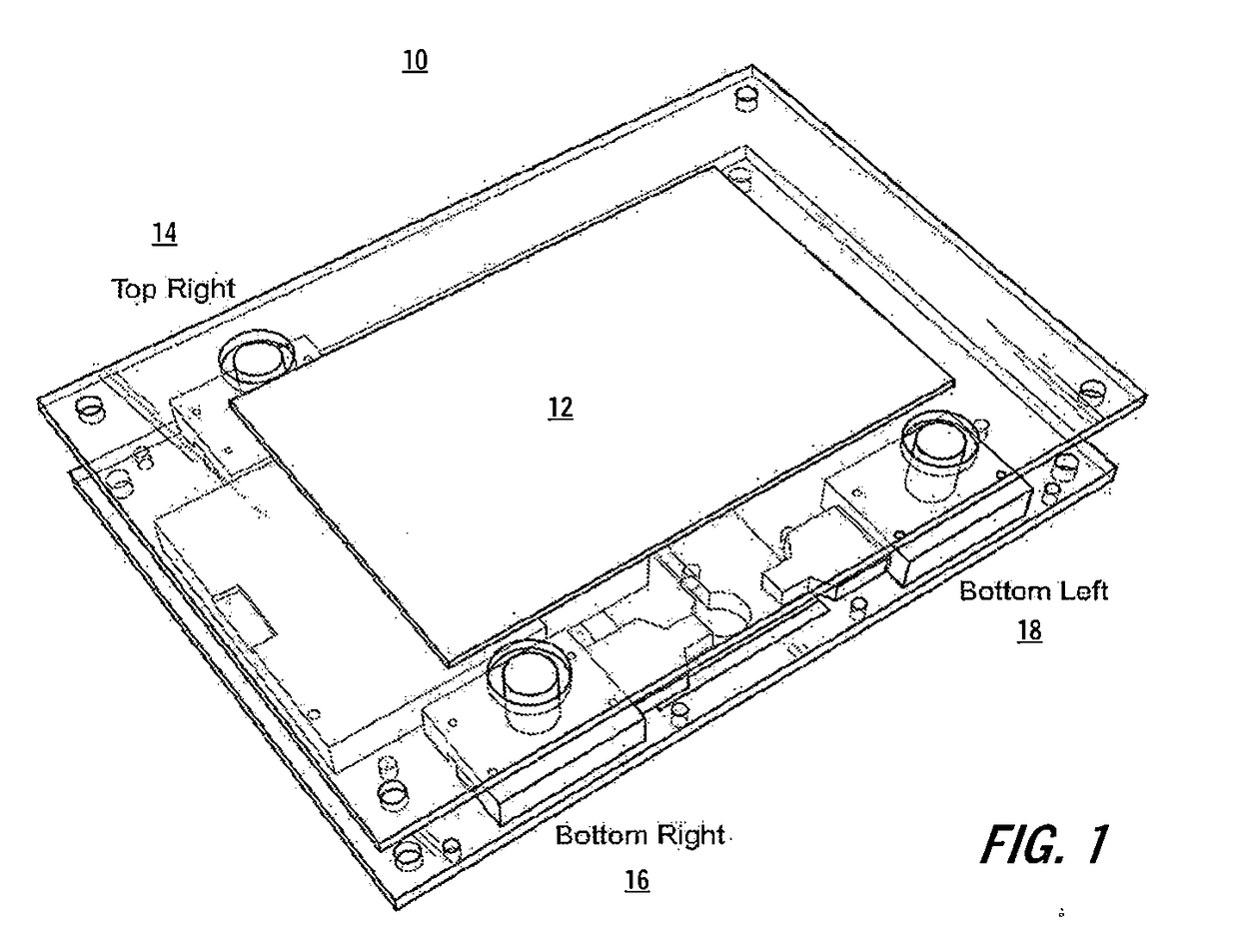

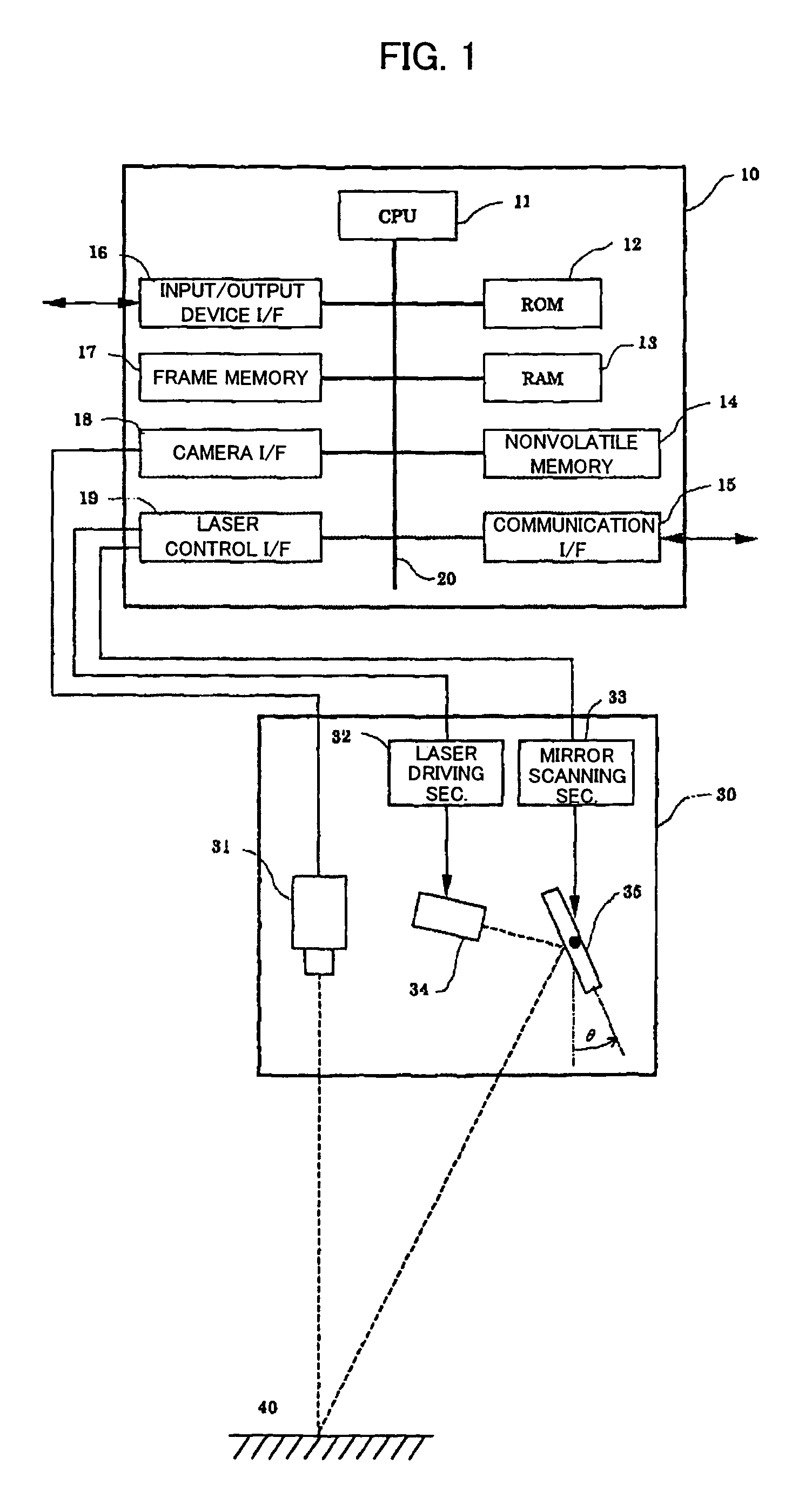

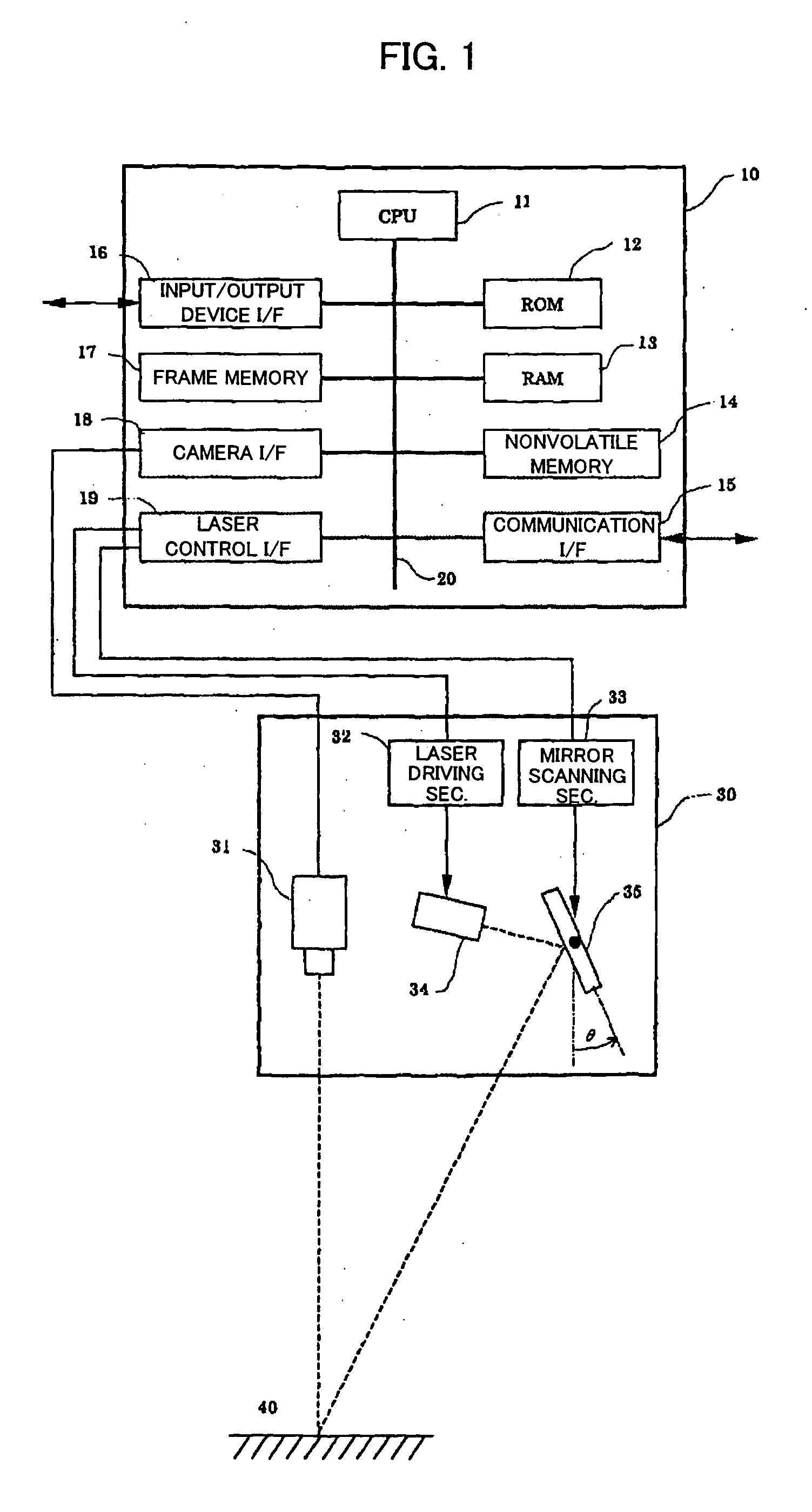

Three-dimensional vision sensor

InactiveUS20100232681A1Avoid performanceCancel noiseImage enhancementImage analysisTransformation parameterThree dimensional measurement

An object of the present invention is to enable performing height recognition processing by setting a height of an arbitrary plane to zero for convenience of the recognition processing. A parameter for three-dimensional measurement is calculated and registered through calibration and, thereafter, an image pickup with a stereo camera is performed on a plane desired to be recognized as having a height of zero in actual recognition processing. Further, three-dimensional measurement using the registered parameter is performed on characteristic patterns (marks m1, m2 and m3) included in this plane. Three or more three-dimensional coordinates are obtained through this measurement and, then, a calculation equation expressing a plane including these coordinates is derived. Further, based on a positional relationship between a plane defined as having a height of zero through the calibration and the plane expressed by the calculation equation, a transformation parameter (a homogeneous transformation matrix) for displacing points in the former plane into the latter plane is determined, and the registered parameter is changed using the transformation parameter.

Owner:ORMON CORP

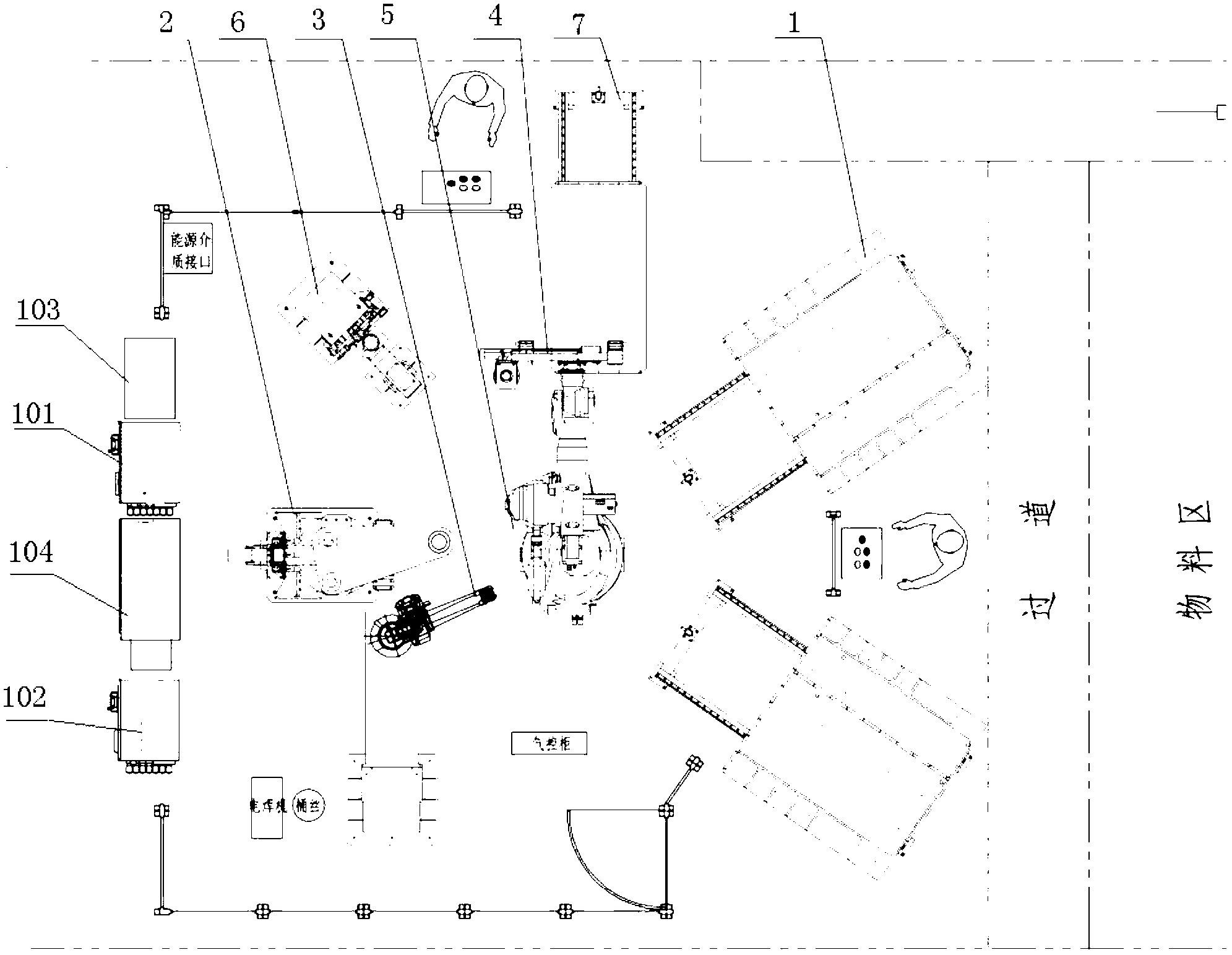

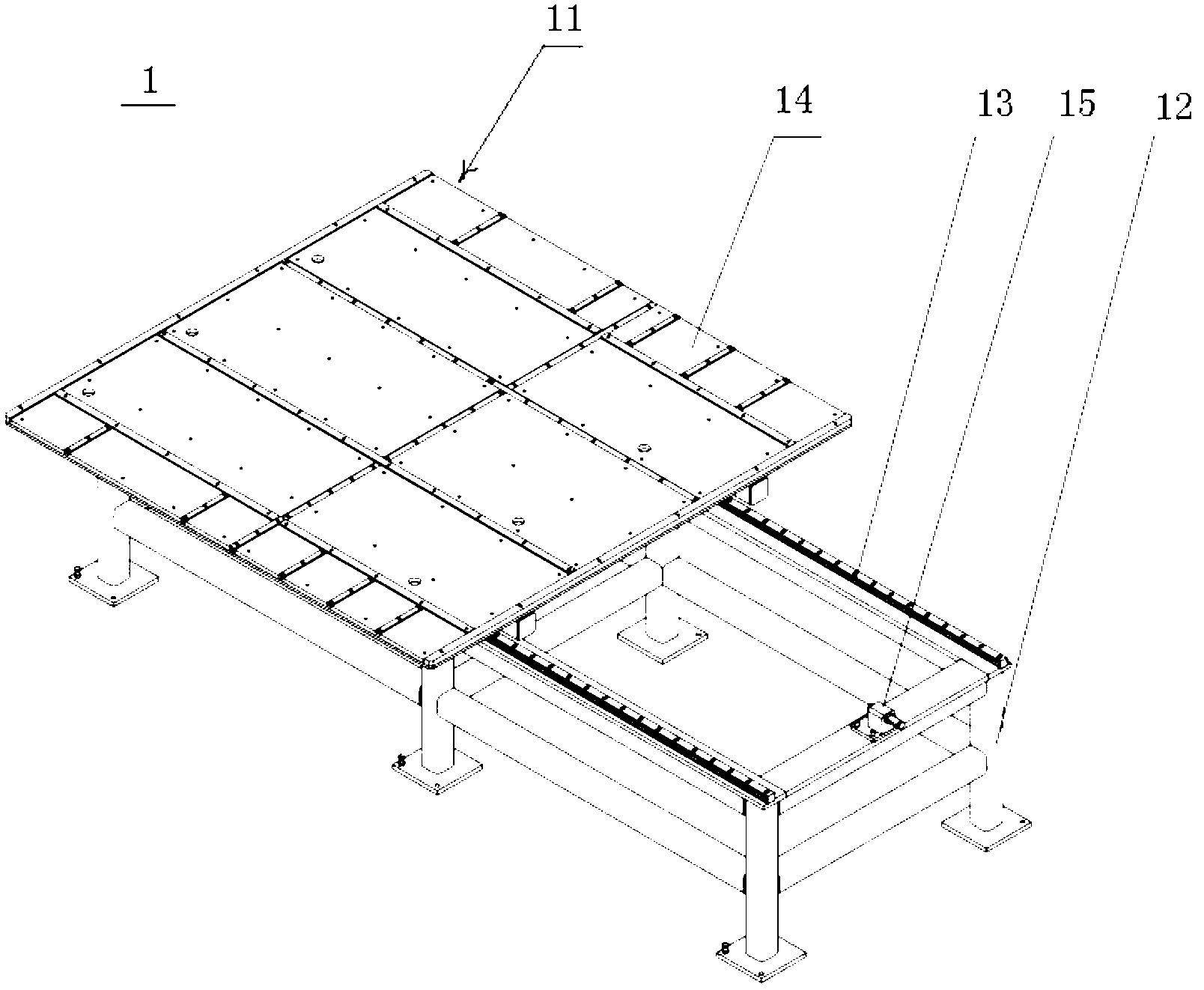

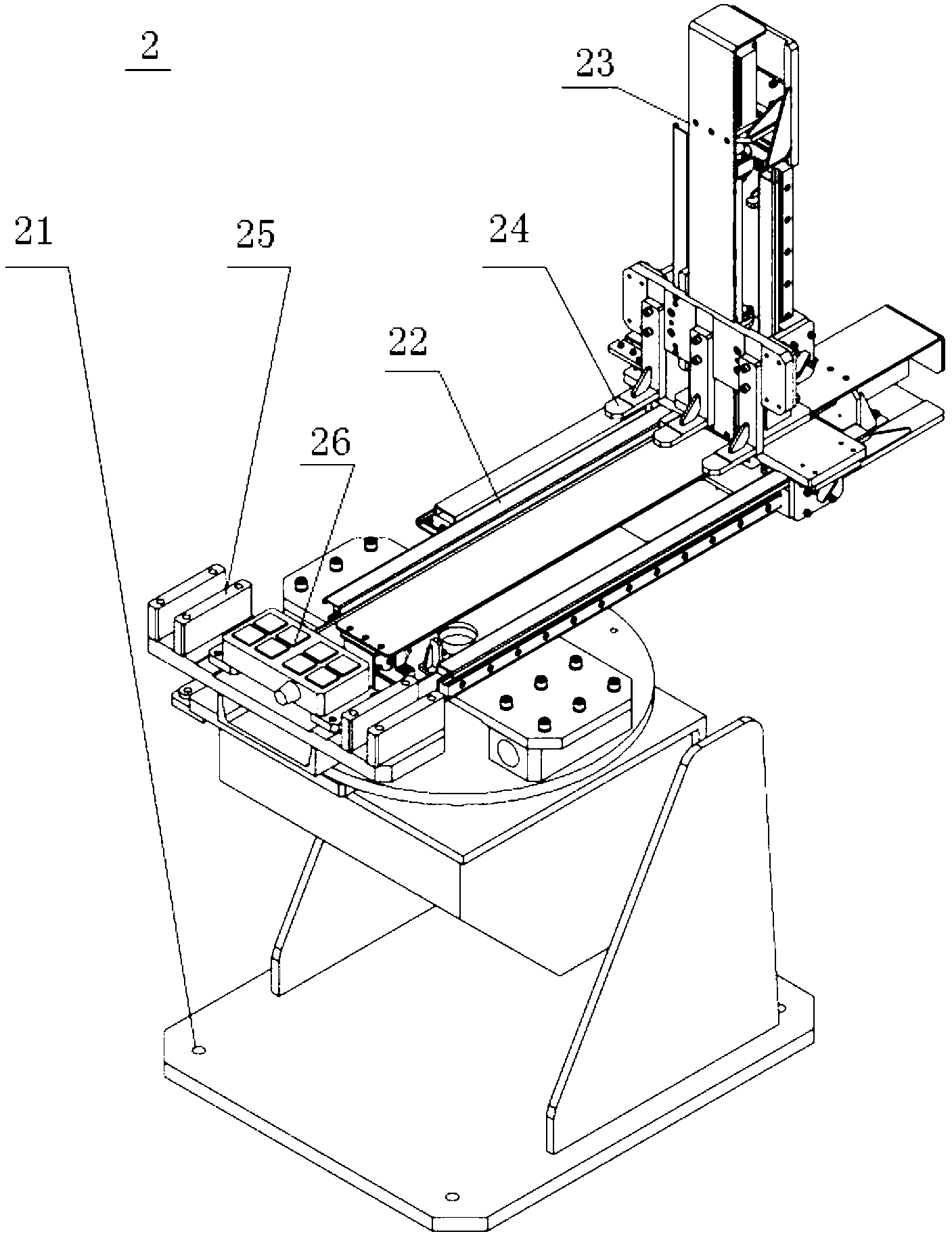

Automatic assembling and welding system based on three-dimensional laser vision

ActiveCN102837103AHigh precisionWelding/cutting auxillary devicesAuxillary welding devicesControl systemControl engineering

The invention discloses an automatic assembling and welding system based on three-dimensional laser vision, which comprises a loading station, a spot welding station, a welding robot, a turnover station, an unloading station, a transfer station, a transfer robot, a control system and an electric system, wherein the control system comprises a welding robot control cabinet for controlling the welding robot, and a transfer robot control cabinet for controlling the transfer robot, and the welding robot control cabinet and the transfer robot control cabinet can communicate with a three-dimensional vision system. By adopting the automatic assembling and welding system based on three-dimensional laser vision disclosed by the invention, high-precision automatic assembling of work pieces can be realized, so that a guarantee is provided for automatic welding of the work pieces.

Owner:CHANGSHA CTR ROBOTICS

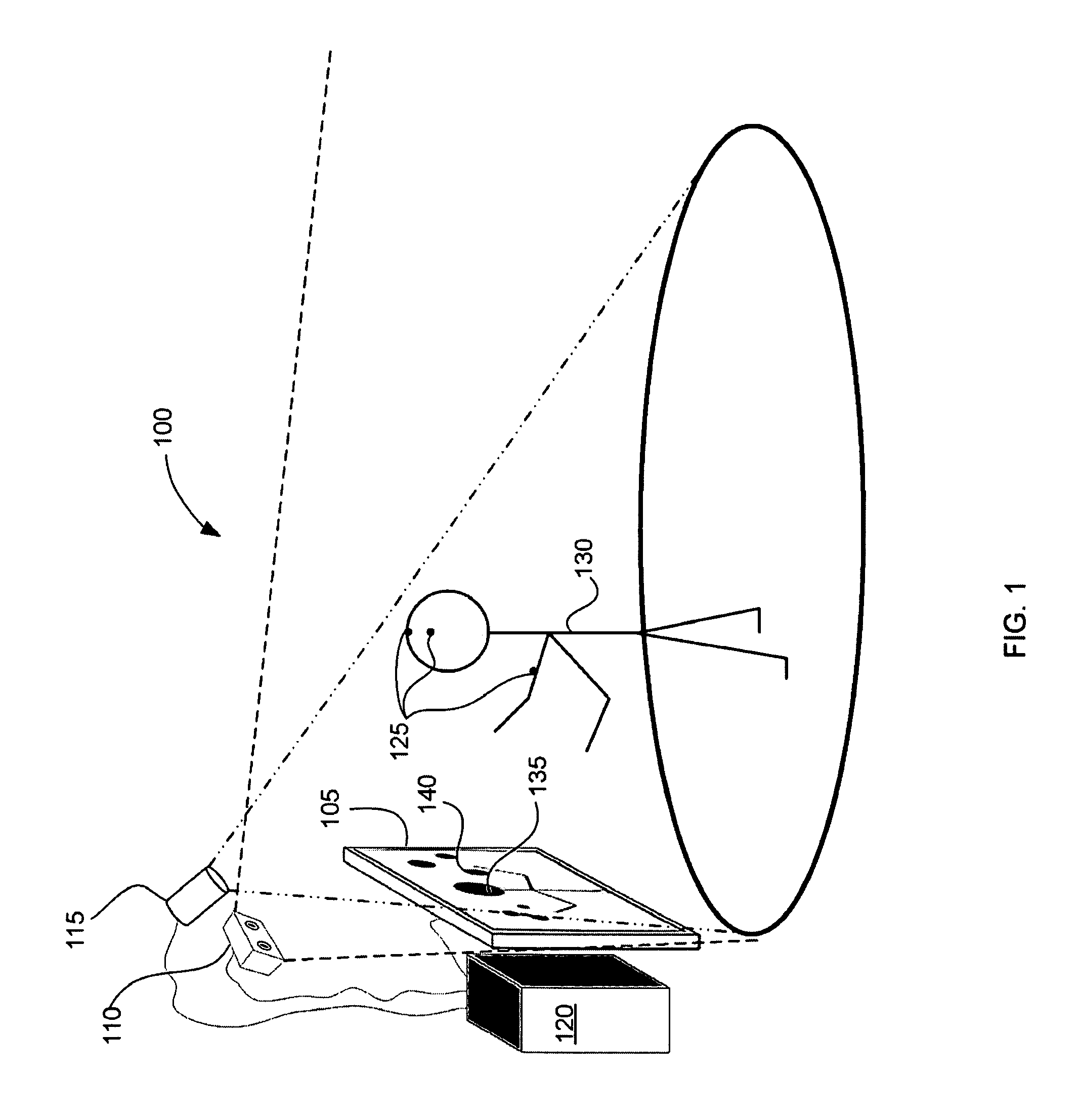

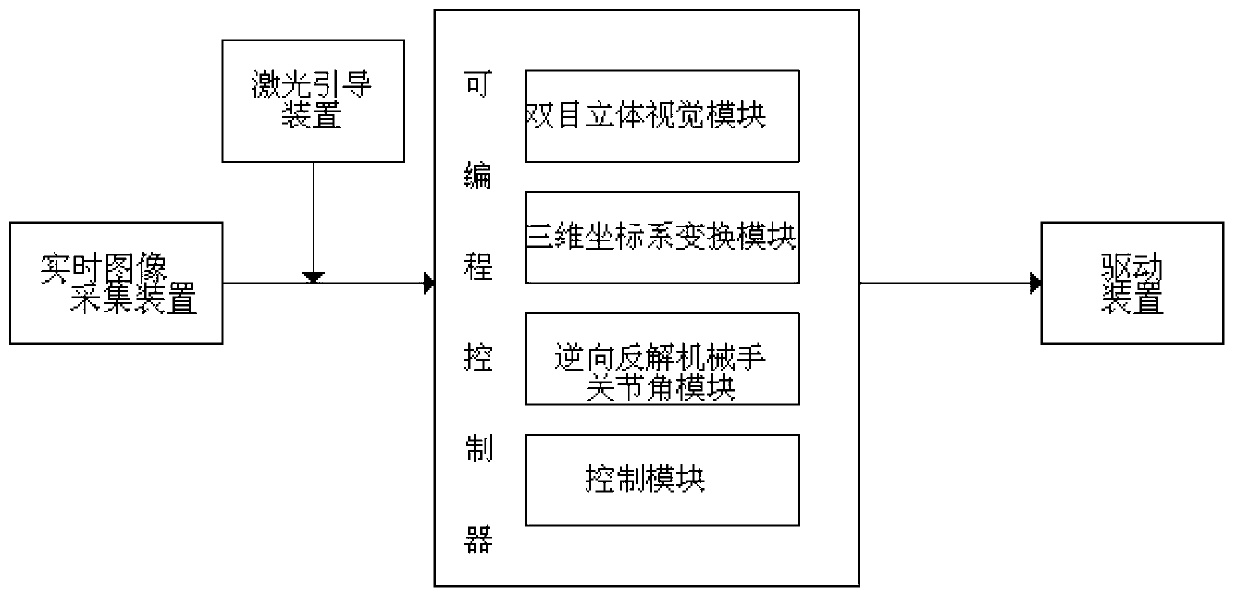

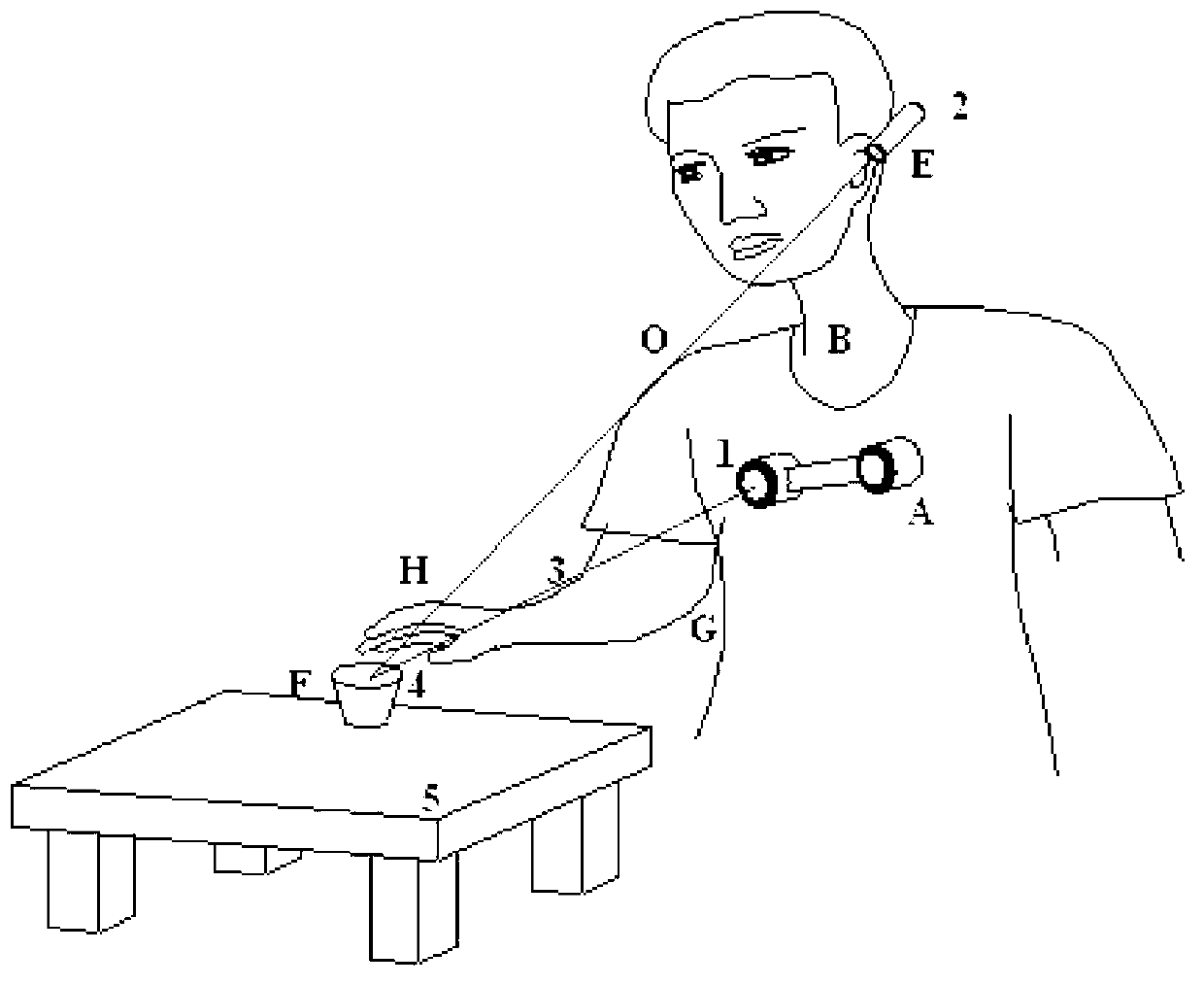

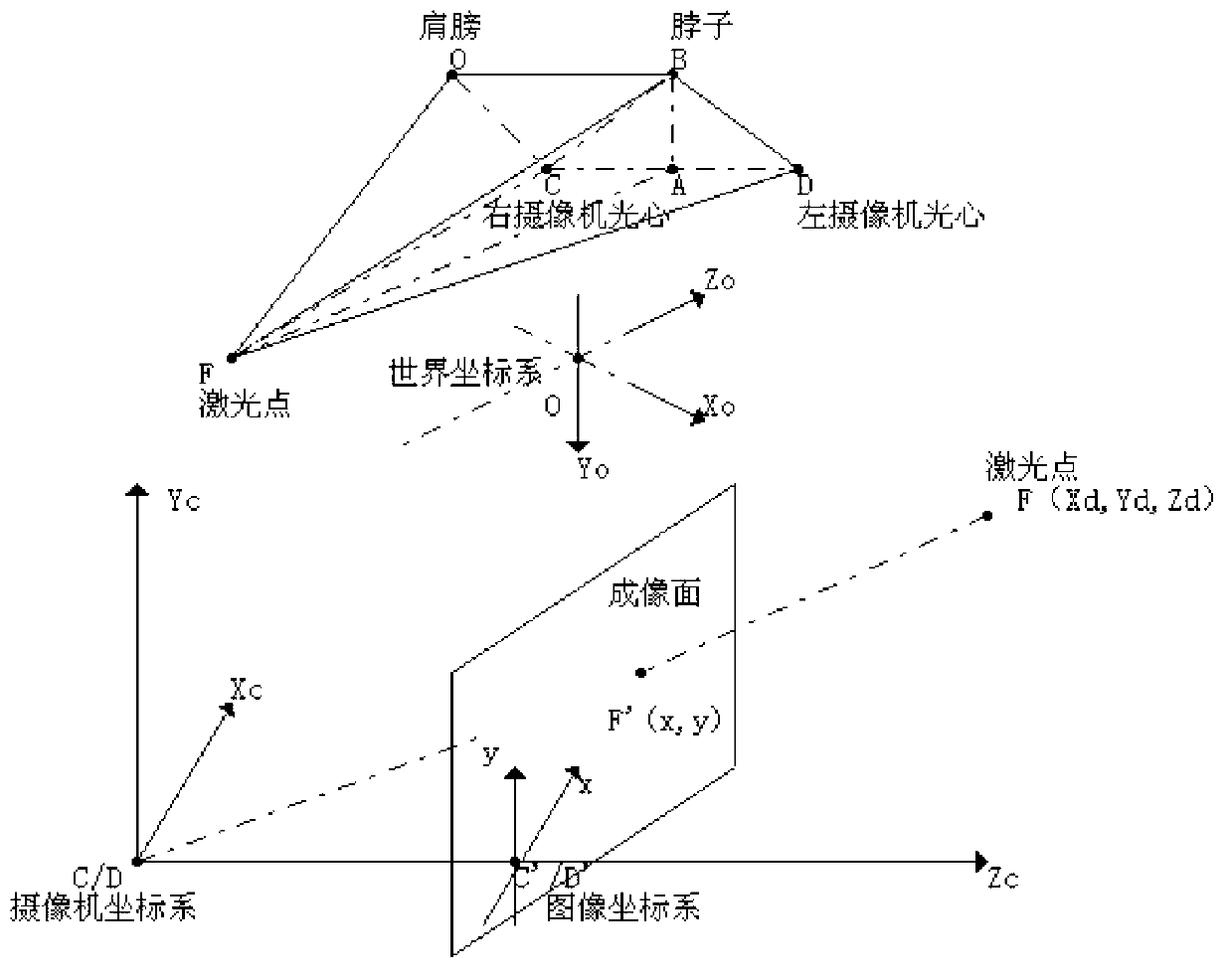

Man-machine interactive manipulator control system and method based on binocular vision

InactiveCN103271784AControl tension reductionImprove participationProgramme-controlled manipulatorProsthesisSystem transformationVisual perception

The invention discloses a man-machine interactive manipulator control system and method based on binocular vision. The man-machine interactive manipulator control system is composed of a real-time image collecting device, a laser guiding device, a programmable controller and a driving device. The programmable controller is composed of a binocular three-dimensional vision module, a three-dimensional coordinate system transformation module, an inverse manipulator joint angle module and a control module. Color characteristics in a binocular image are extracted through the real-time image collecting device to be used as a signal source for controlling a manipulator, and three-dimensional information of red characteristic laser points in a view real-time image is obtained through transformation and calculation of the binocular three-dimensional vision system and a three-dimensional coordinate system and used for controlling the manipulator to conduct man-machine interactive object tracking operation. The control system and method can effectively conduct real-time tracking and extracting of a moving target object and is wide in application fields such as intelligent artificial limb installing, explosive-handling robots, manipulators helping the old and the disabled and the like.

Owner:SHANDONG UNIV OF SCI & TECH

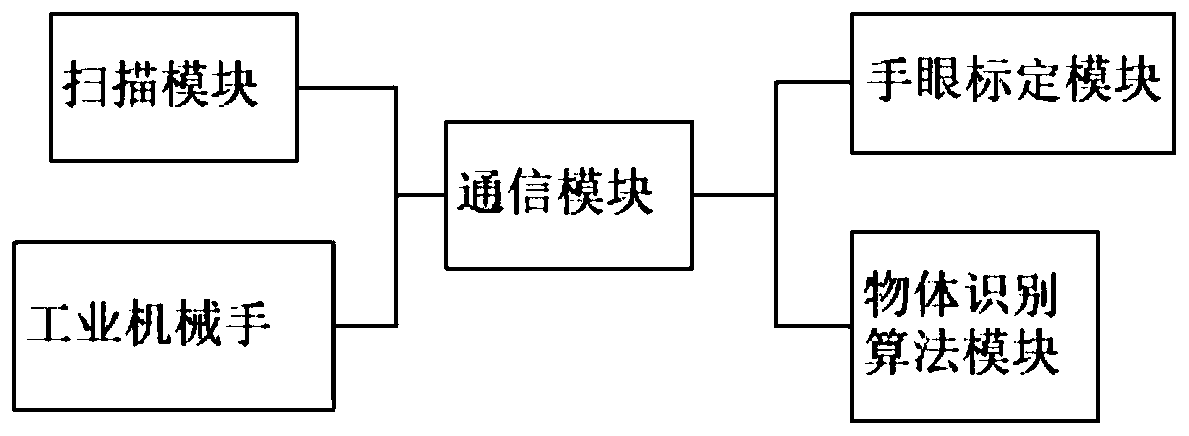

Robot grasp pose estimation method based on object recognition depth learning model

InactiveCN109102547AIncrease success rateImprove computing efficiencyImage analysisEstimation methodsHand eye calibration

The invention discloses a robot grasping pose estimation method based on an object recognition depth learning model, which relates to the technical field of computer vision. The method is based on anRGBD camera and depth learning. The method comprises the following steps: S1, camera parameter calibration and hand-eye calibration being carried out; S2, training an object detection object model; S3, establishing a three-dimensional point cloud template library of the target object; 4, identifying the types and position of each article in the area to be grasped; 5, fusing two-dimensional and three-dimensional vision information and obtaining a point cloud of a specific target object; 6, completing the pose estimation of the target object; S7: adopting an error avoidance algorithm based on sample accumulation to avoid errors; S8: Steps S4 to S7 being continuously repeated by the vision system in the process of moving the robot end toward the target object, so as to realize iterative optimization of the pose estimation of the target object. The algorithm of the invention utilizes a target detection YOLO model to carry out early-stage fast target detection, reduces the calculation amount of three-dimensional point cloud segmentation and matching, and improves the operation efficiency and accuracy.

Owner:SHANGHAI JIEKA ROBOT TECH CO LTD

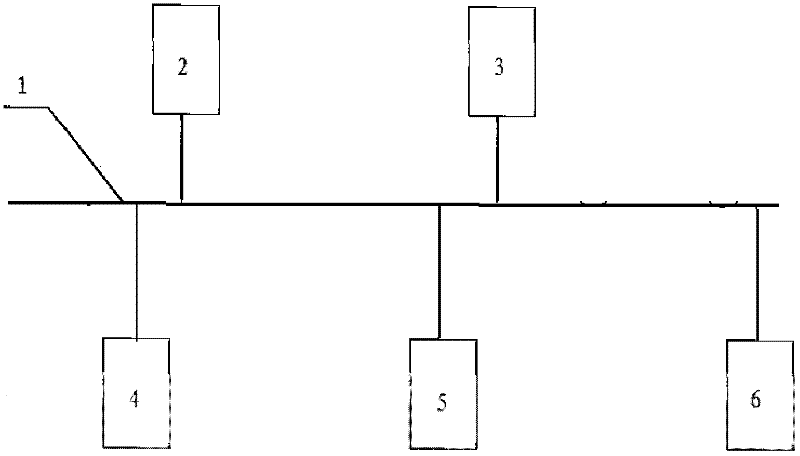

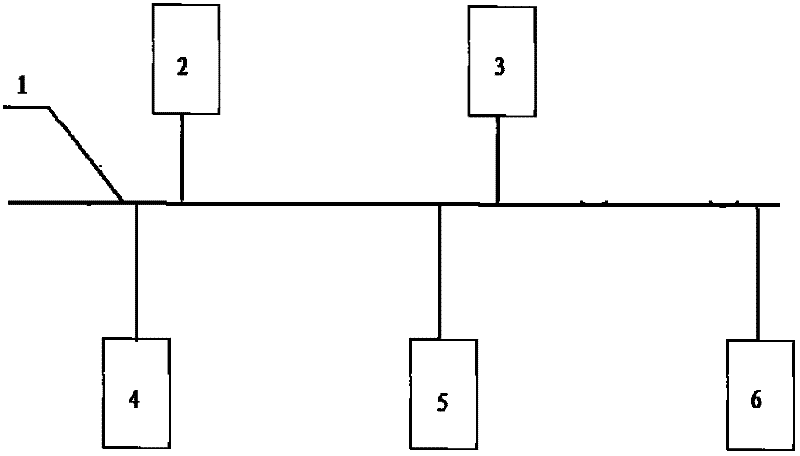

CBTC (Communications Based Train Control) signal system simulation testing platform

The invention provides a CBTC (Communications Based Train Control) signal system simulation testing platform, which comprises a signal bus, a simulation vehicle-mounted and simulation vehicle system, a simulation station, a simulation rail side system, a three-dimensional vision system and a testing platform management system, wherein the simulation vehicle-mounted and simulation vehicle system, the simulation station, the simulation rail side system, the three-dimensional vision system and the testing platform management system are mutually connected through the signal bus. By applying the invention, an integral solution including vehicle, scheduling and signal systems can be checked and demonstrated; a signal system can be subjected to function and performance tests indoors, and failure injection conditions which can not be simulated in various sites can be carried out indoors, thus testing means are enriched and safety of the system is improved; and simulation checking under the condition of multiple vehicles can be passed, a method for analyzing the railway operation capability is deeply researched, and the reference is provided for the layout of signal devices.

Owner:TRAFFIC CONTROL TECH CO LTD

Visual positioning method for robot transport operation

ActiveCN101637908ARealize switchingRealize automated productionProgramme-controlled manipulatorUsing optical meansVisual positioningUltimate tensile strength

The invention provides a visual positioning method for robot transport operation, which comprises a two-dimensional visual positioning method and a three-dimensional visual positioning method, whereinthe two-dimensional visual sense realizes that workpieces are free from mechanical precision positioning, and a robot can automatically compensate the grabbing function; and the three-dimensional visual sense solves the problem that automation production cannot be carried out by the positional deviation of positioning surfaces of the workpieces. The visual positioning method comprehensively applies two-dimensional visual positioning and three-dimensional visual positioning, solves the problems that workpieces to be processed are blank pieces, positions where the workpieces are grabbed are blank surface simultaneously, and the workpieces grabbed by the robot can not be fed accurately, improves the feasibility of production, has high flexibility, saves labor cost, and reduces labor intensity.

Owner:SHANGHAI FANUC ROBOTICS

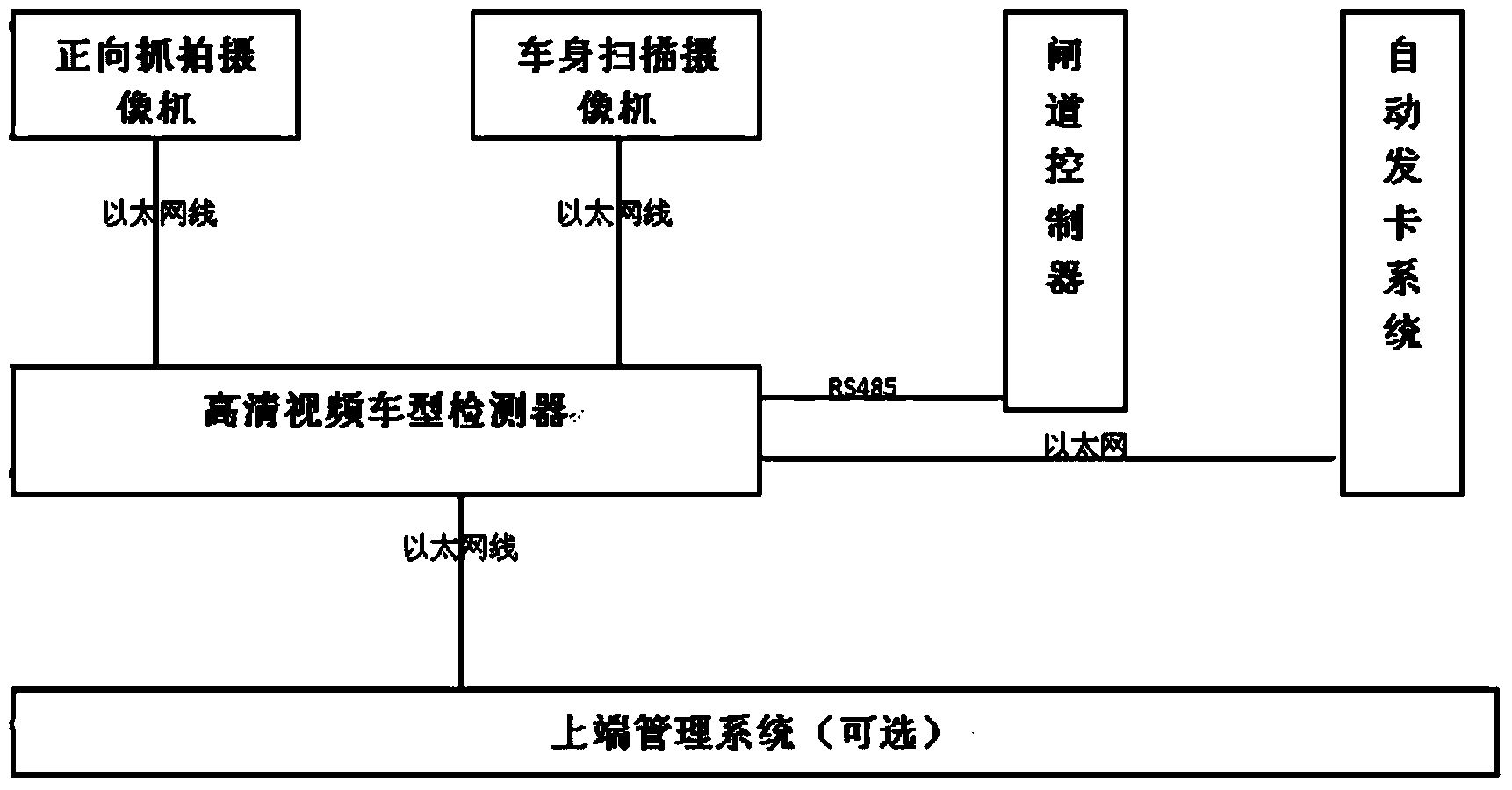

Vehicle type accurate classification system and method based on real-time double-line video stream

ActiveCN103794056AMeet the technical requirements of automatic classification and detectionRoad vehicles traffic controlCharacter and pattern recognitionPhysical spaceThree dimensional measurement

The invention discloses a vehicle type accurate classification system and method based on real-time double-line video stream. The system comprises a vehicle body scanning camera, a high-definition capturing camera and a video vehicle detector. The method comprises the steps that the vehicle body scanning camera and the high-definition capturing camera carry out double-line video collecting on vehicles; after vision field calibration, one-to-one-corresponding logic relation of virtual pixel spaces and real physical spaces is established; the vehicles in a vision field are subjected to target separating; and accurate vehicle physical data are given, model rebuilding and three-dimensional measuring are carried out, and vehicle types are finally judged. The system and method have the advantages that two-line high-definition video stream of the vehicle body scanning camera and the high-definition capturing camera, the embedded double-channel video vehicle type detector and a three-dimensional vision vehicle body model rebuilding and fitting mode are used, various parameters of the vehicles can be provided at the same time, and the vehicles are accurately classified.

Owner:BEIJING SINOITS TECH

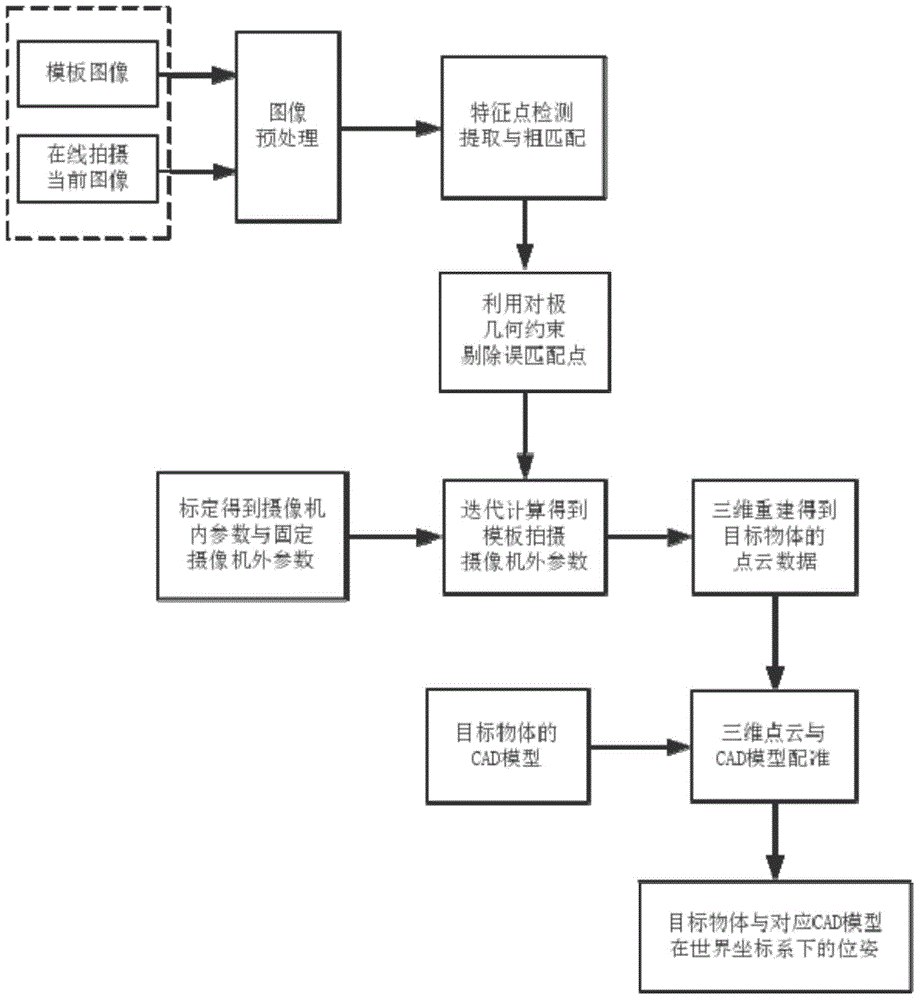

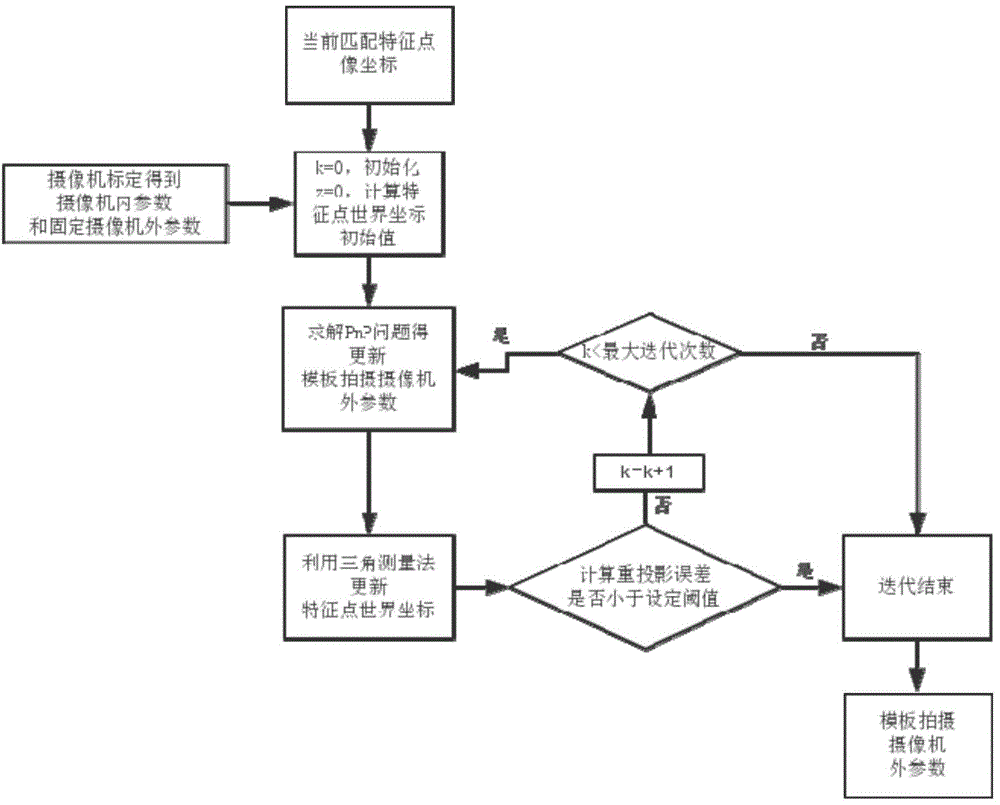

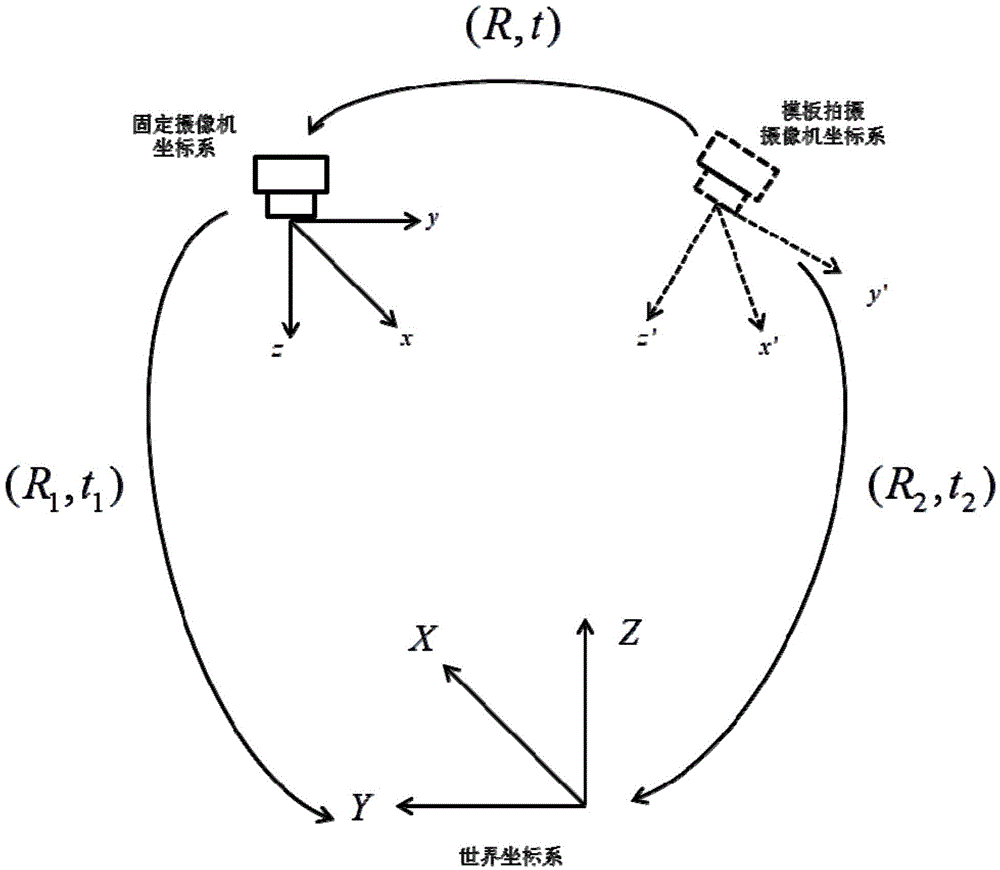

Object posture measuring method based on CAD model and monocular vision

ActiveCN104596502ALow costHigh precisionNavigation instrumentsPicture interpretationPoint cloudVisual perception

The invention discloses an object posture measuring method based on a CAD model and monocular vision. The object posture measuring method based on the CAD model and the monocular vision comprises the following steps: after obtaining a movement relationship between a template shooting camera of an assumed movement and a fixed camera by virtue of movement assumption and iterative calculation of the template shooting camera to obtain an outer parameter of a binocular system consisting of the template shooting camera of the assumed movement and the fixed camera, performing three-dimensional reconstruction on a target to obtain three-dimensional point cloud data of the target, and rectifying a CAD model including object three-dimensional structure information to obtain a corresponding relation between the object posture of the target object under a current world coordinate system and the CAD model, and accurately figuring the posture of the target object. A movable camera fixing carrier is not needed and the CAD model and the three-dimensional vision information of the object are combined, so that the cost is low, the precision is high, and the demand of the actual industrial application can be met.

Owner:ZHEJIANG UNIV

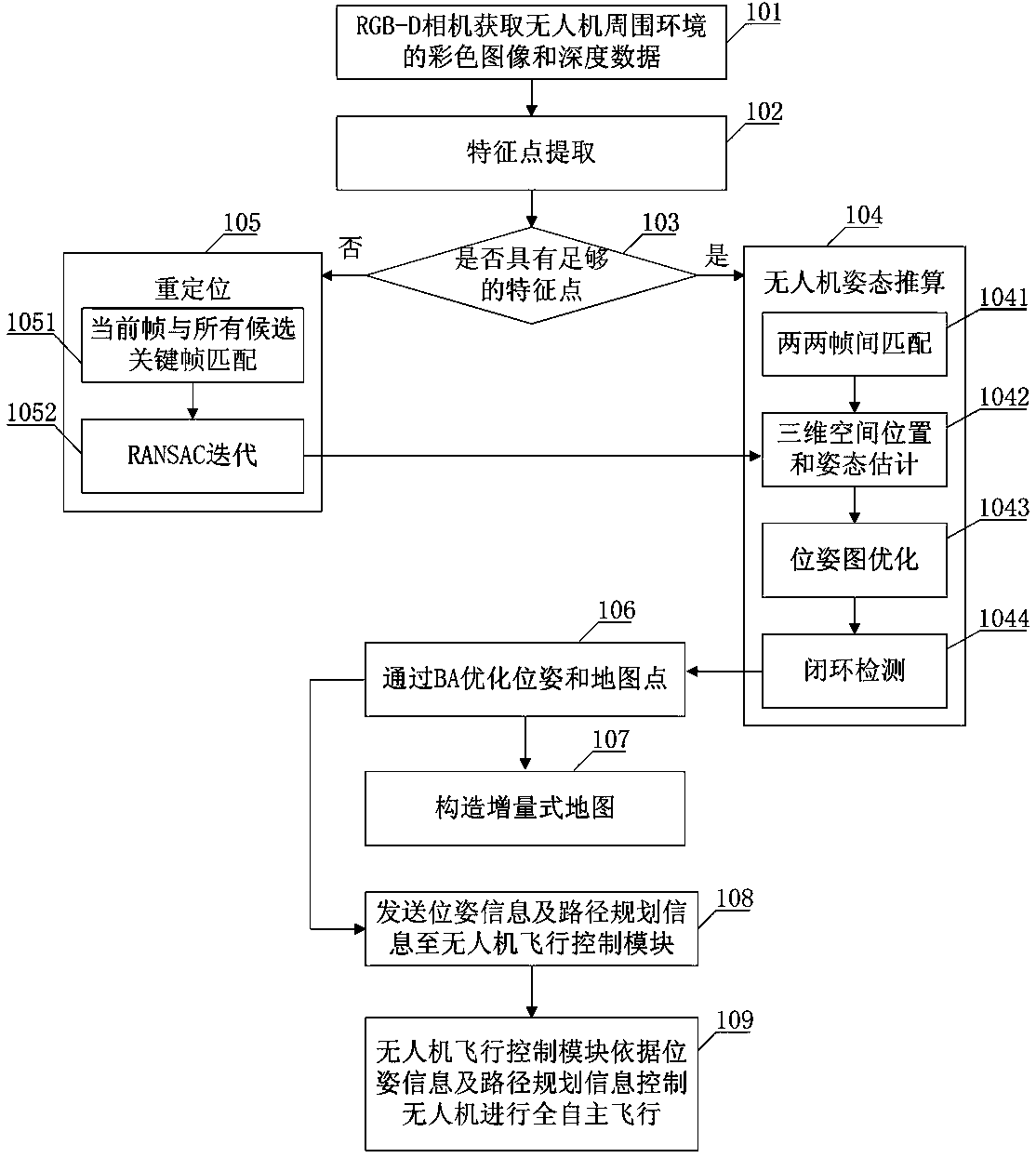

Indoor and independent drone navigation method based on three-dimensional vision SLAM

ActiveCN108303099AAvoid complex processSolve complexityNavigational calculation instrumentsUncrewed vehicleGlobal optimization

The invention provides an indoor and independent drone navigation method based on three-dimensional vision SLAM. The indoor and independent drone navigation method comprises the steps that an RGB-D camera obtains a colored image and depth data of a drone surrounding environment; a drone operation system extracts characteristic points; the drone operation system judges whether enough characteristicpoints exist or not, if the quantity of the characteristic points is larger than 30, it shows that enough characteristic points exist, the drone attitude calculation process is conducted, or, relocating is conducted; a bundling optimizing method is used for global optimization; an incremental map is built. Drone attitude information is given with only one RGB-D camera, a three-dimensional surrounding environment is rebuilt, the complex process that a monocular camera solves depth information is avoided, and the problems of complexity and robustness of a matching algorithm in a binocular camera are solved; an iterative nearest-point method is combined with a reprojection error algorithm, so that drone attitude estimation is more accurate; a drone is located and navigated and independentlyflies indoors and in other unknown environments, and the problem that locating cannot be conducted when no GPS signal exists is solved.

Owner:江苏中科智能科学技术应用研究院

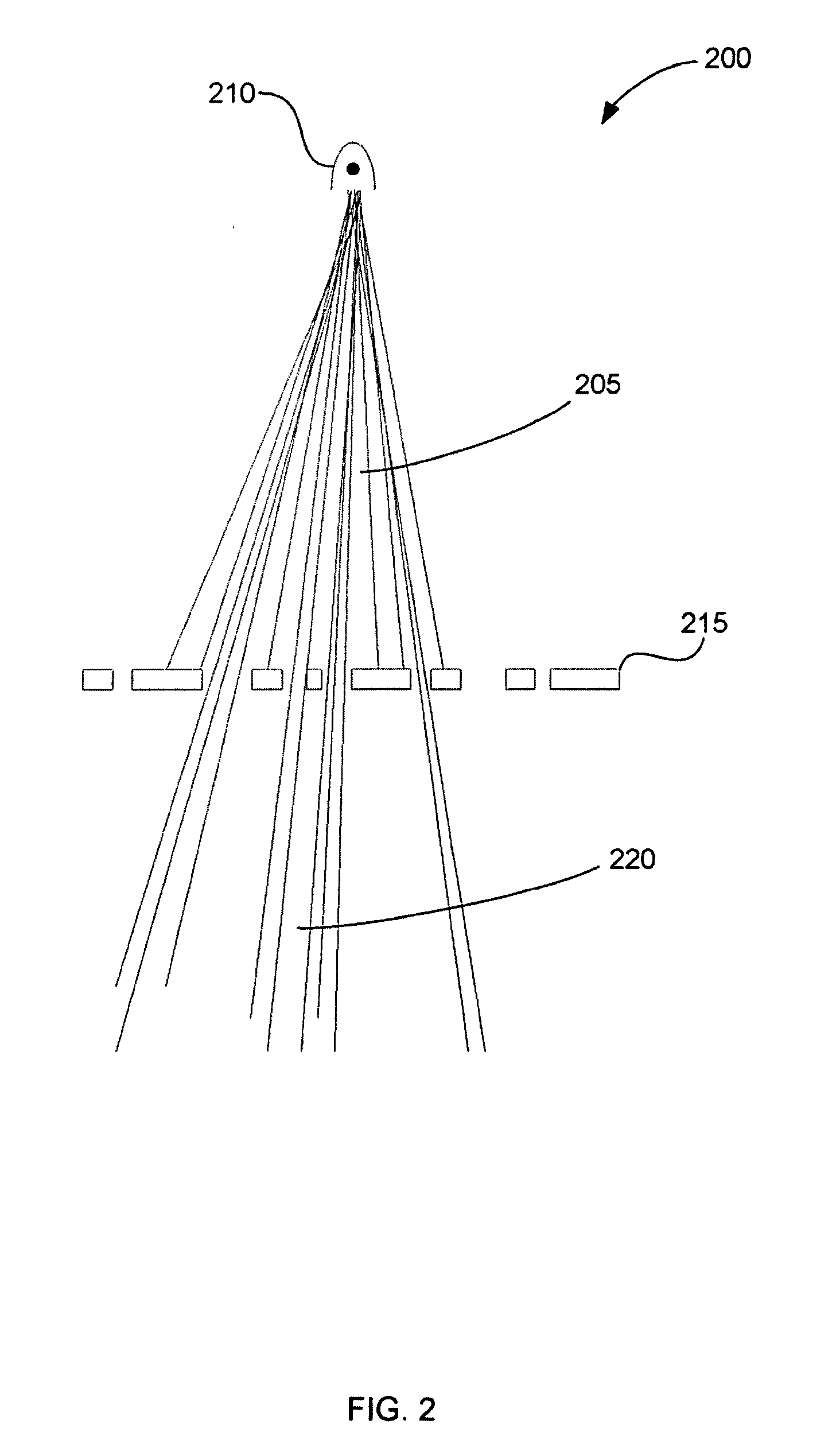

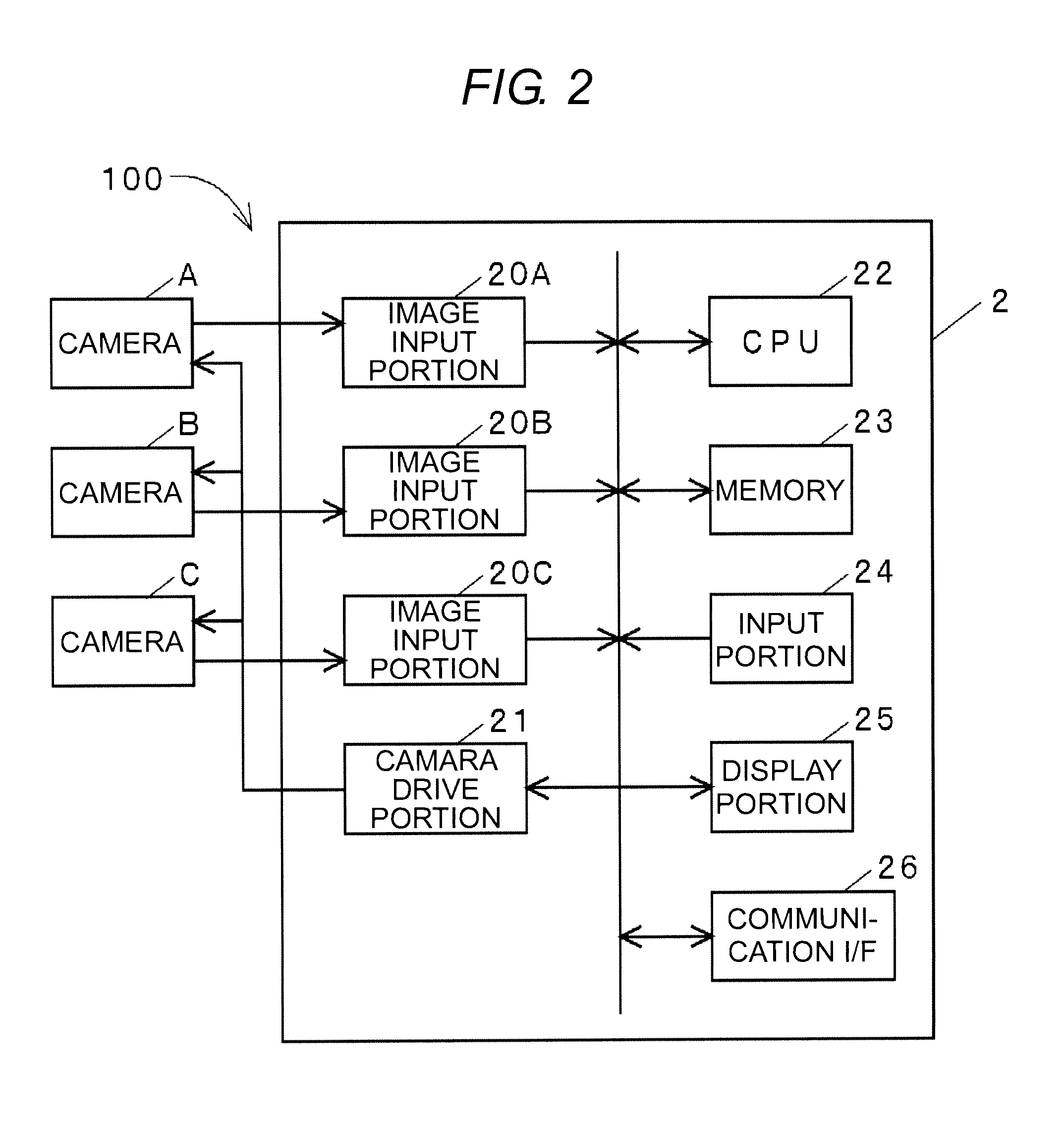

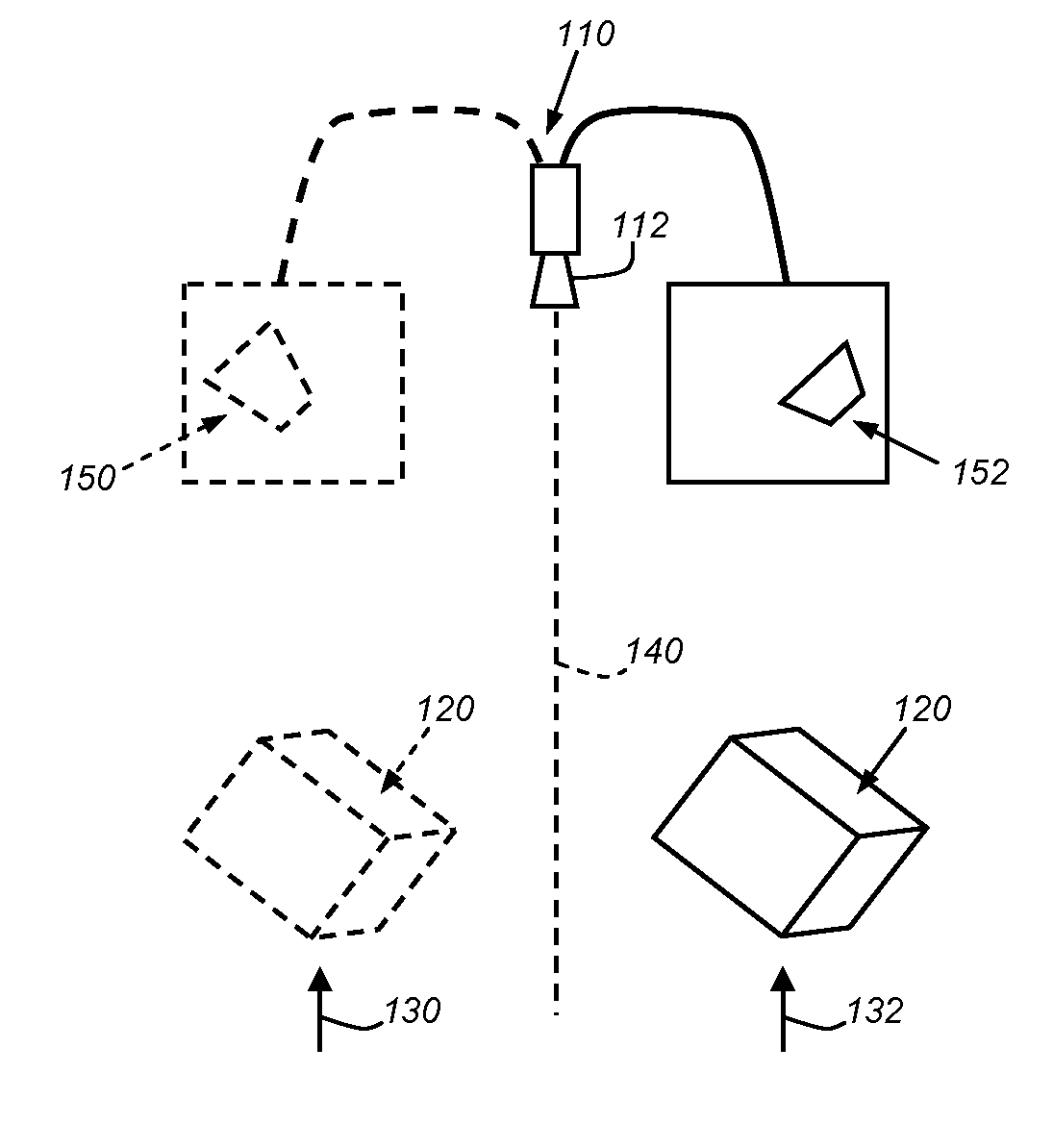

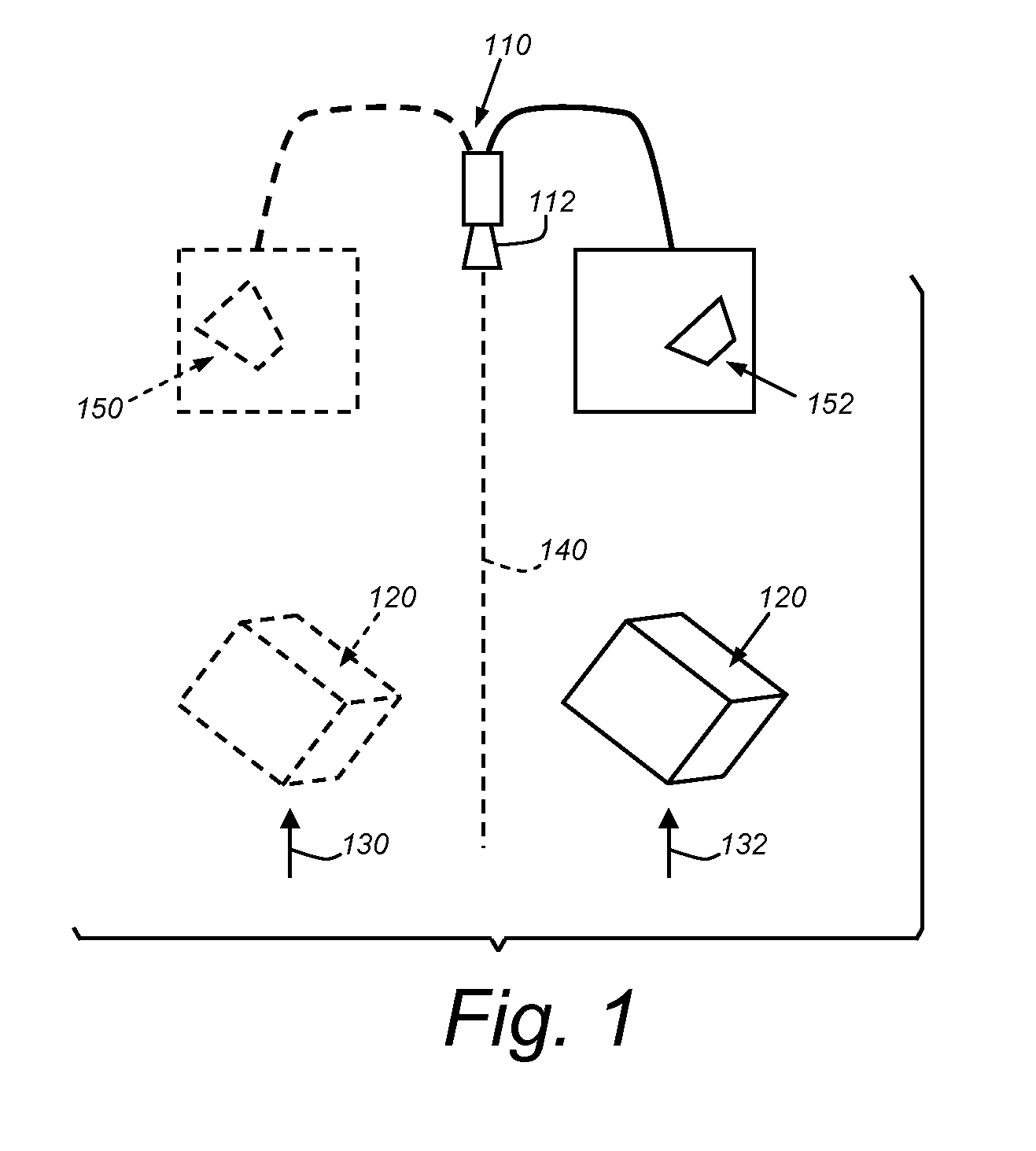

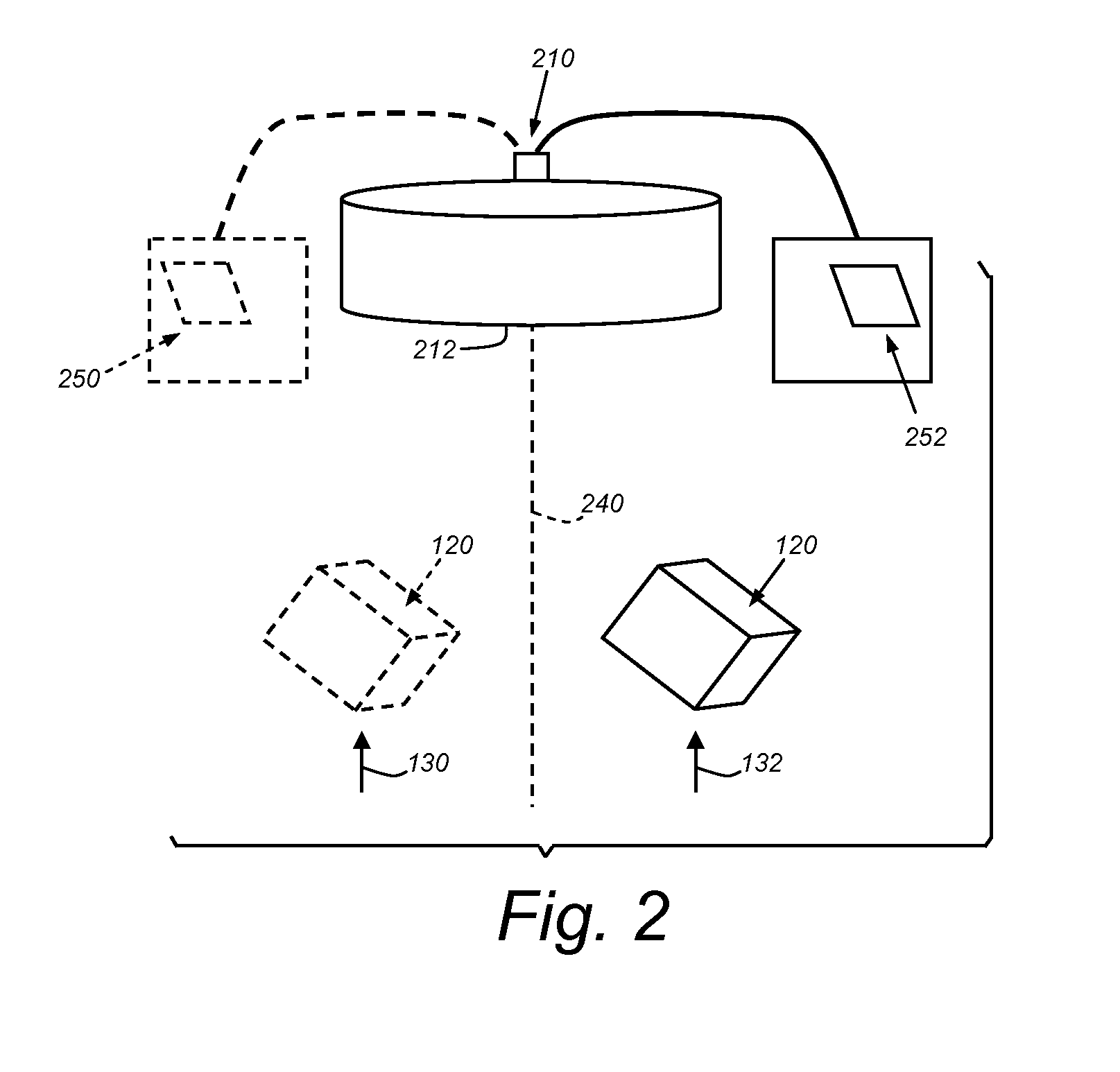

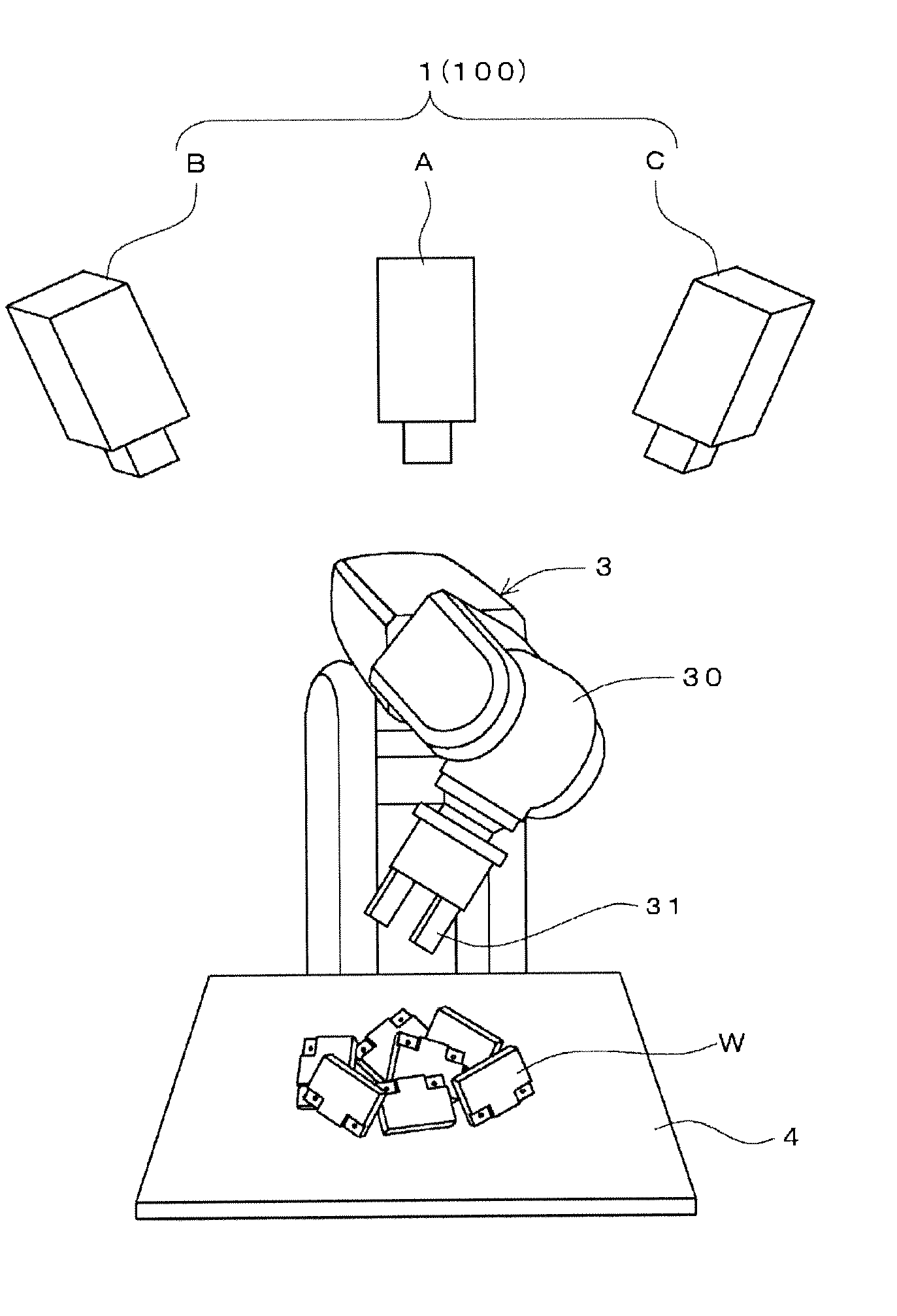

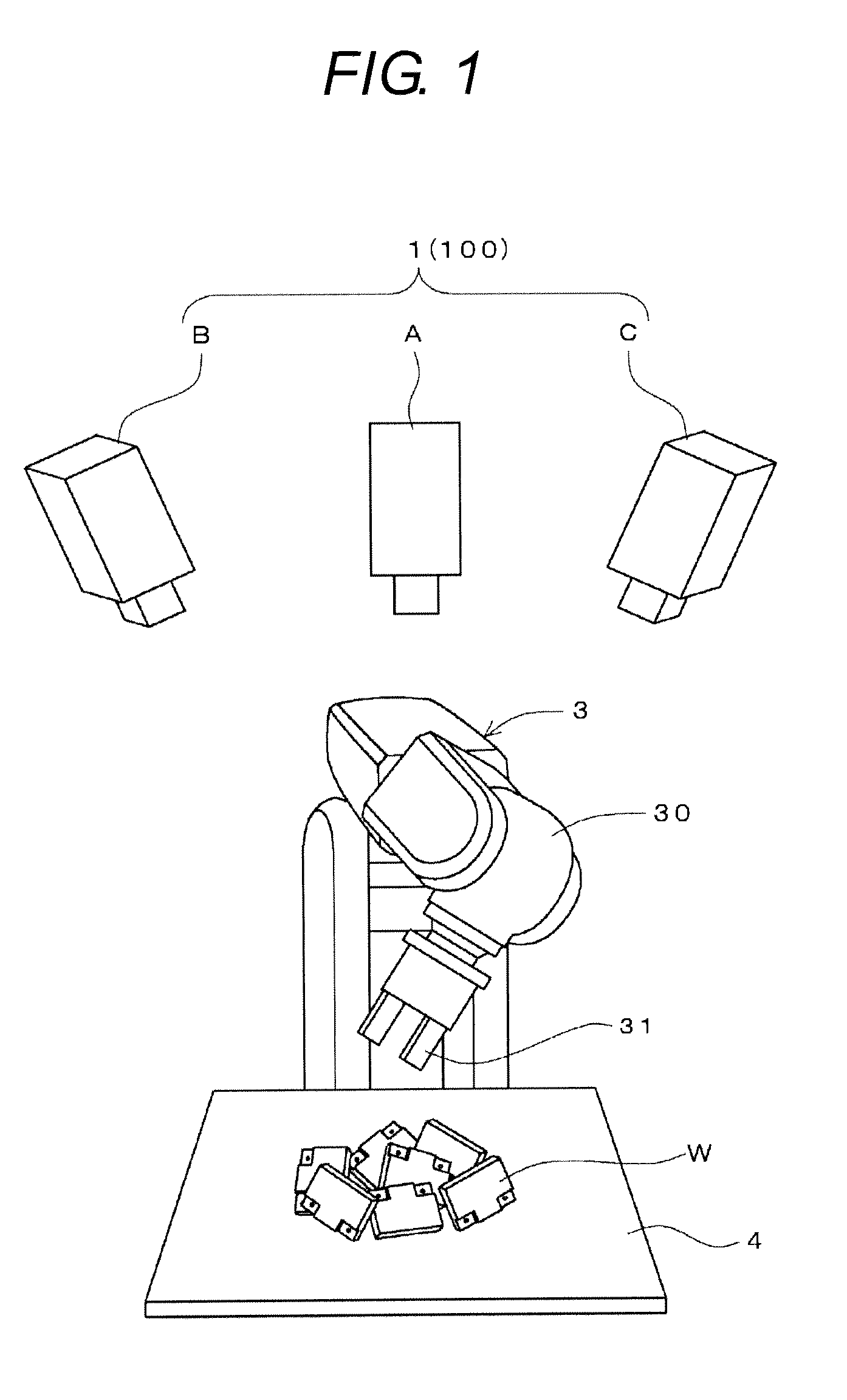

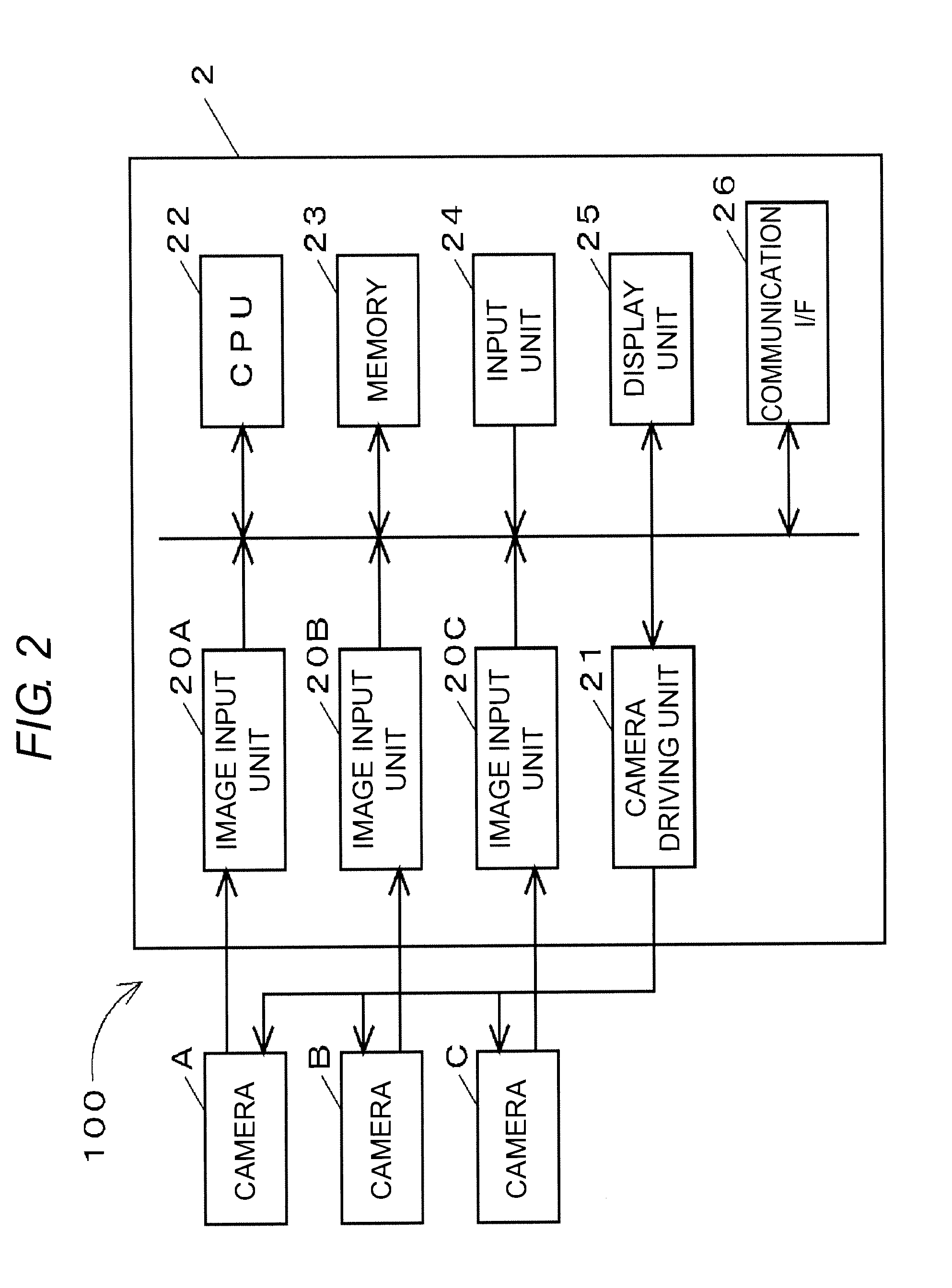

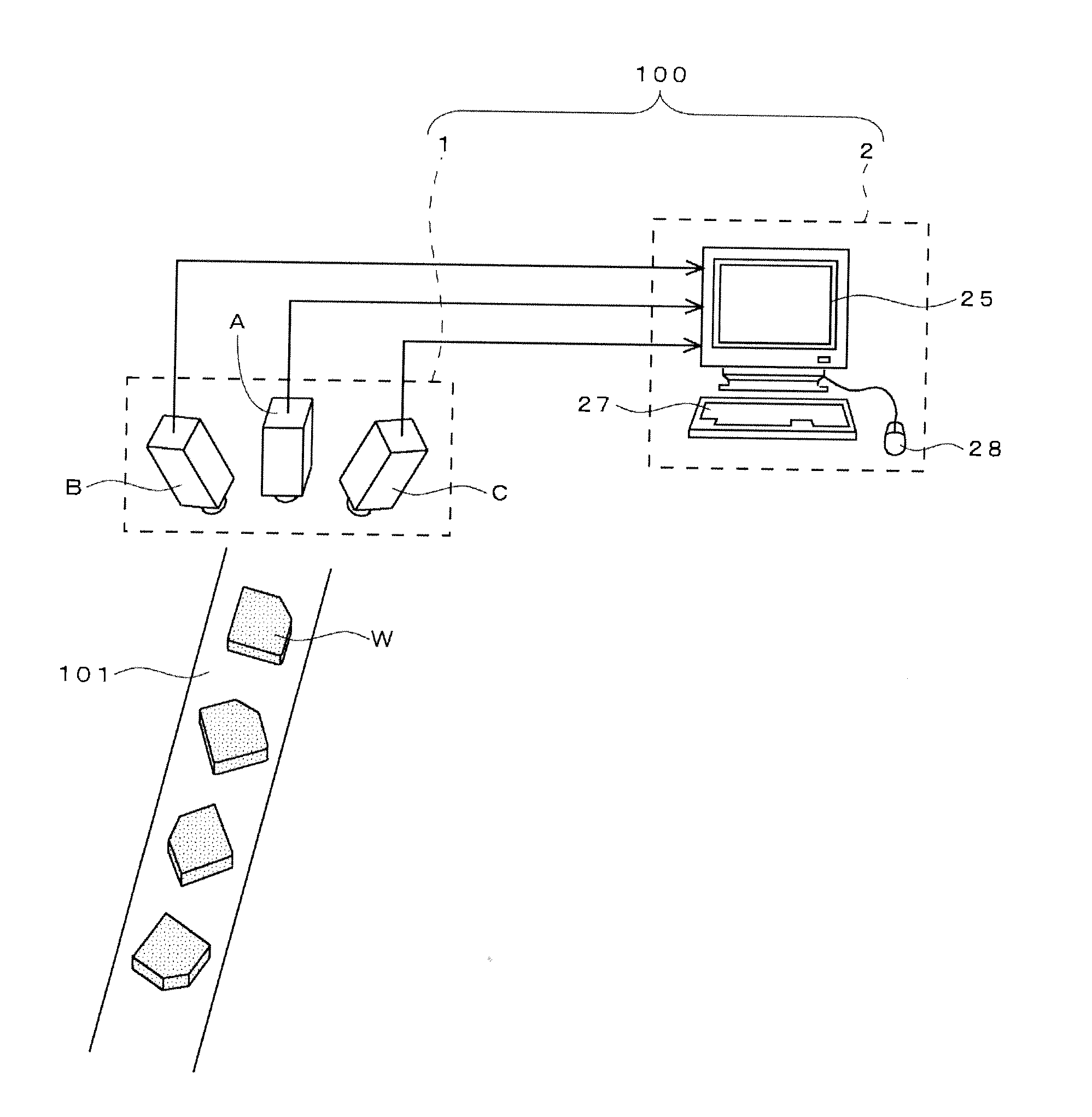

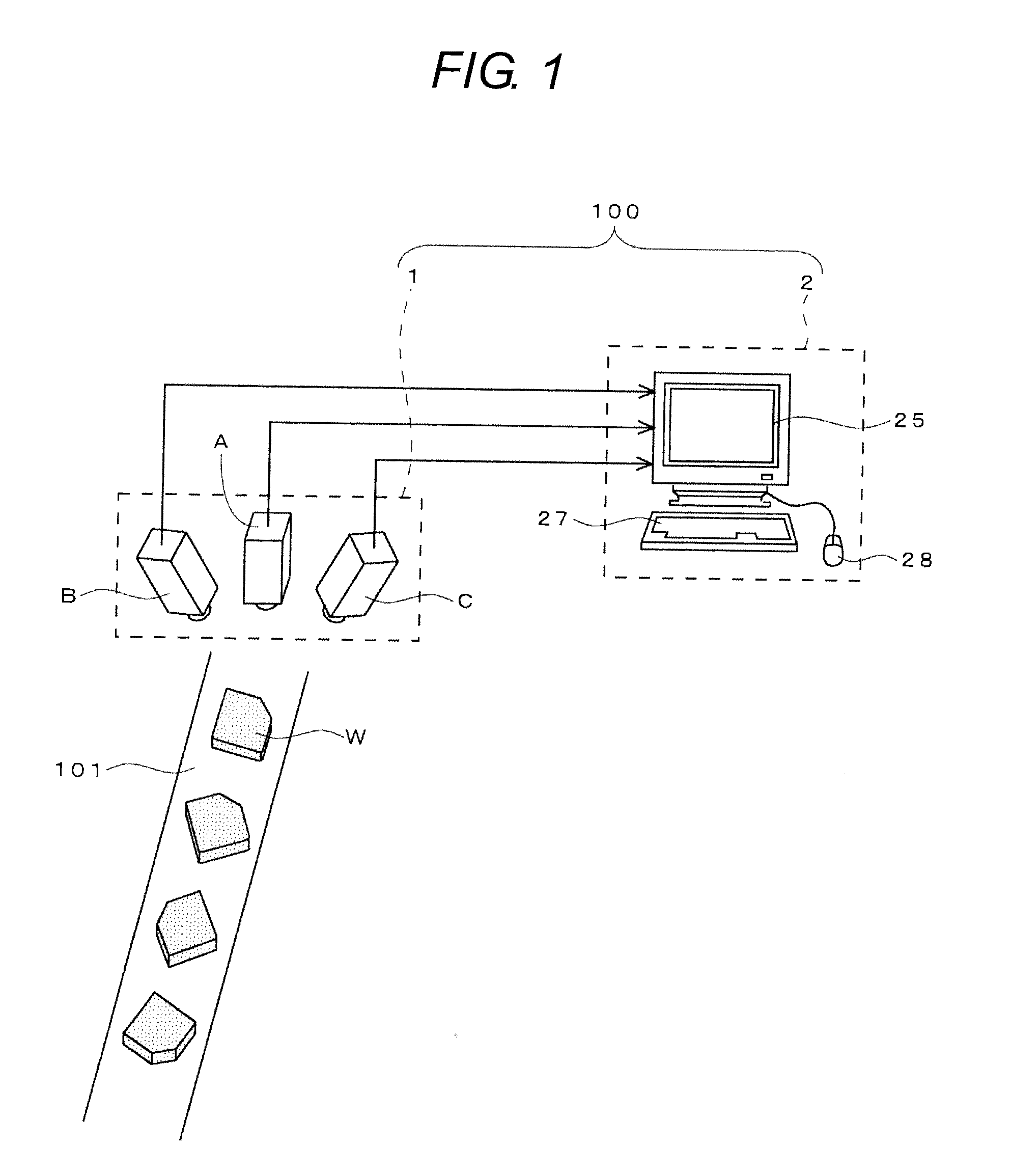

System and method for finding correspondence between cameras in a three-dimensional vision system

ActiveUS20140118500A1Improve accuracyIncrease speedImage enhancementImage analysisManipulatorVisual perception

This invention provides a system and method for determining correspondence between camera assemblies in a 3D vision system implementation having a plurality of cameras arranged at different orientations with respect to a scene involving microscopic and near microscopic objects under manufacture moved by a manipulator, so as to acquire contemporaneous images of a runtime object and determine the pose of the object for the purpose of guiding manipulator motion. At least one of the camera assemblies includes a non-perspective lens. The searched 2D object features of the acquired non-perspective image, corresponding to trained object features in the non-perspective camera assembly can be combined with the searched 2D object features in images of other camera assemblies, based on their trained object features to generate a set of 3D features and thereby determine a 3D pose of the object.

Owner:COGNEX CORP

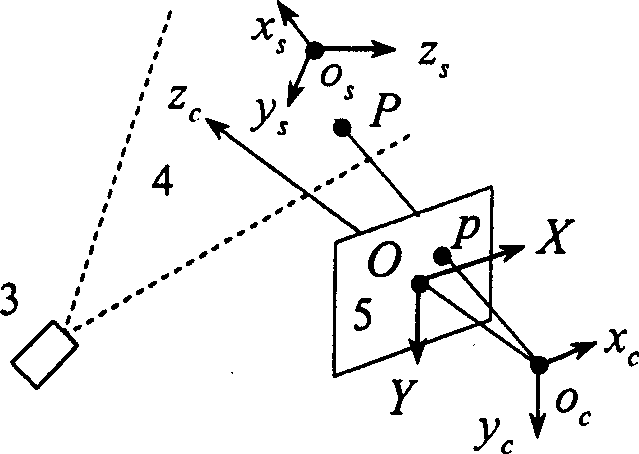

Construction optical visual sense transducer calibration method based on plane targets

InactiveCN1566906AImprove calibration accuracyImprove calibration efficiencyConverting sensor output opticallyVision sensorVisual perception

This invention belongs to measuring technique field and relates to an improvement for the sensor parameters calibration in the measuring of optical three-dimensional vision. The steps of the invention are the following: to set target, to photo target image; to calibrate inner parameters in camera; to photo sensor calibration image; to distortion correct the sensor calibration image; to calculate sensor calibration characteristic points; to calculate the local world coordinates of sensor calibration characteristic points; calculate conversion matrix of camera; to calculate whole world coordinates of calibration characteristic points; to calibrate structure parameters of laser vision sensor; to save the parameters. This method is of high calibration accuracy and simple process and needn't accessory equipment with high cost and is especially suitable to the on-spot calibration of optical vision sensor.

Owner:BEIHANG UNIV

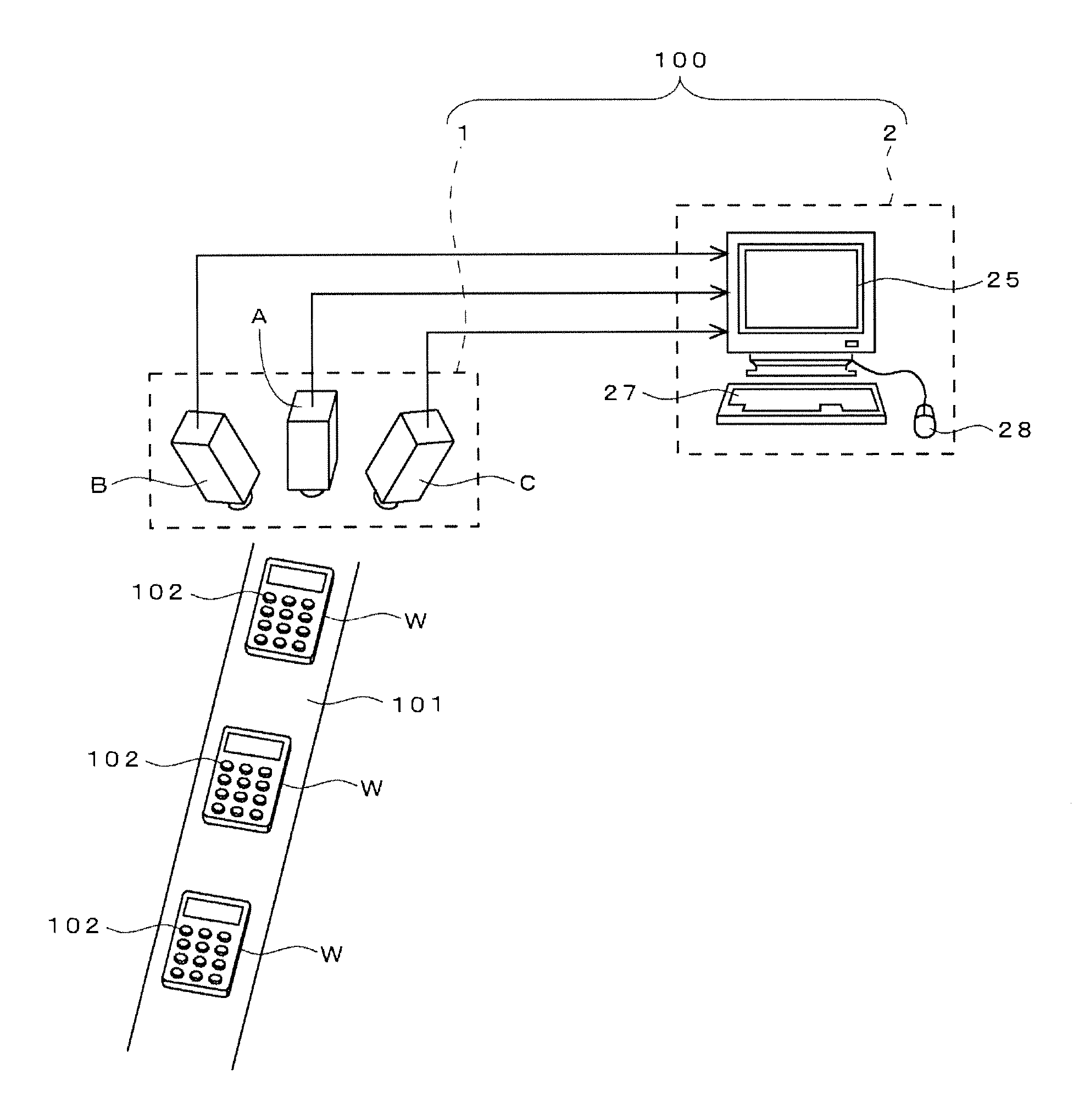

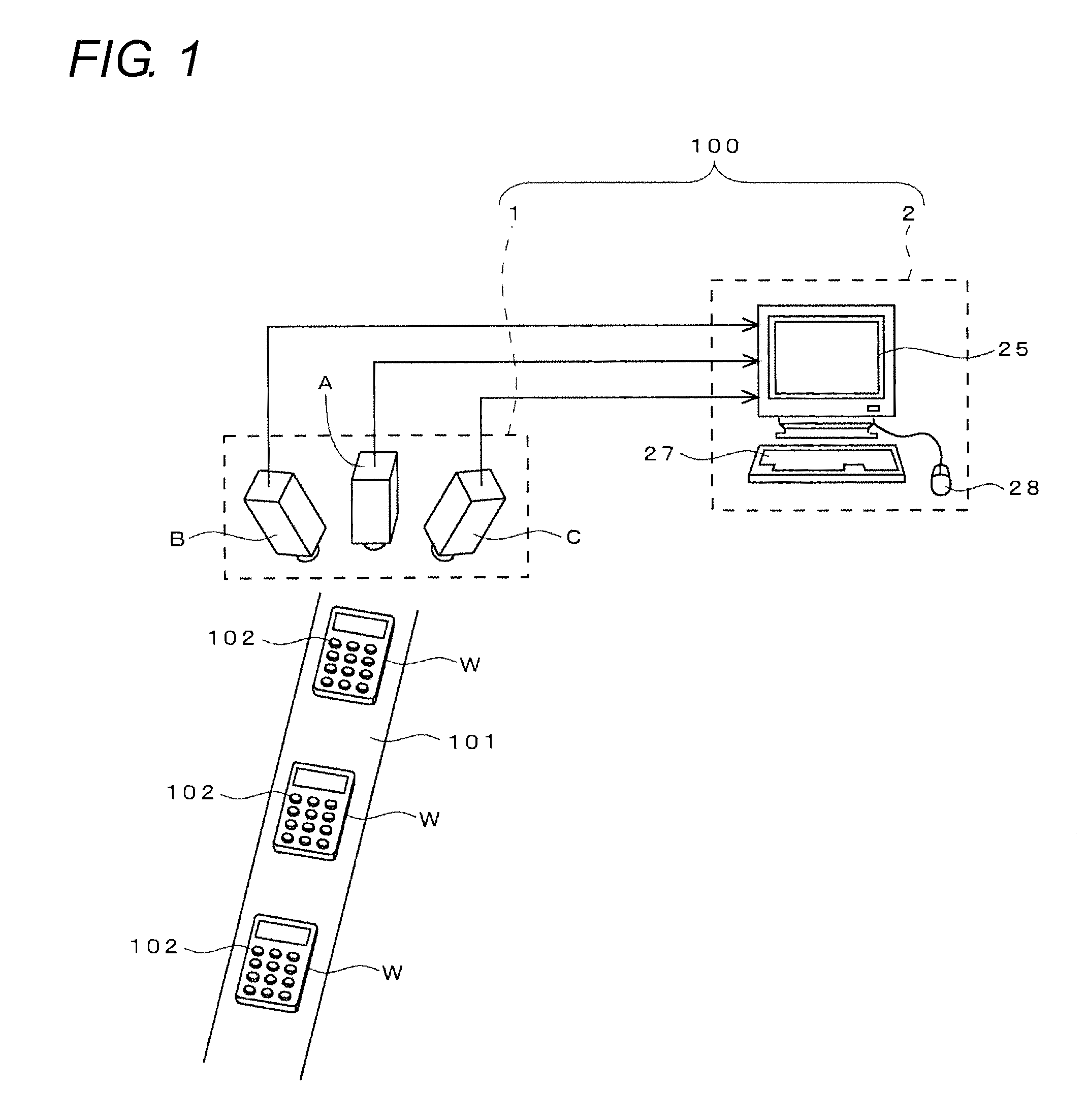

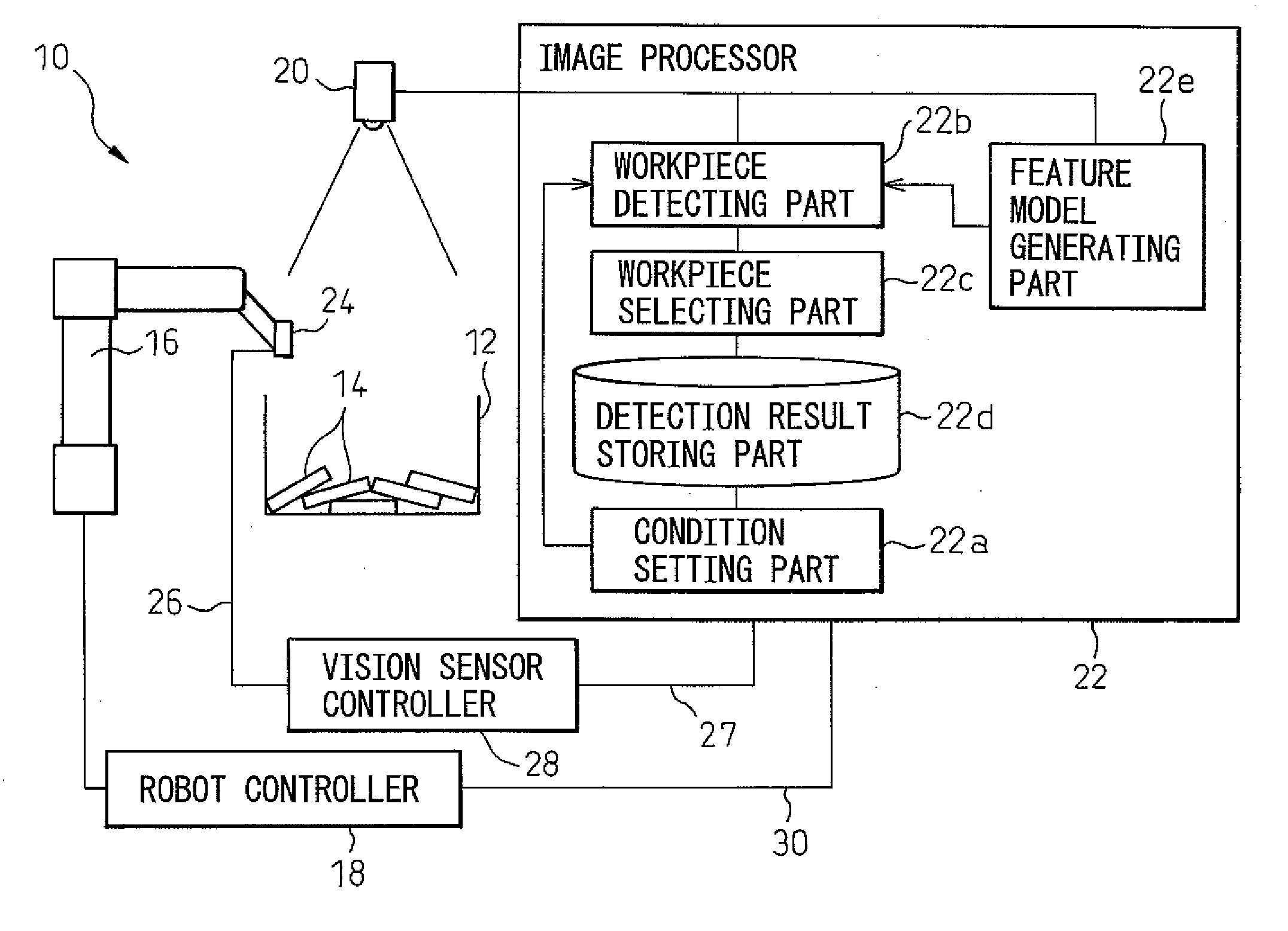

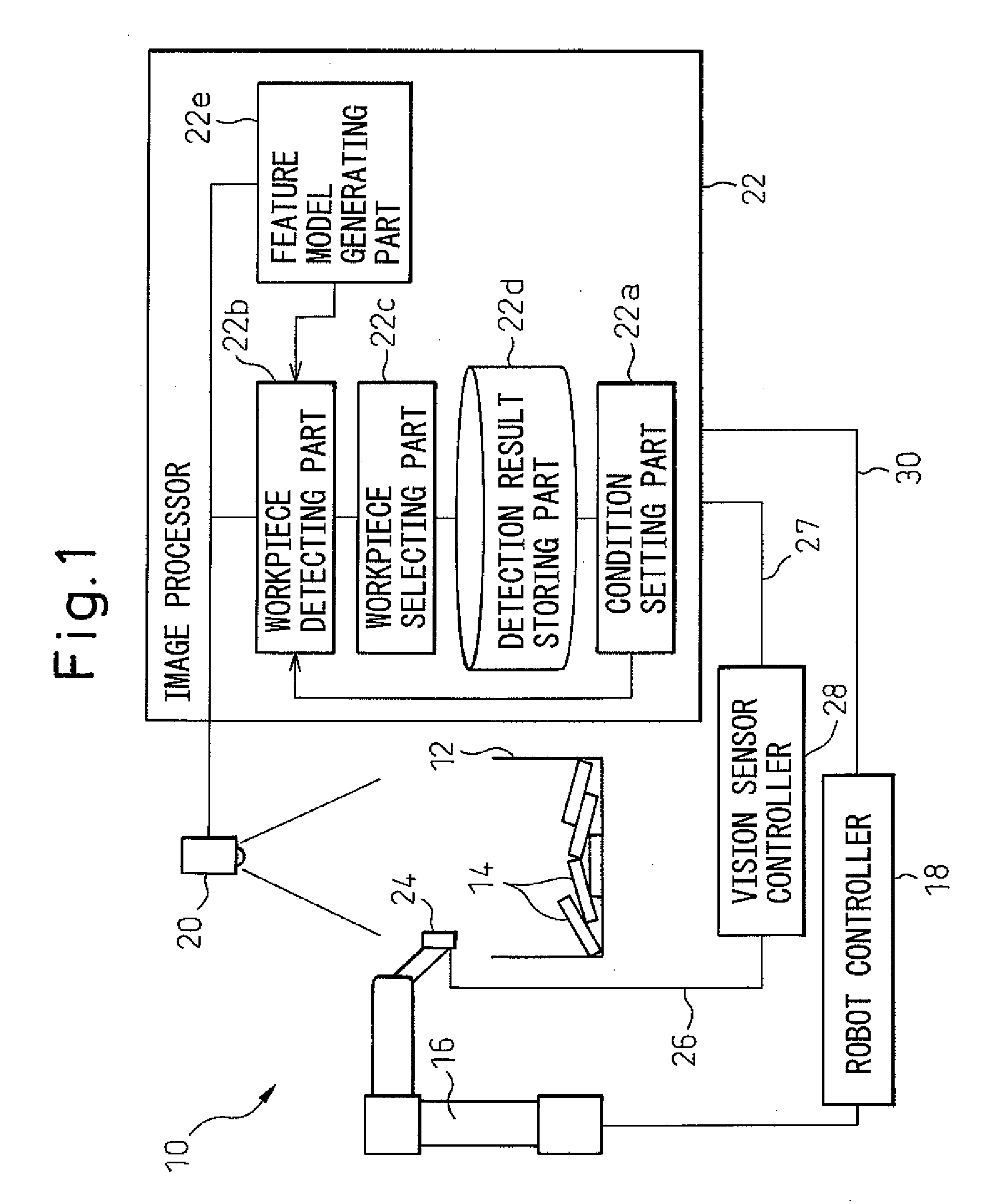

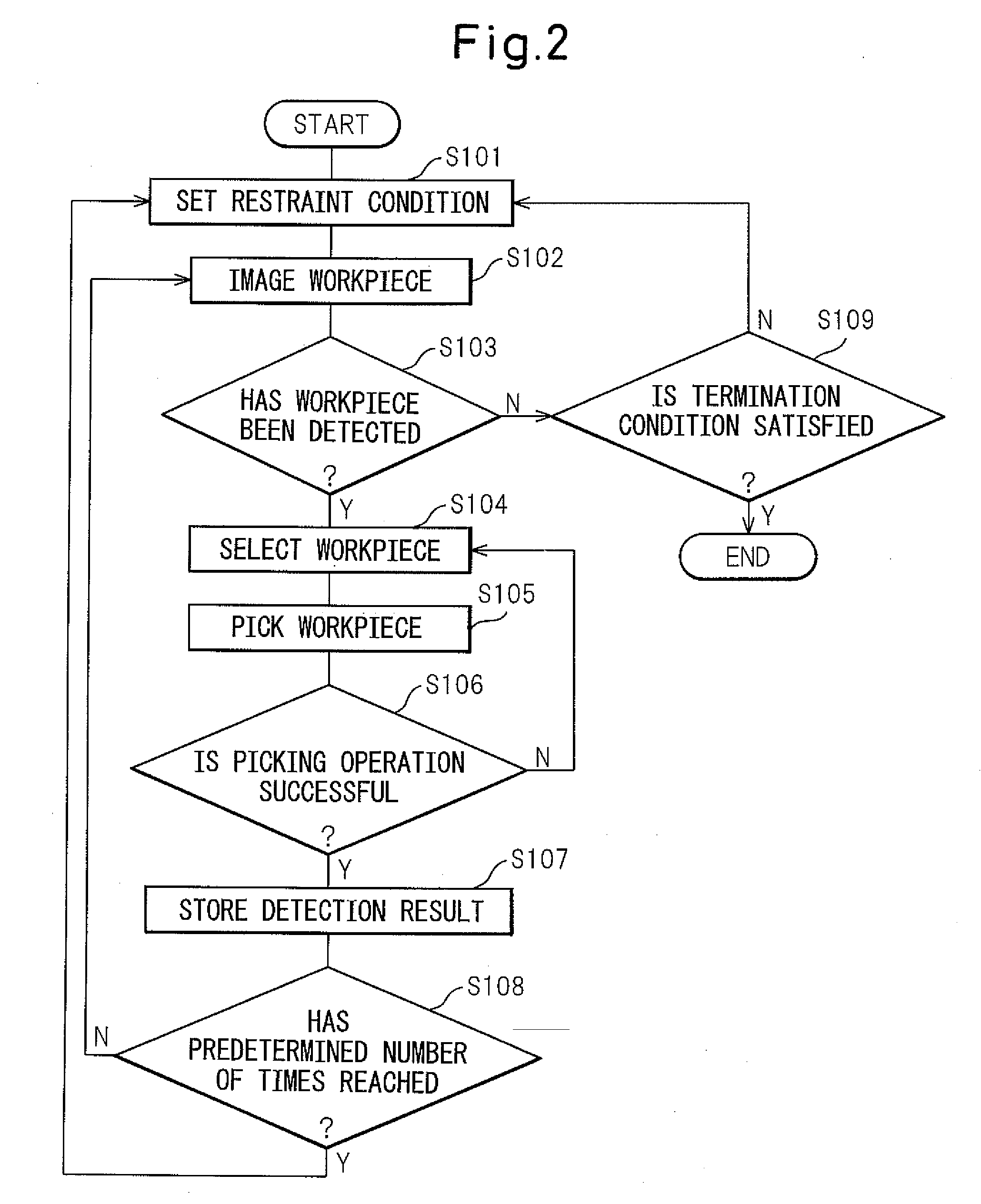

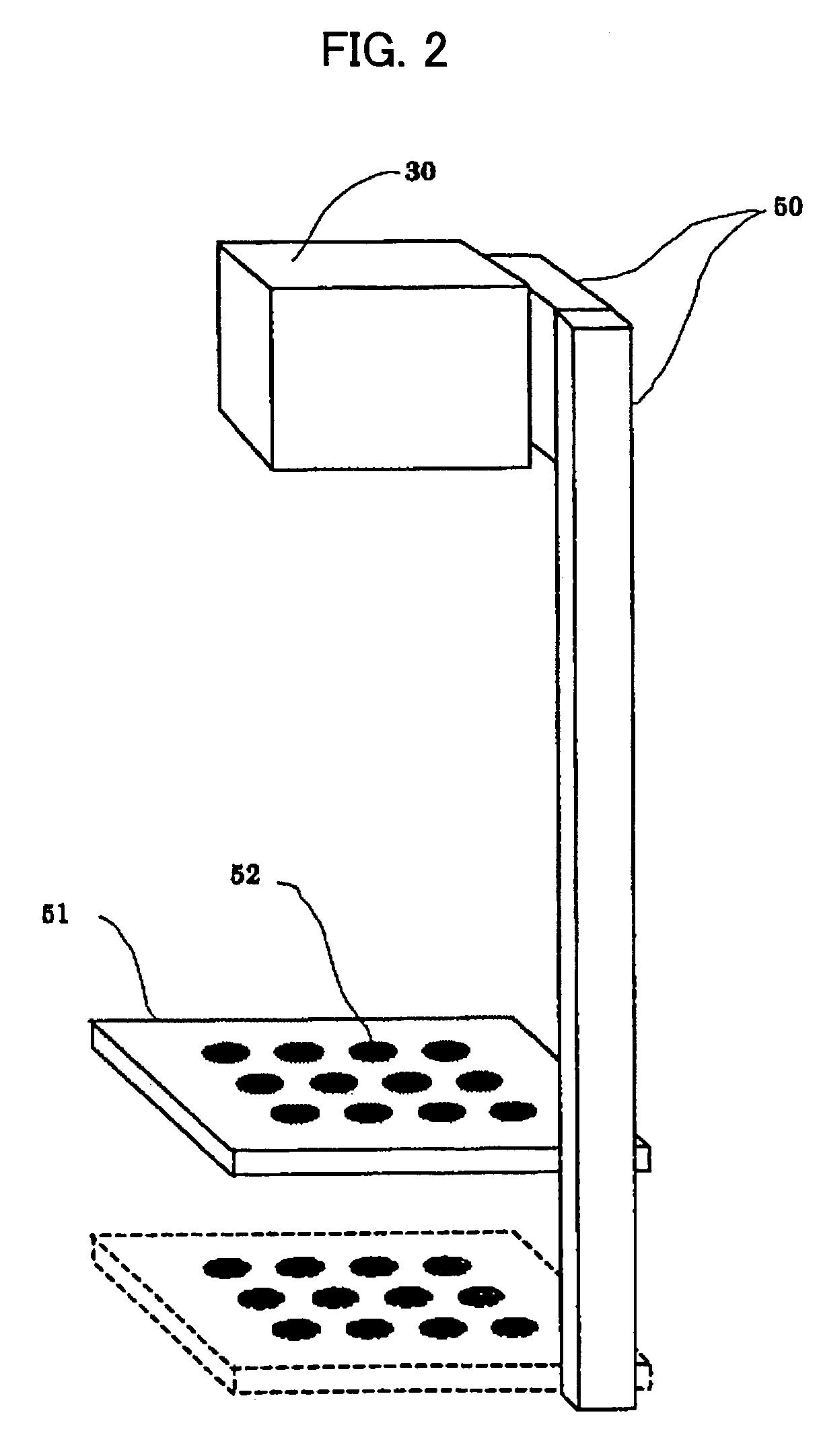

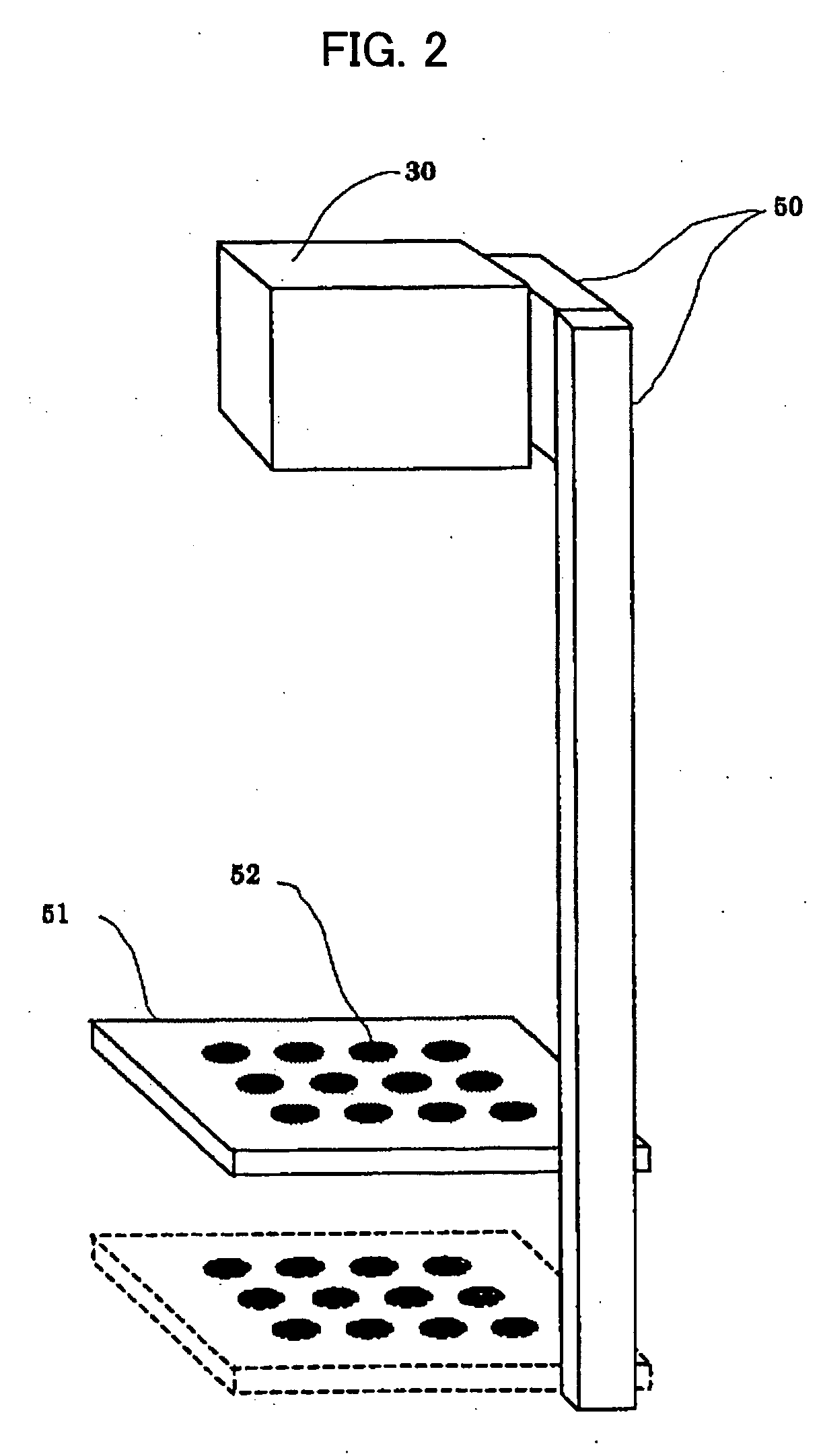

Workpiece picking device

ActiveUS20070177790A1Small sizeAccurate detectionProgramme controlProgramme-controlled manipulatorThree dimensional visionRobot controller

A workpiece picking device capable of correctly detecting the size of a workpiece. The picking device has a robot capable of picking the same kind of workpieces contained in a work container, a robot controller for controlling the robot, a video camera positioned above the work container so as to widely image the workpieces and an image processor for processing an image obtained by the video camera. The three-dimensional position and posture of each workpiece is measured by a three-dimensional vision sensor arranged on a wrist element of the robot.

Owner:FANUC LTD

Method for rapidly constructing three-dimensional architecture scene through real orthophotos

InactiveCN101290222AAvoid occlusionSmooth transitionPhotogrammetry/videogrammetryGraphicsThree dimensional architecture

The invention provides a method for rapidly constructing a three-dimensional building scene through an actual projective image, which concretely comprises following steps of: firstly, extracting the top surface of a building through the actual projective image, obtaining a plane vector diagram, and simultaneously obtaining a vector plane coordinate; secondly, respectively carrying out building top surface triangularization process and ground polygonal triangularization process for the plane vector diagram obtained from the first step and generating a building model and a ground model through combining digital surface model data and digital height model data; thirdly, combining the building model and the ground model to form a three-dimensional scene model; fourthly, carrying out veining mapping superposition for the three-dimensional scene model and the actual projective image and generating the three-dimensional building scene. The method accelerates the modeling speed of the three-dimensional building scene and reduces the manual operation time of modeling mapping design. When the method is used, once modeling and vein mapping can be carried out to a specific zone; true and effective three-dimensional visual effect is obtained; and the working capacity of scene modeling is greatly lowered.

Owner:关鸿亮

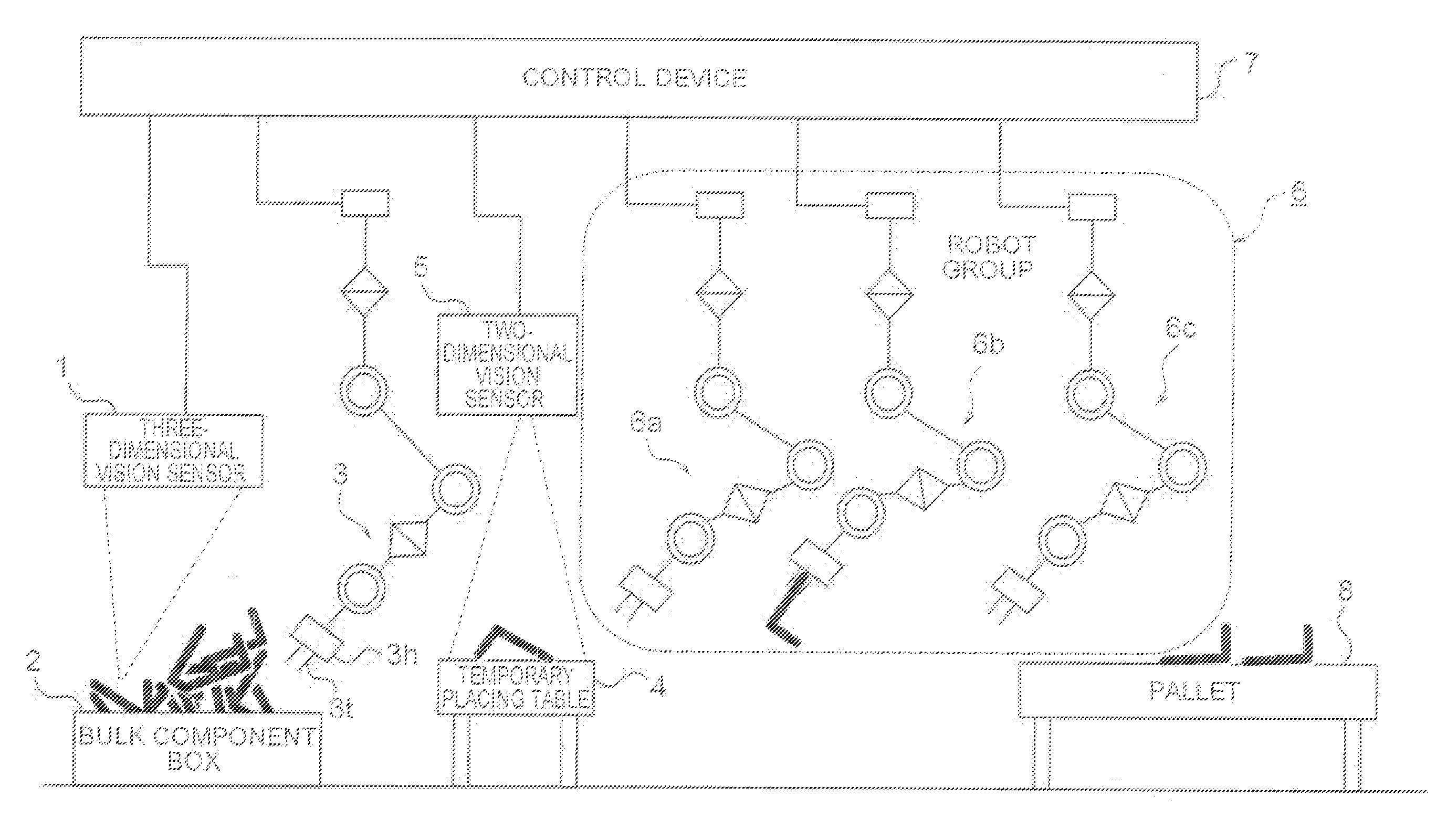

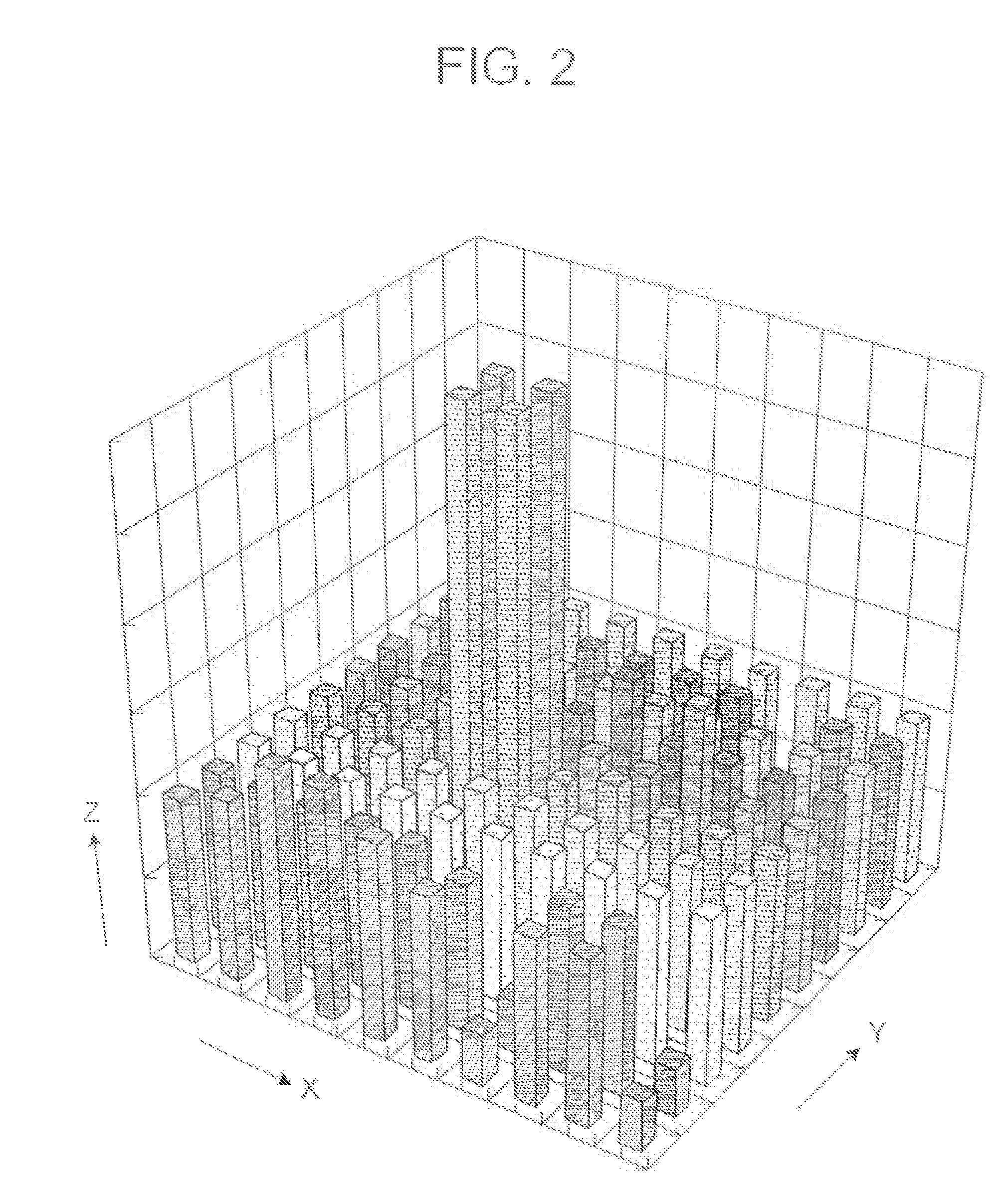

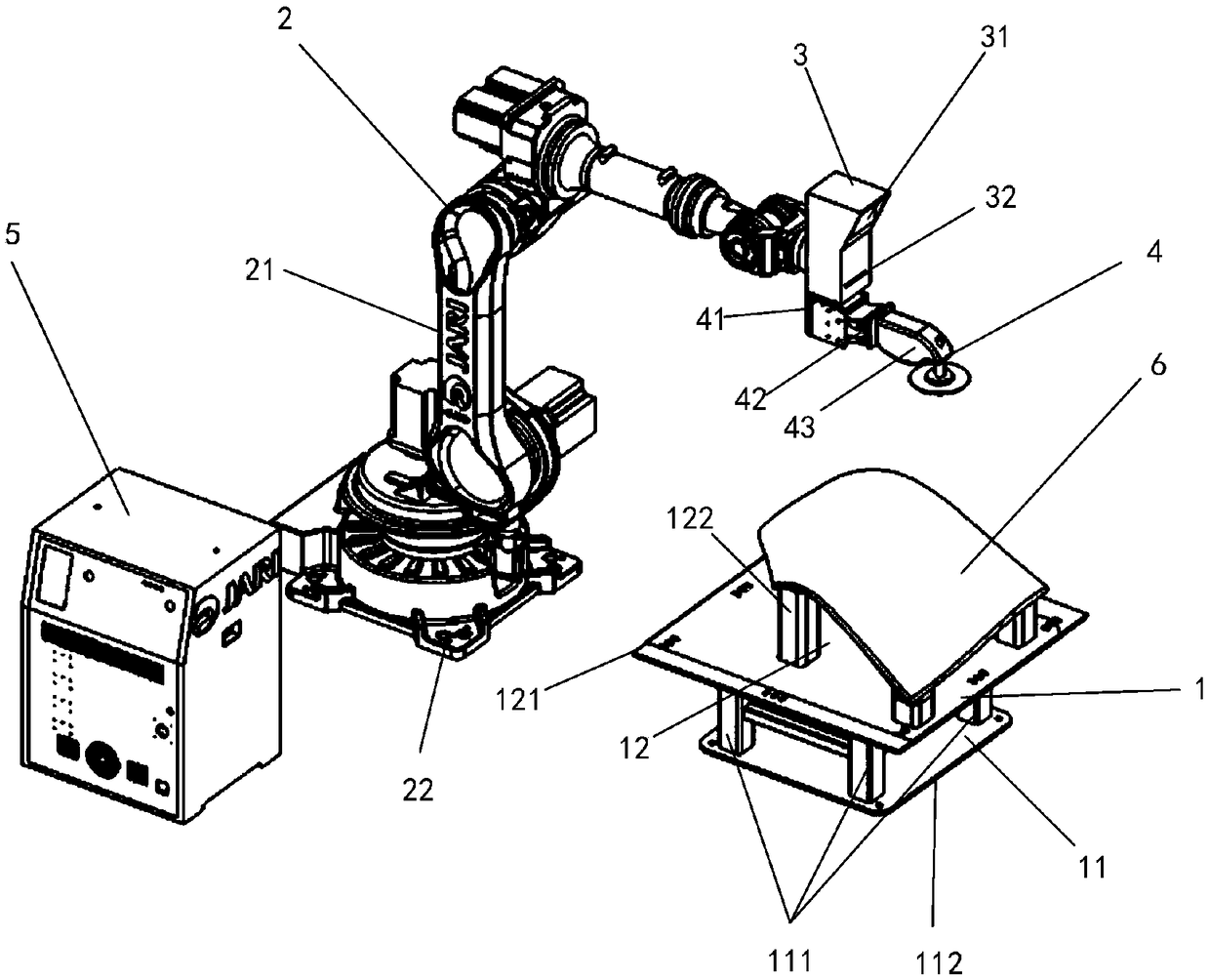

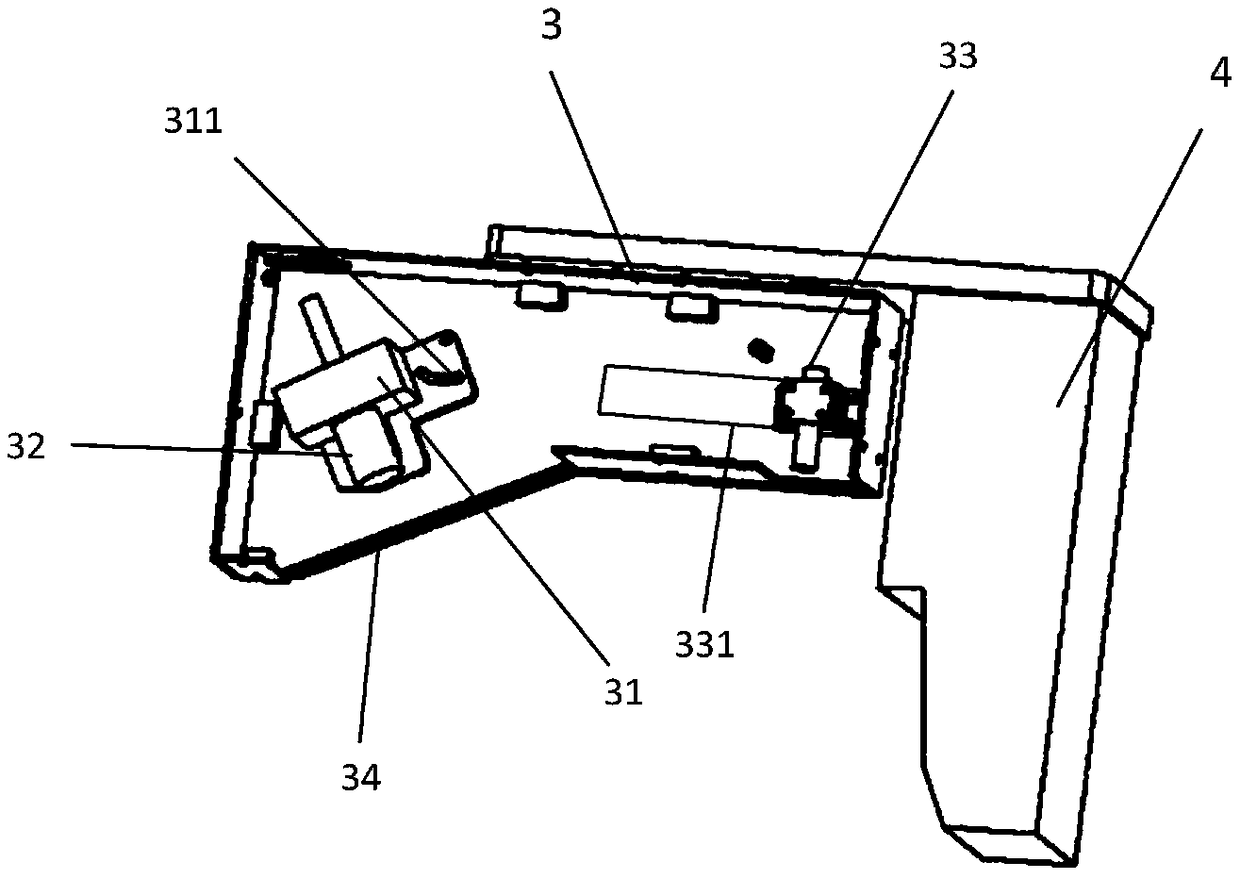

Component supply apparatus

ActiveUS20140147240A1Low costSave time periodProgramme controlProgramme-controlled manipulatorControl engineeringEngineering

A component supply apparatus capable of aligning components of various shapes, which are supplied in bulk, within a short period of time with general means. The apparatus includes a three-dimensional vision sensor measuring a depth map, a bulk component box, a robot picking up a component from the bulk component box, a temporary placing table onto which components are rolled, a two-dimensional vision sensor measuring a profile of the components, a robot group picking up the component rolled on the temporary placing table, and changing a position and orientation of the component into a position and orientation that involve an error of a certain level or less with respect to a position and orientation that are specified in advance, while changing the position and orientation of the component, and a control device controlling those portions.

Owner:MITSUBISHI ELECTRIC CORP

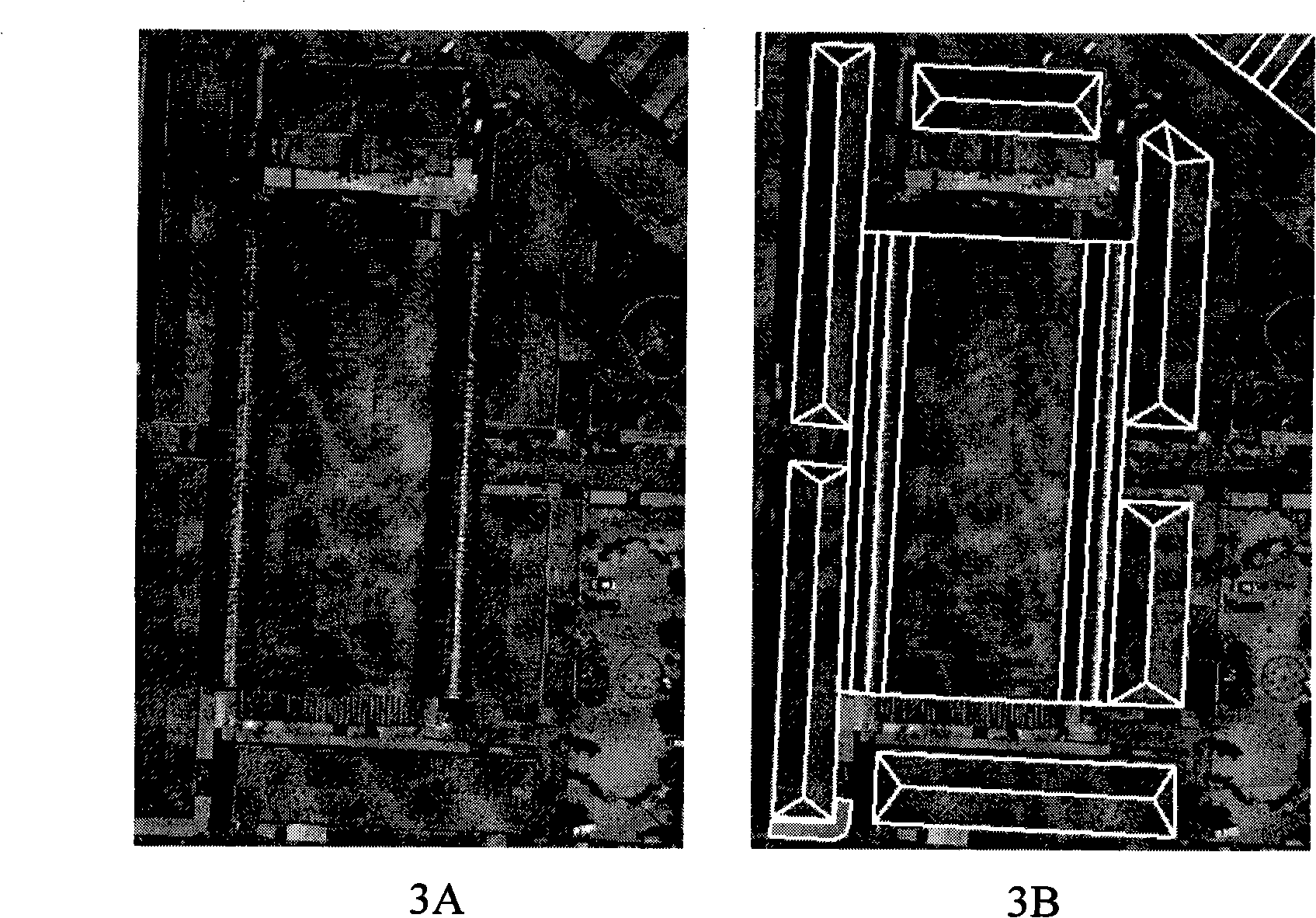

Three-dimensional recognition result displaying method and three-dimensional visual sensor

ActiveUS20100232647A1Improve user friendlinessEasy to confirmImage enhancementImage analysisProjection imageThree dimensional measurement

In the present invention, whether three-dimensional measurement or checking processing with a model is properly performed by setting information and recognition processing result can easily be confirmed. After setting processing is performed to a three-dimensional visual sensor including a stereo camera, a real workpiece is imaged, the three-dimensional measurement is performed to an edge included in a produced stereo image, and restored three-dimensional information is checked with a three-dimensional model to compute a position of the workpiece and a rotation angle for an attitude indicated by the three-dimensional model. Thereafter, perspective transformation of the three-dimensional information on the edge obtained through measurement processing and the three-dimensional model to which coordinate transformation is already performed based on recognition result is performed into a coordinate system of a camera that performs the imaging, and projection images are displayed while being able to be checked with each other.

Owner:ORMON CORP

Method and apparatus for processing three-dimensional vision measurement data

ActiveUS20190195616A1Easy to operateAccurate featuresDetails involving processing stepsImage enhancementPoint cloudMachine vision

A method and an apparatus for processing three-dimensional vision measurement data are provided. The method includes: obtaining three-dimensional point cloud data measured by a three-dimensional machine vision measurement system and establishing a visualized space based thereon; establishing a new three-dimensional rectangular coordinate system corresponding to the visualized space, and performing coordinate translation conversion on the three-dimensional point cloud data; determining three basic observation planes corresponding to the visualized space and a rectangular coordinate system corresponding to the basic observation planes; respectively projecting the three-dimensional point cloud data on the basic observation planes to obtain three plane projection functions; respectively generating, according to the three-dimensional point cloud data, three depth value functions corresponding to the plane projection functions; digitally processing three plane projection graphs and three depth graphs respectively, and converting same to two-dimensional images of a specified format; and compressing, storing and displaying the two-dimensional images of the specified format.

Owner:BEIJING QINGYING MACHINE VISUAL TECH CO LTD

Robot grinding system with three-dimensional vision and control method thereof

PendingCN109483369ASolve the problem of automatic grindingRealize flexible grinding workGrinding feed controlCharacter and pattern recognitionEngineering3d camera

The invention discloses a robot grinding system with three-dimensional vision and a control method thereof. The robot grinding system comprises a workpiece fixing device, a grinding robot, an opticaldevice, pneumatic grinding equipment, a power supply and a control device. The control method of the robot grinding system with the three-dimensional vision comprises the steps that the grinding robotdrives the optical device to horizontally scan a curved surface workpiece; a laser image collected by a 3D camera is transmitted to the control device in the power supply and the control device; thecontrol device performs the three-dimensional point cloud processing to the image obtained by the 3D camera by using a corresponding function, and the preliminary filtering denoising is carried out; the point cloud segmentation and a slice algorithm are used for performing the further processing on the obtained three-dimensional point cloud; and processing results are analyzed and sent to the control device according to rules, and the robot is driven to perform the wholly grinding operation on the curved surface workpiece in the correct attitude. The robot grinding system with three-dimensional vision and the control method thereof are mainly used for automatic grinding in the field of curved surface structure manufacturing, and the production efficiency and production quality are improvedby improving the automation degree of the industrial grinding field.

Owner:716TH RES INST OF CHINA SHIPBUILDING INDAL CORP +1

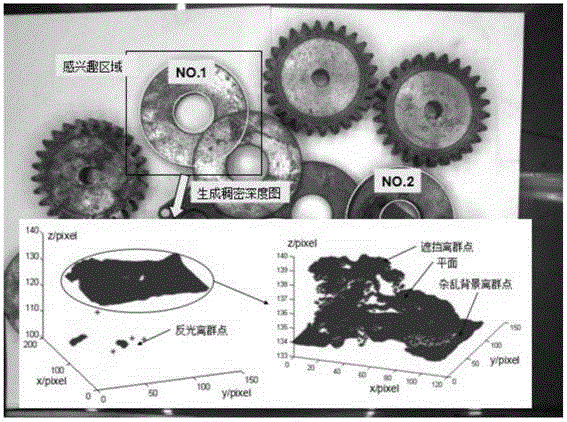

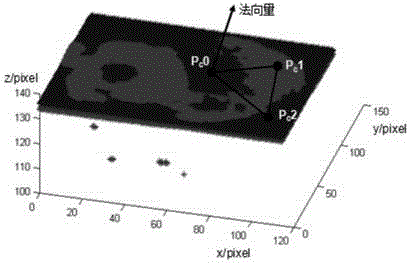

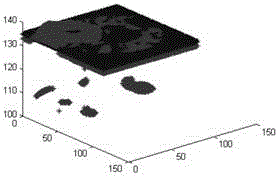

Planar component three-dimensional position and normal vector calculation method based on depth map

ActiveCN105021124AImprove stabilityGood precisionUsing optical meansStatistical analysisShape matching

The invention discloses a planar component three-dimensional position and normal vector calculation method based on a depth map. the method comprises the steps of: identifying a component through shape matching, and acquiring a dense depth map of an area-of-interest where the component is positioned by using a binocular three-dimensional vision system; carrying out non-uniform sampling and statistical analysis on the dense depth map to remove off-group points; fitting a plane in the dense depth map by using a robust random sample consensus algorithm; and calculating a three-dimensional position and a normal vector of the component according to a plane equation, and providing a conversion method for a camera coordinate system and a world coordinate system. The planar component three-dimensional position and normal vector calculation method has the advantages of high universality, high positioning accuracy and good stability, and is suitable for planar components.

Owner:SOUTH CHINA AGRI UNIV

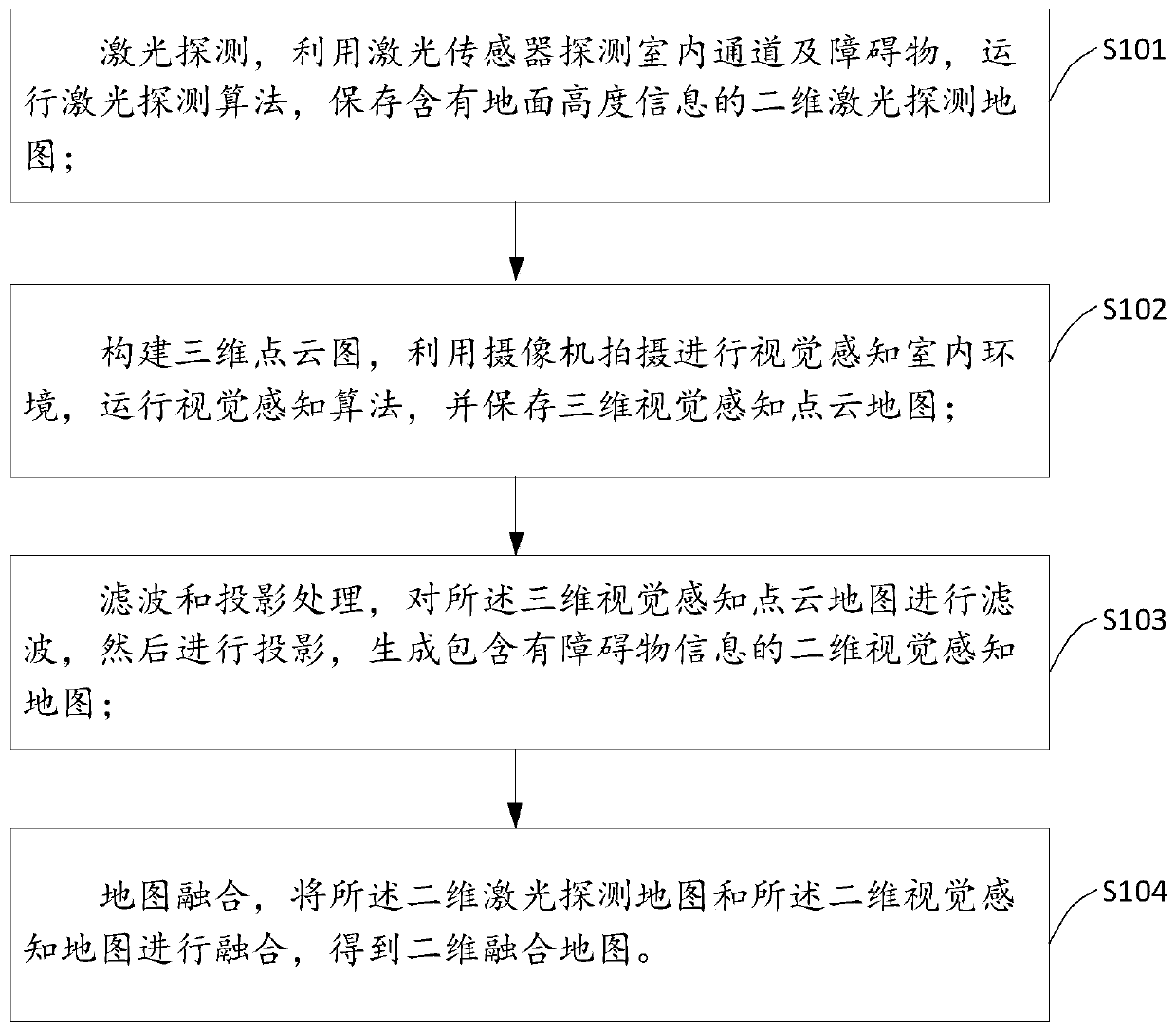

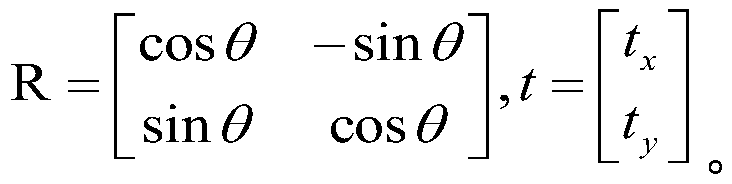

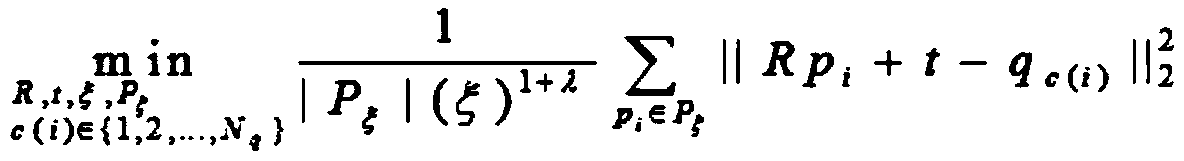

Fusion method of indoor SLAM map based on visual perception and laser detection

InactiveCN109857123AImprove accuracyRich relevant informationPosition/course control in two dimensionsMobile navigationPoint cloud

The invention discloses a fusion method of an indoor SLAM map based on visual perception and laser detection. The method comprises the steps of the laser detection, constructing a three-dimensional point cloud map, filtering and projection processing and map fusion; through the steps, on the one hand, a two-dimensional laser detection map containing ground height information is obtained, on the other hand, a three-dimensional visual perception point cloud map is obtained, and then after the three-dimensional visual perception point cloud map is filtered and projected, a two-dimensional visualperception map containing obstacle information is obtained; and finally, the two-dimensional laser detection map and the two-dimensional visual perception map are fused to obtain a two-dimensional fusion map. According to the fusion method of the indoor SLAM map based on the visual perception and the laser detection, the influential obstacle information in the process of robot navigation can be fused into two-dimensional map information to generate a two-dimensional map with high accuracy and rich information, and the practicability in an indoor environment for robot mobile navigation and pathplanning is high.

Owner:ZHENGZHOU UNIV

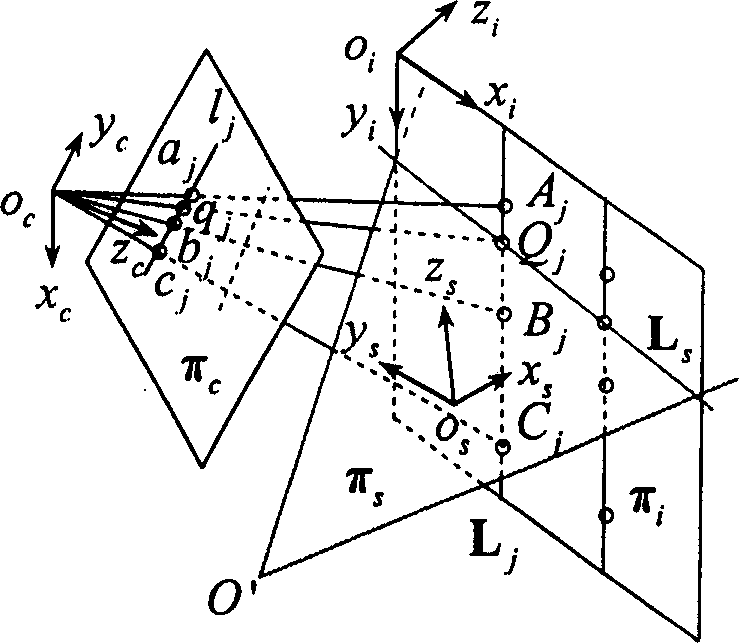

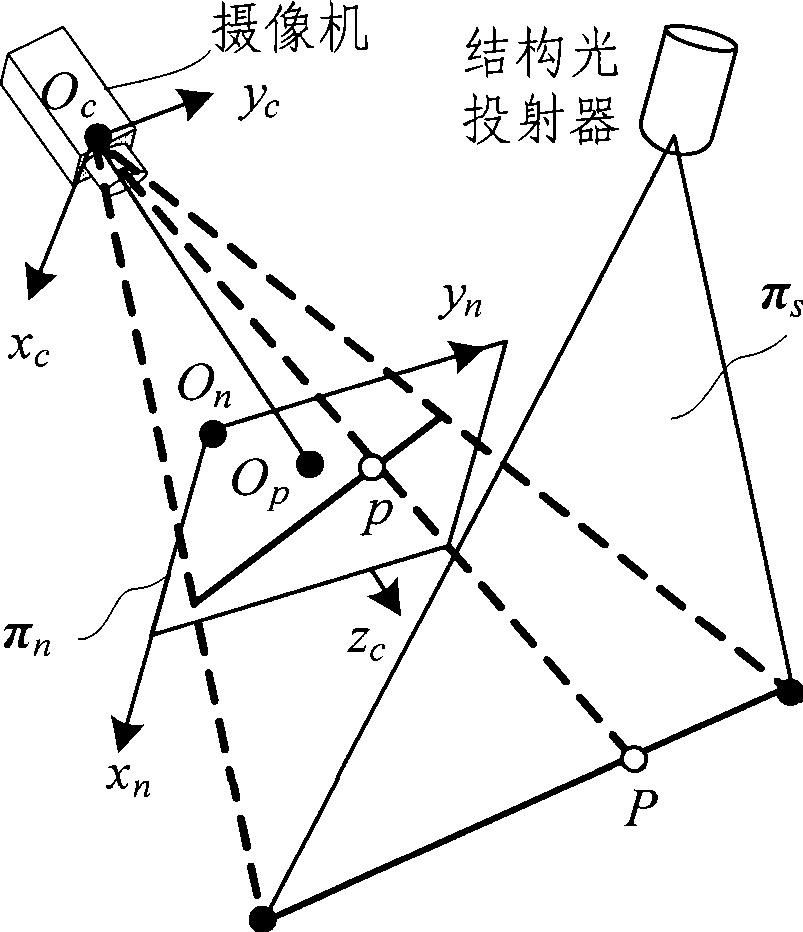

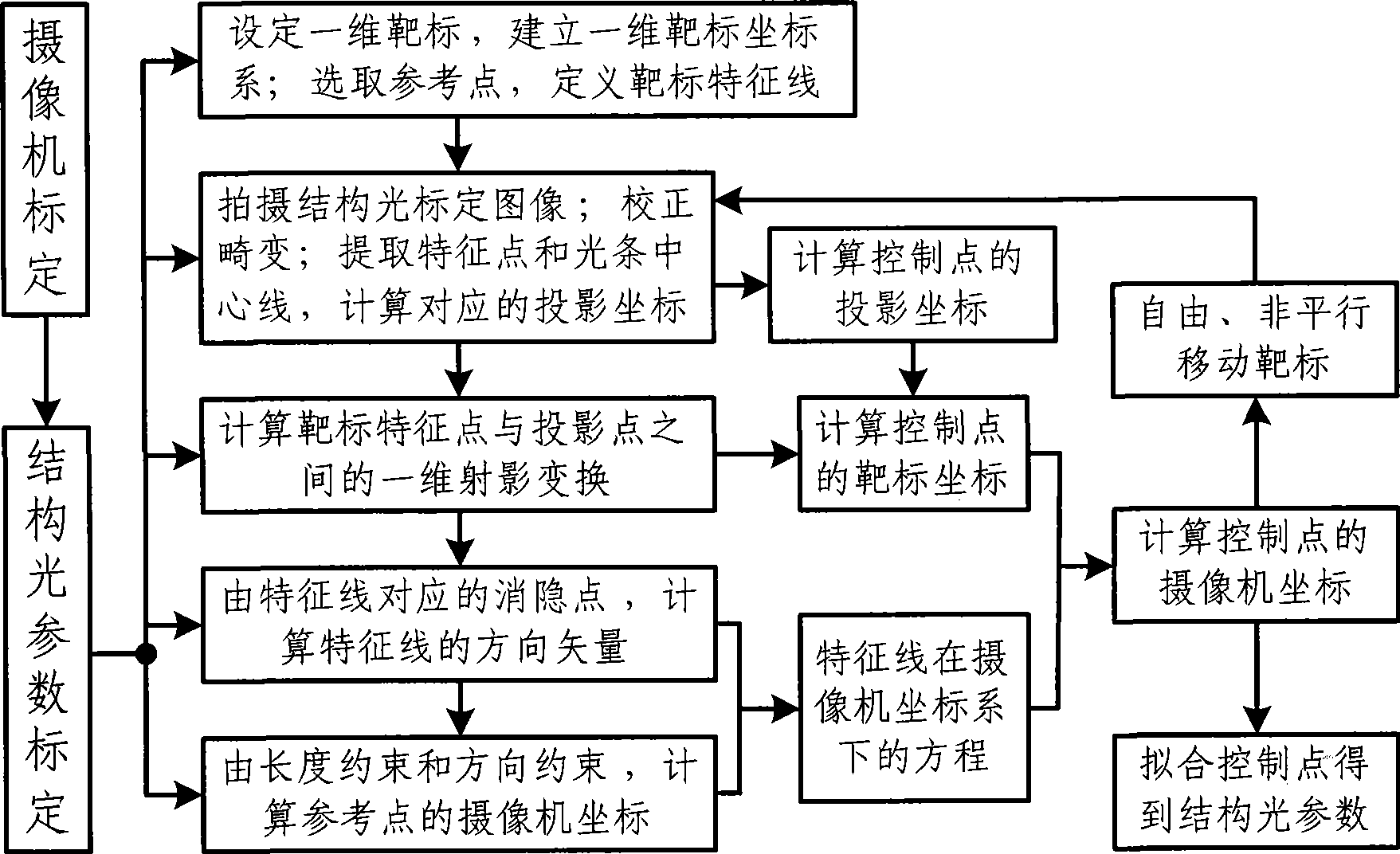

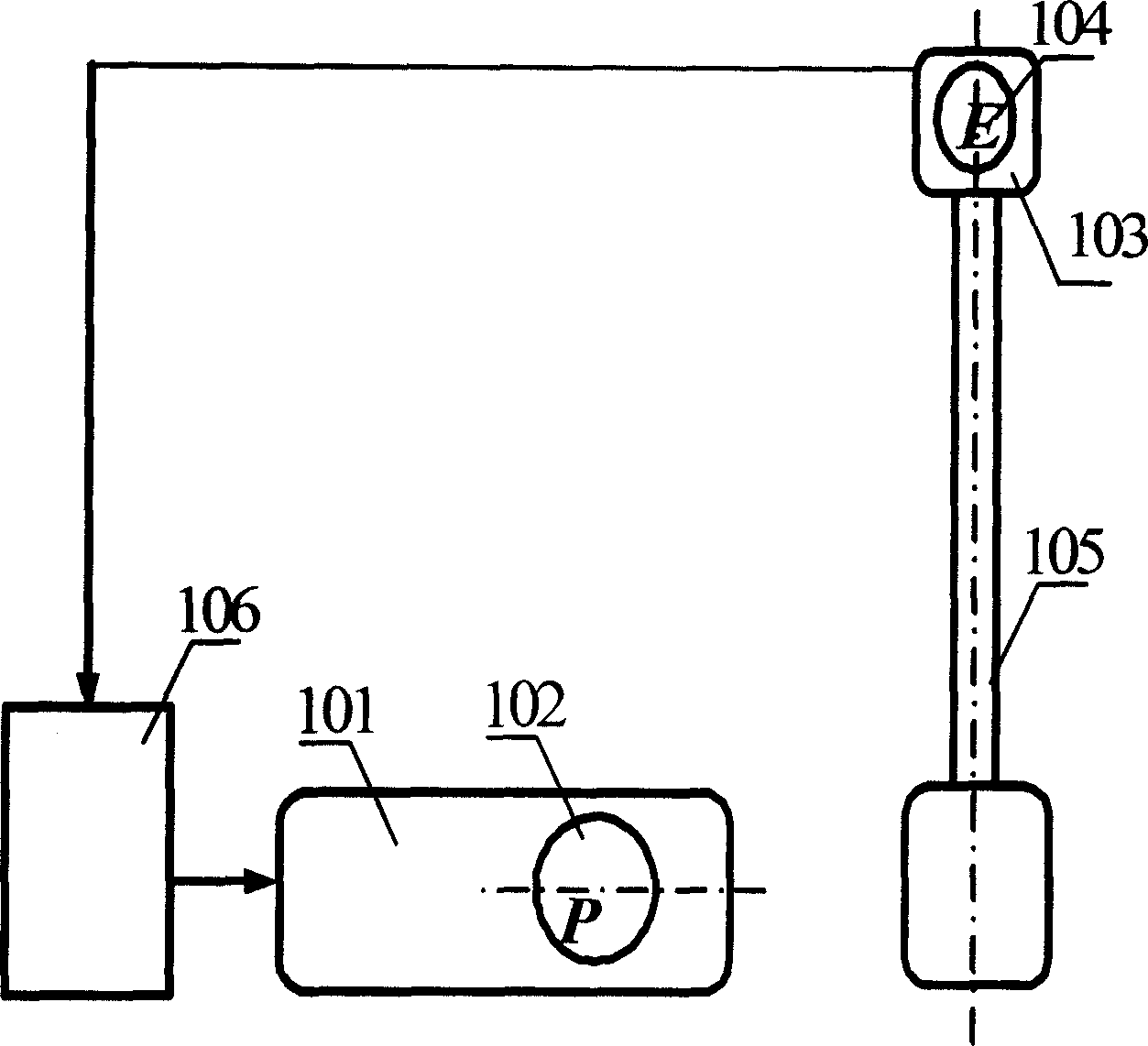

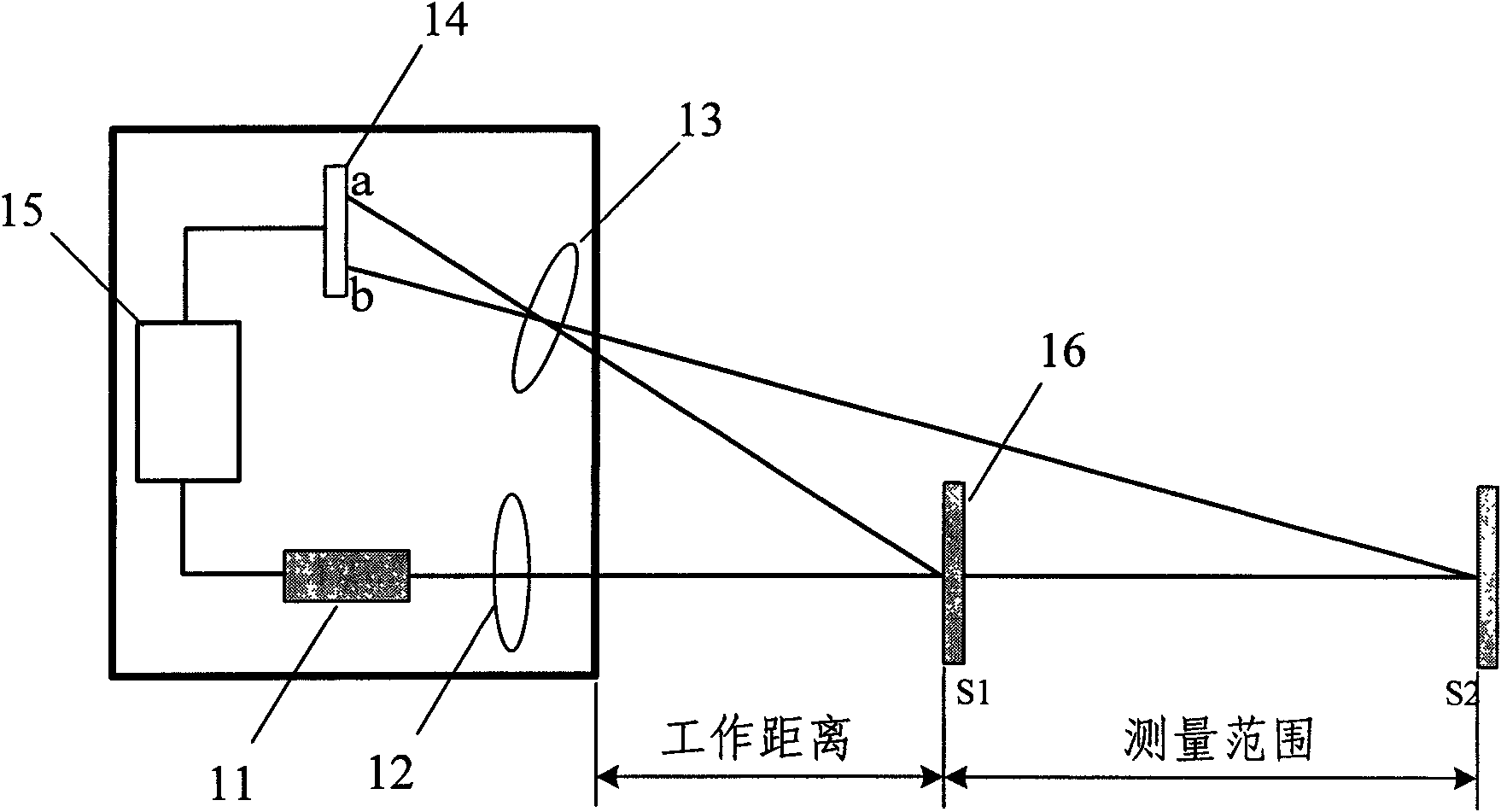

Structure optical parameter demarcating method based on one-dimensional target drone

InactiveCN101419708ALow costEasy maintenanceImage analysisUsing optical meansThree dimensional measurementVisual perception

The invention belongs to the technical field of measurement, and relates to an improvement to a calibration method of structured light parameters in 3D vision measurement of structured light. The invention provides a calibration method of the structured light parameters based on a one-dimensional target. After a sensor is arranged, a camera of the sensor takes a plurality of images of the one-dimensional target in free non-parallel motion; a vanishing point of a characteristic line on the target is obtained by one-dimensional projective transformation, and a direction vector of the characteristic line under a camera coordinate system is determined by the one-dimensional projective transformation and a camera projection center; camera ordinates of a reference point on the characteristic line is computed according to the length constraint among characteristic points and the direction constraint of the characteristic line to obtain an equation of the characteristic line under the camera coordinate system; the camera ordinates of a control point on a plurality of non-colinear optical strips are obtained by the projective transformation and the equation of the characteristic line, and then the control point is fitted to obtain parameters of the structured light. In the method, high-cost auxiliary adjustment equipment is unnecessary; the method has high calibration precision and simple process, and can meet the field calibration need for the 3D vision measurement of the large-sized structured light.

Owner:BEIHANG UNIV

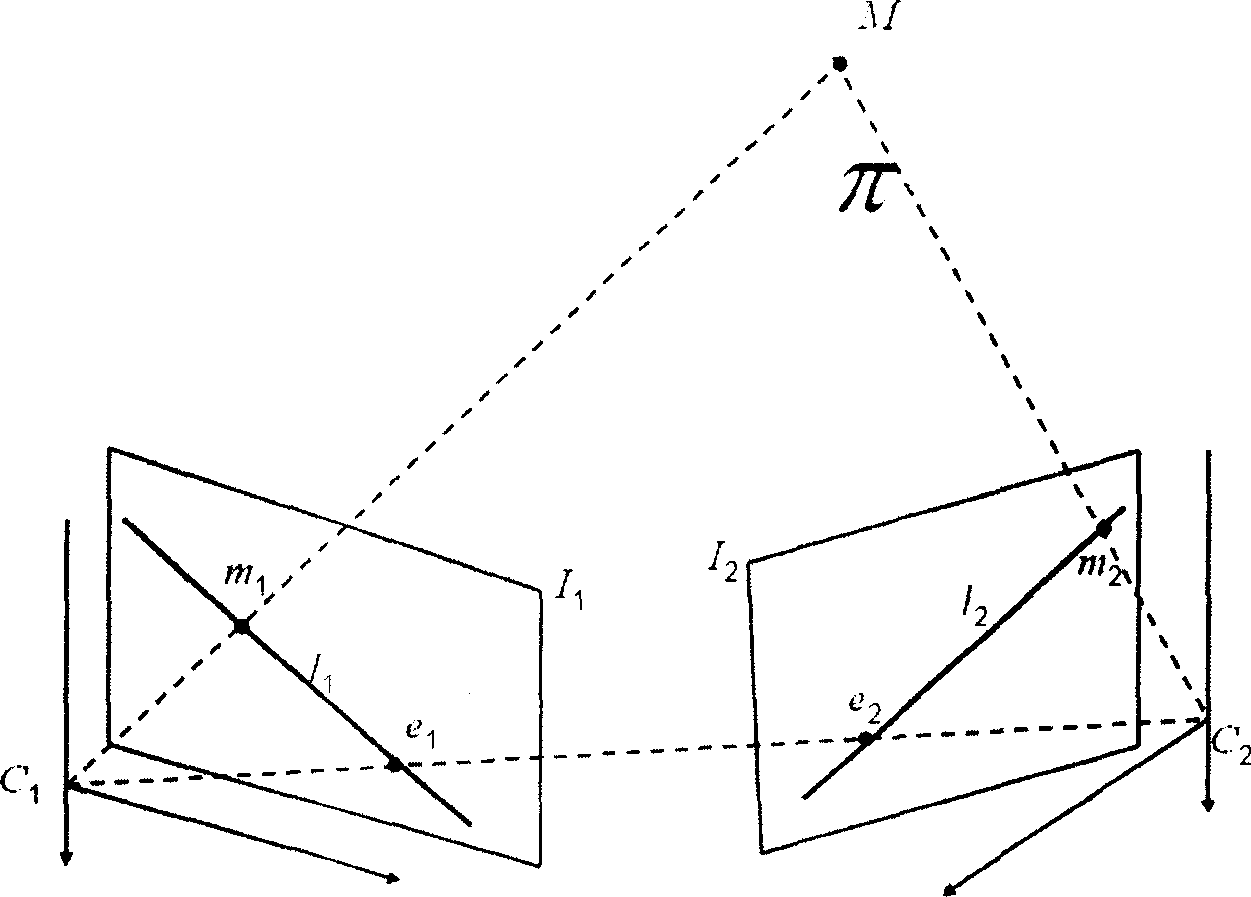

Multi-viewpoint attitude estimating and self-calibrating method for three-dimensional active vision sensor

ActiveCN1888814AImprove calibration efficiencyImage analysisUsing optical meansViewpointsVision sensor

A multi-viewpoints gesture estimate and self-demarcate method for three-dimensional initiative vision sensor belongs to three-dimensional figure imaging and sculpting technology. The said sensor is made up with numeric projector and camera. Collect object vein picture; project a set of orthogonal list map to object early or late and collect corresponding coding list picture; count the two-dimensional coordinate of characteristic point to vein picture and phase value to coding list picture. Use transforming arithmetic from phase position to coordinate to seek the corresponding relationship of diagnostic point of object between the project plane to numeric projector and the imaging plane to camera. Change viewpoint and repeat the said steps by using polar ray geometry restriction element to base optimization equation to automatically estimate multi-viewpoint location gesture and self-demarcate three-dimensional initiative vision sensor. It is veracious, automatic and special to locale multi-viewpoints gesture estimate and self-demarcate of vision sensor.

Owner:SHENZHEN ESUN DISPLAY

Method For Displaying Recognition Result Obtained By Three-Dimensional Visual Sensor And Three-Dimensional Visual Sensor

InactiveUS20100232683A1Easily recognition resultImprove user friendlinessImage enhancementImage analysisElevation angleProjection image

Display suitable to an actual three-dimensional model or a recognition-target object is performed when stereoscopic display of a three-dimensional model is performed while correlated to an image used in three-dimensional recognition processing. After a position and a rotation angle of a workpiece are recognized through recognition processing using the three-dimensional model, coordinate transformation of the three-dimensional model is performed based on the recognition result, and a post-coordinate-transformation Z-coordinate is corrected according to an angle (elevation angle f) formed between a direction of a line of sight and an imaging surface. Then perspective transformation of the post-correction three-dimensional model into a coordinate system of a camera of a processing object is performed, and a height according to a pre-correction Z-coordinate at a corresponding point of the pre-coordinate-transformation three-dimensional model is set to each point of a produced projection image. Projection processing is performed from a specified direction of a line of sight to a point group that is three-dimensionally distributed by the processing, thereby producing a stereoscopic image of the three-dimensional model.

Owner:ORMON CORP

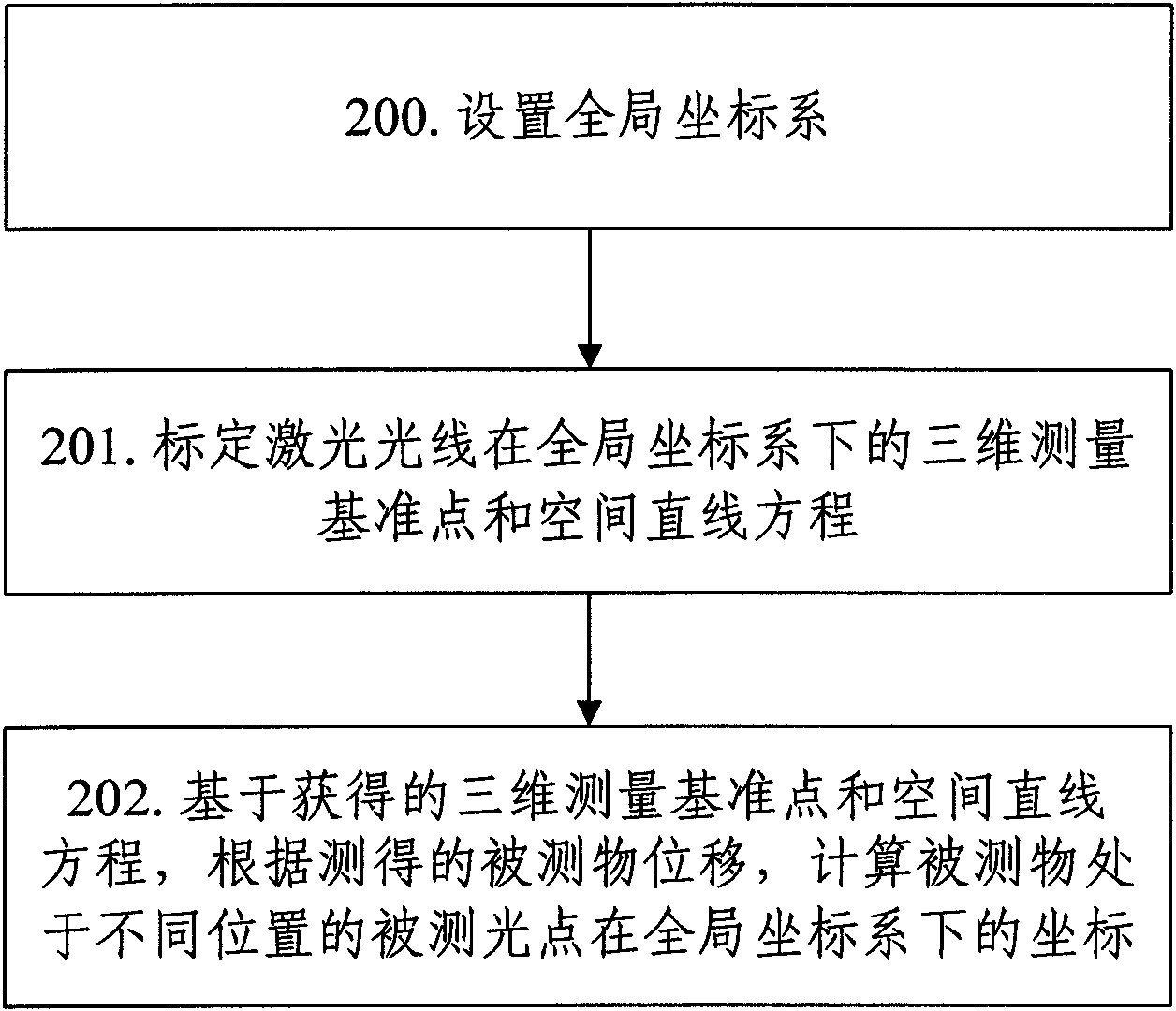

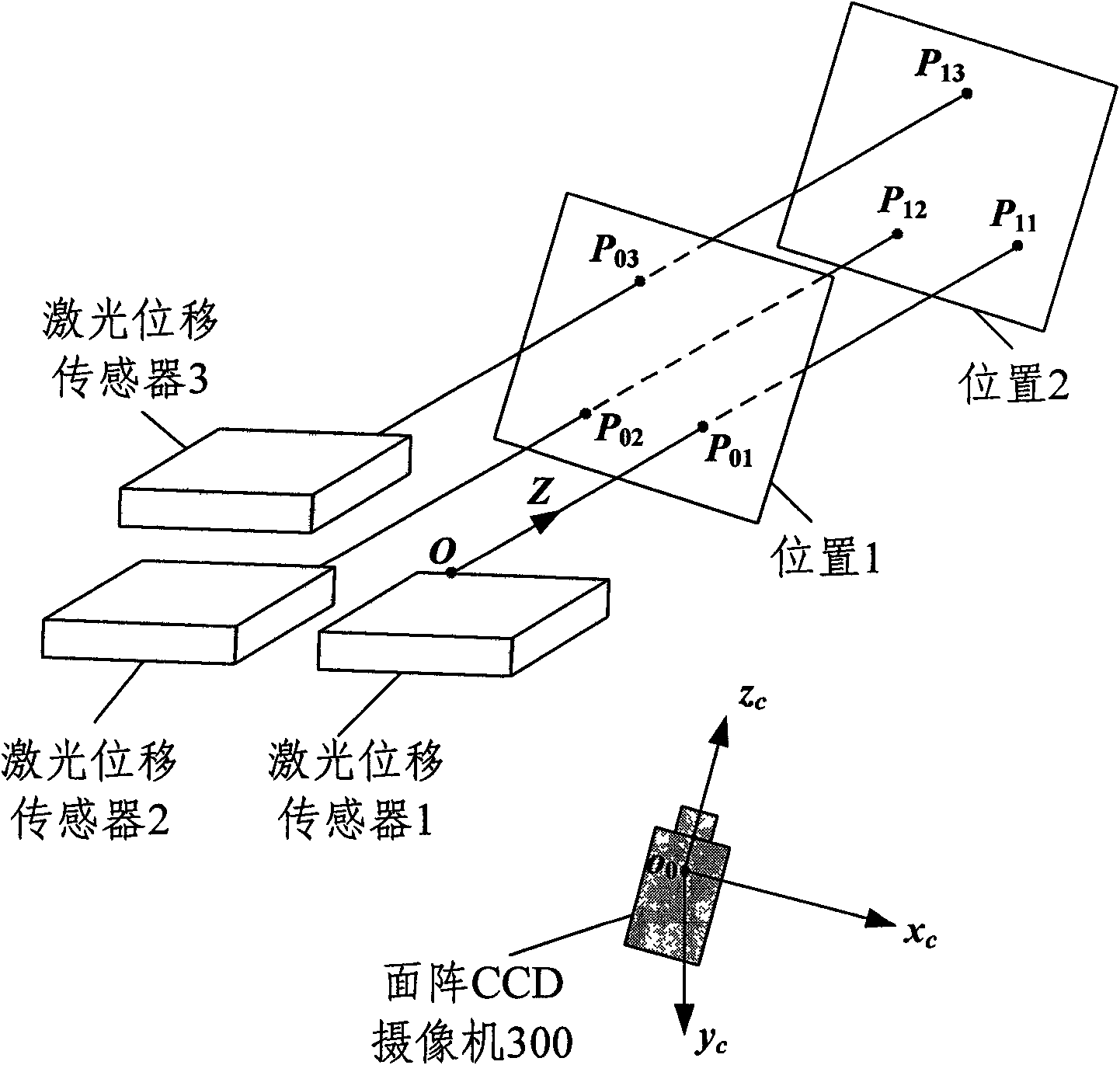

Ultrahigh speed real-time three-dimensional measuring device and method

ActiveCN101603812AIncrease frame rateHigh 3D measurement data update rateUsing optical meansMeasurement deviceLight spot

The invention discloses an ultrahigh speed real-time three-dimensional measuring device and an ultrahigh speed real-time three-dimensional measuring method. The measuring device comprises a matrix camera, and one or more laser displacement sensors; and the measuring method comprises the following steps: obtaining a three-dimensional datum point and a spacial linear equation by the laser displacement sensors, the matrix camera and a planar pattern; and on the basis of the obtained datum point and spacial linear equation, according to measured displacement of a measured object, calculating coordinates of measured light spots of the measured object positioned at different positions in global coordinates so as to complete the ultrahigh speed real-time three-dimensional vision measurement on the measured object.

Owner:BEIHANG UNIV

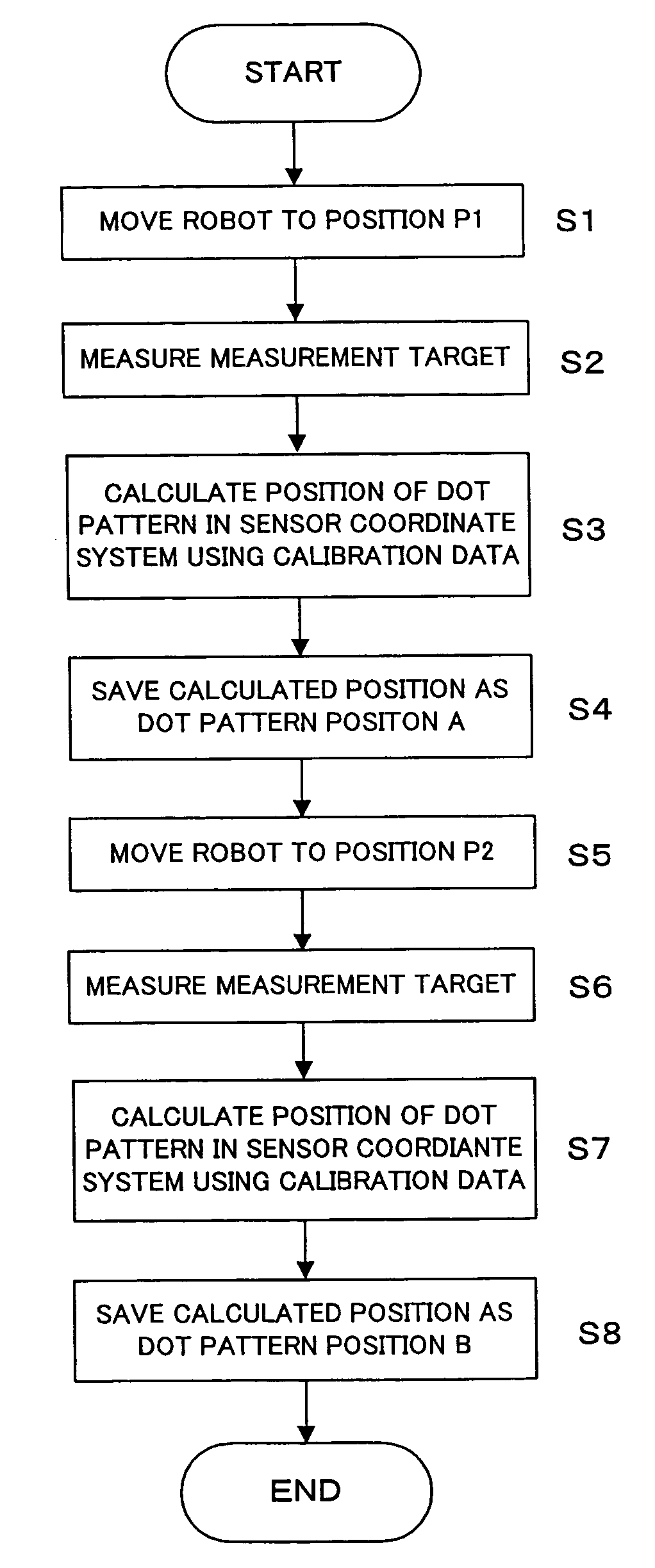

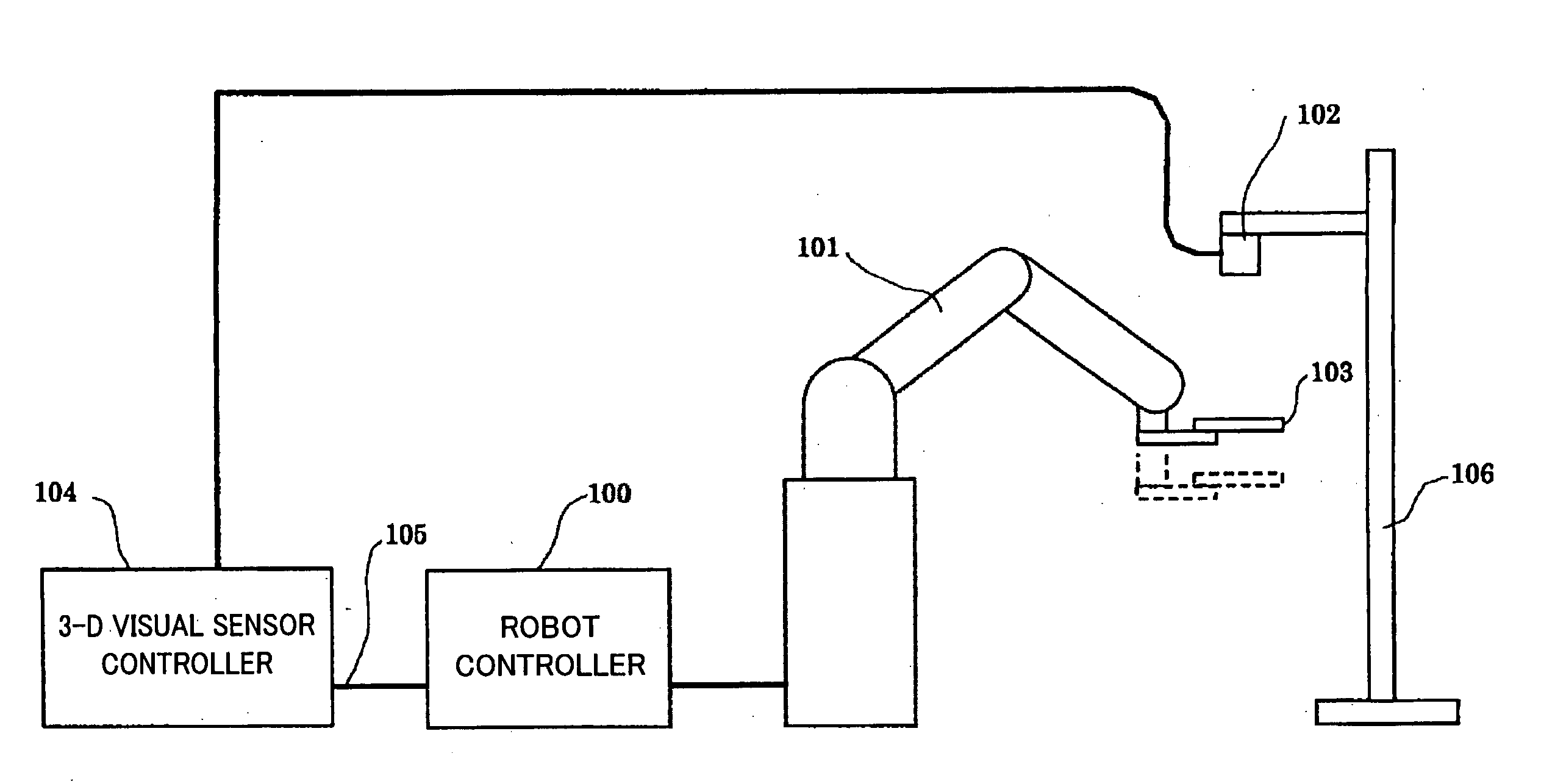

Method of and device for re-calibrating three-dimensional visual sensor in robot system

InactiveUS7359817B2Reduce workloadEasy to implementProgramme-controlled manipulatorPhotogrammetry/videogrammetryRobotic systemsAcquired characteristic

A re-calibration method and device for a three-dimensional visual sensor of a robot system, whereby the work load required for re-calibration is mitigated. While the visual sensor is normal, the visual sensor and a measurement target are arranged in one or more relative positional relations by a robot, and the target is measured to acquire position / orientation information of a dot pattern etc. by using calibration parameters then held. During re-calibration, each relative positional relation is approximately reproduced, and the target is again measured to acquire feature amount information or position / orientation of the dot pattern etc. on the image. Based on the feature amount data and the position information, the parameters relating to calibration of the visual sensor are updated. At least one of the visual sensor and the target, which are brought into the relative positional relation, is mounted on the robot arm. During the re-calibration, position information may be calculated using the held calibration parameters as well as the feature amount information obtained during normal operation of the visual sensor and that obtained during the re-calibration, and the calibration parameters may be updated based on the calculation results.

Owner:FANUC LTD

Depth camera hand-eye calibration method based on CALTag and point cloud information

The invention discloses a depth camera hand-eye calibration method based on CALTag and point cloud information, and the method comprises the steps: building a mathematic model of hand-eye calibration,and obtaining a hand-eye calibration equation AX = XB; then, using a CALTag calibration board for replacing a traditional checkerboard calibration board so thatthe recognition precision of the pose of the calibration board is improved, and meanwhile calculating a matrix A in a hand-eye calibration equation; solving a matrix B by combining the obtained matrix A and the positive kinematics of themechanical arm, and a hand-eye calibration equation A * X = X * B is solved based on the Lie group theory; and finally, obtaining a calibration matrix more suitable for a three-dimensional visual scene based on a trust region reflection optimization iterative algorithm by utilizing the obtained point cloud depth information. The method can accurately determine the coordinate transformation of thepoint cloud coordinate system and the robot coordinate system, is high in grabbing precision, and is suitable for an application scene that a mechanical arm grabs an object in three-dimensional vision.

Owner:XI AN JIAOTONG UNIV

Helicopter rotor blade motion parameter measuring method based on binocular three-dimensional vision

ActiveCN103134477ARealize measurementImprove calibration accuracyPhotogrammetry/videogrammetryMotion parameterContact type

A helicopter rotor blade motion parameter measuring method based on binocular three-dimensional vision includes the steps: (1) utilizing two binocular three-dimensional vision systems to construct a helicopter rotor blade motion image obtaining device; (2) utilizing a standard calibration template to achieve calibration of each binocular three-dimensional vision system; (3) sticking a certain number of calibrating points on each blade of a helicopter; (4) on the base that blade calibrating points in a binocular three-dimensional vision left image and a binocular three-dimensional vision right image are matched with the calibrating points, utilizing camera inner parameters and camera outer parameters, calibrated well, of the binocular three-dimensional vision systems to achieve measurement of three-dimensional information of the calibrating points in blades; (5) utilizing the three-dimensional information, obtained in the step (4), of the calibrating points in the blades, conducting calculation according waving, shimmying, and torsion definitions of blade motion parameters, and obtaining the blade motion parameters of helicopter rotor blades. The helicopter rotor blade motion parameter measuring method based on the binocular three-dimensional vision has the advantages of being simple in operation, free of contact type, small in danger coefficient, high in precision and the like.

Owner:NANCHANG HANGKONG UNIVERSITY

Method of and device for re-calibrating three-dimensional visual sensor in robot system

InactiveUS20060023938A1Reduce workloadEasy to implementProgramme-controlled manipulatorPhotogrammetry/videogrammetryRobotic systemsGraphics

A re-calibration method and device for a three-dimensional visual sensor of a robot system, whereby the work load required for re-calibration is mitigated. While the visual sensor is normal, the visual sensor and a measurement target are arranged in one or more relative positional relations by a robot, and the target is measured to acquire position / orientation information of a dot pattern etc. by using calibration parameters then held. During re-calibration, each relative positional relation is approximately reproduced, and the target is again measured to acquire feature amount information or position / orientation of the dot pattern etc. on the image. Based on the feature amount data and the position information, the parameters relating to calibration of the visual sensor are updated. At least one of the visual sensor and the target, which are brought into the relative positional relation, is mounted on the robot arm. During the re-calibration, position information may be calculated using the held calibration parameters as well as the feature amount information obtained during normal operation of the visual sensor and that obtained during the re-calibration, and the calibration parameters may be updated based on the calculation results.

Owner:FANUC LTD

Method and system for guiding mechanical hand grabbing through three-dimensional visual guidance

InactiveCN109927036ANot easy to break awayGuaranteed working accuracyProgramme-controlled manipulatorWork taskEngineering

The invention provides a method and system for guiding mechanical hand grabbing through three-dimensional visual guidance. The whole system is fixed in space, the visual field does not change due to the movement of a mechanical arm, and a target object is not likely to be away from the observation visual field of a camera; by means of acquisition in an off calibration manner, a system model structure can be repeatedly calibrated, and therefore the work precision in long-time work tasks is guaranteed; and a robot can sense environments, by means of the 3D machine vision scanning and point cloudfeature recognition and analysis technology, the robot is guided to complete some recognition and grabbing tasks of disorderly objects, and people are liberated from high-repeatability dangerous labor.

Owner:QINGDAO XIAOYOU INTELLIGENT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com