Multi-viewpoint attitude estimating and self-calibrating method for three-dimensional active vision sensor

A technology of active vision and attitude estimation, which is applied in instrumentation, computing, image analysis, etc. to improve the calibration efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

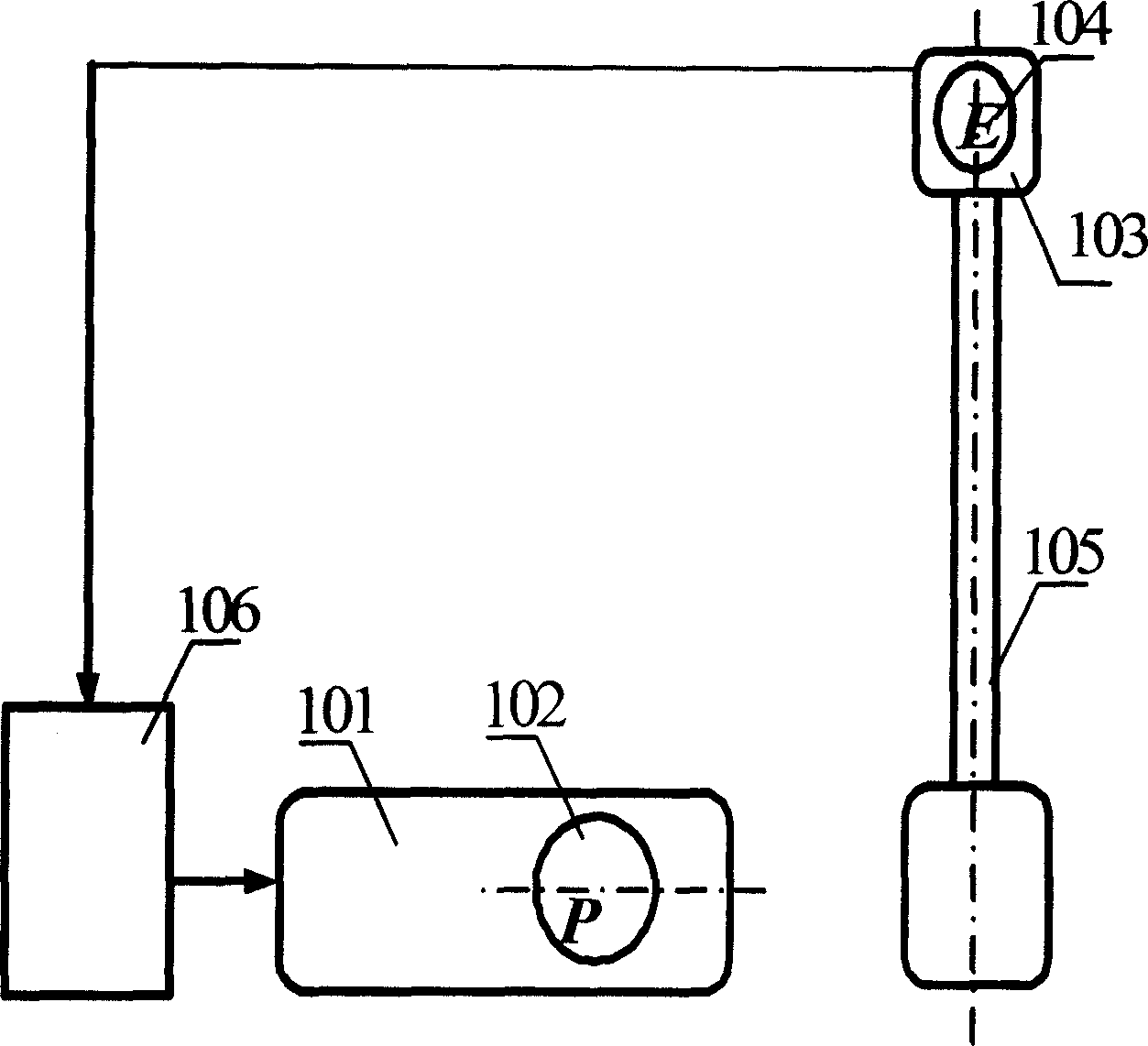

[0072] The structure of the actually designed 3D vision sensor is as follows: Figure 1 Show. 101 is a digital projector, and 103 is a video camera. 102 is the exit pupil P of the projection mirror of the digital projector 101 , and 104 is the entrance pupil E of the imaging lens of the camera 103 . The adjusting rod 105 is used to adjust the height and angle of the camera 103, and the 106 is a computer.

[0073] According to the steps described above, the real object (such as Figure 7) to collect. Solve the attitude position of the viewpoint and calibrate the sensor parameters at the same time.

[0074] The calibration result is:

[0075] (1) Internal parameters of the camera: K c = 3564.36 - 5.99209 252.127 0 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com