Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

229 results about "Reprojection error" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

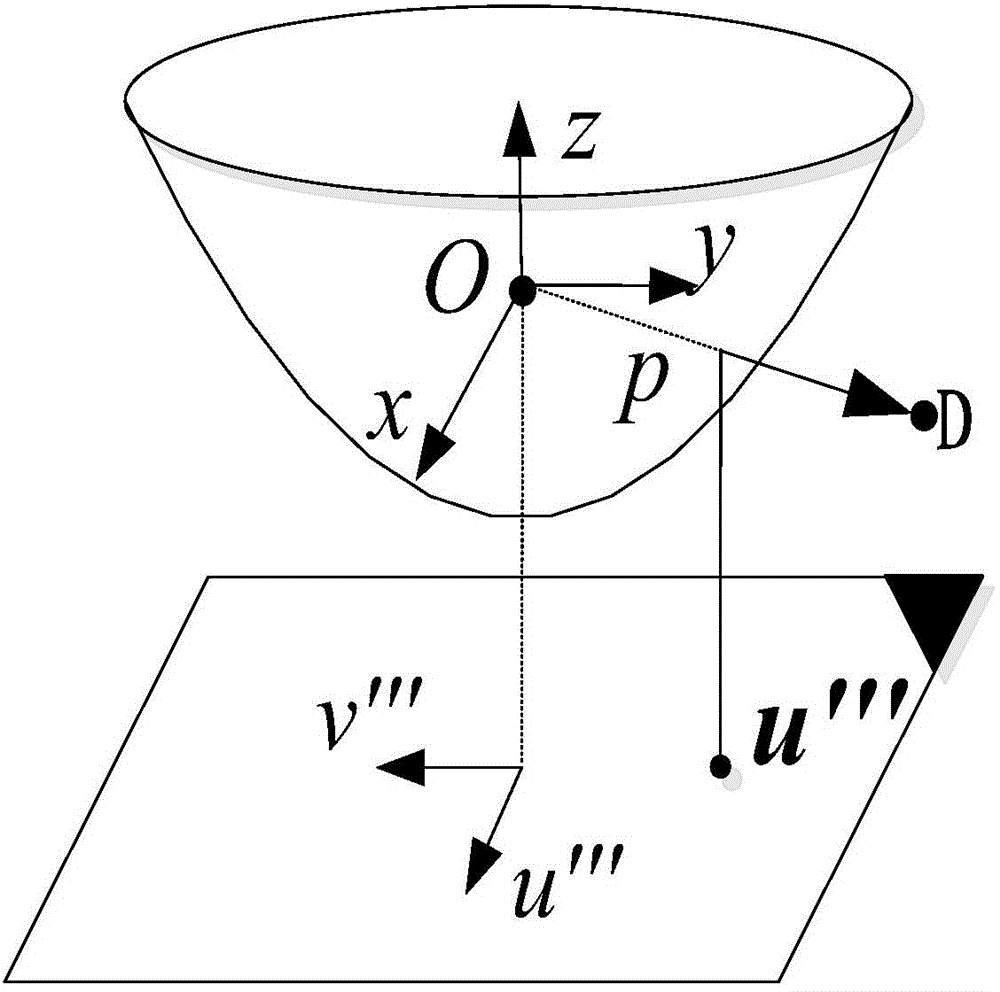

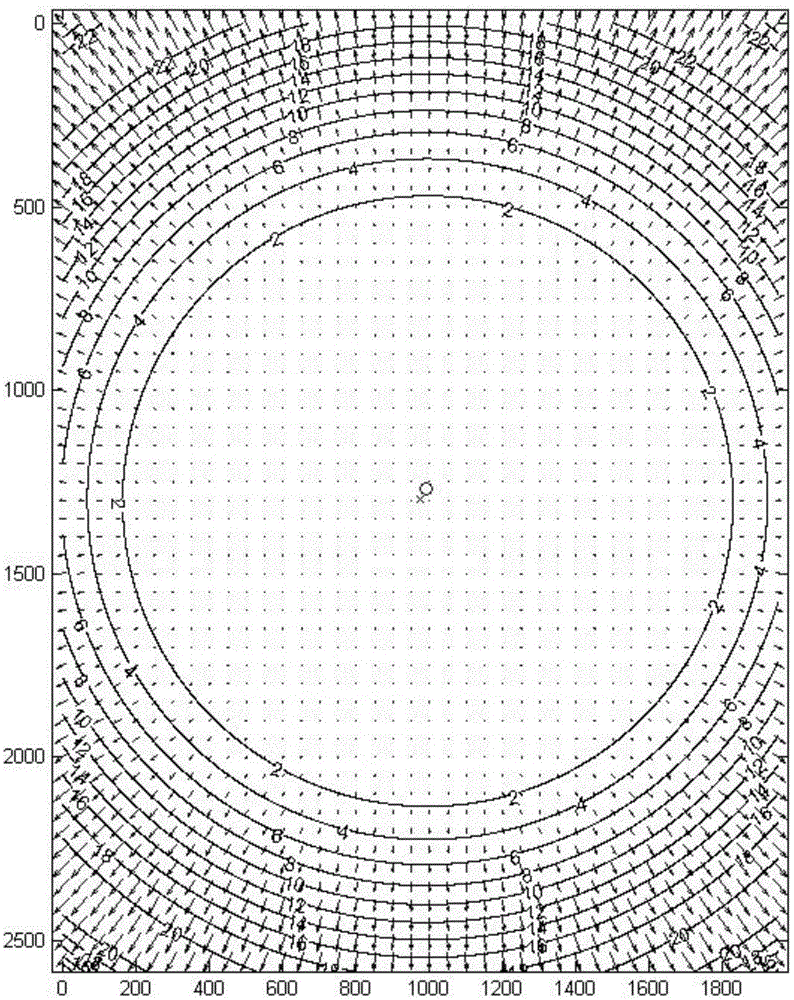

The reprojection error is a geometric error corresponding to the image distance between a projected point and a measured one. It is used to quantify how closely an estimate of a 3D point recreates the point's true projection . More precisely, let be the projection matrix of a camera and be the image projection of , i.e. . The reprojection error of is given by , where denotes the Euclidean distance between the image points represented by vectors and .

Calibration method of correlation between single line laser radar and CCD (Charge Coupled Device) camera

InactiveCN101882313AEasy to makeEasy to buyImage analysisElectromagnetic wave reradiationLaser scanningIntersection of a polyhedron with a line

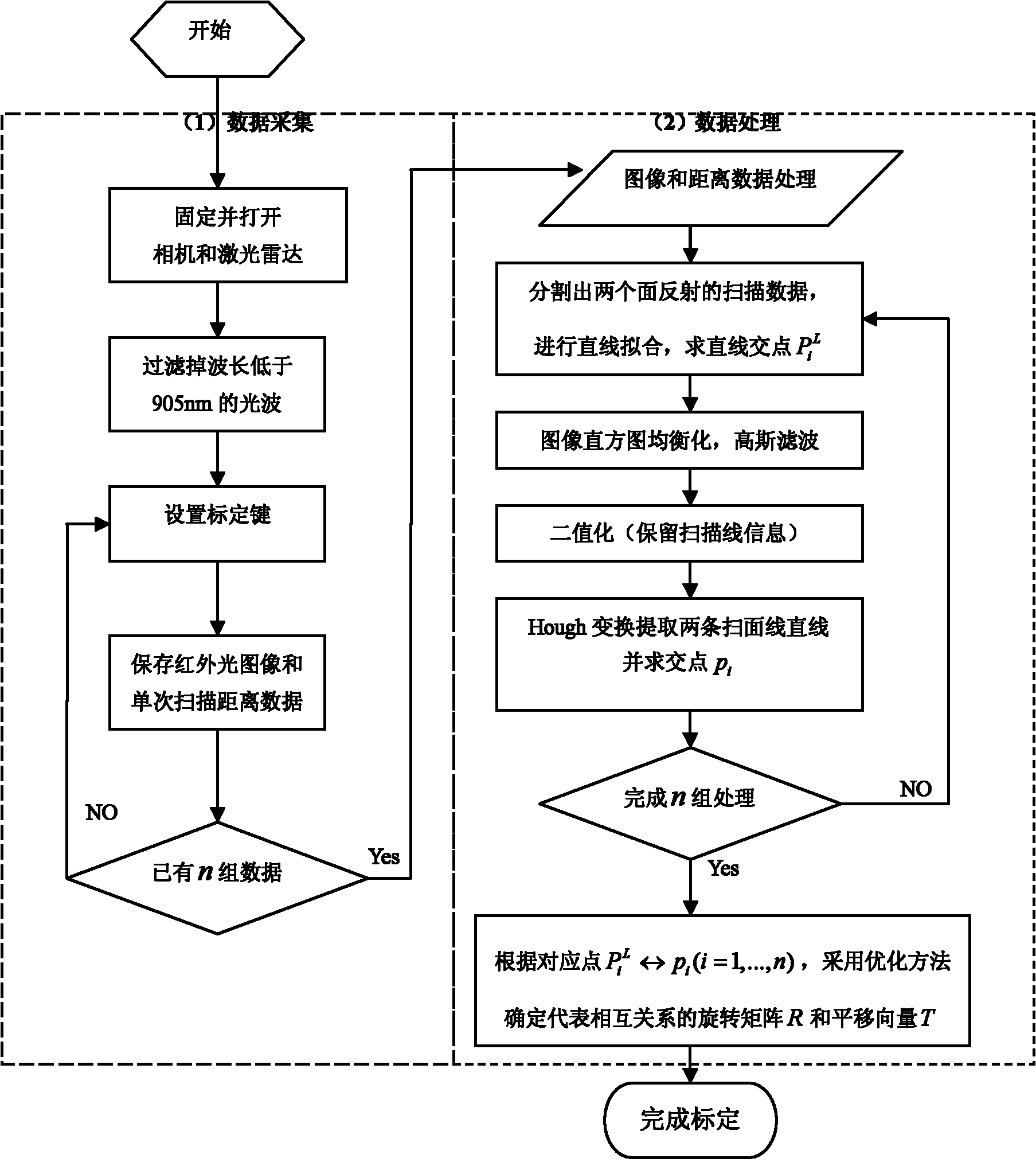

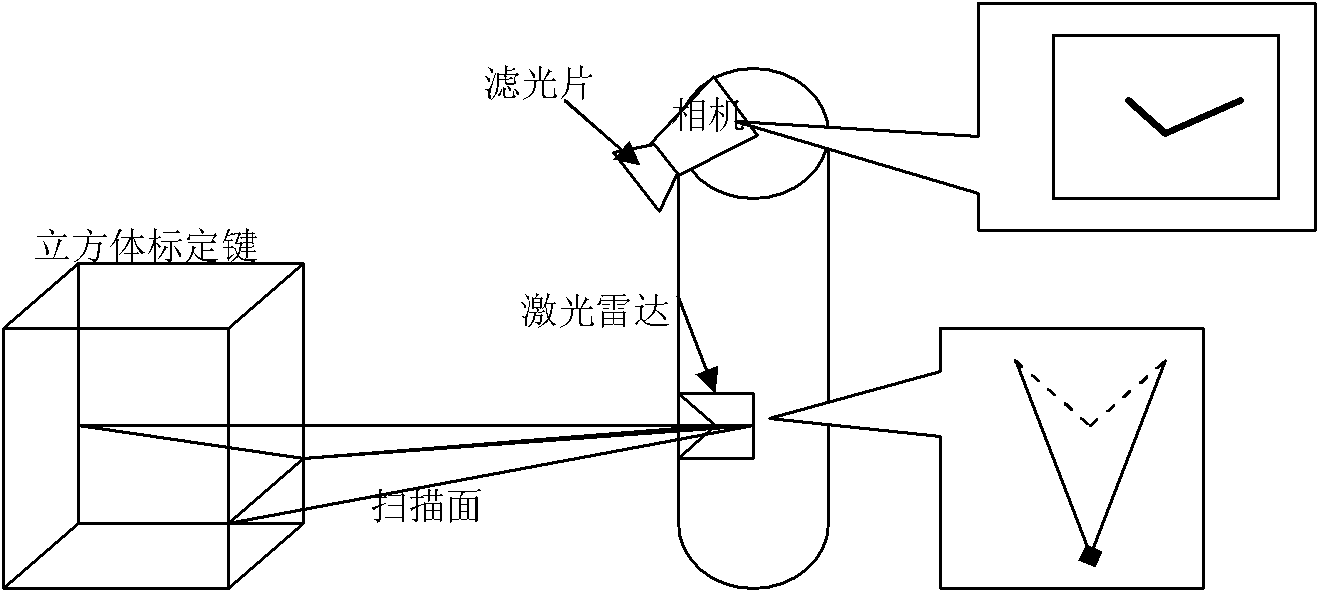

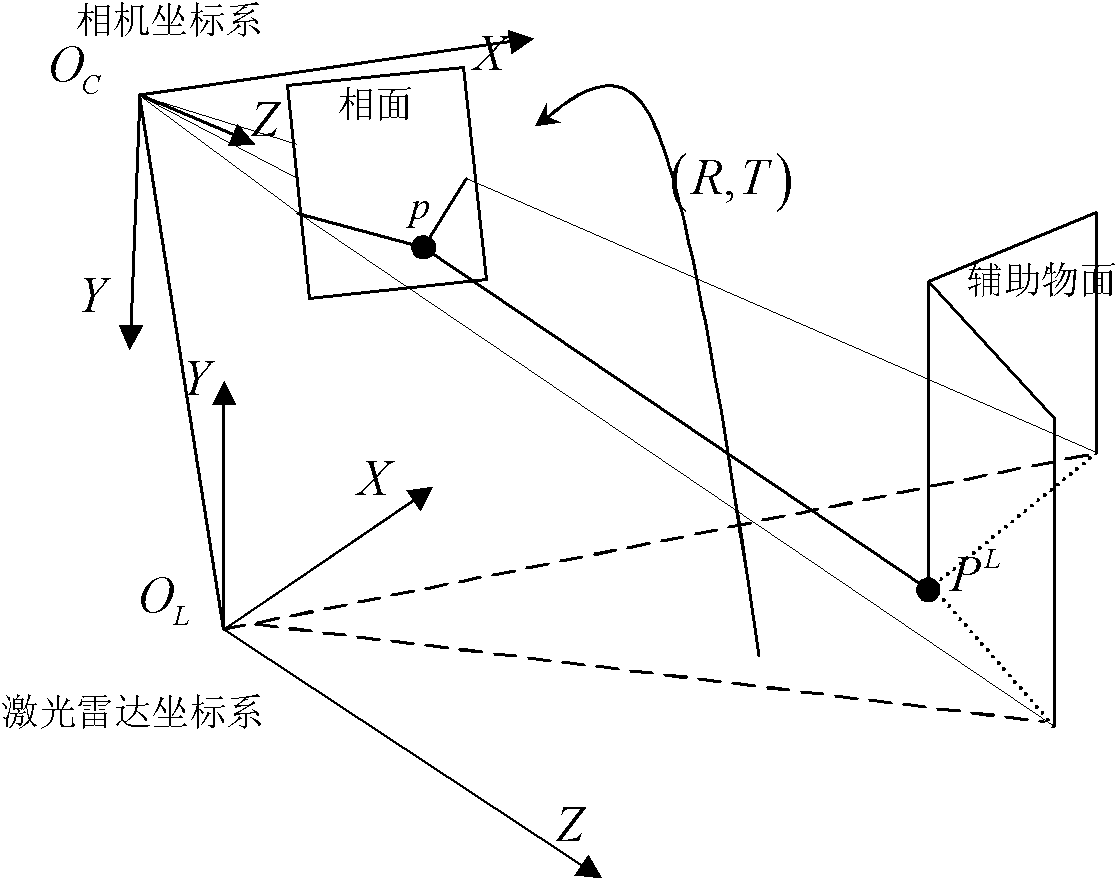

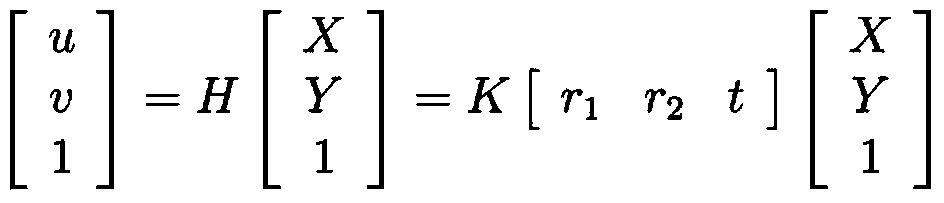

The invention discloses a calibration method of correlation between a single line laser radar and a CCD (Charge Coupled Device) camera, which is based on the condition that the CCD camera can carry out weak imaging on an infrared light source used by the single line laser radar. The calibration method comprises the steps of: firstly, extracting a virtual control point in a scanning plane under the assistance of a cubic calibration key; and then filtering visible light by using an infrared filter to image infrared light only, carrying out enhancement, binarization treatment and Hough transformation on an infrared image with scanning line information, and extracting two laser scanning lines, wherein the intersection point of the two scanning lines is the image coordinate of the virtual control point in the image. After acquiring multiple groups of corresponding points through the steps, a correlation parameter between the laser radar and the camera can be solved by adopting an optimization method for minimizing a reprojection error. Because the invention acquires the information of the corresponding points directly, the calibration process becomes simpler and the precision is greatly improved with a calibrated angle error smaller than 0.3 degree and a position error smaller than 0.5cm.

Owner:NAT UNIV OF DEFENSE TECH

Wide-baseline visible light camera pose estimation method

ActiveCN104200086AEasy to detectAchieve matchingNavigational calculation instrumentsPicture interpretationVisual field lossLight sensing

The invention relates to a wide-baseline visible light camera pose estimation method. The method includes the steps that firstly, the Zhang calibration method is used, and internal references of cameras are calibrated through a plane calibration board; eight datum points on a landing runway are selected in a public visual field region of the cameras and world coordinates of the datum points are accurately measured off line through a total station; in the calibration process, cooperation identification lamps are placed in the positions of the datum points and the poses of the cameras are accurately calculated through detection of the cooperation identification lamps. According to the method, the complex natural scene characteristic of an unmanned aerial vehicle landing scene and the physical light sensing characteristic of the cameras are considered, and glare flashlights are designed and used as the cooperation identification lamps of the visible light cameras; the eight datum points are arranged on the landing runway and space coordinates of the datum points are measured through the total station according to space accuracy at a 10 <-6> m level. According to the method, a calibration result is accurate and a re-projection error on an image is below 0.5 pixel.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

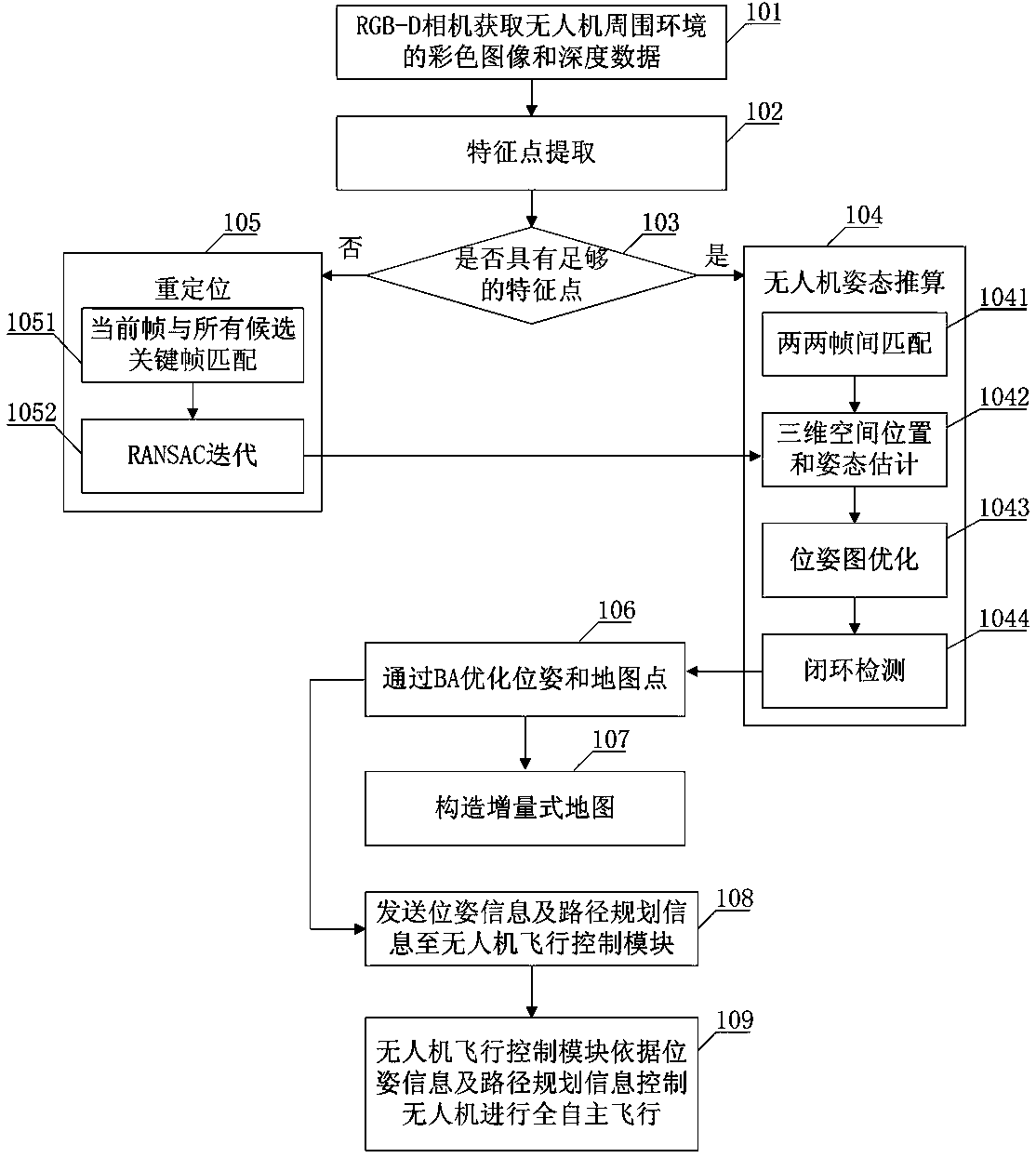

Indoor and independent drone navigation method based on three-dimensional vision SLAM

ActiveCN108303099AAvoid complex processSolve complexityNavigational calculation instrumentsUncrewed vehicleGlobal optimization

The invention provides an indoor and independent drone navigation method based on three-dimensional vision SLAM. The indoor and independent drone navigation method comprises the steps that an RGB-D camera obtains a colored image and depth data of a drone surrounding environment; a drone operation system extracts characteristic points; the drone operation system judges whether enough characteristicpoints exist or not, if the quantity of the characteristic points is larger than 30, it shows that enough characteristic points exist, the drone attitude calculation process is conducted, or, relocating is conducted; a bundling optimizing method is used for global optimization; an incremental map is built. Drone attitude information is given with only one RGB-D camera, a three-dimensional surrounding environment is rebuilt, the complex process that a monocular camera solves depth information is avoided, and the problems of complexity and robustness of a matching algorithm in a binocular camera are solved; an iterative nearest-point method is combined with a reprojection error algorithm, so that drone attitude estimation is more accurate; a drone is located and navigated and independentlyflies indoors and in other unknown environments, and the problem that locating cannot be conducted when no GPS signal exists is solved.

Owner:江苏中科智能科学技术应用研究院

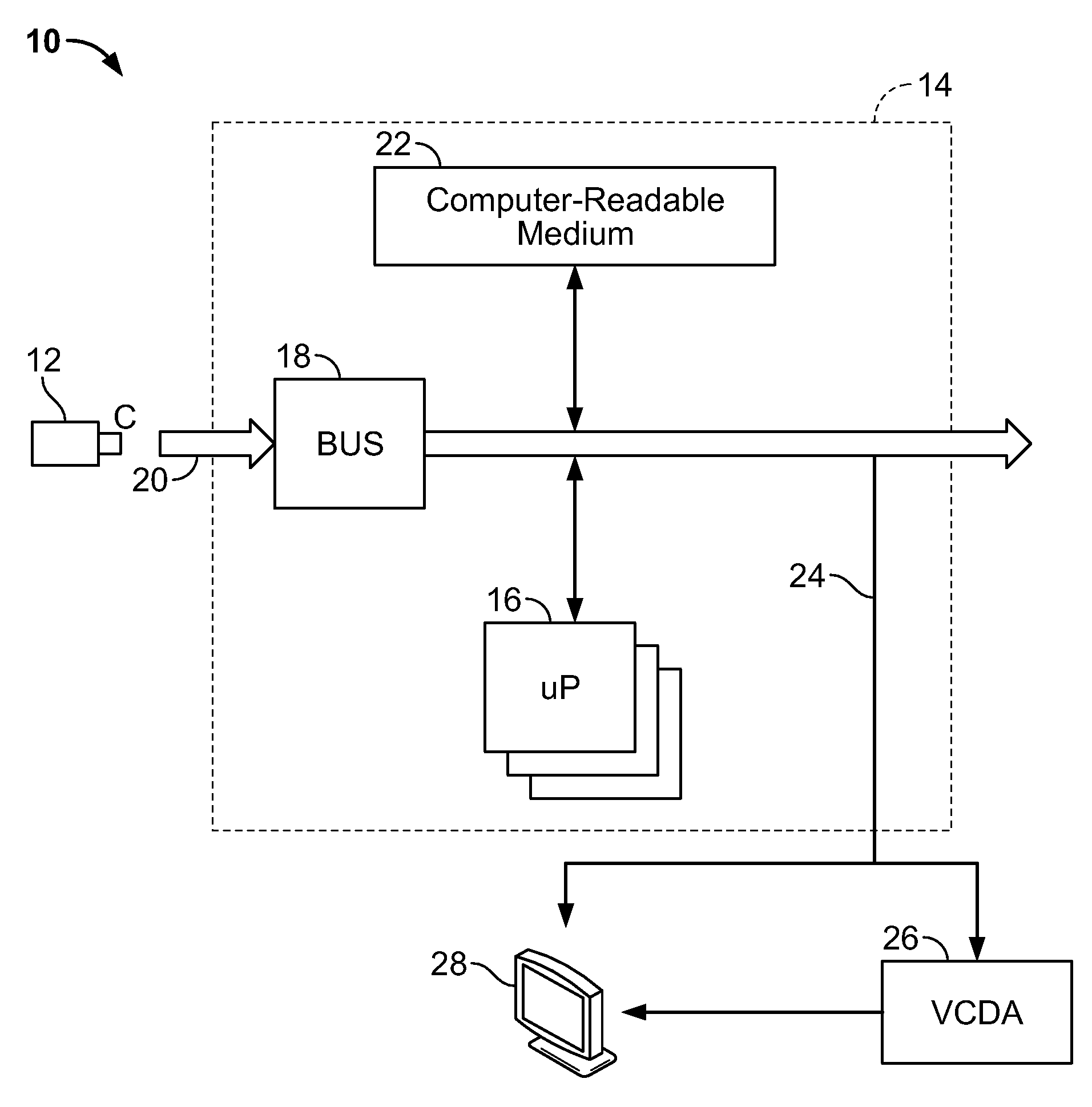

Camera egomotion estimation from an infra-red image sequence for night vision

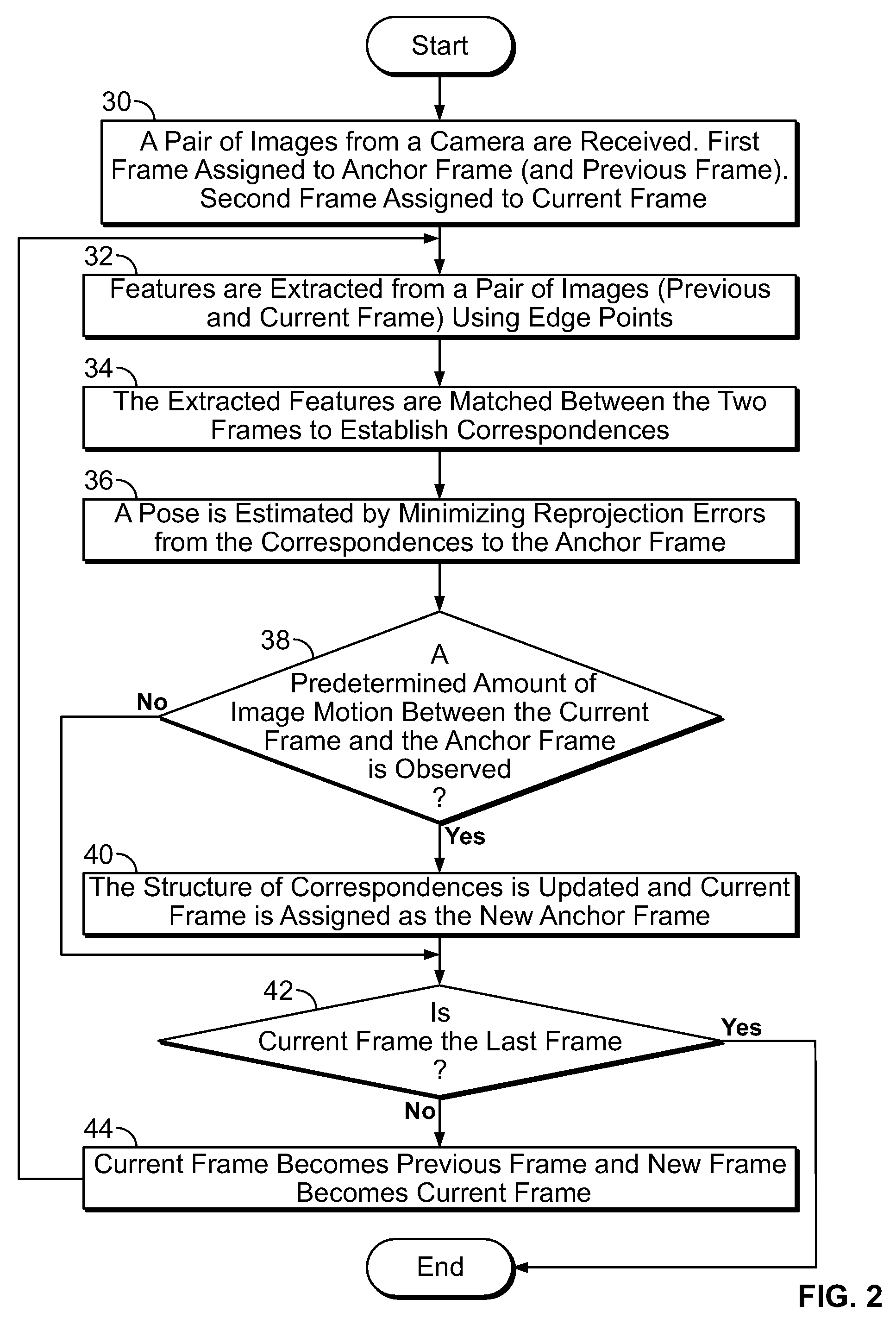

A method for estimating egomotion of a camera mounted on a vehicle that uses infra-red images is disclosed, comprising the steps of (a) receiving a pair of frames from a plurality of frames from the camera, the first frame being assigned to a previous frame and an anchor frame and the second frame being assigned to a current frame; (b) extracting features from the previous frame and the current frame; (c) finding correspondances between extracted features from the previous frame and the current frame; and (d) estimating the relative pose of the camera by minimizing reprojection errors from the correspondences to the anchor frame. The method can further comprise the steps of (e) assigning the current frame as the anchor frame when a predetermined amount of image motion between the current frame and the anchor frame is observed; (f) assigning the current frame to the previous frame and assigning a new frame from the plurality of frames to the current frame; and (g) repeating steps (b)-(f) until there are no more frames from the plurality of frames to process. Step (c) is based on an estimation of the focus of expansion between the previous frame and the current frame.

Owner:SRI INTERNATIONAL

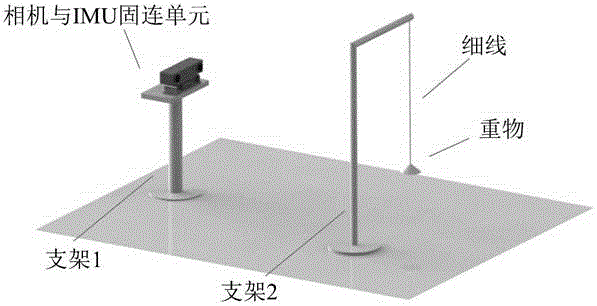

Calibration method for relative attitude of binocular stereo camera and inertial measurement unit

InactiveCN105606127AReduce mistakesImprove robustnessMeasurement devicesStereo cameraReference vector

The invention discloses a simple and feasible calibration method for the relative attitude of a binocular stereo camera and an inertial measurement unit on the basis of the absolute gravity direction. The method comprises the steps that a gravity direction vector serves as a reference vector, expressions of the gravity direction vector under a camera coordinate system and under an IMU coordinate system are solved respectively to obtain the expressions of the same vector under the two different coordinate systems, and then the attitude change relationship between the two coordinate systems can be solved; finally, precision verification is performed through reprojection errors, and application of the calibration method in a visual odometer is supplied. Experiments show that the relative attitude between the camera and the IMU can be accurately solved through the method. The method can be used for assisting in vision positioning of mobile robots and spherical robots.

Owner:BEIJING UNIV OF POSTS & TELECOMM

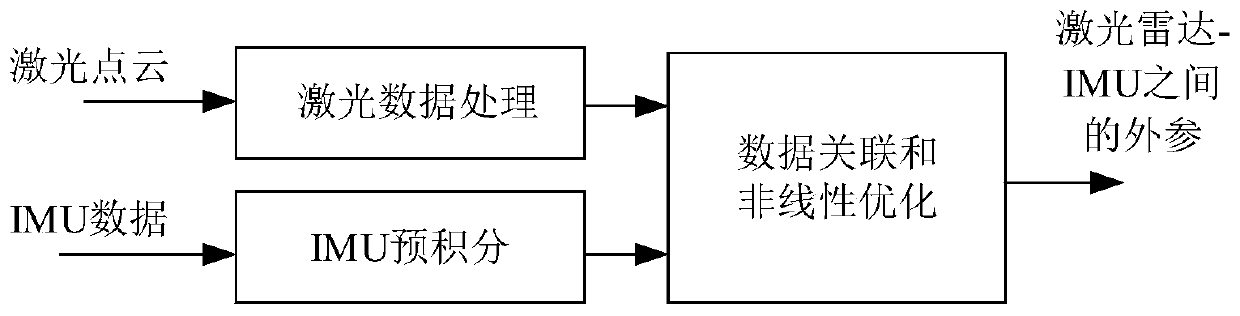

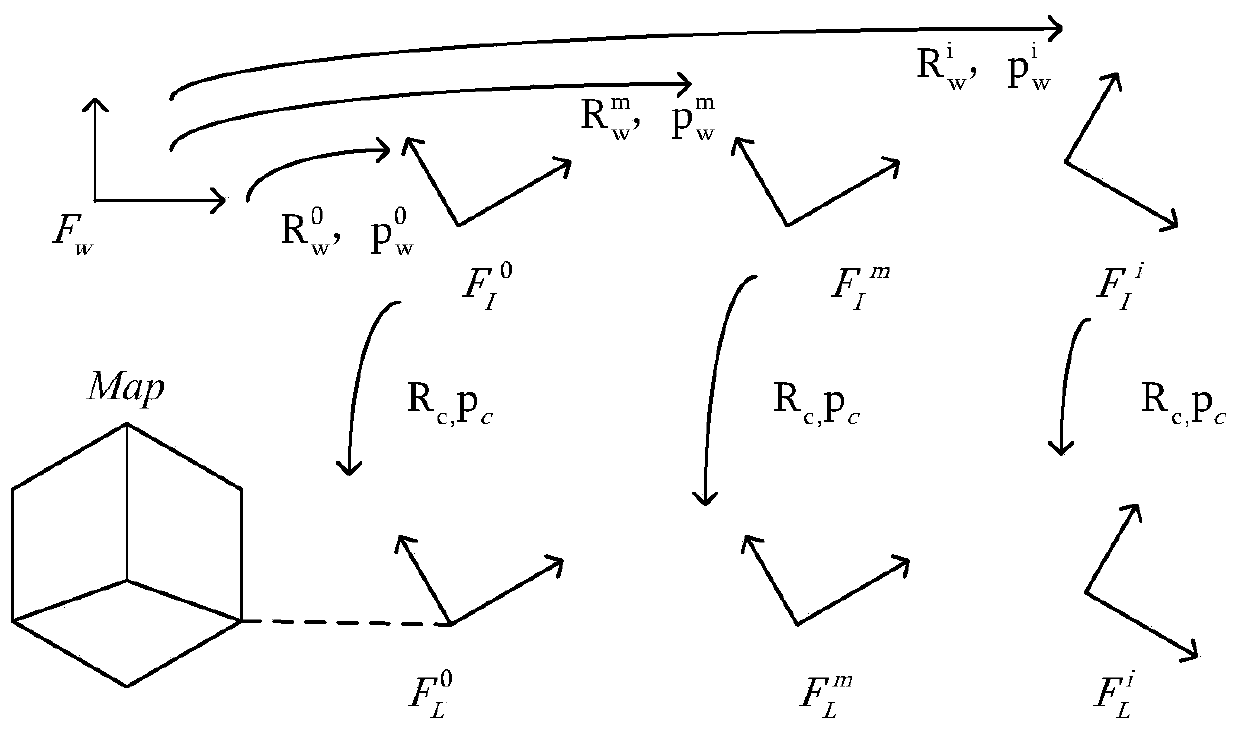

Method and system for laser-IMU external parameter calibration

ActiveCN111207774AImprove accuracyAvoid problems such as inability to solve equations caused by data ambiguityWave based measurement systemsEngineeringCalibration result

The invention provides a method and system for laser-IMU external parameter calibration. The method comprises steps of acquiring IMU measurement data and laser radar measurement data; carrying out IMUpre-integration of the obtained IMU measurement data, carrying out calculation to obtain an IMU pose transformation estimated value of the IMU relative to the IMU initial pose at the next moment, andaccording to the estimated value and an actual measurement value of the IMU at the next moment, obtaining an associated residual error associated with the data; processing measurement data of the laser radar, utilizing IMU pre-integration to obtain projection coordinates of reprojecting a plurality of laser radar points to a world coordinate system, and calculating a reprojection error from eachlaser radar point to a calibration target map; adopting a nonlinear least square method to iteratively optimize laser radar-IMU external parameter calibration so that the external parameter calibration result can be obtained. The method is advantaged in problems that in laser-IMU external parameter calibration, mechanical external parameters are not easy to obtain, manual measurement errors are large, and measurement is troublesome are solved, defects of the laser radar and the IMU are mutually overcome to a certain extent, and pose solving precision and the speed of the SLAM method can be improved.

Owner:济南市中未来产业发展有限公司

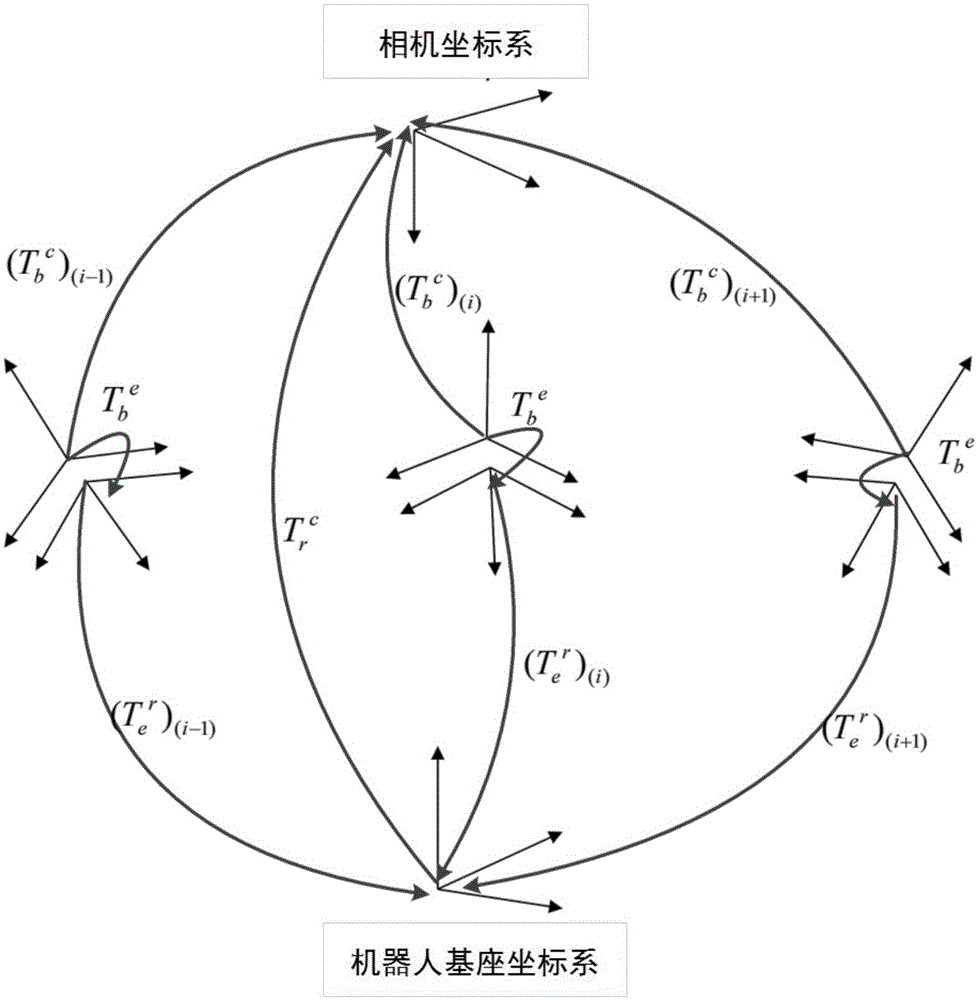

Camera and robot relative pose calibration method based on pixel space optimization

ActiveCN105014667AHigh precisionSmall reprojection errorProgramme-controlled manipulatorCalibration resultReprojection error

The invention discloses a camera and robot relative pose calibration method based on pixel space optimization. According to the method, a calibration board carried at the tail end of a robot is adopted for moving inside the range of the view of a fixed camera, and space motion constraint information of the calibration board is utilized for calibration to obtain the optimal relative pose relation between the camera and a robot base. Firstly, linear invariance of a rotating matrix is utilized for solving a homogeneous transformation matrix to obtain a preliminary calibration result; then, the preliminary calibration result is used as an initial optimization value for optimization of pixel space, so that a reprojection error is made to the minimum. According to the camera and robot relative pose calibration method, the iterative optimization algorithm is adopted, no precision external measurement equipment is needed, model constraints of the image pixel space are utilized, the effective optimization initial value obtaining method is combined, the calibration result with the higher precision is obtained, and the requirement of a visual servo robot for completing positioning and grabbing working in industrial application can be met.

Owner:ZHEJIANG UNIV

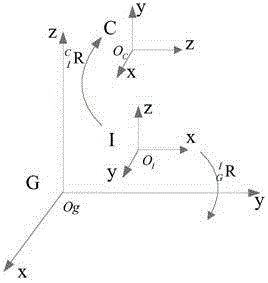

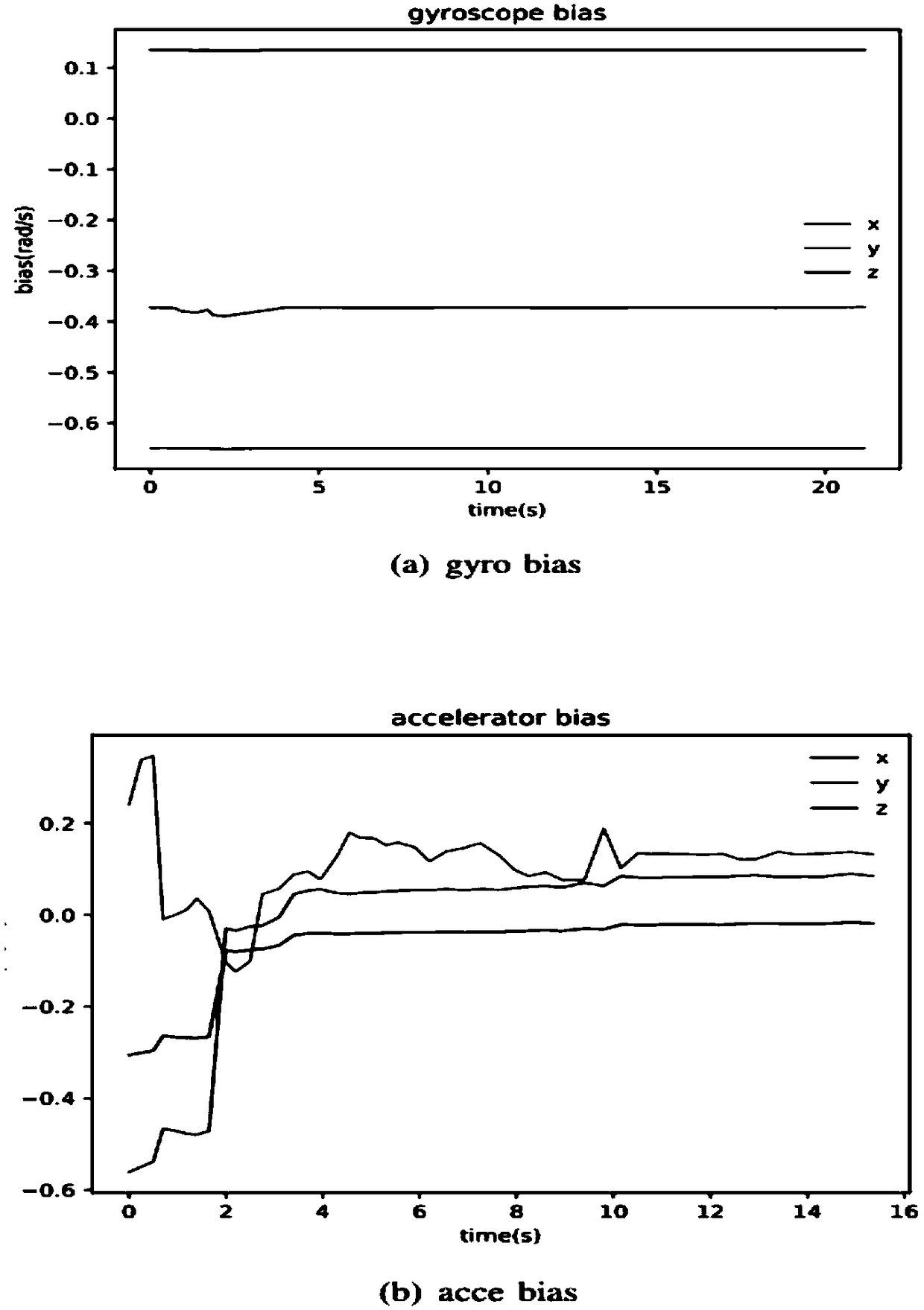

Tightly coupled binocular vision-inertial SLAM method using combined point-line features

InactiveCN109579840AReal-time pose outputReal-time continuous pose outputNavigational calculation instrumentsNavigation by speed/acceleration measurementsPattern recognitionComputer graphics (images)

The invention relates to a tightly coupled binocular vision-inertial SLAM method using combined point-line features, comprising the following steps: determining a transformation relationship between acoordinate system of a camera and a coordinate system of an inertial measurement unit (IMU); establishing a thread of point-line features and IMU tracking to solve an initial three-dimensional point-line coordinate; using the IMU to predict the position of the point-line features to correctly establish associations between the features and data, and combining a re-projection error of the IMU andthe point-line features to solve the pose transformation of consecutive frames after initializing the IMU; establishing a thread of the local bundle adjustment of the point-line features and the IMU,optimizing the three-dimensional point-line coordinate, the pose of a key frame and a state quantity of the IMU in a local key frame window; and establishing a loop back detection thread for the point-line features, using the point-line features to weight and calculate the score of a word bag model to detect the loop back, and optimizing the global state quantity. The tightly coupled binocular vision-inertial SLAM method using the combined point-line features is capable of ensuring stability and high precision in the case where the number of feature points is few and the camera is moving quickly.

Owner:SHANGHAI INST OF MICROSYSTEM & INFORMATION TECH CHINESE ACAD OF SCI

Method for calibrating and optimizing camera parameters of vision measuring system

InactiveCN102663767AImprove operational efficiencyFast convergenceImage analysisComputer visionReprojection error

The invention provides a method for calibrating and optimizing camera parameters of a vision measuring system. The method comprises the following steps of: (1) extracting a circle center of a projection point of one point on a calibration target on an image surface as an image surface coordinate; calculating initial values of internal parameters and external parameters of a camera of the vision measuring system according to the image surface coordinate; (3) optimizing camera distortion coefficients and the internal parameters and the external parameters of the camera by taking an object surface coordinate of the calibration target as constants, and calculating the sum C1 of reprojection errors of all feature points in the different directions on the calibration target on the image surface; (4) optimizing the object surface coordinate of the calibration target by taking the optimized camera distortion coefficients and the internal parameters and the external parameters of the camera as constants and taking the object surface coordinate of the calibration target as variables, and calculating the sum C2 of reprojection errors; (5) selecting cycle conditions, and returning to the step (3) if the cycle conditions are untenable; and (6) making the sum C1 and the sum C2 of the reprojection errors minimum respectively, and thus acquiring the optimized internal parameters and external parameters of the camera and the object surface coordinate.

Owner:BEIJING INFORMATION SCI & TECH UNIV

Multi-view stereoscopic video acquisition system and camera parameter calibrating method thereof

ActiveCN102982548AImprove collection efficiencyAvoid Camera Parameter CalibrationImage analysisStereoscopic videoPoint cloud

The invention provides a multi-view stereoscopic video acquisition system and a camera parameter calibrating method thereof. The method comprises the following steps of obtaining inside and outside parameters of cameras in the system, acquiring multi-view images of common scenes by the cameras at the same time, detecting and matching characteristic points of the multi-view images to obtain matching points among the view images, utilizing the parameters of the cameras to reconstruct and obtain three-dimensional space point cloud coordinates of the matching points among the view images, conducting adjustment and optimization with a thin bundle set to obtain a reprojection error according to the three-dimensional space point cloud coordinates and the inside and outside parameters of the cameras, optimizing the reprojection error and the inside and outside parameters of the cameras, judging whether to conduct secondary optimization according to the optimized reprojection error, and judging whether to recalibrate the parameters according to a secondary optimization result. According to the camera parameter calibrating method, with the adoption of detecting and matching of the characteristic points and adjusting and optimizing of the thin bundle set, the complicated parameter calibration of the cameras is avoided; and the acquisition efficiency of a stereoscopic video is improved.

Owner:TSINGHUA UNIV

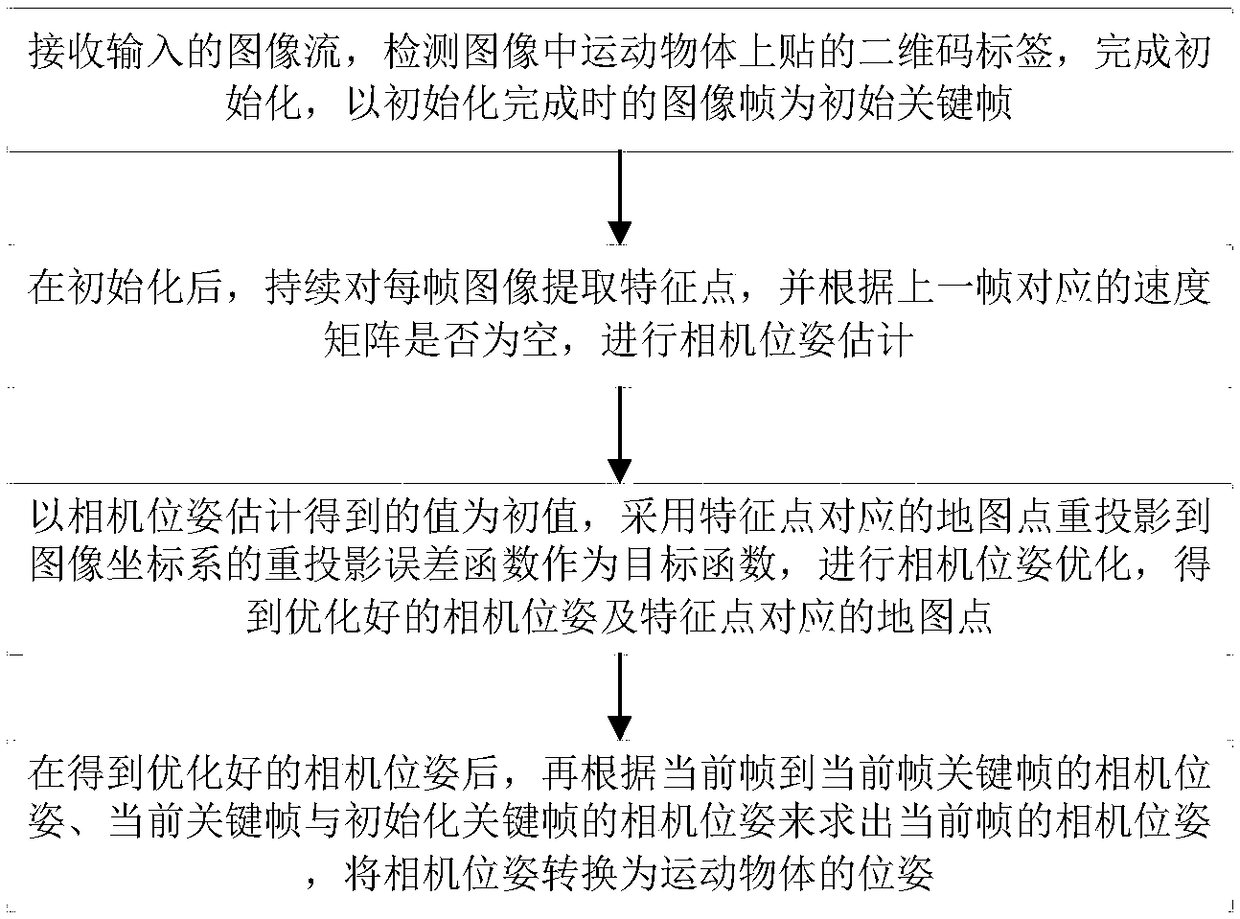

A six-degree-of-freedom attitude estimation method based on Tag

ActiveCN109345588AImprove robustnessImprove pose estimation accuracyImage enhancementImage analysisEstimation methodsImaging quality

The invention discloses a six-degree-of-freedom attitude estimation method based on Tag, By adding a tag to the object to aid detection, the Tag on the object is identified by the camera, Help SLAM complete initialization, After initialization, A feature point is continuously extracted from each frame of the image, and according to whether the speed matrix corresponding to the previous frame is empty, Camera pose estimation is carried out, and the initial value of camera pose estimation is taken as the initial value, and the re-projection error function of map points corresponding to feature points is adopted as the objective function to optimize camera pose, so as to obtain the optimized camera pose and map points corresponding to feature points, and then the camera pose is converted intothe pose of the object. The method of the invention has good robustness when the imaging quality is poor and the object moves at high speed, and has very high attitude estimation accuracy.

Owner:ZHEJIANG UNIV OF TECH

Slam method and system based on laser radar point cloud and camera image data fusion

ActiveCN111563442AImprove robustnessHigh precisionImage enhancementImage analysisCamera imagePoint cloud

The invention provides a laser radar-based point cloud and camera image data fusion slam method and system, and the method comprises the steps: extracting a key frame, carrying out the object instancesegmentation of a key frame image, and obtaining an object instance in the image; performing object segmentation on the point cloud of the key frame to obtain an object in a point cloud space; fusingand unifying the object instance in the image and the object object in the point cloud space to obtain an object set; matching the objects of the front frame and the rear frame according to the object set; performing solving to obtain the pose of the camera according to the point cloud matching error of the front and rear frames, the re-projection error of the image and the object category errorof the feature points in the front and rear frames; and registering the image carrying the object instance information into a point cloud map according to the pose of the camera to obtain the point cloud map with image semantic information. According to the method, the robustness of object instance segmentation is improved, and semantic constraints are added to an optimization equation, so that the precision of the solved pose is higher.

Owner:SHANGHAI JIAO TONG UNIV

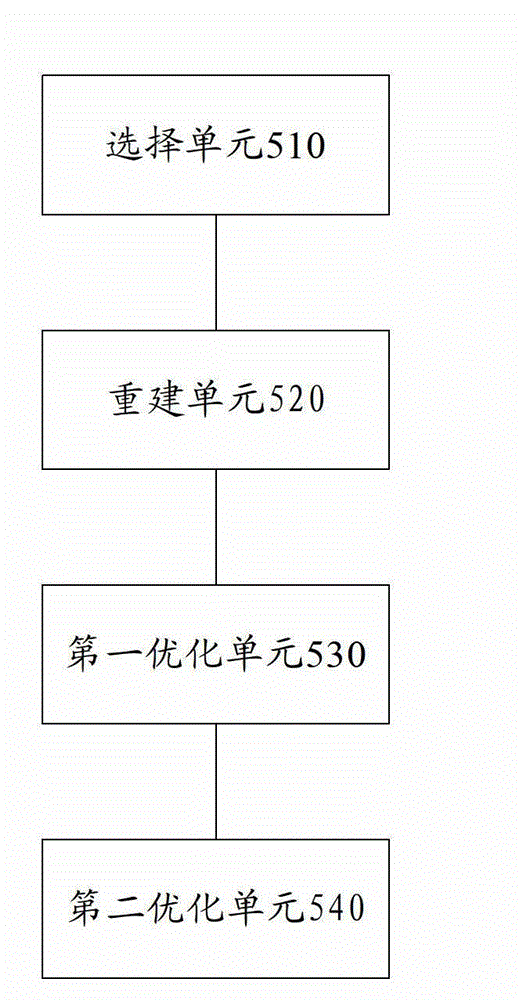

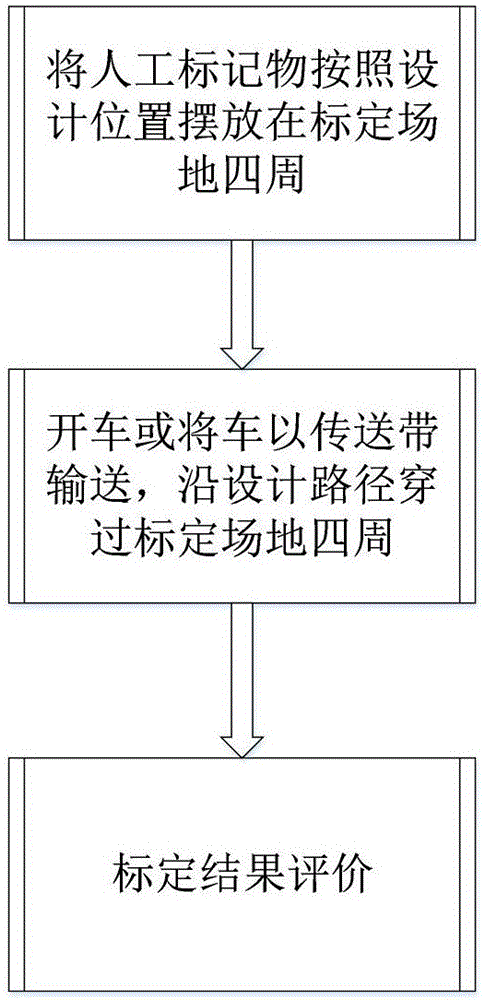

Dynamic calibration system, and combined optimization method and combined optimization device in dynamic calibration system

ActiveCN105844624AHigh precisionImprove execution efficiencyImage enhancementImage analysisFeature extractionCalibration result

The invention provides a dynamic calibration system, and a combined optimization method and a combined optimization device in the dynamic calibration system. The dynamic calibration system comprises a marker geometry feature extraction module, a camera parameter estimation module and a calibrate result evaluation module; the marker geometry feature extraction module detects a marker in the image shot by the camera, extracts geometry features of the marker, and traces and matches the extracted geometry features on images of follow-up frames which are provided by the camera; the camera parameter estimation module is used for obtaining the error of repeated projection according to the extracted geometry features of the marker and current poses of various cameras and obtaining a calibration result when the repeated projection error satisfies a set error threshold; all cameras are positioned in initial position states during the first operation; and the calibration result evaluation module is used for evaluating the accuracy of the calibration result and determining whether to receive parameters of the calibration result. The calibration parameter of the invention has relatively high accuracy, and the dynamic calibration system, the combined optimization method and the combined optimization device in the dynamic calibration system are applicable to the dynamic streamline calibration, high in execution efficiency, wide in application range and simple in system.

Owner:SHANGHAI OUFEI INTELLIGNET VEHICLE INTERNET TECH CO LTD

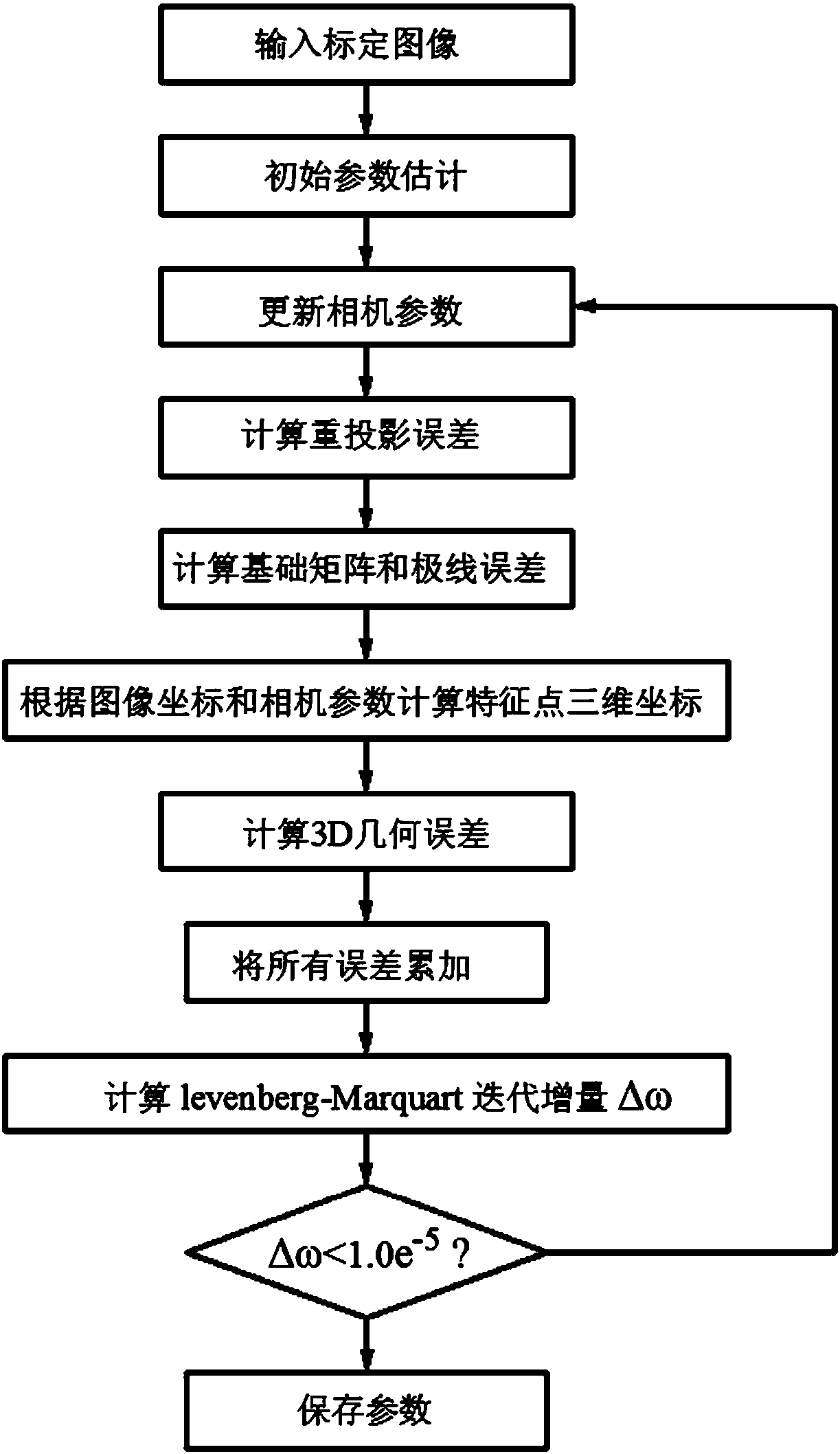

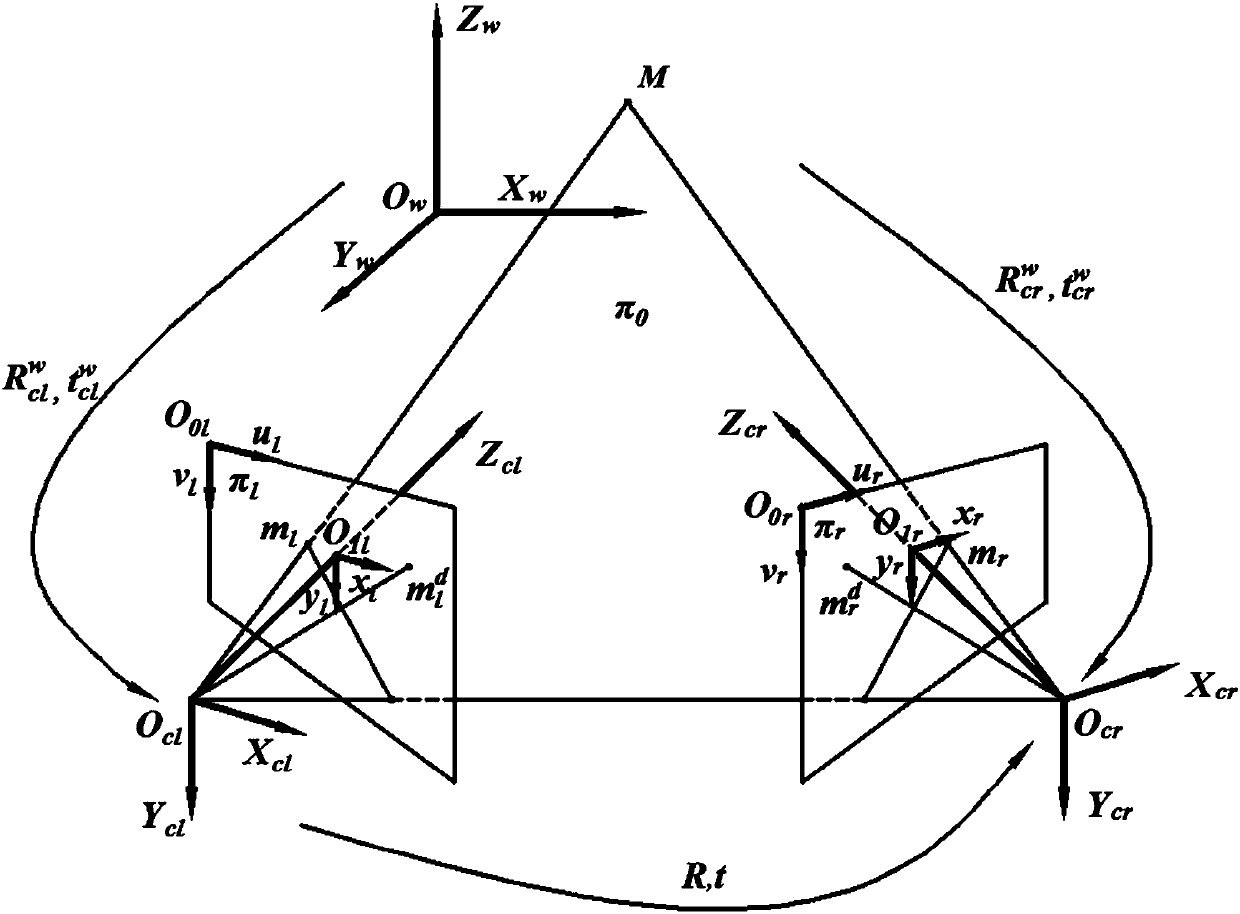

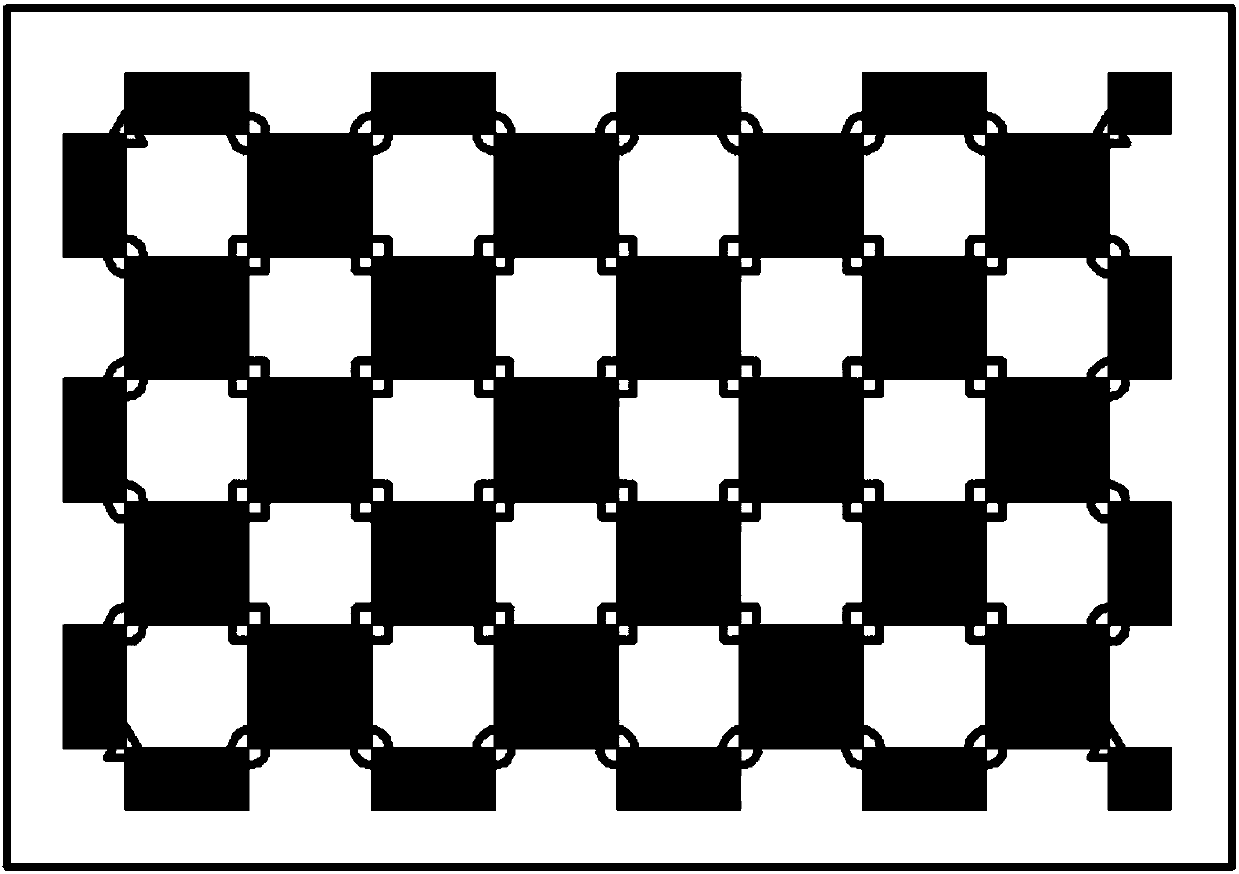

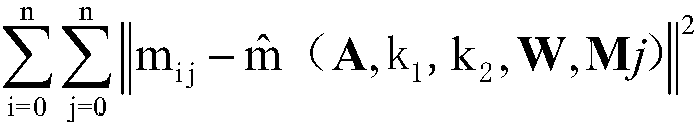

Muti-constraint-based high-precision binocular camera calibration method

ActiveCN108053450AHigh precisionImprove stabilityImage enhancementImage analysisGeometric errorCamera image

The invention discloses a muti-constraint-based high-precision binocular camera calibration method. The method comprises the steps that initial binocular camera parameters are calculated through a Zhang zhengyou calibration method, and re-projection errors and polar line errors are calculated, and then according to feature point coordinates in left and right camera images and the binocular cameraparameters, three-dimensional feature points are rebuilt; 3D geometric errors formed by adjacent distance errors, collinear errors and right angle errors are calculated. An optimized objective function formed by re-projection error cumulative sum, adjacent distance error cumulative sum, collinear error cumulative sum and right angle error cumulative sum is built, the optimized objective function is solved through a Levenberg-Marquardt method, and the optimal binocular camera parameters are obtained. Compared with the Zhang zhengyou calibration method, a binocular camera is calibrated, the distance measurement errors, collinear errors and right angle errors are obviously lowered.

Owner:ZHEJIANG UNIV

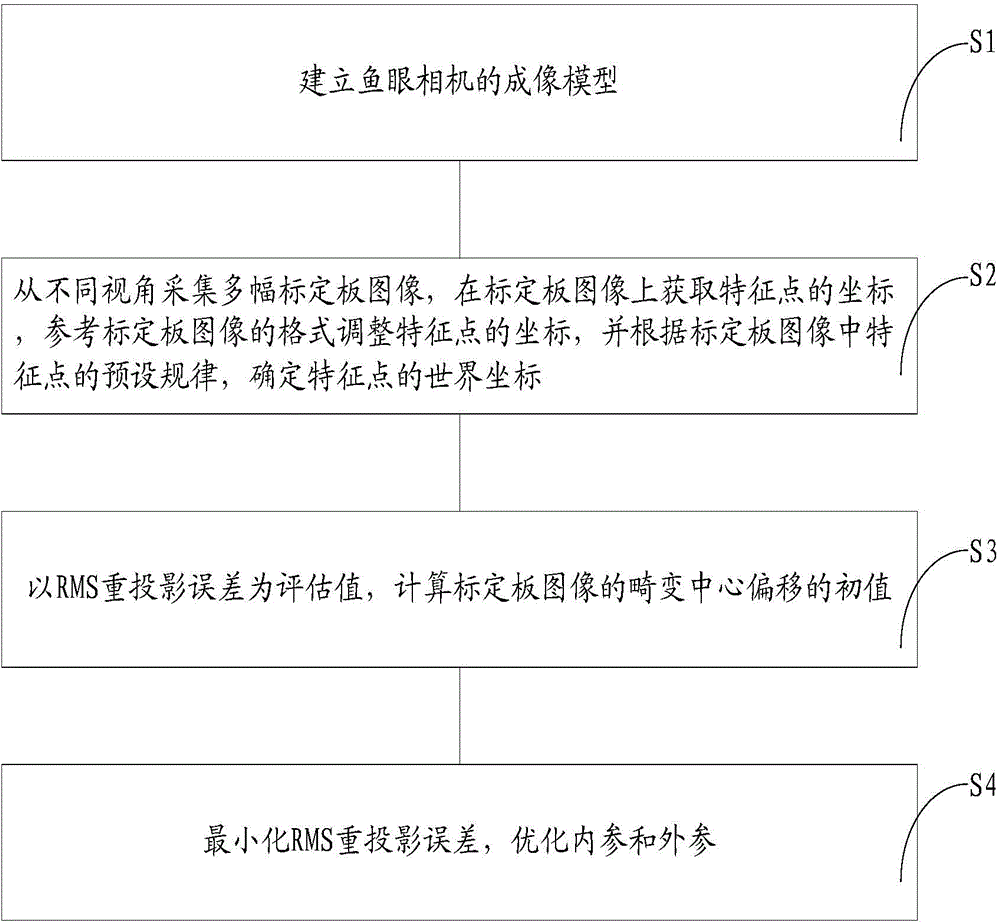

Fisheye camera calibration method and device

ActiveCN104392435AImprove applicabilityHigh precisionImage analysisComputer graphics (images)Calibration result

The invention discloses a fisheye camera calibration method and device. The method includes the steps of establishing an imaging model of a fisheye camera, collecting multiple calibration plate images at different view angles, obtaining coordinates of feature points from the calibration plate images, adjusting the coordinates of the feature points according to the formats of the calibration plate images, determining world coordinates of the feature points according to the preset laws of the feature points in the calibration plate images, calculating the initial values of the distortion center deflection of the calibration plate images with the RMS reprojection error as the evaluation value, minimizing the RMS reprojection error, and optimizing internal parameters and external parameters. In this way, no special calibration devices need to be adopted for the technical scheme, the fisheye camera calibration method and device can be suitable for most fisheye cameras, and the applicability of the calibration method and the accuracy of the calibration result are improved.

Owner:KUNSHAN BRANCH INST OF MICROELECTRONICS OF CHINESE ACADEMY OF SCI

SLAM-based visual perception mapping algorithm and mobile robot

The invention discloses an SLAM-based visual perception mapping algorithm and a mobile robot. The algorithm comprises the steps of obtaining image data of a to-be-mapped area environment and rotatingspeed data of a driving wheel; performing feature extraction on a color image in the image data; after the rotating speed data is calculated and processed according to a kinematics equation, fusing and optimizing the rotating speed data and a depth image in the image data by using a method of minimizing an encoder and a re-projection error to obtain a current frame pose of the robot; screening current frame image data acquired when a robot walks in a to-be-mapped area to obtain a key frame, identifying and classifying objects in an environment by utilizing a color image of the key frame, deleting the classified dynamic objects from the key frame, carrying out sparse processing on static objects, and carrying out local loopback for correction; taking pixel points of the color image and thecorresponding depth image in the key frame as map points, storing the map points through an octree, and carrying out mapping.

Owner:GUANGDONG UNIV OF TECH

Image processing method and device

ActiveCN104363986AHigh precisionQuality improvementEntertainmentImage enhancementImaging processingOptical distortion

The invention discloses an image processing method and device. The method comprises: a distorted image of a captured object is obtained; according to a mapping relation between optical distortion models of at least one set of lenses and reprojection error values, an optical distortion model with a reprojection error value smaller than a set threshold is selected and includes an optical distortion type, a distortion order and a distortion coefficient, the reprojection error value being used for representing the differences between theoretical distorted image coordinates and real distorted image coordinates of any marked object; the model is used for optical distortion correction of an obtained distorted image to obtain an image subjected to optical distortion correction. The method effectively eliminates optical distortion induced by optical imaging theories of an imaging device during the process of obtaining the image of the captured object, and improves the quality of the captured image.

Owner:HUAWEI TECH CO LTD

A binocular vision SLAM method combining point and line characteristics

PendingCN109934862APromote reductionReduce computational complexityImage analysisFeature extractionClosed loop

The invention discloses a point-line feature combined binocular vision SLAM method. The method comprises the following steps: S1, calibrating internal parameters of a binocular camera; S2, using the calibrated camera to collect an environment image, and using a gradient density filter to filter a feature dense region to obtain an effective image detection region; S3, extracting feature points andfeature lines; S4, performing broken line combination on the extracted line features; S5, performing tracking matching by utilizing the feature point line, and selecting a key frame; S6, constructinga cost function by using the re-projection error of the feature point line; S7, performing local map optimization; And S8, judging a closed loop by using the point-line combined word bag model, and optimizing the global track. The invention provides an image filtering mechanism, a line segment merging method and an accelerated back-end optimization calculation method, solves the problems that a large number of invalid features are extracted from an image feature dense region, a line feature extraction method is broken, a traditional back-end optimization method is long in time consumption andthe like, and improves the robustness, the precision and the speed of a system.

Owner:SHANGHAI UNIV

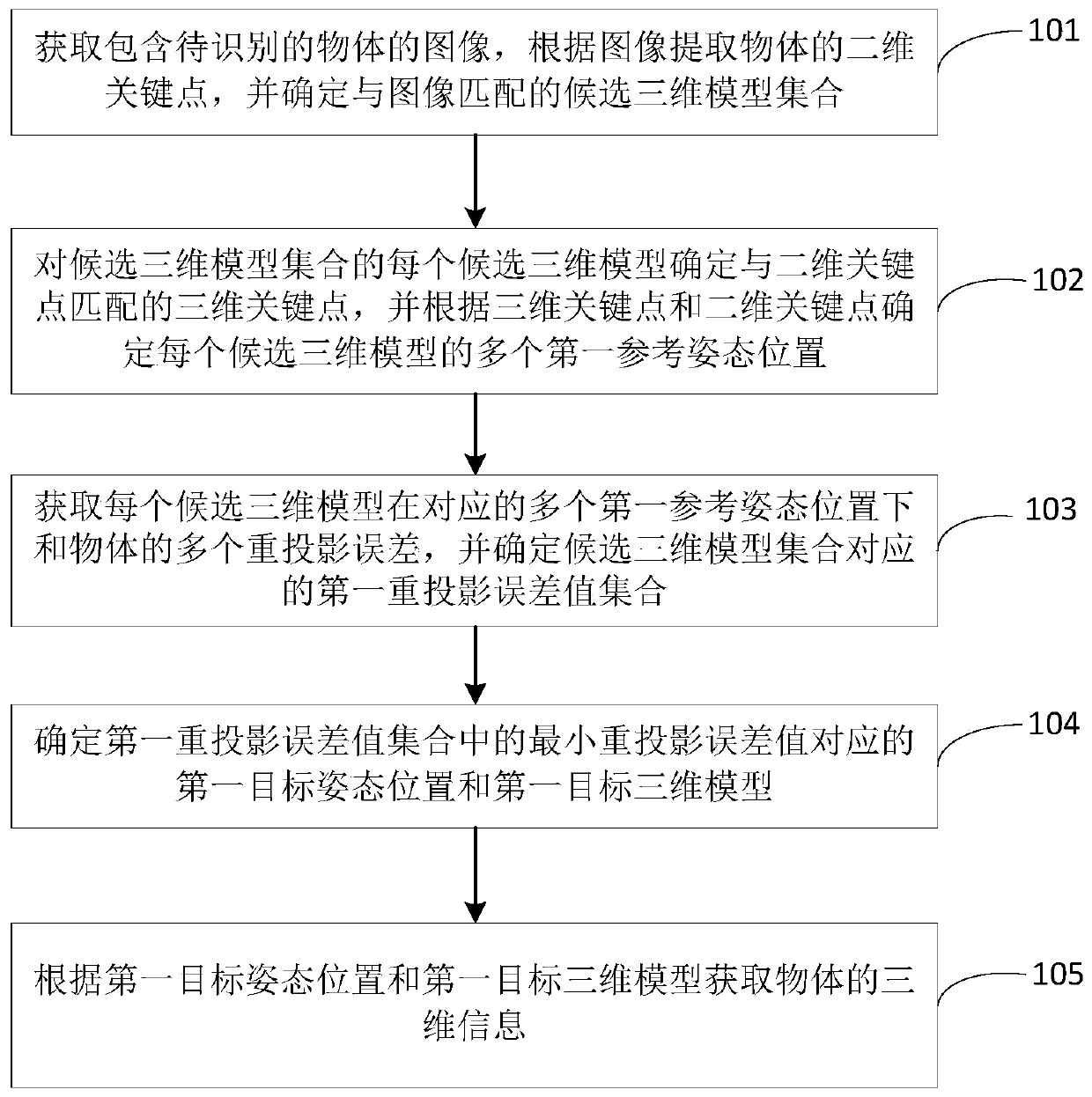

An object three-dimensional information acquisition method and device

ActiveCN109816704AImprove acquisition efficiencyHigh Acquisition AccuracyImage enhancementImage analysisImage extractionReprojection error

The invention provides an object three-dimensional information acquisition method and device, and the method comprises the steps of extracting two-dimensional key points of an object according to an image, and determining three-dimensional key points matched with the two-dimensional key points in each candidate three-dimensional model; determining a plurality of first reference posture positions of each candidate three-dimensional model according to the three-dimensional key points and the two-dimensional key points; obtaining a plurality of re-projection error values of each candidate three-dimensional model and the object under the plurality of first reference attitude positions, and determining a first target attitude position with the minimum re-projection error value and a first target three-dimensional model; and obtaining three-dimensional information of the object according to the first target attitude position and the first target three-dimensional model. Therefore, the obtaining efficiency and the accuracy of the three-dimensional information of the object in the two-dimensional image are improved, and the robustness of the obtaining mode of obtaining the three-dimensional information of the object is ensured.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

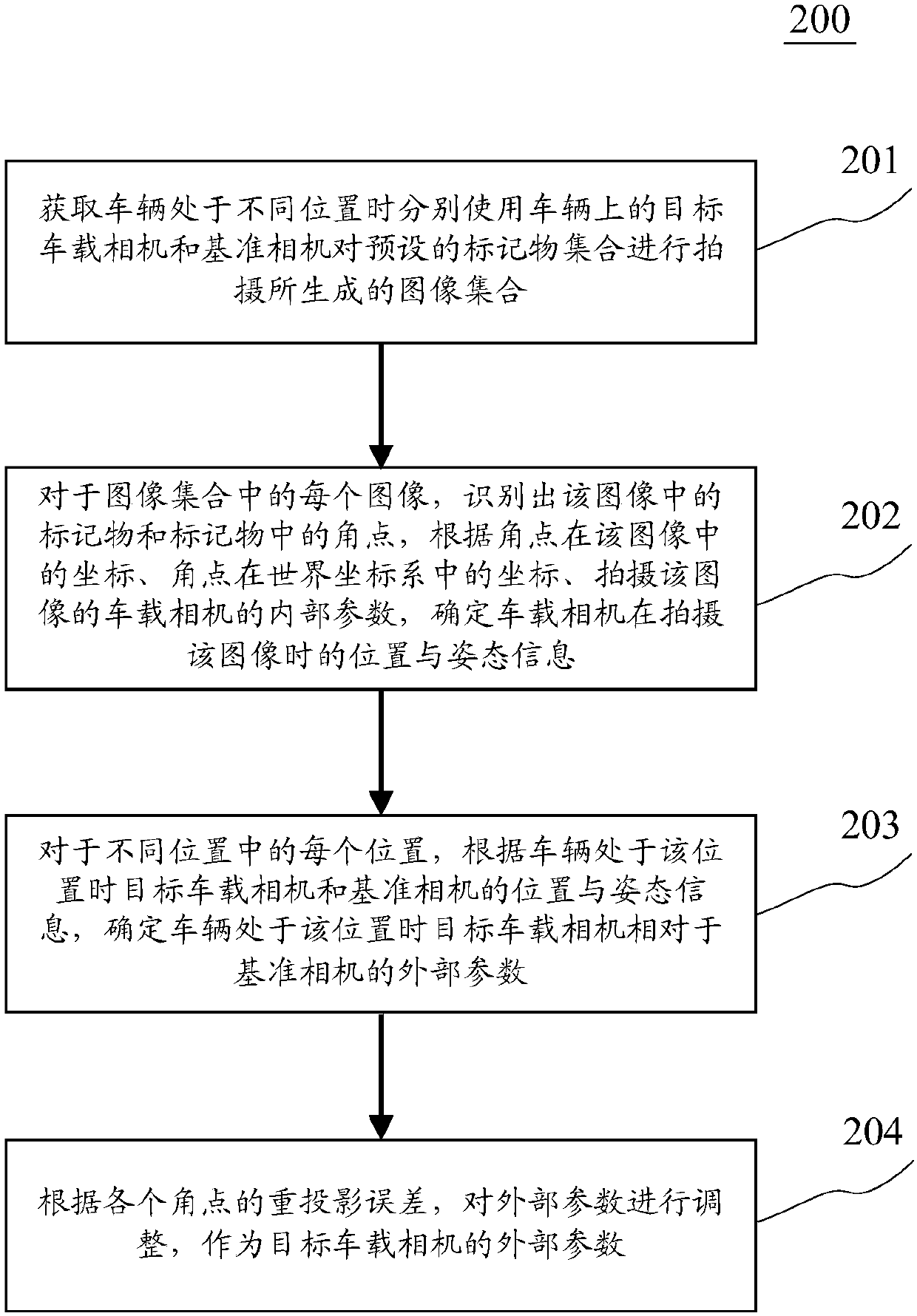

Method and device for acquiring external parameters of vehicle-mounted camera

The invention discloses a method and a device for acquiring external parameters of a vehicle-mounted camera. The method in one particular embodiment comprises steps: an image set generated by using atarget vehicle-mounted camera and a reference camera on the vehicle to photograph a preset marker set when the vehicle is in different positions is acquired; a marker in the image and an angular pointin the marker are recognized, and according to coordinates of the angular point in the image, coordinates in a world coordinate system and the internal parameters of the vehicle-mounted camera, the position information and the attitude information in the case of image photographing by the vehicle-mounted camera are determined; according to the position information and the attitude information ofthe target vehicle-mounted camera and the reference camera when the vehicle is in different positions, the external parameters of the target vehicle-mounted camera relative to the reference camera when the vehicle is in the position are determined; and according to re-projection errors of each angular point, the external parameters are adjusted to be the external parameters of the target vehicle-mounted camera. Thus, the external parameters of multiple vehicle-mounted cameras can be accurately acquired.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

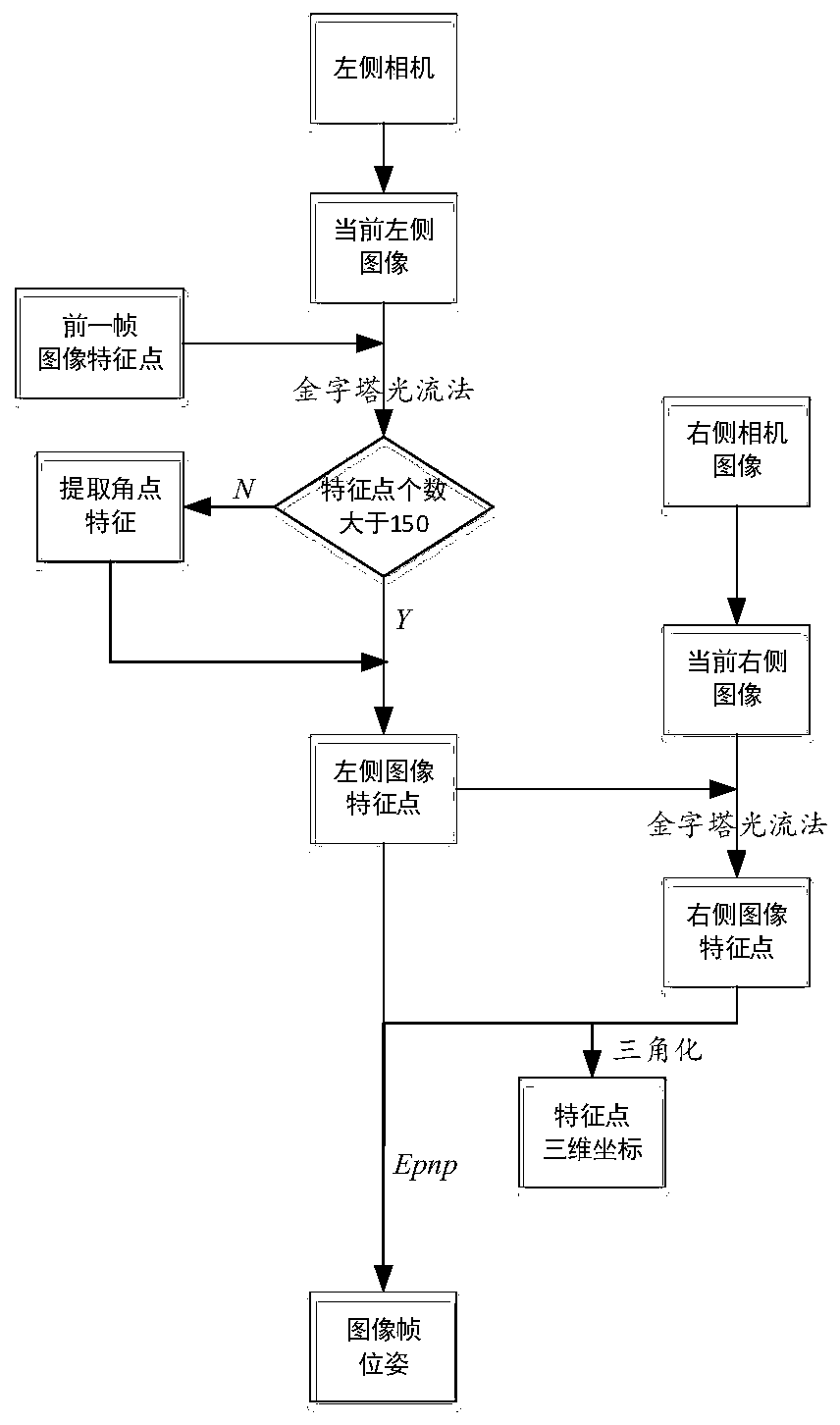

Vehicle mileage calculation method based on double-eye vision

ActiveCN105300403AHigh precisionGuaranteed computing speedDistance measurementReprojection errorVisual perception

The invention belongs to the technical field of autonomous navigation of intelligent traffic vehicles, and particularly relates to a vehicle mileage calculation method based on double-eye vision. The vehicle mileage calculation method comprises the following steps of obtaining a video stream of a double-eye camera which is fixedly arranged at the top part of a vehicle, and transmitting to a vehicular processor; respectively extracting the features of a left image and a right image from the image of each frame of video stream of the double-eye camera, combining with the features of a left image and a right image of the previous frame, and searching a matched feature point set by a feature matching method; according to the matched feature points of the previous frame, using a three-dimensional vision method to calculate the space coordinates of a corresponding three-dimensional point; re-projecting the space coordinates of the three-dimensional point in the previous step to the coordinates of a two-dimensional image of the existing frame, and using a GN iteration algorithm to solve the minimum error of re-projection, so as to solve the motion transformation value of the vehicle of the adjacent frame; according to the motion transformation value of the vehicle, accumulating and updating the mileage information of the vehicle motion. The vehicle mileage calculation method has the advantages that by matching and combining the quick features and the high-precision features, the precision is improved, and the calculating speed is guaranteed.

Owner:TSINGHUA UNIV

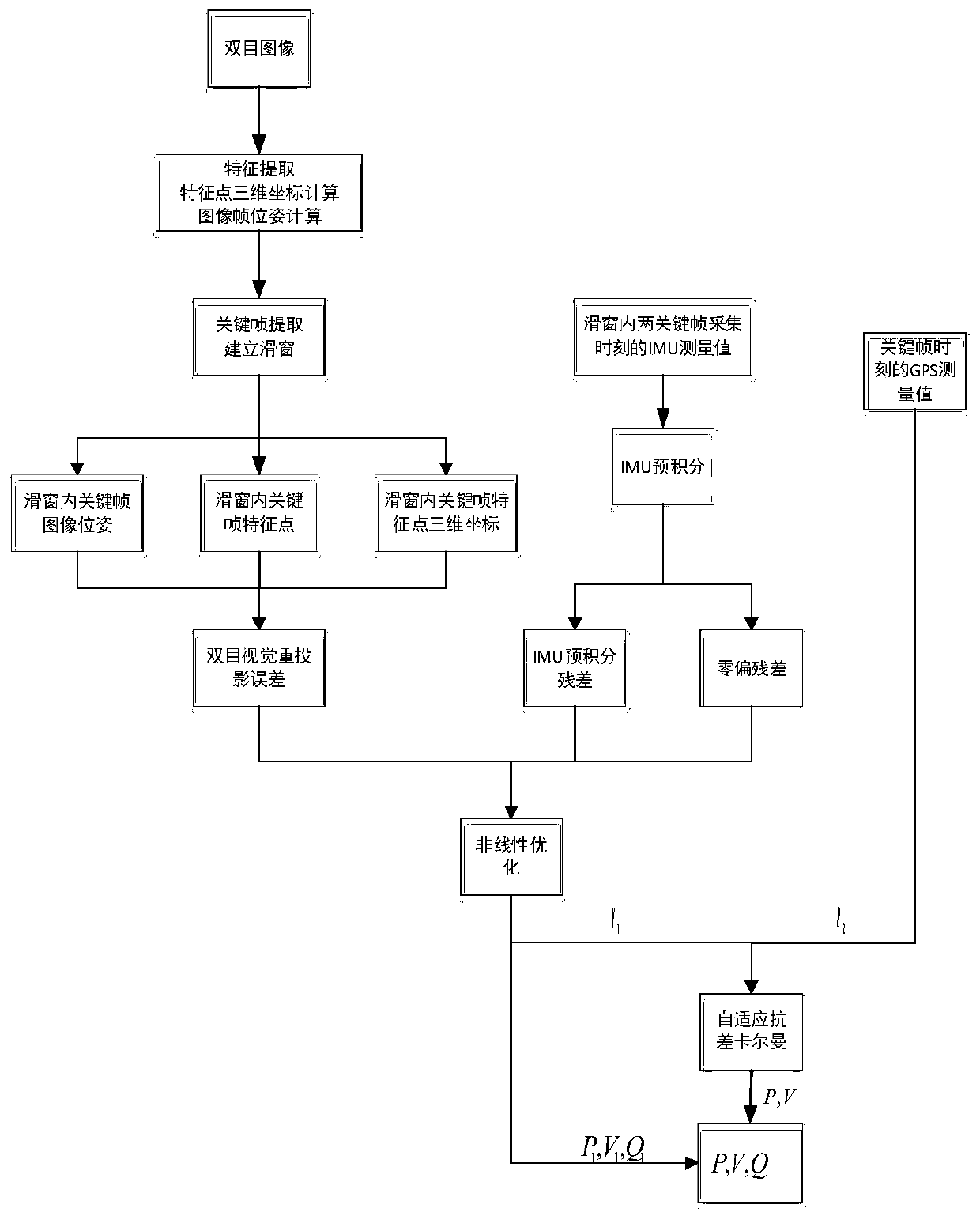

GPS-fused robot vision inertial navigation integrated positioning method

ActiveCN111121767ALocal Relative Pose AccuracyReduce estimation errorImage enhancementImage analysisPattern recognitionEngineering

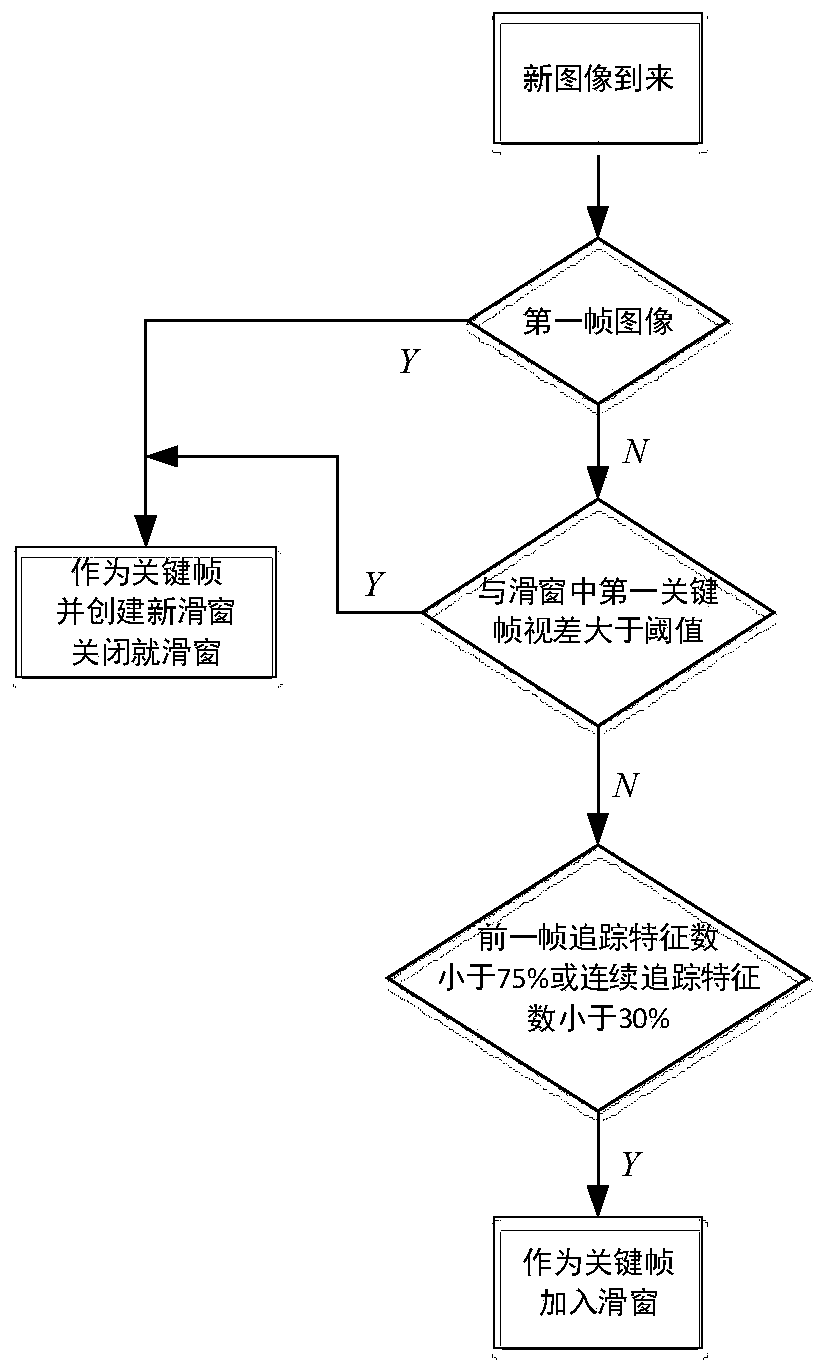

The invention discloses a GPS-fused robot vision inertial navigation integrated positioning method. The method comprises the following steps: extracting and matching feature points of left and right images and front and back images of a binocular camera, and calculating three-dimensional coordinates of the feature points and relative poses of image frames; selecting a key frame in an image stream,creating a sliding window, and adding the key frame into the sliding window; calculating a visual reprojection error, an IMU pre-integration residual error and a zero offset residual error and combining the errors into a joint pose estimation residual error; carrying out nonlinear optimization on the joint pose estimation residual error by using an L-M method to obtain an optimized visual inertial navigation (VIO) robot pose; if the GPS data exist at the current moment, performing adaptive robust Kalman filtering on the GPS position data and the VIO pose estimation data to obtain a final robot pose; and if no GPS data exist, replacing the final pose data with the VIO pose data. According to the method, the positioning precision of the robot is improved, the calculation consumption is reduced, and the demands of large-range and long-time inspection are satisfied.

Owner:NANJING UNIV OF SCI & TECH

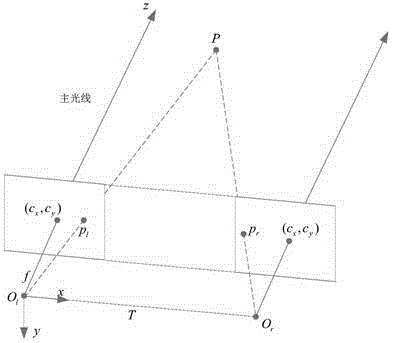

A method for solving 3D coordinates of spatial points based on mathematical model of stereo vision

ActiveCN109272570ARealization of high precision solutionSolution implementationImage analysis3D modellingMathematical modelOptical axis

The invention provides a high-precision solution method for three-dimensional coordinates of a space point in a world coordinate system considering multiple factors in an internal reference based on astereo vision mathematical model. The invention is based on a binocular stereo vision system, after calibrating the initial parameters of left and right cameras in binocular stereo vision system, thecamera parameters are optimized iteratively by the principle of image reprojection error minimization. The internal parameters of the left camera and the right camera are unequal focal length, and the offset of the camera optical axis in the left and right camera coordinates are obtained. Taking photos of the same target point, the precision of recovering the 3D information of the target point isimproved by optimizing the parameters of the left and right cameras. The relationship between the left image coordinate system and the world coordinate system is established, the relationship betweenthe right camera coordinate system and the world coordinate system is established, and the relationship between the right image coordinate system and the right camera coordinate system is established. Through the relationship among the left image coordinate system, the right image coordinate system, the world coordinate system and the right camera coordinate system, the high-precision solution ofthe three-dimensional coordinates of the space points is realized.

Owner:HEFEI UNIV OF TECH

Monocular vision odometer pose processing method based on IMU assistance

ActiveCN110009681AImprove robustnessAdaptableImage enhancementImage analysisFeature extractionOdometer

The invention discloses a monocular vision odometer pose processing method based on IMU assistance. The method comprises: performing ORB feature extraction on continuous images collected by a monocular camera; pre-integrating the IMU data between moments of two adjacent frames of images at the same time; outputting the camera pose at each moment according to the relation between the number of theextracted feature points and a set threshold value, if the number of the feature points is greater than the set threshold value, judging that the image is a normal image sequence, solving the motion pose of the camera by adopting a pure visual model, and then optimizing the camera pose by utilizing the reprojection error of the image; and if the number of the feature points is smaller than a set threshold value, judging that the image is a feature point missing image, and obtaining a camera pose between the feature point missing images by adopting an inertial measurement unit (IMU) pre-integration model. According to the method, the calculation speed is high, the robustness to motion is achieved, and the mapping accuracy is improved.

Owner:CHINA JILIANG UNIV

Binocular calibration method based on chaotic particle swarm optimization algorithm

ActiveCN105654476ASolve the problem of easy to fall into local extremumGuaranteed accuracyImage enhancementImage analysisChaotic particle swarm optimizationImage pair

The invention provides a binocular calibration method based on a chaotic particle swarm optimization algorithm. A plurality of sets of dot array planar calibration board image pairs with different poses are simultaneously photographed through two image cameras. On condition that distortion is not considered, initial values of inner parameters and outer parameters of a left image camera and a right image camera are obtained by means of a Zhang's planar template linear calibration method. Then on condition that a two-order radial distortion and a two-order tangential distortion are considered, a three-dimensional reprojection error is minimized by means of the chaotic particle swarm optimization algorithm, thereby obtaining final inner parameter and final outer parameter of the two image cameras. In an iteration optimization process, a global adaptive inertia weight (GAIW) is introduced. A particle local neighborhood is constructed by means of a dynamic annular topological relationship. Speed and current position are updated according to an optimal fitness value in the particle local neighborhood. Furthermore chaotic optimization is performed on the optimal position which corresponds with the optimal fitness value in the particle local neighborhood. The binocular calibration method effectively settles a problem of low calibration precision caused by a local extreme value in a previous particle swarm optimization algorithm, thereby improving binocular calibration precision and ensuring high precision in subsequent binocular three-dimensional reconstruction.

Owner:湖州菱创科技有限公司

Large visual field camera calibration method based on four sets of collinear constraint calibration rulers

ActiveCN105139411AAchieve high-precision calibrationImprove defectsImage analysisVisual field lossCross-ratio

The invention discloses a large visual field camera calibration method based on four sets of collinear constraint calibration rulers and belongs to the field of optical measurement. The calibration method comprises steps of arranging four sets of collinear constraint calibration rulers in a large optical measurement field; solving distortion parameters by using unlimited characteristics of the cross ratio and straight line constrains; linearly solving the initial value of the calibrated parameter via the calibrated control point in the space; and at last by combining the distortion coefficients and the calibrated initial value and using the L-M optimization method, performing overall optimization with the goal of minimizing the re-projection error so as to get the calibrated precise result of the large visual field camera. According to the invention, by flexibly arranging the calibration control point in the large view field measurement space and combining the four sets of collinear constraint calibration rulers, overall optimization is performed on the calibrated results, high precision calibration for the large view field camera is achieved and the calibration method has a wide application prospect.

Owner:DALIAN UNIV OF TECH

Measurement method of visual odometer, and visual odometer

The invention provides a measurement method of a visual odometer, and the visual odometer. The measurement method of the visual odometer comprises the following steps: acquiring an image sequence of aphotographing object; measuring angular velocity and accelerated velocity of a carrier; using an ORB algorithm, selecting a plurality of key frames from the image sequence; using a non-linear numerical value optimization method, performing optimization operations for reprojection error among the plurality of the key frames and motion model error of the carrier, calculating out posture informationof the carrier corresponding to that the sum of the reprojection error and the motion model error is minimum, and performing real-time three-dimensional reconstruction. The measurement method of thevisual odometer, and the visual odometer, provided by the invention optimize system motion states through the non-linear numerical value optimization method, thus, accurate estimation of own pose of the carrier is facilitated, and reconstruction of a scene three-dimensional map can be executed in real time.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

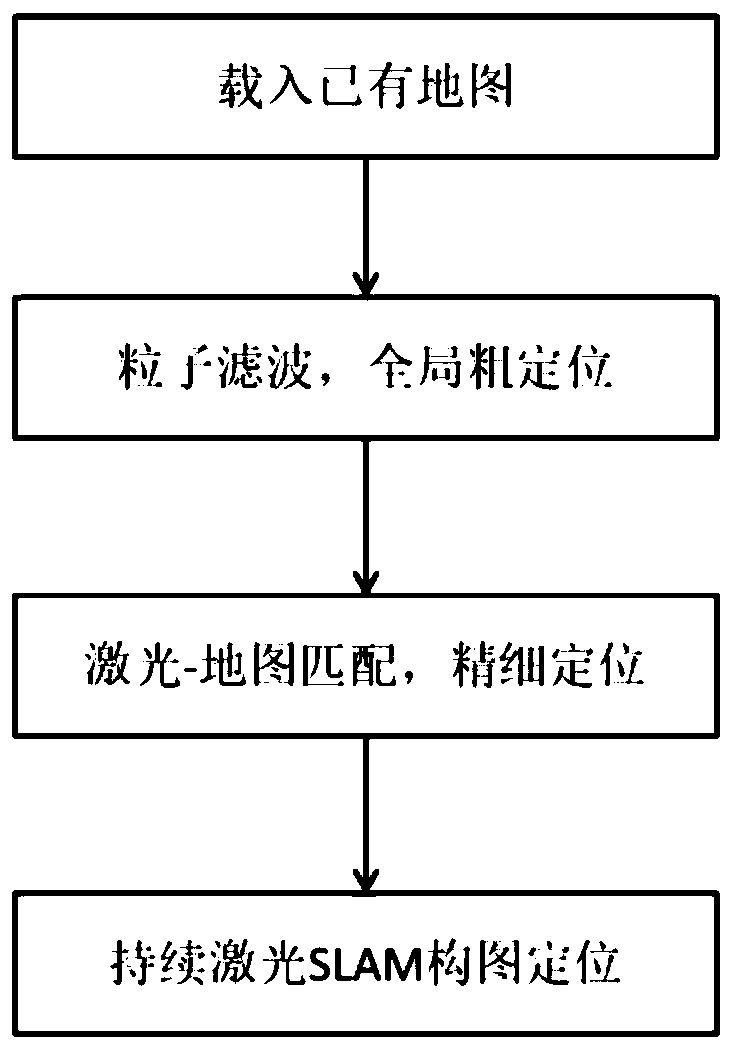

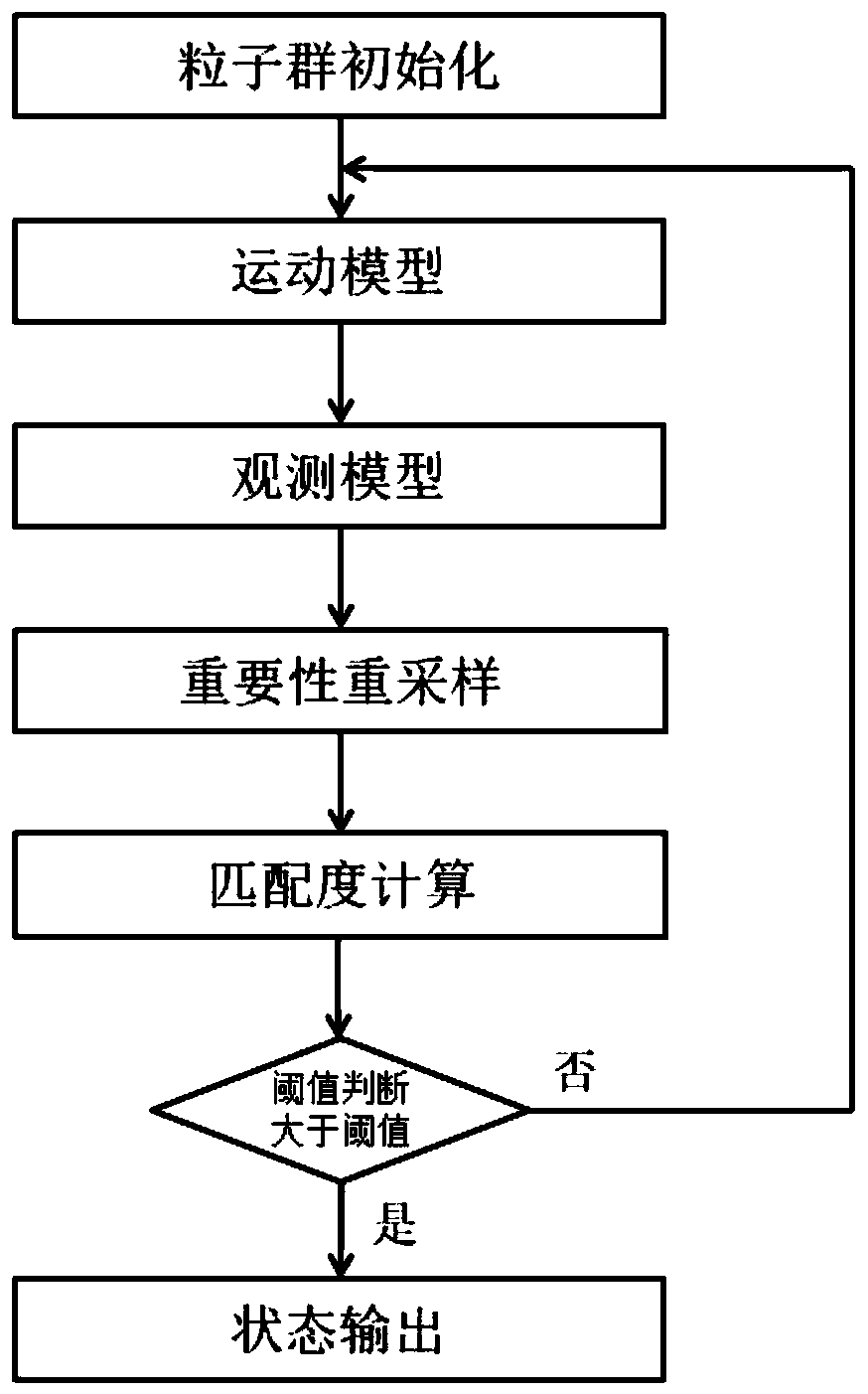

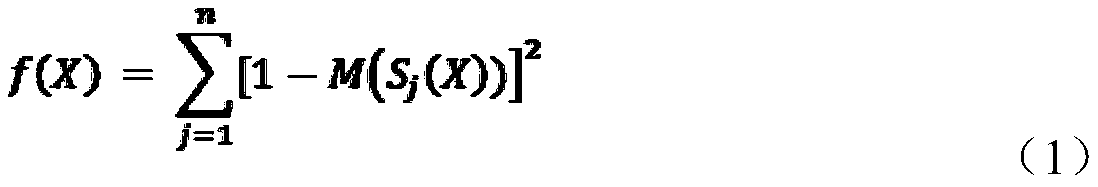

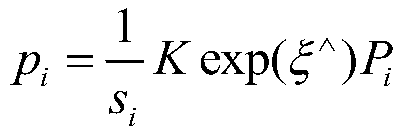

Known occupancy grid map-based continuous laser SLAM composition positioning method

ActiveCN110531766AIncrease the speed of positioningImprove positioning efficiencyArtificial lifeGeographical information databasesLaser scanningReprojection error

The invention relates to a known occupancy grid map-based continuous laser SLAM composition positioning method. The known occupancy grid map-based continuous laser SLAM composition positioning methodcomprises the steps of loading a known occupancy grid map file which is built in advance; outputting a mobile robot state vector under a global rough positioning mode by a particle filtering method; performing laser and map matching, calculating minimum reprojection error of a laser scanning point, and solving the mobile robot state vector to achieve fine positioning; and initializing the occupancy grid map according to the known map, and performing continuous laser SLAM composition positioning by taking the mobile robot state vector of the solved fine positioning result as an SLAM initial state. By the known occupancy grid map-based continuous laser SLAM composition positioning method, the map file which is built in advance can be loaded, environmental updating or expansion is achieved, complete reconstruction is not need from beginning, meanwhile, an extra characteristic mark is also not needed set for an environment, and positioning can be achieved by means of natural profile characteristic.

Owner:SEIZET TECH SHEN ZHEN CO LTD

Light field camera calibration method based on multi-center projection model

The invention provides a light field camera calibration method based on a multi-center projection model. The method comprises the steps of shooting the calibration plates under different postures by moving a calibration plate or a light field camera; obtaining the light field data, determining the corner points on the calibration plates and a matched corner point light set; constructing a linear constraint of the light rays of a light field coordinate system of the light field camera and the three-dimensional space points, calculating the internal parameters of the light field camera and the external parameters under the corresponding attitudes through the linear initialization, establishing a cost function based on a reprojection error, and performing iteration to obtain an optimal solution of the internal parameters, the external parameters and the radial distortion parameters of the light field camera to be calibrated. According to the method, the light rays in space are recorded bythe light field camera essentially, due to the fact that the light rays are parameterized by adopting double parallel planes and absolute coordinates, the problem that three-dimensional point reconstruction is inaccurate is solved, and the purpose of accurately and robustly calibrating the internal parameters of the light field camera is achieved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Pose estimation method for depth camera

ActiveCN110503688AImprove the success rate of convergenceImprove robustnessImage enhancementImage analysisPattern recognitionColor image

The invention relates to the technical field of fixed-point tracking, and discloses a pose estimation method for a depth camera, and the method comprises the steps: enabling the depth camera to be disposed on a moving mechanism for photographing, obtaining a depth image and an RBG color image, and extracting ORB feature point pairs from every two adjacent photographed images; calculating an estimated value xiP of the pose change of the camera by utilizing N ORB feature point pairs with missing depth information, and calculating an estimated value xiQ of the pose change of the camera by utilizing M ORB feature point pairs with complete depth information so as to obtain a total estimated value xi0; constructing a minimum reprojection error model fusing the ORB feature point pairs with missing depth information and the error information corresponding to the ORB feature point pairs with complete depth information, and further obtaining a corresponding jacobian matrix J; and according to the total estimated value xi0, the minimum reprojection error function and the Jacobian matrix J, calculating an optimized total estimated value xik by using a nonlinear optimization method, thereby completing estimation of camera pose change in a shooting process of two adjacent frames of images.

Owner:SHANGHAI UNIV OF ENG SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com