GPS-fused robot vision inertial navigation integrated positioning method

A technology of robot vision and combined positioning, which is applied in the field of combined positioning of robot vision and inertial navigation integrated with GPS, can solve problems such as inability to achieve high-precision composition positioning, and achieve the effect of avoiding computing power consumption and reducing estimation errors.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

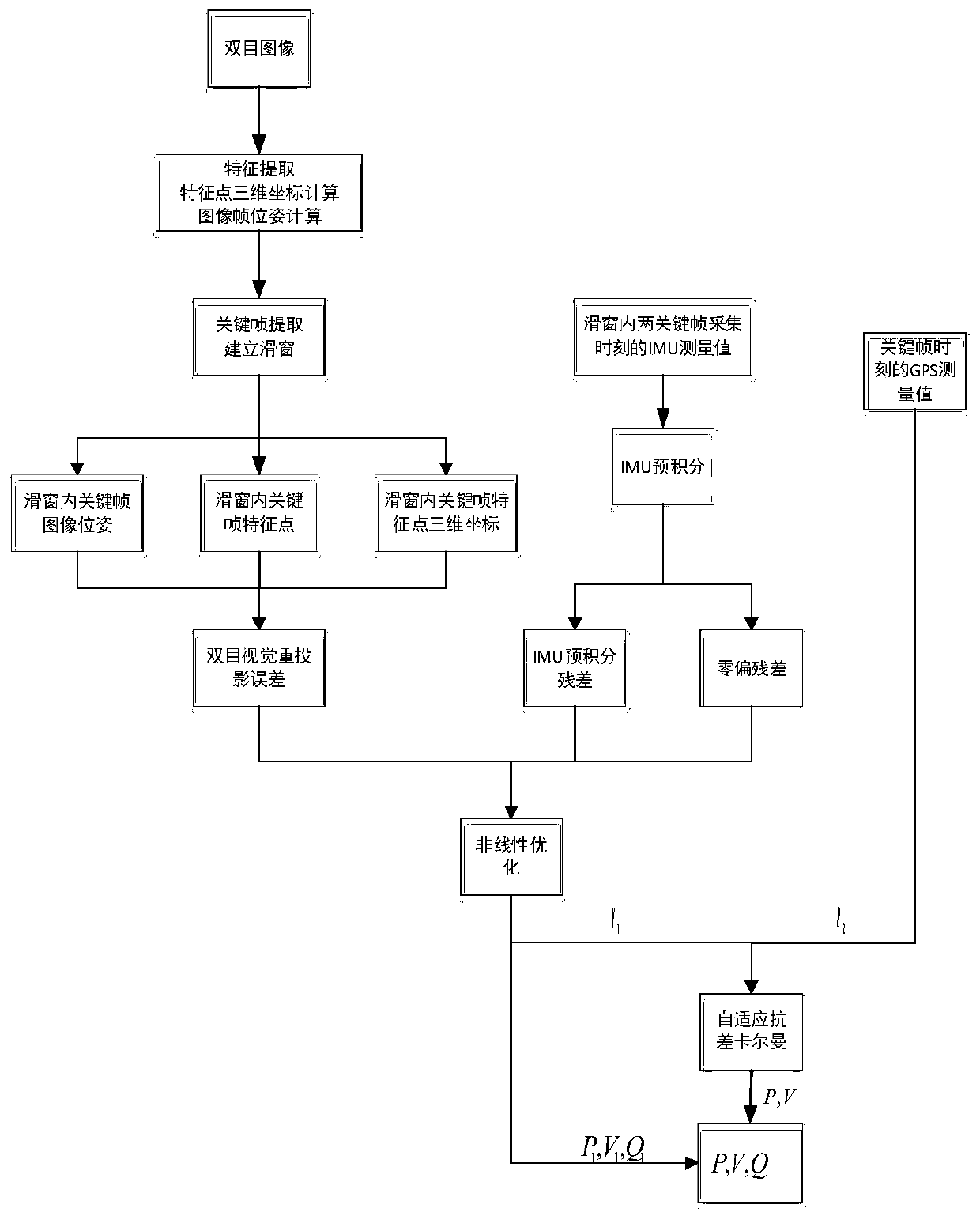

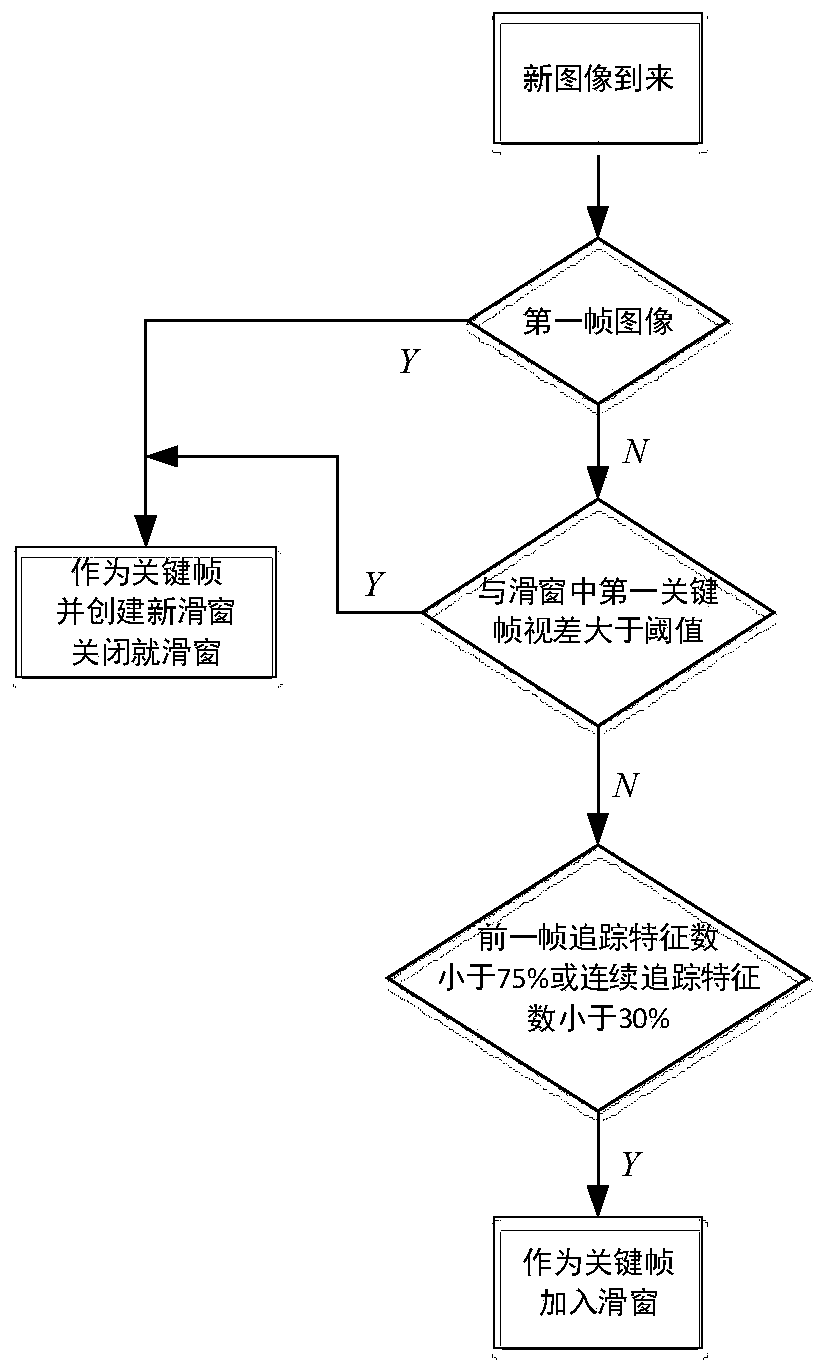

[0017] Such as figure 1 As shown, a robot visual inertial navigation combined positioning method fused with GPS includes the following steps:

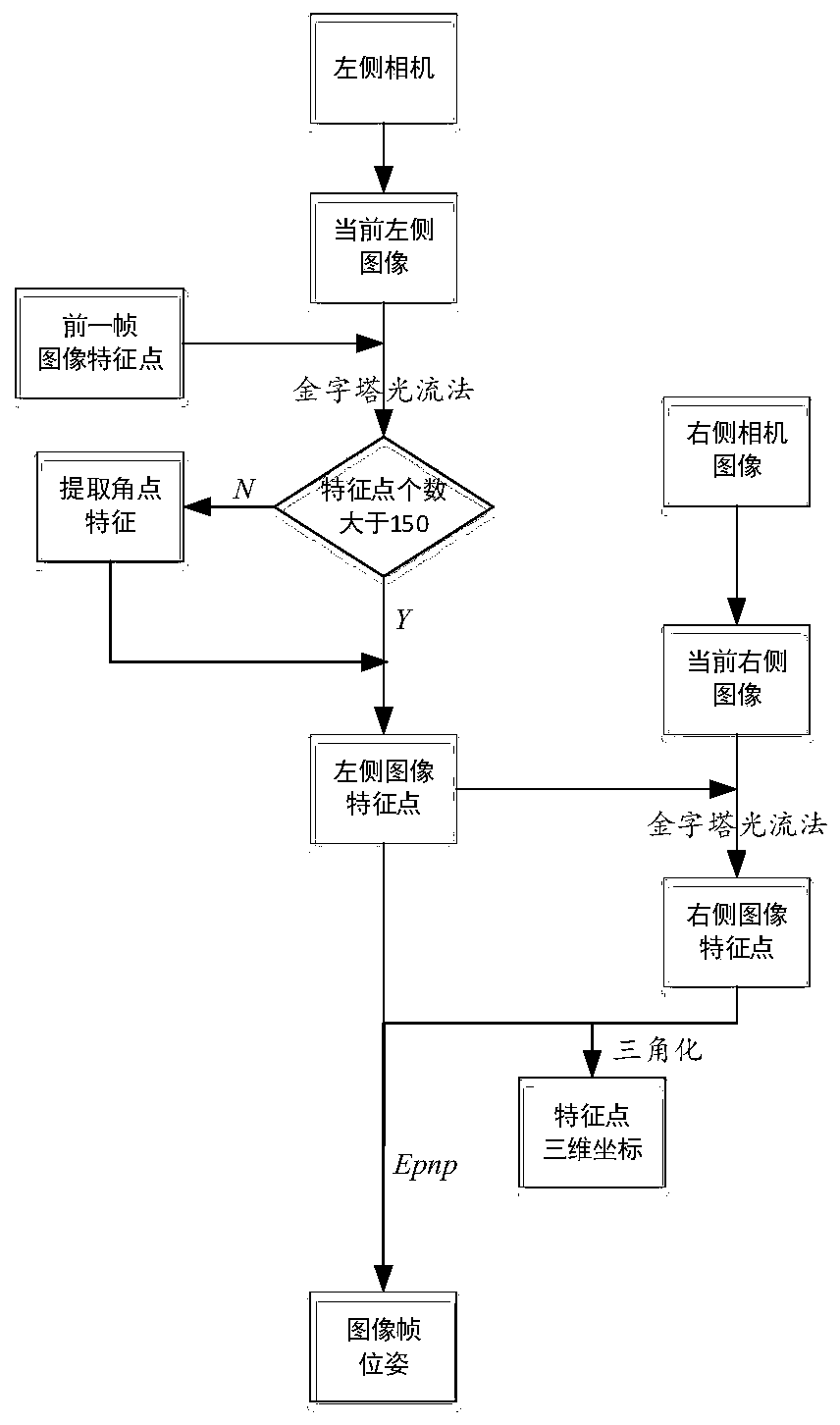

[0018] Step 1. Extract the feature points of the left camera image at the current moment according to the feature points of the left camera image at the previous moment and perform feature point matching, and extract the feature points of the right camera image at the current moment according to the feature points of the left camera image at the current moment and perform feature point matching. Matching, using the above matching feature points to calculate the three-dimensional coordinates of the feature points and the relative pose of the image frame, such as figure 2 As shown, the specific steps are:

[0019] Step 1-1, use the goodFeatureToTrack() function in opencv to extract the Shi-Tomashi corner points of the first frame image of the left camera, and use the LK optical flow method to track the subsequent image feature points o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com