Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

47 results about "Spinlock" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In software engineering, a spinlock is a lock which causes a thread trying to acquire it to simply wait in a loop ("spin") while repeatedly checking if the lock is available. Since the thread remains active but is not performing a useful task, the use of such a lock is a kind of busy waiting. Once acquired, spinlocks will usually be held until they are explicitly released, although in some implementations they may be automatically released if the thread being waited on (the one which holds the lock) blocks, or "goes to sleep".

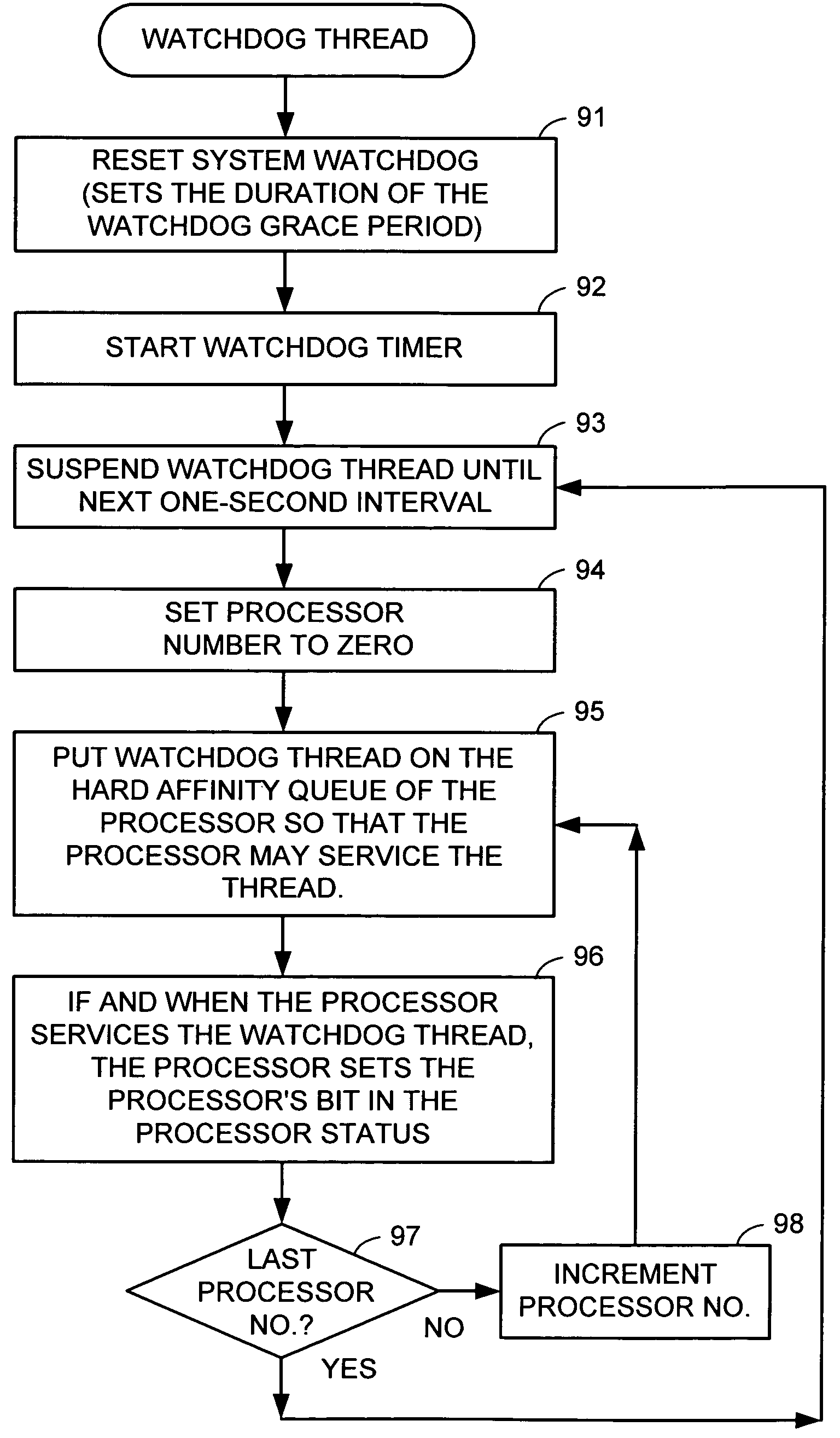

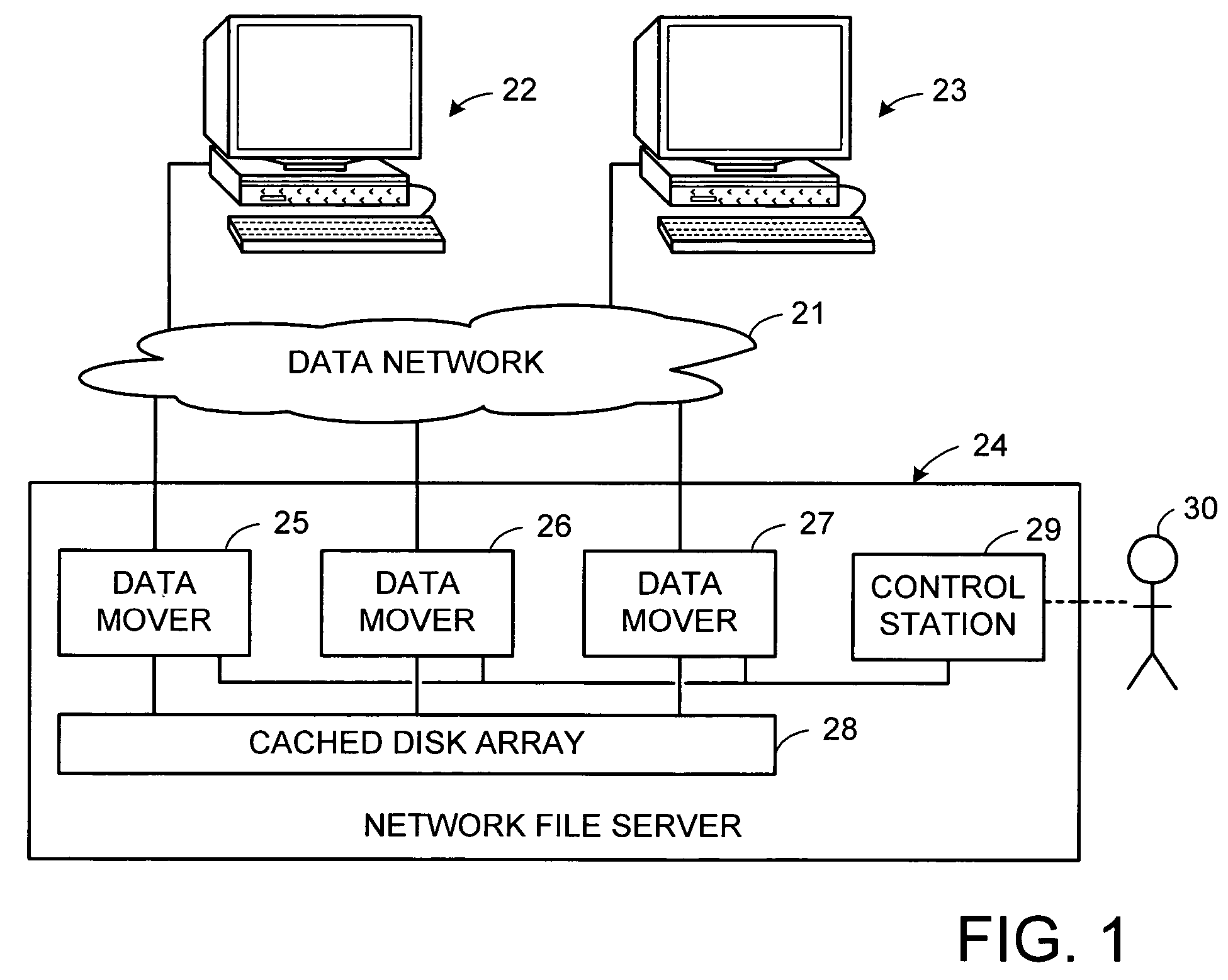

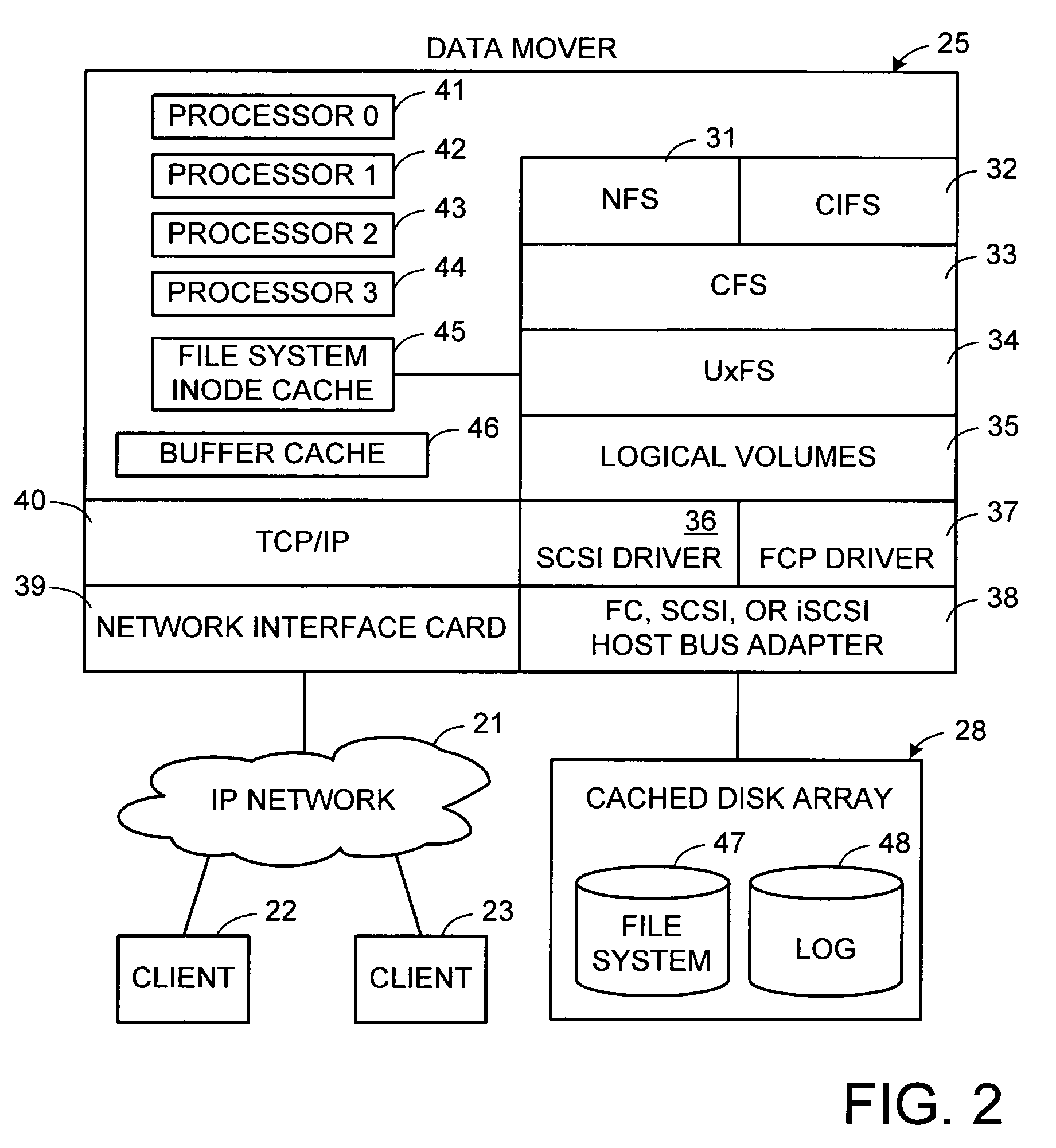

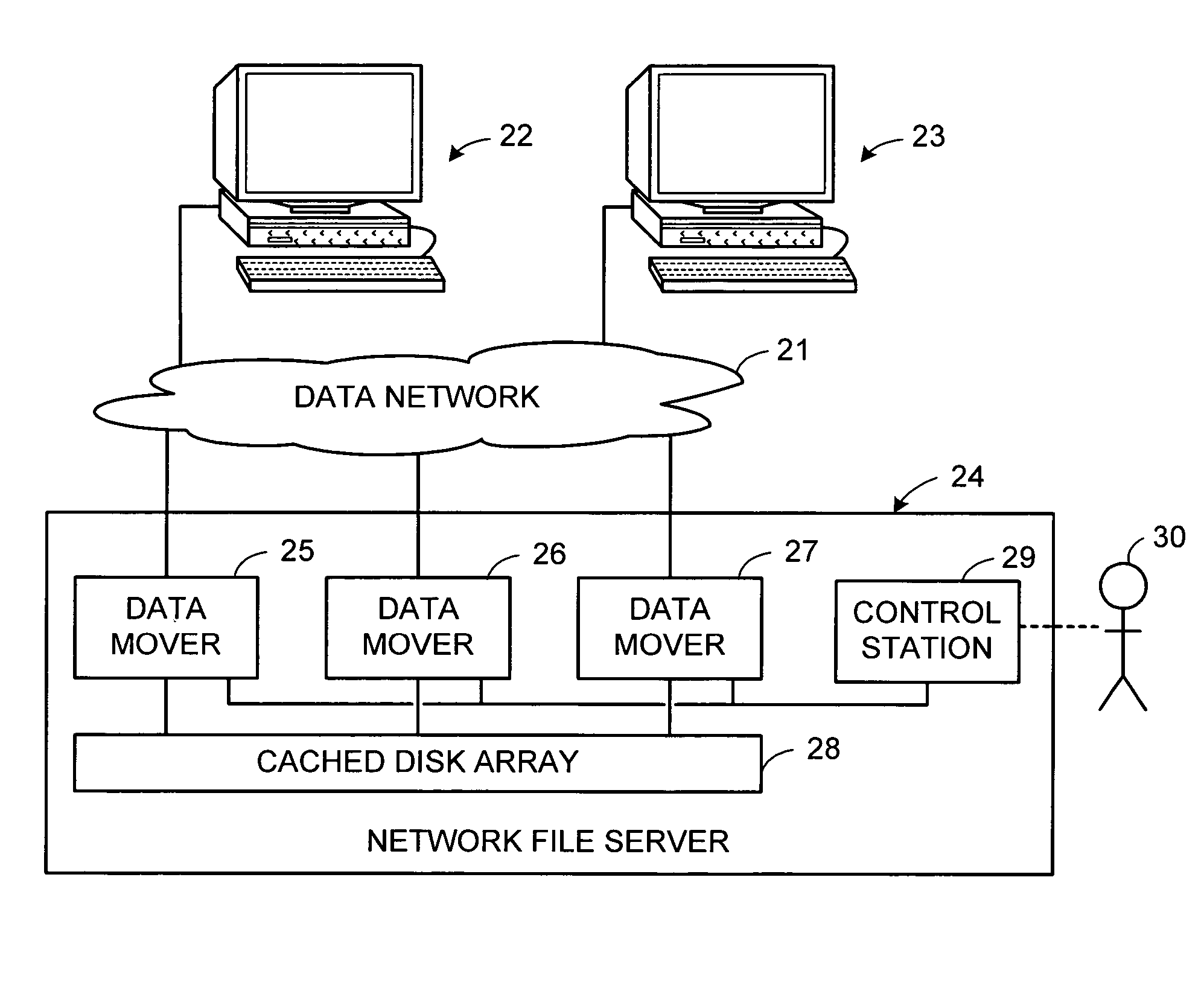

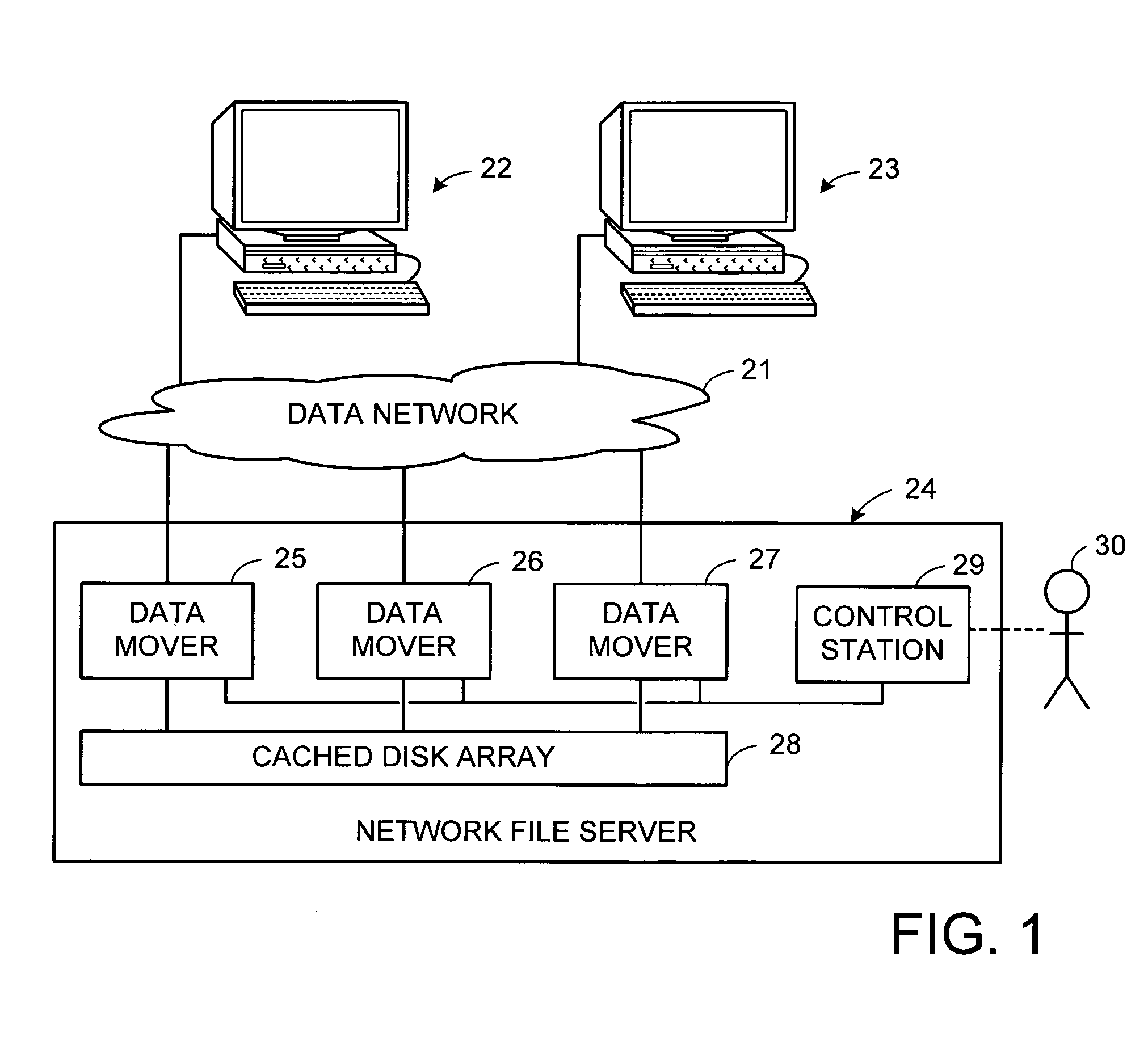

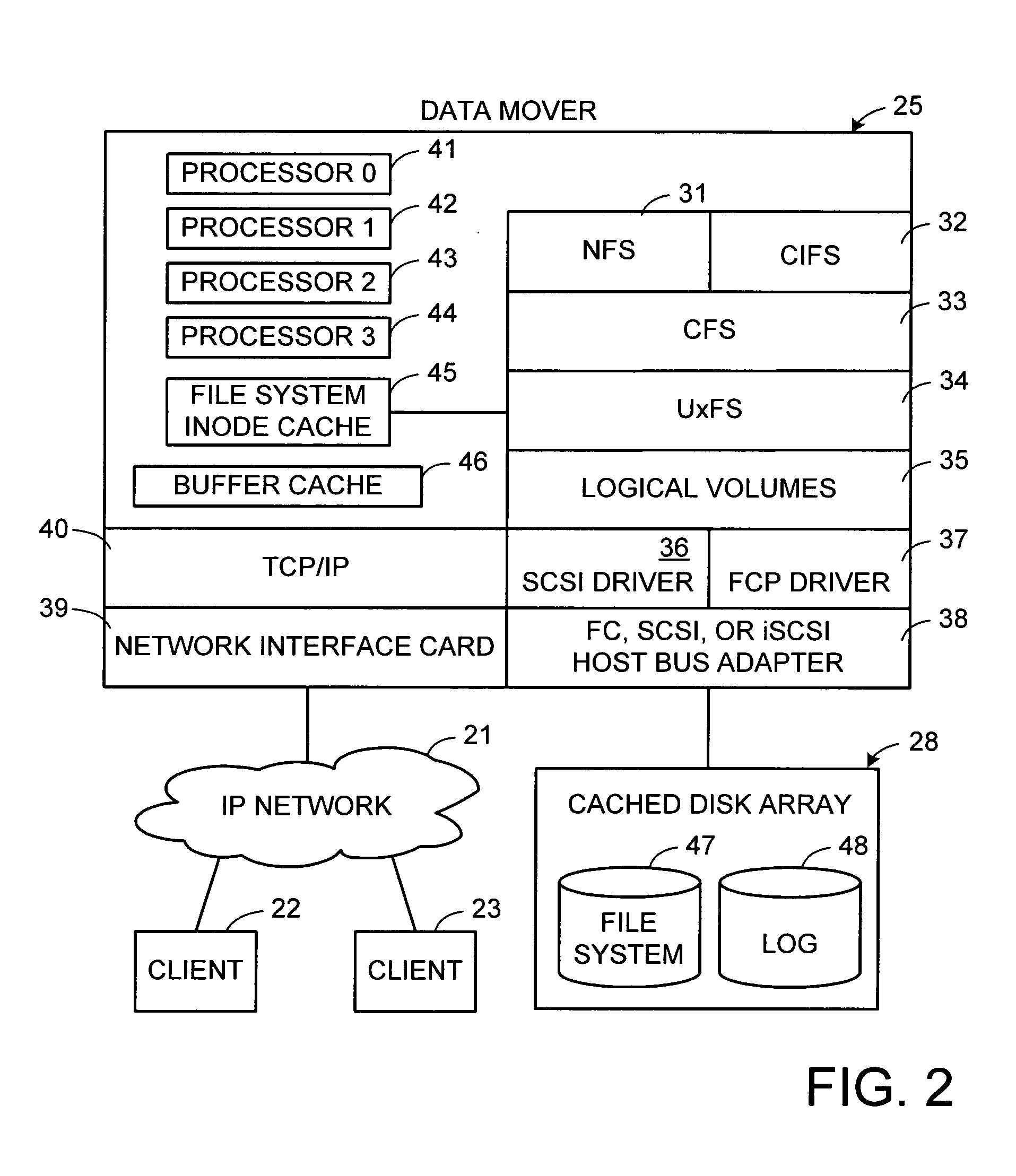

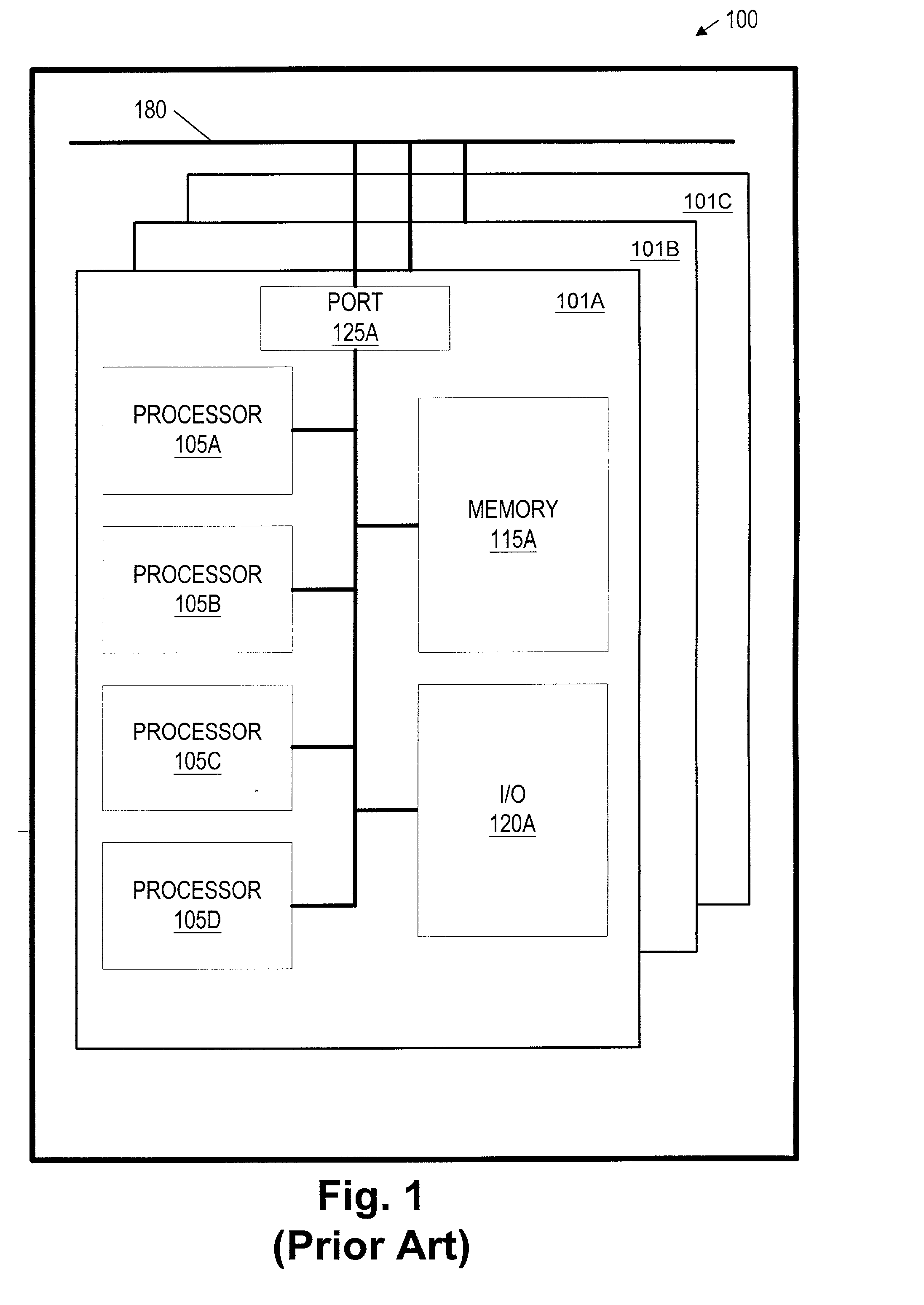

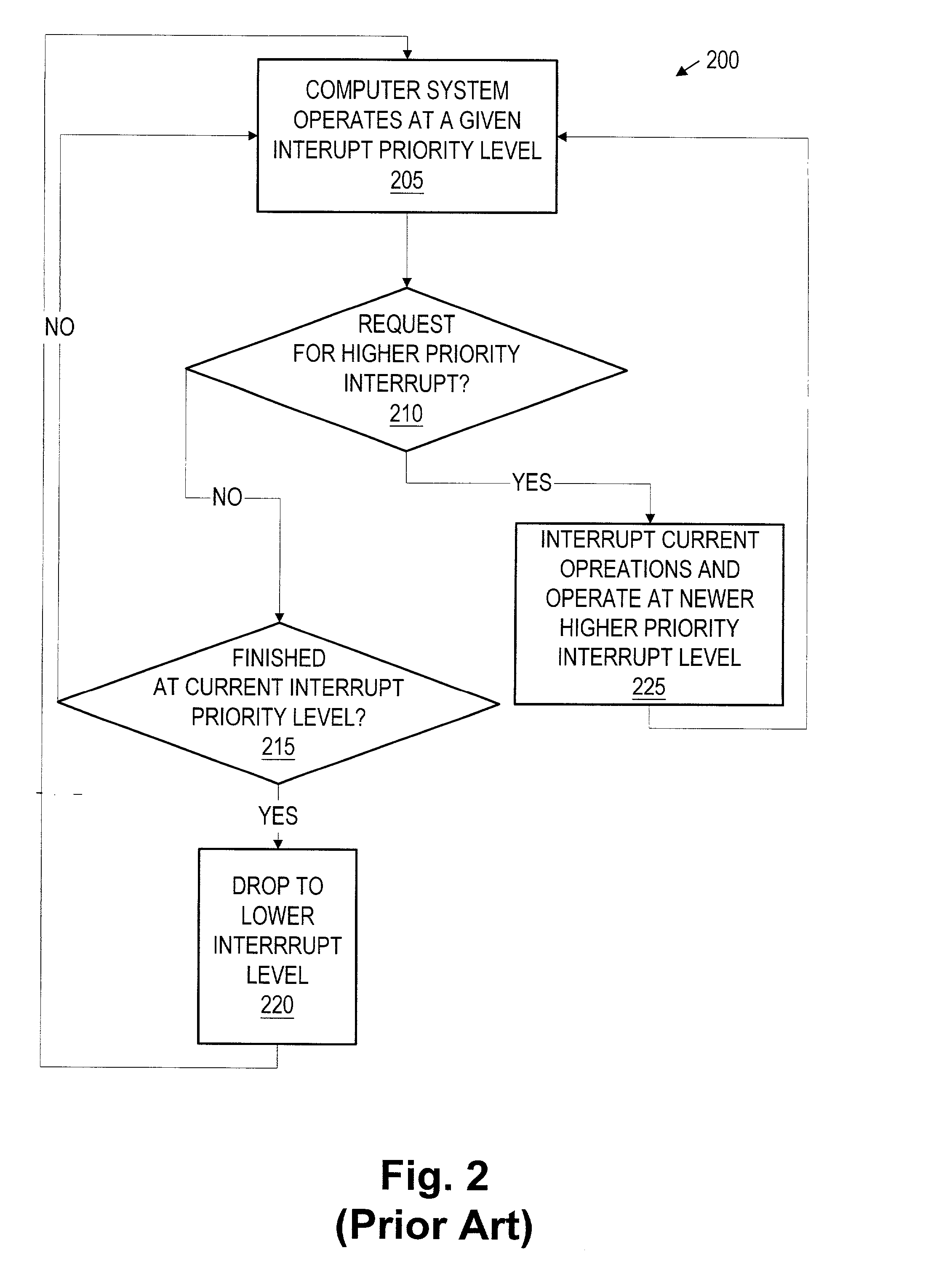

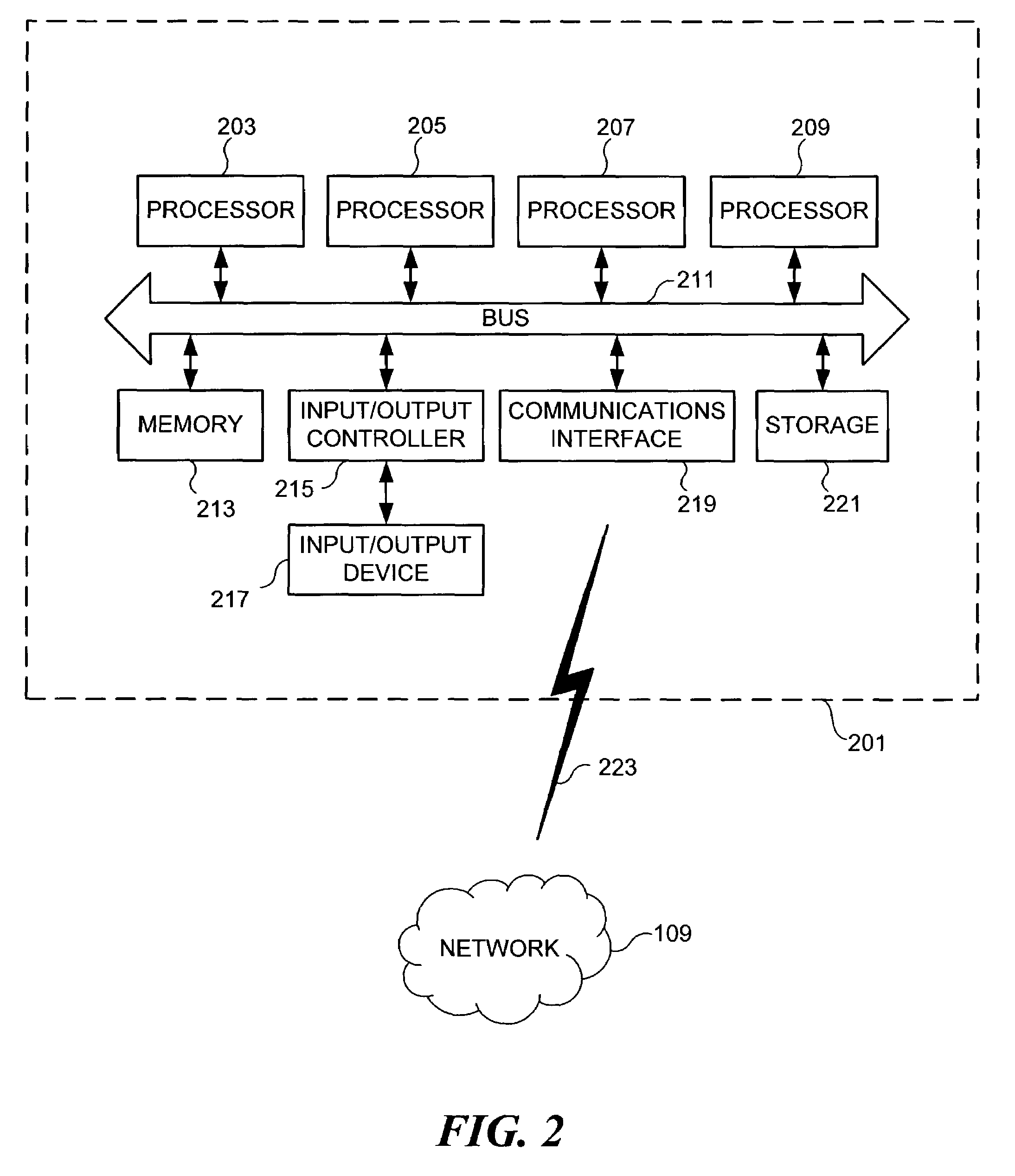

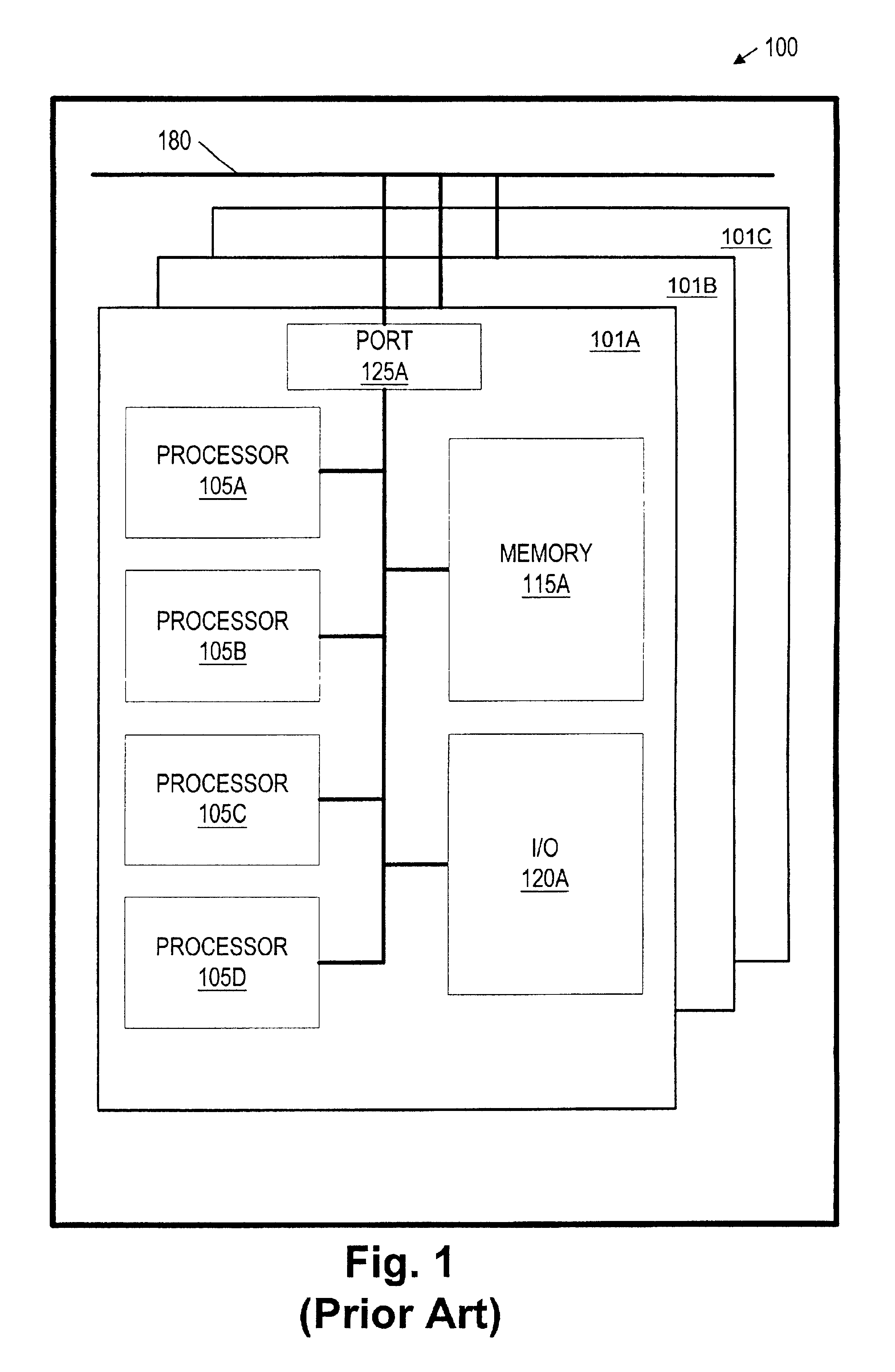

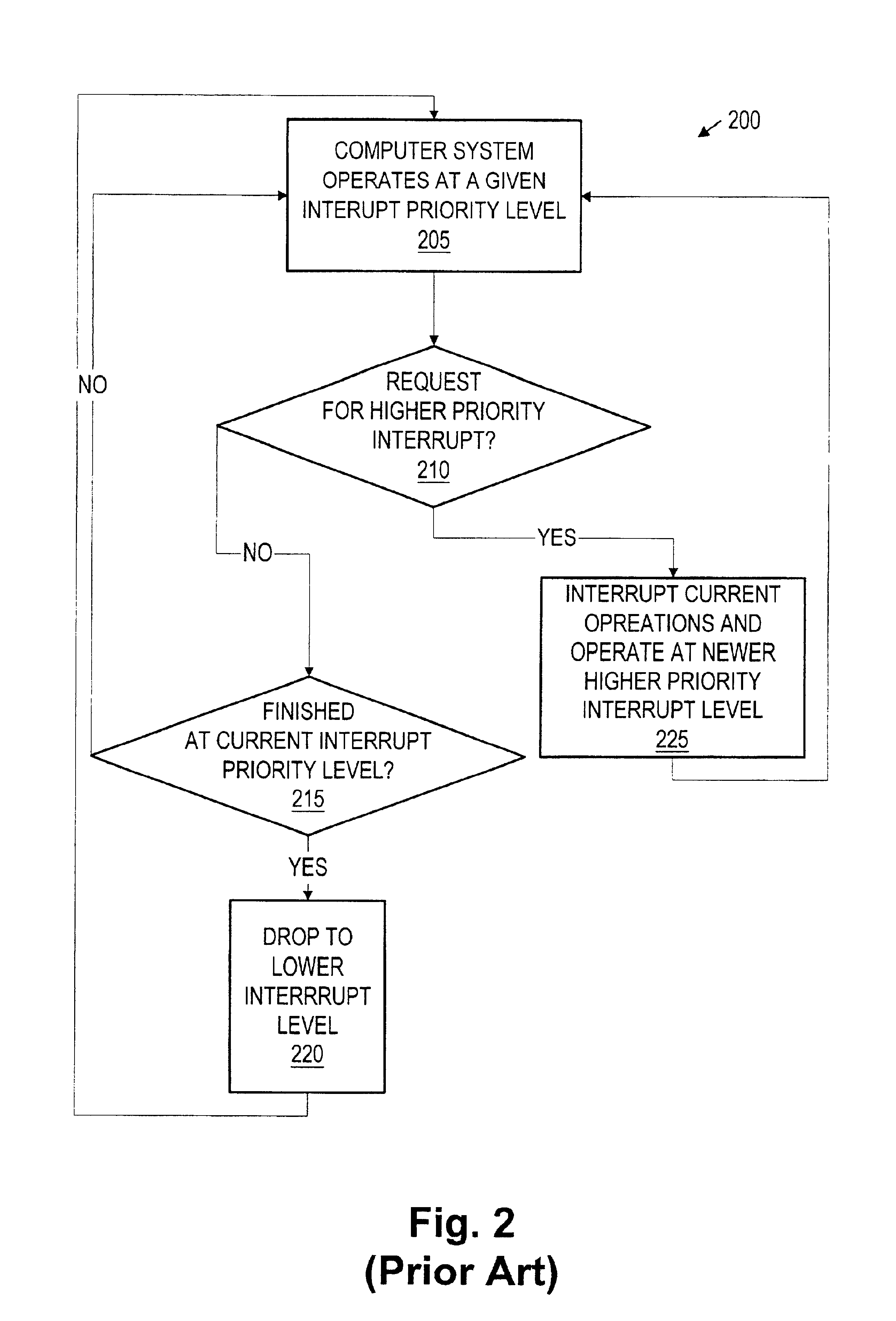

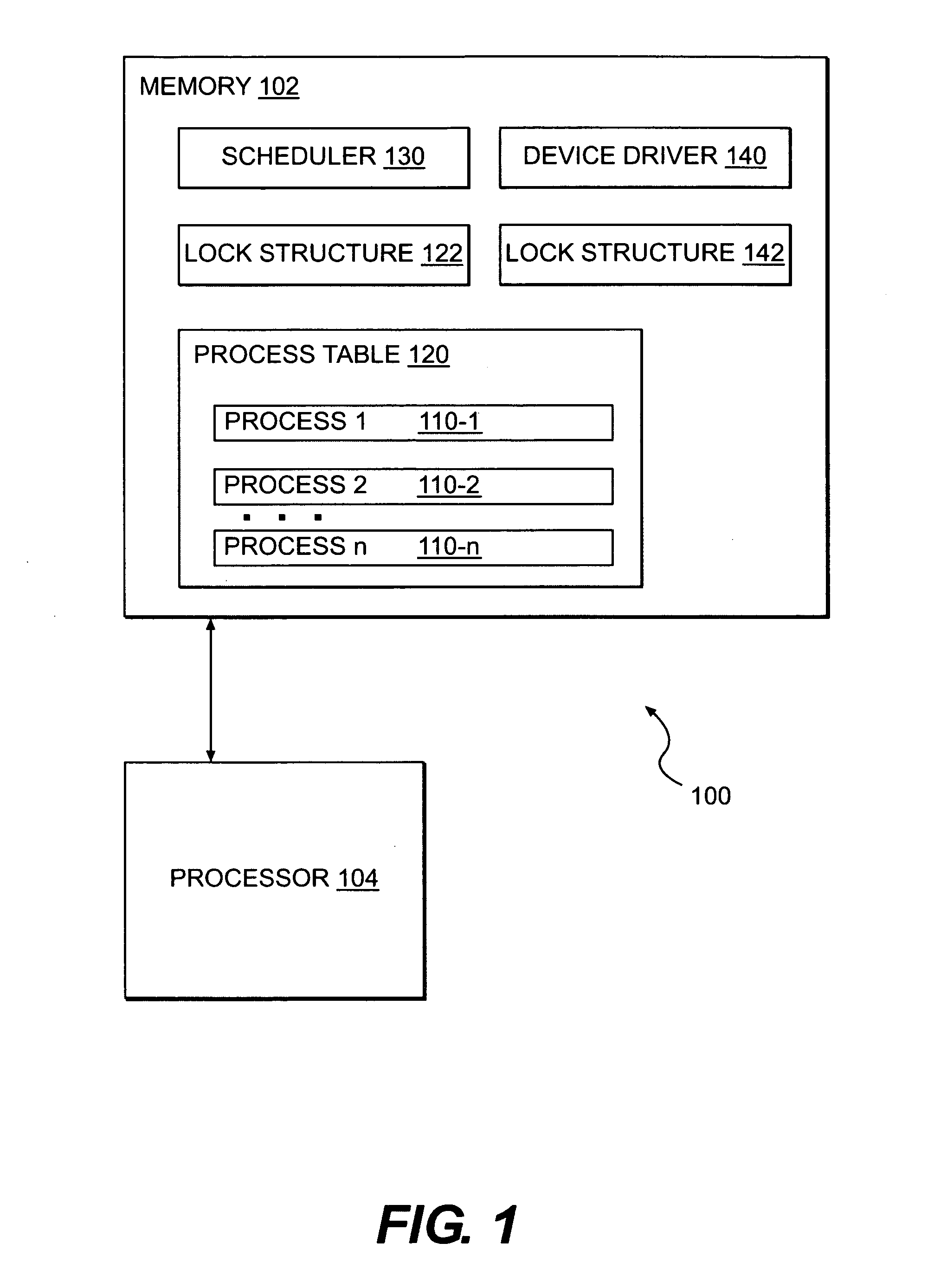

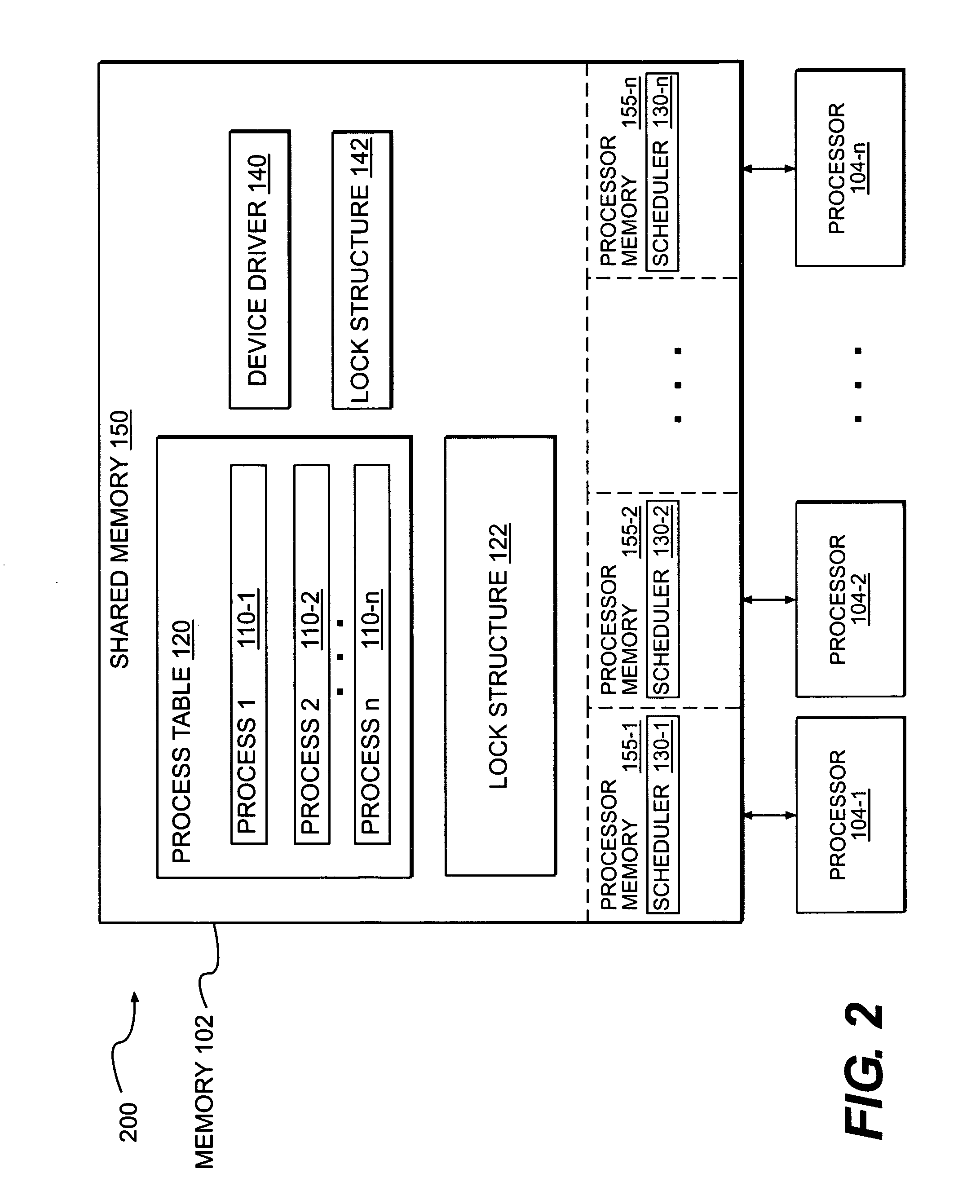

Multi-processor system having a watchdog for interrupting the multiple processors and deferring preemption until release of spinlocks

Each processor in a multi-processor system is periodically interrupted for preempting the current thread for servicing of a watchdog thread during normal operation. Upon failing to service the watchdog thread over a grace period, a system watchdog initiates an orderly shutdown and reboot of the system. In order to prevent spinlocks from causing fake panics, if the current thread is holding one or more spinlocks when the interrupt occurs, then preemption is deferred until the thread releases the spinlocks. For diagnostic purposes, a count is kept of the number of times that preemption is deferred for each processor during each watchdog grace period.

Owner:EMC IP HLDG CO LLC

Multi-processor system having a watchdog for interrupting the multiple processors and deferring preemption until release of spinlocks

Each processor in a multi-processor system is periodically interrupted for preempting the current thread for servicing of a watchdog thread during normal operation. Upon failing to service the watchdog thread over a grace period, a system watchdog initiates an orderly shutdown and reboot of the system. In order to prevent spinlocks from causing fake panics, if the current thread is holding one or more spinlocks when the interrupt occurs, then preemption is deferred until the thread releases the spinlocks. For diagnostic purposes, a count is kept of the number of times that preemption is deferred for each processor during each watchdog grace period.

Owner:EMC IP HLDG CO LLC

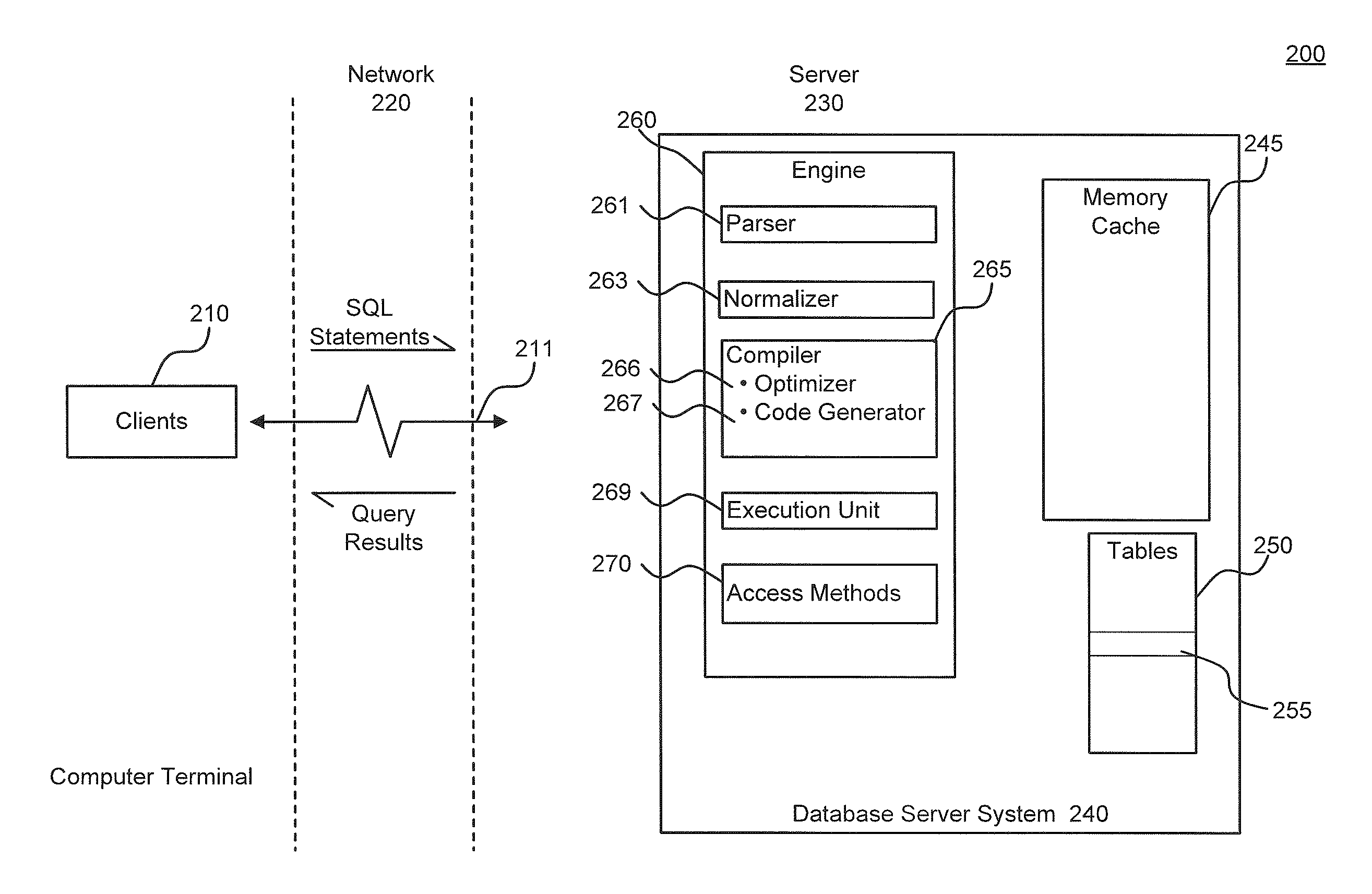

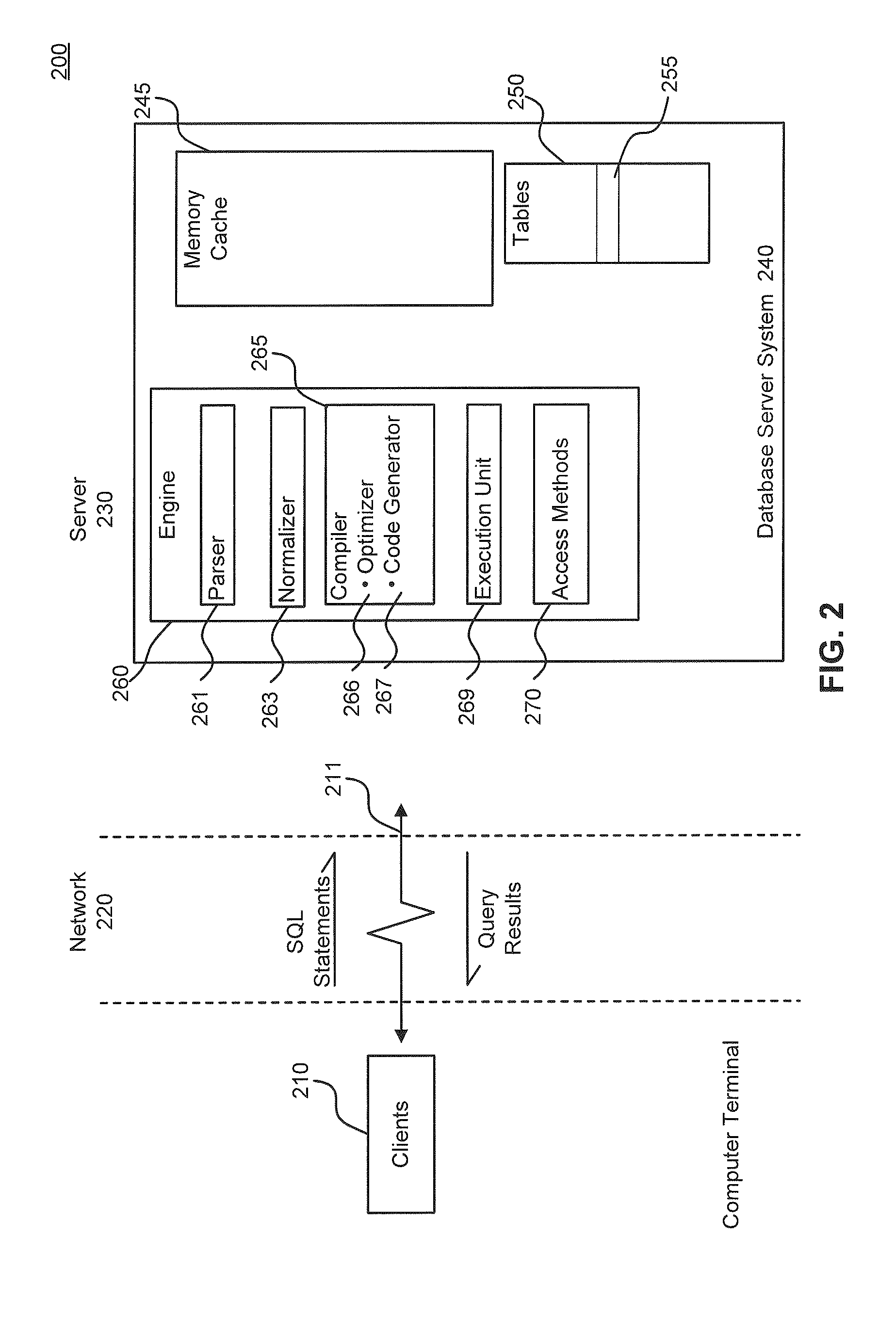

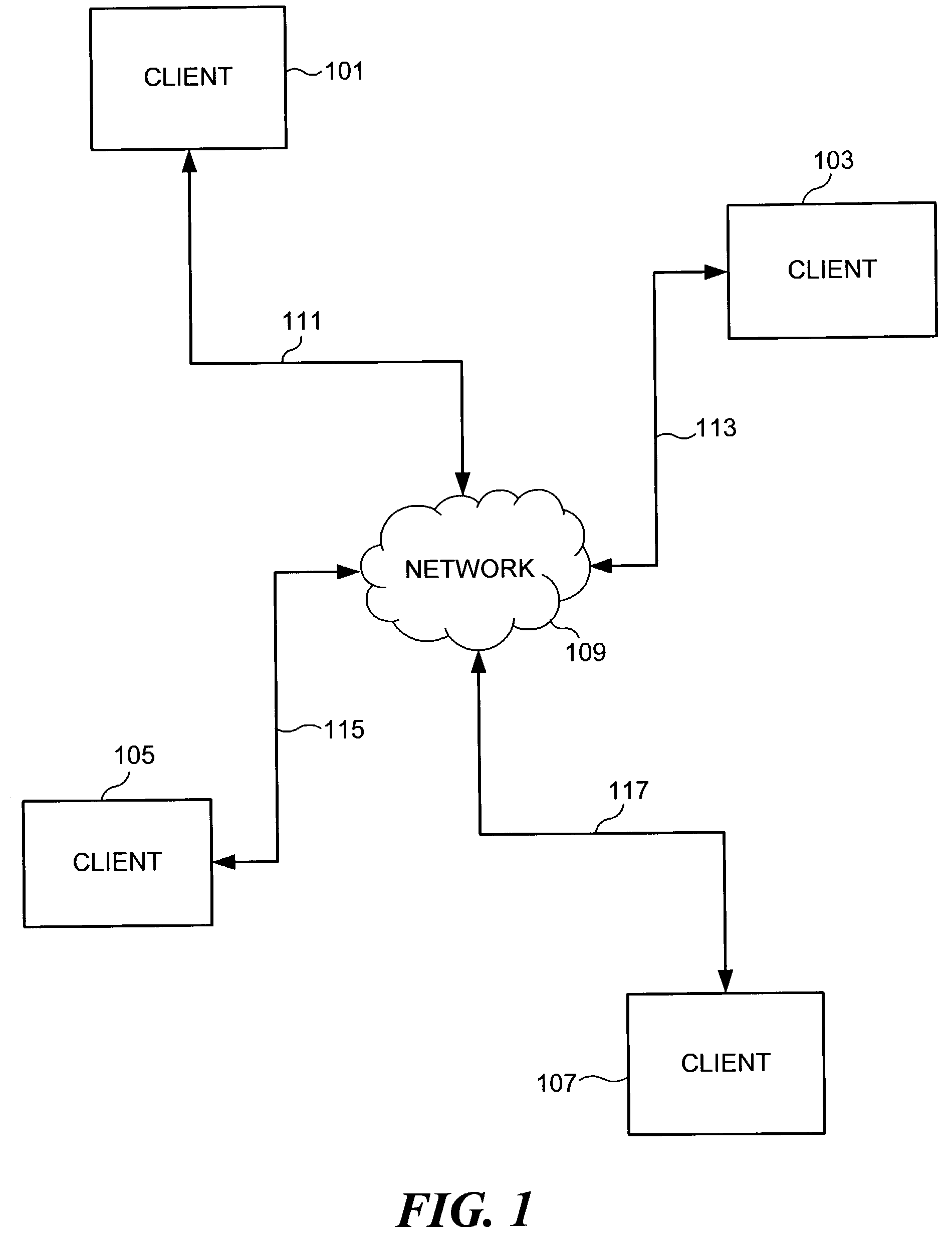

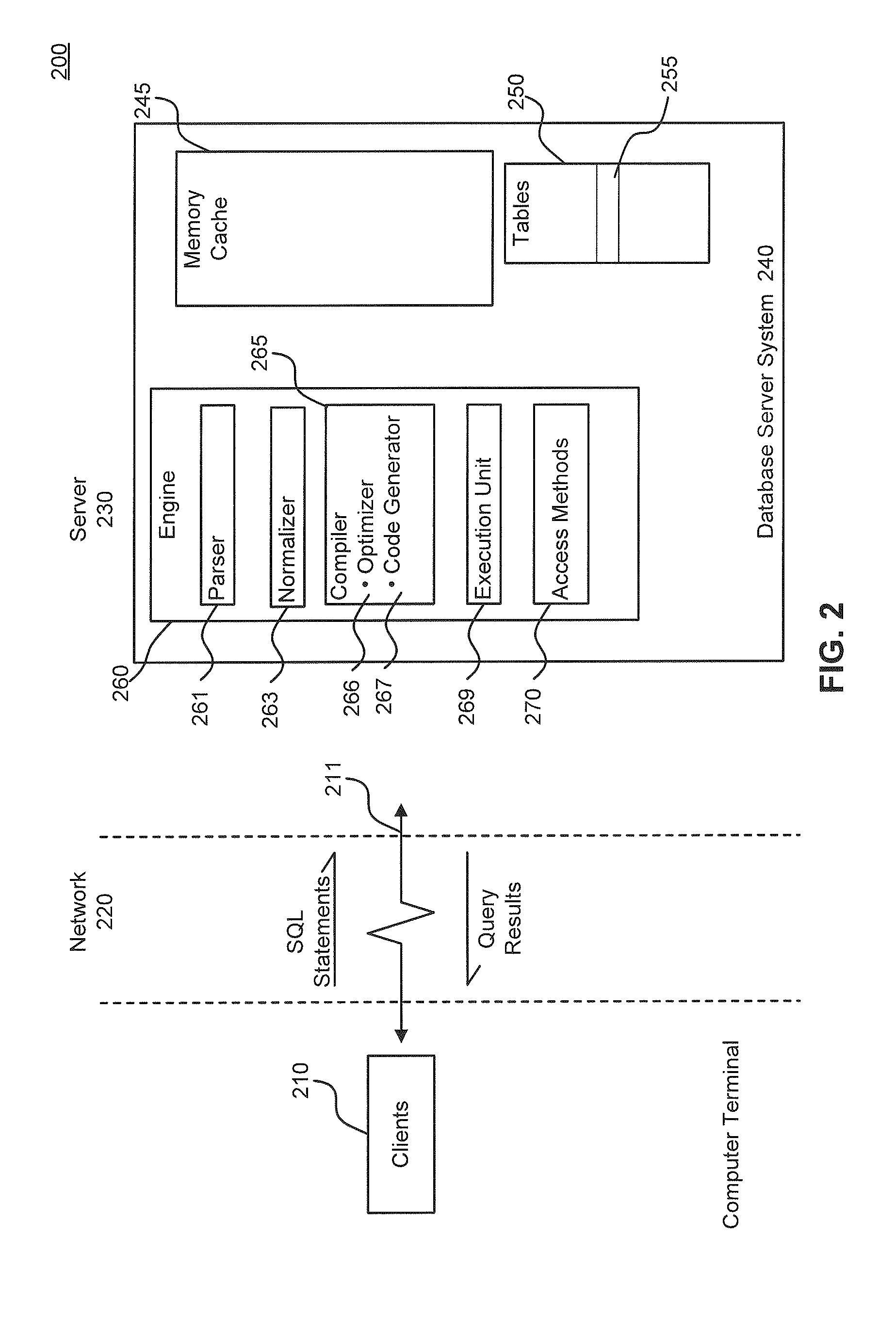

Query Plan Optimization for Prepared SQL Statements

ActiveUS20120084315A1Avoid accessDigital data information retrievalDigital data processing detailsQuery planDatabase server

System, methods and articles of manufacture for optimizing a query plan reuse in a database server system accessible by a plurality of client connections. An embodiment comprises providing at least one global cache storage and a private cache storage to a plurality of client connections, and coordinating utilization of the at least one global cache storage and the private cache storage to share light weight stored procedures and query plans for prepared SQL statements across the plurality of client connections via the at least one global cache storage while avoiding a spinlock access for executing the prepared SQL statements.

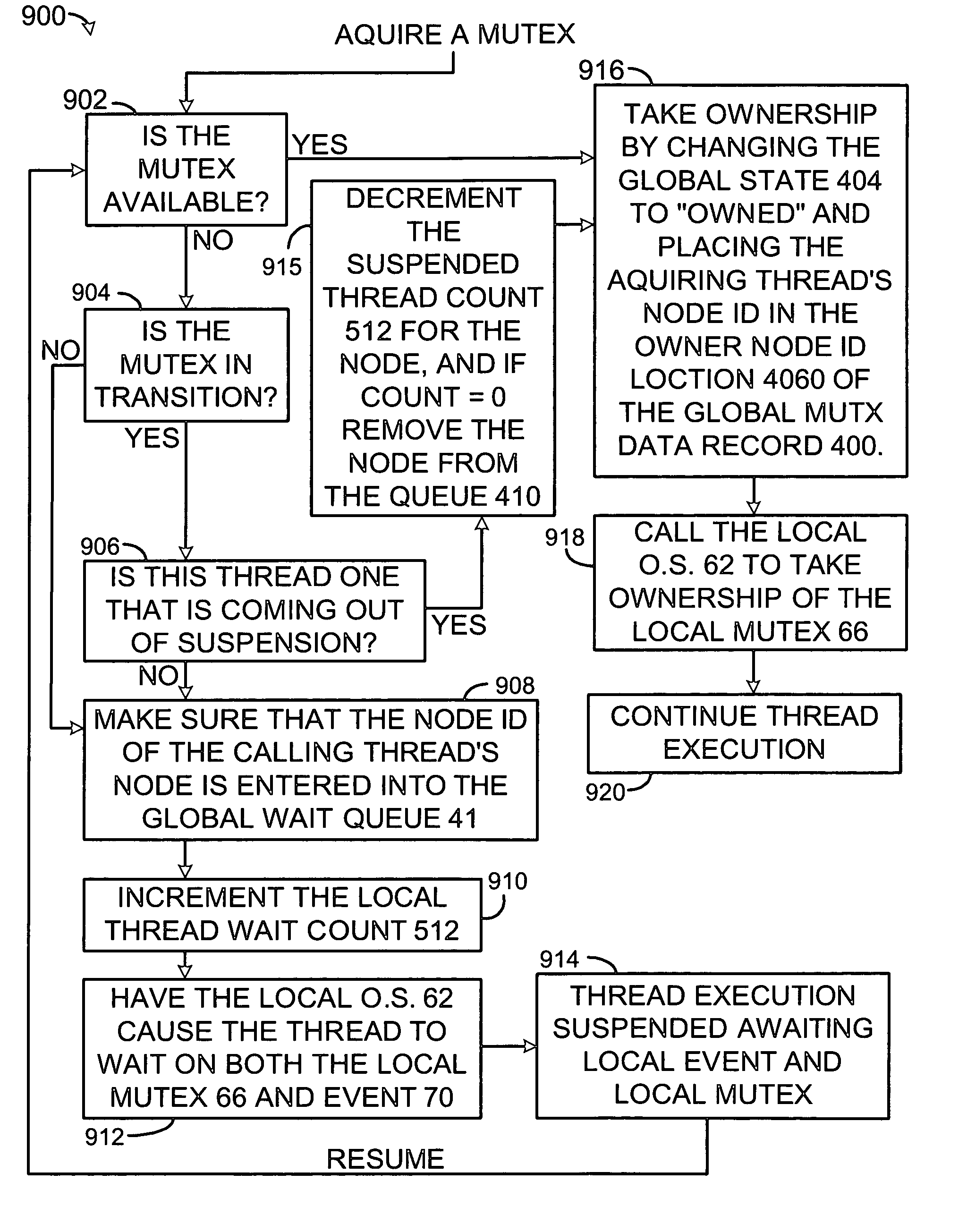

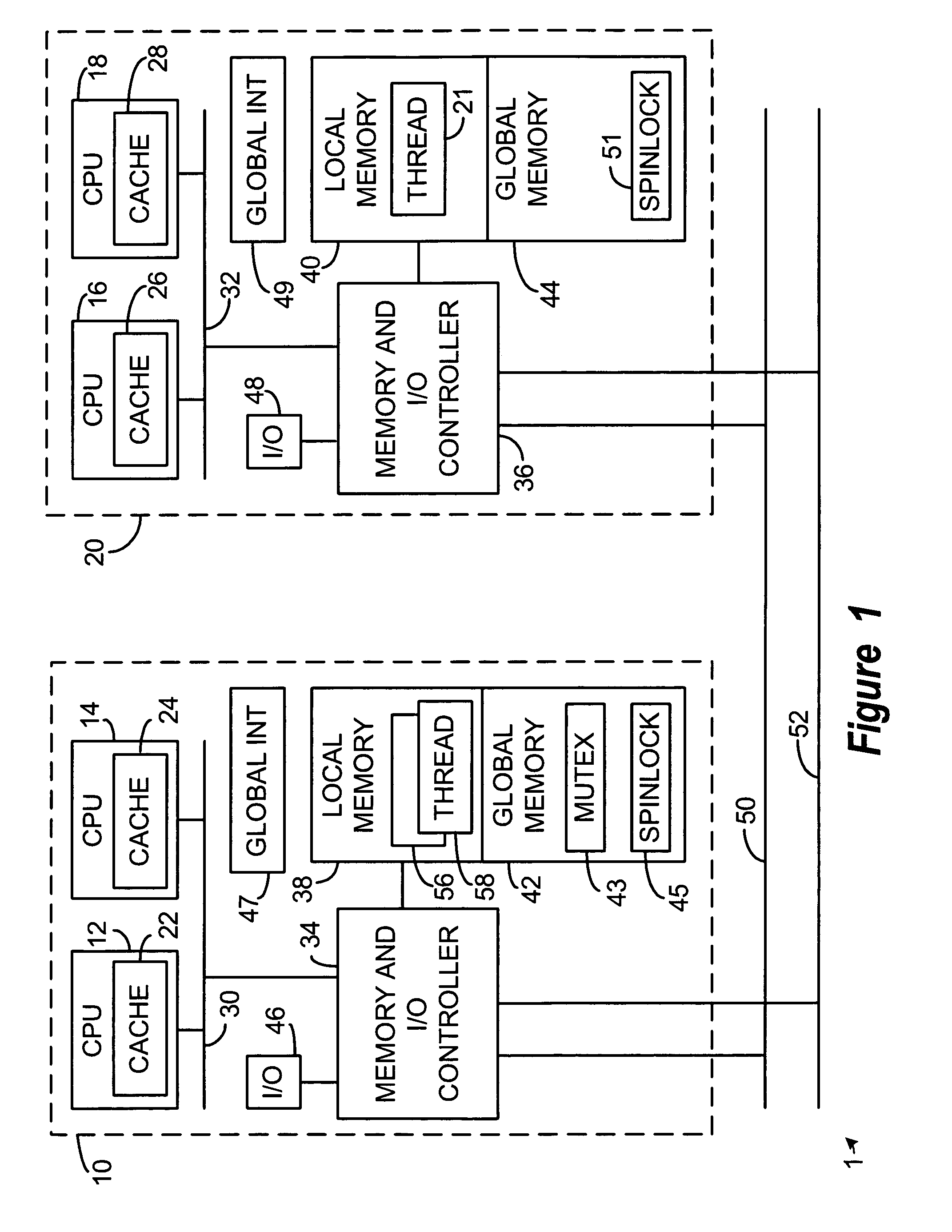

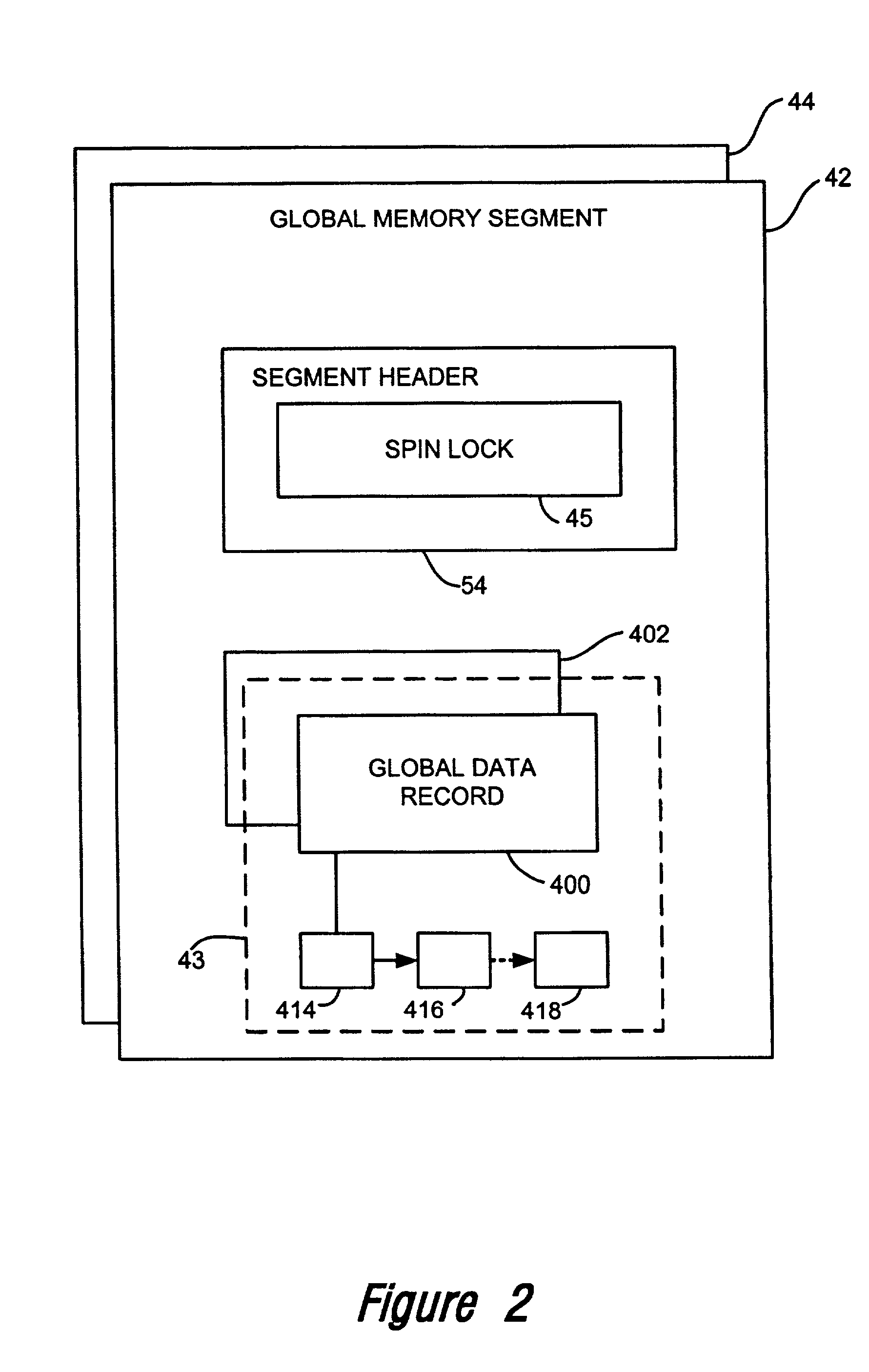

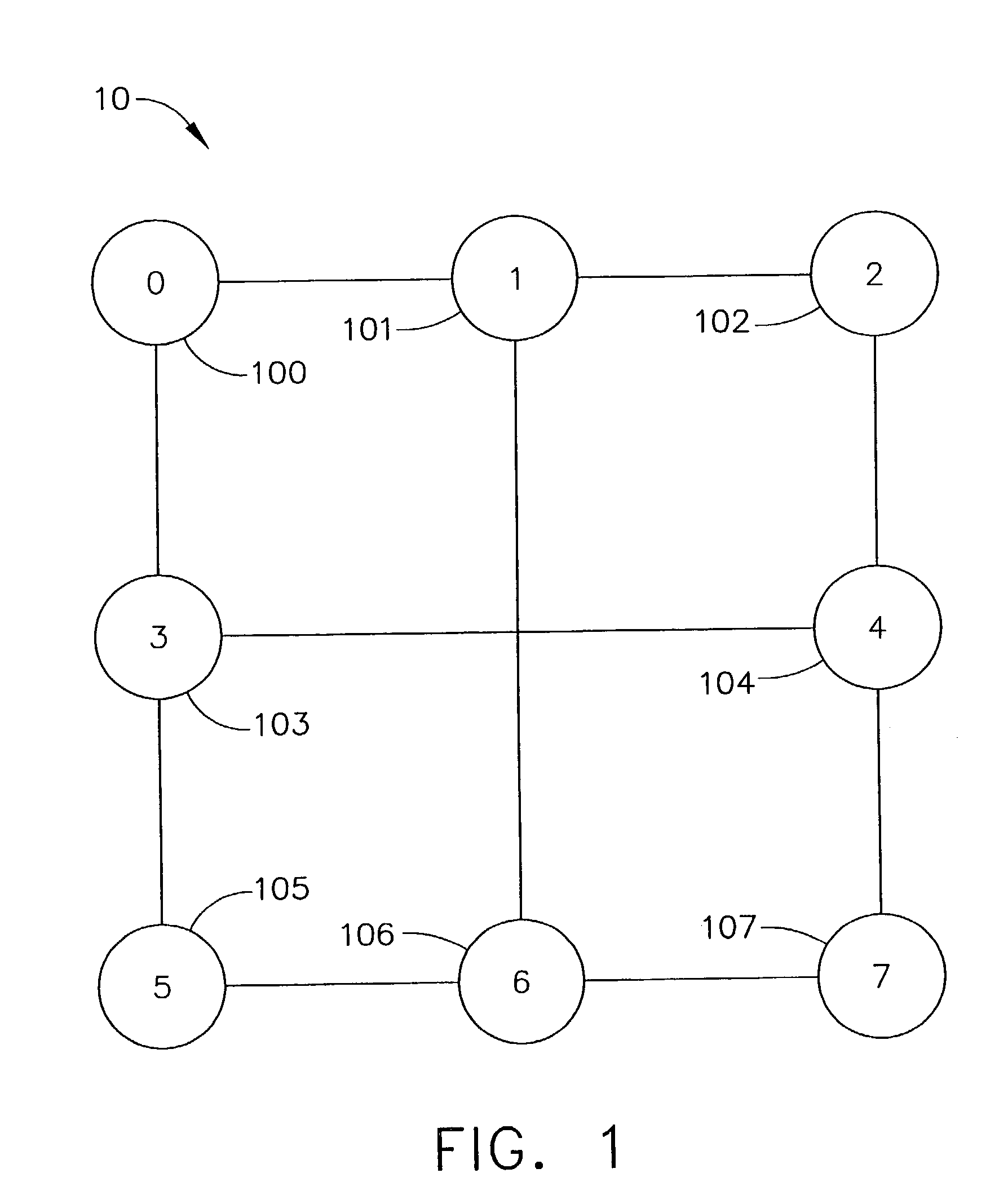

Synchronization objects for multi-computer systems

InactiveUS7058948B2Function increaseData processing applicationsMultiprogramming arrangementsOperational systemMulti processor

Several multiprocessor computer systems, each having its own copy of an operating system, are interconnected to form a multi-computer system having global memory accessible by any processor on any node and including provision for spinlock access control. In this environment, a global mutex, and other like synchronization objects, are realized that can control the coordination of multiple threads running on multiple processors and on multiple nodes or platforms. Each global mutex is supported by a local operating system shadow mutex on each node or platform where threads have opened access to the global mutex. Global mutex functionality is thus achieved that reflects and utilizes the local operating system's mutex system.

Owner:HEWLETT PACKARD DEV CO LP

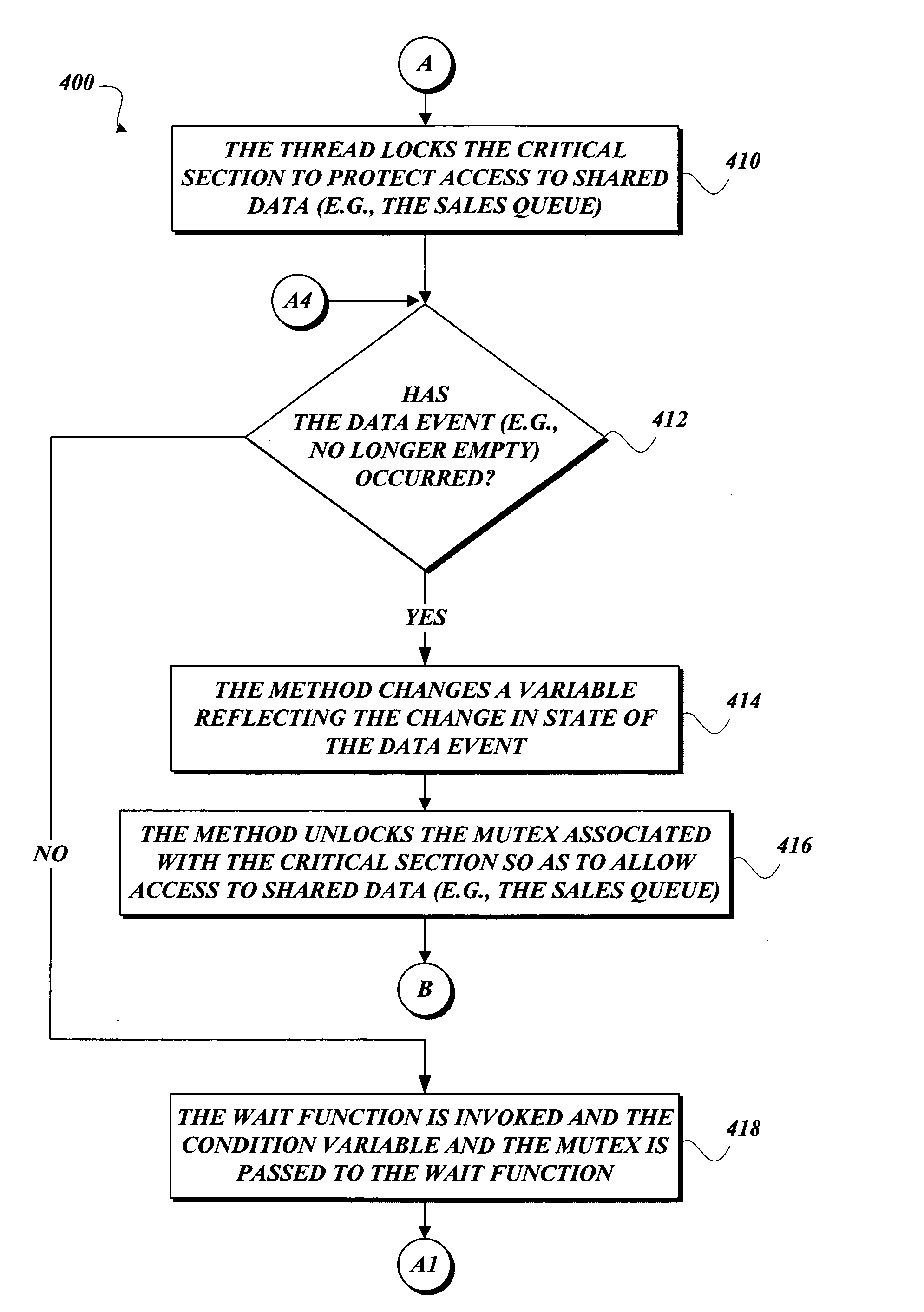

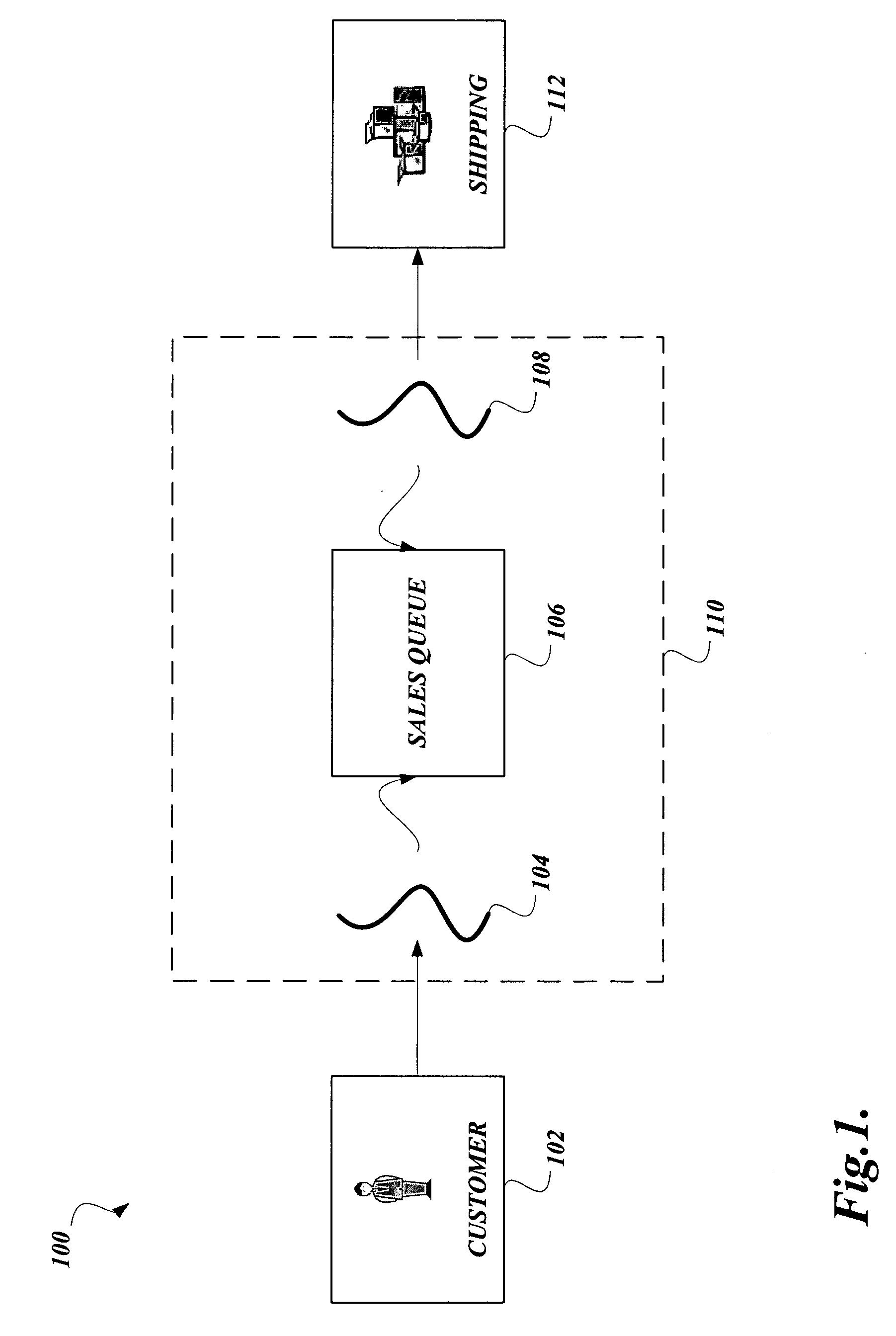

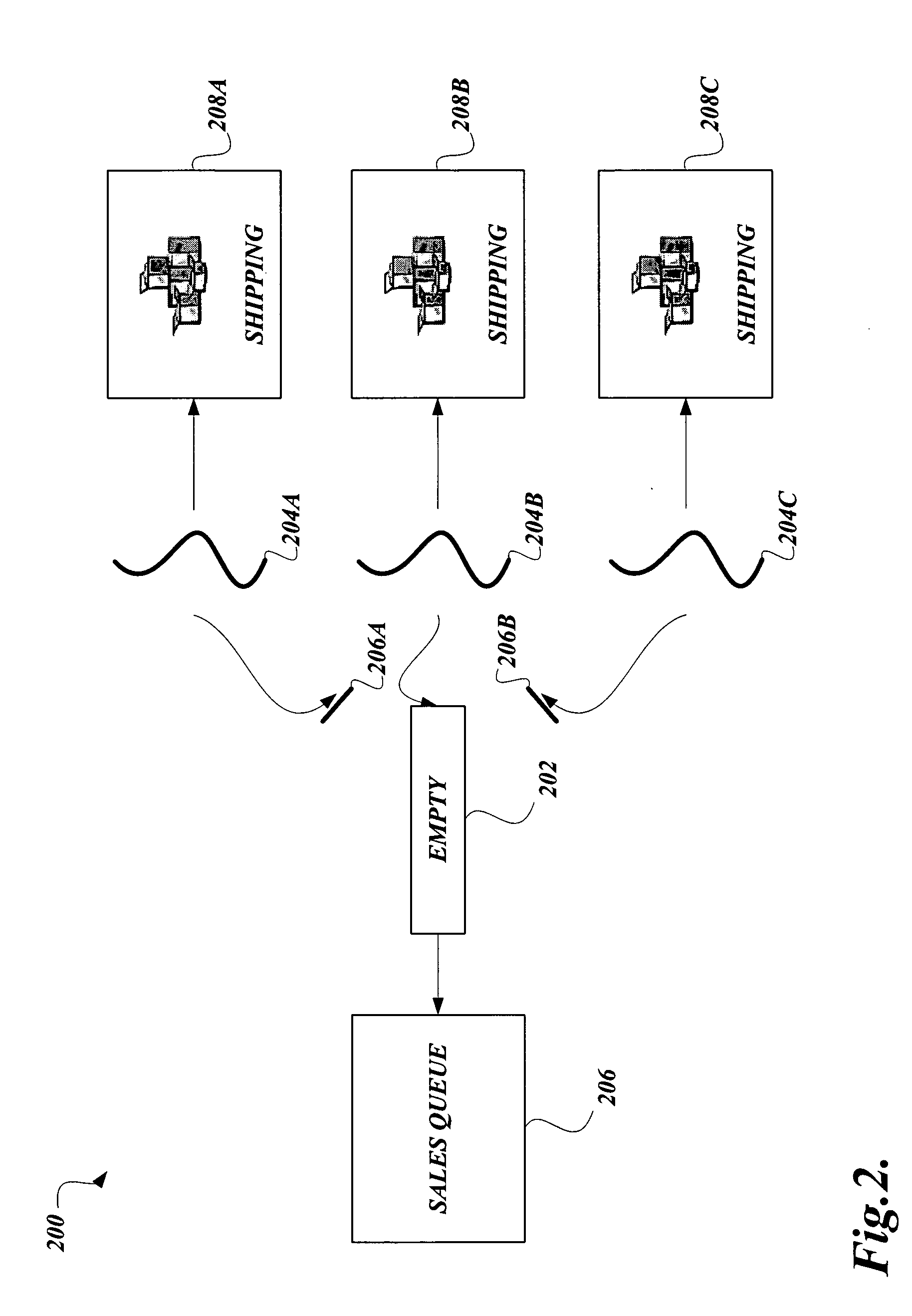

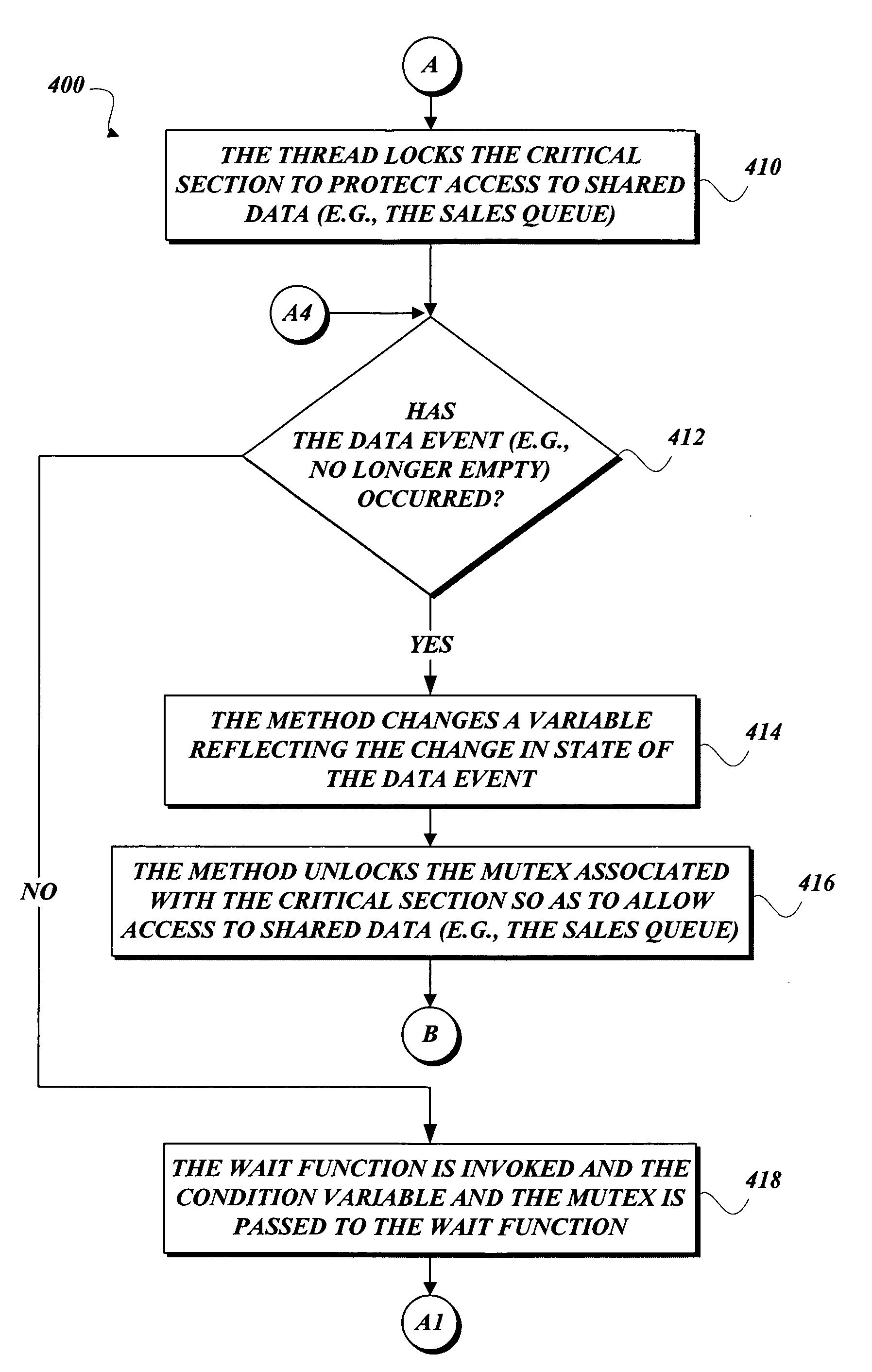

Conditional variables without spinlocks

The use of spinlocks is avoided in the combination of mutex and condition variables by using any suitable atomic compare and swap functionality to add a thread to a list of waiting threads that waits for a data event to occur. Various embodiments of the present invention also provide an organization scheme of data, which describes an access bit, an awaken count, and a pointer to the list of waiting threads. This organization scheme of data helps to optimize the list of waiting threads so as to better awaken a waiting thread or all waiting threads at once.

Owner:MICROSOFT TECH LICENSING LLC

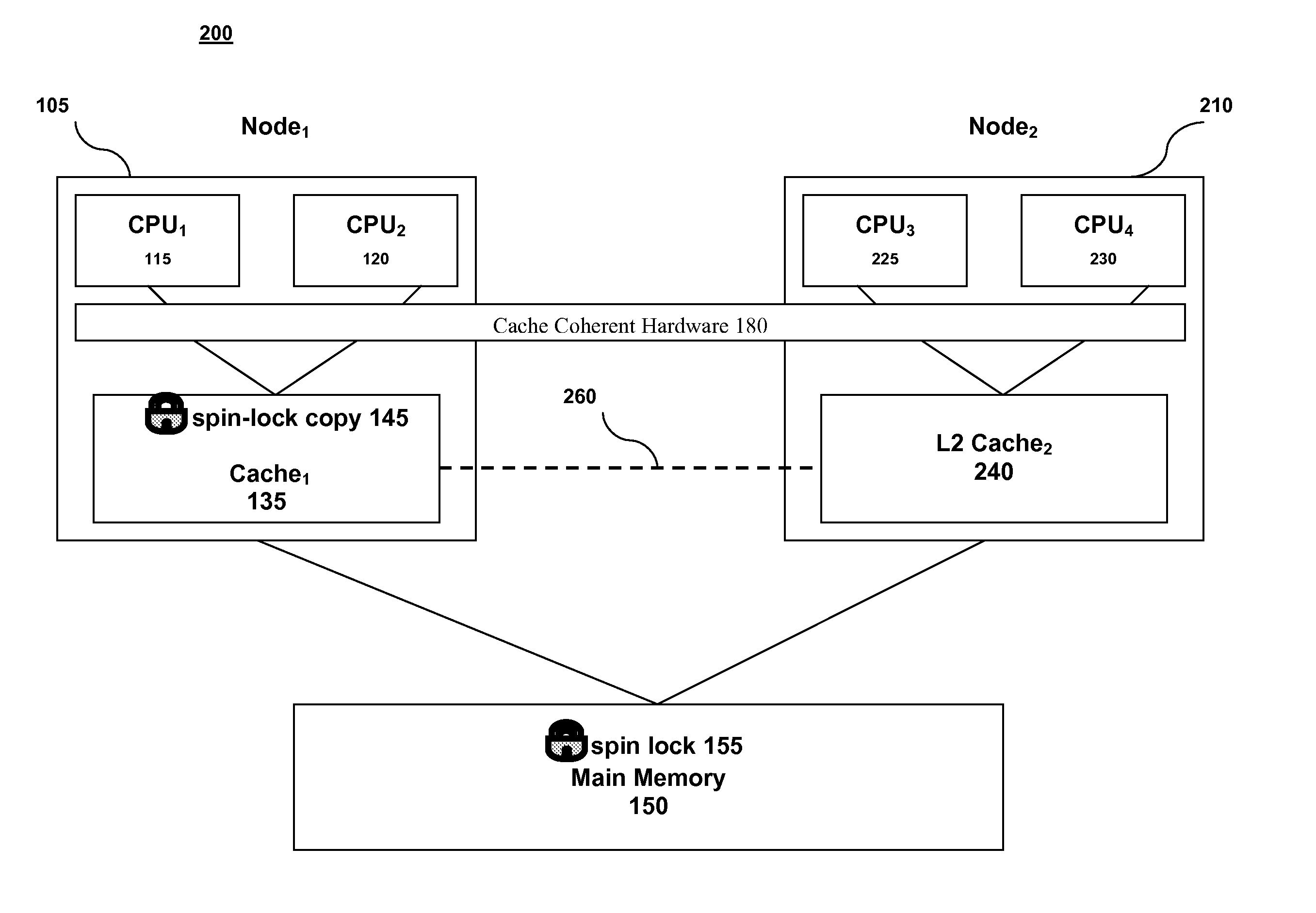

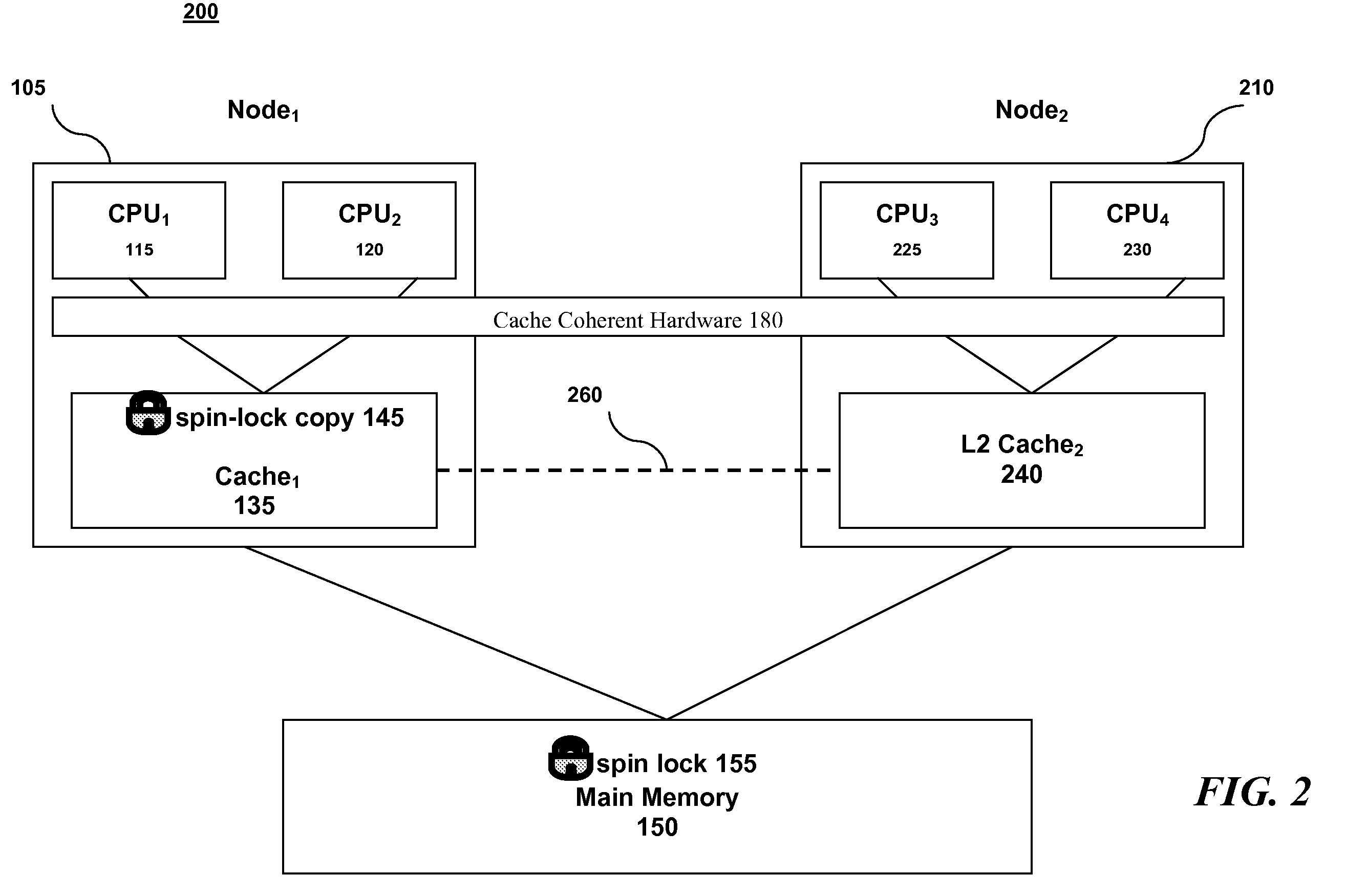

Achieving Both Locking Fairness and Locking Performance with Spin Locks

ActiveUS20080177955A1Digital computer detailsUnauthorized memory use protectionParallel computingSpinlock

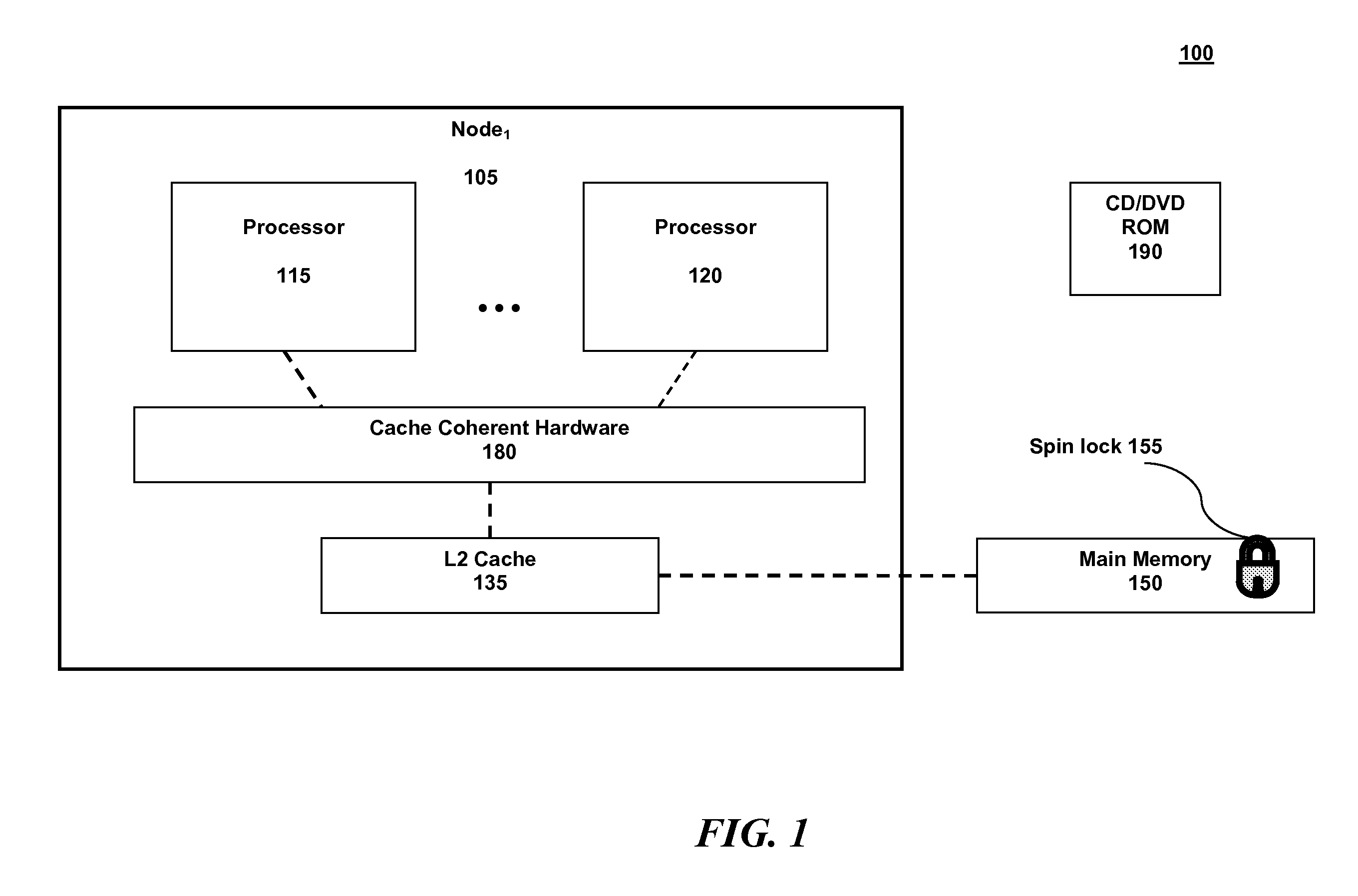

A method for implementing a spin lock in a system including a plurality of processing nodes, each node including at least one processor and a cache memory, the method including steps of: acquiring exclusivity to the cache memory; checking the availability of the spin lock; setting the spin lock to logical one if the spin lock is available; setting the spin lock to logical zero once processing is complete; and explicitly yielding the cache memory exclusivity. Yielding the cache memory exclusivity includes instructing the cache coherent hardware to mark the cache memory as non-exclusive. The cache memory is typically called level two cache.

Owner:IBM CORP

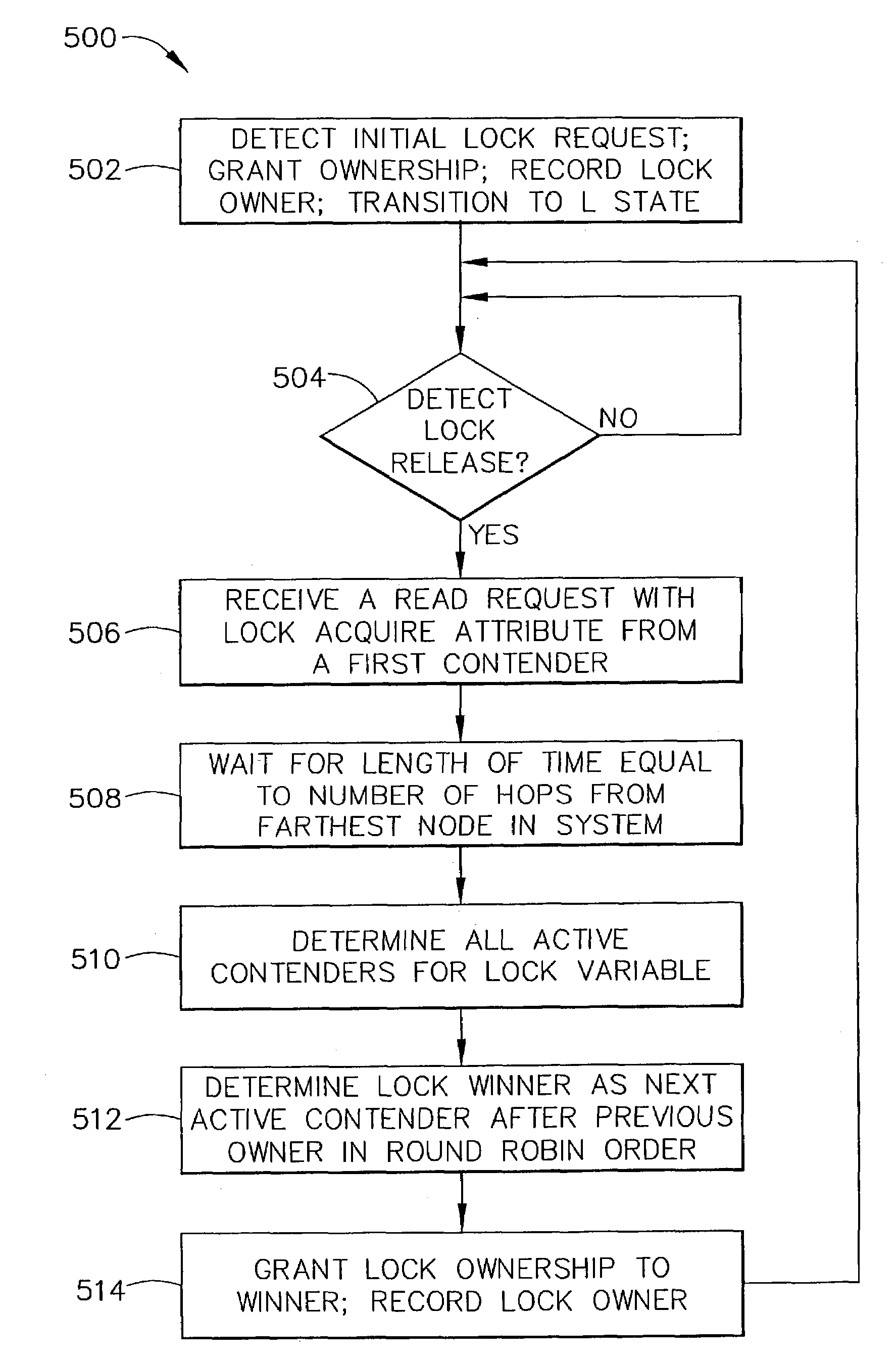

Apparatus and method for balanced spinlock support in NUMA systems

A data processor (300) is adapted for use in a non uniform memory access (NUMA) data processing system (10) having a local memory (320) and a remote memory. The data processor (300) includes a central processing unit (302) and a communication link controller (310). The central processing unit (302) executes a plurality of instructions including an atomic instruction on a lock variable, and generates an access request that includes a lock acquire attribute in response to executing the atomic instruction on the lock variable. The communication link controller (310) is coupled to the central processing unit (302) and has an output adapted to be coupled to the remote memory, and selectively provides the access request with the lock acquire attribute to the remote memory if an address of the access request corresponds to the remote memory.

Owner:MEDIATEK INC

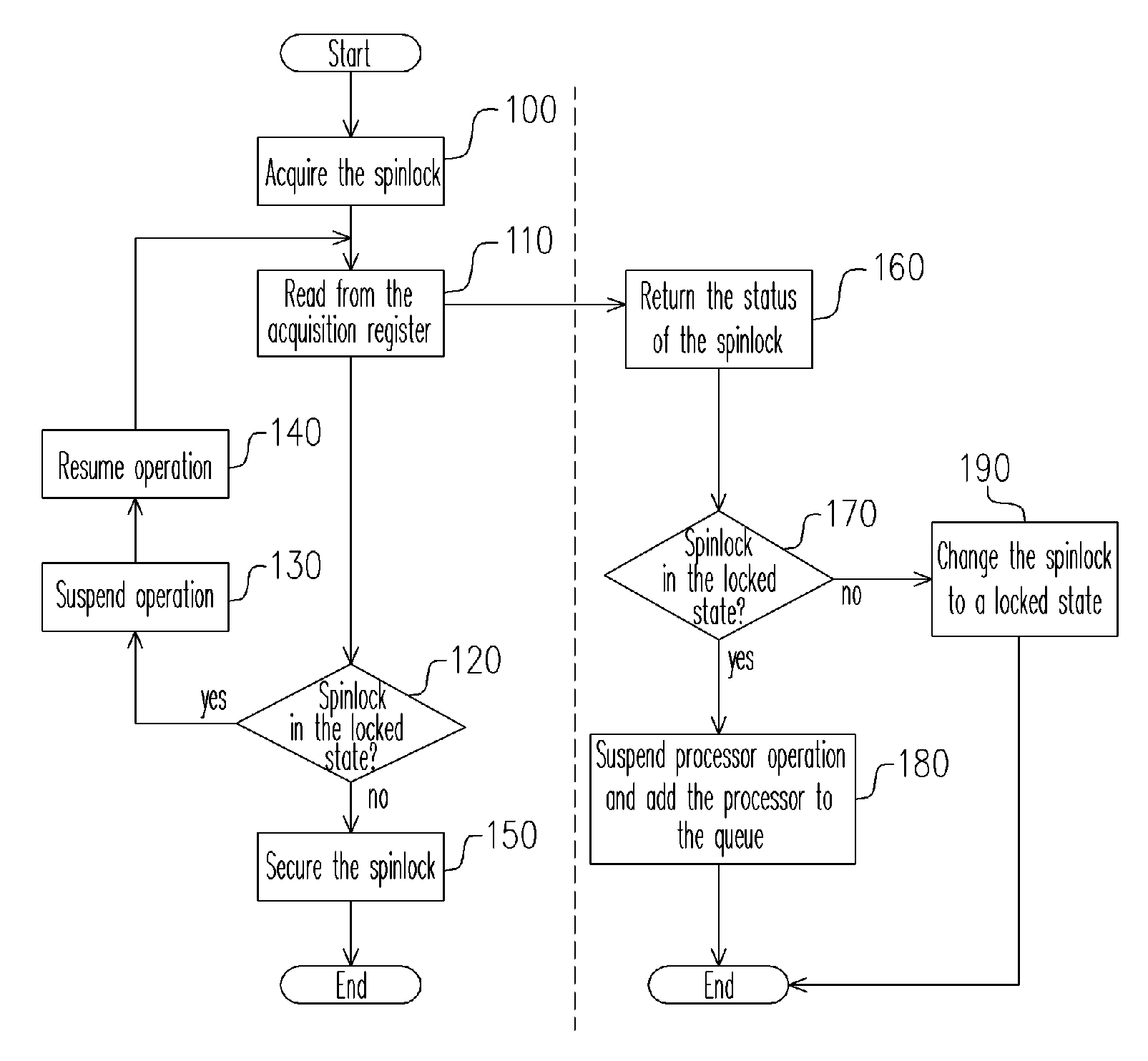

Synchronization method for a multi-processor system and the apparatus thereof

InactiveUS20070050527A1Lot of bus bandwidth bandwidthLot of bandwidth memory bandwidthProgram synchronisationUnauthorized memory use protectionMulti processorSpinlock

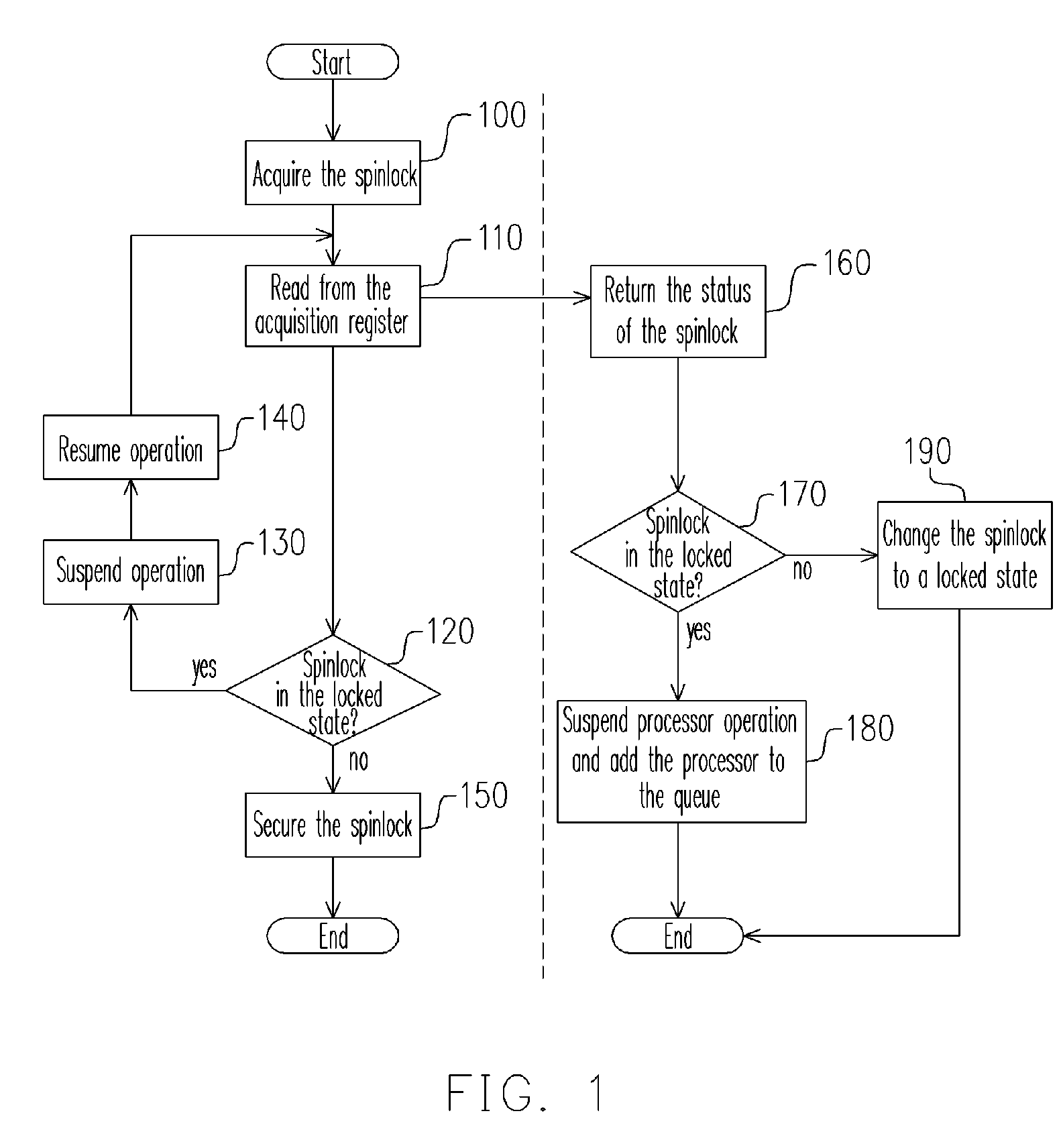

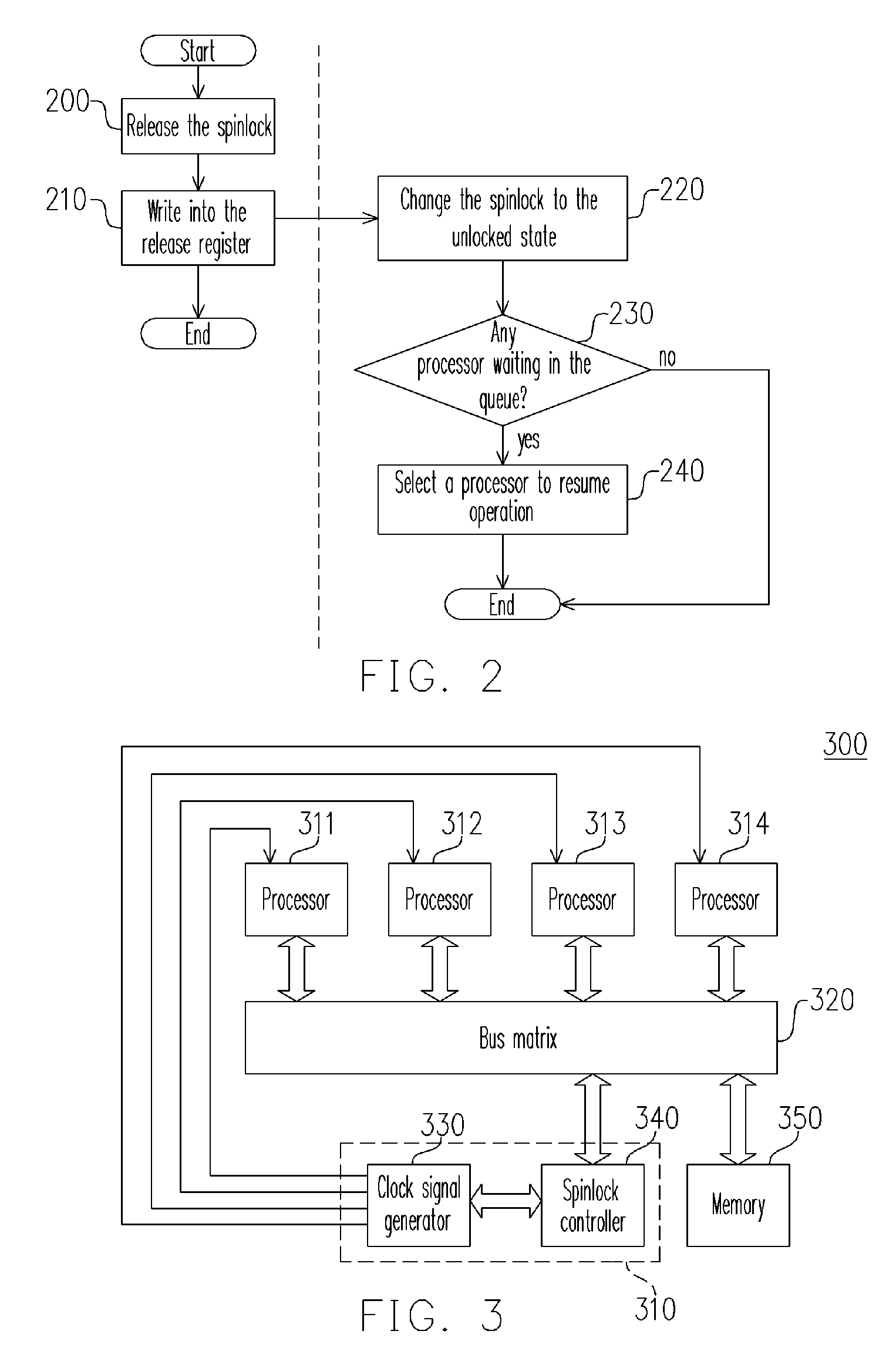

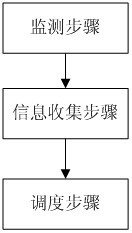

A synchronous method for a multi-processor system and the apparatus thereof are provided. The method comprises the following steps. First, a request for acquiring a spinlock from a processor is received and then the status of the spinlock is returned to the processor. If the spinlock is in an unlock state, the spinlock is changed to a locked state. If the spinlock is already in the locked state, the clock signal to the processor is suspended so that the processor is suspended and the suspended processor is added to a queue. Then, when a request for releasing the spinlock is received from a processor, the spinlock is changed to the unlocked state. Finally, if there are other processors waiting in the queue, one of the processors is selected from the queue according to a predetermined policy and the clock signal of the selected processor is resumed.

Owner:IND TECH RES INST

Virtual CPU dispatching method

InactiveCN102053858AImprove accuracyHigh precisionSoftware simulation/interpretation/emulationVirtualizationExtensibility

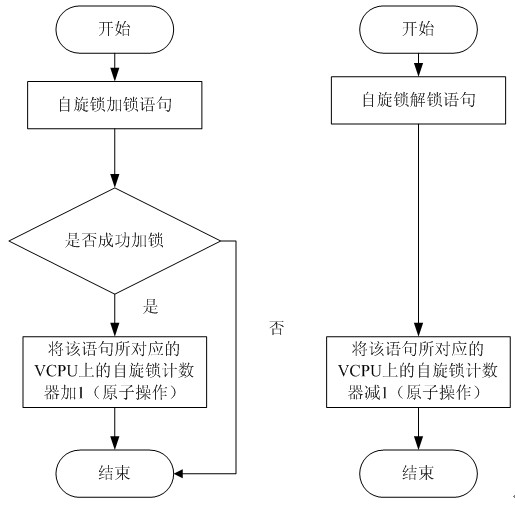

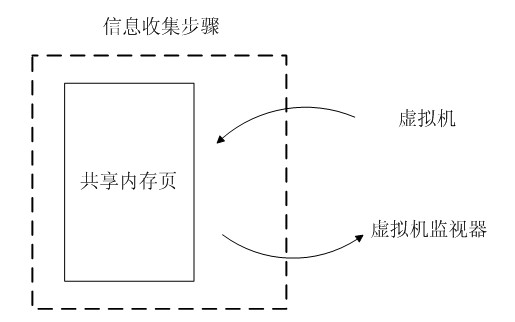

A virtual CPU (Central Processing Unit) dispatching method belongs to the technical field of computing system virtualization, solves the problem of lock holder preemption, and improves the performance of a virtual machine in a multiple processor system. The virtual CPU dispatching method is used in a multiprocessor virtualized environment and comprises a monitoring procedure, an information collecting procedure and a dispatching procedure, wherein the monitoring procedure performs real-time monitoring to spin lock operating commands of all virtual machine operating systems; the information collecting procedure extracts spin lock amount obtained by the monitoring procedure, to form spin lock counting information; and the dispatching procedure adopts fair and efficient dispatching method todispatch the virtual CPU according to the spin lock counting information extracted from the information collecting procedure. Compared with the prior art, the method can detect whether dispatching isavailable more accurately, so as to improve the utilization ratio of physical CPU, ensure that dispatching strategy has flexibility and expandability, as well as fairness, effectively solve the problem of lock holder preemption, and greatly improve the performance of virtual machine in a multiple processor system.

Owner:HUAZHONG UNIV OF SCI & TECH

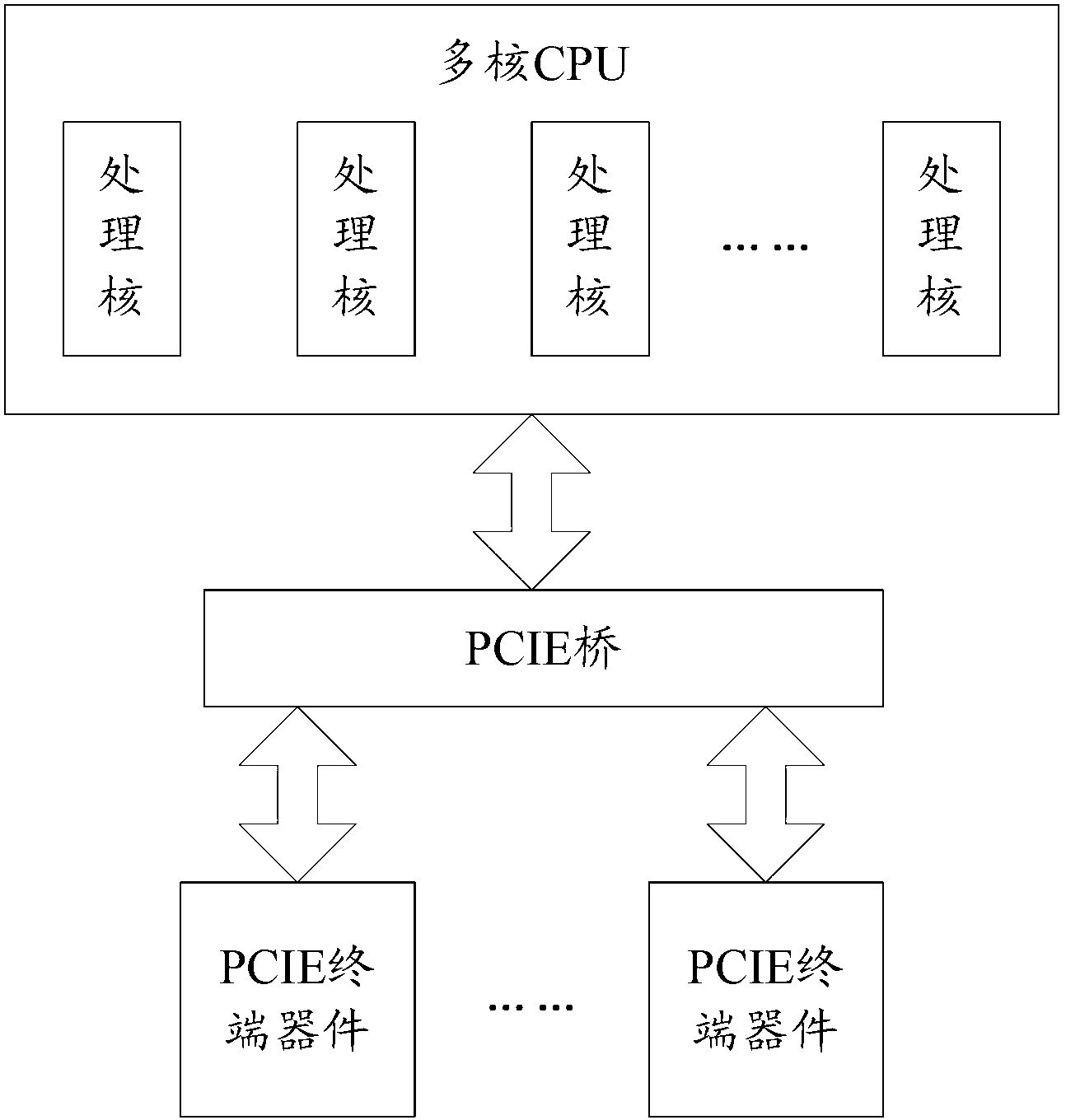

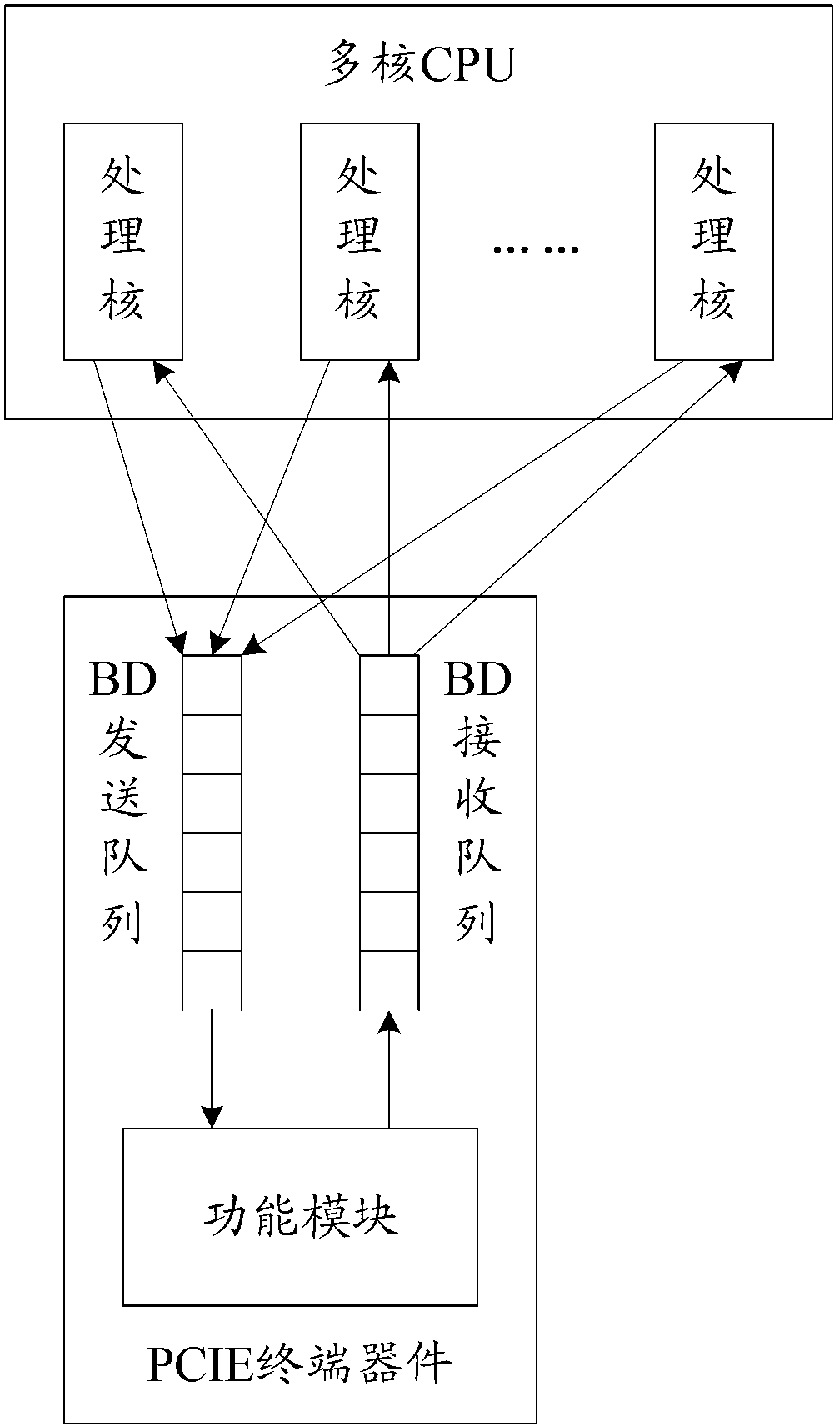

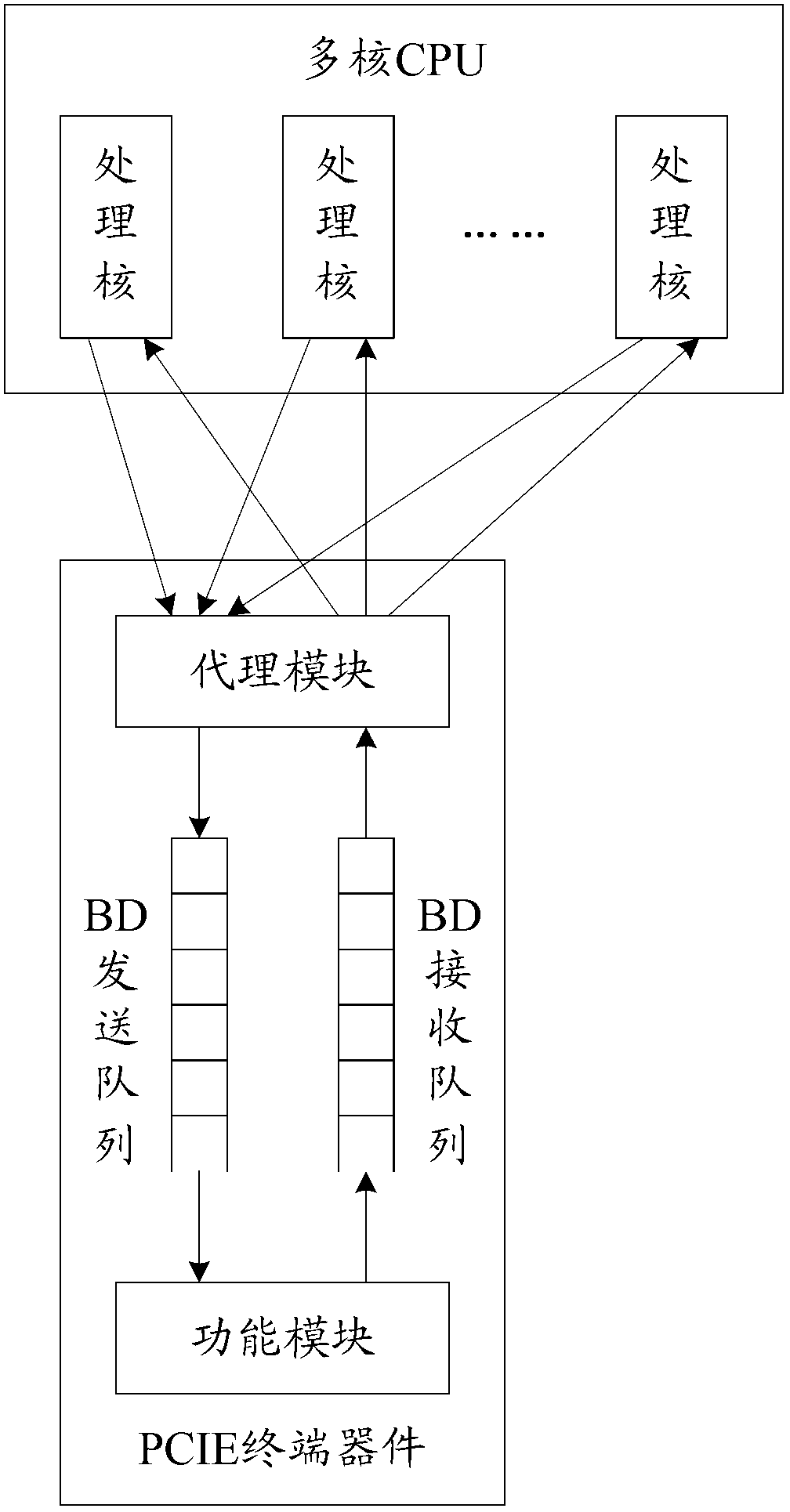

Method and electric device for interacting cache descriptors

ActiveCN103218313AGuaranteed readEnsure that each processing core realizes the read operation through the proxy moduleMemory adressing/allocation/relocationComputer moduleTerminal equipment

The invention discloses a method and an electric device for interacting cache descriptors. According to the electric device, a terminal device is internally and additively provided with an agency module, inlets of a BD (backward diode) transmission queue and a BD receiving queue are provided by the agency module for each process core, and pointer operation is carried out on the BD transmission queue and the BD receiving queue by the agency module, so that the writing operation of each process core to the BD transmission queue and the reading operation of each process core to the BD receiving queue can be guaranteed through the agency module, and the several-for-one completion of each process core to the indicator operation can be further avoided. Furthermore, after the method and the electric device can used, a self-rotating lock and a polling core do not need to be arranged in a multi-core CPU (central processing unit), so that the reduction of the whole efficiency of the multi-core CPU can be avoided.

Owner:XINHUASAN INFORMATION TECH CO LTD

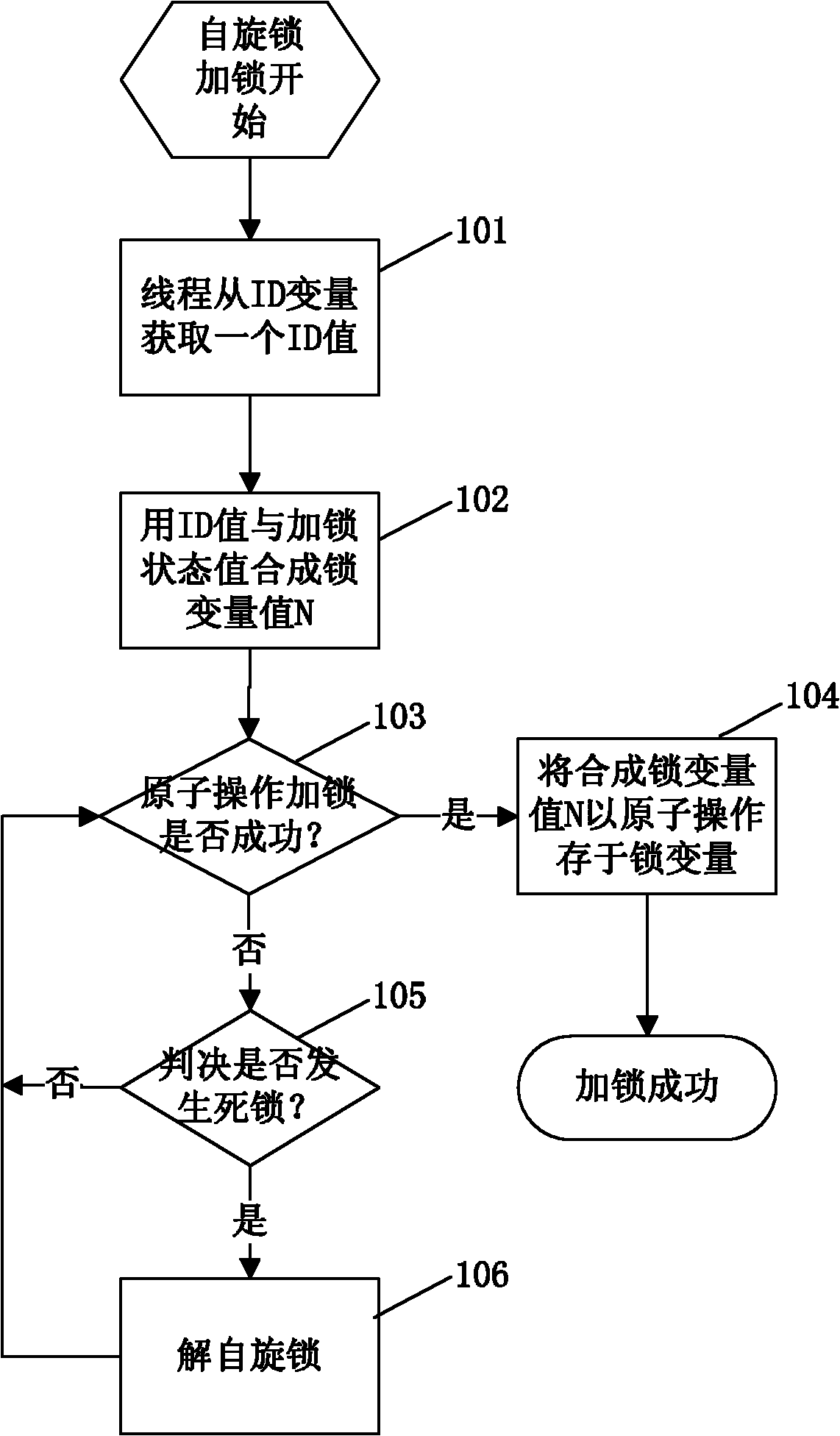

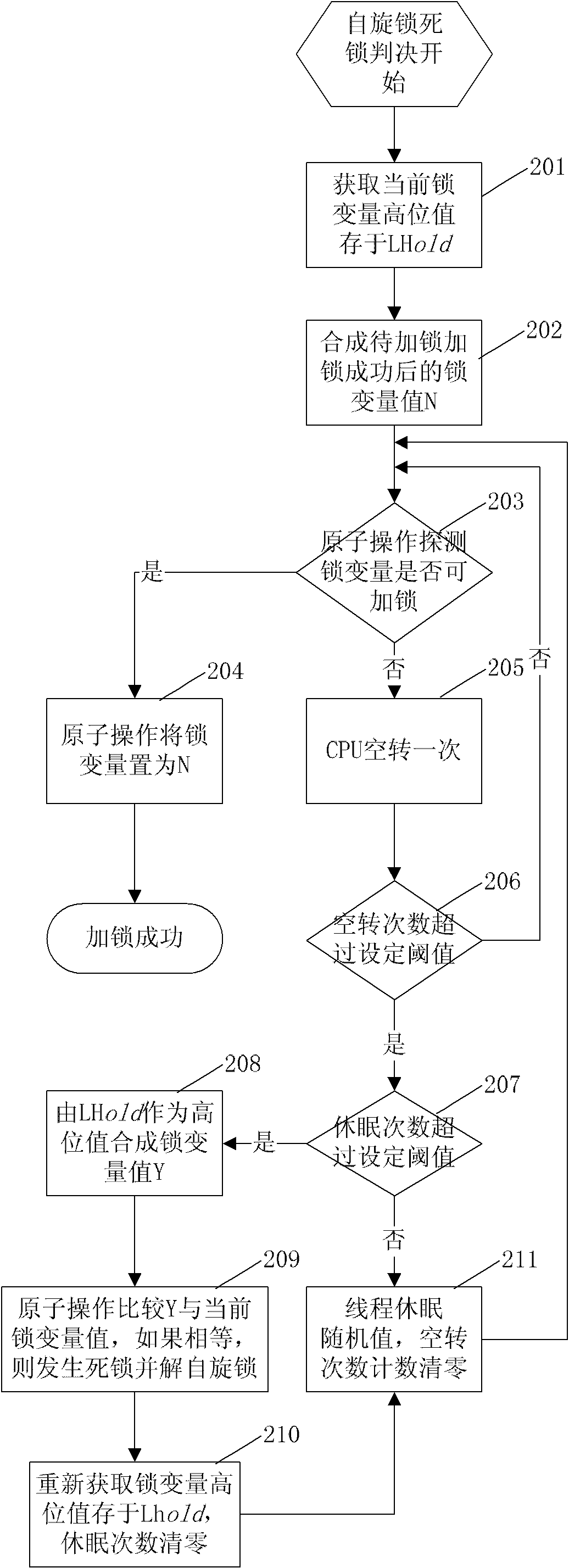

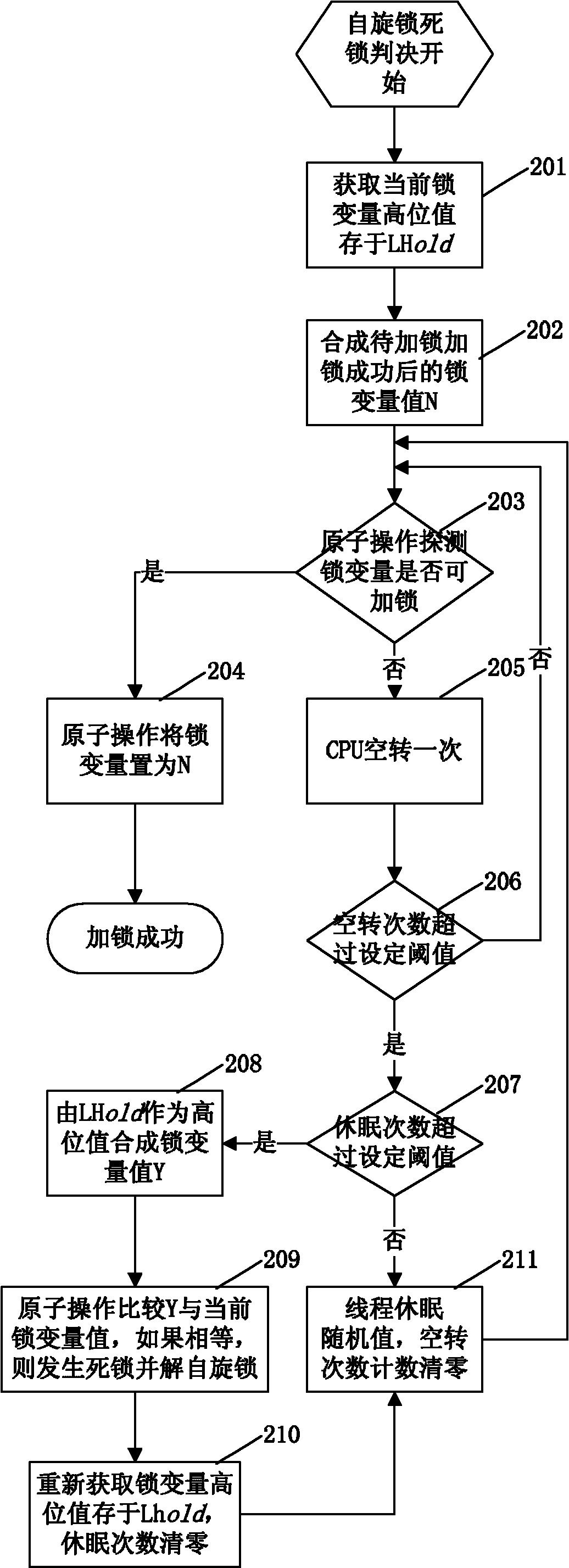

Method for implementing spin lock in database

InactiveCN102129391AAvoid deadlock situationsProtection operationMultiprogramming arrangementsSpinlockComputer science

The invention discloses a method for implementing a spin lock in a database. The method for implementing the spin lock in the database is characterized by comprising the following steps of: storing two global variables, namely a spin lock variable and an ID variable in a shared memory, and initializing the two variables as 0 when a system is initialized; and memorizing a current spin lock holdingthread by using a high storage value of spin lock variables, and implementing automatic unlocking of the spin lock according to the storage value when the lock holding thread is abnormal and exits tocause deadlock. By the method, possible deadlock when multiple tasks compete with the same spin lock is avoided, and the spin lock can protect the operation on a data structure for a long time and can process the deadlock problem caused by the abnormal end of a locking thread in the period by changing a locking flow and a deadlock judgment flow.

Owner:HUAZHONG UNIV OF SCI & TECH

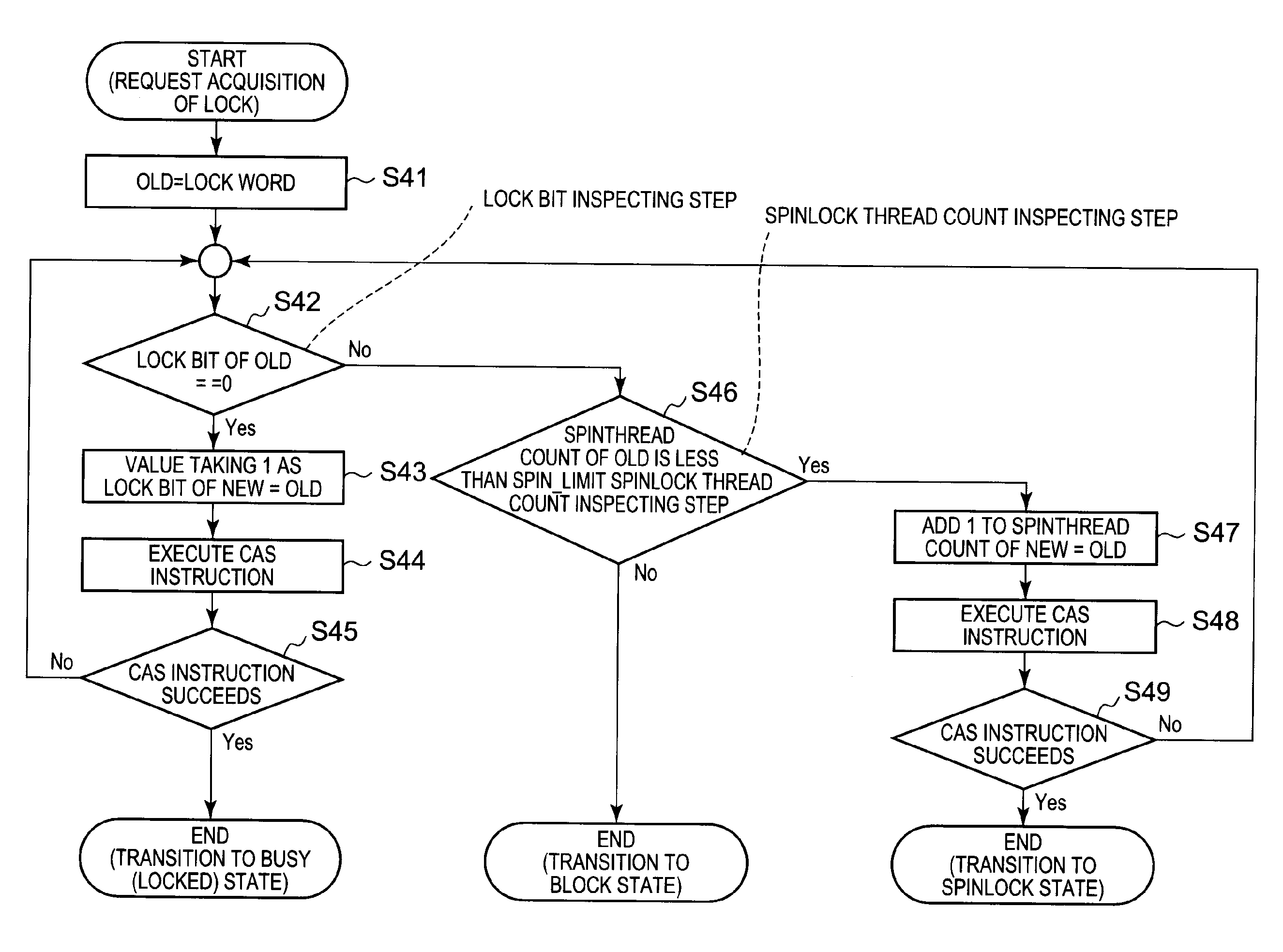

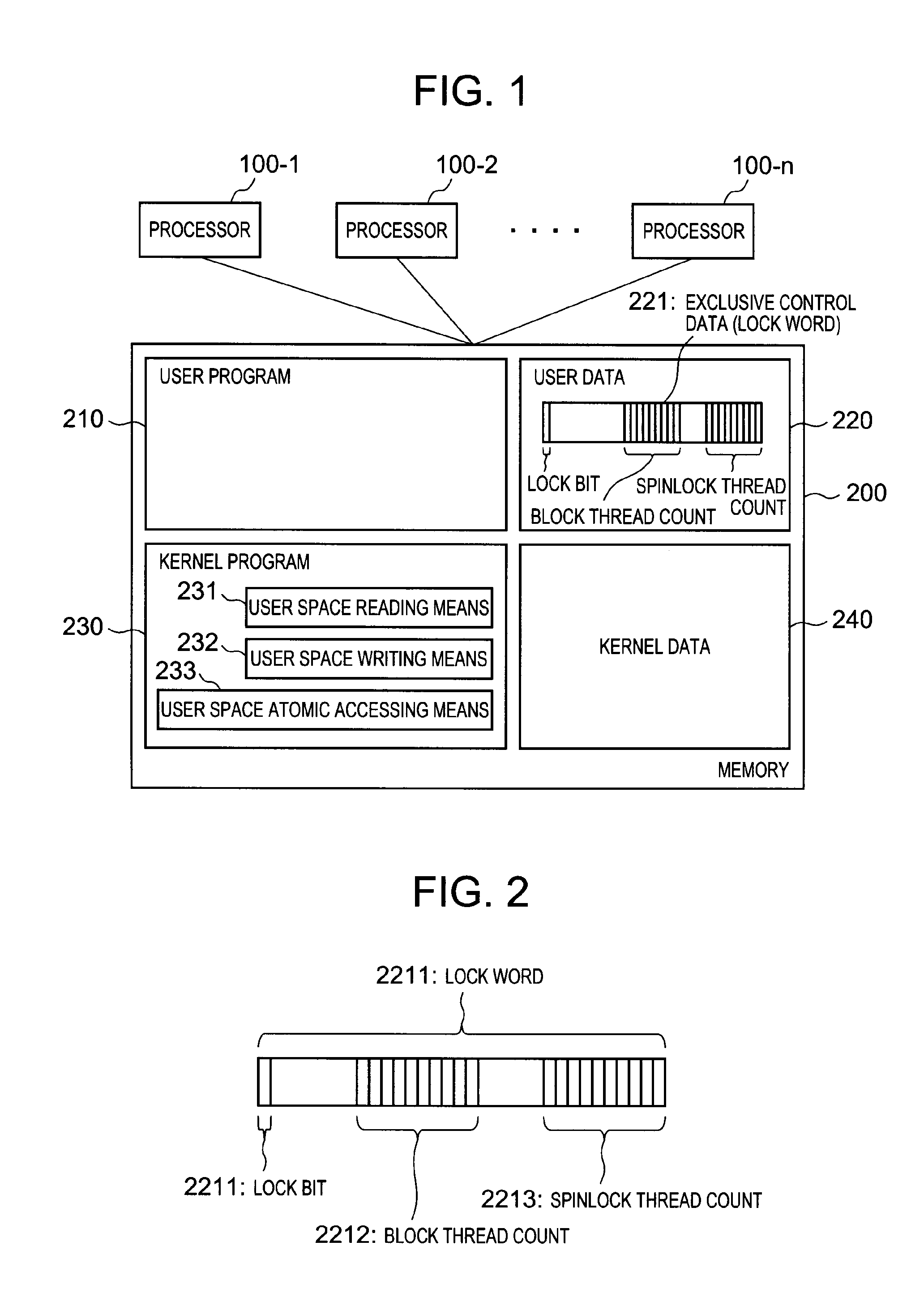

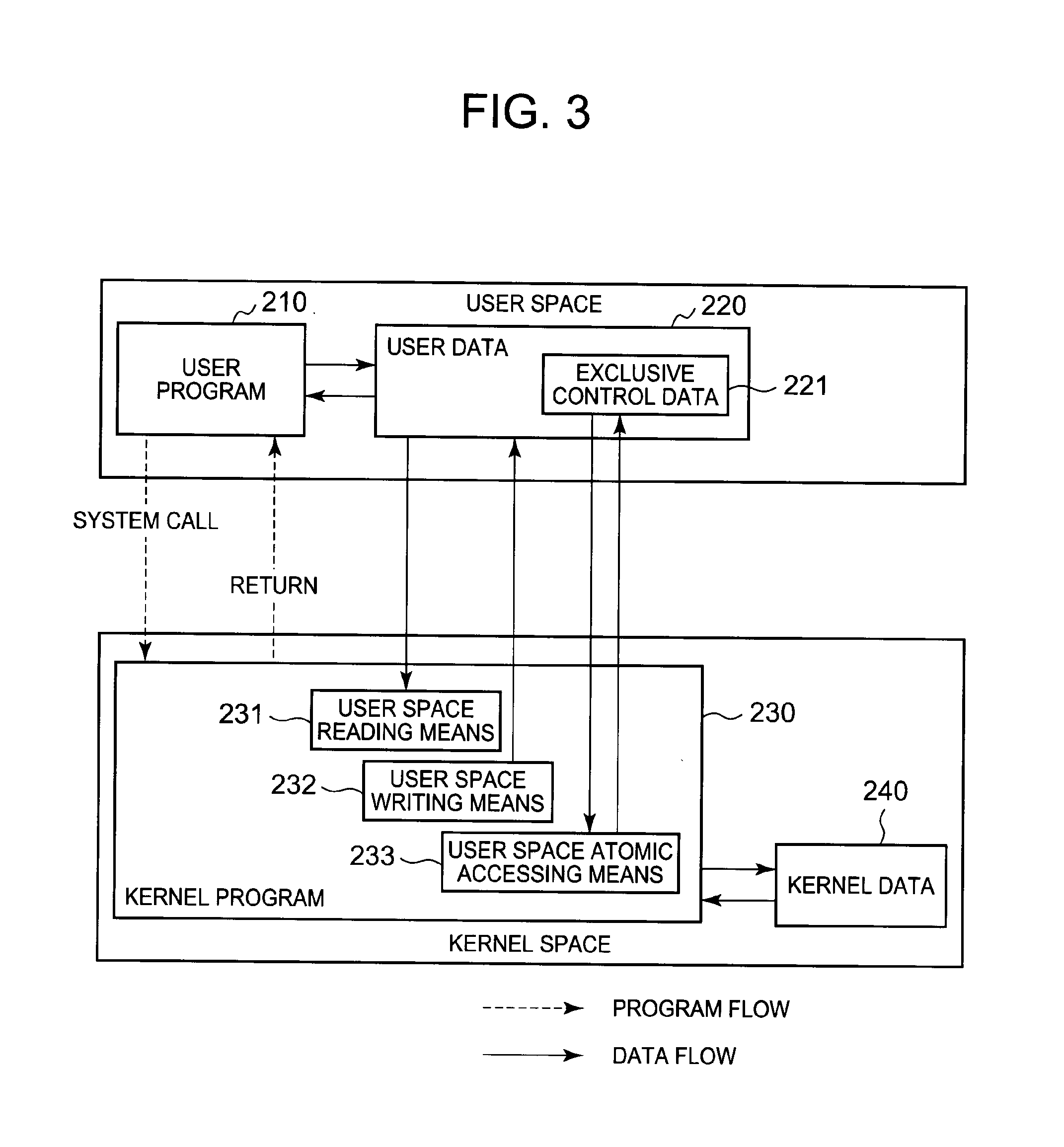

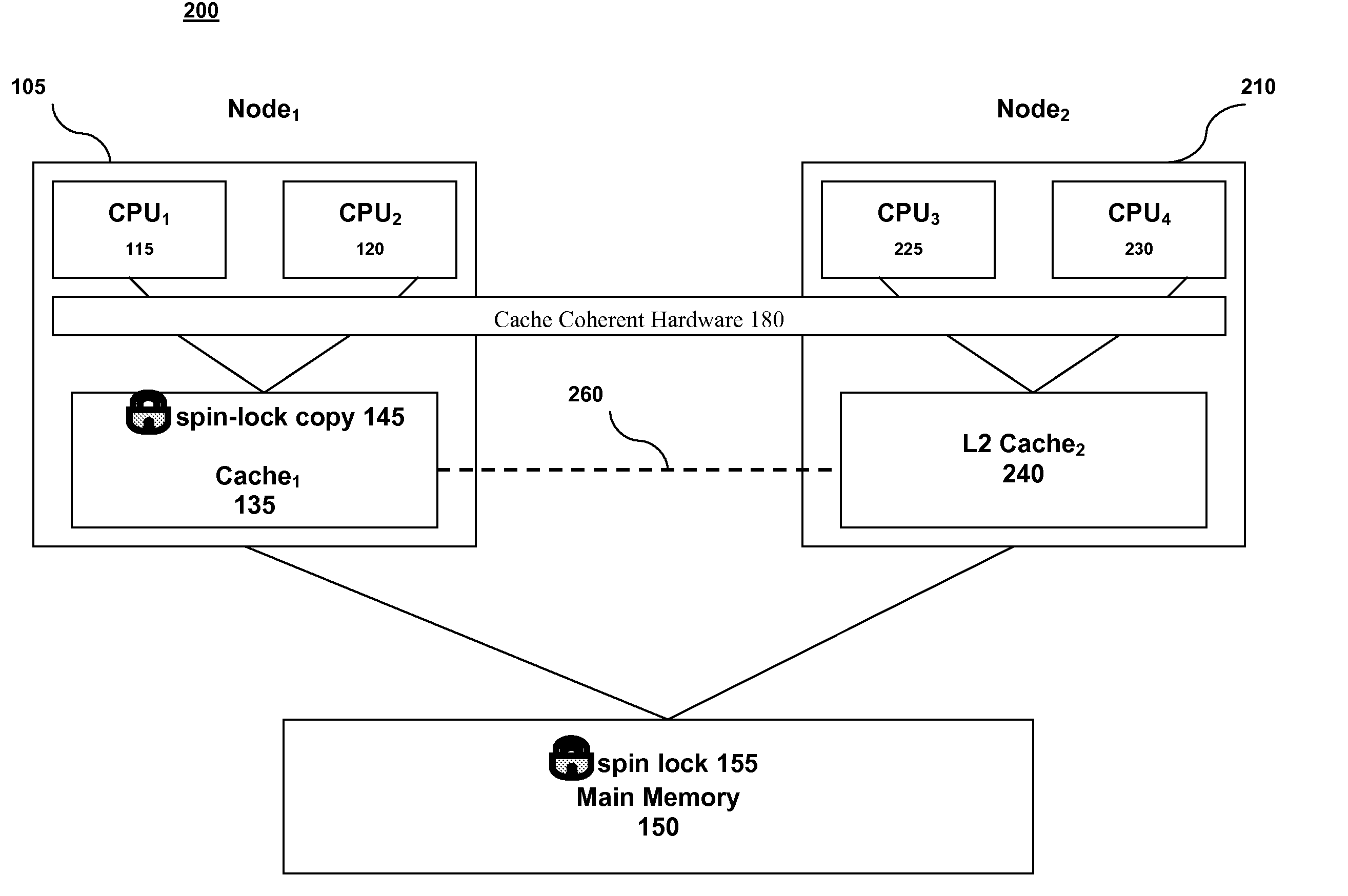

Information processing system, exclusive control method and exclusive control program

InactiveUS20120304185A1Avoid resourcesMultiprogramming arrangementsMemory systemsInformation processingSpinlock

Features of an information processing system include a stand-by thread count information updating means that updates stand-by thread count information showing a number of threads which wait for release of lock according to a spinlock method, according to state transition of a thread which requests acquisition of predetermined lock; and a stand-by method determining means that determines a stand-by method of a thread which requests the acquisition of the lock based on the stand-by thread count information updated by the stand-by thread count information updating means and an upper limit value of the number of threads which wait according to the predetermined spinlock method.

Owner:NEC CORP

Achieving both locking fairness and locking performance with spin locks

ActiveUS7487279B2Digital computer detailsUnauthorized memory use protectionParallel computingSpinlock

A method for implementing a spin lock in a system including a plurality of processing nodes, each node including at least one processor and a cache memory, the method including steps of: acquiring exclusivity to the cache memory; checking the availability of the spin lock; setting the spin lock to logical one if the spin lock is available; setting the spin lock to logical zero once processing is complete; and explicitly yielding the cache memory exclusivity. Yielding the cache memory exclusivity includes instructing the cache coherent hardware to mark the cache memory as non-exclusive. The cache memory is typically called level two cache.

Owner:IBM CORP

Methods and systems for maintaining cache coherency in multi-processor systems

ActiveUS8725958B2Reduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationLoad instructionMulti processor

Owner:RENESAS ELECTRONICS CORP

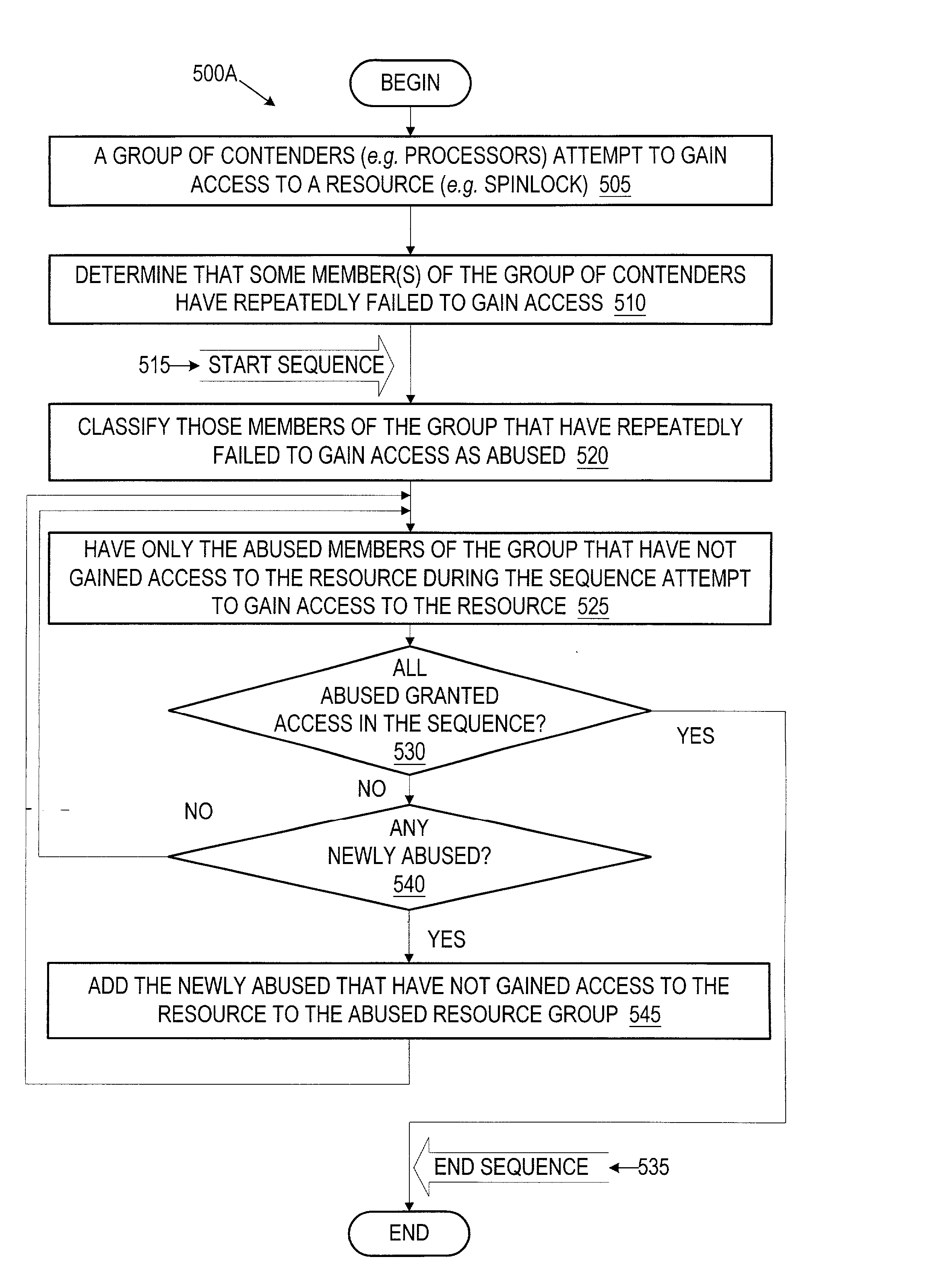

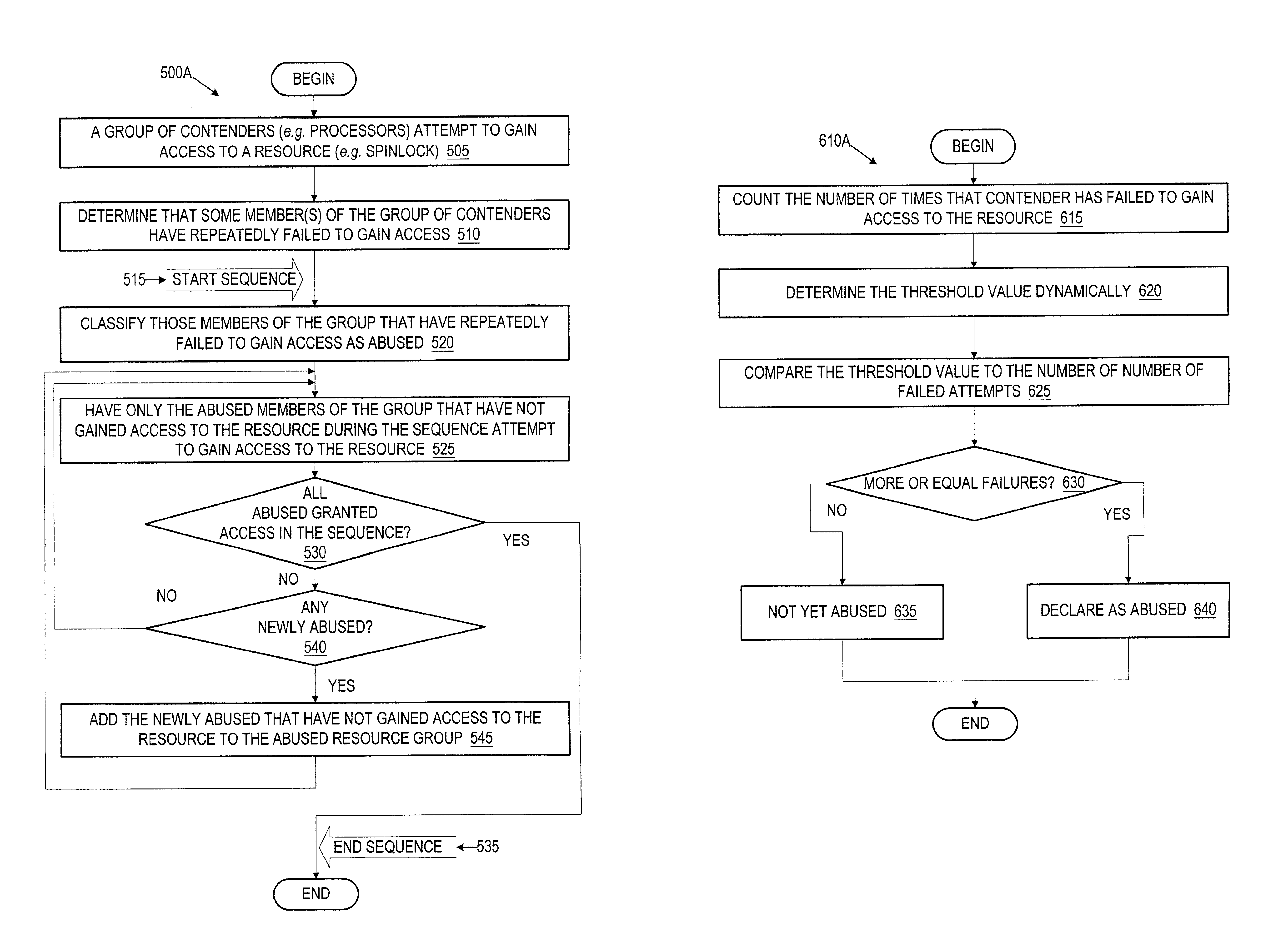

Ensuring fairness in a multiprocessor environment using historical abuse recongnition in spinlock acquisition

A method and system for ordering equitable access to a limited resource (such as a spinlock) by a plurality of contenders (such as processors) where each of the contenders contends for access more than one time. The method classifies one or more contenders that have failed to gain access to the limited resource after at least a predetermined number of attempts as abused contenders. The abused contenders attempt among themselves to gain access to the limited resource. The method repeats the above until all of the abused contenders have gained access to the limited resource.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

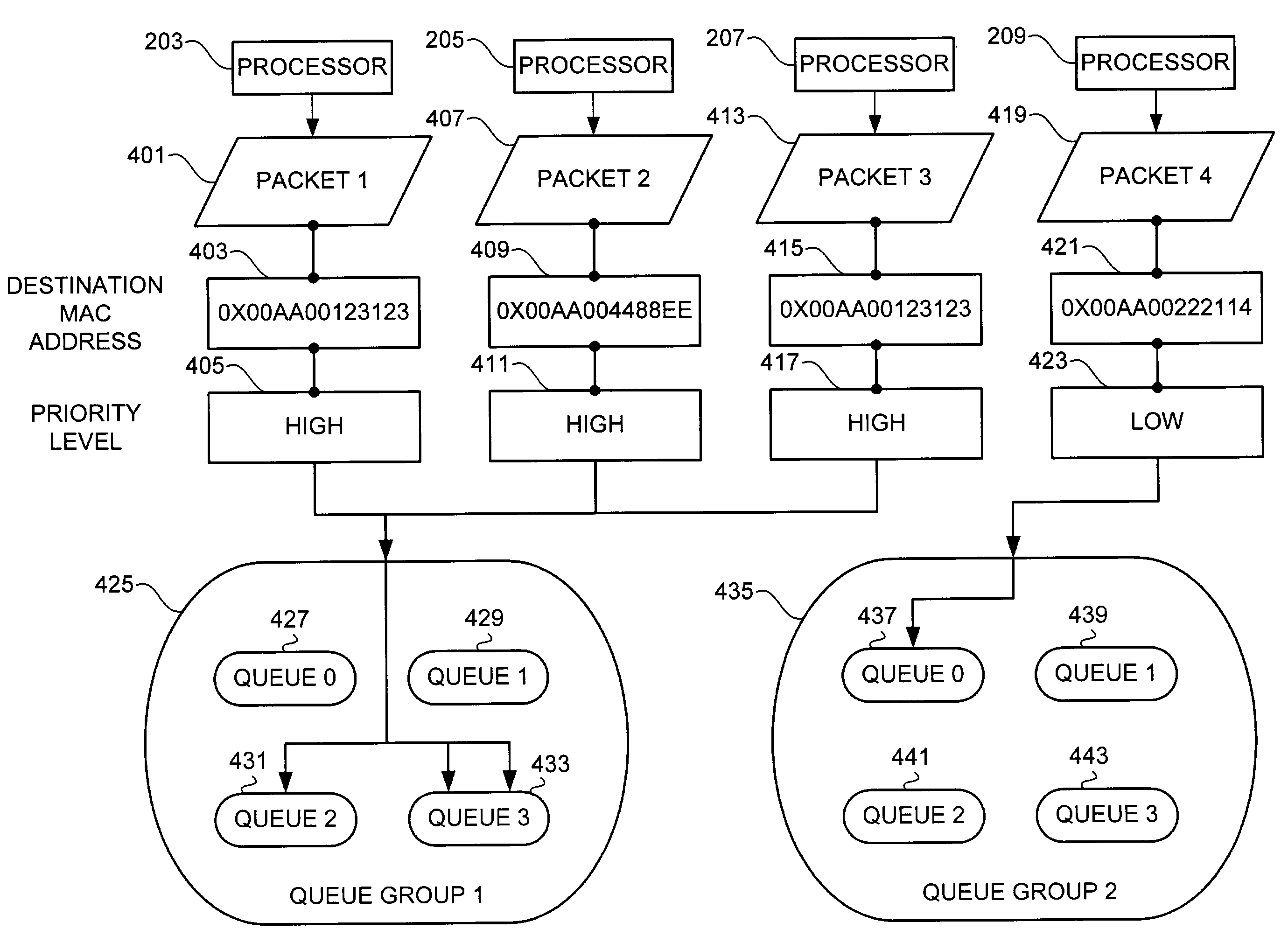

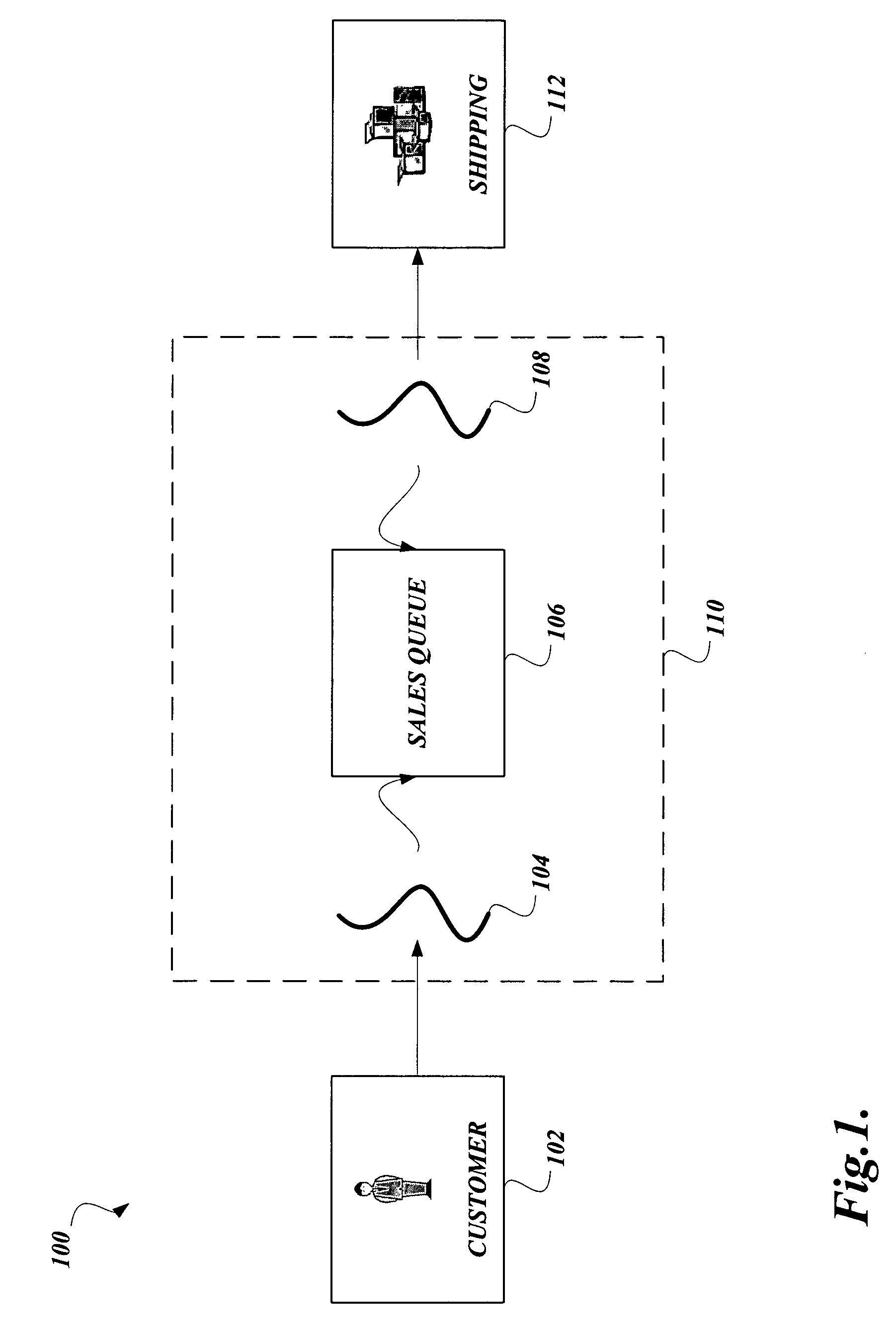

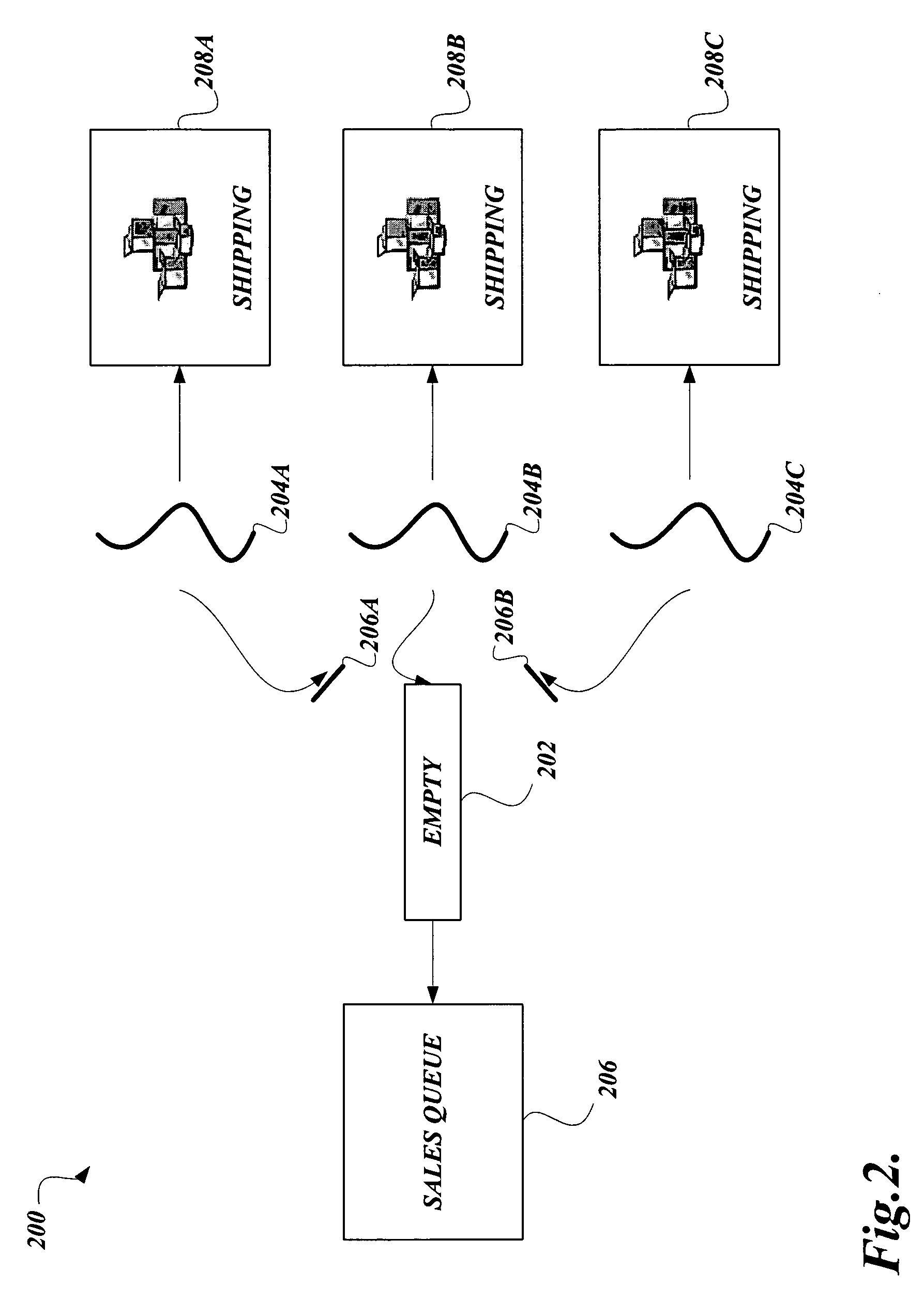

Method and apparatus for minimizing spinlocks and retaining packet order in systems utilizing multiple transmit queues

A method, apparatus, and article of manufacture for retaining packet order in multiprocessor systems utilizing multiple transmit queues while minimizing spinlocks are disclosed herein. Embodiments of the present invention define multiple transmit queues for a given priority level of packets to allow parallel processing and queuing of packets having equal priority in different transmit queues. Queuing packets of equal priority in different transmit queues minimizes processor time spent attempting to acquire queue-specific resources associated with one particular transmit queue. In addition, embodiments of the present invention provide an assignment mechanism to maximize utilization of the multiple transmit queues by queuing packets corresponding to each transmit request in a next available transmit queue defined for a given priority level. Coordination between hardware and software allows the order of the queued packets to be maintained in the transmission process.

Owner:INTEL CORP

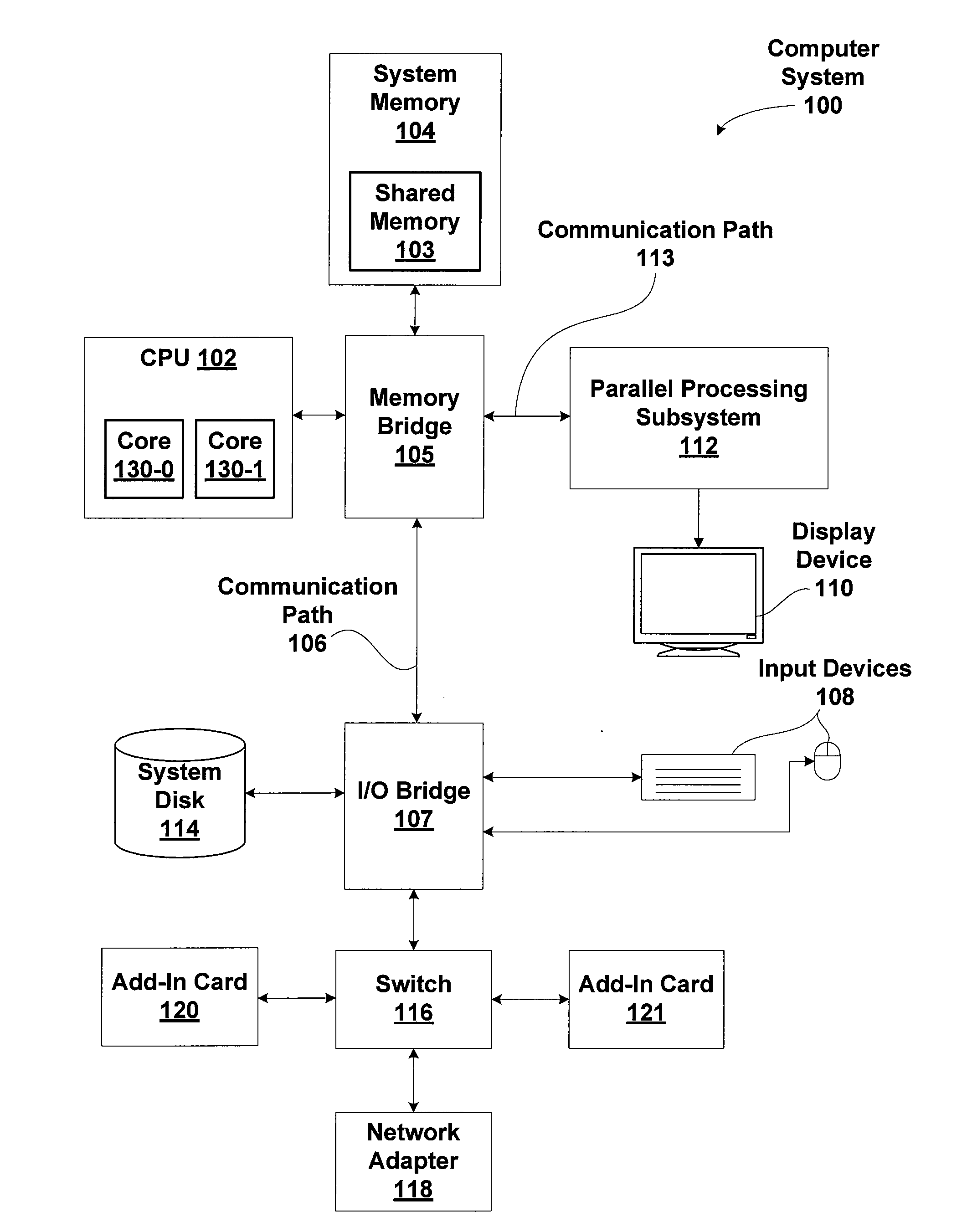

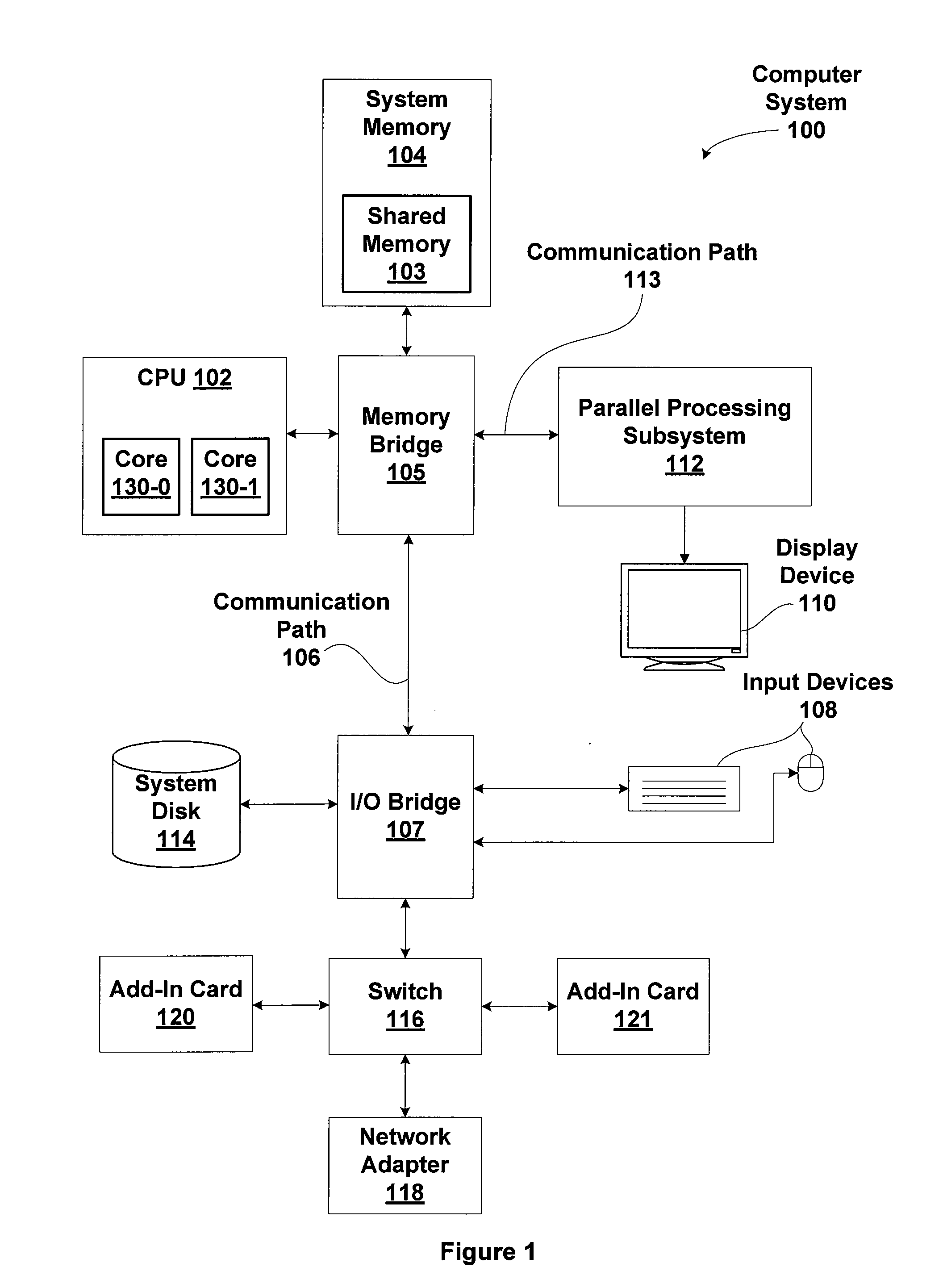

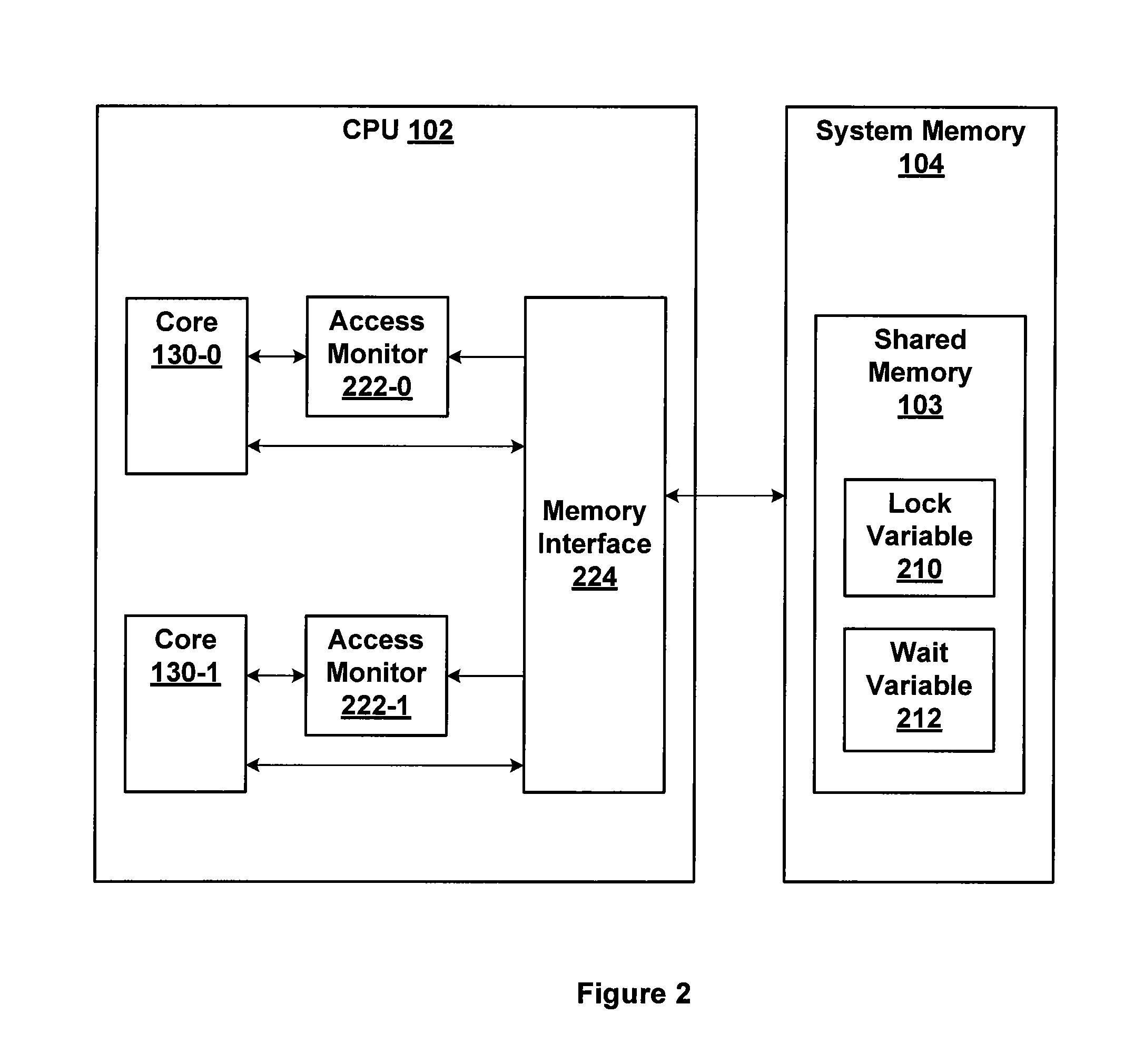

Method for power optimized multi-processor synchronization

ActiveUS20130061005A1Efficiently synchronize operationEfficiently power resourceProgram synchronisationDigital data processing detailsMulti processorParallel computing

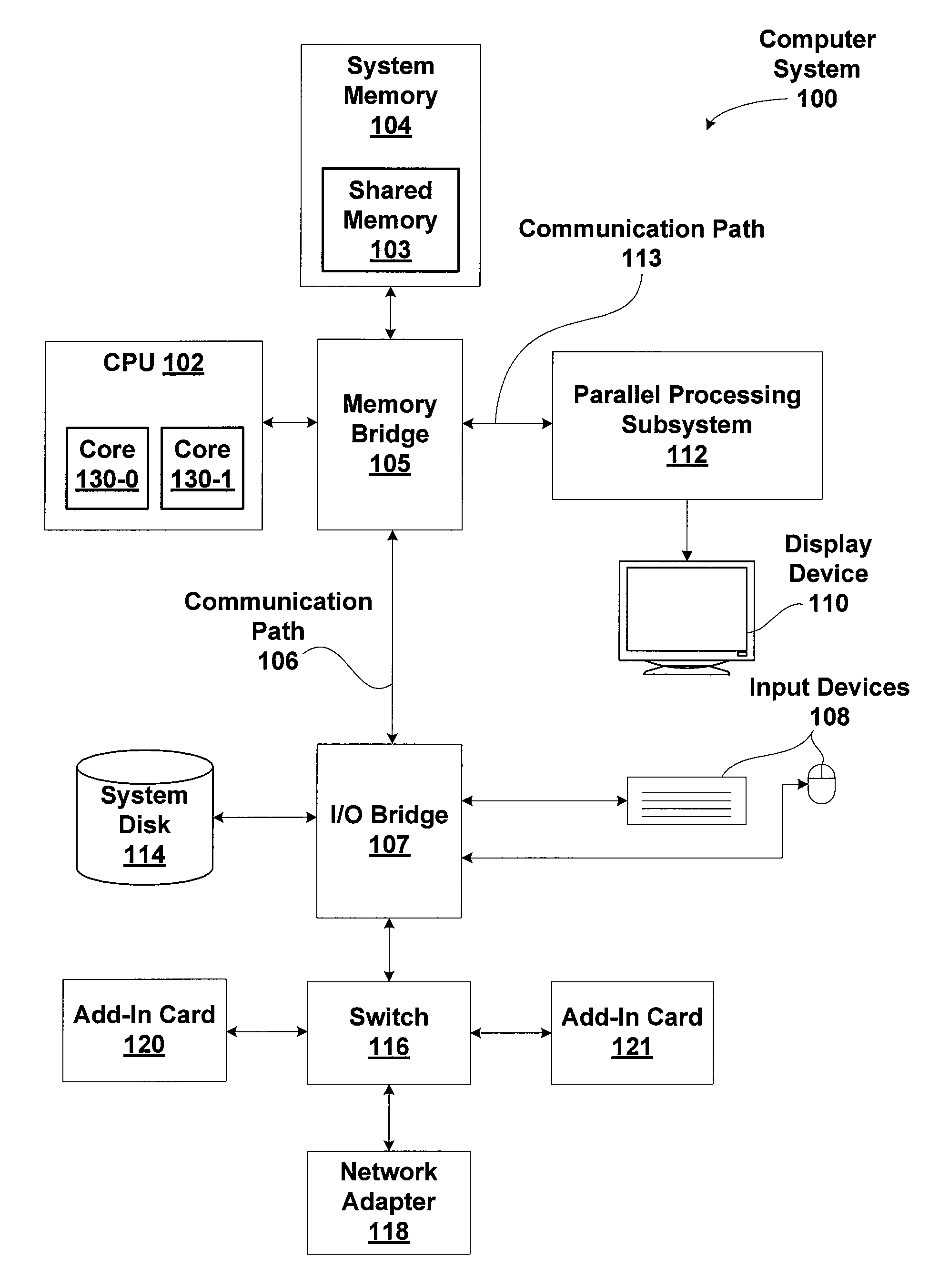

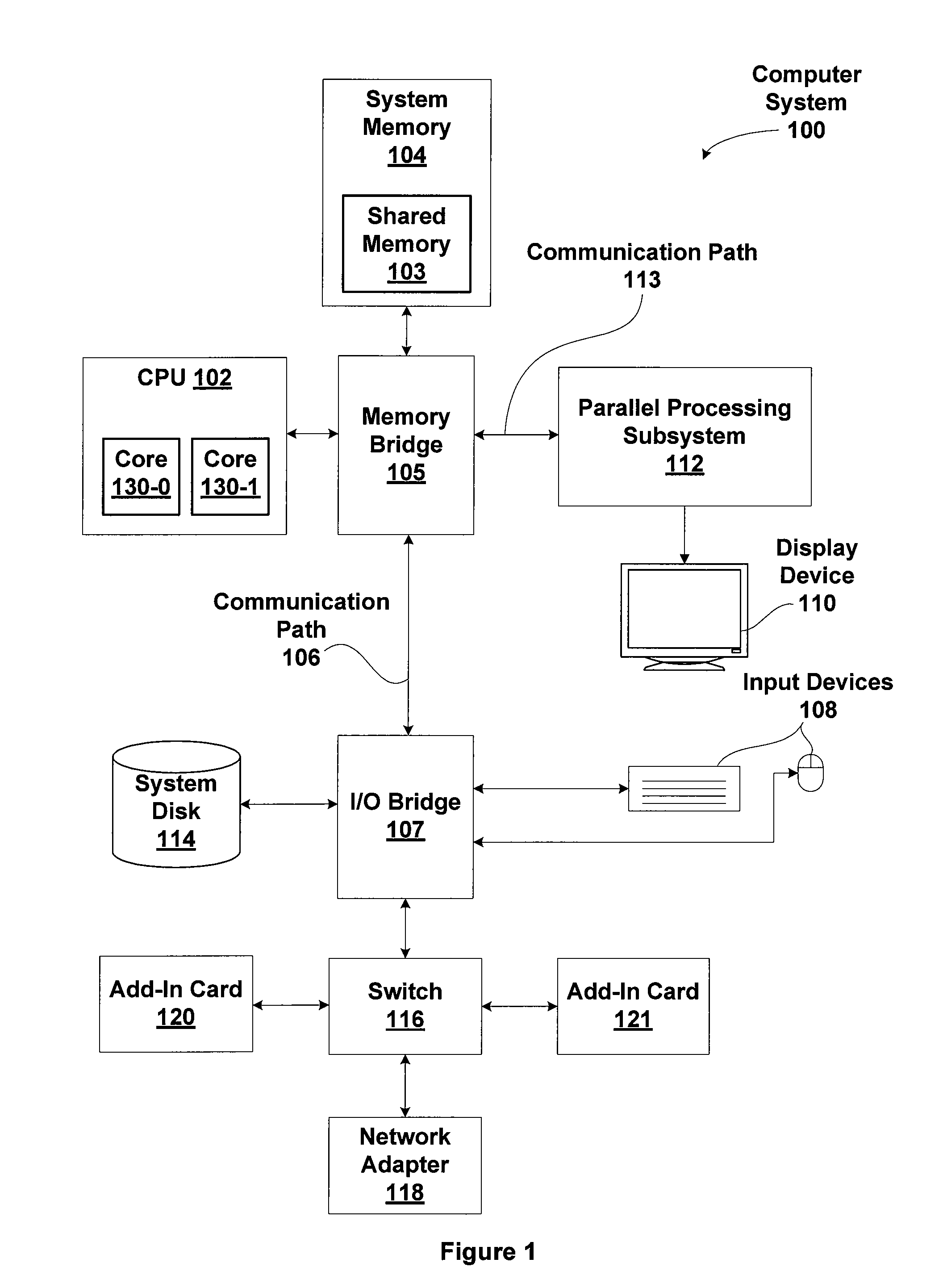

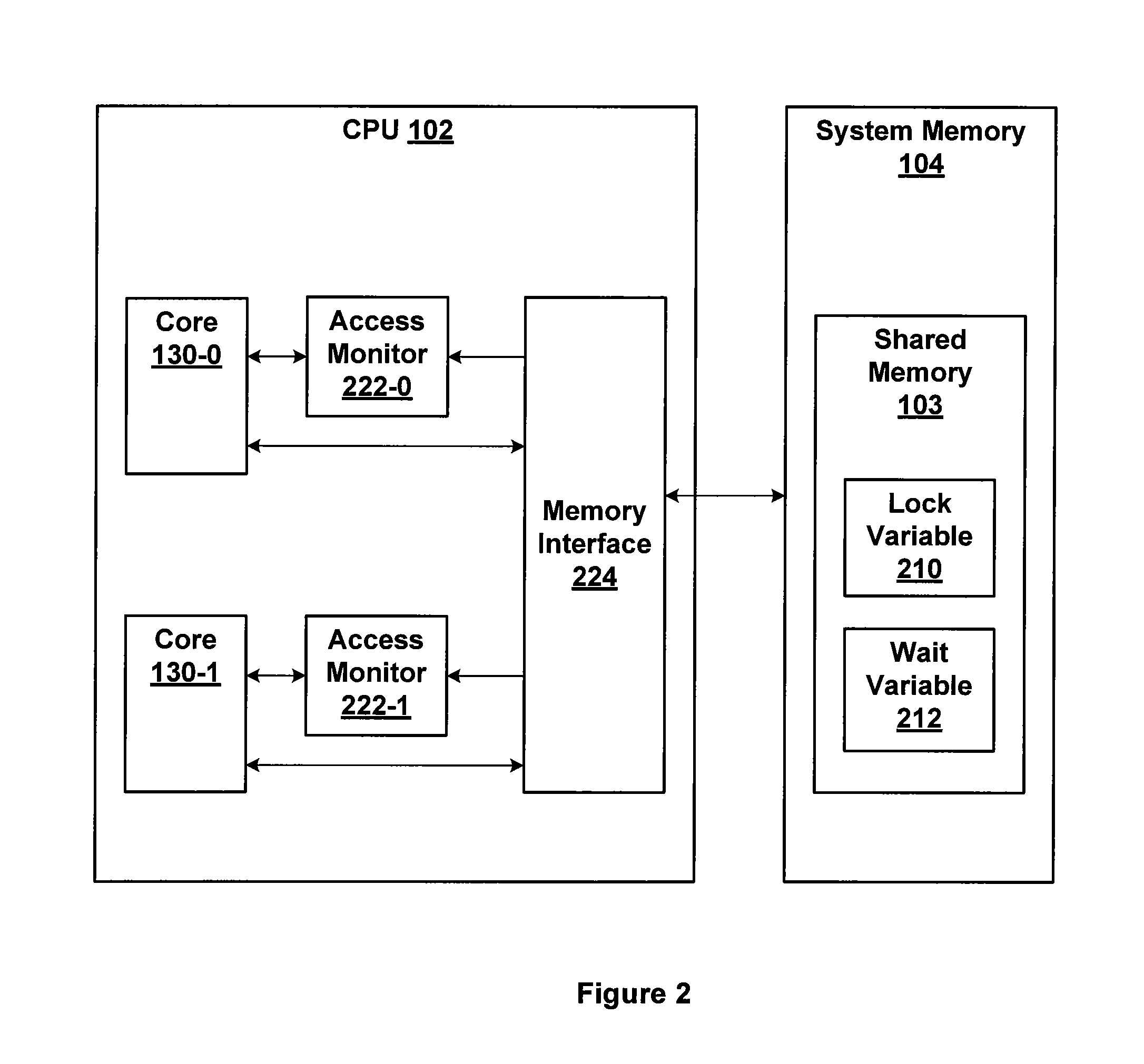

One embodiment of the present invention sets forth a technique for synchronization between two or more processors. The technique implements a spinlock acquire function and a spinlock release function. A processor executing the spinlock acquire function advantageously operates in a low power state while waiting for an opportunity to acquire spinlock. The spinlock acquire function configures a memory monitor to wake up the processor when spinlock is released by a different processor. The spinlock release function releases spinlock by clearing a lock variable and may clear a wait variable.

Owner:NVIDIA CORP

Data processor

ActiveUS20110179226A1Reduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationLoad instructionParallel computing

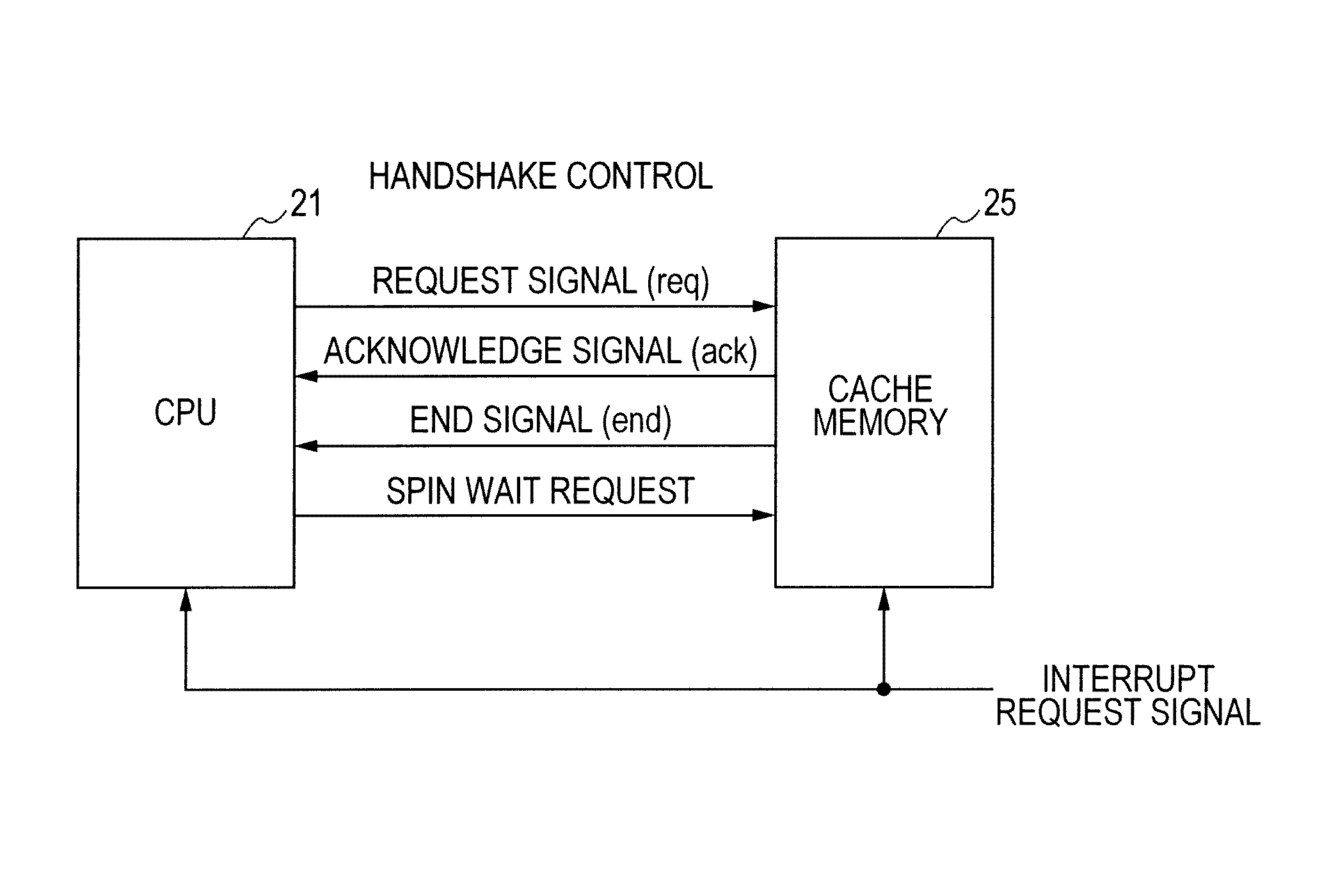

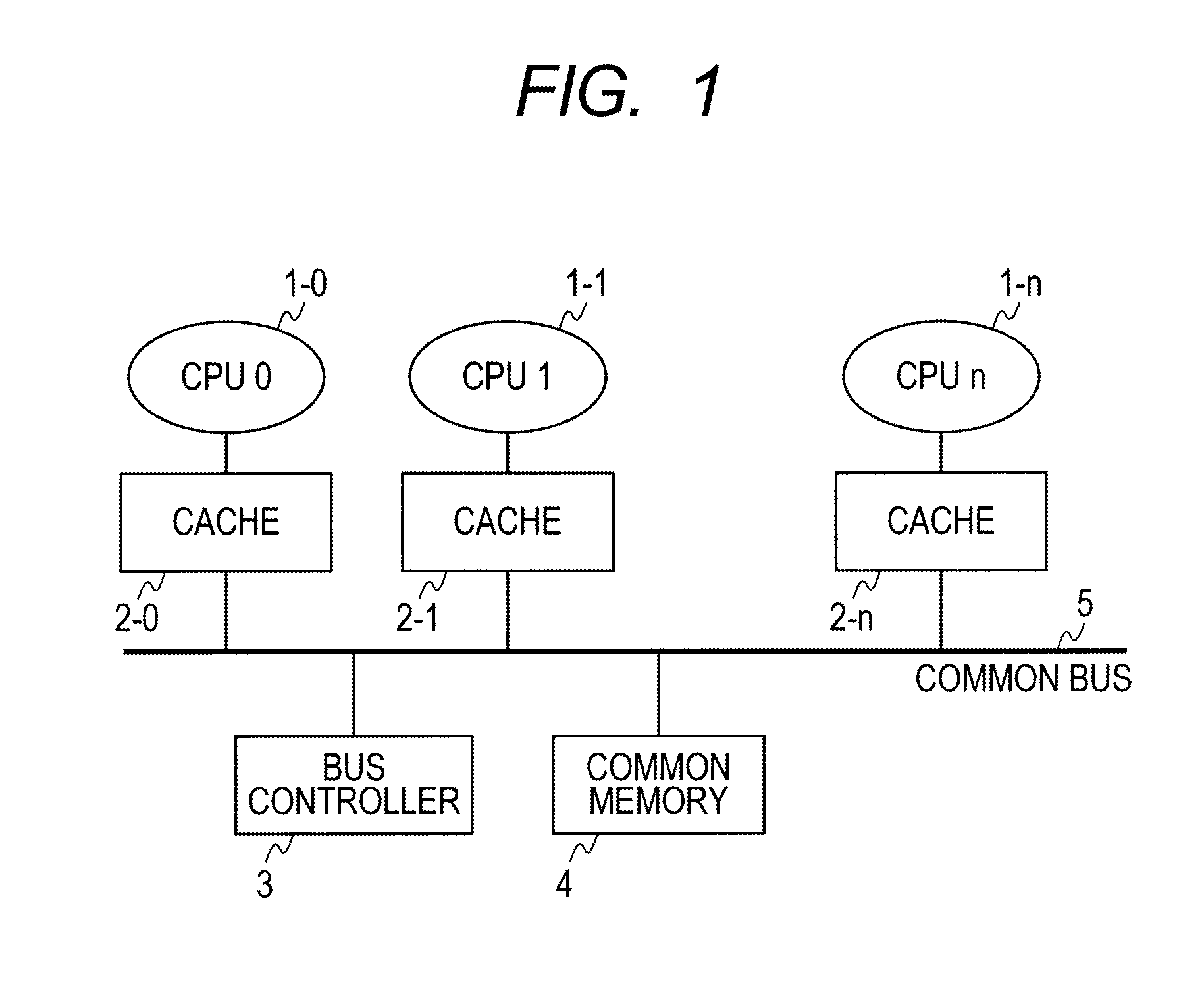

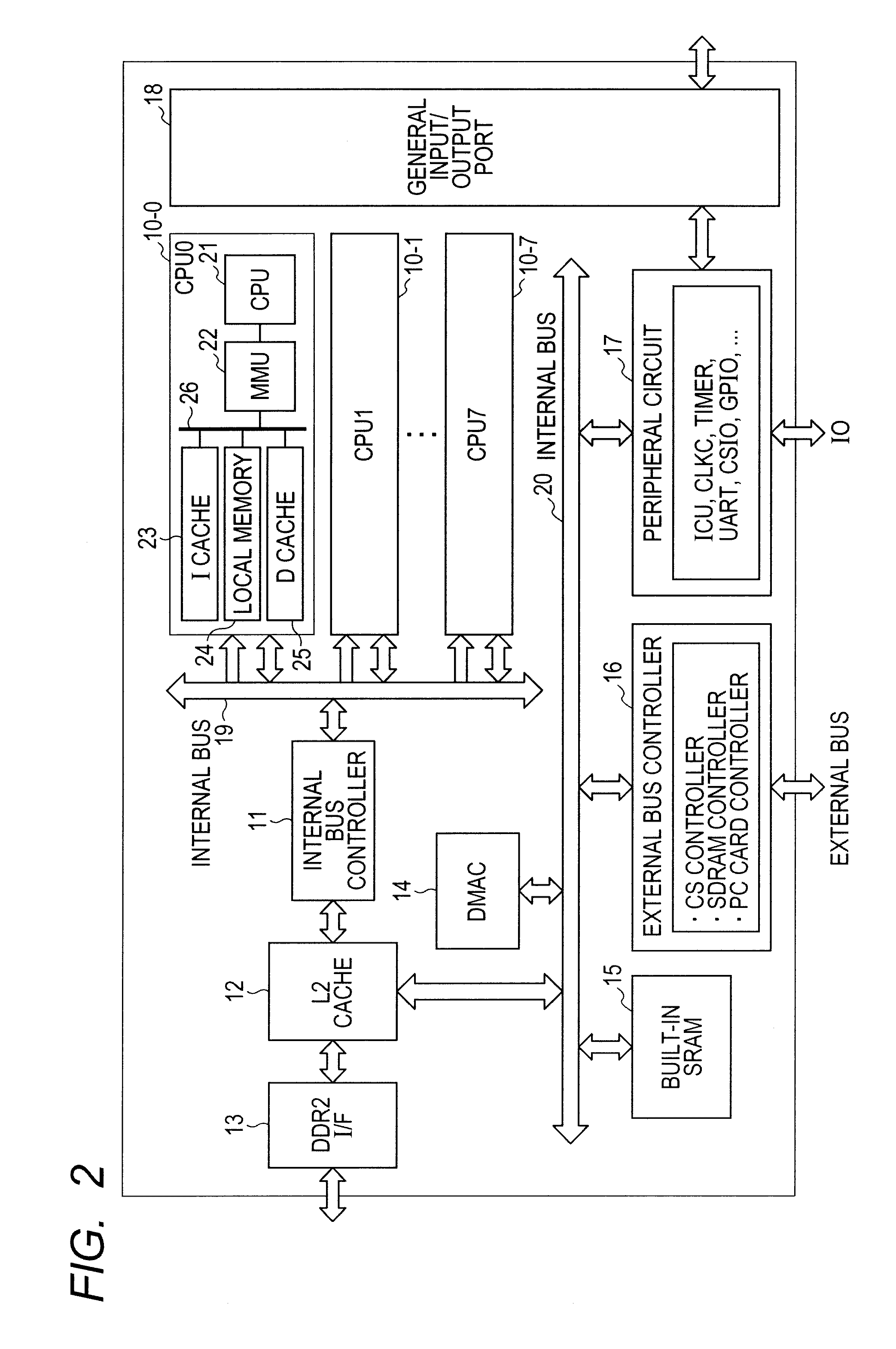

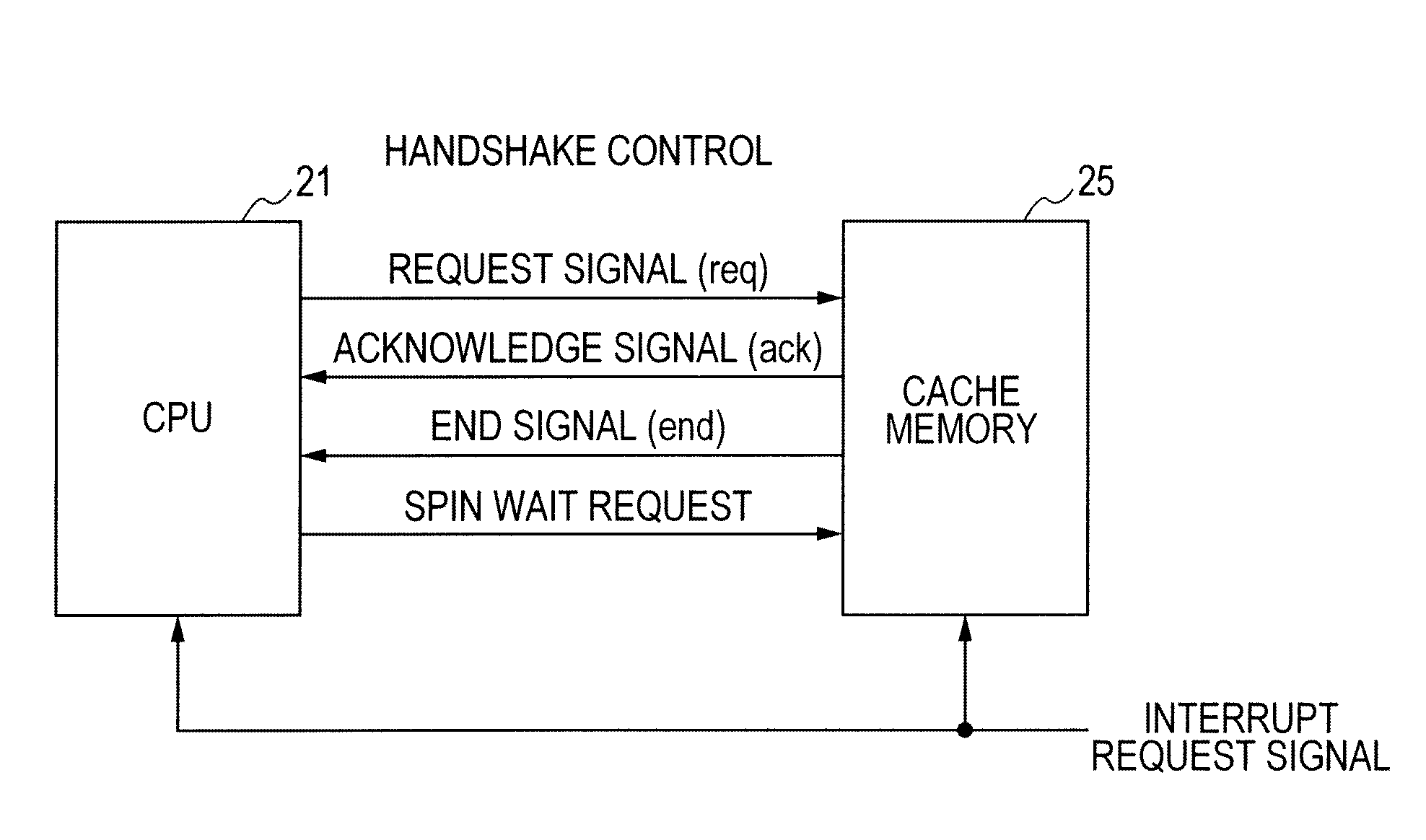

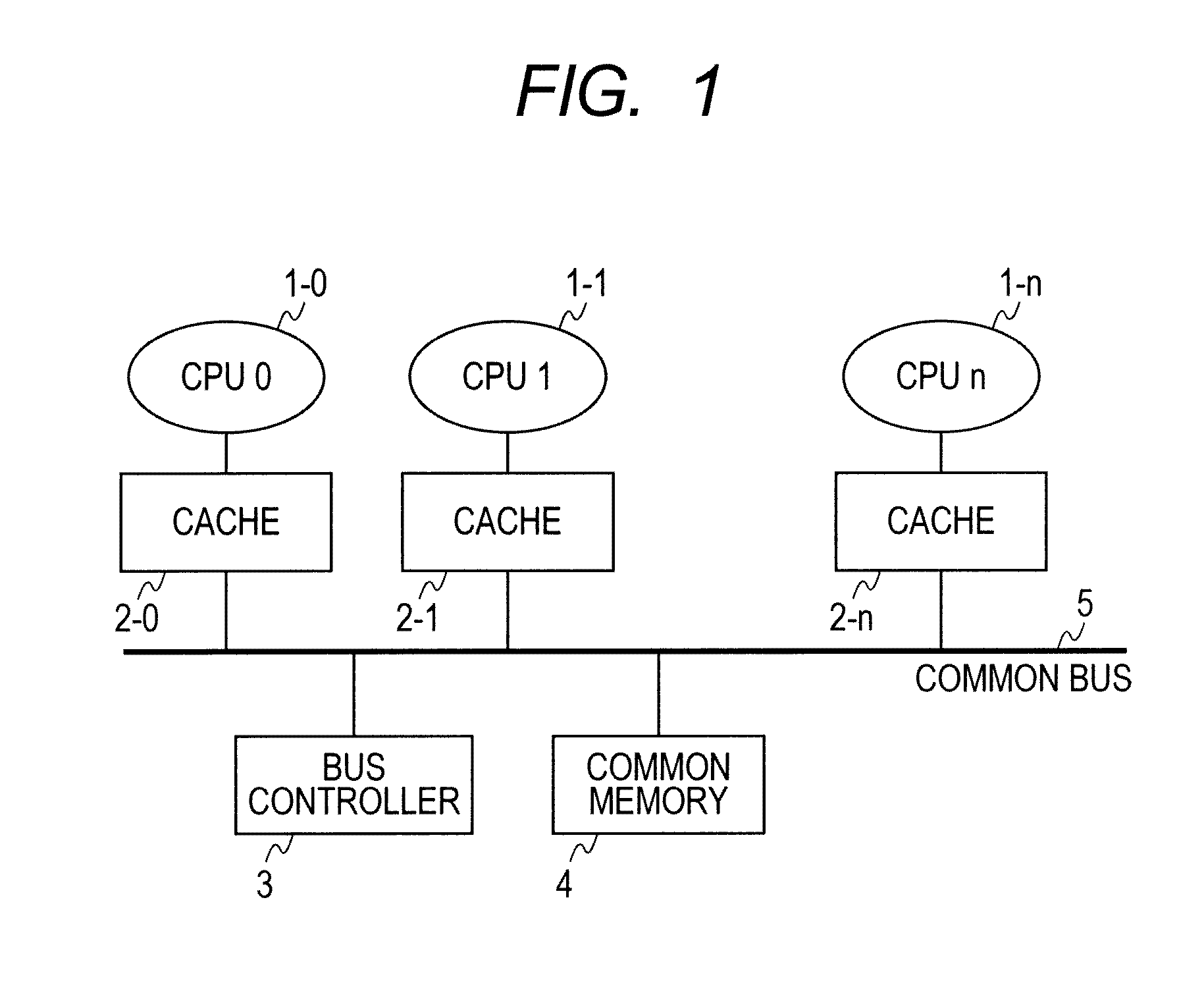

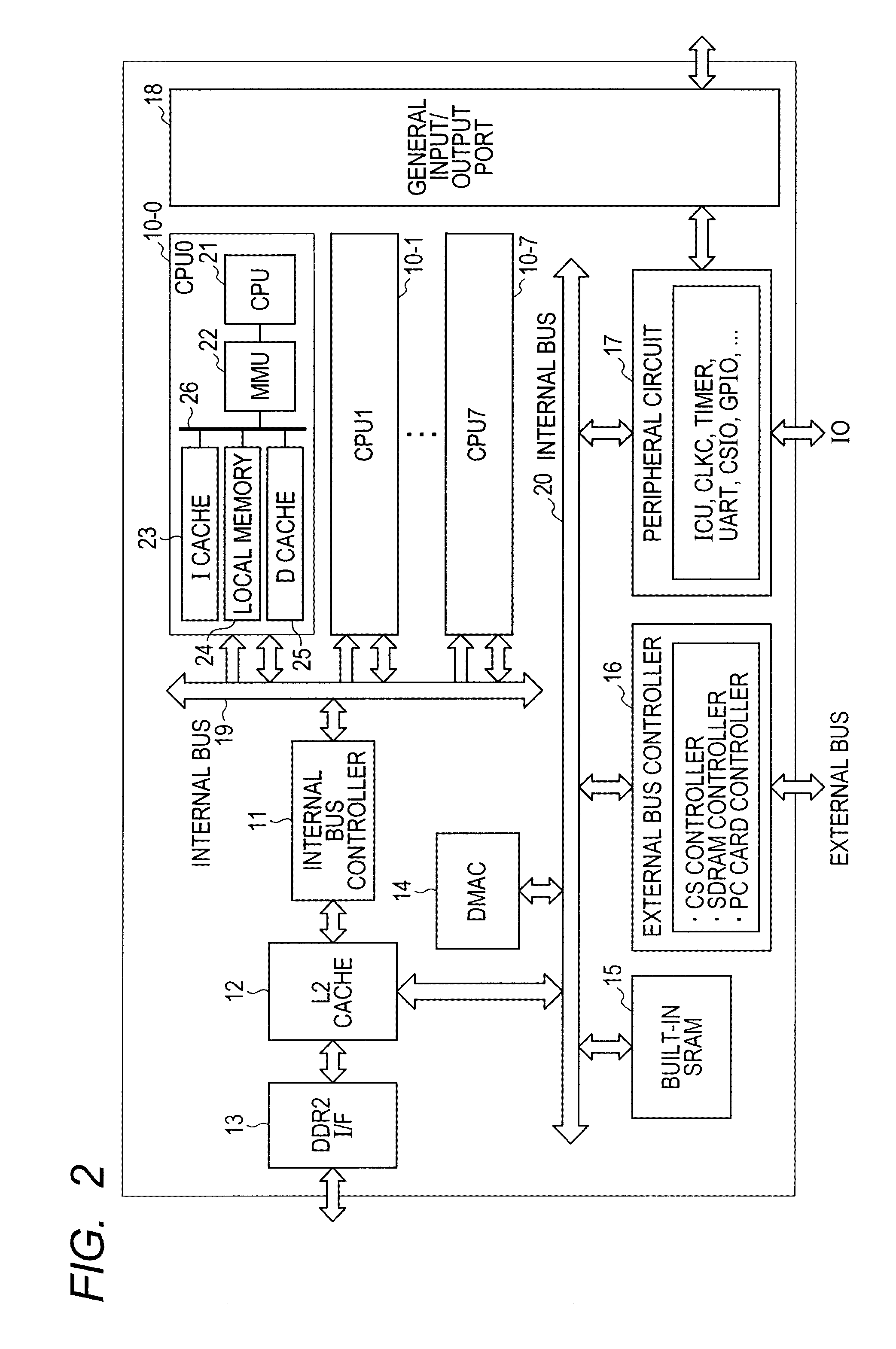

The present invention provides a data processor capable of reducing power consumption at the time of execution of a spin wait loop for a spinlock. A CPU executes a weighted load instruction at the time of performing a spinlock process and outputs a spin wait request to a corresponding cache memory. When the spin wait request is received from the CPU, the cache memory temporarily stops outputting an acknowledge response to a read request from the CPU until a predetermined condition (snoop write hit, interrupt request, or lapse of predetermined time) is satisfied. Therefore, pipeline execution of the CPU is stalled and the operation of the CPU and the cache memory can be temporarily stopped, and power consumption at the time of executing a spin wait loop can be reduced.

Owner:RENESAS ELECTRONICS CORP

Optimization of Data Locks for Improved Write Lock Performance and CPU Cache usage in Mulit Core Architectures

ActiveUS20160098361A1Memory architecture accessing/allocationResource allocationMulticore architectureData access

Data access optimization features the innovative use of a writer-present flag when acquiring read-locks and write-locks. Setting a writer-present flag indicates that a writer desires to modify a particular data. This serves as an indicator to readers and writers waiting to acquire read-locks or write-locks not to acquire a lock, but rather to continue waiting (i.e., spinning) until the write-present flag is cleared. As opposed to conventional techniques in which readers and writers are not locked out until the writer acquires the write-lock, the writer-present flag locks out other readers and writers once a writer begins waiting for a write-lock (that is, sets a writer-present flag). This feature allows a write-lock method to acquire a write-lock without having to contend with waiting readers and writers trying to obtain read-locks and write-locks, such as when using conventional spinlock implementations.

Owner:CHECK POINT SOFTWARE TECH LTD

Ensuring fairness in a multiprocessor environment using historical abuse recognition in spinlock acquisition

A method and system for ordering equitable access to a limited resource (such as a spinlock) by a plurality of contenders (such as processors) where each of the contenders contends for access more than one time. The method classifies one or more contenders that have failed to gain access to the limited resource after at least a predetermined number of attempts as abused contenders. The abused contenders attempt among themselves to gain access to the limited resource. The method repeats the above until all of the abused contenders have gained access to the limited resource.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

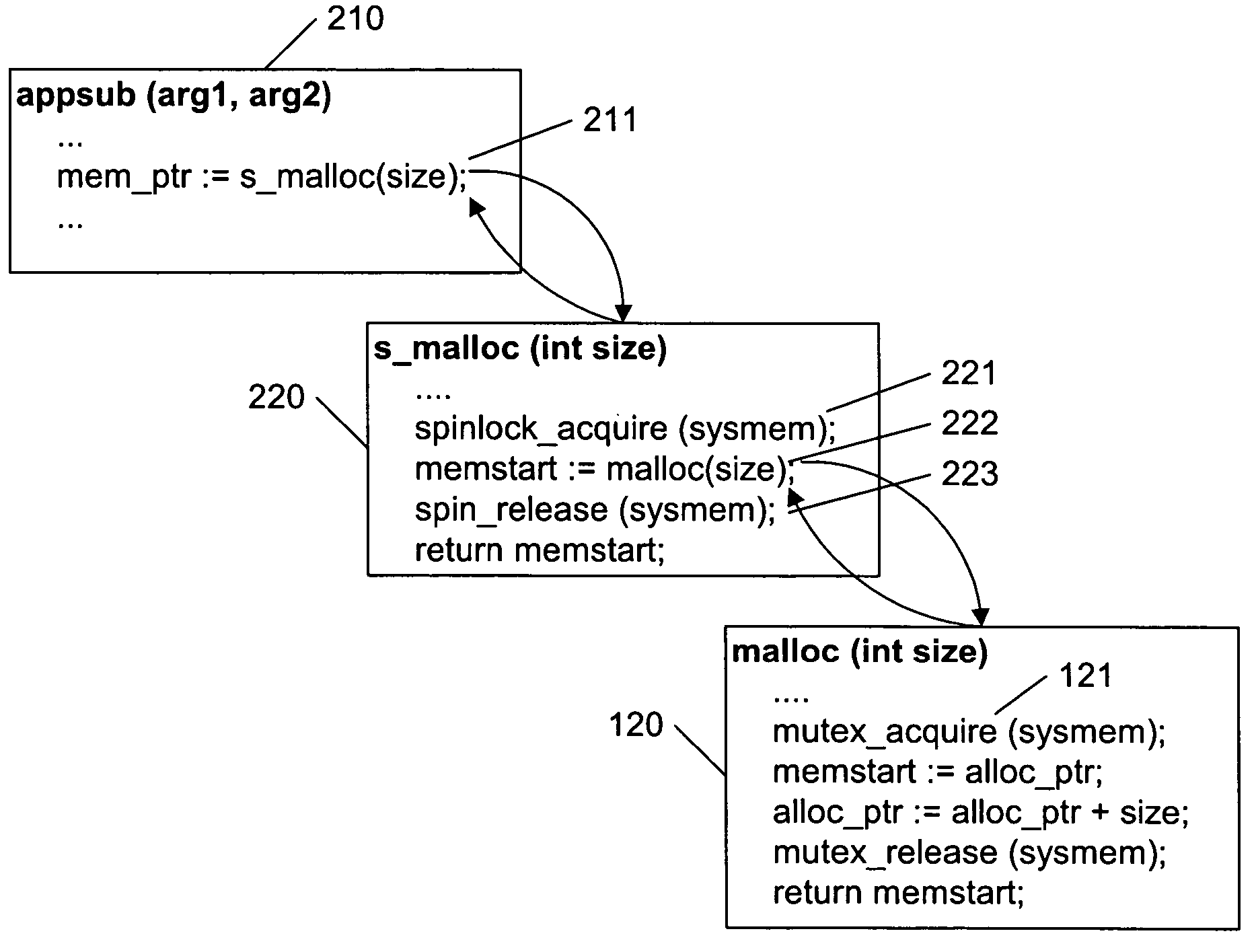

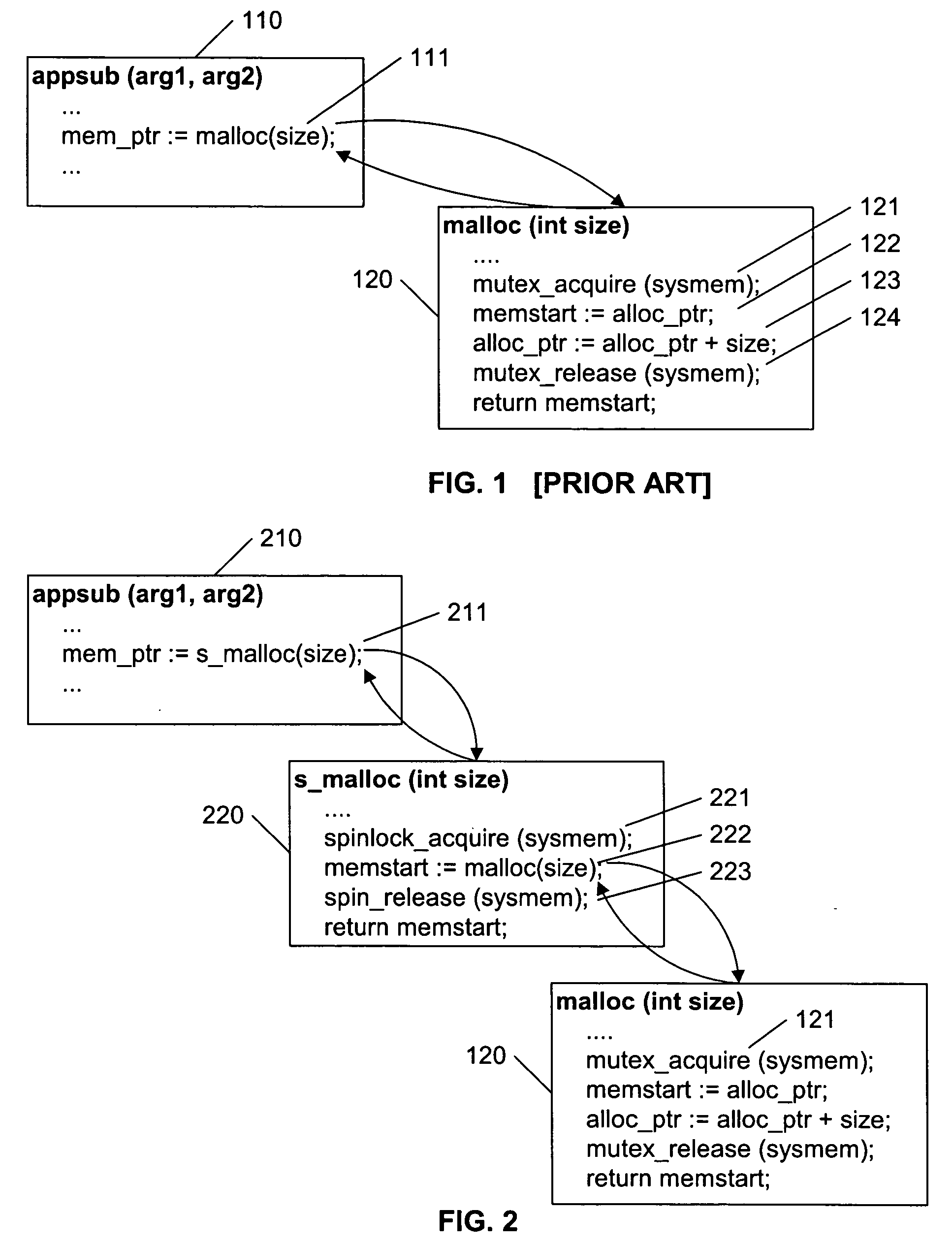

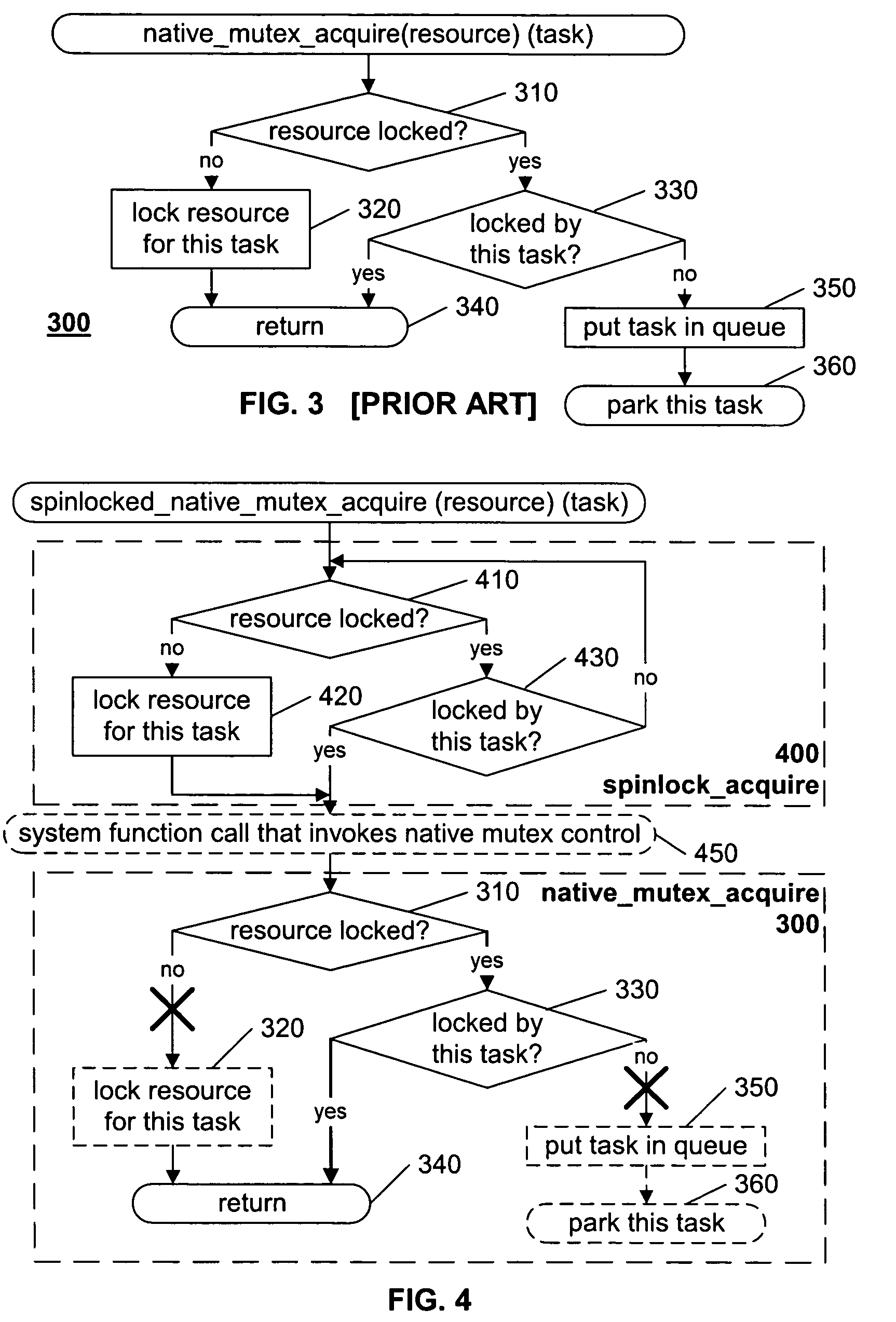

Nested locks to avoid mutex parking

InactiveUS20050050257A1Avoid inefficiencyAvoid overheadMemory adressing/allocation/relocationMultiprogramming arrangementsOperational systemSystem call

A native mutex lock of an operating system is embedded within an application-controlled spinlock. Each of these locks are applied to the same resource, in such a manner that, in select applications, and particularly in parallel processed applications, the adverse side-effects of the inner native mutex lock are avoided. In a preferred embodiment, each call to a system routine that is known to invoke a native mutex is replaced by a call to a corresponding routine that spinlocks the resource before calling the system routine that invokes the native mutex, then releases the spinlock when the system call is completed. By locking the resource before the native mutex is invoked, the calling task is assured that the resource is currently available to the task when the native mutex is invoked, and therefore the task will not be parked / deactivated by the native mutex.

Owner:OPNET TECH LLC

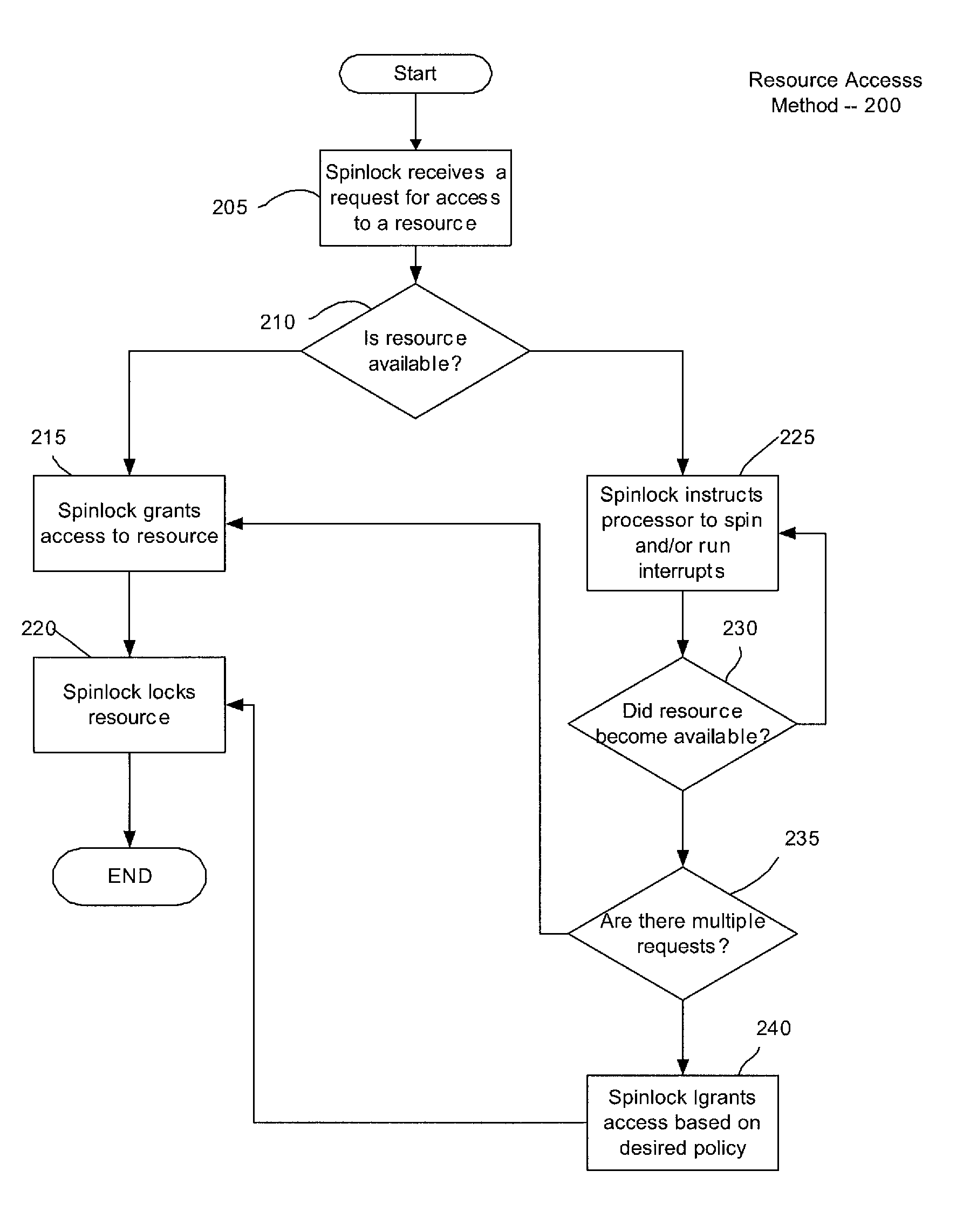

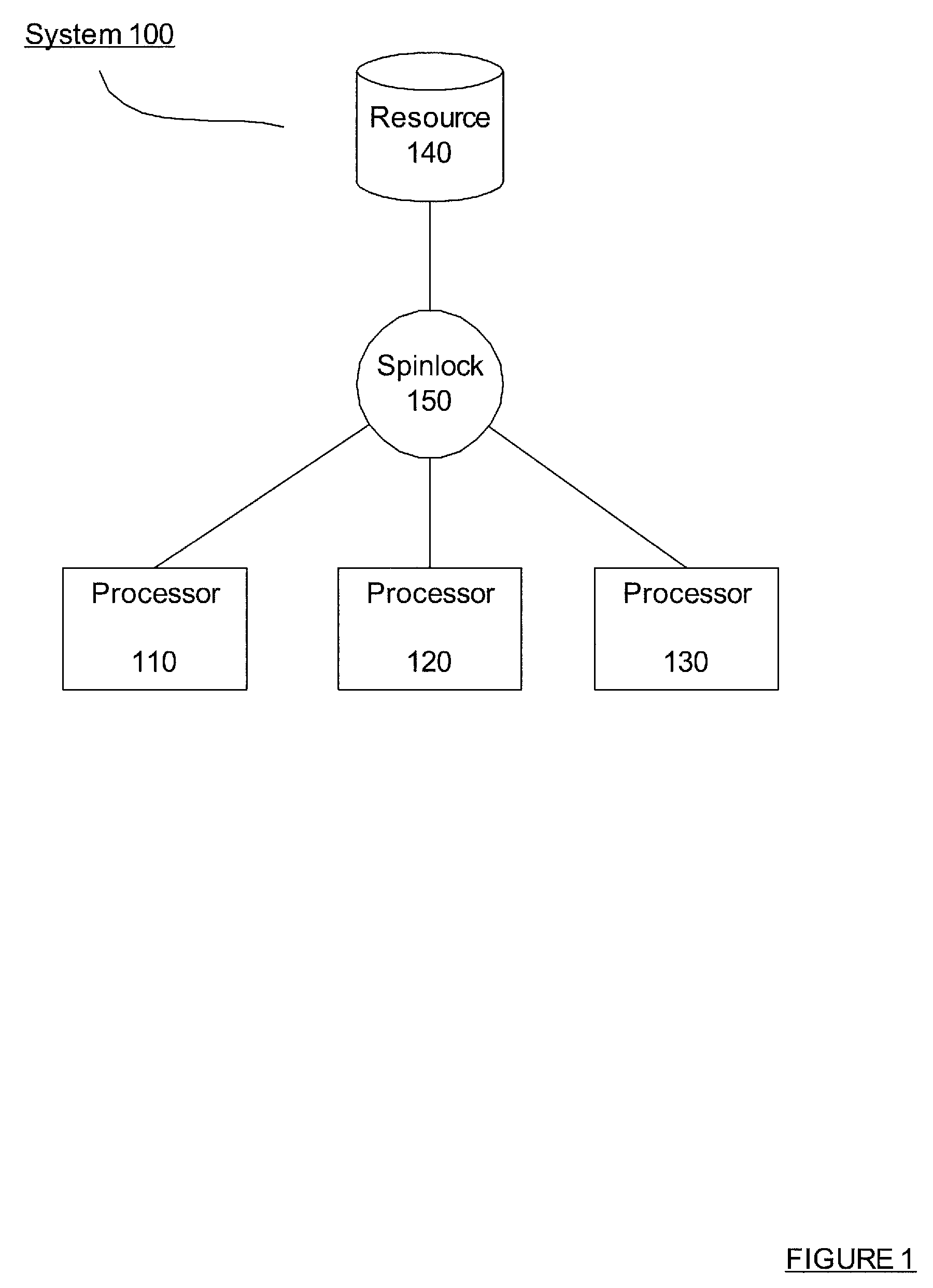

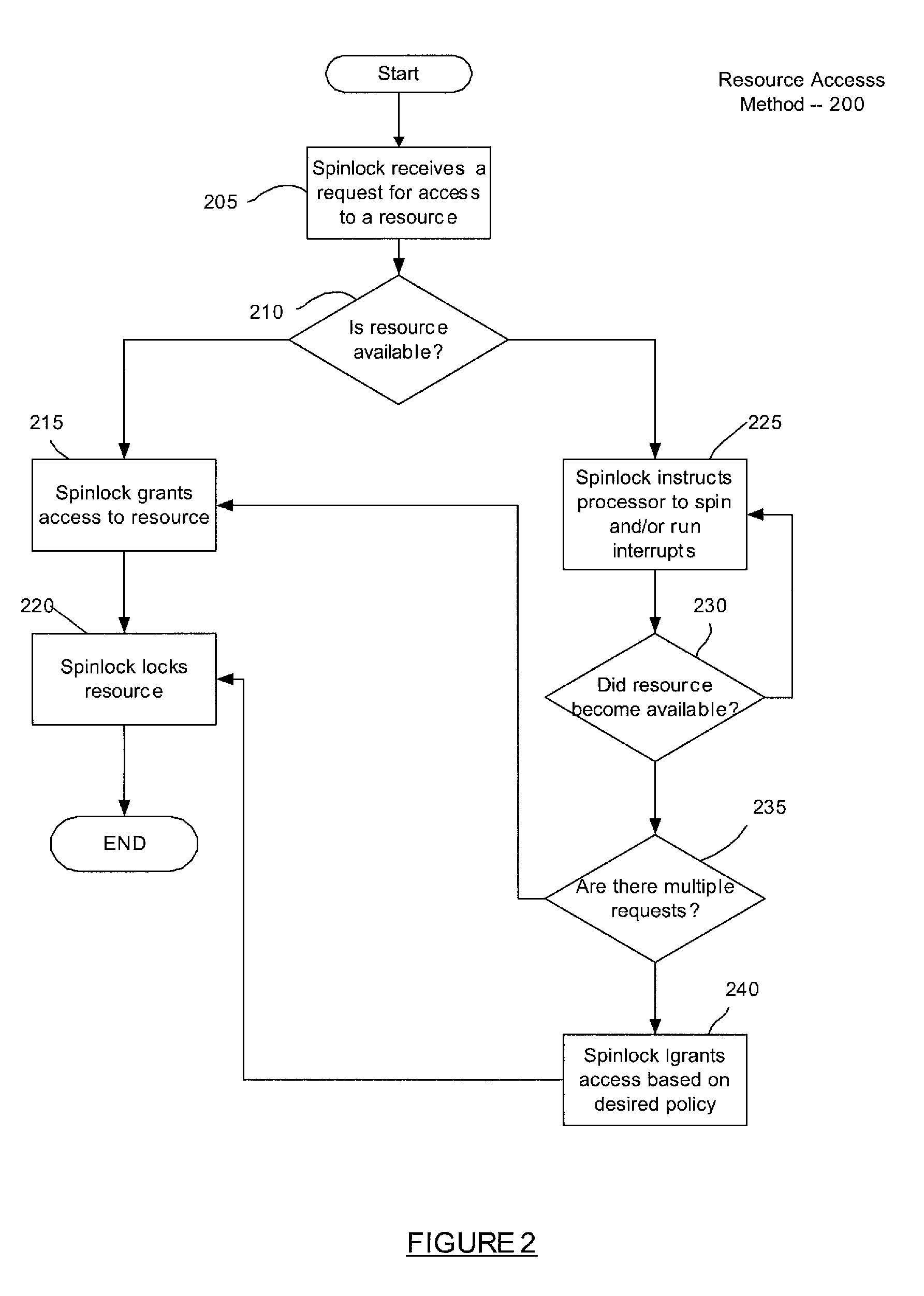

Method and system for implementing realtime spinlocks

Owner:WIND RIVER SYSTEMS

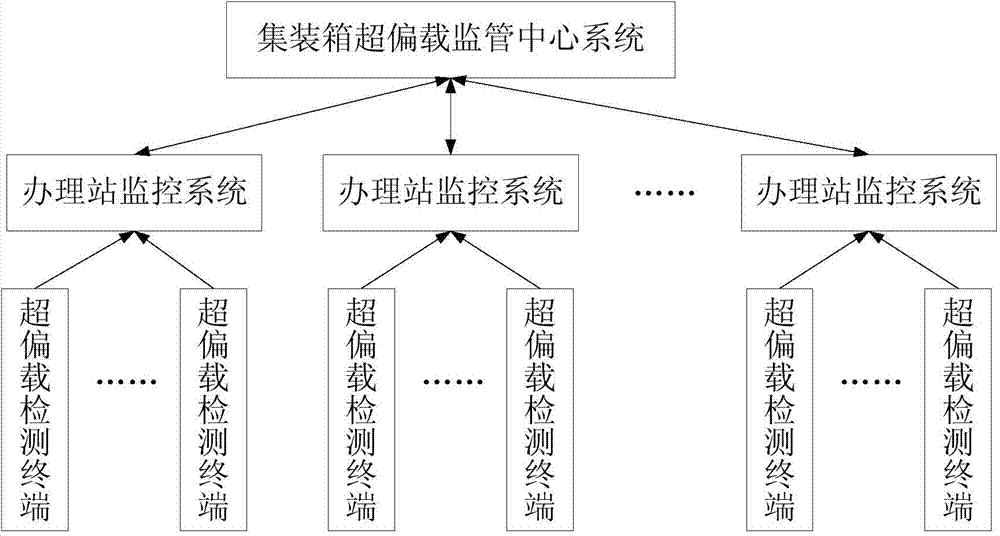

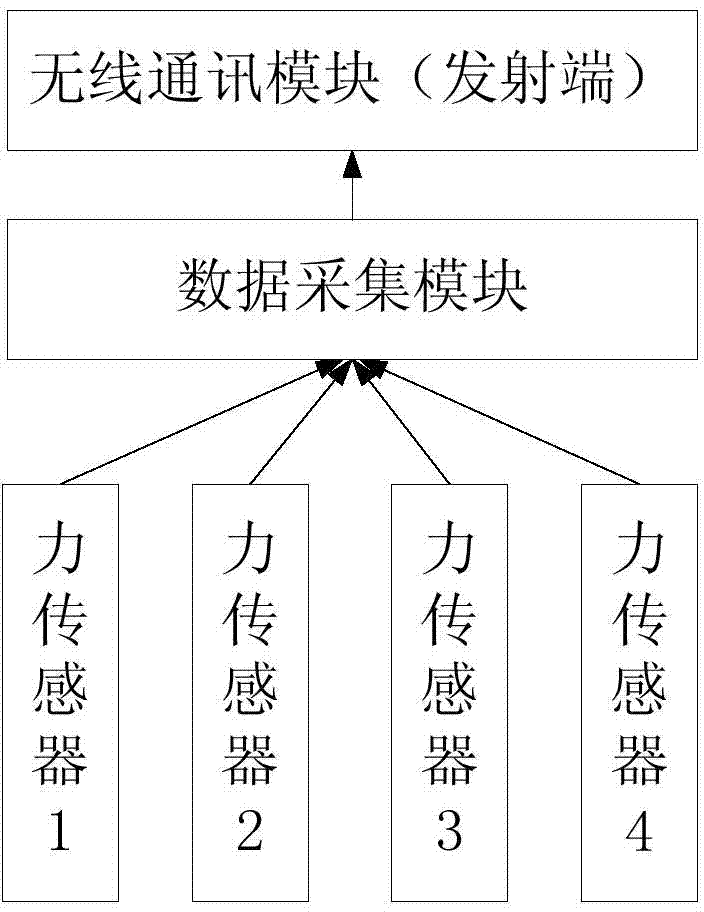

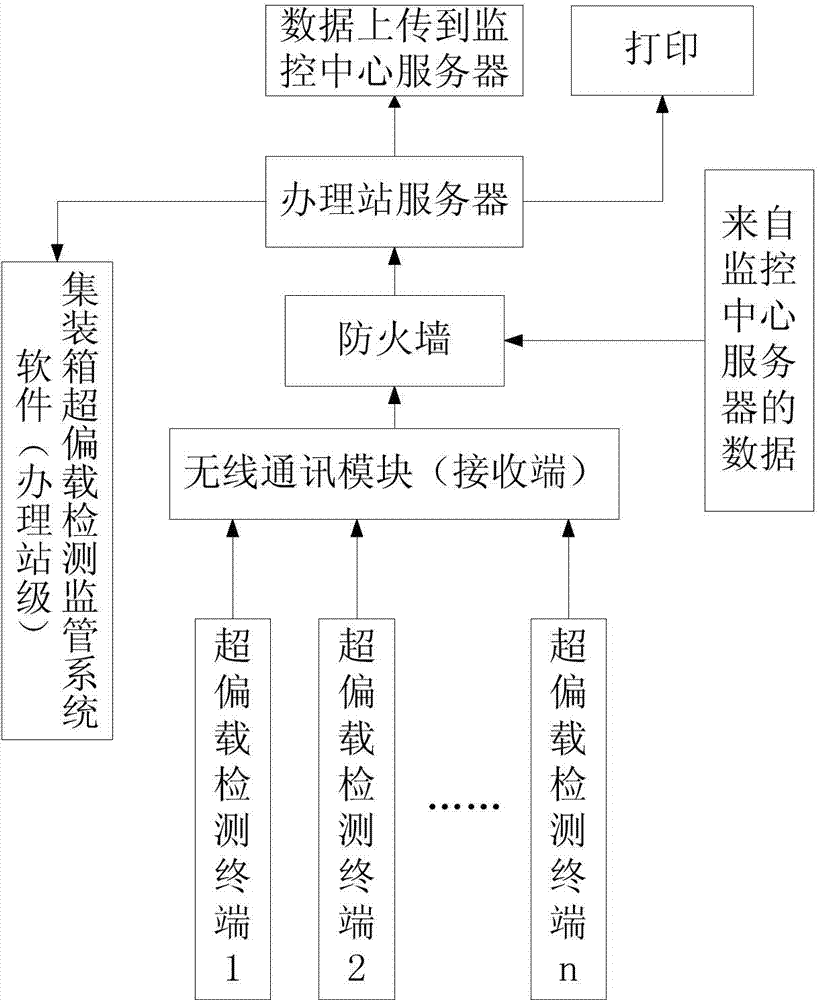

Railway container overload and unbalanced load detection monitoring system

ActiveCN104330145ASolve problems such as regulatory delaysSolve the island problemWeighing indication devicesTerminal systemSpinlock

A railway container overload and unbalanced load detection monitoring system is composed of overload and unbalanced load detection terminal systems mounted on container spreaders, handling station monitoring systems and a monitoring center system. Each overload and unbalanced load detection terminal system comprises force sensors respectively arranged on four spinlocks of the corresponding container spreader, a data acquisition module and a wireless communication module. Each handling station monitoring system is connected with the overload and unbalanced load detection terminal systems on the container spreaders in a corresponding handling station through a wireless network, and the handling station monitoring systems carry out data analysis and processing on measured values of the force sensors on the container spreaders and calculate the total weight and the center of gravity of a container. The monitoring center system is connected with the handling station monitoring systems through the Internet, receives detection data uploaded by the handling station monitoring systems, and issues management information to the handling station monitoring systems in a timely manner. Unified management of railway container overload and unbalanced load detection means and results is realized.

Owner:TRANSPORTATION & ECONOMICS RES INST CHINA ACAD OF RAILWAY SCI CORP LTD

Conditional variables without spinlocks

The use of spinlocks is avoided in the combination of mutex and condition variables by using any suitable atomic compare and swap functionality to add a thread to a list of waiting threads that waits for a data event to occur. Various embodiments of the present invention also provide an organization scheme of data, which describes an access bit, an awaken count, and a pointer to the list of waiting threads. This organization scheme of data helps to optimize the list of waiting threads so as to better awaken a waiting thread or all waiting threads at once.

Owner:MICROSOFT TECH LICENSING LLC

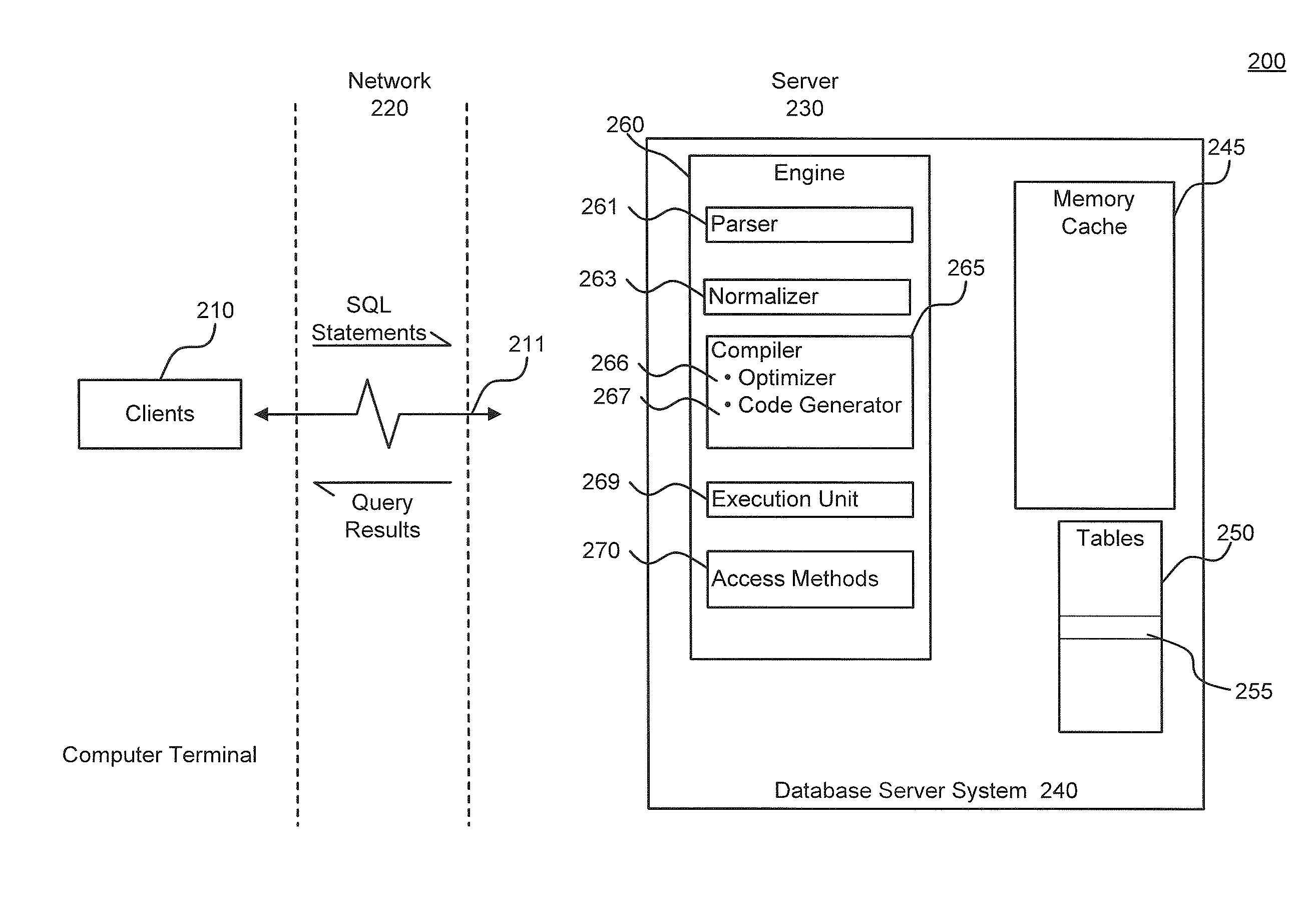

Query plan optimization for prepared SQL statements

ActiveUS8996503B2Digital data information retrievalDigital data processing detailsQuery planDatabase server

System, methods and articles of manufacture for optimizing a query plan reuse in a database server system accessible by a plurality of client connections. An embodiment comprises providing at least one global cache storage and a private cache storage to a plurality of client connections, and coordinating utilization of the at least one global cache storage and the private cache storage to share light weight stored procedures and query plans for prepared SQL statements across the plurality of client connections via the at least one global cache storage while avoiding a spinlock access for executing the prepared SQL statements.

Owner:SYBASE INC

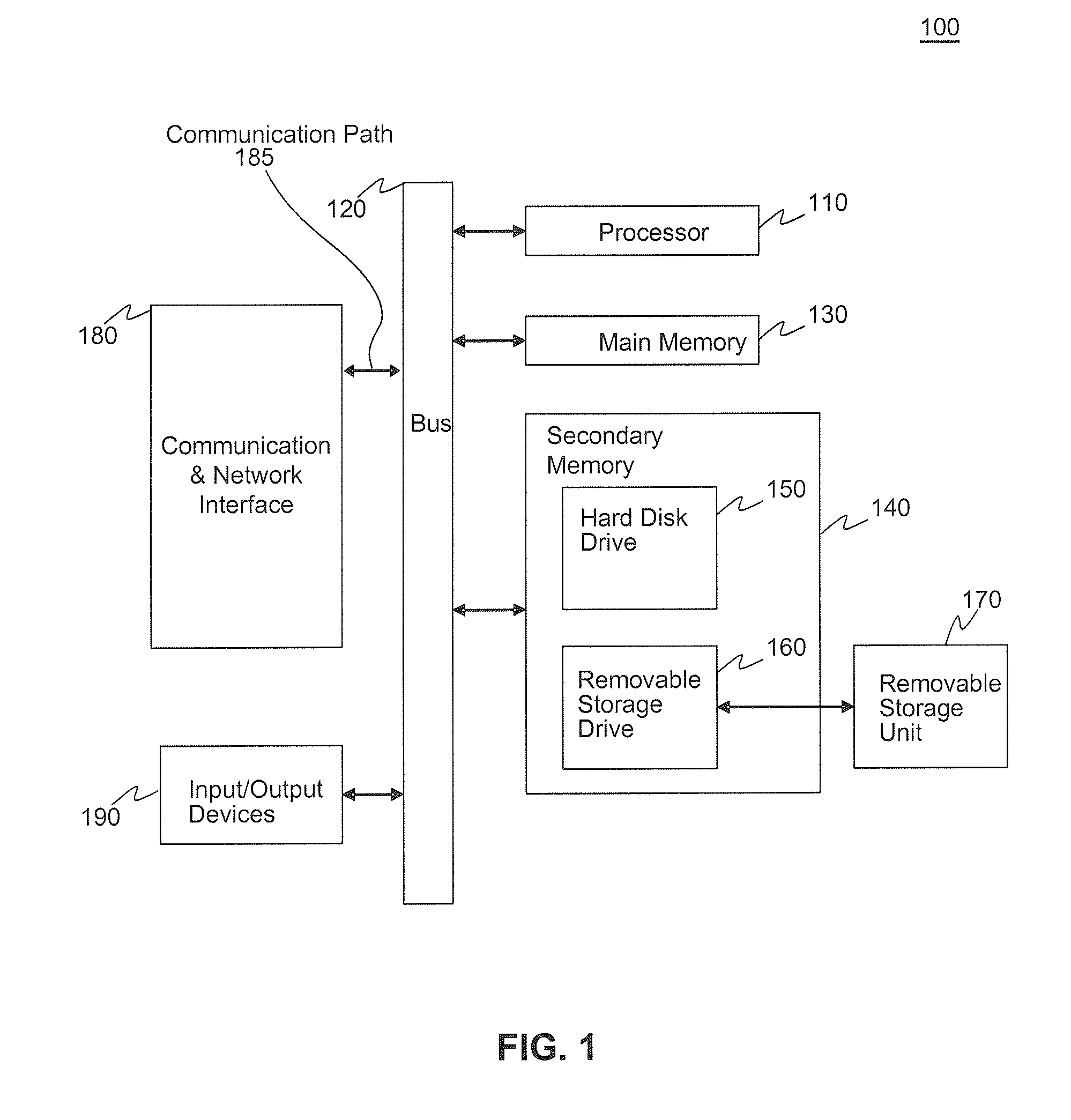

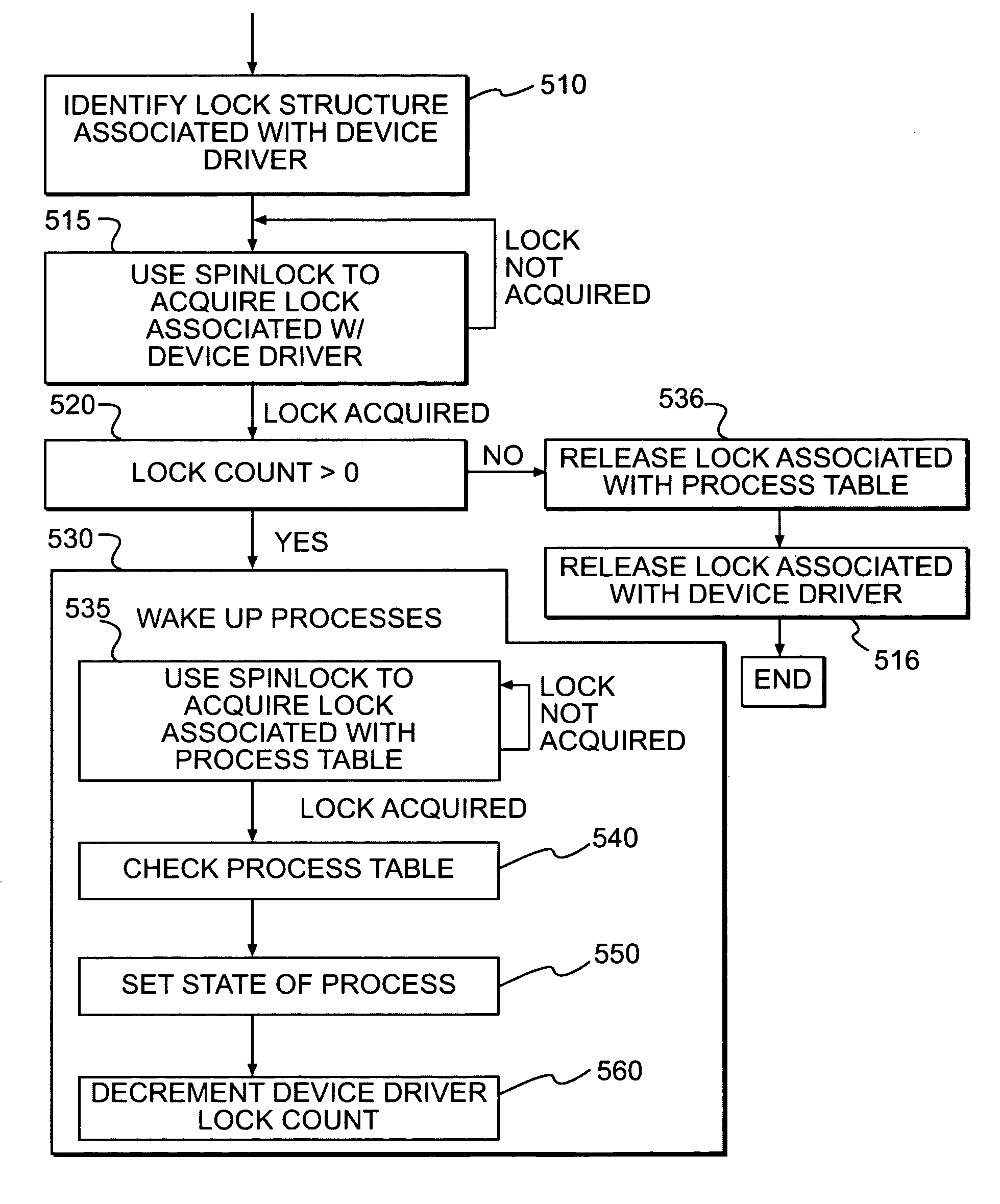

Systems and methods for suspending and resuming threads

ActiveUS7996848B1Digital data processing detailsUnauthorized memory use protectionSpinlockWait state

In a methods and systems of controlling a process's access to a device driver, a lock may be used to establish a process wait state or to wake up one or more processes. A spinlock may be used to acquire a lock associated with a device driver. The lock includes a lock value representing the availability of the lock. If the lock value is a first value, the process acquires the lock and sets the lock value to a second value. Otherwise, the process returns to the step of using the spinlock to acquire the lock associated with the device driver. If the lock is acquired, the process accesses the device driver. If the device is not ready, the process is set to wait for the lock. Waiting for the lock comprises setting a field of the process to a pointer to the lock and setting a state of the process to waiting. After the device has been successfully accessed or the process has been set to wait for the lock, the lock is released typically by setting the lock value to the first value.

Owner:EMC IP HLDG CO LLC

Managing a spinlock indicative of exclusive access to a system resource

ActiveUS8713262B2Guaranteed uptimeEfficient powerProgram synchronisationDigital data processing detailsSpinlockComputer science

Owner:NVIDIA CORP

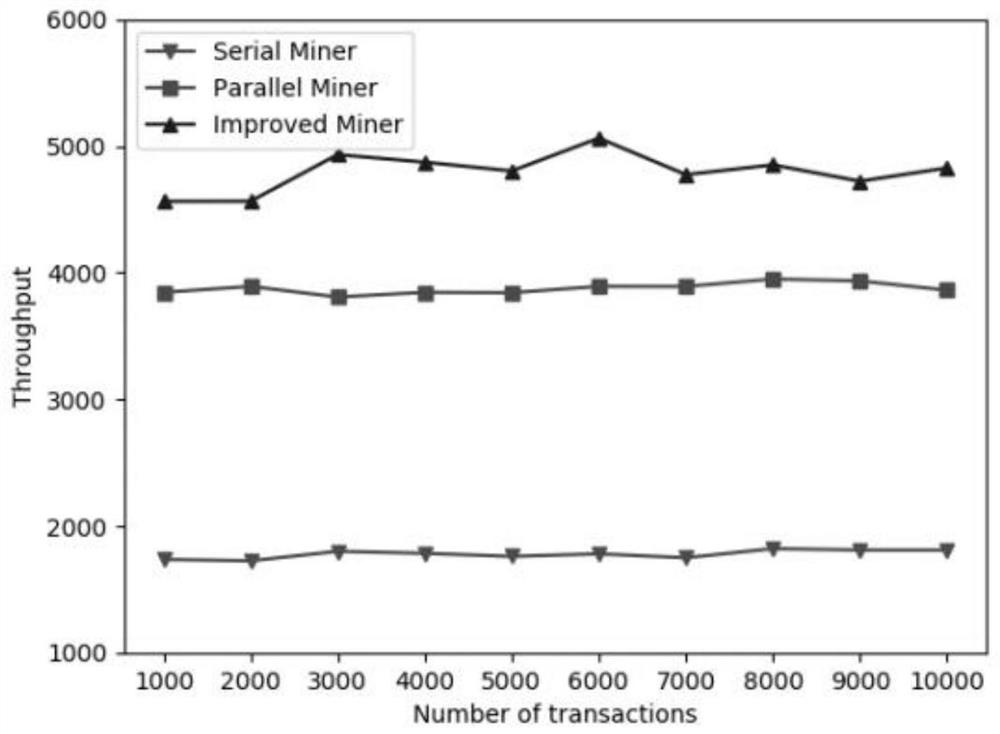

Intelligent contract execution optimization method based on multi-core architecture

PendingCN111724256ASolve the blockageSolve concurrency issuesFinanceForecastingComputer architectureMulticore architecture

The invention relates to an intelligent contract execution optimization method based on a multi-core architecture. The method comprises two stages: 1) a parallel mining stage; 2) parallel verificationstage. According to the invention, aiming at two stages of intelligent contract execution, parallel mining and parallel verification strategies are respectively designed and implemented. In the parallel mining stage, the problems of frequent context switching of threads and blockage of read-write locks are solved by introducing Spinlock and MVCC; meanwhile, in the parallel verification stage, conflict transactions are grouped, and parallelization of the transactions is achieved through a double-end queue; the intelligent contract parallelization strategy provided by the invention provides a new solution for execution of the intelligent contract, also explores the restorability of the task execution sequence in a multi-thread environment, and has certain theoretical value and research significance for effectively solving the concurrency problem.

Owner:TIANJIN UNIV

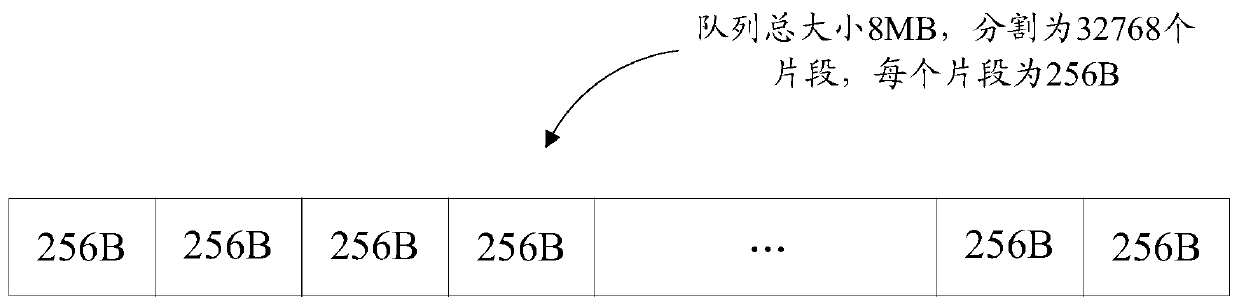

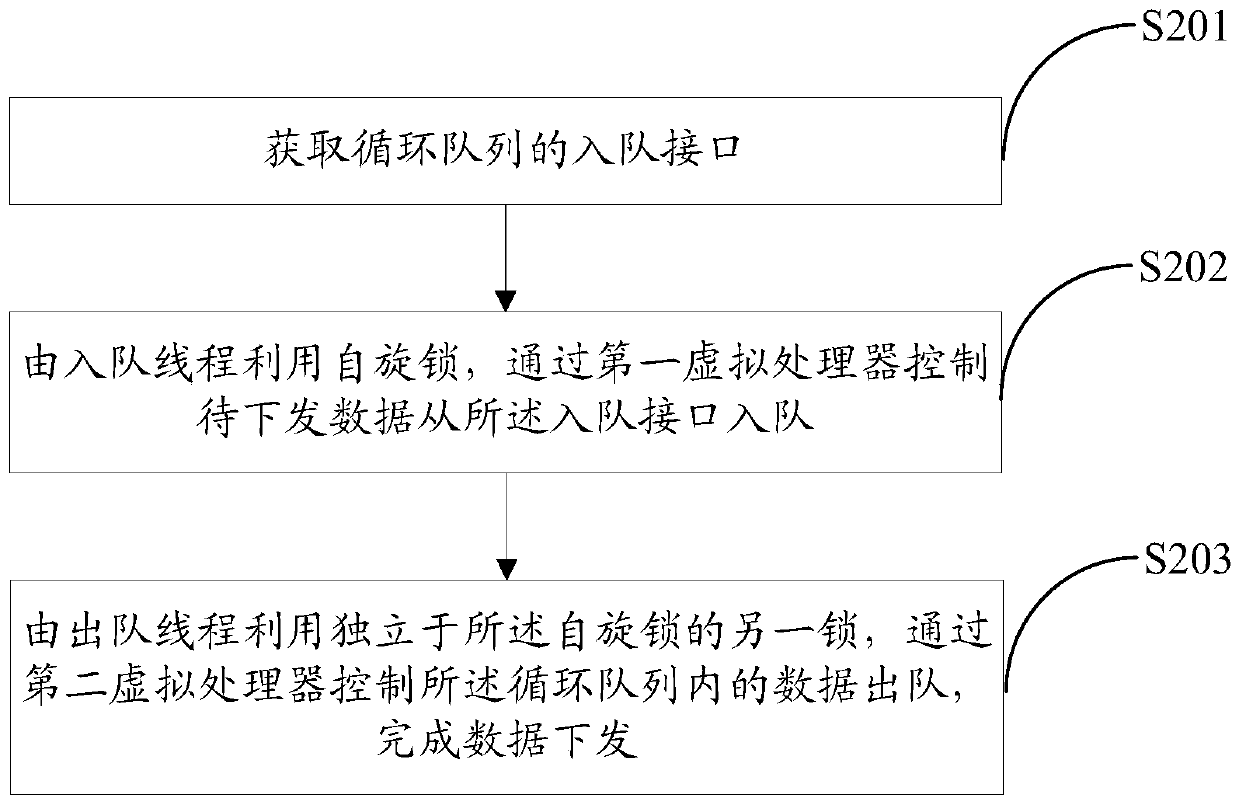

Data issuing method and device, equipment and medium

ActiveCN109753479AConvenient block analysisImprove reliabilityDigital computer detailsElectric digital data processingSpinlockOperating system

The invention provides a data issuing method and device, equipment and a medium. The method comprises the following steps of: constructing a circular queue of a pre-allocated memory for data issuing,dividing the pre-allocated memory into a plurality of fragments so as to correspondingly block and queue the data, and at least comprising the following steps of: obtaining an enqueue interface of thecircular queue; The enqueue thread controling to-be-issued data to enqueue from the enqueue interface through the first virtual processor by using the spin lock; And the dequeuing thread controls data in the circular queue to dequeue through the second virtual processor by utilizing another lock independent of the spin lock to finish data issuing. The embodiment of the invention is applied. Different locks and different virtual processors which are independent of one another can be used for enqueue and dequeue respectively, the enqueue thread and the dequeue thread are decoupled, the queue memory is divided into a plurality of fragments, so that dequeue does not become possible according to the enqueue size, dequeue data is convenient to parse in a partitioning manner, and the improvementis beneficial to improving the reliability and efficiency of configuration data issuing.

Owner:HANGZHOU DPTECH TECH

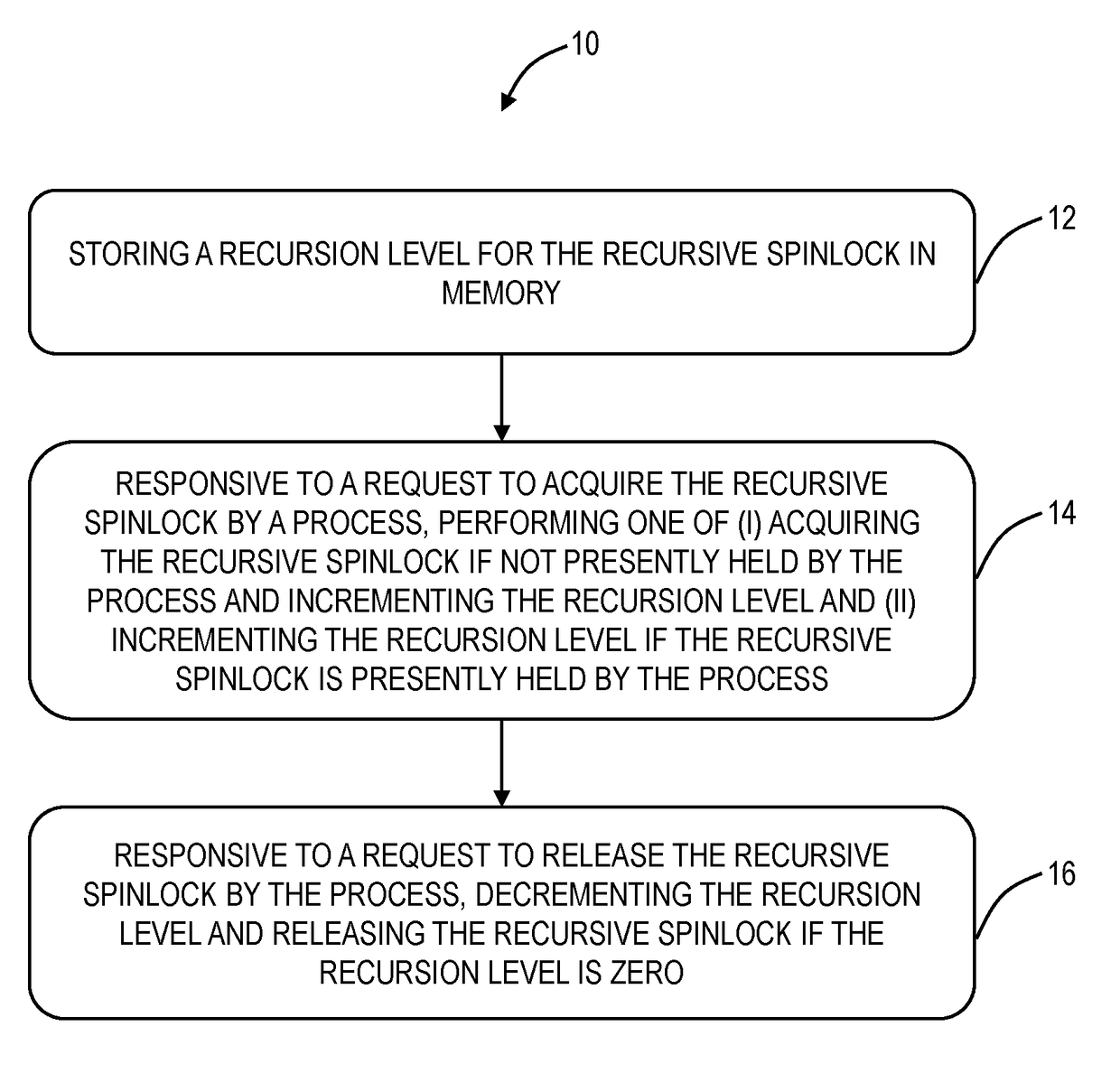

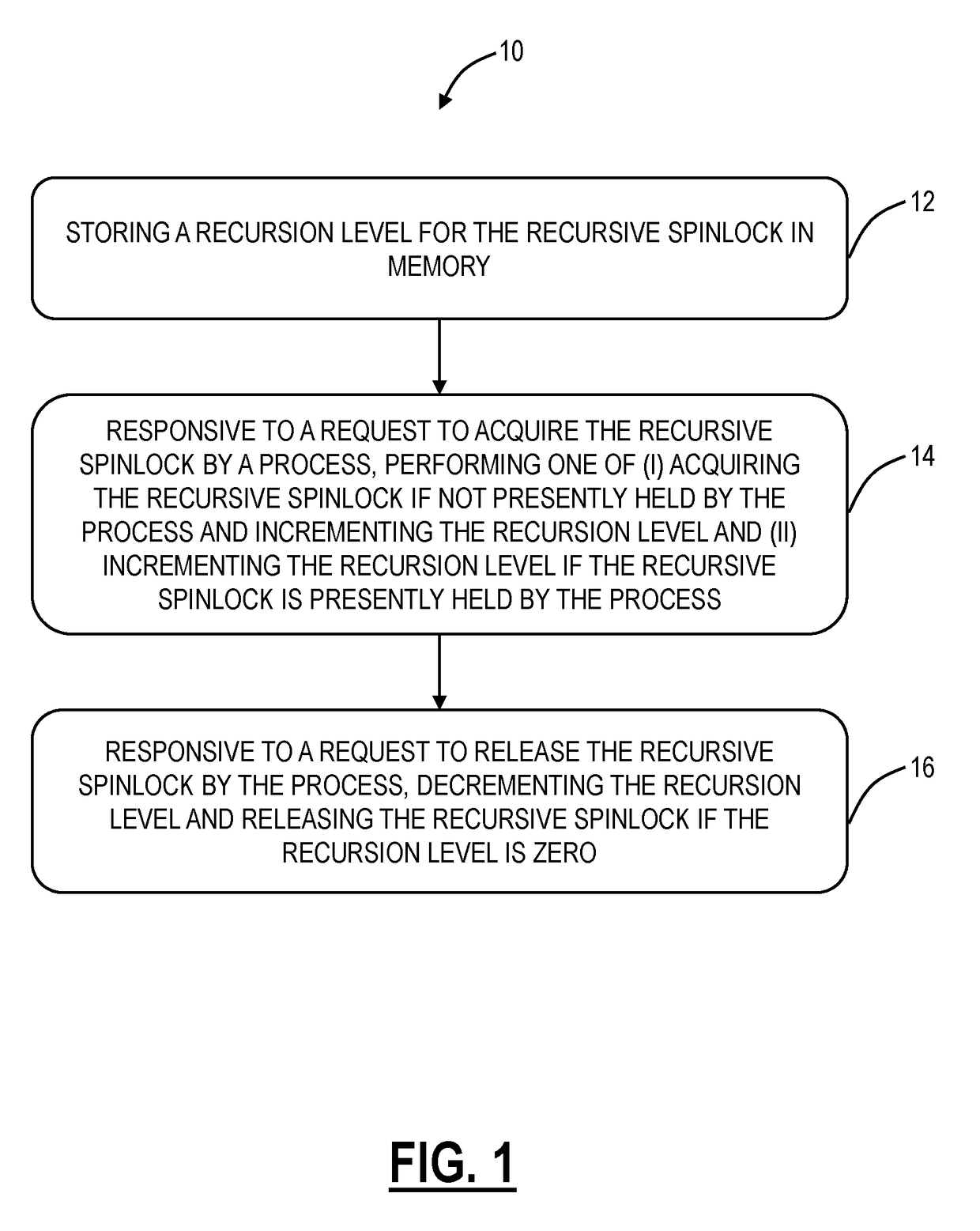

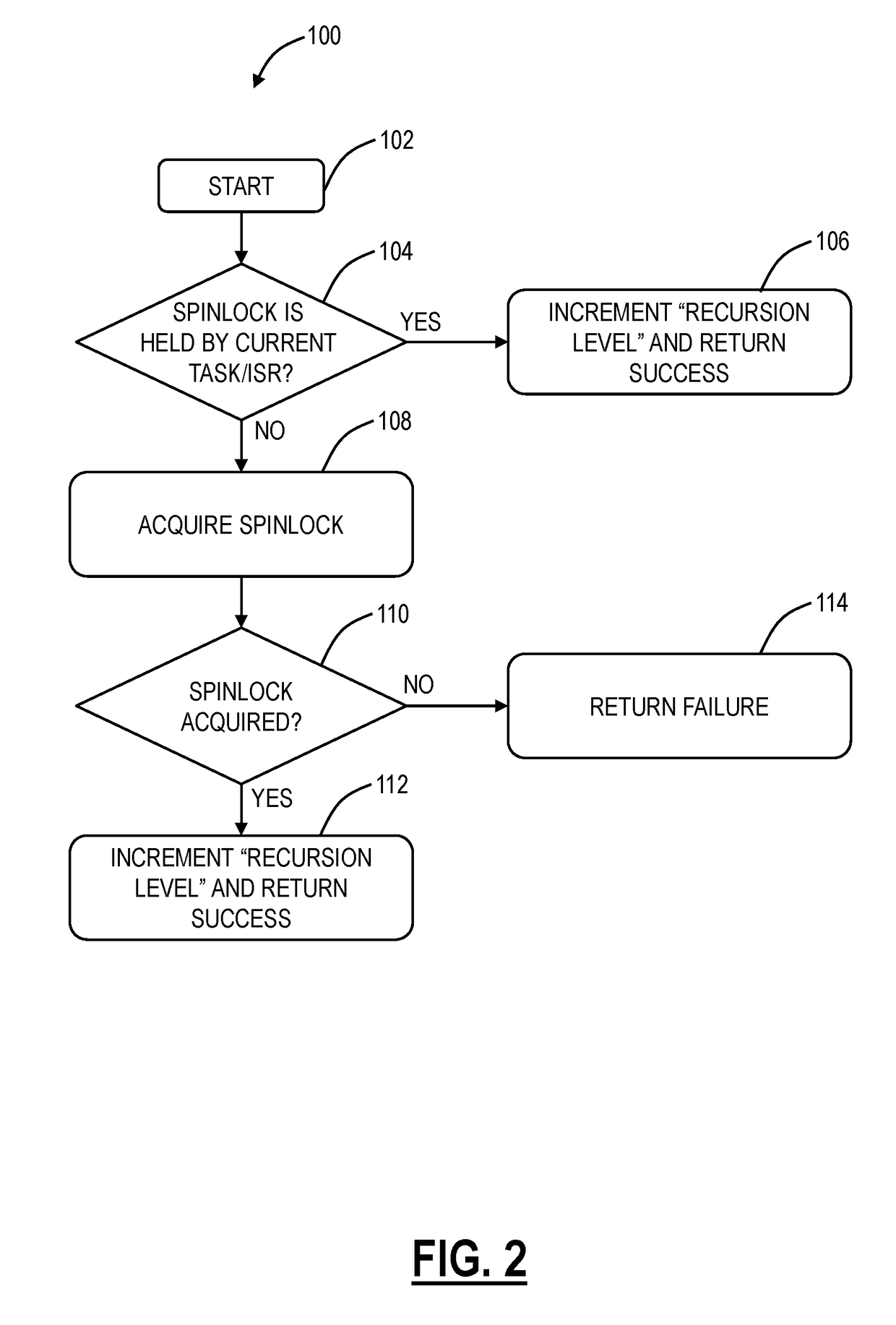

Methods and systems for recursively acquiring and releasing a spinlock

ActiveUS20170242736A1Avoid levelingProgram initiation/switchingProgram synchronisationTheoretical computer scienceSpinlock

A computer-implemented method for a recursive spinlock includes storing a recursion level for the recursive spinlock in memory; responsive to a request to acquire the recursive spinlock by a process, performing one of (i) acquiring the recursive spinlock if not presently held by the process and incrementing the recursion level and (ii) incrementing the recursion level if the recursive spinlock is presently held by the process; and responsive to a request to release the recursive spinlock by the process, decrementing the recursion level and releasing the recursive spinlock if the recursion level is zero. The recursive spinlock can be implemented in a software wrapper used with existing software which supports recursive locks and the recursive spinlock is used in place of the recursive locks in the existing software. The computer-implemented method can be performed on a Symmetric Multiprocessor (SMP) hardware system.

Owner:CIENA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com