Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

272 results about "Speech generation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech generation. Speech generation and recognition are used to communicate between humans and machines. Rather than using your hands and eyes, you use your mouth and ears. This is very convenient when your hands and eyes should be doing something else, such as: driving a car, performing surgery, or (unfortunately) firing your weapons at the enemy.

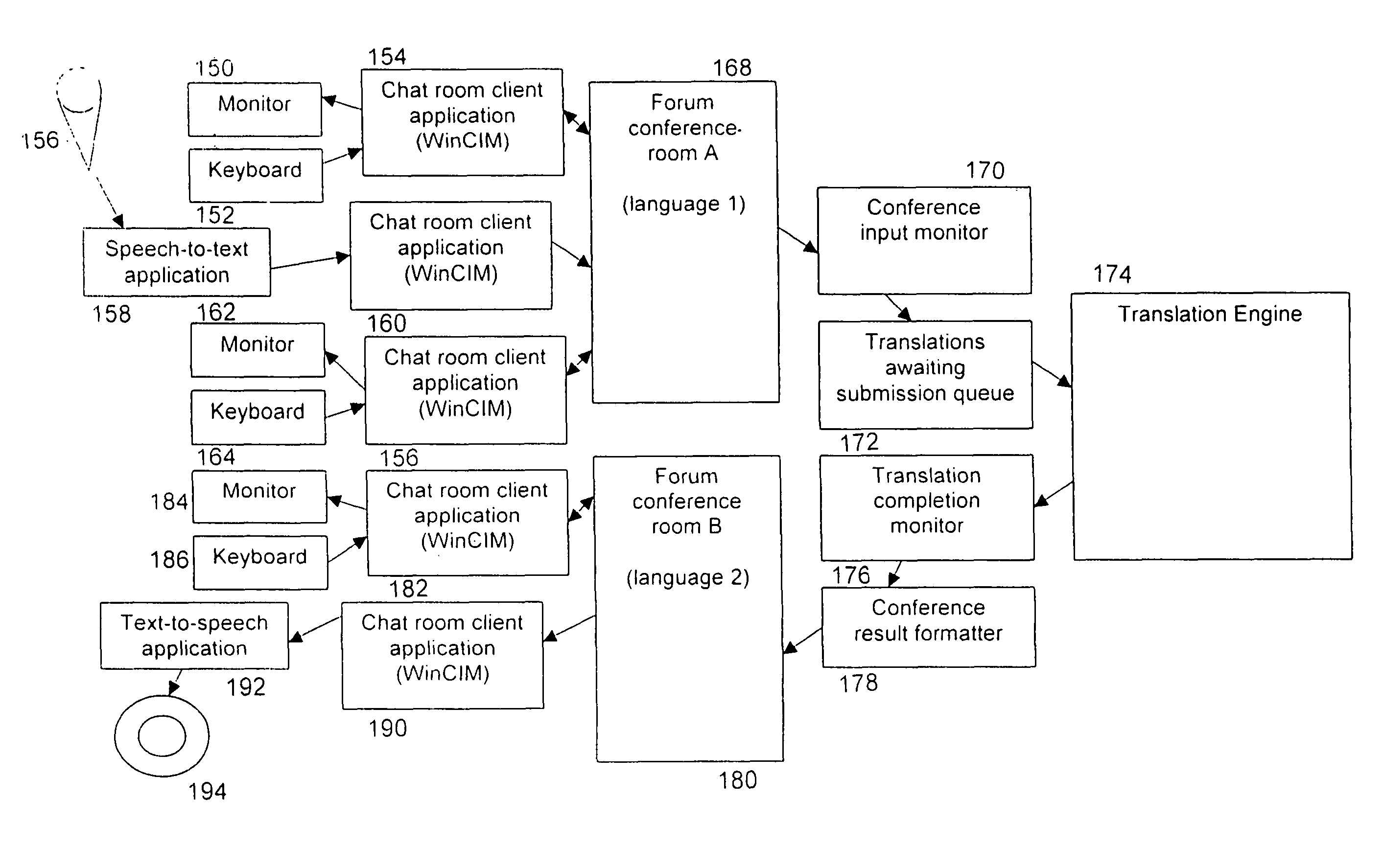

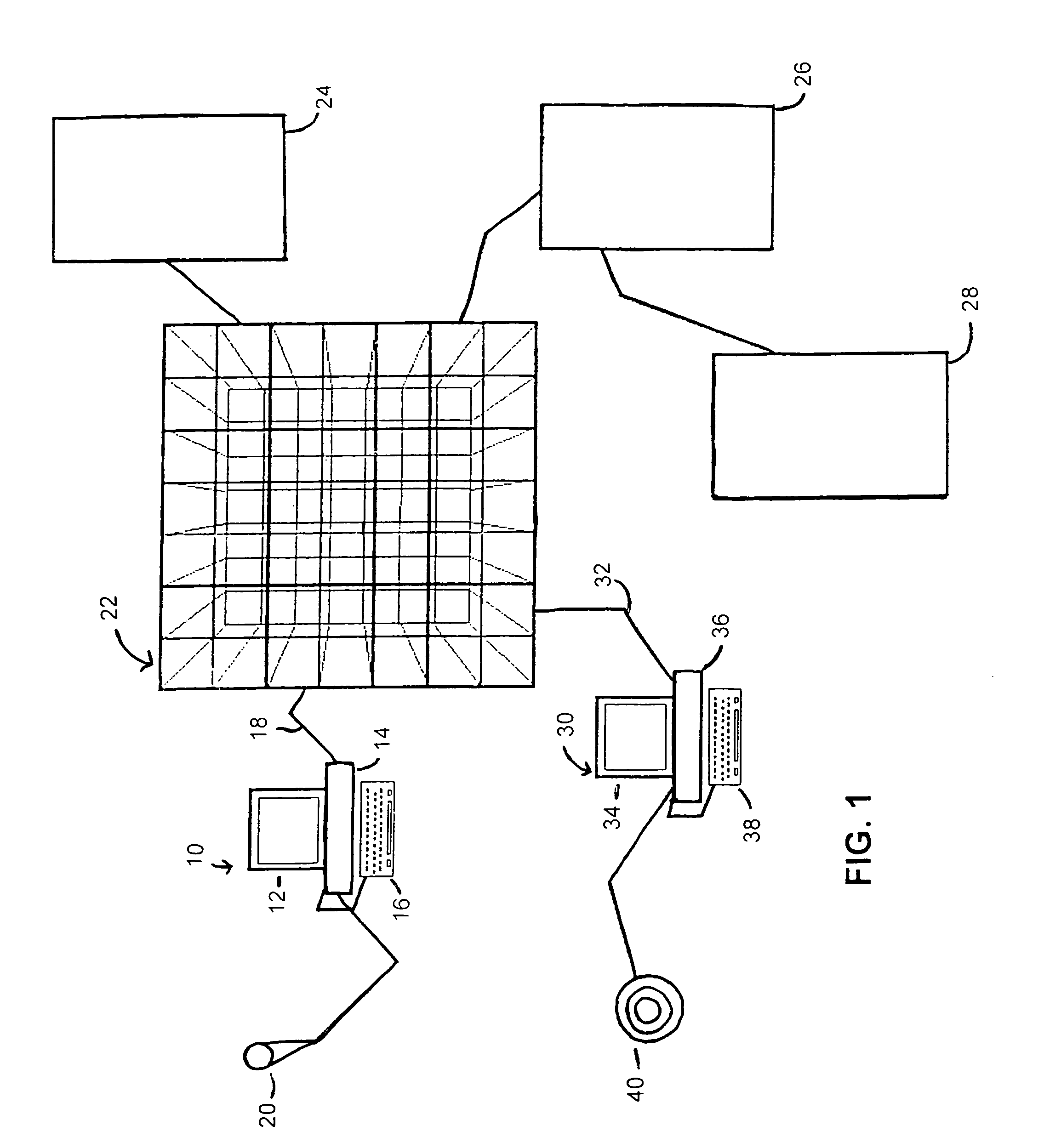

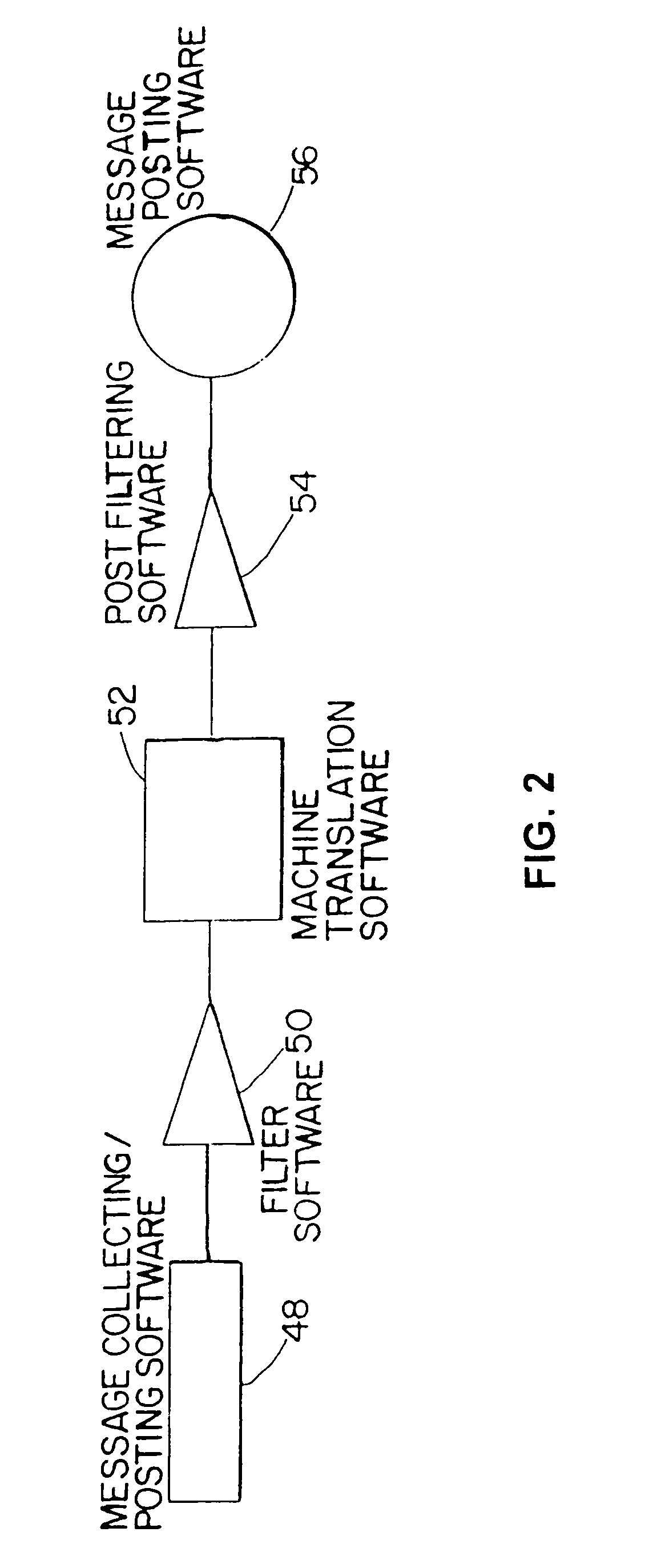

System for automated translation of speech

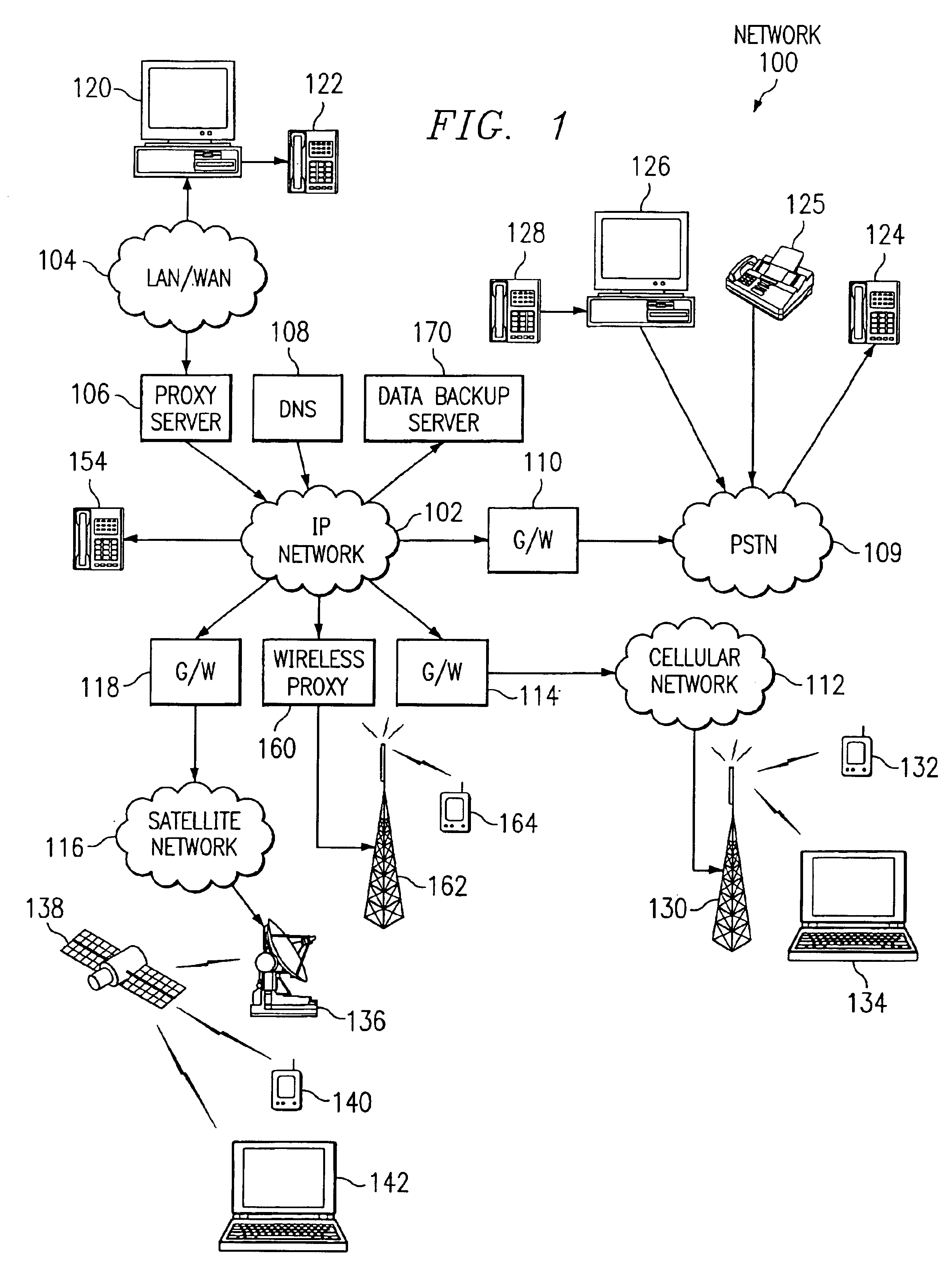

InactiveUS6339754B1Natural language translationAutomatic exchangesSpeech to speech translationRemote computing

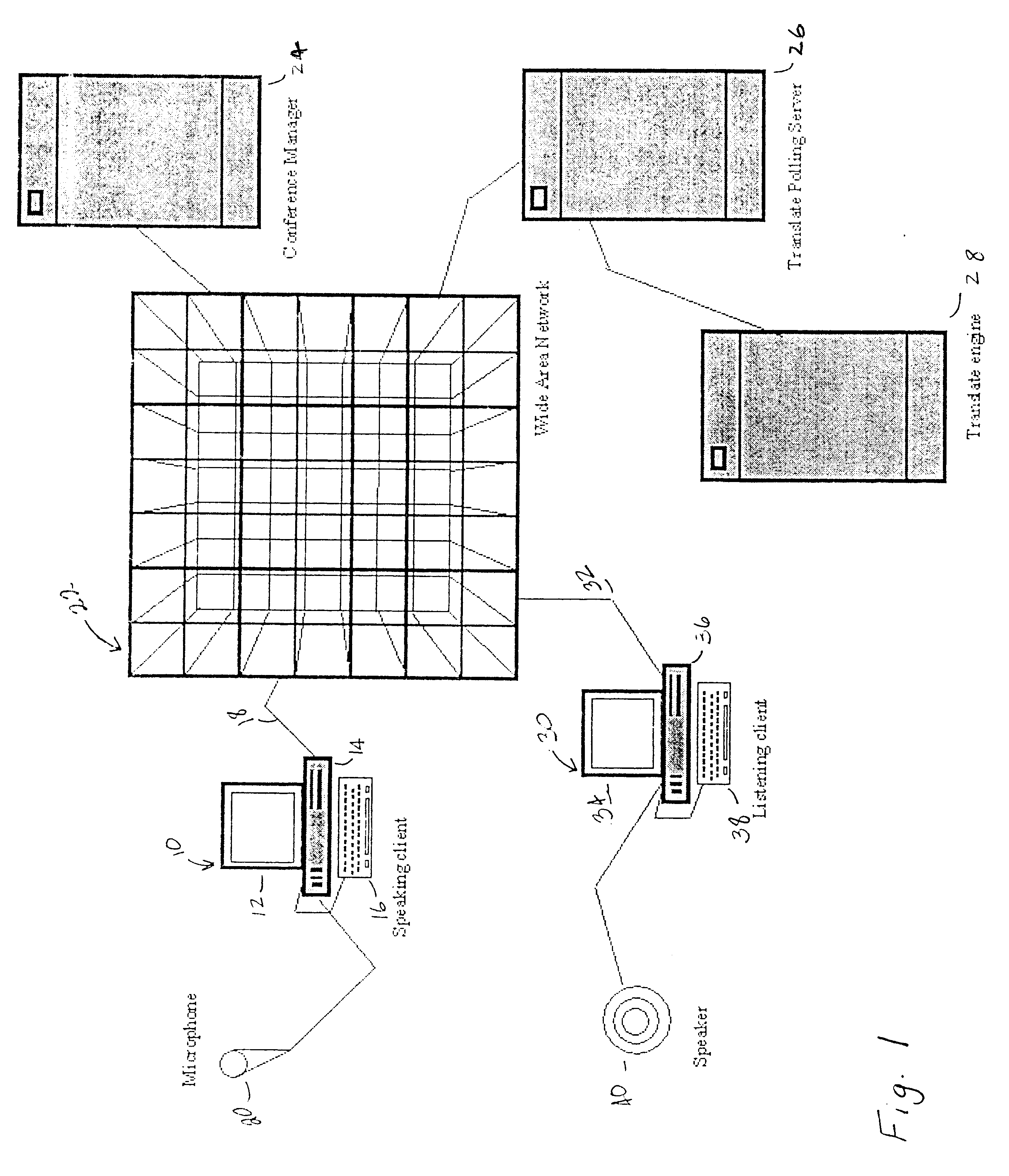

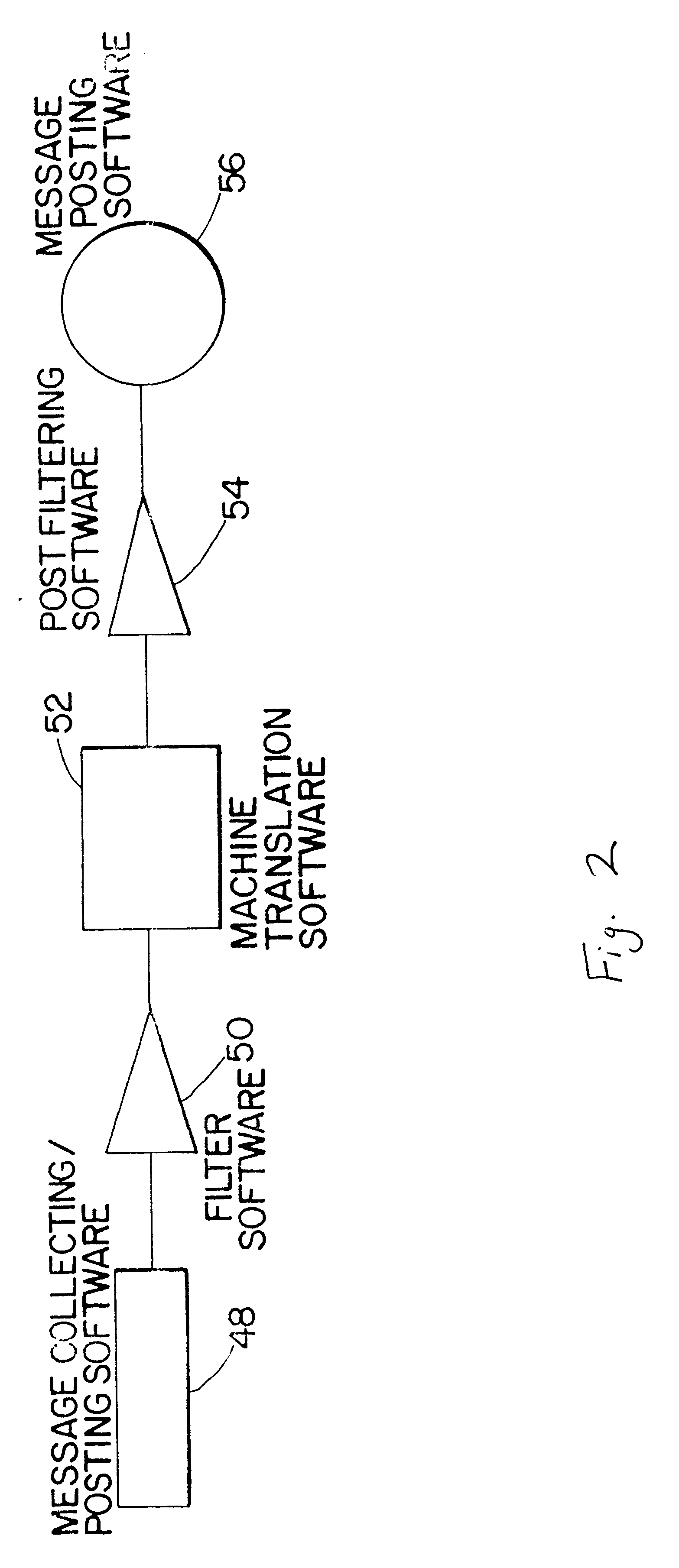

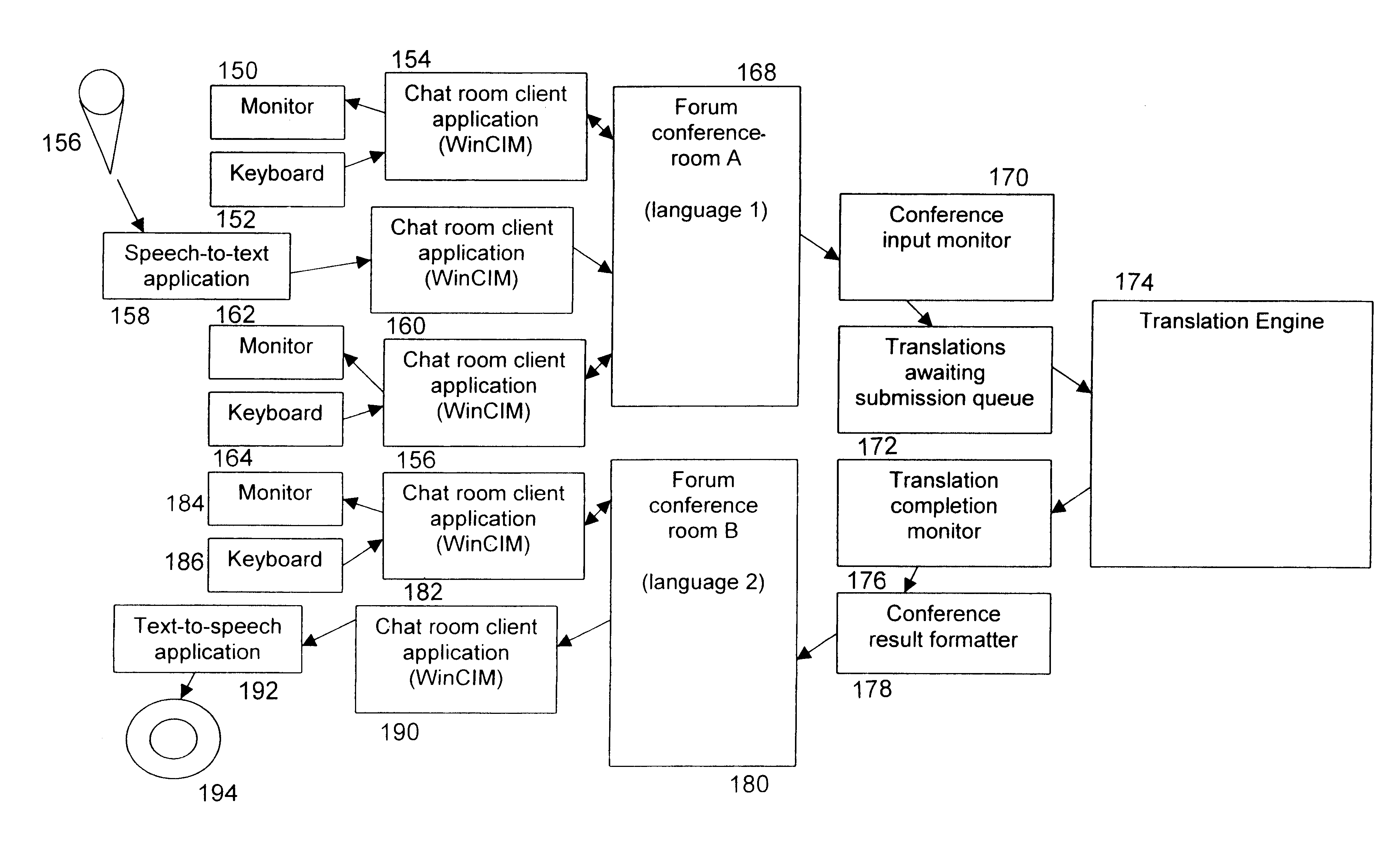

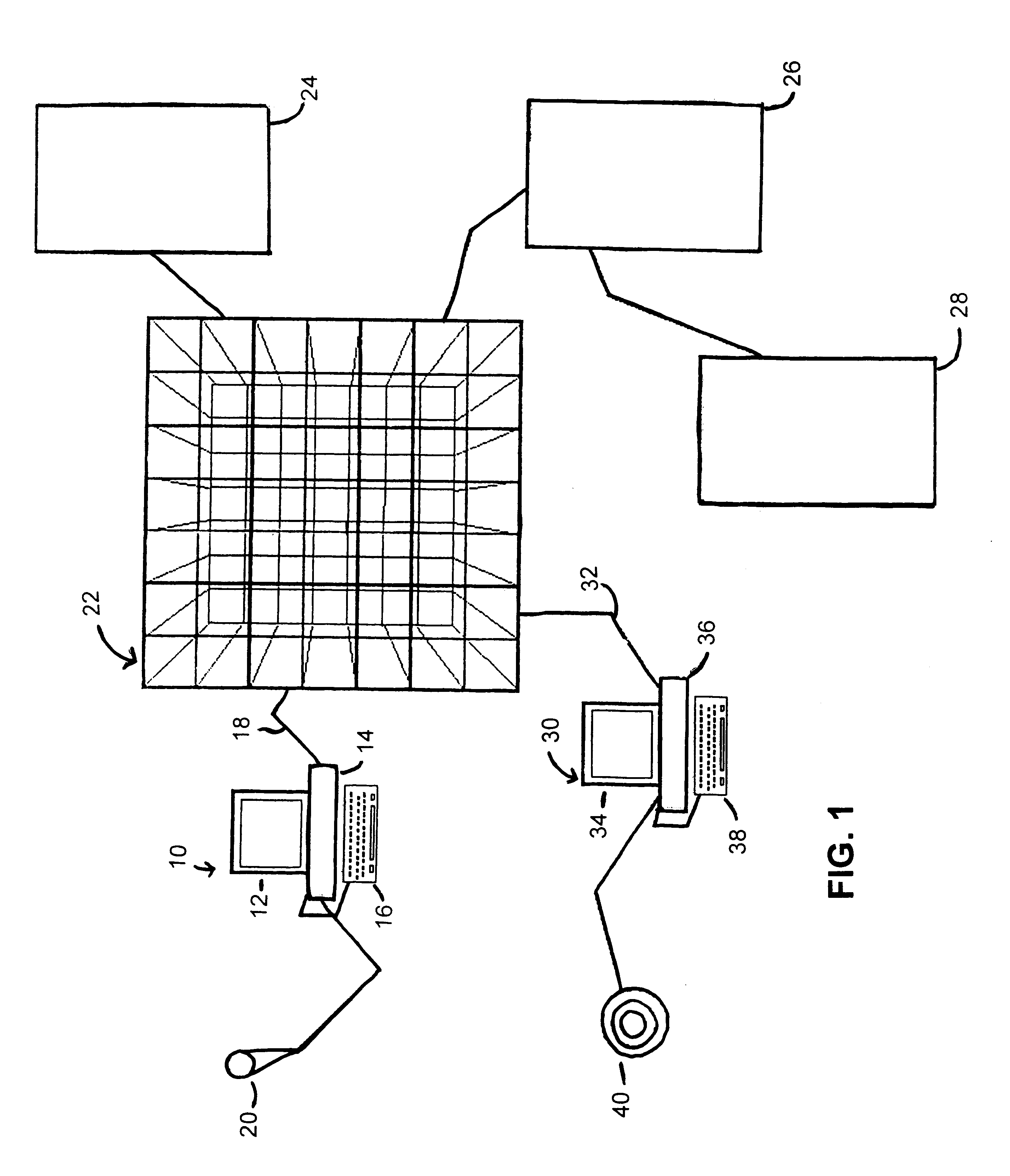

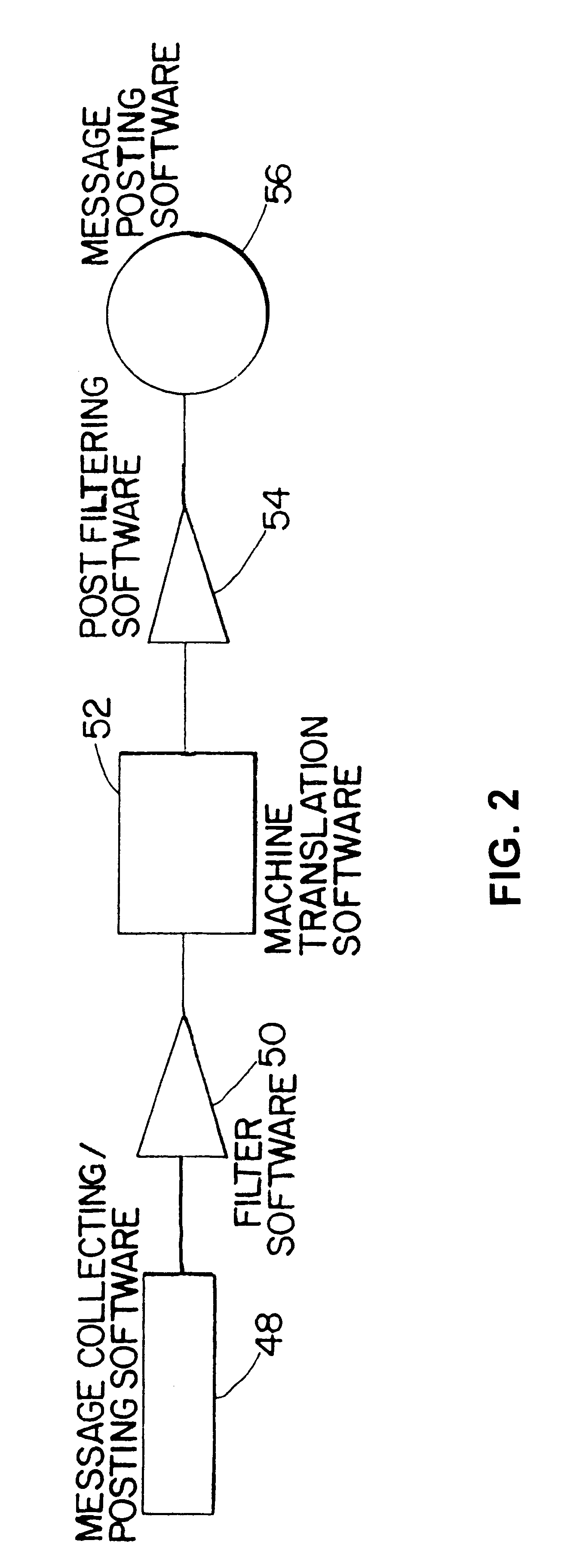

The present invention allows subscribers to an online information service to participate in real-time conferencing or chat sessions in which a message originating from a subscriber in accordance with a first language is translated to one or more languages before it is broadcast to the other conference areas. Messages in a first language are translated automatically to one or more other languages through language translation capabilities resident at online information service host computers. Access software that subscribers use for participating in conference is integrated with speech recognition and speech generation software such that a subscriber may speak the message he or she would like to share with other participants and may hear the messages from the other participants in the conference. Speech-to-speech translation may be accomplished as a message spoken into a computer microphone in accordance with a first language may be recited by a remote computer in accordance with a second language.

Owner:META PLATFORMS INC

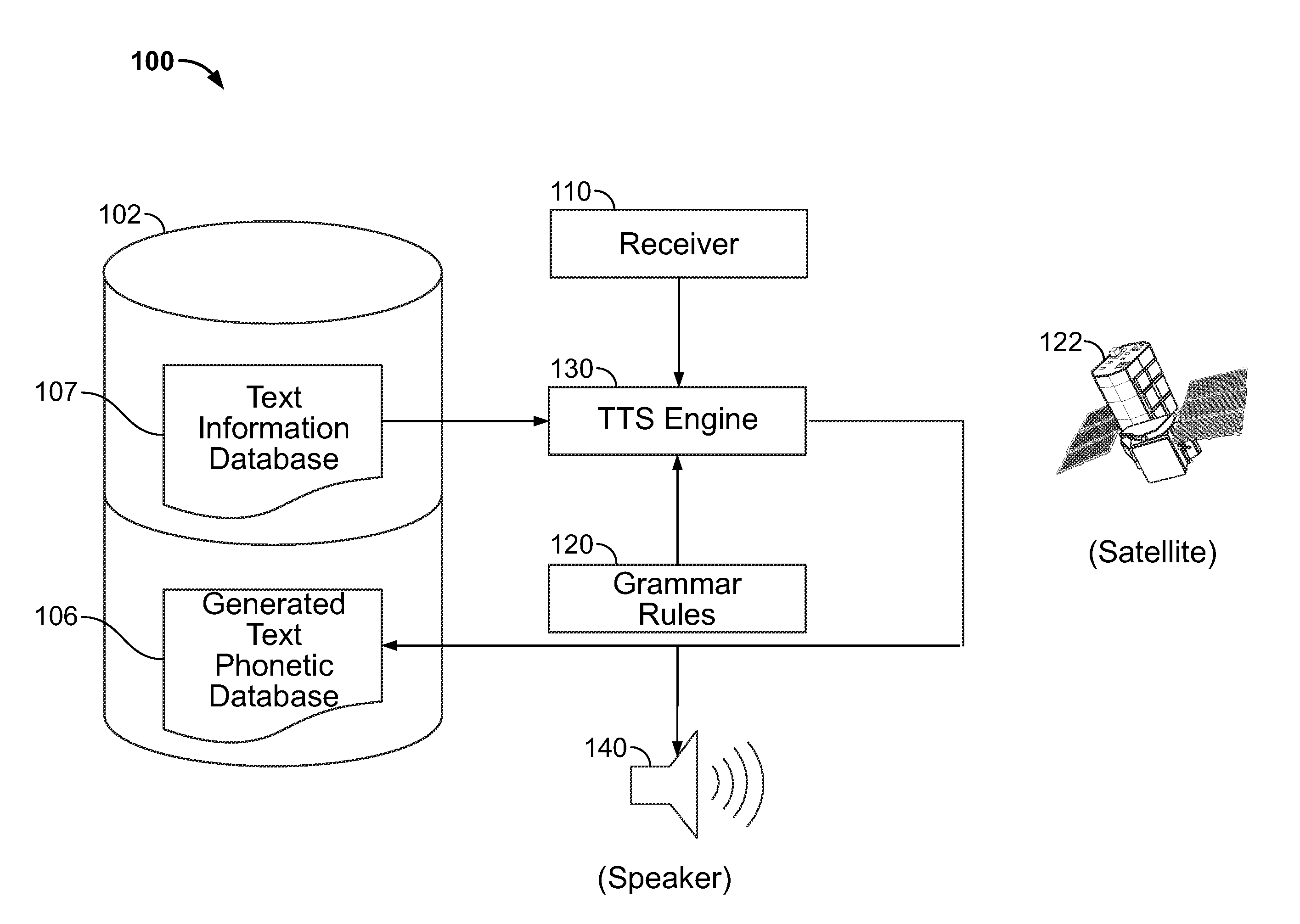

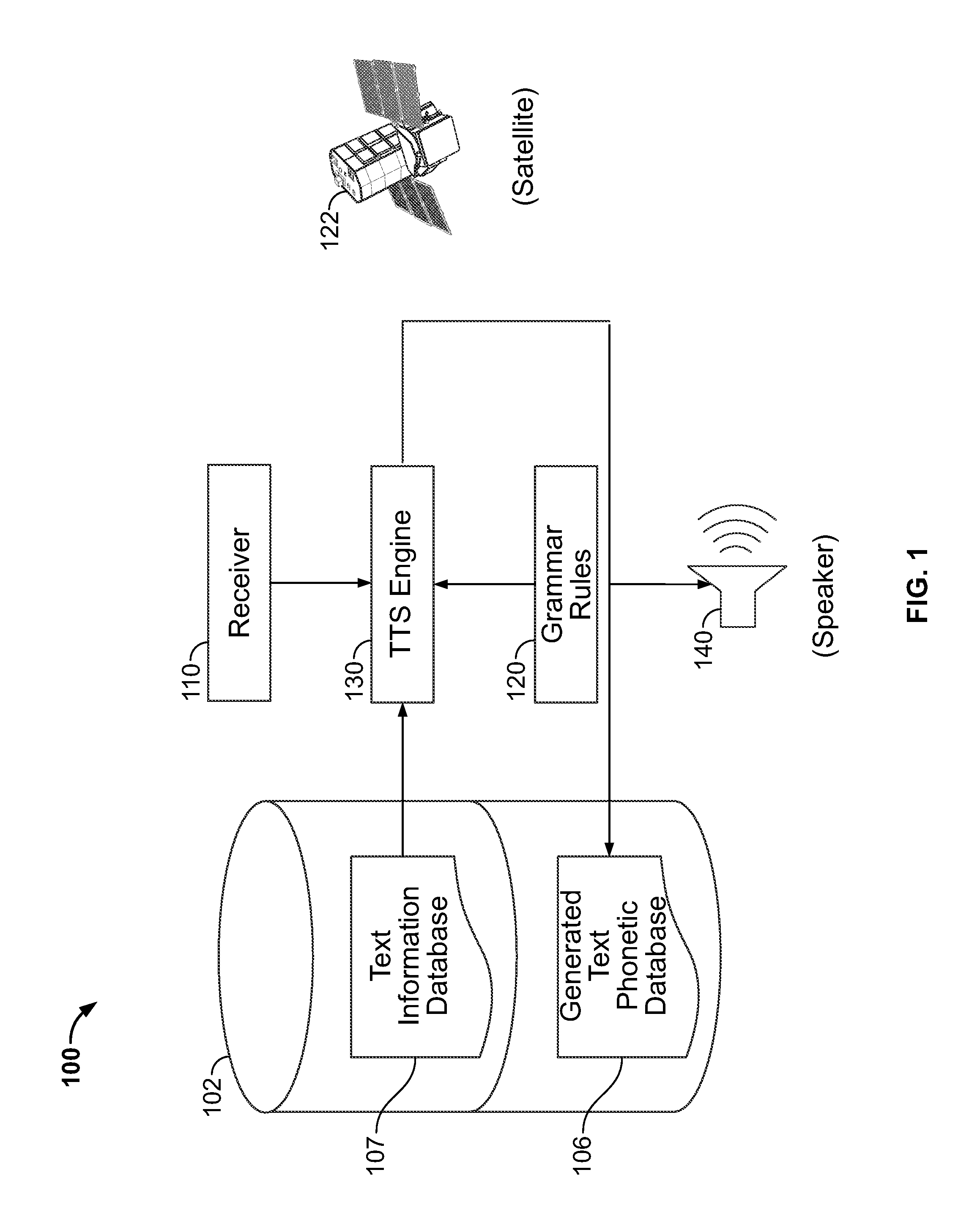

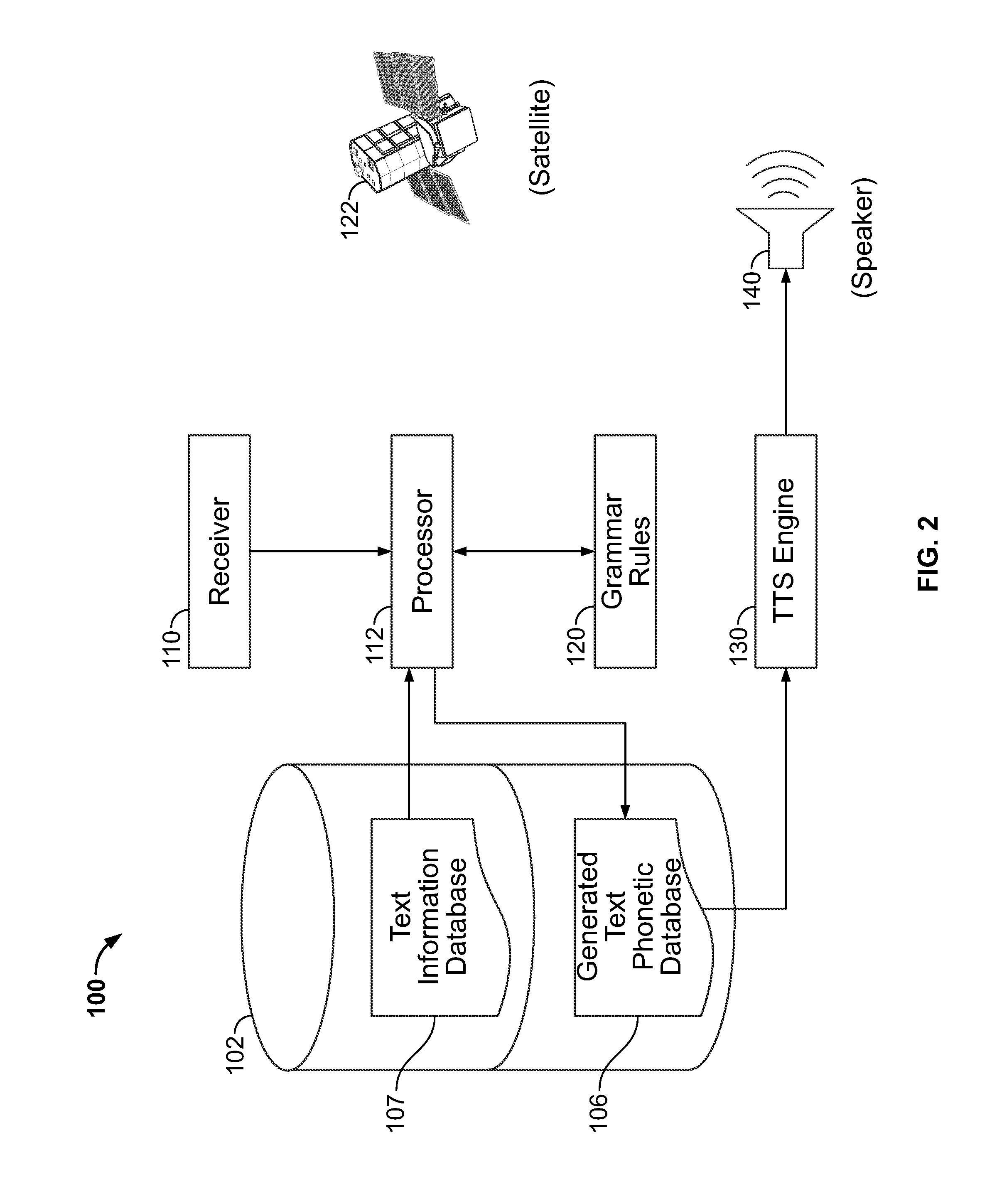

Text pre-processing for text-to-speech generation

A system and method are provided for improved speech synthesis, wherein text data is pre-processed according to updated grammar rules or a selected group of grammar rules. In one embodiment, the TTS system comprises a first memory adapted to store a text information database, a second memory adapted to store grammar rules, and a receiver adapted to receive update data regarding the grammar rules. The system also includes a TTS engine adapted to retrieve at least one text entry from the text information database, pre-process the at least one text entry by applying the updated grammar rules to the at least one text entry, and generate speech based at least in part on the least one pre-processed text entry.

Owner:HONDA MOTOR CO LTD

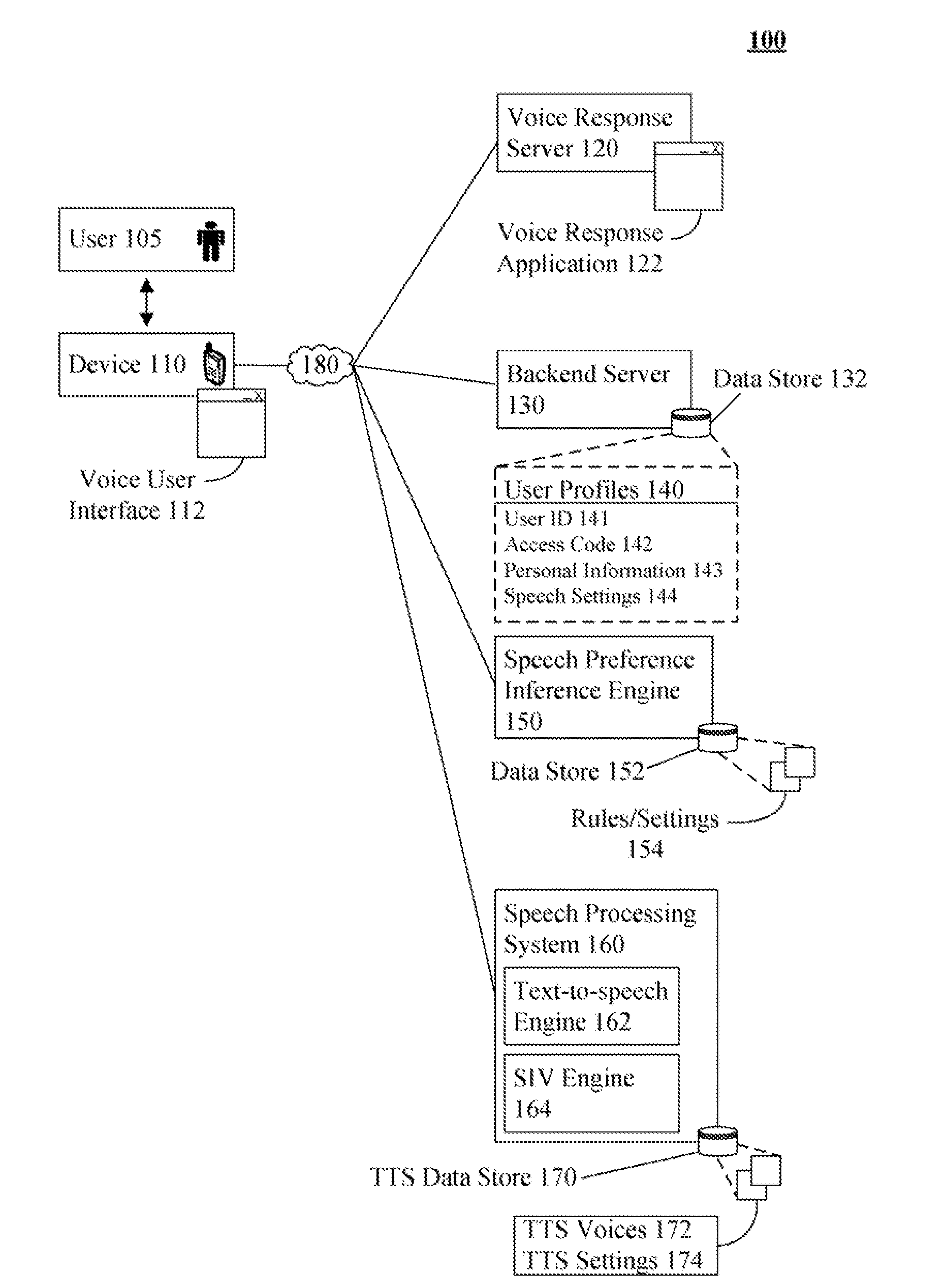

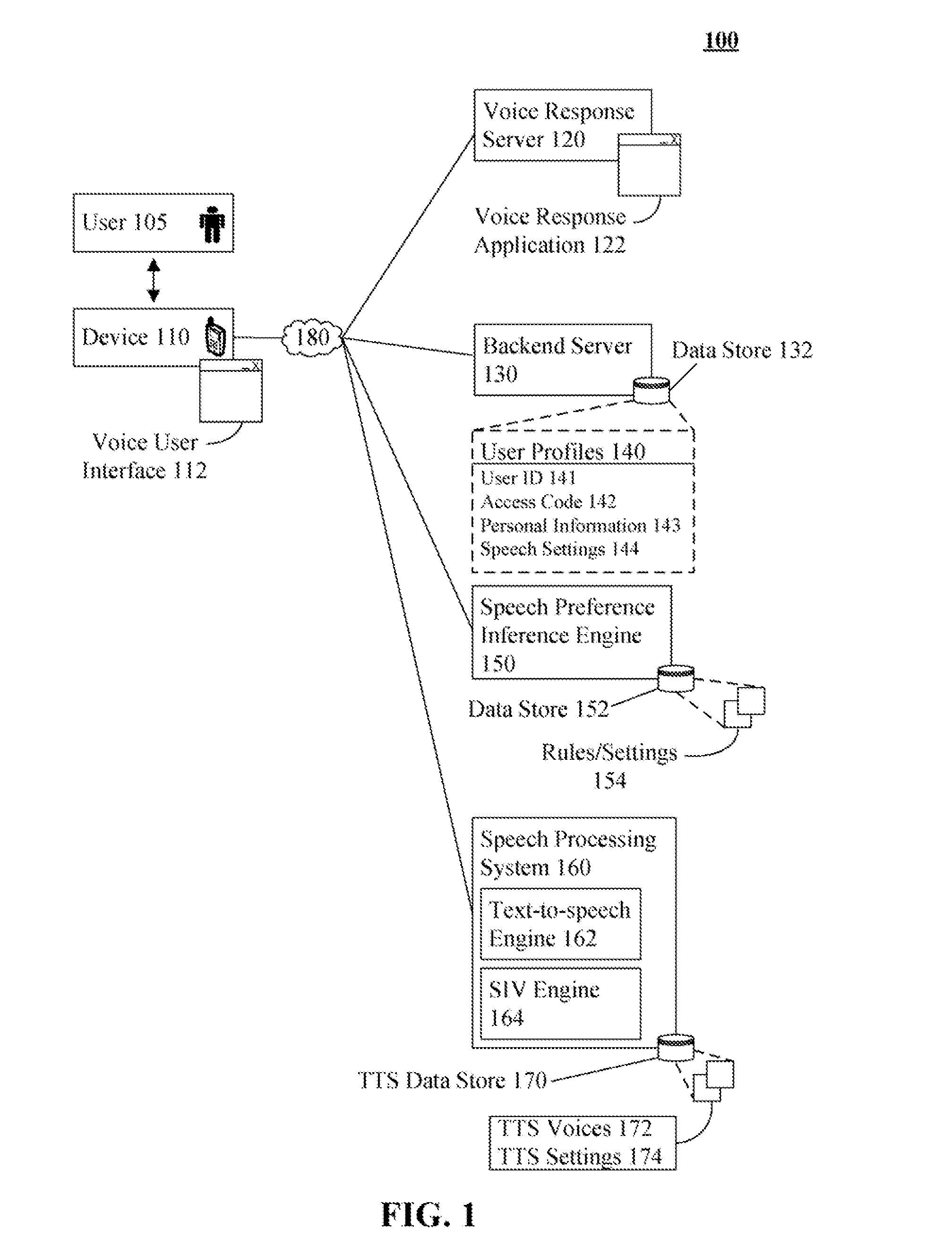

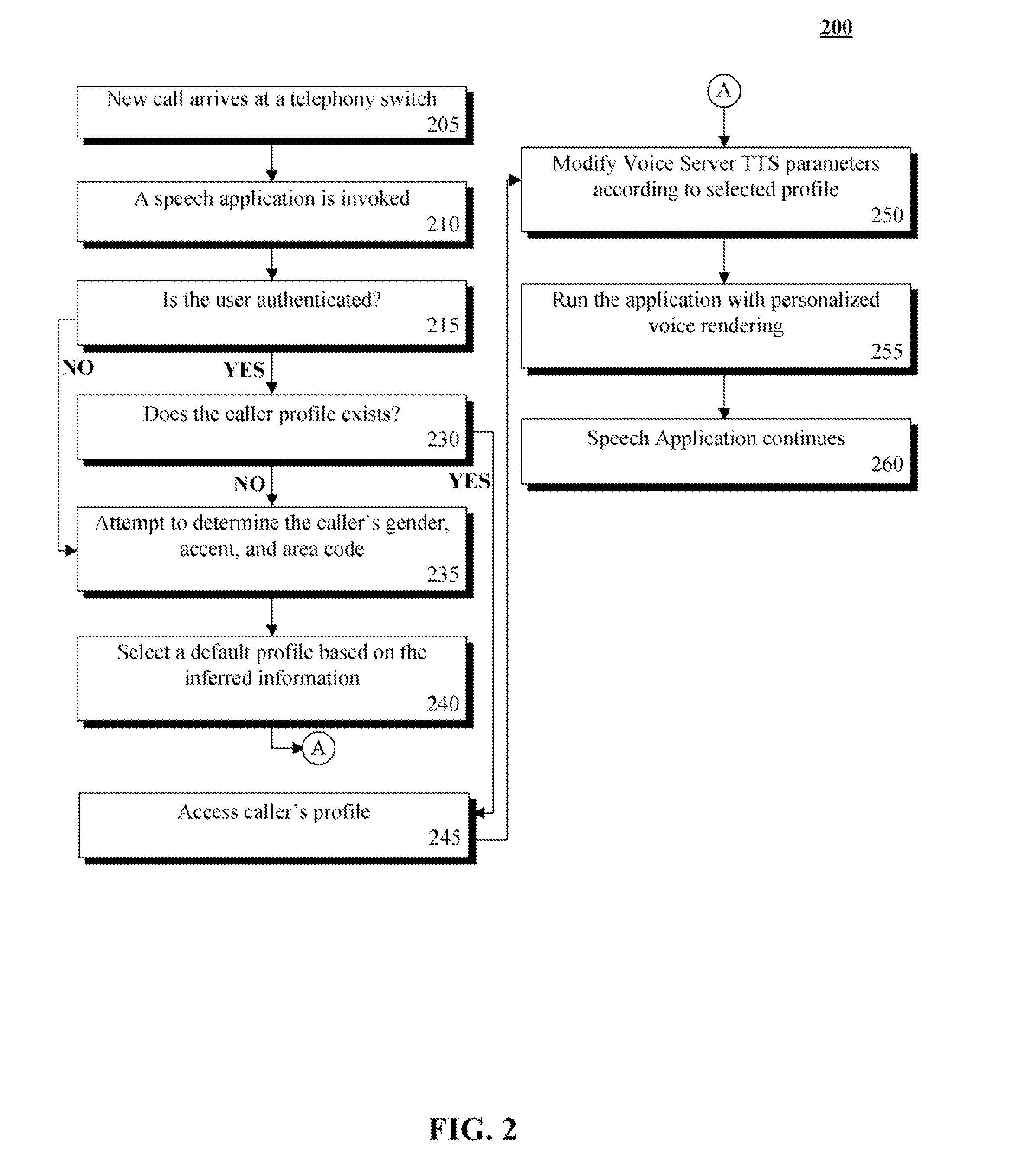

Dynamic modification of voice selection based on user specific factors

The present invention discloses a solution for customizing synthetic voice characteristics in a user specific fashion. The solution can establish a communication between a user and a voice response system. A data store can be searched for a speech profile associated with the user. When a speech profile is found, a set of speech output characteristics established for the user from the profile can be determined. Parameters and settings of a text-to-speech engine can be adjusted in accordance with the determined set of speech output characteristics. During the established communication, synthetic speech can be generated using the adjusted text-to-speech engine. Thus, each detected user can hear a synthetic speech generated by a different voice specifically selected for that user. When no user profile is detected, a default voice or a voice based upon a user's speech or communication details can be used.

Owner:IBM CORP

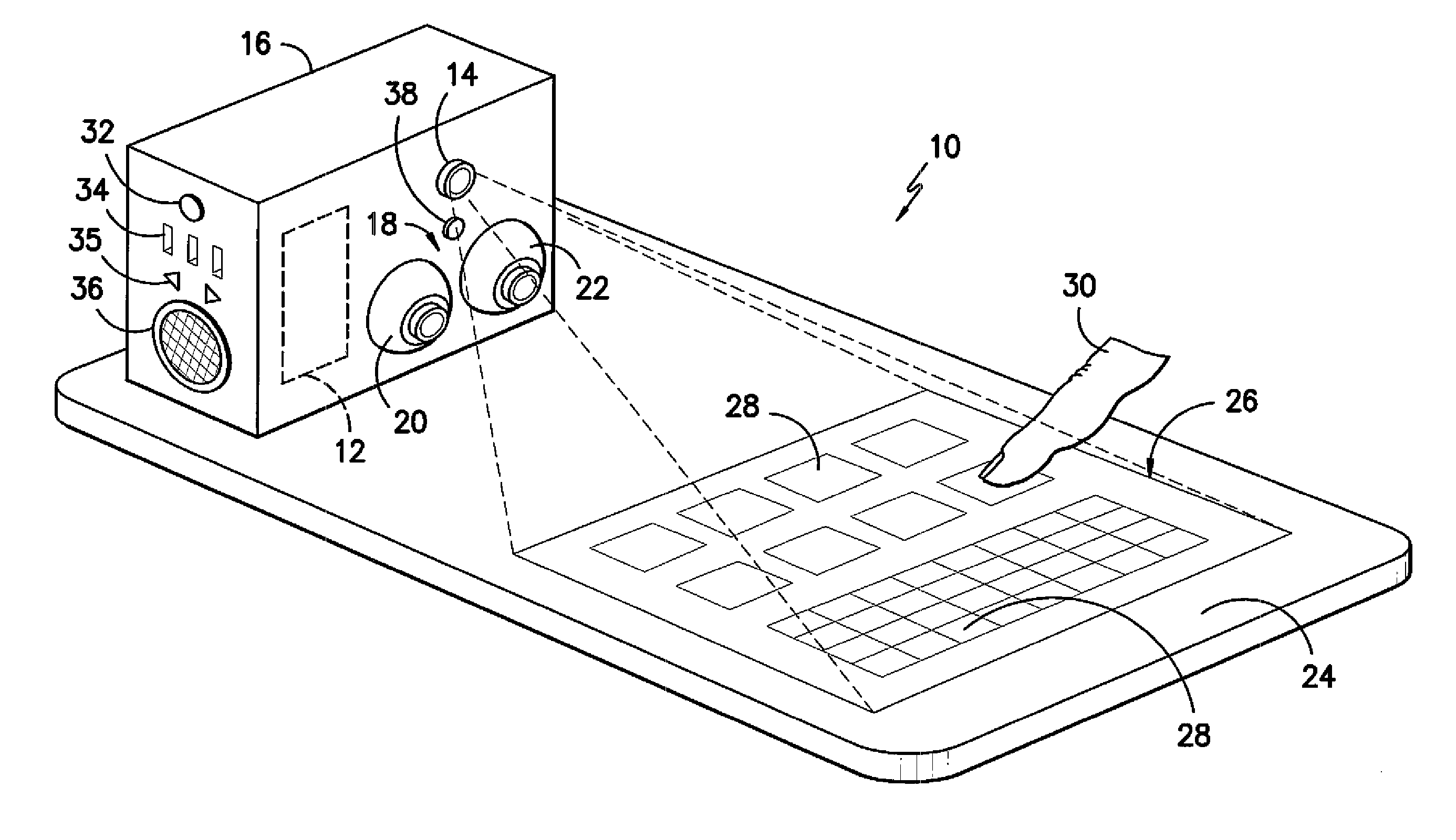

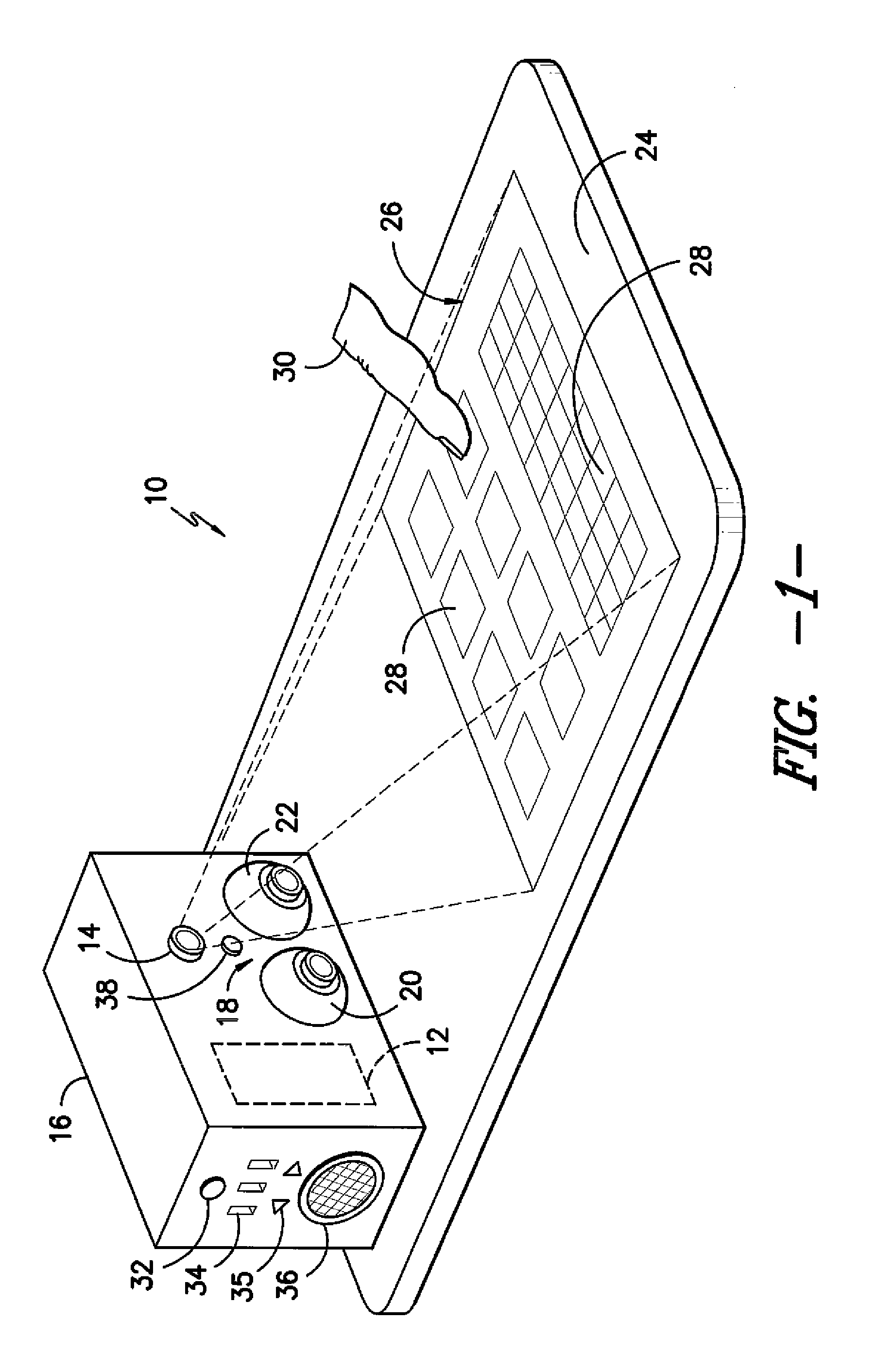

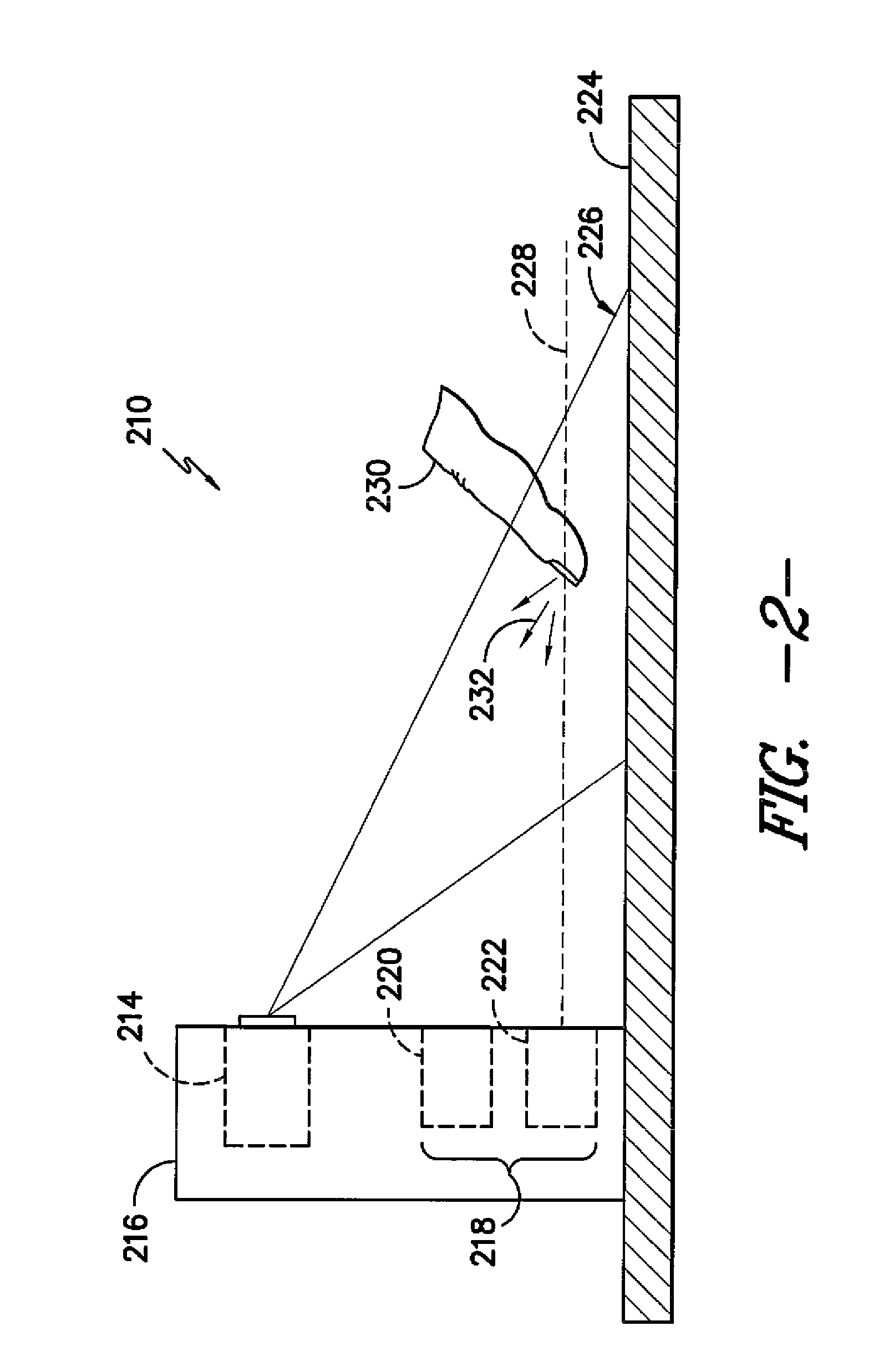

Speech generation device with a projected display and optical inputs

ActiveUS20120035934A1Reduce the possibilityWiden the optionsDigital data processing detailsSpeech synthesisDisplay deviceLoudspeaker

In several embodiments, a speech generation device is disclosed. The speech generation device may generally include a projector configured to project images in the form of a projected display onto a projection Surface, an optical input device configured to detect an input directed towards the projected display and a speaker configured to generate an audio output. In addition, the speech generation device may include a processing unit communicatively coupled to the projector, the optical input device and the speaker. The processing unit may include a processor and related computer readable medium configured to store instructions executable by the processor, wherein the instructions stored on the computer readable medium configure the speech generation device to generate text-to-speech output.

Owner:TOBII DYNAVOX AB

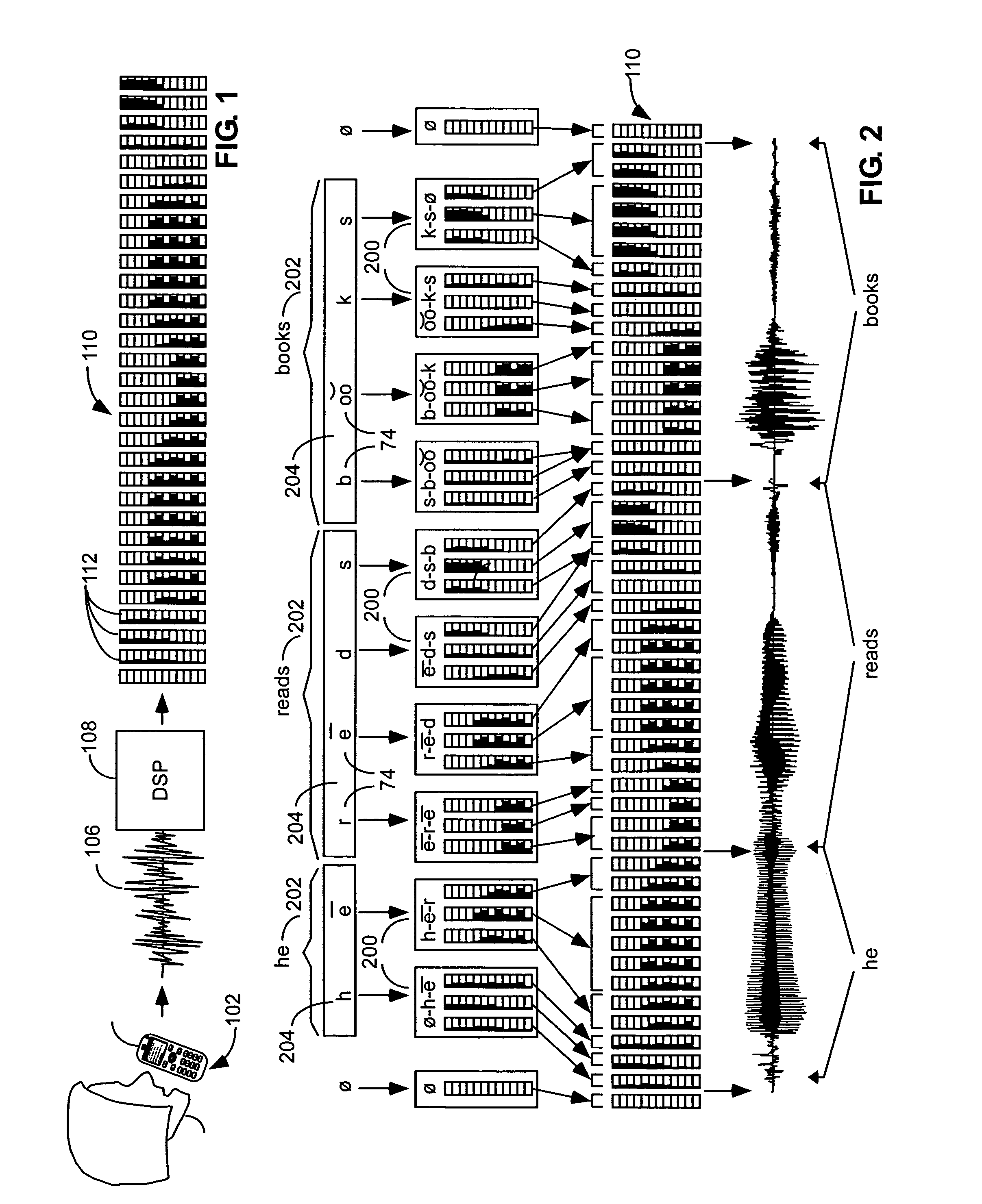

Method and apparatus for improved duration modeling of phonemes

InactiveUS6553344B2Improved duration modelingPressure difference measurement between multiple valvesSpeech synthesisAdditive modelSpeech sound

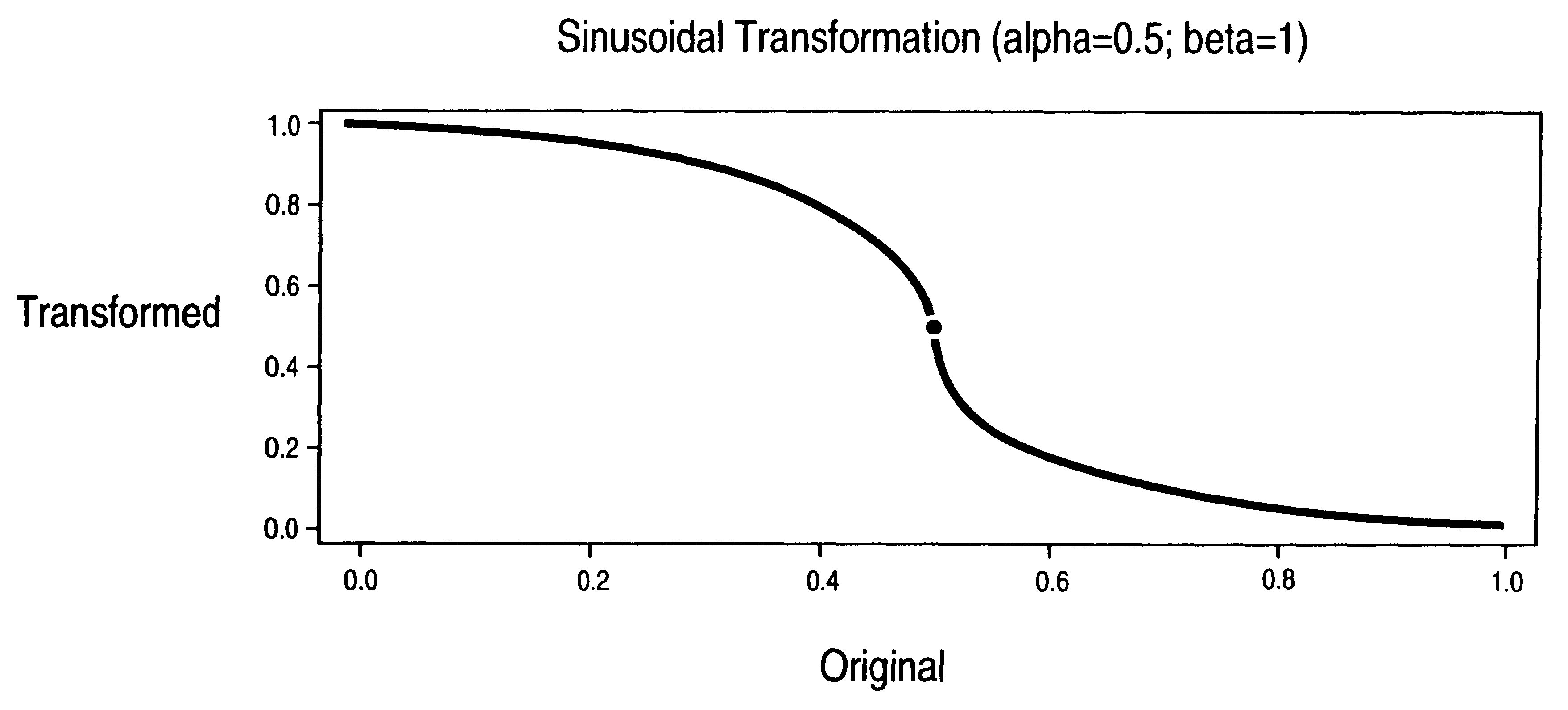

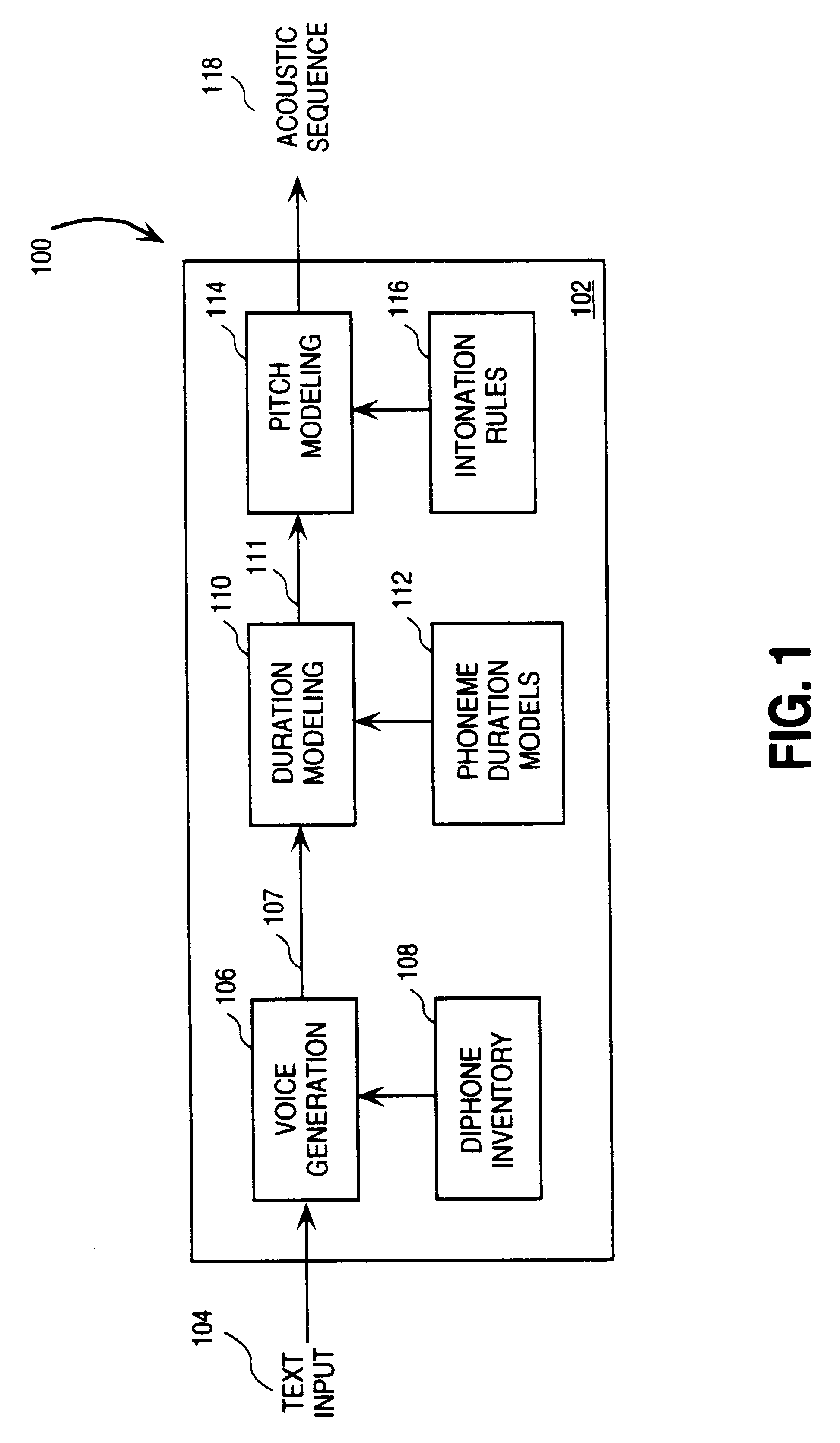

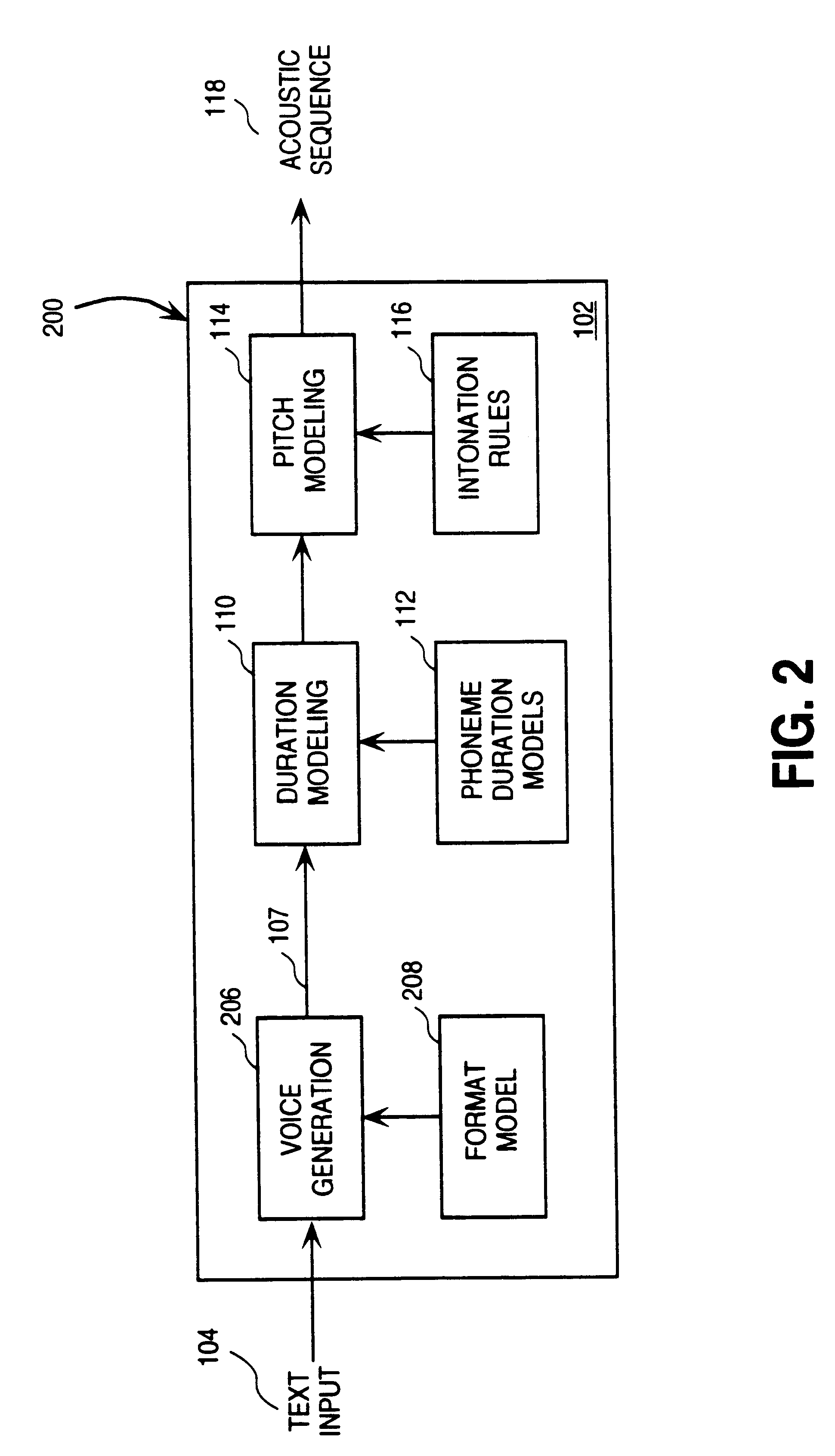

A method and an apparatus for improved duration modeling of phonemes in a speech synthesis system are provided. According to one aspect, text is received into a processor of a speech synthesis system. The received text is processed using a sum-of-products phoneme duration model that is used in either the formant method or the concatenative method of speech generation. The phoneme duration model, which is used along with a phoneme pitch model, is produced by developing a non-exponential functional transformation form for use with a generalized additive model. The non-exponential functional transformation form comprises a root sinusoidal transformation that is controlled in response to a minimum phoneme duration and a maximum phoneme duration. The minimum and maximum phoneme durations are observed in training data. The received text is processed by specifying at least one of a number of contextual factors for the generalized additive model. An inverse of the non-exponential functional transformation is applied to duration observations, or training data. Coefficients are generated for use with the generalized additive model. The generalized additive model comprising the coefficients is applied to at least one phoneme of the received text resulting in the generation of at least one phoneme having a duration. An acoustic sequence is generated comprising speech signals that are representative of the received text.

Owner:APPLE INC

Combined speech recongnition and text-to-speech generation

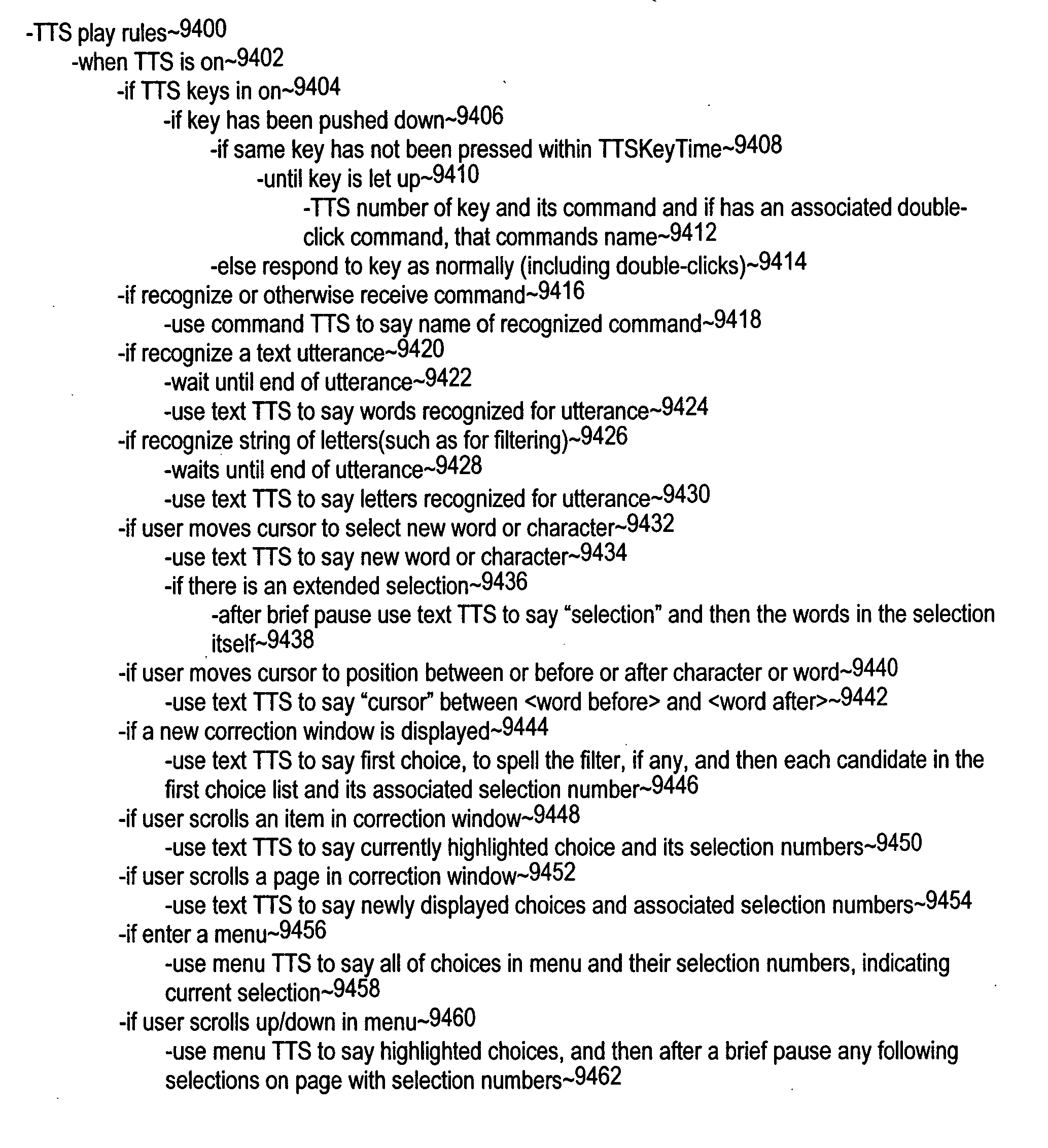

Text-to-speech (TTS) generation is used in conjunction with large vocabulary speech recognition to say words selected by the speech recognition. The software for performing the large vocabulary speech recognition can share speech modeling data with the TTS software. TTS or recorded audio can be used to automatically say both recognized text and the names of recognized commands after their recognition. The TTS can automatically repeats text recognized by the speech recognition after each of a succession of end of utterance detections. A user can move a cursor back or forward in recognized text, and the TTS can speak one or more words at the cursor location after each such move. The speech recognition can be used to produces a choice list of possible recognition candidates and the TTS can be used to provide spoken output of one or more of the candidates on the choice list.

Owner:CERENCE OPERATING CO

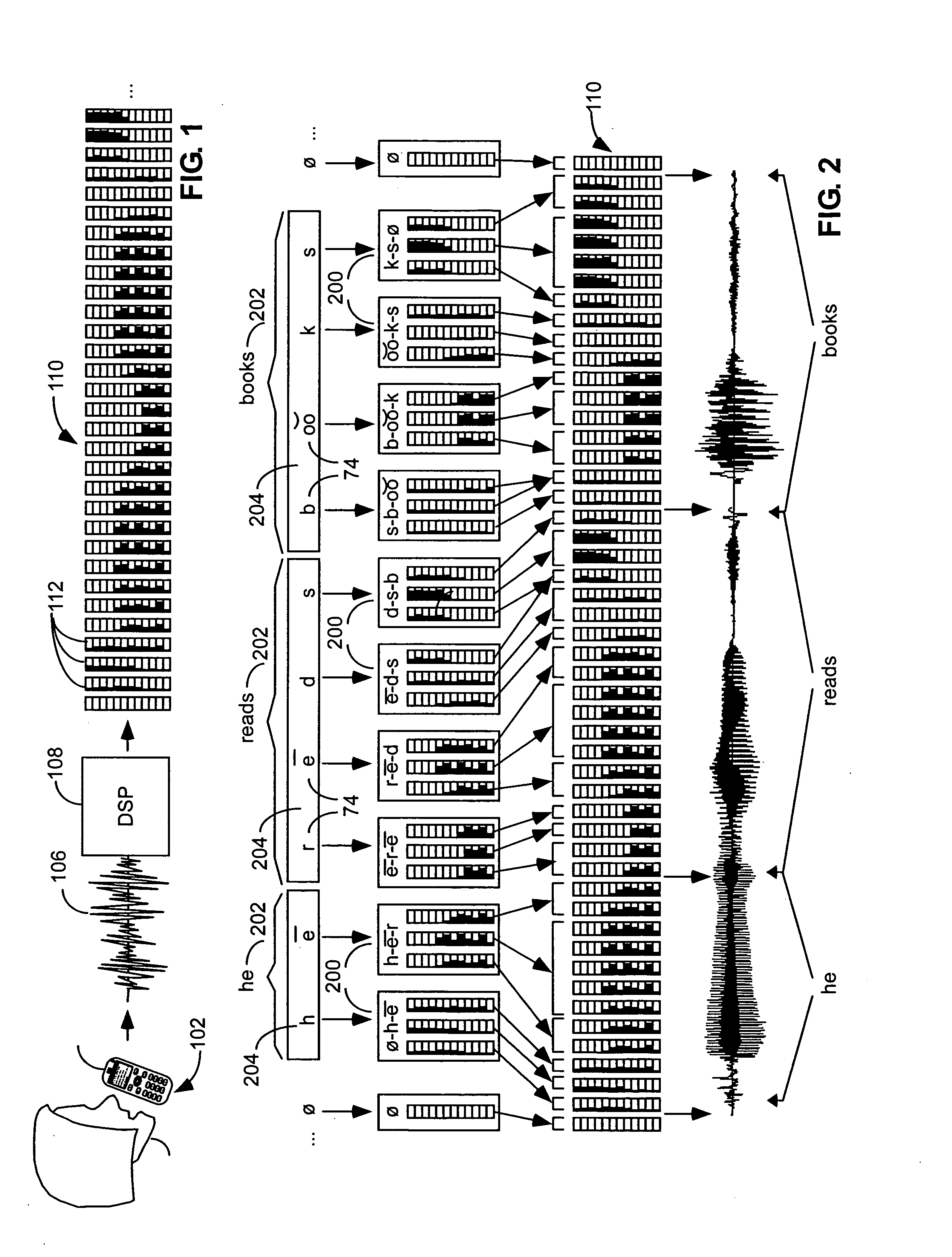

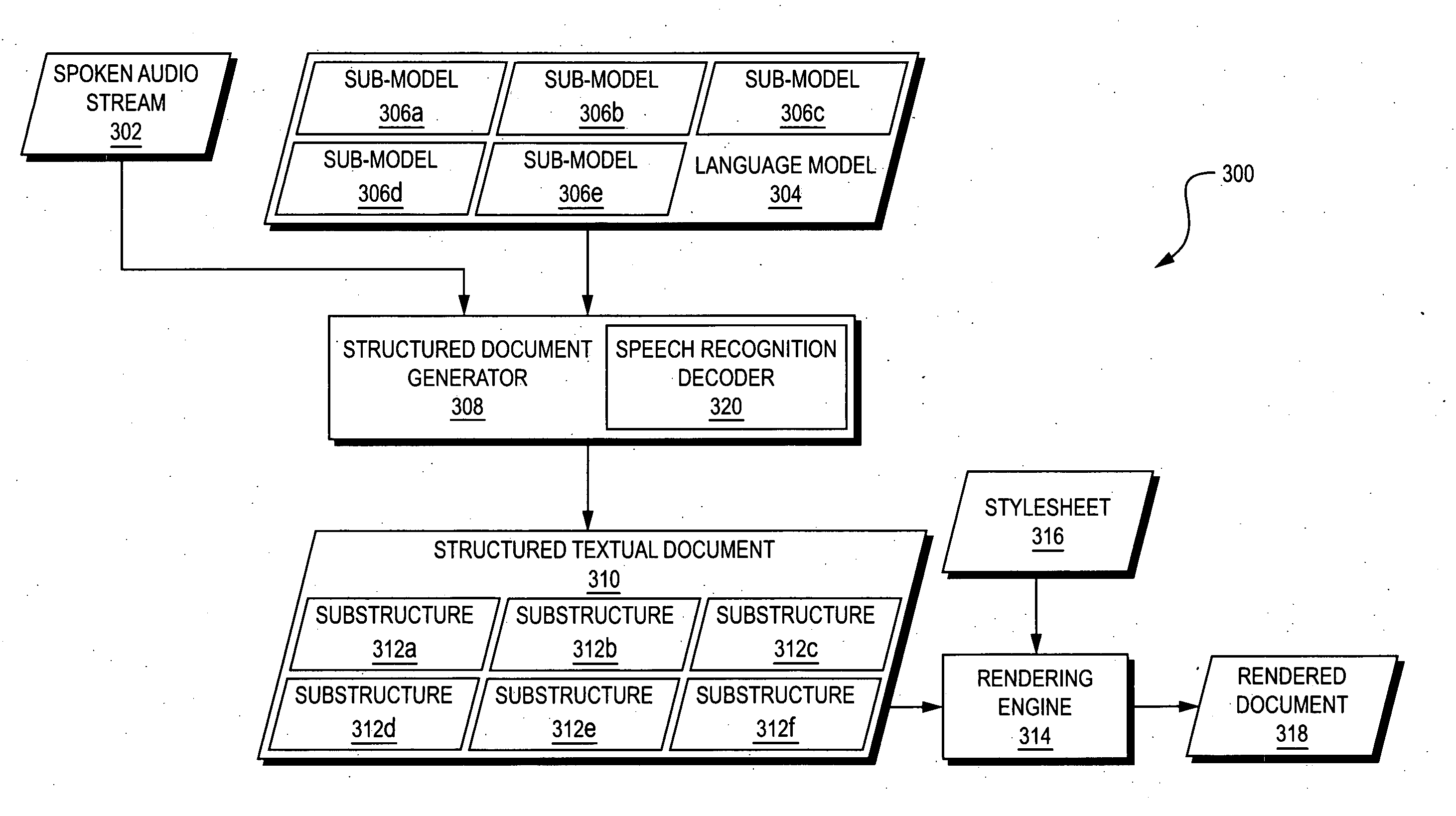

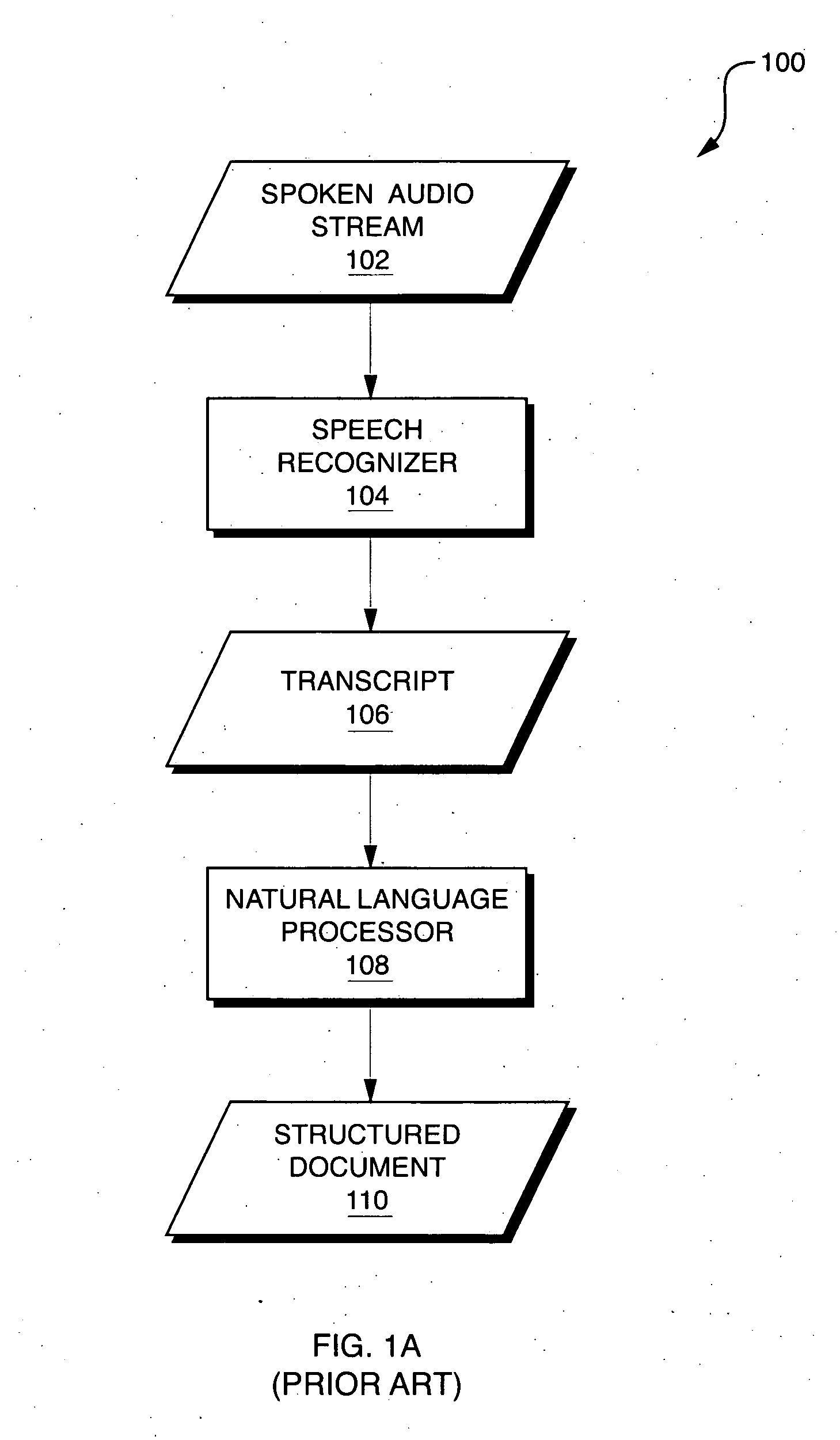

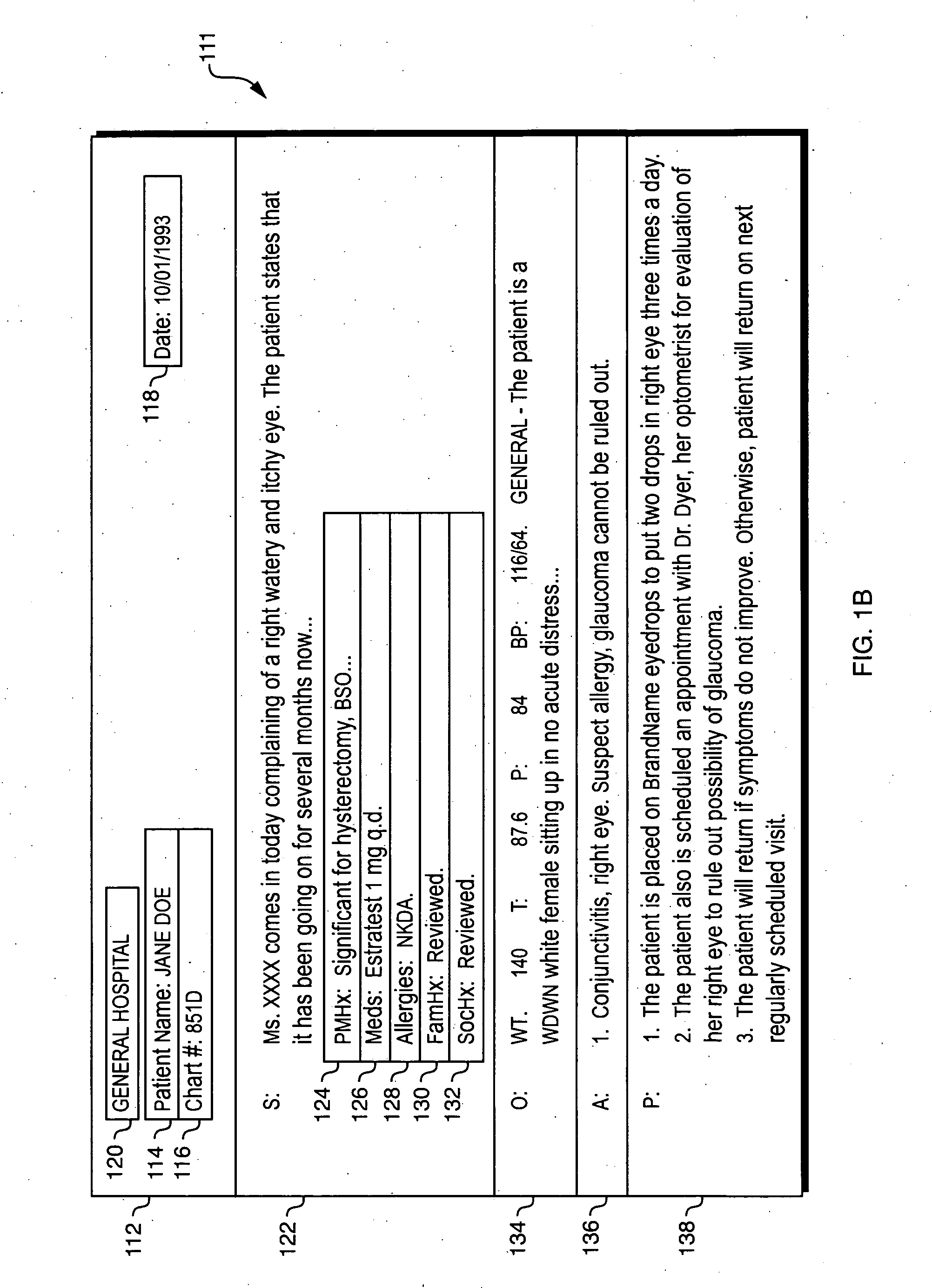

Automated extraction of semantic content and generation of a structured document from speech

InactiveUS20060041428A1Simple technologyMedical report generationSpeech recognitionDocumentation procedureSpeech sound

Techniques are disclosed for automatically generating structured documents based on speech, including identification of relevant concepts and their interpretation. In one embodiment, a structured document generator uses an integrated process to generate a structured textual document (such as a structured textual medical report) based on a spoken audio stream. The spoken audio stream may be recognized using a language model which includes a plurality of sub-models arranged in a hierarchical structure. Each of the sub-models may correspond to a concept that is expected to appear in the spoken audio stream. Different portions of the spoken audio stream may be recognized using different sub-models. The resulting structured textual document may have a hierarchical structure that corresponds to the hierarchical structure of the language sub-models that were used to generate the structured textual document.

Owner:MULTIMODAL TECH INC

System for automated translation of speech

InactiveUS6292769B1Natural language translationSpeech analysisSpeech to speech translationRemote computing

The present invention allows subscribers to an online information service to participate in real-time conferencing or chat sessions in which a message originating from a subscriber in accordance with a first language is translated to one or more languages before it is broadcast to the other conference areas. Messages in a first language are translated automatically to one or more other languages through languages translation capabilities resident at online information service host computers. Access software that subscribers use for participating in conference is integrated with speech recognition and speech generation software such that a subscriber may speak the message he or she would like to share with other participants and may hear the messages from the other participants in the conference. Speech-to-speech translation may be accomplished as a message spoken into a computer microphone in accordance with a first language may be recited by a remote computer in accordance with a second language.

Owner:META PLATFORMS INC

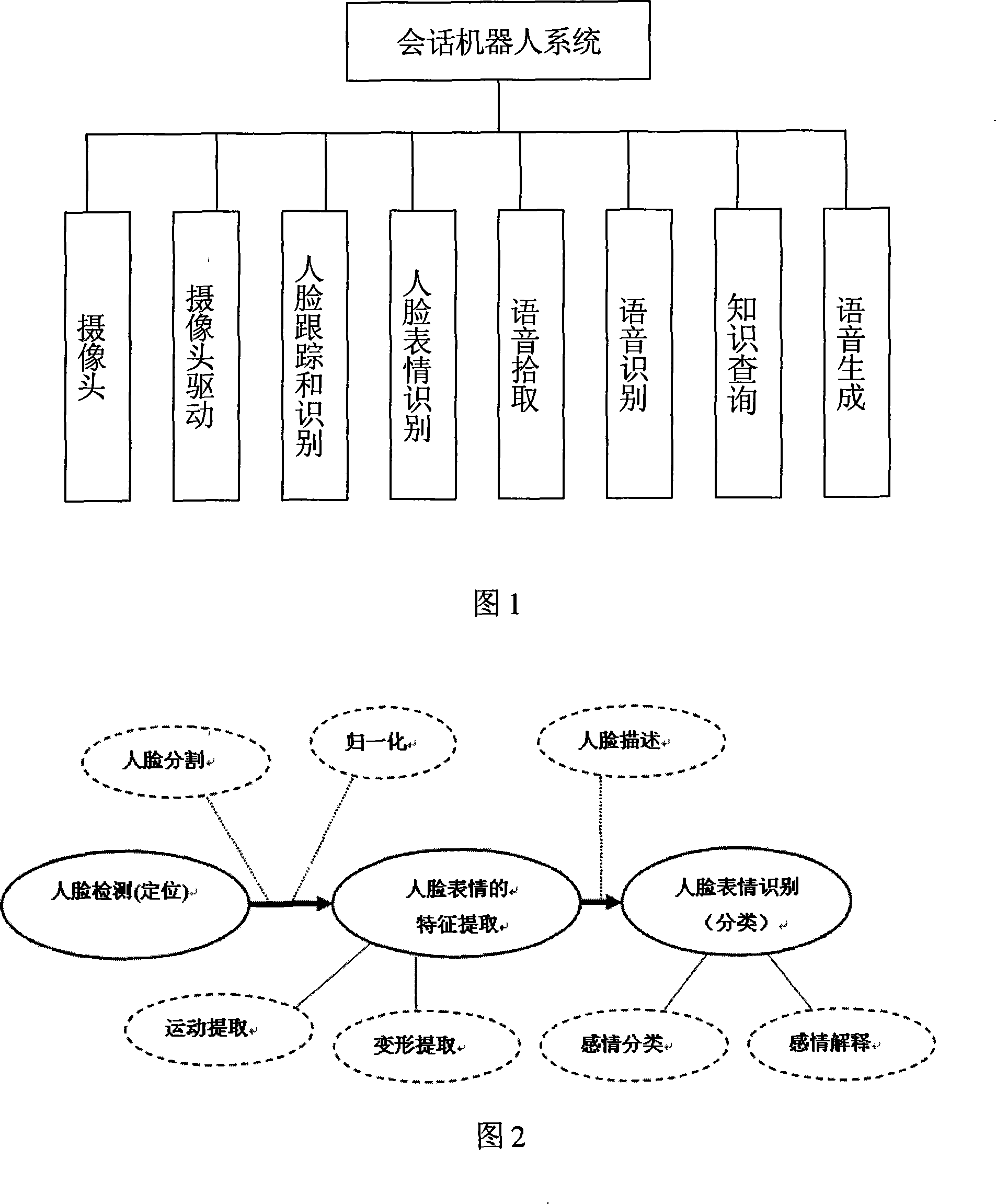

A session robotic system

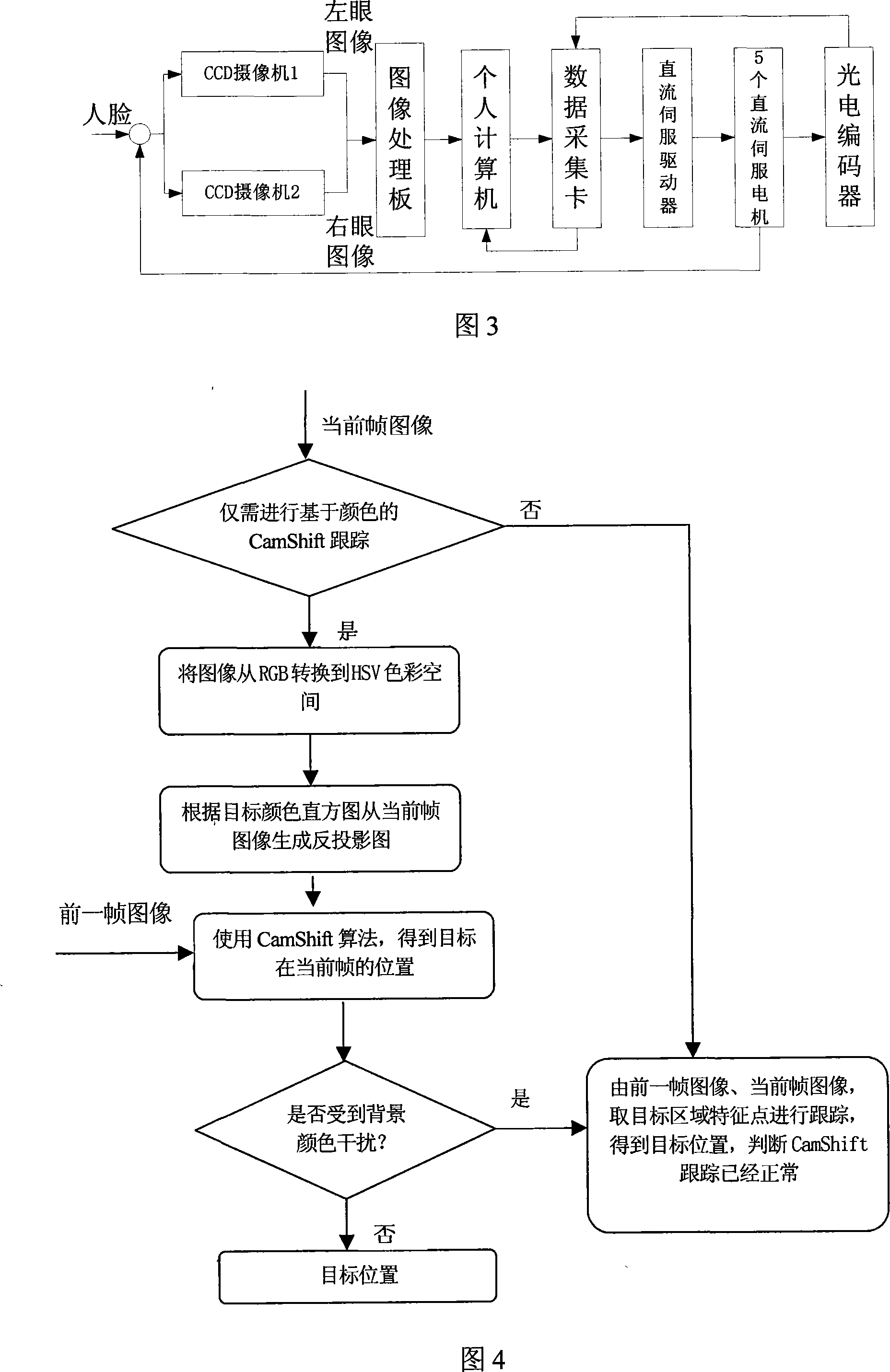

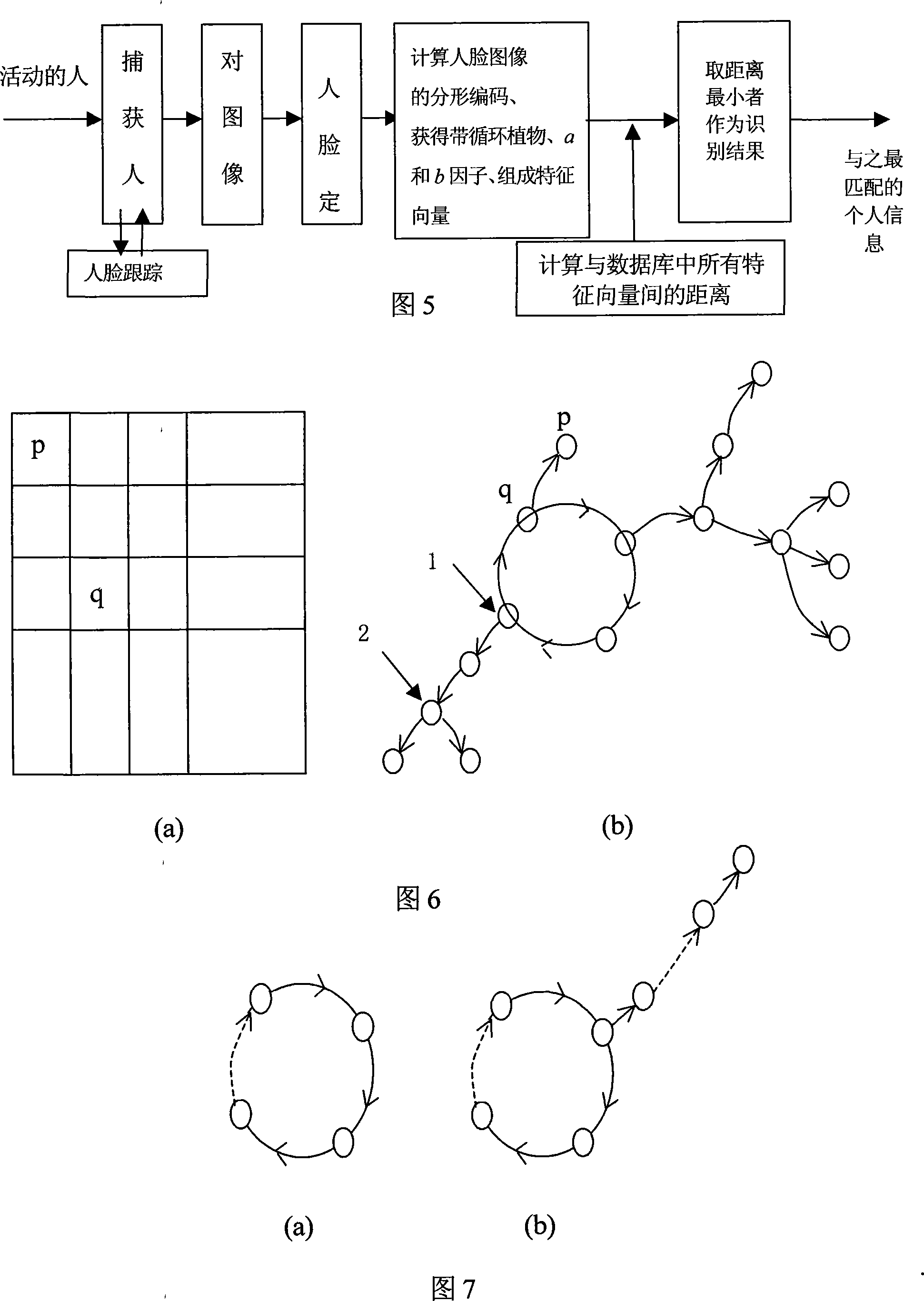

InactiveCN101187990ARecognizableHave the ability to understandInput/output for user-computer interactionBiological modelsRobotic systemsSpeech identification

The invention discloses a conservational robot system. A human face tracking and recognizing module tracks and recognizes a human face image captured by a camera; a human facial expression recognizing module recognizes the expression; and semantic meaning is recognized after voice signals pass through a voice picking module and a voice recognizing module. The robot system understands human demands according to facial expressions and / or voice and then forms conservation statement through a knowledge inquiry module and generates voice for communication with humans through a voice generating module. The conservational robot system has voice recognizing and understanding abilities and can understand commands of users. The invention can be applied to schools, families, hotels, companies, airports, bus stations, docks, meeting rooms and so on for education, chat, conservation, consultation, etc. In addition, the invention can also help users with propaganda and introduction, guest reception, business inquiry, secretary service, foreign language interpretation, etc.

Owner:SOUTH CHINA UNIV OF TECH

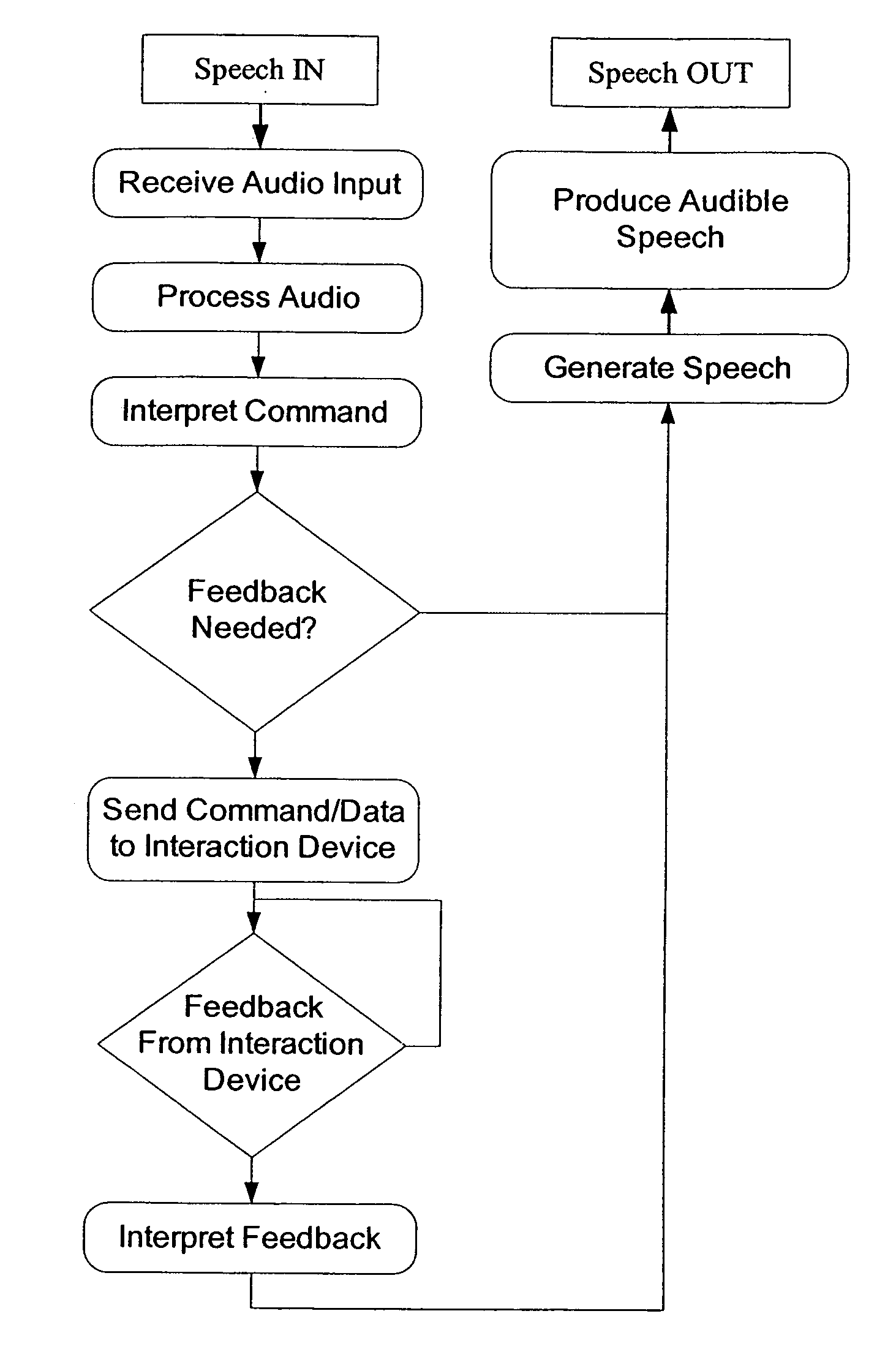

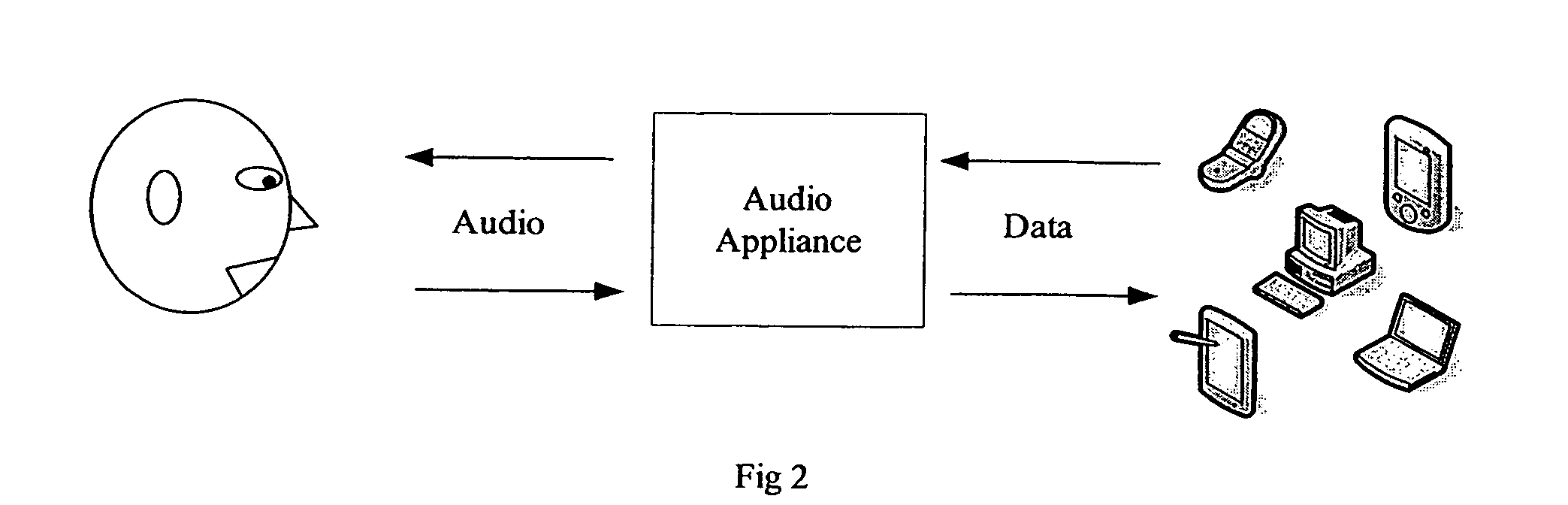

Audio appliance with speech recognition, voice command control, and speech generation

InactiveUS20080037727A1Easy to operateEasy commandTelephonic communicationSpeech recognitionCommand and controlUsability

Methods and devices provided for an audio appliance system that remotely command and control cell phone and various IT, electronic products through voice interface. The voice interface includes voice recognition, and voice generation functions, thus enables the appliance to process information through voice on cell phones / IT products, streamline the information transmission and exchange. Additionally, the appliance enables convenient command and control of various IT and consumer products through voice operation, enhancing the usability of these products and the reach of human users to the outside world.

Owner:SIVERTSEN CLAS +1

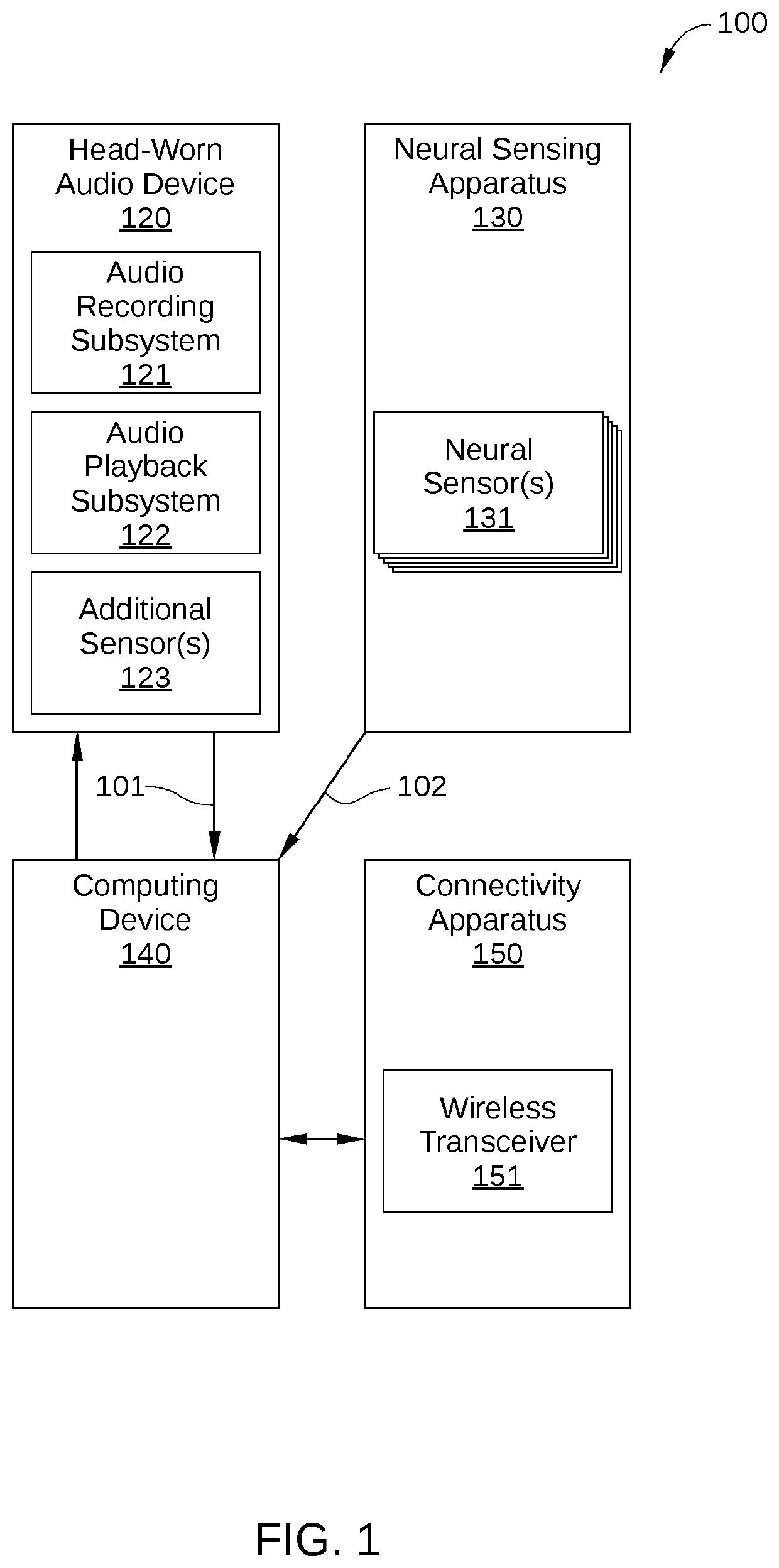

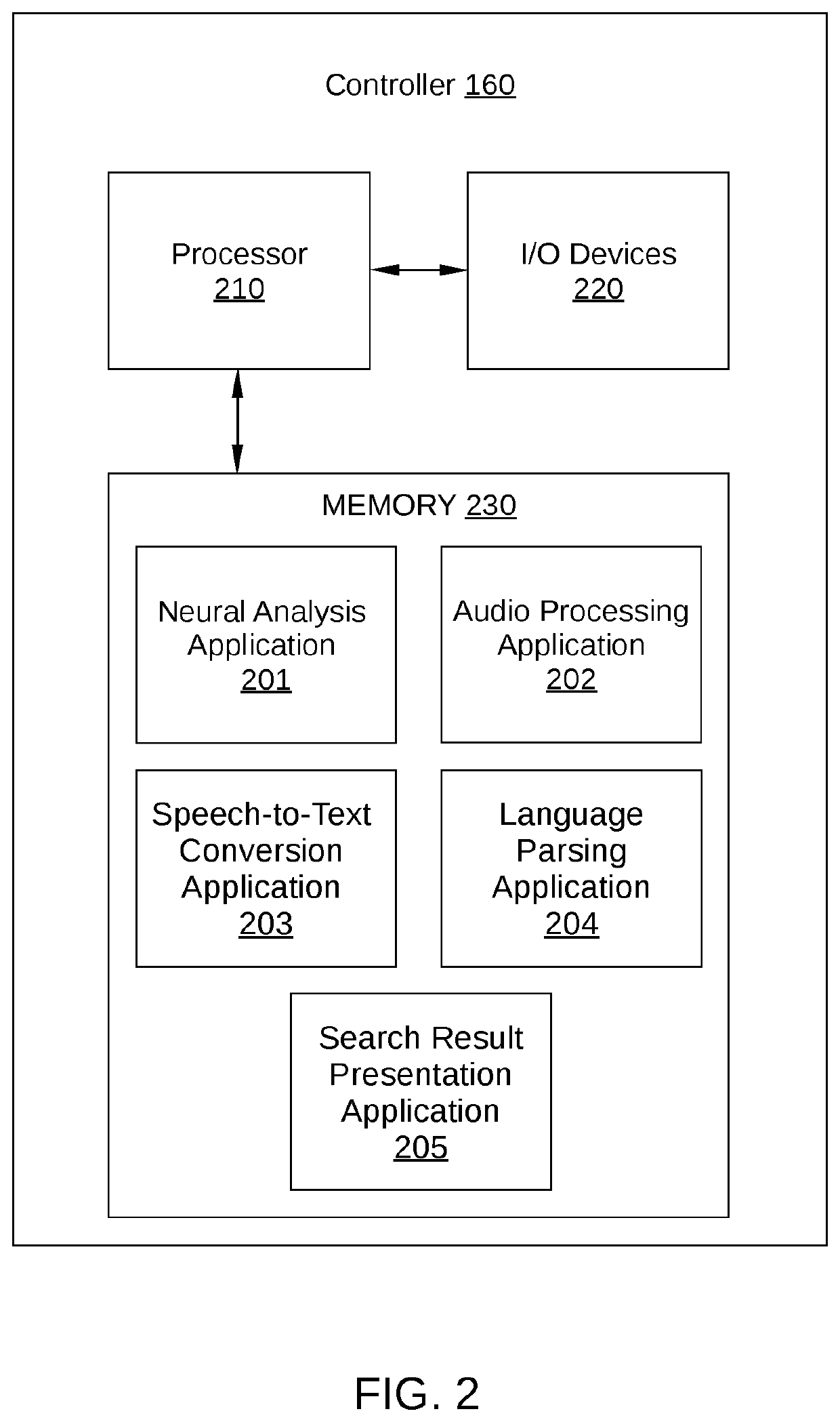

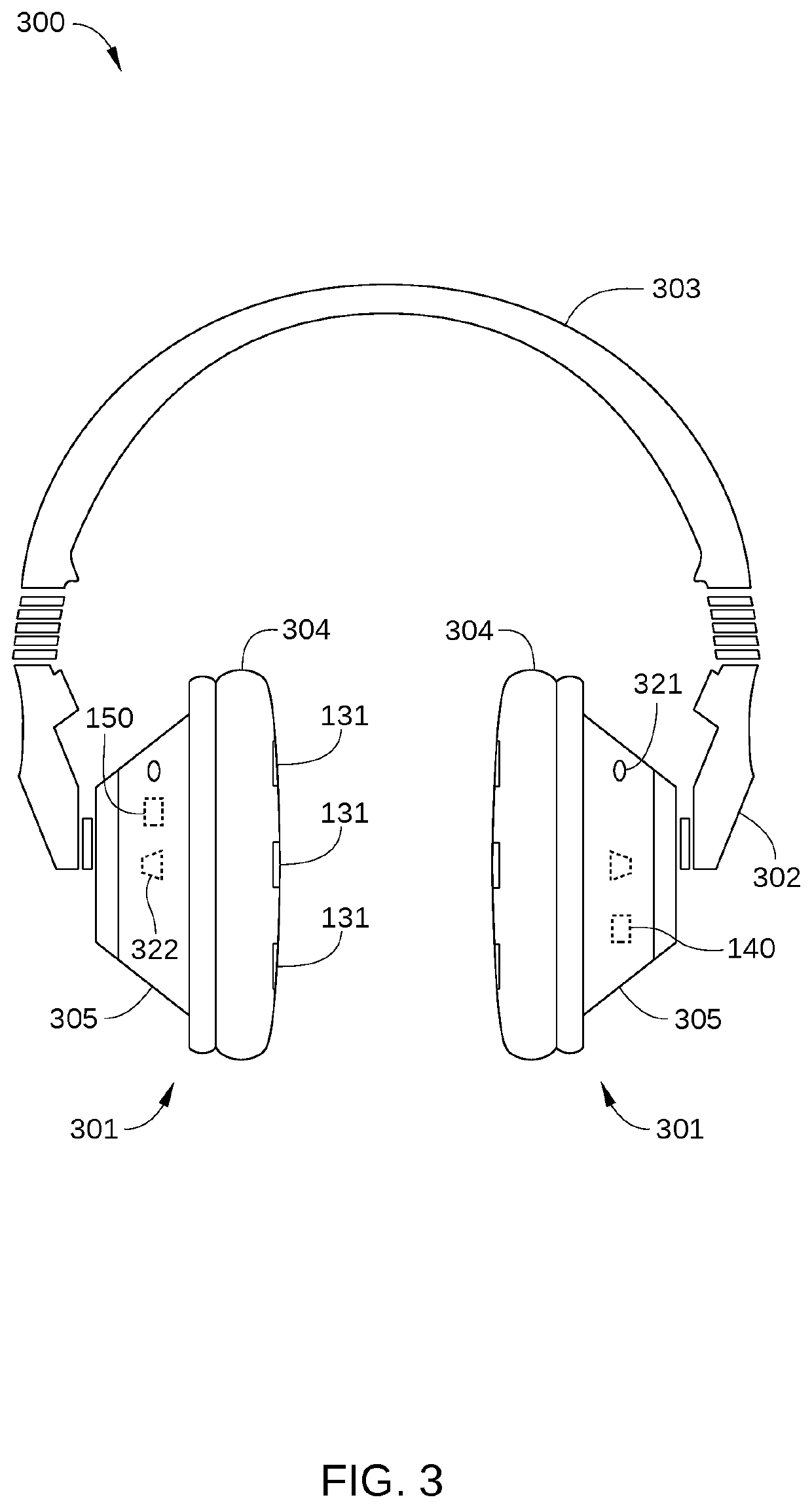

Retroactive information searching enabled by neural sensing

ActiveUS20200034492A1Provide informationNatural language translationInput/output for user-computer interactionOutput deviceInformation searching

A method for retrieving information includes detecting a first neural activity of a user, wherein the first neural activity corresponds to a key thought of the user; in response to detecting the first neural activity, generating a search query based on speech occurring prior to detecting the first neural activity; retrieving information based on the search query; and transmitting the information to one or more output devices.

Owner:HARMAN INT IND INC

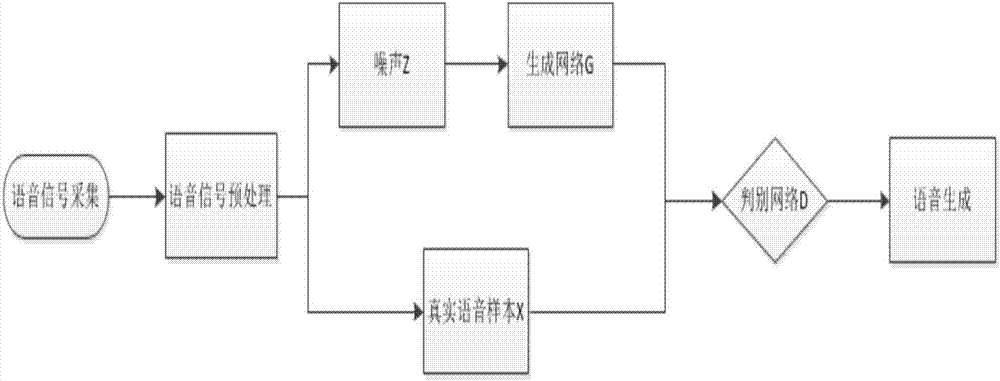

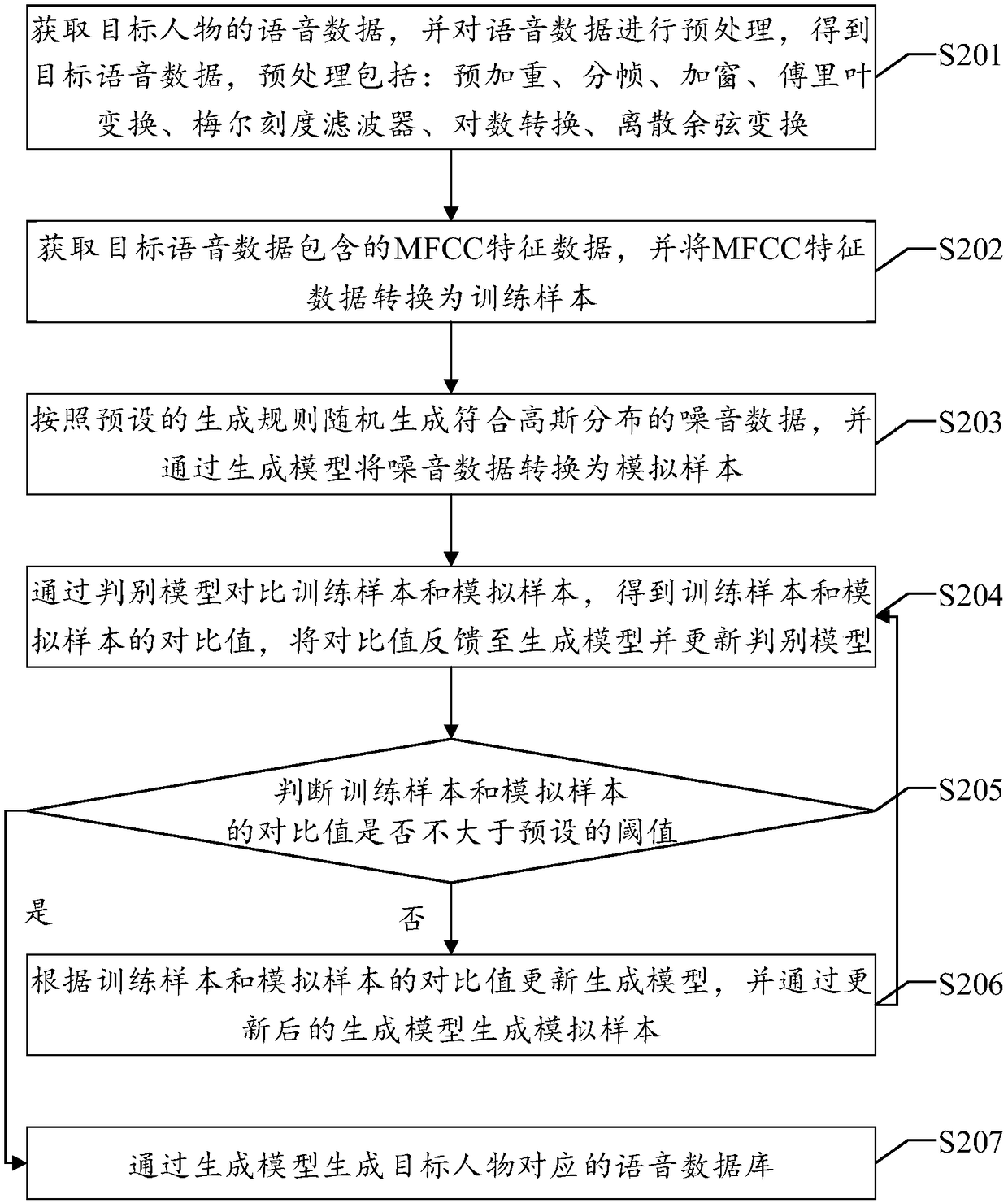

Voice production method based on deep convolutional generative adversarial network

ActiveCN107293289ASpeak clearlySpeak naturallySpeech recognitionMan machineGenerative adversarial network

The invention discloses a voice production method based on a deep convolutional generative adversarial network. The steps comprise: (1) acquiring voice signal samples; (2) preprocessing the voice signal samples; (3) inputting the voice signal samples to a deep convolutional generative adversarial network; (4) training the input voice signals; (5) generating voice signals similar to real voice contents. A tensorflow is used as a learning framework, and a deep convolutional generative adversarial network algorithm is used to train large quantity of voice signals. A dynamic game process of a distinguishing network D and a generation network G in the deep convolutional generative adversarial network is used to finally generate a natural voice signal close to original learning contents. The method generates voice based on the deep convolutional generative adversarial network, and solves problems that an intelligent device is overly dependent on a fixed voice library to sound in a man-machine face-to-face communication process, and mode is monotonous and is lack of variations and is not natural enough.

Owner:NANJING MEDICAL UNIV

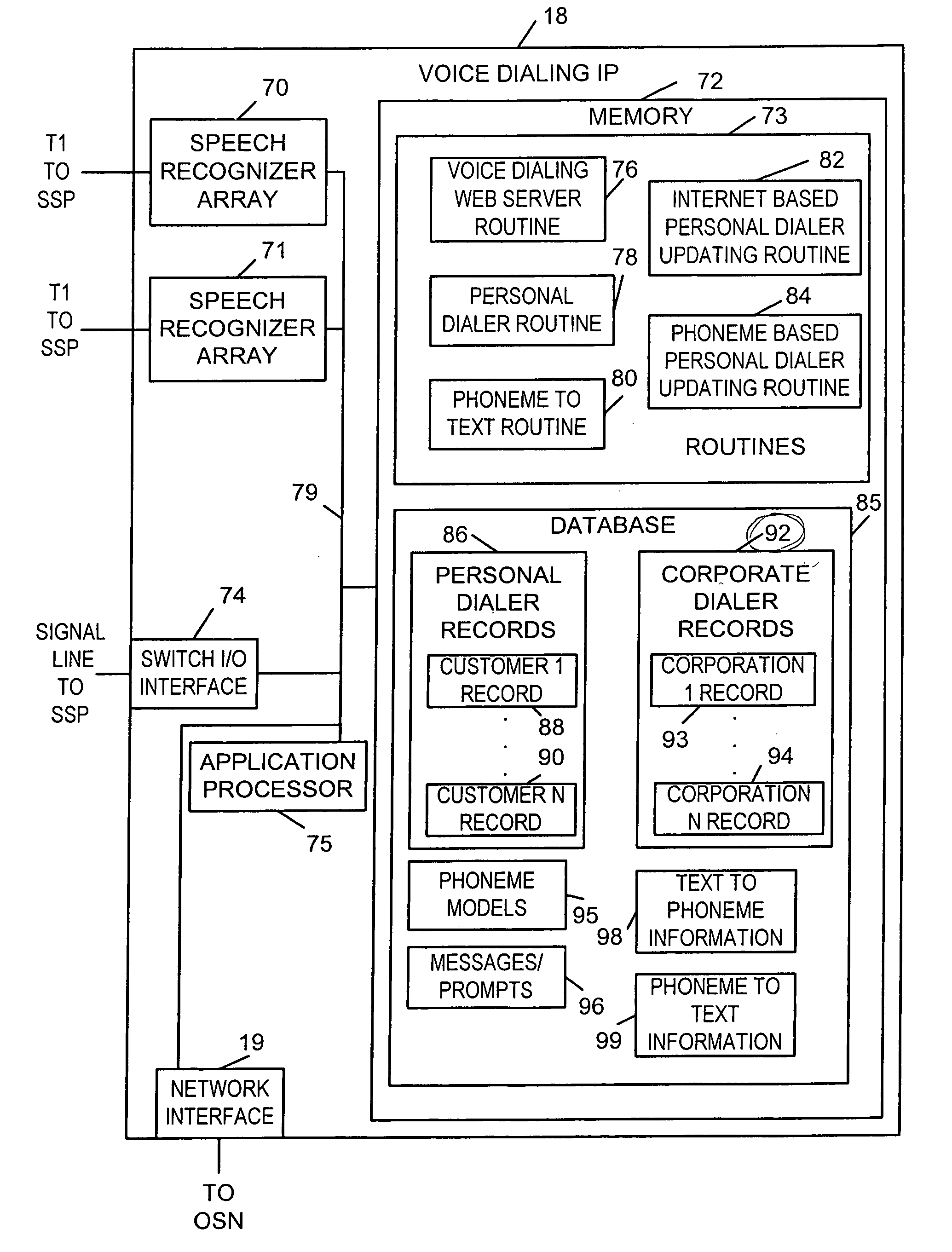

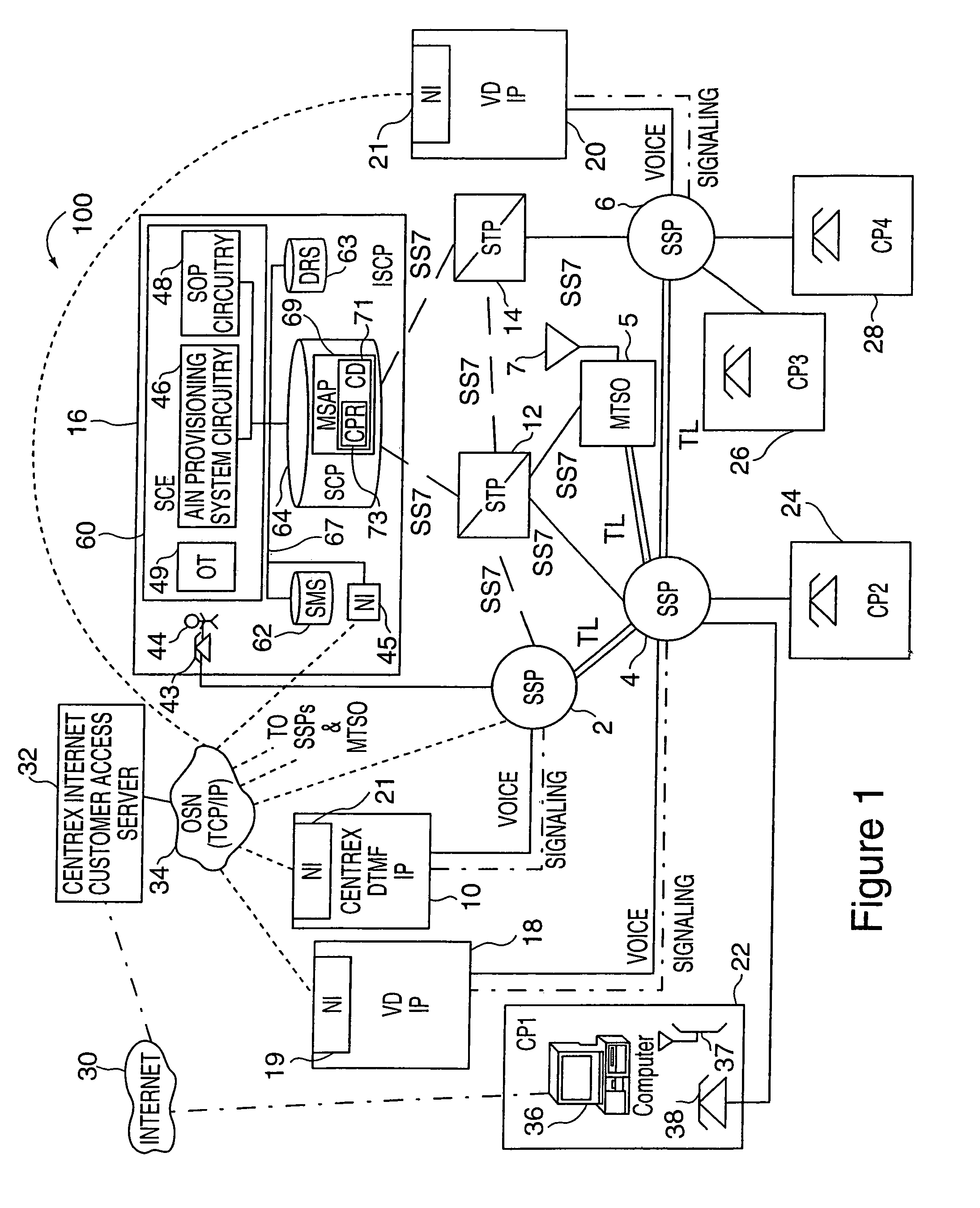

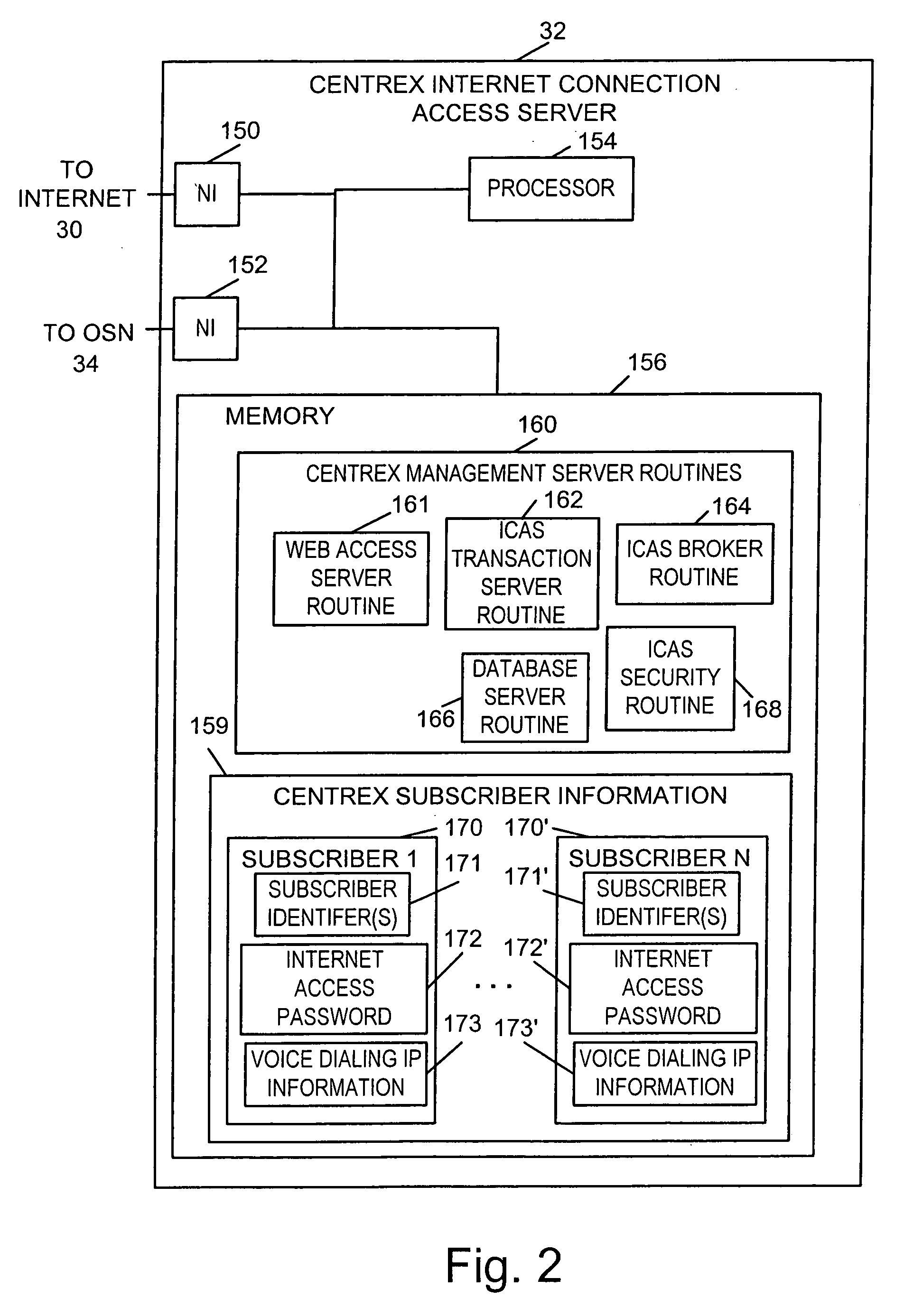

Voice dialing using text names

InactiveUS6963633B1Easily integrateEnhance serviceInterconnection arrangementsAutomatic call-answering/message-recording/conversation-recordingLoudspeakerSpeech identification

Methods and apparatus for implementing communication services such as voice dialing services are described. In one Centrex based voice dialing embodiment, voice dialing service subscribers are given access to personal voice dialing records including calling entries via the Internet as well as via telephone connections. Each calling entry normally includes the name and, optionally nickname, of a party to be called. It also includes one or more telephone numbers associated with each name. Different telephone number identifies, e.g. locations, can be associated with different names. A user can create or update entries in a voice dialing directory using text conveyed over the Internet or speech supplied via a telephone connection. In order to facilitate updating and maintenance of voice dialing directories over the Internet speaker independent (SI) speech recognition models are used. When a calling entry is created via the Internet the text of the name is processed to generate a corresponding speech recognition model there from. When an entry is created via speech obtained over the telephone, a speech recognition model is generated from the speech and a text name is generated is generated using speech to text technology. To avoid having to hang-up and initiate a new voice dialing call the outcome of a voice dialing call is monitored and the subscriber is provided the opportunity to initiate another call using voice dialing if the first call did not complete successfully e.g., goes unanswered.

Owner:GOOGLE LLC

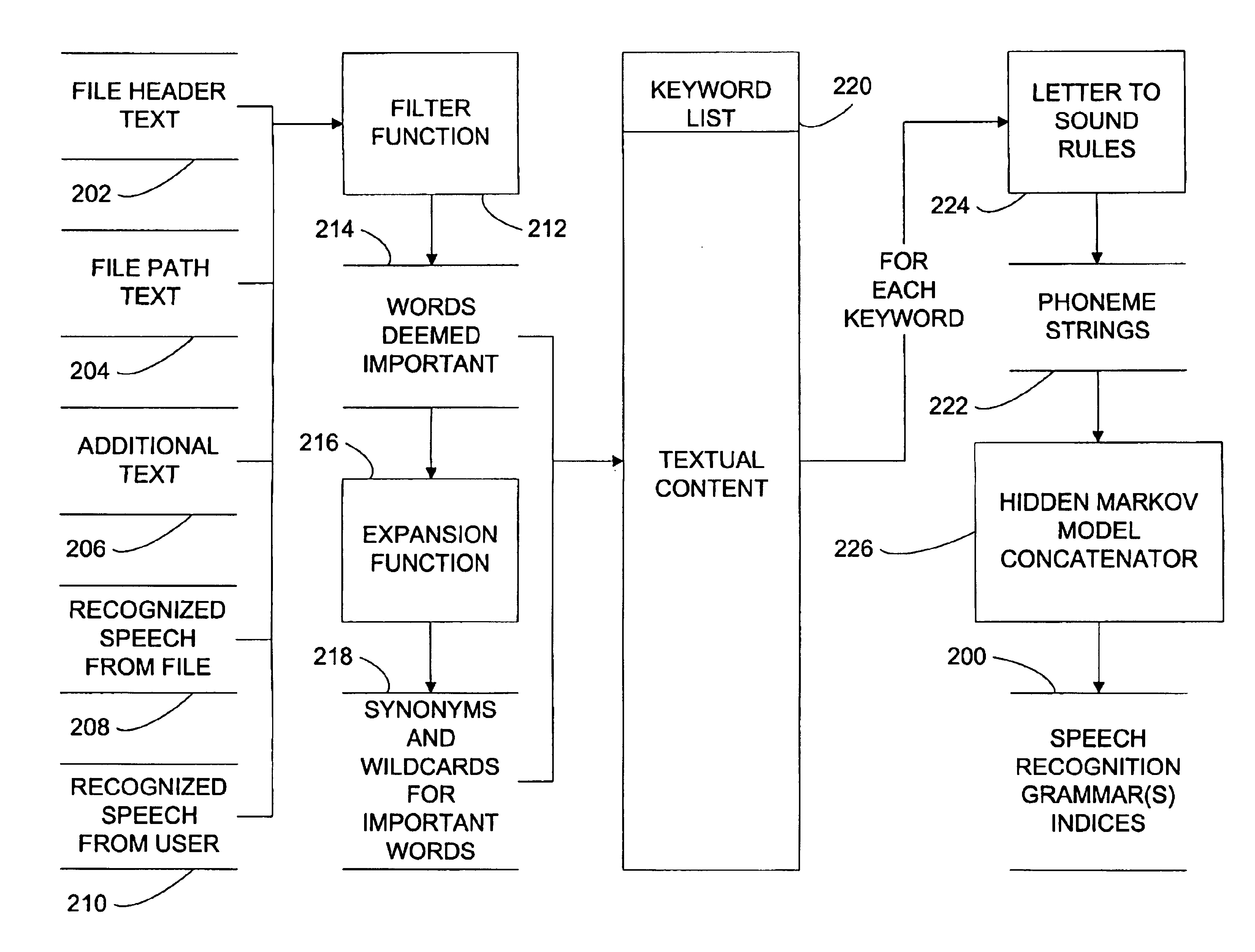

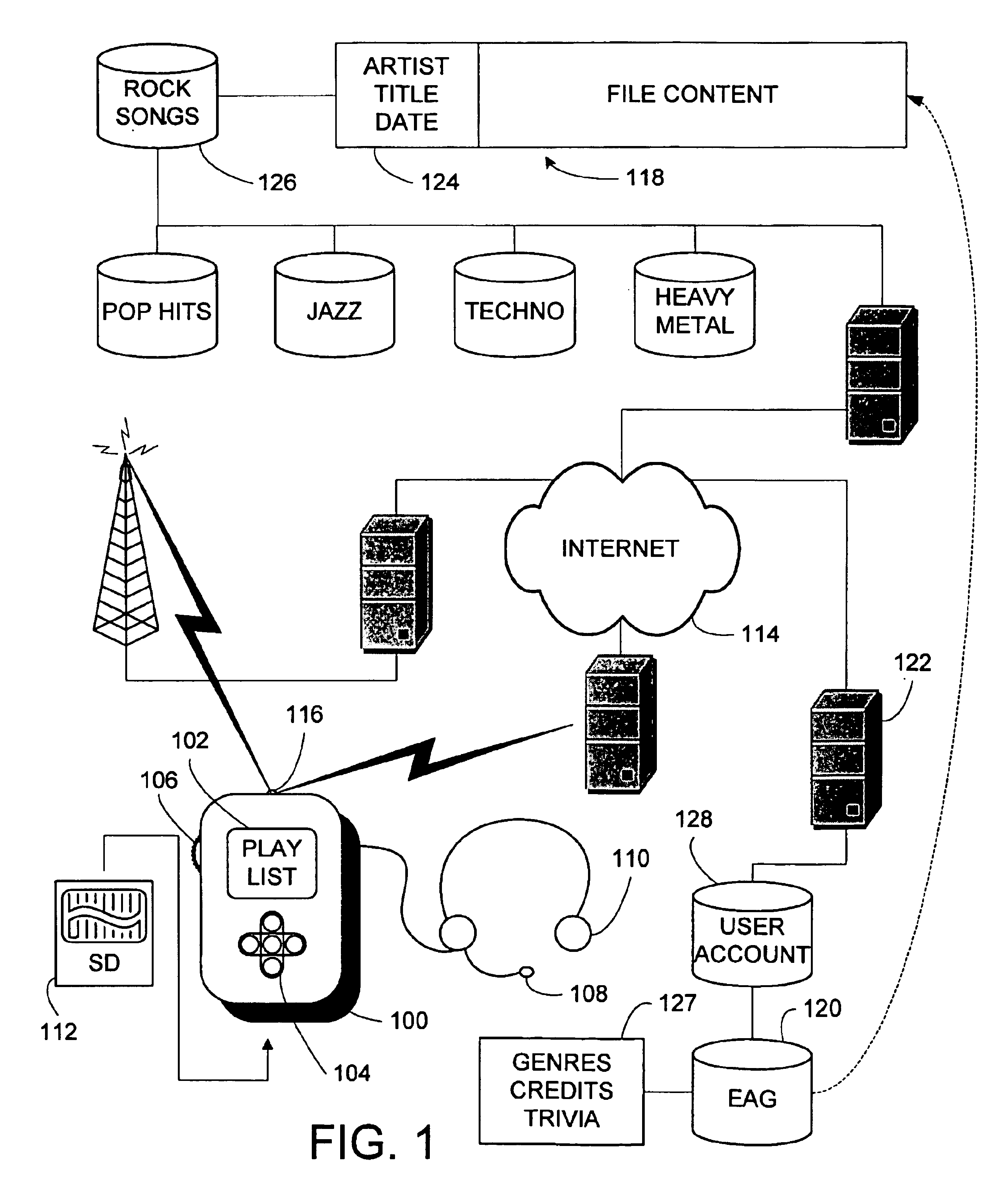

System and method of media file access and retrieval using speech recognition

InactiveUS6907397B2Data processing applicationsDigital data information retrievalSpeech identificationSpeech sound

An embedded device for playing media files is capable of generating a play list of media files based on input speech from a user. It includes an indexer generating a plurality of speech recognition grammars. According to one aspect of the invention, the indexer generates speech recognition grammars based on contents of a media file header of the media file. According to another aspect of the invention, the indexer generates speech recognition grammars based on categories in a file path for retrieving the media file to a user location. When a speech recognizer receives an input speech from a user while in a selection mode, a media file selector compares the input speech received while in the selection mode to the plurality of speech recognition grammars, thereby selecting the media file.

Owner:INTERTRUST TECH CORP

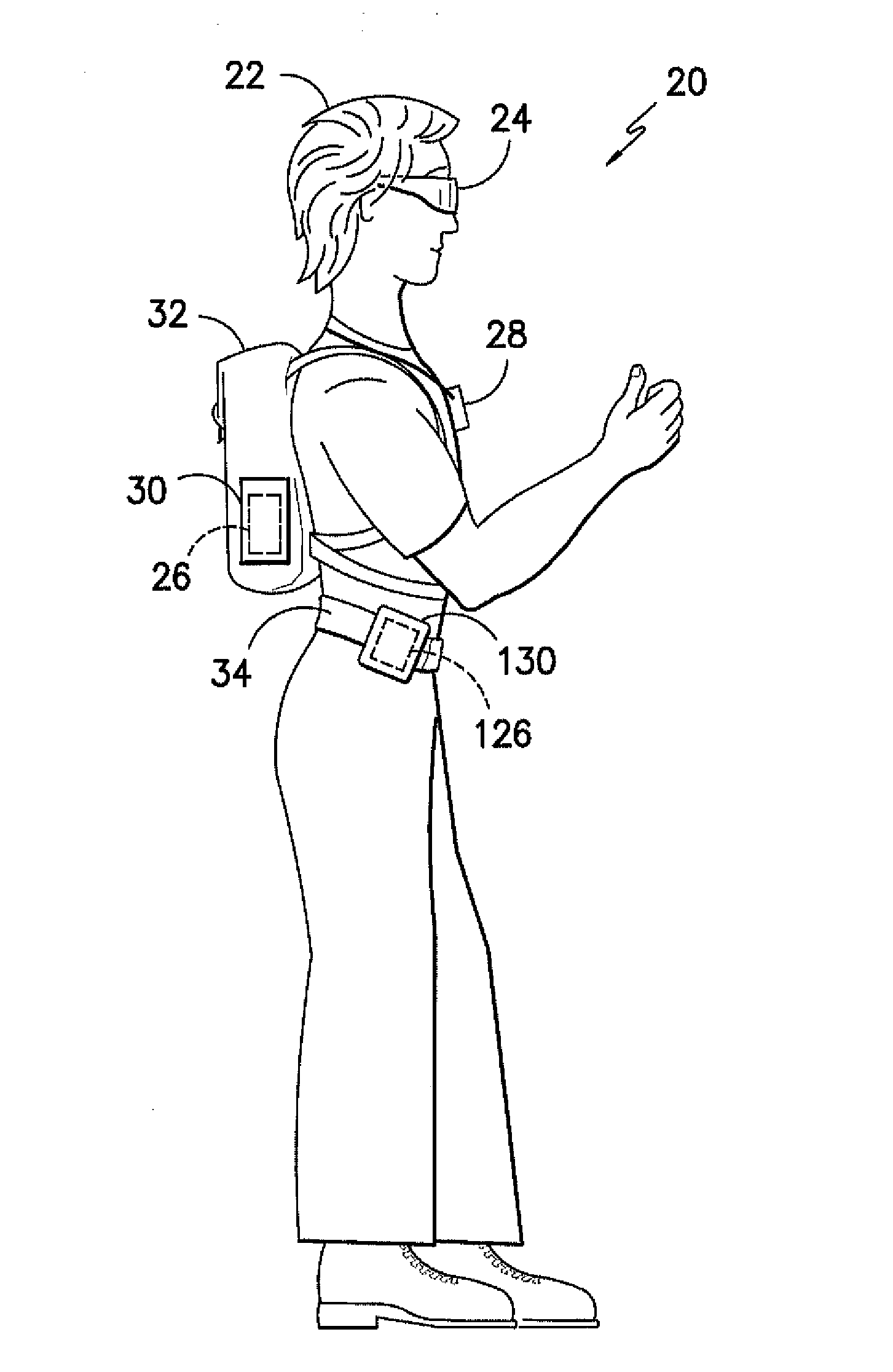

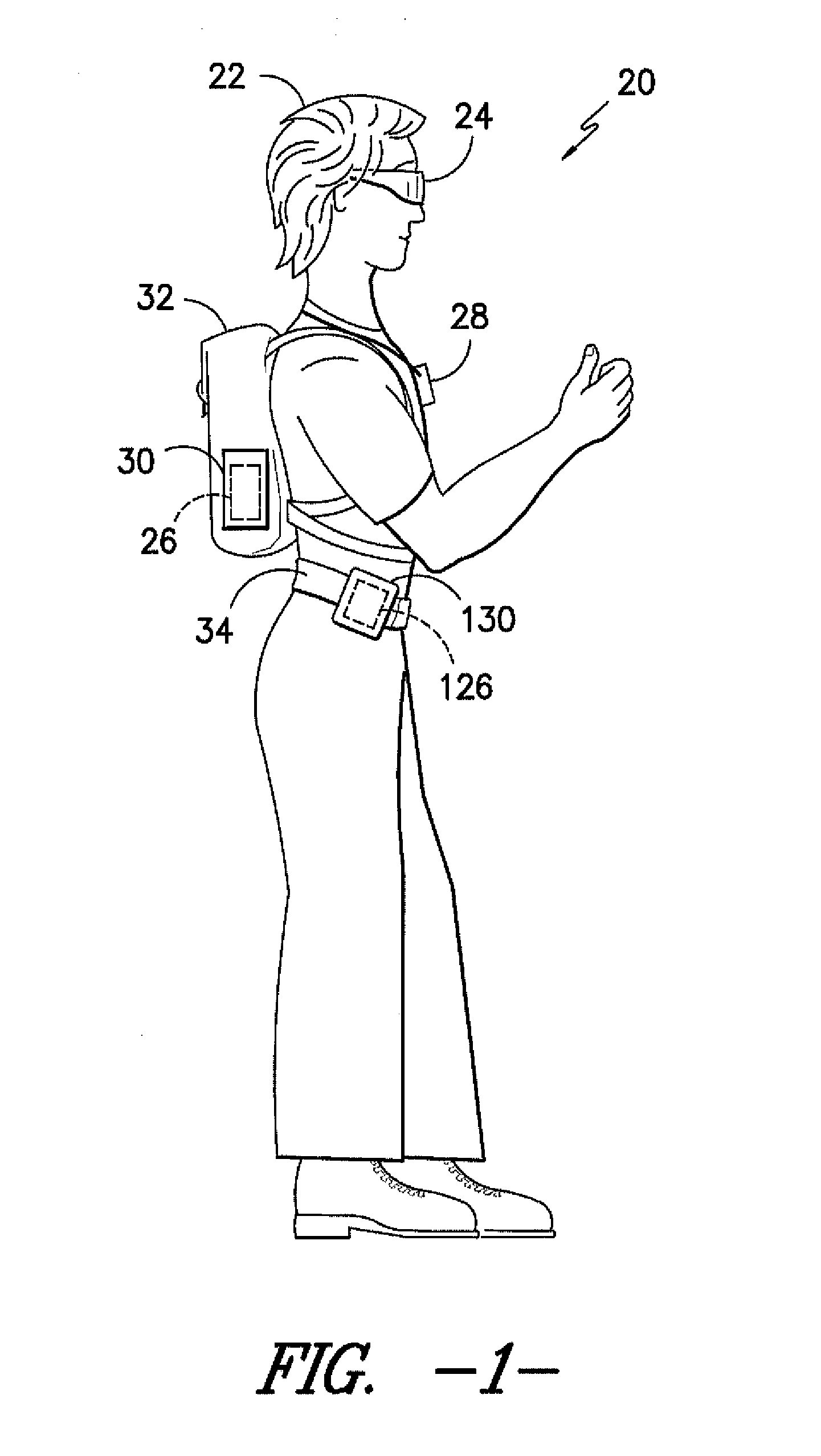

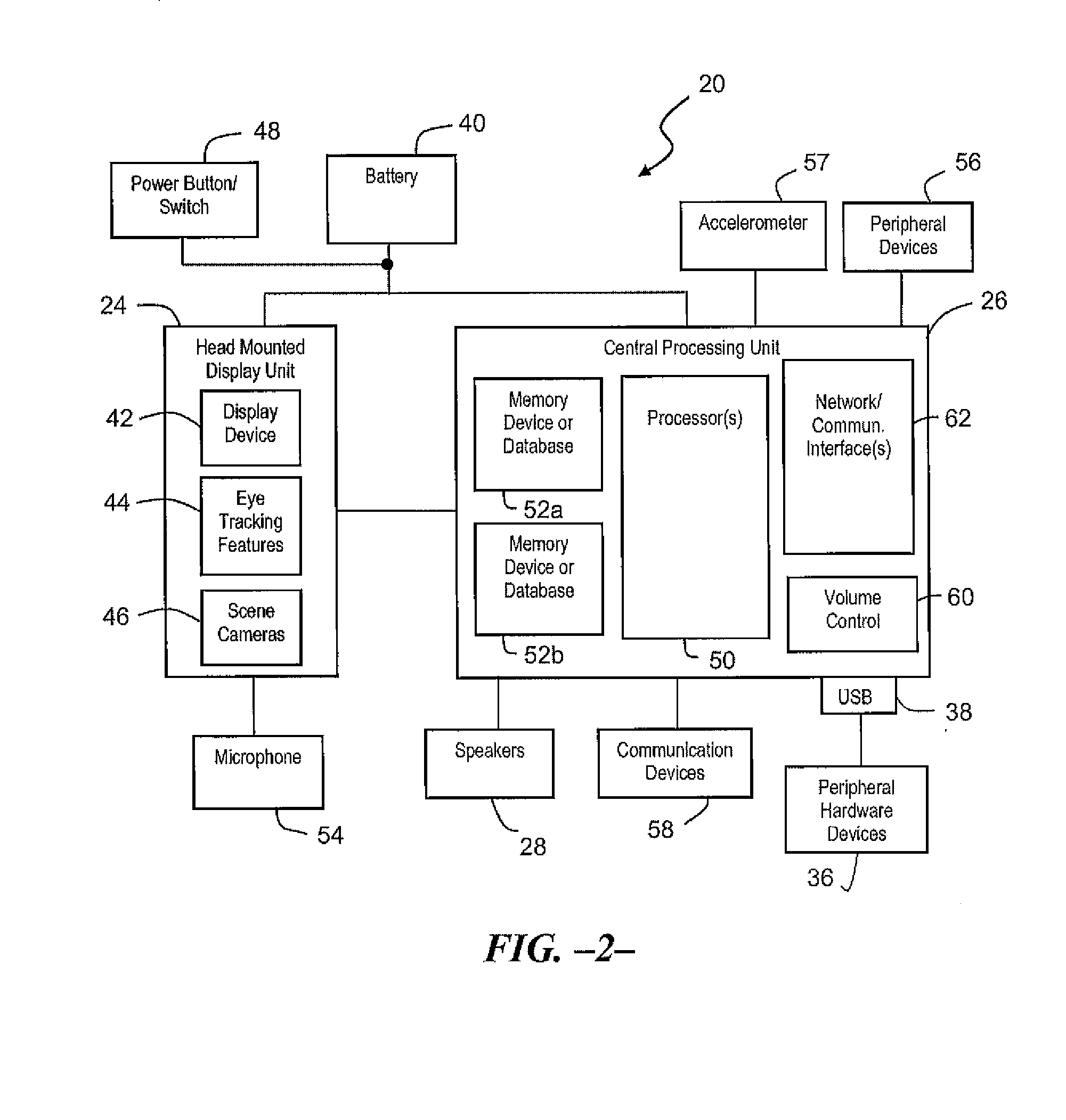

Speech generation device with a head mounted display unit

InactiveUS20130300636A1Eliminates potential visual restrictionReduce power consumptionCathode-ray tube indicatorsSpeech synthesisSpeech soundSpeech generation

A speech generation device is disclosed. The speech generation device may include a head mounted display unit having a variety of different components that enhance the functionality of the speech generation device. The speech generation device may further include computer-readable medium that, when executed by a processor, instruct the speech generation device to perform desired functions.

Owner:DYNAVOX SYST

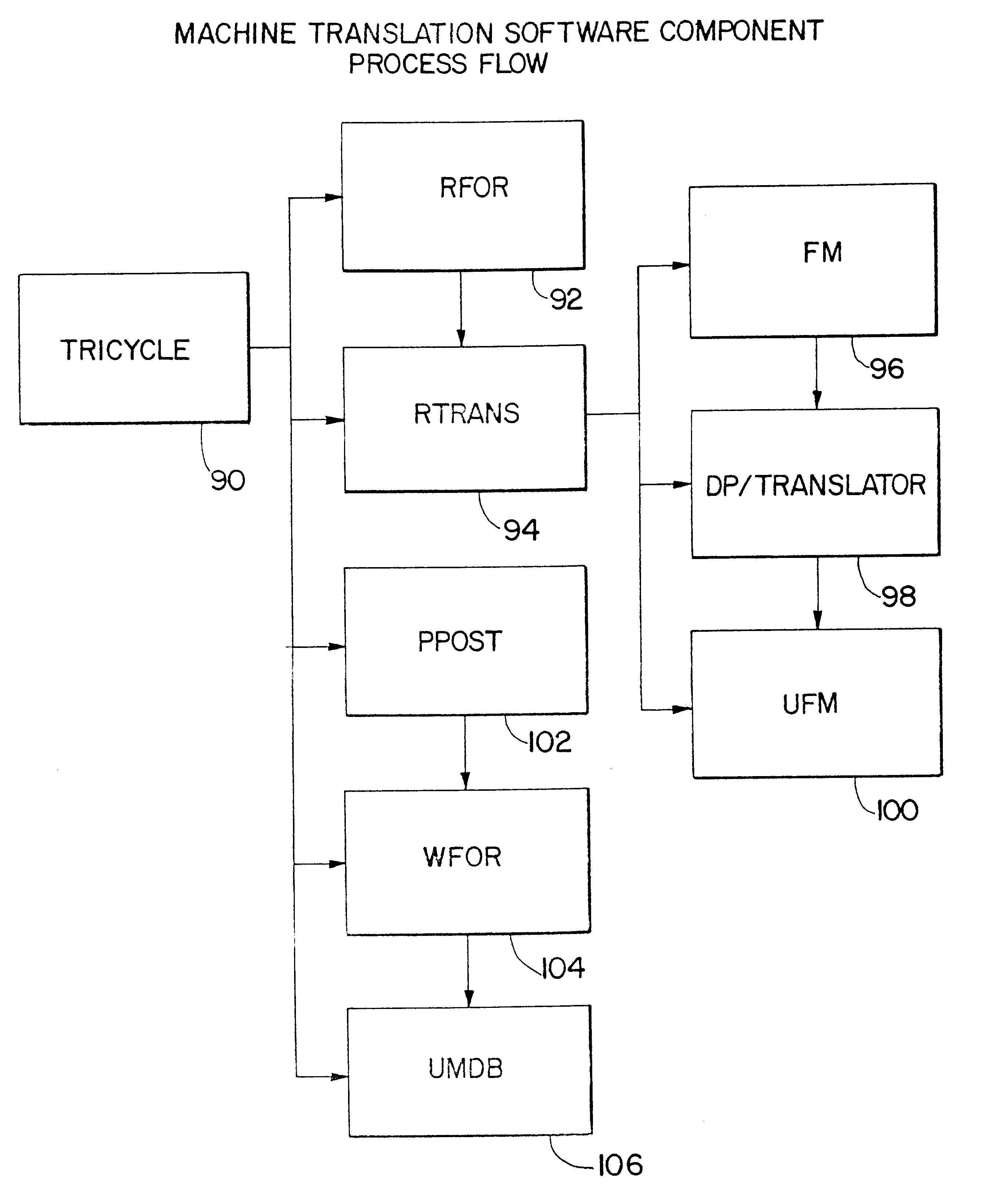

System for automated translation of speech

InactiveUS7970598B1Natural language translationAutomatic exchangesSpeech to speech translationMore language

The present invention allows subscribers to an online information service to participate in real-time conferencing or chat sessions in which a message originating from a subscriber in accordance with a first language is translated to one or more languages before it is broadcast to the other conference areas. Messages in a first language are translated automatically to one or more other languages through language translation capabilities resident at online information service host computers. Access software that subscribers use for participating in conference is integrated with speech recognition and speech generation software such that a subscriber may speak the message he or she would like to share with other participants and may hear the messages from the other participants in the conference. Speech-to-speech translation may be accomplished as a message spoken into a computer microphone in accordance with a first language may be recited by a remote computer in accordance with a second language.

Owner:META PLATFORMS INC

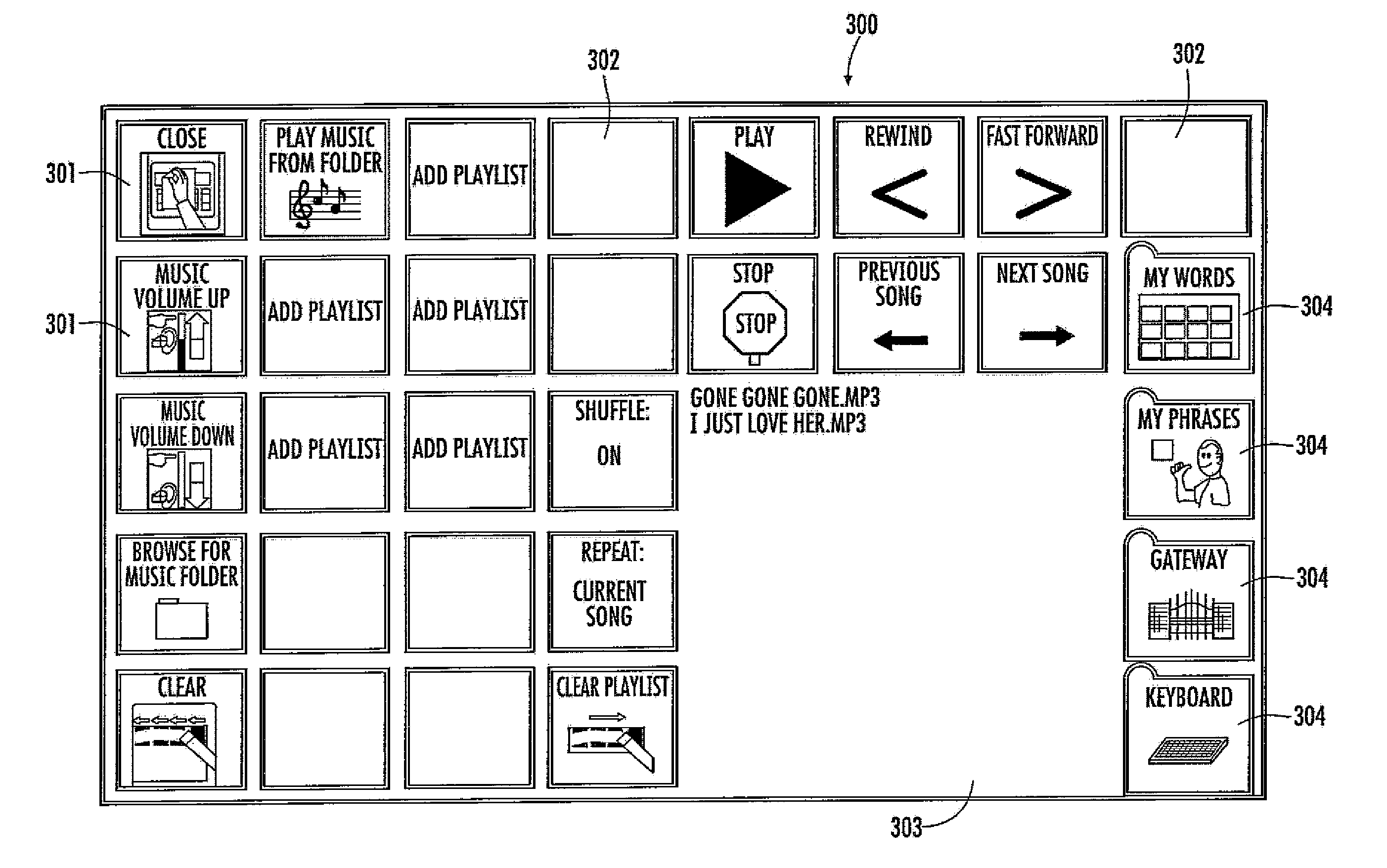

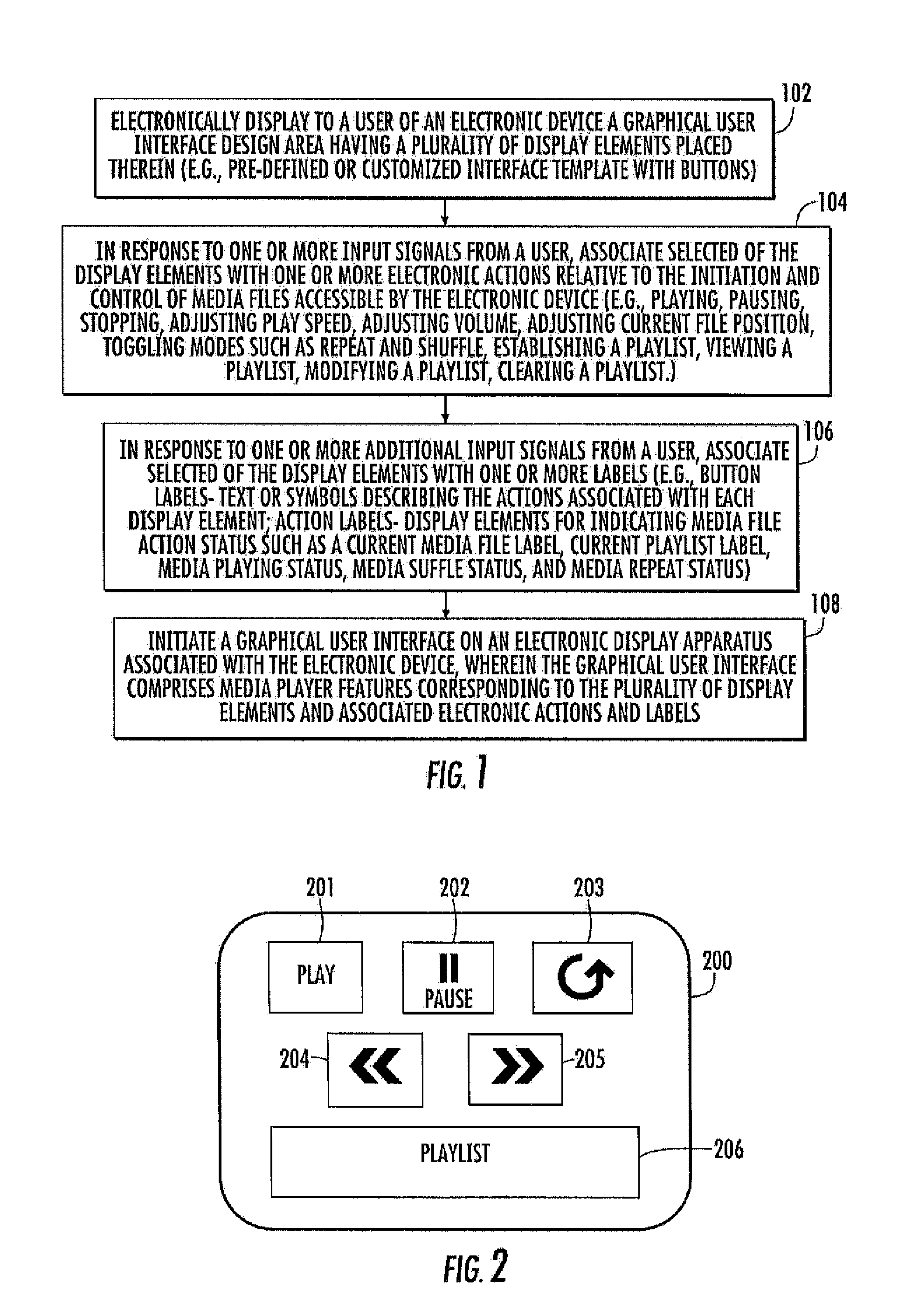

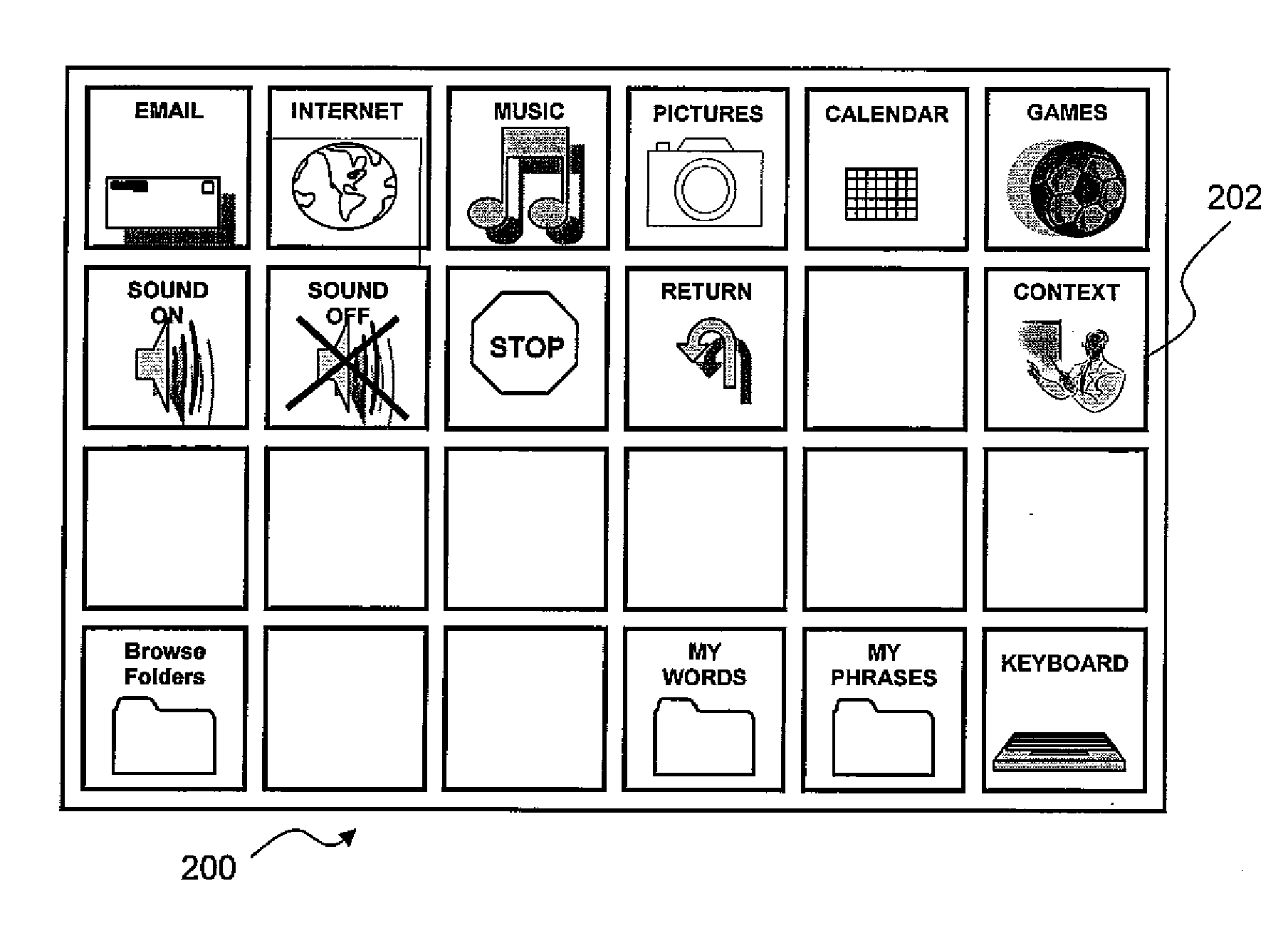

System and method of creating custom media player interface for speech generation device

InactiveUS20110202842A1OptimizationInput/output for user-computer interactionSoftware engineeringGraphicsGraphical user interface

Systems and methods of providing electronic features for creating a customized media player interface for an electronic device include providing a graphical user interface design area having a plurality of display elements. Electronic input signals then may define for association with selected display elements one or more electronic actions relative to the initiation and control of media files accessible by the electronic device (e.g., playing, pausing, stopping, adjusting play speed, adjusting volume, adjusting current file position, toggling modes such as repeat or shuffle, and / or establishing, viewing and / or clearing a playlist.) Additional electronic input signals may define for association with selected display elements labels such as action identification labels or media status labels. A graphical user interface is then initiated on an electronic display associated with an electronic device, wherein the graphical user interface comprises media player features corresponding to the plurality of display elements and associated electronic actions and / or labels.

Owner:DYNAVOX SYST

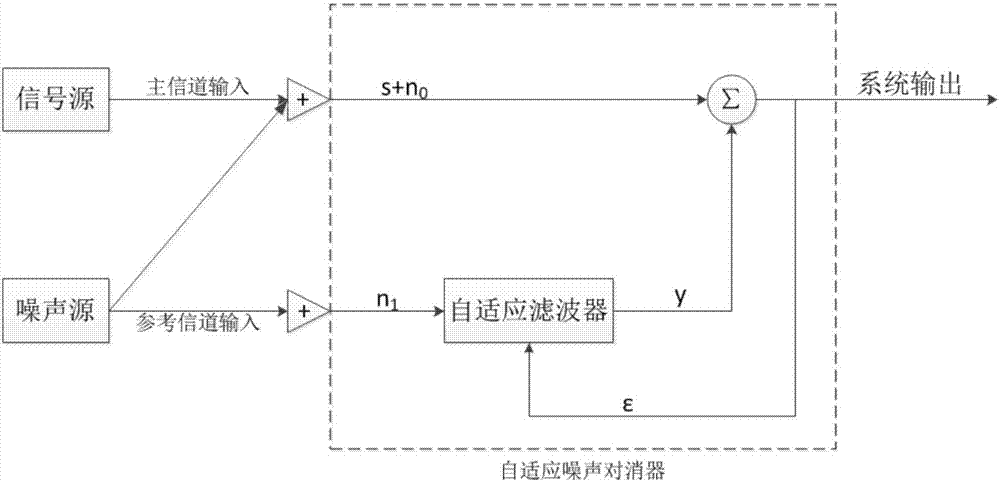

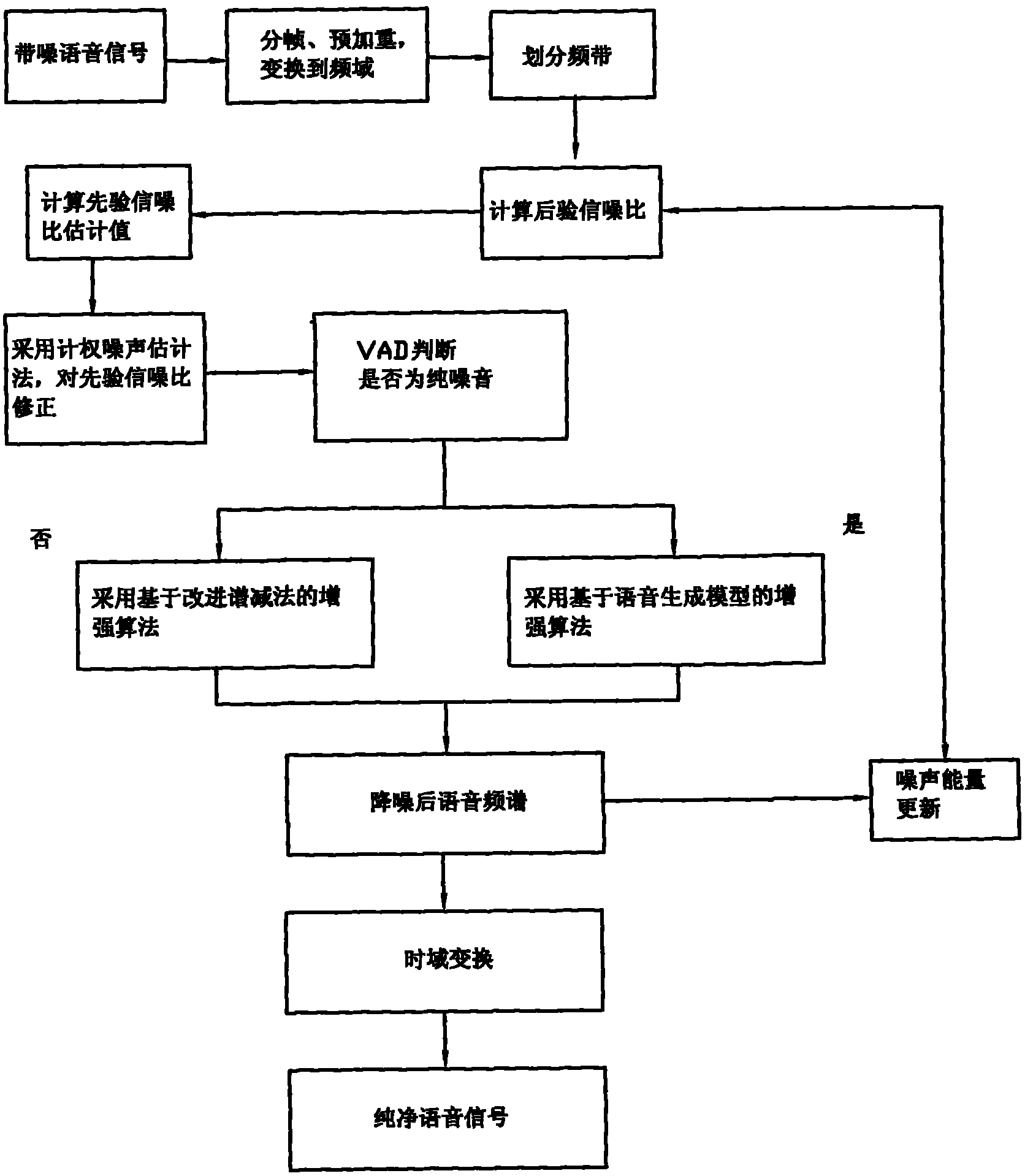

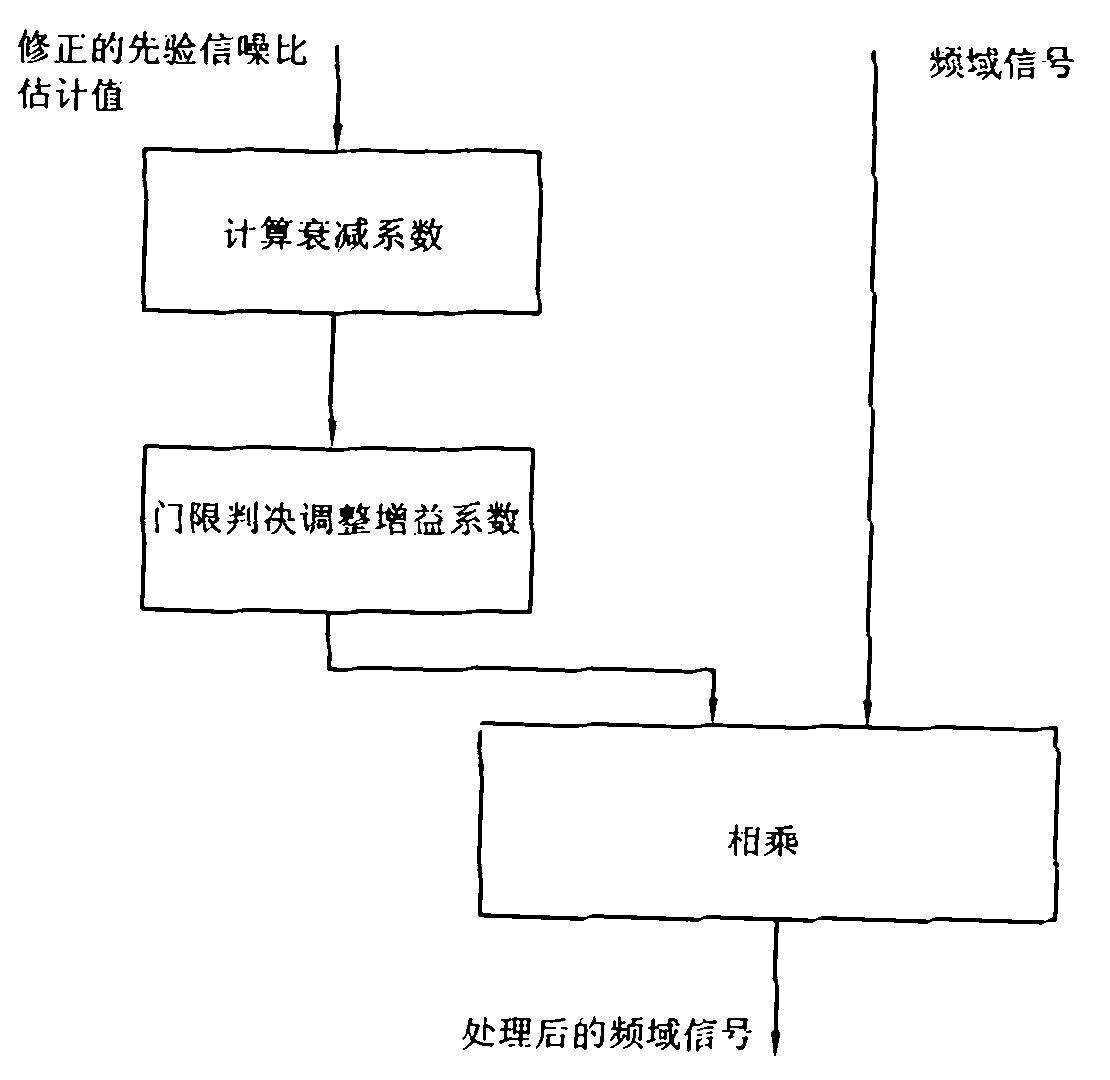

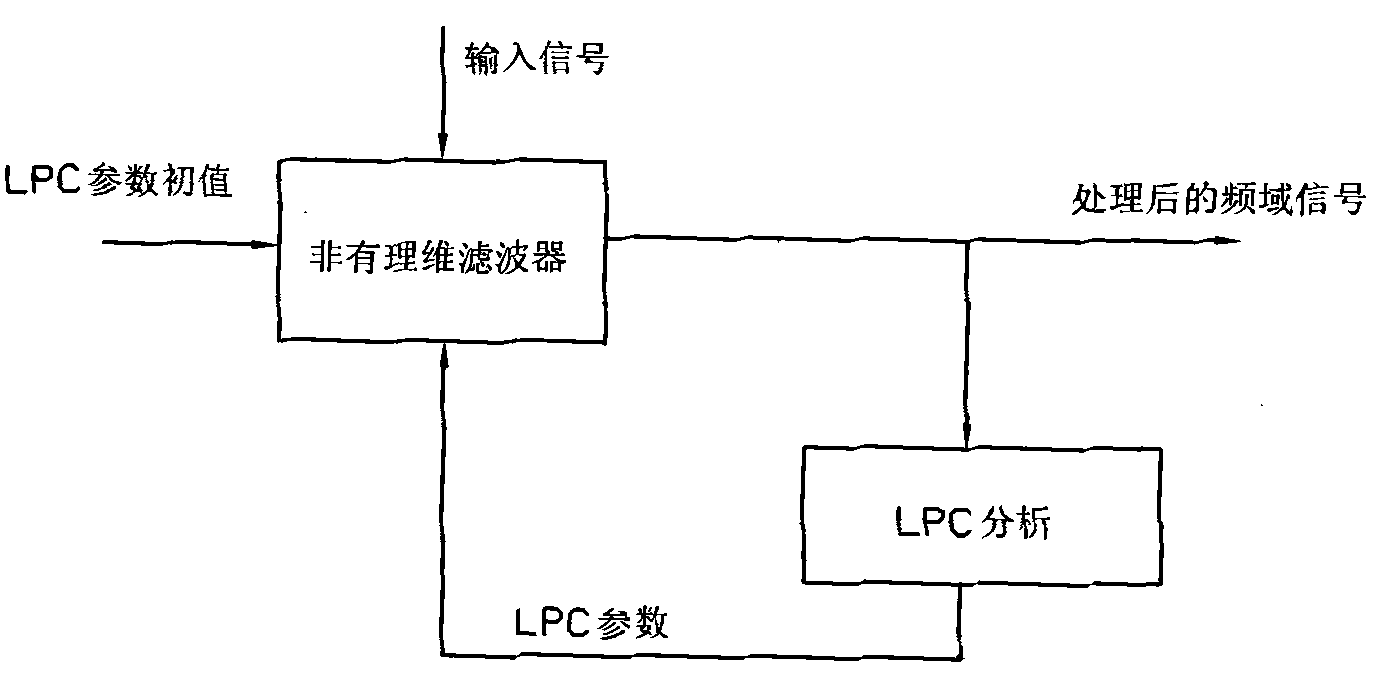

Voice enhancement method and device using same

ActiveCN101976566AGuaranteed intelligibilitySuppress background noiseSpeech analysisTime domainUltrasound attenuation

The invention provides a voice enhancement method. The method comprises the following steps of: judging whether the current frame has pure noise by using a judgment device; if the current frame has pure noise and a plurality of previous frames of the current frame have pure noise, improving frequency domain signals by using a voice enhancement algorithm of an improved spectral subtraction method, otherwise, improving the frequency domain signals by using an enhancement algorithm of a voice generating model; and transforming the processed frequency domain signals to a time domain, performing de-emphasis processing and acquiring output signals. The invention also provides a device using the method. The voice enhancement method greatly improves the attenuation of residual noise, and ensures the voice intelligibility.

Owner:AAC TECH PTE LTD

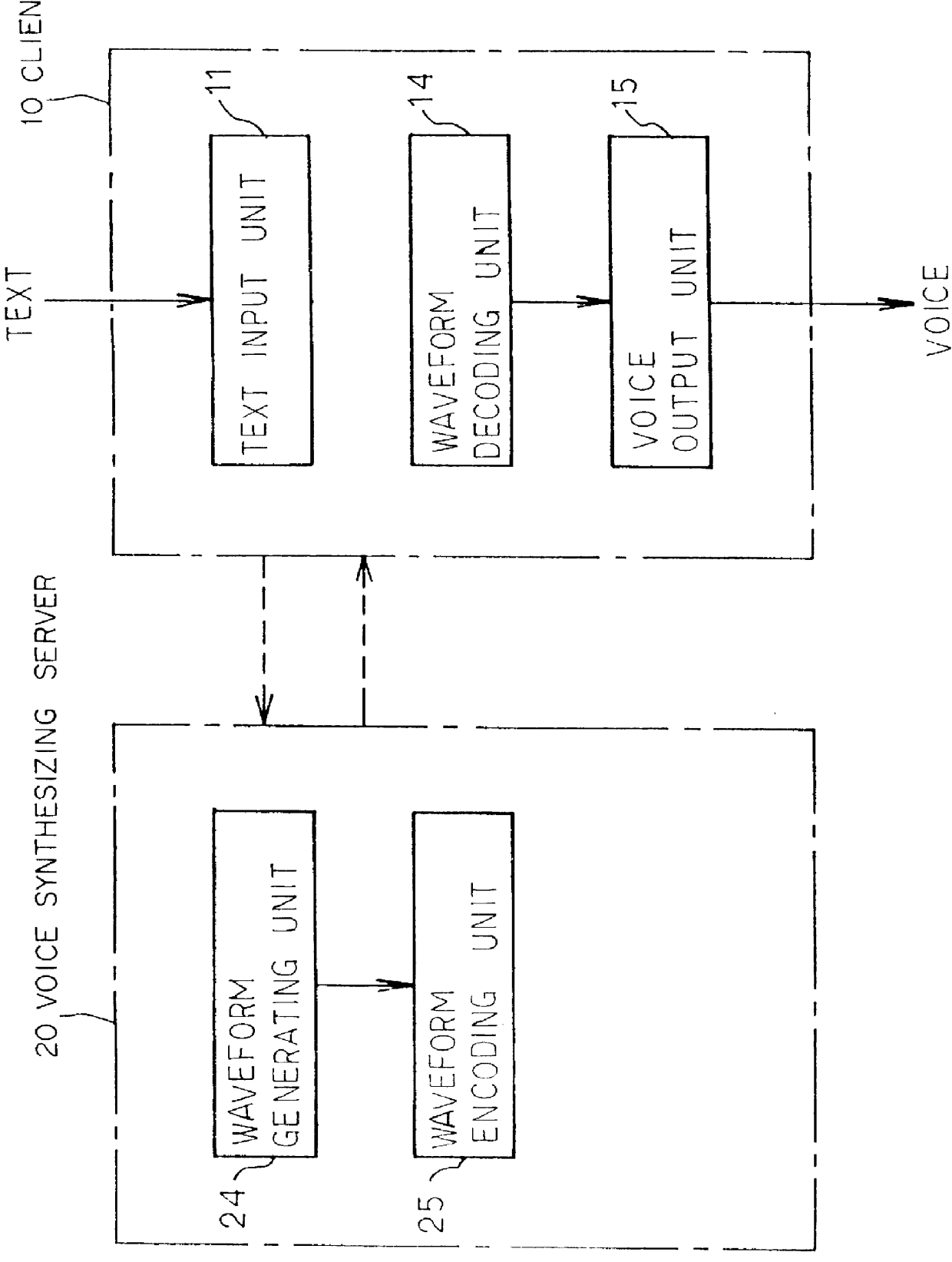

Speech synthesis system

The data control unit in the voice synthesizing server of the present invention receives from a client data to be processed to synthesize voice, and determines the type of the received data. If the received data are text data, they are outputted to the pronunciation symbol generating unit to generate pronunciation symbols. If the received data are pronunciation symbols, they are outputted to the acoustic parameter generating unit to generate acoustic parameters. If the received data are acoustic parameters, they are outputted to the waveform generating unit to generate voice waveforms, and the generated voice waveforms are sent from the data sending unit to the client. The client receives the voice waveforms from the voice generating server, and outputs the received voice waveforms as voice from the voice output unit.

Owner:FUJITSU LTD

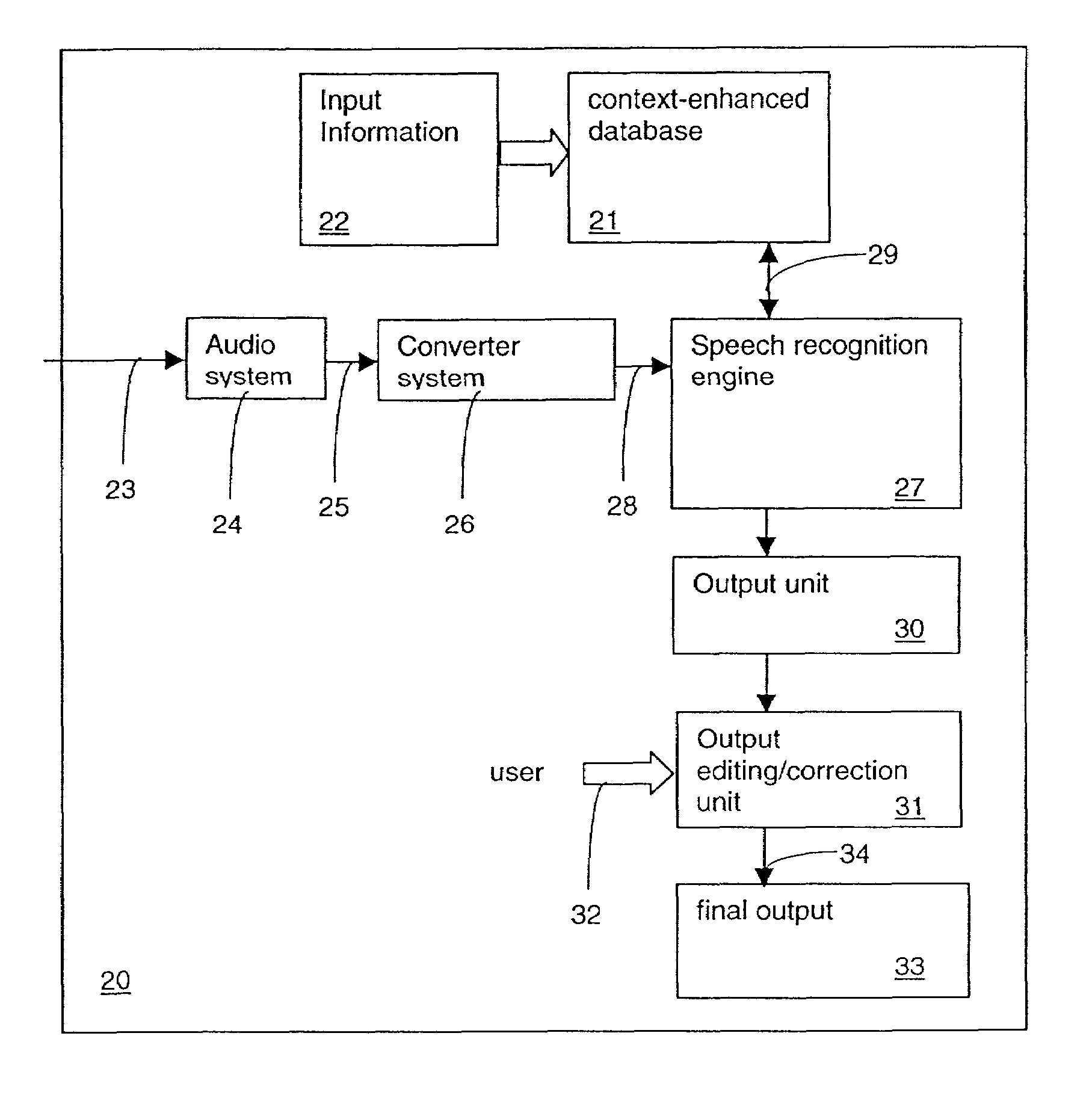

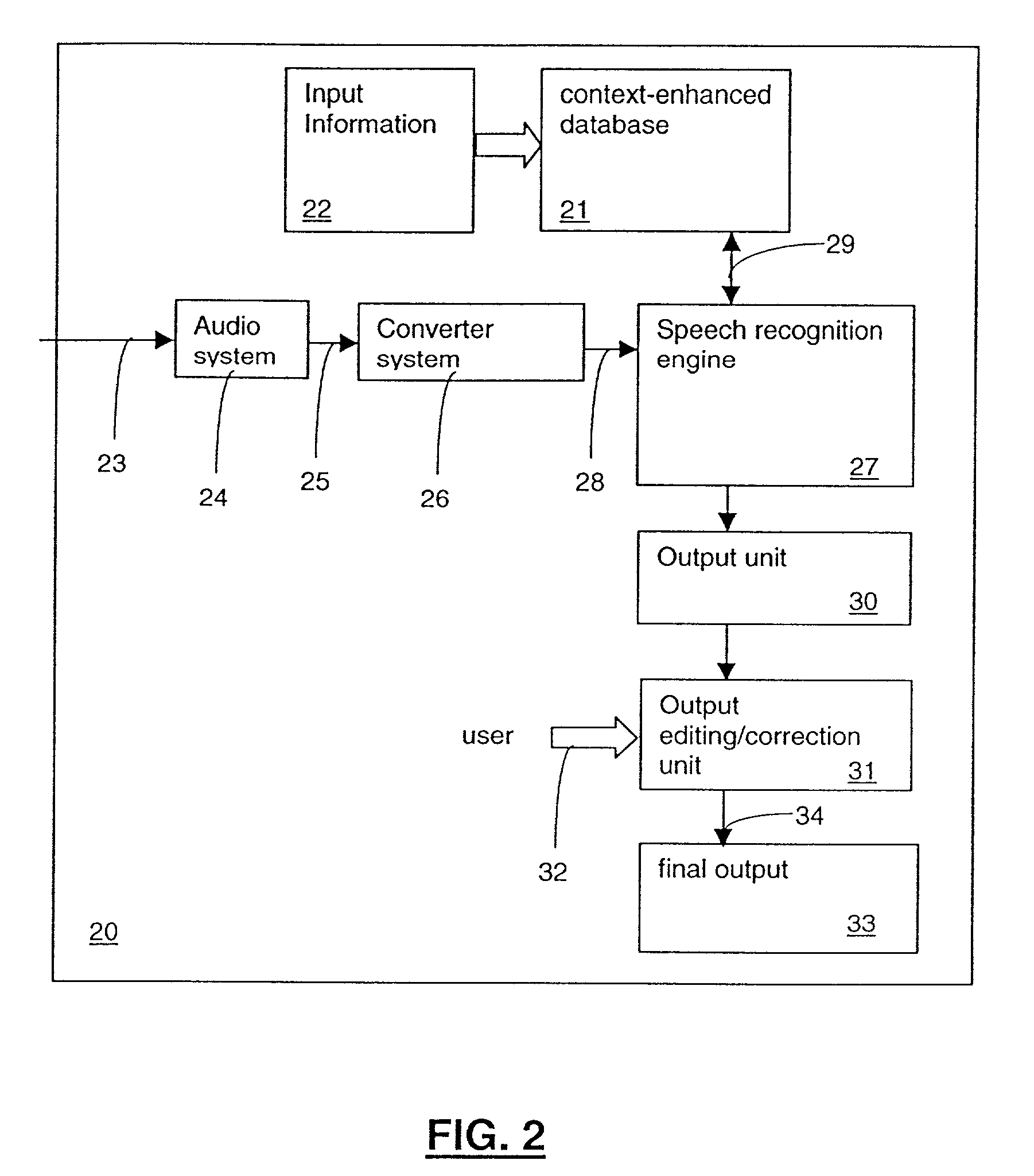

Speech recognition by automated context creation

ActiveUS7243069B2Reduce in quantityImprove usabilitySpeech recognitionSpeech identificationSpeech sound

A method for speech recognition can include generating a context-enhanced database from a system input. A voice-generated output can be generated from a speech signal by performing a speech recognition task to convert the speech signal into computer-processable segments. During the speech recognition task, the context-enhanced database can be accessed to improve the speech recognition rate. Accordingly, the speech signal can be interpreted with respect to words included within the context-enhanced database. Additionally, a user can edit or correct an output in order to generate the final voice-generated output which can be made available.

Owner:NUANCE COMM INC

Combined speech recognition and text-to-speech generation

ActiveUS7577569B2Speech recognitionSpeech synthesisVocabulary speech recognitionSpeech identification

Text-to-speech (TTS) generation is used in conjunction with large vocabulary speech recognition to say words selected by the speech recognition. The software for performing the large vocabulary speech recognition can share speech modeling data with the TTS software. TTS or recorded audio can be used to automatically say both recognized text and the names of recognized commands after their recognition. The TTS can automatically repeats text recognized by the speech recognition after each of a succession of end of utterance detections. A user can move a cursor back or forward in recognized text, and the TTS can speak one or more words at the cursor location after each such move. The speech recognition can be used to produces a choice list of possible recognition candidates and the TTS can be used to provide spoken output of one or more of the candidates on the choice list.

Owner:CERENCE OPERATING CO

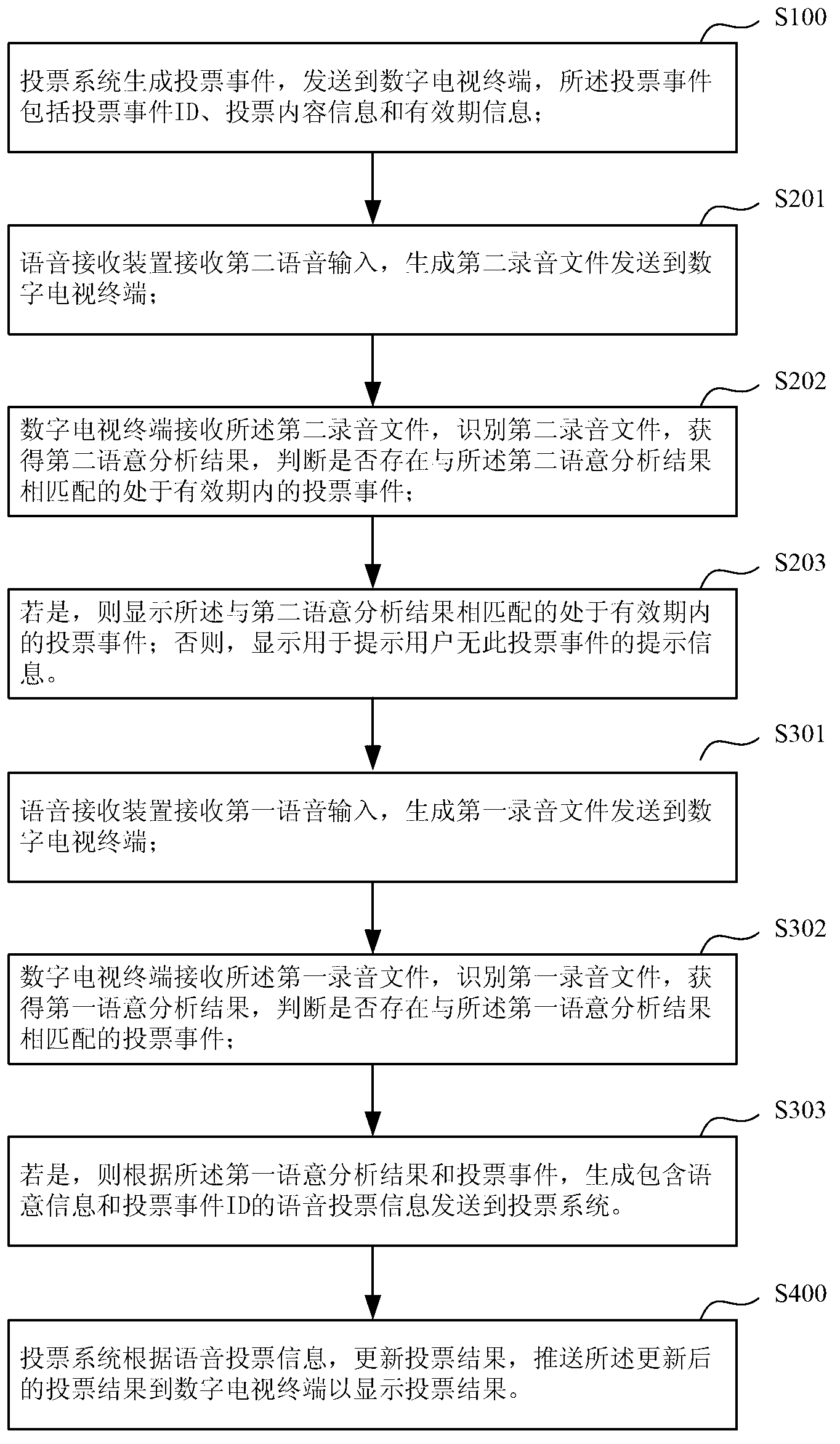

Television voice voting method, television voice voting system and television voice voting terminal

InactiveCN103067754AThe convenient way to participate in TV voting activitiesRealize the function of voice TV votingSelective content distributionComputer hardwareUser participation

The invention discloses a television voice voting method, a television voice voting system and a television voice voting terminal. The television voice voting method comprise the following steps: a vote event is formed by the voting system and sent to a digital television terminal, wherein the vote event comprises a vote event identification (ID), vote content information and validity period information; the vote event is received by the digital television terminal and the vote event within the period of validity is showed; voice input is received, voice is identified, and voice voting information is generated and sent to the voting system; a voting result is updated by the voting system according to the voice voting information, the voting result is sent to the digital television terminal and showed. Due to the fact that the digital television terminal receives the vote event which is sent by the voting system, identifies the voice voting information of a user, sends a vote which is chosen by the user to the voting system, receives and shows the updated voting result which is sent back by the system, the function of voice television voting is achieved. The method through which users participate in a television voting activity is more convenient, faster and more interesting.

Owner:SHENZHEN COSHIP ELECTRONICS CO LTD

User interface using speech generation to answer cellular phones

InactiveUS6842622B2Cordless telephonesUnauthorised/fraudulent call preventionSpeech soundCellular telephone

A method, computer program product, and system for answering a wireless telephone is provided. In one embodiment, the wireless telephone receives an incoming call and then determines whether the user has placed the phone in automatic call answering mode. If it is determined that automatic call answering has been selected by a user, then the phone answers the incoming call by providing the calling party with an indication that the user will take the call momentarily, such as by sending a voice message indicating that the user is busy but will take the call momentarily and instructing the calling party to not hang up. The phone also places itself into mute mode until the user has taken the incoming call to prevent the calling party from overhearing conversations that may be taking place around the user until the user has determined that it is convenient to take the phone call. In other embodiments, the wireless telephone allows the user to carry on conversations without speaking through selection of appropriate prerecorded or generated phrases.

Owner:TAIWAN SEMICON MFG CO LTD

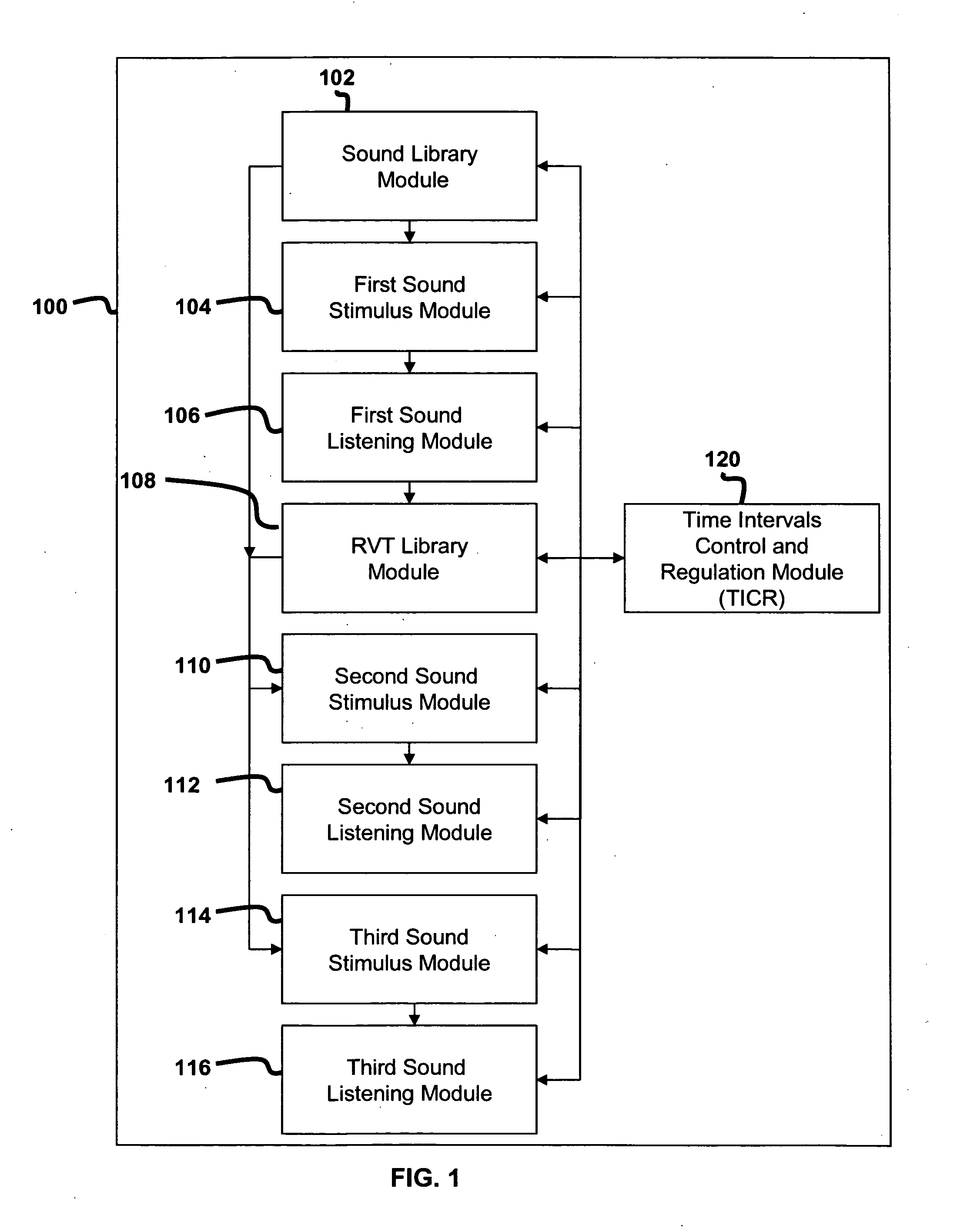

System for treating disabilities such as dyslexia by enhancing holistic speech perception

InactiveUS20050142522A1Increased complexityEasy to demonstrateReadingElectrical appliancesDeep dyslexiaPattern perception

The present invention relates to systems and methods for enhancing the holistic and temporal speech perception processes of a learning-impaired subject. A subject listens to a sound stimulus which induces the perception of verbal transformations. The subject records the verbal transformations which are then used to create further sound stimuli in the form of semantic-like phrases and an imaginary story. Exposure to the sound stimuli enhances holistic speech perception of the subject with cross-modal benefits to speech production, reading and writing. The present invention has application to a wide range of impairments including, Specific Language Impairment, language learning disabilities, dyslexia, autism, dementia and Alzheimer's.

Owner:EPOCH INNOVATIONS

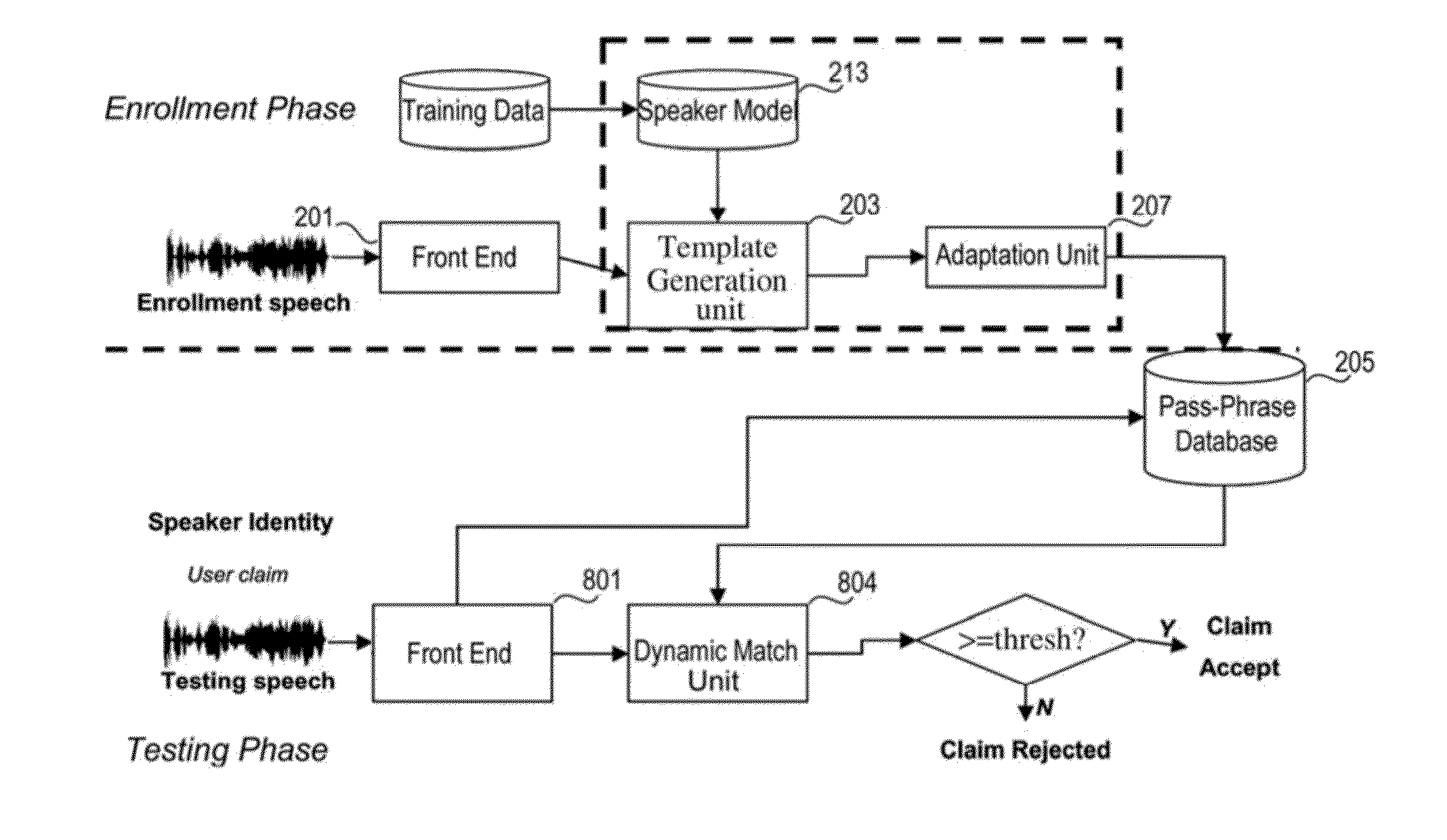

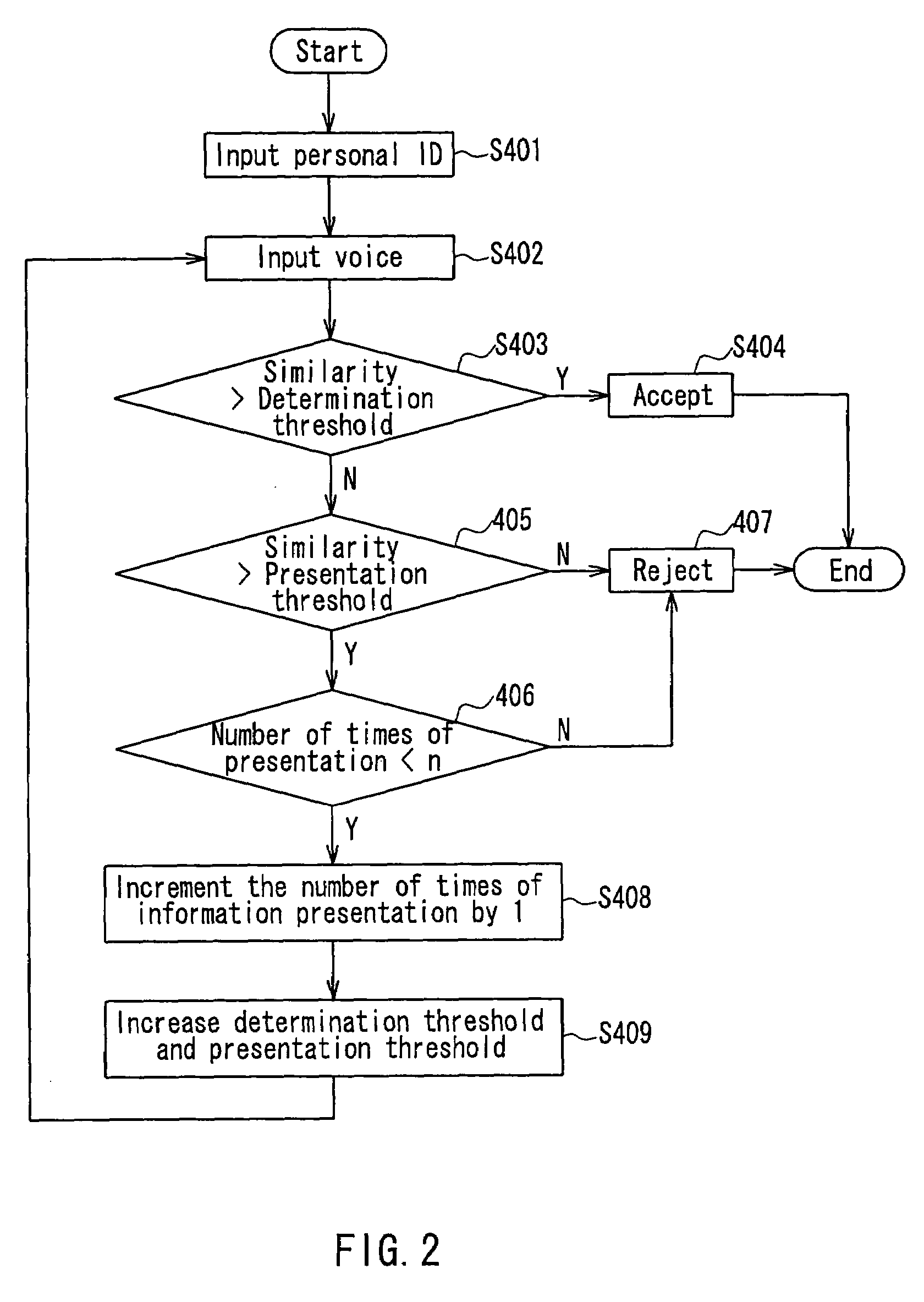

Device and method for pass-phrase modeling for speaker verification, and verification system

A device and method for pass-phrase modeling for speaker verification and a speaker verification system are provided. The device comprises a front end which receives enrollment speech from a target speaker, and a template generation unit which generates a pass-phrase template with a general speaker model based on the enrollment speech. With the device, method and system of the present disclosure, by taking the rich variations contained in a general speaker model into account, the robust pass-phrase modeling is ensured even the enrollment data is insufficient, even just one pass-phrase is available from a target speaker.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

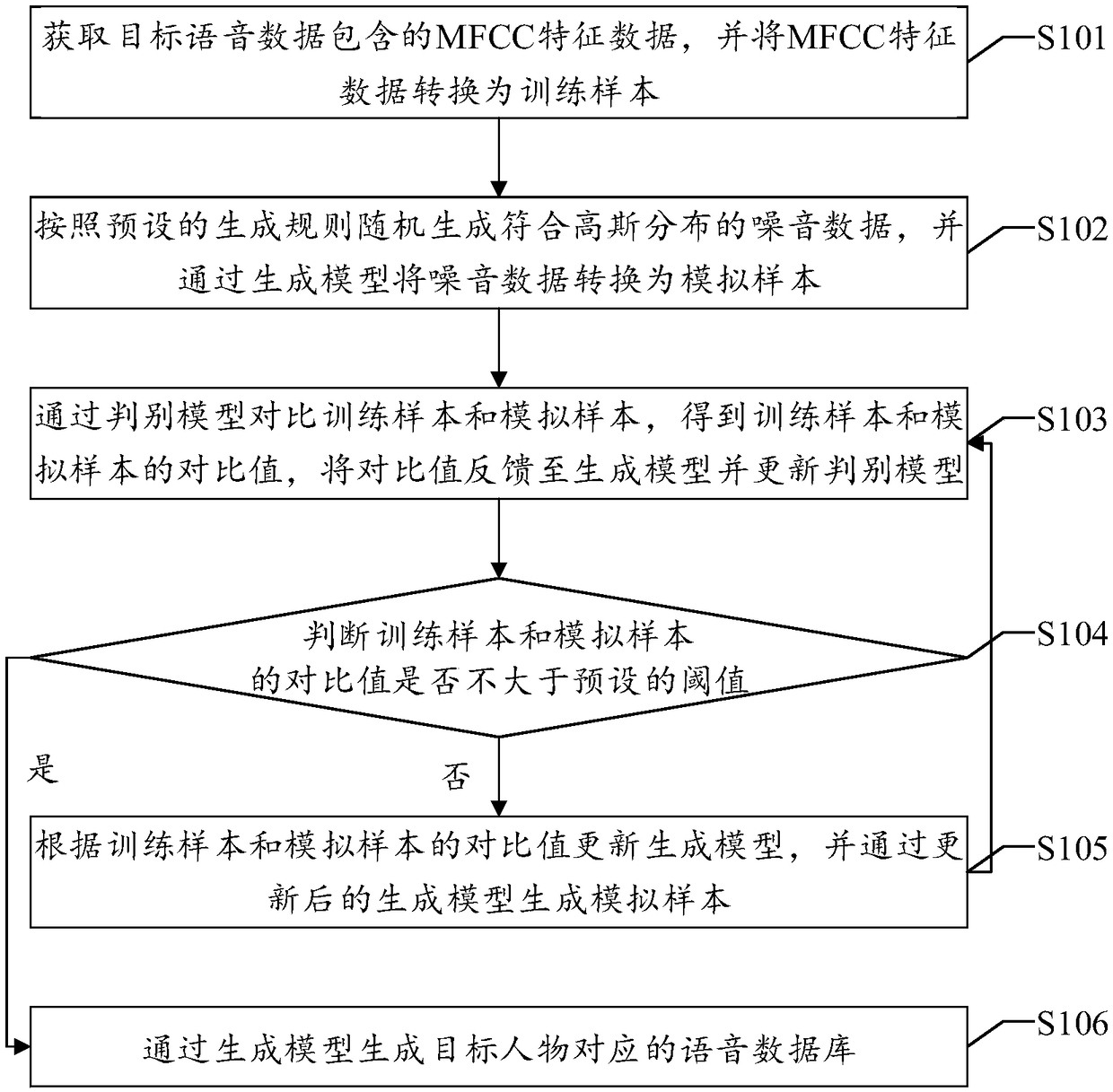

Voice generation method and device based on generative adversarial network

ActiveCN108597496AImprove discrimination abilityAuthentic enoughSpeech recognitionIdentity recognitionAlgorithm

The invention discloses a voice generation method based on a generative adversarial network. According to the method, randomly-generated noise data meeting Gaussian distribution is converted into a simulation sample through a generative model; as the simulation sample does not have the language content, when the generative model and a discrimination model are circularly updated, generative capacities required to be learned by the generative model and discrimination capacities required to be learned by the discrimination model are correspondingly increased, and accordingly the generative capacities of the generative model and the discrimination capacities of the discrimination model are improved; when a contrast value between a training sample and the simulation sample is smaller than or equal to a preset threshold value, it is thought that the generative model has the capacity of generating real data; a voice database generated through the generative model has enough reality, and the recognition rate can be increased when the generative model is applied to identity recognition. Correspondingly, the voice generation method, a voice generation device and voice generation equipment based on the generative adversarial network and a computer readable storage medium have the same advantages.

Owner:SPEAKIN TECH CO LTD

Voice authentication system

InactiveUS7447632B2High precisionReduce rejection rateSpeech recognitionComputer hardwareSpeech verification

A voice authentication system includes: a standard template storage part 17 in which a standard template that is generated from a registered voice of an authorized user and featured with a voice characteristic of the registered voice is stored preliminarily in a state of being associated with a personal ID of the authorized user; an identifier input part 15 that allows a user who intends to be authenticated to input a personal ID; a voice input part 11 that allows the user to input a voice; a standard template / registered voice selection part 16 that selects a standard template and a registered voice corresponding to the inputted identifier; a determination part 14 that refers to the selected standard template and determines whether or not the inputted voice is a voice of the authorized user him / herself and whether or not presentation-use information is to be outputted by referring to a predetermined determination reference; a presentation-use information extraction part 19 that extracts information regarding the registered voice of the authorized user corresponding to the inputted identifier; and a presentation-use information output part 18 that presents the presentation-use information to the user in the case where it is determined by the determination part that the presentation-use information is to be outputted to the user.

Owner:FUJITSU LTD

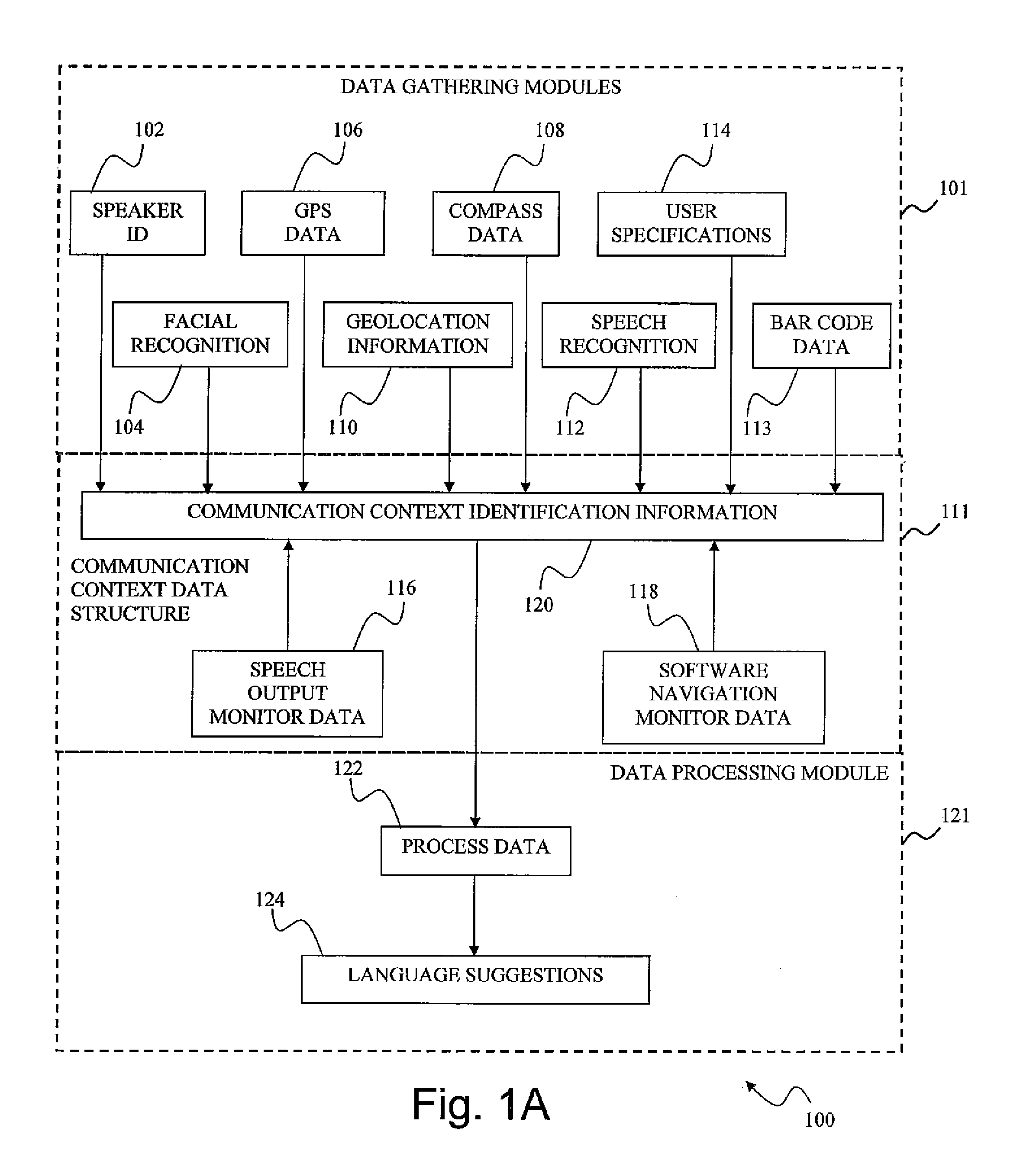

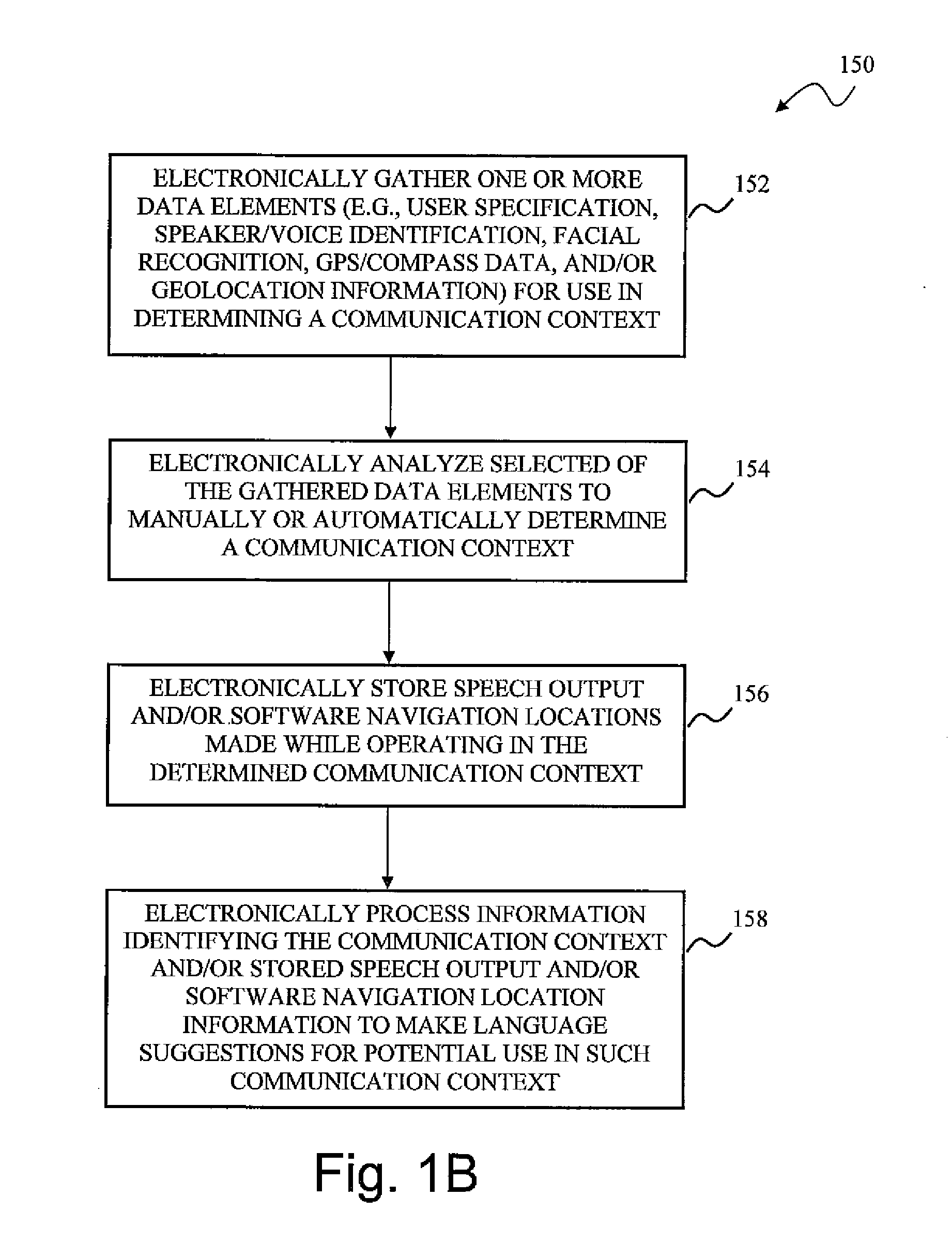

Context-aware augmented communication

InactiveUS20120137254A1OptimizationInput/output processes for data processingText displaySpeech identification

Systems and methods of providing electronic features for creating context-aware vocabulary suggestions for an electronic device include providing a graphical user interface design area having a plurality of display elements. An electronic device user may be provided automated context-aware analysis from information from plural sources including GPS, compass, speaker identification (i.e., voice recognition), facial identification, speech content determination, user specifications, speech output monitoring, and software navigation monitoring to provide a selectable display of suggested vocabulary, previously stored words and phrases, or a keyboard as input options to create messages for text display and / or speech generation. The user may, optionally, manually specify a context.

Owner:DYNAVOX SYST

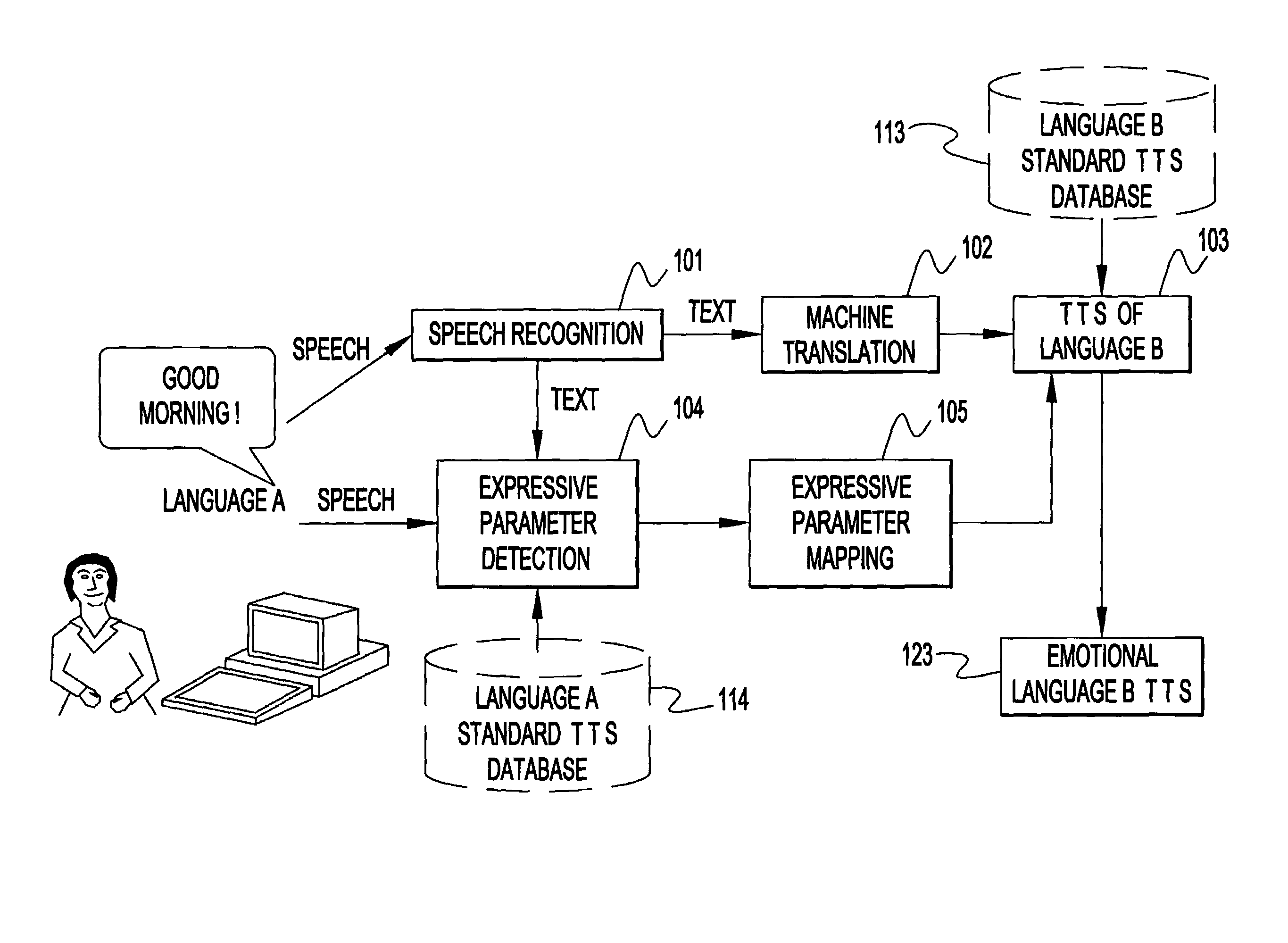

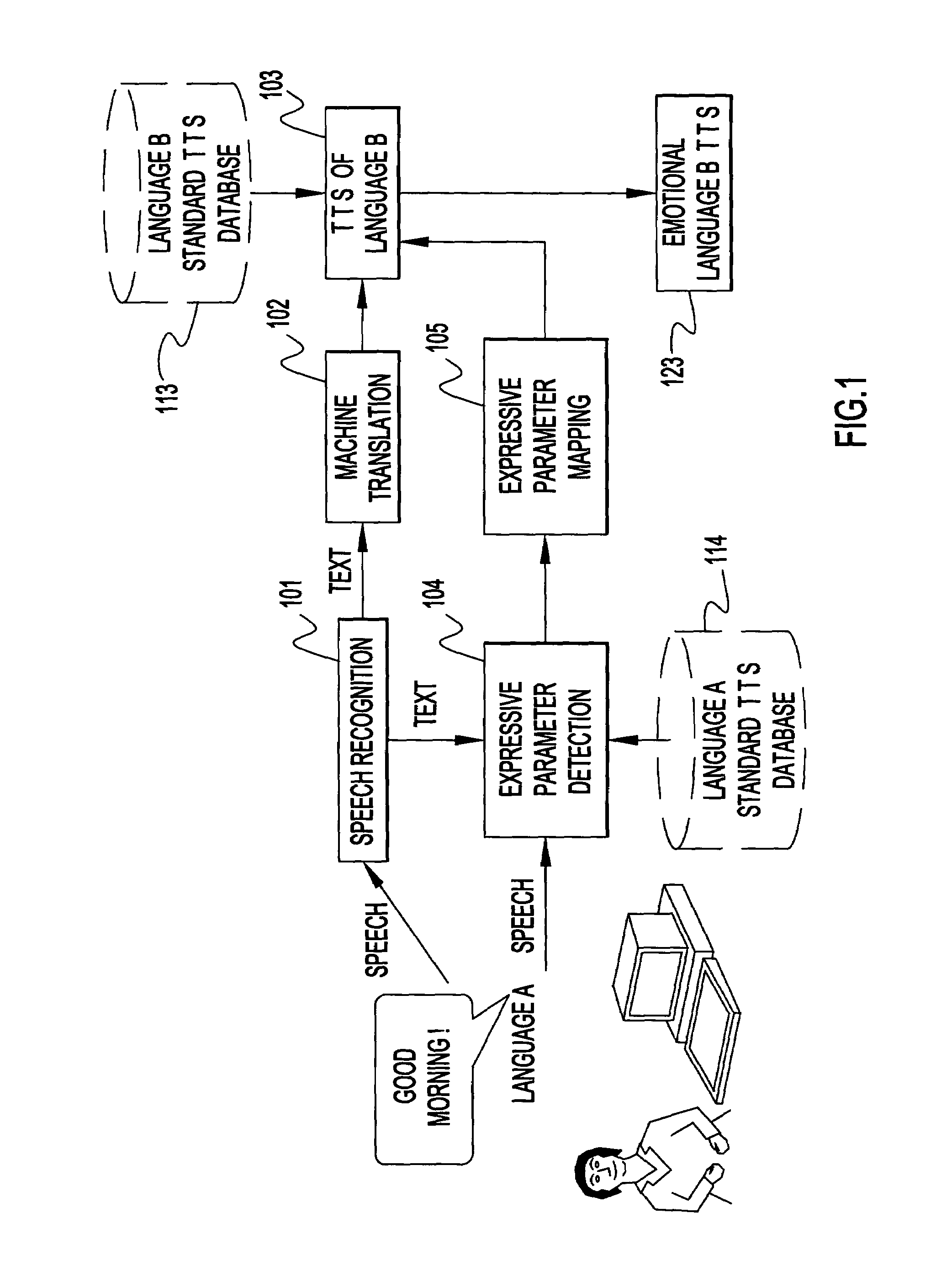

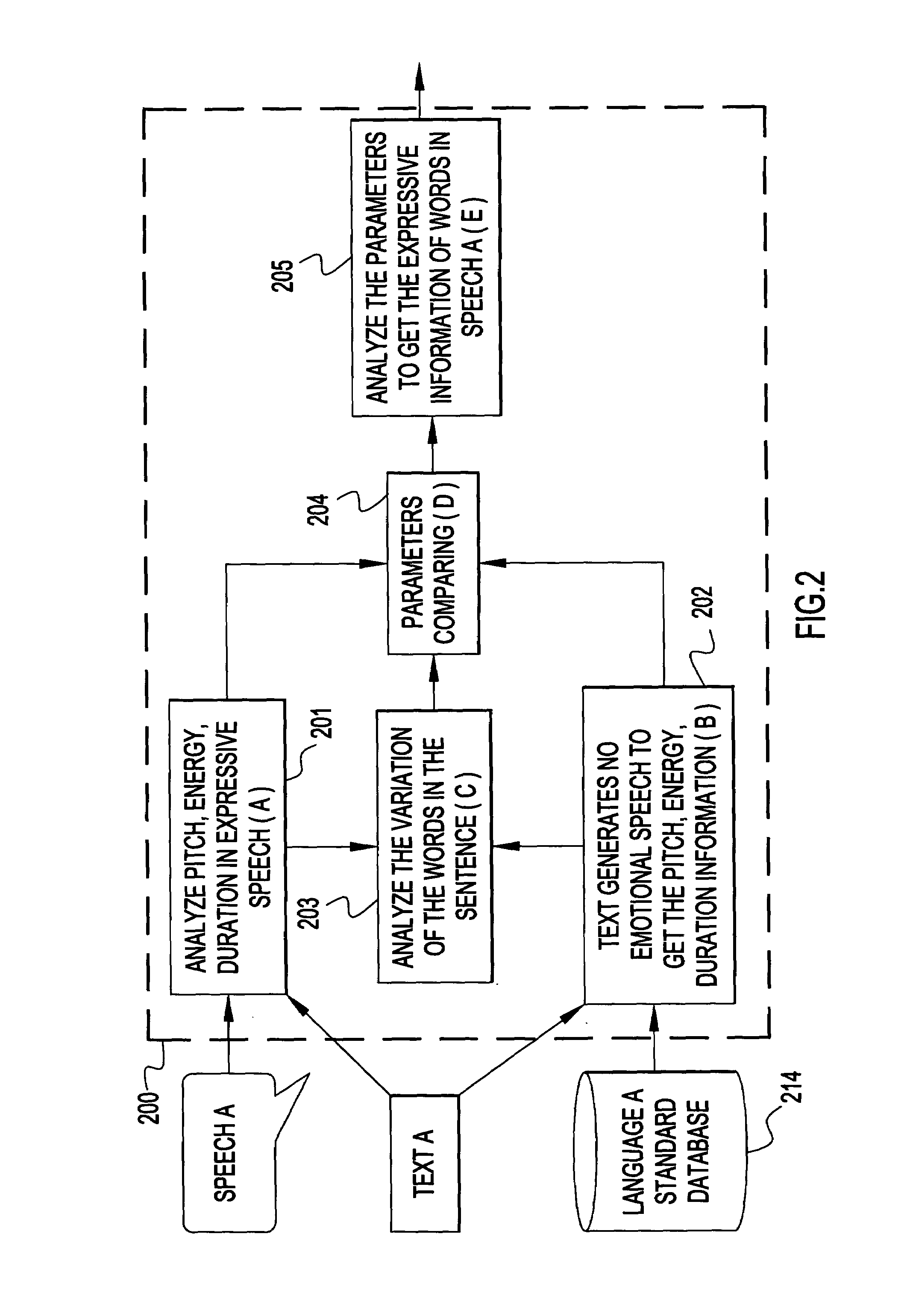

Speech-to-speech generation system and method

InactiveUS7461001B2Improve voice qualitySpeech recognitionSpecial data processing applicationsSpeech identificationMachine translation

An expressive speech-to-speech generation system and method which can generate expressive speech output by using expressive parameters extracted from the original speech signal to drive the standard TTS system. The system comprises: speech recognition means, machine translation means, text-to-speech generation means, expressive parameter detection means for extracting expressive parameters from the speech of language A, and expressive parameter mapping means for mapping the expressive parameters extracted by the expressive parameter detection means from language A to language B, and driving the text-to-speech generation means by the mapping results to synthesize expressive speech. The system and method can improve the quality of the speech output of the translating system or TTS system.

Owner:IBM CORP

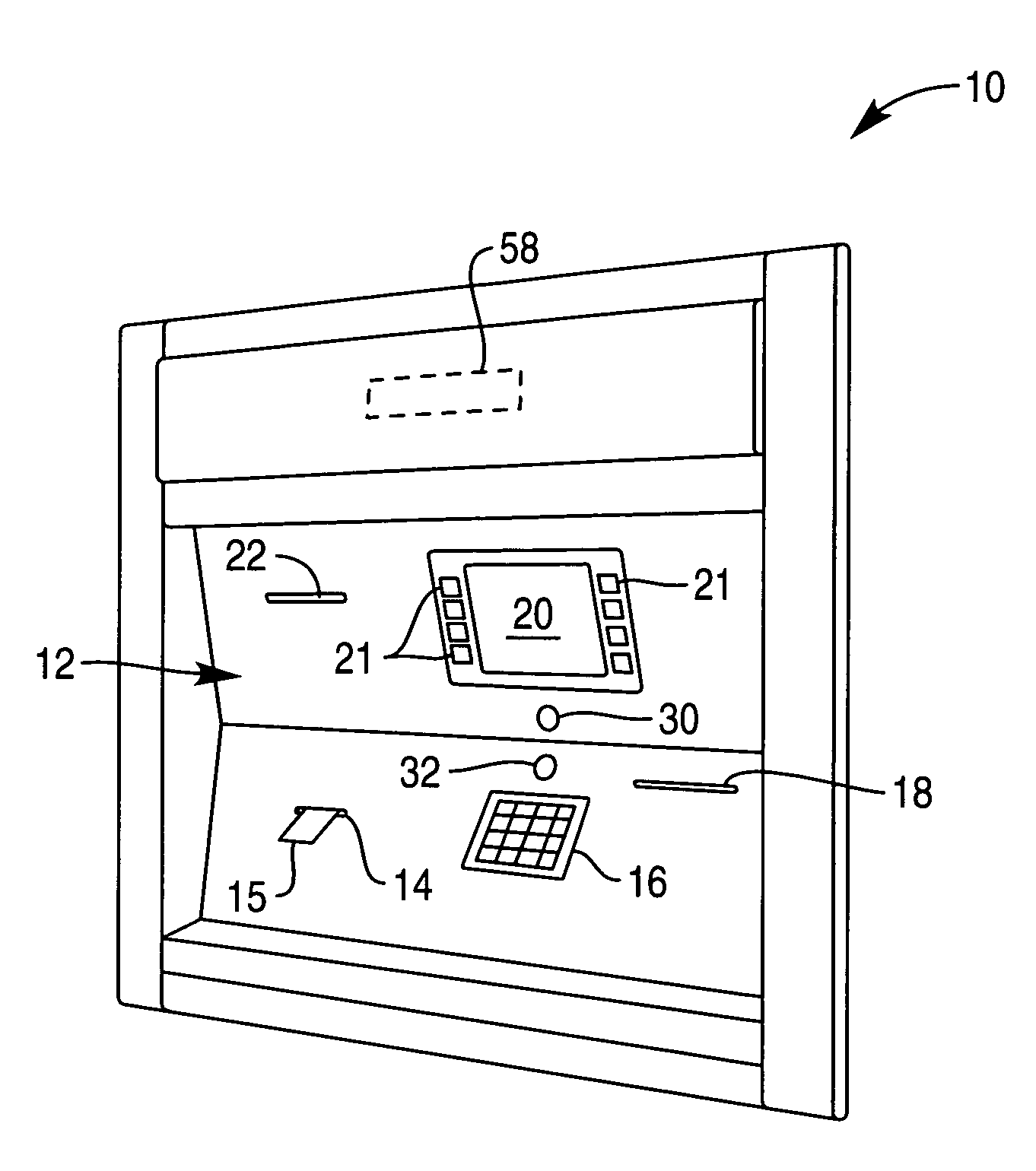

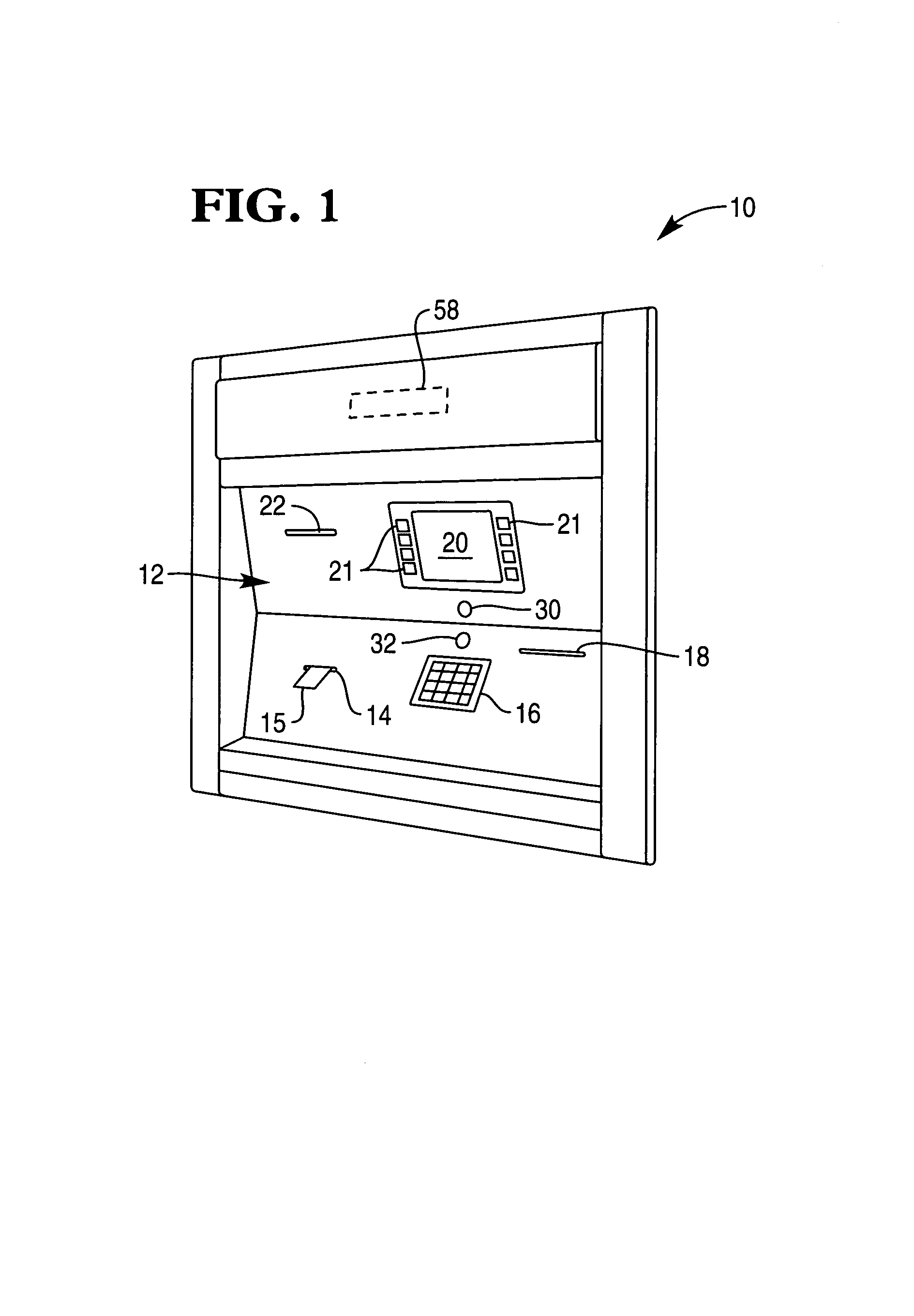

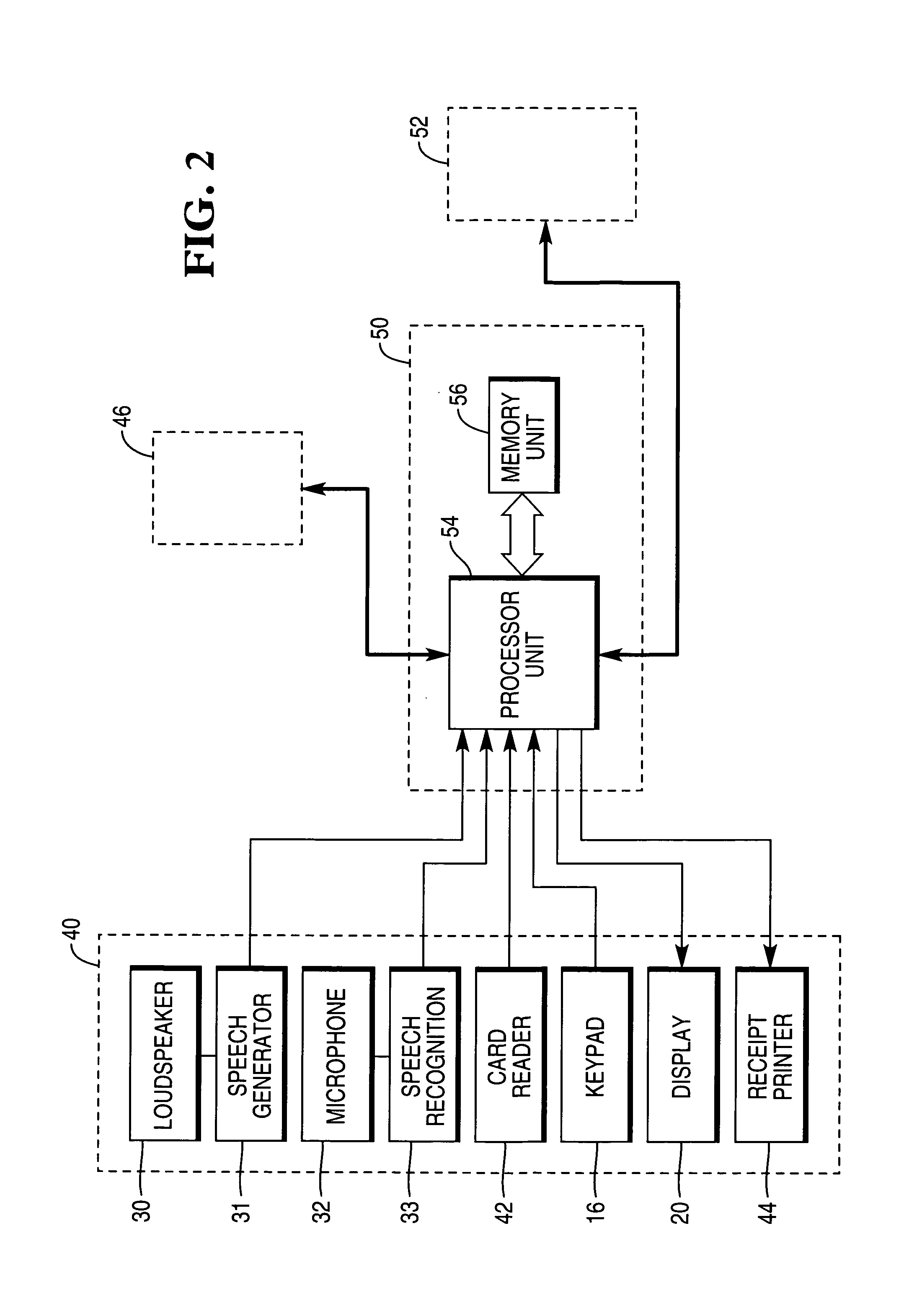

Self-service terminal

InactiveUS7194414B1Eliminate difficultiesComplete banking machinesFinanceOperating instructionComputer terminal

A self-service terminal, such as an ATM (10), comprises a speech generator (31) and loudspeaker (30) for producing natural language operating instructions for a user, and a user interface (12) permitting the user to interact with the terminal (10) in response to the spoken instructions. The user interface may include a microphone (32) and a speech recognition module (33).

Owner:NCR CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com