Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4371 results about "Compass" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A compass is an instrument used for navigation and orientation that shows direction relative to the geographic cardinal directions (or points). Usually, a diagram called a compass rose shows the directions north, south, east, and west on the compass face as abbreviated initials. When the compass is used, the rose can be aligned with the corresponding geographic directions; for example, the "N" mark on the rose points northward. Compasses often display markings for angles in degrees in addition to (or sometimes instead of) the rose. North corresponds to 0°, and the angles increase clockwise, so east is 90° degrees, south is 180°, and west is 270°. These numbers allow the compass to show magnetic North azimuths or true North azimuths or bearings, which are commonly stated in this notation. If magnetic declination between the magnetic North and true North at latitude angle and longitude angle is known, then direction of magnetic North also gives direction of true North.

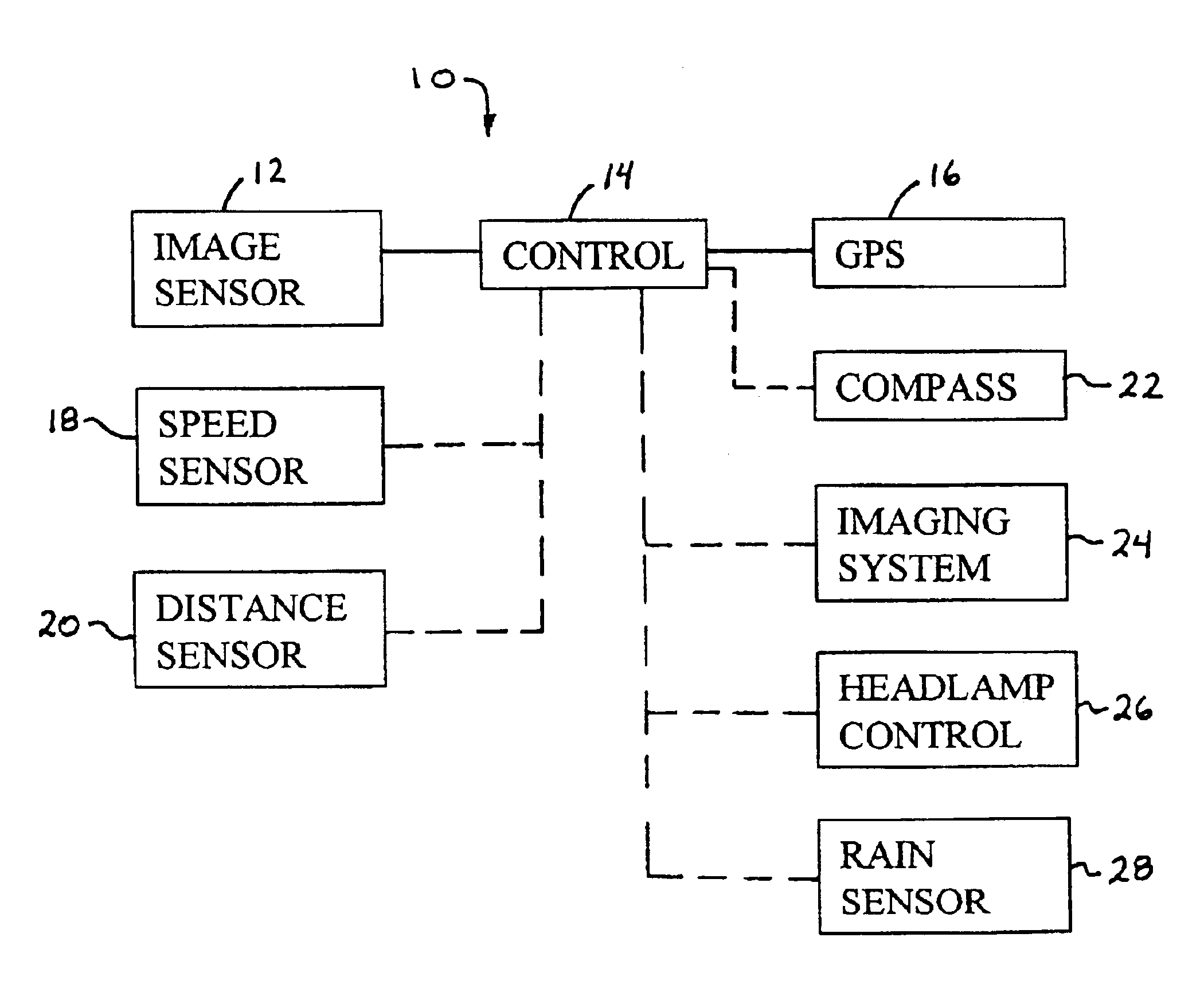

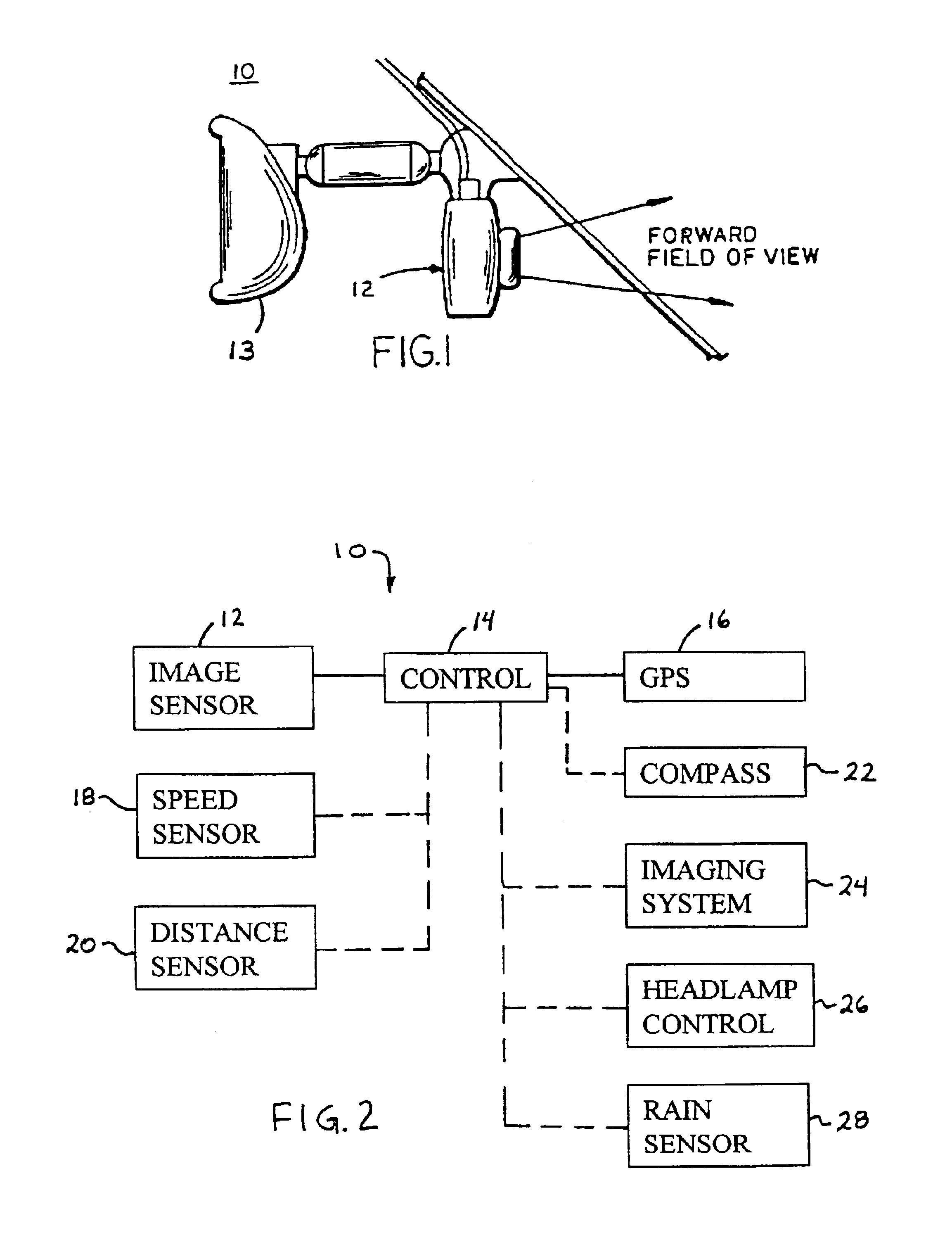

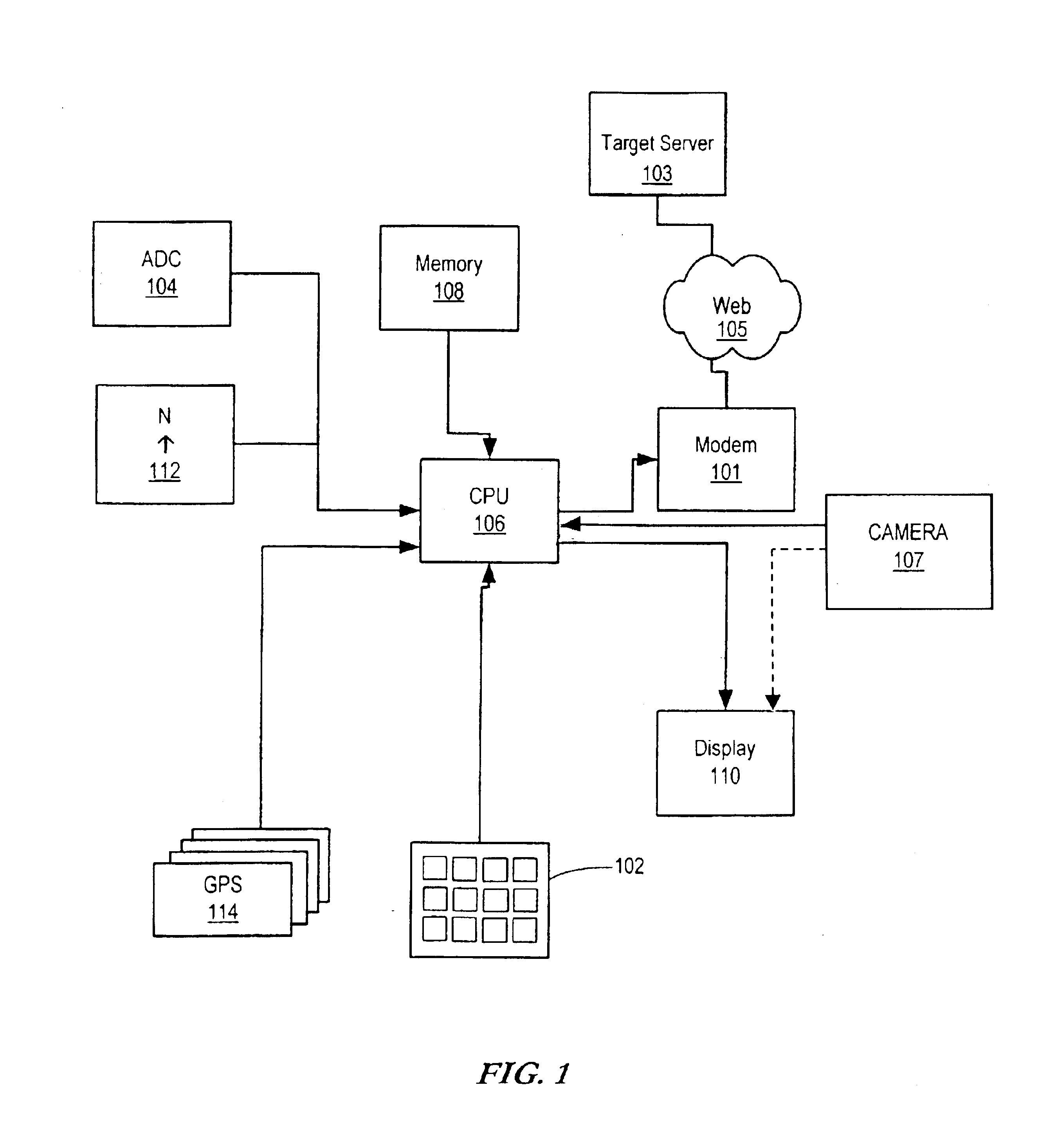

Imaging system for vehicle

An imaging system suitable for use with a vehicle based global positioning system includes an imaging sensor and a control. The imaging sensor is positioned at the vehicle and may face generally forwardly with respect to the direction of travel of the vehicle. The control may be operable to provide a record of vehicle movement since the last position data derived from the vehicle based global positioning system. The imaging system may also or otherwise be suitable for use with a vehicle based magnetic compass system. The imaging system may be operable in combination with a magnetic field sensor of the magnetic compass system to provide a substantially continuously calibrated heading indication, which is substantially resistant to local magnetic anomalies and changes in vehicle inclination. The imaging sensor may be associated with one or more other accessories of the vehicle.

Owner:DONNELLY CORP

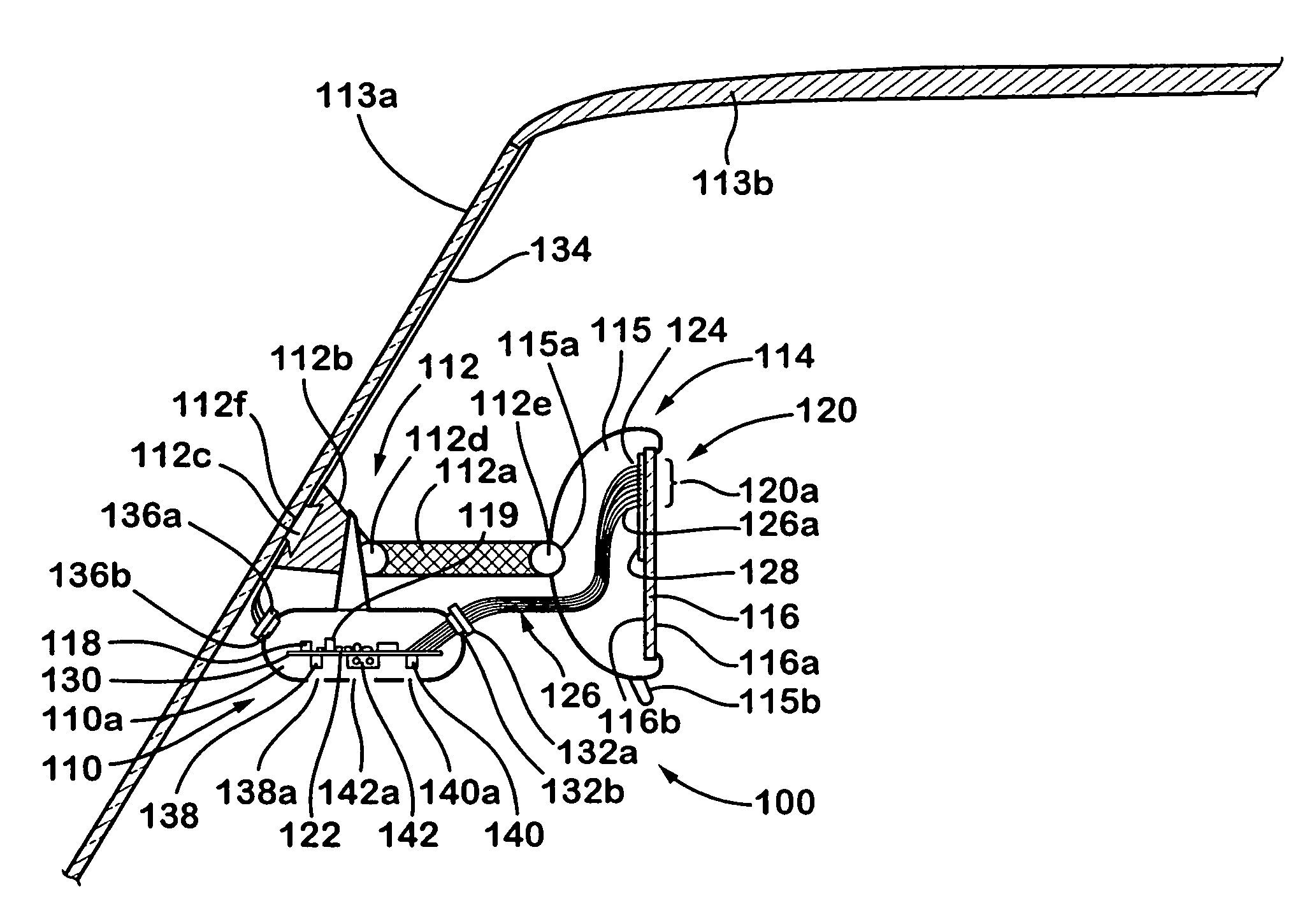

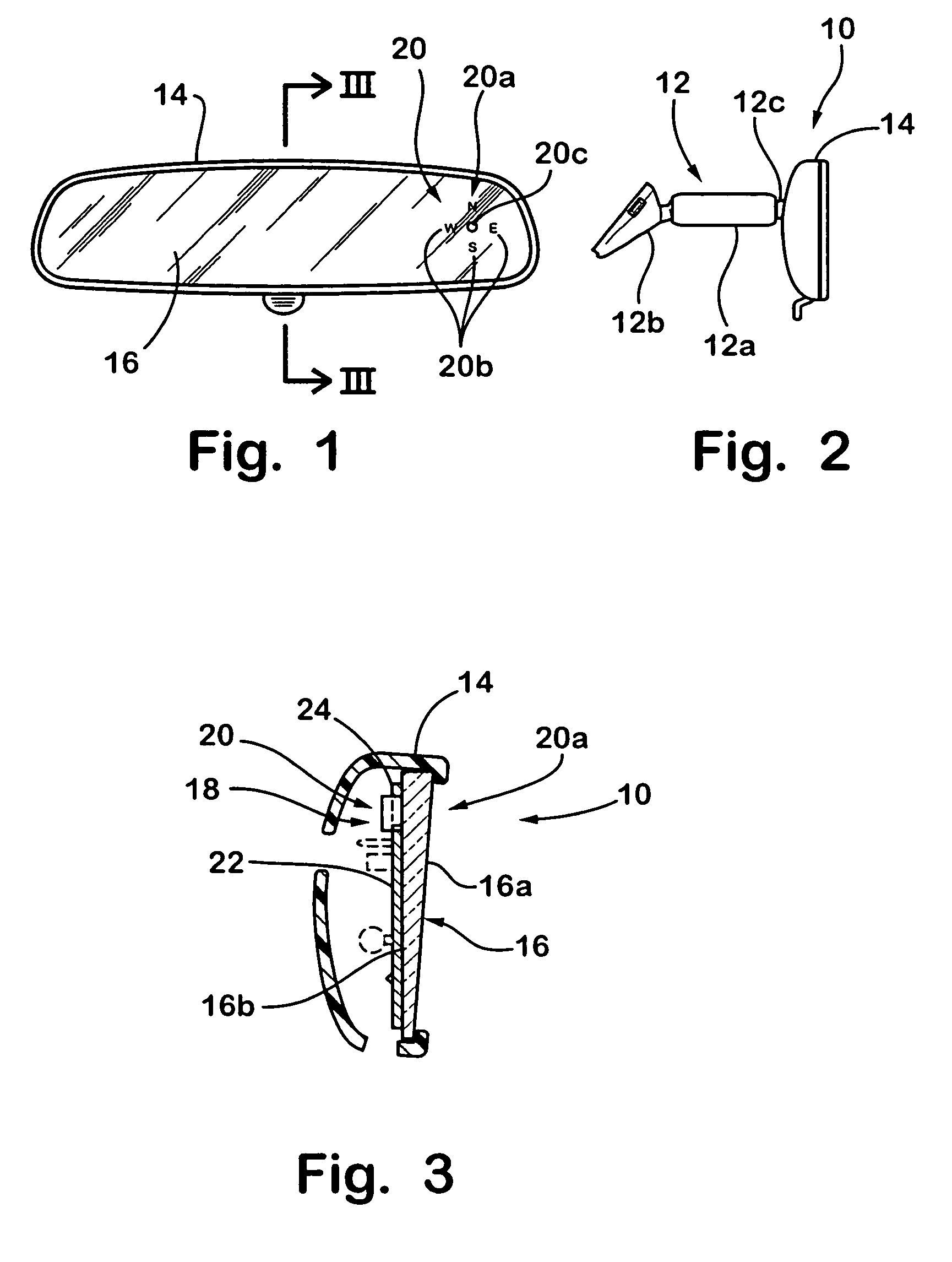

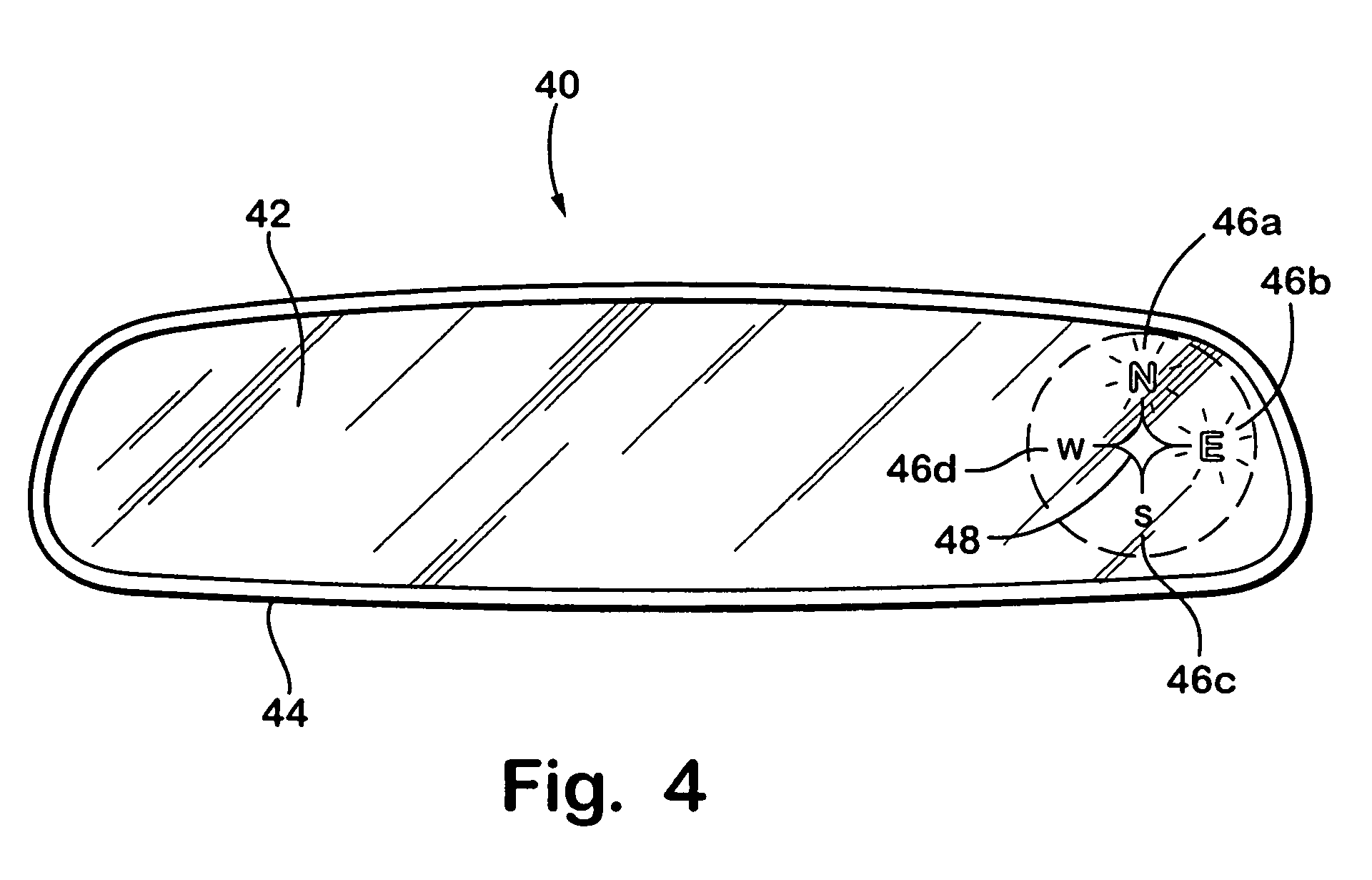

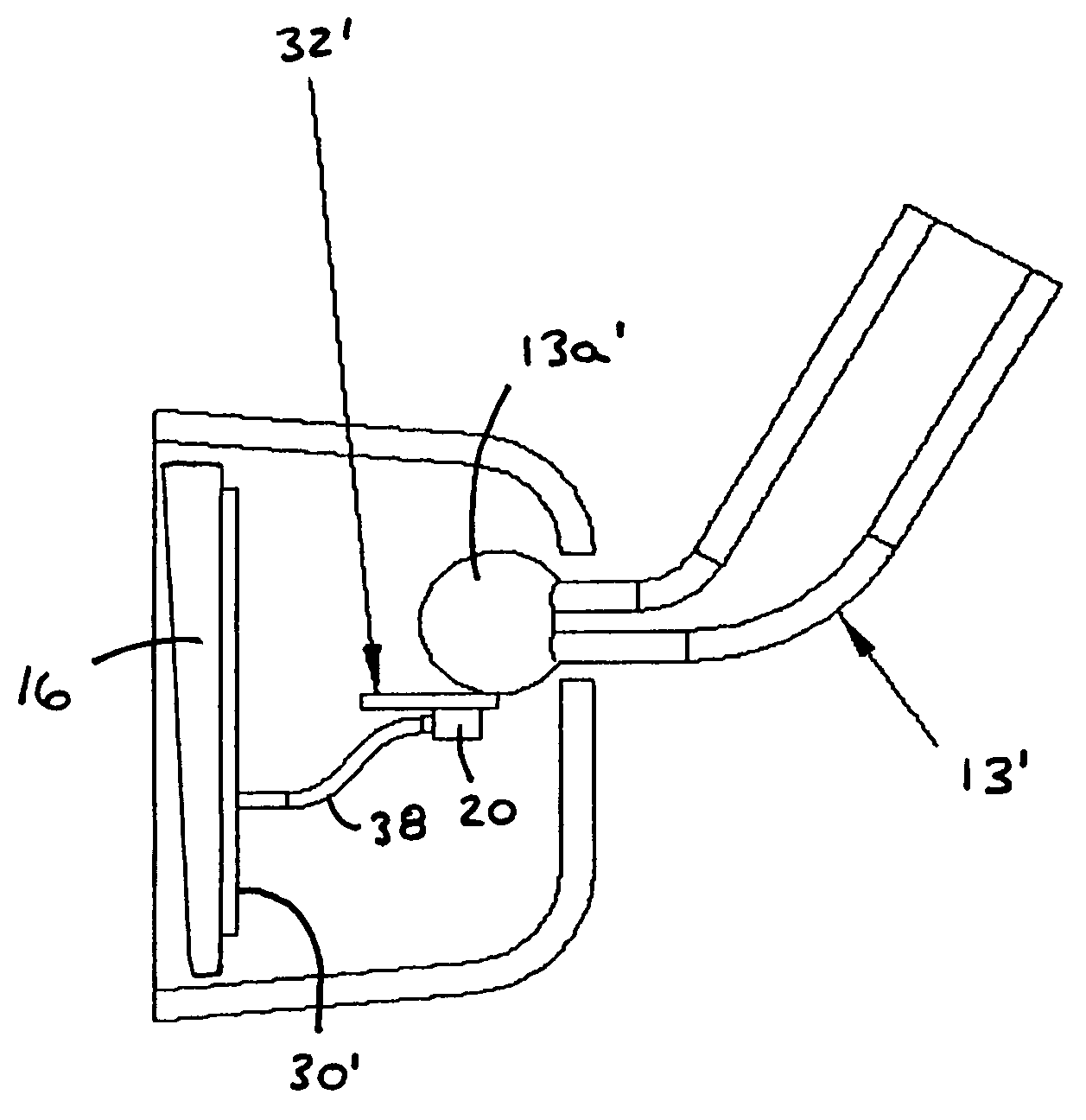

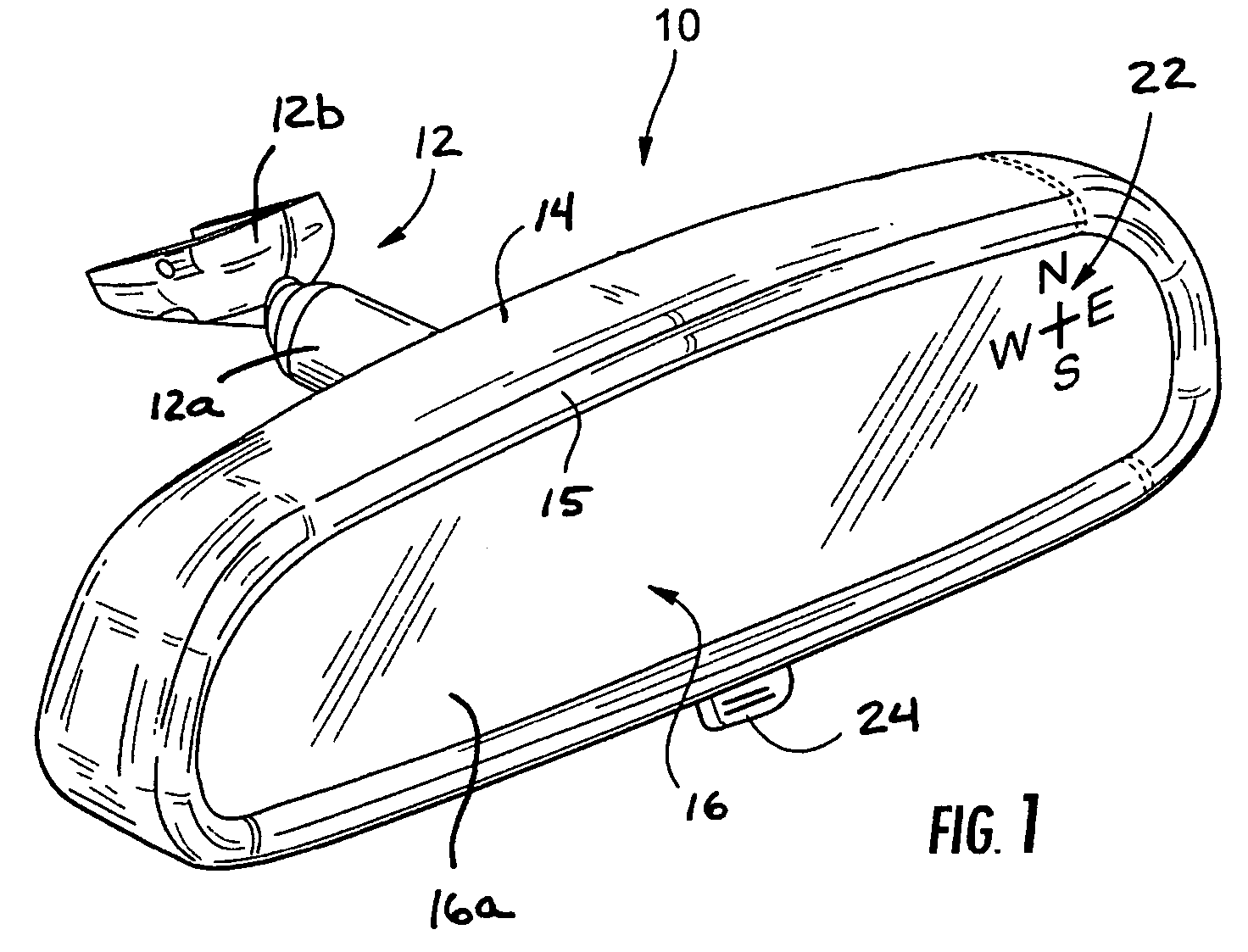

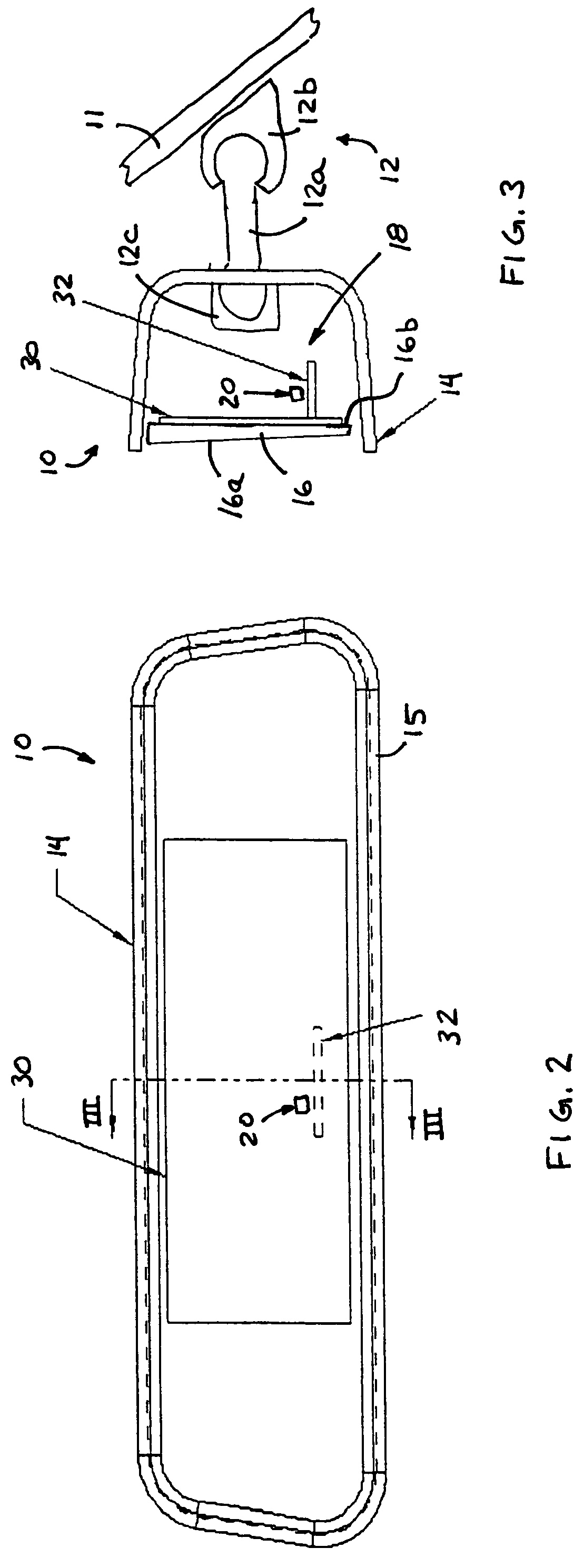

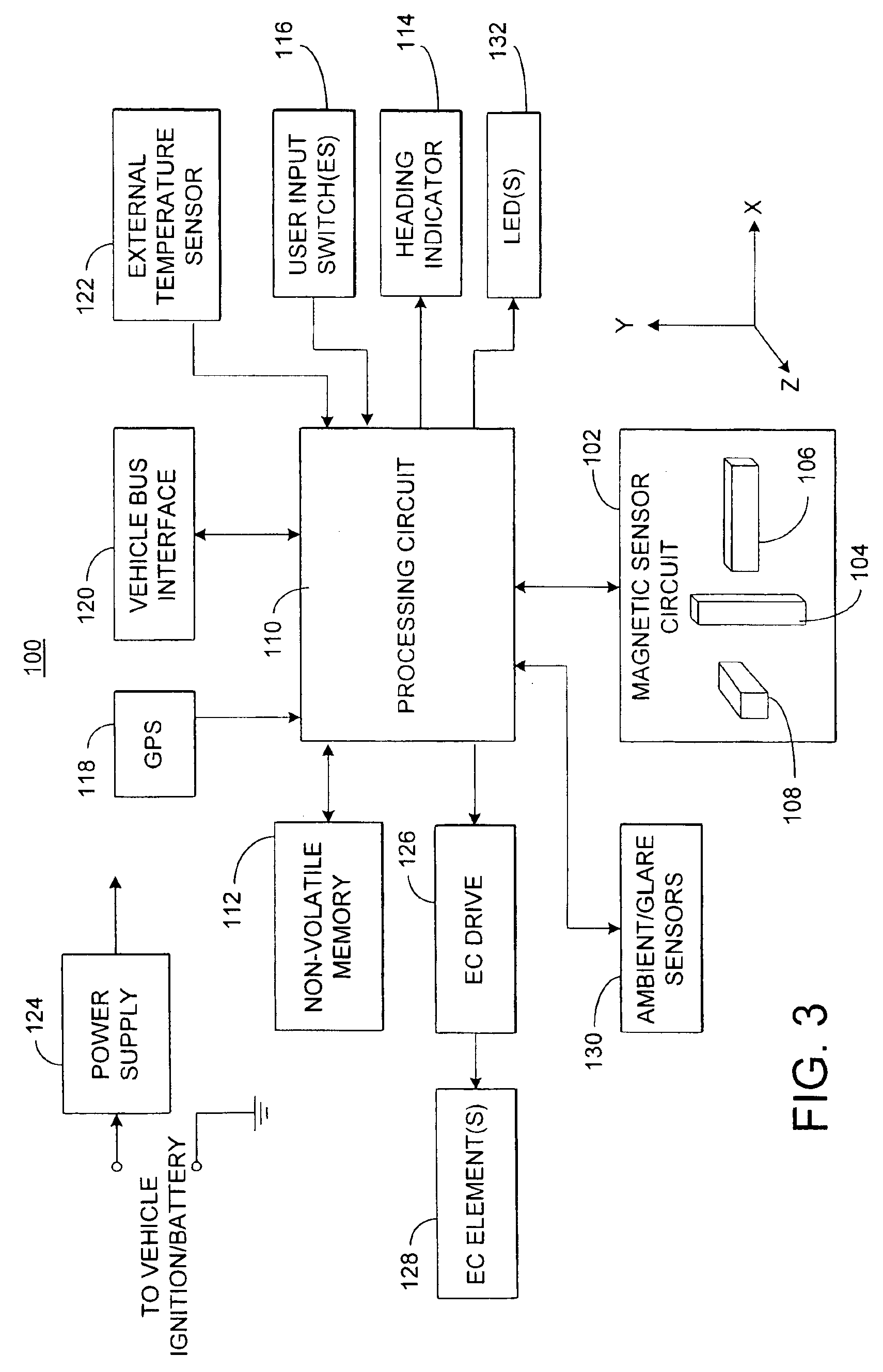

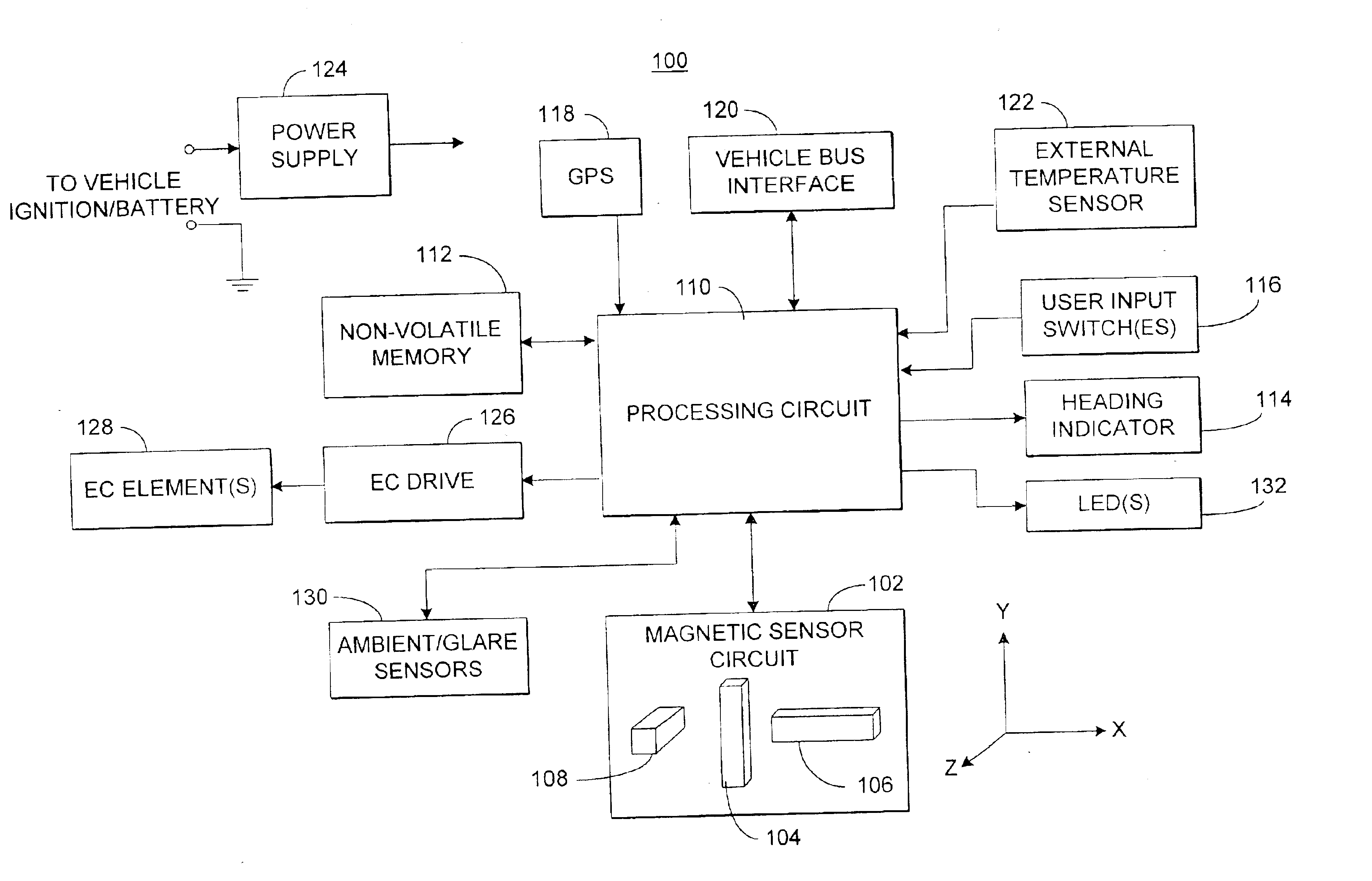

Interior rearview mirror system with compass

An interior rearview mirror system includes a compass system and a display. The compass system is operable to display information indicative of a directional heading of the vehicle to a driver of the vehicle. The display comprises a plurality of ports formed in a reflective coating of a mirror reflective element and includes illumination sources positioned behind and generally aligned with corresponding ones of the ports. Each of the illumination sources may be energized to project illumination through a respective one of the ports such that the ports are backlit by the respective illumination sources, in order to convey the directional information to the driver of the vehicle. The system may include a microprocessor operable to control each illumination source via a respective wire connected between the microprocessor and each illumination source. The compass system may utilize a global positioning system for vehicle direction determination.

Owner:DONNELLY CORP

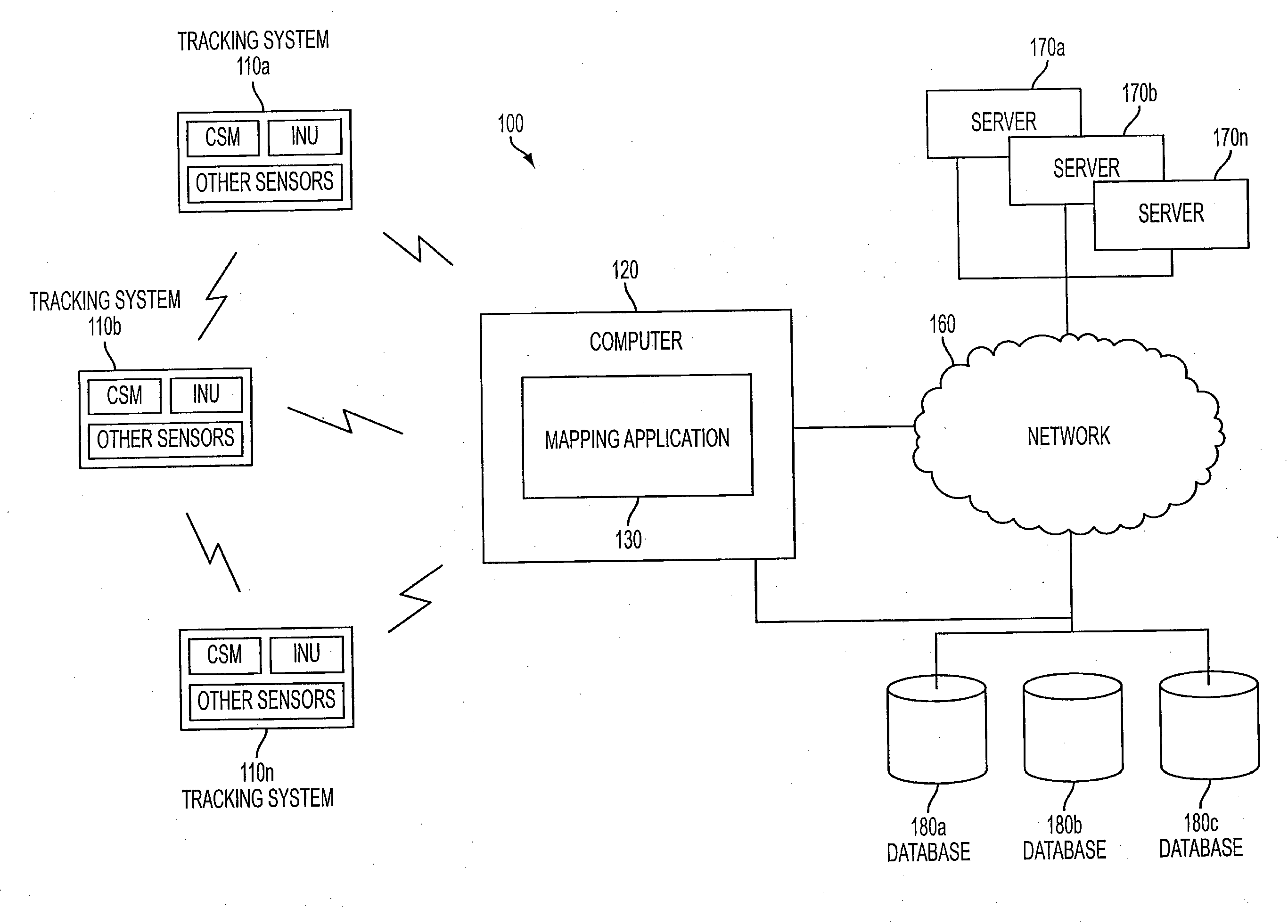

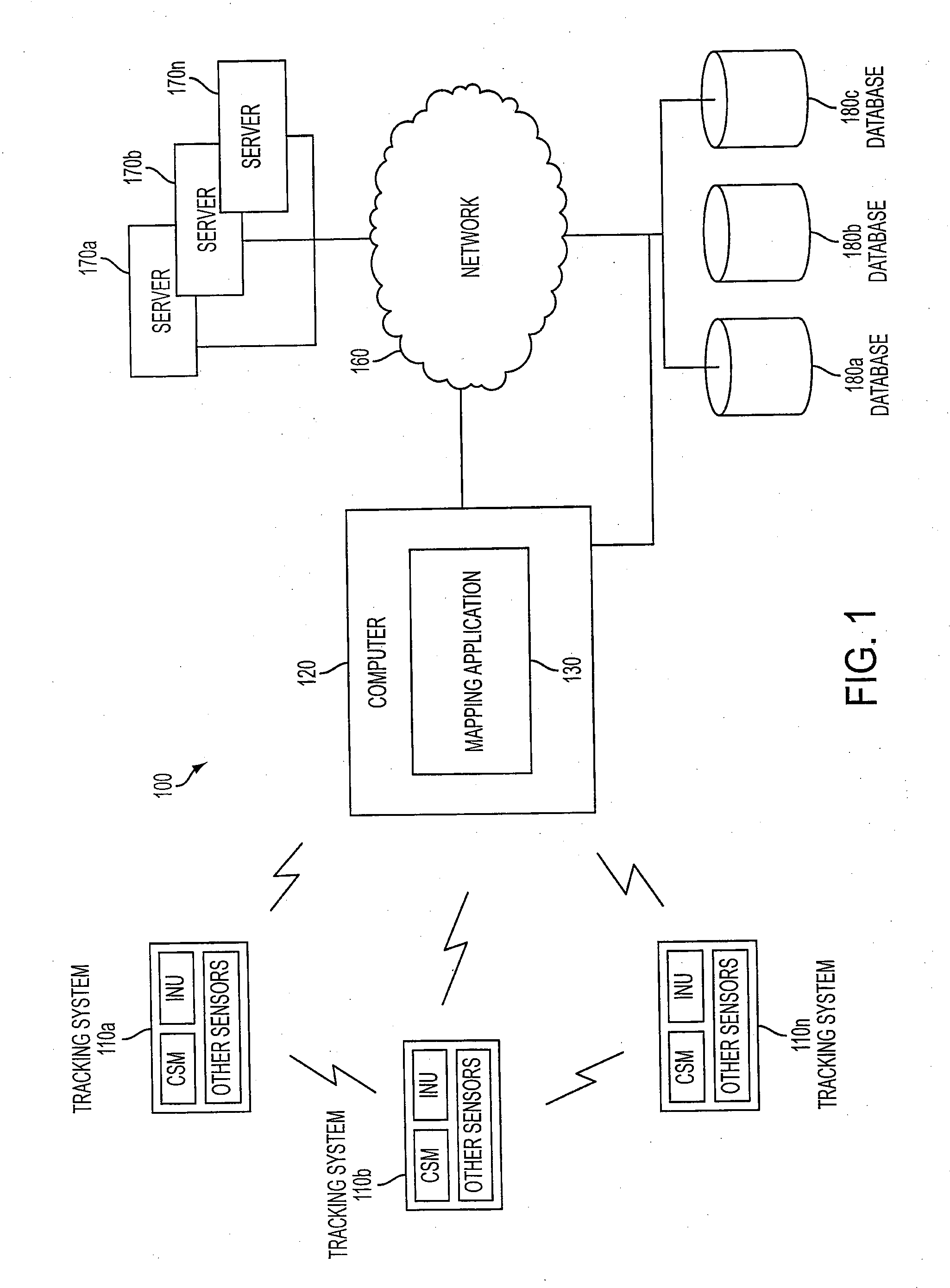

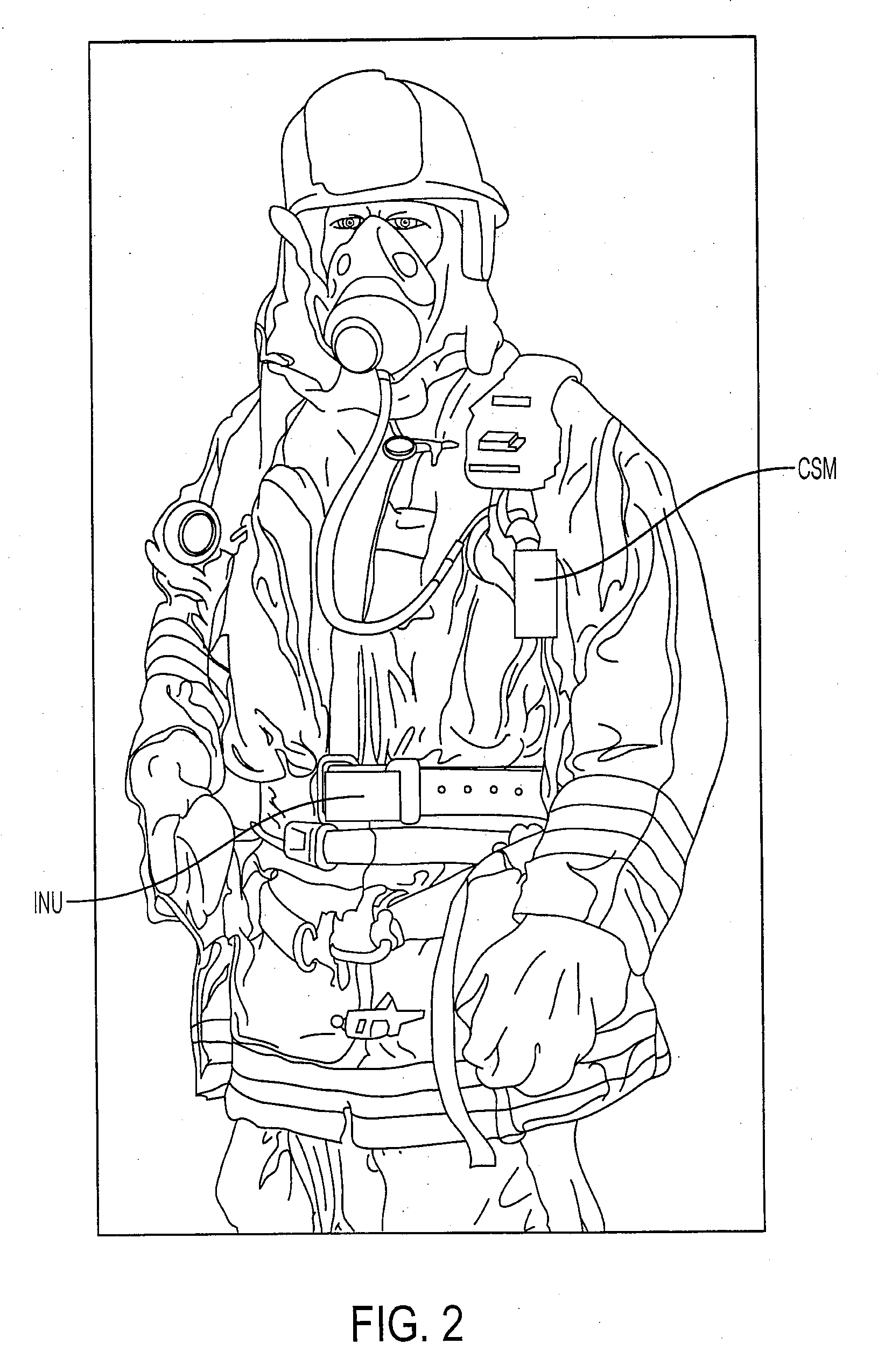

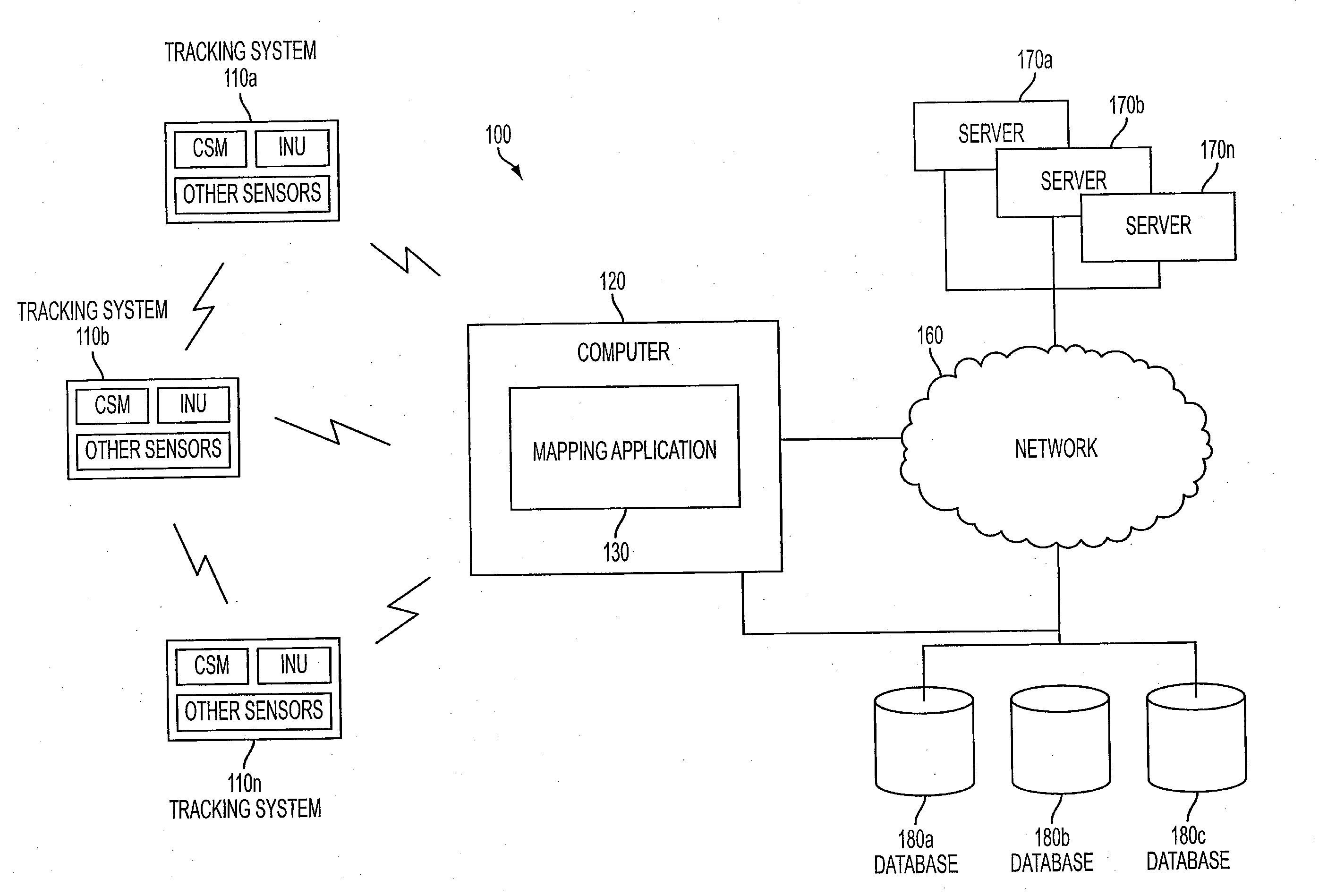

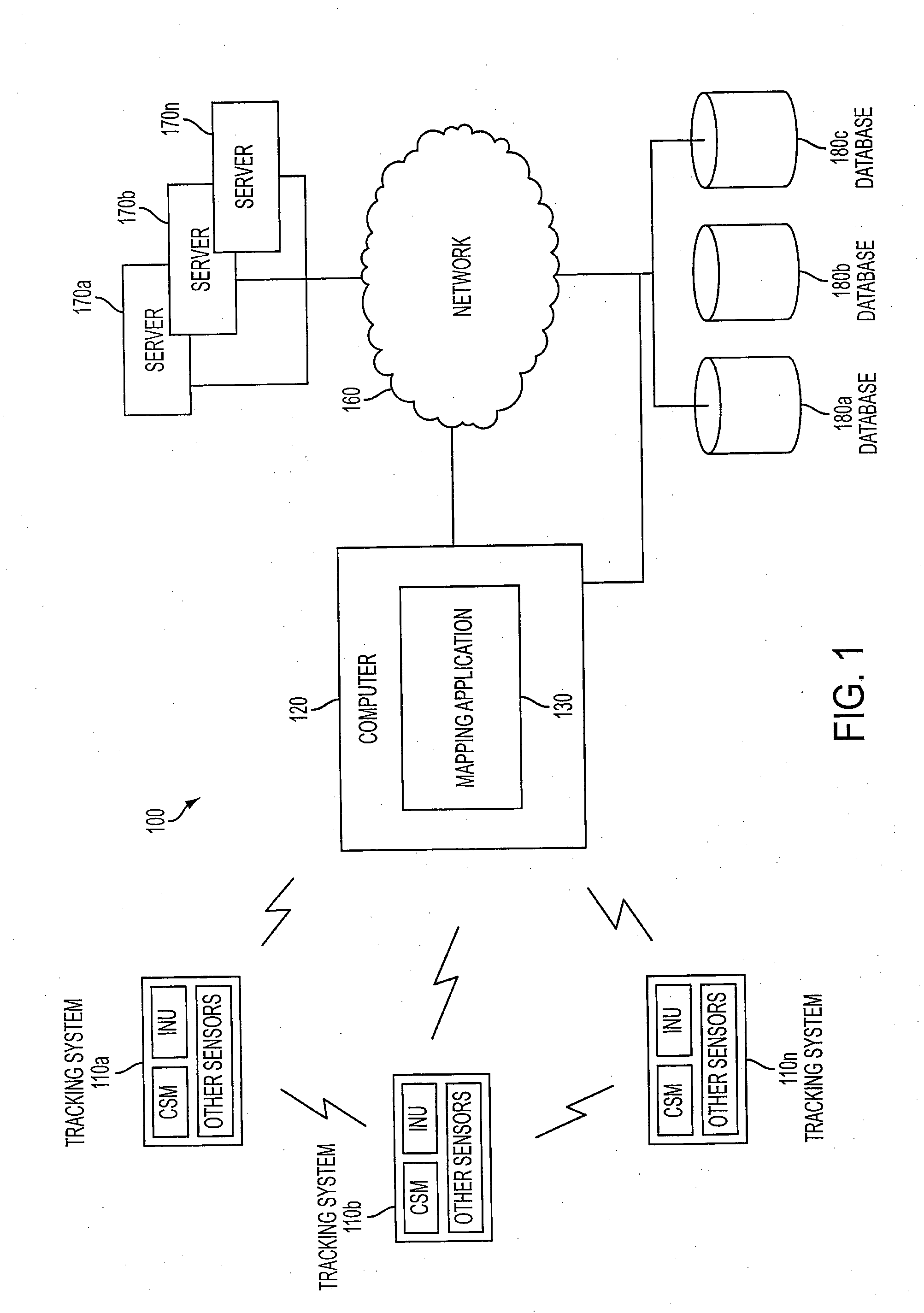

System and method for locating, tracking, and/or monitoring the status of personnel and/or assets both indoors and outdoors

ActiveUS20090043504A1Improving location estimatesImprove accuracyParticular environment based servicesNavigation by speed/acceleration measurementsComputer visionMarine navigation

A system and method for locating, tracking, and / or monitoring the status of personnel and / or assets (collectively “trackees”), both indoors and outdoors, is provided. Tracking data obtained from any number of sources utilizing any number of tracking methods (e.g., inertial navigation and signal-based methods) may be provided as input to a mapping application. The mapping application may generate position estimates for trackees using a suite of mapping tools to make corrections to the tracking data. The mapping application may further use information from building data, when available, to enhance position estimates. Indoor tracking methods including, for example, sensor fusion methods, map matching methods, and map building methods may be implemented to take tracking data from one or more trackees and compute a more accurate tracking estimate for each trackee. Outdoor tracking methods may be implemented to enhance outdoor tracking data by combining tracking estimates such as inertial tracks with magnetic and / or compass data if and when available, and with GPS, if and when available.

Owner:TRX SYST

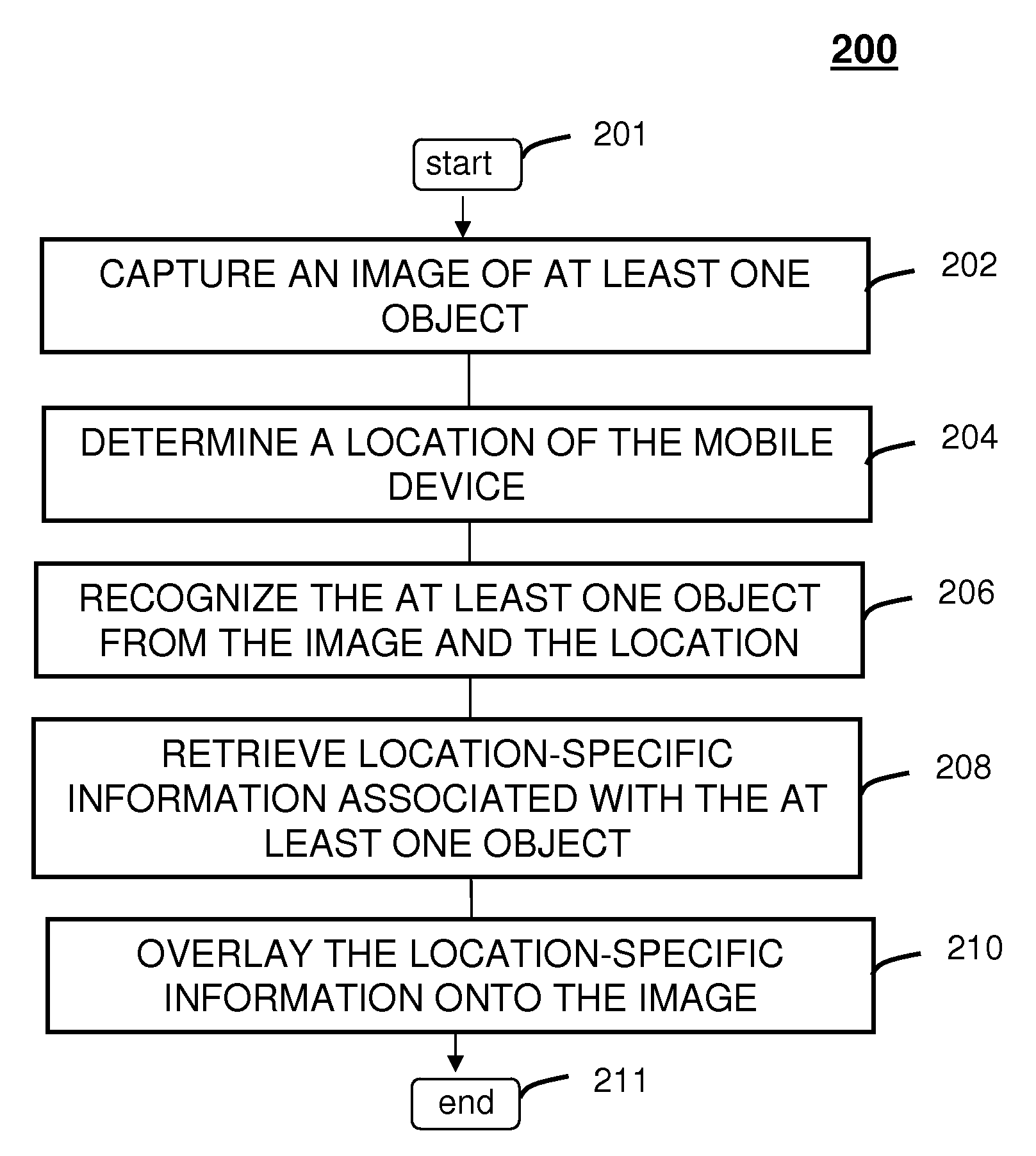

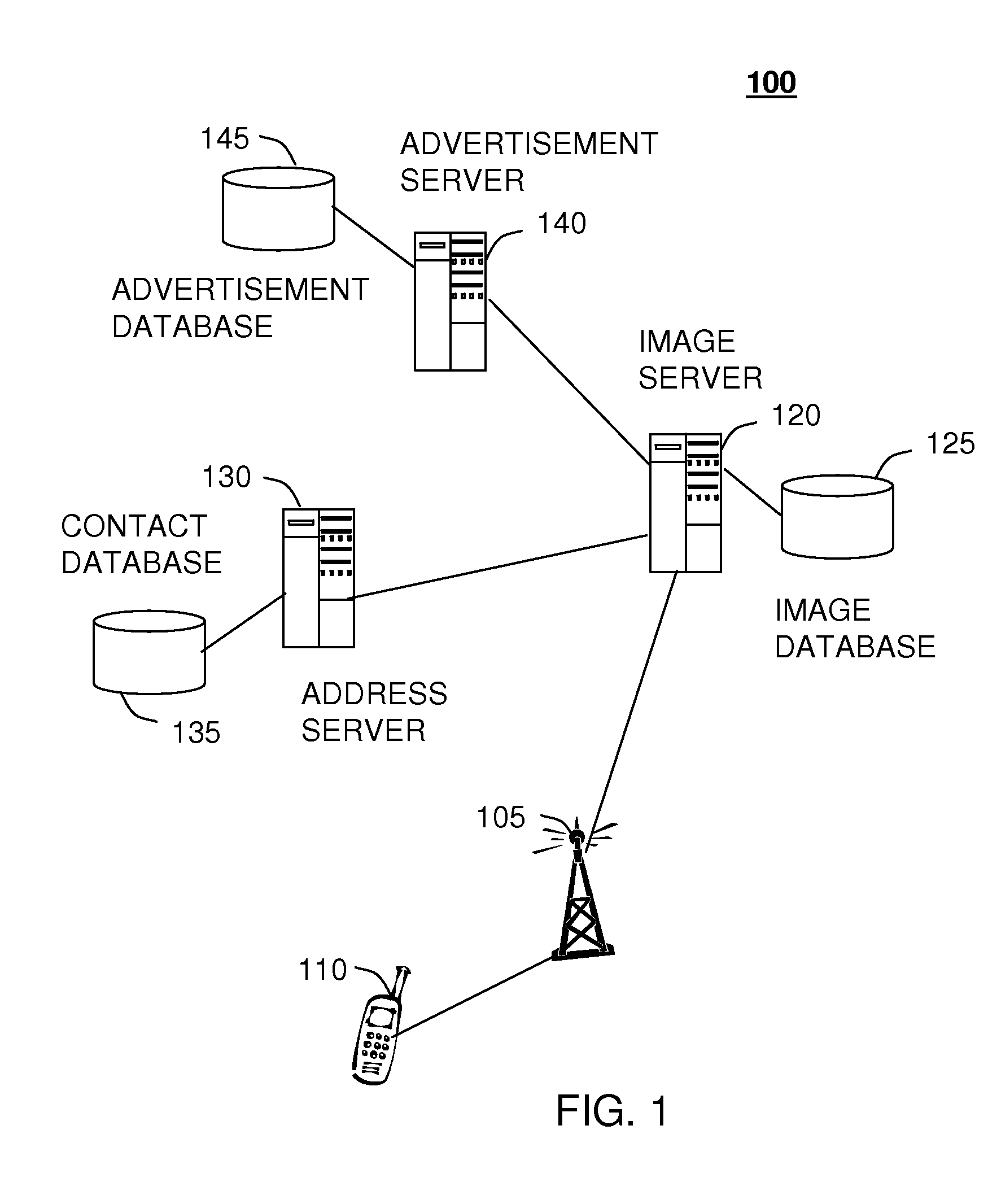

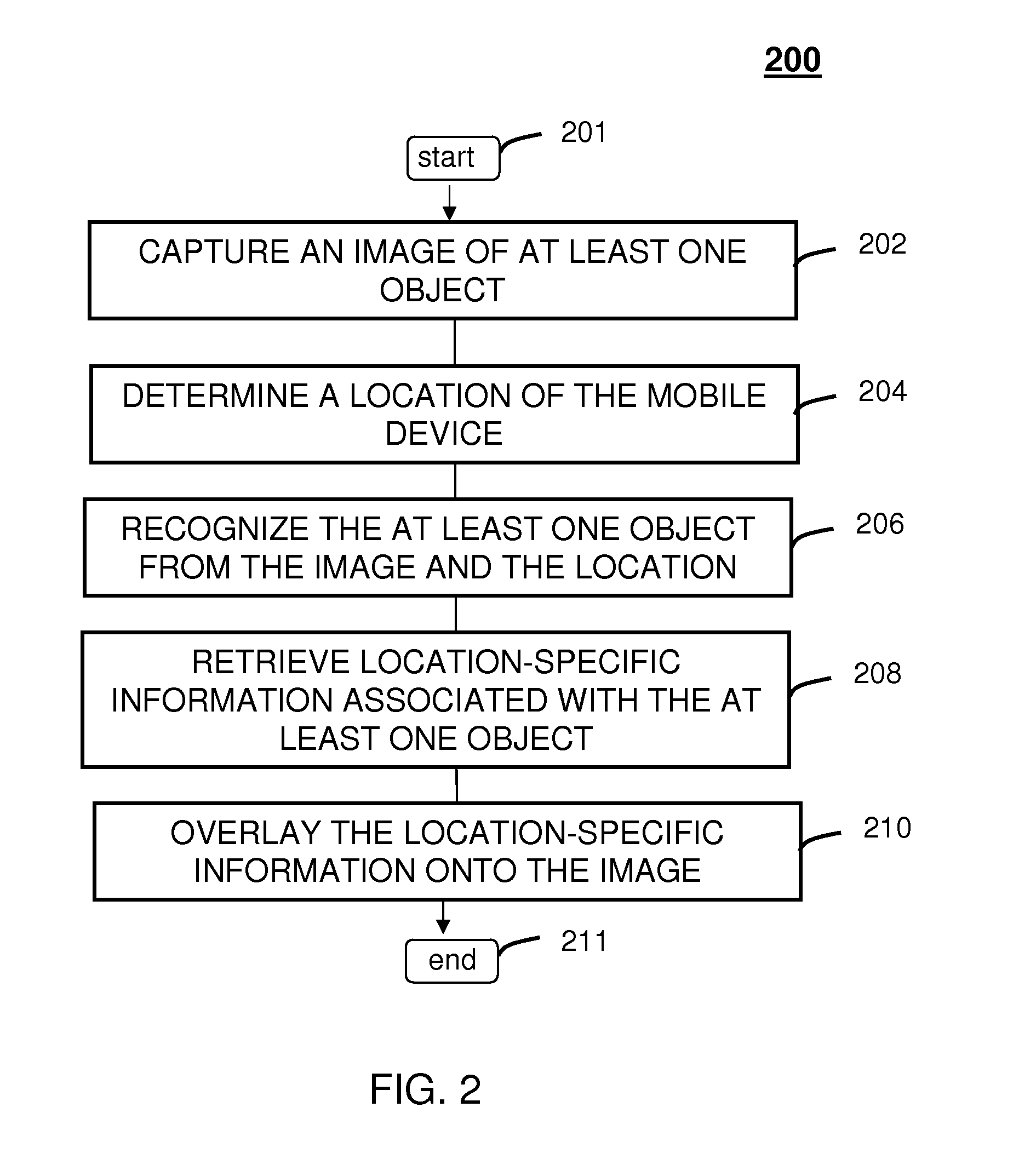

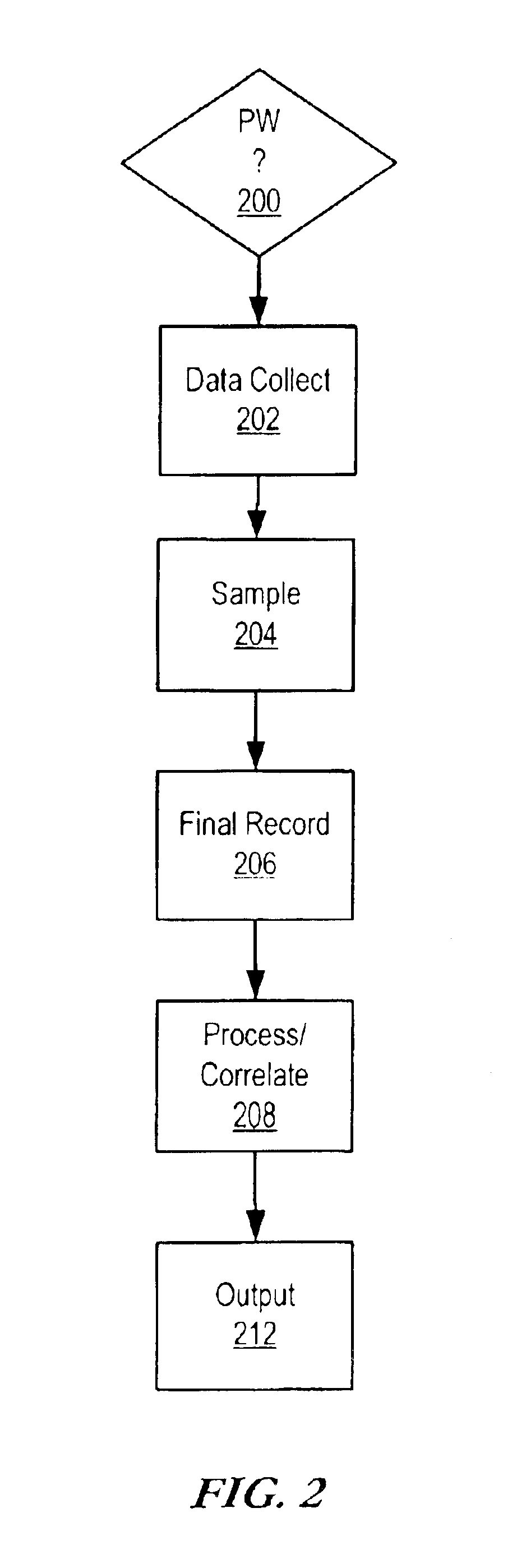

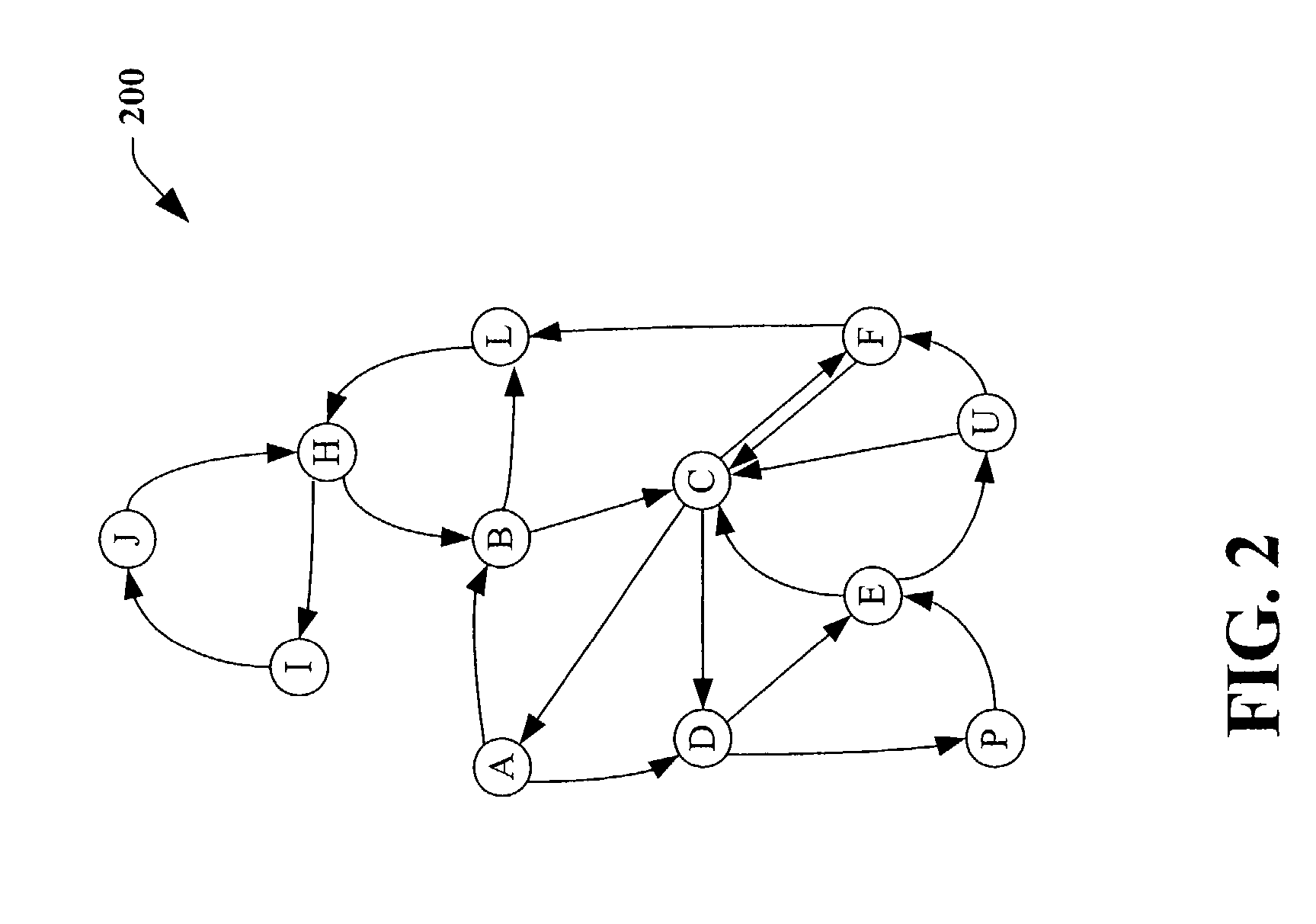

Method and system for providing location-specific image information

InactiveUS20080147730A1Television system detailsDigital data information retrievalComputer visionMobile device

A system (100) and method (200) for providing location-specific image information is provided. The method can include capturing (202) an image of an object from a mobile device (110), determining (204) a location of the mobile device, recognizing (206) the object from a database of images from the location, and retrieving (208) location-specific information associated with the object. A camera zoom (402), camera focus (412), and compass heading (422) can also be included for reducing a search scope. The method can include recognizing (306) a building in the image, identifying (320) a business associated with the building, retrieving an advertisement associated with the business, and overlaying the advertisement at a location of the building in the image. A list of contacts can also be retrieved (326) from an address of the recognized building and displayed on the mobile device.

Owner:MOTOROLA INC

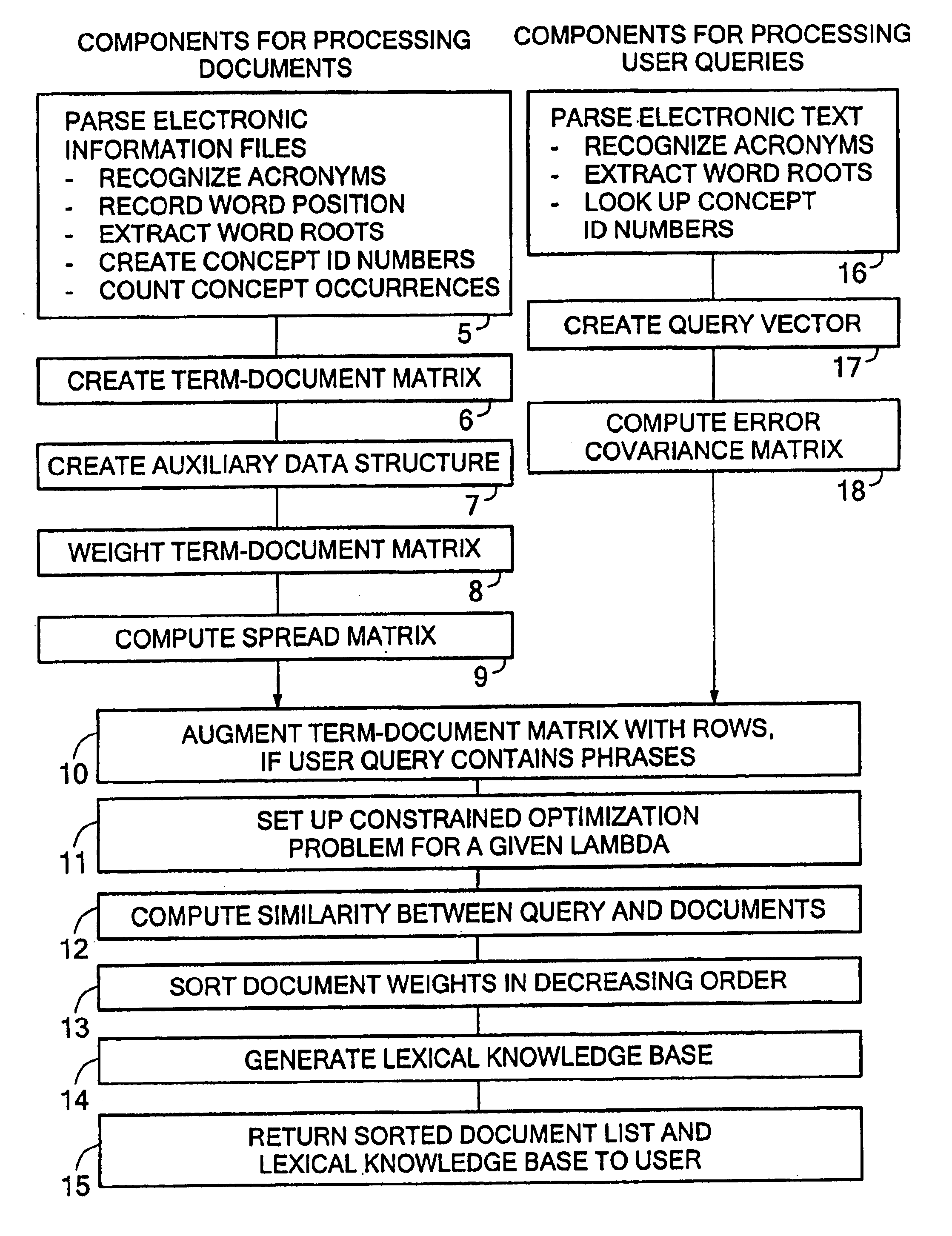

Internet navigation using soft hyperlinks

InactiveUS6862710B1High degree of correlationHigh degreeData processing applicationsWeb data indexingNavigation systemDocument preparation

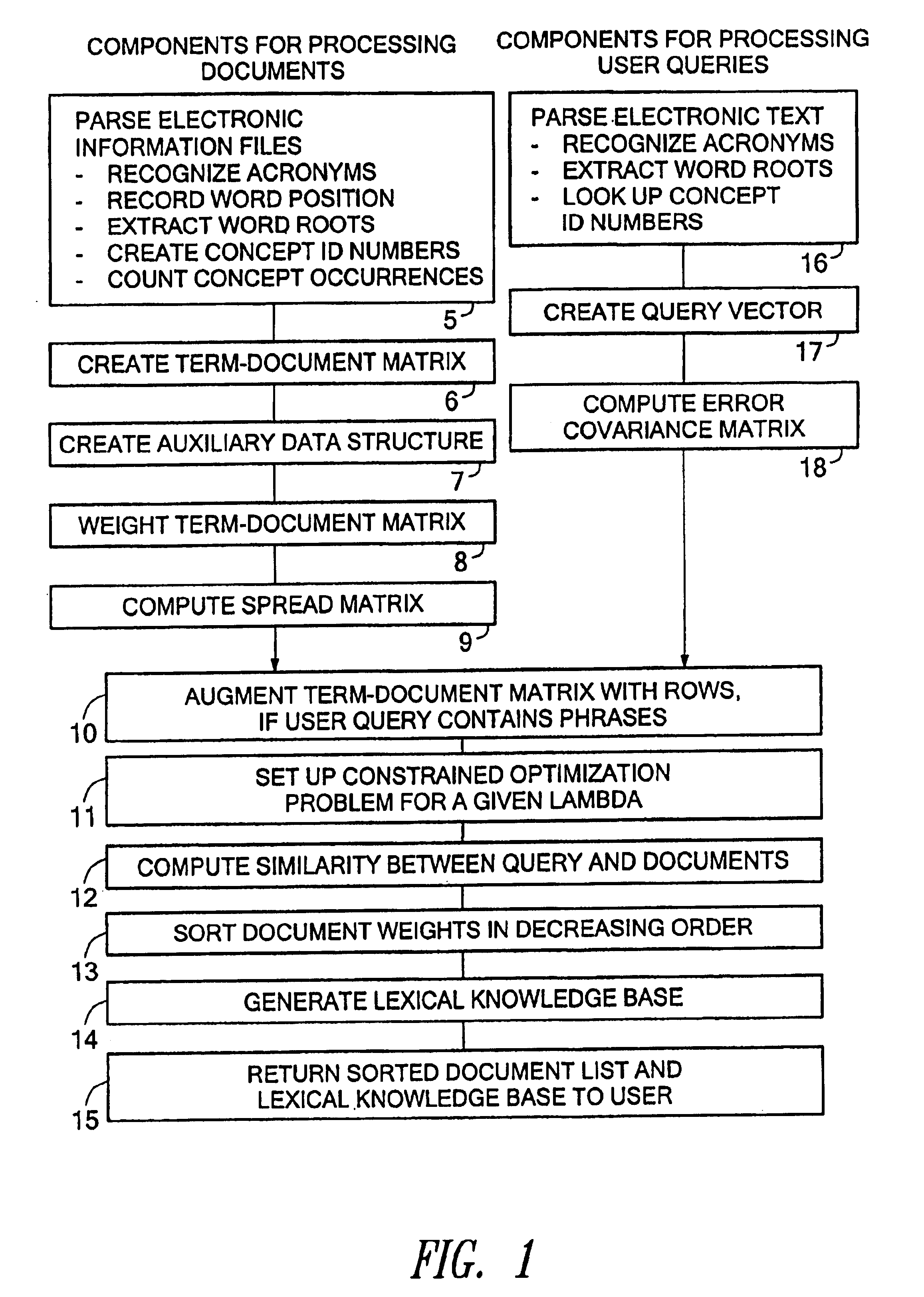

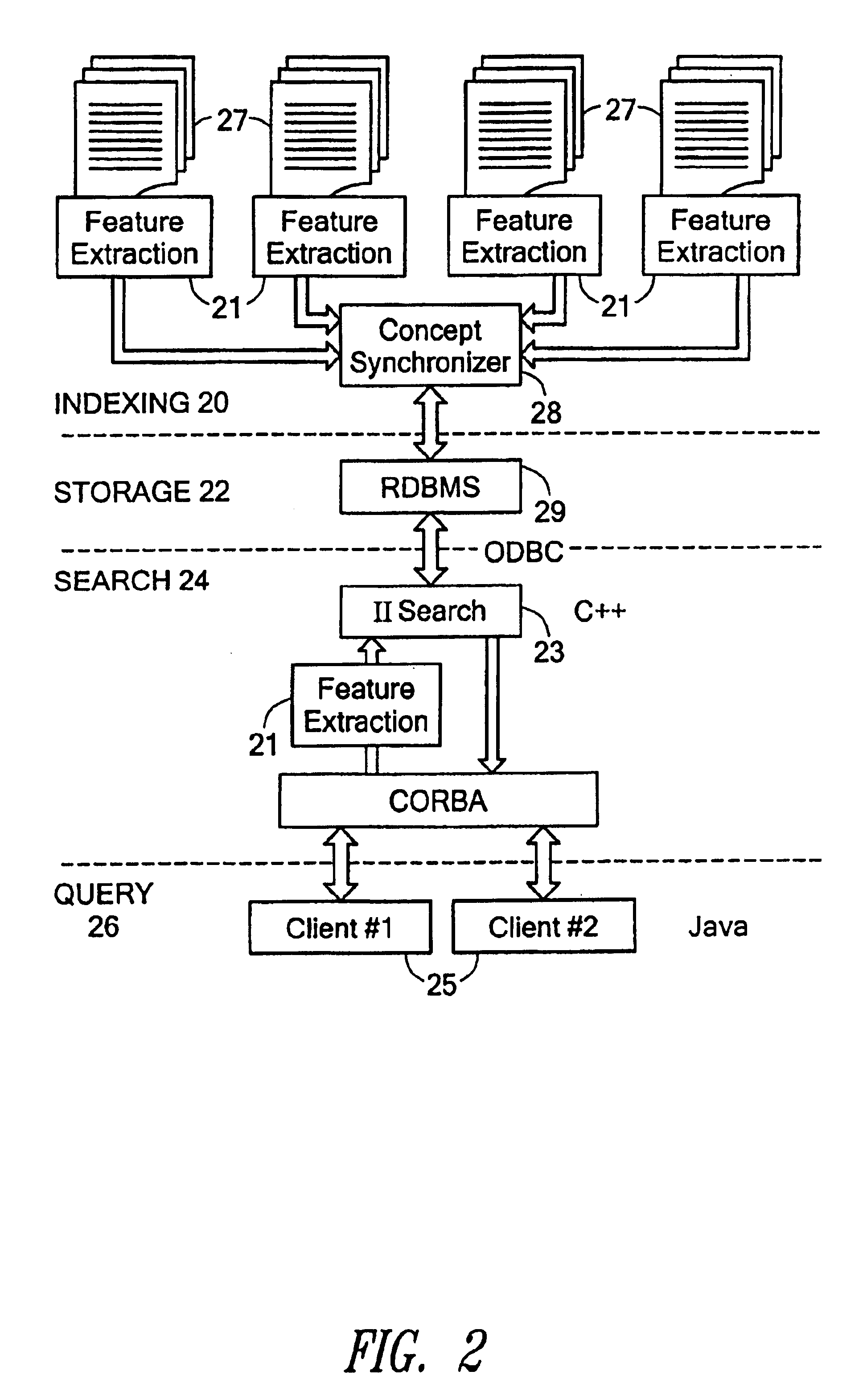

A system for internet navigation using soft hyperlinks is disclosed, in connection with an illustrative information retrieval system with which it may be used. The navigation tool provides freedom to move through a collection of electronic documents independent of any hyperlink which has been inserted within an HTML page. A user can click on any term in a document page, not only those that are hyperlinked. For example, when a user clicks on an initial word within the document, the disclosed system employs a search engine in the background to retrieve a list of related terms. In an illustrative embodiment, a compass-like display appears with pointers indicating the first four terms returned by the search engine. These returned terms have the highest degree of correlation with the initial search term in a lexical knowledge base that the search engine constructs automatically. The disclosed system allows the user to move from the current document to one of a number of document lists which cover different associations between the initial word clicked on by the user and other terms extracted from within the retrieved list of related terms. The disclosed system may further allow the user to move to a document that is considered most related to the initial word clicked on by the user, or to a list of documents that are relevant to a phrase or paragraph selection indicated by the user within the current page.

Owner:FIVER LLC

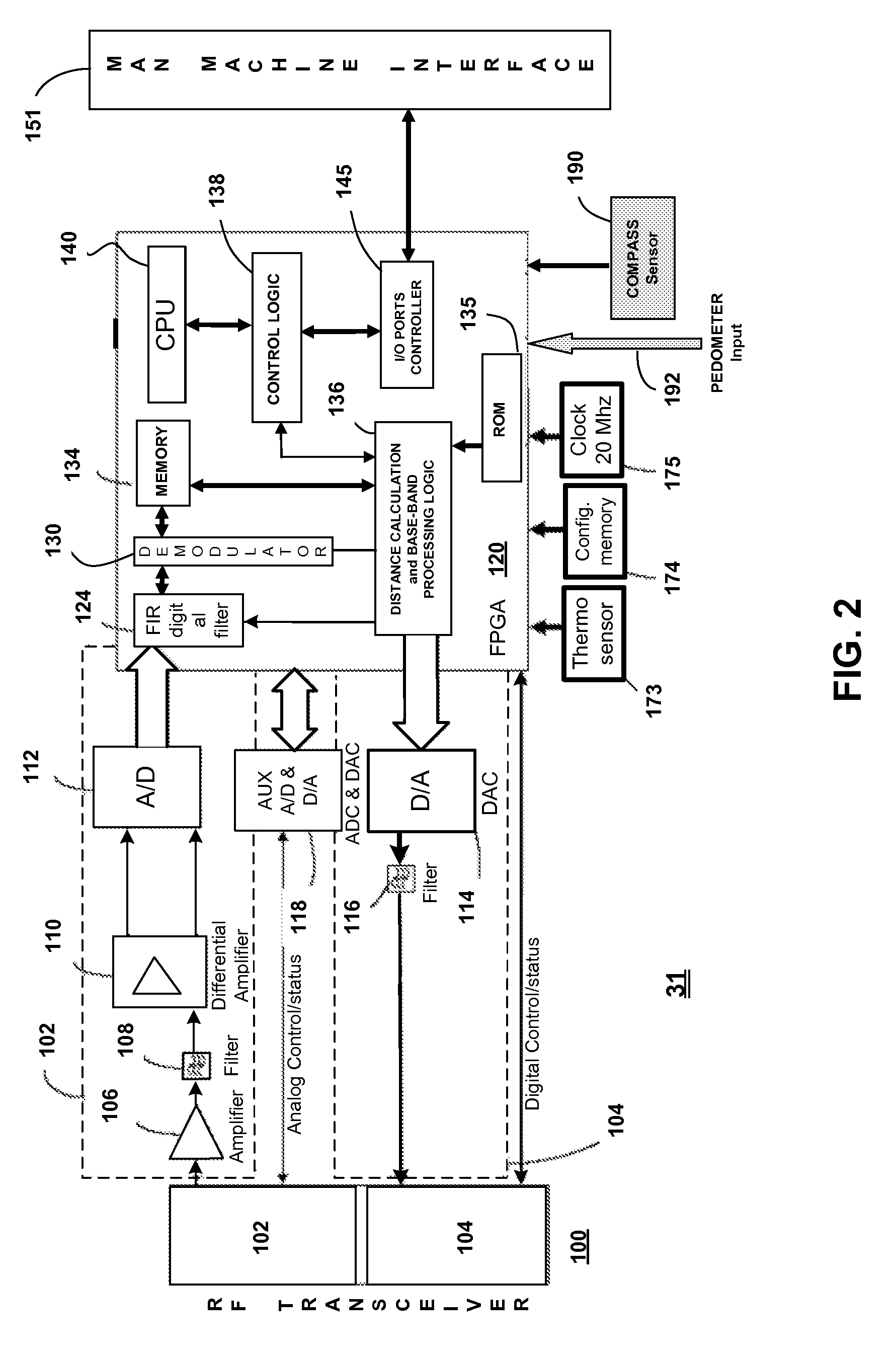

Method and system for positional finding using rf, continuous and/or combined movement

ActiveUS20070075898A1Eliminate disadvantagesBeacon systems using radio wavesPosition fixationGyroscopeTransceiver

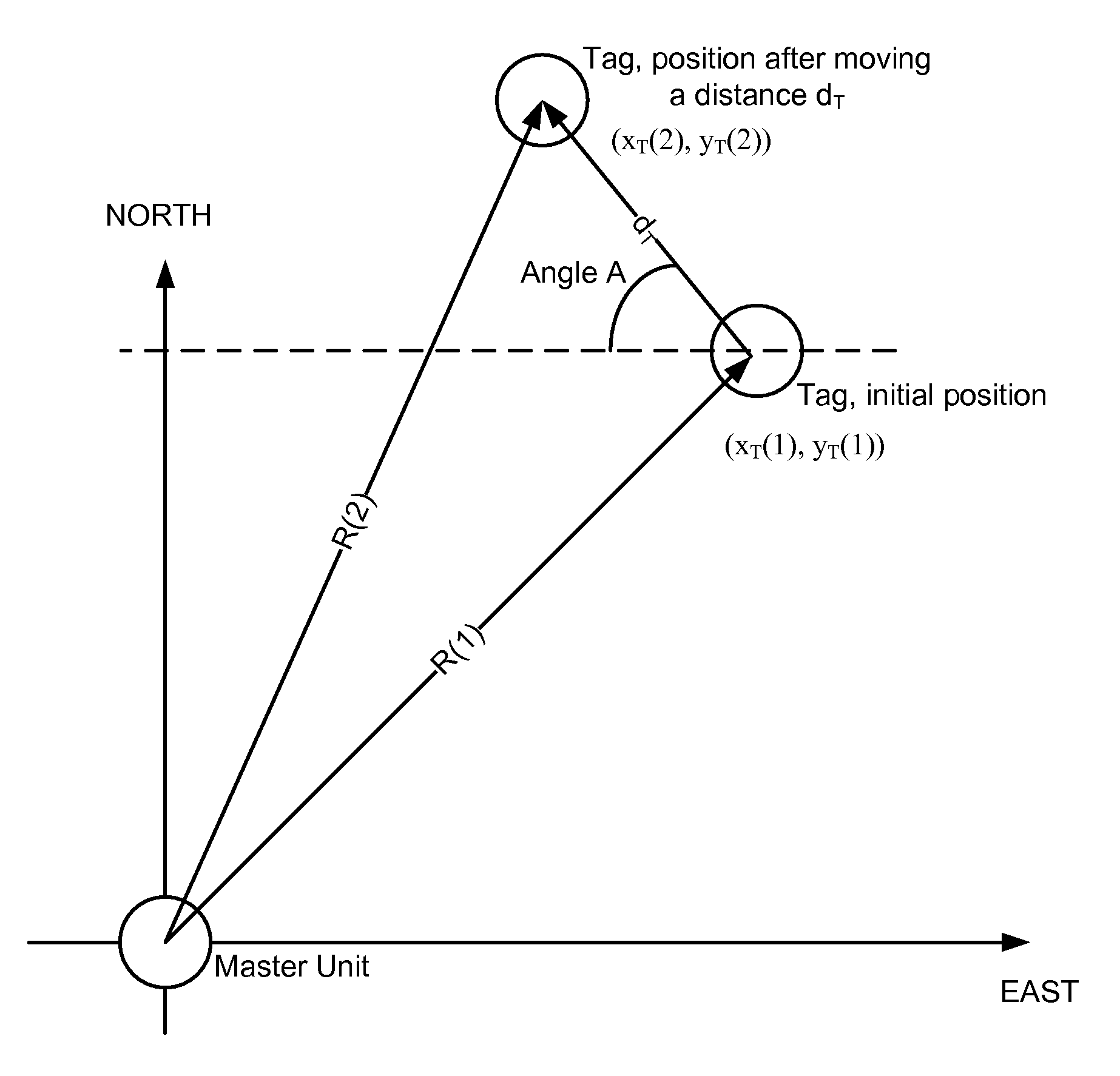

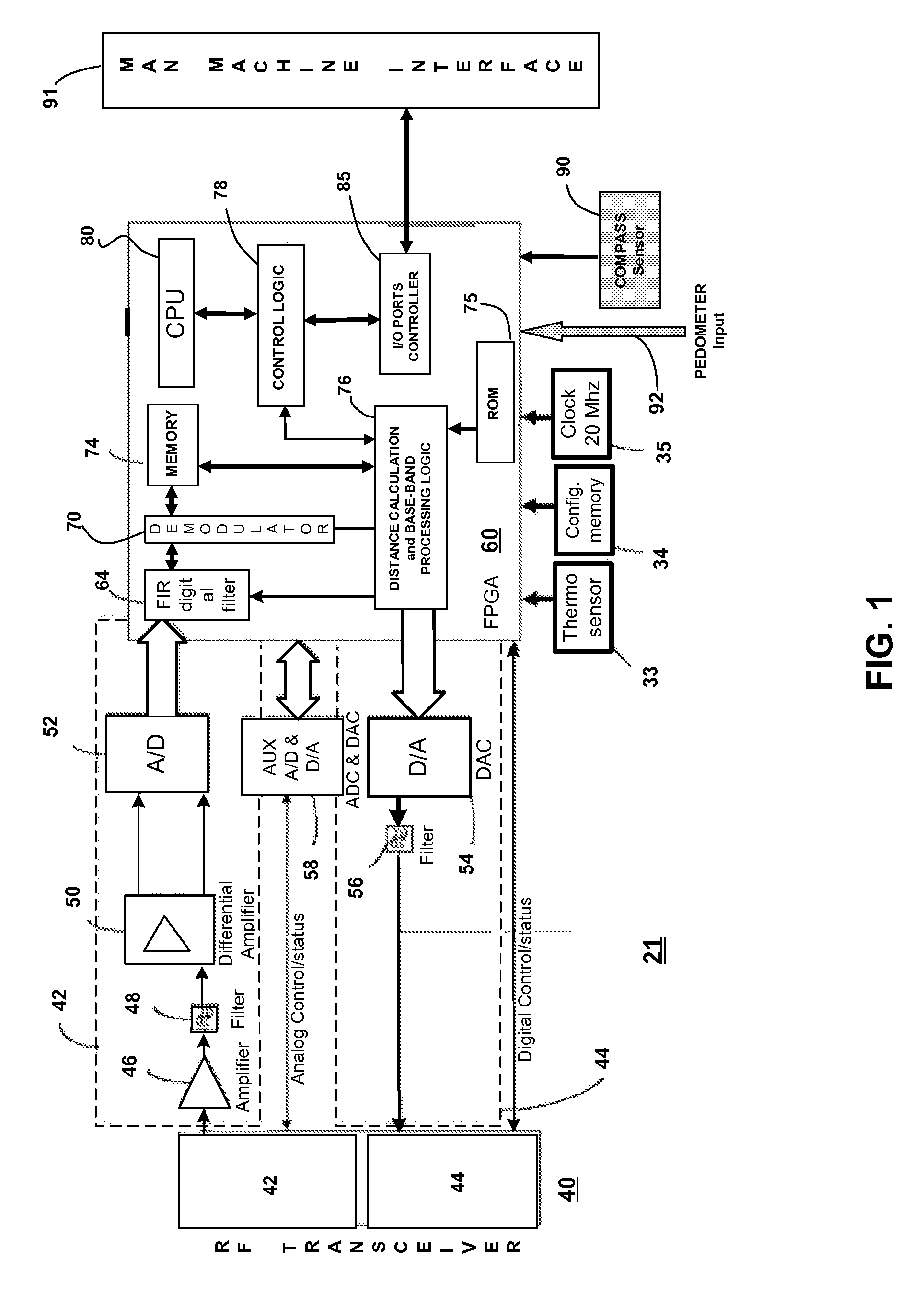

A system for determining location of an object, the system including a Master Unit having an RF transceiver and adapted to measure distance to the Tag. The Master Unit has a first input from which it can derive its current position. The Master Unit transmits instructions to the Tag for movement in a predetermined direction. The Master Unit measures distance to the Tag after the movement in the predetermined direction. The Master Unit determines position of the Tag after the movement in the predetermined direction. The Tag can include a compass, a pedometer, and optionally an accelerometer, a solid-state gyroscope, an altimeter inputs for determining its current position by the Master Unit. The Master can optionally include a compass as well as a pedometer, an altimeter, an accelerometer, a solid-state gyroscope, an altimeter and a GPS receiver. Also, the Tag movement does not have to follow the Master's direction. However, the Master Unit still will be able to determine the Tag location(s). Also, the roles of the Master Unit and Tag can be reversed.

Owner:QUALCOMM TECHNOLOGIES INC

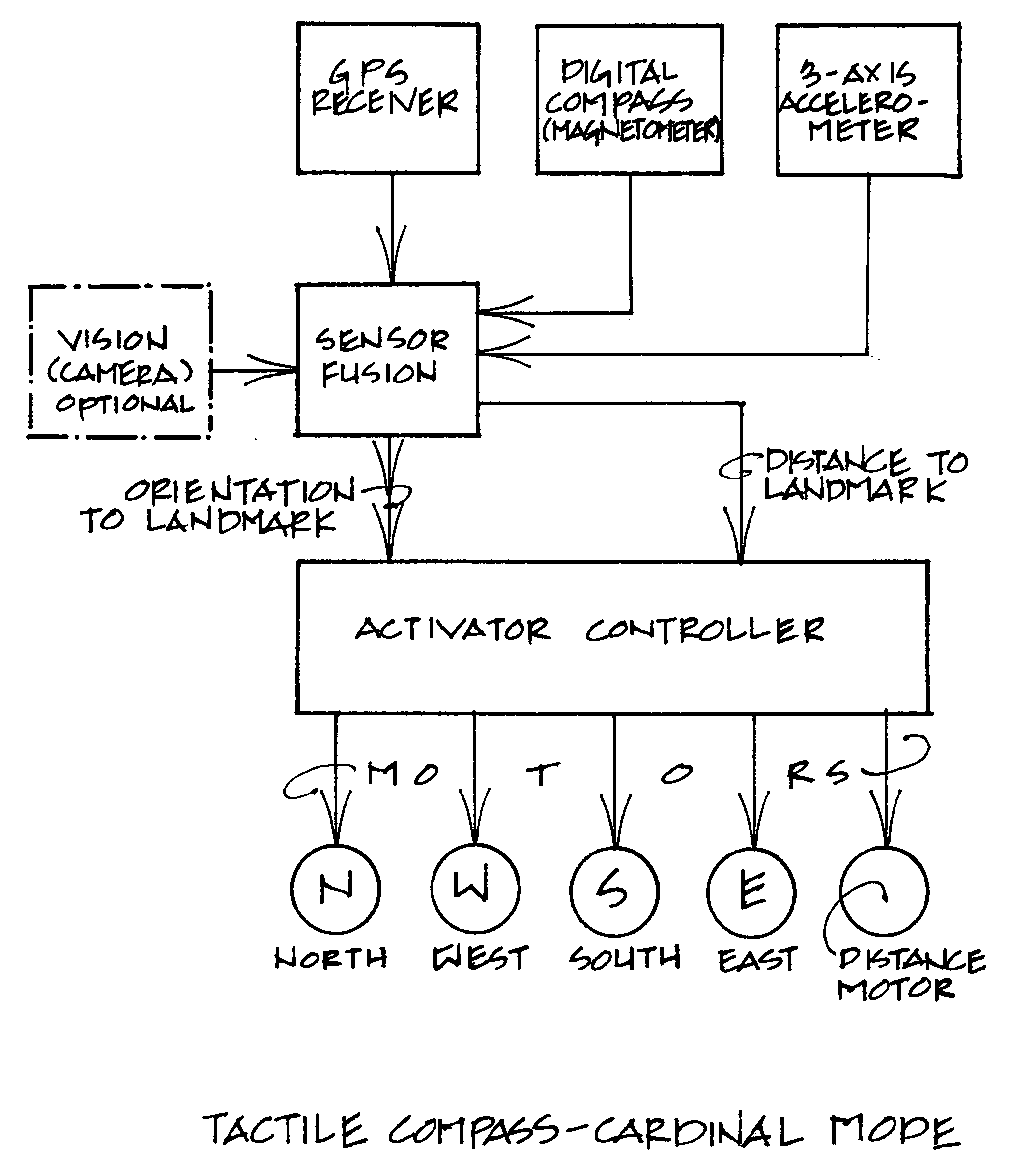

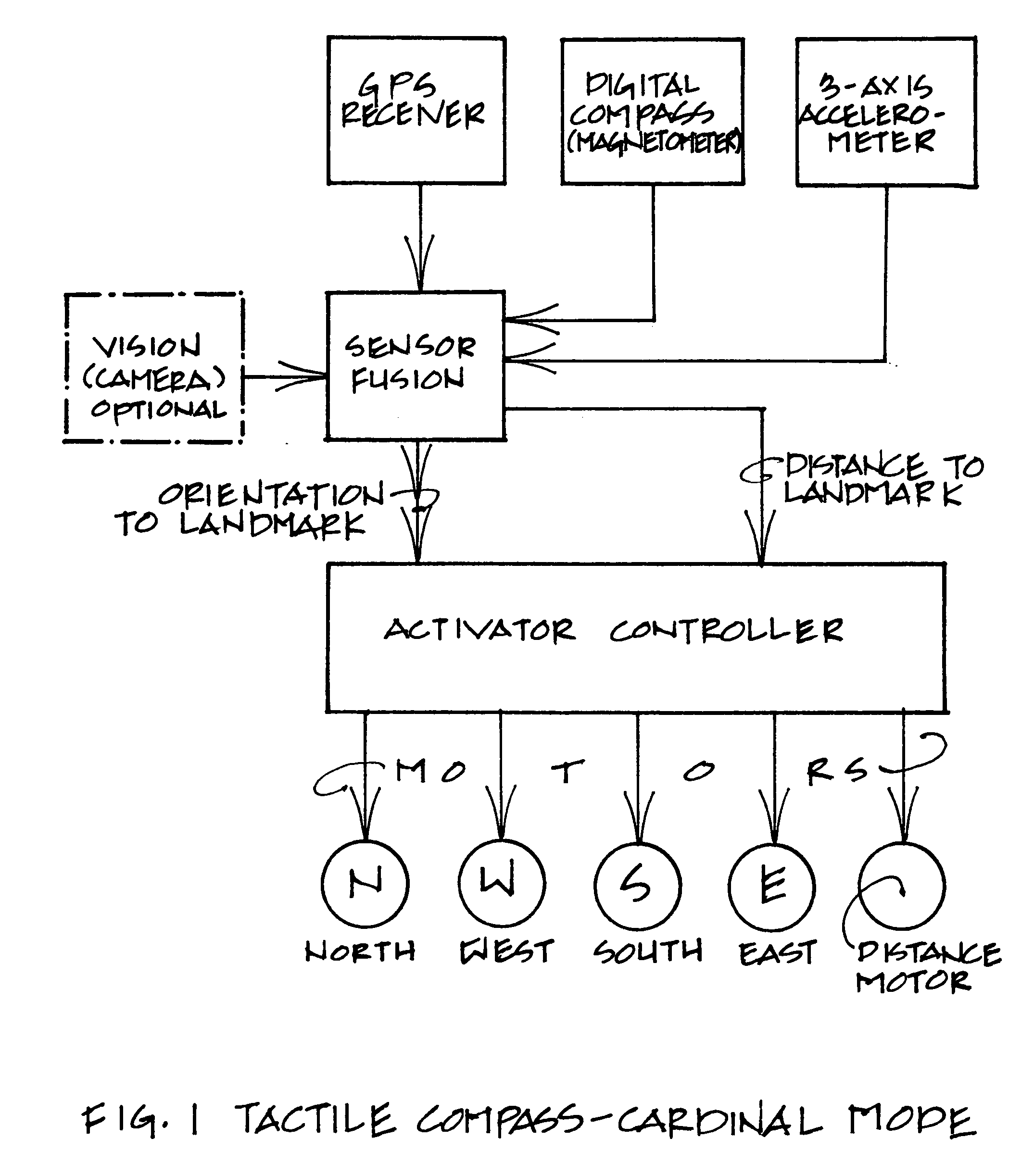

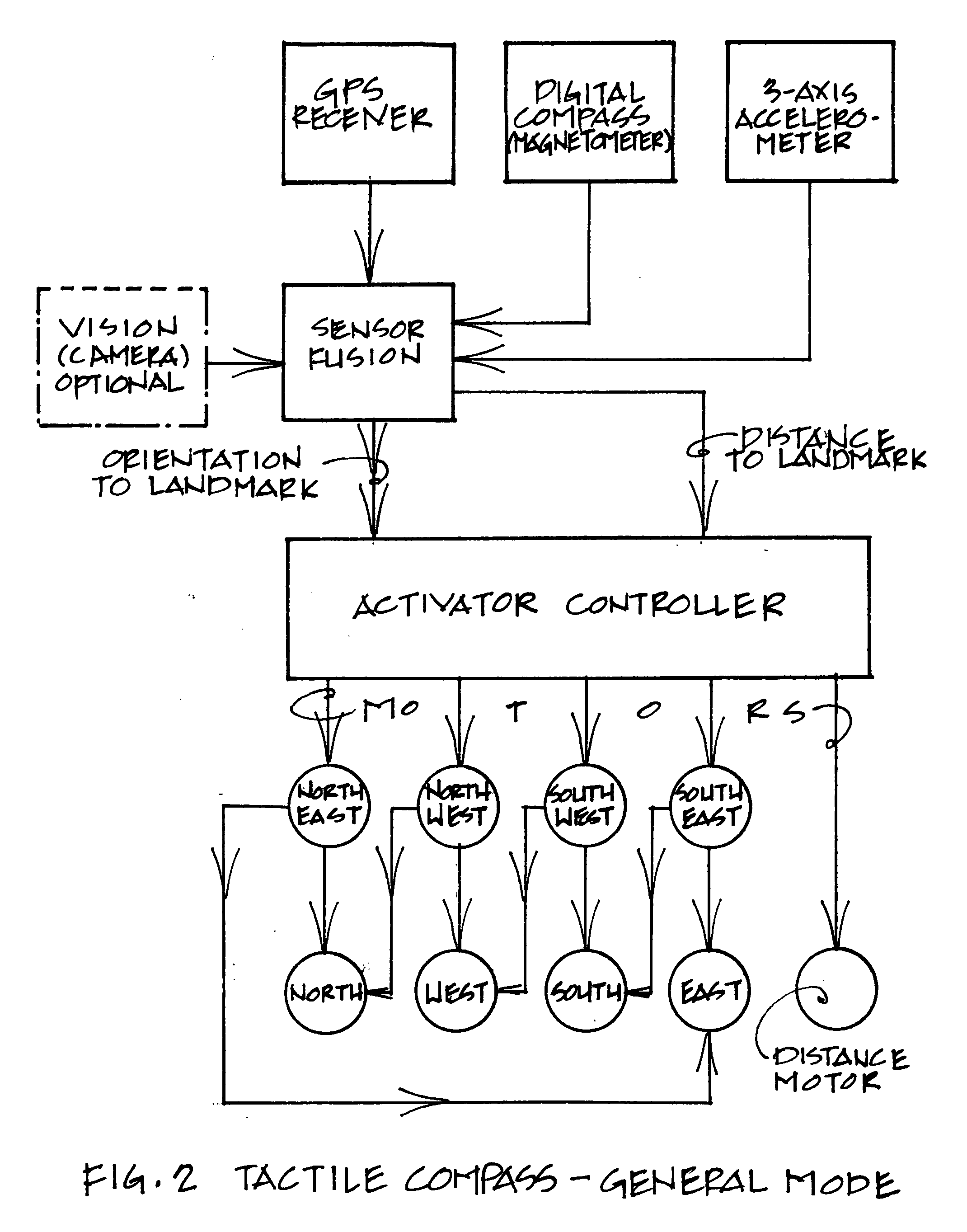

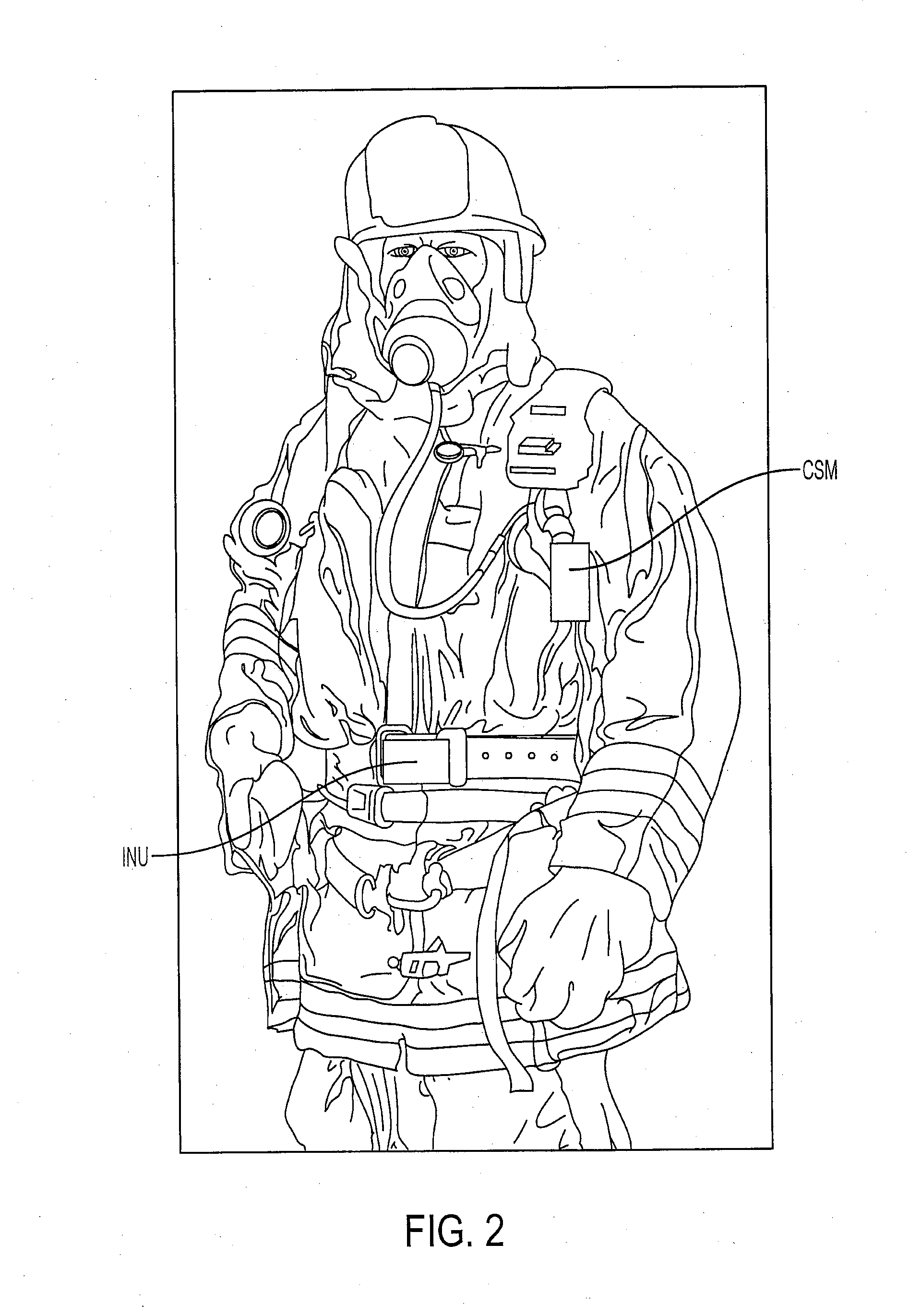

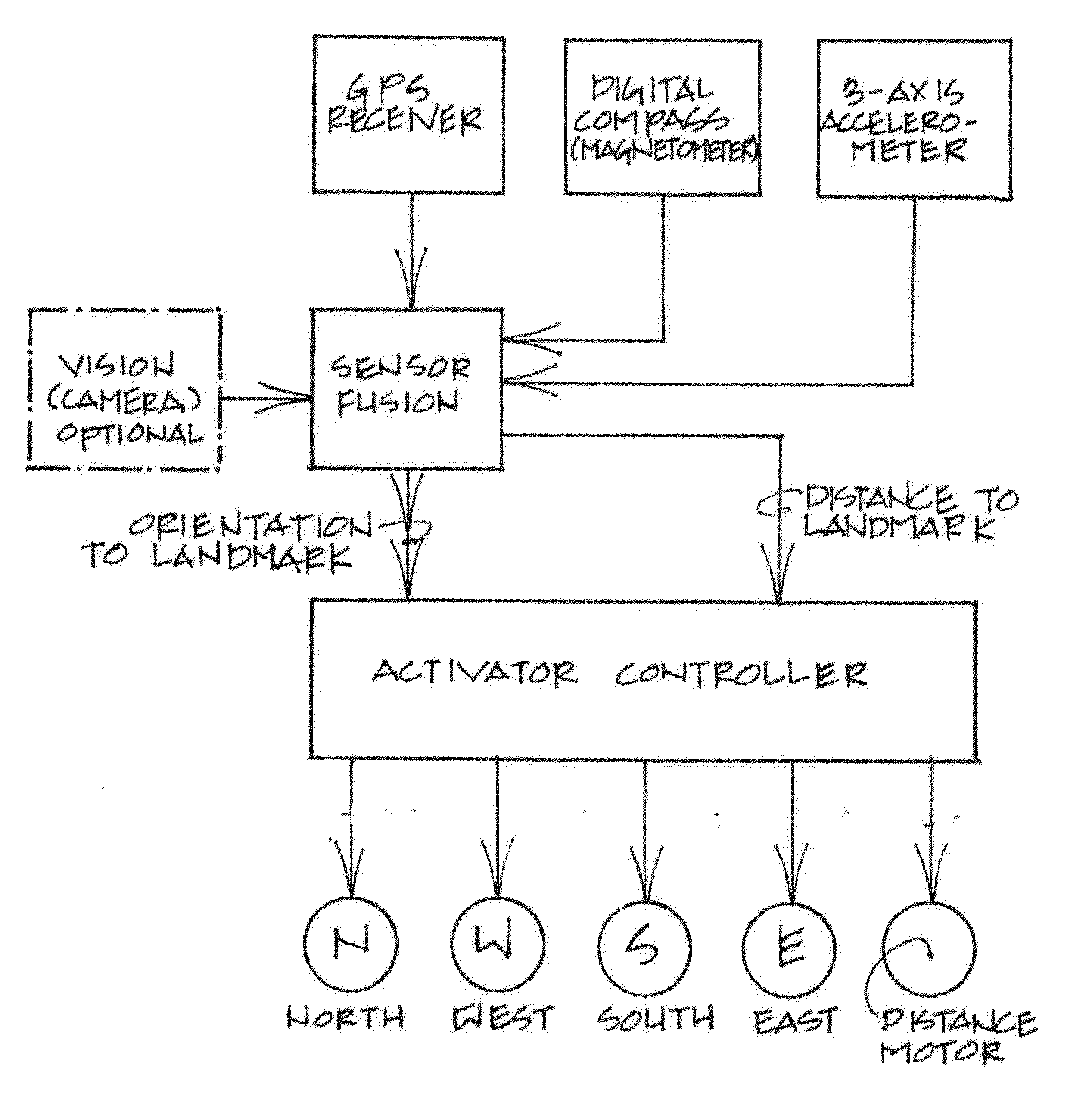

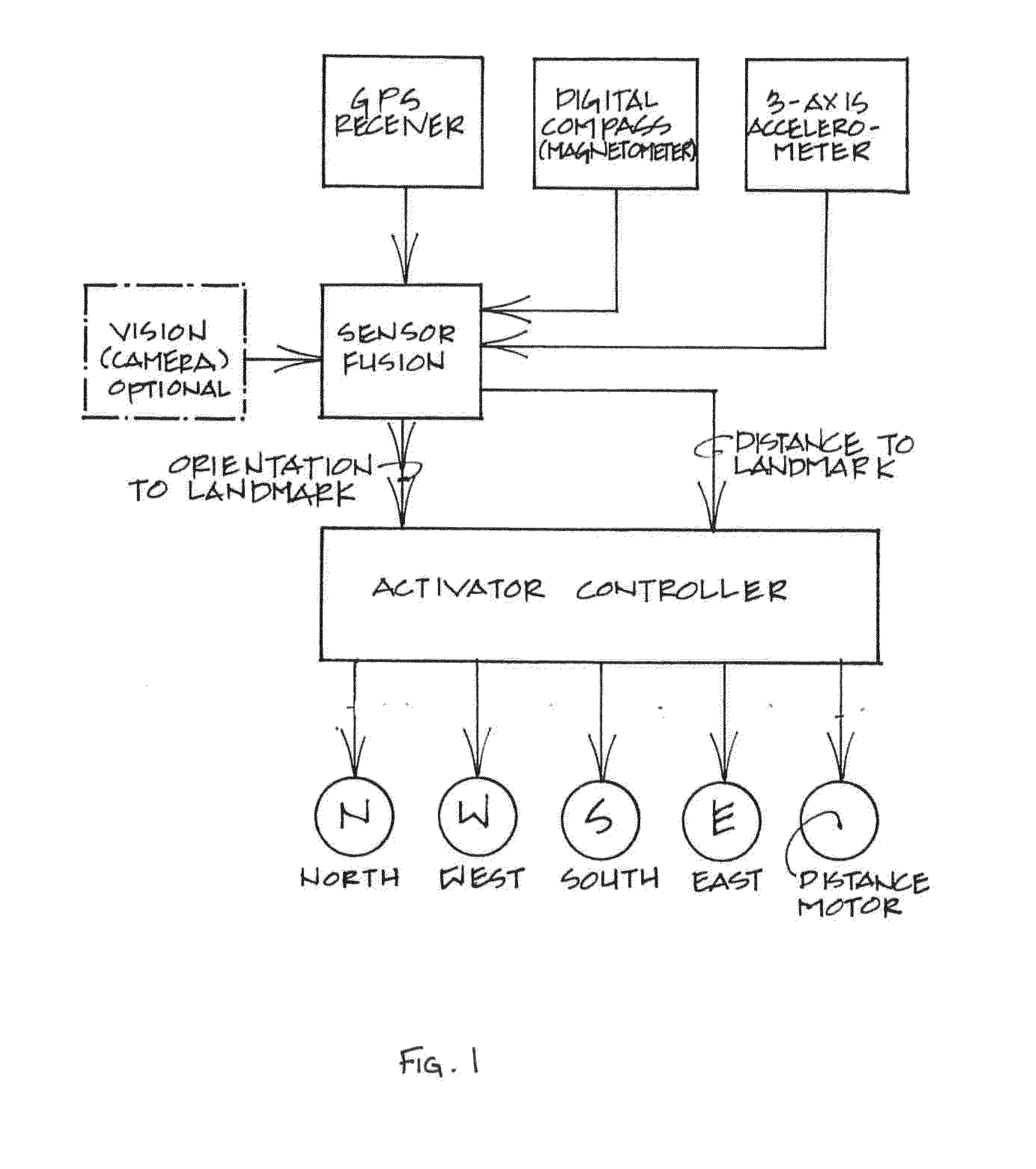

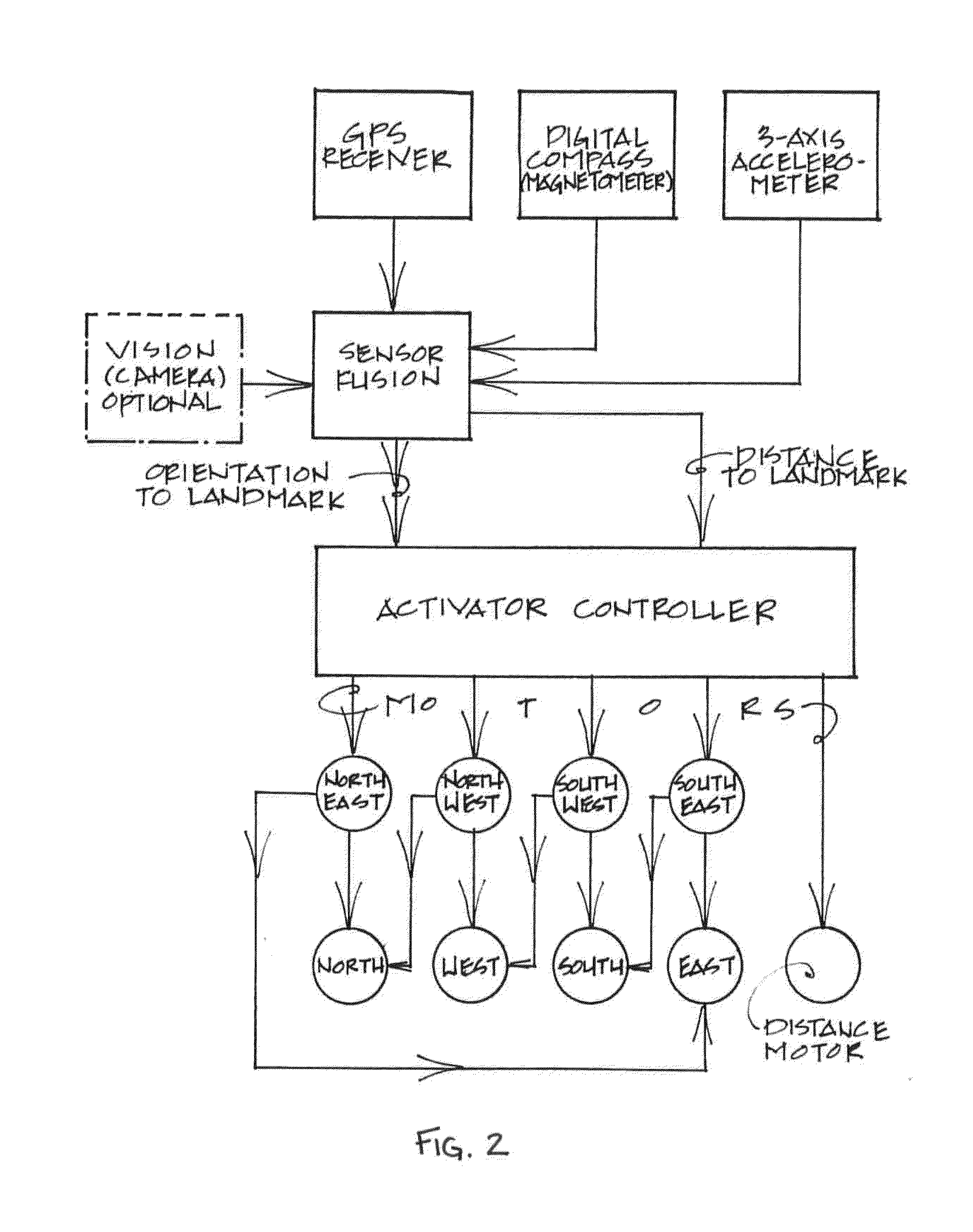

Wearable tactile navigation system

InactiveUS20080120029A1Navigational calculation instrumentsPosition fixationTactile perceptionGps navigation

The wearable tactile navigation system frees you from requiring to use your eyes as there is no display, all positional information is conveyed via touch. As a compass, the device nudges you towards North. As a GPS navigator, the device orients you towards a landmark (i.e., home) and lets you feel how far away home is. A bluetooth interface provides network capabilities, allowing you to download map landmarks from a cell phone. The bidirectional networking capability generalizes the device to a platform capable of collecting any sensor data as well as providing tactile messages and touch telepresence. The main application of the device is a wayfinding device for people that are blind and for people that suffer from Alzheimer's disease but there are many other applications where it is desirable to provide geographical information in tactile form as opposed to providing it in visual or auditory form.

Owner:ZELEK JOHN S +1

Optical see-through augmented reality modified-scale display

InactiveUS7002551B2Improve accuracyAccurate currentInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsDisplay device

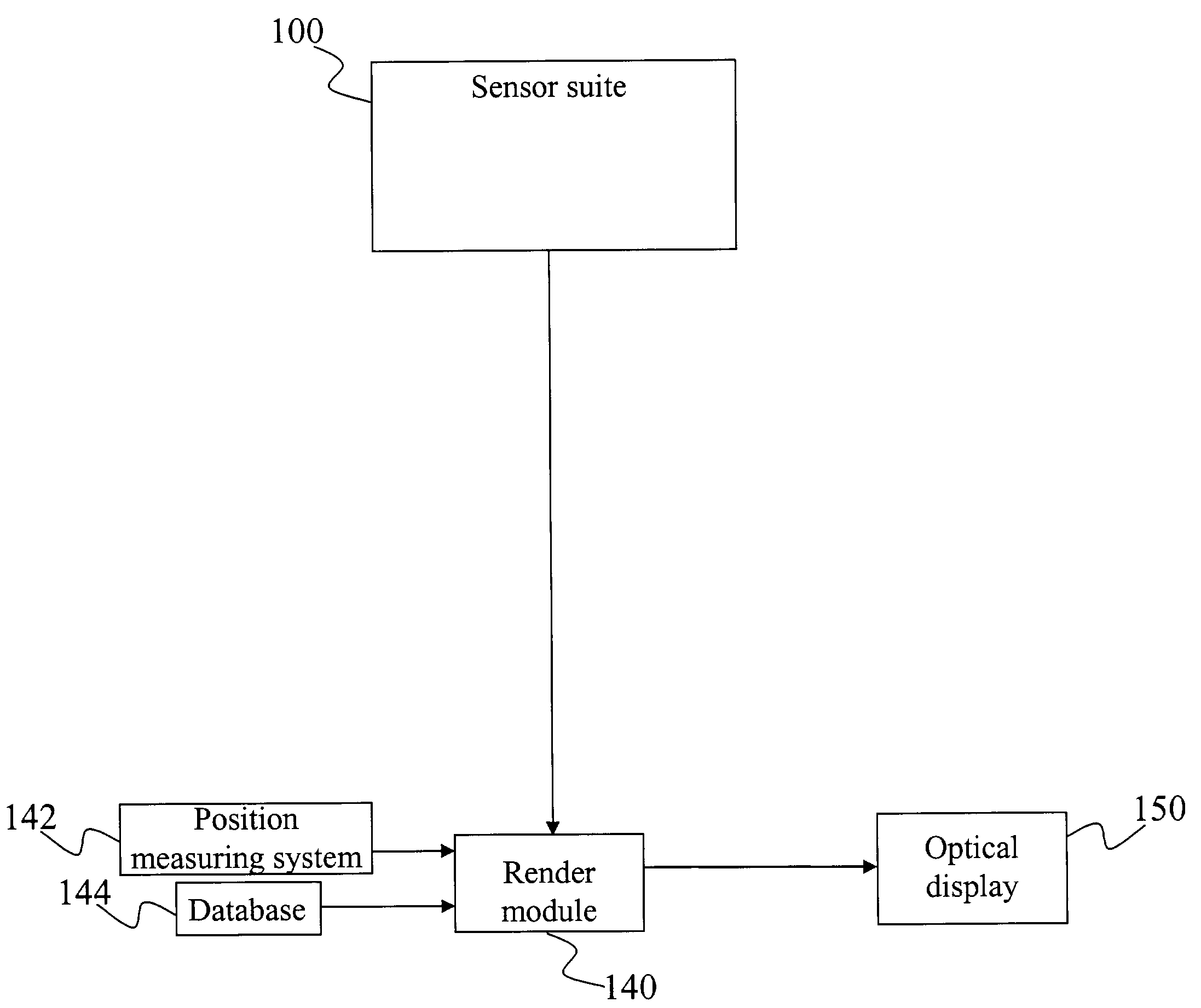

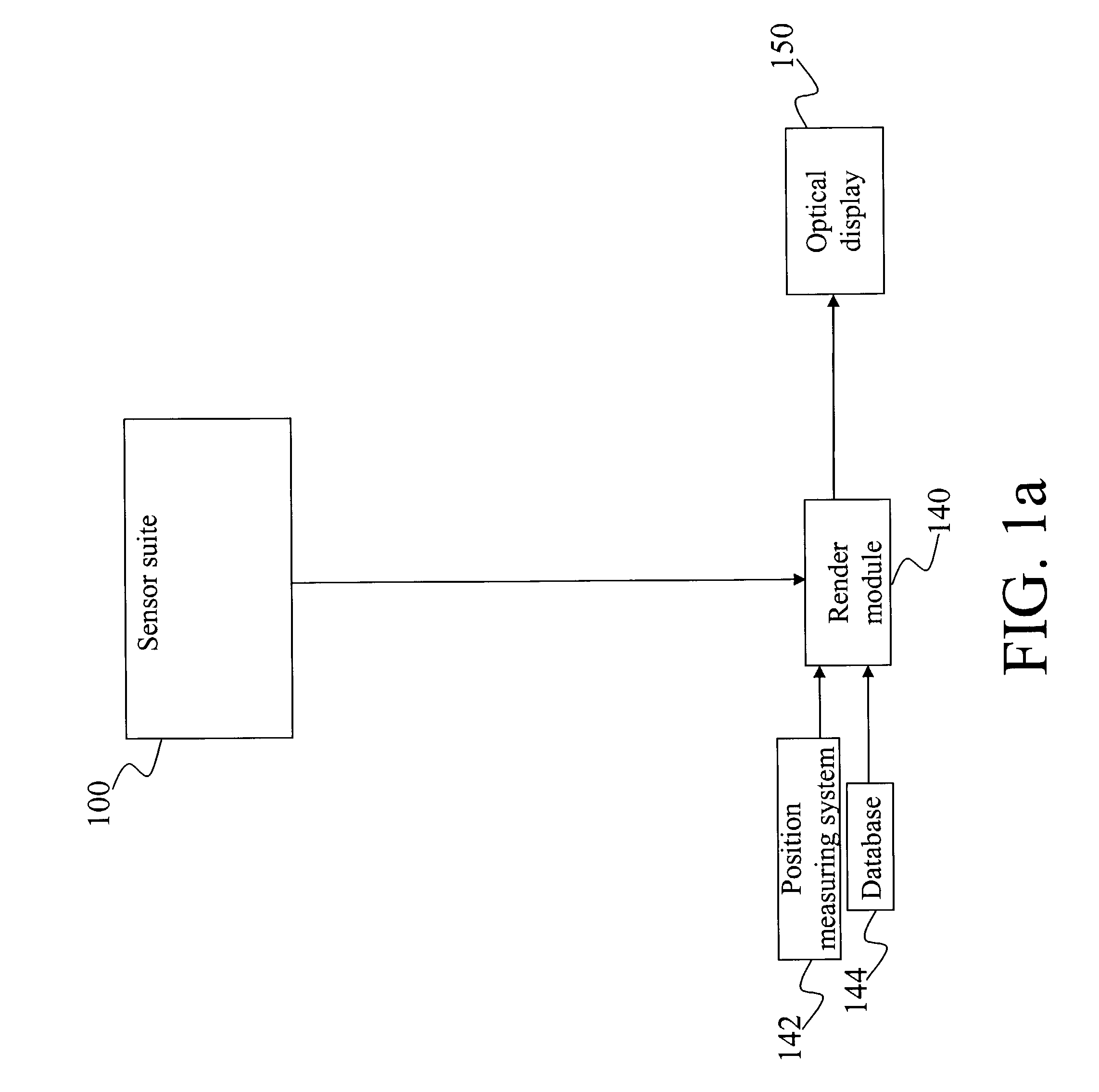

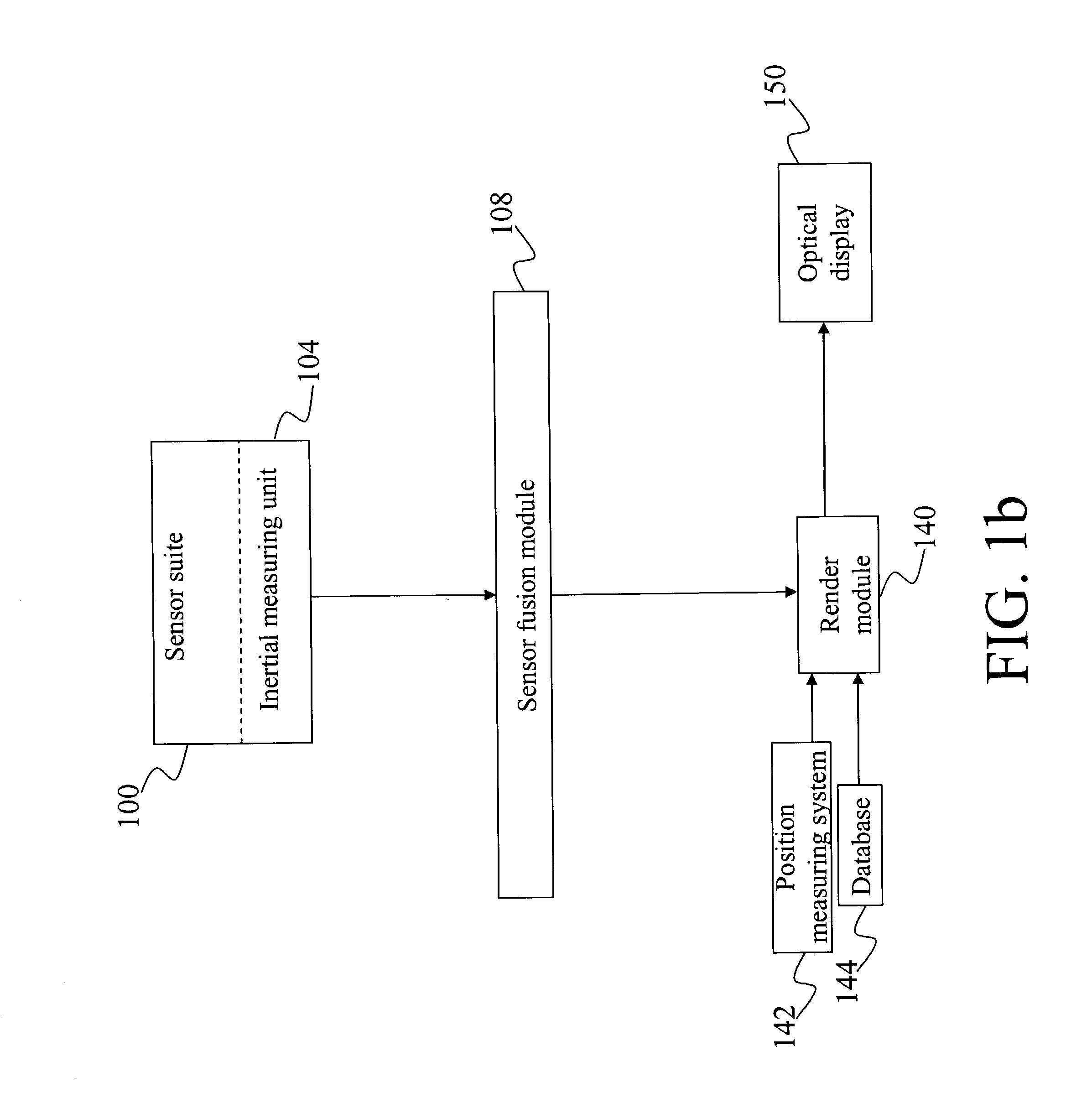

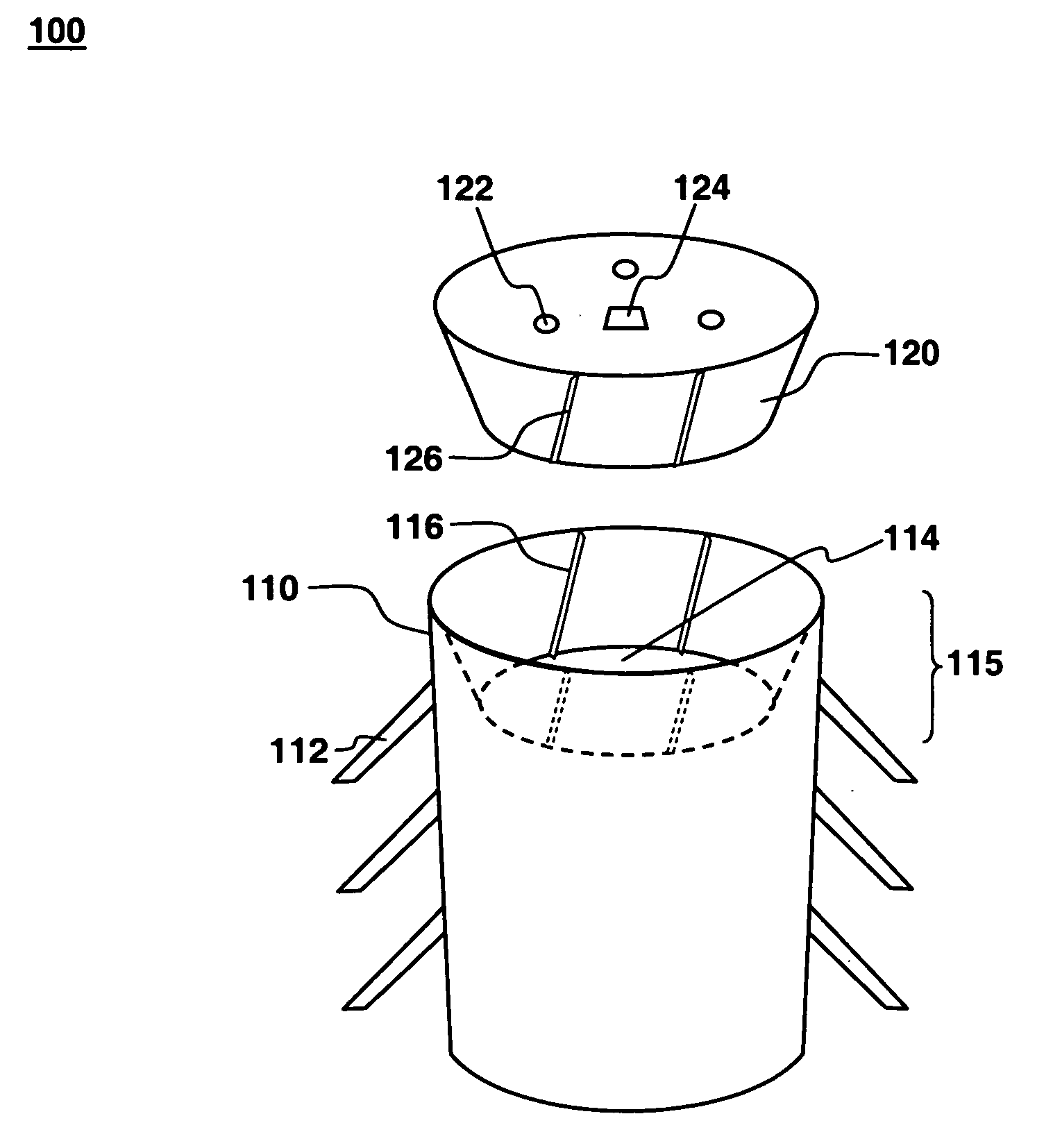

A method and system for providing an optical see-through Augmented Reality modified-scale display. This aspect includes a sensor suite 100 which includes a compass 102, an inertial measuring unit 104, and a video camera 106 for precise measurement of a user's current orientation and angular rotation rate. A sensor fusion module 108 may be included to produce a unified estimate of the user's angular rotation rate and current orientation to be provided to an orientation and rate estimate module 120. The orientation and rate estimate module 120 operates in a static or dynamic (prediction) mode. A render module 140 receives an orientation; and the render module 140 uses the orientation, a position from a position measuring system 142, and data from a database 144 to render graphic images of an object in their correct orientations and positions in an optical display 150.

Owner:HRL LAB

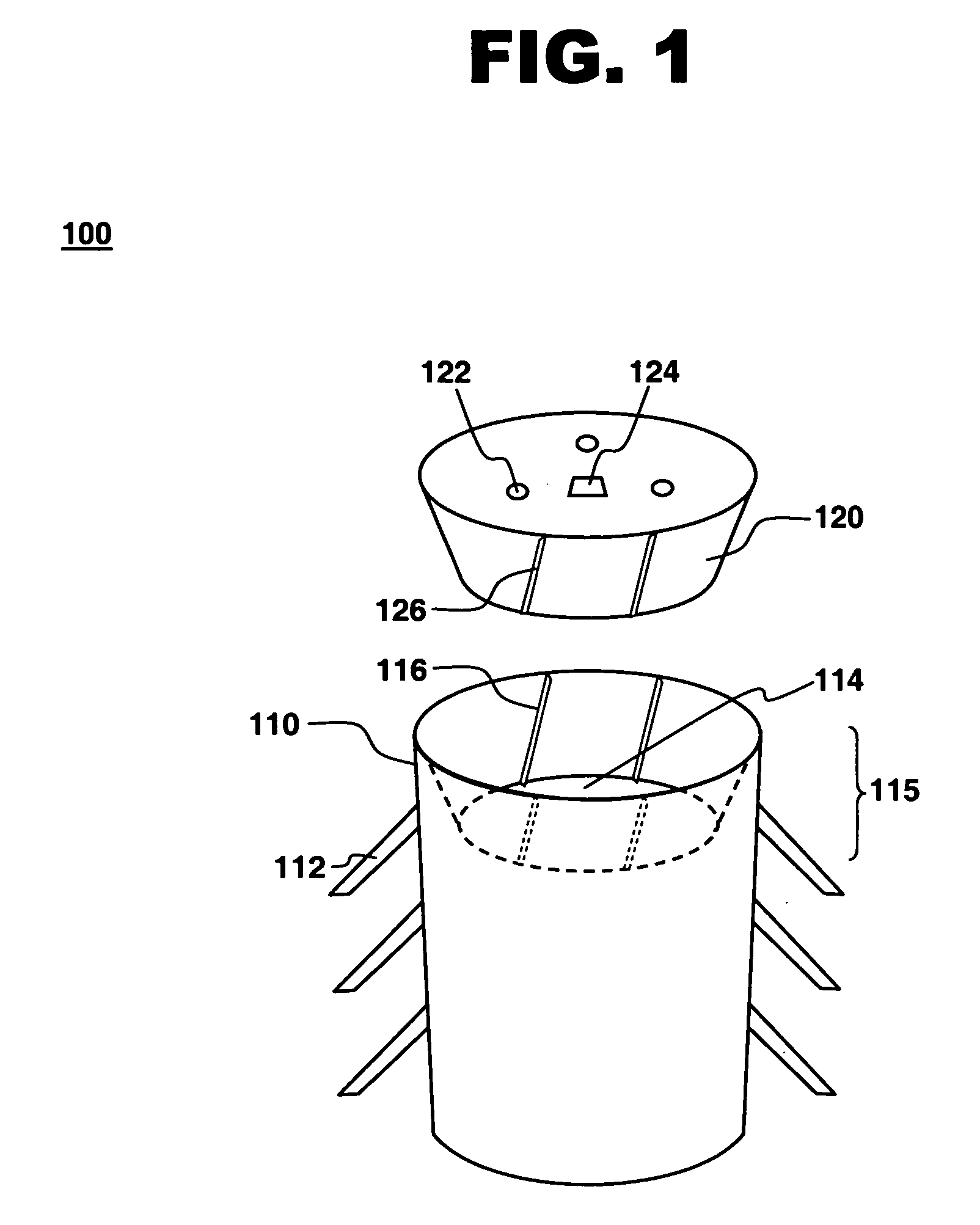

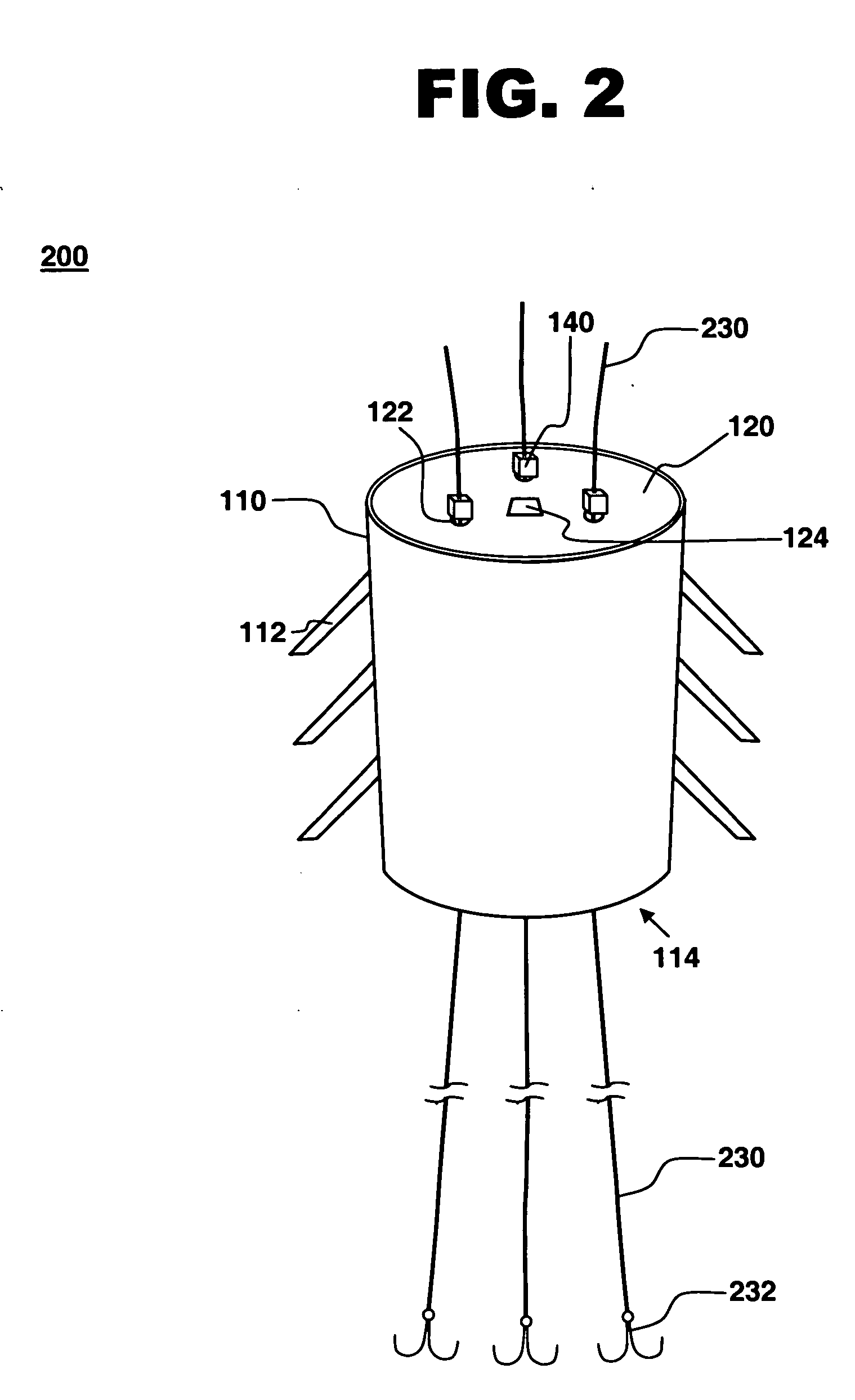

Multifilament anchor for reducing a compass of a lumen or structure in mammalian body

InactiveUS20060089711A1Reducing compassReduce compassSuture equipmentsHeart valvesProximateDistal portion

A system for reducing a compass of an opening or structure in a mammalian body comprises an anchor having a central aperture, a tensioner having a plurality of openings, and a plurality of filaments, each including a retaining member affixed to a distal portion of the filament. The tensioner is receivable within the anchor central aperture. A proximal portion of each filament is receivable within a tensioner opening. A method of reducing a compass of a lumen or structure in a mammalian body comprises delivering the anchor to a first location proximate target tissue, delivering the filaments to a second location proximate the target tissue, threading the filaments through the anchor and the tensioner openings, positioning the tensioner in the anchor aperture, retaining the filaments in the tensioner, and rotating the tensioner to twist the filaments, thereby shortening the length of the filaments and increasing the tension across the system.

Owner:MEDTRONIC VASCULAR INC

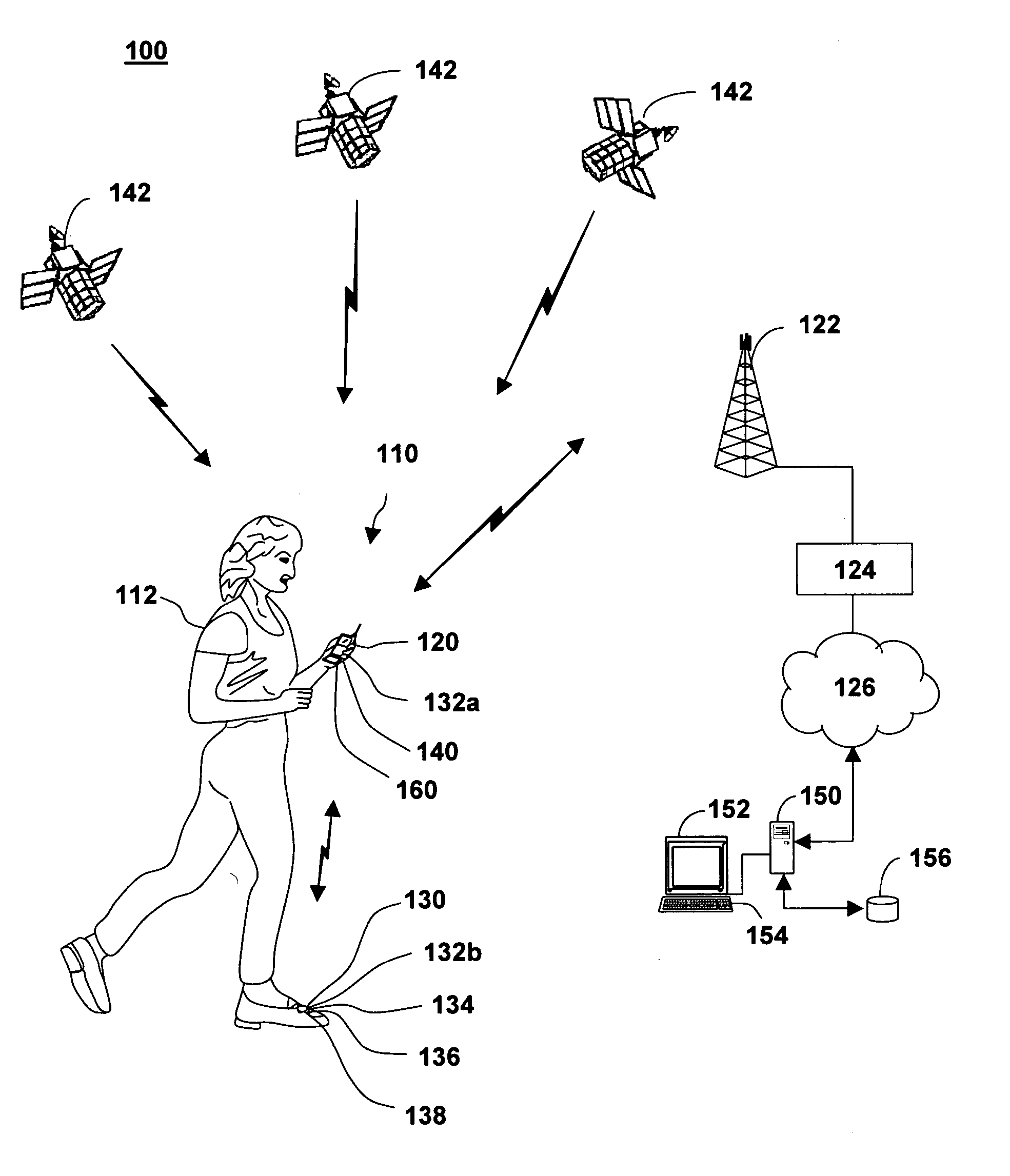

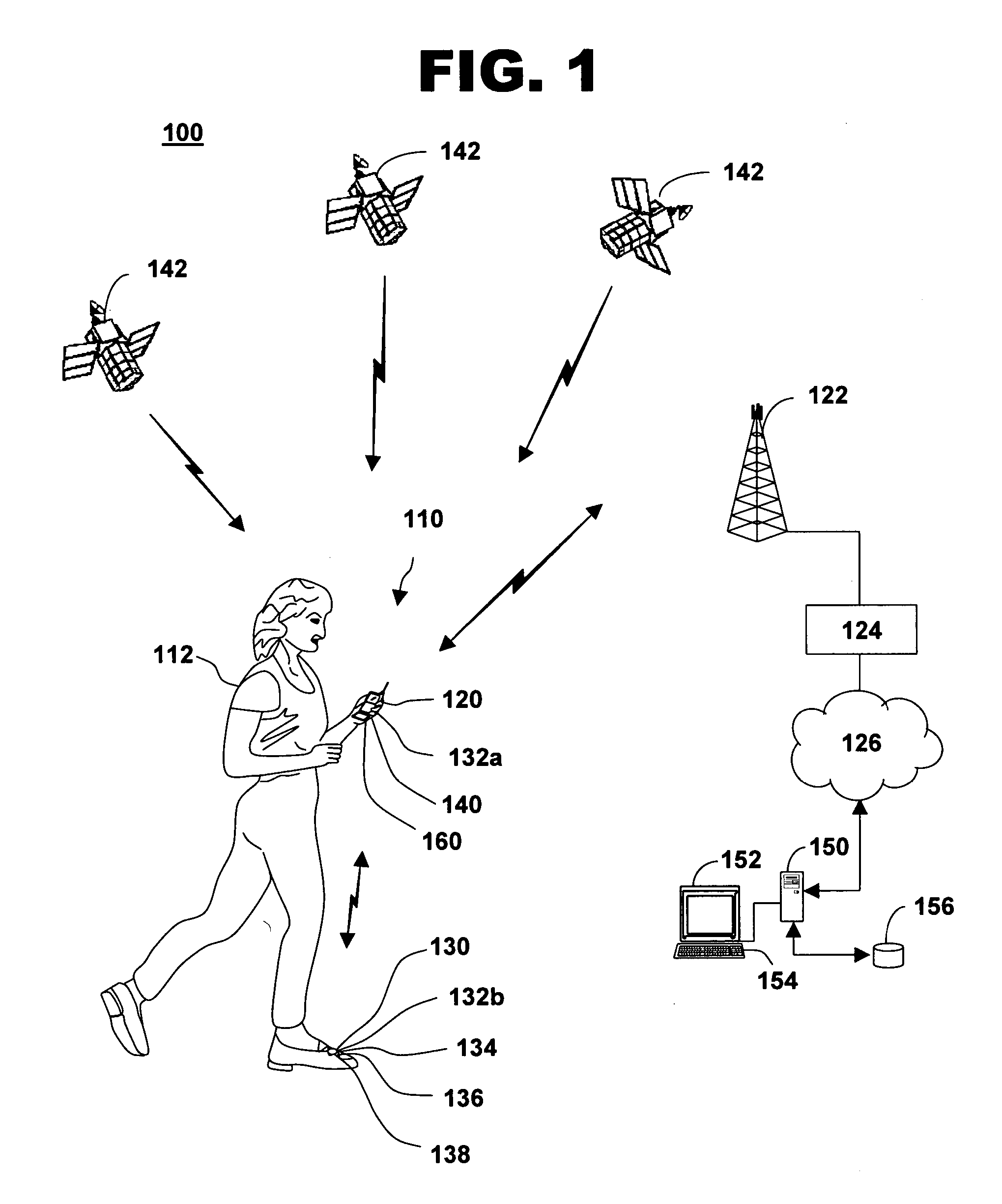

Wireless personal tracking and navigation system

InactiveUS20050033515A1Instruments for road network navigationNavigation by speed/acceleration measurementsElectricityNavigation system

The invention provides a personal tracking system comprising a wireless communication device, a pedometer electrically coupled to the wireless communication device, and an electronic compass operably positioned with respect to the pedometer. The wireless communication device receives readings from the pedometer and the electronic compass to provide position information. A method of tracking a location of a person, a system for tracking the location of a person, and an electronic module for a personal tracking system are also disclosed.

Owner:MOTOROLA INC

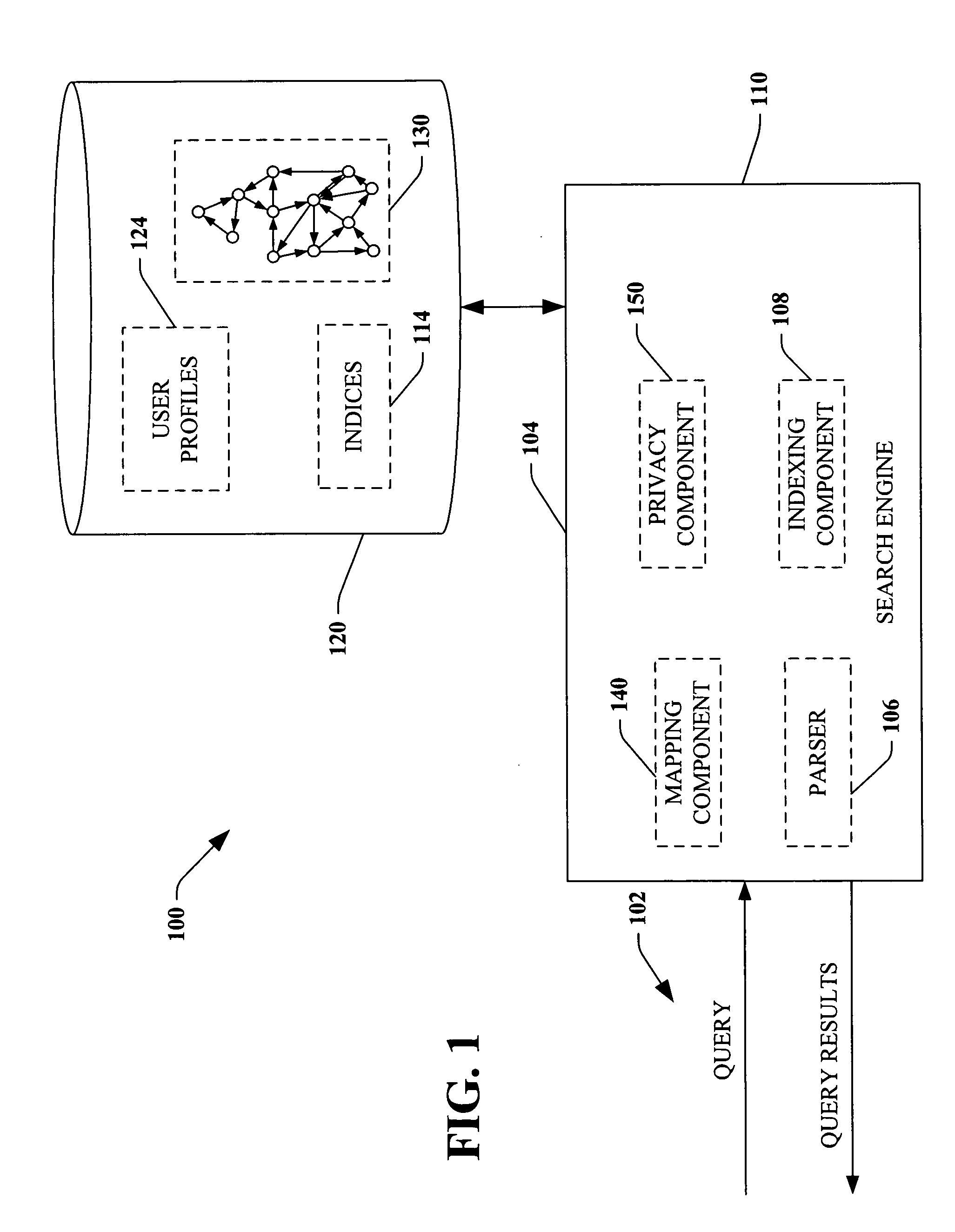

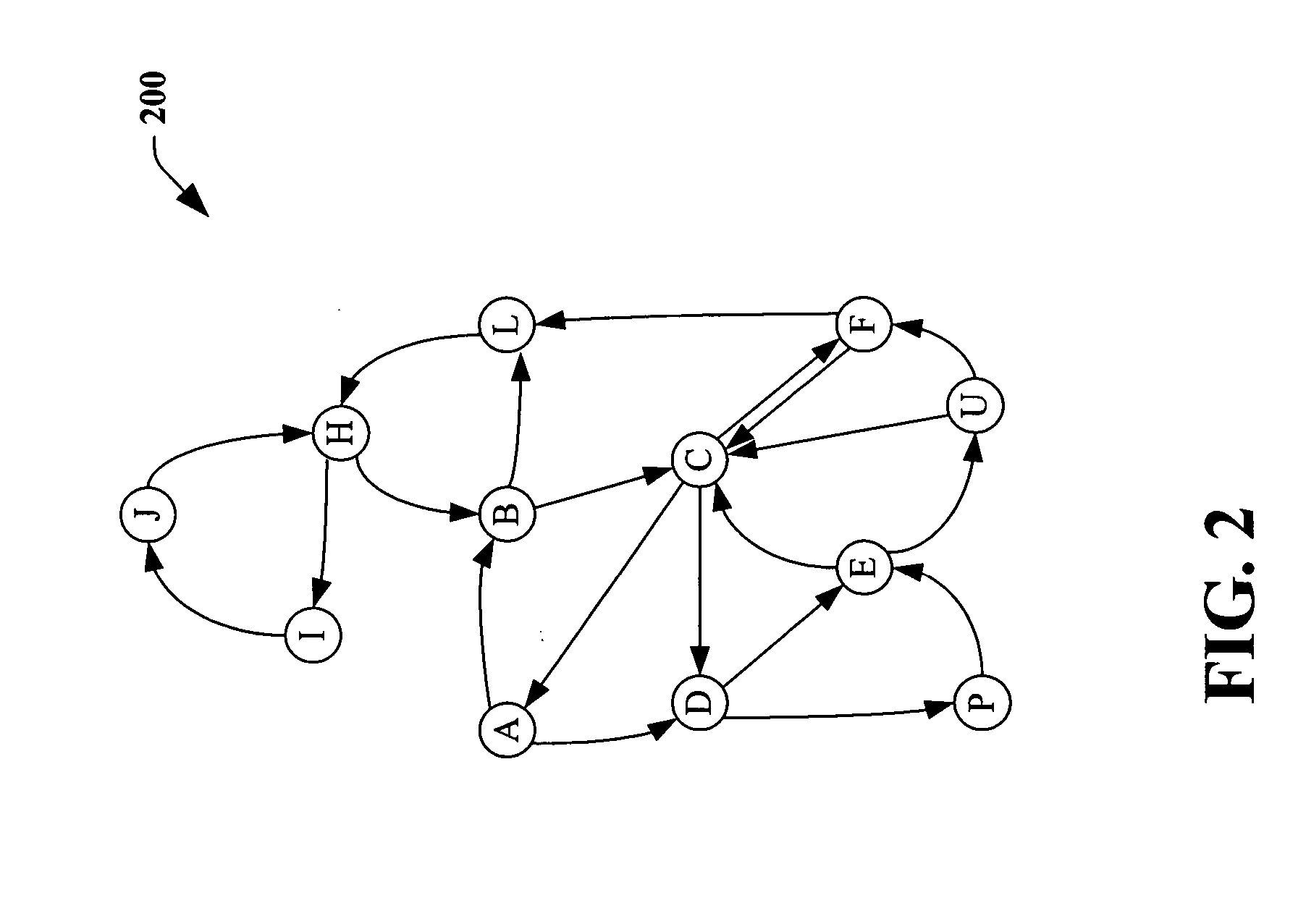

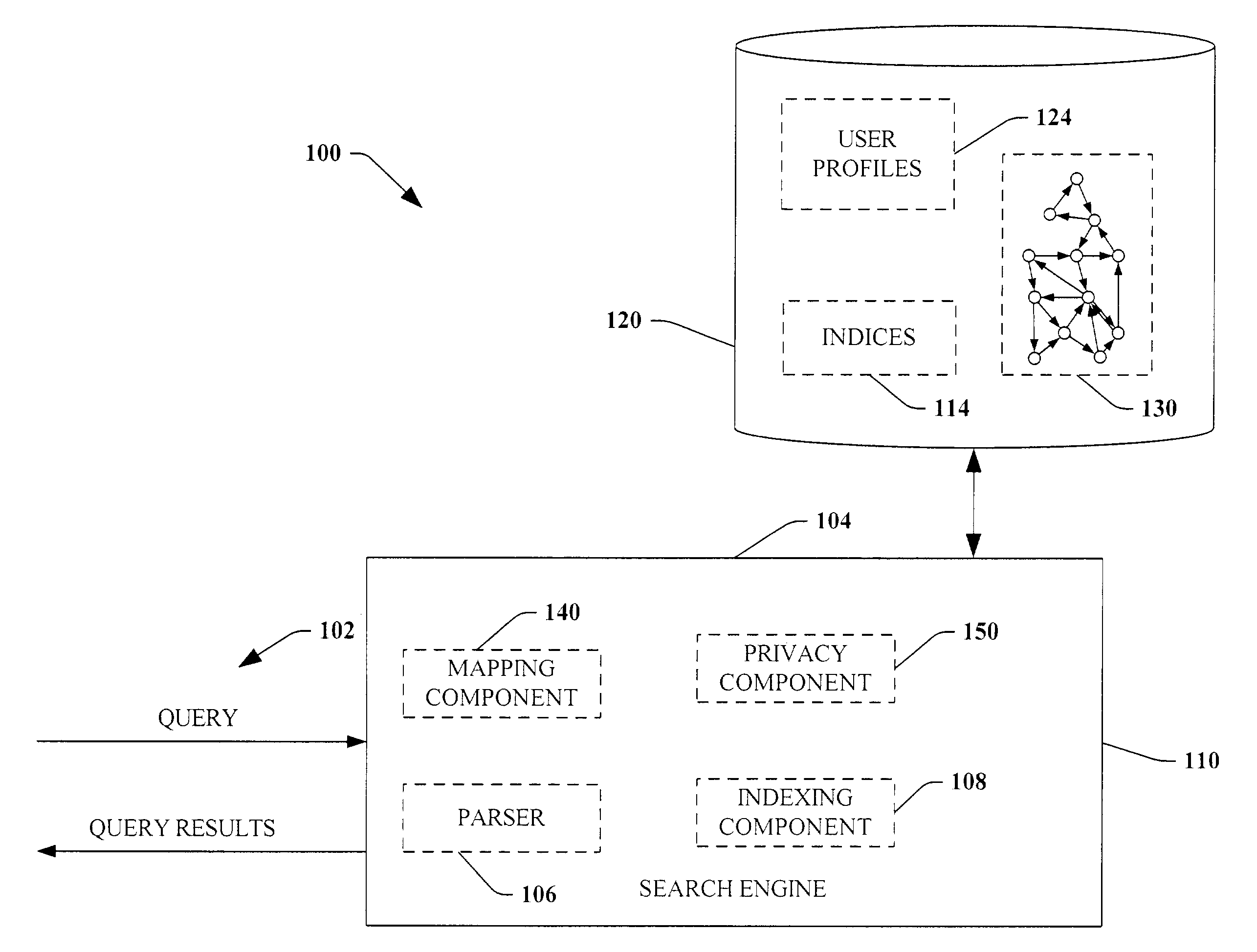

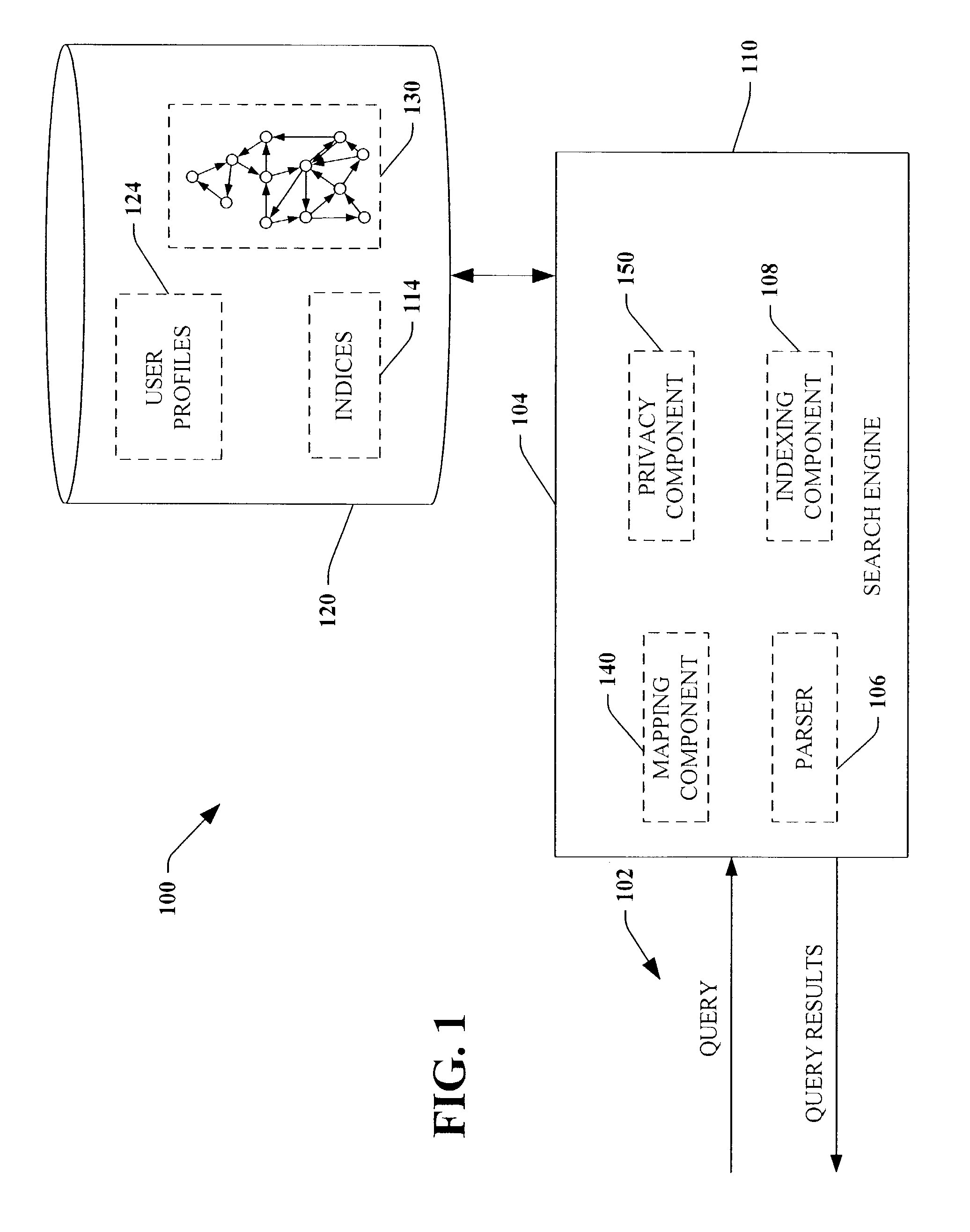

System and method for employing social networks for information discovery

ActiveUS20060041543A1Maintaining trust integrityAccurate directionData processing applicationsDigital data information retrievalUser participationInformation discovery

Systems and methods are provided that enable searches of social networks by acting as a “compass” that assists users in navigating the social network. Individual user participation is not required in response to queries from other users. The systems and methods offer navigational assistance or information as opposed to a traditional search which returns requested information, thus currently acceptable social mechanisms for arbitrating trust can be exploited. As a result, users do not make their personal information publicly searchable, while at the same time, they are protected from potential misrepresentations of facts.

Owner:MICROSOFT TECH LICENSING LLC

System and method for locating, tracking, and/or monitoring the status of personnel and/or assets both indoors and outdoors

ActiveUS20120130632A1Improving location estimatesImprove the accuracy of both outdoorInstruments for road network navigationRoad vehicles traffic controlSensor fusionOrbit

Owner:TRX SYST

Wearable tactile navigation system

InactiveUS20130218456A1Portable solutionInstruments for road network navigationNavigational calculation instrumentsTactile perceptionGps navigation

The wearable tactile navigation system frees you from requiring to use your eyes as there is no display, all positional information is conveyed via touch. As a compass, the device nudges you towards North. As a GPS navigator, the device orients you towards a landmark (i.e., home) and lets you feel how far away home is. A bluetooth interface provides network capabilities, allowing you to download map landmarks from a cell phone. The bidirectional networking capability generalizes the device to a platform capable of collecting any sensor data as well as providing tactile messages and touch telepresence. The main application of the device is a wayfinding device for people that are blind and for people that suffer from Alzheimer's disease but there are many other applications where it is desirable to provide geographical information in tactile form as opposed to providing it in visual or auditory form.

Owner:ZELEK JOHN S +1

Interior rearview mirror system with compass

Owner:DONNELLY CORP

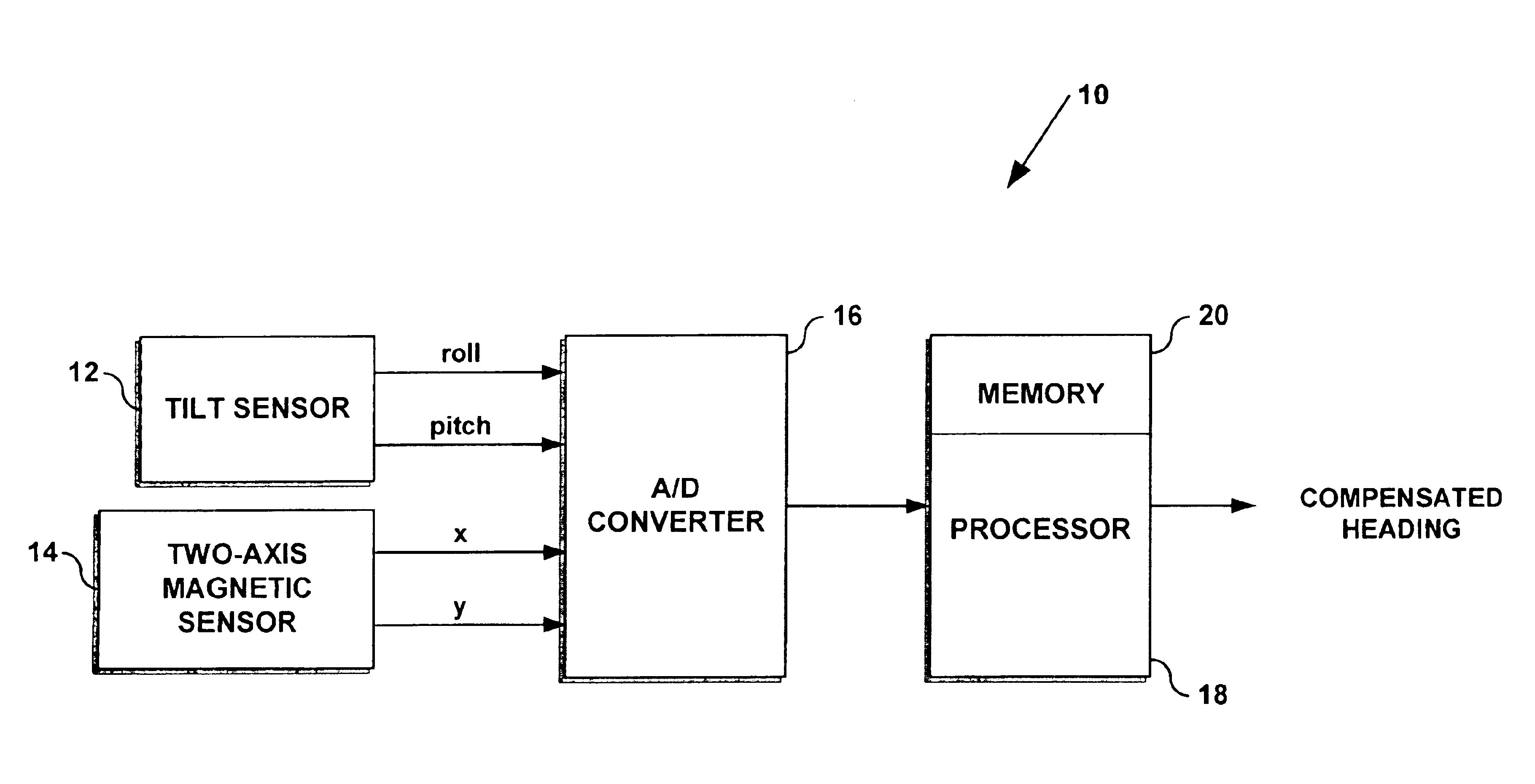

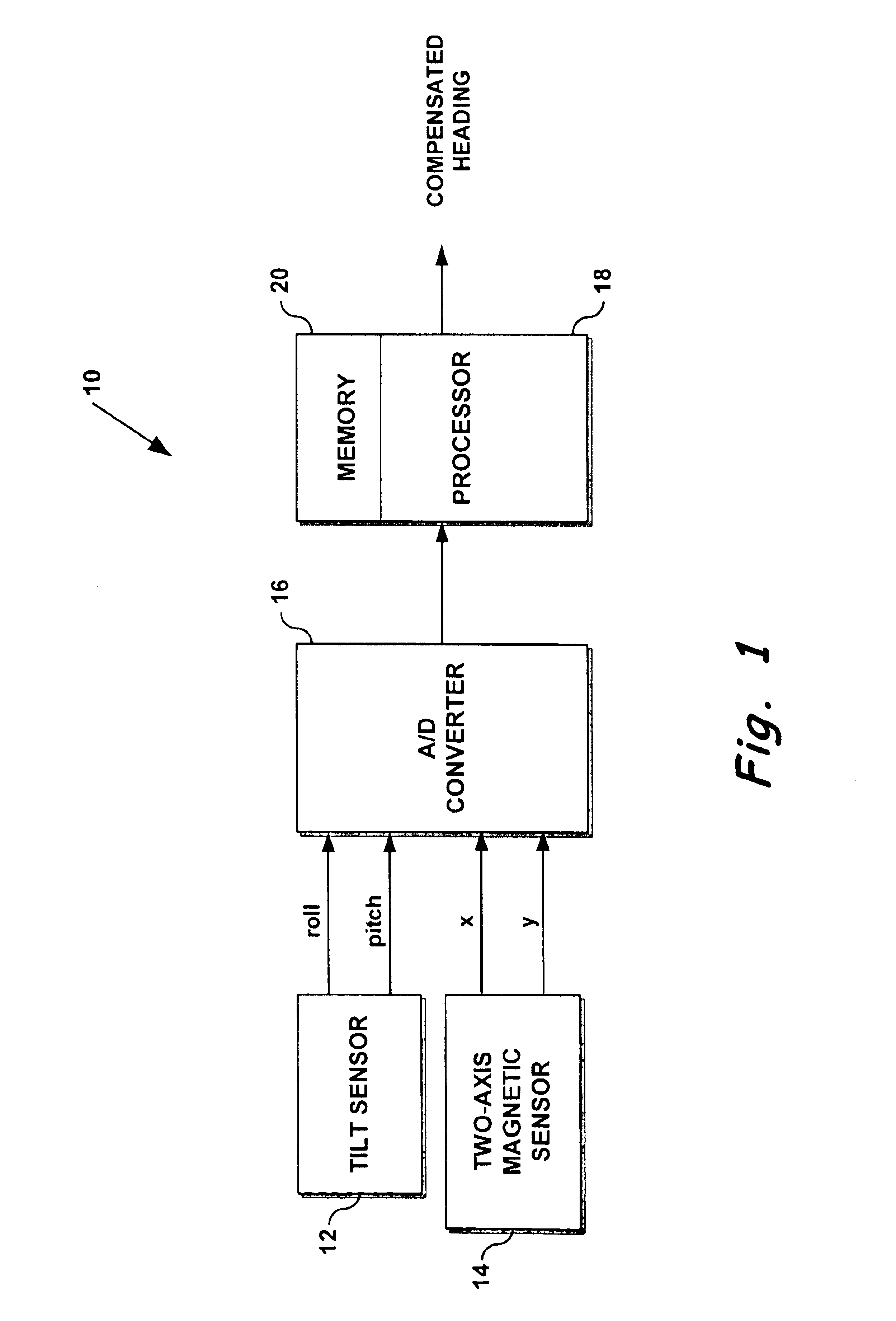

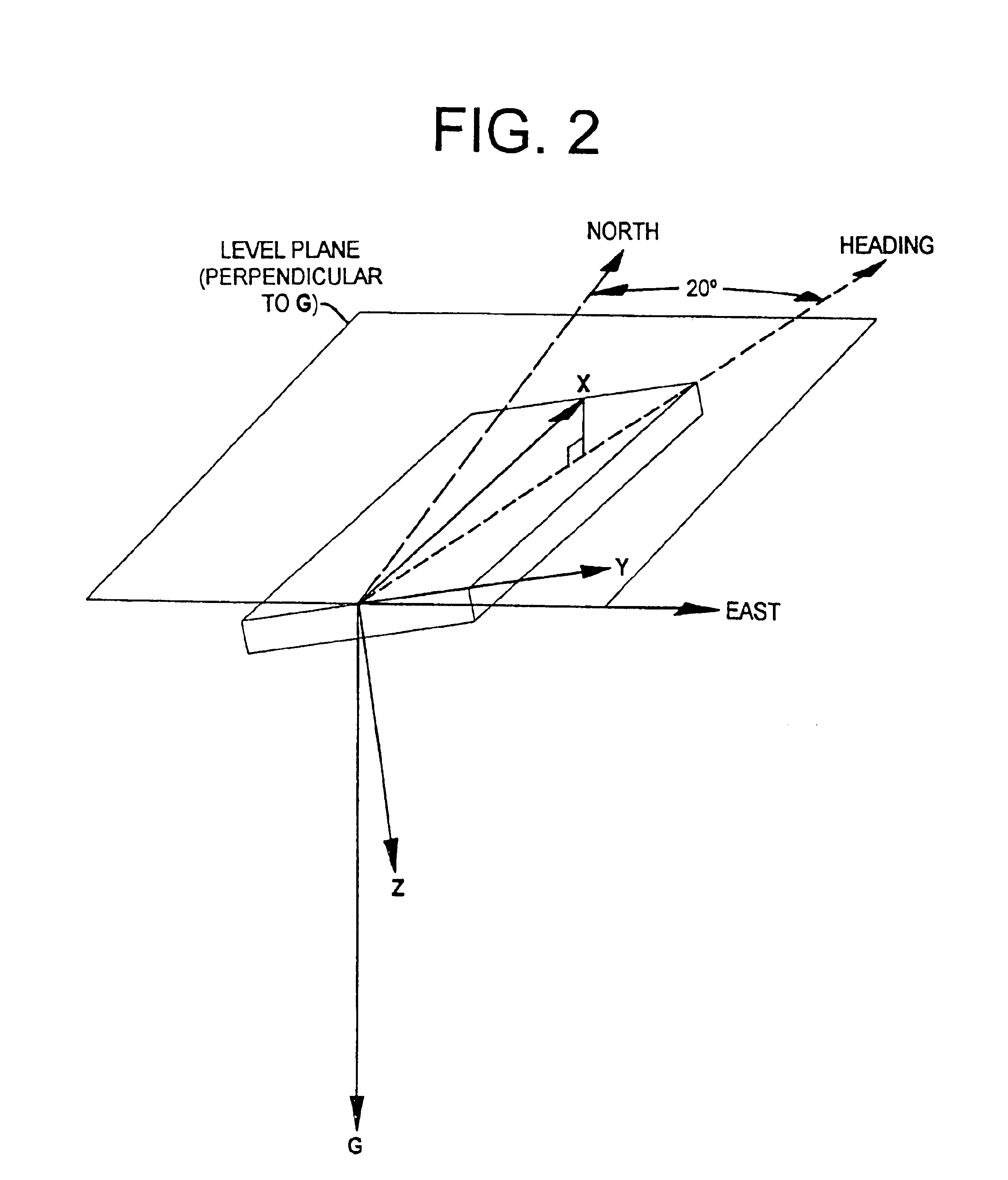

System for using a 2-axis magnetic sensor for a 3-axis compass solution

A tilt-compensated electronic compass can be realized by calculating rather than measuring Earth's magnetic field component Z in a direction orthogonal to the two measurement axes of a 2-axis magnetic sensor. The orthogonal component Z can be calculated using a stored value for the Earth's magnetic field strength applicable over a wide geographic region. The calculation also requires using measured field values from the 2-axis sensor. Once Z is known, and using input from a 2-axis tilt sensor, compensated orthogonal components X and Y can be calculated by mathematically rotating the measured field strength values from a tilted 2-axis sensor back to the local horizontal plane. Thus, a very flat and compact tilt-compensated electronic compass is possible.

Owner:HONEYWELL INT INC

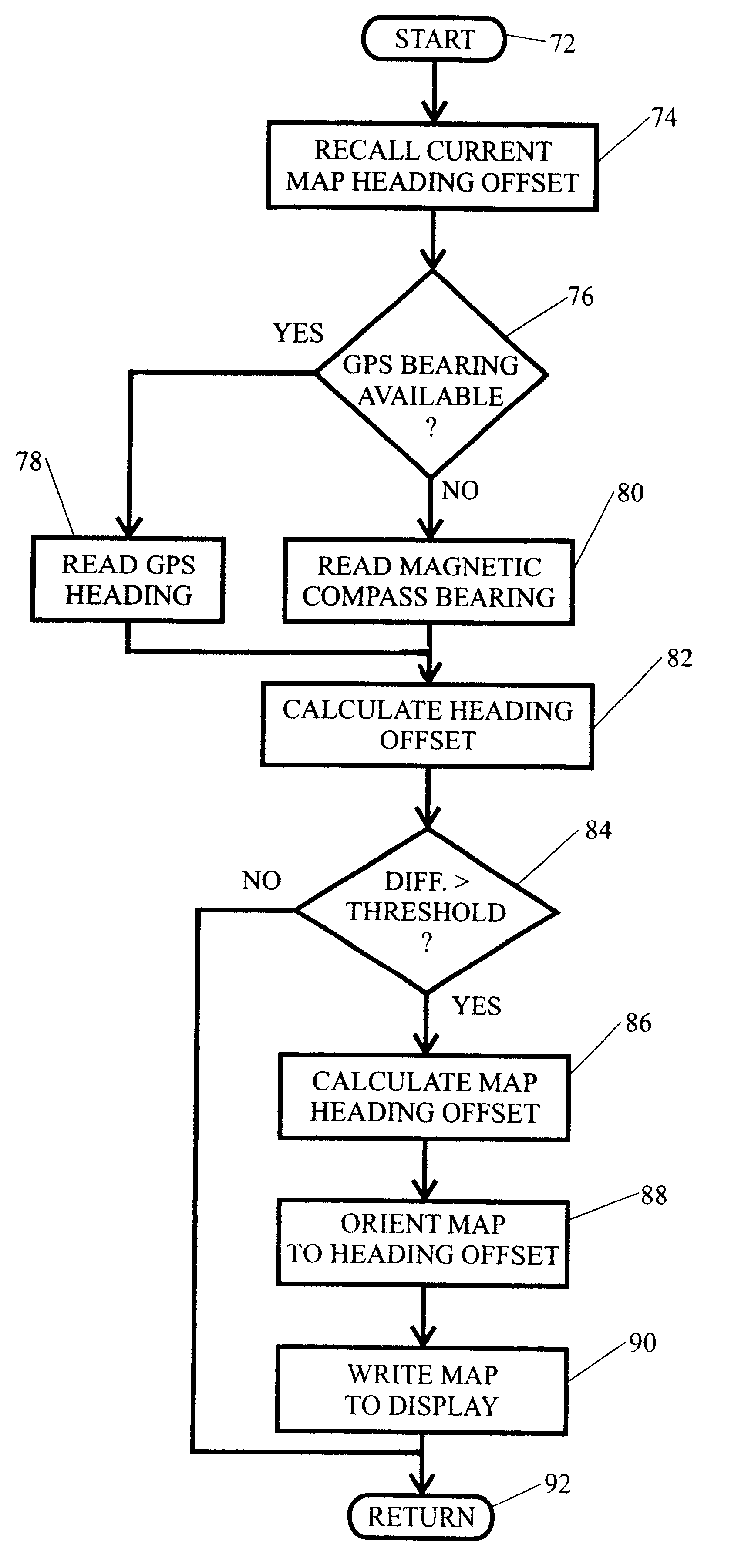

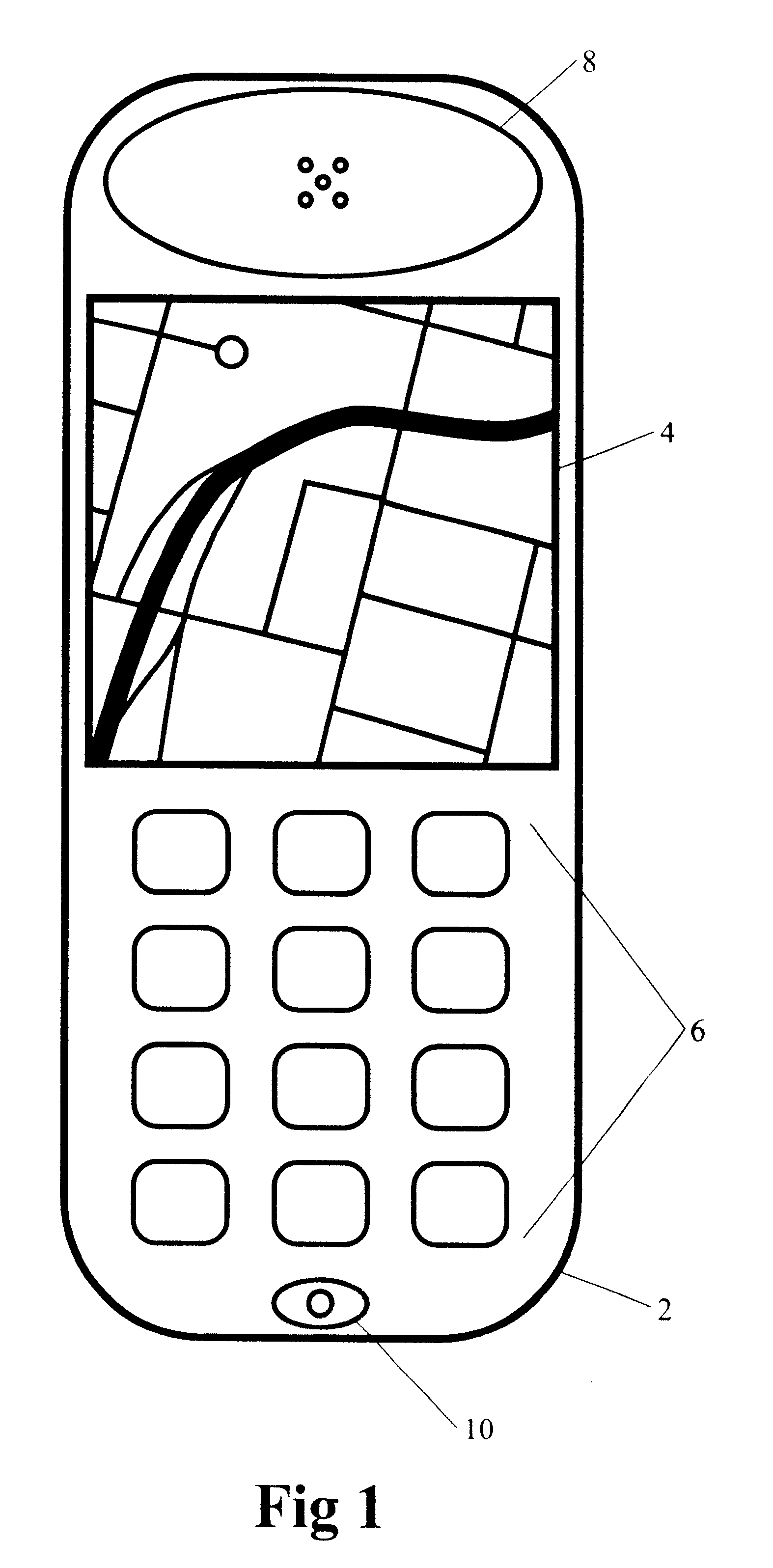

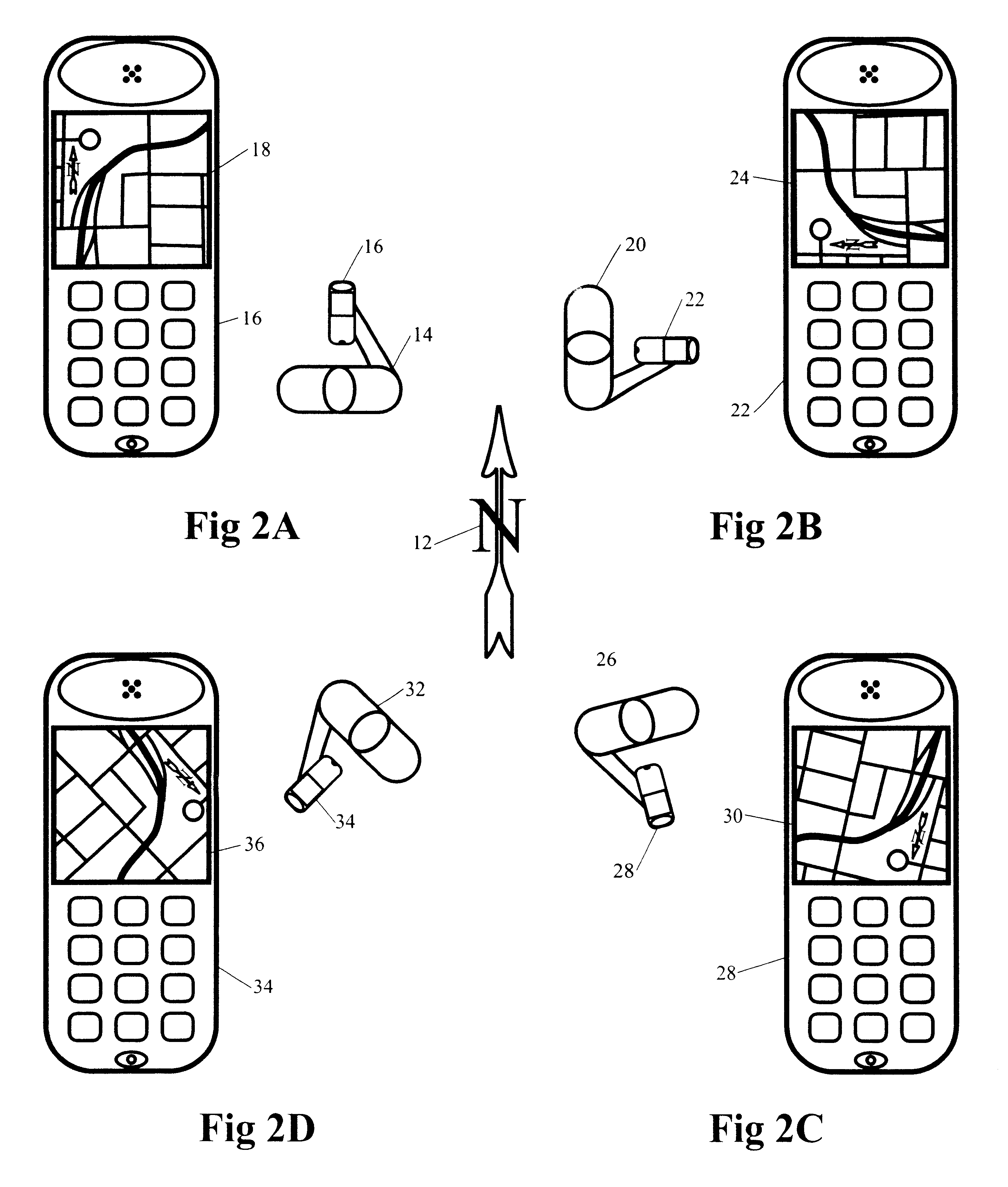

Method and apparatus for orienting a map display in a mobile or portable device

InactiveUS6366856B1Cordless telephonesNavigational calculation instrumentsMobile mappingGps receiver

A method apparatus for orienting a map display in a mobile or portable device. An electronic compass is integrated with a hand-held portable or mobile mapping device and an electronic compass bearing is used to calculate an offset value with respect to the map default orientation. The map is thus reoriented in the display to the direction of the device. In an alternative embodiment, a GPS receiver is also integrated with the device. A heading fix is calculated from two or more GPS position fixes to determine orientation of the device. When a heading fix is unavailable, such as at initial start-up of the device, a compass heading is used in lieu of the GPS heading fix to orient the map on a display.

Owner:QUALCOMM INC

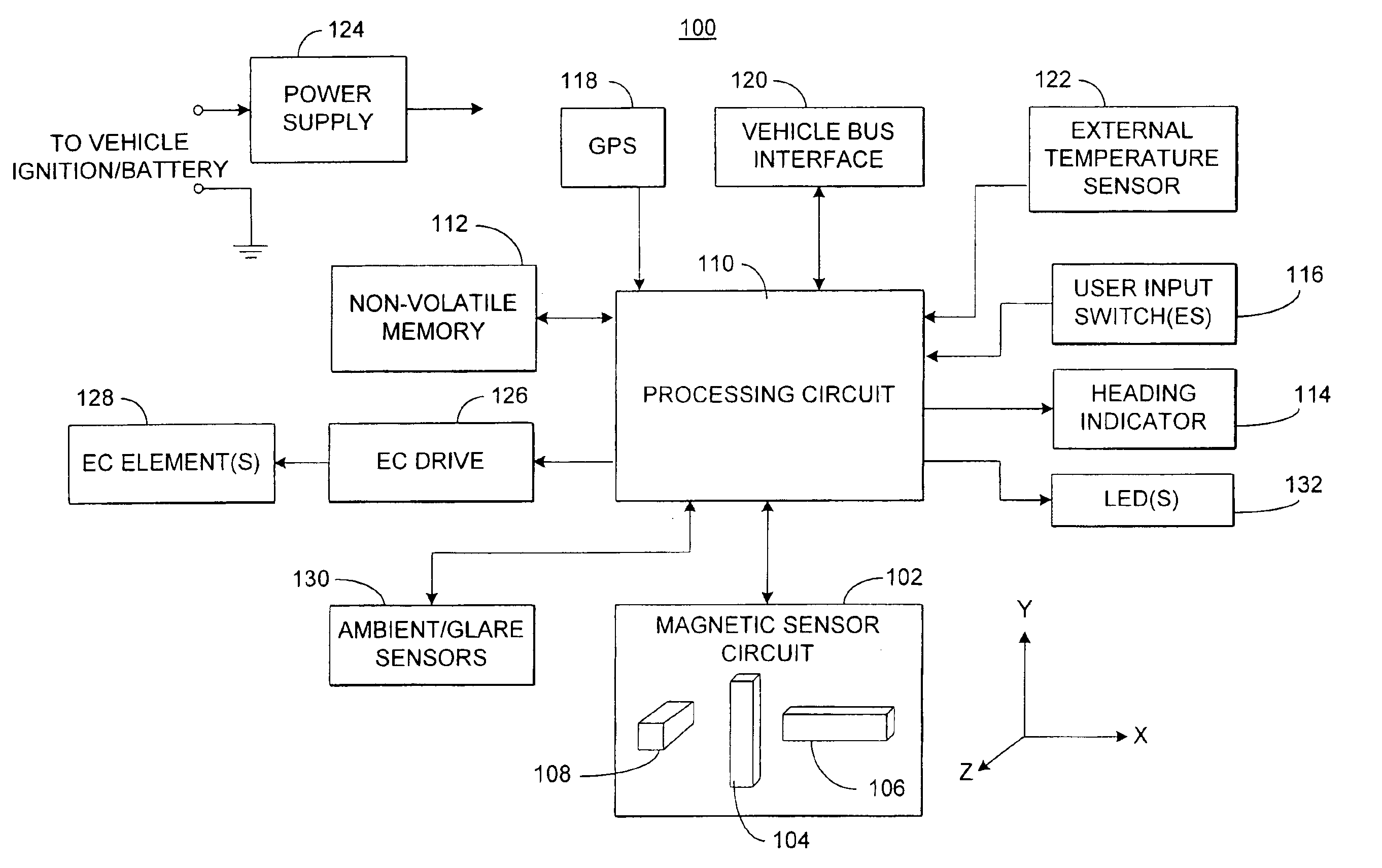

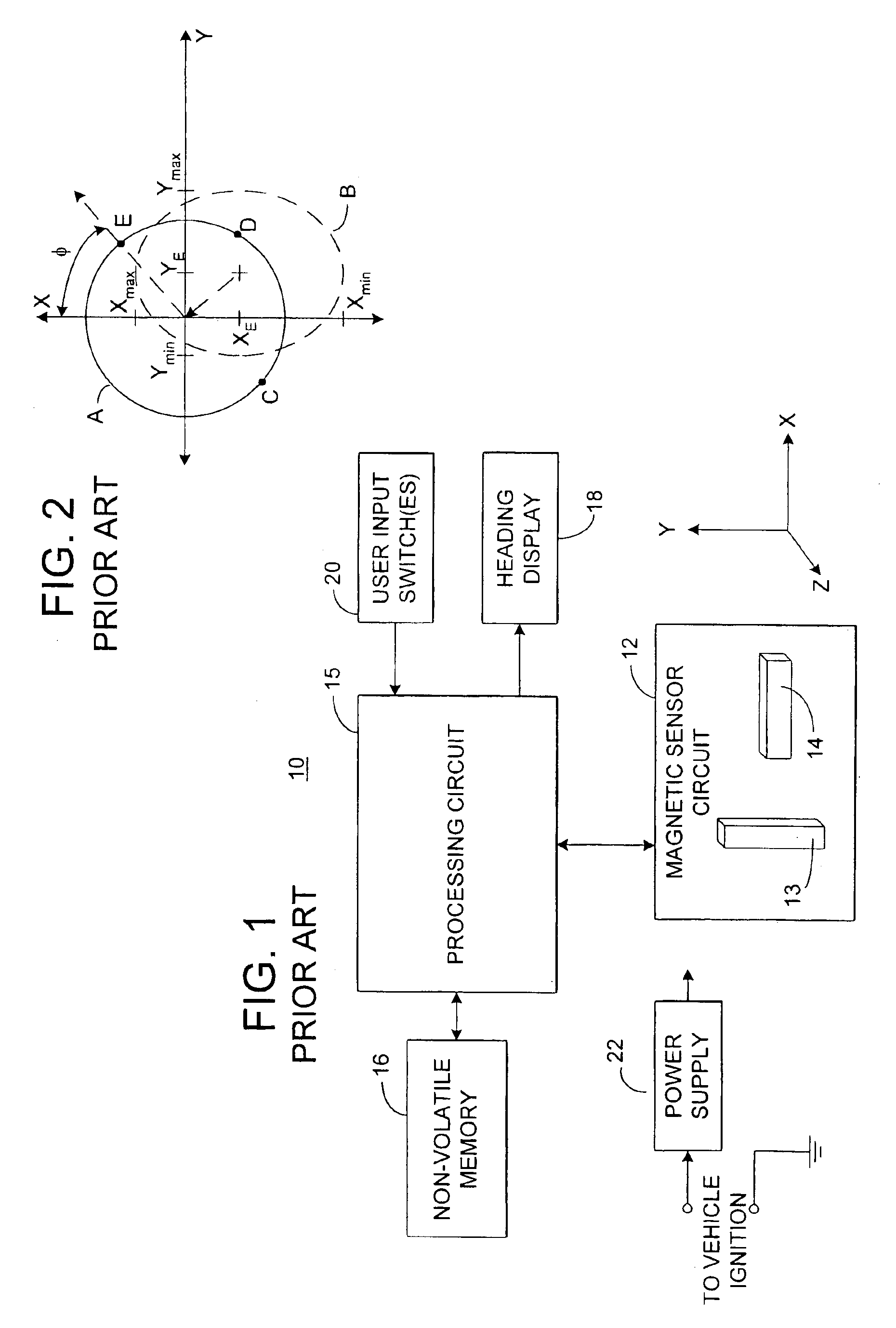

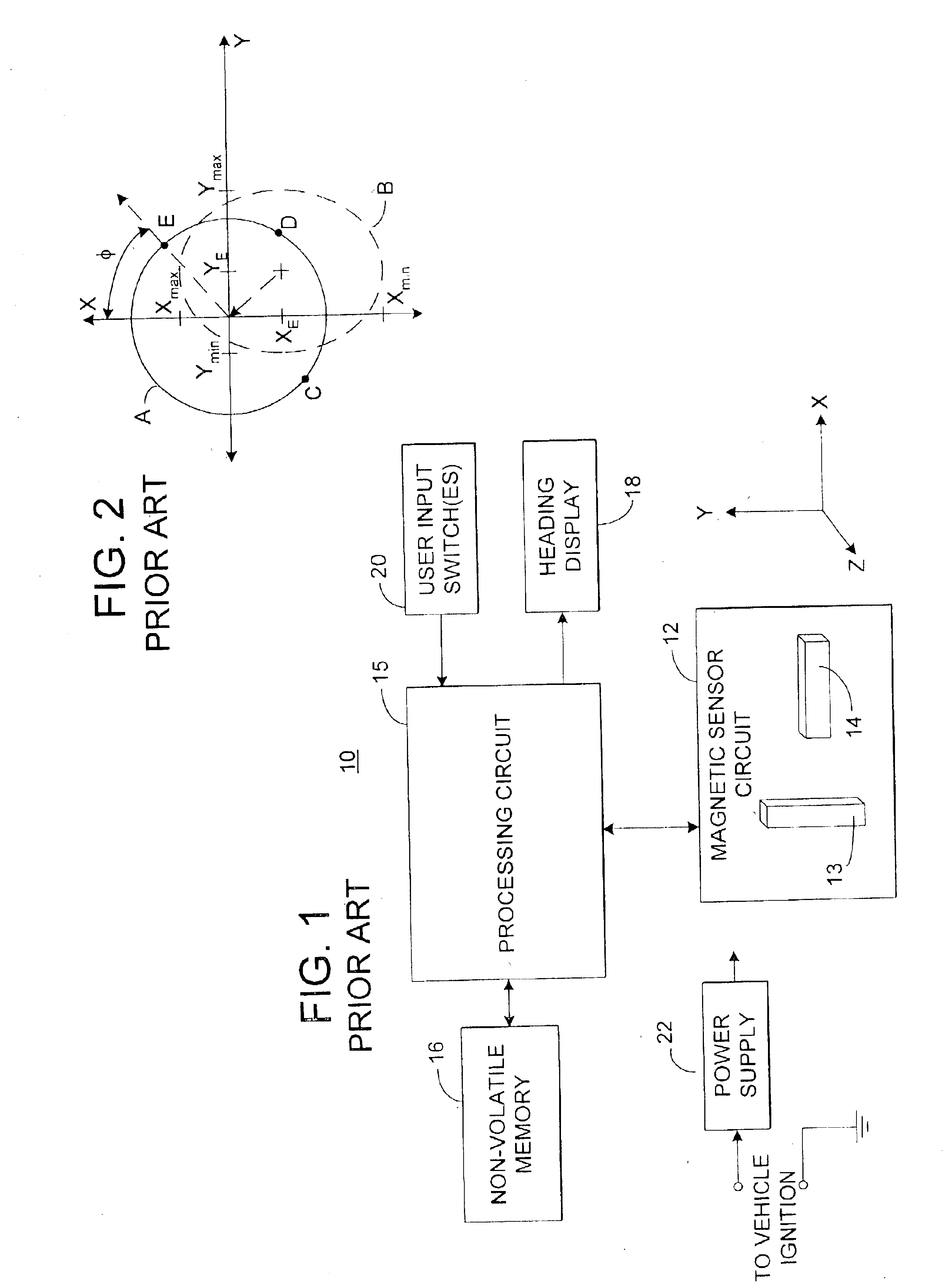

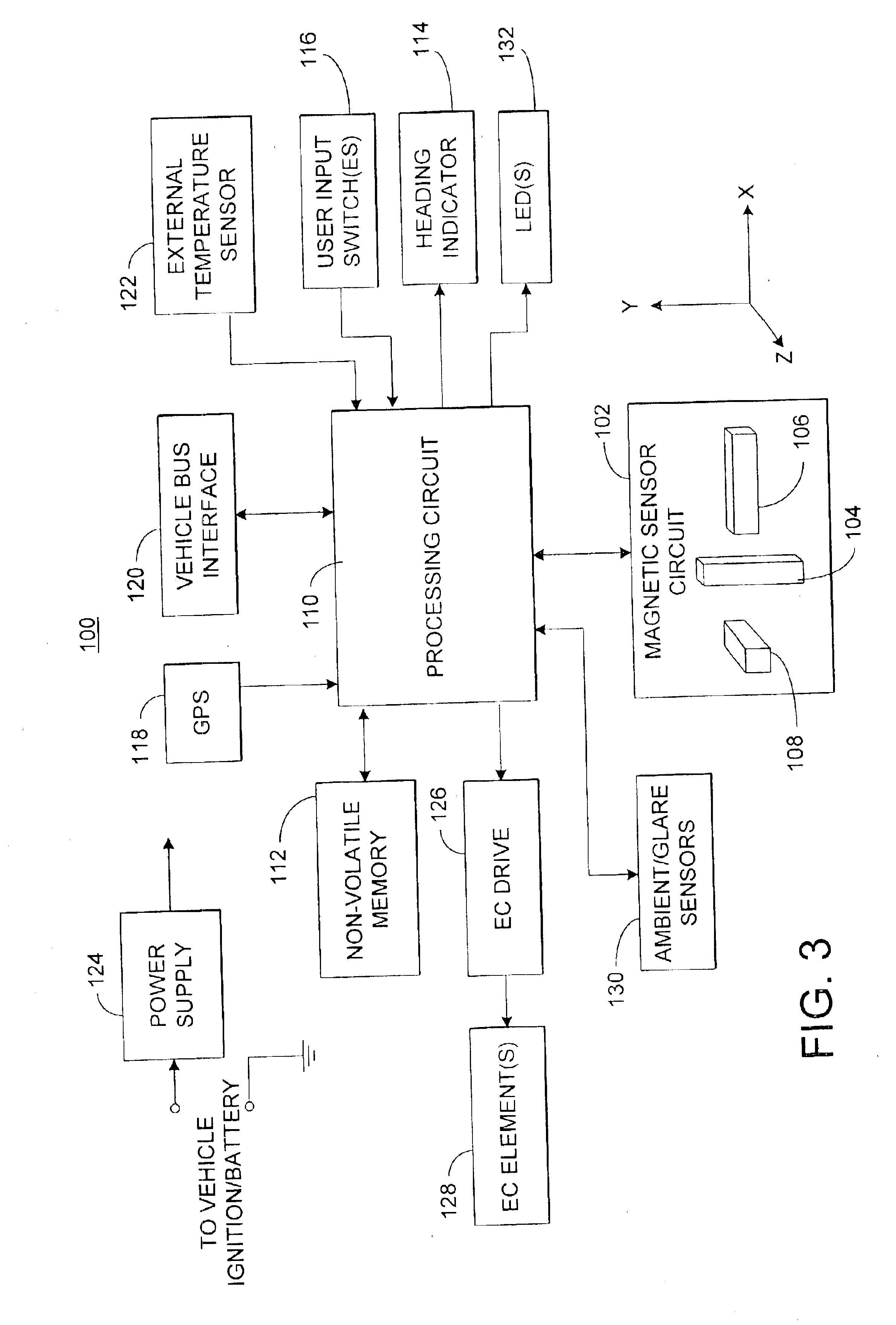

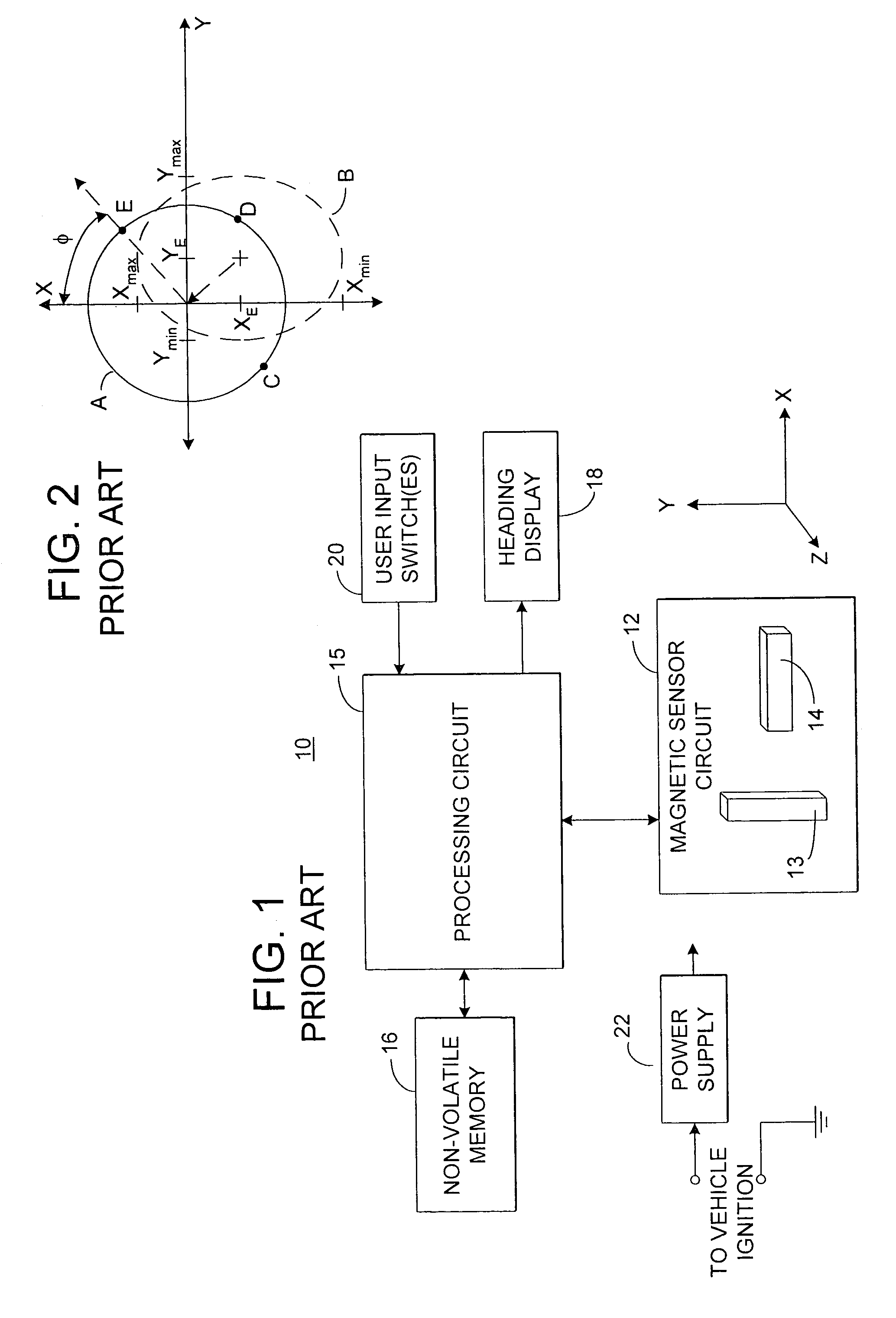

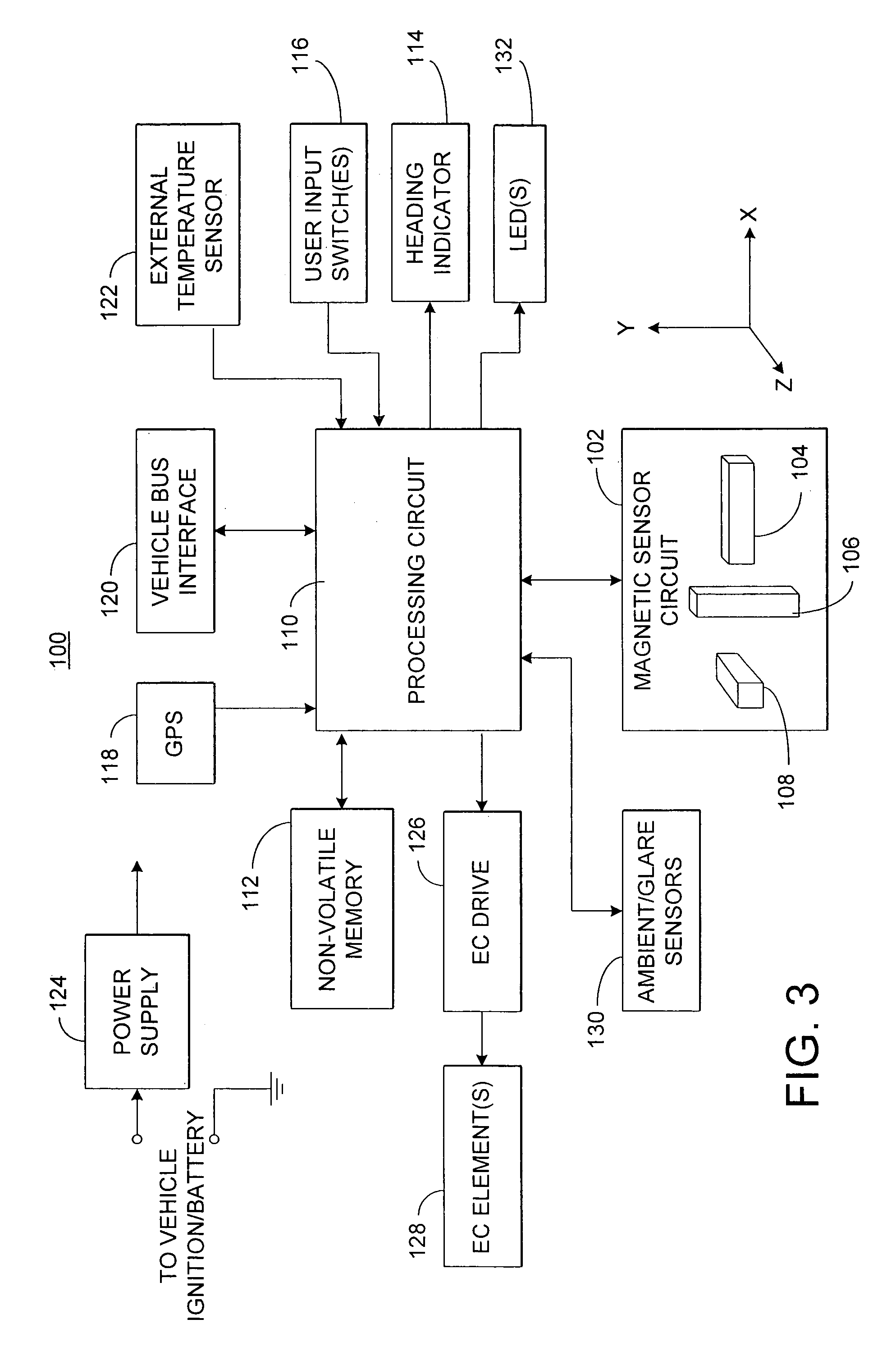

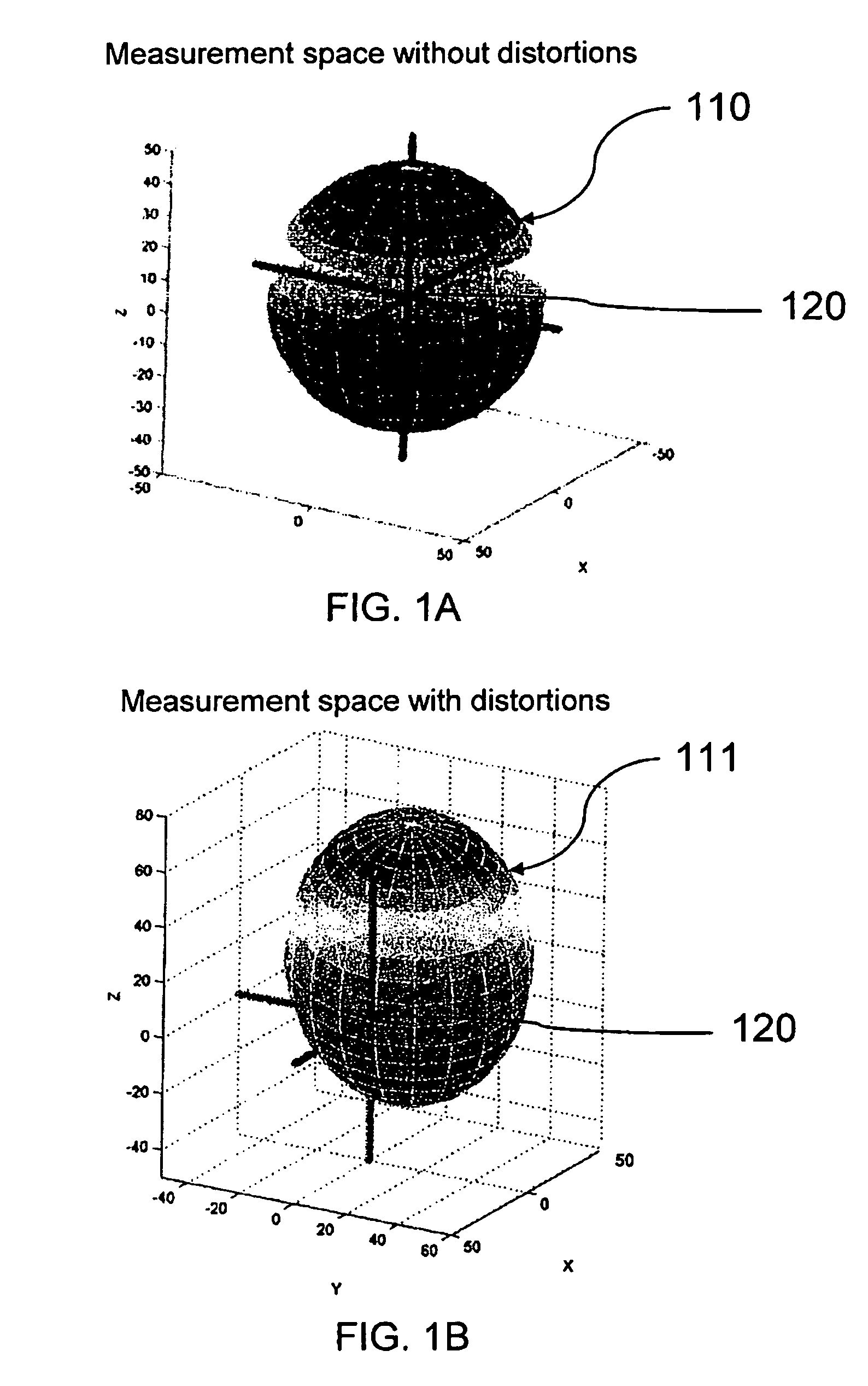

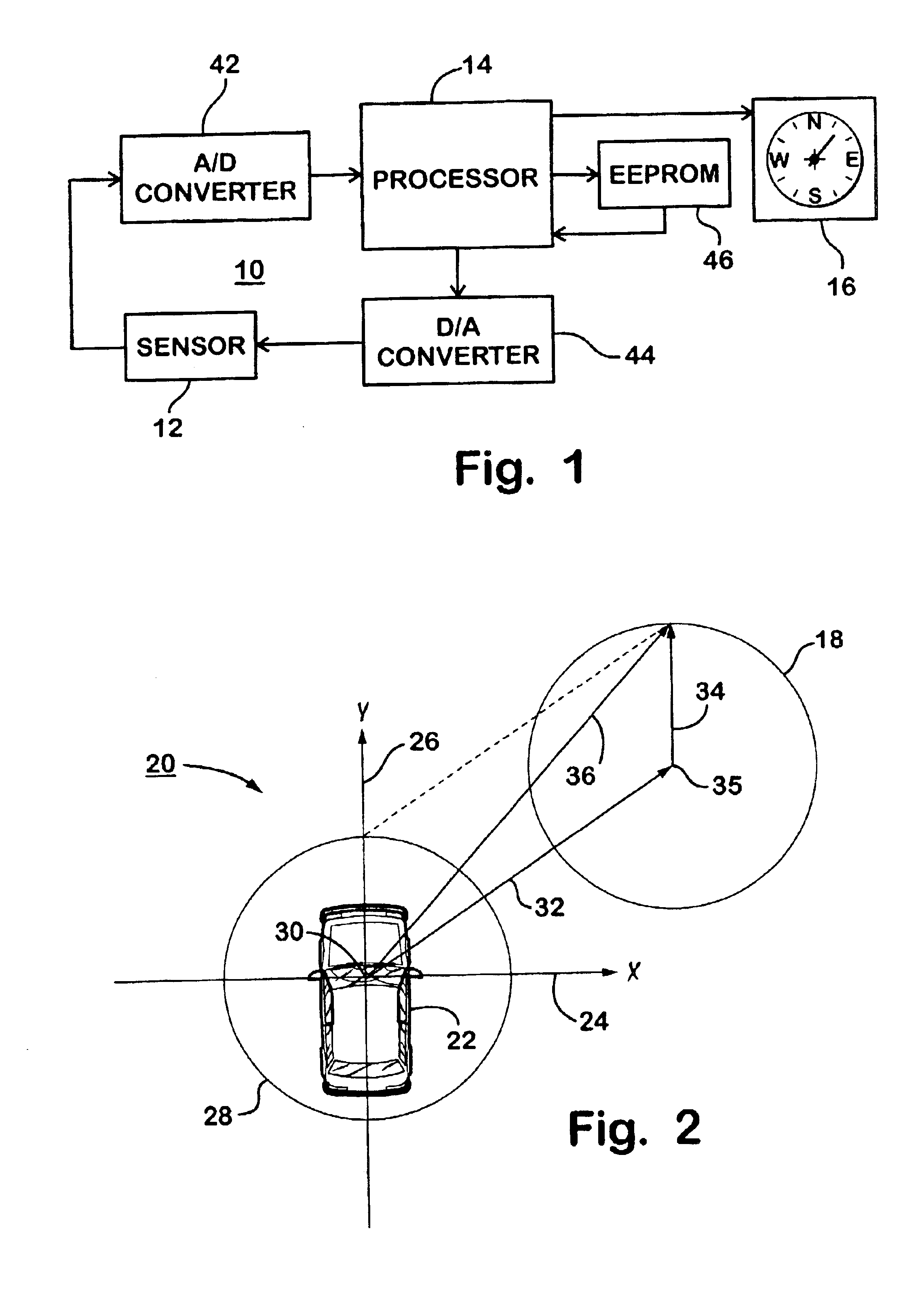

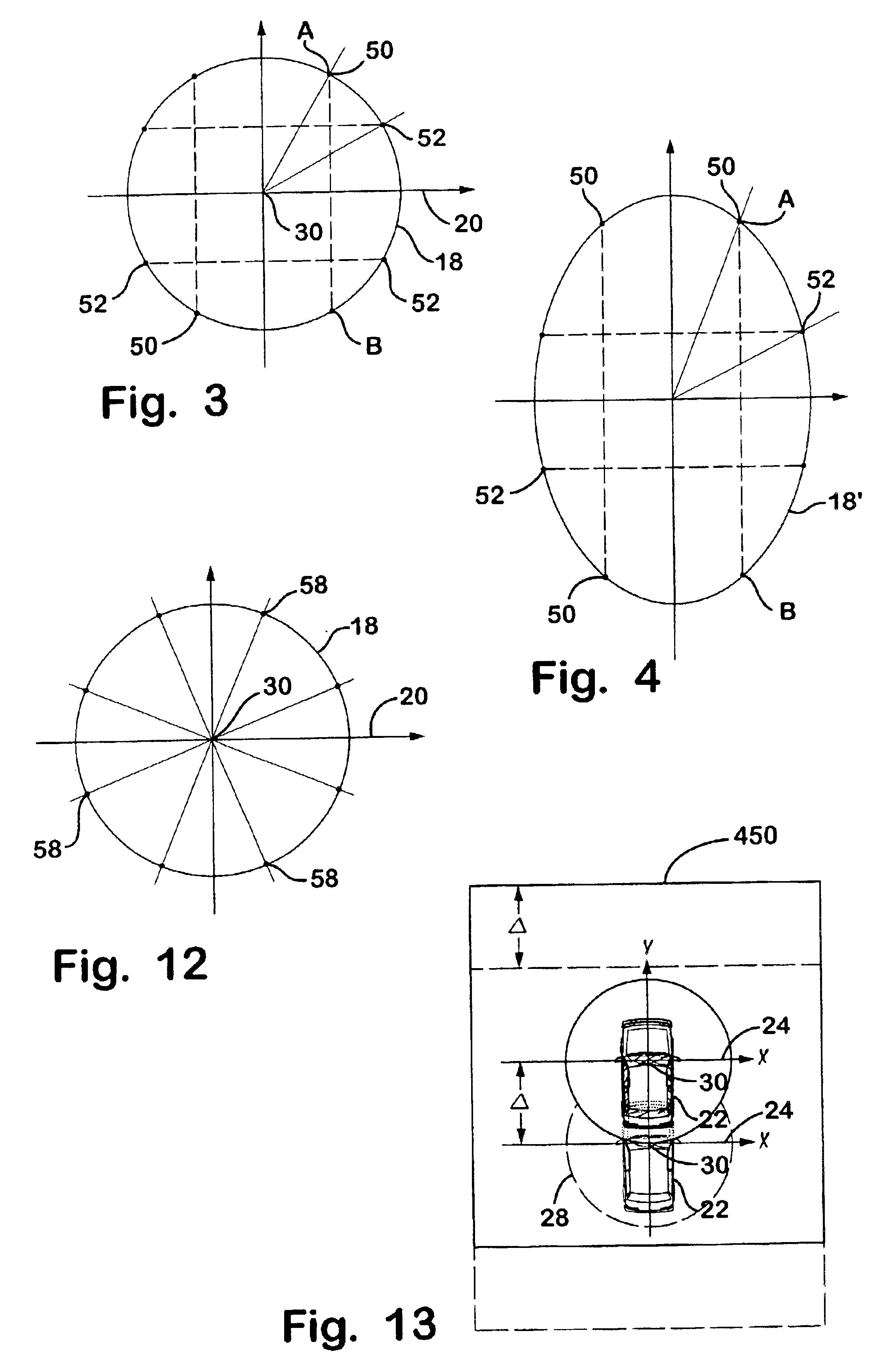

Electronic compass system

An electronic compass system includes a magnetic sensor circuit having at least two sensing elements for sensing perpendicular components of the Earth's magnetic field vector. A processing circuit is coupled to the sensor circuit to filter, process, and compute a heading. The processing circuit further selects an approximating geometric pattern, such as a sphere, ellipsoid, ellipse, or circle, determines an error metric of the data points relative to the approximating pattern, adjusts the pattern to minimize the error, thereby obtaining a best fit pattern. The best fit pattern is then used to calculate the heading for each successive sensor reading provided that the noise level is not noisy and until a new best fit pattern is identified. The electronic compass system is particularly well suited for implementation in a vehicle rearview mirror assembly.

Owner:GENTEX CORP

Electronic compass system

An electronic compass system includes a magnetic sensor circuit having at least two sensing elements for sensing perpendicular components of the Earth's magnetic field vector. A processing circuit is coupled to the sensor circuit to filter, process, and compute a heading. The processing circuit further selects an approximating geometric pattern, such as a sphere, ellipsoid, ellipse, or circle, determines an error metric of the data points relative to the approximating pattern, adjusts the pattern to minimize the error, thereby obtaining a best fit pattern. The best fit pattern is then used to calculate the heading for each successive sensor reading provided that the noise level is not noisy and until a new best fit pattern is identified. The electronic compass system is particularly well suited for implementation in a vehicle rearview mirror assembly.

Owner:GENTEX CORP

Electronic compass system

InactiveUS7149627B2Change effectInstruments for road network navigationTesting/calibration of speed/acceleration/shock measurement devicesEngineeringUltimate tensile strength

An electronic compass system includes a magnetic sensor circuit having at least two sensing elements for sensing perpendicular components of the Earth's magnetic field vector. A processing circuit is coupled to the sensor circuit to filter, process, and compute a heading. The processing circuit may determine whether too much noise is present in the output signals received from said magnetic sensor circuit as a function of the relative strength of the Earth's magnetic field vector. The magnetic sensor circuit may include three magnetic field sensing elements contained in a common integrated package having a plurality of leads extending therefrom for mounting to a circuit board. The sensing elements need not be perpendicular to each other or parallel or perpendicular with the circuit board. The electronic compass system is particularly well suited for implementation in a vehicle rearview mirror assembly.

Owner:GENTEX CORP

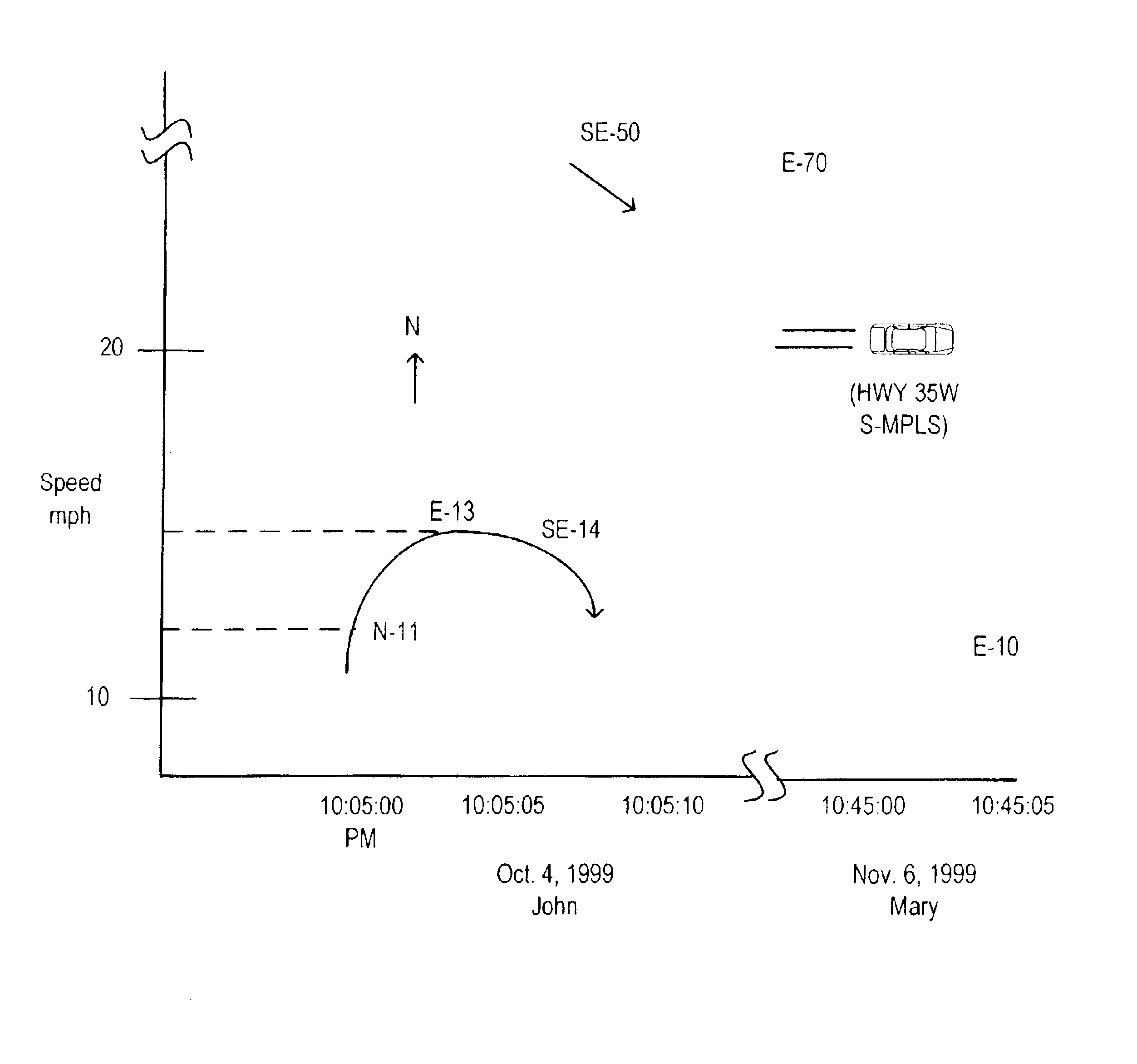

Automobile monitoring for operation analysis

An automobile monitoring arrangement tracks and records automobile operation for post-use automobile operation-analysis. In one specific embodiment, a record of automobile-operation data contains periodic recordings of speed and direction of an automobile while it was being driven, as such data is provided by a conventional electronic compass and the automobile's electronic speed indicating signal. A processor performs calculations using speed and directional data to calculate acceleration and rate of directional change. Automobile operation data from the recording devices and the calculations performed is compared to stored reference data to determine if the vehicle was abused or driven in an unsafe manner by the operator. The data is output to a display showing automobile operating data and instances where the automobile was abused or driven in an unsafe manner.

Owner:THE TORONTO DOMINION BANK

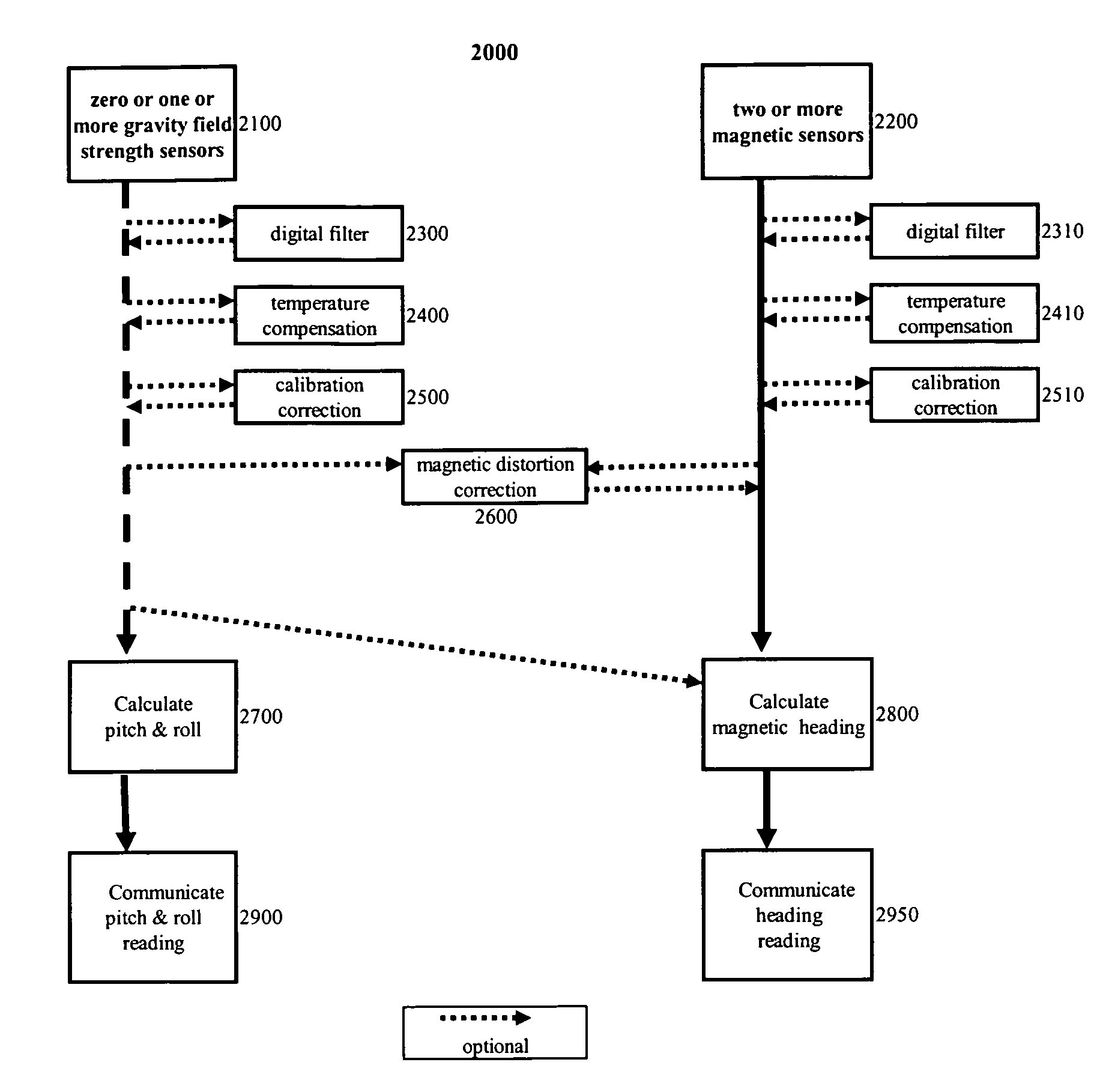

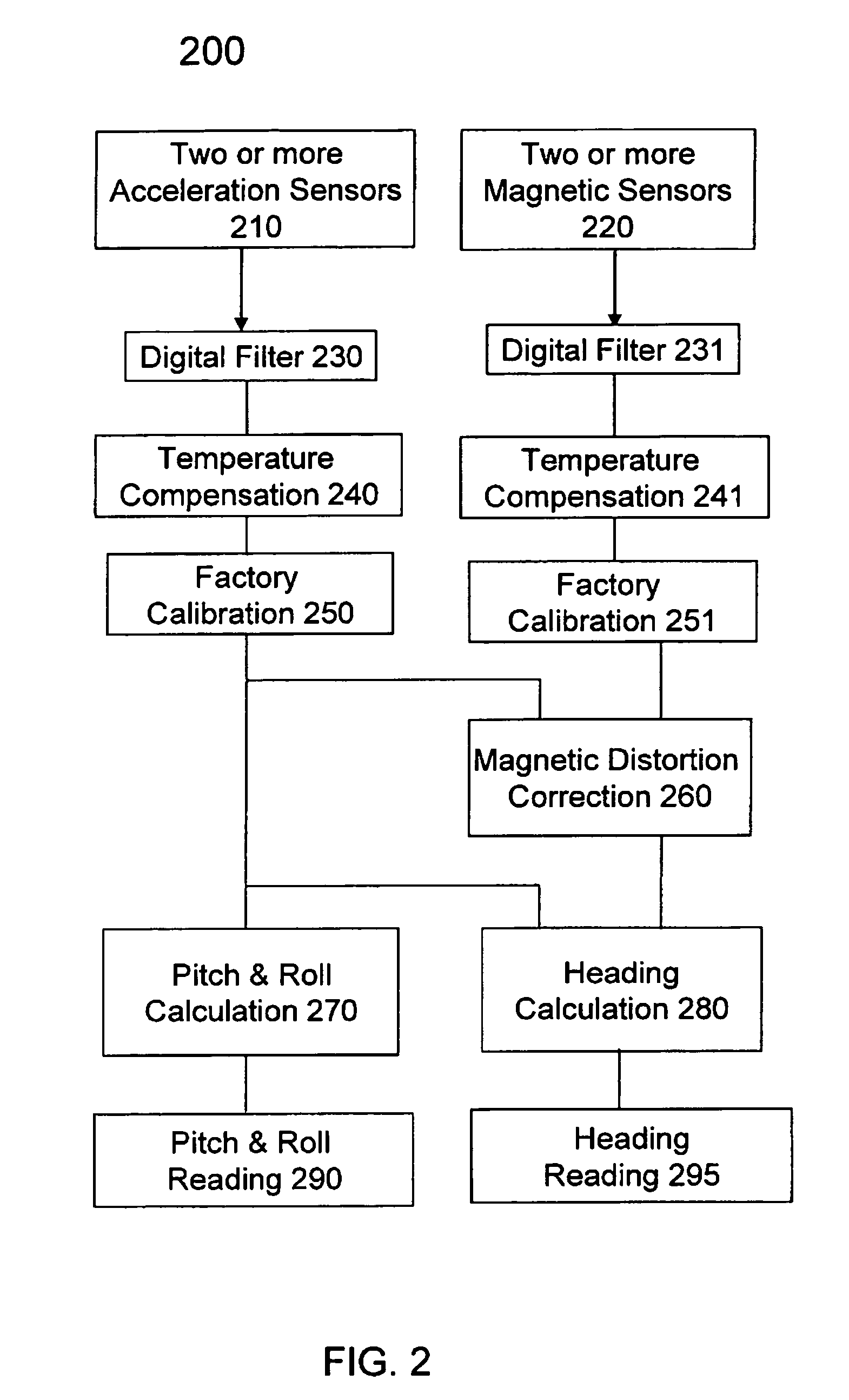

Automatic calibration of a three-axis magnetic compass

InactiveUS7451549B1Sure easyLess computing powerNavigation instrumentsTesting/calibration of speed/acceleration/shock measurement devicesData setAccelerometer

A magnetic compass apparatus and method to account for magnetic distortion while determining a magnetic heading is disclosed. The method enables a compass module, comprising at least two magnetometers, to characterize its magnetic environment dynamically, while collecting data of a geomagnetic field; a user moves an apparatus through various orientations; the environment may or may not contain magnetic distortion influences. Data gathered by magnetometers and, optionally, accelerometers are processed through at least two filters before being transferred as a processed data set for repetitive measurement calculations. A series of calculations is executed recursively in time by solving one or more linear vector equations using processed data.

Owner:P&I

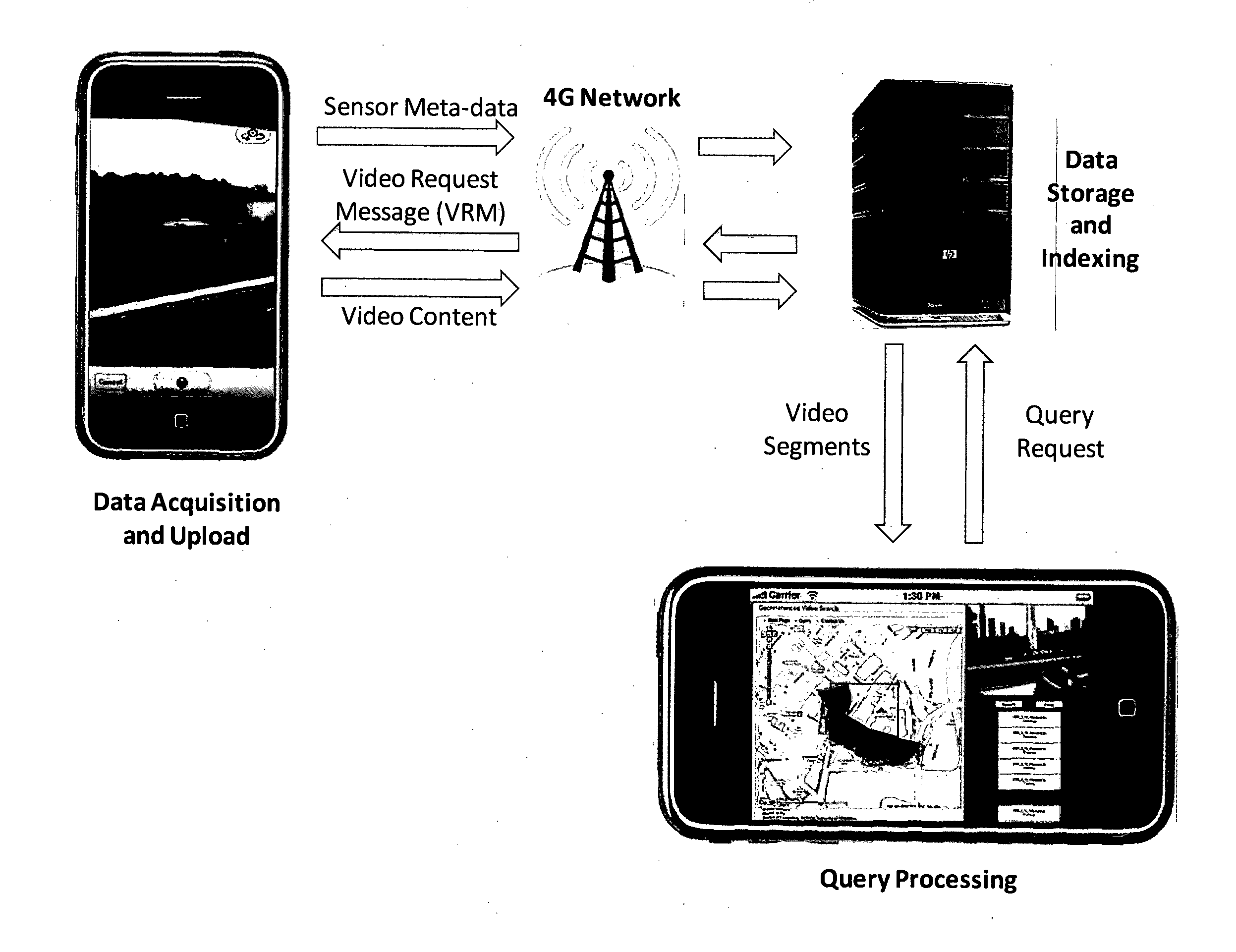

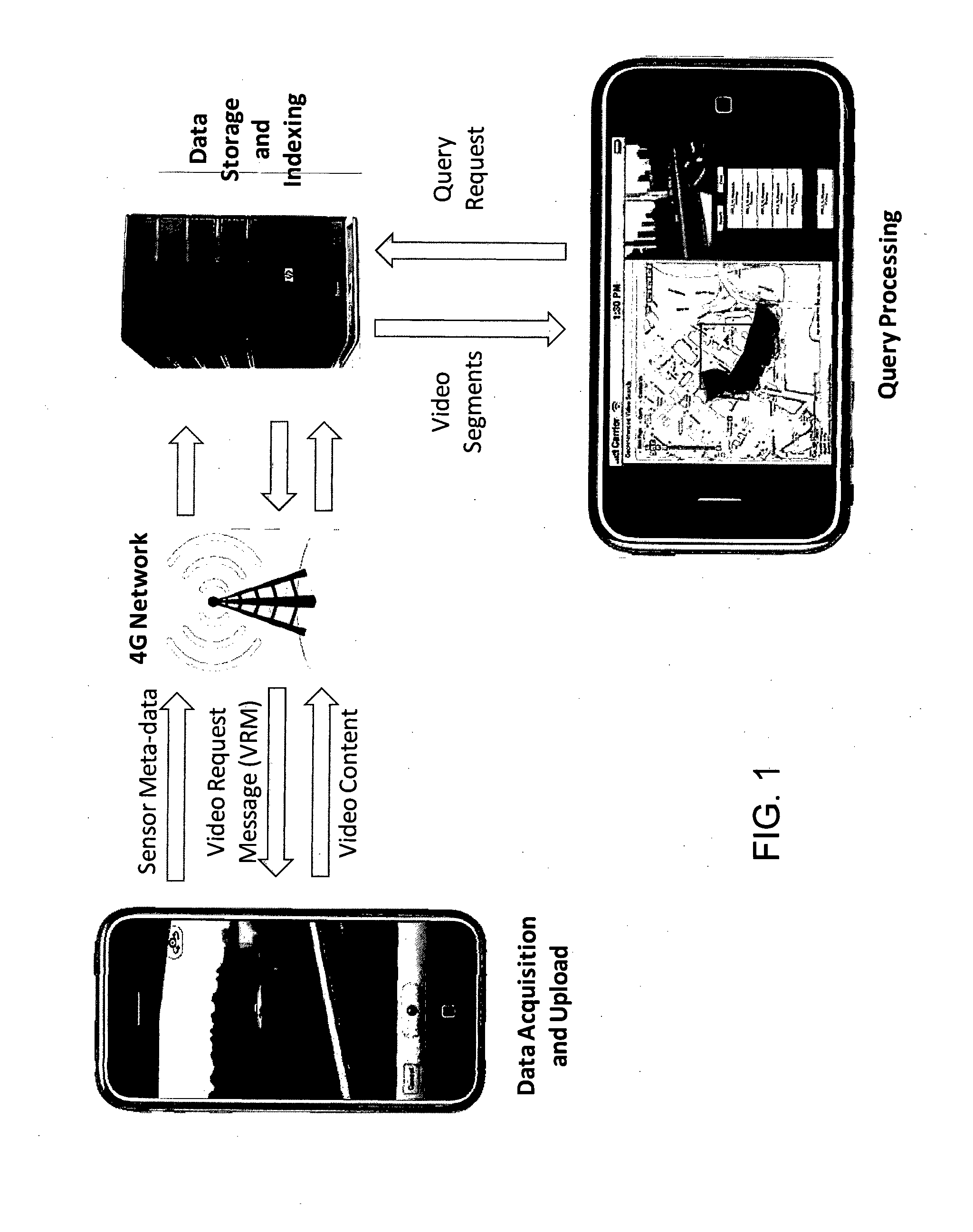

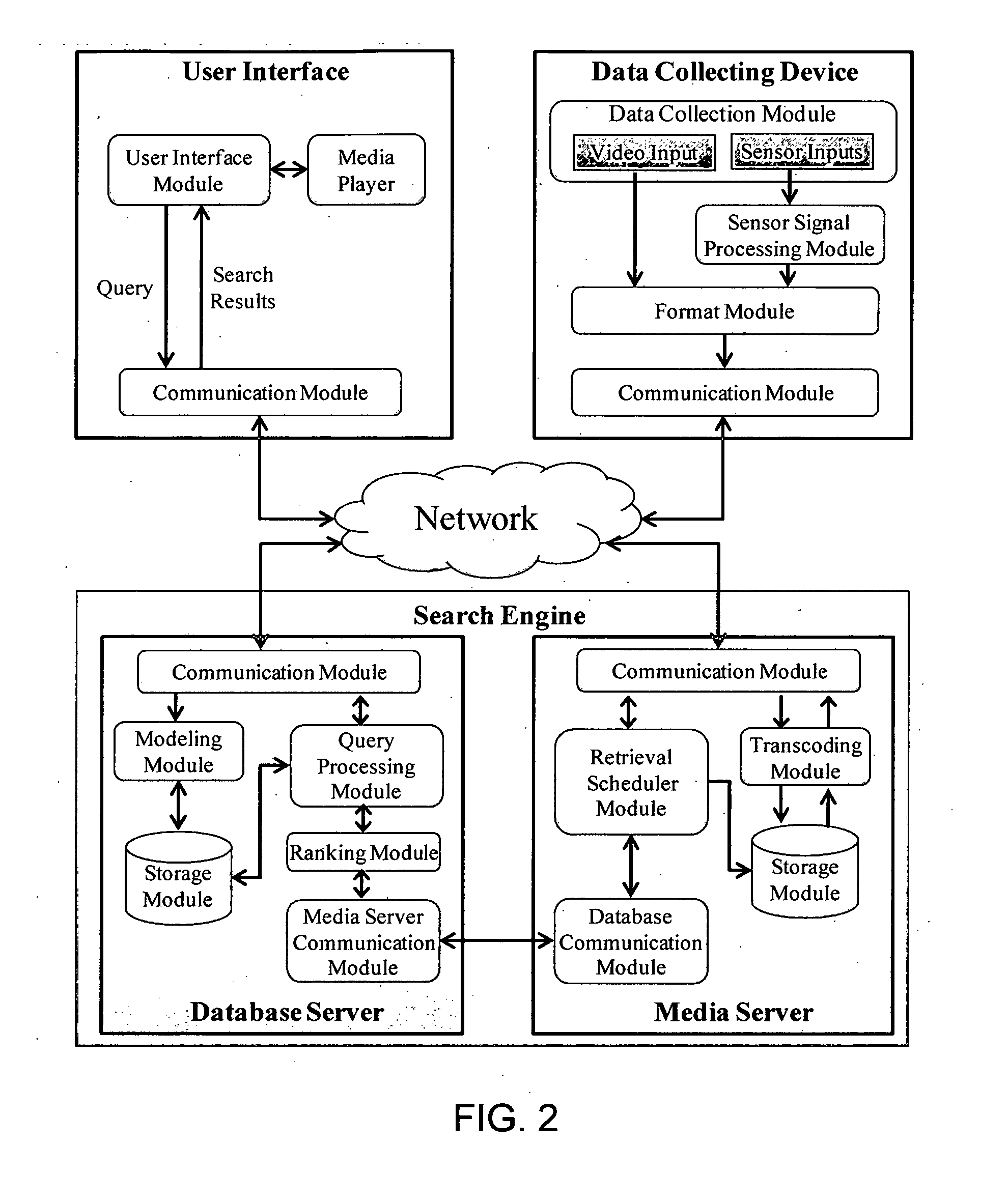

Apparatus, System, and Method for Annotation of Media Files with Sensor Data

ActiveUS20130330055A1Novel and efficientEasily handle such integrated recording taskColor television signals processingMetadata video data retrievalAmbient lightingAcquisition apparatus

Embodiments of methods for multimedia annotation with sensor data (referred to herein as Sensor-rich video) includes acquisition, management, storage, indexing, transmission, search, and display of video, images, or sound, that has been recorded in conjunction with additional sensor information (such as, but not limited to, global positioning system information (latitude, longitude, altitude), compass directions, WiFi fingerprints, ambient lighting conditions, etc.). The collection of sensor information is acquired on a continuous basis during recording. For example, the GPS information may be continuously acquired from a corresponding sensor at every second during the recording of a video. Therefore, the acquisition apparatus generates a continuous stream of video frames and a continuous stream of sensor meta-data values. The two streams are correlated in that every video frame is associated with a set of sensor values. Note that the sampling frequency (i.e., the frequency at which sensor values can be measured) is dependent on the type of sensor. For example, a GPS sensor may be sampled at 1-second intervals while a compass sensor may be sampled at 50 millisecond intervals. Video is also sampled at a specific rate, such as 25 or 30 frames per second. Sensor data are associated with each frame. If sensor data has not changed from the previous frame (due to a low sampling rate) then the previously measured data values are used. The resulting combination of a video and a sensor stream is called a sensor-rich video.

Owner:NAT UNIV OF SINGAPORE

System and method for employing social networks for information discovery

InactiveUS7472110B2Facilitate maintaining privacyFacilitate conductionData processing applicationsDigital data information retrievalInternet privacyUser participation

Systems and methods are provided that enable searches of social networks by acting as a “compass” that assists users in navigating the social network. Individual user participation is not required in response to queries from other users. The systems and methods offer navigational assistance or information as opposed to a traditional search which returns requested information, thus currently acceptable social mechanisms for arbitrating trust can be exploited. As a result, users do not make their personal information publicly searchable, while at the same time, they are protected from potential misrepresentations of facts.

Owner:MICROSOFT TECH LICENSING LLC

Vehicle compass compensation

A compass compensation system is provided for automatically and continuously calibrating an electronic compass for a vehicle, without requiring an initial manual calibration or preset of the vehicle magnetic signature. The system initially adjusts a two axis sensor of the compass in response to a sampling of at least one initial data point. The system further calibrates the compass by sampling data points that are substantially opposite to one another on a plot of a magnetic field and averaging an ordinate of the data points to determine a respective zero value for the Earth magnetic field. The system also identifies a change in magnetic signature and adjusts the sensor assembly.

Owner:DONNELLY CORP

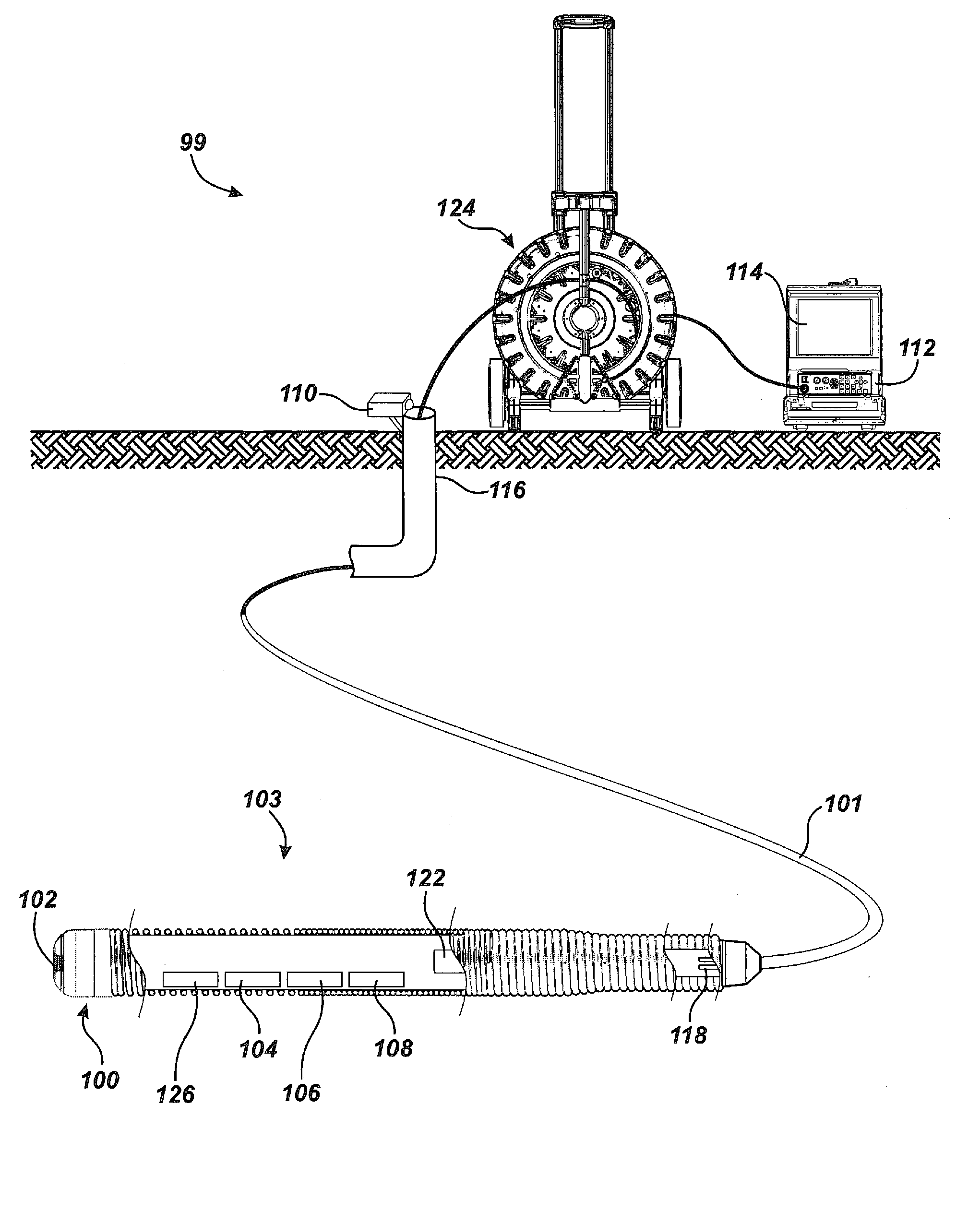

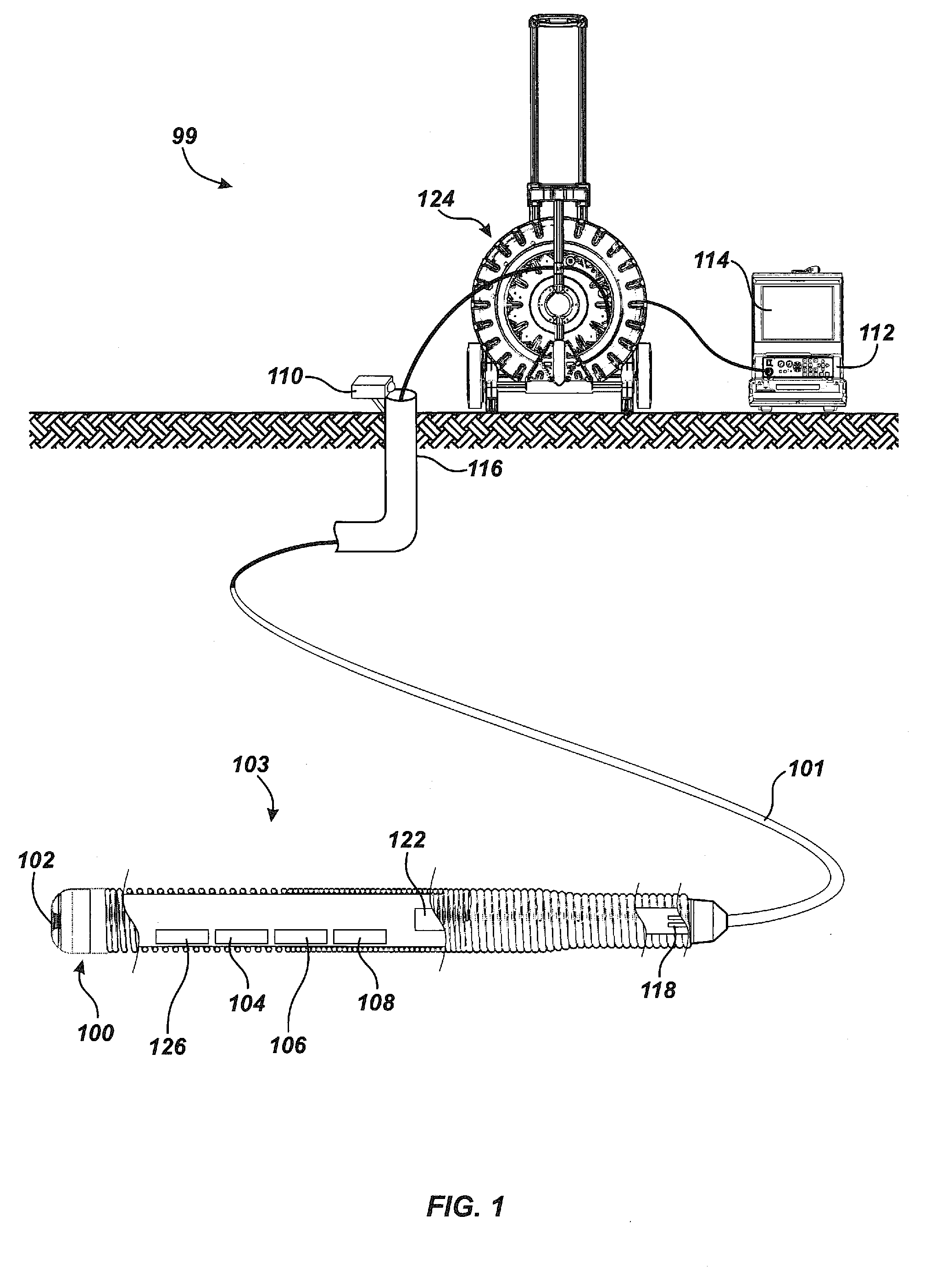

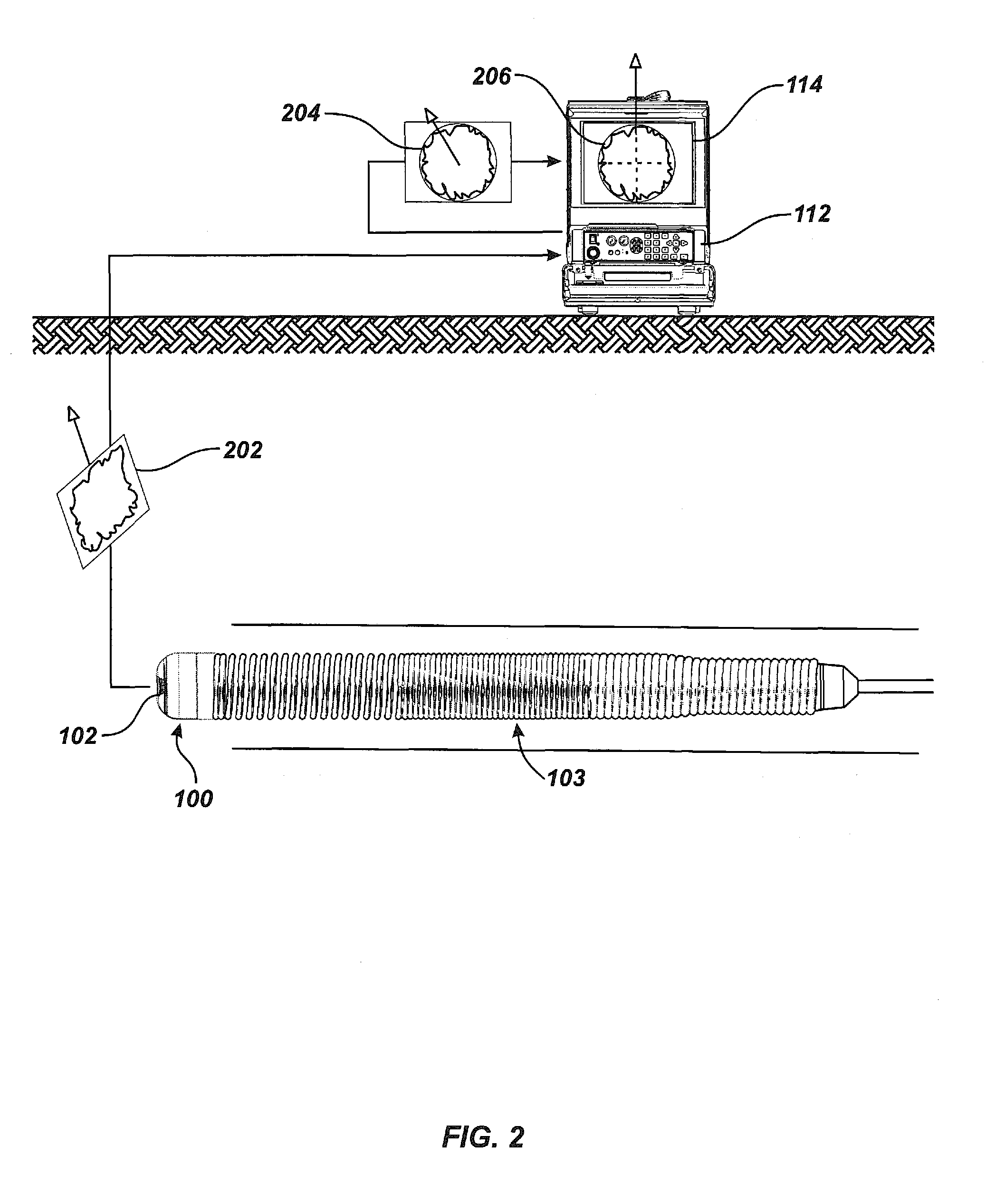

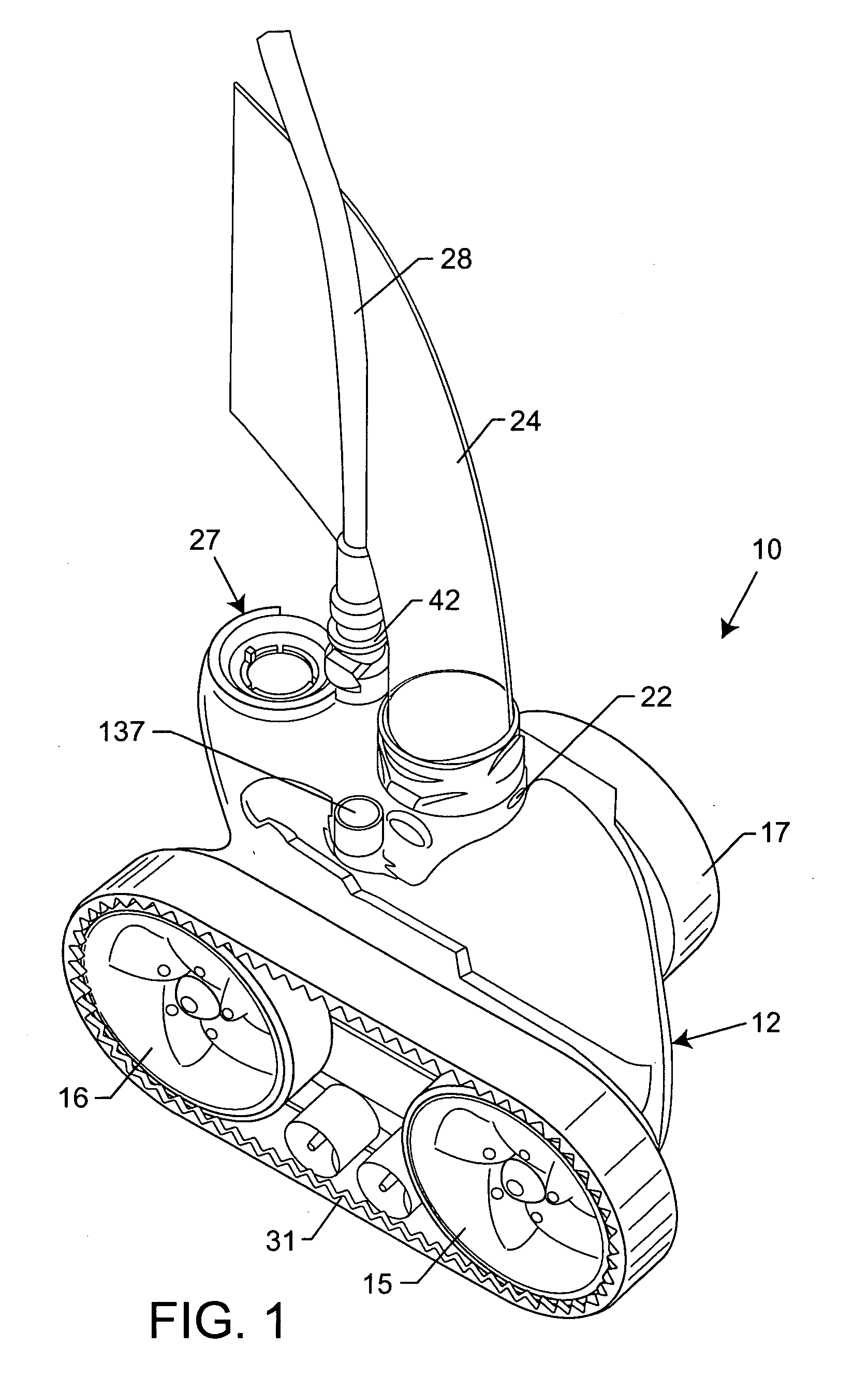

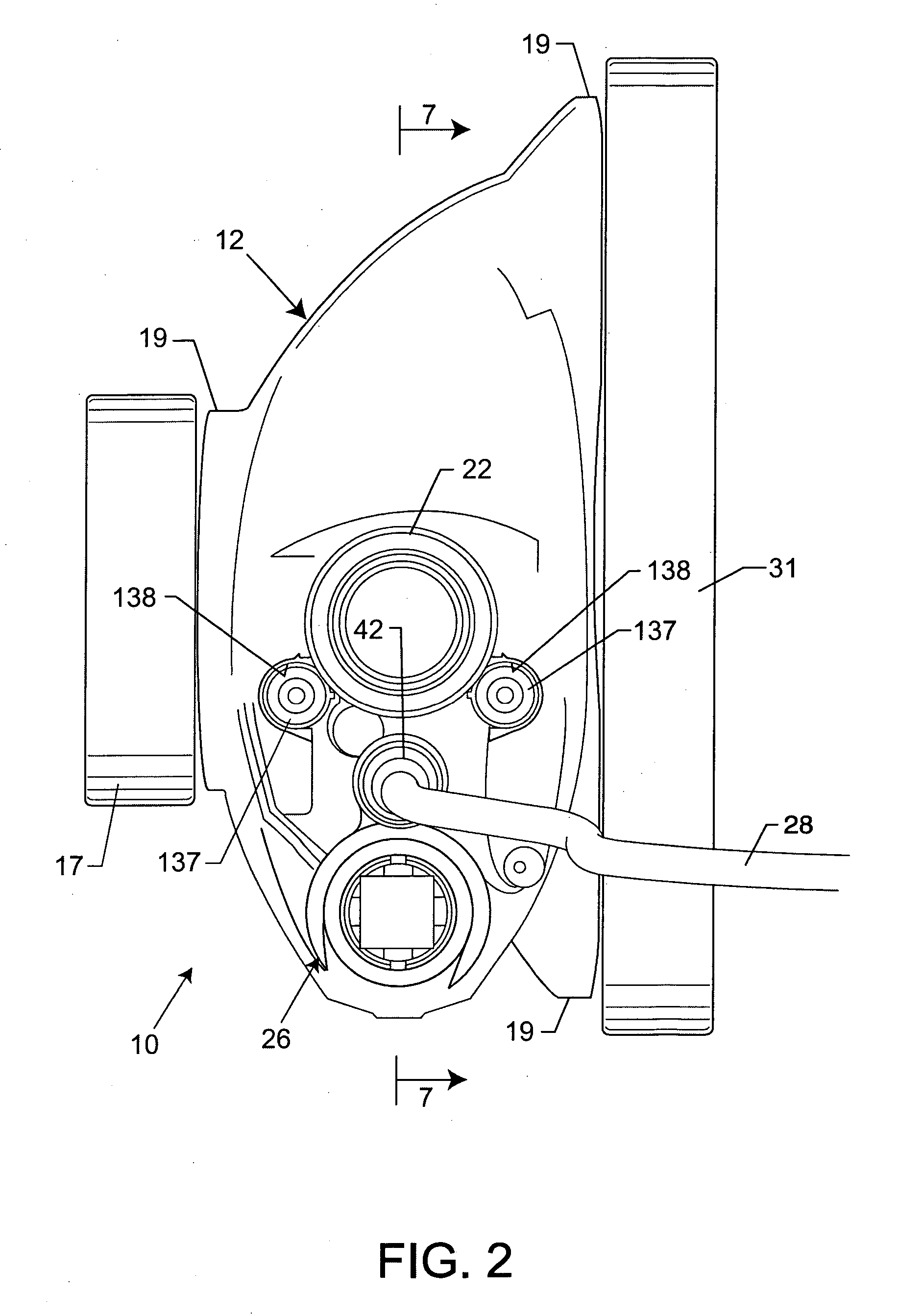

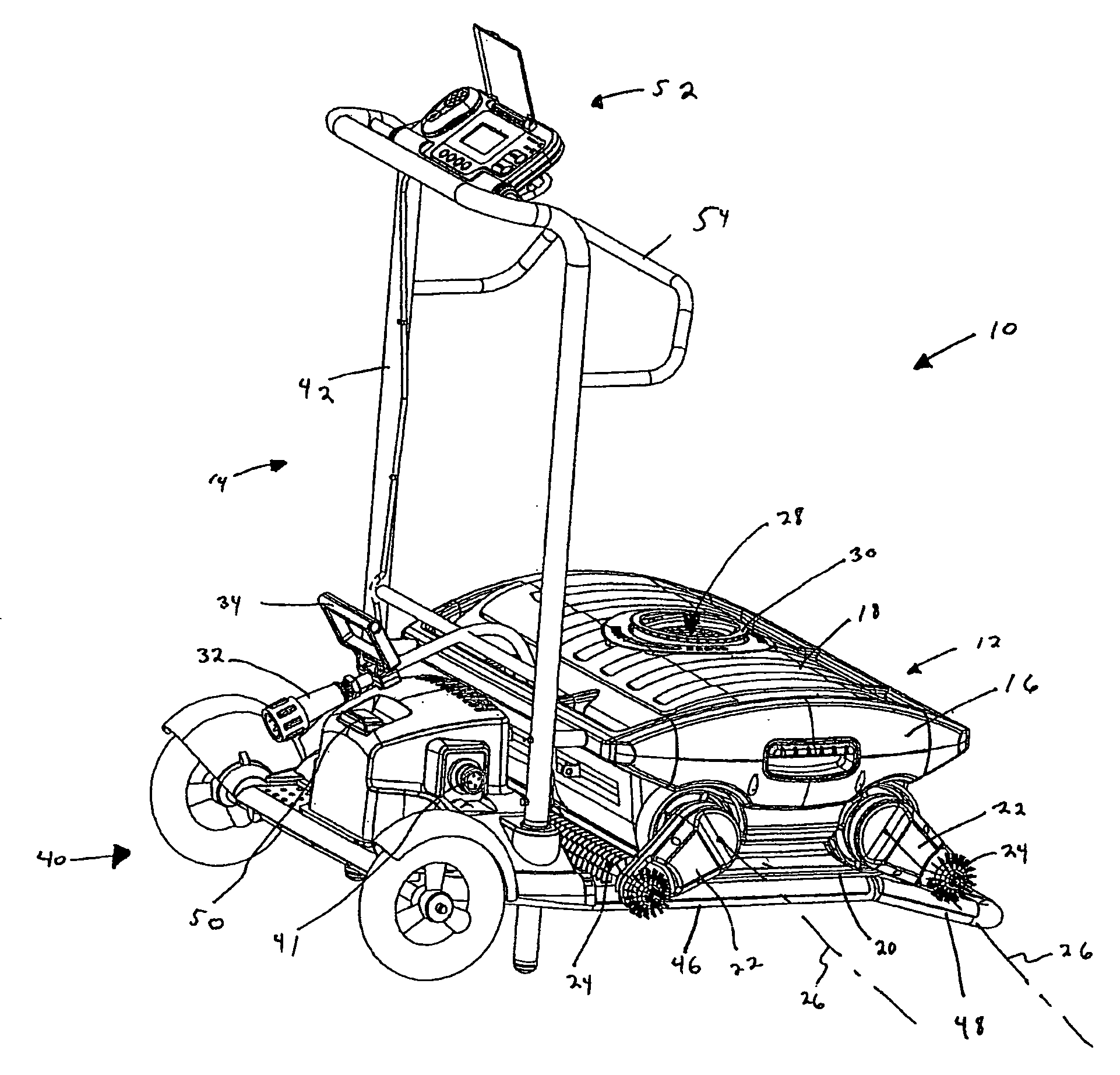

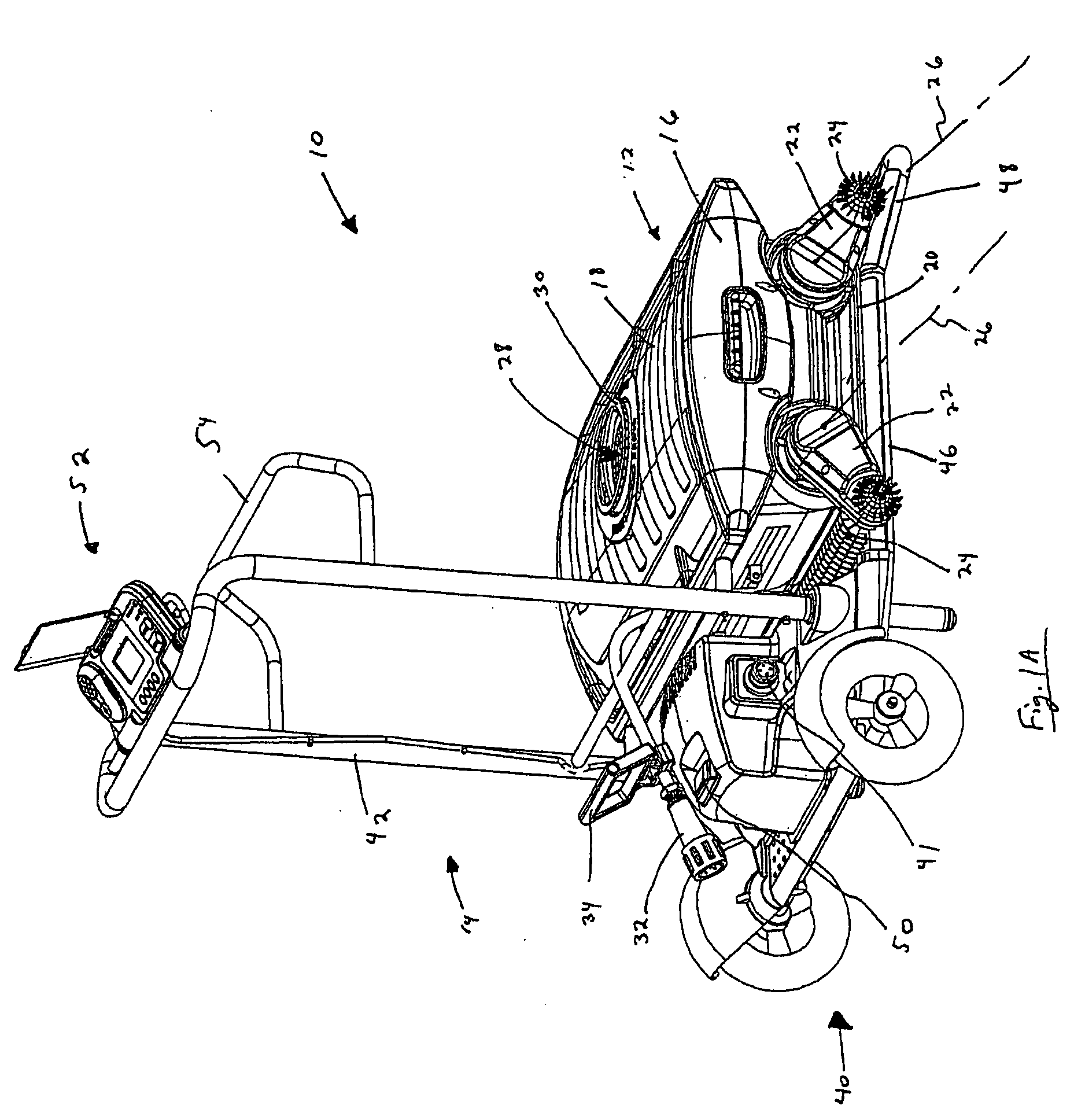

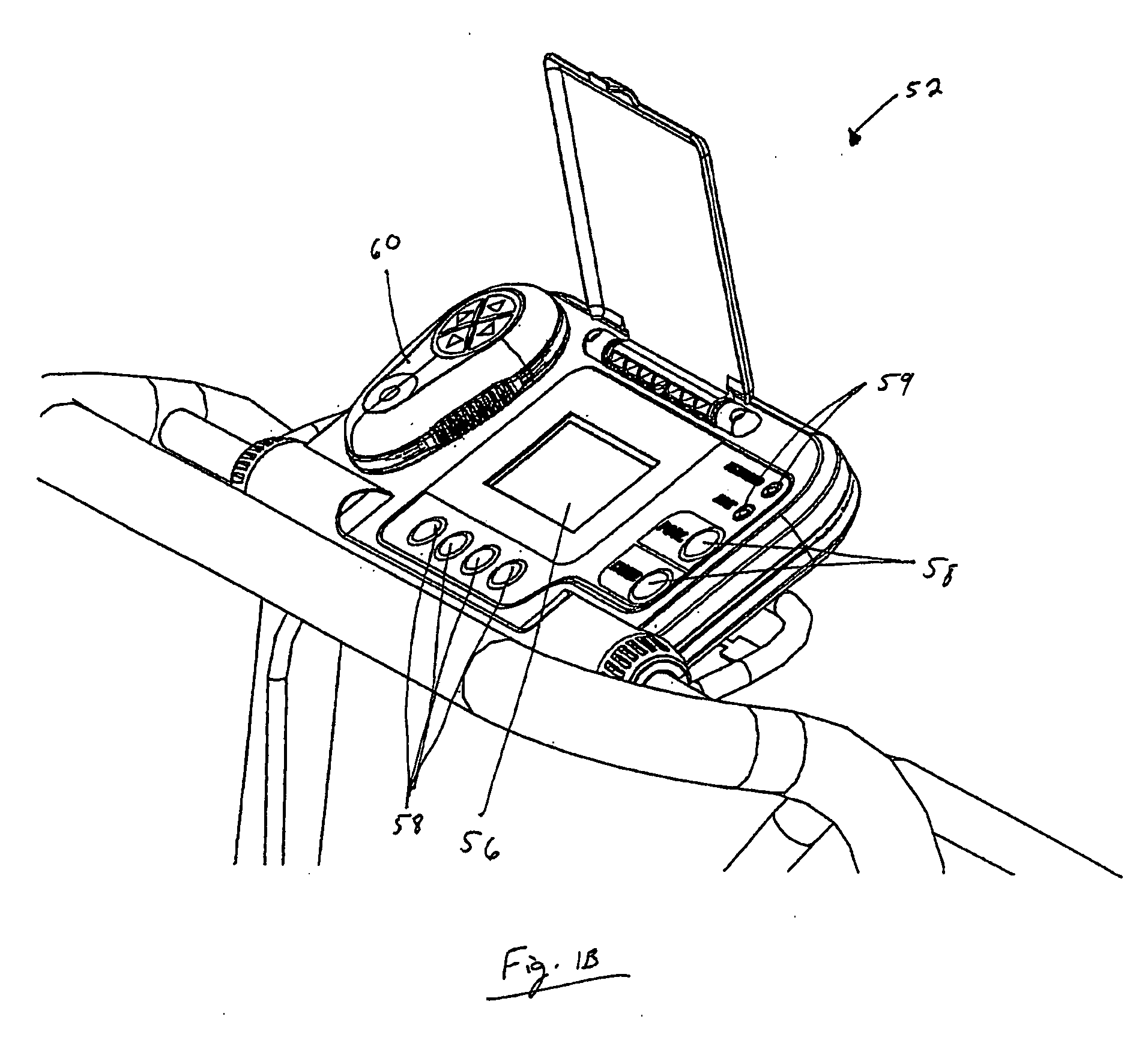

Pipe mapping system

ActiveUS8547428B1Simple methodTelevision system detailsImage enhancementData connectionThree axis accelerometer

A pipe inspection system employing a camera head assembly incorporating multiple local condition sensors, an integral dipole Sonde, a three-axis compass, and a three-axis accelerometer. The camera head assembly terminates a multi-channel push-cable that relays local condition sensor and video information to a processor and display subsystem. A cable storage structure includes data connection and wireless capability with tool storage and one or more battery mounts for powering remote operation. During operation, the inspection system may produce a two- or three-dimensional (3D) map of the pipe or conduit from local condition sensor data and video image data acquired from structured light techniques or LED illumination.

Owner:SEESCAN

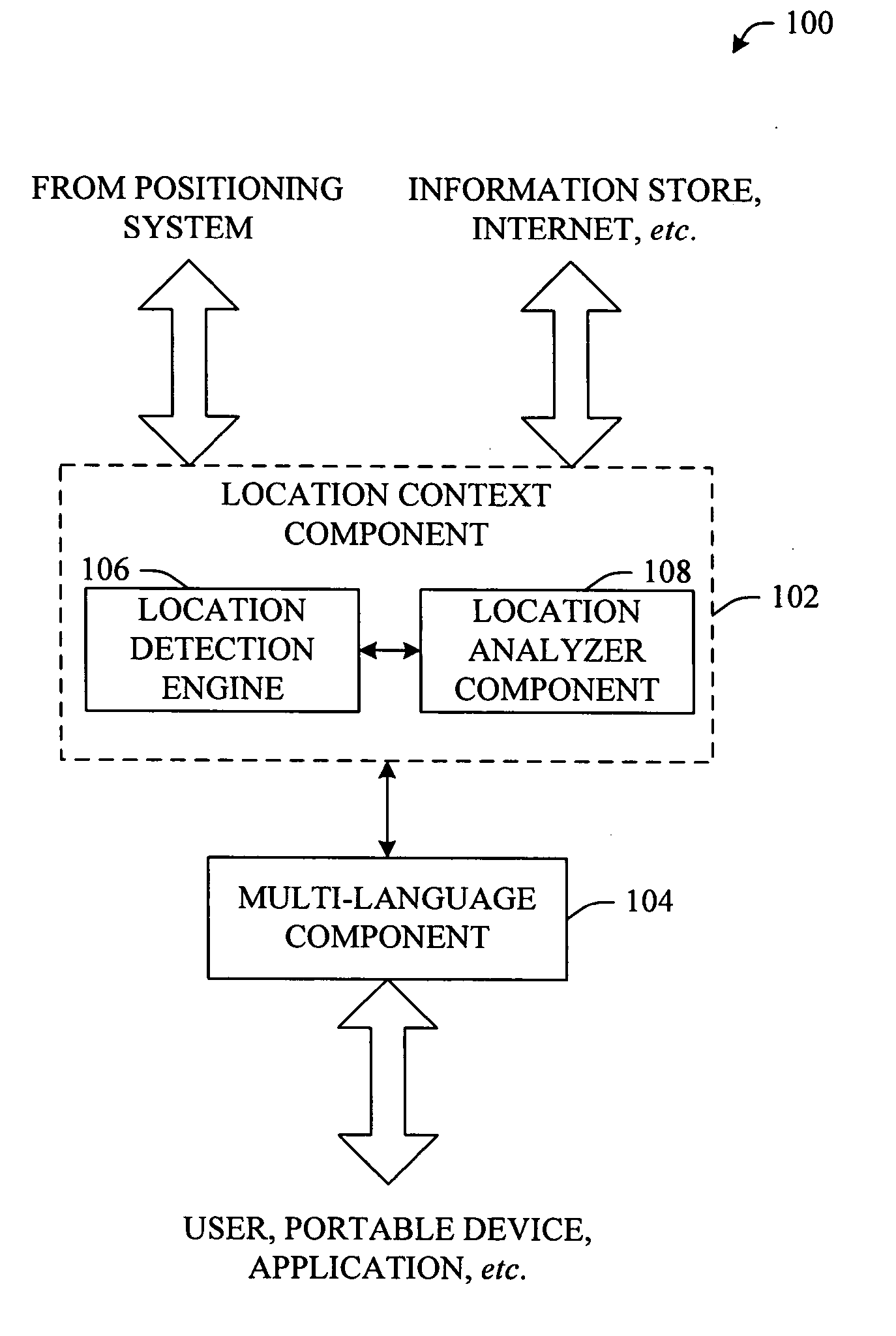

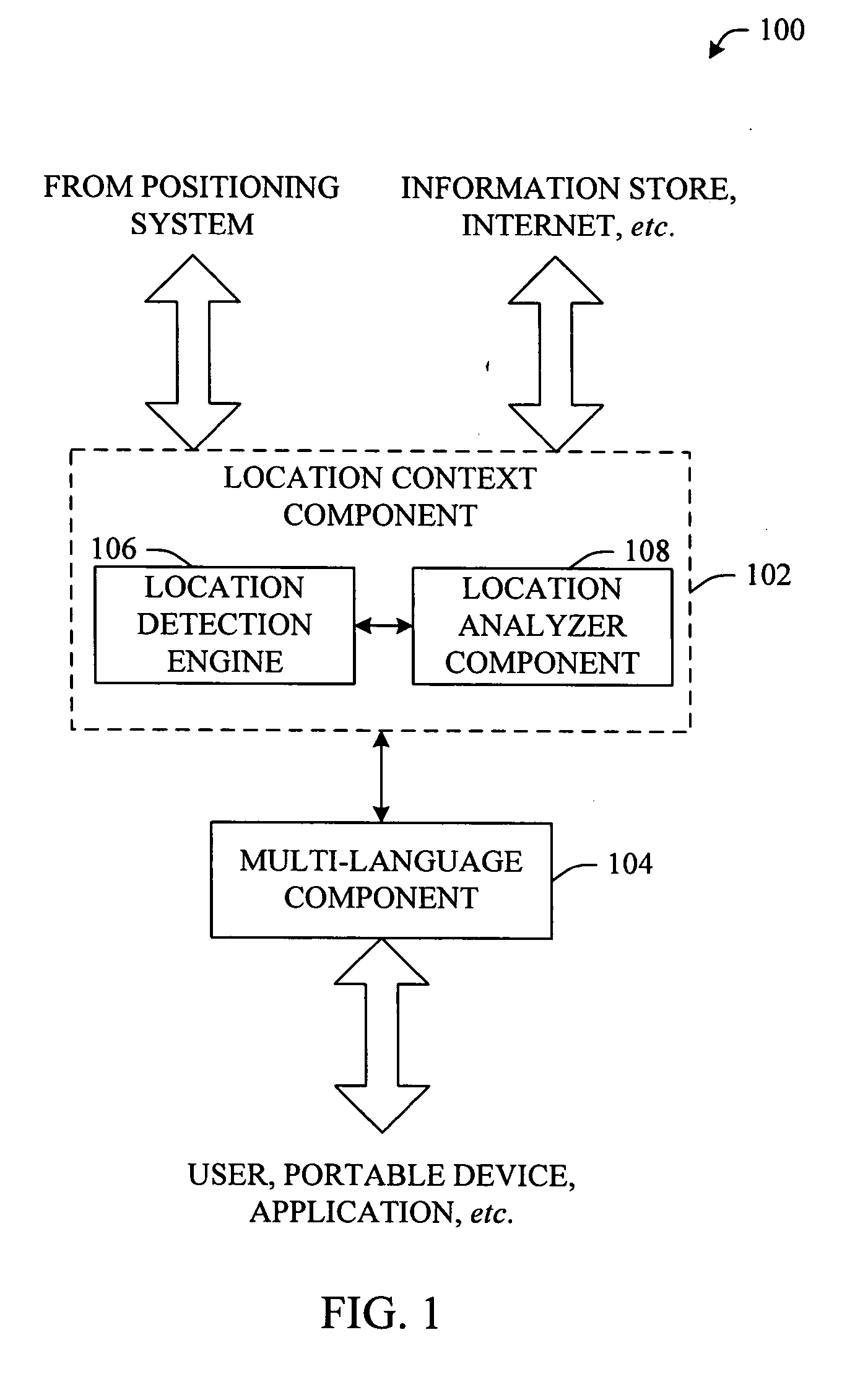

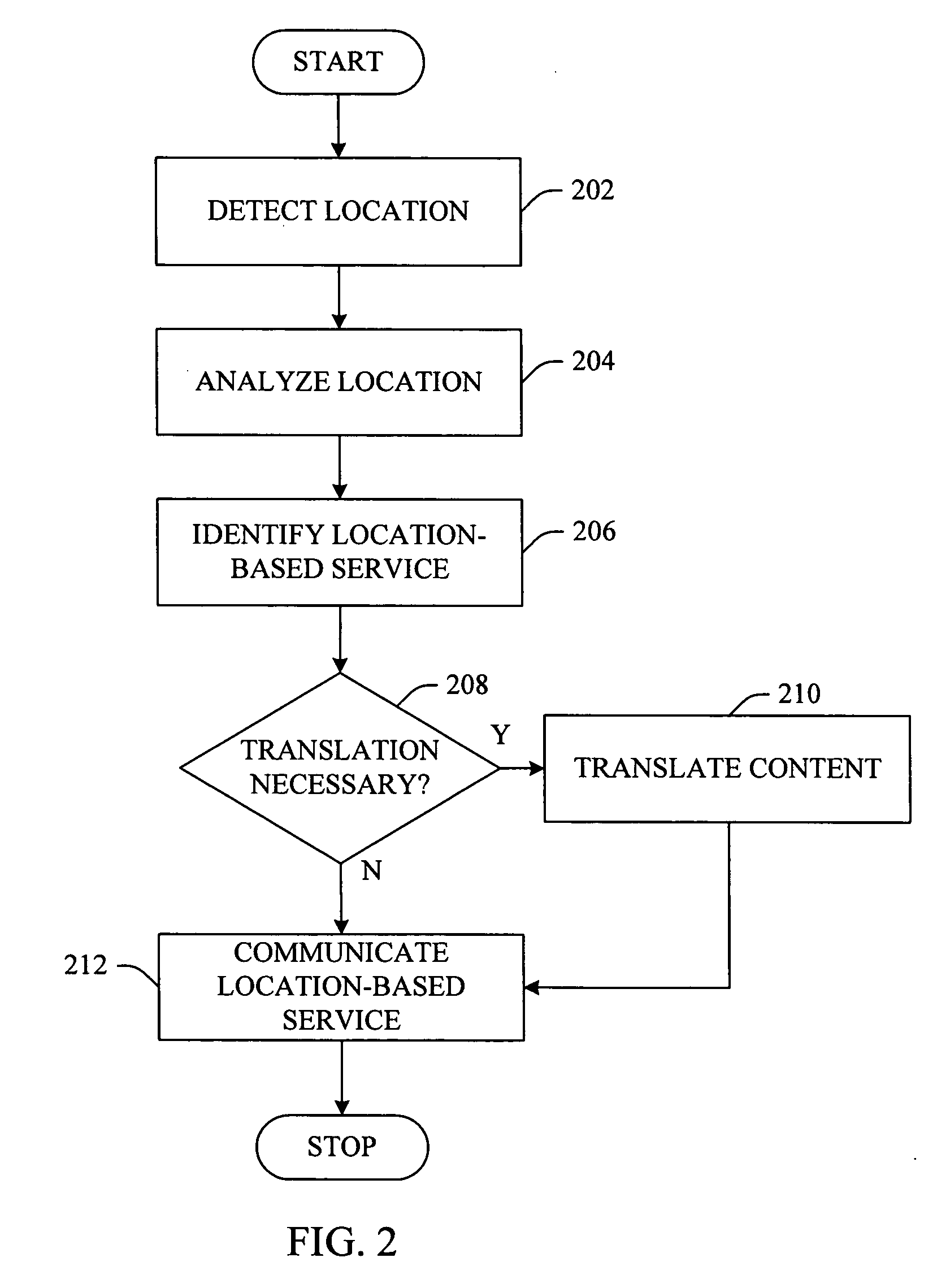

Location aware multi-modal multi-lingual device

InactiveUS20070005363A1Facilitates inferenceGood automationRoad vehicles traffic controlServices signallingAccelerometerTriangulation

Location-based technologies (e.g., global position system (GPS)) can be employed to facilitate providing multi-modal, multi-lingual location-based services. Identification of location can provide significant context as to identifying user state and intentions. Thus, location identification can facilitate providing / augmenting data and services (e.g., location-aware based suggestions, truncating contact lists based upon location, location-based reminders as a user approaches a predetermined location, truncating pre-loaded tasks, suggesting routes to accomplish pre-loaded tasks in a PIM). Still other aspects can augment GPS location identification with a compass, accelerometer, azimuth control, cellular triangulation, SPOT services of telephone, etc. Effectively, these alternative aspects can facilitate determination of a target location by detecting movement and direction of a user and / or portable device.

Owner:MICROSOFT TECH LICENSING LLC

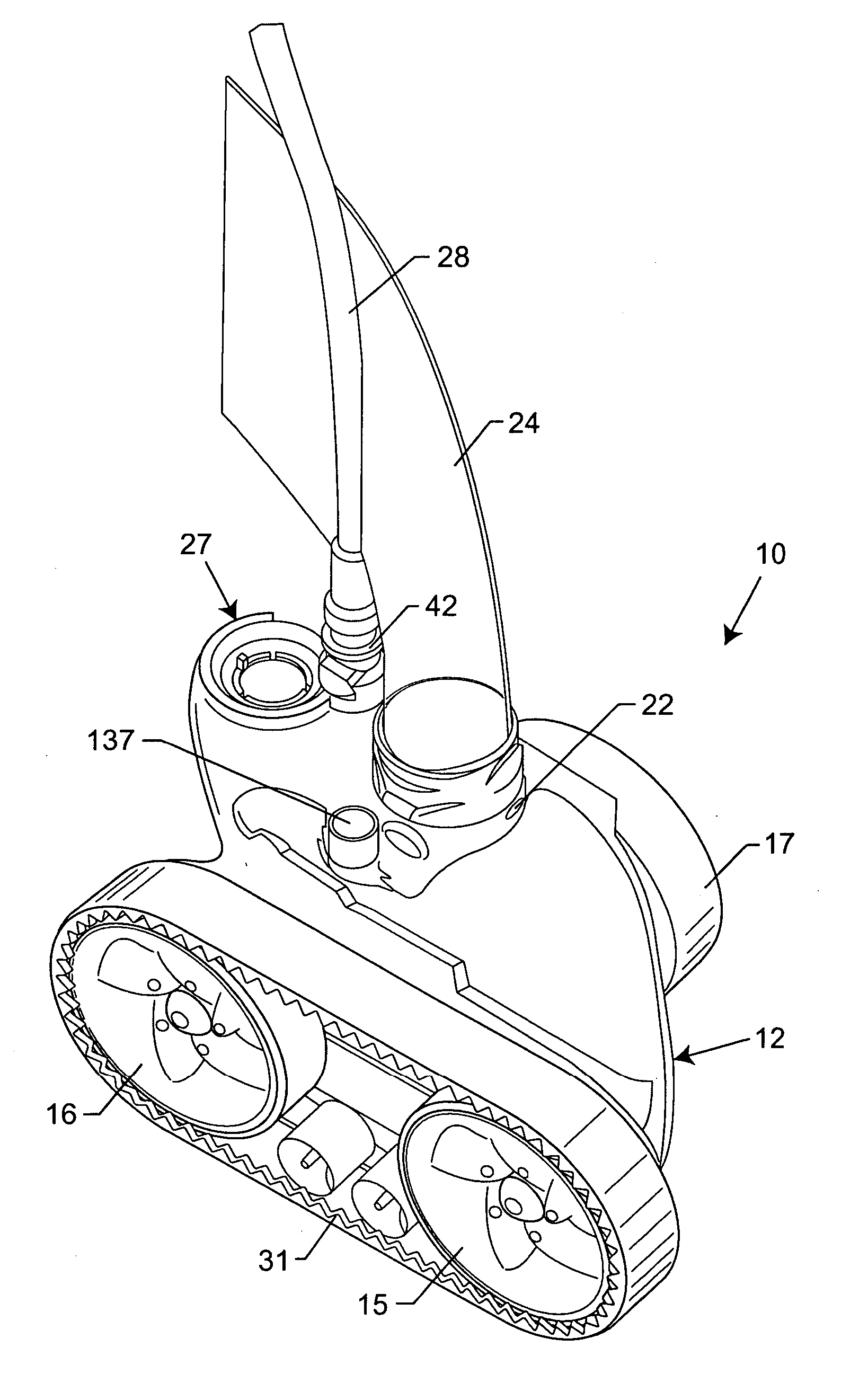

Automatic pool cleaner

ActiveUS20070094817A1Prevent movementEfficient vacuumingGymnasiumSwimming poolsPower cableMarine engineering

An automatic pool cleaner is provided of the type for random travel over submerged floor and side wall surfaces of a swimming pool or the like to dislodge and collect debris. The pool cleaner includes an electric-powered traction drive system for rotatably driving cleaner wheels, and an electric-powered water management system including a water supply pump and related manifold unit for venturi-vacuuming and collection of settled debris within a porous filter bag. A directional control system including an on-board compass monitors turning movements of the pool cleaner during normal random travel operation, and functions to regulate the traction drive system in a manner to prevent, e.g., excess twisting of a conduit such as a power cable tethered to the pool cleaner.

Owner:ZODIAC POOL SYST LLC

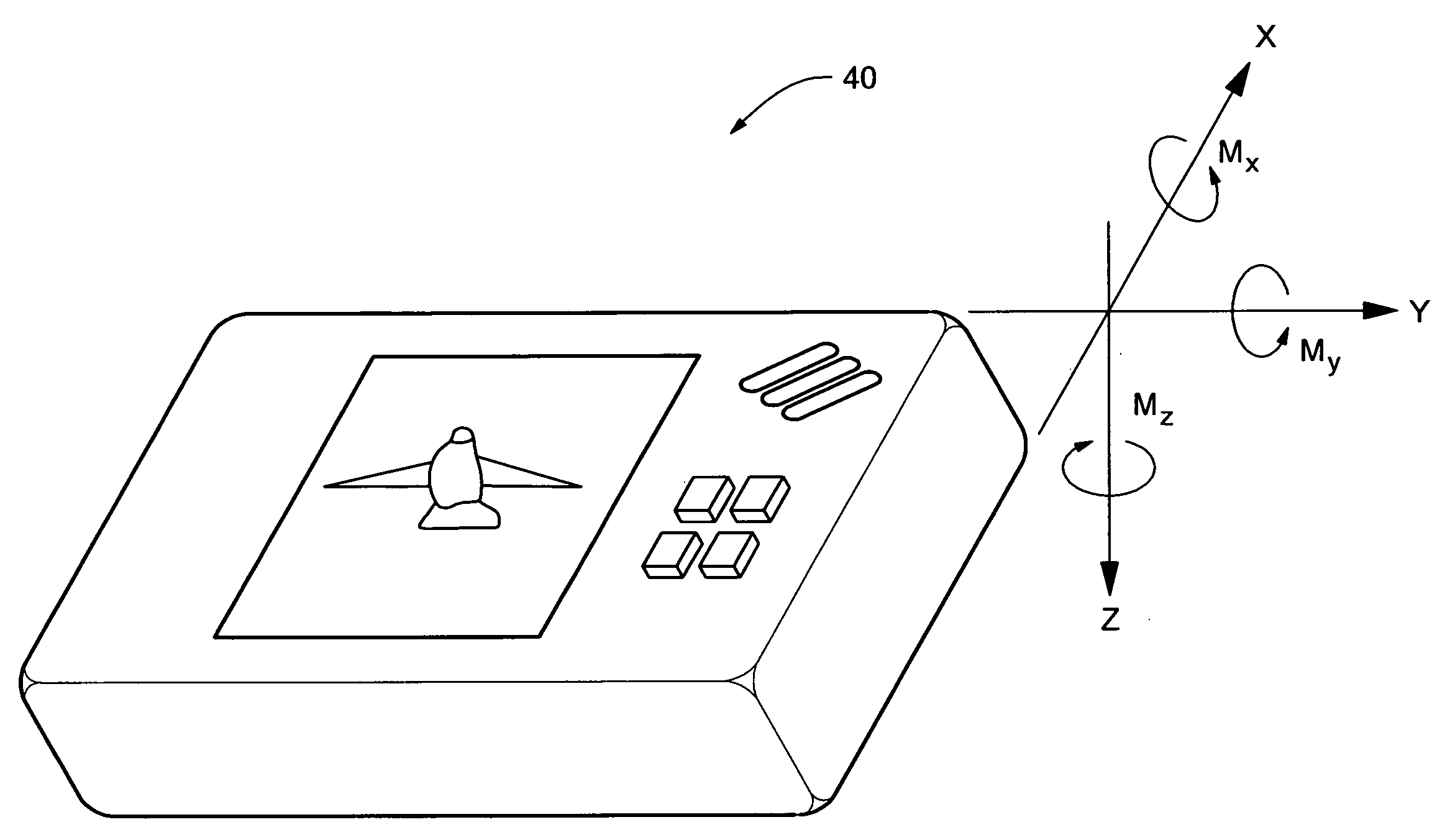

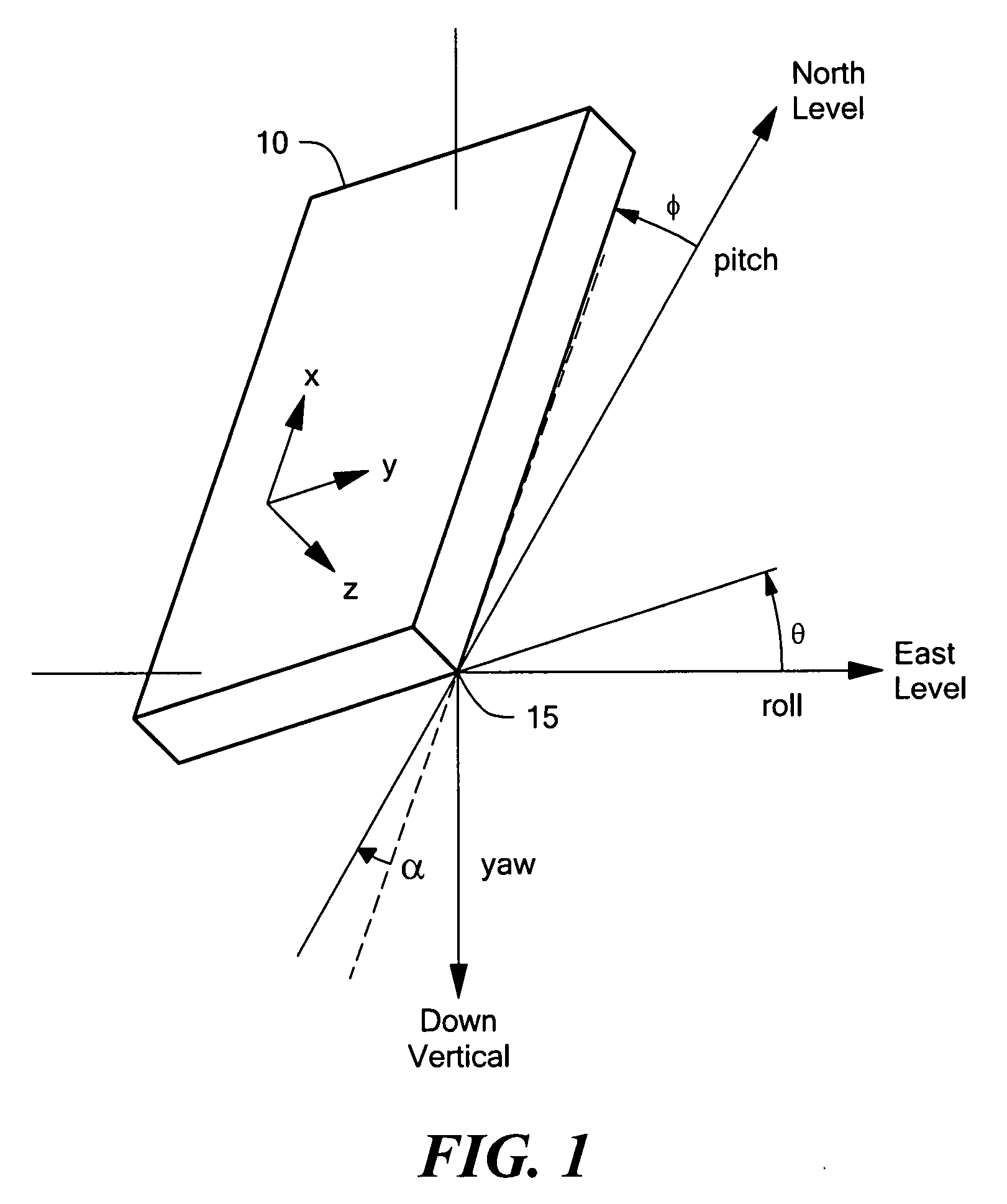

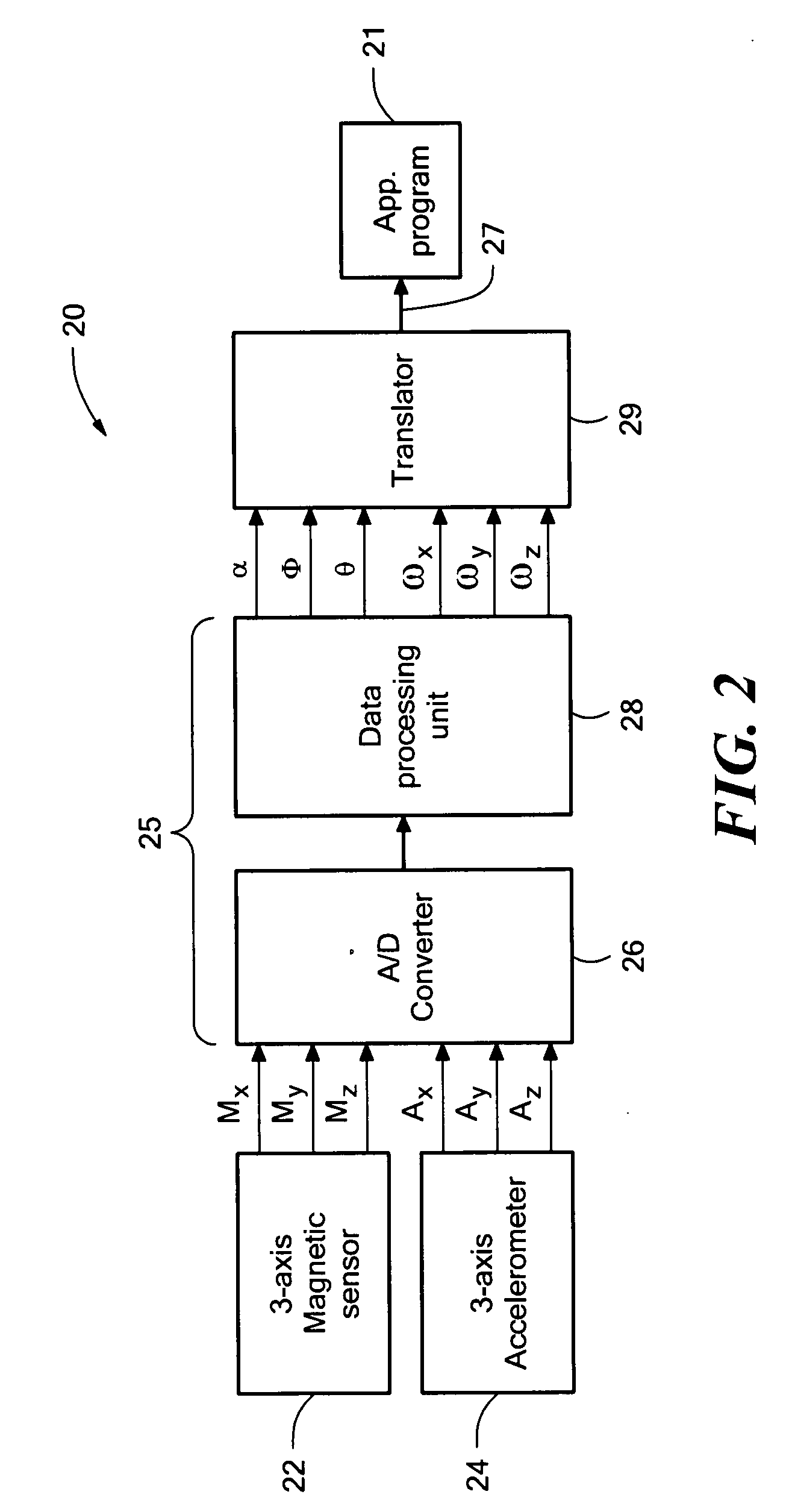

System for sensing yaw rate using a magnetic field sensor and portable electronic devices using the same

InactiveUS20080042973A1Digital data processing detailsAcceleration measurementTriaxial accelerometerMEMS magnetic field sensor

An attitude- and motion-sensing system for an electronic device, such as a cellular telephone, a game device, and the like, is disclosed. The system, which can be integrated into the portable electronic device, includes a two- or three-axis accelerometer and a three-axis magnetic compass. Data about the attitude of the electronic device from the accelerometer and magnetic compass are first processed by a signal processing unit that calculates attitude angles (pitch, roll, and yaw) and rotational angular velocities. These data are then translated into input signals for a specific application program associated with the electronic device.

Owner:MEMSIC

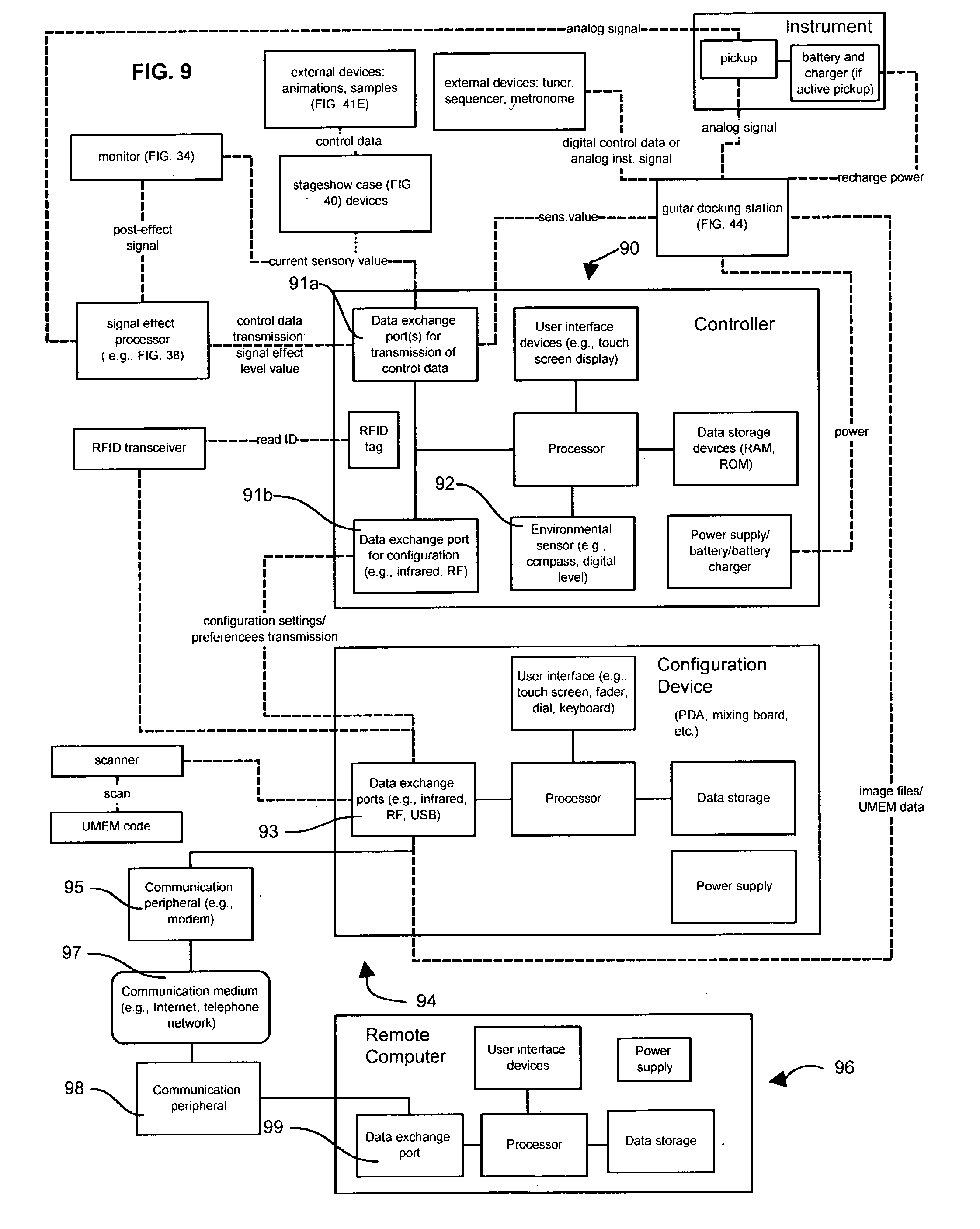

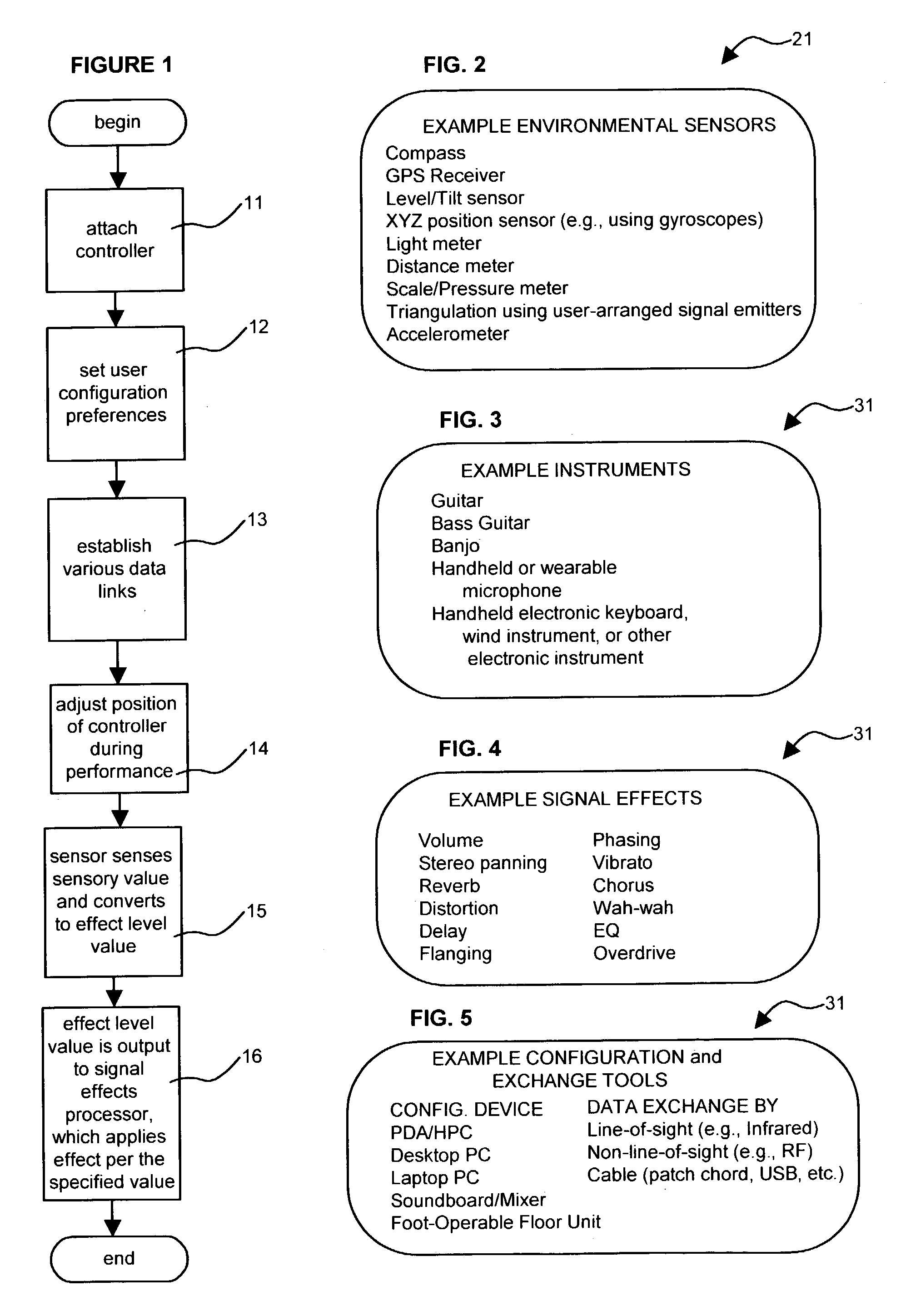

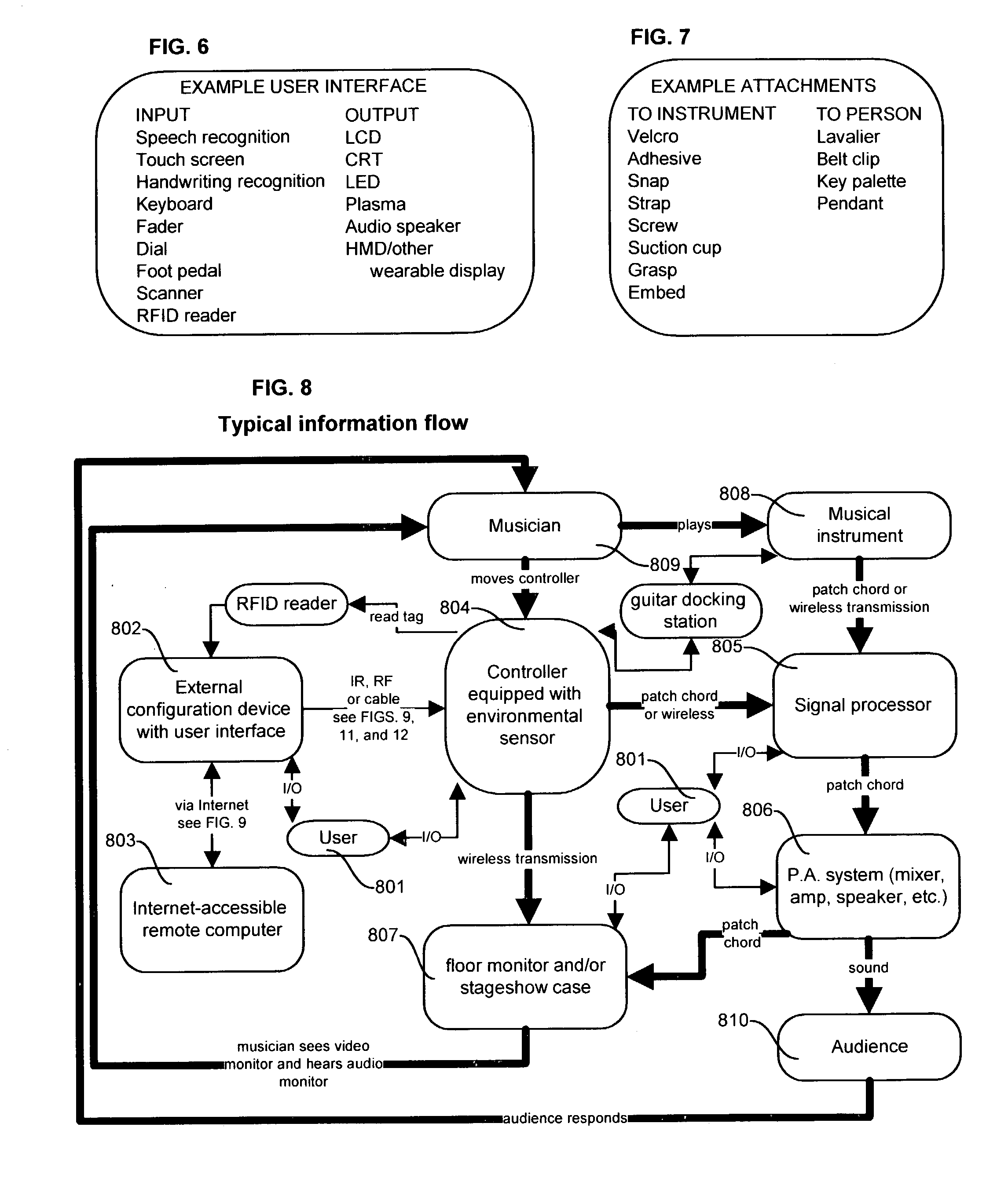

Guitar effects control system, method and devices

InactiveUS20030196542A1Function increaseElectrophonic musical instrumentsVisual indicationsDocking stationControl system

Disclosed is a guitar effects controller comprising a digital compass and means for converting directional degree information to signal effect level values. Alternate embodiments provide different sensors, e.g., GPS receiver or tilt sensor. The invention allows user control of instrument volume or other signal effects by turning, tilting, or otherwise manipulating the guitar. Also disclosed is a user configuration system whereby an effects controller can be configured using RF or infrared technology, RFID tags, the Internet, and other tools. Controller function is enhanced by a multipurpose guitar docking station and case. Also disclosed is a universal music exchange medium to facilitate the rapid configuration of system components.

Owner:HARRISON SHELTON E JR

Pool cleaning robot

InactiveUS20090057238A1Navigational calculation instrumentsWater/sewage treatmentControl theoryReference orientation

A pool cleaning robot adapted to move in a direction along the bottom surface of a pool. The robot comprises a compass, a rate gyroscope, and a controller adapted to determine the orientation of the robot, relative to a reference orientation thereof, based on readings of the compass and the gyroscope.

Owner:MAYTRONICS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com