Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

41 results about "Rendering (computer graphics)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Rendering or image synthesis is the automatic process of generating a photorealistic or non-photorealistic image from a 2D or 3D model (or models in what collectively could be called a scene file) by means of computer programs. Also, the results of displaying such a model can be called a render. A scene file contains objects in a strictly defined language or data structure; it would contain geometry, viewpoint, texture, lighting, and shading information as a description of the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" may be by analogy with an "artist's rendering" of a scene.

System method and computer program product for remote graphics processing

InactiveUS7274368B1Great utilityCathode-ray tube indicatorsMultiple digital computer combinationsClient-sideFramebuffer

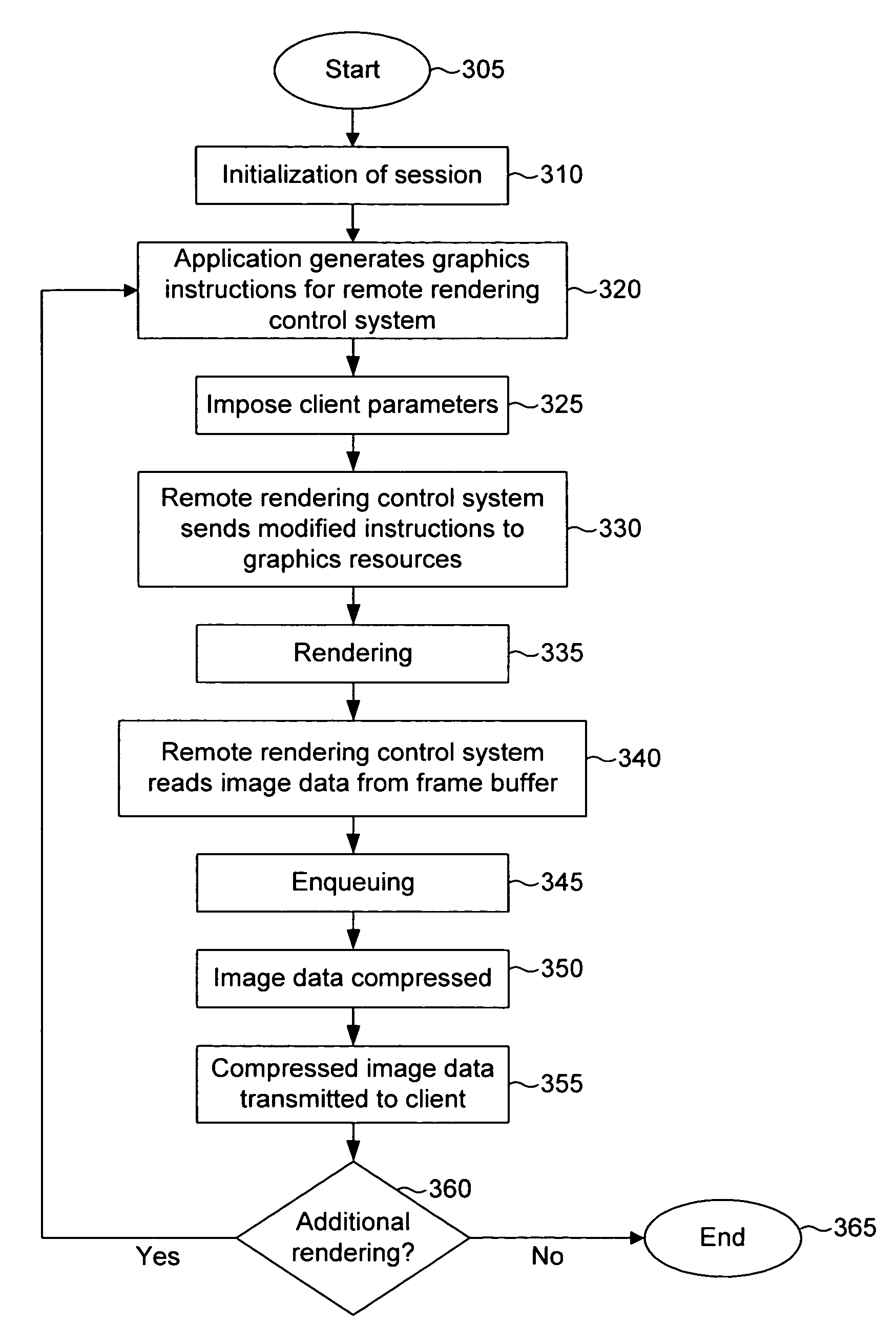

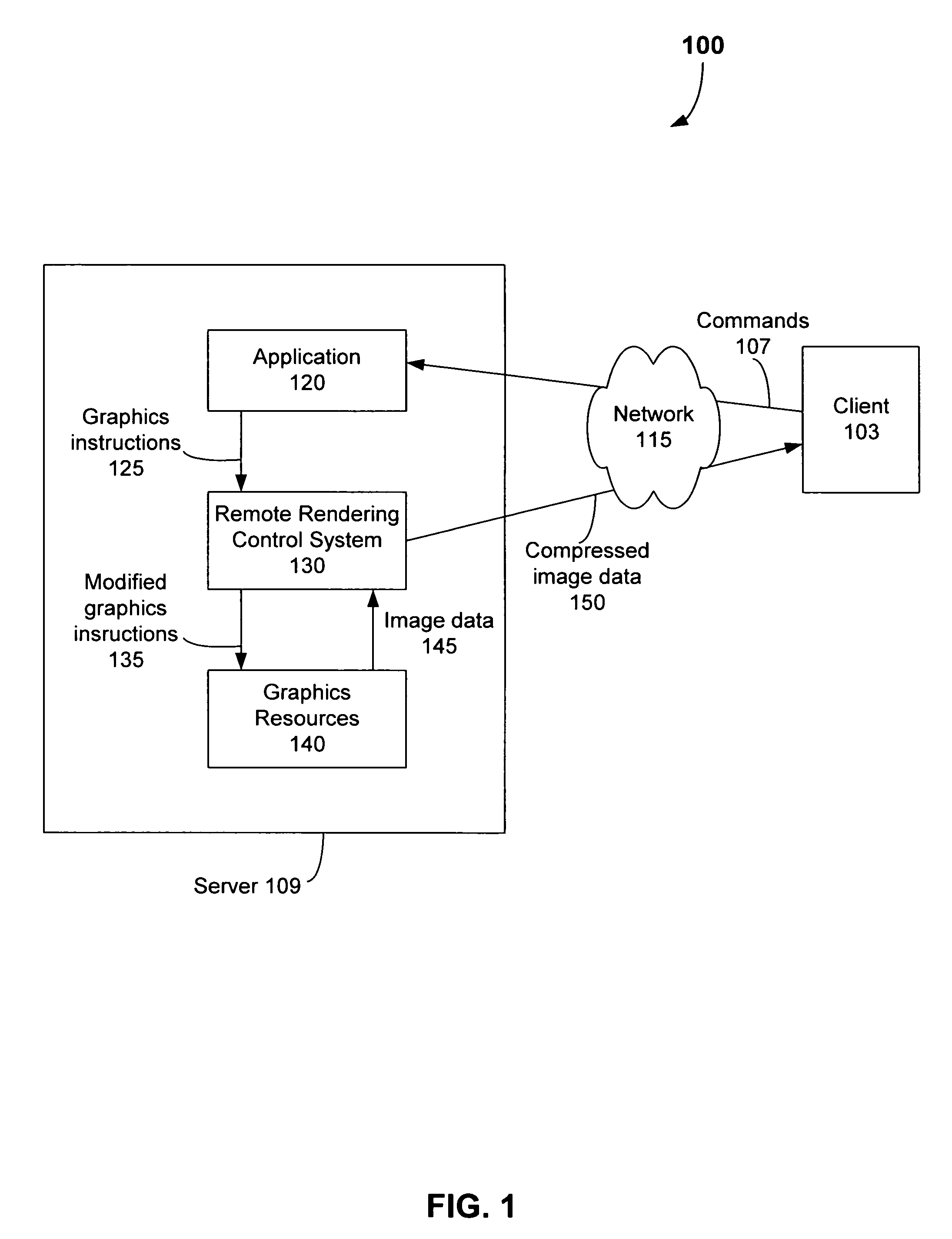

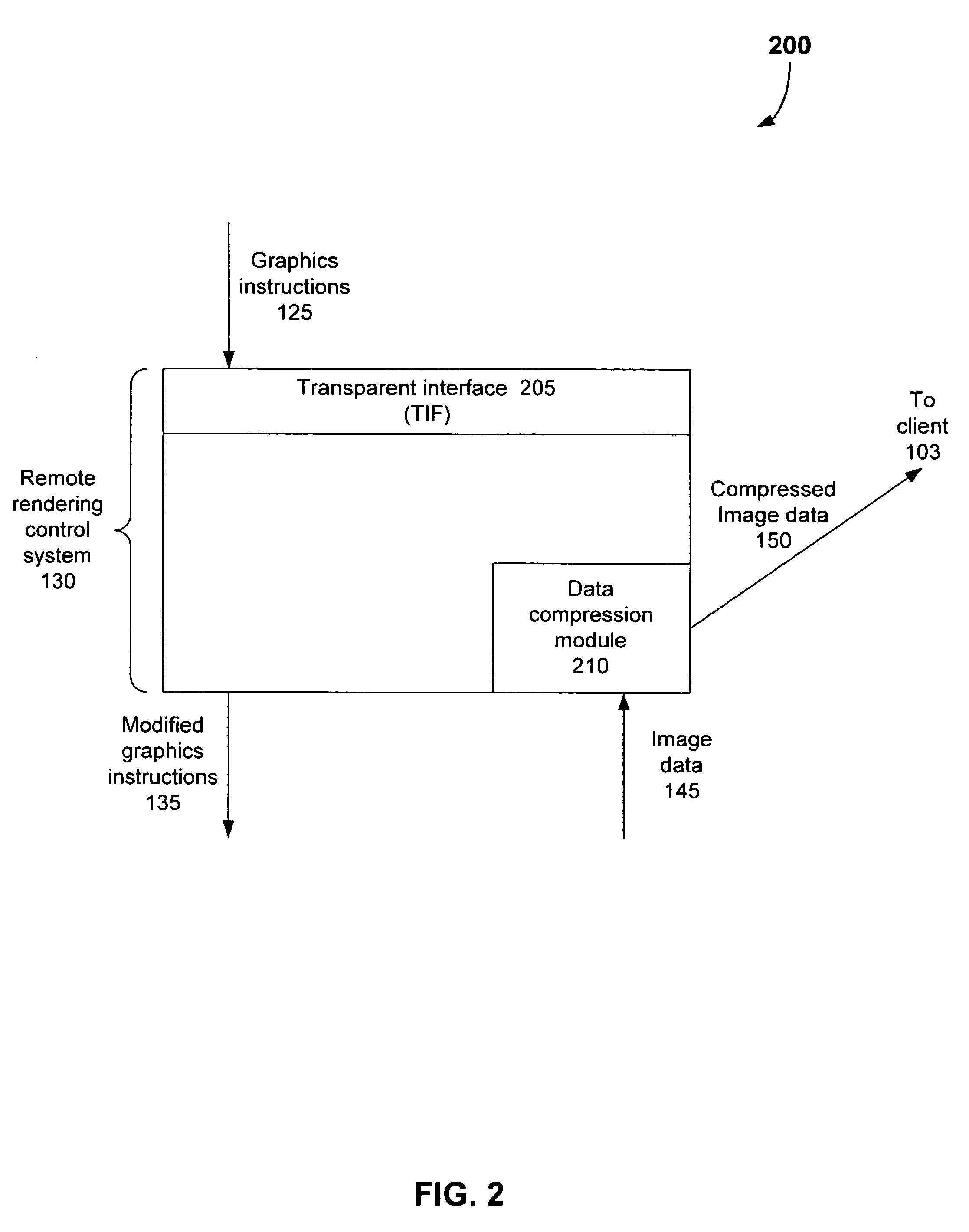

A system, method, and computer program product are provided for remote rendering of computer graphics. The system includes a graphics application program resident at a remote server. The graphics application is invoked by a user or process located at a client. The invoked graphics application proceeds to issue graphics instructions. The graphics instructions are received by a remote rendering control system. Given that the client and server differ with respect to graphics context and image processing capability, the remote rendering control system modifies the graphics instructions in order to accommodate these differences. The modified graphics instructions are sent to graphics rendering resources, which produce one or more rendered images. Data representing the rendered images is written to one or more frame buffers. The remote rendering control system then reads this image data from the frame buffers. The image data is transmitted to the client for display or processing. In an embodiment of the system, the image data is compressed before being transmitted to the client. In such an embodiment, the steps of rendering, compression, and transmission can be performed asynchronously in a pipelined manner.

Owner:GOOGLE LLC +1

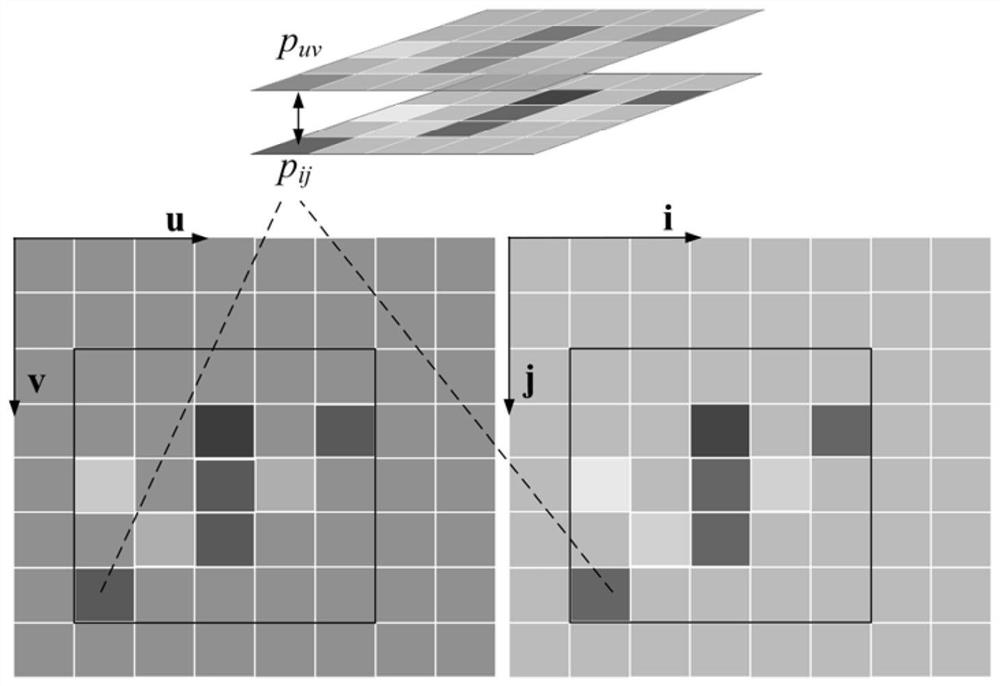

Systems and methods for providing controllable texture sampling

InactiveUS7324116B2Increase level of realism in renderingImage enhancementCharacter and pattern recognitionPattern recognitionGraphics

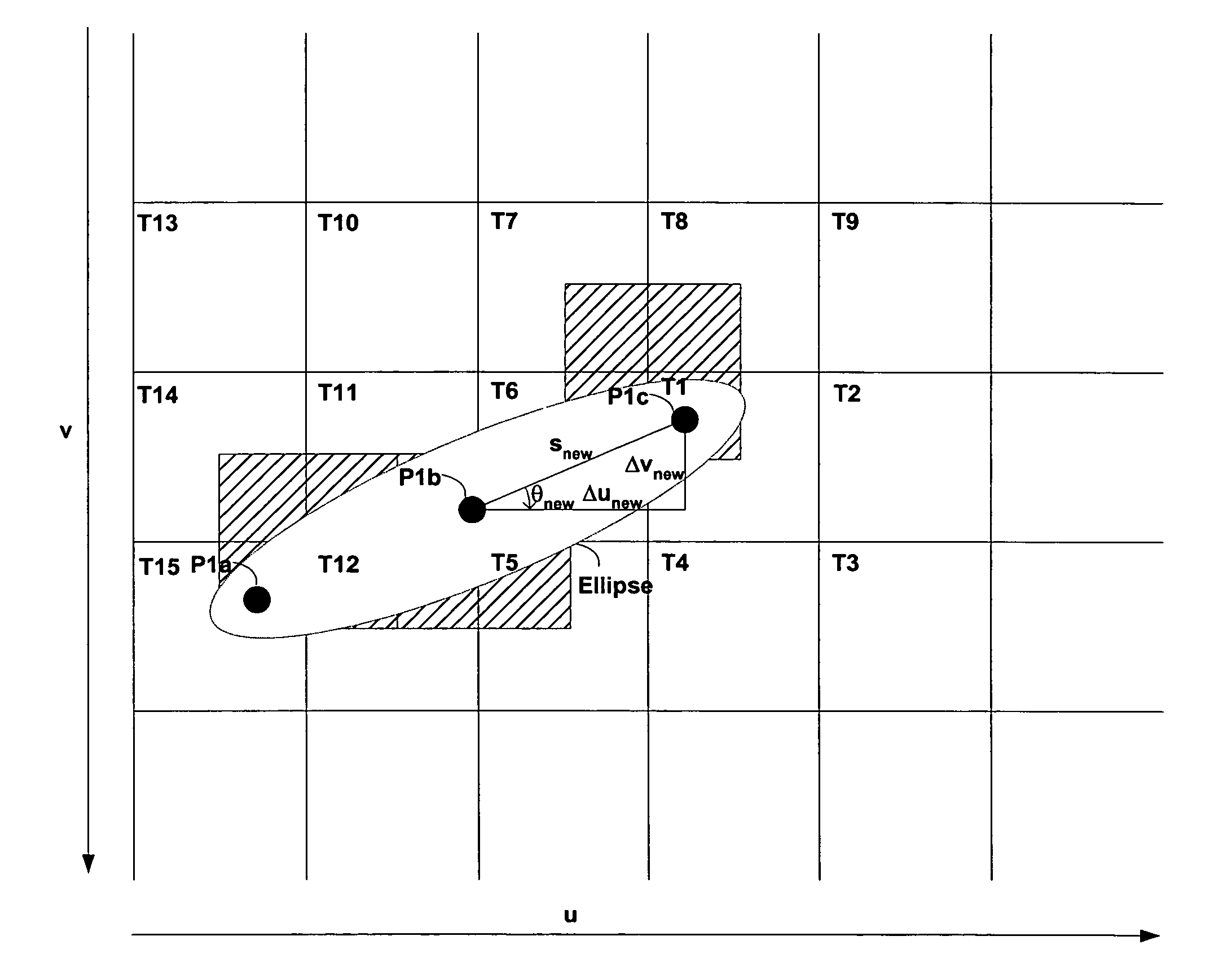

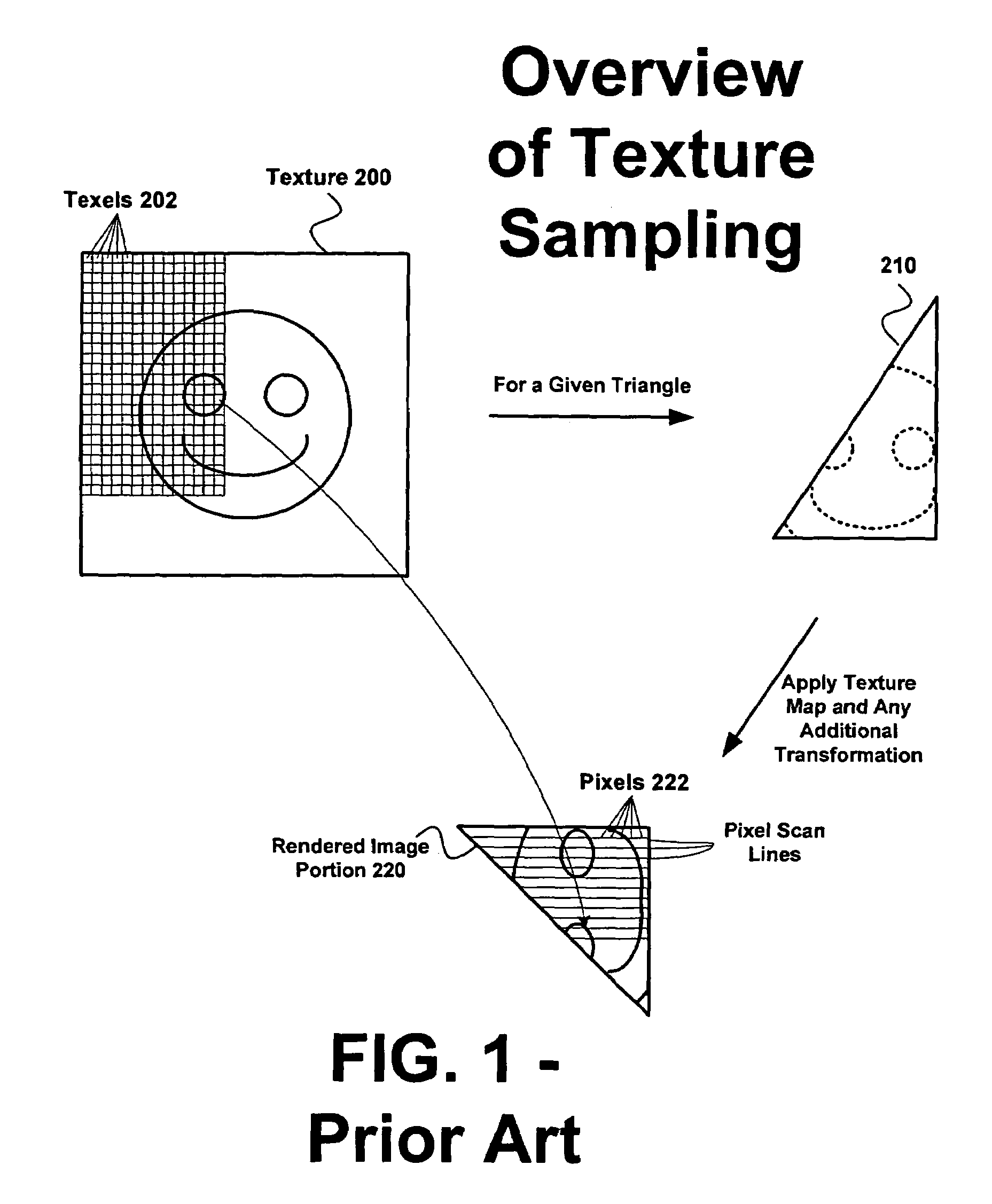

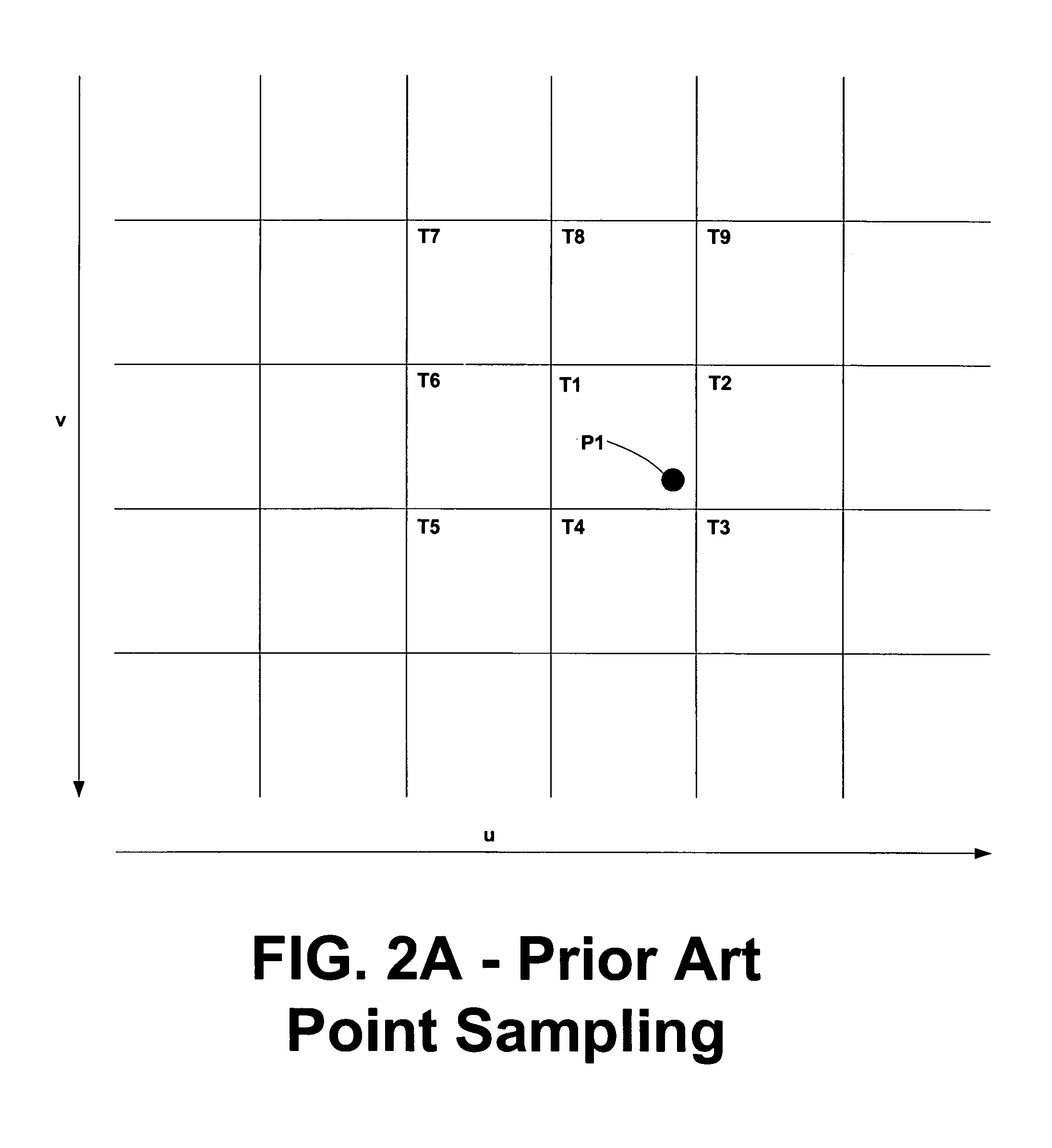

Systems and methods are provided for controlling texture sampling in connection with computer graphics in a computer system. In various embodiments, improved mechanisms for controlling texture sampling are provided that enable 3-D accelerator hardware to greatly increase the level of realism in rendering, including improved mechanisms for (1) motion blur; (2) generating anisotropic surface reflections (3) generating surface self-shadowing (4) ray-cast volumetric sampling (4) self-shadowed volumetric rendering and (5) self-shadowed volumetric ray-casting. In supplementing existing texture sampling techniques, parameters for texture sampling may be replaced and / or modified.

Owner:MICROSOFT TECH LICENSING LLC

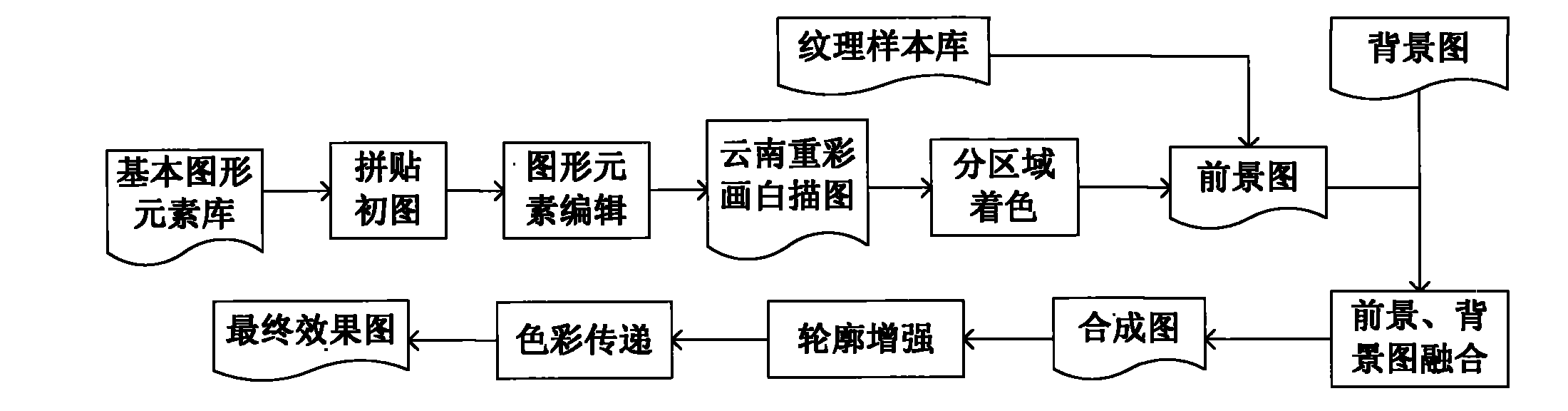

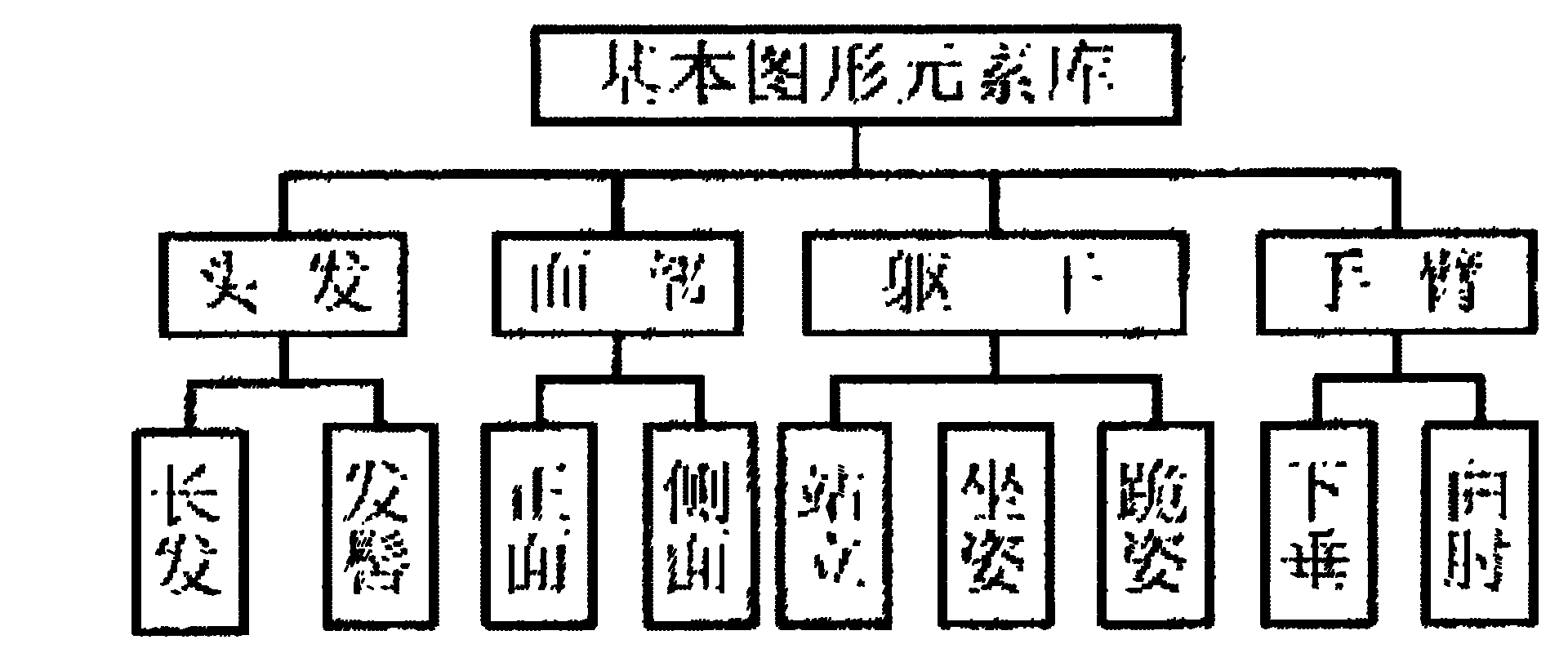

Digital simulation and synthesis technology with artistic style of Yunnan heavy-color painting

ActiveCN101887366AAvoid mutual interferenceIndependentDrawing from basic elementsSpecific program execution arrangementsGraphicsComputer graphics

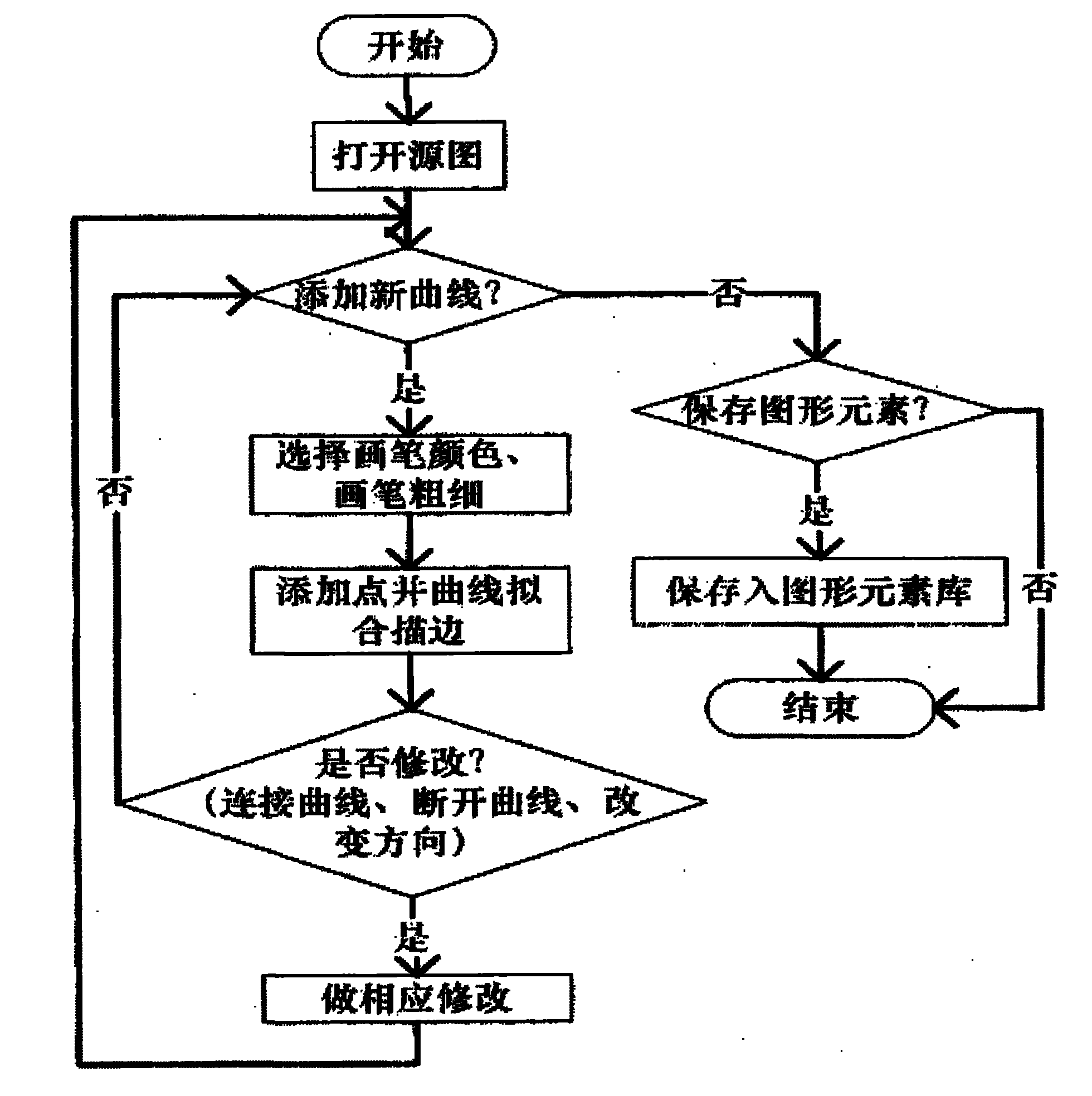

The invention relates to non-photorealistic rendering in computer graphics, in particular to digital simulation and synthesis with artistic style of Yunnan heavy-color painting, and belongs to the technical field of non-photorealistic graphic rendering. The digital simulation and synthesis are characterized in that: the digital simulation and synthesis comprise an establishment and management module of a Yunnan heavy-color painting basic graphic element library, a graphic element library-based Yunnan heavy-color painting traditional delineation drawing rendering module, and a Yunnan heavy-color painting traditional delineation drawing-based coloring and rendering module. The basic graphic element library is used for providing graphic elements needed by foreground traditional delineation drawings, such as hair, facial forms, trunks and the like. The Yunnan heavy-color painting traditional delineation drawing rendering module can select appropriate graphic elements from the graphic element library to combine the graphic elements by layers so as to generate the Yunnan heavy-color painting traditional delineation drawings. In order to harmoniously collocate all the graphic elements, a system provides multiple kinds of operation for the graphic elements, such as translation, selection, scaling and the like and can modify the shapes of the graphic elements at the same time. The Yunnan heavy-color painting traditional delineation drawing-based coloring and rendering module is in charge of coloring the traditional delineation drawings, and the effects of coloring can reflect texture characteristics and color characteristics in an original drawing of the heavy-color painting, and special characteristic simulation of the Yunnan heavy-color painting, such as character edge hollow sense, background fusion and the like is realized.

Owner:YUNNAN UNIV

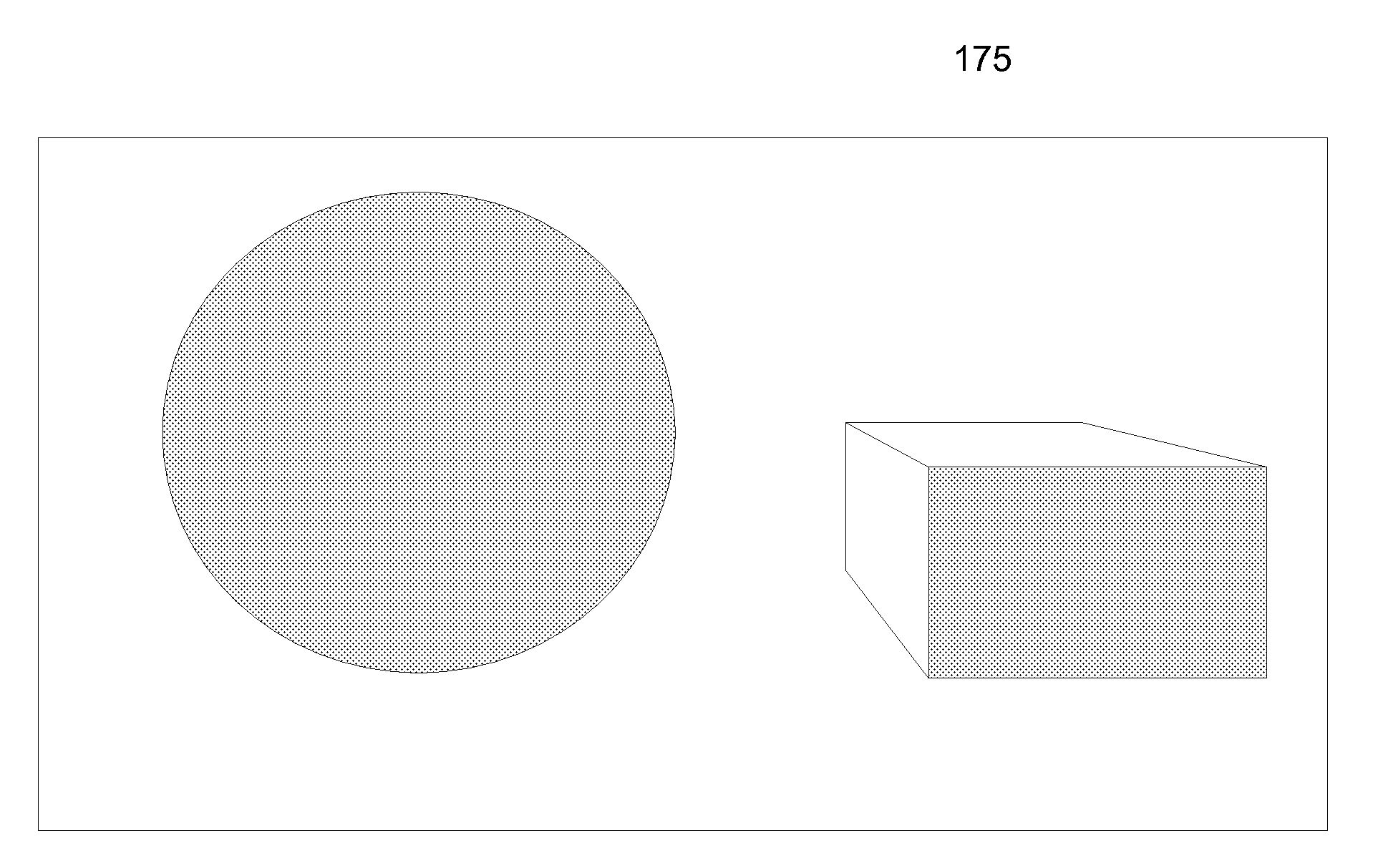

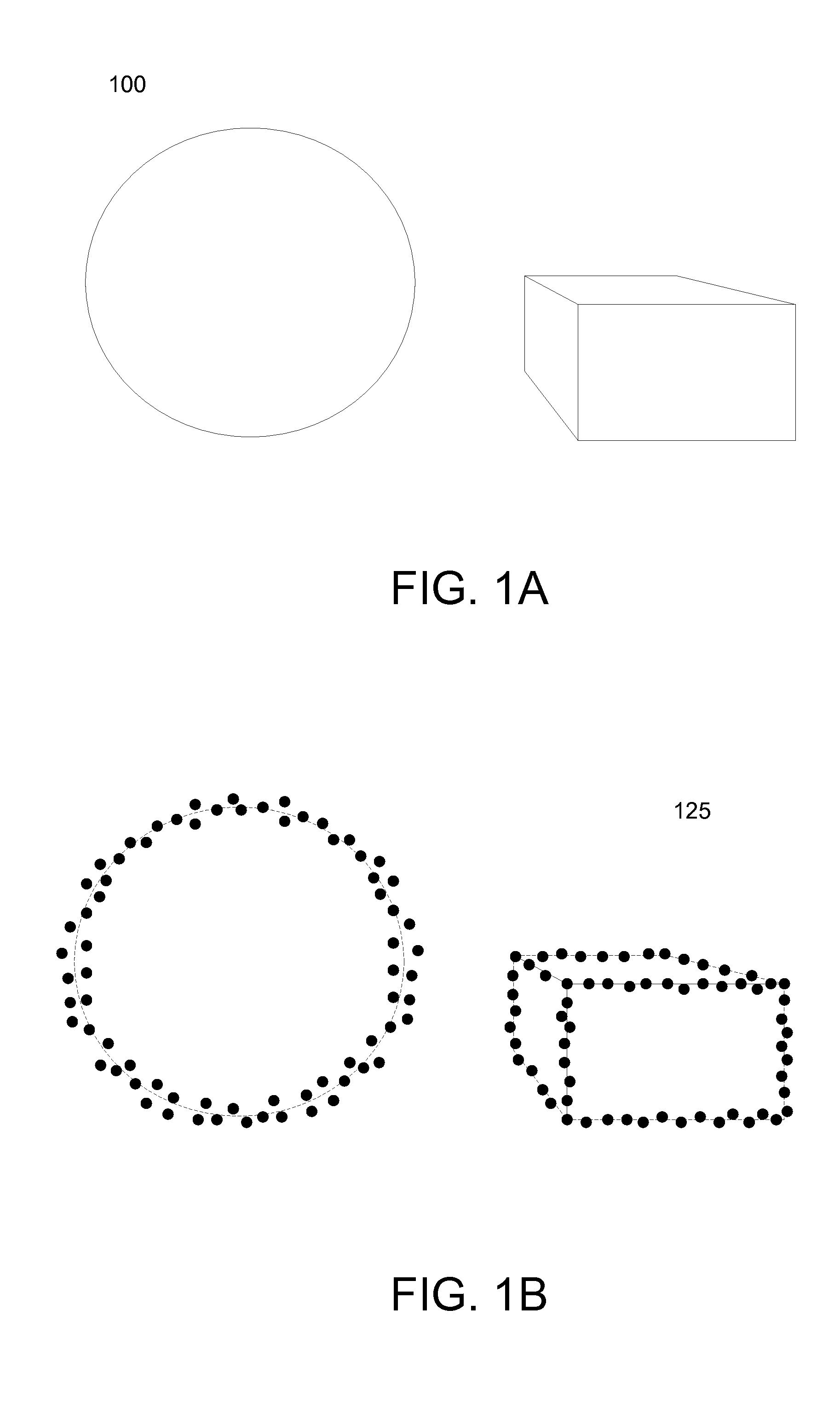

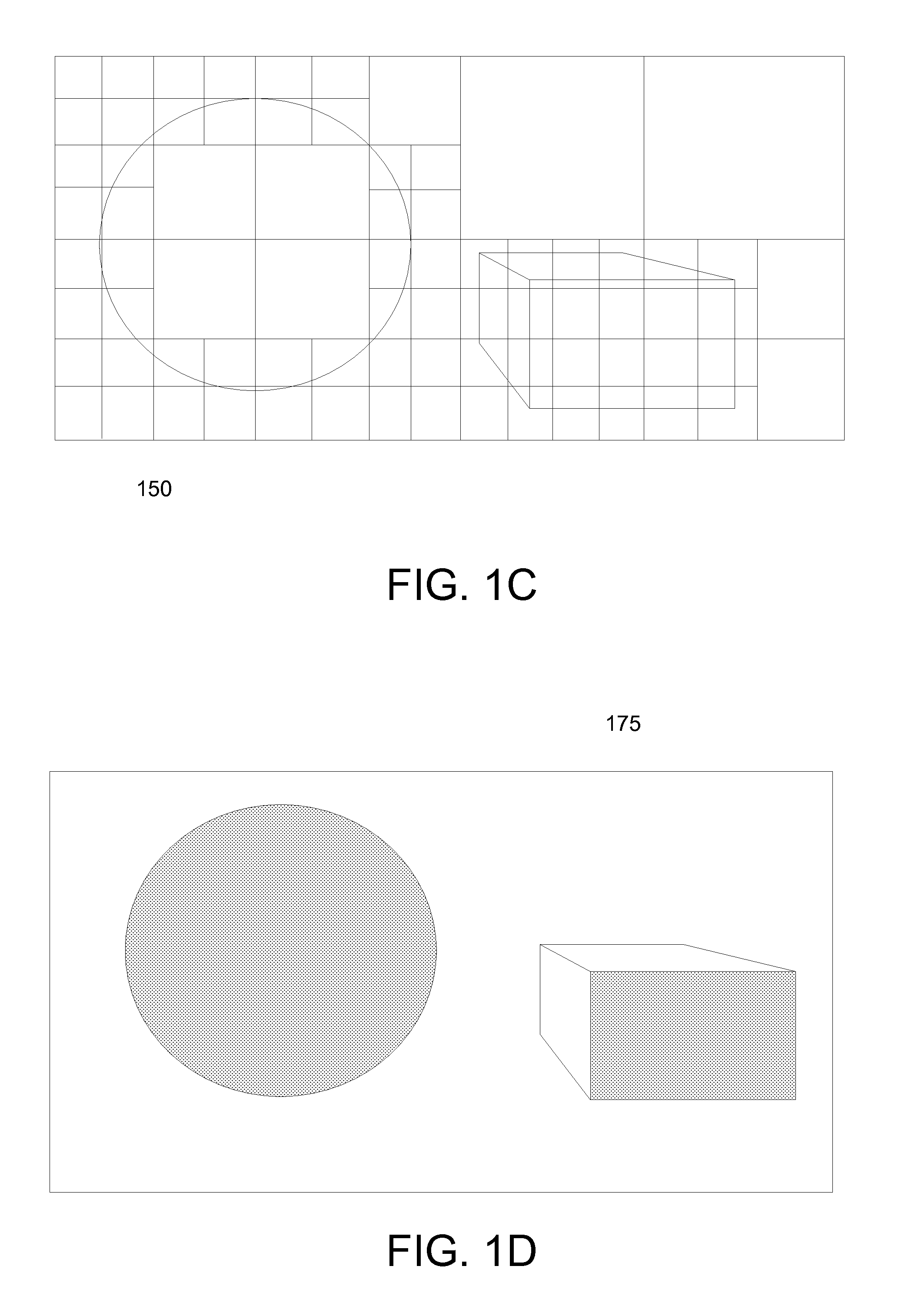

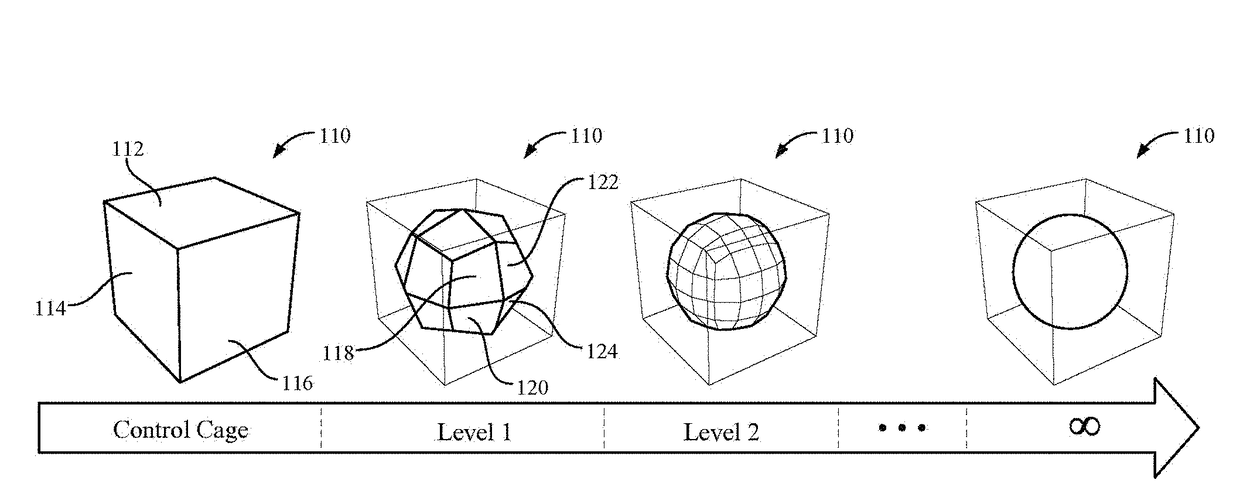

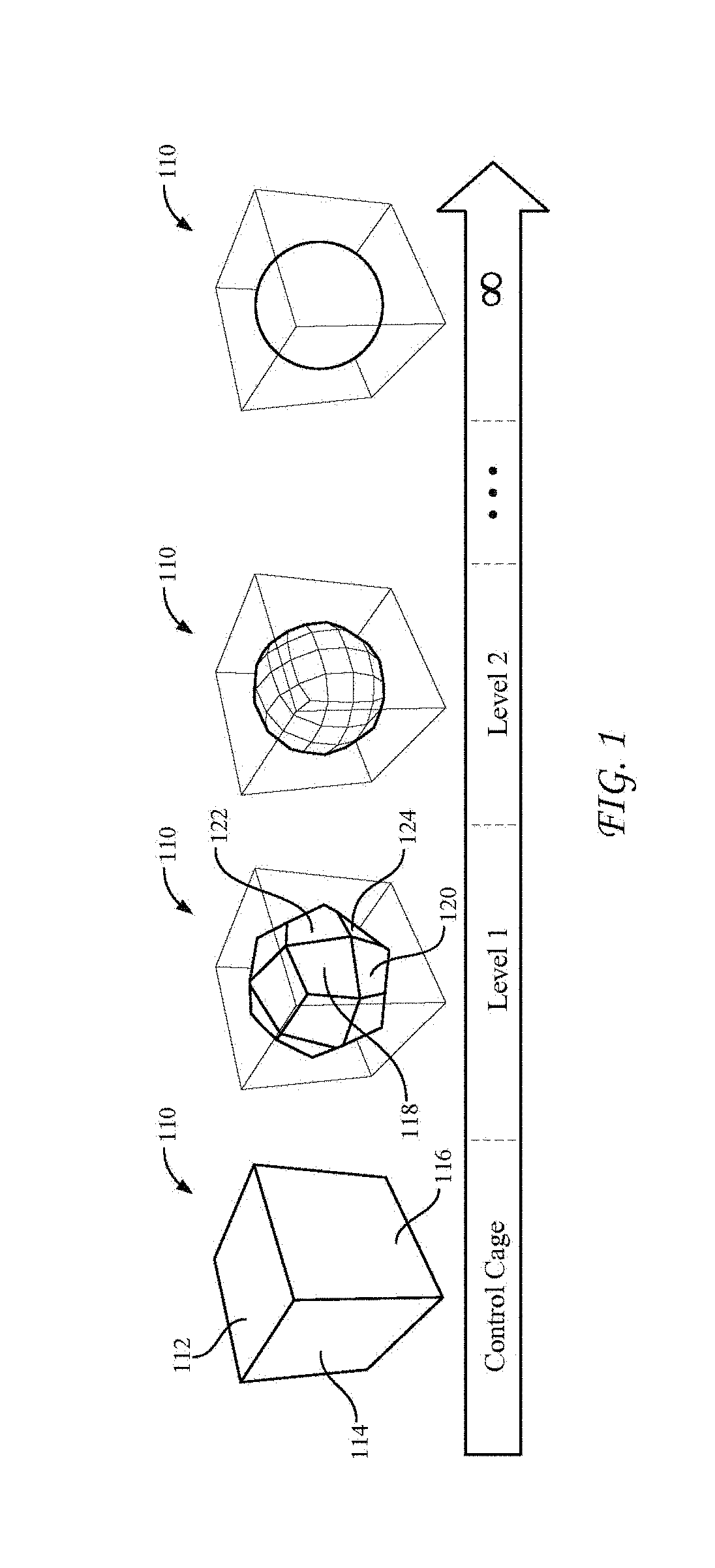

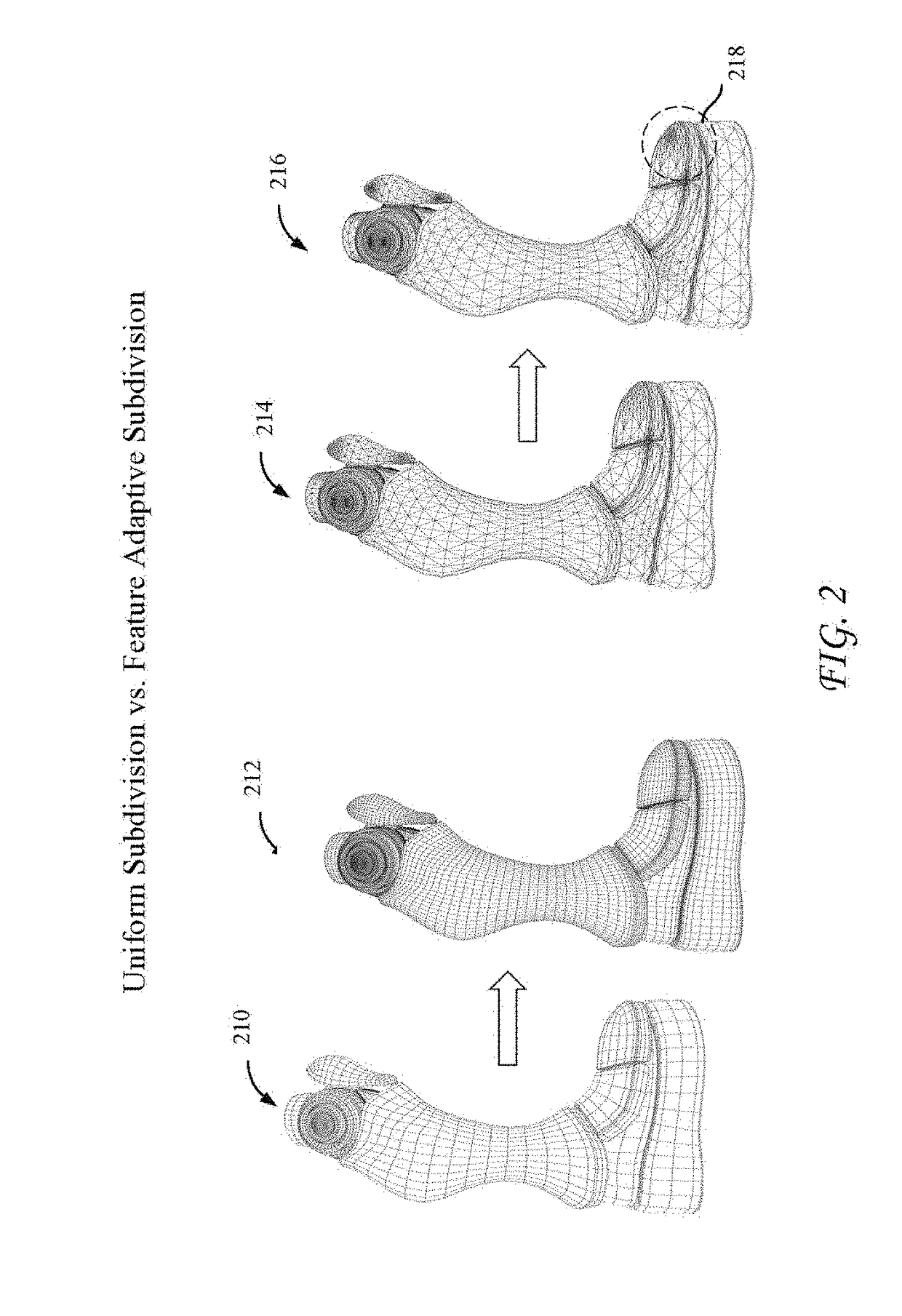

Alternate Scene Representations for Optimizing Rendering of Computer Graphics

ActiveUS20130027417A1Improves render performanceDifferent degree of detailCathode-ray tube indicatorsImage generationComputer visionGraphics

Shading attributes for scene geometry are predetermined and cached in one or more alternate scene representations. Lighting, shading, geometric, and / or other attributes of the scene may be precalculated and stored for at least one of the scene representations at an appropriate level of detail. Rendering performance is improved generally and for a variety of visual effects by selecting between alternate scene representations during rendering. A renderer selects one or more of the alternate scene representations for each of the samples based on the size of its filter area relative to the feature sizes or other attributes of the alternate scene representations. If two or more alternate scene representations are selected, the sampled values from these scene representations may be blended. The selection of scene representations may vary on a per-sample basis and thus different scene representations may be used for different samples within the same image.

Owner:PIXAR ANIMATION

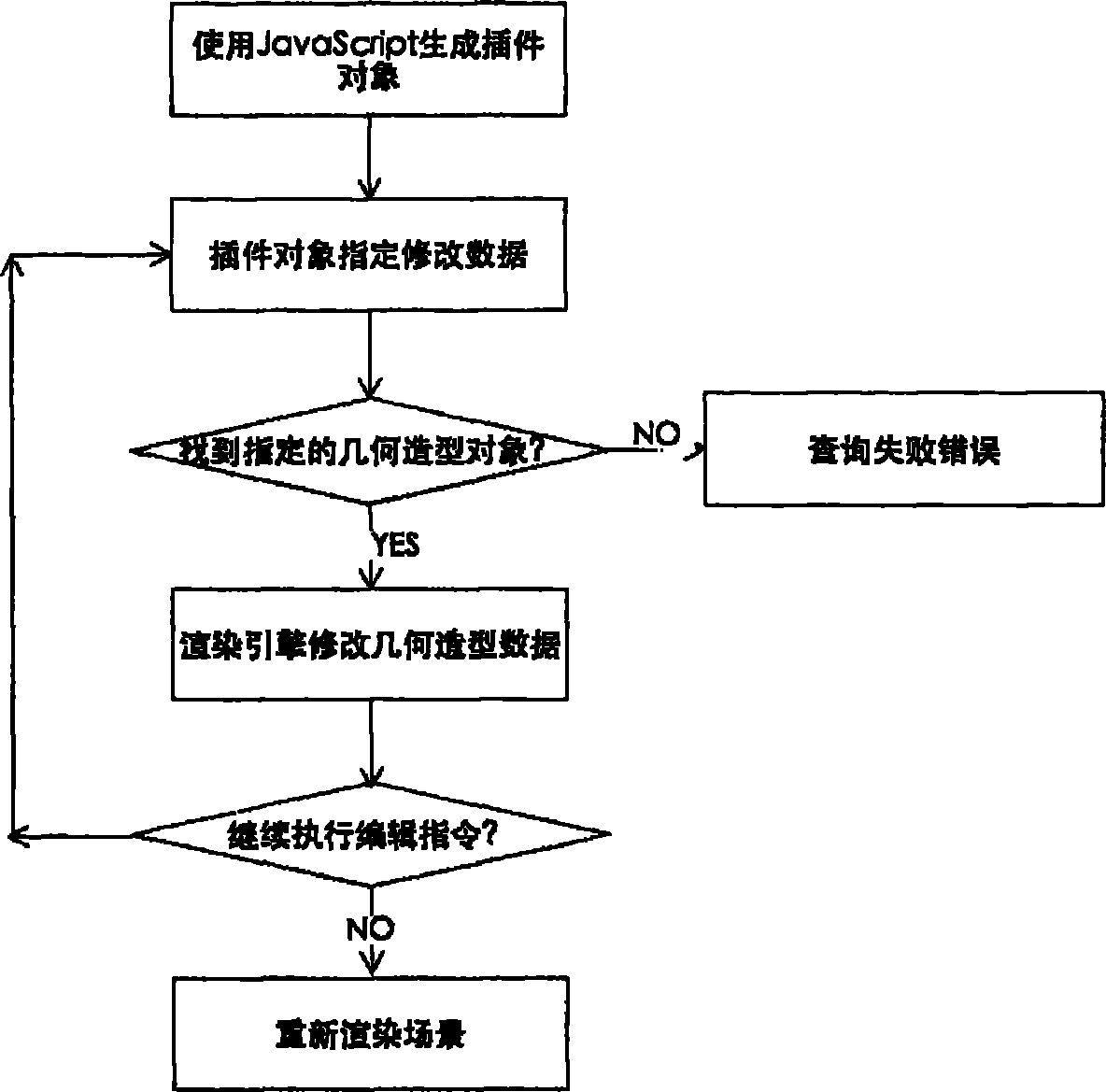

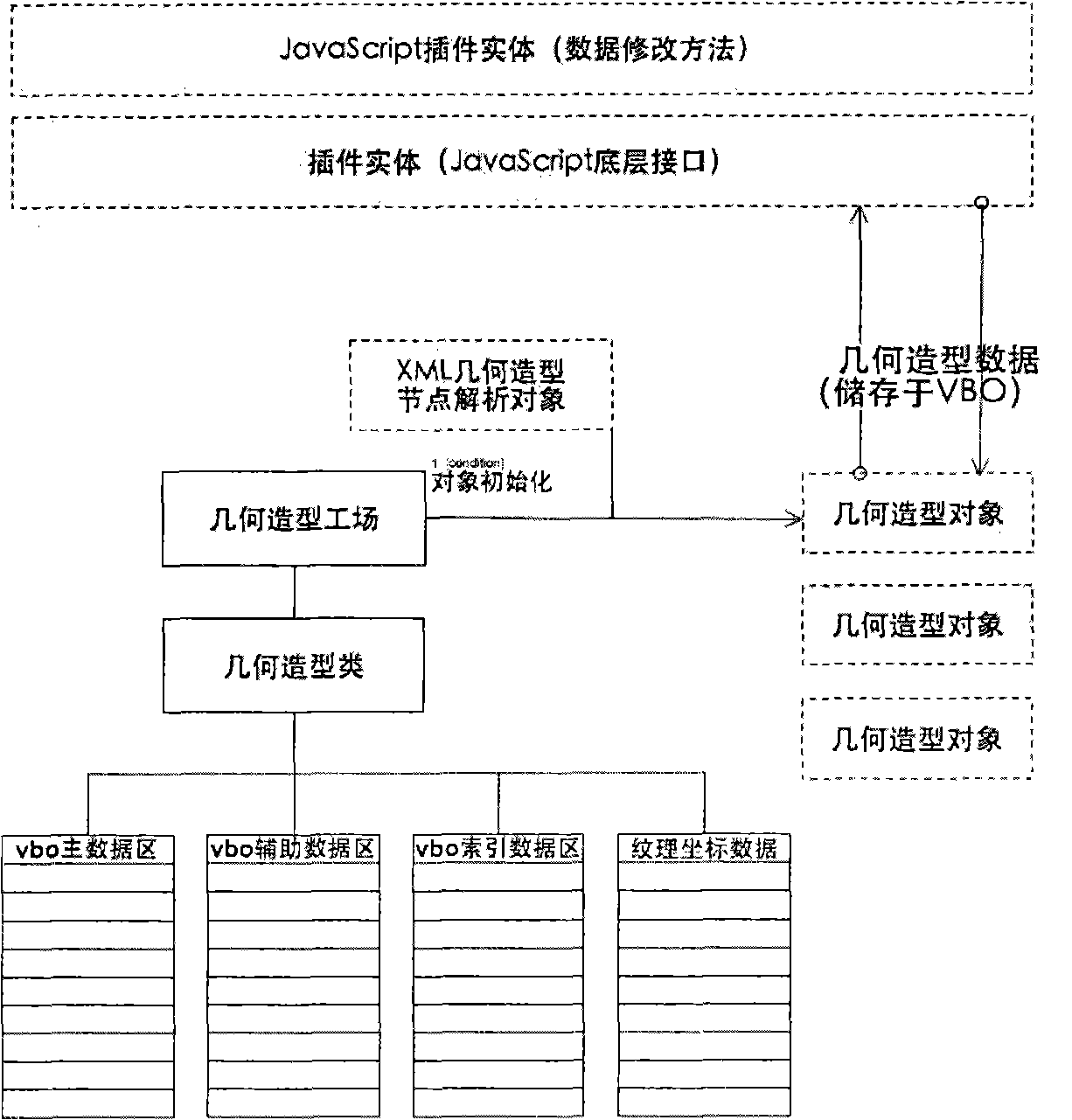

Method for rendering editable webpage three-dimensional (Web3D) geometric modeling

InactiveCN101853162ASpecific program execution arrangements3D-image renderingData exchangeScenario based

The invention discloses a method for rendering an editable webpage three-dimensional (Web3D) geometric modeling and belongs to the technical field of computer graphics processing. In the method, a geometric modeling object is generated by using a rendering engine geometric modeling workshop and analyzing the geometric modeling in an image node, and rendering for the editable three-dimensional geometric modeling is realized by expanding a JavaScript bottom layer application program interface (API) with a plug-in component on the basis of realizing Web3D by analyzing a data exchange protocol and using the rendering engine based on a scene tree. In the method, two basic Web3D rendering thoughts are combined and the redrawing efficiency of the geometric modeling is improved on the basis that vertex grain editable Web3D geometric modeling rendering is realized. The method belongs to the method for the three-dimensional geometric modeling of a Web rich client and can be applied to the animation industry.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

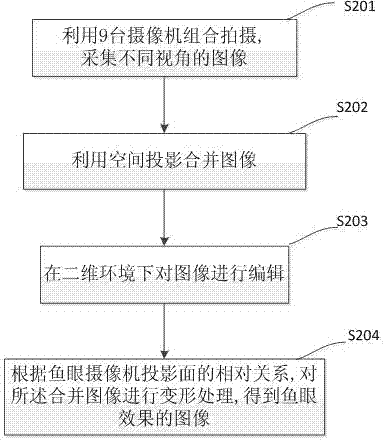

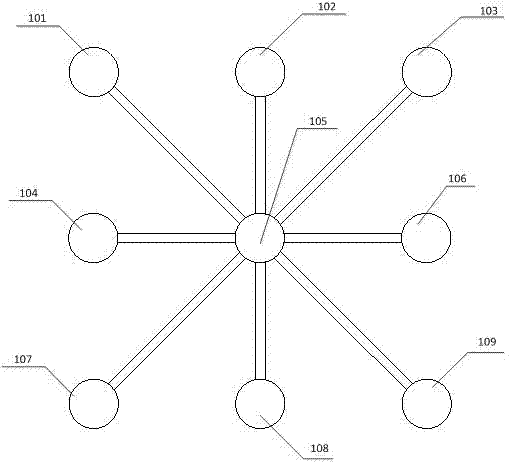

Method for generating image with fisheye effect by utilizing a plurality of video cameras

InactiveCN102340633ALow costEasy to operateTelevision system detailsColor television detailsRadiologyComputer graphics

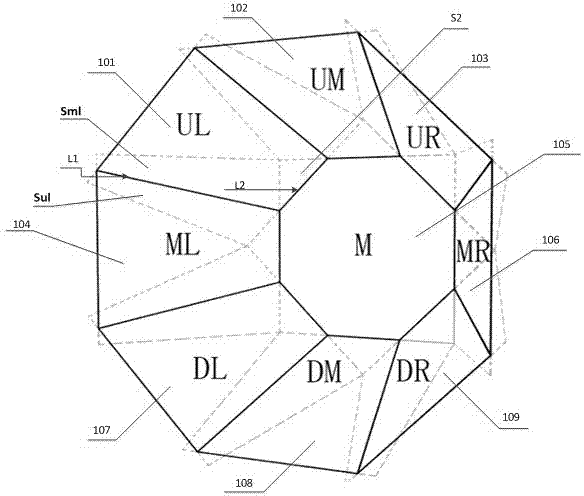

The invention discloses a method for generating an image with a fisheye effect by utilizing a plurality of video cameras, and relates to a digital film technology, in particular to the method for shooting a real field of view by utilizing nine video cameras to generate the image with the fisheye effect by adopting an optical principle and a computer graphics technology. The method is suitable forprojection imaging on a curved screen. The method comprises the following steps of: combining and arranging the nine video cameras, wherein one video camera is arranged in a center as a positioning reference video camera around which the other eight video cameras at different angles are uniformly distributed; and acquiring images of different visual angles by utilizing the nine video cameras in acombined way, combining the images rendered by the nine video cameras, and performing deformation processing on the combined image to obtain the image with the fisheye effect. By the method, semispherical images can be seamlessly jointed in a way of combined shooting of specific angles by adopting the plurality of video cameras, image rendering cost is low and operations are simple; and the method is suitable for projecting real image frames on the curved screen.

Owner:深圳市远望淦拓科技有限公司

Implementation method and device of fluff effect and terminal

PendingCN111462313AAlleviate high performance consumptionReduce limitations3D modellingManufacturing computing systemsComputer hardwareComputational science

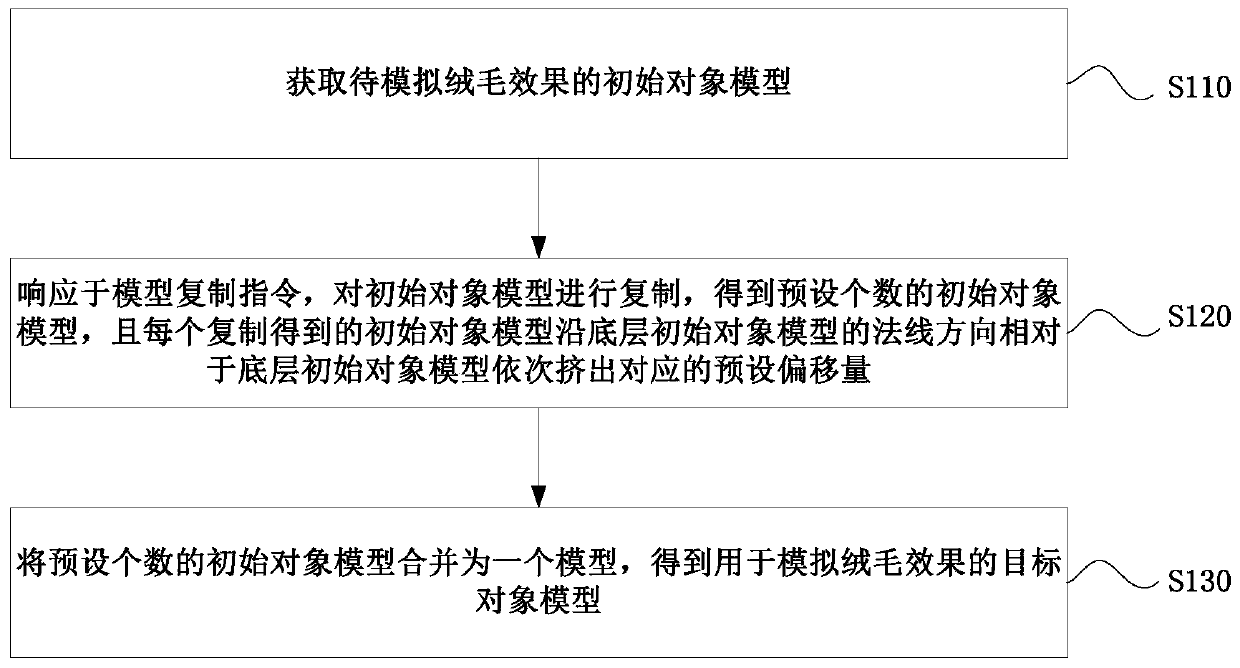

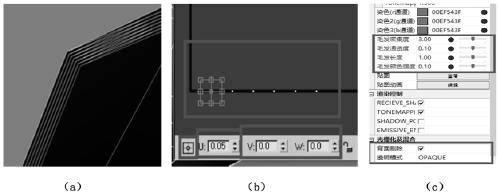

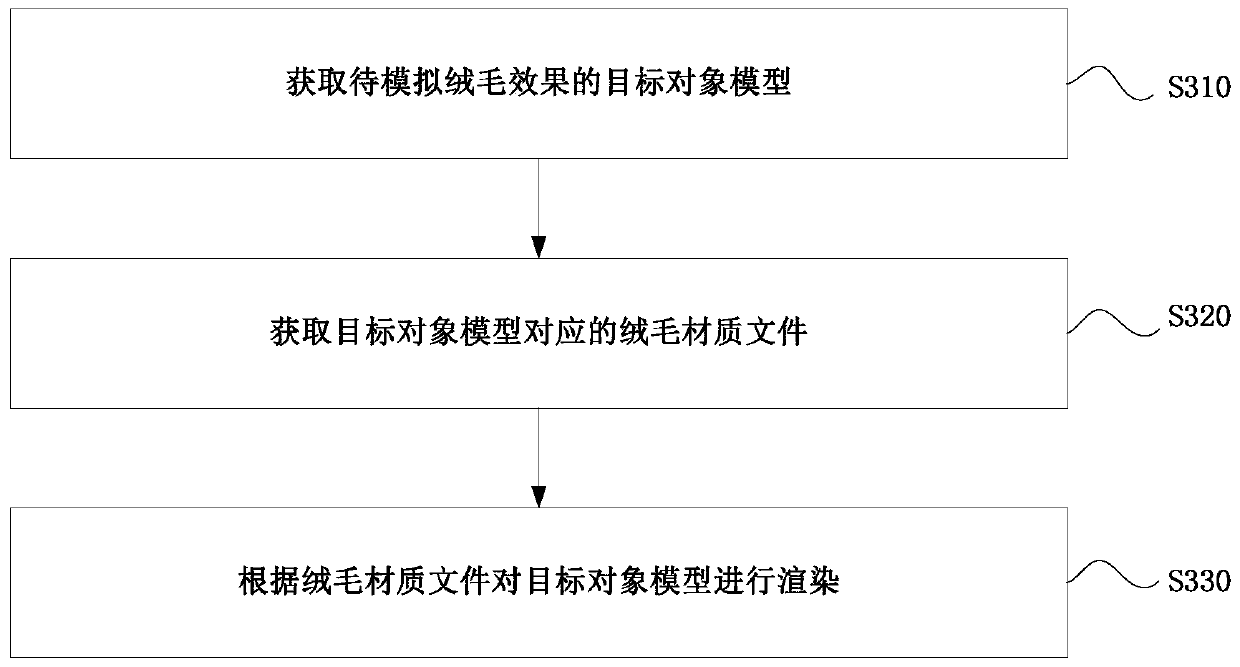

The invention provides a fluff effect implementation method and device and a terminal, and relates to the technical field of computer graphics images.The method comprises the steps that initial objectmodels of a to-be-simulated fluff effect are obtained and copied in response to a model copying instruction, and a preset number of initial object models are obtained; each copied initial object model extrudes corresponding preset offsets in sequence relative to the bottom-layer initial object model along the normal direction of the bottom-layer initial object model; and combining the preset number of initial object models into one model to obtain a target object model for simulating a fluff effect. According to the method, the target object model for simulating the fluff effect can be directly obtained; according to the method, fluff with a multi-layer effect can be simulated without multi-channel rendering, and the problems of high performance consumption and high limitation of a methodfor simulating the fluff effect by rendering in the prior art are solved, so that the technical effects of reducing the rendering times, reducing the performance consumption and meeting the configuration requirements are achieved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

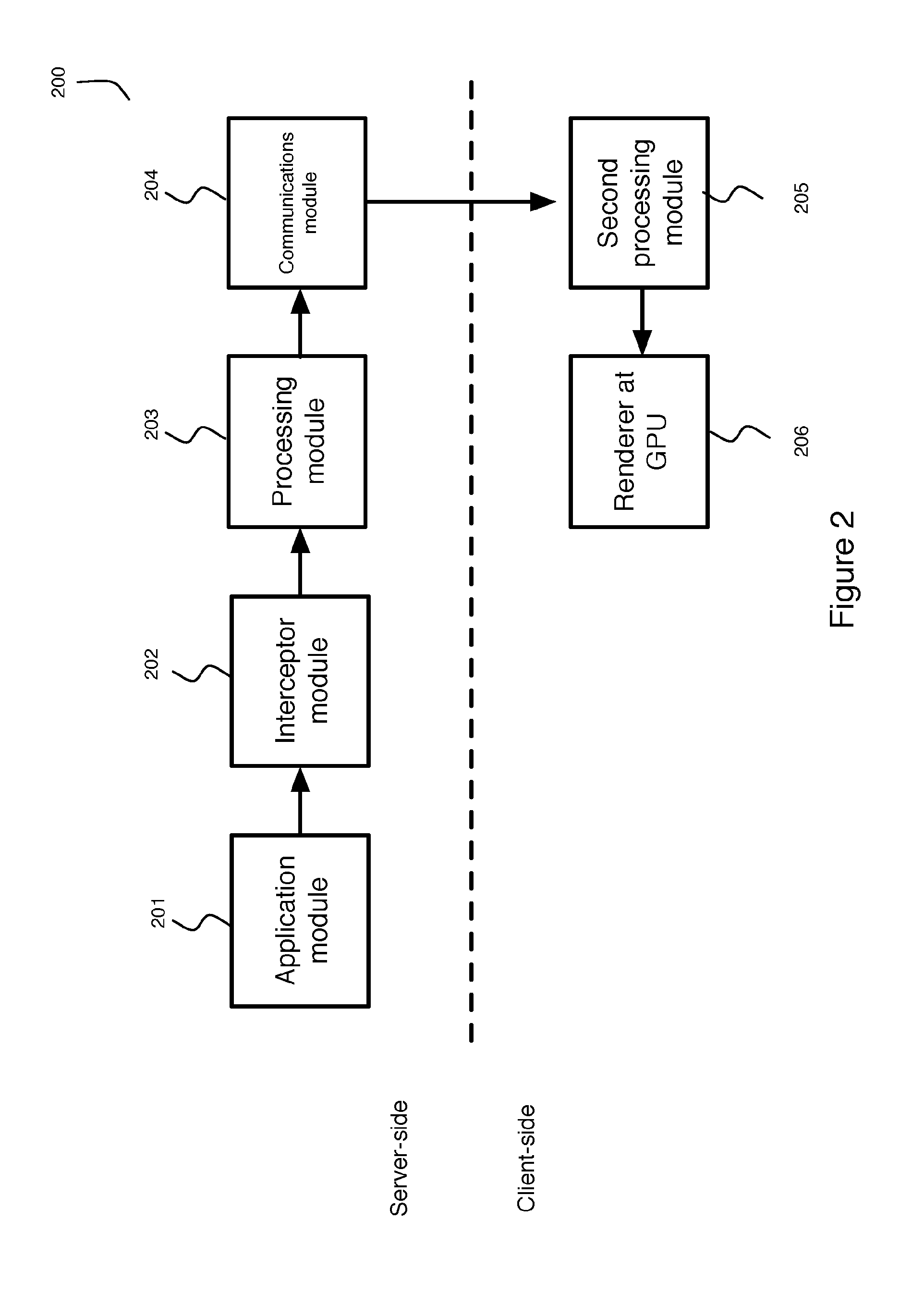

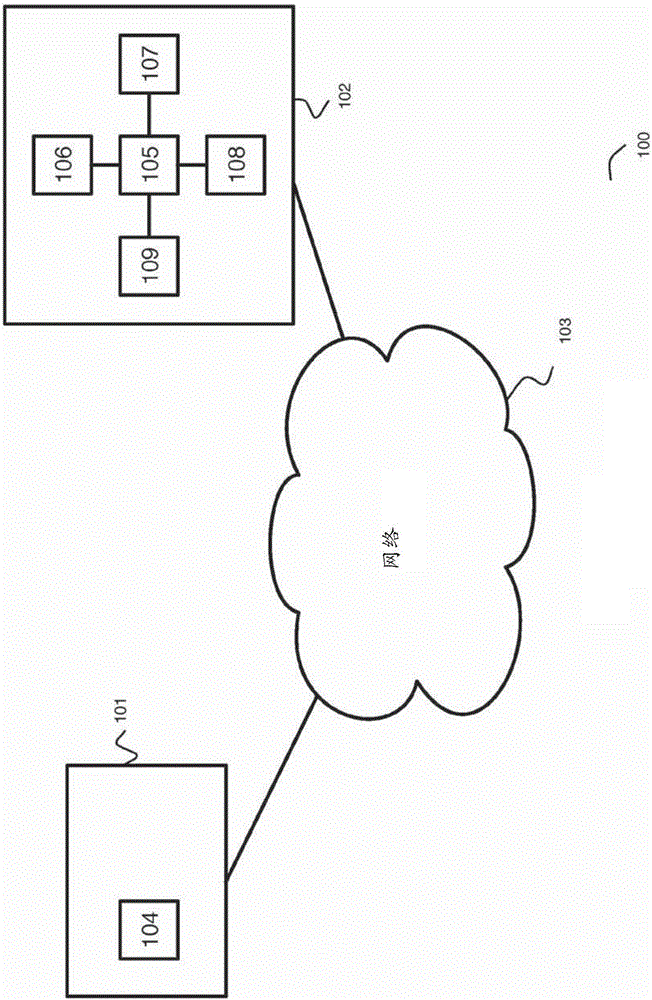

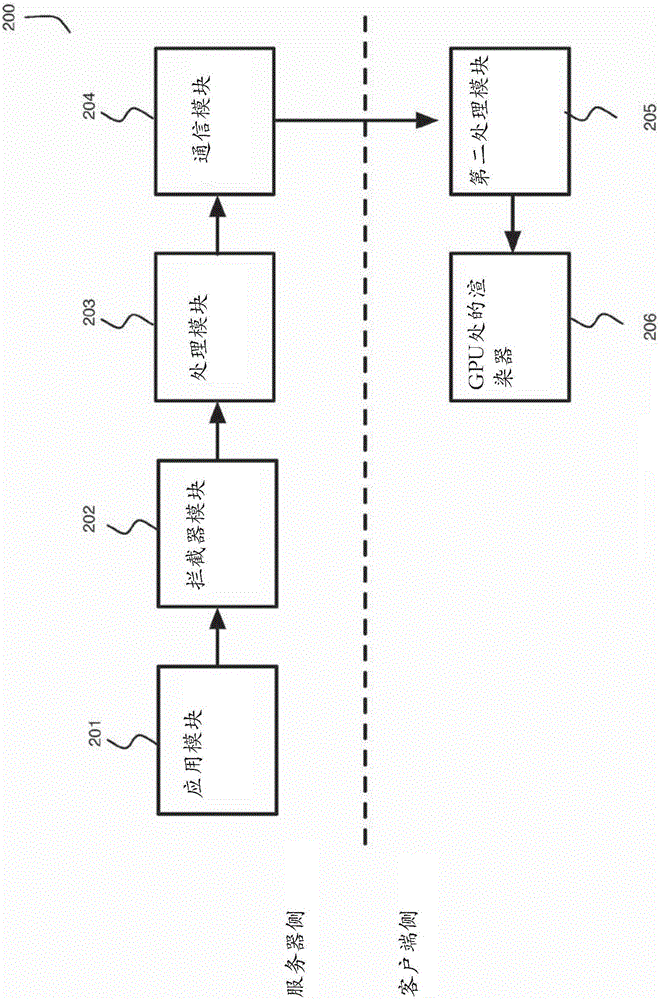

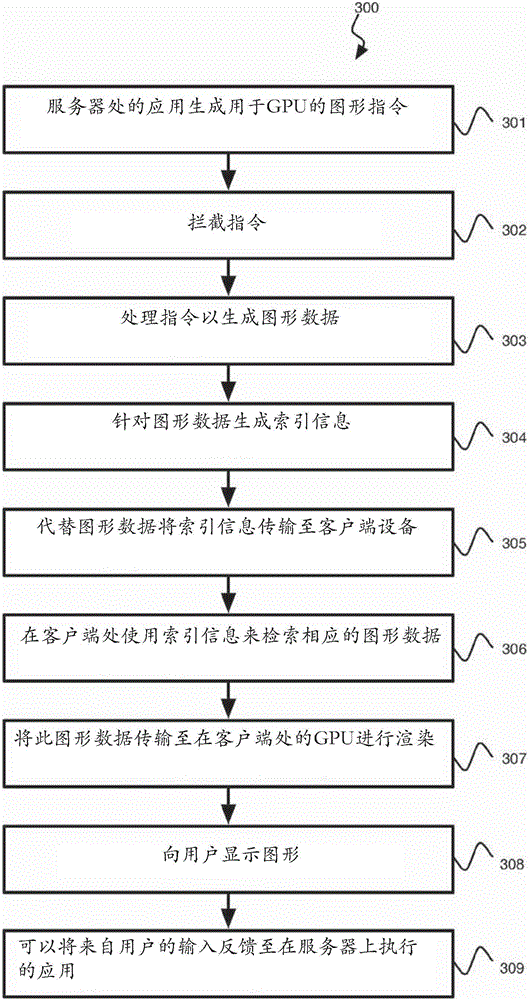

A method and system for interactive graphics streaming

ActiveUS20170011487A1Increase the number ofLow costImage codingProcessor architectures/configurationInteractive graphicsClient-side

The present invention relates to a method of streaming interactive computer graphics from a server to a client device. The method includes the steps of: intercepting graphics instructions transmitted from an application destined for a graphical processing unit (GPU) at the server; processing the graphics instructions to generate graphics data at the server; generating index information for, at least, some of the graphics data at the server; transmitting the index information in place of the graphics data to a client device; extracting corresponding graphics data stored at the client device utilizing the index information; and rendering computer graphics at a graphical processing unit (GPU) at the client device using the corresponding graphics data. A system for streaming interactive computer graphics is also disclosed.

Owner:MYTHICAL INC

Method and system for interactive graphics streaming

ActiveCN105917382AIncrease the number of usersLow running costImage codingProcessor architectures/configurationInteractive graphicsClient-side

The present invention relates to a method of streaming interactive computer graphics from a server to a client device. The method includes the steps of: intercepting graphics instructions transmitted from an application destined for a graphical processing unit (GPU) at the server; processing the graphics instructions to generate graphics data at the server; generating index information for, at least, some of the graphics data at the server; transmitting the index information in place of the graphics data to a client device; extracting corresponding graphics data stored at the client device utilizing the index information; and rendering computer graphics at a graphical processing unit (GPU) at the client device using the corresponding graphics data. A system for streaming interactive computer graphics is also disclosed.

Owner:MYTHICAL GAMES UK LTD

BIM rendering method and equipment based on lightweight equipment, and computer readable storage medium

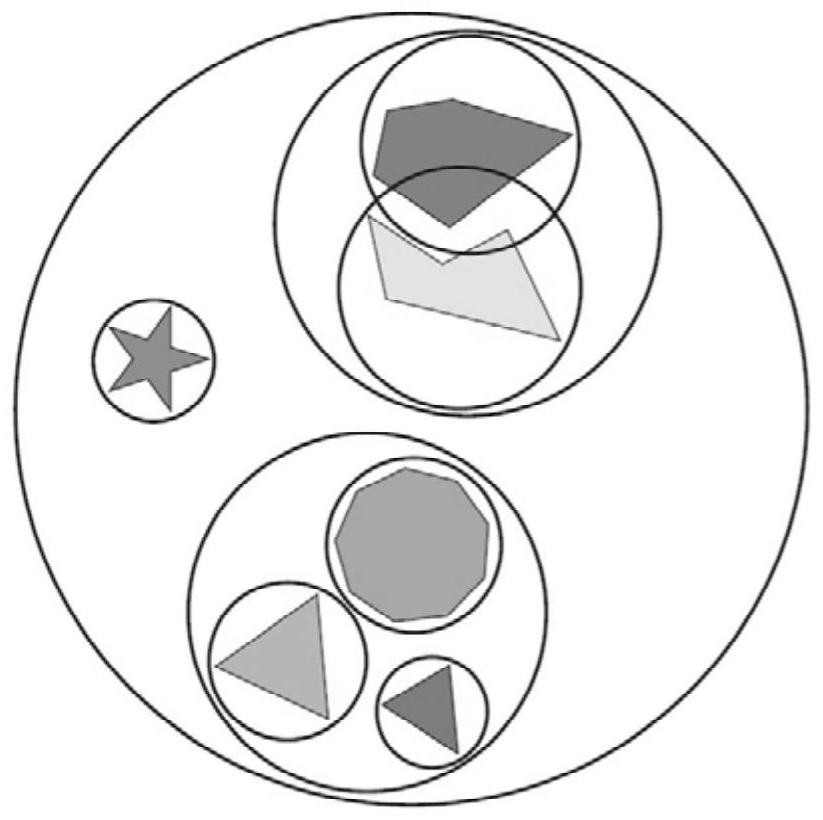

InactiveCN112347546AReduce occupancySpeed up the rendering processGeometric CADDesign optimisation/simulationPERQBinary segmentation

The invention relates to the field of computer graphics, and provides a BIM rendering method and equipment based on a lightweight device, and a computer readable storage medium, so as to prevent program flash back while accelerating BIM rendering. The method comprises the following steps: analyzing the complexity, dynamic and static characteristics and / or geometrical characteristics of a buildingthree-dimensional model according to geometrical structure data of the building three-dimensional model; generating a corresponding hierarchical bounding box tree structure, a binary segmentation space tree structure or an octree structure according to an analysis result obtained by analyzing the complexity, the dynamic and static characteristics and / or the geometrical characteristics of the three-dimensional model of the building so as to record information of the three-dimensional model of the building; and based on an algorithm of a hierarchical bounding box tree structure, a binary segmentation space tree structure or an octree structure, reading information of the building three-dimensional model from a memory, and rendering an effective part of the building three-dimensional model. According to the technical scheme, when the building three-dimensional model is rendered, memory occupation can be reduced, and therefore the rendering process can be accelerated.

Owner:久瓴(江苏)数字智能科技有限公司

Method for manufacturing computer skin animation based on high-precision three-dimensional scanning model

ActiveCN111369649AReduce workloadUses less memory spaceAnimationManufacturing computing systemsSkinComputational model

The invention belongs to the technical field of computer graphics. The invention relates to a three-dimensional model skin animation generation method. The method for manufacturing the computer skin animation based on the high-precision three-dimensional scanning model specifically comprises the steps that firstly, skeleton binding is completed through a digital three-dimensional model skeleton line scanned by a three-dimensional scanner and an action template library of skeletons, skin weights are calculated, model vertex coordinates are updated in real time, and the computer skin animation is completed. According to the method, the three-dimensional model scanned by the high-precision three-dimensional scanner can be processed, the weight of the vertex of the model is calculated throughan algorithm, and compared with manual processing, the workload of model processing is greatly reduced; and only storing texture coordinates, indexes and node weights of the model by utilizing a skinanimation principle, and calculating new vertex data according to the node weights in each frame rendering process. Compared with a frame data merging method and a skeletal animation method, the method has the advantages that the occupied memory space is small, non-rigid vertex deformation is achieved, and the achieved animation effect is more natural.

Owner:SUZHOU DEKA TESTING TECH CO LTD

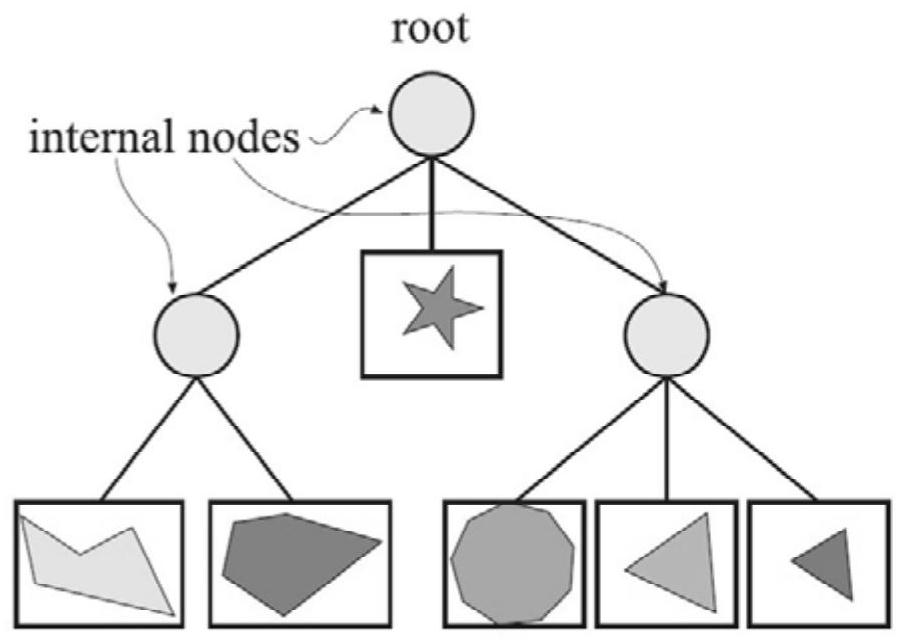

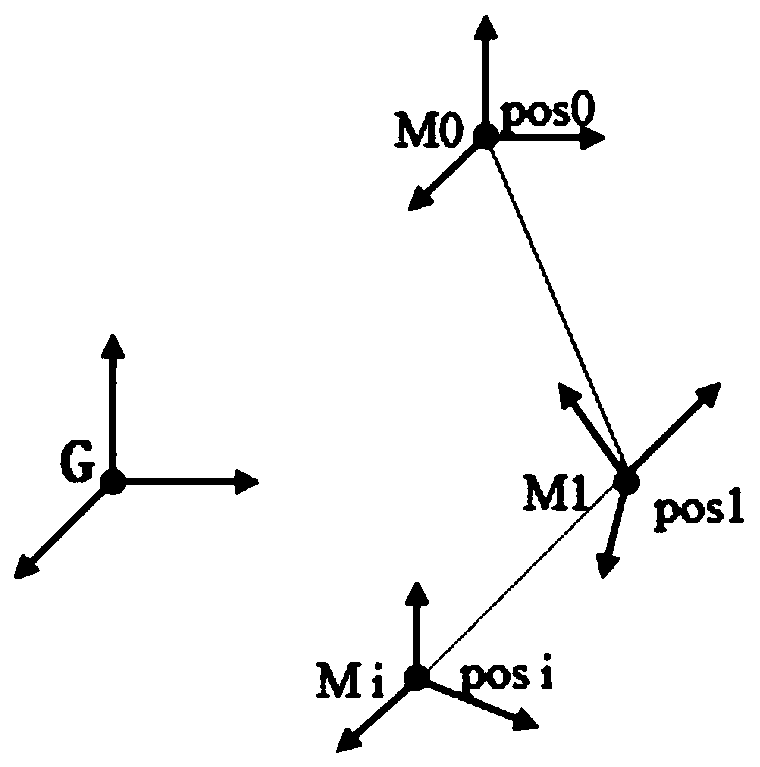

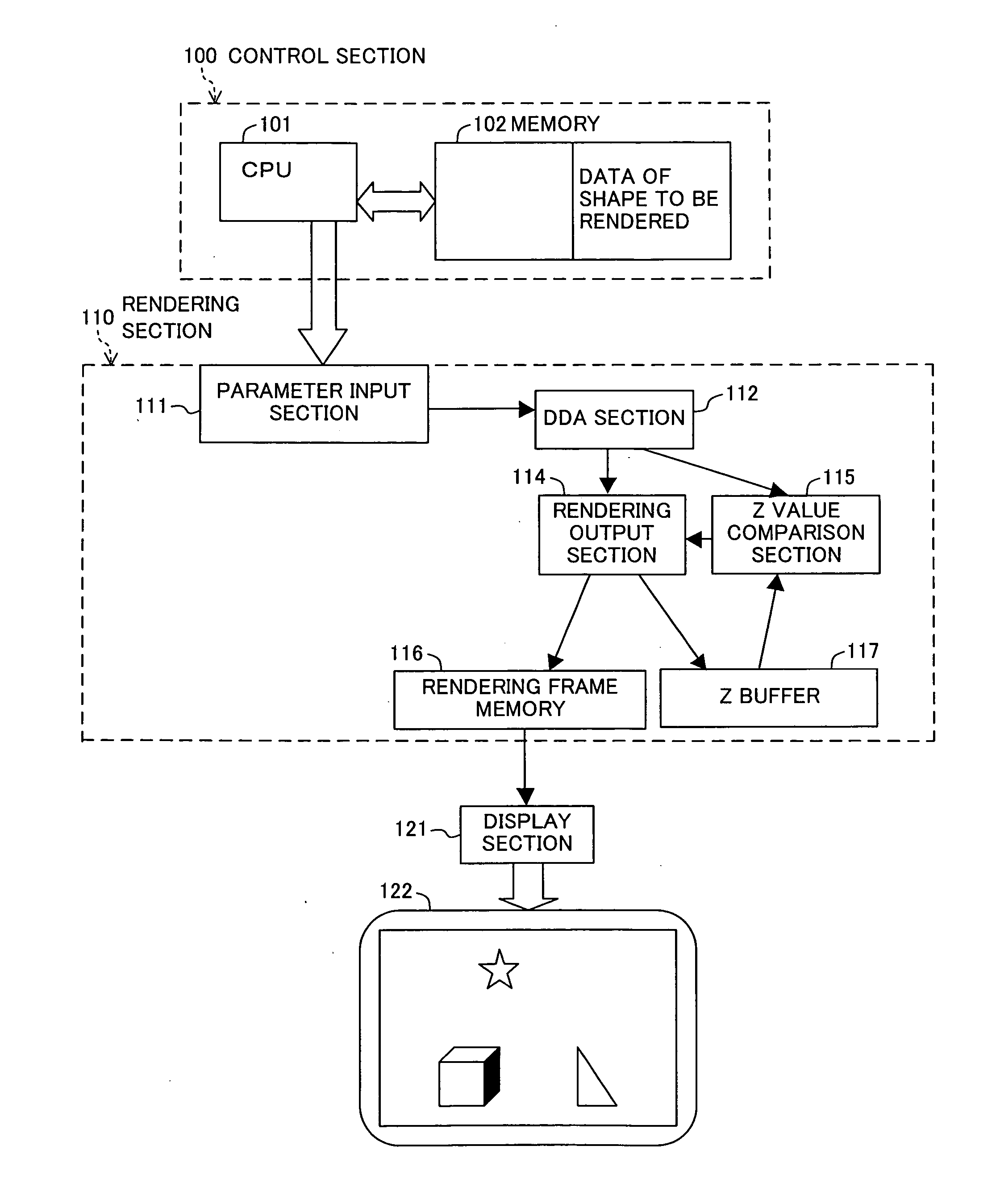

Computer graphics rendering method and apparatus

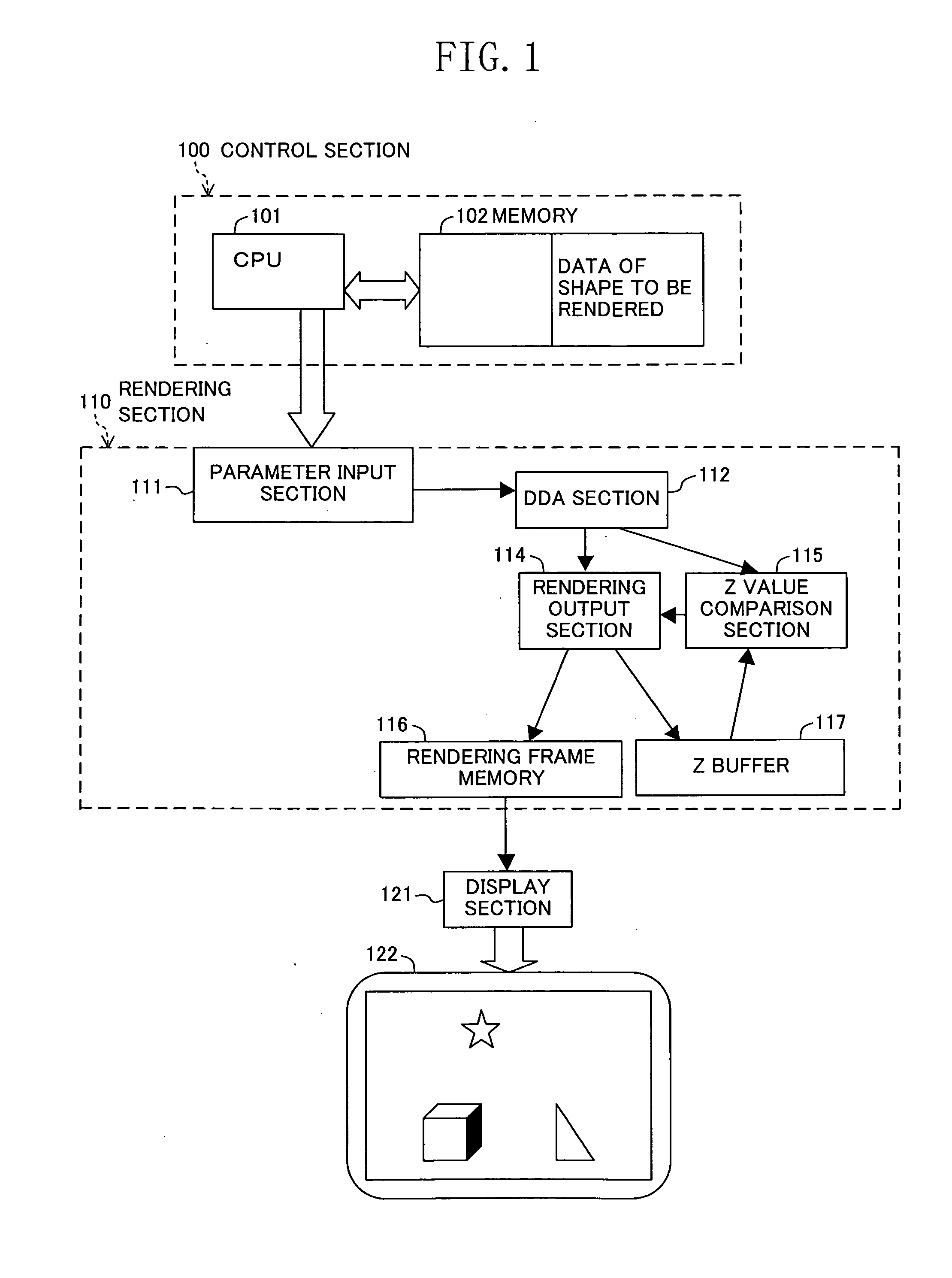

When a plurality of coplanar shapes are rendered over one another by performing a hidden surface removal operation using the Z value, a reference plane is specified, and the Z value of each point of a shape to be rendered on the reference plane is uniquely calculated based on the Z value of the rendering start point, the Z value gradient dZdx for the X direction, and the Z value gradient dZdy for the Y direction. Thus, any coplanar shapes will have the same Z value for each point shared therebetween. Therefore, if the rendering process is performed under such a rule that a shape is overwritten when the Z value of the new shape is less than or equal to the current Z value, it is ensured that the previously-rendered shape is always overwritten with a later-rendered, coplanar shape. Thus, it is possible to prevent a phenomenon that some pixels of a later-rendered shape that are supposed to be displayed are not displayed.

Owner:PANASONIC CORP

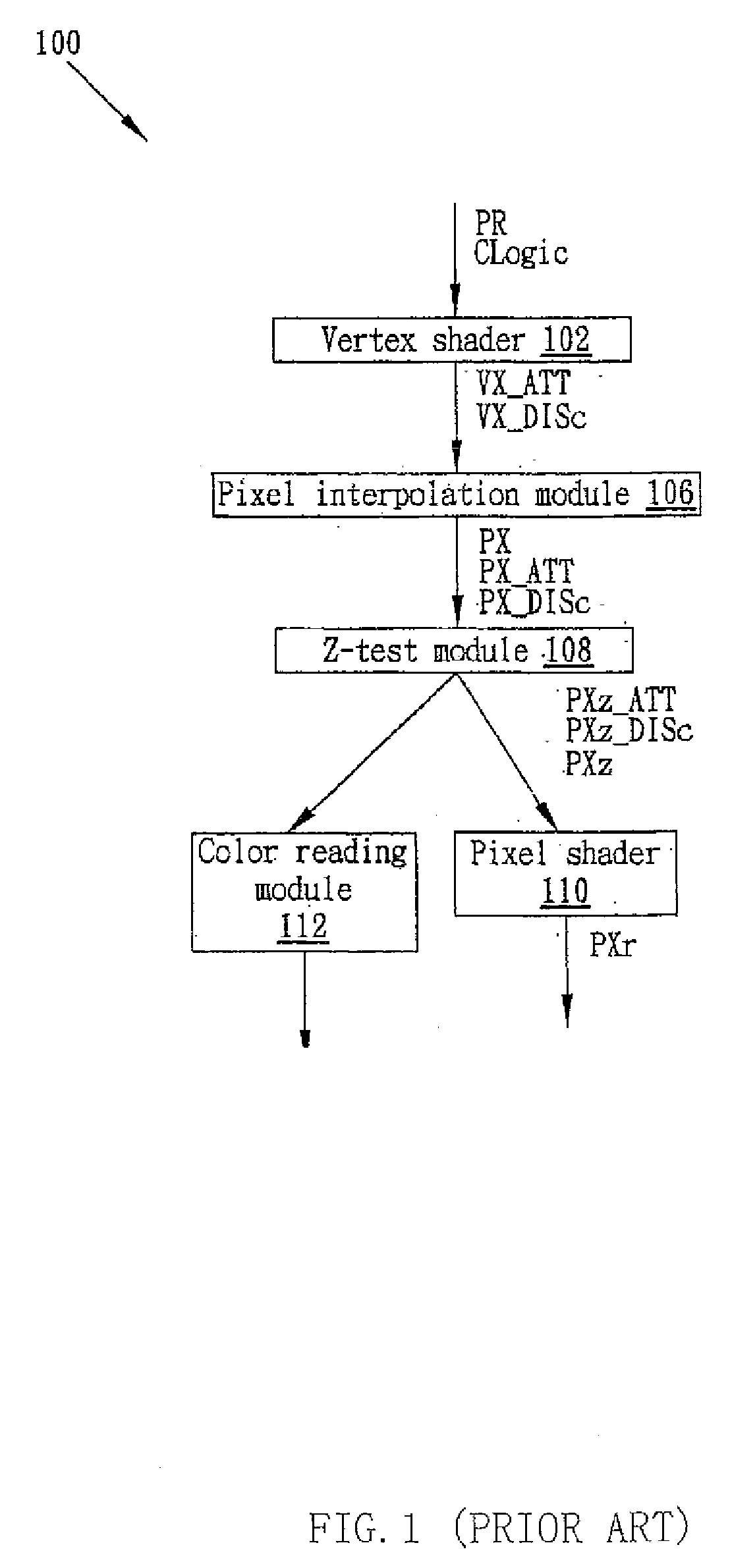

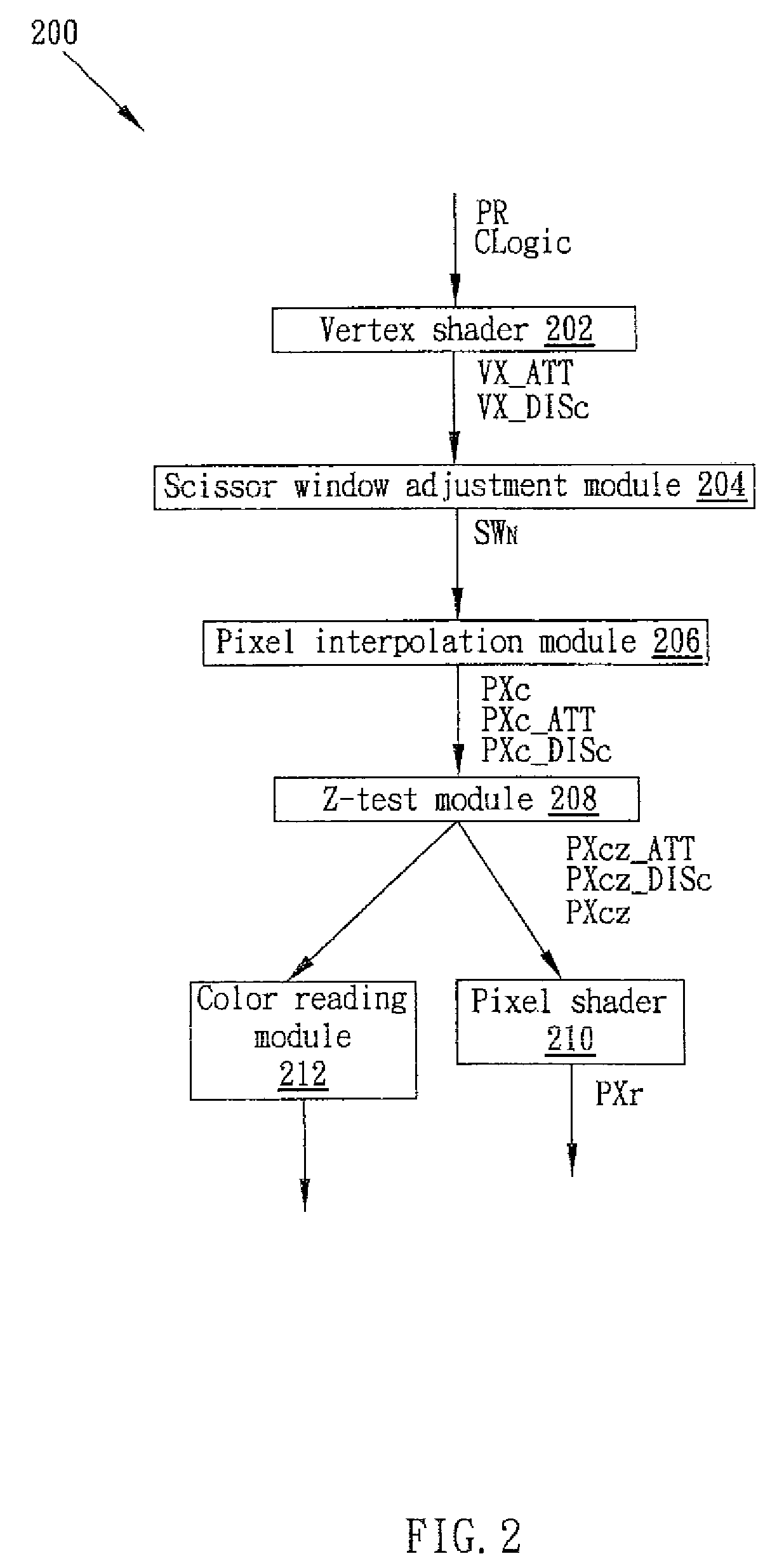

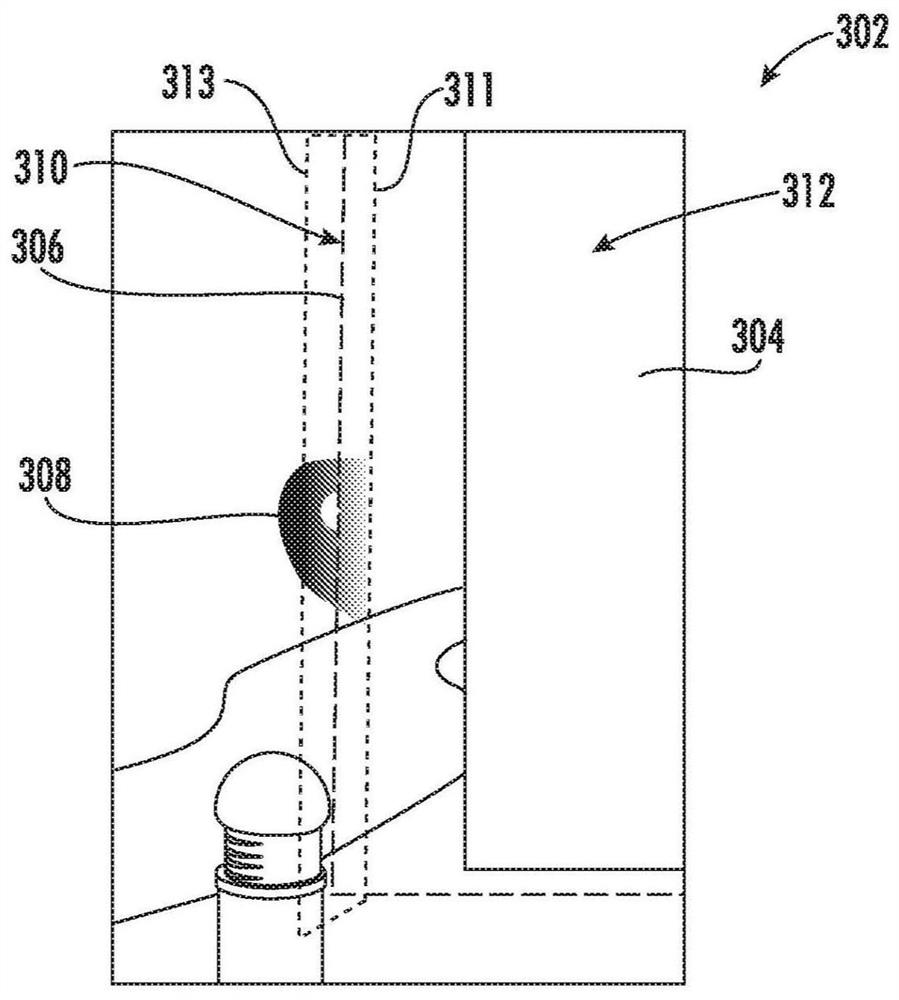

Method and apparatus for rendering computer graphics primitive

ActiveUS20090002393A1Avoid system resource wastingAvoid redundant calculationsCathode-ray tube indicators3D-image renderingGraphicsUser defined

The present invention is directed to a method for rendering a computer graphics primitive intersected with one or more user-defined clipping planes. The method includes receiving a primitive, a clipping plane and a default scissor window; determining a second scissor window according to the spatial relationship among a first scissor window, the clipping plane and the vertices of the primitive; determining a group of pixels to be rendered by eliminating pixels not covered by an adjusted scissor window from the primitive; and determining a group of actually rendered pixels, in which the actually rendered pixels determining step includes removing the pixels meeting a clipping criterion from the group of pixels to be rendered. The present invention also includes an apparatus for performing the method.

Owner:VIA TECH INC

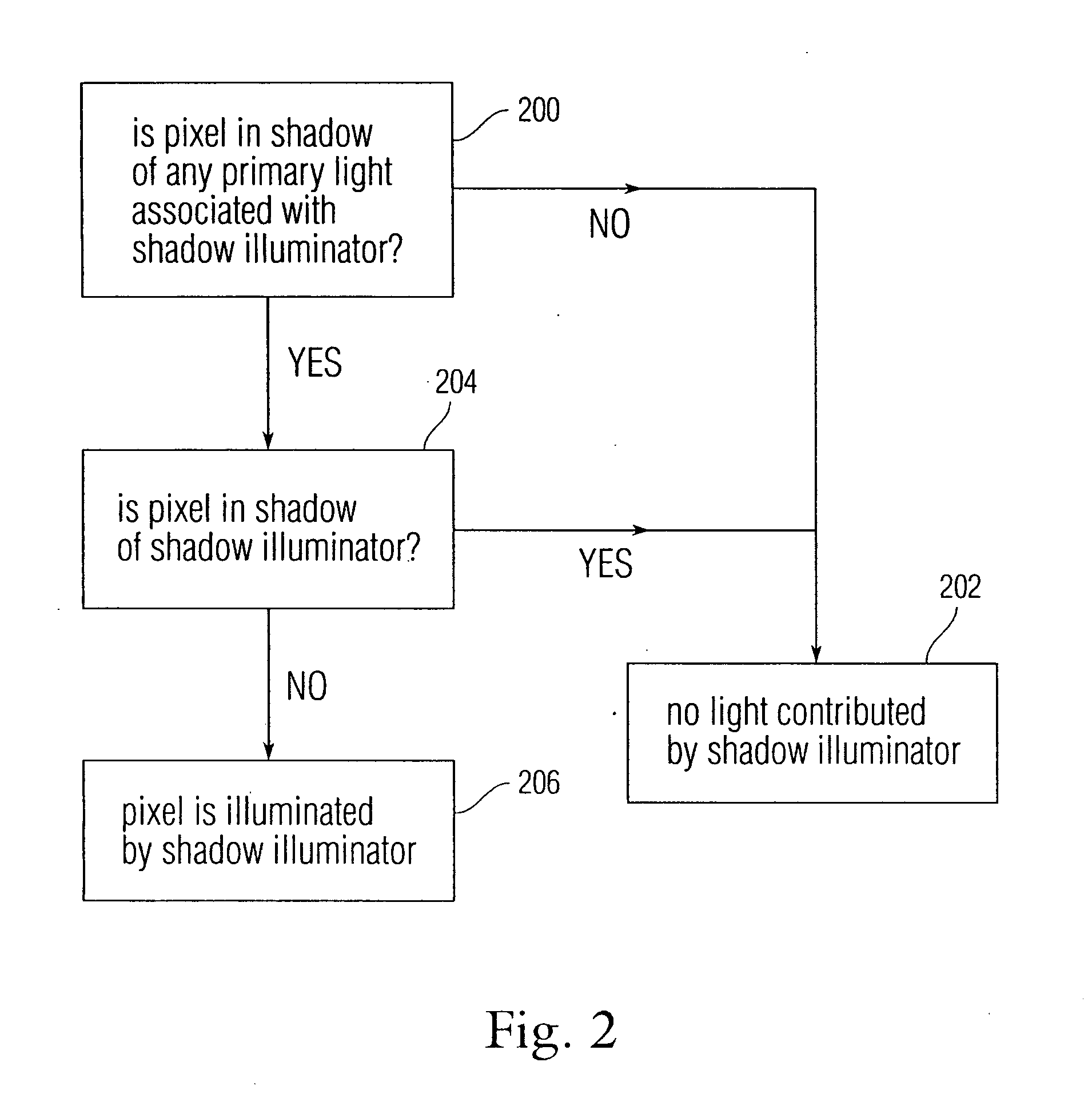

System and method for rendering computer graphics utilizing a shadow illuminator

Embodiments of the present invention are directed to rendering computer graphics using an augmented direct light model which approximates the effect of indirect light in shadows. More specifically, a shadow illuminator light source is provided for. The shadow illuminator light source is associated with an ordinary, or primary light source and is used to provide illumination in areas which are in shadows with respect to the primary light source. The shadow illuminator provides illumination only to areas which are considered to be in the shadows with respect to the light source the shadow illuminator is associated with. Thus, the shadow illuminator may be used to approximate the effects of indirect light.

Owner:DREAMWORKS ANIMATION LLC

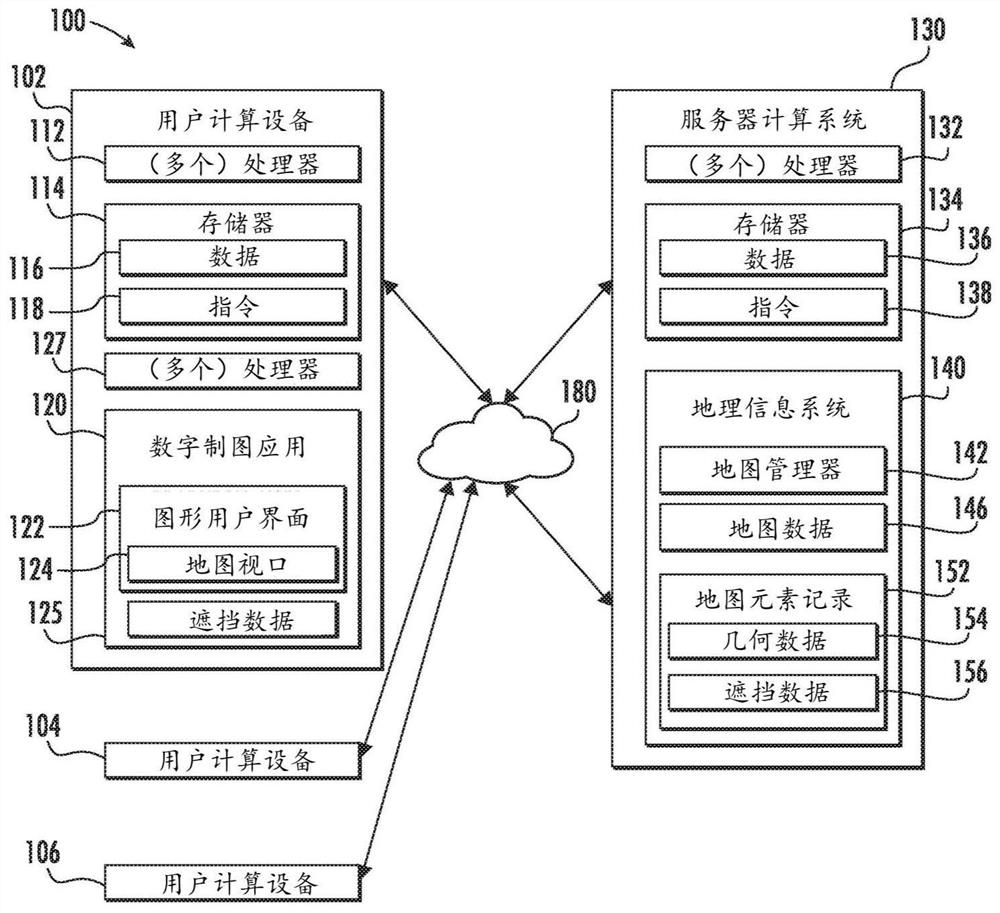

Soft Occlusion for Computer Graphics Rendering

Systems and methods for rendering computer graphics using soft occlusion are provided. A computing system may obtain display data for virtual elements to be displayed in association with imagery depicting an environment including physical objects. The computing system may generate a set of graphical occlusion parameters associated with the rendered image data and the display data based at least in part on the estimated geometry of the physical object. The set of graphical occlusion parameters may define a blend of display data and imagery for virtual elements at regions of soft occlusion including one or more locations within the estimated geometry. The computing system can render a composite image from the display data and imagery of the virtual element based at least in part on the set of graphical occlusion parameters.

Owner:GOOGLE LLC

Image character removing method, system and device based on gate circulation unit

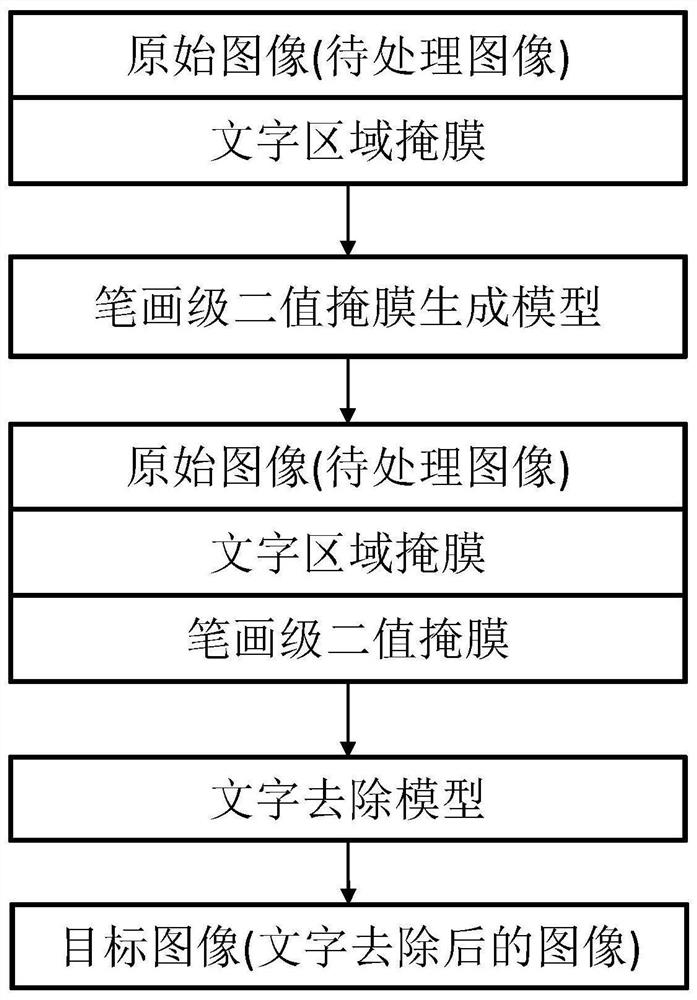

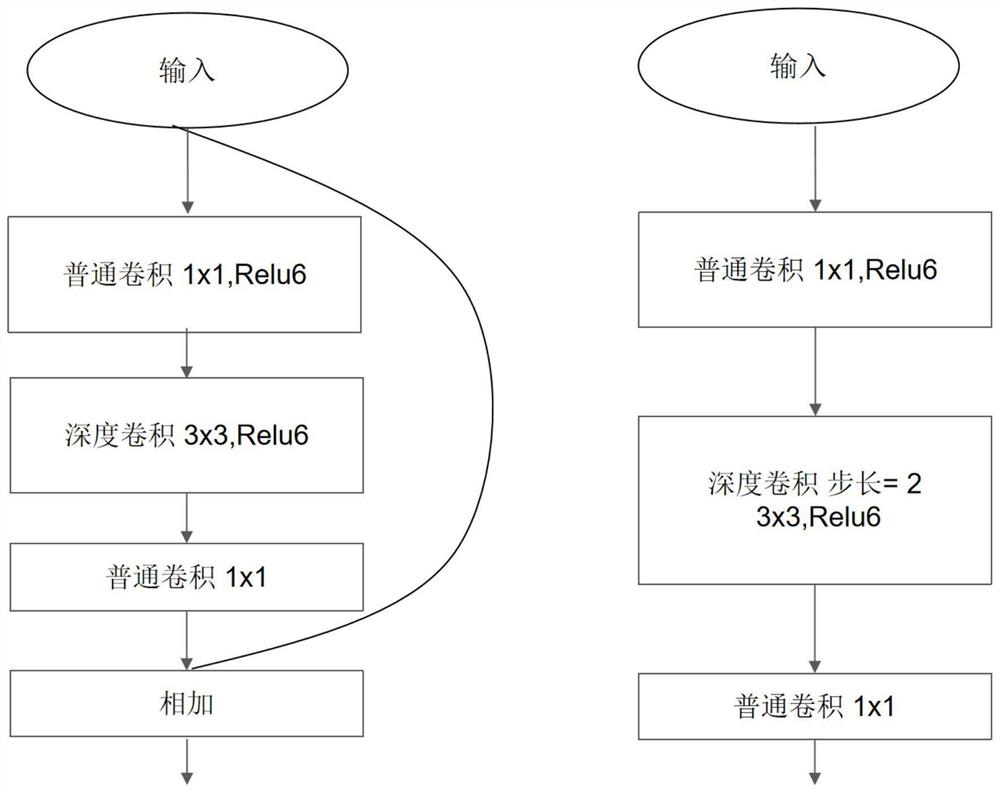

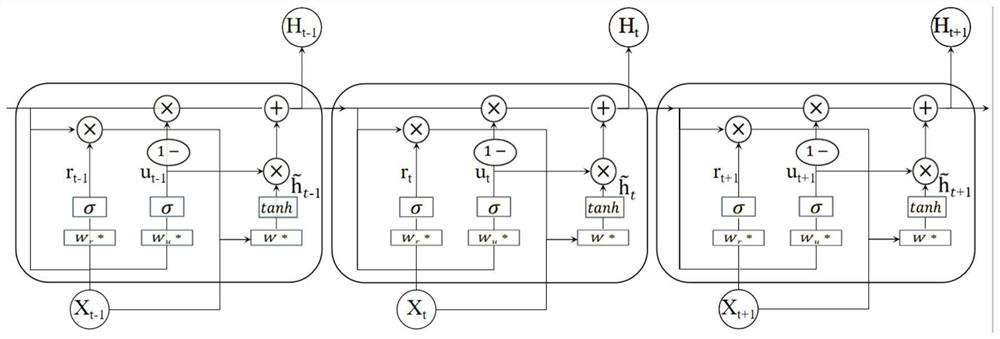

ActiveCN112419174AExcellent peak signal to noise ratioHigh structural similarityImage enhancementImage analysisEngineeringComputer graphics

The invention belongs to the field of computer graphics and computer vision, particularly relates to an image character removing method, system and device based on a gate circulation unit, and aims tosolve the problem that characters in an image cannot be effectively removed due to the fact that accurate character strokes cannot be extracted in the prior art. The method comprises the following steps: constructing a stroke-level binary mask generation model based on one or more inverse residual modules connected in series and a gate cycle unit, and obtaining a stroke-level binary mask of an input image based on the input image and a character region mask; and constructing a character removal model based on the one or more encoders, the one or more decoders and the feature processing module, and obtaining a character-removed image based on the fusion features of the input image, the character region mask and the stroke-level binary mask. The fine stroke-level character mask can be obtained, so that the model is effectively guided to remove the characters with high quality, and the rendering and backfilling effects in the character removing process are improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Displacement directed tessellation

ActiveUS20180240272A1Geometric image transformationImage generationGraphicsRendering (computer graphics)

Systems, methods, and devices are disclosed for rendering computer graphics. In various embodiments, a displacement map is created for a plurality of surfaces and a tessellation process is initiated. It is determined that the tessellation density of a first set of surfaces and a second set of surfaces should be modified based on the displacement map. Based on the displacement map, a tessellation factor scale for each surface of the first set of surfaces is increased and a tessellation factor scale for each surface of the second set of surfaces is decreased, respectively.

Owner:SONY COMPUTER ENTERTAINMENT INC

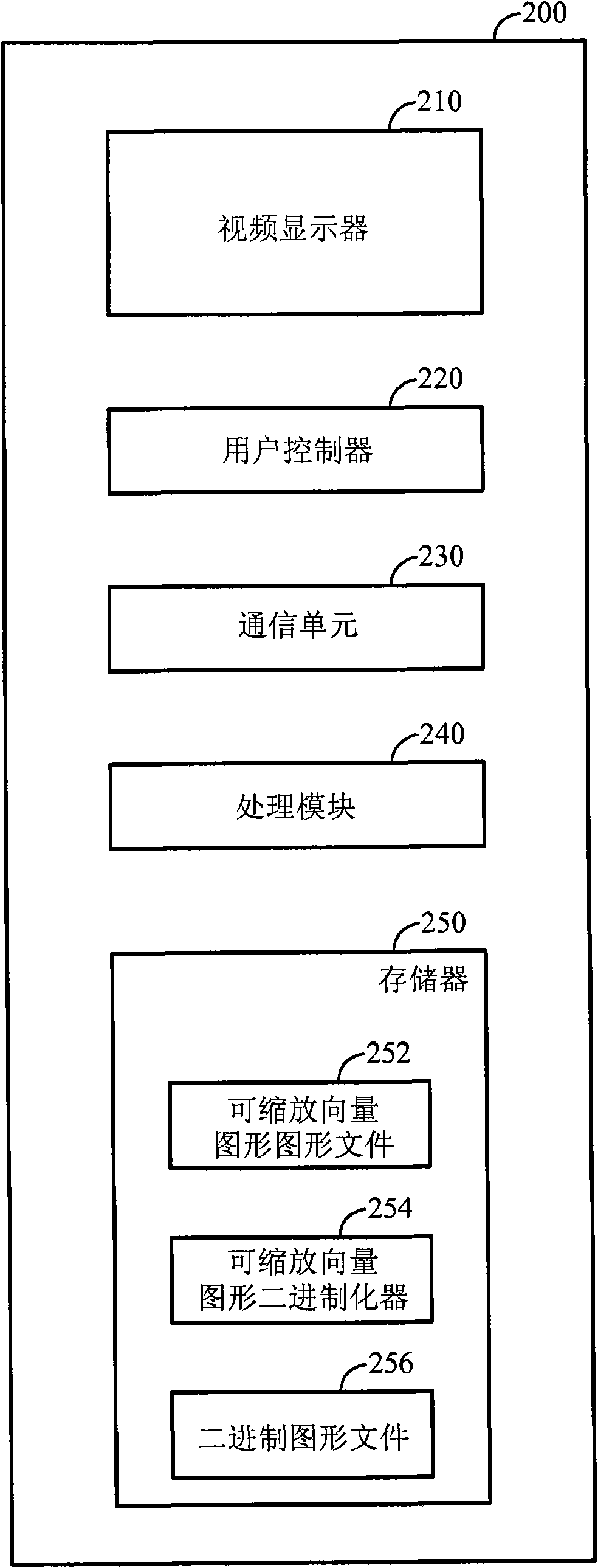

Computer graphics rendering

InactiveCN101627368ADrawing from basic elementsImage data processing detailsComputer scienceWireless

Techniques for rendering computer graphics are described. The techniques include binarization of graphics files generated using a vector graphics language (e.g., Scalable Vector Graphics (SVG)). In exemplary applications, the method is used for rendering video information in cellular phones, video game consoles, personal digital assistants (PDA), or laptop computers, among other video-enabled or audio / video-enabled wireless or wired devices.

Owner:QUALCOMM INC

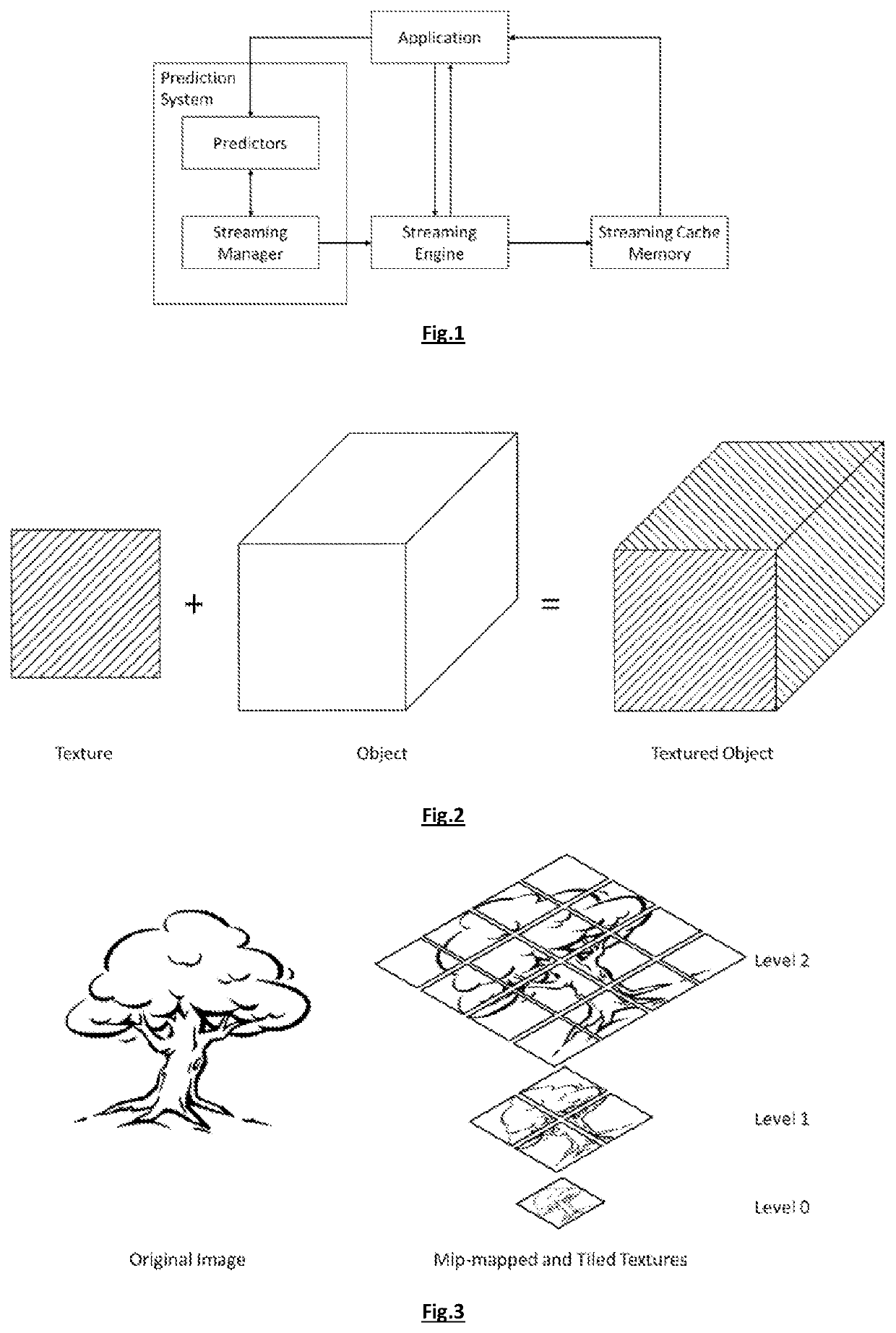

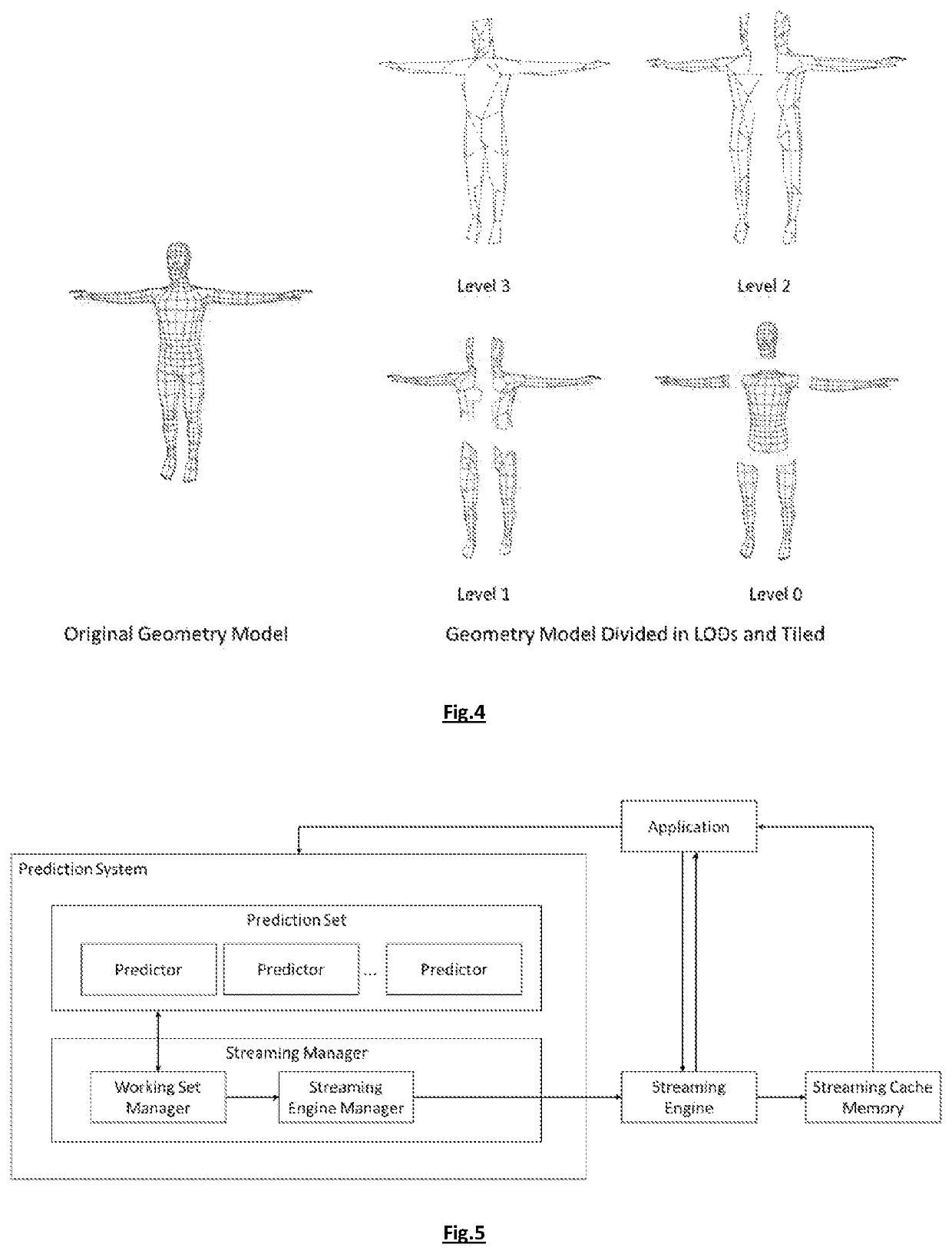

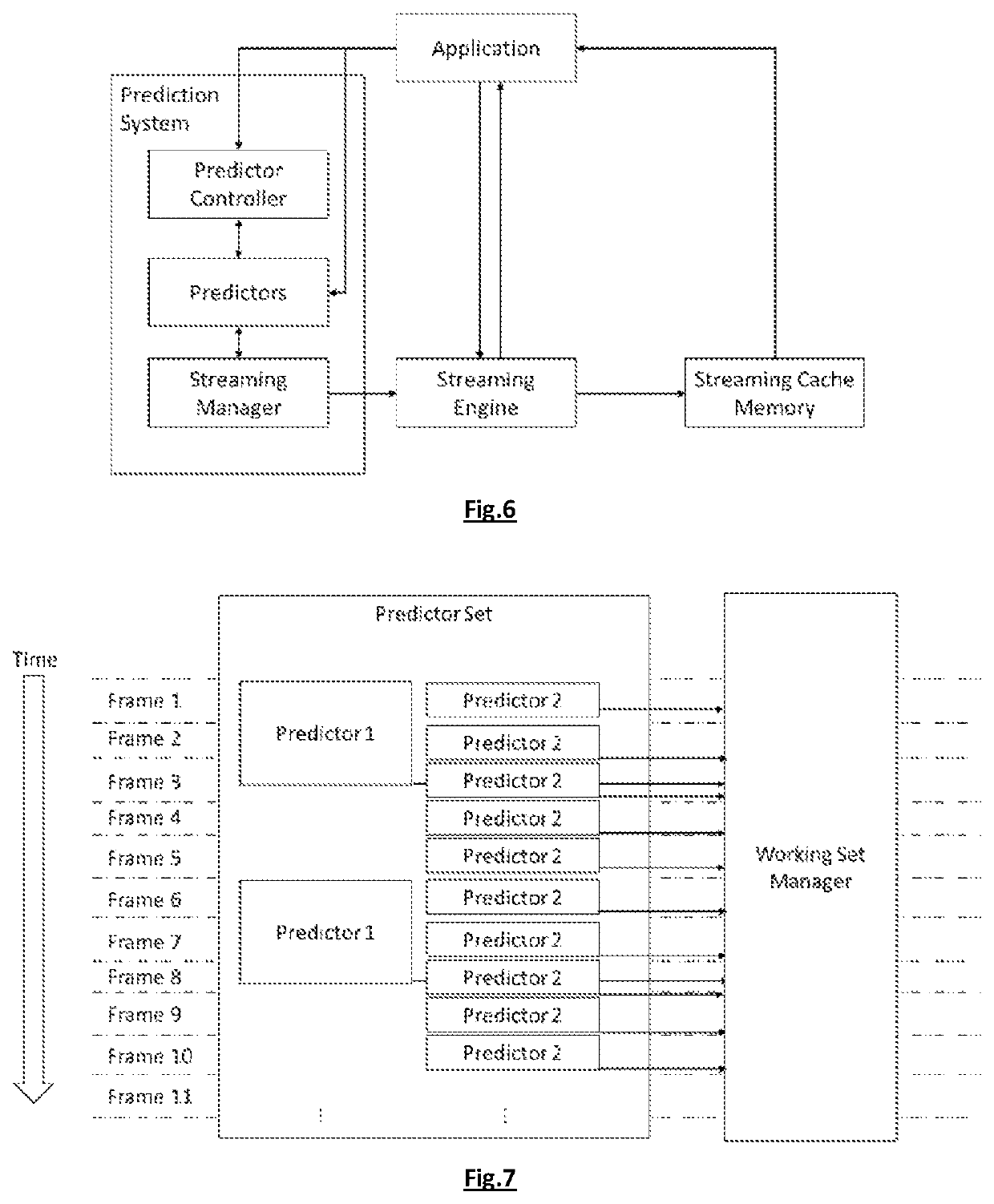

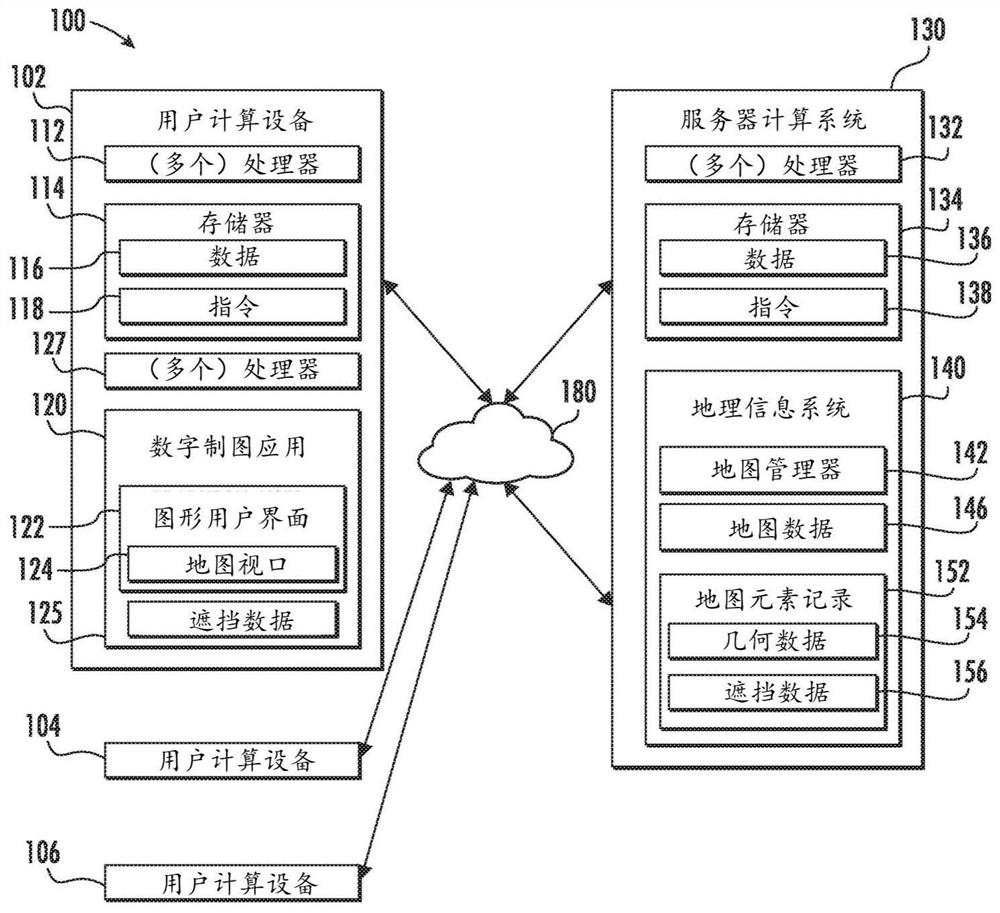

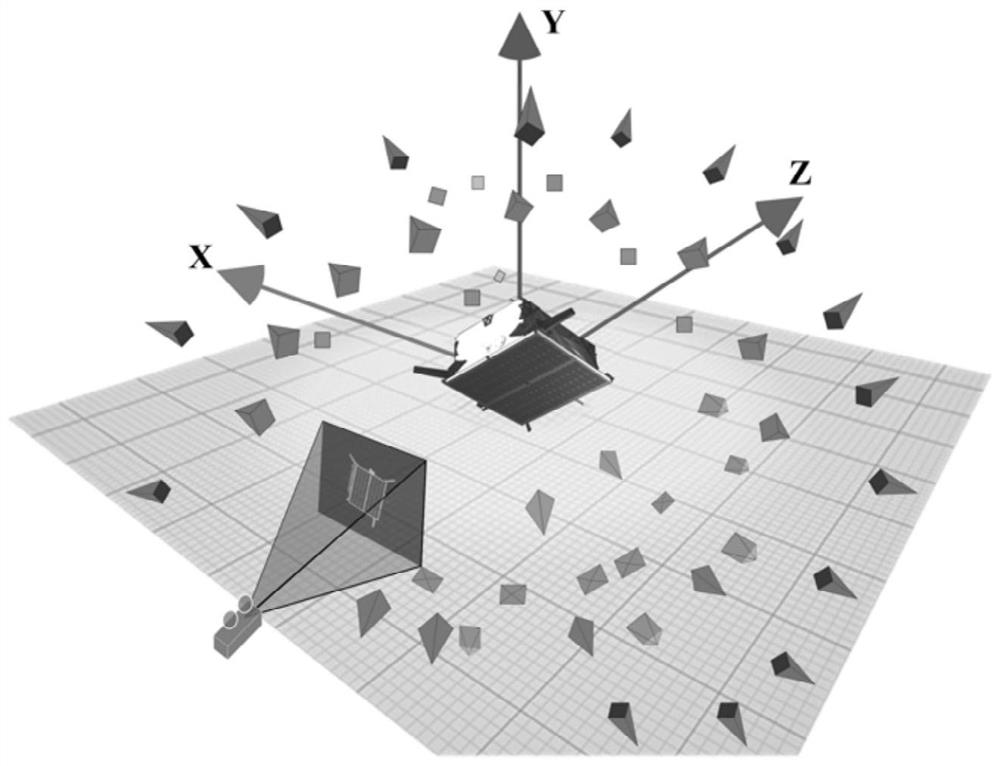

Prediction system for texture streaming

ActiveUS10748241B2Optimizing streamingImprove visual qualityImage memory managementTransmissionGraphicsAlgorithm

A prediction system for determining a set of subregions can be used for rendering a virtual world of a computer graphics application. The subregions belong to streamable objects to be used for rendering the virtual world. The streamable objects each comprise a plurality of subregions. The prediction system comprises a plurality of predictor units arranged for receiving from a computer graphics application information on the virtual world and each arranged for obtaining a predicted set of subregions for rendering a virtual world using streamable objects. Each predicted set can be obtained by applying a different prediction scheme. A streaming manager is arranged for receiving the predicted sets of subregions, for deriving from the predicted sets a working set of subregions to be used for rendering and for outputting, based on the working set of subregions, steering instructions concerning the set of subregions to be actually used.

Owner:UNITY TECH APS

Soft-occlusion for computer graphics rendering

Systems and methods for rendering computer graphics using soft-occlusion are provided. A computing system can obtain display data for a virtual element to be displayed in association with imagery depicting an environment including a physical object. The computing system can generate a set of graphics occlusion parameters associated with rendering the image data and the display data based at leastin a part on an estimated geometry of the physical object,. The set of graphics occlusion parameters can define blending of the display data for the virtual element and the imagery at a soft-occlusionregion that includes one or more locations within the estimated geometry. The computing system can render a composite image from the display data for the virtual element and the imagery based at least in part on the set of graphics occlusion parameters.

Owner:GOOGLE LLC

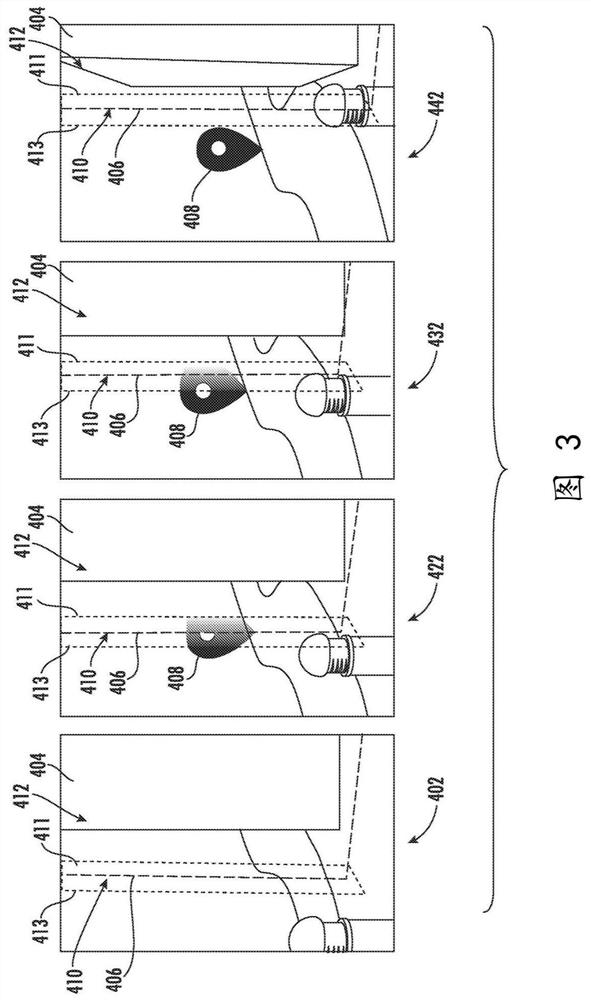

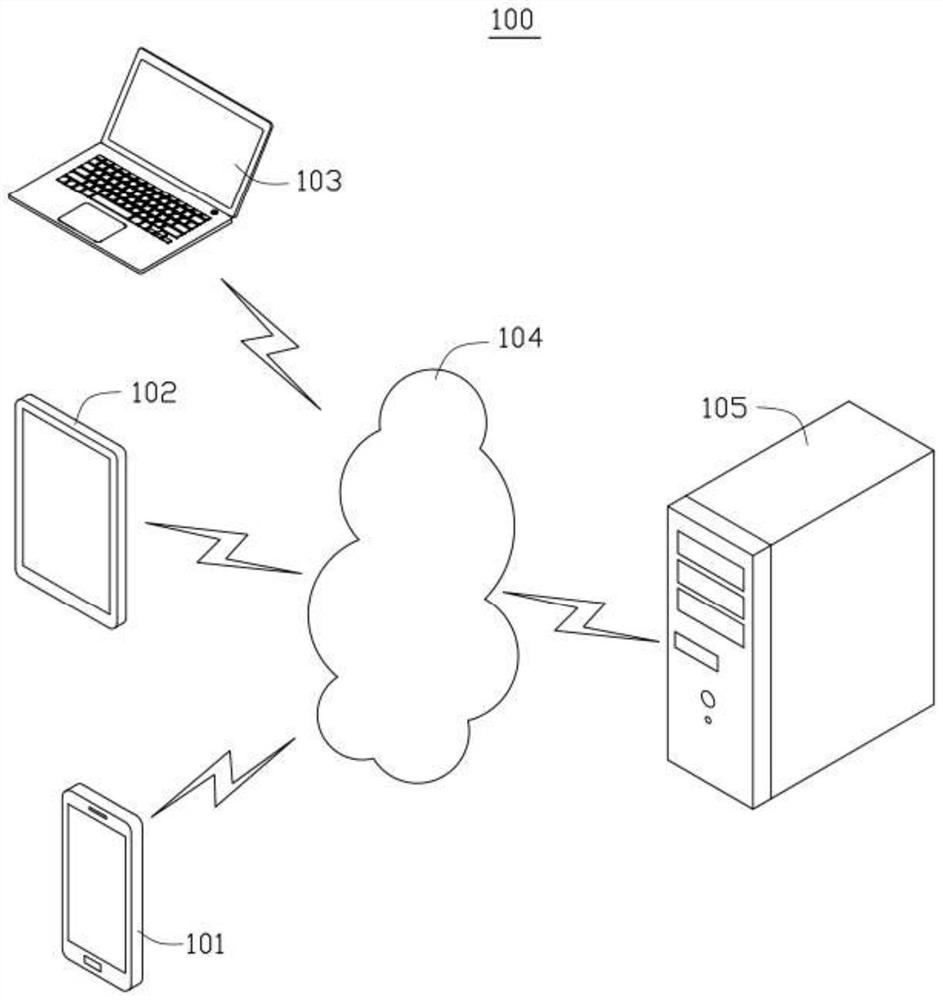

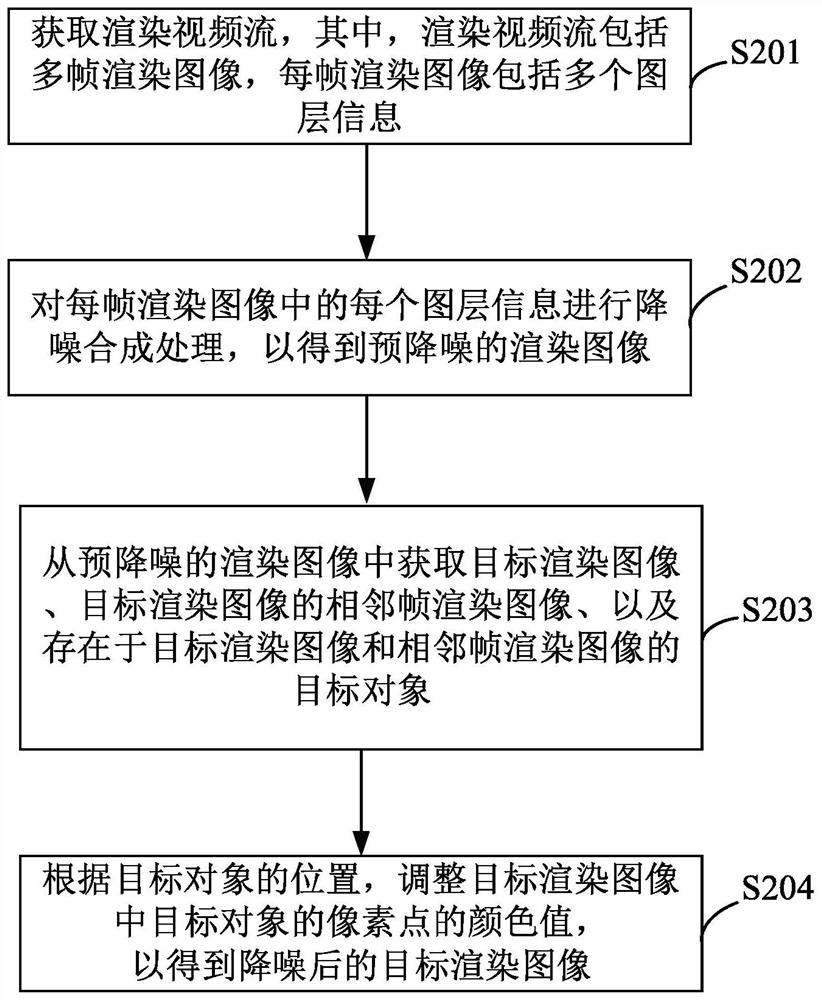

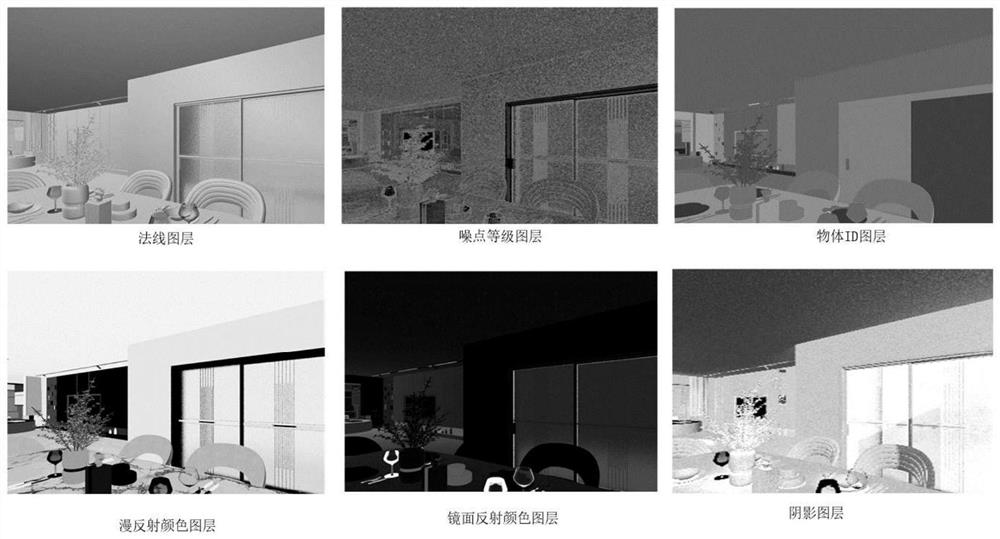

Image noise reduction method and device, computer equipment and medium

PendingCN114663314AQuality improvementImprove efficiencyImage enhancementImage analysisRadiologyImage noise reduction

The embodiment of the invention belongs to the technical field of computer graphics, and relates to an image noise reduction method and device, computer equipment and a medium thereof.The method comprises the steps that a rendering video stream is obtained, the rendering video stream comprises multiple frames of rendering images, and each frame of rendering image comprises multiple pieces of layer information; and performing noise reduction synthesis processing on each image layer information in each frame of rendered image to obtain a pre-noise-reduced rendered image, obtaining a target rendered image, an adjacent frame of rendered image of the target rendered image and a target object existing in the target rendered image and the adjacent frame of rendered image from the pre-noise-reduced rendered image, and according to the position of the target object, obtaining the target rendered image according to the position of the target object. According to the method, the color value of the pixel point of the target object in the target rendering image is adjusted according to the color value of the pixel point of the target object in the target rendering image so as to obtain the denoised target rendering image, on one hand, reduction of denoising errors of multiple frames of rendering images in the concentrated denoising process is facilitated, on the other hand, multiplexing of inter-frame information is achieved, and the denoising quality and denoising efficiency of the rendering images are improved.

Owner:HANGZHOU QUNHE INFORMATION TECHNOLOGIES CO LTD

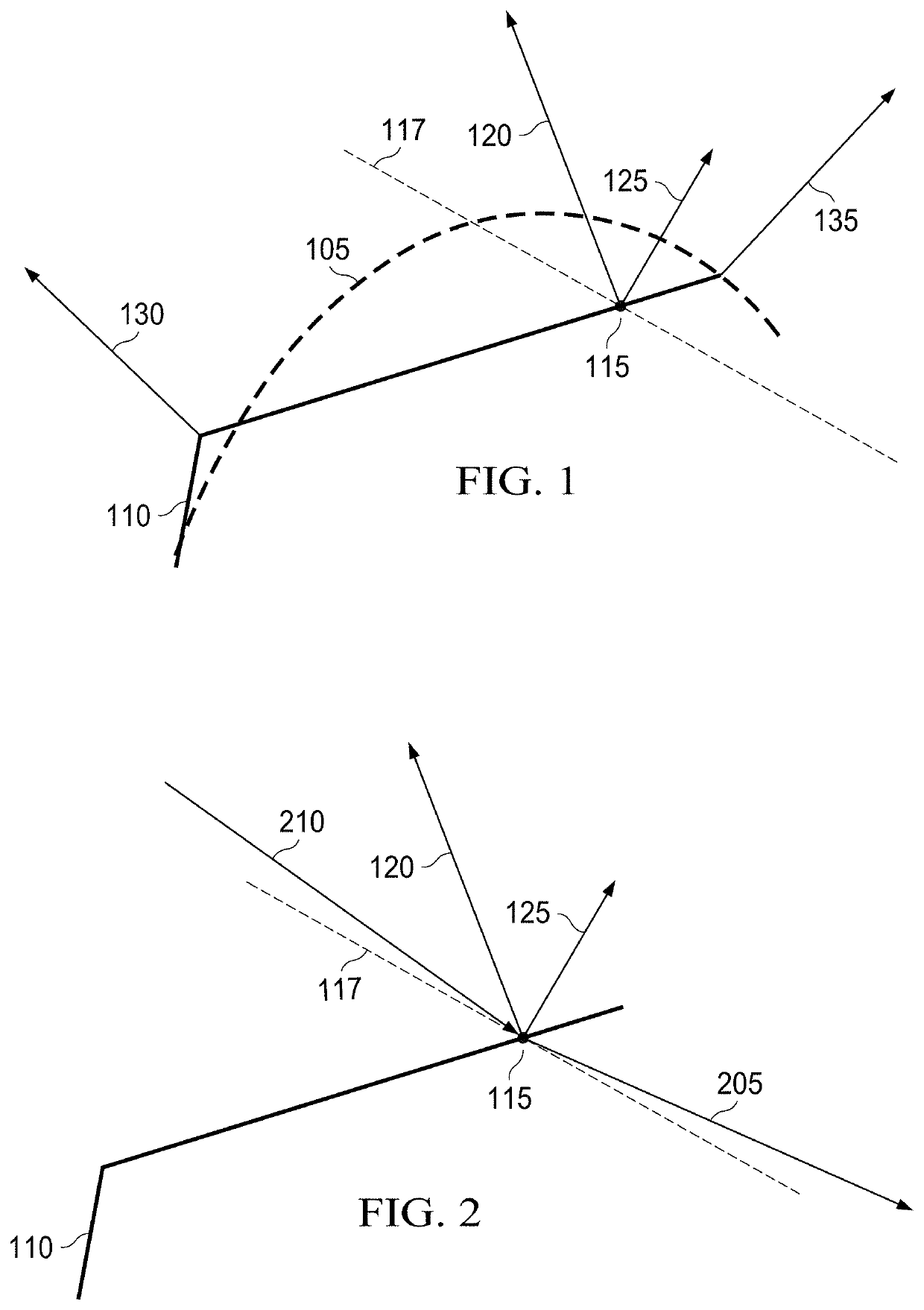

View-dependant shading normal adaptation

A method of adjusting a shading normal vector for a computer graphics rendering program. Calculating a normalized shading normal vector pointing outwards from an origin point on a tessellated surface modeling a target surface to be rendered. Calculating a normalized outgoing reflection vector projecting from the origin point for an incoming view vector directed towards the origin point and reflecting relative to the normalized shading normal vector. Calculating a correction vector such that when the correction vector is added to the normalized outgoing reflection vector a resulting vector sum is yielded that is equal to a maximum reflection vector, wherein the maximum reflection vector is on or above the tessellated surface. Calculating a normalized maximum reflection vector by normalizing a vector sum of the correction vector plus the maximum reflection vector. Calculating a normalized adjusted shading normal vector by normalizing a vector difference of the normalized maximum reflection vector minus the incoming view vector.

Owner:NVIDIA CORP

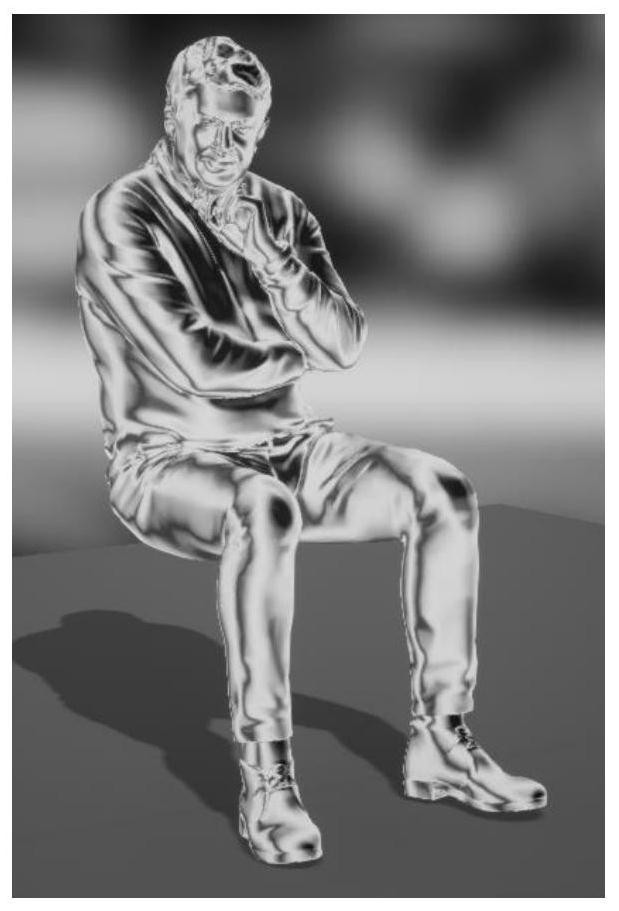

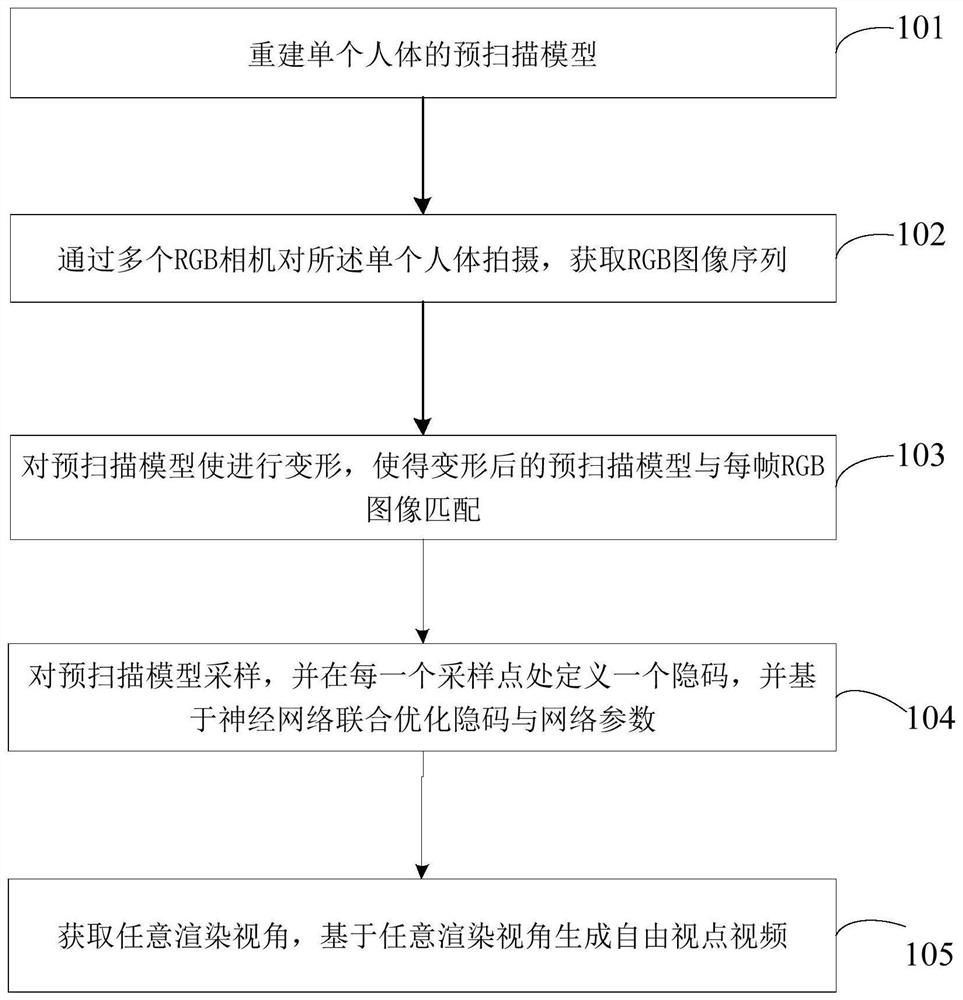

Dynamic human body free viewpoint video generation method and device based on neural network

The invention provides a method and device for generating a dynamic human body free viewpoint video based on a neural network, and relates to the technical field of computer vision and computer graphics, and the method comprises the steps: reconstructing a pre-scanning model of a single human body; shooting the single human body through a plurality of RGB cameras to obtain an RGB image sequence; the pre-scanning model being deformed, so that the deformed pre-scanning model is matched with each frame of RGB image; sampling the pre-scanning model, defining a hidden code at each sampling point, and jointly optimizing the hidden codes and network parameters based on a neural network; and obtaining any rendering view angle, and generating a free viewpoint video based on the any rendering view angle. Therefore, the RGB image sequence is captured based on the plurality of RGB cameras, and the free viewpoint video with continuous and dynamic time domain is generated according to the sequence, so that a more real and dynamic rendering result is generated.

Owner:杭州新畅元科技有限公司

Space target 6D attitude estimation technology based on image segmentation Mask and neural rendering

PendingCN112508007AReduce dependenceImprove calculation accuracyCharacter and pattern recognitionNeural architecturesFeature extractionImage segmentation

The invention discloses a space target 6D attitude estimation technology based on image segmentation Mask and neural rendering in order to solve the problems that an existing method is low in featureextraction stability and high in multi-instance high-granularity viewpoint sampling labor time cost. Image segmentation Mask is taken as stable image representation and neural network differentiable rendering is taken as an attitude true value for matching calculation, attitude representation extraction and generation are carried out by introducing new image attitude representation and computer vision instance segmentation and computer graphics differentiable rendering technologies, the feature extraction stability is improved. Micro-rendering and silhouette mask binarization operations are performed on a target three-dimensional model by using a neural rendering technology, so that the rendering precision and the matching efficiency are improved.

Owner:PLA PEOPLES LIBERATION ARMY OF CHINA STRATEGIC SUPPORT FORCE AEROSPACE ENG UNIV

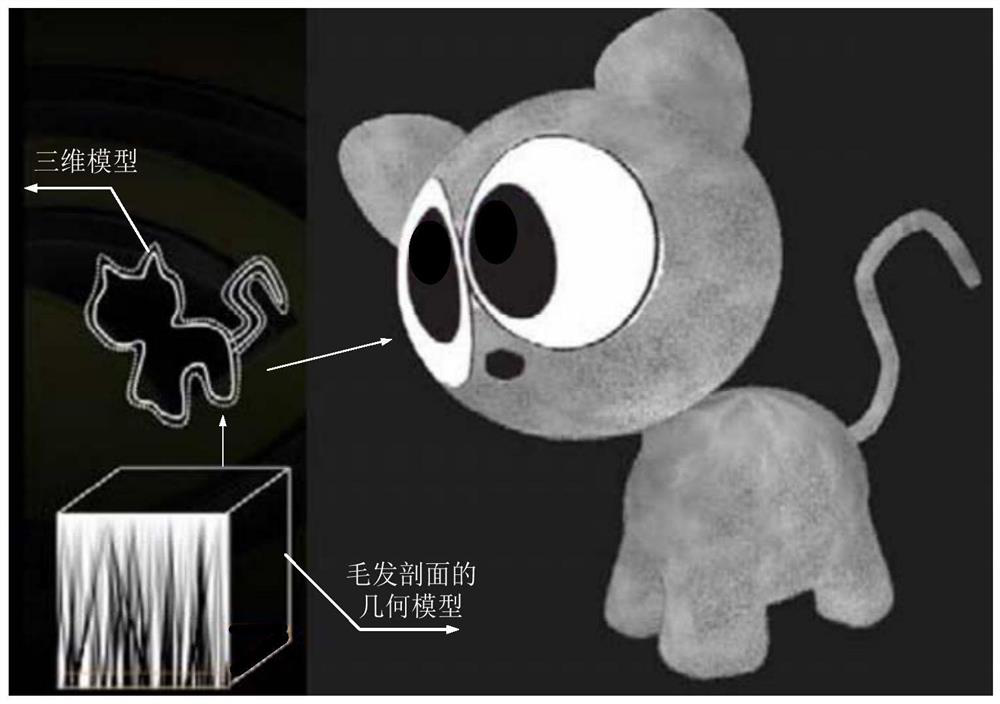

Hair rendering method, device, electronic device and storage medium

ActiveCN109685876BImprove efficiencyLower performance requirementsImage generation3D-image renderingGraphicsEngineering

The present application relates to a hair rendering method, device, electronic device and storage medium, and belongs to the technical field of computer graphics. The method includes: acquiring a plurality of initial sections, the plurality of initial sections being used for simulating the cross sections of hair growth; according to the rule that the hair distribution density decreases in turn, determining the hair on each initial section in the plurality of initial sections Distributing points to obtain a plurality of hair sections; through the preset lighting model, the multiple hair sections are sequentially rendered on the three-dimensional model of the hair to be drawn to obtain a target three-dimensional model, and the preset lighting model is used to simulate the lighting effect of the hair . The present application can provide a simple and efficient hair rendering method, which improves the efficiency of hair rendering and has lower performance requirements for rendering devices.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

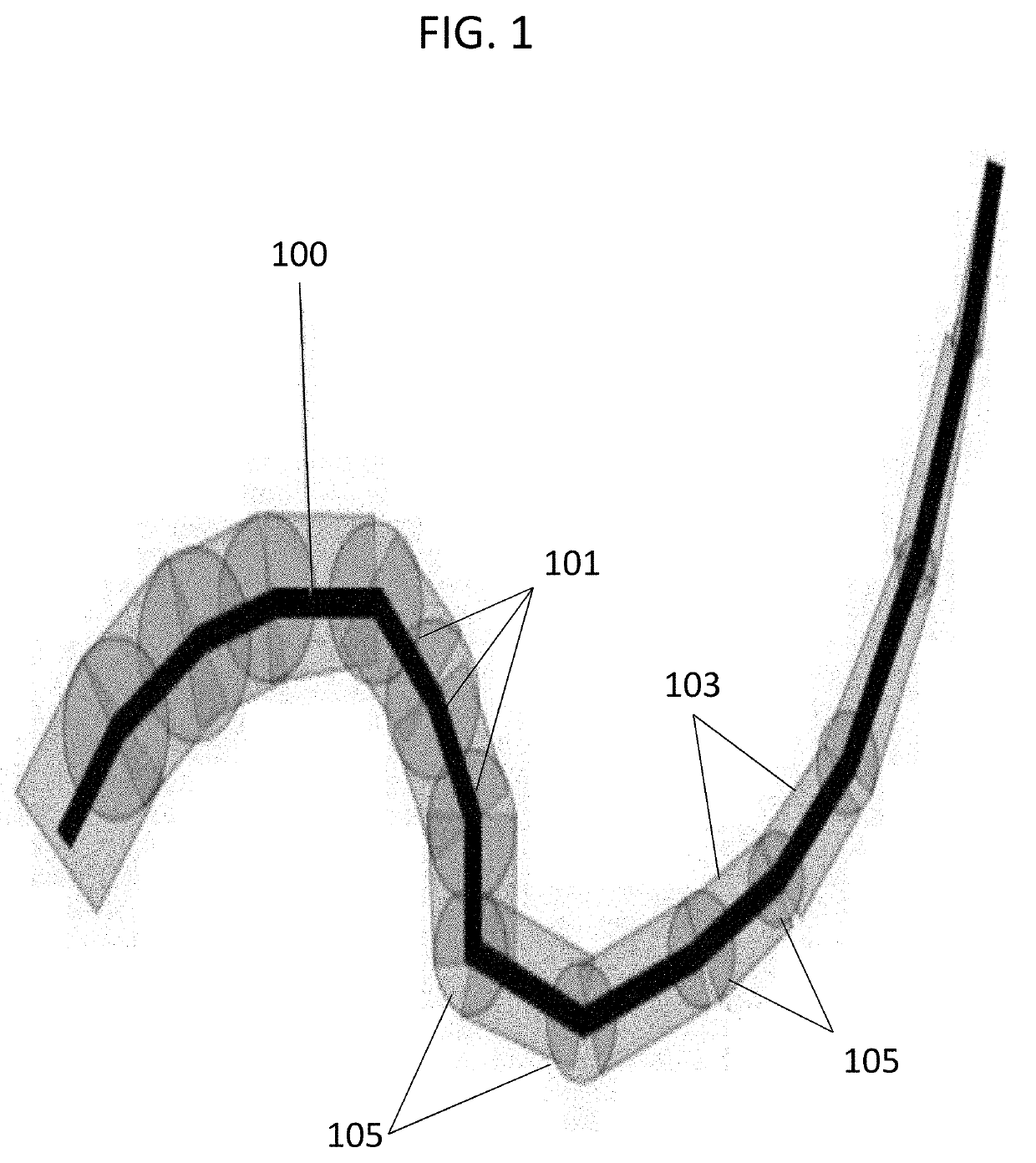

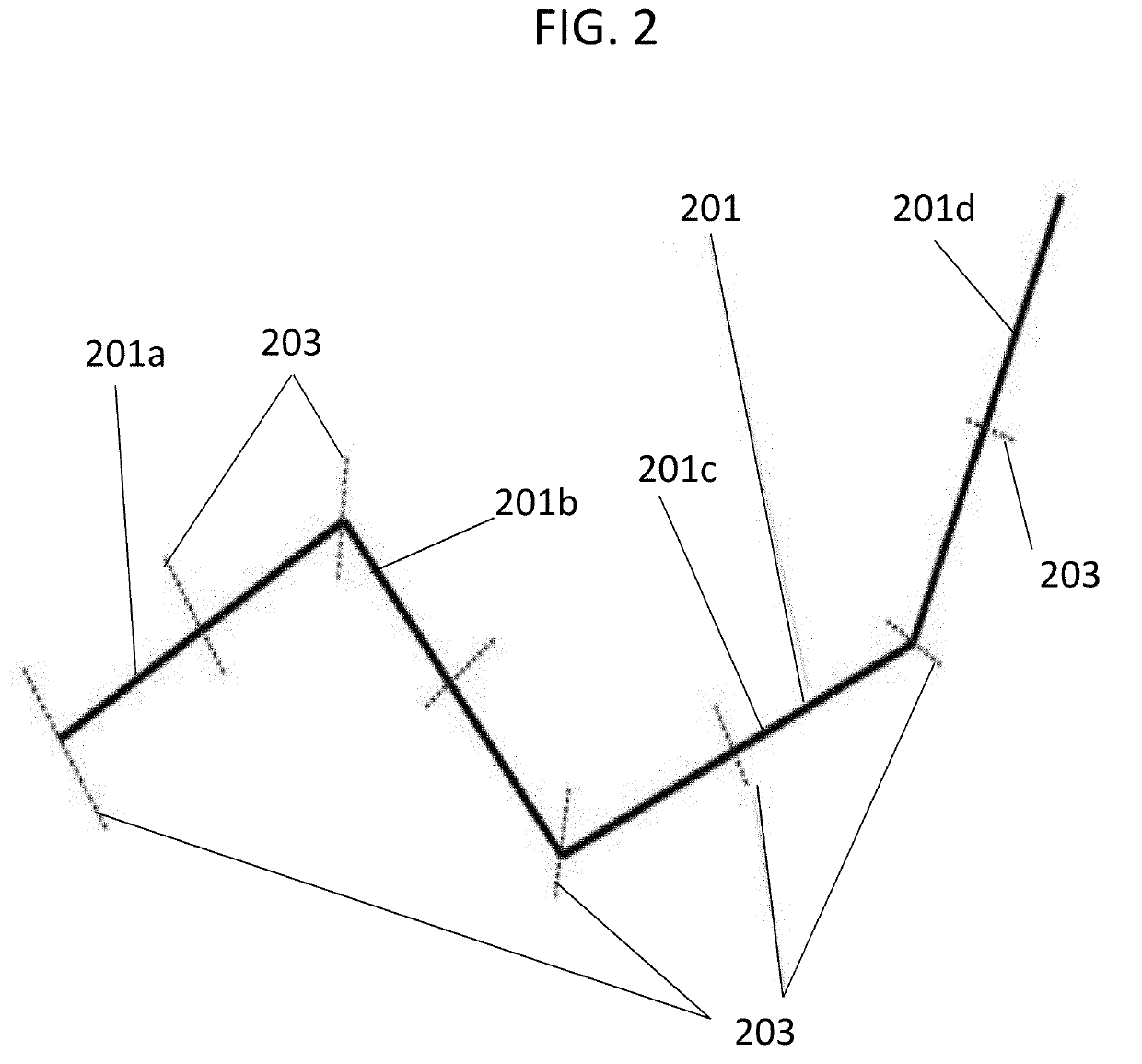

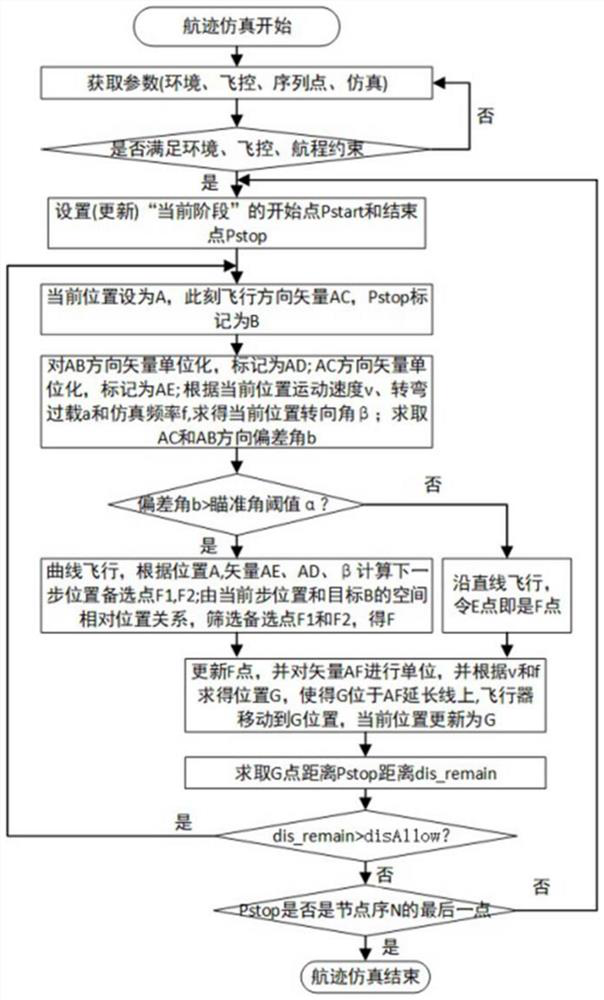

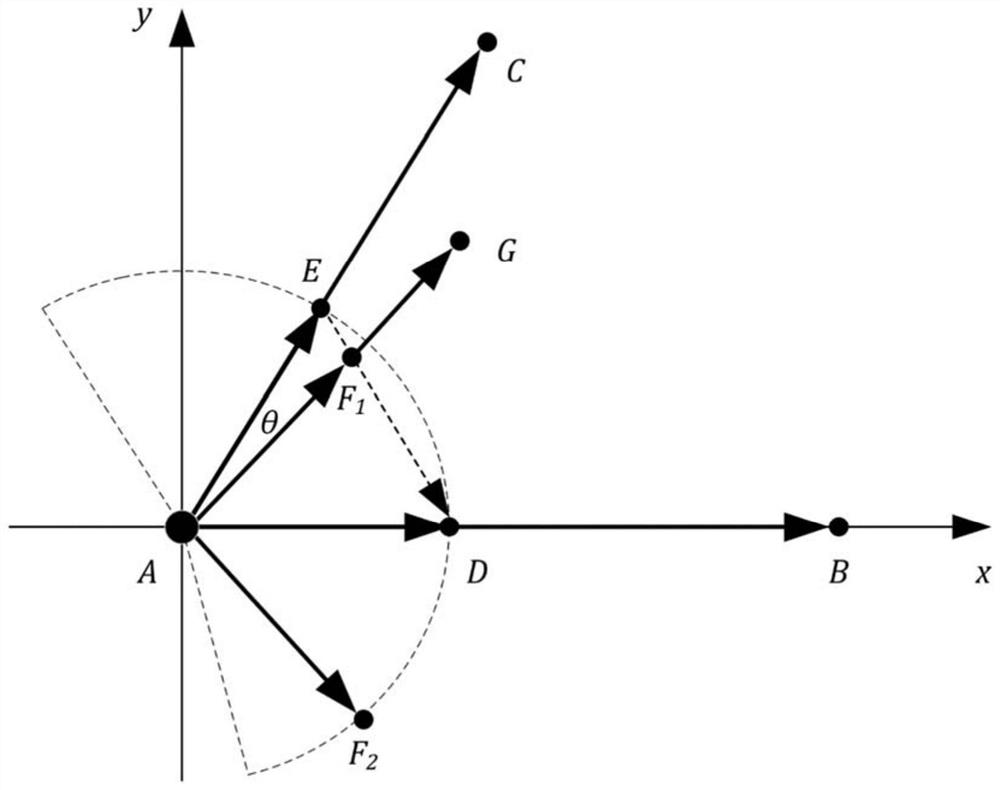

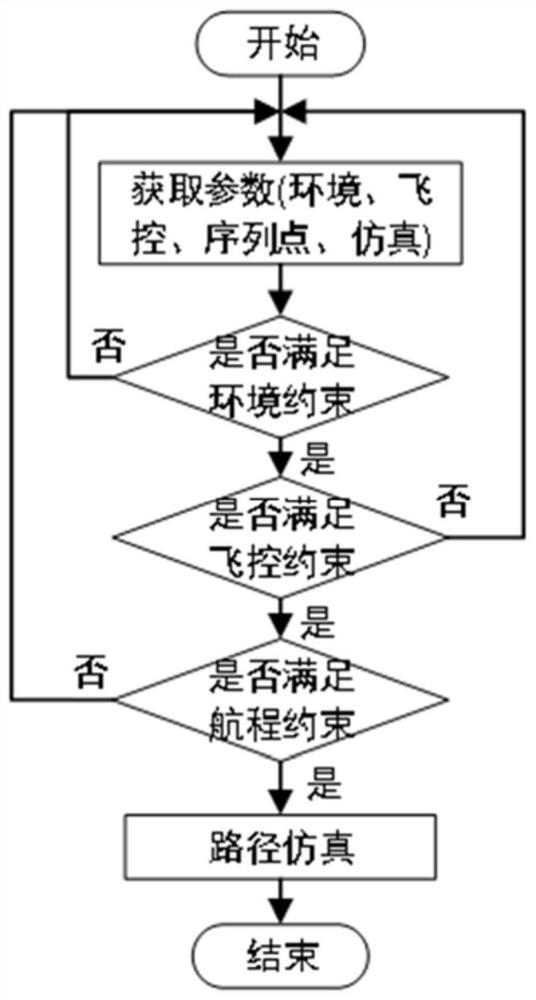

Track rationality evaluation and self-generation method and system based on given node sequence

ActiveCN112857372ASolve the self-generation problemNavigational calculation instrumentsAlgorithmComputer graphics

The invention provides a track rationality evaluation and self-generation method and system based on a given node sequence, and relates to the technical field of computer graphics real-time rendering. The method comprises the steps of 1 defining necessary parameters of an algorithm; 2 judging the legality of a node sequence according to the defined necessary parameters; and step 3 automatically generating a track based on a legal track node sequence. According to the node sequence rationality determination method based on the environmental parameter constraint, track self-generation conforming to the kinematics constraint can be carried out on the node sequence.

Owner:SHANGHAI JIAO TONG UNIV

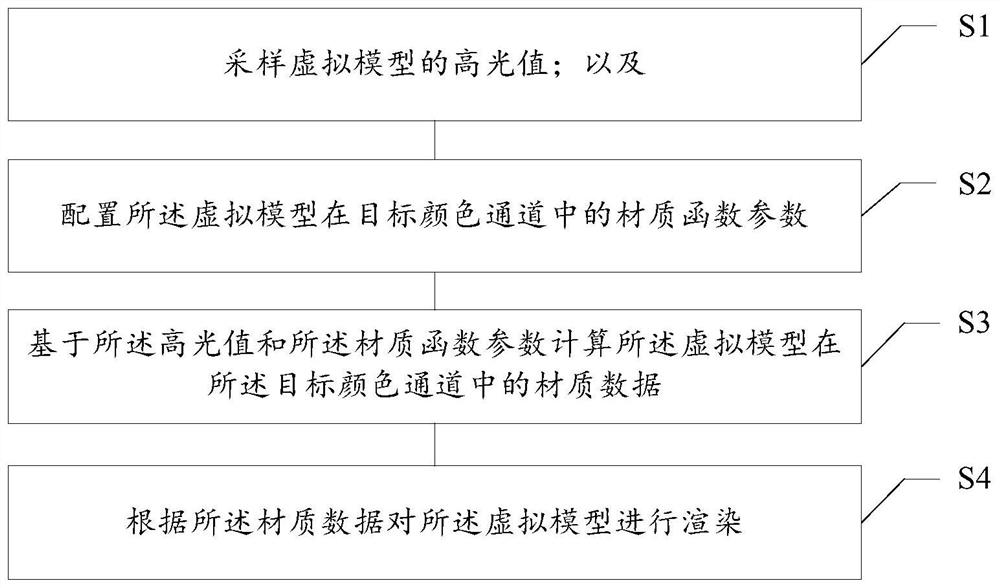

Material rendering method and device, storage medium and electronic equipment

ActiveCN113160379AReduce consumptionImprove controllability3D-image renderingComputational scienceGraphics

The invention relates to the field of computer graphics, in particular to a material rendering method and device, a storage medium and electronic equipment. The material rendering method comprises the steps of sampling a highlight value of a virtual model; configuring a material function parameter of the virtual model in a target color channel; calculating material data of the virtual model in the target color channel based on the highlight value and the material function parameter; and rendering the virtual model according to the material data. According to the material rendering method provided by the invention, the controllability of laser material rendering can be enhanced, and meanwhile, the precision of the rendering effect is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Method and device for generating dynamic human free viewpoint video based on neural network

The present application proposes a method and device for generating a dynamic human body free-viewpoint video based on a neural network, which relates to the technical field of computer vision and computer graphics, wherein the method includes: reconstructing a pre-scan model of a single human body; Describe a single human body shot to obtain a sequence of RGB images; deform the pre-scan model so that the deformed pre-scan model matches each frame of RGB image; sample the pre-scan model, and define a hidden code at each sampling point, And jointly optimize the hidden code and network parameters based on the neural network; obtain any rendering perspective, and generate free-view video based on the arbitrary rendering perspective. As a result, a sequence of RGB images is captured based on multiple RGB cameras, and a temporally continuous and dynamic free-viewpoint video is generated based on this sequence, resulting in more realistic and dynamic rendering results.

Owner:杭州新畅元科技有限公司

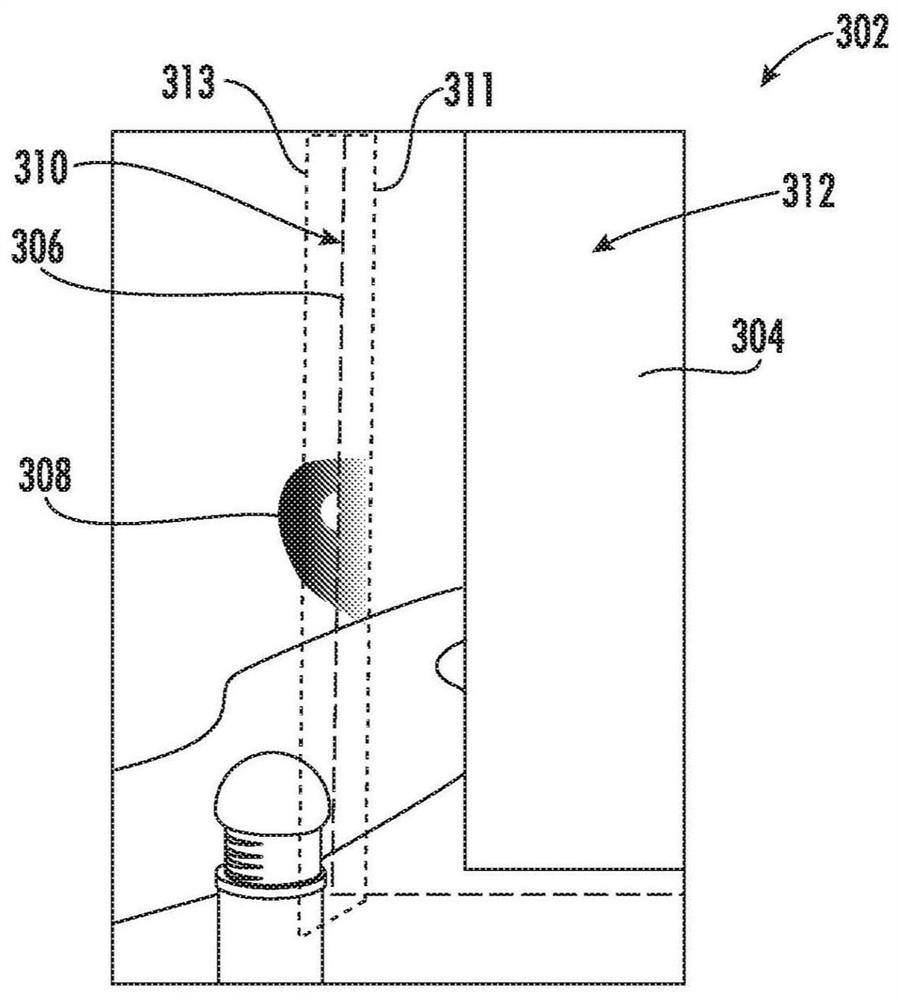

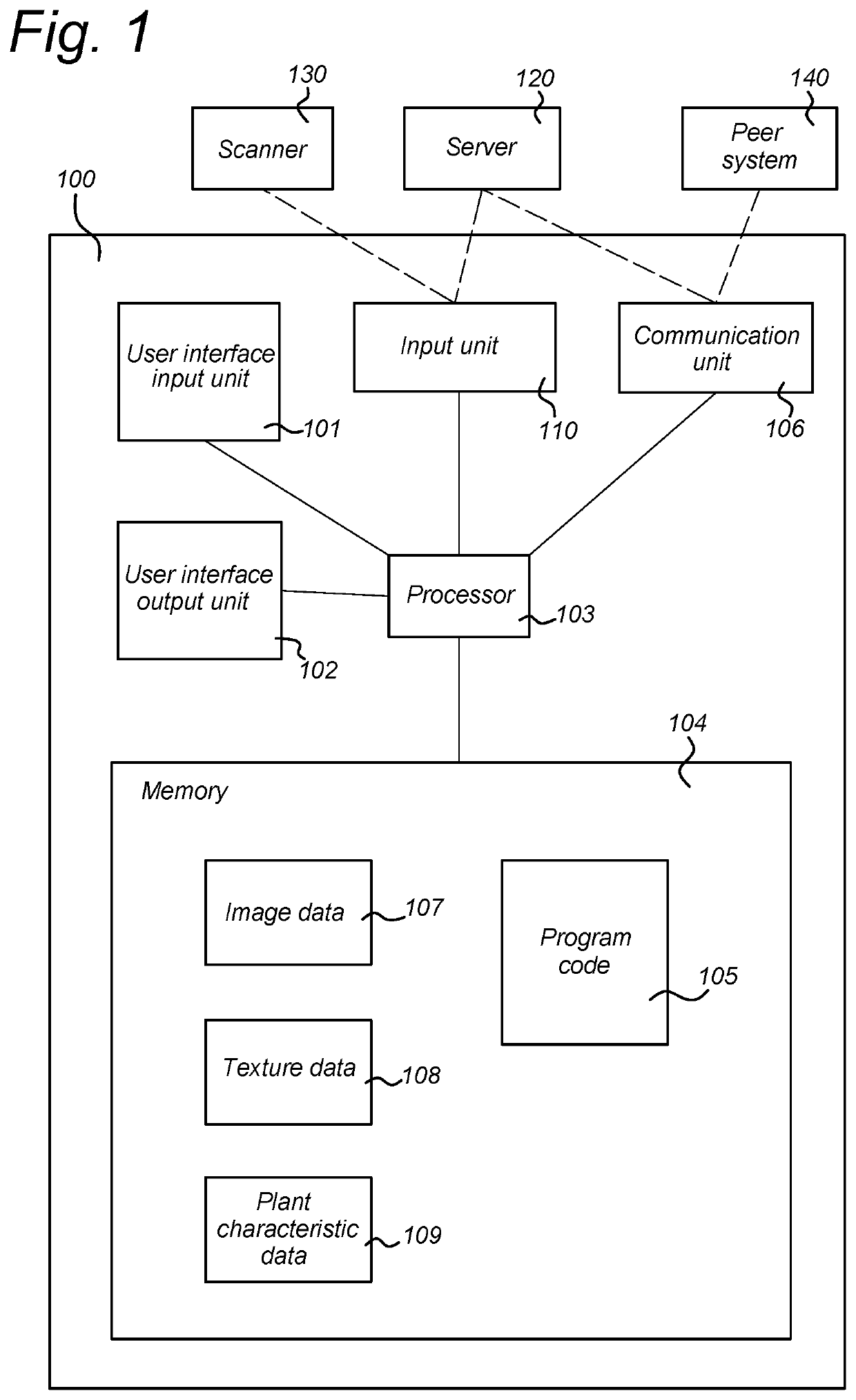

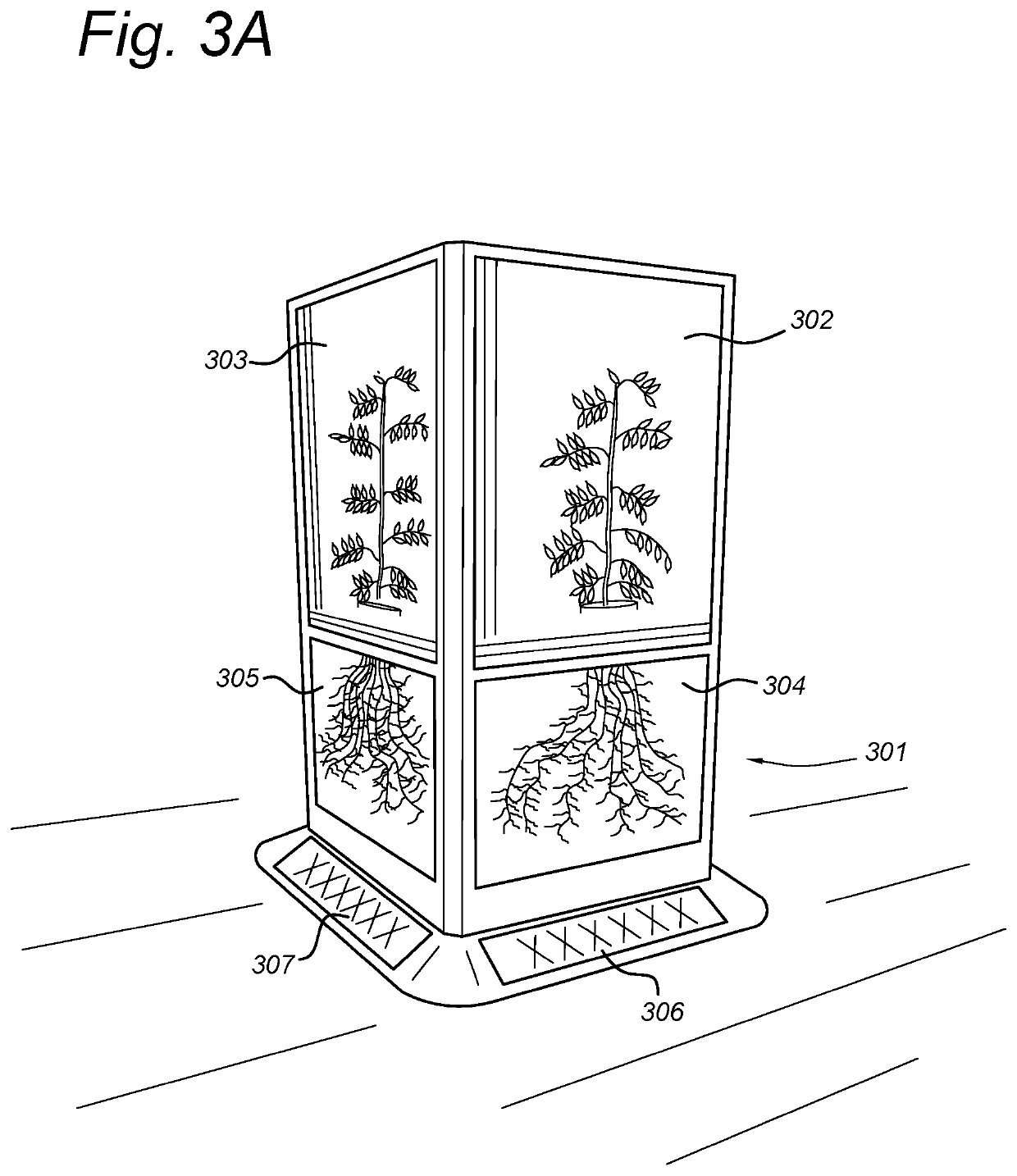

Monitoring plants

ActiveUS11360068B2Improve visualizationFind desirable features in plants more easilyTesting plants/treesImage data processingGraphicsThree-dimensional space

A system for monitoring plants comprises an input unit, an output unit, and a processor that provides (201) image data (107) for a plurality of plants, each image data being associated with an individual plant. It associates (204) the image data (107) of each plant with corresponding plant characteristic data (109). It selects (206) a subset of the plants based on the plant characteristic data (109). It generates (207) a plurality of computer graphics objects corresponding to the selected plants. It applies (208) the image data (107) of each selected plant in form of a computer graphics texture to the corresponding computer graphics object. It determines (209) a position of each computer graphics object in a three-dimensional space of a computer graphics scene. It creates (210) a computer graphics rendering of the scene. It displays (211) the computer graphics rendering.

Owner:KEYGENE NV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com