Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

57 results about "Pinhole camera model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

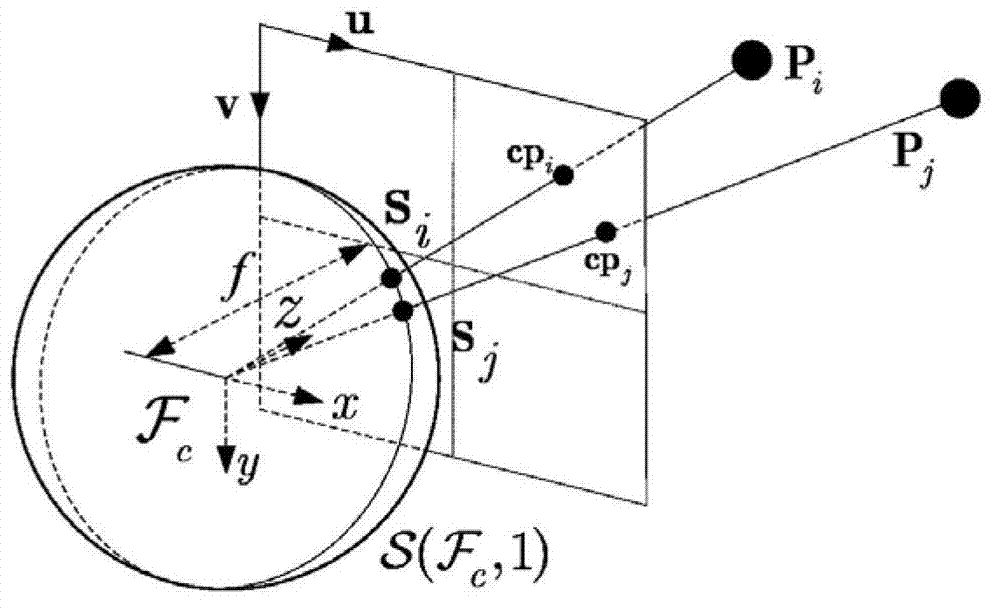

Inventor

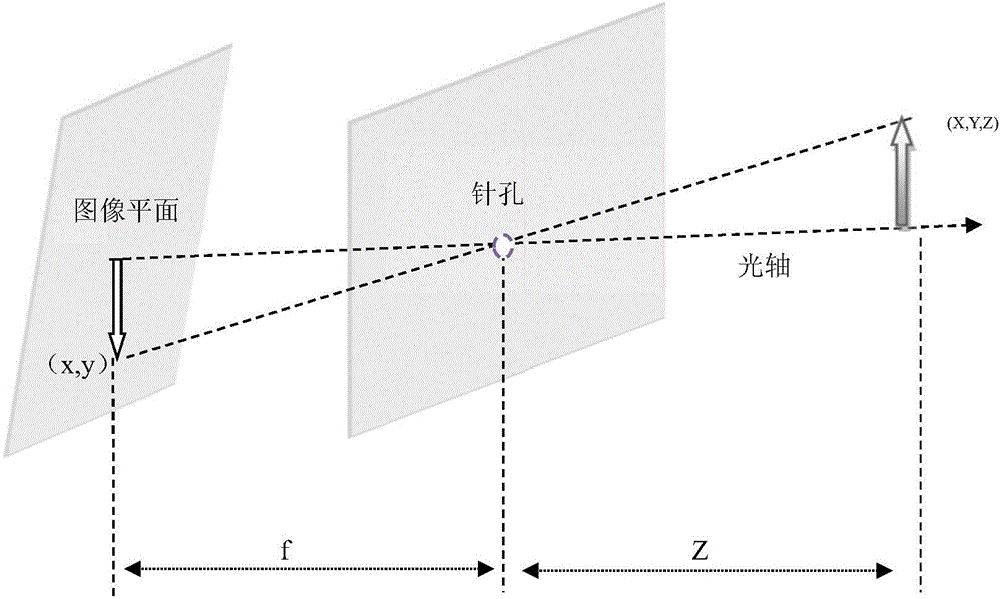

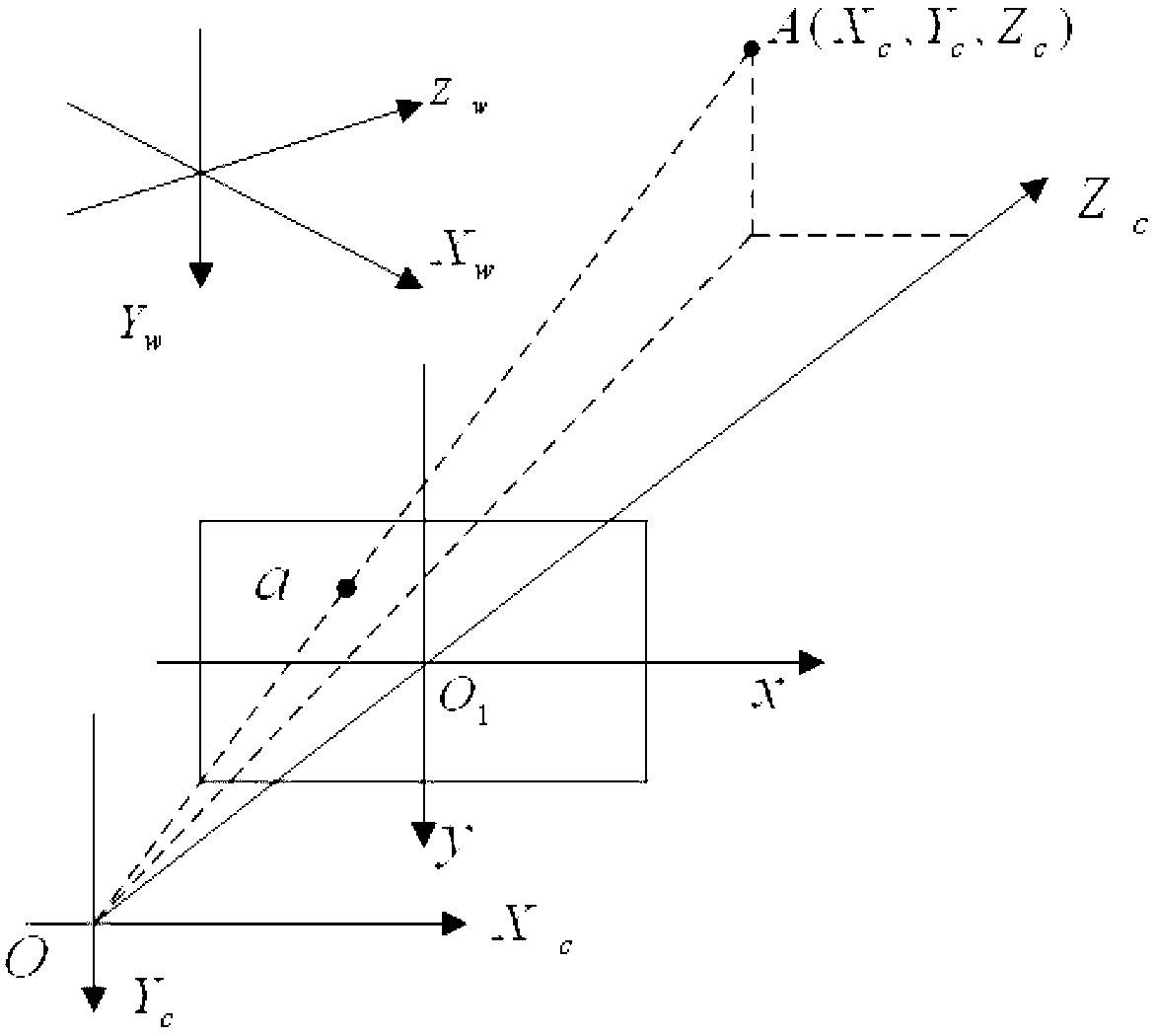

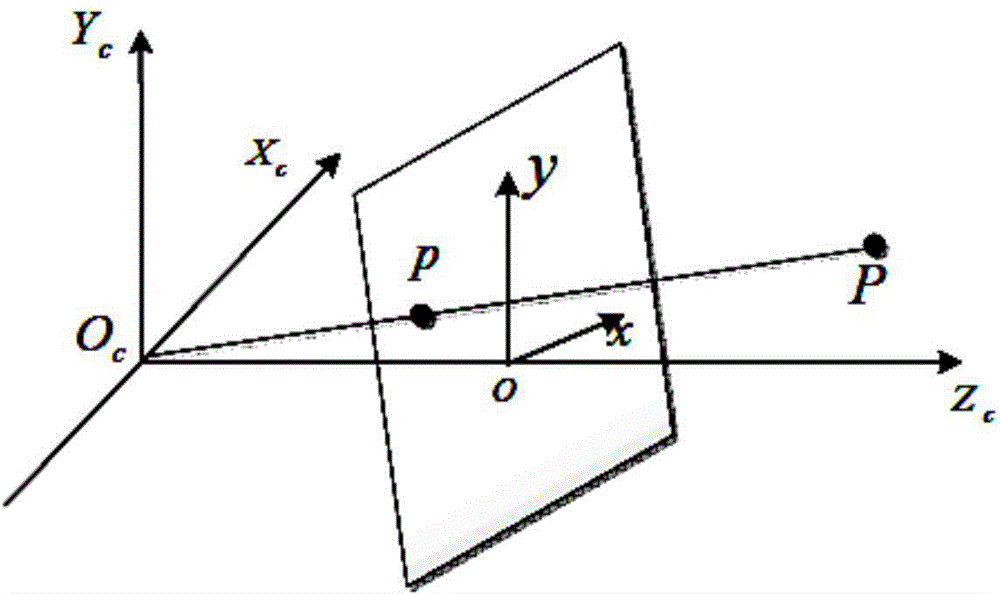

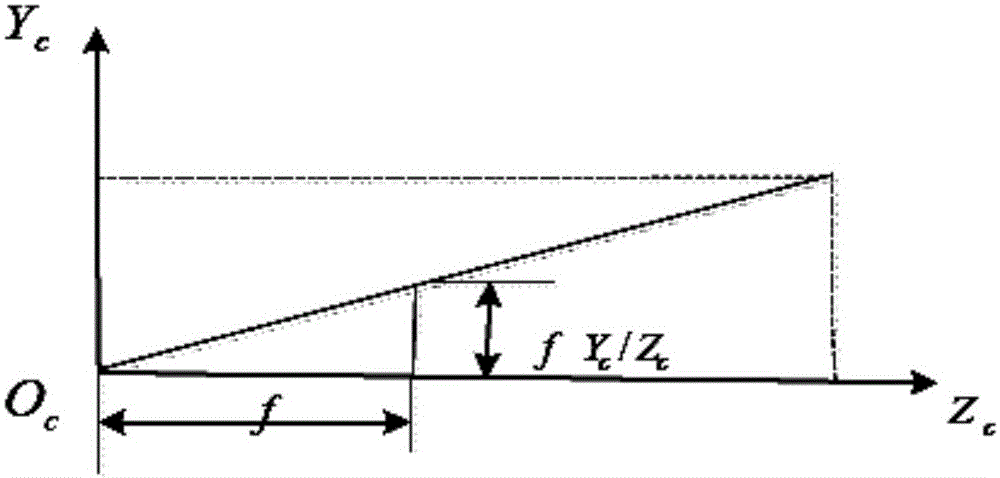

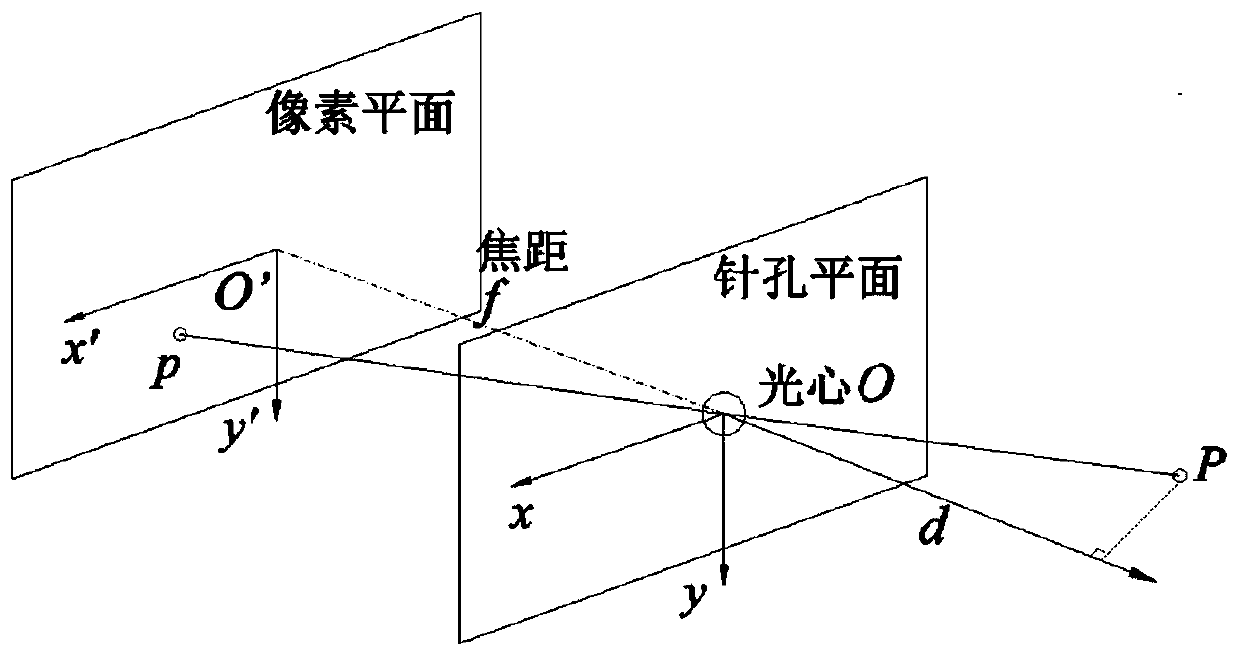

The pinhole camera model describes the mathematical relationship between the coordinates of a point in three-dimensional space and its projection onto the image plane of an ideal pinhole camera, where the camera aperture is described as a point and no lenses are used to focus light. The model does not include, for example, geometric distortions or blurring of unfocused objects caused by lenses and finite sized apertures. It also does not take into account that most practical cameras have only discrete image coordinates. This means that the pinhole camera model can only be used as a first order approximation of the mapping from a 3D scene to a 2D image. Its validity depends on the quality of the camera and, in general, decreases from the center of the image to the edges as lens distortion effects increase.

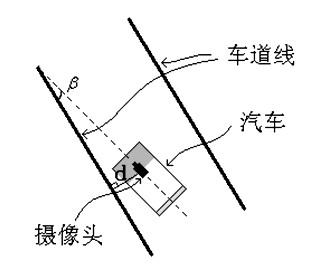

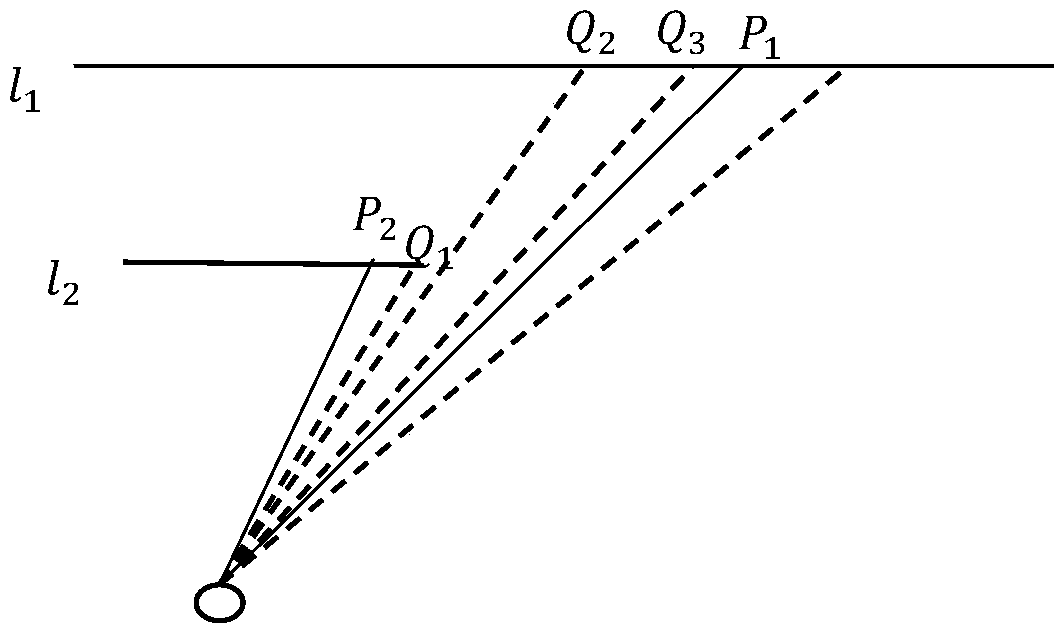

Visual computing and prewarning method of deviation angle and distance of automobile from lane line

InactiveCN101894271AHigh speedMeet real-time detection requirementsImage analysisCharacter and pattern recognitionPinhole camera modelImaging processing

The invention relates to a visual computing and prewarning method of deviation angle and distance of an automobile from a lane line. The image processing and computer vision technologies are utilized, and the deviation angle and distance of the automobile from the lane line are computed in real time according to the road surface image acquired by a vehicle-mounted camera, thereby estimating the line crossing time for safety prewarning. The method comprises the following steps: detecting the lane lines of the road surface image to obtain a linear equation of partial lane lines; establishing a three-dimensional coordinate system by using the camera as the initial point, and recording the mounting height and depression angle of the camera; calibrating the focal length according to the lane detection result under the condition of a given deflection angle; computing the deflection angle and vertical distance of the automobile relative to the lane line according to a pinhole camera model; and estimating the deviation time from the lane according to the instantaneous running speed of the automobile, thereby obtaining the safety prewarning or intelligent control information of the running automobile.

Owner:CHONGQING UNIV

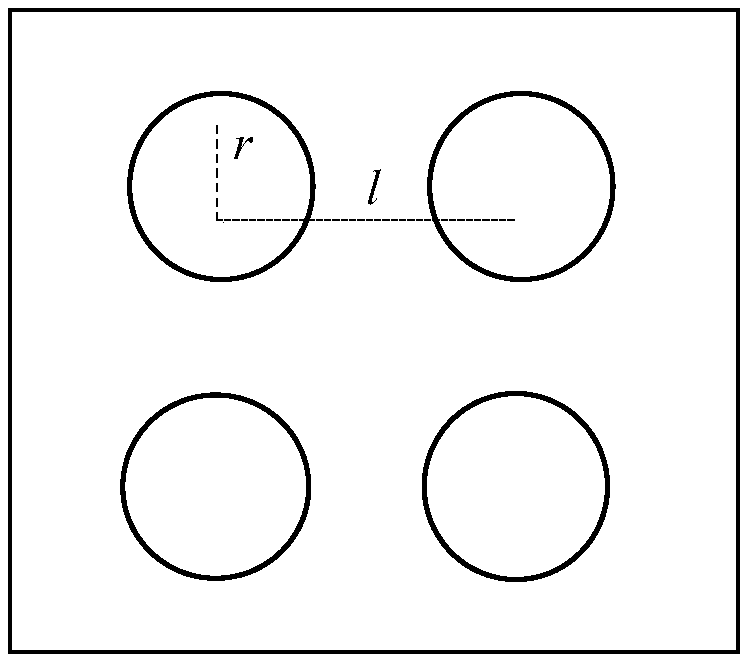

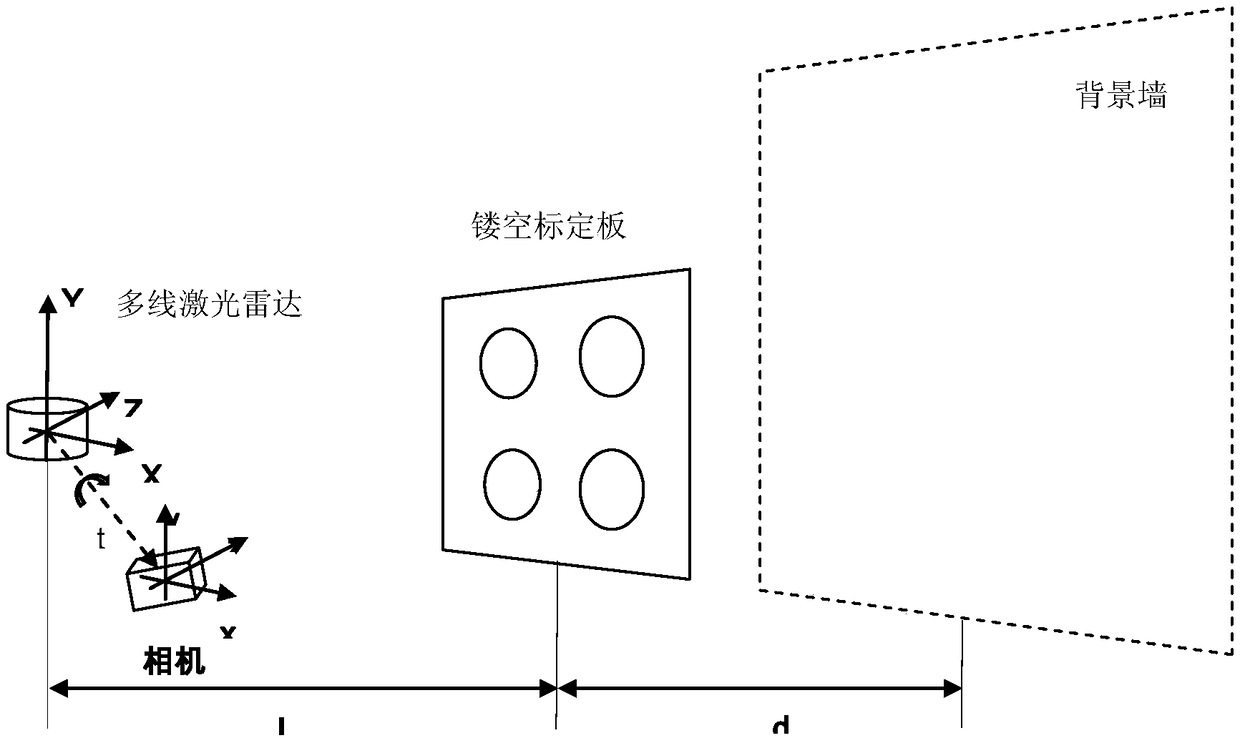

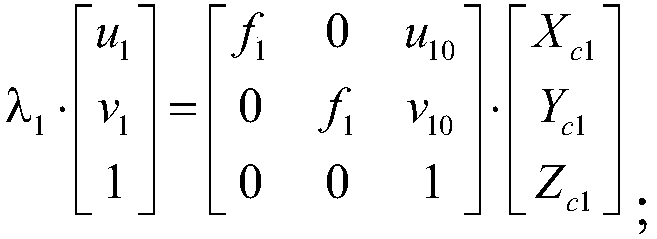

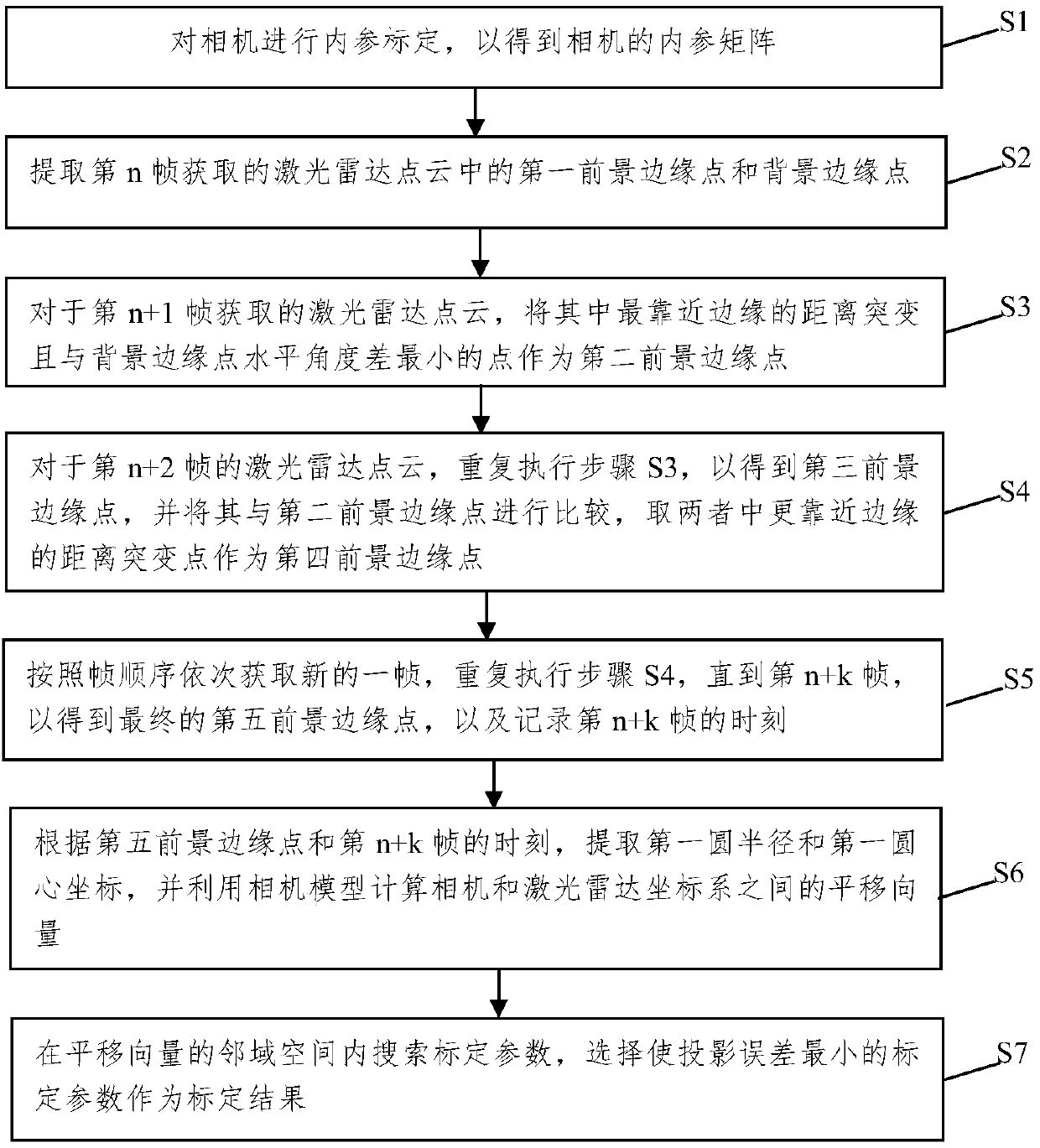

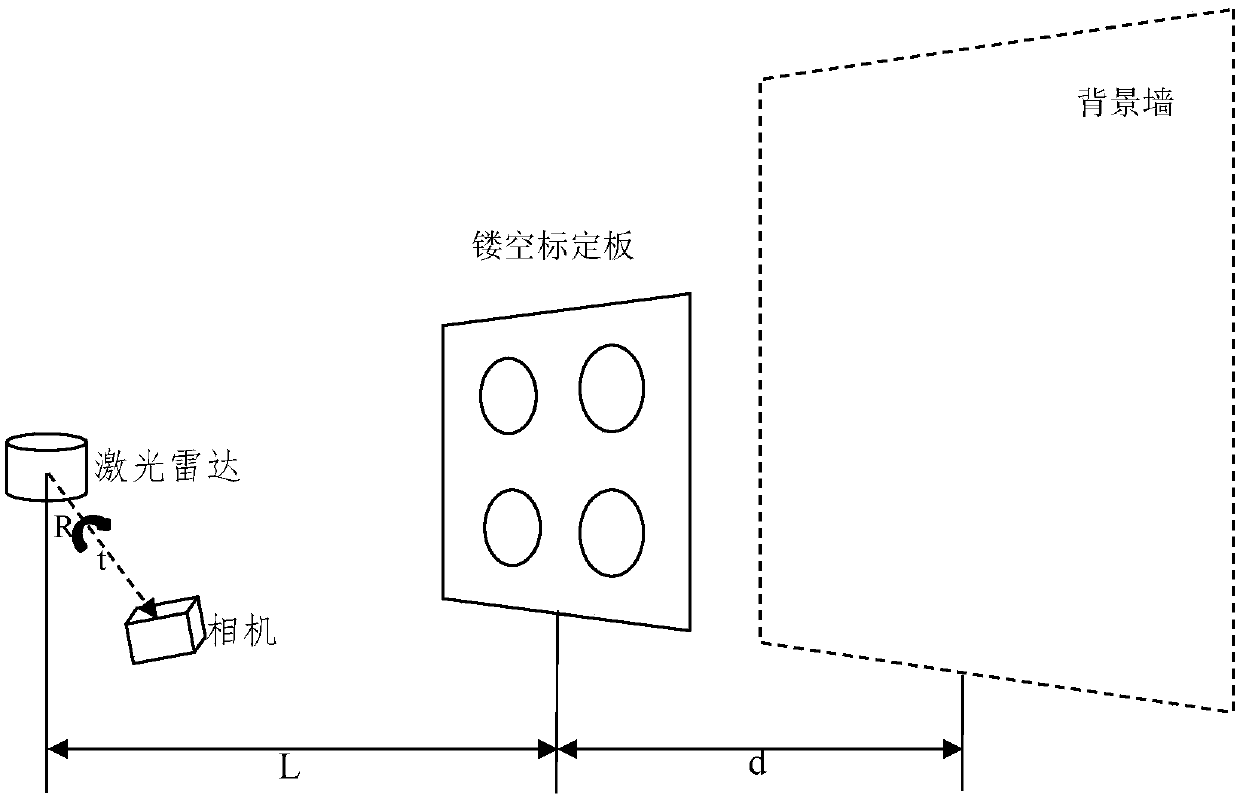

A multi-line lidar and camera joint calibration method based on fine radar scanning edge points

ActiveCN109300162AHigh precision extractionSolve the lack of precisionImage enhancementImage analysisCalibration resultVisual perception

The invention relates to a multi-line lidar and camera joint calibration method based on fine radar scanning edge points, which mainly relates to the technical fields of robot vision, multi-sensor fusion and the like. Because of the influence of the resolution of lidar, the scanned edge points are often not accurate enough, so the calibration results are not accurate enough. According to the characteristic that the range of the lidar point is abrupt at the edge, the invention searches and compares for many times, and takes the point closer to the edge as the standard point. By detecting the circle in the camera image and radar edge points, the translation between the camera and the lidar is calculated according to the pinhole camera model. The calibration parameter C is searched in the neighborhood space of the obtained translation vector to find the calibration result that minimizes the projection error. The invention can extract the points swept by the lidar on the edge of the objectwith high precision, avoids the problem of insufficient precision caused by low resolution of the lidar, and improves the calibration precision.

Owner:ZHEJIANG UNIV OF TECH

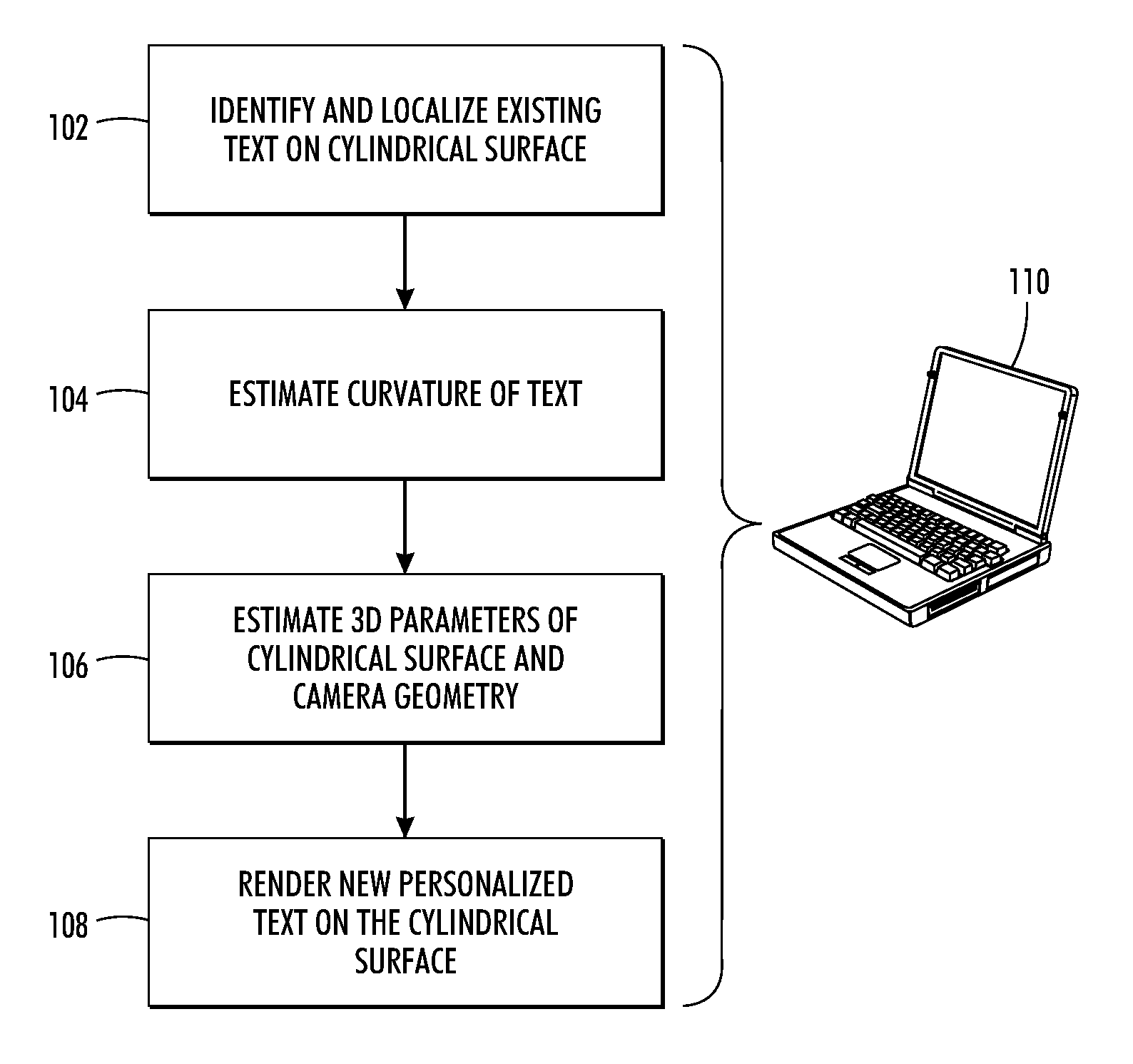

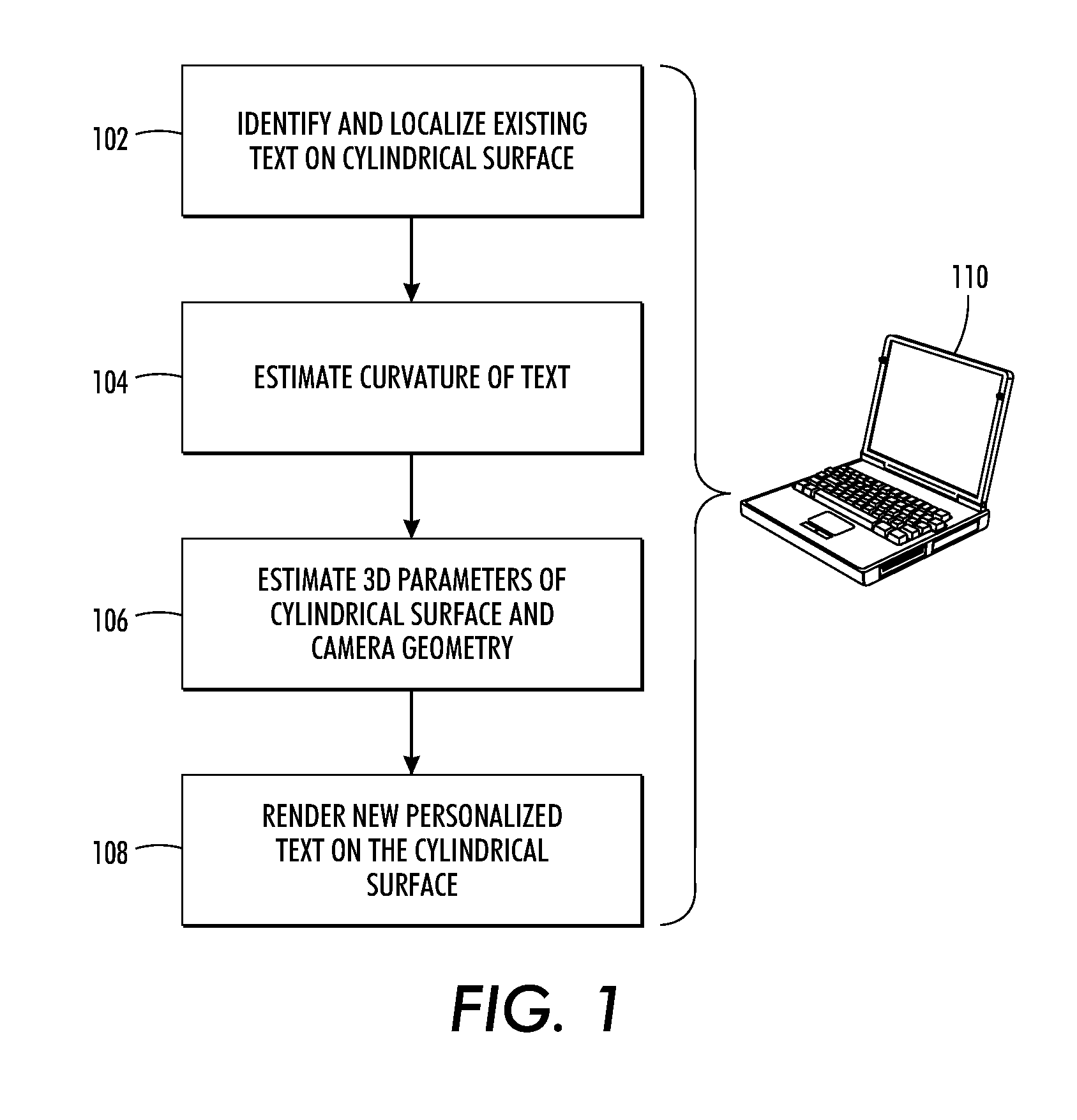

Rendering personalized text on curved image surfaces

InactiveUS20120146991A1Drawing from basic elementsCharacter and pattern recognitionPinhole camera modelPersonalization

As set forth herein, a computer-implemented method facilitates replacing text on cylindrical or curved surfaces in images. For instance, the user is first asked to perform a multi-click selection of a polygon to bound the text. A triangulation scheme is carried out to identify the pixels. Segmentation and erasing algorithms are then applied. The ellipses are estimated accurately through constrained least squares fitting. A 3D framework for rendering the text, including the central projection pinhole camera model and specification of the cylindrical object, is generated. These parameters are jointly estimated from the fitted ellipses as well as the two vertical edges of the cylinder. The personalized text is wrapped around the cylinder and subsequently rendered.

Owner:XEROX CORP

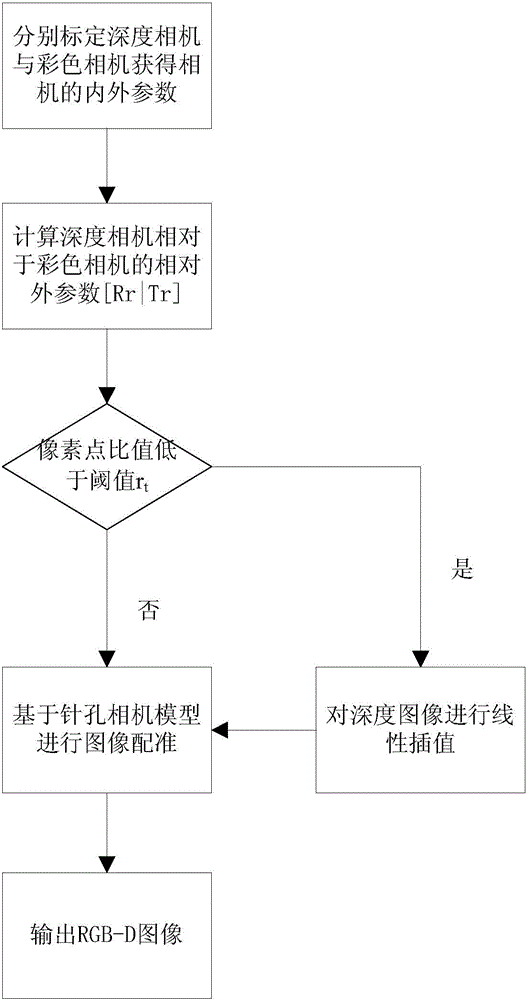

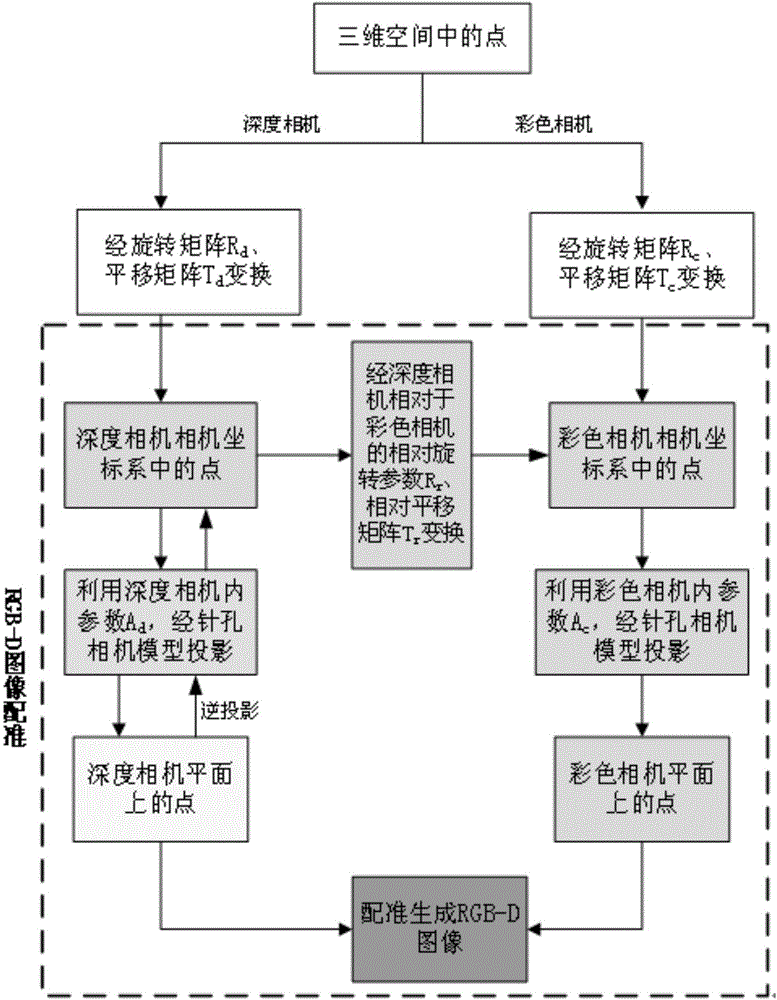

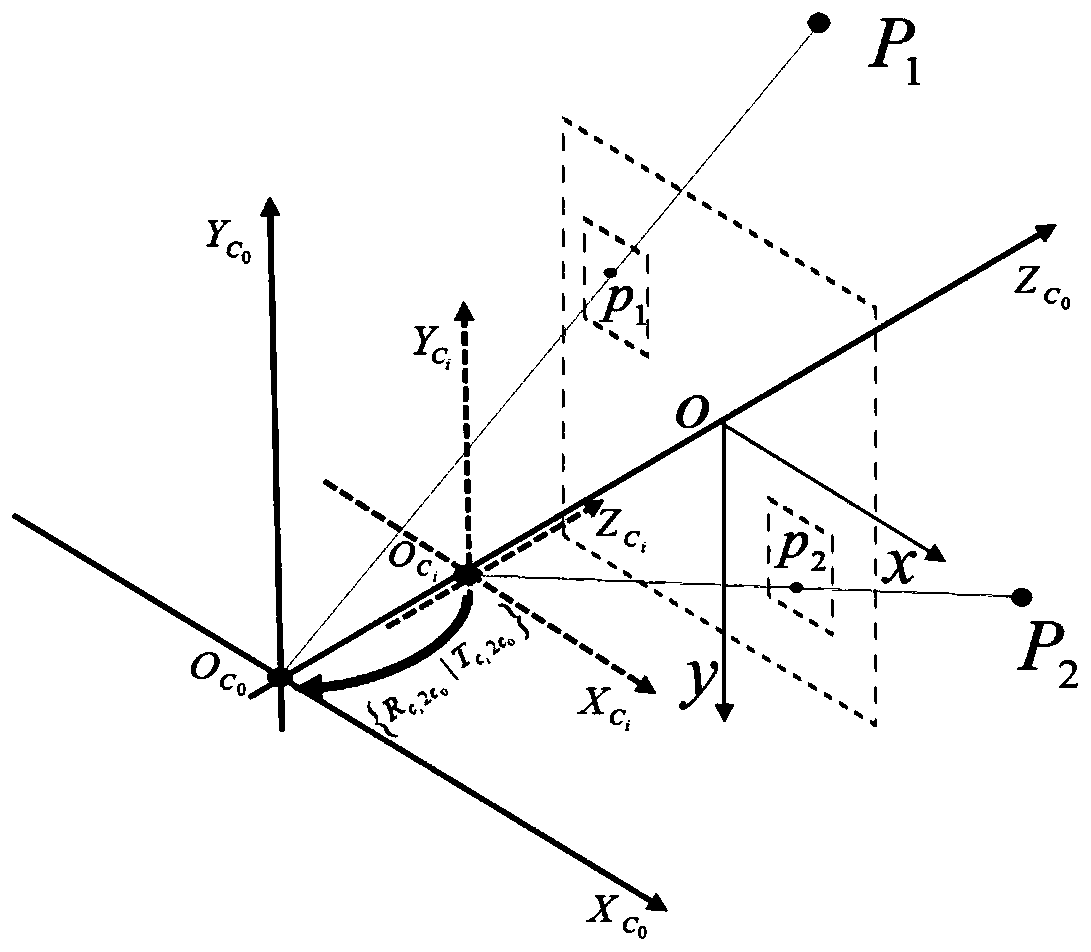

RGB-D image acquisition method

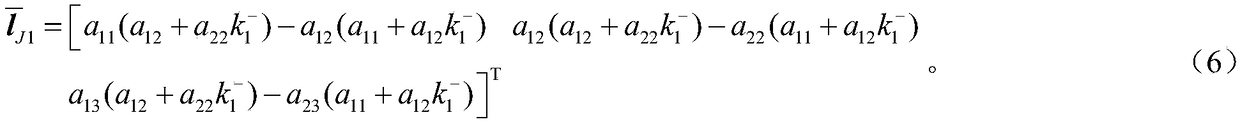

ActiveCN104463880AQuality improvementPracticalImage enhancementImage analysisPinhole camera modelColor image

The invention discloses an RGB-D image acquisition method. The RGB-D image acquisition method includes the steps that a depth camera is calibrated to obtain internal parameters Ad and external parameters [Rd|Td] of the depth camera, a color camera is calibrated to obtain internal parameters Ac and external parameters [Rc|Tc] of the color camera, and relative external parameters [Rr|Tr] of the depth camera under a camera coordinate system of the color camera are calculated; a depth image and a color image of one photographic field are obtained through the depth camera and the color camera respectively, the obtained Ad, the obtained Ac and the obtained [Rr|Tr] are used for projecting points on the depth image to the color image based on a pinhole camera model, registering of the depth image and the color image is carried out, and a registered RGB-D image is obtained. The RGB-D image acquisition method has the good application value for obtaining the RGB-D image in the outdoor environment.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

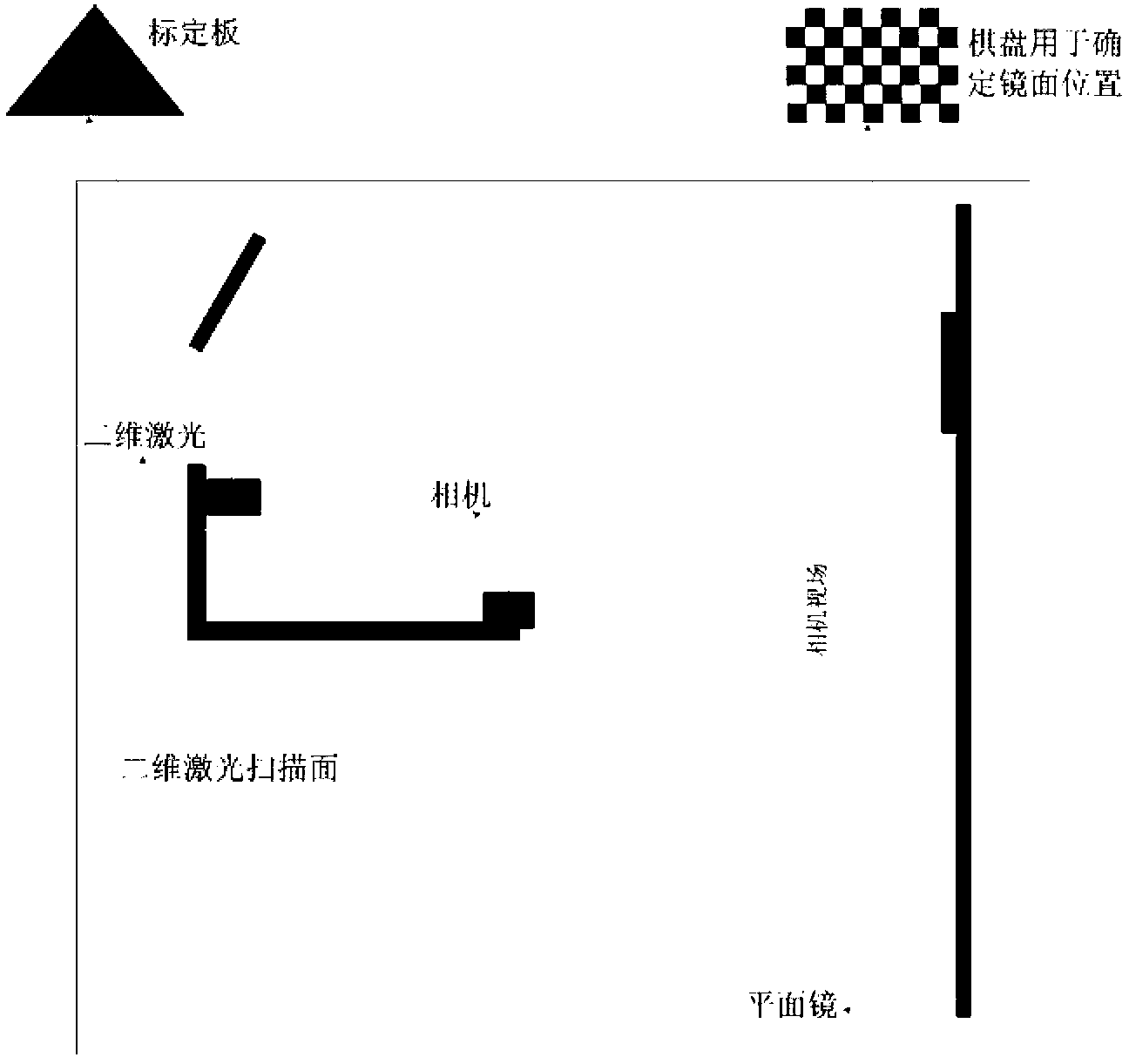

Calibrating method for two-dimensional laser and camera without overlapped viewing fields

InactiveCN103177442AExpand the field of viewHigh degree of automationImage analysisPinhole camera modelCamera image

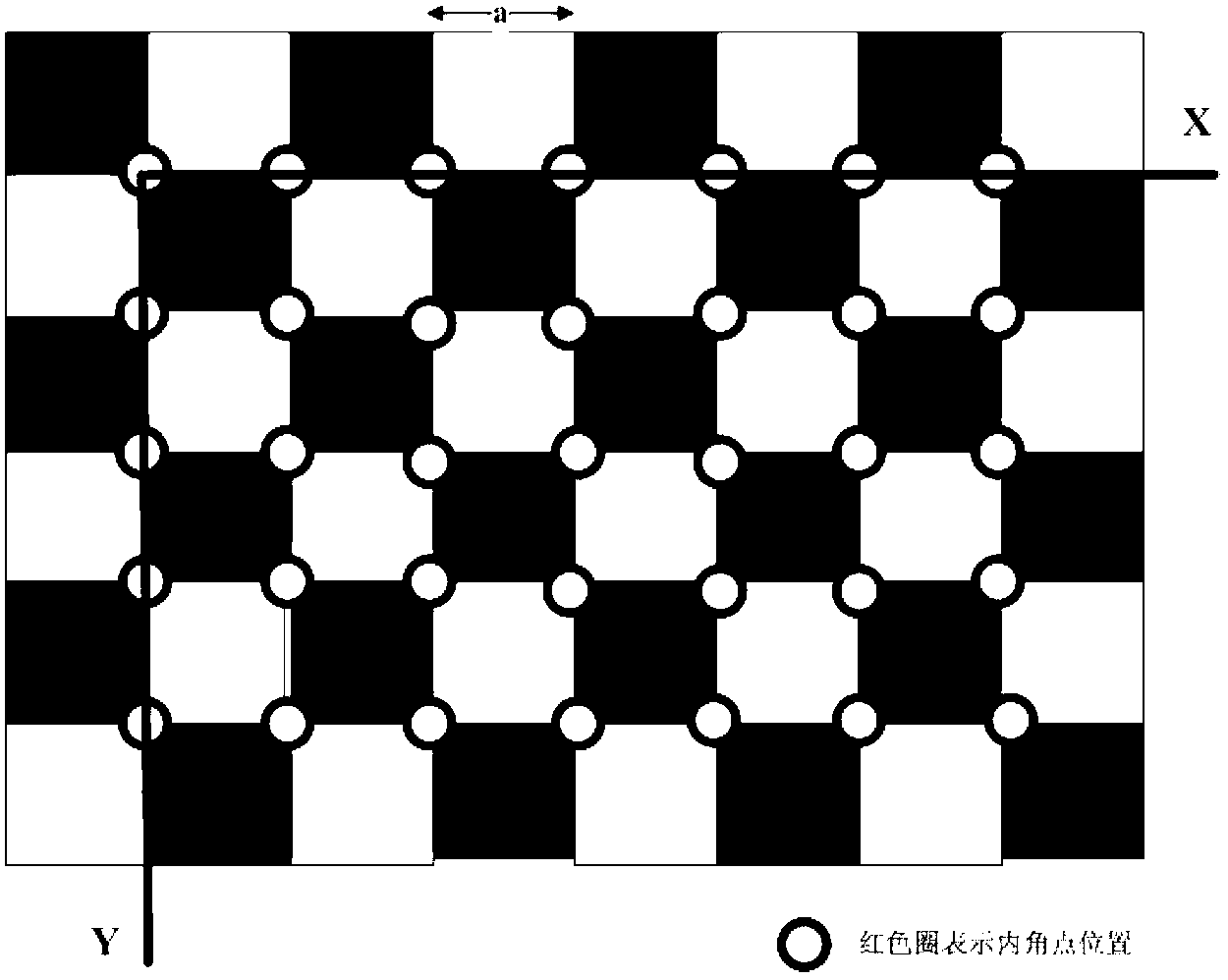

The invention discloses a calibrating method for two-dimensional laser and a camera without overlapped viewing fields. A calibrating system comprises a two-dimensional laser scanner, the camera, a plane mirror and the like. The calibrating method includes calibrating intrinsic parameters of the camera by the aid of the traditional chessboard table via a pinhole camera model; reasonably configuring the laser scanner, the camera and the plane mirror, adhering the black and white chessboard table on the plane mirror, locating the chessboard table within the range of a viewing field of the camera and determining a transformation matrix between a coordinate system of the chessboard and a coordinate system of the camera; determining a transformation relation between the coordinate system of the chessboard and a coordinate system of a camera image reflected in the mirror according to a symmetric relation of mirror surface images so as to acquire a transformation relation between the actual camera and the camera image reflected in the mirror; performing observation at selected sufficient frequency by changing the position and gestures of a black triangular calibrating plate to acquire sufficient observation samples, estimating by a Gauss-Newton iterative process to acquire a transformation matrix between the coordinate system of the camera image and the two-dimensional laser scanner; and acquiring a rotation and translation transformation matrix between the coordinate system of the camera and a coordinate system of the two-dimensional laser scanner via relations between positions and gestures of the camera and the camera image and relations between positions and gestures of the camera image and the two-dimensional laser scanner.

Owner:BEIJING UNIV OF POSTS & TELECOMM

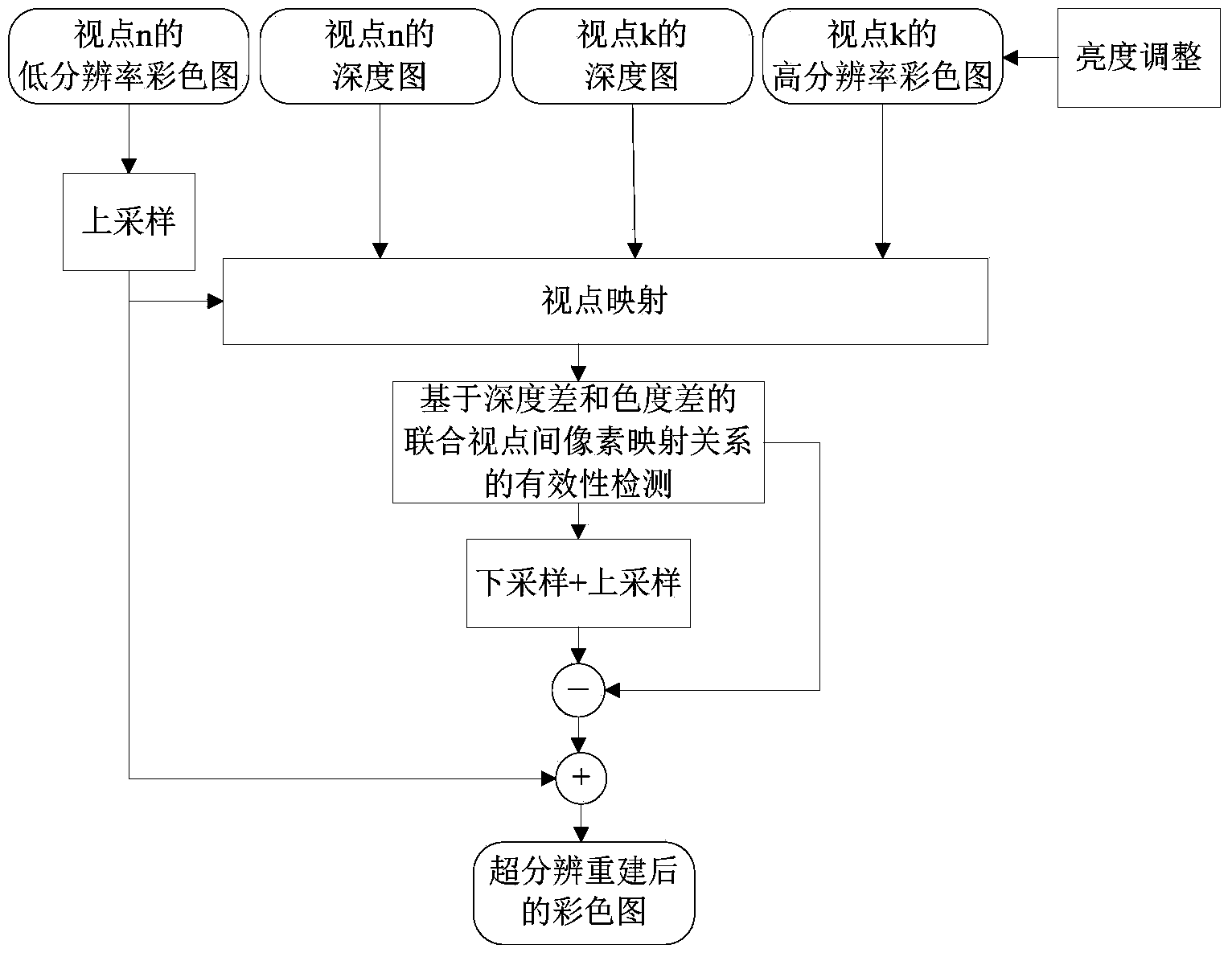

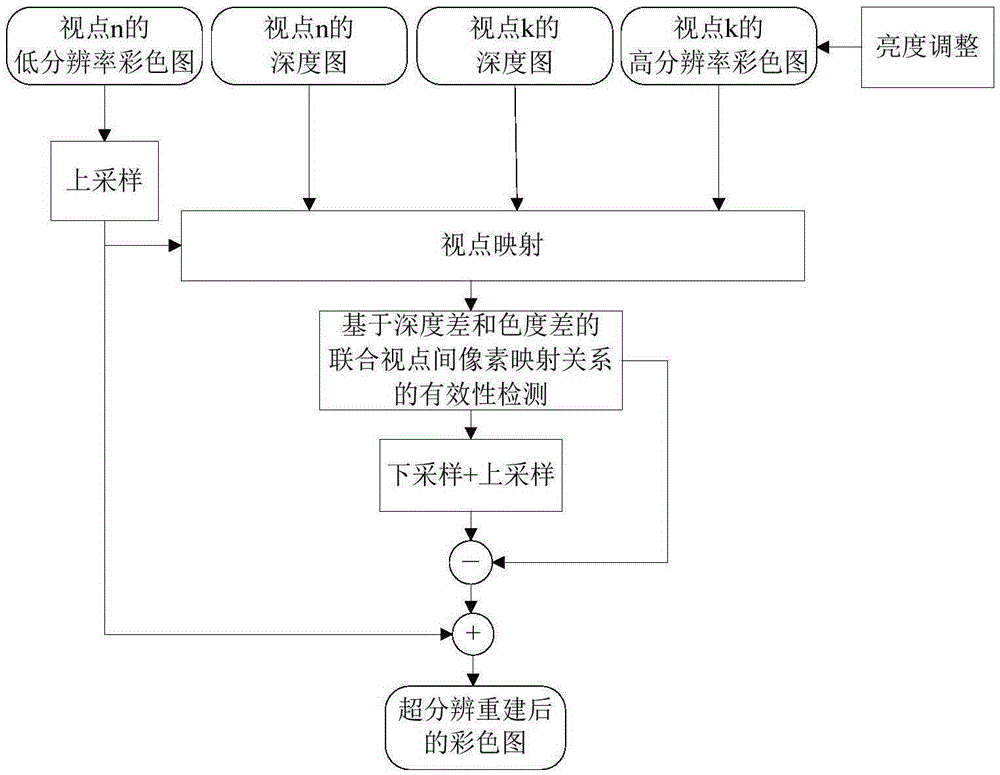

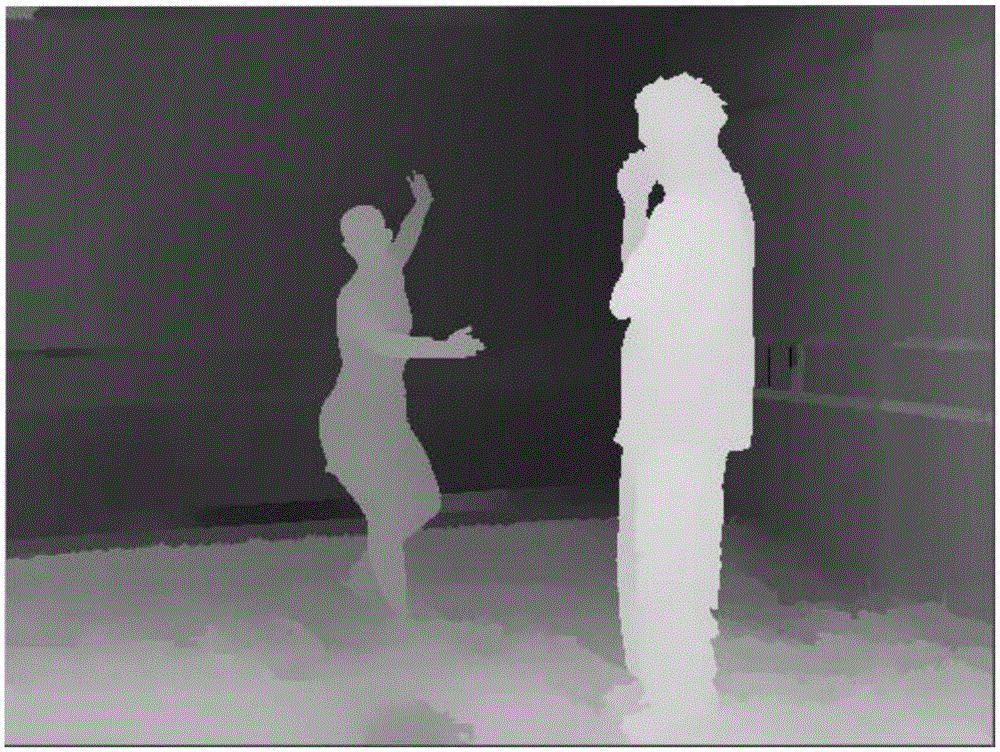

Multi-view-point image super-resolution method based on deep information

InactiveCN104079914AQuality improvementReduce computational complexitySteroscopic systemsColor imagePinhole camera model

The invention provides a multi-view-point image super-resolution method based on deep information. The multi-view-point image super-resolution method mainly solves the problem that in the prior art, the edge artifact phenomenon occurs when super-resolution reconstruction is conducted on a low-resolution view point image. The method includes the steps of mapping a high-resolution colored image of a view point k on the image position of a view point n according to a pinhole camera model through the deep information, related camera parameters and a backward projection method, conducting effectiveness detection based on pixel mapping relations between the joint view points of the depth differences and the color differences on the projected image, only reserving pixel points conforming to effectiveness detection so as to prevent luminance differences between different view points from influencing illumination regulation conducted on the colored image of the view point k in advance, separating out high-frequency information of the projected image, and adding the high-frequency information to the image obtained after the low-resolution colored image of the view point n is sampled so as to obtain a super-resolution reconstructed image of the view point n. By means of the method, the edge artifact phenomenon is effectively relieved when super-resolution reconstruction is conducted on the low-resolution view point, and the quality of the super-resolution reconstructed image is improved.

Owner:SHANDONG UNIV

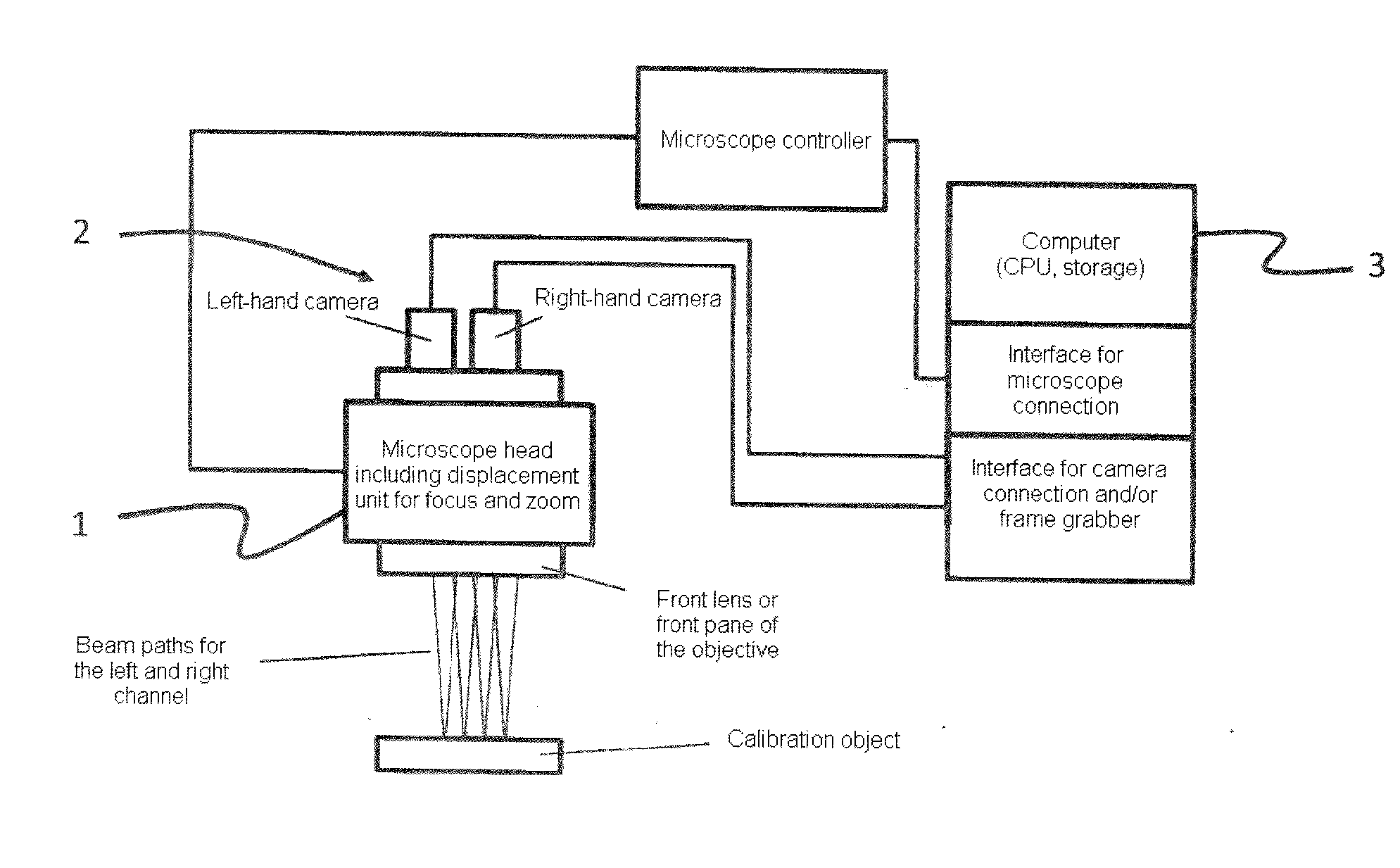

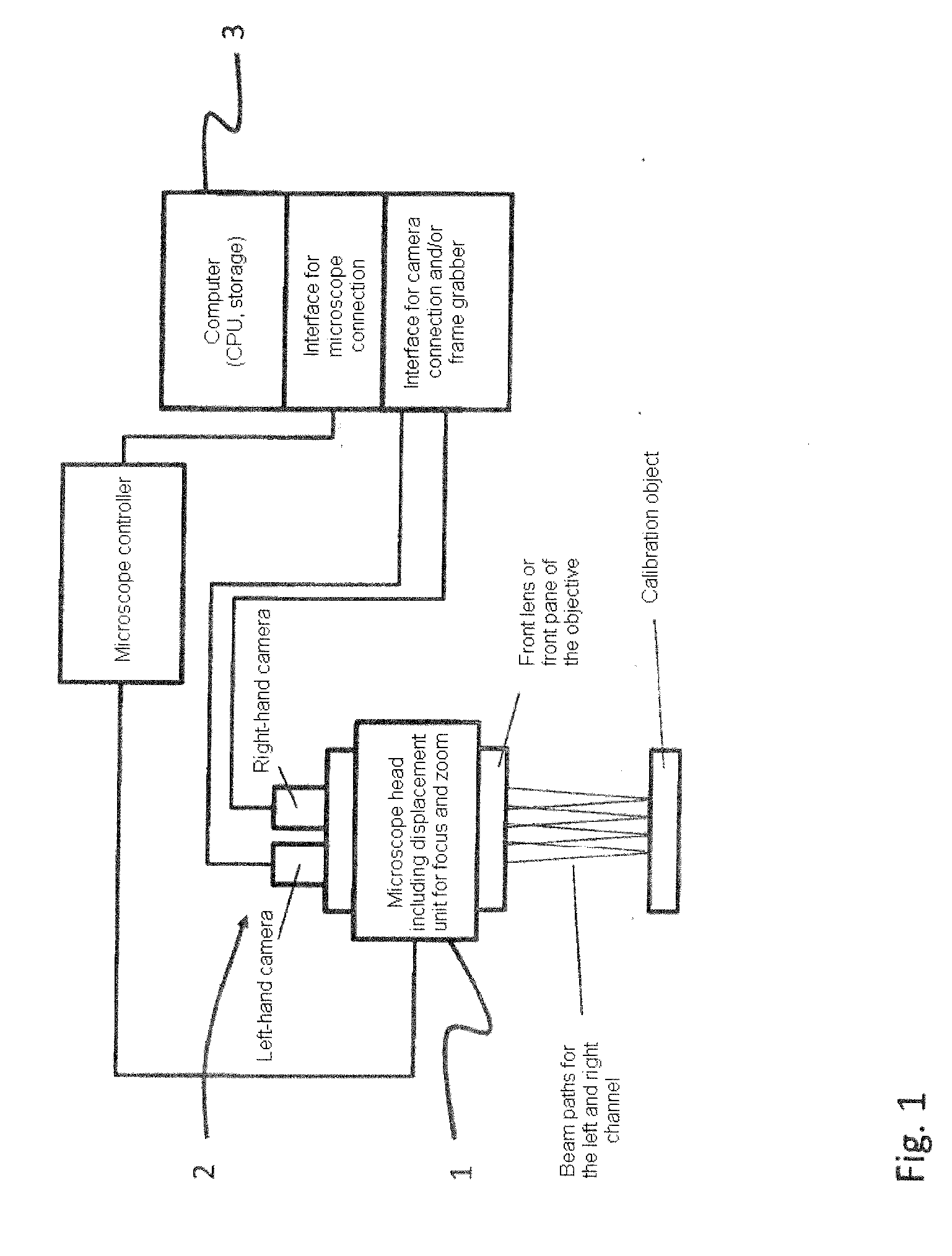

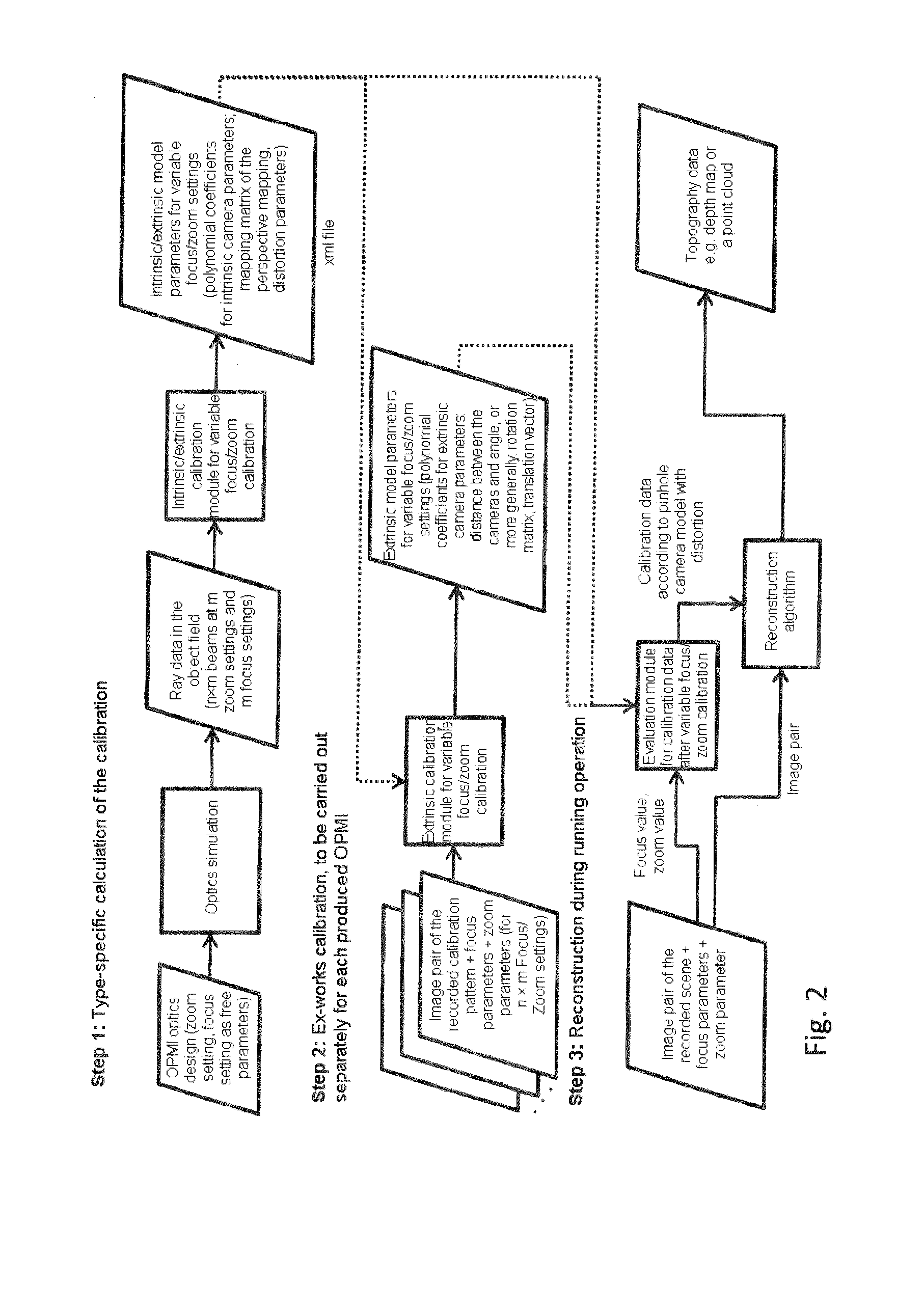

Method for the image-based calibration of multi-camera systems with adjustable focus and/or zoom

InactiveUS20150346471A1Improve accuracyImprove robustnessImage enhancementTelevision system detailsPinhole camera modelMulti camera

The invention relates to a method for the image-based calibration of multi-camera systems with adjustable focus and / or zoom, including the following method steps: calculating a number of beams from an optics simulation for different focus and / or zoom settings or combinations of focus and zoom settings; reading out the focus and / or zoom settings and storing these values with the beams such that a unique assignment is ensured; parameterizing a continuous pinhole camera model for extrinsic and intrinsic calibration of different zoom and / or focus settings of the multi-camera system on the basis of the data from the optics simulation.

Owner:CARL ZEISS MEDITEC AG

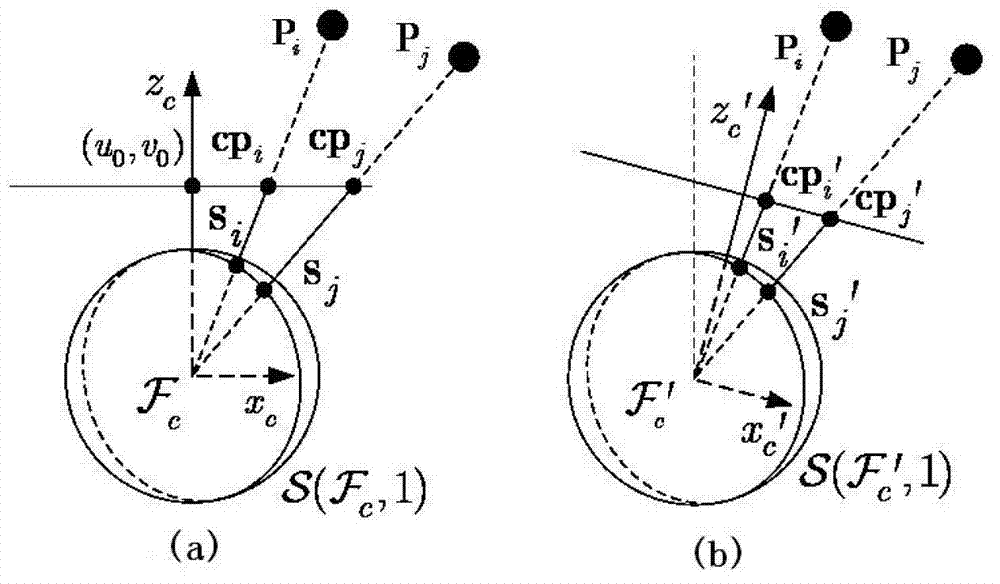

Pure rotation camera self-calibration method based on spherical projection model

ActiveCN102855620AImprove calibration accuracyImprove robustnessImage analysisPinhole camera modelPinhole camera

The invention discloses a novel pure rotation self-calibration method based on a spherical projection model, aims at internal parameter calibration tasks of a pinhole camera, and particularly relates to a pure rotation camera self-calibration method based on the spherical projection model. A spherical projection model of the pinhole camera is constructed, and that the distance between a space stationary point and a corresponding spherical projection point is consistent during pure rotation of the camera is analyzed; a constrain equation set of internal parameters is constructed according to the property; and the equation set is solved by a nonlinear least square algorithm. Compared with prior methods, internal parameters can be obtained by using corresponding point characteristics on two images, complicated matrix numeric calculation is not needed, only four matching points on the two images can complete calibration of four internal parameters of the camera, and the method is suitable for on-line and off-line calibration. Simulation and experiment results show that the method is simple and practical, high in calibration accuracy and good in robustness for image noise and translation noise.

Owner:NANKAI UNIV

Target space positioning method based on single camera

ActiveCN104574415ATo achieve the effect of real-time positioningImprove practicalityImage enhancementImage analysisCamera lensSpatial positioning

The invention discloses a target space positioning method based on a single camera, and belongs to the technical field of intelligent video monitoring. Aiming at the problem that an existing target space positioning technology based on a linear camera model does not consider imaging errors caused by lens distortion, based on the isotropous characteristic of lens distortion, when an ideal linear camera model is used for target space positioning, space positioning auxiliary information such as the position of the camera and geodetic absolute coordinates of a reference point and an identification point of the scene center are obtained by combining a GPS, the position, in an image, of a target is compensated and corrected, and therefore the actual geodetic absolute coordinates of the target can be obtained fast and accurately. Compared with the prior art, the robustness, the real-time performance and the applicability of the target space positioning method are improved greatly.

Owner:NANJING UNIV OF POSTS & TELECOMM

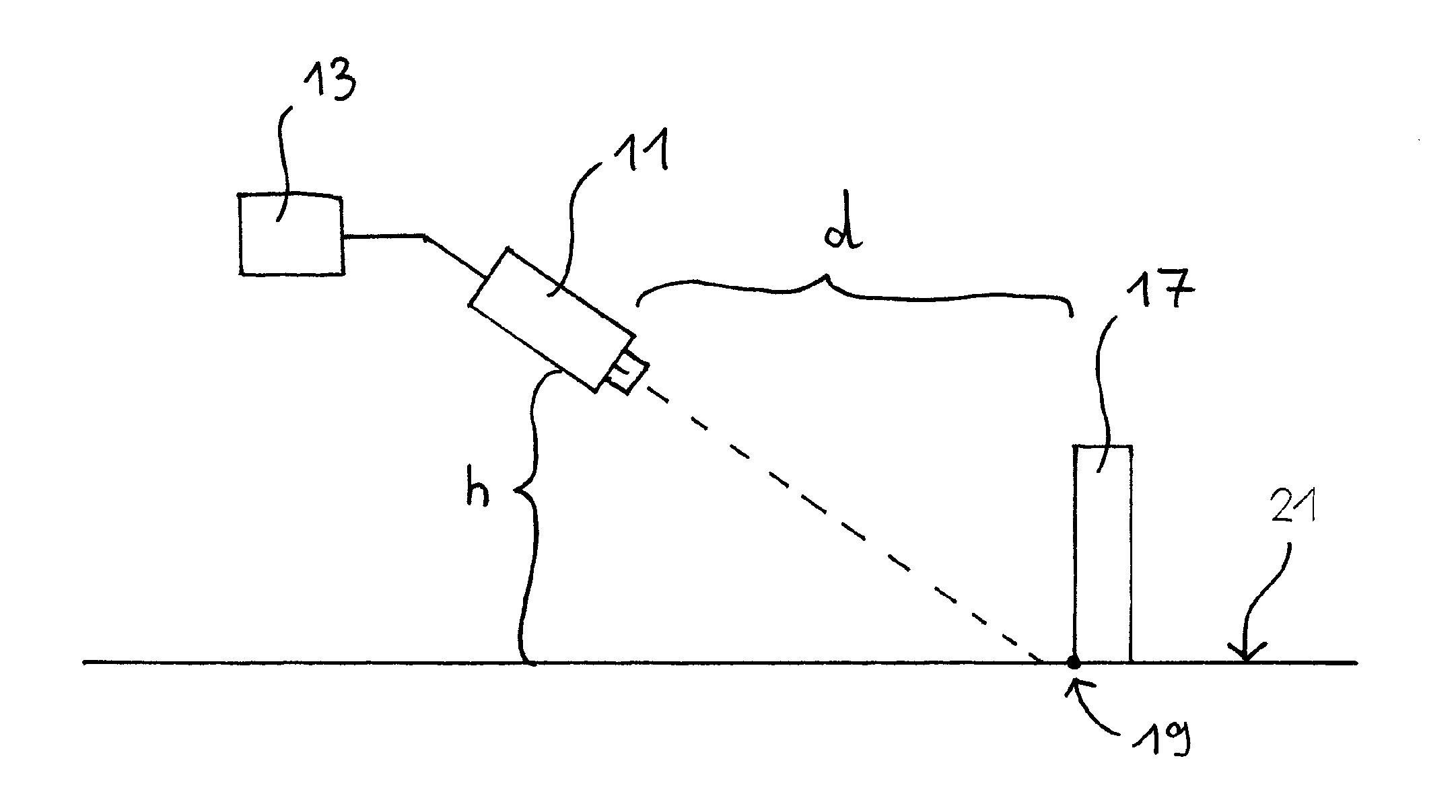

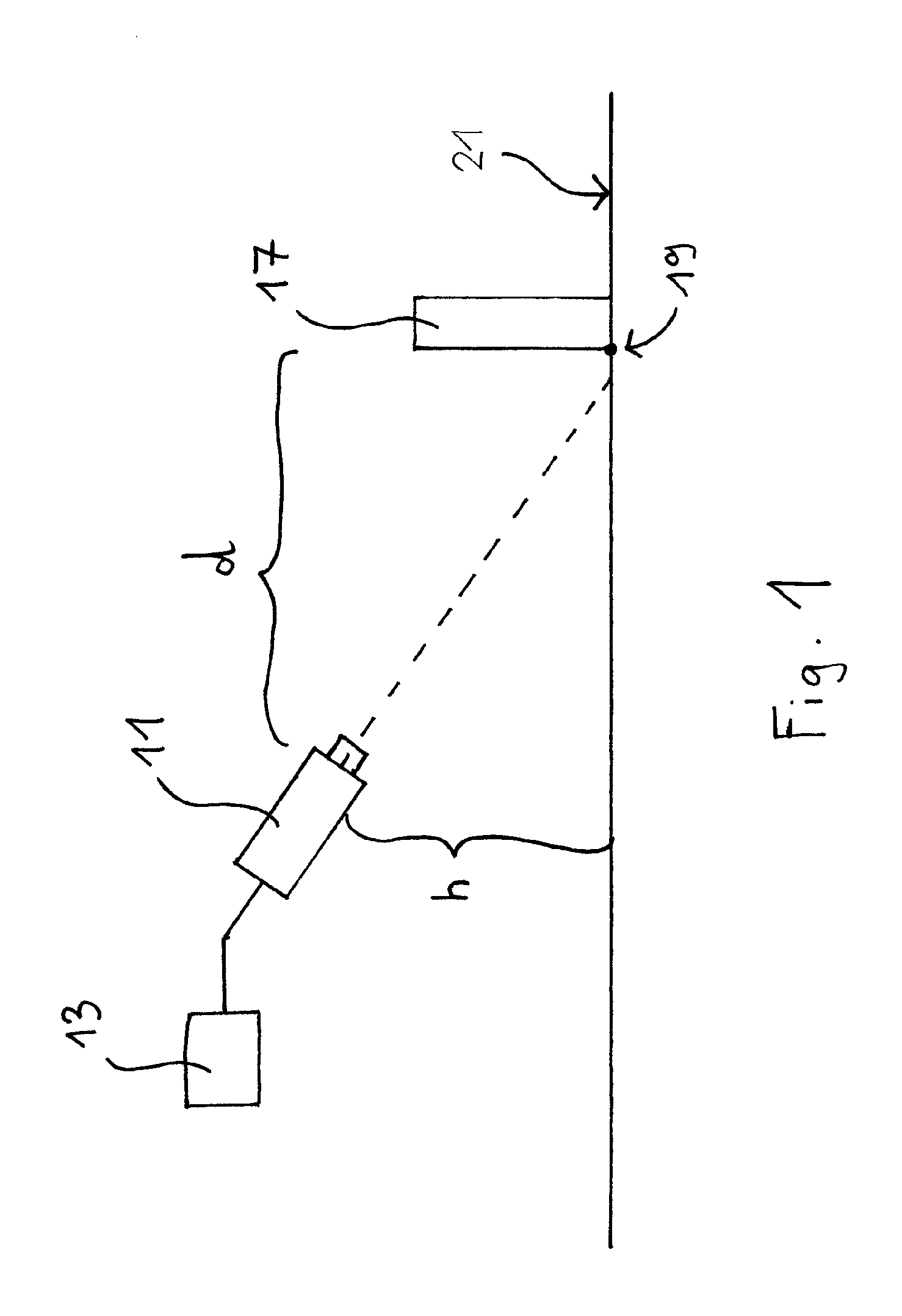

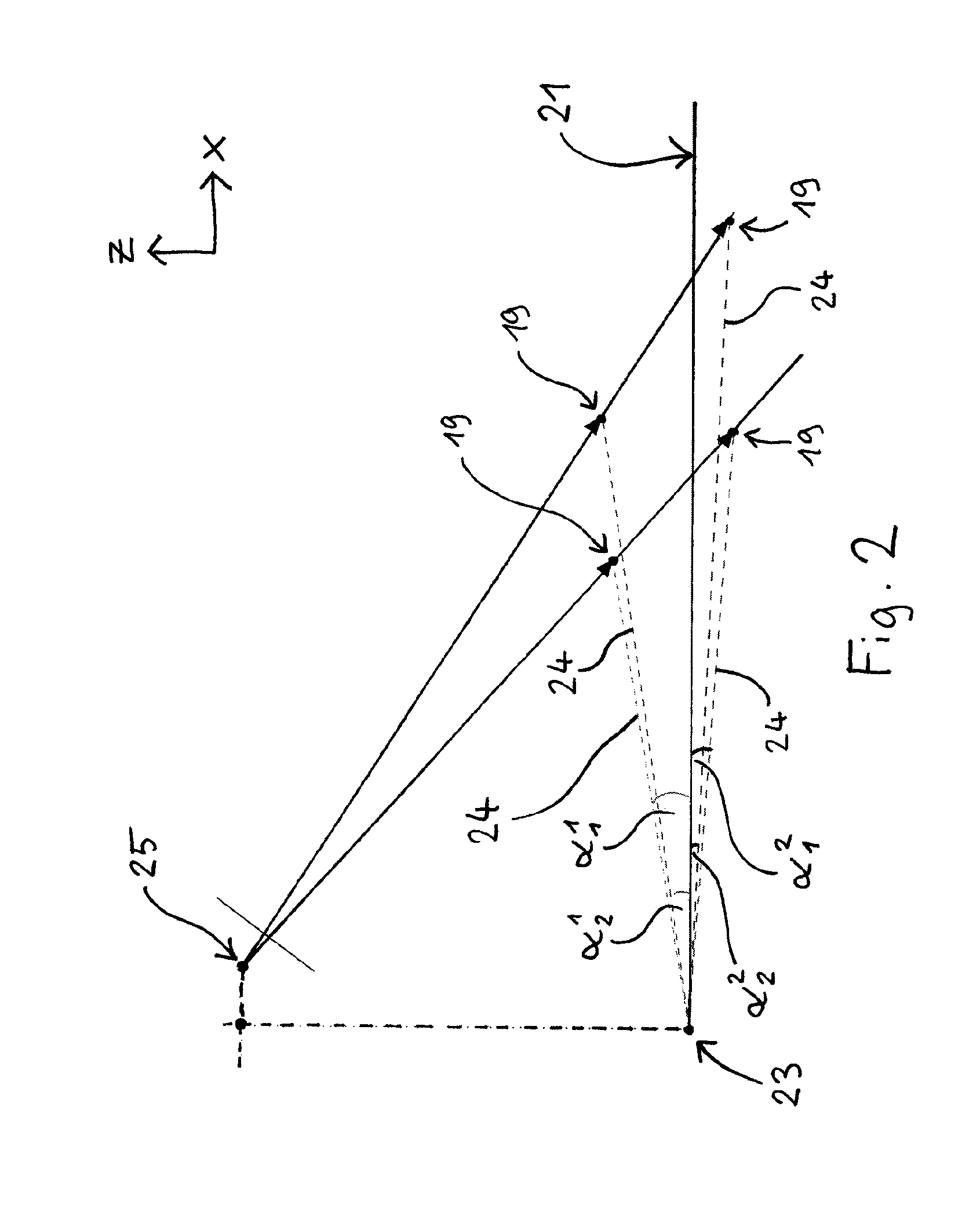

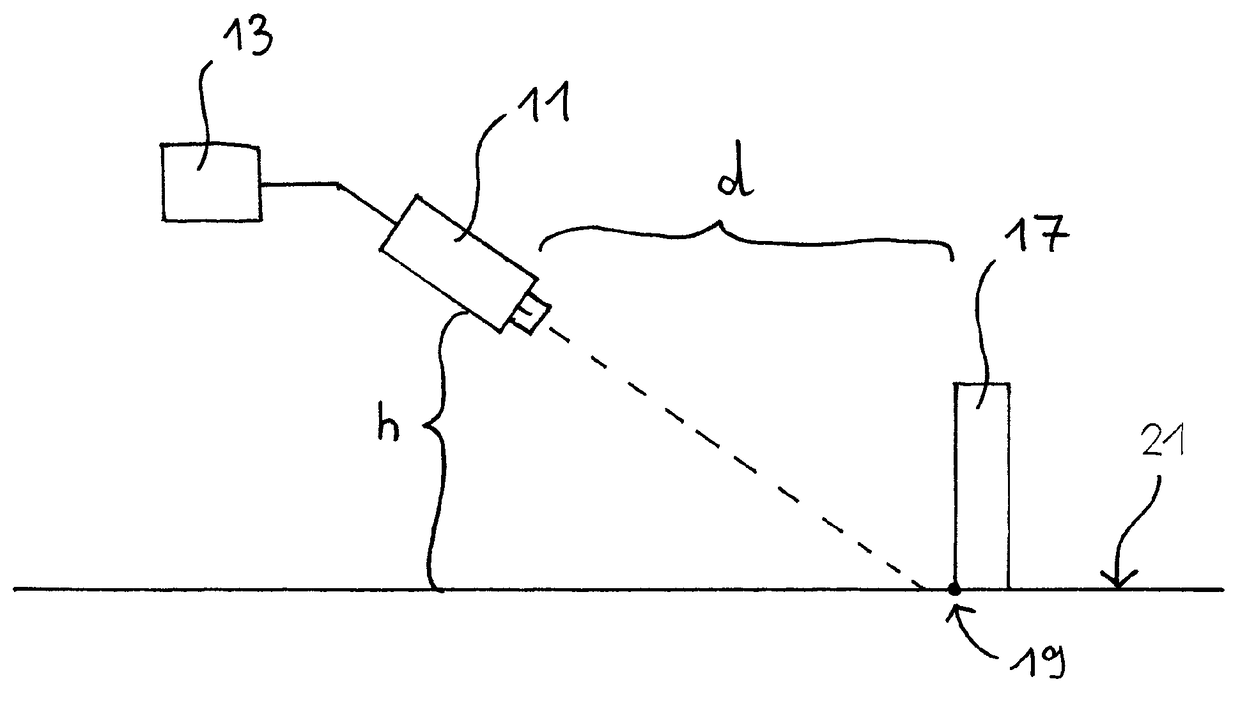

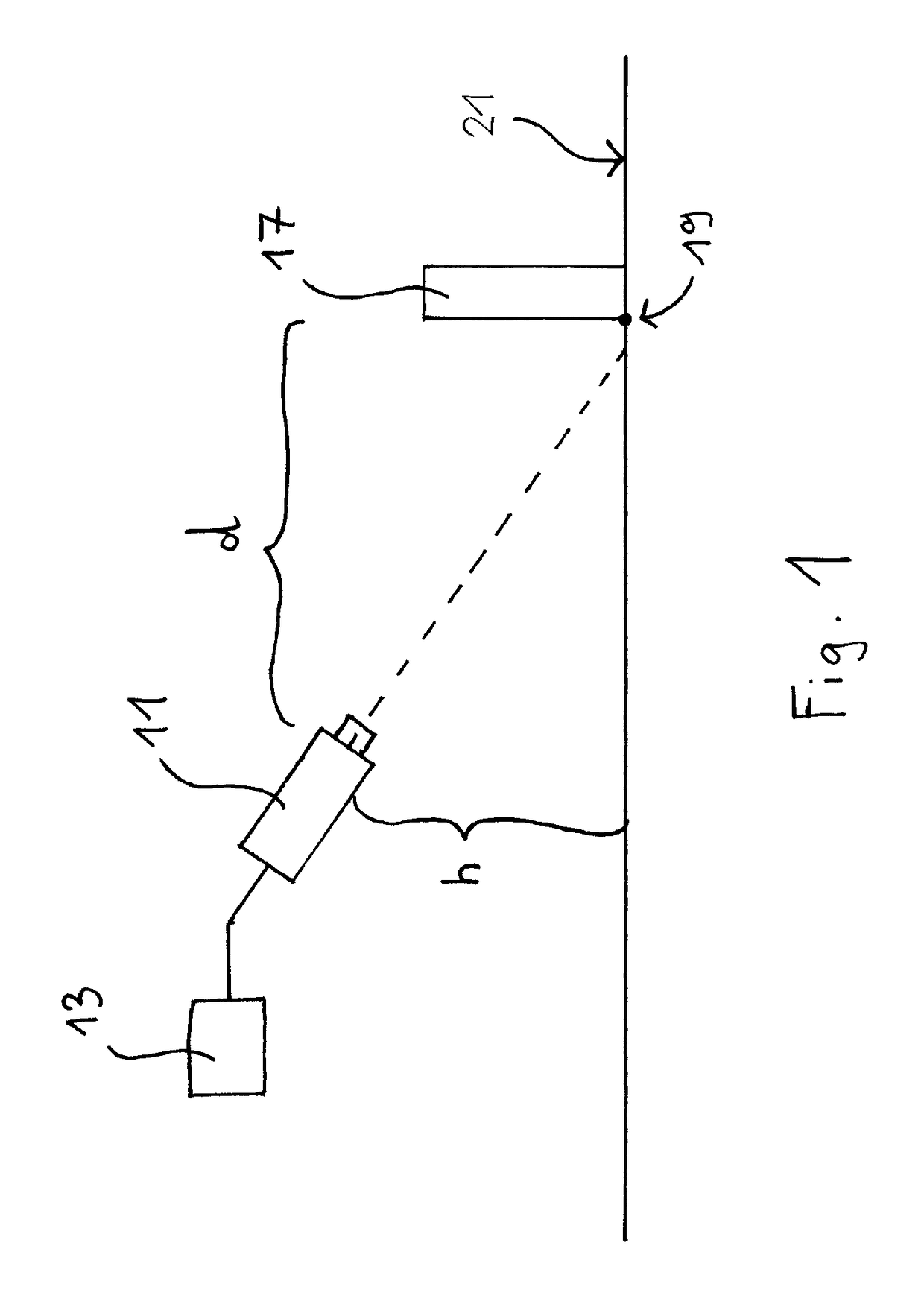

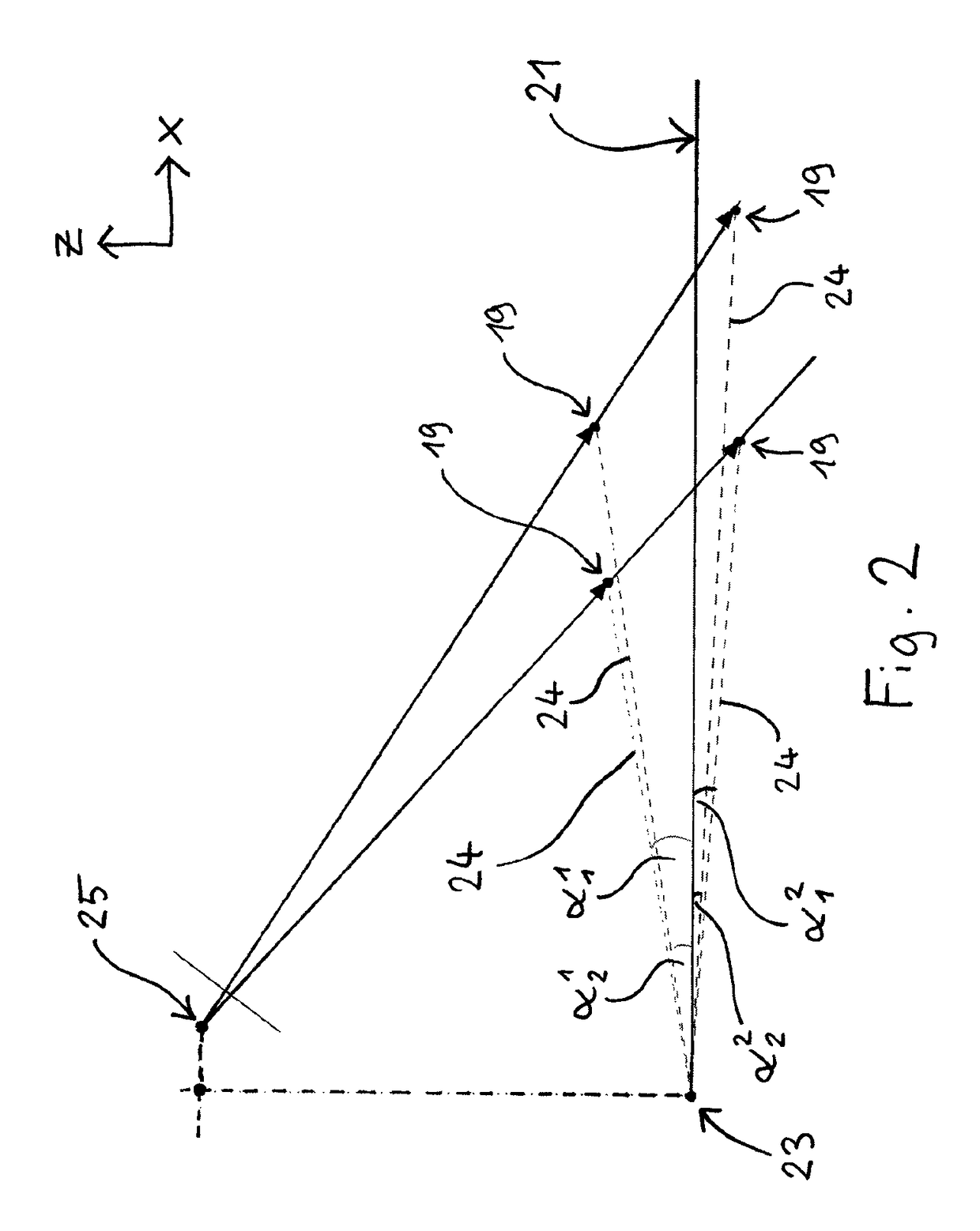

Method To Determine Distance Of An Object From An Automated Vehicle With A Monocular Device

ActiveUS20160180531A1Solve the lack of reliabilitySaving of computing stepTelevision system detailsImage analysisPinhole camera modelPinhole camera

A method of determining the distance of an object from an automated vehicle based on images taken by a monocular image acquiring device. The object is recognized with an object-class by means of an image processing system. Respective position data are determined from the images using a pinhole camera model based on the object-class. Position data indicating in world coordinates the position of a reference point of the object with respect to the plane of the road is used with a scaling factor of the pinhole camera model estimated by means of a Bayes estimator using the position data as observations and under the assumption that the reference point of the object is located on the plane of the road with a predefined probability. The distance of the object from the automated vehicle is calculated from the estimated scaling factor using the pinhole camera model.

Owner:APTIV TECH LTD

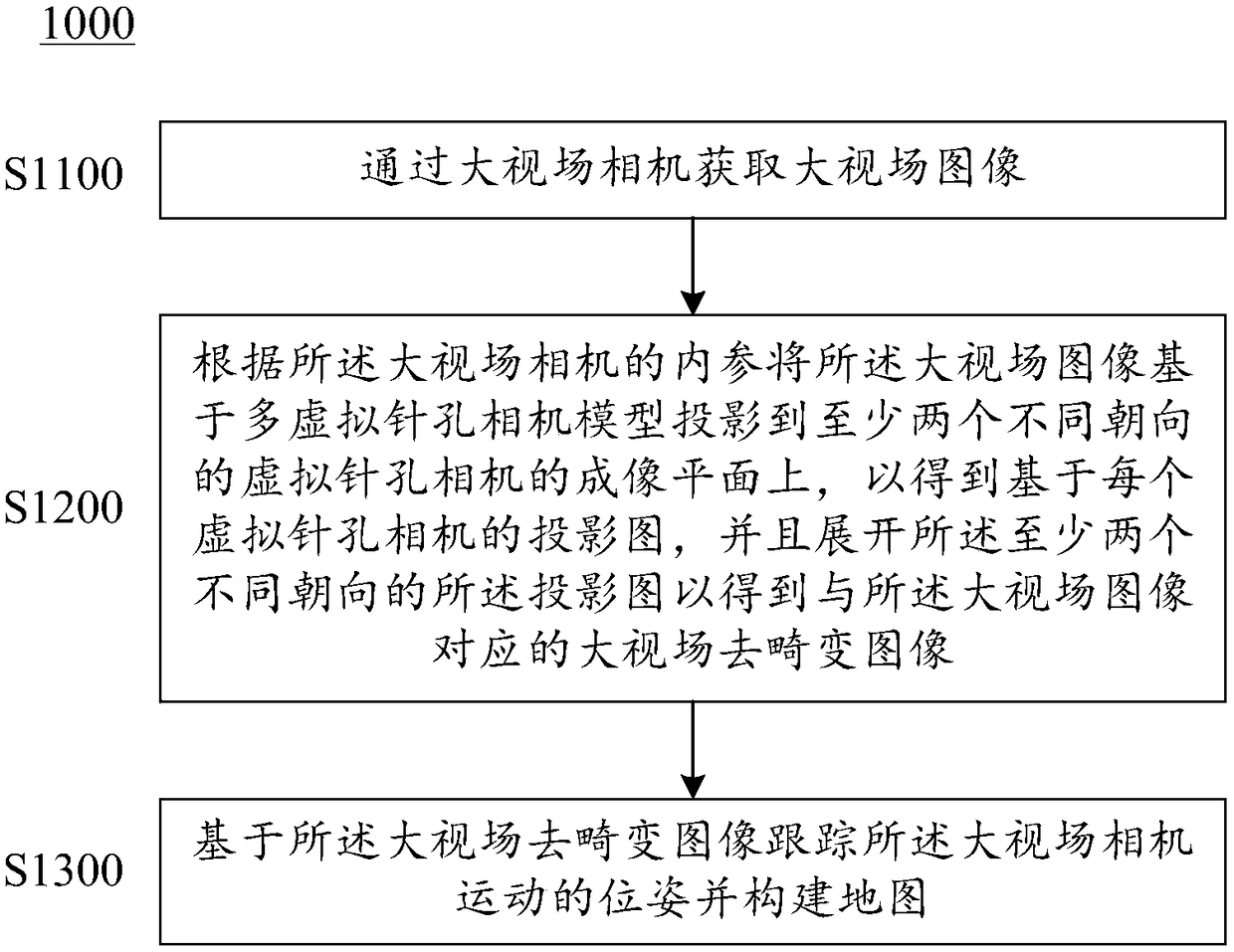

Method and system for simultaneous positioning and mapping, and storage medium

ActiveCN108776976AReserve visionUnaffected by image distortionImage enhancementImage analysisPinhole camera modelPinhole camera

The embodiment of the invention provides a method and system for the simultaneous positioning and mapping, and a storage medium. The method comprises the steps: obtaining a big-view-field image through a big-view-field camera; projecting the big-view-field image to imaging planes of at least two virtual pin hole cameras in different directions based on a multiple virtual pin hole camera model according to the internal parameters of the big-view-field camera, so as to obtain a projection map based on each virtual pin hole camera; unfolding at least two projection maps in different directions, so as to obtain a big-view-field distortion removal image corresponding to the big-view-field image, wherein the multiple virtual pin hole camera model comprises at least two virtual pin hole cameras in different directions, and the centers of the virtual pin hole cameras are coincided with the center of the big-view-field camera; tracking the movement of the big-view-field camera based on the big-view-field distortion removal image, and building a map. Through the above scheme, the method can maintain all view fields of the big-view-field camera, and is not affected by the image distortion.

Owner:UISEE TECH BEIJING LTD

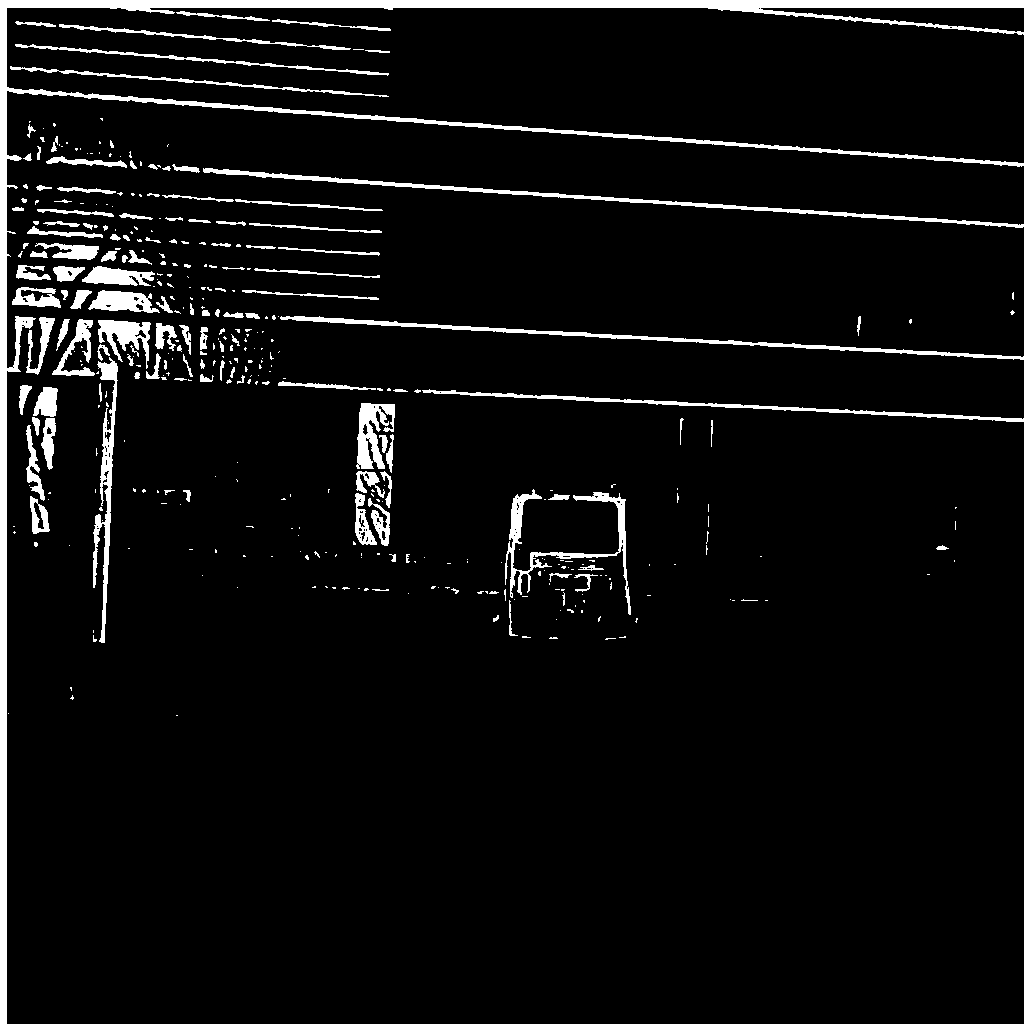

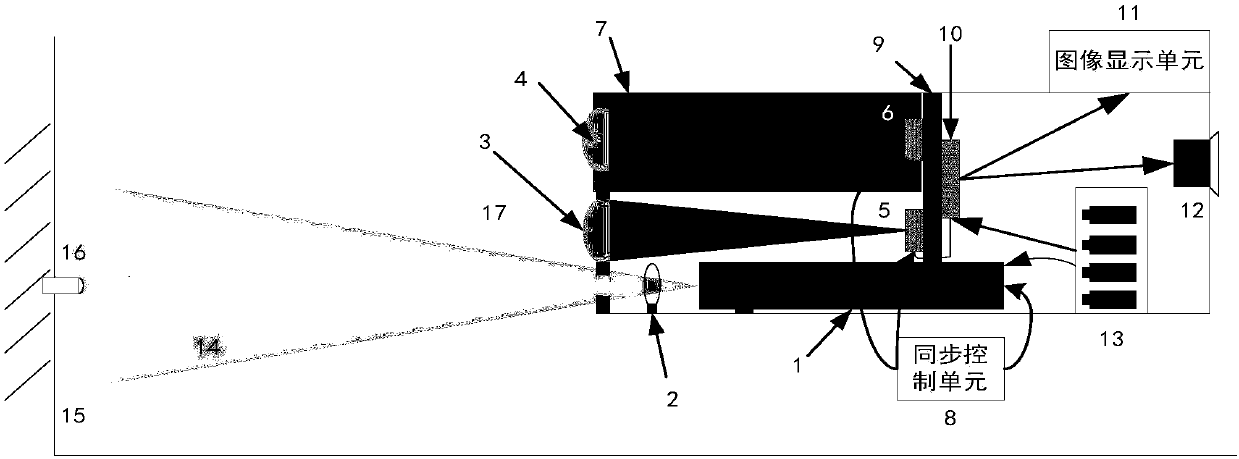

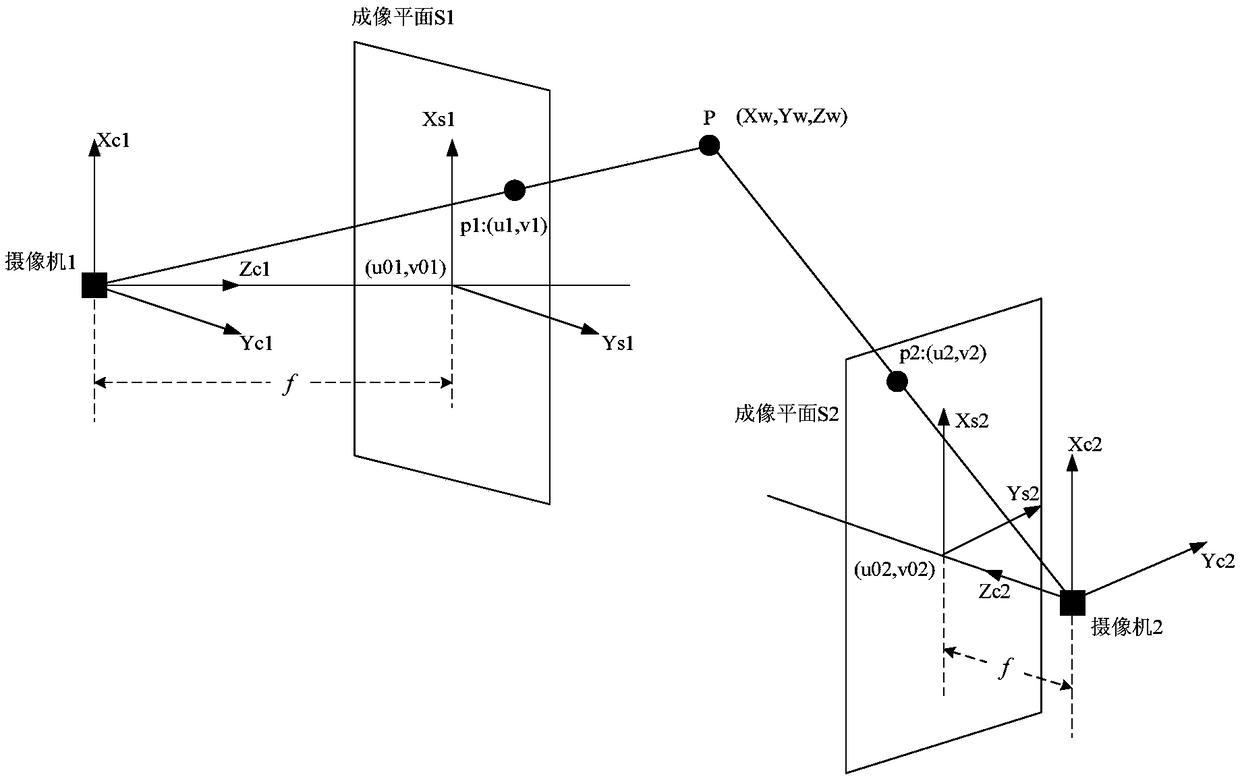

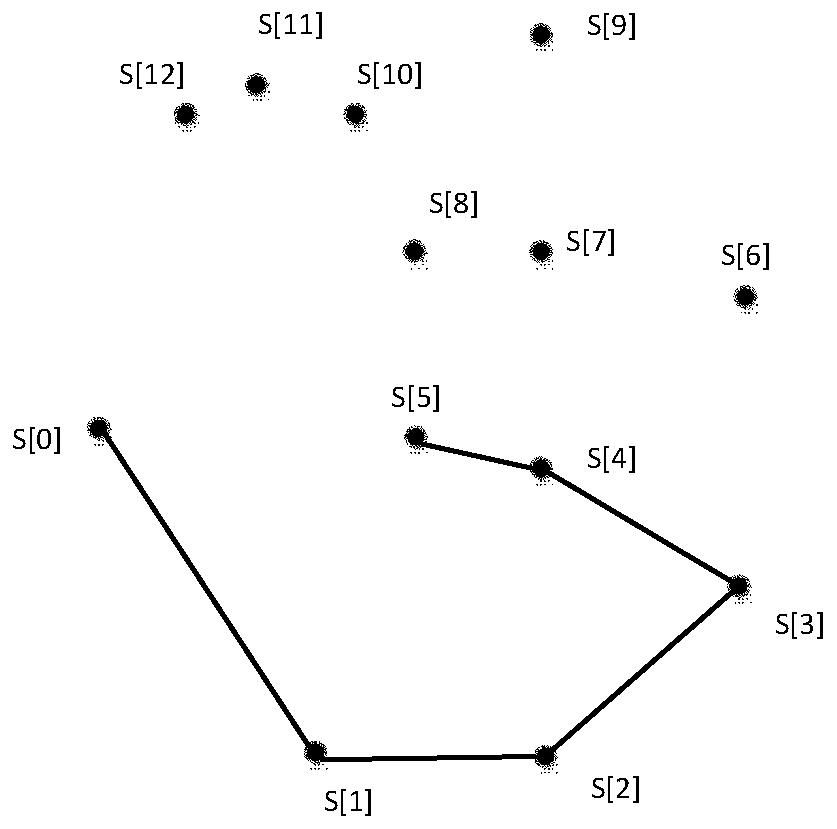

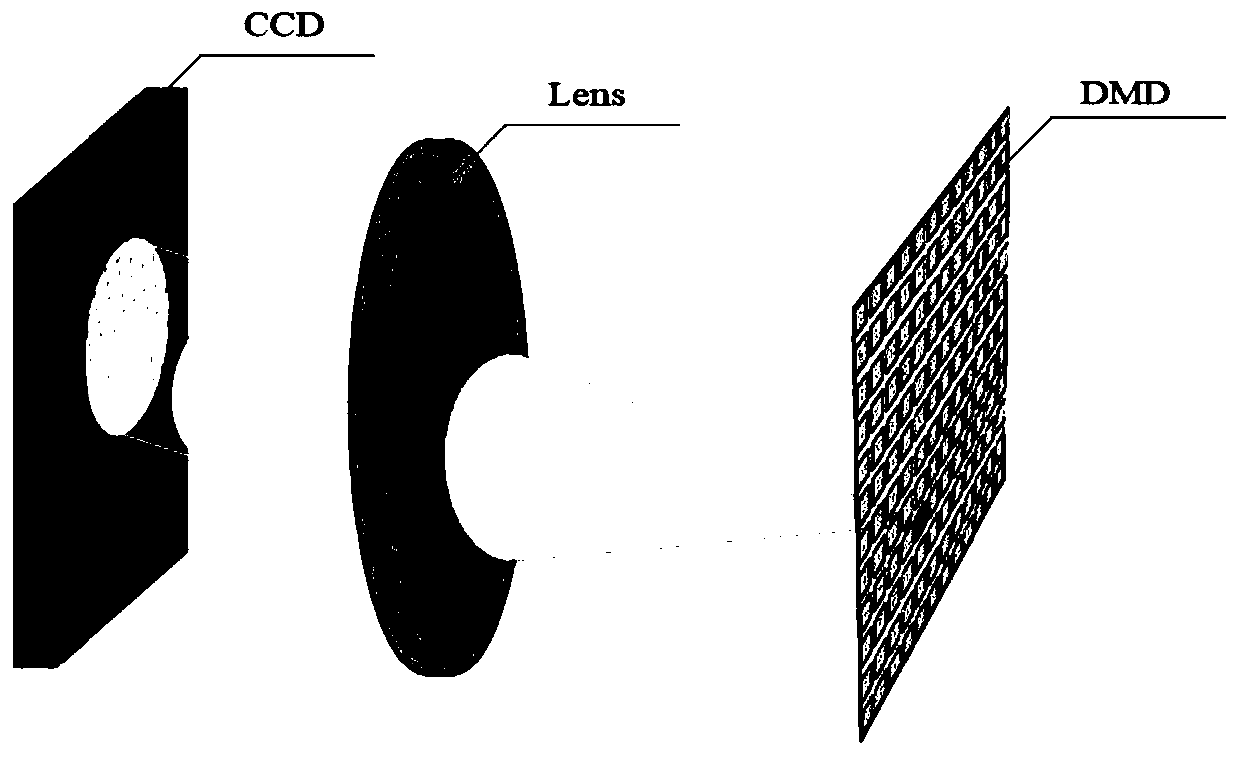

Pinhole camera detecting method based on binocular detection

InactiveCN108320303AEasy to identifyEfficient identificationImage analysisInformation processingPinhole camera model

The invention discloses a pinhole camera detecting method based on binocular detection, which is characterized in that pinhole cameras hidden in a room can be effectively detect and its accurate position can be determined; based on the feature of the cat-eye effect of the pinhole cameras that the reflected light is returned along the original path and the scattering angle is quite small, and by means of an invisible laser beam, suspicious areas in a room with possible pinhole cameras can be scanned; two CCDs having certain transverse distance are adopted to simultaneously detect the reflectedlight of the suspicious area; the imaging time sequence of two CCDs maintain simultaneous, and after the sequence images of the two CCDS undergo difference and information processing, the difference images with high recognition degree can be obtained, and effective identification can be realized. The invention is advantageous in that as the two images obtained are both active detection images, thedifference between the reflected light at background can be little, and the difference between the reflected light of the pinhole cameras can be great, and thereby without too much subsequent information processing, the images aimed by the pinhole camera with high recognition degree can be obtained; the identifying accuracy can be greatly increased, and false-alarm rate can be reduced.

Owner:PLA PEOPLES LIBERATION ARMY OF CHINA STRATEGIC SUPPORT FORCE AEROSPACE ENG UNIV

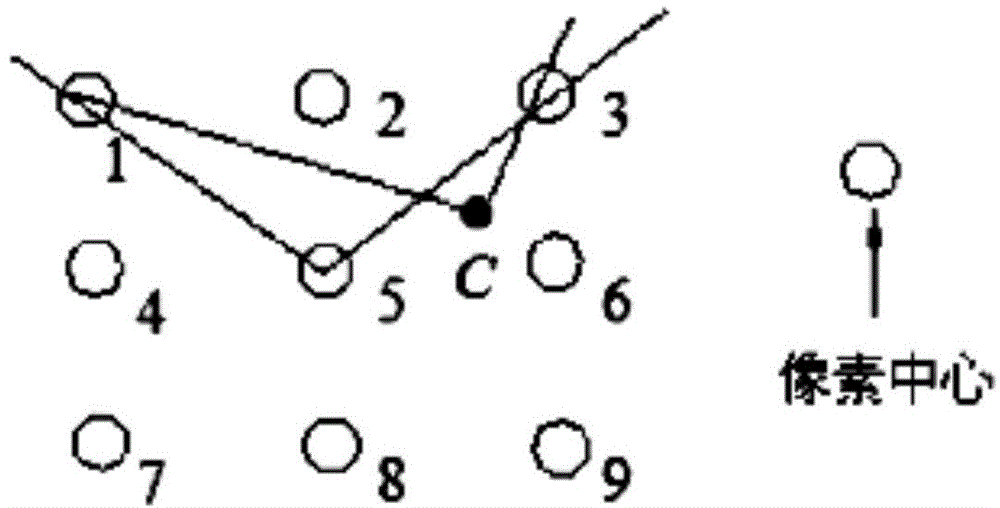

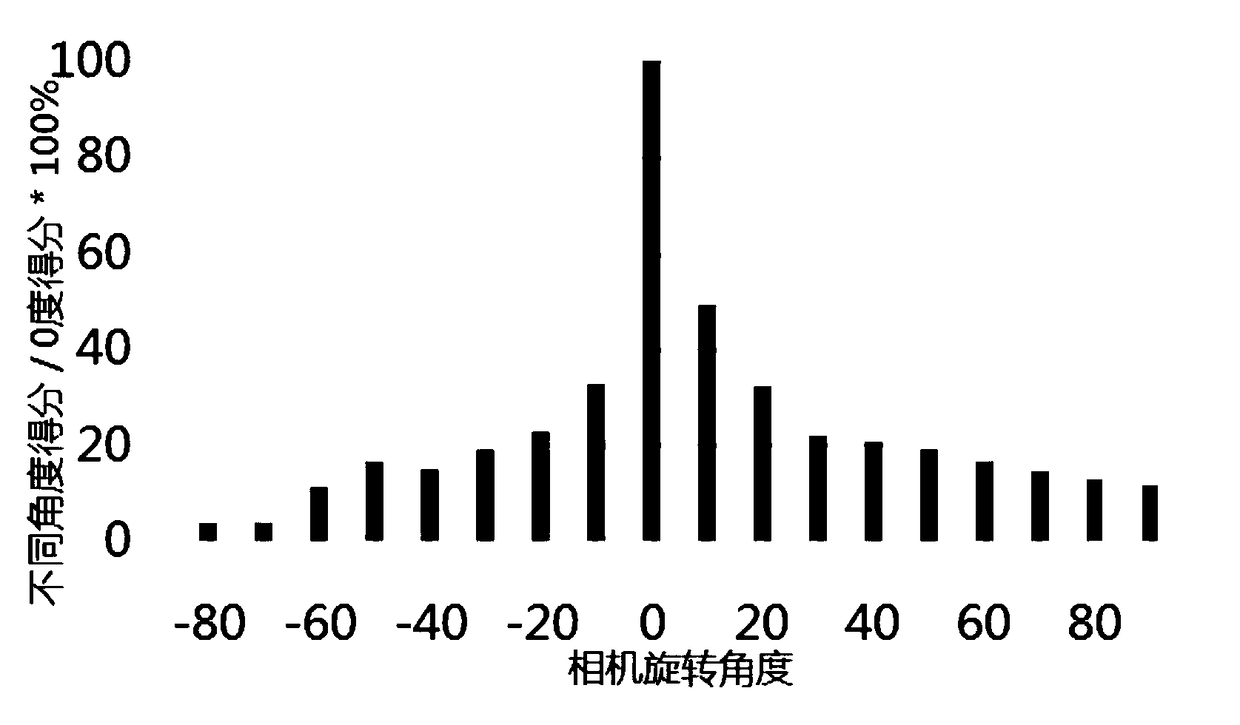

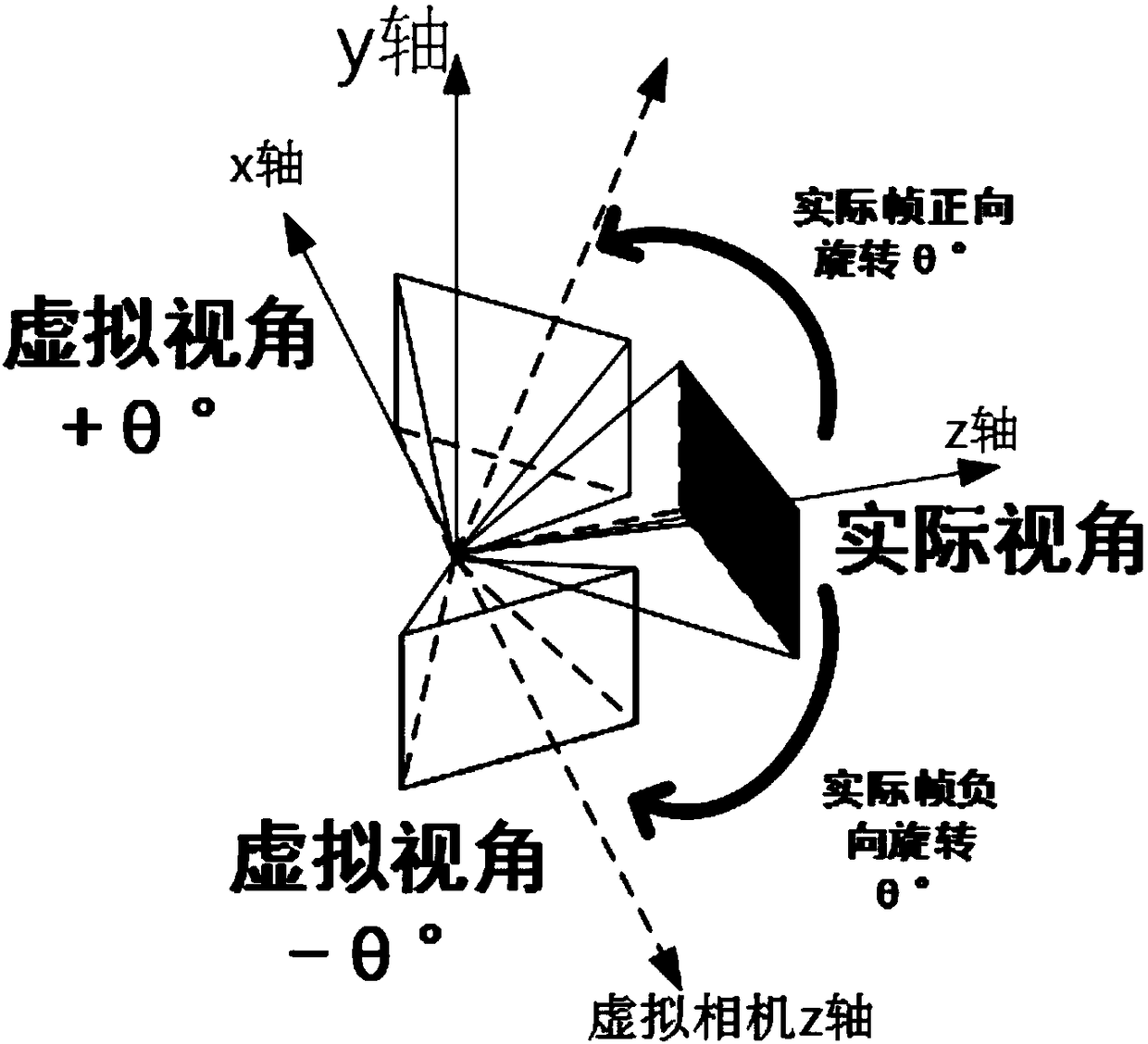

Visual SLAM loopback detection method fusing with geometric information

ActiveCN108537844AImprove the efficiency of loop detectionLow costImage analysisSpecial data processing applicationsPinhole camera modelPoint cloud

The invention discloses a visual SLAM loopback detection method fusing with geometric information, comprising: S1, acquiring a real key frame; S2: determining a change of view of a virtual camera, andcalculating the pose of the virtual camera in a SLAM system; S3, reconstructing three-dimensional point cloud in the SLAM system according to the real key frame; S4, calculating the pixel coordinatesof each three-dimensional point of the three-dimensional point cloud in a virtual key frame according to a pinhole camera model and projective geometry; S5, rendering the virtual key frame of the virtual camera; S6, extracting the feature descriptors of real key frame and the virtual key frame; S7: calculating the BoVs of the real key frame and the virtual key frame, adding the BoVs to a database, searching all real key frames and virtual key frames in the database during loopback detection to obtain loopback detection information. The visual SLAM loopback detection method fusing with geometric information can not only directly generate loopback detection information of different viewing angles by using monocular camera information, but also has higher efficiency than a traditional loopback detection method.

Owner:SHANGHAI JIAO TONG UNIV

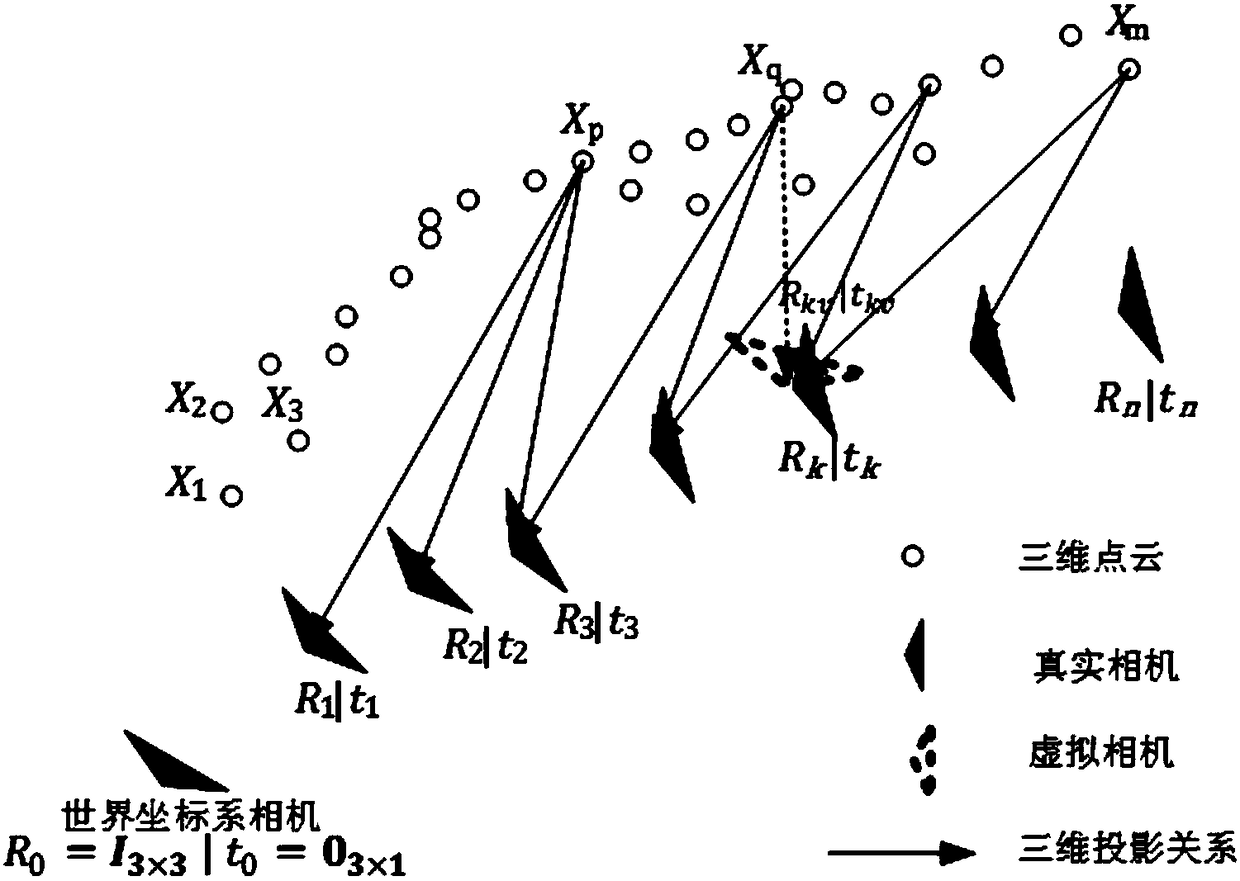

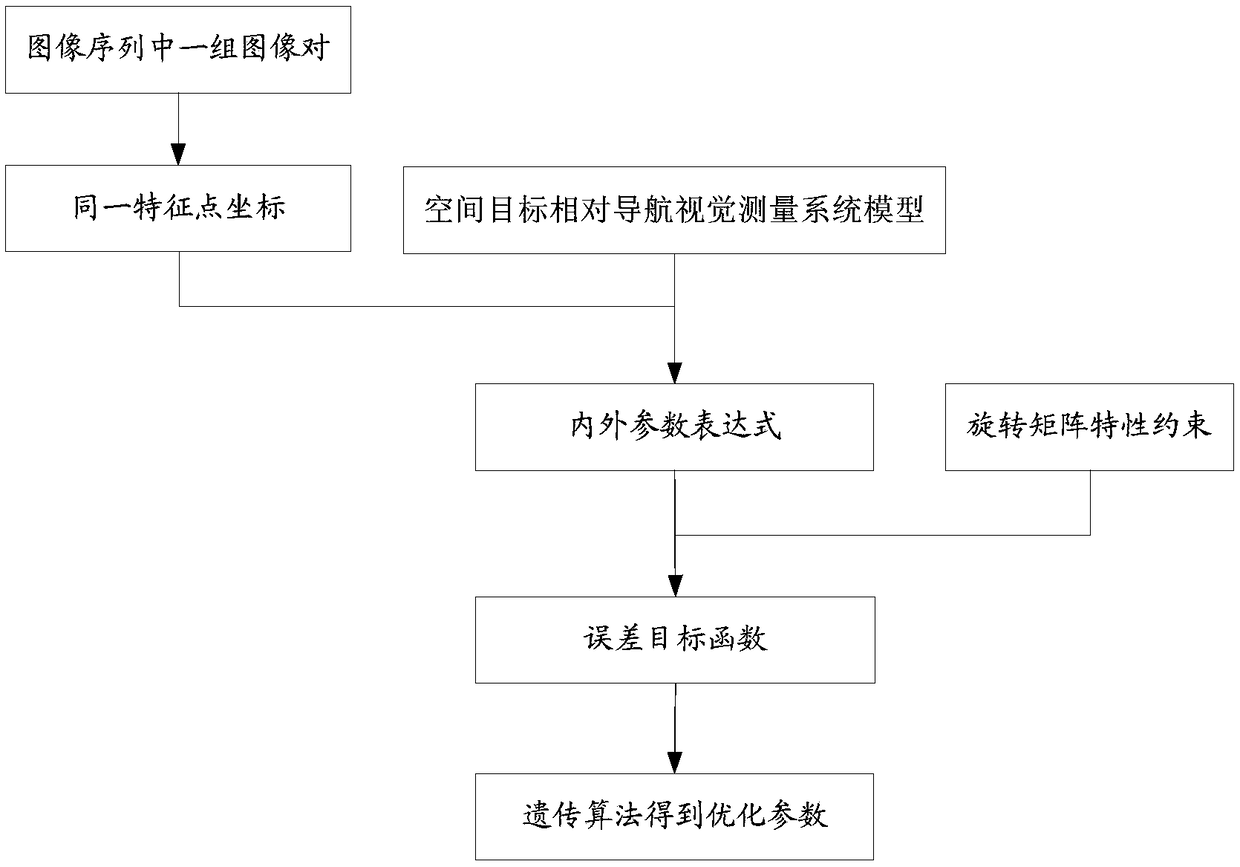

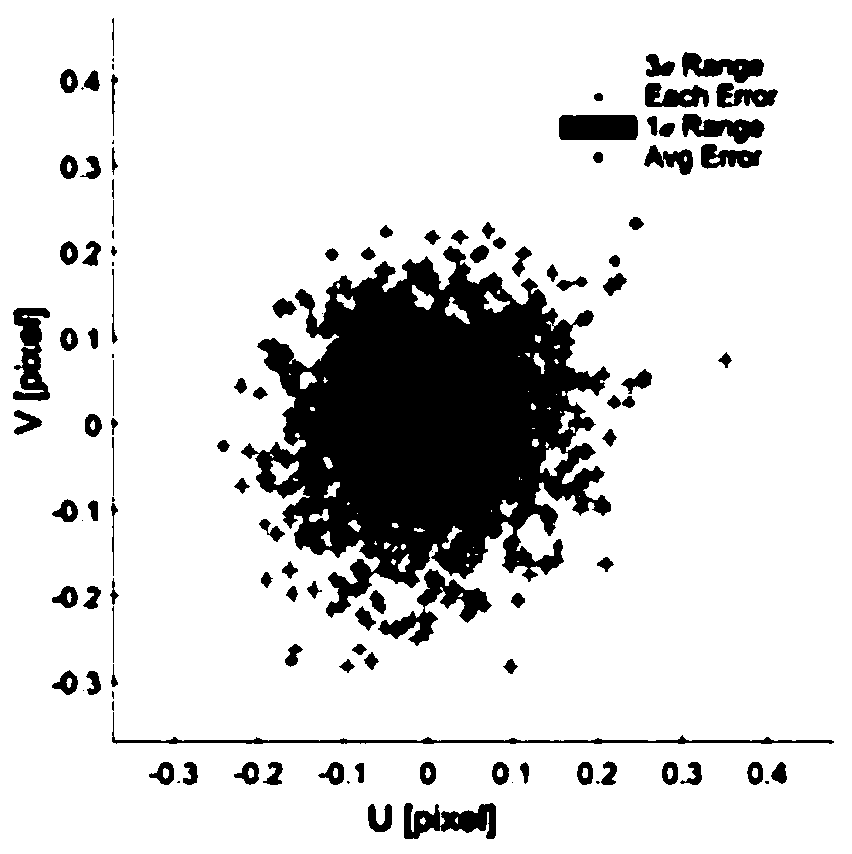

On-orbit self-calibration method for space target relative navigation vision measurement system

ActiveCN108645426AGuaranteed Calibration CorrectionGuaranteed Calibration IssuesMeasurement devicesPinhole camera modelError function

An on-orbit self-calibration method for a space target relative navigation vision measurement system comprises the following steps: establishing the space target relative navigation vision measurementsystem based a pinhole camera model; obtaining an internal and external parameter expression for the space target relative navigation vision measurement system by using an identified common feature point in a group of corresponding images in an image sequence; further obtaining an ideal constraint equation for the internal and external parameter expression by using the characteristics of a rotation matrix; and constructing the error function of the ideal constraint equation as an objective function, preliminarily obtaining parts of parameters of the space target relative navigation vision measurement system through optimization using a particle swarm algorithm having a fast calculation speed, and obtaining all remaining parameters through further optimization based on the internal and external parameter expression for the space target relative navigation vision measurement system in order to achieve the on-orbit self-calibration method for the space target relative navigation vision measurement system.

Owner:BEIJING INST OF SPACECRAFT SYST ENG

Joint calibration method based on laser radar and camera and computer readable storage medium

ActiveCN111123242AHigh precision extractionSolve the lack of precisionImage analysisWave based measurement systemsPinhole camera modelPoint cloud

The invention relates to a joint calibration method based on a laser radar and a camera and a computer readable storage medium. The joint calibration method comprises the following steps of: carryingout edge detection on a laser radar point cloud extracted by the laser radar, extracting edge points of a background part, searching a foreground edge point corresponding to each background edge pointthrough a depth difference of laser radar points; performing repeated searching and comparison to obtain foreground edge points closer to an edge, then respectively extracting the laser radar edge point cloud and the circle center and radius of a circle in an edge image acquired by the camera at a same moment, calculating a translation vector between the camera and the laser radar through a pinhole camera model, searching calibration parameters in a neighborhood space of the obtained translation vector so as to find a calibration result minimizing the projection error. Points scanned by the laser radar at the edge of an object can be extracted with high precision, and the problem of insufficient precision caused by low resolution of the laser radar can be avoided. Through the obtained precise edge points, a more accurate calibration result can be obtained.

Owner:海南智博睿科技有限公司

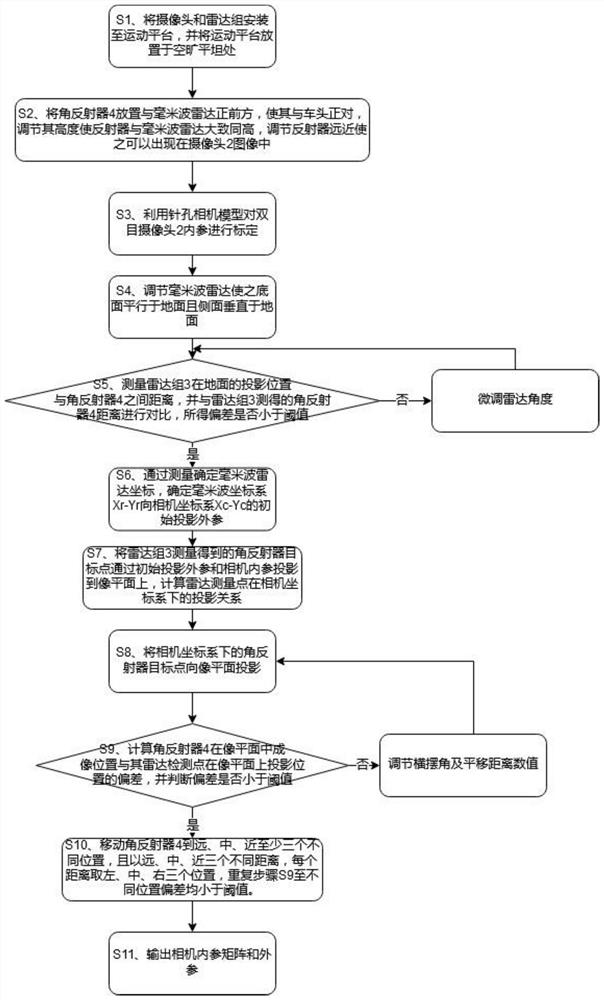

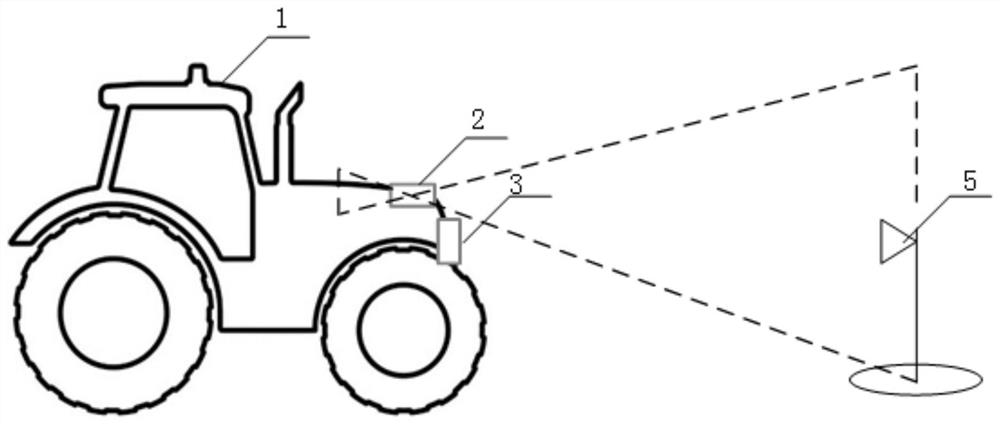

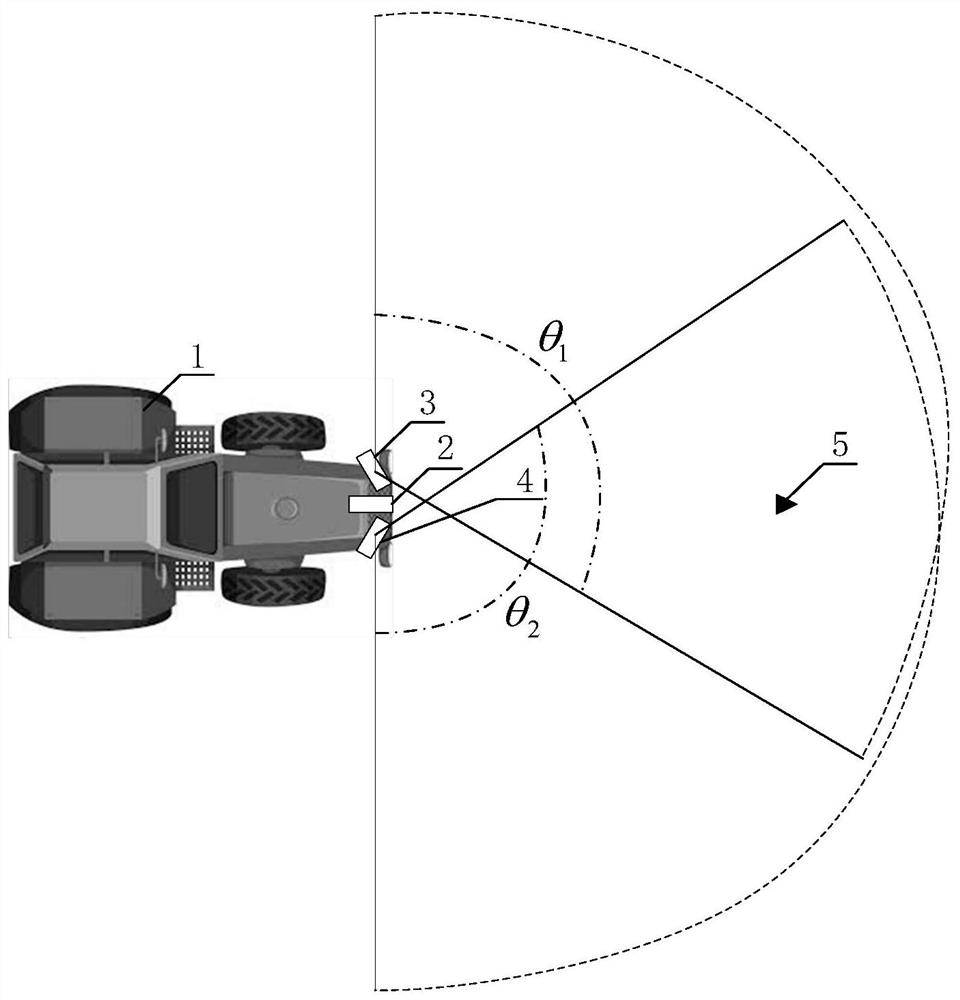

Millimeter-wave radar and camera rapid joint calibration method

PendingCN112070841AIncrease reflection strengthAvoid affecting joint calibration accuracyImage analysisWave based measurement systemsPinhole camera modelRadar detection

The invention relates to a millimeter-wave radar and camera rapid joint calibration method, and the method comprises the following steps: S1, enabling a camera and a radar group to be installed on a motion platform, and enabling the motion platform to be placed at an open flat place; s2, arranging a corner reflector right in front of the motion platform, adjusting the height of the corner reflector to be the same as the height of the radar set, and adjusting the distance between the corner reflector and the radar set until the corner reflector appears in the image range of the camera; s3, calibrating the internal parameters of the camera by using the pinhole camera model and adjusting the radar angle at the same time; s4, determining an initial projection external parameter of the millimeter wave coordinate system Xr-Yr to the camera coordinate system Xc-Yc; s5, adjusting a yaw angle and a translation distance value in the external parameter by calculating the deviation between the imaging position of the corner reflector in the image plane and the projection position of the radar detection point on the image plane; s6, outputting an internal parameter matrix and an external parameter of the camera, and completing the calibration. The corner reflector is high in reflection intensity and small in size, and better feedback can be obtained.

Owner:北京中科原动力科技有限公司

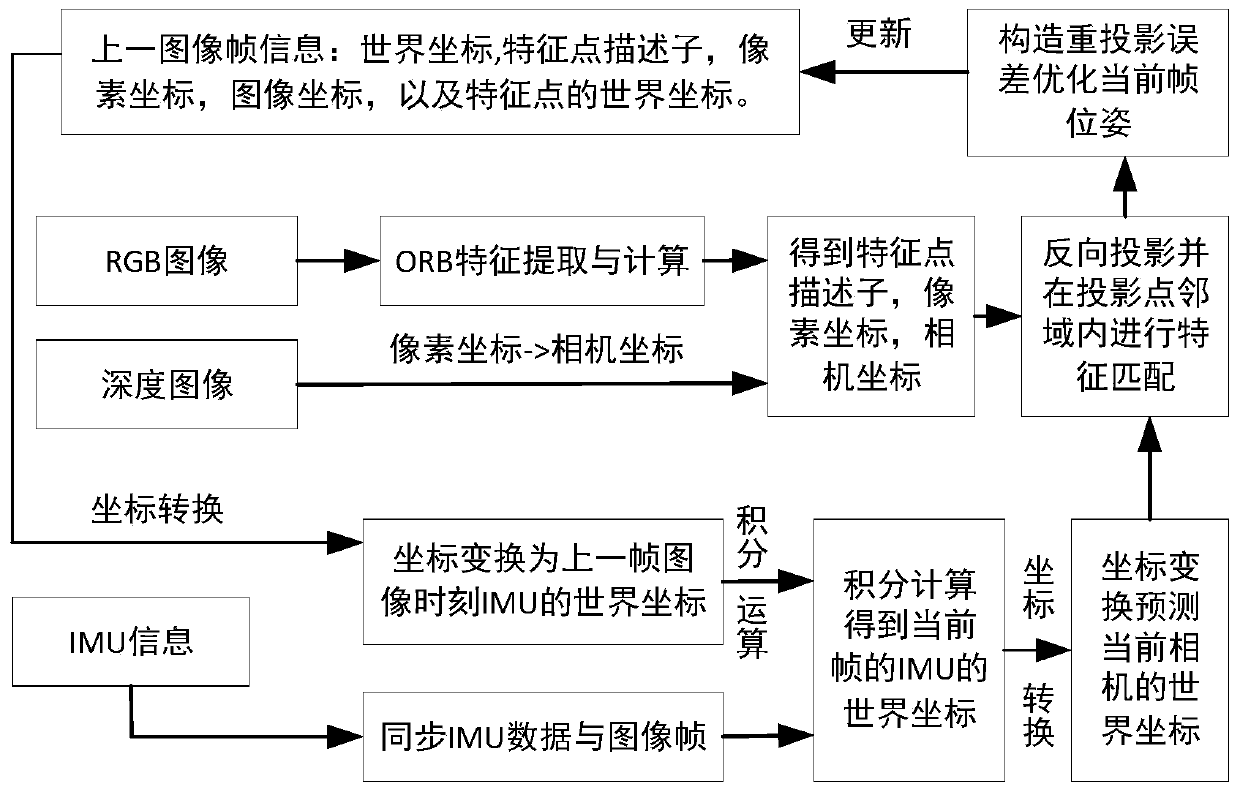

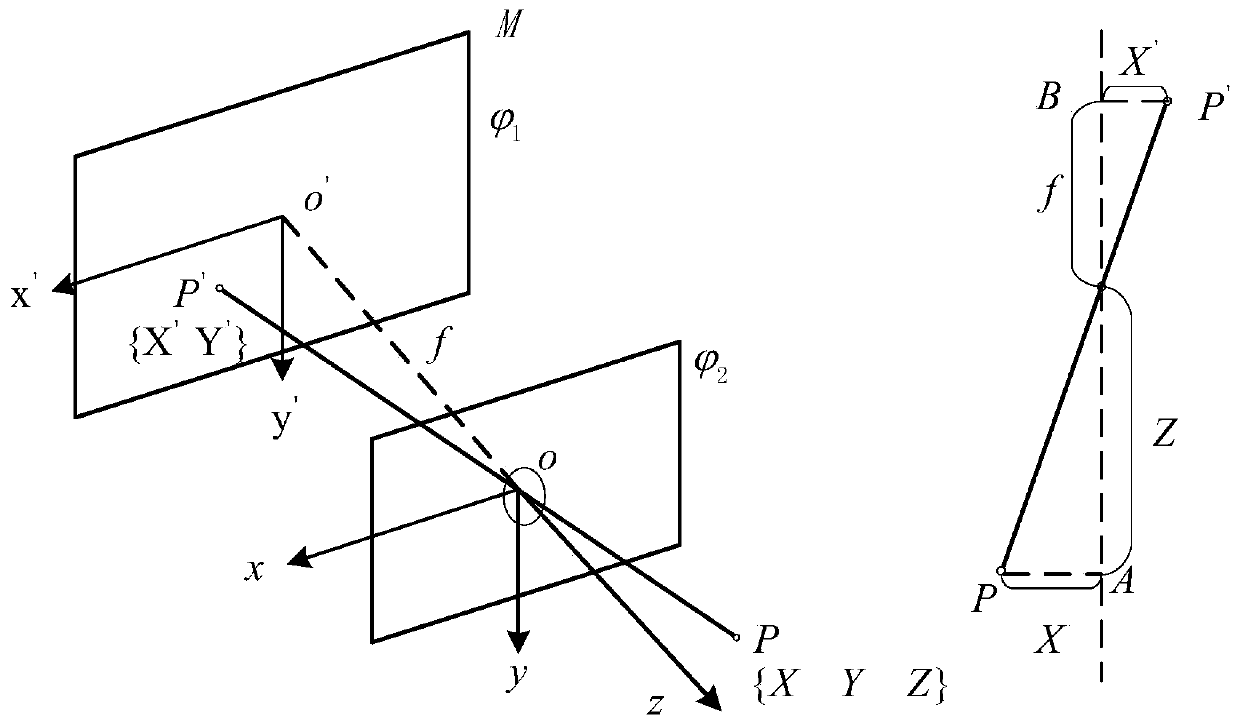

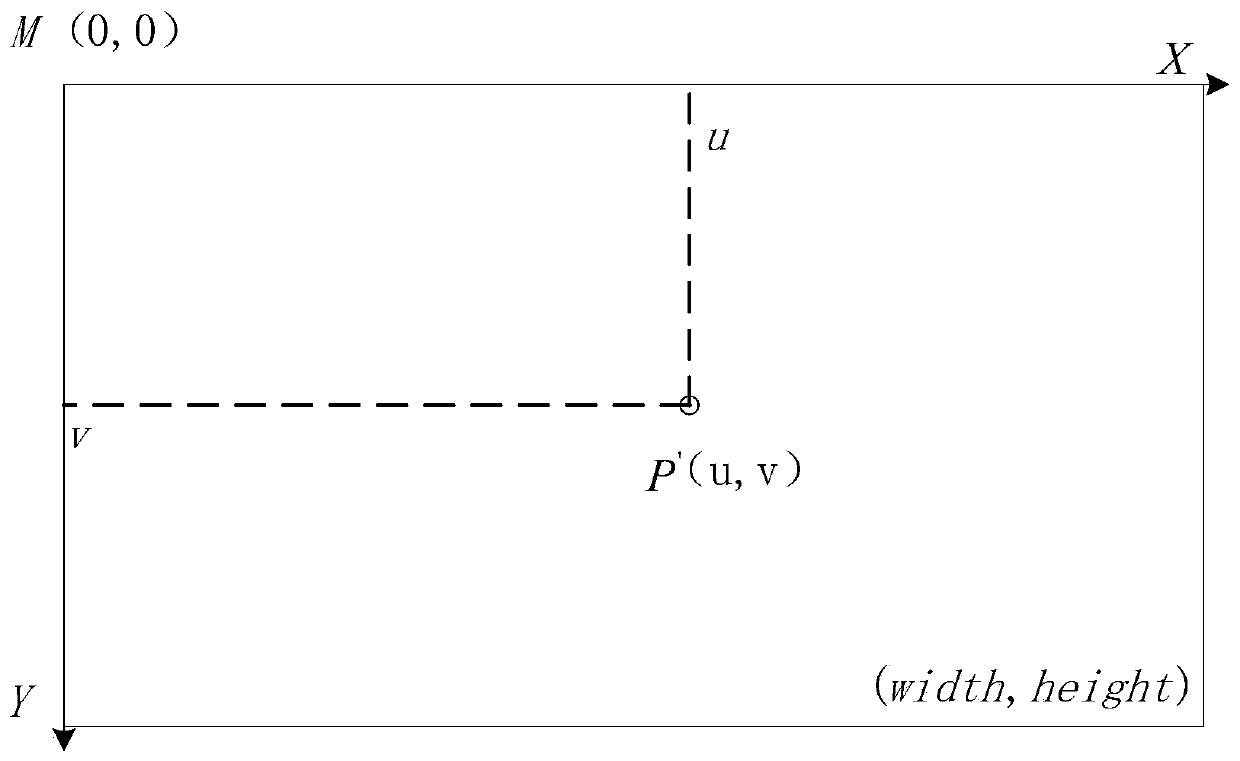

Mobile robot positioning method based on RGB-D camera and IMU information fusion

ActiveCN111156998APrecise positioning attitudeImprove featuresPhotogrammetry/videogrammetryNavigation by speed/acceleration measurementsPositioning systemMobile robot

The invention discloses a mobile robot positioning method based on RGB-D camera and IMU information fusion. The method comprises the following steps: (1) establishing a pinhole camera model; (2) establishing an IMU measurement model; (3) performing structured light camera depth calculation and pose transformation calculation based on feature point matching; (4) carrying out IMU pre-integration attitude calculation and conversion between an IMU coordinate system and a camera coordinate system; and (5) performing an RGB-D data and IMU data fusion process and a camera pose optimization process, and finally obtaining an accurate positioning pose. According to the invention, the RGB-D camera and the IMU sensor are combined for positioning; the characteristic that the IMU has good state estimation in short-time rapid movement is well utilized, and the camera has the characteristic that the camera basically does not drift under the static condition, so that the positioning system has good static characteristics and dynamic characteristics, and the robot can adapt to low-speed movement occasions and high-speed movement occasions.

Owner:SOUTH CHINA UNIV OF TECH +1

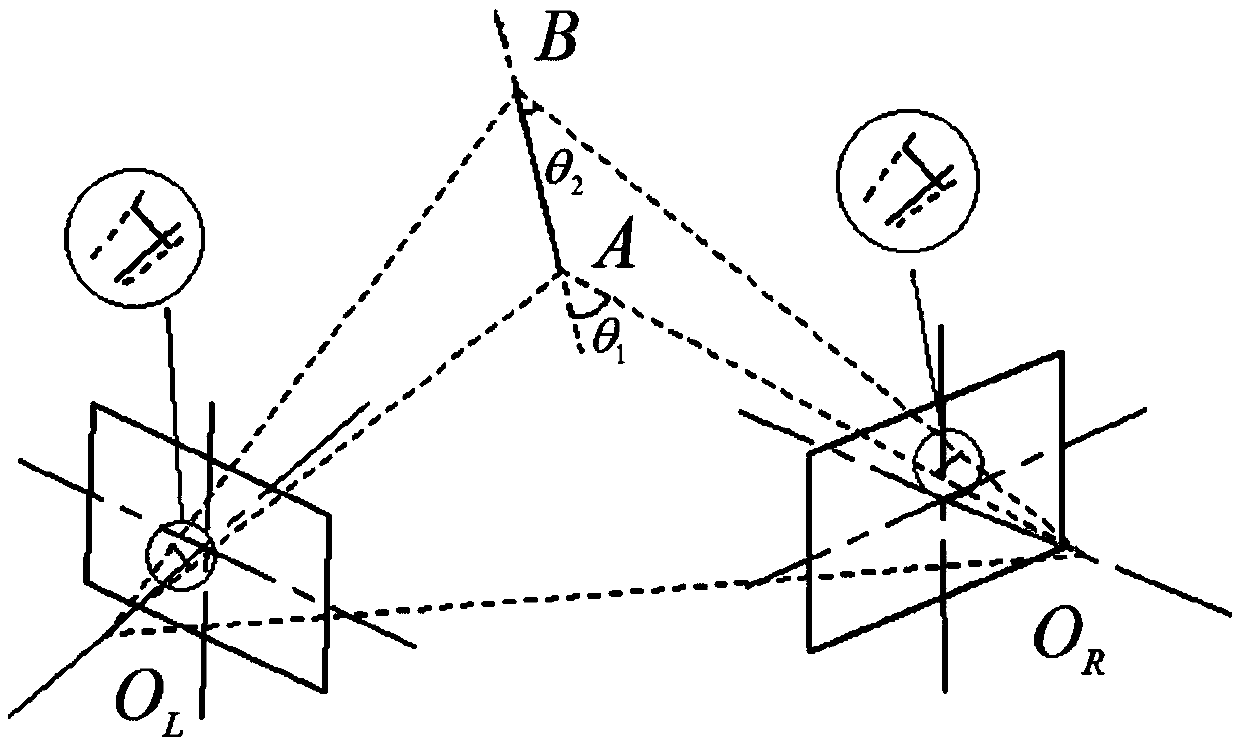

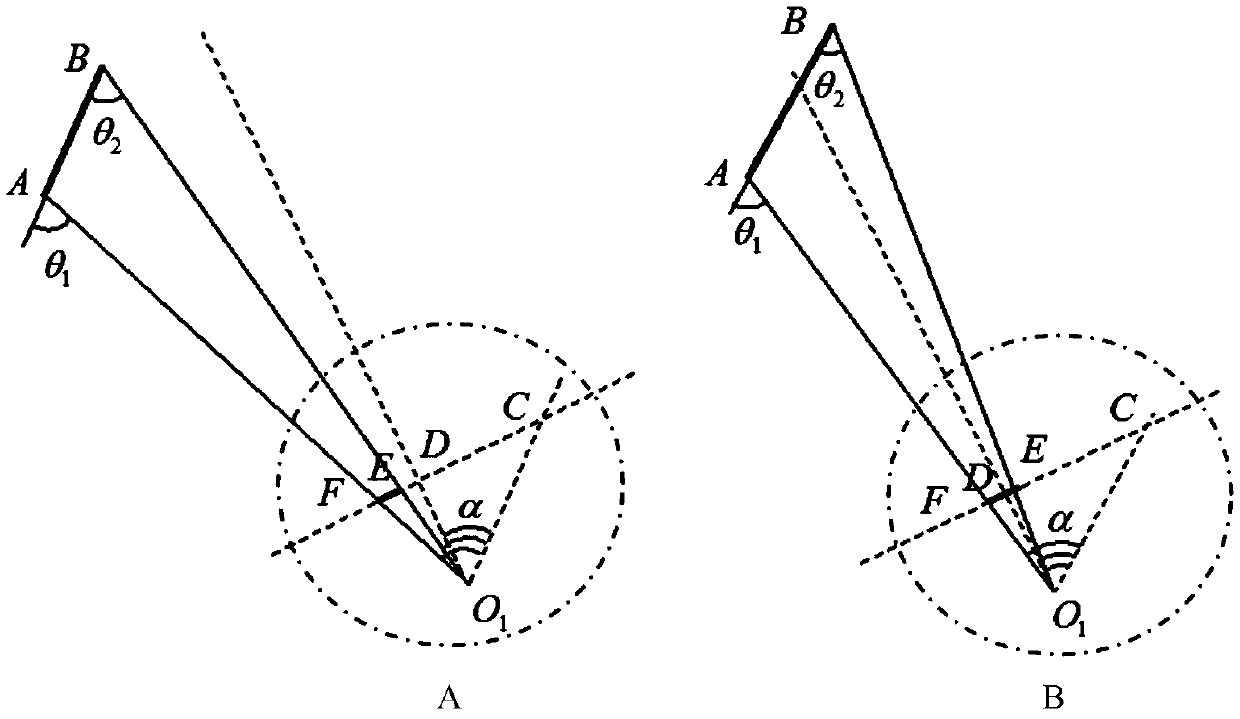

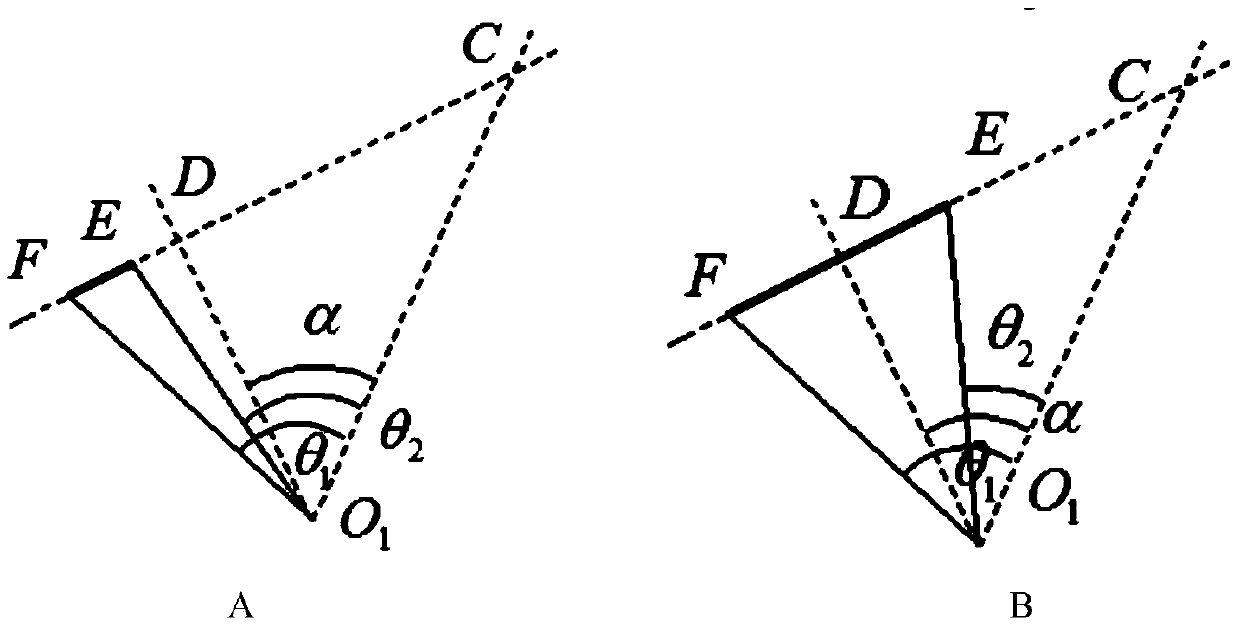

Balancing optimization algorithm for target measuring head

ActiveCN105678088AImprove robustnessImprove stabilityInformaticsSpecial data processing applicationsPinhole camera modelTarget surface

The invention discloses a balancing optimization algorithm for a target measuring head. The invariant relation of a vector in the Euclidean space and the photographic space is found to be used as a constraint condition, space vectors from handheld target surface feature points B to the target measuring head A under different poses and imaging rules under a pinhole camera model are built, the constraint condition is used as a condition equation to build a function model, Helmet posterior variance estimation is adopted as a random model, finally, the difference value is brought into the condition equation, and difference value testing is completed. According to the algorithm, the space position information of each handheld target surface feature point is sufficiently utilized, the robustness is good, the stability is high, 3D space reduction precision of the handheld target measuring head is improved, and the effective method is provided for further improving the binocular stereoscopic vision measuring precision.

Owner:XI AN JIAOTONG UNIV

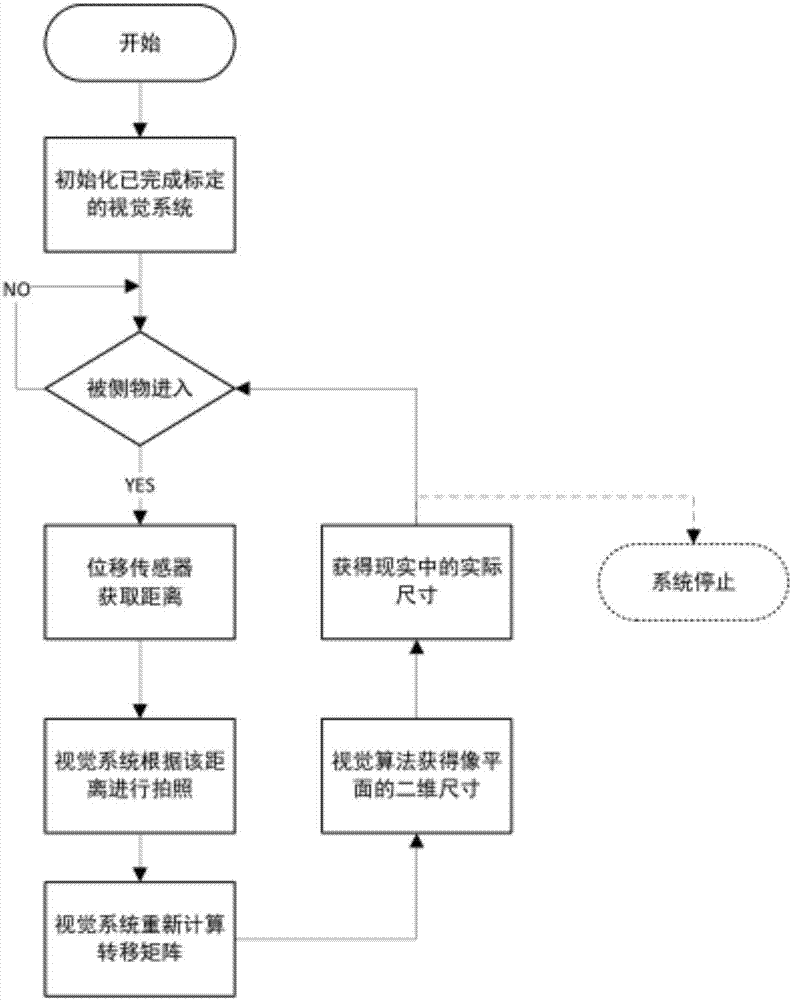

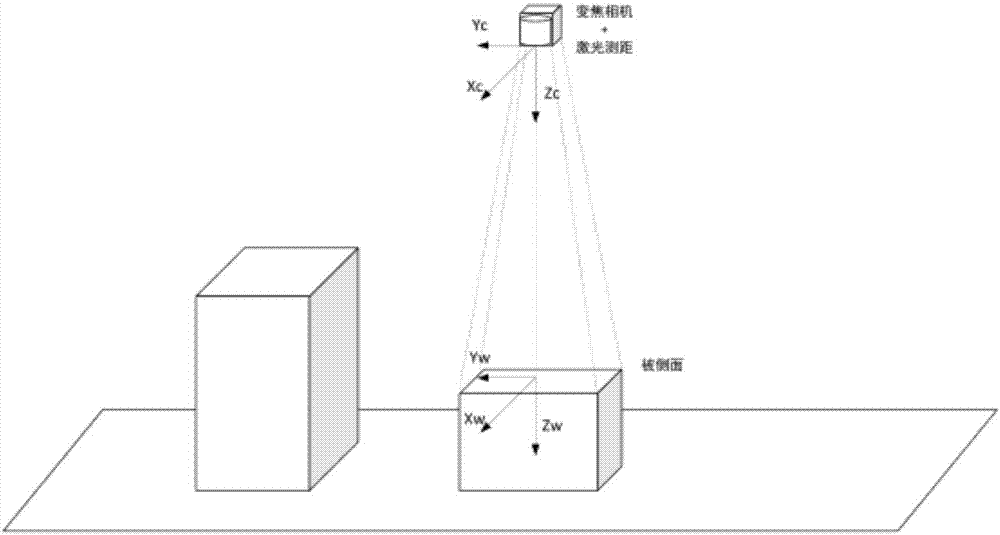

Vision-based multi-depth two-dimensional plane size measurement method

InactiveCN106871785AQuick measurementSolve the problem that cannot be accurately calibratedUsing optical meansPinhole camera modelImaging processing

The invention provides a vision-based multi-depth two-dimensional plane size measurement method which is characterized in that firstly camera internal parameters are calibrated so that plane parameters are obtained; the distance from the camera to a measured object is measured by a laser distance measurement instrument or a displacement sensor according to the perspective projection relation of a pinhole camera model and transmitted to a vision system to be adjusted and then photographing is performed; and the size of the measured object is calculated through image processing. With application of the method, "dynamic calibration" is realized by the system by using vision instead of using the relatively expensive 2D laser sensor, and mapping from the plane to the actual space coordinates is realized by the system according to difference distances from the measured object to the camera so as to complete size measurement.

Owner:成都天衡电科科技有限公司

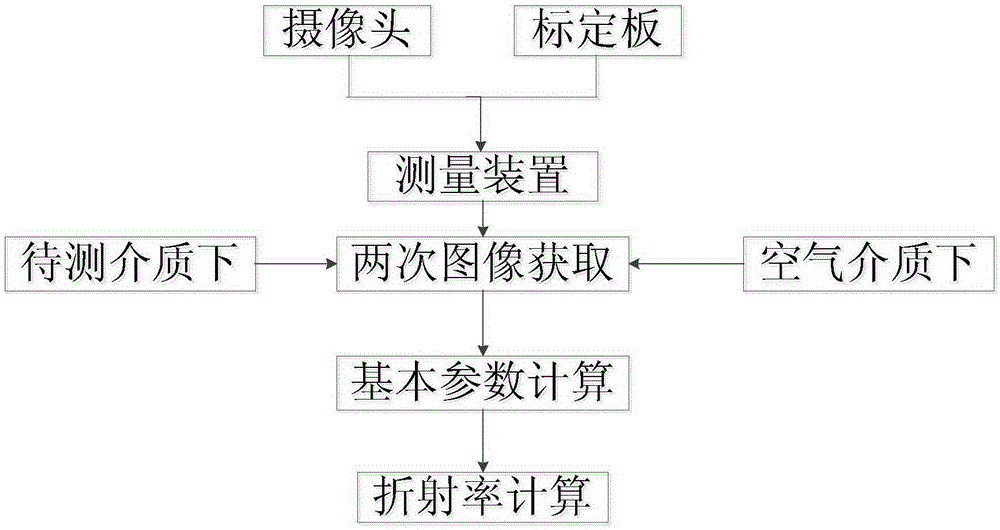

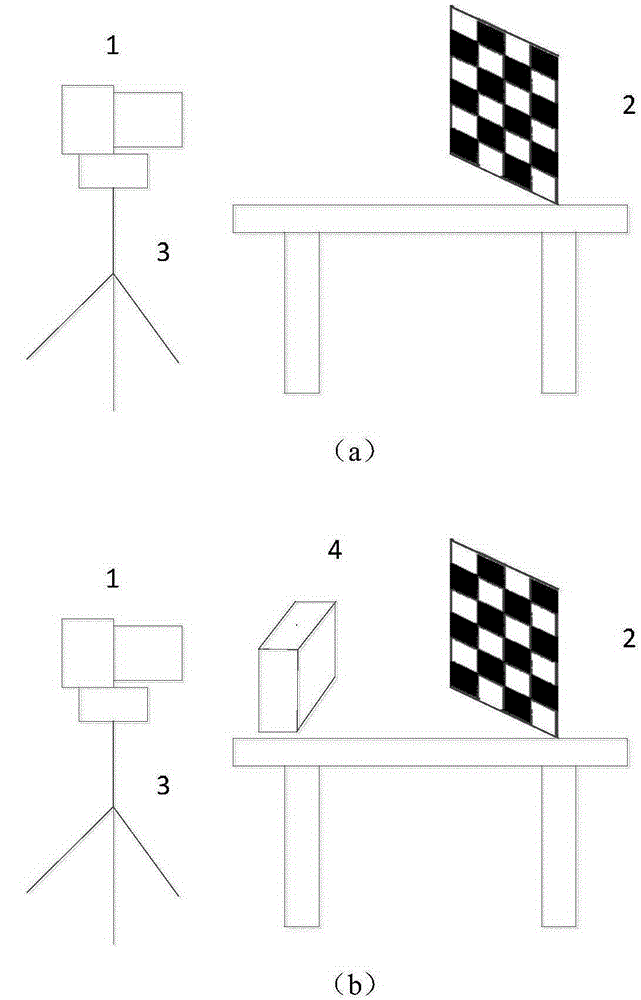

Computer vision based transparent medium refractivity measurement method

InactiveCN105181646ASimple calculationSimplify the measurement stepsPhase-affecting property measurementsMeasurement costRefractive index

The invention provides a computer vision based transparent medium refractivity measurement method. The method includes: selecting a camera and a calibration plate; establishing a camera coordinate system, an image coordinate system and a relationship of the two coordinate systems; conducting two image acquisition on the calibration plate with the camera, with one image acquisition carried out under the air medium, and the other carried out under the circumstance of placing a to-be-measured medium between the calibration plate and the camera; utilizing a pinhole camera model to calculate the depth information of a corner point under a camera coordinate system, the corner point corresponded included angle between a back projection ray and an optical axis, and the position change of the corner point in two images; measuring the thickness of the to-be-measured medium, deducing the refractivity calculation formula of the to-be-measured medium according to a pinhole imaging model and the Snell law of refraction, and introducing the calculation parameters to obtain the refractivity of the to-be-measured medium. The method provided by the invention realizes measurement of object refractivity only through two image acquisition on the calibration plate with the camera, greatly simplifies the process of calculation, simplifies the measurement steps, reduces the measurement cost, and has high calculation accuracy.

Owner:WUHAN UNIV OF TECH

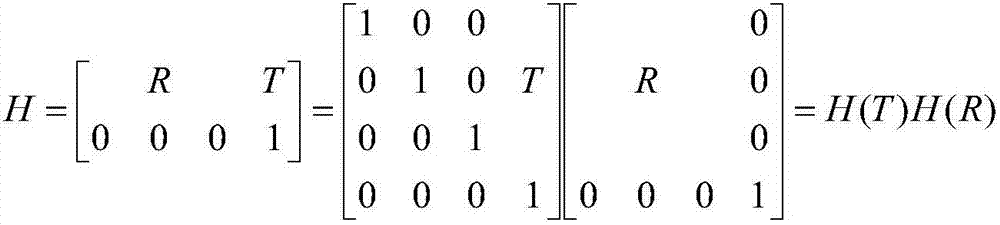

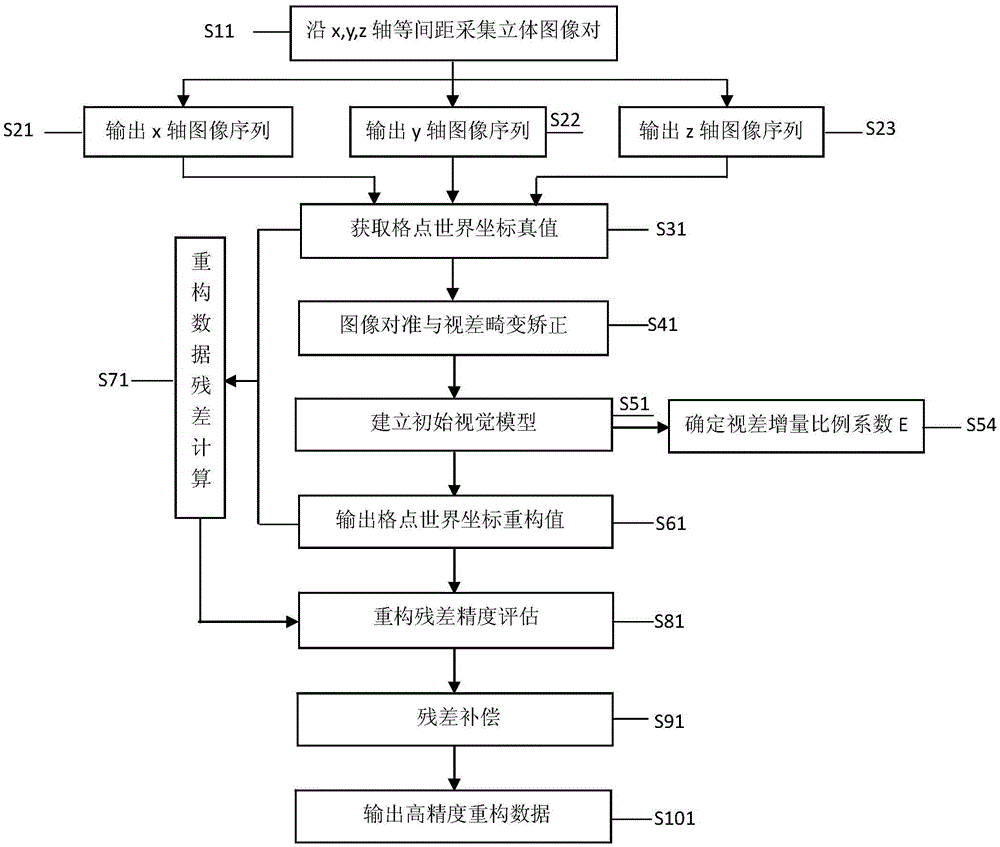

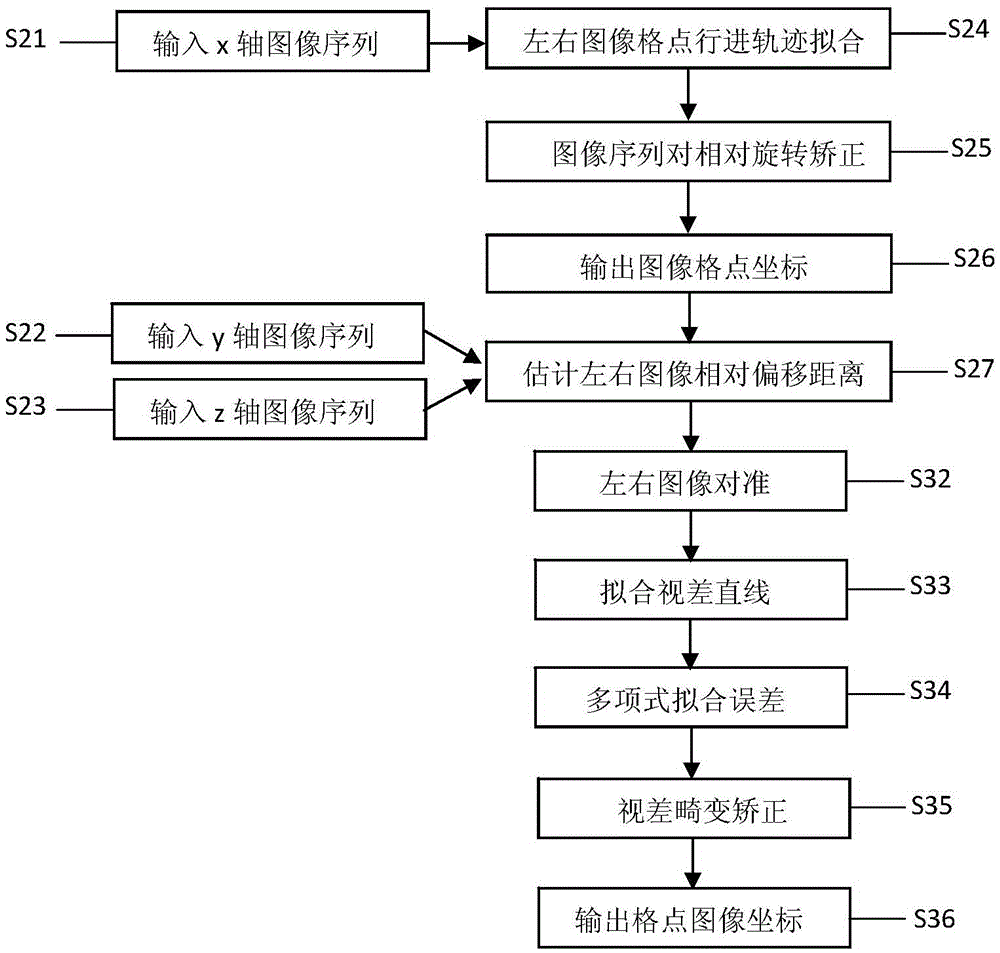

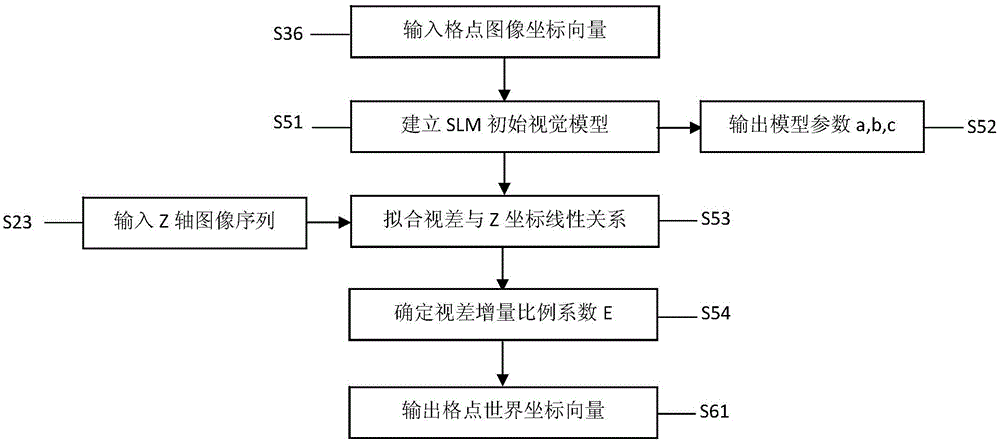

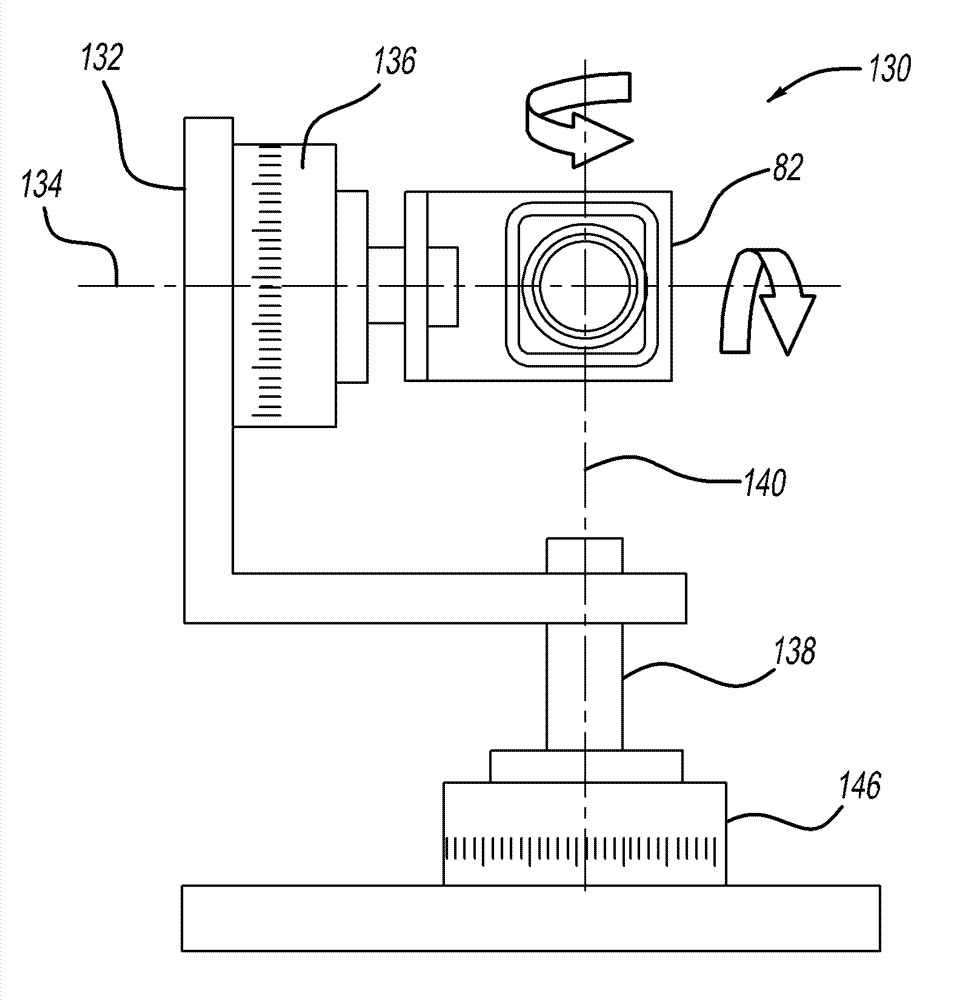

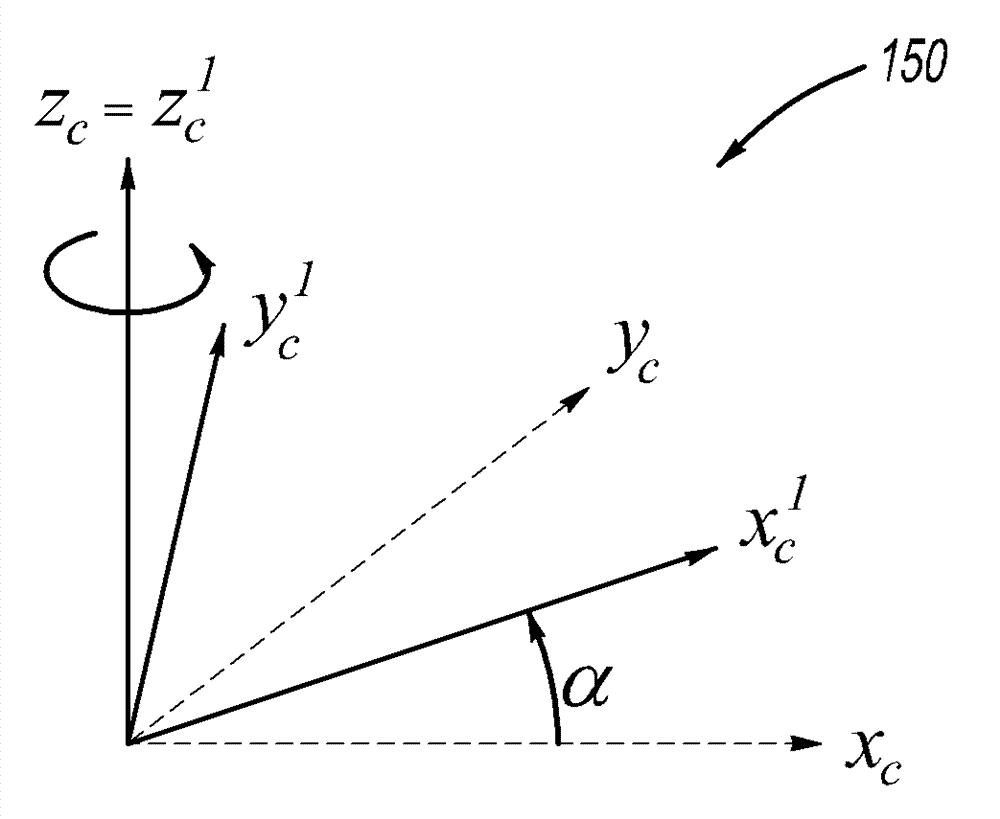

SLM microscopic vision data reconstruction method by using residual feedback

The invention relates to an SLM microscopic vision data reconstruction method by using residual feedback, in particular, a method for improving SLM microscopic vision system reconstruction precision based on a pinhole camera model by using residual analysis to improve a residual compensation model. The method mainly comprises the following steps of: equal-interval SLM stereo image pair acquisition; image alignment and parallax distortion correction; initial visual model establishment; reconstruction residual calculation; reconstruction residual precision evaluation; and residual compensation. According to the method of the invention, based on the advantages of a pinhole camera model, a residual compensation model is established, a plurality of kinds of errors in a data reconstruction process of an SLM vision system are compensated, and therefore, parameter calibration difficulty can be decreased, and defects in the application of existing pinhole models to the micro optical field can be eliminated, and the novel model has high practicability. With the method of the invention adopted, as for any kind of SLM vision system, high-precision reconstruction data can be outputted as long as a residual compensation model is determined.

Owner:BEIJING UNIV OF TECH

WIDE field of view camera image calibration and de-warping

The invention relates to calibration and de-warping of a wide field of view camera image, and specifically provides a system and a method for calibrating and de-warping an ultra-wide field of view camera. The method comprises estimating intrinsic parameters such as the focal length of the camera and an image center of the camera by using multiple measurement values of the near optical axis object points and a pinhole camera model. The method further includes estimating distortion parameters of the camera by using an angular distortion model that defines an angular relationship between an incident optical ray passing an object point in an object space and an image point on an image plane that is an image of the object point on the incident optical ray. The method can include a parameter optimization process to further refine the parameter estimation.

Owner:GM GLOBAL TECH OPERATIONS LLC

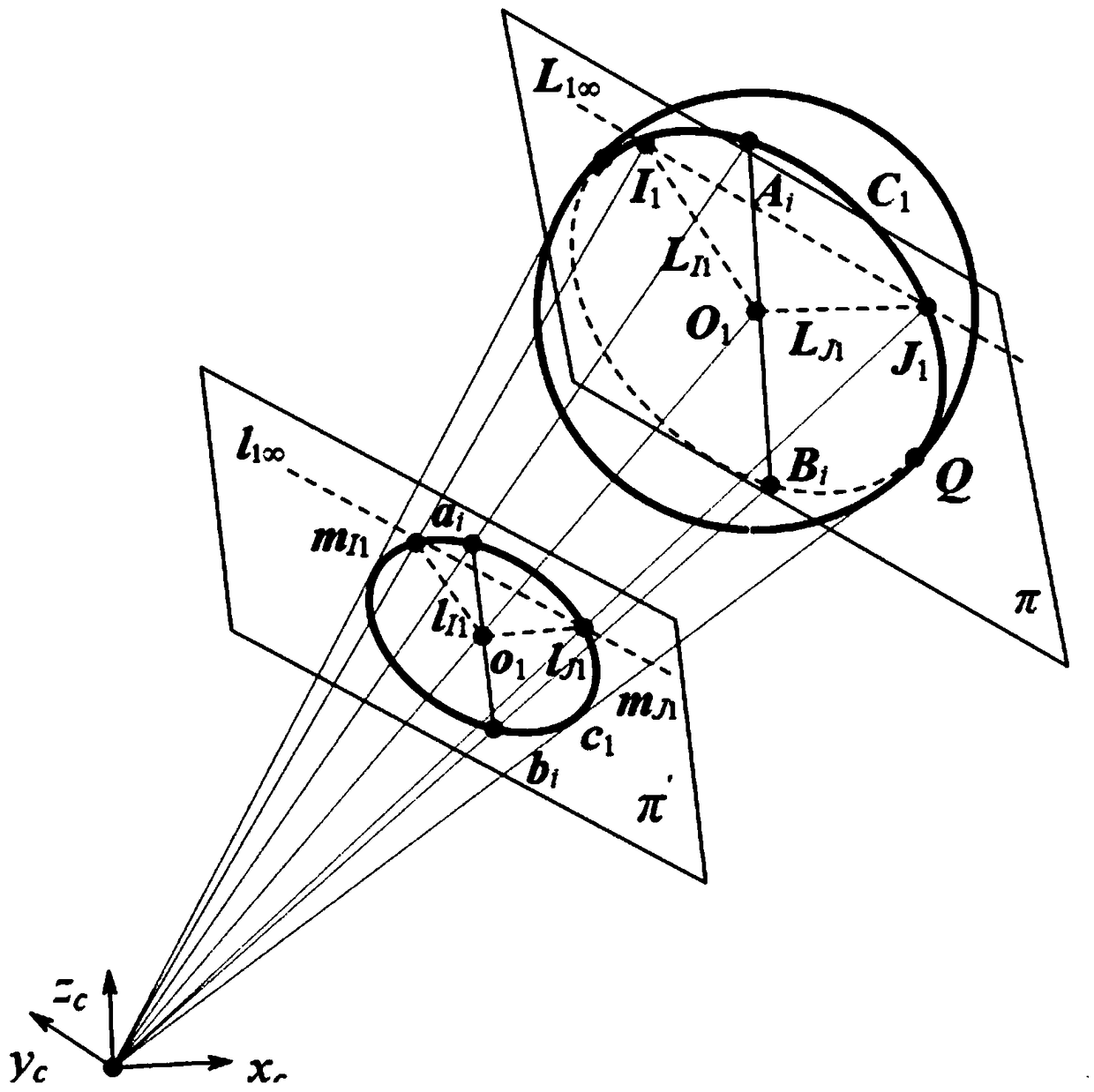

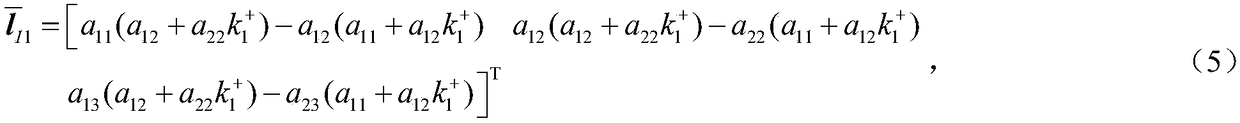

Method for qualitatively calibrating pinhole camera by utilizing single ball and asymptotic line

ActiveCN108921904AEasy to makePhysical scale is not requiredImage analysisPinhole cameraPinhole camera model

The invention relates to a method for qualitatively calibrating a pinhole camera by utilizing a single ball and an asymptotic line, characterized in that a ball element is utilized only. Firstly, pixel coordinates of target image edge points are extracted from three images and a ball image equation is obtained by adopting least square fitting. On the basis of the obtained ball image equation, an asymptotic line of a ball image is solved. The asymptotic line of the ball image is namely a polar line of an image of a circular point about the ball image, thus an image of a circle center is determined according to a polarity principle, orthogonal end points can be obtained by virtue of the image of the circle center, and the three images provide six groups of orthogonal end points. Finally, intrinsic parameters of the camera are solved by utilizing constraints of the orthogonal end points on an absolute conic image. The method comprises the following specific steps: fitting a target projection equation, estimating the asymptotic line of the ball image, determining the orthogonal end points, and solving the intrinsic parameters of the pinhole camera.

Owner:YUNNAN UNIV

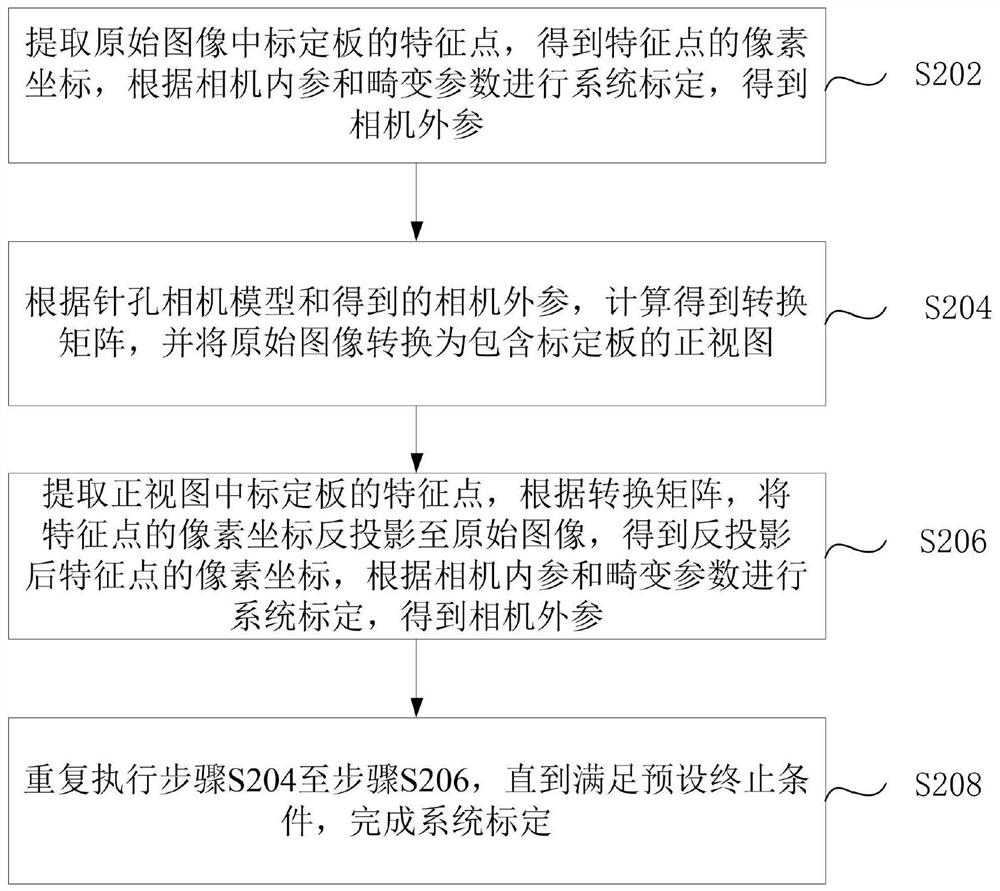

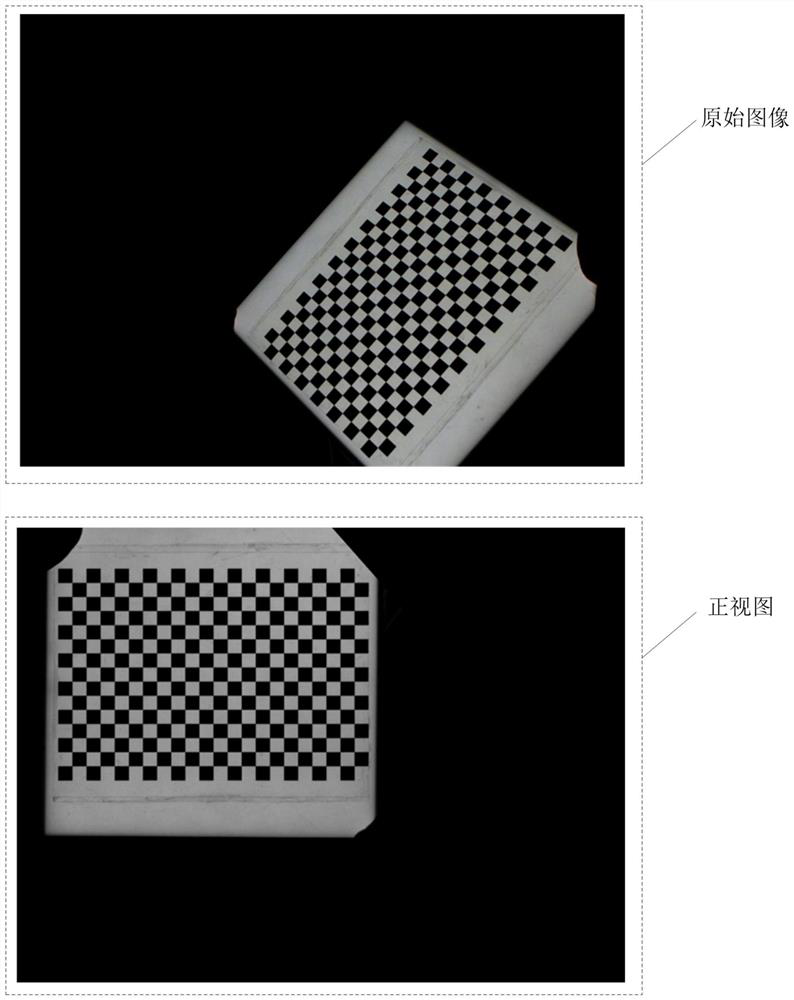

System calibration method, system and device for optimizing feature extraction and medium

PendingCN113838138AReduce mistakesSolve the problem of low calibration accuracyImage enhancementImage analysisPinhole camera modelFeature extraction

The invention relates to a system calibration method, system and device for optimizing feature extraction and a medium, and the method comprises the steps: carrying out system calibration through extracting feature points of a calibration plate in an original image, and obtaining external parameters of a camera; repeatedly executing the preset iterative calculation until a preset termination condition is met, and completing system calibration. The preset iterative calculation comprises the following steps: calculating to obtain a conversion matrix according to a pinhole camera model and obtained camera external parameters, and converting an original image into a front view containing a calibration plate; extracting the feature points of the calibration plate in the front view, back-projecting the pixel coordinates of the feature points to the original image according to the conversion matrix, and performing system calibration to obtain the external parameters of the camera by obtaining the pixel coordinates of the back-projected feature points. According to the invention, the problem of low system calibration accuracy caused by image factors is solved, the accuracy of feature point extraction of the calibration plate is improved, and the influence of feature point extraction errors introduced by distortion and perspective back projection on a final system calibration result is reduced.

Owner:杭州灵西机器人智能科技有限公司

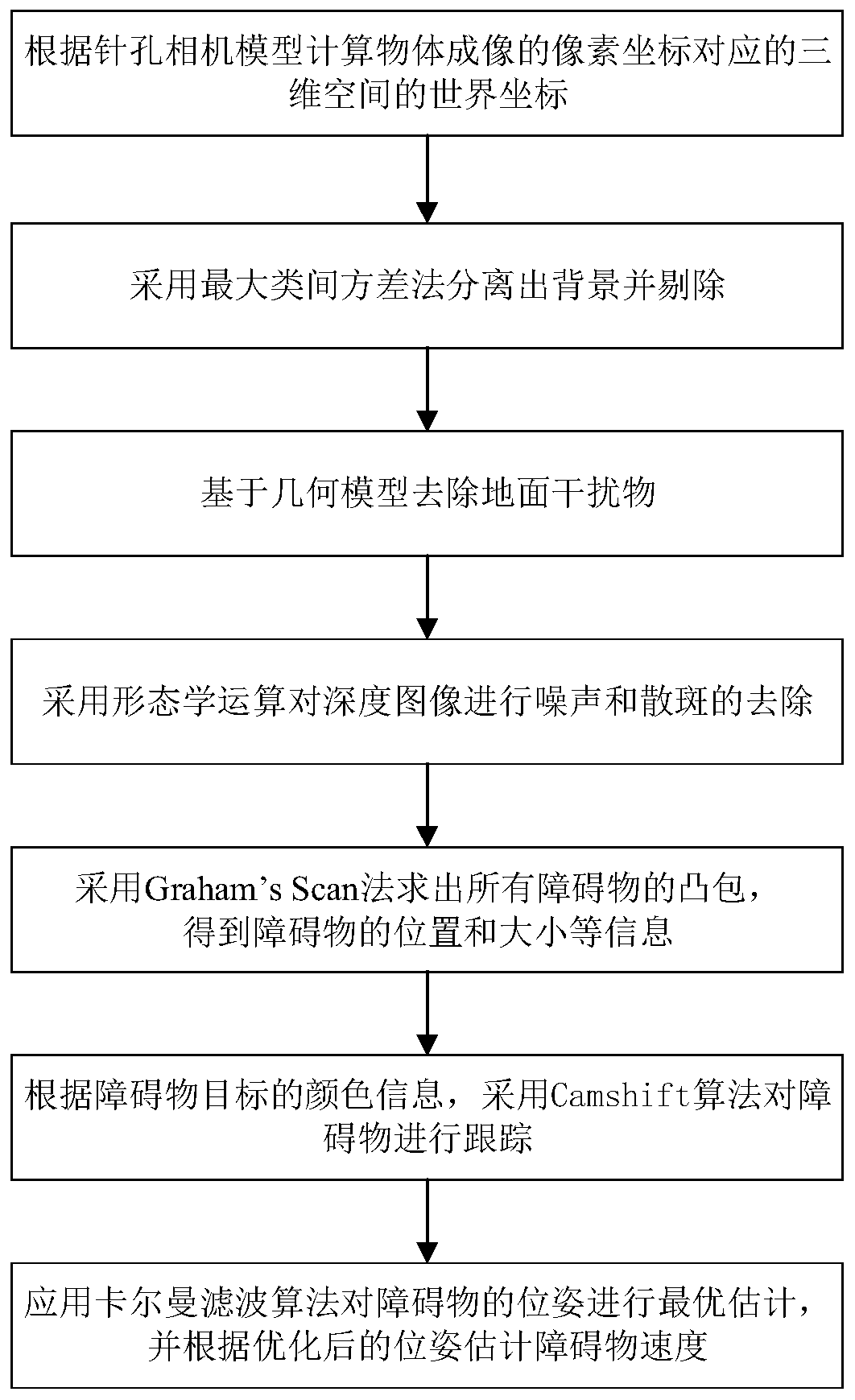

barrier perception method based on an RGB-D camera

ActiveCN109801309AImplement trackingImprove adaptabilityImage analysisInternal combustion piston enginesPinhole camera modelThree-dimensional space

The invention discloses a barrier perception method based on an RGB-D camera. The method comprises the following steps of adopting RGB-B camera to collect RGB and depth images of an object and calculating world coordinates of a three-dimensional space corresponding to pixel coordinates of object imaging according to the pinhole camera model; separating a background by adopting a maximum between-cluster variance method and removing the background; Removing ground interferents based on the geometric model;performing speckle removal on the depth image by adopting morphological operation; and solving convex hulls of all obstacles by adopting a Grahams Scan method to obtain information such as positions and sizes of the obstacles. A CamShift algorithm is adopted to track an obstacle, the observation value of the pose of the obstacle is obtained, the predicted value of the pose is obtained according to the speed of the obstacle, the pose of the obstacle is subjected to optimal estimation byapplying a Kalman filtering algorithm, and the speed of the obstacle is estimated according to the optimized pose. The method has high real-time performance and applicability.

Owner:SOUTH CHINA UNIV OF TECH

Synthetic aperture camera calibration method based on light field distribution

ActiveCN110146032AEfficient constructionOvercoming direction ambiguityImage analysisUsing optical meansPhysicsPinhole camera model

The invention belongs to the technical field of precision measurement and particularly relates to a synthetic aperture camera calibration method based on light field distribution. The method comprisessteps: in order to overcome the pupil aberration problem of a pinhole camera model, an imaging pupil is divided into a plurality of sub pupils, and according to actual light field distribution, an imaging sensor is divided into different imaging areas corresponding to sub pupils; and in combination of synthetic aperture camera model joint calibration optimization, a camera model more in line withthe actual imaging process is constructed. The pupil aberration caused by single pinhole imaging hypothesis can be effectively eliminated, the direction ambiguity of monocular vision is overcome, andgreat significance is achieved for improving the measurement precision of a photogrammetry technology.

Owner:FUDAN UNIV

Method to determine distance of an object from an automated vehicle with a monocular device

ActiveUS9862318B2The result is accurateImprove robustnessTelevision system detailsImage analysisPinhole camera modelImaging processing

A method of determining the distance of an object from an automated vehicle based on images taken by a monocular image acquiring device. The object is recognized with an object-class by means of an image processing system. Respective position data are determined from the images using a pinhole camera model based on the object-class. Position data indicating in world coordinates the position of a reference point of the object with respect to the plane of the road is used with a scaling factor of the pinhole camera model estimated by means of a Bayes estimator using the position data as observations and under the assumption that the reference point of the object is located on the plane of the road with a predefined probability. The distance of the object from the automated vehicle is calculated from the estimated scaling factor using the pinhole camera model.

Owner:APTIV TECH LTD

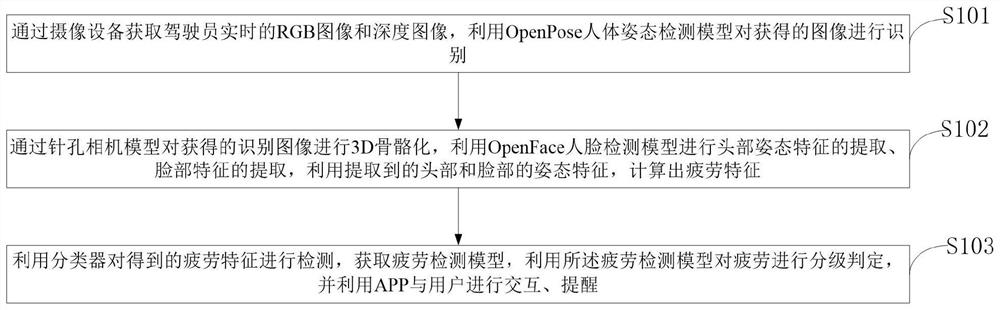

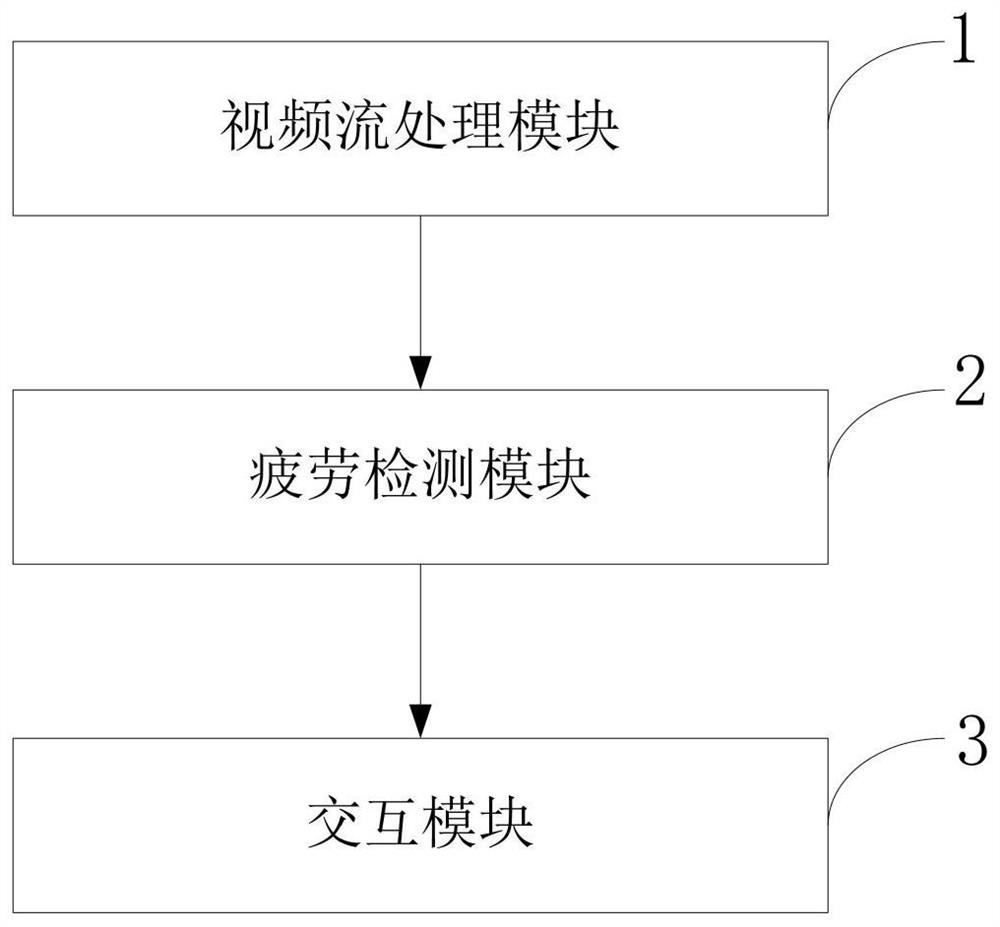

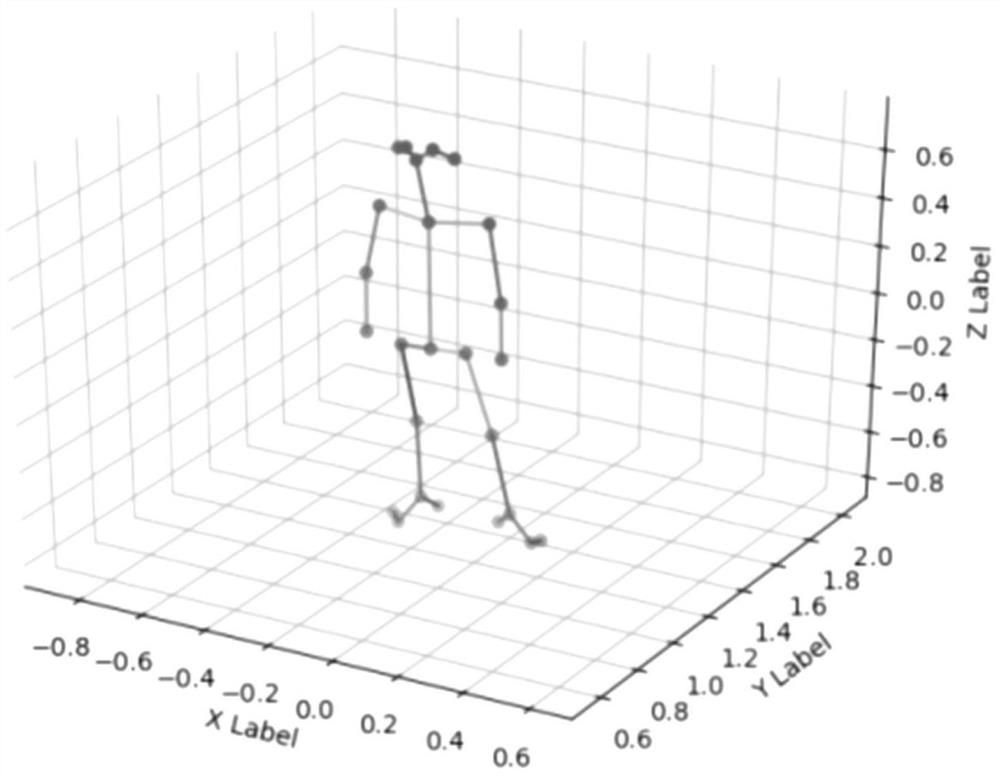

Fatigue detection system and method based on facial skeleton model, medium and detection equipment

PendingCN113920491ASolve the convenienceSolve the problem of inconvenient wearingCharacter and pattern recognitionPattern recognitionPinhole camera model

The invention discloses a driving fatigue detection system and method based on a facial skeleton model, a medium and detection equipment, and relates to the technical field of image recognition. The method comprises the following steps: identifying an obtained image by using a human body posture detection model; performing 3D skeletization on the obtained recognition image through a pinhole camera model, extracting head posture features and face features by using a face detection model, and calculating fatigue features by using the extracted head and face posture features; detecting the obtained fatigue features by using a classifier to obtain a fatigue detection model, carrying out grading judgment on fatigue by using the fatigue detection model, and interacting with a user by using an APP. According to the method, fatigue behaviors are analyzed, fatigue recognition features are extracted, and a fatigue detection model fusing multiple fatigue features is obtained by using a random forest algorithm. The whole system is realized on the Raspberry Pi, development of a rear end and an APP end is completed, and the whole fatigue detection system has high integrity.

Owner:HARBIN INST OF TECH AT WEIHAI

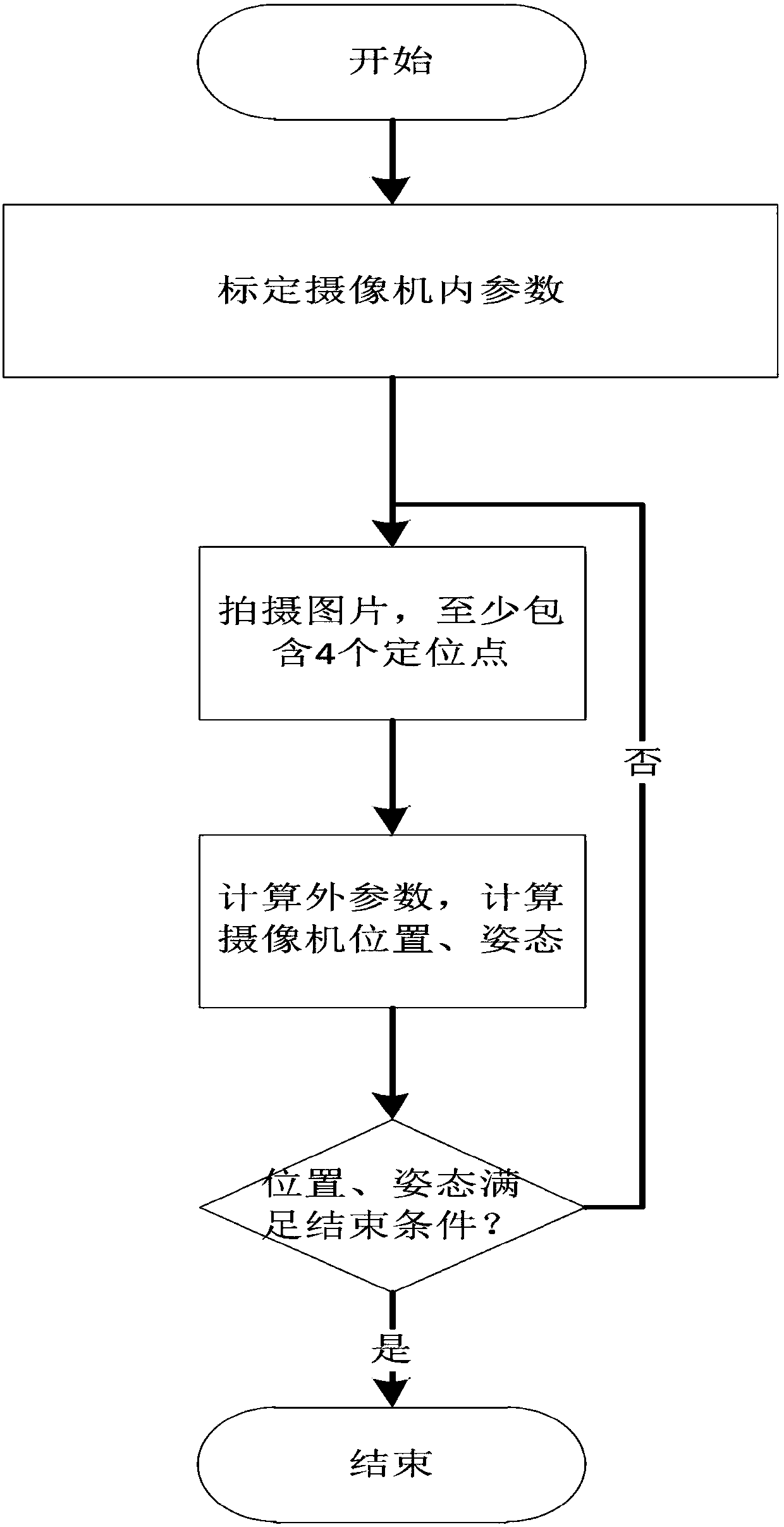

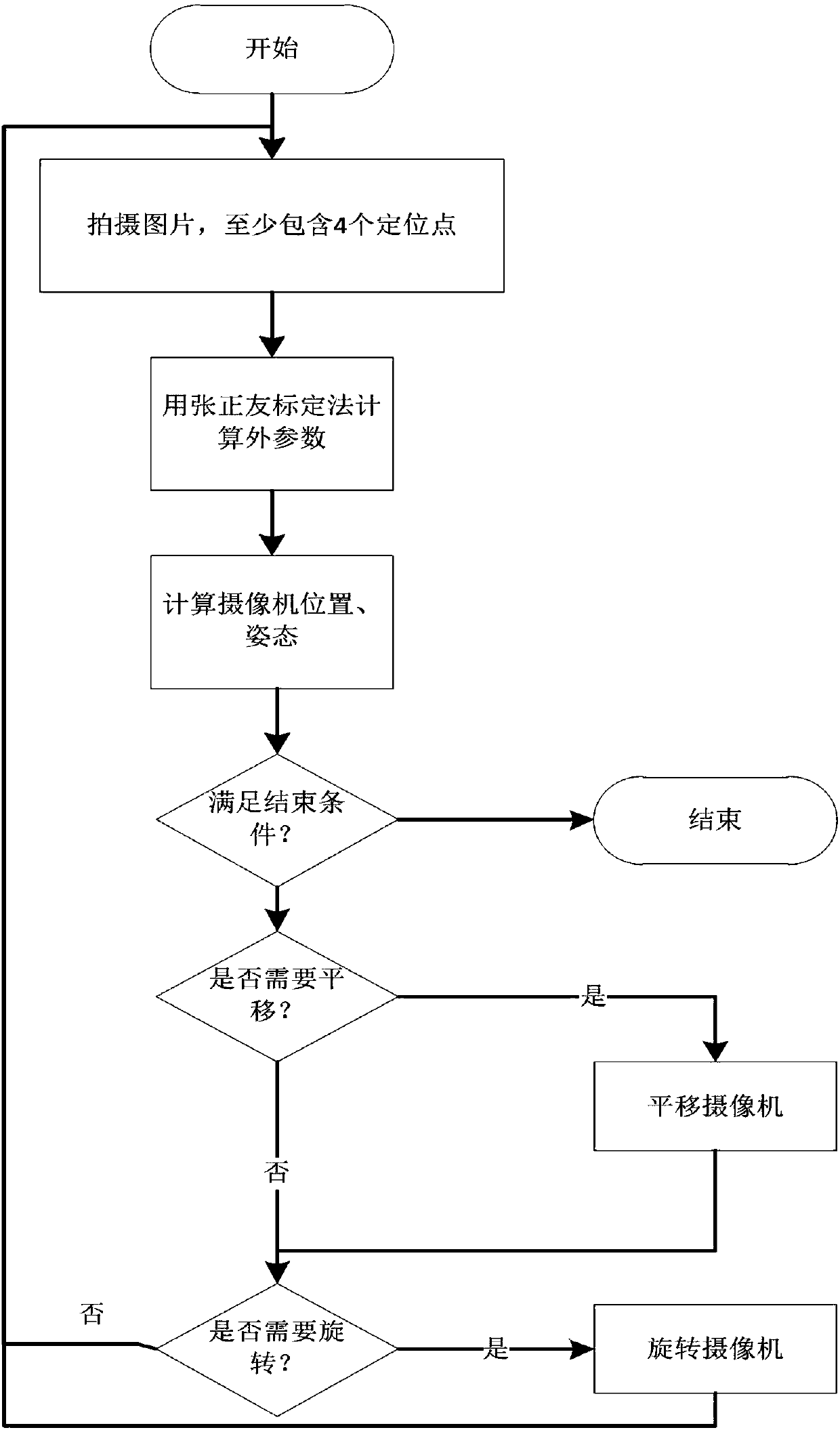

Pinhole camera model-based camera posture correcting method

ActiveCN108109179ARealize the camera attitude correction functionRealize the attitude correction functionImage analysisPinhole camera modelCorrection method

The invention relates to a pinhole camera model-based camera posture correcting method. The method is mainly and technically characterized in that the same camera is adopted for shooting calibration images at a plurality of angles and positions, and calibrating the internal parameters of the camera; placing the camera in the initial state; shooting images and calculating to obtain the external parameters of the camera; calculating to obtain the position and the posture information of the camera; if the error is large, controlling and adjusting the position and the posture of the camera. According to the invention, the method is reasonable in design. During the correcting process of the camera, the relation between a camera coordinate system and a world coordinate system is calculated through a simple and easy method. In this way, the position and the posture of the camera are quickly adjusted and specific application requirements are met. The real-time calculation amount does not needto be additionally increased, and the software performance of the system is greatly improved. Moreover, no extra hardware equipment is additionally arranged, so that the system cost is greatly saved.

Owner:TIANJIN UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com