Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

81 results about "Neural network language models" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

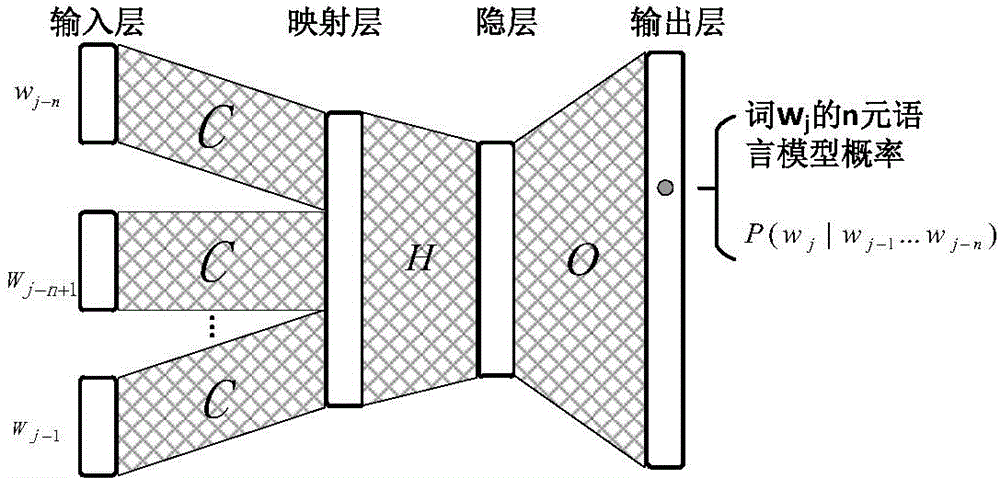

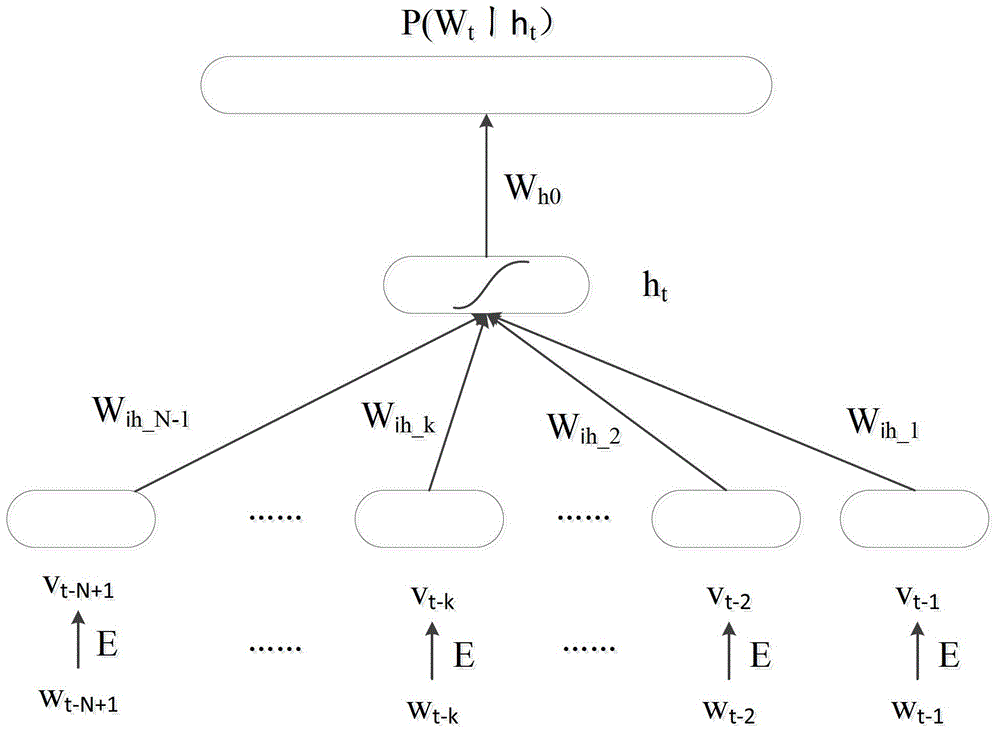

A neural network language model is a language model based on Neural Networks , exploiting their ability to learn distributed representations to reduce the impact of the curse of dimensionality.

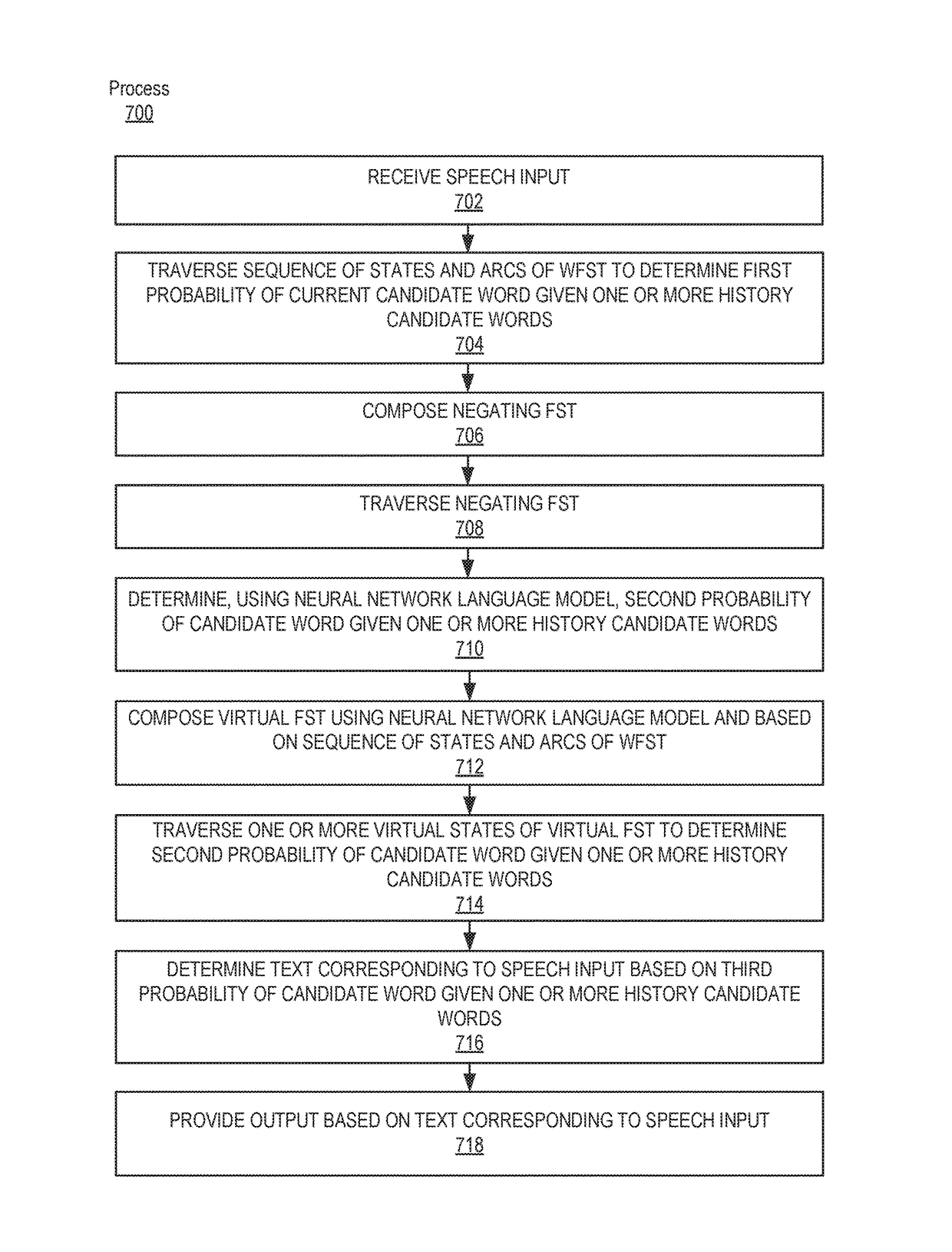

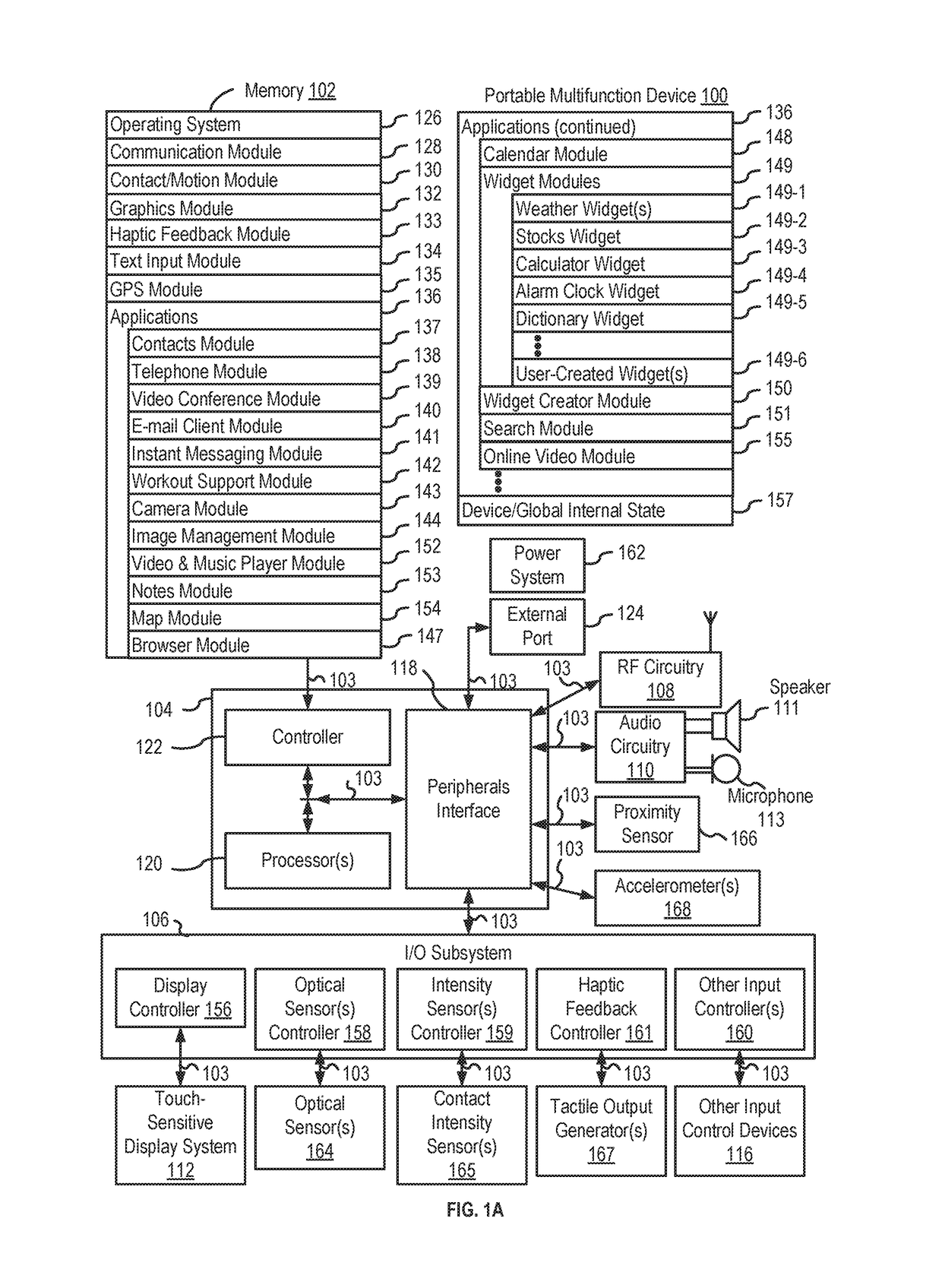

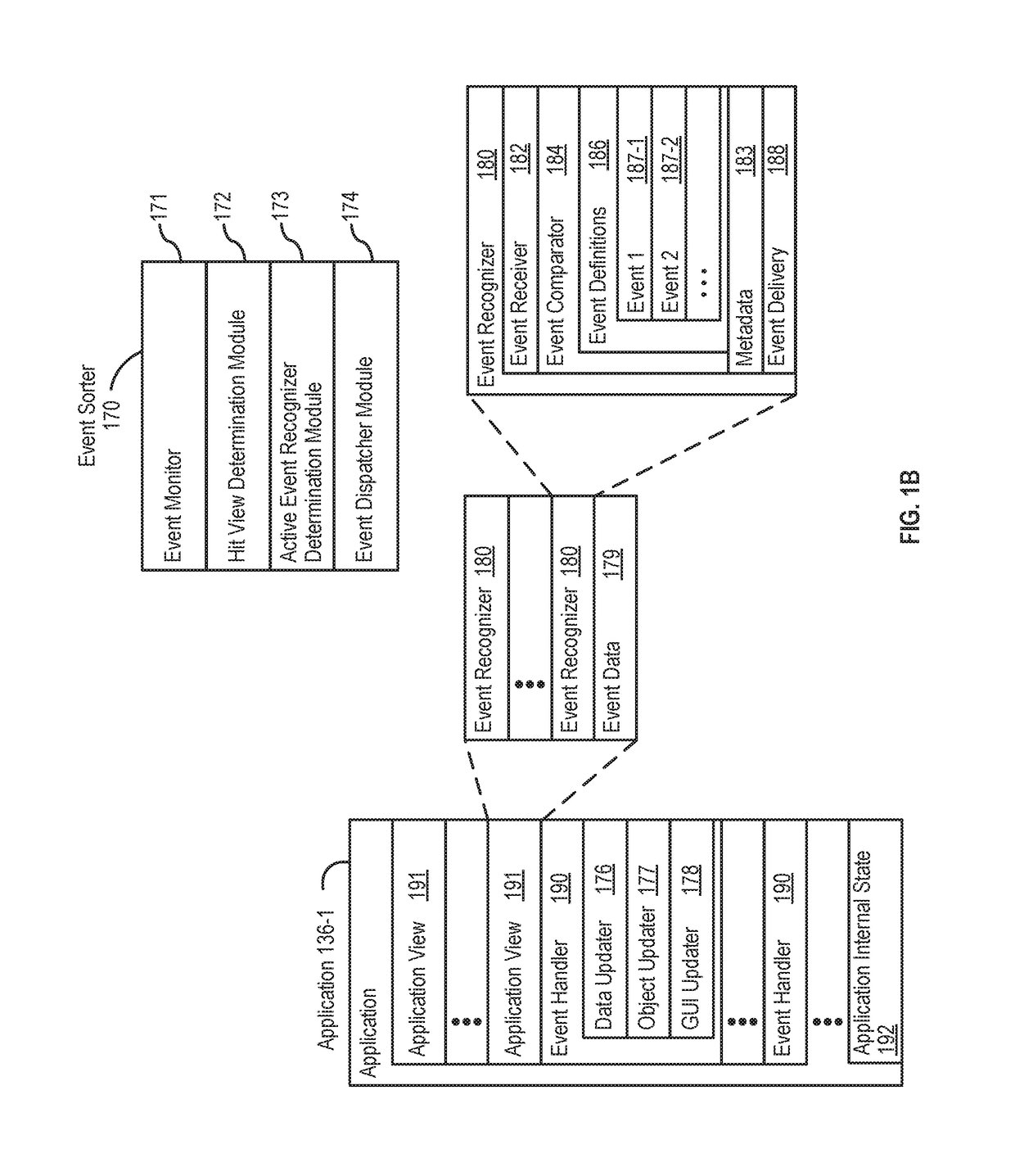

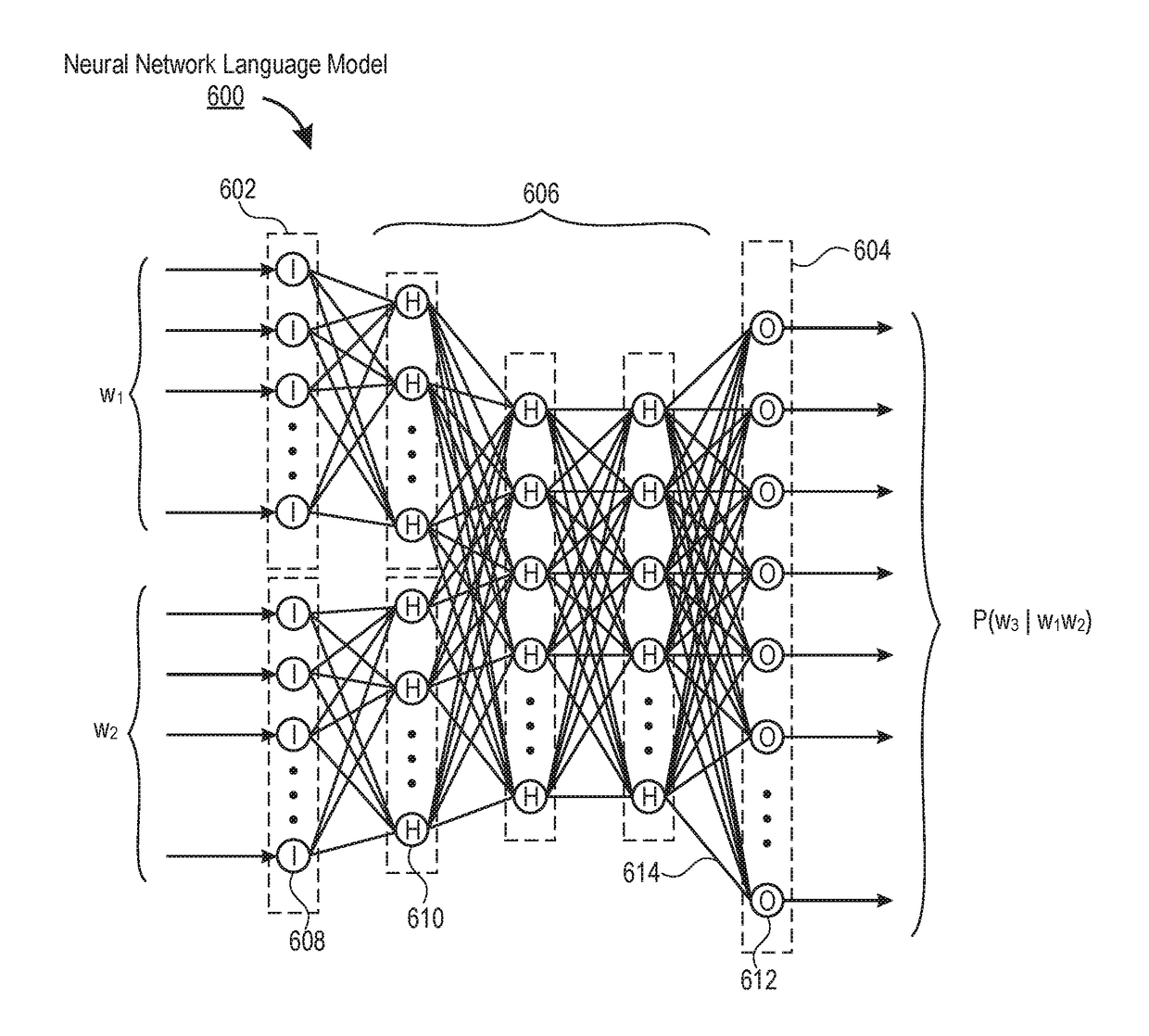

Applying neural network language models to weighted finite state transducers for automatic speech recognition

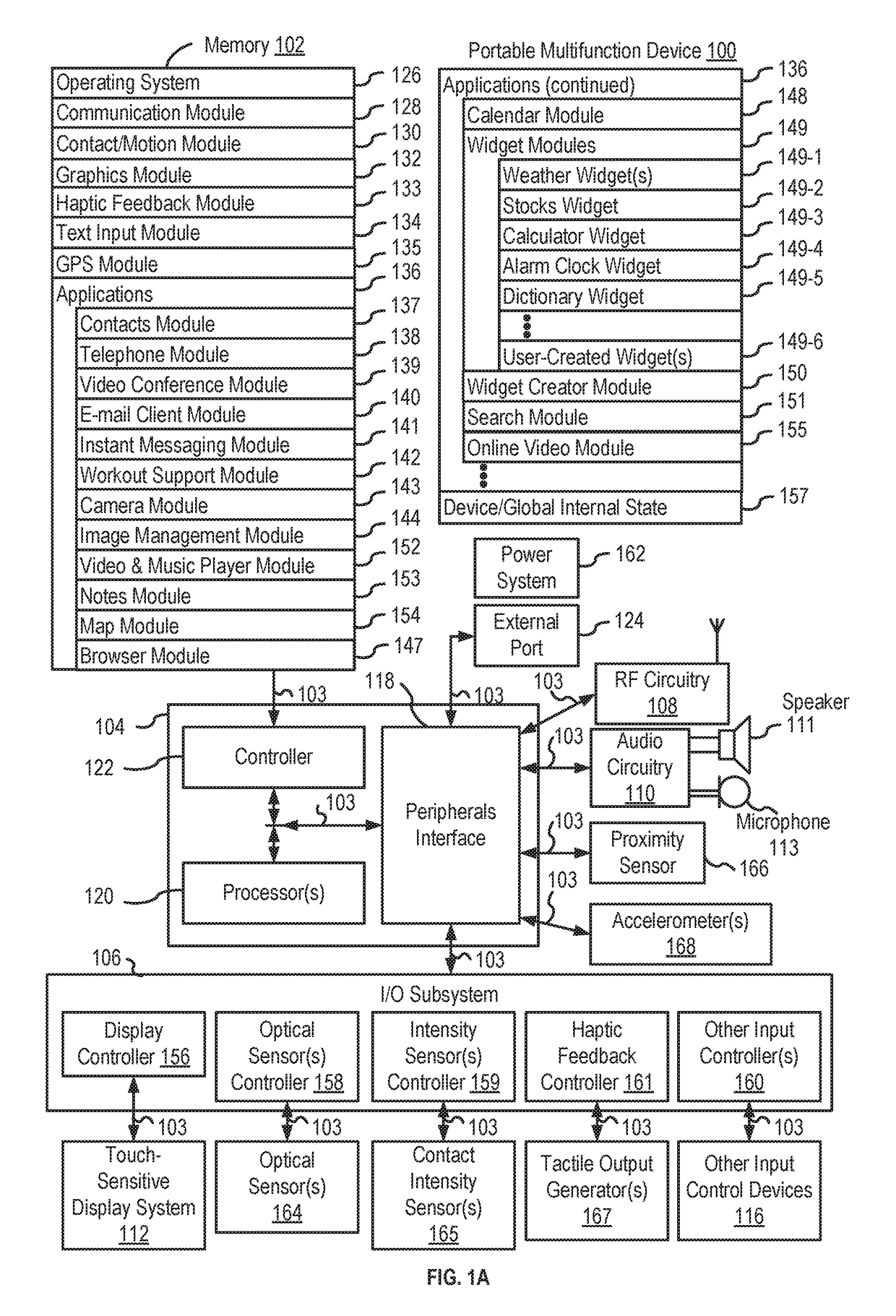

Systems and processes for converting speech-to-text are provided. In one example process, speech input can be received. A sequence of states and arcs of a weighted finite state transducer (WFST) can be traversed. A negating finite state transducer (FST) can be traversed. A virtual FST can be composed using a neural network language model and based on the sequence of states and arcs of the WFST. The one or more virtual states of the virtual FST can be traversed to determine a probability of a candidate word given one or more history candidate words. Text corresponding to the speech input can be determined based on the probability of the candidate word given the one or more history candidate words. An output can be provided based on the text corresponding to the speech input.

Owner:APPLE INC

Applying neural network language models to weighted finite state transducers for automatic speech recognition

Systems and processes for converting speech-to-text are provided. In one example process, speech input can be received. A sequence of states and arcs of a weighted finite state transducer (WFST) can be traversed. A negating finite state transducer (FST) can be traversed. A virtual FST can be composed using a neural network language model and based on the sequence of states and arcs of the WFST. The one or more virtual states of the virtual FST can be traversed to determine a probability of a candidate word given one or more history candidate words. Text corresponding to the speech input can be determined based on the probability of the candidate word given the one or more history candidate words. An output can be provided based on the text corresponding to the speech input.

Owner:APPLE INC

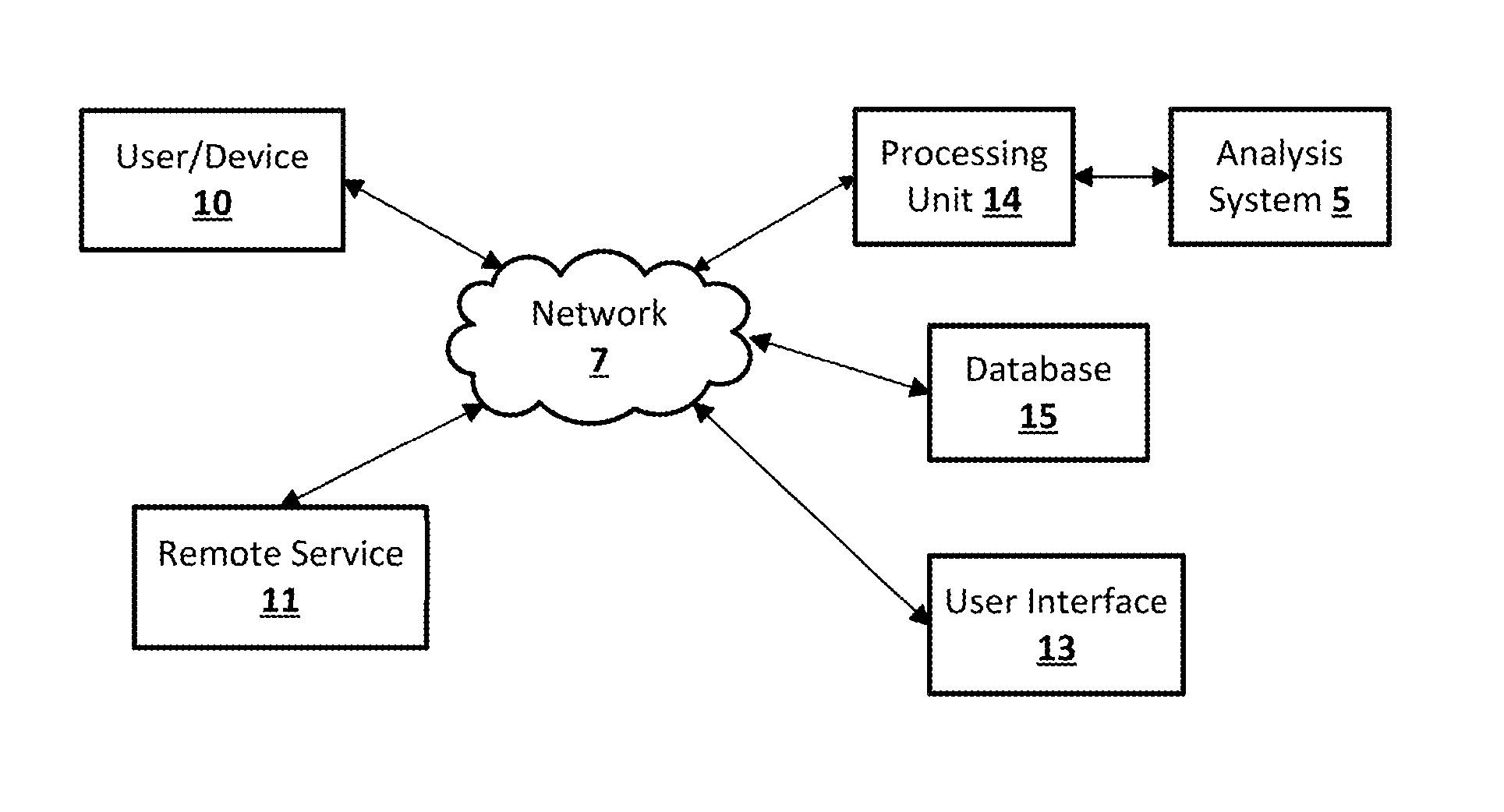

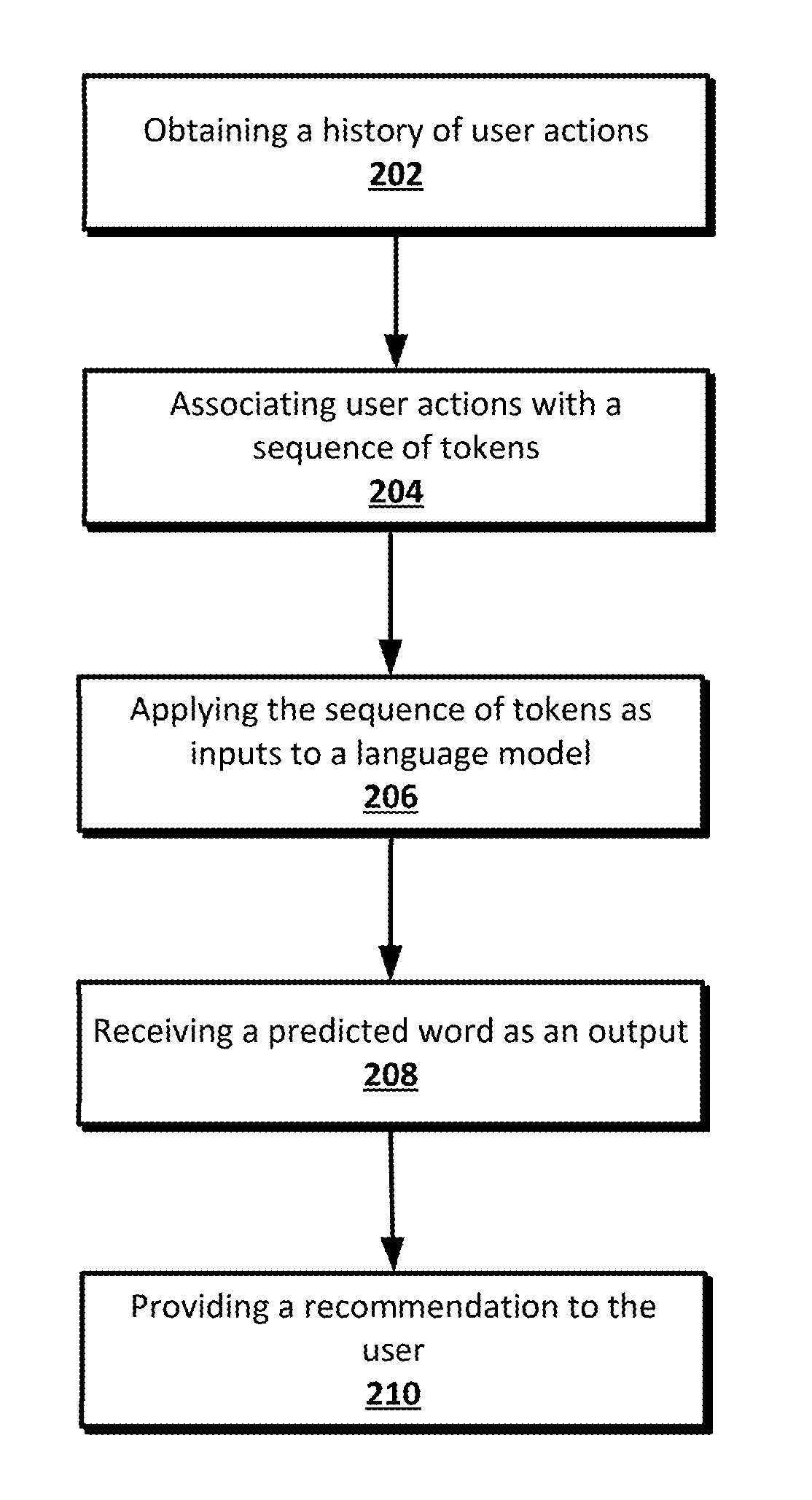

Content Recommendation System using a Neural Network Language Model

The present disclosure relates to applying techniques similar to those used in neural network language modeling systems to a content recommendation system. For example, by associating consumed media content to words of a language model, the system may provide content predictions based on an ordering. Thus, the systems and techniques described herein may produce enhanced prediction results for recommending content (e.g. word) in a given sequence of consumed content. In addition, the system may account for additional user actions by representing particular actions as punctuation in the language model.

Owner:GOOGLE LLC

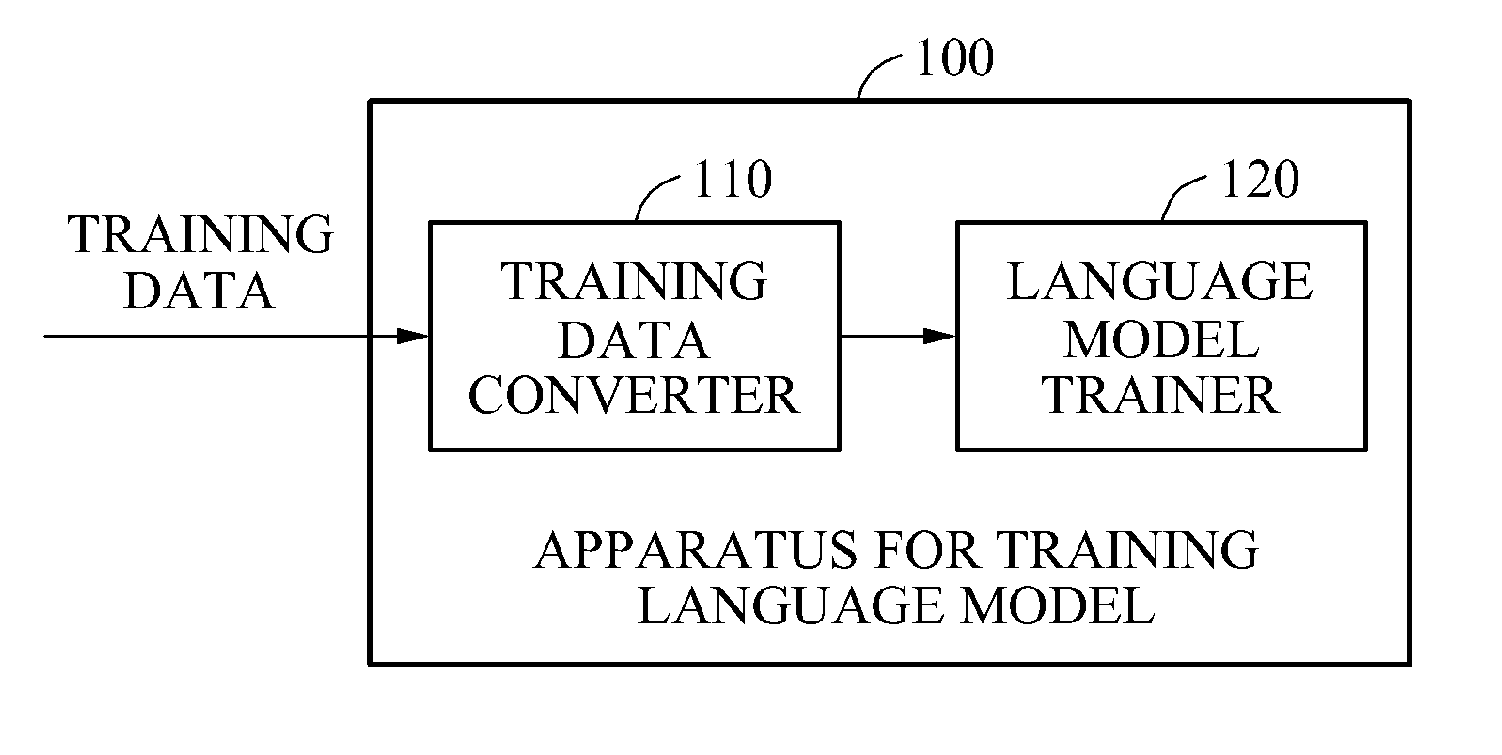

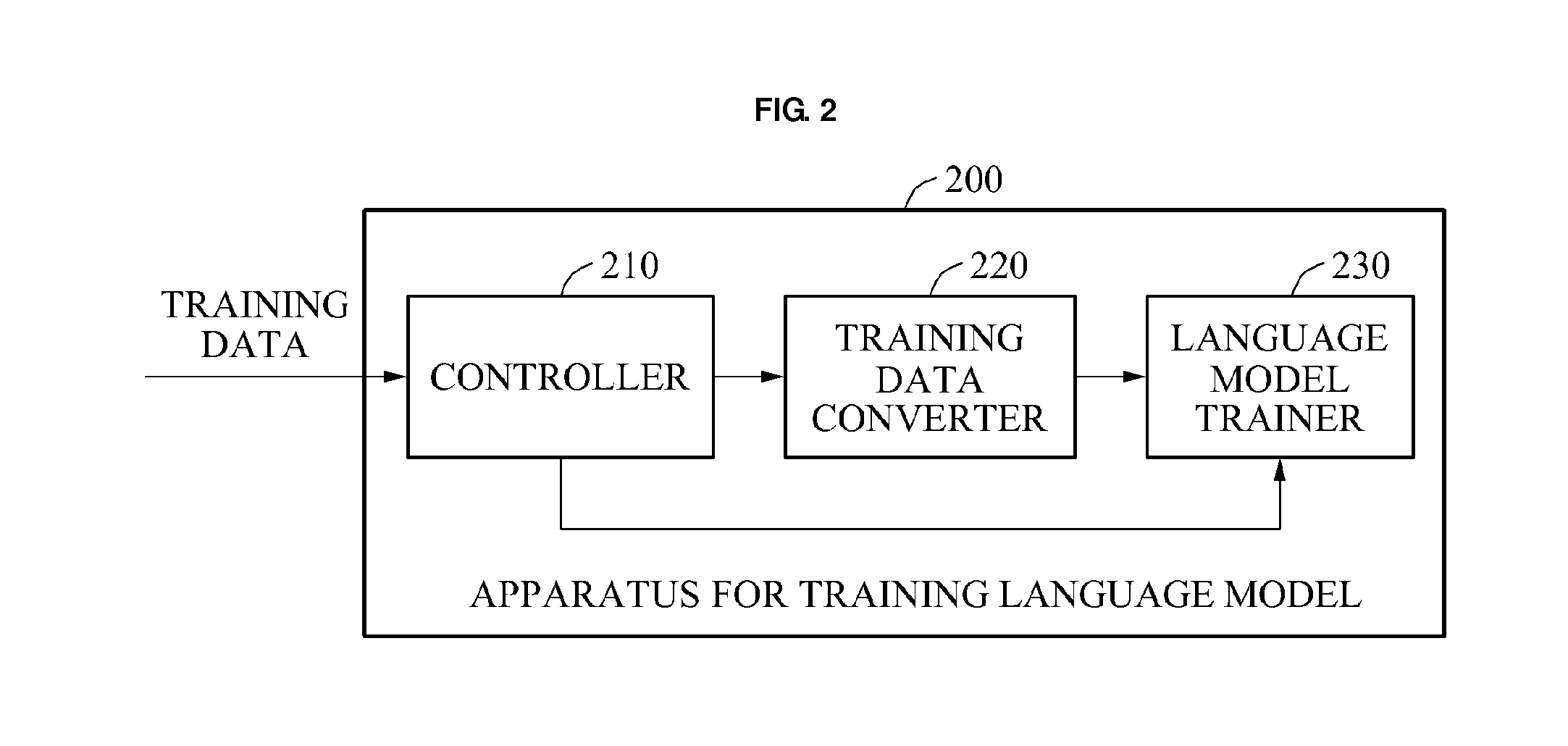

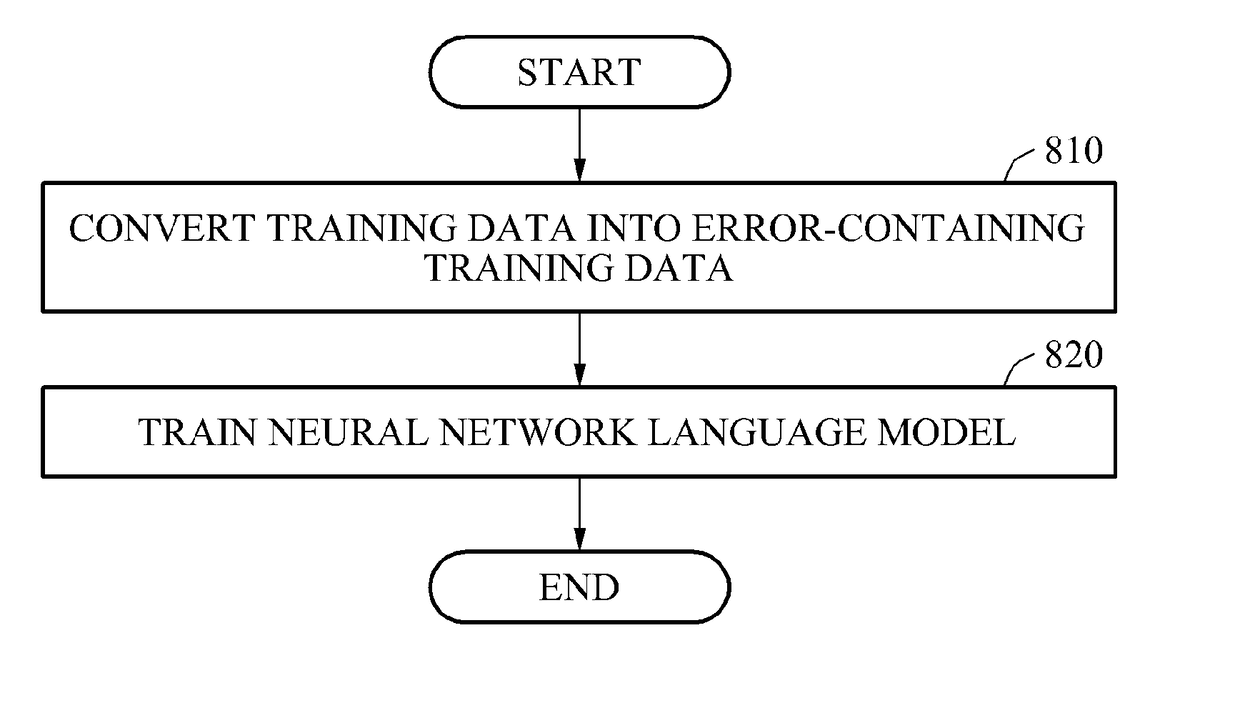

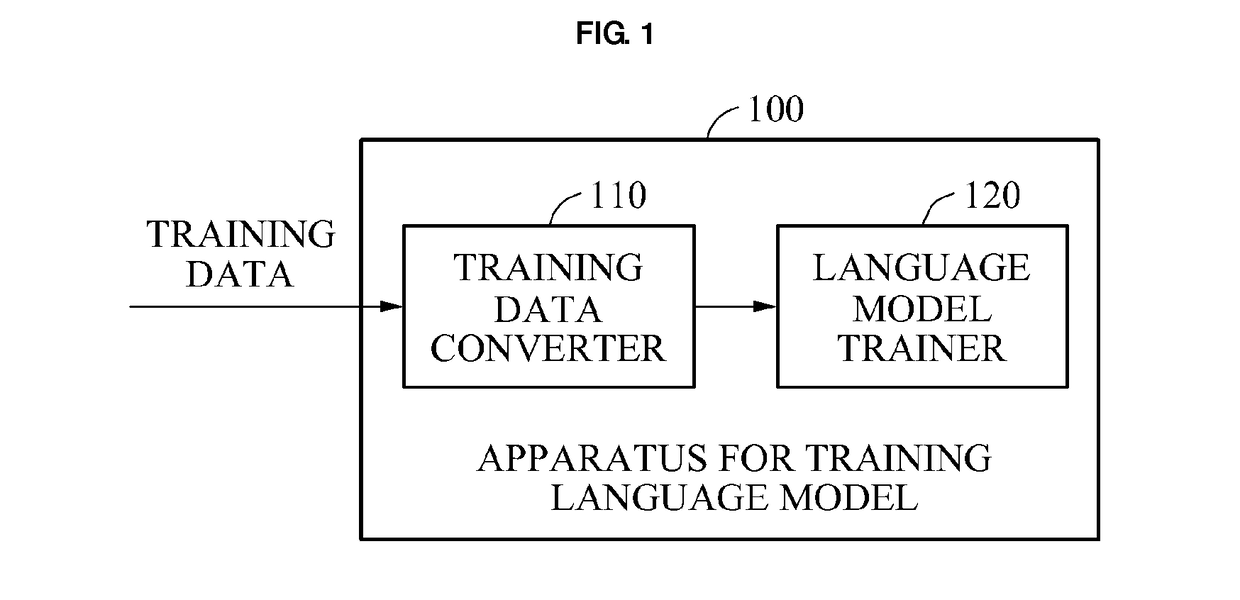

Method and apparatus for training language model and recognizing speech

A method and apparatus for training a neural network language model, and a method and apparatus for recognizing speech data based on a trained language model are provided. The method of training a language model involves converting, using a processor, training data into error-containing training data, and training a neural network language model using the error-containing training data.

Owner:SAMSUNG ELECTRONICS CO LTD

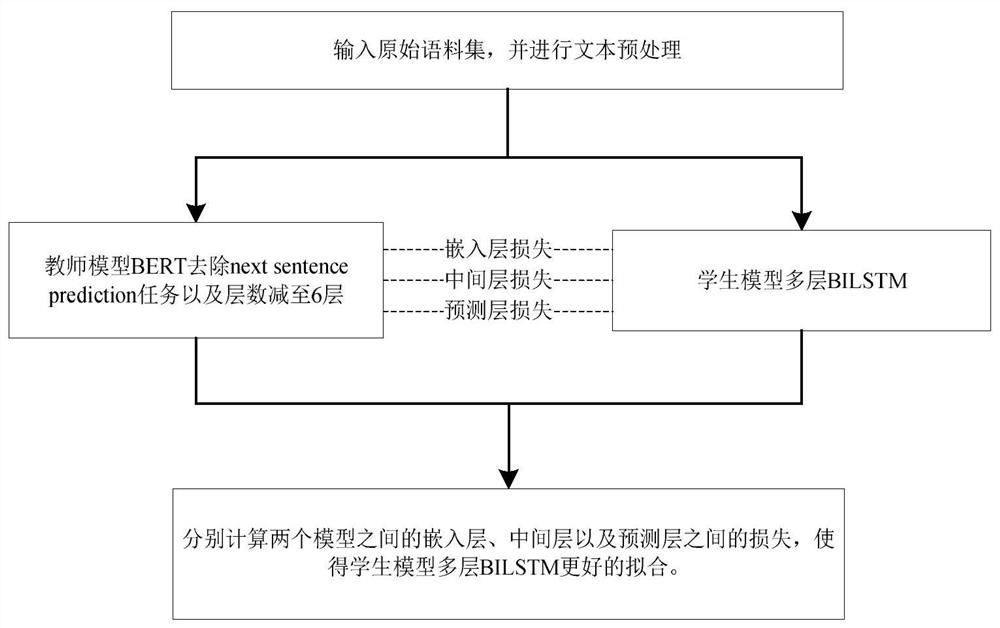

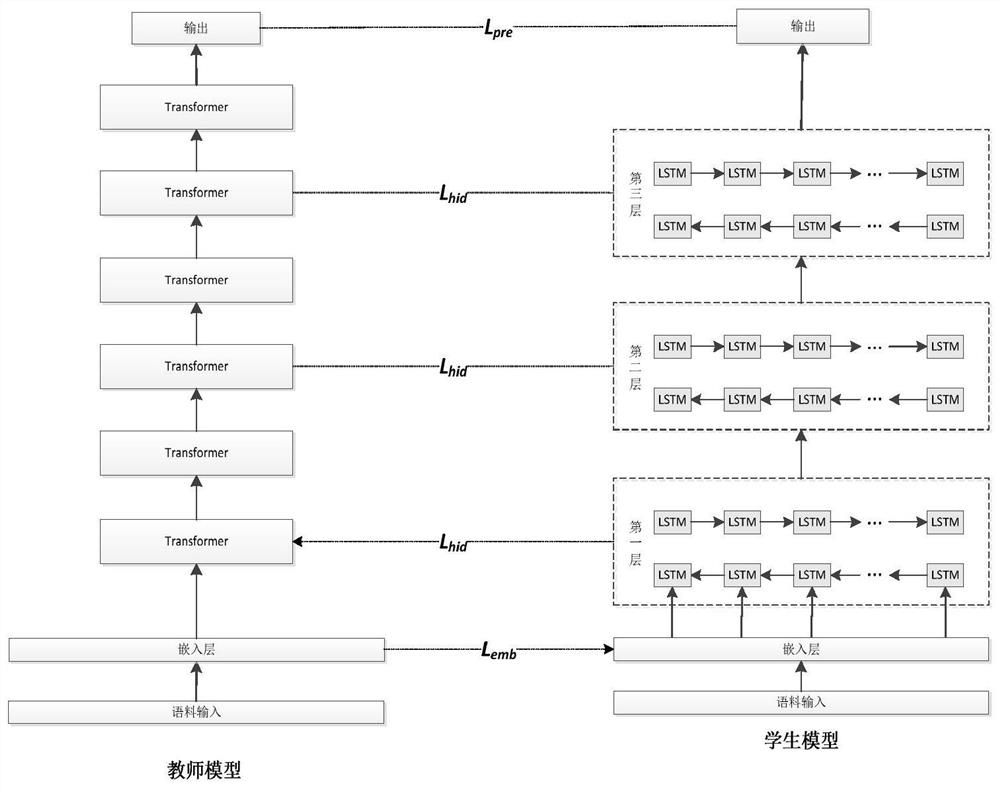

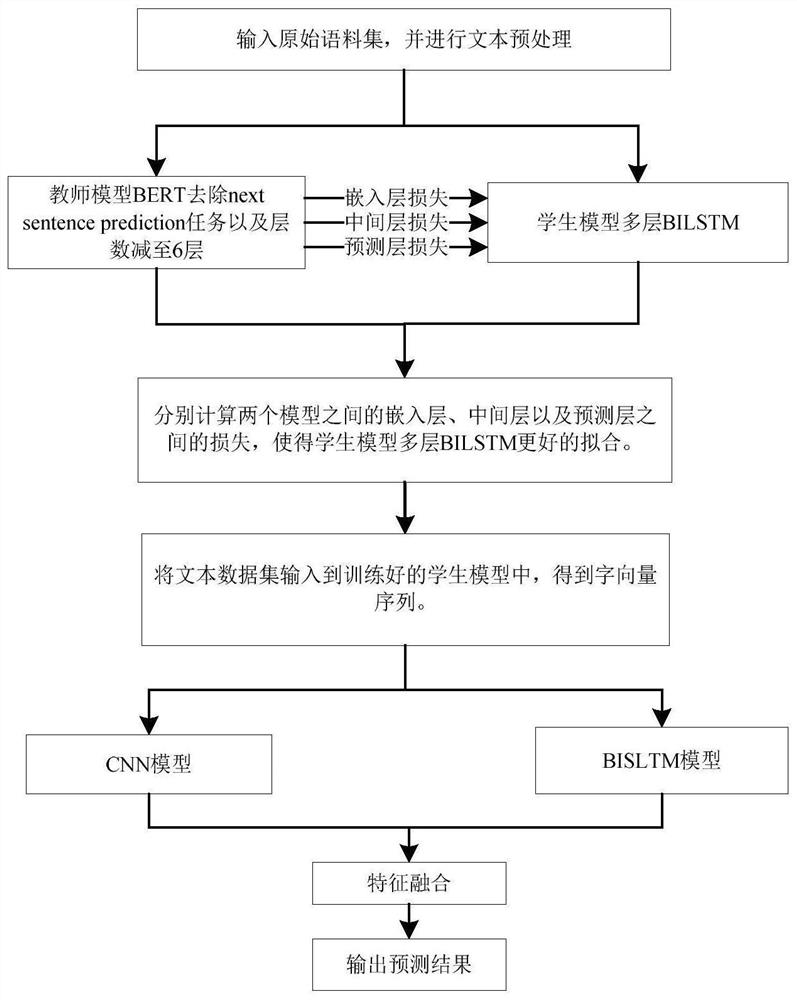

Multilayer neural network language model training method and device based on knowledge distillation

ActiveCN111611377AHigh precisionImprove learning effectSemantic analysisNeural architecturesHidden layerLinguistic model

The invention discloses a multilayer neural network language model training method and device based on knowledge distillation. The method comprises the steps that firstly, a BERT language model and amulti-layer BILSTM model are constructed to serve as a teacher model and a student model, the constructed BERT language model comprises six layers of transformers, and the multi-layer BILSTM model comprises three layers of BILSTM networks; then, after the text corpus set is preprocessed, the BERT language model is trained to obtain a trained teacher model; and the preprocessed text corpus set is input into a multilayer BILSTM model to train a student model based on a knowledge distillation technology, and different spatial representations are calculated through linear transformation when an embedding layer, a hiding layer and an output layer in a teacher model are learned. Based on the trained student model, the text can be subjected to vector conversion, and then a downstream network is trained to better classify the text. According to the method, the text pre-training efficiency and the accuracy of the text classification task can be effectively improved.

Owner:HUAIYIN INSTITUTE OF TECHNOLOGY

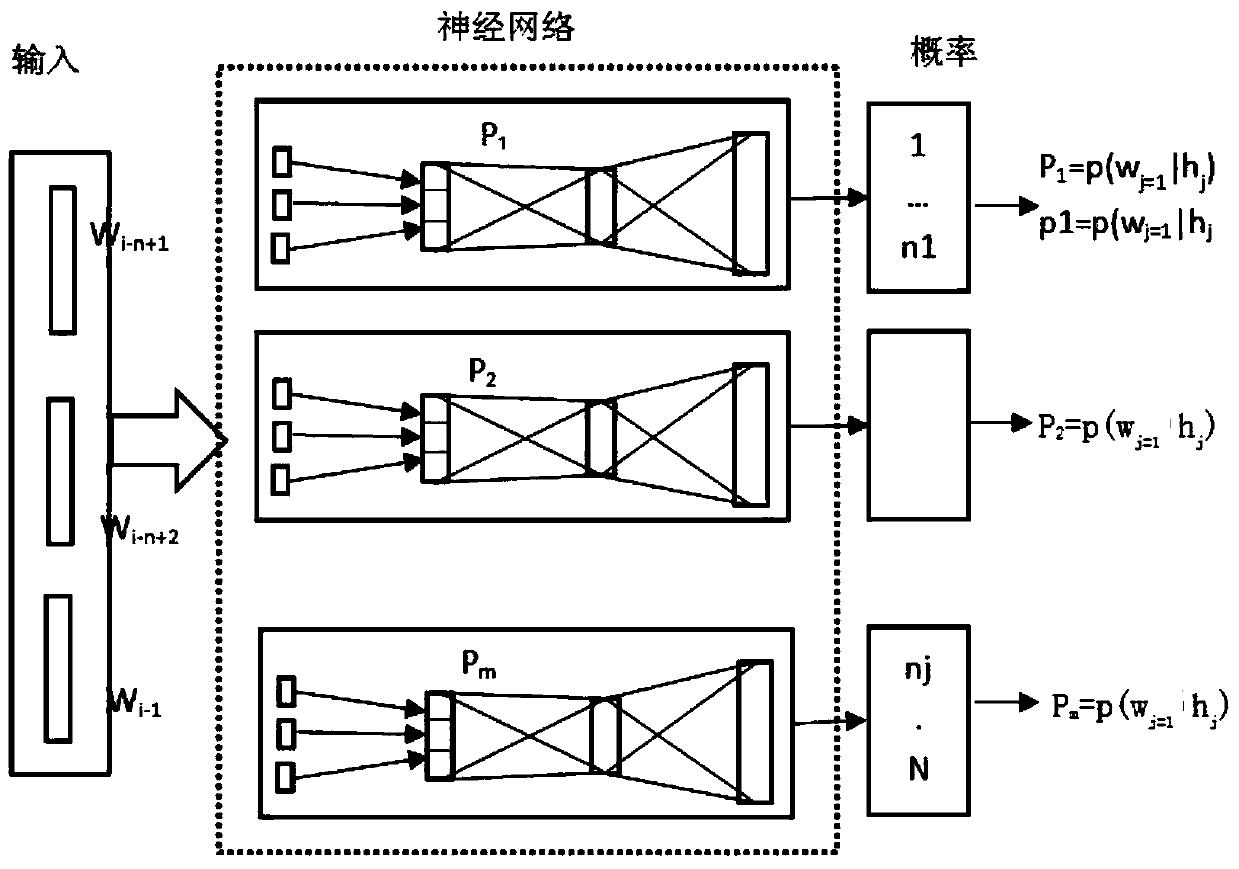

Linguistic model training method and system based on distributed neural networks

InactiveCN103810999AResolution timeSolving the problem of underutilizing neural networksSpeech recognitionLinguistic modelSpeech identification

The invention discloses linguistic model training method and system based on distributed neural networks. The method comprises the following steps: splitting a large vocabulary into a plurality of small vocabularies; corresponding each small vocabulary to a neural network linguistic model, each neural network linguistic model having the same number of input dimensions and being subjected to the first training independently; merging output vectors of each neural network linguistic model and performing the second training; obtaining a normalized neural network linguistic model. The system comprises an input module, a first training module, a second training model and an output model. According to the method, a plurality of neural networks are applied to training and learning different vocabularies, in this way, learning ability of the neural networks is fully used, learning and training time of the large vocabularies is greatly reduced; besides, outputs of the large vocabularies are normalized to realize normalization and sharing of the plurality of neural networks, so that NNLM can learn information as much as possible, and the accuracy of relevant application services, such as large-scale voice identification and machine translation, is improved.

Owner:TSINGHUA UNIV

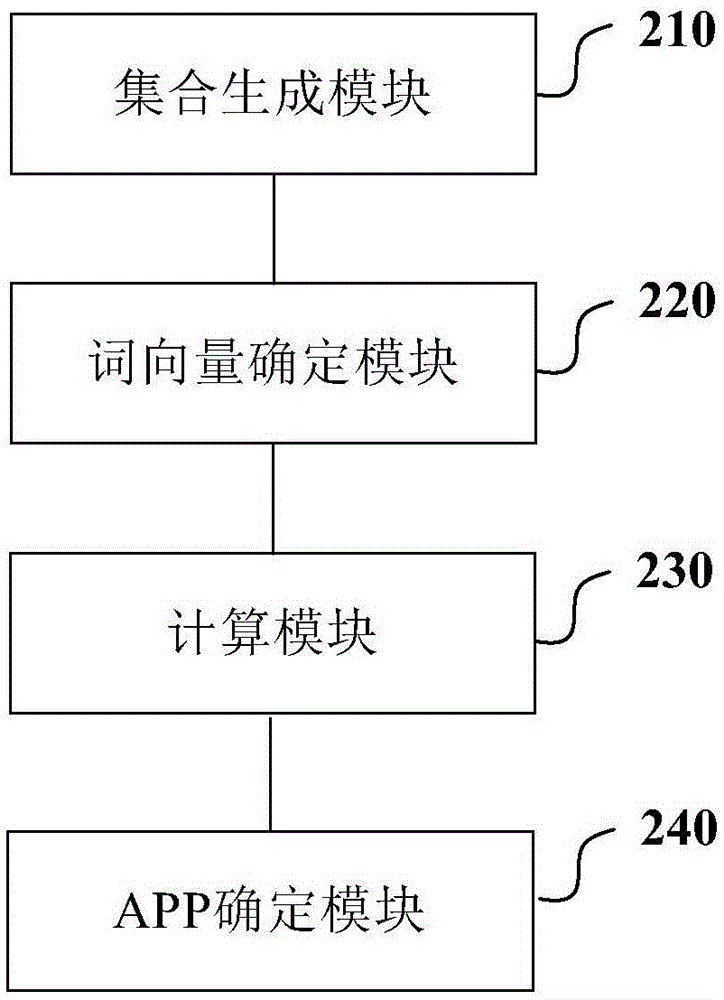

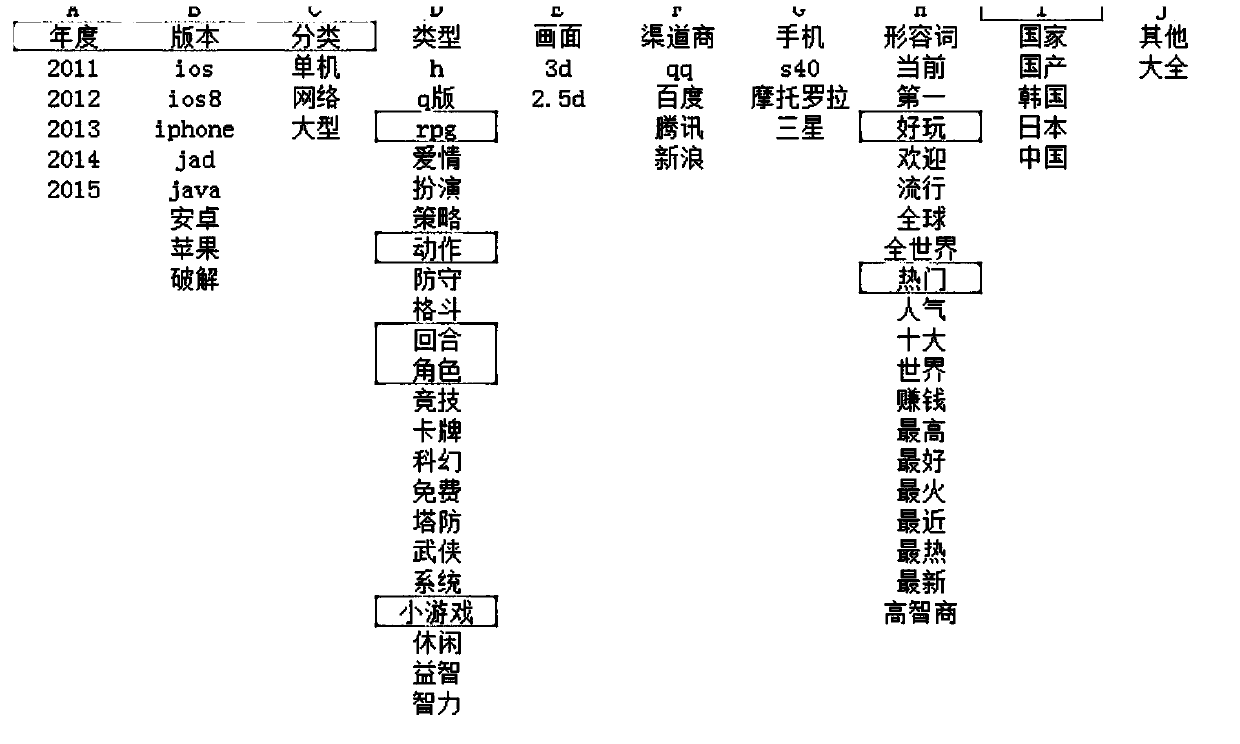

Method and apparatus for determining to-be-recommended application (APP)

InactiveCN105117440AImprove user experienceIncrease the odds of clicking to download a recommended appMarketingSpecial data processing applicationsHabitData mining

The invention provides a method and an apparatus for determining a to-be-recommended application (APP). The method comprises: obtaining a plurality of APP names installed within a predetermined duration by a terminal user, and based on an installation time sequence, generating a word set comprising the APP names; training the word set with a neural network language model and determining a first word vector corresponding to the word set; performing prediction calculation processing on the first word vector through a prediction model; and according to a prediction result, determining the to-be-recommended APP. According to the method and the apparatus, due to the adoption of the method for determining the to-be-recommended APP based on the historical installation data of the terminal user to construct the work vector, i.e., actual usage habits and actual usage demands of the terminal user are considered in the recommendation process, so that the determined to-be-recommended APP and the terminal user have relatively high matching degree; and further, after the APP with relatively high degree of matching with the terminal user is recommended to the terminal user, the user can quickly obtain the APP matched with the usage demands and the usage habits, so that the user experience is improved.

Owner:BEIJING QIHOO TECH CO LTD +1

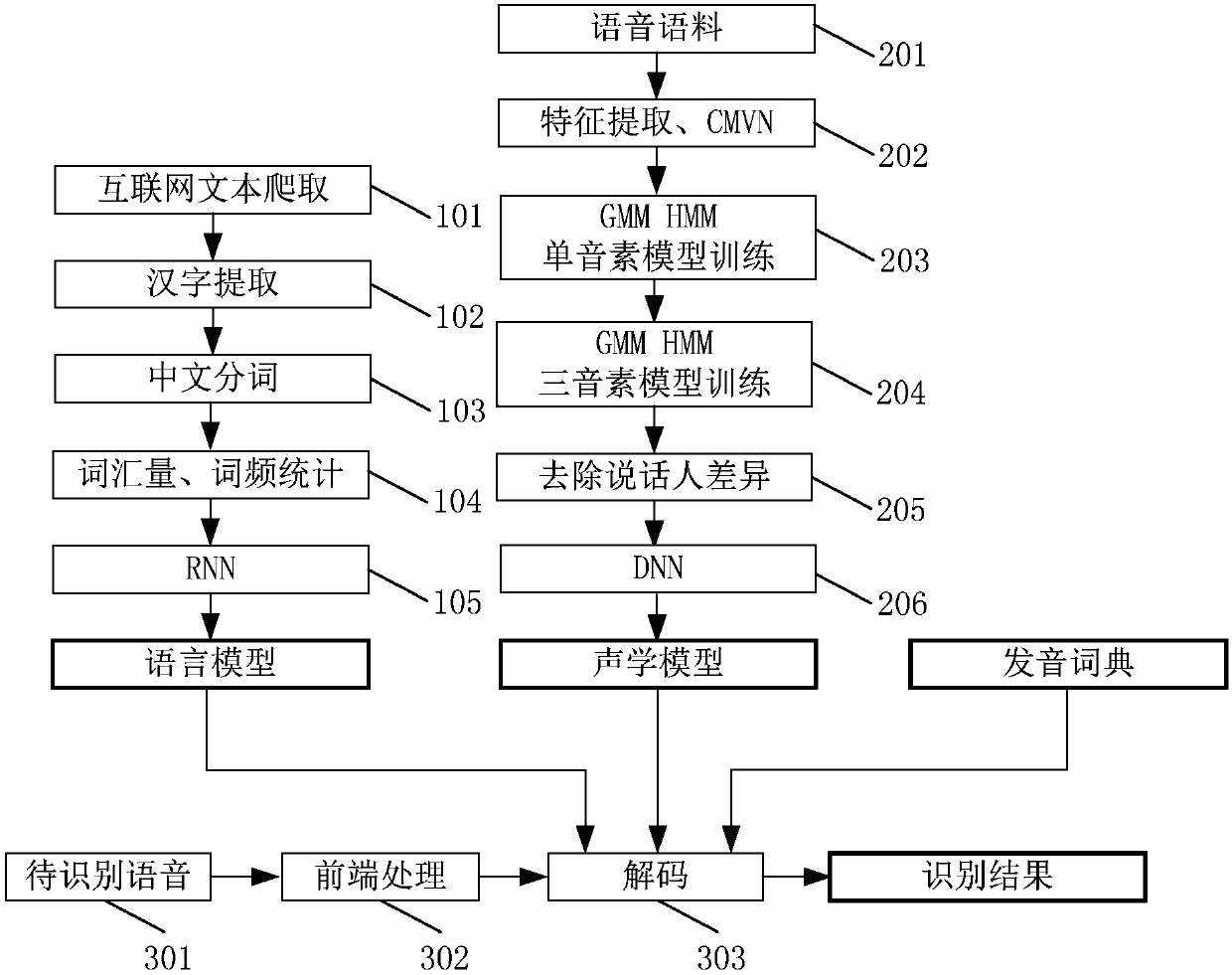

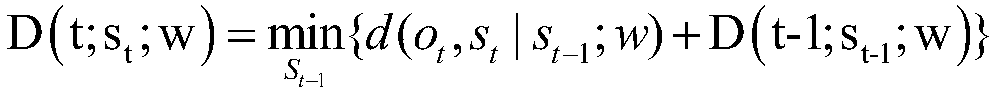

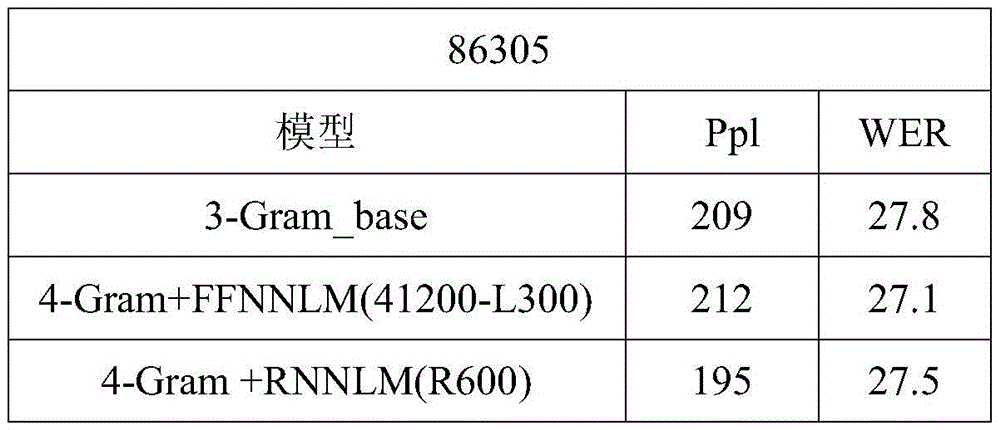

Chinese speech recognition method based on cyclic neural network language model and deep neural network acoustic model

ActiveCN108492820AResolve accuracySolve the delay problemNatural language data processingSpeech recognitionSpeech identificationAcoustic model

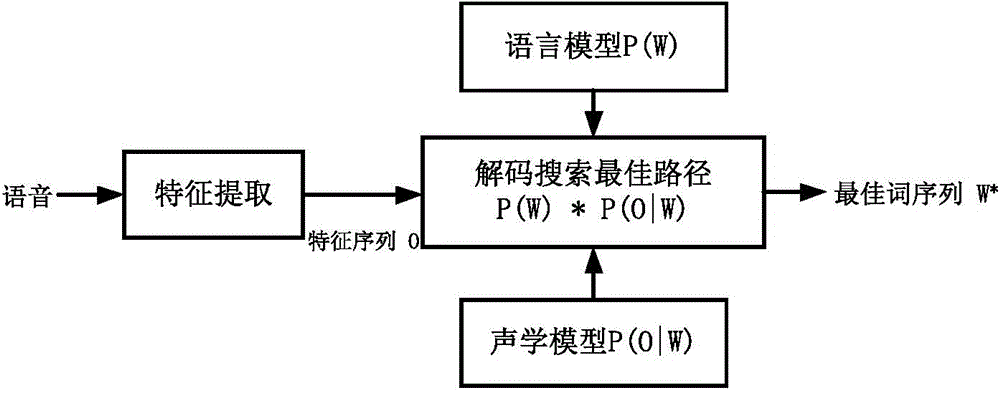

The invention discloses a Chinese speech recognition method based on a cyclic neural network language model and a deep neural network acoustic model. The Chinese speech recognition method mainly includes the following steps: S1, training the language model based on a cyclic neural network; S2, training the acoustic model based on a deep neural network; and S3, employing a Viterbi search scheme bya decoder for Chinese speech recognition based on the cyclic neural network language model and the deep neural network acoustic model. The Chinese speech recognition method based on a cyclic neural network language model and a deep neural network acoustic model combines the accuracy of the cyclic neural network and the low delay of the deep neural network, solves the shortcomings that a current n-gram language model has low accuracy and a long and short time memory acoustic model has high time delay, and achieves Chinese speech recognition with low delay and higher accuracy.

Owner:SOUTH CHINA UNIV OF TECH

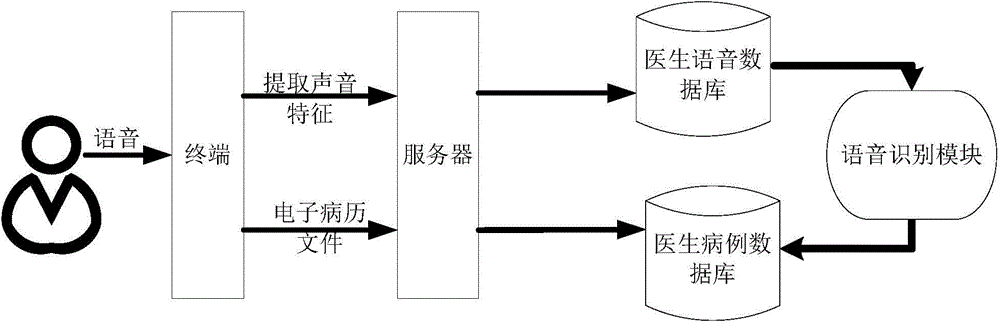

Electronic medical record generating method and electronic medical record system

ActiveCN104485105AImprove production efficiencyImprove accuracySpeech recognitionMedical recordSpeech identification

The invention discloses an electronic medical record generating method and an electronic medical record system. The electronic medical record generating method includes the steps that a terminal collects input voices when receiving an instruction for instructing to create an electronic medical record; the terminal extracts sound characteristics of the voices input this time to generate a sound characteristic file; the terminal transmits the sound characteristic file to a server; the server receives the sound characteristic file coming from the terminal and carries out voice identification to obtain a voice identification result; the server stores the voice identification result into an electronic medical record file. The step that the server carries out voice identification on the sound characteristic file is characterized in that the sound characteristic file is sequentially processed through an acoustic model, an N-gram voice model and a neural network language model, and the voice identification result is obtained. By means of the technical scheme, the electronic medical record generating efficiency can be effectively improved.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

N-gram grammar model constructing method for voice identification and voice identification system

InactiveCN105261358AReduce sparsityControlling the Search PathSpeech recognitionPart of speechSpeech identification

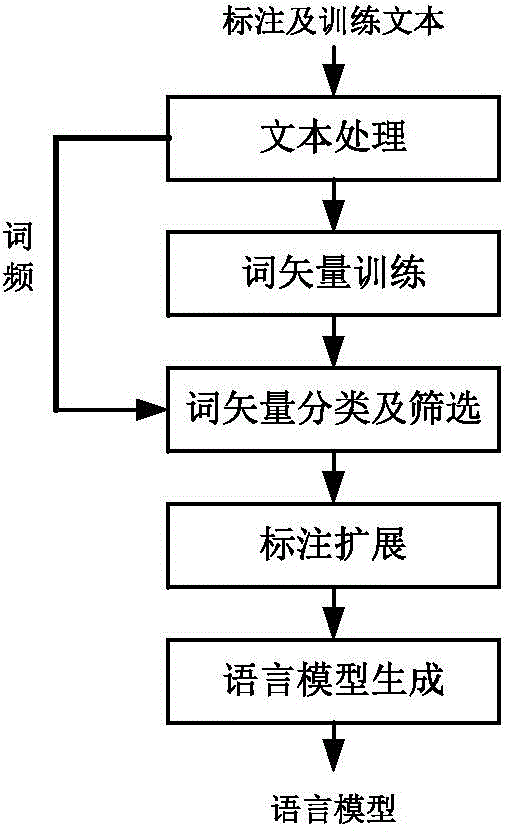

The invention provides an n-gram grammar model constructing method for voice identification and a voice identification system. The method comprises: step (101), training is carried out by using a neural network language model to obtain word vectors, and classification and multi-layer screening is carried out on word vectors to obtain parts of speech; step (102), manual marking is expanded by using a direct word frequency statistic method; and when same-kind-word substitution is carried out, direct statistics of 1-to-n-gram grammar combination units changing relative to an original sentence is carried out, thereby obtaining an n-gram grammar model of the expanding part; step (103), manual marking is carried out to generate a preliminary n-gram grammar model, model interpolation is carried out on the preliminary n-gram grammar model and the n-gram grammar model of the expanding part, thereby obtaining a final n-gram grammar model. In addition, the step (101) includes: step (101-1), inputting a mark and a training text; step (101-2), carrying out training by using a neural network language model to obtain corresponding work vectors of words in a dictionary; step (101-3), carrying out word vector classification by using a k mean value method; and step (101-4), carrying out multi-layer screening on the classification result to obtain parts of speech finally.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

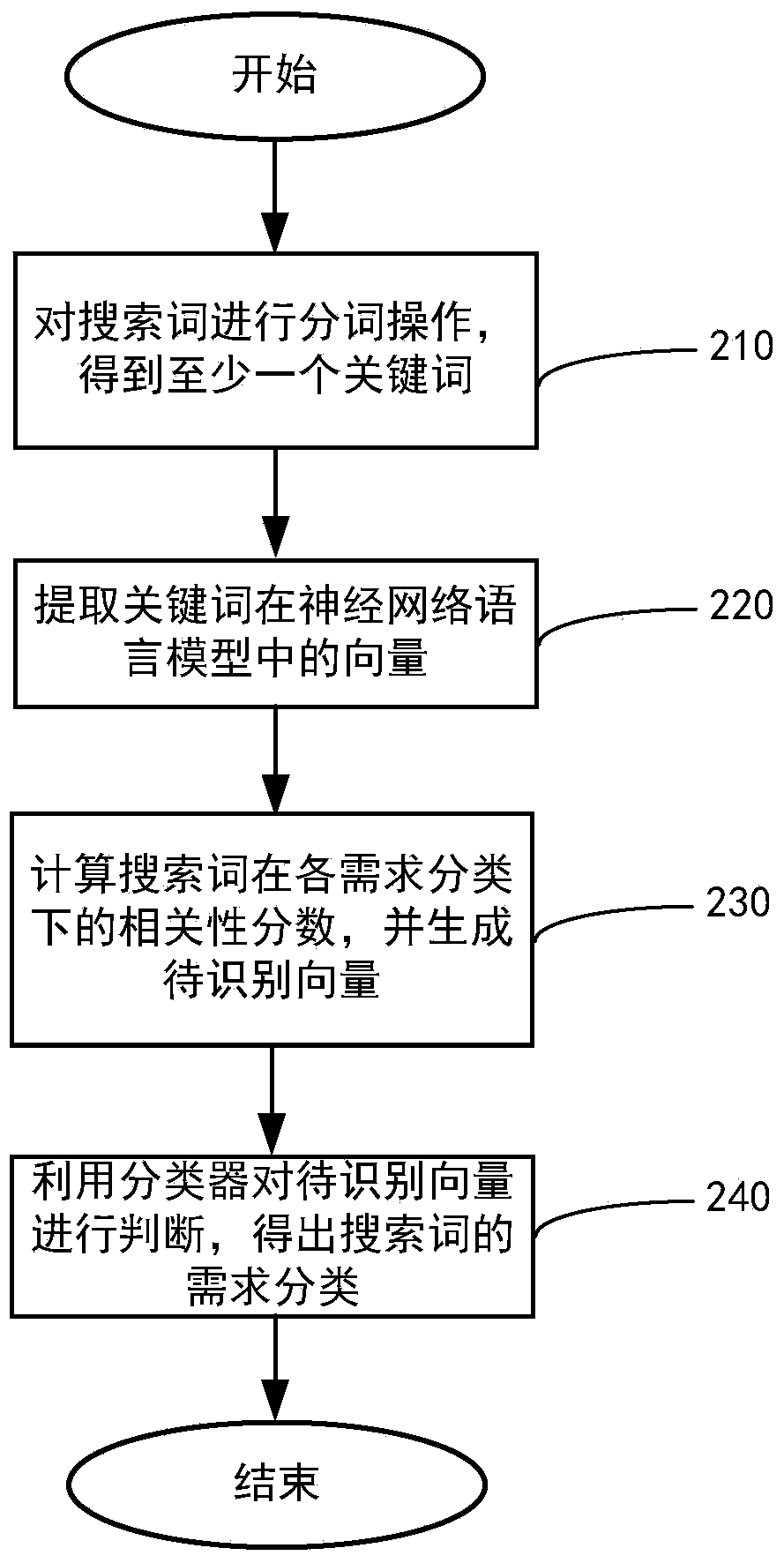

Method and system for identifying demand classification corresponding to searching

ActiveCN104199822AEfficient and accurate identificationWeb data indexingSpecial data processing applicationsSearch wordsUser input

The invention provides a method and system for identifying a demand classification corresponding to searching. The method comprises the following steps that (a) word segmentation operation is conducted on input searching words, and at least one key word is obtained; (b) a model vector of the key word in a neural network language model is extracted; (c) the correlation fractions of the searching words in multiple demand classifications are calculated according to the model vector; (d) a vector to be identified is judged by using a classifier, and the demand classification corresponding to the searching words is obtained. According to the method and system for identifying the demand classification corresponding to searching, the searching key word input by a user can be identified so that a specific demand classification information category can be matched for the user, targeted classification information searching can be conducted under the demand category, and thus the system can accurately and efficiently identify searching demands.

Owner:BEIJING 58 INFORMATION TECH

Neural network language model training method and device and voice recognition method

InactiveCN104376842ACalculation speedReduce complexitySpeech recognitionComputation complexitySpeech sound

The invention discloses a neural network language model training method and device and a voice recognition method and relates to the voice recognition technology. The neural network language model training method and device and the voice recognition method aim to solve the problem that in the prior art, when the computation complexity of a neural network language model is lowered, the recognition accuracy of the neural network language model is lowered. According to the technical scheme, in the process of training parameters of the neural network language model, normalization factors of an output layer are adopted to modify a target cost function, and a modified target cost function is obtained; the parameters of the neural network language model are updated according to the modified target cost function to obtain trained target parameters of the neural network language model, wherein the target parameters enable the normalization factors in the trained neural network language model to be constants. The neural network language model training method and device and the voice recognition method can be applied to the neural network voice recognition process.

Owner:TSINGHUA UNIV +1

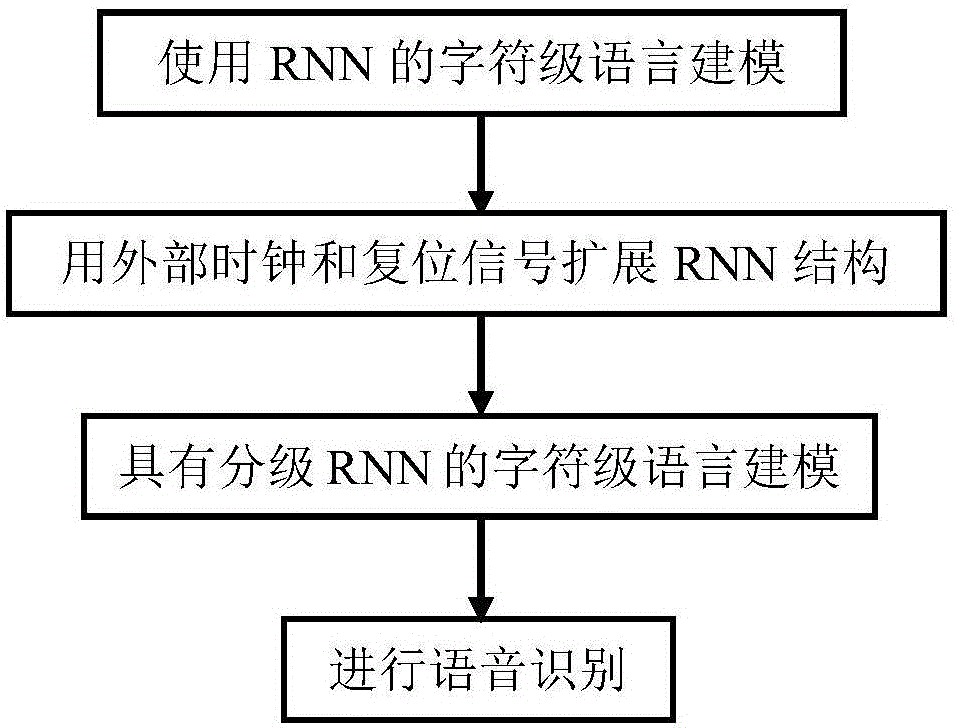

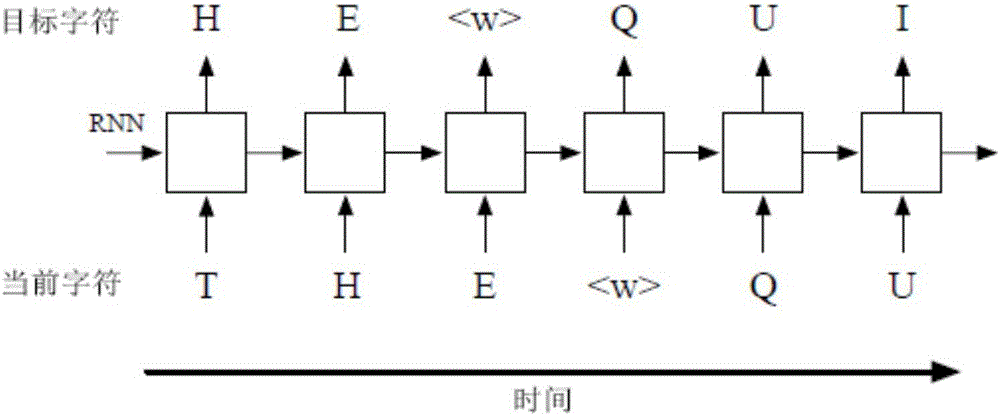

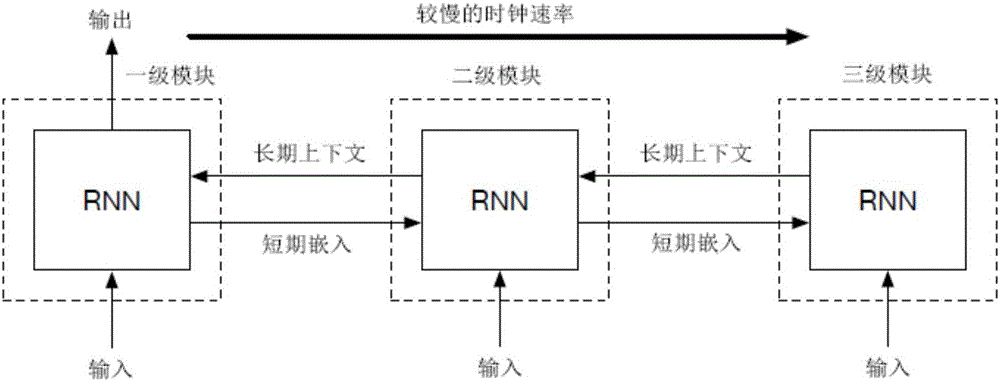

Voice recognition method based on layered circulation neural network language model

InactiveCN106782518ASpeech recognitionNeural learning methodsSpeech identificationProcess information

The invention provides a voice recognition method based on a layered circulation neural network language model. The method mainly comprises steps of character-level language modeling using RNN, expansion of an RNN structure by use of an external clock and a reset signal, character-level language modeling with graded RNN and voice recognition. According to the invention, the traditional single-clock RNN character-level language model is replaced by layered circulation neural network-based language model, so quite high recognition precision is achieved; quantity of parameters is reduced; vocabulary of the language model is huge; required storage space is quite small; and a layered language model can be expanded to process information of quite long period, such as sentences, topics or other contexts.

Owner:SHENZHEN WEITESHI TECH

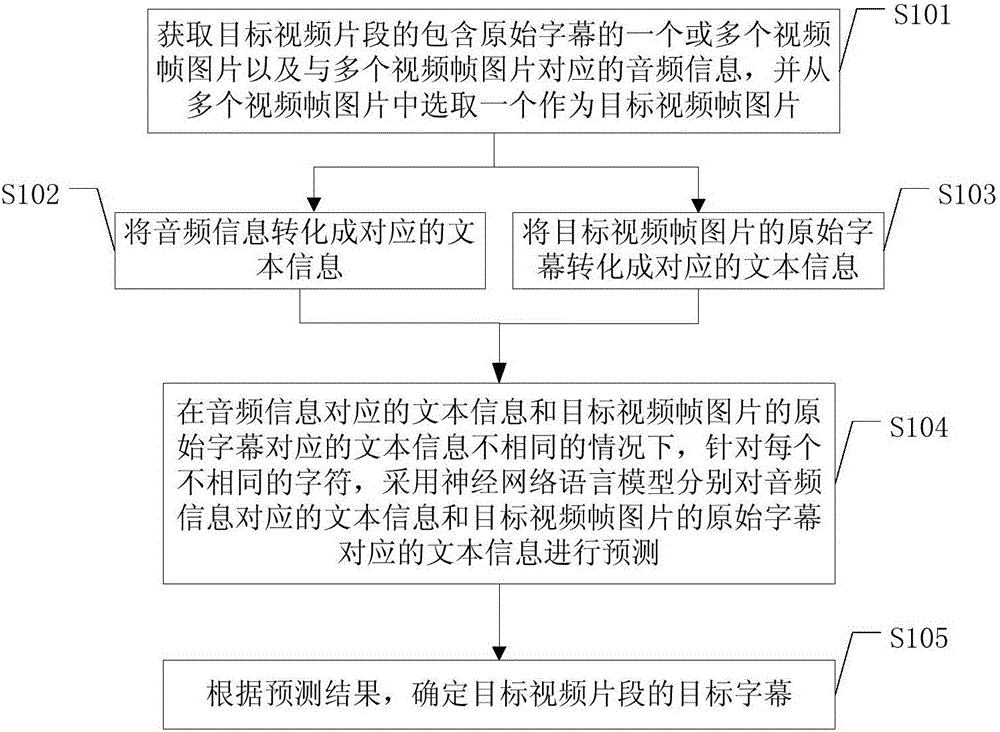

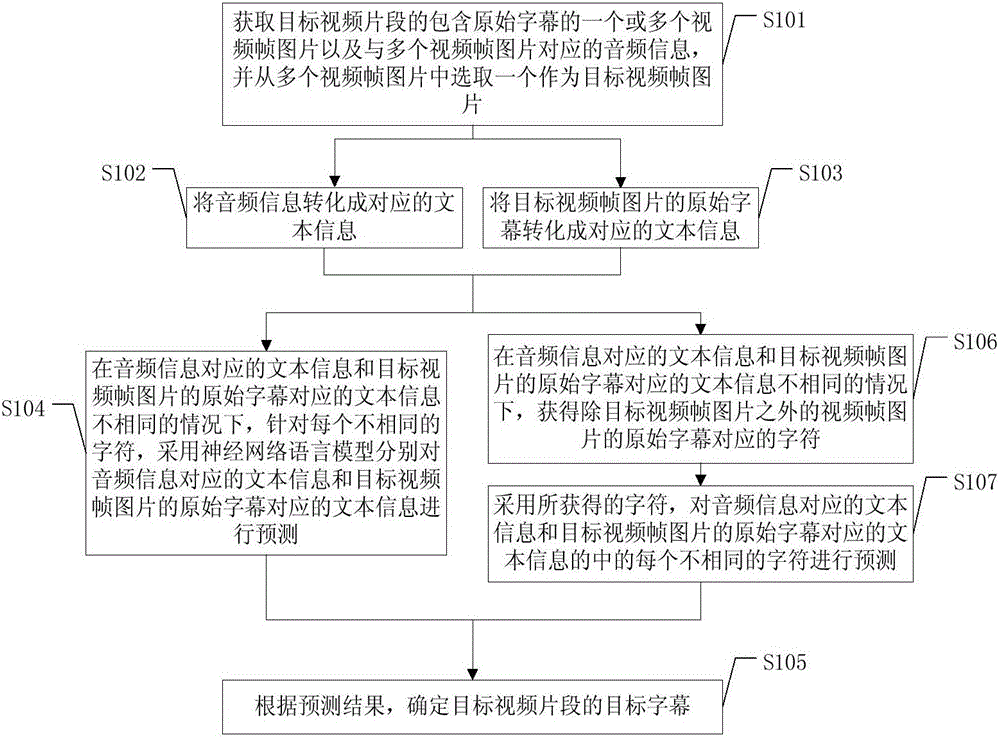

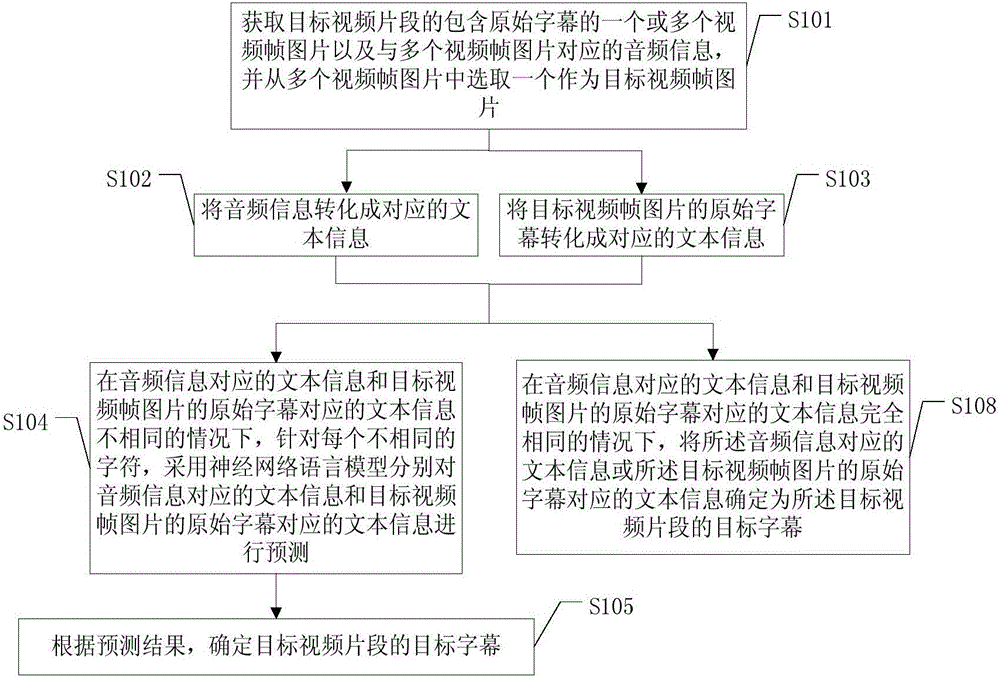

Video subtitle determining method and video subtitle determining device

ActiveCN106604125AImprove accuracyFix bugsSpeech recognitionSelective content distributionComputer graphics (images)Computer vision

The embodiments of the invention provide a video subtitle determining method and a video subtitle determining device. The method comprises the following steps: acquiring one or more video frame images containing original subtitles of a target video clip and audio information corresponding to the multiple video frame images, and selecting one video frame image as a target video frame image from the multiple video frame images; converting the audio information into corresponding text information; converting the original subtitle of the target video frame image into corresponding text information; under the condition that the text information corresponding to the audio information is not the same with the text information corresponding to the original subtitle of the target video frame image, using a neural network language model to predict the text information corresponding to the audio information and the text information corresponding to the original subtitle of the target video frame image for each different character; and determining the target subtitles of the target video clip according to the prediction result. By implementing the embodiments of the invention, the accuracy of target video subtitles is improved.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

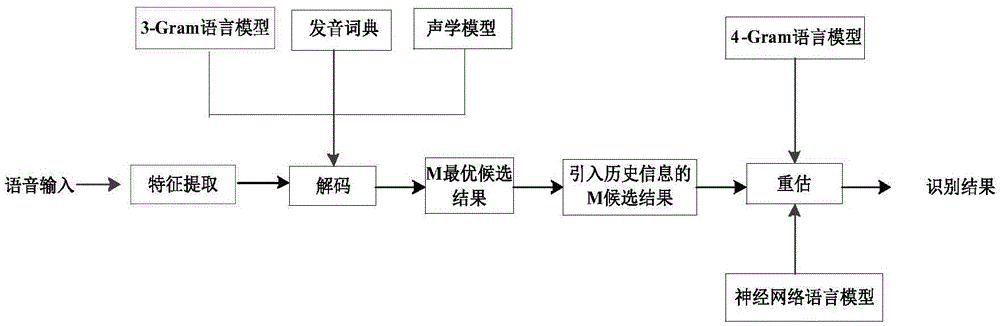

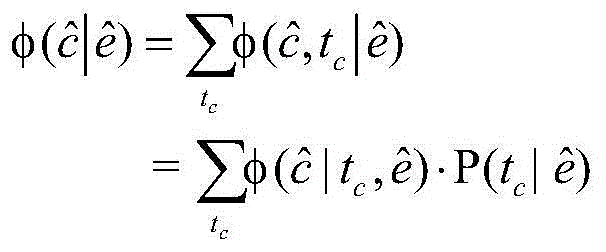

Language model re-evaluation method based on long and short memory network

ActiveCN106803422AImprove memory functionImprove performanceSpeech recognitionAlgorithmProcess information

The invention provides a language model re-evaluation method based on long and short memory network. The method includes the following steps: a step 100 of inputting to-be-identified language information, and pre-processing the input to-be-identified language information; a step 101 of conducting one-time decoding on the pre-processed information with an N-gram grammar language model, then selecting M optimal candidate results; a step 102 of introducing the identifying result of the one-time decoding to the acquired M optimal candidate results as historical sentence information; a step 103 of re-evaluating the selected M optimal candidate results with the n-gram language model; a step 104 of re-evaluating the M optimal candidate results that are introduced with the historical sentence information by using a neural network training language model which is based on a LSTM structure; and a step 105 of combining the result of the re-evaluation obtained by using the n-gram language model with the result of the re-evaluation obtained by using the neural network language model which is based on the LSTM, selecting an optimal result as the final identification result of the to-be-identified language information.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

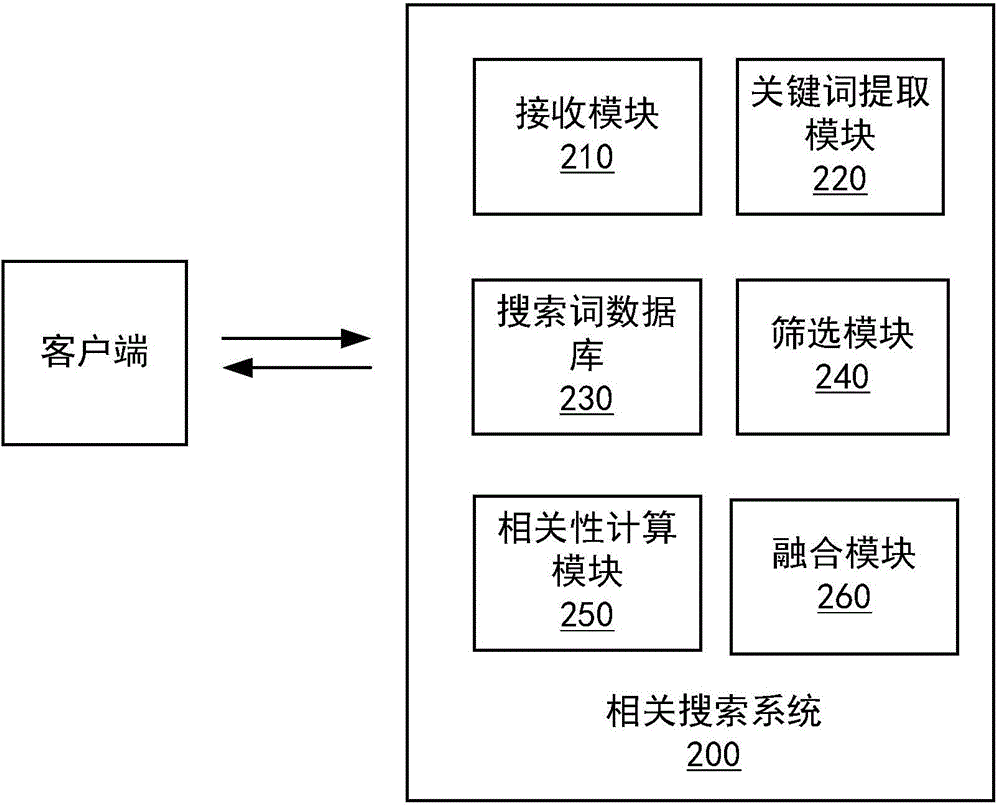

Related searching system and method

ActiveCN104143005AEfficient and accurate searchSpecial data processing applicationsData miningSearch terms

The invention provides a related searching system and method. The method comprises the following steps of (a) receiving search terms, extracting key words and parameters; (b) screening candidate search terms base on the key words and parameters; (c) utilizing a neural network language model to calculate correlation between the search terms and the candidate search terms and obtaining other characteristic correlations; (d) performing weighting calculation on multiple characteristic correlations to obtain related search term results. The related searching system comprises a receiving module for receiving the search terms, a key word extracting module for extracting the key words and parameters, a search term database for storing the candidate search terms, a screening module for searching the candidate search terms under the screen conditions of the key words and parameters, a correlation calculating module for calculating the multiple characteristic correlations and a fusion module for performing the weighting calculation on multiple characteristic correlation fractions to obtain the related search term results. By means of the related searching system and method, efficient and accurate related searching can be achieved through a simple structure.

Owner:BEIJING 58 INFORMATION TECH

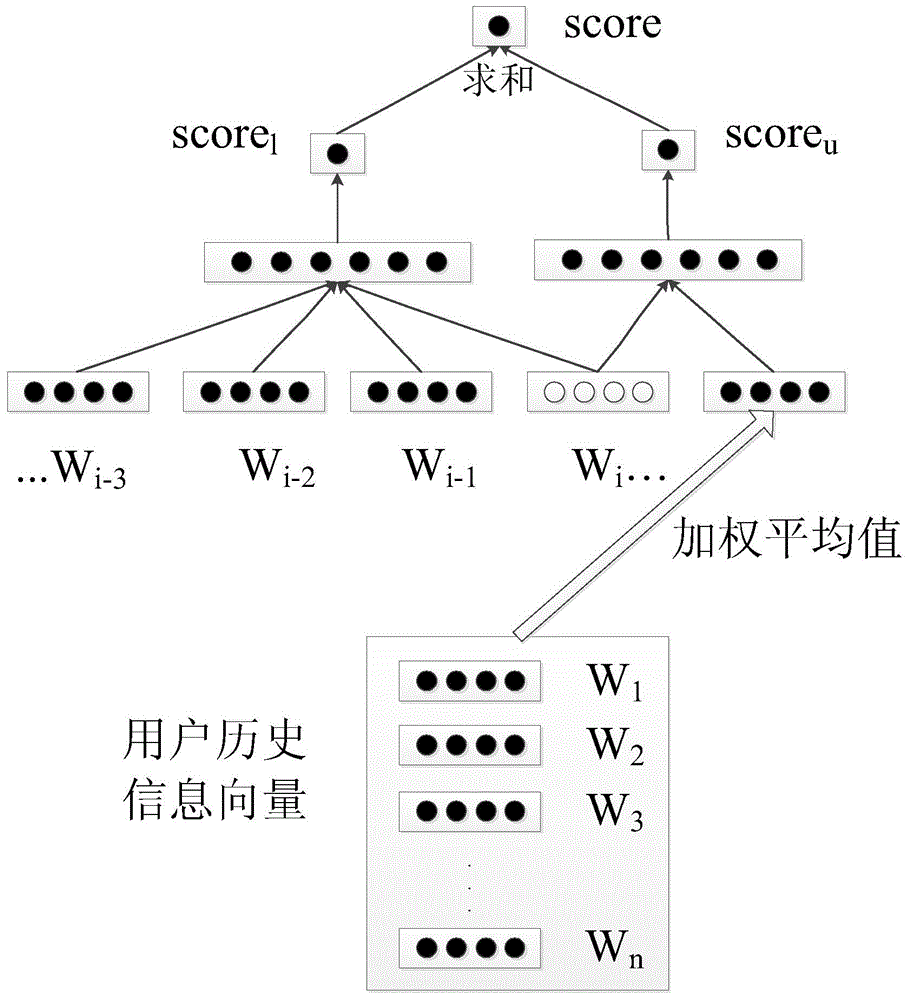

Method for standardizing Chinese and English hybrid texts in Chinese social networks

InactiveCN104102630AEasy to readData preprocessing works wellSpecial data processing applicationsSemantic spaceMachine translation

The invention belongs to the technical field of machine translation, and particularly discloses a method for standardizing Chinese and English hybrid texts in Chinese social networks. The method includes steps of identifying non-standard words; generating translation substitute words for the English words by the aid of hidden topic translation models; resorting the translation substitute words by the aid of neural network language models relevant to historical information of users and selecting standard words corresponding to the non-standard words. The method has the advantages that the texts of the networks are preprocessed and accordingly are adaptive to processing work of most natural languages; bilingual alignment training corpora of semantic spaces of non-social networks correspond to semantic spaces of the social networks by means of topic mapping, accordingly, the method is good in expansibility, and the translation accuracy can be guaranteed.

Owner:FUDAN UNIV

Chinese phonetic symbol keyword retrieving method based on feed forward neural network language model

ActiveCN106856092AReduce processingIncrease training speedSpeech recognitionPattern recognitionNODAL

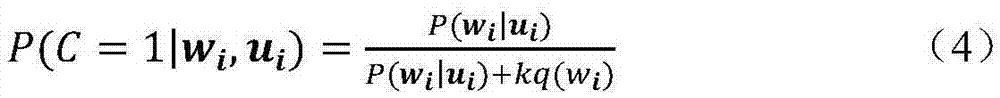

The invention provides a Chinese phonetic symbol keyword retrieving method based on a feed forward neural network language model. The method comprises: (1), an input sample including historical words and target words are inputted into a feed forward neural network model; for each target word wi, a plurality of noise words with probability distribution q (wi) are added and an active output of a last hidden layer is transmitted to the target words and nodes where the noise words are located, and conversion matrixes between all layers are calculated based on an objective function; errors between an output of an output layer and the target words are calculated, all conversion matrixes are updated until the feed forward neural network model training is completed; (2), a target word probability of inputting a word history is calculated by using the feed forward neural network model; and (3), the target word probability is applied to a decoder and voice decoding is carried out by using the decoder to obtain word graphs of multiple candidate identification results, the word graphs are converted into a confusion network and an inverted index is generated; and a keyword is retrieved in the inverted index and a targeted key word and occurrence time are returned.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

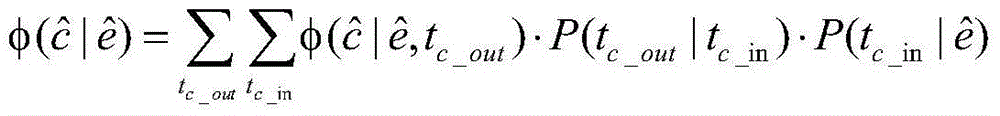

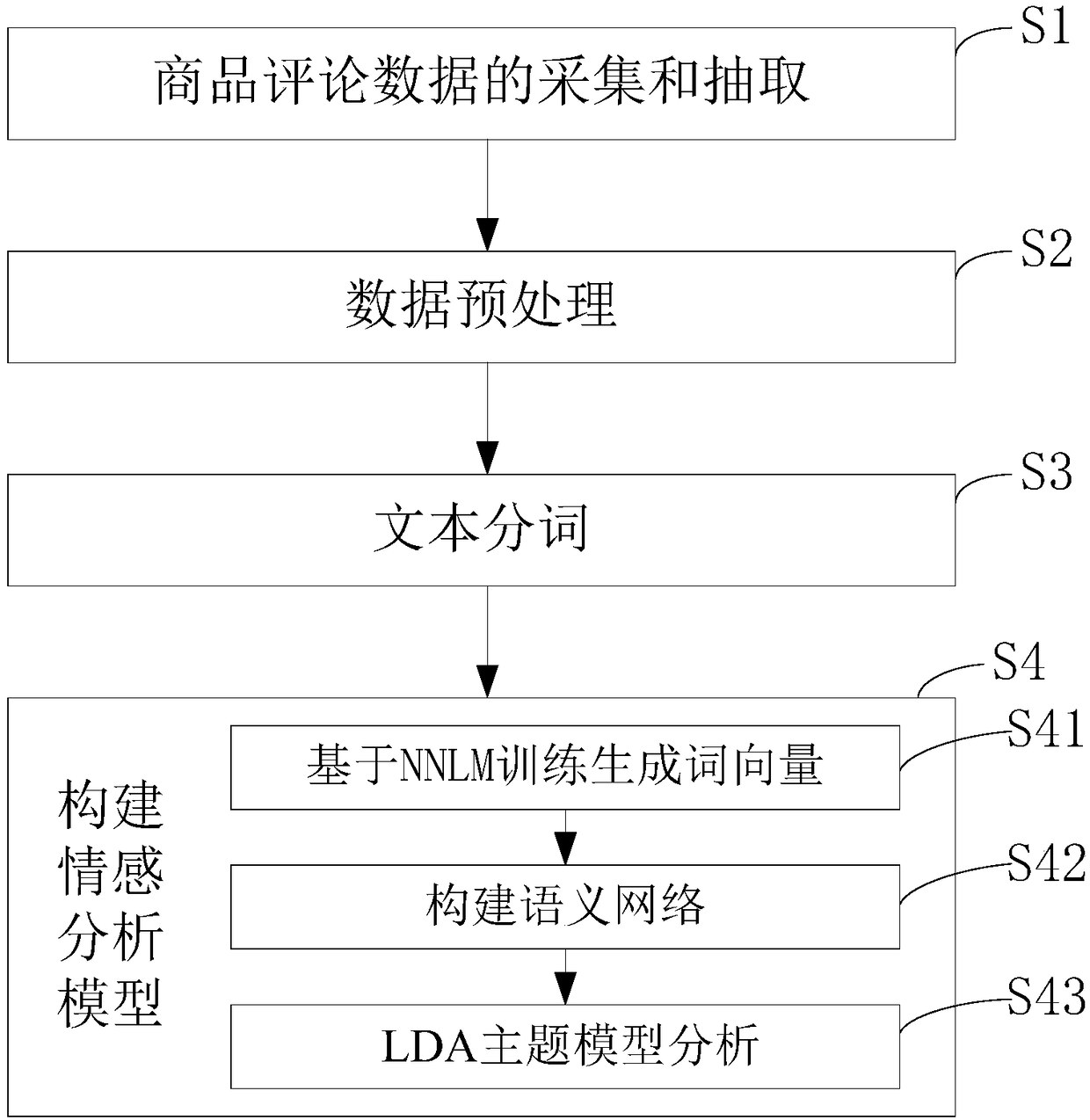

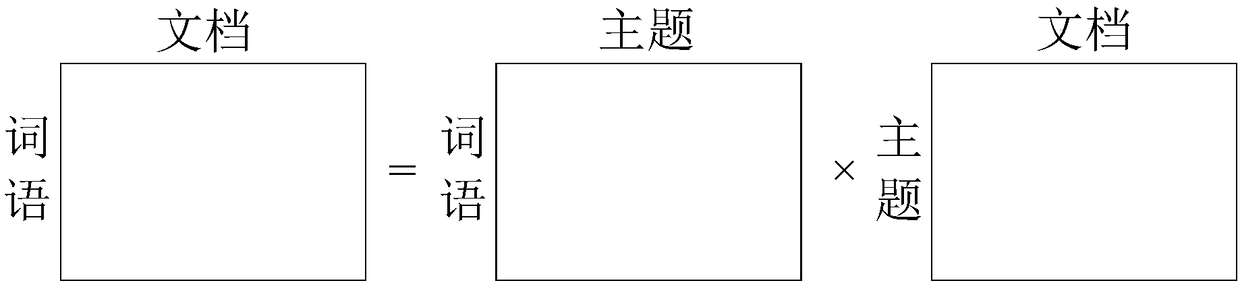

Machine learning-based commodity comment data sentiment analysis method

The invention discloses a machine learning-based commodity comment data sentiment analysis method. The method comprises the steps of collecting and extracting commodity comment data; preprocessing thedata, wherein the preprocessing comprises text duplicate removal, mechanical compression word removal and short sentence deletion; based on a Jieba word segmentation method, performing text word segmentation on the preprocessed data; building a sentiment analysis model: generating word vectors based on NNLM (Neural Network Language Model) training, and establishing a semantic network; and based on an LDA topic model, performing semantic mining, and generating a topic in an unsupervised manner. The unsupervised sentiment analysis method is realized; and a result shows that the sentiment analysis mode can effectively analyze comment sentiments of users.

Owner:BEIJING UNIV OF TECH

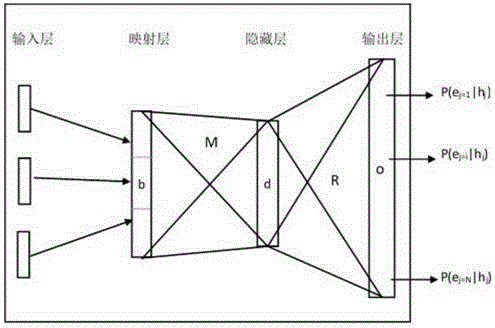

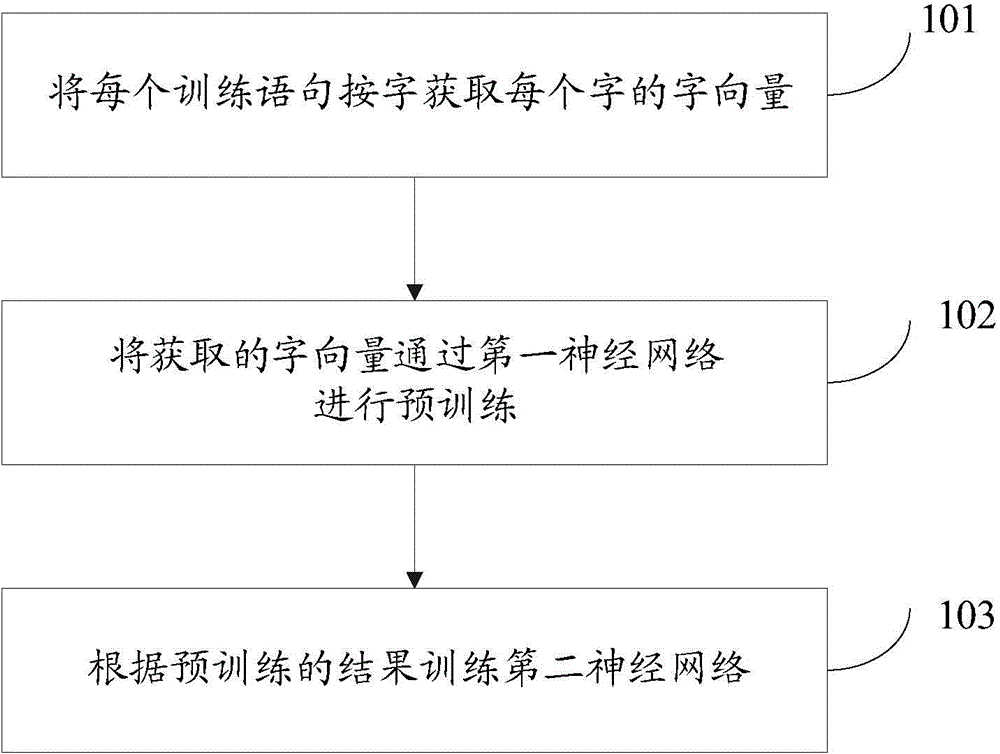

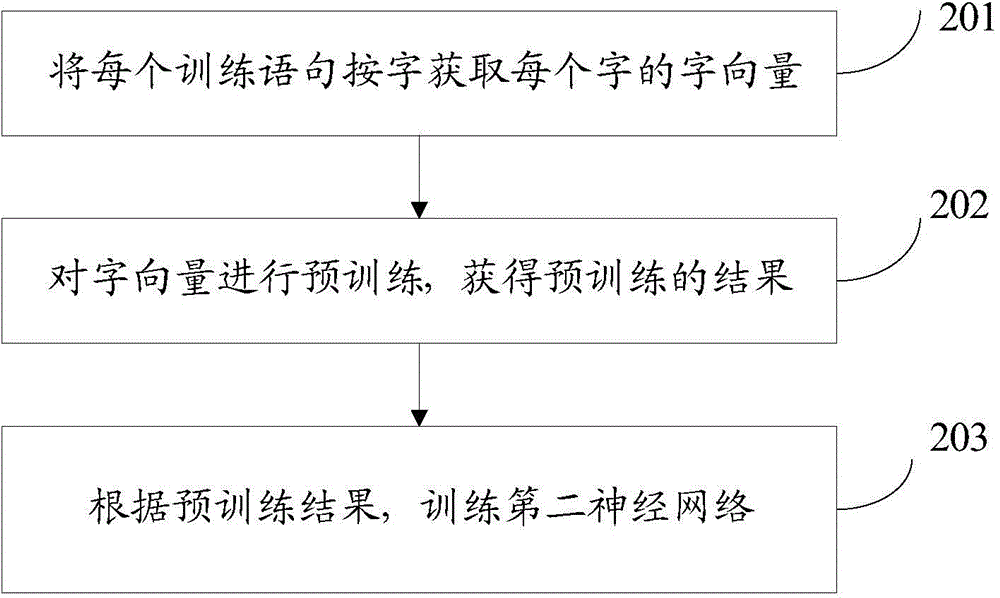

Character based neural network training method and device

ActiveCN105279552AReduce complexityImprove training efficiencyBiological neural network modelsFeature vectorEuclidean vector

The invention provides a character based neural network training method and device. The method comprises that the vector of each character in each training sentence is obtained; the character vector serves as a parameter of a first neural network and input to the first neural network to implement pre-training, and a pre-training result, which comprises characteristic vectors of the preceding and following texts of the character vector, is obtained; and the characteristic vectors of the preceding and following texts of the character vector serves as parameters of a second neural network, and input to the second neural network to train the second neural network. The character based neural network training method and device can be used to solve the problem that the training efficiency is low in a word based neural network language model.

Owner:TSINGHUA UNIV +1

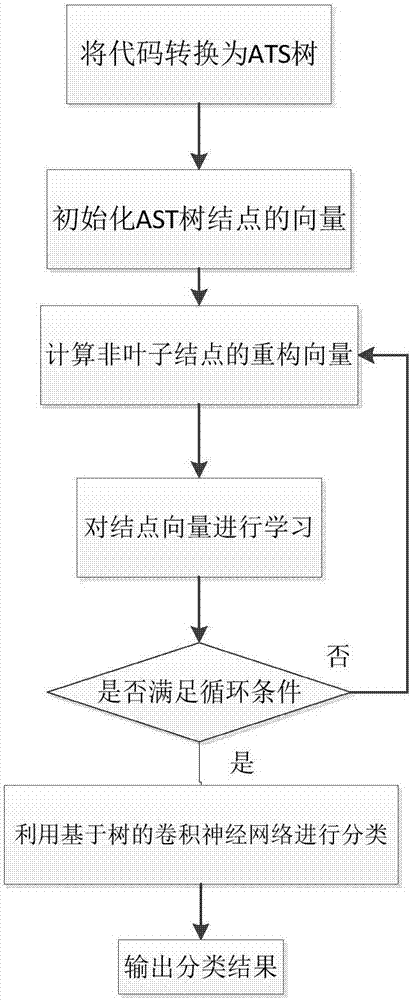

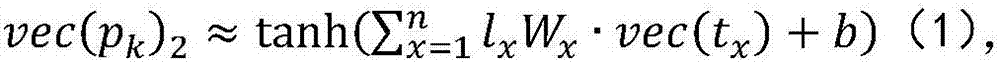

Code classification method based on neural network linguistic model

ActiveCN107220180AImprove development efficiencyAvoid the curse of dimensionalitySoftware testing/debuggingSpecial data processing applicationsLinguistic modelAlgorithm

The invention belongs to the field of the software engineering, and discloses a code classification method based on a neural network linguistic model. The method comprises the following steps: firstly converting a code to an AST tree, initializing a vector of a node ci of the AST tree, and to obtain a reconstitution vector of a non-leaf node pk by using a vector of a child node tx; updating the vector of the node ci by using an AST-Node2Vec model, if the circulation condition is not satisfied, continuously circulating; if the circulation condition is satisfied, outputting the AST tree with the updated node vector and the reconstitution vector of the updated non-leaf node; and using the AST tree with the updated node vector and the reconstitution vector of the updated non-leaf node as the input of a convolution neutral network based on the tree, and completing the code classification by using the convolution neutral network based on the tree. The method is used for classifying the codes so that the problem of dimension curse can be effectively avoided, the semantically similarity can be displayed, and the codes can be classified better according to the function.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

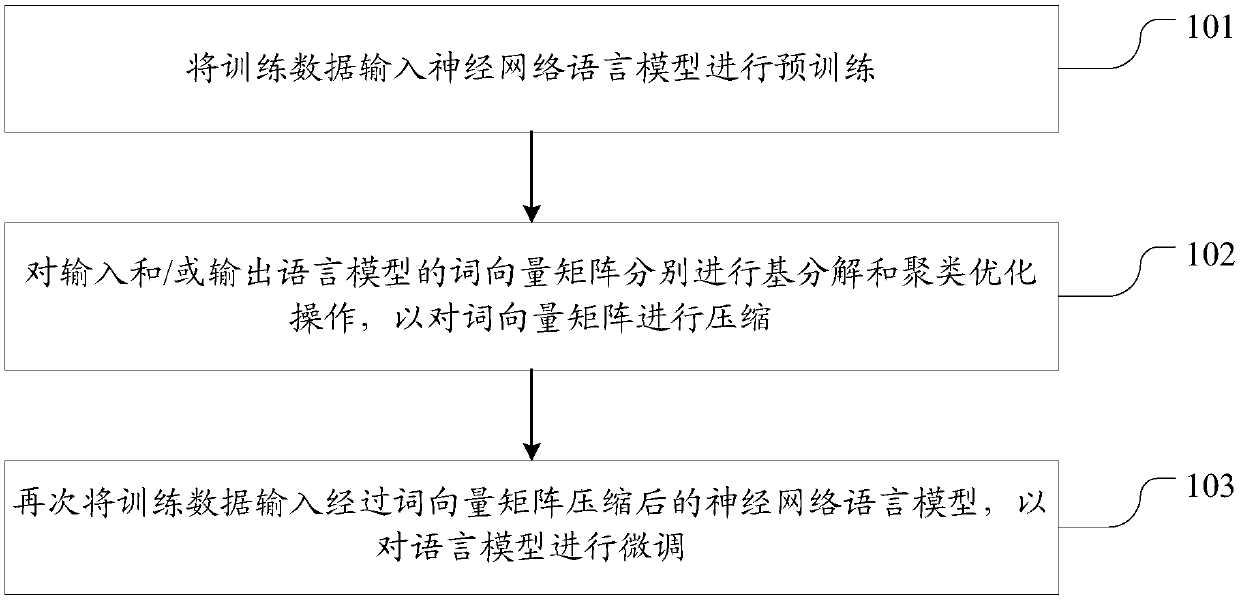

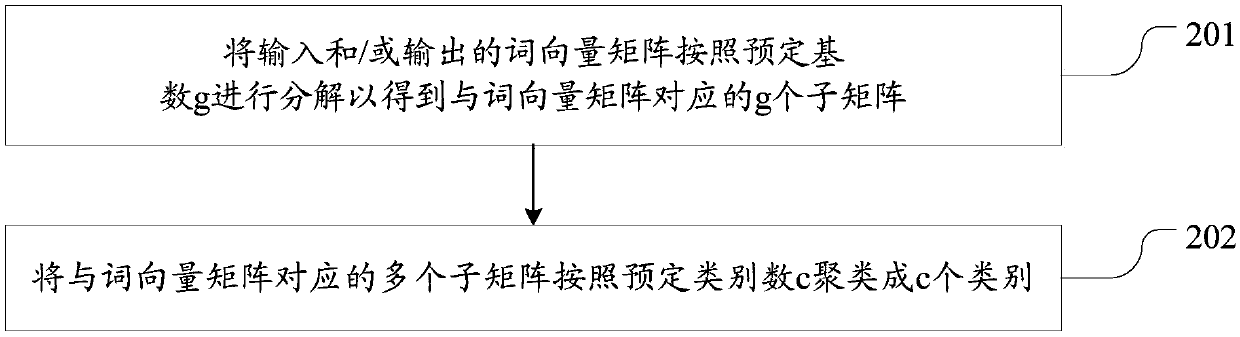

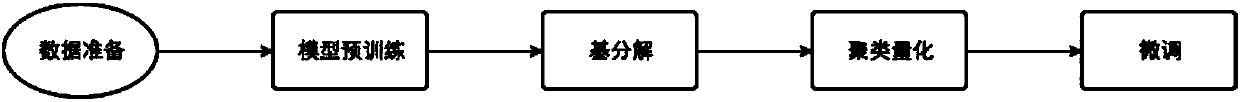

Compression method and system used for neural network language model (NN LM)

InactiveCN108415888ASignificant memory reduction rateText processingSpecial data processing applicationsProduct quantizationDecomposition

The invention discloses a compression method and system used for a neural network language model (NN LM). The method includes: inputting training data into the neural network language model for pre-training; respectively carrying out base decomposition and clustering quantization operations on word vector matrices of input and / or output of the language model to compress the word vector matrices; and inputting the training data again into the neural network language model after compression of the word vector matrices to finely tune the language model. The invention provides a novel and efficient structured word embedding framework based on product quantization, the framework is used for compressing the input / output word vector matrices, and a significant memory reducing rate can be obtainedin a case of not damaging NN LM performance.

Owner:AISPEECH CO LTD

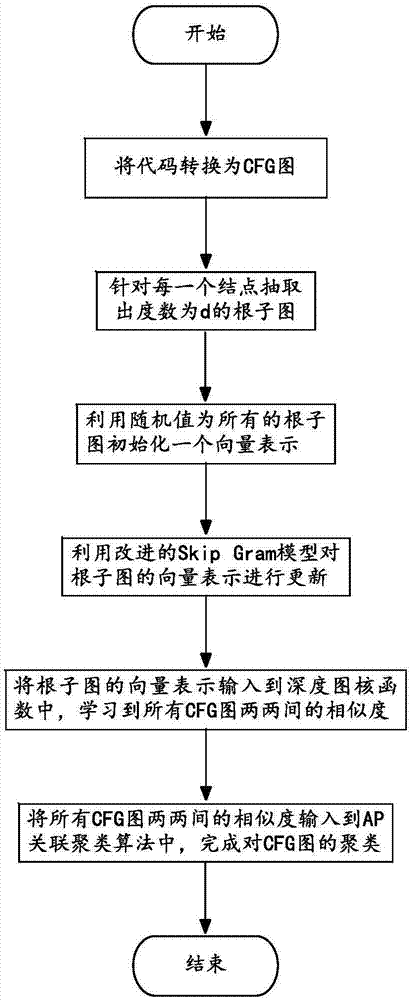

Duplicated code detecting method based on neural network language model

ActiveCN107273294AAvoid the curse of dimensionalityGet similarityBiological neural network modelsSoftware testing/debuggingCluster algorithmPattern recognition

The invention discloses a duplicated code detecting method based on a neural network language model and belongs to the technical field of duplicated code detecting methods. The problem that duplicated codes unchanged essential cannot be detected by adopting a duplicated code detecting method in the prior art, accordingly the detection accuracy rate is low, and economic losses of code originators are likely caused is solved. The duplicated code detecting method comprises the steps that 1, each of codes is converted into a corresponding CFG image; 2, a root diagram of each node in each CFG image is extracted; 3, all the root diagrams are represented by adopting vectors; 4, the vector representations of the root diagrams are input into a depth diagram-kernel function for learning, and the similarity of all the CFG images is obtained; 5, the similarity of all the CFG images is input into an AP associating and clustering algorithm, CFG image clustering is performed to obtain multiple clustering clusters, and the codes corresponding to the CFG images in the same clustering cluster are duplicated codes. The duplicated code detecting method is used for finding duplicated codes.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

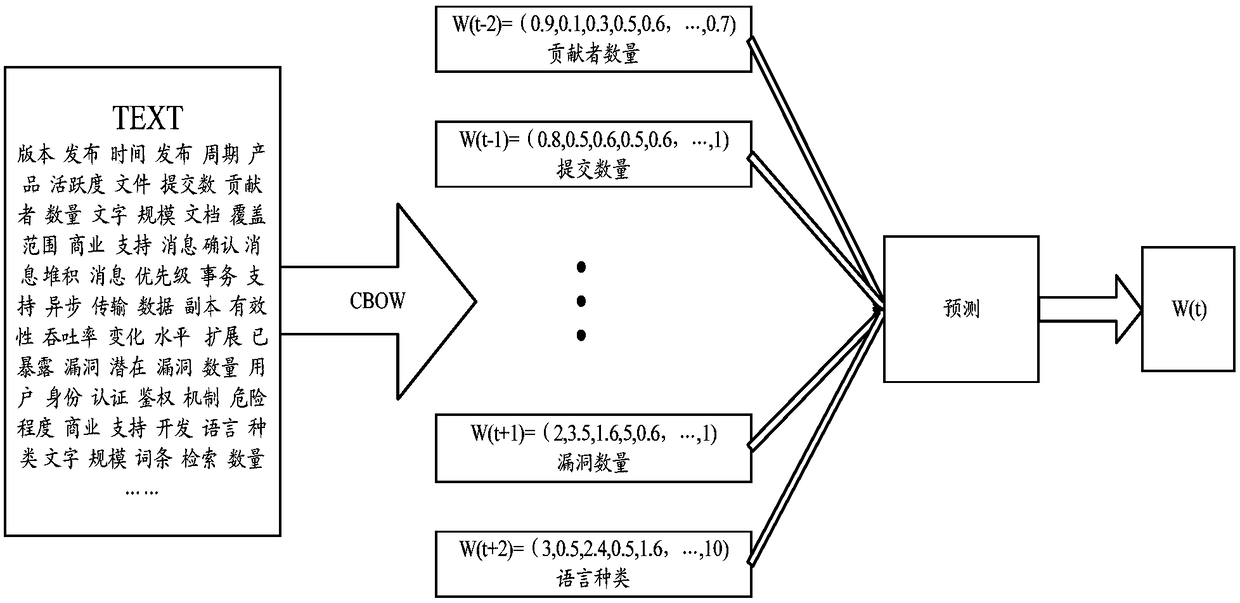

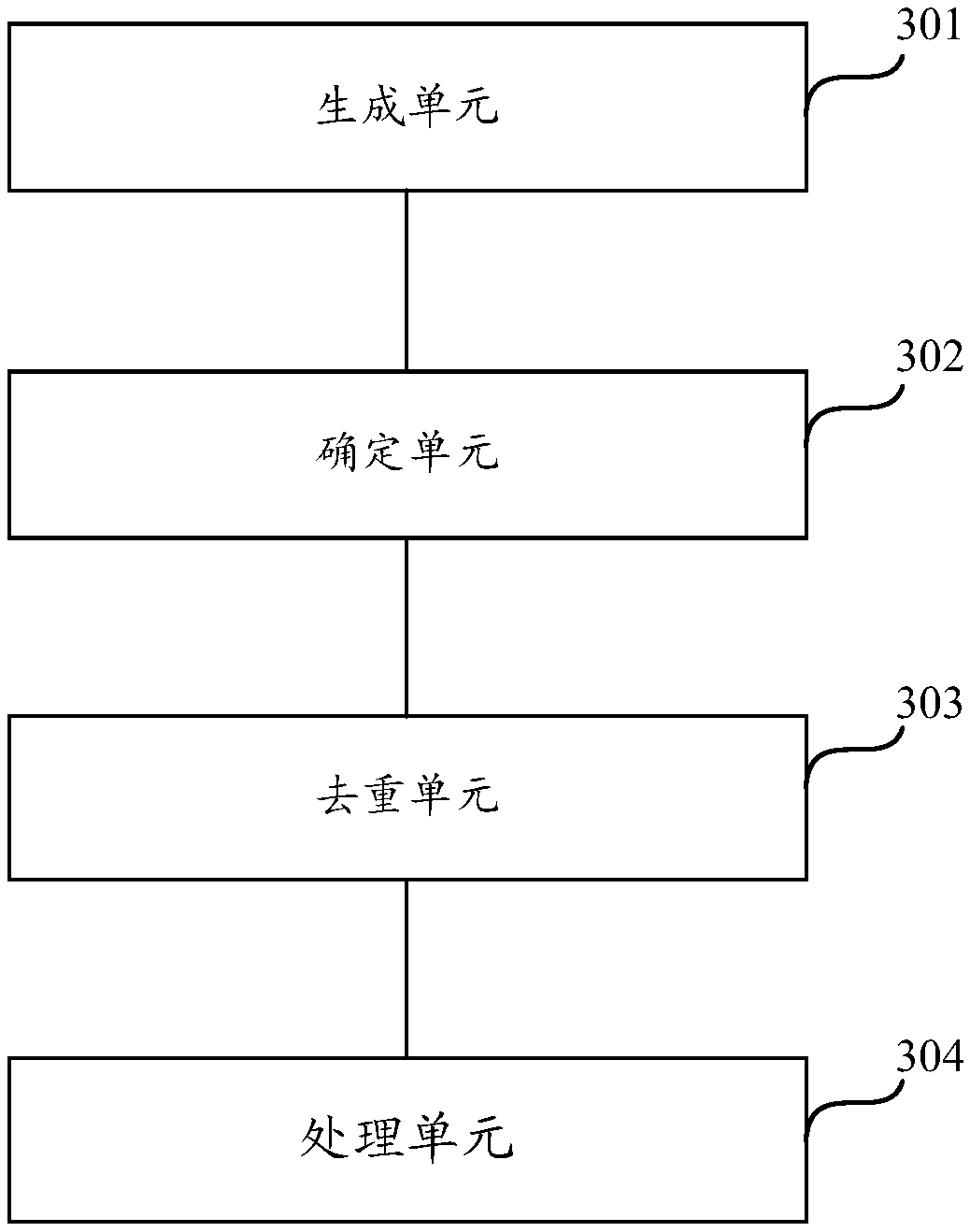

Text quality index obtaining method and device

ActiveCN108182175AImprove accuracyAccurate and detailed quality index resultsNatural language data processingSpecial data processing applicationsAlgorithmOpen source

The invention discloses a text quality index obtaining method and device. The method comprises the steps of obtaining a name and keywords of a text, generating a text data source, adopting a preset neural network language model for training entries in the text data source to obtain multiple word vectors, determining sentence vectors of multiple sentences, and performing de-weighting on the sentence vectors of the multiple sentences, wherein P-type quality indexes are involved; saving the content corresponding to the sentence vectors of the P-type quality indexes, on the basis of random forest,determining an important degree value of the P-type quality index, and according to the content corresponding to the sentence vectors of the P-type quality indexes and the important degree value of the P-type quality indexes, obtaining details and importance ranks of the quality indexes needing to be evaluated. Sentences of open source software are quantified into the vectors, a quality index setis obtained, the follow-up ranking accuracy is improved, the important degree value of the quality indexes is obtained on the basis of random forest, and the obtained quality index result is more accurate and refined.

Owner:CHINA UNIONPAY

Content recommendation system using a neural network language model

The present disclosure relates to applying techniques similar to those used in neural network language modeling systems to a content recommendation system. For example, by associating consumed media content to words of a language model, the system may provide content predictions based on an ordering. Thus, the systems and techniques described herein may produce enhanced prediction results for recommending content (e.g. word) in a given sequence of consumed content. In addition, the system may account for additional user actions by representing particular actions as punctuation in the language model.

Owner:GOOGLE LLC

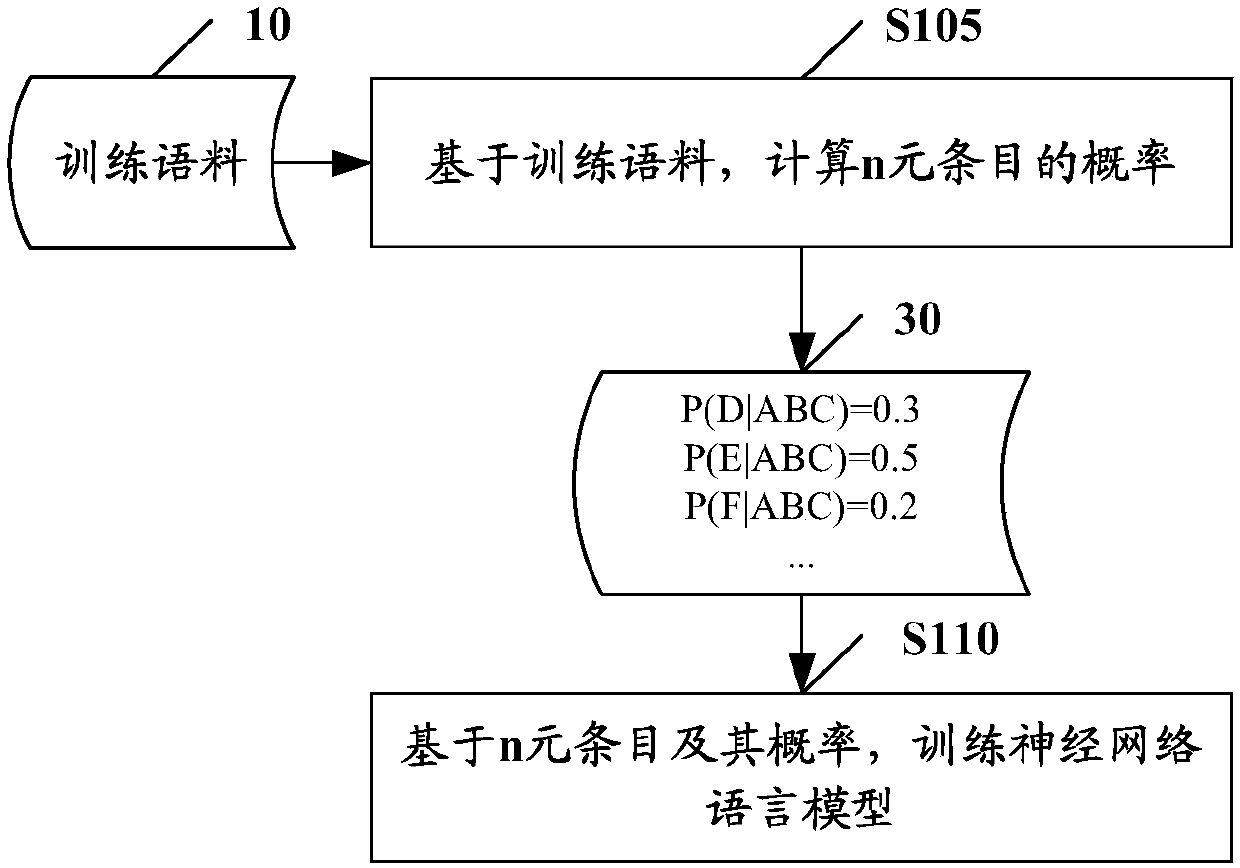

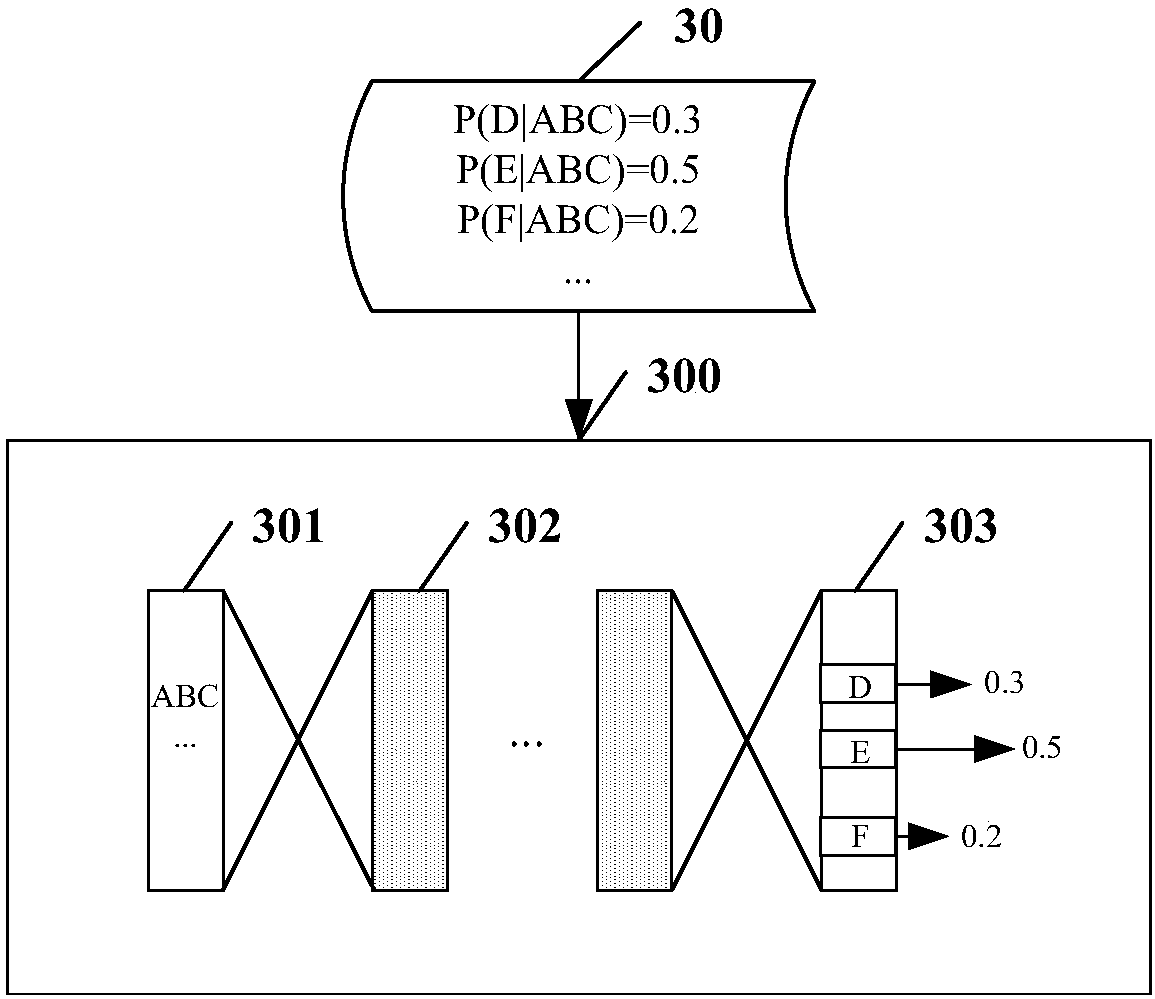

Method and device for training language model of neural network and voice recognition method and device

InactiveCN107808660AImprove performanceReasonable training goalsSpeech recognitionAlgorithmIdentification device

The invention provides a method and device for training a language model of a neural network and a voice recognition method and device. According to one embodiment, the device for training the language model of the neural network includes a calculation unit calculating probabilities of n entries based on training corpus; and a training unit training the above language model of the neural network based on the above n entries and the probabilities.

Owner:KK TOSHIBA

Corpus expansion method and apparatus

ActiveCN108021551APerfect sentence pathImprove the probability of sentenceNatural language data processingSpecial data processing applicationsExtension methodHuman language

The invention provides a corpus expansion method and apparatus. The method comprises the steps of performing training by utilizing first corpus data to obtain an n-gram language model and a neural network language model, wherein the first corpus data is sparse corpus data; by utilizing the neural network language model, predicting word or phrase data after words or phrases in the first corpus data, and generating second corpus data; inputting the second corpus data to the n-gram language model, and performing filtering to generate third corpus data; and adding the third corpus data to the first corpus data, thereby generating updated first corpus data. The problems of small sentence accomplishment probability and influence on usage effect, due to necessary word deficiency, for sparse corpora in actual application are solved.

Owner:BEIJING SINOVOICE TECH CO LTD

Method and apparatus for training language model and recognizing speech

A method and apparatus for training a neural network language model, and a method and apparatus for recognizing speech data based on a trained language model are provided. The method of training a language model involves converting, using a processor, training data into error-containing training data, and training a neural network language model using the error-containing training data.

Owner:SAMSUNG ELECTRONICS CO LTD

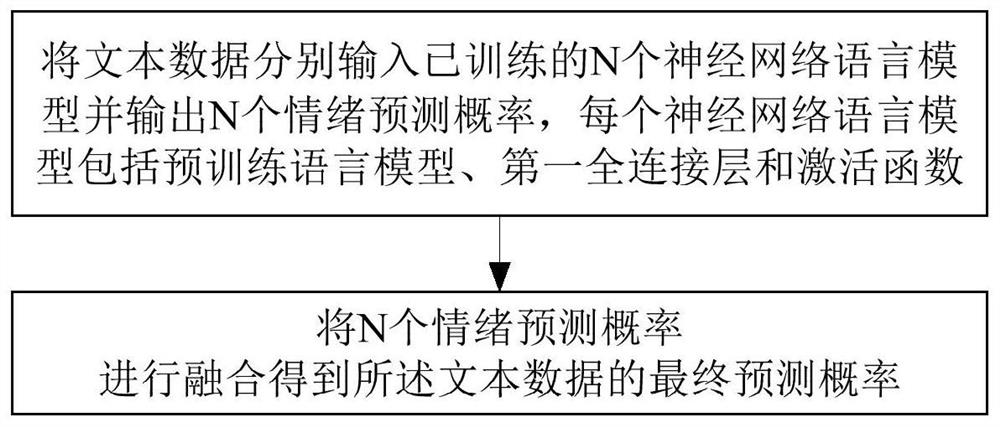

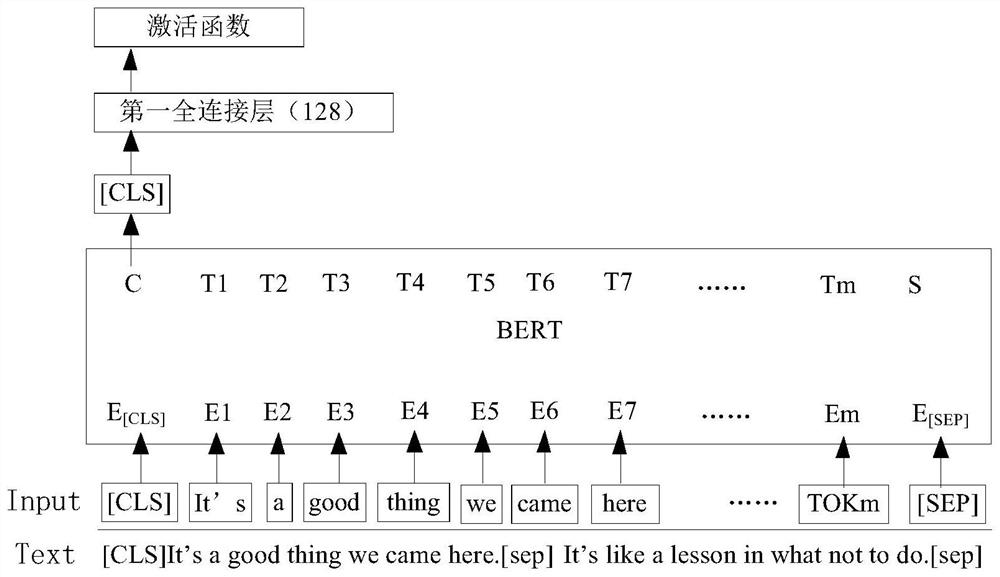

Text-based emotion detection method and device, computer equipment and medium

PendingCN112597759AImproving Emotion Detection EfficiencyImprove predictive performanceCharacter and pattern recognitionNatural language data processingActivation functionPrediction probability

The invention discloses a text-based emotion detection method and device, computer equipment and a medium, and the emotion detection method comprises the steps of inputting text data into N trained neural network language models, and outputting N emotion prediction probabilities, wherein each neural network language model comprises a pre-trained language model, a first full connection layer and anactivation function; and fusing the N emotion prediction probabilities to obtain a final prediction probability of the text data, wherein N is a natural number greater than or equal to 2. According to the embodiment provided by the invention, the plurality of neural network language models are set, each pre-trained language model is used for predicting according to different extracted features togenerate the plurality of emotion prediction probabilities, and the plurality of emotion prediction probabilities are fused to obtain the final prediction probability of the input text data, so thatthe prediction capability is remarkably improved, and the invention has wide application prospects.

Owner:深延科技(北京)有限公司

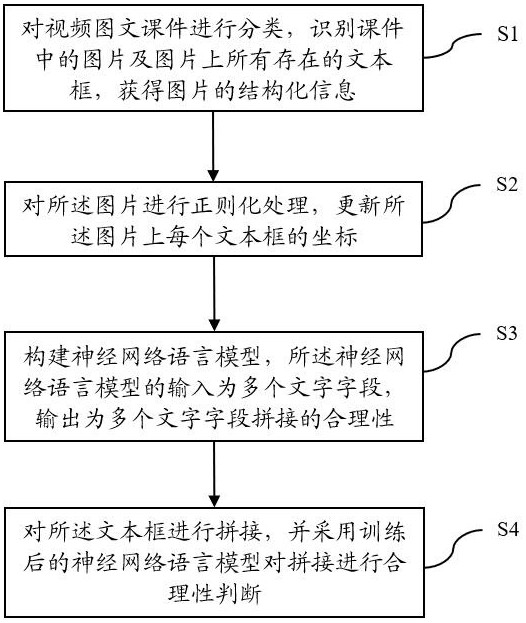

Video image-text courseware text extraction method and device, equipment and medium

ActiveCN112287916AReadableProcessableCharacter and pattern recognitionNeural architecturesPattern recognitionVideo image

The invention relates to artificial intelligence, and discloses a video image-text courseware text extraction method. The method comprises the steps of classifying video image-text courseware, identifying pictures in the video image-text courseware and all textboxes existing on the pictures by adopting a text identification method, and obtaining structured information of the pictures; performing regularization processing on the picture to update the coordinate of each textbox on the picture; constructing a neural network language model for training, wherein the input of the neural network language model is a plurality of character fields, and the output of the neural network language model is the splicing rationality of the plurality of character fields; splicing the textboxes, performingreasonability judgment on splicing by adopting a trained neural network language model, and if the reasonability conforms to a preset value, extracting texts after the textboxes are spliced. In addition, the invention also relates to a blockchain technology, and the video image-text courseware can be stored in the blockchain. According to the invention, complete readable, processable and structured texts can be provided from images and videos.

Owner:PINGAN INT SMART CITY TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com