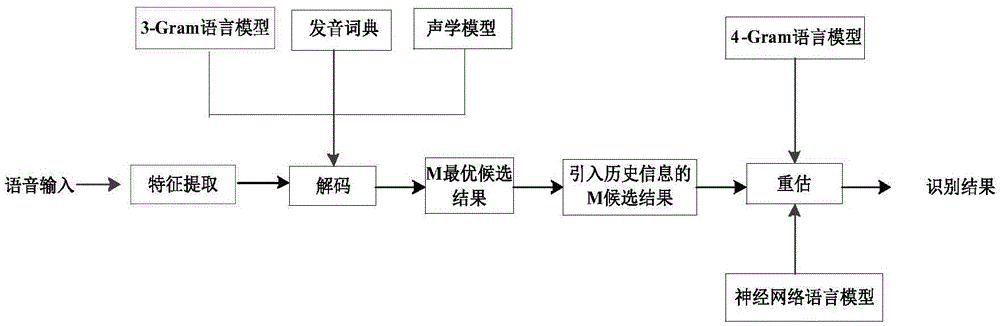

Language model re-evaluation method based on long and short memory network

A long-term and short-term memory and language model technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of inability to remember historical information, limited performance improvement, and insignificant effect of high-meta language model re-evaluation, and achieve learning ability. The effect of strong, improved performance, good memory function

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be described in detail below in conjunction with the accompanying drawings and preferred embodiments.

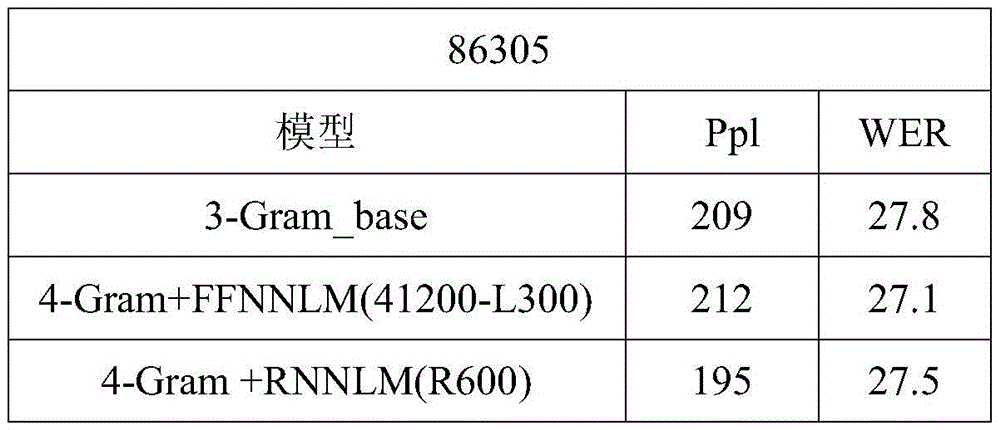

[0038] The data sets used in this experiment are as follows:

[0039]Training set: The training data used include the Chinese text corpus provided by the Linguistic Data Consortium (LDC): Call-Home, Call-Friend and Call-HKUST; the self-collected natural spoken dialogue data, collectively referred to as CTS (Conversational Telephone Speech ) corpus. The other part of the training data is the text corpus downloaded from the Internet, collectively referred to as general corpus.

[0040] Development set: Self-collected telephone channel dataset.

[0041] Test set: The data set (86305) provided by the National 863 High-tech Program in 2005 and the partial data (LDC) of natural spoken telephone conversations collected by the University of Hong Kong in 2004.

[0042] 1. Training phase

[0043] 1) Use the CTS corpus to train the trigram language...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com