Linguistic model training method and system based on distributed neural networks

A neural network and language model technology, applied in speech analysis, speech recognition, instruments, etc., to achieve the effect of improving accuracy and reducing the time for learning and training

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

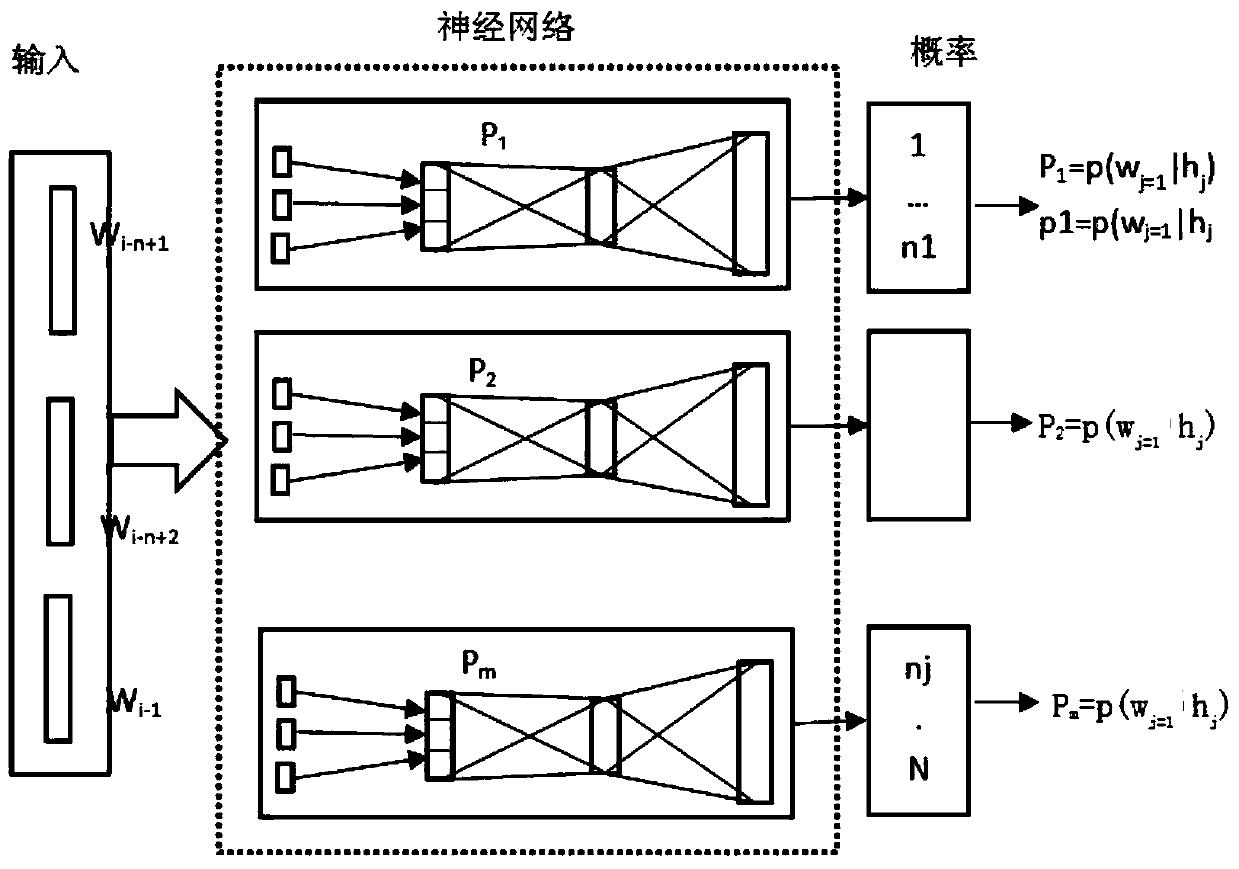

[0044] see figure 2 As shown, in order to solve the problem of large vocabulary neural network model training and training time is too long, we propose a language model based on distributed neural network. That is to split the large vocabulary into multiple small vocabulary, each small vocabulary corresponds to a small neural network, and the input dimension of each small neural network is the same.

[0045] For example, see with figure 1 As shown, there is currently a vocabulary of 10w, that is, the output layer of the neural network is 10w-dimensional, and P(w j In |h), w is from 1-10w. The language model of the distributed neural network of the present invention is exactly that the output layer is split into 10, promptly utilizes 10 small neural network models to train different vocabulary, p 1 (w j |h) in w from 1-1w, p 2 (w j In |h), w is from 1w-2w, and so on, and finally the network is merged.

[0046] More specifically, from figure 2 It can be seen that for t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com