Chinese phonetic symbol keyword retrieving method based on feed forward neural network language model

A technology of neural network model and language model, which is applied in speech analysis, speech recognition, instruments, etc., to achieve the effect of improving performance and speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

[0040] Such as figure 1 Shown, based on the Chinese speech keyword retrieval method of forward neural network language model, described method comprises:

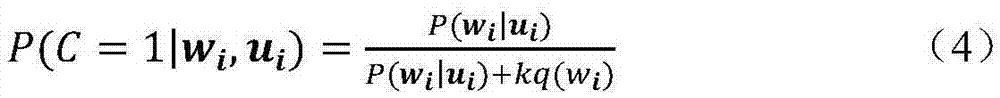

[0041] Step 1) Utilize the training sample and adopt the NCE criterion to train the forward neural network language model; specifically include:

[0042] Step 101) When inputting N training samples, simultaneously input a unary probability based on the target word statistics of the training samples;

[0043] Such as figure 2 As shown, the forward neural network language model of the present embodiment includes an input layer, a mapping layer, two layers of hidden layers and an output layer;

[0044] The training samples include input samples and target words; wherein N input samples are: u i (1≤i≤N), each u i by n-1 word history v ij (1≤j≤n-1) composition, u i =(v i1 ,v i2 ,...v i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com