Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

156 results about "Iterative analysis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Not Found. Iterative refers to a systematic, repetitive, and recursive process in qualitative data analysis. An iterative approach involves a sequence of tasks carried out in exactly the same manner each time and executed multiple times.

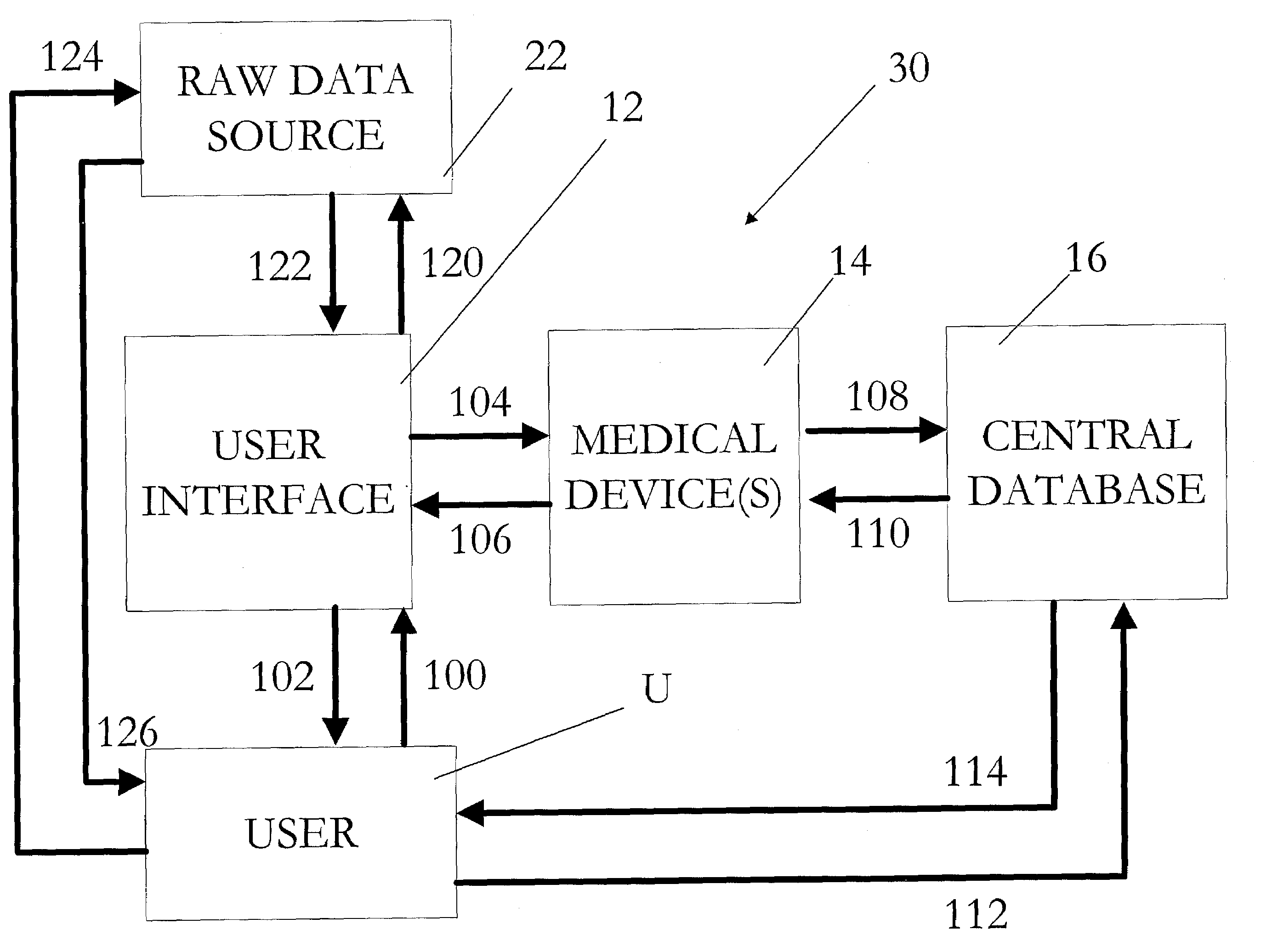

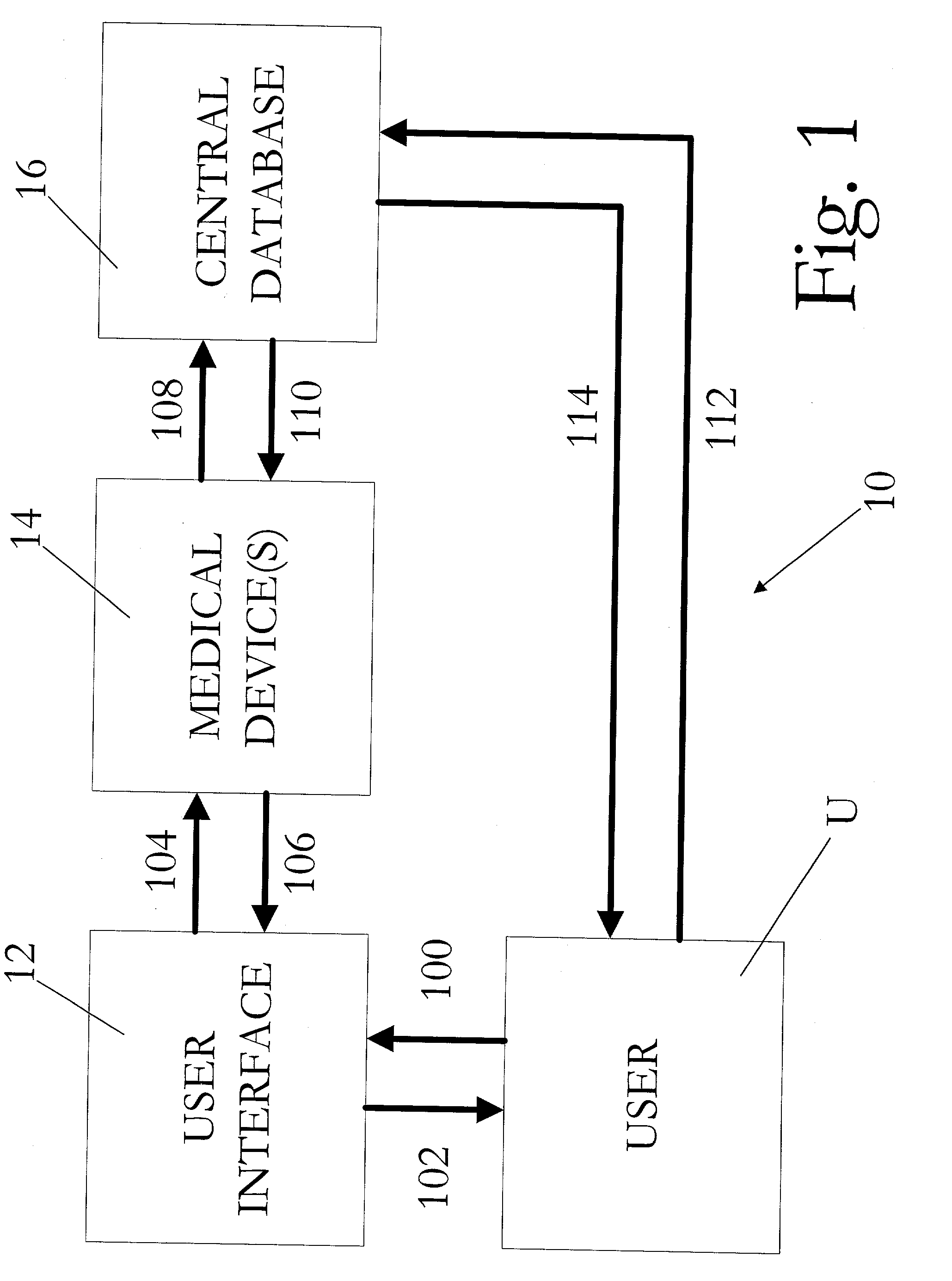

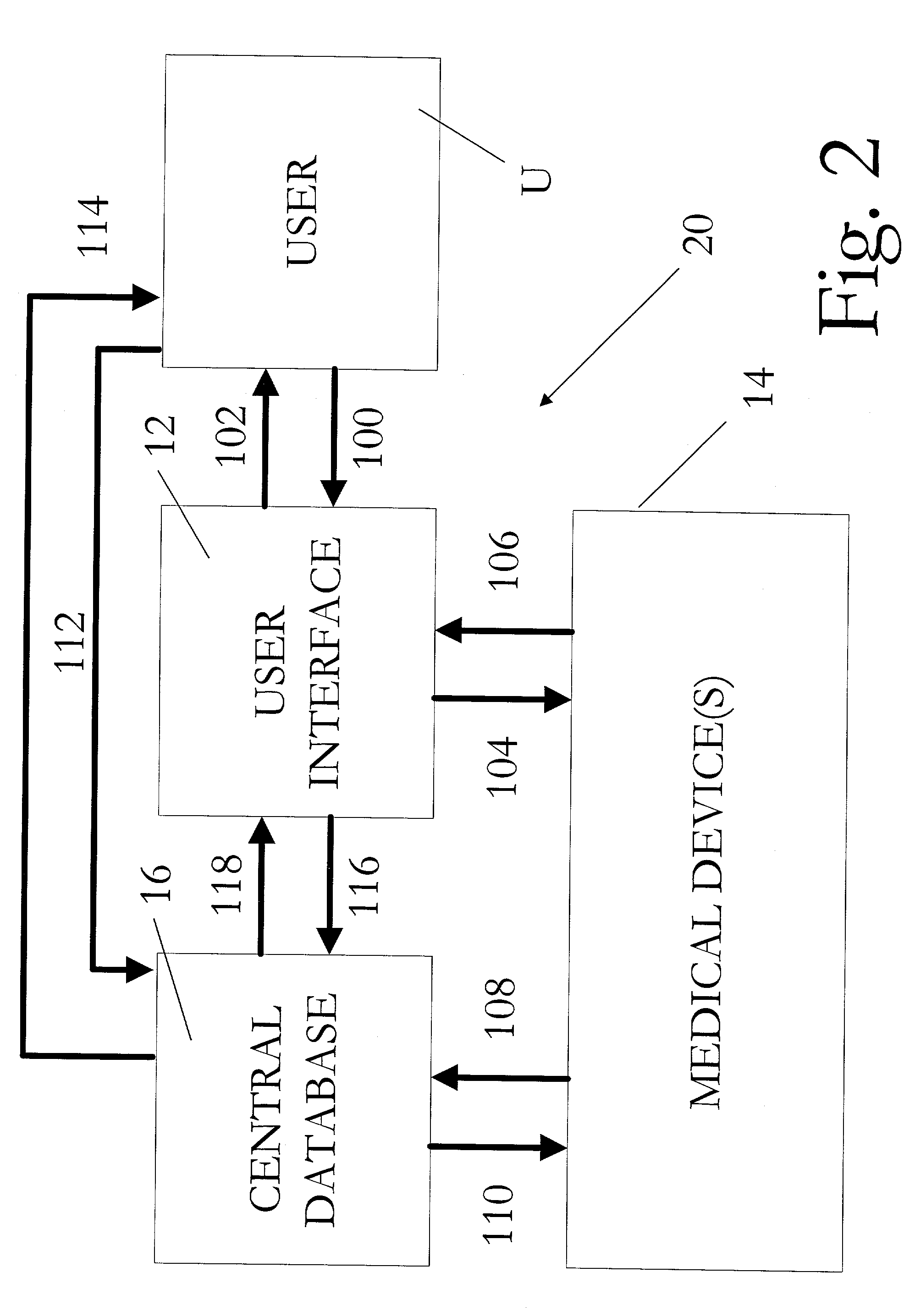

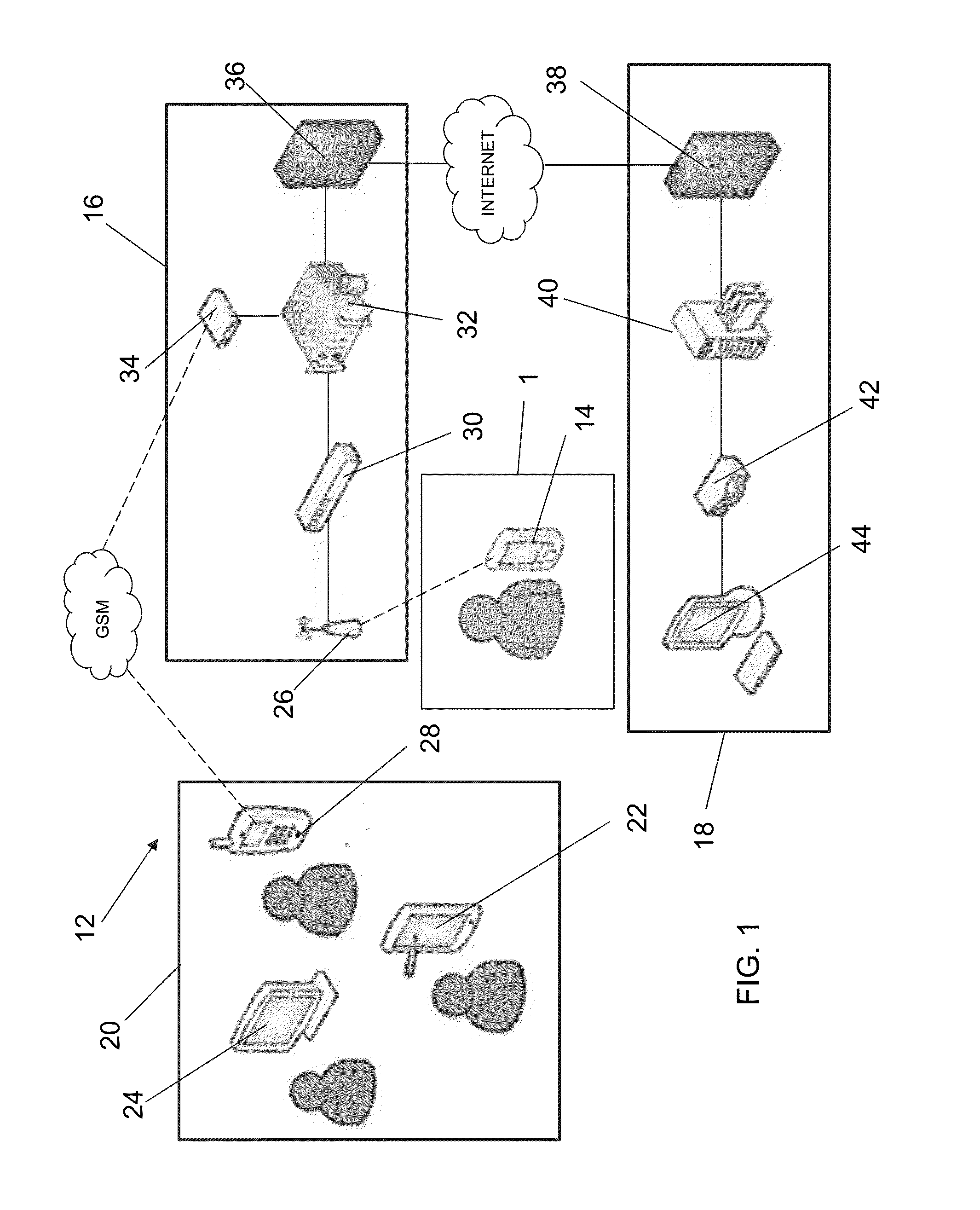

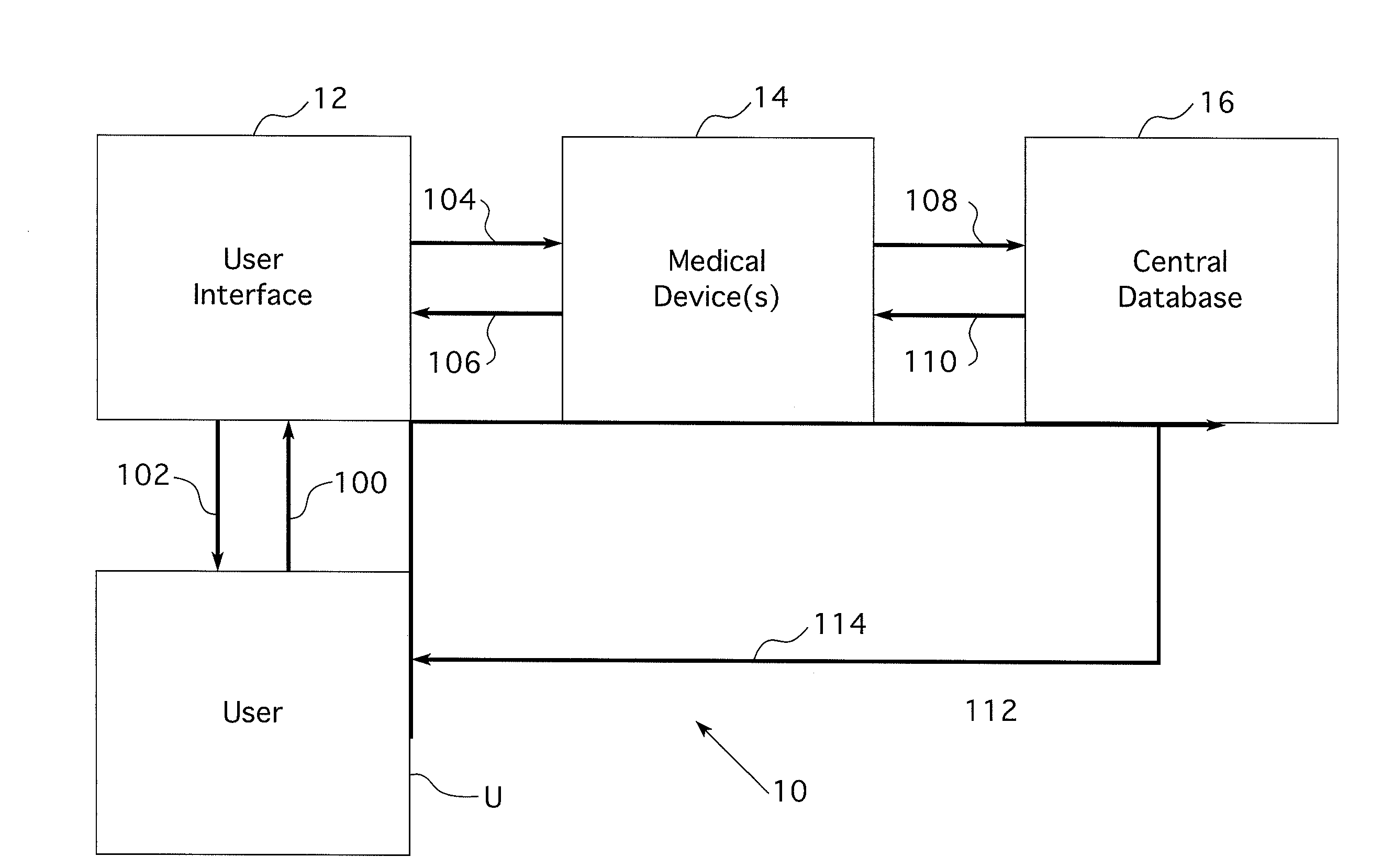

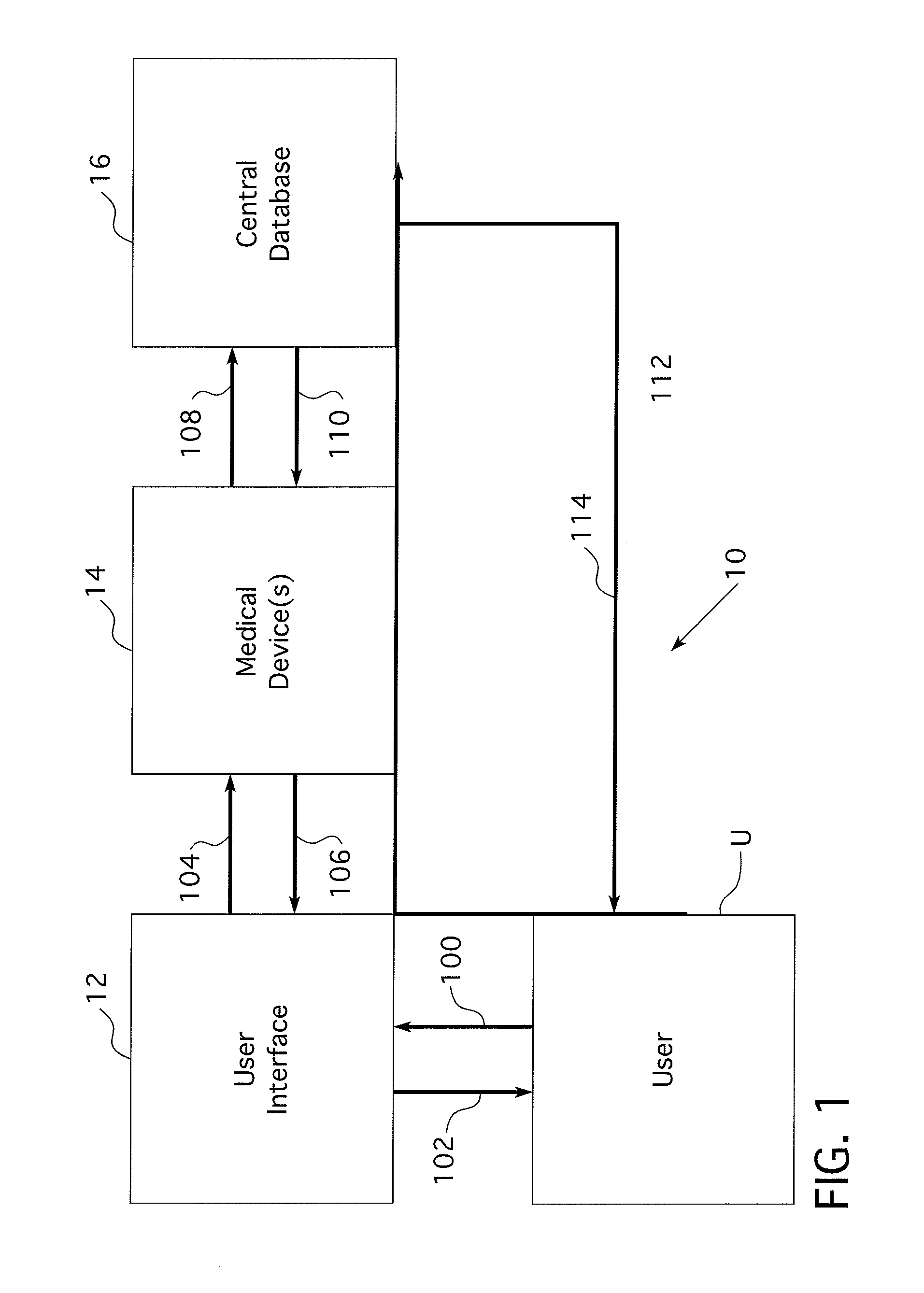

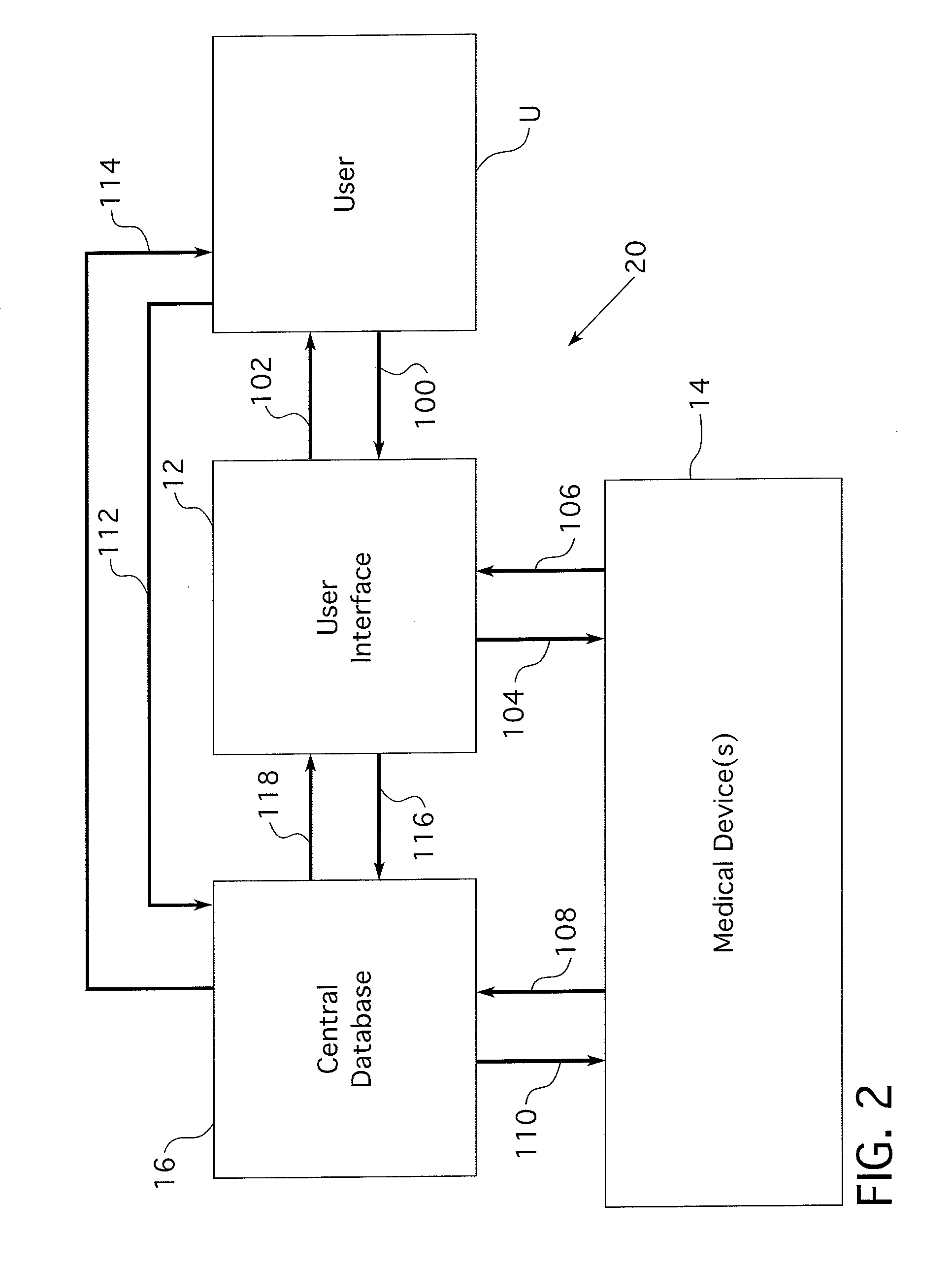

System and method for automated benchmarking for the recognition of best medical practices and products and for establishing standards for medical procedures

ActiveUS7457804B2Quality improvementLow costDigital data processing detailsDrug and medicationsMedical equipmentCentral database

A system for the collection, management, and dissemination of information relating at least to a medical procedure is disclosed. The system includes a user interface adapted to provide raw data information at least about one of a patient, the medical procedure, and a result of the medical procedure. A medical device communicates with the user interface, receives the raw data information from the user interface, and generates operational information during use. A central database communicates at least with the medical device and receives data from the medical device. The central database is used to create related entries based on the raw data information and the operational information and optionally transmits the related entry to the medical device or the medical device user. The related entry includes information that provides guidance based on previously tabulated, related medical procedures. A system for the iterative analysis of medical standards is also disclosed along with methods for the evaluation of medical procedures and standards.

Owner:BAYER HEALTHCARE LLC

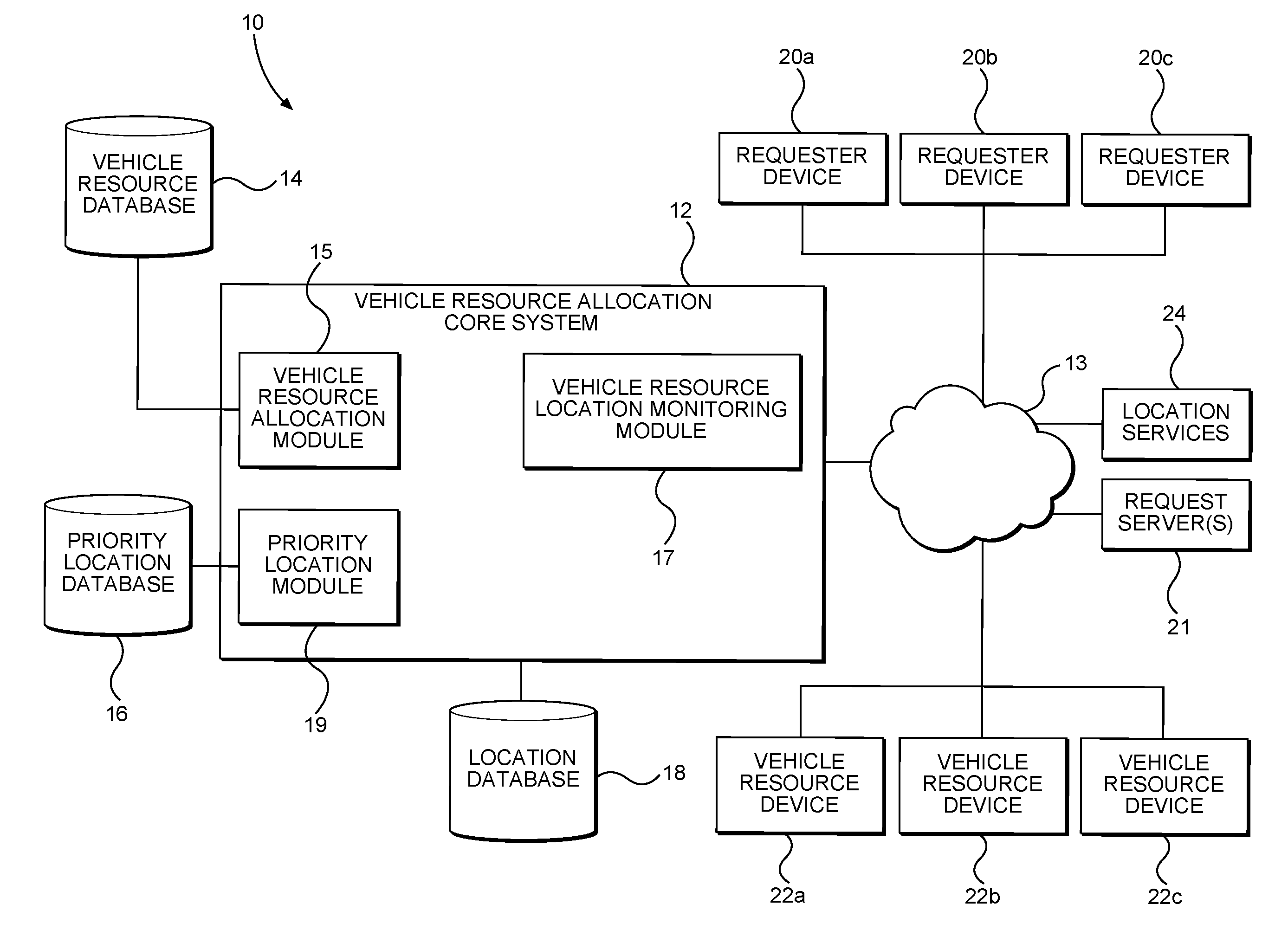

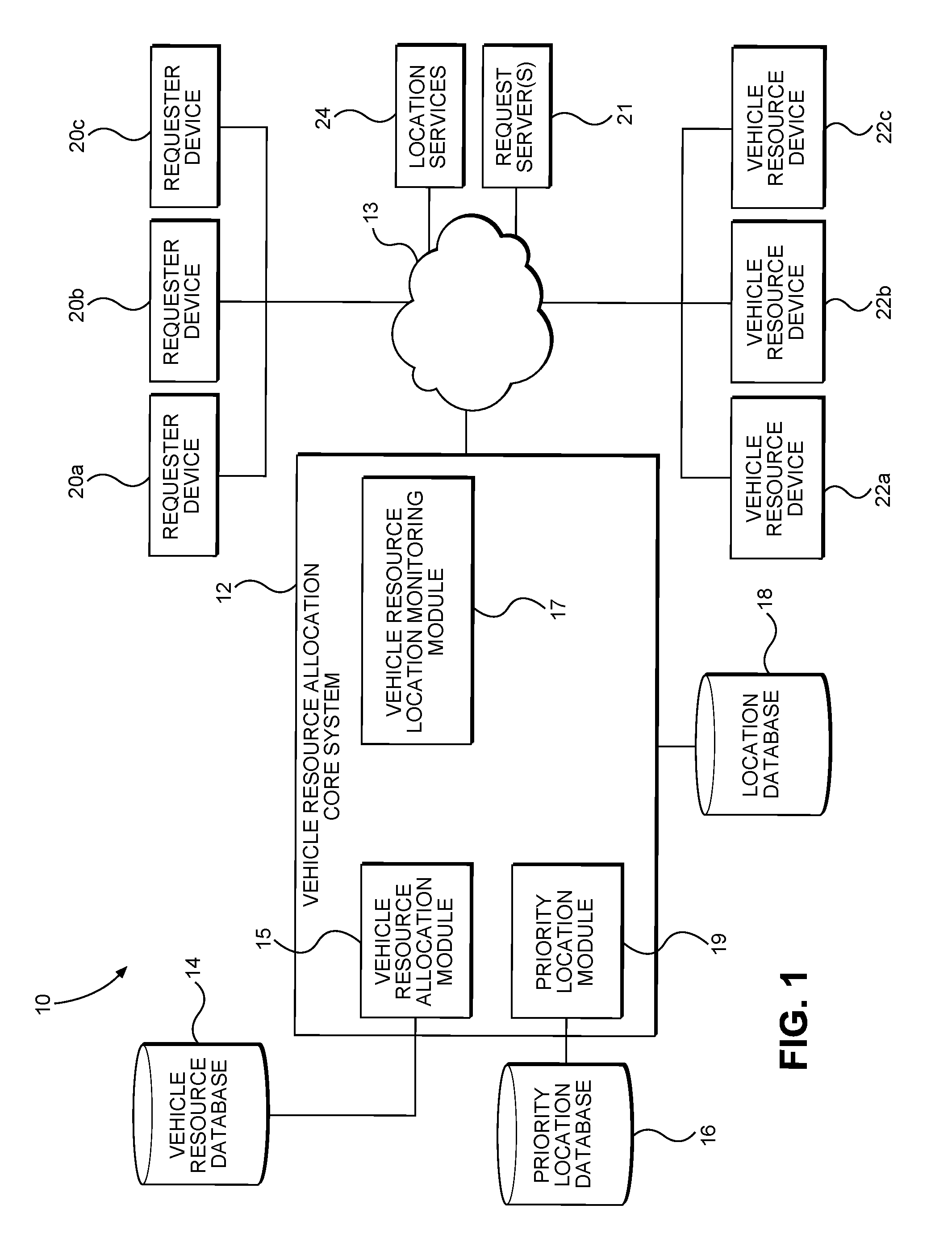

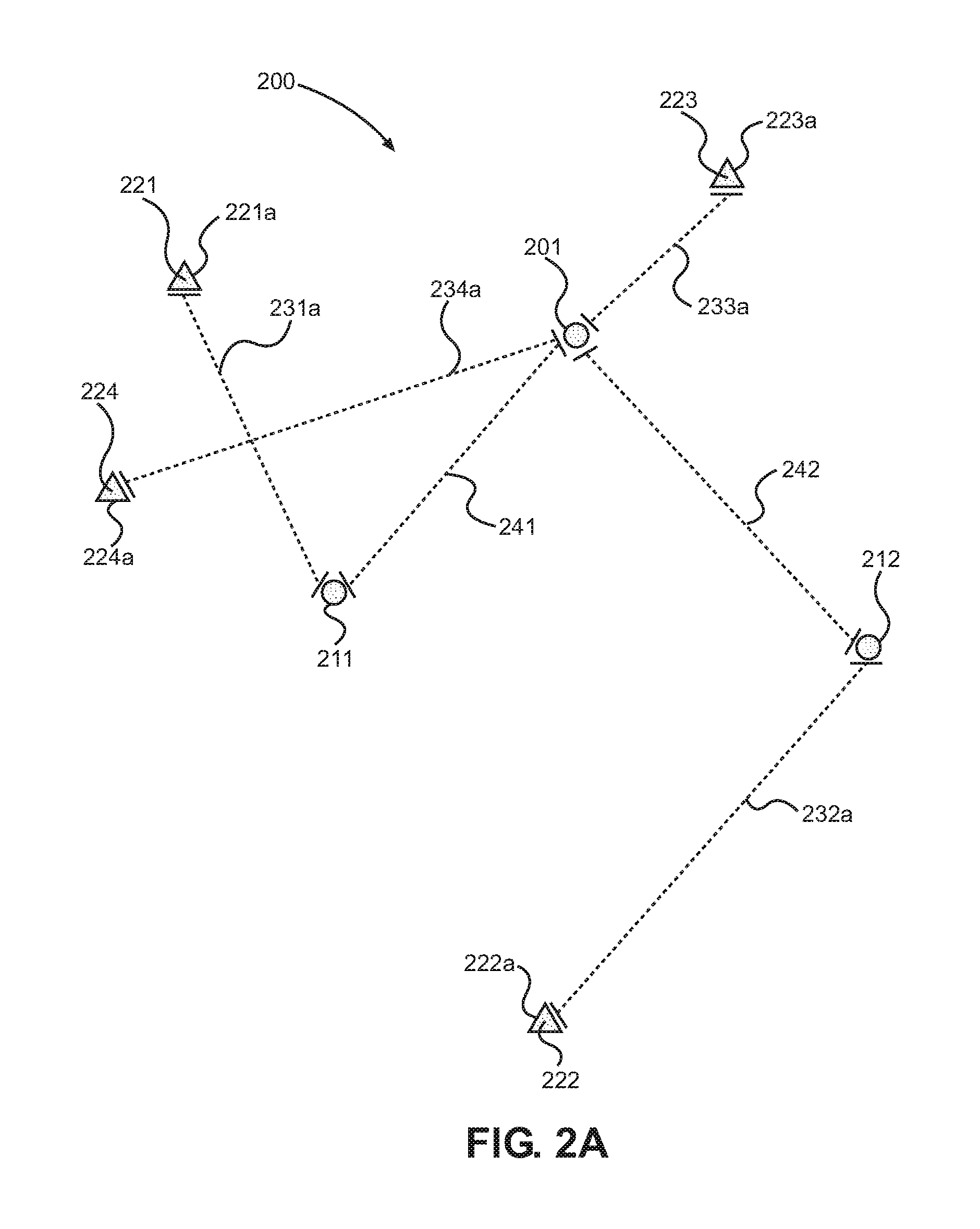

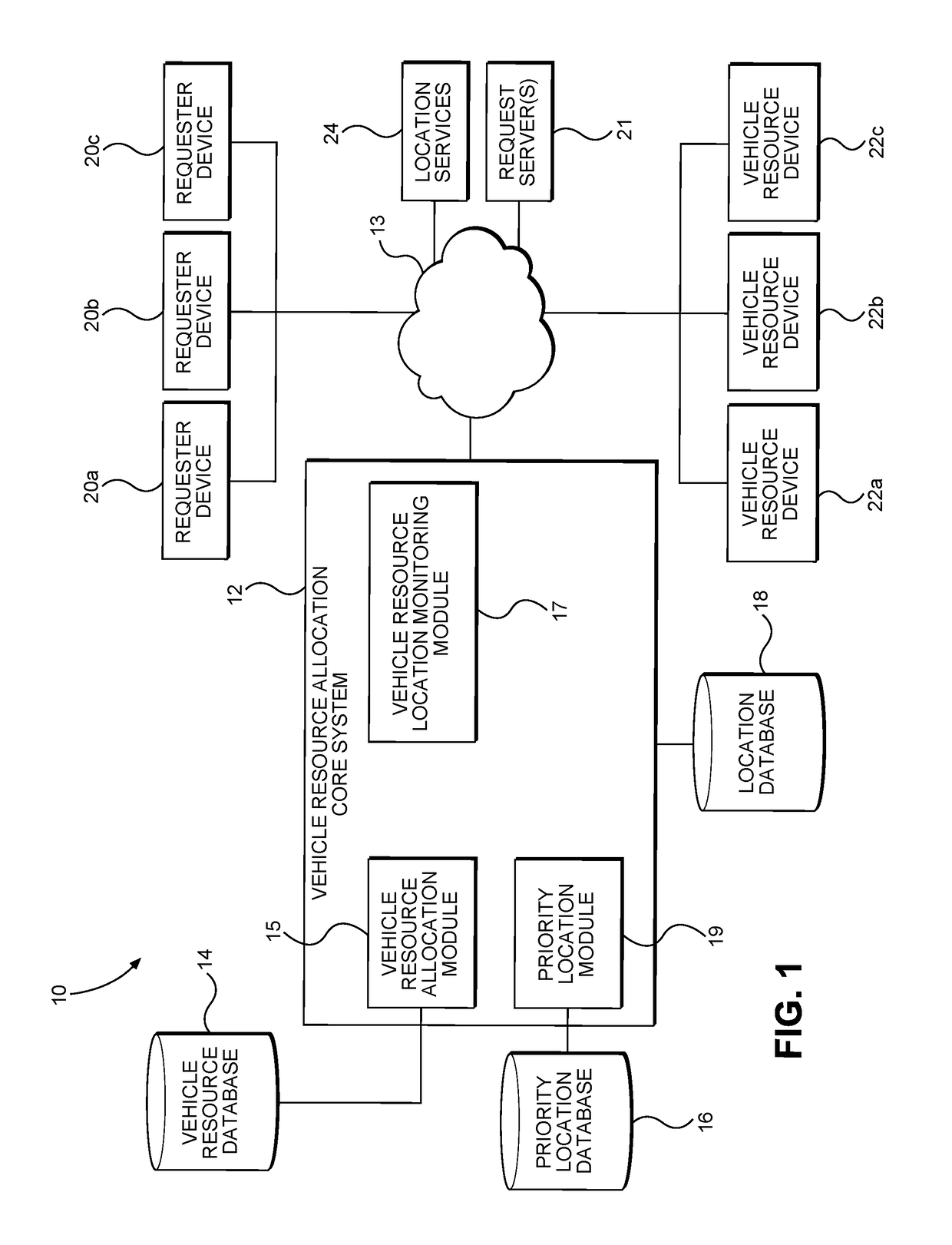

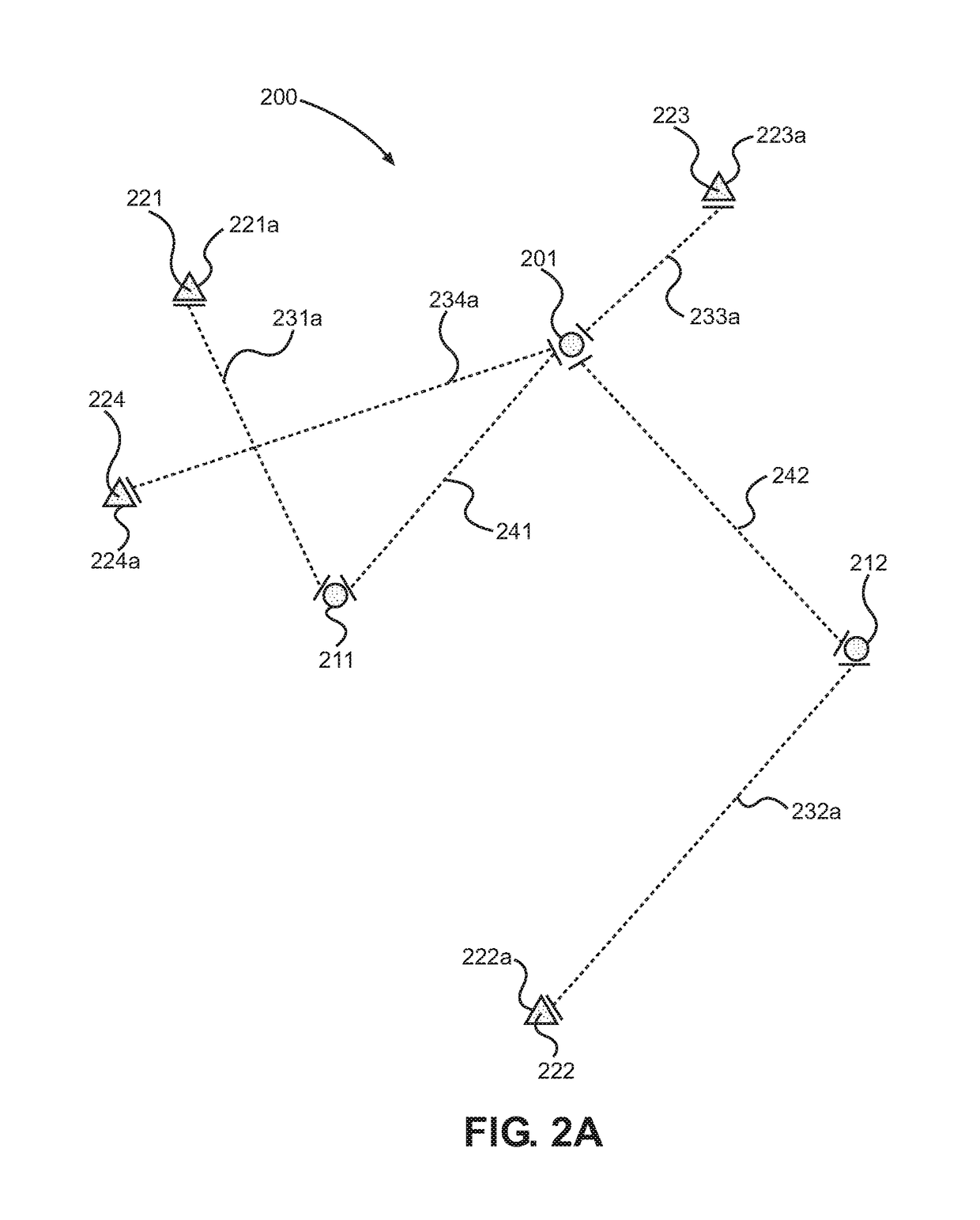

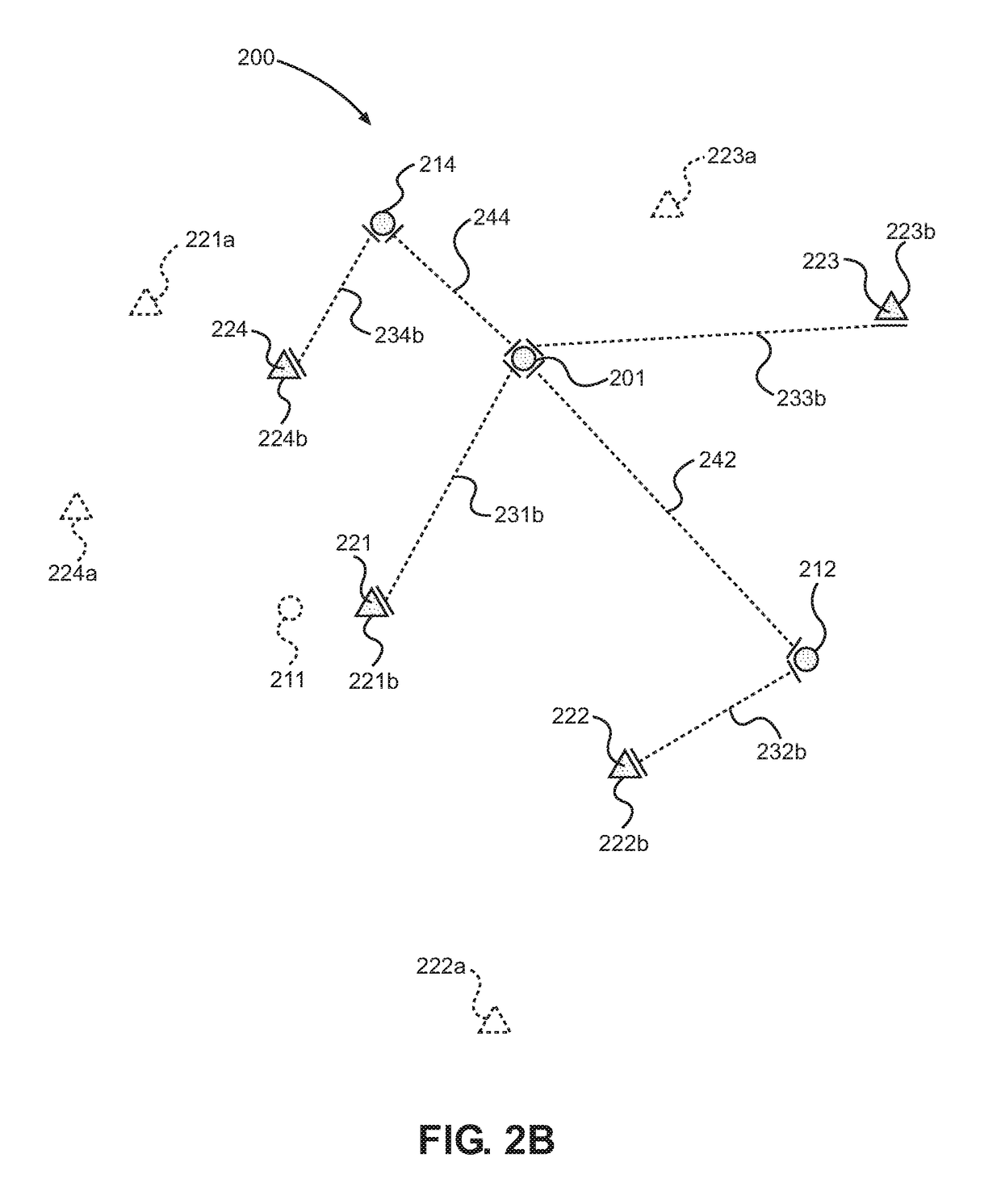

Systems and Methods for Vehicle Resource Management

ActiveUS20160247109A1Instruments for road network navigationRoad vehicles traffic controlStart timeResource Management System

Systems, methods, apparatus, and computer-readable media provide for allocating vehicle resources to future vehicle requirements. In some embodiments, allocating a vehicle resource to a vehicle requirement may be based on an iterative analysis of candidate vehicle resources using one or more of: a suitability of a candidate vehicle resource to fulfil the vehicle requirement, a journey time from a vehicle location to a start location, and / or a start time for the vehicle requirement.

Owner:ADDISON LEE

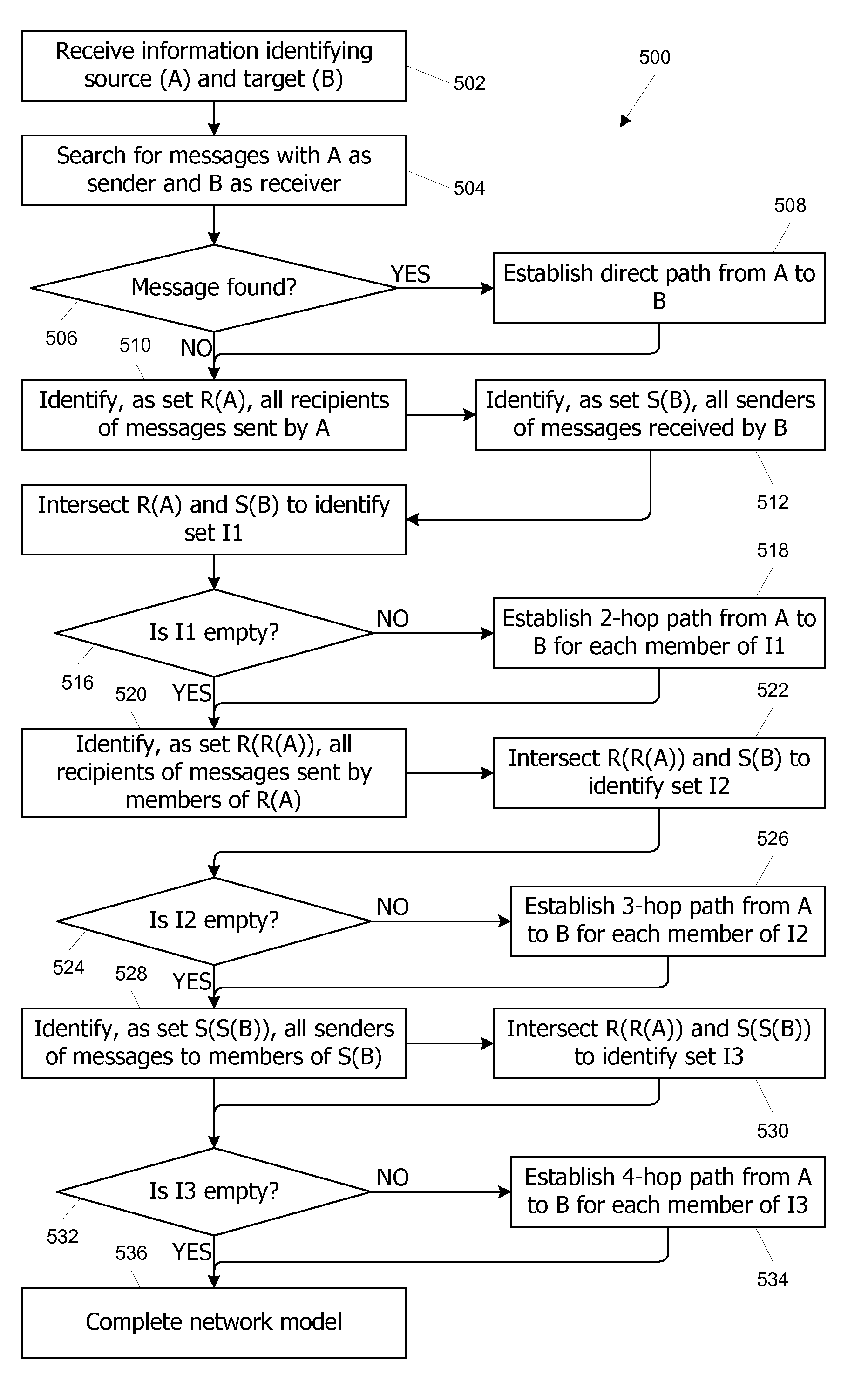

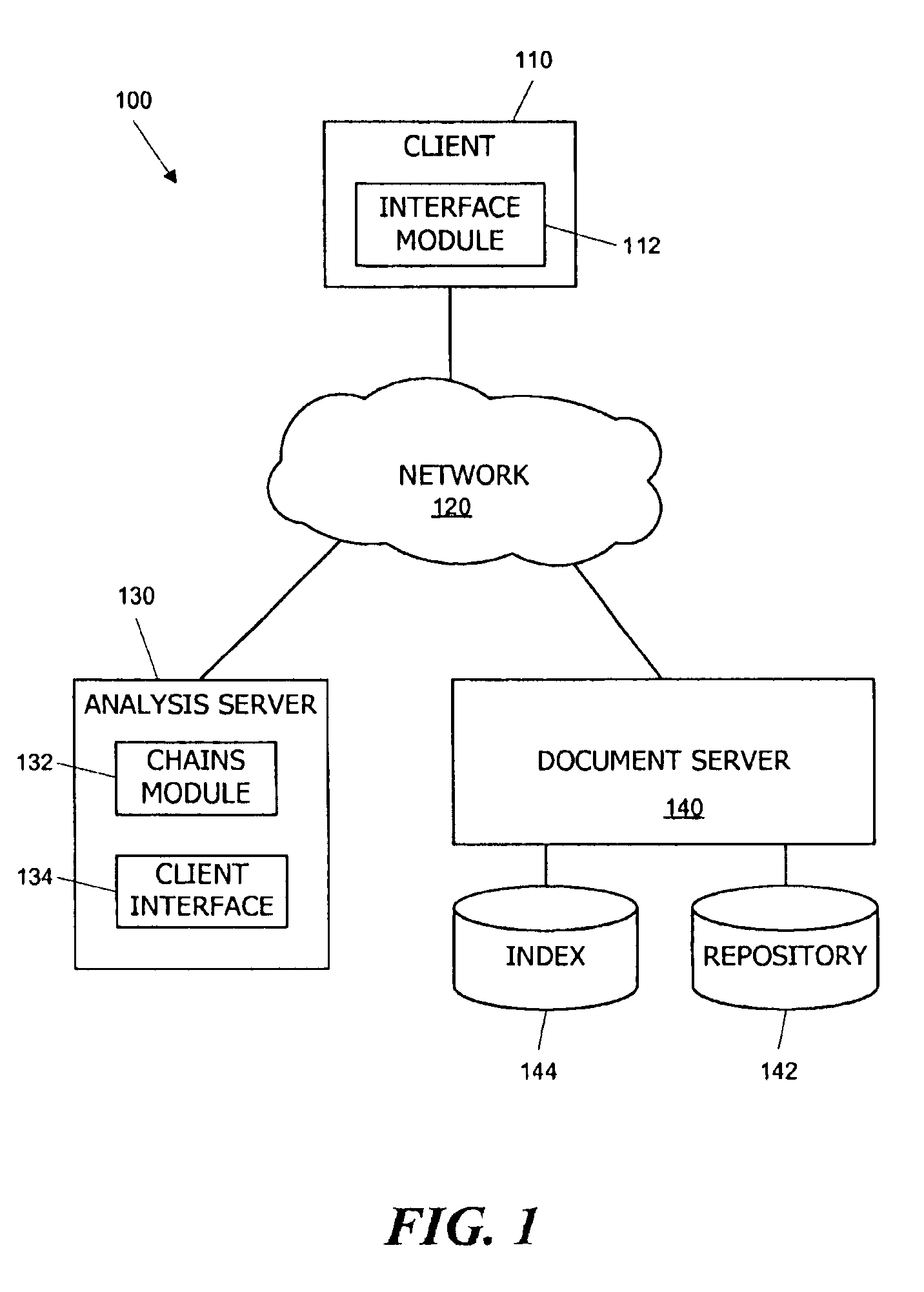

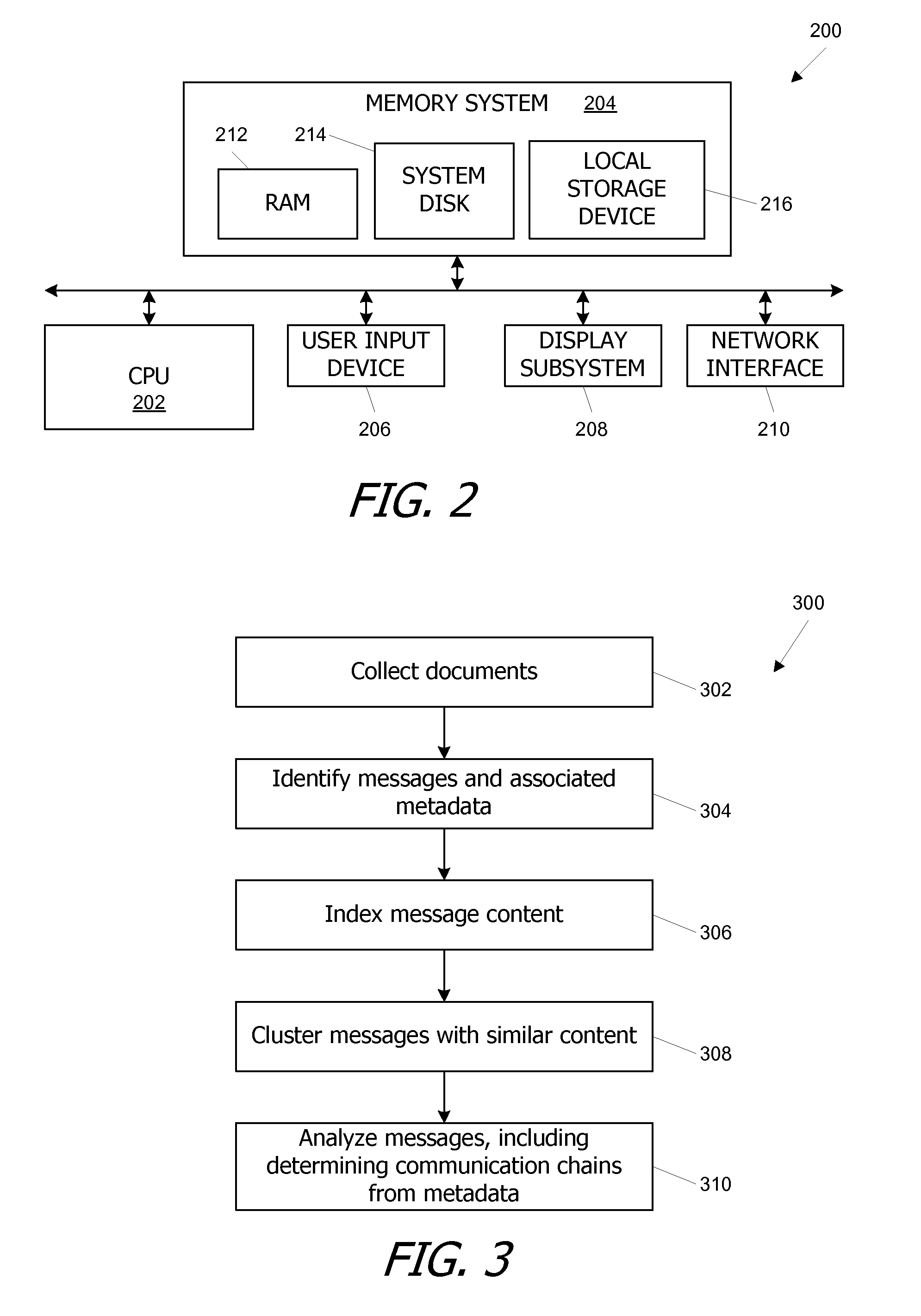

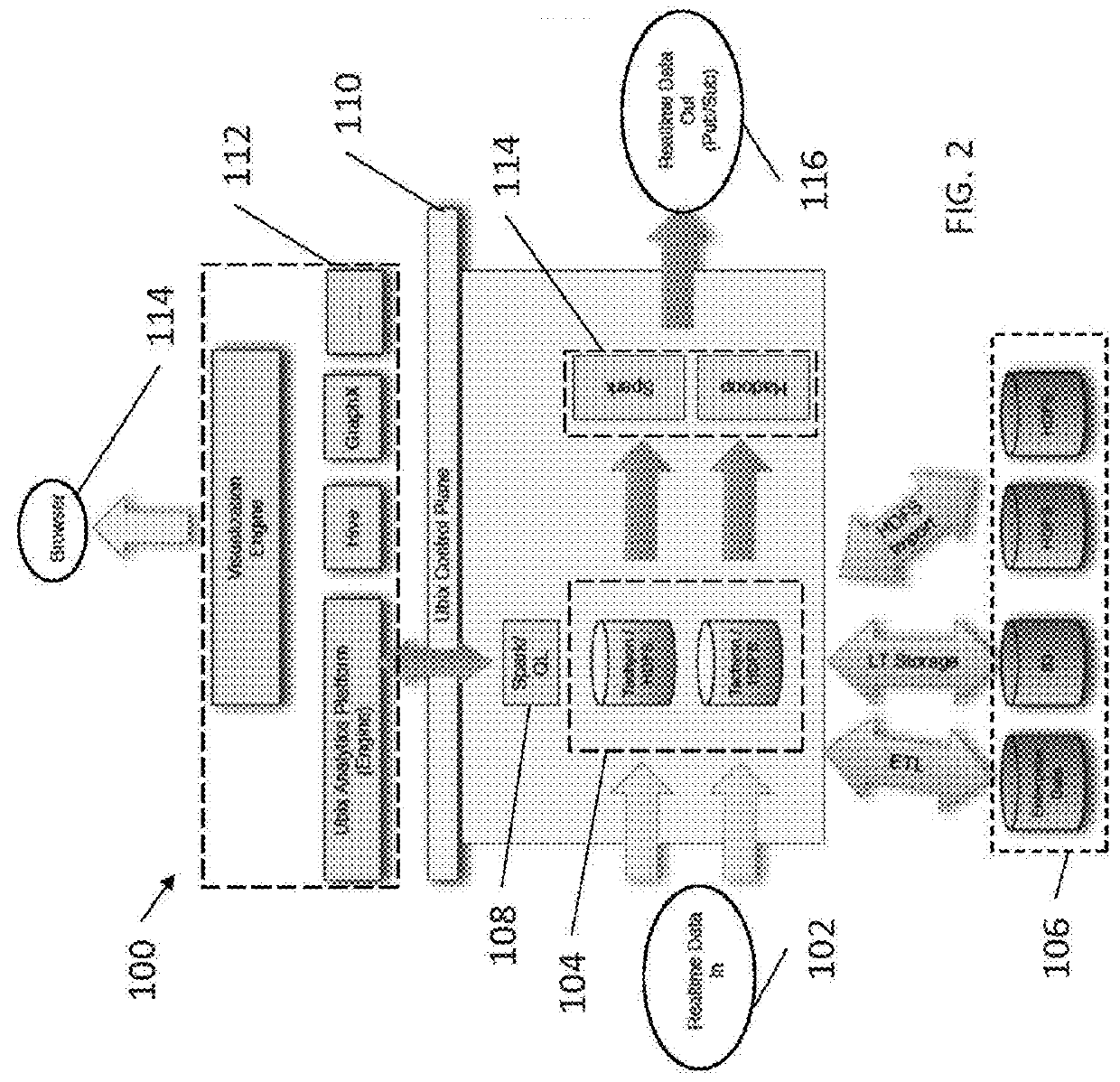

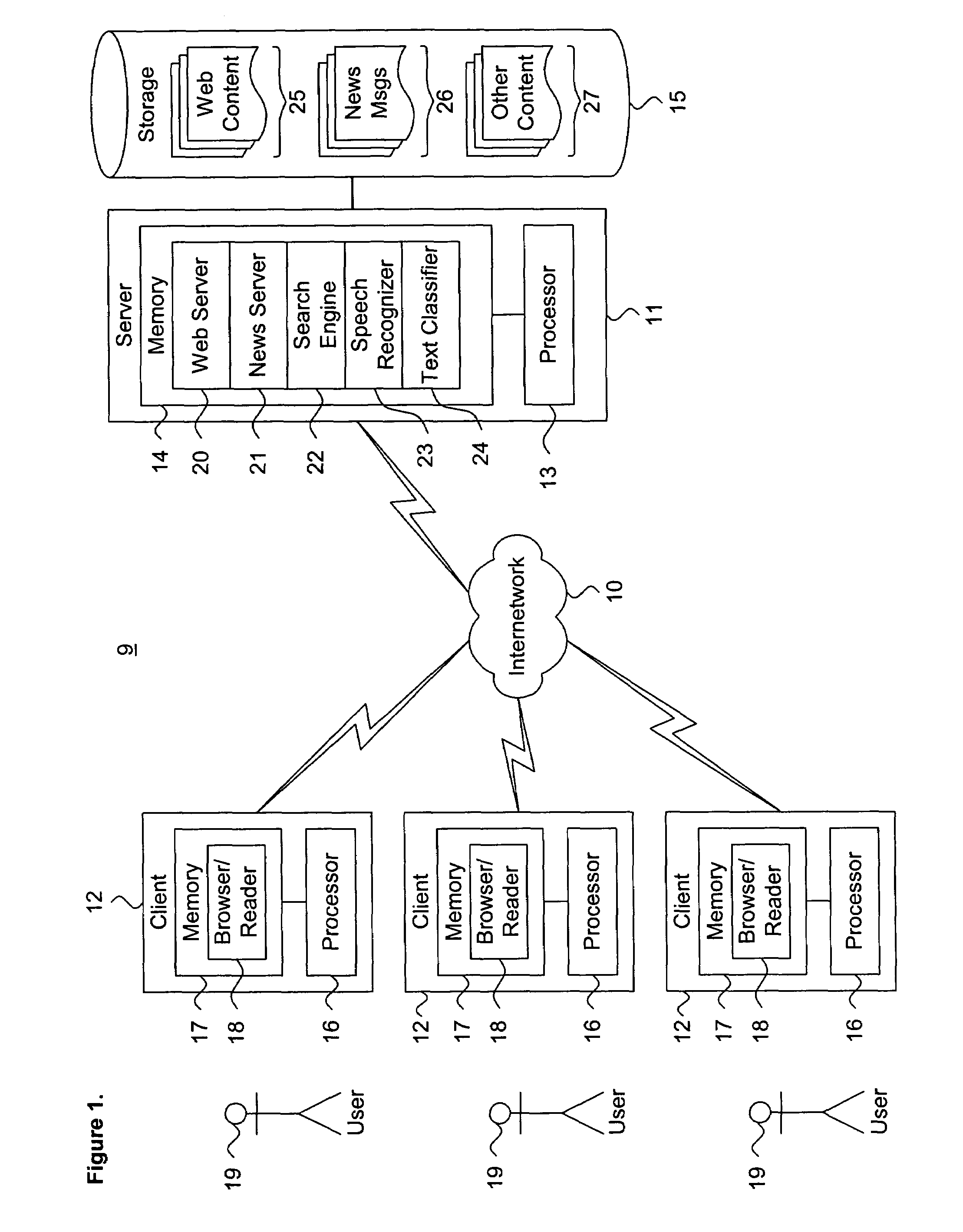

Systems and methods for determining communication chains based on messages

InactiveUS7664821B1Easy to identifyMultiple digital computer combinationsData switching networksElectronic documentCommunication link

A pool of messages, e.g., e-mails and / or other electronic documents that each correspond to a communication from a sender to a recipient, is analyzed to identify communication chains between a source and a target. Sender and recipient identifiers extracted from the messages are used to detect communication links between pairs of entities. Indirect chains of any desired length can be found by iteratively tracing a communication path one step forward from the source, then one step backward from the target, and so on; at each new step, entities at end points of the forward paths and backward paths are compared to detect any entities that complete a communication chain from source to target. Information related to the identified communication chains can be presented to a user via an interactive report that supports iterative analysis of the communication-chain data.

Owner:MICRO FOCUS LLC

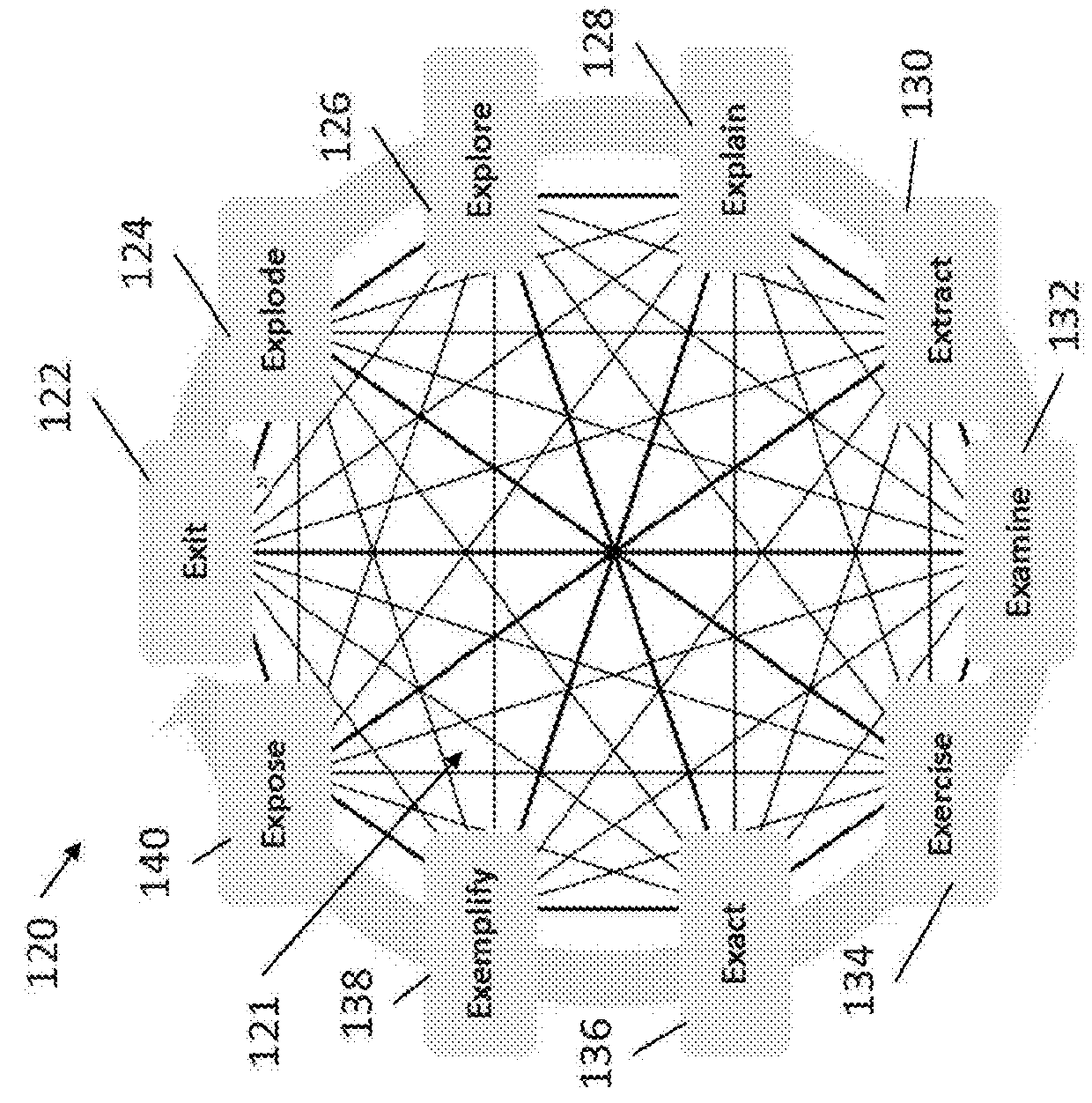

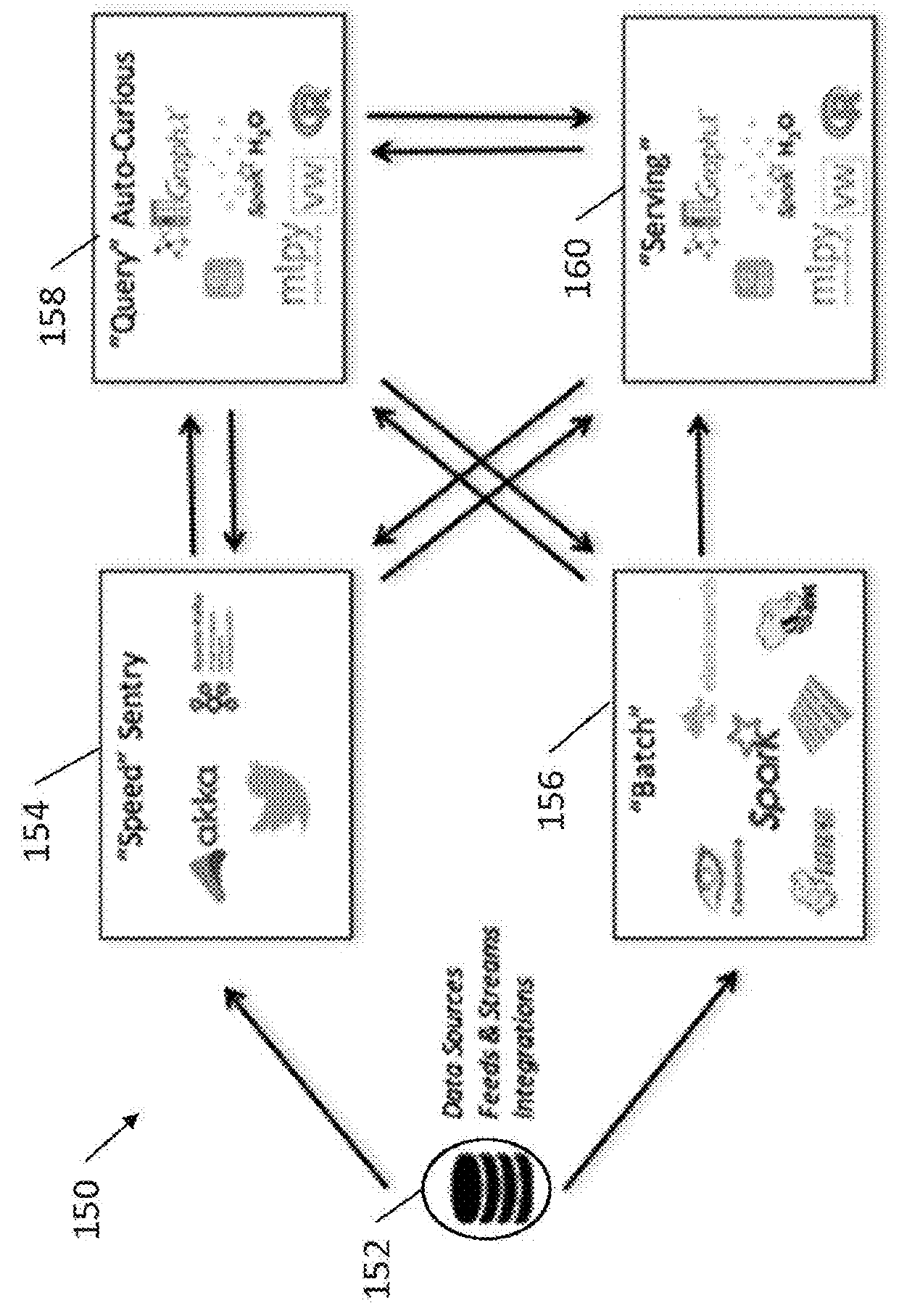

Systems and methods for automating data science machine learning analytical workflows

Systems and methods for automating data science machine learning using analytical workflows are disclosed that provide for user interaction and iterative analysis including automated suggestions based on at least one analysis of a dataset.

Owner:U2 SCI LABS INC

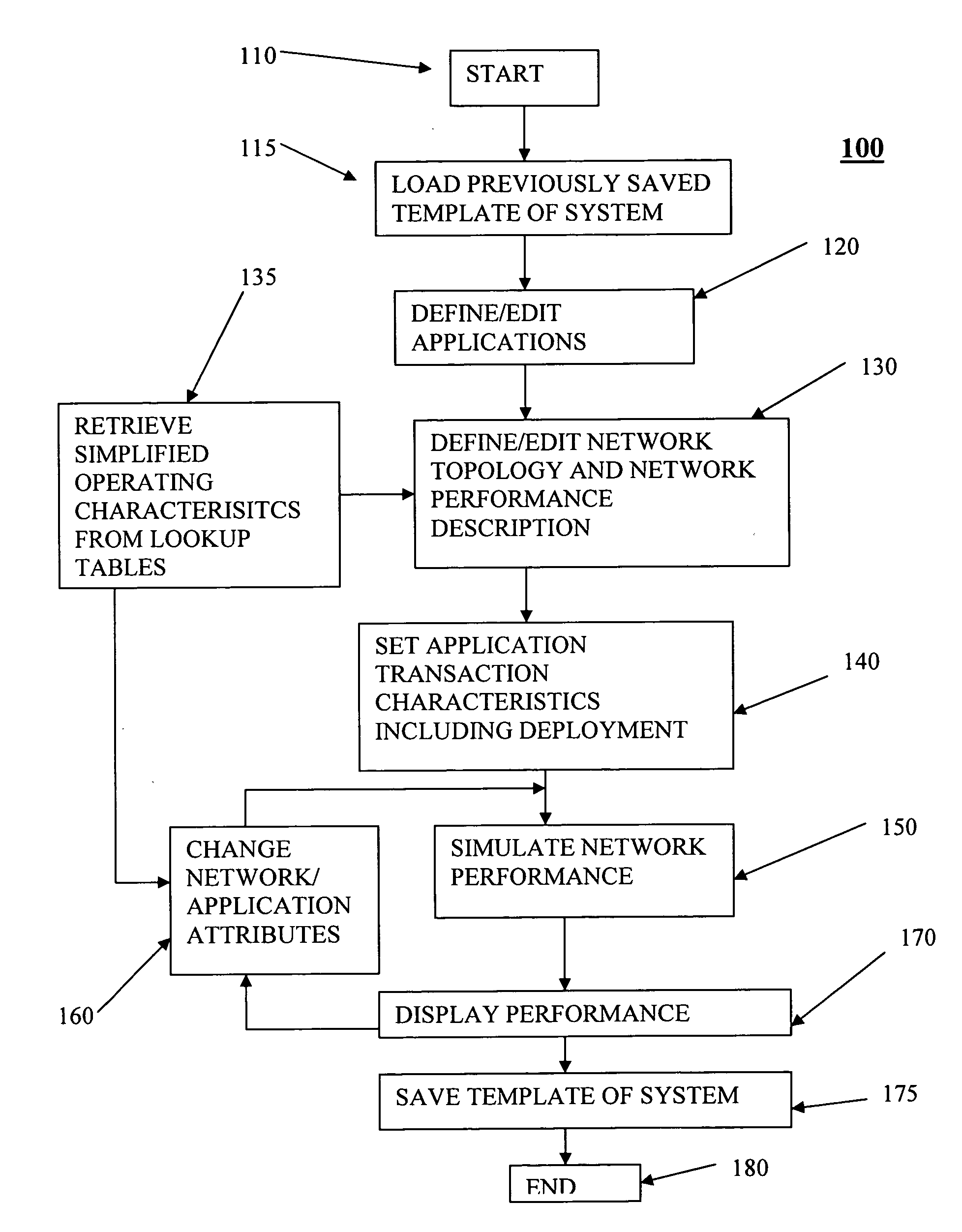

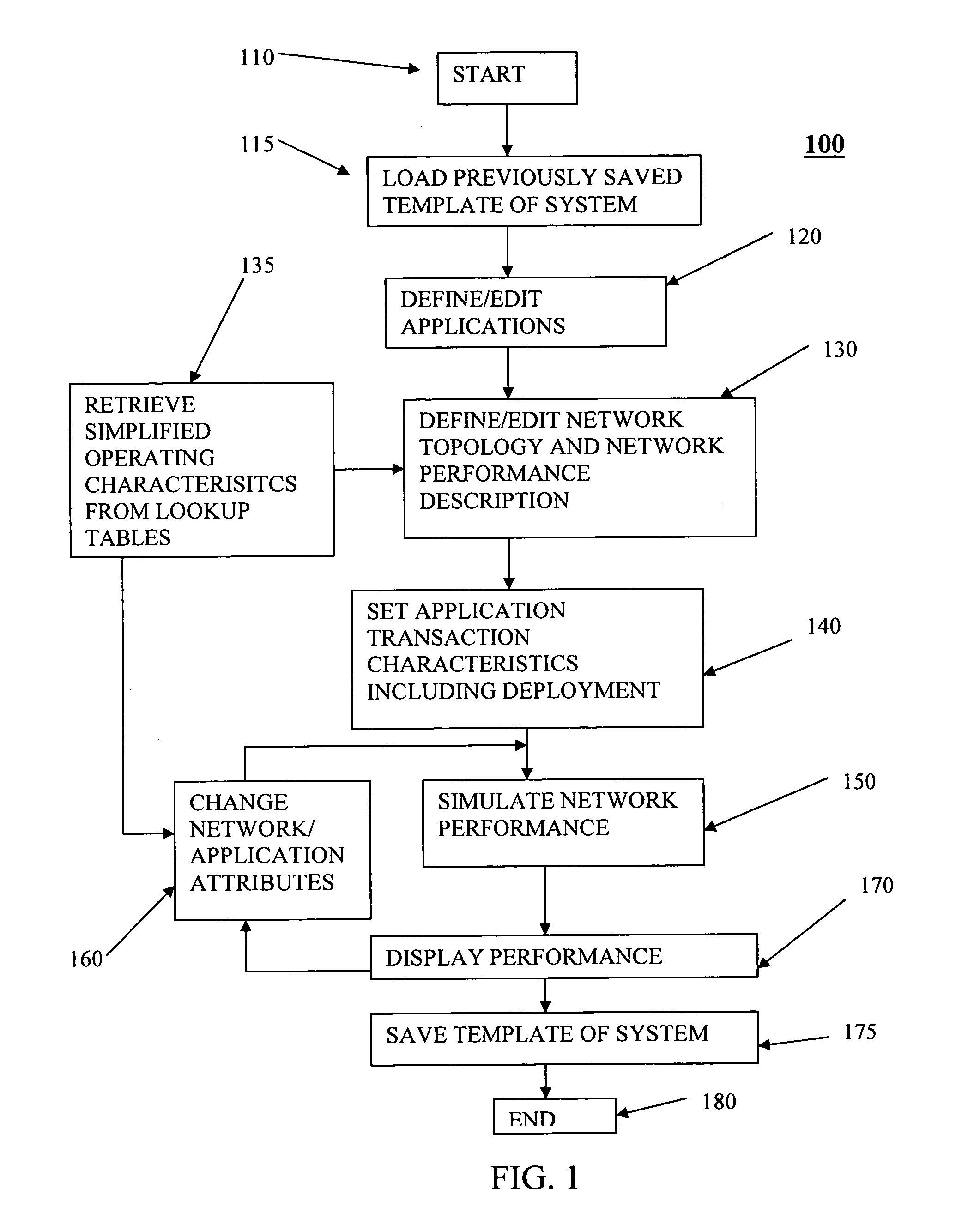

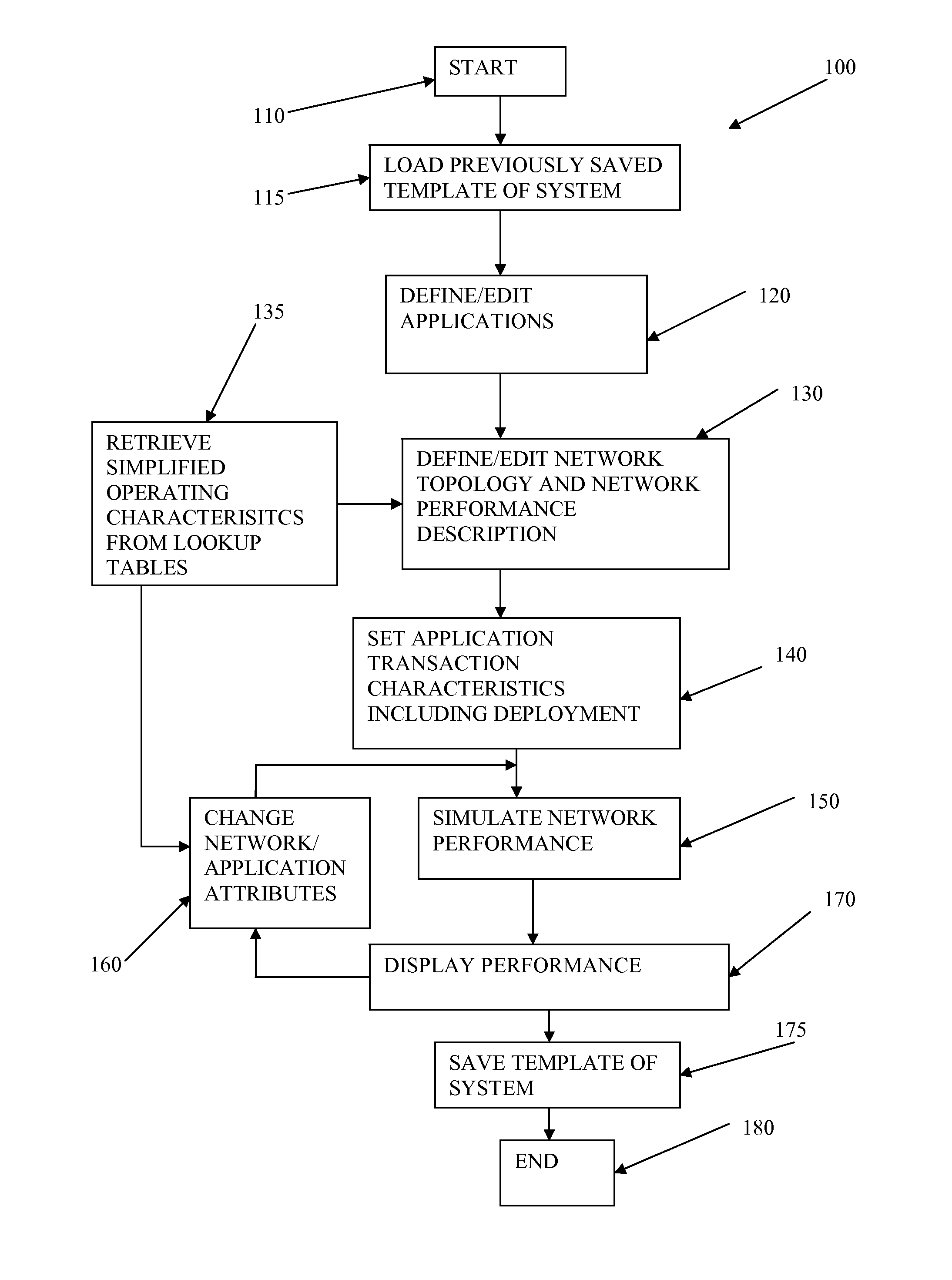

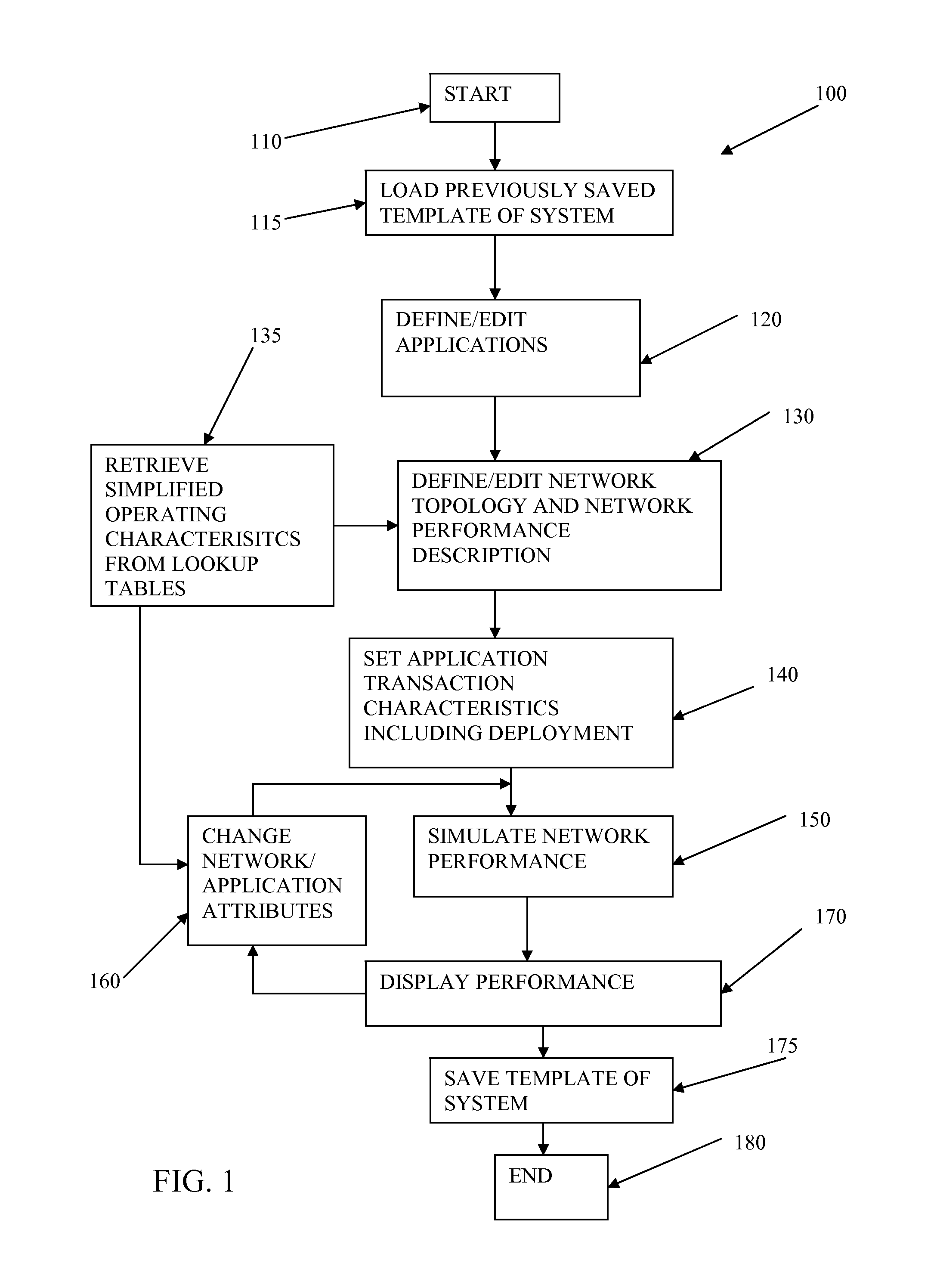

Network capacity planning

Data representing application deployment attributes, network topology, and network performance attributes based on a reduced set of element attributes is utilized to simulate application deployment. The data may be received from a user directly, a program that models a network topology or application behavior, and a wizard that implies the data based on an interview process. The simulation may be based on application deployment attributes including application traffic pattern, application message sizes, network topology, and network performance attributes. The element attributes may be determined from a lookup table of element operating characteristics that may contain element maximum and minimum boundary operating values utilized to interpolate other operating conditions. Application response time may be derived using an iterative analysis based on multiple instances of one or more applications wherein a predetermined number of iterations is used or until a substantially steady state of network performance is achieved.

Owner:OPNET TECH LLC

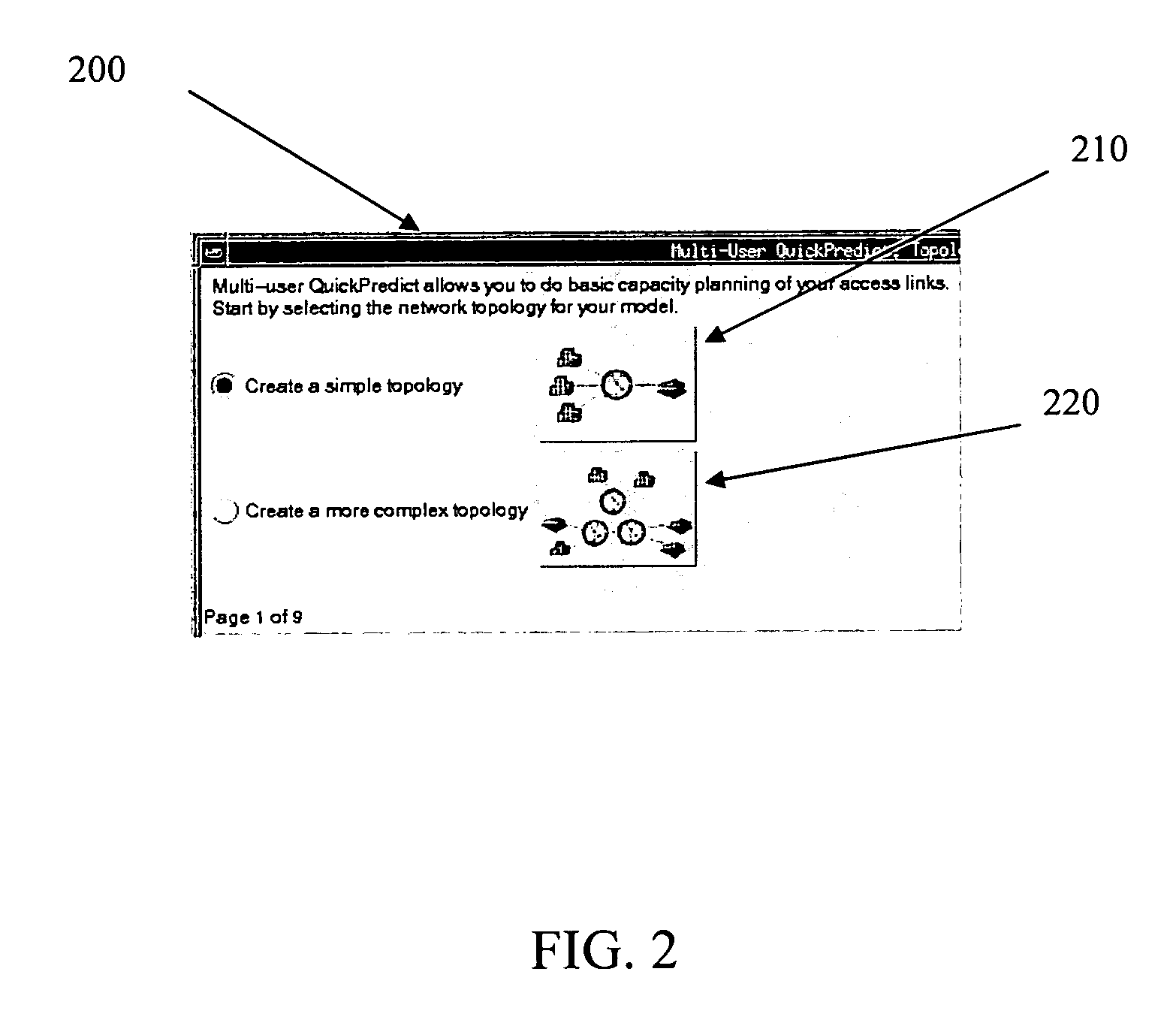

Method and apparatus for identifying feedback in a circuit

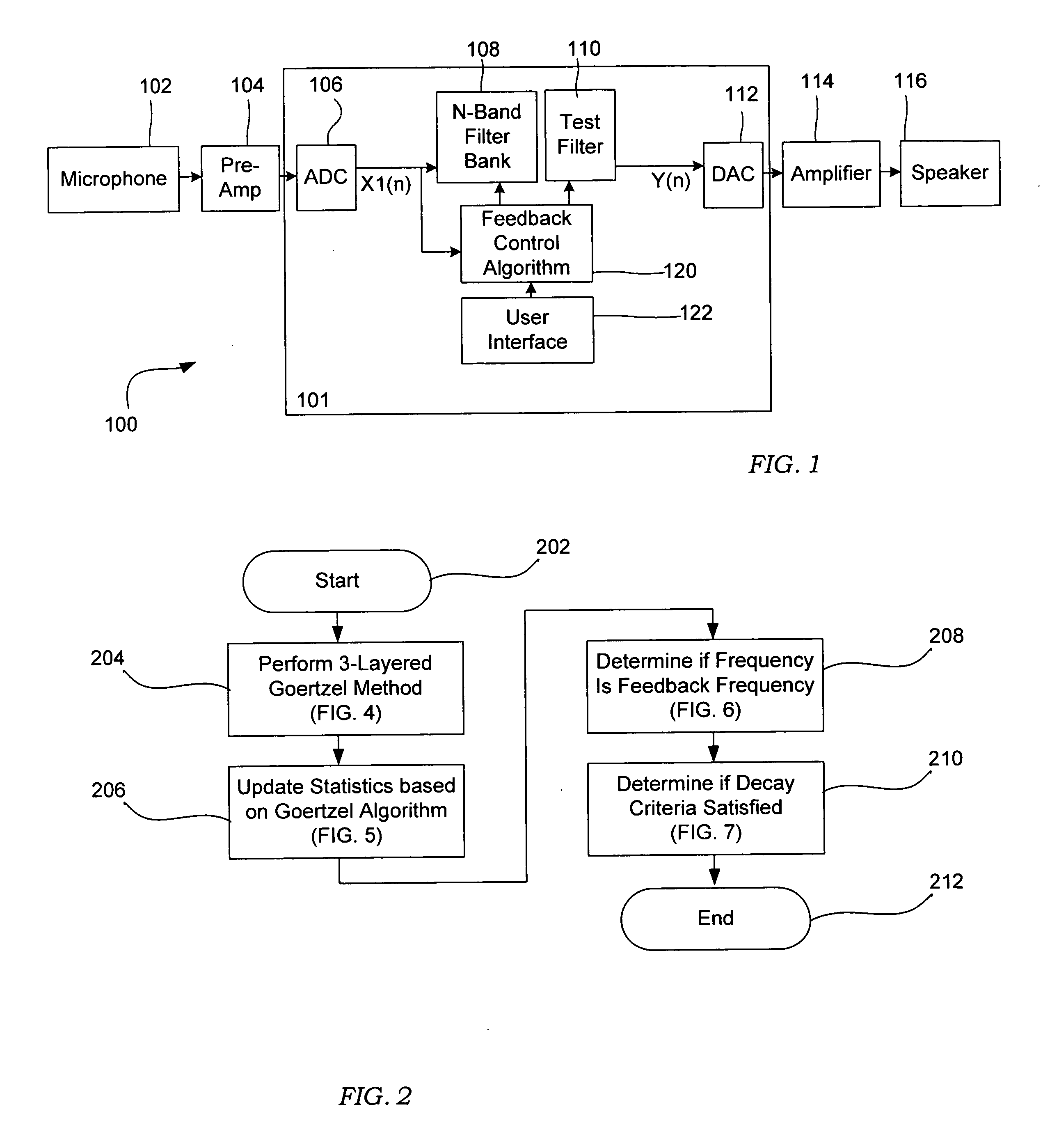

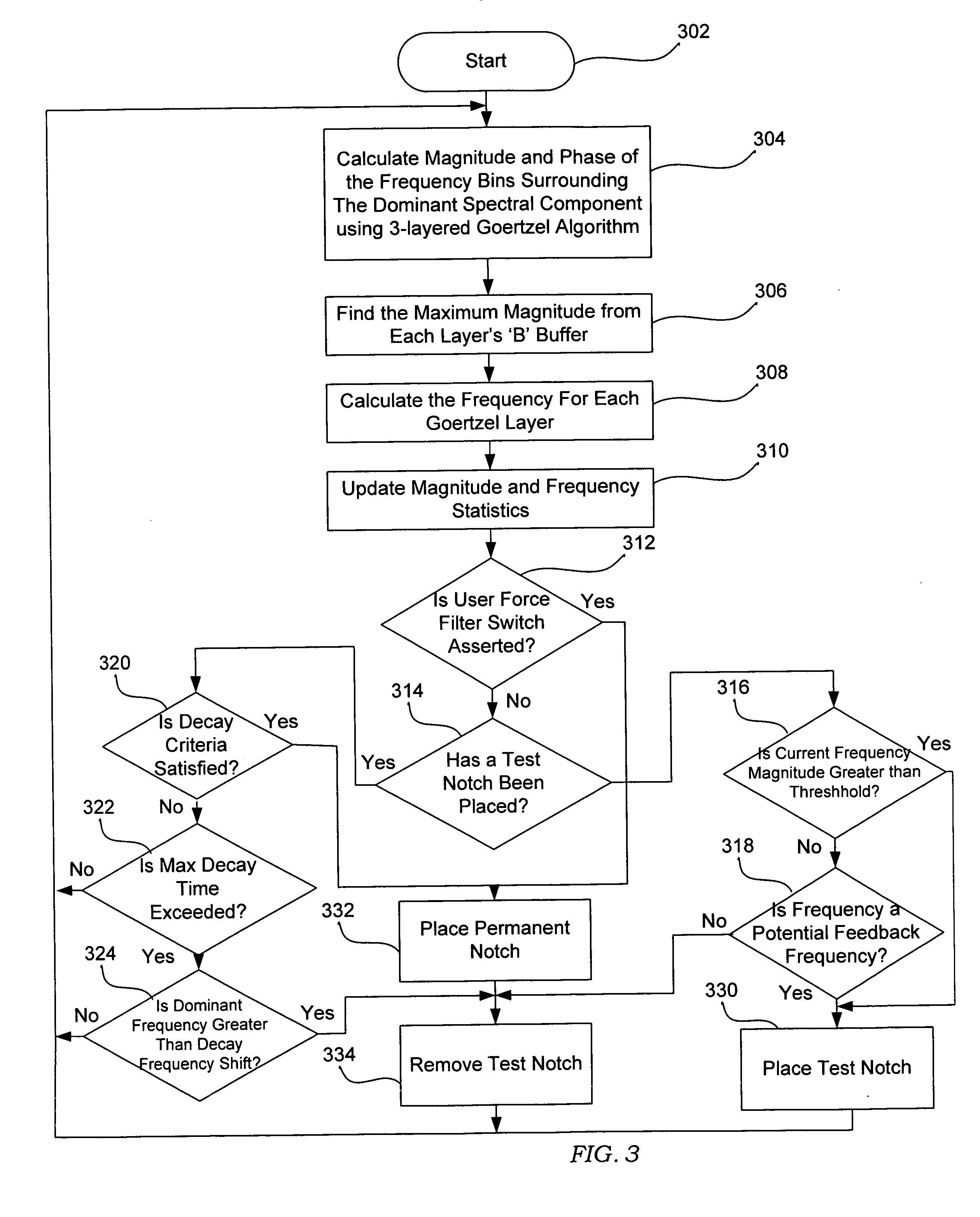

ActiveUS20060215852A1Improve abilitiesPublic address systemsSpeech analysisFrequency characteristicFeedback control

A system and method for analyzing a signal to monitor the dynamics of its magnitude and frequency characteristics over time. An electronic circuit for identifying feedback in an audio signal, formed in accordance with embodiments of the invention may comprise a feedback control block operable to determine a candidate frequency having potential feedback such that the feedback control block is further operable to perform an iterative analysis of the magnitude of the audio signal at the candidate frequency to determine the growth characteristics of the signal. The electronic circuit may further include a test filter block operable to deploy a test filter at a candidate frequency and a permanent filter block operable to deploy a permanent filter at the candidate frequency if the feedback control block determines that the growth characteristics of the signal at the candidate frequency comprises feedback characteristics after the test filter has been deployed.

Owner:INMUSIC BRANDS

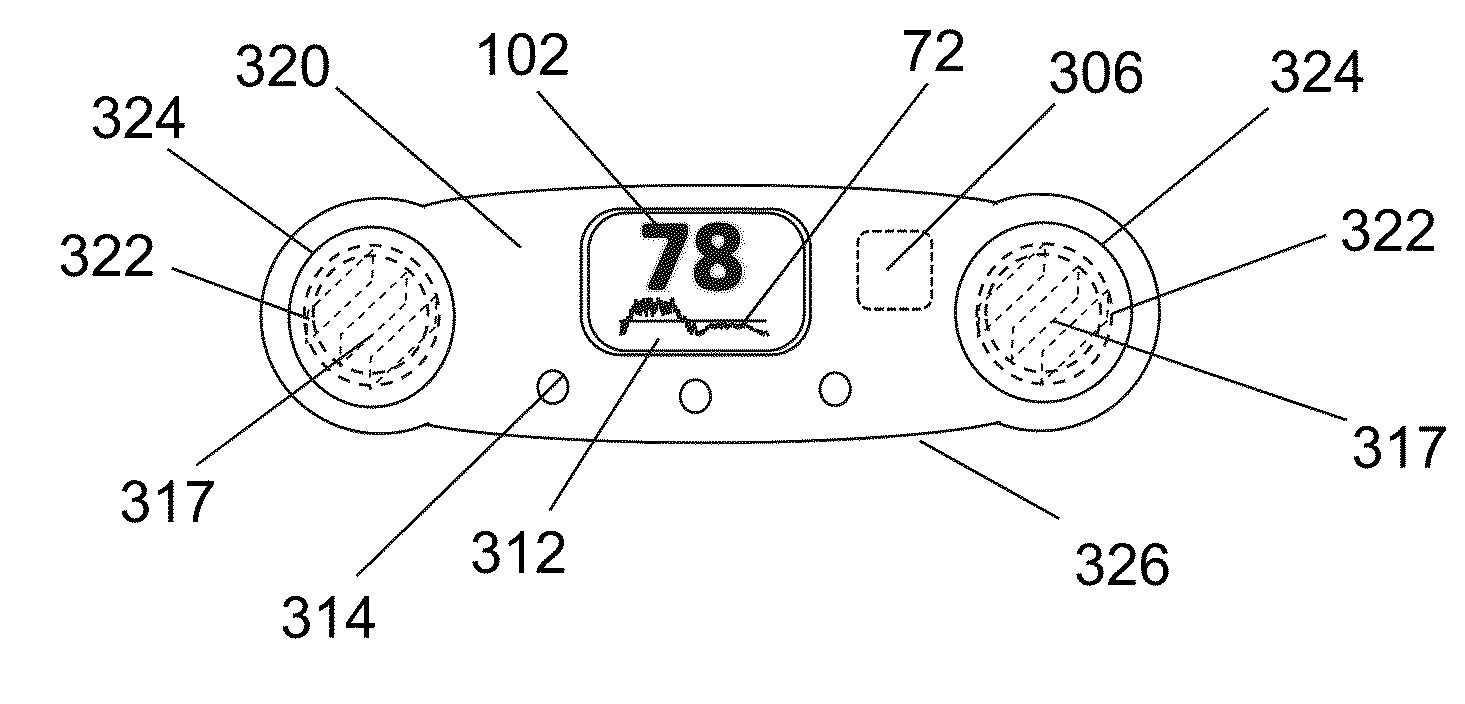

Method and apparatus for the measurement of autonomic function for the diagnosis and validation of patient treatments and outcomes

A pain measurement and diagnostic system (PMD) for bioanalytical analysis of pain matrix activity and the autonomic nervous system to diagnose and validate patient treatments, health status and outcomes. The PMD is implemented using medical devices for measuring and reporting objective measurements of pain through patient monitoring and analyzing related biological, psychological, social, environmental, and demographic factors that may contribute to and effect physiological outcomes for patients and through the integrated and iterative analysis improve diagnosis of pain, the evaluation of related disease states, health status, and treatment options.

Owner:DULLEN DEBORAH

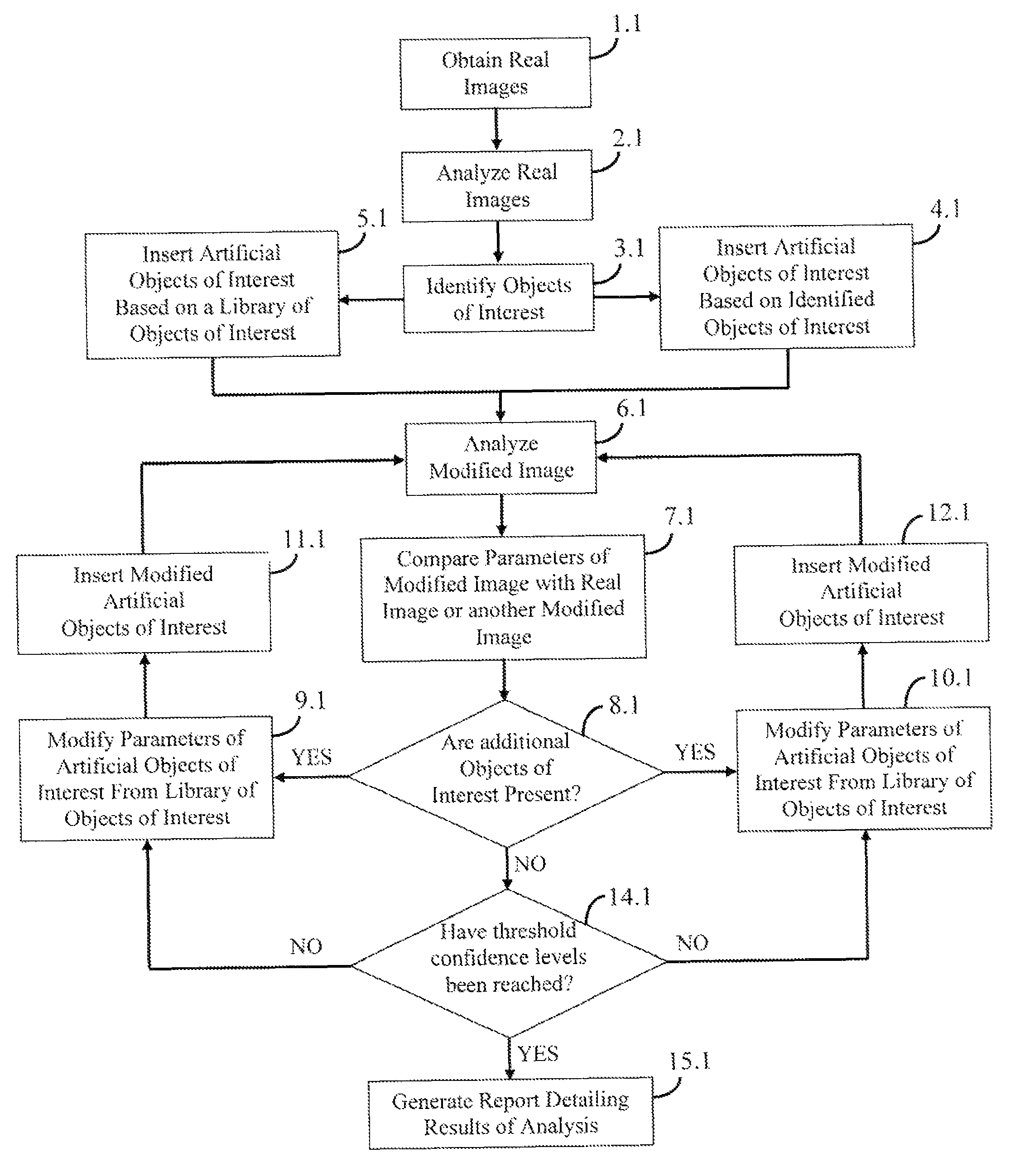

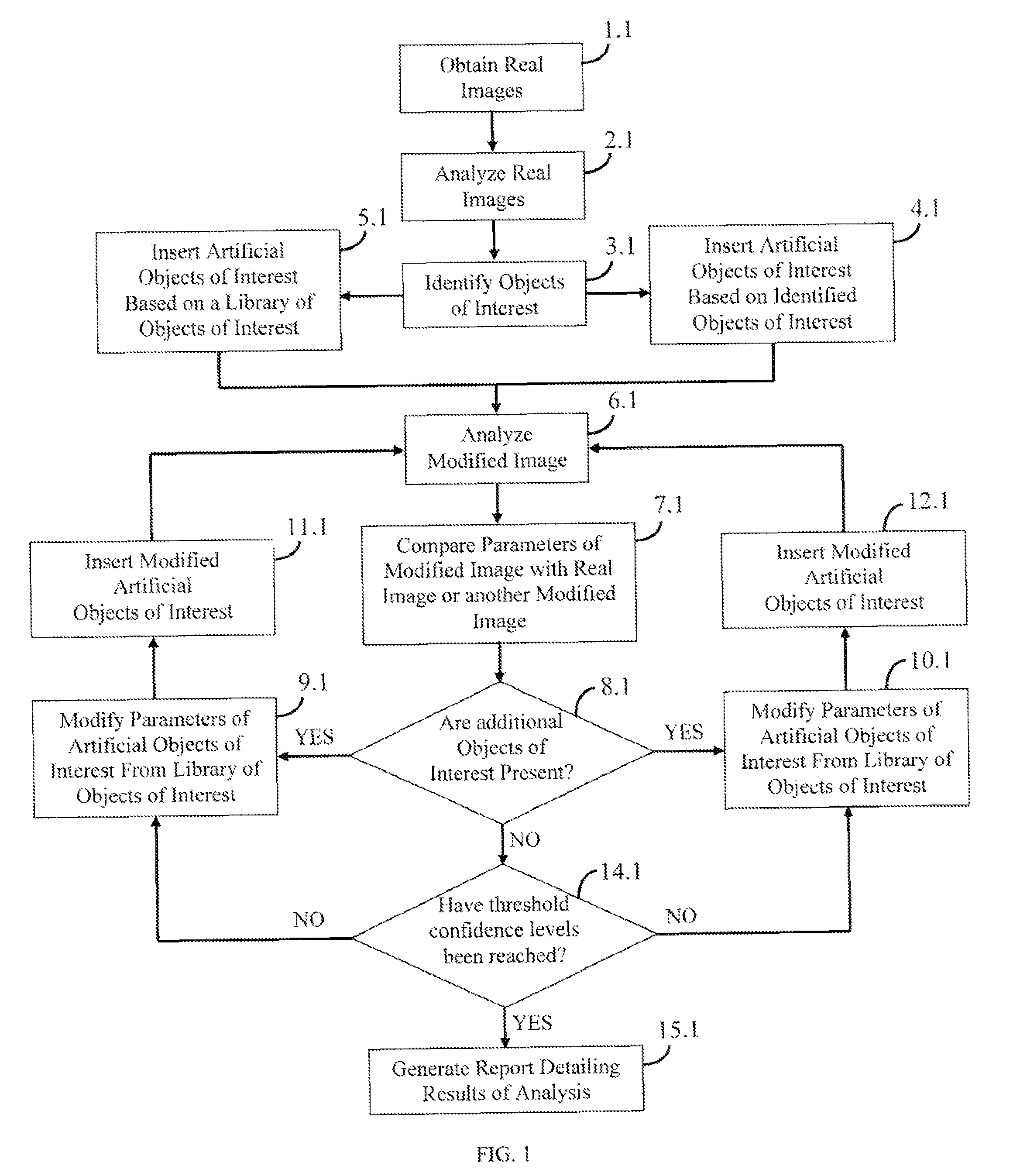

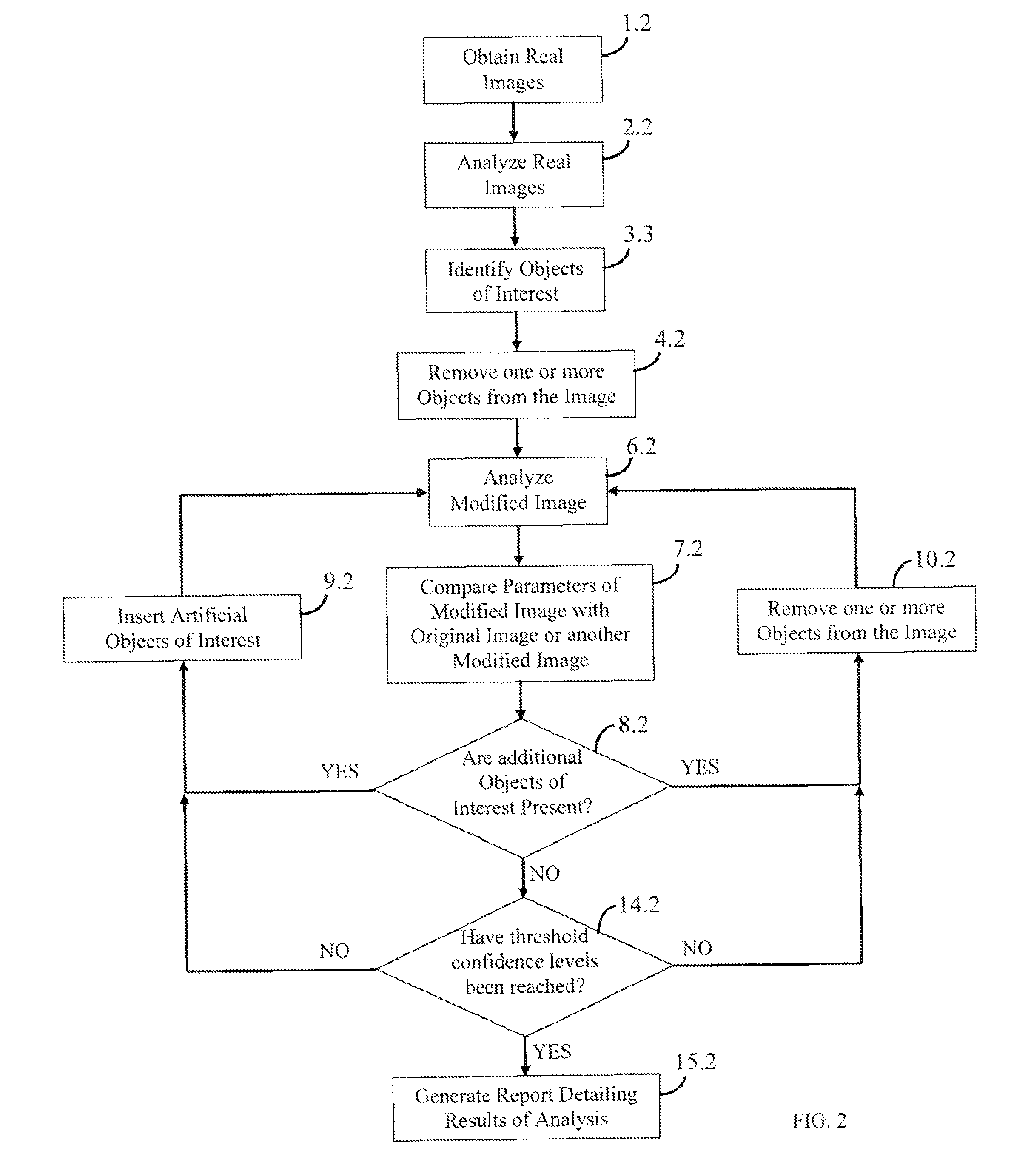

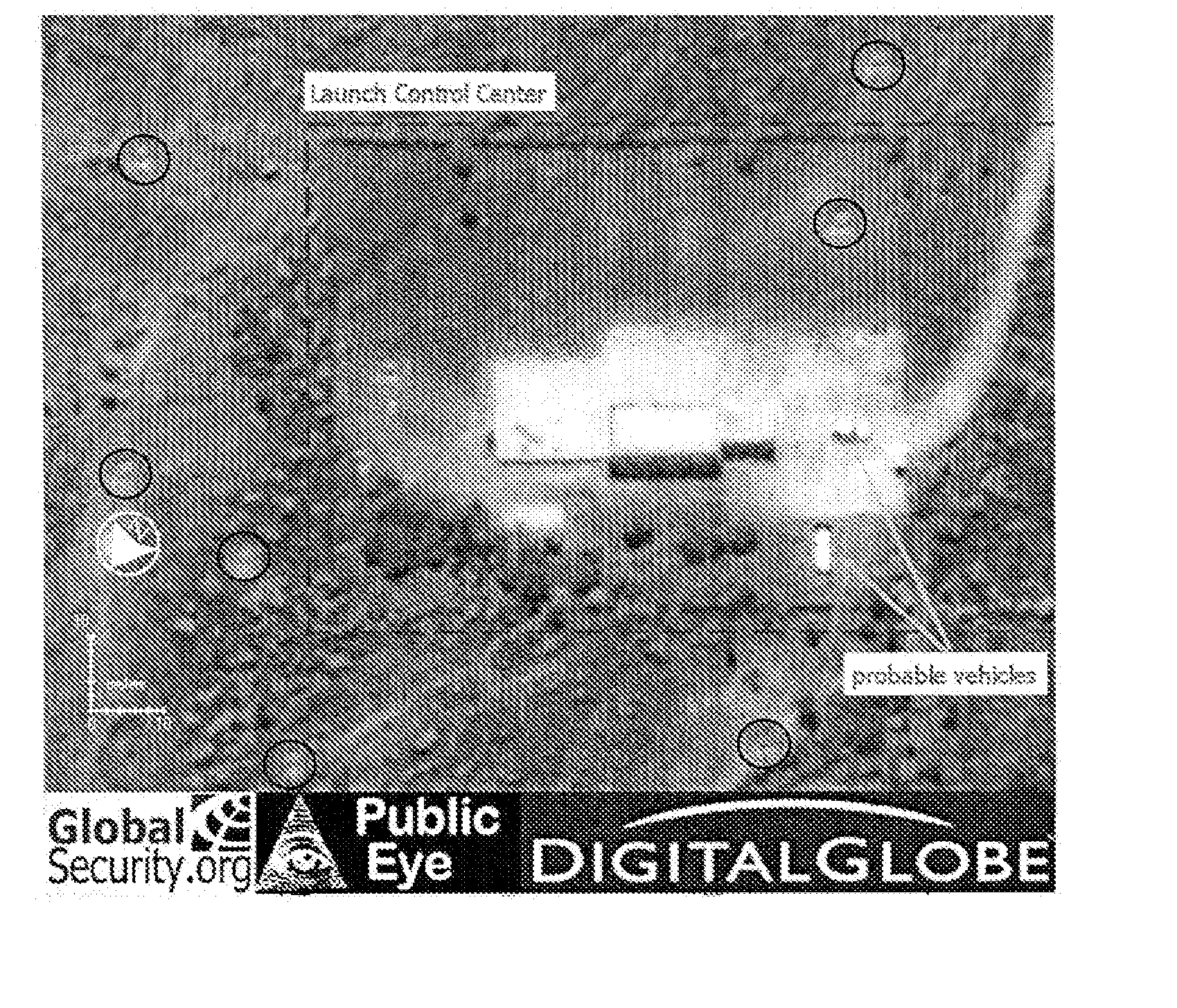

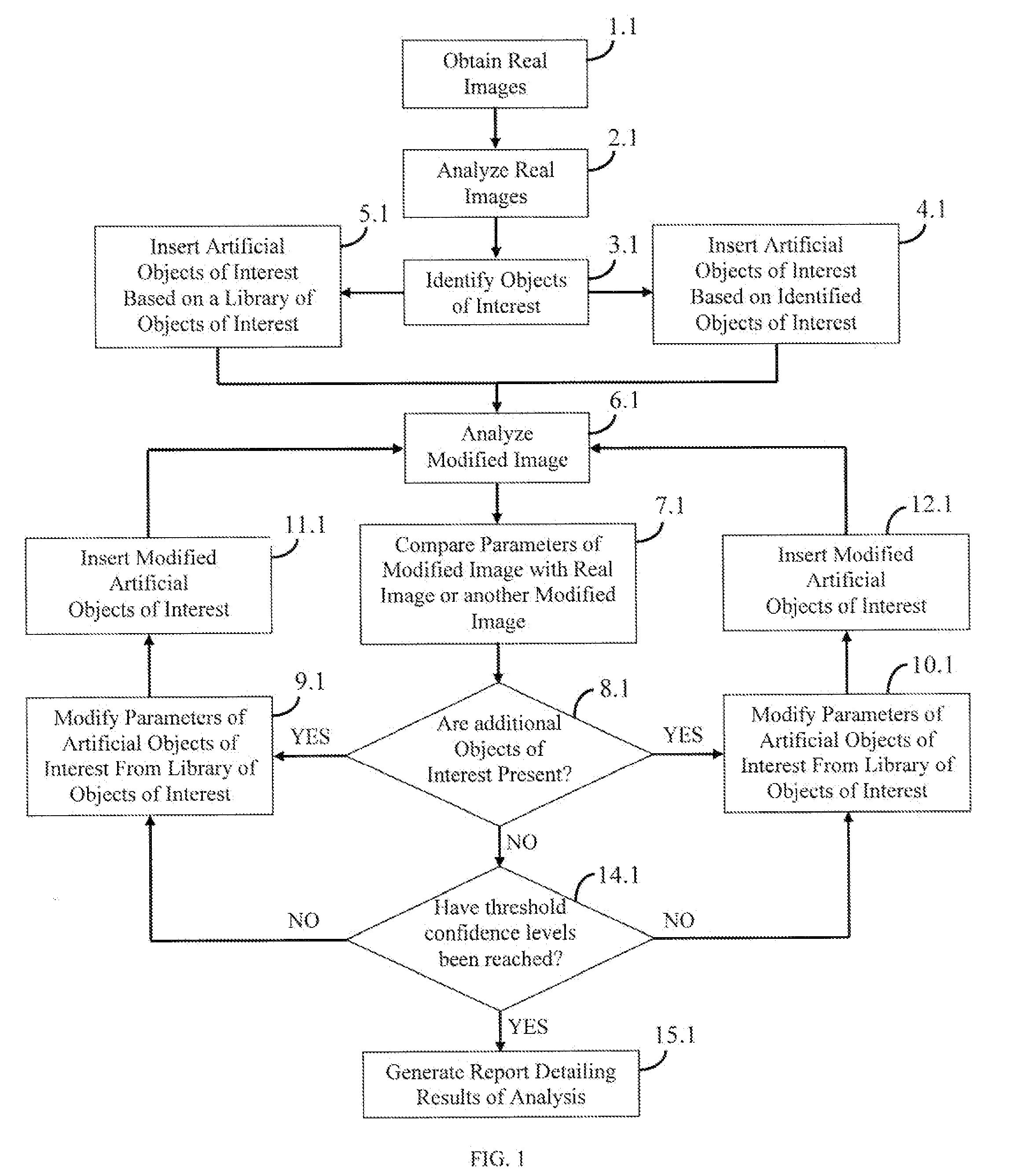

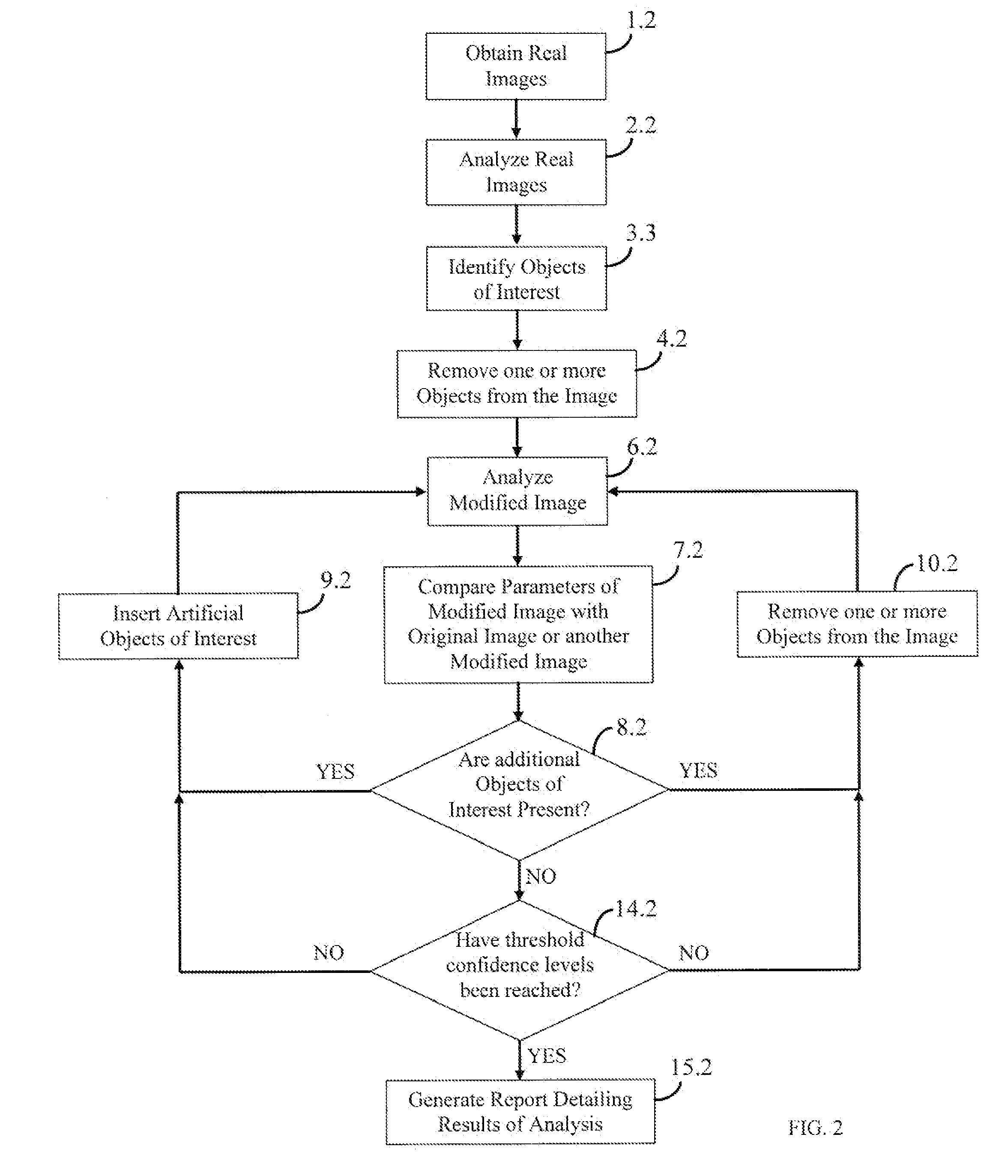

Image analysis by object addition and recovery

The invention described herein is generally directed to methods for analyzing an image. In particular, crowded field images may be analyzed for unidentified, unobserved objects based on an iterative analysis of modified images including artificial objects or removed real objects. The results can provide an estimate of the completeness of analysis of the image, an estimate of the number of objects that are unobserved in the image, and an assessment of the quality of other similar images.

Owner:IMAGE INSIGHT

Image analysis by object addition and recovery

The invention described herein is generally directed to methods for analyzing an image. In particular, crowded field images may be analyzed for unidentified, unobserved objects based on an iterative analysis of modified images including artificial objects or removed real objects. The results can provide an estimate of the completeness of analysis of the image, an estimate of the number of objects that are unobserved in the image, and an assessment of the quality of other similar images.

Owner:IMAGE INSIGHT

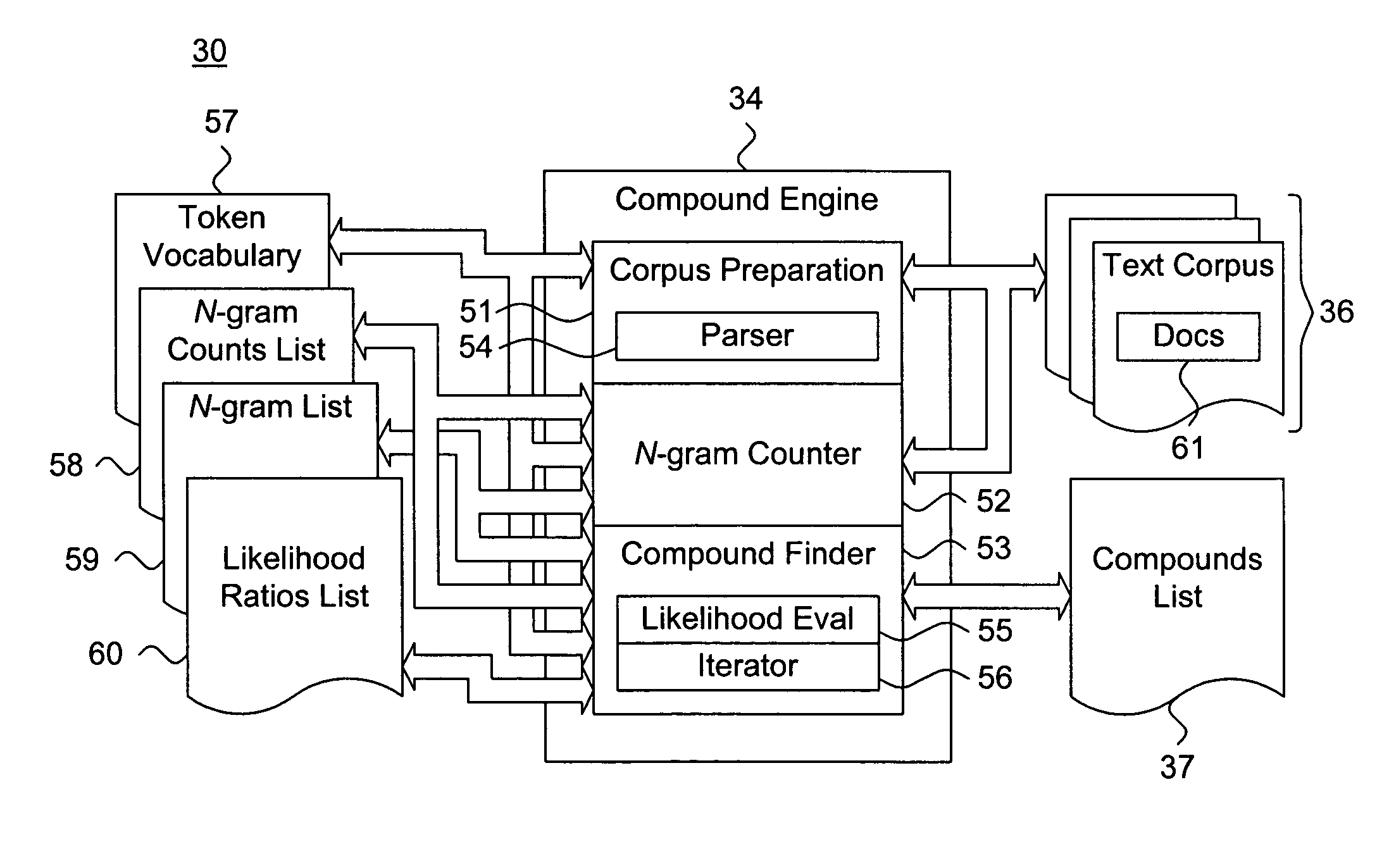

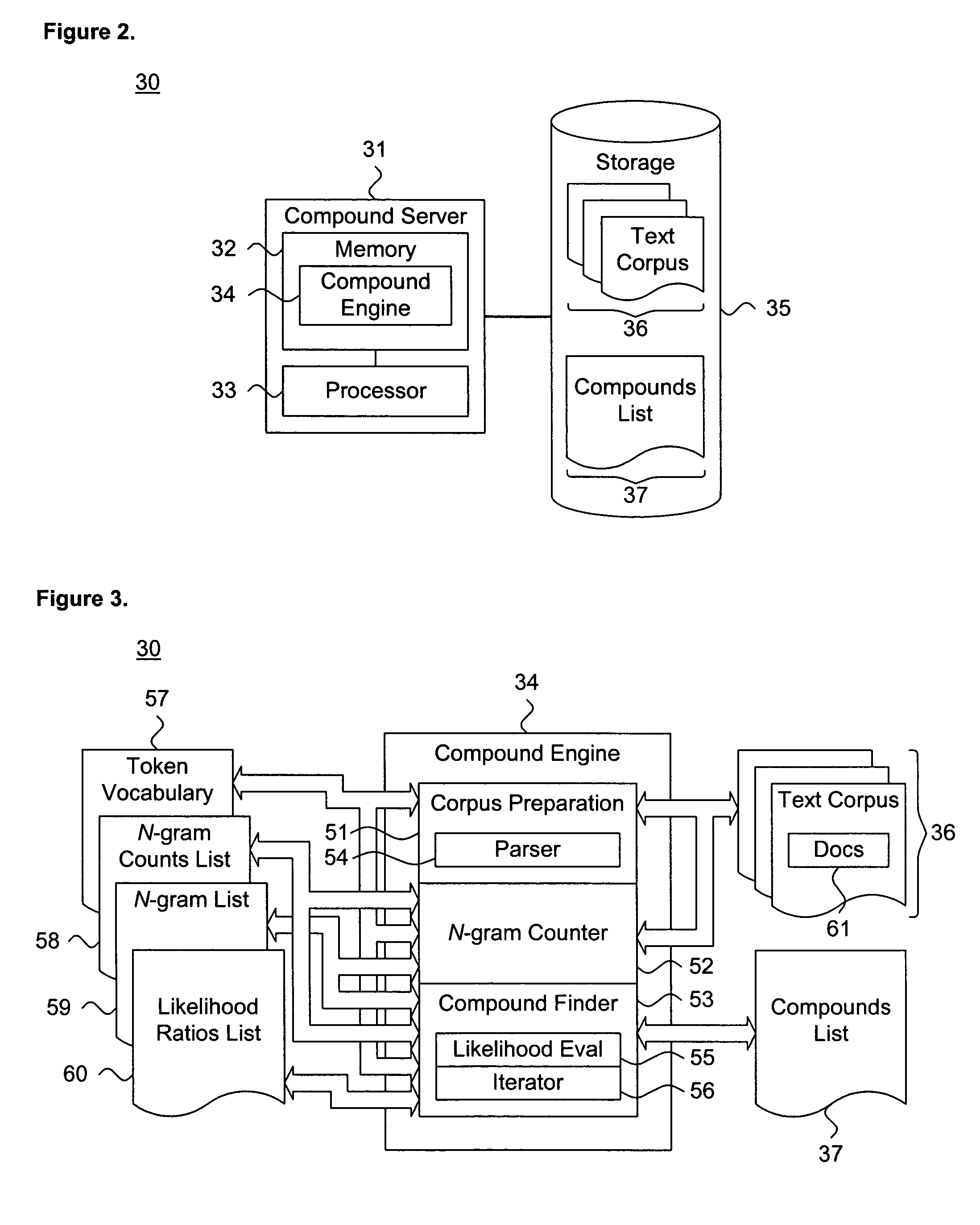

System and method for identifying compounds through iterative analysis

ActiveUS7555428B1Low scoreNatural language data processingSpecial data processing applicationsIterative analysisText corpus

A system and method for identifying compounds through iterative analysis of measure of association is disclosed. A limit on a number of tokens per compound is specified. Compounds within a text corpus are iteratively evaluated. A number of occurrences of one or more n-grams within the text corpus is determined. Each n-gram includes up to a maximum number of tokens, which are each provided in a vocabulary for the text corpus. At least one n-gram including a number of tokens equal to the limit based on the number of occurrences is identified. A measure of association between the tokens in the identified n-gram is determined. Each identified n-gram with a sufficient measure of association is added to the vocabulary as a compound token and the limit is adjusted.

Owner:GOOGLE LLC

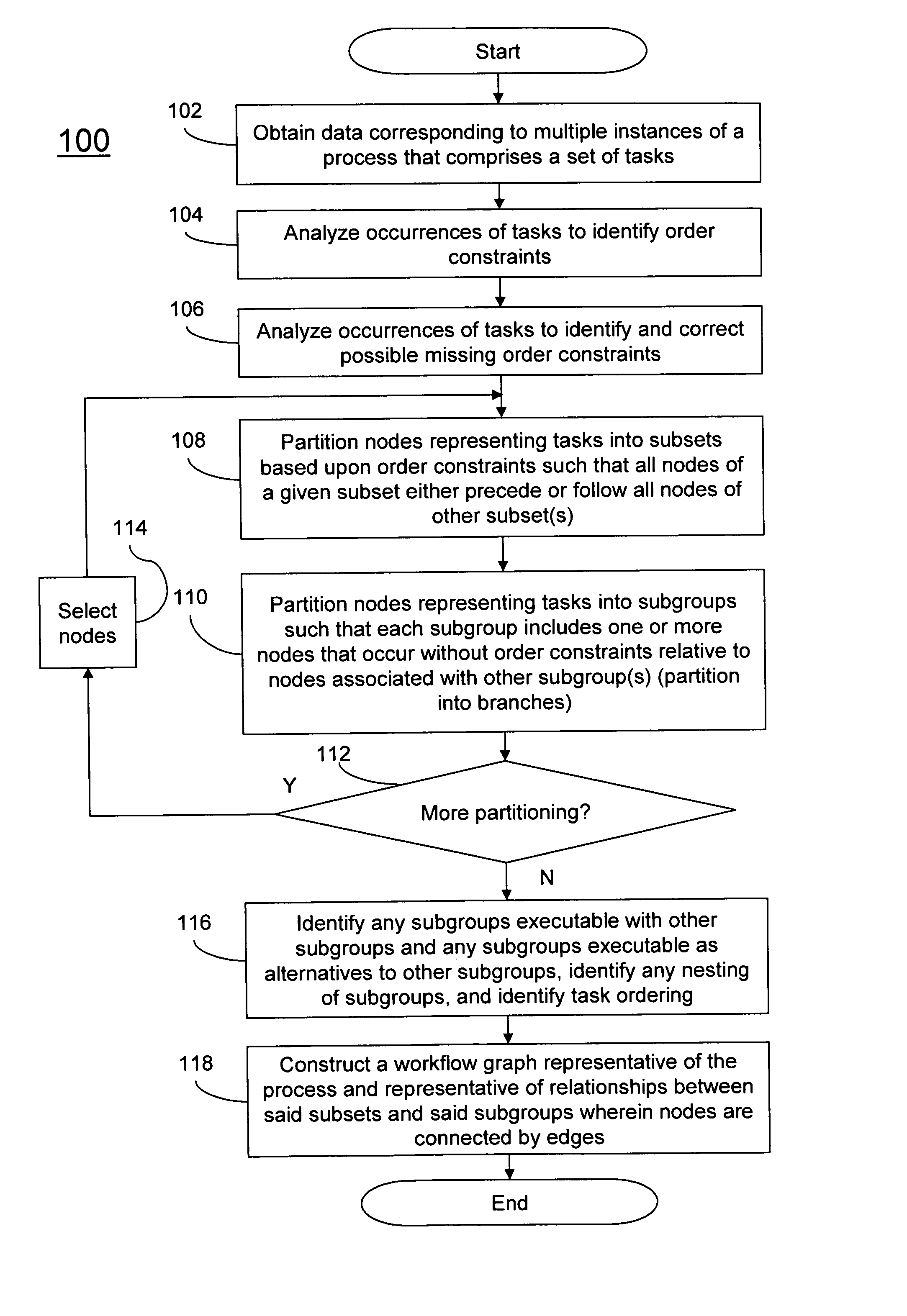

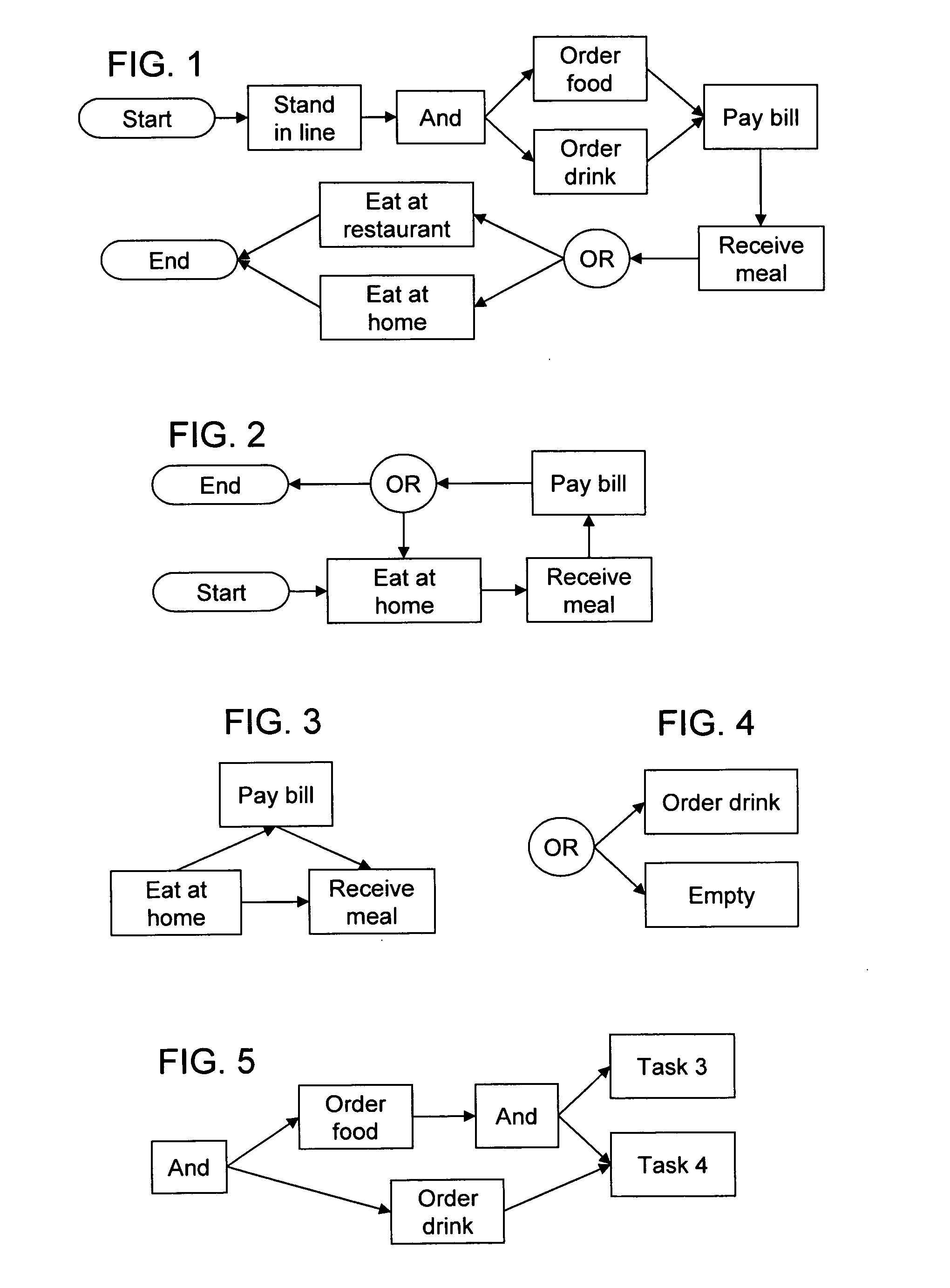

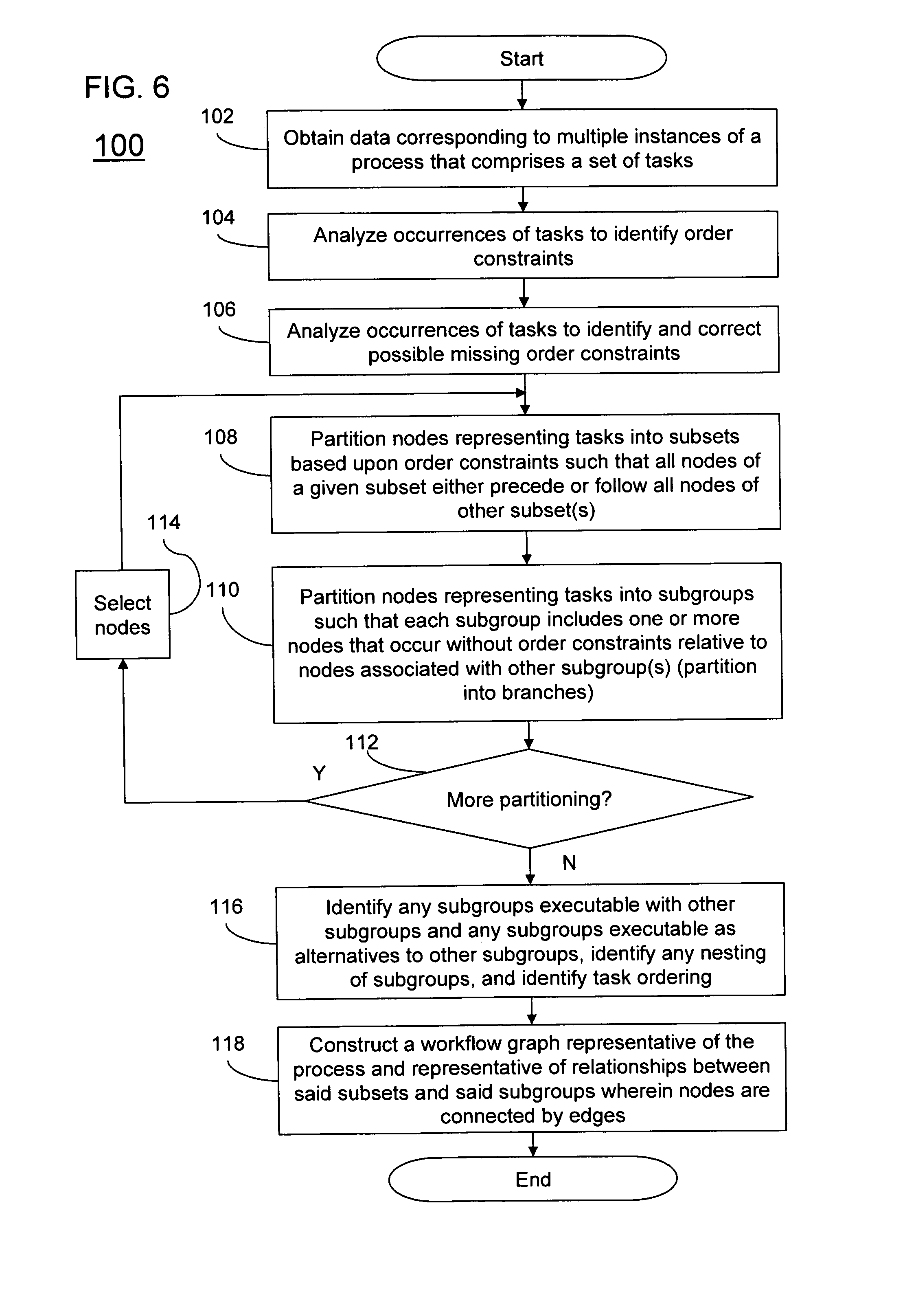

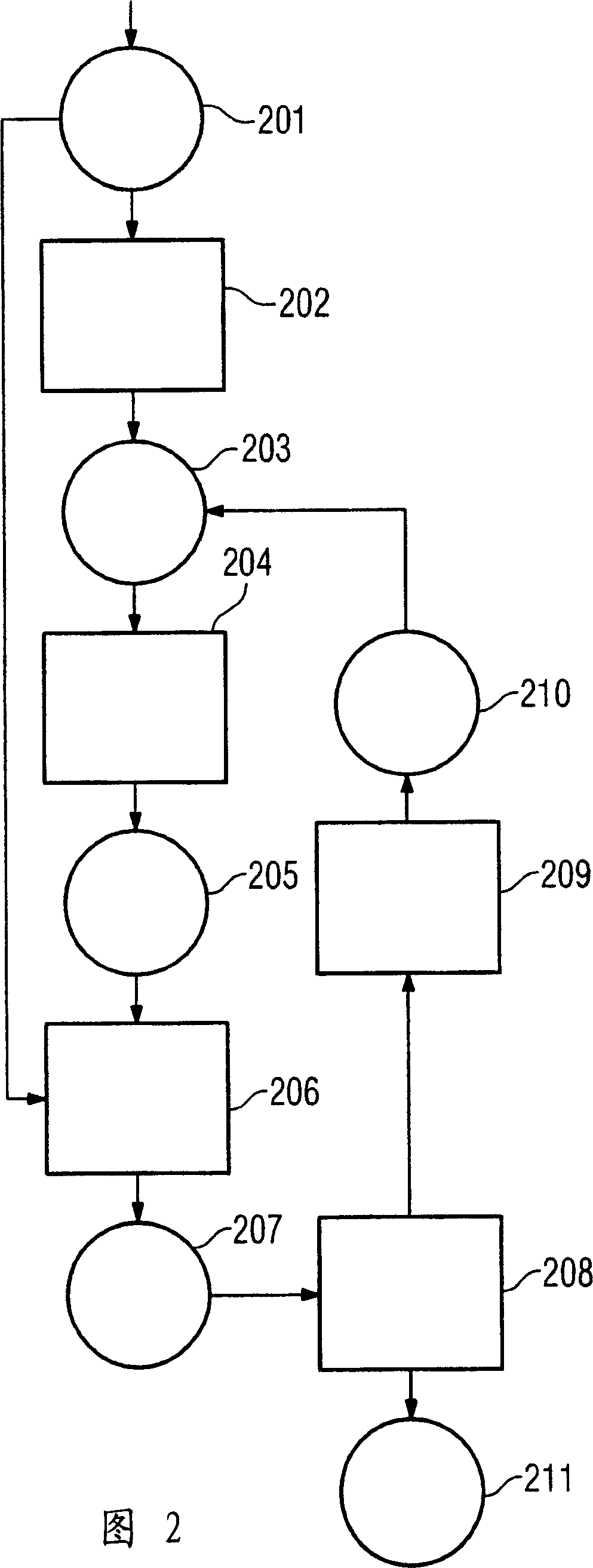

Methods and apparatus for identifying workflow graphs using an iterative analysis of empirical data

InactiveUS20080065448A1Improve understandingFacilitate process optimizationForecastingMultiprogramming arrangementsOrder formTheoretical computer science

A method and system for generating a workflow graph from empirical data of a process are described. A processing system obtains data corresponding to multiple instances of a process, the process including a set of tasks, the data including information about order of occurrences of the tasks. The processing system analyzes the occurrences of the tasks to identify order constraints. The processing system partitions nodes representing tasks into subsets based upon the order constraints, wherein the subsets are sequence ordered with respect to each other such that all nodes associated with a given subset either precede or follow all nodes associated with another subset. The processing system partitions nodes representing tasks into subgroups, wherein each subgroup includes one or more nodes that occur without order constraints relative to nodes associated with other subgroups. A workflow graph representative of the process is constructed wherein nodes are connected by edges.

Owner:JUSTSYST EVANS RES

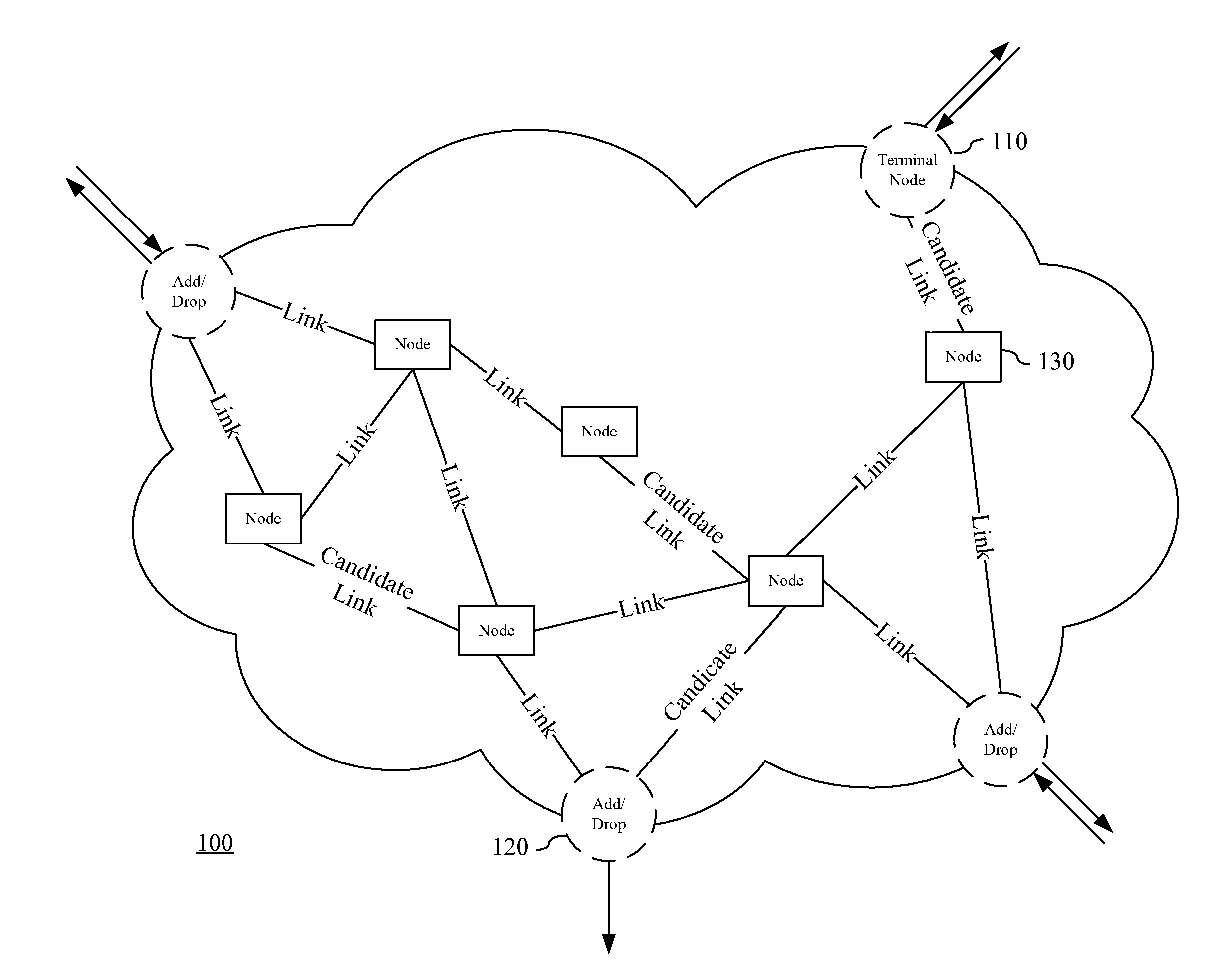

Network Planning and Optimization of Equipment Deployment

ActiveUS20090103453A1Solve insufficient bandwidthSufficient connectivityWavelength-division multiplex systemsData switching by path configurationNetworked systemEngineering

Embodiments of the present invention provide systems, devices and methods for improving the efficient deployment and configuration of networking equipment within a network build-out. In certain embodiments of the invention, an iterative analysis of inter-node equipment placement and connectivity, and inter- and intra-node traffic flow is performed to identify a preferred deployment solution. This analysis of deployment optimization takes into account both configurations from a network node perspective as well as from a network system perspective. Deployment solutions are iteratively progressed and analyzed to determine a preferred solution based on both the cost of deployment and satisfaction of the network demands. In various embodiments of the invention, a baseline marker is generated from which the accuracy of the solution may be approximated that suggests to an engineer whether the deployment is approaching an optimal solution.

Owner:INFINERA CORP

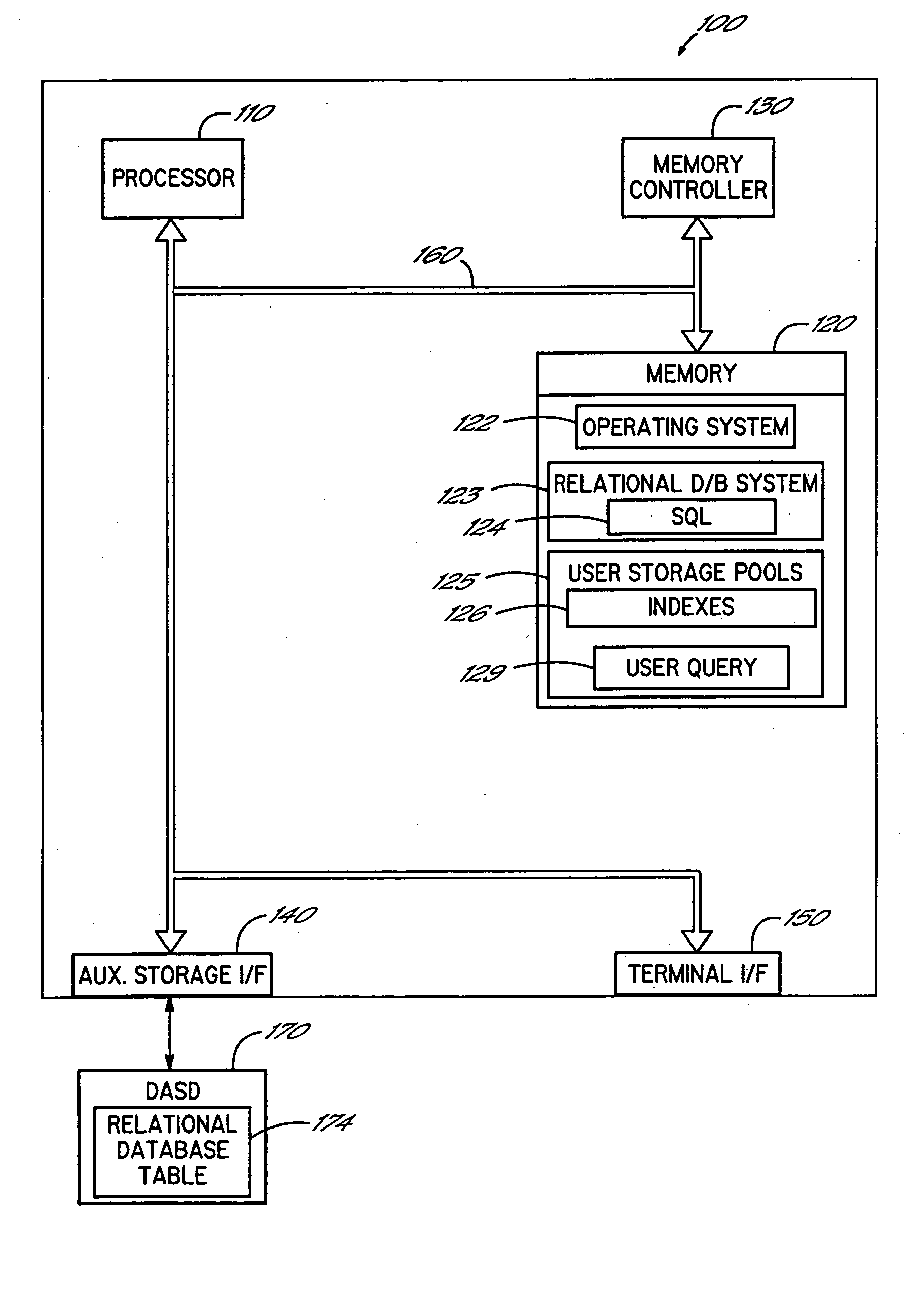

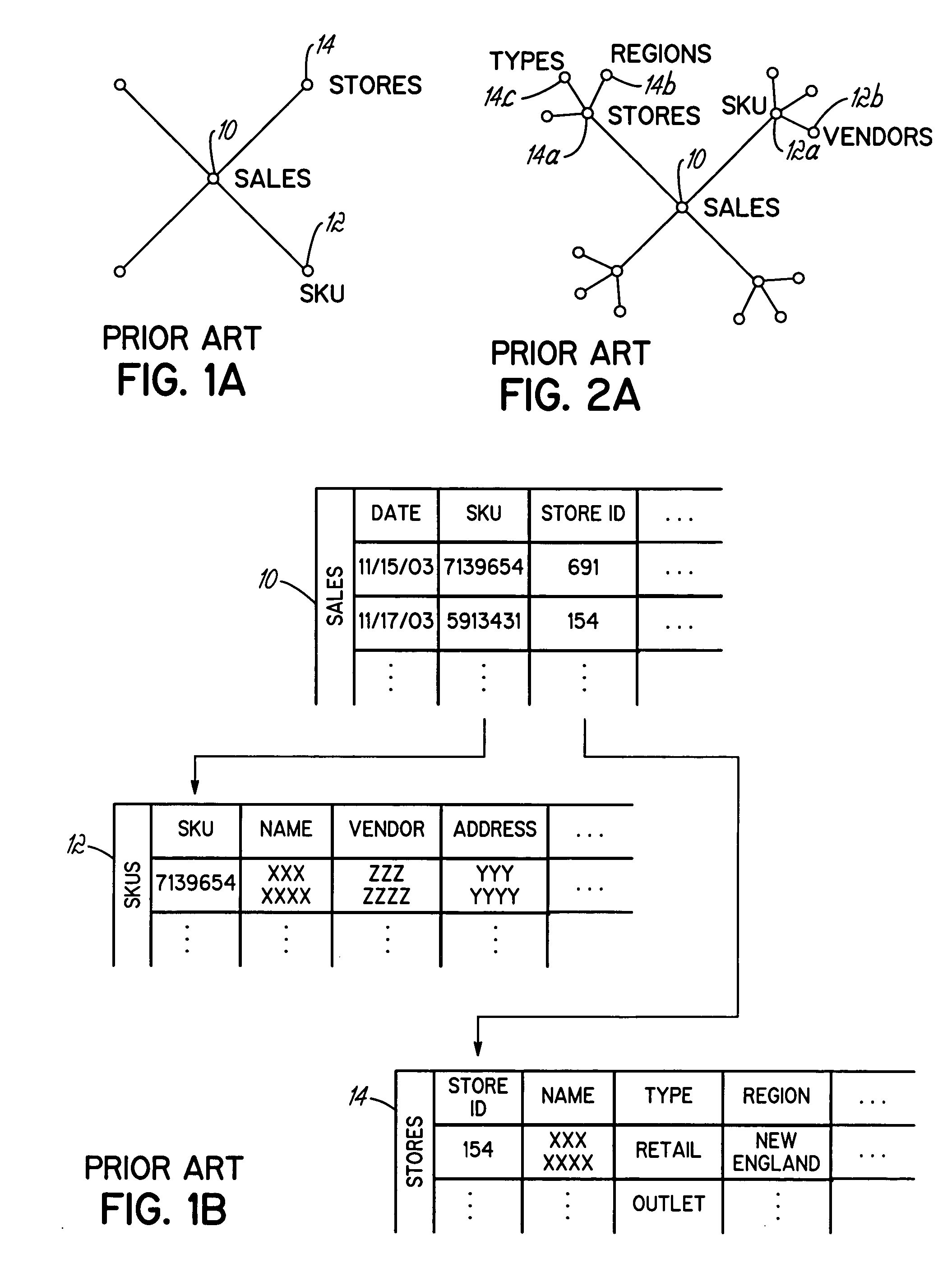

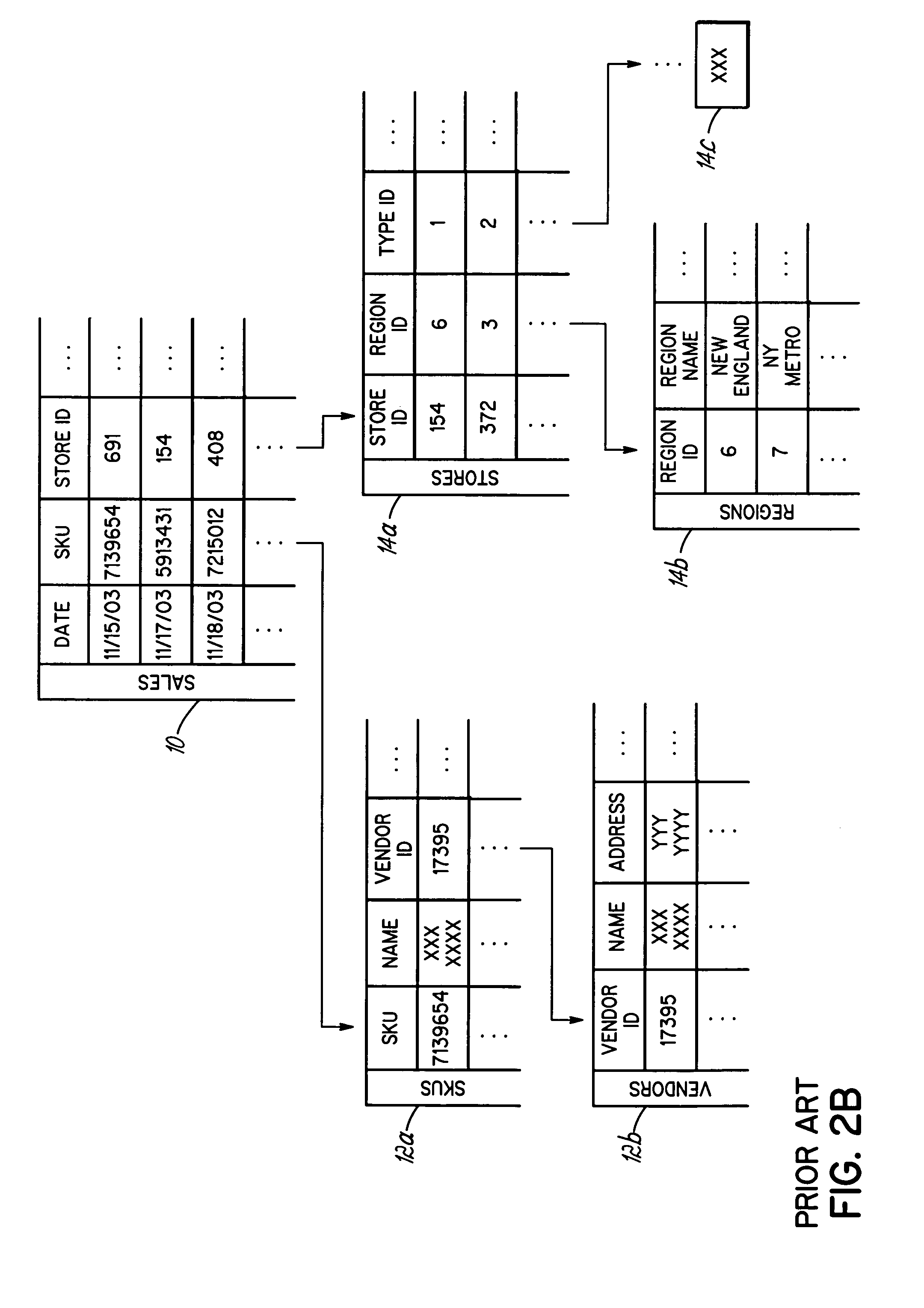

Look-ahead predicate generation for join costing and optimization

InactiveUS20050160102A1Digital data information retrievalDigital data processing detailsRelational databaseTheoretical computer science

A relational database system analyzes each potential join in a query, to determine whether a relation involved in the join is subject to a selection criterion, and evaluate whether that selection criterion or the join per se effects a join reduction. The computational expense of generating a look-ahead predicate comprising the tuples of the second relation matching any applicable selection criterion, is compared to the computational savings that result from the join reduction. The most beneficial look-ahead predicate among all potential joins of relations in the query is identified through iterative analysis of all possible joins. Thereafter, membership in the look-ahead predicate is added as a selection criterion on the first relation, and further iterative analysis is performed of all possible joins of the remaining relations and the look-ahead predicate, to iteratively identify additional joins in the query that benefit from the formation of the look-ahead predicate, and potentially form further look-ahead predicates.

Owner:IBM CORP

Network capacity planning

ActiveUS20110055390A1Multiple digital computer combinationsTransmissionReceiver operating characteristicLookup table

Data representing application deployment attributes, network topology, and network performance attributes based on a reduced set of element attributes is utilized to simulate application deployment. The data may be received from a user directly, a program that models a network topology or application behavior, and a wizard that implies the data based on an interview process. The simulation may be based on application deployment attributes including application traffic pattern, application message sizes, network topology, and network performance attributes. The element attributes may be determined from a lookup table of element operating characteristics that may contain element maximum and minimum boundary operating values utilized to interpolate other operating conditions. Application response time may be derived using an iterative analysis based on multiple instances of one or more applications wherein a predetermined number of iterations is used or until a substantially steady state of network performance is achieved.

Owner:RIVERBED TECH LLC

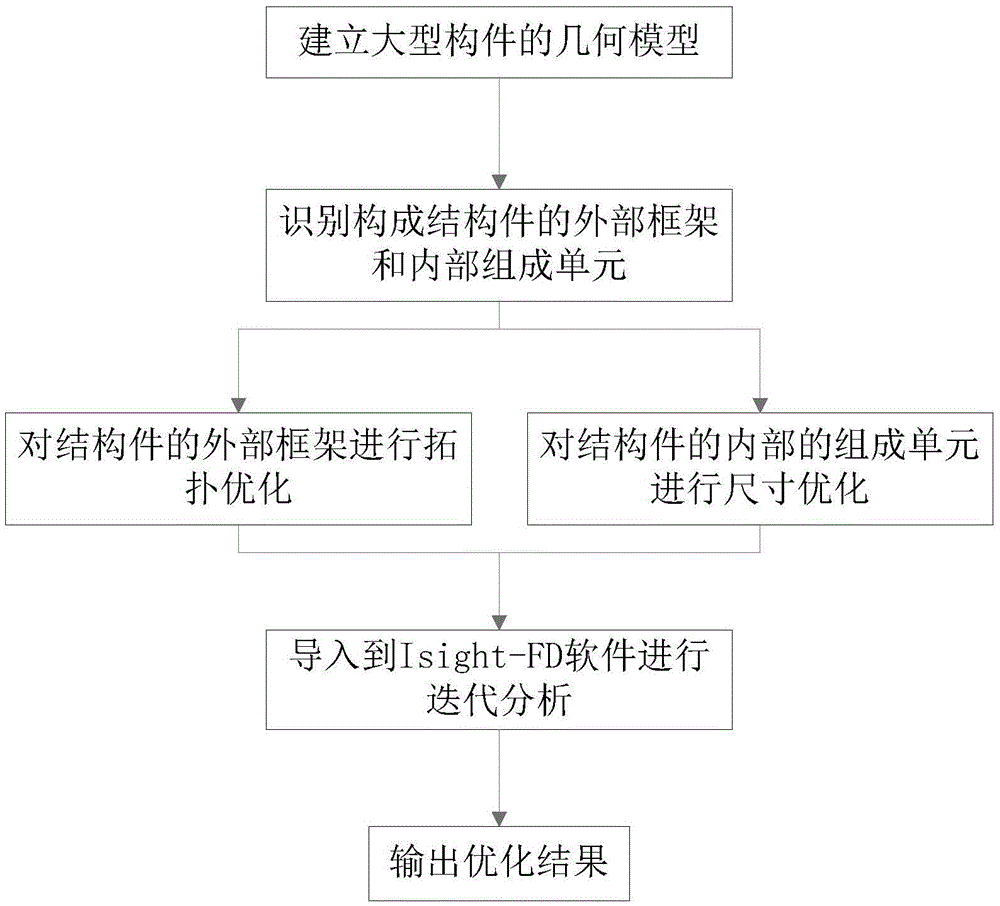

Large structure dynamic optimization design method based on structural decomposition

InactiveCN105528503AMeet the mechanical performance requirementsGeometric CADDesign optimisation/simulationStructural decompositionTopology optimization

The invention relates to a large structure dynamic optimization design method based on structural decomposition. In three-dimensional modeling software, a geometric model of a large member is established, a size variable of an external frame forming a structural member is recognized, and a basic style and the size variable of an internal composition unit are recognized; the external frame of the structural member is subjected to topological optimization, the size of the internal composition unit is optimized, and size data are acquired; the optimized size data are imported into Isight-FD software, optimization iterative analysis is performed on different combinations and different size configurations of the external frame and the internal composition unit according to the constraint condition and the target function, and an optimization result is output. The method is widely applied to lightweight and weight reduction design of a large and heavy-load complicated structure part and has the advantages of capability of realizing design from the simple into the deep as well as fastness and reliability. Due to the robustness of an algorithm, the geometric structure meeting the requirements of mechanical properties can be found only through a few sampling points, and reliable technical support is provided for structure design work of large heavy-load equipment at the present stage.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

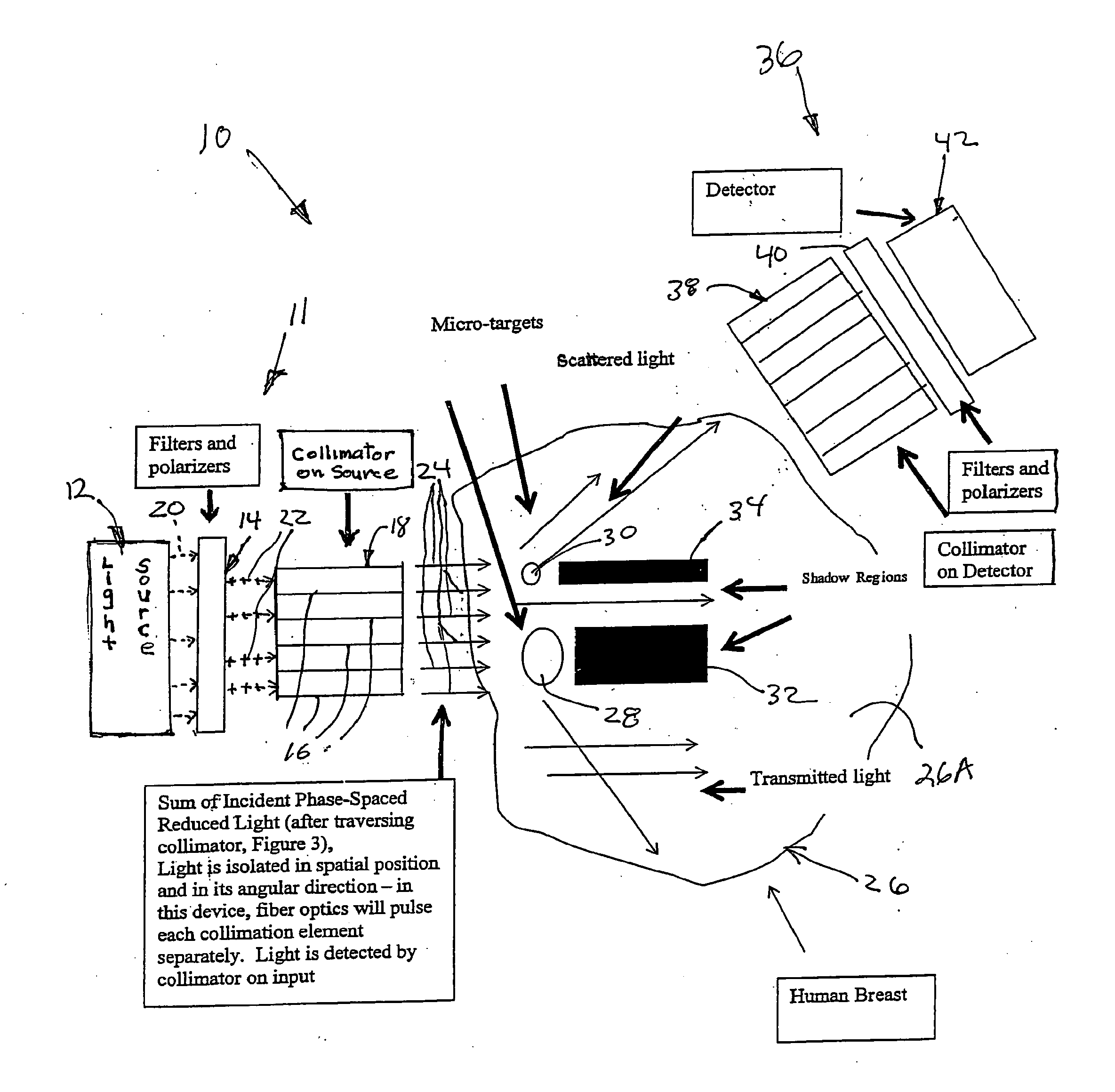

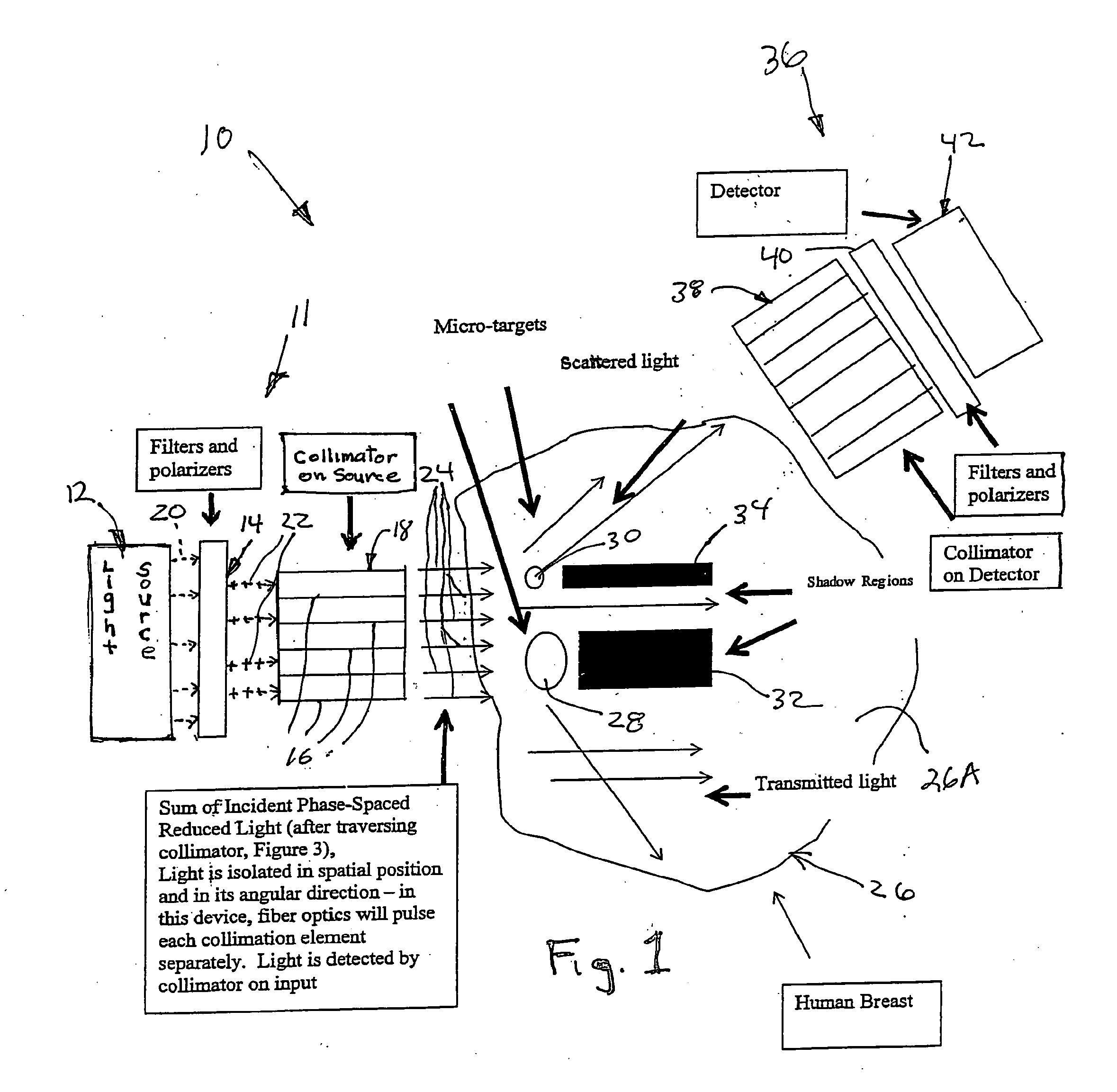

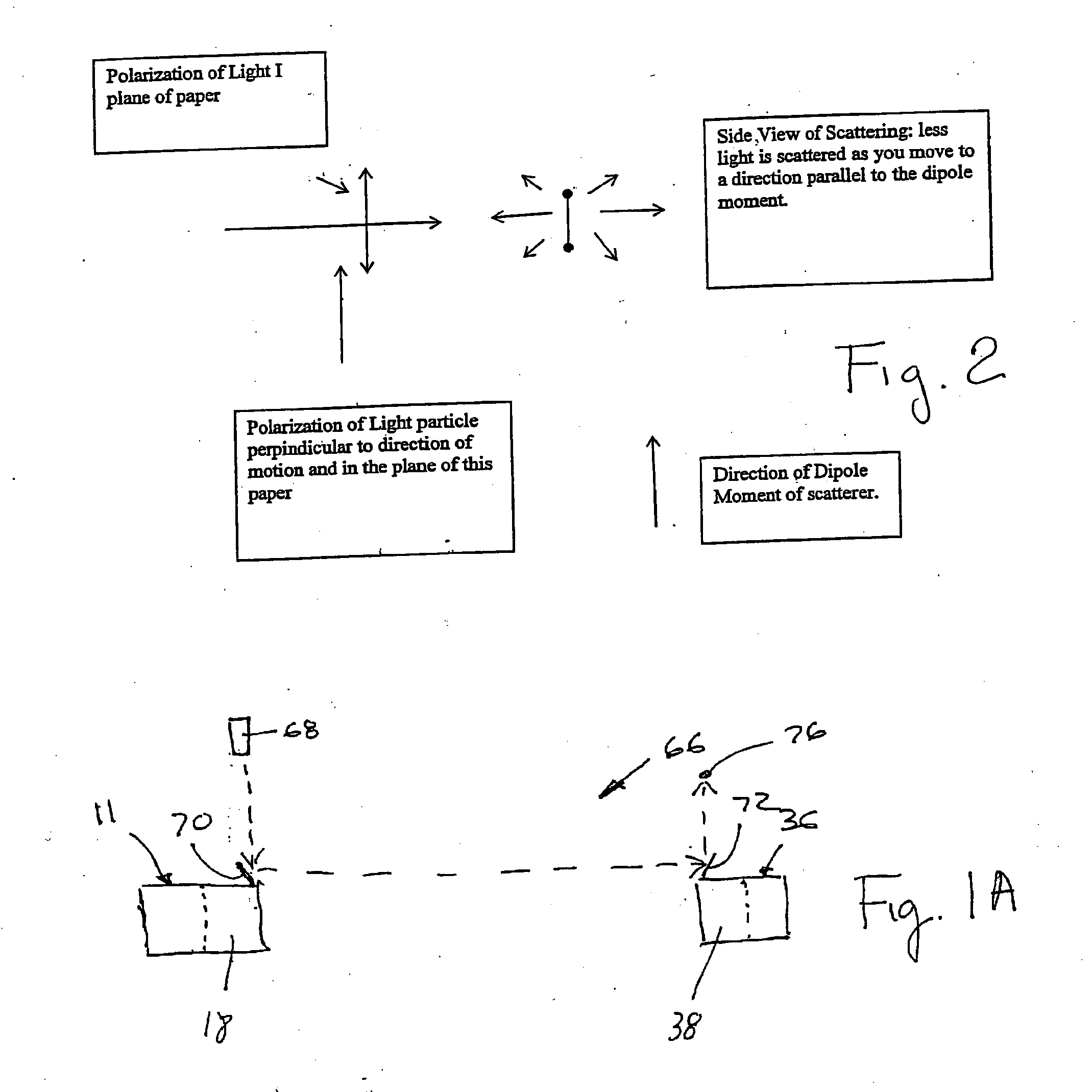

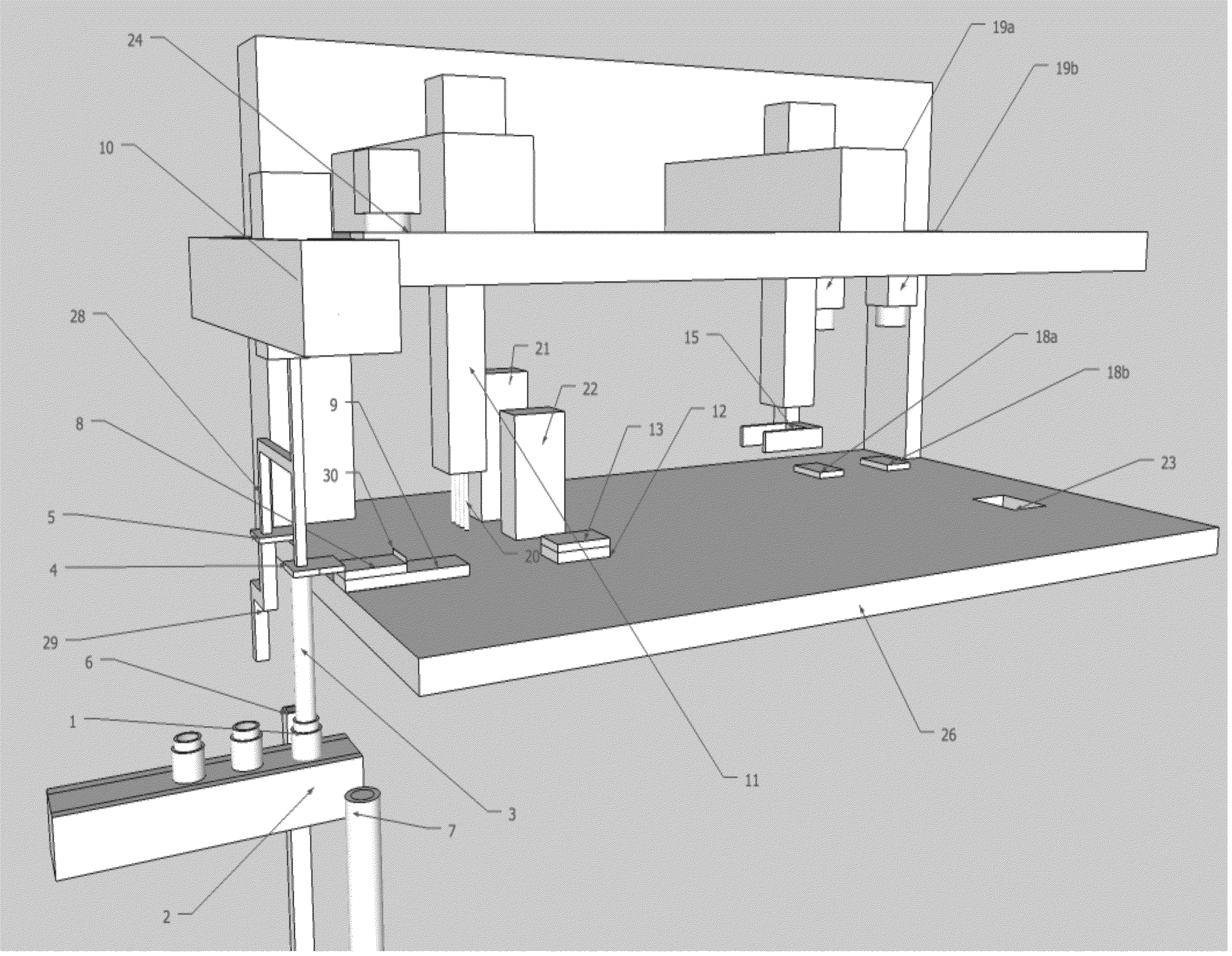

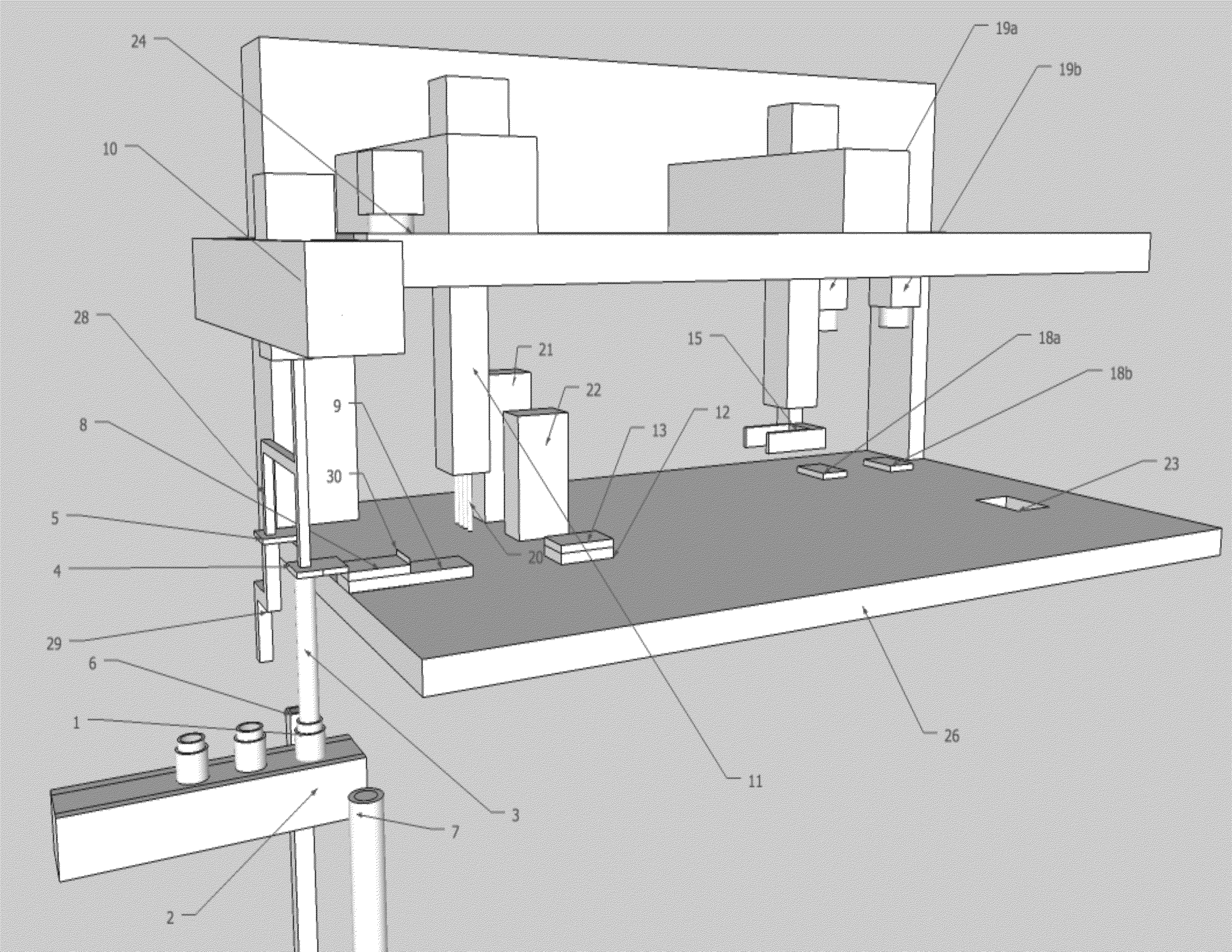

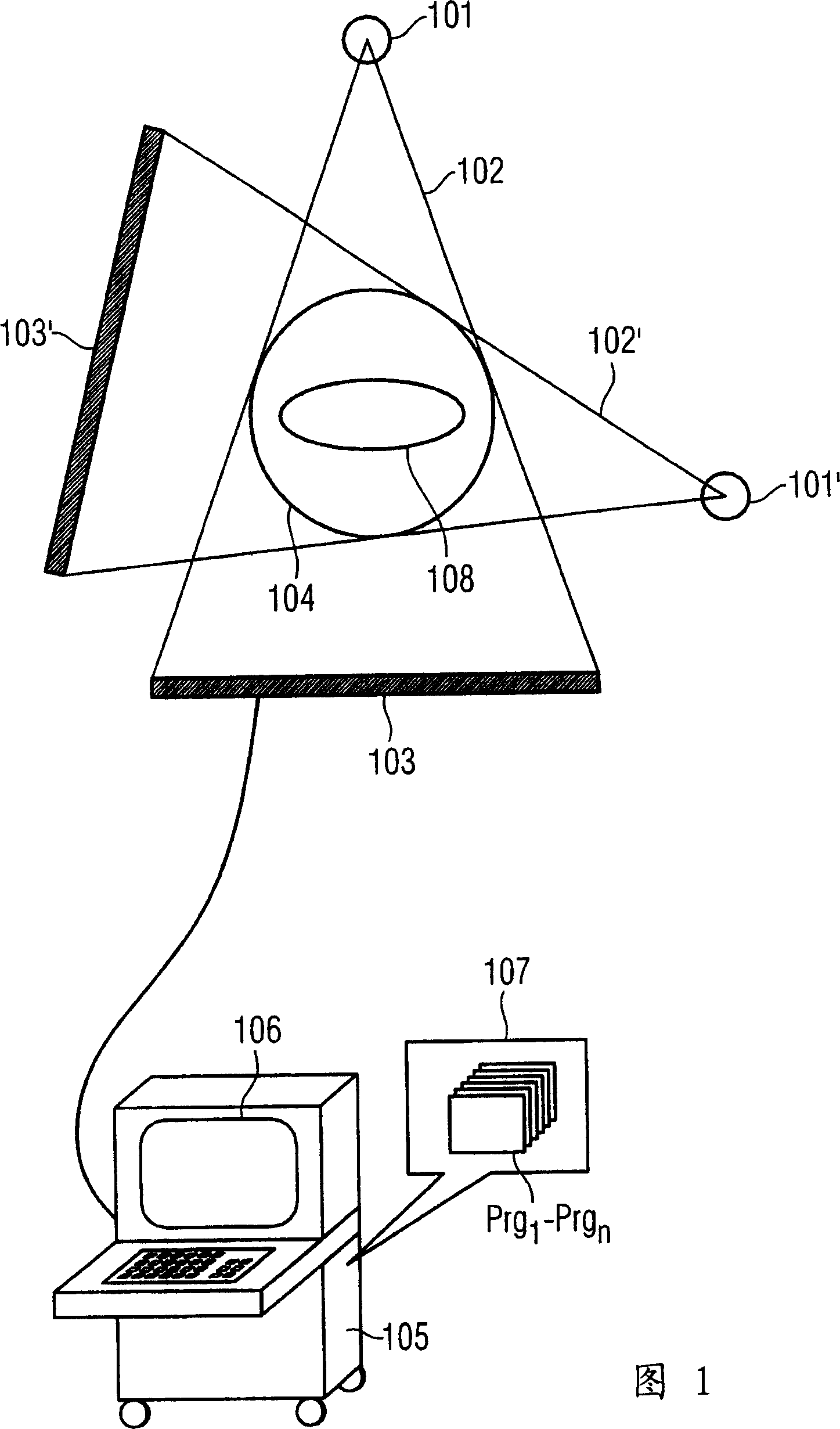

Apparatus and method for phase-space reduction for imaging of fluorescing, scattering and/or absorbing structures

InactiveUS20120059254A1Ease of use and safetyMaximum safetyEndoscopesLuminescent dosimetersInfraredFluorescence

A method and apparatus are disclosed for utilizing light, including ultraviolet, optical and / or infrared, for detecting a body in an object, such as biomaterial or tissue, animal and / or human tissue. The body or object may be made fluorescent by the use of dyes or agent. Light is used to illuminate the body and object and the scattered light, fluorescent and / or emitted light, reflected light and transmitted light are detected and used to reconstruct the body and / or object using an iterative analysis. Further, the method and apparatus may be extended to endoscopic applications to make subcutaneous images of internal tissue above, on, in or beyond endoscopic pathways such as esophagus, stomach, colon, bronchial tubes and / or other openings, cavities and spaces animate or inanimate, and in man-made or industrial materials as carbon / resin structures.

Owner:LIFAN WANG +4

Device for automating behavioral experiments on animals

The present invention enables testing the effect of one or more test agent(s) on one or more animal(s) of a group of animals, preferably insects, to identify agents that affect behavioral properties of the animals. The invention is comprised of the steps of providing animals suitable for testing, bringing those animals into contact with the test agent(s), moving the animals from a growth container to isolate them, prepare and separate the animals for singulation, relocated the animals to a behavior arena, subject the animals to behavioral tracking to assess their behavioral state, and removing the animals from the behavioral tracking to facilitate iterative analysis of further groups. The invention enables a method for preparing a therapeutic compound for the treatment of an animal disease.

Owner:CLARIDGE CHANG ADAM

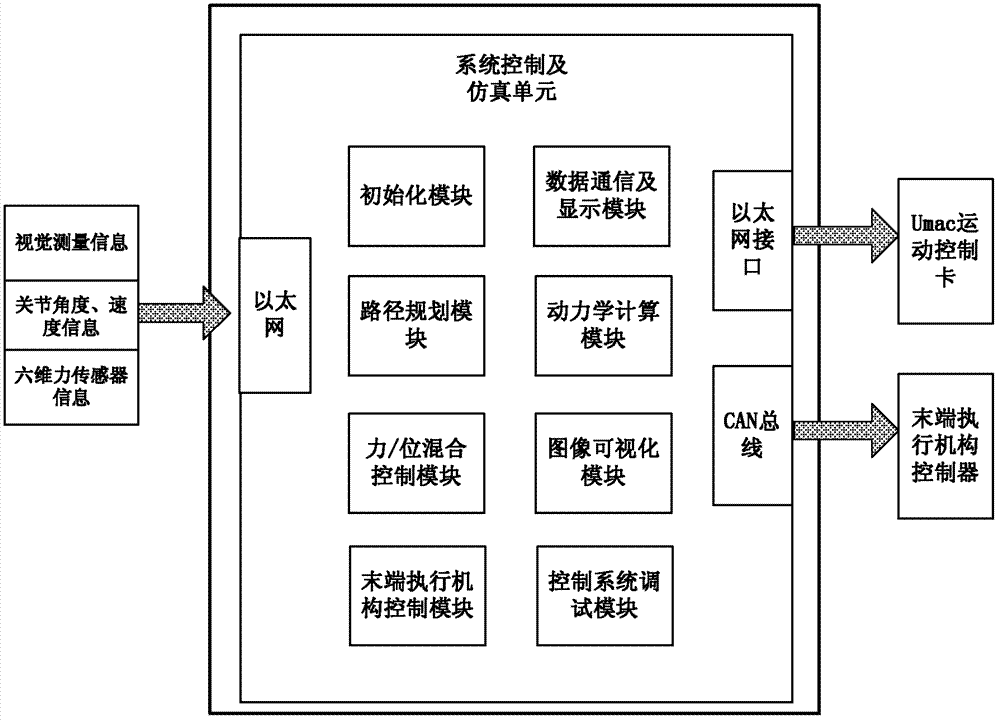

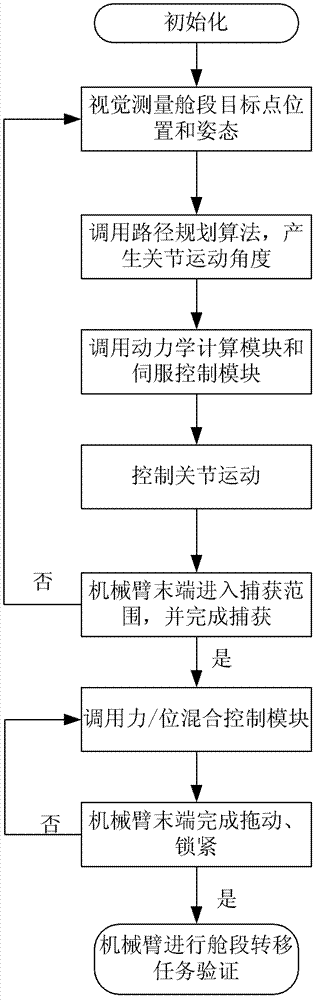

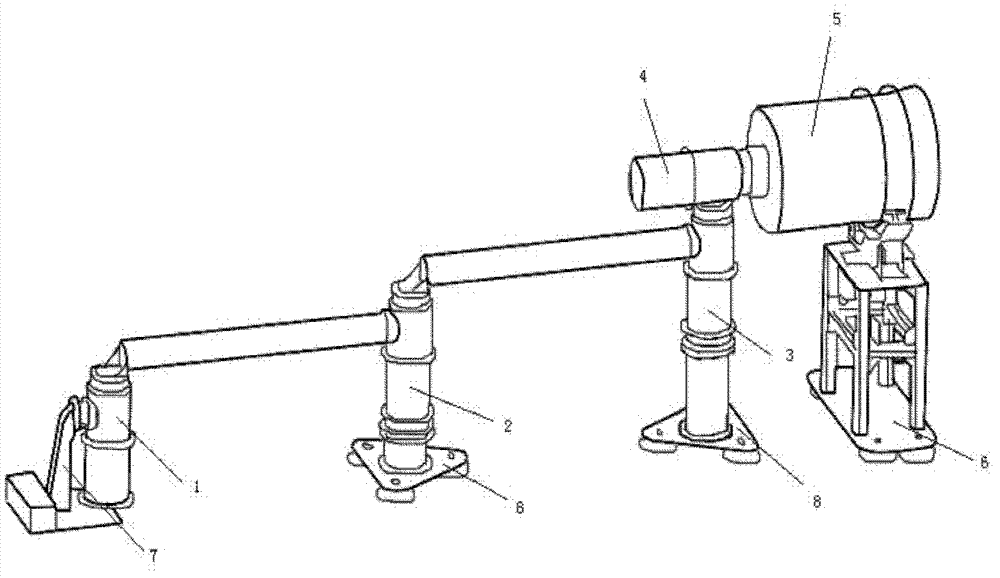

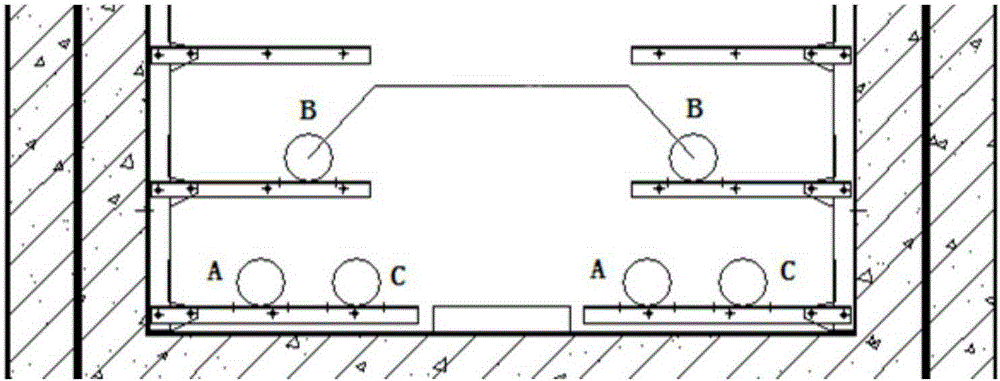

Planar simulation and verification platform for four-degree-of-freedom robot arm control system

ActiveCN102778886AHigh precisionSimulator controlElectric testing/monitoringCollision dynamicsSimulation

The invention discloses a planar simulation and verification platform for a four-degree-of-freedom robot arm control system, comprising four simulated robot arm joints, one set of six-dimensional force sensors, two arm rods, one end executor with binocular visual camera, one set of remote measurement camera and remote measurement camera controller, one set of middle distance measurement camera and middle distance measurement camera controller, a UMAC (Universal Motion and Automation Controller) motion control card, an industrial personal computer, a gas floating platform and a simulated fixing wall. Under the planar motion state, the planar simulation and verification platform for the four-degree-of-freedom robot arm control system can realize the verification of a simulation test on a high-precision and high-stability servo control algorithm for a great-load and multiple-degree-of-freedom system of a large spatial robot arm, the verification of a control test on the grasping, collision dynamics and control of the end executor, and the verification of a simulation test on the coupling characteristics between the dynamics of the spatial robot arm and the control system; moreover, the planar simulation and verification platform undergoes iterative analysis with a simulated model; and therefore, a verification method is provided for the breakthroughs of a spatial large robot arm control system algorithm and a key technique.

Owner:BEIJING INST OF SPACECRAFT SYST ENG

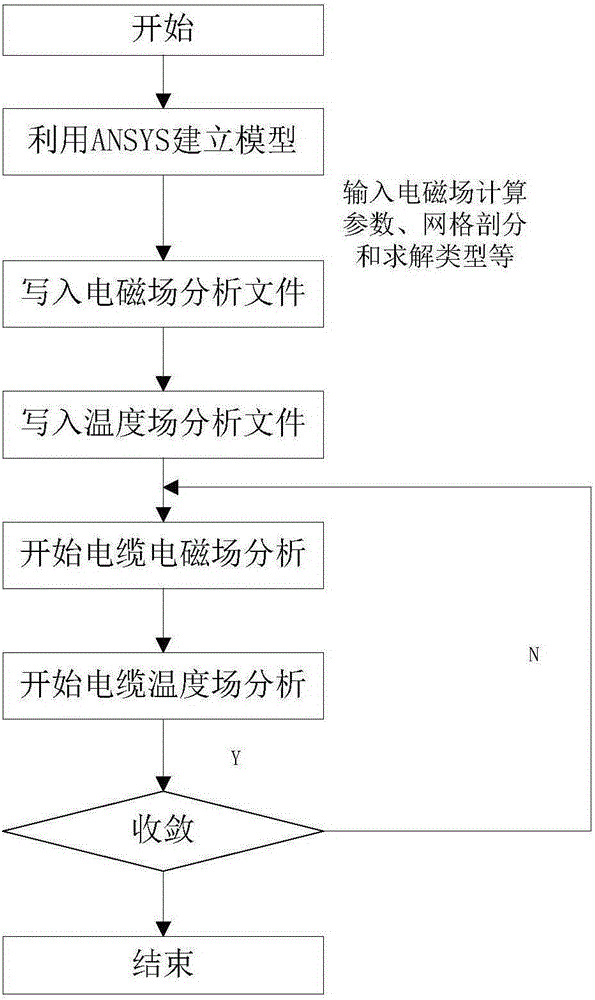

Calculating method for power-cable magnetic-thermal coupling field

InactiveCN105184003AAddressing Magnetic-Thermal Coupling IssuesImprove capacity utilizationSpecial data processing applicationsPower cablePhysical model

The invention provides a calculating method for a power-cable magnetic-thermal coupling field. The method comprises the steps that 1, a power-cable electromagnetic field physical model and a power-cable temperature field physical model are built; 2, power-cable physical simulation models are built, and calculating parameters and boundary conditions are determined; 3, the corresponding calculating parameters are obtained by measuring a power cable; 4, an electromagnetic field and a temperature field of the power cable are solved according to the electromagnetic field physical simulation model and the temperature field physical simulation model; 5, if calculating results are convergent, the electromagnetic field and the temperature field of the power cable are obtained, magnetic-thermal coupling analysis is achieved, and if the calculating results are not convergent, iterative analysis is performed by returning to the step 4 until the calculating results are convergent. The calculating method is flexible in solving mode and very suitable for the magnetic-thermal coupling problems of processing cable loss and temperature rising.

Owner:BEIJING ELECTRIC POWER ECONOMIC RES INST +2

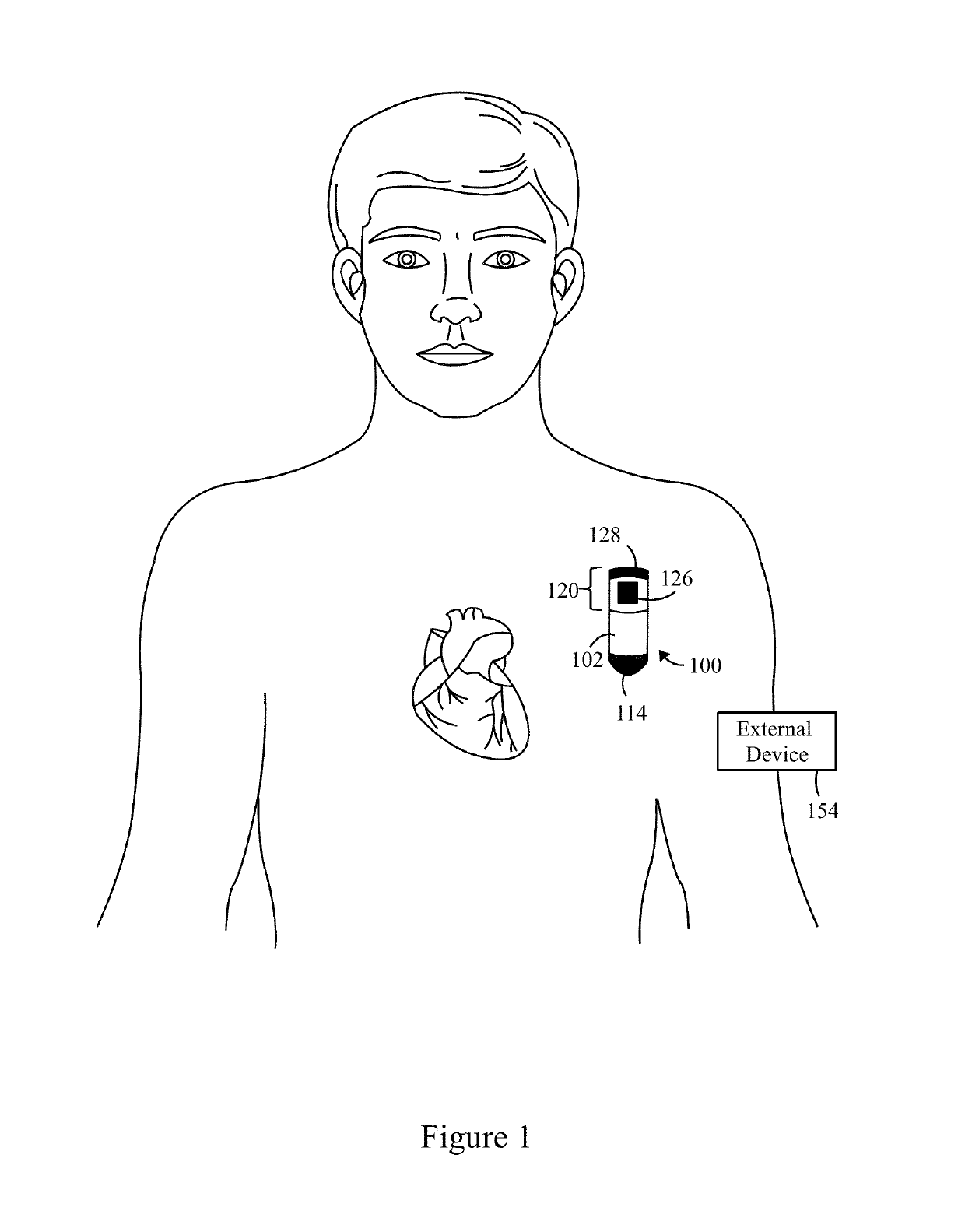

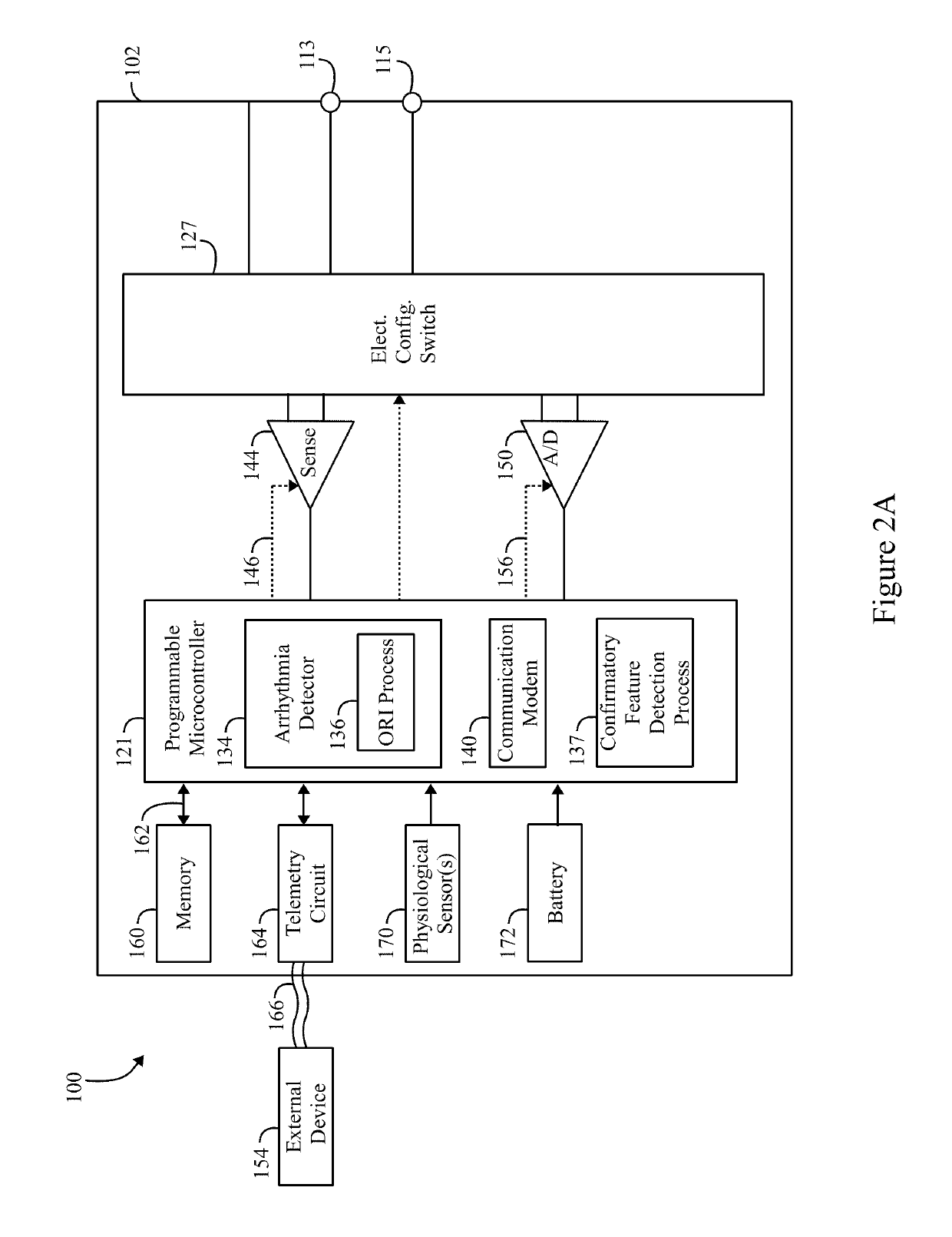

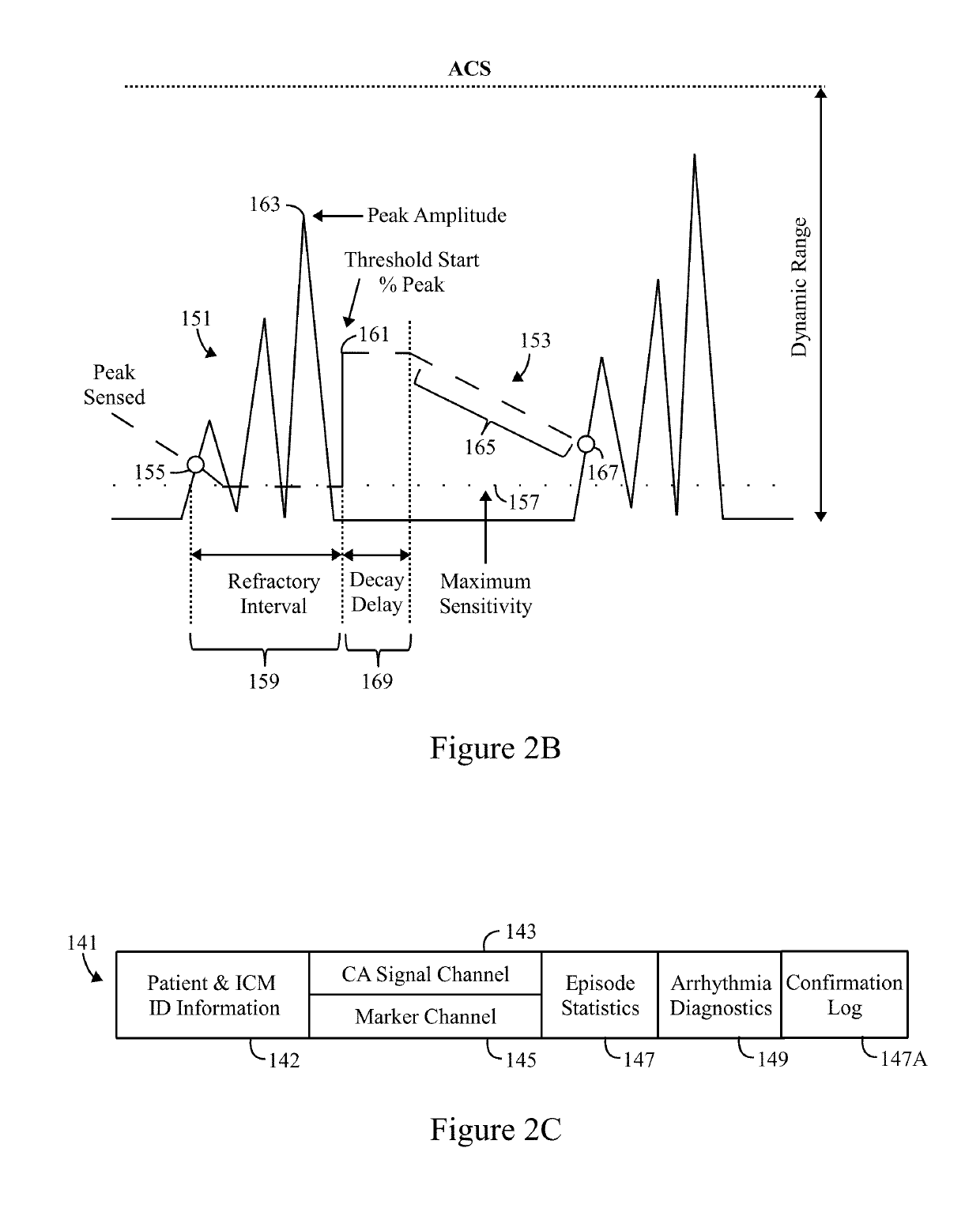

Method and system to detect r-waves in cardiac arrhythmic patterns

Computer implemented methods and systems for detecting arrhythmias in cardiac activity are provided. The method is under control of one or more processors configured with specific executable instructions. The method obtains a far field cardiac activity (CA) data set that includes far field CA signals for beats. The method applies a feature enhancement function to the CA signals to form an enhanced feature in the CA data set. The method calculates an adaptive sensitivity level and sensitivity limit based on the enhanced feature from one or more beats within the CA data set and automatically iteratively analyzes a beat segment of interest by comparing the beat segment of interest to the current sensitivity level to determine whether one or more R-waves are present within the beat segment of interest. The method repeats the iterative analyzing operation while progressively adjusting the current sensitivity level until i) the one or more R-waves are detected in the beat segment of interest and / or ii) the current sensitivity level reaches the sensitivity limit. The method detects an arrhythmia within the beat segment of interest based on a presence or absence of the one or more R-waves and records results of the detecting of the arrhythmia.

Owner:PACESETTER INC

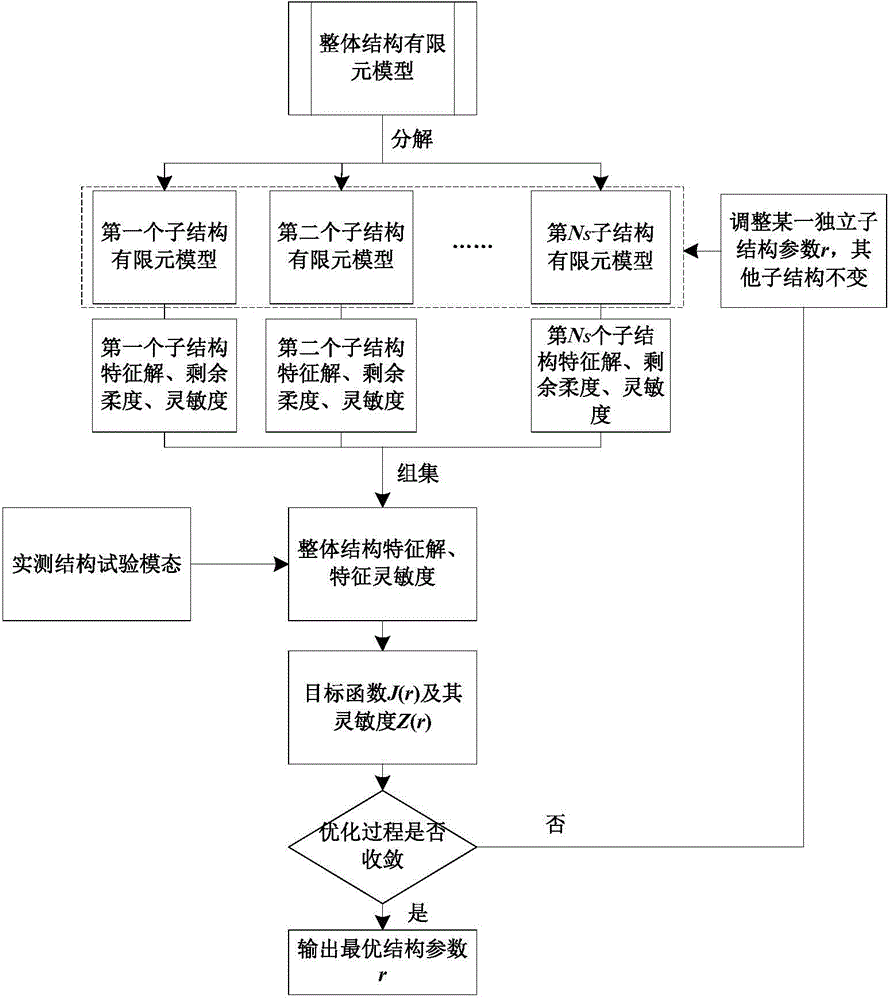

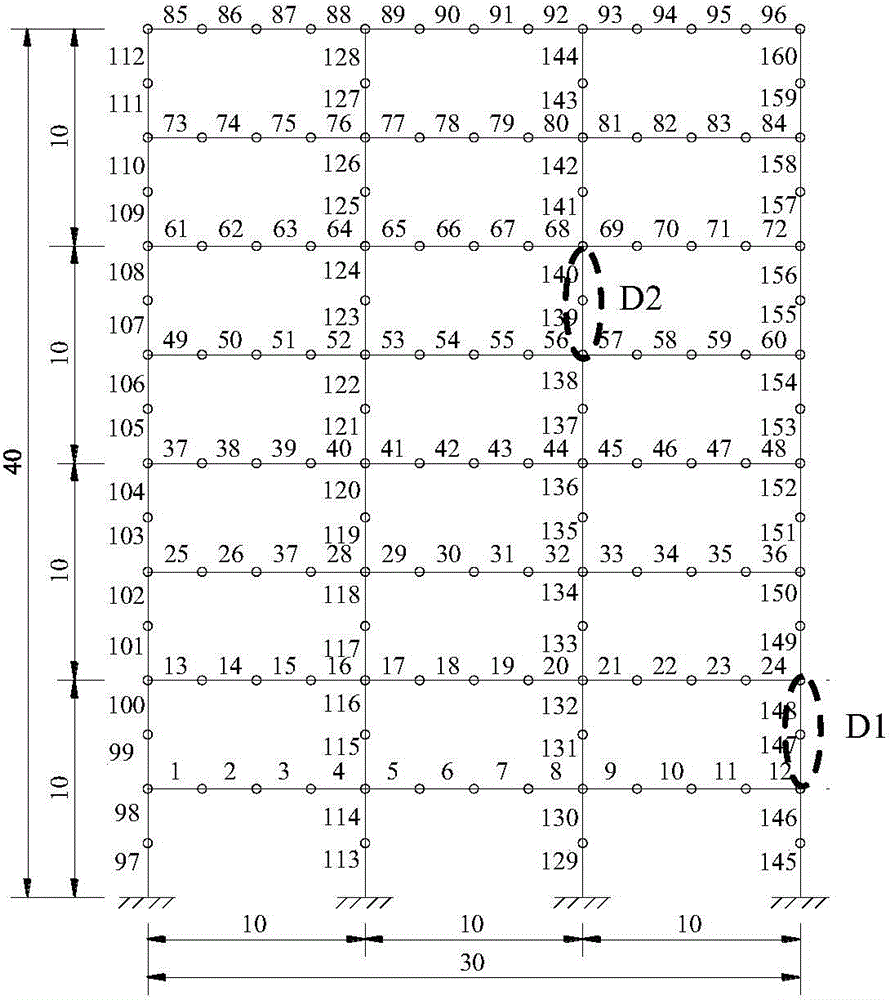

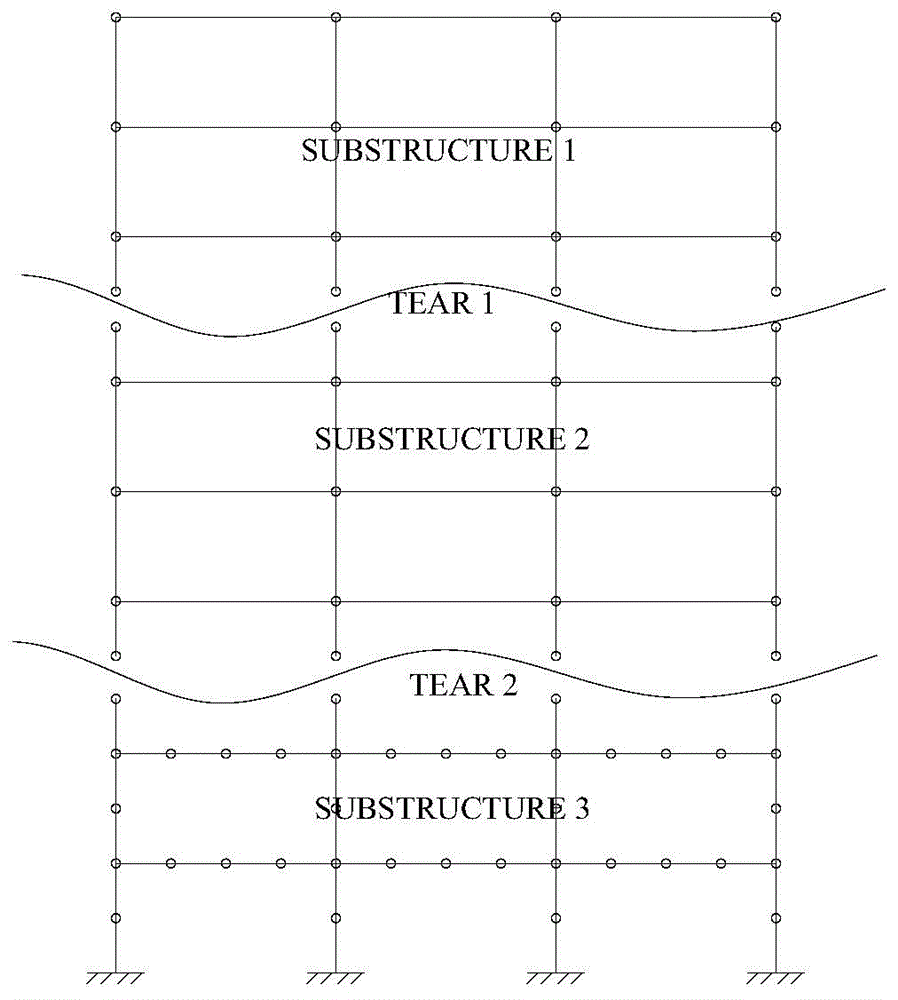

Finite element model correction method based on positive substructure

InactiveCN104484502ALighten the computational burdenReduce analytical computing equipment requirementsSpecial data processing applicationsElement modelCorrection method

The invention discloses a finite element model correction method based on a positive substructure. The finite element model correction method includes the steps: firstly, dividing an integral structure finite element model into independent substructure models; building an integral structure characteristic equation according to the substructure models to obtain an integral structure characteristic solution; building an integral structure characteristic sensitivity equation according to the integral structure characteristic equation to obtain an integral structure characteristic solution sensitivity matrix; building a target function according to residual errors of the integral structure characteristic solution and a measured integral structure test mode; adjusting structural parameters of the substructure and minimizing the target function to obtain optimal structural parameters; adjusting structural parameters of the finite element model and correcting the finite element model; recognizing structural damage according to change of result parameters of the finite element model. When a structure is locally damaged and a local area needs to be corrected, large structure finite element model correction efficiency and precision are effectively improved only by iteratively analyzing the local substructure models without repeatedly analyzing the integral structure model.

Owner:HUAZHONG UNIV OF SCI & TECH

Systems and methods for vehicle resource management

Systems, methods, apparatus, and computer-readable media provide for allocating vehicle resources to future vehicle requirements. In some embodiments, allocating a vehicle resource to a vehicle requirement may be based on an iterative analysis of candidate vehicle resources using one or more of: a suitability of a candidate vehicle resource to fullfill the vehicle requirement, a journey time from a vehicle location to a start location, and / or a start time for the vehicle requirement.

Owner:ADDISON LEE

System and method for automated benchmarking for the recognition of best medical practices and products and for establishing standards for medical procedures

InactiveUS20090070342A1Reduce gapLow costDrug and medicationsDigital data processing detailsMedical equipmentCentral database

A system for the collection, management, and dissemination of information relating at least to a medical procedure is disclosed. The system includes a user interface adapted to provide raw data information at least about one of a patient, the medical procedure, and a result of the medical procedure. A medical device communicates with the user interface, receives the raw data information from the user interface, and generates operational information during use. A central database communicates at least with the medical device and receives data from the medical device. The central database is used to create related entries based on the raw data information and the operational information and optionally transmits the related entry to the medical device or the medical device user. The related entry includes information that provides guidance based on previously tabulated, related medical procedures. A system for the iterative analysis of medical standards is also disclosed along with methods for the evaluation of medical procedures and standards.

Owner:BAYER HEALTHCARE LLC

Method of reproducing tomography image of object

InactiveCN1956006AReduce the number of timesReduce computing timeImage enhancementReconstruction from projectionBack projectionTomography

A method is disclosed for the iterative analytical reconstruction of a tomographic representation of an object from projection data of a moving radiation source through this object onto a detector. In an embodiment of the method, corrections are undertaken iteratively with the aid of back projections of the object to be represented from calculated projection data, wherein the corrections are performed on the projections.

Owner:SIEMENS AG

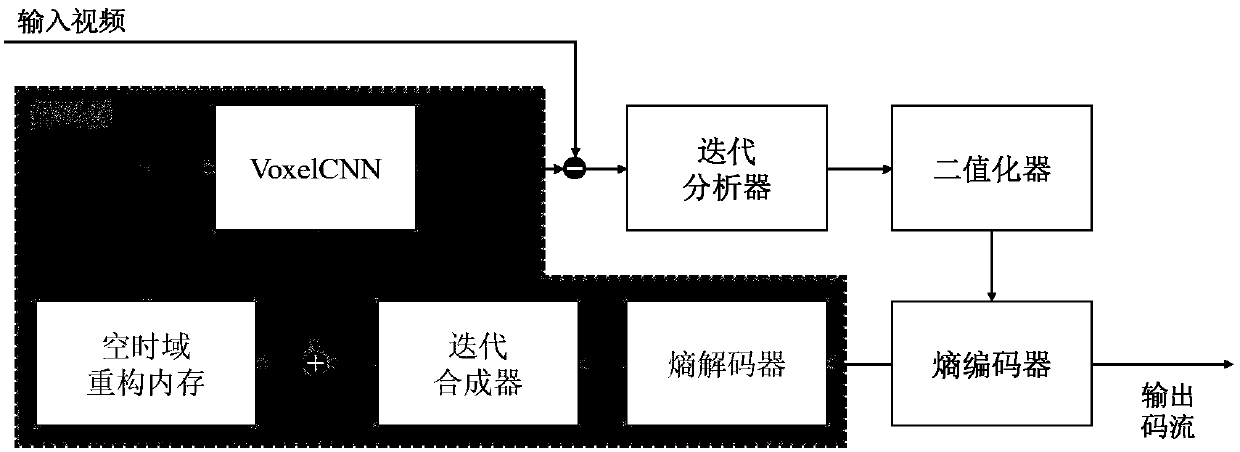

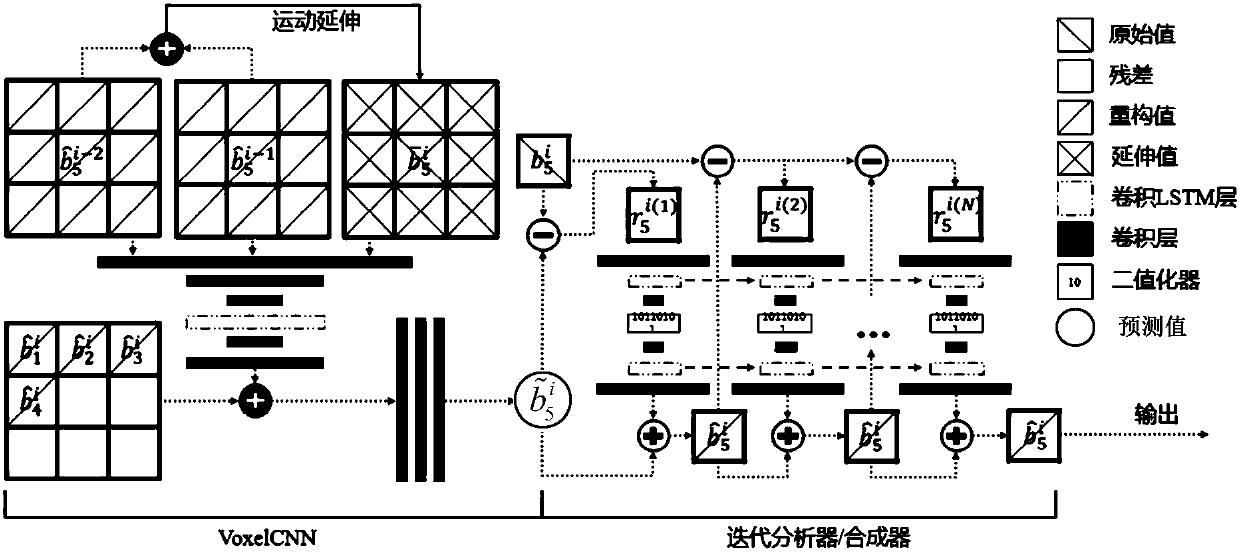

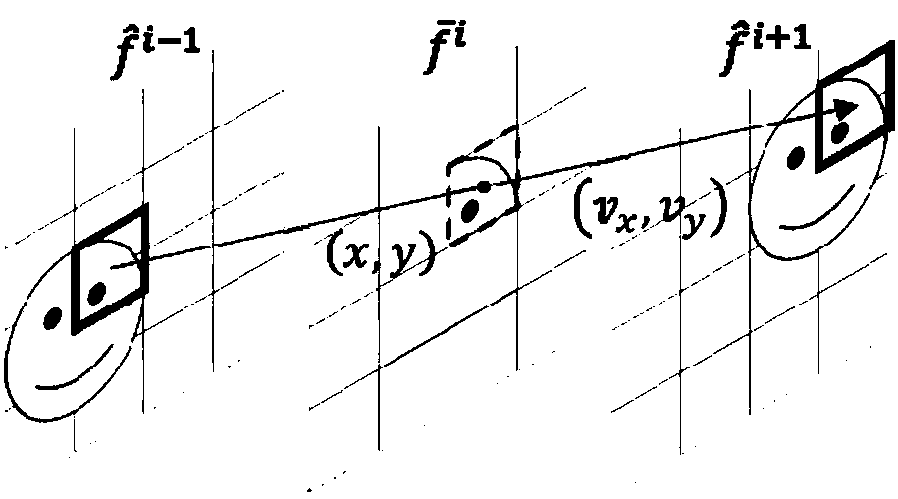

Learning-based video coding and decoding framework

ActiveCN108174218AEncoding rate-distortion optimization controlDigital video signal modificationTime domainCoding block

The invention discloses a learning-based video coding and decoding framework, which comprises a space-time domain reconstruction memory, a space-time domain prediction network, an iterative analyzer,an iterative synthesizer, a binarization device, an entropy coder and an entropy decoder, wherein the space-time domain reconstruction memory is used for storing a reconstructed video content after coding and decoding; the space-time domain prediction network is used for utilizing the space-time domain correlation of the reconstructed video content, modeling the reconstructed video content througha convolutional neural network and a circulating neural network, and outputting a predicted value of a current coding block, wherein a residual error is formed by subtraction of the predicted value and an original value; the iterative analyzer and the iterative synthesizer are used for coding and decoding the input residual error step by step; the binarization device is used for quantizing the output of the iterative analyzer into a binary representation; the entropy coder is used for carrying out entropy coding on the quantized coding output in order to obtain an output code stream; and theentropy decoder is used for carrying out entropy decoding on the output code stream and outputting the output code stream to the iterative synthesizer. According to the coding framework, the prediction of a space-time domain is realized through the learning-based VoxelCNN (namely space-time domain prediction network), and the control of video coding rate distortion optimization is realized througha residual iterative coding method.

Owner:UNIV OF SCI & TECH OF CHINA

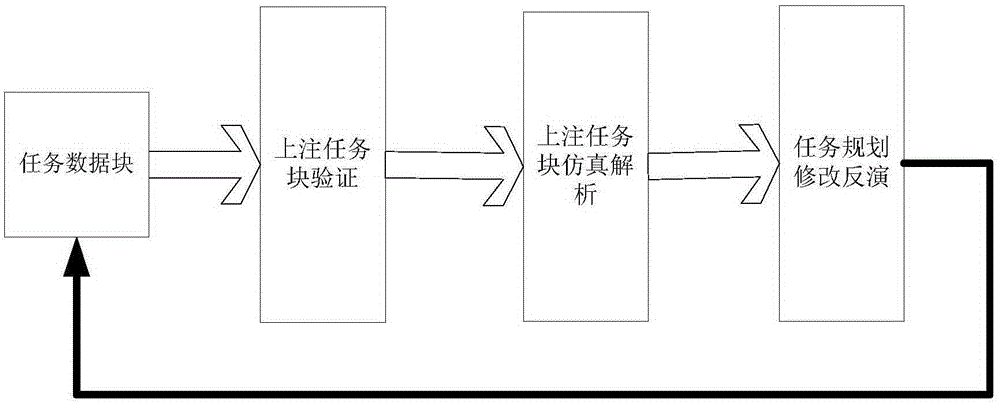

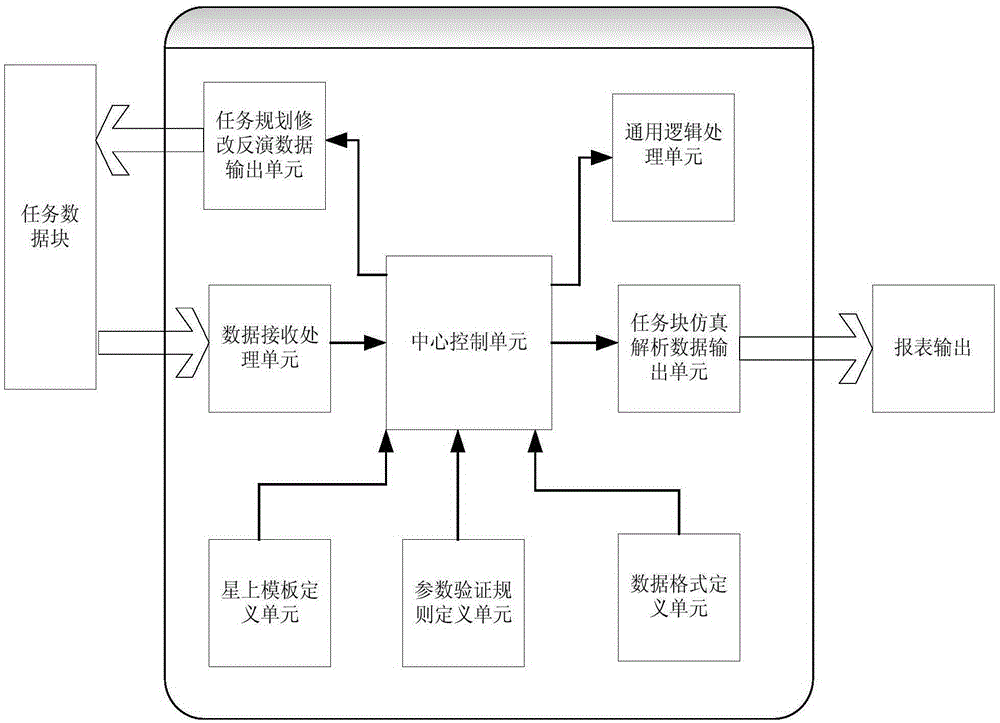

Agile satellite mission interpretation closed loop simulation verification system and method

ActiveCN105334756AEnsure proper implementationSolve Verification SimulationProgramme control in sequence/logic controllersClosed loop simulationVerification system

The invention provides an agile satellite mission interpretation closed loop simulation verification system and method. Efficiency of key links of simulation verification and inversion modification of current agile satellite mission planning can be effectively enhanced, and correct implementation of agile satellite mission planning can be guaranteed. With application of the agile satellite mission interpretation closed loop simulation verification system and method, firstly a satellite mission host mission interpretation function is simulated, a conventional satellite mission test bed is replaced, mission blocks are interpreted into instruction sets which can be performed by a satellite mission host and information of instruction names, parameters and performing time can be visually displayed in a form mode, and mission block interpretation results are automatically interpreted and abnormal information is outputted so that noting of error mission blocks on satellites can be avoided; secondly, the parameters in the mission blocks and mission block settings can be modified and the mission blocks can be generated again by the system, and iterative analysis is performed on the mission blocks so that correctness can be guaranteed, the risk caused by manual arrangement of the mission blocks can be avoided, and mission block generation efficiency and reliability can be enhanced; and finally the interpretation results of the finally generated correct mission blocks are compared with action sequences generated by a mission planning system so that correctness and rationality of mission planning can be verified.

Owner:AEROSPACE DONGFANGHONG SATELLITE

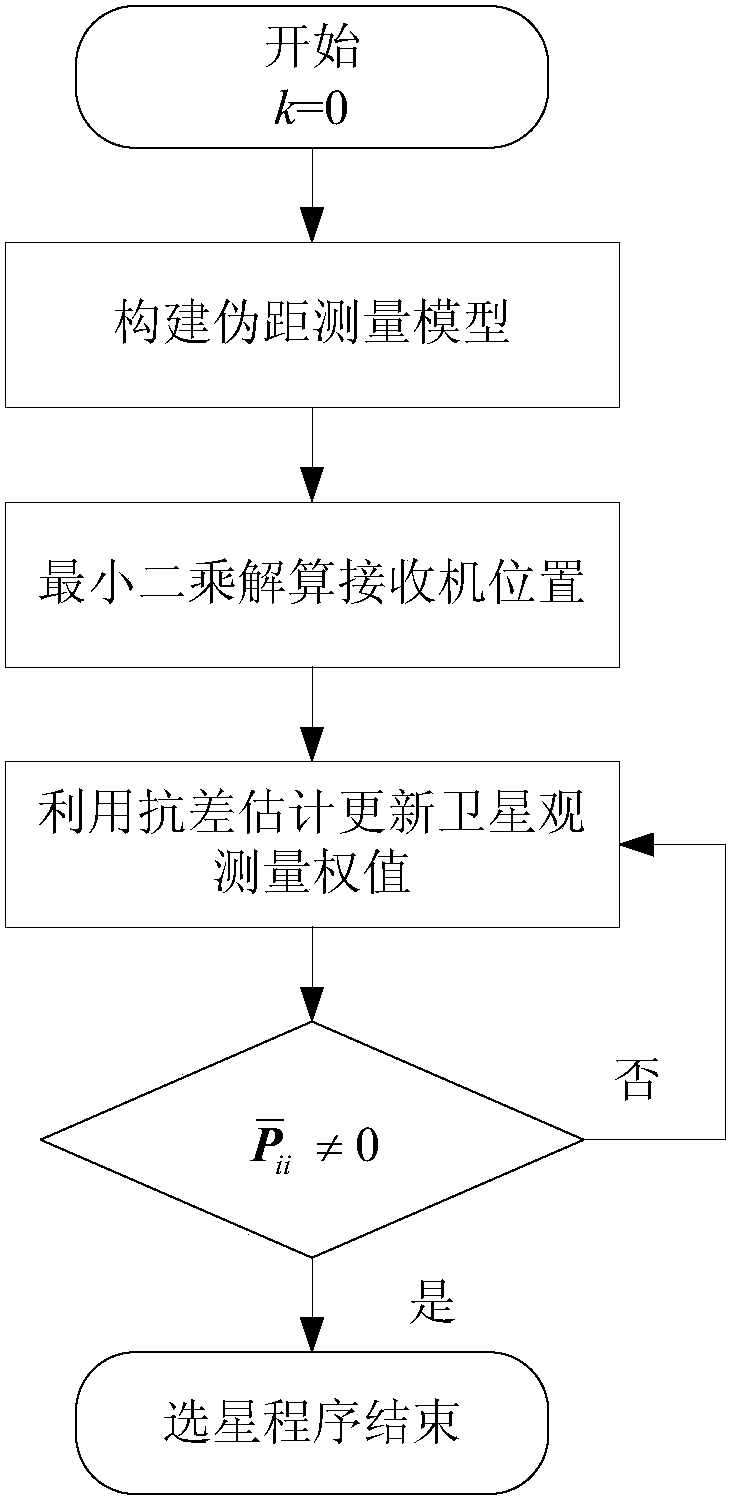

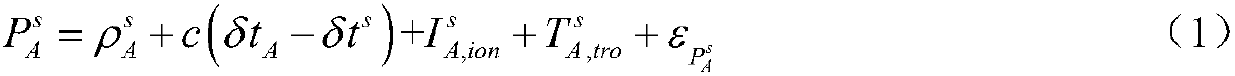

GNSS satellite selection method based on robust least square

InactiveCN107765269AImprove computing efficiencyImprove real-time performanceSatellite radio beaconingRobust least squaresEquivalent weight

The invention discloses a GNSS (Global Navigation Satellite System) satellite selection method based on robust least square, which comprises the following steps: (1) constructing a pseudo range measurement model; (2) setting the priori value of a weight matrix, and solving the pseudo range measurement model constructed in step (1) by using the least square technology to get the receiver position solution and the clock error; (3) calculating the standard residual according to the receiver position solution and the clock error, and using a robust equivalent weight coefficient to update the weight matrix; and (4) judging and analyzing the weight of each satellite according to the updated weight matrix; if the weight of a satellite is equal to 0, eliminating the satellite from the pseudo rangemeasurement model, extracting the corresponding element of the weight matrix, recalculating the receiver position solution and the clock error by using the least square technology, and transferring the receiver position solution and the clock error to step (3) to make iterative analysis; and if the weights of all satellites are not equal to 0, outputting available satellites, and ending the satellite selection program. Through the method, the weight of each observation satellite can be adaptively adjusted, and satellites with poor observation quality can be eliminated.

Owner:CHINESE AERONAUTICAL RADIO ELECTRONICS RES INST

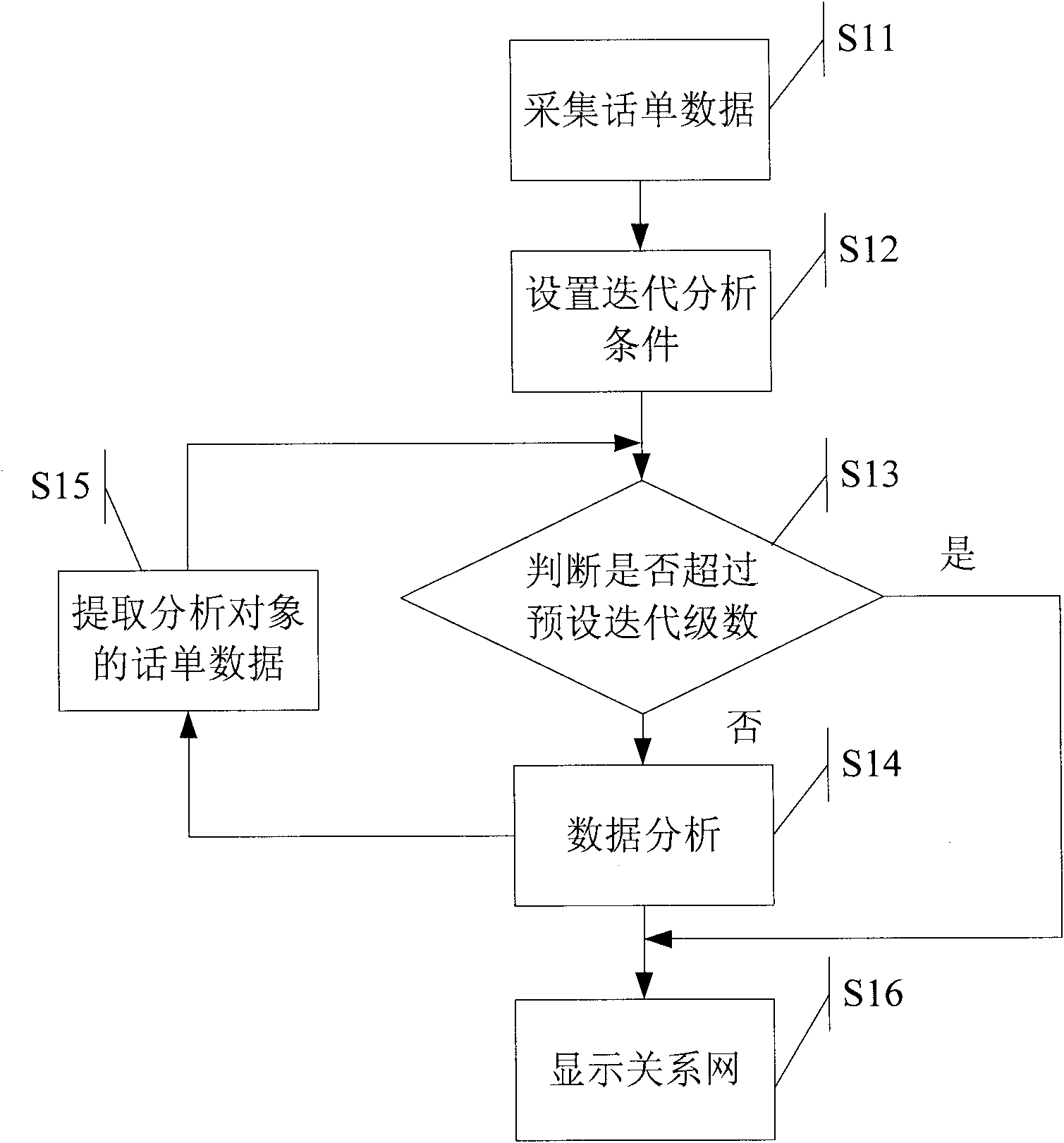

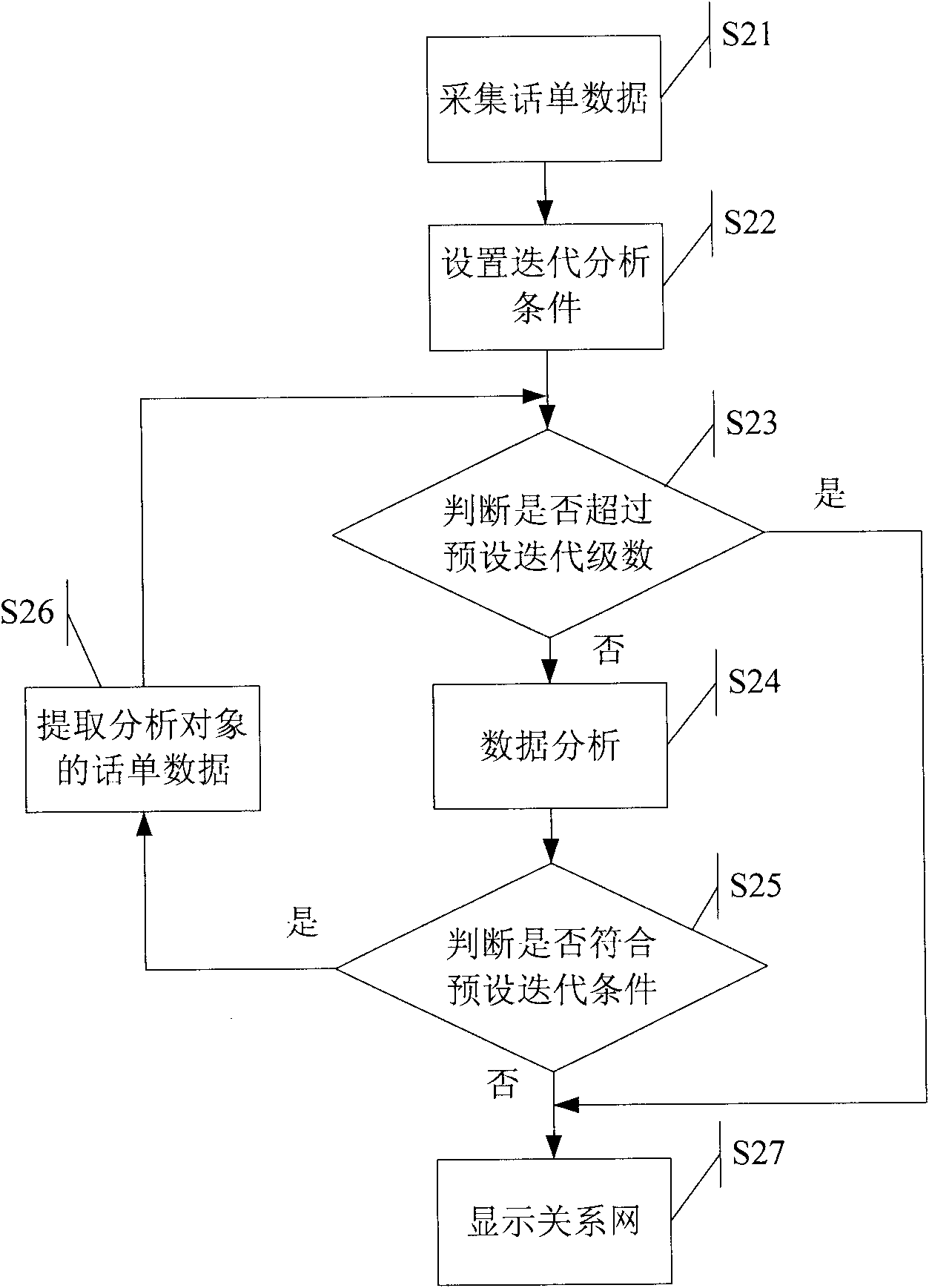

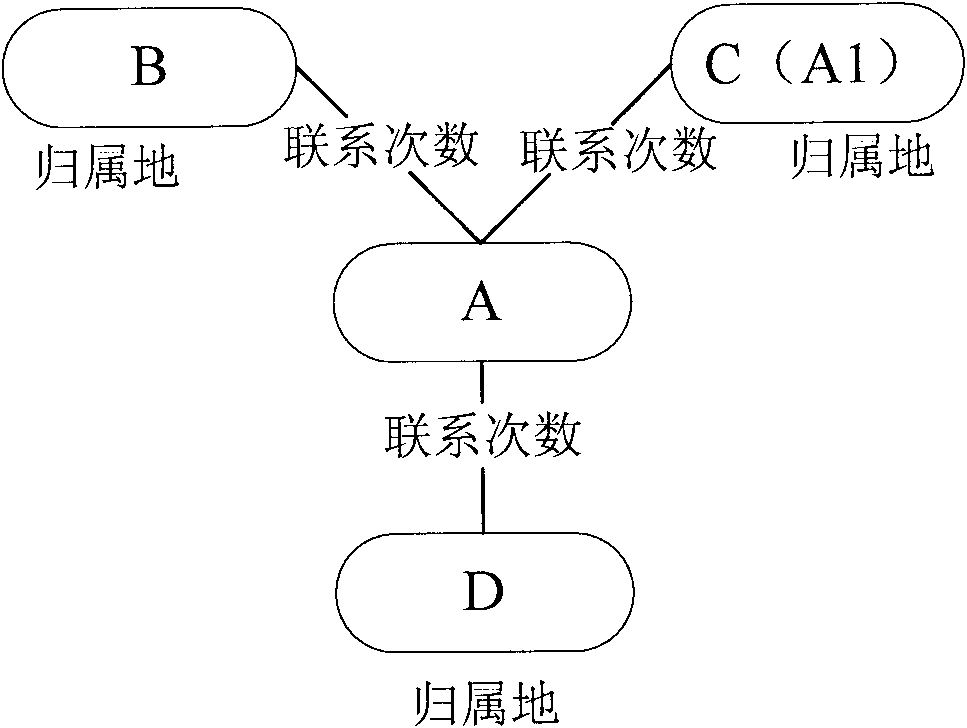

Interpersonal relationship analysis method and system based on ticket

InactiveCN101854439AEasy to analyzeSupervisory/monitoring/testing arrangementsAnalysis methodData science

The invention discloses an interpersonal relationship analysis method based on a ticket, which comprises the following steps that: ticket data produced by a target number is collected, and the target number is determined to be an analysis object; the ticket data judges the iterative analysis conditions of the analysis object; when the level of the analysis object does not exceed a preset iterative level, the relationship between the analysis object and the number of a contact person is analyzed, and the analysis results are recorded; the number of the contact person is sequentially determined to be the analysis object and the ticket data of the analysis object is extracted to judge the iterative analysis conditions; and when the level of the analysis object exceeds the preset iterative level, the analysis results are processed into a relationship network diagram with the target number as the center and displayed. The method can obtain quite a complete interpersonal relationship network of the target number. Simultaneously, the invention also discloses an analysis system based on the interpersonal relationship network based on the ticket.

Owner:SHENZHEN COSHIP ELECTRONICS CO LTD

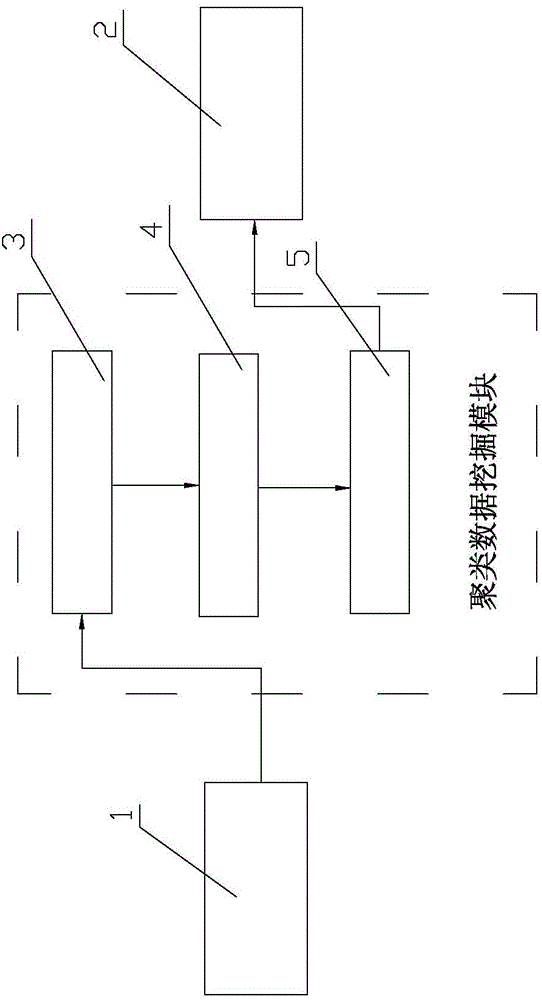

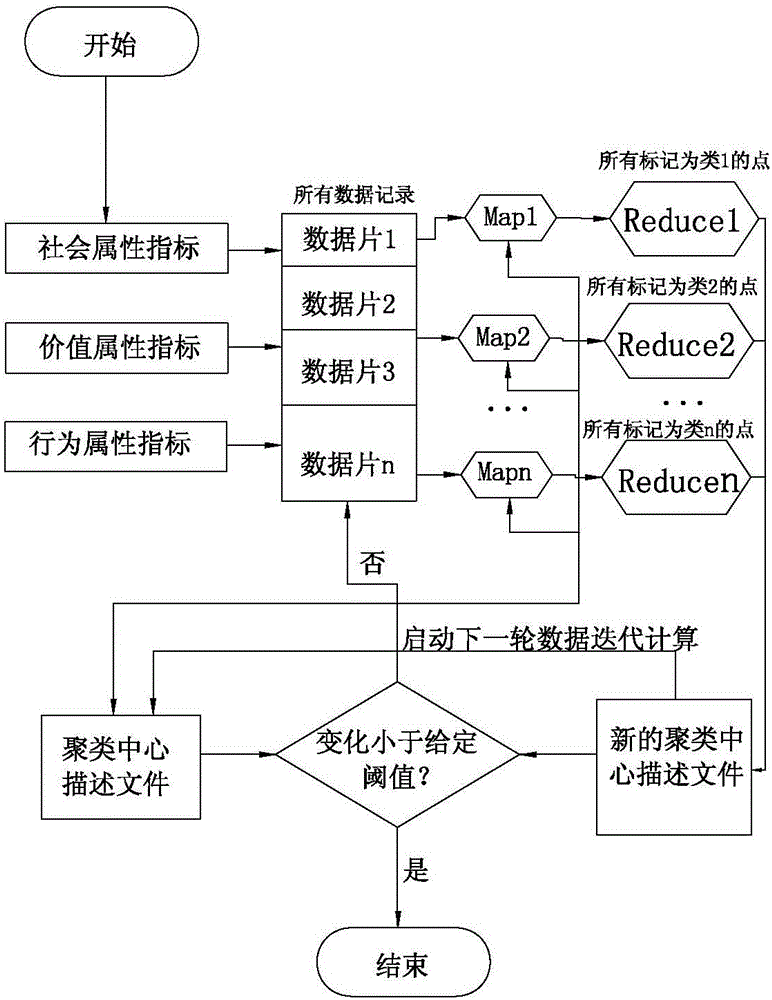

Electricity fee collection risk assessment device based on big data platform clustering algorithm and method thereof

InactiveCN104992297AAvoid the risk of not paying bills on timeReduce the risk of not being able to return on timeResourcesClustered dataCluster algorithm

The invention relates to the technical field of fee collection risk assessment, and provides an electricity fee collection risk assessment device based on a big data platform clustering algorithm and a method thereof. The device comprises an electricity consuming unit feature data import module, a clustering data mining module and an electricity consuming unit credit evaluation system output module. The electricity consuming unit feature data import module extracts a social attribute indicator, a value attribute indicator, a behavior attribute indicator and other mass data of an electricity consuming unit and stores the mass data in a big data platform. The clustering data mining module performs parallel iterative analysis processing one the data and preliminarily judges the credit rating to which the electricity consuming unit belongs. The electricity consuming unit credit evaluation system output module confirms and outputs the credit rating of the electricity consuming unit according to data division of the clustering data mining module. The risk that the electricity consuming unit does not pay electricity fee on time can be effectively avoided so that the risk that funds of electric power enterprises cannot be withdrawn on time can be effectively reduced further.

Owner:STATE GRID CORP OF CHINA +1

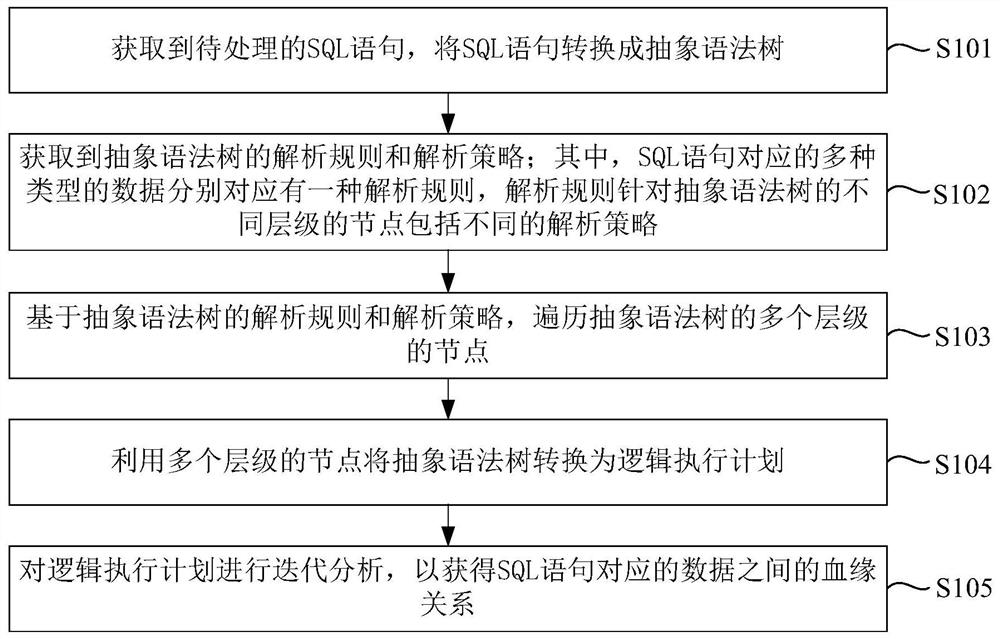

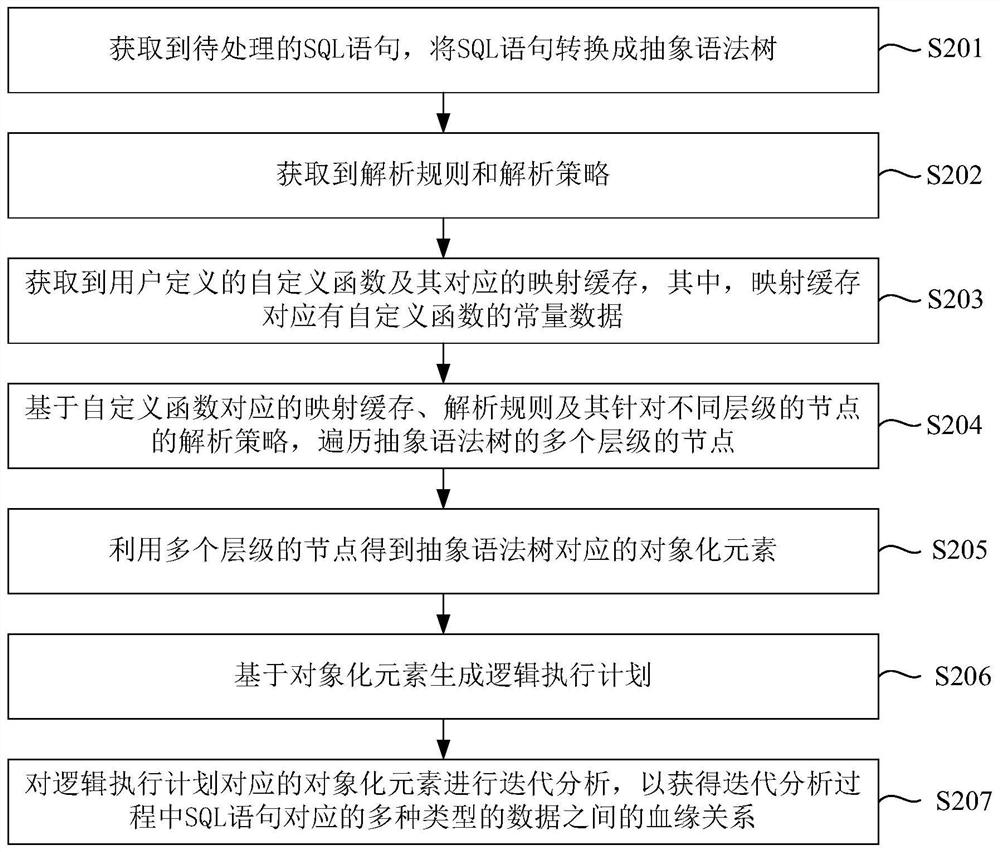

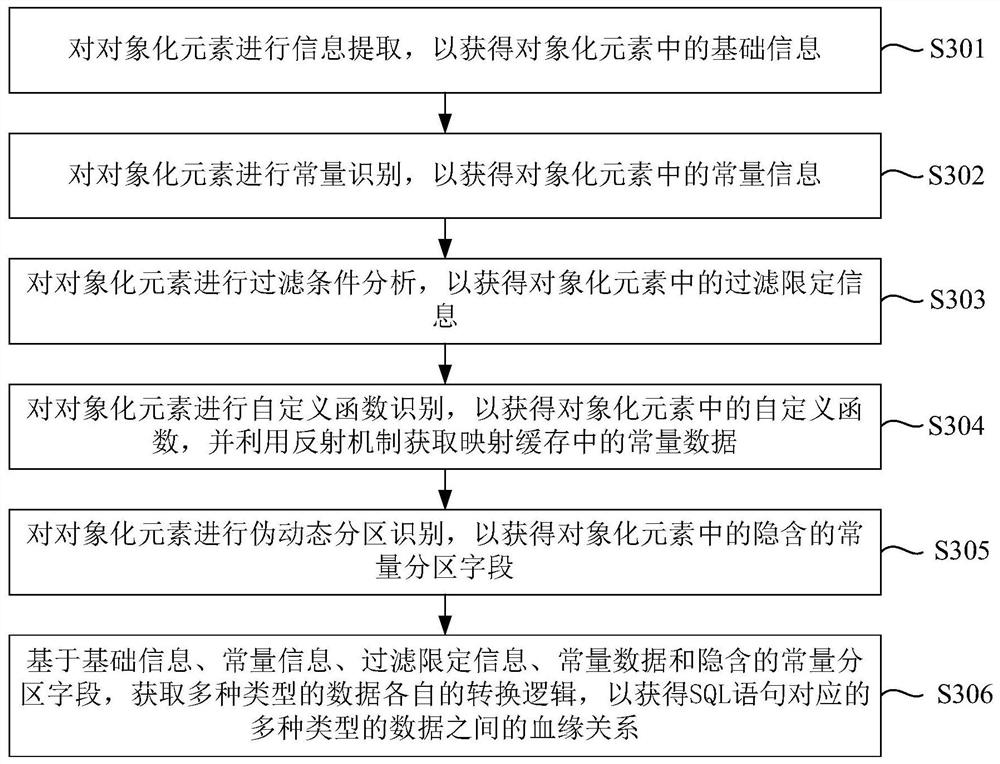

Data consanguinity analysis method and device, electronic equipment and storage medium

PendingCN113032362ALow costImprove efficiencyReverse engineeringSpecial data processing applicationsExecution planTheoretical computer science

The invention discloses a data consanguinity analysis method and device, electronic equipment and a storage medium, and the data consanguinity analysis method comprises the steps: obtaining a to-be-processed SQL statement, and converting the SQL statement into an abstract syntax tree; obtaining an analysis rule and an analysis strategy of the abstract syntax tree, wherein various types of data corresponding to the SQL statement respectively correspond to an analysis rule, and the analysis rule comprises different analysis strategies for nodes of different hierarchies of the abstract syntax tree; based on the analysis rule and the analysis strategy of the abstract syntax tree, traversing nodes of multiple levels of the abstract syntax tree; converting the abstract syntax tree into a logic execution plan by using nodes of multiple levels; and performing iterative analysis on the logic execution plan to obtain a blood relationship between data corresponding to the SQL statements. By means of the mode, the blood relationship between the data in the data processing process can be obtained, and the data screening cost is reduced.

Owner:GUANGZHOU HUYA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com