Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

79 results about "Data anonymization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Data anonymization is a type of information sanitization whose intent is privacy protection. It is the process of either encrypting or removing personally identifiable information from data sets, so that the people whom the data describe remain anonymous.

Data anonymization based on guessing anonymity

ActiveUS8627483B2Maximizes guessing anonymityMinimize distortionDigital data processing detailsPublic key for secure communicationGame basedOptimization problem

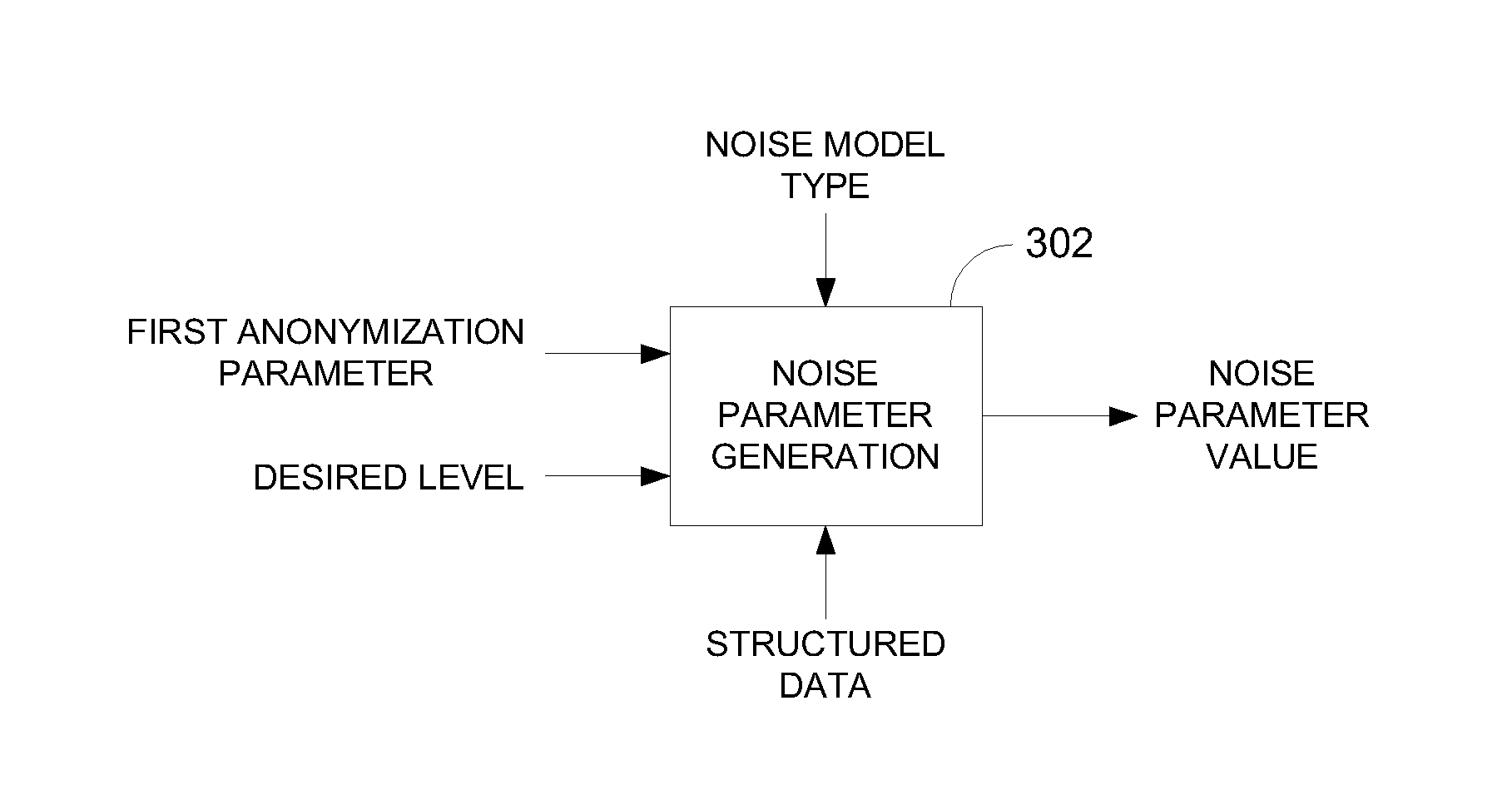

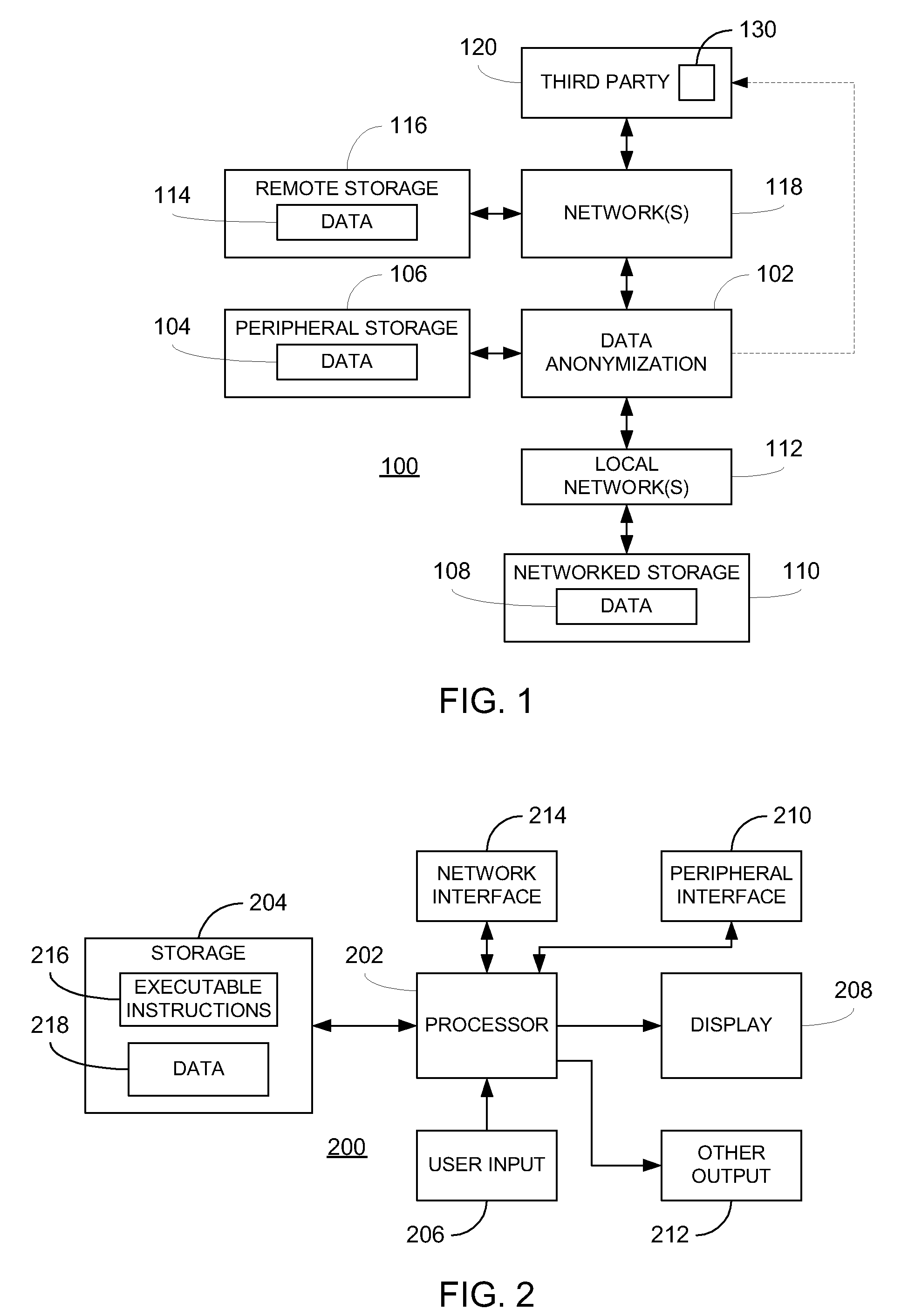

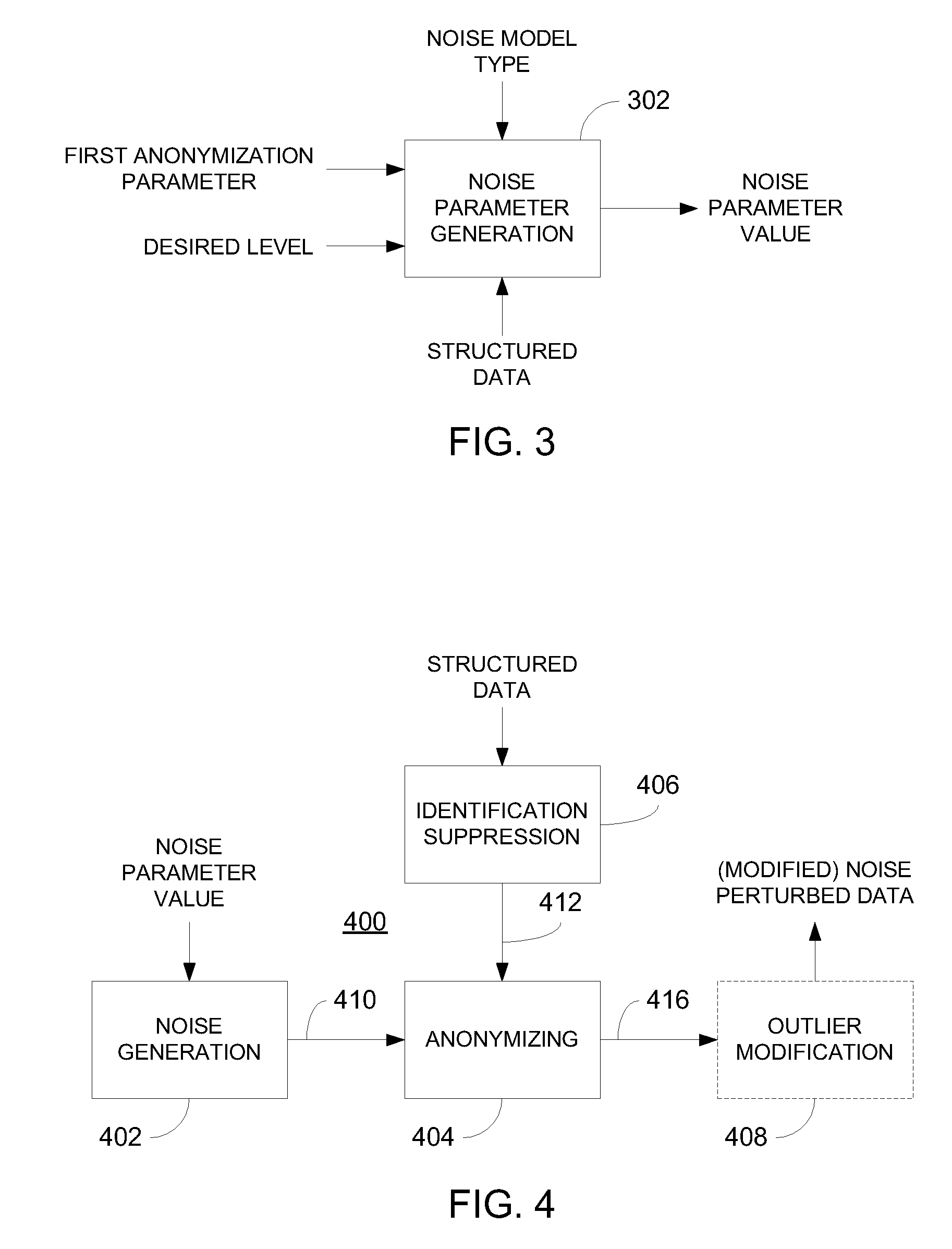

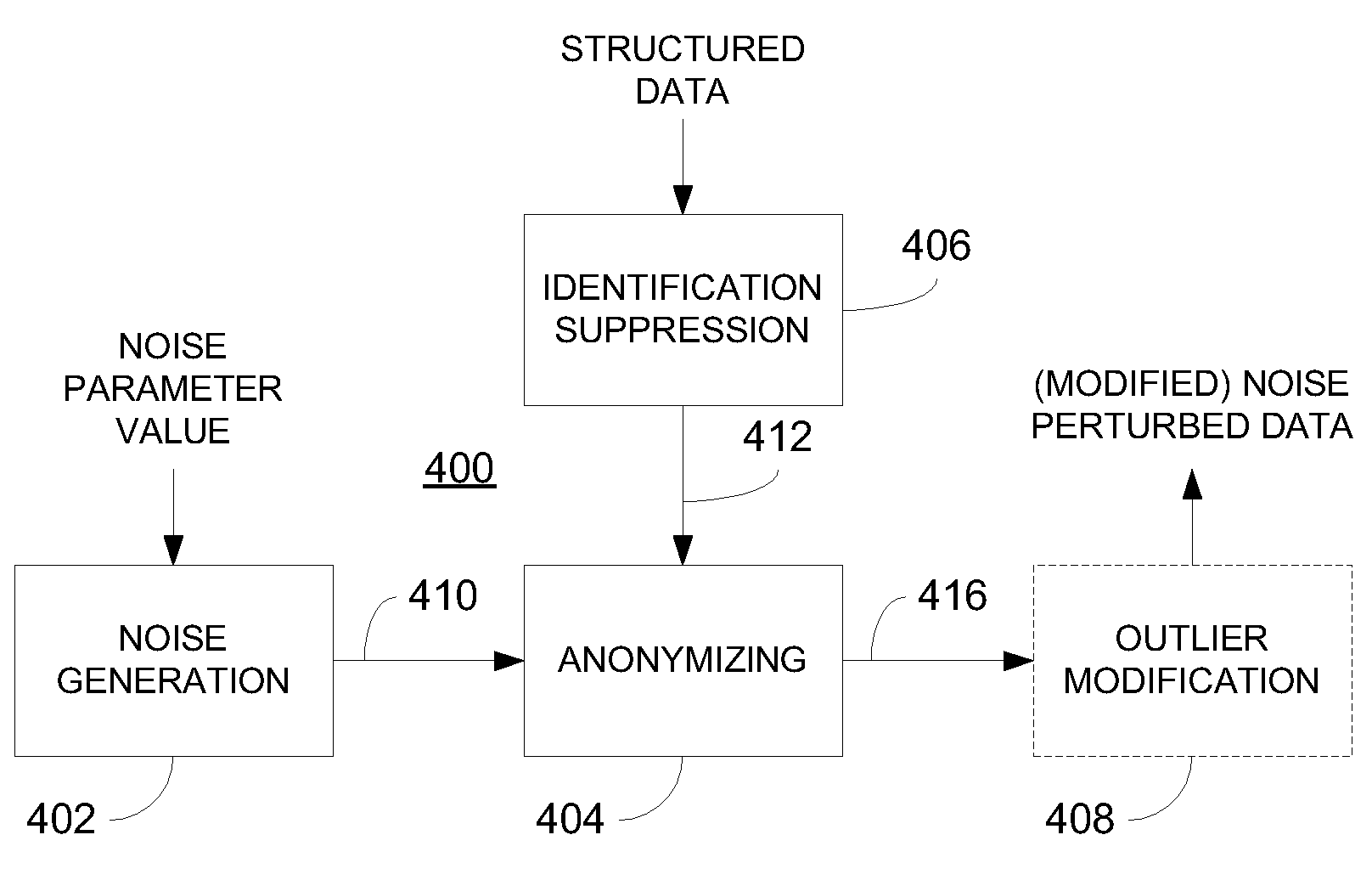

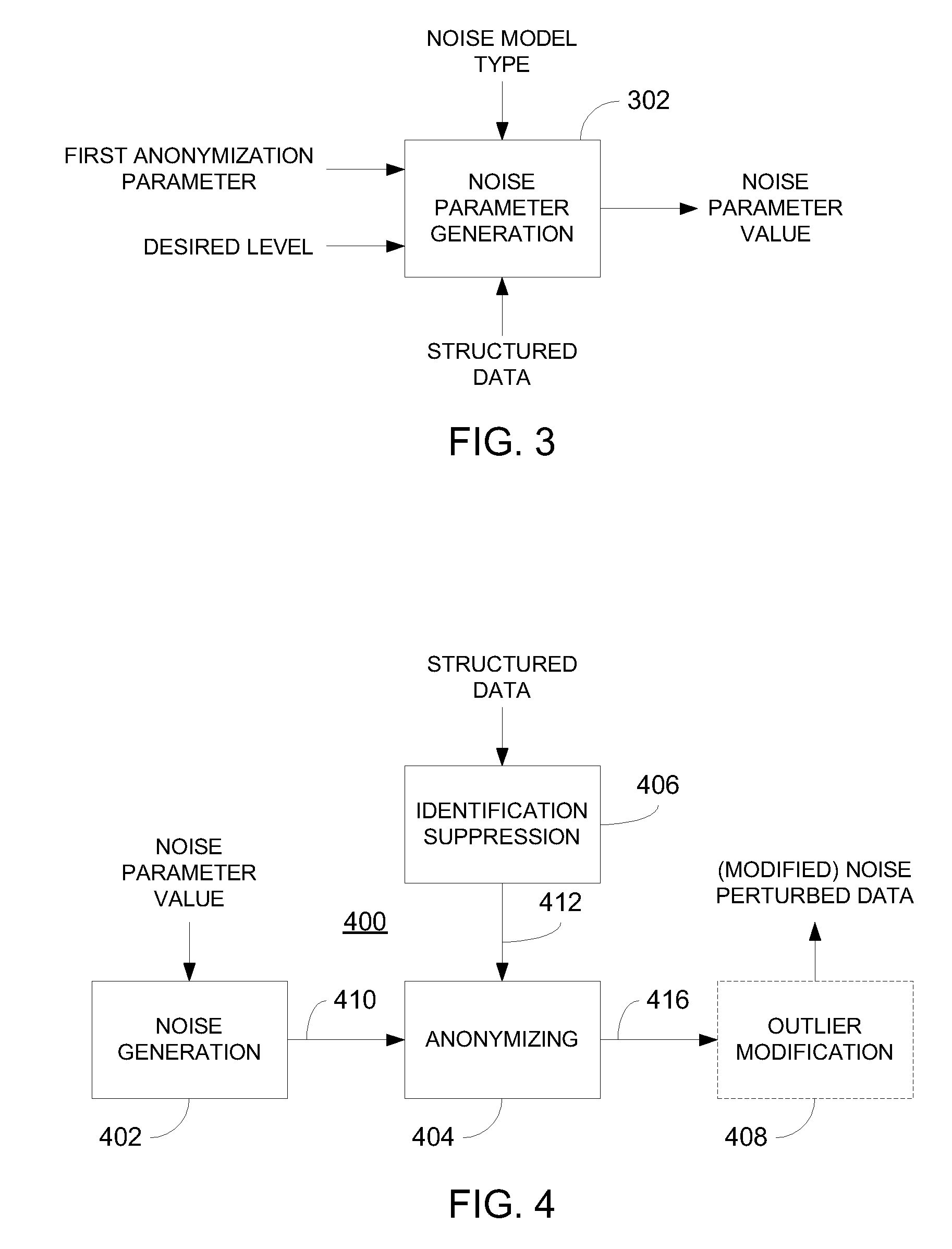

Privacy is defined in the context of a guessing game based on the so-called guessing inequality. The privacy of a sanitized record, i.e., guessing anonymity, is defined by the number of guesses an attacker needs to correctly guess an original record used to generate a sanitized record. Using this definition, optimization problems are formulated that optimize a second anonymization parameter (privacy or data distortion) given constraints on a first anonymization parameter (data distortion or privacy, respectively). Optimization is performed across a spectrum of possible values for at least one noise parameter within a noise model. Noise is then generated based on the noise parameter value(s) and applied to the data, which may comprise real and / or categorical data. Prior to anonymization, the data may have identifiers suppressed, whereas outlier data values in the noise perturbed data may be likewise modified to further ensure privacy.

Owner:ACCENTURE GLOBAL SERVICES LTD

Systems and methods for enforcing third party oversight of data anonymization

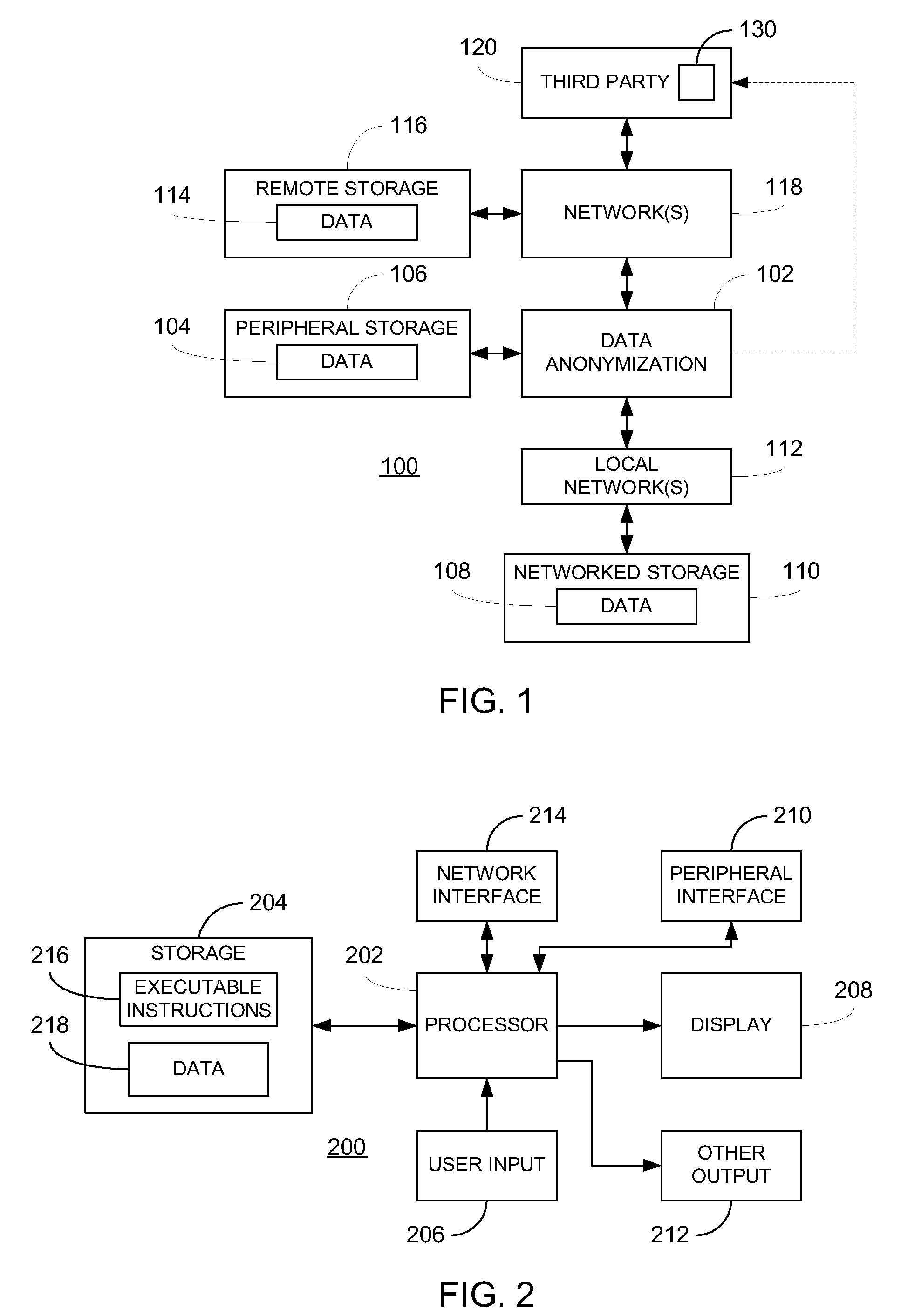

ActiveUS9542568B2Reduce stepsUser identity/authority verificationDigital data protectionThird Party OversightDatabase

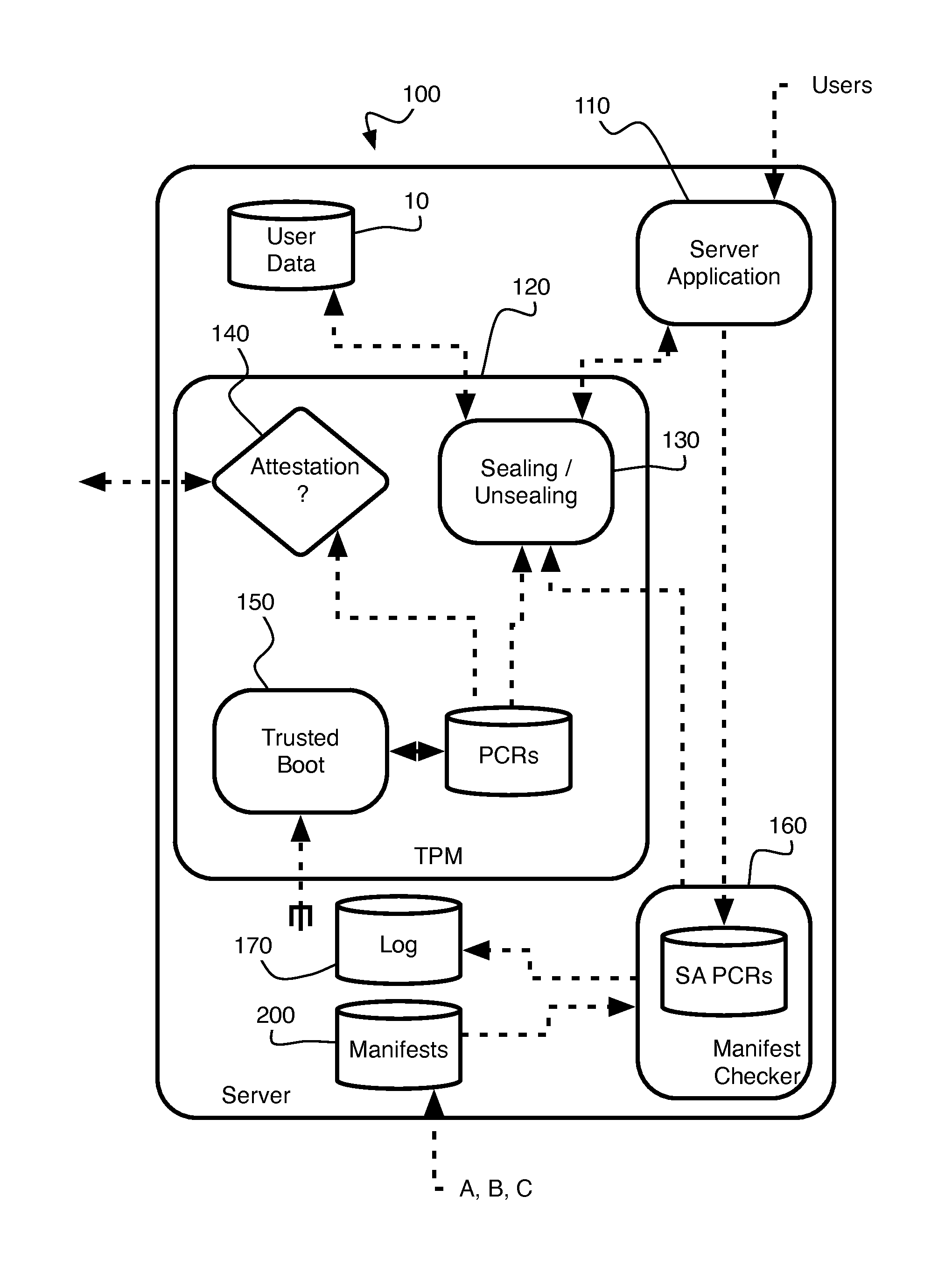

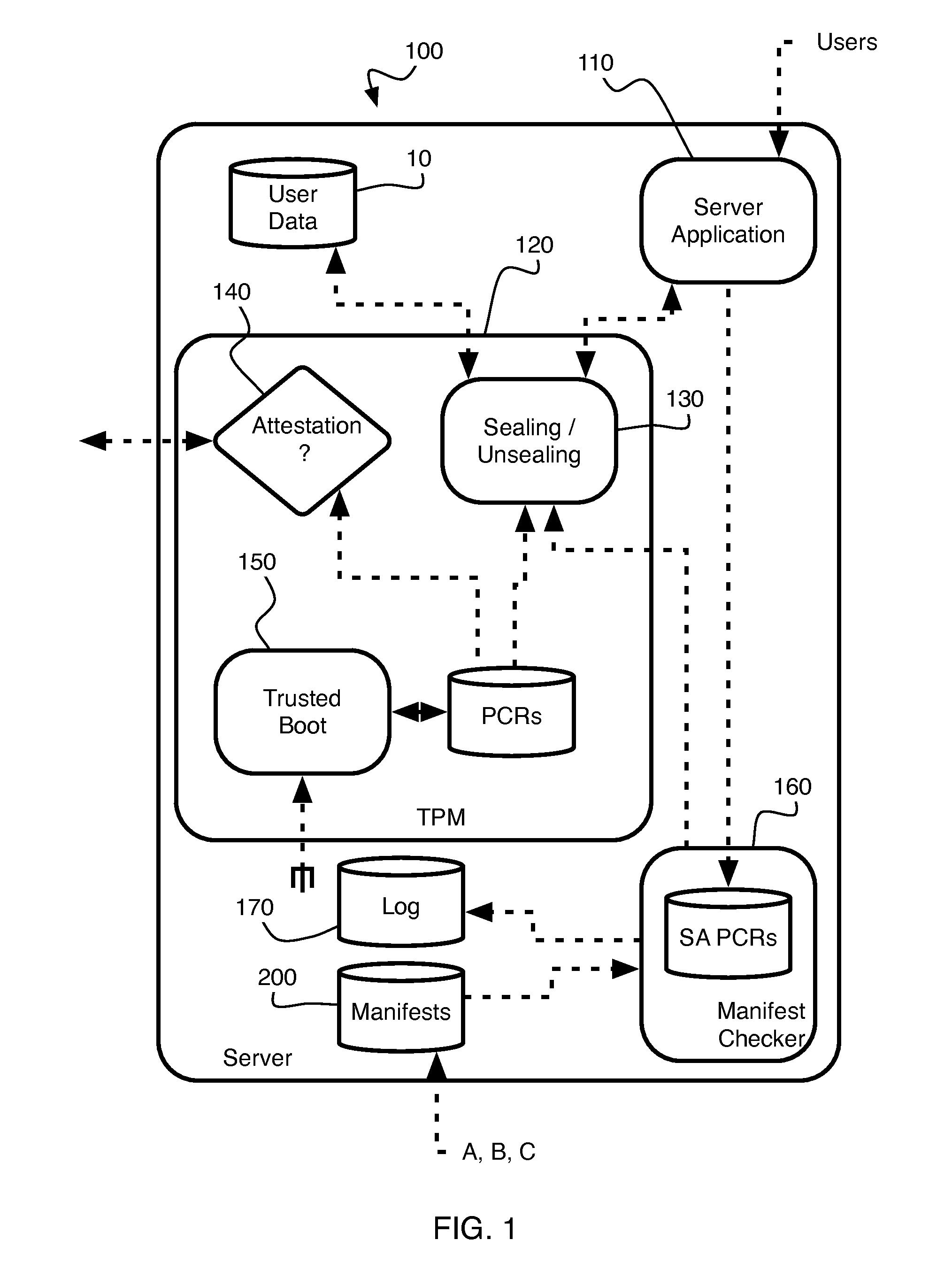

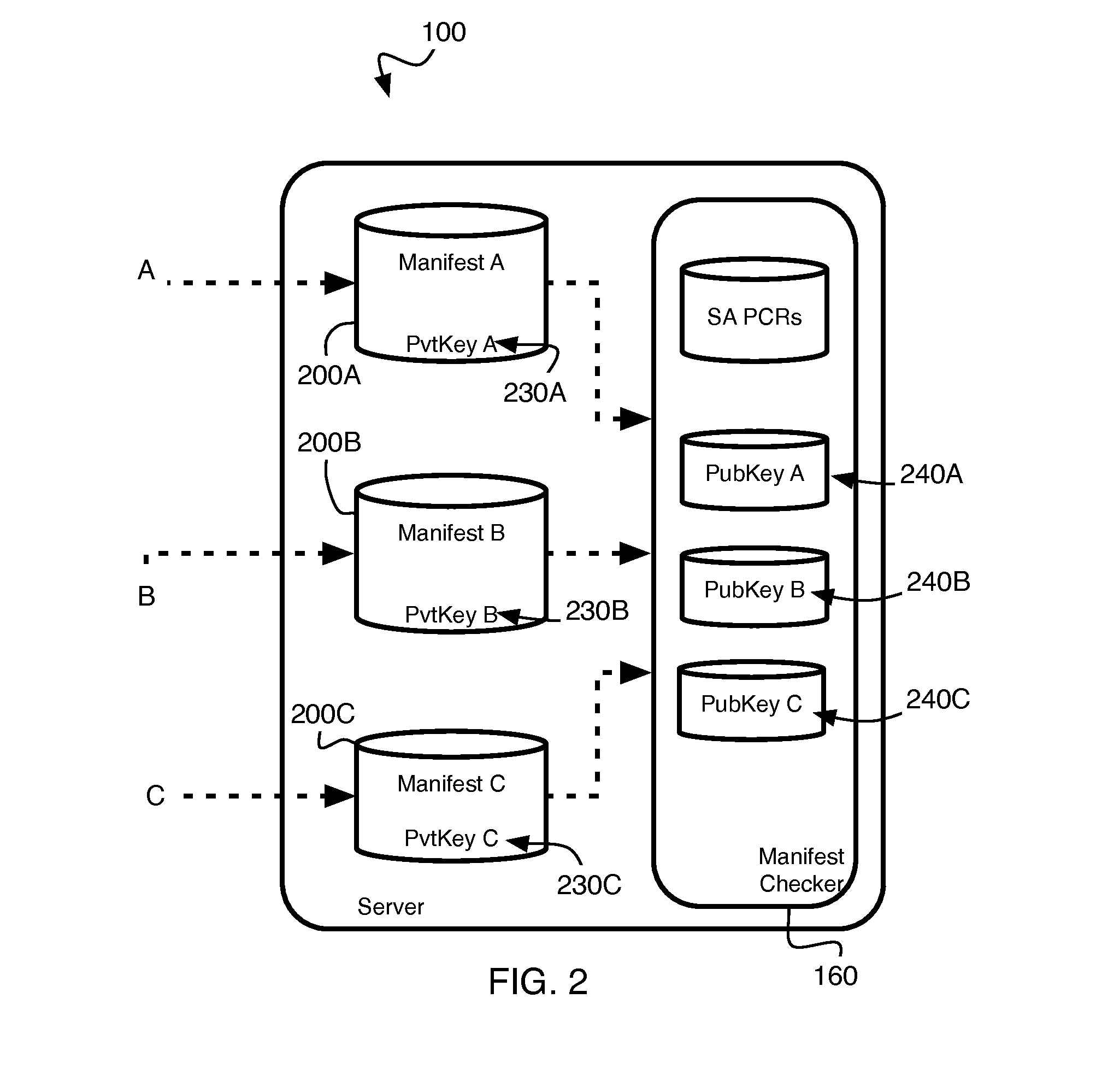

A modifiable server is utilized to reliably seal and unseal data according to a measurement of the server, by structuring the server to have a modifiable sandbox component for sealing, unsealing the data, and a non-modifiable checker component for enabling or disabling said sandbox component. The checker component determines whether the sandbox component complies with pre-determined standards. If the sandbox component is compliant, the checker component enables the sandbox component to seal and unseal the data using a measurement of the checker component. Otherwise, the checker component disables the sandbox component.

Owner:MAX PLANCK GESELLSCHAFT ZUR FOERDERUNG DER WISSENSCHAFTEN EV

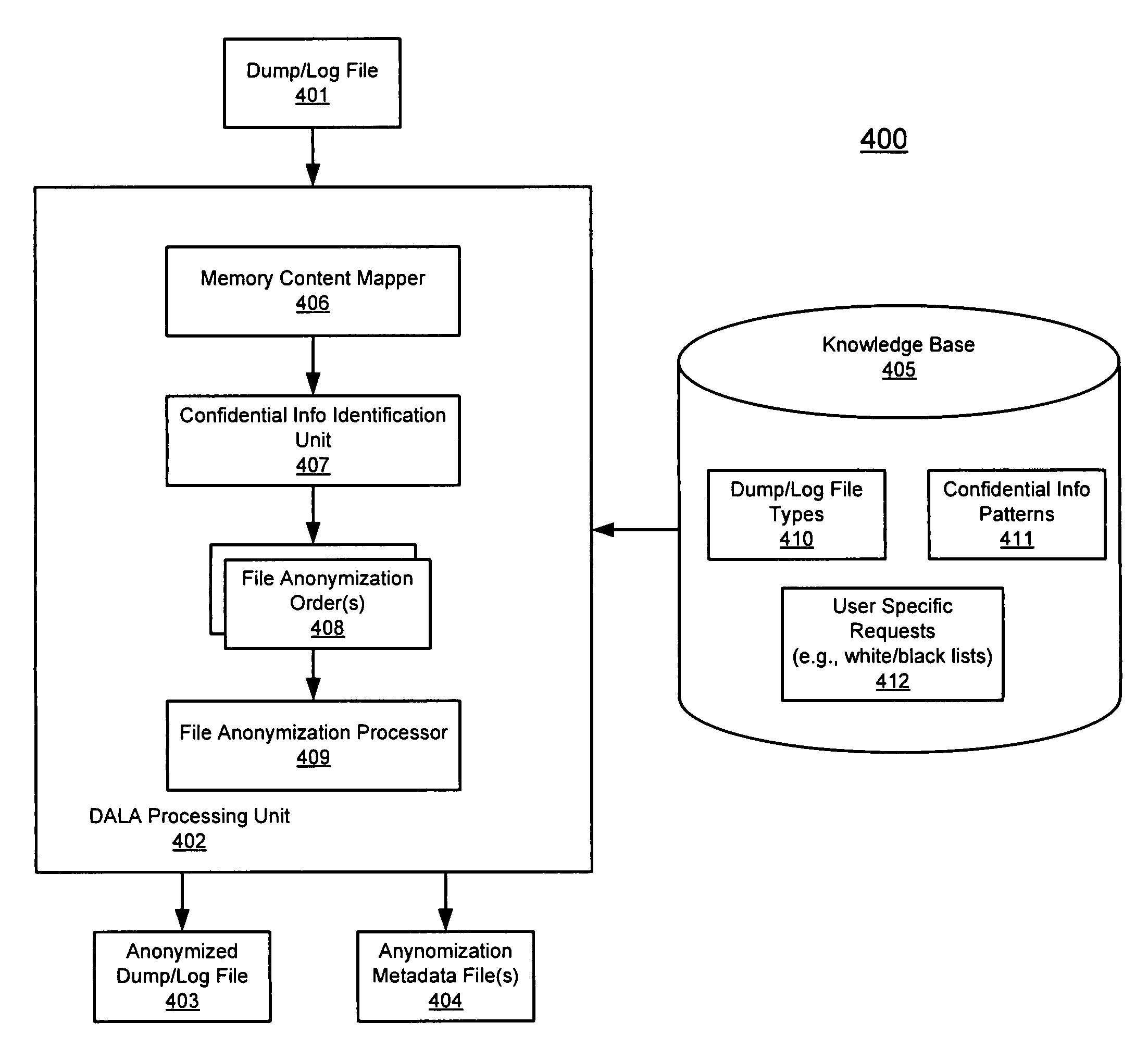

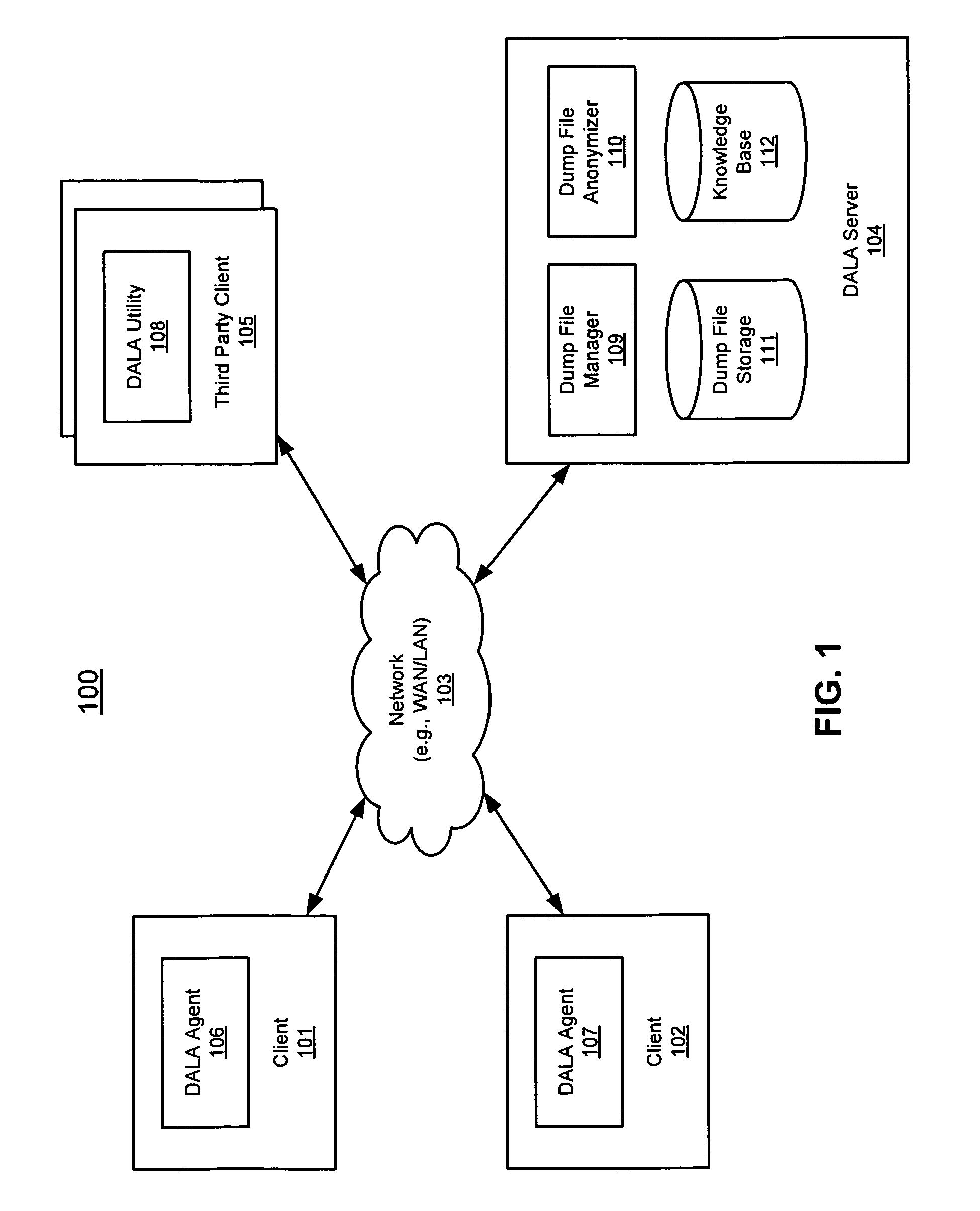

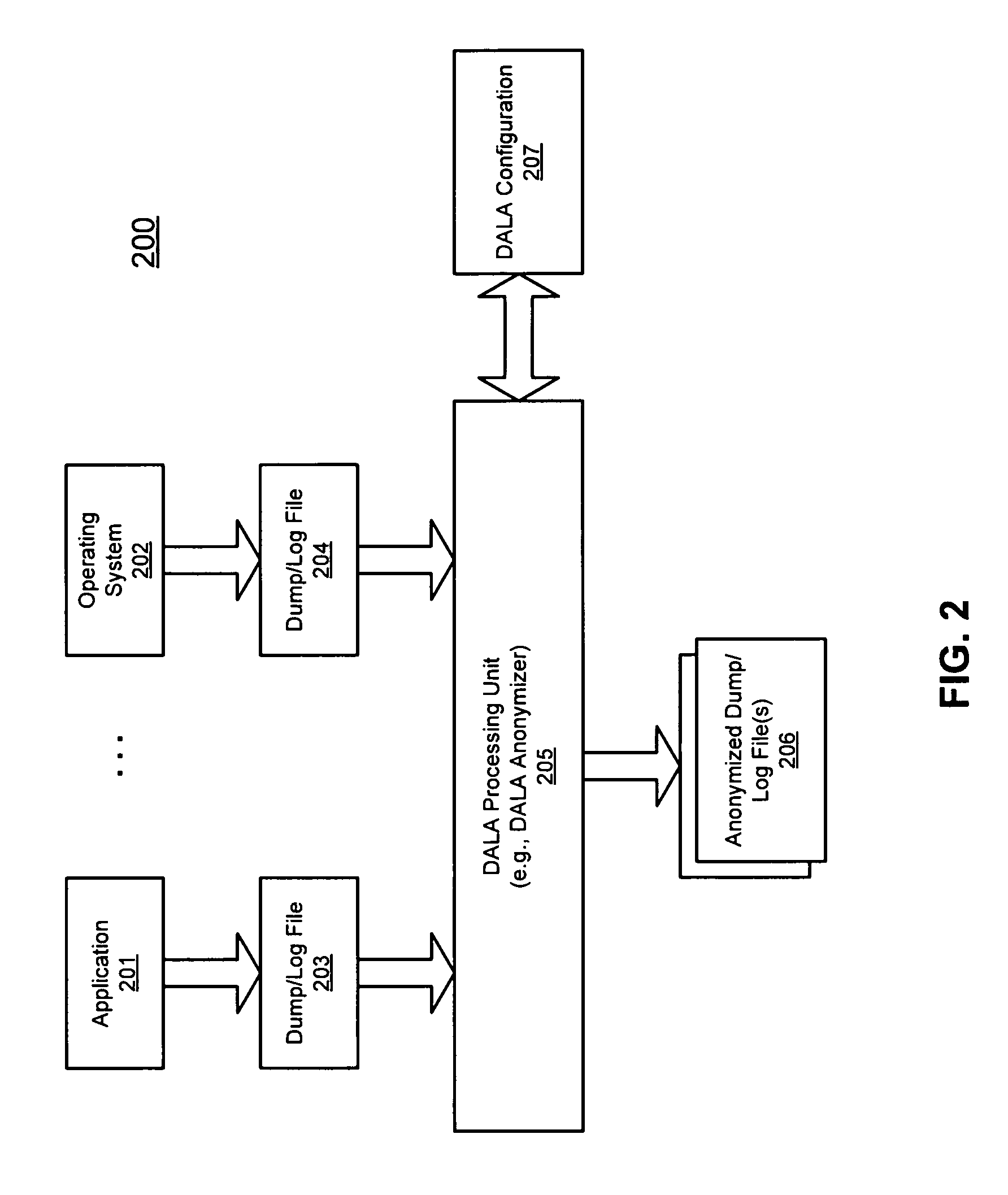

Method and apparatus for dump and log anonymization (DALA)

ActiveUS20090282036A1Digital data processing detailsUnauthorized memory use protectionFile transmissionClient machine

According to one embodiment of the invention, an original dump file is received from a client machine to be forwarded to a dump file recipient. The original dump file is parsed to identify certain content of the original dump file that matches certain data patterns / categories. The original dump file is anonymized by modifying the identified content according to a predetermined algorithm, such that the identified content of the original dump file is no longer exposed, generating an anonymized dump file. The anonymized dump file is then transmitted to the dump file recipient. Technical content and infrastructure of the original dump file is maintained within the anonymized dump file after the anonymization, such that a utility application designed to process the original dump file can still process the anonymized dump file without exposing the identified content of the original dump file to the dump file recipient. Other methods and apparatuses are also described.

Owner:FEDTKE STEPHEN U

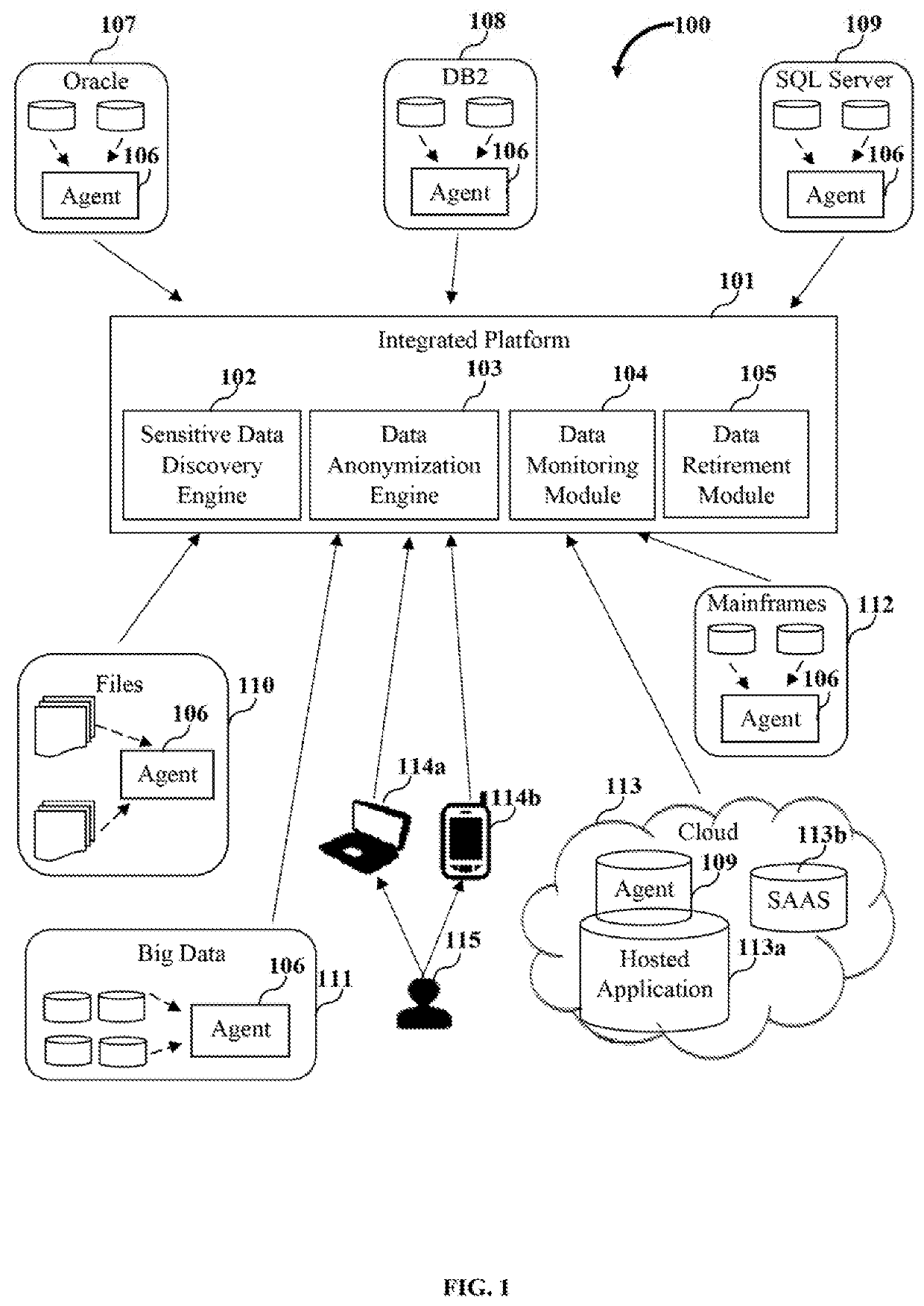

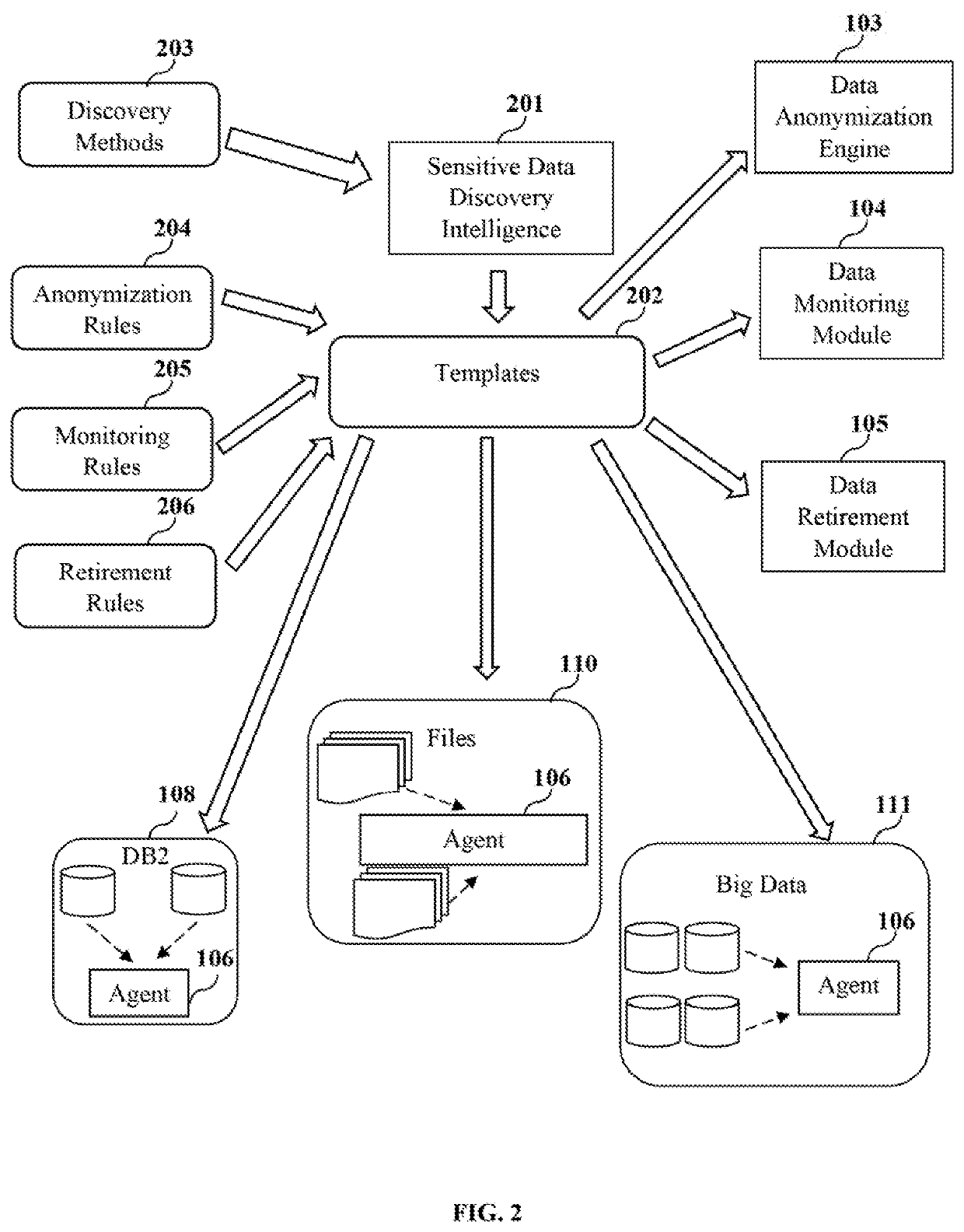

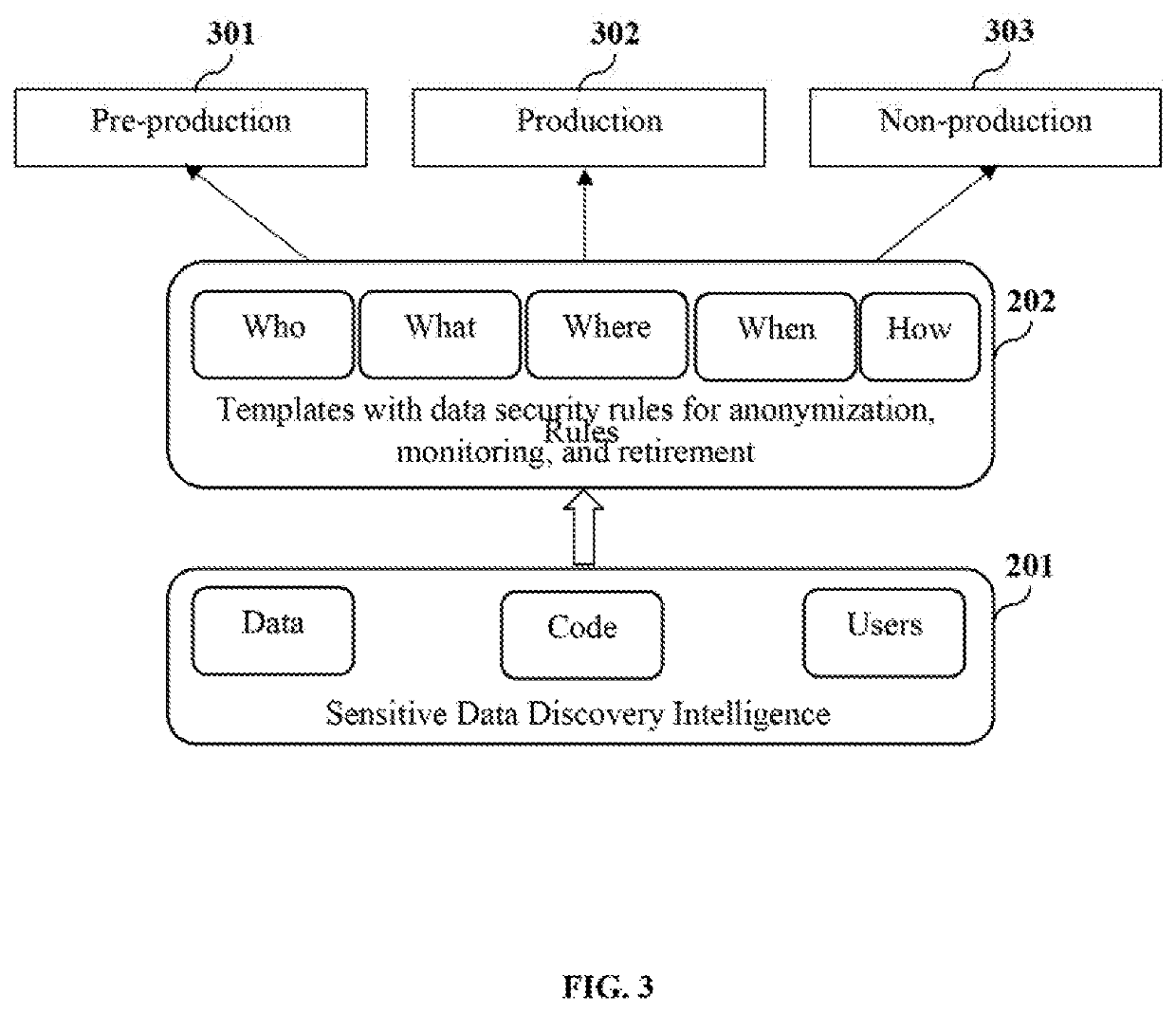

Integrated system and method for sensitive data security

Owner:MENTIS INC

Method and apparatus for providing anonymization of data

ActiveUS20100268719A1Digital data processing detailsComputer security arrangementsData miningData anonymization

Owner:AT&T INTPROP I L P

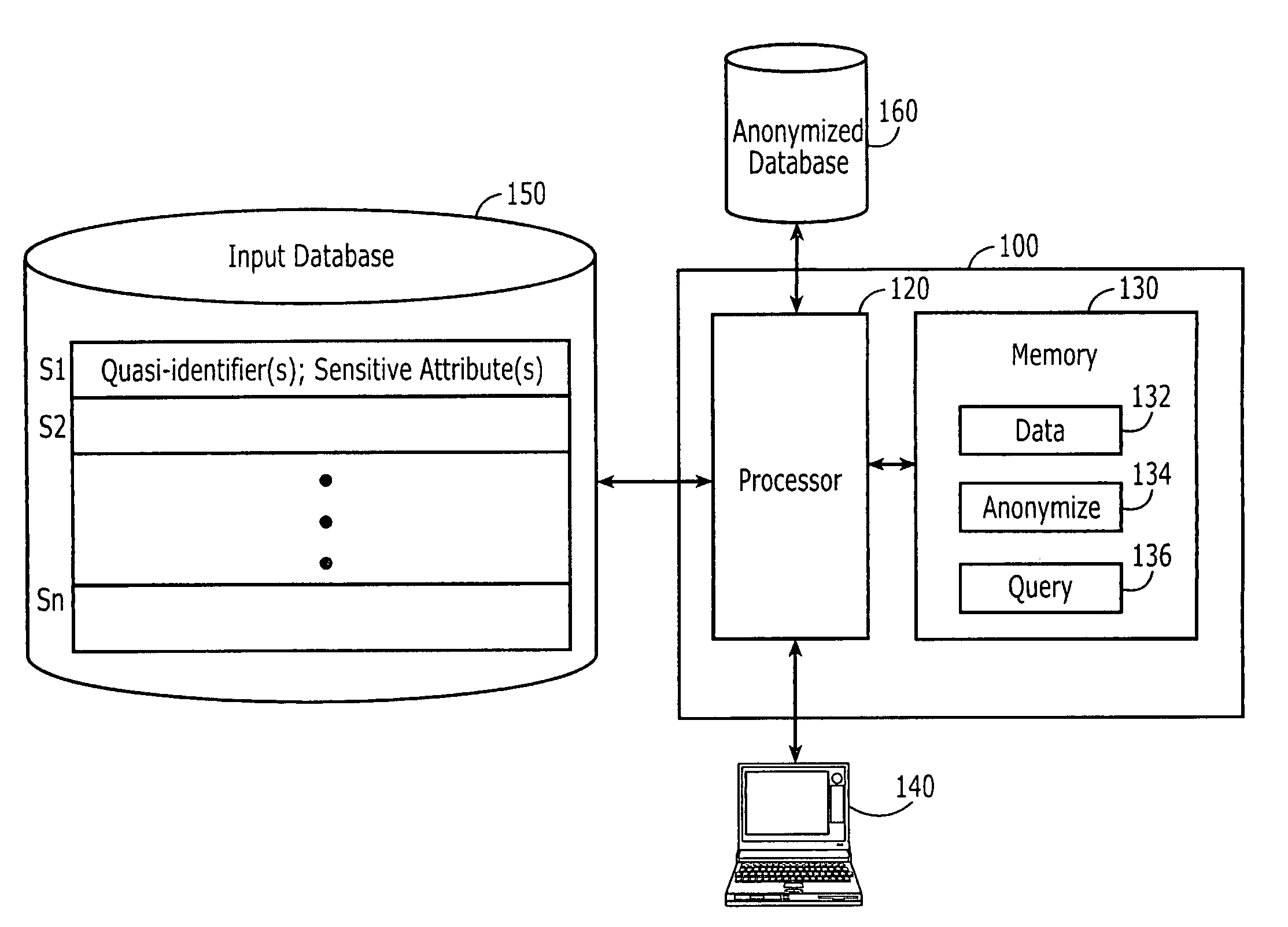

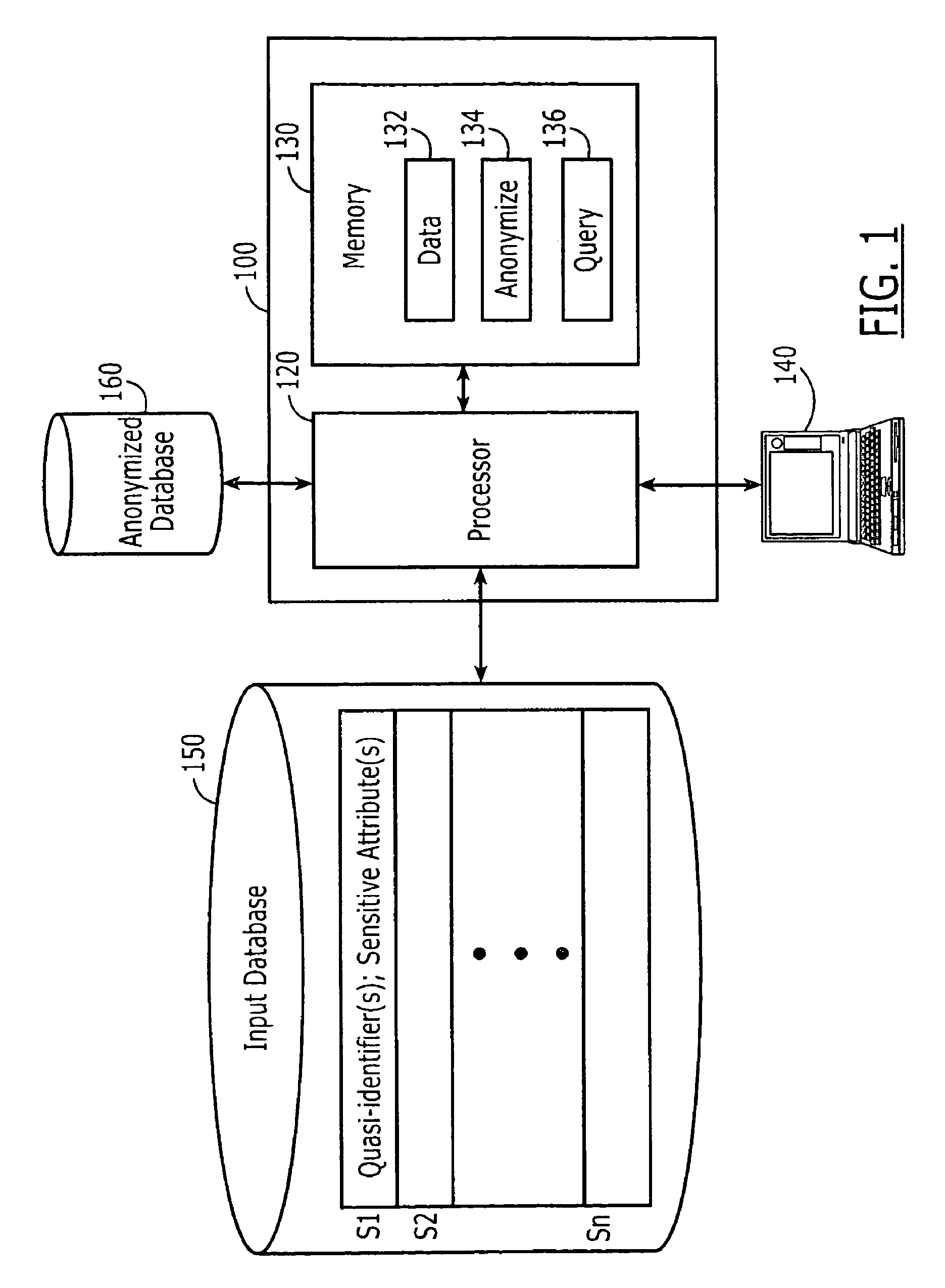

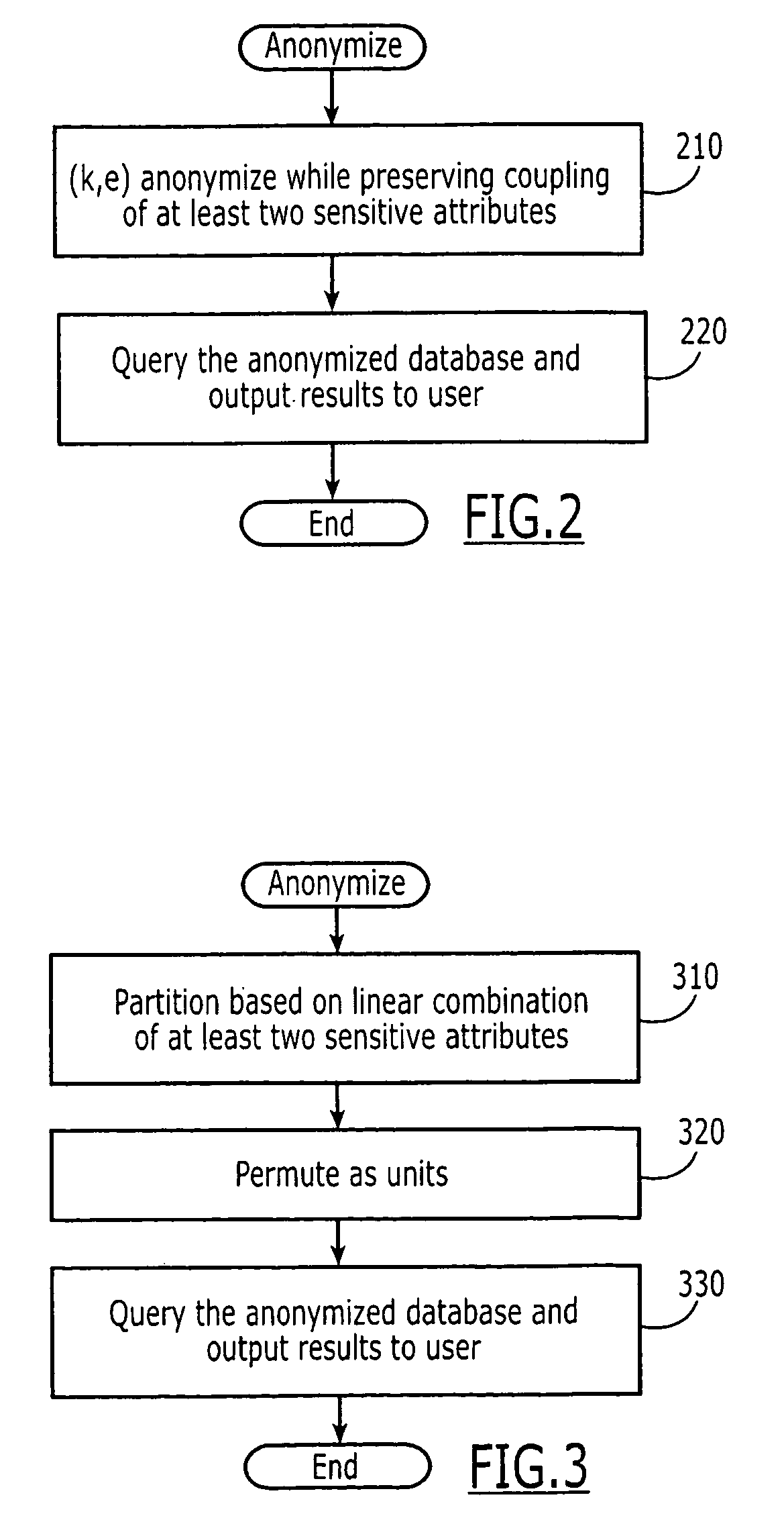

Computer systems, methods and computer program products for data anonymization for aggregate query answering

ActiveUS20100114920A1Small cost increaseLow costDigital data processing detailsComputer security arrangementsComputer system designComputerized system

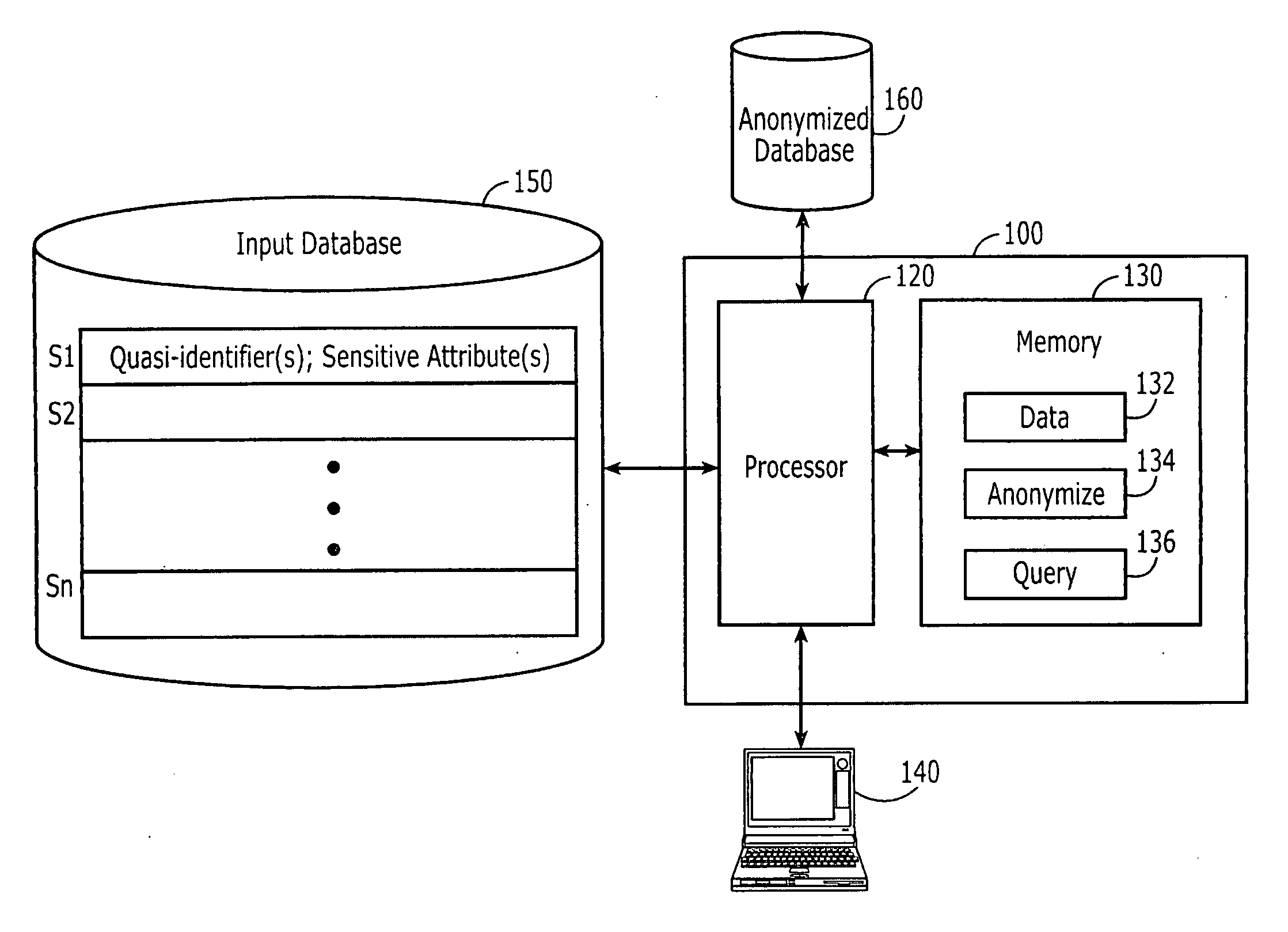

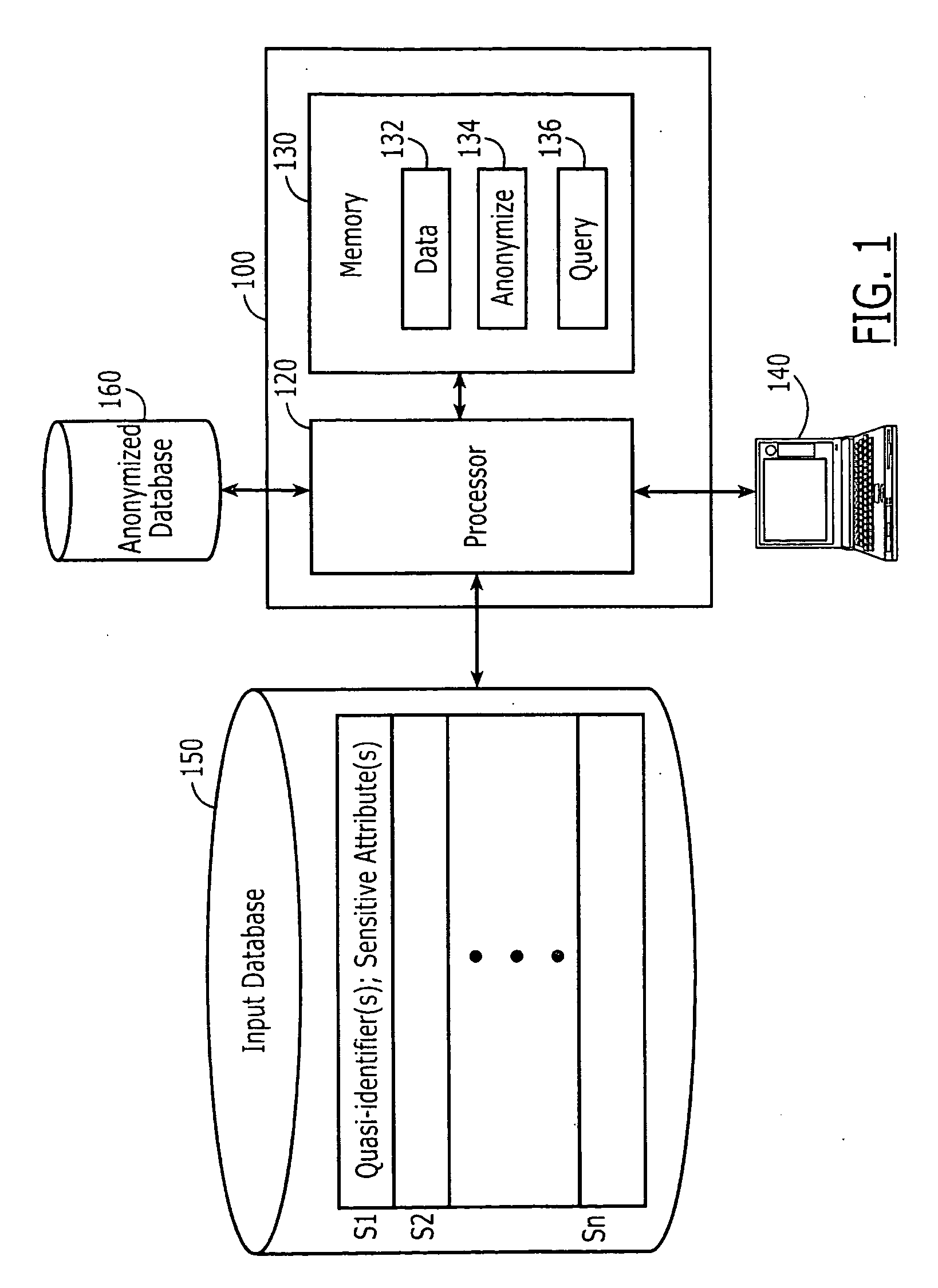

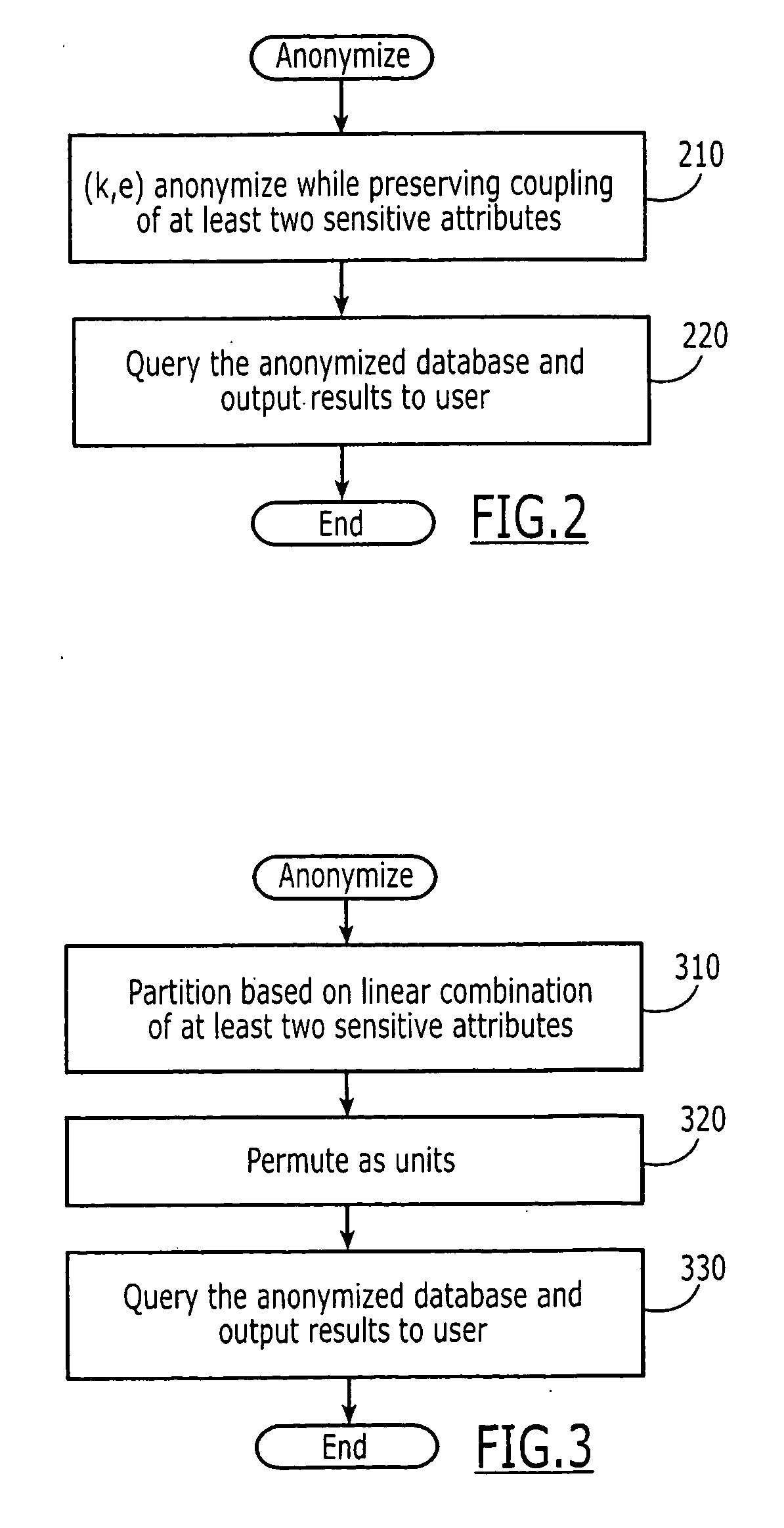

Computer program products are provided for anonymizing a database that includes tuples. A respective tuple includes at least one quasi-identifier and sensitive attributes associated with the quasi-identifier. These computer program products include computer readable program code that is configured to (k,e)-anonymize the tuples over a number k of different values in a range e of values, while preserving coupling at least two of the sensitive attributes to one another in the sets of attributes that are anonymized to provide a (k,e)-anonymized database. Related computer systems and methods are also provided.

Owner:AT&T INTPROP I L P

Data anonymization based on guessing anonymity

ActiveUS20100162402A1Minimize distortionMaximizes guessing anonymityPublic key for secure communicationDigital data processing detailsGame basedData value

Privacy is defined in the context of a guessing game based on the so-called guessing inequality. The privacy of a sanitized record, i.e., guessing anonymity, is defined by the number of guesses an attacker needs to correctly guess an original record used to generate a sanitized record. Using this definition, optimization problems are formulated that optimize a second anonymization parameter (privacy or data distortion) given constraints on a first anonymization parameter (data distortion or privacy, respectively). Optimization is performed across a spectrum of possible values for at least one noise parameter within a noise model. Noise is then generated based on the noise parameter value(s) and applied to the data, which may comprise real and / or categorical data. Prior to anonymization, the data may have identifiers suppressed, whereas outlier data values in the noise perturbed data may be likewise modified to further ensure privacy.

Owner:ACCENTURE GLOBAL SERVICES LTD

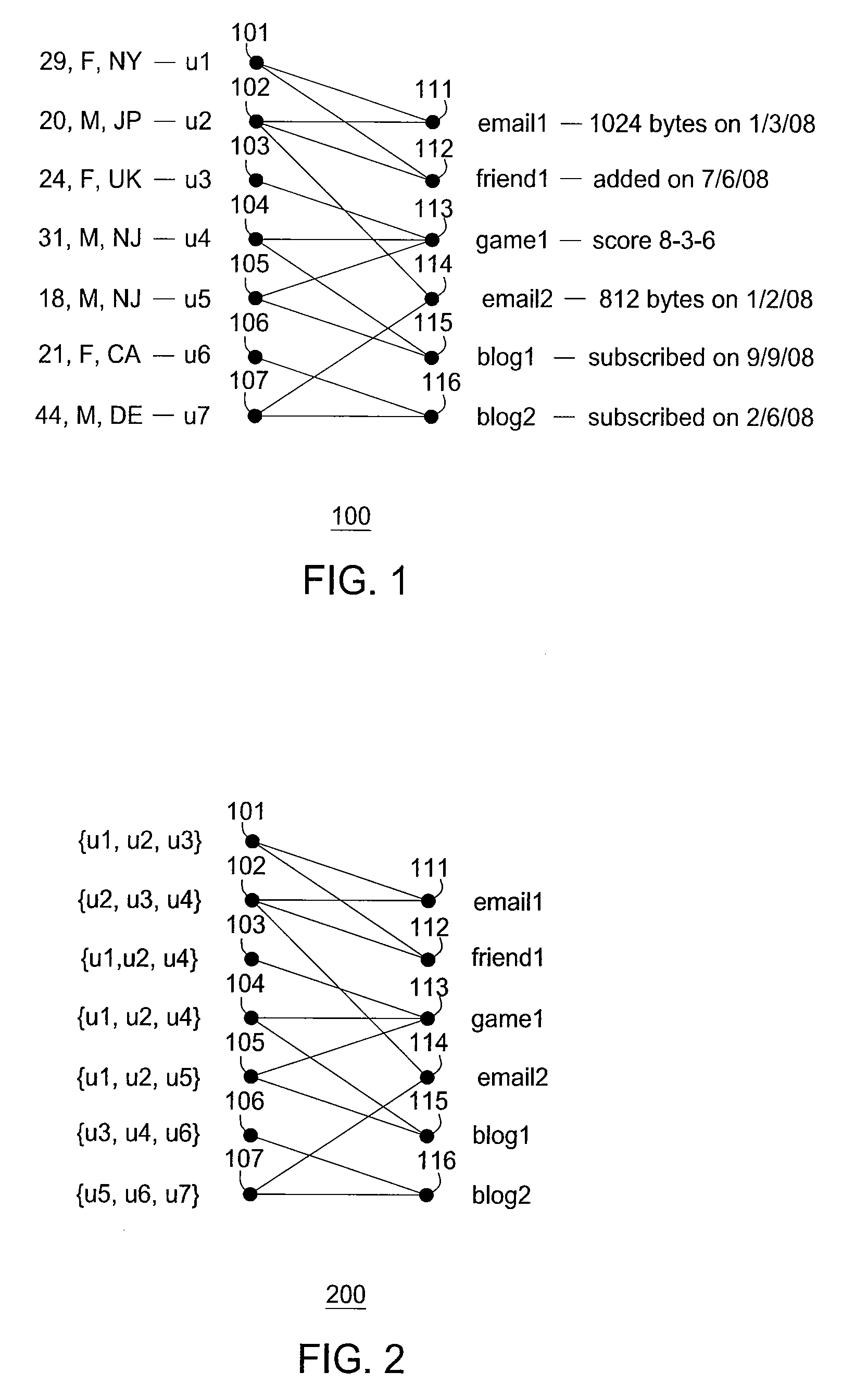

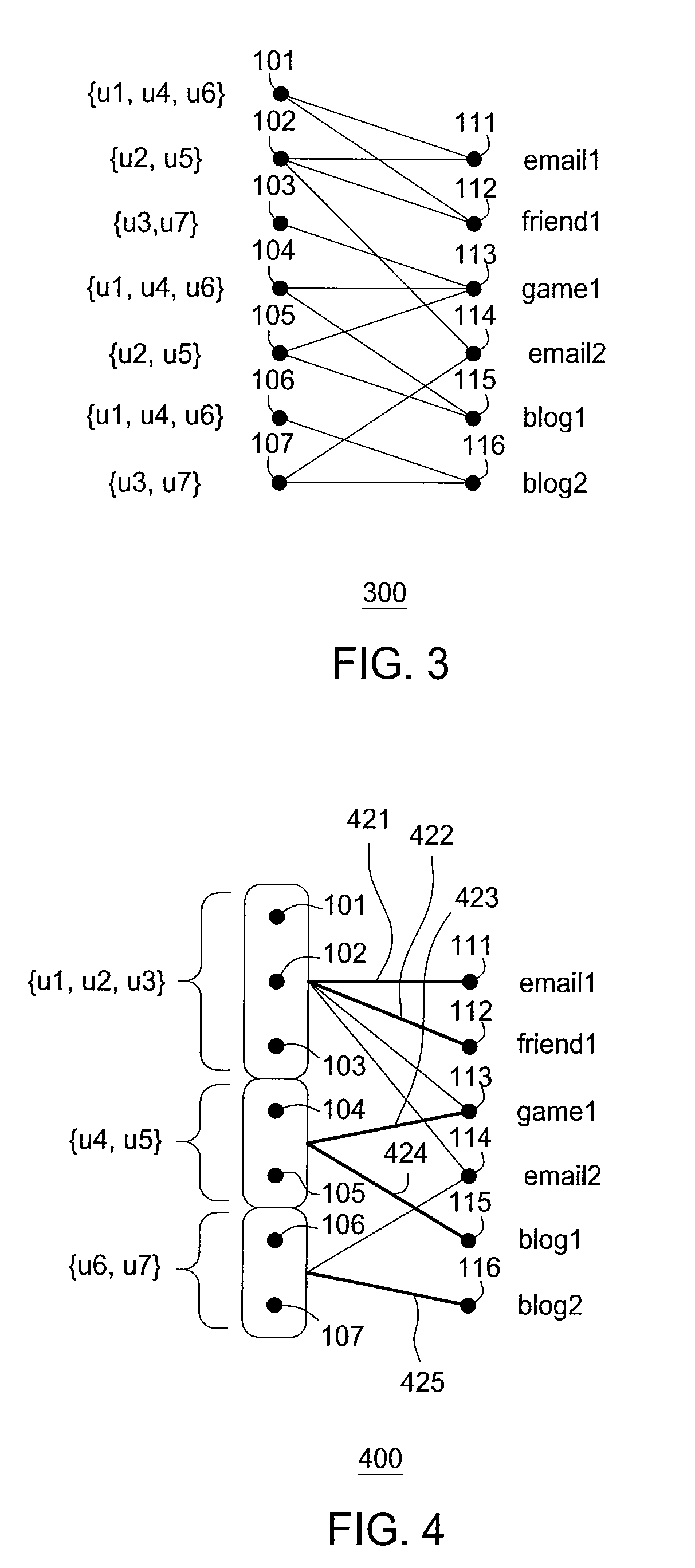

Method and apparatus for providing anonymization of data

ActiveUS20110041184A1Digital data processing detailsAnalogue secracy/subscription systemsData miningData anonymization

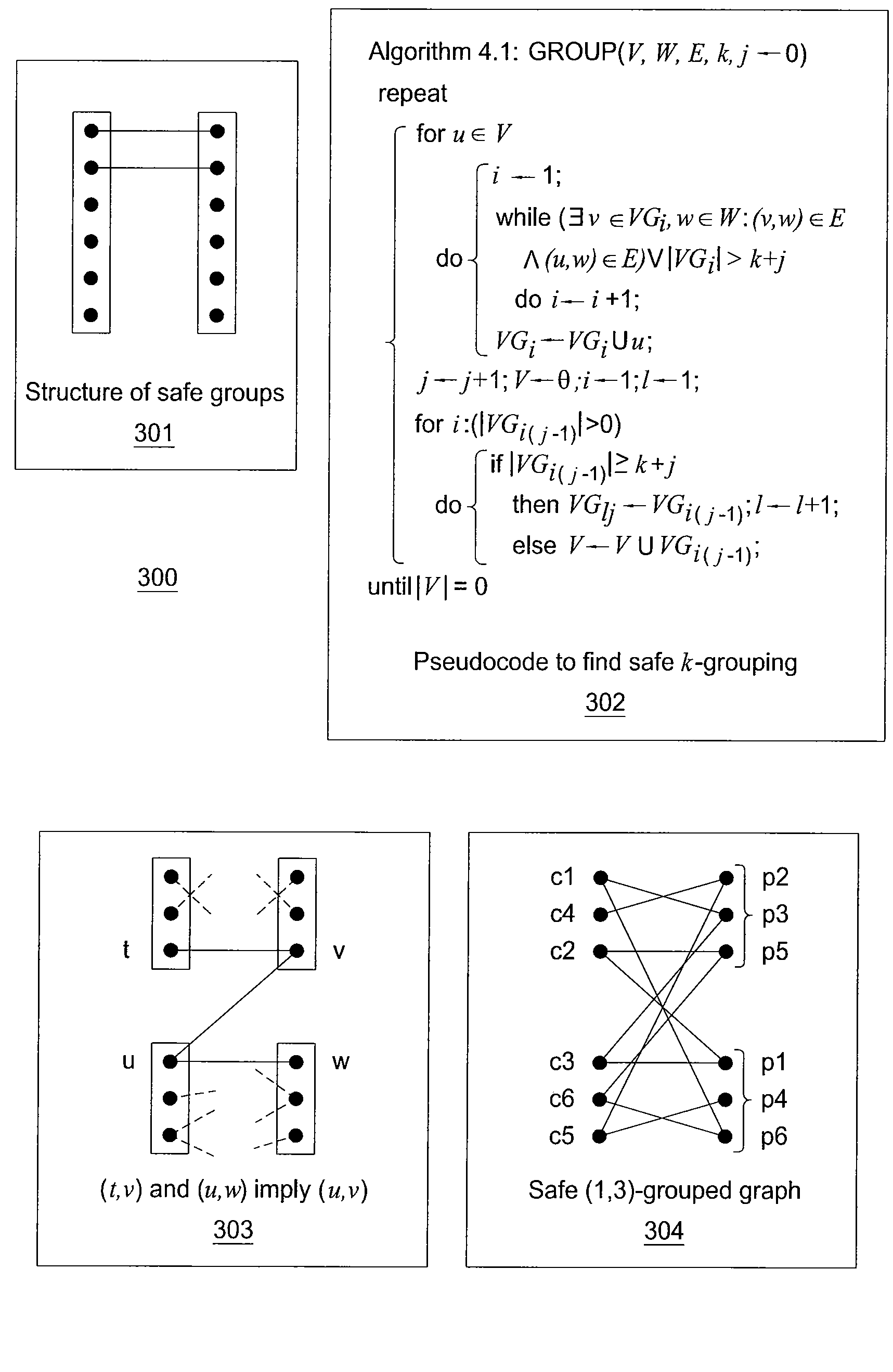

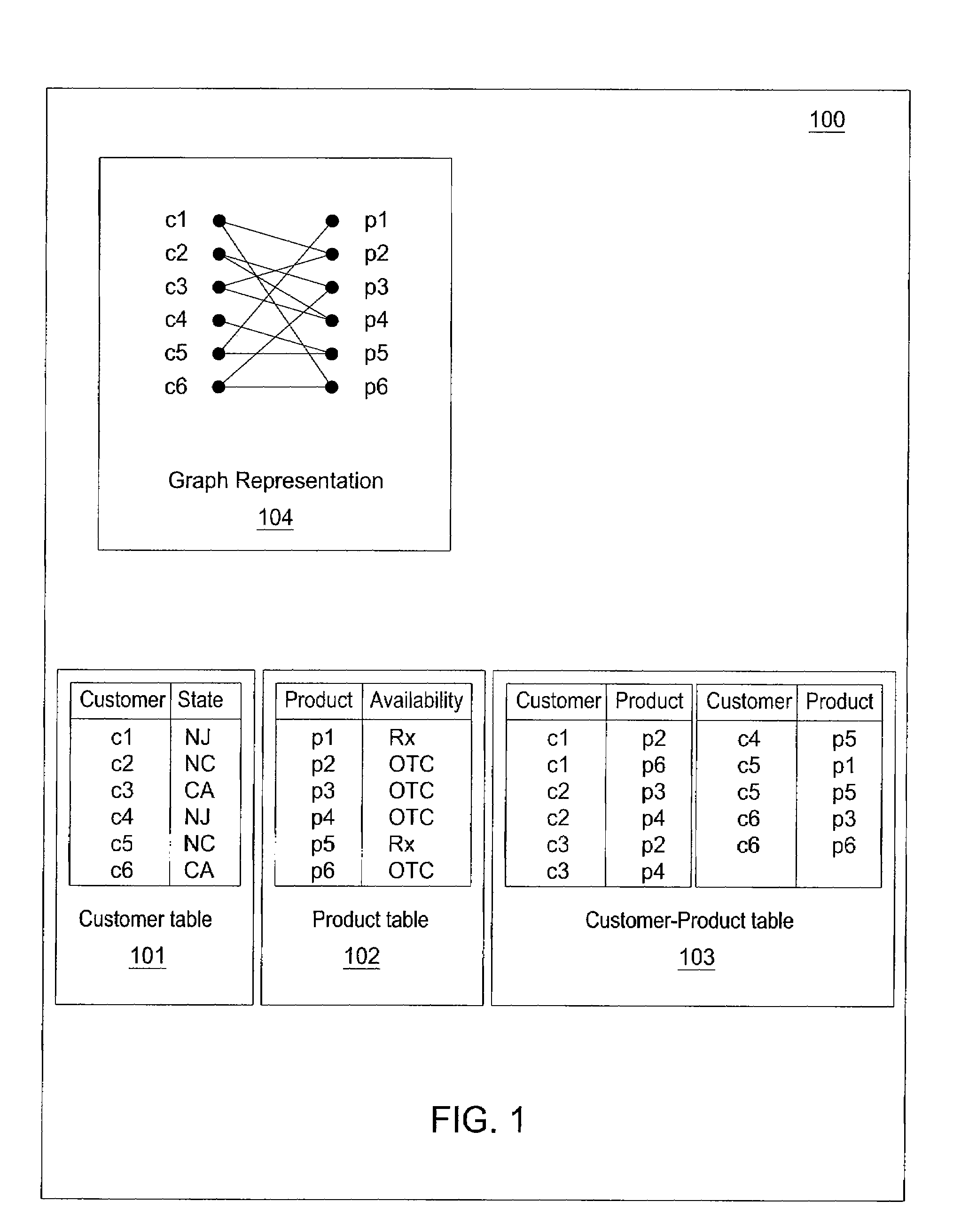

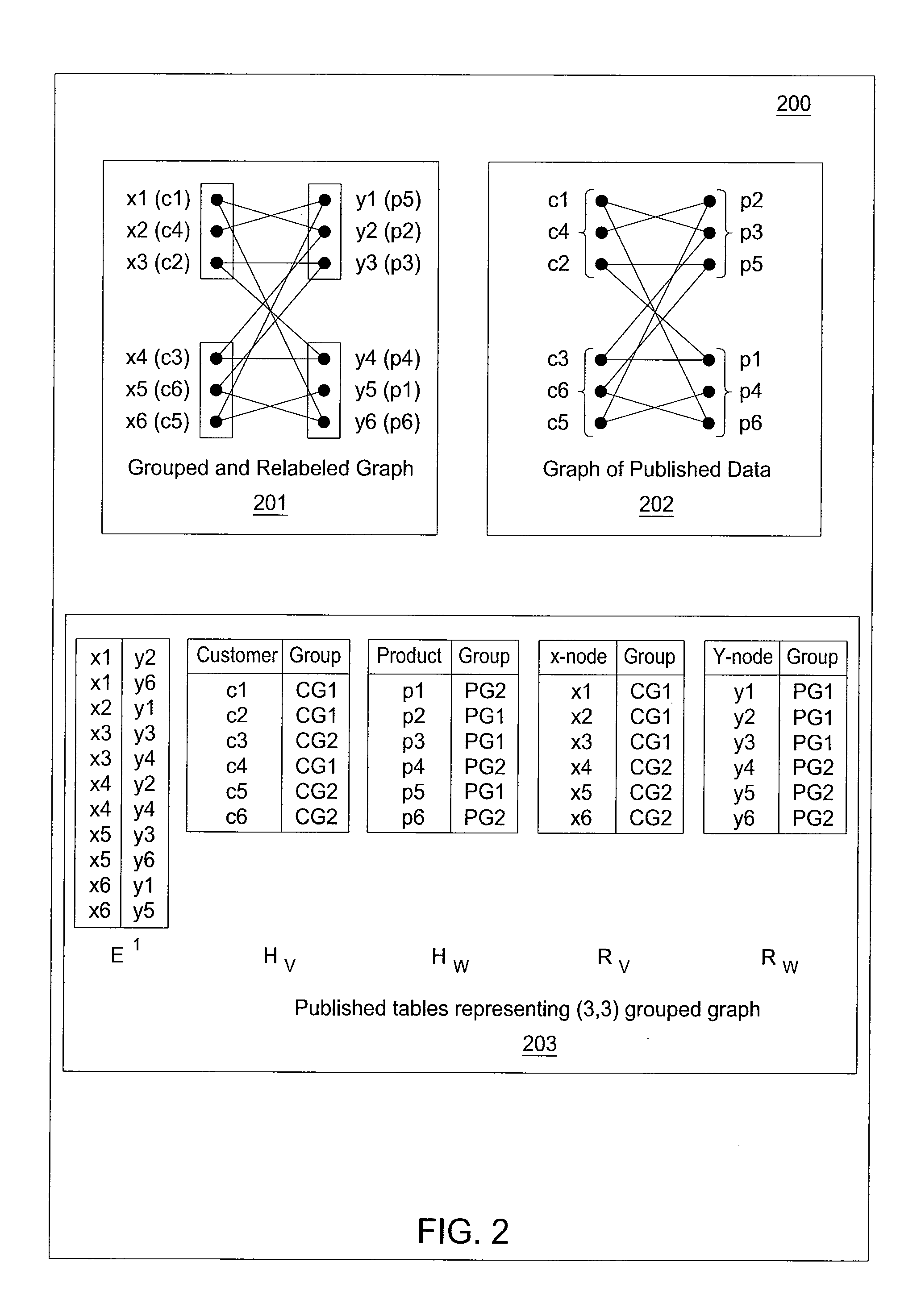

A method and apparatus for providing an anonymization of data are disclosed. For example, the method receives a request for anonymizing, wherein the request comprises a bipartite graph for a plurality of associations or a table that encodes the plurality of associations for the bipartite graph. The method places each node in the bipartite graph in a safe group and provides an anonymized graph that encodes the plurality of associations of the bipartite graph, if a safe group for all nodes of the bipartite graph is found.

Owner:AT&T INTPROP I L P

Location anonymization-based privacy protection method and apparatus

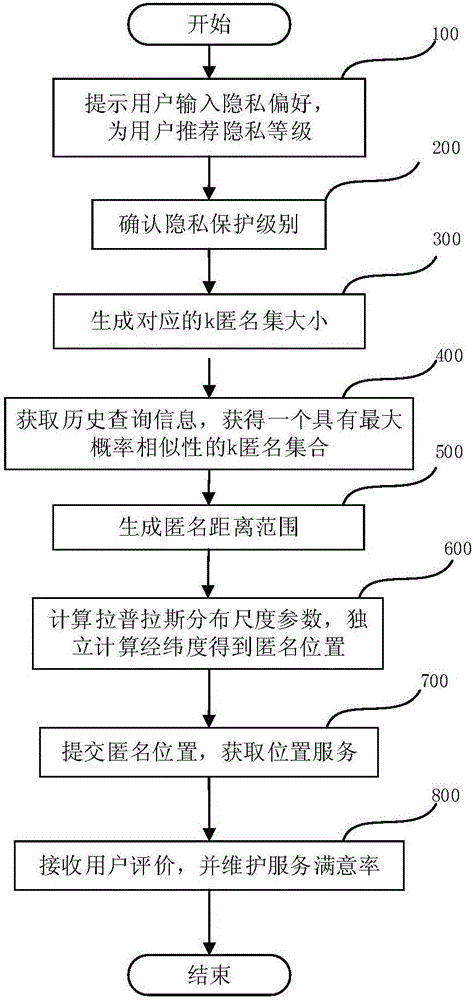

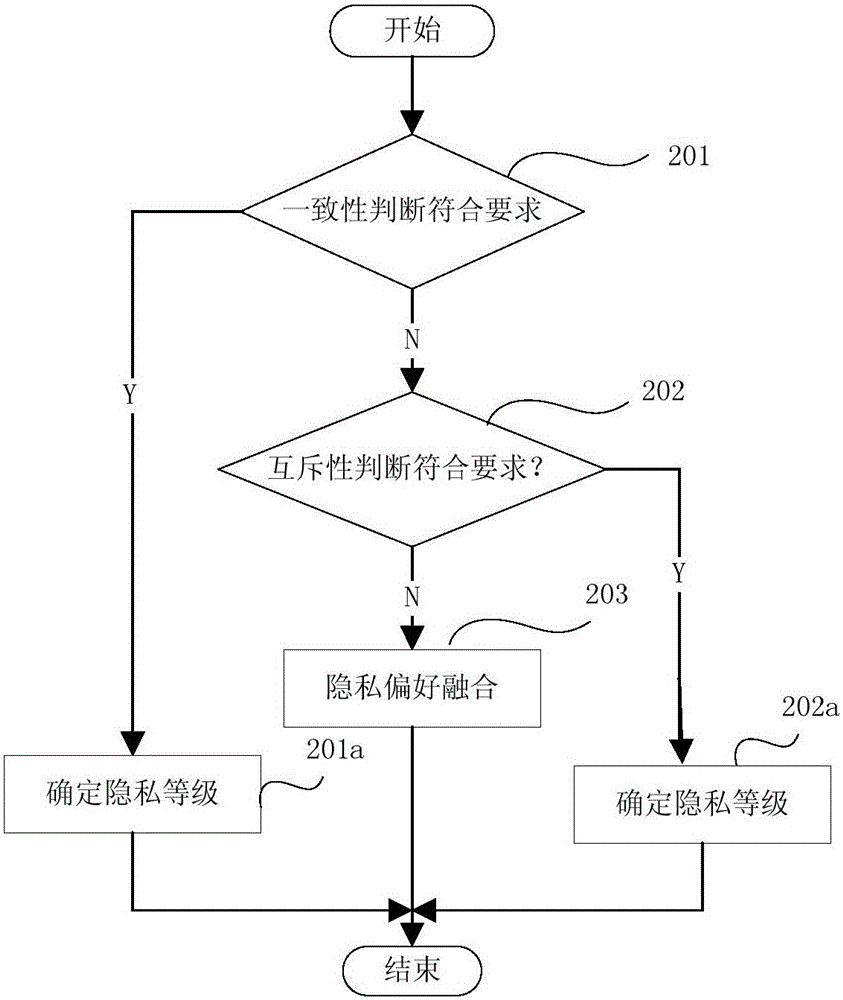

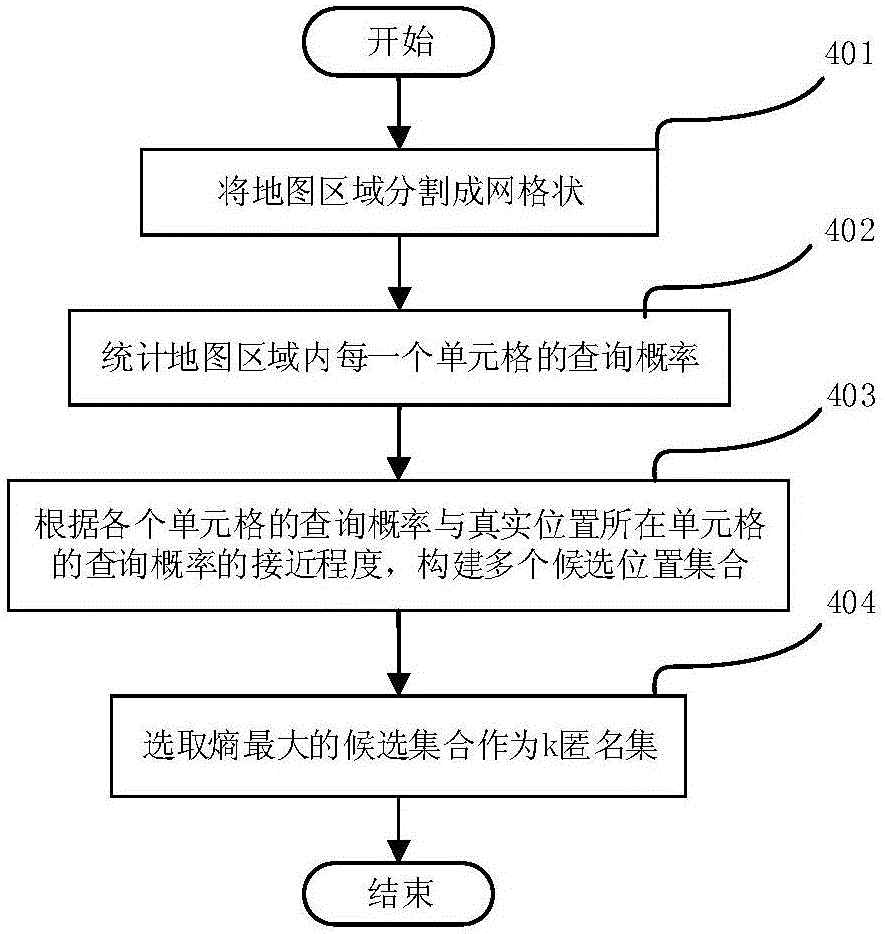

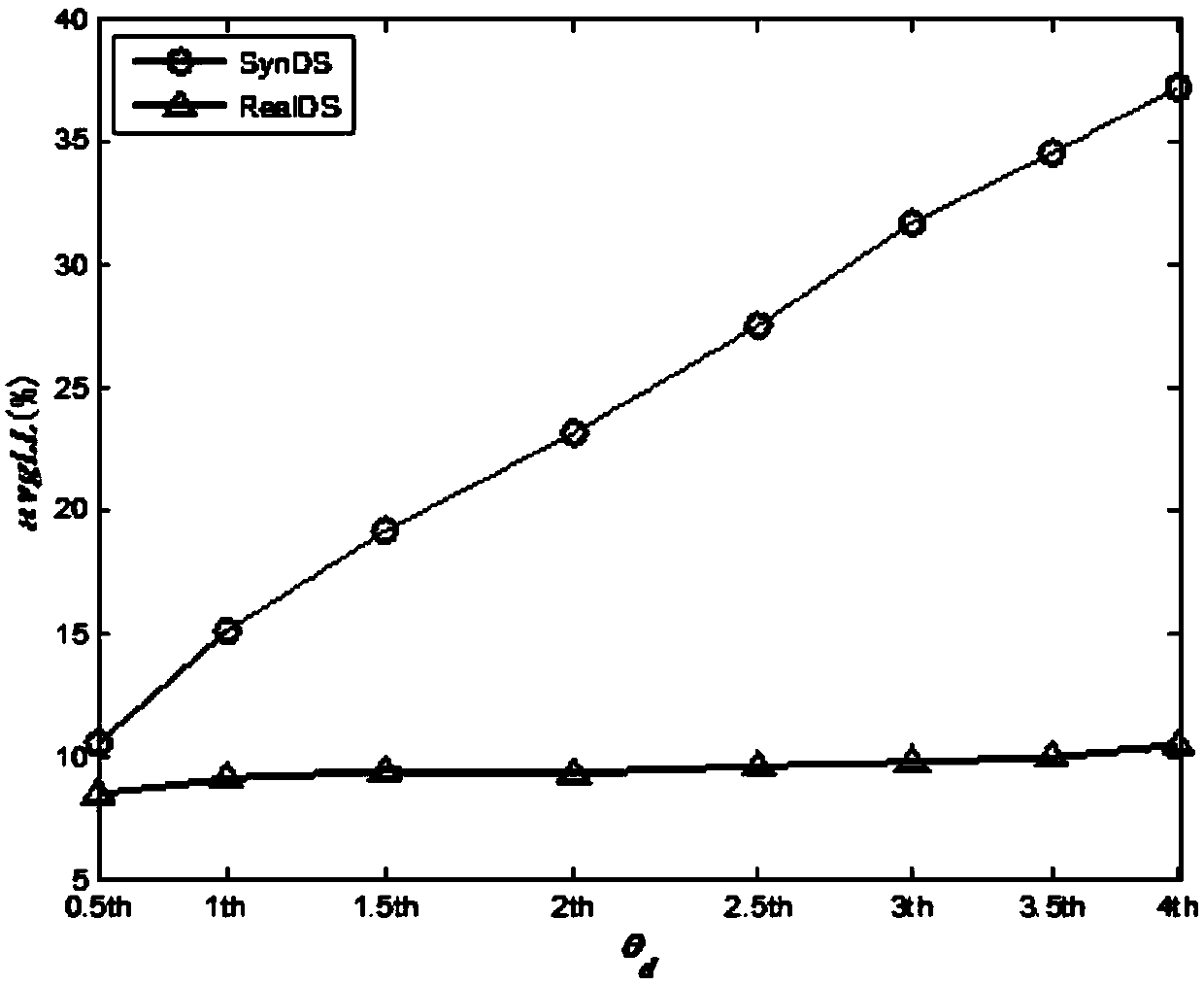

ActiveCN106209813AImprove service qualityImplement location privacy protectionTransmissionPrivacy protectionAnonymity

The invention provides a location anonymization-based privacy protection method. The method comprises the steps of 1) determining an anonymity degree k according to a currently queried privacy level, wherein the higher the privacy level is and the higher the anonymity degree k is; 2) creating a plurality of candidate anonymity sets with k elements by taking the anonymity degree k as the size of a currently queried anonymity set; 3) searching for one candidate anonymity set with a maximum query probability set entropy from the created candidate anonymity sets to serve as the anonymity set; 4) generating an upper bound and a lower bound of an anonymity distance according to the privacy level, wherein the higher the privacy level is, the larger the upper bound of the anonymity distance is, and the larger the lower bound of the anonymity distance is; and 5) generating an anonymous location used for replacing a currently queried real location according to the generated upper bound and lower bound of the anonymity distance in the range of the anonymity set. The invention furthermore provides a corresponding privacy protection apparatus. According to the method and the apparatus, the LBS (Location Based Service) quality is improved while the location privacy protection is realized, and different demands of different users on privacy protection can be guaranteed.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

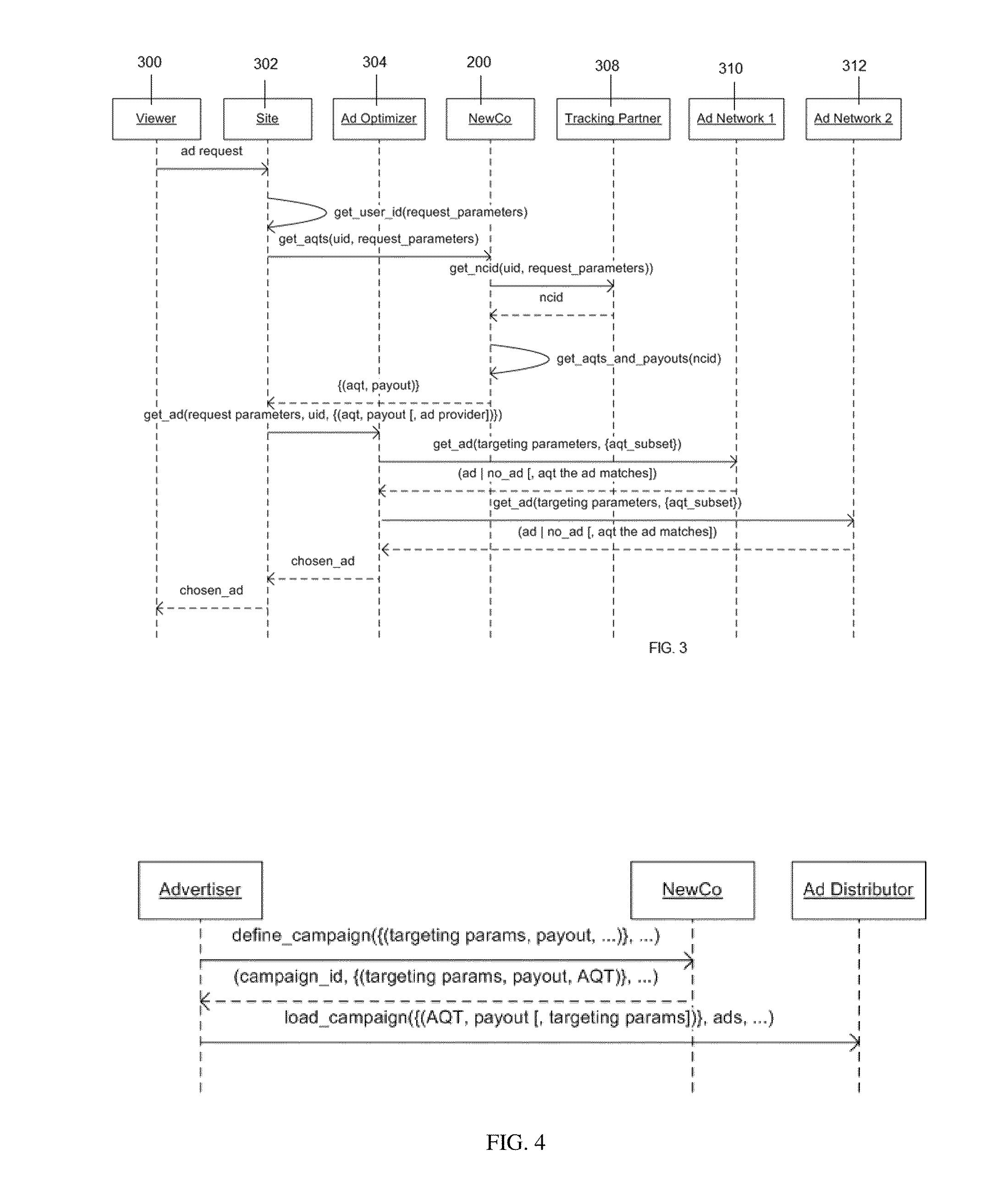

Privacy-safe targeted advertising method and system

A method and system for sophisticated consumer deep profile building provided as a service in a certifiably clean, i.e., privacy-safe, manner to advertisers, ad networks, publishers, aggregators and service providers. In an embodiment, an entity provides a tracking and targeting service that acts as a container for sensitive consumer information. The entity preferably implements a stringent policy of transparency and disclosure, deploys sophisticated security and data anonymization technologies, and it offers a simple, centralized consumer service for privacy disclosure, review and deletion of collected profile information, opt-in, opt-out, and the like. In exchange, advertisers, ad networks, publishers, aggregators and service providers (including, without limitation, mobile operators, multiple service providers (MSPs), Internet service providers (ISPs), and the like), receive privacy-safe targeting services without ever having to touch sensitive information.

Owner:SIMEONOV SIMEON S

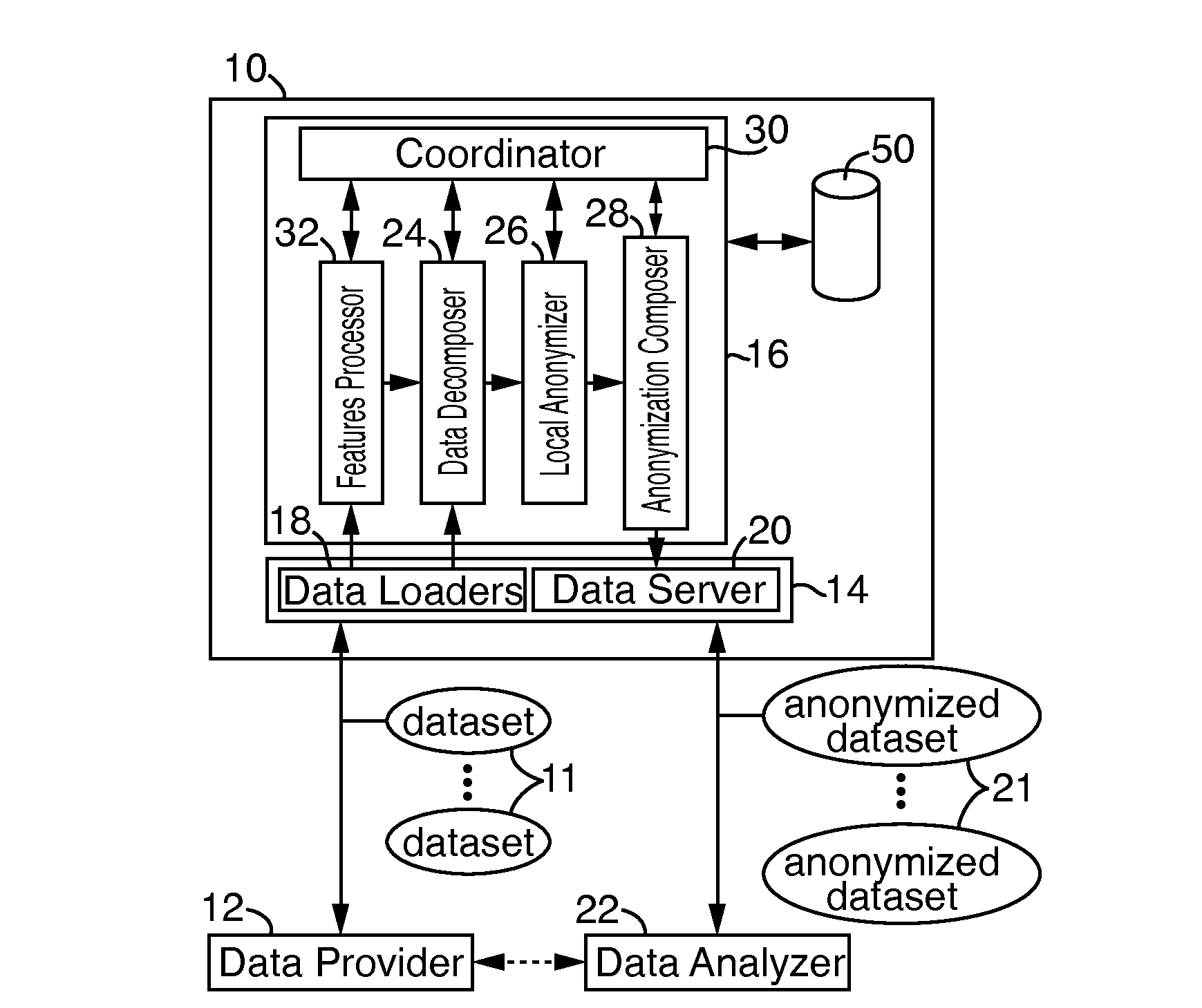

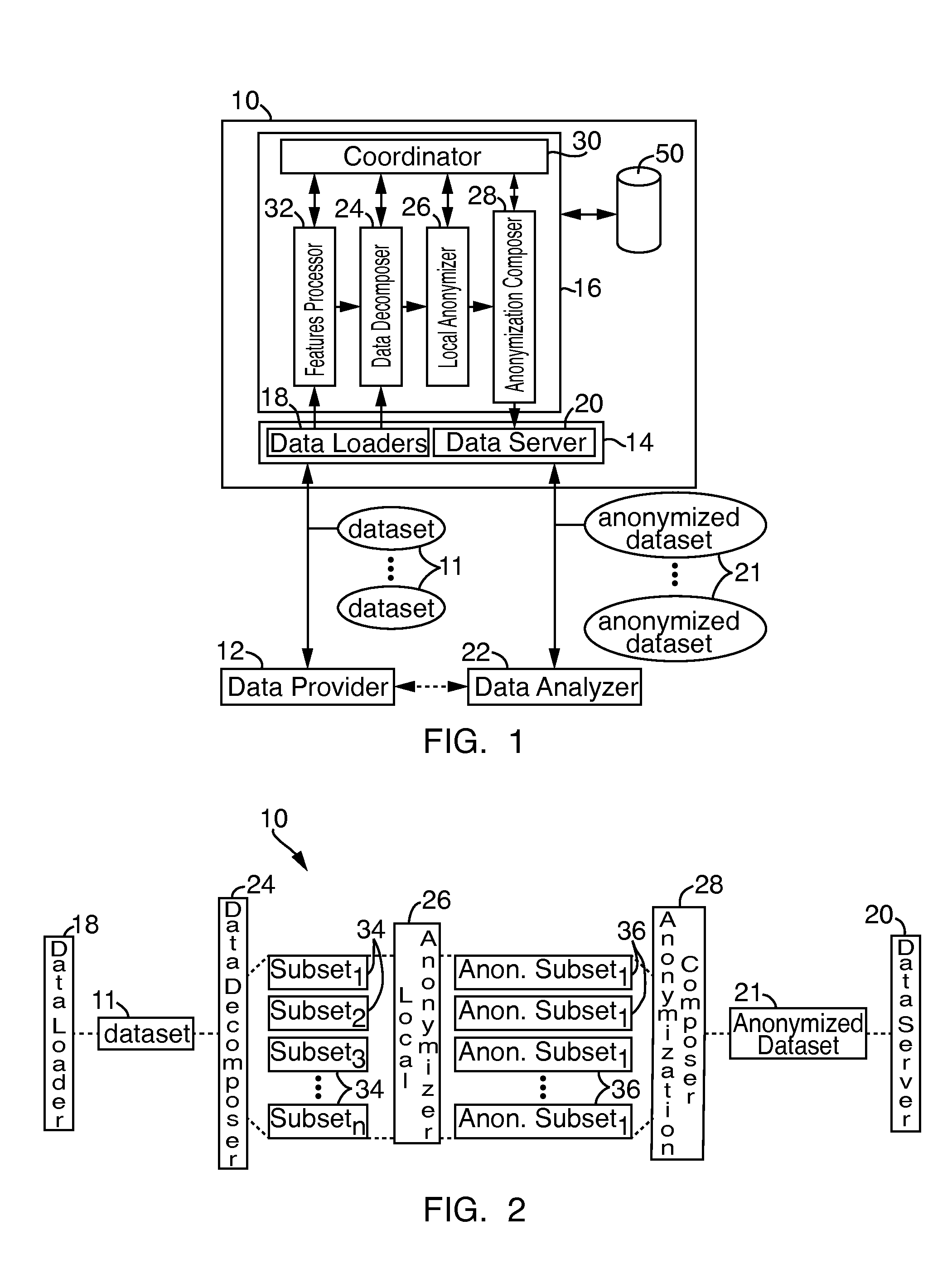

Systems and methods for data anonymization

InactiveUS20140380489A1Digital data processing detailsAnalogue secracy/subscription systemsData setTheoretical computer science

A system and method for dynamic anonymization of a dataset includes decomposing, at at least one processor, the dataset into a plurality of subsets and applying an anonymization strategy on each subset of the plurality of subsets. The system and method further includes aggregating, at the at least one processor, the individually anonymized subsets to provide an anonymized dataset.

Owner:ALCATEL LUCENT SAS

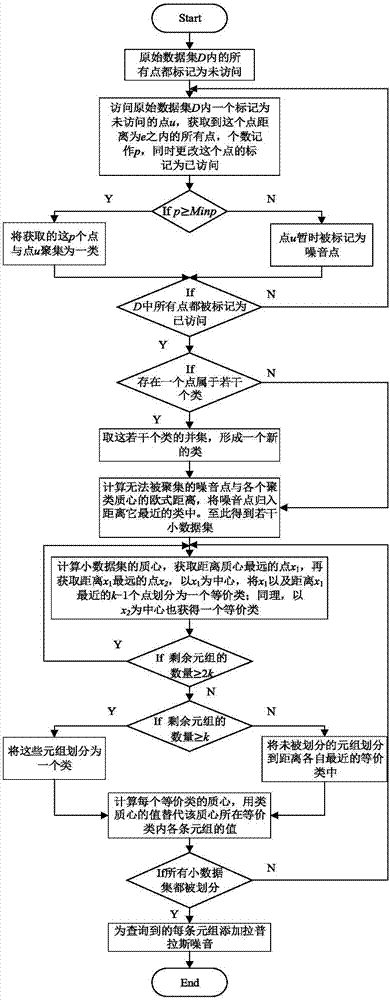

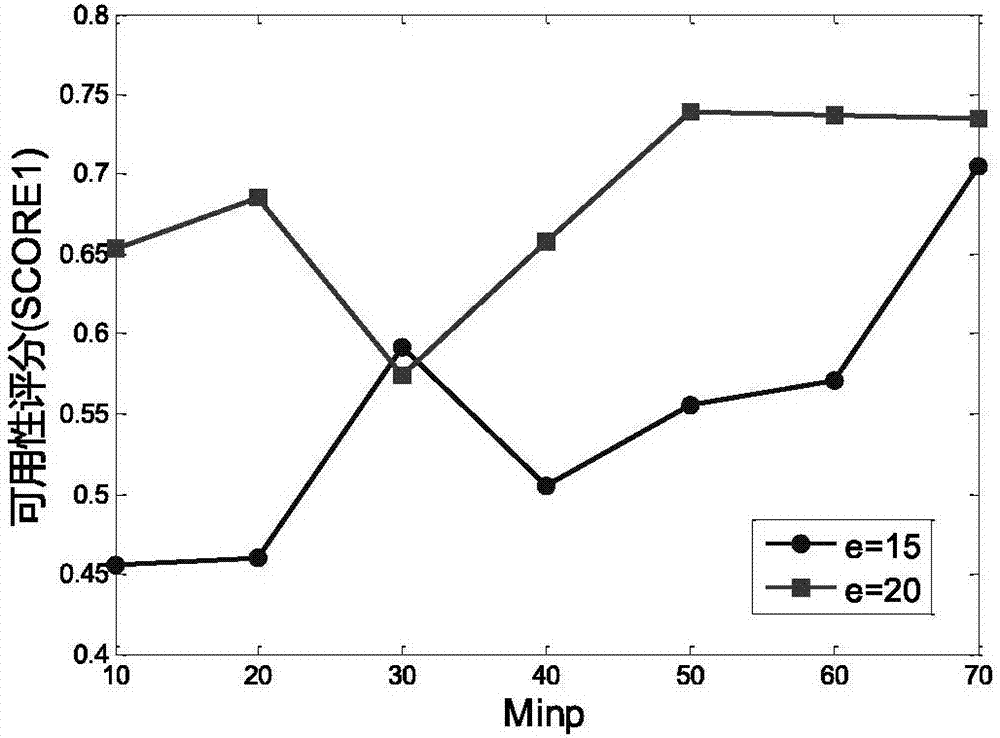

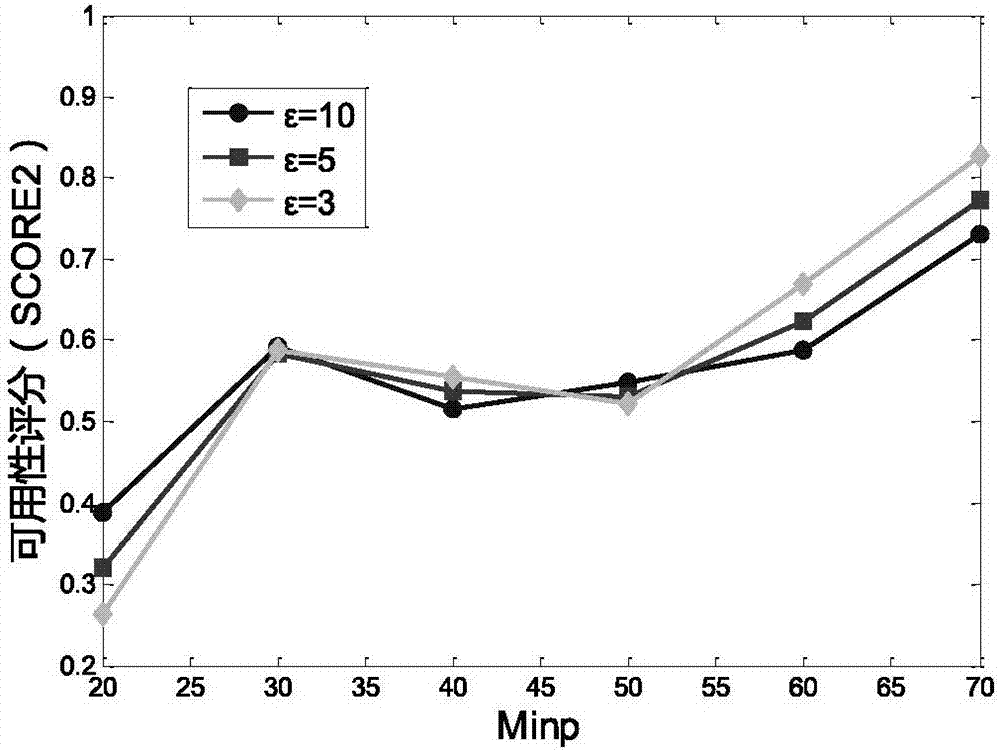

Differential privacy protection method based on microaggregation anonymity

The invention discloses a differential privacy protection method based on microaggregation anonymity and relates to the technical field of data anonymization and differential privacy protection. According to the invention, an original data set is classified according to density through a DBSCAN cluster function and abnormal points and noisy points are classified into closest classes; the cluster is classified for a second time by means of the MDAV function and anonymization is performed the equivalent class record number is controlled between k and 2k-1; finally, by means of the differential privacy protection technology, adding Laplace noise to each data record. Experiments show that, compared with existing methods, the DCMVDP method has less information loss and higher data availability under the premise of ensuring privacy data.

Owner:XUZHOU MEDICAL UNIV

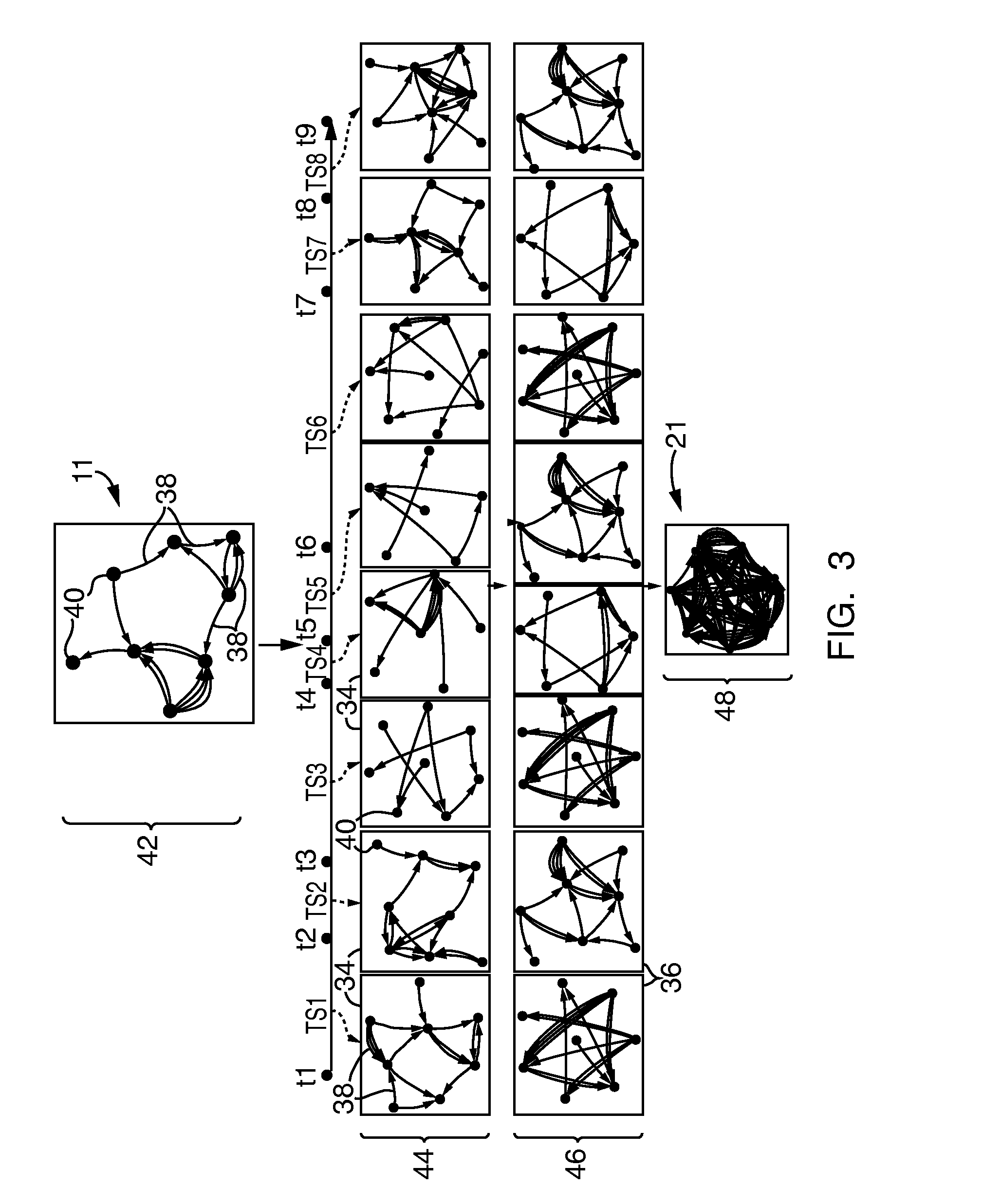

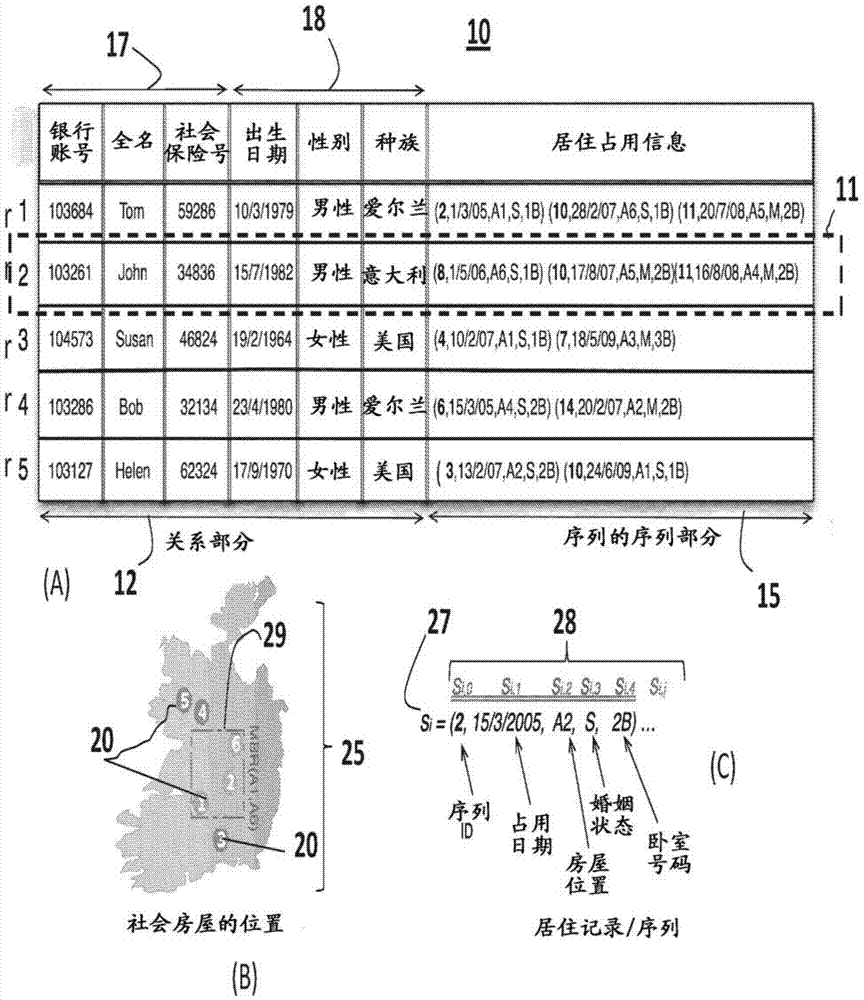

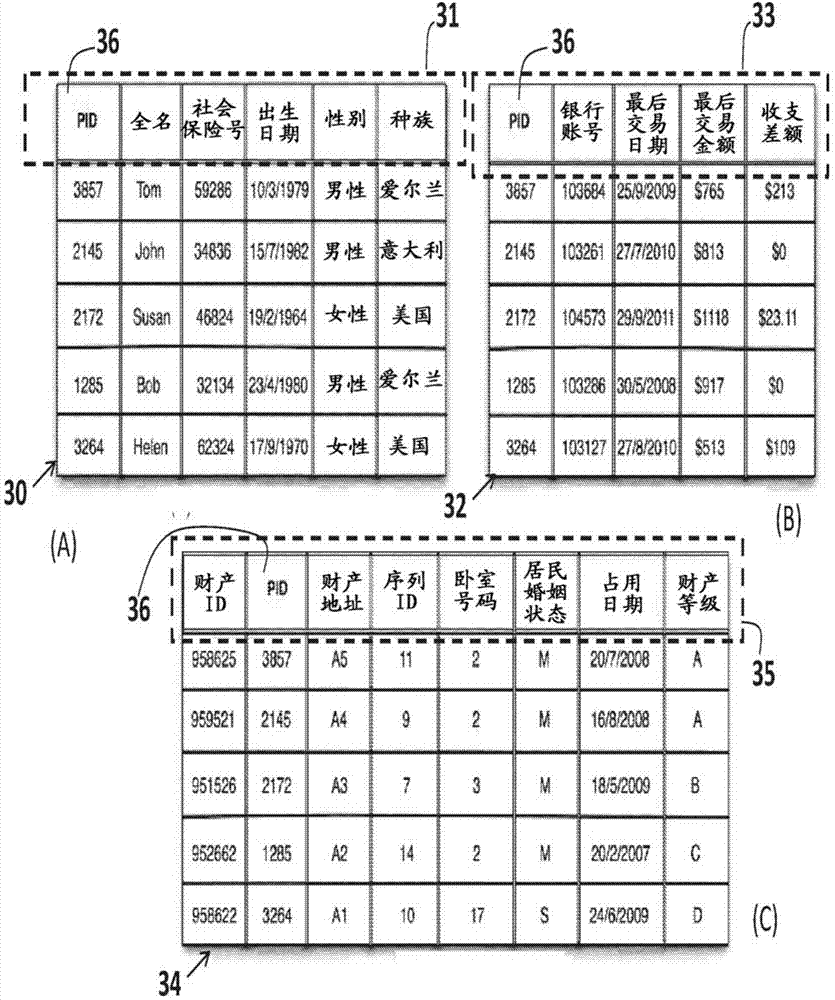

Method And System For Anonymizing Data

ActiveCN104732154ADigital data protectionSpecial data processing applicationsData setPrivacy protection

A system, method and computer program product for anonymizing data. Datasets anonymized according to the method have a relational part having multiple tables of relational data, and a sequential part having tables of time-ordered data. The sequential part may include data representing a “sequences-of-sequences”. A “sequence-of-sequences” is a sequence which, itself, consists of a number of sequences. Each of these kinds of data may be anonymized using k-anonymization techniques and offers privacy protection to individuals or entities from attackers whose knowledge spans the two (or more) kinds of attribute data.

Owner:IBM CORP

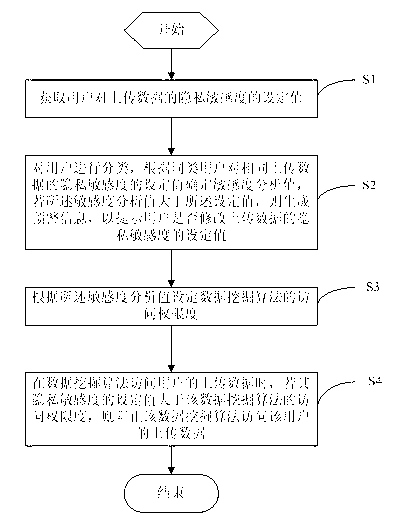

Method and system for protecting privacy of users in big data mining environments

InactiveCN103294967AAvoid accessEasy to implementDigital data protectionSpecial data processing applicationsInternet privacyUser privacy

The invention discloses a method and a system for protecting the privacy of users in big data mining environments. The method includes steps of acquiring set values of the privacy sensitivity of the users on upload data; classifying the users, determining sensitivity analysis values according to the set values of the privacy sensitivity of the same kinds of users on the same upload data, generating early-warning information to prompt the users to determine whether to modify the set values of the privacy sensitivity on the upload data or not if the sensitivity analysis values are larger than the set values; setting access permission degrees of data mining algorithms according to the sensitivity analysis values; stopping the corresponding data mining algorithm from accessing the upload data of a certain user if the corresponding set value of the privacy sensitivity of the certain user is larger than the corresponding access permission degree of the data mining algorithm when the data mining algorithm is about to access the upload data of the certain user, or correspondingly processing the data by a data anonymization confusion process and data fragmentation confusion process. The method and the system have the advantages that whether privacy leak can be caused during big data mining or not can be clearly judged, and the privacy of the users can be effectively protected.

Owner:霍尔果斯智融未来信息科技有限公司

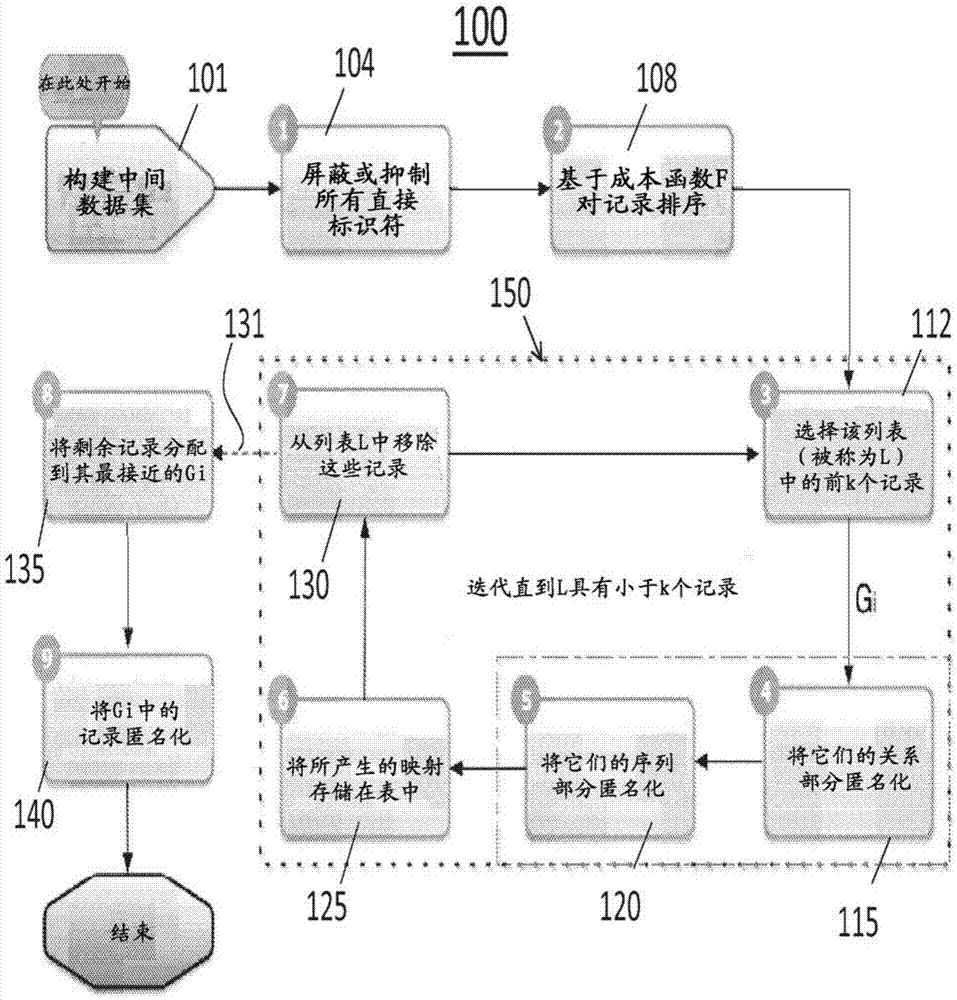

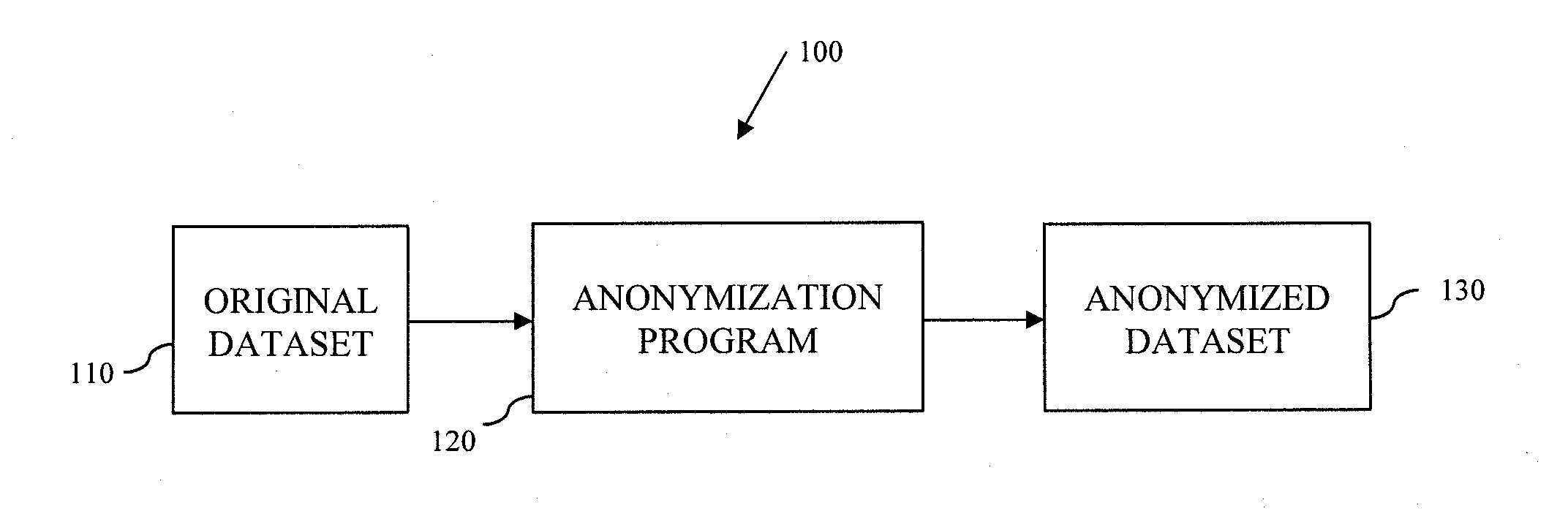

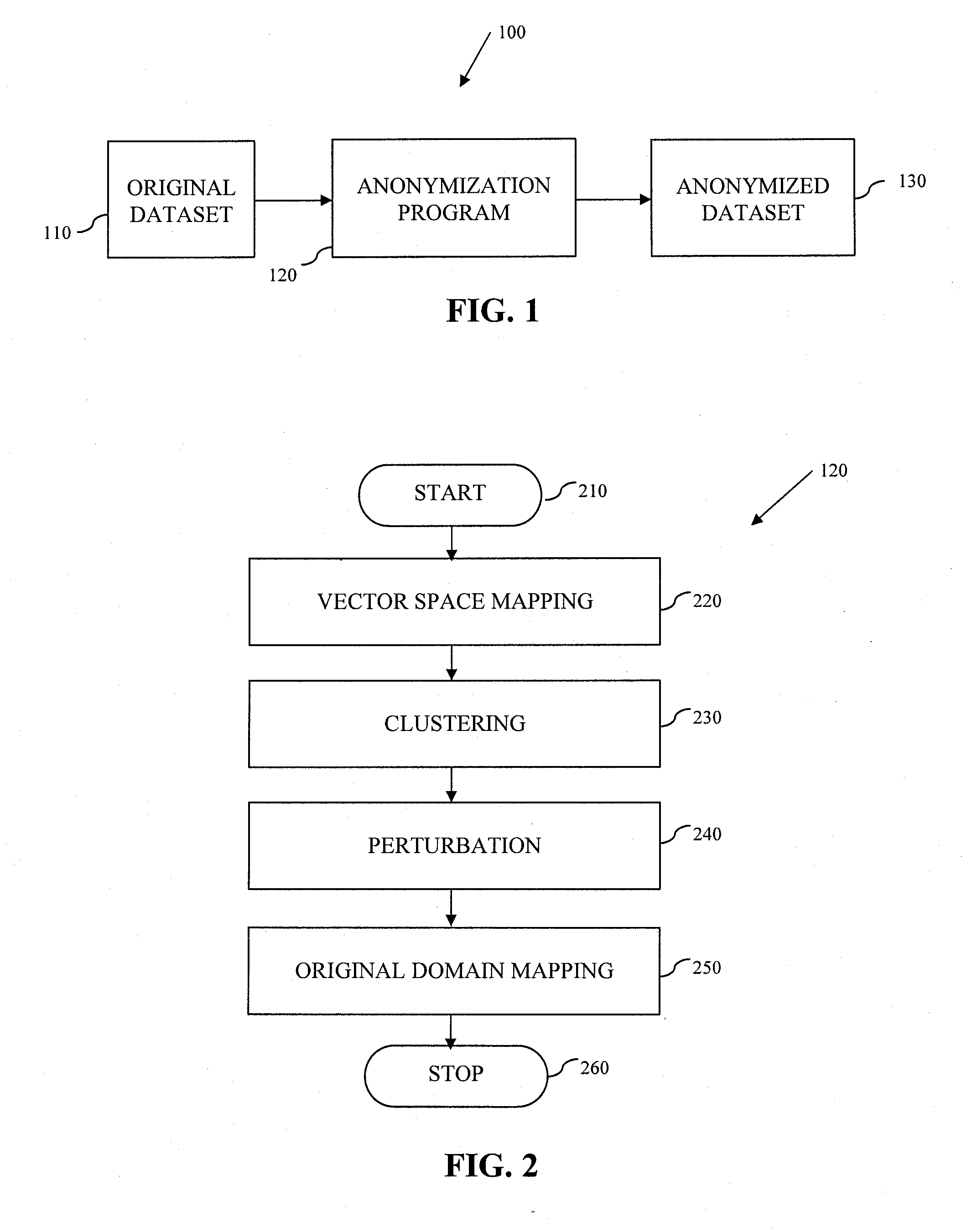

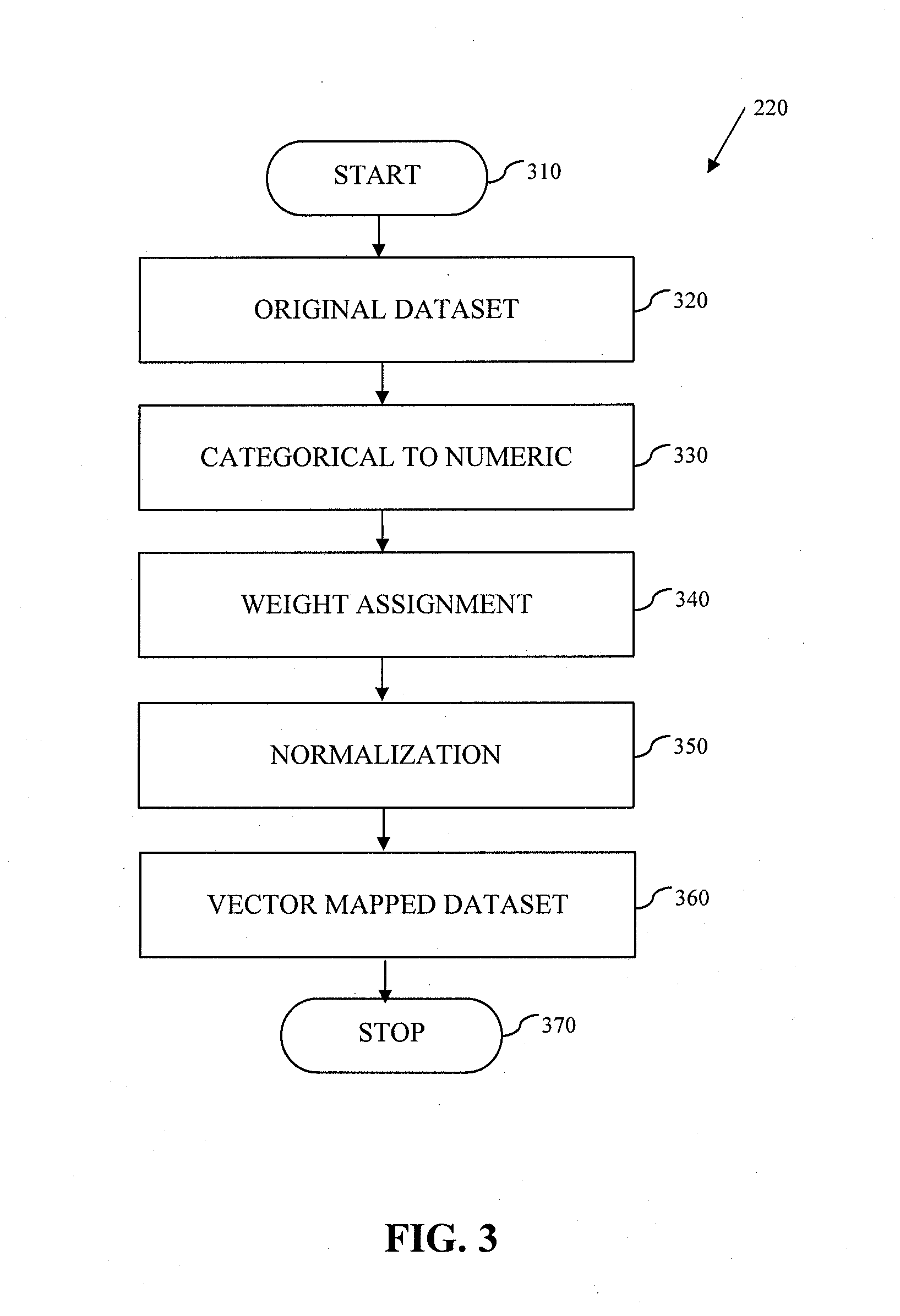

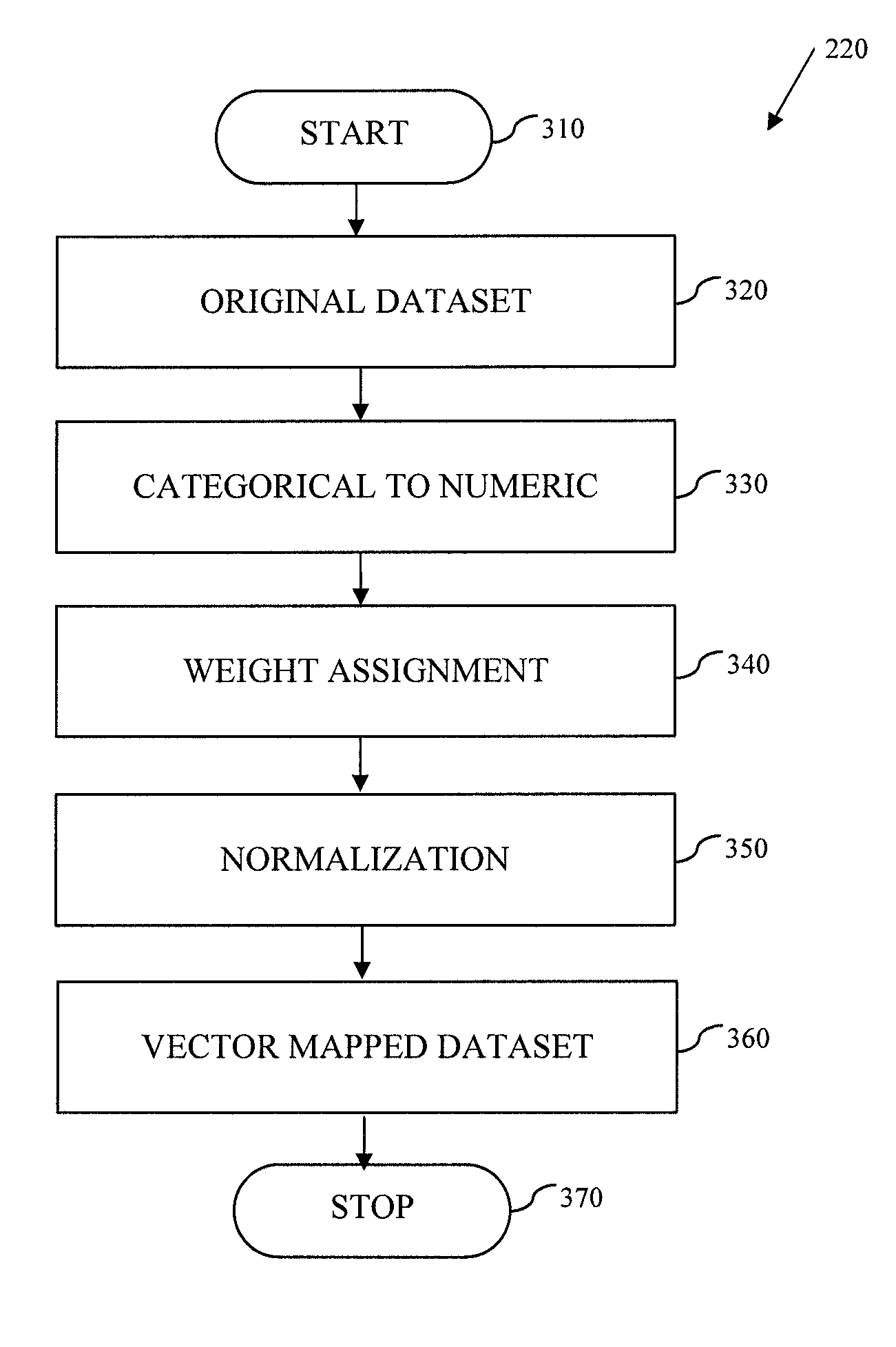

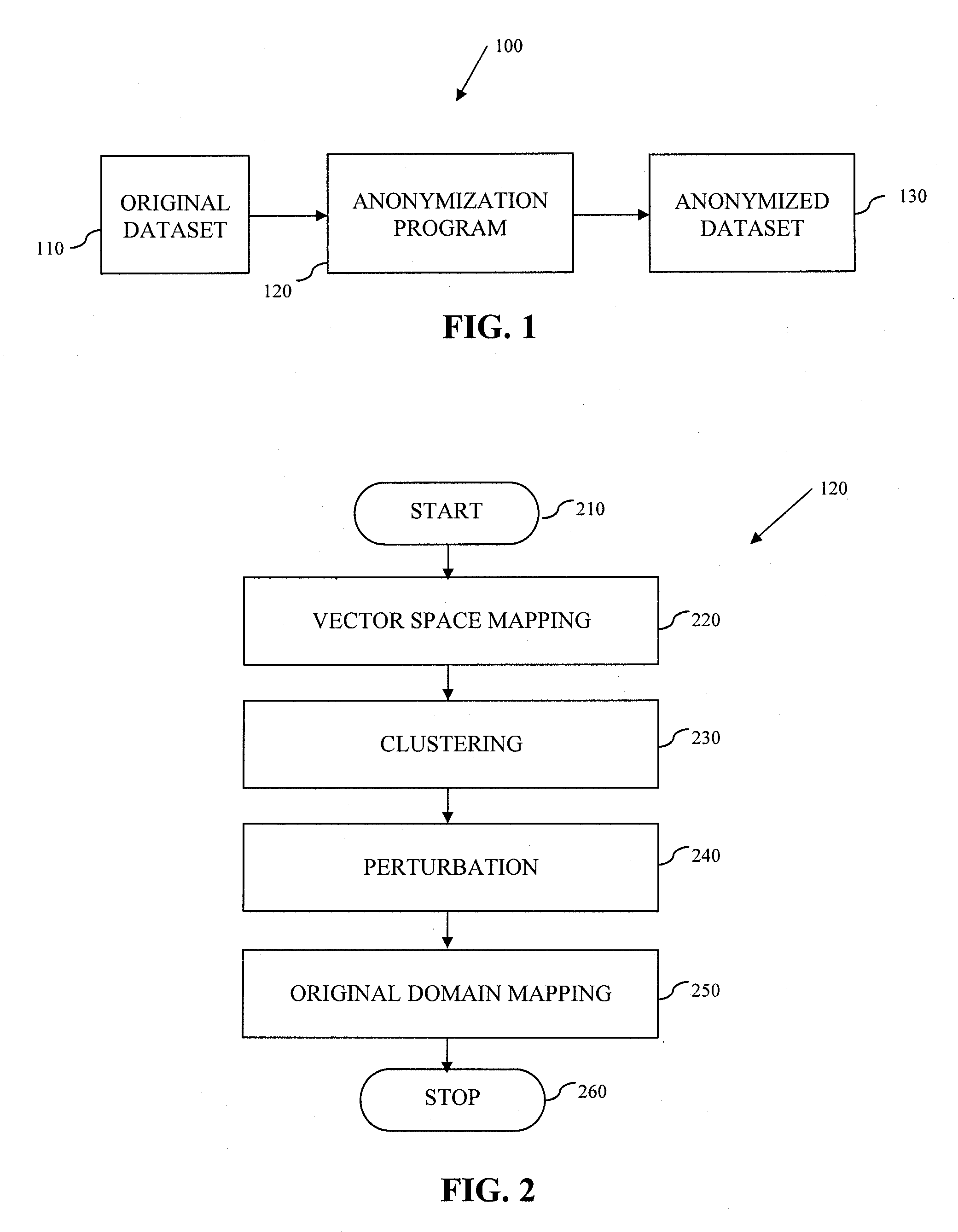

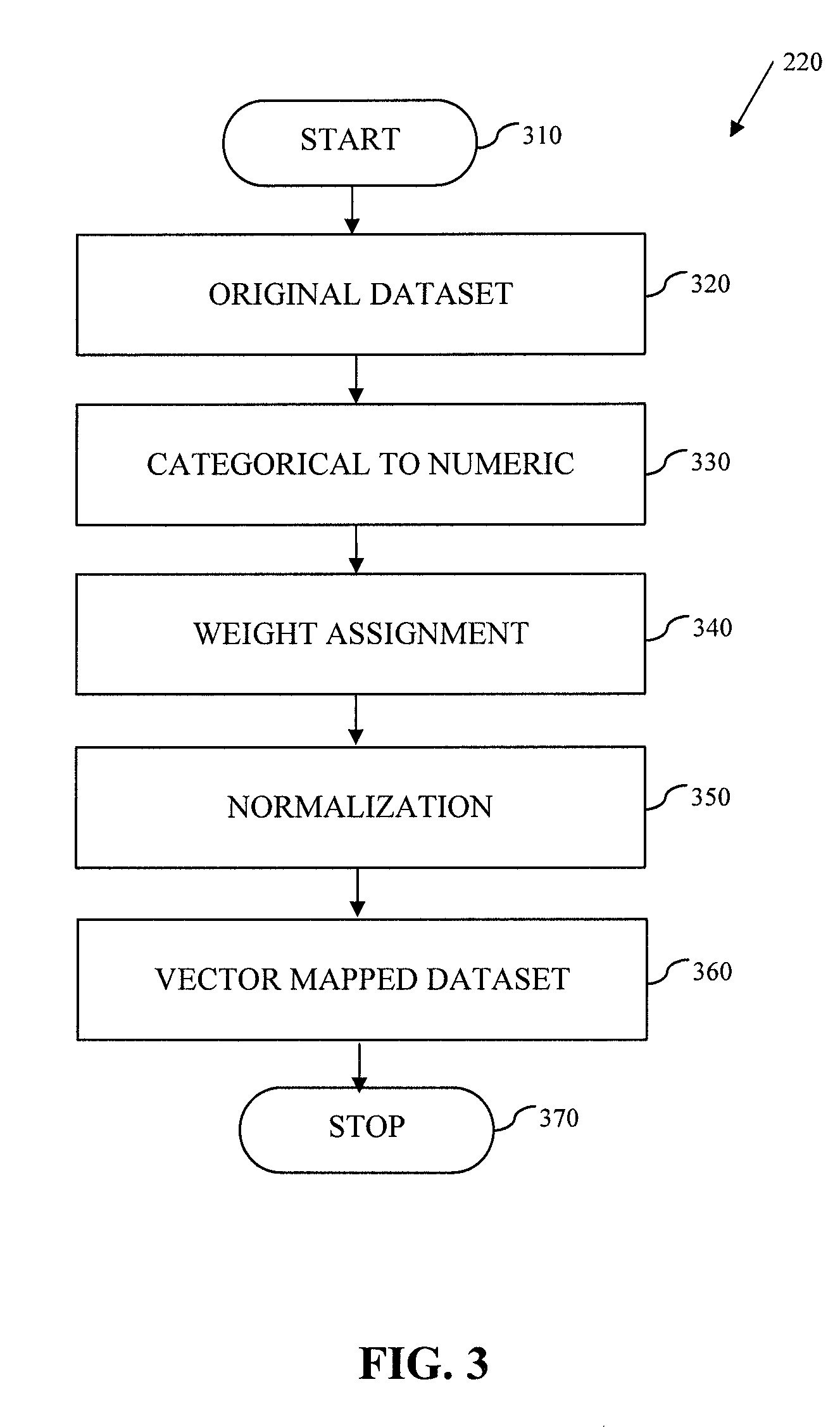

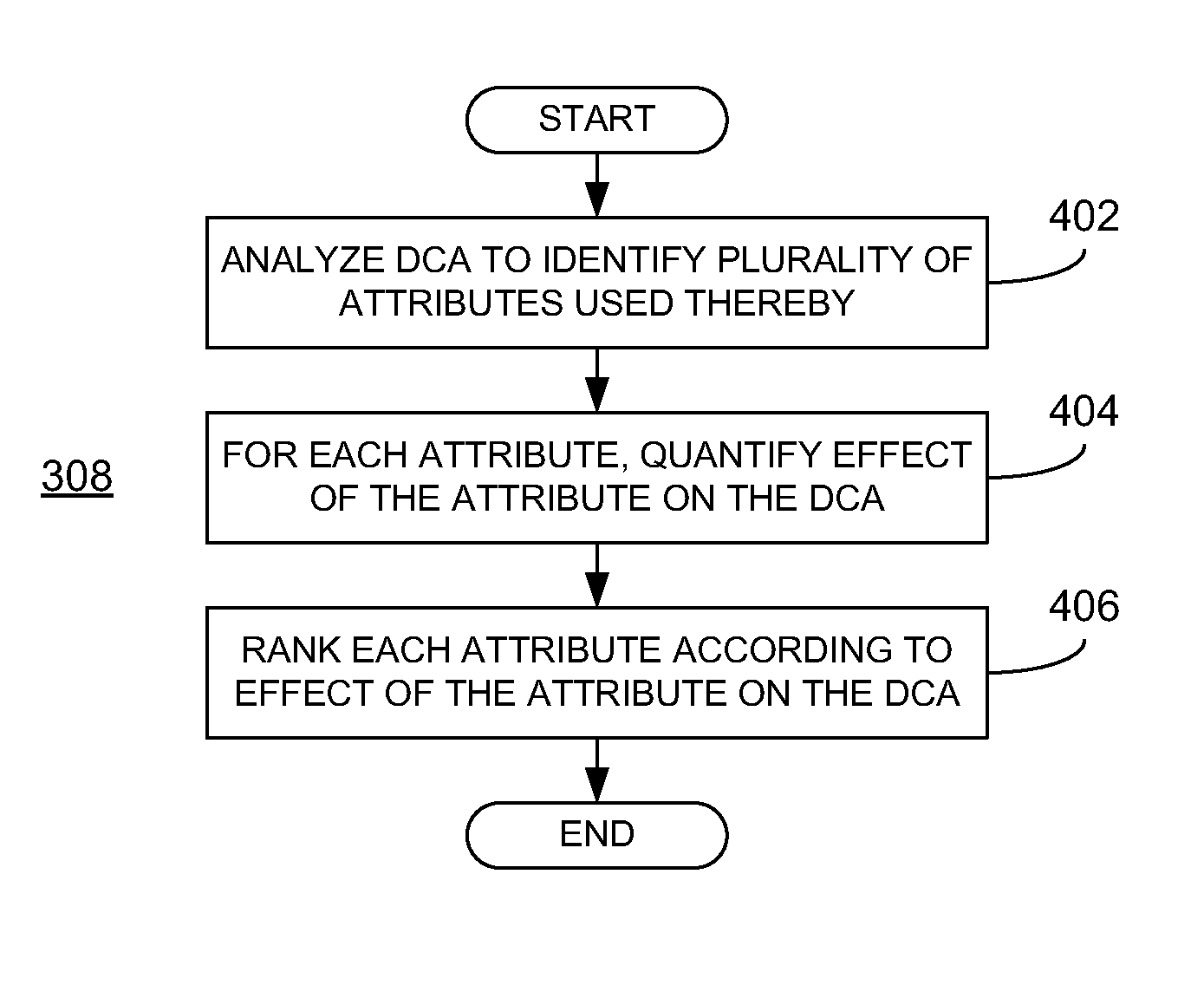

System and Method for Data Anonymization Using Hierarchical Data Clustering and Perturbation

ActiveUS20130339359A1Digital data information retrievalDigital data processing detailsData transformationComputerized system

A system and method for data anonymization using hierarchical data clustering and perturbation is provided. The system includes a computer system and an anonymization program executed by the computer system. The system converts the data of a high-dimensional dataset to a normalized vector space and applies clustering and perturbation techniques to anonymize the data. The conversion results in each record of the dataset being converted into a normalized vector that can be compared to other vectors. The vectors are divided into disjointed, small-sized clusters using hierarchical clustering processes. Multi-level clustering can be performed using suitable algorithms at different clustering levels. The records within each cluster are then perturbed such that the statistical properties of the clusters remain unchanged.

Owner:ELECTRIFAI LLC

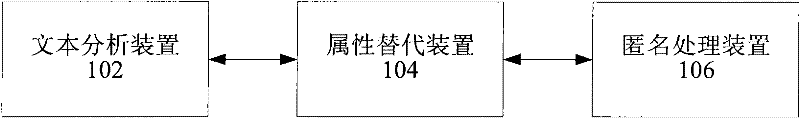

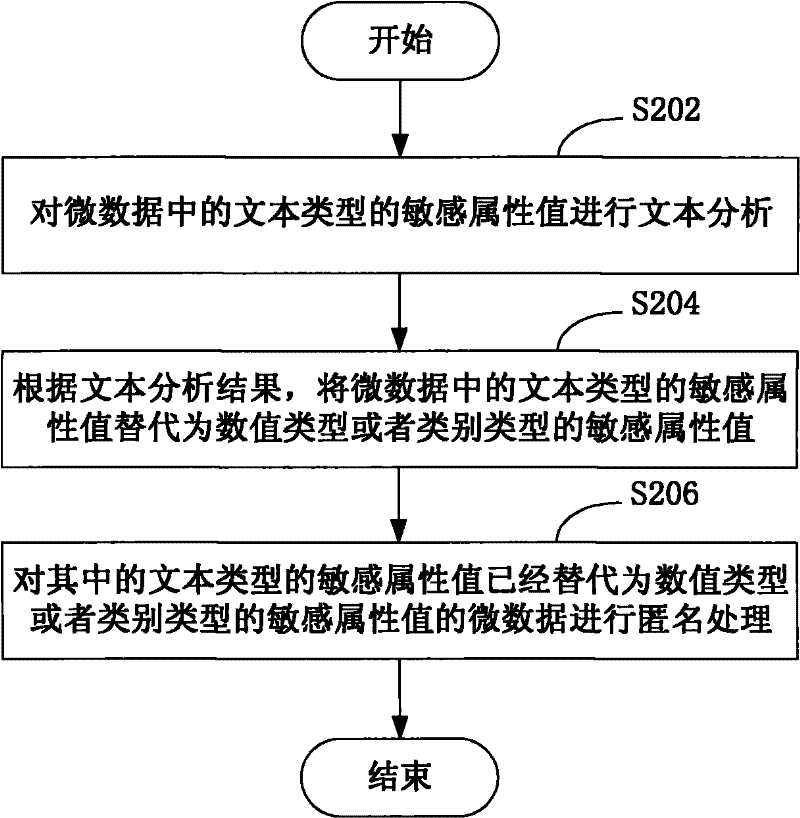

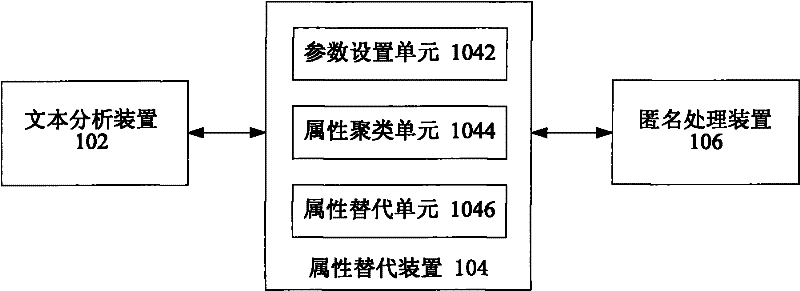

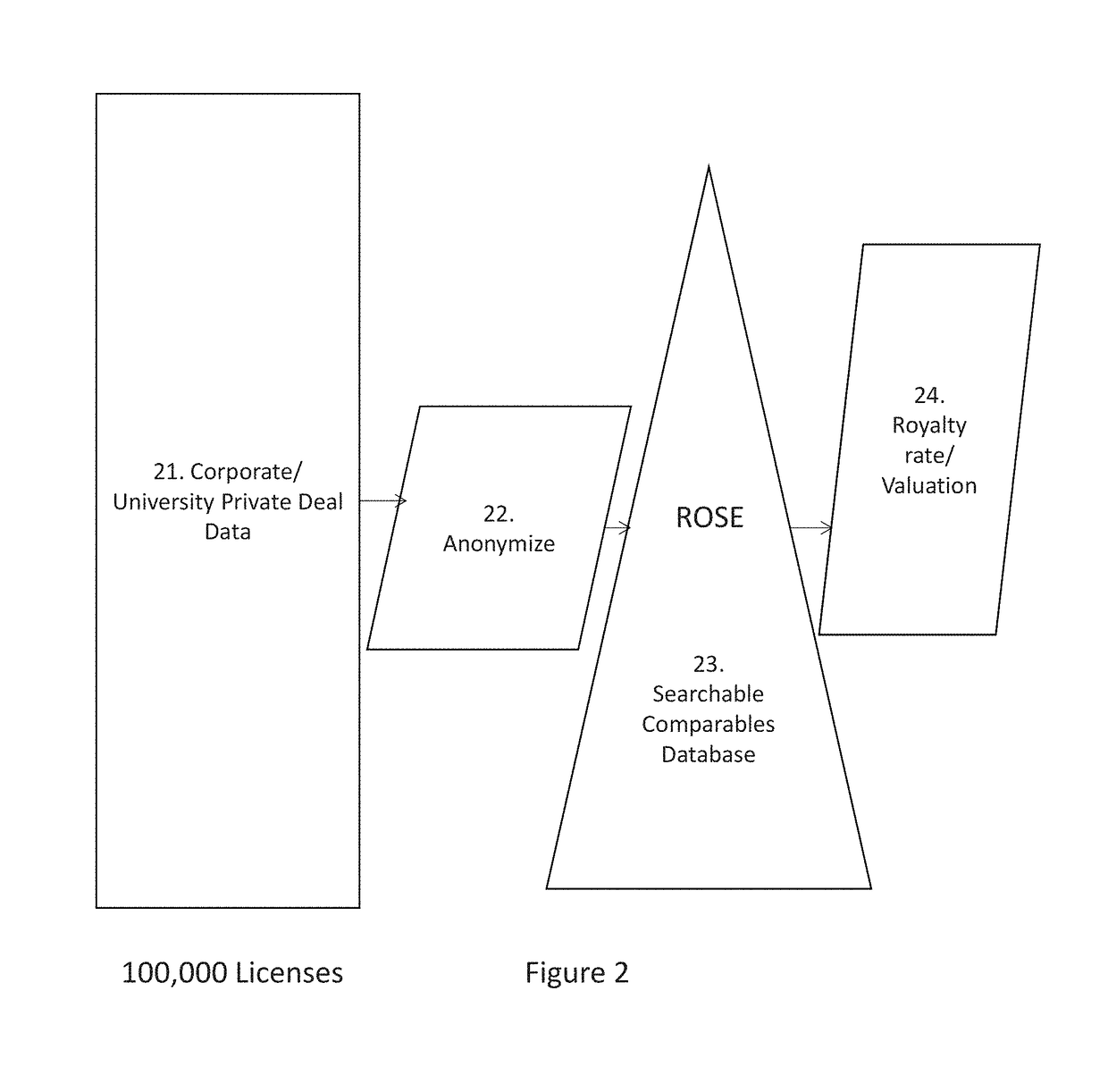

Data anonymization method and system

The invention provides a data anonymization method and system. The data anonymization method comprises the steps of: carrying out text analysis on the attribute value of a text type in data; replacing the attribute value of the text type in the data with the attribute value of a value type or a class type according to text analysis result; and carrying out anonymization processing on the data in which the attribute value of the text type is replaced by the attribute value of the value type or the class type. According to the invention, after anonymization processing, the data comprising the attribute value of the text type not only can prevent the privacy leakage based on the attribute value, but also still has use value.

Owner:NEC (CHINA) CO LTD

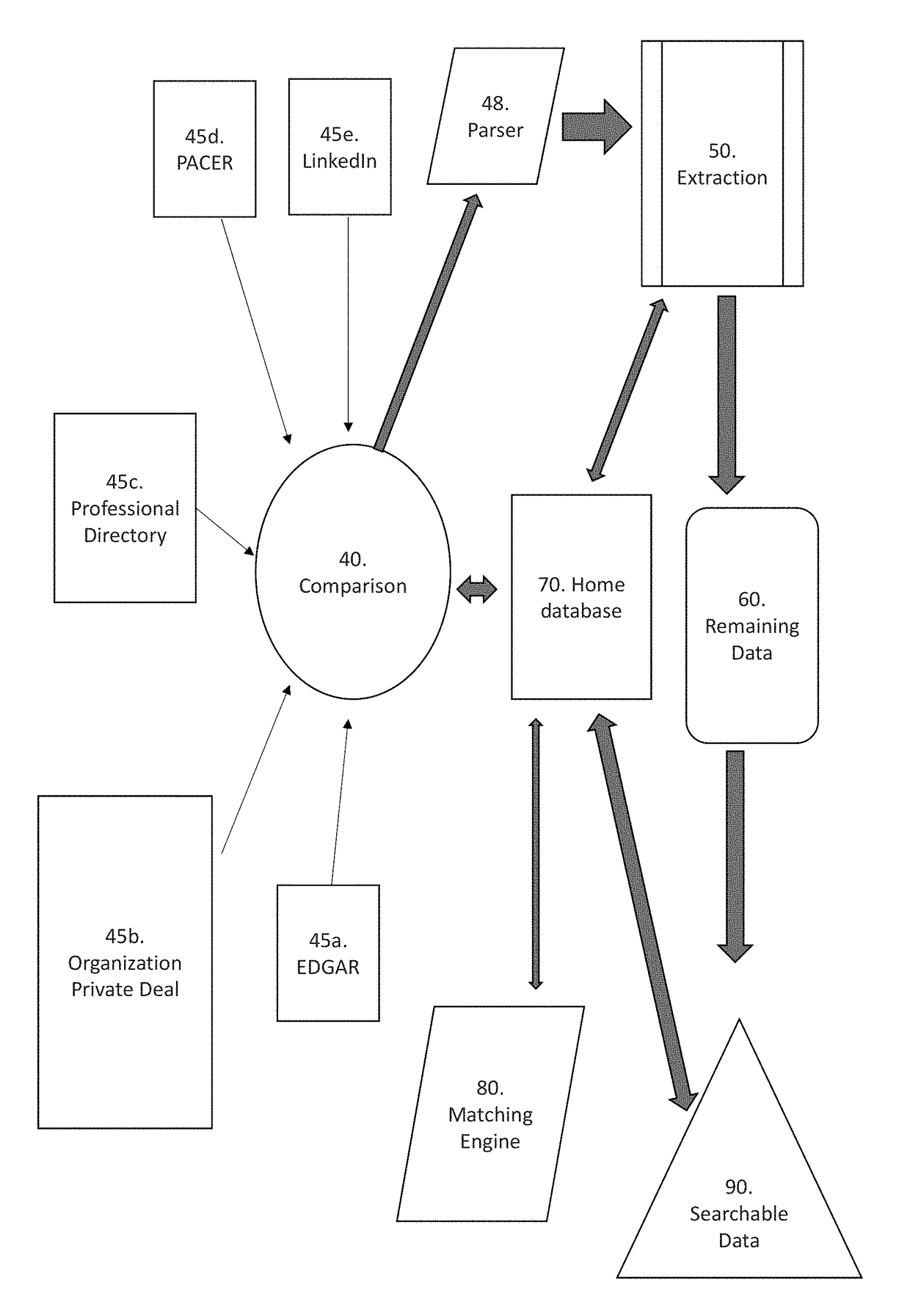

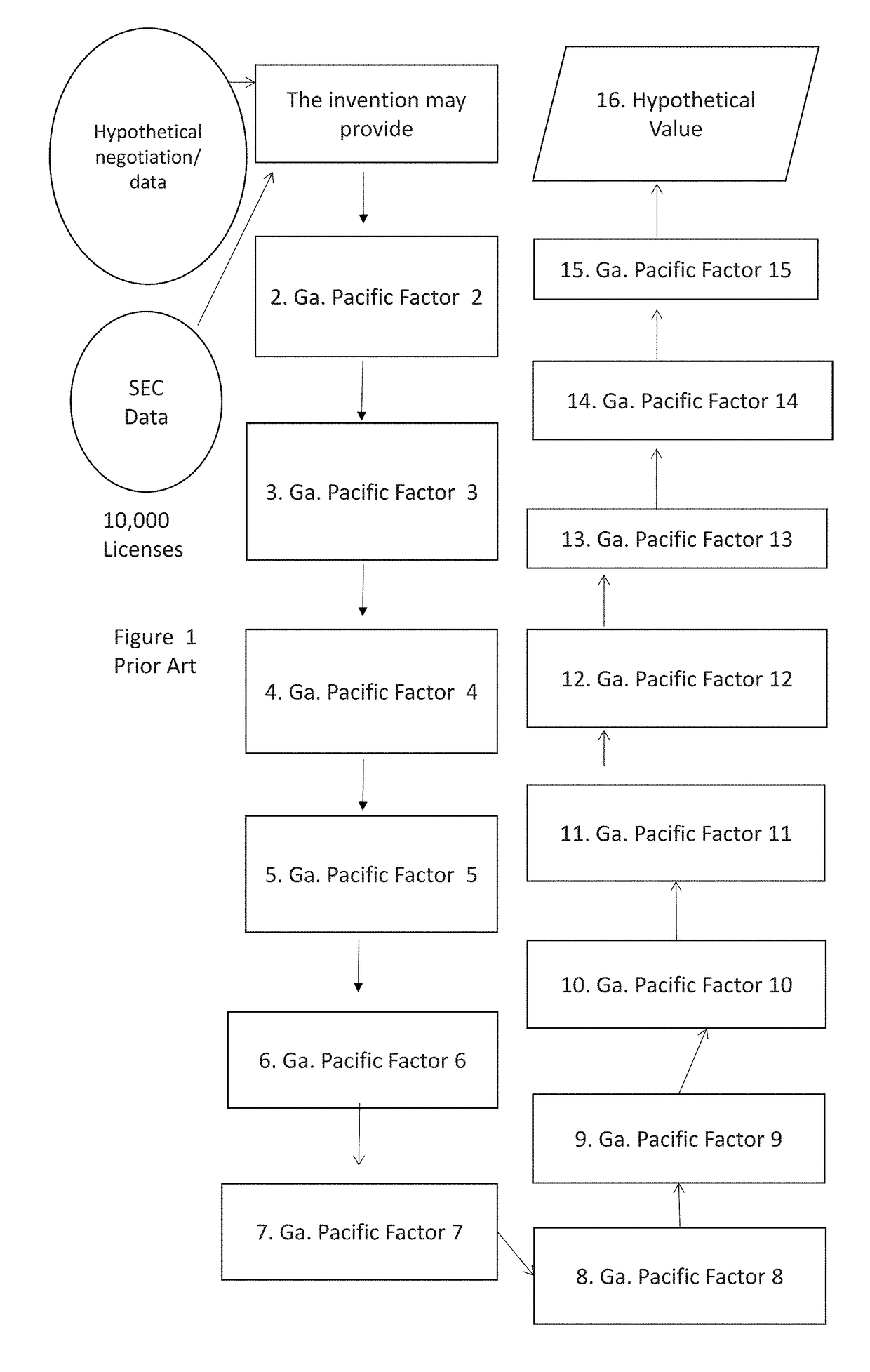

System for anonymization and filtering of data

InactiveUS20180247078A1Data processing applicationsDigital data protectionClient-sideDocument preparation

Owner:GOULD & RATNER LLP

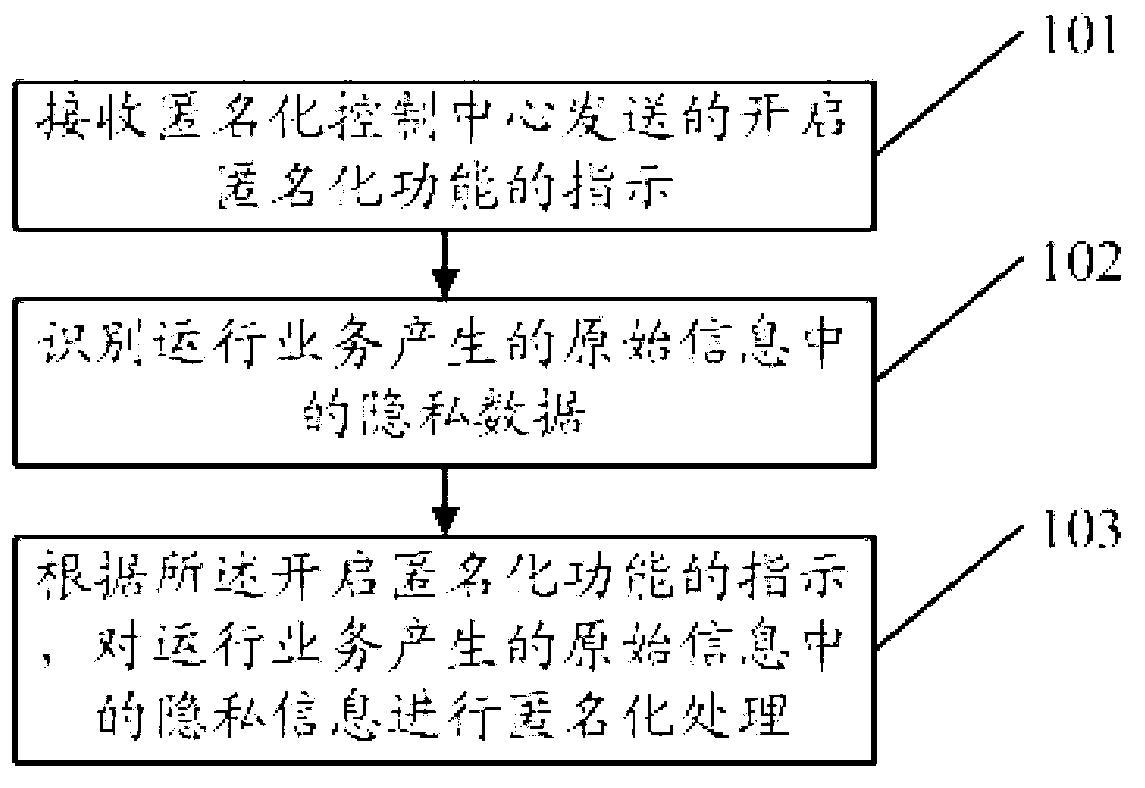

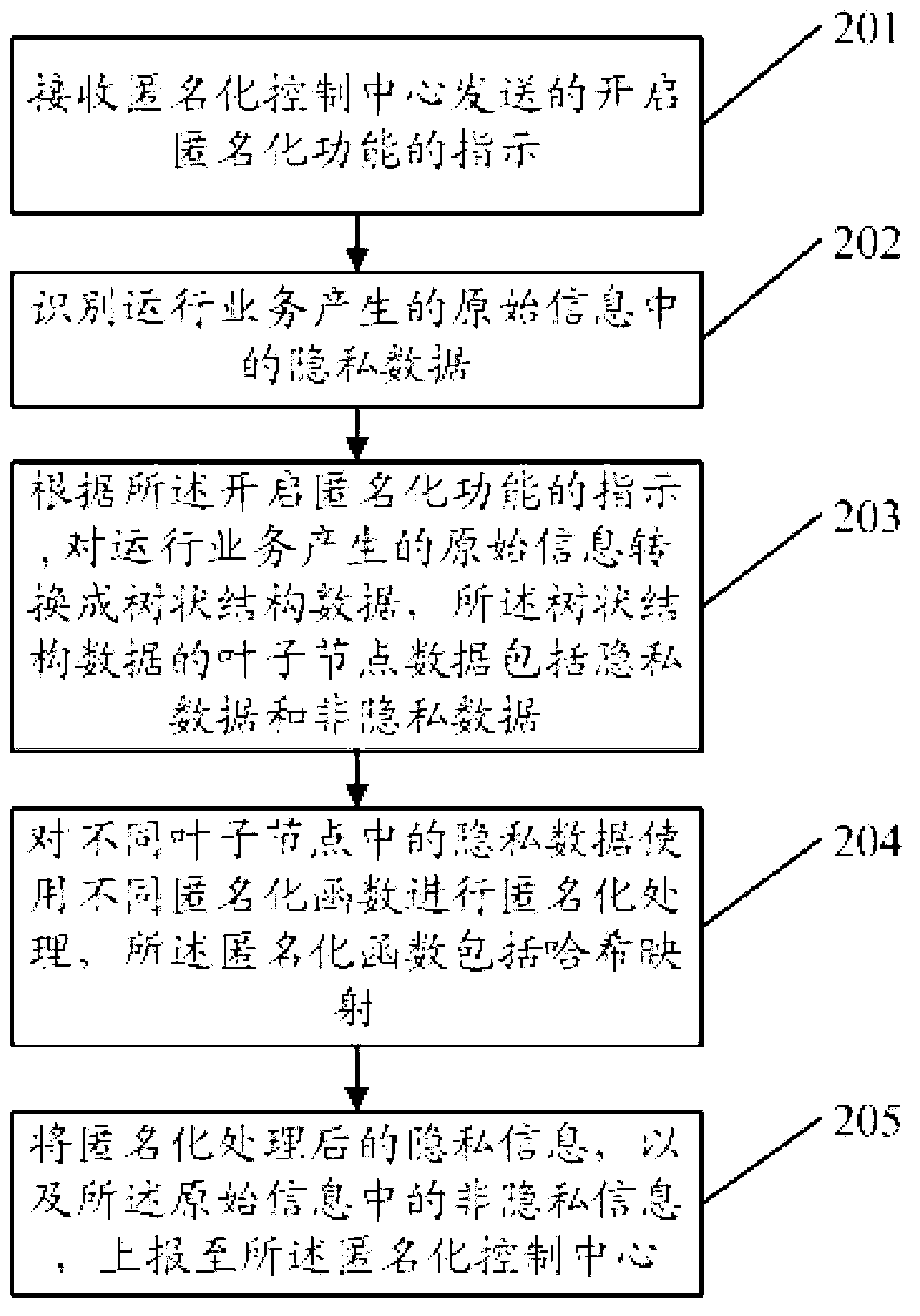

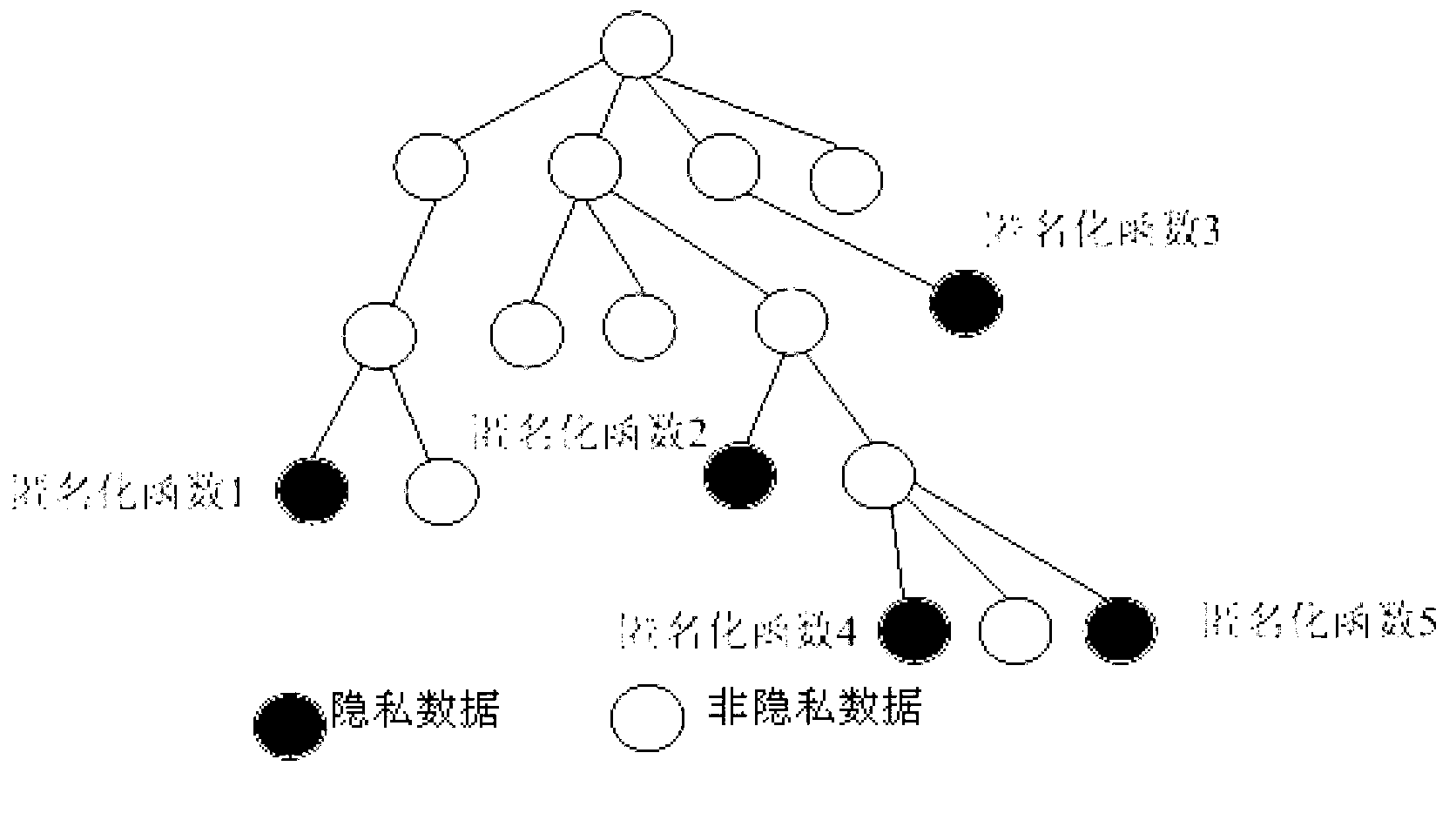

Method, device and system of privacy data anonymization in communication network

ActiveCN103067918APrevent leakageMeet legal requirements to protect personal privacySecurity arrangementInternet privacyData anonymization

Provided is a method, a device and a system of privacy data anonymization in a communication network. The method of the privacy data anonymization in the communication network comprises the following steps of receiving a directive of opening an anonymization function sent by an anonymization control center, recognizing privacy data in raw information generated by operating business, and carrying out anonymization process of the privacy data in the raw information generated by operating business. When the method, the device and the system of the privacy data anonymization in the communication network are in use, sensitive data in the raw information is processed in an anonymization mode, equipment maintenance businessmen are incapable of restoring the sensitive data form data which are processed in the anonymization mode, and leakage of the sensitive data is avoided.

Owner:HUAWEI TECH CO LTD

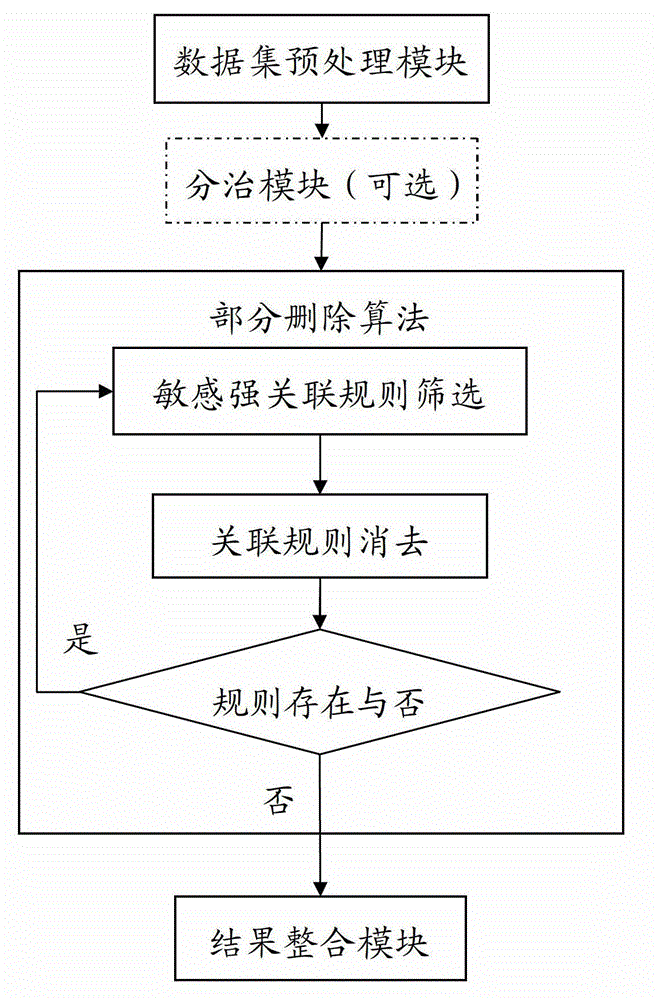

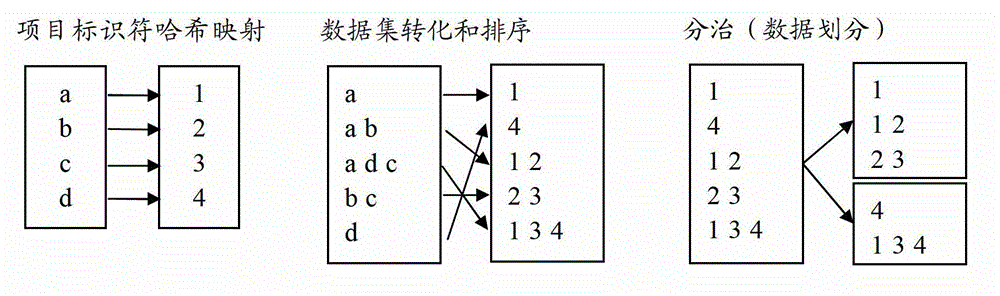

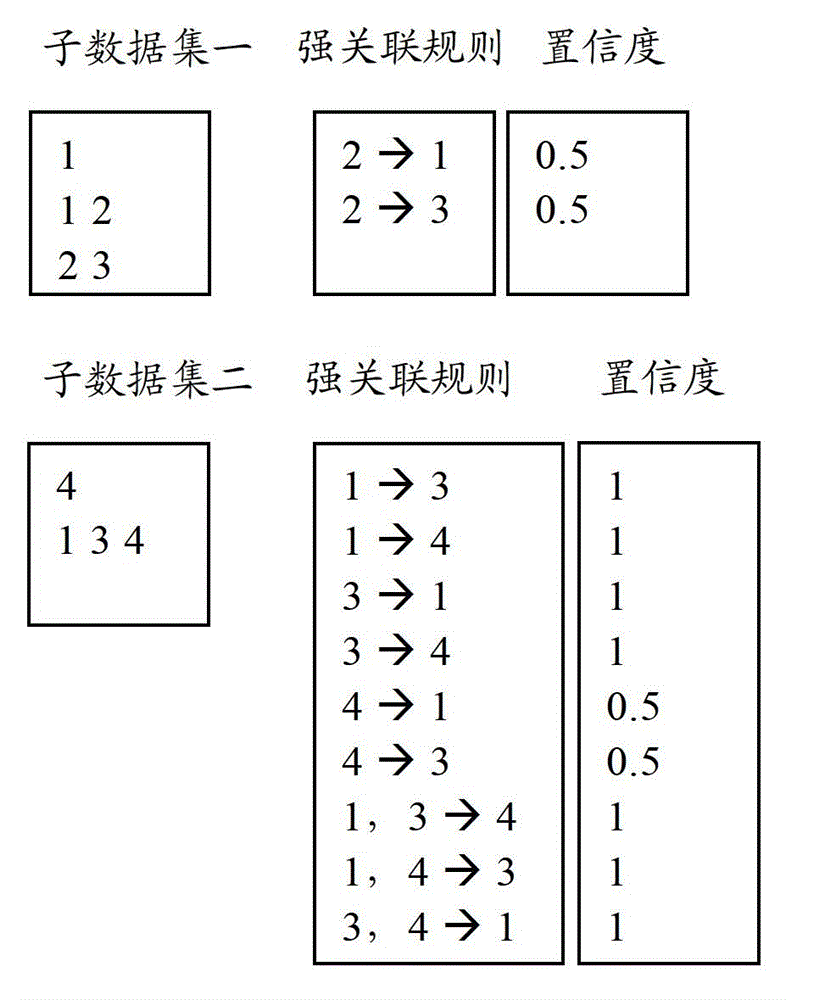

System for anonymizing set type data by partially deleting certain items

InactiveCN102867022AAnonymization is correct and efficientGuaranteed value in useSpecial data processing applicationsData setWeak association

The invention provides a system for anonymizing set type data by partially deleting certain items. The system preprocesses a dataset, then eliminates dangerous and sensitive strong association rules in the dataset by utilizing a multi-round iteration method, and ensures that the items are minimally deleted. A specific iteration implementation process comprises the following steps of: screening sensitive strong association rules from the dataset; and partially deleting certain items in the rules from the dataset, so that the dangerous and sensitive strong association rules become secure and sensitive weak association rules or are removed from the dataset. An iteration process can be skipped until the dangerous and sensitive strong association rules do not exist in the dataset. According to the system, a divide-and-conquer concept is combined to accelerate an anonymization process, so that the anonymization process can be concurrently executed through a plurality of threads, and the efficiency of the anonymization process is greatly improved on the premise of ensuring that the number of the deleted items is not sharply increased.

Owner:SHANGHAI JIAO TONG UNIV

Computer systems, methods and computer program products for data anonymization for aggregate query answering

ActiveUS8112422B2Low costDigital data information retrievalDigital data processing detailsComputer system designComputerized system

Computer program products are provided for anonymizing a database that includes tuples. A respective tuple includes at least one quasi-identifier and sensitive attributes associated with the quasi-identifier. These computer program products include computer readable program code that is configured to (k,e)-anonymize the tuples over a number k of different values in a range e of values, while preserving coupling at least two of the sensitive attributes to one another in the sets of attributes that are anonymized to provide a (k,e)-anonymized database. Related computer systems and methods are also provided.

Owner:AT&T INTPROP I LP

Method and apparatus for dump and log anonymization (DALA)

ActiveUS8166313B2Unauthorized memory use protectionHardware monitoringFile transmissionClient machine

According to one embodiment of the invention, an original dump file is received from a client machine to be forwarded to a dump file recipient. The original dump file is parsed to identify certain content of the original dump file that matches certain data patterns / categories. The original dump file is anonymized by modifying the identified content according to a predetermined algorithm, such that the identified content of the original dump file is no longer exposed, generating an anonymized dump file. The anonymized dump file is then transmitted to the dump file recipient. Technical content and infrastructure of the original dump file is maintained within the anonymized dump file after the anonymization, such that a utility application designed to process the original dump file can still process the anonymized dump file without exposing the identified content of the original dump file to the dump file recipient. Other methods and apparatuses are also described.

Owner:FEDTKE STEPHEN U

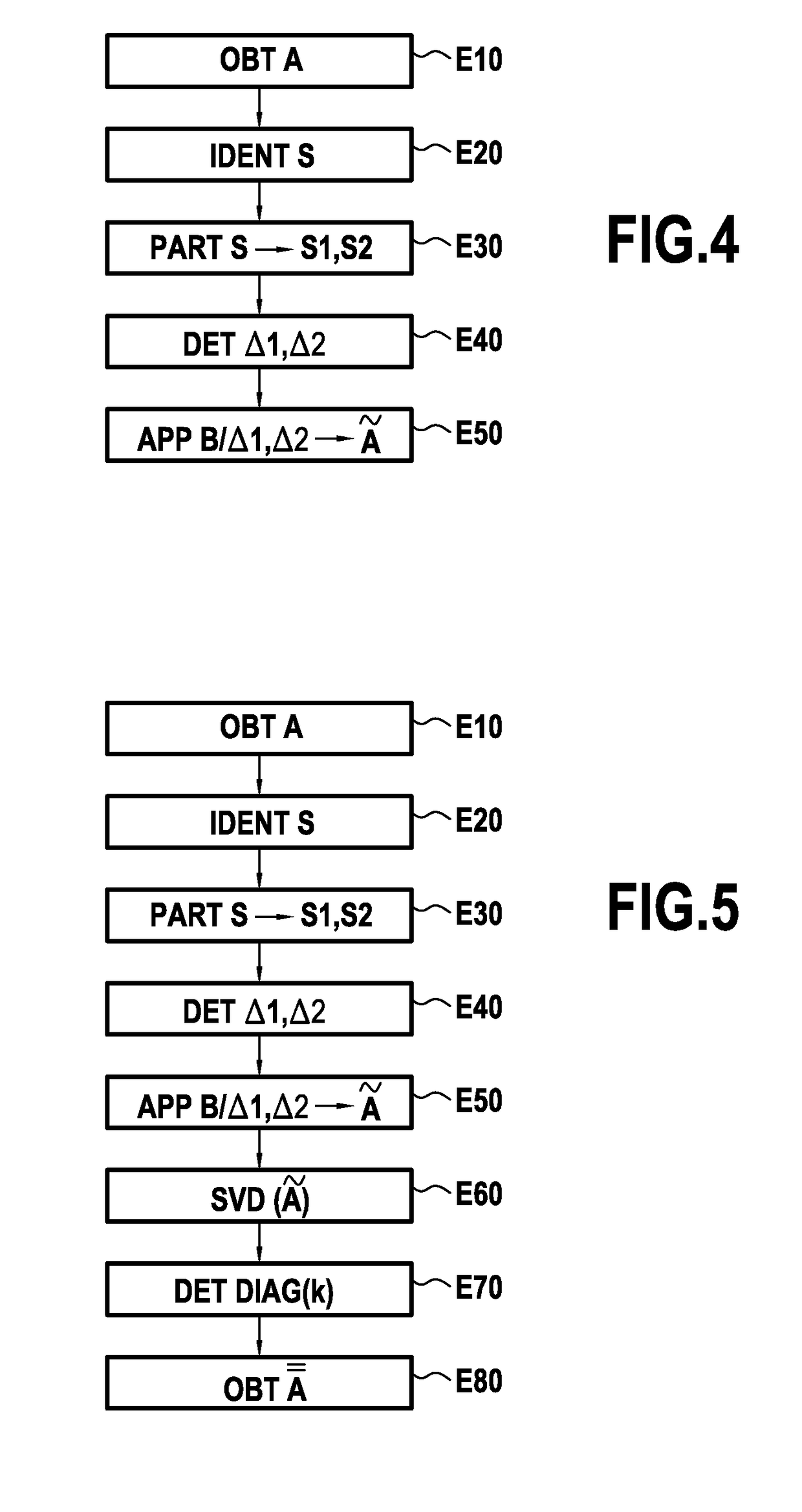

Method and device for anonymizing data stored in a database

ActiveUS20190050599A1Quality improvementFine granularityDigital data protectionSecuring communicationData setNoise level

A method is provided to anonymize “initial” data stored in a database of a computer system and resulting from aggregating personal data relating to a plurality of individuals. The method includes: an identification act identifying in the initial data a set of data that is “sensitive” that would be affected by personal data relating to one individual being added to or removed from the database; a partitioning act partitioning the sensitive data set into a plurality of subsets as a function of a sensitivity level of the sensitive data; a determination act determining a sensitivity level for each subset; and an anonymization act anonymizing the initial data and including, for each subset, adding noise to the sensitive data of that subset with a noise level that depends on the sensitivity level determined for the subset.

Owner:ORANGE SA (FR)

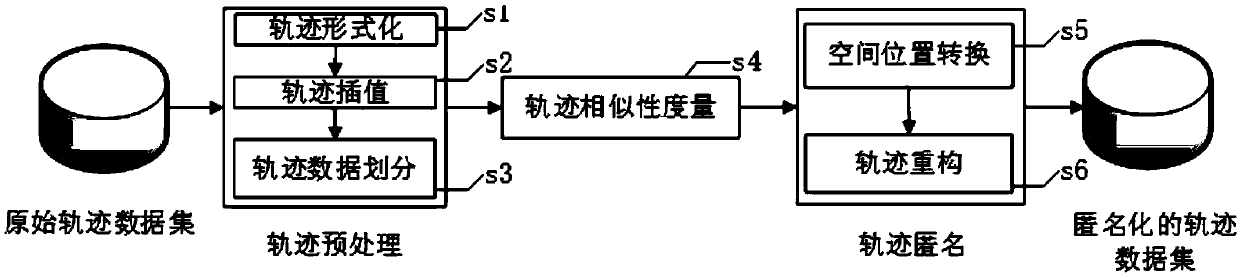

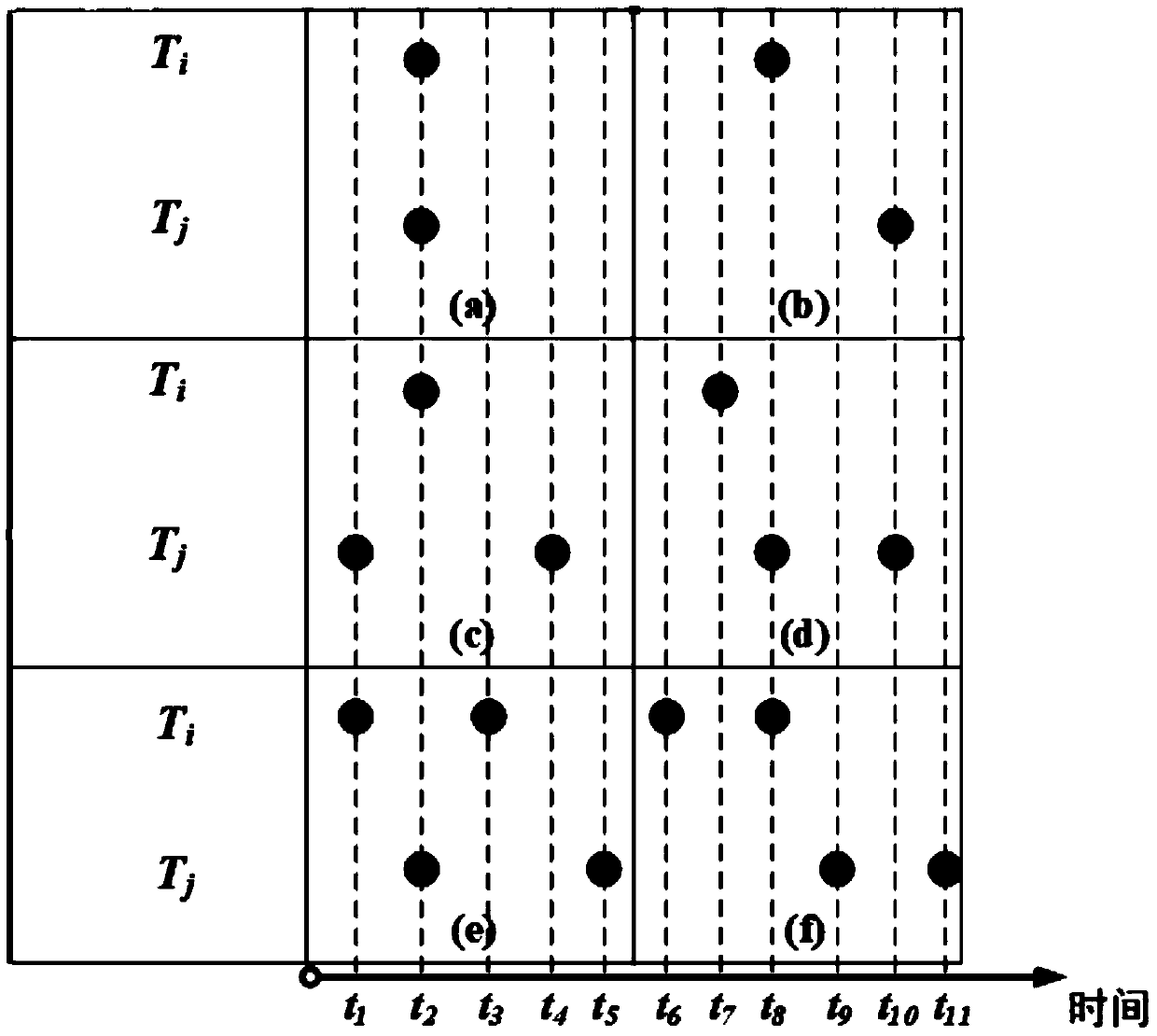

Privacy protection track data publishing method based on three-dimensional meshing

ActiveCN108734022AProtect private informationImprove usabilityDigital data protectionData setPrivacy protection

The invention is applicable to the field of data mining technology and provides a privacy protection track data publishing method based on three-dimensional meshes. The method comprises the steps thattrack data is preprocessed; based on location point sampling time, interpolation is performed on a missing location between a head location point and a tail location point of each track; meshing is performed on a track region to divide the track region into a plurality of space-time units; based on the time, direction and spatial locations of sub-tracks in each space-time unit, the distance between the sub-tracks is calculated; location point pairs meeting constraint conditions are found on the two closest sub-tracks, and time and spatial locations of the location point pairs are exchanged toobtain anonymous sub-tracks; and the anonymous sub-tracks belonging to the same track and distributed in each space-time unit are reconstructed to obtain an anonymous track dataset. According to themethod, location exchange is performed according to the similarities among the sub-tracks in each space-time unit, track anonymization is realized, and the availability of track publishing data is effectively improved while user privacy information is protected.

Owner:ANHUI NORMAL UNIV

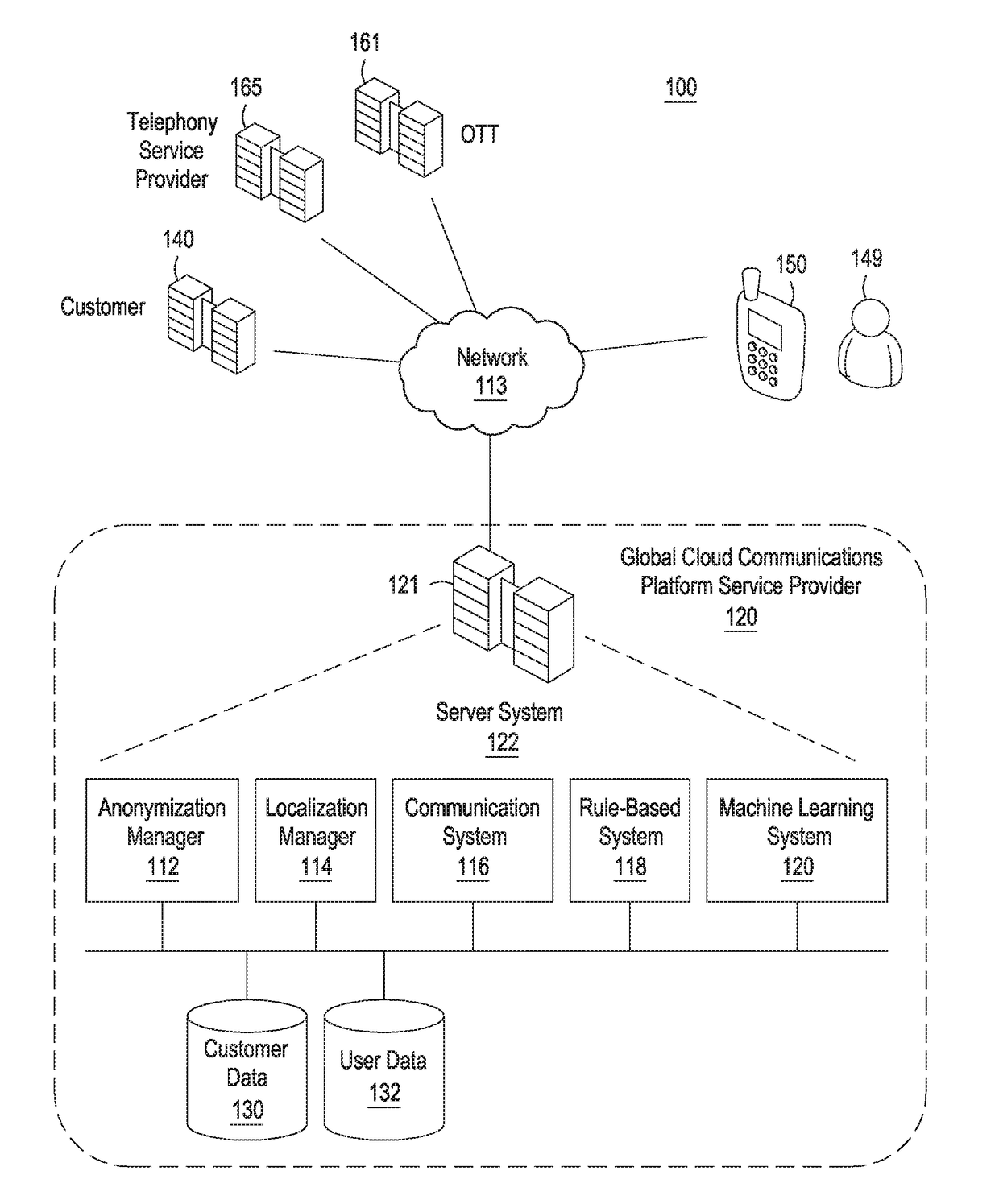

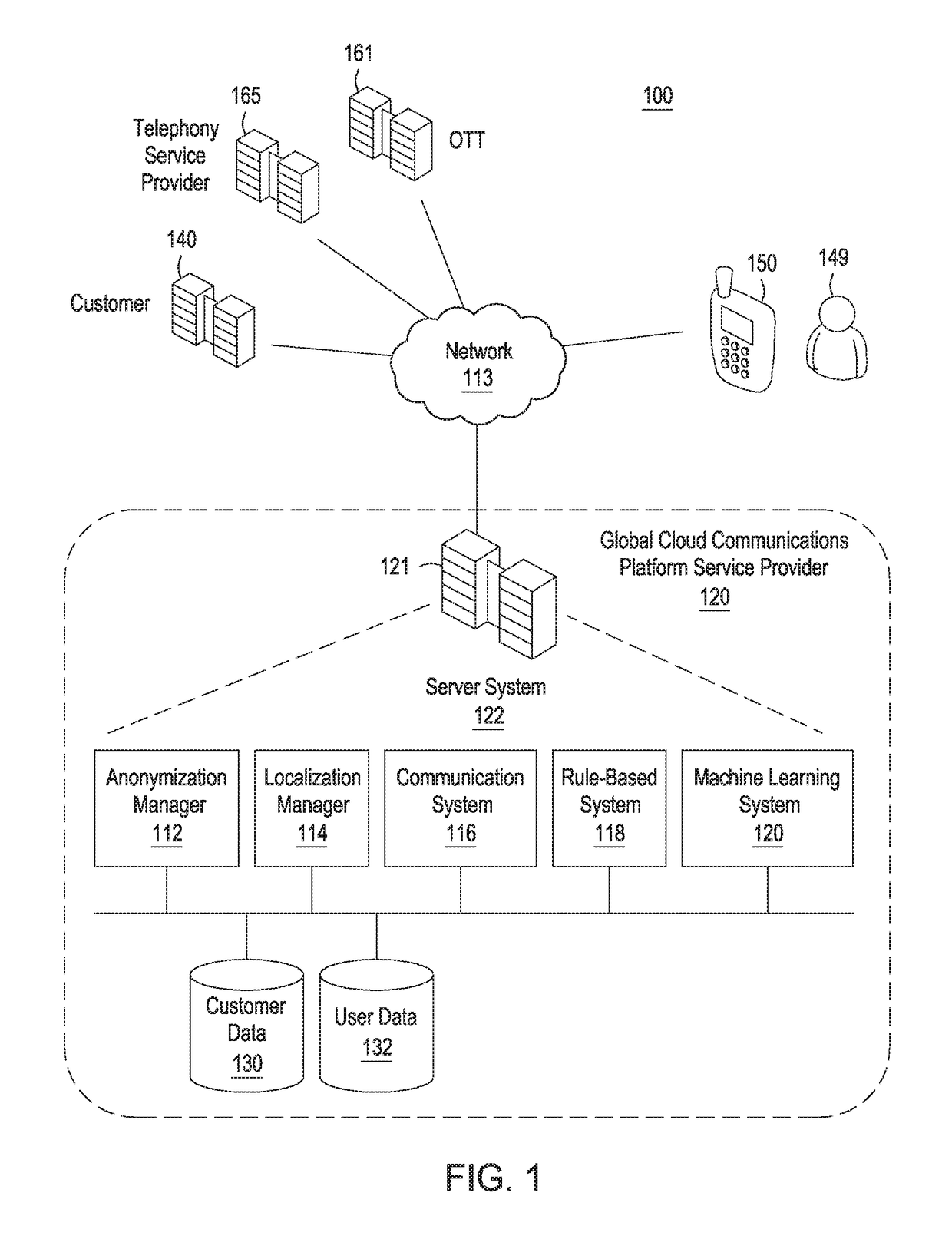

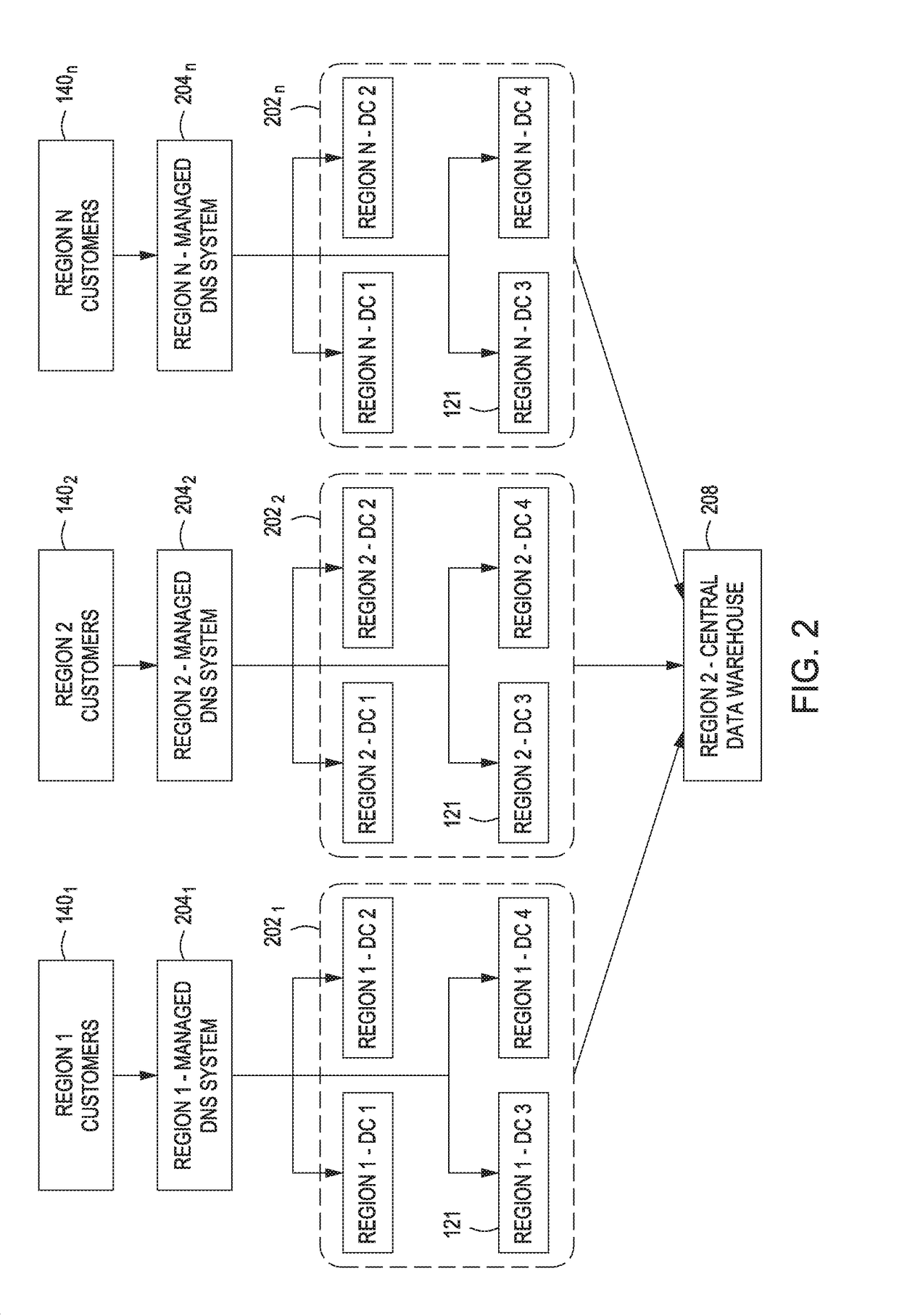

Systems and methods for regional data storage and data anonymization

Systems and methods for data localization and anonymization are provided herein. In some embodiments, systems and methods for data localization and anonymization may include receiving a communication request to send a message or establish a call between a first service provider and an end user device associated with an end user, determining that the communication request is associated with a requirement for securing personally identifiable information (PII) of the end user, and processing the communication request based on the requirement for securing the PII of the end user, wherein the requirement includes at least one of (A) localization of the communication request processing or (B) anonymization of any data records associated with the communication request that includes the PII of end user.

Owner:VONAGE BUSINESS

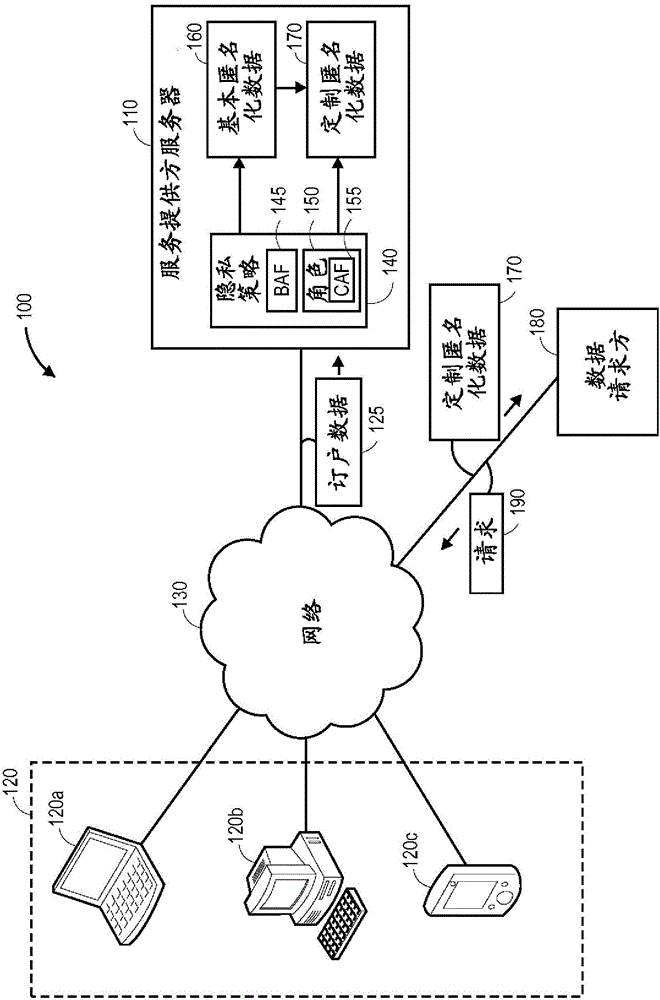

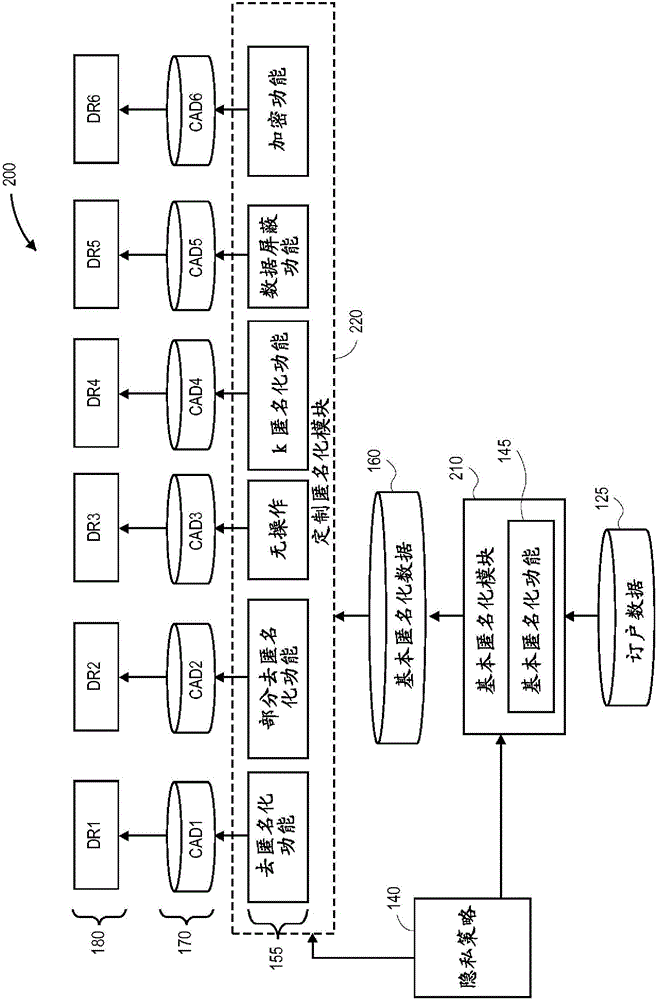

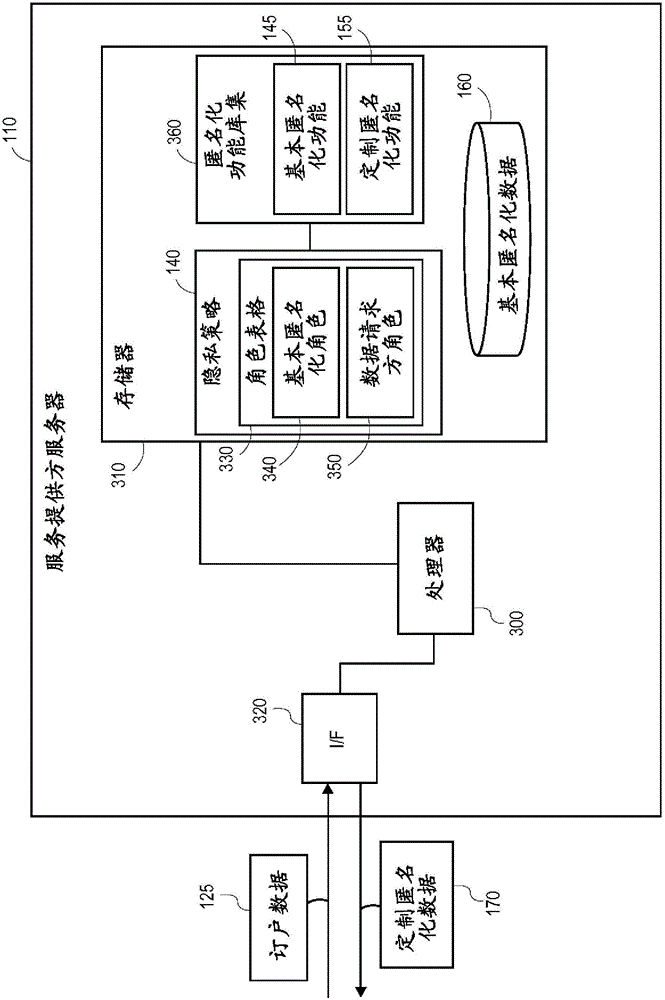

Role-based anonymization

A data anonymization system provides role-based anonymization for data requesters. The system applies a base anonymization function to subscriber data related to at least one subscriber of a service provider to produce base anonymized subscriber data. Upon receiving a request for the subscriber data from a data requester, a role assigned to that data requester is determined to identify a custom anonymization function to be applied to the subscriber data in order to produce custom anonymized subscriber data for the data requester.

Owner:ALCATEL LUCENT SAS

System and method for data anonymization using hierarchical data clustering and perturbation

ActiveUS9135320B2Digital data information retrievalDigital data processing detailsComputerized systemEuclidean vector

Owner:ELECTRIFAI LLC

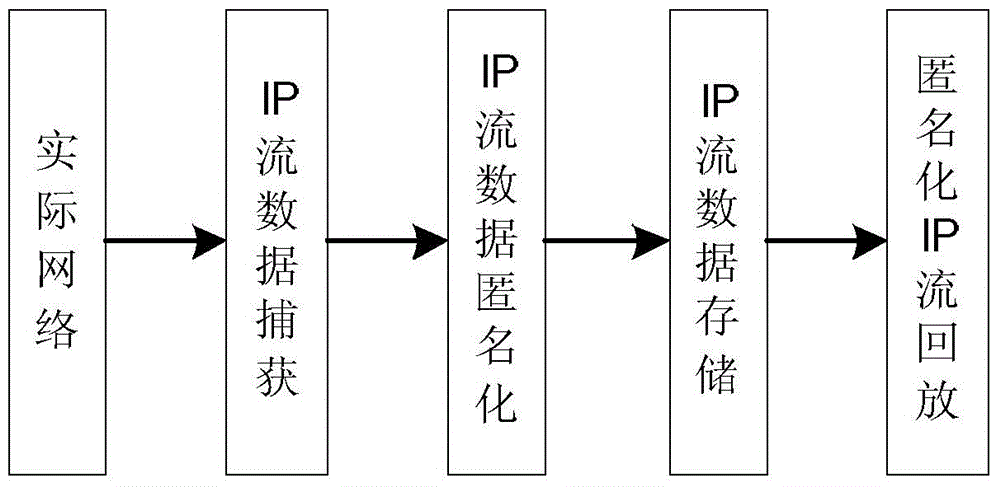

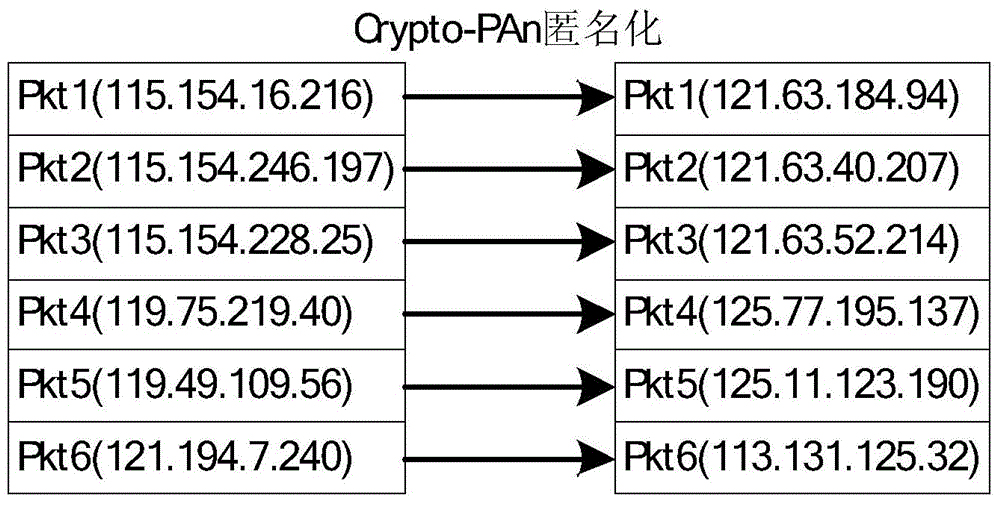

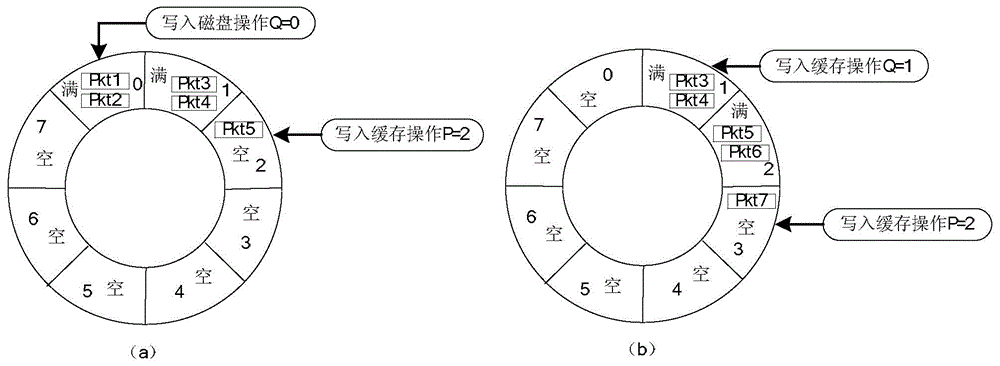

Online real-time anonymization system and method for IP stream data

InactiveCN104601583AMake up cycleMake up for real-timeData switching networksTraffic capacityStreaming data

The invention provides online real-time anonymization system and method for IP stream data. The system comprises an IP stream data capturing module, an IP stream data anonymization module, an IP stream data storing module and an anonymization IP stream replaying module; the IP stream data capturing module is used for extracting data packet IP address information and data packet head information from received network traffic; the IP stream anonymization module is used for processing the IP address online and on real time by anonymization through anonymization algorithm during capturing the IP stream data; the IP stream data storing module is used for storing the IP stream data subjected to anonymization to a storing device; the anonymization IP stream replaying module is used for specifying source and target MAC addresses of a data packet to be replayed and re-calculating the check bit of an IP stream data packet head during replaying so as to replay the IP stream data subjected to anonymization online and on real time.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

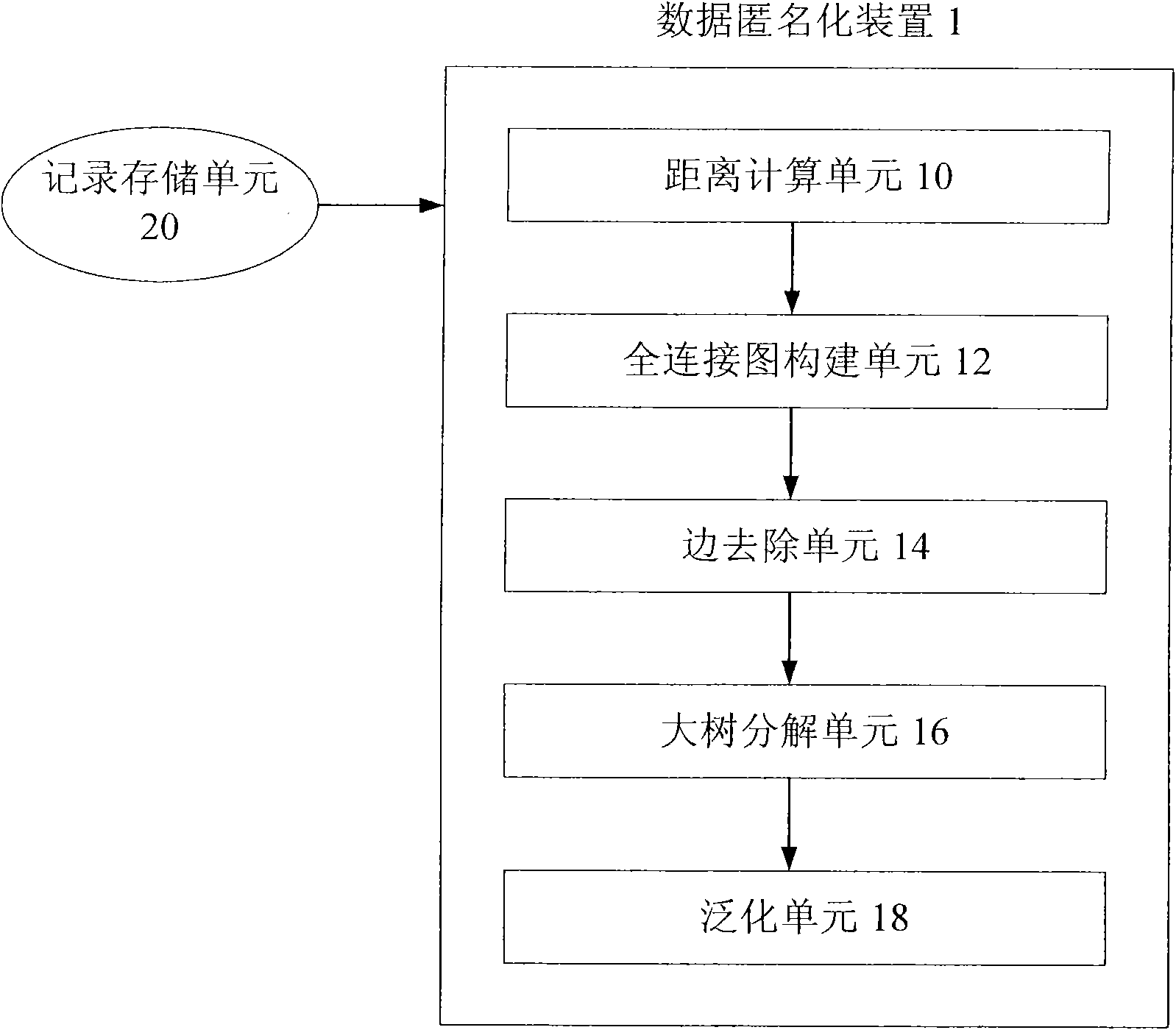

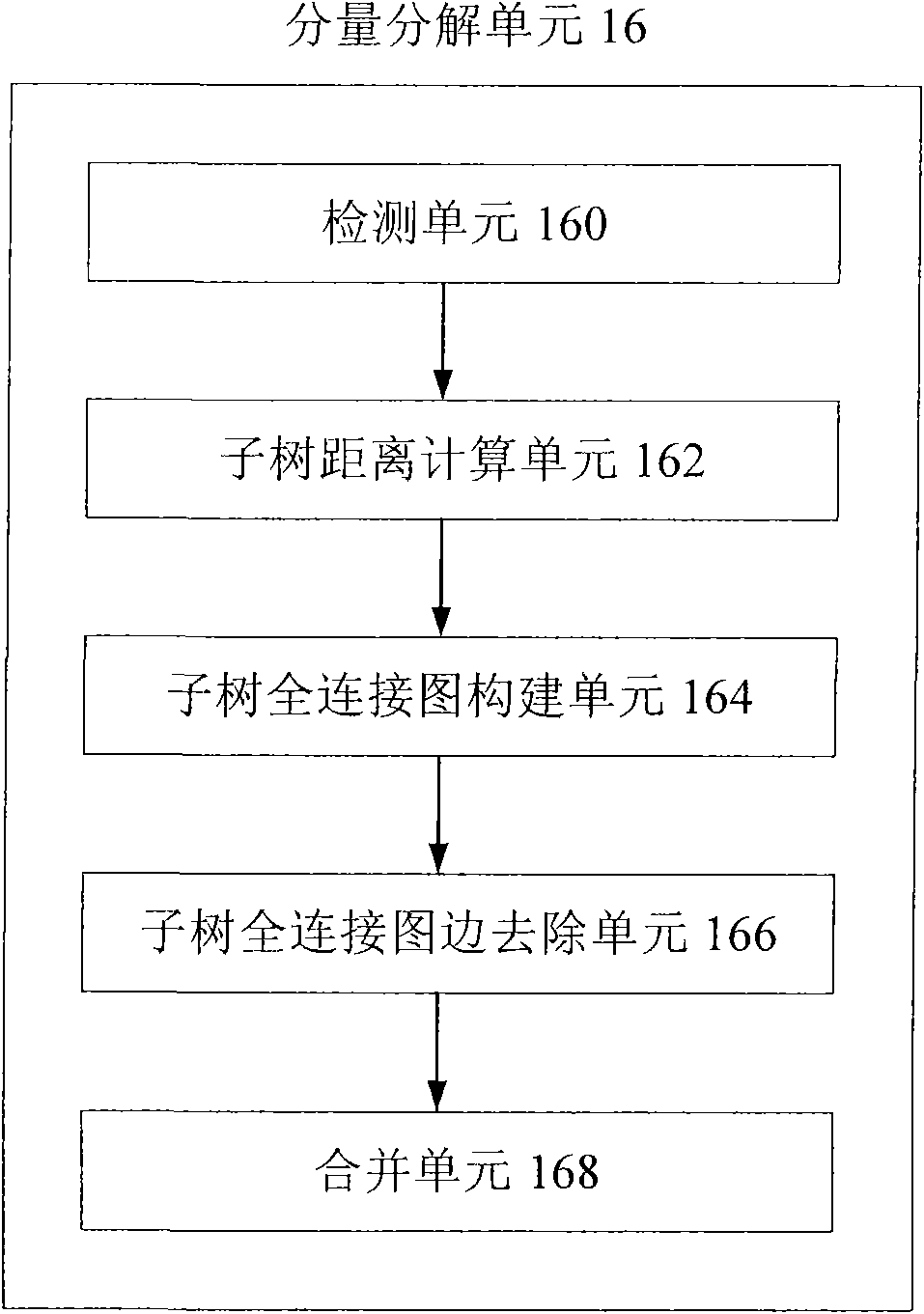

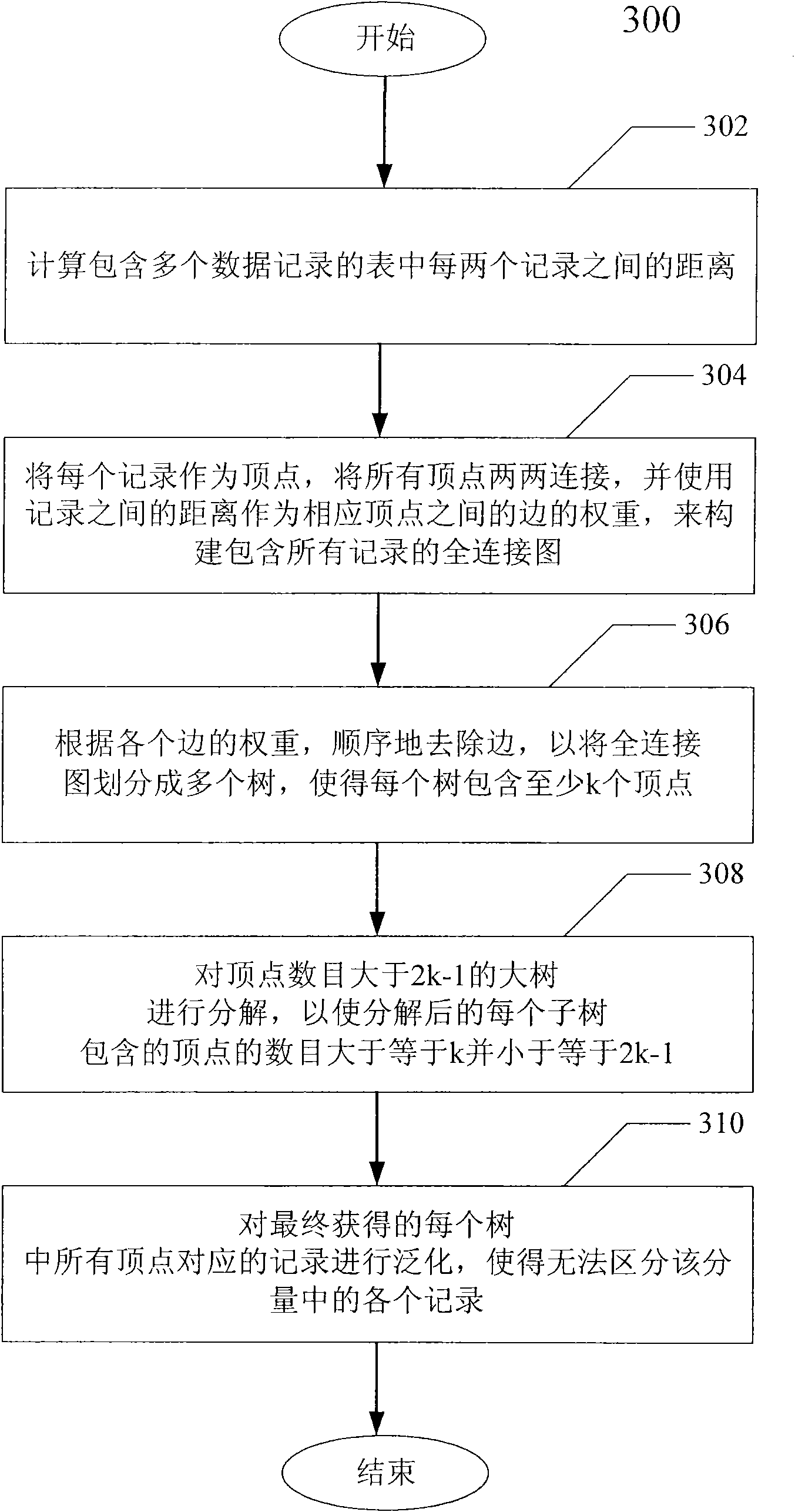

Data anonymization device and method

InactiveCN102314565AReduce lossesLocal optimization implementationComputer security arrangementsTheoretical computer scienceGlobal optimization

The invention provides a data anonymization device and a data anonymization method. The device comprises a distance calculation unit, a full connection diagram construction unit, a side removing unit, a large tree decomposition unit and a generalization unit, wherein the distance calculation unit is used for calculating distances among a plurality of data records; the full connection diagram construction unit is used for using the records as apexes, connecting all the apexes pairwise, using the distances among the records as weights of sides among the corresponding apexes, and constructing a full connection diagram comprising all the records; the side removing unit is used for sequentially removing the sides according to the weight of each side, dividing the full connection diagram into a plurality of trees and making each tree comprise at least k apexes; the large tree decomposition unit is used for further decomposing trees with the apex number of more than (2k-1), so that the number of apexes contained in each decomposed subtree is more than or equal to k and less than or equal to (2k-1); and the generalization unit is used for generalizing records corresponding to all the apexes in each finally obtained tree, so that the records in the tree cannot be distinguished. By the data anonymization device and the data anonymization method, information loss is further reduced through a global optimization mechanism.

Owner:NEC (CHINA) CO LTD

Database anonymization for use in testing database-centric applications

ActiveUS8682910B2Digital data information retrievalDigital data processing detailsAnalysis dataApplication software

Owner:ACCENTURE GLOBAL SERVICES LTD

Multi-domain data privacy protection method for cloud platform

ActiveCN110378148AMeet needsReduce the risk of privacy breachesDigital data protectionData privacy protectionOriginal data

The invention discloses a multi-domain data privacy protection method for a cloud platform, and relates to the technical field of multi-domain data privacy protection. The method aims to solve the problems that an existing multi-domain data privacy protection method generally adopts static anonymity to publish data, is not flexible enough, cannot limit the data range obtained by data analysts andcannot meet the requirement for using original data. The method comprises the steps of data anonymization treatment and original data recovery treatment. The invention aims at specific requirements indata analysis and transaction processing scenes. Corresponding privacy protection strategies are adopted. For structured data tables in different fields, the effect of reducing the cloud data privacyleakage risk is achieved through a privacy protection technology taking data anonymity as a main body. Meanwhile, certain transaction processing requirements are met. The anonymized data is stored and used for data analysis, so that the risk of privacy information leakage is reduced. The data is dynamically anonymized. The data range which can be obtained by data analysts is limited. Meanwhile, the data privacy is better protected.

Owner:HARBIN INST OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com