Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

706 results about "Facial affect" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

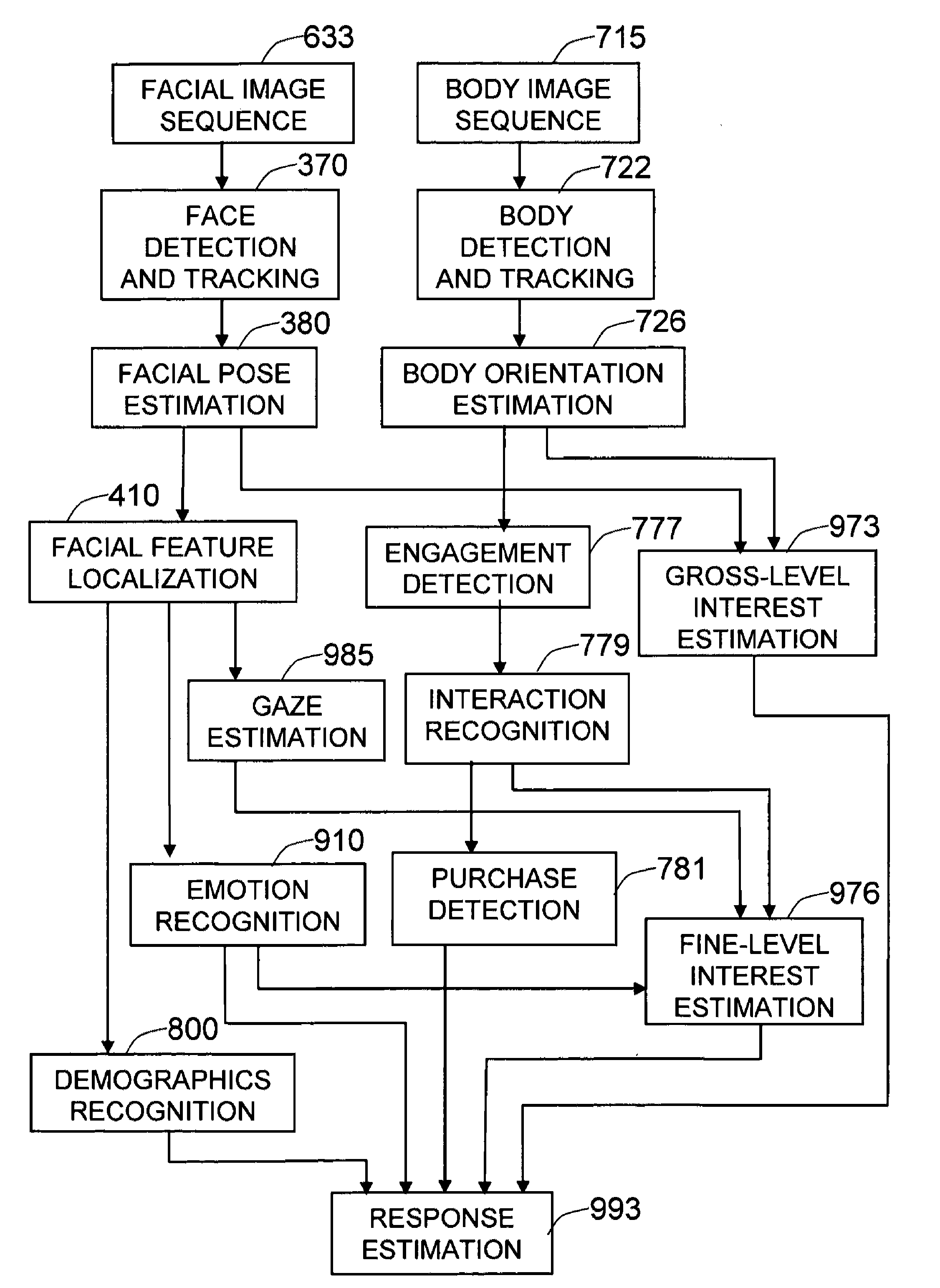

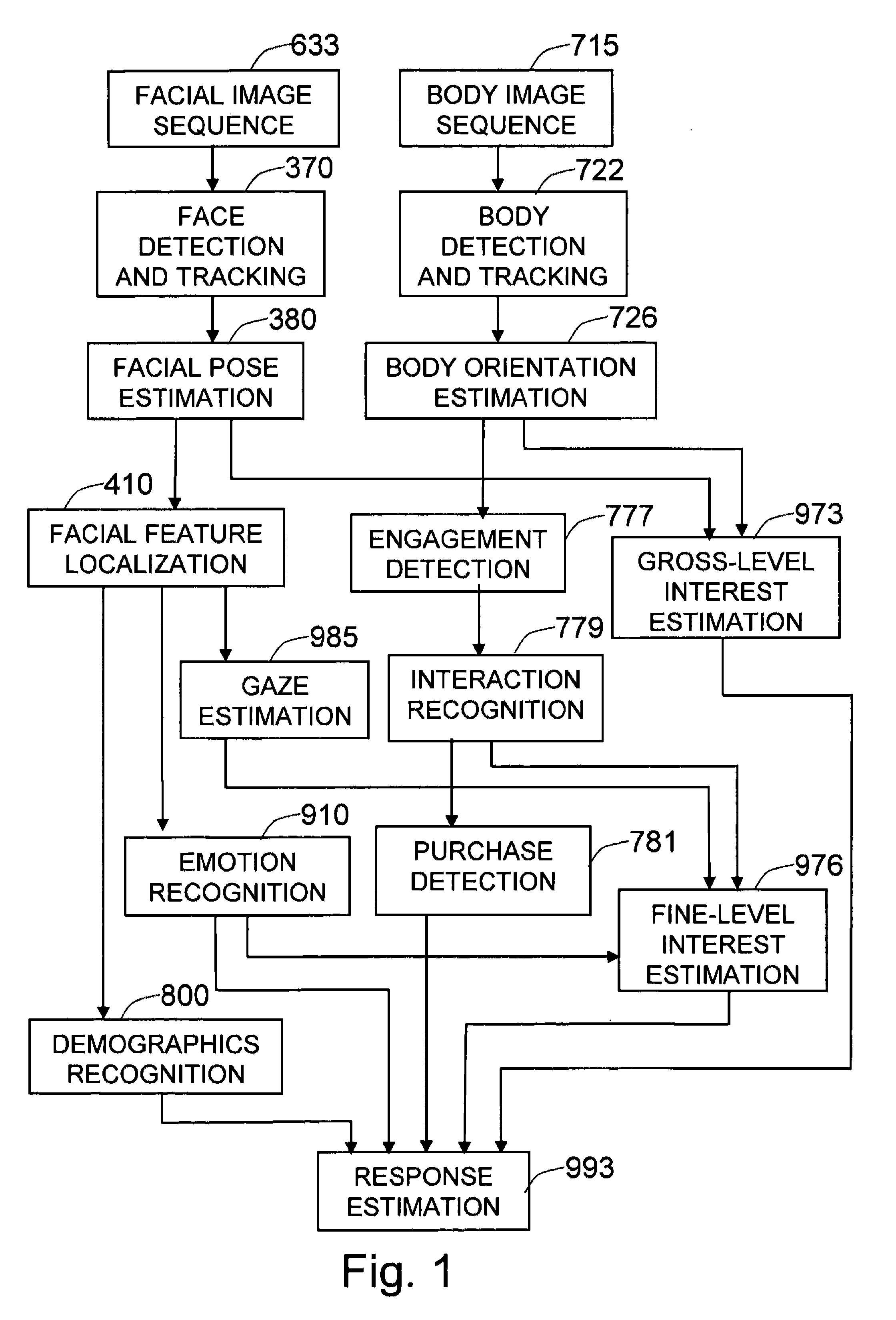

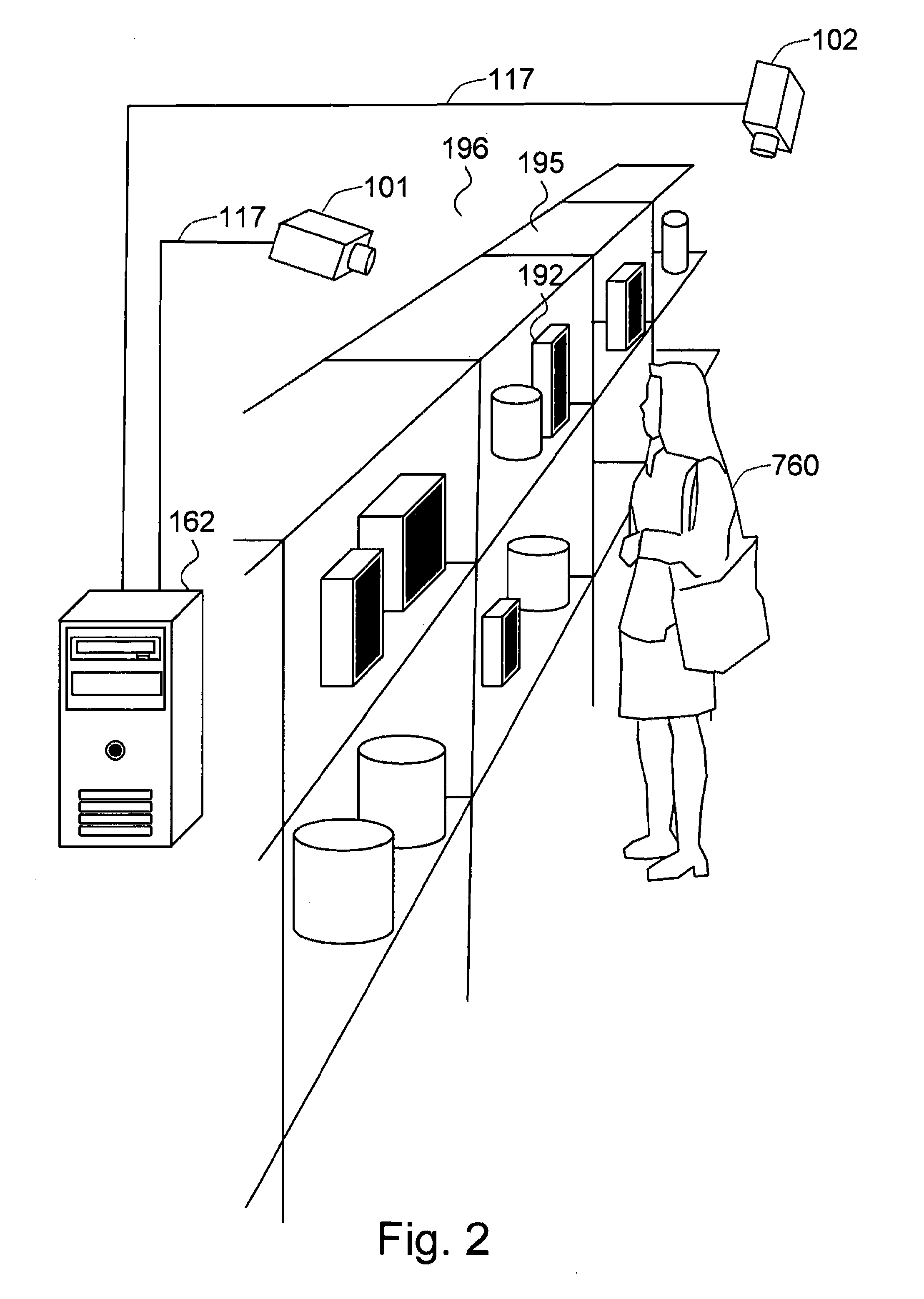

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

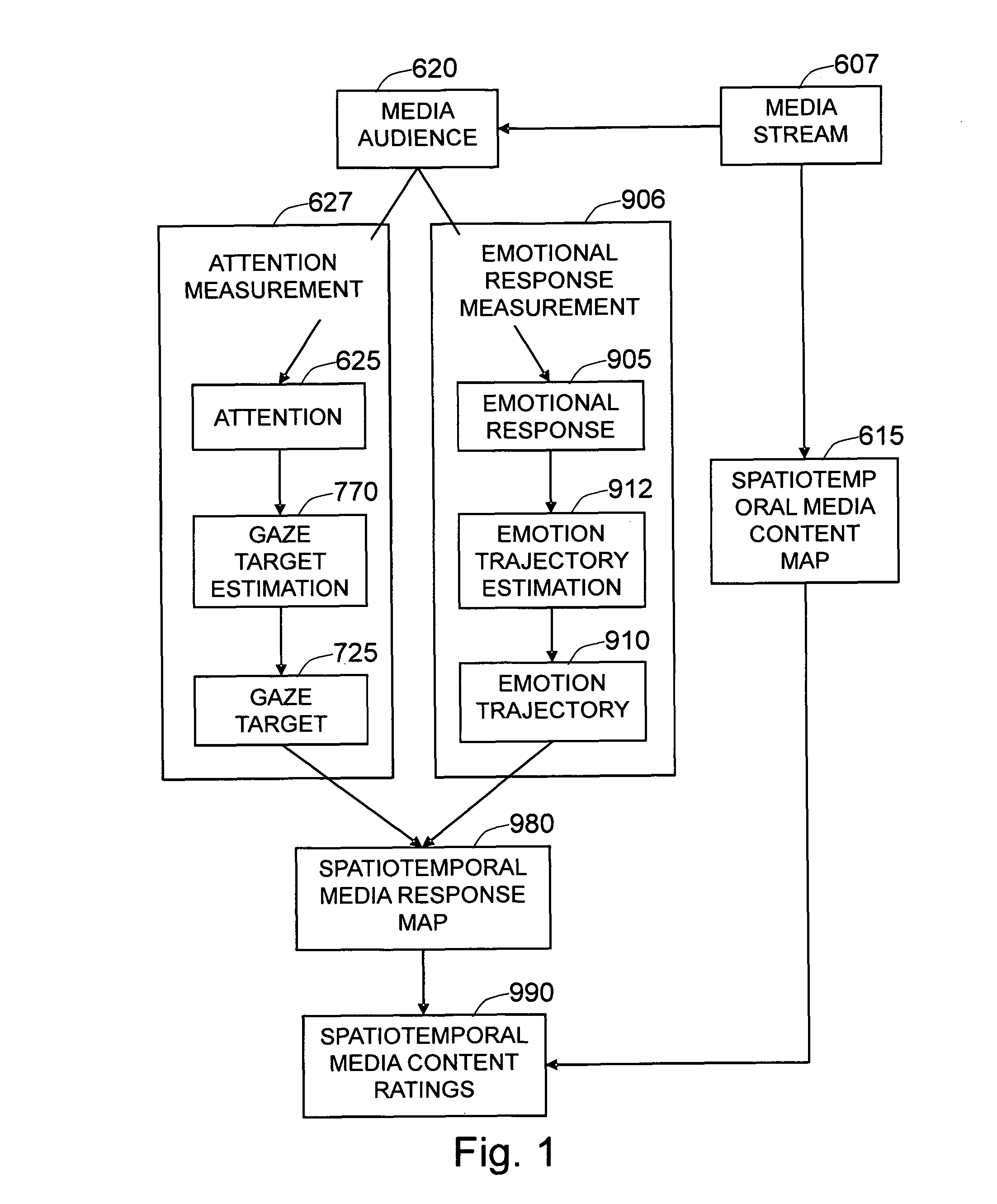

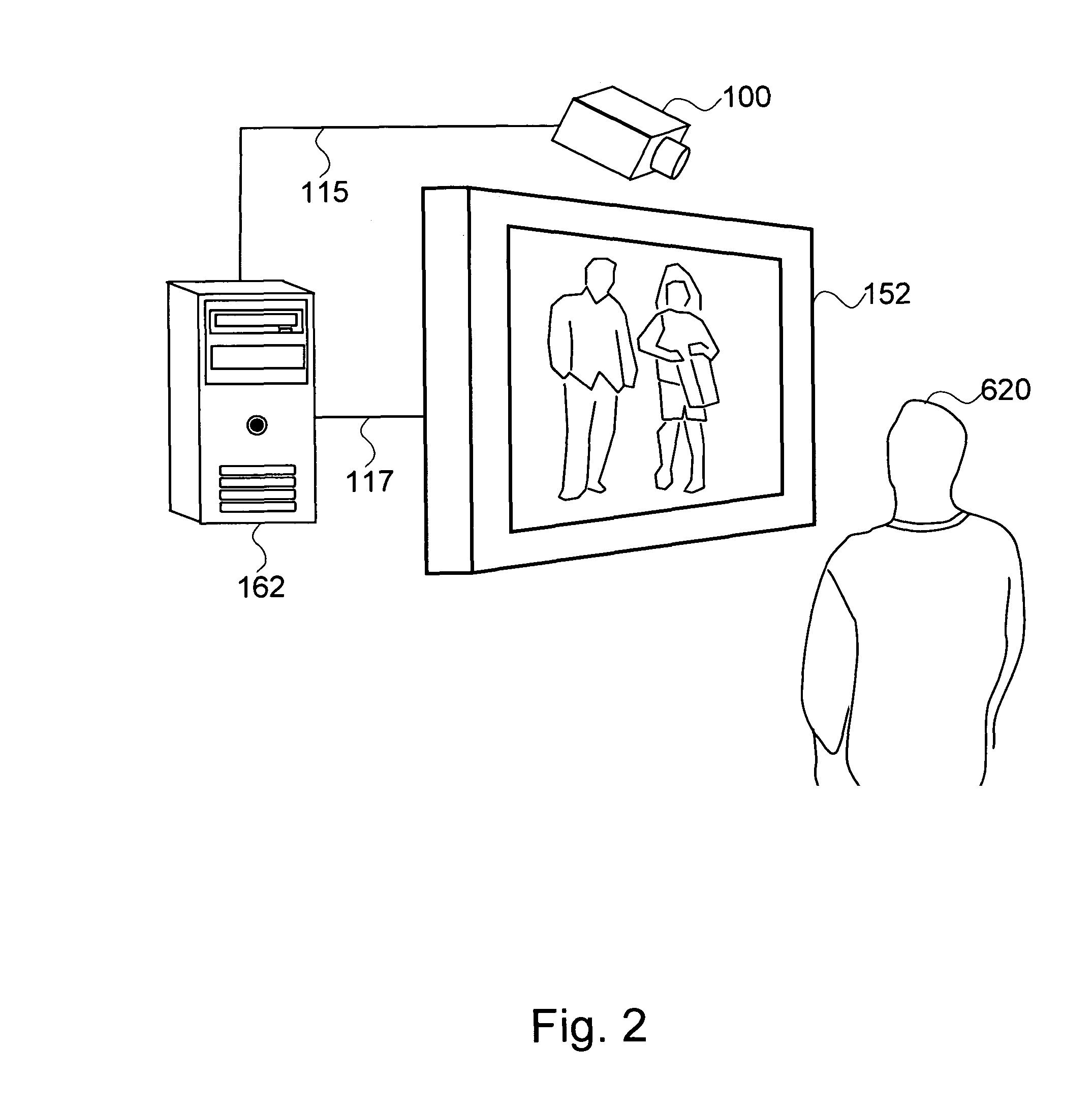

Method and system for measuring emotional and attentional response to dynamic digital media content

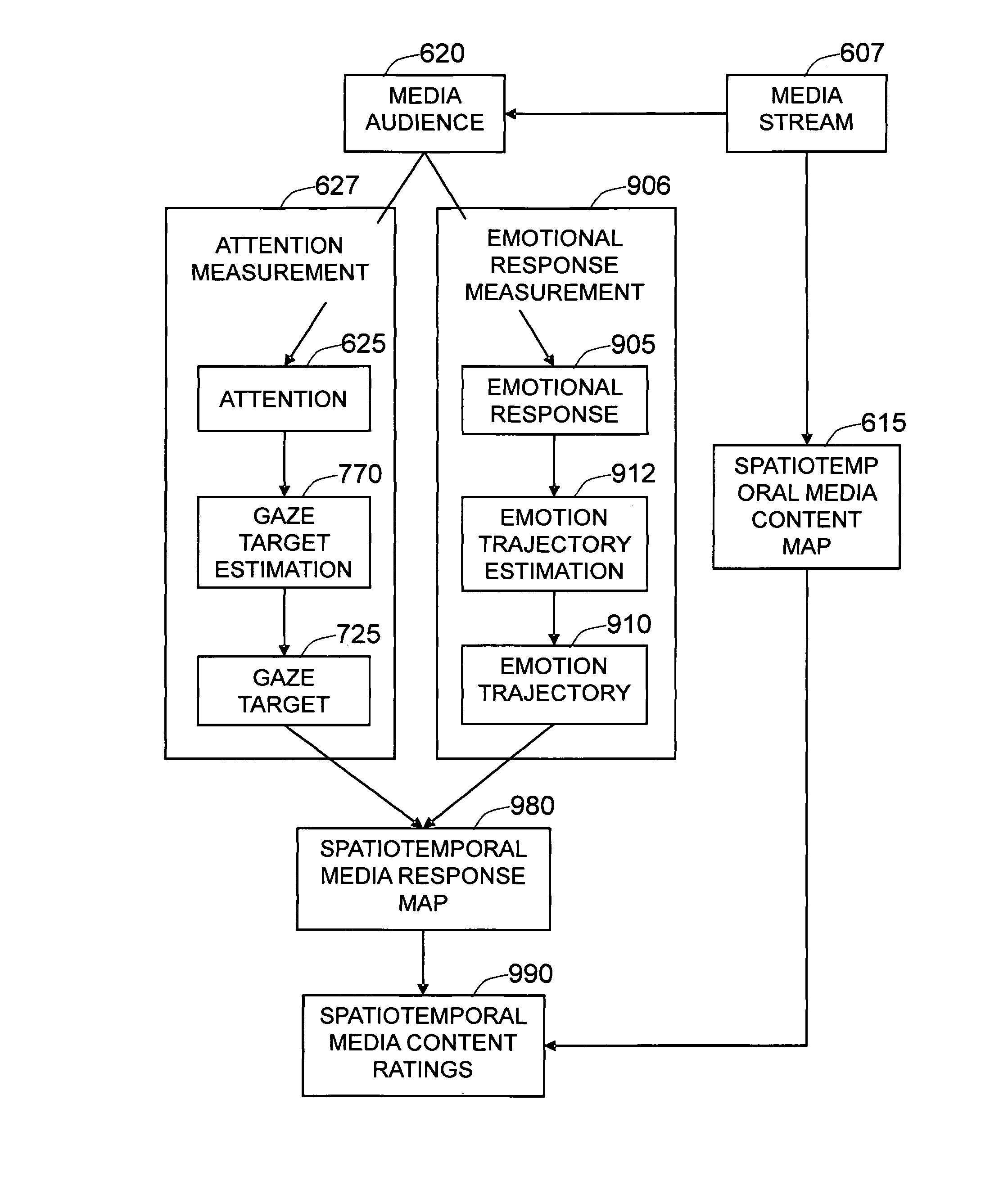

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

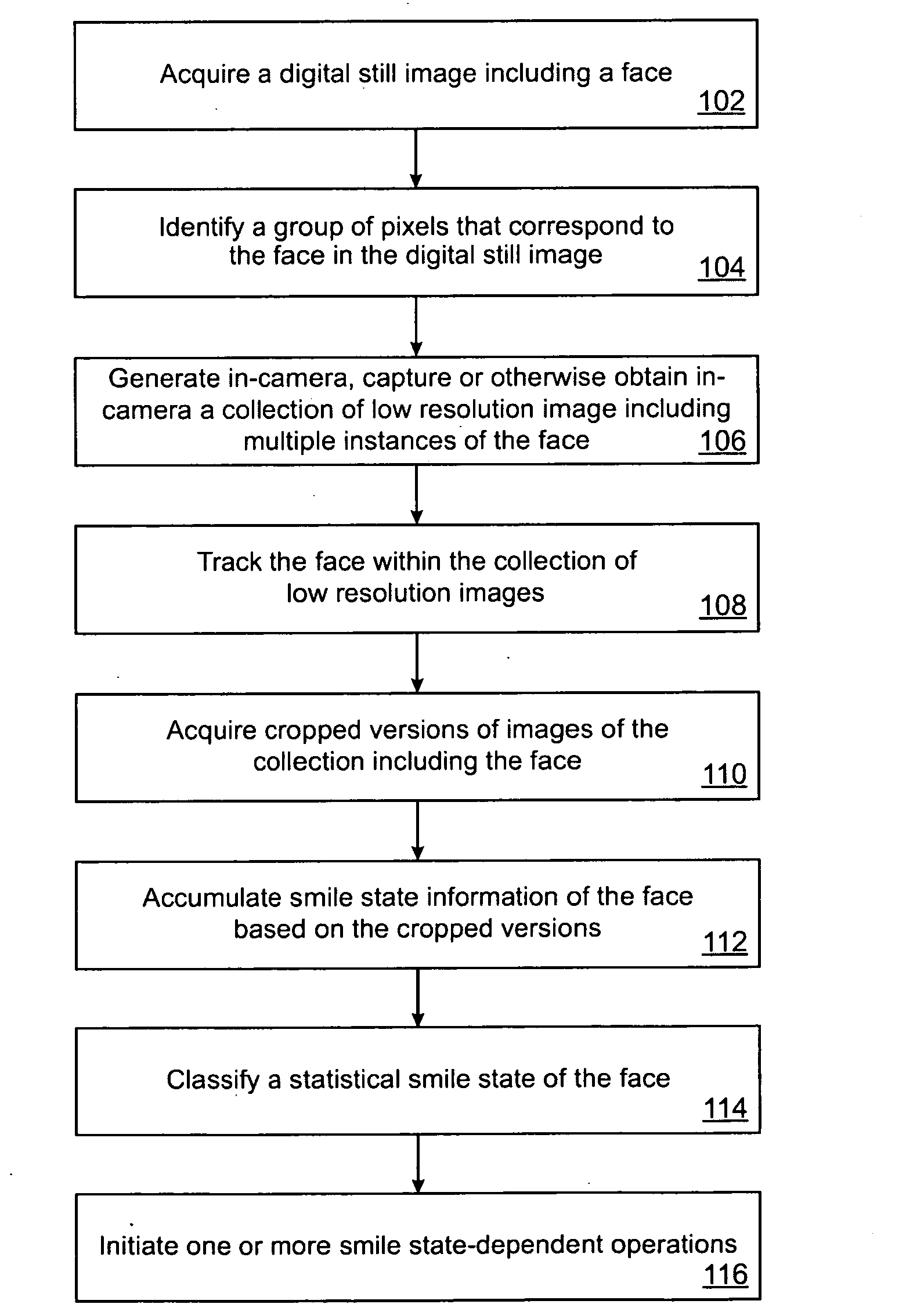

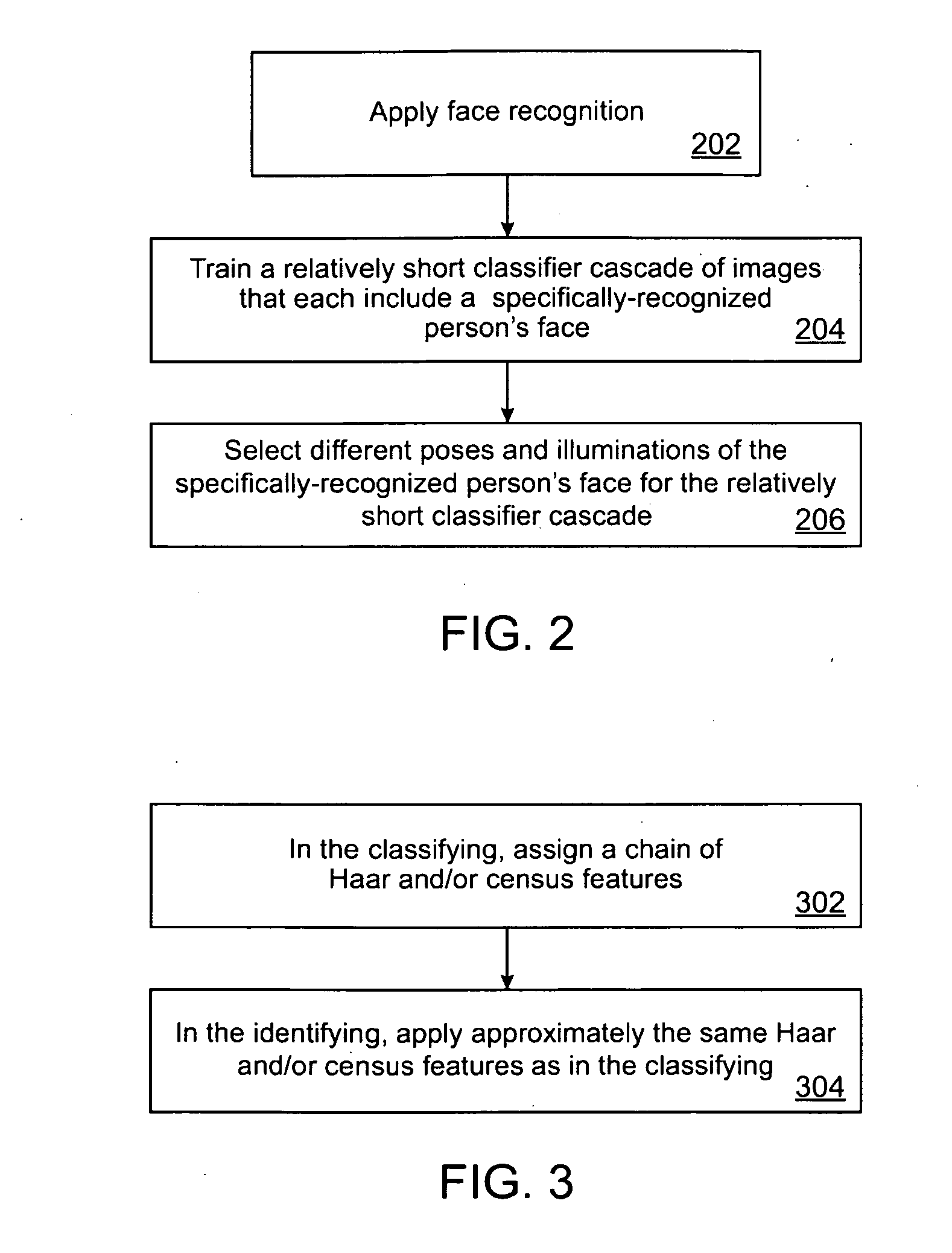

Detecting facial expressions in digital images

ActiveUS20090190803A1Reduce resolutionTelevision system detailsImage analysisPattern recognitionRadiology

A method and system for detecting facial expressions in digital images and applications therefore are disclosed. Analysis of a digital image determines whether or not a smile and / or blink is present on a person's face. Face recognition, and / or a pose or illumination condition determination, permits application of a specific, relatively small classifier cascade.

Owner:FOTONATION LTD

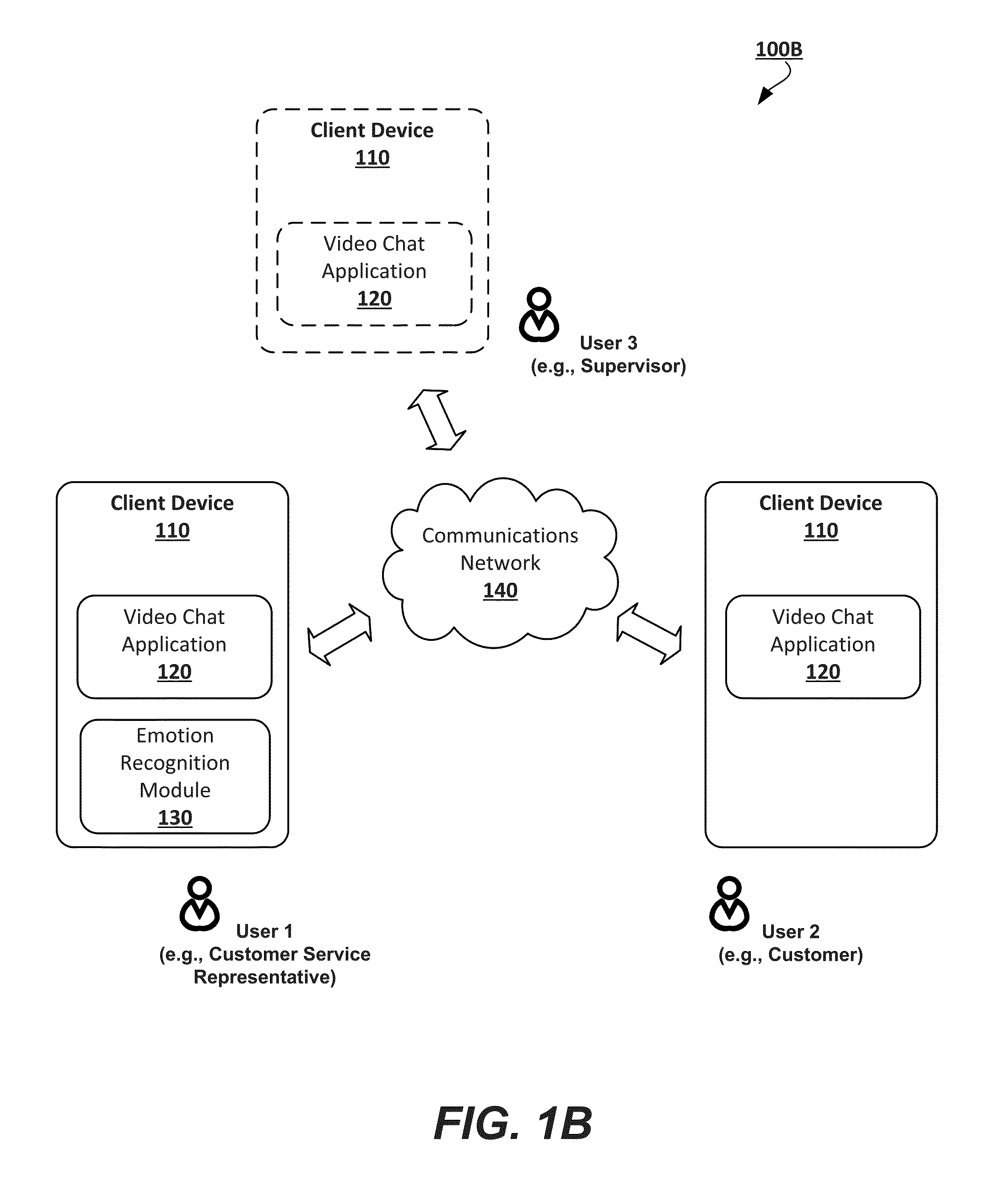

Emotion recognition in video conferencing

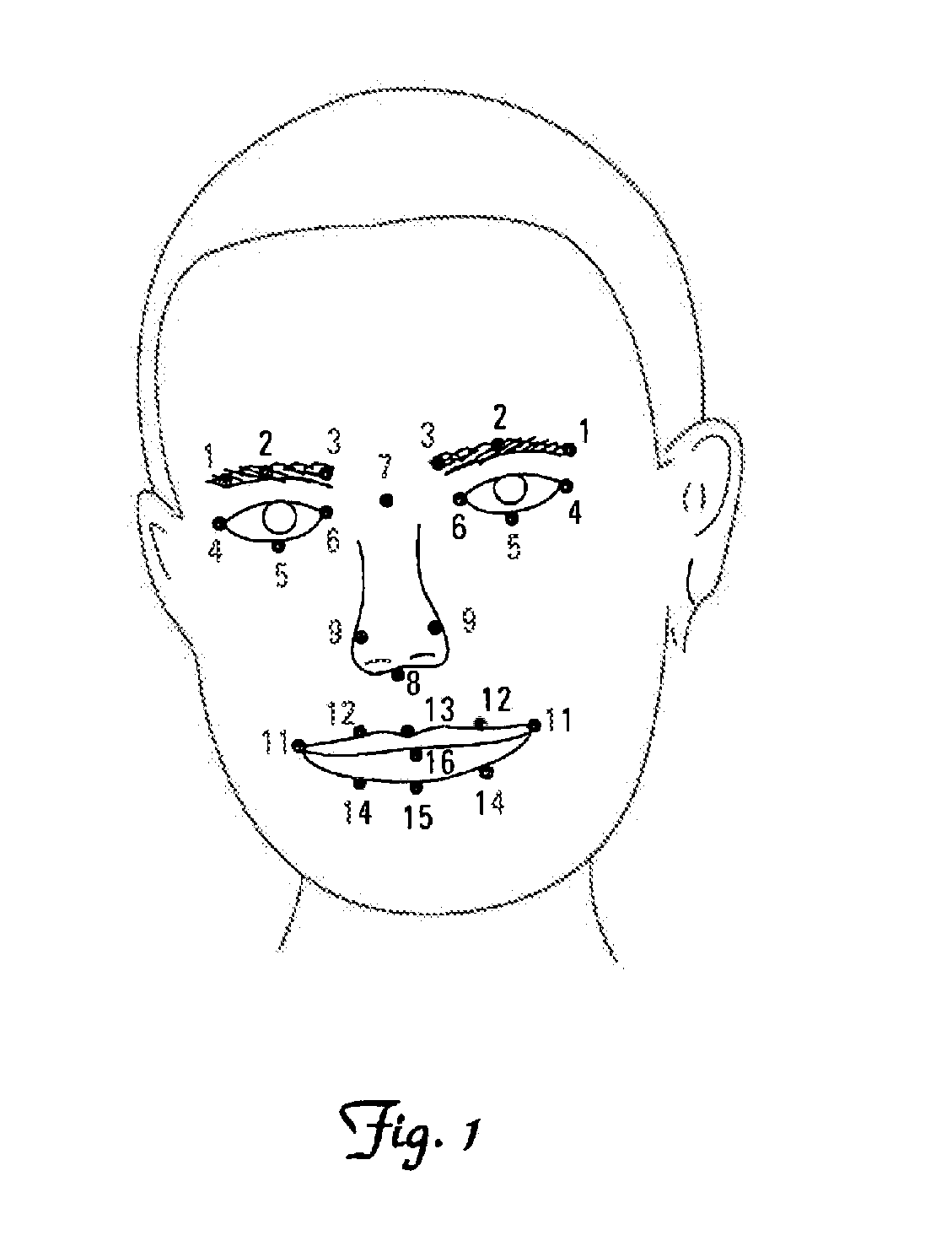

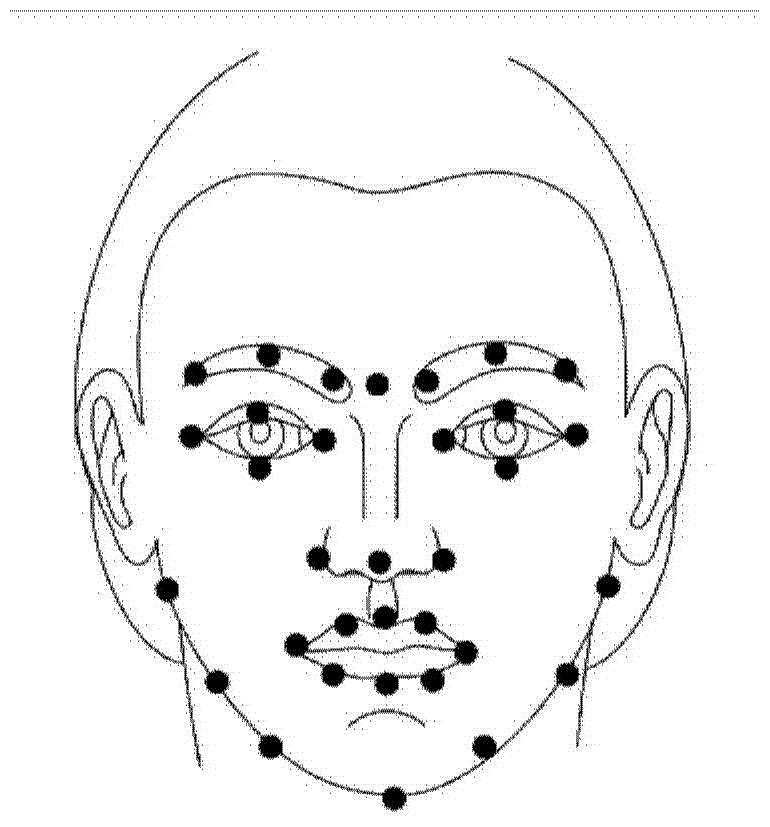

Methods and systems for videoconferencing include recognition of emotions related to one videoconference participant such as a customer. This ultimately enables another videoconference participant, such as a service provider or supervisor, to handle angry, annoyed, or distressed customers. One example method includes the steps of receiving a video that includes a sequence of images, detecting at least one object of interest (e.g., a face), locating feature reference points of the at least one object of interest, aligning a virtual face mesh to the at least one object of interest based on the feature reference points, finding over the sequence of images at least one deformation of the virtual face mesh that reflect face mimics, determining that the at least one deformation refers to a facial emotion selected from a plurality of reference facial emotions, and generating a communication bearing data associated with the facial emotion.

Owner:SNAP INC

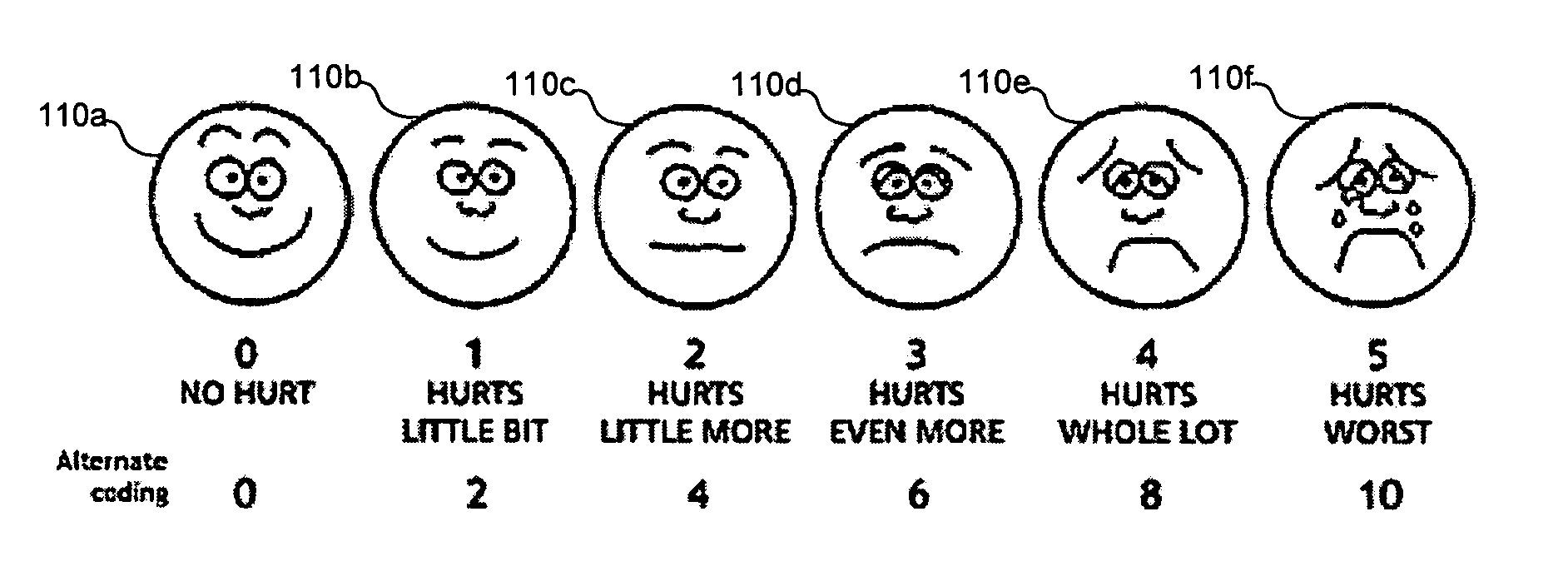

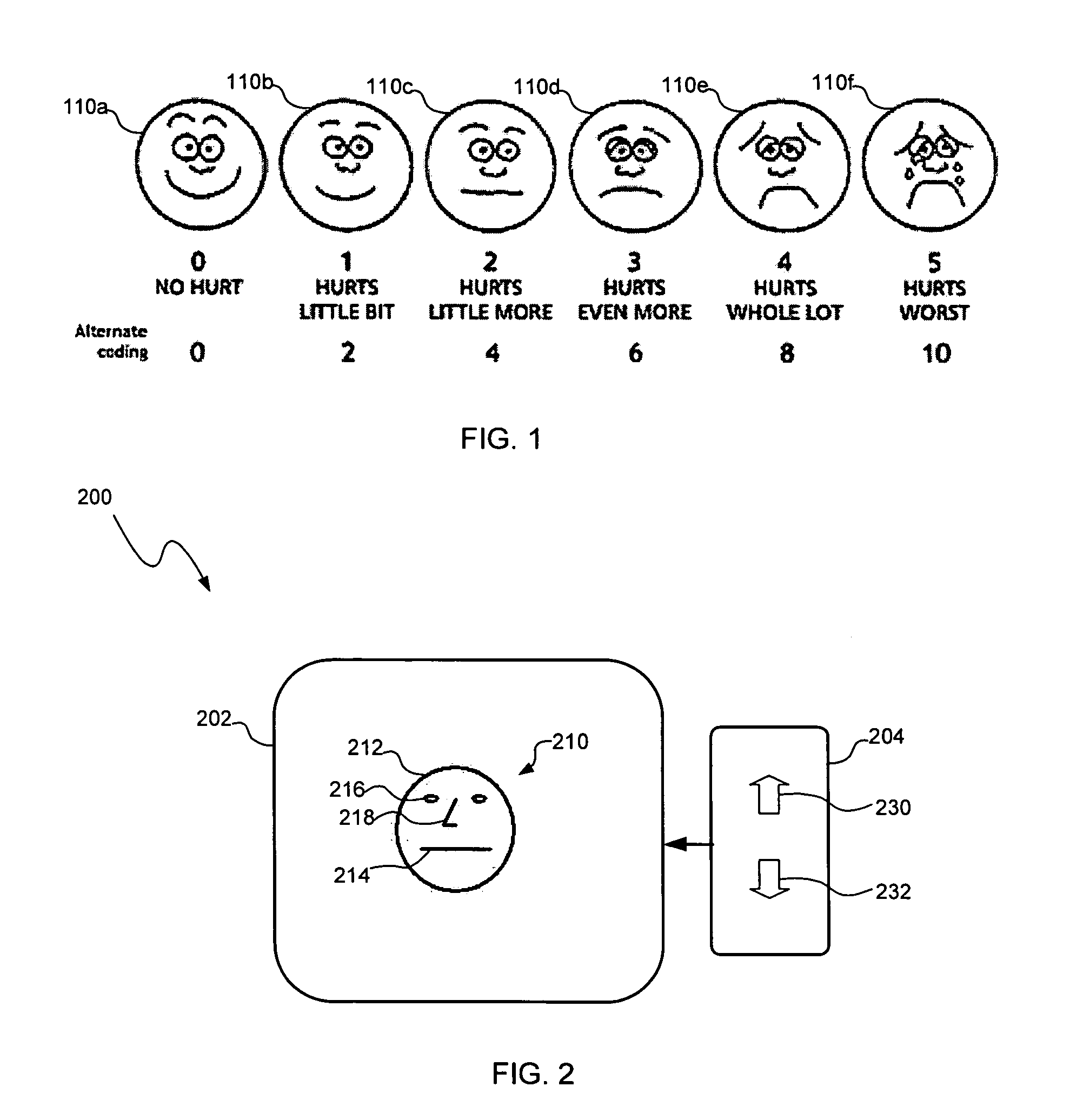

Computerized assessment system and method for assessing opinions or feelings

A computerized assessment system and method may be used to assess opinions or feelings of a subject (e.g., a child patient). The system and method may display a computer-generated face image having a variable facial expression (e.g., changing mouth and eyes) capable of changing to correspond to opinions or feelings of the subject (e.g., smiling or frowning). The system and method may receive a user input signal in accordance with the opinions or feelings of the subject and may display the changes in the variable facial expression in response to the user input signal. The system and method may also prompt the subject to express an opinion or feeling about a matter to be assessed.

Owner:PSYCHOLOGICAL APPL

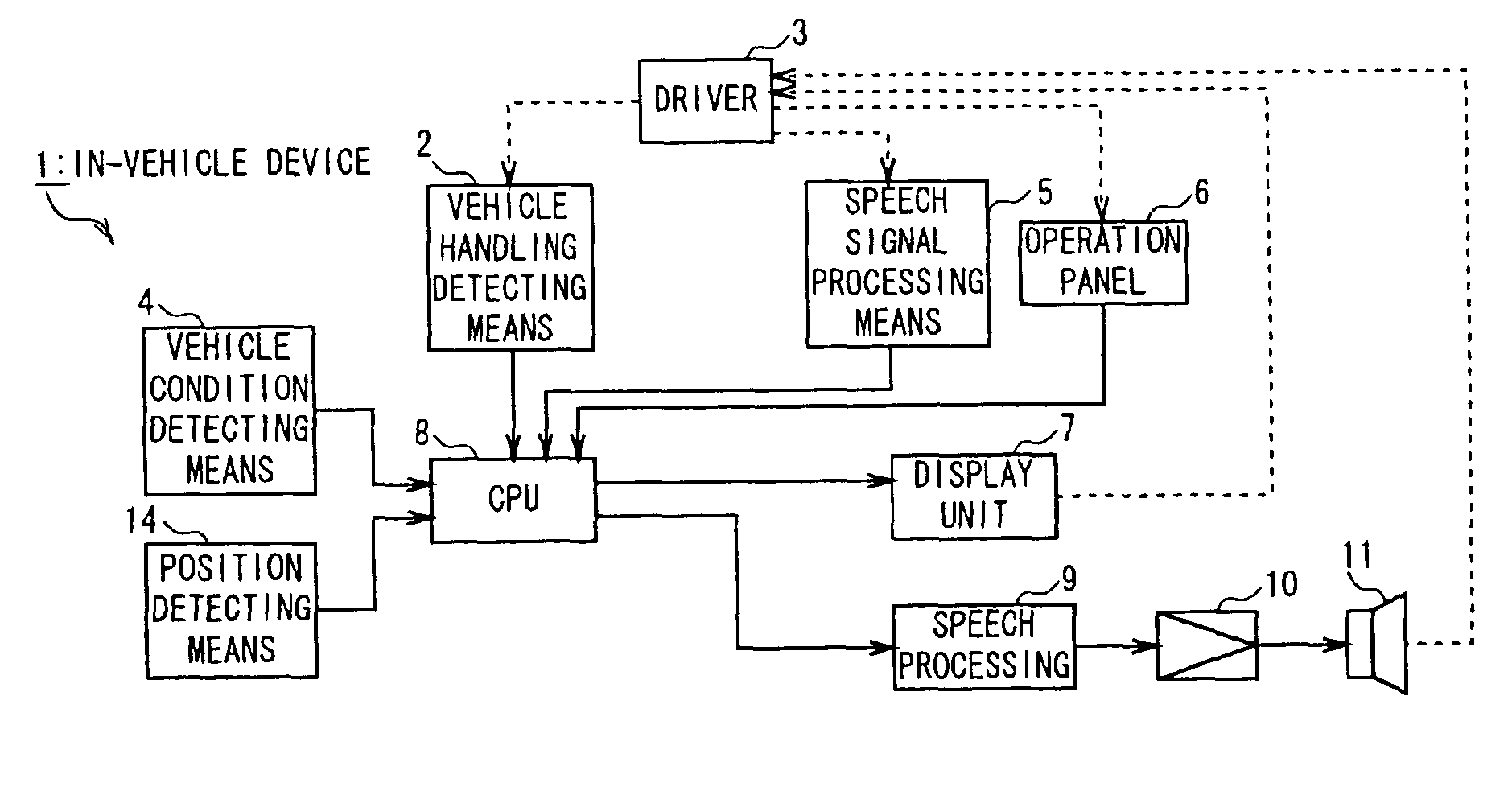

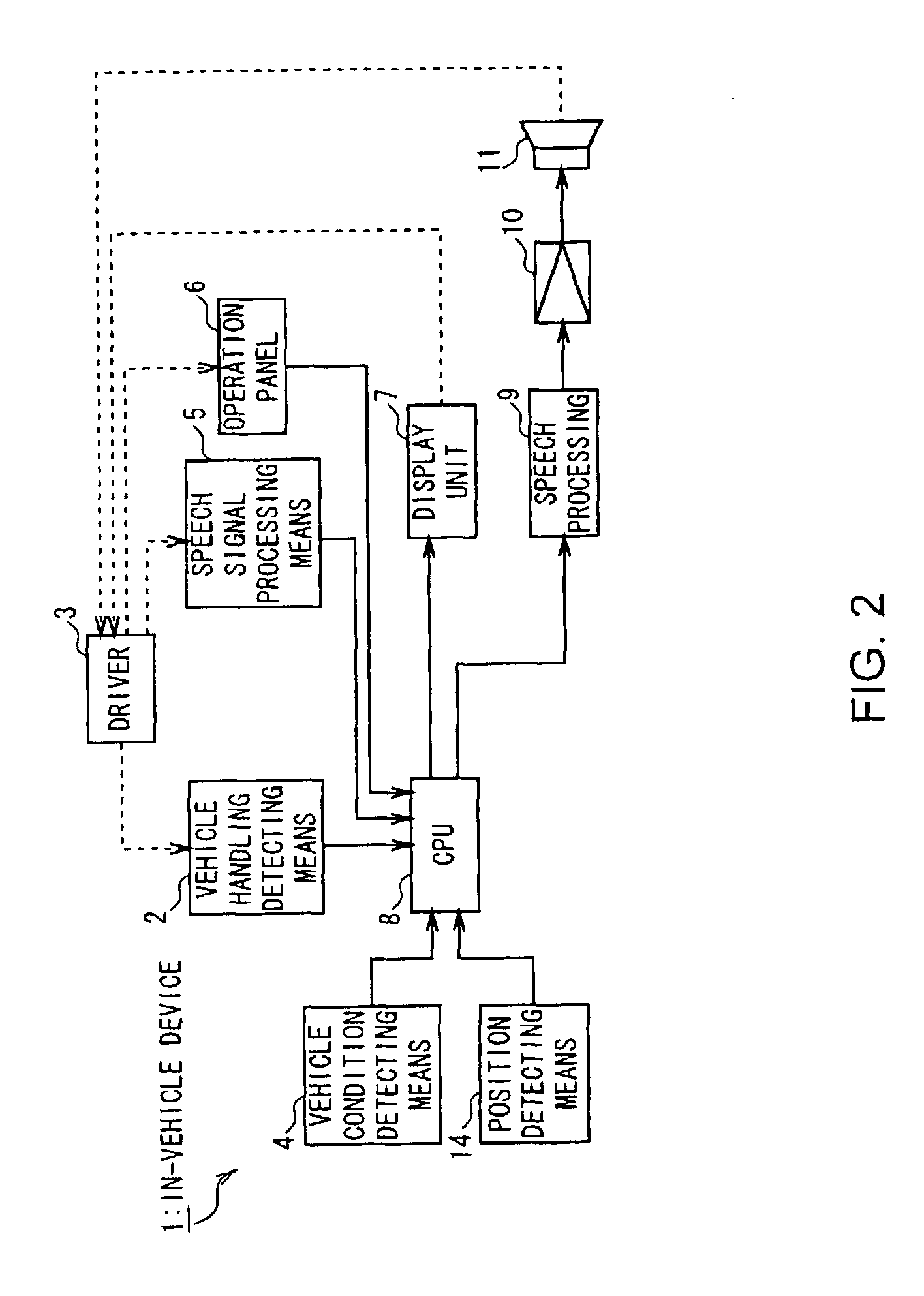

Vehicle information processing device, vehicle, and vehicle information processing method

InactiveUS7020544B2Accurate communicationInstruments for road network navigationDigital data processing detailsDriver/operatorIn vehicle

An in-vehicle device, a vehicle, and a vehicle information processing method, which are used, for example, to assist in driving an automobile and can properly communicate a vehicle situation to a driver. Methods and mechanisms express user's handling of the vehicle by virtual feelings on the assumption that the vehicle has a personality, and displays the virtual feelings by facial expressions of a predetermined character.

Owner:SONY CORP +1

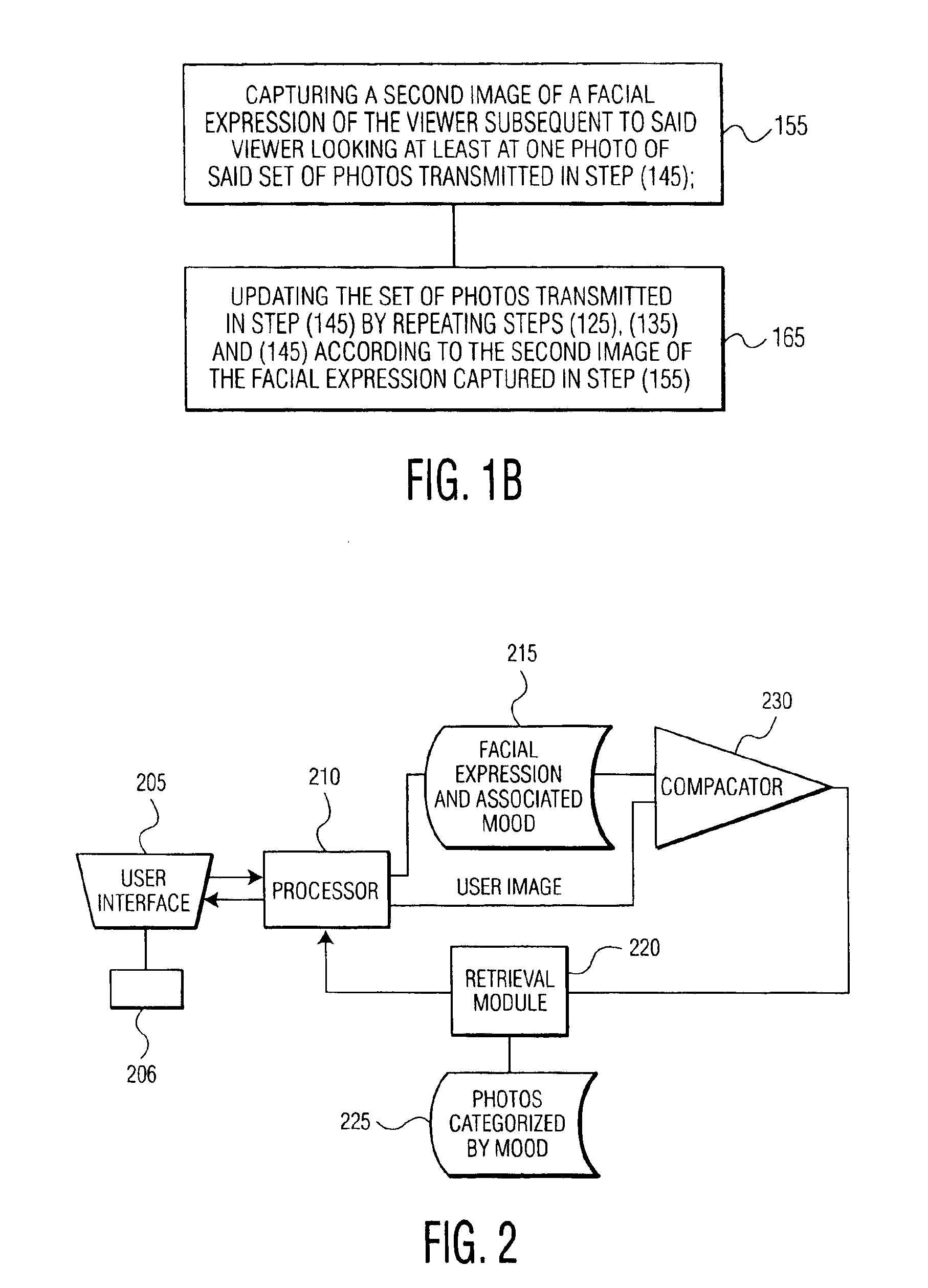

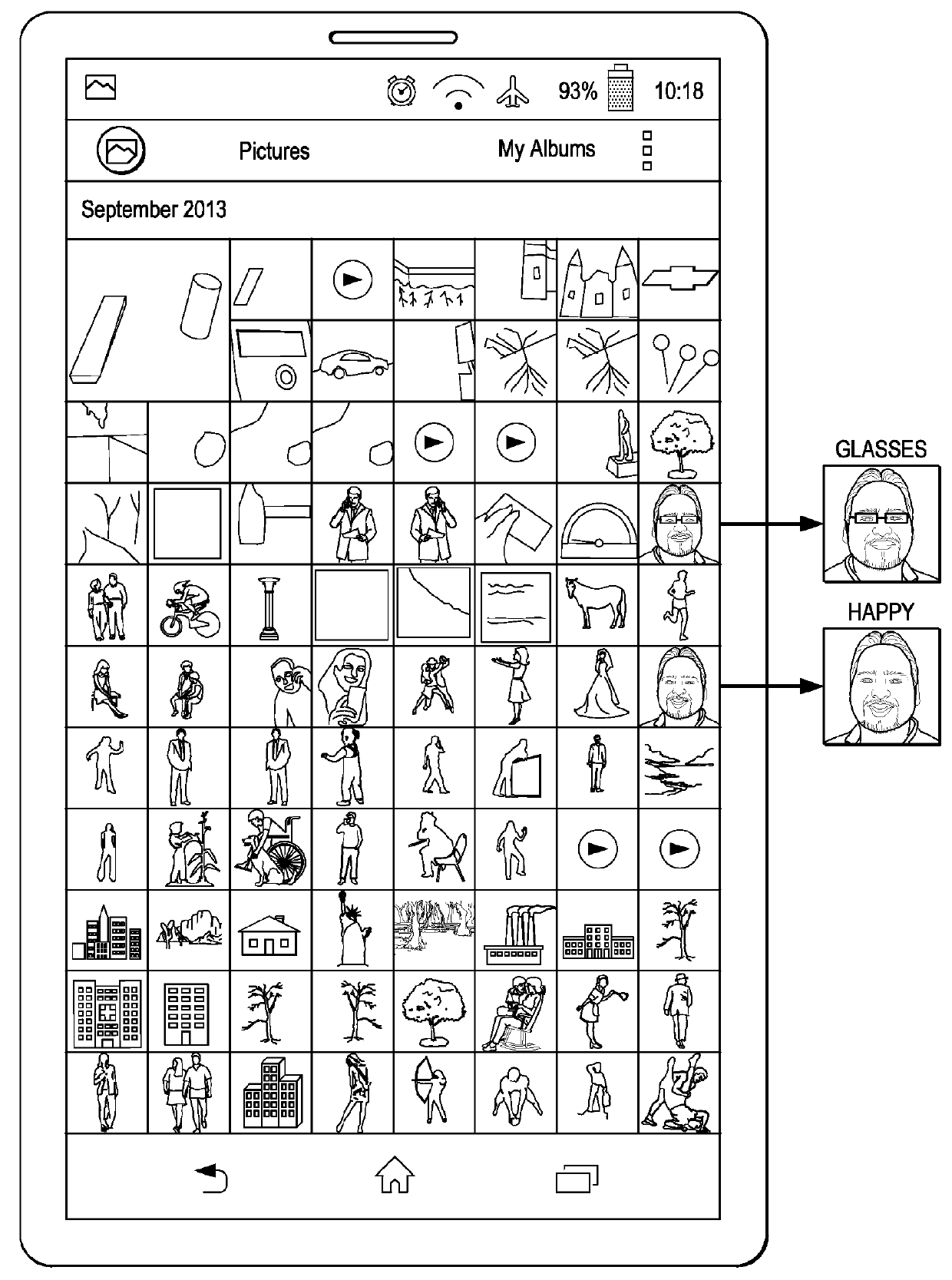

Mood based virtual photo album

A method and system for providing a mood based virtual photo album which provides photos based upon a sensed mood the viewer. The method may include the steps of capturing a first image of a facial expression of a viewer by a camera, providing the image to a pattern recognition module of a processor, determine a mood of the viewer by comparing the facial expression with a plurality of previously stored images of facial expressions having an associated emotional identifier that indicates a mood of each of the plurality of previously stored images, retrieving a set of photos from storage for transmission to the viewer based on the emotional identifier associated with the determined mood, and transmitting the set of photos in the form of an electronic photo album. A system includes a camera, a user interface for transmitting a first image of a facial expression of a viewer captured by the camera, a processor for receiving the transmitted image by the user interface, and including a pattern recognition module for comparing the image received by the processor with a plurality of images of facial expressions from a storage area to determine a mood of the viewer. A retrieval unit retrieves a set of electronic photos corresponding to the mood of the viewer, and transmits the set of electronic photos for display as a virtual photo album.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Combining effective images in electronic device having a plurality of cameras

ActiveUS20140232904A1Quality assuranceTelevision system detailsColor television detailsCombined methodFacial expression

An image combining method in an electronic device having a plurality of cameras. In response to a photographing signal, images successively photographed through at least a first camera are successively stored. A first image is selected from the successively photographed images which satisfies a predetermined classification reference, such as a degree of blurring, a facial expression, and / or a shooting composition. A second image is captured through a second camera; and the first and second images are then combined. The combined image may be a picture-in-picture (PIP) type combination image. The first and second cameras may be front and rear cameras of a portable terminal, or vice versa. The successive image capture and selection technique may also be applied to the second camera.

Owner:SAMSUNG ELECTRONICS CO LTD

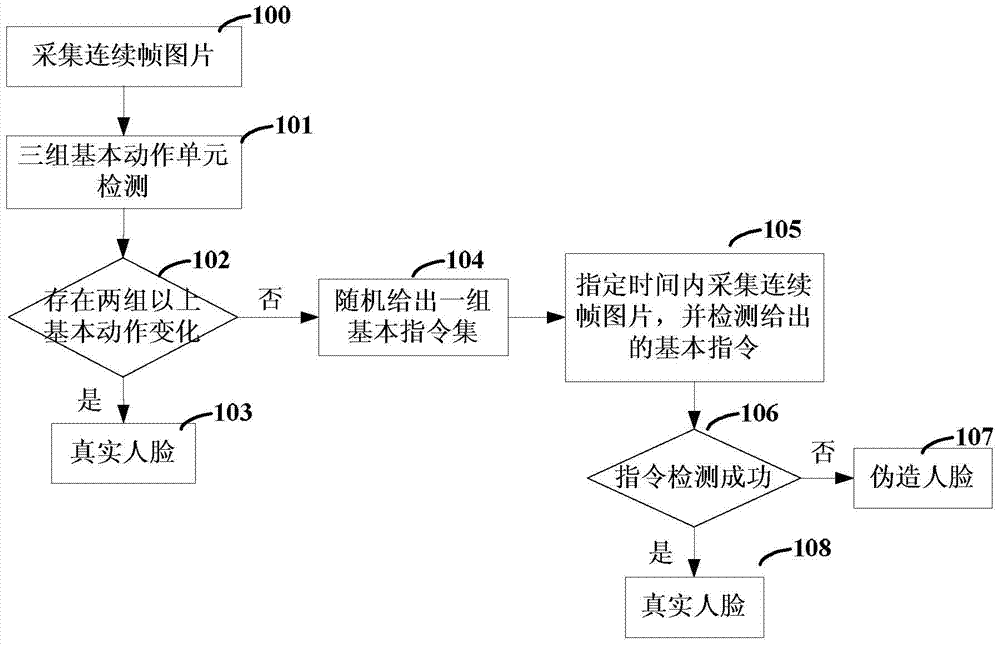

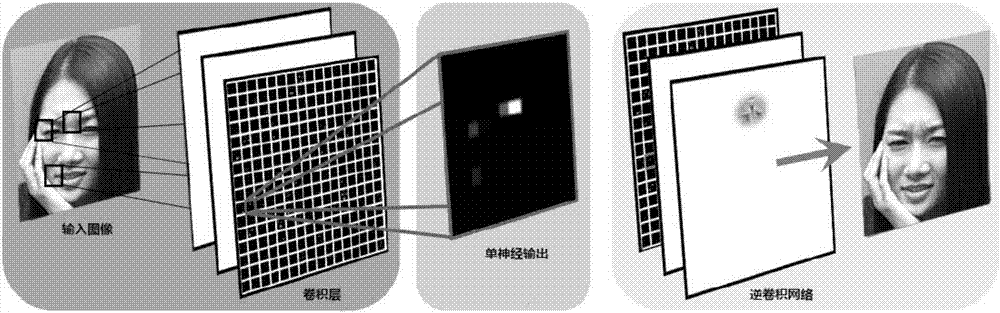

Bio-assay detection method and device

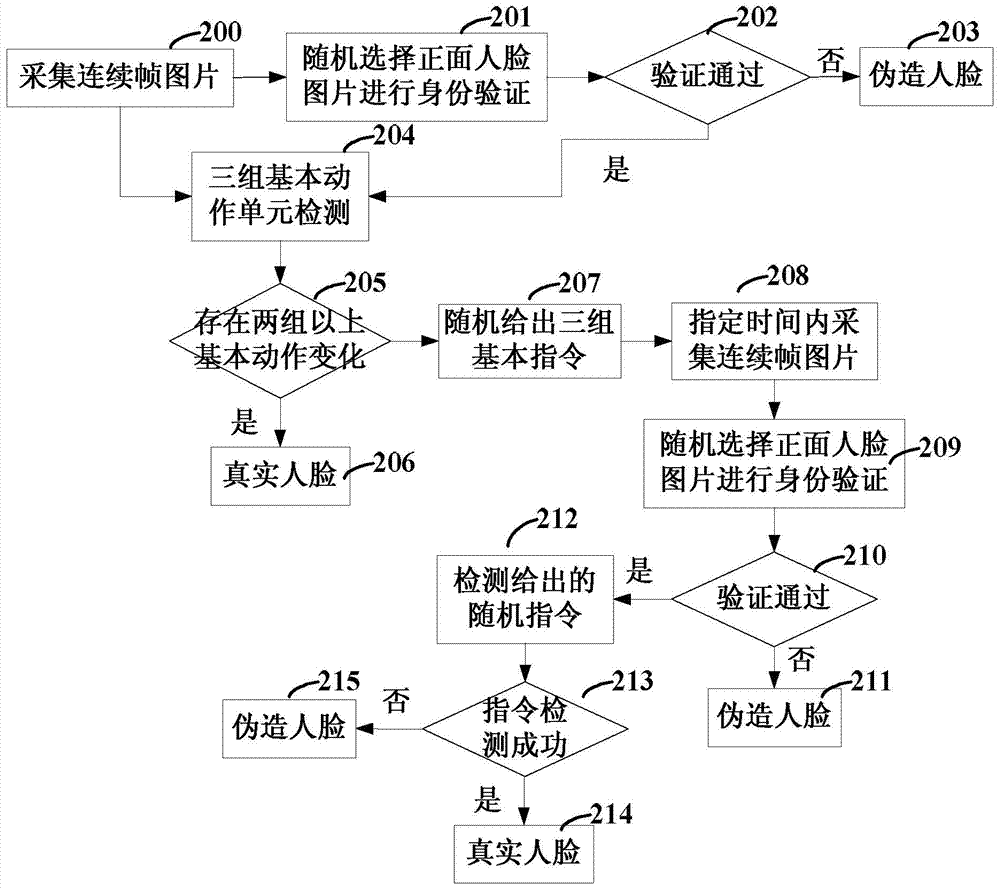

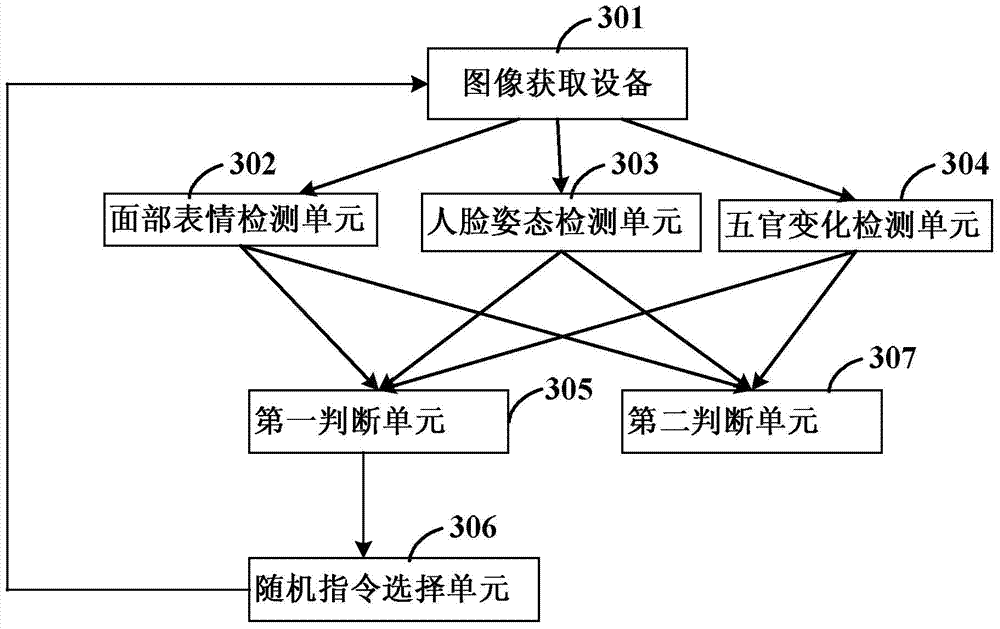

ActiveCN104751110AImprove accuracyImprove reliabilityCharacter and pattern recognitionAssayConsecutive frame

The invention discloses a bio-assay detection method. The method comprises the steps of 1, continuously acquiring frame pictures of a face through an image acquiring device; 2, detecting the acquired continuous frame pictures through a trained facial expression detecting unit, a face posture detecting unit and an expression change detecting unit; 3, determining that the face is a true human face if more than two groups of basic actions change according to the detection result in step 2, and otherwise, entering step 4; 4, randomly selecting a group of basic instructions from state element sets of the three detecting units, and prompting a user of finishing the group of basic instructions within the specified time; if that the user finishes the basic instructions is determined according to the detecting results of the three units, determining that the face is the true human face, and otherwise, determining that the face is a false face. With the adoption of the method, the effective deformation of the human face can be accurately caught and accurately detected, so that the true human face and the false face can be distinguished, and the invasion of a human face recognition system can be reduced.

Owner:HANVON CORP

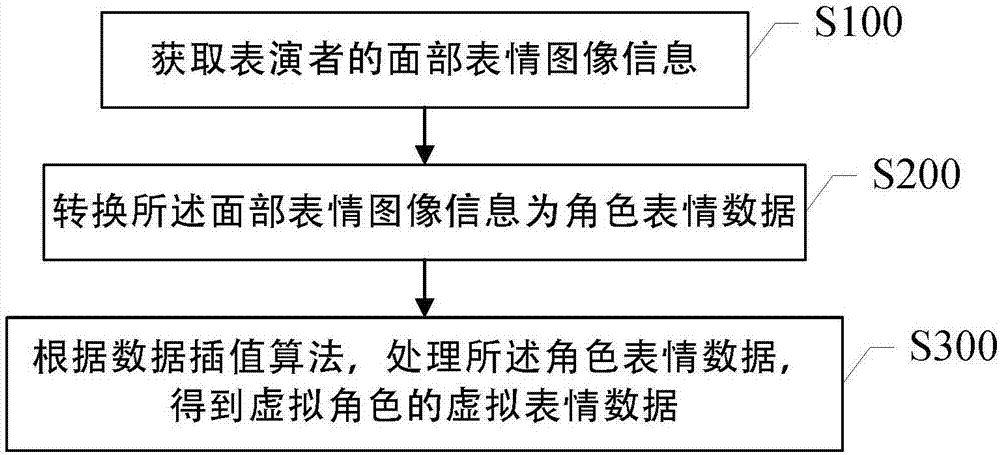

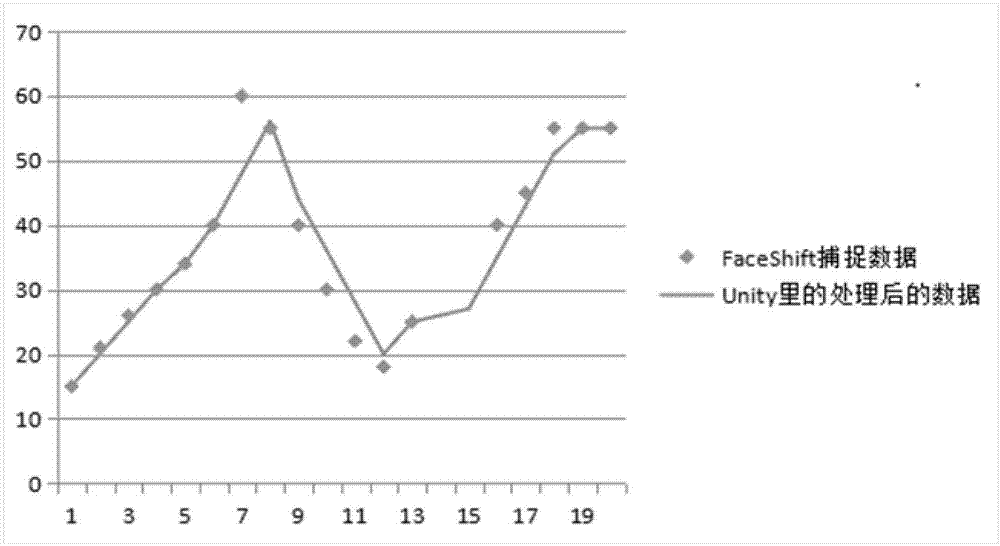

Data processing method and system based on virtual character

The invention discloses a data processing method and a system based on a virtual character. The invention provides a data processing method based on a virtual character, comprising the following steps: S100, acquiring facial expression image information of a performer; S200, converting the facial expression image information into character expression data; and S300, processing the character expression data according to a data interpolation algorithm to get virtual expression data of a virtual character. The facial movements of a human face can be transferred to any virtual character chosen by users and demonstrated. The current facial expression change of a performer can be reflected in real time through the virtual character. A more vivid virtual character can be created, interest is added, and the user experience is enhanced. Moreover, when there is an identification fault, the action of the character can be stably controlled within an interval to make virtual character demonstration more vivid and natural.

Owner:上海微漫网络科技有限公司

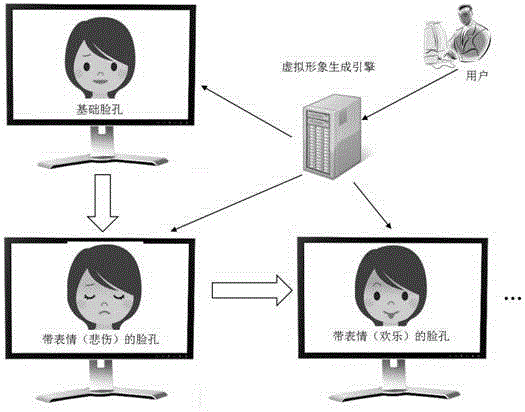

Method and system for generating and using facial expression for virtual image created by free combination

The invention relates to a method of generating and using a facial expression for a virtual image created by free combination. The method comprises steps: a facial organ or a local face is selected in a virtual image organ set as a part to form a basic virtual image; a specific facial expression is selected; and then, the organ part meeting the condition is used for replacing the organ part in the basic virtual image to enable the virtual image to meet the selected specific facial expression. The invention also provides a method of generating a virtual image organ database, thereby meeting realization requirements of the above virtual image facial expression. A small amount of image resources can be used to generate a huge amount of freely-combined virtual images for enabling a user to select a virtual image to present the individual, and the virtual image facial expression is used for showing a personal feeling or an emotion. The method is applied to various kinds of two-dimensional and three-dimensional virtual images such as a human face, a whole body, an animal, a cartoon model, and an abstract emotion face.

Owner:LANXII PLAY BEIJING TECH CO LTD

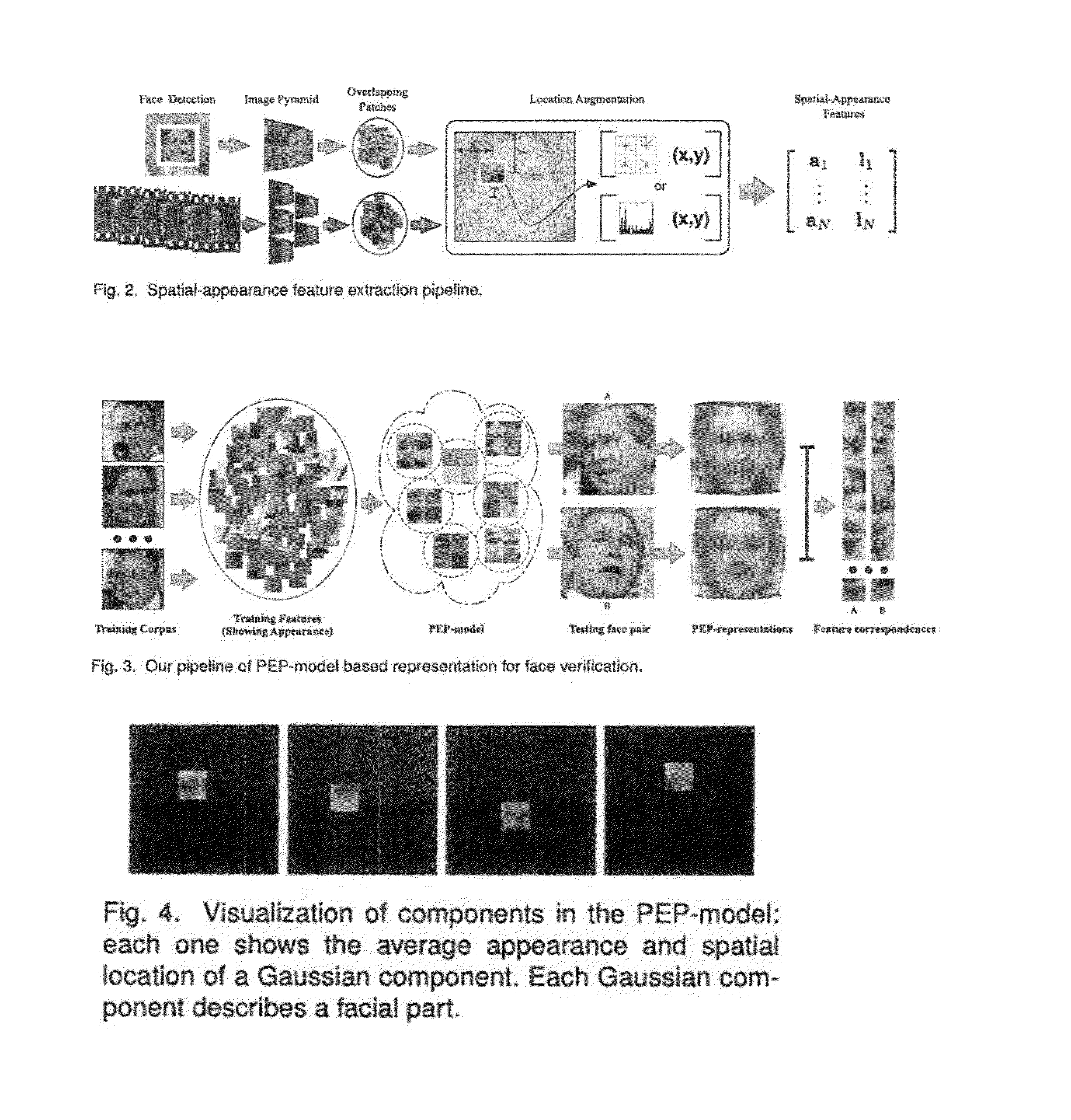

Flexible part-based representation for real-world face recognition apparatus and methods

An automated face recognition apparatus and method employing a programmed computer that computes a fixed dimensional numerical signature from either a single face image or a set / track of face images of a human subject. The numerical signature may be compared to a similar numerical signature derived from another image to acertain the identity of the person depicted in the compared images. The numerical signature is invariant to visual variations induced by pose, illumination, and face expression changes, which can subsequently be used for face verification, identification, and detection, using real-world photos and videos. The face recognition system utilizes a probabilistic elastic part model, and achieves accuracy on several real-world face recognition benchmark datasets.

Owner:STEVENS INSTITUTE OF TECHNOLOGY

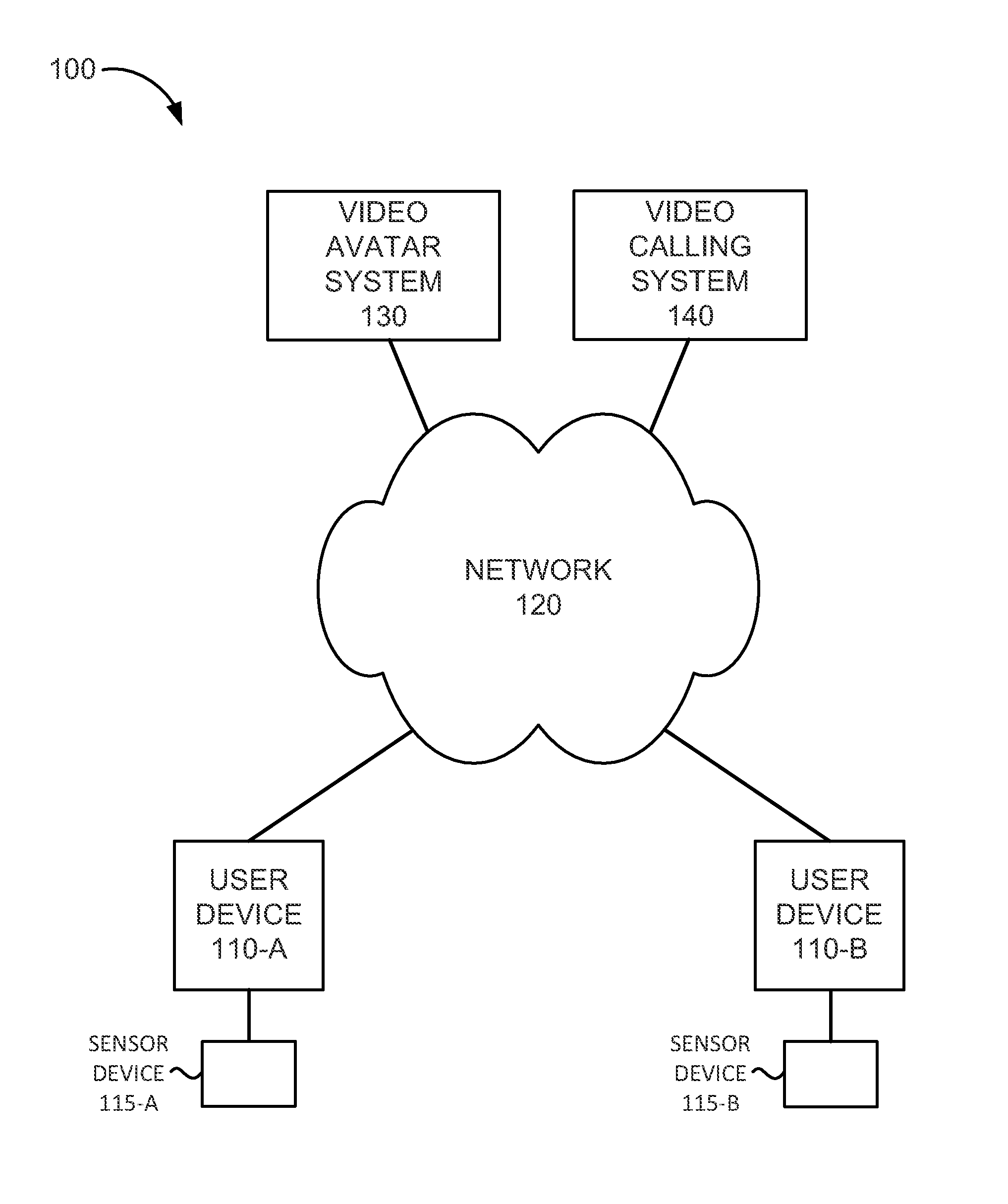

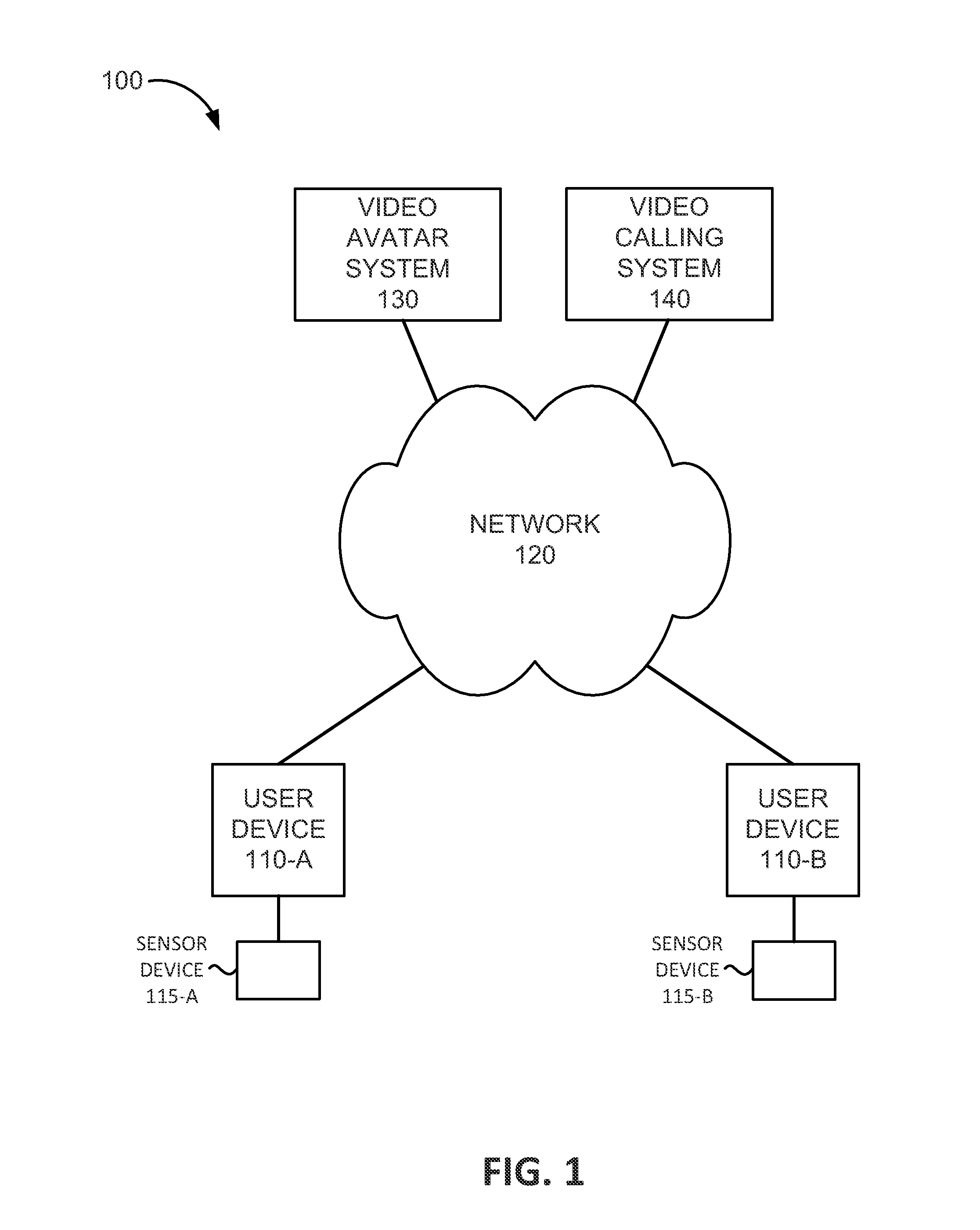

Static and dynamic video calling avatars

ActiveUS20140176662A1Services signallingTwo-way working systemsHuman–computer interactionCommunication device

A communication device may include logic configured to detect a request to initiate a video call by the user of the communication device; select an avatar for the video call, wherein the avatar corresponds to an image selected by the user to be used as a replacement for a video stream for video calls associated with the user; determine a facial expression associated with the user of the communication device; select an avatar facial expression for the selected avatar, based on the determined facial expression; and incorporate the selected avatar facial expression into a video stream associated with the video call.

Owner:VERIZON PATENT & LICENSING INC

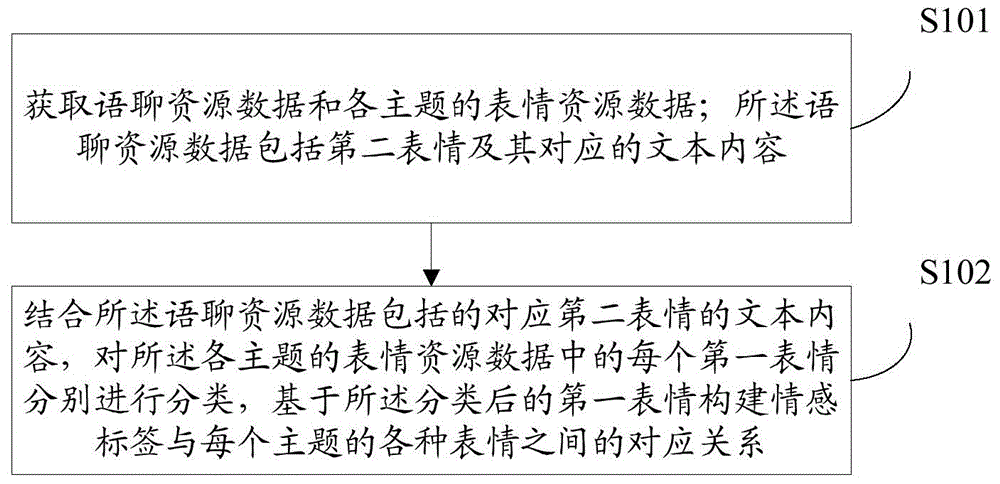

Expression input method and device based on face identification

ActiveCN104063683AAvoid selectivityAvoid the situationCharacter and pattern recognitionSpecial data processing applicationsComputer visionSubject specific

The invention discloses an expression input method and device based on face identification, and relates to the technical field of input methods. The method comprises the steps of starting an input method, acquiring a shot picture of a user, determining emotion labels corresponding to the facial expression in the picture by a face expression identification model, acquiring the expressions of all themes of the emotion labels respectively based on the corresponding relation of the emotion labels and the expressions in all the themes, the expressions of all the themes are ordered and used as candidate items to be displayed in a client end. According to the expression input method and device, the labels can be directly identified and matched according to the currently-shot picture of the user, the user can input the expression conveniently, the expression accuracy is high, and rich and wide-range expression resources are provided for the user.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

Method and report assessing consumer reaction to a stimulus by matching eye position with facial coding

InactiveUS20120046993A1Digital data information retrievalSpecial data processing applicationsFacial expressionEye position

Owner:HILL DANIEL A

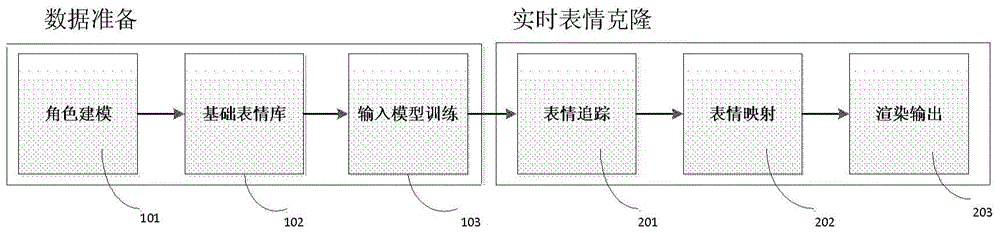

Expression cloning method and device capable of realizing real-time interaction with virtual character

The invention discloses an expression cloning method and device capable of realizing real-time interaction with a virtual character and belongs to the fields such as computer graphics and virtual reality. The method includes the following steps that: 1, modeling and skeleton binding are performed on the virtual character; 2, the basic expression base of the virtual character is established; 3, expression input training is carried out: the maximum displacement of facial feature points under each basic expression is recorded; 4, expression tracking is carried out: the facial expression change of a real person is recorded through motion capture equipment, and the weights of the basic expressions are obtained through calculation; 5, expression mapping is carried out: the obtained weights of the basic expressions are transferred to the virtual character in real time, and rotation interpolation is performed on corresponding skeletons; and the real-time rendering output of the expression of the virtual character is carried out. With the method adopted, the expression of the virtual character can be synthesized rapidly, stably and vividly, so that the virtual character can perform expression interaction with the real person stably in real time.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Method of micro facial expression detection based on facial action coding system (FACS)

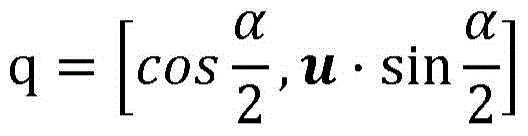

InactiveCN107194347AImprove recognition rateAccurate identificationCharacter and pattern recognitionNetwork architectureBase function

The invention provides a method of micro facial expression detection based on a facial action coding system (FACS). The method has a main content of a visual CNN filter, network architecture and training, migration learning and micro facial expression detection. The method comprises steps: firstly, a robust emotion classification framework is built; the provided network learning model is analyzed; the provided network training filter is visualized in different emotion classification tasks; and the model is applied to micro facial expression detection. The recognition rate of the existing method in micro facial expression detection is improved, the strong correlation between features generated by an unsupervised learning process and action units for a facial expression analysis method is presented, the FACS-based function generalization ability in aspects of providing high-precision score cross data and cross mission is verified, the micro facial expression detection recognition rate is improved, the facial expression can be recognized more accurately, the emotion state is deduced, the effectiveness and the accuracy of application in various fields are improved, and development of artificial intelligence is pushed.

Owner:SHENZHEN WEITESHI TECH

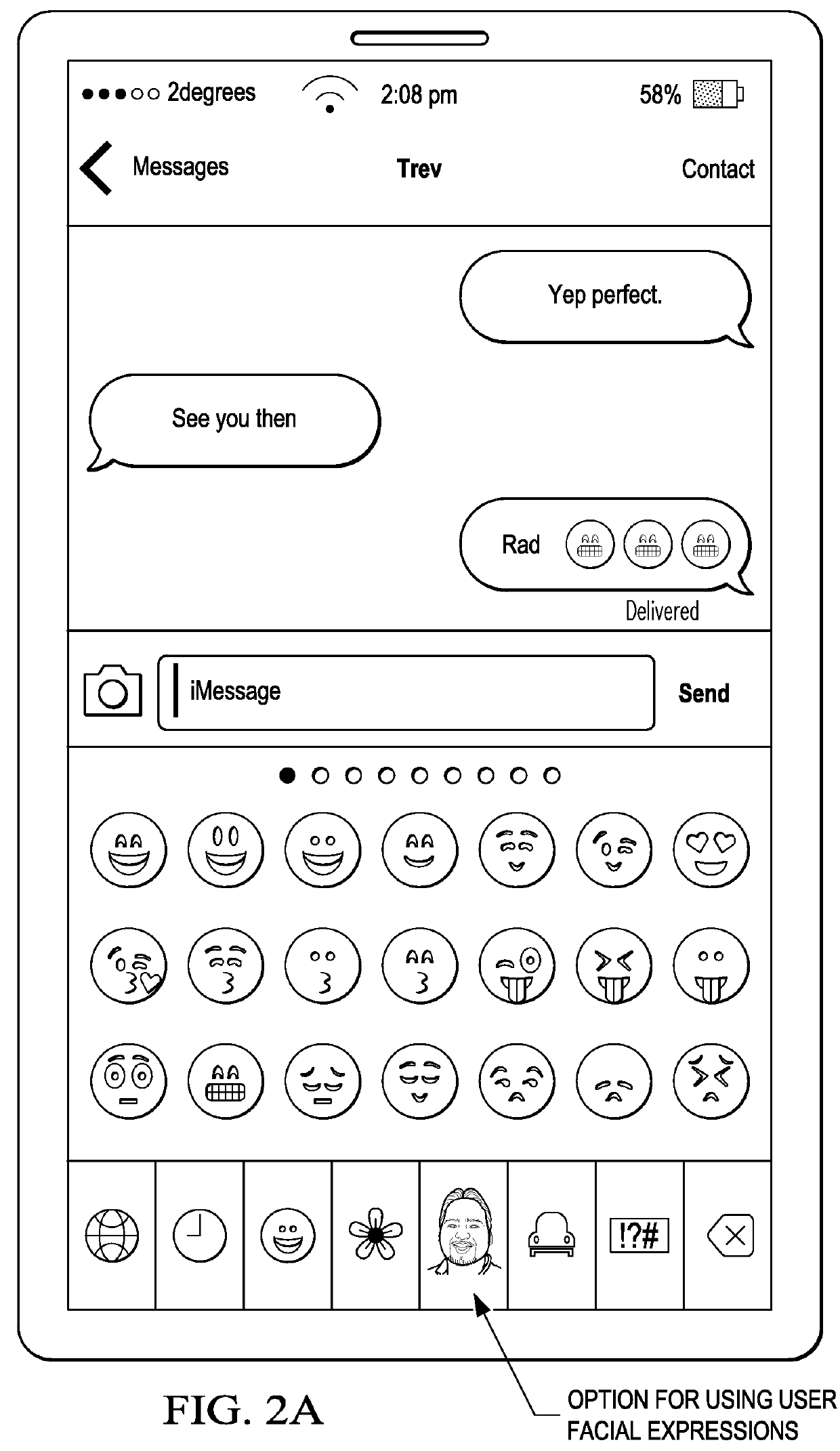

System and Methods of Generating User Facial Expression Library for Messaging and Social Networking Applications

InactiveUS20160055370A1Easy to useSubstation equipmentMessaging/mailboxes/announcementsUser deviceFacial expression

Embodiments are provided that utilize images of users to represent true or personalized emotions for the users in messaging or social networking applications. A library of user facial expression images is generated for this purpose, and made accessible to messaging or social networking applications, such as on a smartphone or other user devices. The images of facial expressions include face photographs of the user that convey emotions or expressions of the user, such as a happy face or a sad face. An embodiment method includes detecting an image accessible by an electronic device, determining whether the image shows a face of the user and whether the image shows a facial expression expressed by the face of the user, adding the image to a library of facial expressions of the user in accordance with the determining step, and sending a message including the image as an emoticon.

Owner:FUTUREWEI TECH INC

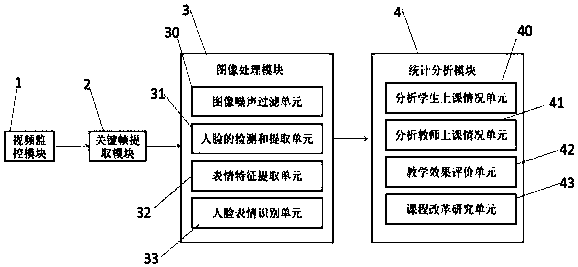

A classroom teaching effect evaluation system based on facial expression recognition

InactiveCN109657529AAdvancement of Curriculum ReformImprove teaching qualityClosed circuit television systemsResourcesActivity timeEvaluation system

The invention discloses a classroom teaching effect evaluation system based on facial expression recognition. According to the invention, videos in a classroom are analyzed in real time; the facial expressions of students in class are extracted, and the understanding degree, the activity degree, the doubtful degree and the activity time index information of the students in class are counted and analyzed, so that teachers can know the psychological states of the students and the mastery degree of the students on the knowledge points, the teachers can adopt corresponding teaching regulation andcontrol means, and the teaching quality of the class is improved; The classroom expression state of the teacher is automatically analyzed, the classroom expression index of the teacher is counted, andthe classroom emotion basis is provided for teaching management personnel to examine the teacher. The classroom expressions of teachers and students are recorded, the classroom expression indexes ofthe teachers and the students are obtained through statistical analysis, and the indexes serve as reference indexes for classroom teaching evaluation and have comprehensiveness and objectivity. the classroom teaching effect of the target course in each school for course reform is counted as a reference. Through the technical scheme of the invention, teachers and teaching management personnel can better accomplish the teaching concept taking students as the main part.

Owner:TAIZHOU UNIV

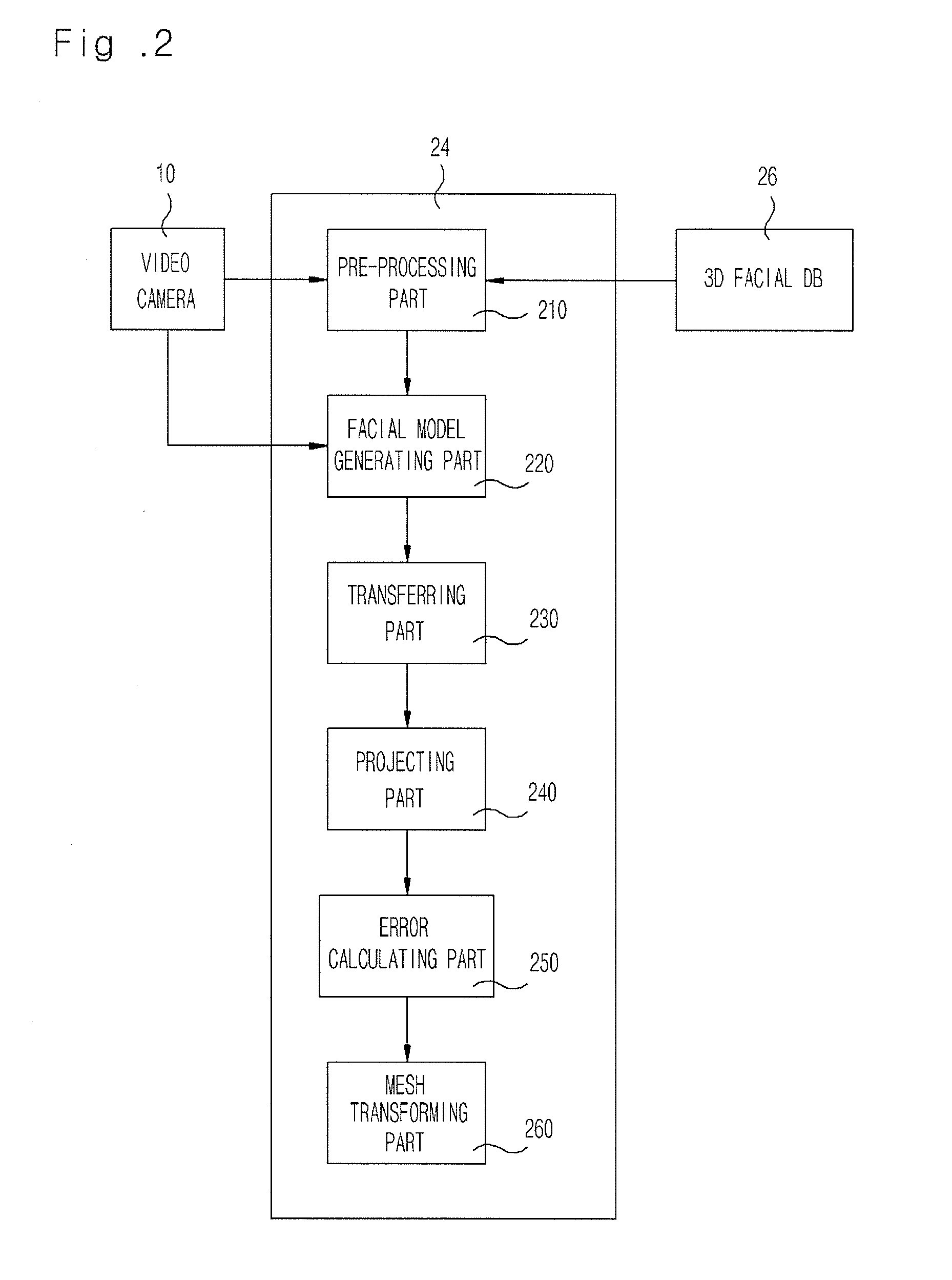

System and method for generating 3-d facial model and animation using one video camera

InactiveUS20080136814A1Solve exact calculationsError minimizationAnimation3D-image renderingAnimationGeometric modeling

Provided are system and method for generating a 3D facial model and animation using one video camera. The system includes a pre-processing part, a facial model generating part, a transferring part, a projecting part, an error calculating part, and a mesh transforming part. The pre-processing part sets correspondence relations with other meshes, generates an average 3D facial model, and generates a geometrical model and a texture dispersion model. The facial model generating part projects the average 3D facial onto an expressionless facial image frame that stares a front side to generate a performer's 3D facial model. The transferring part transfers a 3D facial model template having an animation-controlled model to the performer's 3D facial model to generate the performer's 3D facial model. The projecting part projects the performer's 3D facial model onto a facial animation video frame including a facial expression. The error calculating part calculates an error projected by the projecting part. The mesh transforming part moves or rotationally converts a joint in such a direction as to minimize the error.

Owner:ELECTRONICS & TELECOMM RES INST

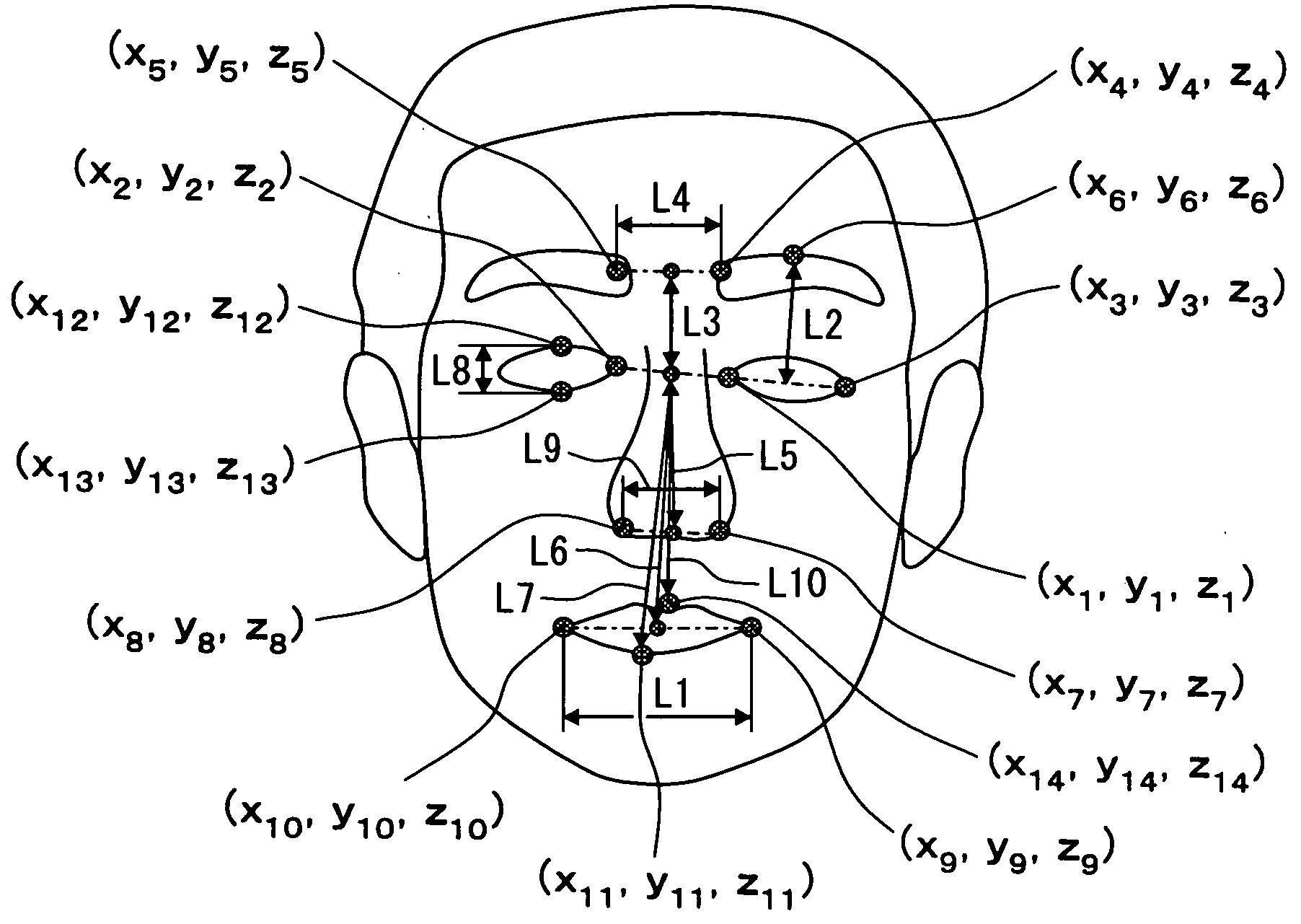

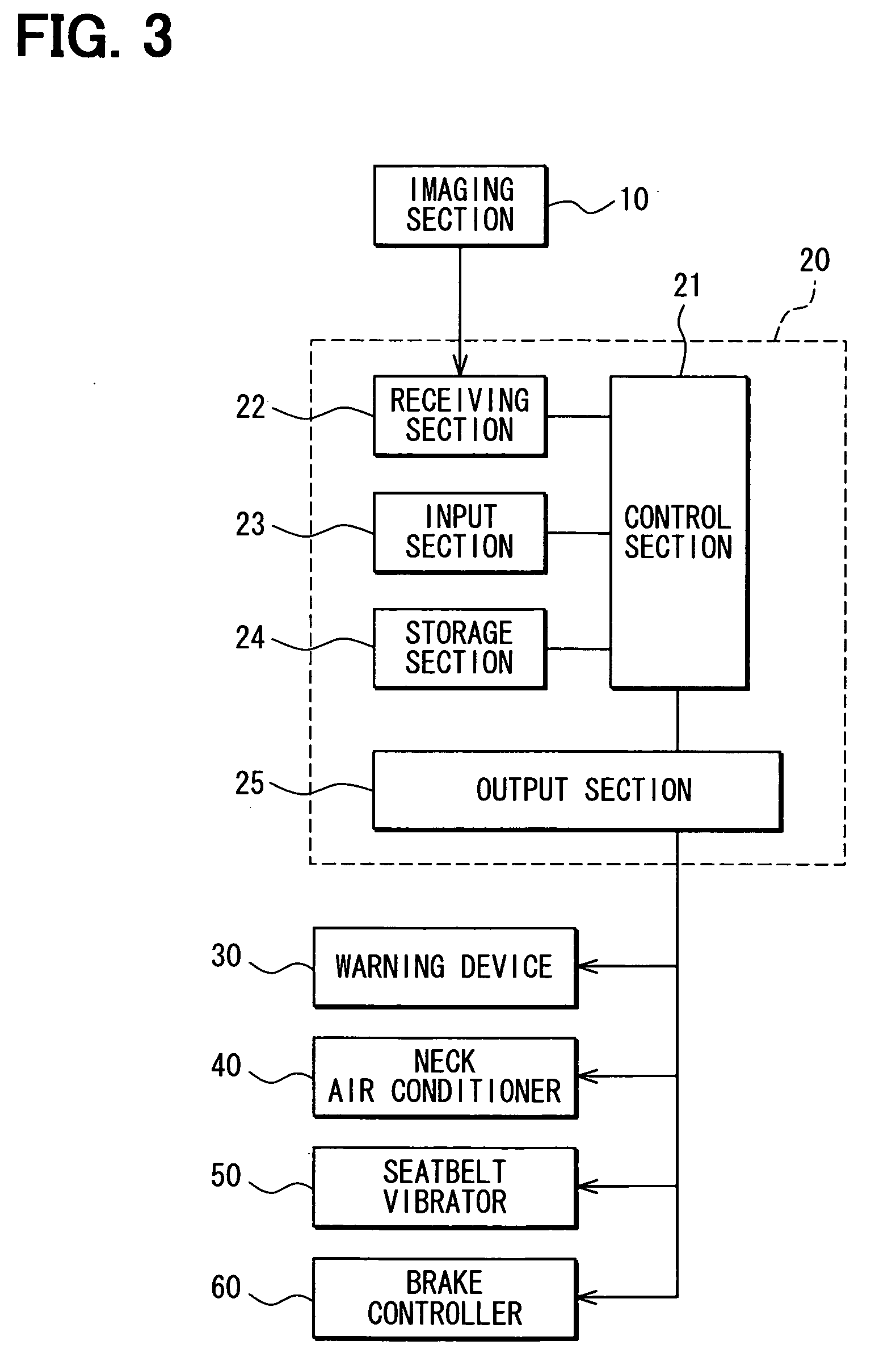

Device, program, and method for determining sleepiness

ActiveUS20080212828A1Sure easyReduce chanceCharacter and pattern recognitionPhysical medicine and rehabilitationDriver/operator

A sleep prevention system captures a facial image of a driver, determines a sleepiness level from the facial image and operates warning devices including neck air conditioner, seatbelt vibrator, and brake controller if necessary based on the sleepiness determination. A sleepiness determination device determines sleepiness from facial expression information such as distances between corners of a mouth, distance between an eyebrow and eye, tilt angle of a head, and other facial feature distances. The facial distances are calculated from captured images and from reference information gather during wakefulness. The sleepiness degree is determined based on the determination results including combinations thereof.

Owner:DENSO CORP

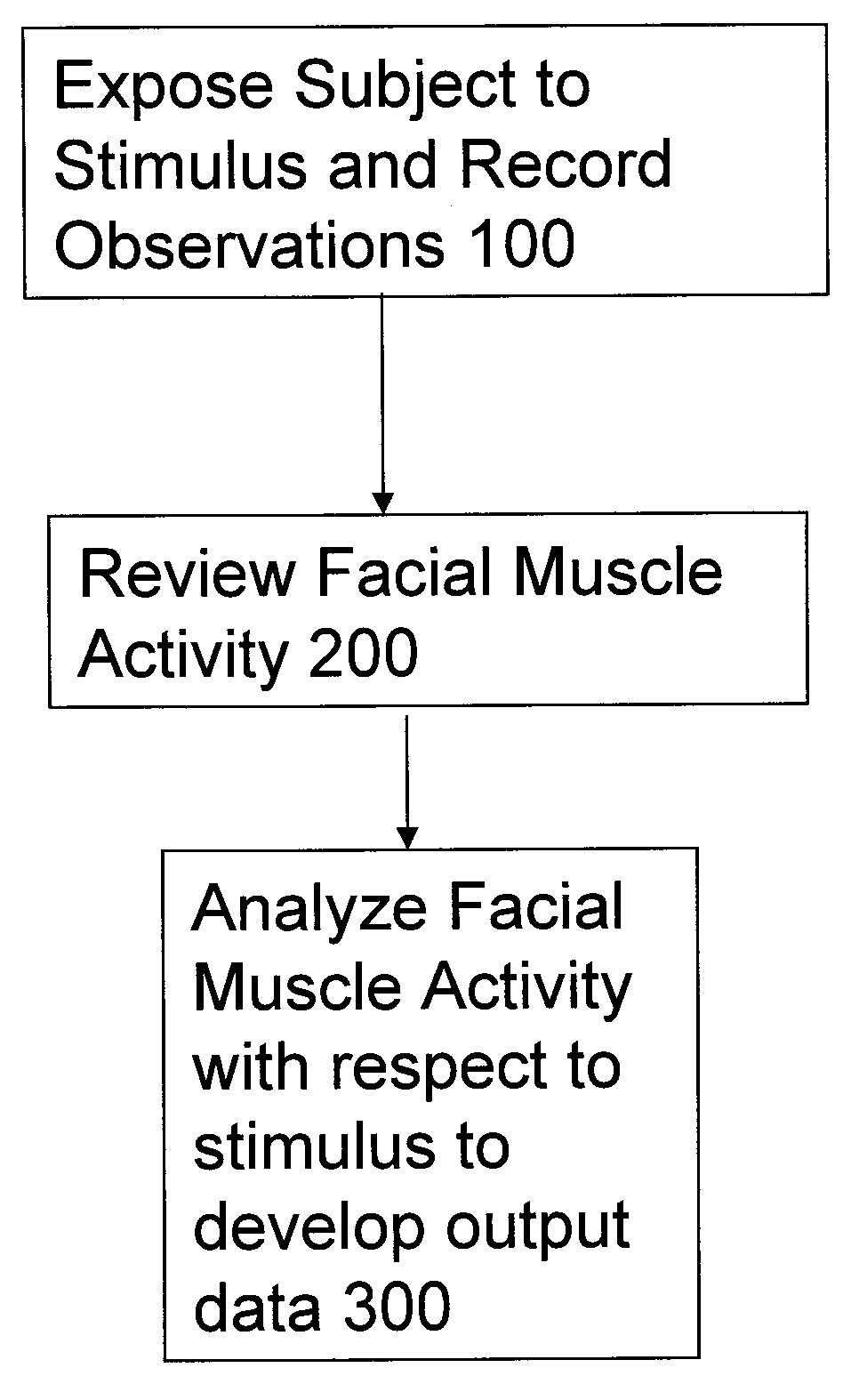

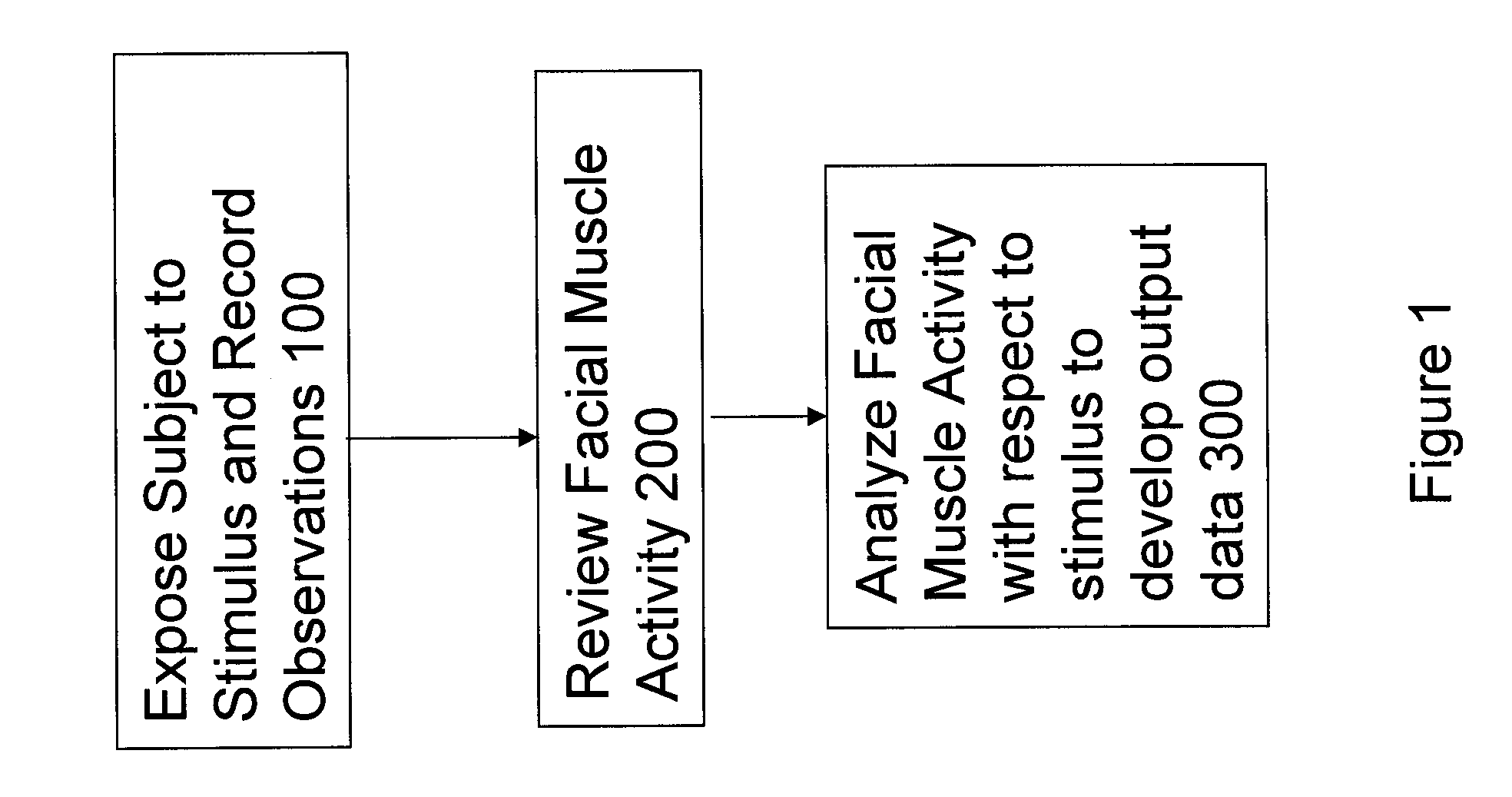

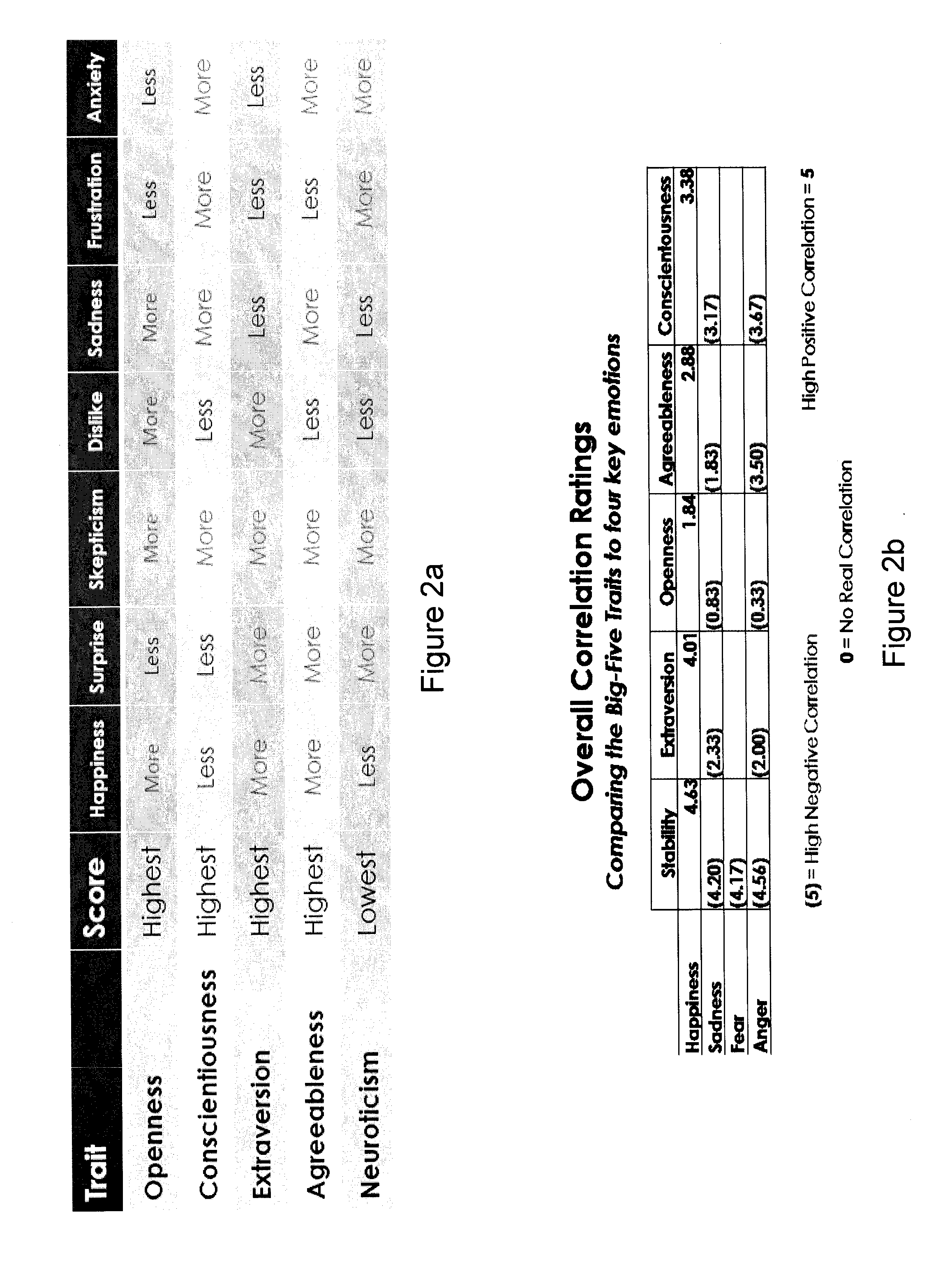

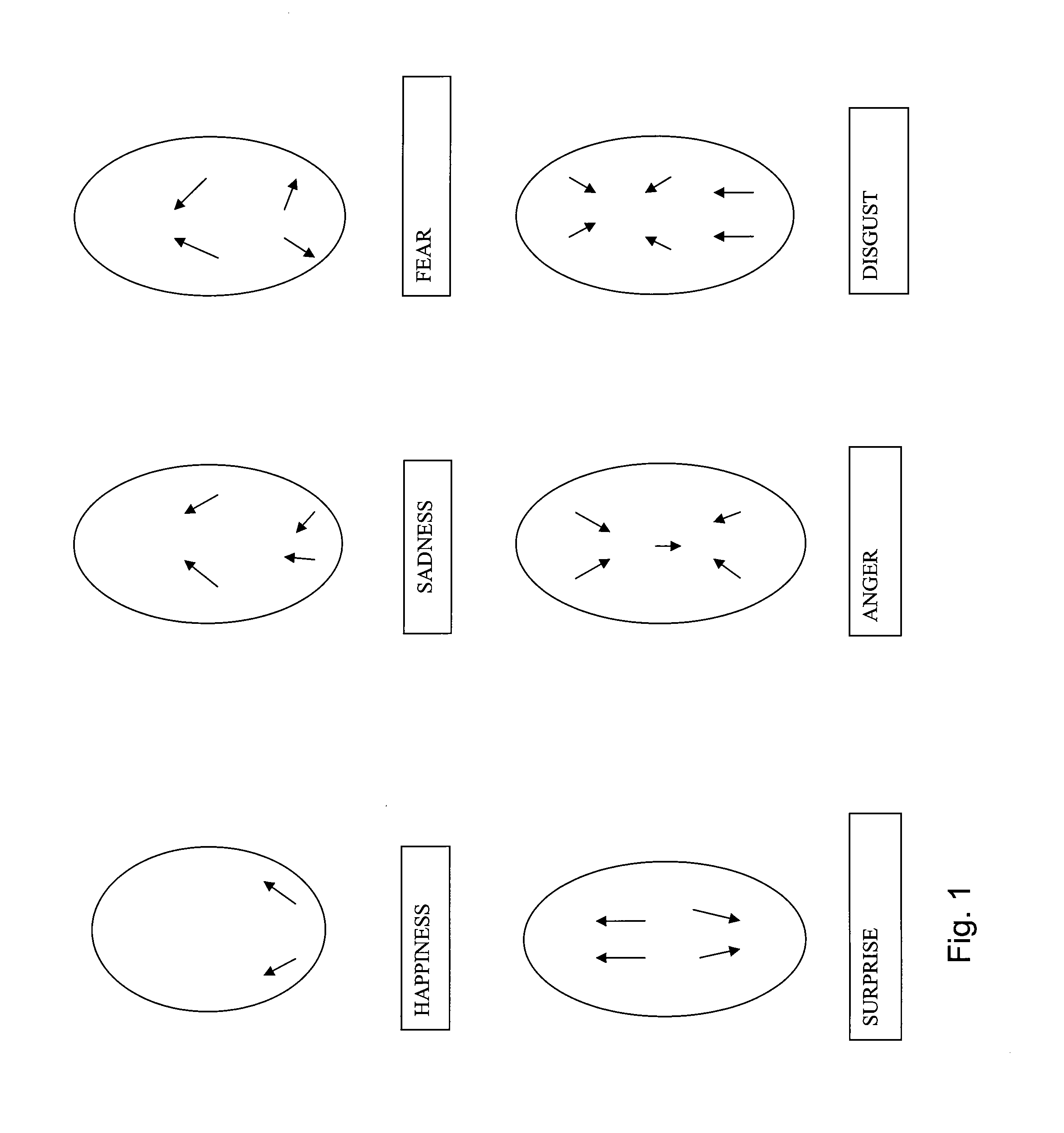

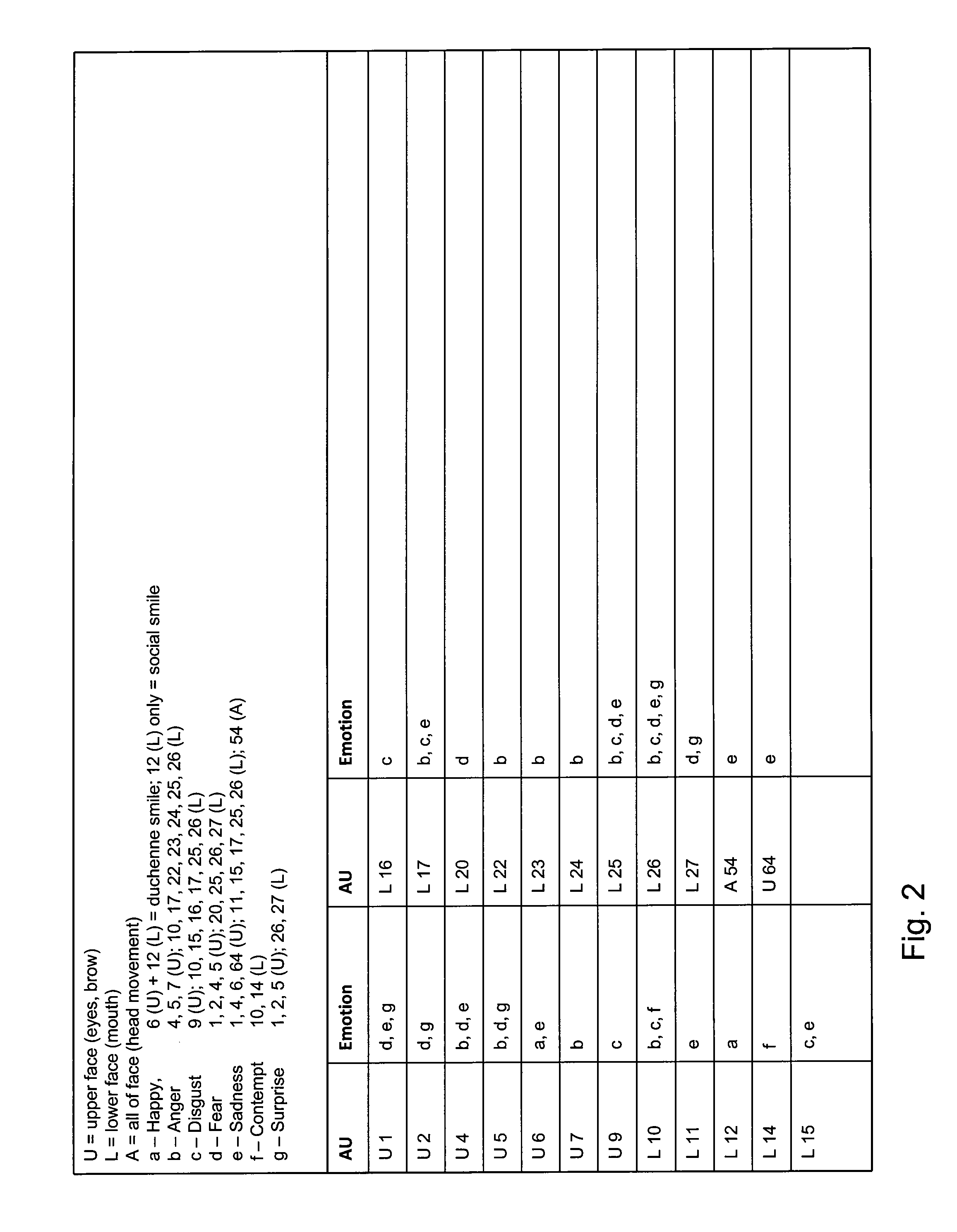

Method of assessing people's self-presentation and actions to evaluate personality type, behavioral tendencies, credibility, motivations and other insights through facial muscle activity and expressions

A method of assessing an individual through facial muscle activity and expressions includes receiving a visual recording stored on a computer-readable medium of an individual's non-verbal responses to a stimulus, the non-verbal response comprising facial expressions of the individual. The recording is accessed to automatically detect and record expressional repositioning of each of a plurality of selected facial features by conducting a computerized comparison of the facial position of each selected facial feature through sequential facial images. The contemporaneously detected and recorded expressional repositionings are automatically coded to an action unit, a combination of action units, and / or at least one emotion. The action unit, combination of action units, and / or at least one emotion are analyzed to assess one or more characteristics of the individual to develop a profile of the individual's personality in relation to the objective for which the individual is being assessed.

Owner:SENSORY LOGIC

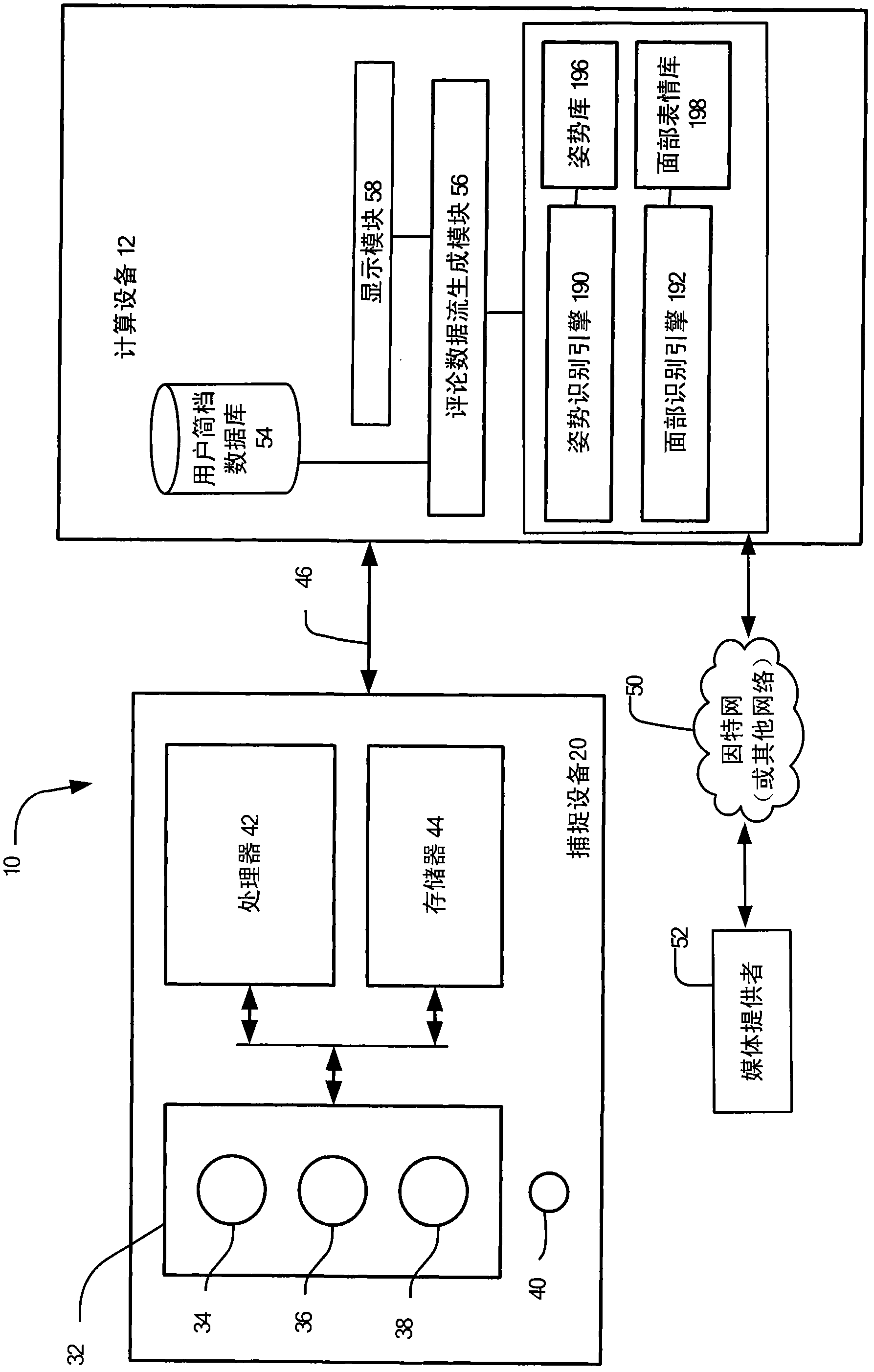

Simulated group interaction with multimedia content

The invention discloses simulated group interaction with multimedia content. A method and system for generating time synchronized data streams based on a viewer's interaction with a multimedia content stream is provided. A viewer's interactions with a multimedia content stream being viewed by the viewer are recorded. The viewer's interactions include comments provided by the viewer, while viewing the multimedia content stream. Comments include text messages, audio messages, video feeds, gestures or facial expressions provided by the viewer. A time synchronized commented data stream is generated based on the viewer's interactions. The time synchronized commented data stream includes the viewer's interactions time stamped relative to a virtual start time at which the multimedia content stream is rendered to the viewer. One or more time synchronized data streams are rendered to the viewer, via an audiovisual device, while the viewer views a multimedia content stream.

Owner:MICROSOFT TECH LICENSING LLC

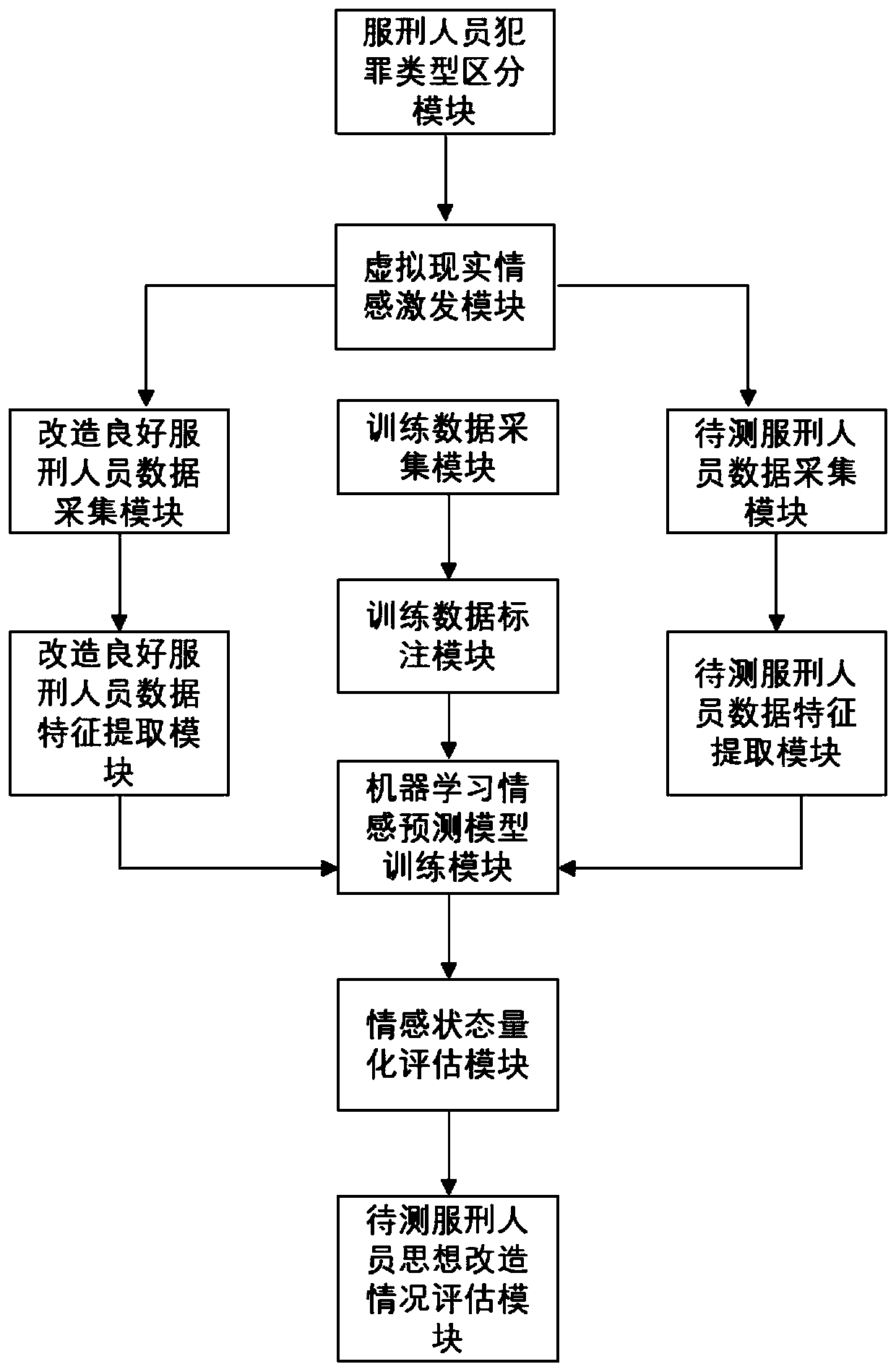

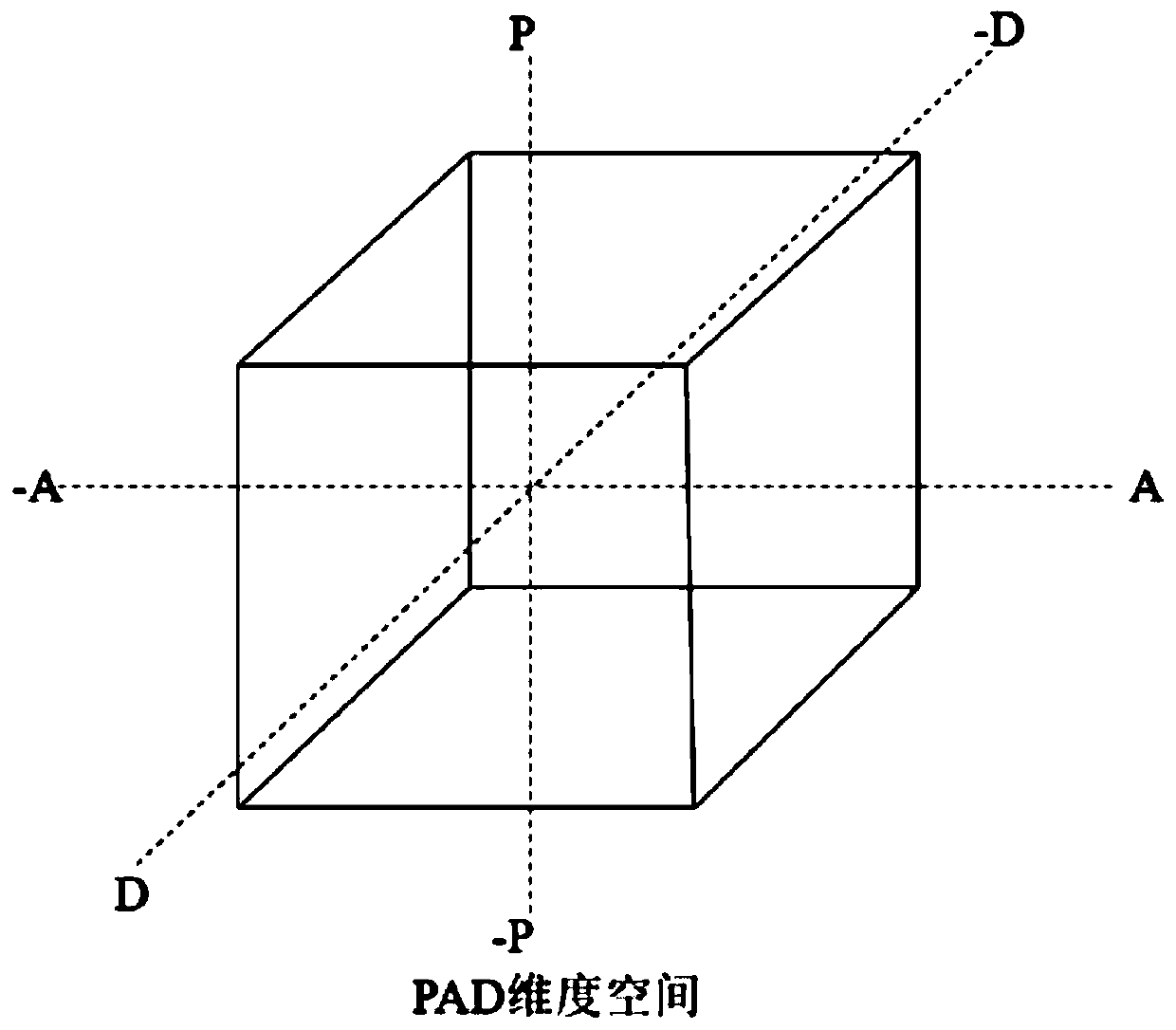

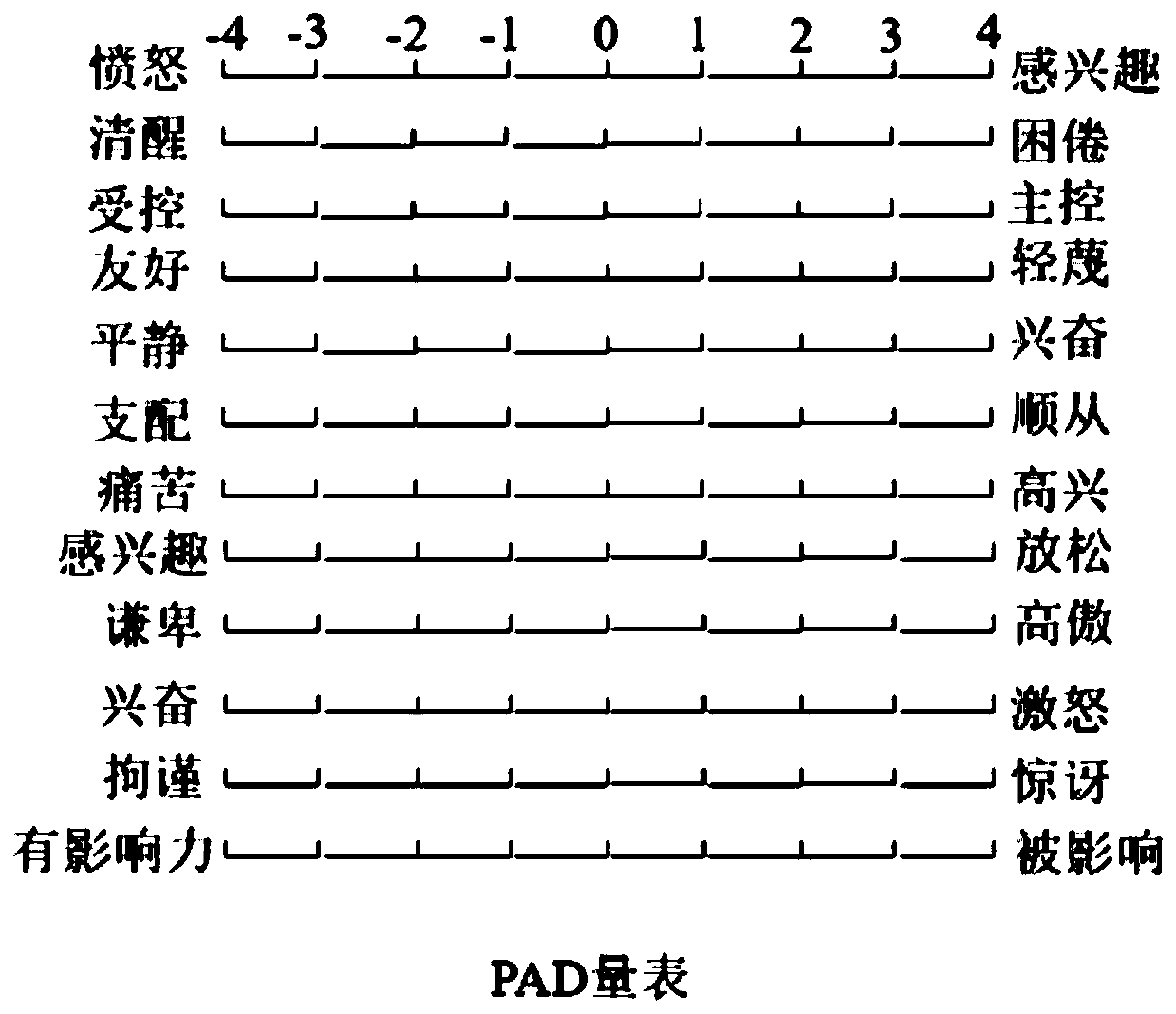

Prisoner psychological health state assessment method and system based on multi-modal information

ActiveCN110507335AAvoid interferenceRapid assessmentSensorsPsychotechnic devicesMental healthNetwork model

The invention discloses a prisoner psychological health state assessment method and a system based on multi-modal information. The method comprises the following steps: obtaining physiological signals, facial expression images and voice signals of reformed prisoners and prisoners to be tested after they experience virtual reality scenes, and extracting physiological signal features, facial expression image features and voice signal features from the obtained signals; inputting the physiological signal features, facial expression image features and voice signal features of the reformed prisonerinto a pre-trained neural network model, and outputting a psychological state assessment vector of the reformed prisoners; inputting the physiological signal features, the facial expression featuresand the voice signal features of the prisoners to be tested into a pre-trained neural network model, and outputting a psychological state assessment vector of the prisoners to be tested; calculating the psychological state assessment vector distance between the prisoners to be tested and the reformed prisoners; and assessing the mental health state of the prisoners according to the distance.

Owner:SHANDONG UNIV +1

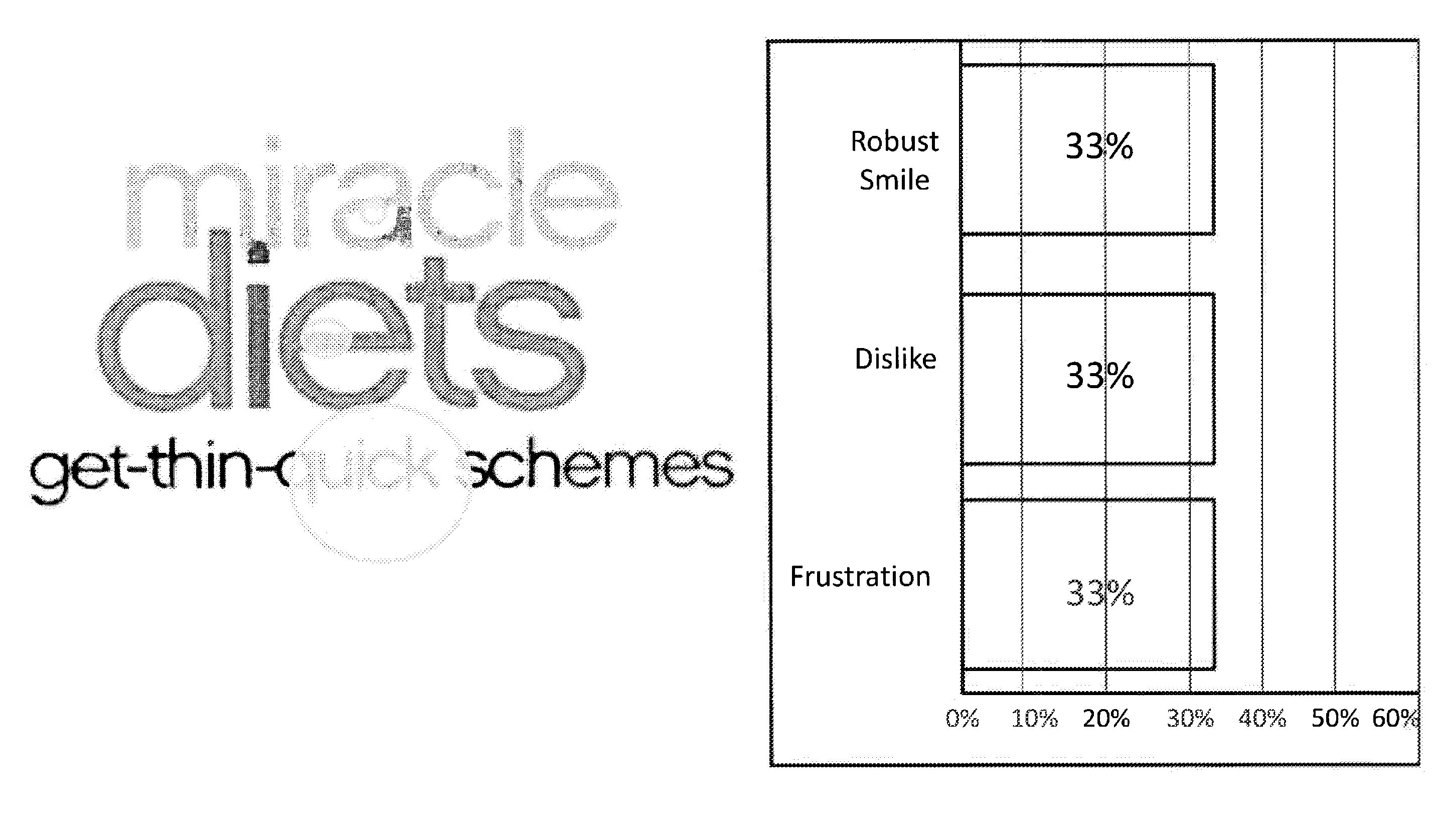

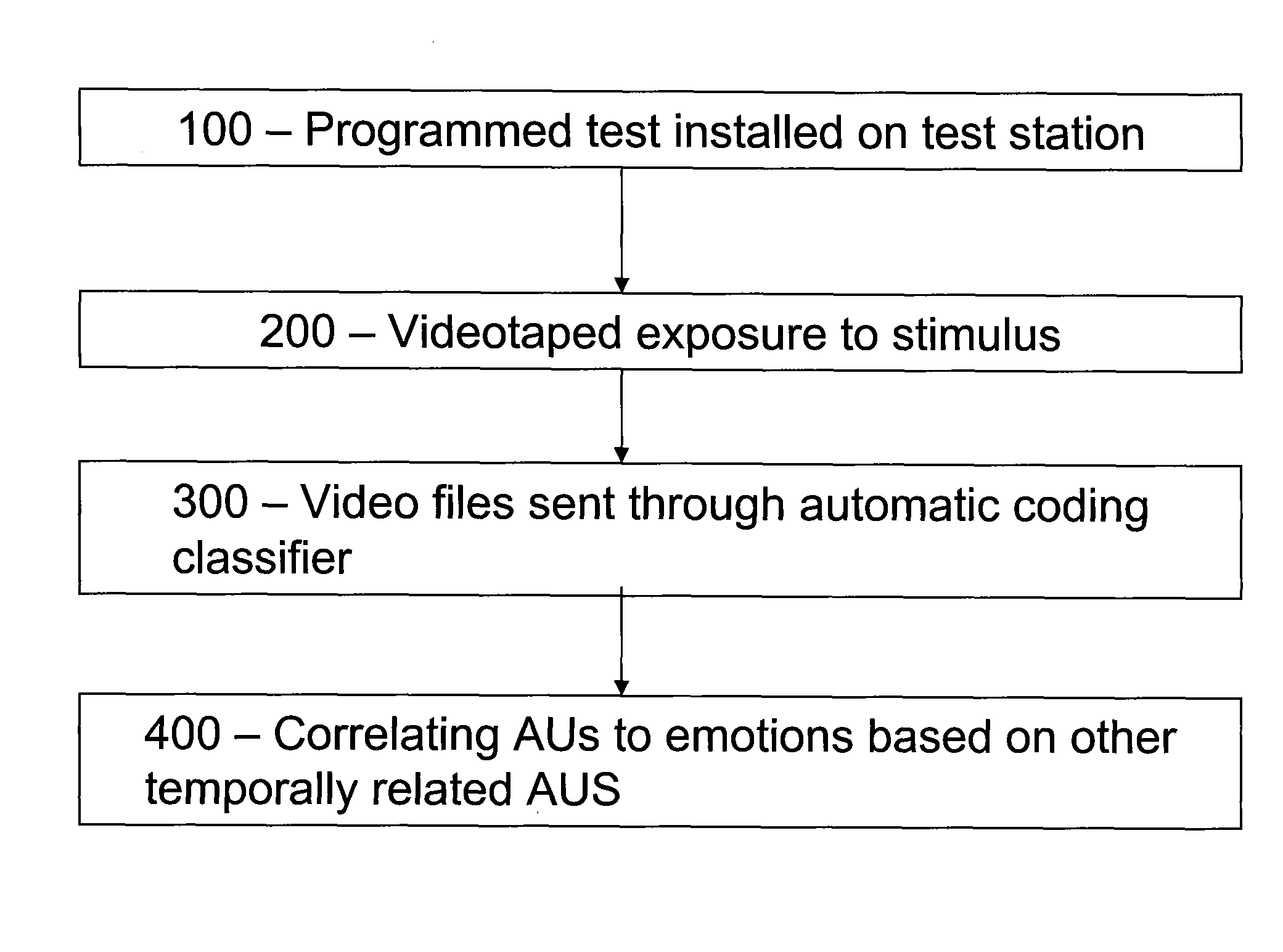

Methods of facial coding scoring for optimally identifying consumers' responses to arrive at effective, incisive, actionable conclusions

InactiveUS8326002B2Acquiring/recognising facial featuresMarketingPattern recognitionFacial expression

The present disclosure relates to a method of assessing consumer reaction to a stimulus, comprising receiving a visual recording stored on a computer-readable medium of facial expressions of at least one human subject as the subject is exposed to a business stimulus so as to generate a chronological sequence of recorded facial images; accessing the computer-readable medium for automatically detecting and recording expressional repositioning of each of a plurality of selected facial features by conducting a computerized comparison of the facial position of each selected facial feature through sequential facial images; automatically coding contemporaneously detected and recorded expressional repositionings to at least a first action unit, wherein the action unit maps to a first set of one or more possible emotions expressed by the human subject; assigning a numerical weight to each of the one or more possible emotions of the first set based upon both the number of emotions in the set and the common emotions in at least a second set of one or more possible emotions related to at least one other second action unit observed within a predetermined time period.

Owner:SENSORY LOGIC

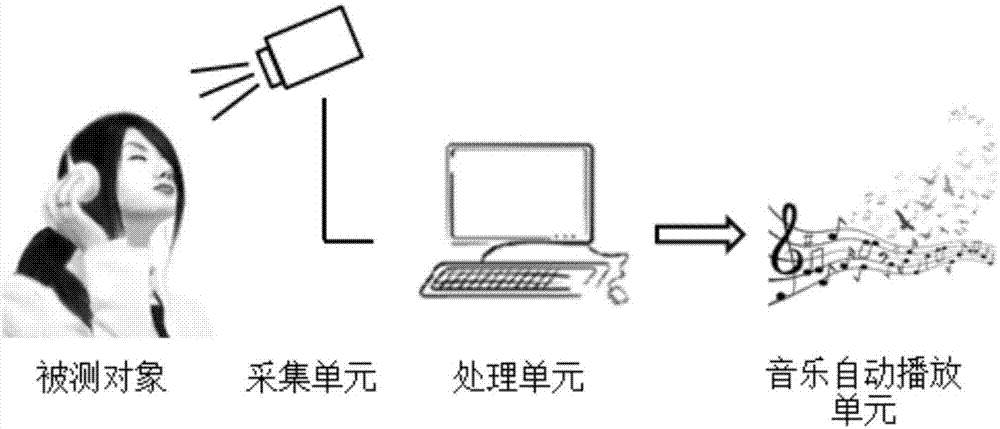

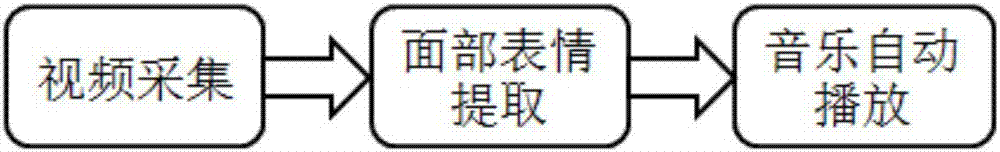

Automatic music playing system and method based on target facial expression acquisition

ActiveCN107145326AImprove experienceNo hardware purchases involvedInput/output for user-computer interactionSound input/outputPattern recognitionFacial expression

The present invention discloses an automatic music playing system and method based on target facial expression acquisition. The system comprises an acquisition unit, a processing unit and a music playing unit. The acquisition unit is used for acquiring video information of facial emotion of a user, and transferring the video information to the processing unit. The processing unit is used for controlling the acquisition unit to acquire a video of the user, integrating the acquired video information to generate a video signal, and extracting an emotion signal of the user from the video signal after integration, and transferring the emotion signal to the music playing unit. The music playing unit implements automatic playing of music according to a change of facial expression of the user. According to the system and method provided by the present invention, the facial emotion and heart rate of a target can be accurately detected, and music can be played intelligently according to a real-time psychological change of the user, so as to provide the best experience for the user.

Owner:ZHEJIANG UNIV

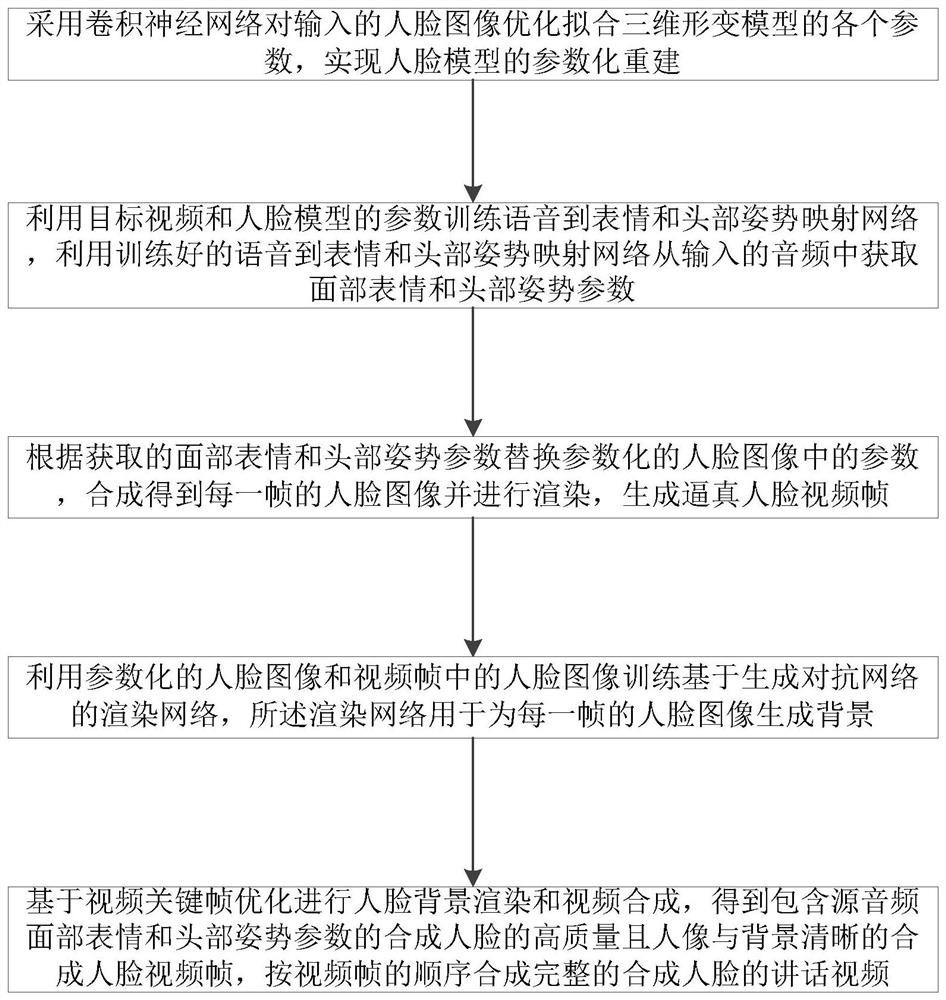

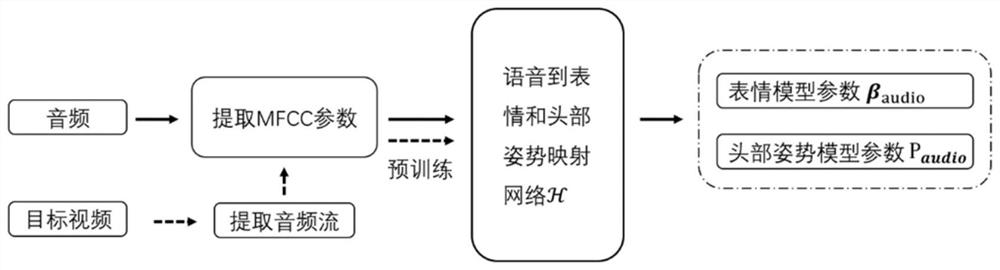

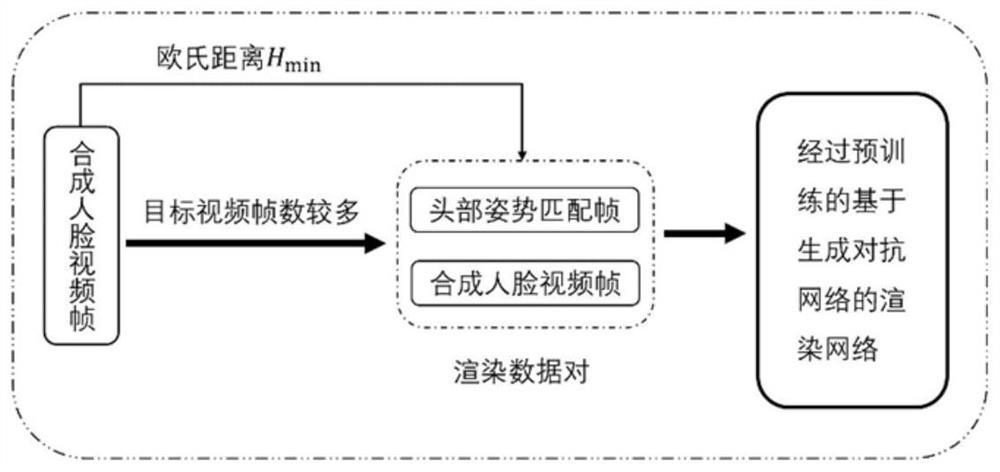

Synthetic video generation method based on three-dimensional face reconstruction and video key frame optimization

PendingCN113269872ATime or labor cost reductionReduce labor costsDetails involving 3D image dataCharacter and pattern recognitionComputer graphics (images)Generative adversarial network

The invention discloses a synthetic video generation method based on three-dimensional face reconstruction and video key frame optimization. The synthetic video generation method comprises the following steps: optimizing and fitting each parameter of a three-dimensional face deformation model for an input face image by adopting a convolutional neural network; training a voice-to-expression and head posture mapping network by using the parameters of the target video and the face model, and acquiring facial expression and head posture parameters from the input audio by using the trained voice-to-expression and head posture mapping network; synthesizing a human face and rendering the synthesized human face to generate a vivid human face video frame; training a rendering network based on a generative adversarial network by using the parameterized face image and the face image in the video frame, wherein the rendering network is used for generating a background for each frame of face image; and performing face background rendering and video synthesis based on video key frame optimization. The background transition of each frame of the output synthesized face video is natural and vivid, and the usability and practicability of the synthesized face video can be greatly enhanced.

Owner:GUANGDONG UNIV OF TECH

Mobile device image capture and image modification including filters, superimposing and geofenced comments in augmented reality

A camera may capture real world content which may be displayed in a mobile device or glasses. This content may be modified in real time. The image capture of objects and transformation of those objects are displayed. The images obtained may be processed and changes may be enacted such that they are displayed virtually different than they are physically. Various micro-expressions and emotions may be detected by the facial expressions of a person. Digital notes may be saved and associated with objects. These notes and environments may be saved by a user and viewable by anyone in the geofenced area or all users. An individual may capture an image of an object using a mobile device / intelligent electronic glasses / headset, tap the object on the display screen, and write or speak notes regarding the object. A keyboard, keypad, image, icons, or other items may be virtually placed on to a surface.

Owner:AIRSPACE REALITY

Methods and systems for image and voice processing

ActiveUS20210056348A1Reduce in quantityMinimize and reduce errorImage enhancementImage analysisLatent imageComputer graphics (images)

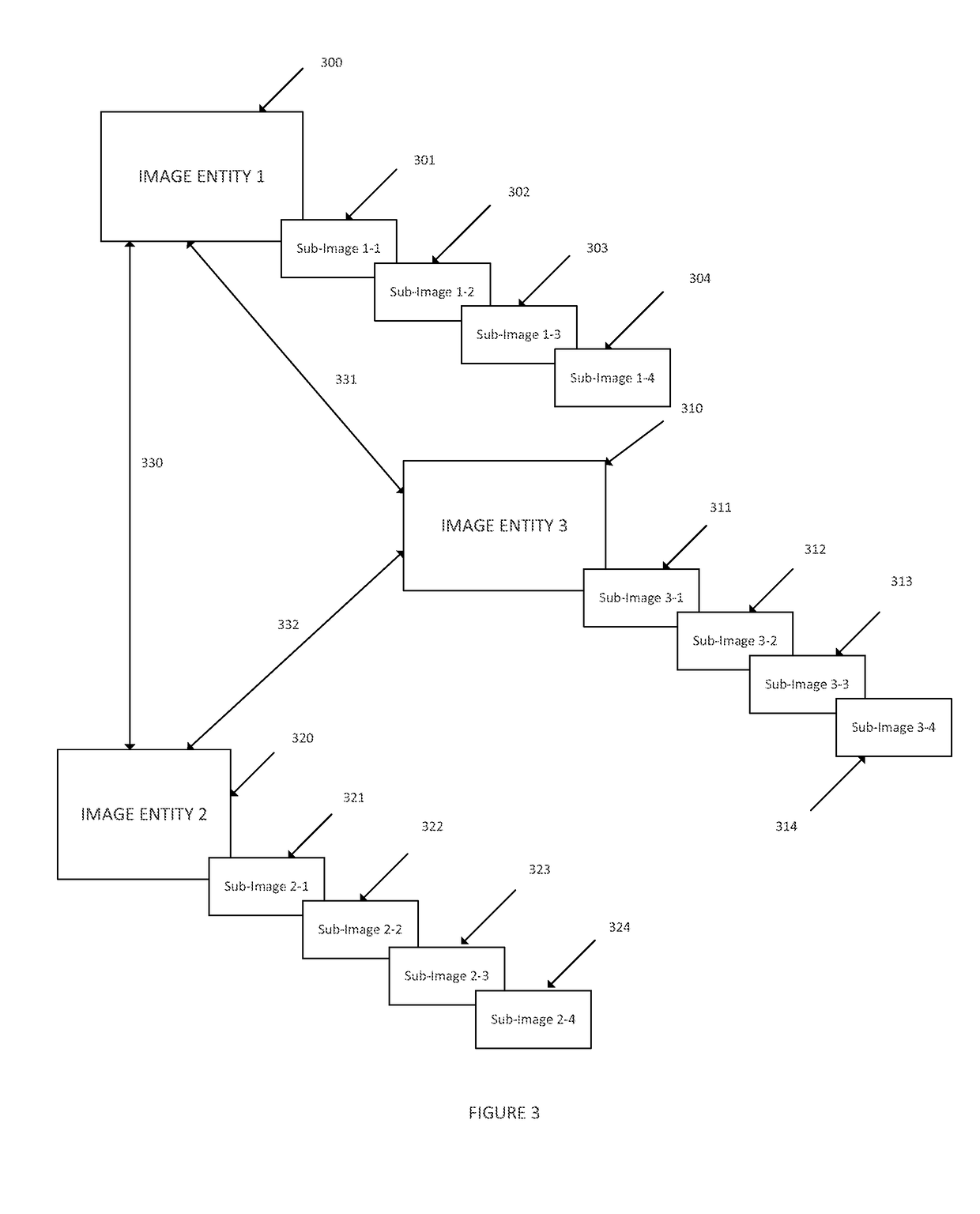

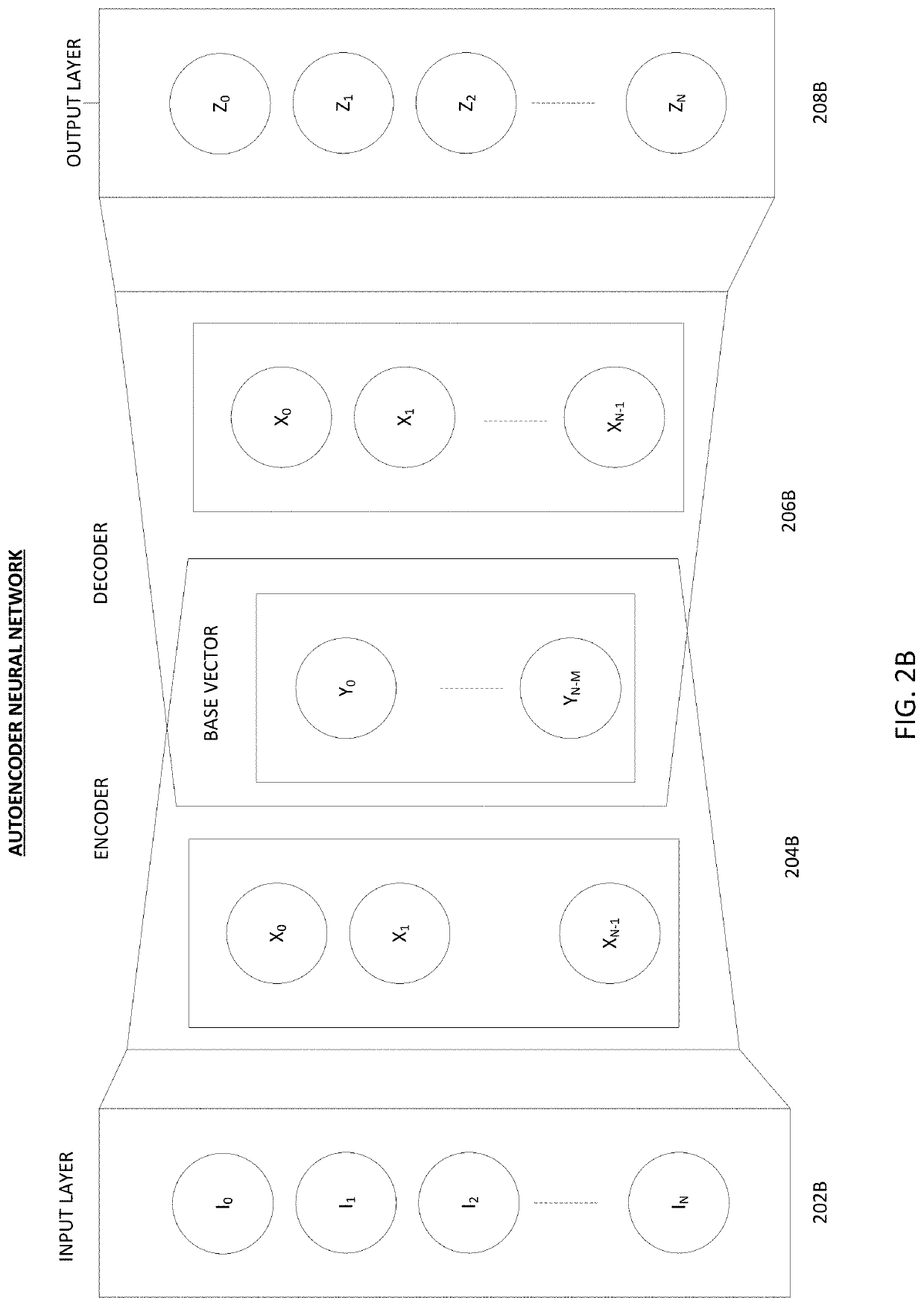

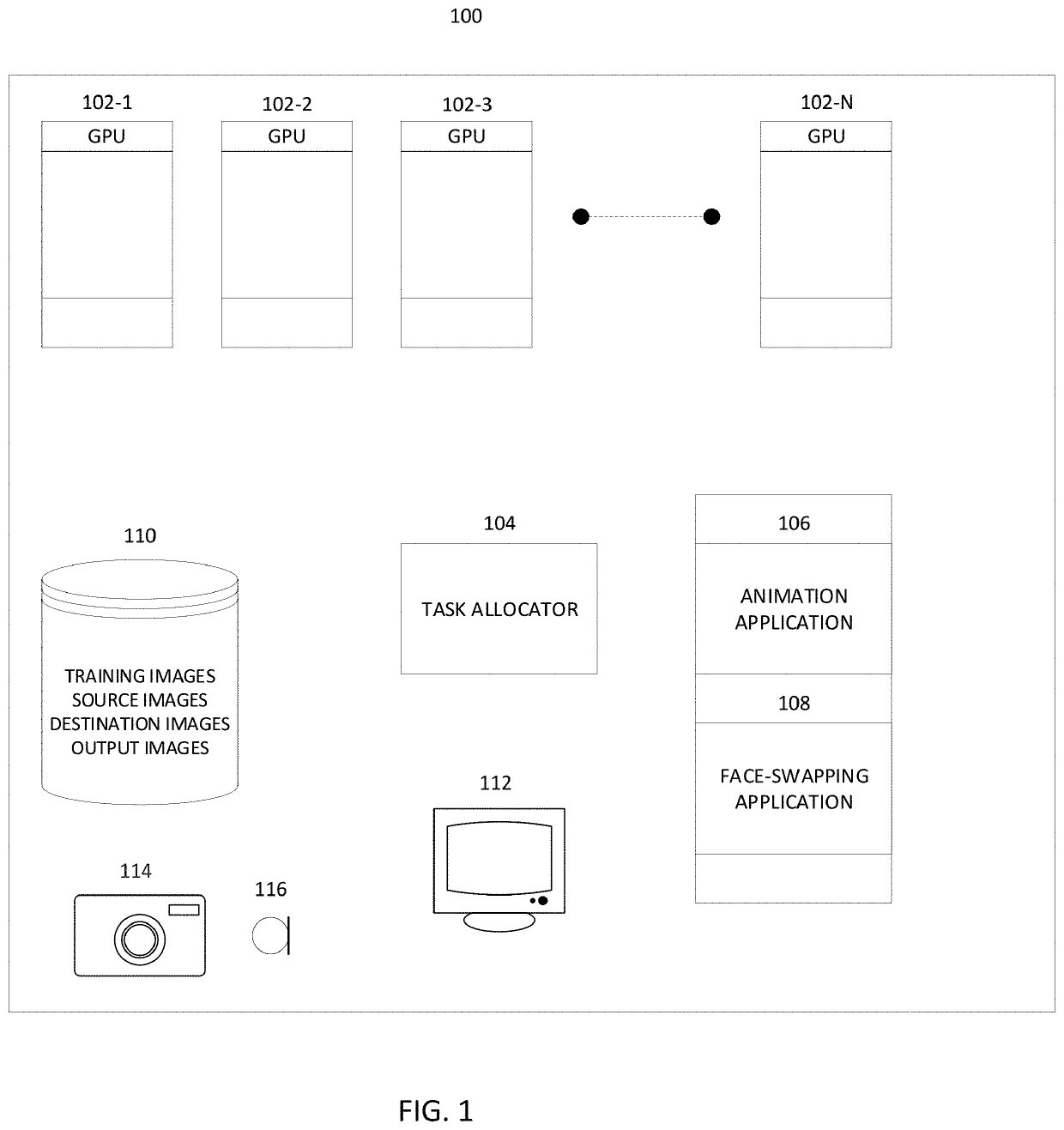

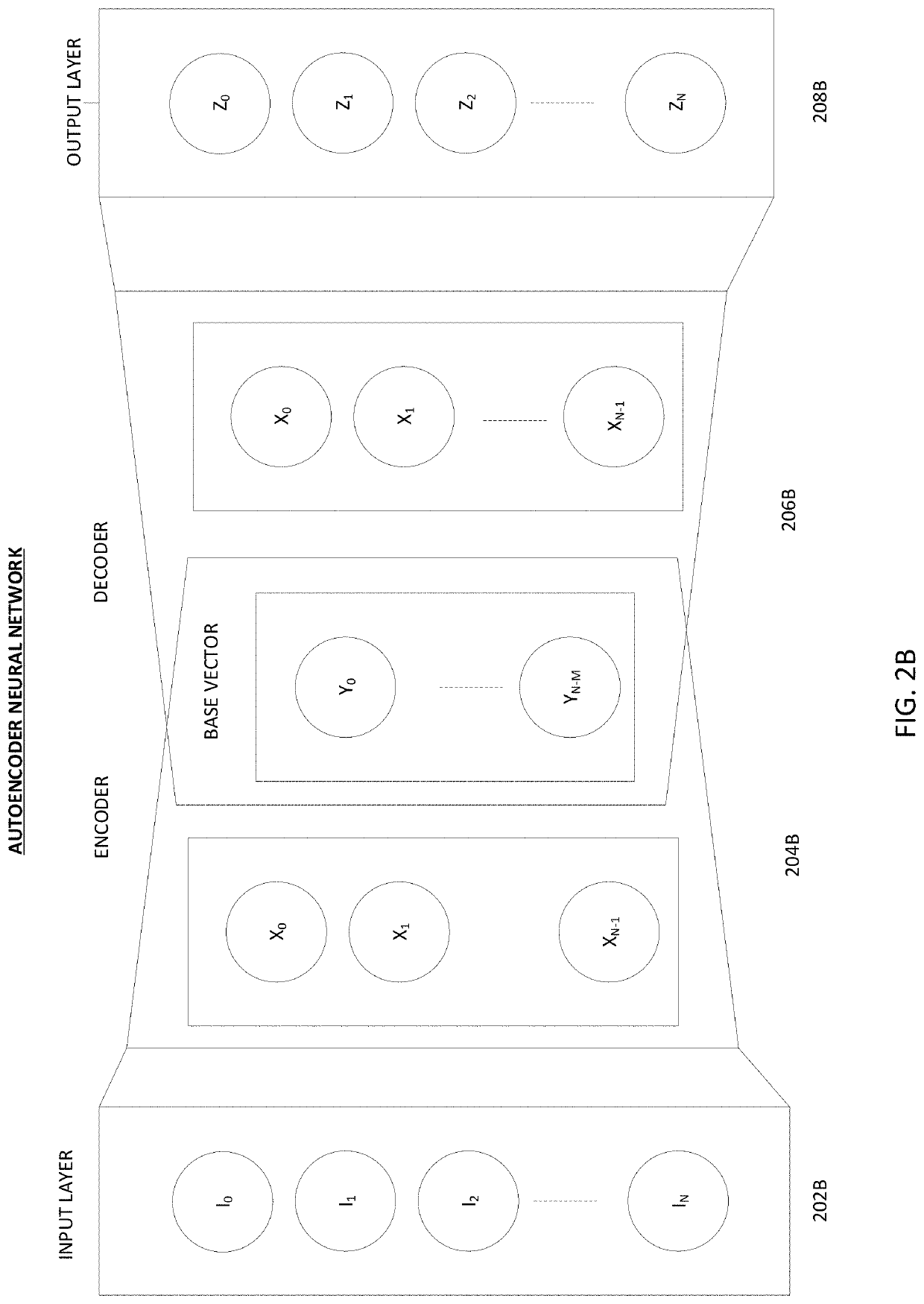

Systems and methods are disclosed configured to train an autoencoder using images that include faces, wherein the autoencoder comprises an input layer, an encoder configured to output a latent image from a corresponding input image, and a decoder configured to attempt to reconstruct the input image from the latent image. An image sequence of a face exhibiting a plurality of facial expressions and transitions between facial expressions is generated and accessed. Images of the plurality of facial expressions and transitions between facial expressions are captured from a plurality of different angles and using different lighting. An autoencoder is trained using source images that include the face with different facial expressions captured at different angles with different lighting, and using destination images that include a destination face. The trained autoencoder is used to generate an output where the likeness of the face in the destination images is swapped with the likeness of the source face, while preserving expressions of the destination face.

Owner:NEON EVOLUTION INC

Methods and systems for image processing

Systems and methods are disclosed configured to pre-train an autoencoder using images that include faces, wherein the autoencoder comprises an input layer, an encoder configured to output a latent image from a corresponding input image, and a decoder configured to attempt to reconstruct the input image from the latent image. An image sequence of a CGI sculpted and textured face exhibiting a plurality of facial expressions and transitions between facial expressions is accessed. Images of the plurality of facial expressions and transitions between facial expressions are captured from a plurality of different angles. The pre-trained autoencoder is trained using source images that include a CGI face with different facial expressions captured at different angles, and using destination images that include a real face. The trained autoencoder is used to generate an output where the real face in the destination images is swapped with the CGI face, while preserving expressions of the real face.

Owner:NEON EVOLUTION INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com