Expression input method and device based on face identification

An expression input and face recognition technology, applied in the field of input methods, can solve the problems of monotonous default expressions, many expression options, and complex selection interfaces, so as to reduce the constraints of development and widespread use, improve use efficiency, and reduce time costs. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

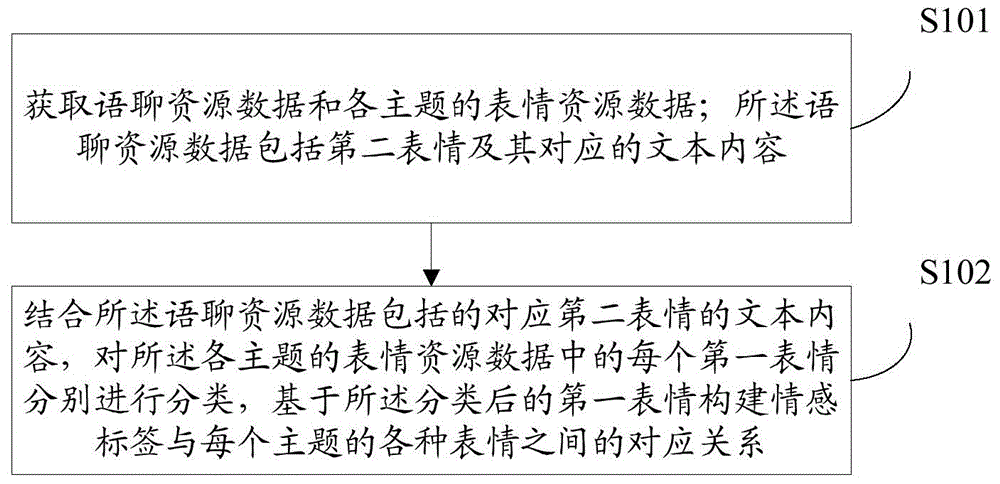

[0051] refer to figure 1 , which shows a schematic flowchart of an expression input method based on face recognition in the present invention.

[0052] In the embodiment of the present invention, the corresponding relationship between emotion tags and expressions in each topic and the facial expression recognition model will be constructed in advance.

[0053] The following describes the construction process of establishing the corresponding relationship between emotional tags and expressions in each topic:

[0054] Step S100, constructing the correspondence between the emotional tags and the expressions in each topic according to the collected chat resource data and the expression resource data of each topic.

[0055] In the present invention, the corresponding relationship between emotion tags and expressions in each topic can be obtained by collecting chat resource data and expression resource data of each topic, and using the chat resource data to analyze the expression r...

Embodiment 2

[0177] refer to Figure 5 , which shows a schematic flow chart showing an expression input method based on face recognition in the present invention. include:

[0178] Step 510, start the input method;

[0179]Step 520, judging whether the current input environment of the client input method requires emoticon input; if emoticon input is required, proceed to step 530; if not, proceed to traditional input mode.

[0180] That is, the input method recognizes the environment in which the user is typing. If it is a chatting environment, a webpage input, etc., where there is a high possibility of emoticon input, step 130 is executed. If not, directly receive the user's input sequence, perform word conversion to generate candidates and display them to the user.

[0181] Step 530, obtain the photo taken by the user;

[0182] When the user triggers the camera function during the input process, the embodiment of the present invention acquires the photos taken by the user.

[0183] ...

Embodiment 3

[0190] refer to Image 6 , which shows a schematic flow chart showing an expression input method based on face recognition in the present invention. include:

[0191] Step 610, the mobile client starts the input method;

[0192] Step 620, the mobile client judges whether the current input environment of the input method of the client needs emoticon input; if emoticon input is required, proceed to step 630; if not, proceed to traditional input mode.

[0193] Step 630, acquire the user's photo taken by the front camera of the mobile client, and transmit the photo to the cloud server.

[0194] Step 640, the cloud server adopts the facial expression recognition model to determine the emotion label corresponding to the facial expression in the photo;

[0195] Step 650, based on the correspondence between the emotion tags and the expressions in the topics, the cloud server respectively acquires the expressions of the topics corresponding to the emotion tags;

[0196] According t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com