Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

240 results about "Optical flow estimation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

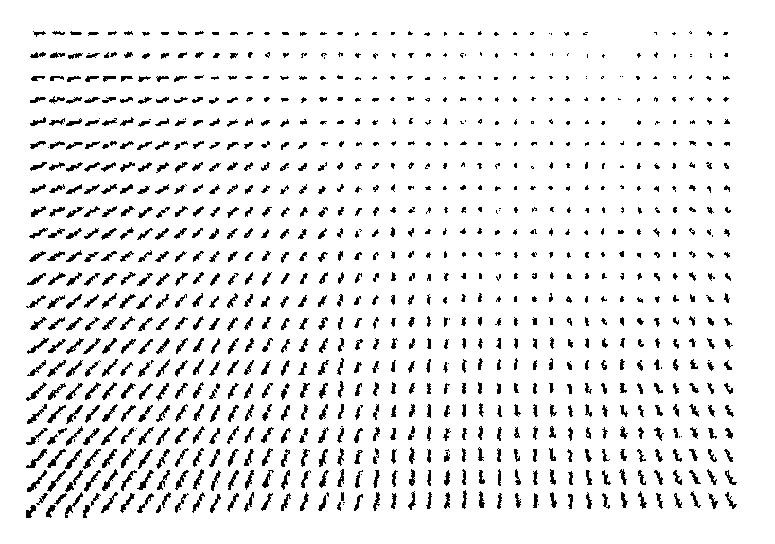

Optical flow estimation is used in computer vision to characterize and quantify the motion of objects in a video stream, often for motion-based object detection and tracking systems. Moving object detection in a series of frames using optical flow.

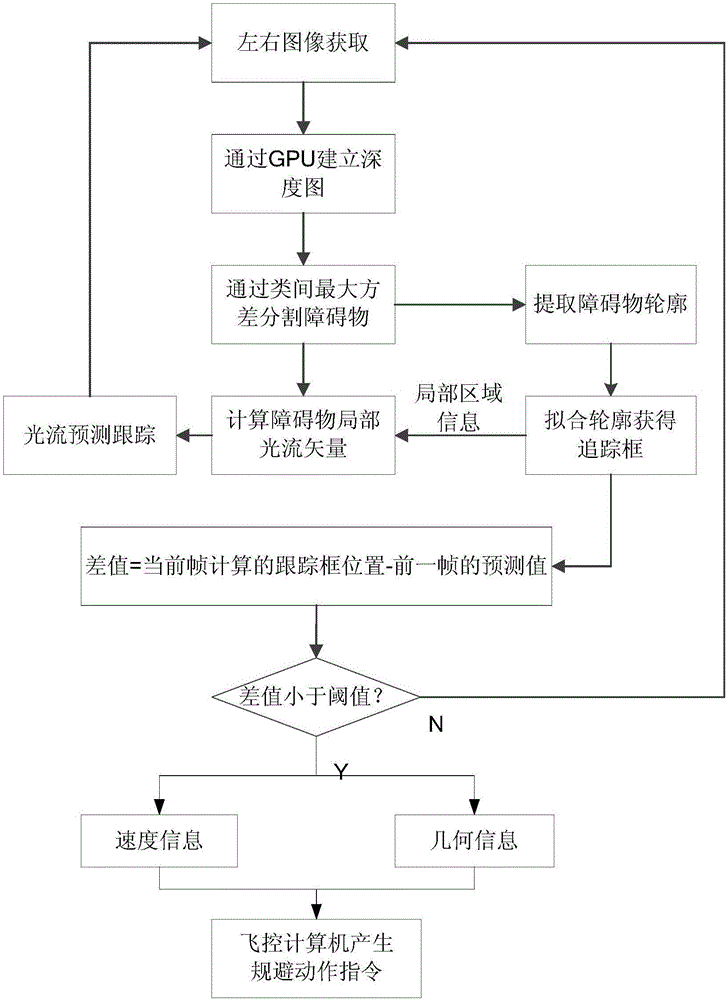

Unmanned aerial vehicle (UAV) obstacle avoidance method and system based on binocular vision and optical flow fusion

InactiveCN106681353ATime-consuming to solveImprove real-time performanceImage analysisPosition/course control in three dimensionsGraphicsUncrewed vehicle

The invention discloses an unmanned aerial vehicle (UAV) obstacle avoidance method and system based on binocular vision and optical flow fusion. The method includes the steps of obtaining image information through an airborne binocular camera in real time; obtaining image depth information by using the graphics processing unit (GPU); extracting geometric contour information of the most threatening obstacle by using the obtained depth information and calculating the threat distance by a threat depth model; obtaining an obstacle tracking window by the rectangular fitting of the geometric contour information of the obstacle and calculating the optical flow field of the area to which the obstacle belongs to obtain the velocity of the obstacle relative to the UAV; and sending out an avoidance flight action instruction by a flight control computer to avoid the obstacle according to the calculated obstacle distance information, the geometric contour information and the relative velocity information. The invention realizes effective fusion of the depth information of the obstacle and the optical flow vector, obtains the movement information of the obstacle relative to the UAV in real time, improves the ability of the UAV to realize the visual obstacle avoidance, and enables a greater increase in the real-time performance and accuracy as compared with traditional algorithms.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

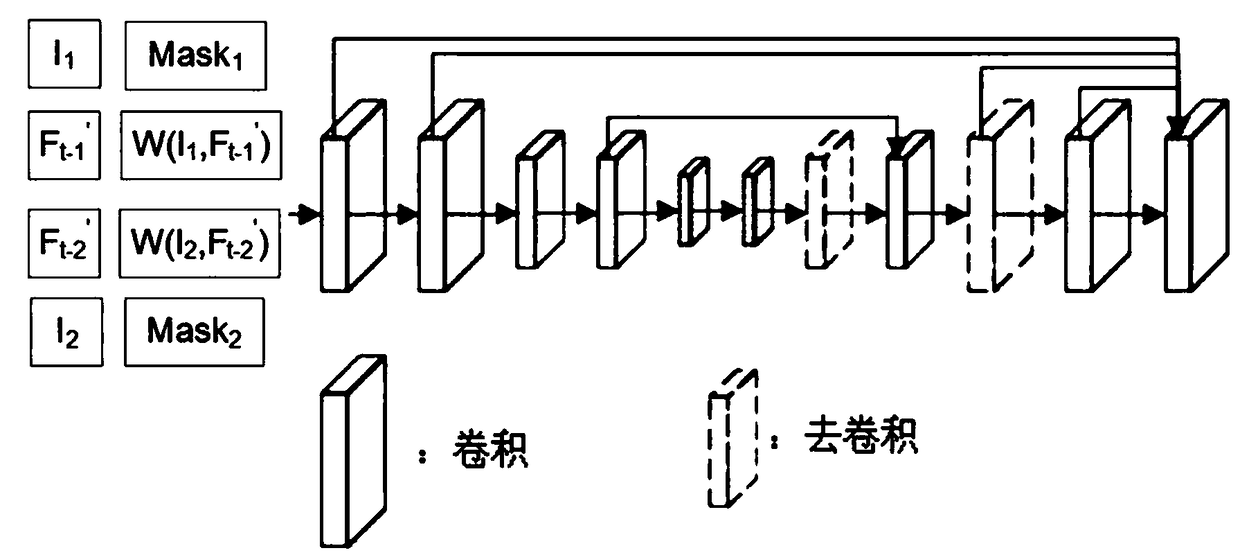

Method for generating new video frame

ActiveCN109151474AAchieving Motion Coherence FeaturesGuaranteed smoothnessDigital video signal modificationTime processingVideo editing

The invention relates to a method for generating a new video frame, and belongs to the technical field of video editing. An optical flow of former and latter video frames is used to estimate an optical flow from a newly generated intermediate frame to the former and latter video frames, and corresponding inverse interpolation is carried out on the former and latter frames according to an optical flow value to generate a new intermediate video frame. The new video frame is predicted from coarsely to finely by combining association between the former and latter frames with a multi-scale framework, and according to an experimental result, the intermediate video frame of higher quality is generated, the time continuity of a new video is maintained, am rapid and almost real-time processing effect is achieved, and compared with a traditional video frame inserting method, the method of the invention has higher application value and wider research meanings.

Owner:FUDAN UNIV

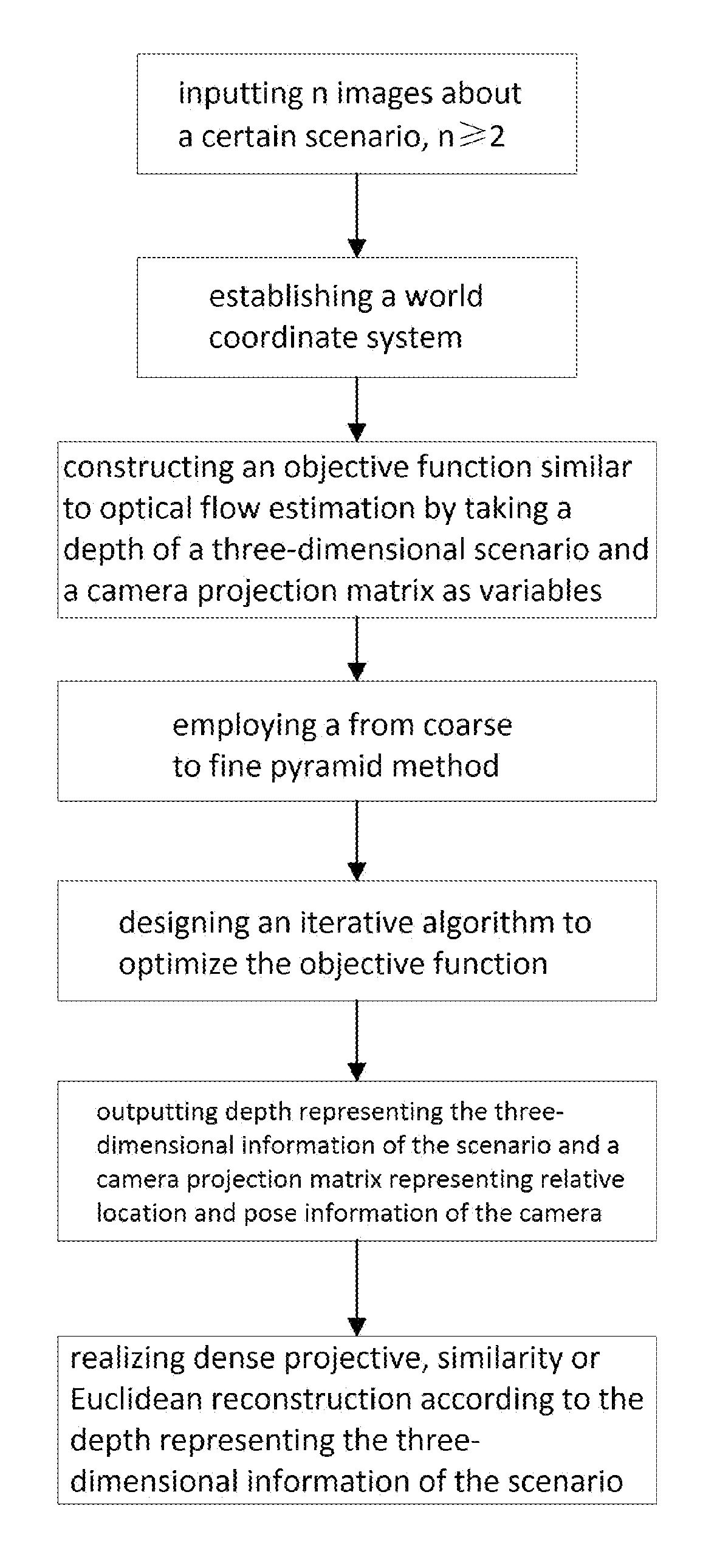

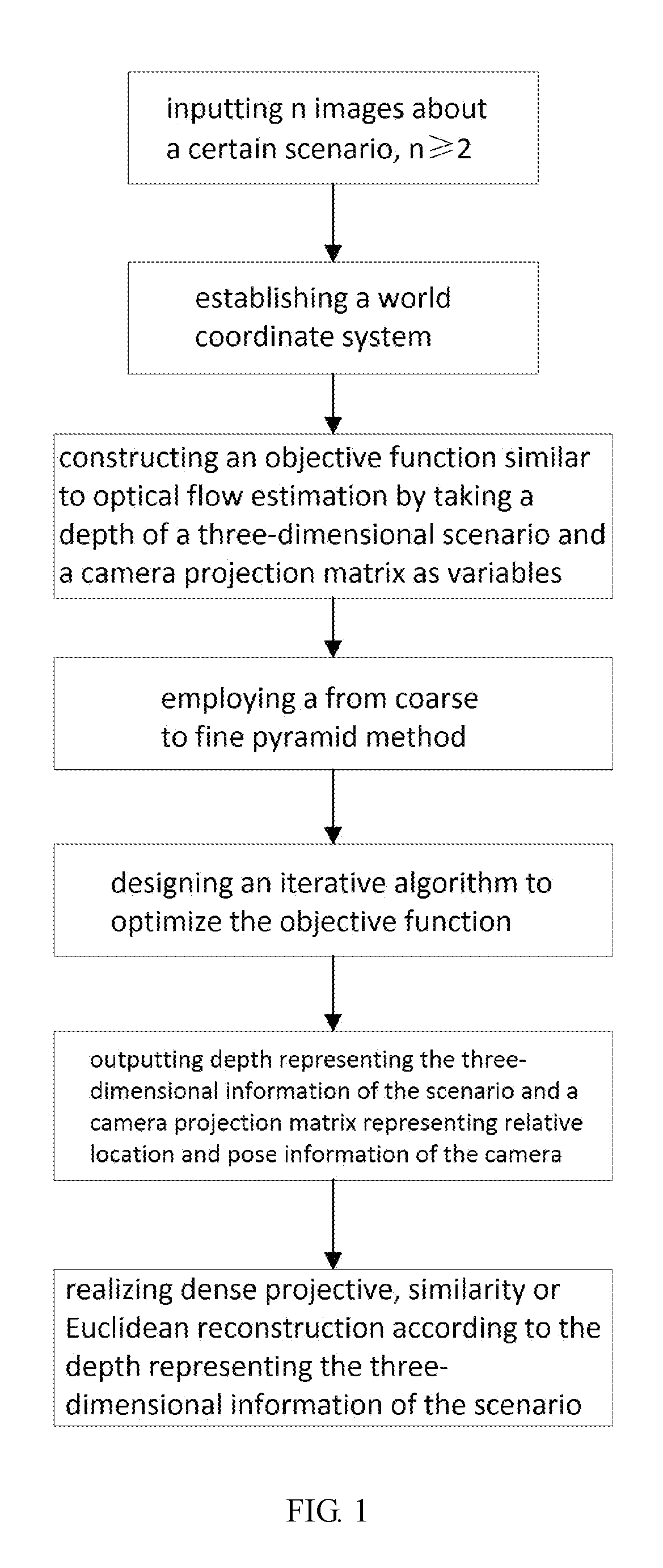

Non-feature extraction-based dense sfm three-dimensional reconstruction method

The present invention discloses a non-feature extraction dense SFM three-dimensional reconstruction method, comprising: inputting n images about a certain scenario, n≧2; establishing a world coordinate system consistent with a certain camera coordinate system; constructing an objective function similar to optical flow estimation by taking a depth of a three-dimensional scenario and a camera projection matrix as variables; employing a from coarse to fine pyramid method; designing an iterative algorithm to optimize the objective function; outputting depth representing the three-dimensional information of the scenario and a camera projection matrix representing relative location and pose information of the camera; and realizing dense projective, similarity or Euclidean reconstruction according to the depth representing the three-dimensional information of the scenario. The present invention can accomplish dense SFM three-dimensional reconstruction with one step. Since estimation of dense three-dimensional information is achieved by one-step optimization, an optimal solution or at least local optimal solution can be obtained by using the objective function as an index, it is significantly improved over an existing method and has been preliminarily verified by experiments.

Owner:SUN YAT SEN UNIV

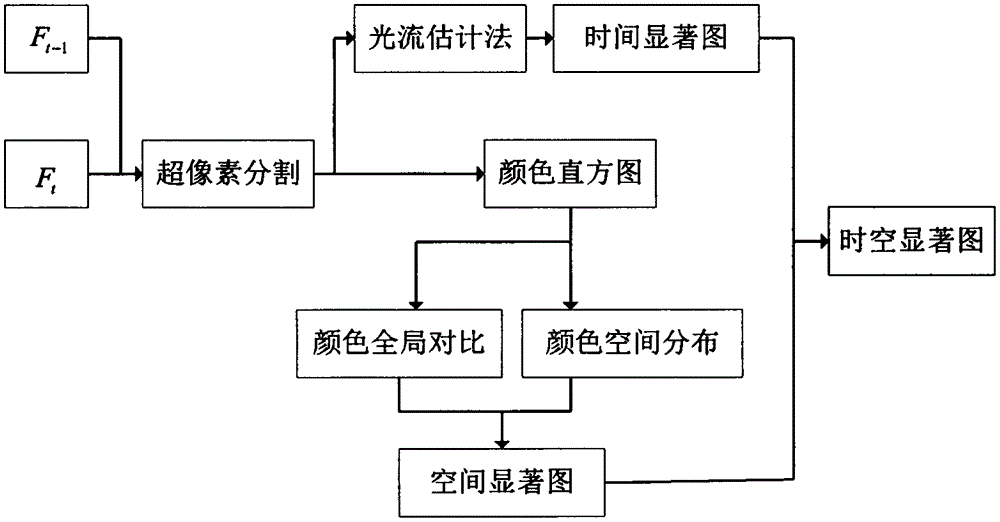

Motion-feature-fused space-time significance detection method

The invention belongs to the field of image and video processing, in particular to a space-time significance detection method which fuses space-time significance and motion features. The space-time significance detection method comprises the following steps: firstly, utilizing a superpixel partitioning algorithm to express each frame of image as one series of superpixels, and extracting a superpixel-level color histogram as features; then, obtaining a spatial salient map through the calculation of the global comparison and the spatial distribution of colors; thirdly, through optical flow estimation and block matching methods, obtaining a temporal salient map; and finally, using a dynamic fusion strategy to fuse the spatial salient map and the temporal salient map to obtain a final space-time salient map. The method fuses the space significance and the motion features to carry out significance detection, and the algorithm can be simultaneously applied to the significance detection in dynamic and static scenes.

Owner:JIANGNAN UNIV

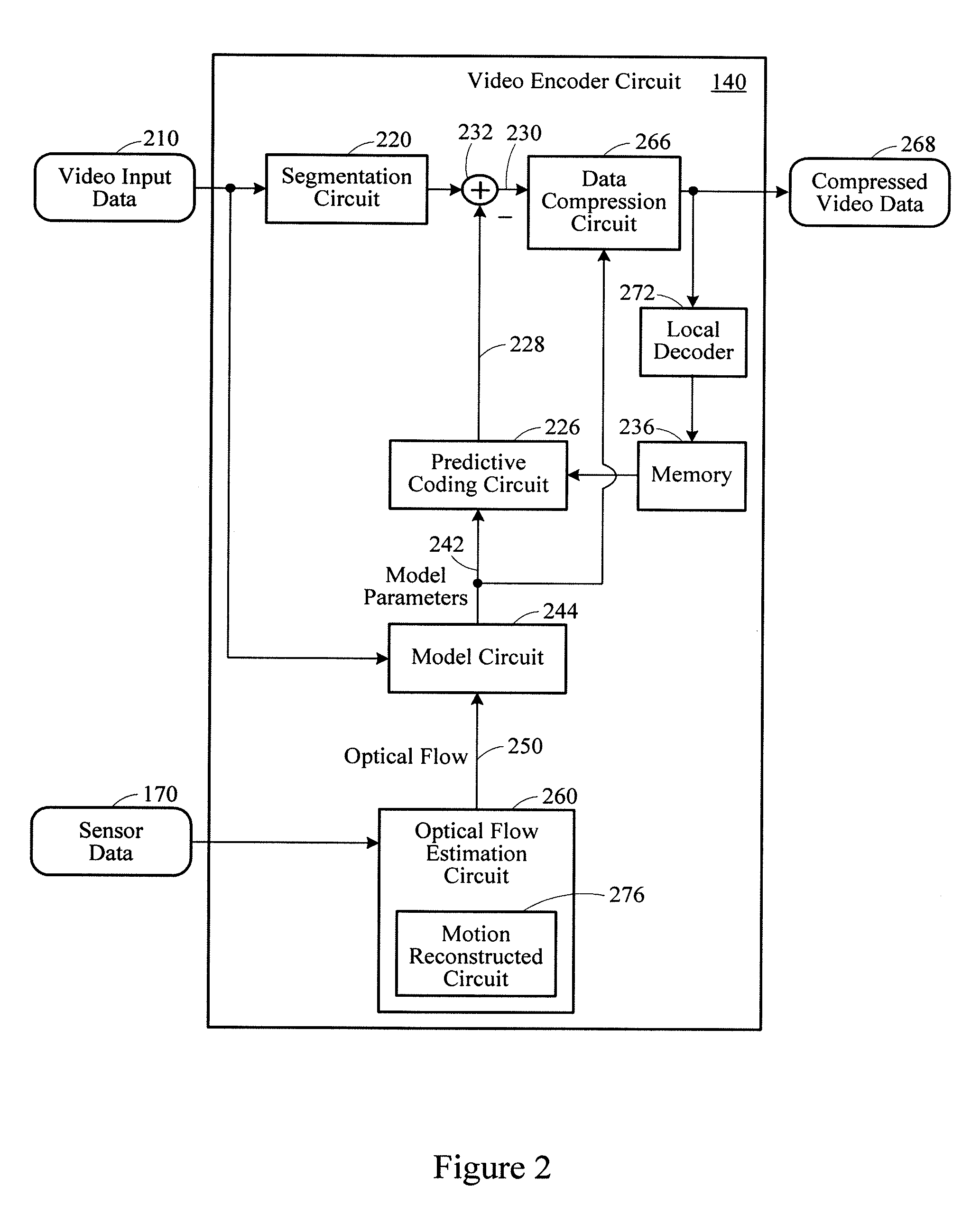

Video compression system

A video compression system processes images captured from a video camera mounted to a vehicle. Vehicle-mounted sensors generate vehicle motion information corresponding to a current state of motion of the vehicle. An optical flow estimation circuit estimates apparent motion of objects within a visual field. A video encoder circuit in communication with the optical flow estimation circuit compresses the video data from the video camera based on the estimated apparent motion.

Owner:HARMAN BECKER AUTOMOTIVE SYST

Camera attitude estimation method based on deep neural network

ActiveCN110490928AWith scene geometryHigh precisionImage enhancementImage analysisFeature extractionViewpoints

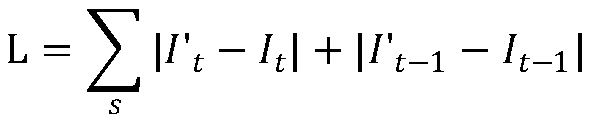

The invention discloses a camera attitude estimation method based on a deep neural network. The method comprises the following steps: 1) constructing a camera attitude estimation network; 2) constructing an unsupervised training scheme, respectively reconstructing corresponding images from the input front and back frame images by using the estimated depth map, the inter-frame relative pose and theoptical flow, and constructing a loss function of the network by using a luminosity error between the input image and the reconstructed image; 3) sharing a feature extraction part by the pose estimation module and the optical flow estimation module, and enhancing the geometrical relationship of the features to the interframes; and 4) inputting a to-be-trained single-viewpoint video, outputting acorresponding inter-frame relative pose, and reducing the loss function through an optimization means to train the model until the network achieves convergence. According to the model provided by theinvention, the camera pose of the corresponding sequence is output by inputting the single-viewpoint video sequence, the training process is carried out in an end-to-end unsupervised mode, and pose estimation performance is improved through optical flow and pose joint training.

Owner:TIANJIN UNIV

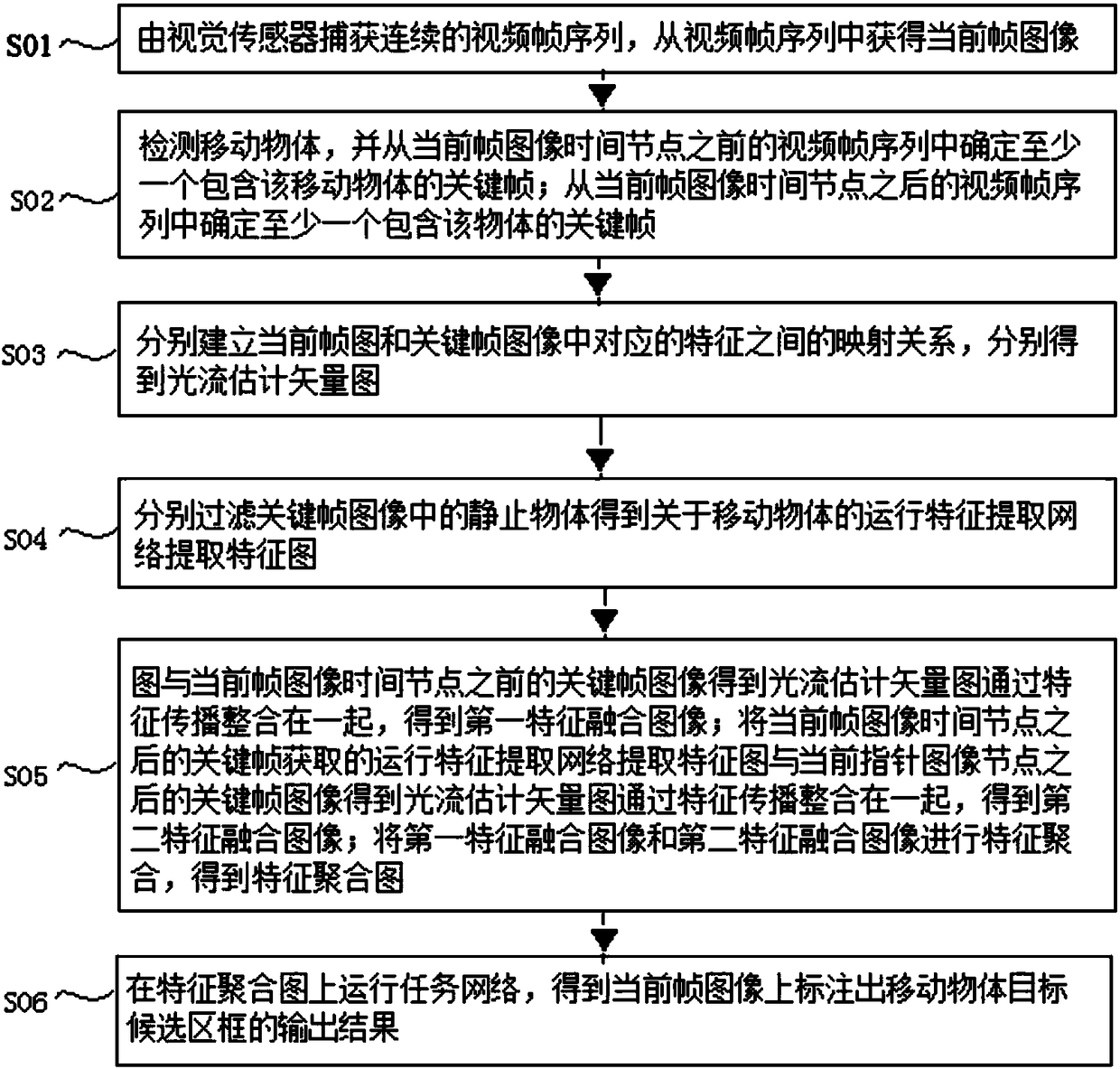

Object tracking method and system based on depth characteristic flow, terminal and medium

ActiveCN108242062AHigh similarityProcessing speedImage enhancementImage analysisFeature extractionFrame sequence

The invention provides an object tracking method and system based on a depth characteristic flow, a terminal and a medium; the method comprises the following steps: using a visual sensor to capture acontinuous video frame sequence, and obtaining the current frame image from the video frame sequence; detecting a mobile object, determining at least one key frame containing the mobile object, building a mapping relation between the current frame image and corresponding characteristics in the key frame image, and obtaining an optical flow estimated vector graph; respectively filtering static objects in the key frame image so as to obtain a motion characteristic extraction network related to the mobile object, and extracting a characteristic graph; propagating the key frame mobile object characteristics onto the current frame. The method employs the depth characteristic flow to process images, is fast in speed, cannot affect video segmentation and task identification, and can use an end-to-end mode to map, thus improving precision.

Owner:北京纵目安驰智能科技有限公司

Over-Parameterized Variational Optical Flow Method

InactiveUS20100272311A1Rich meaningImage analysisCharacter and pattern recognitionSpacetimeComputer science

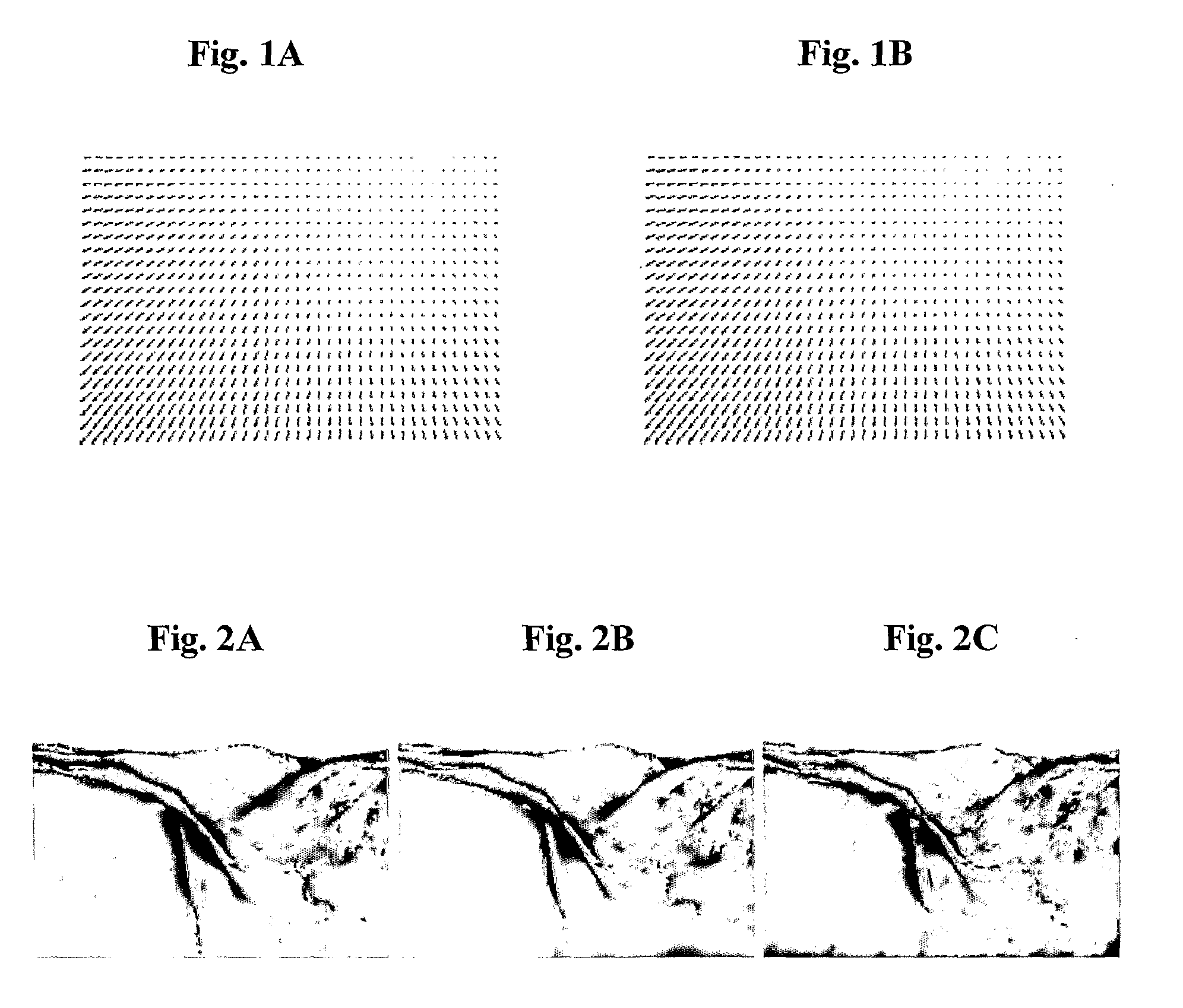

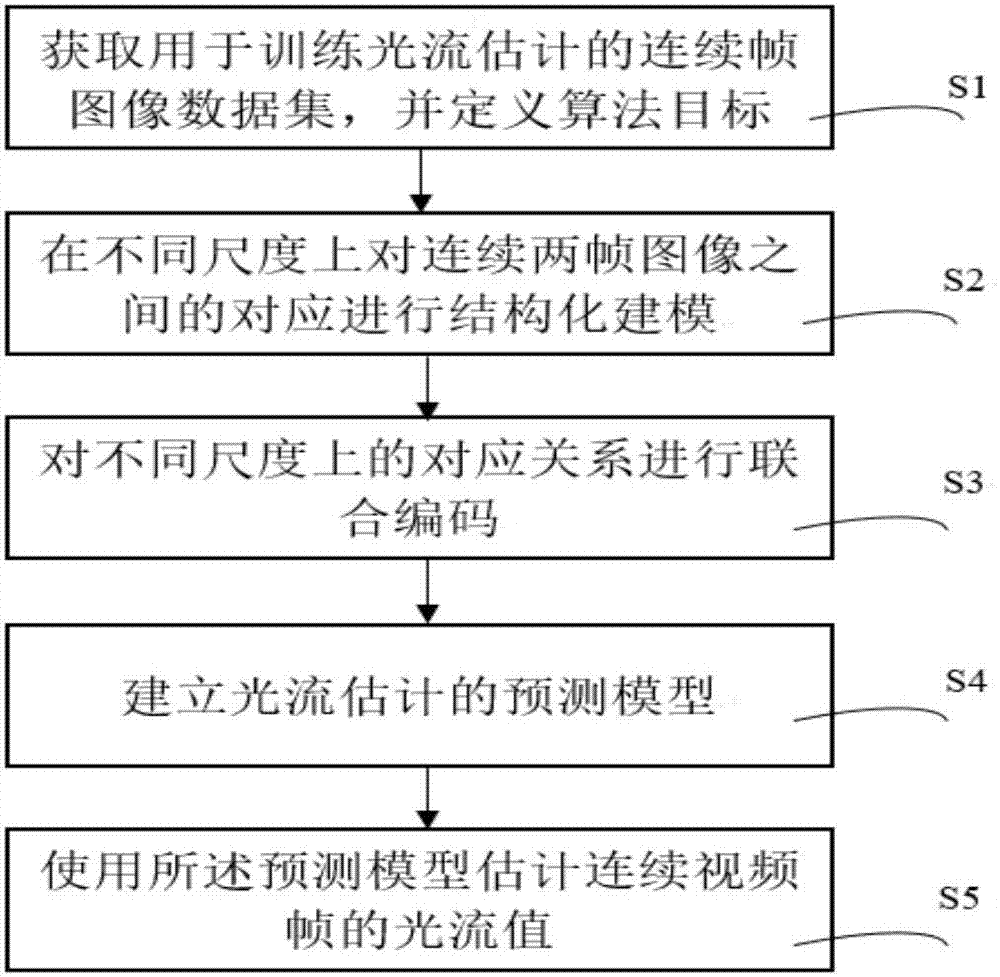

An optical flow estimation process based on a spatio-temporal model with varying coefficients multiplying a set of basis functions at each pixel. The benefit of over-parameterization becomes evident in the smoothness term, which instead of directly penalizing for changes in the optic flow, accumulates a cost of deviating from the assumed optic flow model. The optical flow field is represented by a general space-time model comprising a selected set of basis functions. The optical flow parameters are computed at each pixel in terms of coefficients of the basis functions. The model is thus highly over-parameterized, and regularization is applied at the level of the coefficients, rather than the model itself. As a result, the actual optical flow in the group of images is represented more accurately than in methods that are known in the art.

Owner:TECHNION RES & DEV FOUND LTD

Defocus depth estimation and full focus image acquisition method of dynamic scene

The invention provides a defocus depth estimation and full focus image acquisition method of a dynamic scene. The method comprises the following steps of: acquiring first depth maps and globally inconsistent fuzzy kernels of a plurality of defocused images, and employing an image deblurring algorithm based on defocus depth estimation to carry out feedback iterative optimization to obtain a full focus image and a second depth map of each moment; after carrying out color segmentation on the full focus image of each moment, and carrying out plane fitting on the depth map, and carrying out refinement of space to obtain a third depth map, and carrying out optimization again to obtain an optimized full focus image; after carrying out optical flow estimation on the full focus image, carrying out smoothing on the third depth map, refining the third depth map in the time to obtain a depth estimation result with a consistent time. According to the method, a more precise dynamic scene depth estimation result and a full clear image can be obtained, and realization is easy.

Owner:TSINGHUA UNIV

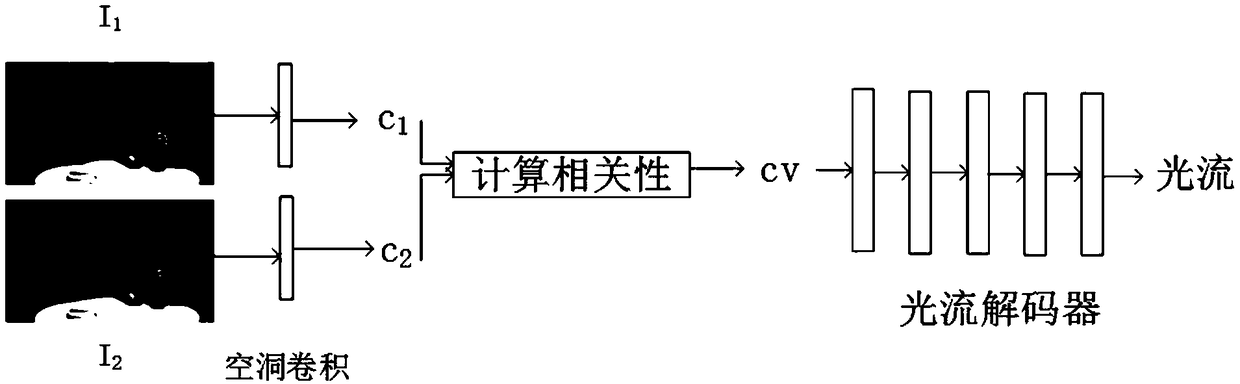

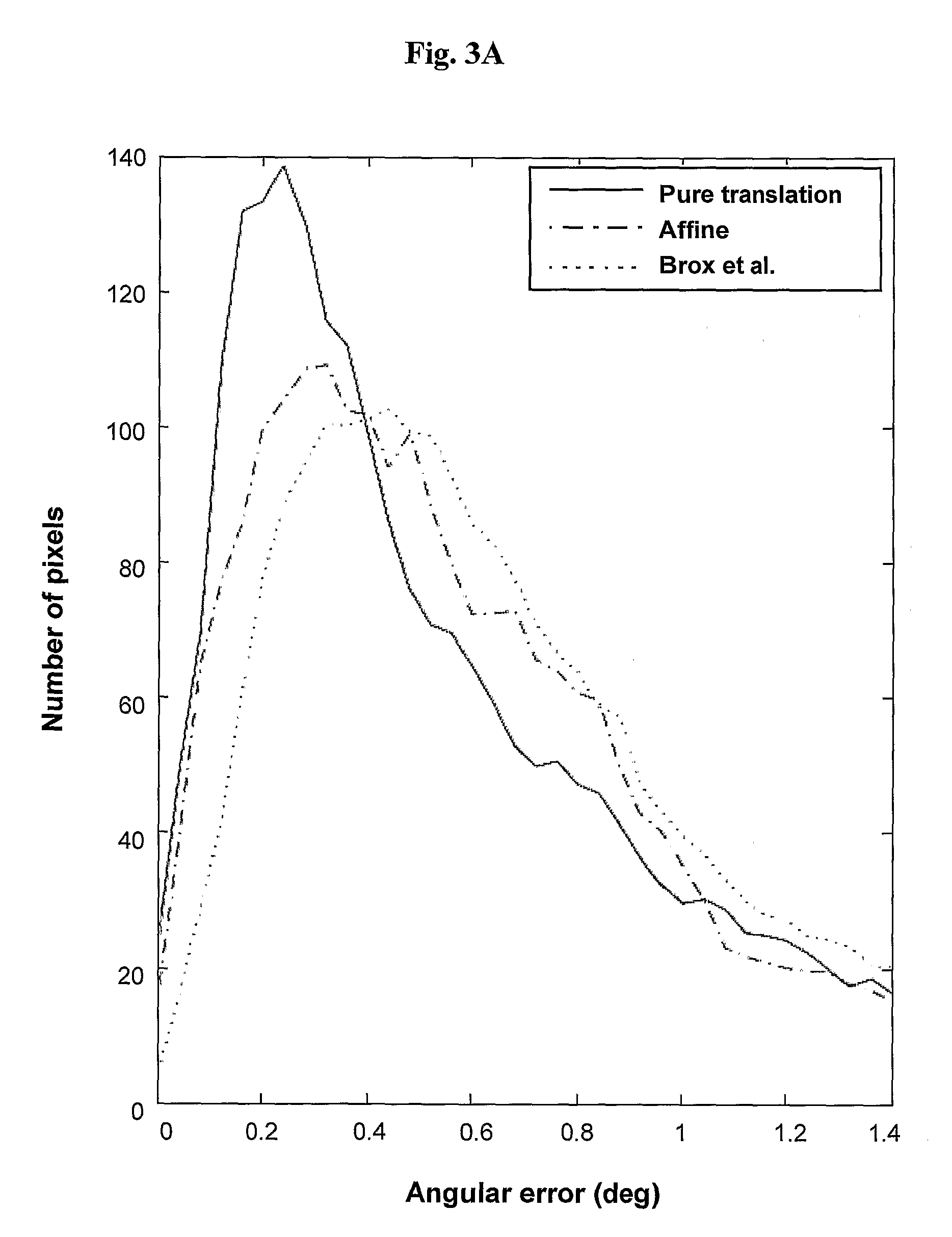

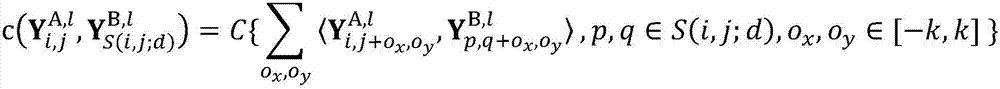

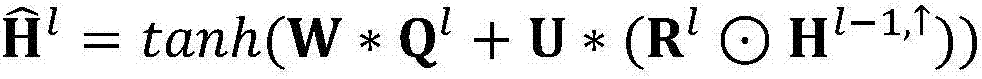

Optical flow estimation method based on multiple dimensioned corresponding structuring learning

The invention discloses an optical flow estimation method based on multiple dimensioned corresponding structuring learning; under given successive video frames, the method can parse the motion conditions of the first frame relative to the second frame; the method specifically comprises the following steps: obtaining a successive frame image data set used for training optical flow estimation, and defining an algorithm object; carrying out structuring modeling for correspondences between two successive frame images in different dimensions; joint encoding the corresponding relations in different dimensions; building an optical flow estimation prediction model; using the prediction model to estimate the successive video frame optical flow values. The optical flow estimation method is applied to the optical flow motion analysis in true videos, and can provide better effect and robustness under various complex conditions.

Owner:ZHEJIANG UNIV

Digital processing method and system for determination of optical flow

InactiveUS20100124361A1Reduce resolutionHigh resolutionImage enhancementImage analysisOctaveGradient estimation

A method and system for determining an optical flow field between a pair of images is disclosed. Each of the pair of images is decomposed into image pyramids using a non-octave pyramid factor. The pair of decomposed images is transformed at a first pyramid scale to second derivative representations under an assumption that a brightness gradient of pixels in the pair of decomposed images is constant. Discrete-time derivatives of the second derivative image representations are estimated. An optical flow estimation process is applied to the discrete-time derivatives to produce a raw optical flow field. The raw optical flow field is scaled by the non-octave pyramid factor. The above-cited steps are repeated for the pair of images at another pyramid scale until all pyramid scales have been visited to produce a final optical flow field, wherein spatiotemporal gradient estimations are warped by a previous raw optical flow estimation.

Owner:CHEYTEC TECH LLC

Weak supervision video sequential action positioning method and system based on deep learning

ActiveCN111079646AAccurate detectionInternal combustion piston enginesCharacter and pattern recognitionEngineeringAction recognition

The invention discloses a weak supervision video sequential action positioning method and system based on deep learning, and the method comprises the following steps: S1, extracting a current frame and a previous frame in a video, extracting an optical flow through an optical flow estimation network, and inputting the optical flow and frames sampled by the video at equal intervals into a double-flow action recognition network to extract video features; S2, performing semantic consistency modeling on the video features to obtain embedded features; S3, mapping the embedded features to a class activation sequence by a training classification module; S4, updating the video features by adopting an attention module; S5, taking the updated video features as the input of the next cycle, and repeating S2 to S4 until stopping; S6, fusing class activation sequences generated by each cycle, and calculating classification loss of estimated action classes and real class labels; S7, fusing the embedded features of each cycle to calculate similarity loss between the action features; and S8, obtaining target loss according to the classification loss and the similarity loss, and updating system model parameters.

Owner:SUN YAT SEN UNIV

Compact SFM three-dimensional reconstruction method without feature extraction

The invention discloses a compact SFM three-dimensional reconstruction method without feature extraction. The method comprises the steps that n images related to a certain scene are input, where n is larger than or equal to two; a world coordinate system identical to a certain camera coordinate system is established; the depth of the three-dimensional scene and a camera projection matrix serve as variables, an objective function similar to that of light stream estimation is established, a method of a pyramid becoming thinner is adopted, an iterative algorithm is designed, the objective function is optimized, and the depth representing three-dimensional information of the scene and the camera projection matrix representing relative pose information of a camera are output; according to the depth representing the three-dimensional information of the scene, compact projection and similarity or Euclid reconstruction are achieved. According to the method, compact SFM three-dimensional reconstruction can be completed through one step. Due to the fact that the compact three-dimensional information is estimated through one-step optimization, a objective function value serves as an index, an optimal solution can be obtained, the optimal solution is at least a locally optimal solution, and the method is greatly improved compared with an existing method and is preliminarily verified through experiments.

Owner:SUN YAT SEN UNIV

Image restoration method in computer vision system, including method and apparatus for identifying raindrops on a windshield

InactiveUS8797417B2Improve robustnessImage enhancementTelevision system detailsStereo cameraRestoration method

A vehicle is equipped with a camera (which may be a stereoscopic camera) and a computer for processing the image data acquired by the camera. The image acquired by the camera is processed by the computer, and features are extracted therefrom. The features are further processed by various techniques such as object detection / segmentation and object tracking / classification. The acquired images are sometimes contaminated by optical occlusions such as raindrops, stone-chippings and dirt on the windshield. In such a case, the occluded parts of the image are reconstructed by optical flow estimation or stereo disparity estimation. The fully reconstructed image is then used for intended applications.

Owner:HONDA MOTOR CO LTD

End-to-end optical flow estimation method based on multi-stage loss

ActiveCN110111366AImprove accuracyEasy to convergeImage enhancementImage analysisCorrelation analysisImaging Feature

The invention discloses an end-to-end optical flow estimation method based on multistage loss, and the method comprises the steps: sending two adjacent images into the same feature extraction convolutional neural network, carrying out the feature extraction, and obtaining a multi-scale feature map of the two frames of images; carrying out correlation analysis operation on the two image feature maps under each scale so as to obtain multi-scale loss information; combining the loss amount information obtained under the same scale, the feature map of the first frame of image under the scale and the optical flow information obtained by the previous stage of prediction together, sending the combined information into an optical flow prediction convolutional neural network, obtaining a residual flow under the scale, and adding the residual flow with an upper sampling result of the optical flow information of the previous stage to obtain the optical flow information of the scale; and carrying out feature fusion operation on the optical flow information of the second-level scale and the input two frames of images, and sending the fused information to a motion edge optimization network to obtain a final optical flow prediction result. By using the method, the optical flow estimation algorithm precision and efficiency can be improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Optical flow estimation method and apparatus

InactiveCN105261042AAccurate Optical Flow EstimationImage enhancementImage analysisReference imageEnergy equation

The embodiment of the invention provides an optical flow estimation method and apparatus. The method comprises: determining M first inliers of a first image and M second inliers of a second image, wherein the M first inliers and the M second inliers are corresponding one-to-one, the M first inliers are M pixels in the first image meeting preset conditions, the M second inliers are M pixels in the second image meeting preset conditions, and the first image employs the second image as a reference image; obtaining a first optical flow field of the first image according to the M first inliers and the M second inliers; determining a second optical flow field according to a second algorithm based on the first optical flow field, wherein the second algorithm makes the optical flow vector quantity in the second optical flow field greater than the optical flow vector quantity in the first optical flow field; and using the second optical flow field as the coupling item constraint of an energy equation to solve the energy equation and obtain an object optical flow field. The optical flow estimation method and apparatus can realize more accurate optical flow estimation under large displacement scenes.

Owner:HUAWEI TECH CO LTD

Background repairing method of road scene video image sequence

ActiveCN104021525AAvoid generatingGood restorativeImage enhancementNeural learning methodsVideo imageGaussian process

The invention provides a background repairing method of a road scene video image sequence. The method comprises the steps that first, an optical flow diagram of a current frame to the adjacent frame is calculated, then based on Gaussian process regression, splashes in a lost optical flow diagram area are calculated, and the splashes are used for initialization of optical flow prediction; after the initialization of the optical flow prediction, then three layers of BP neural networks are used for achieving row-by-row optical flow prediction; then image repairing is conducted, in the image repairing stage, the optical flow initialization and optical flow prediction are used for enabling the pixels in a current frame lost area to correspond to an adjacent frame background area, and a Gaussian mixture model is used for achieving image repairing. According to the video image sequence under a movement background condition, corresponding of the current frame lost foreground area pixels to the adjacent frame background pixels is achieved based on optical flow information, image repairing of the movement foreground lost areas in the road scene video image sequence is effectively achieved, and the method is simple and effective.

Owner:XI AN JIAOTONG UNIV

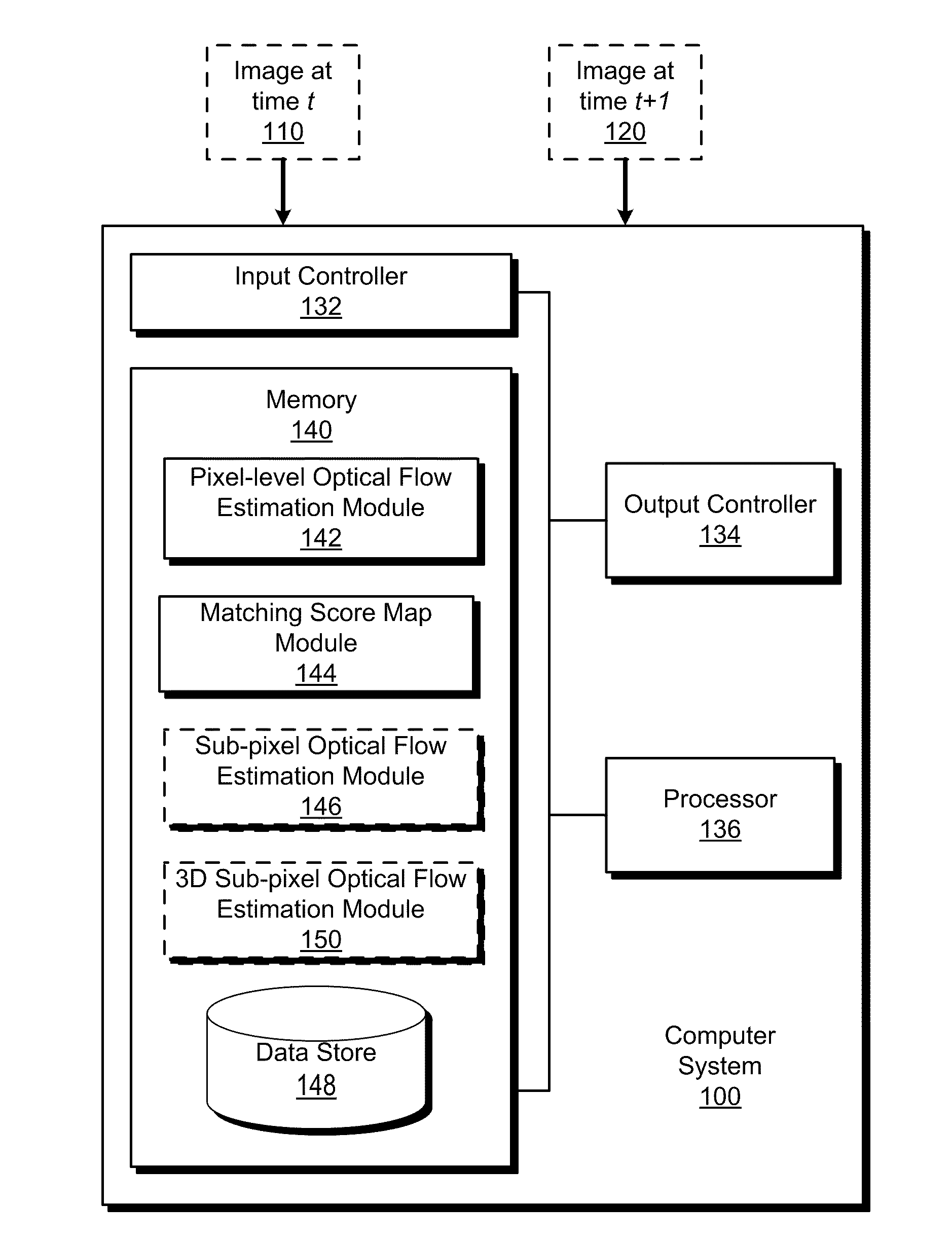

Fast sub-pixel optical flow estimation

A system and method are disclosed for fast sub-pixel optical flow estimation in a two-dimensional or a three-dimensional space. The system includes a pixel-level optical flow estimation module, a matching score map module, and a sub-pixel optical flow estimation module. The pixel-level optical flow estimation module is configured to estimate pixel-level optical flow for each pixel of an input image using a reference image. The matching score map module is configured to generate a matching score map for each pixel being estimated based on pixel-level optical flow estimation. The sub-pixel optical flow estimation module is configured to select the best pixel-level optical flow from multiple pixel-level optical flow candidates, and use the selected pixel-level optical flow, its four neighboring optical flow vectors and matching scores associated with the pixel-level optical flows to estimate the sub-pixel optical flow for the pixel of the input image.

Owner:HONDA MOTOR CO LTD

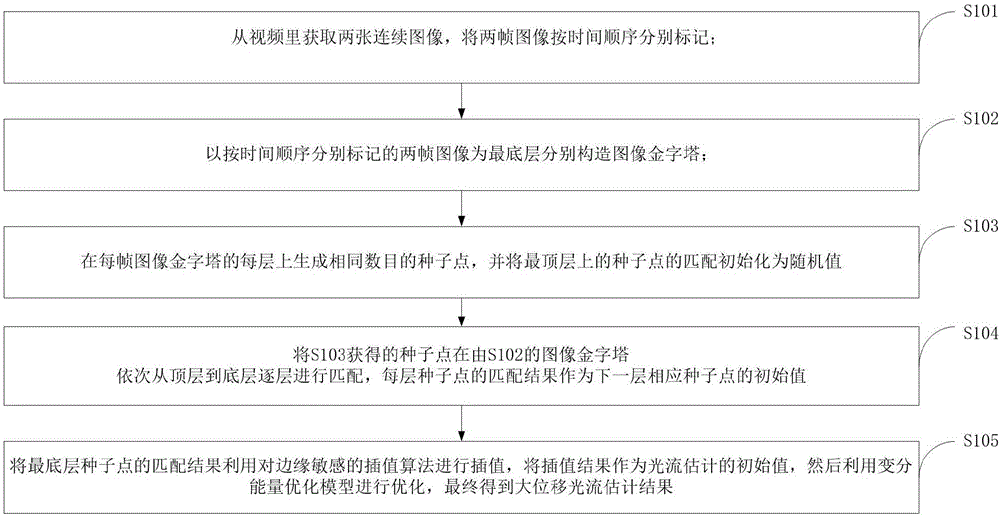

Effective estimation method for large displacement optical flows

ActiveCN105809712ABroadcast restrictionsAccurate Large Displacement MatchingImage enhancementImage analysisEstimation methodsComputer science

The invention discloses an effective estimation method for large displacement optical flows, comprising capturing two continuous images from a video and marking the frame images of the two as I1 and I2 in a time order; taking I1 and I2 as the lowest layers to establish image pyramids respectively (described in the specification); generating seed points in equal numbers on each layer of the image pyramids (described in the specification); initiating the matching of seed points at the top layer (described in the specification) for random values; matching the obtained seed points from the top layer to the lowest lower of the image pyramids successively and the matching result of seed points at each layer serves as an initial value for the next layer; and interpolating the matching result of seed points at the lowest layer through an edge sensitive interpolation algorithm; using the interpolating result as the initial value for optical flow estimation and optimizing it through a variation energy optimization model to finally achieve the estimation result of large displacement optical flows. According to the invention, a more effective and flexible result can be achieved. The number of seed points can be controlled for different application scenarios for optical flow results with varied efficiency and accuracy.

Owner:XIDIAN UNIV

Edge-aware spatio-temporal filtering and optical flow estimation in real time

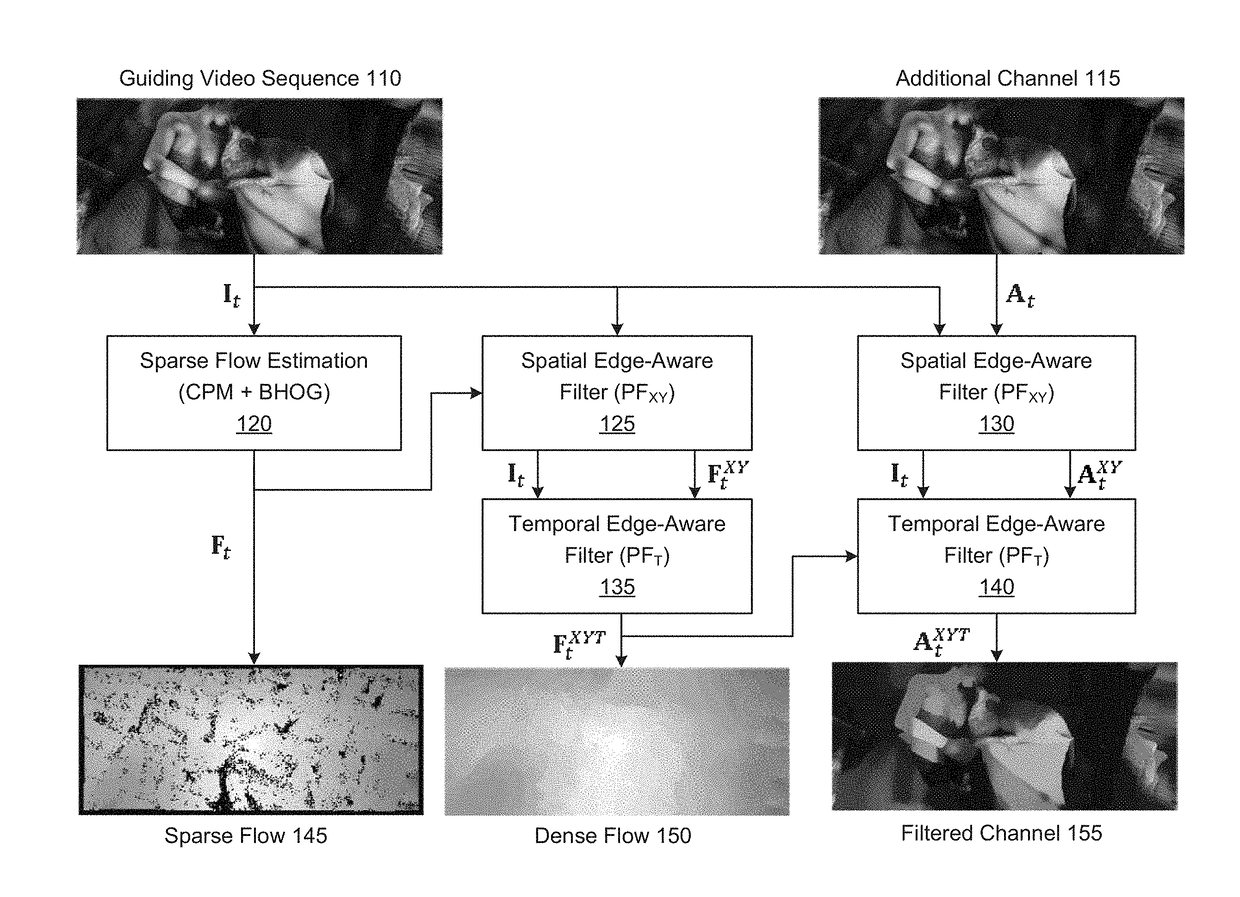

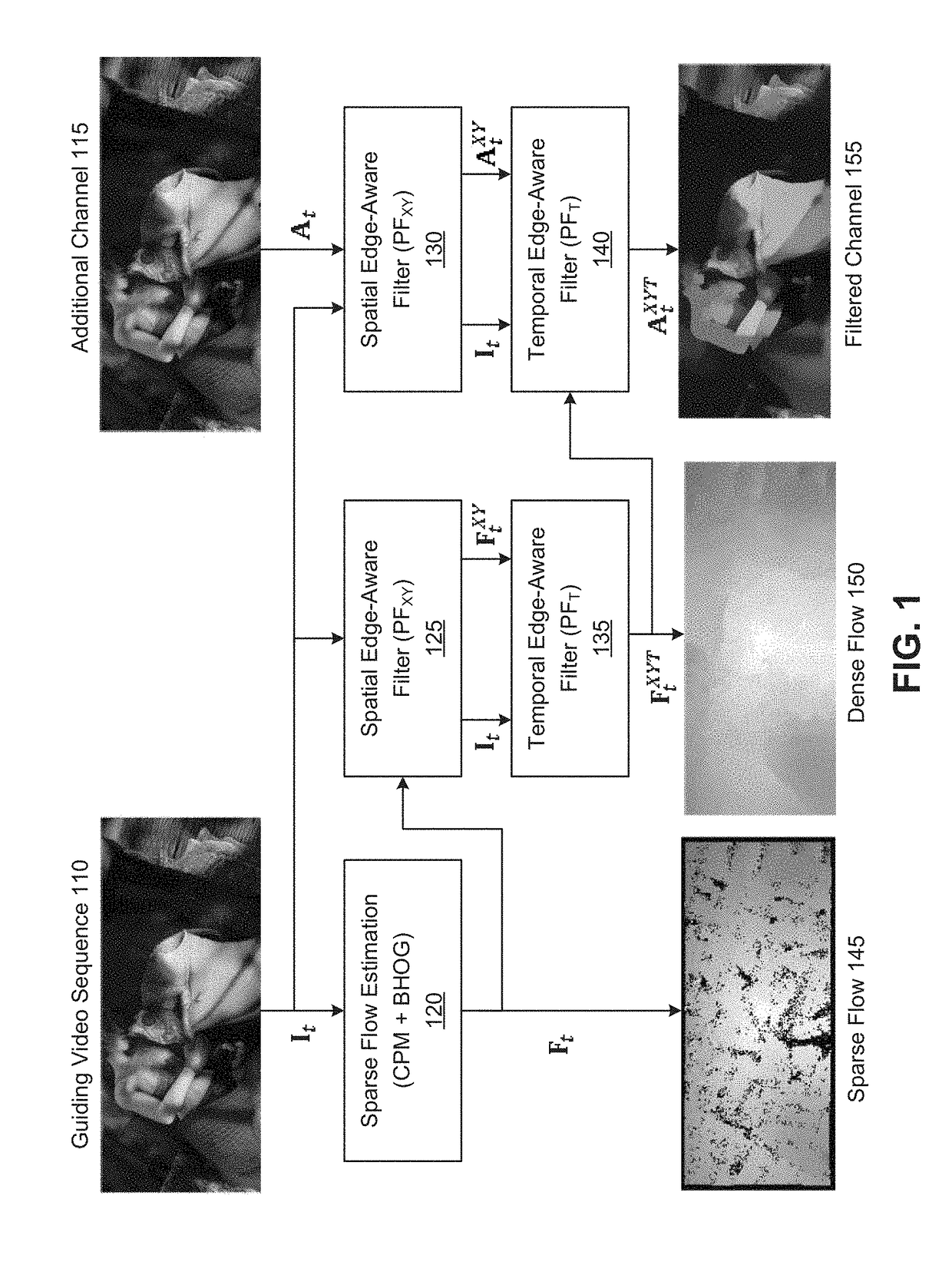

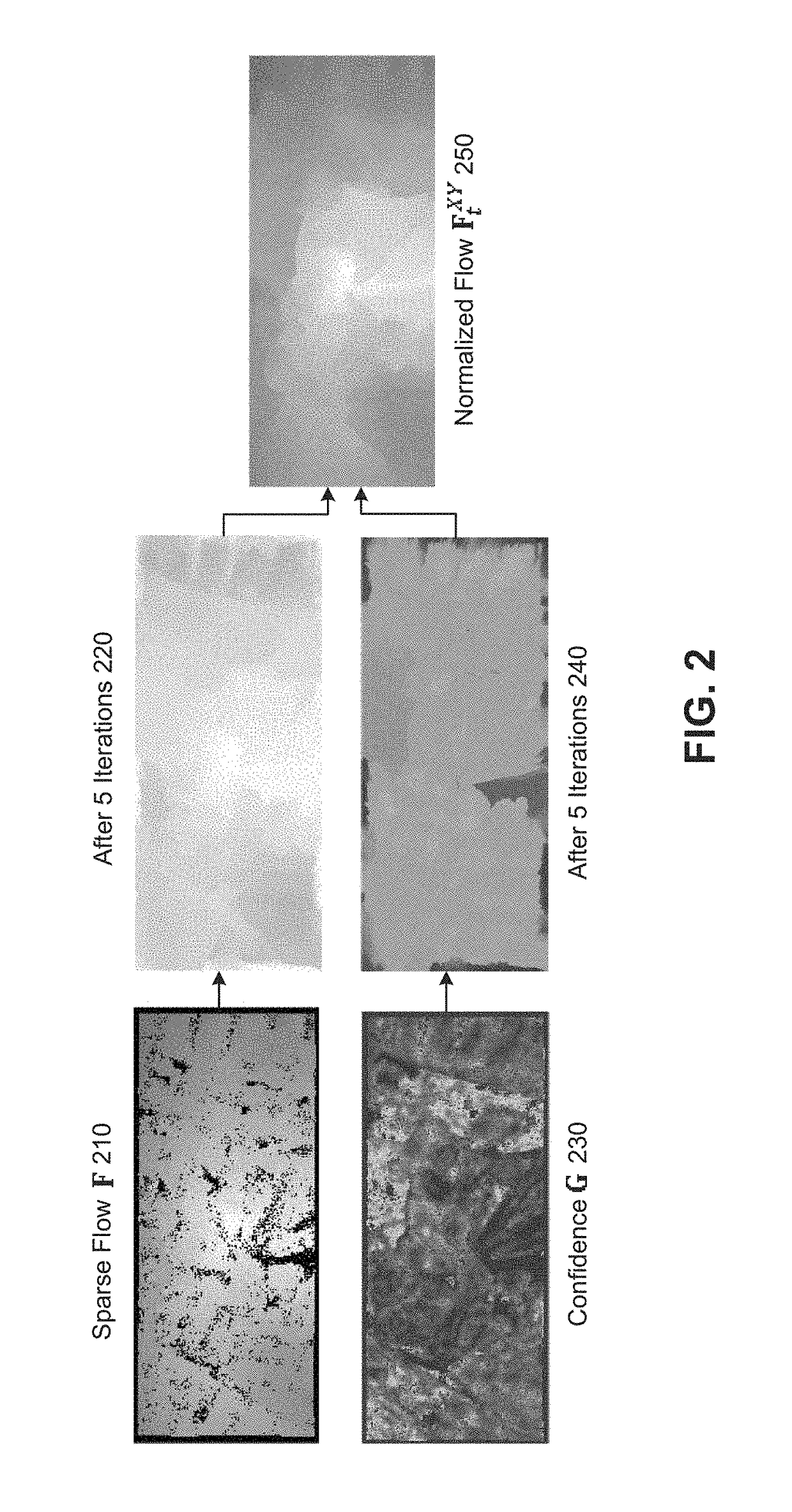

The disclosure provides an approach for edge-aware spatio-temporal filtering. In one embodiment, a filtering application receives as input a guiding video sequence and video sequence(s) from additional channel(s). The filtering application estimates a sparse optical flow from the guiding video sequence using a novel binary feature descriptor integrated into the Coarse-to-fine PatchMatch method to compute a quasi-dense nearest neighbor field. The filtering application then performs spatial edge-aware filtering of the sparse optical flow (to obtain a dense flow) and the additional channel(s), using an efficient evaluation of the permeability filter with only two scan-line passes per iteration. Further, the filtering application performs temporal filtering of the optical flow using an infinite impulse response filter that only requires one filter state updated based on new guiding video sequence video frames. The resulting optical flow may then be used in temporal edge-aware filtering of the additional channel(s) using the nonlinear infinite impulse response filter.

Owner:ETH ZURICH EIDGENOESSISCHE TECHN HOCHSCHULE ZURICH +1

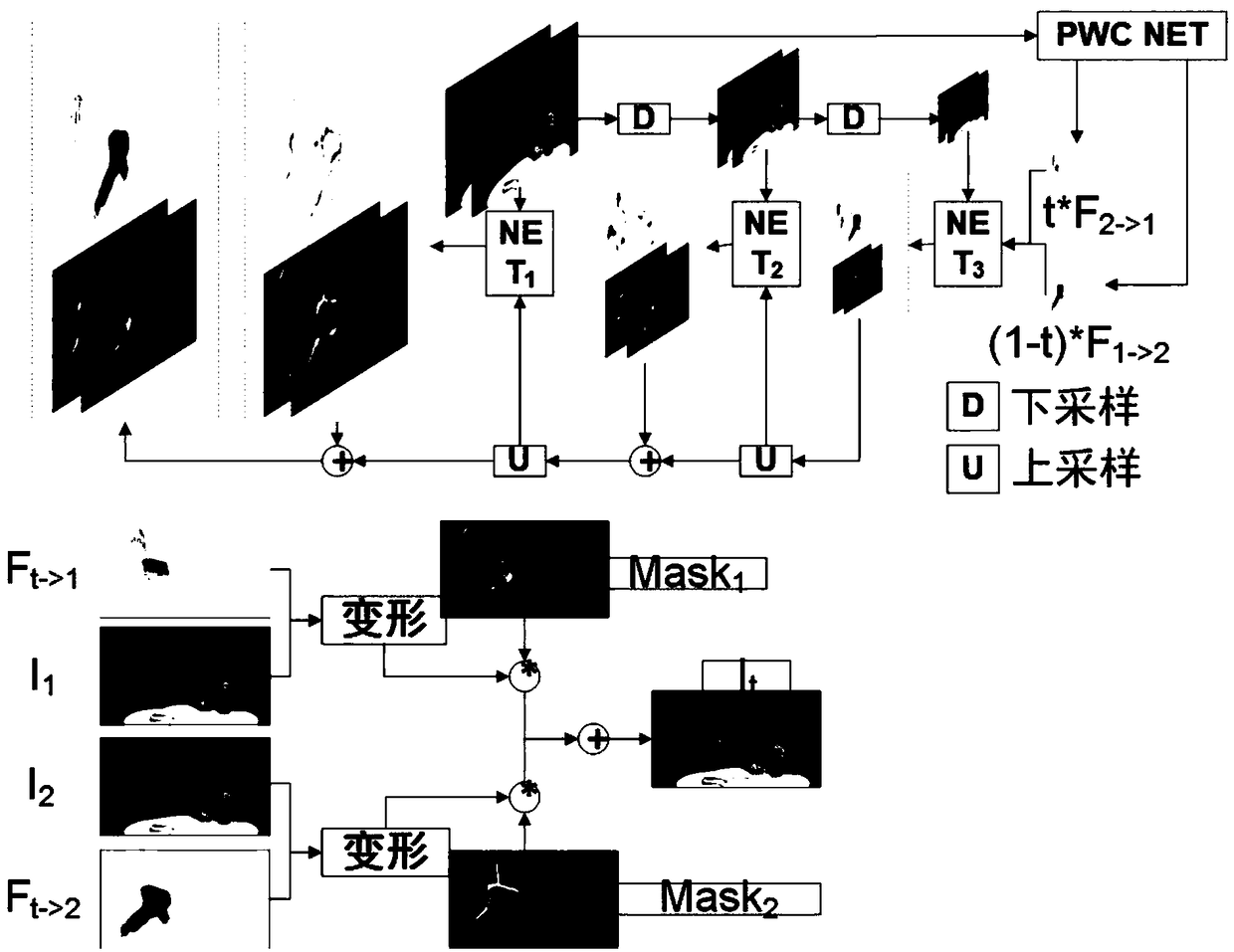

Lightweight video interpolation method based on a feature level optical flow

ActiveCN109756690AHigh precisionHigh speedTelevision system detailsImage analysisSignal-to-noise ratio (imaging)Network model

The invention discloses a lightweight video interpolation method based on a feature level optical flow, which is used for solving the technical problem that the existing lightweight video interpolation method is poor in practicability. According to the technical scheme, firstly, multi-scale transformation is carried out on two continuous frames of images in a given video, and an optical flow estimation module at the feature level is adopted to calculate a forward optical flow and a reverse optical flow between the two frames under the current scale; warp transformation on the two images is performed in time sequence according to the forward optical flow and the reverse optical flow respectively to obtain two interpolation images; the interpolated images are combined to obtain a four-dimensional tensor, and the tensor is processed by utilizing three-dimensional convolution to obtain an interpolated image under the scale; And weighted average on the images are carried out with differentscales to obtain a final interpolation image. According to the invention, the video interpolation is carried out by using the optical flow of the feature level and the multi-scale fusion technology, and the precision and speed of the video interpolation are improved. A 1.03 MB network model is used for obtaining the average peak signal-to-noise ratio of 32.439 and the structural similarity of 0.886.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Method and system for segment-based optical flow estimation

InactiveUS20070092122A1Reduce transmissionEfficiently breaks the spatial coherence over the motion boundariesTelevision system detailsImage analysisOcclusion detectionPyramid

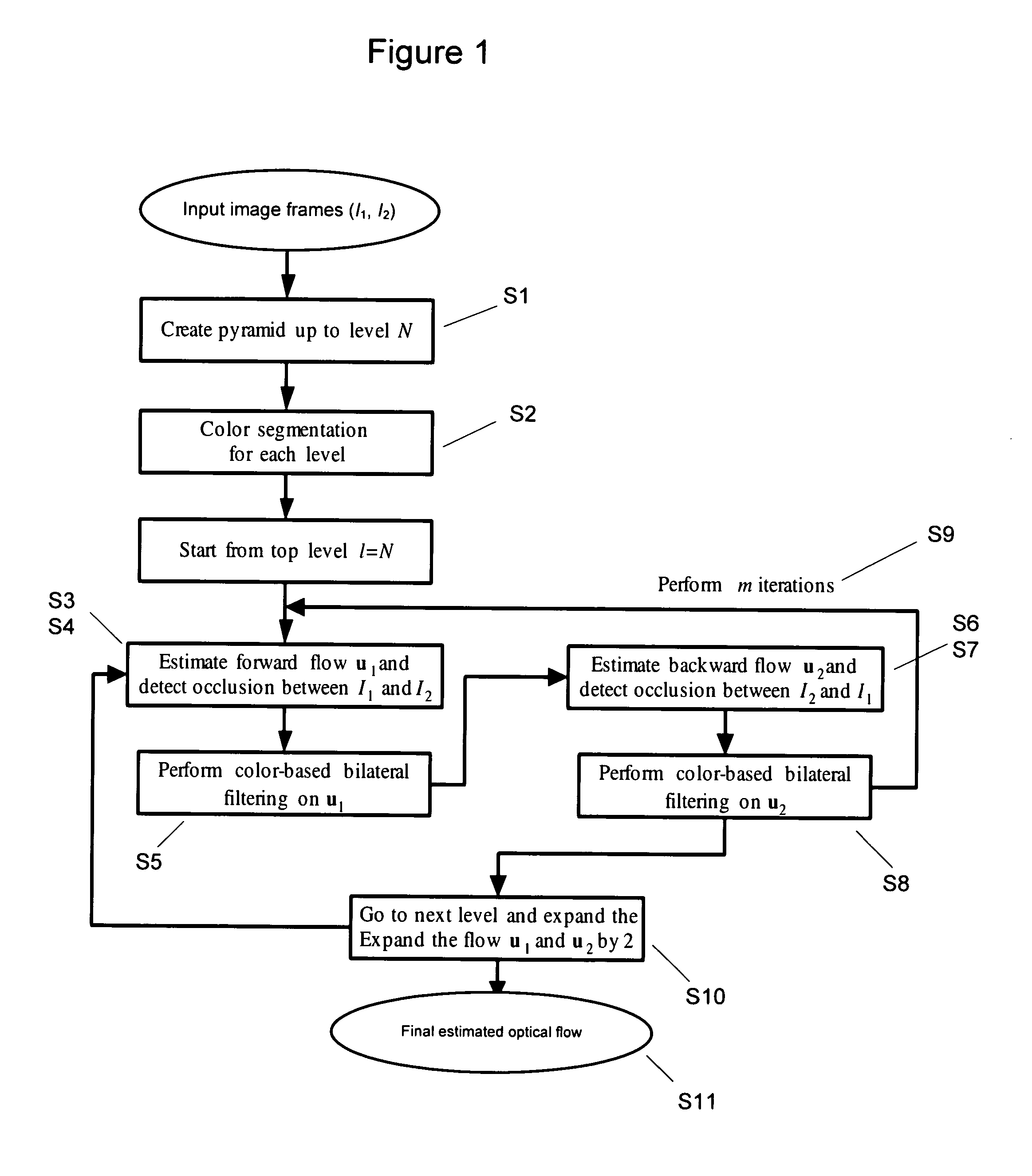

The methods and systems of the present invention enable the estimation of optical flow by performing color segmentation and adaptive bilateral filtering to regularize the flow field to achieve a more accurate flow field estimation. After creating pyramid models for two input image frames, color segmentation is performed. Next, starting from a top level of the pyramid, additive flow vectors are iteratively estimated between the reference frames by a process including occlusion detection, wherein the symmetric property of backward and forward flow is enforced for the non-occluded regions. Next, a final estimated optical flow field is generated by expanding the current pyramid level to the next lower level and the repeating the process until the lowest level is reached. This approach not only generates efficient spatial-coherent flow fields, but also accurately locates flow discontinuities along the motion boundaries.

Owner:SRI INTERNATIONAL

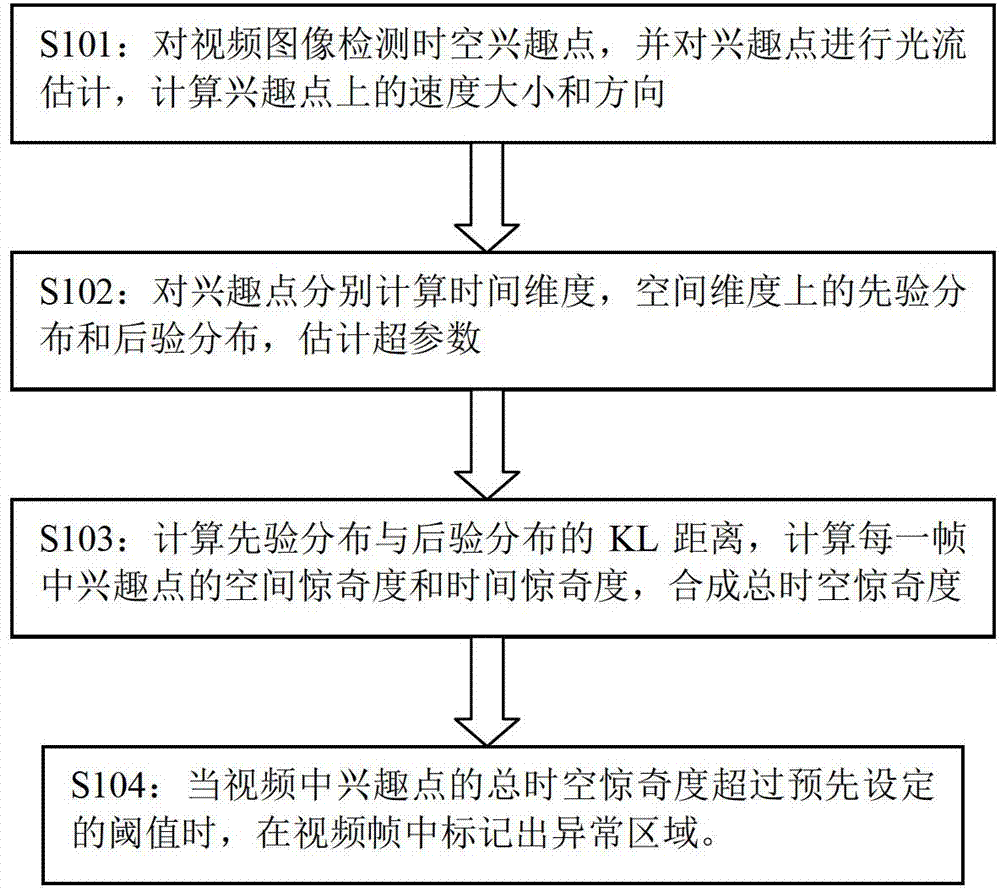

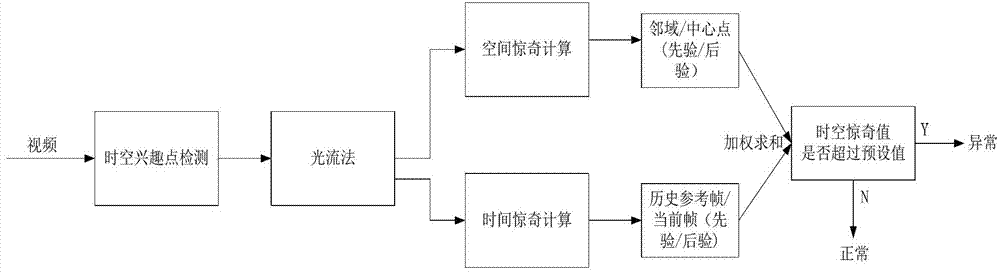

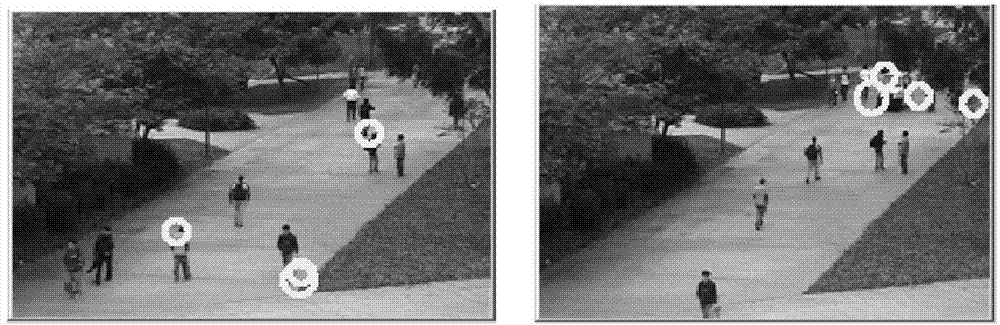

Method and device of video abnormal behavior detection based on Bayes surprise degree calculation

InactiveCN103198296ARealize detectionImprove applicabilityCharacter and pattern recognitionFeature extractionAnomaly detection

The invention provides a method and a device of video abnormal behavior detection based on Bayes surprise degree calculation. The method comprises the steps of extracting a spatio-temporal interest point (STIP) in a video frame to be used as a point to be detected, and using the movement velocity size and direction of the point to be detected in an optical flow estimation scene as a feature calculation surprise; calculating prior and posterior probability distribution in the spatial dimension and the time dimension by aiming at a video, and respectively calculating a spatial surprise degree and a time surprise degree of each point to be detected; combining a total surprise degree through the time surprise degree and the spatial surprise degree; and warning abnormal situations under the condition that the surprise values of a plurality of points to be detected exceed a threshold value. The device comprises an STIP detection module, a feature extraction module, a surprise calculation module and an anomaly detection module. By means of the method and the device of the video abnormal behavior detection based on the Bayes surprise degree calculation, detection of several specific types of emergent and anomalous events can be achieved, and the abnormal analysis algorithm has good applicability and high classifying accuracy rate.

Owner:UNIV OF SCI & TECH OF CHINA

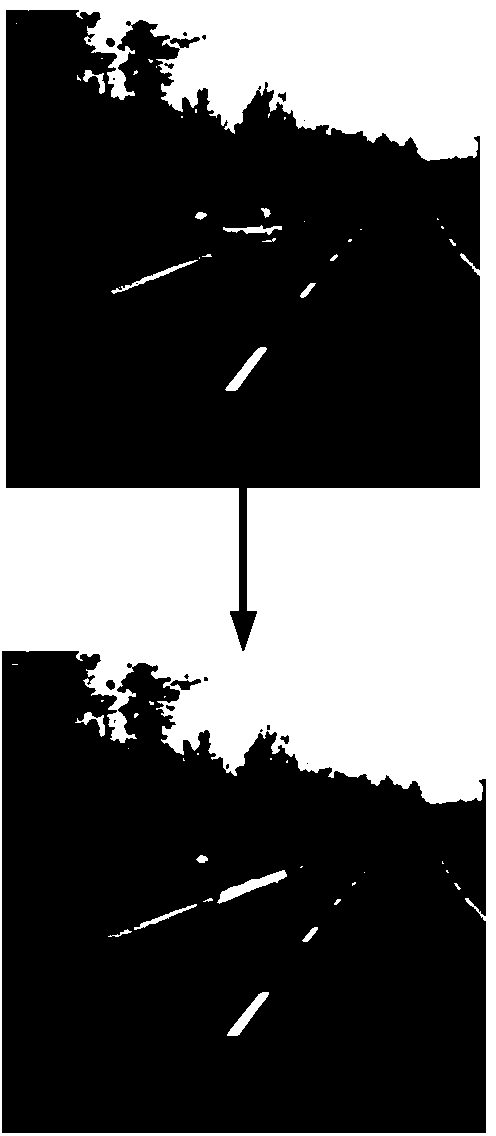

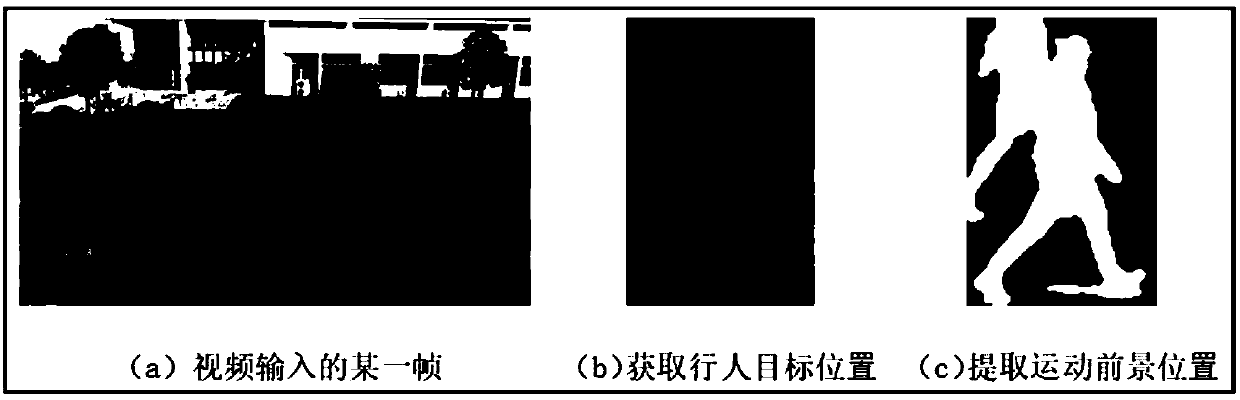

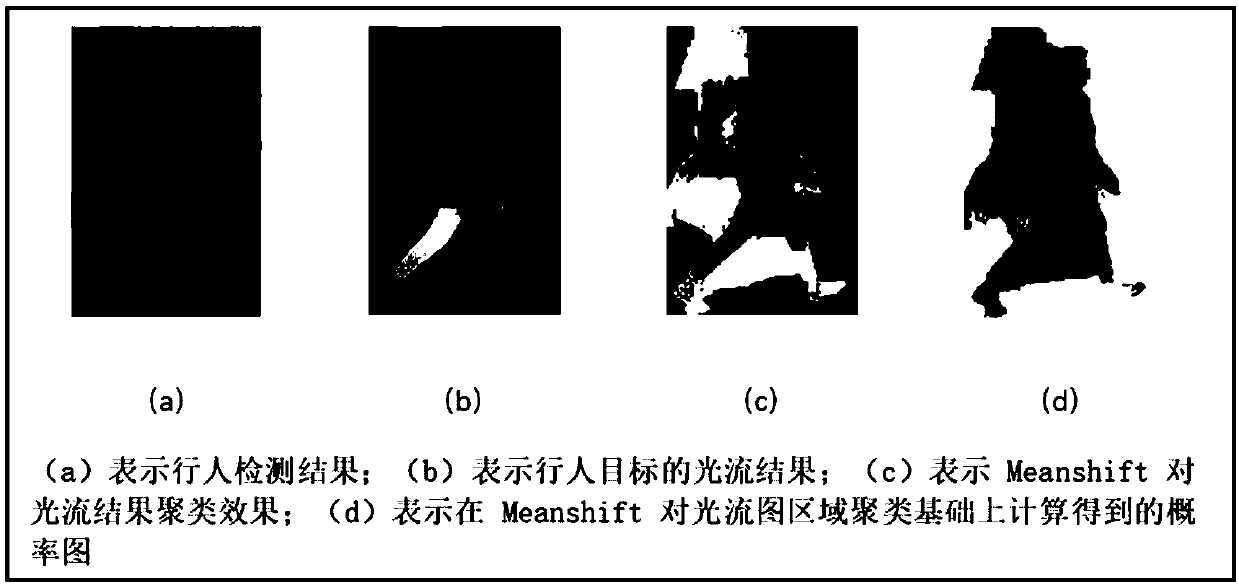

A method suitable for segmenting salient human body instances in video image

To address the shortcomings of the prior art, the invention provides a method suitable for segmenting salient human body instances in a video image, which introduces the motion persistence and the time-space structure consistency of a moving object in a video sequence, and realizes a human body instances segmentation method combining optical flow clustering, saliency detection and multi-feature voting based on the two constraints. For continuity of motion, the strategy of calculating the probability of foreground targets based on the clustering of optical flow regions is used, that is, clustering regions based on optical flow characteristics and calculating foreground probability by taking the area of regions as weights; for spatio-temporal structure consistency, a multi-feature voting strategy based on fusion saliency detection and rough contour is proposed and, based on saliency detection and adjacent frame light interest difference, the method optimizes the target foreground with complete contour at pixel level, so as to achieve the case segmentation of unoccluded mobile pedestrians.

Owner:ANHUI UNIVERSITY

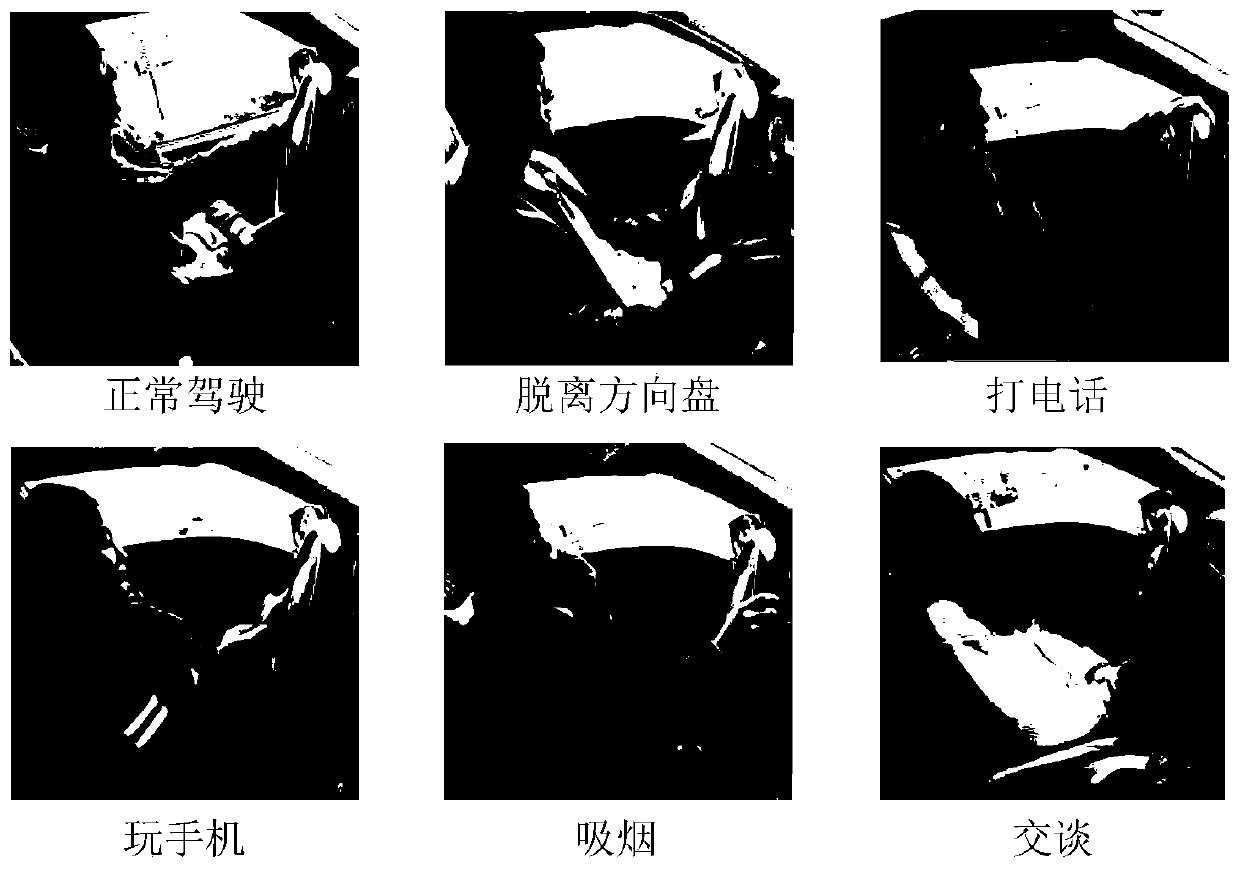

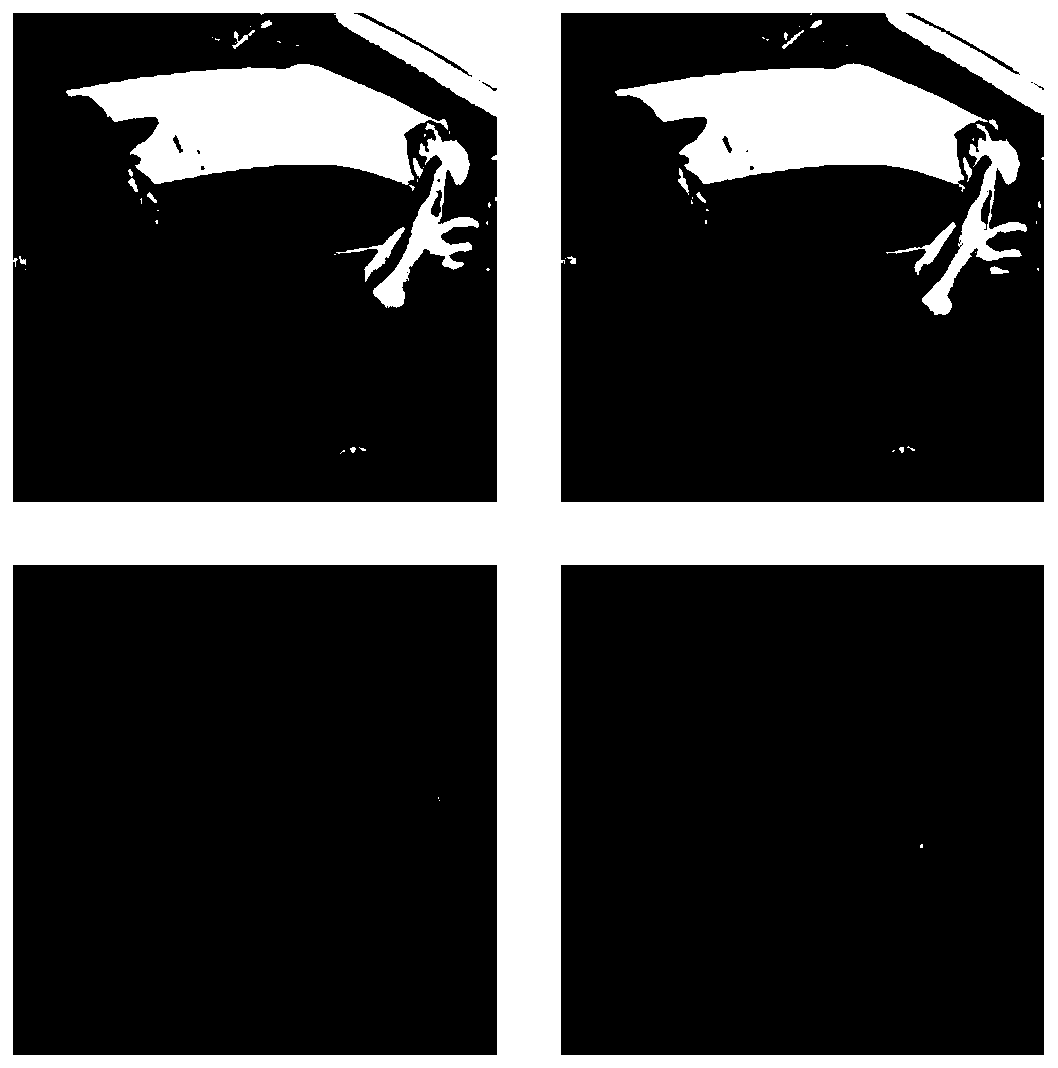

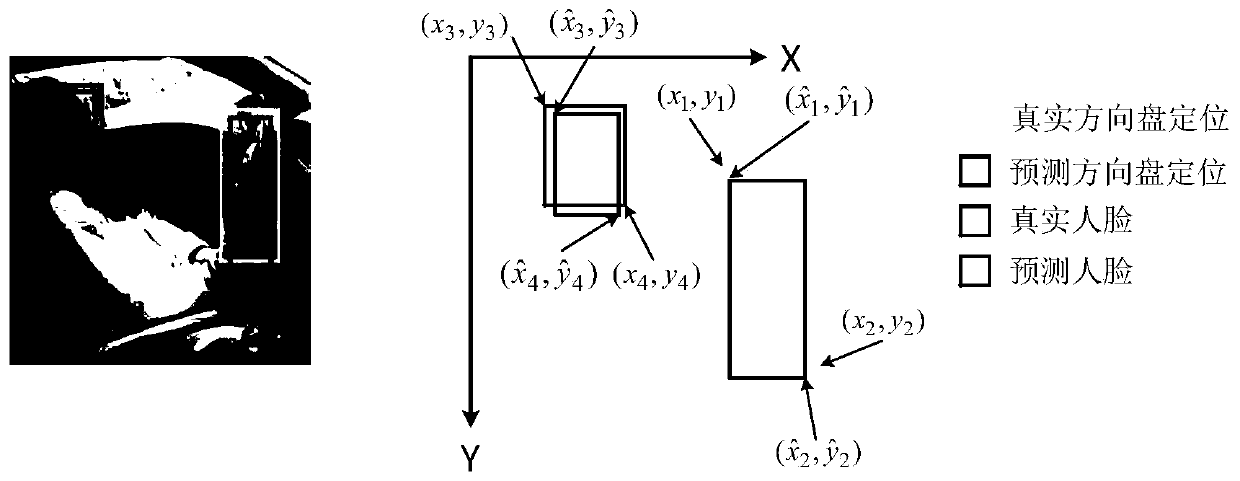

A video driver behavior identification method based on a multi-task space-time convolutional neural network

ActiveCN109784150ARich in featuresImprove accuracyCharacter and pattern recognitionInternal combustion piston enginesSimulationMulti-task learning

The invention provides a video driver behavior identification method based on a multi-task space-time convolutional neural network. A multi-task learning strategy is introduced into a training processof a space-time convolutional neural network and is applied to driver behavior identification in a monitoring video. Auxiliary driver positioning and optical flow estimation tasks are implicitly embedded into the video classification tasks, so that the convolutional neural network model is promoted to learn richer driver local space and motion time characteristics, and the driver behavior recognition accuracy is improved. Compared with an existing driver identification method, the multitask space-time convolutional neural network architecture designed by the invention combines interframe information, is high in generalization and identification accuracy, can be used for real-time driver behavior identification under a monitoring video, and has an important application value in the field of traffic safety.

Owner:SOUTHEAST UNIV

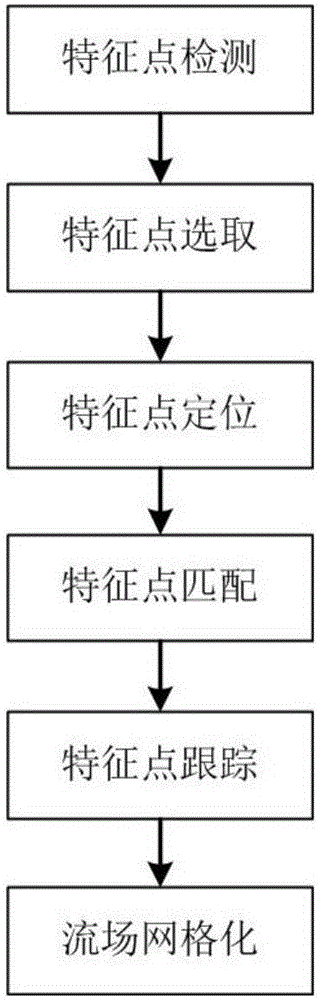

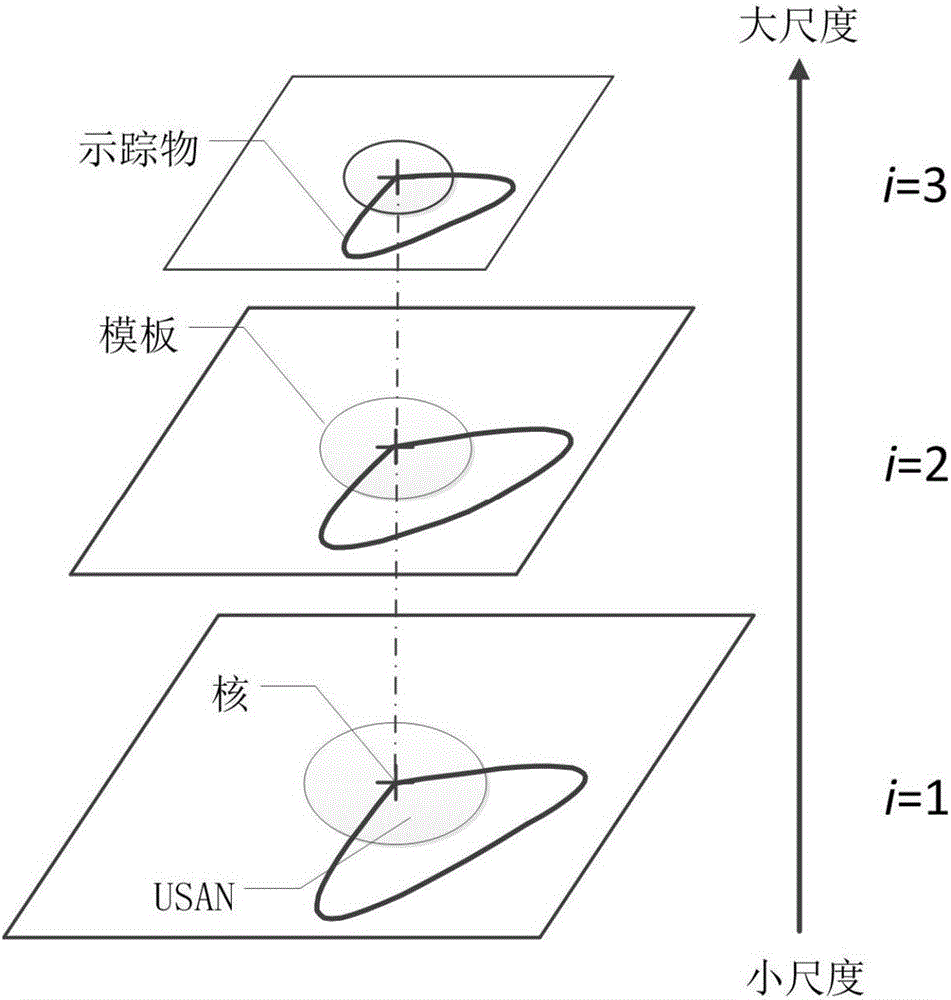

Fluid motion vector estimation method based on feature optical flow

The invention discloses a fluid motion vector estimation method based on feature optical flow, comprising the following steps: first, carrying out feature point extraction and sub-pixel location on two consecutive frames of images I1 and I2 in an H.264 video stream under different resolutions through use of a multi-scale SUSAN feature point detection algorithm to get feature point sets P1 and P2; then, using an H.264 video compression domain priori feature optical flow estimation algorithm to extract the motion vector of a macro block where the feature points in P1 are located as priori information for setting the search radius, searching for matching feature points in the feature point set P2, estimating an optical flow motion vector, and getting a sparse feature optical flow field; and finally, meshing the sparse feature optical flow field through use of a flow field meshing algorithm based on inverse distance weighted interpolation to get a uniform two-dimensional flow velocity vector field. The method can be applied to motion vector estimation of natural tracer water such as river water surface, and is especially applicable to high-spatial-resolution two-dimensional instantaneous flow field measurement.

Owner:HOHAI UNIV

Cluster optical flow characteristic-based abnormity behavior detection method, system and device

ActiveCN108052859ASmall amount of calculationImage analysisCharacter and pattern recognitionPattern recognitionLocation area

The invention discloses a cluster optical flow characteristic-based abnormity behavior detection method comprising the following steps: using a depth learning target detection method to obtain a target position area; obtaining the optical flow information of the target areas in at least two adjacent frame images; building a space-time model for the extracted optical flow information of the targetareas; obtaining the average information entropy of the space-time model corresponding to each detection target; clustering the optical flow points in the space-time model so as to obtain cluster optical flow points and the average kinetic energy of the cluster points; determining whether the target has abnormal behaviors, such as fighting and running, or not according to the cluster point averagekinetic energy, the information entropy and the deviation of a pre-trained normal model. The method can accurately and fast detect abnormity video frames and abnormal targets; the invention also provides an abnormity behavior detection system and device applied to the intelligent video analysis field, thus providing very well practical values.

Owner:SHENZHEN UNIV

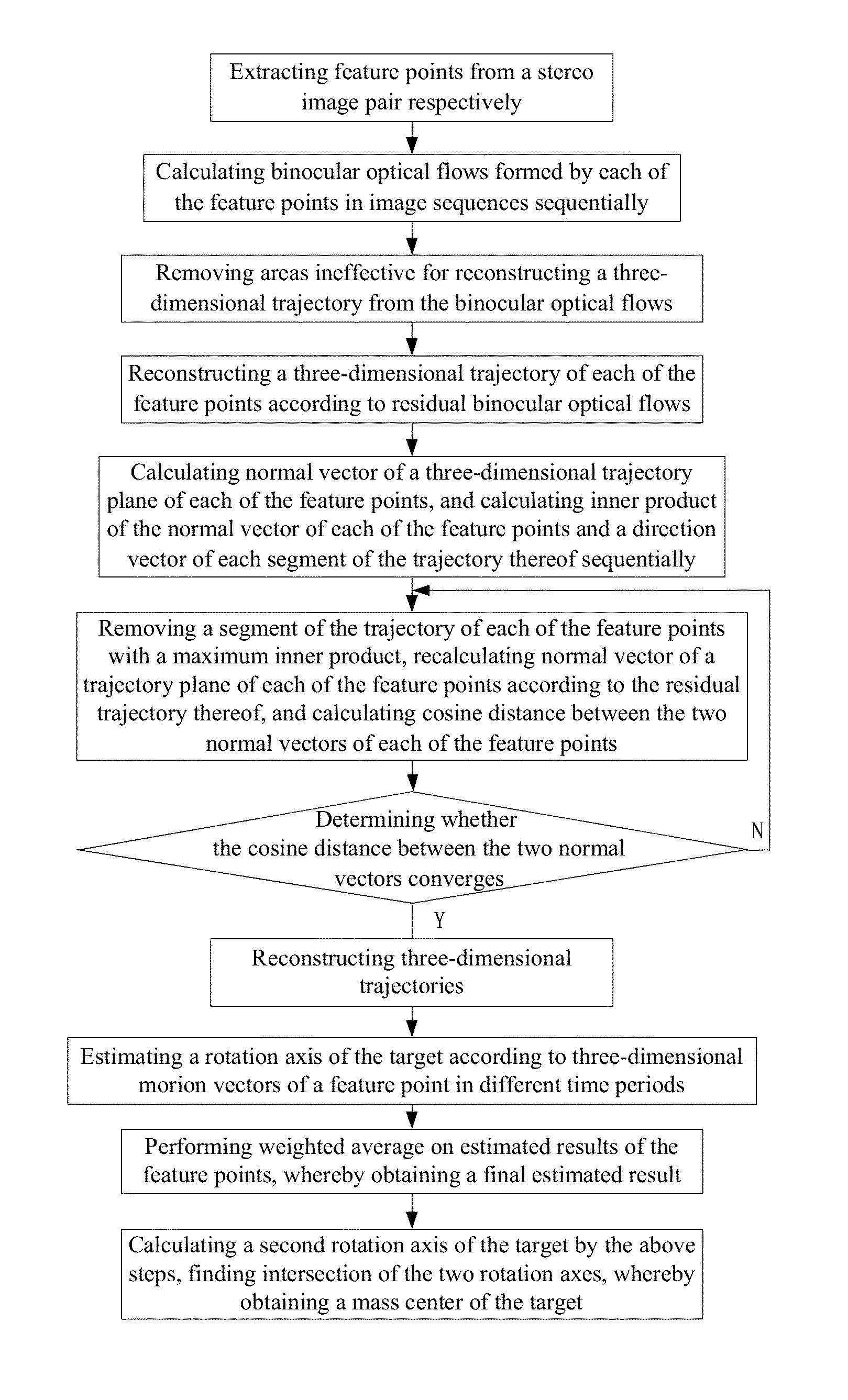

Method for estimating rotation axis and mass center of spatial target based on binocular optical flows

ActiveUS20150242700A1Eliminate areaAccurate spacingImage enhancementImage analysisErrors and residualsThree dimensional motion

A method for estimating a rotation axis and a mass center of a spatial target based on binocular optical flows. The method includes: extracting feature points from binocular image sequences sequentially and respectively, and calculating binocular optical flows formed thereby; removing areas ineffective for reconstructing a three-dimensional movement trajectory from the binocular optical flows of the feature points, whereby obtaining effective area-constrained binocular optical flows, and reconstructing a three-dimensional movement trajectory of a spatial target; and removing areas with comparatively large errors in reconstructing three-dimensional motion vectors from the optical flows by multiple iterations, estimating a rotation axis according to a three-dimensional motion vector sequence of each of the feature points obtained thereby, obtaining a spatial equation of an estimated rotation axis by weighted average of estimated results of the feature points, and obtaining spatial coordinates of a mass center of the target according to two estimated rotation axes.

Owner:HUAZHONG UNIV OF SCI & TECH

Video depth map estimation method and device with space-time consistency

ActiveCN110782490AImprove relevanceImprove the problem of excessive error before and afterImage enhancementImage analysisNetwork structureVideo sequence

The invention provides a video depth map estimation method and device with space-time consistency. The method comprises the following steps: generating a training set: taking a central frame as a target view, taking a front frame and a rear frame as source views, and generating a plurality of sequences; for a static object in a scene, constructing a framework for jointly training monocular depth and camera pose estimation from an unmarked video sequence, which comprises constructing a depth map estimation network structure, constructing a camera pose estimation network structure, and constructing a loss function of the part; for a moving object in the scene, cascading a previous optical flow network after the created framework to simulate the motion in the scene, which comprises establishing an optical flow estimation network structure and establishing a loss function of the part; proposing a loss function of the deep neural network for the space-time consistency test of the depth map;continuously optimizing the model, carrying out joint training on monocular depth and camera attitude estimation, and then training an optical flow network; and realizing depth map estimation of continuous video frames by utilizing the optimized model.

Owner:WUHAN UNIV

Dense optical flow estimation method and device

ActiveCN107527358AImprove accuracyHigh speedImage enhancementImage analysisReference imageImage pair

The invention discloses a dense optical flow estimation method and device to improve the accuracy and efficiency of dense optical flow estimation. The method includes the following steps: processing an image pair according to a preset sparse optical flow estimation algorithm to get an initial sparse optical flow corresponding to a reference image in the image pair, wherein the image pair includes a reference image and a frame of image next to the reference image; generating a sparse optical flow mask according to the initial sparse optical flow; and inputting the reference image and the initial sparse optical flow and the sparse optical flow mask of the reference image to a pre-trained convolutional neural network for optical flow mask estimation to get a dense optical flow of the reference image.

Owner:BEIJING TUSEN ZHITU TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com