Motion-feature-fused space-time significance detection method

A technology of motion features and detection methods, applied in image data processing, instruments, calculations, etc., can solve problems such as difficulty in obtaining salient regions, ignoring dynamic and spatial features, and highlighting only motion features.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

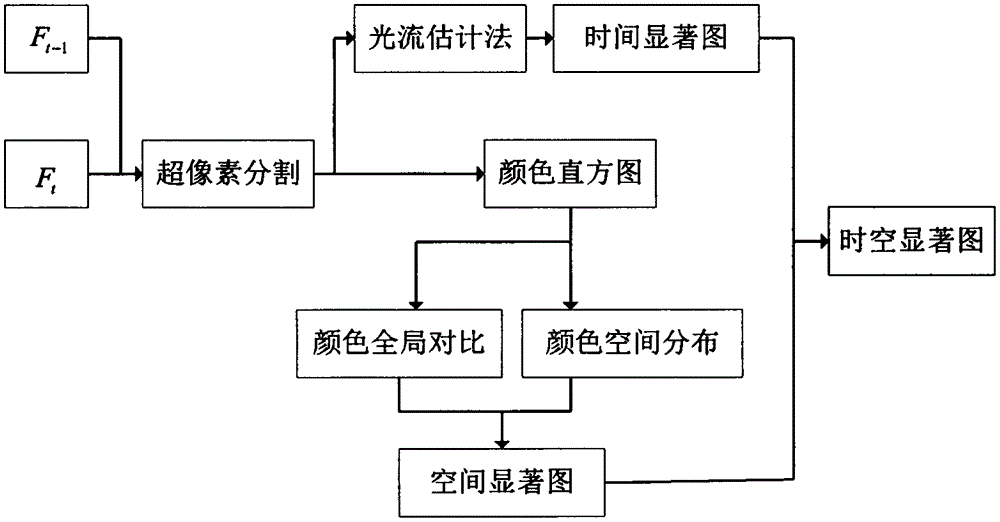

[0029] attached figure 1 For the implementation flowchart of this invention, its concrete steps are:

[0030] (1) Image preprocessing: Each frame of input image is divided into a series of uniform and compact superpixels by SLIC superpixel segmentation algorithm as the basic processing unit of saliency detection.

[0031] (2) Extraction of color features: For each frame of image, the superpixel is used as a unit to calculate the mean value of all pixels in the superpixel in the Lab space, and quantify to obtain the color histogram CH t and normalized such that

[0032] (3) Calculation of temporal salience: F calculated by optical flow motion estimation method t relative to the previous frame F t-1 The motion vector field of (u (x,y) , v (x,y) ), and calculate the superpixel Inner mean vector field size Then find the superpixel and its related superpixel set that best match the current frame in the previous frame through the block matching method, and use formula (1)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com