Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

39results about How to "Accurate pose estimation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

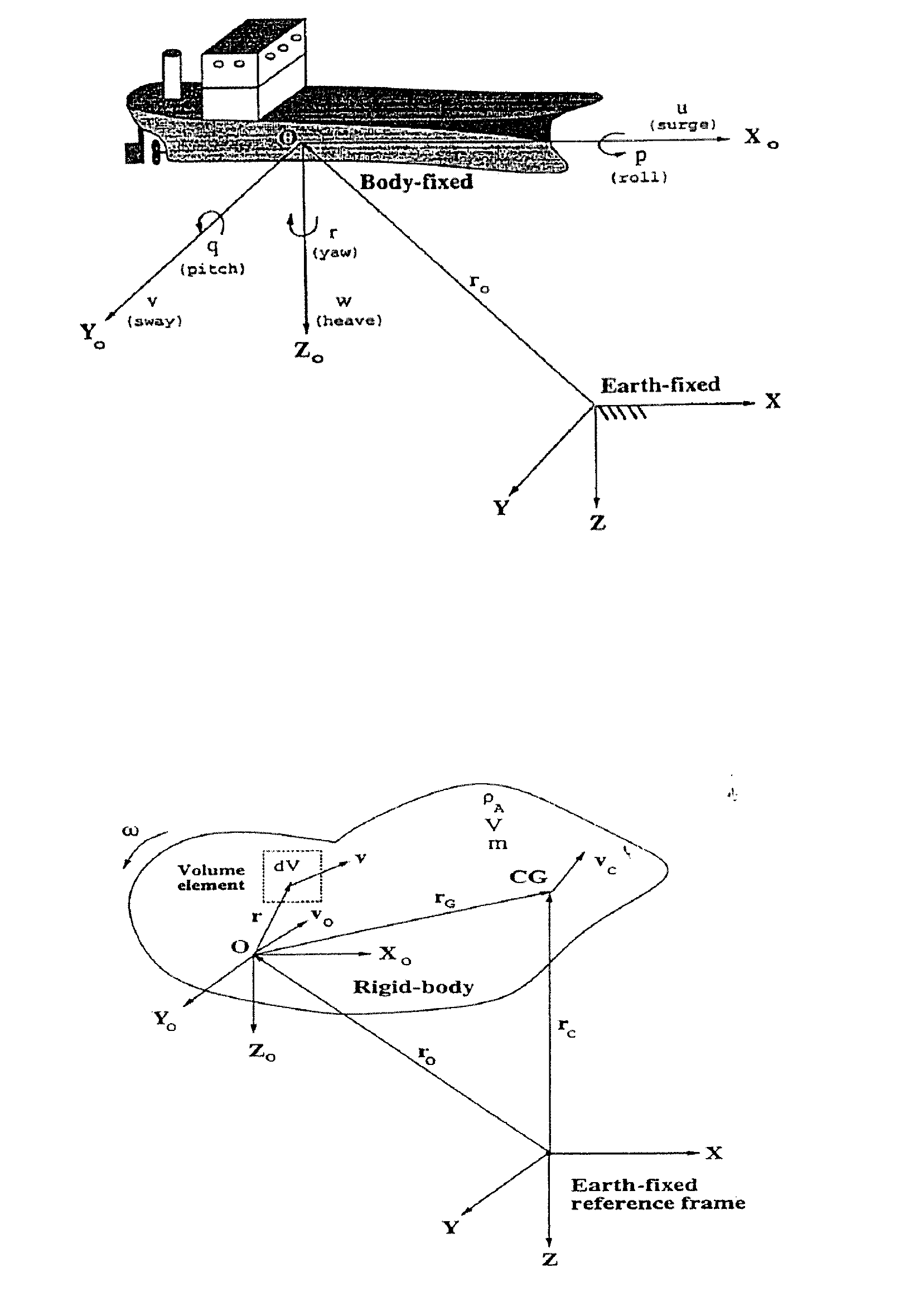

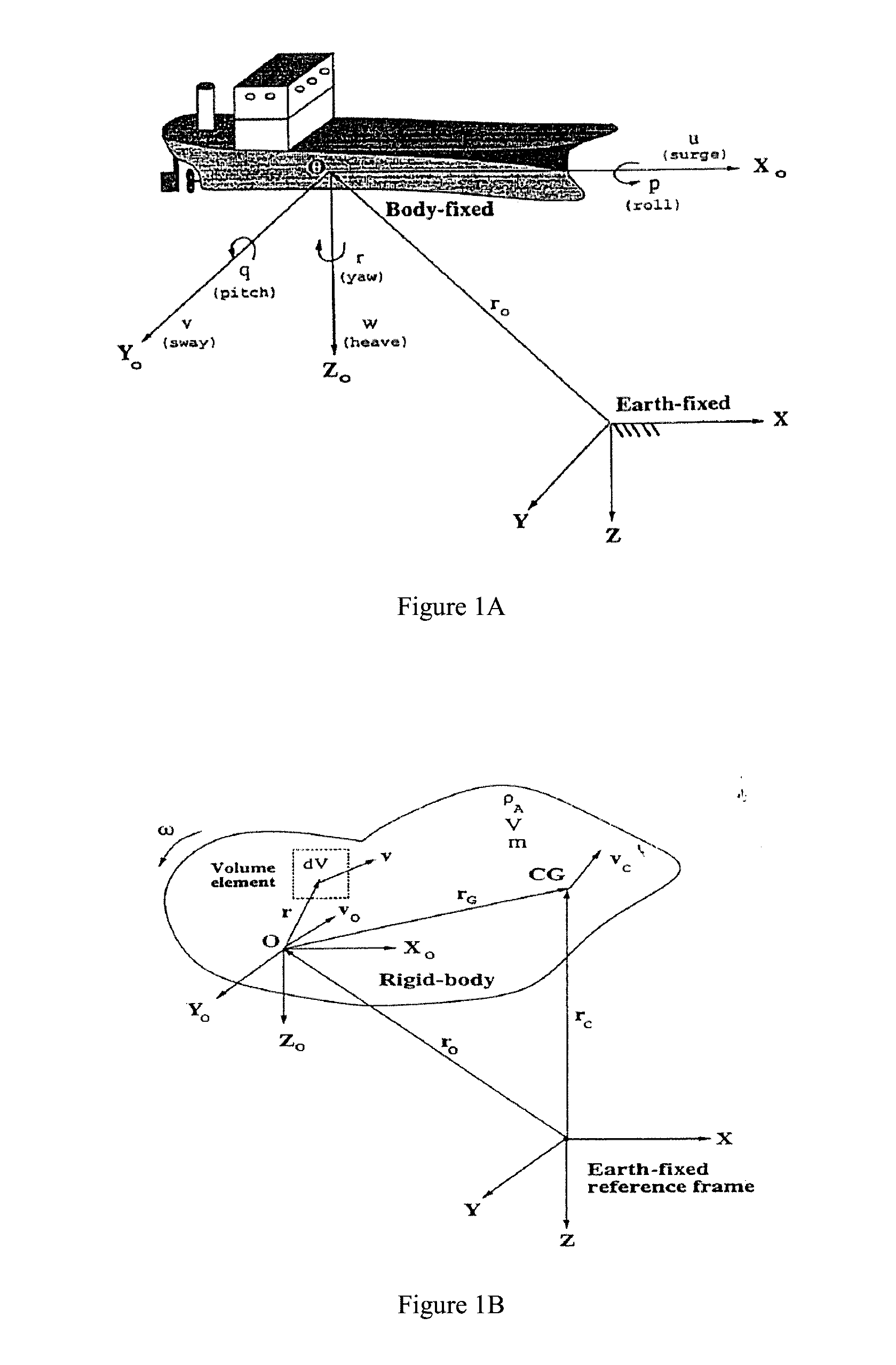

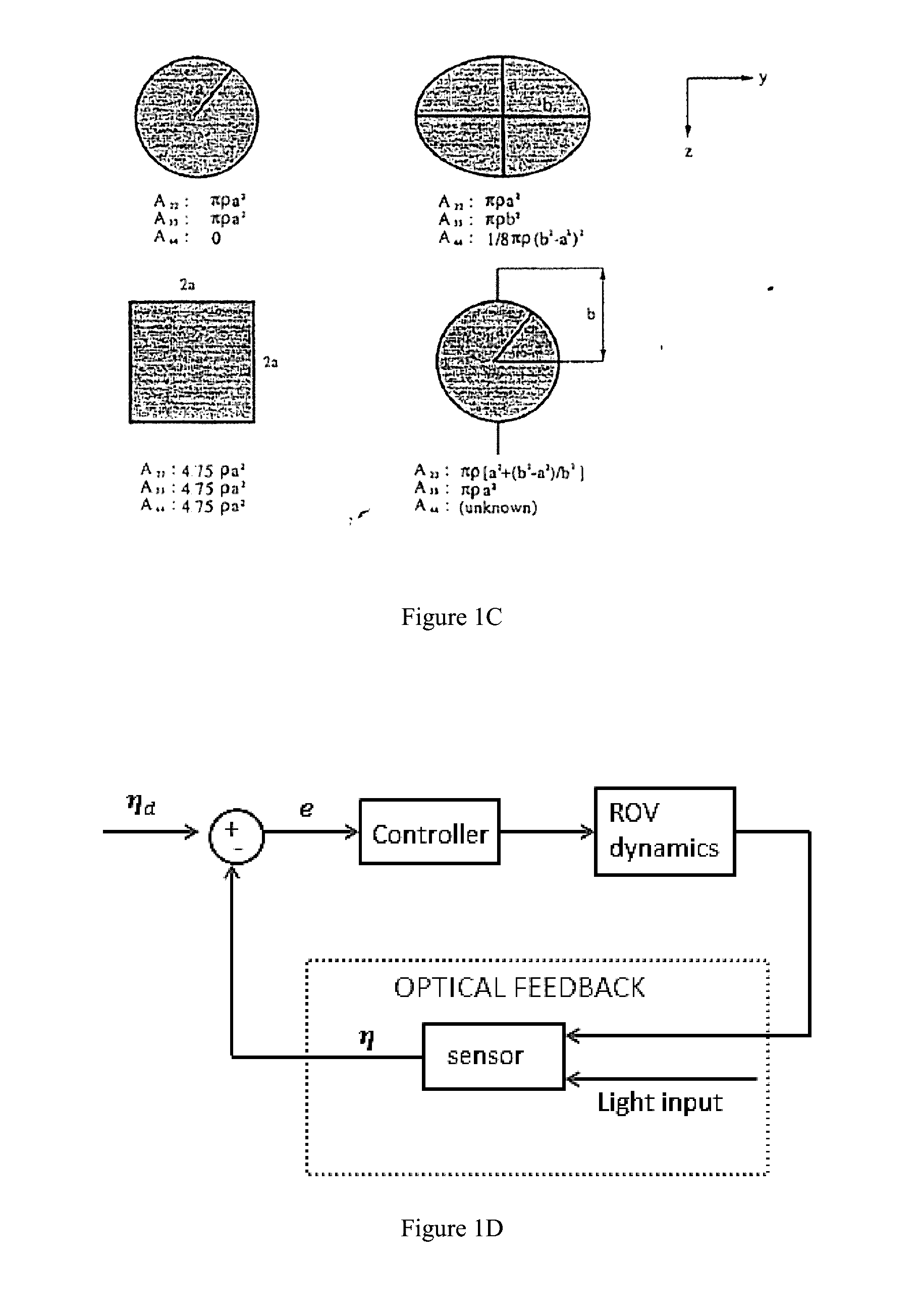

POSE DETECTION AND CONTROL OF UNMANNED UNDERWATER VEHICLES (UUVs) UTILIZING AN OPTICAL DETECTOR ARRAY

ActiveUS20160334793A1Decrease performanceAccurate pose estimationNavigational calculation instrumentsDirection/deviation determining electromagnetic systemsOptical detectorsOptical detector

Optical detectors and methods of optical detection for unmanned underwater vehicles (UUVs) are disclosed. The disclosed optical detectors and may be used to dynamically position UUVs in both static-dynamic systems (e.g., a fixed light source as a guiding beacon and a UUV) and dynamic-dynamic systems (e.g., a moving light source mounted on the crest of a leader UUV and a follower UUV).

Owner:UNIVERSITY OF NEW HAMPSHIRE

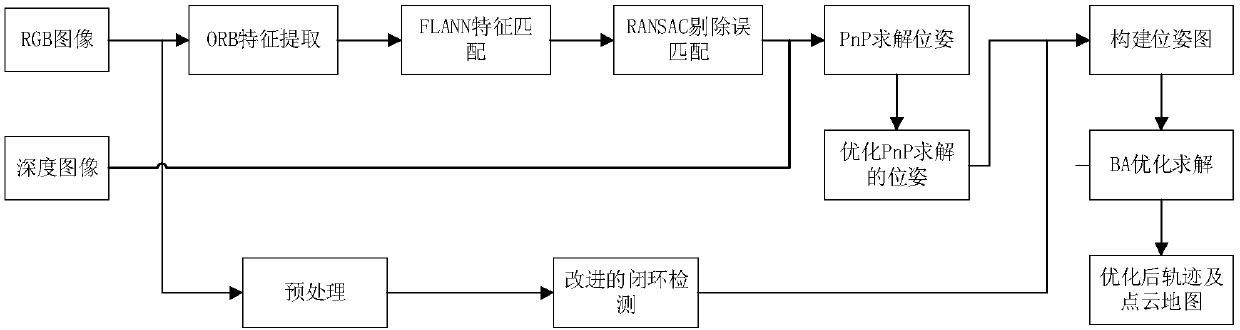

Improved closed-loop detection algorithm-based mobile robot vision SLAM (Simultaneous Location and Mapping) method

InactiveCN107680133AImprove extraction speedImprove matching speedImage enhancementImage analysisRgb imageClosed loop

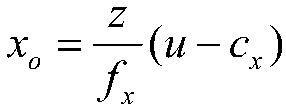

The present invention provides an improved closed-loop detection algorithm-based mobile robot vision SLAM (Simultaneous Location and Mapping) method. The method includes the following steps that: S1,Kinect is calibrated through a using the Zhang Dingyou calibration method; S2, ORB feature extraction is performed on acquired RGB images, and feature matching is performed by using the FLANN (Fast Library for Approximate Nearest network); S3, mismatches are deleted, the space coordinates of matching points are obtained, and inter-frame pose transformation (R, t) is estimated through adopting thePnP algorithm; S4, structureless iterative optimization is performed on the pose transformation solved by the PnP; and S5, the image frames are preprocessed, the images are described by using the bagof visual words, and an improved similarity score matching method is used to perform image matching so as to obtain closed-loop candidates, and correct closed-loops are selected; and S6, an image optimization method centering cluster adjustment is used to optimize poses and road signs, and more accurate camera poses and road signs are obtained through continuous iterative optimization. With the method of the invention adopted, more accurate pose estimations and better three-dimensional reconstruction effects under indoor environments can be obtained.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Improved particle filter-based mobile robot positioning method

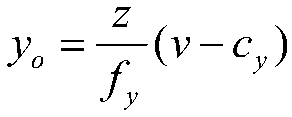

ActiveCN103487047AAccurate pose estimationAccurate estimateNavigational calculation instrumentsMachine learningSimulationParticle swarm optimization

The invention provides an improved particle filter-based mobile robot positioning method. The improved particle filter-based mobile robot positioning method comprises the following steps: establishing a motion equation and a road sign calculation equation of a robot; optimizing a particle set by using a multi-agent particle swarm optimization algorithm, wherein the obtained optimal value is estimation of a pose; estimating an environmental road sign by using Kalman filtering algorithm; updating and normalizing the weight and resampling. The positioning method is accurate in positioning and easy to implement; the pose estimation and the environmental road sign estimation of the mobile robot are more accurate in a simulation process of the mobile robot.

Owner:DEEPBLUE ROBOTICS (SHANGHAI) CO LTD

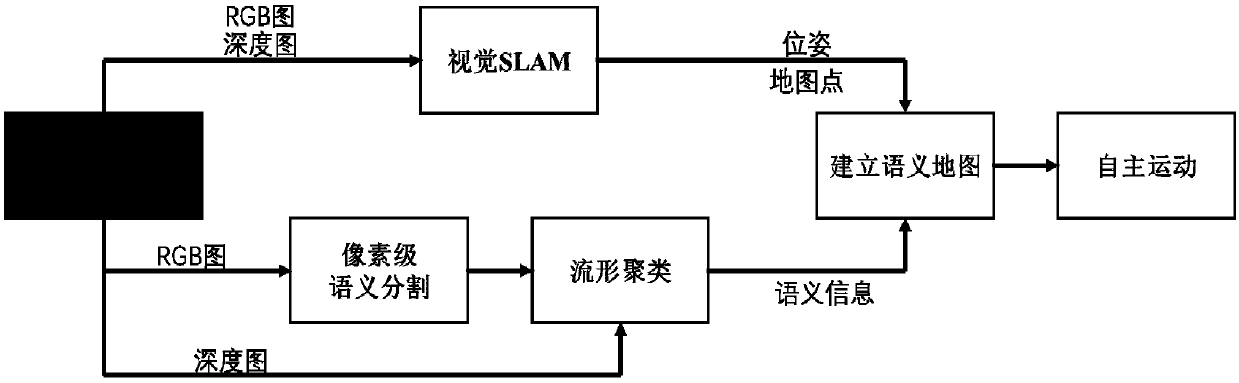

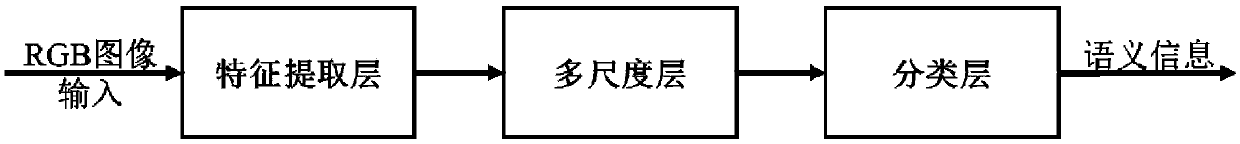

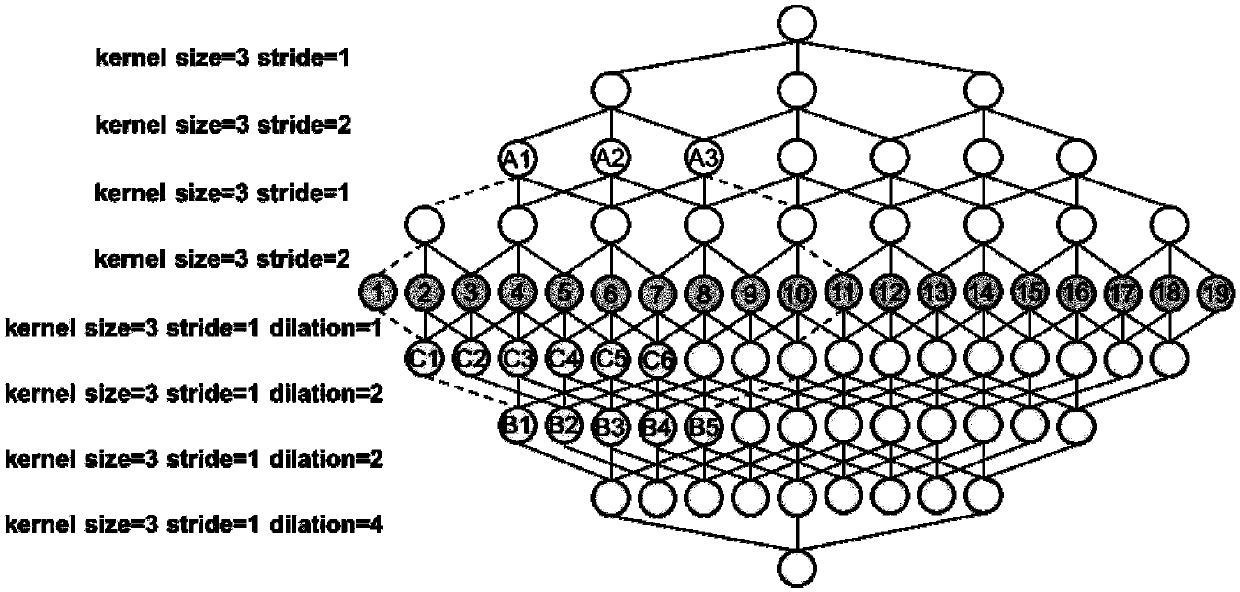

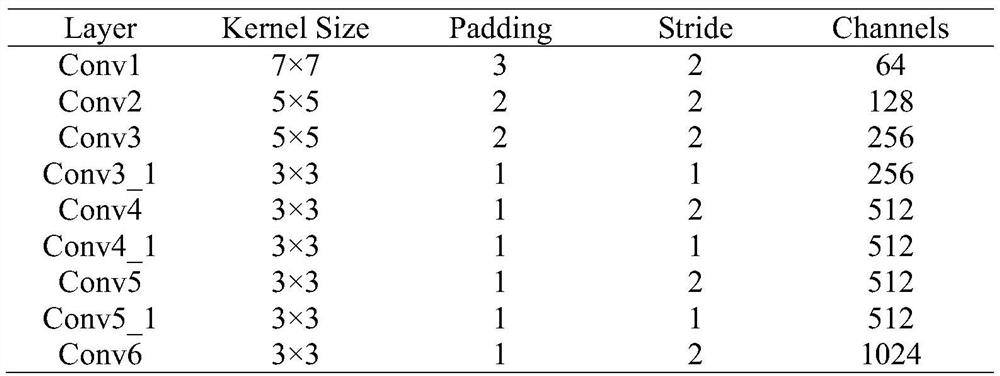

A method and system for realizing a visual SLAM semantic mapping function based on a cavity convolutional deep neural network

ActiveCN109559320AAccurate pose estimationEliminate accumulated errorsImage enhancementImage analysisVisual perceptionPoint match

The invention relates to a method for realizing a visual SLAM semantic mapping function based on a cavity convolutional deep neural network. The method comprises the following steps of (1) using an embedded development processor to obtain the color information and the depth information of the current environment via a RGB-D camera; (2) obtaining a feature point matching pair through the collectedimage, carrying out pose estimation, and obtaining scene space point cloud data; (3) carrying out pixel-level semantic segmentation on the image by utilizing deep learning, and enabling spatial pointsto have semantic annotation information through mapping of an image coordinate system and a world coordinate system; (4) eliminating the errors caused by optimized semantic segmentation through manifold clustering; and (5) performing semantic mapping, and splicing the spatial point clouds to obtain a point cloud semantic map composed of dense discrete points. The invention also relates to a system for realizing the visual SLAM semantic mapping function based on the cavity convolutional deep neural network. With the adoption of the method and the system, the spatial network map has higher-level semantic information and better meets the use requirements in the real-time mapping process.

Owner:EAST CHINA UNIV OF SCI & TECH

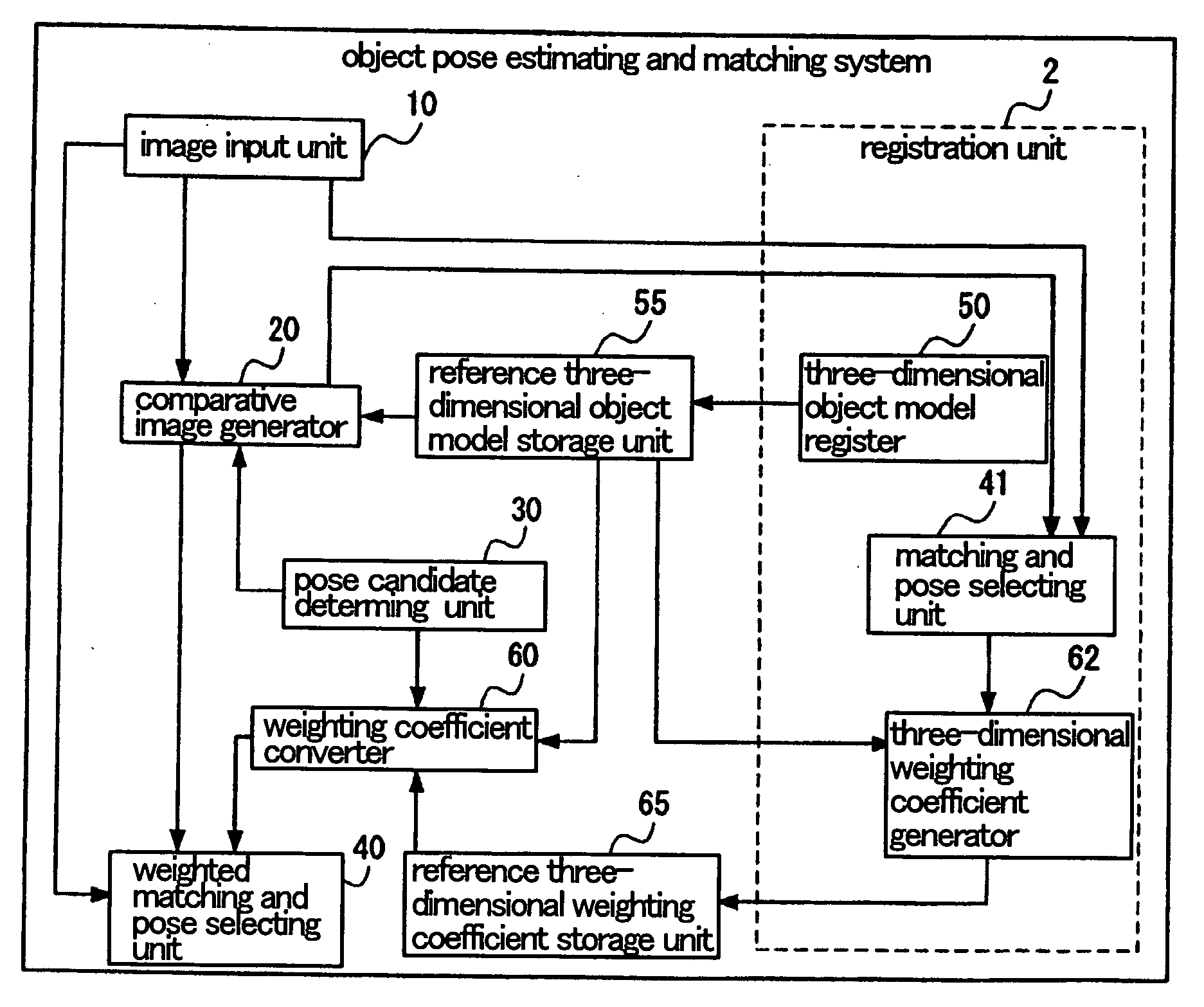

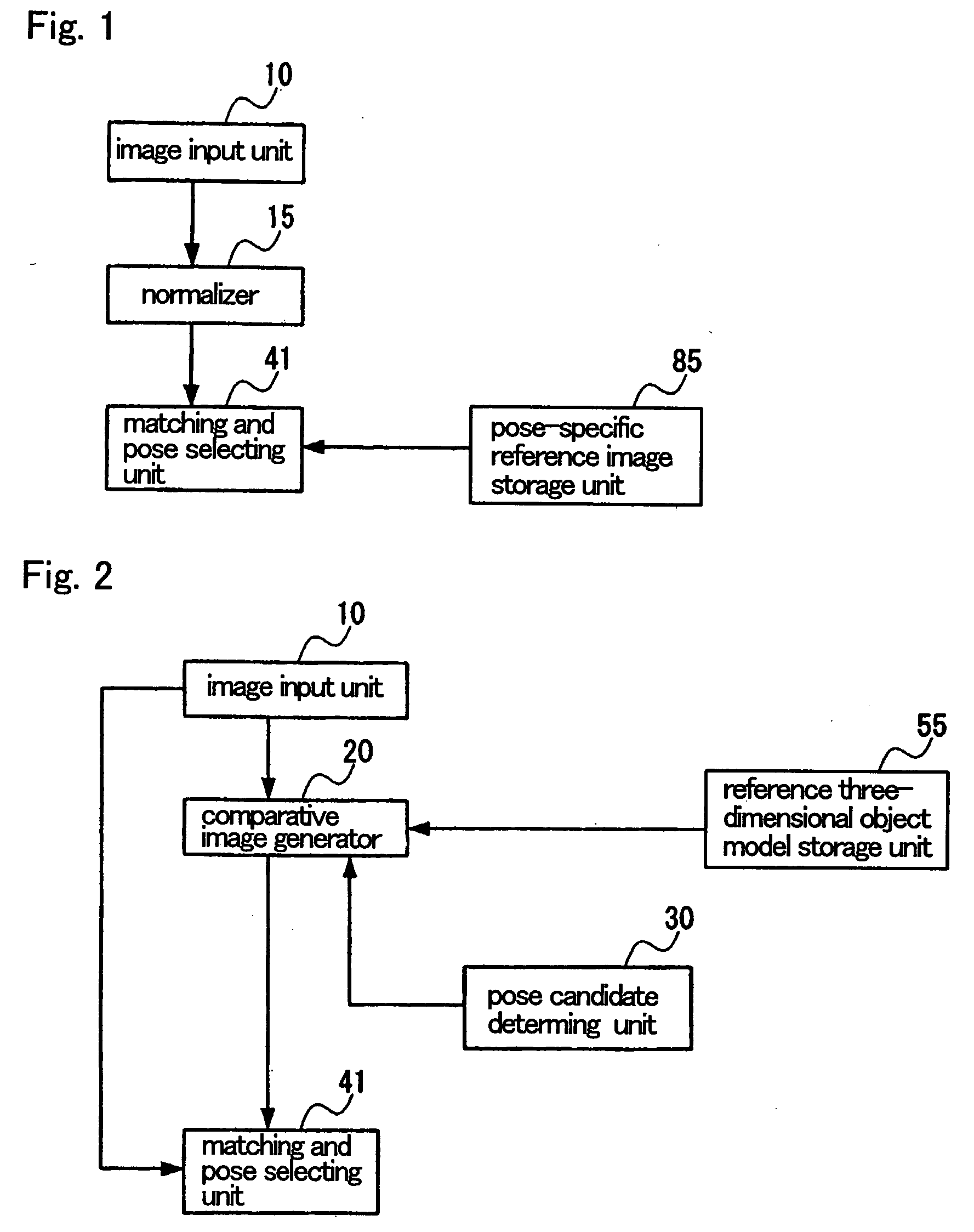

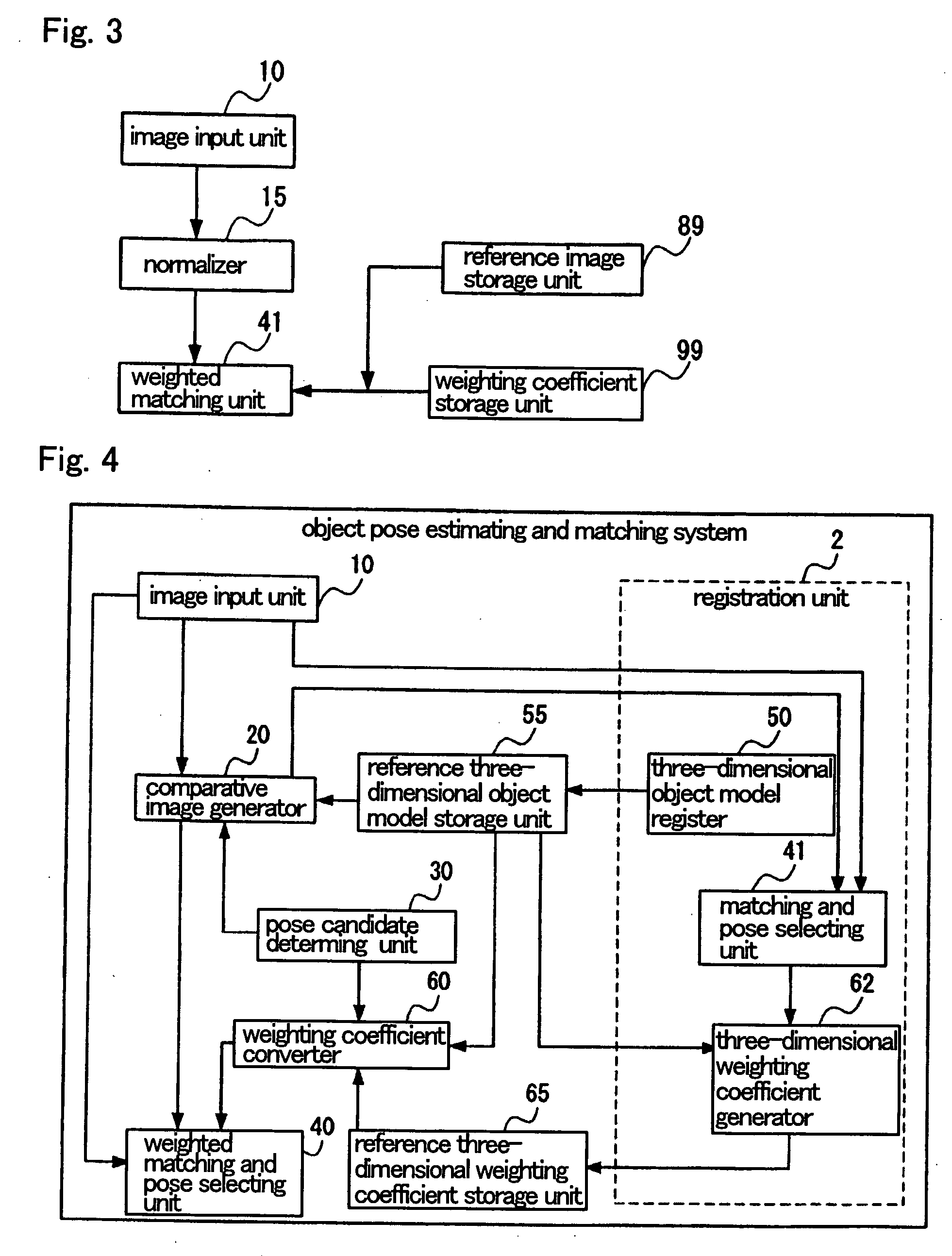

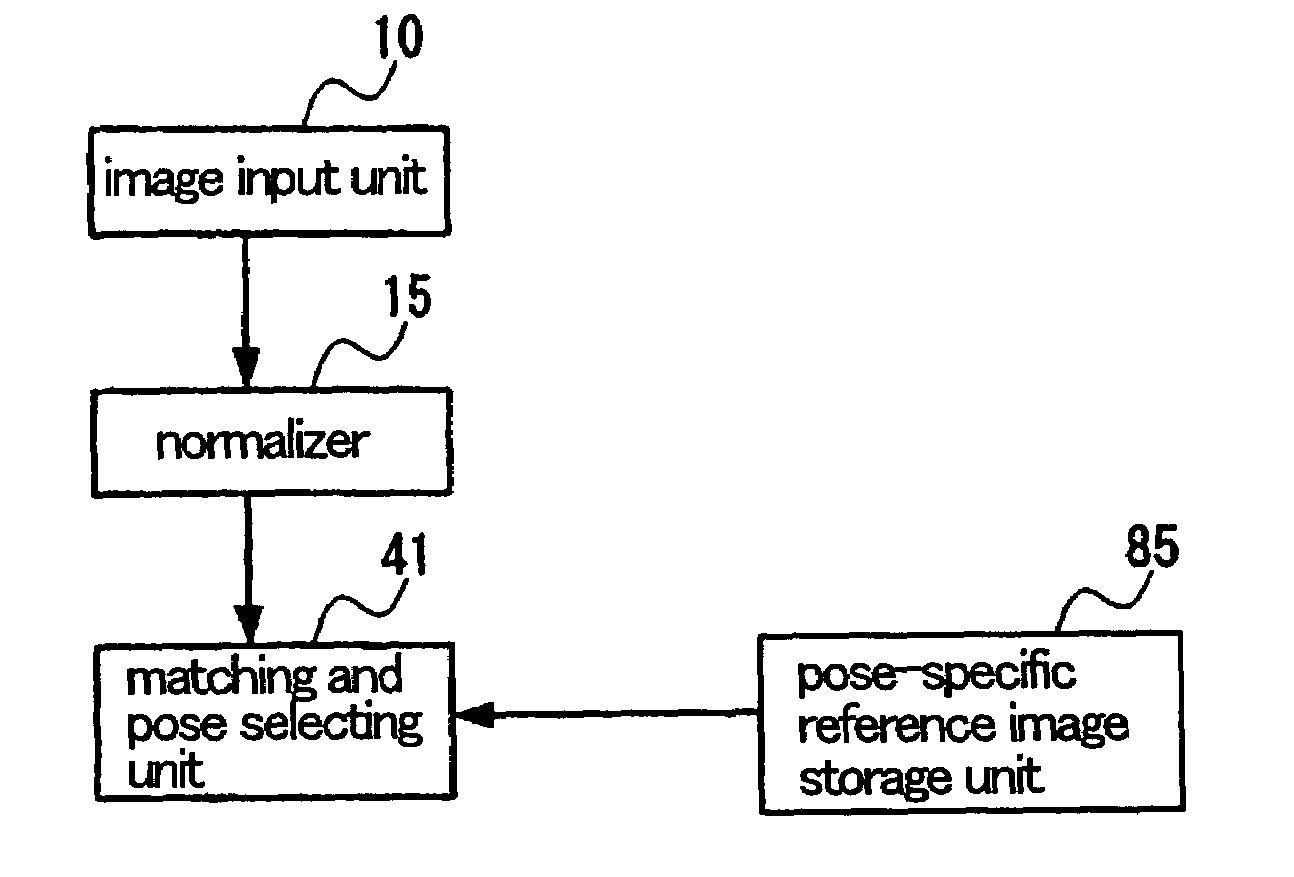

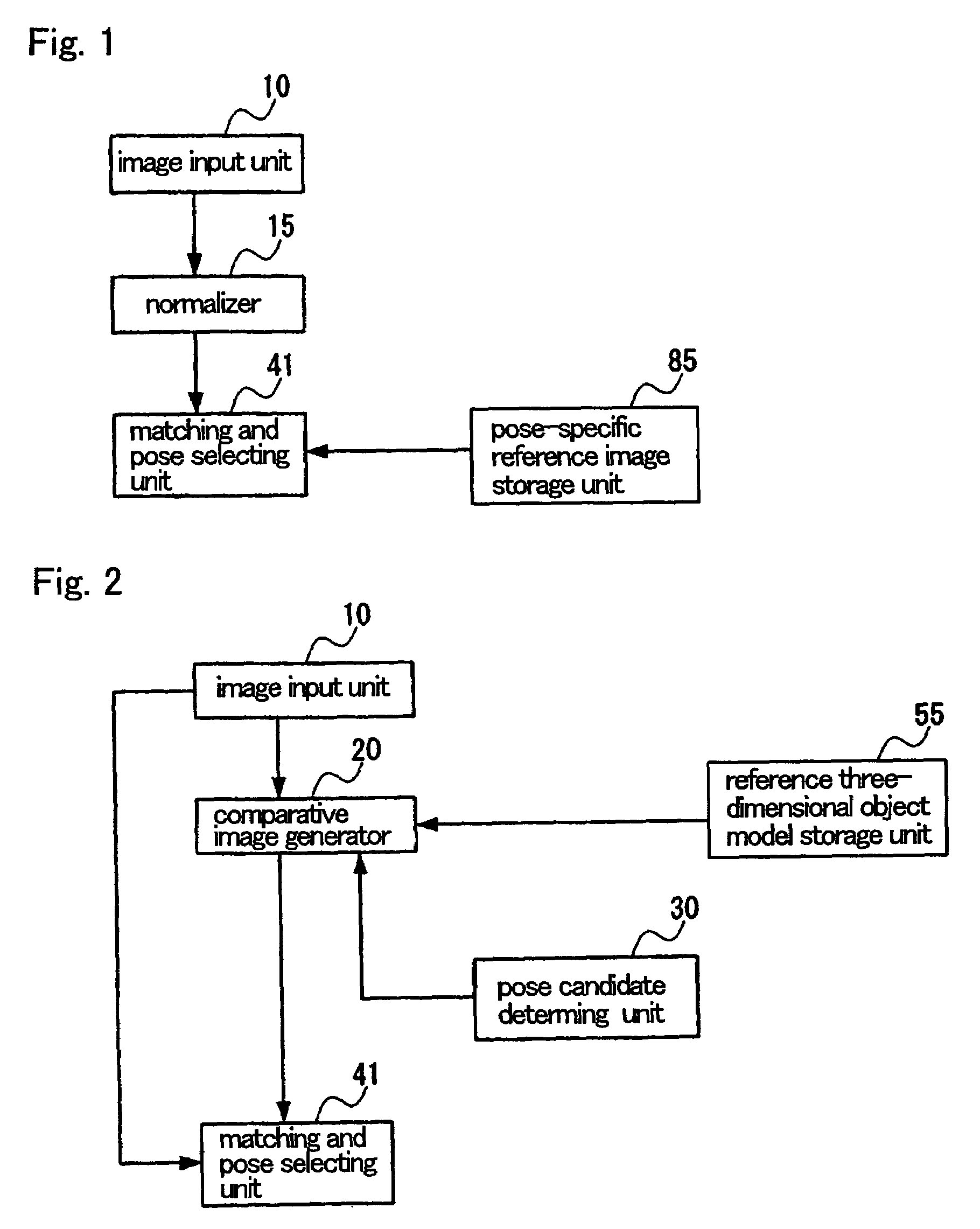

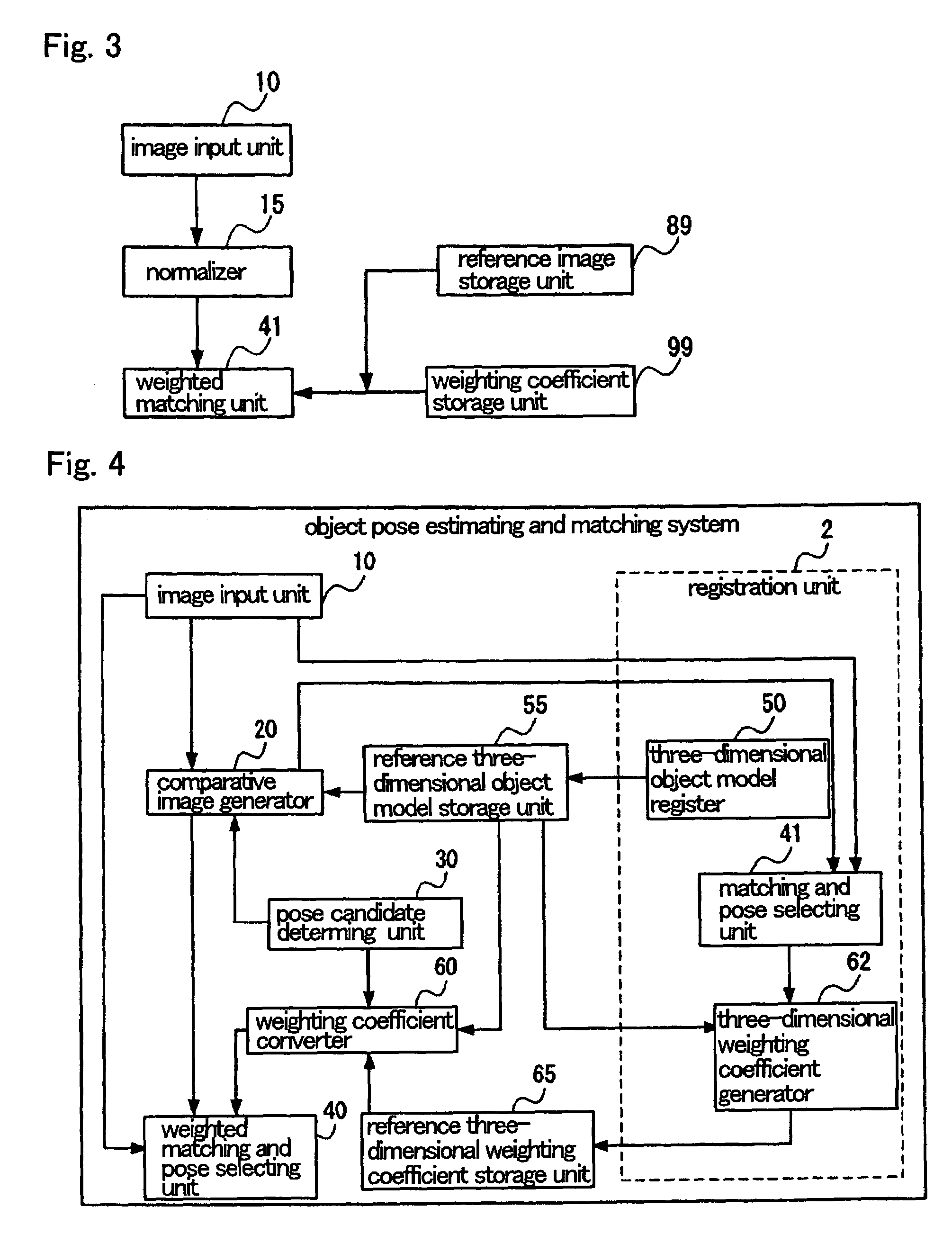

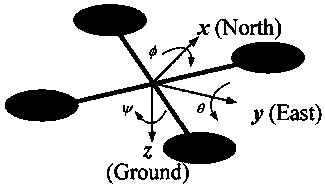

Object posture estimation/correction system using weight information

ActiveUS20060165293A1Exact matchAccurate pose estimationImage enhancementImage analysisWeight coefficientDimensional weight

An object pose estimating and matching system is disclosed for estimating and matching the pose of an object highly accurately by establishing suitable weighting coefficients, against images of an object that has been captured under different conditions of pose, illumination. Pose candidate determining unit determines pose candidates for an object. Comparative image generating unit generates comparative images close to an input image depending on the pose candidates, based on the reference three-dimensional object models. Weighting coefficient converting unit determines a coordinate correspondence between the standard three-dimensional weighting coefficients and the reference three-dimensional object models, using the standard three-dimensional basic points and the reference three-dimensional basic points, and converts the standard three-dimensional weighting coefficients into two-dimensional weighting coefficients depending on the pose candidates. Weighted matching and pose selecting unit calculates weighted distance values or similarity degrees between said input image and the comparative images, using the two-dimensional weighting coefficients, and selects one of the comparative images whose distance value up to the object is the smallest or whose similarity degree with respect to the object is the greatest, thereby to estimate and match the pose of the object.

Owner:NEC CORP

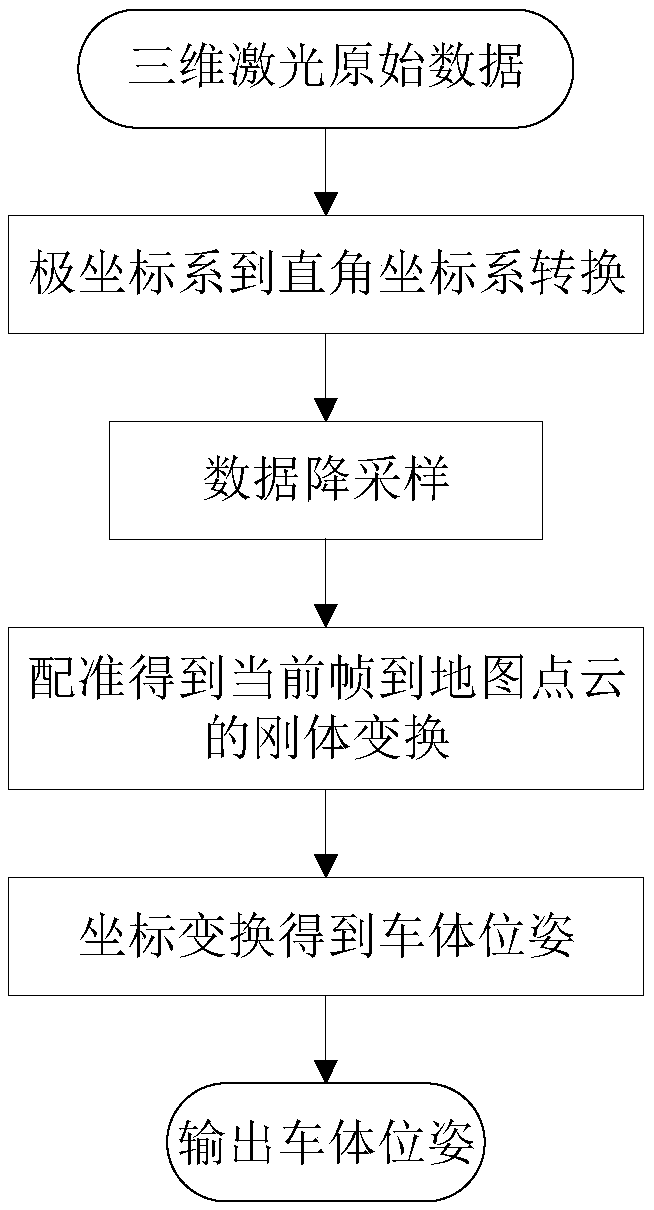

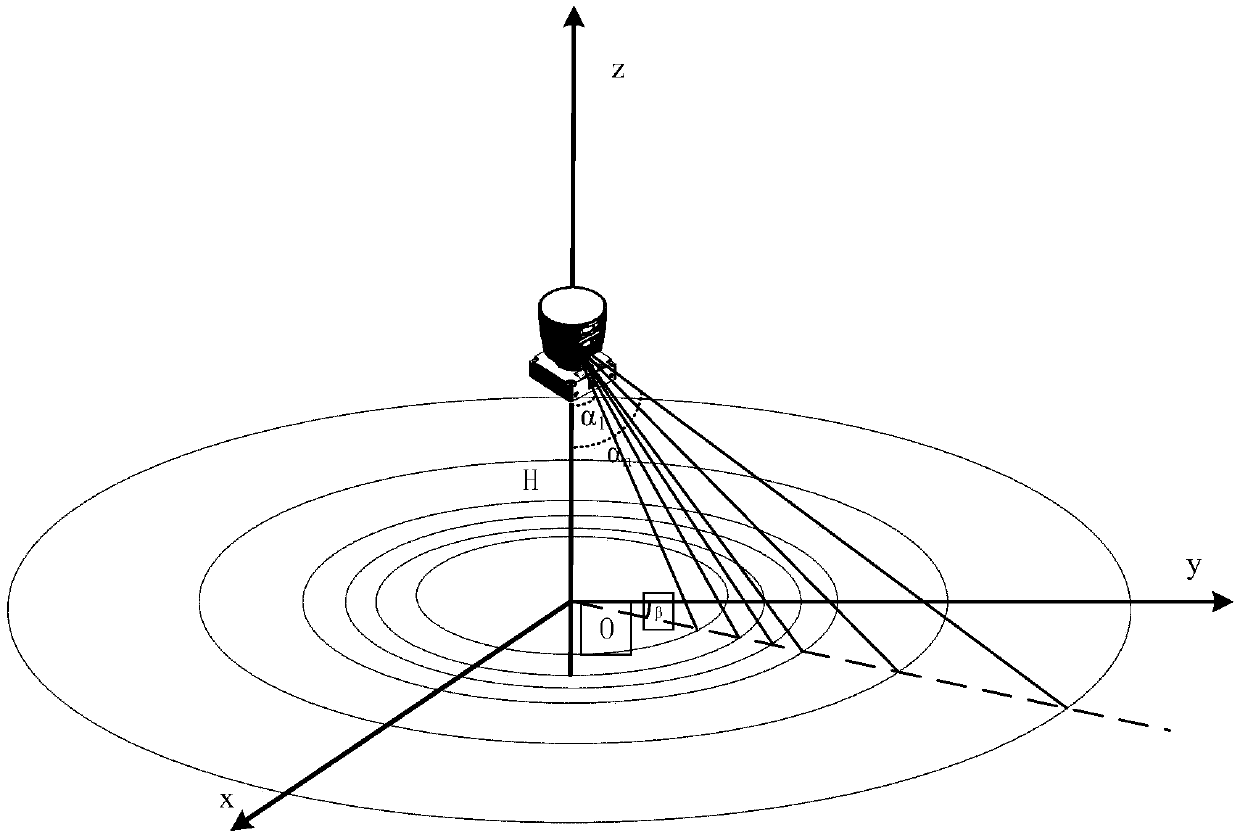

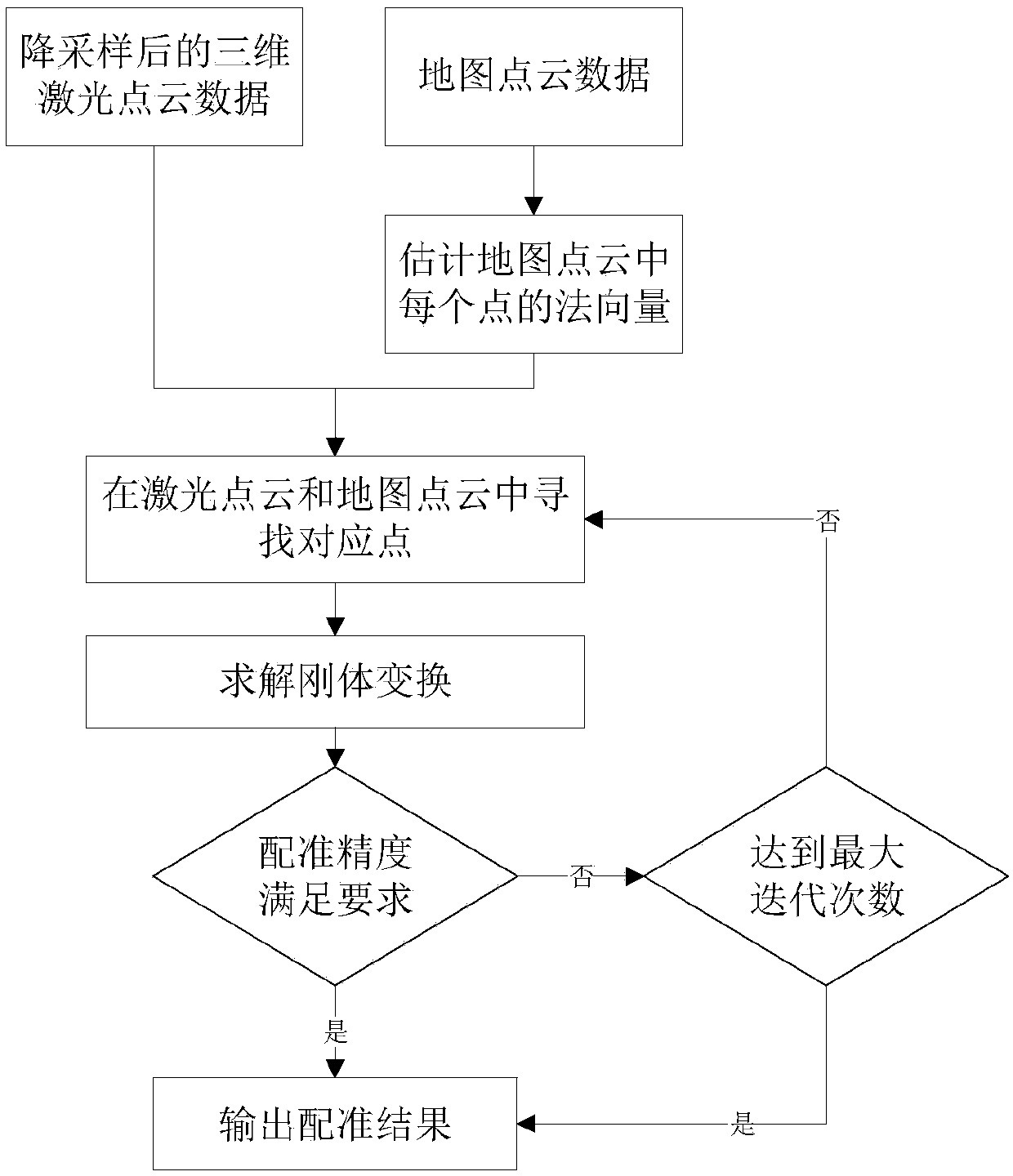

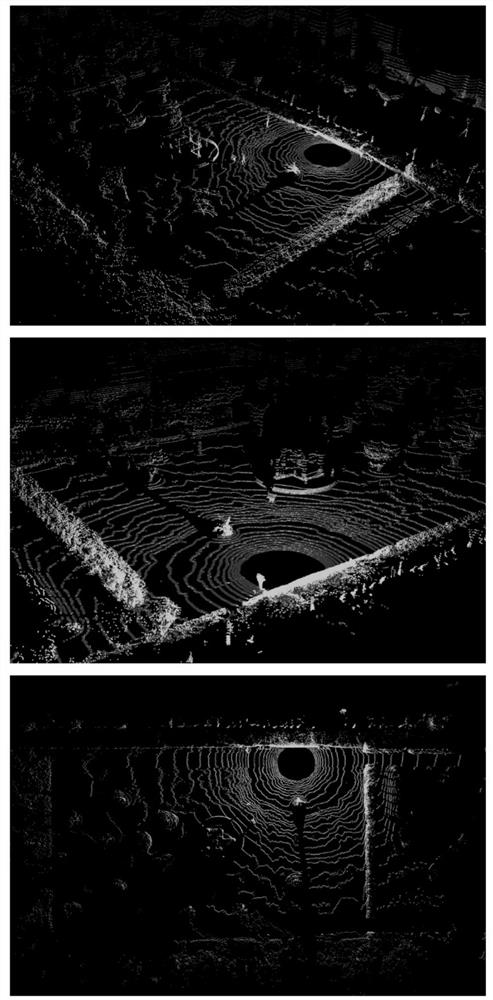

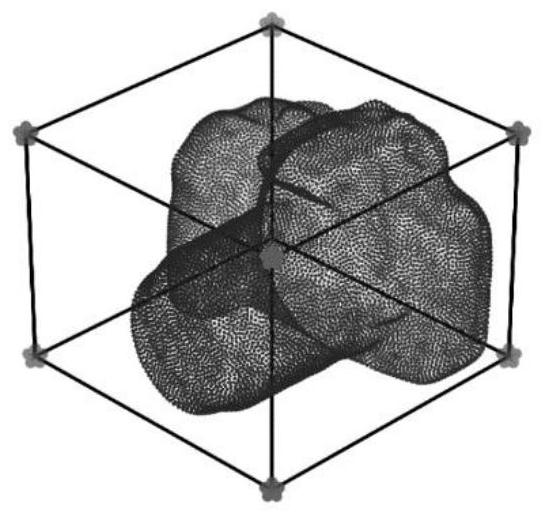

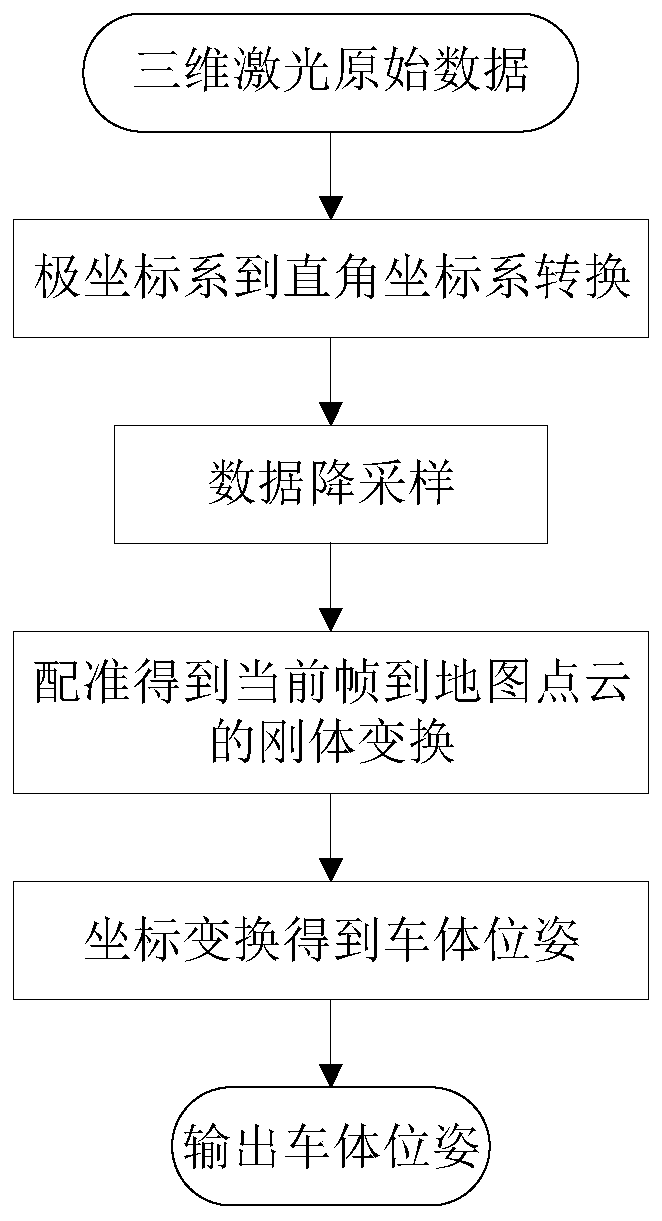

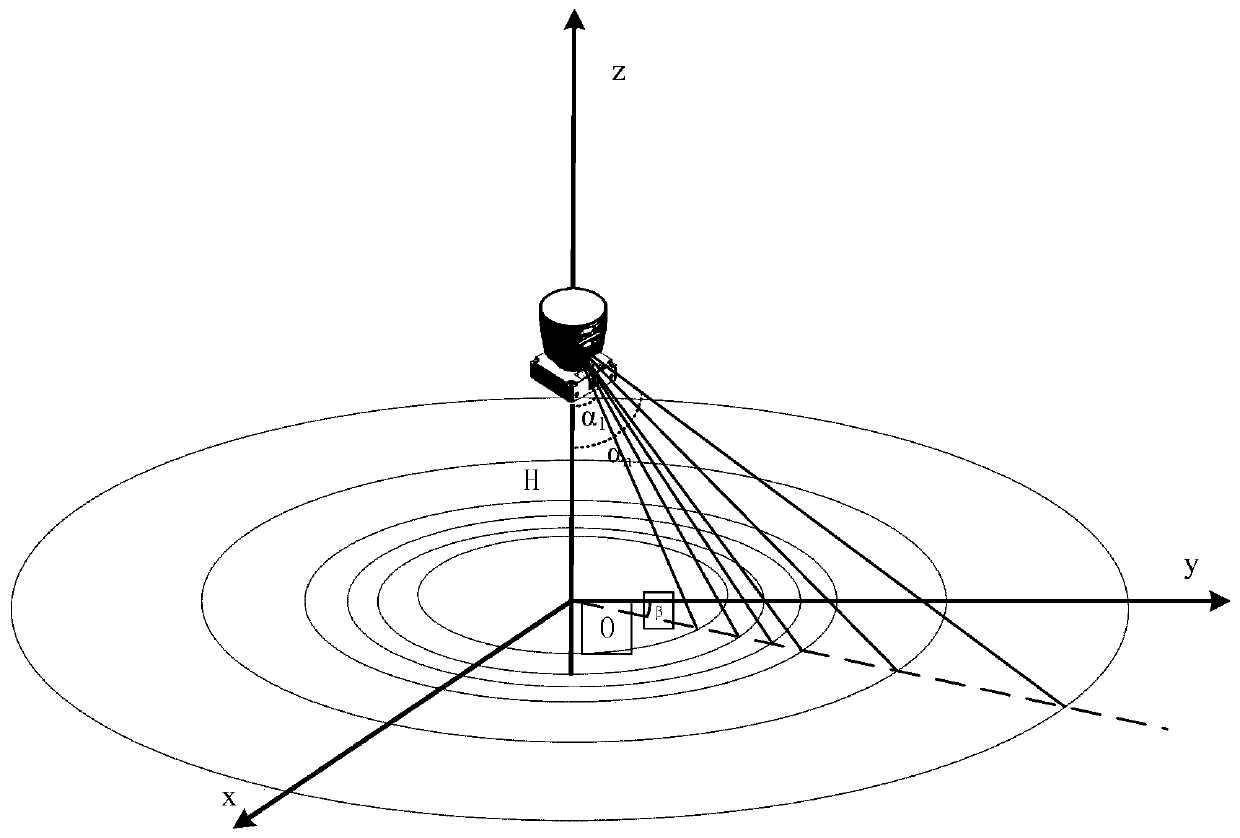

Position and posture estimation method of driverless car based on distance from point to surface and cross correlation entropy rectification

The invention discloses a position and posture estimation method of a driverless car based on a distance from a point to a surface and cross correlation entropy rectification, which comprises the following steps: firstly, a three-dimensional laser radar is calibrated, and then the acquired three-dimensional laser radar data is subjected to coordinate conversion; point cloud alignment is carried out on the acquired data and the existing map data to obtain a rotary and translation transformation of a rigid body; so that the position and posture of an active moving body are obtained according tothe rotary and translation conversion. According to the invention, by using the three-dimensional laser radar as the data source, the function of estimating the position and posture of the driverlesscar is finished through the steps of coordinate system conversion, data drop sampling, point set rectification and the like. The method can well overcome the influence of weather, light and other environmental factors. Moreover, the error evaluation function based on the distance from the point to the surface and the cross correlation entropy has good resistance to noise and abnormal points, suchas mismatching of the scene and the map description part, dynamic obstacles and the like, therefore, the function of accurate and robust estimation of the position and posture of the driverless car can be achieved.

Owner:XI AN JIAOTONG UNIV

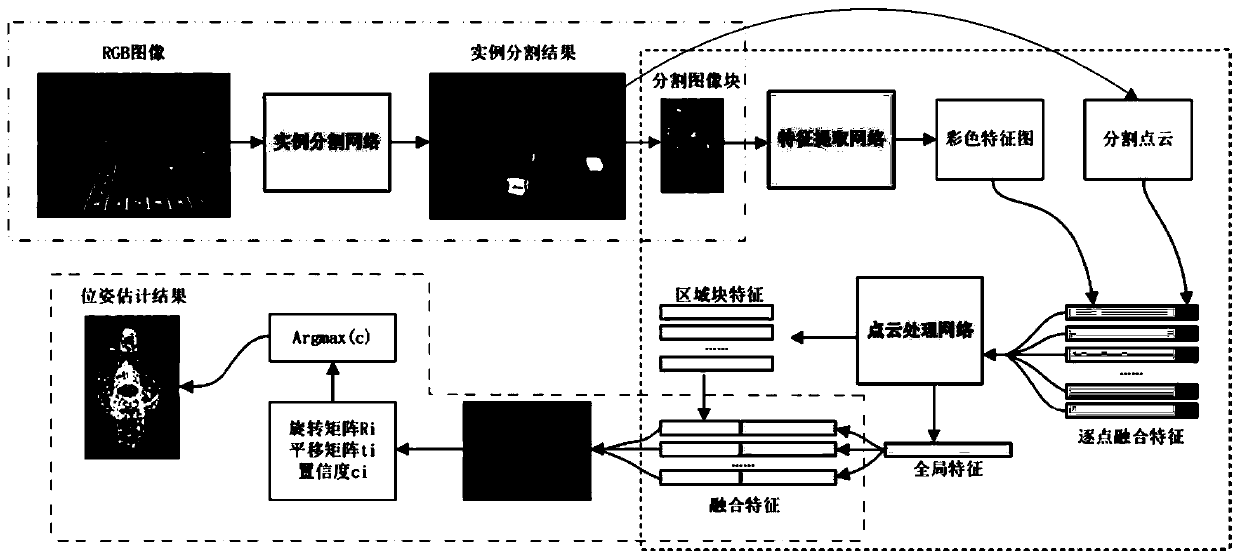

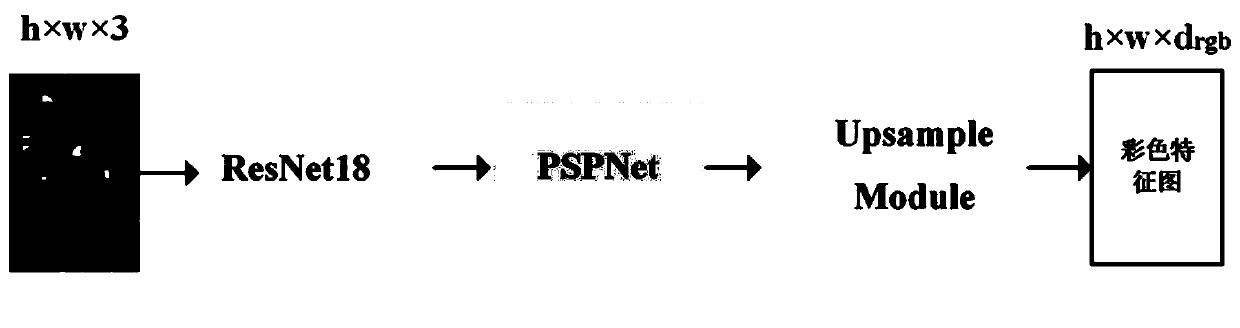

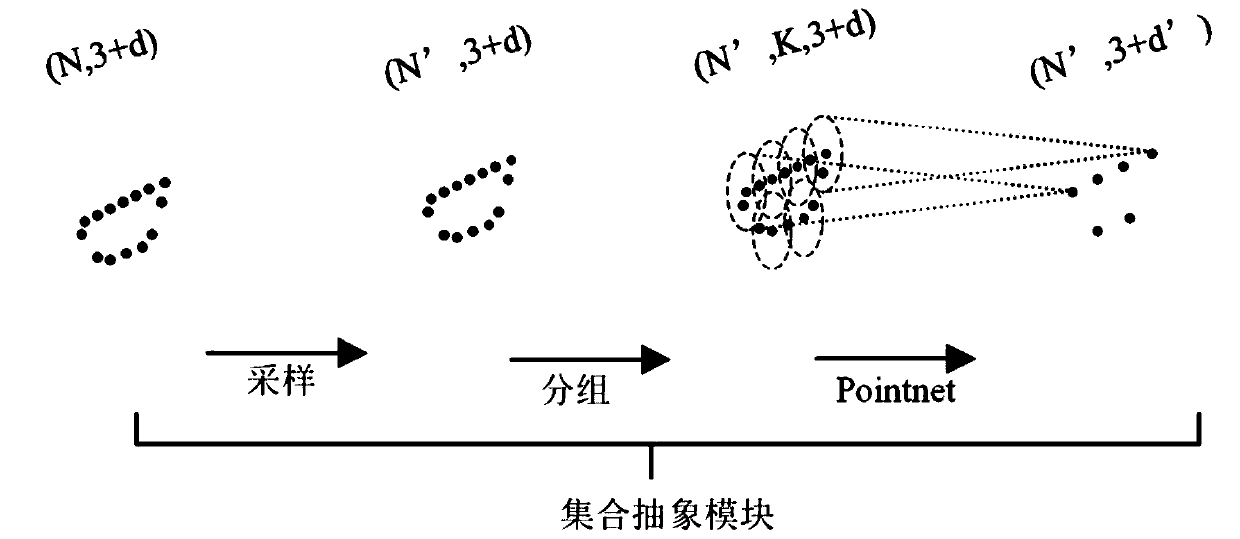

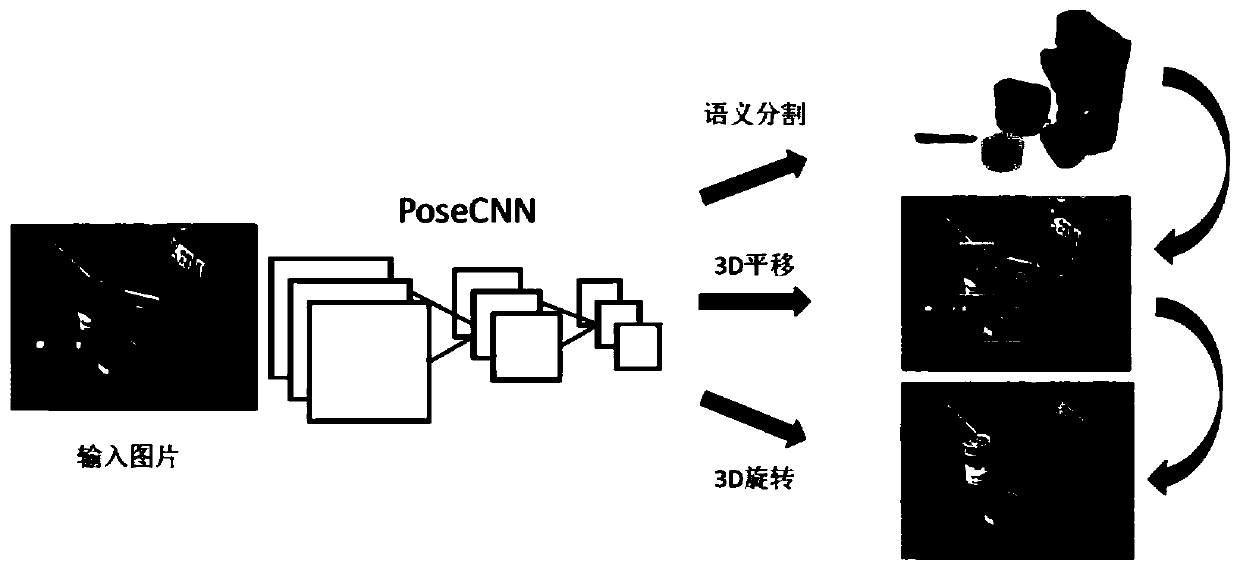

Object six-degree-of-freedom pose estimation method based on color and depth information fusion

ActiveCN111179324AEliminate the effects of stackingAccurate pose estimationImage enhancementImage analysisPattern recognitionColor image

The invention relates to an object six-degree-of-freedom pose estimation method based on color and depth information fusion. The object six-degree-of-freedom pose estimation method comprises the following steps of: acquiring a color image and a depth image of a target object, and carrying out instance segmentation on the color image; cutting a color image block containing a target object from thecolor image, and acquiring a target object point cloud from the depth image; extracting color features from the color image block, and combining the color features to the target object point cloud atthe pixel level; carrying out point cloud processing on the target object point cloud to obtain a plurality of point cloud local region features fusing the color information and the depth informationand a global feature, and combining the global feature into the point cloud local region features; and predicting the pose and confidence of one target object by means of each local feature, and taking the pose corresponding to the highest confidence as a final estimation result. Compared with the prior art, color information and depth information are combined, the object pose is predicted by combining the local features and the global features, and the object six-degree-of-freedom pose estimation method has the advantages of being high in robustness, high in accuracy rate and the like.

Owner:TONGJI UNIV

Object posture estimation/correlation system using weight information

ActiveUS7706601B2Accurate pose estimationImage enhancementImage analysisWeight coefficientDimensional weight

An object pose estimating and matching system is disclosed for estimating and matching the pose of an object highly accurately by establishing suitable weighting coefficients, against images of an object that has been captured under different conditions of pose, illumination. Pose candidate determining unit determines pose candidates for an object. Comparative image generating unit generates comparative images close to an input image depending on the pose candidates, based on the reference three-dimensional object models. Weighting coefficient converting unit determines a coordinate correspondence between the standard three-dimensional weighting coefficients and the reference three-dimensional object models, using the standard three-dimensional basic points and the reference three-dimensional basic points, and converts the standard three-dimensional weighting coefficients into two-dimensional weighting coefficients depending on the pose candidates. Weighted matching and pose selecting unit calculates weighted distance values or similarity degrees between said input image and the comparative images, using the two-dimensional weighting coefficients, and selects one of the comparative images whose distance value up to the object is the smallest or whose similarity degree with respect to the object is the greatest, thereby to estimate and match the pose of the object.

Owner:NEC CORP

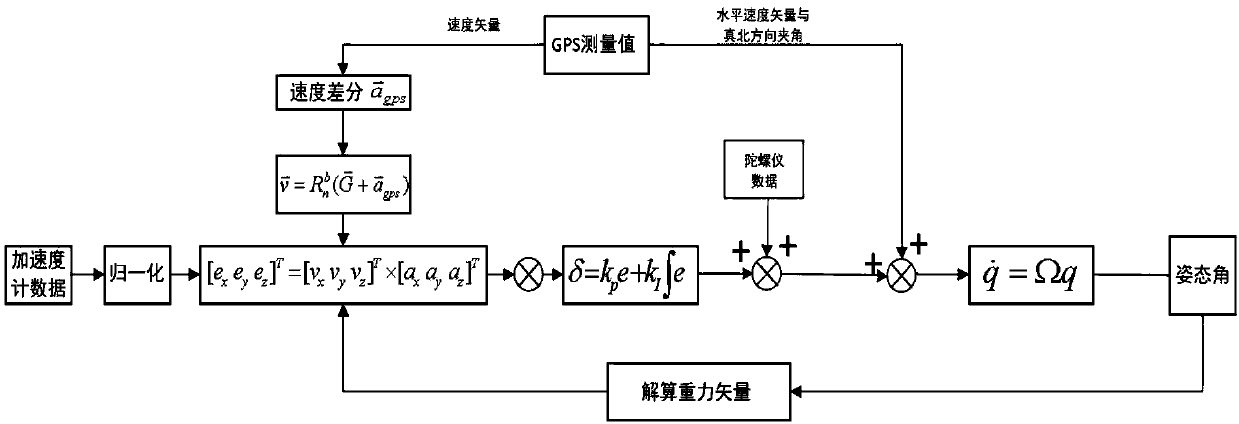

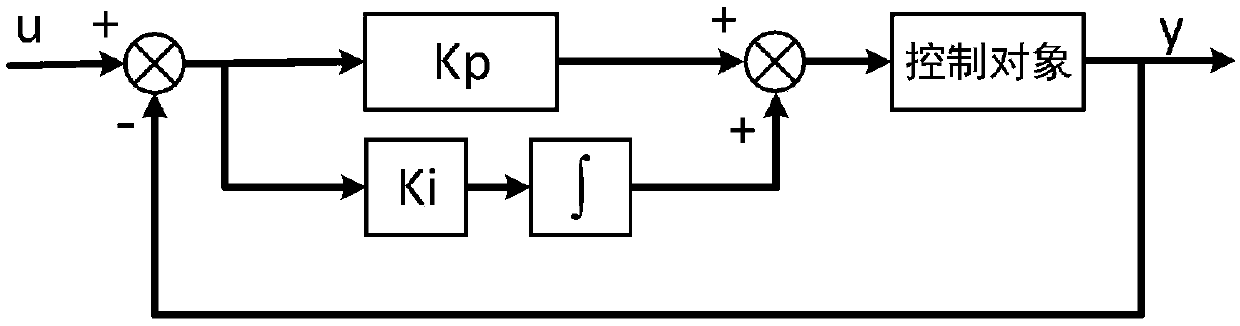

UAV (Unmanned Aerial Vehicle) attitude estimation method based on single antenna GPS and IMU under large maneuvering conditions

PendingCN110793515AAccurate Pose EstimationMake up for the disadvantageNavigational calculation instrumentsNavigation by speed/acceleration measurementsComplementary filterUncrewed vehicle

The invention provides an attitude estimation algorithm based on a low-cost MEMS sensor and a single antenna GPS and applied to an UAV (Unmanned Aerial Vehicle) under large maneuvering conditions. Thealgorithm differentiates the speed values measured by GPS, compensates the linear acceleration measured by the IMU based on the obtained results, corrects the drift of the gyroscope based on the corrected acceleration information by using a complementary filtering fusion algorithm to obtain accuracy estimation of the current attitude angle, uses GPS information in real time to correct the yaw angle during the flight of the UAV using BTT control, and outputs the optimal attitude information that is fused and corrected.

Owner:HARBIN INST OF TECH

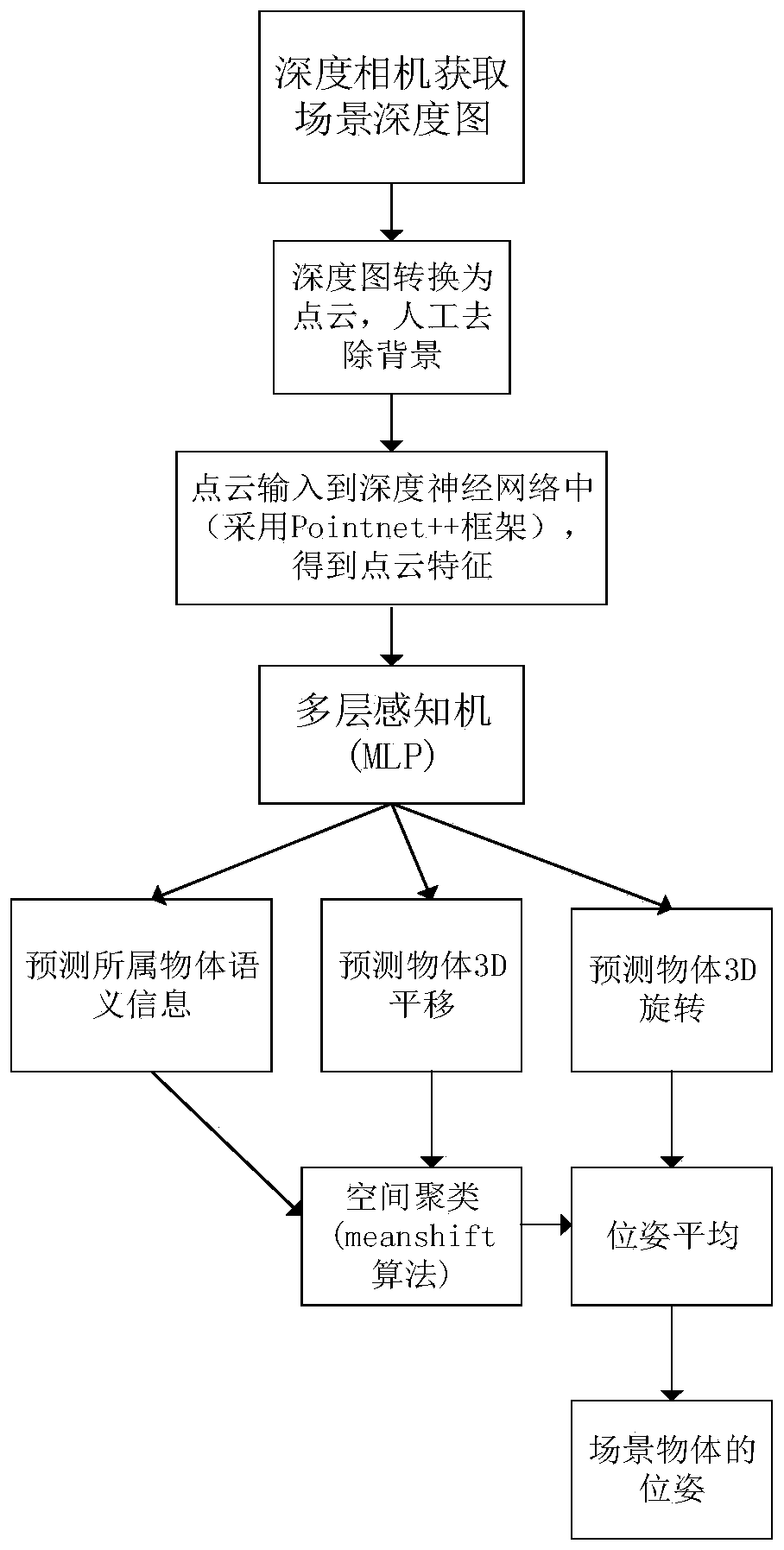

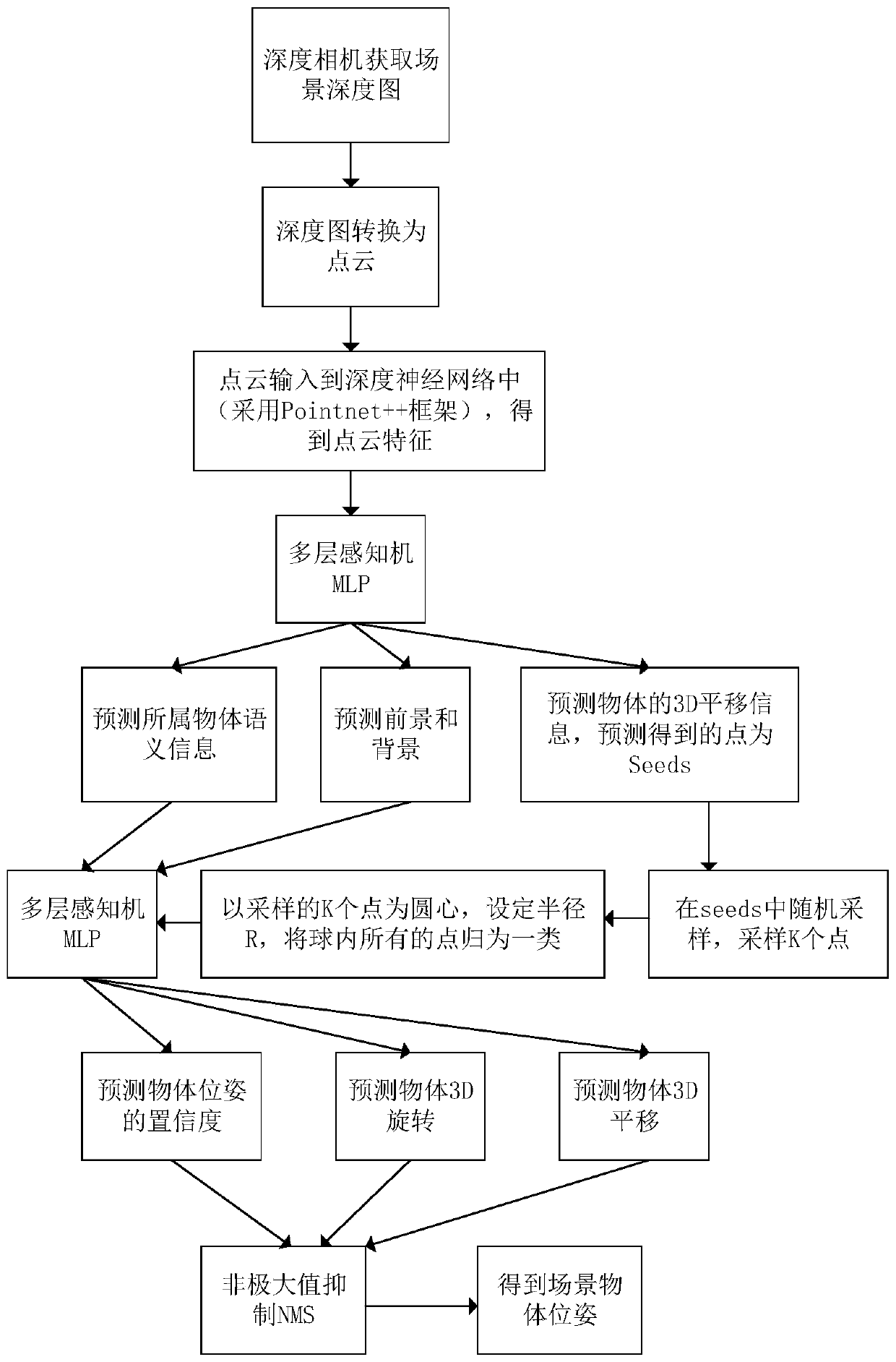

Stacked object 6D pose estimation method and device based on deep learning

ActiveCN111259934ADealing with occlusionSmall scaleImage analysisCharacter and pattern recognitionPattern recognitionPoint cloud

The invention discloses a stacked object 6D pose estimation method and device based on deep learning. The method comprises the steps: inputting a point cloud of scene depth information into a point cloud deep learning network, and extracting the features of the point cloud; learning semantic information of an object to which point cloud features belong, a foreground and a background of a scene and3D translation information of the object to which the point cloud features belong through a multi-layer perceptron, and performing regression to obtain seed points; randomly sampling K points in theseed points, K being greater than the number of to-be-estimated objects, and classifying the seed points by taking the K points as central points; predicting 3D translation, 3D rotation and 6D pose confidence of the object according to the features of each type of points through a multilayer perceptron; According to the predicted 6D pose and the 6D pose confidence coefficient, using a non-maximumsuppression NMS method to obtain a final scene object pose. According to the method, accurate estimation of the pose of the stacked scene is realized end to end, the input is the scene point cloud, the pose of each object in the scene is directly output, and the shielding problem of the stacked objects can be well solved.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

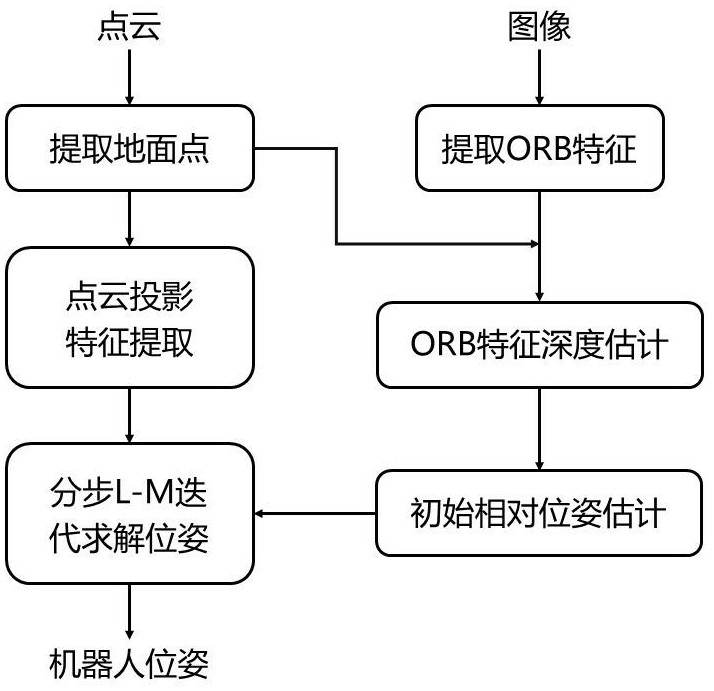

Vision and laser radar fused outdoor mobile robot pose estimation method

ActiveCN112396656AEnough constraintsAccurate pose estimationImage analysisInternal combustion piston enginesPattern recognitionVisual perception

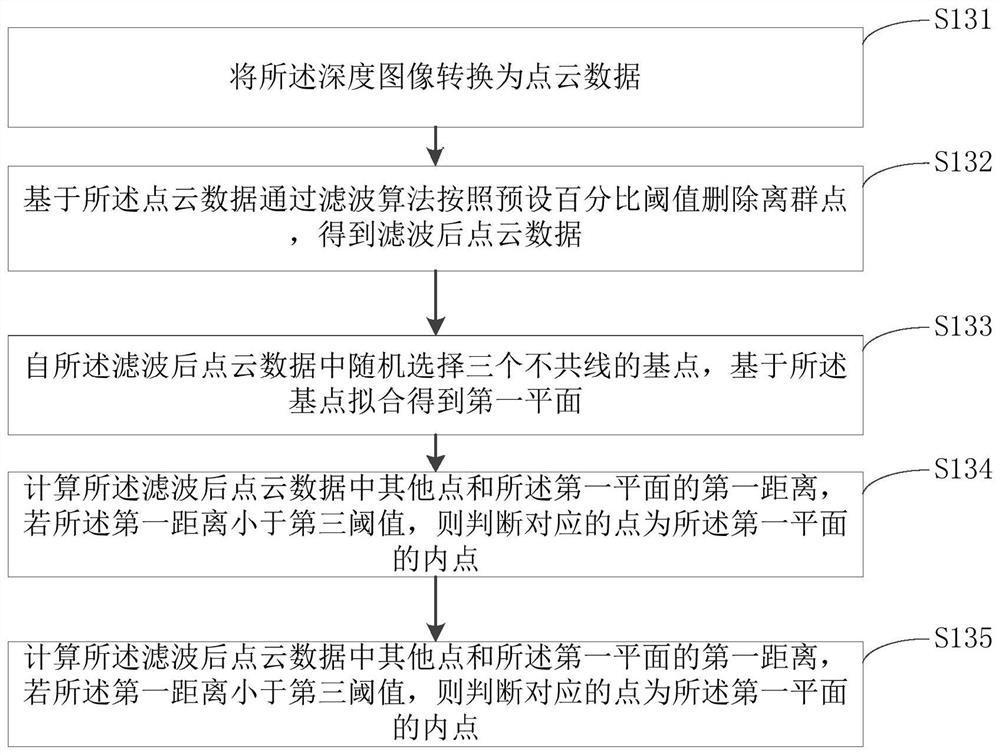

The invention relates to a vision and laser radar fused outdoor mobile robot pose estimation method. The method comprises the following steps of S1, obtaining point cloud data and vision image data; S2, adopting an iterative fitting algorithm to accurately estimate the ground model and extract ground points; S3, extracting ORB feature points from the lower half area of the visual image, and estimating the depth for the visual feature points according to the ground points; s4, obtaining a depth image formed by the depth information of the point cloud; s5, extracting edge features, plane features and ground features; s6, matching the visual features by using a Hamming distance and an RANSAC algorithm, and preliminarily calculating the relative pose of the mobile robot by using an iterative closest point method; and S7, obtaining the final pose of the robot according to the relative pose obtained by vision, the point-surface constraint and the normal vector constraint provided by the ground point cloud, and the point line and the point-surface constraint provided by the non-ground point cloud. According to the invention, pose estimation with higher precision and higher robustness of the mobile robot in an outdoor environment is realized.

Owner:FUZHOU UNIV

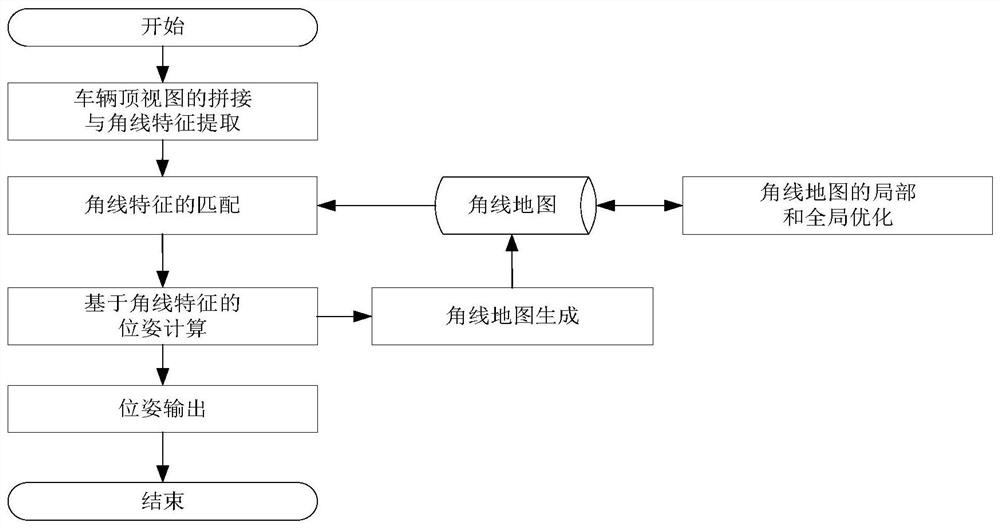

Top view-based parking lot vehicle self-positioning and map construction method

ActiveCN111862673ASolve the scale problemSmall amount of calculationImage enhancementImage analysisView basedCollection system

The invention discloses a top view-based parking lot vehicle self-positioning and map construction method. The method comprises the steps of: taking a look-around system which is composed of a low-cost fisheye camera as an information collection system, generating a top view based on the look-around system, extracting the angular line features of parking lines nearby a vehicle in a robust manner,and carrying out tracking and map construction; and generating an angular line map in real time by utilizing a map matching technology, and carrying out real-time high-precision positioning and map construction on the vehicle by utilizing local map optimization and global map optimization. According to the method, the problem of scale drift of a monocular SLAM is avoided in principle, and real-time and high-precision vehicle self-positioning and map construction work is completed on a low-power-consumption vehicle-mounted processor through a low-cost sensor by means of an existing vehicle-mounted system so as to assist in completing an autonomous parking task. The invention further provides a construction device, a construction system, an automatic driving vehicle and an autonomous parkingsystem.

Owner:CHANGCHUN YIHANG INTELLIGENT TECH CO LTD

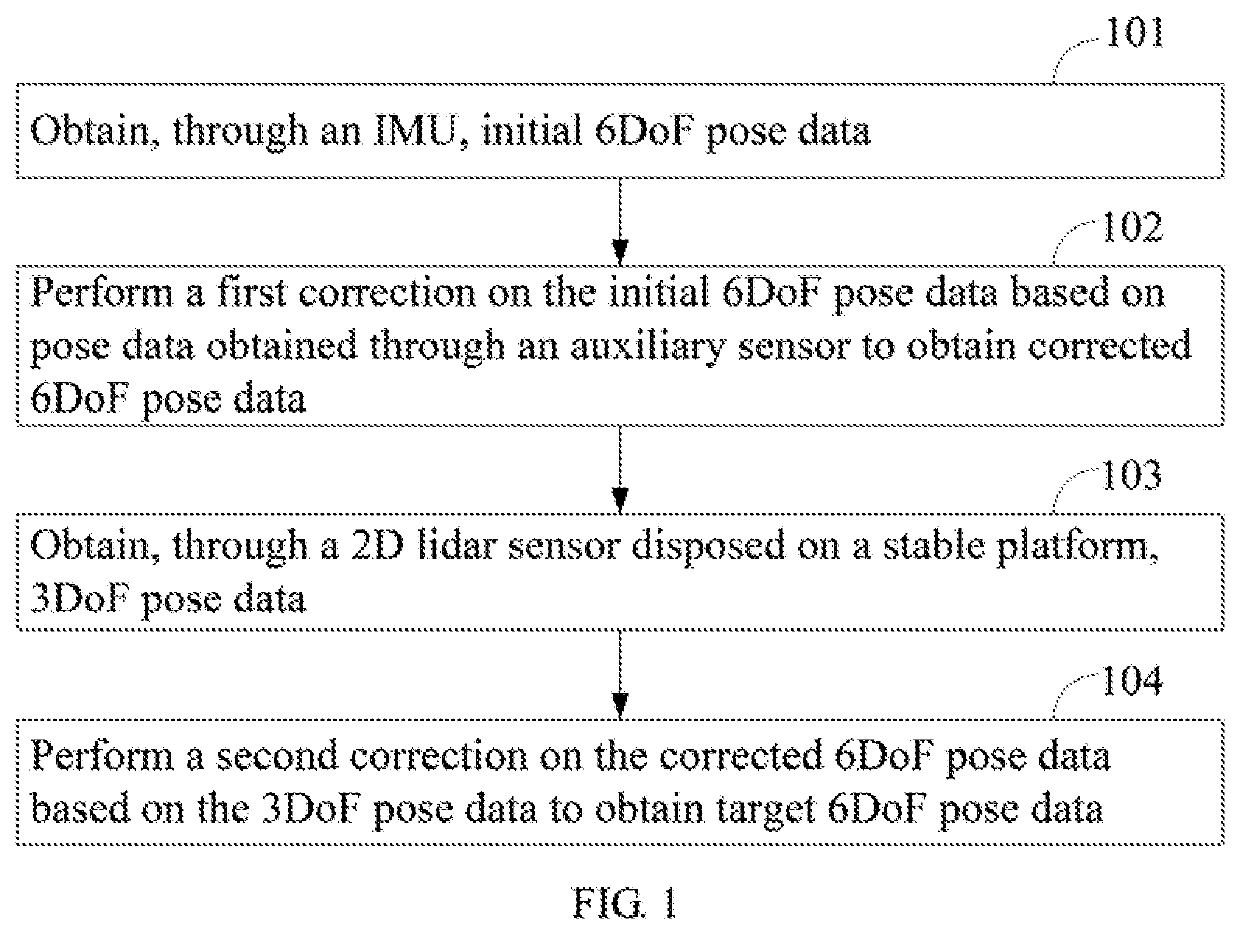

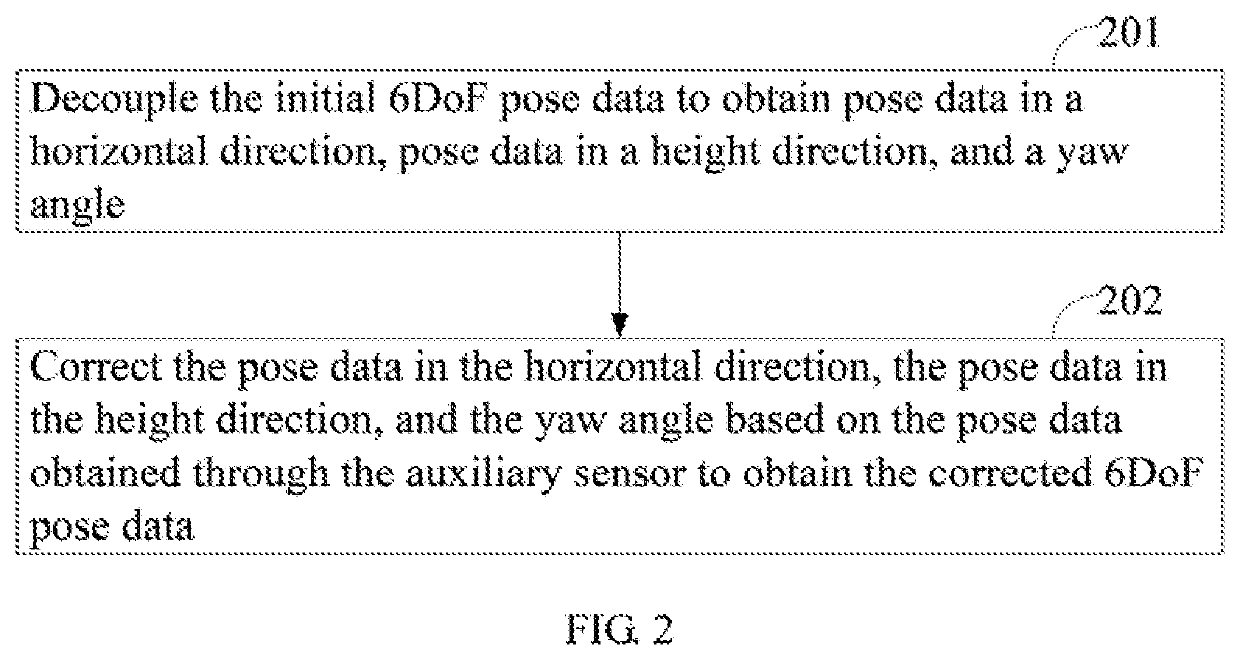

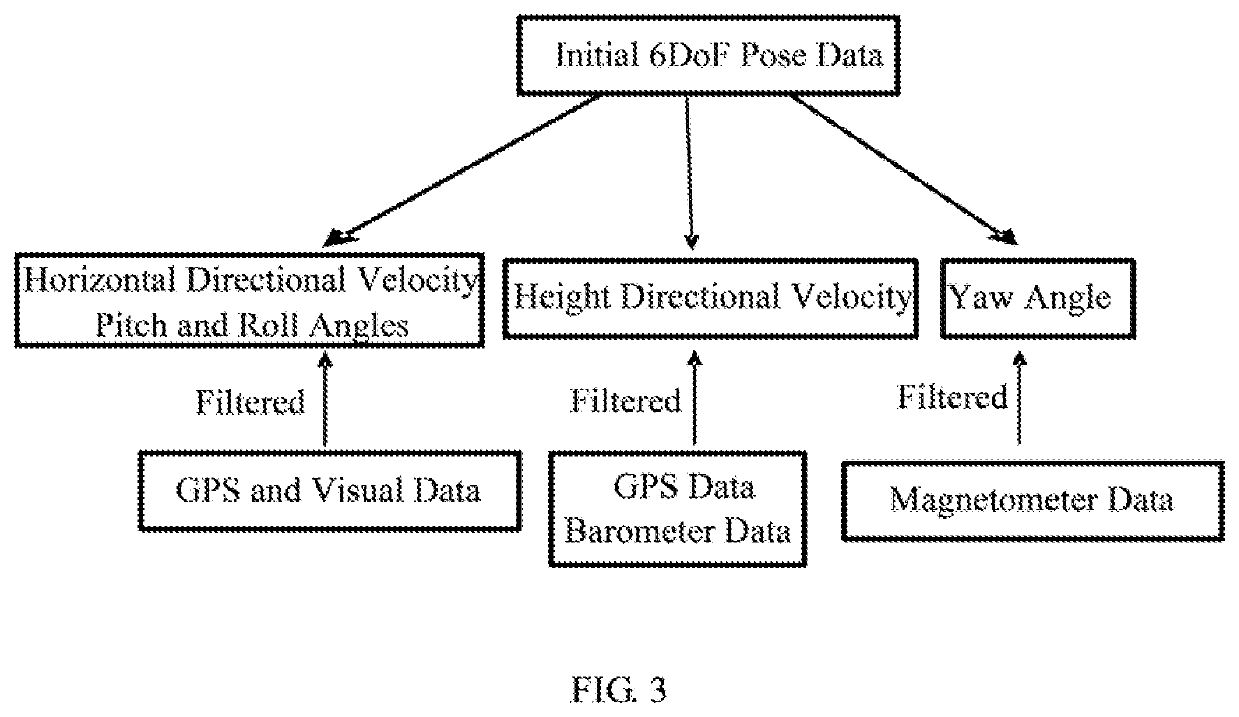

Robot pose estimation method and apparatus and robot using the same

ActiveUS20200206945A1Accurate pose estimationProgramme-controlled manipulatorNavigation instrumentsInertial measurement unitPose

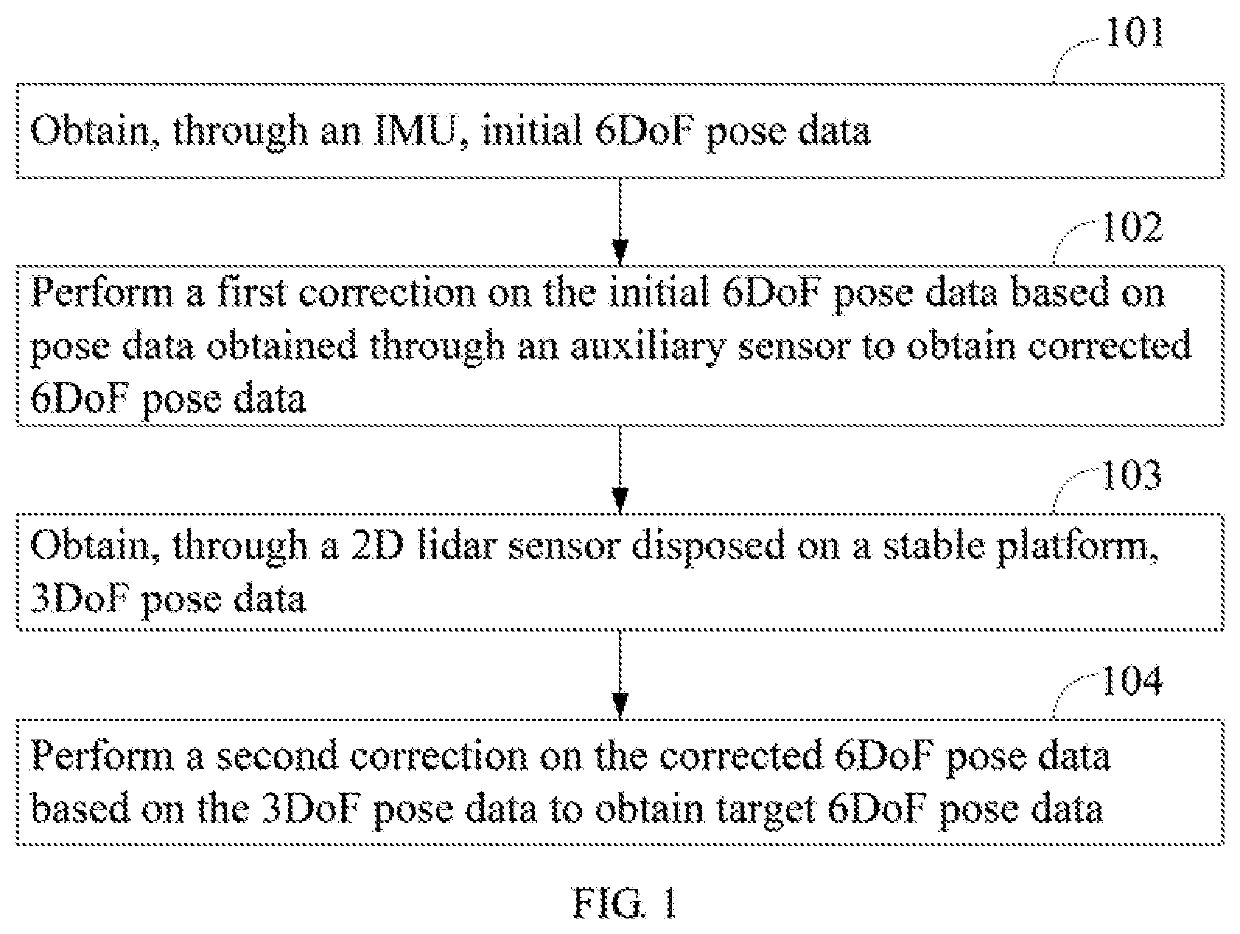

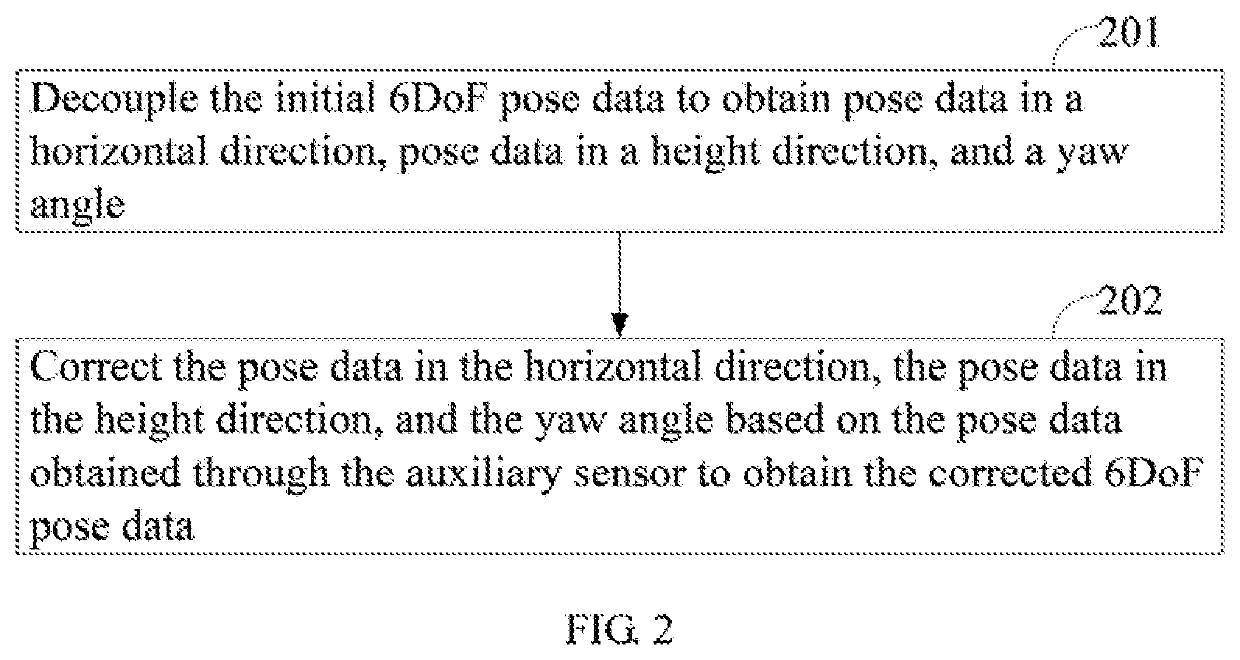

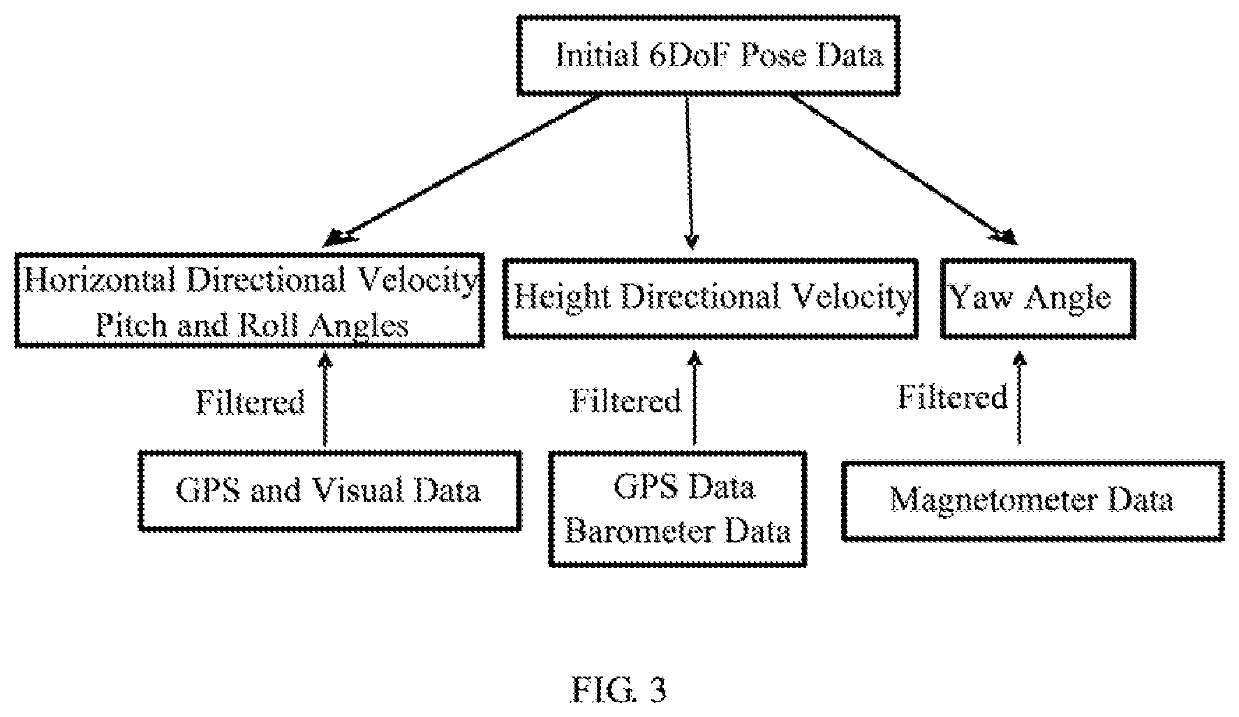

The present disclosure relates to robot technology, which provides a robot pose estimation method as well as an apparatus and a robot using the same. The method includes: obtaining, through an inertial measurement unit, initial 6DoF pose data; performing a first correction on the initial 6DoF pose data based on pose data obtained through an auxiliary sensor to obtain corrected 6DoF pose data; obtaining, through a 2D lidar sensor disposed on a stable platform, 3DoF pose data; and performing a second correction on the corrected 6DoF pose data based on the 3DoF pose data to obtain target 6DoF pose data. In this manner, the accuracy of the pose data of the robot is improved, and the accurate pose estimation of the robot is realized.

Owner:UBTECH ROBOTICS CORP LTD

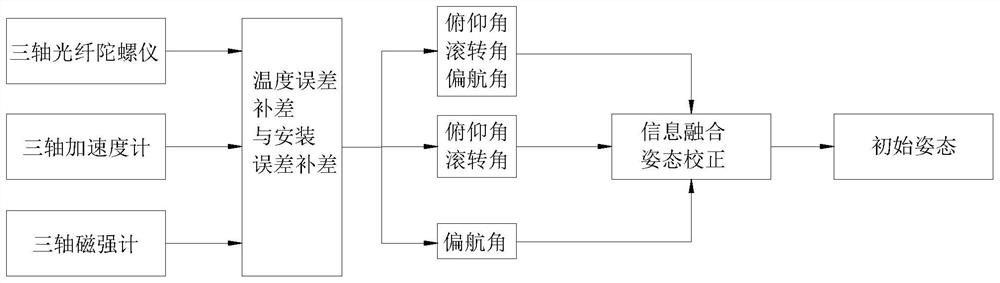

Unmanned aerial vehicle attitude measurement method based on strapdown inertial navigation and Beidou satellite navigation system

PendingCN112630813AAccurate pose estimationNavigation by speed/acceleration measurementsSatellite radio beaconingPhysicsSatellite

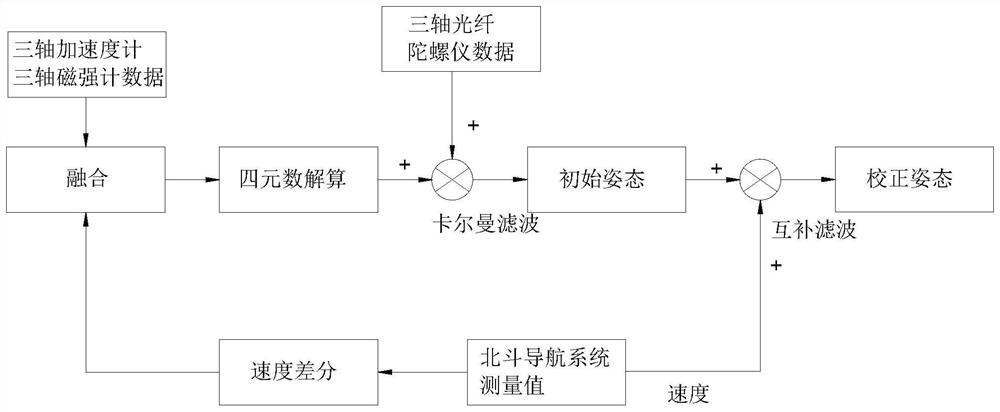

The invention discloses an unmanned aerial vehicle attitude measurement method based on strapdown inertial navigation and a Beidou satellite navigation system, which is used for solving the problem that the measured attitude information error of the existing unmanned aerial vehicle attitude measurement is continuously increased along with time accumulation, and comprises the following steps: based on three sensors in the strapdown inertial navigation system of the unmanned aerial vehicle, measuring the attitude of the unmanned aerial vehicle; respectively measuring the angular velocity, the specific force and the magnetic field intensity of the unmanned aerial vehicle carrier; processing angular velocity data of the three-axis optical fiber gyroscope by using a quaternion Runge-Kutta method, and performing preliminary calculation to obtain estimated values of three attitude angles; and measuring by using the magnetic field values in three directions to obtain another attitude parameter yaw angle. The attitude information measured by the three-axis optical fiber gyroscope is preliminarily corrected by utilizing a Kalman filtering method, and complementary filtering compensation is performed on an inertial device of the unmanned aerial vehicle by introducing speed information measured by a Beidou satellite after difference, so that the unmanned aerial vehicle can still obtain an accurate attitude estimation value under the condition of no GPS navigation information.

Owner:NAT UNIV OF DEFENSE TECH

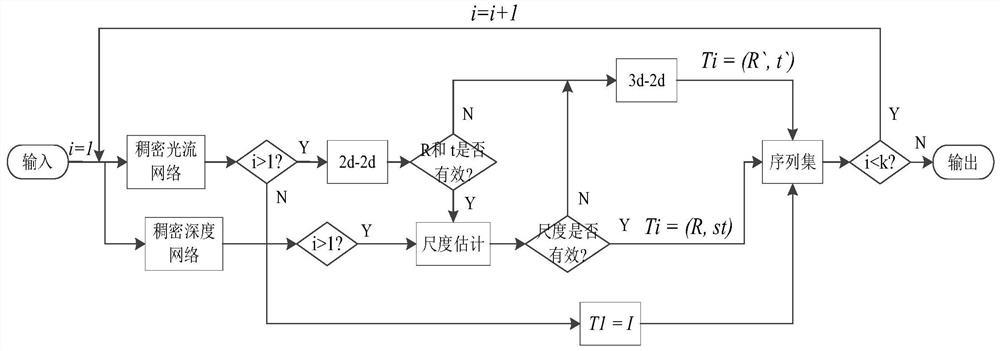

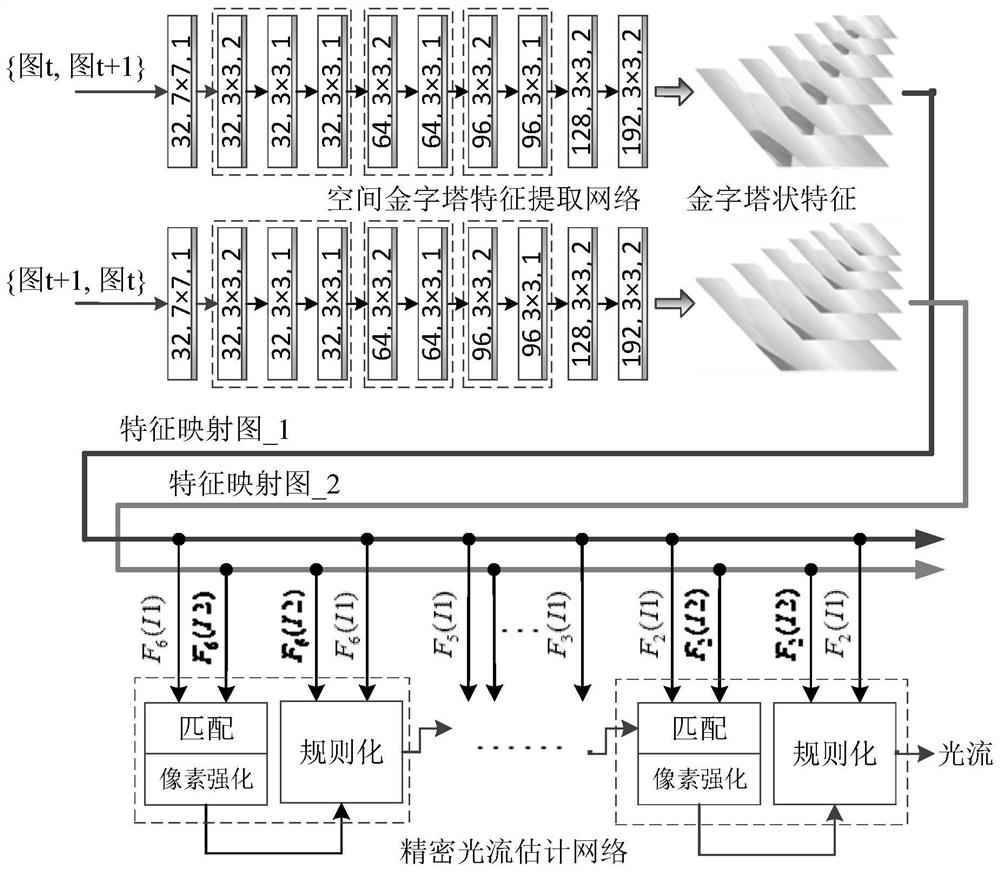

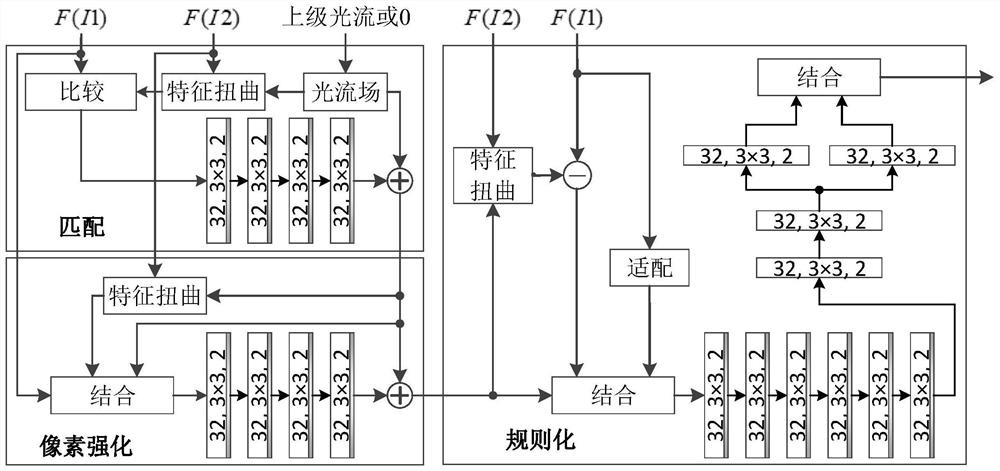

A monocular vision odometer method adopting deep learning and mixed pose estimation

PendingCN111899280AAccurate Pose Estimation ResultsFixed an issue where it only worked when the camera was moving slowlyImage enhancementImage analysisPattern recognitionTriangulation

The invention discloses a monocular vision odometer method adopting deep learning and mixed pose estimation. The monocular vision odometer method comprises the following steps: estimating an optical flow field between continuous images by utilizing a deep learning neural network, and extracting key point matching pairs from the optical flow field; taking key point matching pairs as input, and according to a 2d-2d pose estimation principle, calculating a rotation matrix and a translation vector preliminarily by using an epipolar geometry method. A monocular image depth field is estimated by using a deep neural network, a geometric theory triangulation method is combined, and the depth field serves as a reference value, thus calculating by using an RANSAC algorithm to obtain an absolute scale, and converting the pose from a normalized coordinate system to a real coordinate system; and when the 2d-2d pose estimation fails or the absolute scale estimation fails, performing pose estimationby using a PnP algorithm by using a 3d-2d pose estimation principle. According to the invention, accurate pose estimation and absolute scale estimation can be obtained, the robustness is good, and thecamera track can be well reproduced in different scene environments.

Owner:HARBIN ENG UNIV

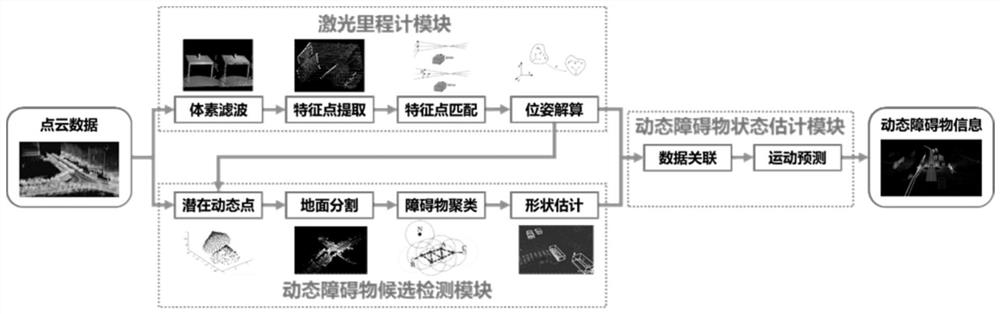

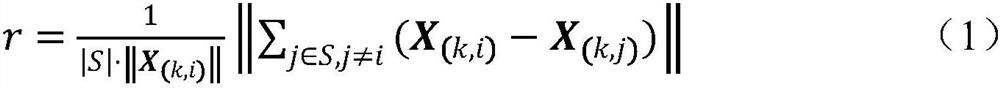

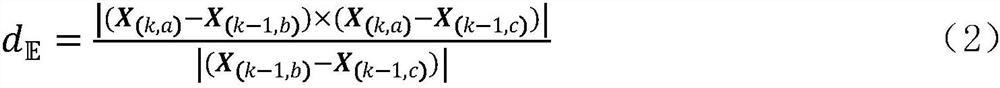

Laser radar dynamic obstacle detection method considering wheeled robot pose estimation

ActiveCN113345008AEfficient systemAccurate pose estimationImage enhancementProgramme-controlled manipulatorEngineeringRoboty

The invention discloses a laser radar dynamic obstacle detection method considering wheeled robot pose estimation, and the method comprises the steps: 1, extracting a point cloud according with a preset curvature feature as a feature point through the geometric characteristics of laser point cloud data, building a matching relation of the same feature point in two frames of point cloud data at adjacent moments, constructing a cost function, and constructing an ICP problem by taking the pose of the wheeled robot as a variable to obtain pose information of the wheeled robot; 2, detecting candidate dynamic obstacles; and 3, estimating a dynamic obstacle state. Multiple sensors do not need to be combined, the purpose of dynamic obstacle detection is achieved on the premise that a single sensor is used, the system is made to be more efficient, and safety is higher.

Owner:HUNAN UNIV

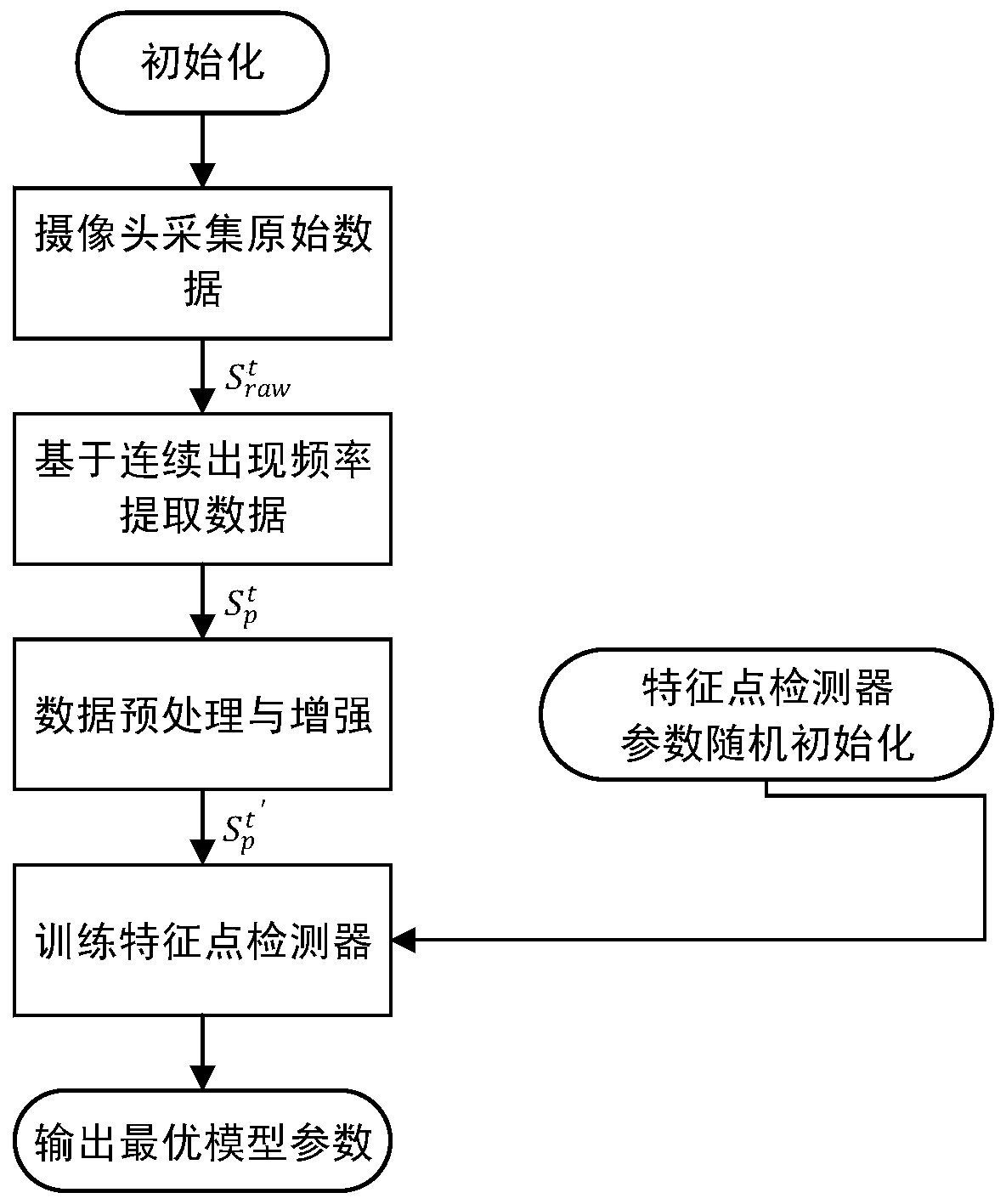

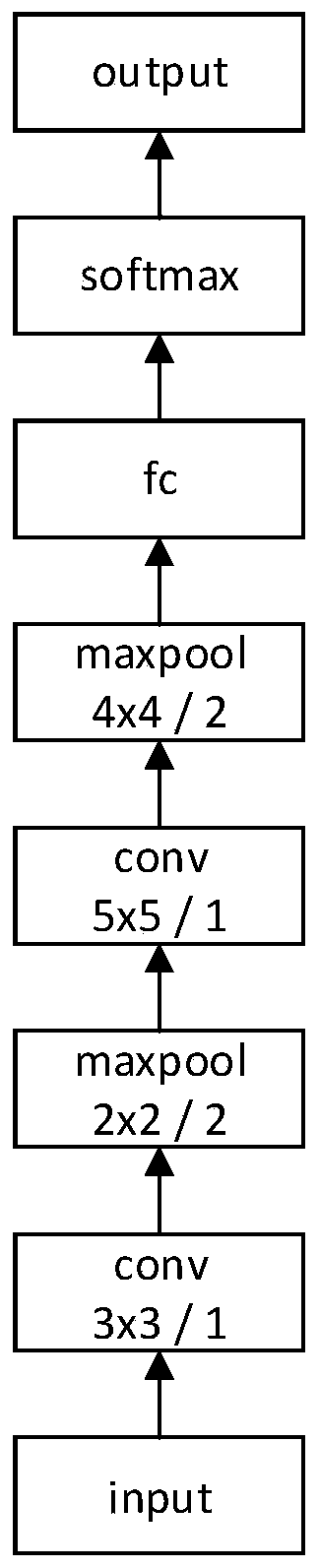

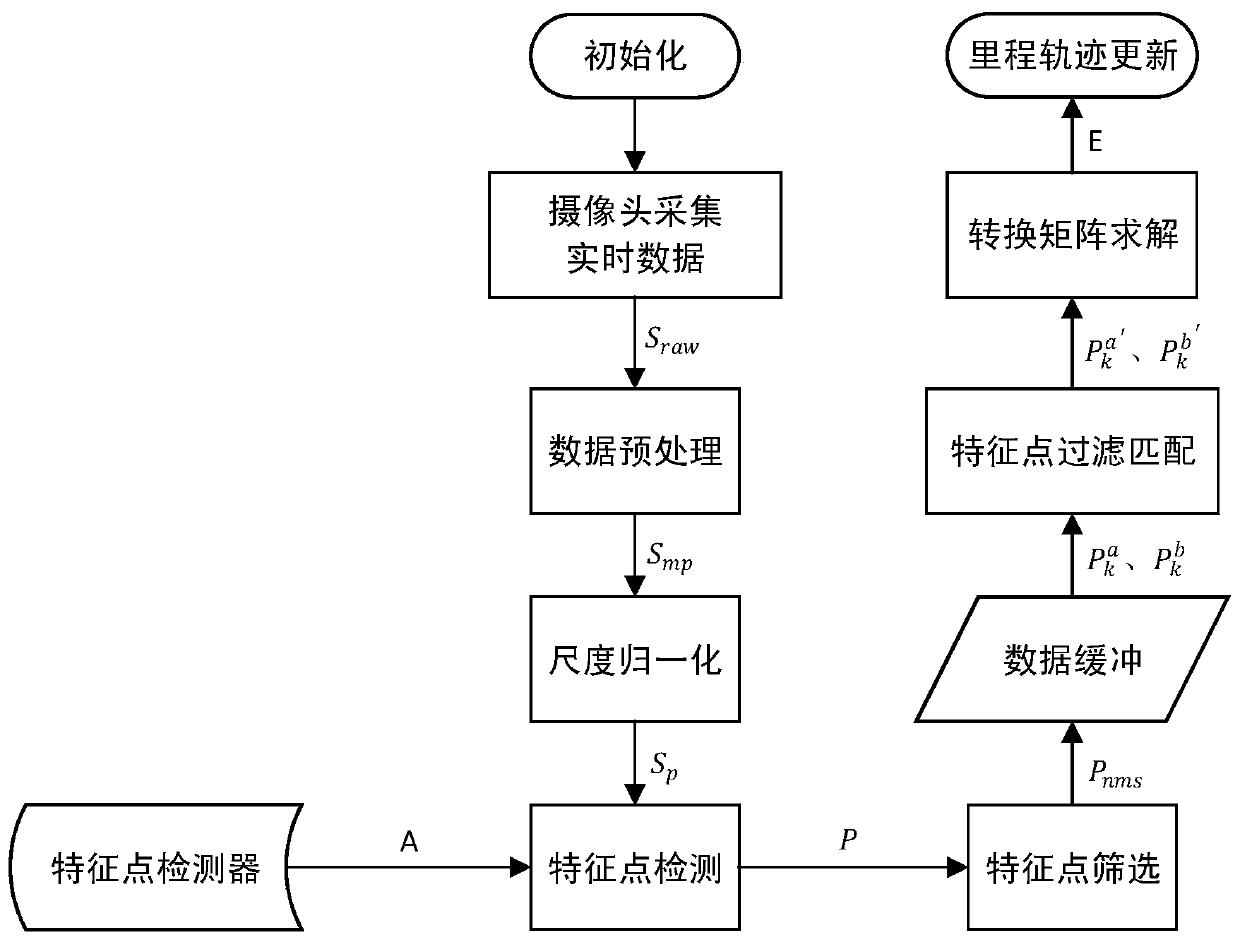

Visual mileometer method based on convolutional neural network

ActiveCN109708658ARobust feature pointsExact mileageImage analysisDistance measurementPoint detectorVisual perception

The invention relates a visual mileometer method based on the convolutional neural network. The method comprises steps S1, original environment data is collected by a camera carried by a mobile robot,and a feature point detector A based on the convolutional neural network is trained; S2, the motion of the to-be-estimated mileage is performed by the mobile robot, and to-be-estimated original datais collected by the carried camera; S3, data sampling, trimming and pre-processing operations of the to-be-estimated data collected by the camera are performed to obtain to-be-processed data; S4, thefeature point detector A is utilized to filter to-be-detected data to obtain the feature point information; and S5, the feature point information is utilized in combination with the polar constraint method to solve a motion estimation matrix of a moving subject, and mileage coordinates are further reckoned. The method is advantaged in that changes between frame environments before and after filtering can be associated to obtain the more stable feature points, matching accuracy is enhanced, and thereby an estimation error of the visual mileometer is reduced.

Owner:ZHEJIANG UNIV

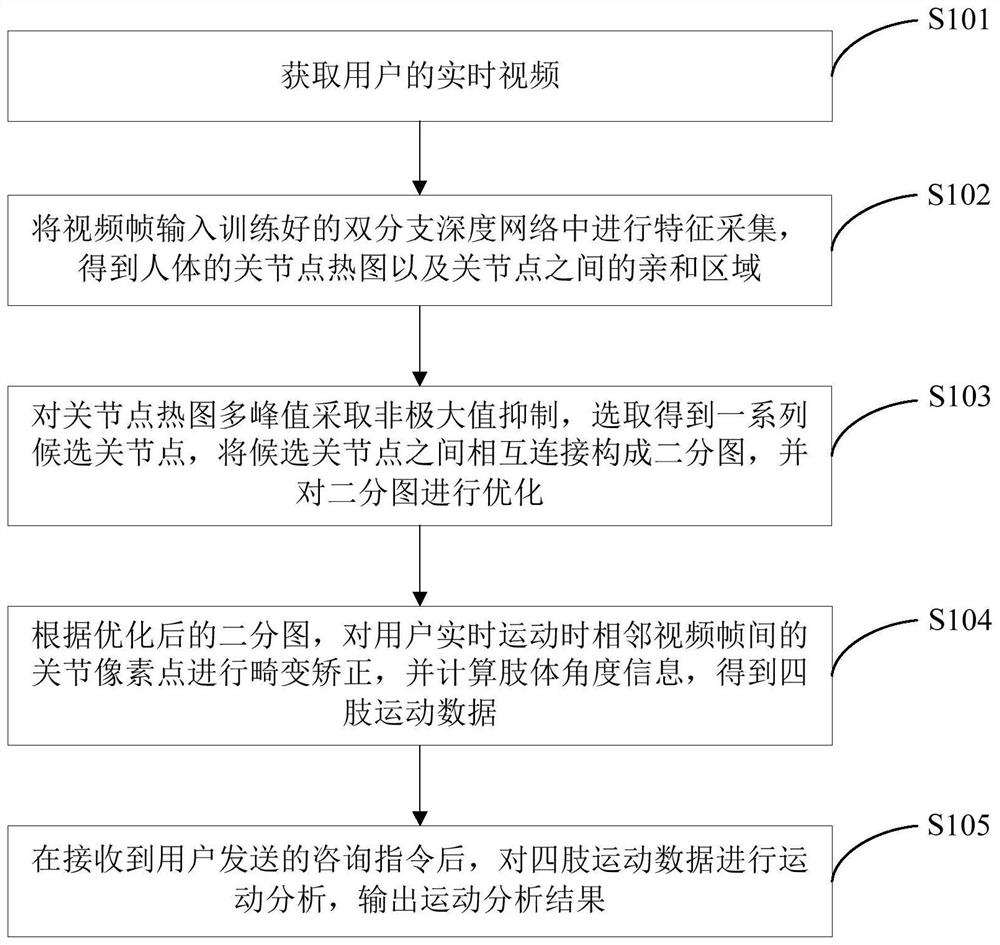

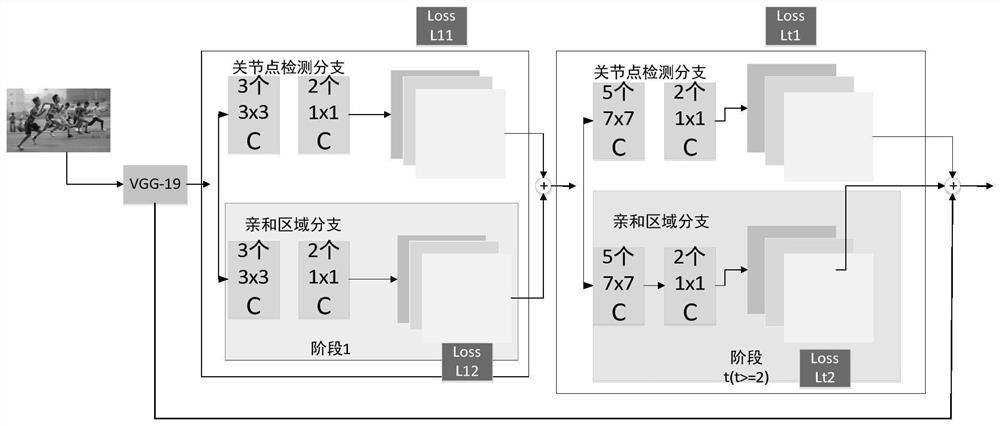

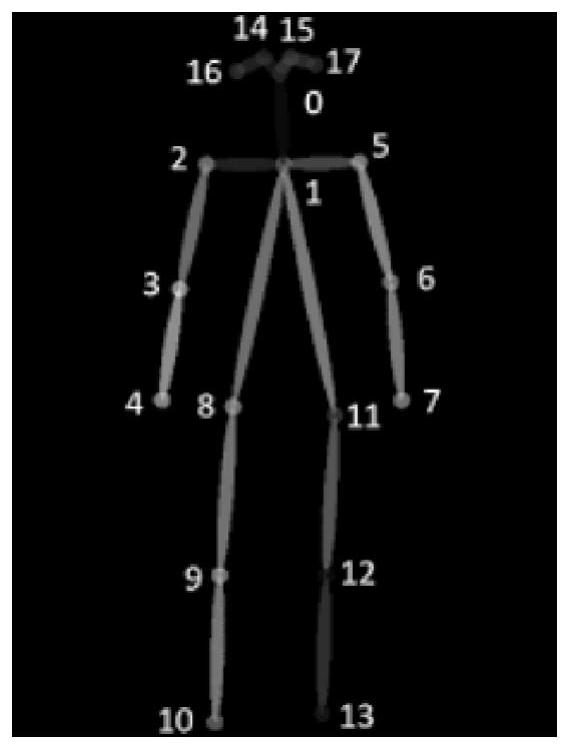

Real-time attitude estimation motion analysis method and system, computer equipment and storage medium

PendingCN112258555APreserve image space featuresEliminate the effects ofImage enhancementImage analysisHuman bodyHeat map

The invention discloses a real-time attitude estimation motion analysis method and system, computer equipment and a storage medium. The method comprises the following steps: acquiring a real-time video of a user; inputting the video frame into a trained double-branch deep network for feature acquisition to obtain a joint point heat map of a human body and an affinity region between joint points; performing non-maximum suppression on multiple peak values of the joint point thermograph, selecting and obtaining a series of candidate joint points, connecting the candidate joint points with one another to form a bipartite graph, and optimizing the bipartite graph; according to the optimized bipartite graph, performing distortion correction on joint pixel points between adjacent video frames when the user moves in real time, calculating limb angle information, and obtaining limb movement data; and after a consulting instruction sent by the user is received, performing motion analysis on thelimb motion data, and outputting a motion analysis result. According to the invention, the image space features can be more effectively reserved, and unnecessary influence when optimal connection is found between the established joint points is eliminated.

Owner:FOSHAN UNIVERSITY

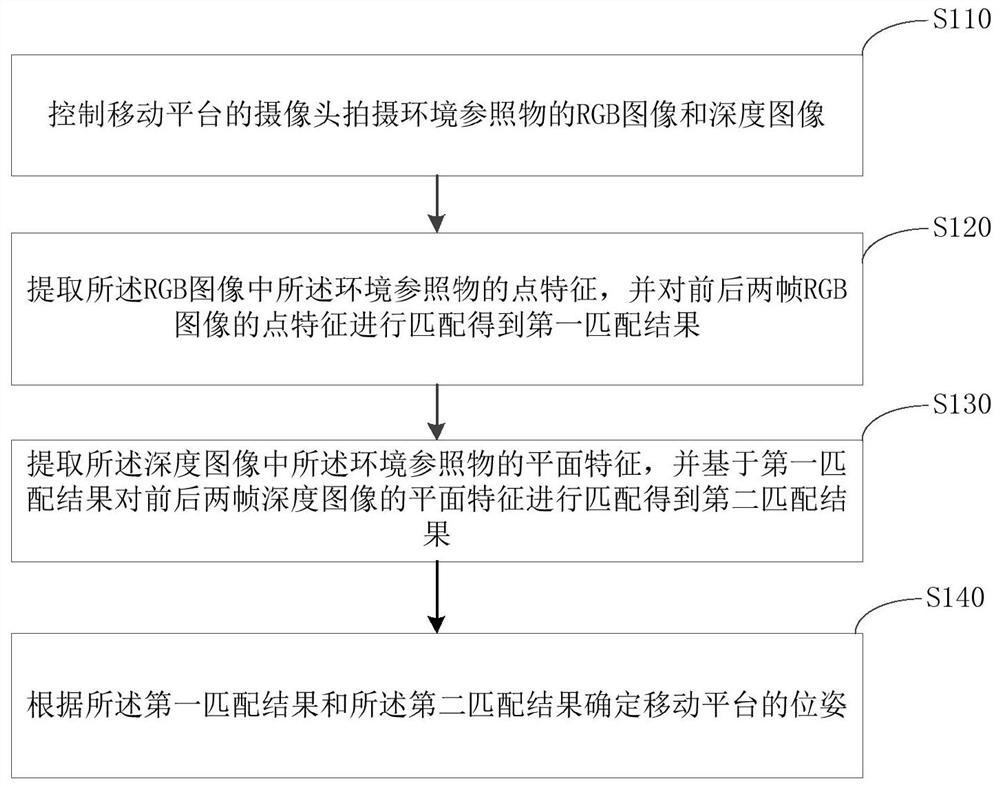

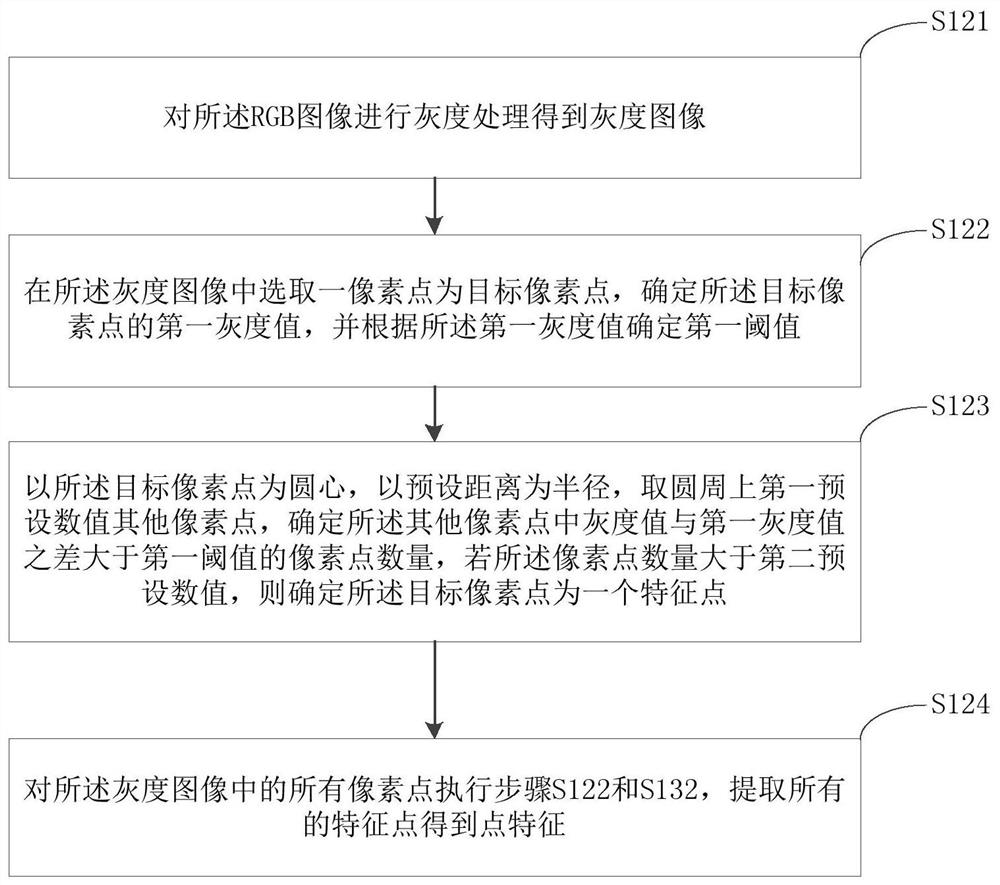

Pose determination method and device of mobile platform, equipment and storage medium

ActiveCN112752028AImprove robustnessReduce sensitivityTelevision system detailsColor television detailsRgb imageEngineering

The embodiment of the invention discloses a pose determination method and device of a mobile platform, equipment and a storage medium. The method comprises the following steps: controlling a camera of a mobile platform to shoot an RGB image and a depth image of an environment reference object; extracting point features of the environment reference object in the RGB image, and matching the point features of the front and back two frames of RGB images to obtain a first matching result; extracting plane features of the environment reference object in the depth images, and matching the plane features of the front and rear frames of depth images based on the first matching result to obtain a second matching result; and determining the pose of the mobile platform according to the first matching result and the second matching result. The method is higher in robustness, lower in sensitivity to light change, higher in feature matching accuracy when the framing object point features are deficient or textures are weak, and more accurate in pose estimation.

Owner:SOUTH UNIVERSITY OF SCIENCE AND TECHNOLOGY OF CHINA

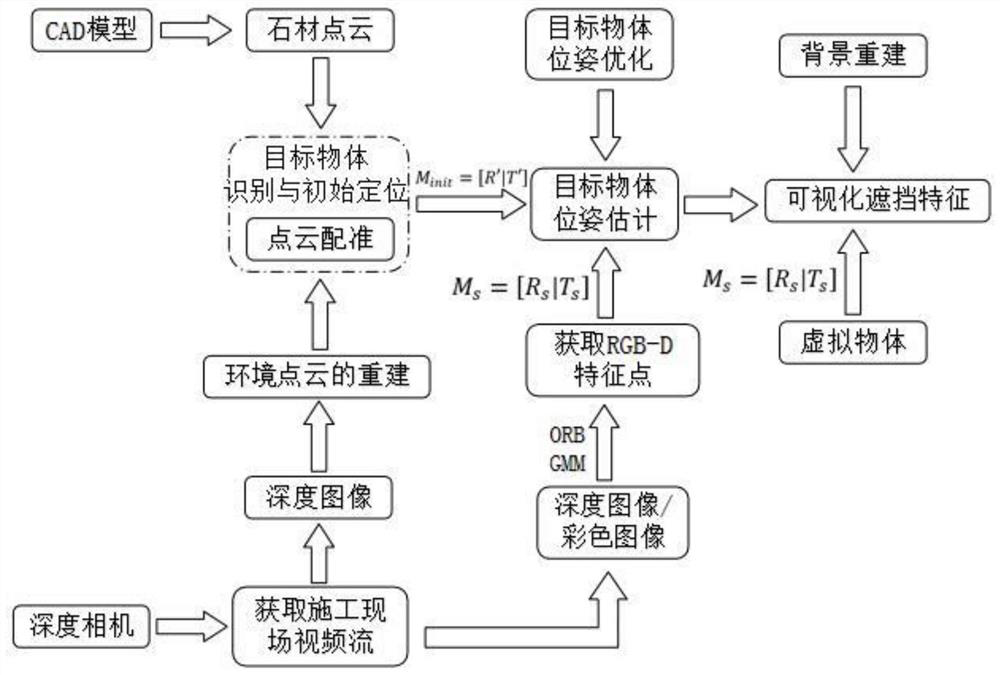

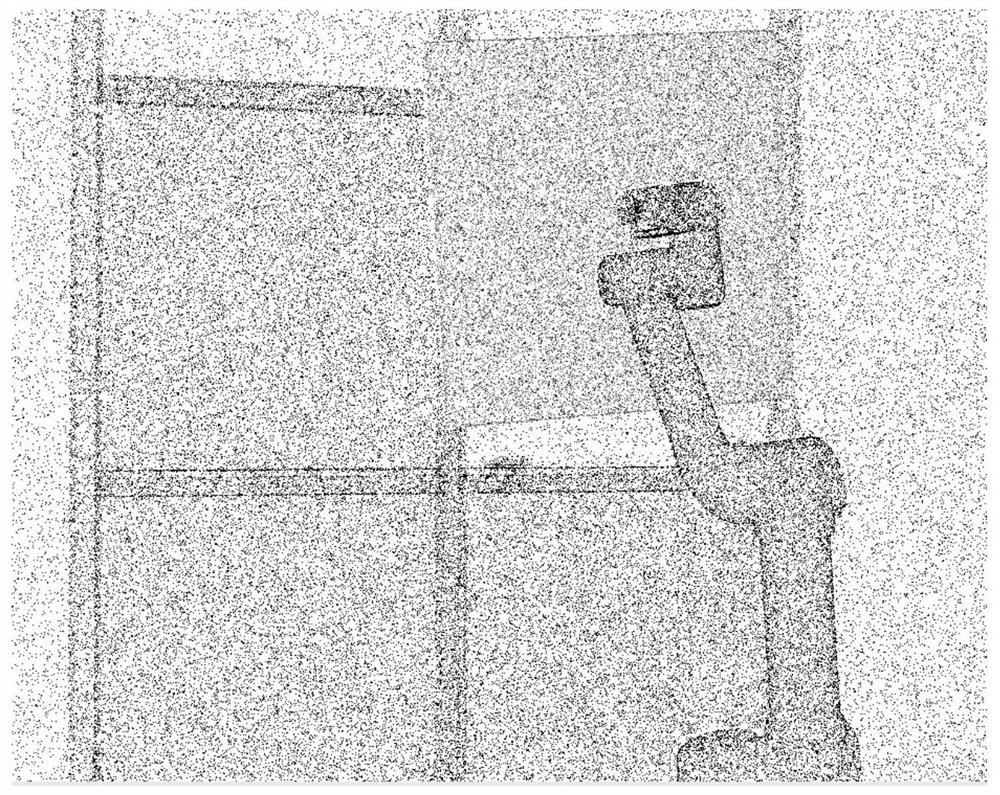

Augmented reality visualization method based on depth camera and application

ActiveCN112258658AImprove visualizationImprove work efficiencyImage enhancementImage analysisPoint cloudKey frame

The invention relates to an augmented reality visualization method based on a depth camera and an application. The method comprises the specific steps of 1, reconstructing background point cloud through the depth camera, and obtaining target object point cloud through three-dimensional modeling; 2, reconstructing an environment point cloud based on a depth camera; 3, key feature points of the target object point cloud and the filtered environment point cloud being extracted, point cloud registration being carried out, the pose of the target object under a depth camera coordinate system being obtained, and recognition and initial positioning of the target object being completed; 4, performing three-dimensional tracking registration based on RGBD feature points; 5, selecting a key frame according to the relative pose variation of the target object of the current key frame and the next frame of image for pose optimization of the target object, and completing updating of the target objectand the target area; and 6, realizing visualization of the occlusion features. According to the method, the target object can be well recognized in a complex scene, and the real-time performance, robustness and tracking accuracy of the system are improved.

Owner:HEBEI UNIV OF TECH

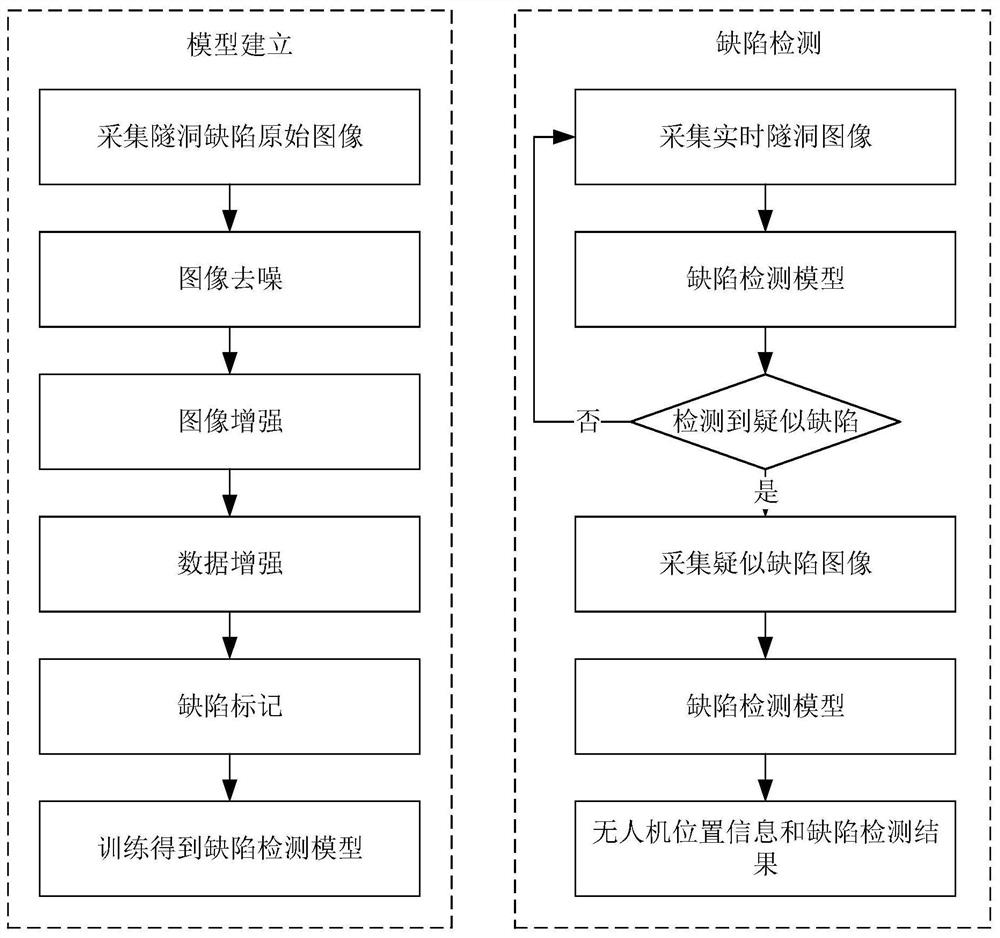

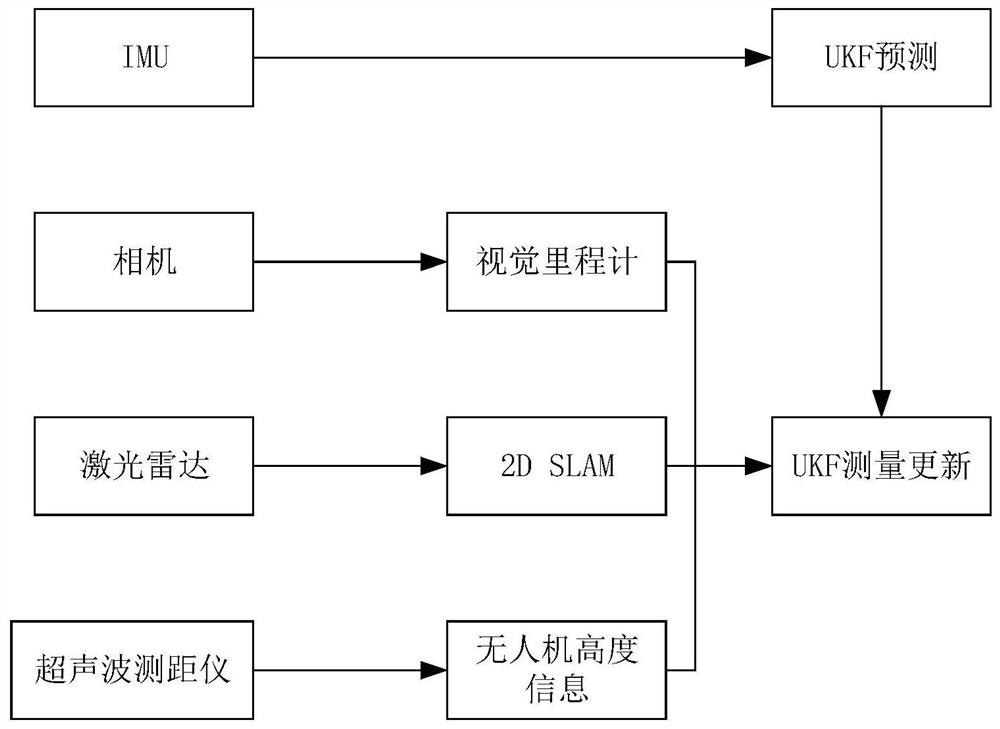

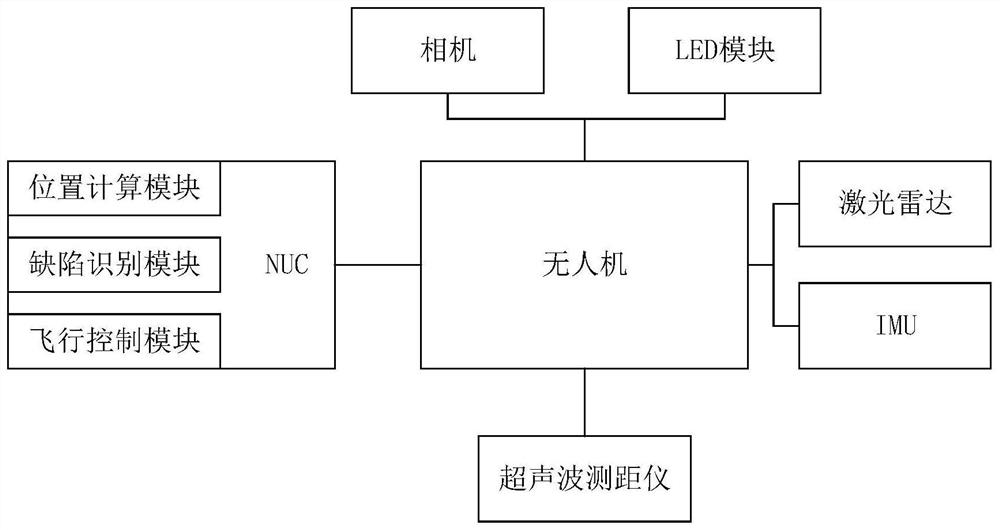

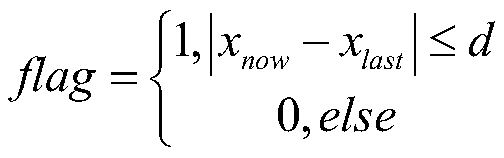

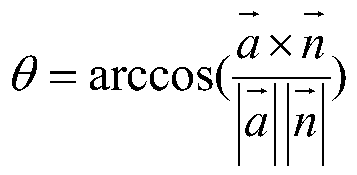

Unmanned aerial vehicle tunnel defect detection method and system

PendingCN113358665AAccurate pose estimationAccurate detectionImage enhancementImage analysisComputer visionEngineering

The invention relates to an unmanned aerial vehicle tunnel defect detection method and system, an unmanned aerial vehicle carries an LED module, a camera, a laser radar, an ultrasonic range finder and an IMU, and the method comprises the following steps: collecting images in a tunnel based on the LED module and the camera to obtain a training image set; training by using the training image set to obtain a defect detection model; collecting real-time tunnel images, performing suspected defect detection on the real-time tunnel images through the defect detection model, obtaining unmanned aerial vehicle pose information based on the camera, the laser radar, the ultrasonic range finder and the IMU, and controlling the unmanned aerial vehicle to hover. Compared with the prior art, the LED module is used for supplementing illumination in the tunnel, the IMU, the camera, the laser radar and the ultrasonic range finder are fused to achieve unmanned aerial vehicle pose estimation, the trained defect detection model is used for detecting whether suspected defects exist or not in real time, hovering is carried out after the suspected defects are found, and defect detection is further carried out. Accurate pose estimation and defect detection can be realized in a tunnel which has no GPS signal and is highly symmetrical inside.

Owner:TONGJI UNIV

Container detection and locking method based on multi-sensor fusion

ActiveCN111292261AThe detection position is accurateAccurate pose estimationImage enhancementImage analysisPrincipal component analysisControl engineering

The invention relates to a container detection and locking method based on multi-sensor fusion. In the actual industrial environment, a forklift needs to be used for carrying equipment and containers.During automatic carrying, firstly, the positions of the container and the bottom corner fittings need to be positioned, then carrying equipment is operated to lock and lift the container, and finally the container is carried to a destination. A traditional detection locking method is low in locking efficiency through manual operation and high in labor cost. According to the method, different environmental information is collected through multiple sensors, the position of the container in the image is detected through the neural network, then the front posture of the container is roughly estimated through a principal component analysis method until the container is close to the container, then refined estimation is conducted on the posture of the container, and finally the container is locked.

Owner:HANGZHOU DIANZI UNIV +1

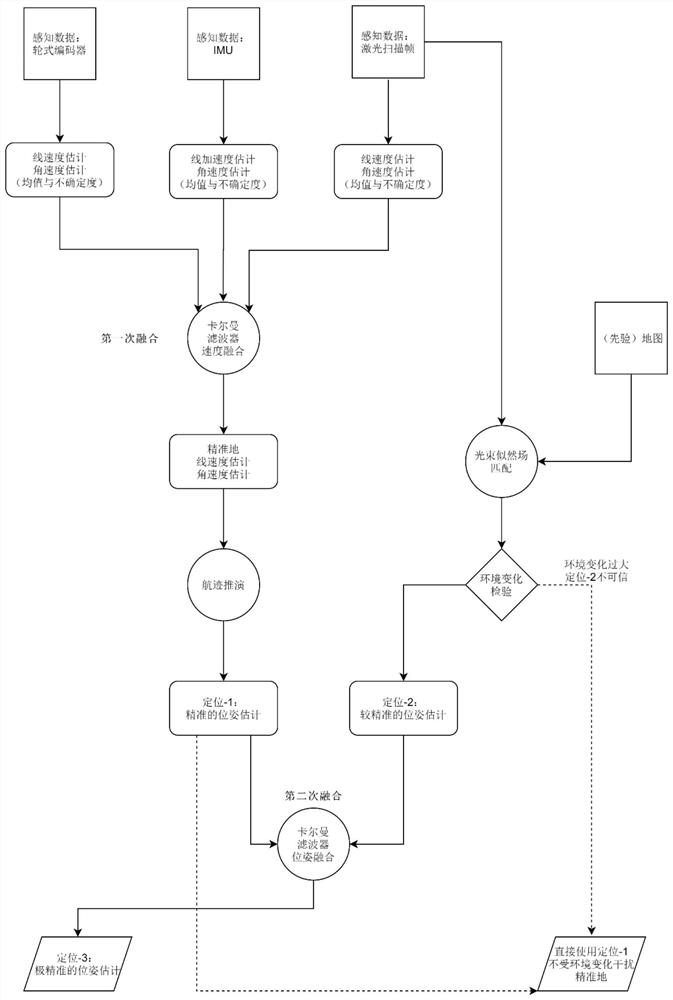

Industrial mobile robot positioning method in high-dynamic environment

ActiveCN112066982AThe location information is accurateImprove adaptabilityNavigational calculation instrumentsNavigation by speed/acceleration measurementsSensing dataLaser scanning

The invention discloses an industrial mobile robot positioning method in a high-dynamic environment, which comprises the following steps of: respectively acquiring sensing data of a wheel type encoder, an inertia measurement unit and a laser scanning unit, and performing speed estimation to obtain a covariance matrix corresponding to the speed estimation; fusing the speed estimation and covariancematrixes of the wheel type encoder, the inertia measurement unit and the laser scanning unit by adopting an extended Kalman filter to obtain accurate fused speed estimation; performing track deduction on the industrial mobile robot, and converting fused speed estimation into first pose estimation; acquiring a light beam likelihood field algorithm to match and position the sensing data of the laser scanning unit with a map; solving the tracking laser observation quality of the laser map acquired by the laser scanning unit at any moment, and performing environmental change inspection; obtainingsecond pose estimation; and fusing the first pose estimation and the second pose estimation by adopting an extended Kalman filter to obtain accurate third pose estimation.

Owner:成都睿芯行科技有限公司

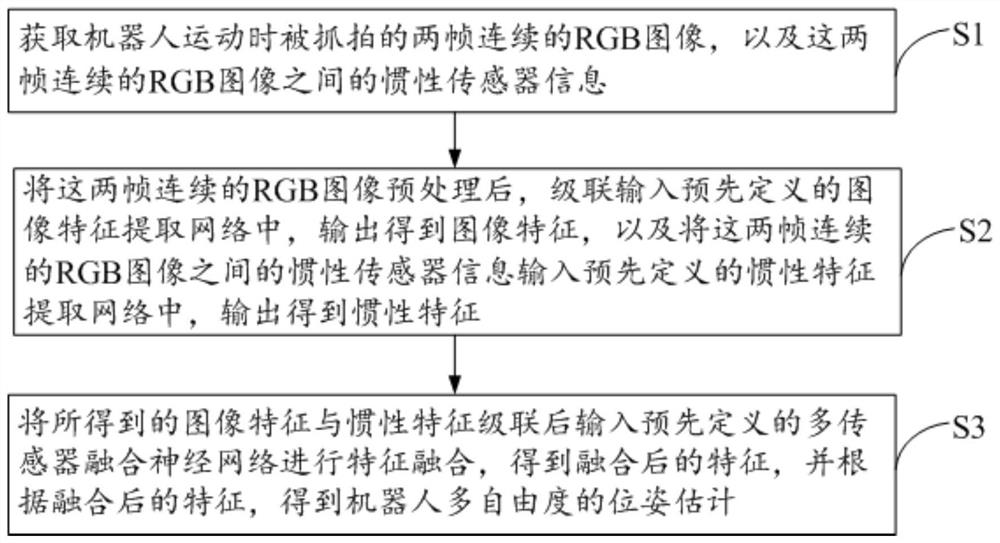

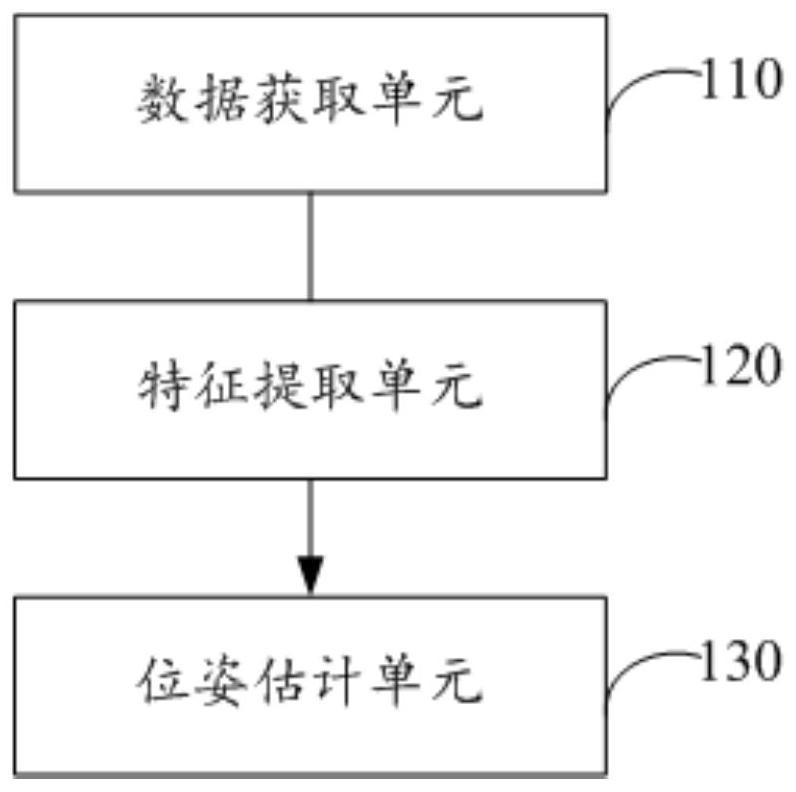

Robot pose estimation method and system based on multi-sensor feature fusion

PendingCN113920165ASolve the problem of complex calculation and low efficiencyEfficient Pose EstimationImage enhancementImage analysisRgb imageImaging Feature

The invention provides a robot pose estimation method based on multi-sensor feature fusion, and the method comprises the steps: obtaining two frames of continuous RGB images captured when a robot moves, and inertial sensor information between the two frames of continuous RGB images; preprocessing the two frames of continuous RGB images, cascading and inputting the two frames of continuous RGB images into a predefined image feature extraction network, outputting to obtain image features, inputting inertial sensor information between the two frames of continuous RGB images into a predefined inertial feature extraction network, and outputting to obtain inertial features; and cascading the obtained image features and inertial features, inputting the cascaded image features and inertial features into a predefined multi-sensor fusion neural network for feature fusion to obtain fused features, and obtaining multi-degree-of-freedom pose estimation of the robot according to the fused features. By implementing the method, the problems of low accuracy of pose estimation by using a single sensor and complex calculation and low efficiency of a traditional multi-sensor fusion algorithm can be solved.

Owner:SHENZHEN POWER SUPPLY BUREAU

Robot pose estimation method and apparatus and robot using the same

ActiveUS11279045B2Accurate pose estimationProgramme-controlled manipulatorNavigation instrumentsPattern recognitionComputer graphics (images)

The present disclosure relates to robot technology, which provides a robot pose estimation method as well as an apparatus and a robot using the same. The method includes: obtaining, through an inertial measurement unit, initial 6DoF pose data; performing a first correction on the initial 6DoF pose data based on pose data obtained through an auxiliary sensor to obtain corrected 6DoF pose data; obtaining, through a 2D lidar sensor disposed on a stable platform, 3DoF pose data; and performing a second correction on the corrected 6DoF pose data based on the 3DoF pose data to obtain target 6DoF pose data. In this manner, the accuracy of the pose data of the robot is improved, and the accurate pose estimation of the robot is realized.

Owner:UBTECH ROBOTICS CORP LTD

Real-time 6D pose estimation method and computer readable storage medium

PendingCN114359377AImprove real-time performanceEfficient integrationImage enhancementImage analysisComputer graphics (images)Engineering

The invention provides a real-time 6D pose estimation method and a computer readable storage medium. The method comprises the steps that a preset key point is selected from a three-dimensional model of a target object, and coordinates of the preset key point in the three-dimensional model based on a three-dimensional model origin coordinate system are obtained; predicting coordinates of the preset key point of the target object in an actual scene based on a camera coordinate system through a network; and performing fitting calculation on the coordinates of the preset key point in the three-dimensional model origin coordinate system and the coordinates of the preset key point in the camera coordinate system to obtain the current pose of the target object. Therefore, the network has excellent real-time performance, and the highest pose estimation precision at the same speed is realized.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

Unmanned vehicle pose estimation method based on point-to-plane distance and cross-correlation entropy registration

ActiveCN108868268BStrong resistanceAccurate pose estimationNavigation by terrestrial meansParkingsPoint cloudRadar

The invention discloses a position and posture estimation method of a driverless car based on a distance from a point to a surface and cross correlation entropy rectification, which comprises the following steps: firstly, a three-dimensional laser radar is calibrated, and then the acquired three-dimensional laser radar data is subjected to coordinate conversion; point cloud alignment is carried out on the acquired data and the existing map data to obtain a rotary and translation transformation of a rigid body; so that the position and posture of an active moving body are obtained according tothe rotary and translation conversion. According to the invention, by using the three-dimensional laser radar as the data source, the function of estimating the position and posture of the driverlesscar is finished through the steps of coordinate system conversion, data drop sampling, point set rectification and the like. The method can well overcome the influence of weather, light and other environmental factors. Moreover, the error evaluation function based on the distance from the point to the surface and the cross correlation entropy has good resistance to noise and abnormal points, suchas mismatching of the scene and the map description part, dynamic obstacles and the like, therefore, the function of accurate and robust estimation of the position and posture of the driverless car can be achieved.

Owner:XI AN JIAOTONG UNIV

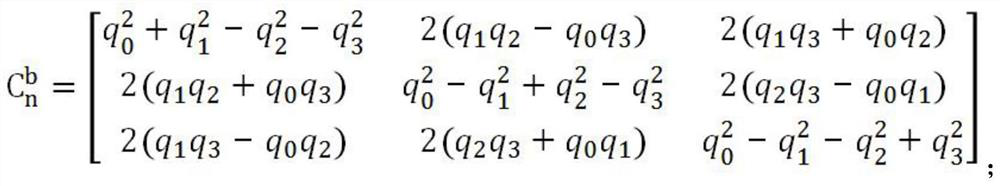

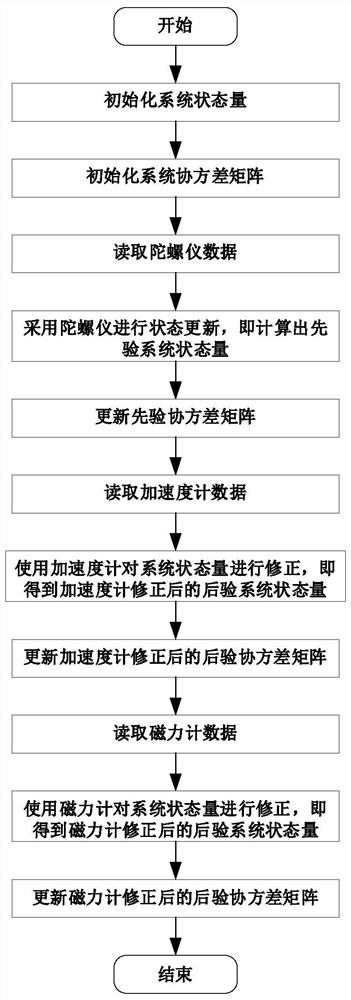

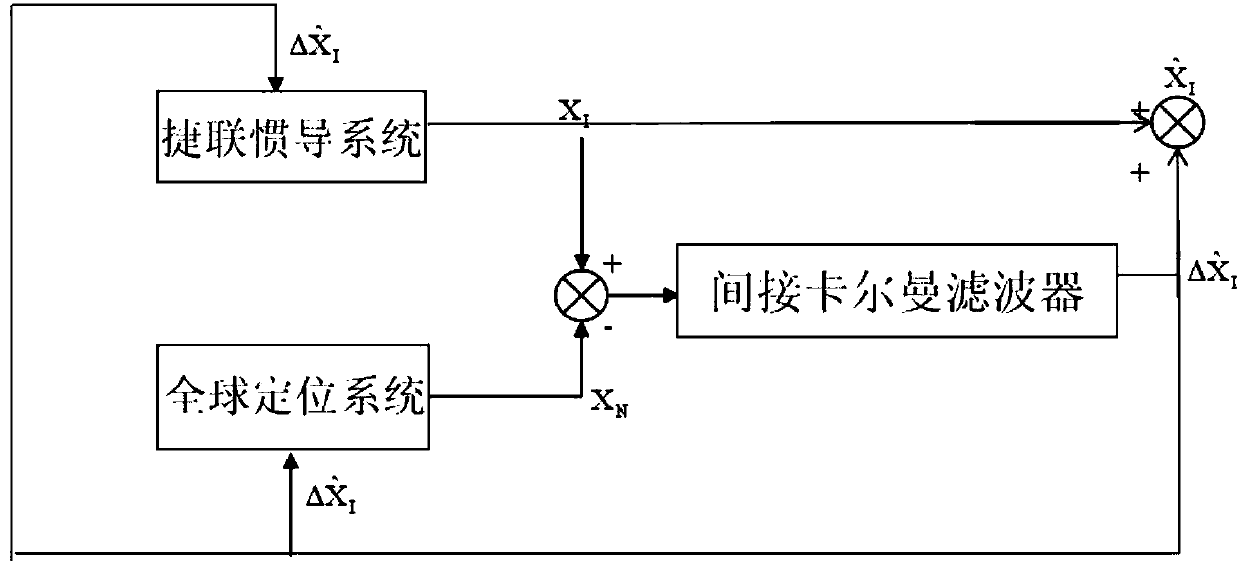

A PTZ Attitude Estimation Method Based on Extended Kalman Filtering

ActiveCN113155129BSmall amount of calculationAccurate pose estimationNavigational calculation instrumentsNavigation by speed/acceleration measurementsAccelerometerMedicine

The present invention provides a pan-tilt attitude estimation method based on extended Kalman filtering. The method adopts quaternion to represent the current attitude of the object, and the system state quantity includes the offset error between the quaternion and the angle increment. The offset error of the angle increment is corrected by the magnetometer, which makes the attitude estimation more accurate, and the accelerometer correction and the magnetometer correction are divided into two stages, so that the accelerometer correction and the magnetometer correction do not interfere with each other, and the attitude estimation accuracy is improved; In the accelerometer correction, the quaternion third vector in the correction amount is set to zero, and in the magnetometer correction, the quaternion first vector and the second vector in the correction amount are set to zero. By adopting the present invention, more accurate attitude estimation information can be obtained.

Owner:PEKING UNIV

Vehicle self-localization and map construction method in parking lot based on top view

ActiveCN111862673BSolve the scale problemSmall amount of calculationImage enhancementImage analysisIn vehicleTransport engineering

A top-view-based self-positioning and map construction method for parking lots, using a low-cost fisheye camera surround view system as an information collection system, generating a top view based on the surround view system, and robustly extracting parking spaces near the vehicle The angle line features of the vehicle are tracked and mapped; the angle line map is generated in real time by using the graph matching technology, and the local map optimization and the global map optimization are used to perform real-time high-precision positioning and map construction of the vehicle. The method avoids the scale drift problem of monocular SLAM in principle, and uses the existing vehicle system to complete real-time, high-precision vehicle self-localization and map construction work with low-cost sensors and low-power vehicle processors , to assist the completion of the autonomous parking task. The present disclosure also provides a construction device, a construction system, an automatic driving vehicle and an autonomous parking system.

Owner:CHANGCHUN YIHANG INTELLIGENT TECH CO LTD

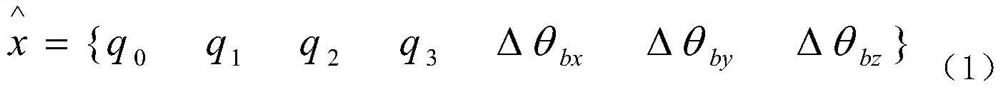

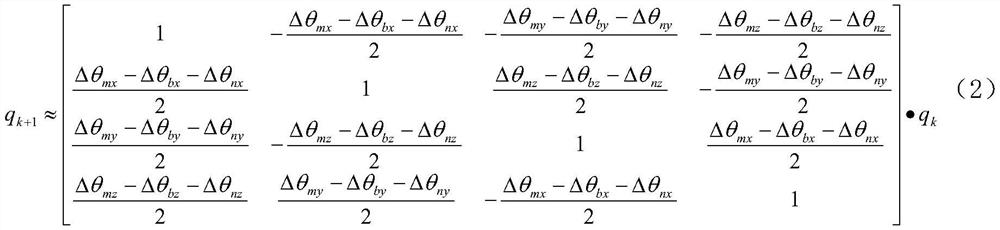

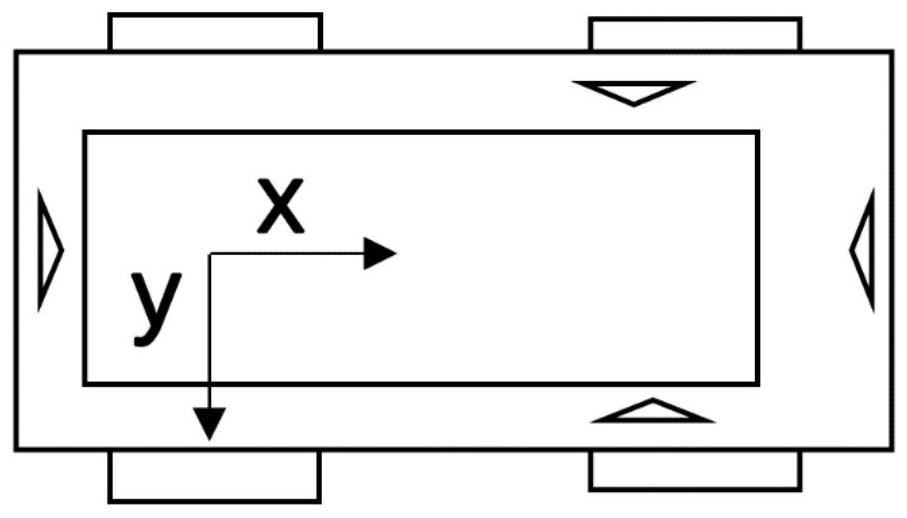

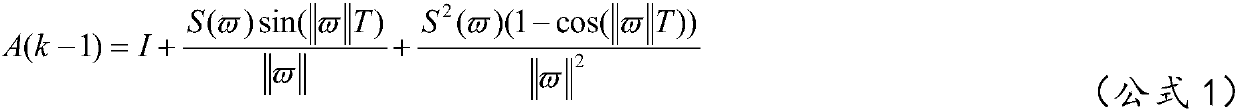

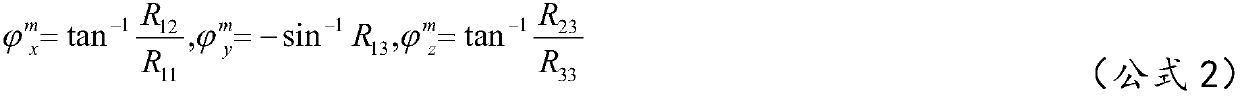

A Pose Estimation Method for Mobile Platform Based on Indirect Kalman Filter

ActiveCN105865452BHigh precisionThe calculation formula is simpleNavigational calculation instrumentsKaiman filterObservation data

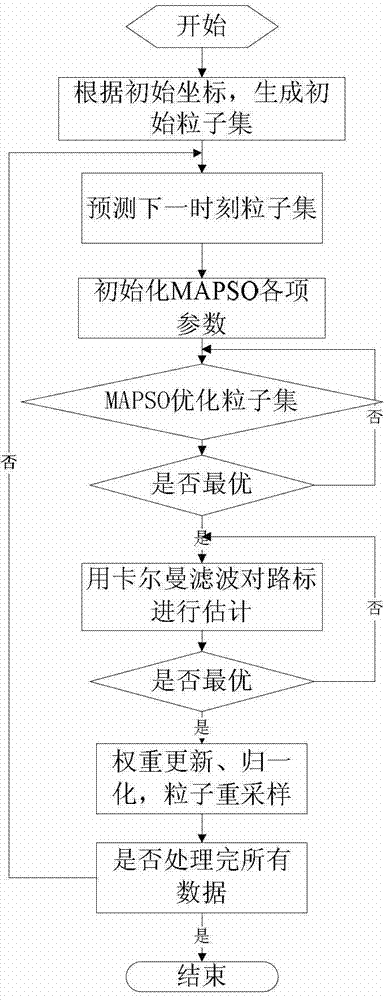

The invention discloses a mobile platform pose estimation method based on indirect Kalman filtering. The method includes the following steps that difference between a calculation value XI of a strapdown inertial navigation system and observation data XN of a global positioning system serves as observation input of an indirect Kalman filter, a prediction equation is built in the indirect Kalman filter, output of the filter is an estimated value of an error, part of parameter feedback in the output is used for correcting parameters of observation computation of the strapdown inertial navigation system and the global positioning system so as to correct error calculation of the two systems, and the sum of parameters, like coordinates and postures, in XI and the estimated value serves as an estimated value of all values in a final system, wherein please see the estimated value in the description. Compared with the traditional method in which a direct Kalman filtering algorithm is adopted, more accurate pose estimation results can be given, and work efficiency of a robot is effectively improved.

Owner:ZHEJIANG GUOZI ROBOT TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com