Augmented reality visualization method based on depth camera and application

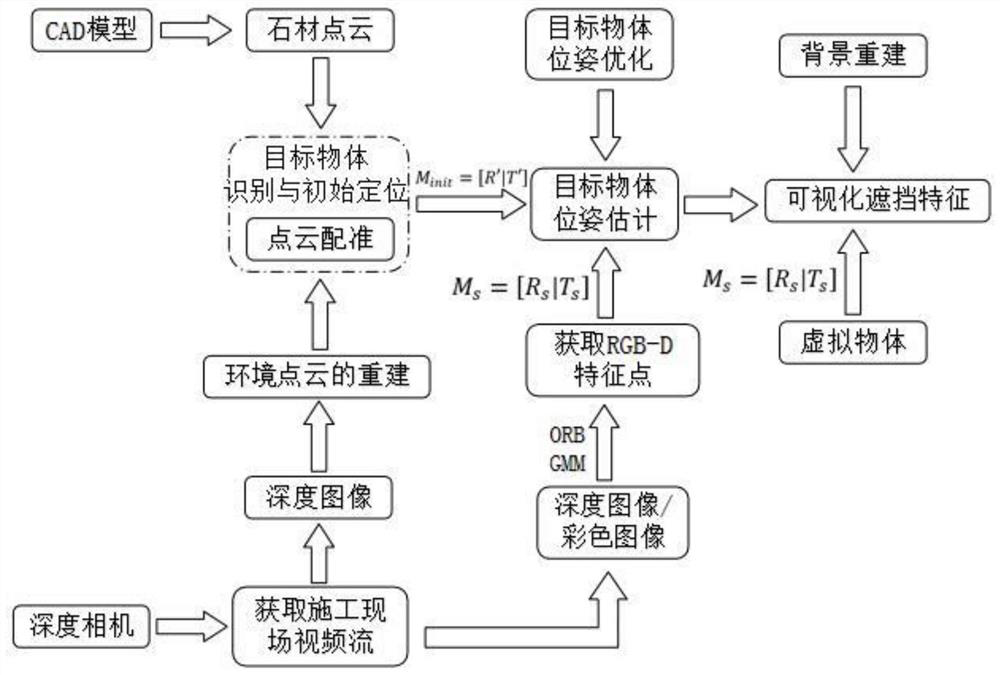

A depth camera and augmented reality technology, applied in image enhancement, image data processing, 3D modeling, etc., can solve the problem that the calculation amount is difficult to ensure the system speed and accuracy, the system requires high real-time performance and robustness, and feature point interference and other problems, to achieve the effect of enhancing the visualization effect, reducing the amount of calculation, and reducing the collection error

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] The present invention will be further described below in conjunction with the embodiments and accompanying drawings, but it is not intended to limit the protection scope of the present application.

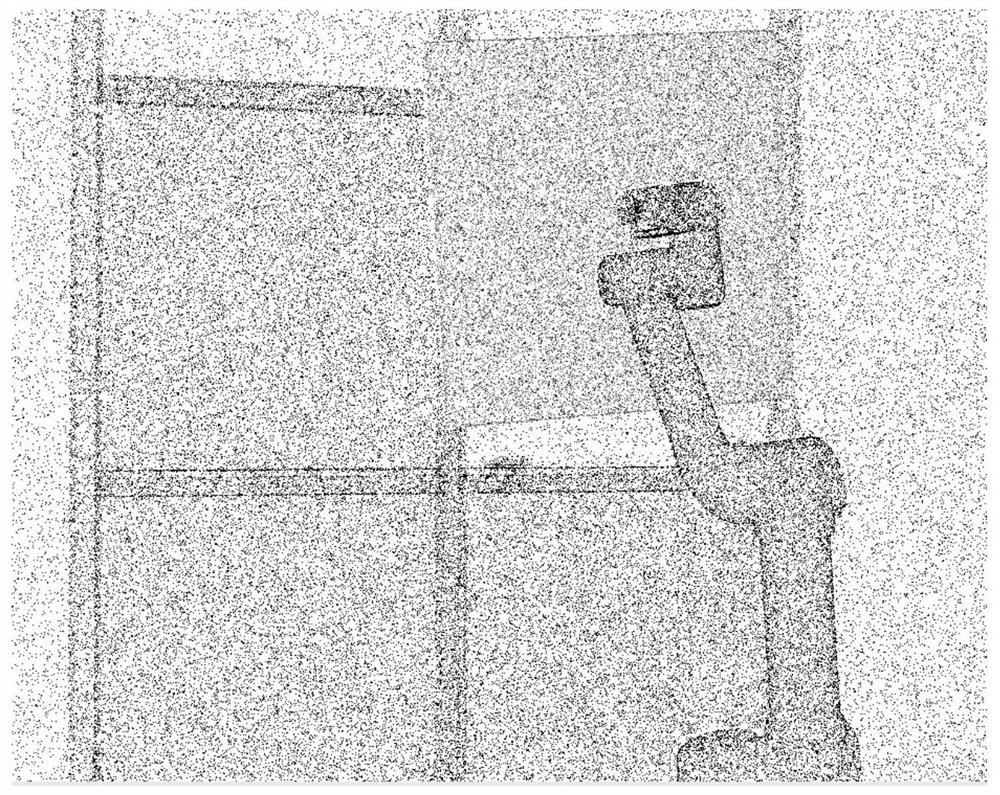

[0029] This embodiment takes the installation scene of SE-type dry-hanging stone curtain wall as an example for illustration. Due to the complex environment of the curtain wall installation site, the uncertainty of the background, and the moving target object, the visualization effect of this method is more prominent. The mobile chassis and UR5 robotic arm are used to absorb and install the stone. During the installation process, the workers cannot obtain enough visual information such as the pendant on the back of the stone to be installed and the keel of the curtain wall. They can only rely on personal experience and position estimation to complete a series of construction. Due to the inaccurate installation of the operation task, the installation time is longer and the co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com